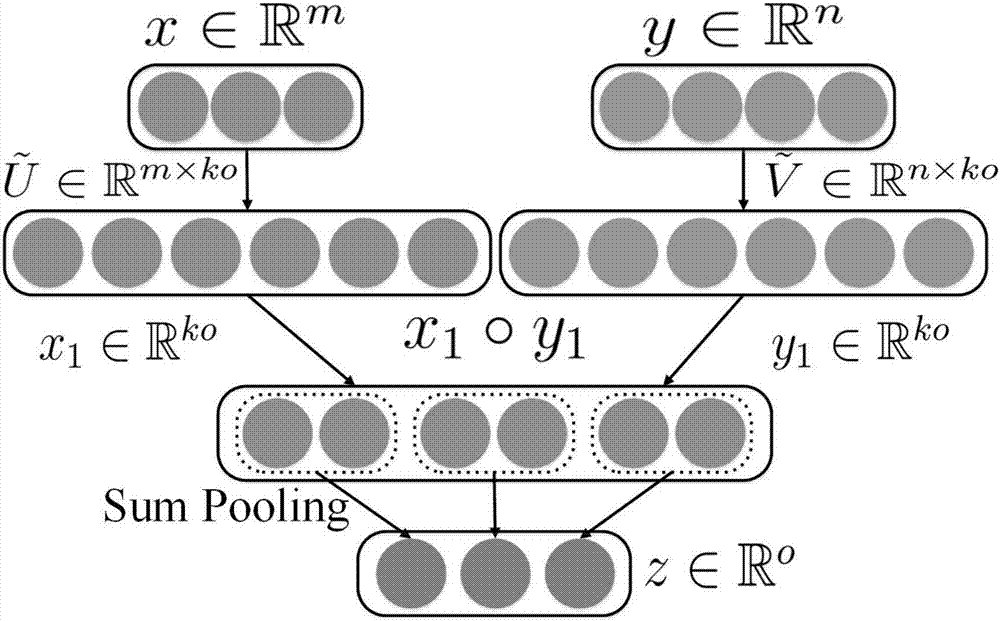

Image content question and answer method based on multi-modality low-rank dual-linear pooling

An image content, bilinear technology, applied in the field of deep neural network, can solve the problem of high computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

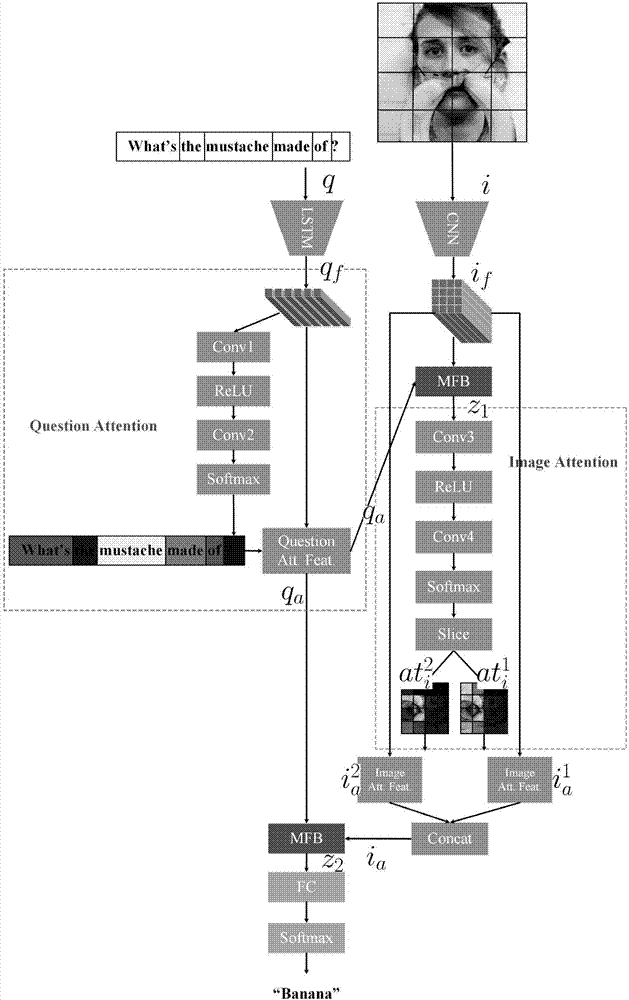

[0102] The detailed parameters of the present invention will be further specifically described below.

[0103] Such as figure 1 As shown, the present invention provides a deep neural network structure for image content question answering (Image Question Answer, IQA), and the specific steps are as follows:

[0104] The data preprocessing described in step (1) and image and text are carried out feature extraction, specifically as follows:

[0105] The COCO-VQA dataset is used here as training and testing data.

[0106] 1-1. For image data, the existing 152-layer deep residual network (Resnet-152) model is used to extract image features. Specifically, we uniformly scale the image data to 448×448 and input it into the deep residual network, and extract the output of its res5c layer as the image feature

[0107] 1-2. For question text data, we first segment the question and build a word dictionary for the question. And each question only takes the first 15 words, and if the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com