Patents

Literature

130 results about "Viseme" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A viseme is any of several speech sounds that look the same, for example when lip reading (Fisher 1968). Visemes and phonemes do not share a one-to-one correspondence. Often several phonemes correspond to a single viseme, as several phonemes look the same on the face when produced, such as /k, ɡ, ŋ/, (viseme: /k/), /t͡ʃ, ʃ, d͡ʒ, ʒ/ (viseme: /ch/), /t, d, n, l/ (viseme: /t/), and /p, b, m/ (viseme: /p/). Thus words such as pet, bell, and men are difficult for lip-readers to distinguish, as all look like /pet/. However, there may be differences in timing and duration during actual speech in terms of the visual 'signature' of a given gesture that can not be captured with a single photograph. Conversely, some sounds which are hard to distinguish acoustically are clearly distinguished by the face (Chen 2001). For example, acoustically speaking English /l/ and /r/ can be quite similar (especially in clusters, such as 'grass' vs. 'glass'), yet visual information can show a clear contrast. This is demonstrated by the more frequent mishearing of words on the telephone than in person. Some linguists have argued that speech is best understood as bimodal (aural and visual), and comprehension can be compromised if one of these two domains is absent (McGurk and MacDonald 1976).

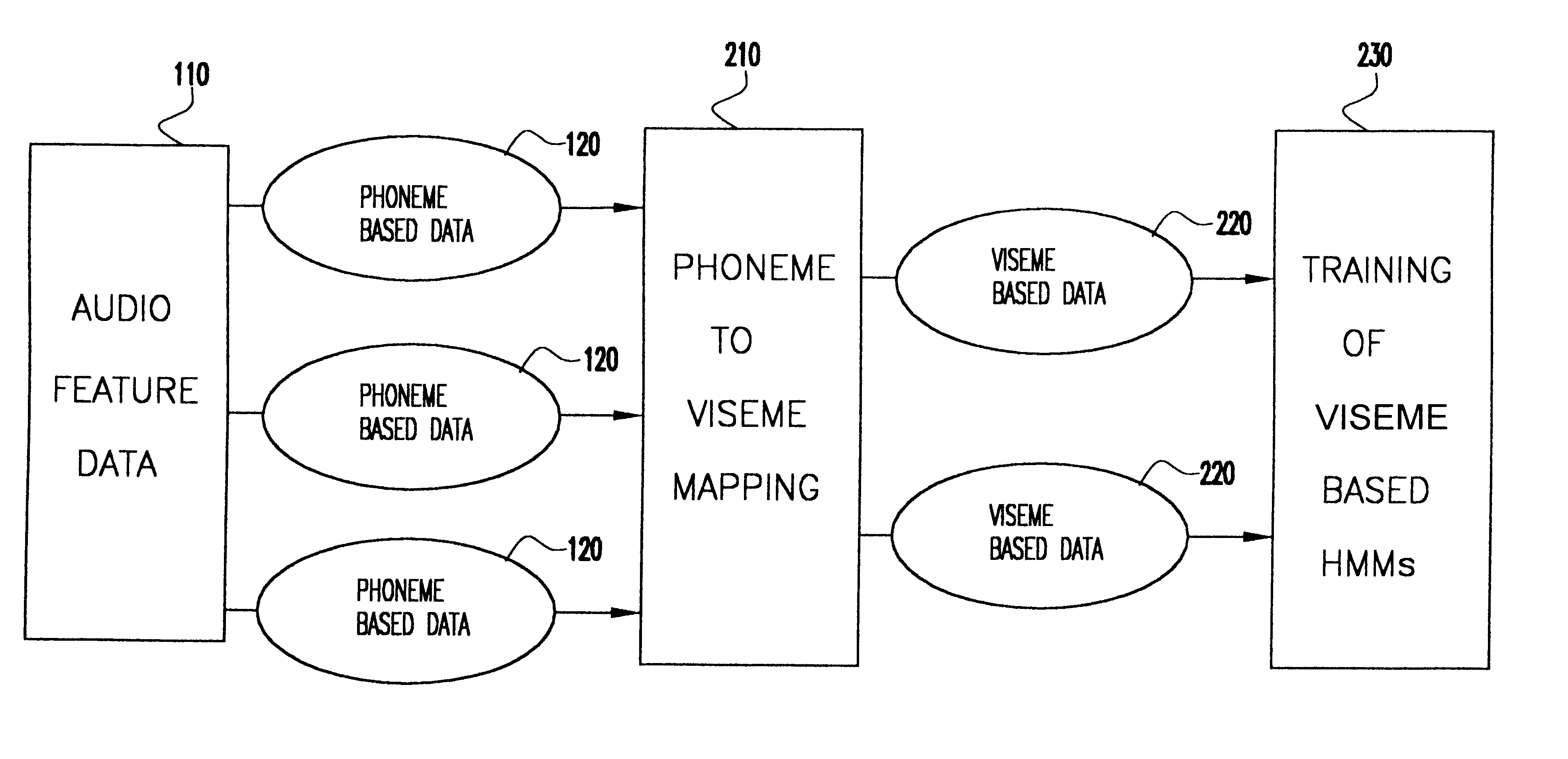

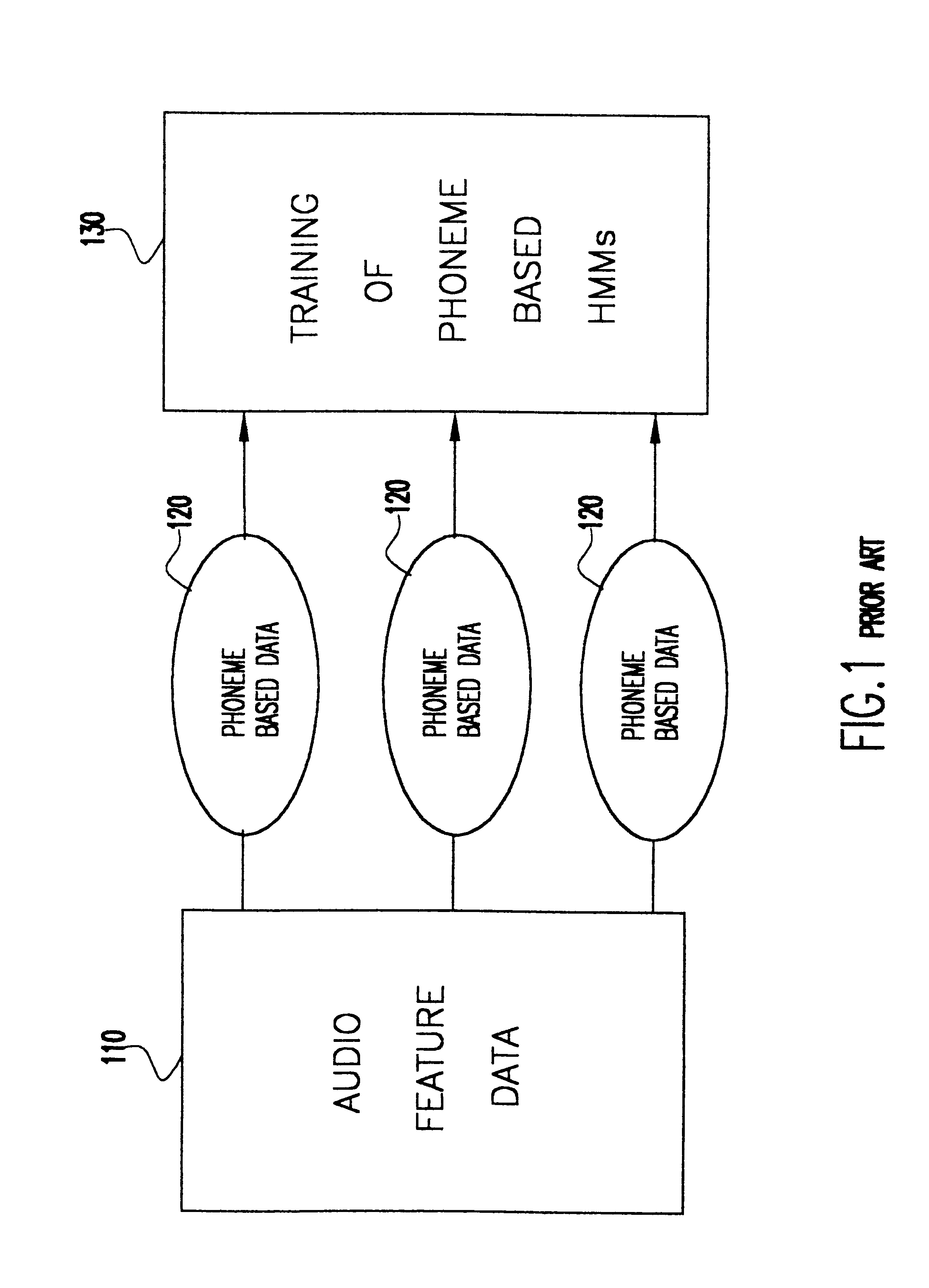

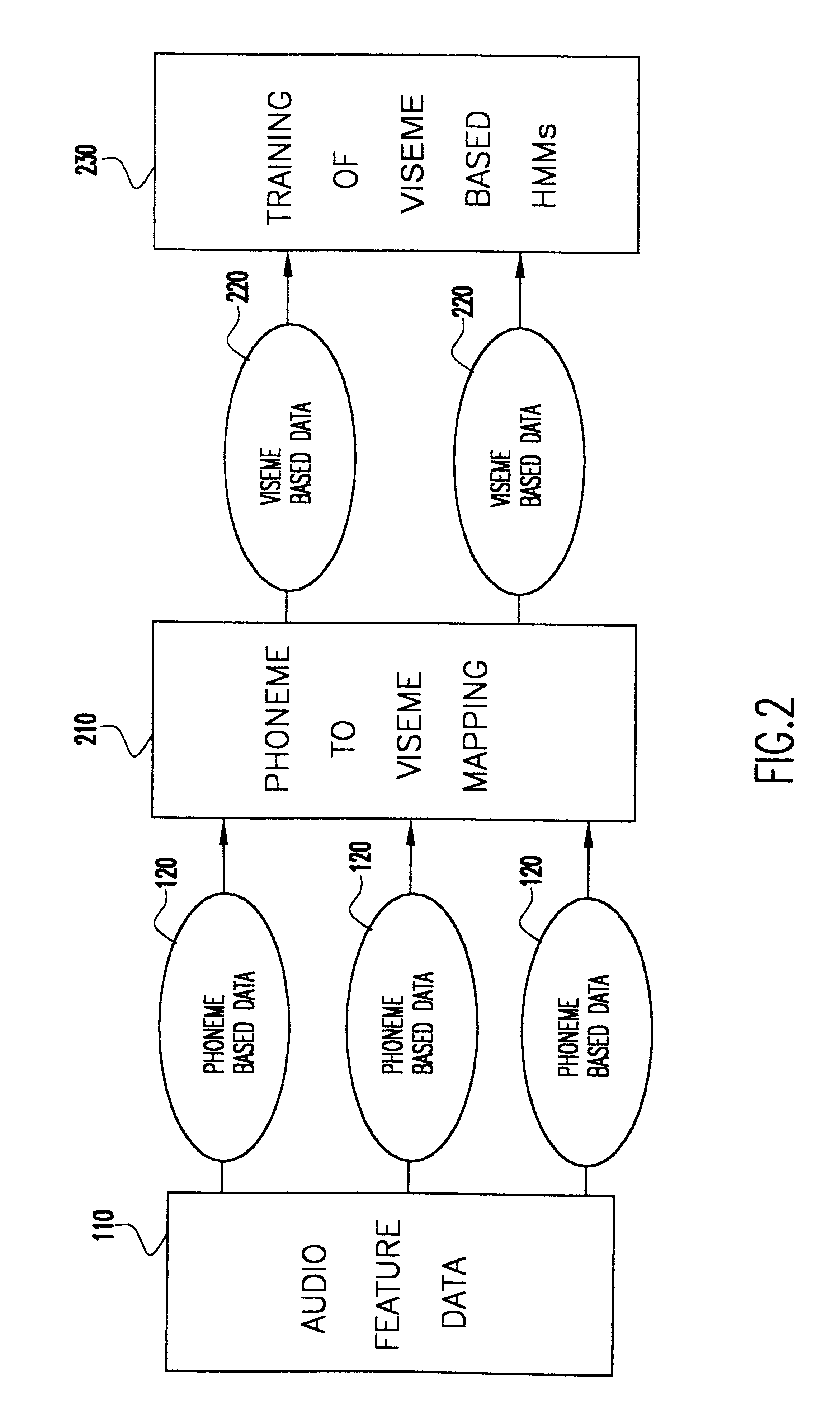

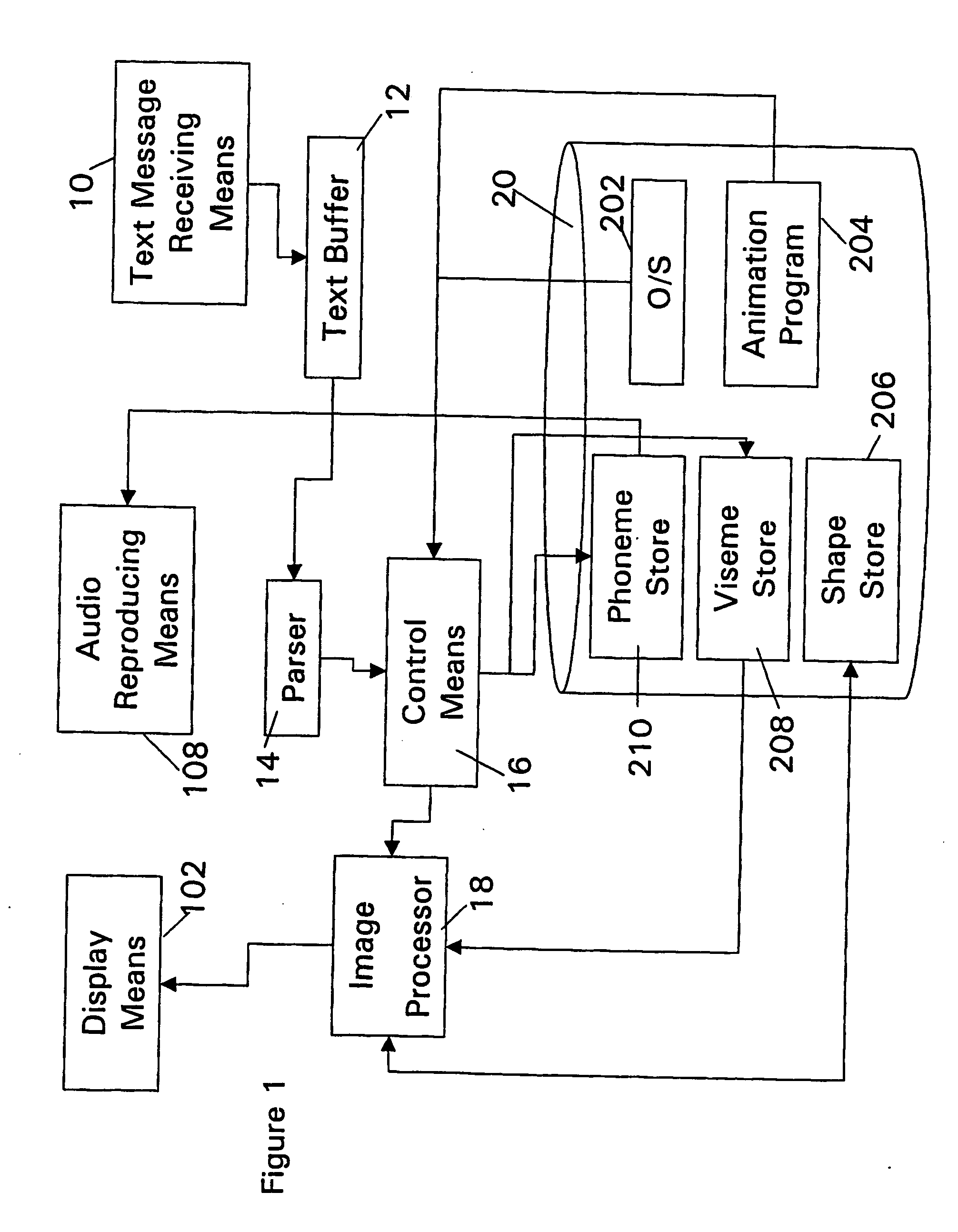

Speech driven lip synthesis using viseme based hidden markov models

InactiveUS6366885B1Shorten the timeSmall sizeElectronic editing digitised analogue information signalsRecord information storageNODALTraining phase

A method of speech driven lip synthesis which applies viseme based training models to units of visual speech. The audio data is grouped into a smaller number of visually distinct visemes rather than the larger number of phonemes. These visemes then form the basis for a Hidden Markov Model (HMM) state sequence or the output nodes of a neural network. During the training phase, audio and visual features are extracted from input speech, which is then aligned according to the apparent viseme sequence with the corresponding audio features being used to calculate the HMM state output probabilities or the output of the neutral network. During the synthesis phase, the acoustic input is aligned with the most likely viseme HMM sequence (in the case of an HMM based model) or with the nodes of the network (in the case of a neural network based system), which is then used for animation.

Owner:UNILOC 2017 LLC

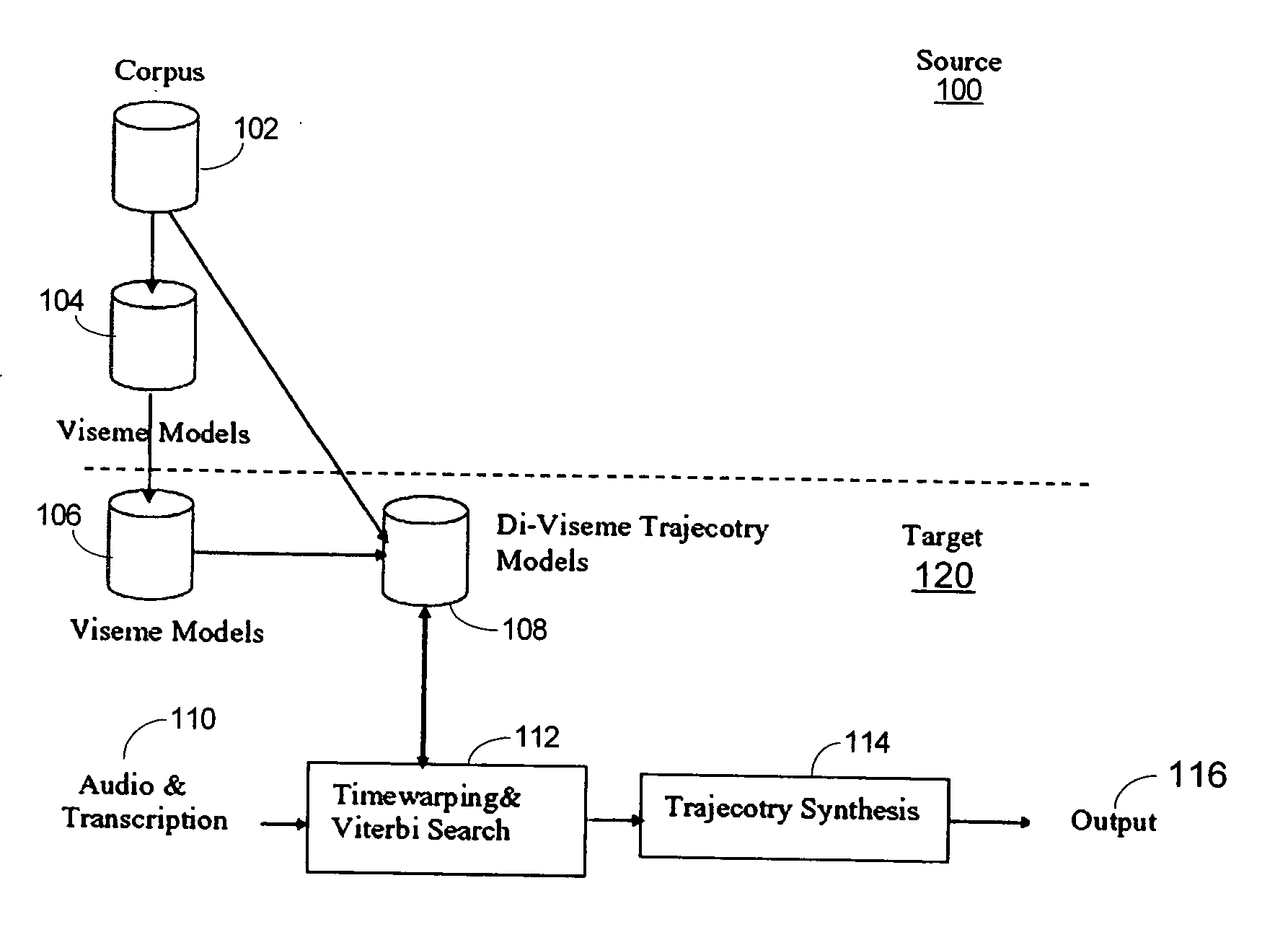

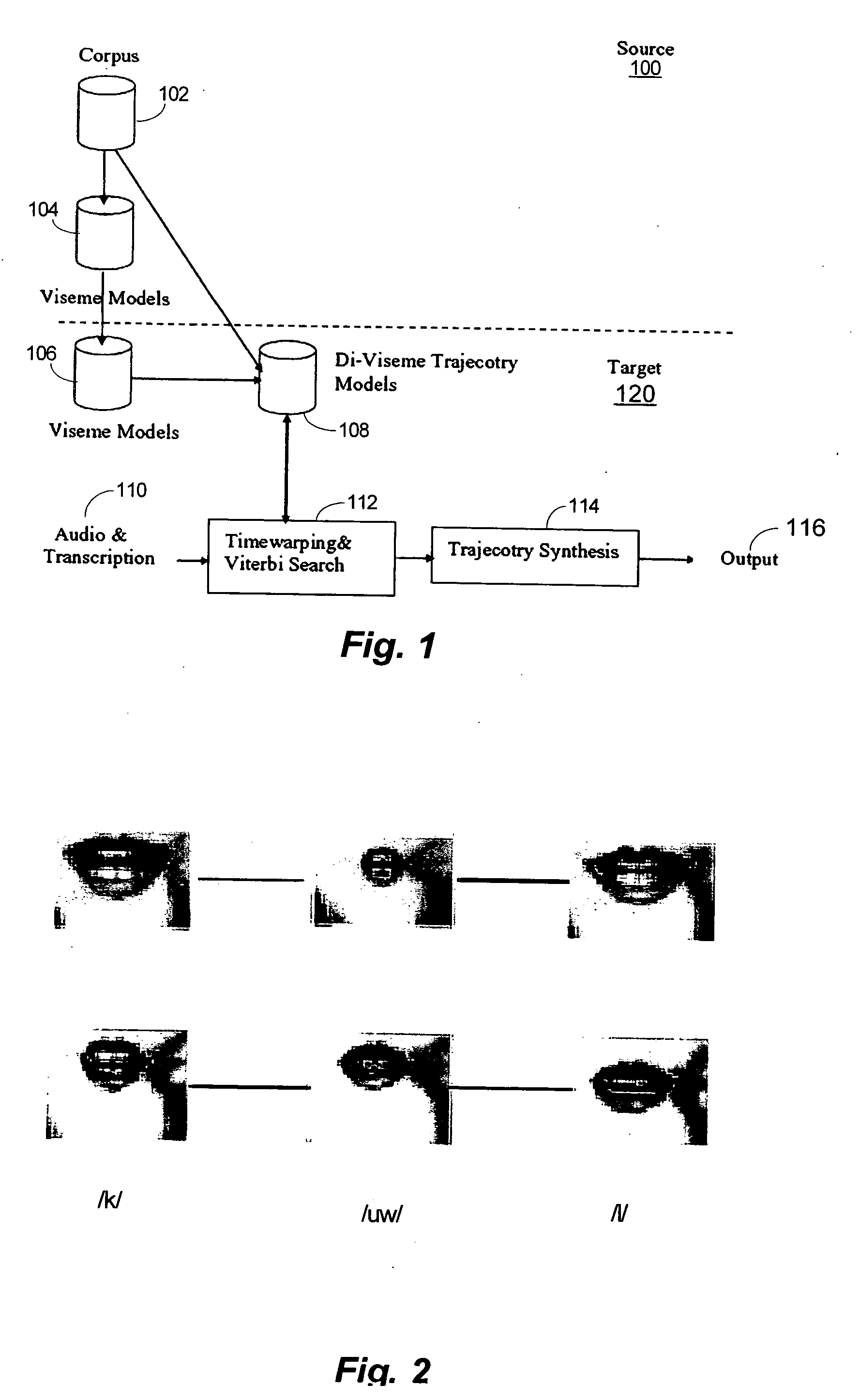

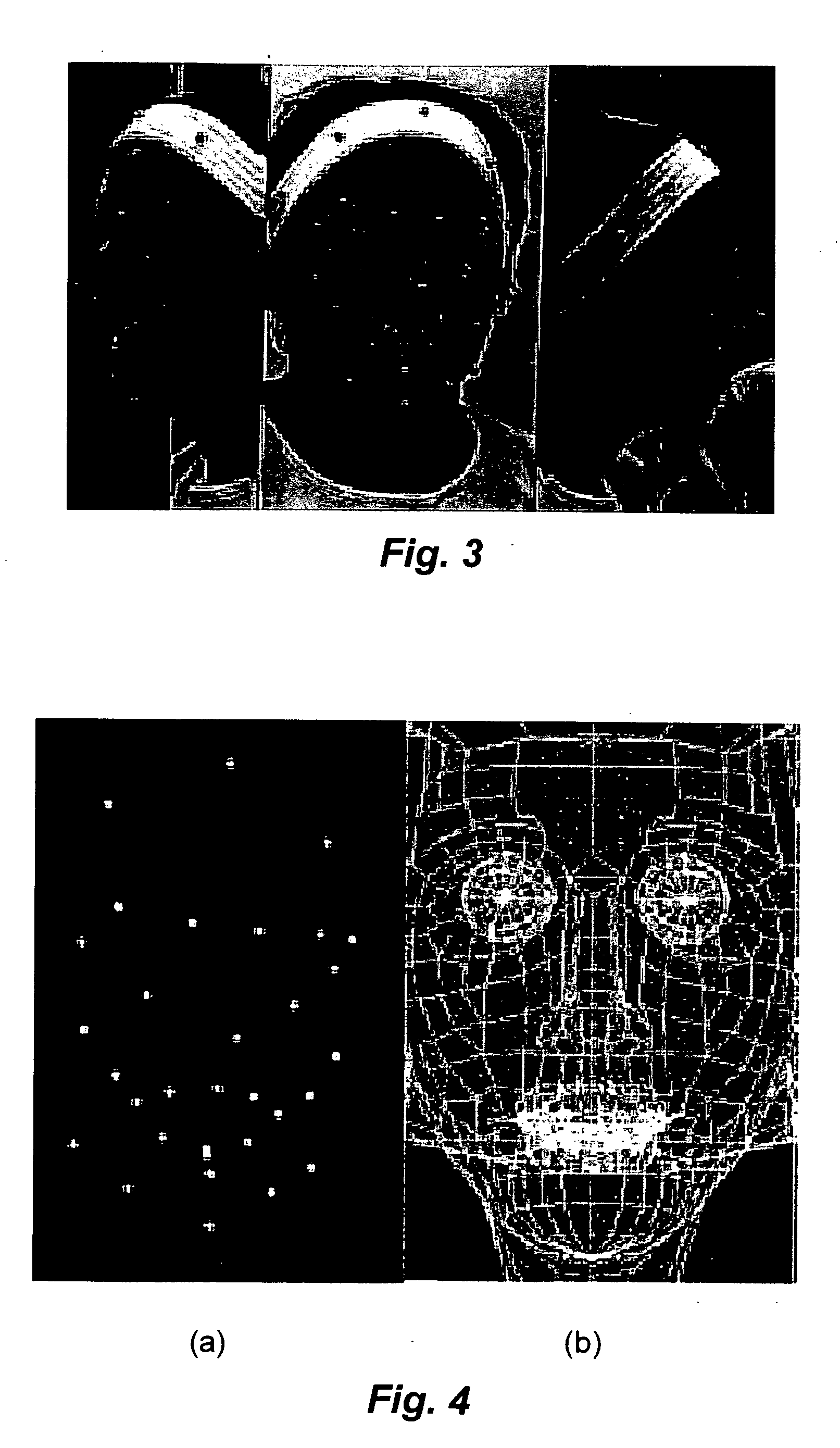

Methods and systems for synthesis of accurate visible speech via transformation of motion capture data

The disclosure describes methods for synthesis of accurate visible speech using transformations of motion-capture data. Methods are provided for synthesis of visible speech in a three-dimensional face. A sequence of visemes, each associated with one or more phonemes, are mapped onto a three-dimensional target face, and concatentated. The sequence may include divisemes corresponding to pairwise sequences of phonemes, wherein the diviseme is comprised of motion trajectories of a set facial points. The sequence may also include multi-units corresponding to words and sequences of words. Various techniques involving mapping and concatenation are also addressed.

Owner:UNIV OF COLORADO THE REGENTS OF

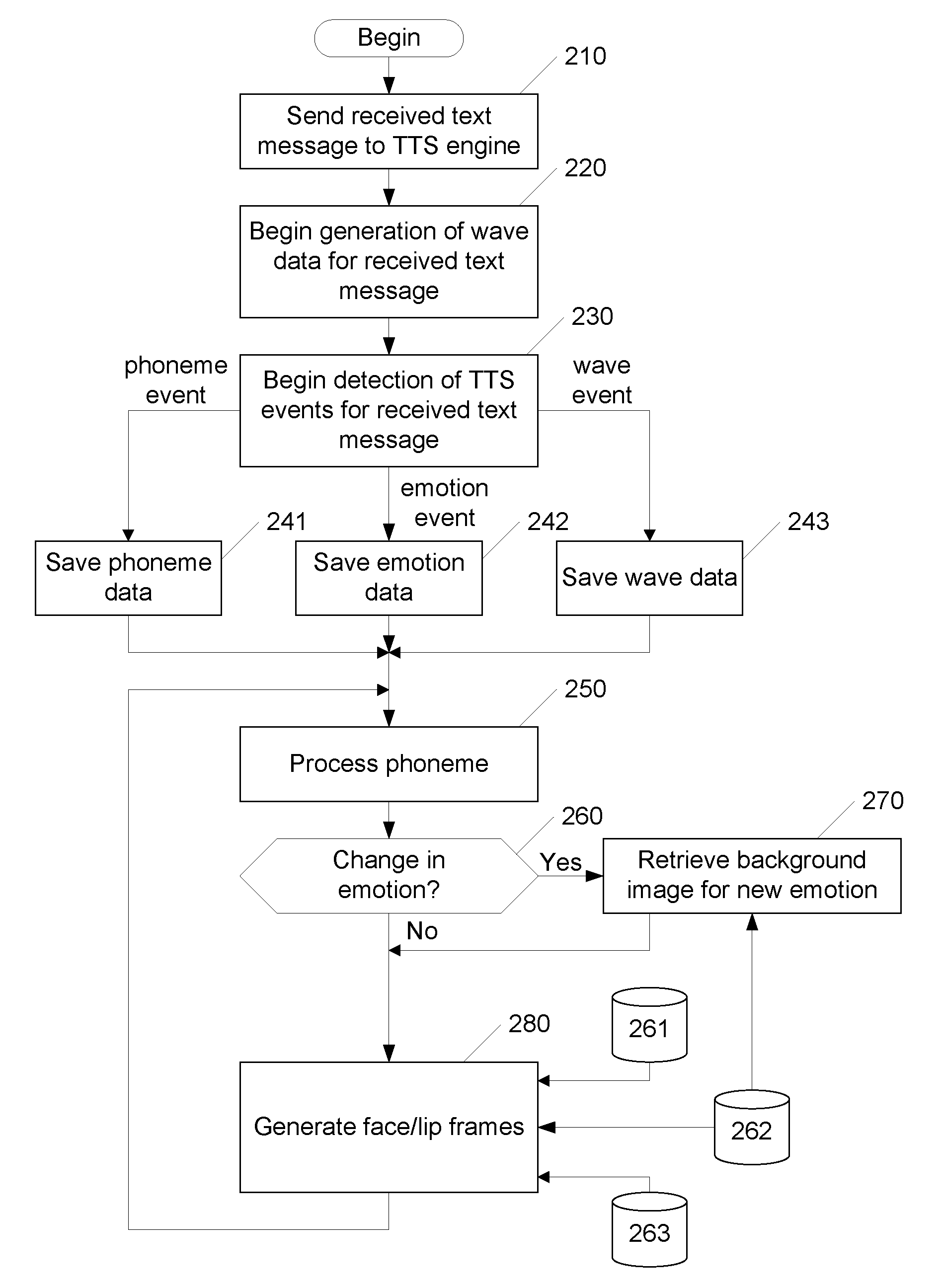

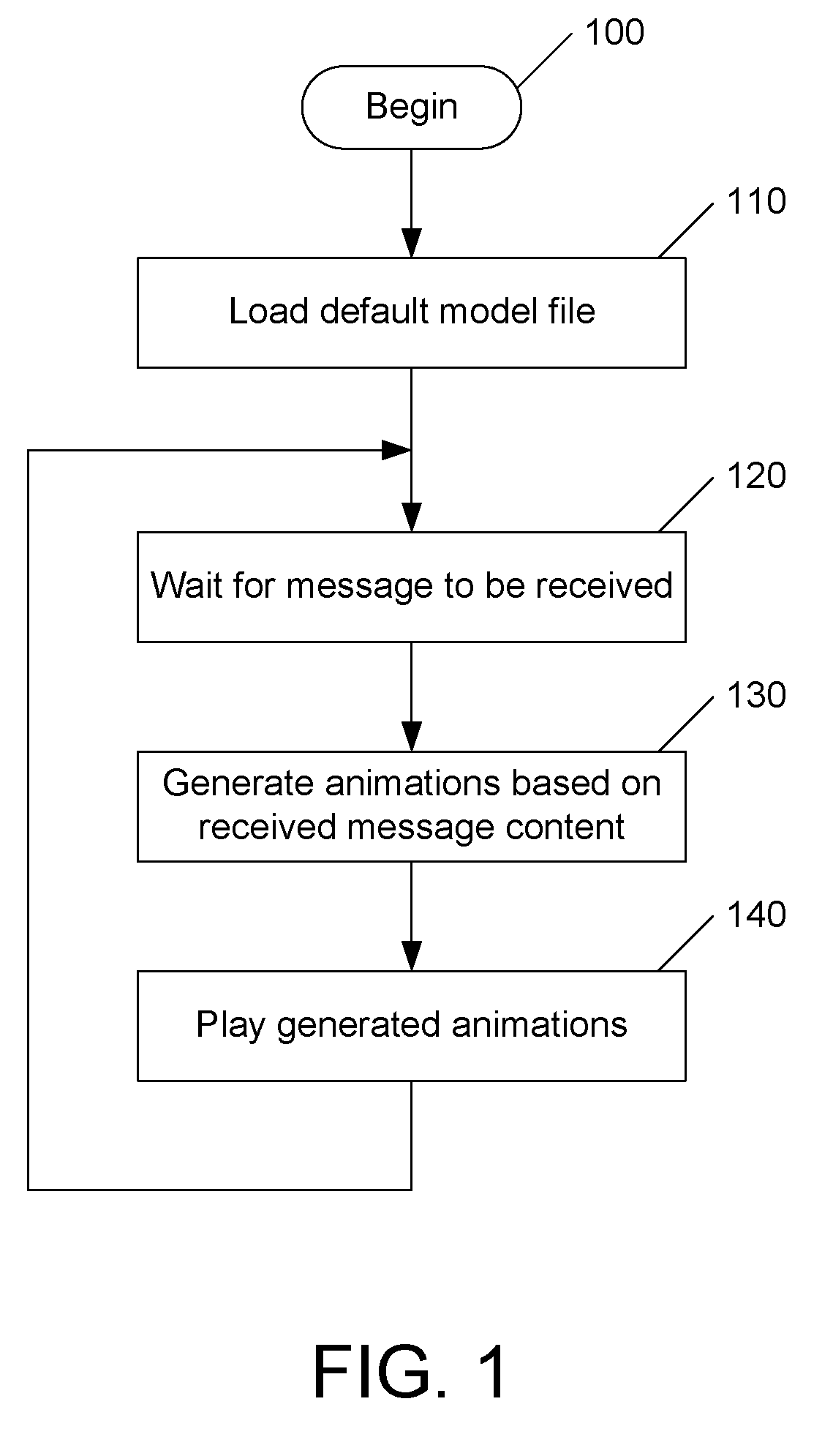

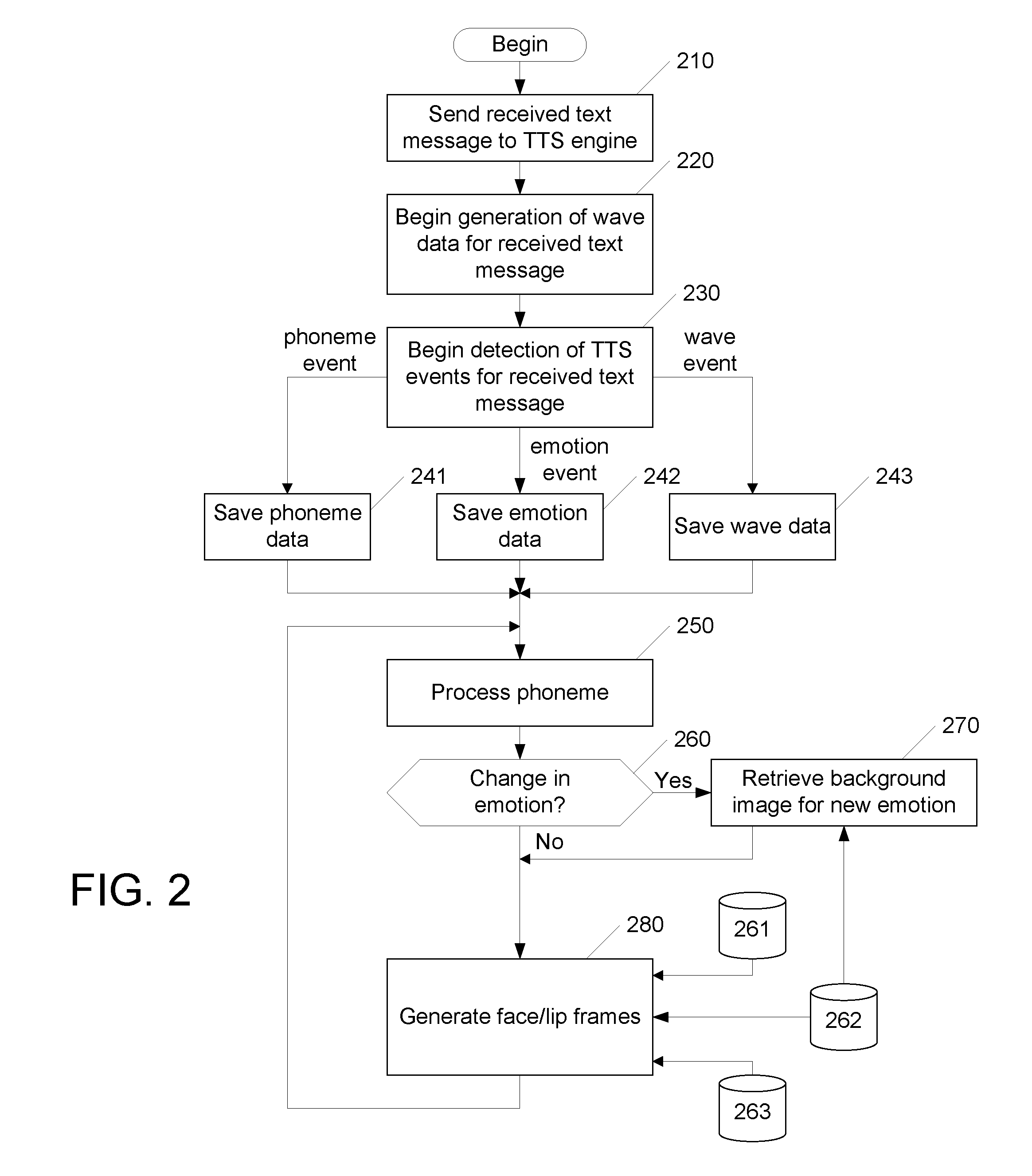

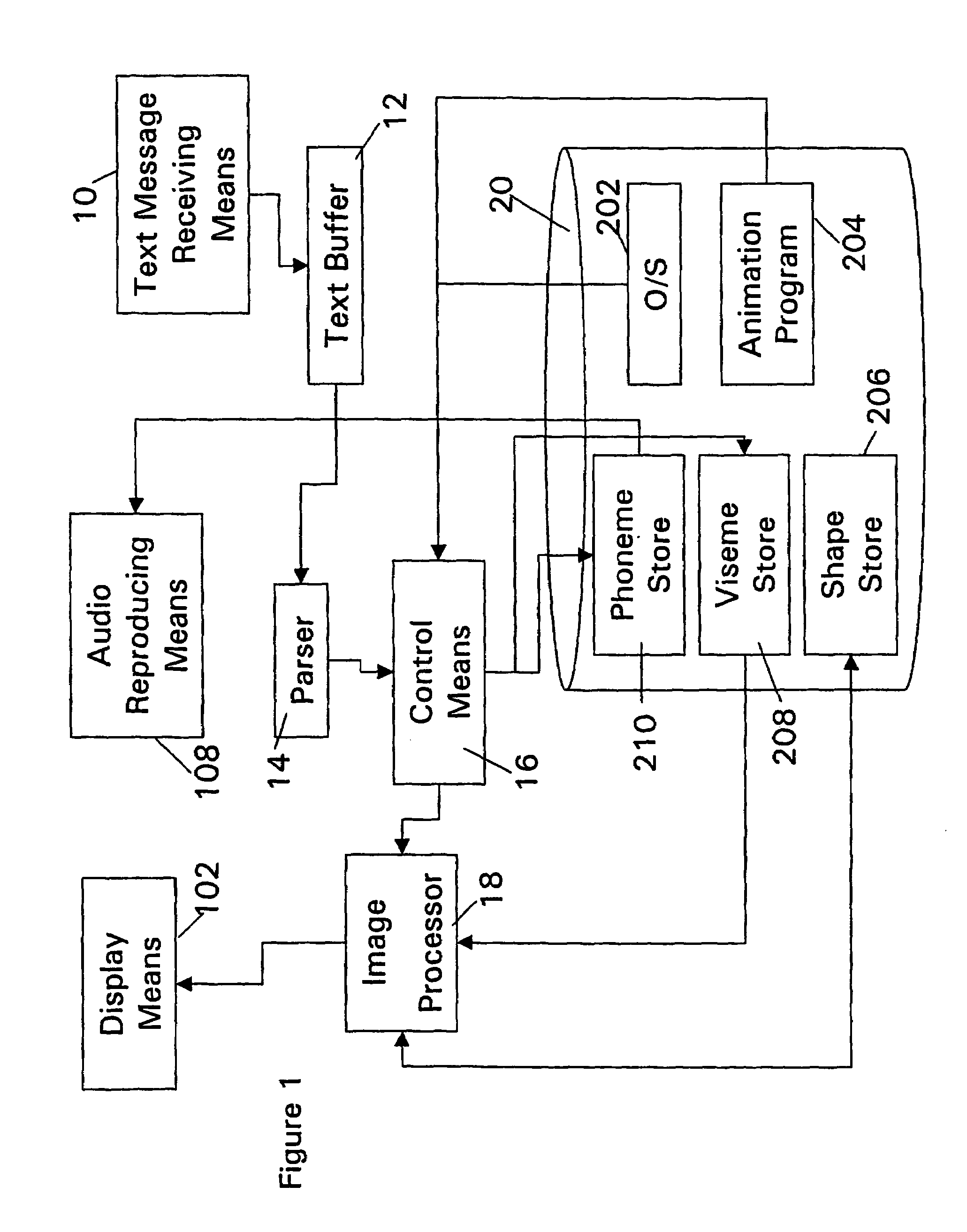

Image-based instant messaging system for providing expressions of emotions

Emotions can be expressed in the user interface for an instant messaging system based on the content of a received text message. The received text message is analyzed using a text-to-speech engine to generate phoneme data and wave data based on the text content. Emotion tags embedded in the message by the sender are also detected. Each emotion tag indicates the sender's intent to change the emotion being conveyed in the message. A mapping table is used to map phoneme data to viseme data. The number of face / lip frames required to represent viseme data is determined based on at least the length of the associated wave data. The required number of face / lip frames is retrieved from a stored set of such frames and used in generating an animation. The retrieved face / lip frames and associated wave data are presented in the user interface as synchronized audio / video data.

Owner:CERENCE OPERATING CO

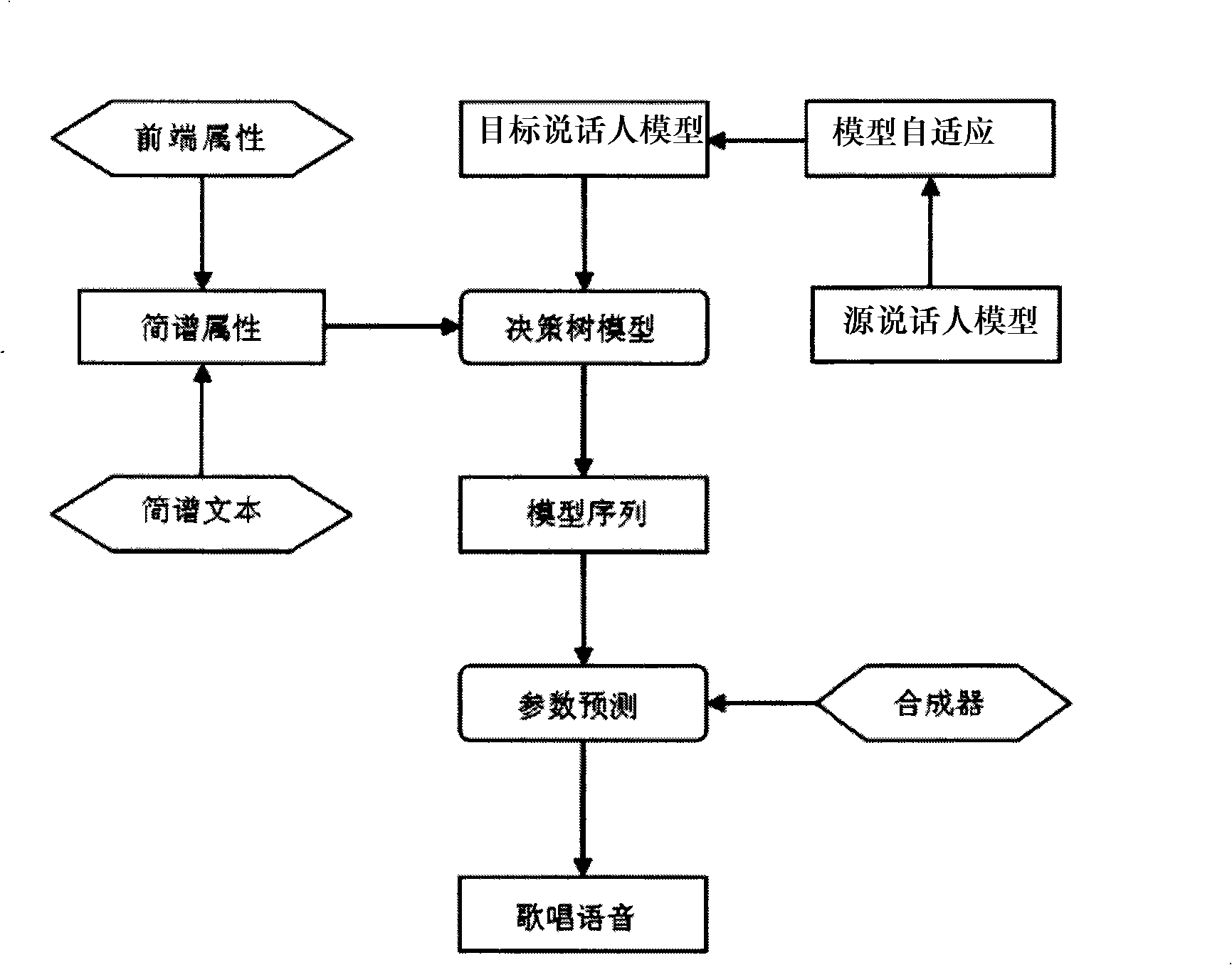

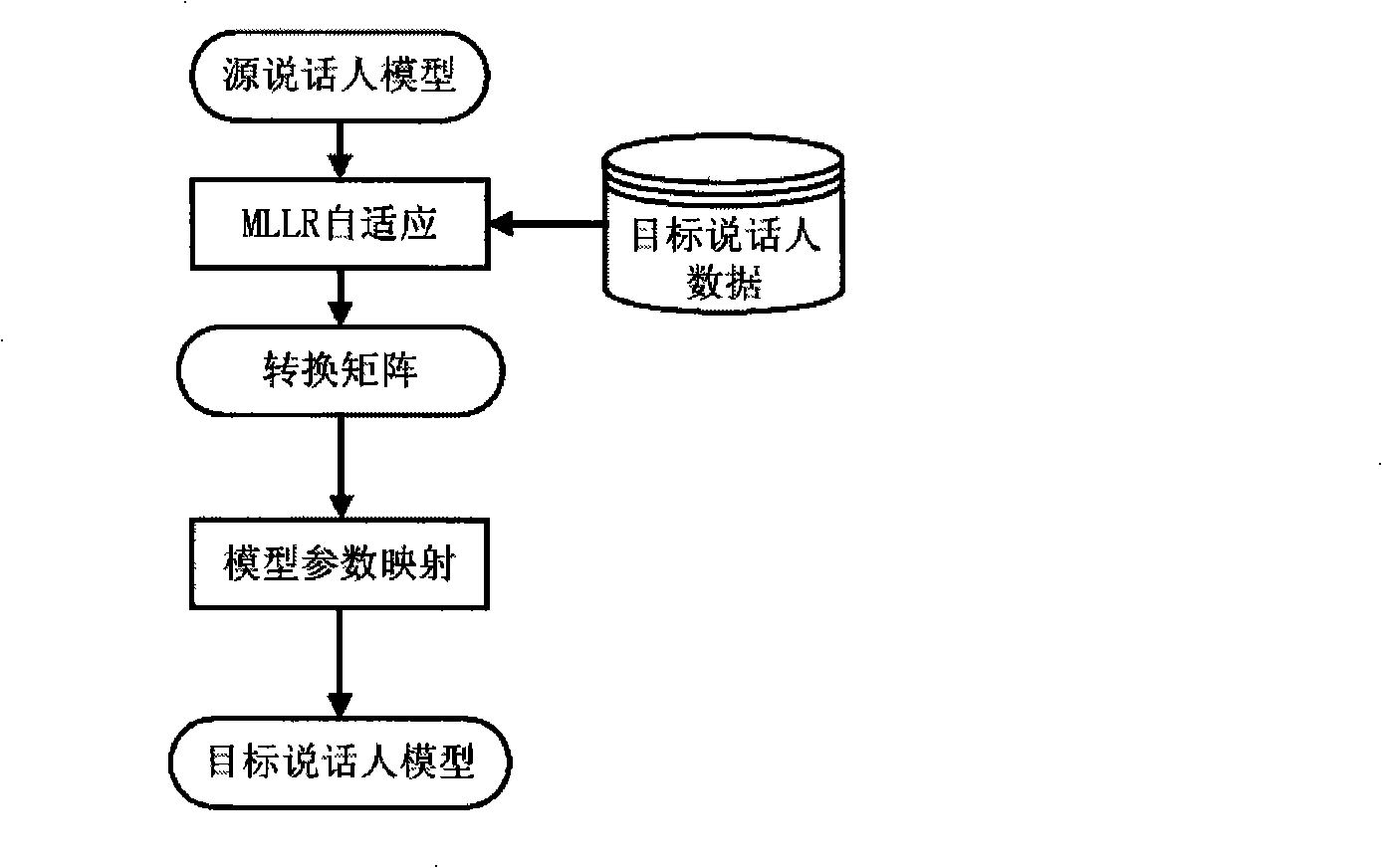

Synthesizing method of personalized singing voice

ActiveCN101308652AImprove acceleration performanceIncrease entertainmentSpeech synthesisPersonalizationFrequency spectrum

The invention relates to an individualized singing sound synthesis method, including the following steps: building up a module of the coefficient of the line spectrum frequency of the sound and obtaining a relevant decision-making tree module through training; recording the reading sounds of a special subscriber to get the module of the coefficient of the line spectrum frequency of the sound of the subscriber; obtaining the attribute set relevant to the context of the lyric of the numerical notes, and pre-estimating the frequency parameters and the time duration data of initial consonant and vowels corresponding to the lyric according to the decision-making tree module and the module of the coefficient of the individualized line spectrum frequency; building up fundamental frequency data according to the numerical notes and combining the fundamental frequency data with the time duration and frequency parameters to obtain synthesized parameters; inputting the parameters into a parameterized sound synthesis vocoder, so that individualized singing sound can be synthesized. The method of the invention can synthesize synthesis sound with singing style by adjusting a few parameters of the rhythm and can synthesize singing sound by only recording a small reciting style library.

Owner:IFLYTEK CO LTD

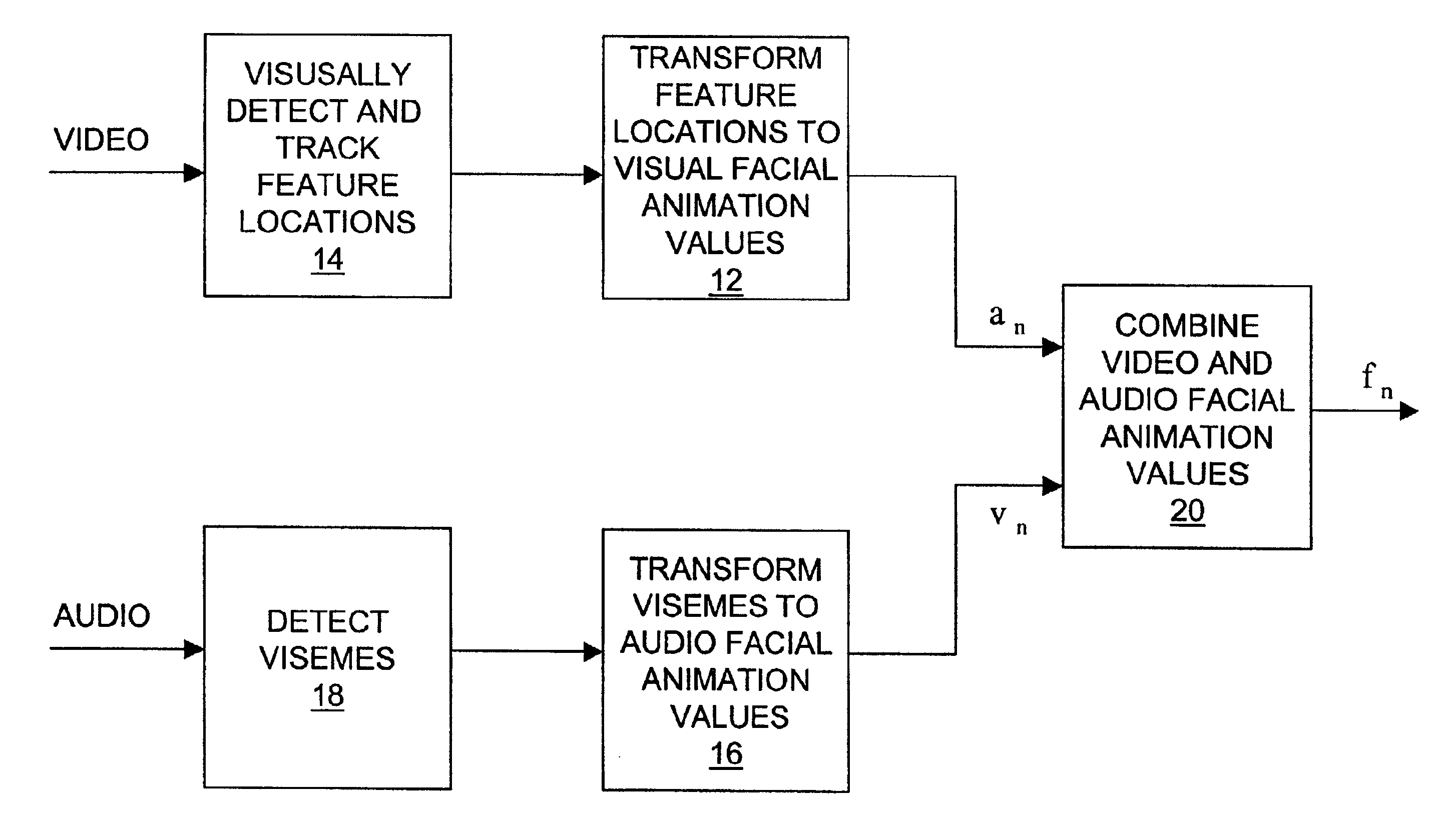

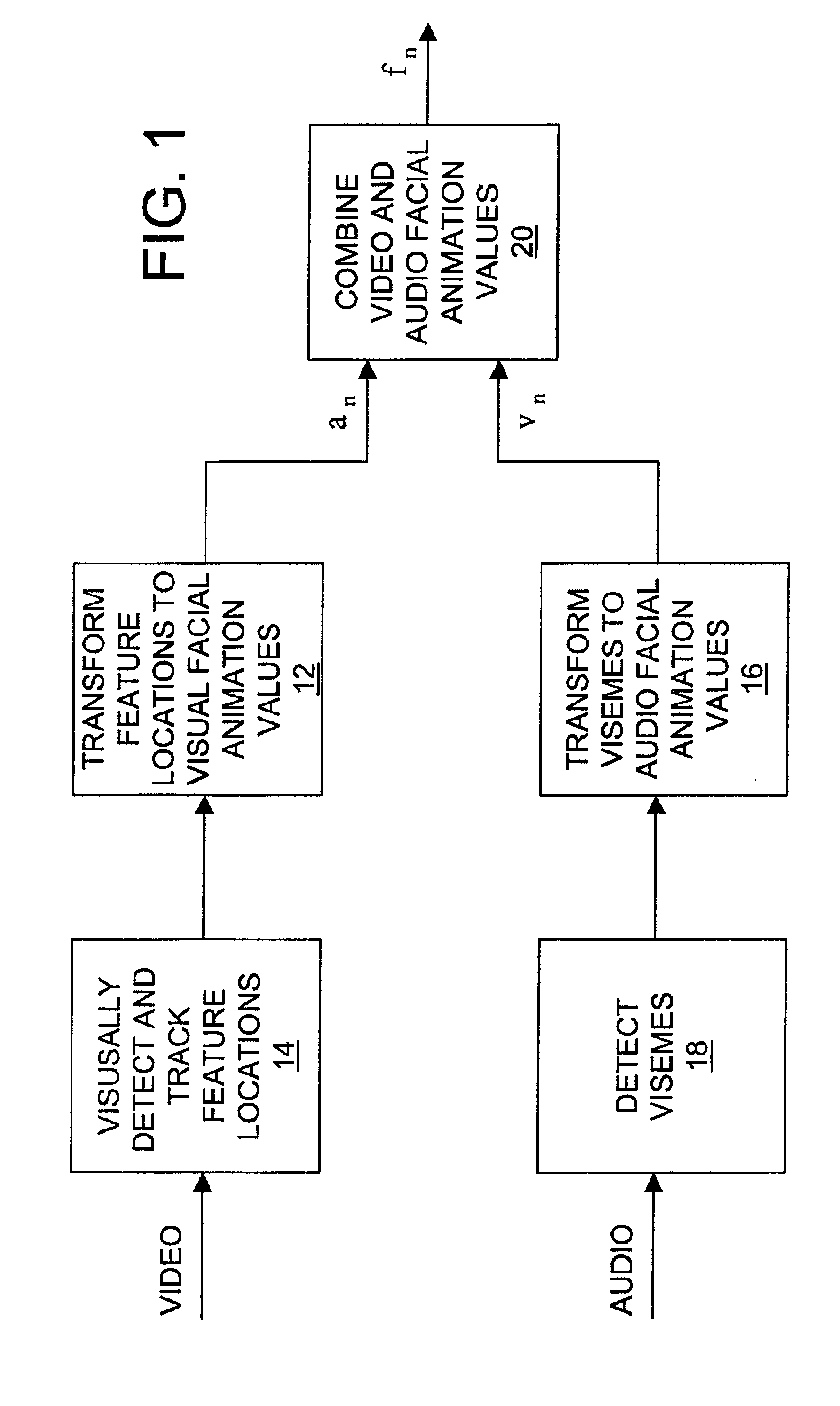

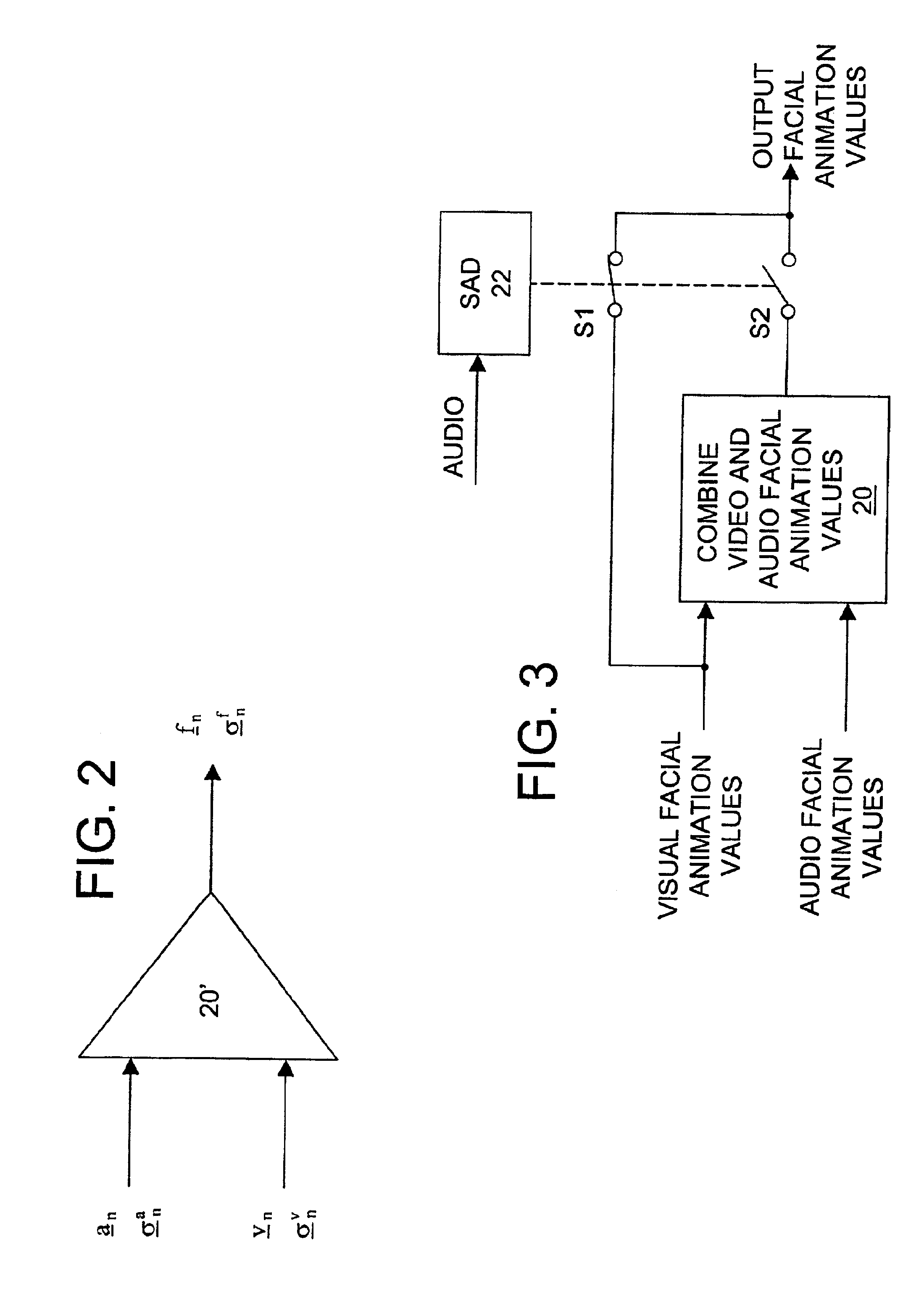

Method and system for generating facial animation values based on a combination of visual and audio information

Facial animation values are generated using a sequence of facial image frames and synchronously captured audio data of a speaking actor. In the technique, a plurality of visual-facial-animation values are provided based on tracking of facial features in the sequence of facial image frames of the speaking actor, and a plurality of audio-facial-animation values are provided based on visemes detected using the synchronously captured audio voice data of the speaking actor. The plurality of visual facial animation values and the plurality of audio facial animation values are combined to generate output facial animation values for use in facial animation.

Owner:GOOGLE LLC

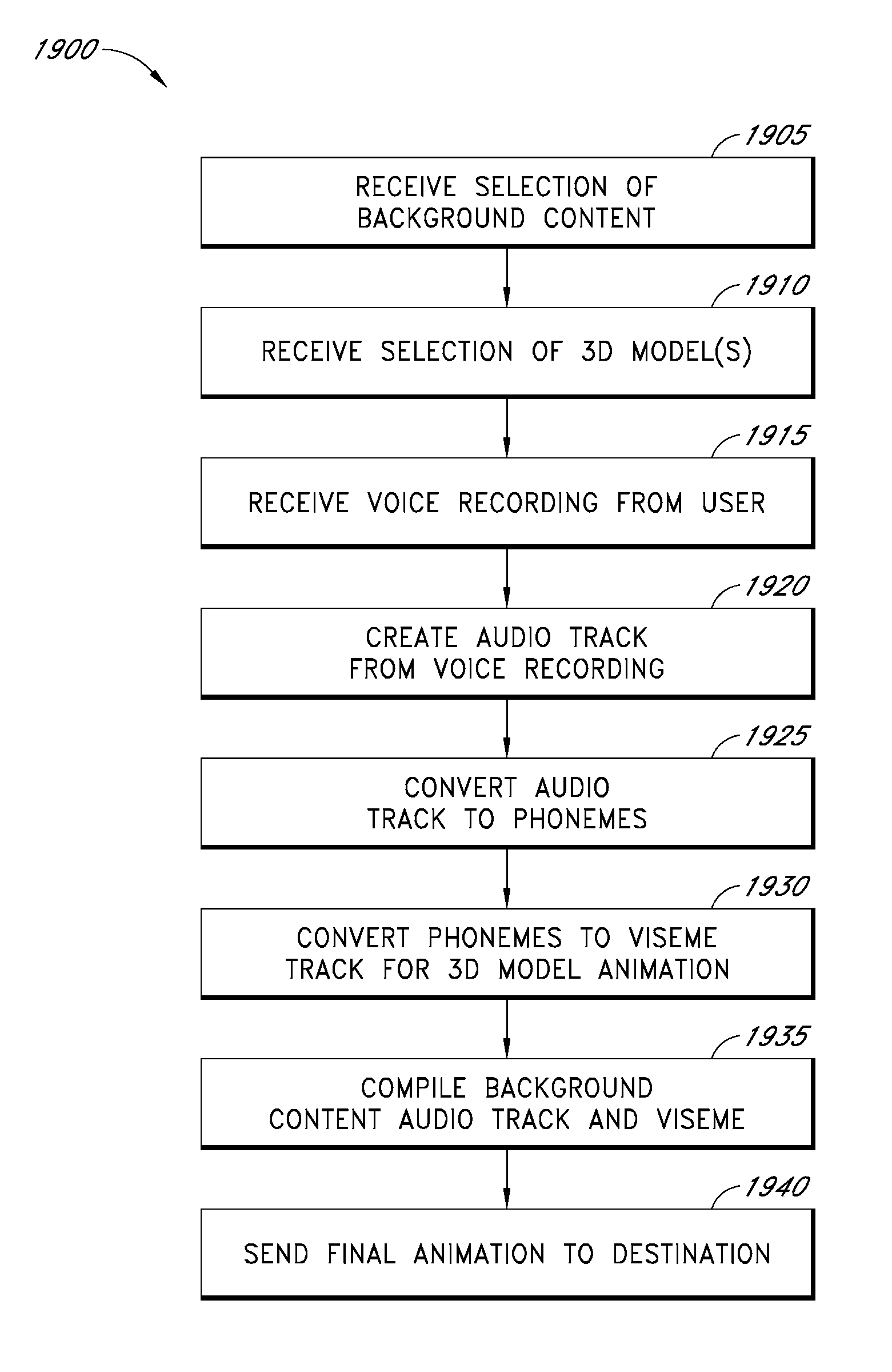

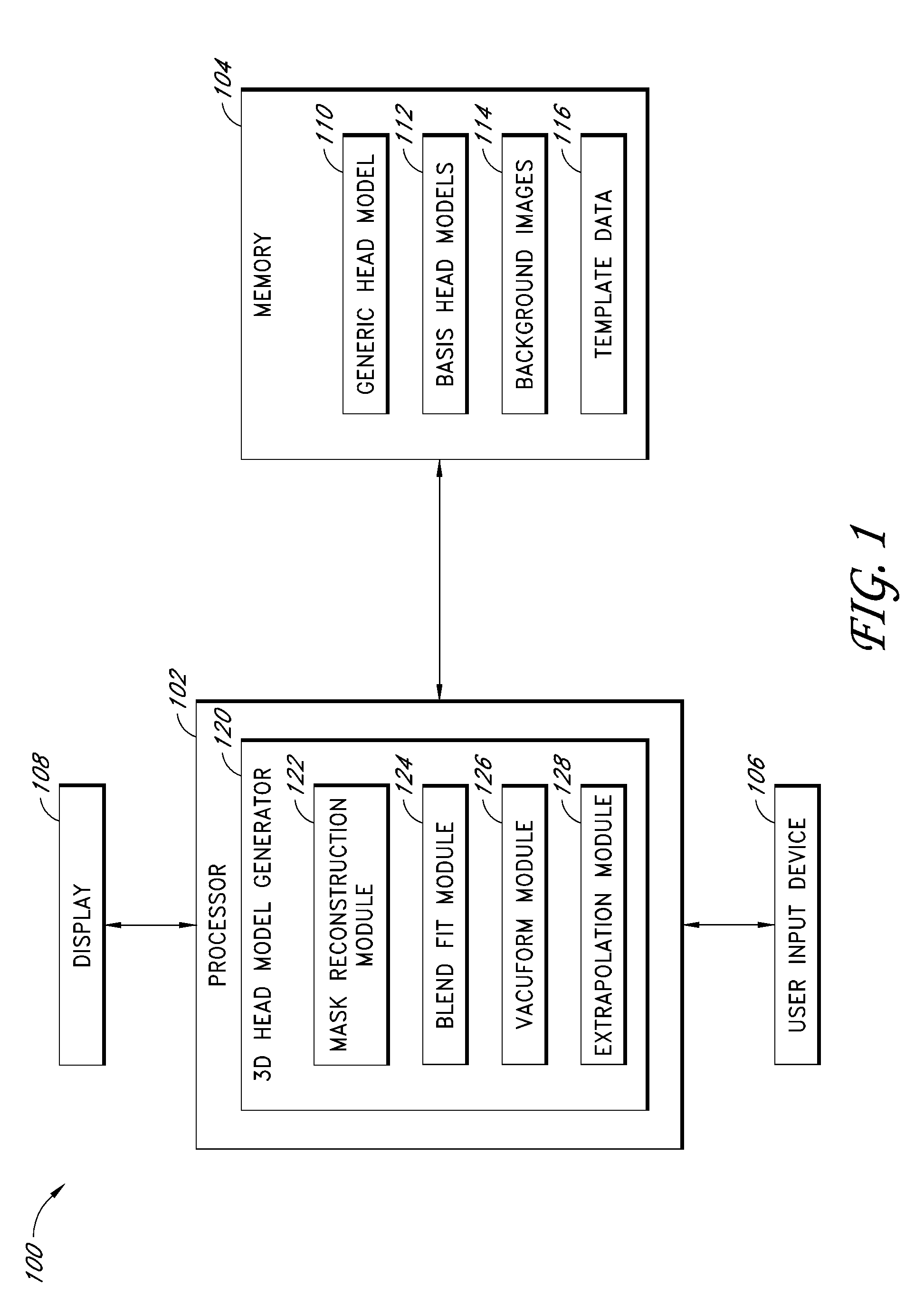

Systems and methods for voice personalization of video content

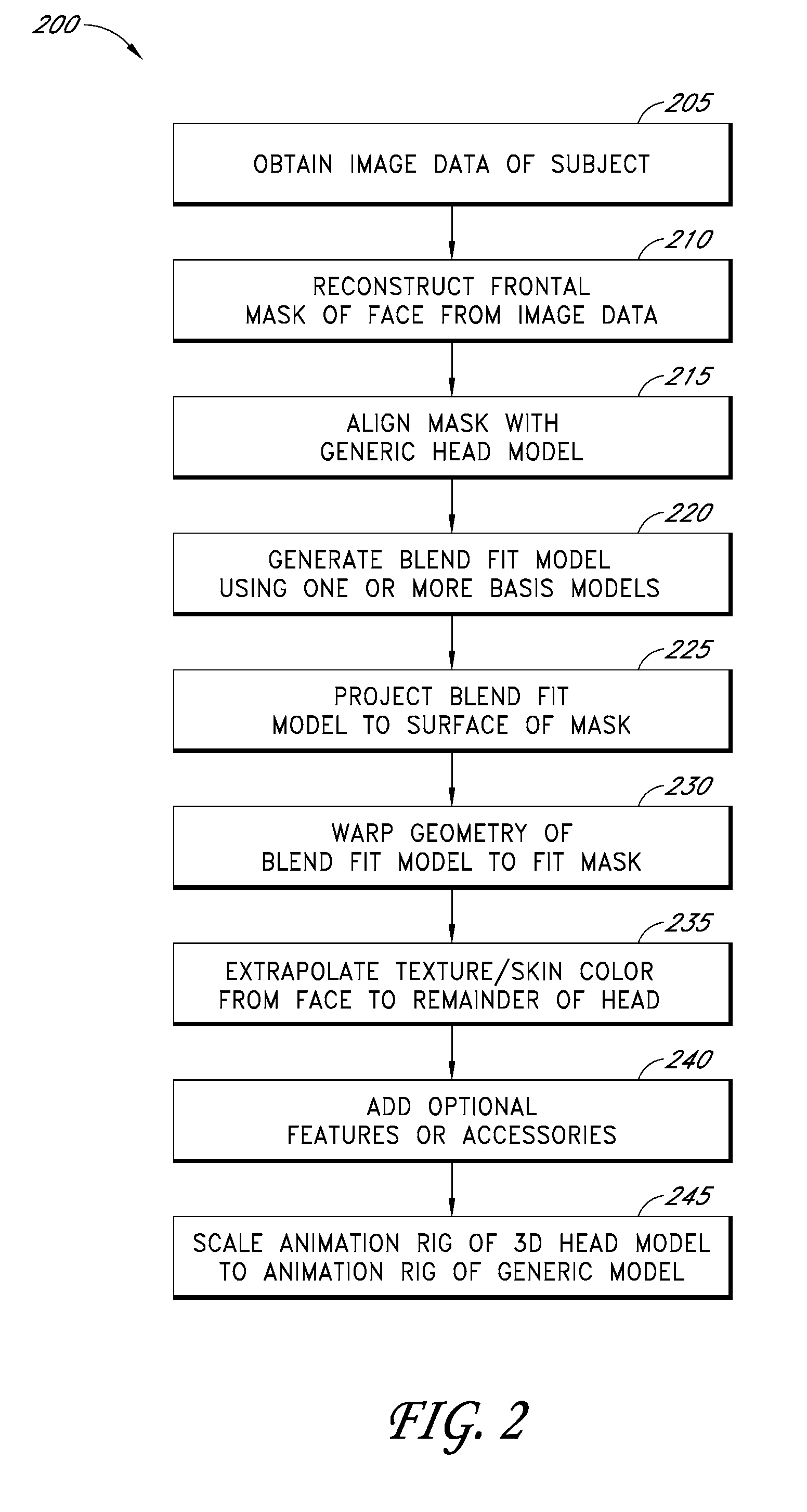

InactiveUS20090135177A1Rapidly and easily generateFacilitates compositing a 2D or 3D representationCharacter and pattern recognitionAnimationPersonalizationVoice transformation

Systems and methods are disclosed for performing voice personalization of video content. The personalized media content may include a composition of a background scene having a character, head model data representing an individualized three-dimensional (3D) head model of a user, audio data simulating the user's voice, and a viseme track containing instructions for causing the individualized 3D head model to lip sync the words contained in the audio data. The audio data simulating the user's voice can be generated using a voice transformation process. In certain examples, the audio data is based on a text input or selected by the user (e.g., via a telephone or computer) or a textual dialogue of a background character.

Owner:IMAGE METRICS INC

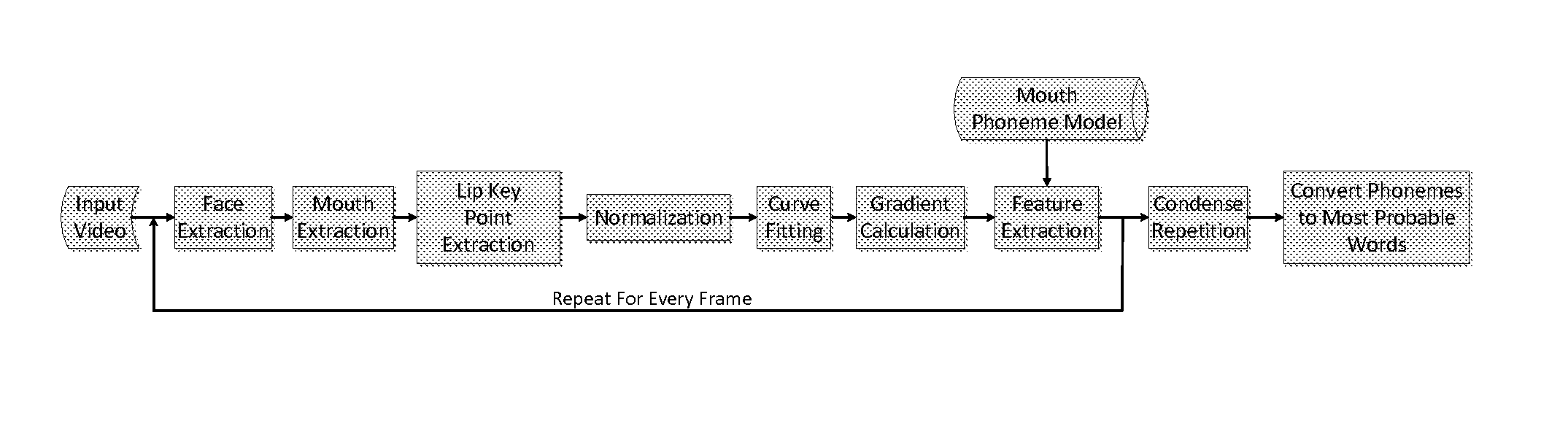

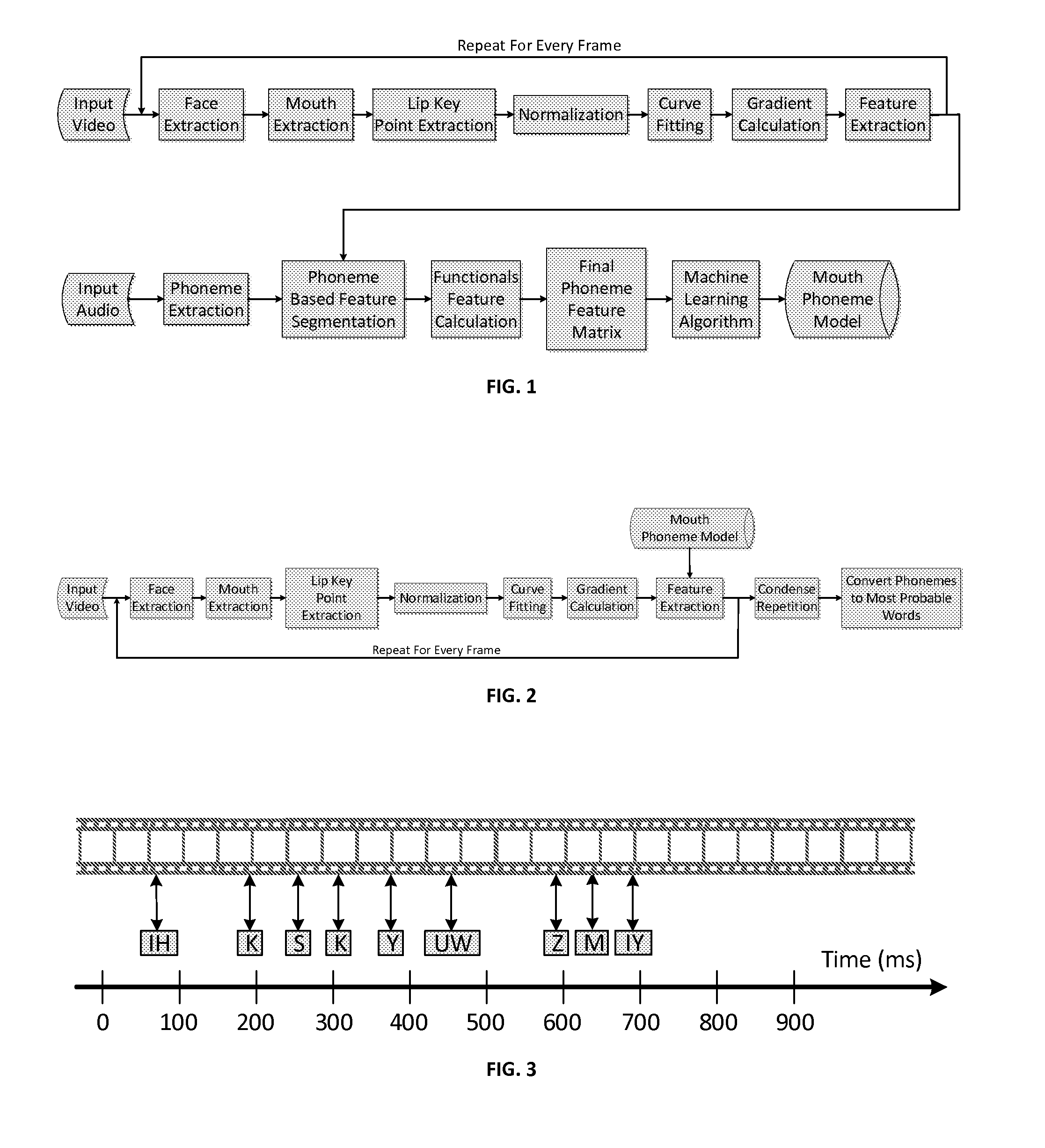

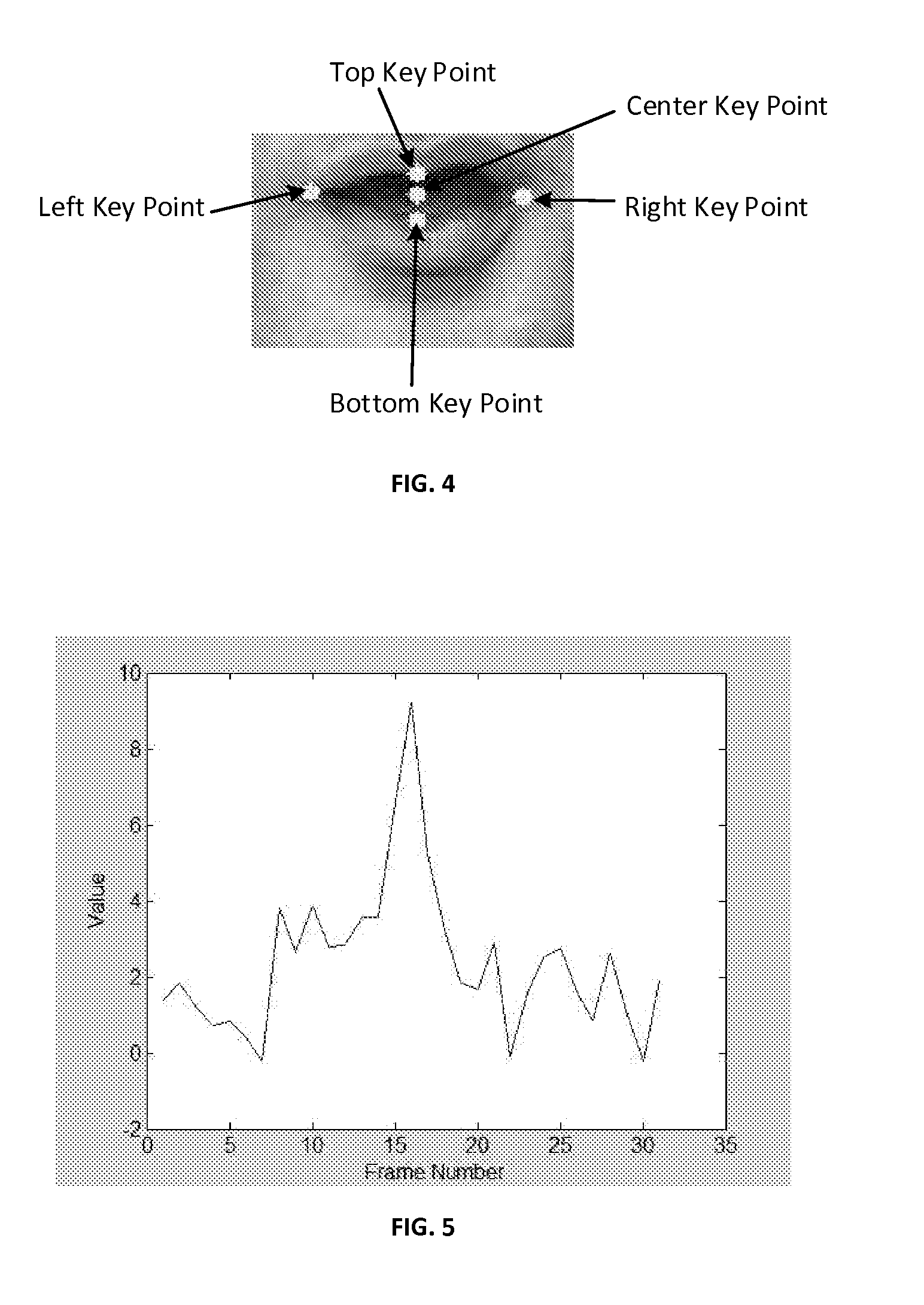

Mouth-Phoneme Model for Computerized Lip Reading

InactiveUS20150279364A1Character and pattern recognitionSpeech recognitionAudio frequencyVisual perception

The invention described here uses a Mouth Phoneme Model that relates phonemes and visemes using audio and visual information. This method allows for the direct conversion between lip movements and phonemes, and furthermore, the lip reading of any word in the English language. Speech API was used to extract phonemes from audio data obtained from a database which consists of video and audio information of humans speaking a word in different accents. A machine learning algorithm similar to WEKA (Waikato Environment for Knowledge Analysis) was used to train the lip reading system.

Owner:KRISHNAN AJAY +1

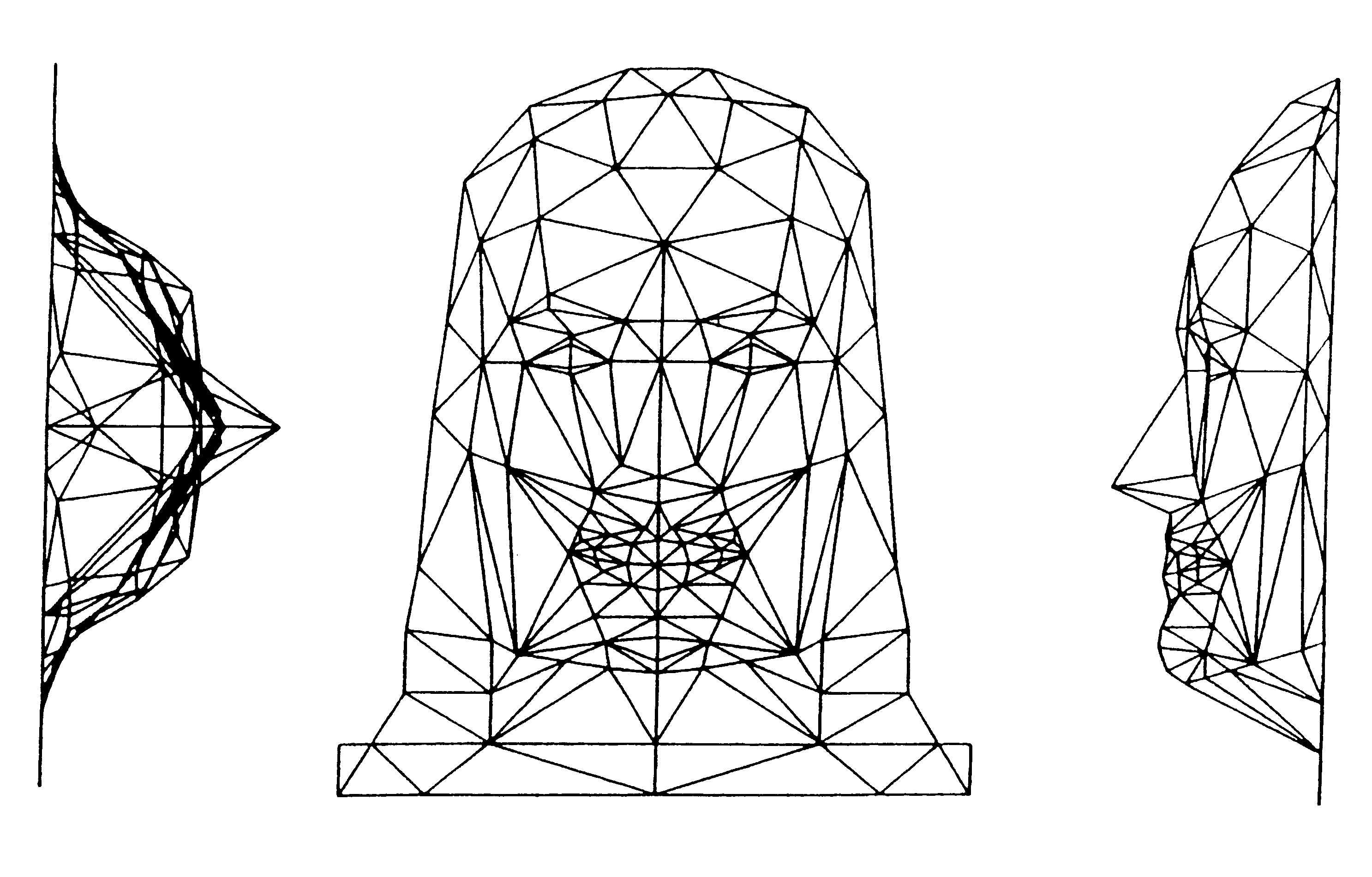

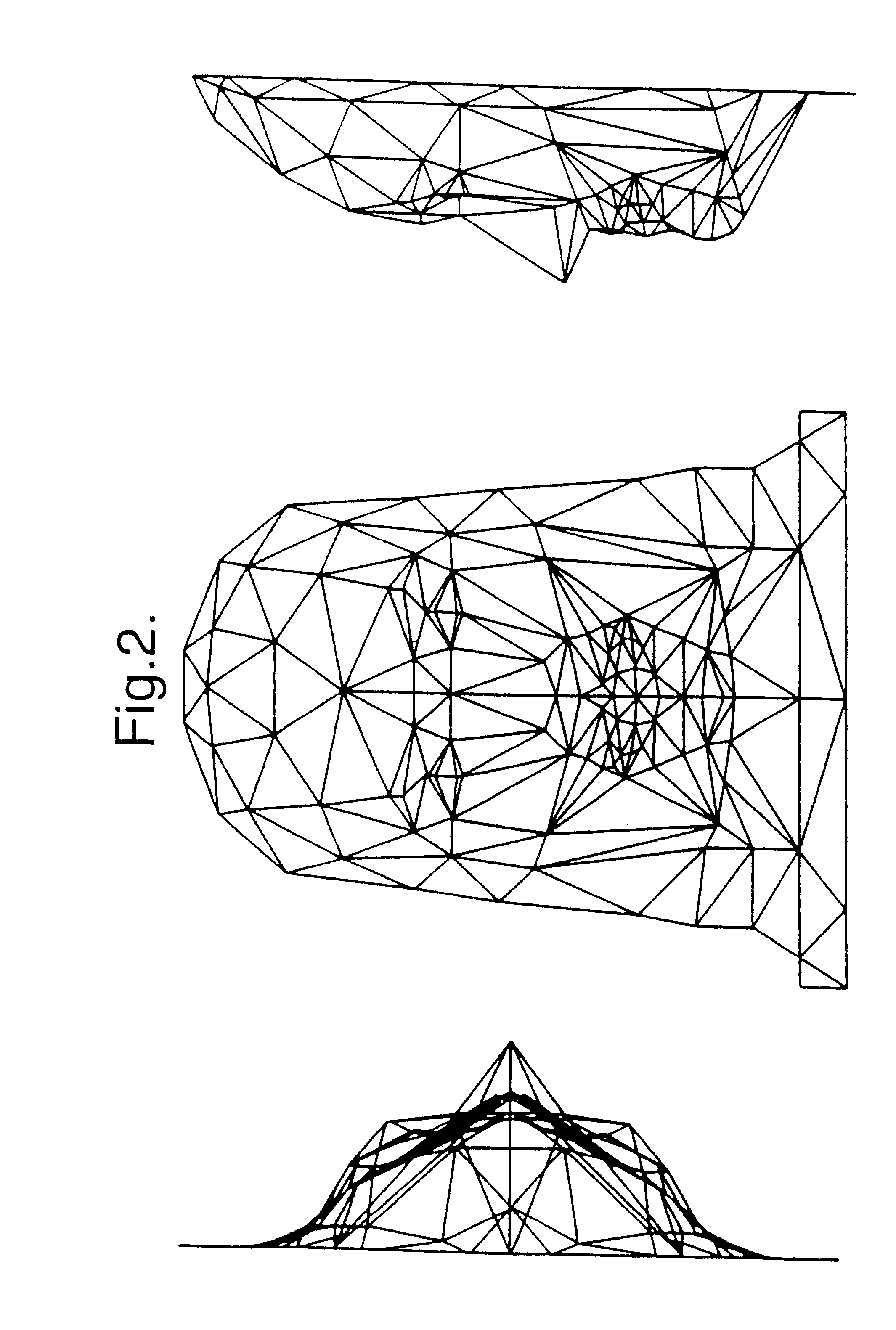

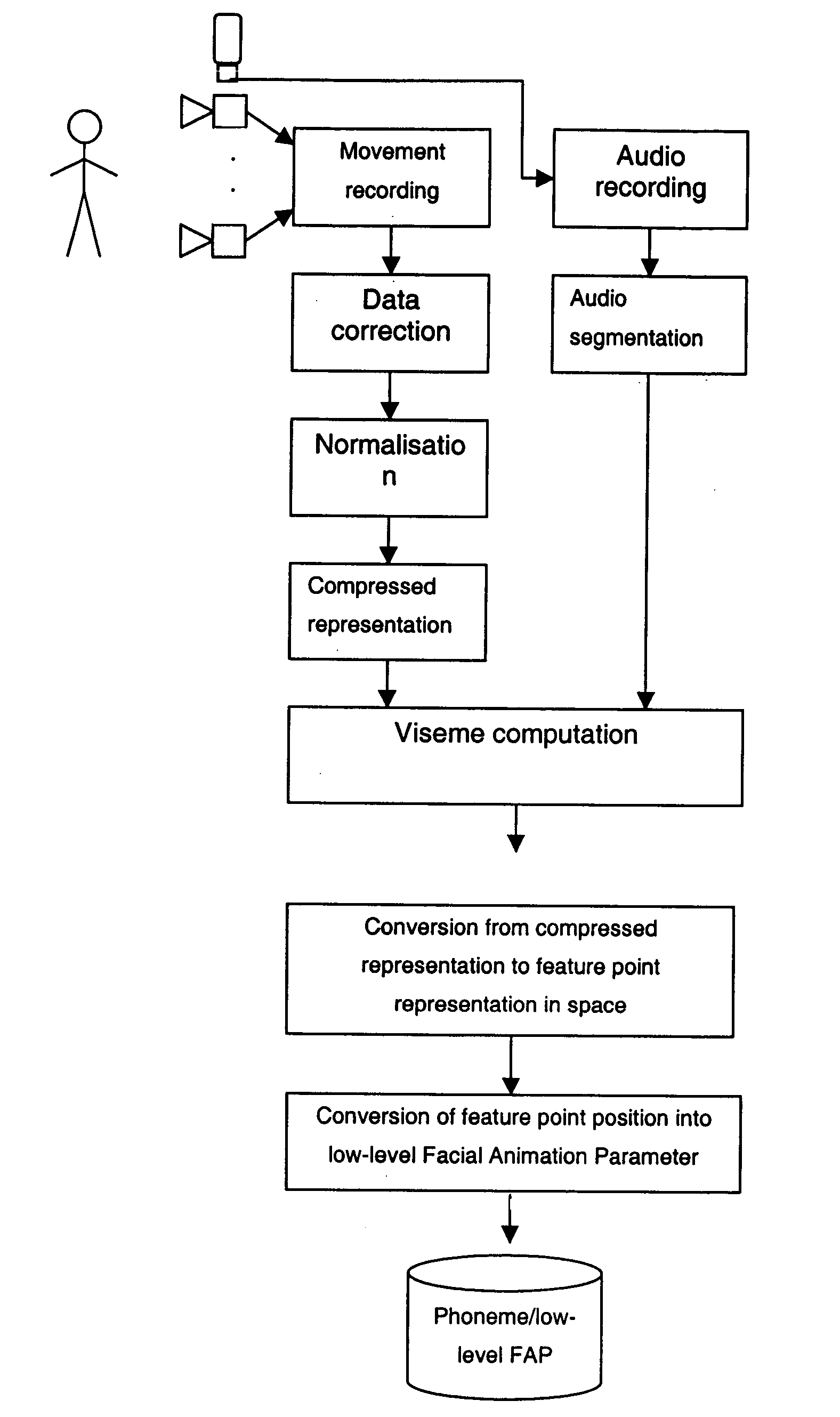

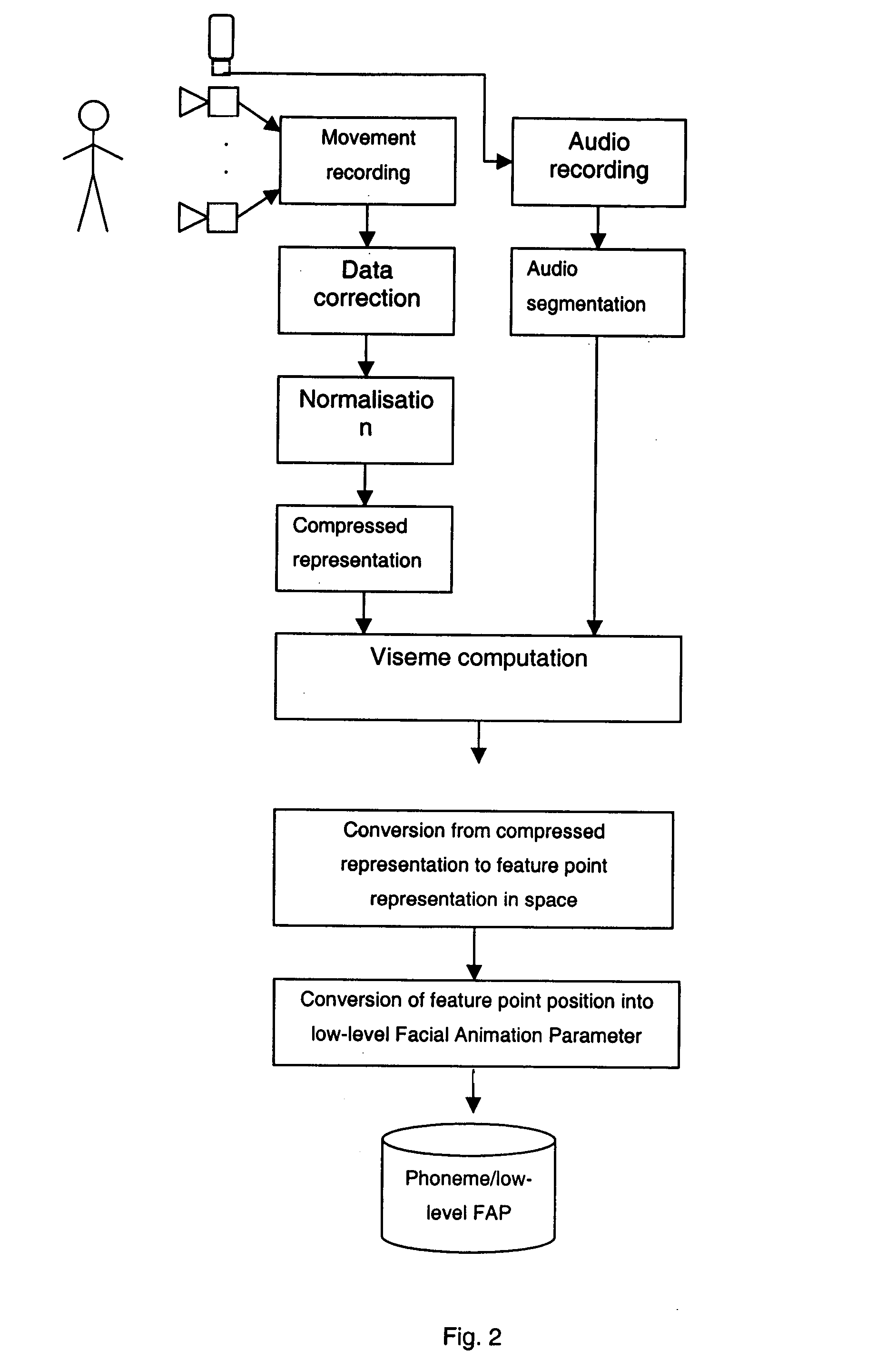

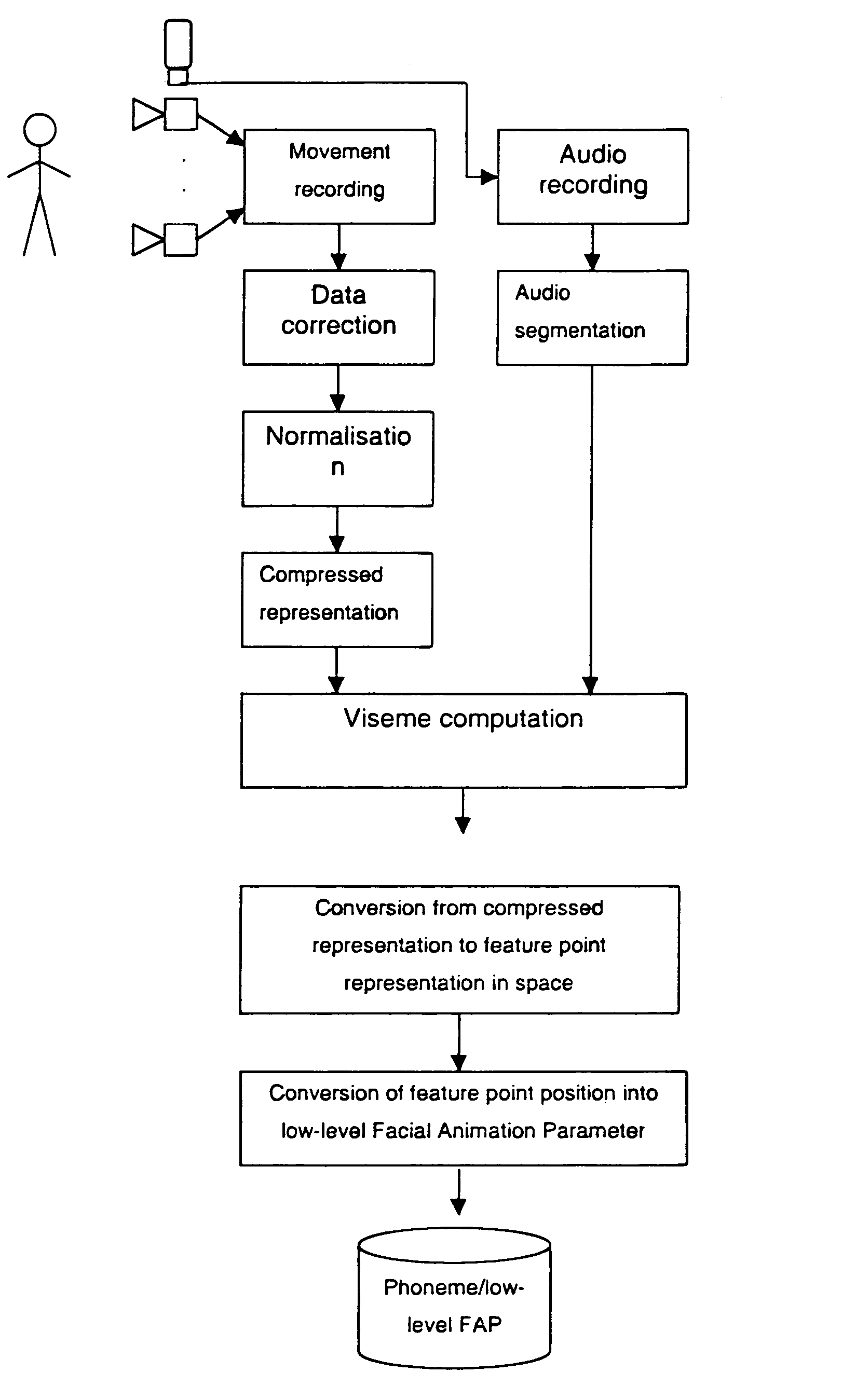

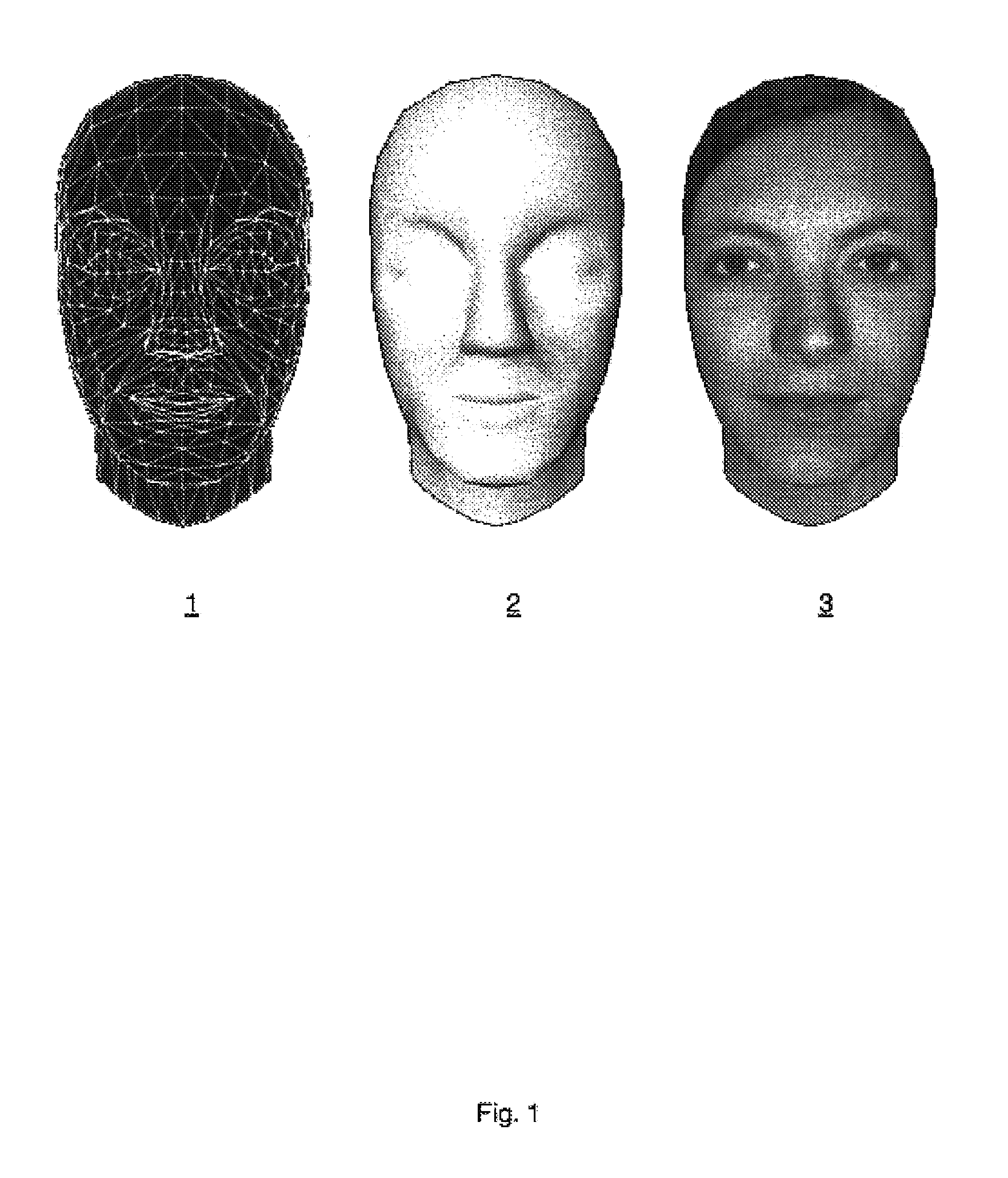

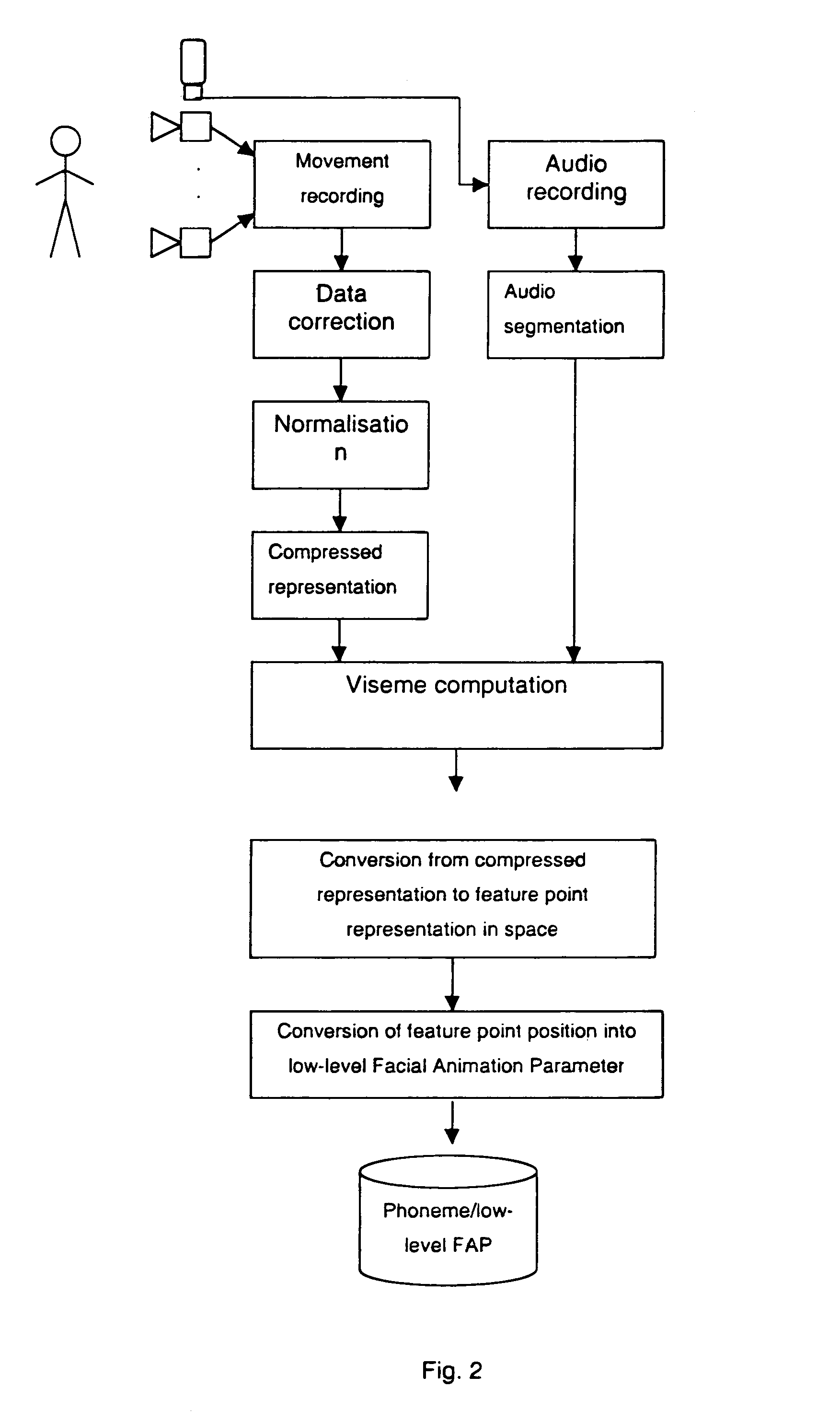

Method of animating a synthesised model of a human face driven by an acoustic signal

The method permits the animation of a synthesised model of a human face in relation to an audio signal. The method is not language dependent and provides a very natural animated synthetic model, being based on the simultaneous analysis of voice and facial movements, tracked on real speakers, and on the extraction of suitable visemes. The subsequent animation consists in transforming the sequence of visemes corresponding to the phonemes of the driving text into the sequence of movements applied to the model of the human face.

Owner:TELECOM ITALIA SPA

Method of animating a synthesized model of a human face driven by an acoustic signal

The method permits the animation of a synthesised model of a human face in relation to an audio signal. The method is not language dependent and provides a very natural animated synthetic model, being based on the simultaneous analysis of voice and facial movements, tracked on real speakers, and on the extraction of suitable visemes.The subsequent animation consists in transforming the sequence of visemes corresponding to the phonemes of the driving text into the sequence of movements applied to the model of the human face.

Owner:TELECOM ITALIA SPA

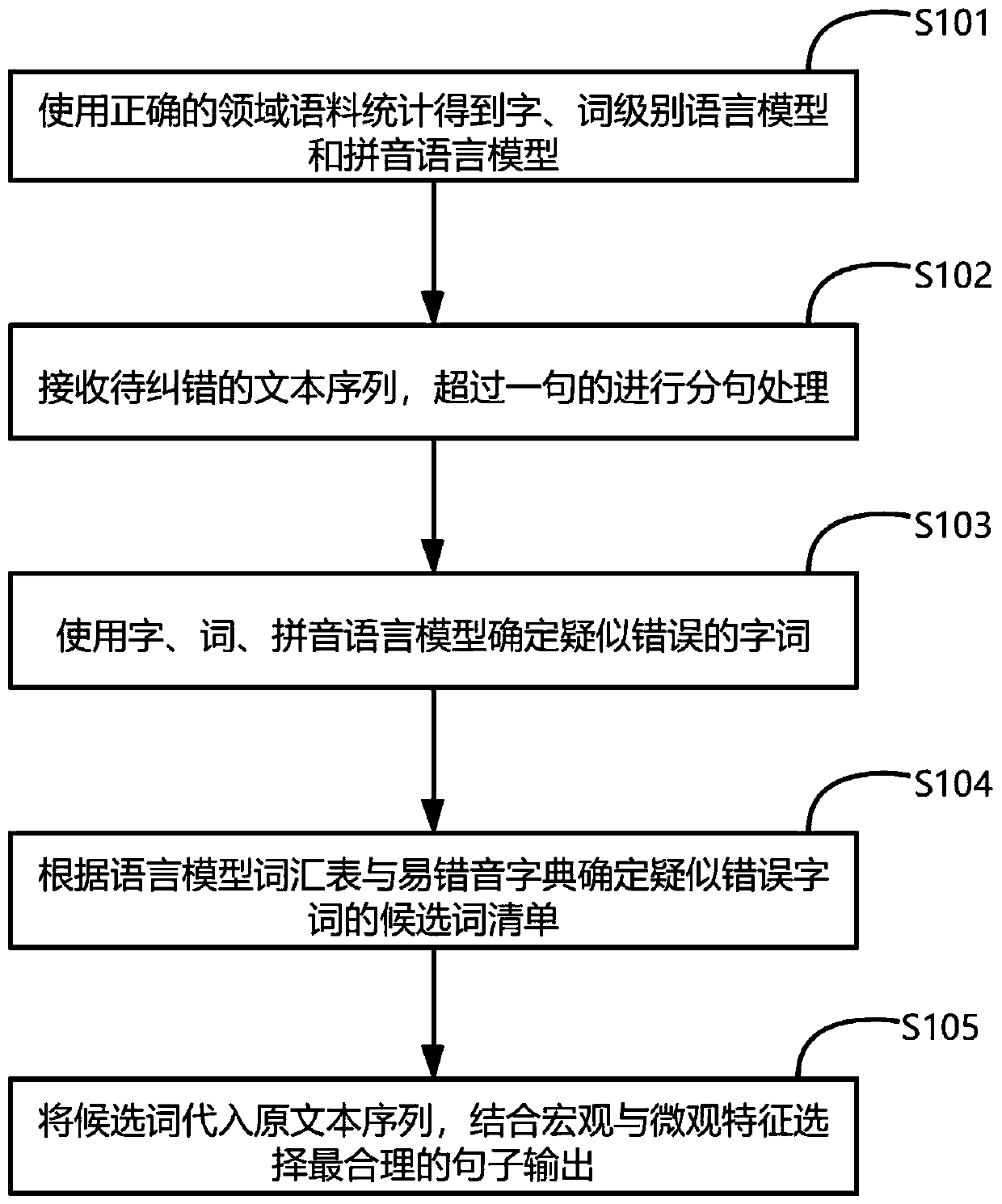

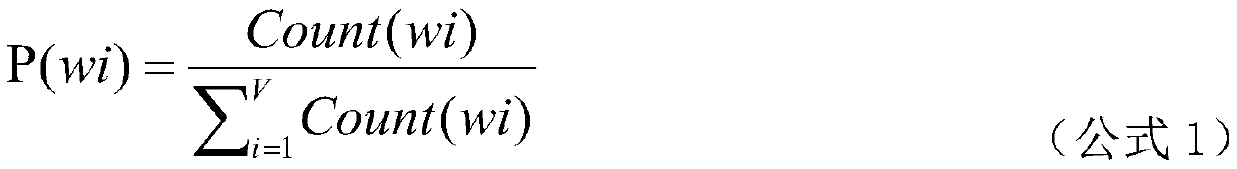

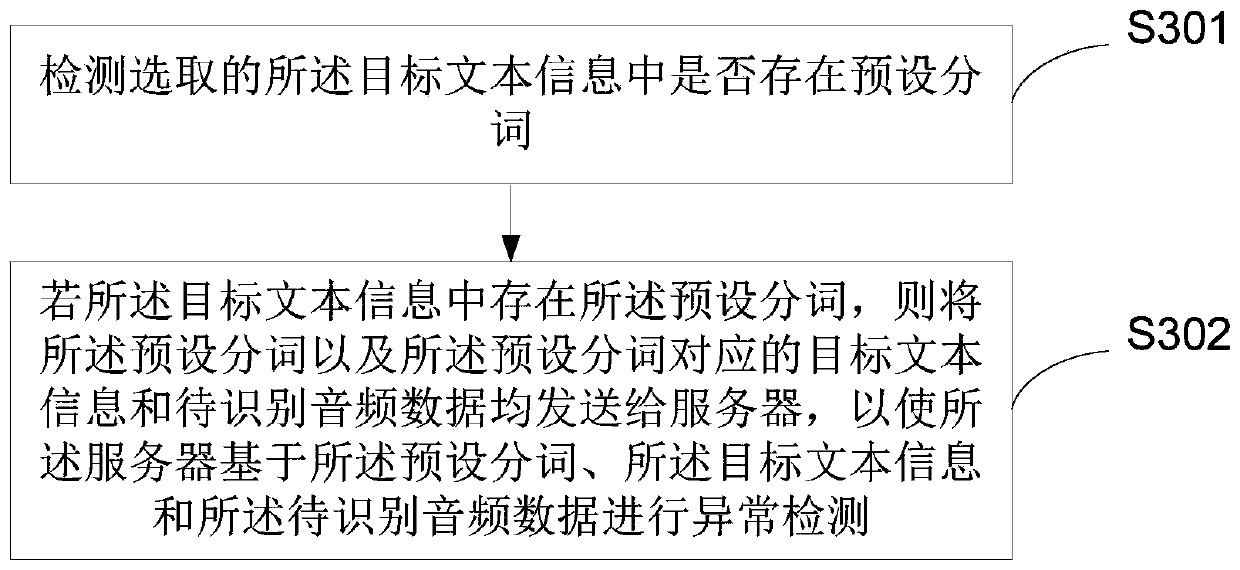

Voice recognition text error correction method in specific field

ActiveCN111369996AImprove the detection rateAvoid being mishandledNatural language data processingSpeech recognitionWord listSpeech sound

The invention relates to a voice recognition text error correction method in a specific field, wherein the method comprises the following steps: firstly, performing statistics by using correct field corpora to obtain a character and word level language model and a pinyin language model; then, receiving a text sequence to be subjected to error correction, and performing clause processing on more than one sentence; determining suspected wrong words by using a word, word and pinyin language model; determining a candidate word list of the suspected wrong words according to a language model vocabulary and a pronunciation-prone dictionary; and finally, substituting candidate words into the original text sequence, and selecting and outputting the most reasonable sentence in combination with macroscopic and microcosmic scores. Basic units with different granularities and dimensions such as characters, words, pinyin and initial and final consonants are selected to construct a language model, and word segmentation error interference caused by wrong characters is reduced; isolated character disorder is processed by adopting a word language model, and continuous recognition errors caused by pronunciation deviation is distinguished by adopting the pinyin language model; and candidate sentences after the wrong words are replaced are comprehensively evaluated by macroscopic and microcosmic scores, and the smoothness degree of the replaced sentences are measured.

Owner:网经科技(苏州)有限公司

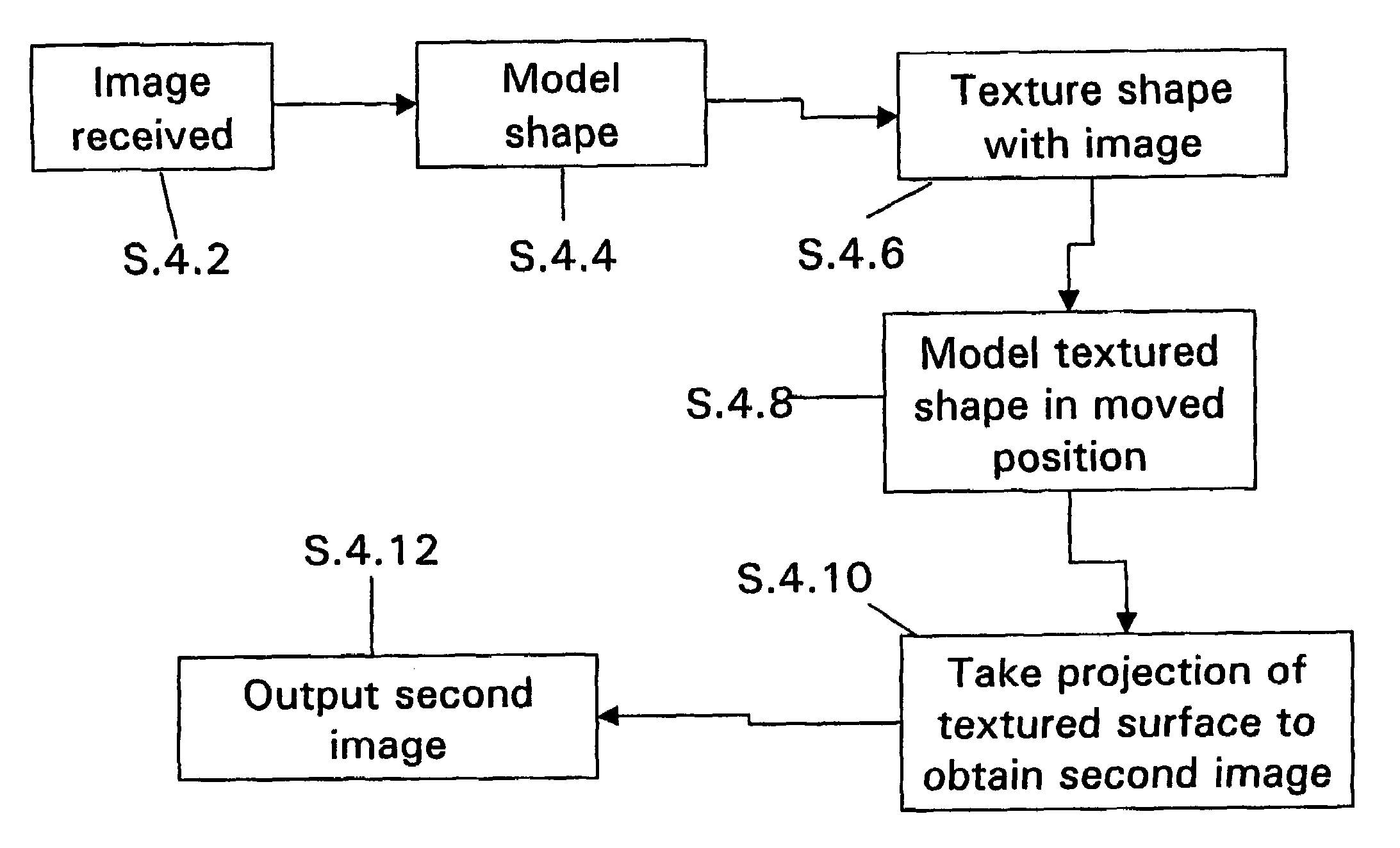

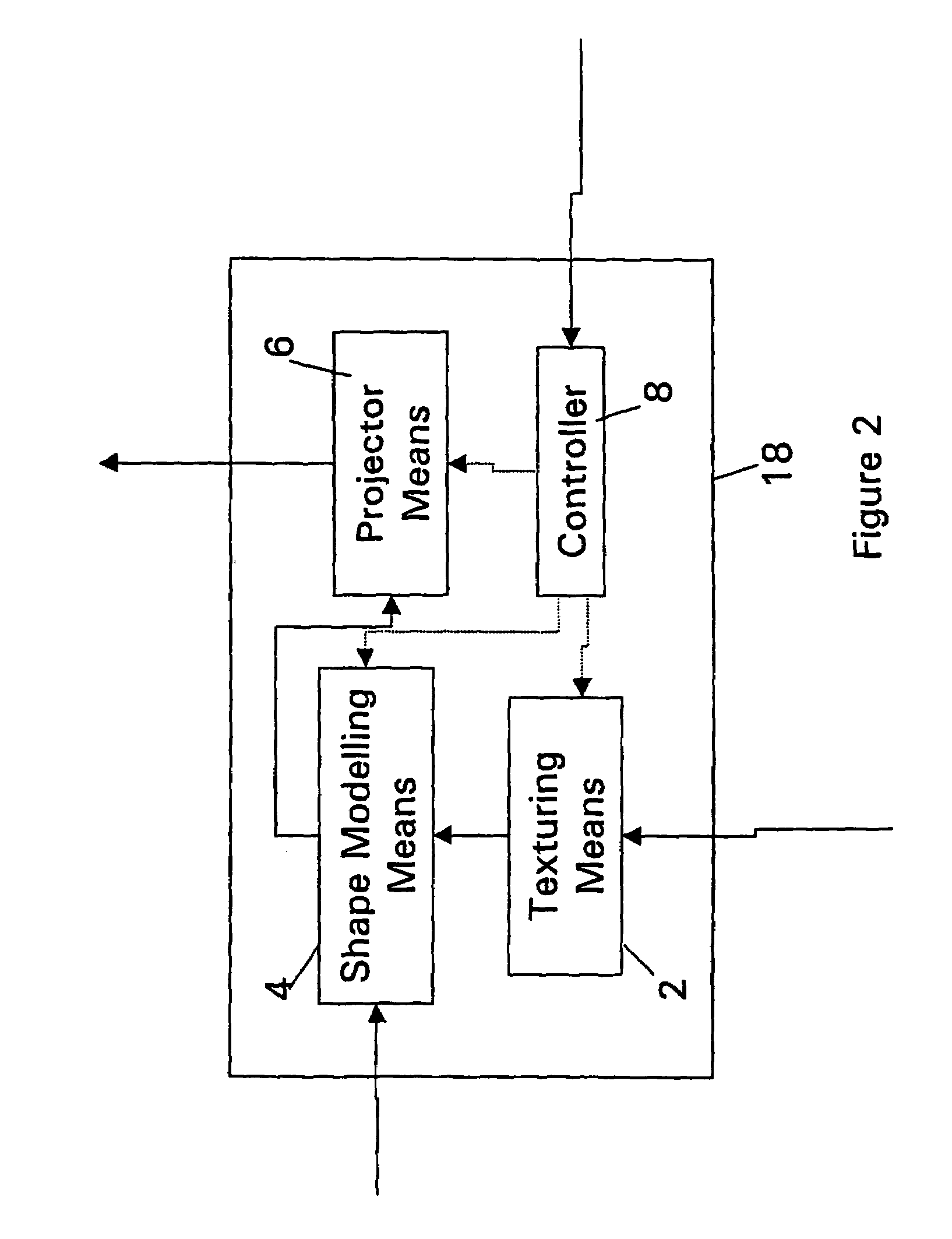

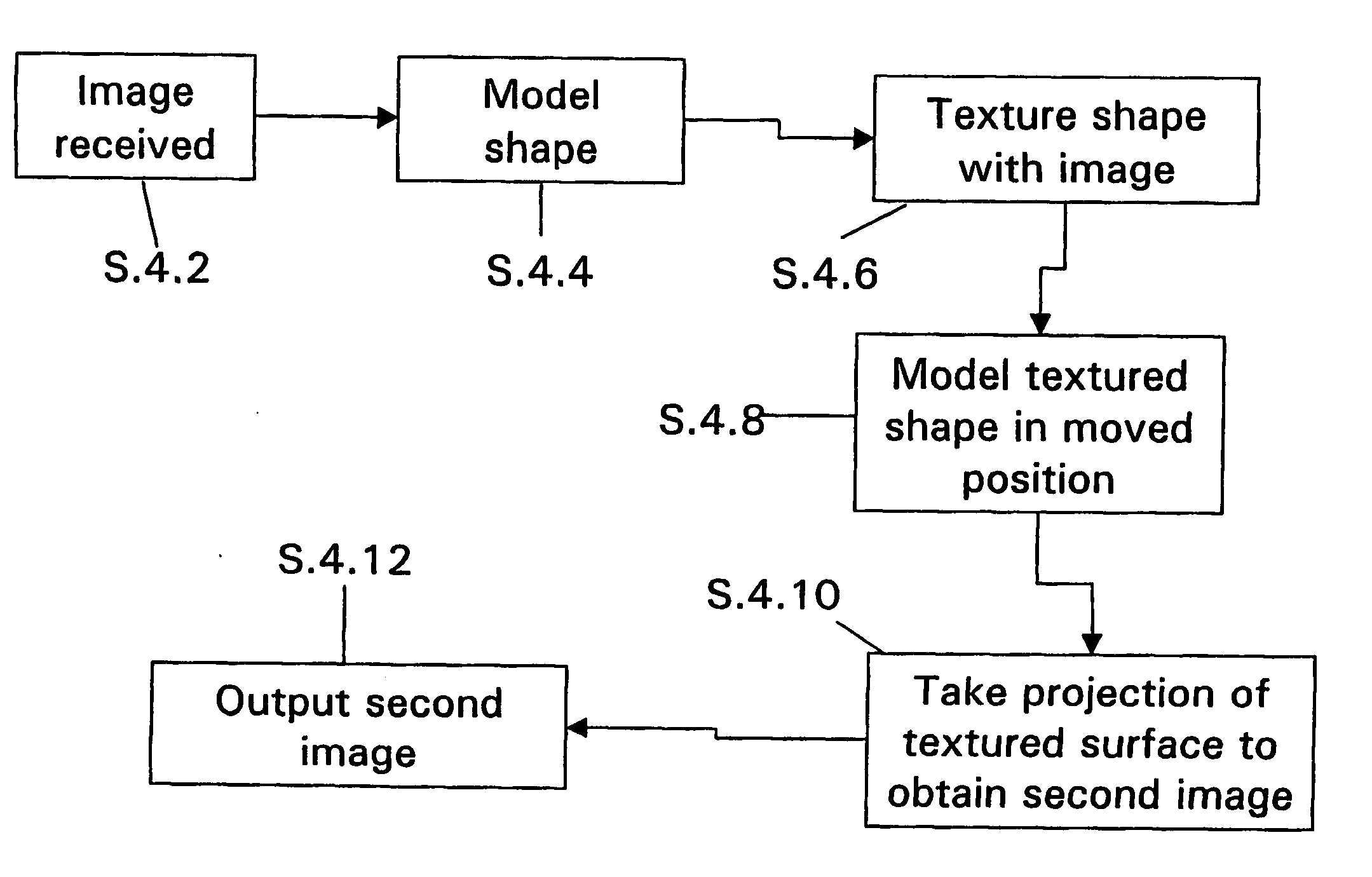

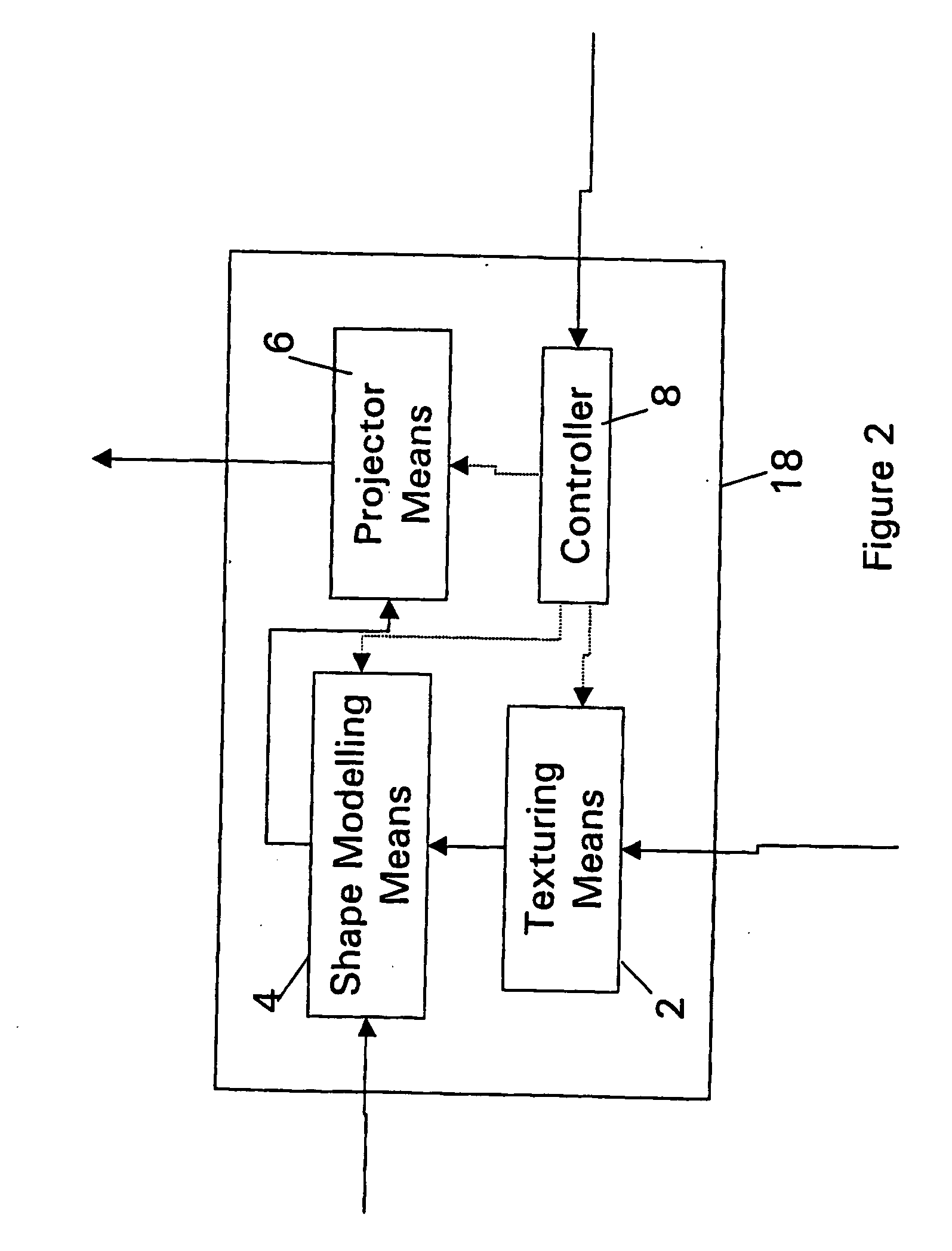

Image processing method and system

InactiveUS7184049B2Easy to operateReduce computational intensityGeometric image transformationCathode-ray tube indicatorsImaging processingComputer graphics (images)

The invention provides an image processing method and system wherein an image is conceptually textured onto the surface of a three dimensional shape via a projection thereonto. The shape and / or the image position are then moved relative to each other, preferably by a rotation about one or more axes of the shape, and a second projection taken of the textured surface back to the image position to obtain a second, processed image. The view displayed within the processed image will be seen to have undergone an aspect ratio change as a result of the processing. The invention is of particular use in simulating the small movements of humans when speaking, and in particular of processing viseme images to simulate such small movements when displayed as a sequence.

Owner:BRITISH TELECOMM PLC

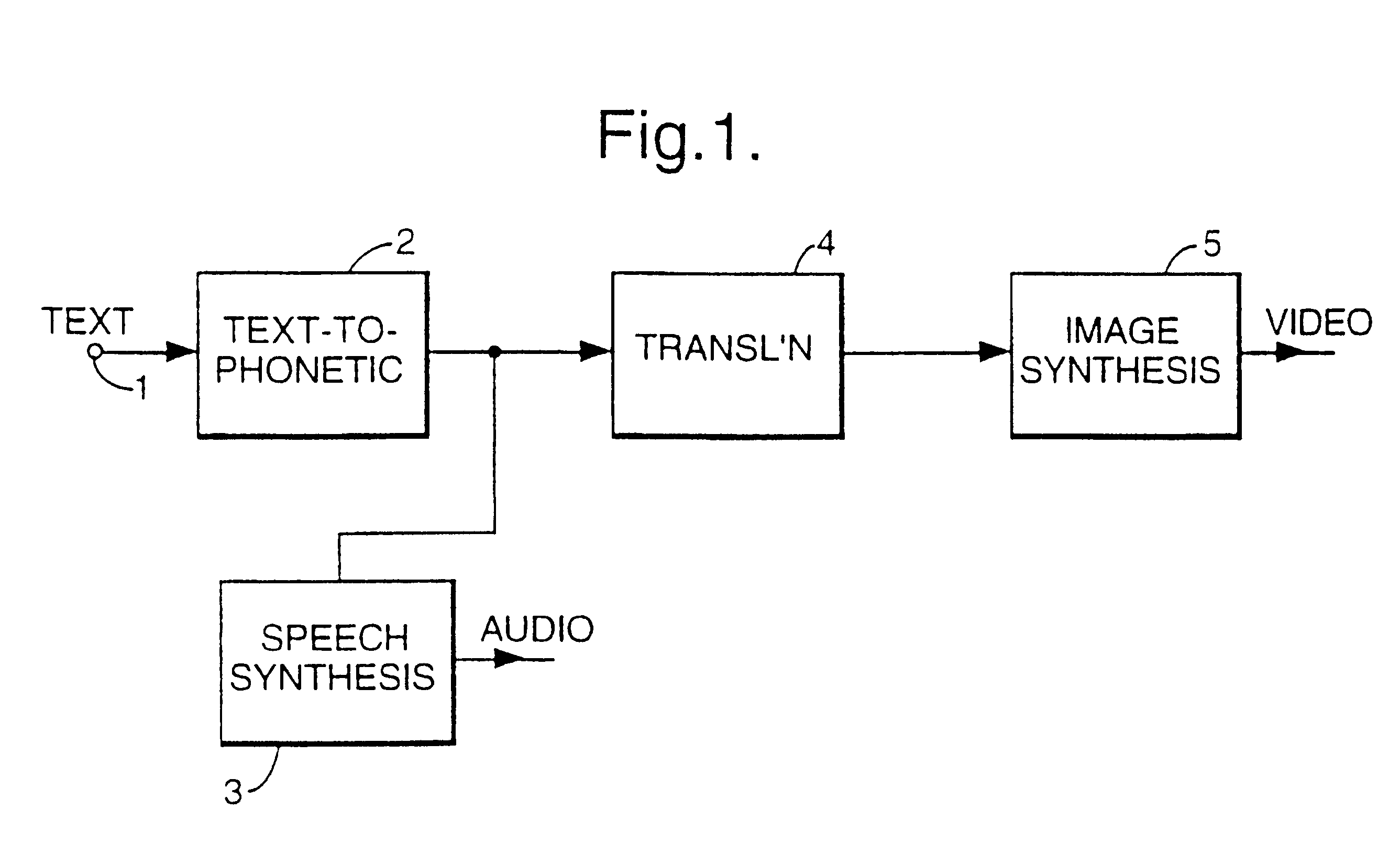

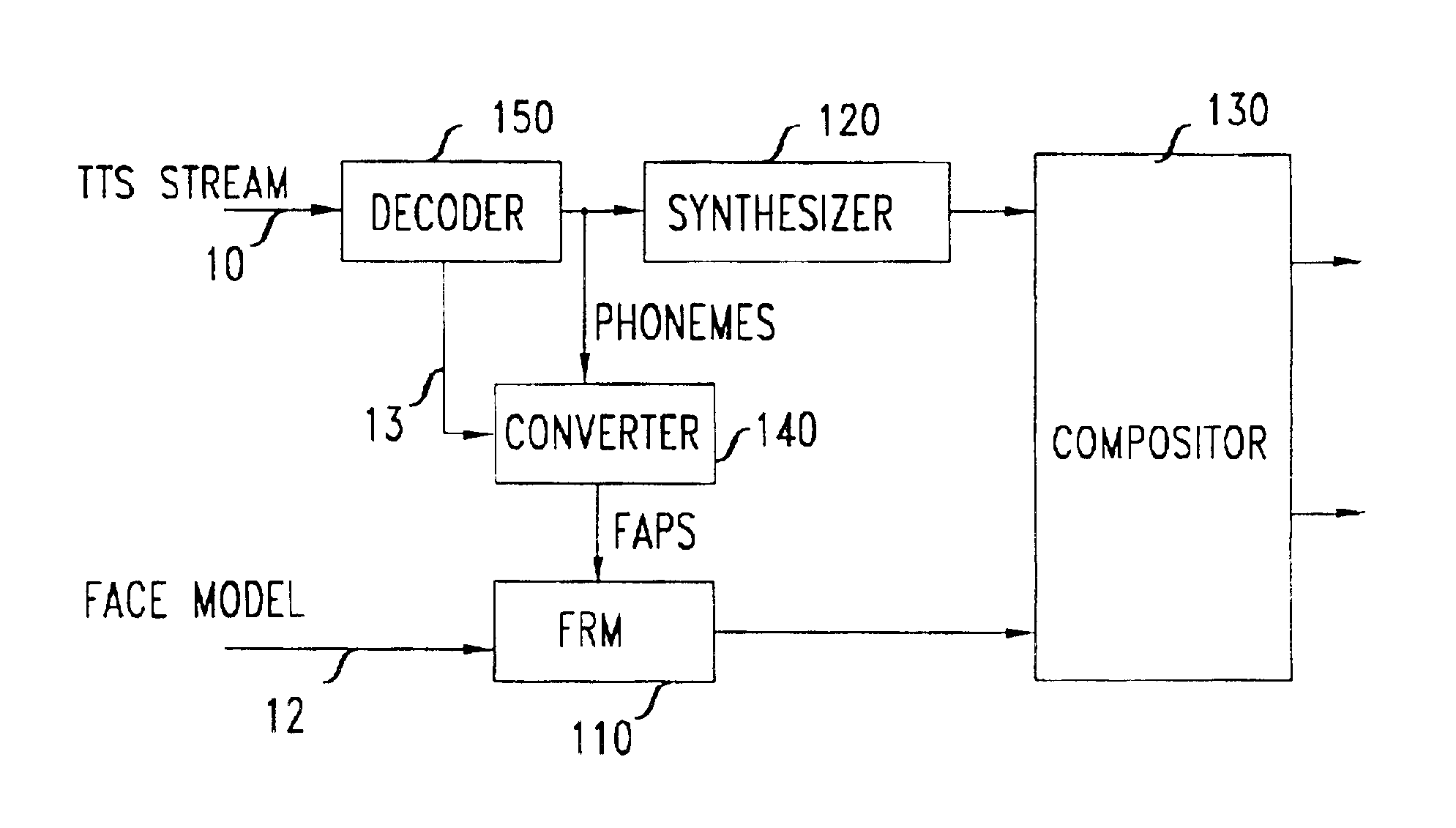

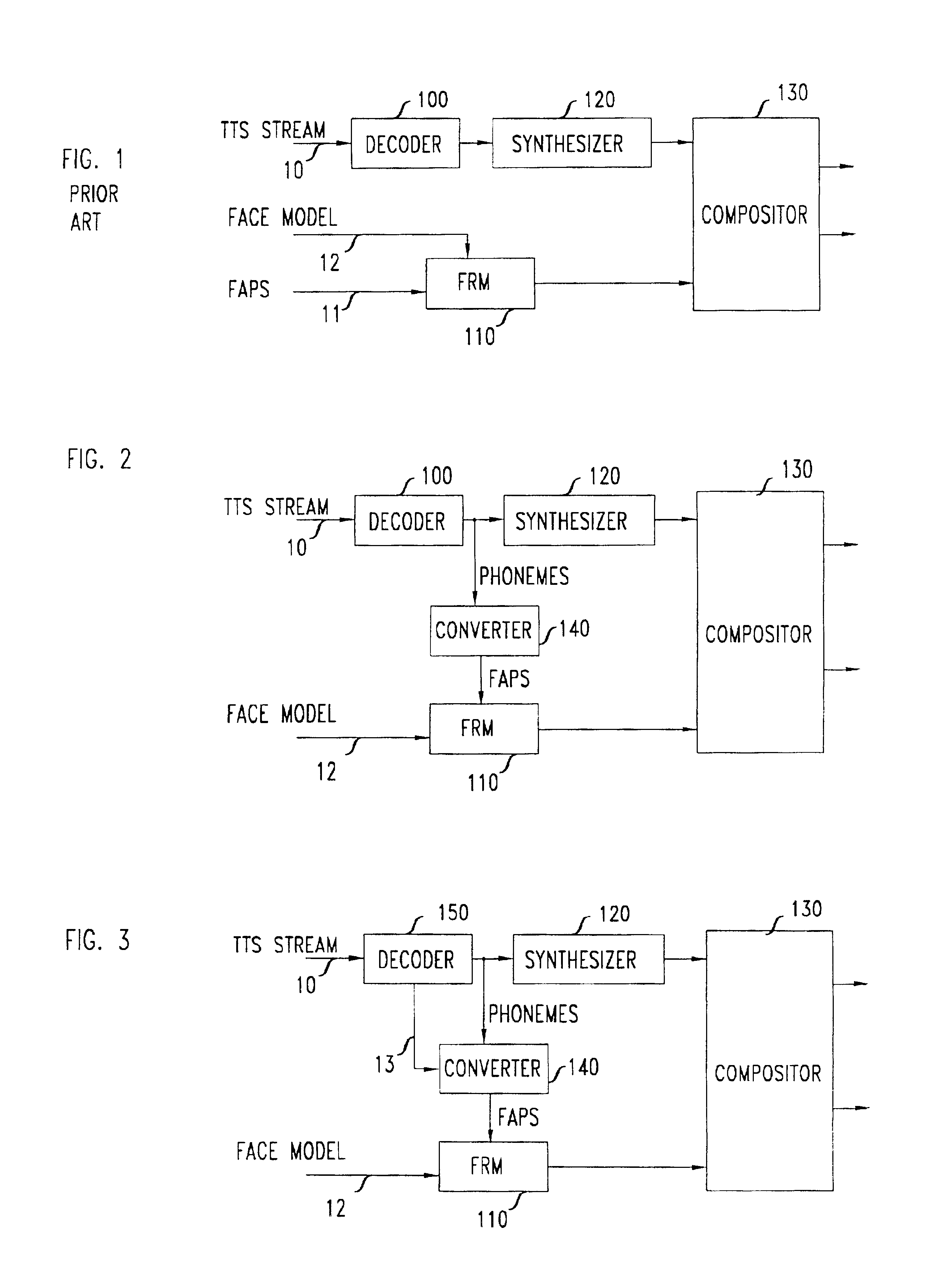

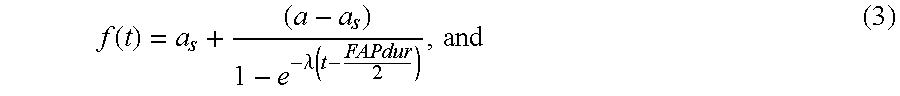

Integration of talking heads and text-to-speech synthesizers for visual TTS

InactiveUS6839672B1Convenient ArrangementSmooth transitionSelective content distributionSpeech synthesisSpeech synthesisComputer science

An enhanced arrangement for a talking head driven by text is achieved by sending FAP information to a rendering arrangement that allows the rendering arrangement to employ the received FAPs in synchronism with the speech that is synthesized. In accordance with one embodiment, FAPs that correspond to visemes which can be developed from phonemes that are generated by a TTS synthesizer in the rendering arrangement are not included in the sent FAPs, to allow the local generation of such FAPs. In a further enhancement, a process is included in the rendering arrangement for creating a smooth transition from one FAP specification to the next FAP specification. This transition can follow any selected function. In accordance with one embodiment, a separate FAP value is evaluated for each of the rendered video frames.

Owner:NUANCE COMM INC

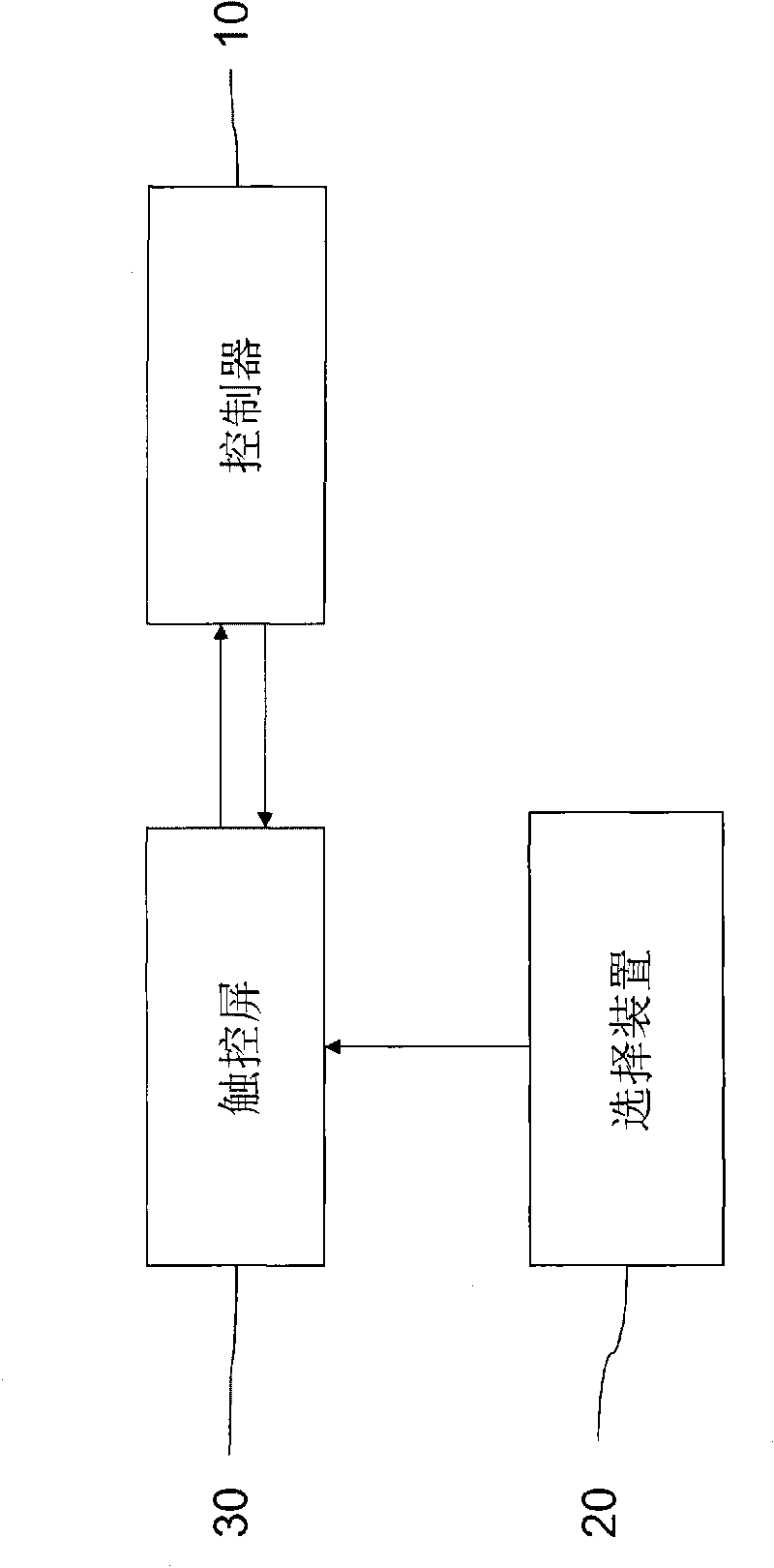

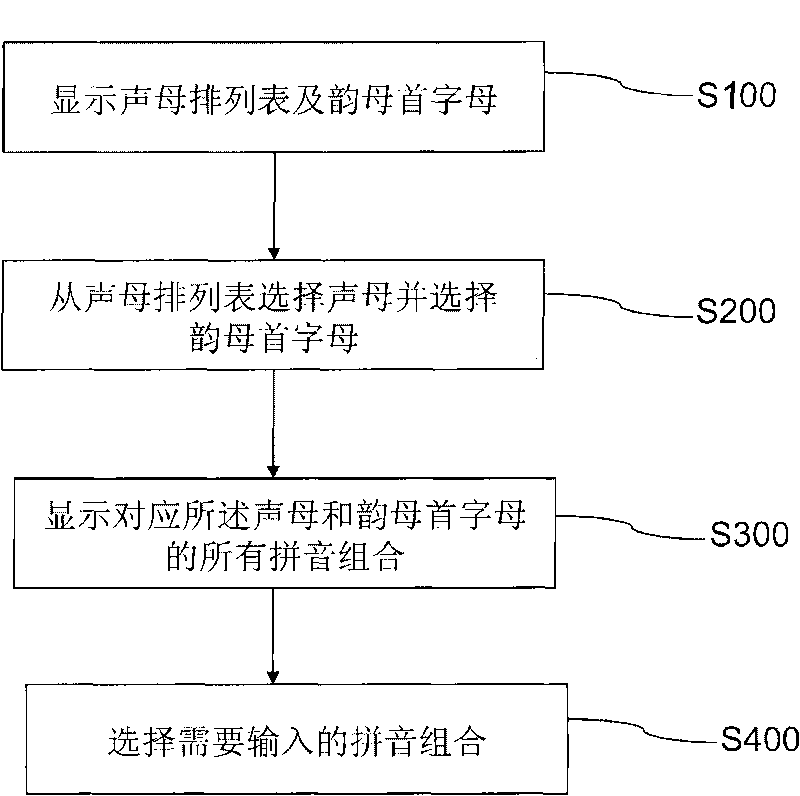

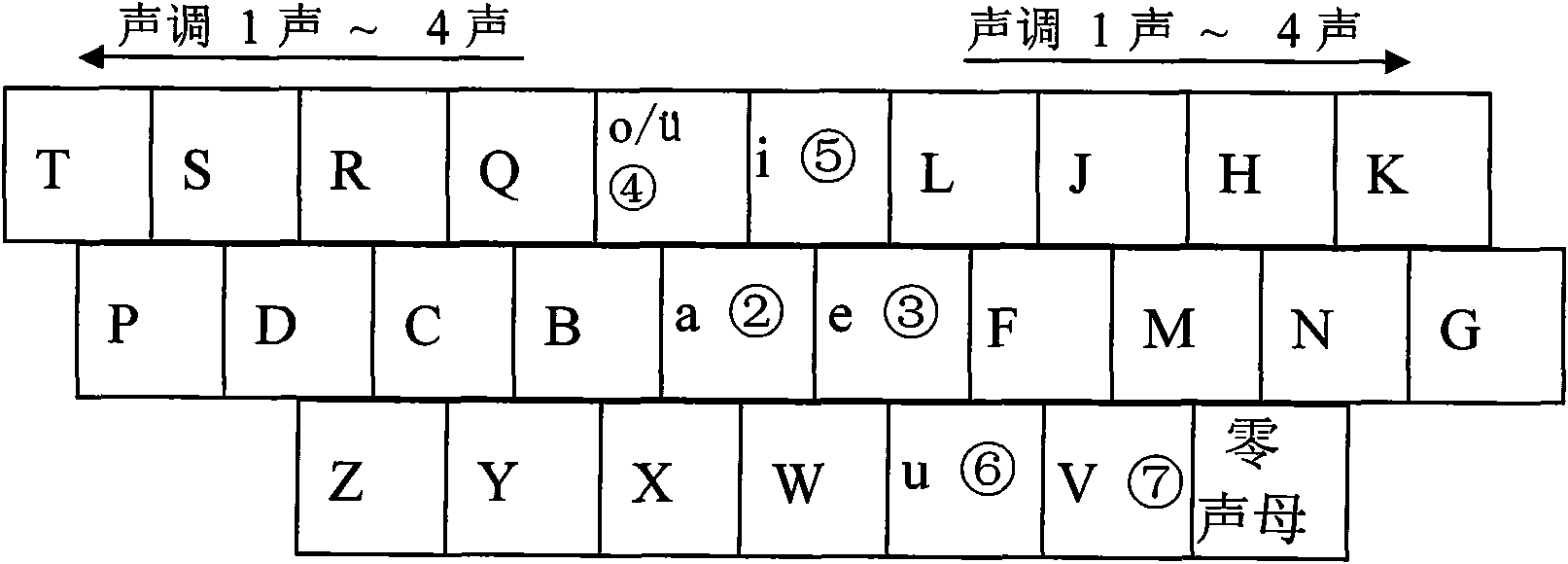

Pinyin input method and terminal thereof

InactiveCN101714049AExtended service lifeType fastInput/output processes for data processingAcousticsPinyin input method

The invention relates to a Pinyin input method and a terminal thereof. The Pinyin input method is applied to a portable complete touch control screen terminal. The Pinyin input method comprises the following steps of: when entering a Pinyin input mode, displaying an initial consonant arrangement table and a plurality of vowel initials which are arranged at the periphery of each initial consonant key of the initial consonant table and can be combined with the initial consonants; selecting the initial consonant from the initial consonant arrangement table displayed by the touch control screen and selecting the needed vowel initial from the vowel initials which are displayed at the periphery of the initial consonants and can be combined with the initial consonants; displaying all Pinyin combinations which begin with the initial consonant and the vowel initial; and selecting the Pinyin combination needing to be input from the displayed all Pinyin combinations which begin with the initial consonant and the vowel initial.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

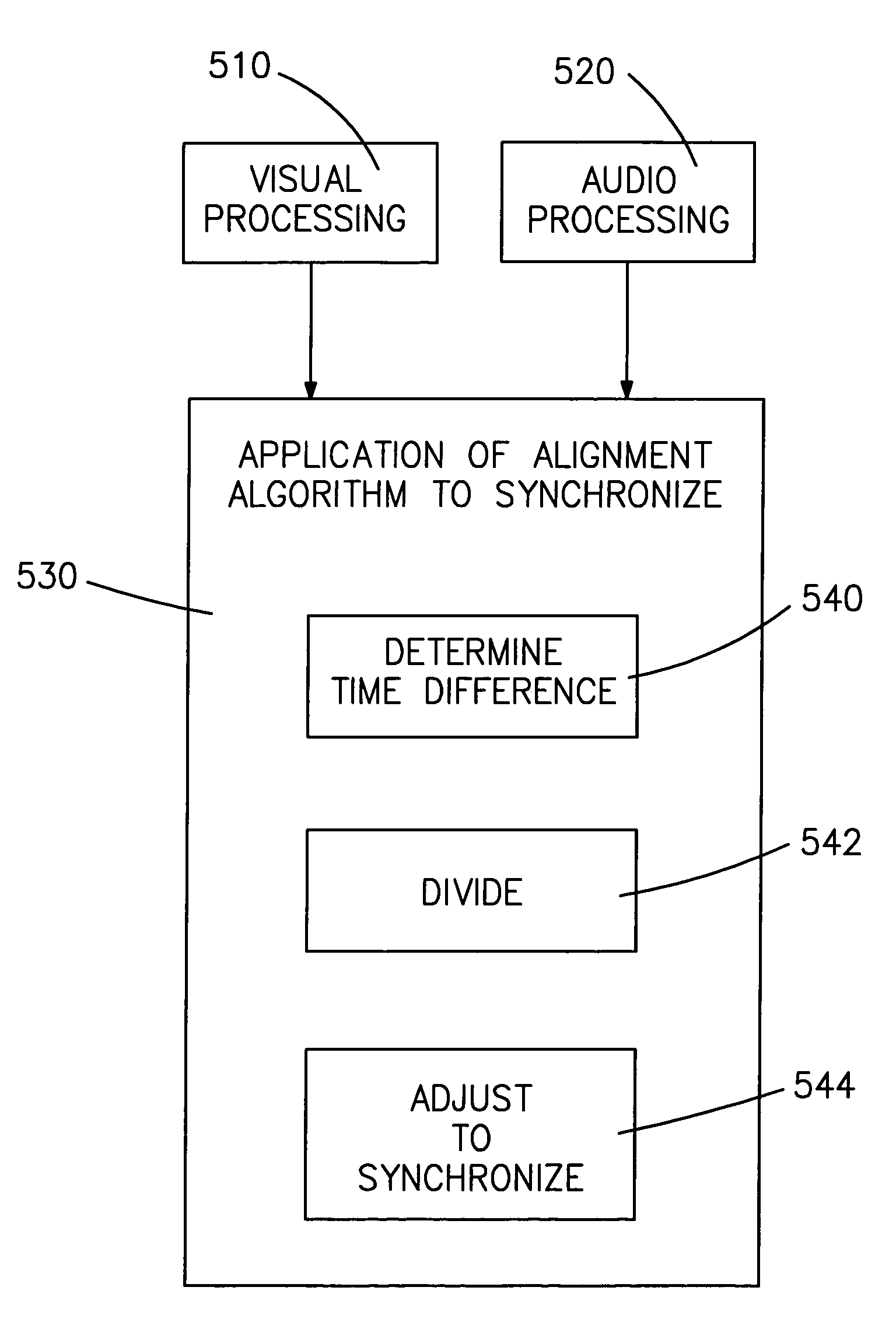

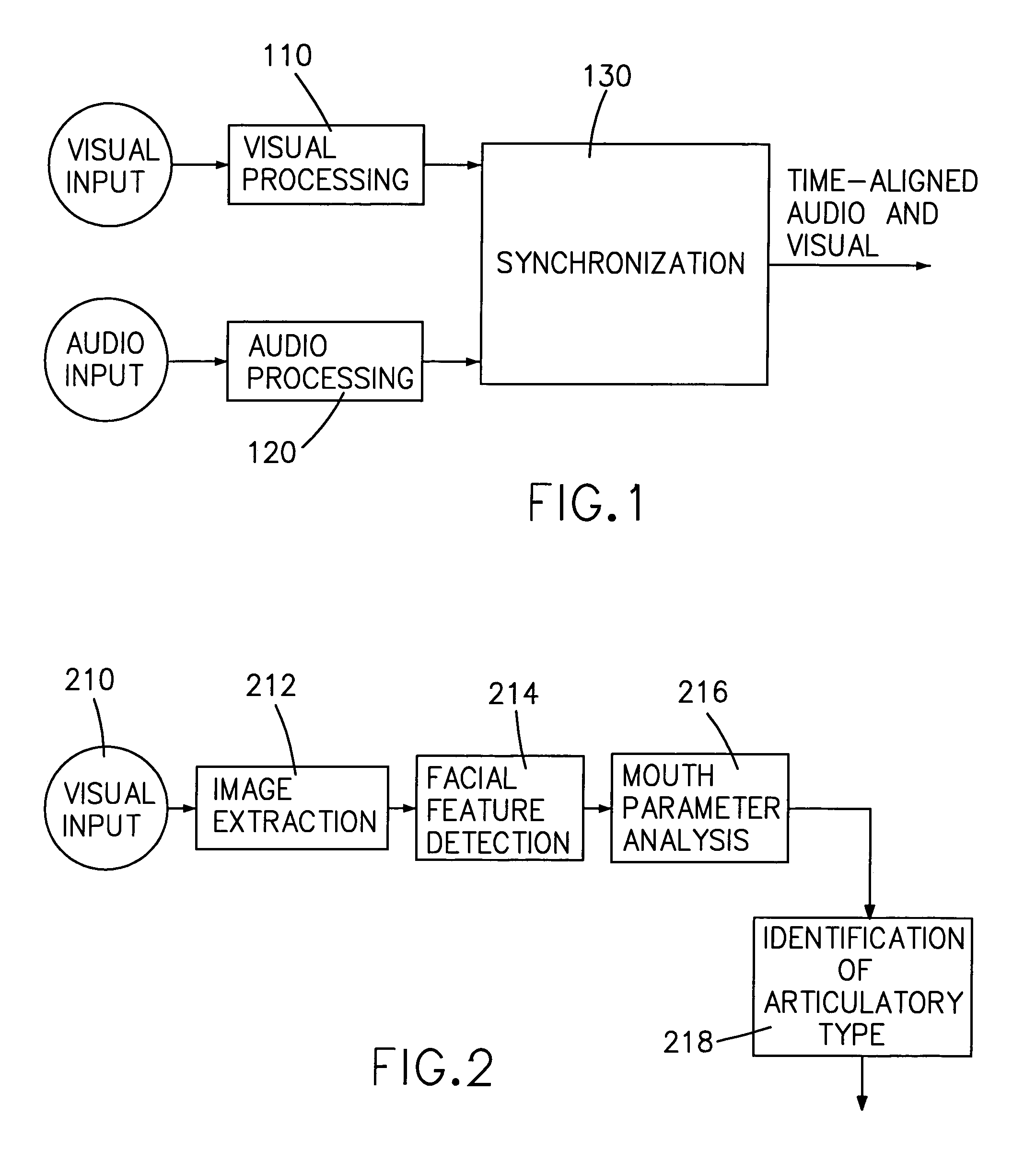

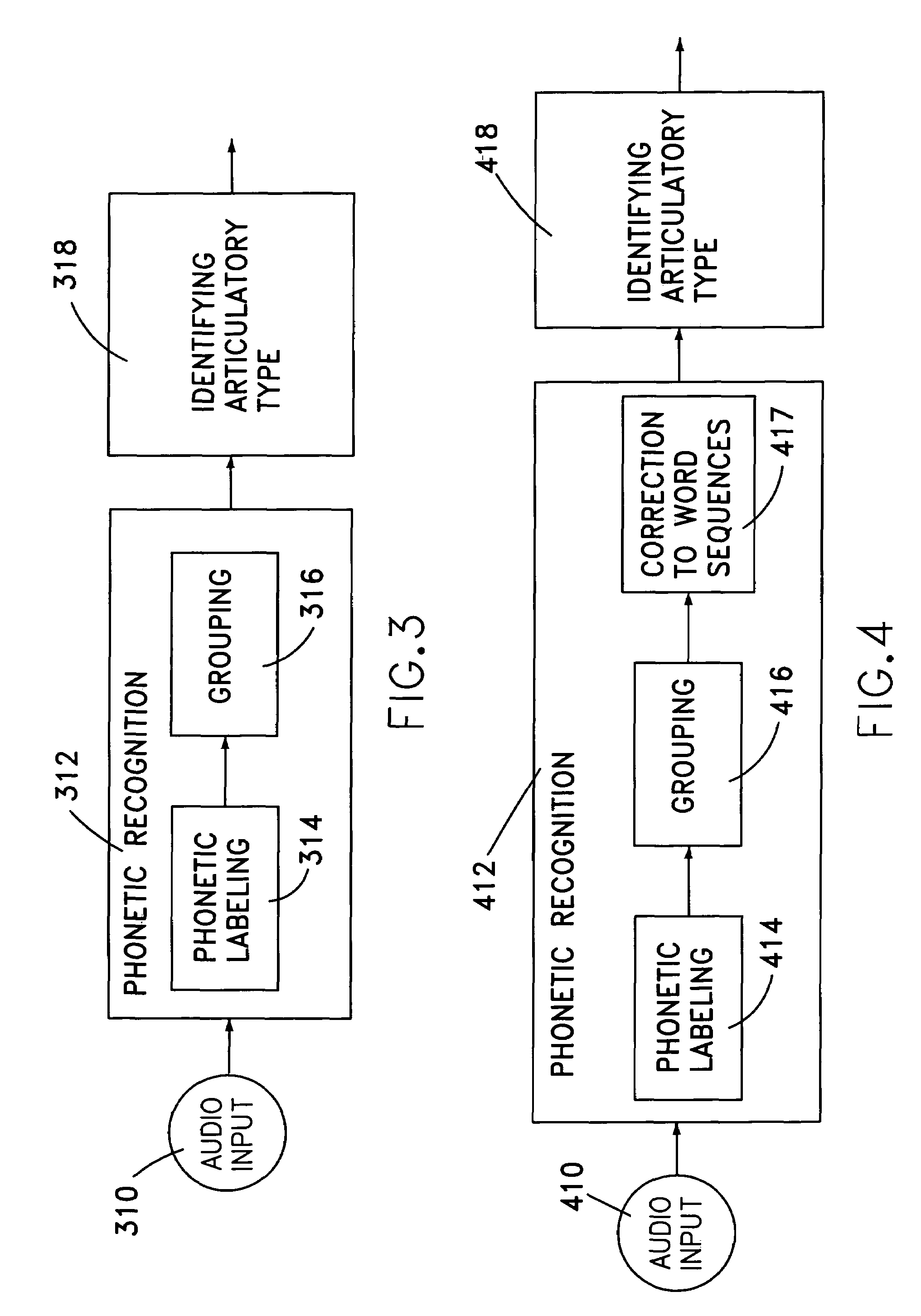

System and method for eliminating synchronization errors in electronic audiovisual transmissions and presentations

InactiveUS7149686B1Speech recognitionSelective content distributionSpeech identificationSpeech sound

A system and method for eliminating synchronization errors using speech recognition. Using separate audio and visual speech recognition techniques, the inventive system and method identifies visemes, or visual cues which are indicative of articulatory type, in the video content, and identifies phones and their articulatory types in the audio content. Once the two recognition techniques have been applied, the outputs are compared to determine the relative alignment and, if not aligned, a synchronization algorithm is applied to time-adjust one or both of the audio and the visual streams in order to achieve synchronization.

Owner:IBM CORP

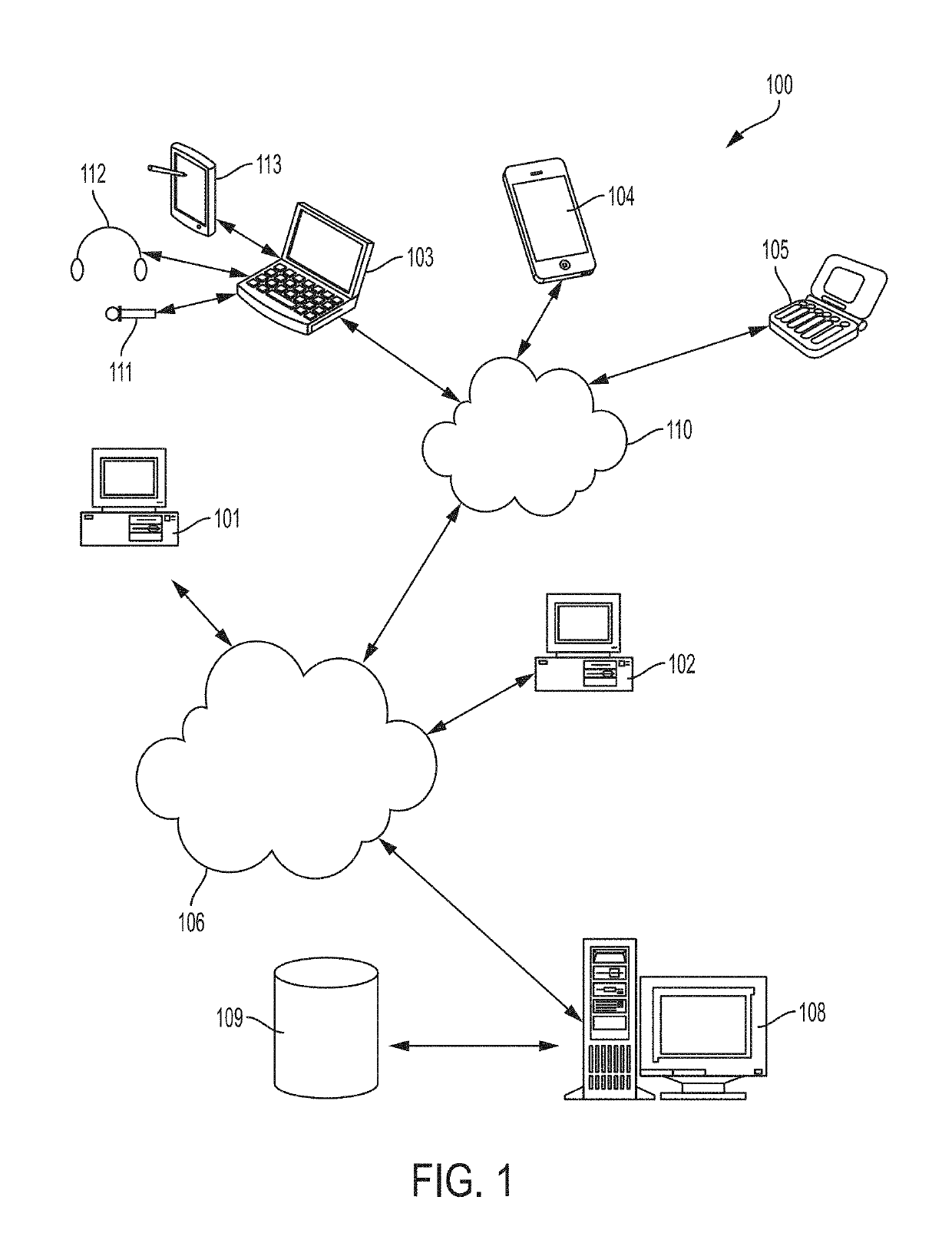

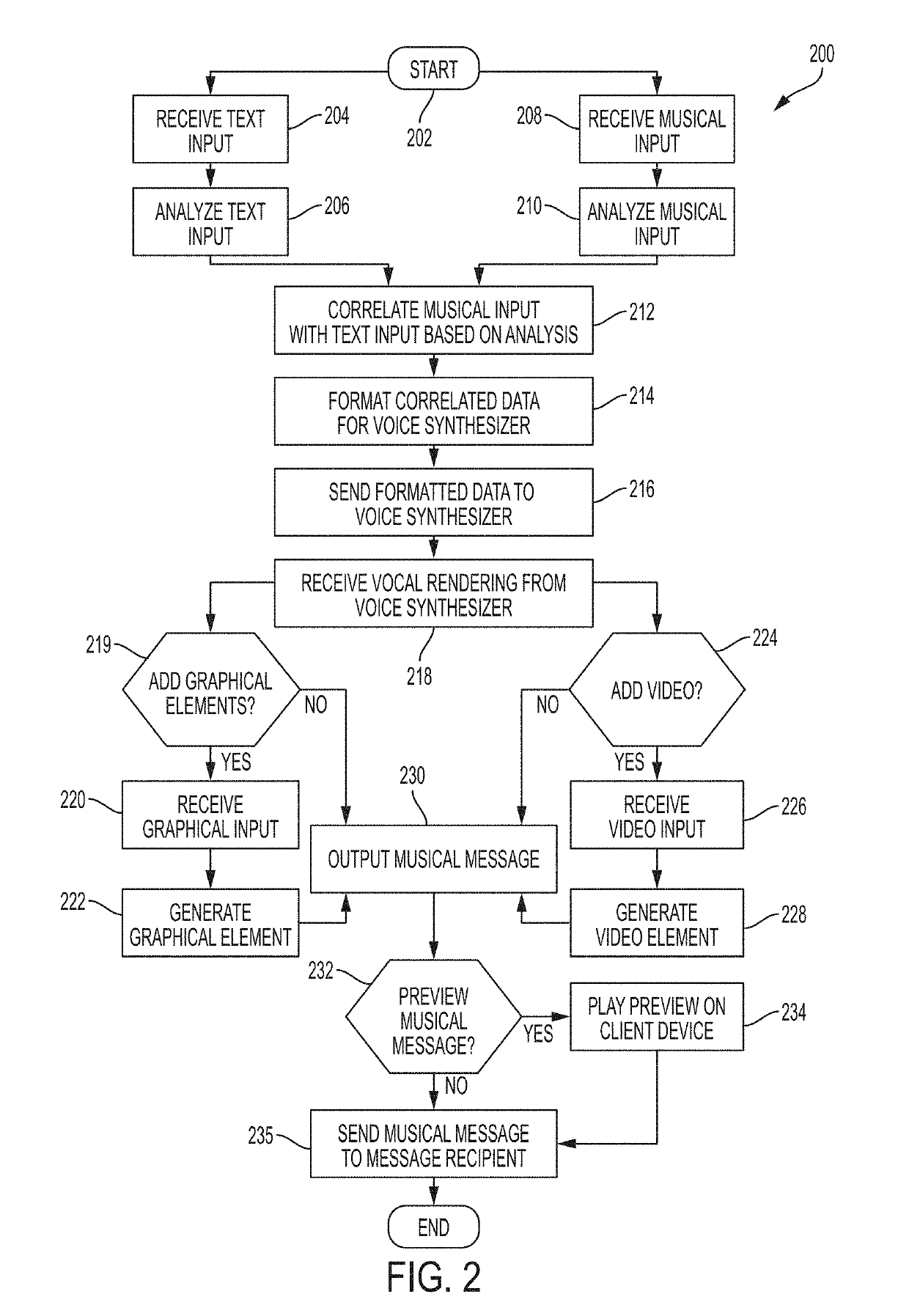

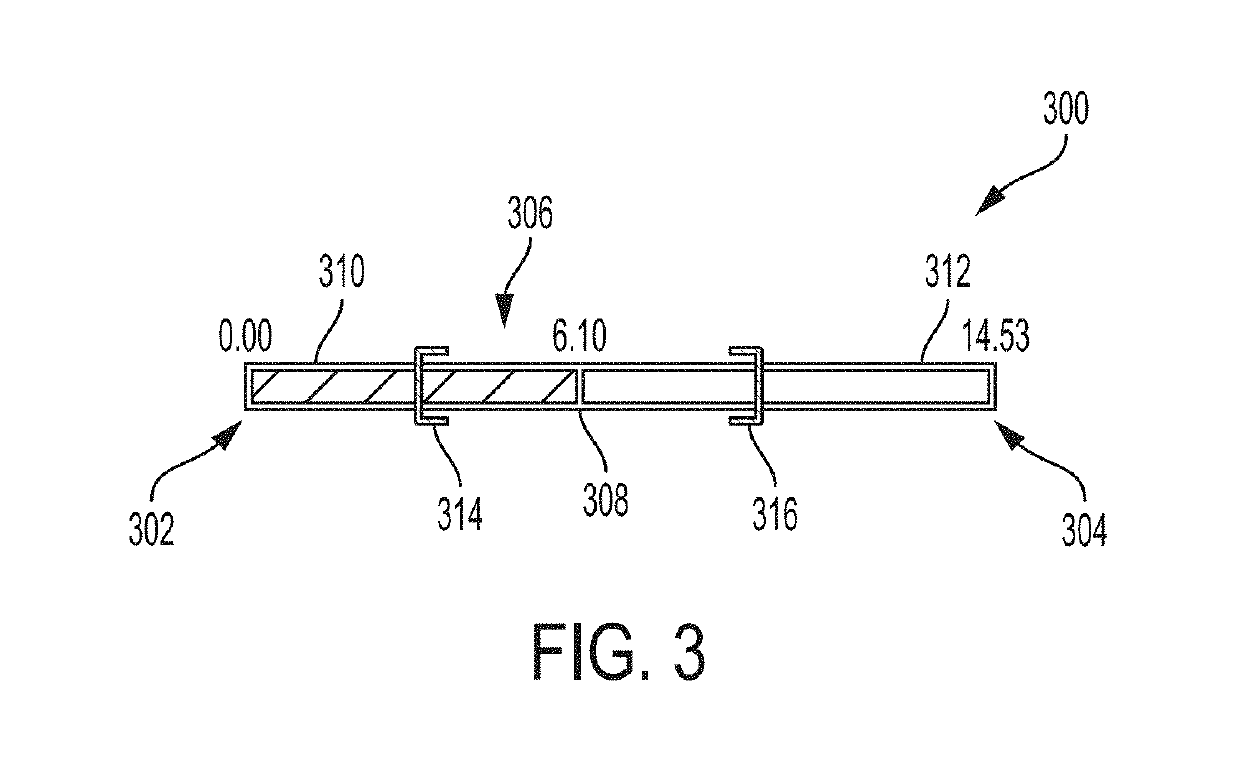

Systems and methods for generating animated multimedia compositions

InactiveUS20190147838A1Electrophonic musical instrumentsColor television signals processingAnimationTime line

A method for generating multimedia output. The method comprises receiving a text input and receiving an animated character input corresponding to an animated character including at least one movement characteristic. The method includes analyzing the text input to determine at least one text characteristic of the text input. The method includes generating a viseme timeline by applying at least one viseme characteristic to each of the at least one text characteristic. Based on the viseme timeline, the method includes generating a multimedia output coordinating the at least one character movement of the animated character with the at least one viseme characteristic.

Owner:MUSIC MASTERMIND

Image processing method and system

ActiveUS20050162432A1Exact reproductionEasy to operateGeometric image transformationCathode-ray tube indicatorsImaging processingThree dimensional shape

The invention provides an image processing method and system wherein an image is conceptually textured onto the surface of a three dimensional shape via a projection thereonto. The shape and / or the image position are then moved relative to each other, preferably by a rotation about one or more axes of the shape, and a second projection taken of the textured surface back to the image position to obtain a second, processed image. The view displayed within the processed image will be seen to have undergone an aspect ratio change as a result of the processing. The invention is of particular use in simulating the small movements of humans when speaking, and in particular of processing viseme images to simulate such small movements when displayed as a sequence.

Owner:BRITISH TELECOMM PLC

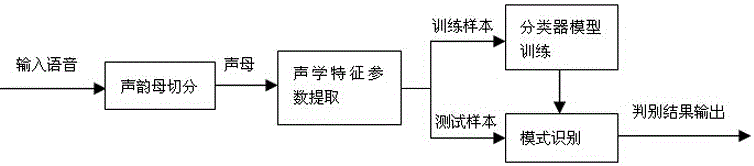

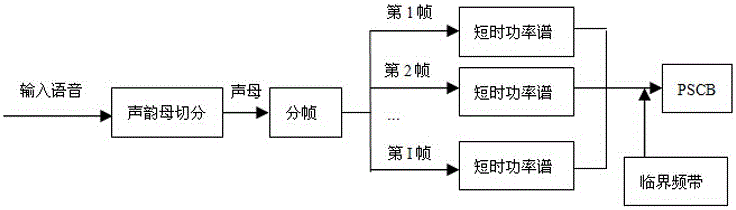

Cleft palate voice glottal stop automatic identification algorithm and device

InactiveCN104992707ARealize automatic identificationImprove recognition accuracySpeech recognitionSyllableGlottal stop

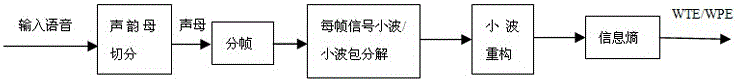

The invention discloses a cleft palate voice glottal stop automatic identification algorithm and device, relates to the technical field of voice analysis and identification, and aims to provide a glottal stop automatic identification method and device. A computer is adopted for automatically identifying cleft palate voice glottal stops, effective and objective auxiliary diagnosis is provided to patients and voice teachers, and wide popularization of cleft palate voice assessment and voice treatment is facilitated. According to the technical key points of the invention, the method comprises the steps of: 1, collecting voice signals of syllables to be tested; 2, carrying out initial and final division on the voice signals of syllables, and retaining initial voice signals; 3, extracting characteristic values of the initial voice signals; and 4, sending the characteristic values into trained identification models, wherein the identification models judge whether glottal stops exist in the voice signals of syllables according to the characteristic values.

Owner:SICHUAN UNIV

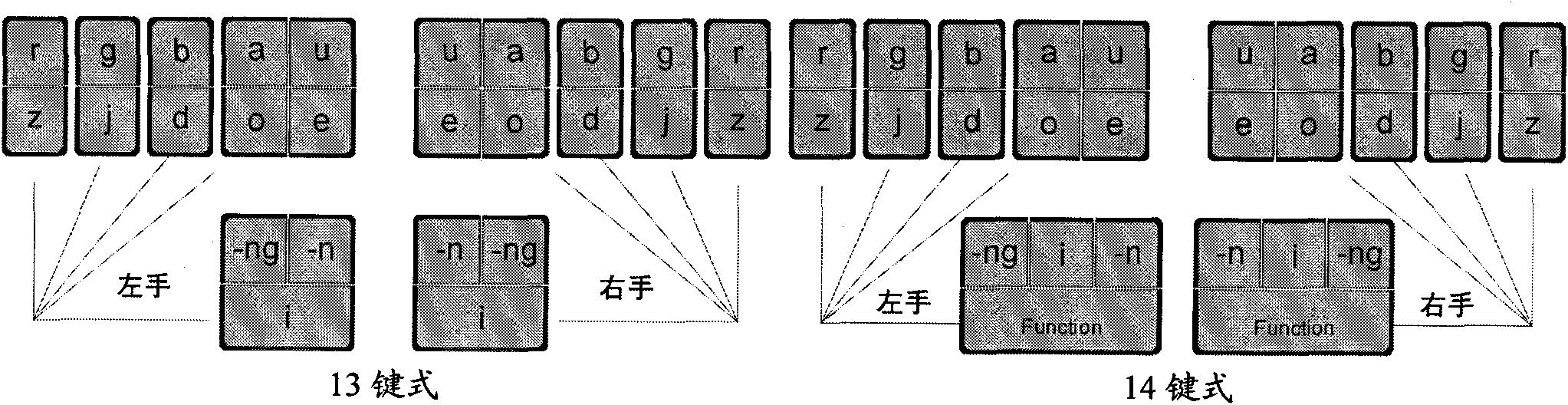

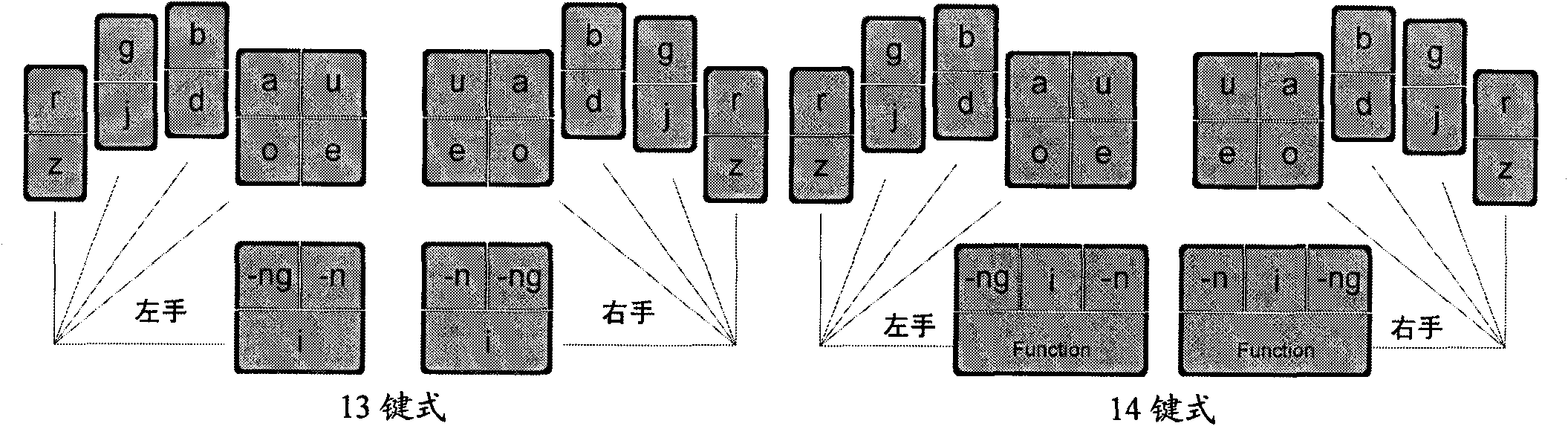

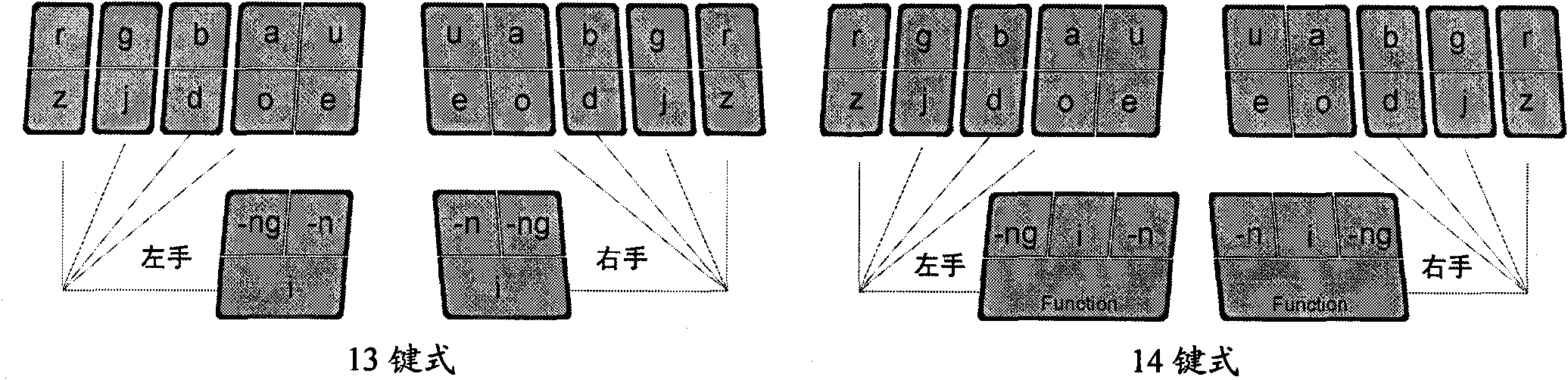

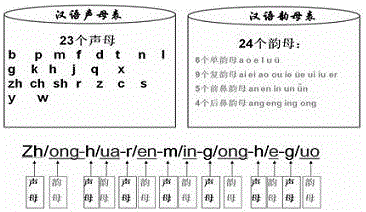

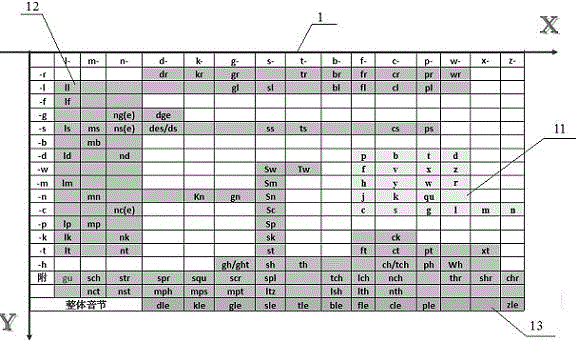

Input method of multi-language general multi-key co-striking type and keyboard device

InactiveCN101957660ASame code problem solvedImprove accuracyInput/output processes for data processingSyllableEngineering

The invention relates to an input method of multi-language general multi-key co-striking type and a keyboard device, particularly suitable for inputting languages, such as Chinese, English, Japanese, Korean, German, Russian, and the like. On a basis of abiding by speech rules of various languages, the invention adopts the input method and the keyboard device, i.e. a multi-language general keyboard or a keyboard main body which is provided with mutually symmetrical fourteen keys or thirteen keys at the left side and the right side and the measure that a plurality of syllables (or necessarily singlehanded input consonants) can be realized once through singlehanded multi-key co-striking so that the keyboard input speed of various languages can catch up with the speech or thinking rhythm, and the aim that what you want and what you speak are what you get is realized. The physiological structures of the fingers are fully considered in the keyboard layout of the consonant / initial keys and vowel / final keys respectively at the left part and the right part so that co-striking is easy and natural without troubling users, the combinations of the consonants / initials and the vowels / finals resemble common voice symbol modes of various languages as far as possible, and the process of grasping the input method is easy and understandable, accords with a rule and can more rapidly and conveniently realize the aim that everyone can rapidly input.

Owner:王道平

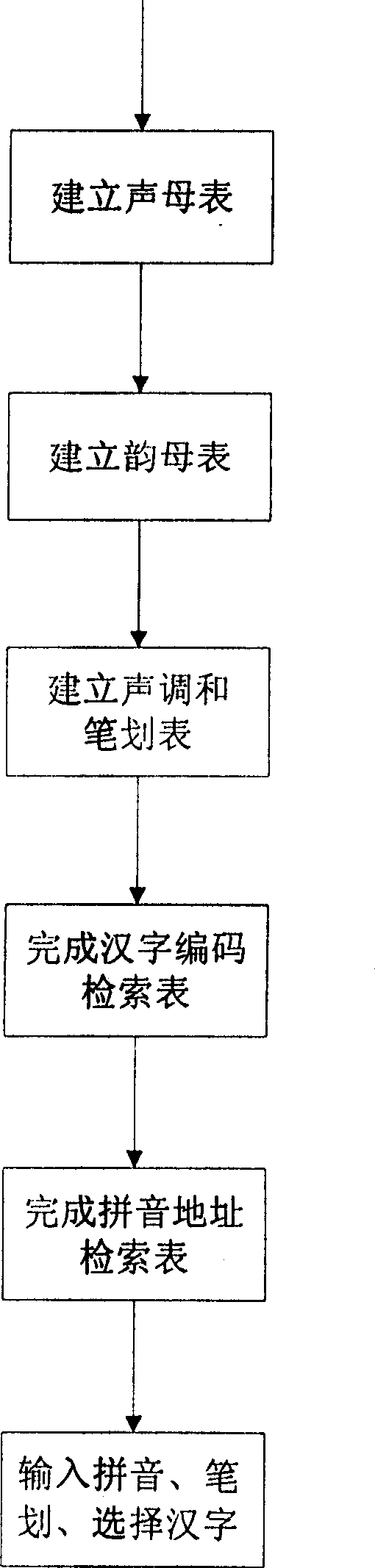

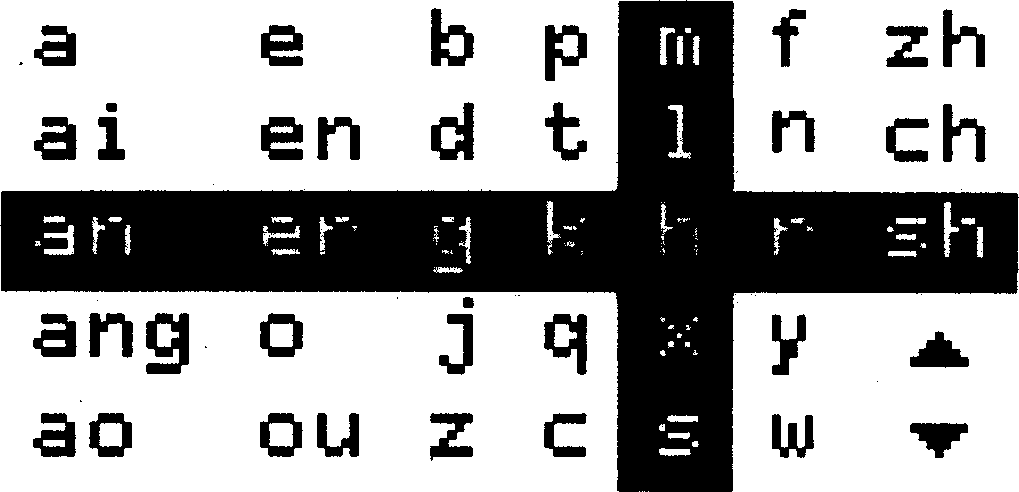

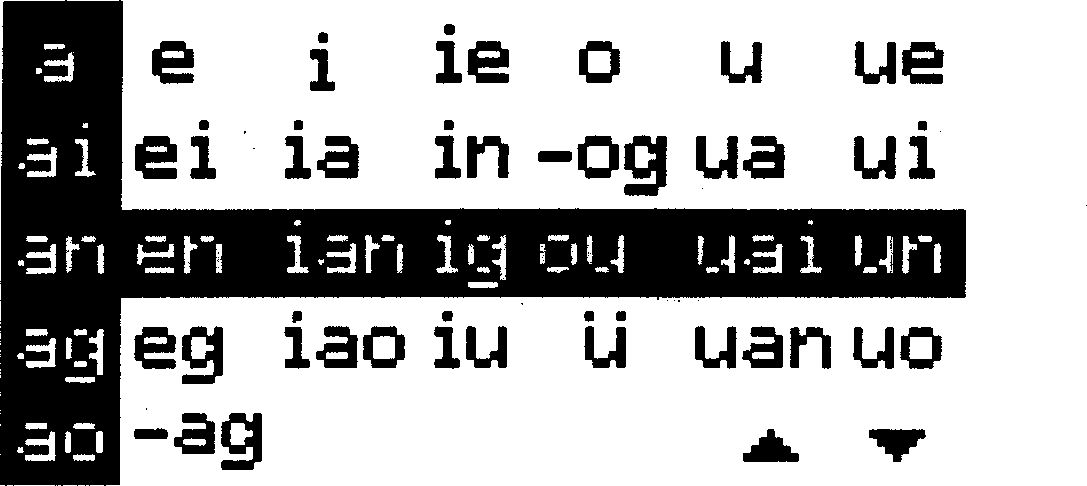

Embedded applied Chinese character inputting method

InactiveCN1510554ASimple inputImprove input efficiencyInput/output processes for data processingUser inputObject definition

The method includes the following steps: 1) initial consonant, vowel, pronunciation tone and writing stroke of Chinese phonetic transcription are used as coding element separately and the table for them is set up according to dual-spelling of Chinese phonetic transcription; 2) coding index list is defined by using the phonetic transcription as index object; 3) phonetic transcription address index list is defined and 4) the system is firstly to search storing address of Chinese character according to dual-spelling inputted by the user, then all Chinese character with the same tone and stroke as inputted are searched out in relevant offset address and they are displayed on selection region, finally determinant matrix is applied for picking up Chinese character to be inputted.

Owner:ZTE CORP

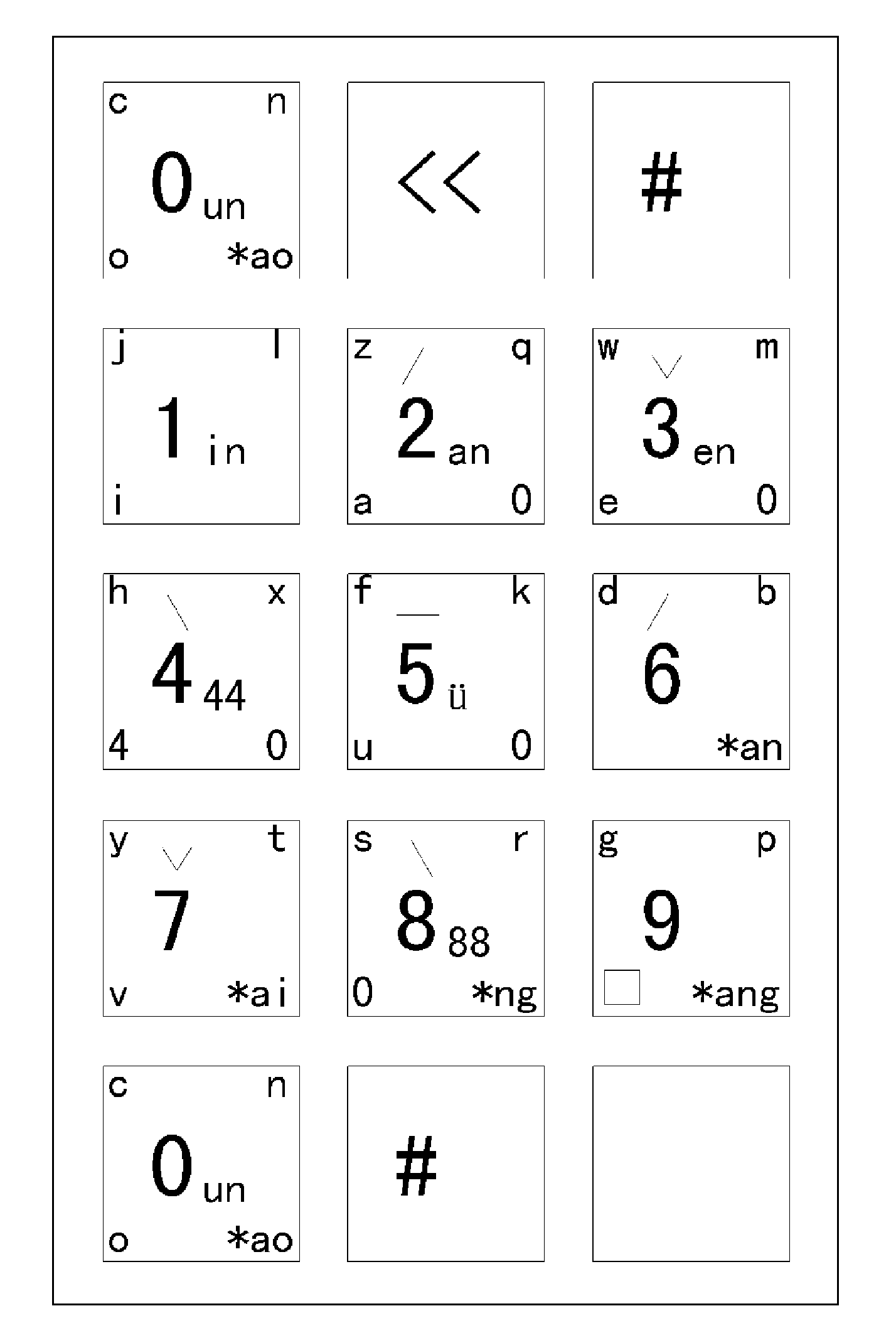

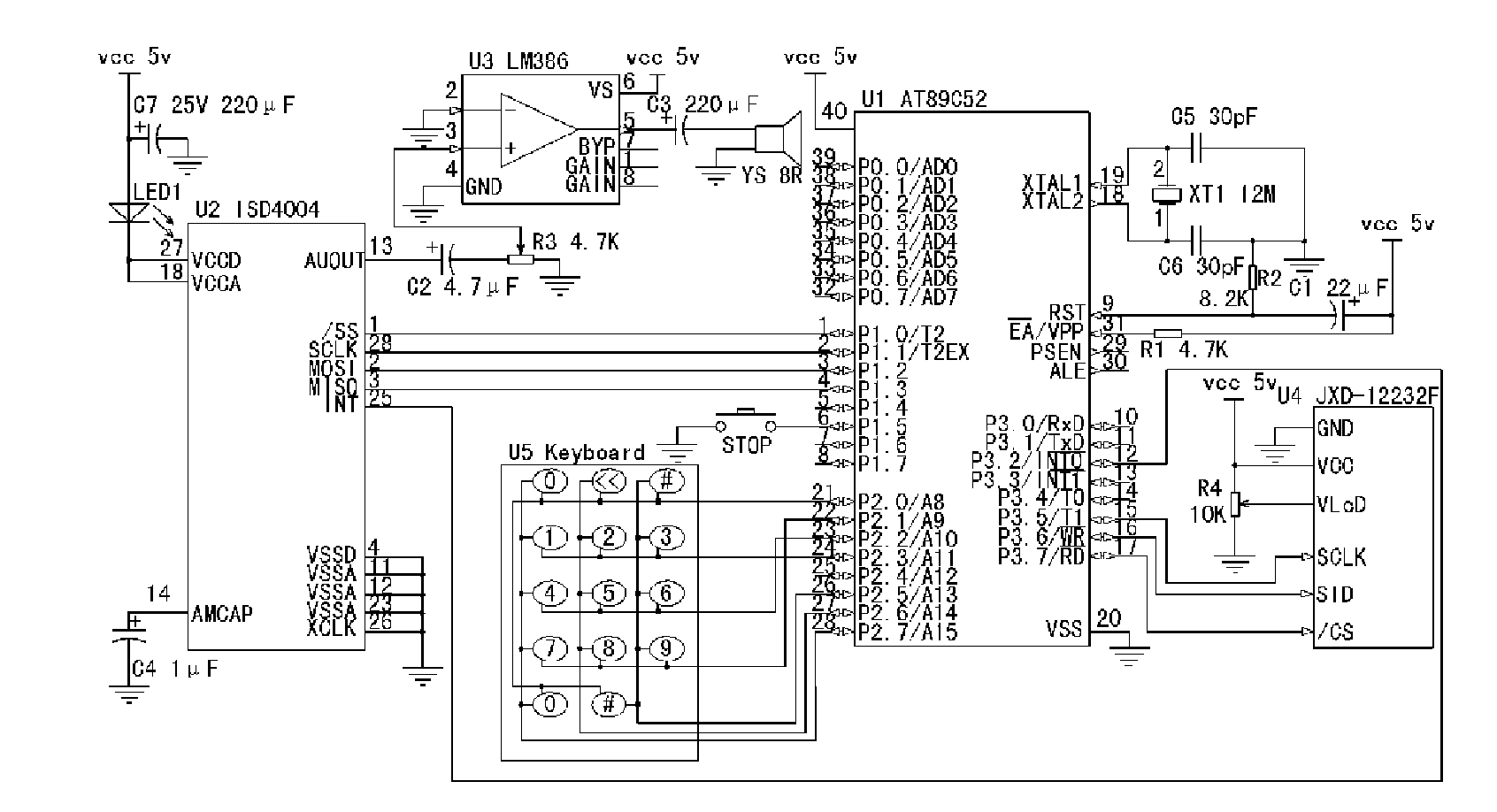

Four-number Chinese character syllable code coding method, numeric keyboard and pronouncing device

InactiveCN101876856ASimple methodNo repeat codeInput/output for user-computer interactionSyllableMicrocontroller

The invention relates to a four-number Chinese character syllable Chinese character input method. The four-number Chinese character syllable codes comprise initial consonant codes, vowel codes and intonation codes. The numeric keyboard comprises ten numeric keys which are respectively marked with the numbers of 0, 1, 2, 3, 4, 5, 6, 7, 8 and 9 on the surface. Each numeric key is marked with a first group of consonants, a second group of consonants, a single vowel, composite vowels and intonation signs, which are represented by the numbers of 0, 1, 2, 3, 4, 5, 6, 7, 8 and 9. The four-number Chinese character syllable code Chinese character input method employs the number and pictographic alphabet method to input, and has advantages of simple method, no repeated codes, easy learning, remembering and mastering.

Owner:臧广树

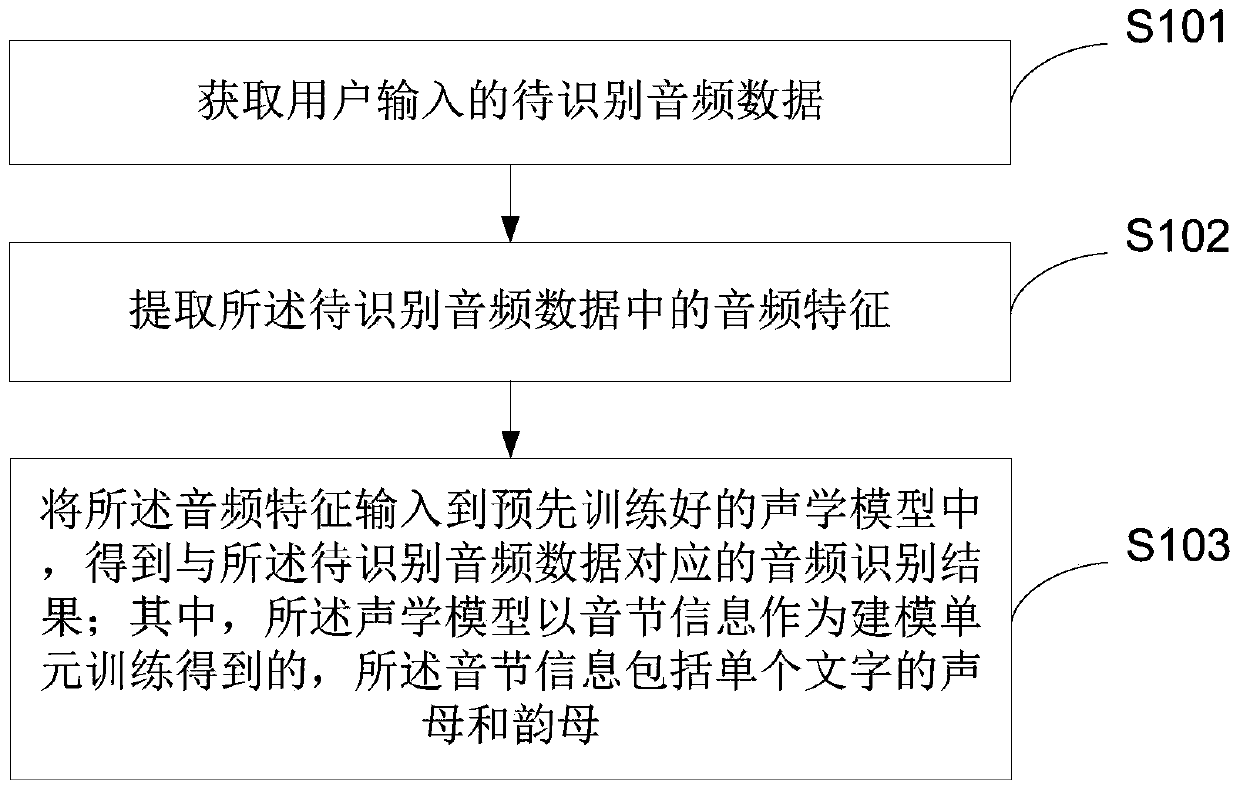

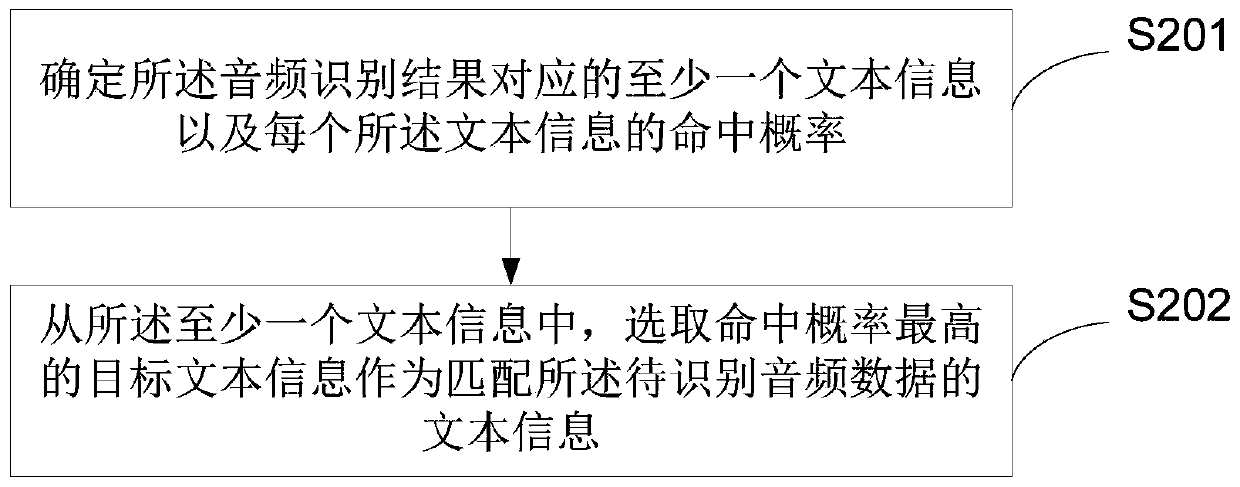

Audio recognition method and device and acoustic model training method and device

PendingCN111415654ASave resource spaceSpeed up audio recognitionSpeech recognitionSyllableSpeech sound

The invention provides an audio recognition method and device and an acoustic model training method and device, and relates to the technical field of audio processing. The audio recognition method comprises the steps of obtaining to-be-recognized audio data input by a user; extracting audio features in the to-be-recognized audio data; inputting the audio features into a pre-trained acoustic modelto obtain an audio recognition result corresponding to the to-be-recognized audio data, wherein the acoustic model is obtained through training by taking syllable information as a modeling unit, and the syllable information comprises initial consonants and final consonants of single characters. According to the application, the acoustic model used in the audio recognition process is obtained by training the syllable information as the modeling unit, the resource space occupation amount of the acoustic model is small, the resource space of the mobile terminal can be saved, the audio recognitionspeed of the mobile terminal is accelerated, and rapid voice recognition on the mobile terminal is realized.

Owner:BEIJING DIDI INFINITY TECH & DEV

Storage method for inputting Chinese word into computer by calculation functional encoding phoneme of word

InactiveCN101004640AIntuitive lockPerfect lockInput/output processes for data processingChinese charactersUpper Case Letter

A method for inputting Chinese character by computer in storage mode includes using fixed four positions of line, column, longitudinal and order English capital letters as unique corresponding identification of Chinese character; enabling to use 26 English capital letters and two ASCII code symbols to directly spell out modern Chinese characters and phrase in reciprocal-corresponding way as per standard stroke attribute and pronunciation attributes of initial consonant and vowel as well as four tones.

Owner:北京汉码魔方科技有限公司

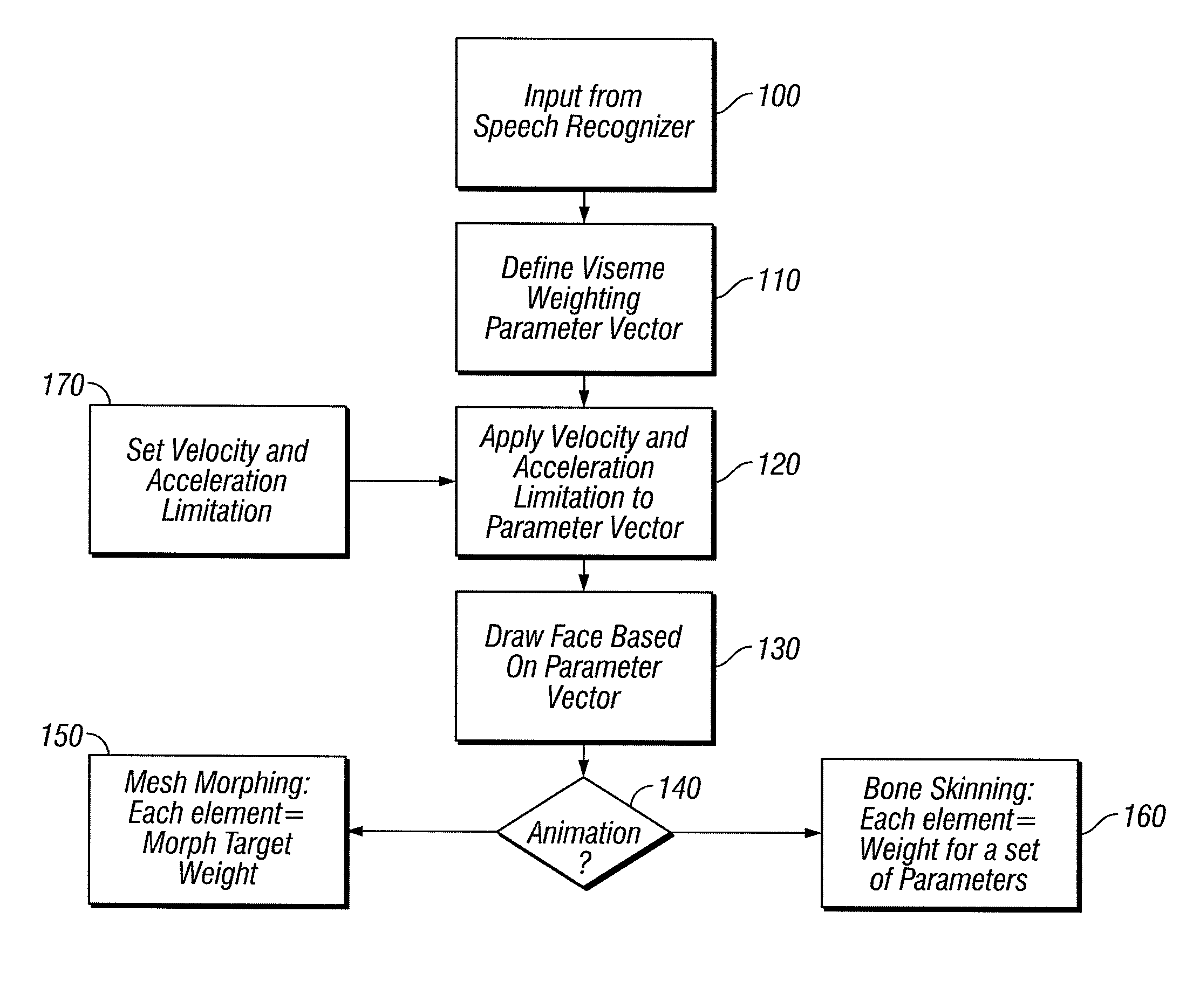

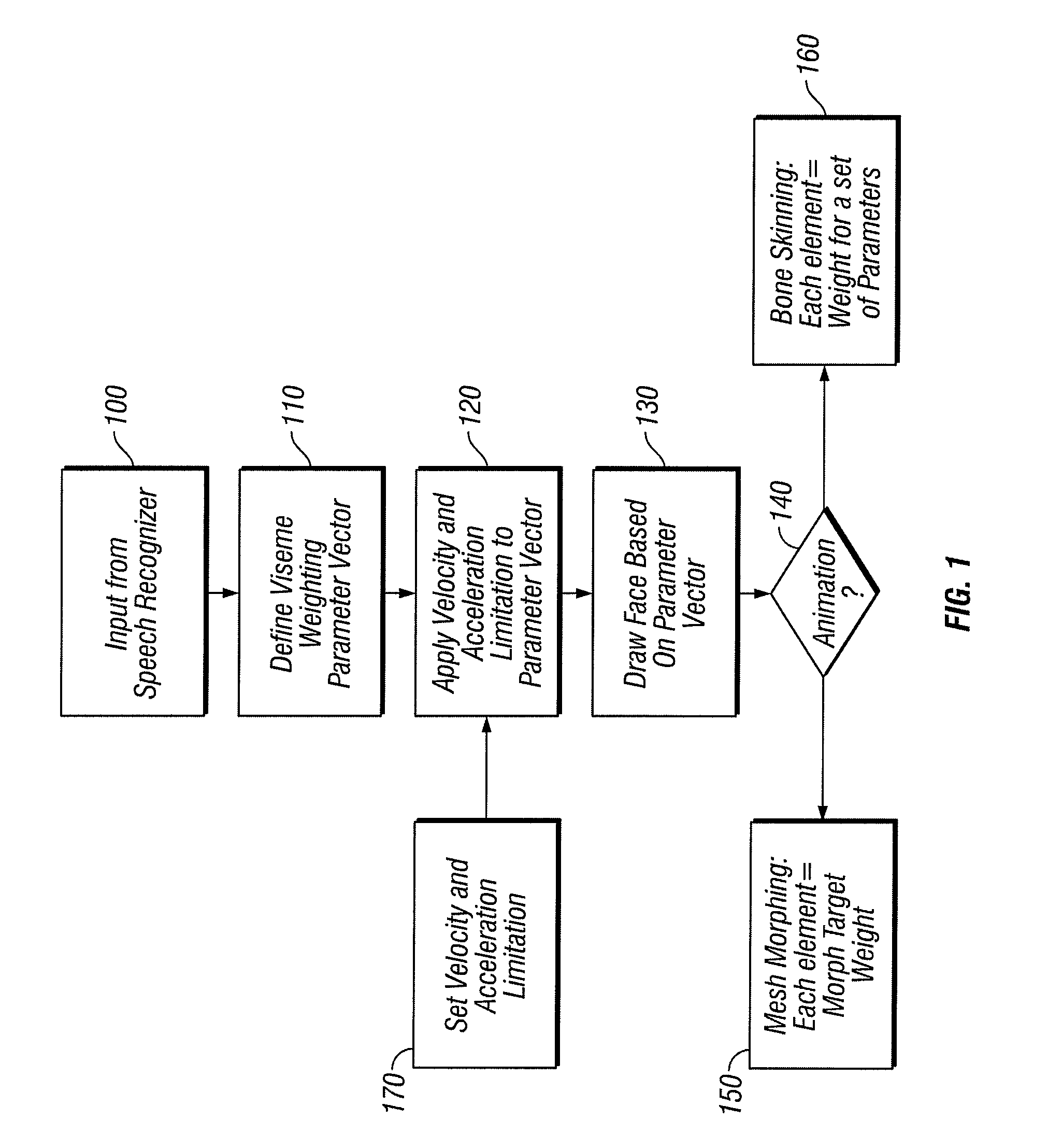

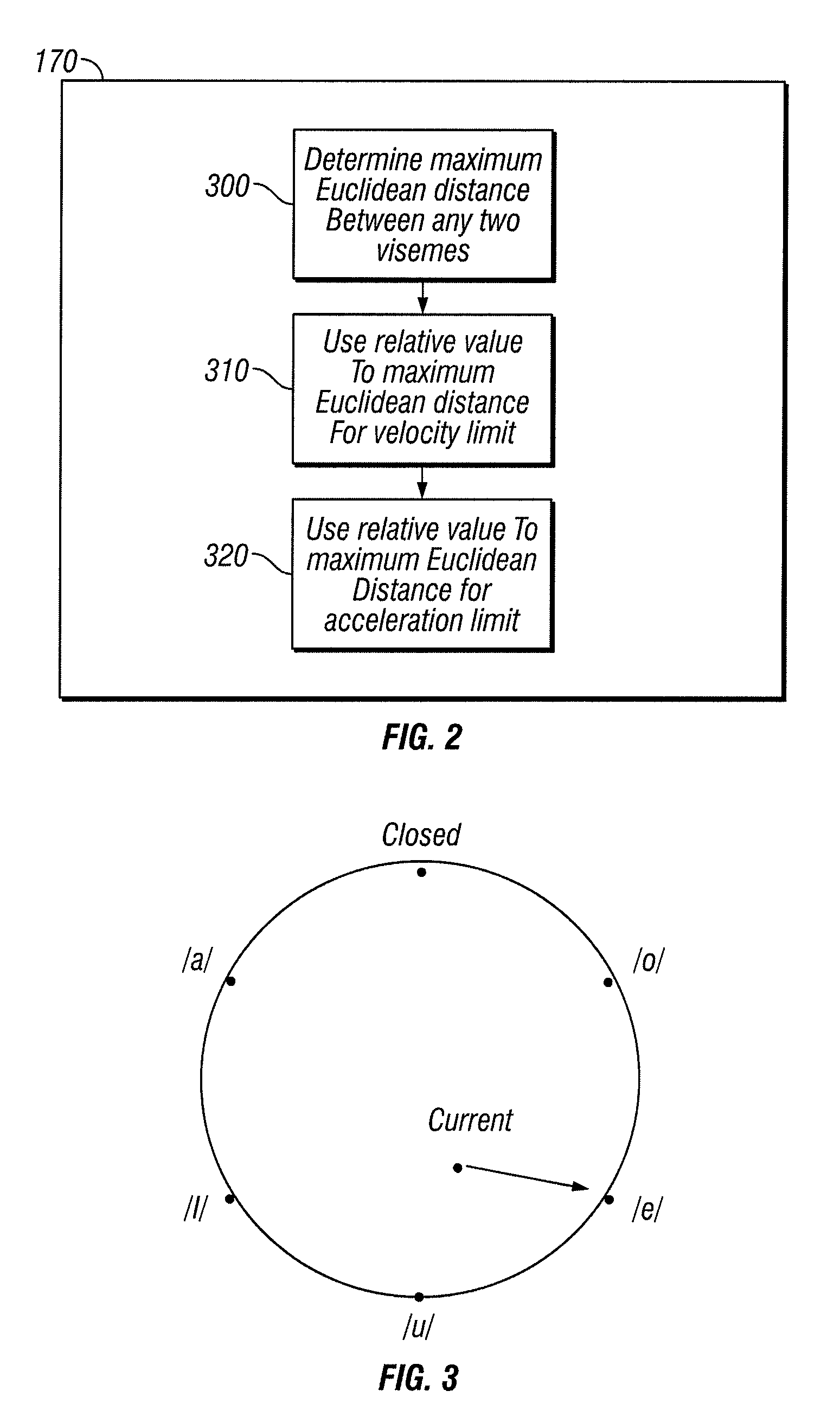

Method and apparatus for providing natural facial animation

Natural inter-viseme animation of 3D head model driven by speech recognition is calculated by applying limitations to the velocity and / or acceleration of a normalized parameter vector, each element of which may be mapped to animation node outputs of a 3D model based on mesh blending and weighted by a mix of key frames.

Owner:SONY COMPUTER ENTERTAINMENT INC

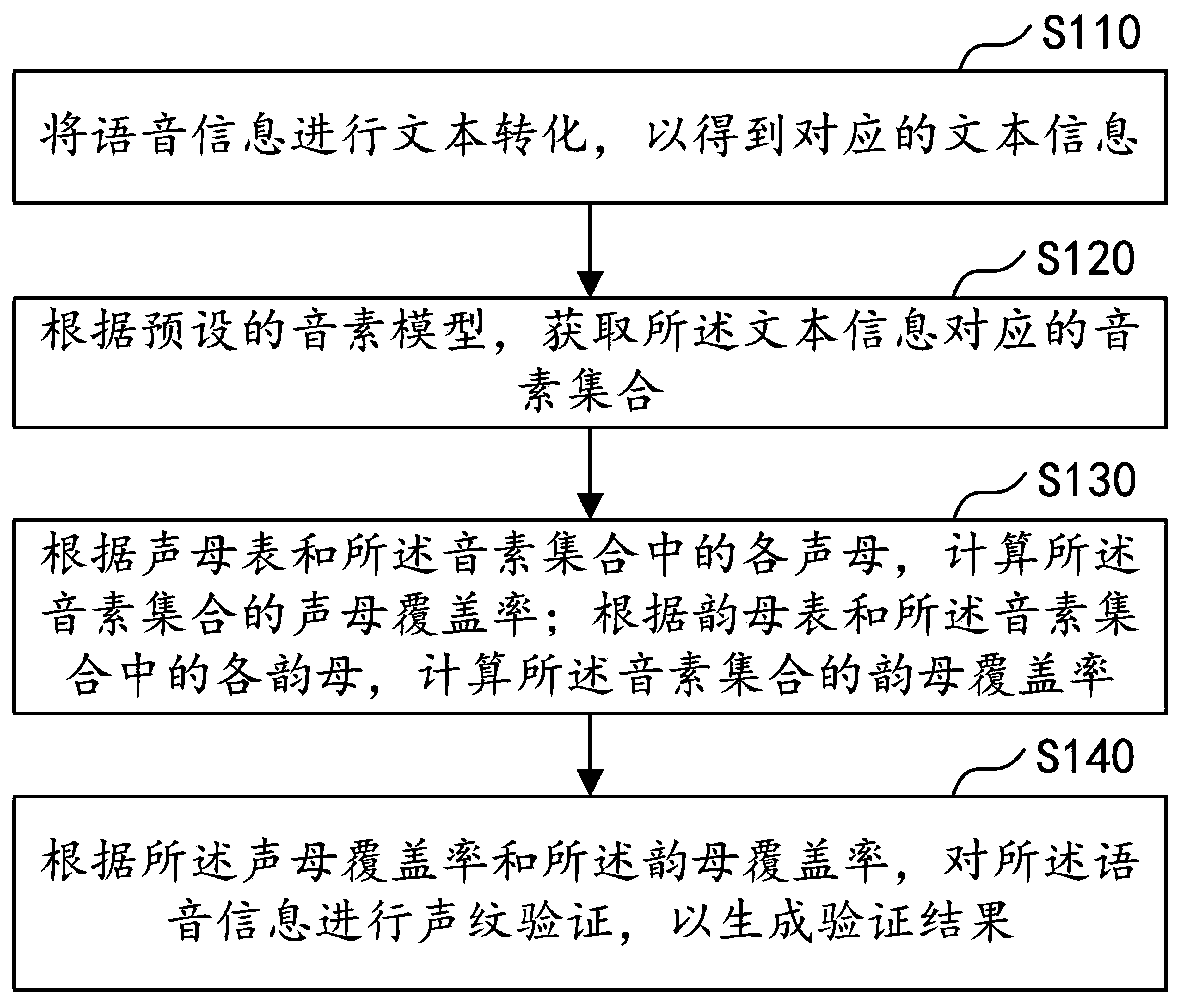

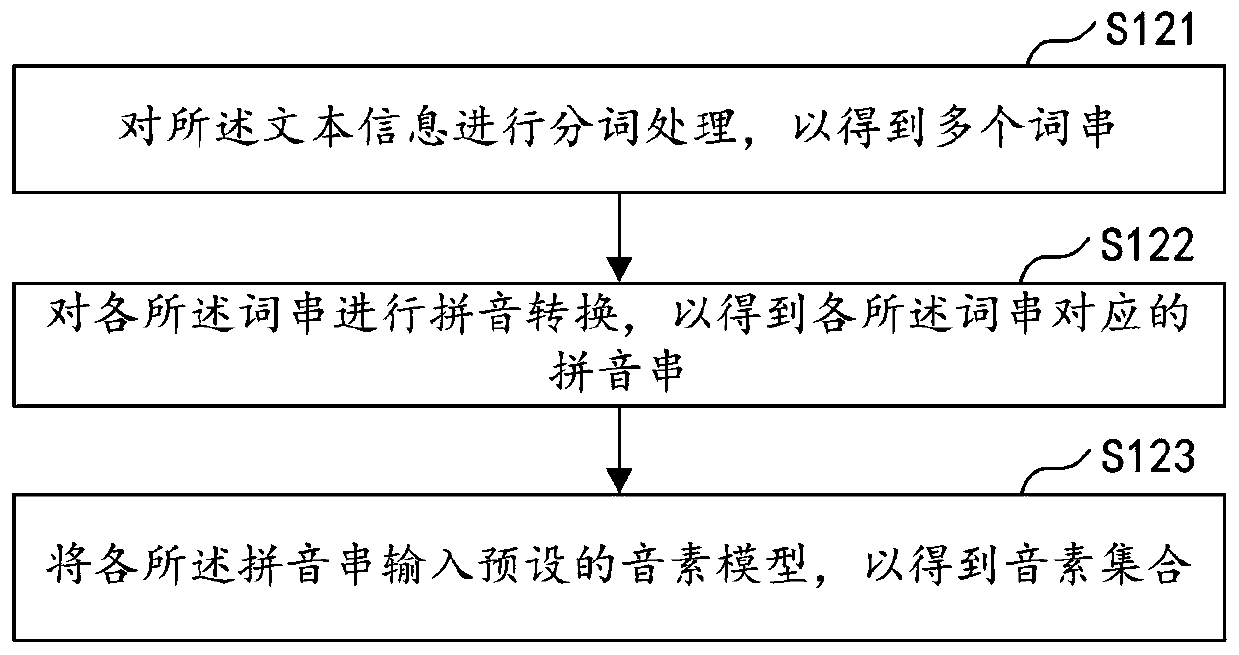

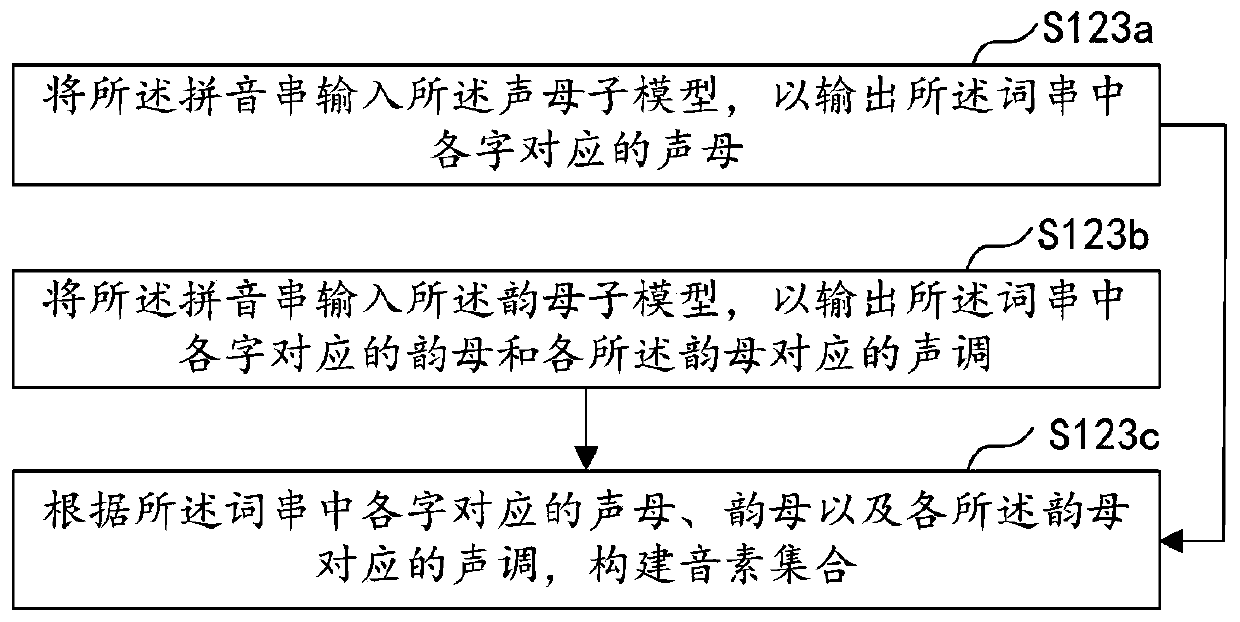

Voiceprint verification method, apparatus, device, and storage medium

The invention relates to the field of biological recognition. Specifically, a pre-trained phoneme model is adopted to realize the voice processing. The invention discloses a voiceprint verification method, an apparatus, a device and a storage medium. The method comprises the steps: carrying out the text conversion of voice information, so as to obtain corresponding text information; according to apreset phoneme model, obtaining a phoneme set corresponding to the text information, wherein the phoneme set comprises initial consonants and final consonants corresponding to each character in the text information; calculating an initial consonant coverage rate of the phoneme set according to an initial consonant table and each initial consonant in the phoneme set; calculating the vowel coveragerate of the phoneme set according to a vowel table and each vowel in the phoneme set; and performing voiceprint verification on the voice information according to the initial consonant coverage rateand the final coverage rate to generate a verification result. Therefore, the voice information with high voice feature integrity of the user is found out. An important reference is provided for voiceprint identity verification.

Owner:PING AN TECH (SHENZHEN) CO LTD

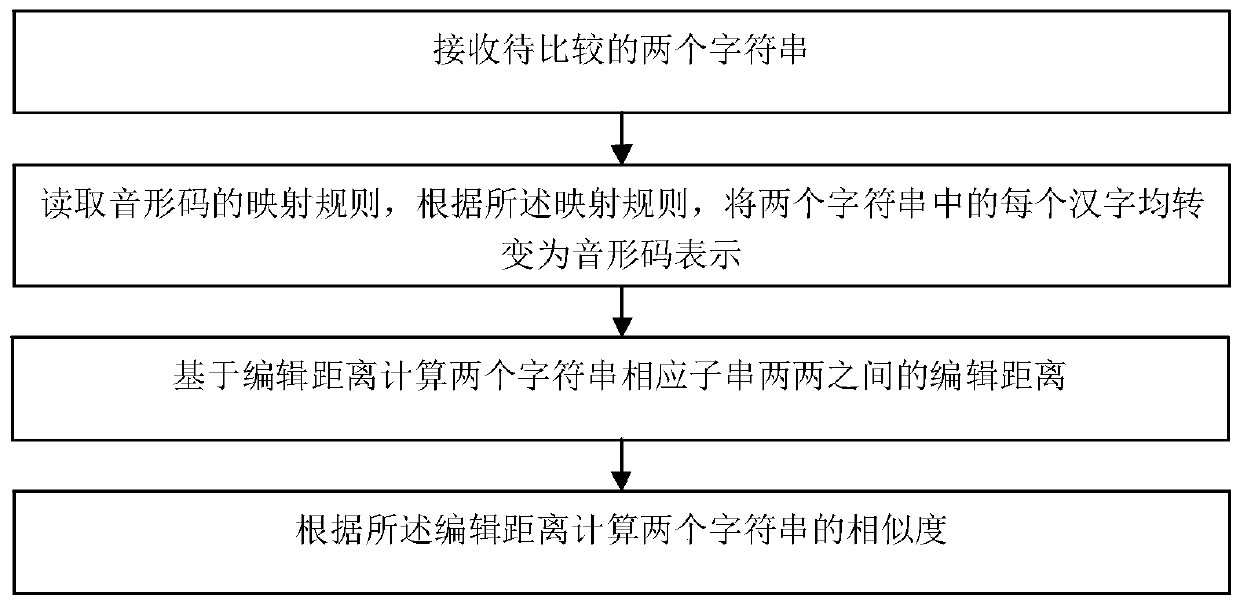

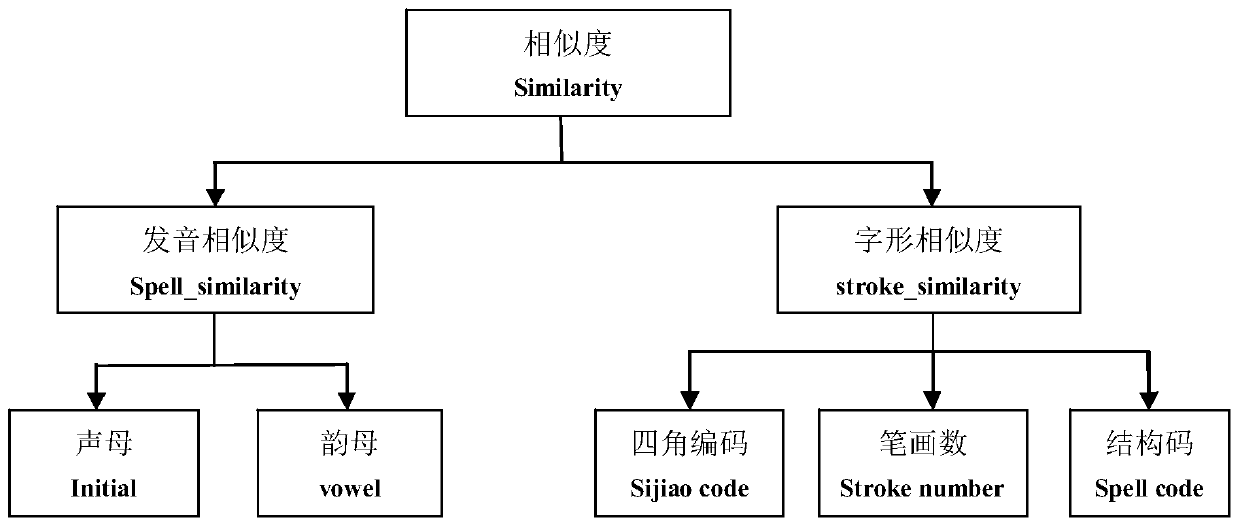

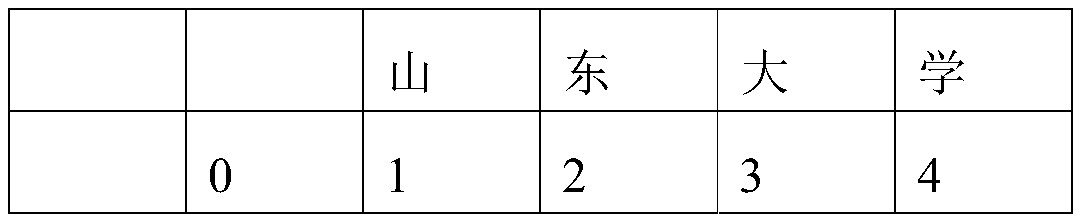

Chinese character string similarity calculation method and device based on phonetic and morphological codes

PendingCN111209447AThe similarity is accurate and comprehensiveImprove conversion efficiencyOther databases queryingChinese charactersEdit distance

Owner:SHANDONG UNIV

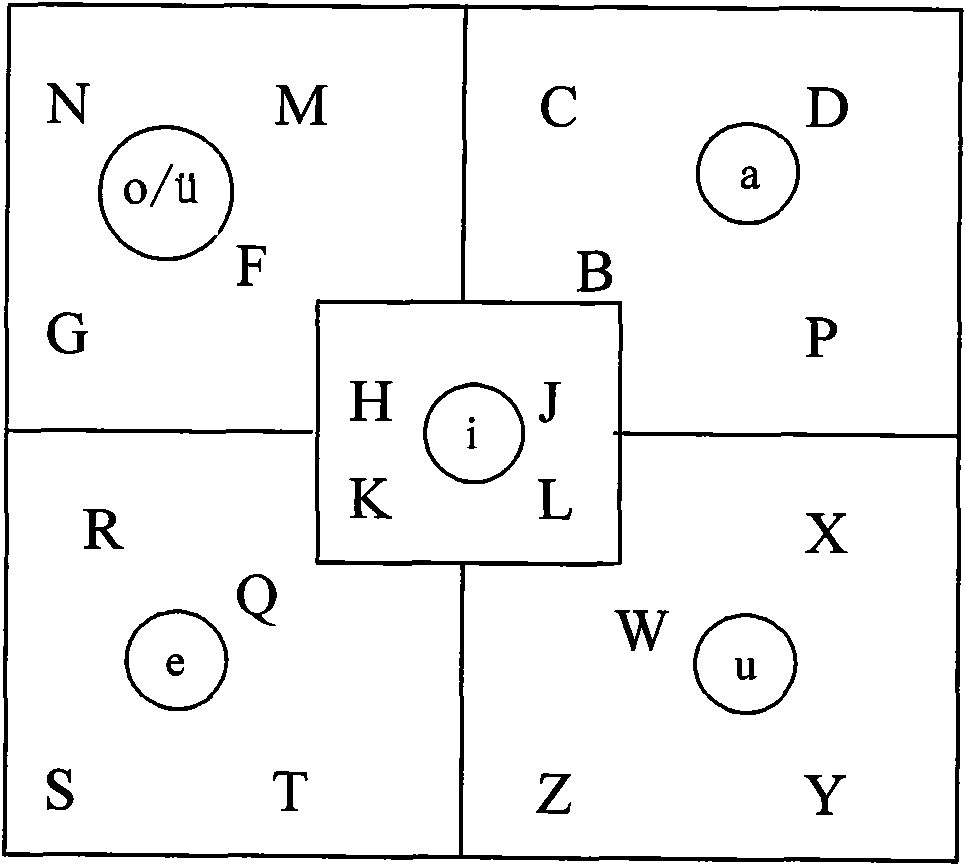

Input keyboard and pinyin input method thereof

The present invention discloses a keyboard layout mode which is easy to memorize and can realize the quick input of word and a corresponding pinyin input method. According to the layout, 26 letters are partitioned according to the simple finals. Each subarea contains four initial consonants of a Chinese syllable and one simple vowel of a Chinese syllable. The rest one single simple vowel row of a Chinese syllable and a certain single simple vowel of a Chinese syllable share a subarea. The letter layout approximately accords with the sequence of the English alphabet and is adjusted approximately for facilitating the memorizing. When the pinyin input method is used under the keyboard layout, each initial consonant of a Chinese syllable represents the single simple vowel of a Chinese syllable, and the single simple vowel key of a Chinese syllable is used as a number selecting key (or the uses of mark, etc.) thus reducing the frequency of finger moving and increasing the input efficiency. In the input of the touch screen, the total subarea is used as a single simple vowels of a Chinese syllable of the subarea because of the increased area of the single digit key, convenient input and reduced error probability. The initial consonant key of a Chinese syllable can be defined to a single simple vowel of a Chinese syllable, which comprises the tone, thereby greatly reducing the code repeating rate.

Owner:李玲

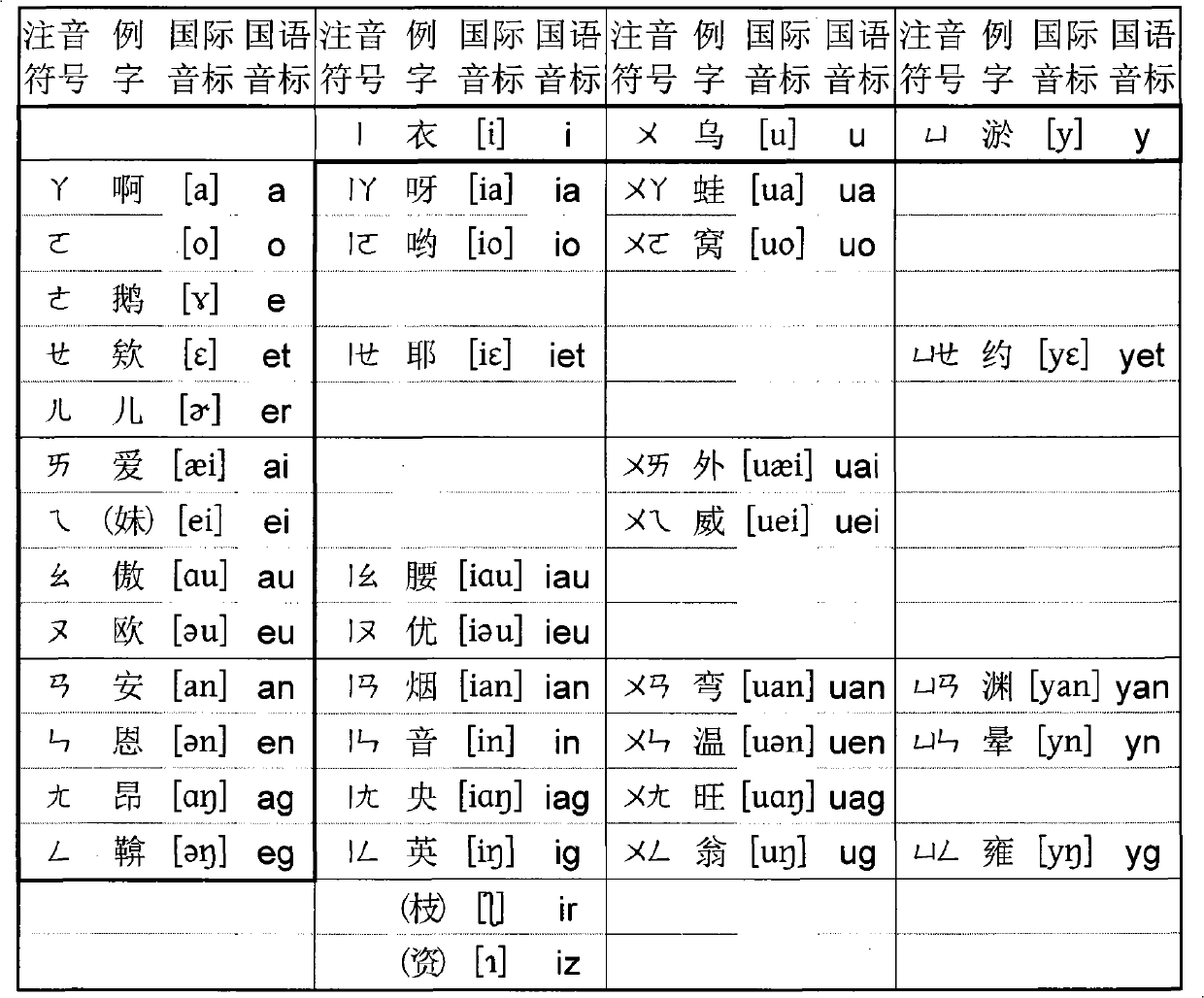

Chinese phonetic symbol, simplified phonetic symbol, English keyboard and tiny keyboard Chinese character input method

InactiveCN101556509AFast learningIncrease typing speedInput/output processes for data processingSyllableLettering

The invention discloses a Chinese phonetic symbol and a Chinese character input method thereof; the Chinese character input method comprises the following steps: basic vowels and consonants of the international phonetic symbol of Chinese characters regularly corresponded to the 26 English letters; the syllable ending ng of Chinese phonetic alphabets is changed into g according to the shape of the international phonetic symbol of Chinese characters, the final sounds including v, ou and ao are respectively changed into y, eu and au; both iong and yong are uniformly changed into yg; both ong and weng are uniformly changed into ug; o acts as an initial consonant as well as a final sound; initial consonants including zh, ch and sh are changed into v, w and o respectively; syllables starting with i, u and y are not changed; codes for a syllable need 4 letters at most. On the basis, final sounds can be further simplified so that a syllable needs 3 letters at most: for example, iau and uai are simplified into io and uy respectively; ieu, uei and uen are simplified into iu, ui and un respectively; an and ag with i, u and y in the front are simplified into m and h; ian, uan and yan are simplified into im, um and ym; and iag and uag are simplified into ih and uh.

Owner:郭恒勋

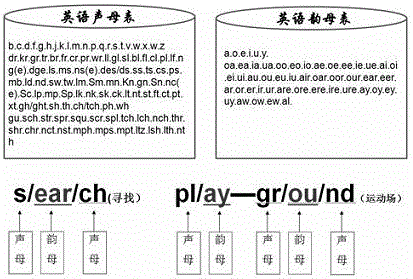

Method and tool for training English learner to read and memorize words

InactiveCN105679105ACombination operation is convenientDeepen memoryMechanical appliancesComputer scienceSyllable

The invention discloses a method and tool for training English learners to read and memorize words. The application uses "English initials" and "English finals" to analyze English words as a whole, and the brain forms the "initials" and "English finals" of words through memory. "finals" and "whole syllables", combined with the English word combination board to carry out splicing and combination training on the "consonants" and "finals" that make up words, and on the basis of the chunkiness of English vocabulary, the laws of memory psychology Effectively applied to the cognitive process, through the chunking effect, combined with the tools of this application, the efficiency of English learners in reading and memorizing words can be greatly improved. The tool of the present application has a simple structure and convenient operation of word combinations. Through the tool for intuitive training, the block effect can be fully exerted, which can not only deepen the learner's memory, but also increase the fun of learning.

Owner:张楠

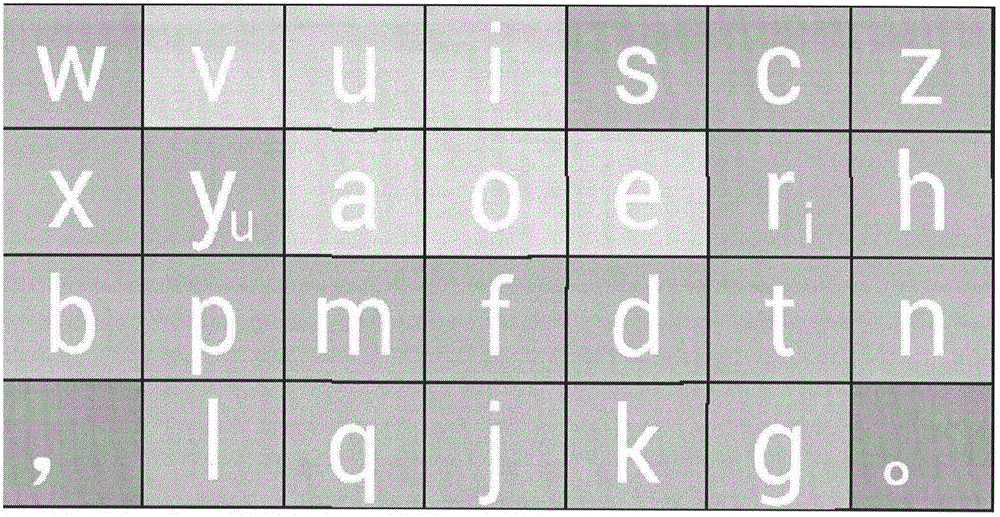

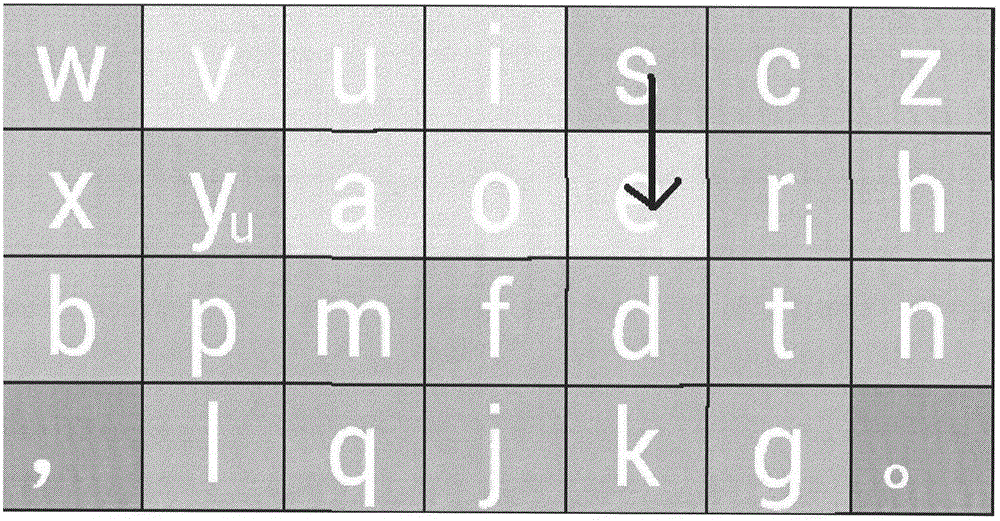

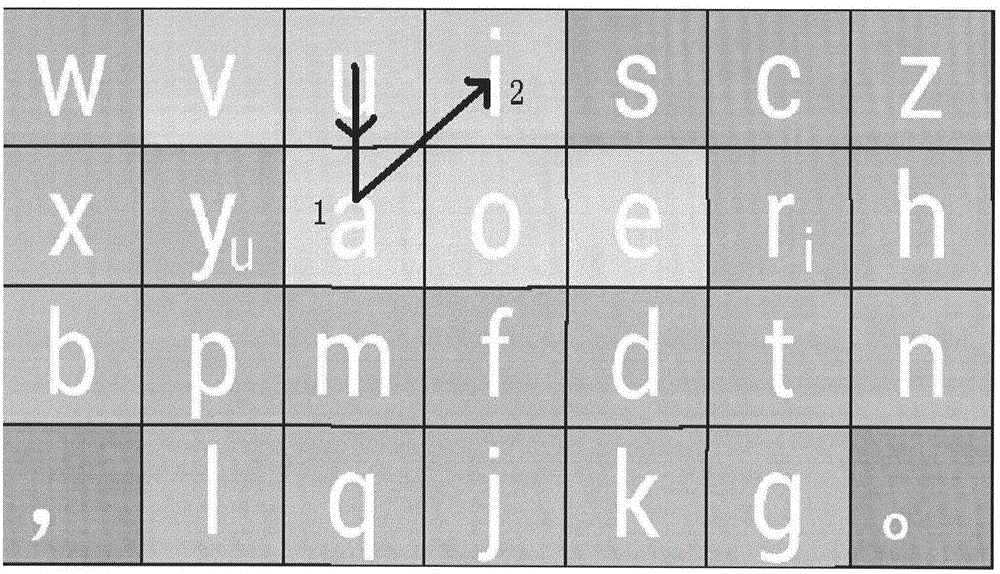

Virtual keyboard design and input method for touch screen device

ActiveCN104536583ASimple and few operationsImprove efficiencyInput/output for user-computer interactionComputer scienceViseme

Provided is a virtual keyboard design and an input method for a touch screen device. The layout of the virtual keyboard is arranged to be provided with four rows and seven columns. The virtual keyboard comprises 26 letters and two punctuation marks. The first row must comprise the letters u, i and v, wherein the letter v represents the vowel u. The letters u and i must be adjacent to each other. The second row must comprise the letters a, o, e and r, wherein the letter a is adjacent to the letter o and located on the left side of the letter o. The letter e is adjacent to the letter r and located on the left side of the letter r. The second row must comprise the letters u and i. The letters u and i share same virtual buttons with any other two consonants of the second row respectively, and thus vowel and consonant sharing buttons are formed. The letters z, c, s, b, d, f, g, h, j, k, l, m, n, p, q, t, w, x and y, sixteen consonants in total, can be randomly distributed in any positions from the first row to the fourth row. All of the short consonants and the short vowels are acquired by clicking and all of long consonants and long vowels are acquired by sliding. According to the method, the quick and convenient Chinese character pinyin input can be achieved on any touch screen device. The virtual keyboard design and the input method have the advantages that the learning is easy, the input efficiency is high and the misoperation rate is low.

Owner:黄柏儒

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com