Patents

Literature

210 results about "Voice engine" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A voice engine is a software subsystem for bidirectional audio communication, typically used as part of a telecommunications system to simulate a telephone. It functions like a data pump for audio data, specifically voice data. The voice engine is typically used in an embedded system.

Systems and methods for dynamically improving user intelligibility of synthesized speech in a work environment

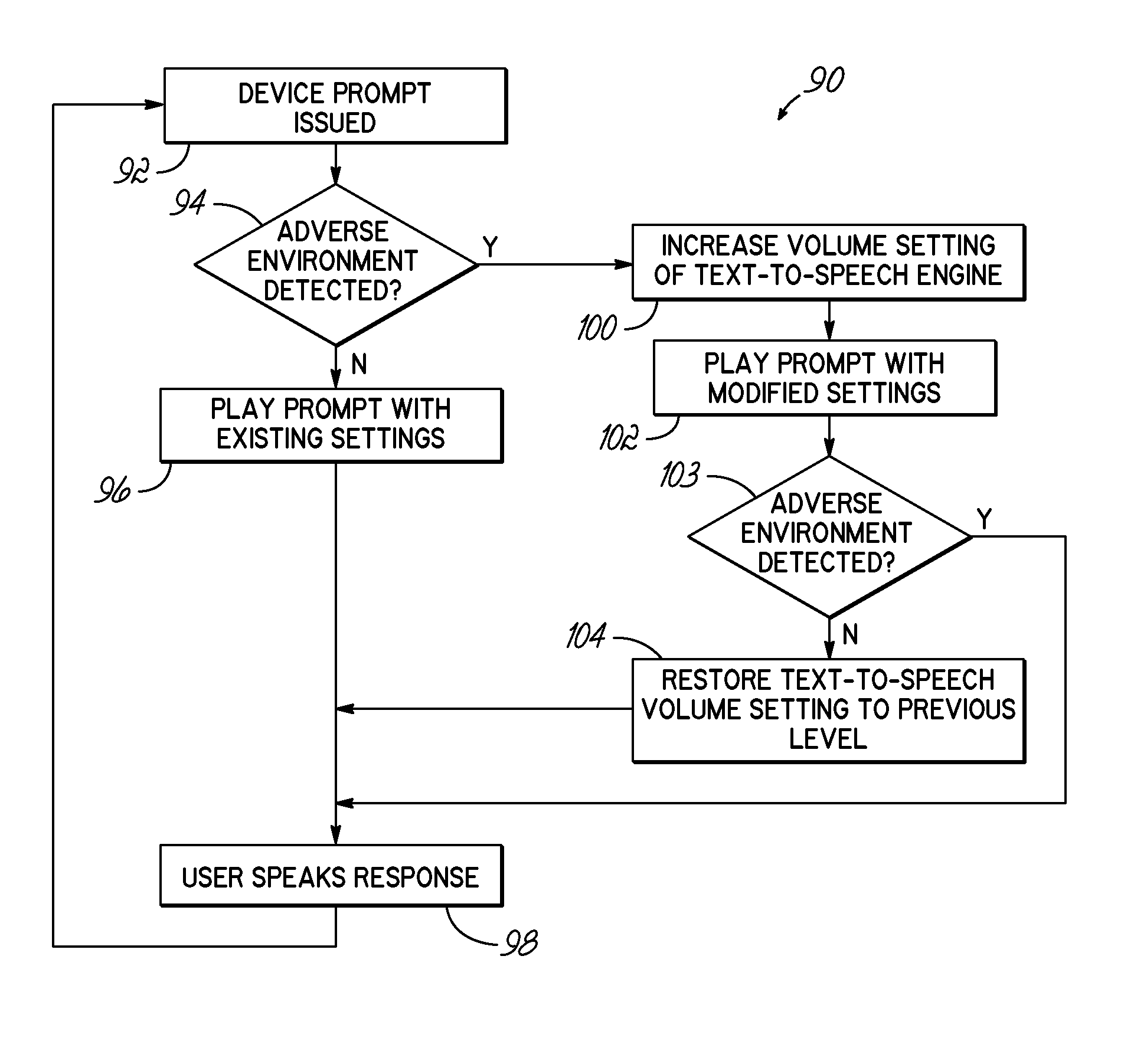

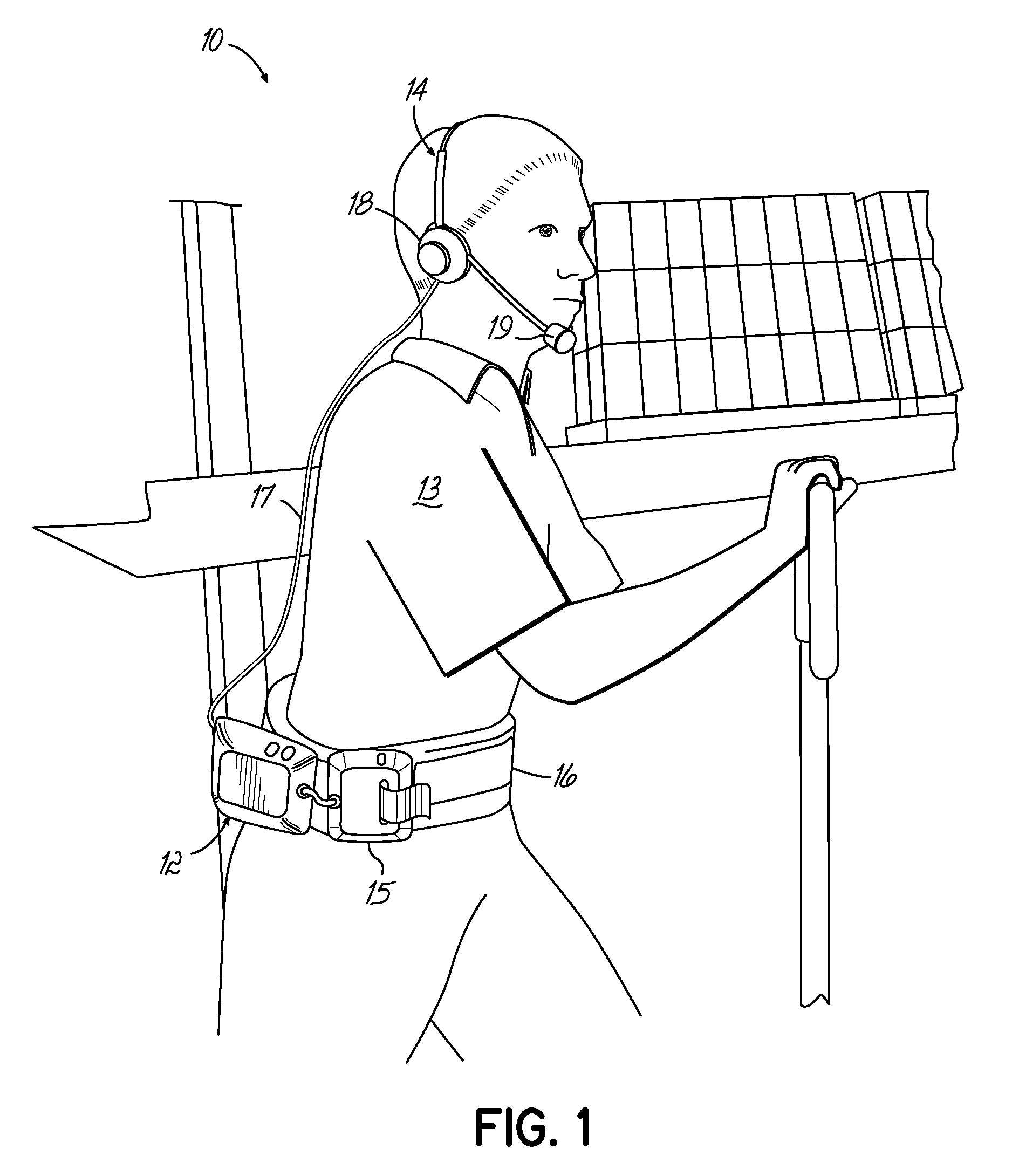

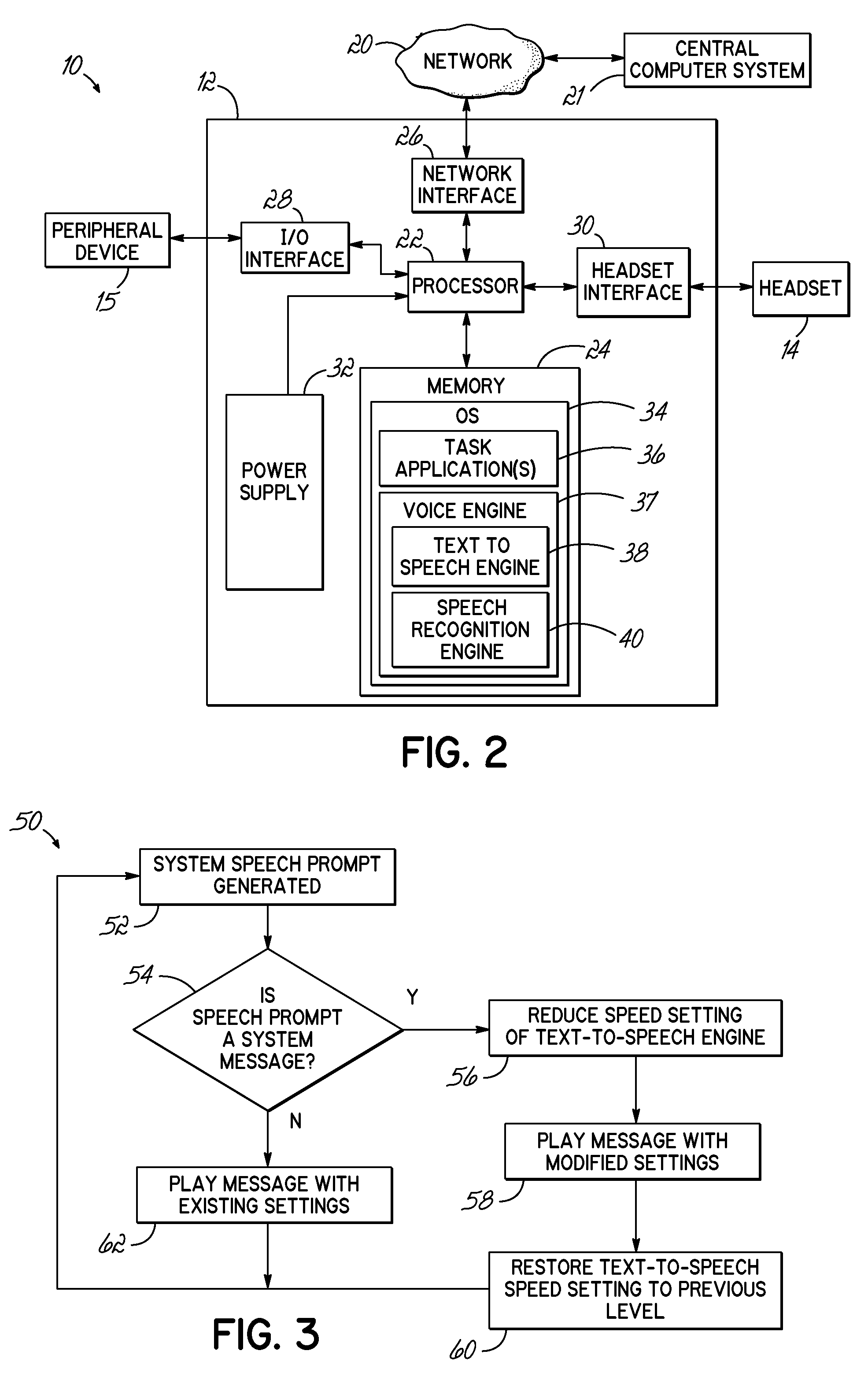

Method and apparatus that dynamically adjusts operational parameters of a text-to-speech engine in a speech-based system. A voice engine or other application of a device provides a mechanism to alter the adjustable operational parameters of the text-to-speech engine. In response to one or more environmental conditions, the adjustable operational parameters of the text-to-speech engine are modified to increase the intelligibility of synthesized speech.

Owner:VOCOLLECT

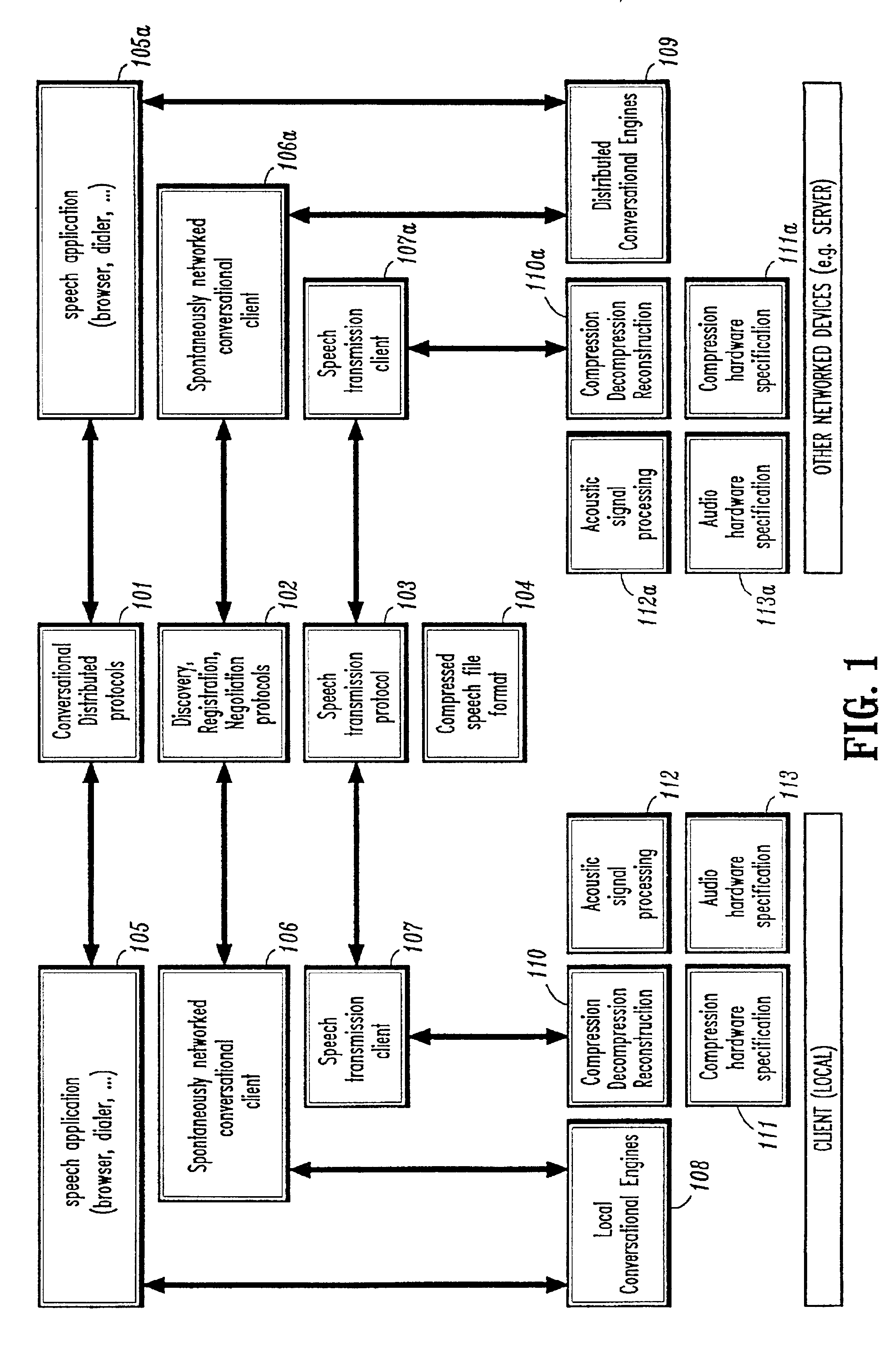

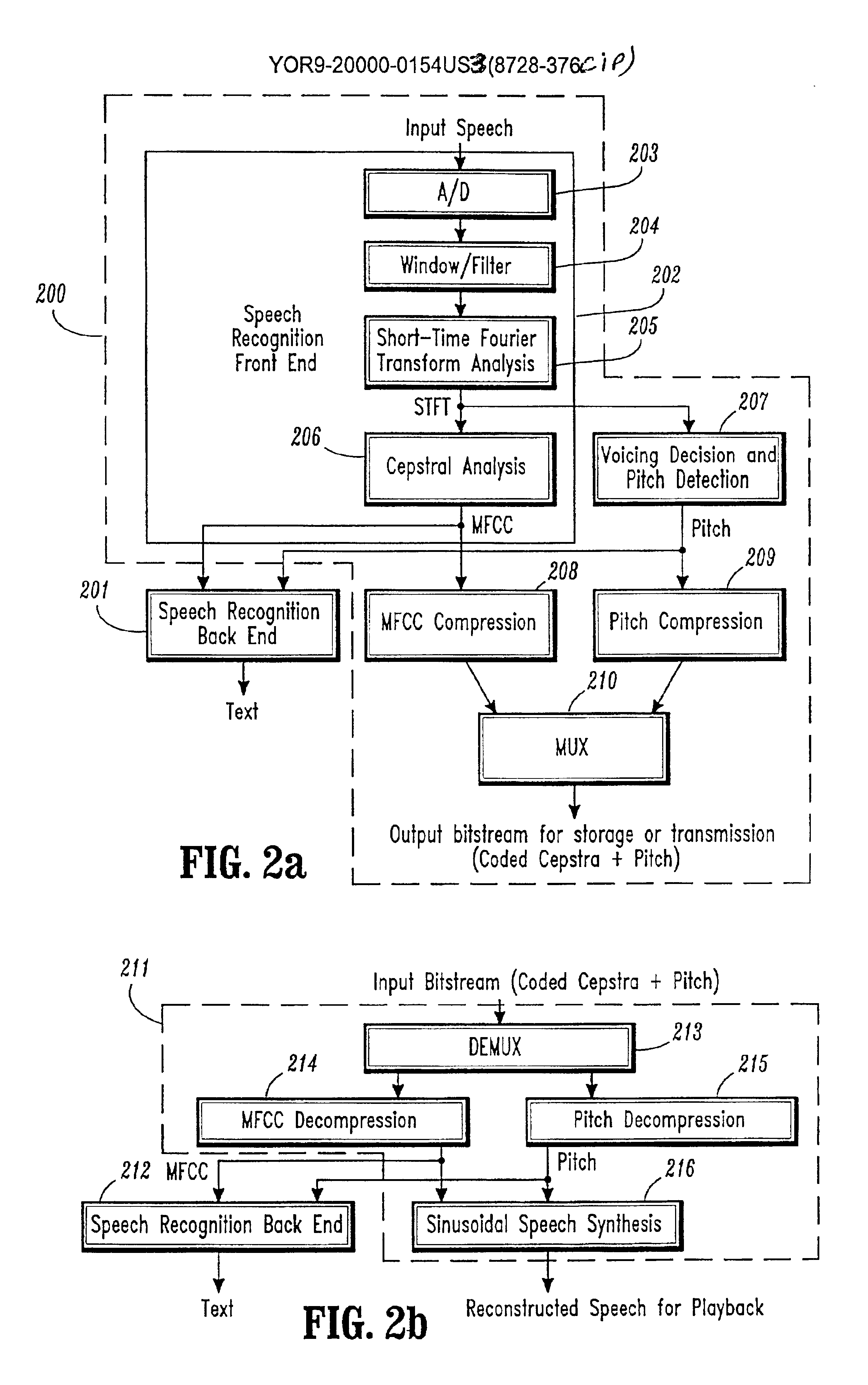

Conversational networking via transport, coding and control conversational protocols

InactiveUS6934756B2Broadcast transmission systemsMultiple digital computer combinationsSession controlRemote control

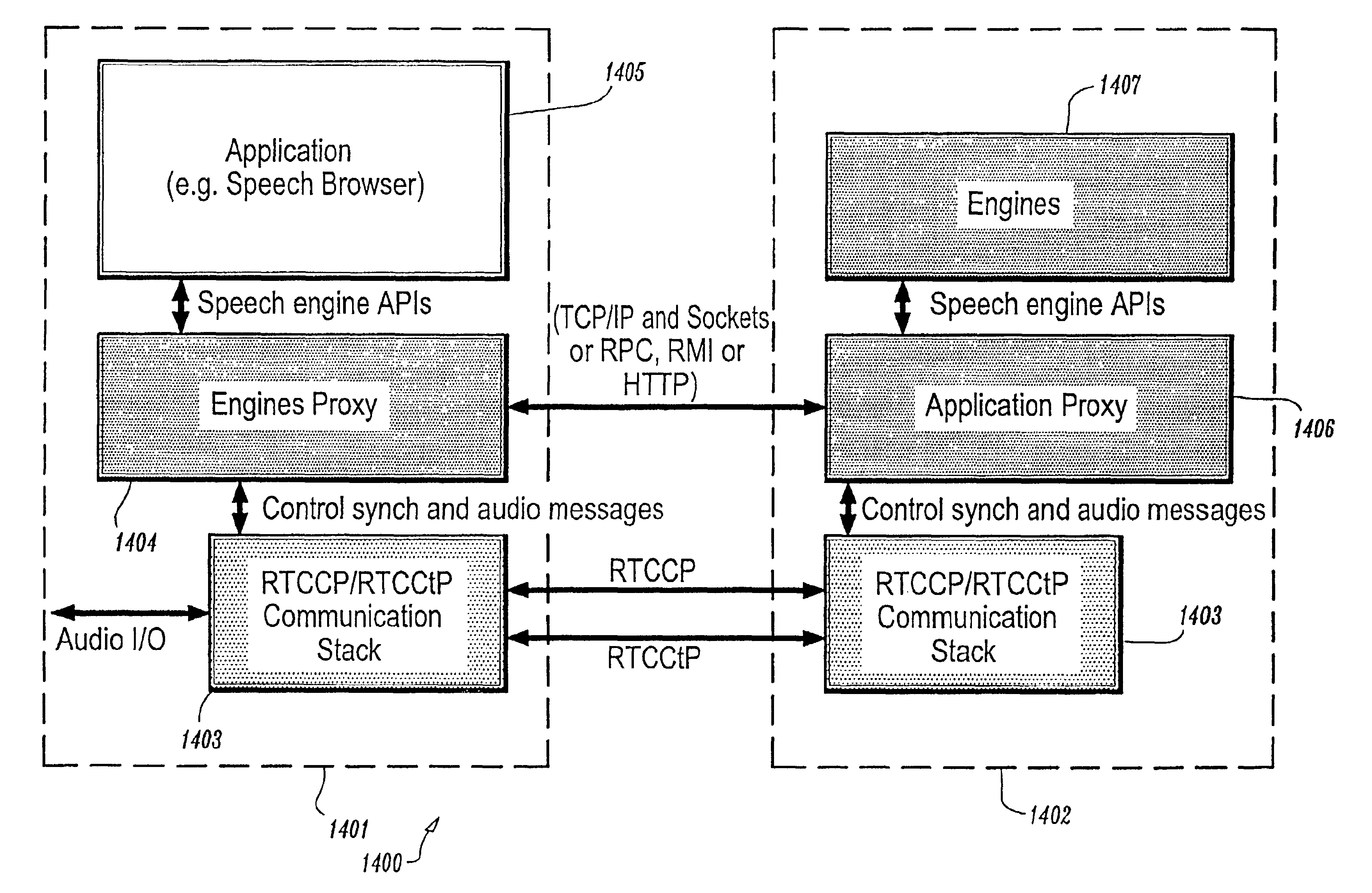

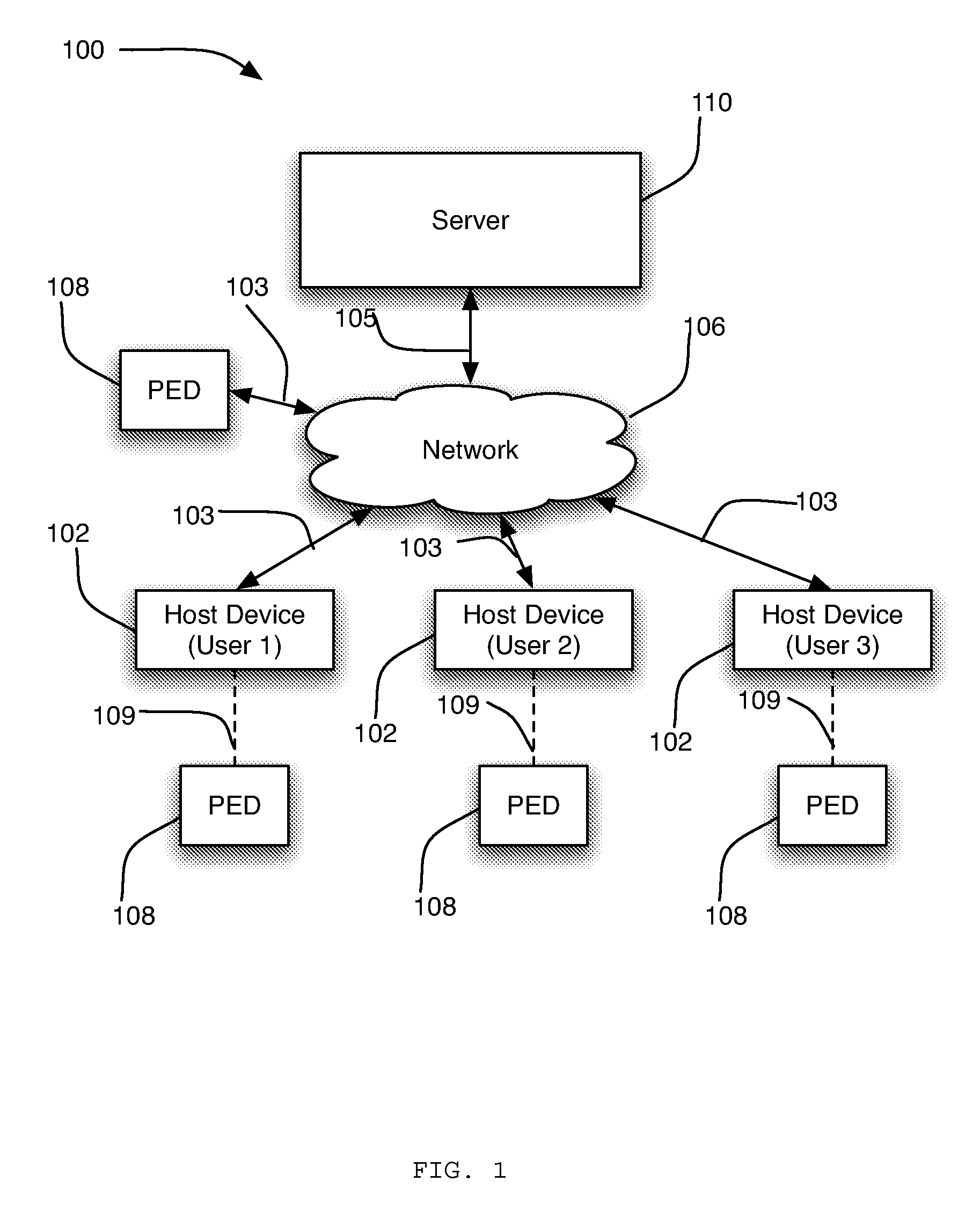

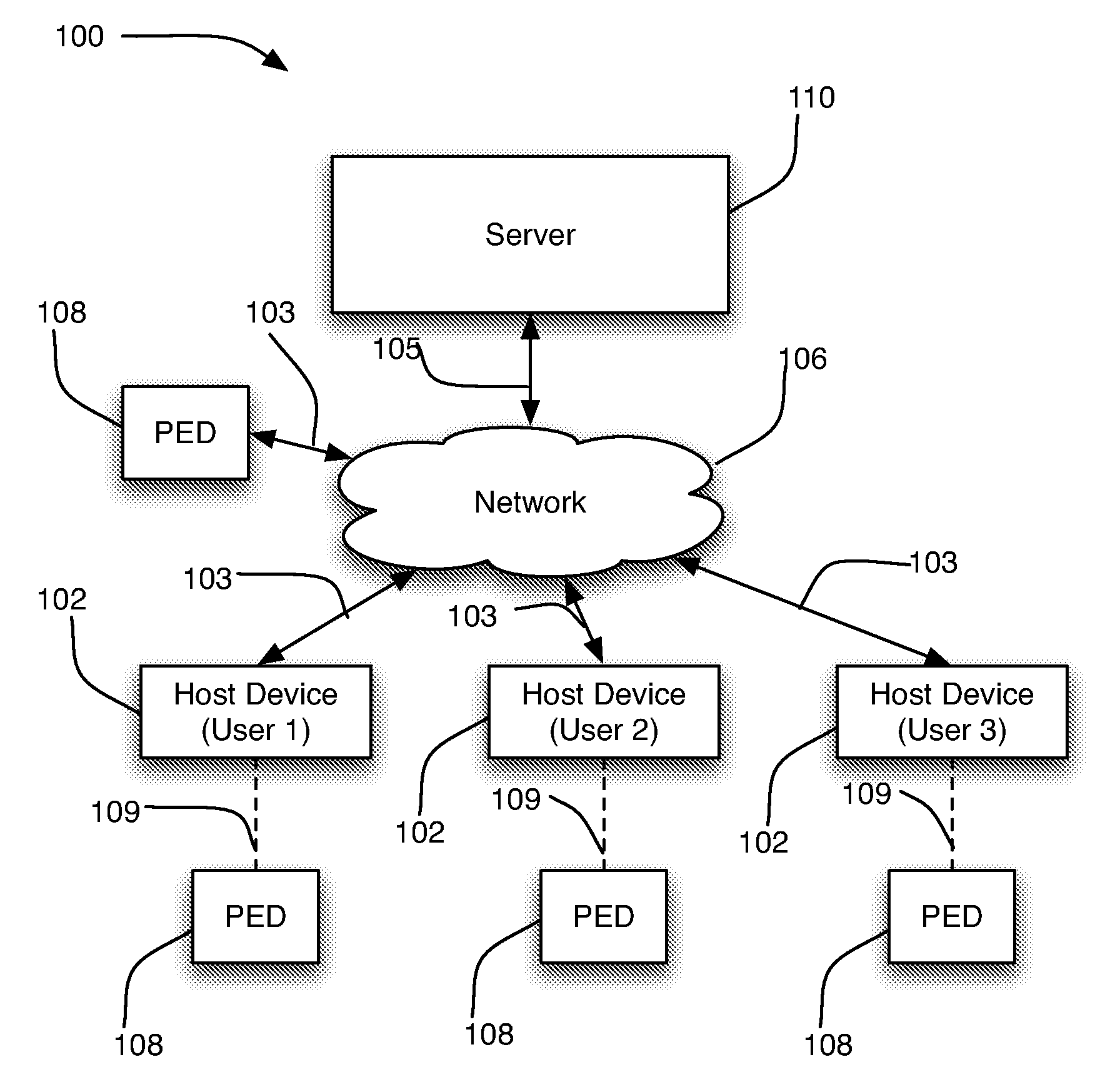

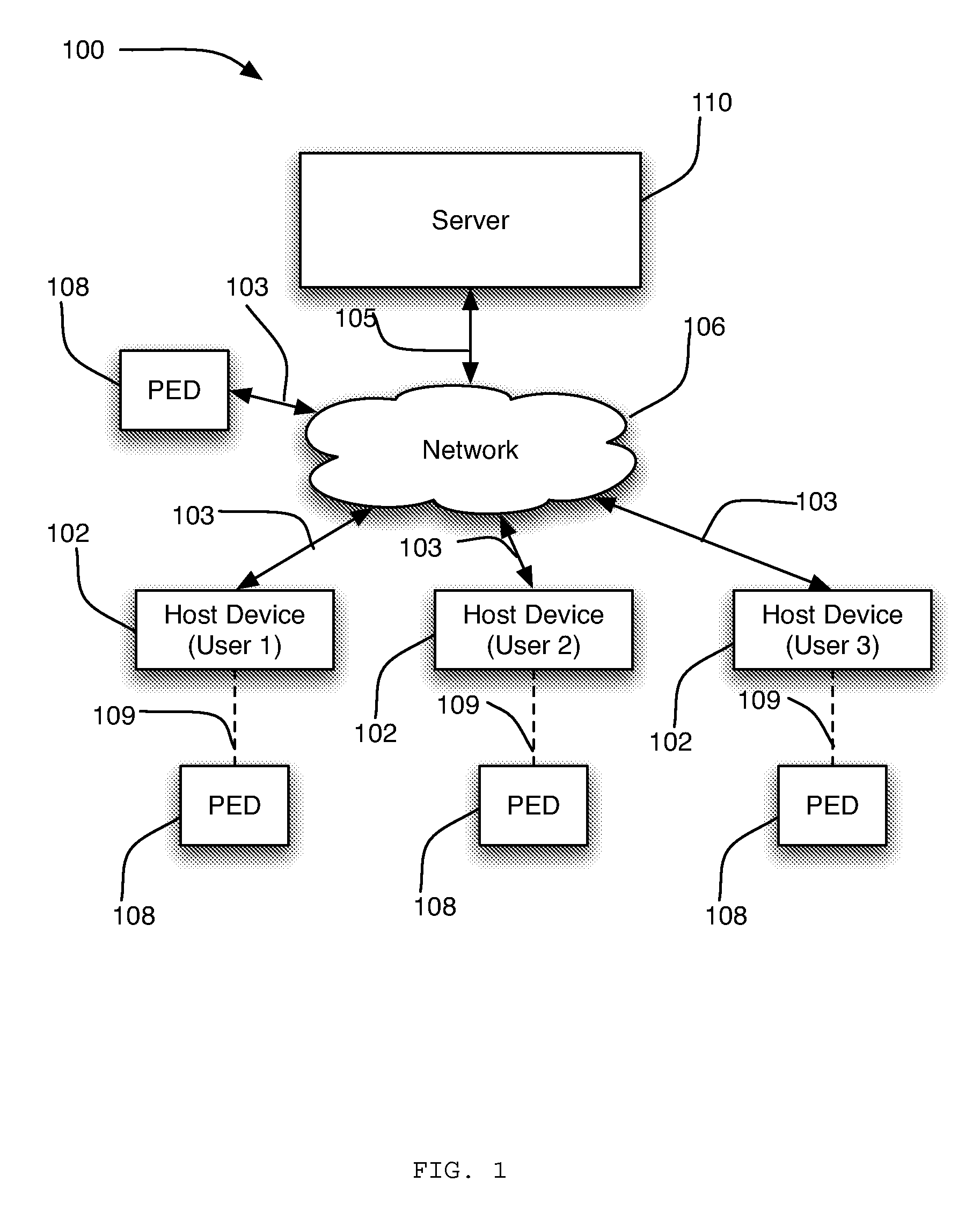

A system and method for implementing conversational protocols for distributed conversational networking architectures and / or distributed conversational applications, as well as real-time conversational computing between network-connected pervasive computing devices and / or servers over a computer network. The implementation of distributed conversational systems / applications according to the present invention is based, in part, on a suitably defined conversational coding, transport and control protocols. The control protocols include session control protocols, protocols for exchanging of speech meta-information, and speech engine remote control protocols.

Owner:IBM CORP

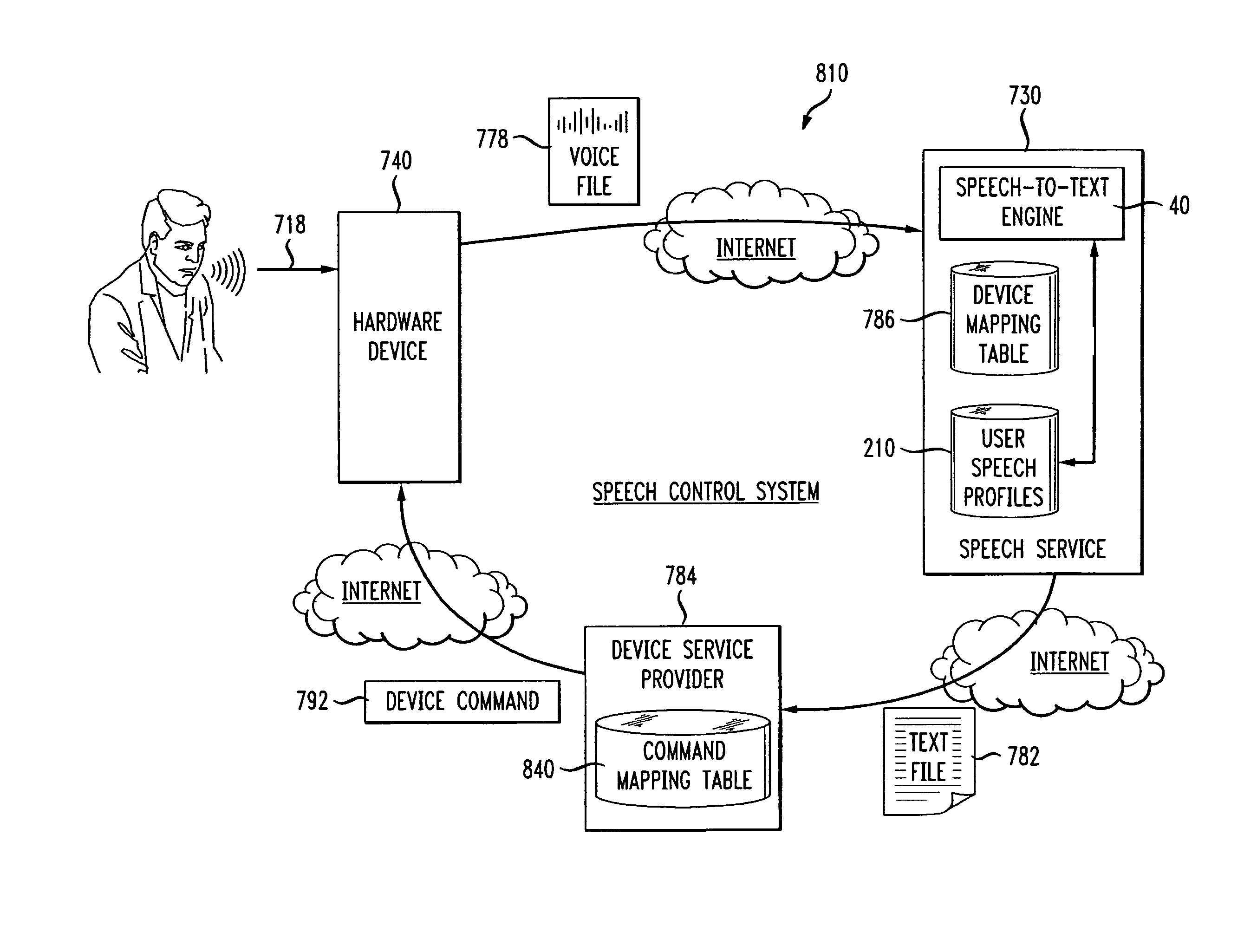

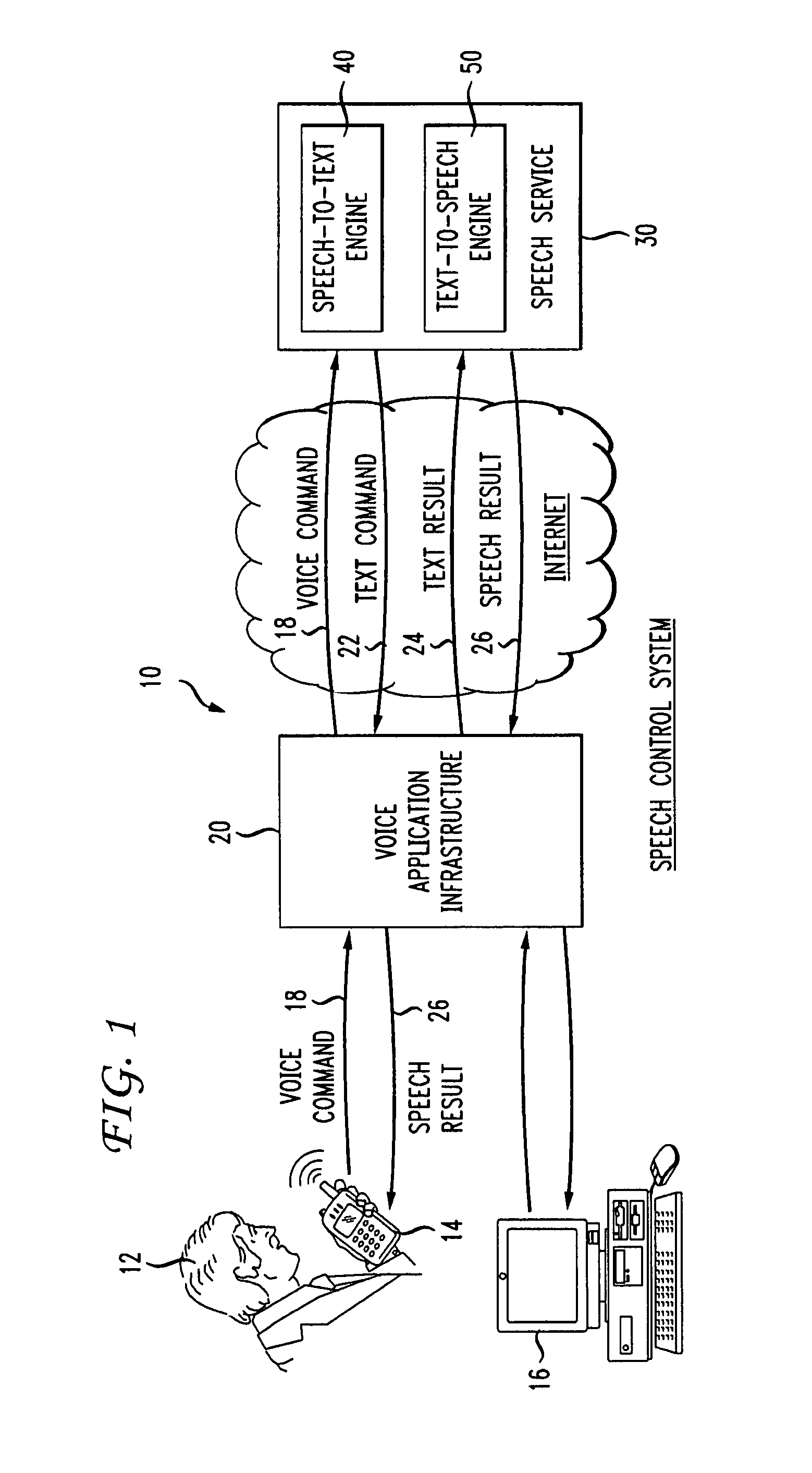

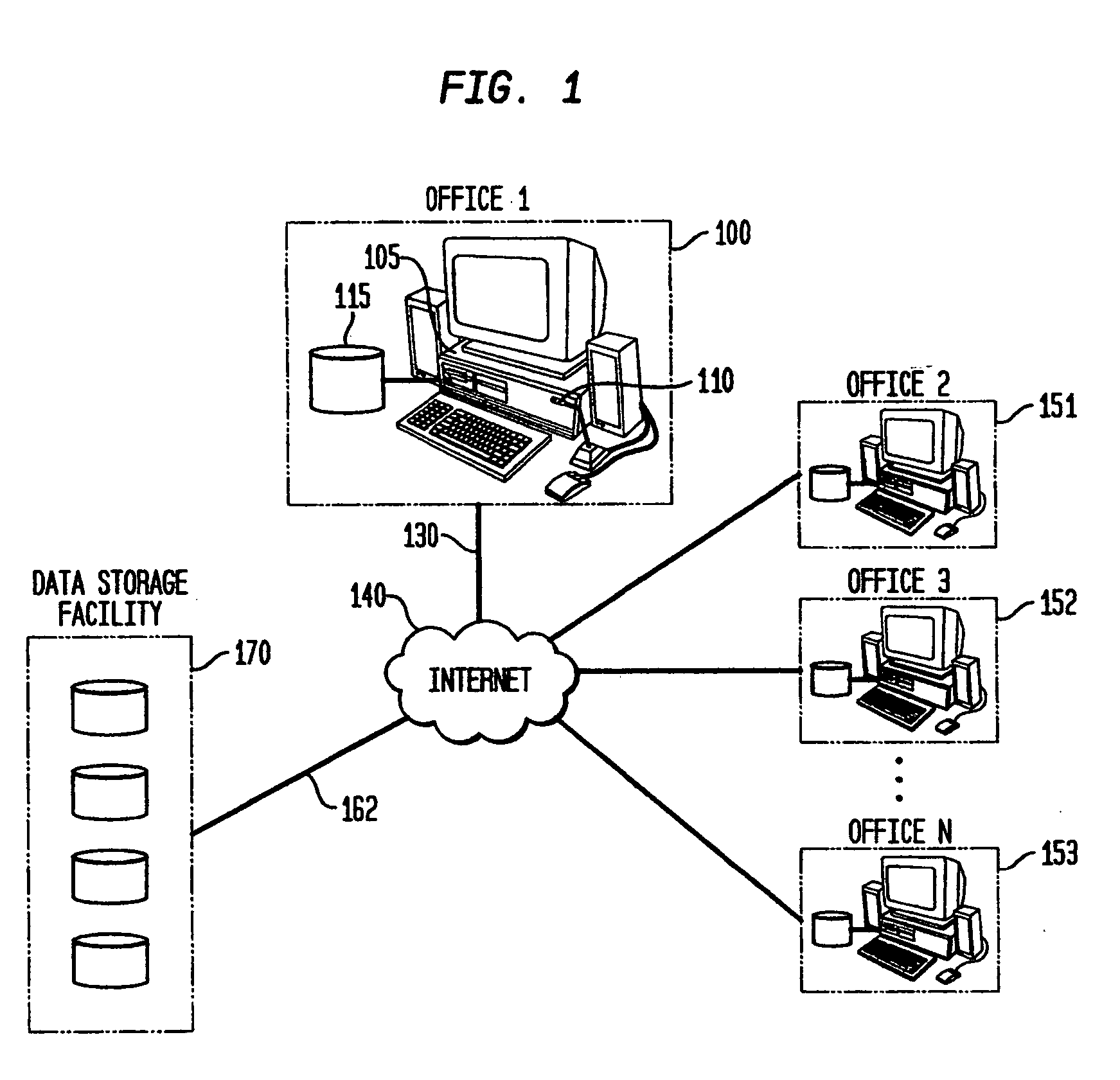

Speech controlled services and devices using internet

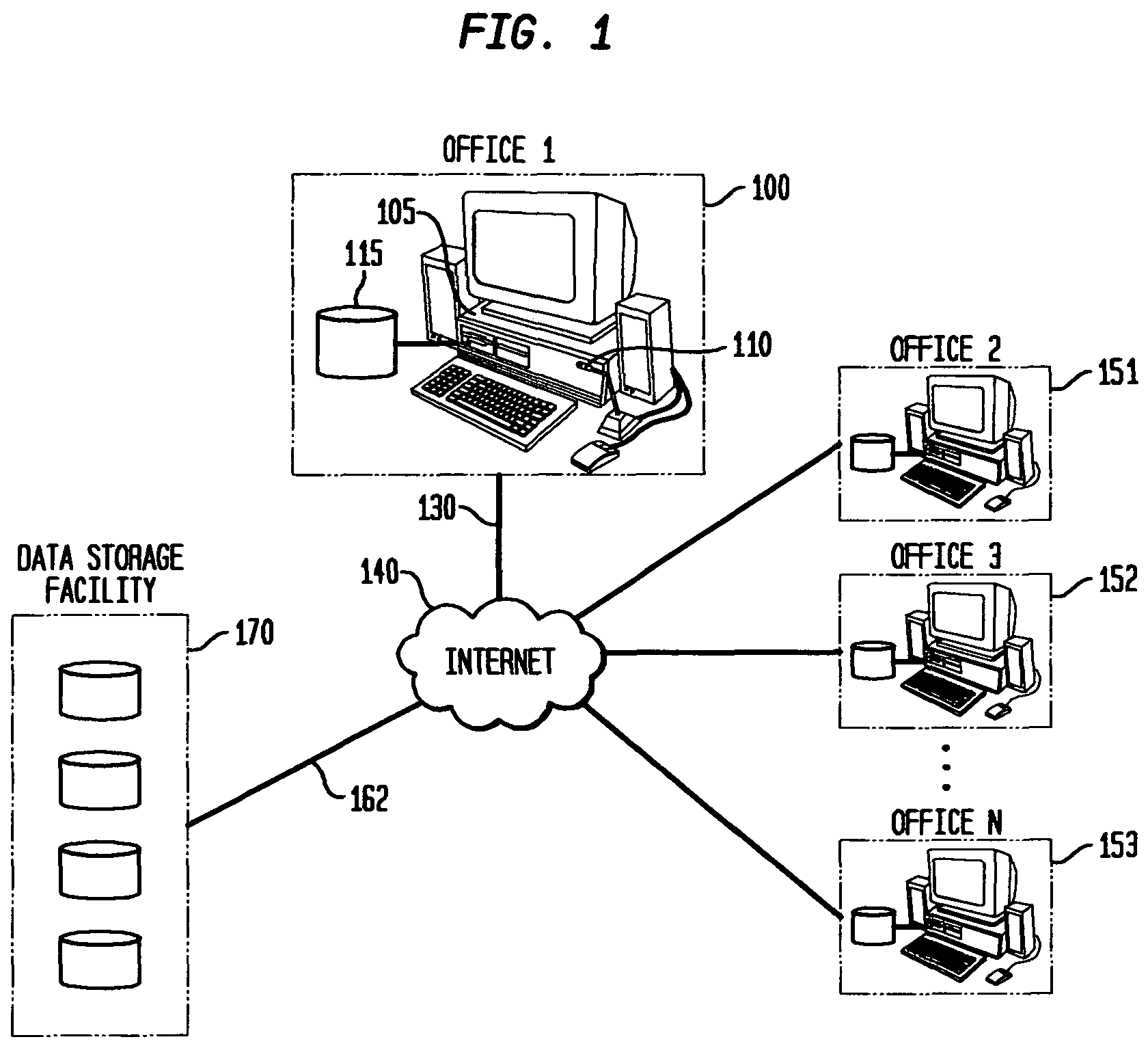

A speech service, including a speech-to-text engine and a text-to-speech engine, creates and maintains user profiles at a central location accessible over the Internet. A user connects to a software application over a mobile telephone and delivers a voice command. The speech service transcribes the voice command into a text command for the software application. The software application performs a service desired by the user and delivers a text result to the speech service that is converted into a speech result that is delivered to the user. A user speaks to a hardware device to perform a function. The hardware device sends the speech to the speech service over the Internet that transcribes the speech into a text command that is sent over the Internet to a device service provider. The device service provider maps the text command into a device command that is then sent back over the Internet to the hardware device to perform the function. A remote hardware device can be controlled using the software application.

Owner:FONEWEB

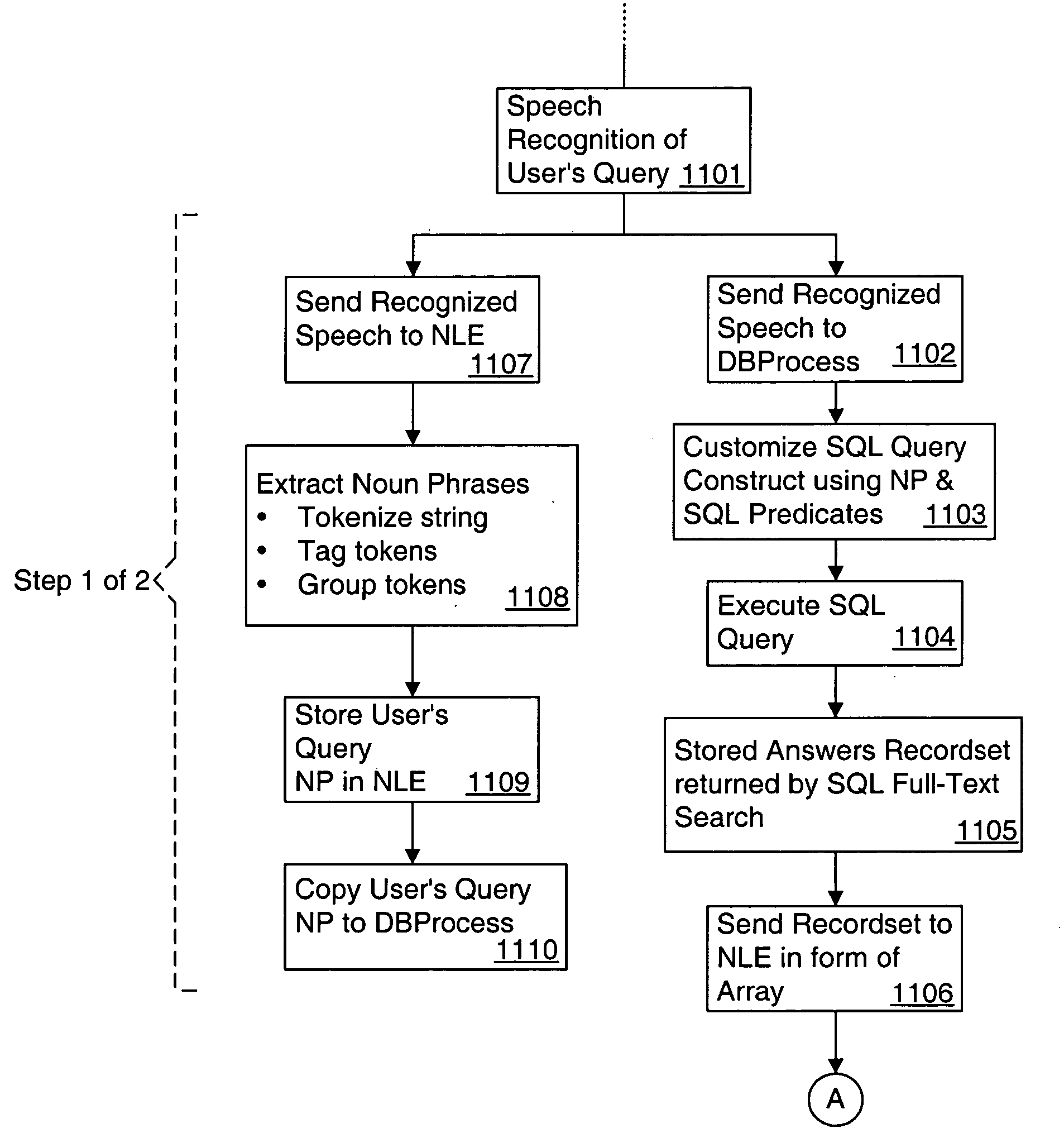

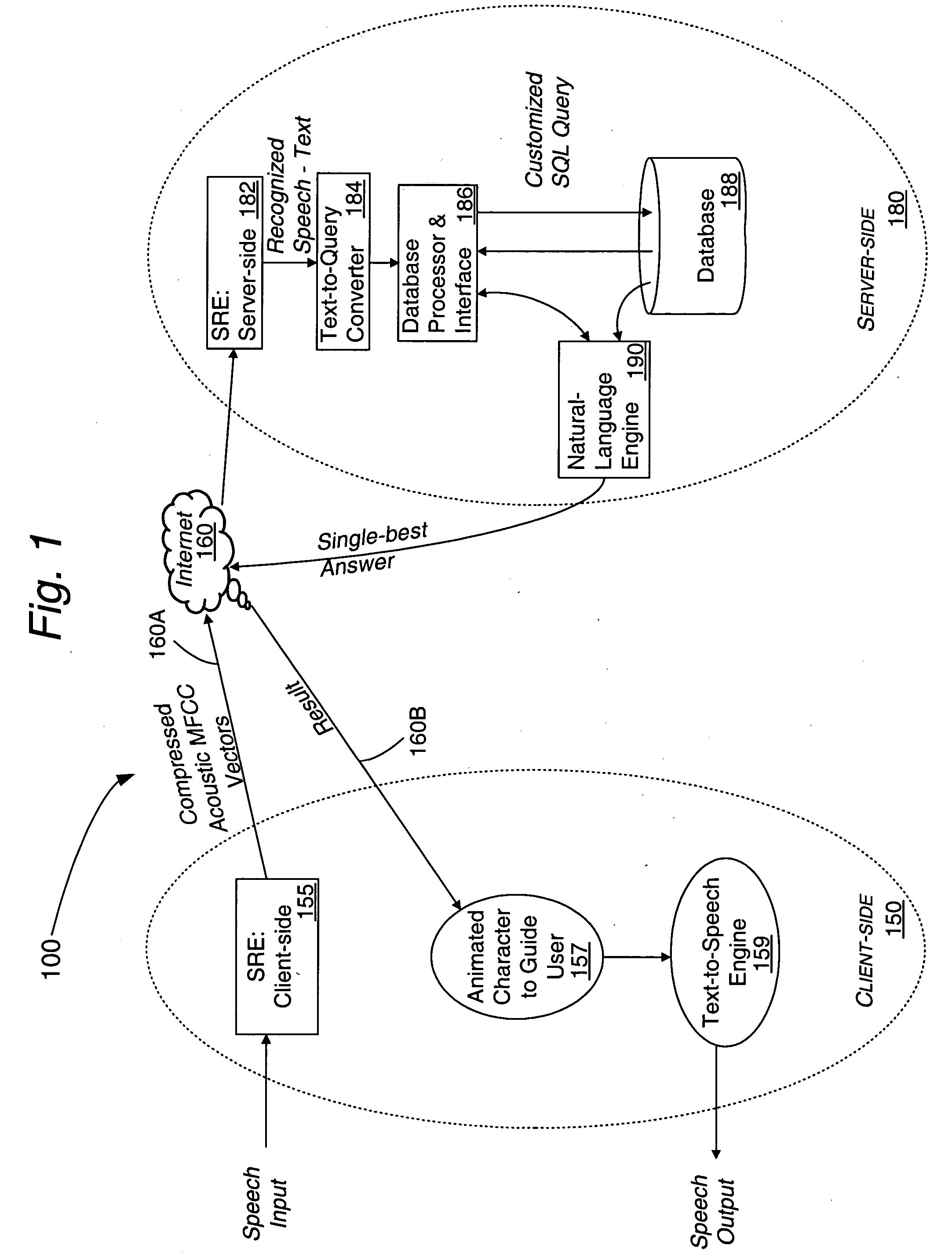

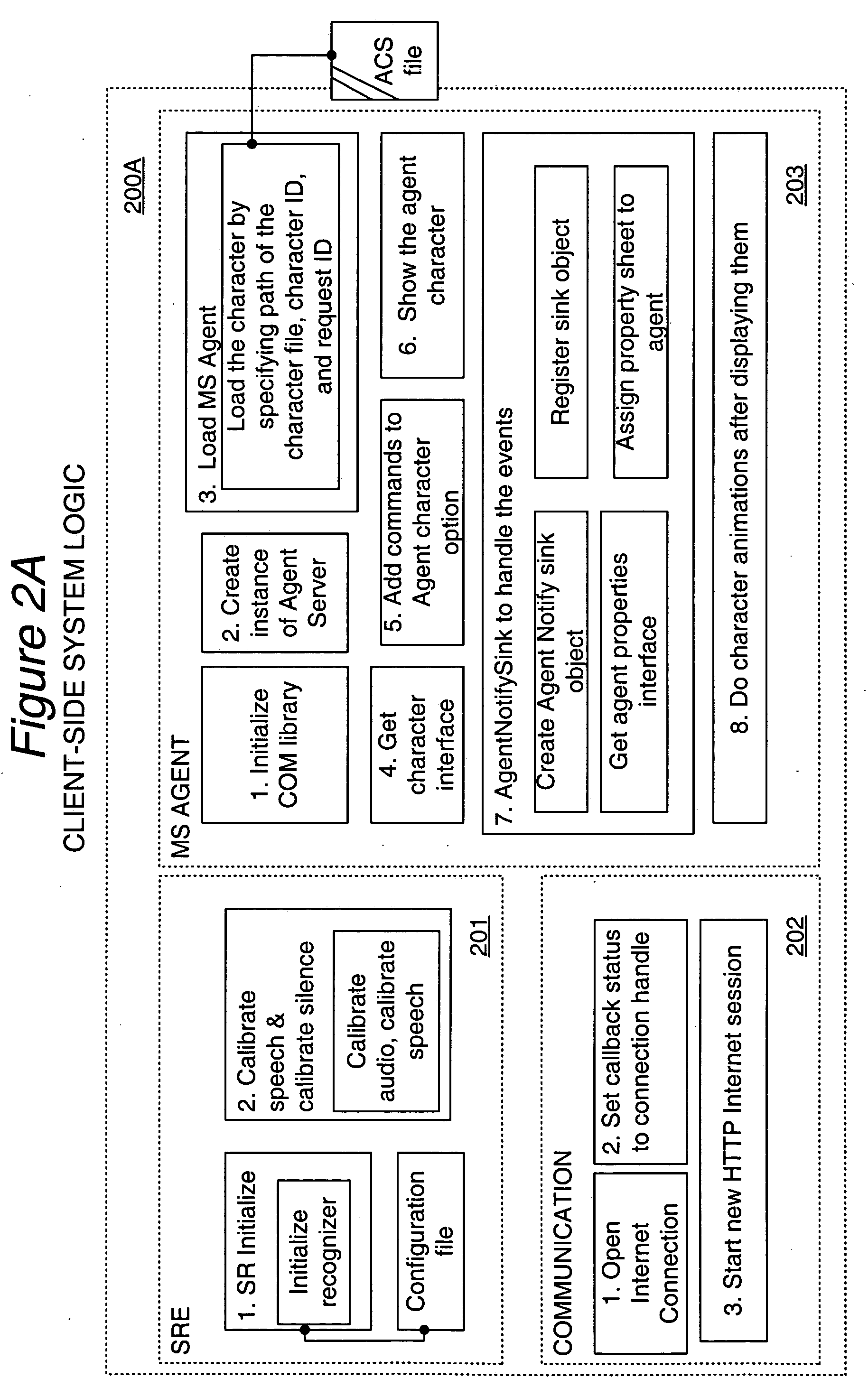

Distributed real time speech recognition system

InactiveUS20050080625A1Facilitates query recognitionAccurate best responseNatural language translationData processing applicationsFull text searchTime system

A real-time system incorporating speech recognition and linguistic processing for recognizing a spoken query by a user and distributed between client and server, is disclosed. The system accepts user's queries in the form of speech at the client where minimal processing extracts a sufficient number of acoustic speech vectors representing the utterance. These vectors are sent via a communications channel to the server where additional acoustic vectors are derived. Using Hidden Markov Models (HMMs), and appropriate grammars and dictionaries conditioned by the selections made by the user, the speech representing the user's query is fully decoded into text (or some other suitable form) at the server. This text corresponding to the user's query is then simultaneously sent to a natural language engine and a database processor where optimized SQL statements are constructed for a full-text search from a database for a recordset of several stored questions that best matches the user's query. Further processing in the natural language engine narrows the search to a single stored question. The answer corresponding to this single stored question is next retrieved from the file path and sent to the client in compressed form. At the client, the answer to the user's query is articulated to the user using a text-to-speech engine in his or her native natural language. The system requires no training and can operate in several natural languages.

Owner:NUANCE COMM INC

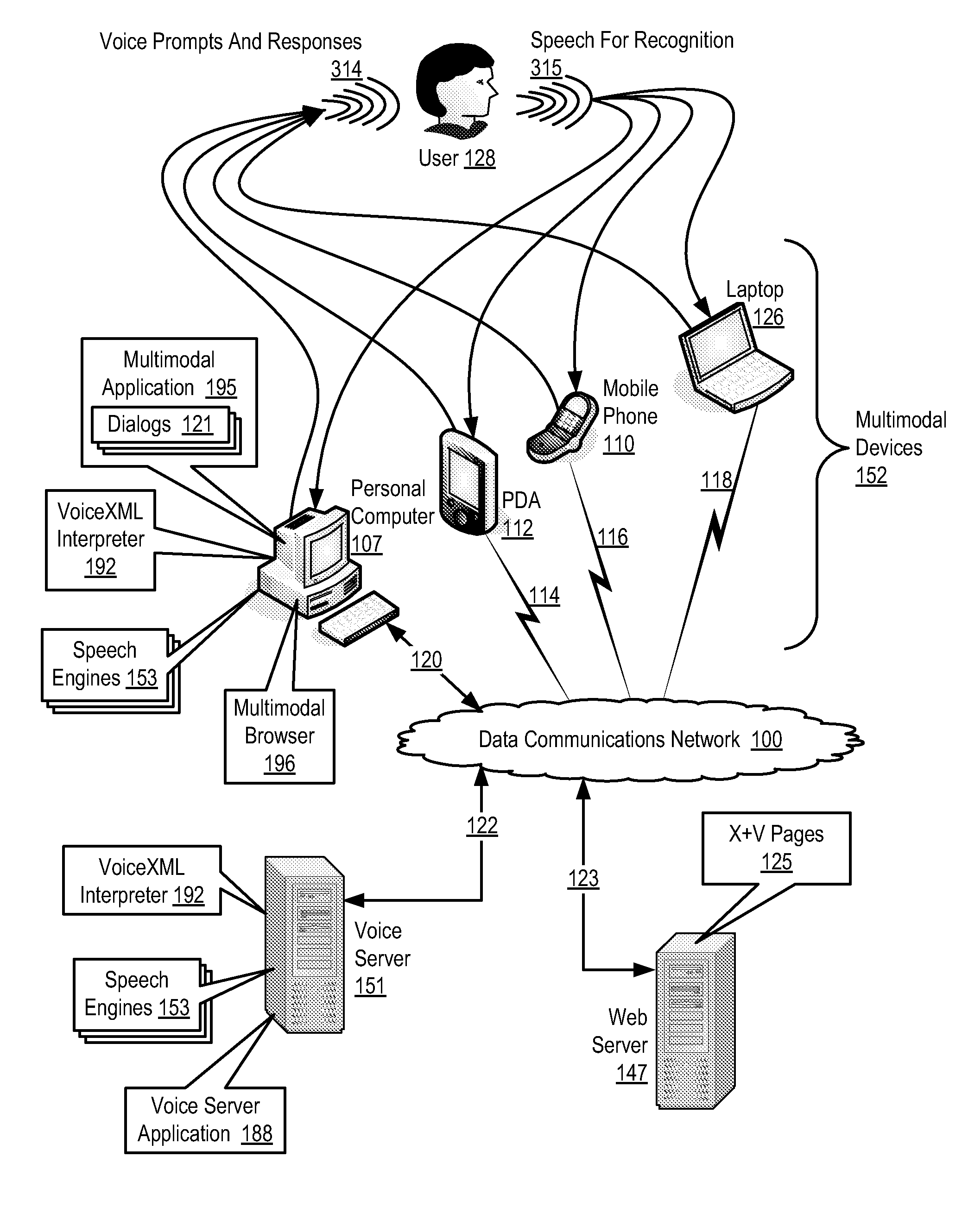

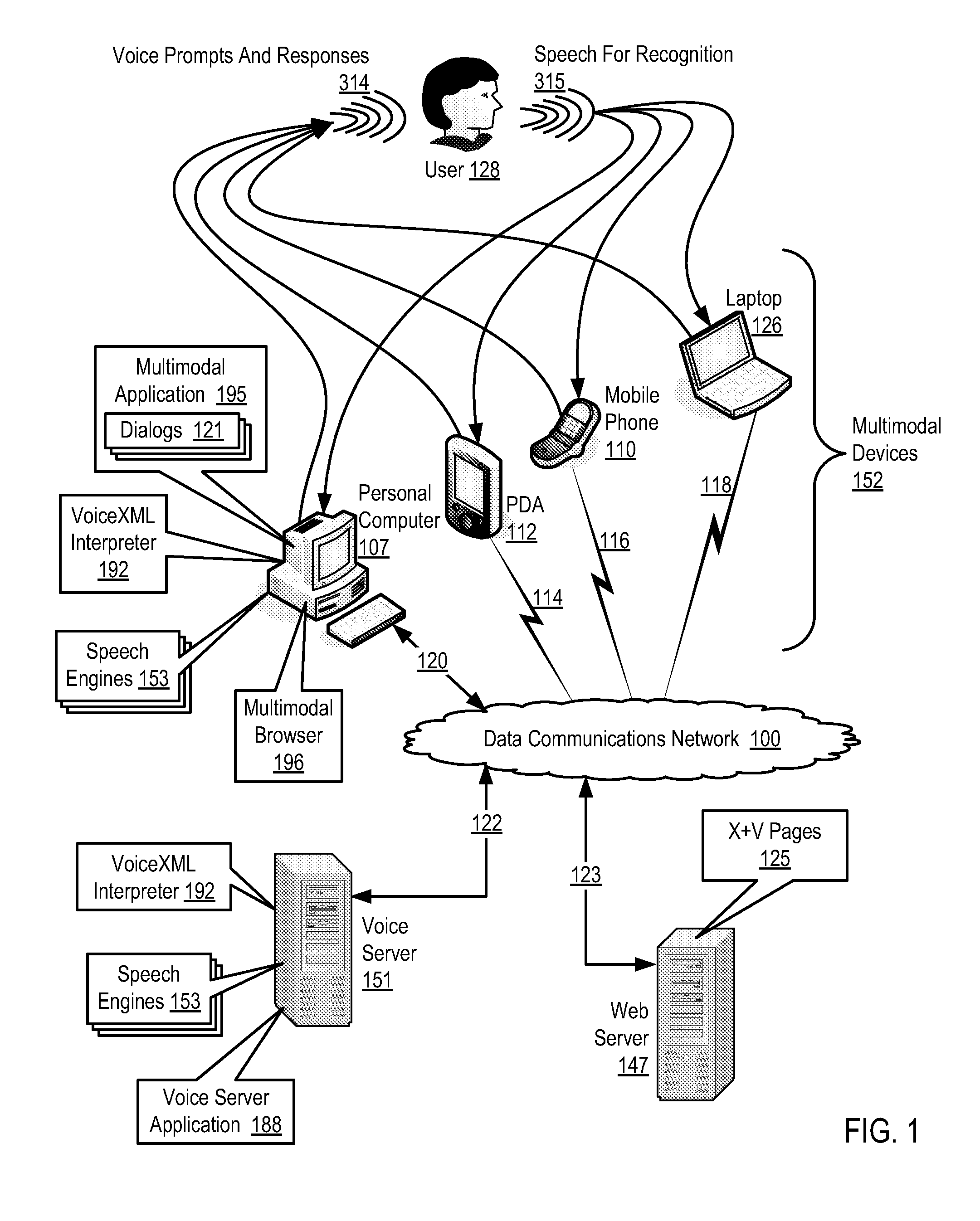

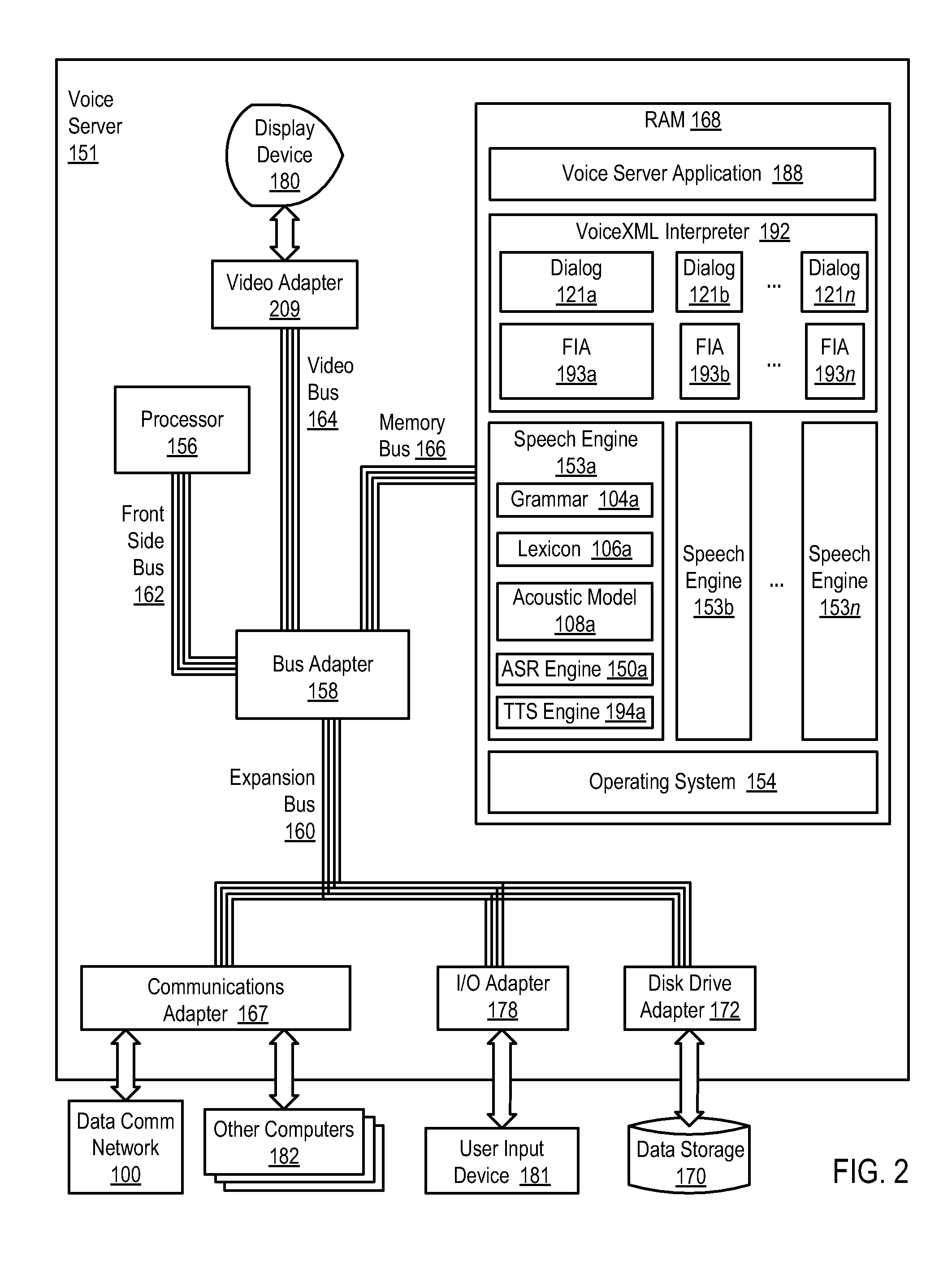

Supporting Multi-Lingual User Interaction With A Multimodal Application

Methods, apparatus, and products are disclosed for supporting multi-lingual user interaction with a multimodal application, the application including a plurality of VoiceXML dialogs, each dialog characterized by a particular language, supporting multi-lingual user interaction implemented with a plurality of speech engines, each speech engine having a grammar and characterized by a language corresponding to one of the dialogs, with the application operating on a multimodal device supporting multiple modes of interaction including a voice mode and one or more non-voice modes, the application operatively coupled to the speech engines through a VoiceXML interpreter, the VoiceXML interpreter: receiving a voice utterance from a user; determining in parallel, using the speech engines, recognition results for each dialog in dependence upon the voice utterance and the grammar for each speech engine; administering the recognition results for the dialogs; and selecting a language for user interaction in dependence upon the administered recognition results.

Owner:NUANCE COMM INC

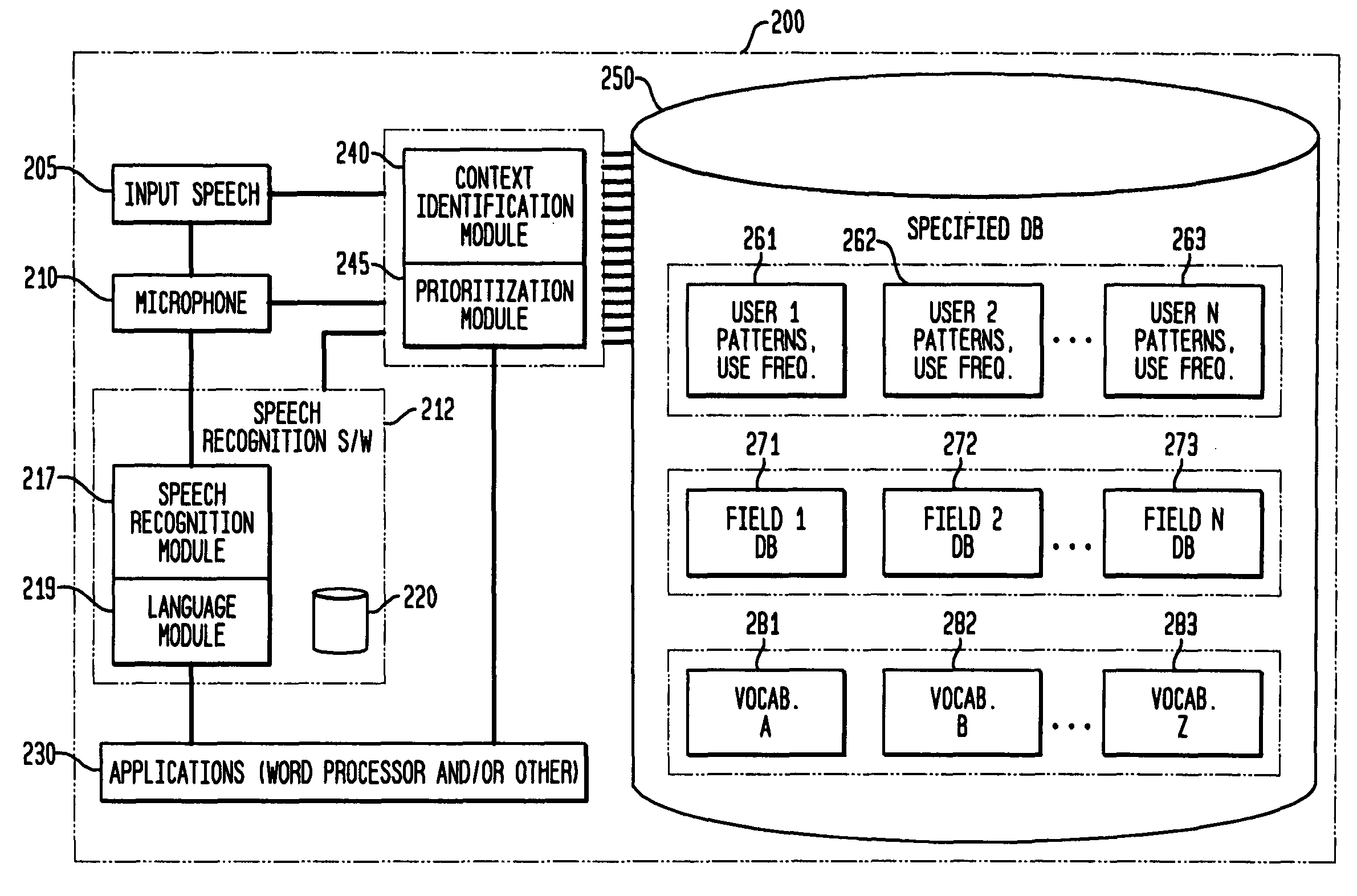

Method and apparatus for improving the transcription accuracy of speech recognition software

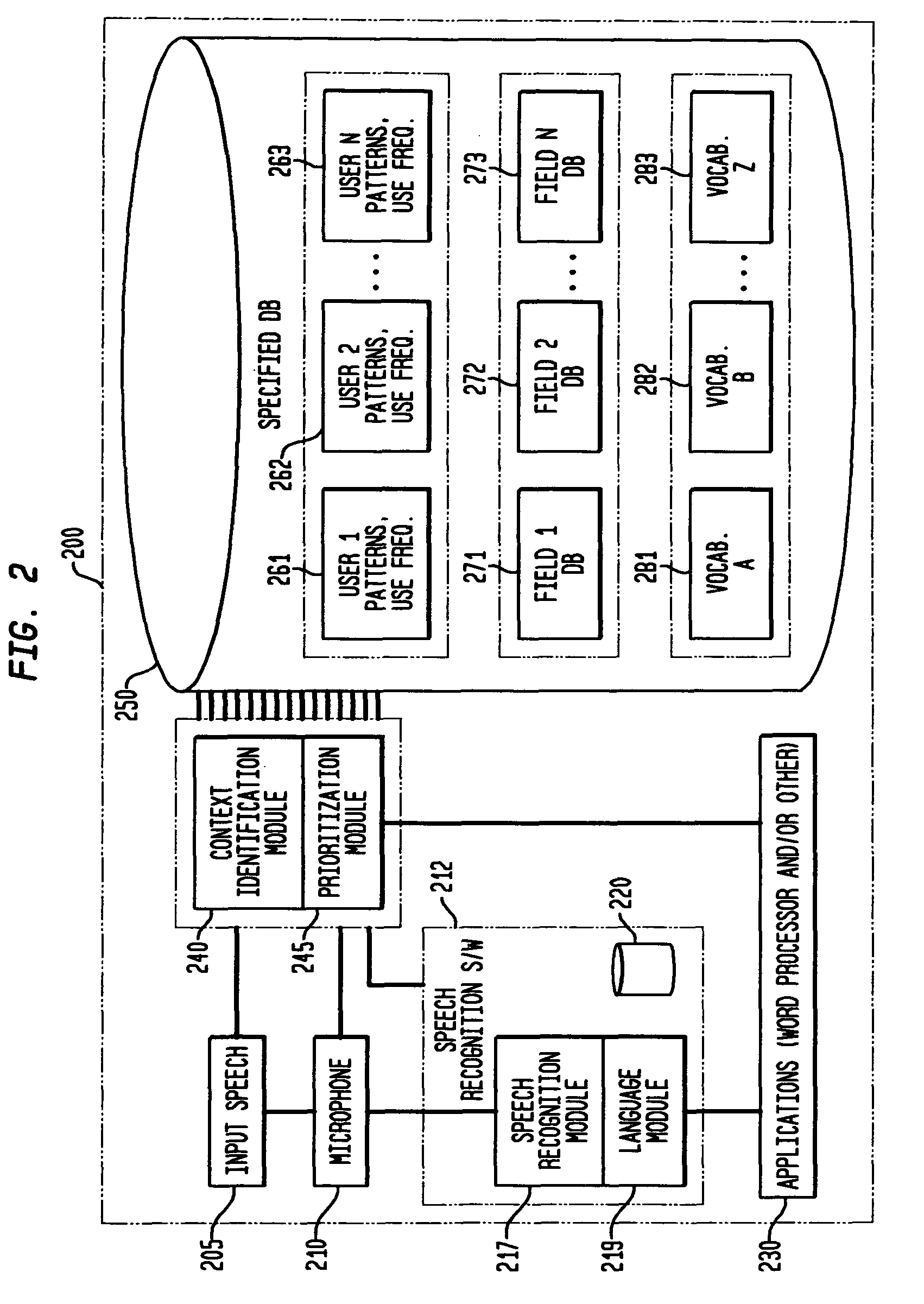

ActiveUS7805299B2Improve accuracyEasy to identifySpeech recognitionDigital dataSpeech identification

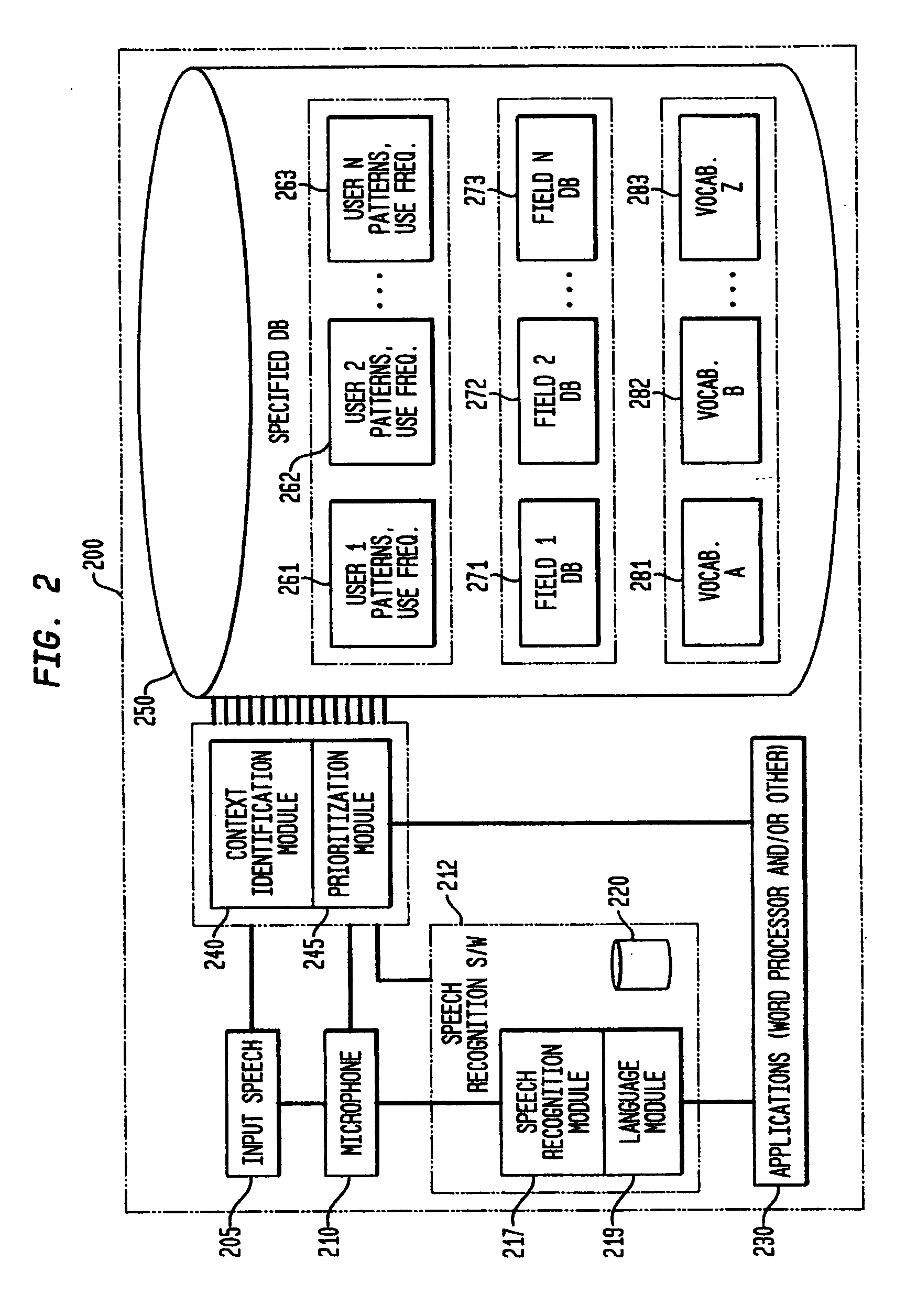

A virtual vocabulary database is provided for use with a with a particular user database as part of a speech recognition system. Vocabulary elements within the virtual database are imported from the user database and are tagged to include numerical data corresponding to the historical use of the vocabulary element within the user database. For each speech input, potential vocabulary element matches from the speech recognition system are provided to the virtual database software which creates virtual sub-vocabularies from the criteria according to predefined criteria templates. The software then applies vocabulary element weighting adjustments according to the virtual sub-vocabulary weightings and applies the adjustment to the default weighting provided by the speech recognition system. The modified weightings are returned with the associated vocabulary elements to the speech engine for selection of an appropriate match to the input speech.

Owner:COIFMAN ROBERT E

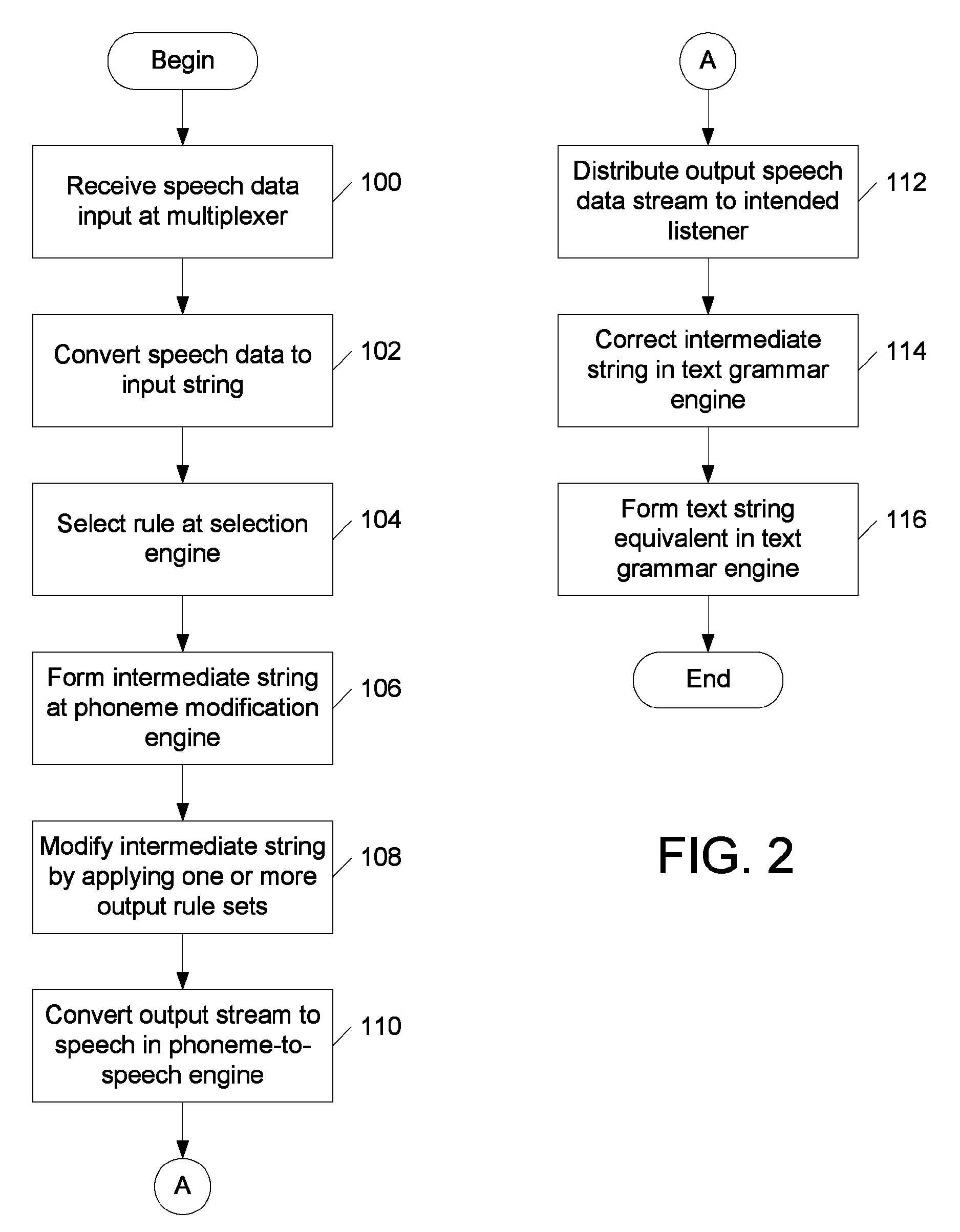

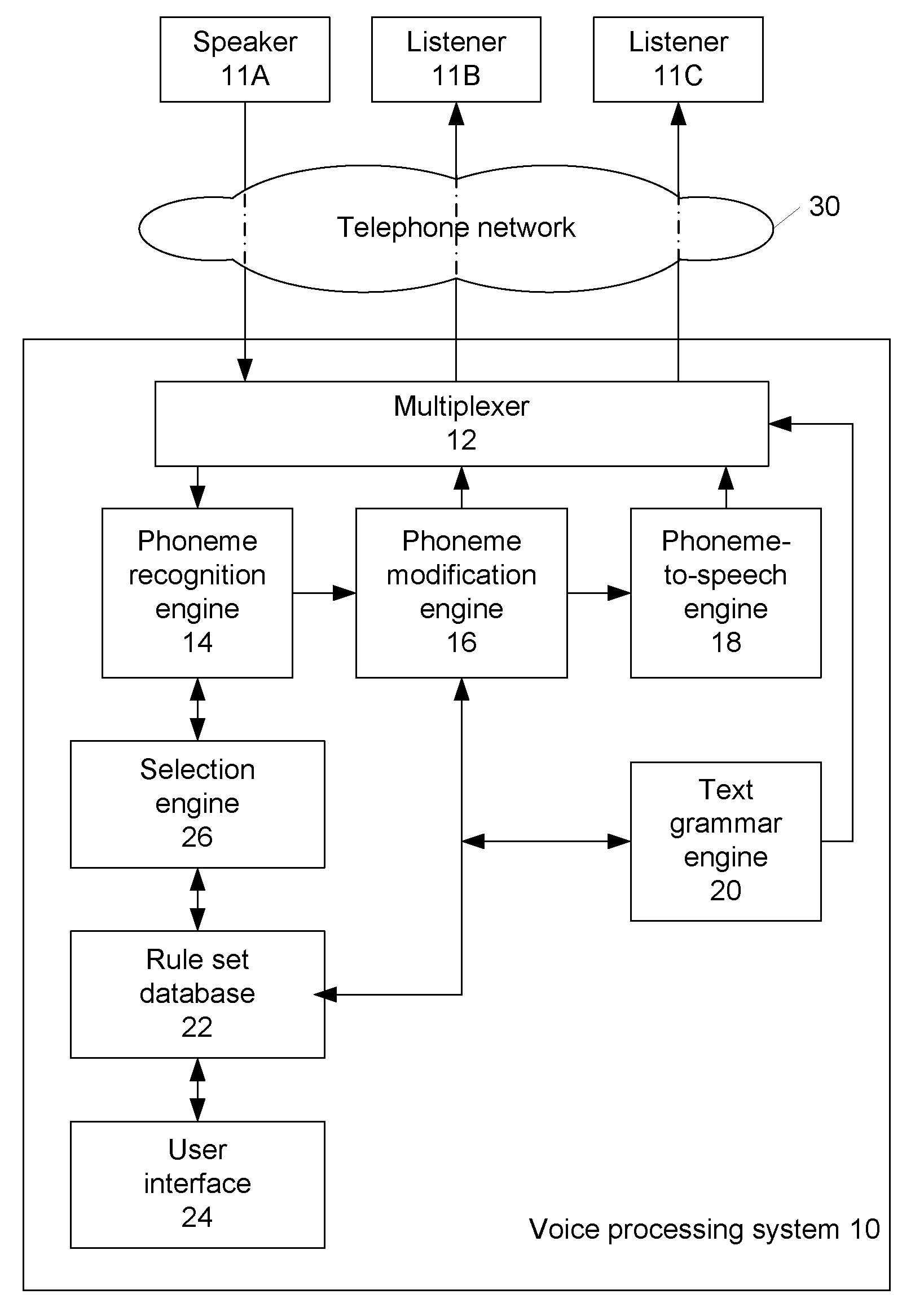

Phonetic decoding and concatentive speech synthesis

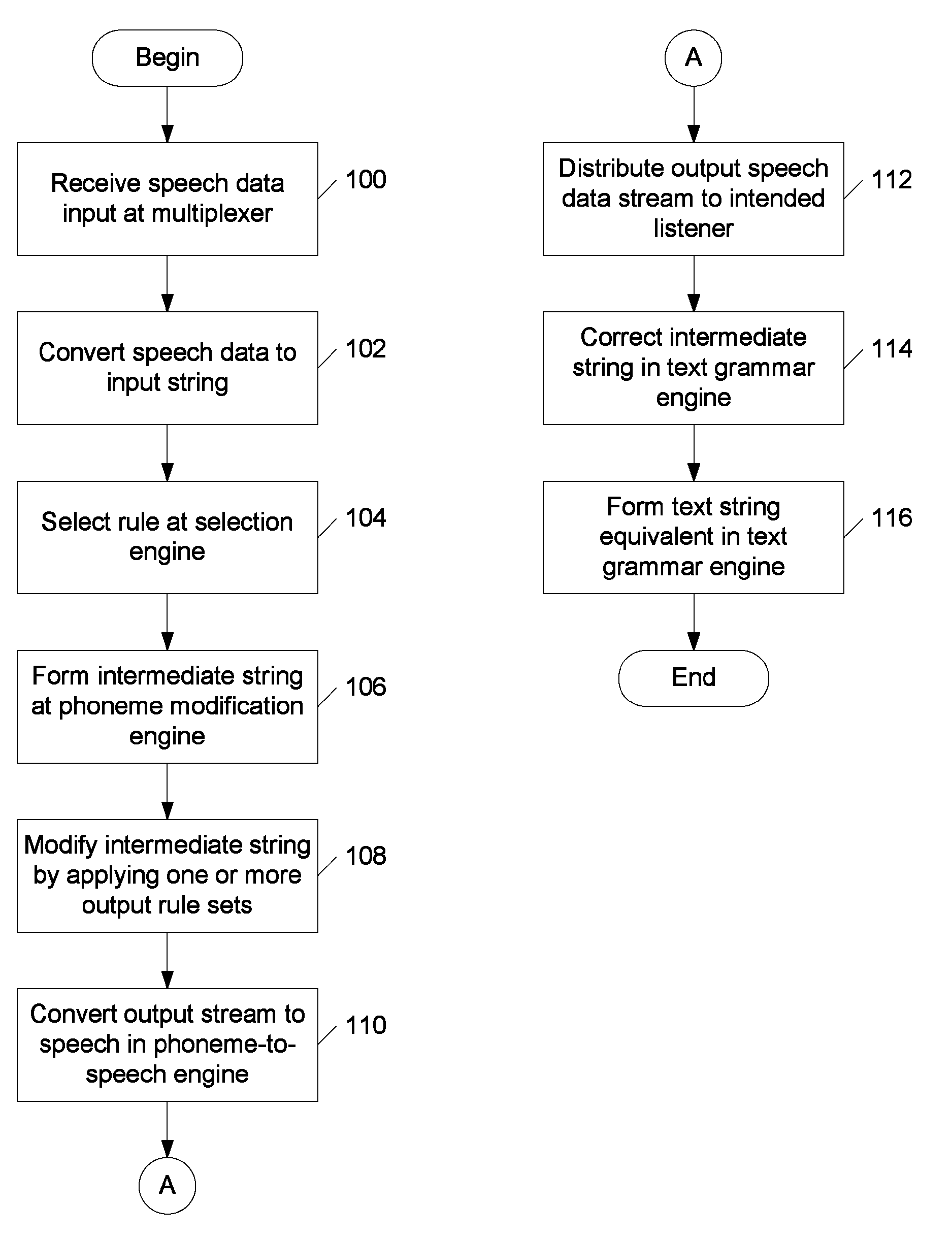

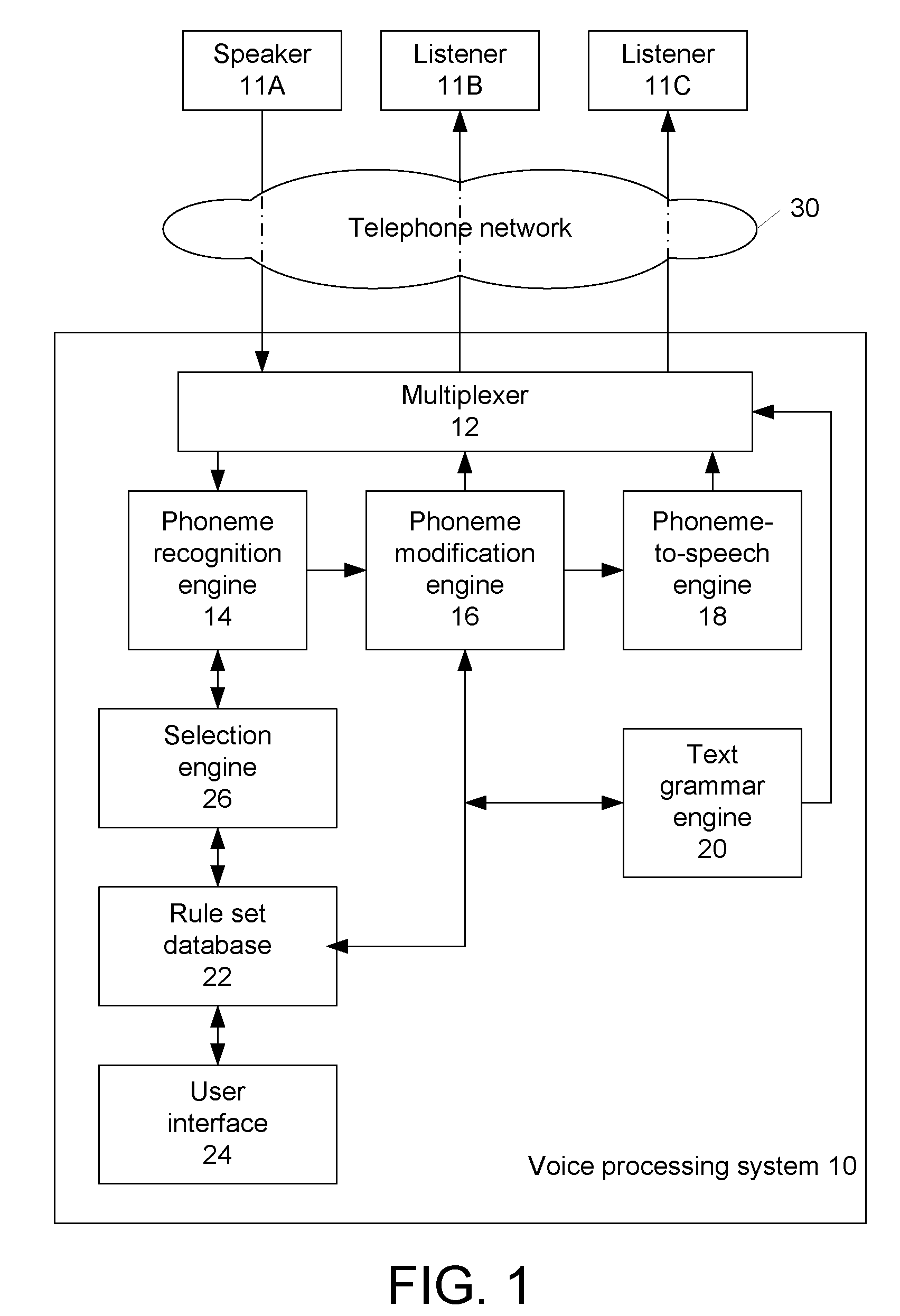

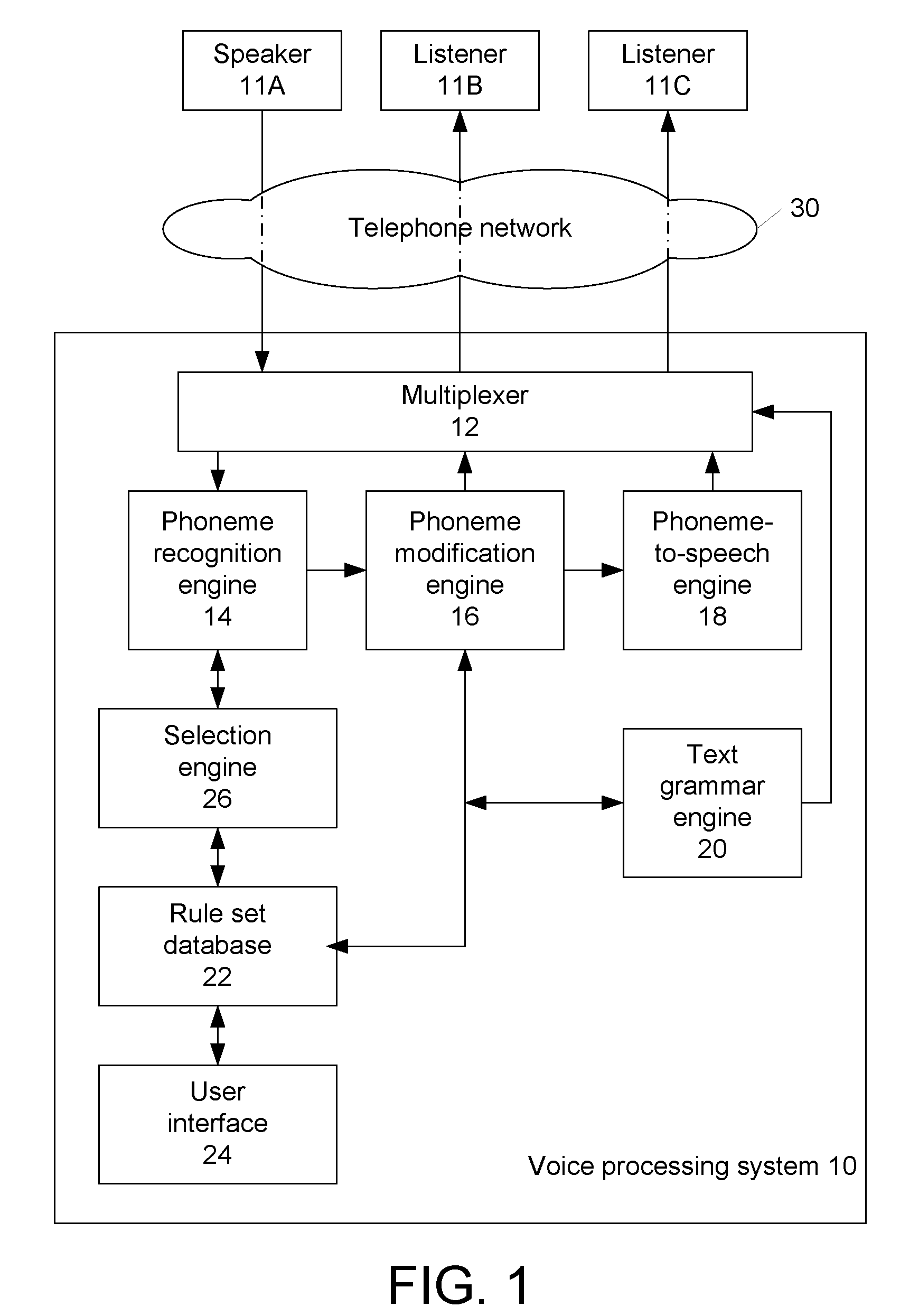

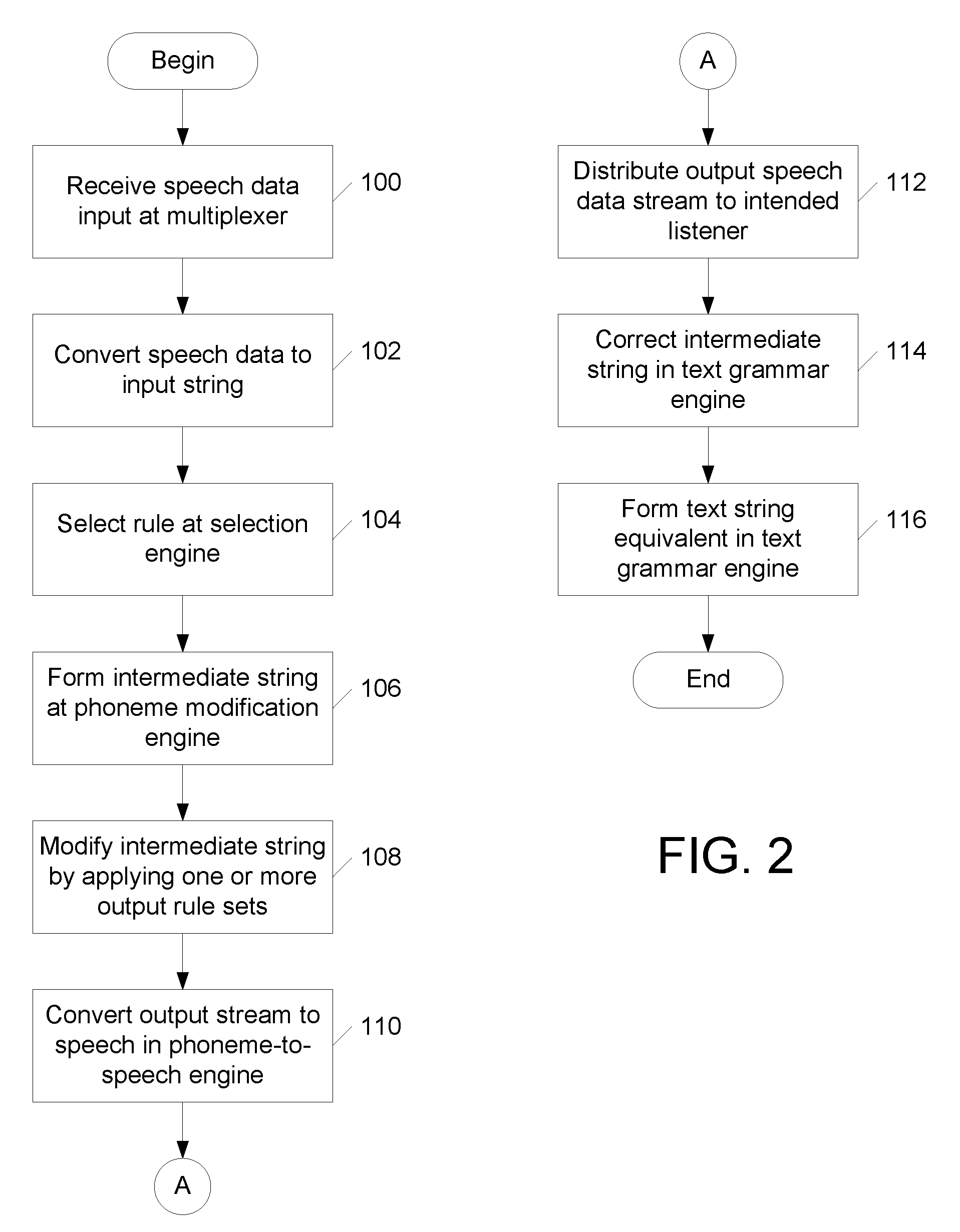

A speech processing system includes a multiplexer that receives speech data input as part of a conversation turn in a conversation session between two or more users where one user is a speaker and each of the other users is a listener in each conversation turn. A speech recognizing engine converts the speech data to an input string of acoustic data while a speech modifier forms an output string based on the input string by changing an item of acoustic data according to a rule. The system also includes a phoneme speech engine for converting the first output string of acoustic data including modified and unmodified data to speech data for output via the multiplexer to listeners during the conversation turn.

Owner:CERENCE OPERATING CO

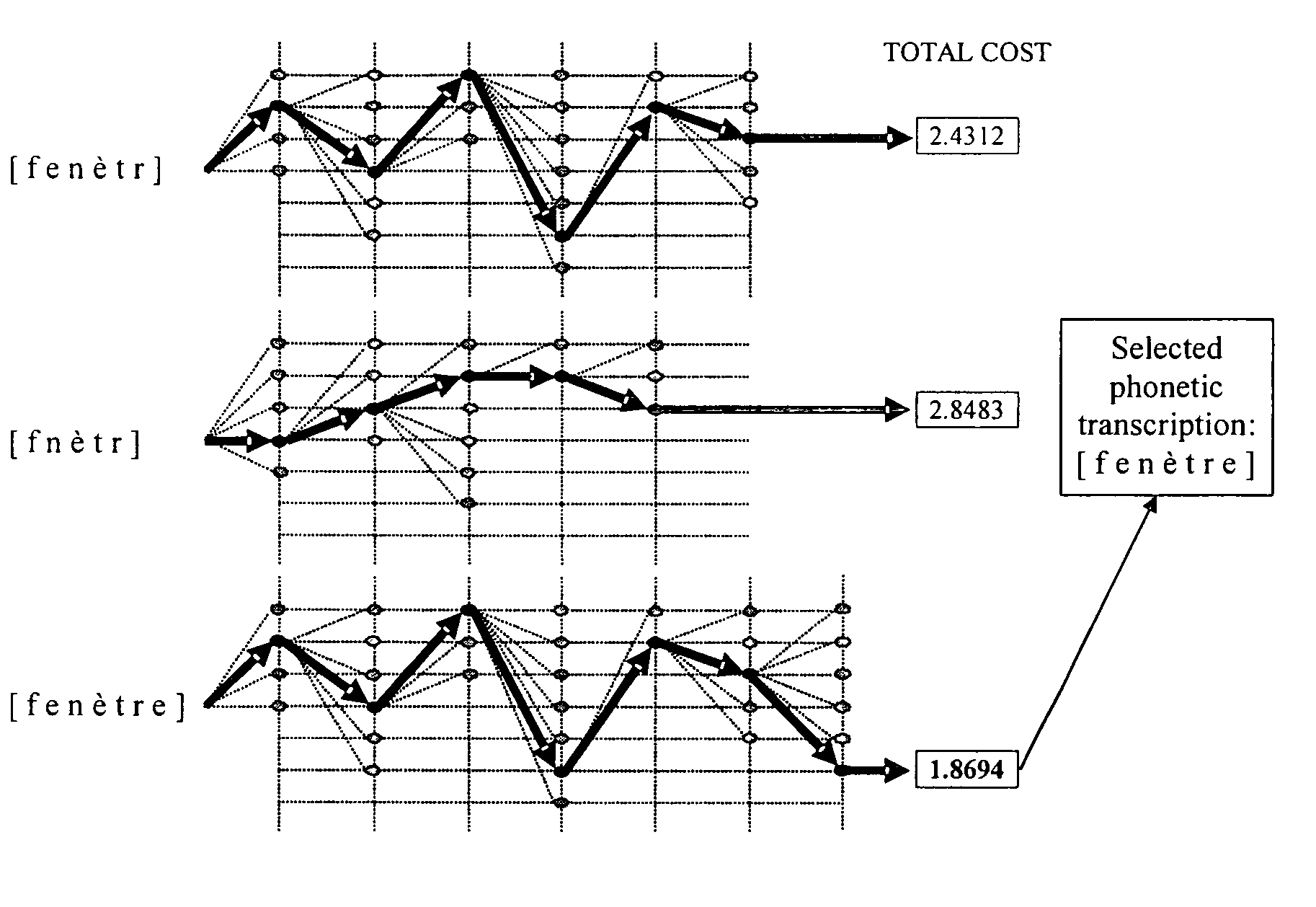

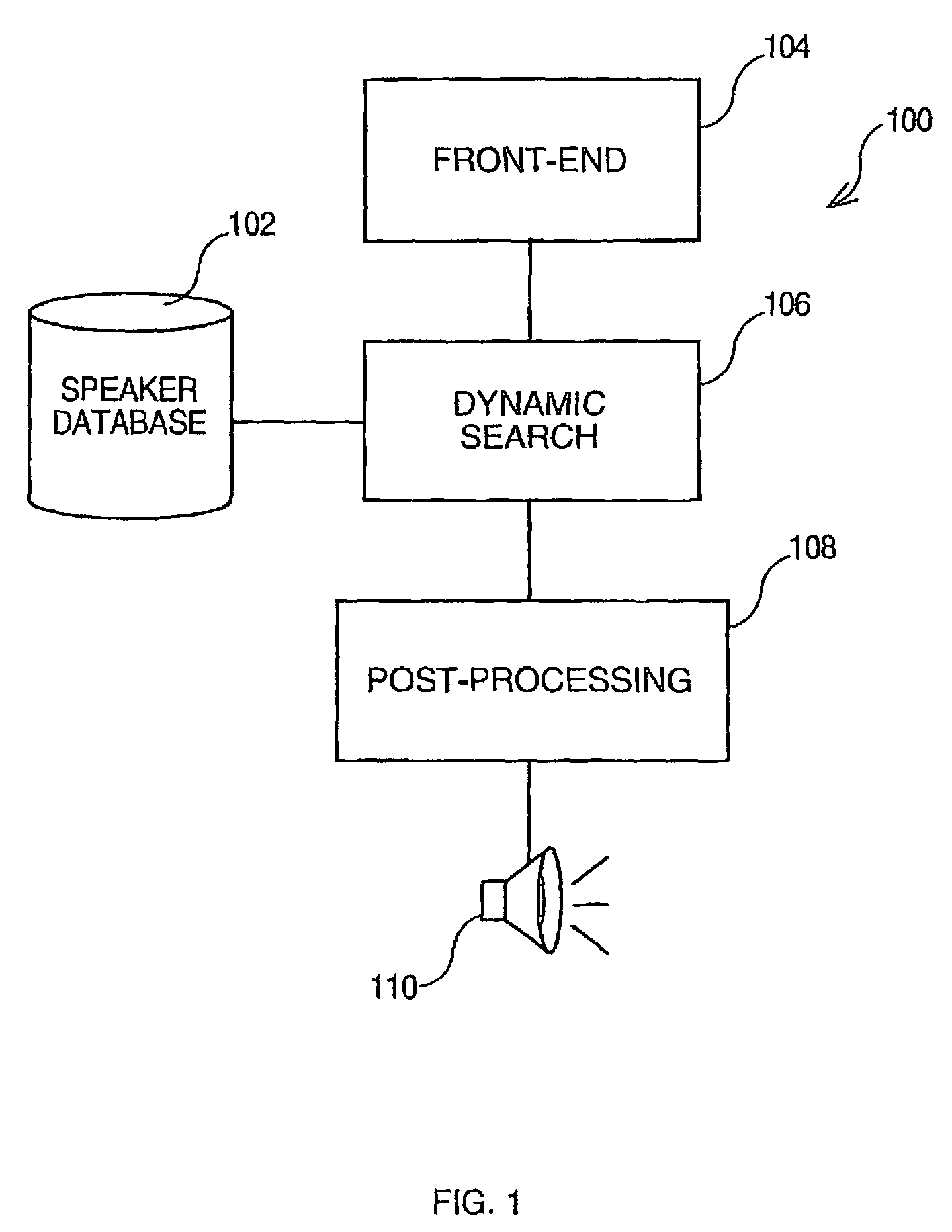

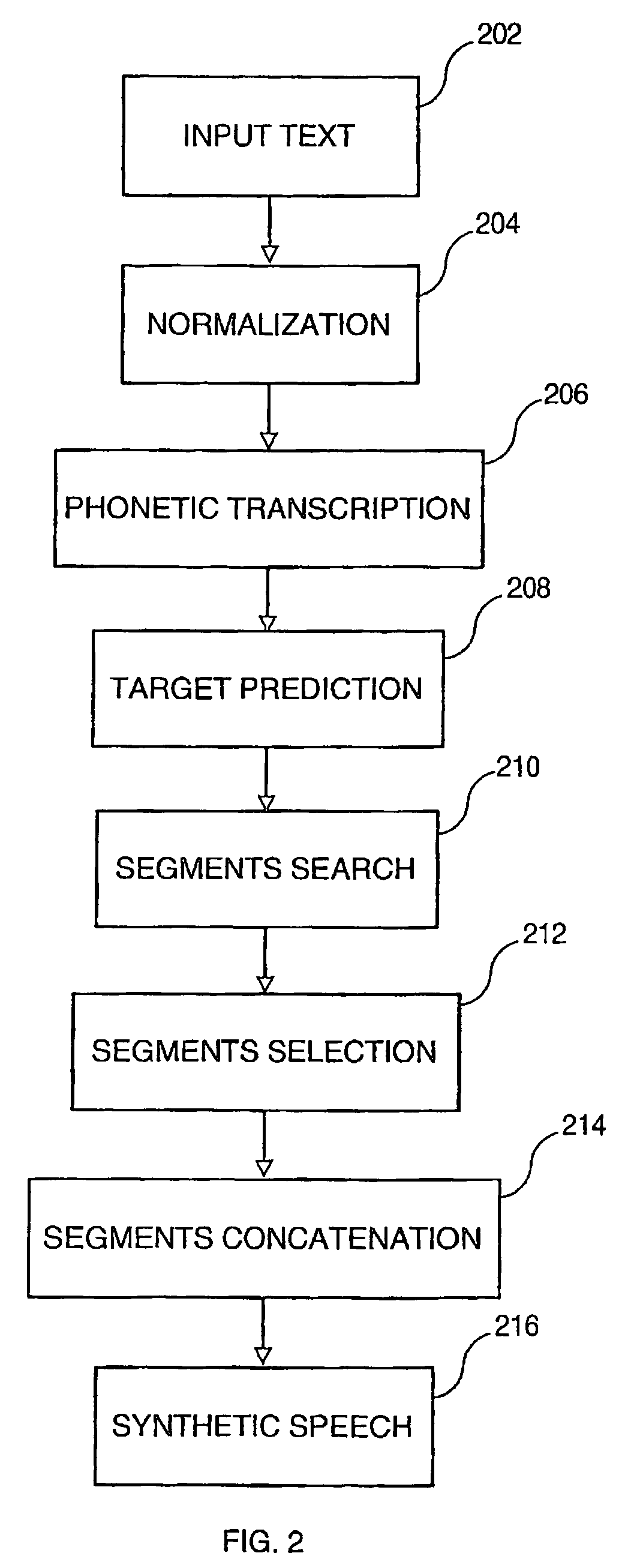

Systems and methods for selecting from multiple phonectic transcriptions for text-to-speech synthesis

ActiveUS7869999B2Quality improvementReduce in quantitySpeech synthesisEnd systemText to speech synthesis

A system and method for generating synthetic speech, which operates in a computer implemented Text-To-Speech system. The system comprises at least a speaker database that has been previously created from user recordings, a Front-End system to receive an input text and a Text-To-Speech engine. The Front-End system generates multiple phonetic transcriptions for each word of the input text, and the TTS engine uses a cost function to select which phonetic transcription is the more appropriate for searching the speech segments within the speaker database to be concatenated and synthesized.

Owner:CERENCE OPERATING CO

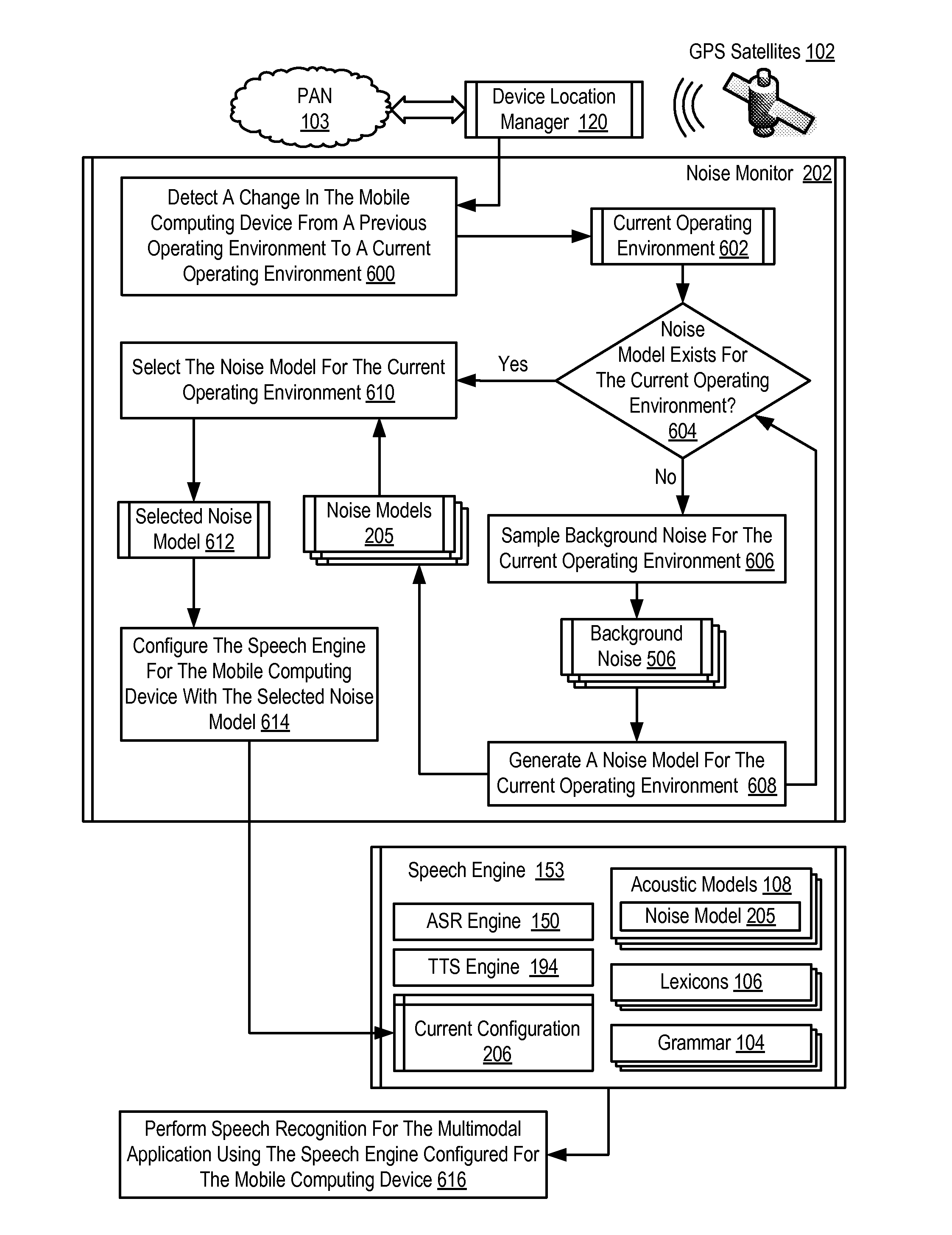

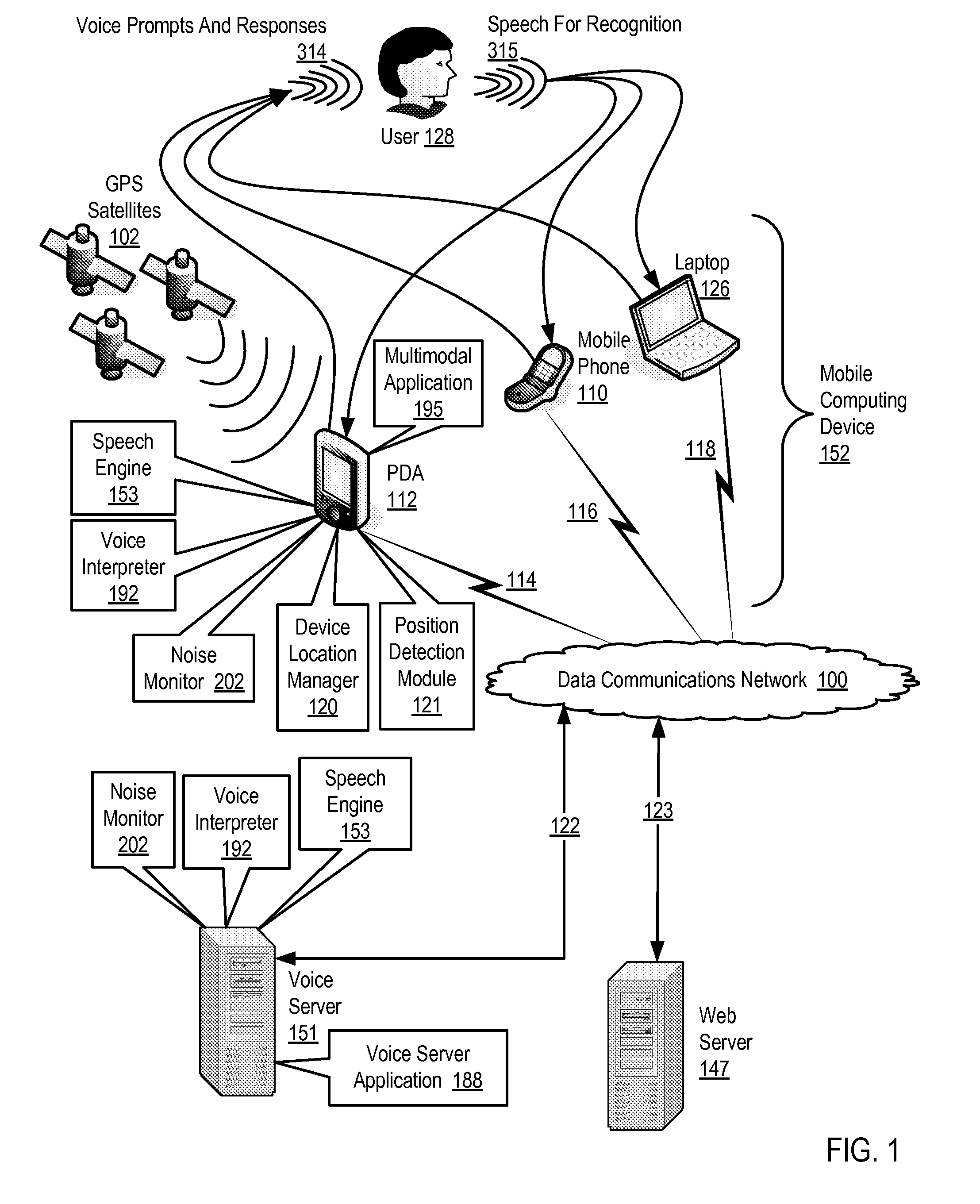

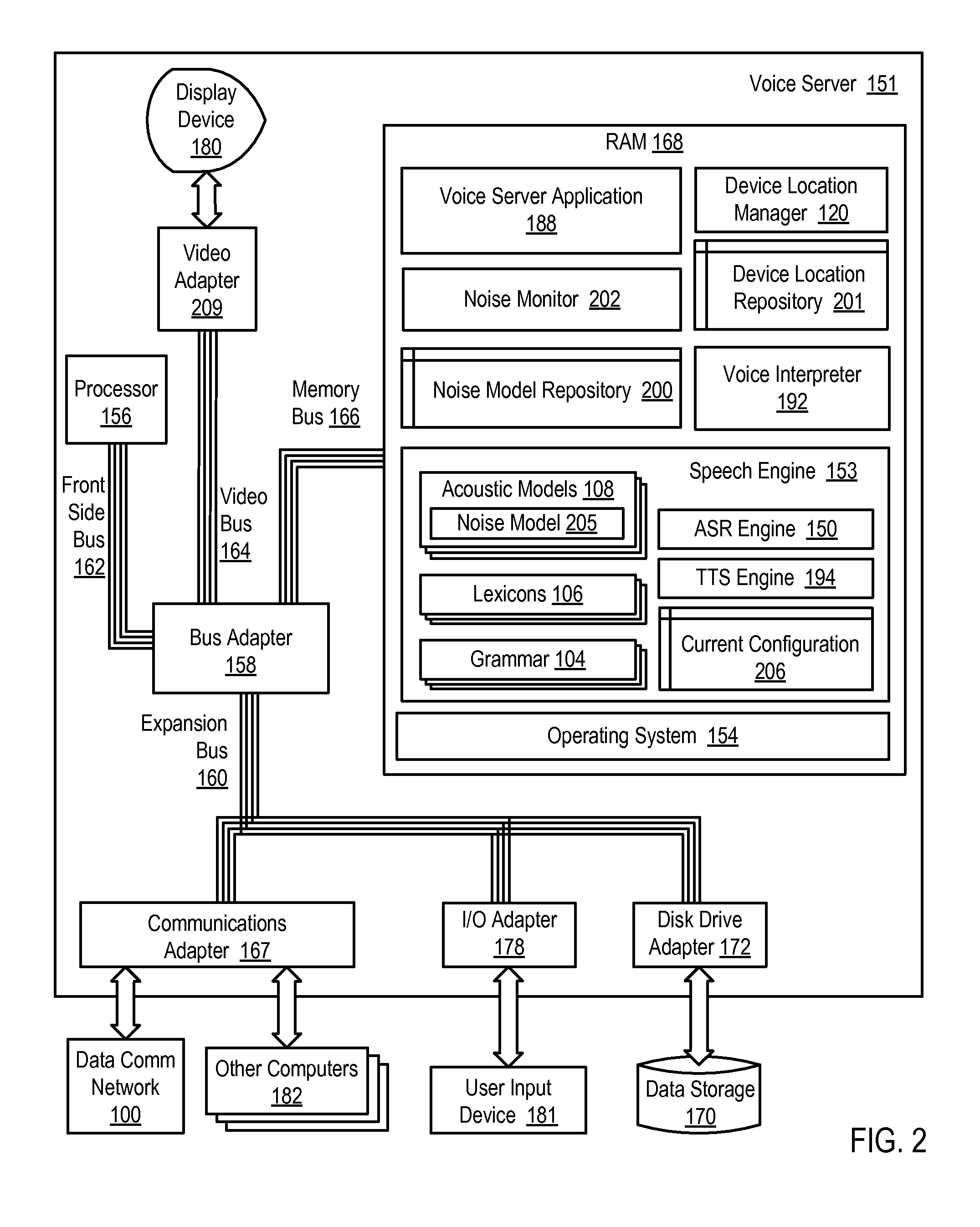

Adjusting a speech engine for a mobile computing device based on background noise

Owner:CERENCE OPERATING CO

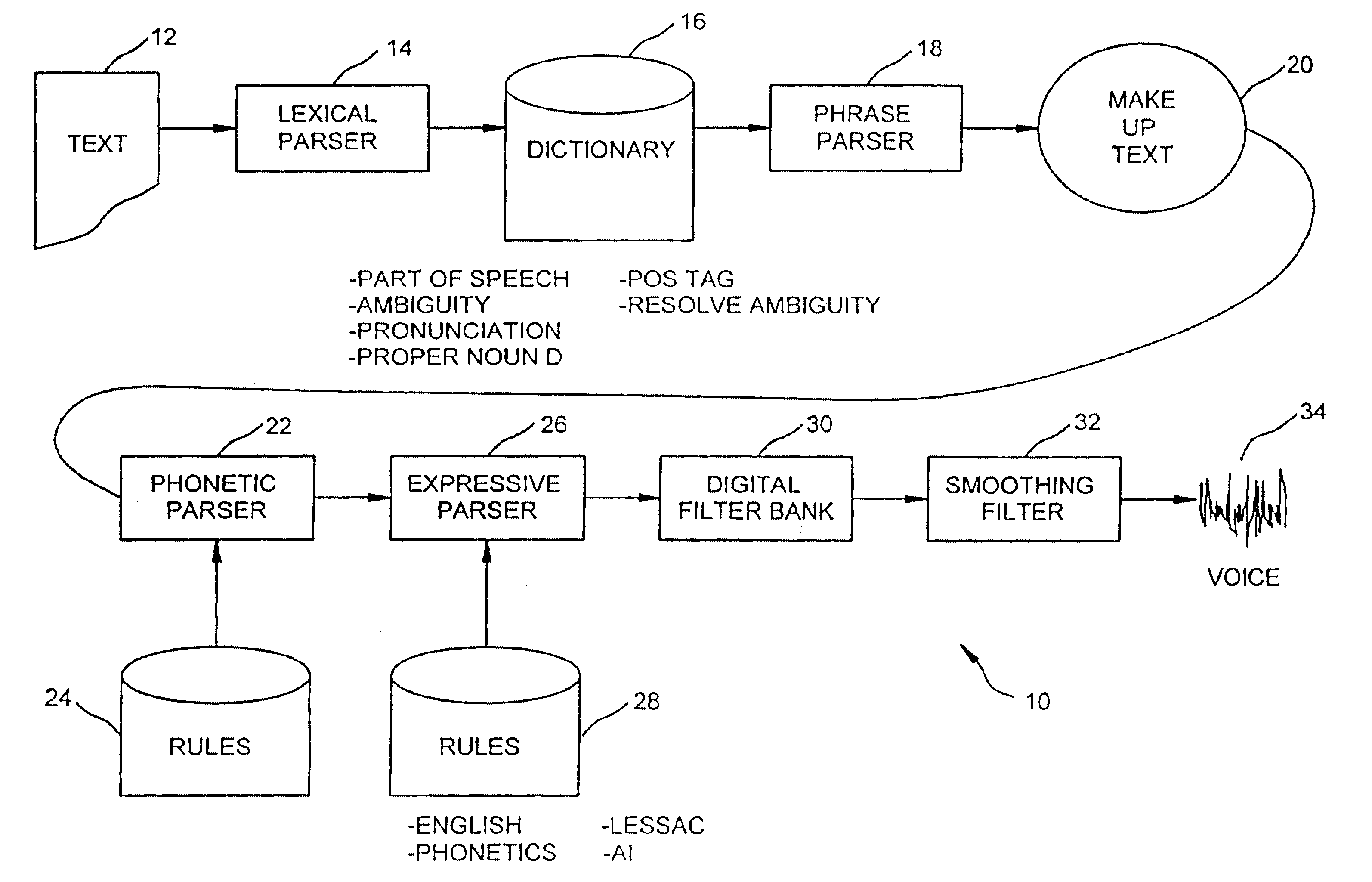

Text to speech

InactiveUS6865533B2Avoiding conditioned pitfallSmall sizeSpeech recognitionElectrical appliancesSpoken languageSpeech sound

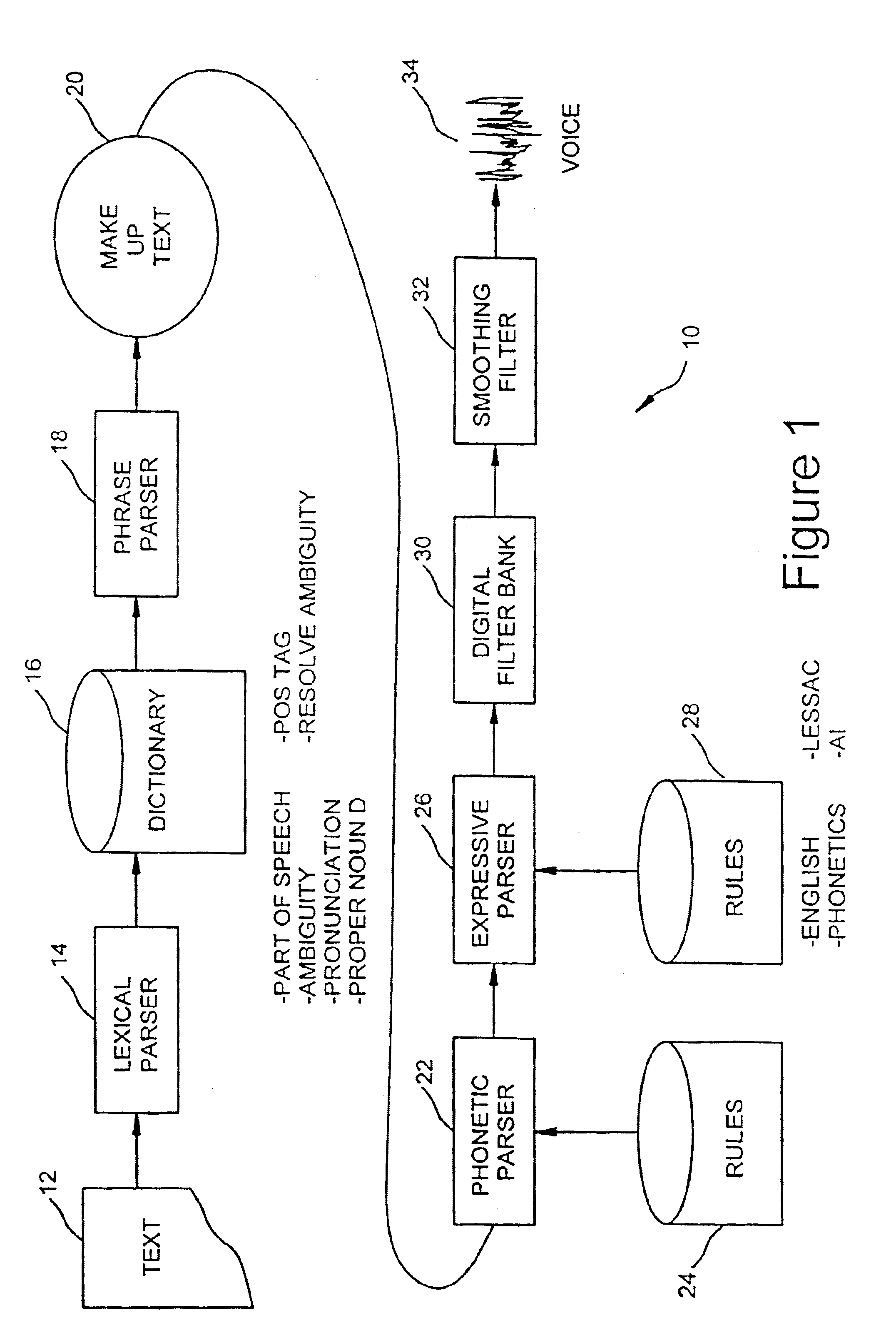

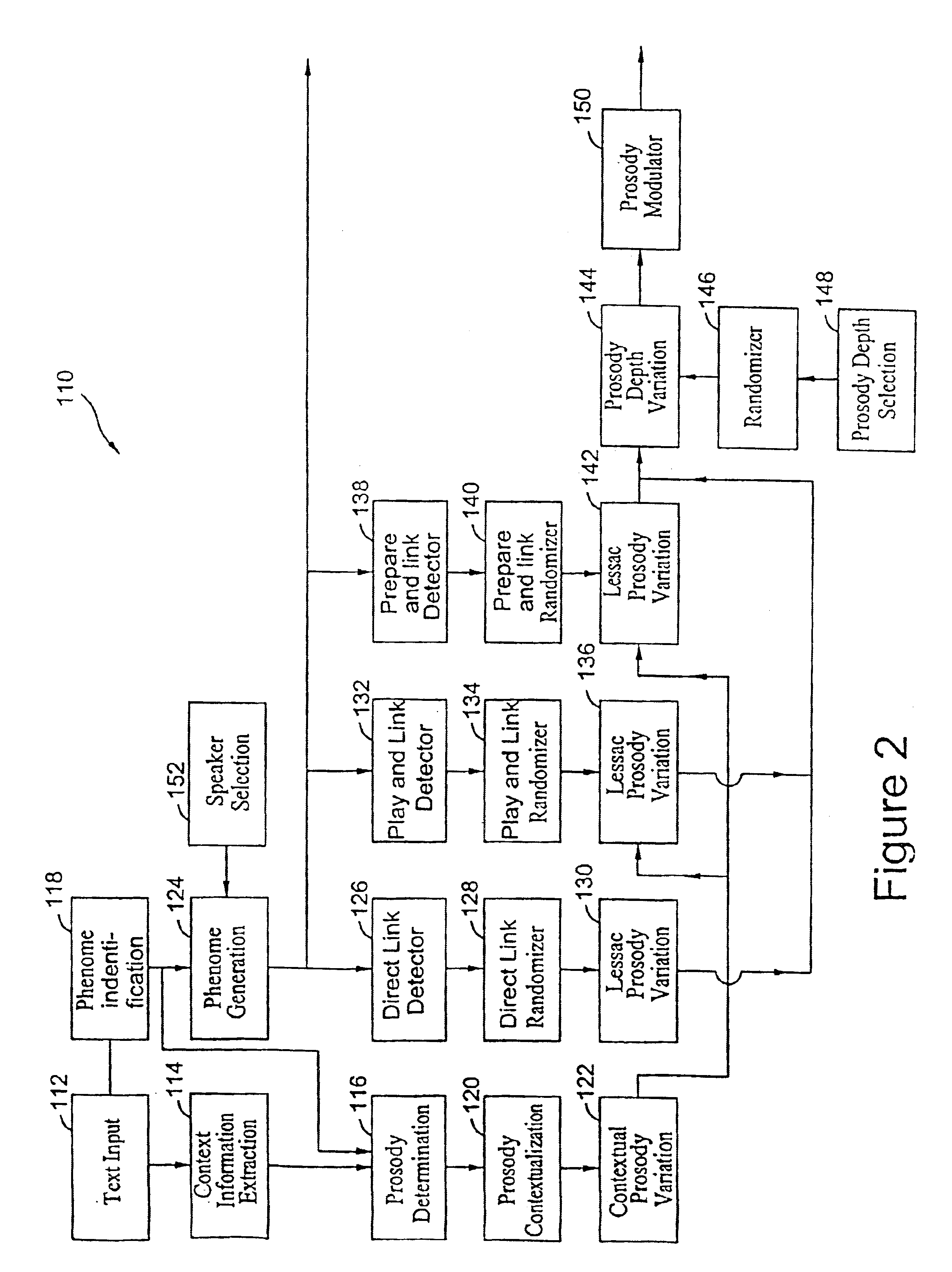

A preferred embodiment of the method for converting text to speech using a computing device having a memory is disclosed. The inventive method comprises examining a text to be spoken to an audience for a specific communications purpose, followed by marking-up the text according to a phonetic markup systems such as the Lessac System pronunciation rules notations. A set of rules to control a speech to text generator based on speech principles, such as Lessac principles. Such rules are of the tide normally implemented on prior art text-to-speech engines, and control the operation of the software and the characteristics of the speech generated by a computer using the software. A computer is used to speak the marked-up text expressively. The step of using a computer to speak the marked-up text expressively is repeated using alternative pronunciations of the selected style of expression where each of the tonal, structural, and consonant energies, have a different balance in the speech, are also spoken to a trained speech practitioners that listened to the spoken speech generated by the computer. The spoken speech generated by the computer is then evaluated for consistency with style criteria and / or expressiveness. And audience is then assembled and the spoken speech generated by the computer is played back to the audience. Audience comprehension of spoken speech generated by the computer is evaluated and correlated to a particular implemented rule or rules, and those rules which resulted relatively high audience comprehension are selected.

Owner:LESSAC TECH INC

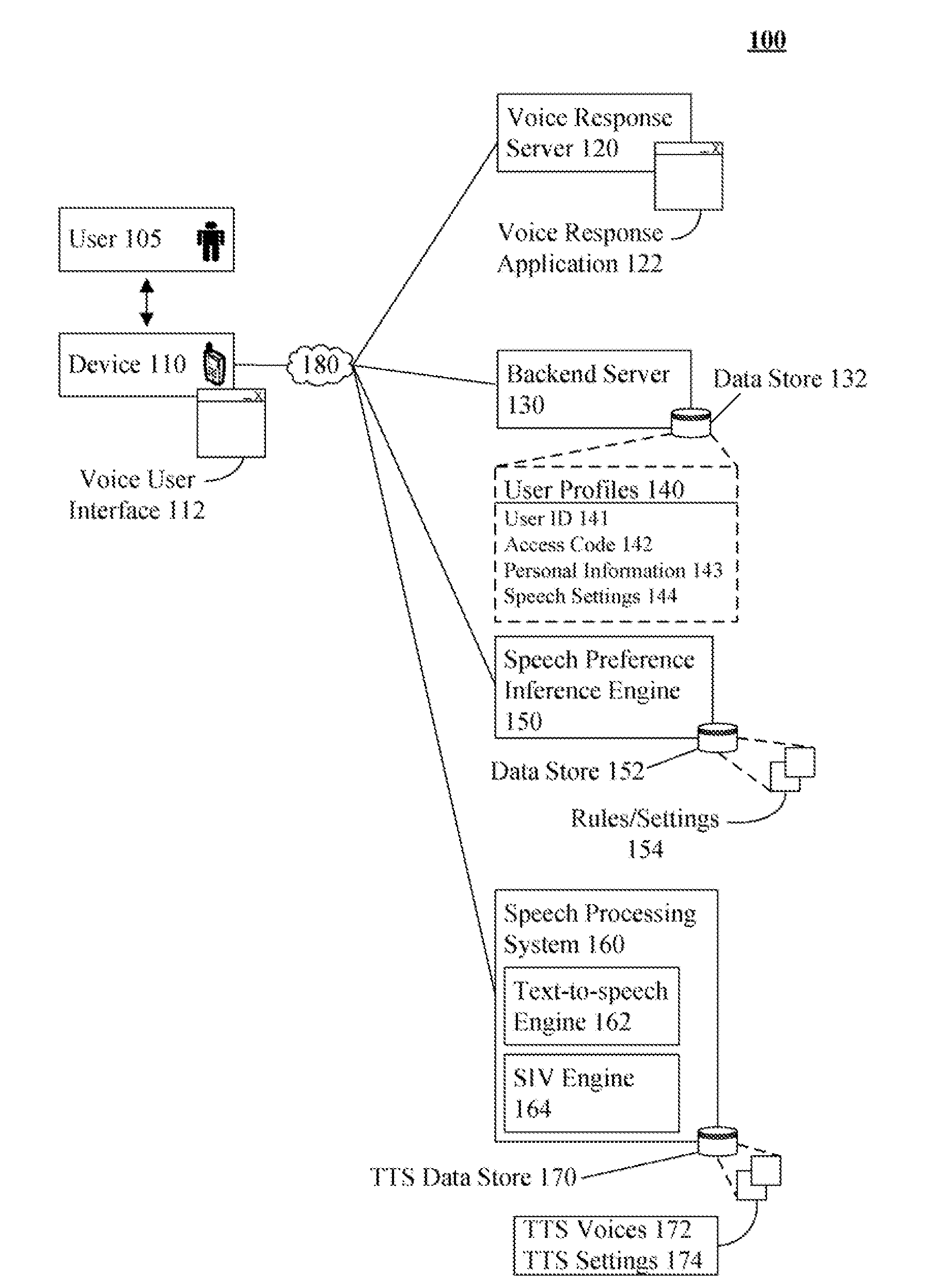

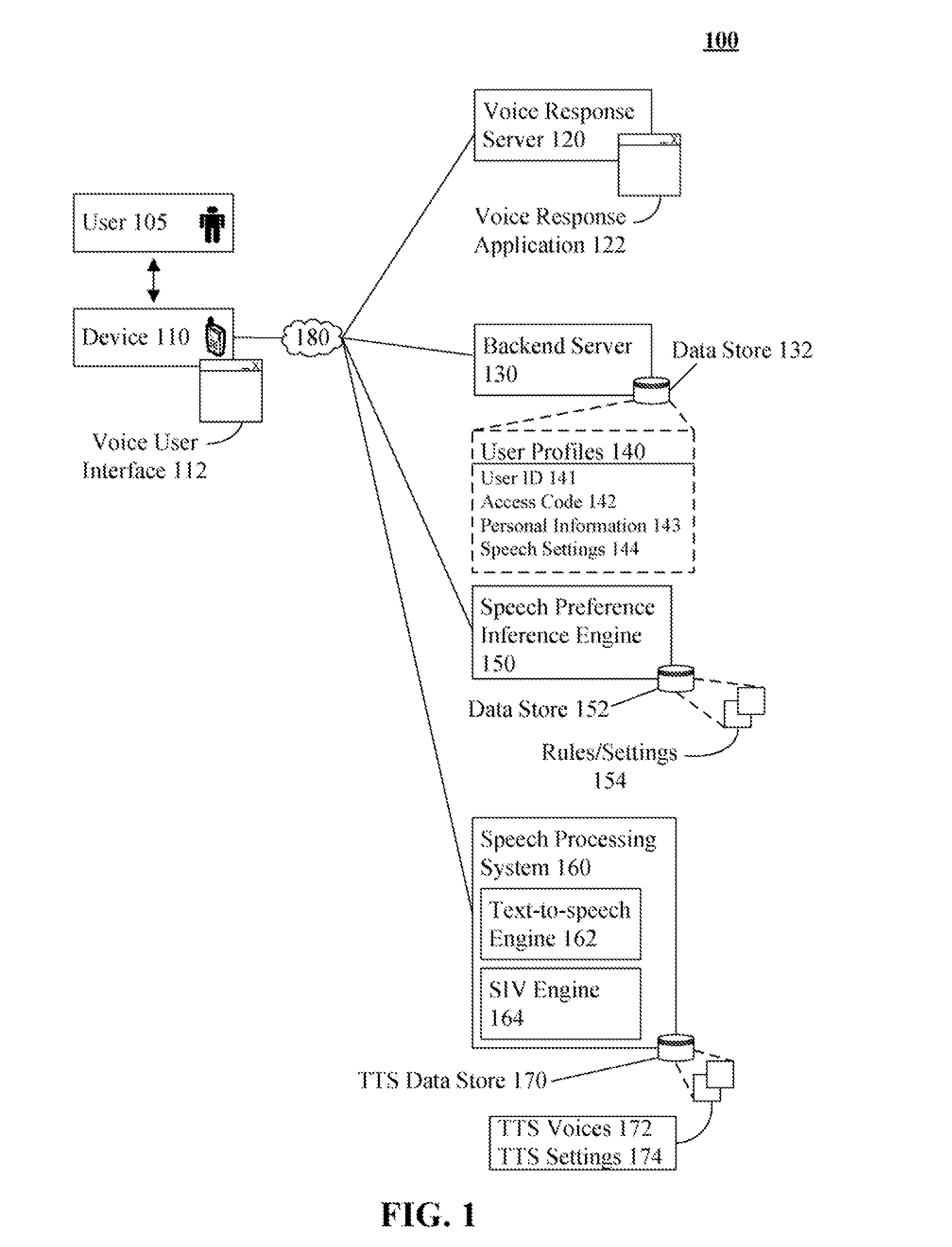

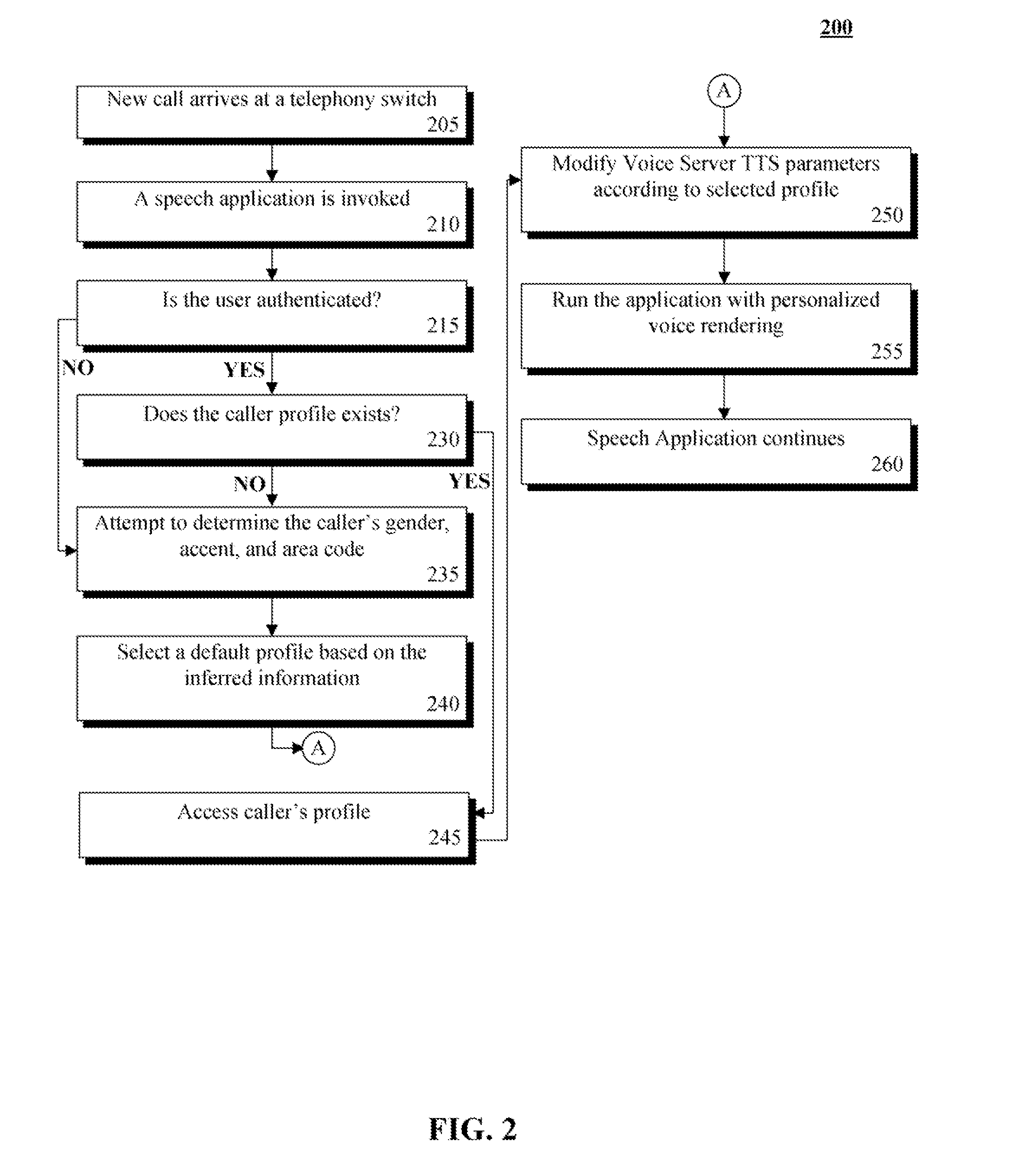

Dynamic modification of voice selection based on user specific factors

The present invention discloses a solution for customizing synthetic voice characteristics in a user specific fashion. The solution can establish a communication between a user and a voice response system. A data store can be searched for a speech profile associated with the user. When a speech profile is found, a set of speech output characteristics established for the user from the profile can be determined. Parameters and settings of a text-to-speech engine can be adjusted in accordance with the determined set of speech output characteristics. During the established communication, synthetic speech can be generated using the adjusted text-to-speech engine. Thus, each detected user can hear a synthetic speech generated by a different voice specifically selected for that user. When no user profile is detected, a default voice or a voice based upon a user's speech or communication details can be used.

Owner:IBM CORP

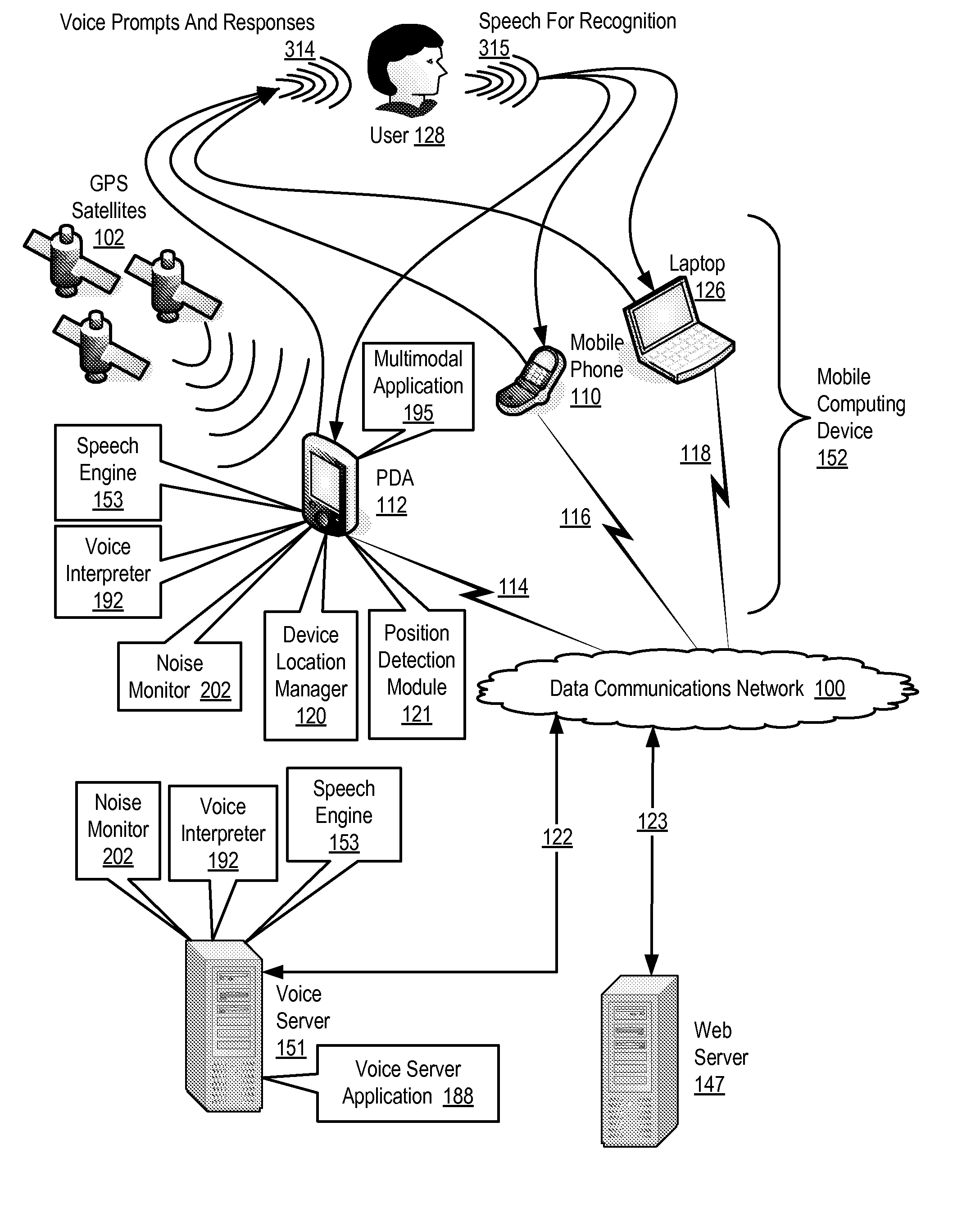

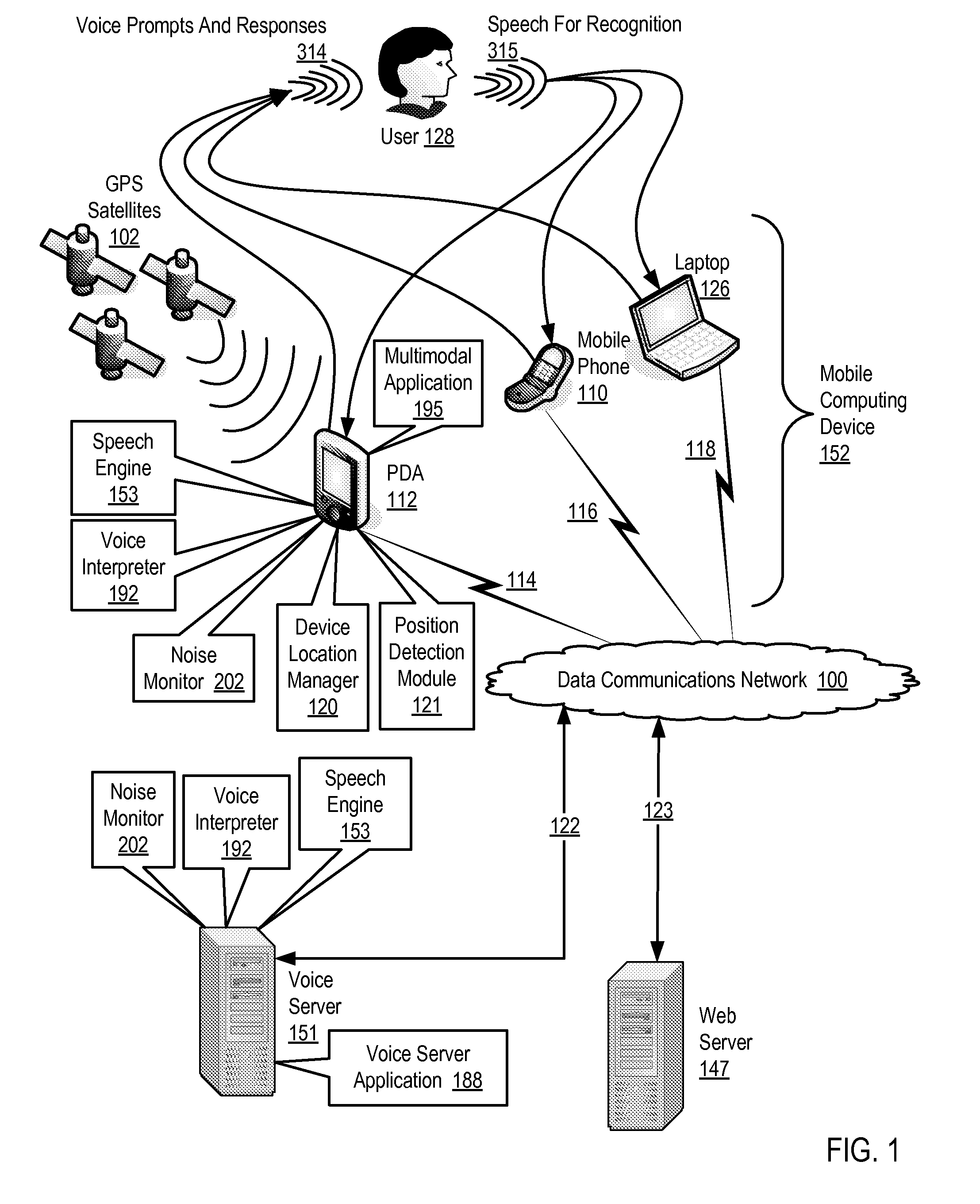

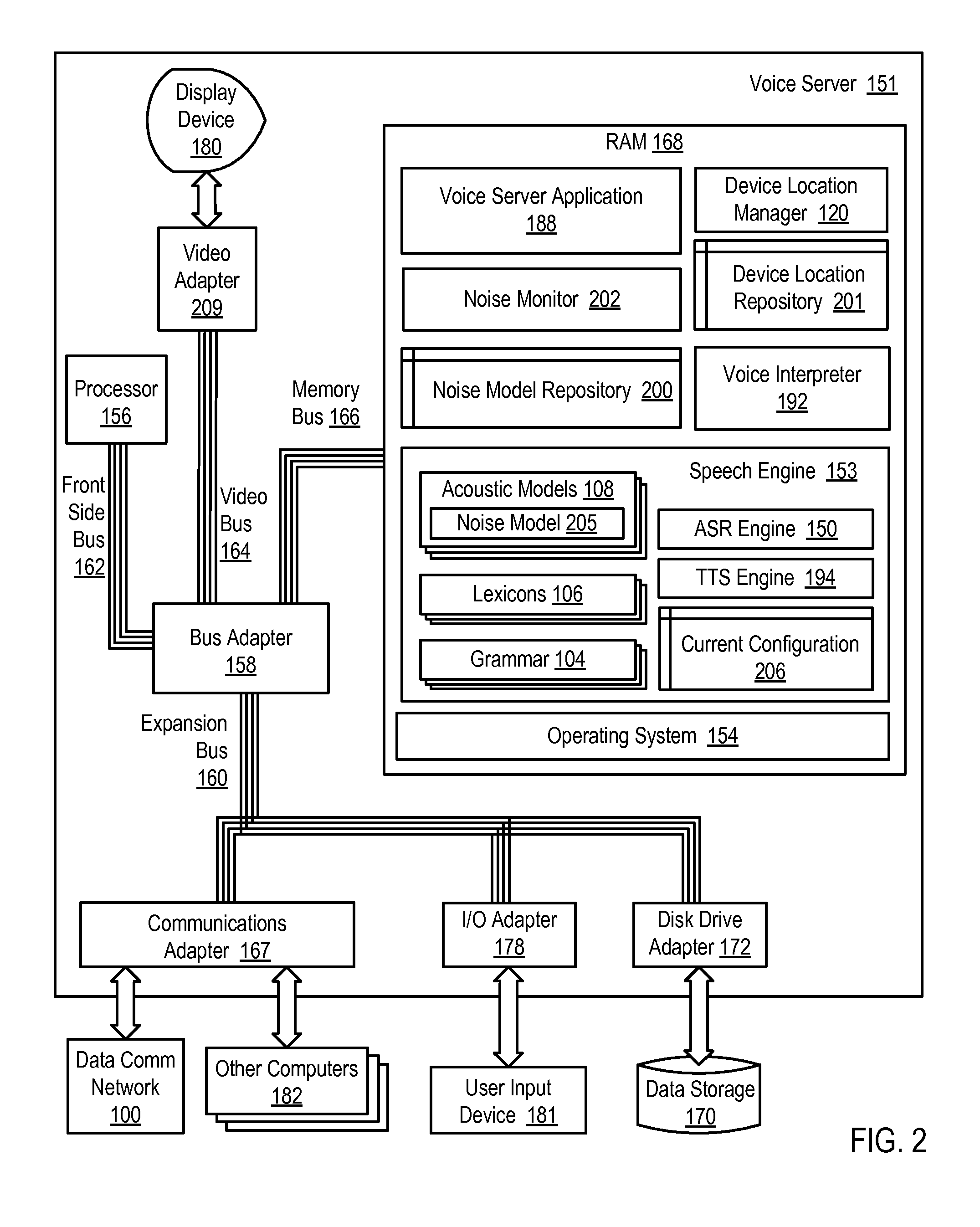

Adjusting A Speech Engine For A Mobile Computing Device Based On Background Noise

Methods, apparatus, and products are disclosed for adjusting a speech engine for a mobile computing device based on background noise, the mobile computing device operatively coupled to a microphone, that include: sampling, through the microphone, background noise for a plurality of operating environments in which the mobile computing device operates; generating, for each operating environment, a noise model in dependence upon the sampled background noise for that operating environment; and configuring the speech engine for the mobile computing device with the noise model for the operating environment in which the mobile computing device currently operates.

Owner:CERENCE OPERATING CO

Systems and methods for determining the language to use for speech generated by a text to speech engine

Owner:APPLE INC

Phonetic decoding and concatentive speech synthesis

A speech processing system includes a multiplexer that receives speech data input as part of a conversation turn in a conversation session between two or more users where one user is a speaker and each of the other users is a listener in each conversation turn. A speech recognizing engine converts the speech data to an input string of acoustic data while a speech modifier forms an output string based on the input string by changing an item of acoustic data according to a rule. The system also includes a phoneme speech engine for converting the first output string of acoustic data including modified and unmodified data to speech data for output via the multiplexer to listeners during the conversation turn.

Owner:CERENCE OPERATING CO

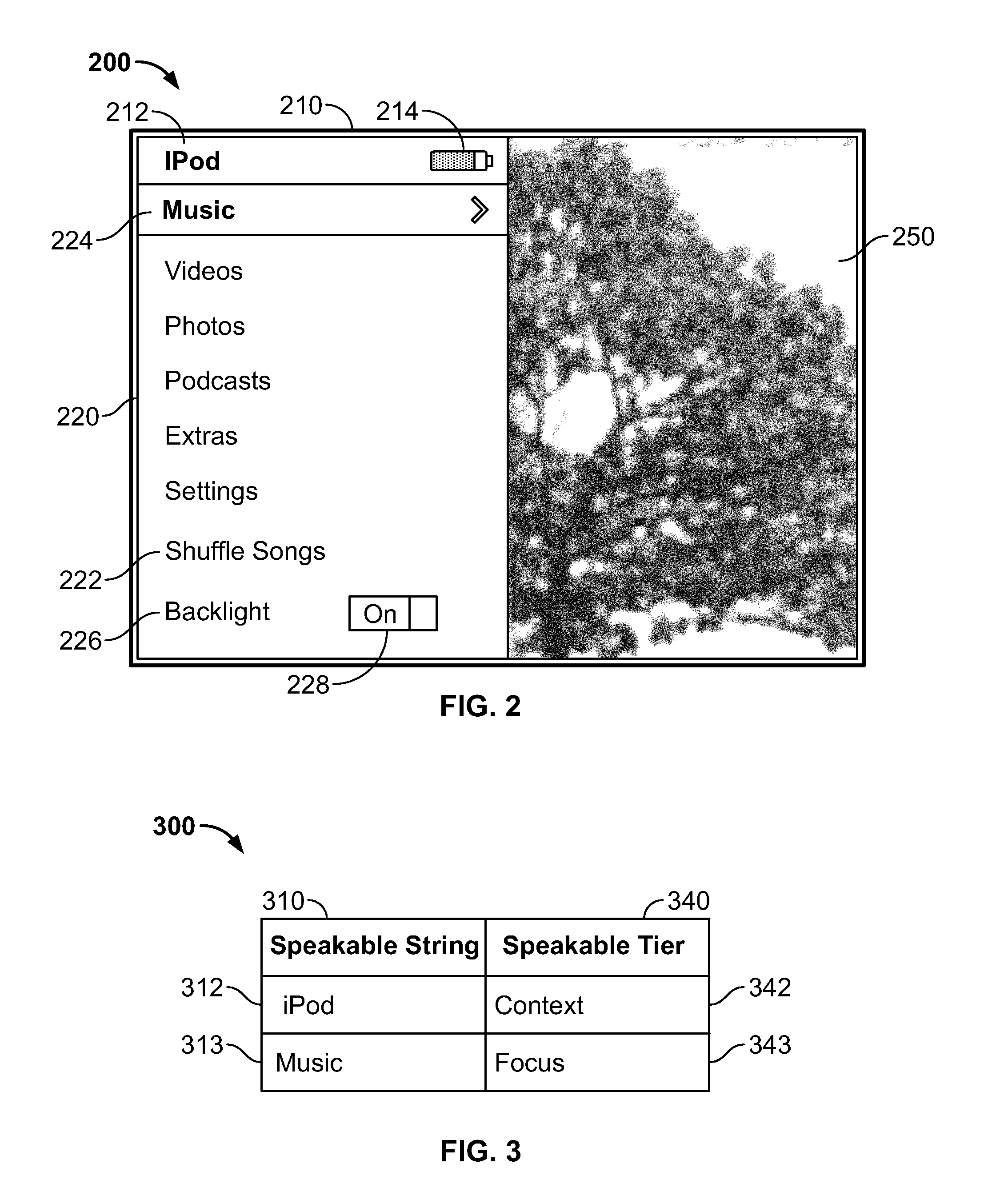

Multi-Tiered Voice Feedback in an Electronic Device

This invention is directed to providing voice feedback to a user of an electronic device. Because each electronic device display may include several speakable elements (i.e., elements for which voice feedback is provided), the elements may be ordered. To do so, the electronic device may associate a tier with the display of each speakable element. The electronic device may then provide voice feedback for displayed speakable elements based on the associated tier. To reduce the complexity in designing the voice feedback system, the voice feedback features may be integrated in a Model View Controller (MVC) design used for displaying content to a user. For example, the model and view of the MVC design may include additional variables associated with speakable properties. The electronic device may receive audio files for each speakable element using any suitable approach, including for example by providing a host device with a list of speakable elements and directing a text to speech engine of the host device to generate and provide the audio files.

Owner:APPLE INC

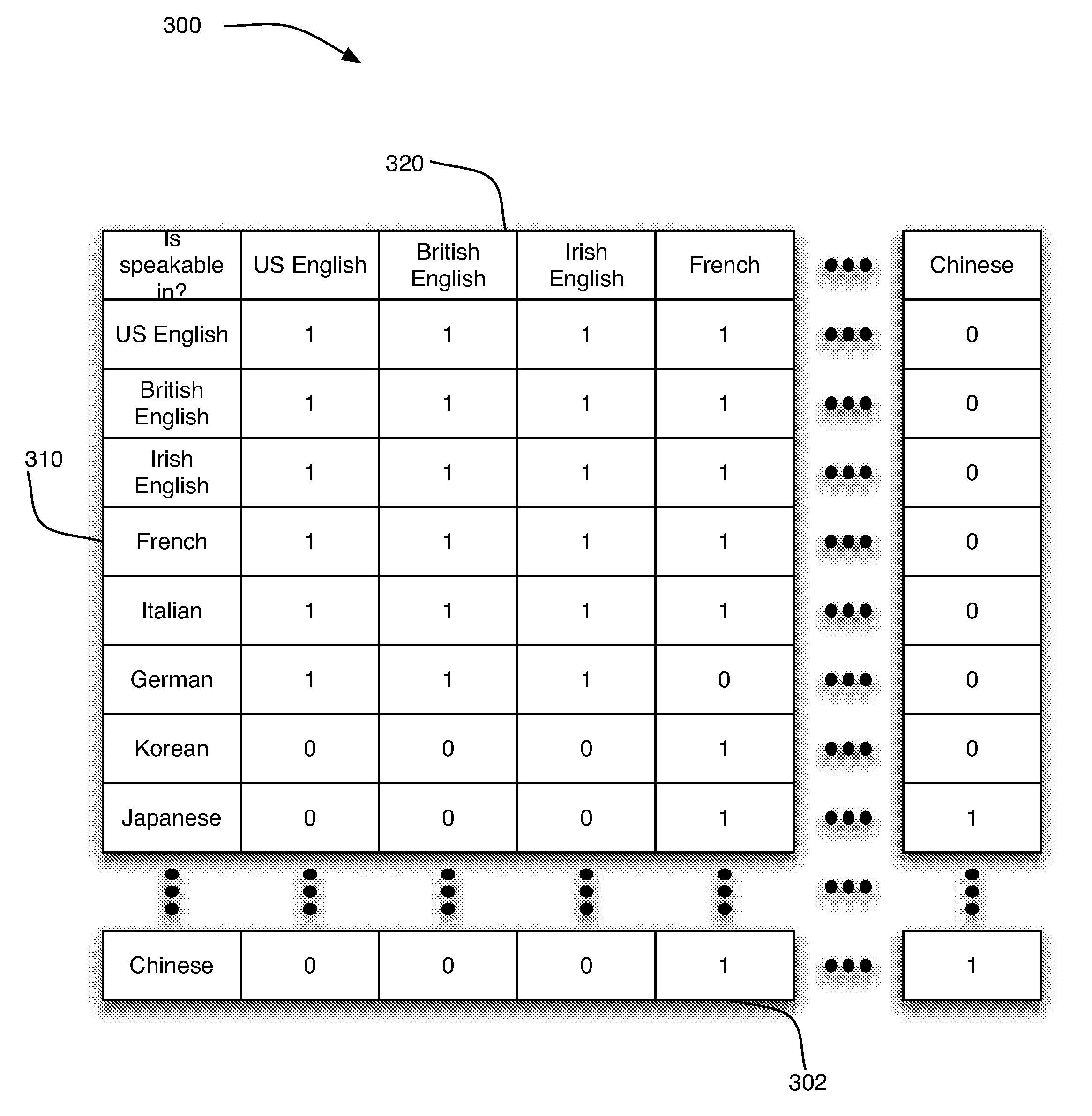

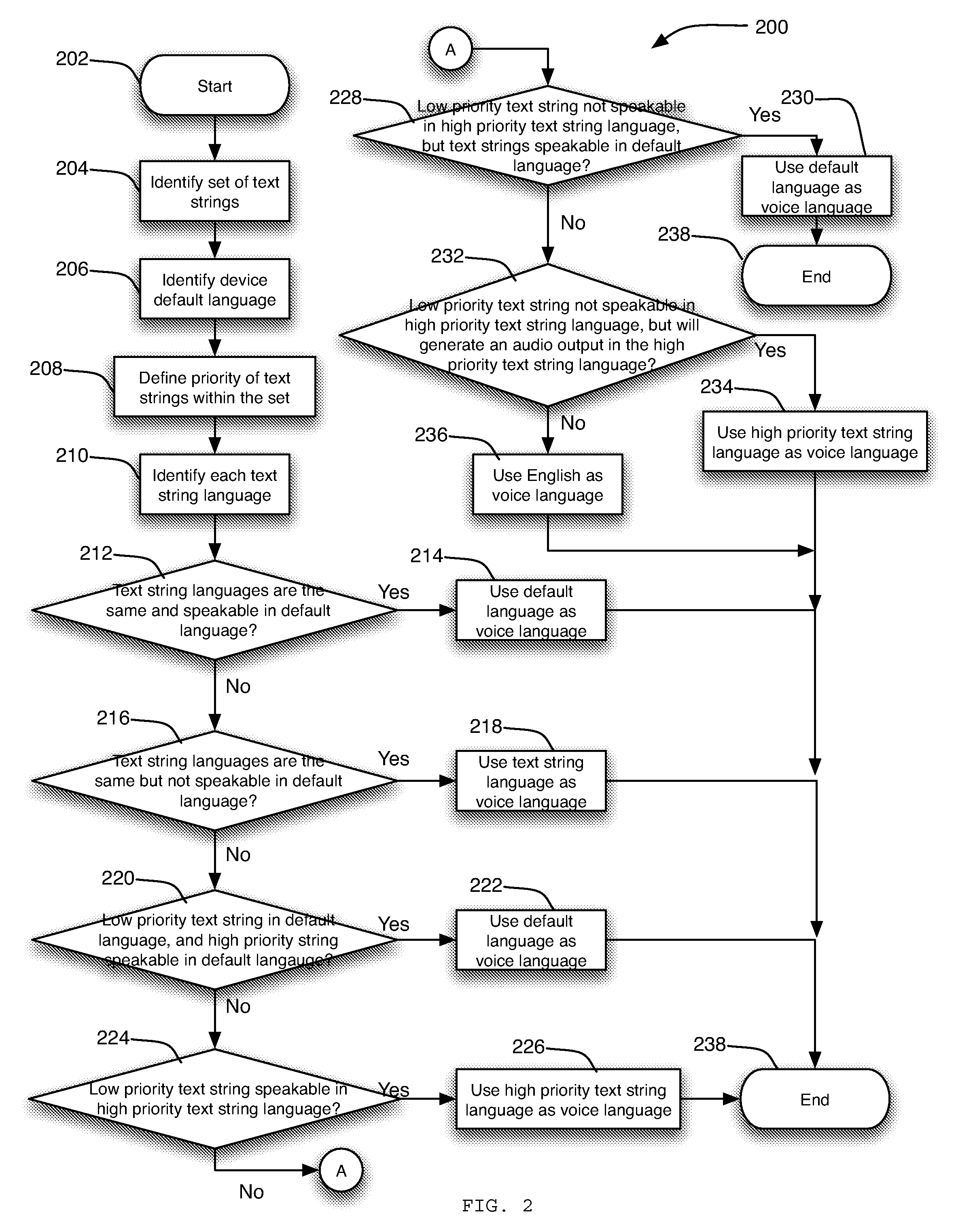

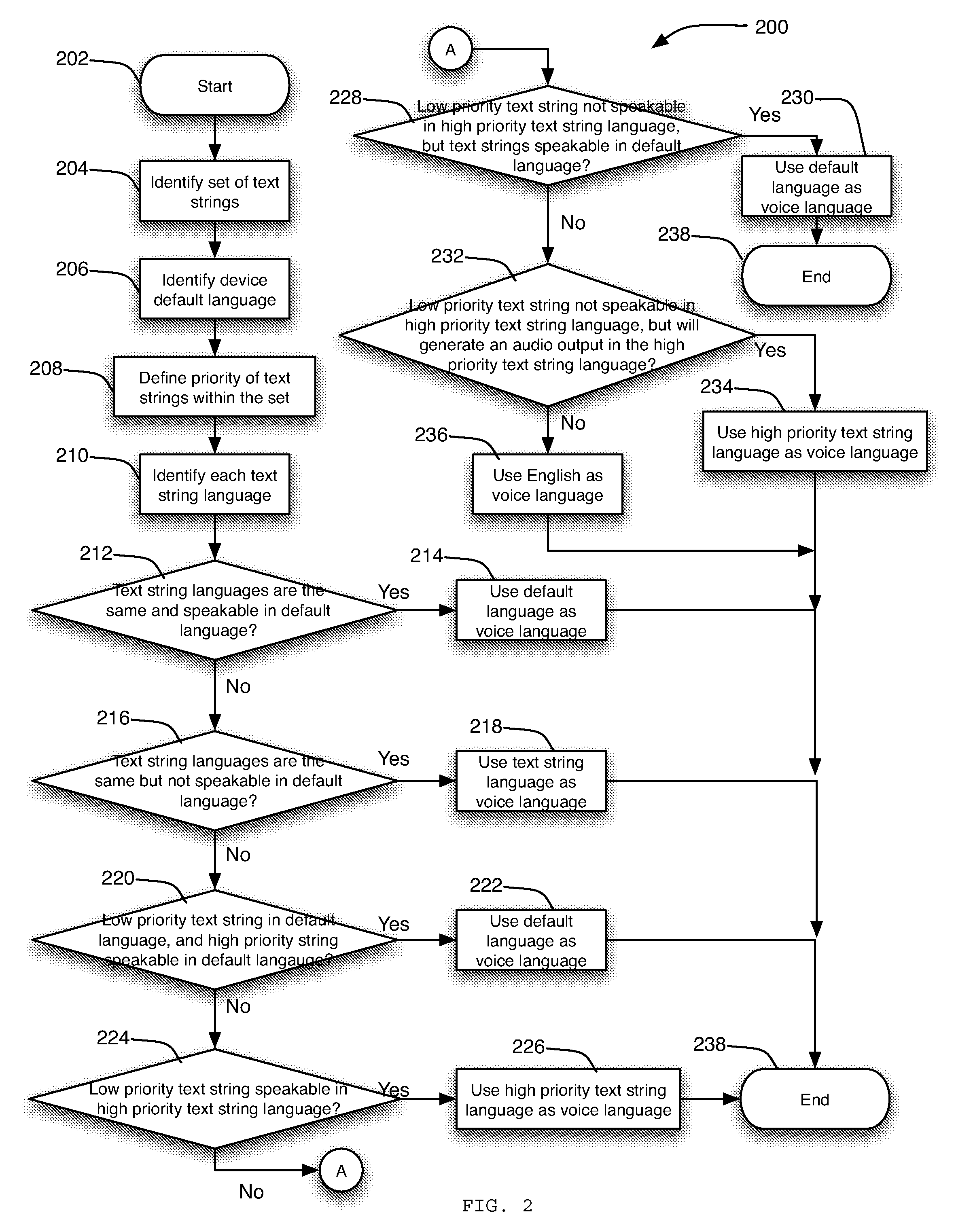

Systems and methods for determining the language to use for speech generated by a text to speech engine

Algorithms for synthesizing speech used to identify media assets are provided. Speech may be selectively synthesized from text strings associated with media assets, where each text string can be associated with a native string language (e.g., the language of the string). When several text strings are associated with at least two distinct languages, a series of rules can be applied to the strings to identify a single voice language to use for synthesizing the speech content from the text strings. In some embodiments, a prioritization scheme can be applied to the text strings to identify the more important text strings. The rules can include, for example, selecting a voice language based on the prioritization scheme, a default language associated with an electronic device, the ability of a voice language to speak text in a different language, or any other suitable rule.

Owner:APPLE INC

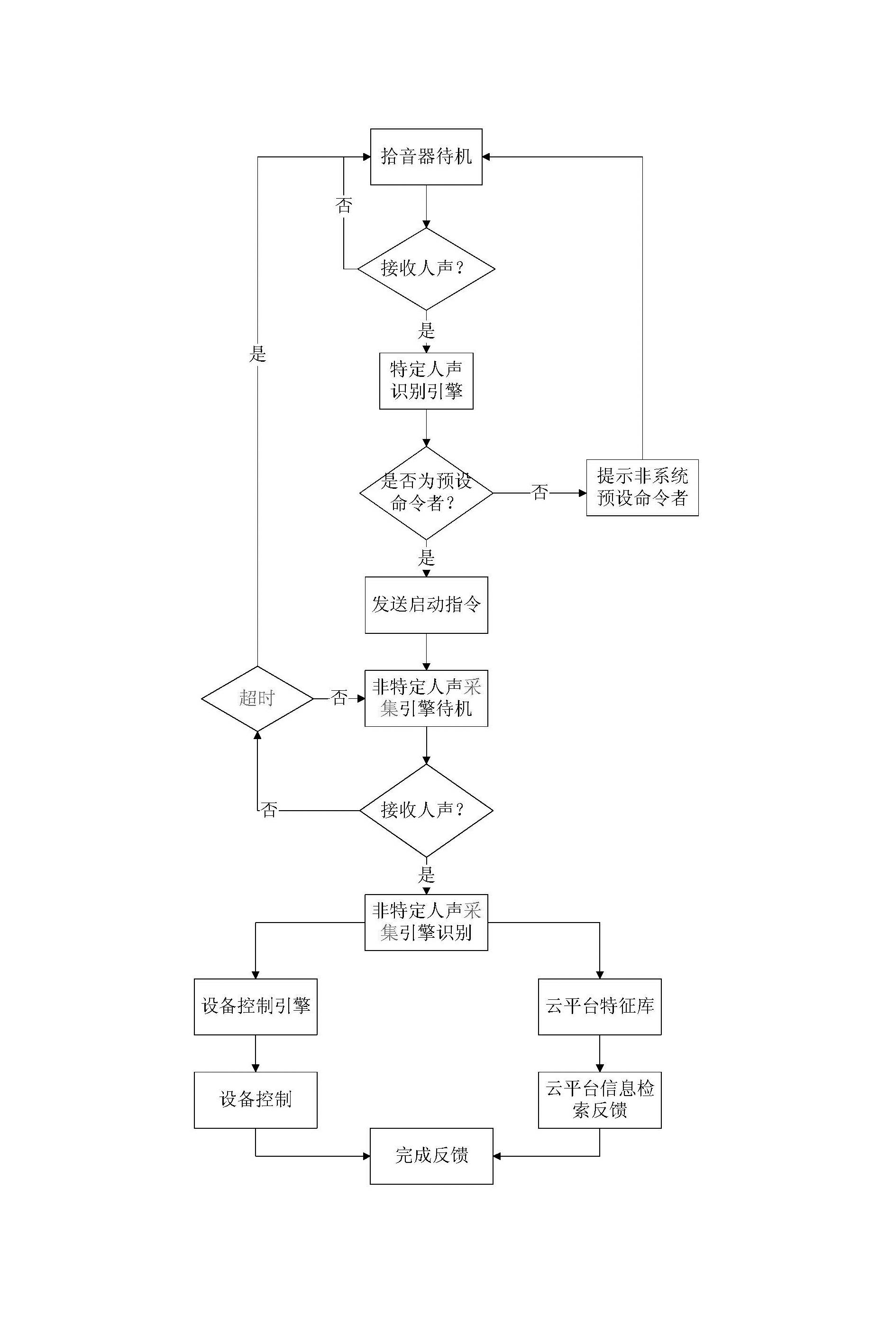

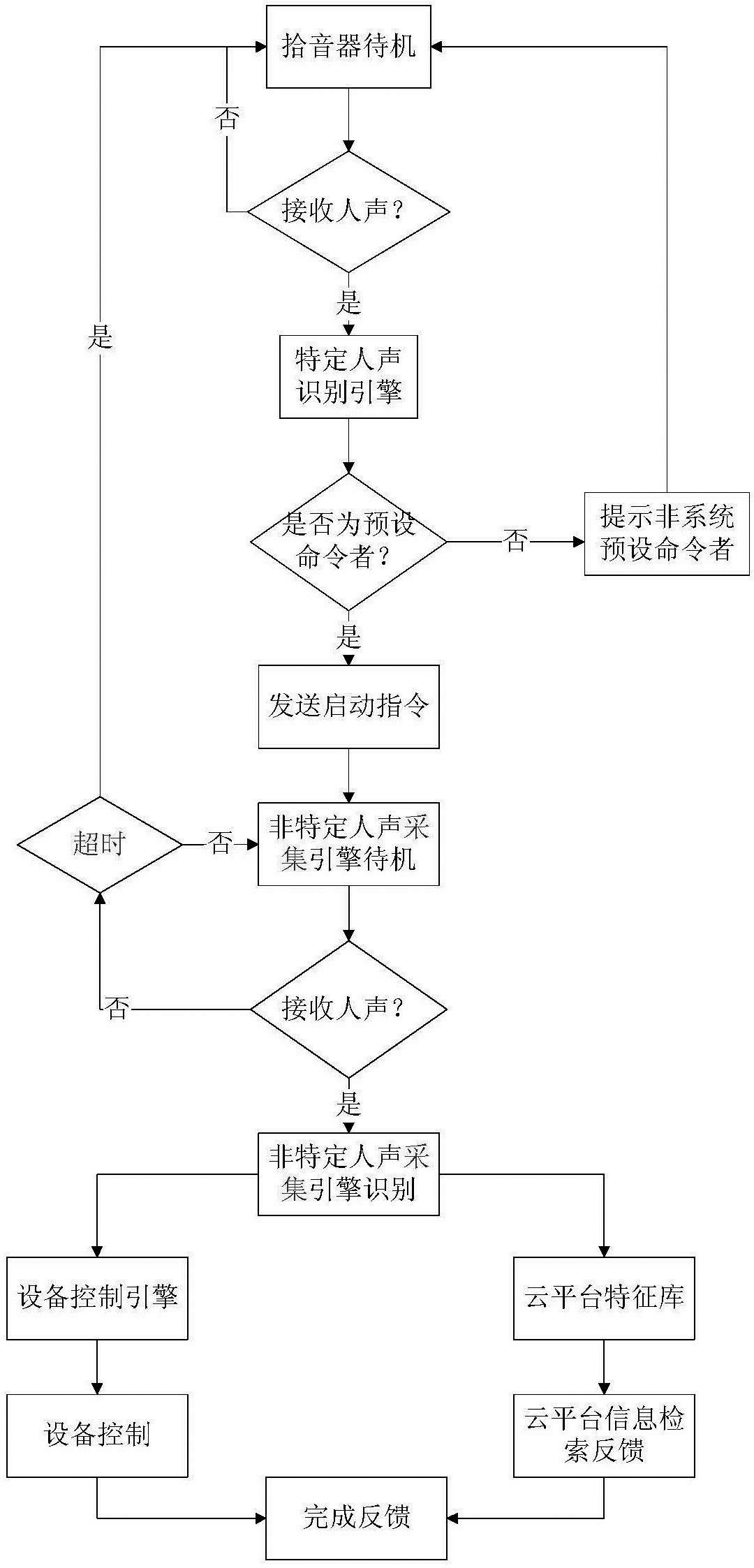

Multi-speech control method suitable for cloud platform

Owner:厦门云聚智能科技有限公司

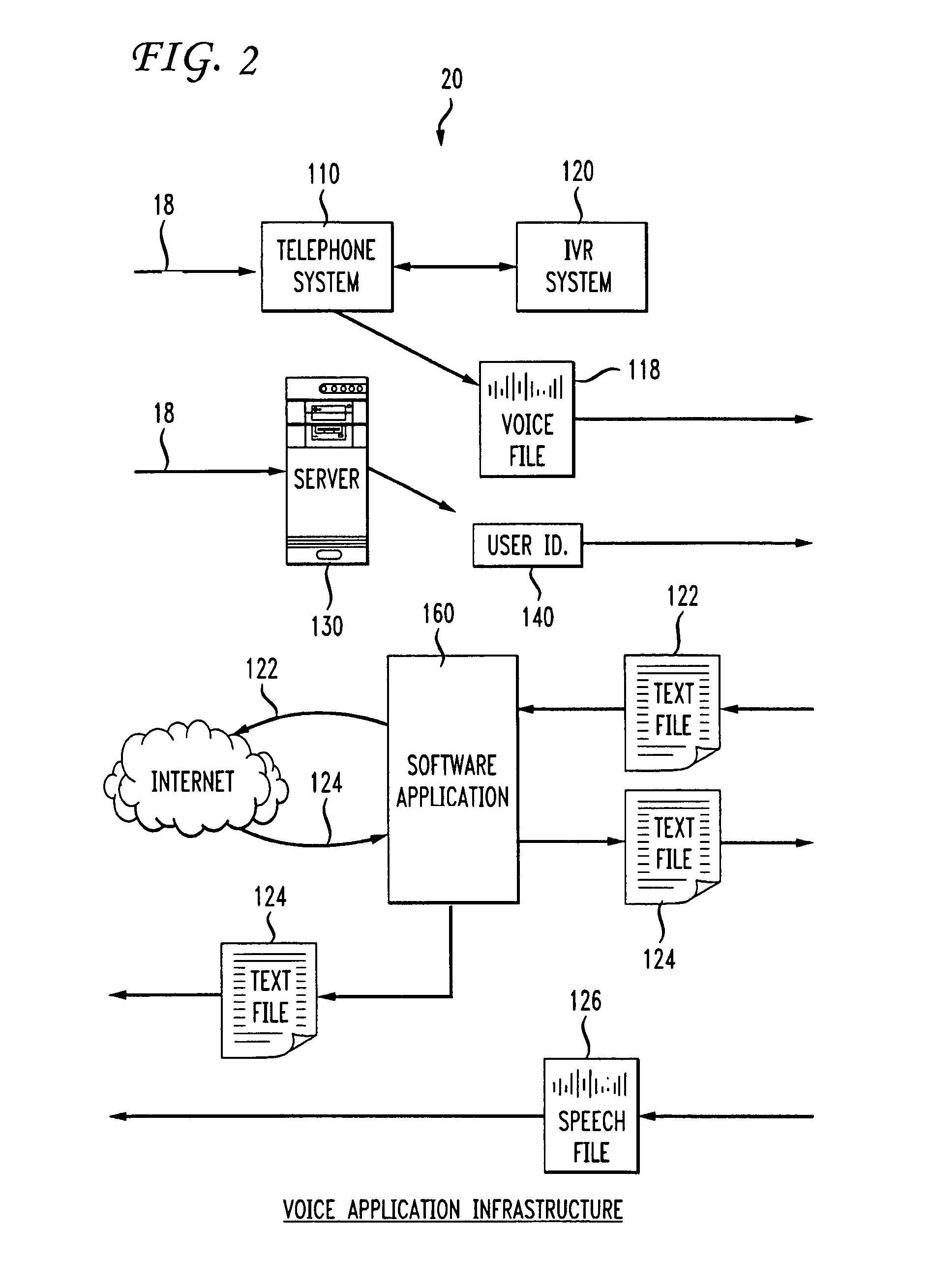

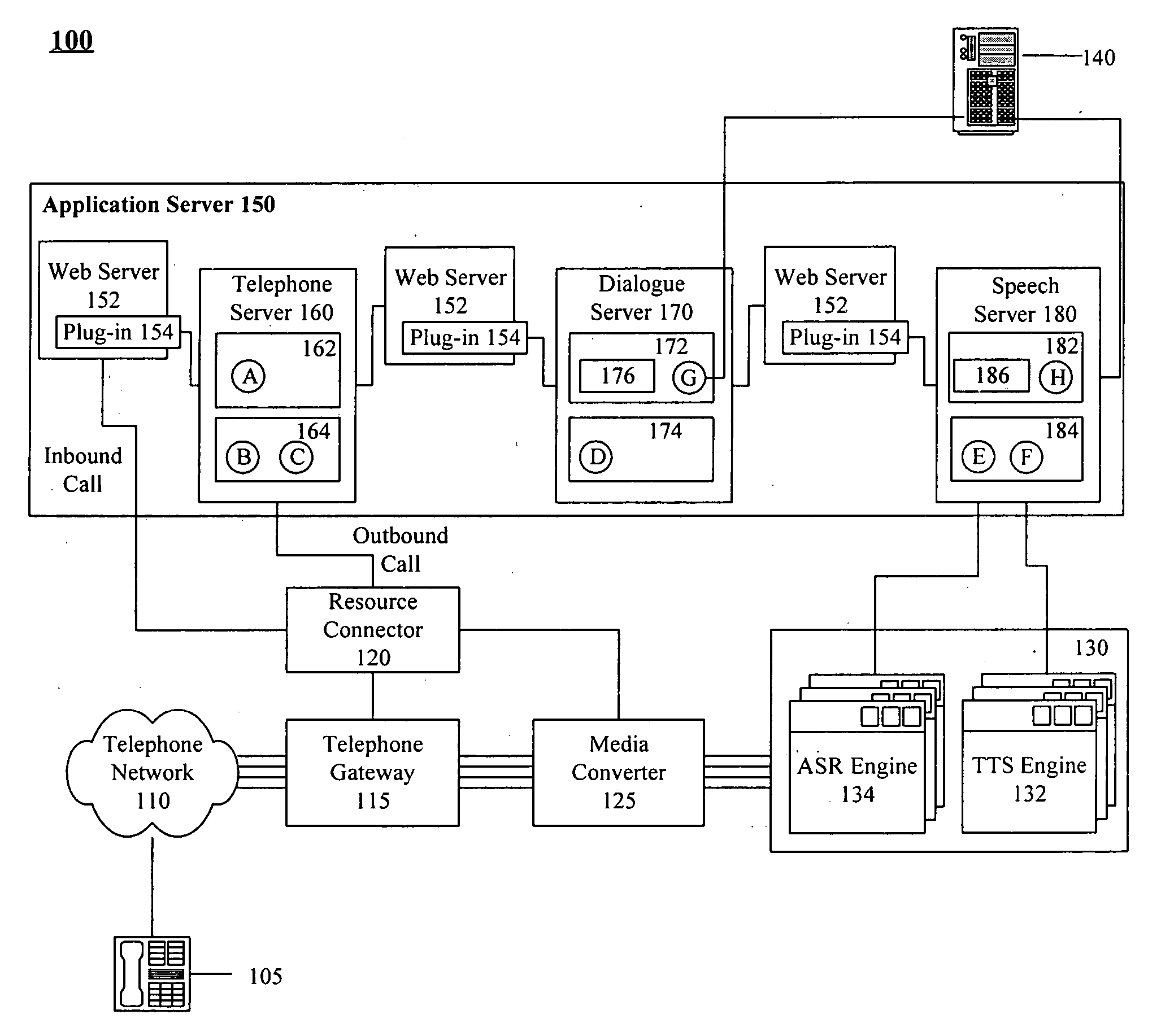

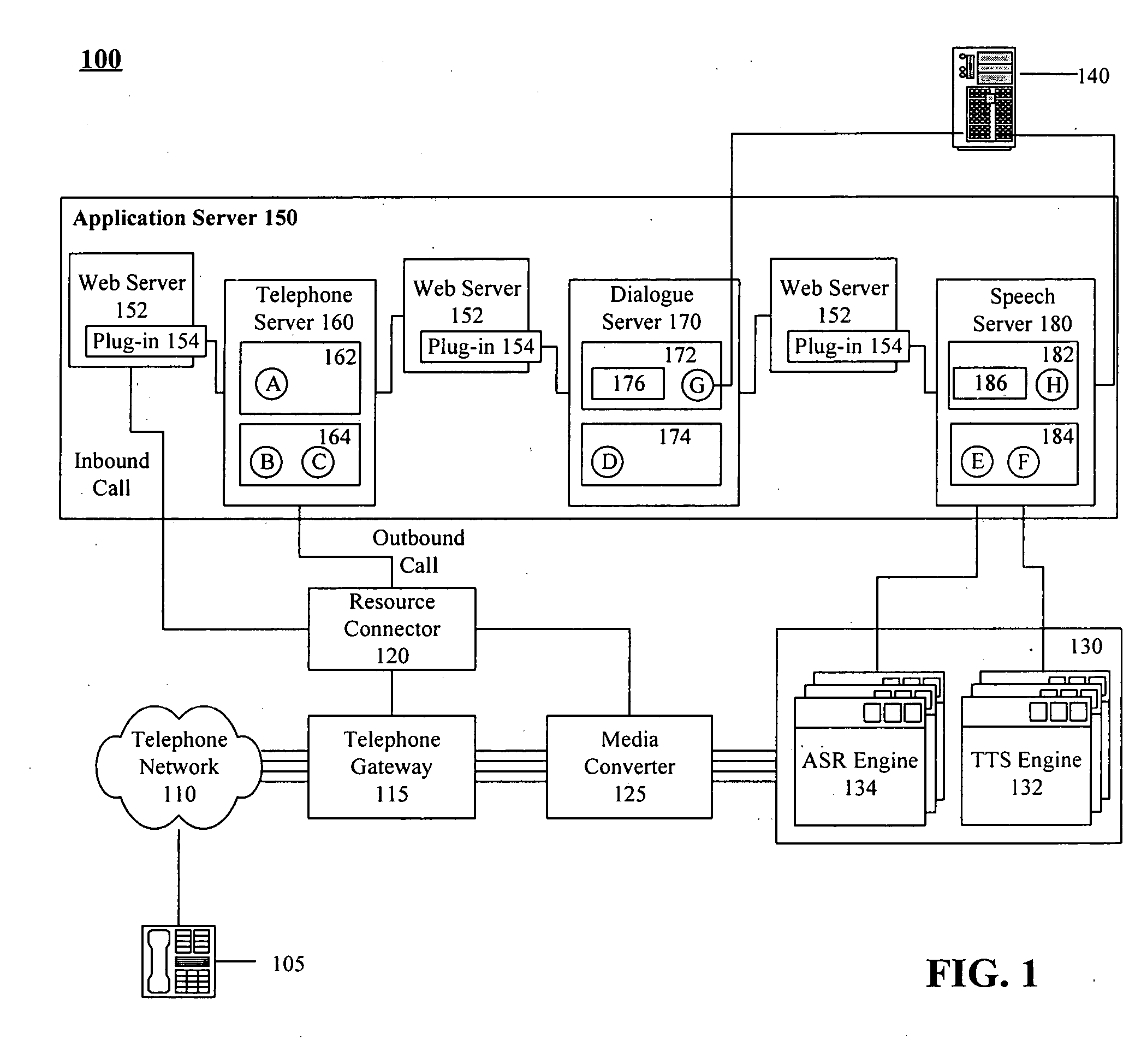

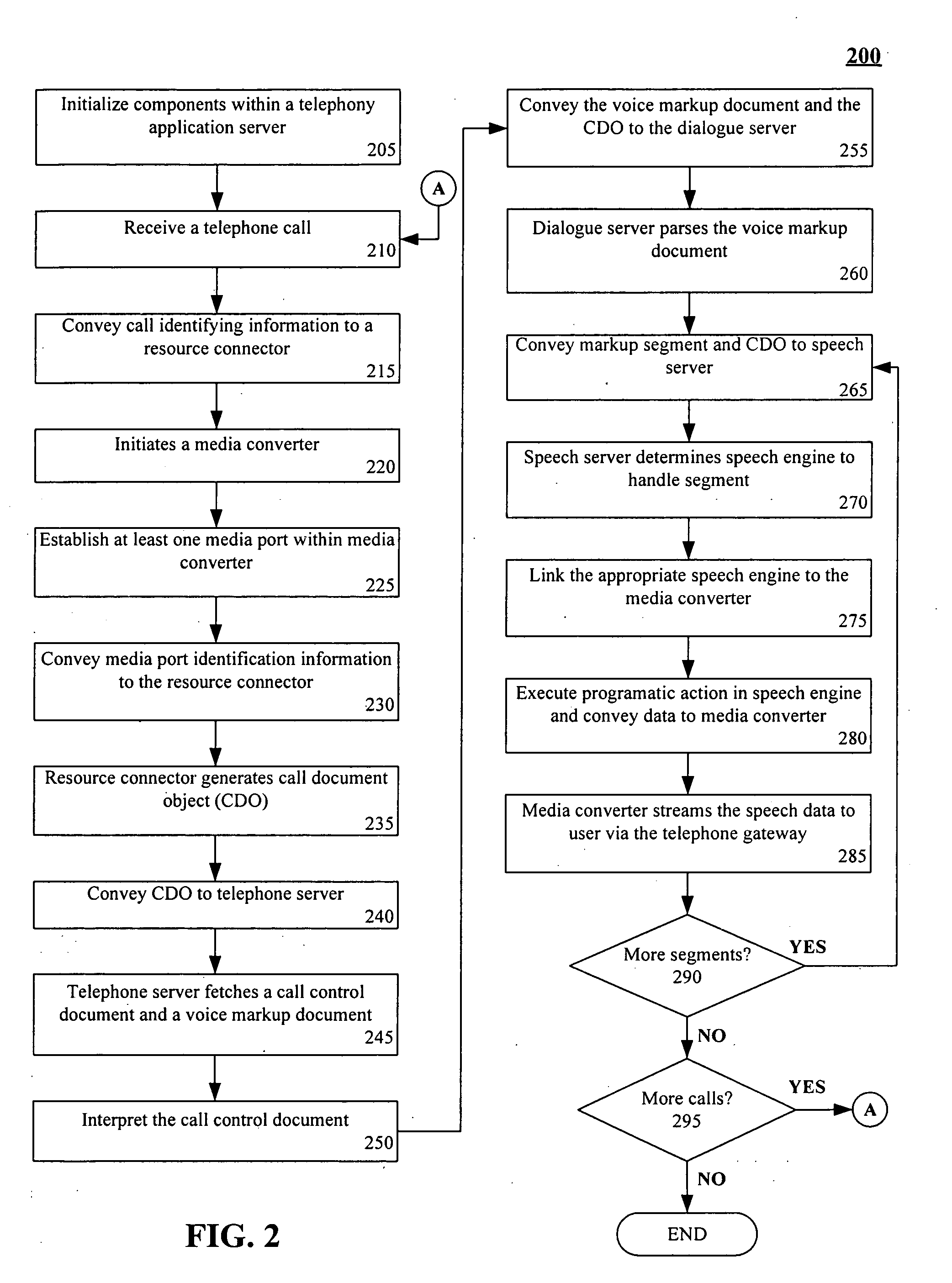

Telecommunications voice server leveraging application web-server capabilities

ActiveUS20050243977A1Minimizes code developmentReduce maintenance costsMultiplex system selection arrangementsSpecial service provision for substationApplication serverSpeech sound

A method for providing voice telephony services can include the step of receiving a call via a telephone gateway. The telephone gateway can convey call identifying data to a resource connector. A media port can be responsively established within a media converter that is communicatively linked to the telephone gateway through a port associated with the call. A call description object can be constructed that includes the call identifying data and an identifier for the media port. The call description object can be conveyed to a telephony application server that provides at least one speech service for the call. The telephony application server can initiate at least one programmatic action of a communicatively linked speech engine. The speech engine can convey results of the programmatic action to the media converter through the media port. The media converter can stream speech signals for the call based upon the results.

Owner:NUANCE COMM INC

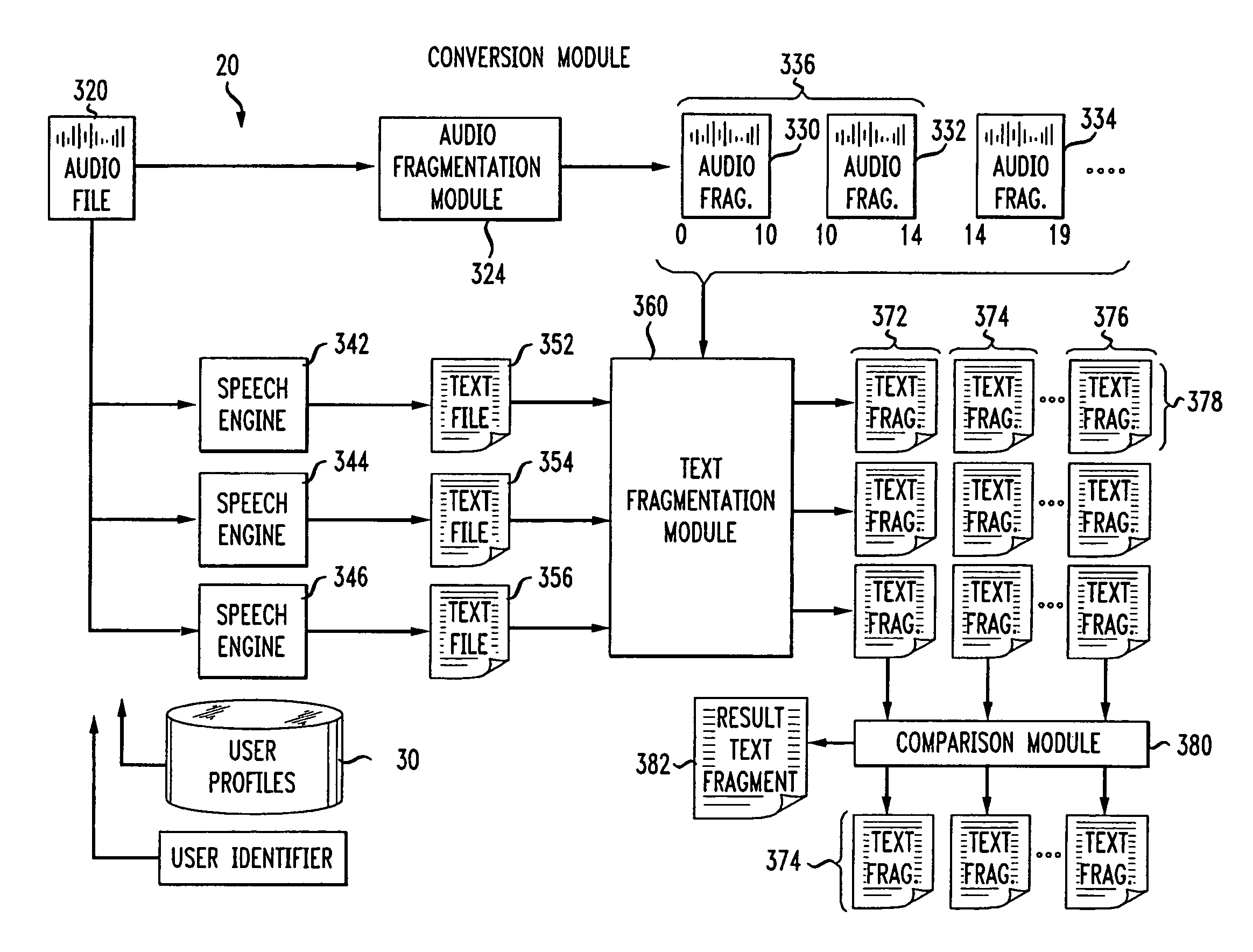

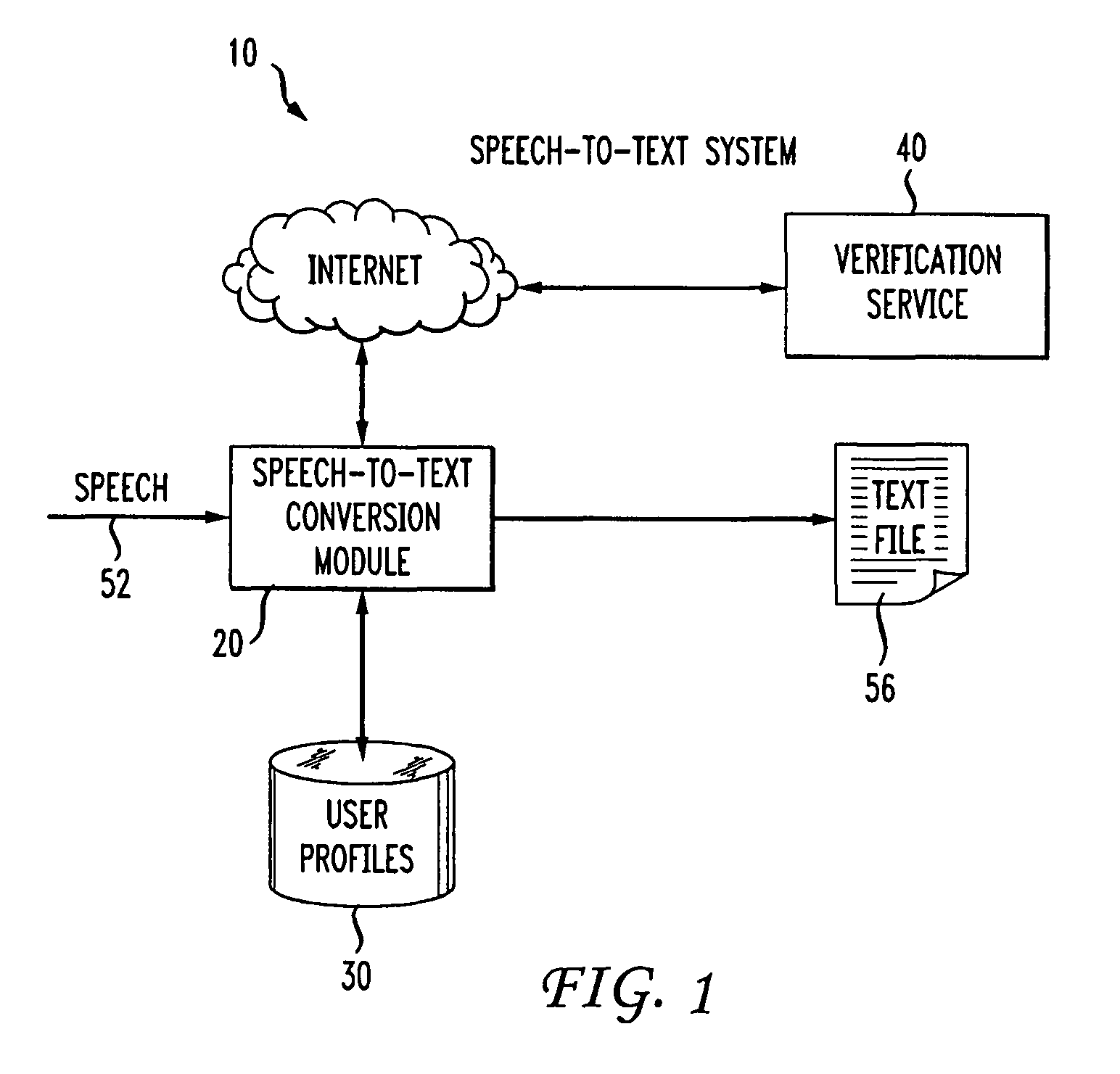

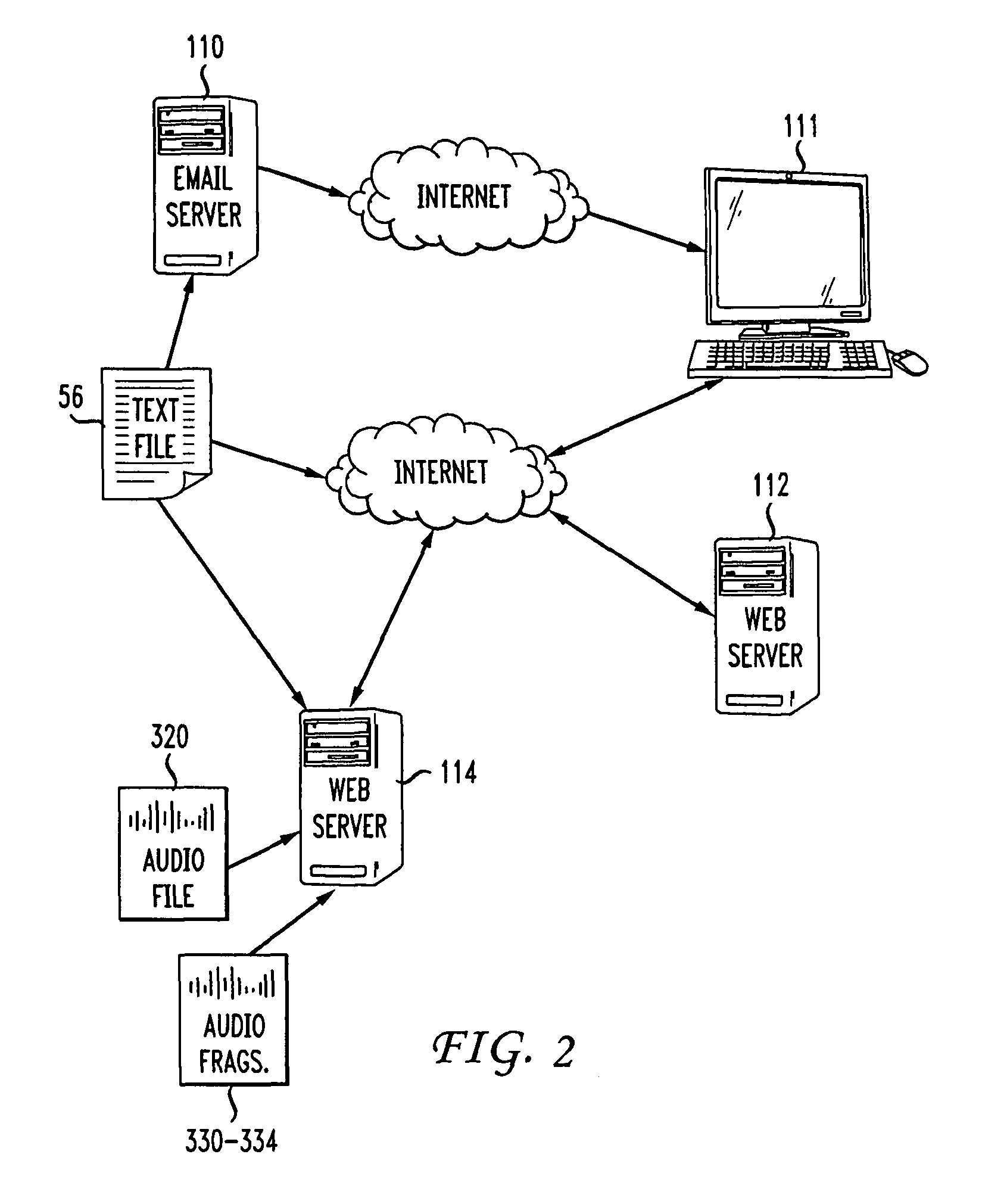

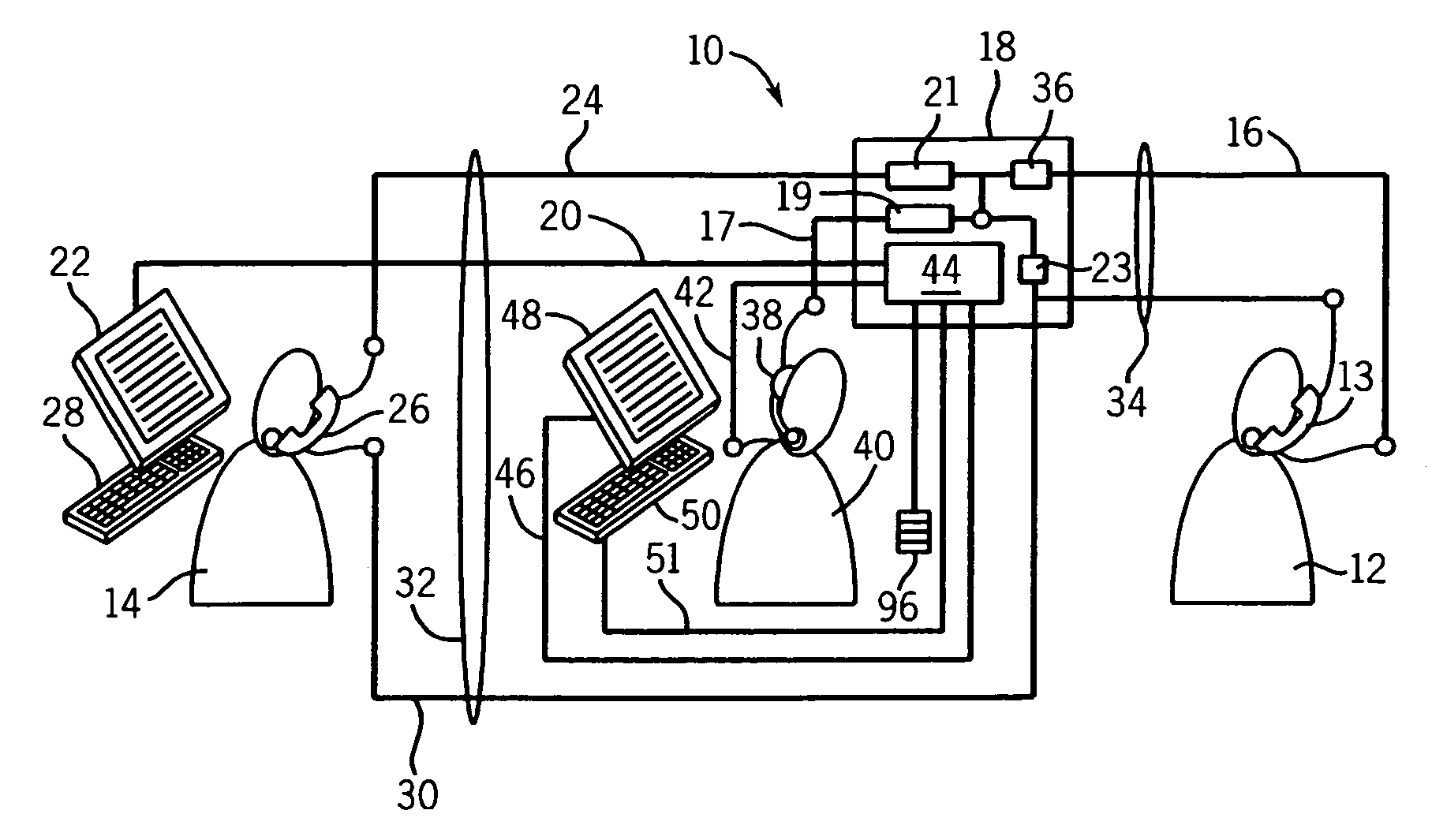

Precision speech to text conversion

InactiveUS8041565B1Improve productivityLong pipeline delaySpeech recognitionTransformation of textHuman agent

A speech-to-text conversion module uses a central database of user speech profiles to convert speech to text. Incoming audio information is fragmented into numerous audio fragments based upon detecting silence. The audio information is also converted to numerous text files by any number of speech engines. Each text file is then fragmented into numerous text fragments based upon the boundaries established during the audio fragmentation. Each set of text fragments from the different speech engines corresponding to a single audio fragments is then compared. The best approximation of the audio fragment is produced from the set of text fragments; a hybrid may be produced. If no agreement is reached, the audio fragment and set the text fragments are sent to human agents who verify and edit to produce a final edited text fragment that best corresponds to the audio fragment. Fragmentation that produces overlapping audio fragments requires splicing of the final text fragments to produce the output text file.

Owner:FONEWEB

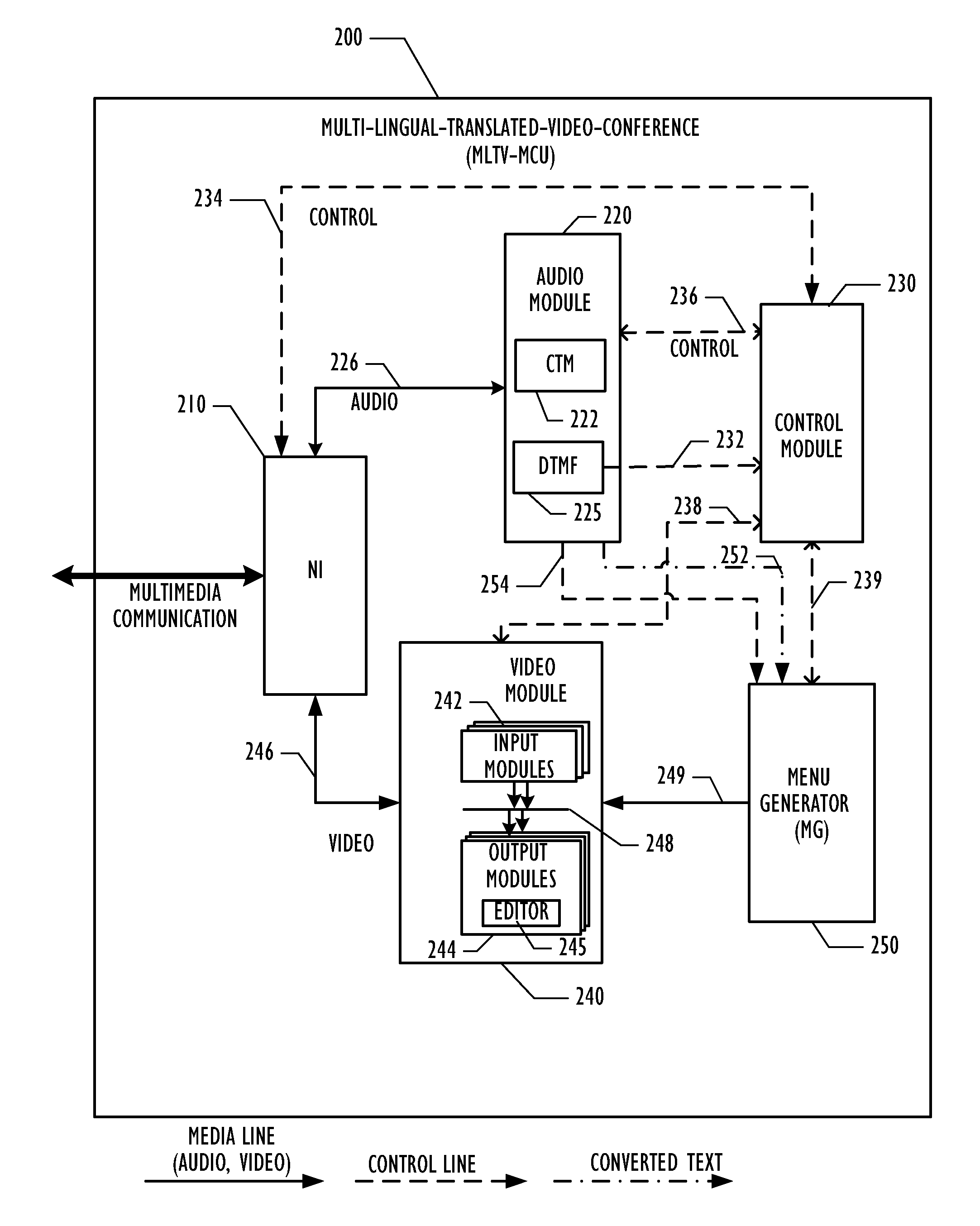

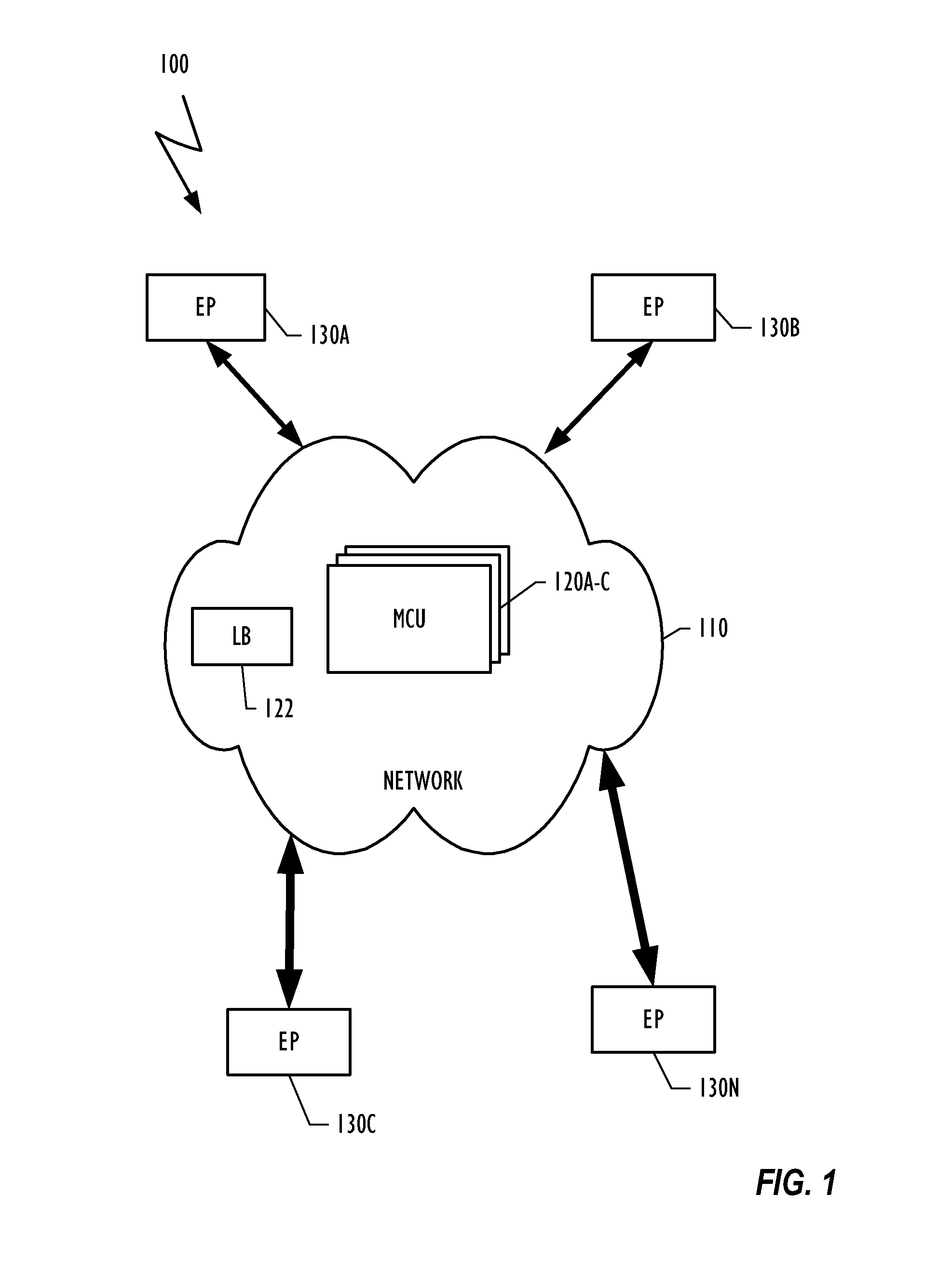

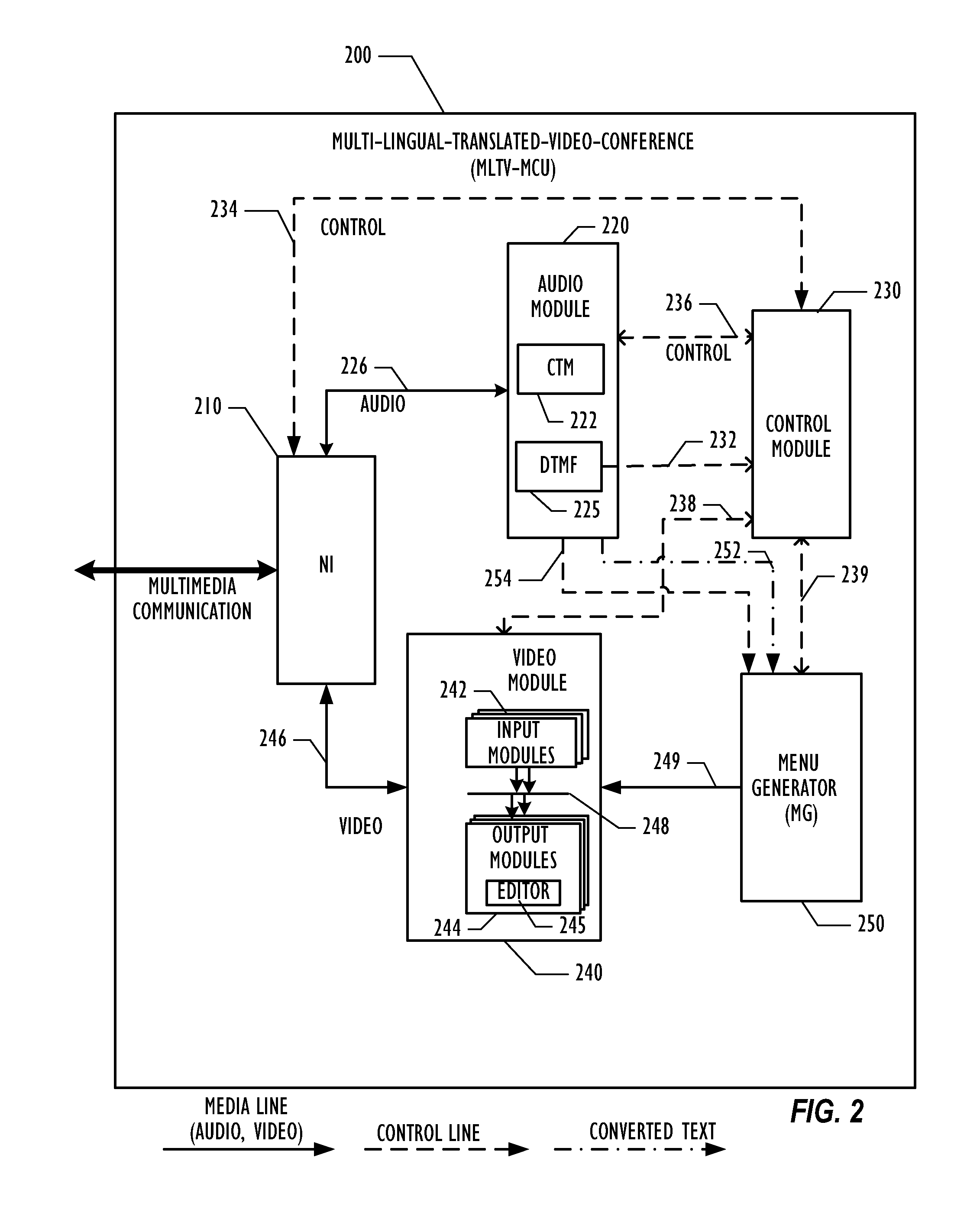

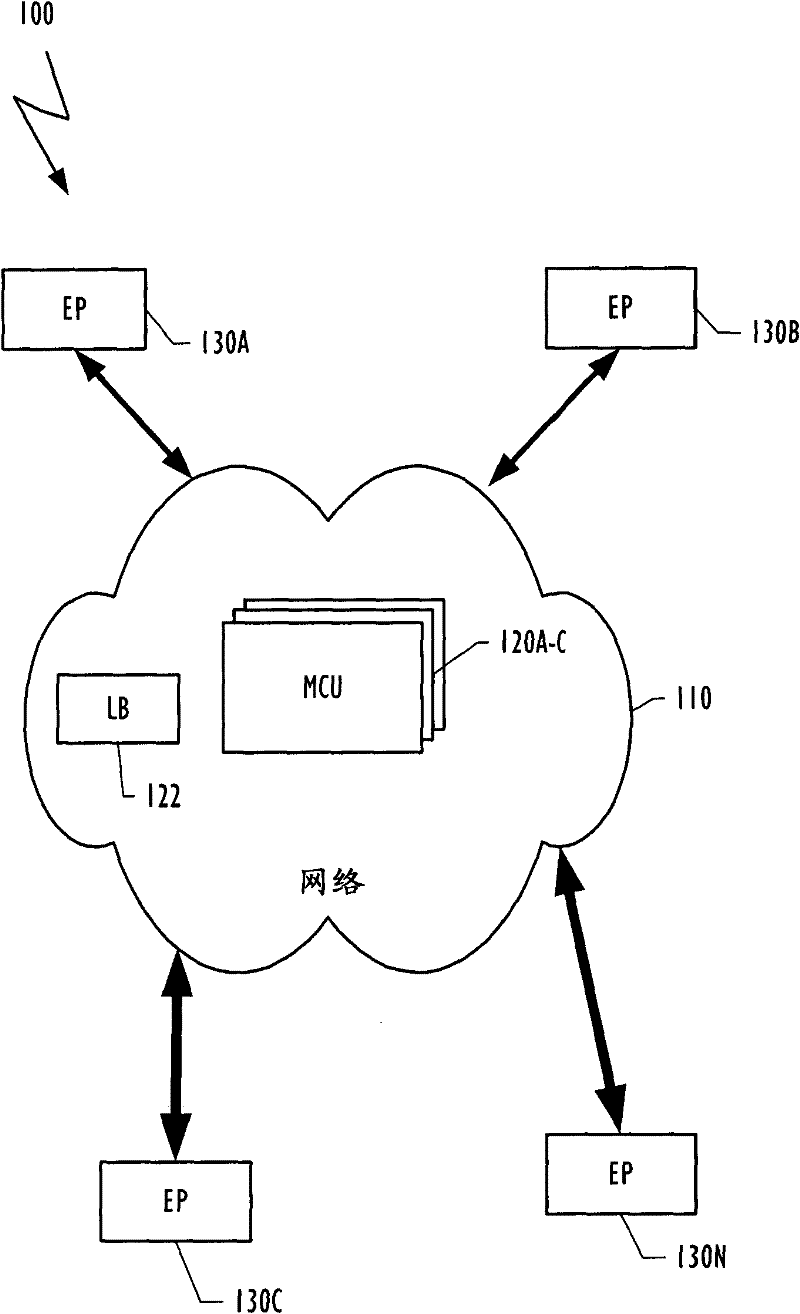

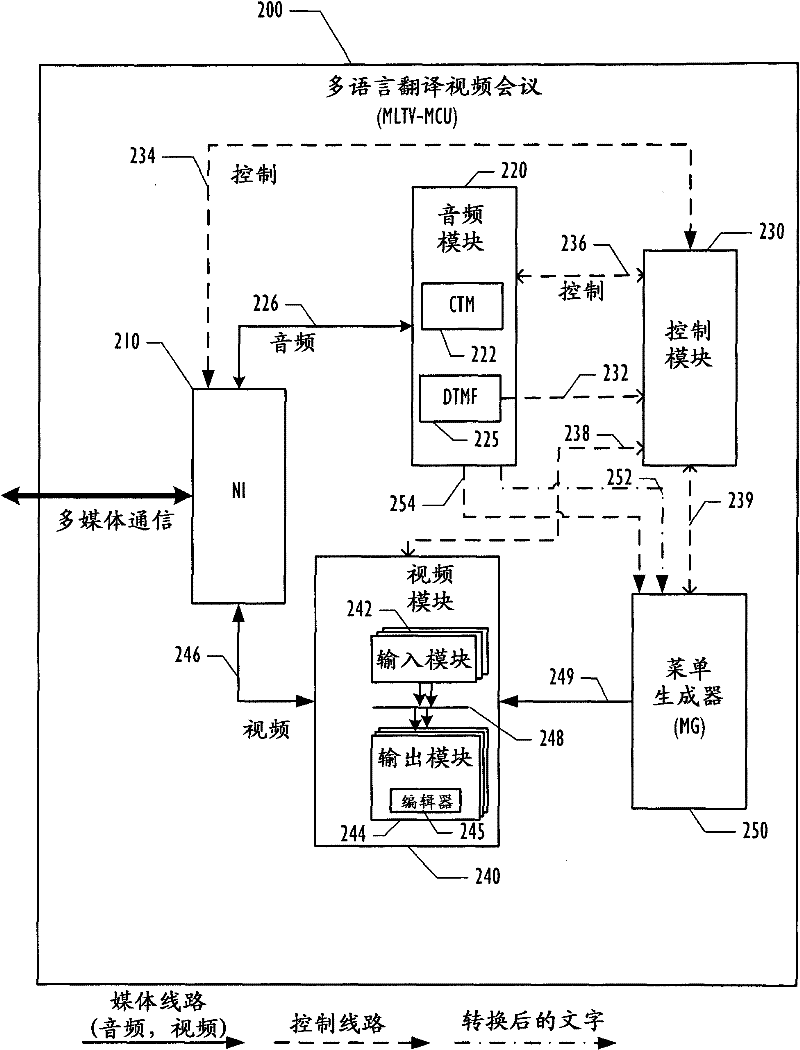

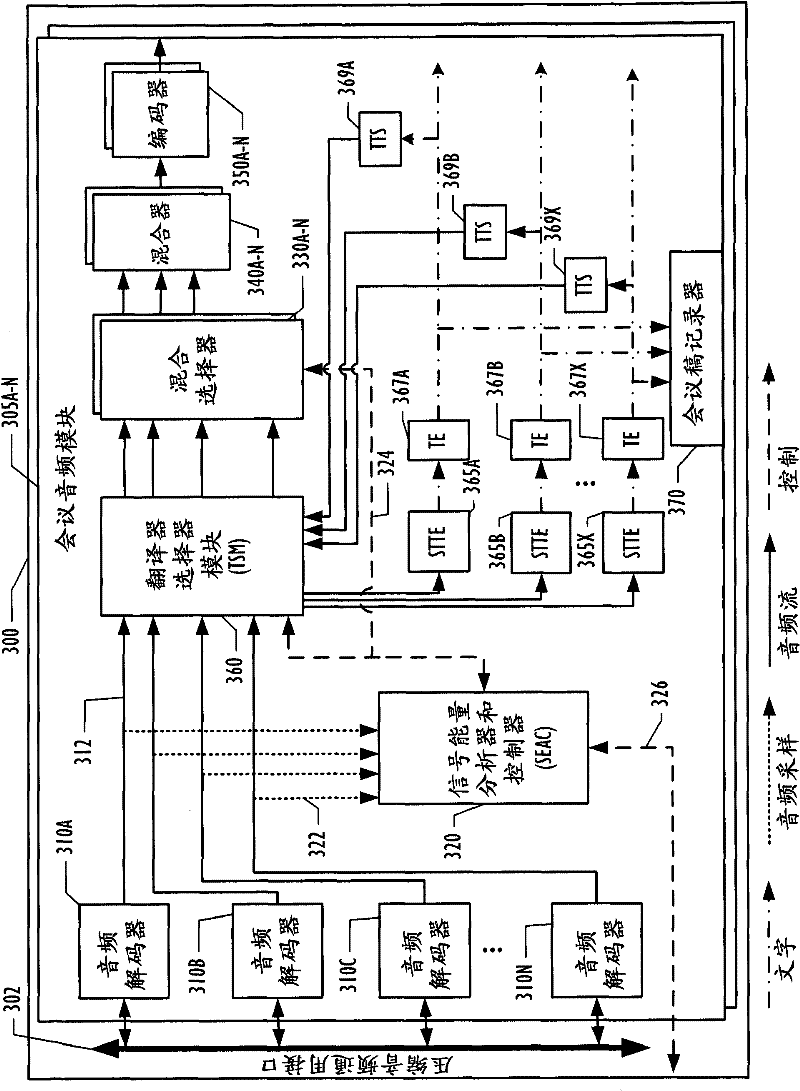

Method and System for Adding Translation in a Videoconference

InactiveUS20110246172A1Enabling synchronizationQuality improvementNatural language translationTelevision conference systemsSpeech soundHuman language

A multilingual multipoint videoconferencing system provides real-time translation of speech by conferees. Audio streams containing speech may be converted into text and inserted as subtitles into video streams. Speech may also be translated from one language to another, with the translated speech inserted into video streams as and choose the subtitles or replacing the original audio stream with speech in the other language generated by a text to speech engine. Different conferees may receive different translations of the same speech based on information provided by the conferees on desired languages.

Owner:POLYCOM INC

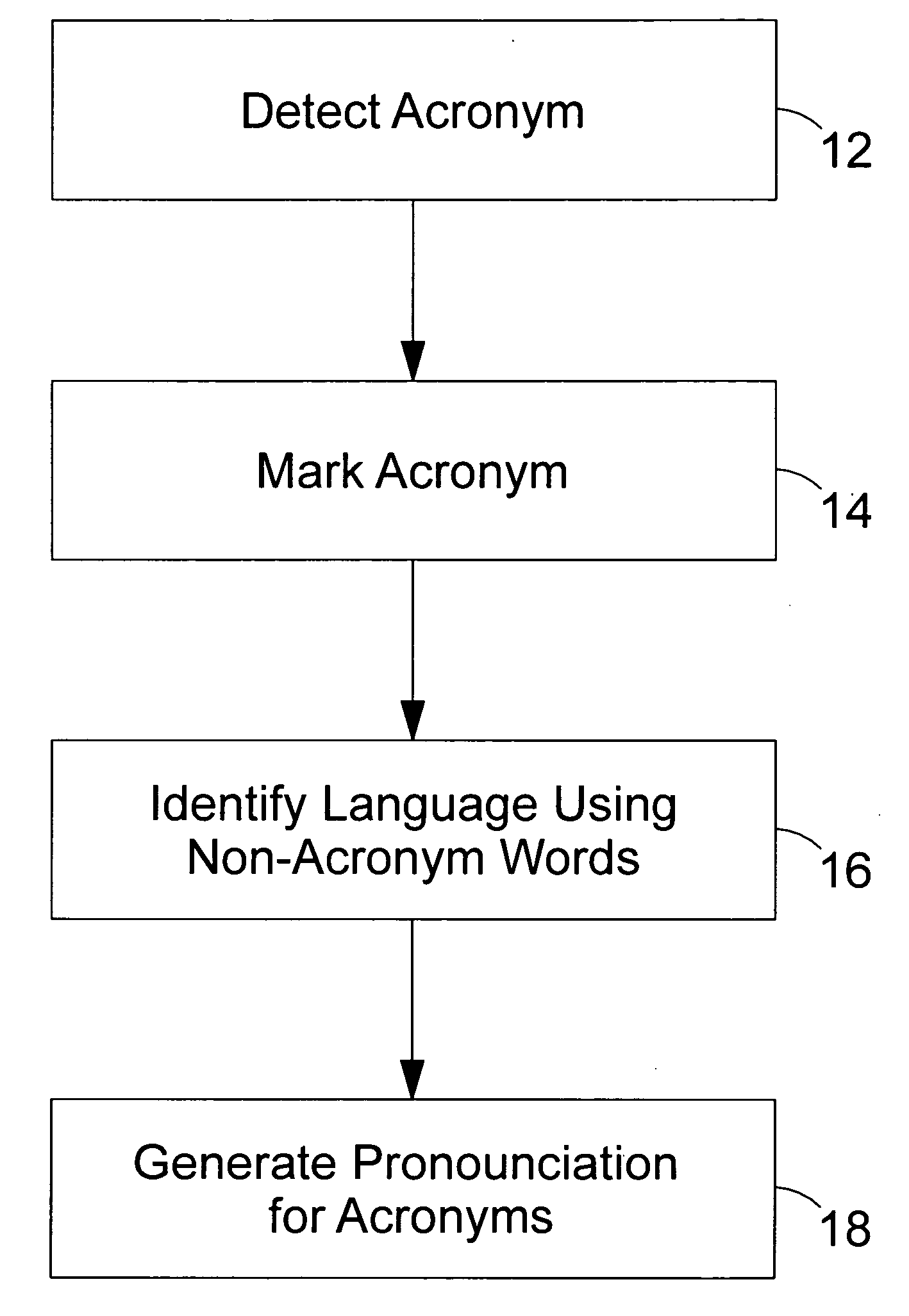

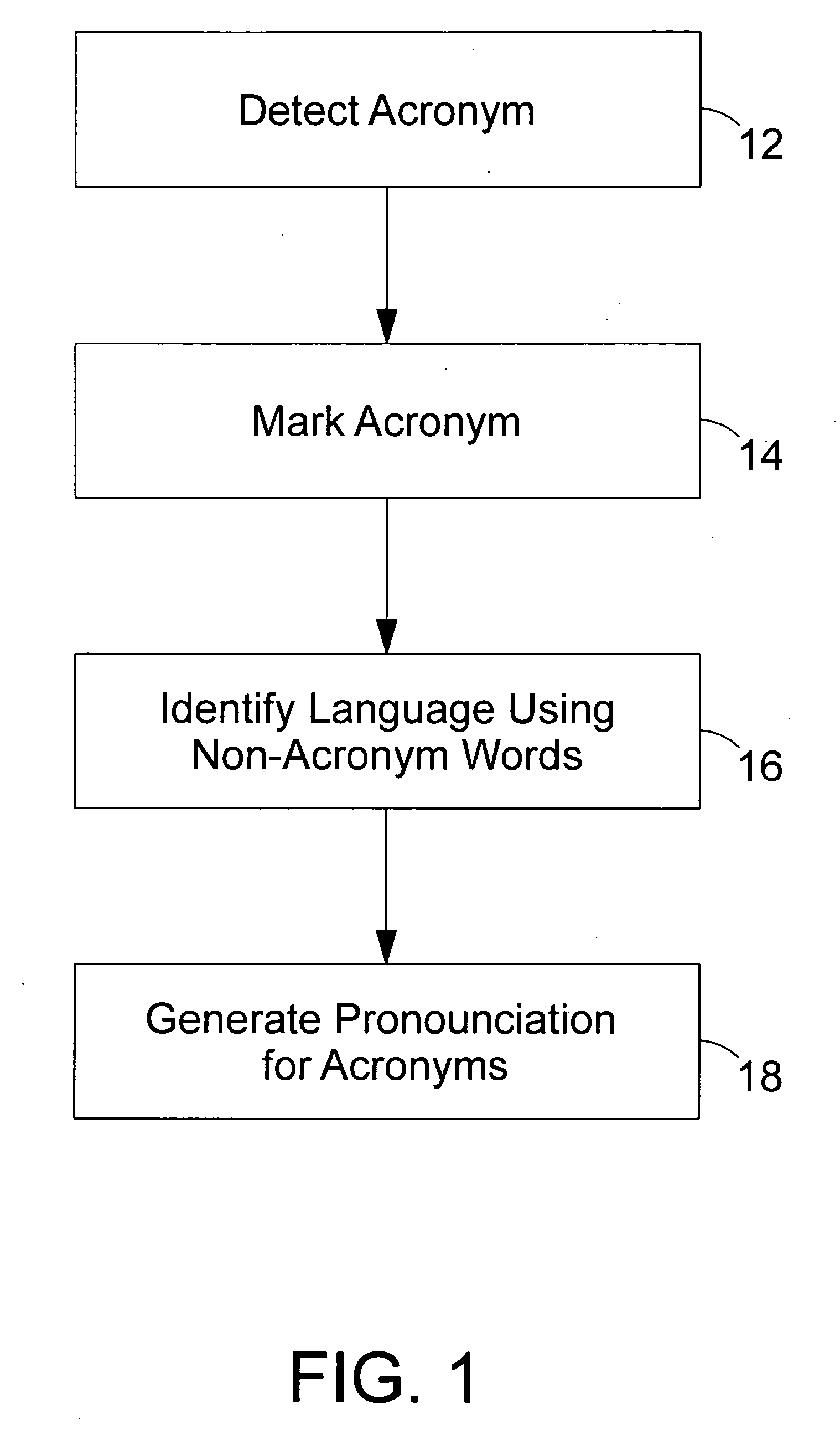

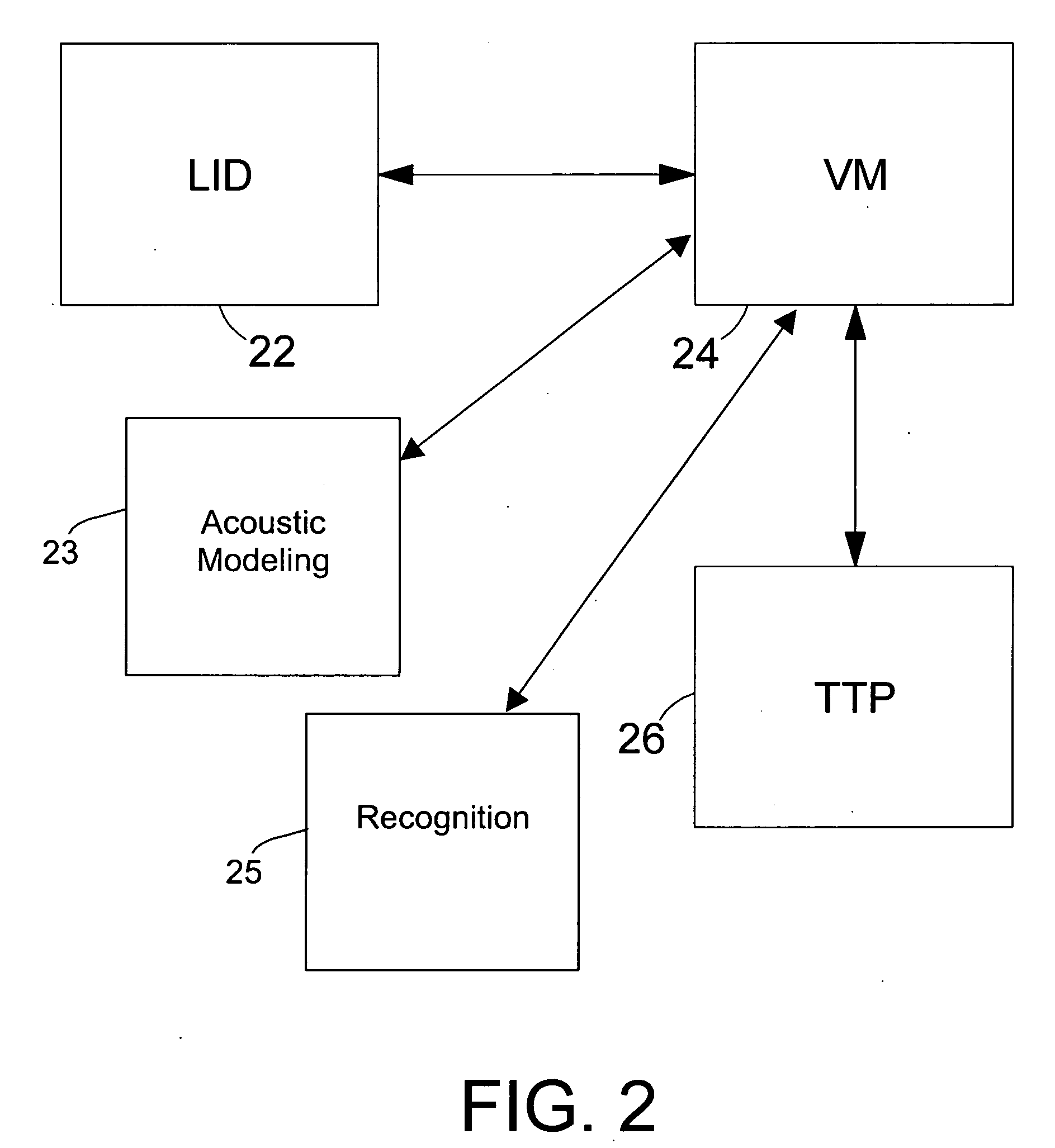

Handling of acronyms and digits in a speech recognition and text-to-speech engine

A method is disclosed for the detection of acronyms and digits and for finding the pronunciations for them. The method can be incorporated as part of an Automatic Speech Recognition (ASR) and Text-to-Speech (TTS) system. Moreover, the method can be part of Multi-Lingual Automatic Speech Recognition (ML-ASR) and TTS systems. The method of handling of acronyms in a speech recognition and text-to-speech system can include detecting an acronym from text, identifying a language of the text based on non-acronym words in the text, and utilizing the identified language in acronym pronunciation generation to generate a pronunciation for the detected acronym.

Owner:NOKIA CORP

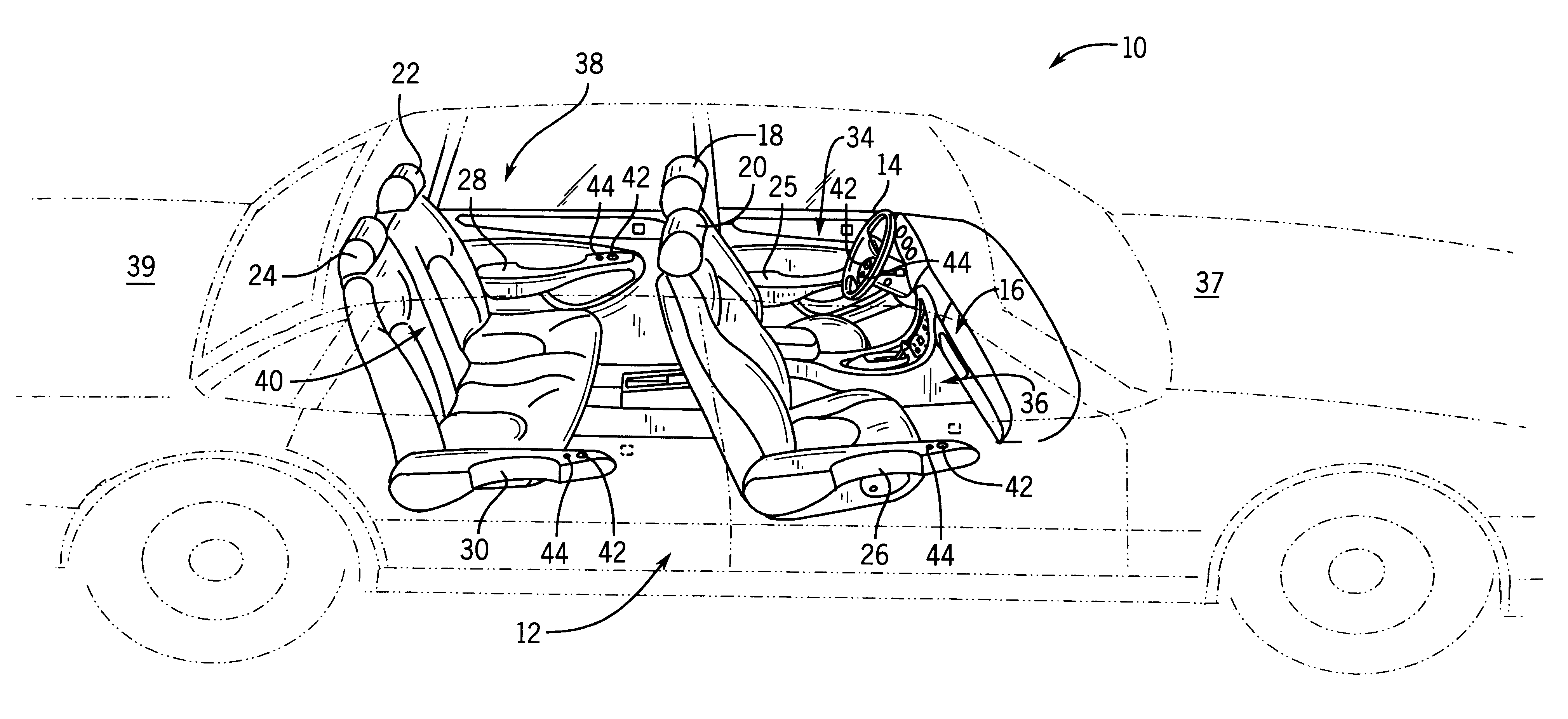

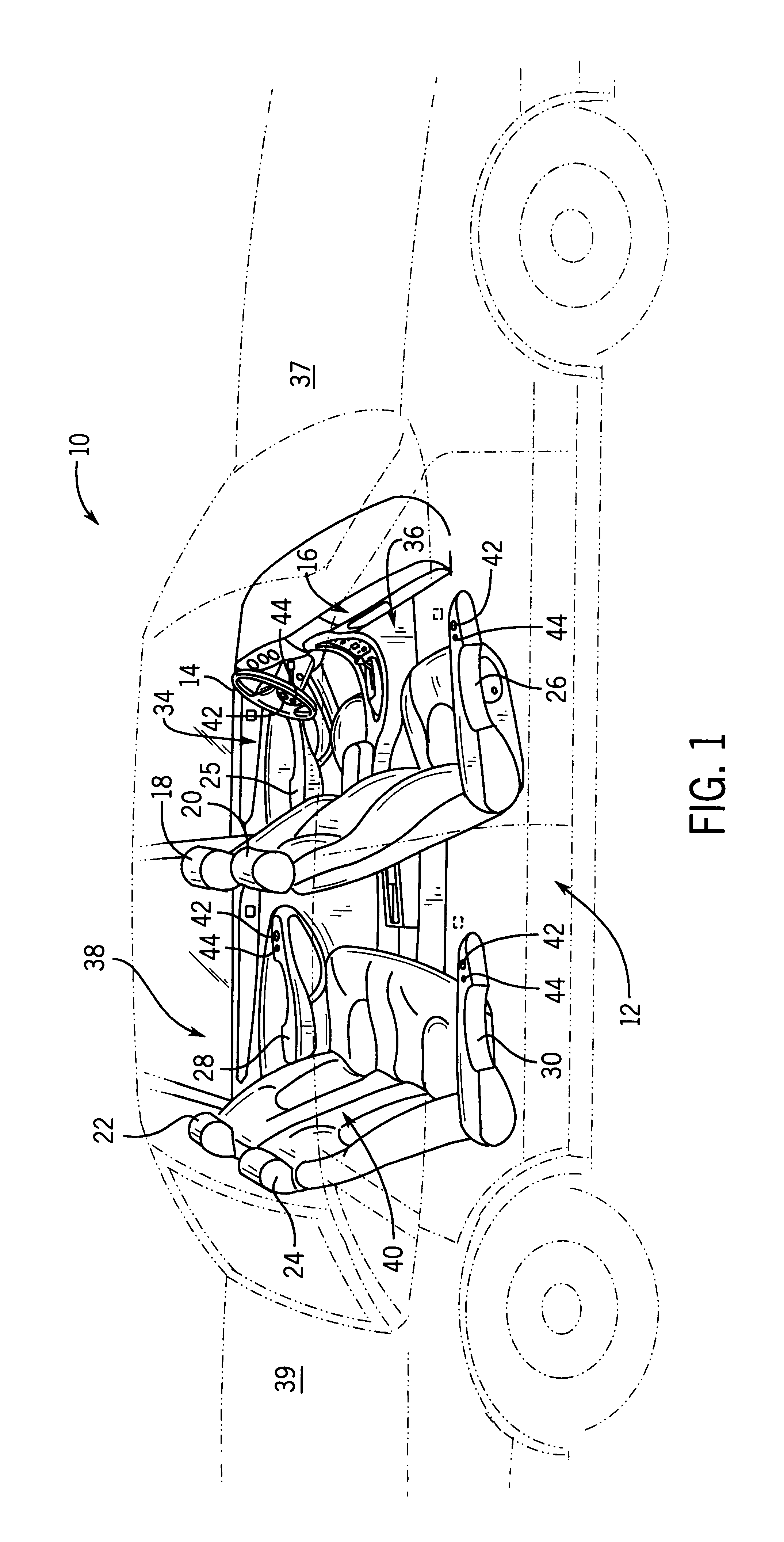

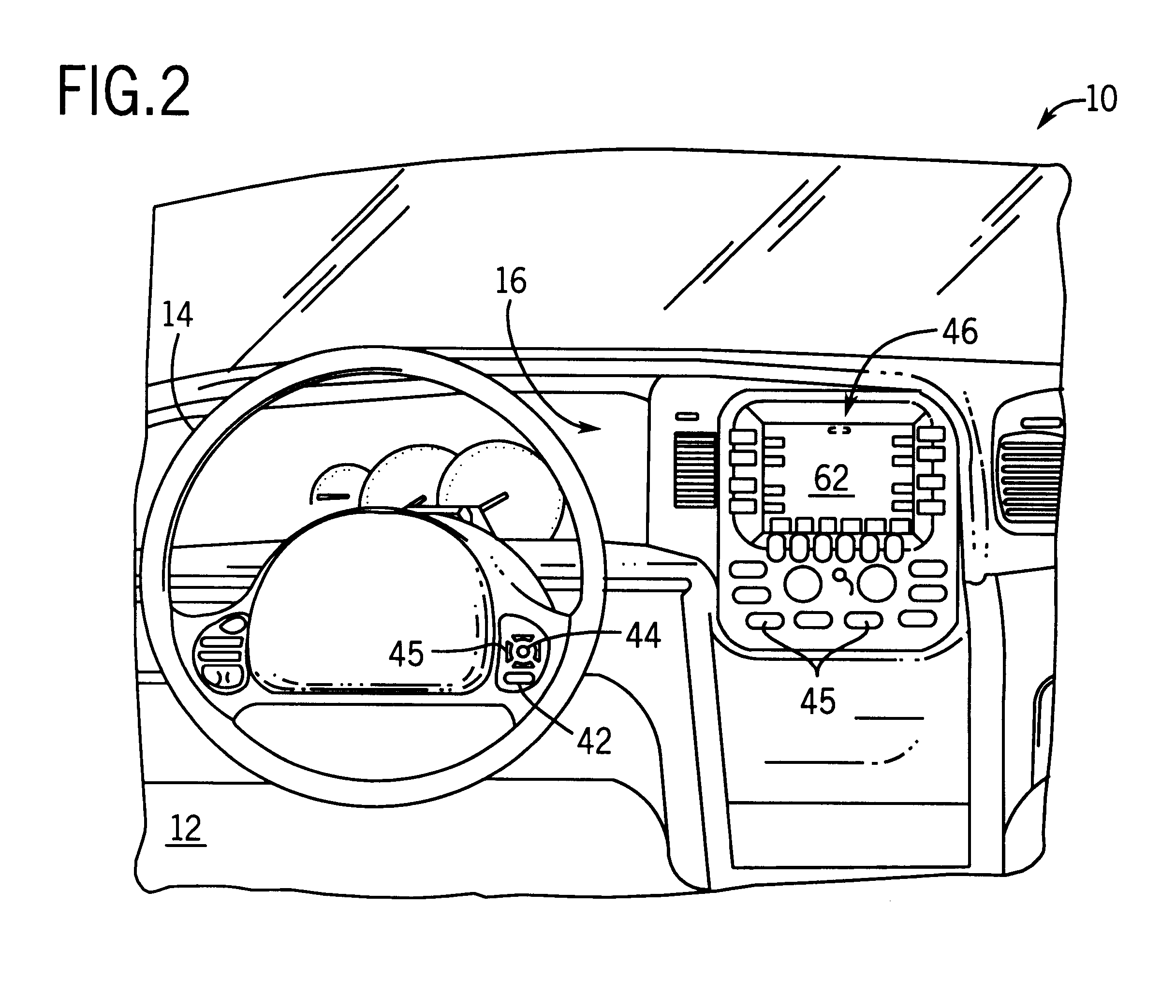

Method and apparatus for controlling multiple speech engines in an in-vehicle speech recognition system

InactiveUS6230138B1Accurately recognizing speechAccurate identificationAir-treatment apparatus arrangementsSpeech recognitionSpeech ProcessorOn board

Disclosed herein is a method and apparatus for controlling a speech recognition system on board an automobile. The automobile has one or more voice activated accessories and a passenger cabin with a number of seating locations. The speech recognition system has a plurality of microphones and push-to-talk controls corresponding to the seating locations for inputting speech commands and location identifying signals, respectively. The speech recognition system also includes multiple speech engines recognizing speech commands for operating the voice activated accessories. A selector is coupled to the speech engines and push-to-talk controls for selecting the speech engine best suited for the current speaking location. A speech processor coupled to the speech engine selector is used to recognize the speech commands and transmit the commands to the voice activated accessory.

Owner:VISTEON GLOBAL TECH INC

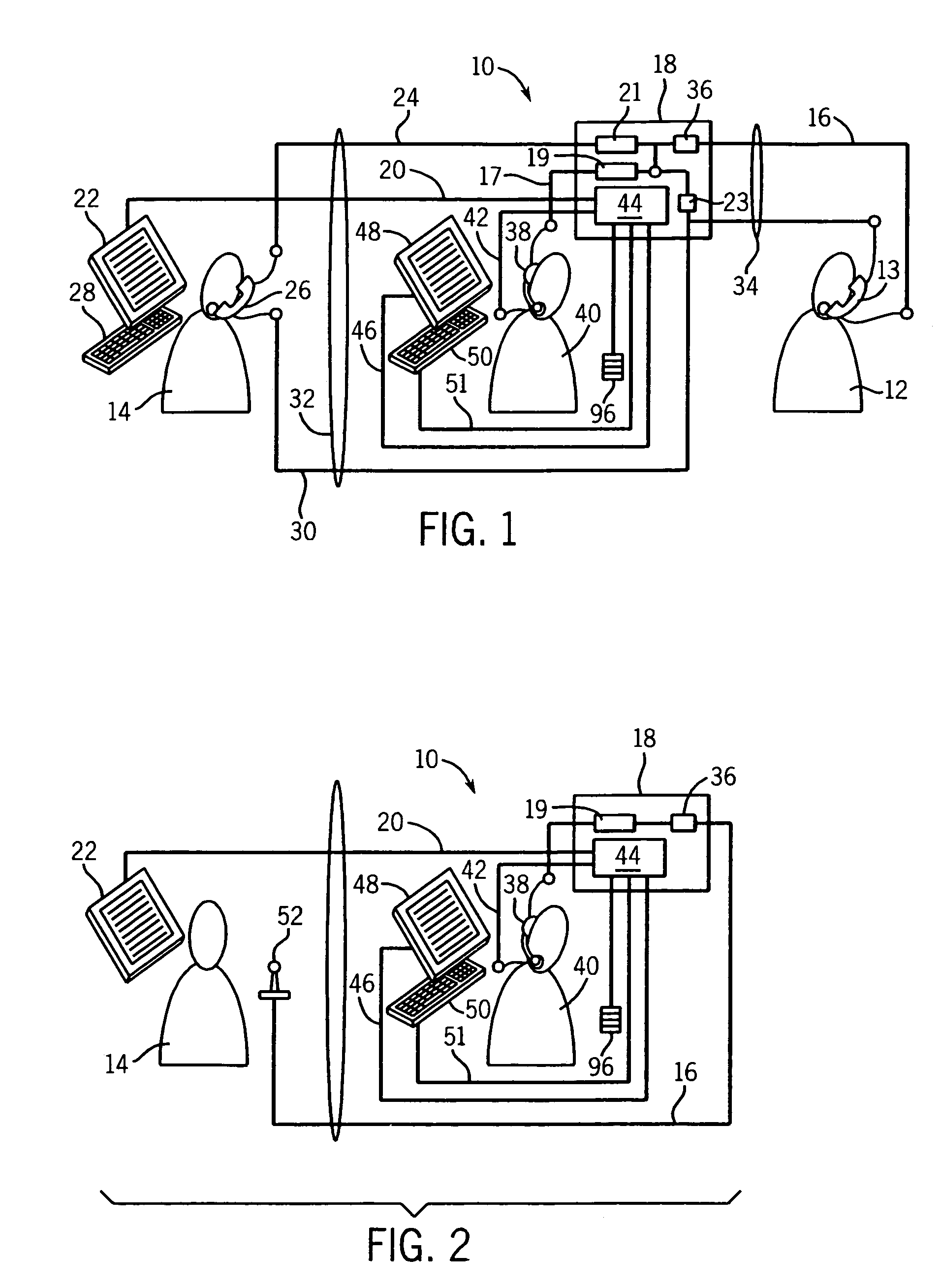

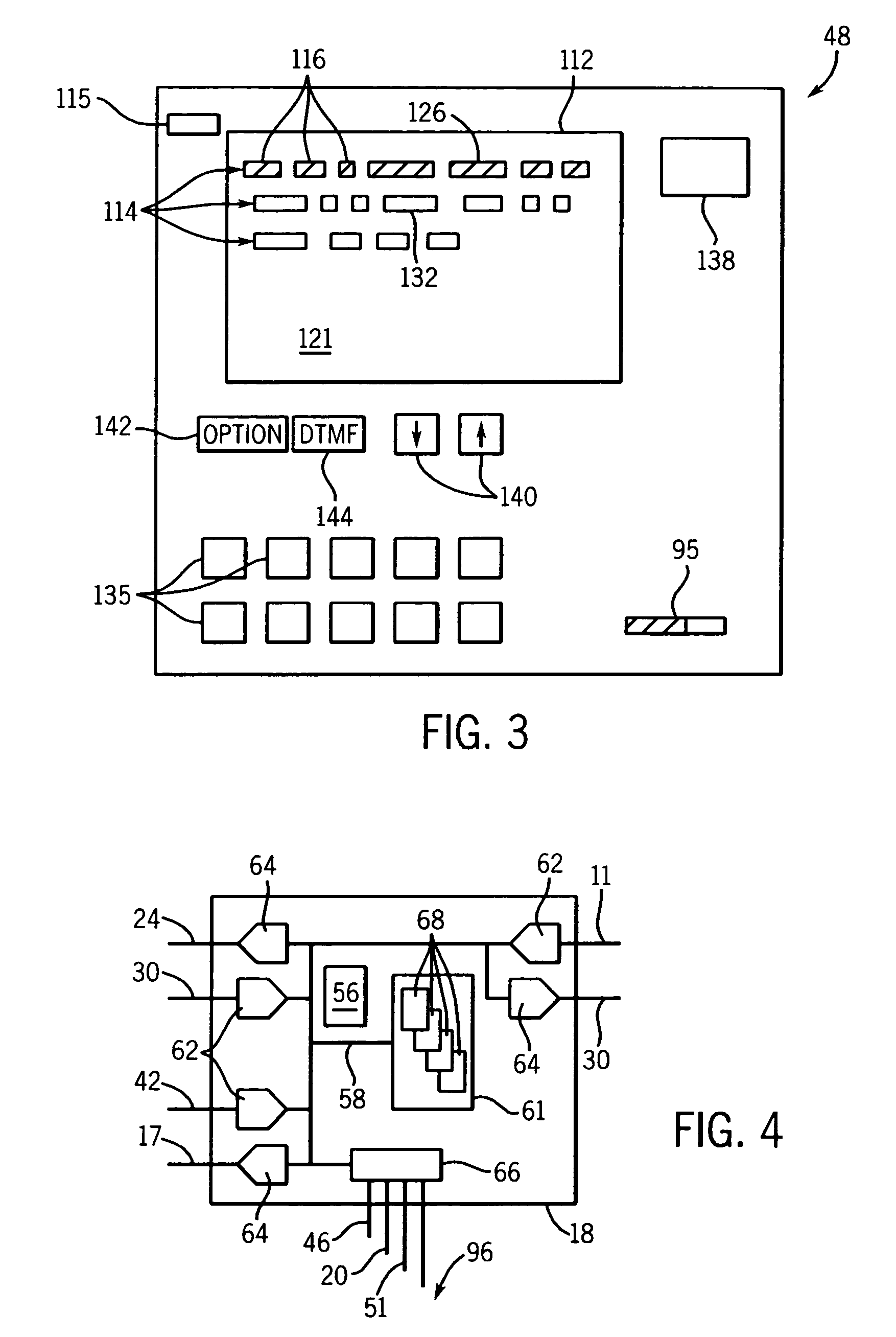

Real-time transcription correction system

InactiveUS7164753B2Simplify the editing processImprove accuracySpecial service for subscribersAutomatic call-answering/message-recording/conversation-recordingSpoken languageCommon word

A voice transcription system employing a speech engine to transcribe spoken words, detects the spelled entry of words via keyboard or voice to invoke a database of common words attempting to complete the word before all the letters have been input. This database is separate from the database of words used by the speech engine. A voice level indicator is presented to the operator to help the operator keep his or her voice in the ideal range of the speech engine.

Owner:ULTRATEC INC

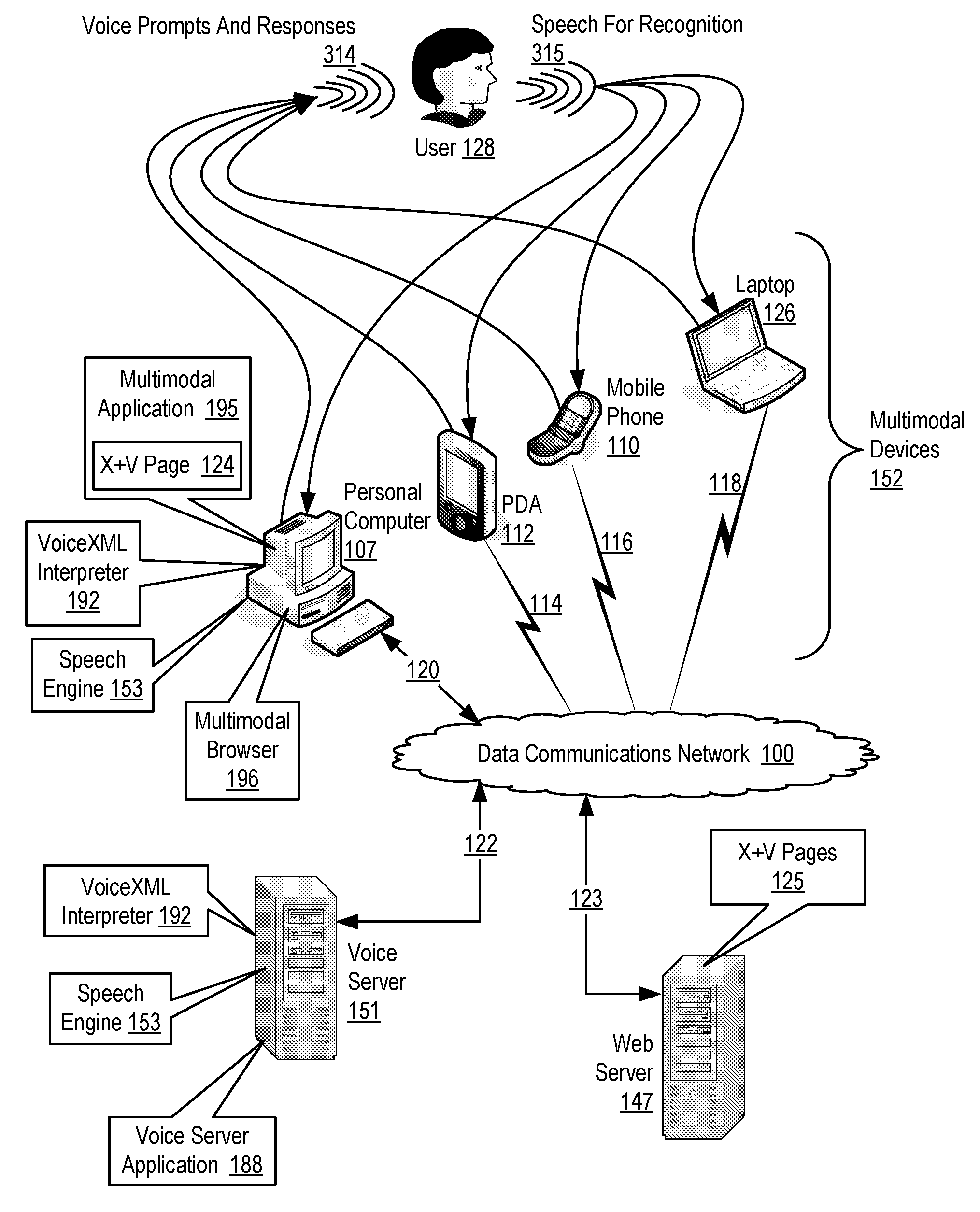

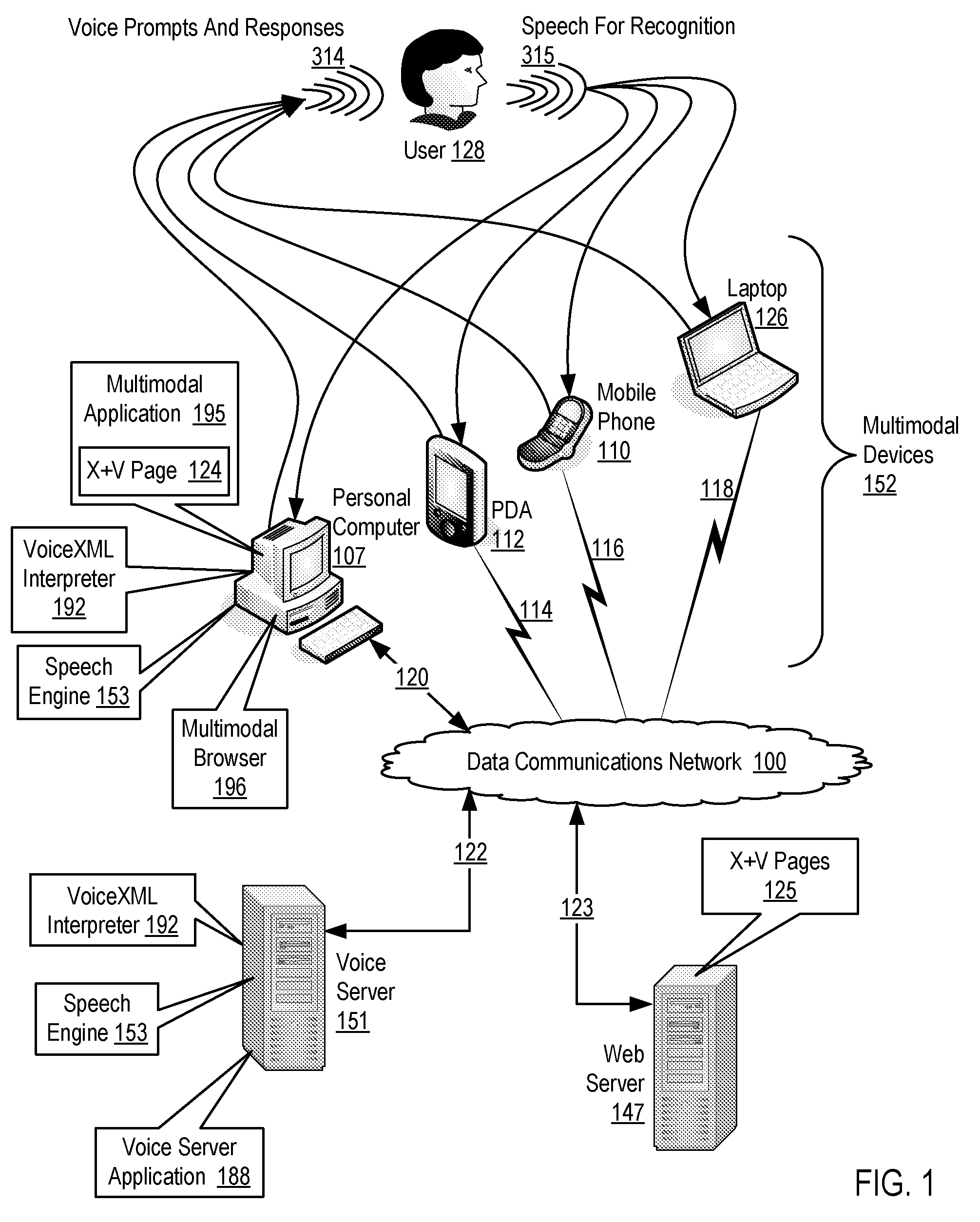

Providing Expressive User Interaction With A Multimodal Application

Methods, apparatus, and products are disclosed for providing expressive user interaction with a multimodal application, the multimodal application operating in a multimodal browser on a multimodal device supporting multiple modes of user interaction including a voice mode and one or more non-voice modes, the multimodal application operatively coupled to a speech engine through a VoiceXML interpreter, including: receiving, by the multimodal browser, user input from a user through a particular mode of user interaction; determining, by the multimodal browser, user output for the user in dependence upon the user input; determining, by the multimodal browser, a style for the user output in dependence upon the user input, the style specifying expressive output characteristics for at least one other mode of user interaction; and rendering, by the multimodal browser, the user output in dependence upon the style.

Owner:NUANCE COMM INC

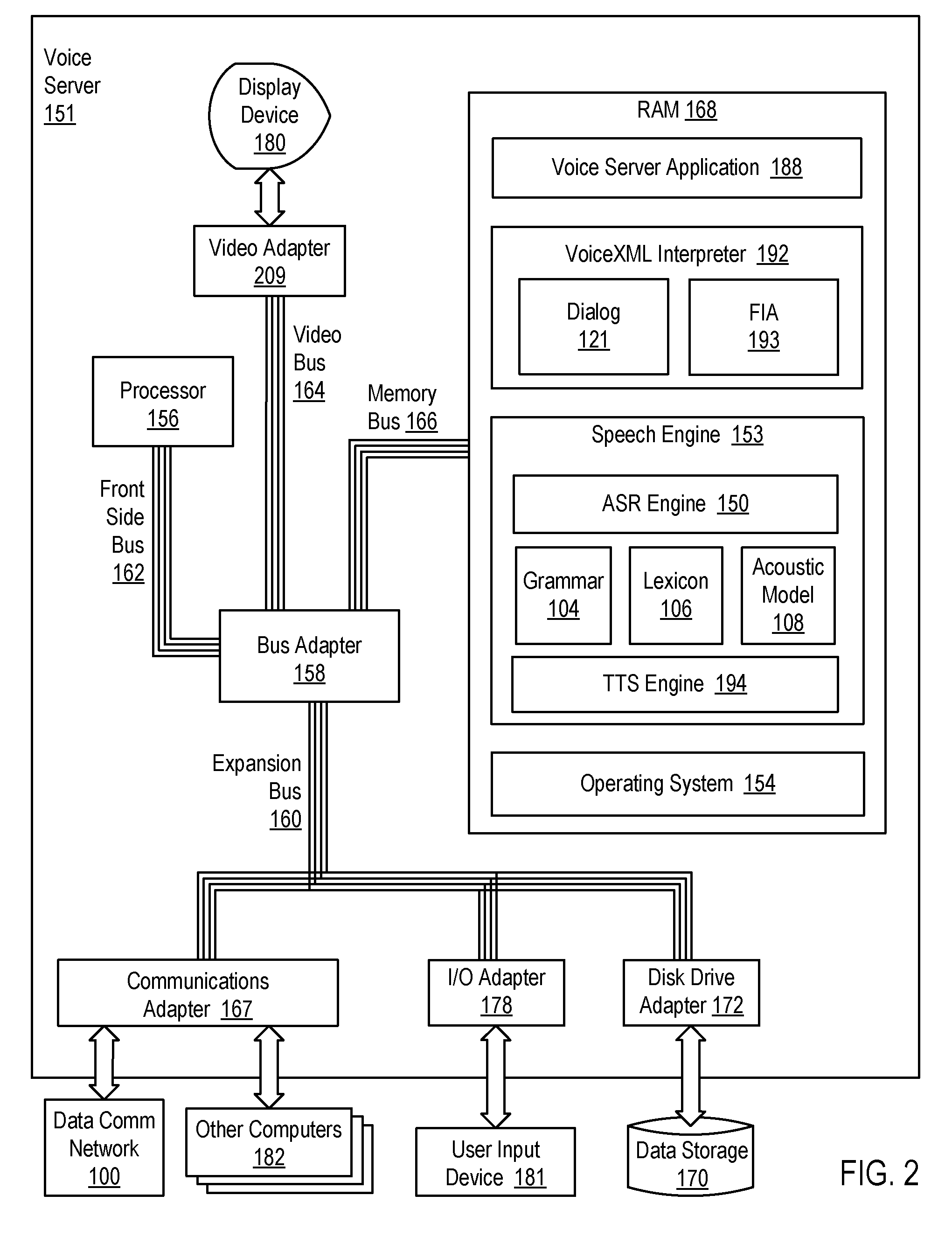

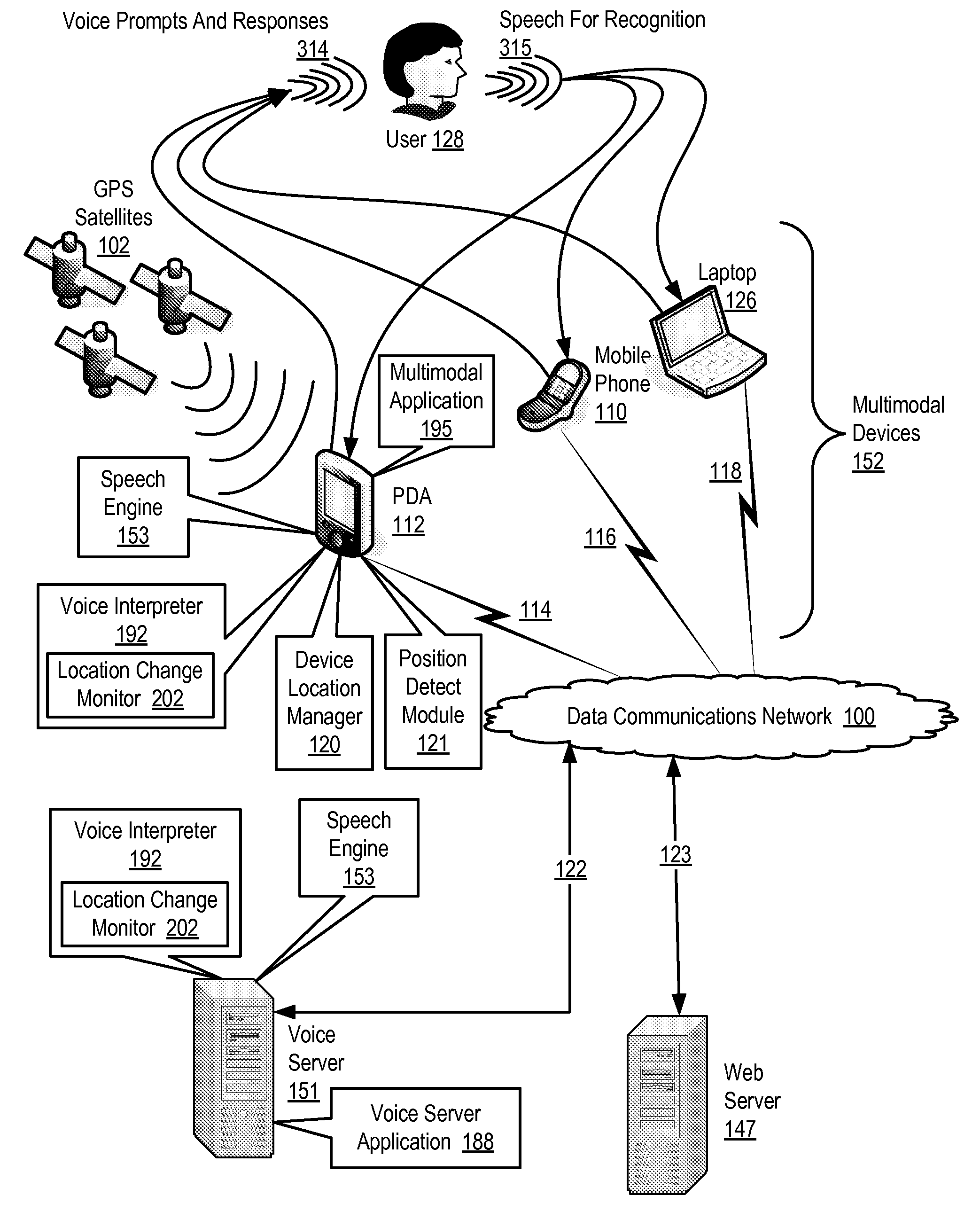

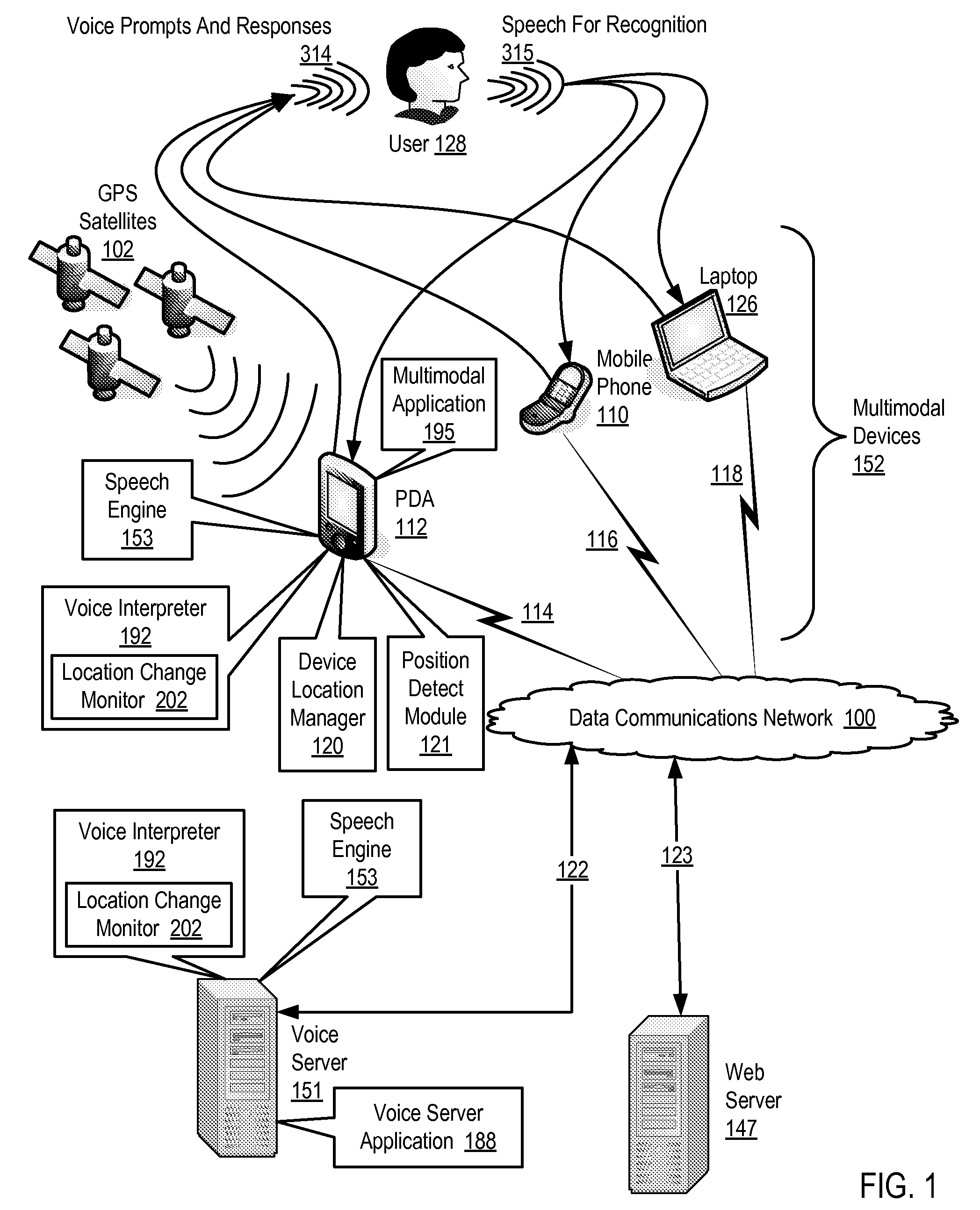

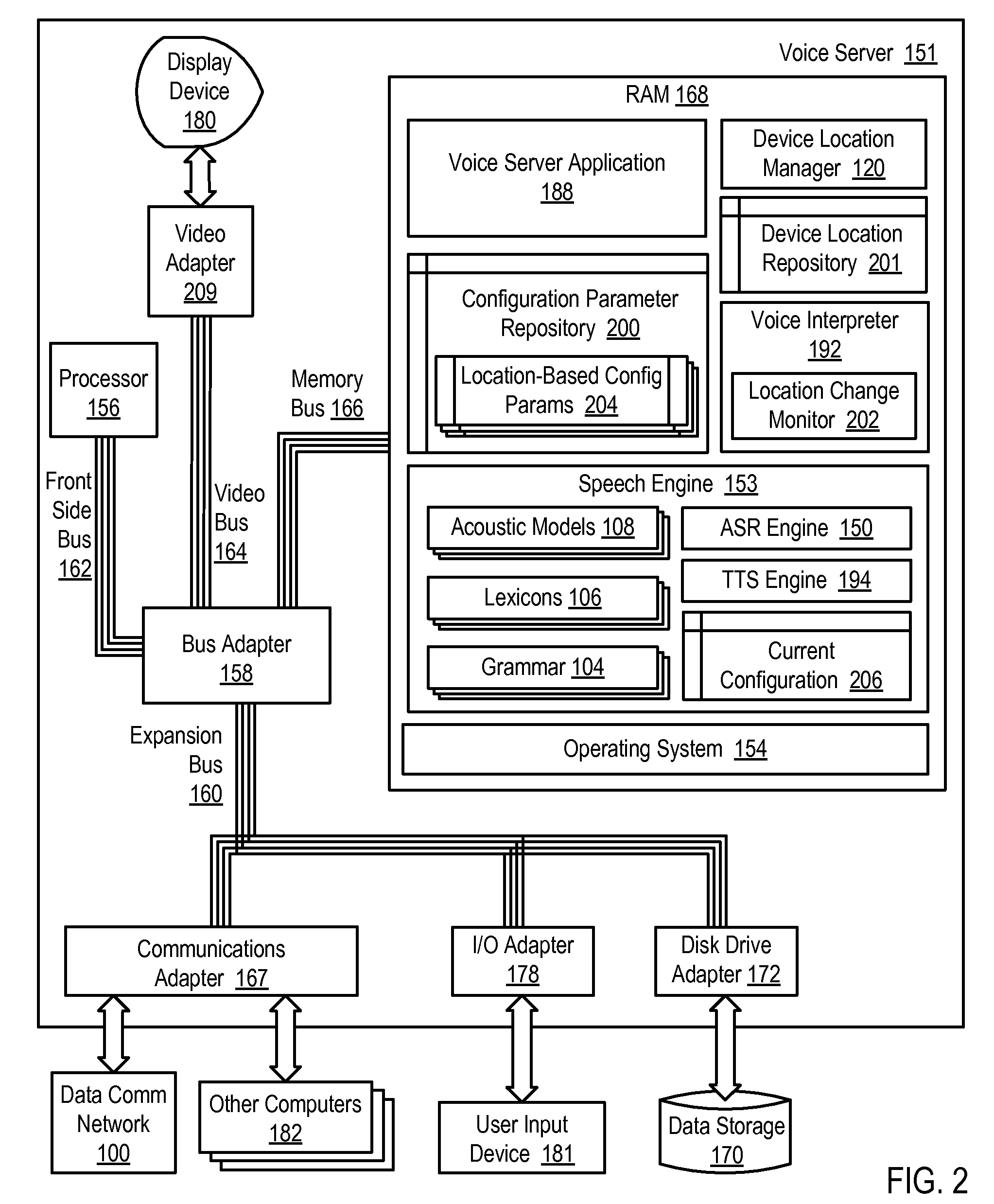

Configuring A Speech Engine For A Multimodal Application Based On Location

Methods, apparatus, and products are disclosed for configuring a speech engine for a multimodal application based on location. The multimodal application operates on a multimodal device supporting multiple modes of user interaction with the multimodal application. The multimodal application is operatively coupled to a speech engine. Configuring a speech engine for a multimodal application based on location includes: receiving a location change notification in a location change monitor from a device location manager, the location change notification specifying a current location of the multimodal device; identifying, by the location change monitor, location-based configuration parameters for the speech engine in dependence upon the current location of the multimodal device, the location-based configuration parameters specifying a configuration for the speech engine at the current location; and updating, by the location change monitor, a current configuration for the speech engine according to the identified location-based configuration parameters.

Owner:NUANCE COMM INC

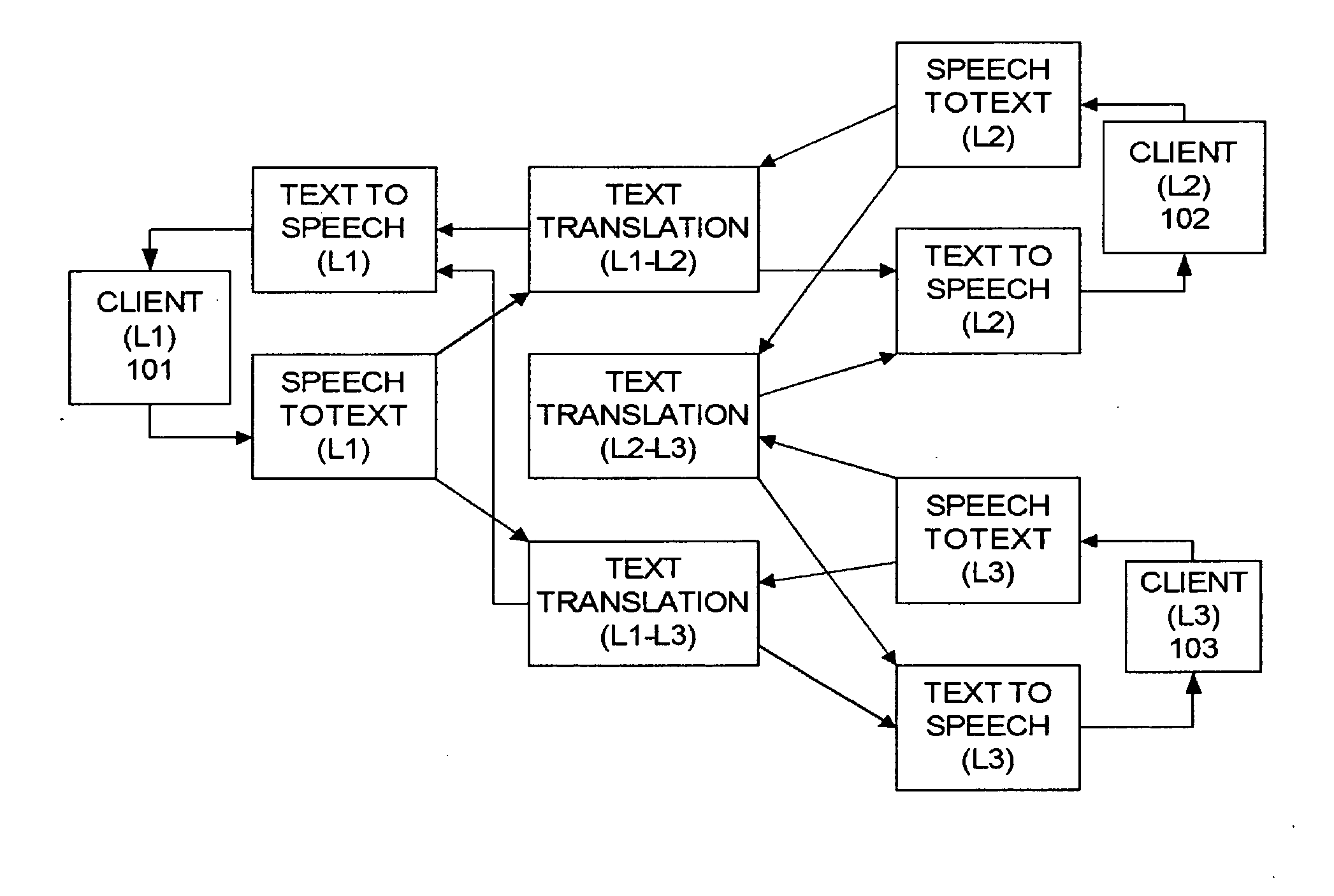

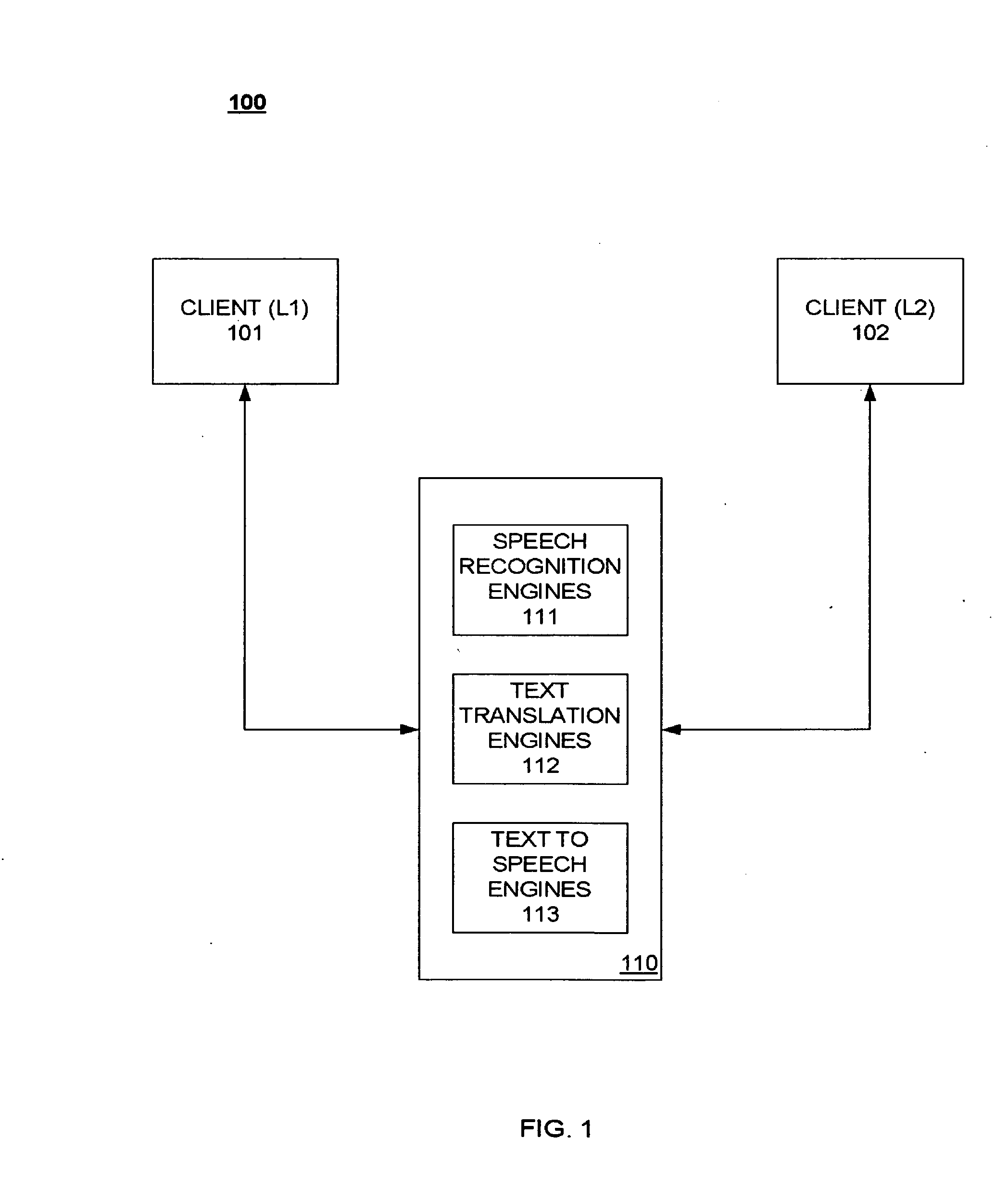

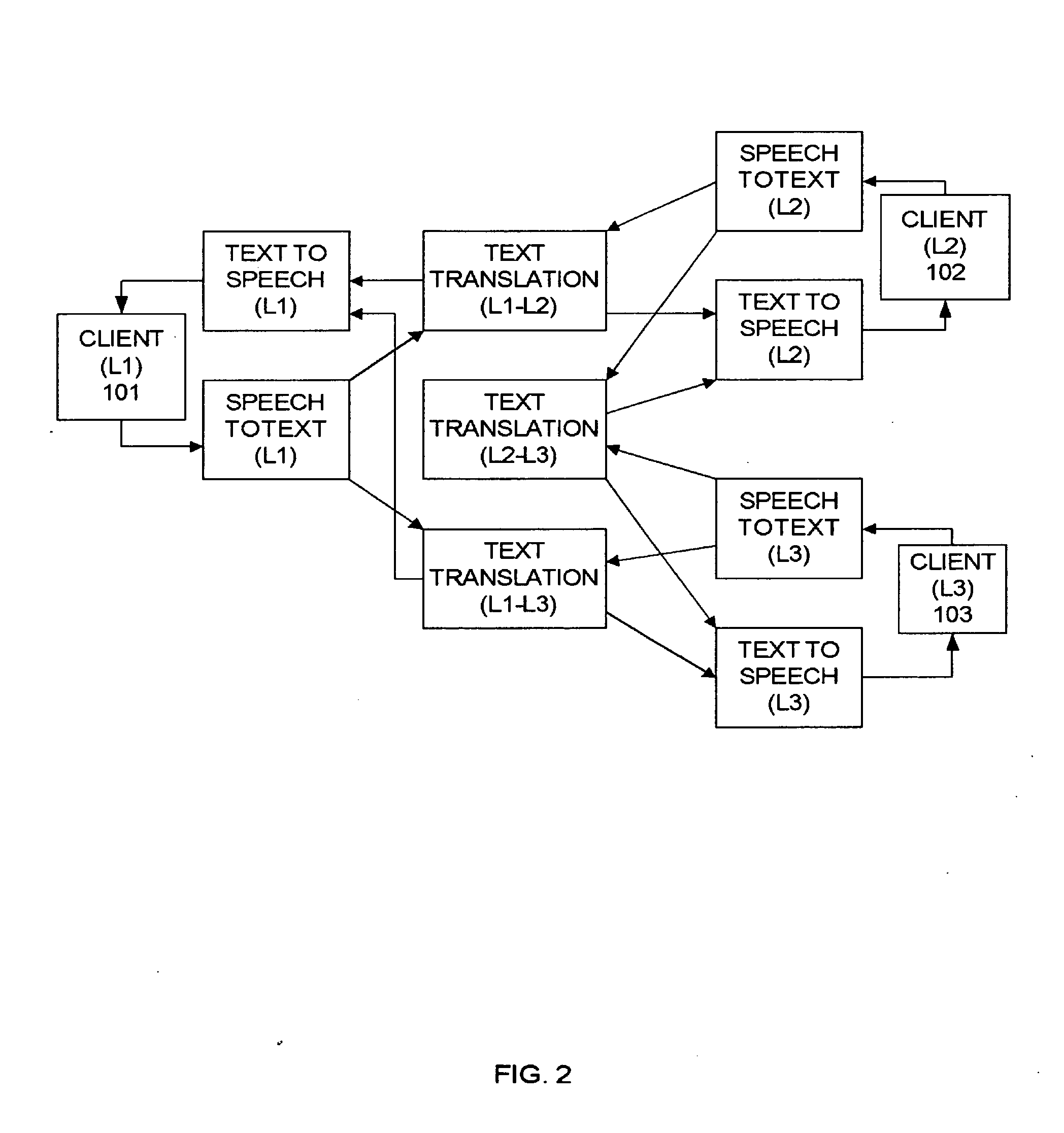

Method and apparatus for translating speech during a call

InactiveUS20100121629A1Improve abilitiesNatural language translationComplete banking machinesClient-sideSpeech sound

A translation platform allows a client using a first language to communicate via translated voice and / or text to at least a second client using a second language. A control server uses various speech recognition engines, text translation engines and text to speech engines to accomplish real-time or near-real time translations.

Owner:COHEN SANFORD H

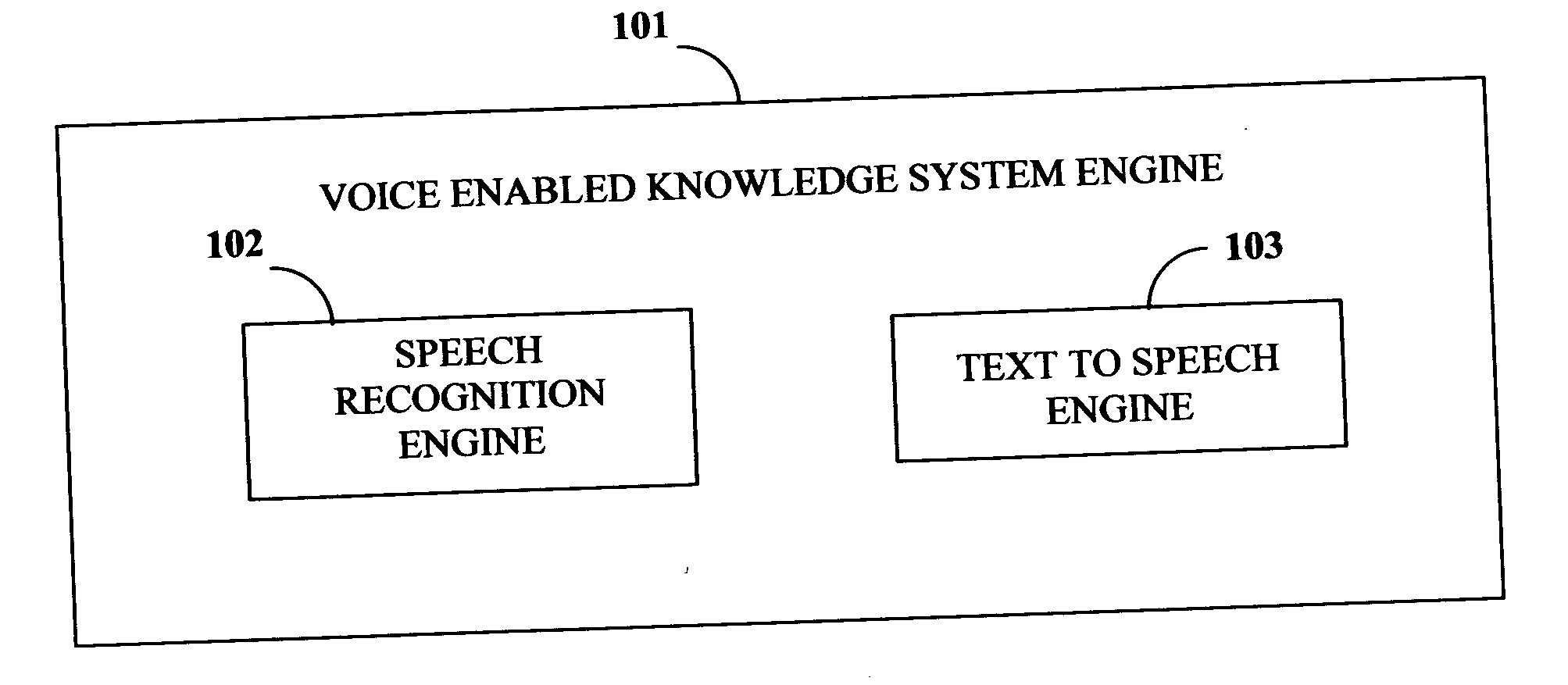

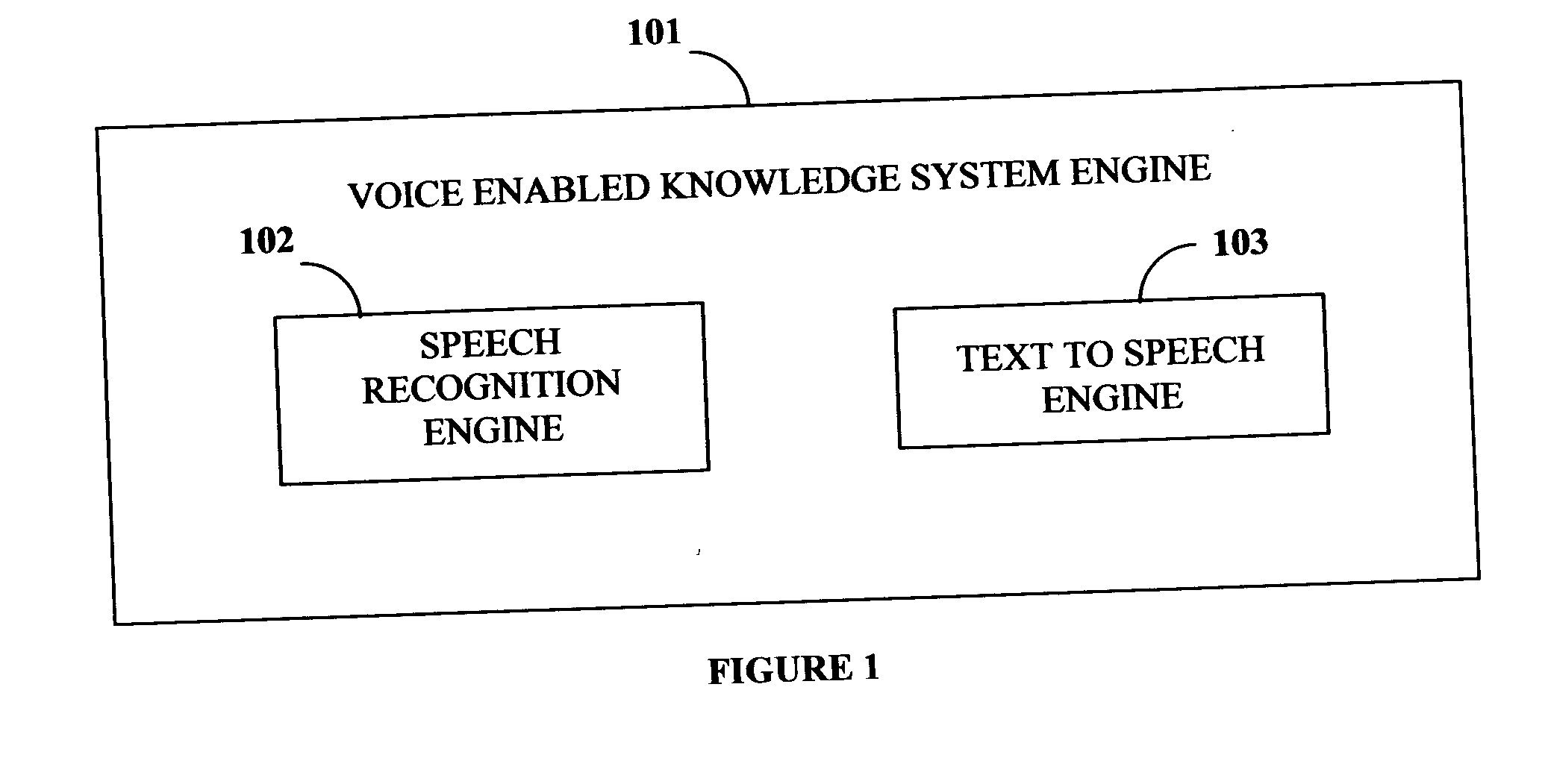

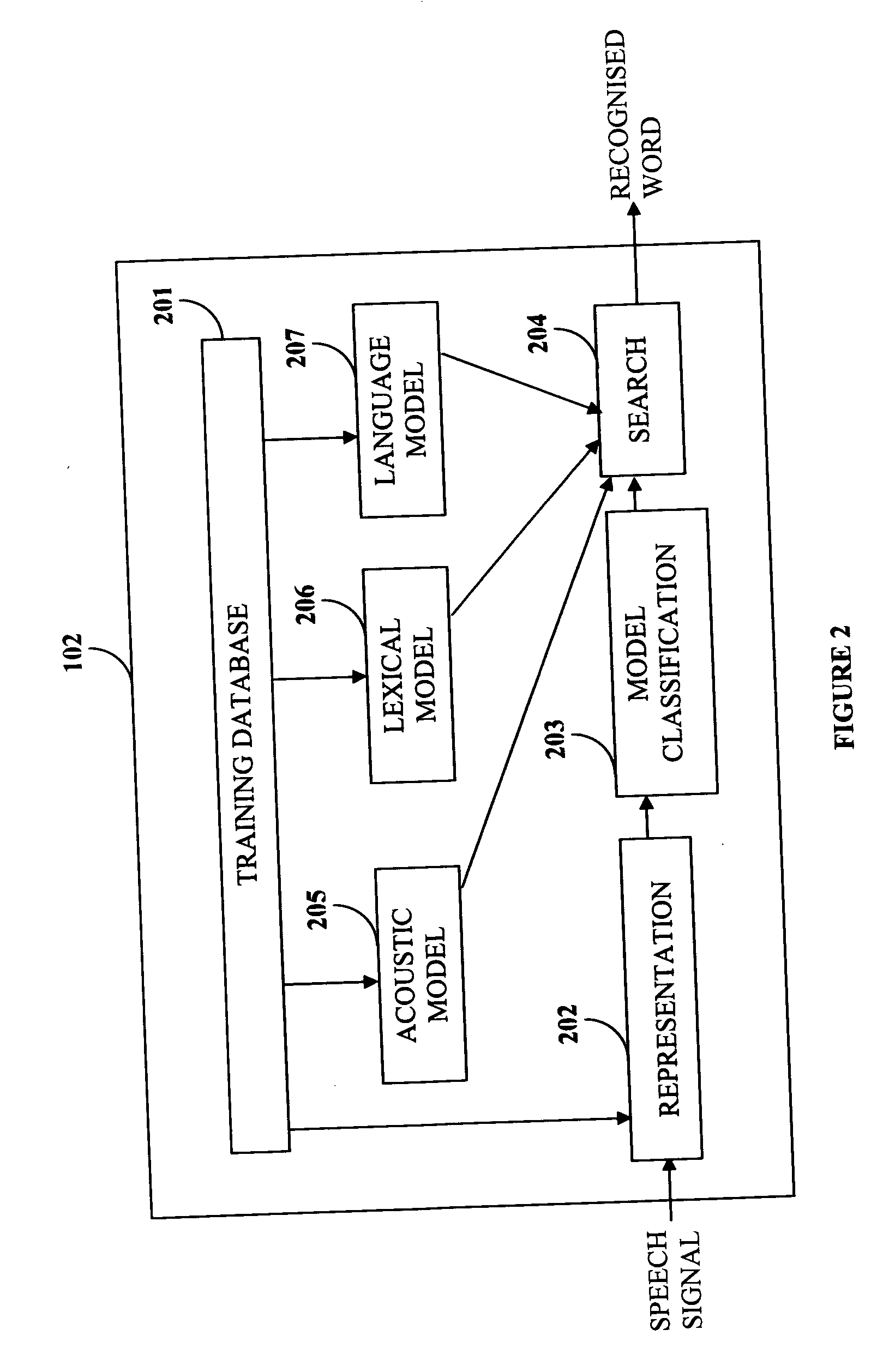

Voice enabled knowledge system

InactiveUS20070124142A1Convenient dictationSpeech recognitionSpeech synthesisSpoken languageTransformation of text

This invention discloses a voice enabled knowledge system, comprising a speech recognition engine and text to speech engine. The speech recognition engine further comprises a representation unit to represent the spoken words, a model classification unit to classify the spoken words, a training database to match the spoken words with preset words and a search unit to search for the spoken word in said training database, based on the results of said model classification. The text to speech engine for conversion of an input text to speech, comprises a text pre-processing unit for analyzing the input text in a sentence form, a prosody unit for word recognition using said acoustic model, a concatenation unit for converting the diphone equivalents into words and thereafter into a sentence and an audio output device for speech output.

Owner:MUKHERJEE SANTOSH KUMAR

Method and system for adding translation in a videoconference

A multilingual multipoint videoconferencing system provides real-time translation of speech by conferees. Audio streams containing speech may be converted into text and inserted as subtitles into video streams. Speech may also be translated from one language to another, with the translated speech inserted into video streams as and choose the subtitles or replacing the original audio stream with speech in the other language generated by a text to speech engine. Different conferees may receive different translations of the same speech based on information provided by the conferees on desired languages.

Owner:POLYCOM INC

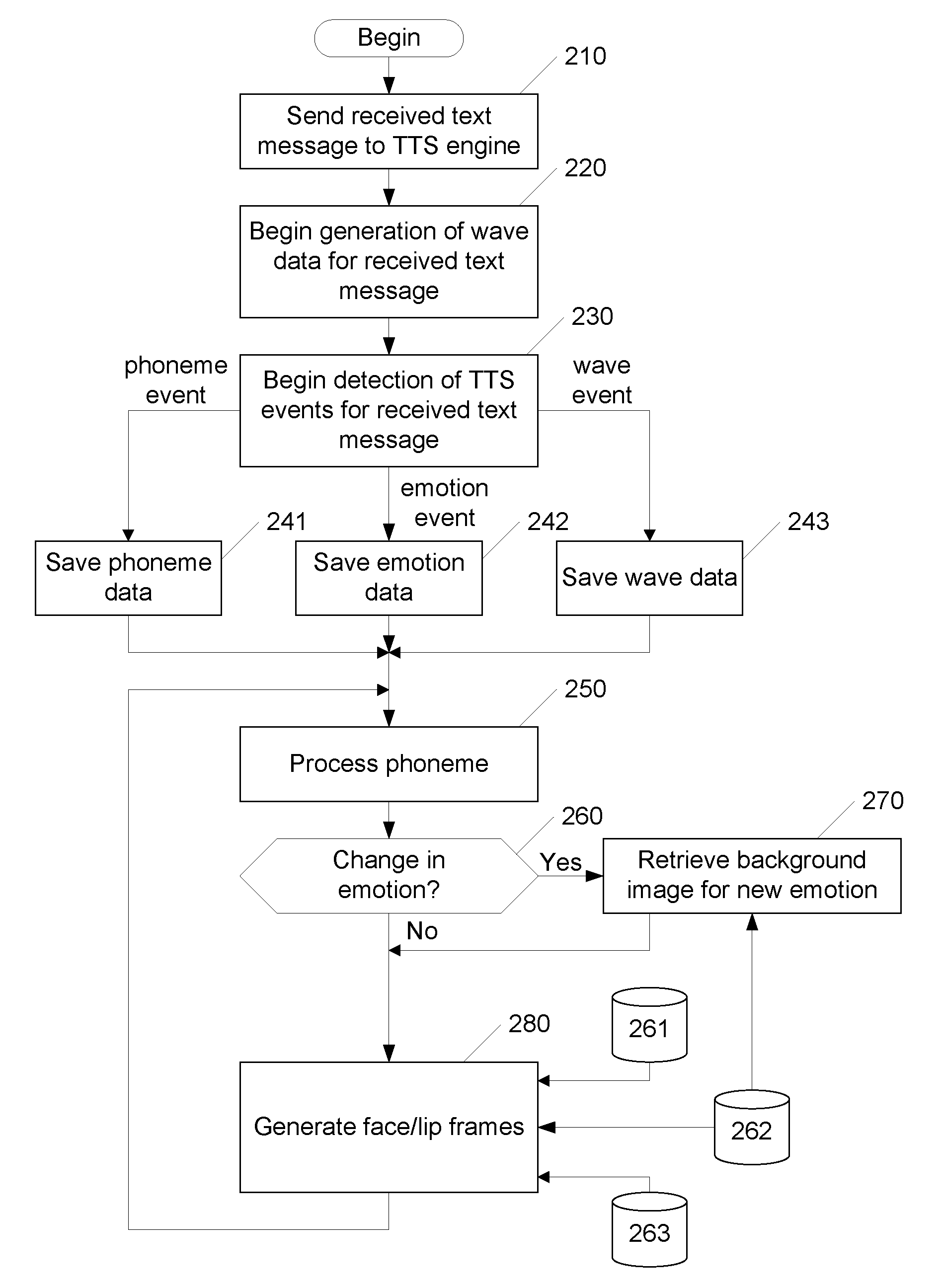

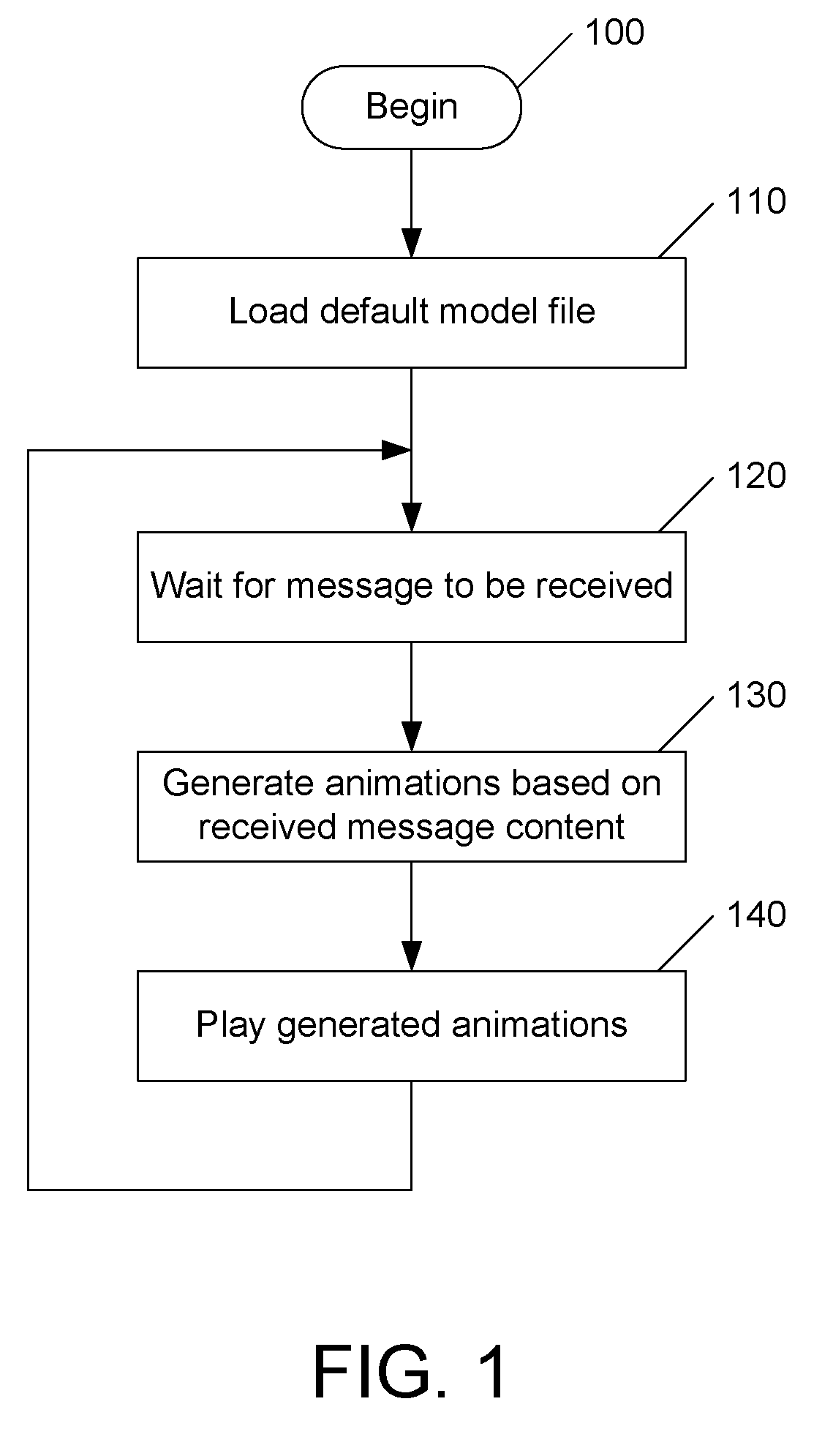

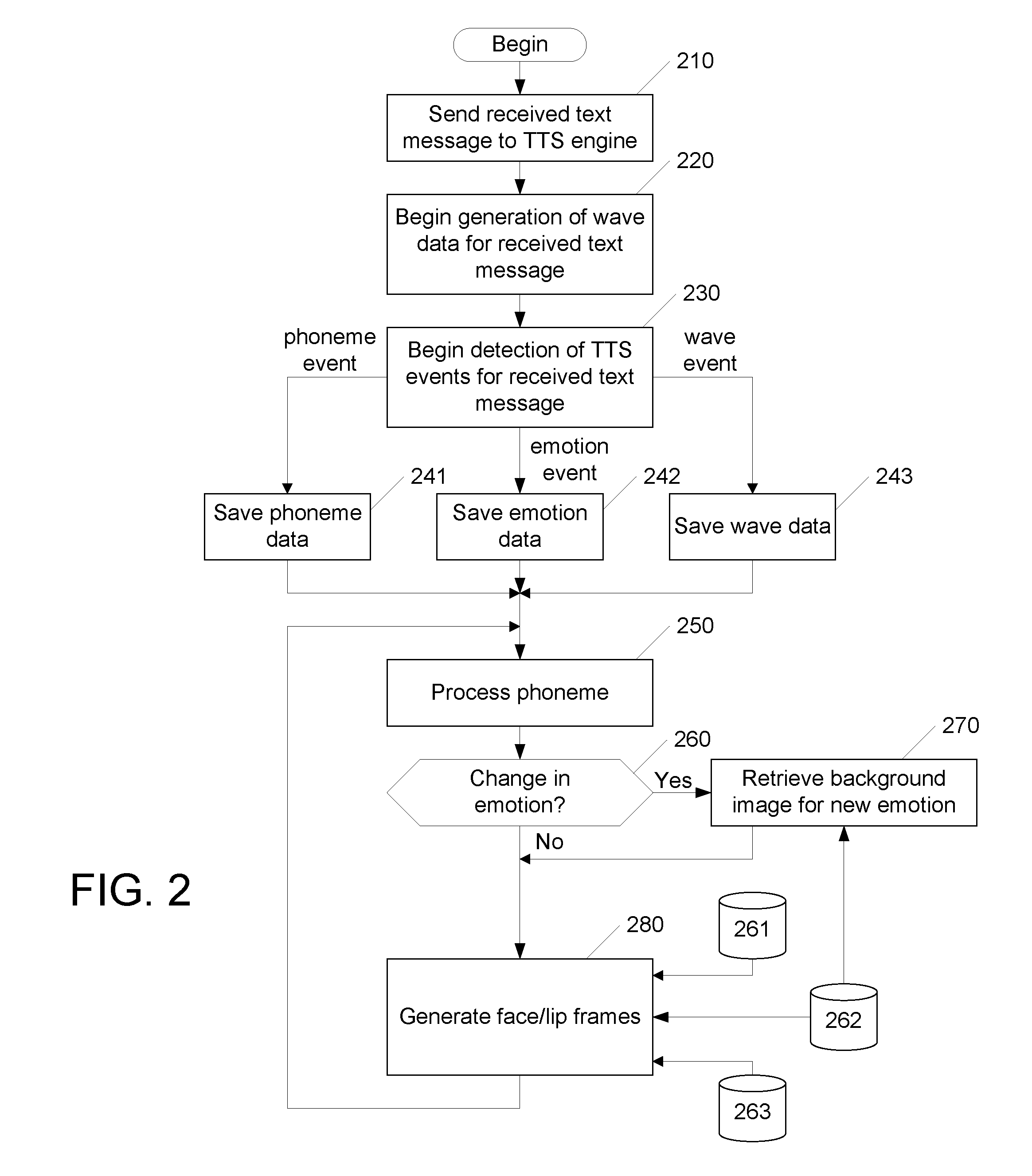

Image-based instant messaging system for providing expressions of emotions

Emotions can be expressed in the user interface for an instant messaging system based on the content of a received text message. The received text message is analyzed using a text-to-speech engine to generate phoneme data and wave data based on the text content. Emotion tags embedded in the message by the sender are also detected. Each emotion tag indicates the sender's intent to change the emotion being conveyed in the message. A mapping table is used to map phoneme data to viseme data. The number of face / lip frames required to represent viseme data is determined based on at least the length of the associated wave data. The required number of face / lip frames is retrieved from a stored set of such frames and used in generating an animation. The retrieved face / lip frames and associated wave data are presented in the user interface as synchronized audio / video data.

Owner:CERENCE OPERATING CO

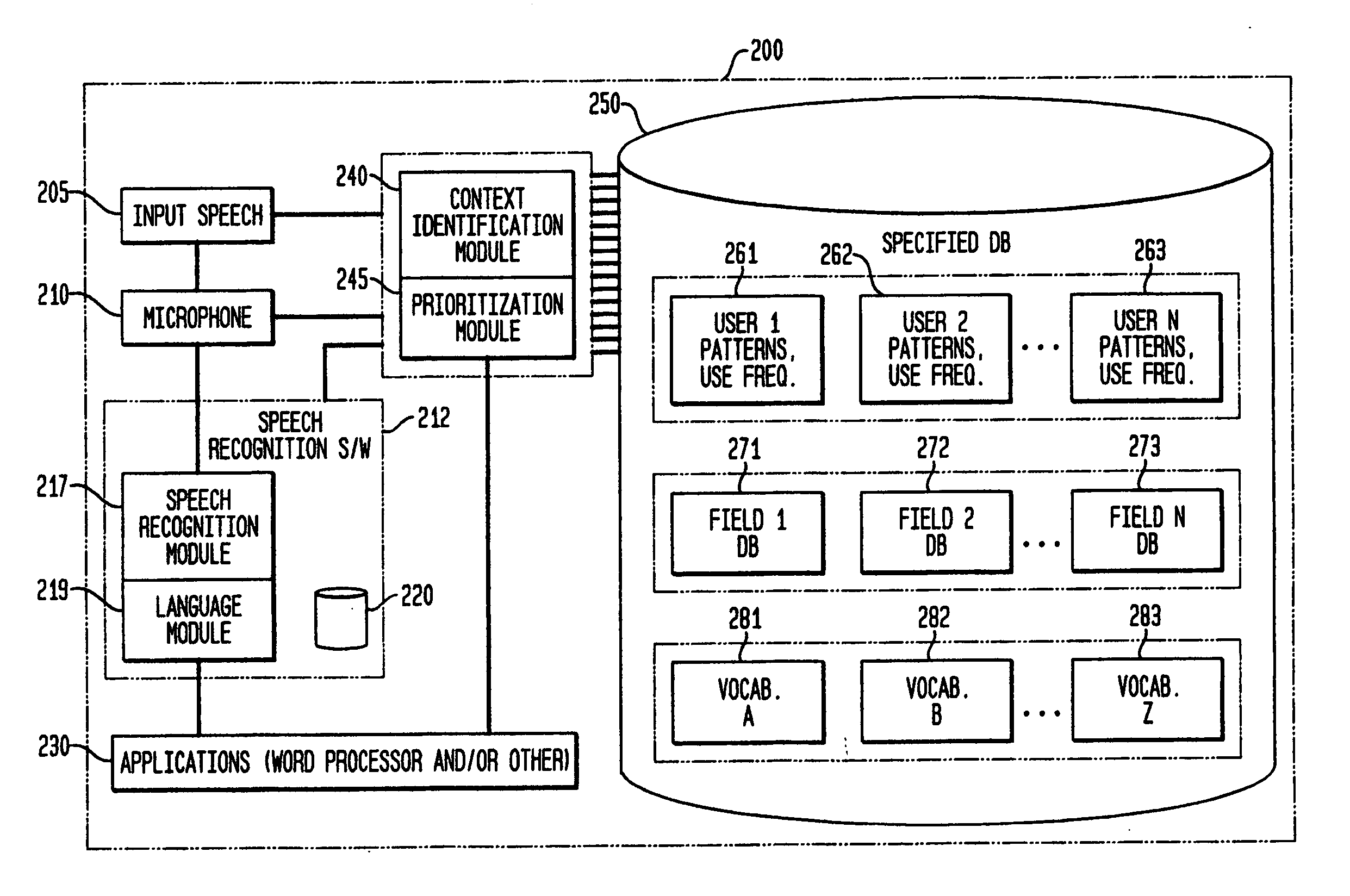

Method and apparatus for improving the transcription accuracy of speech recognition software

ActiveUS20070038449A1Improve accuracyImprove speech recognition performanceSpeech recognitionDigital dataSpeech identification

A virtual vocabulary database is provided for use with a with a particular user database as part of a speech recognition system. Vocabulary elements within the virtual database are imported from the user database and are tagged to include numerical data corresponding to the historical use of the vocabulary element within the user database. For each speech input, potential vocabulary element matches from the speech recognition system are provided to the virtual database software which creates virtual sub-vocabularies from the criteria according to predefined criteria templates. The software then applies vocabulary element weighting adjustments according to the virtual sub-vocabulary weightings and applies the adjustment to the default weighting provided by the speech recognition system. The modified weightings are returned with the associated vocabulary elements to the speech engine for selection of an appropriate match to the input speech.

Owner:COIFMAN ROBERT E

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com