Patents

Literature

951 results about "Mouth shape" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Interactive virtual teacher system having intelligent error correction function

InactiveCN102169642AMake up for boringImprove recognition rateElectrical appliancesPersonalizationSpoken language

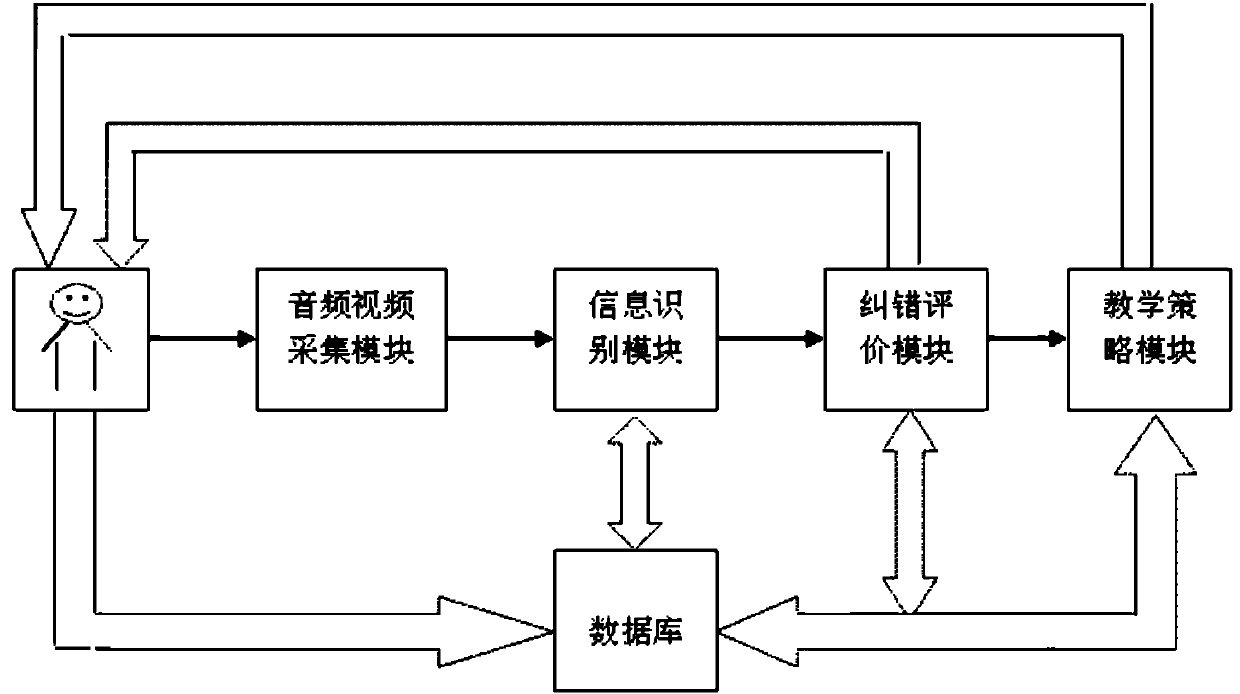

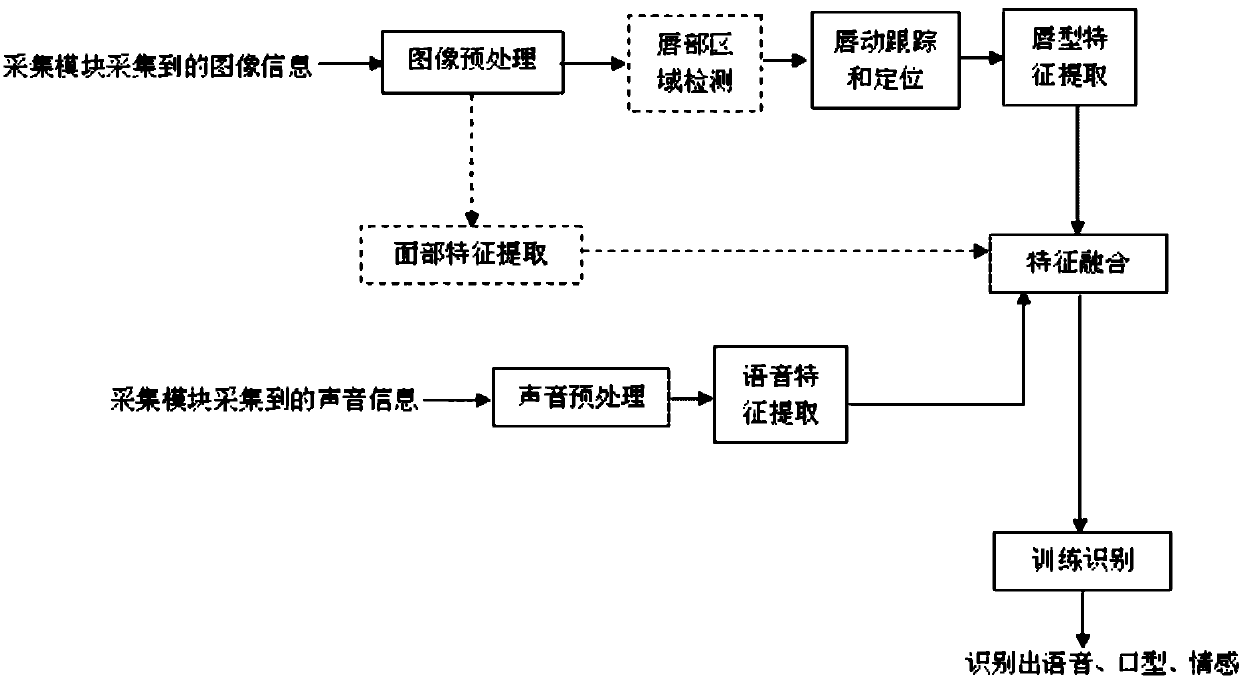

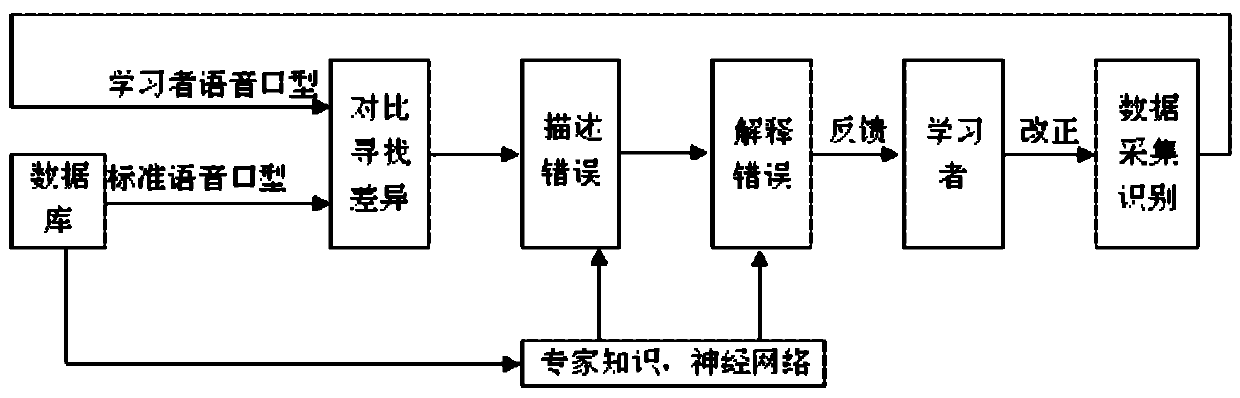

The invention discloses an interactive virtual teacher system having an intelligent error correction function, which is designed for solving the technical problem that the conventional man-machine interaction teaching is limited to speech synthesis information and spoken language evaluation and cannot satisfy the need for intelligent error collection interaction in learning and communication. Thesystem comprises an audio and video data acquisition module, an information identification module, an error correction evaluation module, a teaching policy module and other modules. An audio sensor and a video sensor acquire the face images and voice signals of learners; the information identifies fused mouth shapes, pronunciation and emotion of the learners; the error collection evaluation module automatically evaluates the pronunciation mouth shapes of the learners and detects the differences between pronunciation mouth shape data and standard data stored in a standard pronunciation mouth shape databases, automatically selects a proper time to show the reasons of incorrect pronunciation and correction means and provides a correct pronunciation mouth shape and animated demonstration; andthe teaching policy module makes a teach-student one-by-one interactive personalized teaching implementation solutions according to evaluation data and emotion states. In the invention, real-time communication and animated demonstration are realized by fusing voice and emotion multi-source information and by intelligent error correction and simulation by video interaction with a virtual teacher. Thus, the accuracy of pronunciation teaching is improved.

Owner:SHENYANG AEROSPACE UNIVERSITY

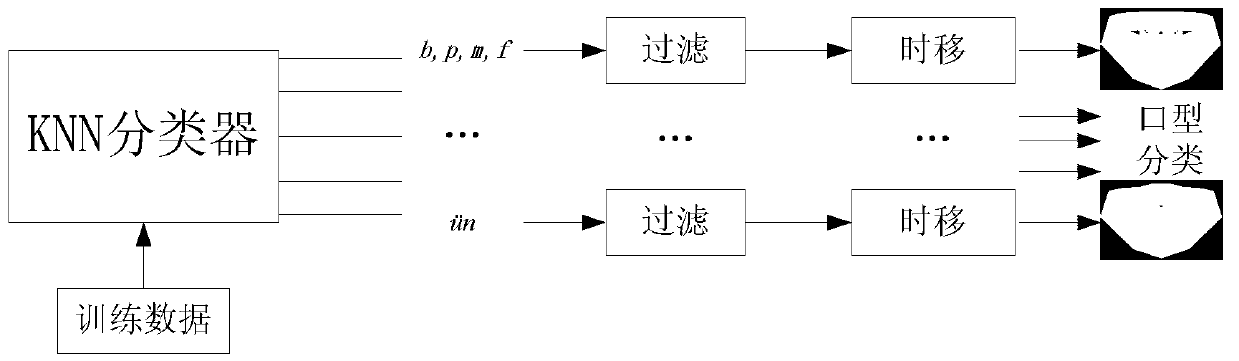

Voice synchronous-drive three-dimensional face mouth shape and face posture animation method

InactiveCN103218842APromote generationReduced intelligibilityCharacter and pattern recognitionAnimationNeighbor algorithmMouth shape

The invention discloses a voice synchronous-drive three-dimensional face mouth shape and face posture animation method. A user can input new voice information, and the new voice information can be preprocessed to combine mouth shape animations and face posture animations which are synchronous with voice on the face head of a virtual man. The method specifically comprises two stages. In a training stage, voice visualization modeling can be achieved through a k-nearest neighbor algorithm (KNN) and hidden Markov model (HMM) mixed model. In a combining stage, the user can input new voice information, characteristics of voice signals are extracted, face posture and mouth shape sequence parameters corresponding to the voice signals can be generated through the KNN and HMM mixed model and are processed in a transition mode, and X face open source software is used to combine delicate and abundant three-dimensional face animations. The method has significant theoretical study value and has wide application prospect in the fields of visual communication, virtual meetings, games, entertainments, teaching assistance and the like.

Owner:SOUTHWEST JIAOTONG UNIV

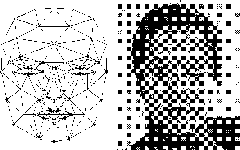

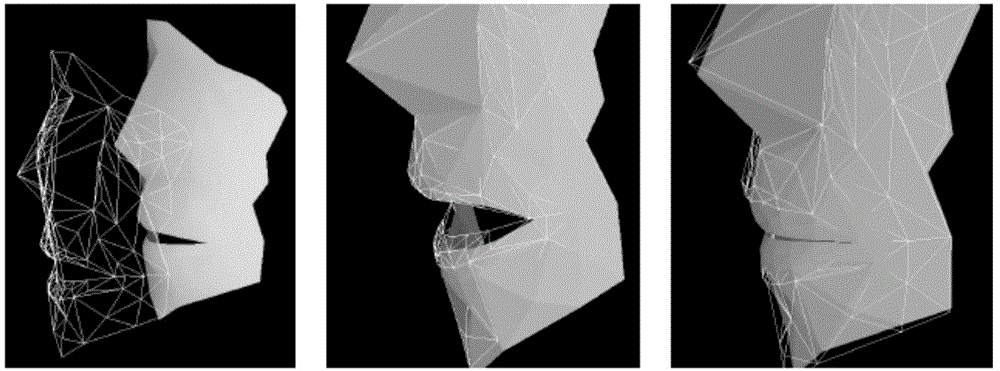

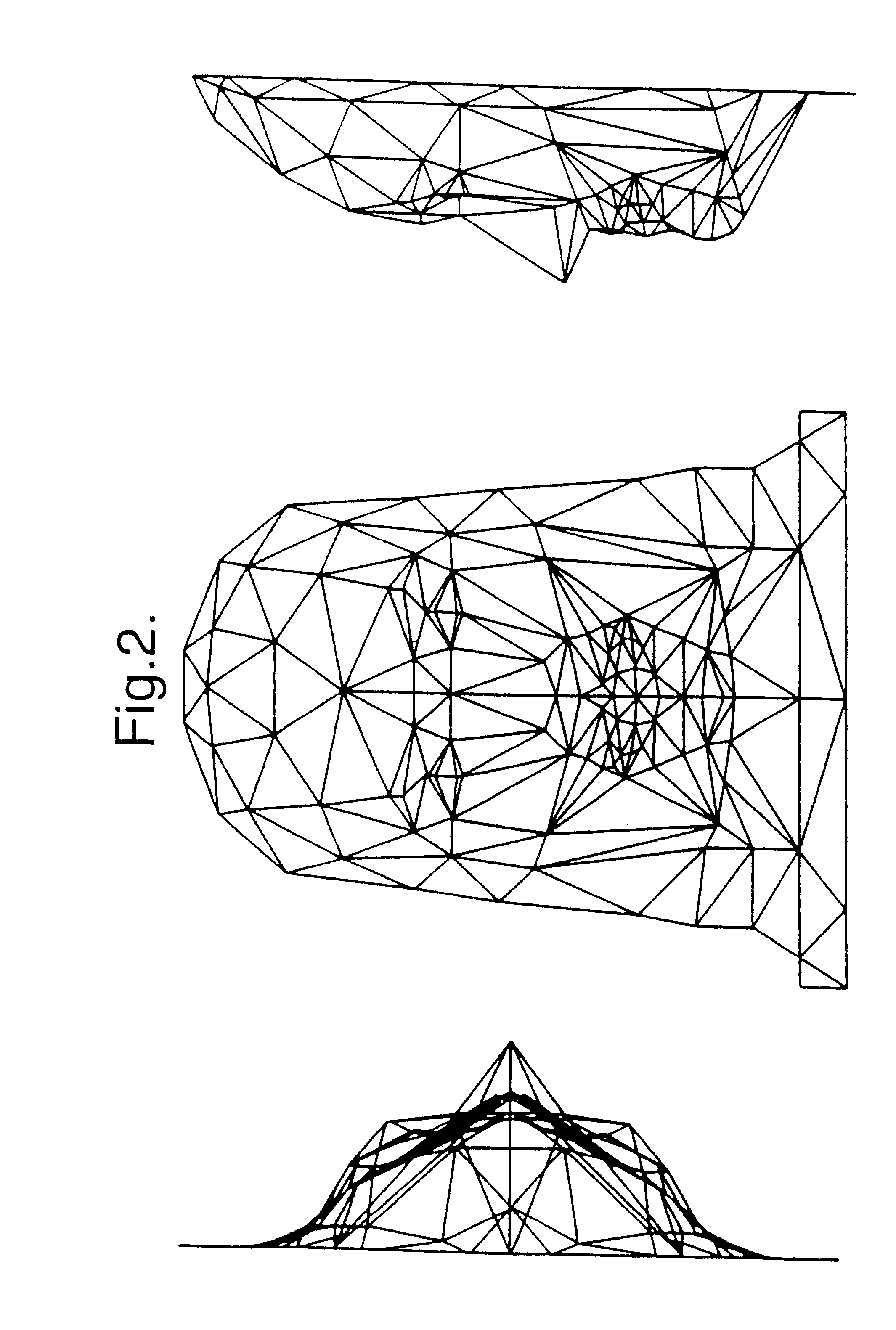

Rapid generation method for facial animation

InactiveCN101826217AImprove descriptive powerQuick changeImage analysis3D-image renderingPattern recognitionImaging processing

The invention relates to a rapid generation method for facial animation, belonging to the image processing technical field. The method comprises the following steps: firstly detecting coordinates of a plurality of feature points matched with a grid model face in the original face pictures by virtue of an improved active shape model algorithm; completing fast matching between a grid model and a facial photo according to information of the feature points; performing refinement treatment on the mouth area of the matched grid model; and describing a basic facial mouth shape and expression change via facial animation parameters and driving the grid model by a parameter flow, and deforming the refined grid model by a grid deformation method based on a thin plate spline interpolation so as to generate animation. The method can quickly realize replacement of animated characters to generate vivid and natural facial animation.

Owner:SHANGHAI JIAO TONG UNIV

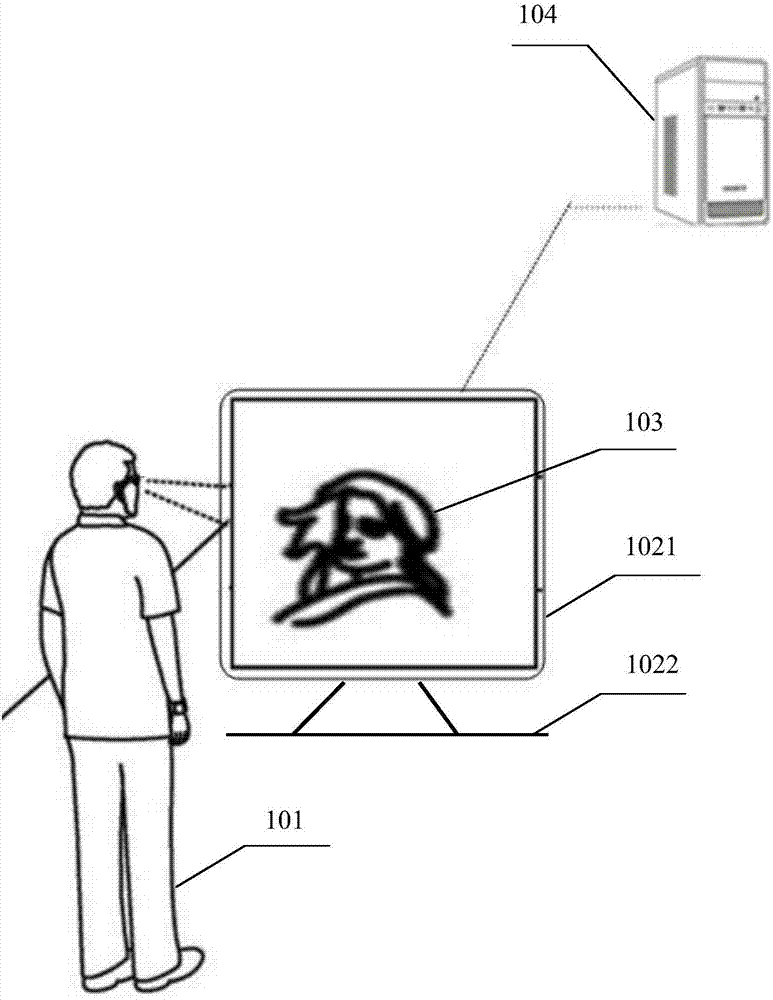

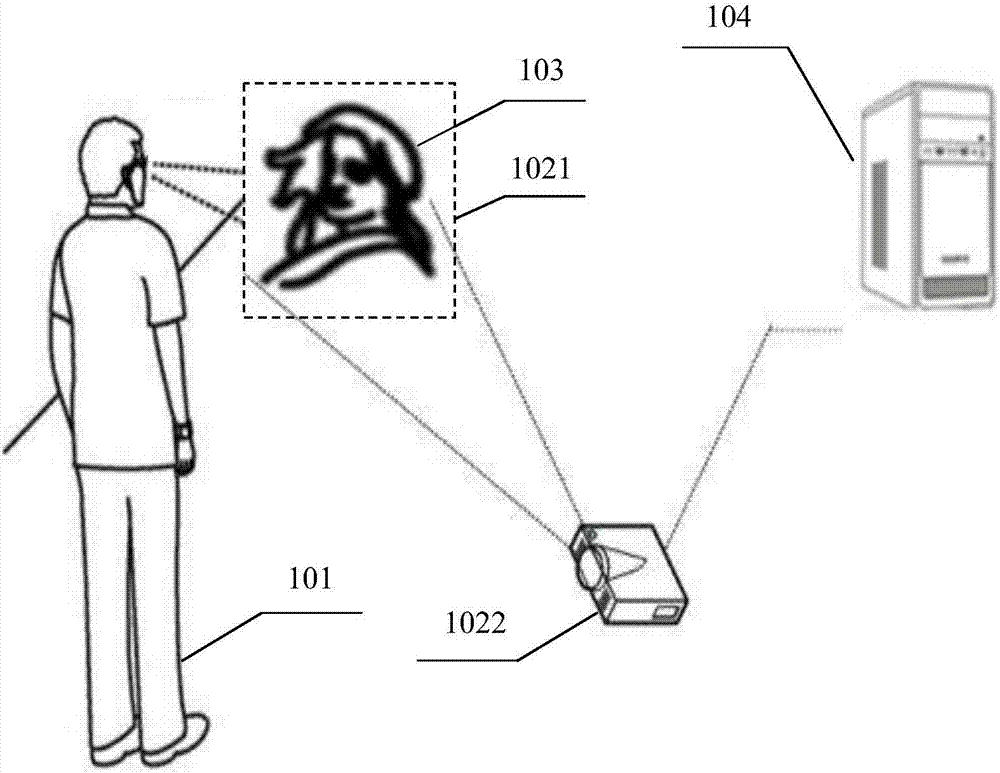

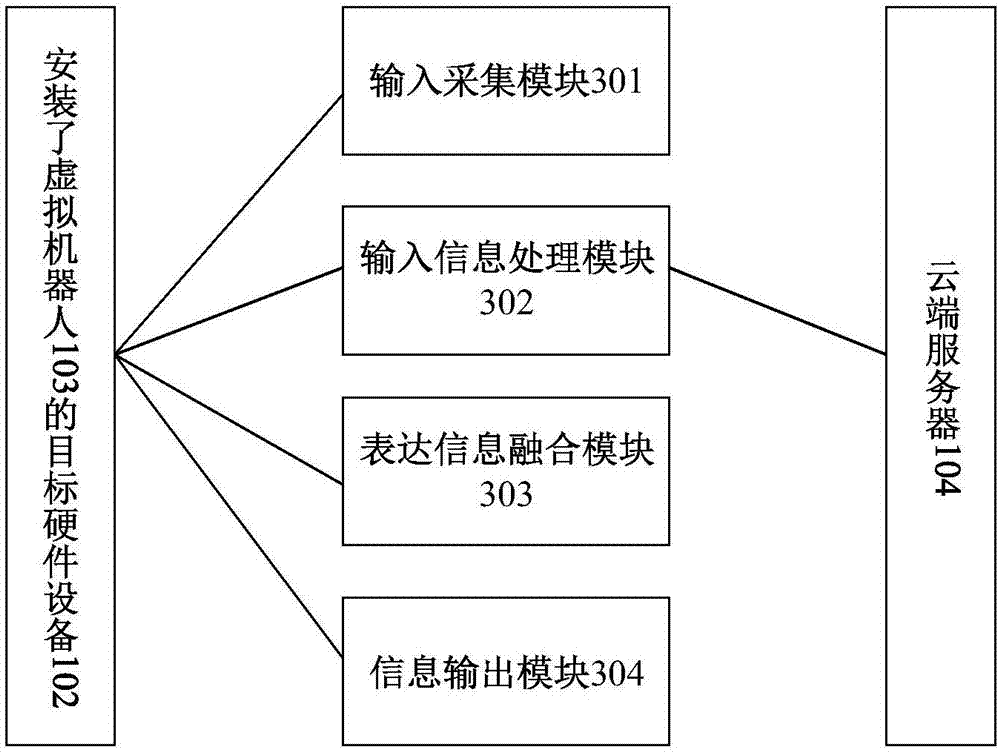

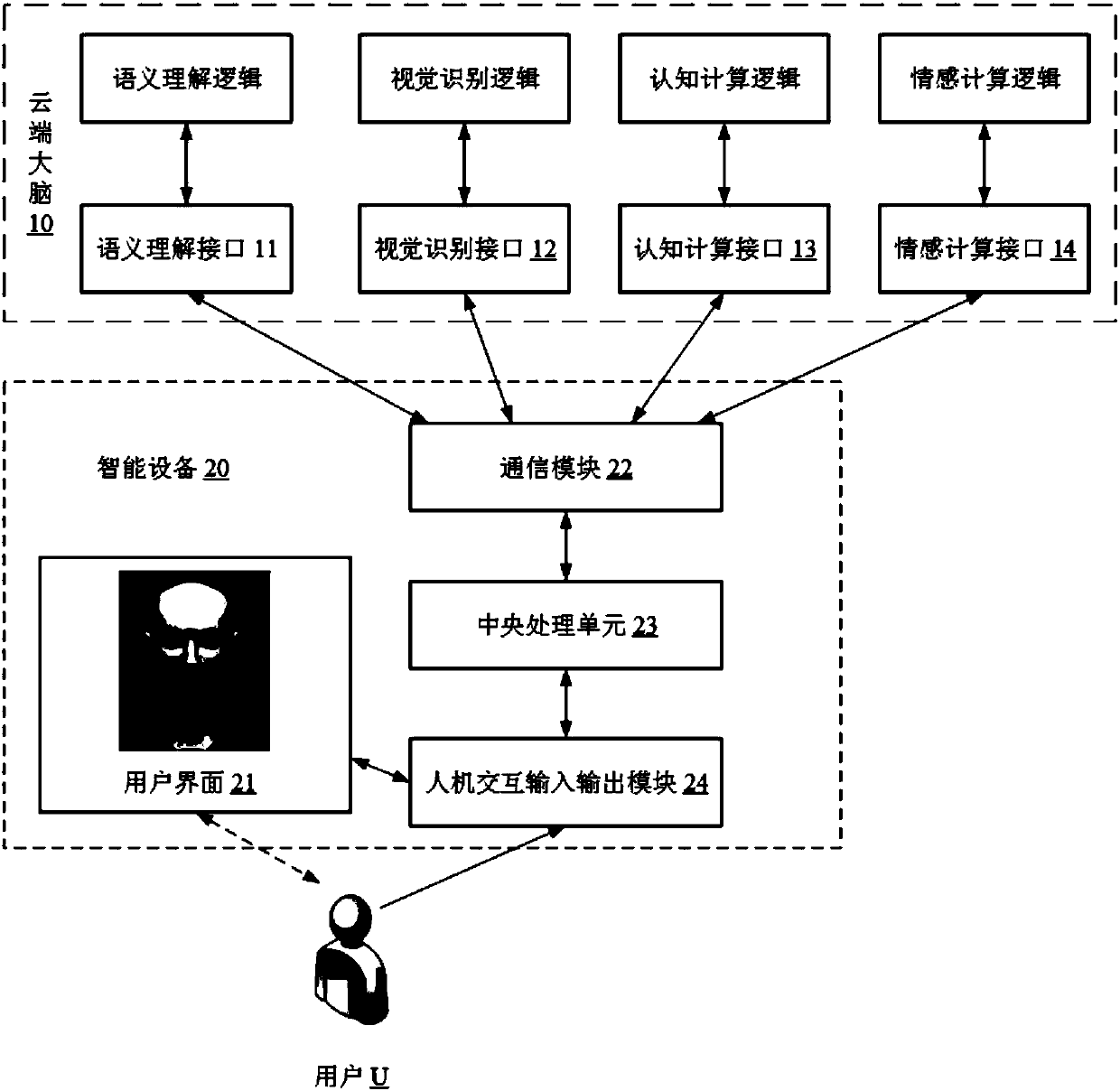

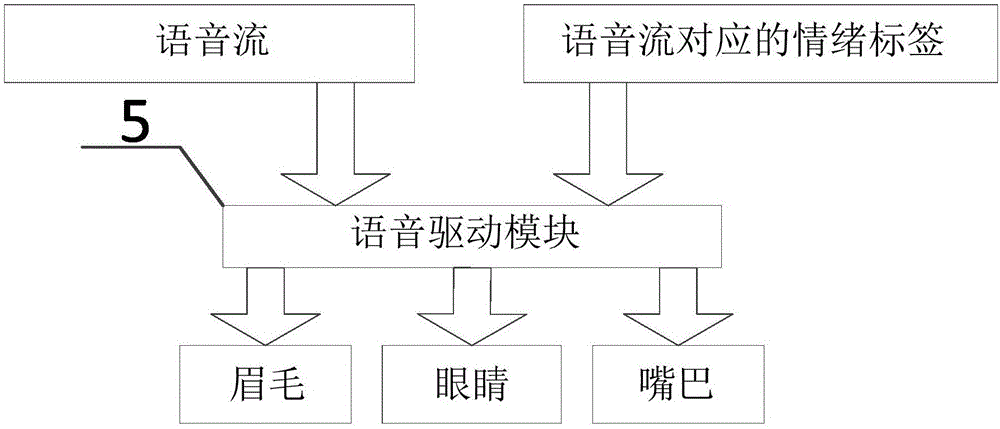

Multi-mode interaction method and system for multi-mode virtual robot

ActiveCN107340859AHigh viscosityImprove fluencyInput/output for user-computer interactionArtificial lifeAnimationVirtual robot

The invention provides a multi-mode interaction method for a multi-mode virtual robot. An image of the virtual robot is displayed in a preset display region of a target hardware device; and the constructed virtual robot has preset role attributes. The method comprises the following steps of obtaining a single-mode and / or multi-mode interaction instruction sent by a user; calling interfaces of a semantic comprehension capability, an emotion recognition capability, a visual capability and a cognitive capability to generate response data of all modes, wherein the response data of all the modes is related to the preset role attributes; fusing the response data of all the modes to generate multi-mode output data; and outputting multi-mode output data through the image of the virtual robot. The virtual robot is adopted for performing conversation interaction; on one hand, an individual with an image can be displayed on a man-machine interaction interface through a high-modulus 3D modeling technology; and on the other hand, the effect of natural fusion of voices and mouth shapes as well as expressions and body actions can be achieved through an animation of a virtual image.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

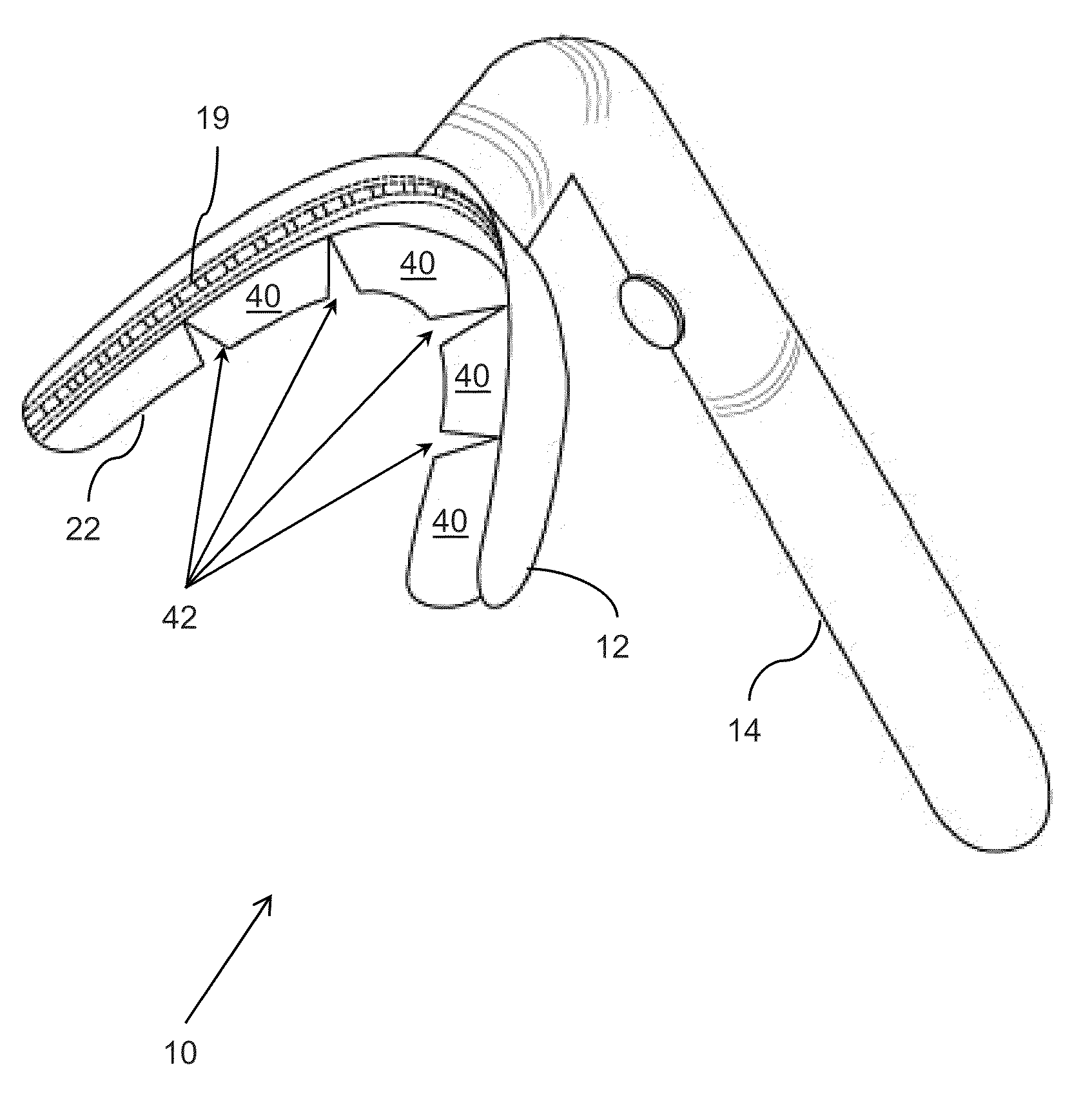

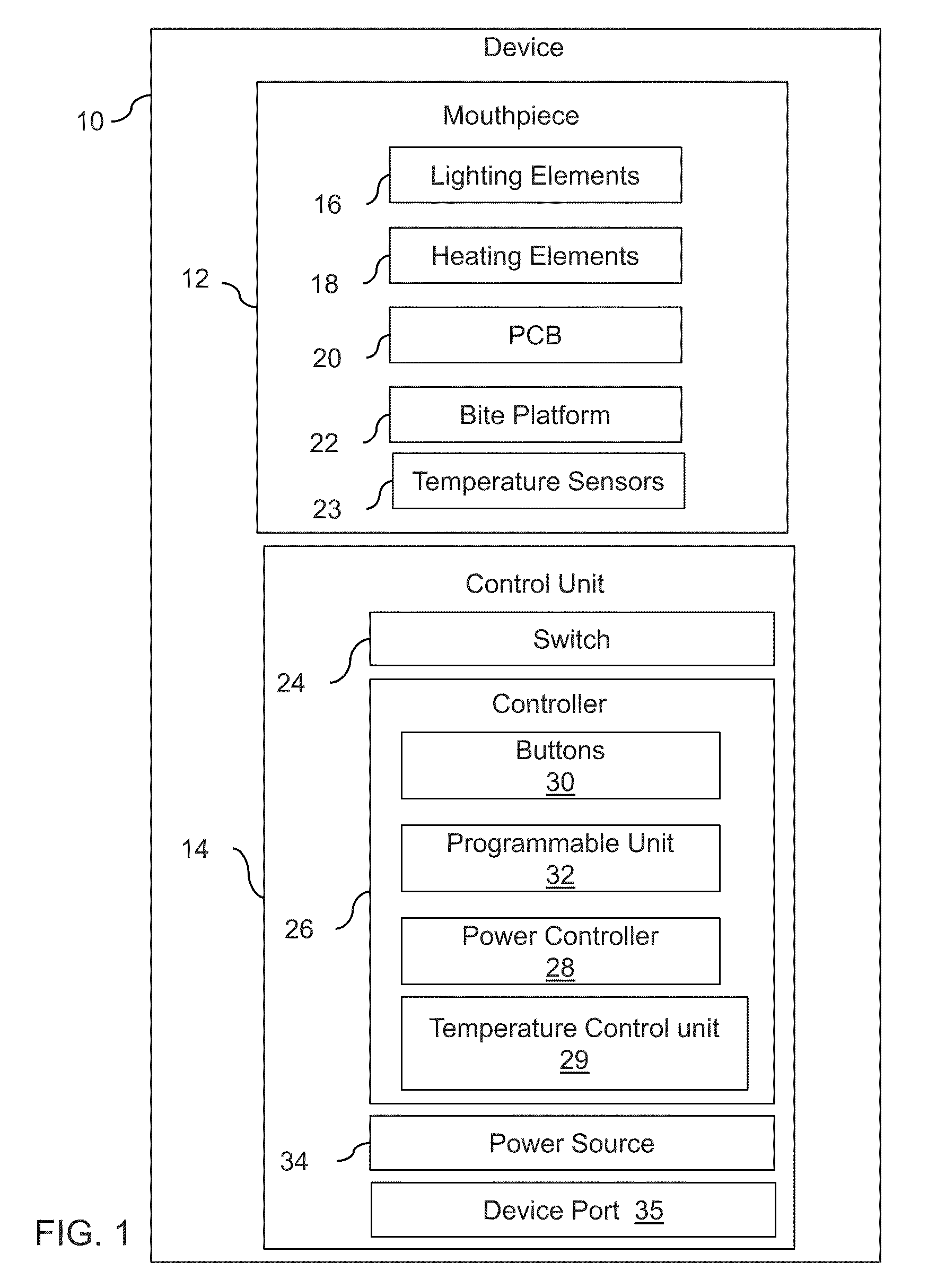

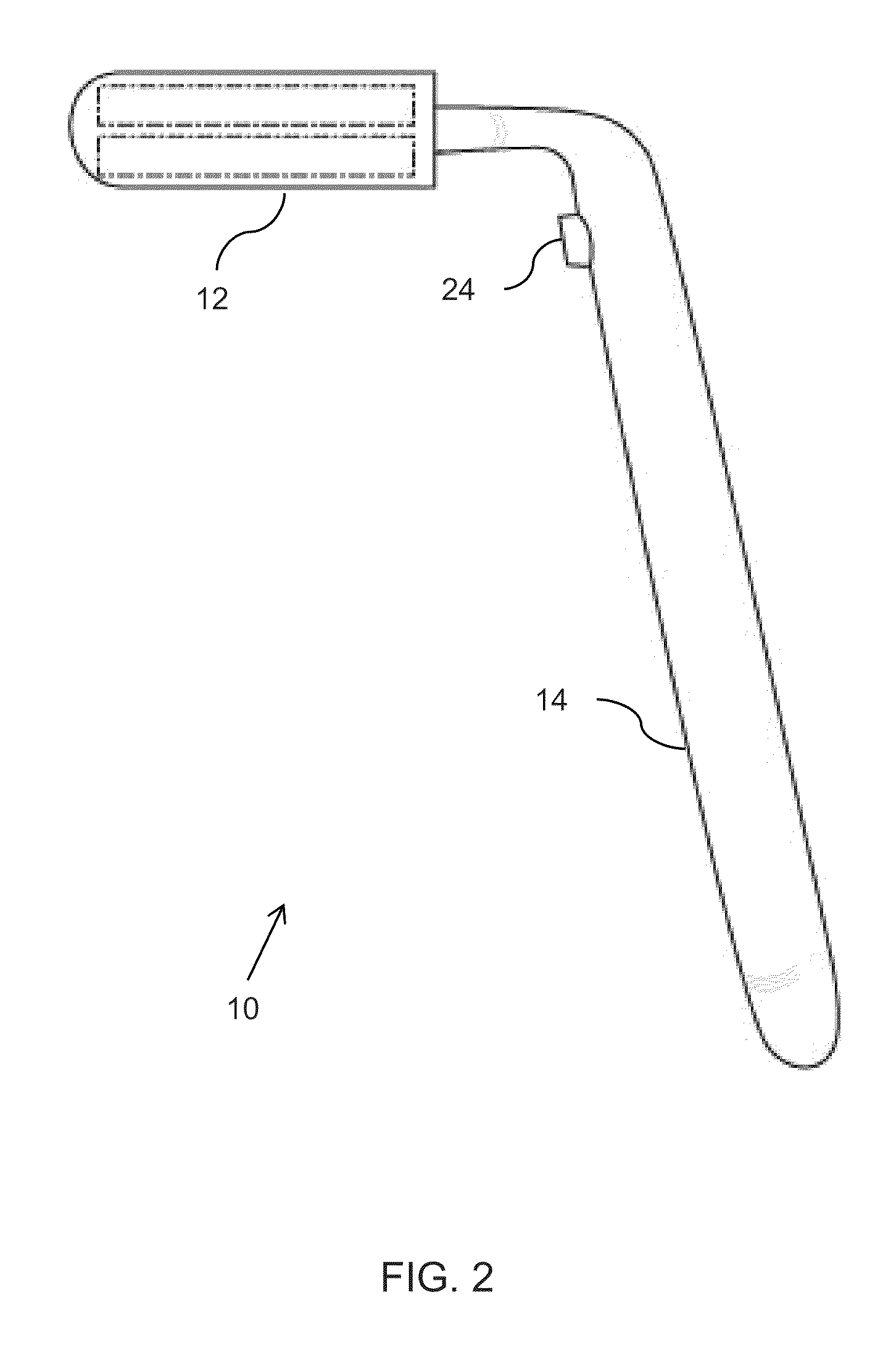

Device and method for dental whitening

Embodiments of the invention provide a portable dental whitening device. The device comprises a flexible mouthpiece including a bite platform having a horseshoe shape adapted to receive a plurality of mouth shapes. The mouthpiece comprising at least one lighting element for generating light, at least one heating element for generating heat, a flexible printed circuit board, and at least one temperature sensor for detecting the temperature of the heat generated by the at least one heating elements. Further, the device comprises a control unit for selectively powering said lighting element and said heating element. The dental whitening is achieved by applying simultaneously the light and the heat and the chemical activity delivered by a whitening agent provided in the mouthpiece.

Owner:WOLFF ANDY +1

Virtual person-based multi-mode interactive processing method and system

PendingCN107765852AMeet needsImprove experienceInput/output for user-computer interactionGraph readingData matchingUser needs

The invention discloses a virtual person-based multi-mode interactive processing method and system. A virtual person runs in an intelligent device. The method comprises the following steps of awakening the virtual person to enable the virtual person to be displayed in a preset display region, wherein the virtual person has specific characters and attributes; obtaining multi-mode data, wherein themulti-mode data includes data from a surrounding environment and multi-mode input data interacting with a user; calling a virtual person ability interface to analyze the multi-mode data, and decidingmulti-mode output data; matching the multi-mode output data with executive parameters of a mouth shape, a facial expression, a head action and a limb body action of the virtual person; and presentingthe executive parameters in the preset display region. When the virtual person interacts with the user, voice, facial expression, emotion, head and limb body fusion can be realized to present a vividand fluent character interaction effect, thereby meeting user demands and improving user experience.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

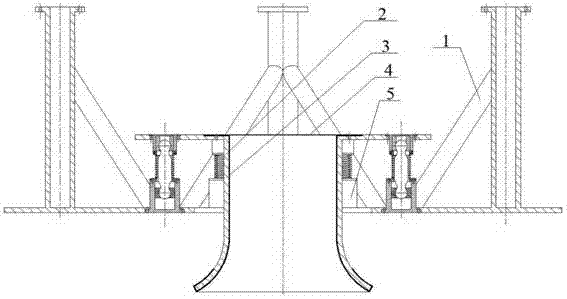

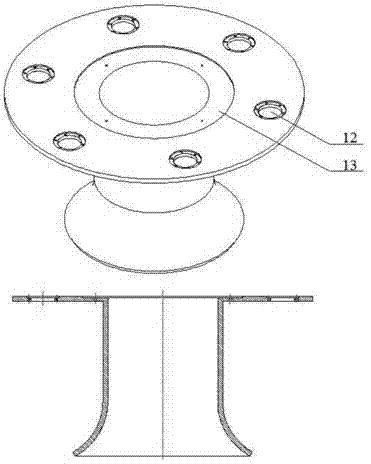

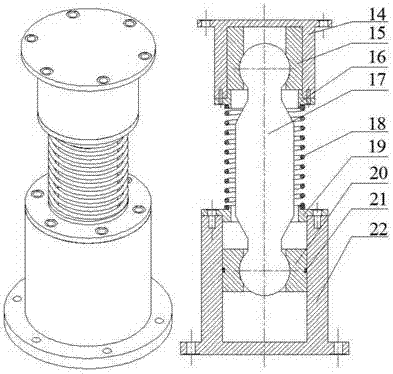

Anti-blocking-and-hanging drilling well water-secluding pipe recovery guiding device

ActiveCN105443058AAvoid hanging problemsInhibit sheddingBorehole/well accessoriesWell drillingMouth shape

The invention relates to an anti-blocking-and-hanging drilling well water-secluding pipe recovery guiding device. The device is characterized by comprising a support, a guiding chamber, a rubber sleeve, a rubber sleeve pressing ring and a supporting device; the support is provided with multiple connecting legs, multiple supporting device lower portion mounting holes are formed in the outer side of the support, and a guiding chamber mounting hole is formed in the middle of the support; the guiding chamber is in a horn-mouth shape, the rubber sleeve is arranged inside the guiding chamber, and the rubber sleeve pressing ring is arranged at the top of the guiding chamber; the supporting device comprises an upper supporting barrel, a wearproof bushing, an upper supporting barrel cover, a connecting rod, a spring, a lower supporting barrel cover, a piston, a piston embracing clamp and a lower supporting barrel; the wearproof bushing is arranged inside the upper supporting barrel, and the piston is arranged inside the lower supporting barrel; a groove is formed at the outer side of the piston, and the groove is matched with the piston embracing clamp; the upper end and the lower end of the connecting rod are both spherical bodies, and the spring is arranged at the outer side of the connecting rod; the spring is provided with an installing ring. The device is simple in structure, convenient to operate, high in reliability and easy and convenient to assemble and can be widely applied to all types of maritime water-secluding pipe anti-blocking-and-hanging recycling operation.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

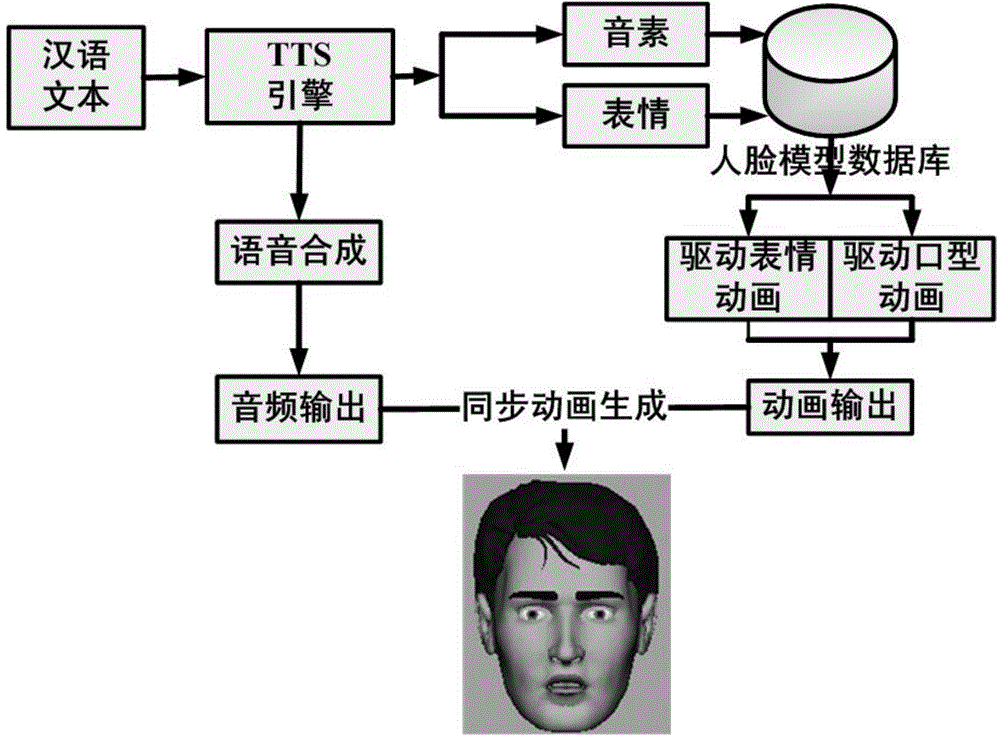

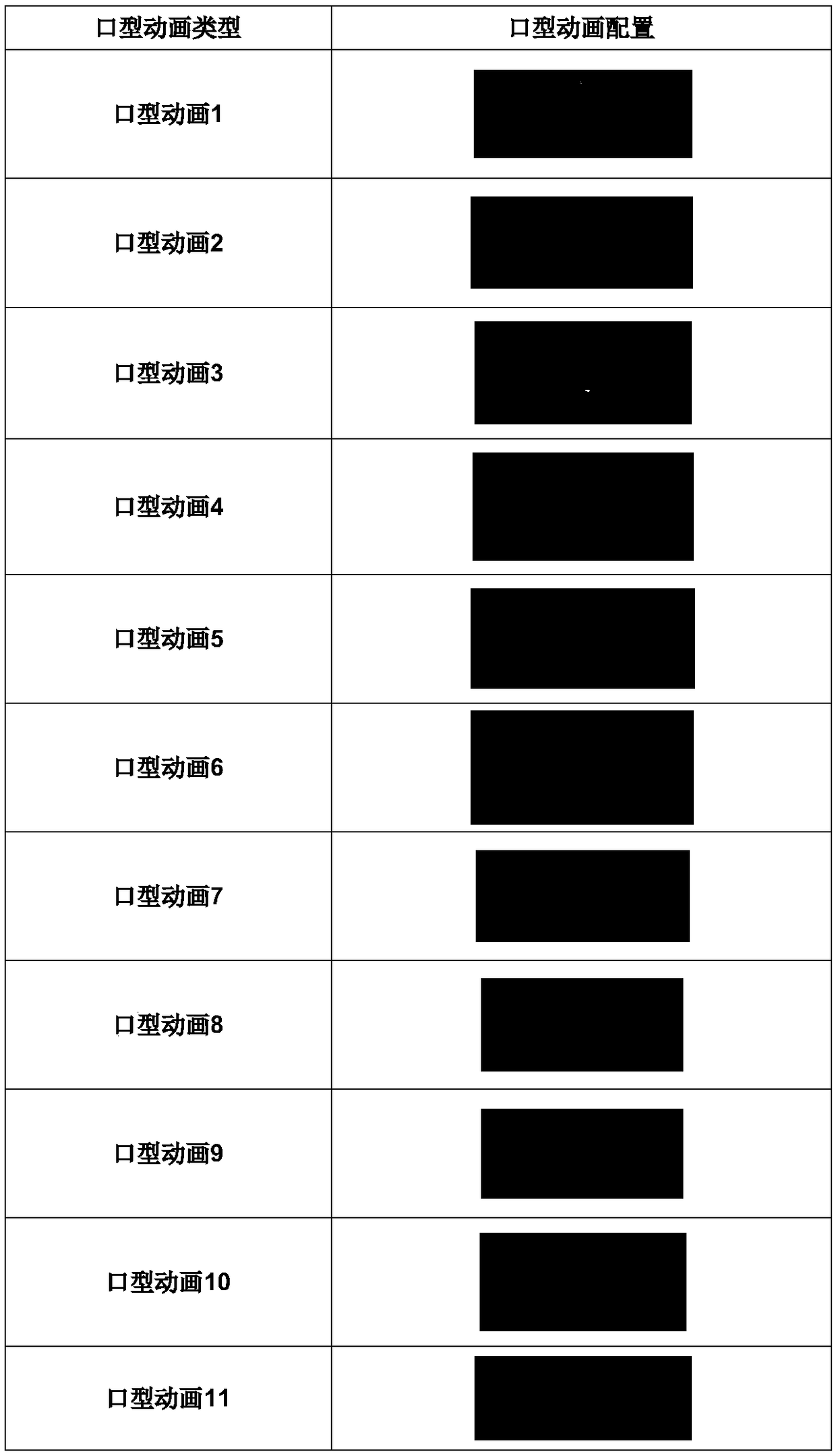

Mouth shape animation synthesis method based on comprehensive weighted algorithm

ActiveCN104361620AGood effectFlexible synthesisAnimationSpeech synthesisPattern recognitionChinese characters

The invention discloses a mouth shape animation synthesis method based on a comprehensive weighted algorithm. The mouth shape animation synthesis method comprises the following steps: analyzing input Chinese texts, splitting Chinese characters into different Chinese visual phonemes, sending the phonemes to a voice synthesis system, synthesizing the phonemes to form a basic visual phoneme flow, establishing a parameter human face model with reality sense based on an MPEG-4 standard, driving the deformation of the model by using visual phoneme animation frame parameters, adding background images, and performing hierarchical processing and addition on noises, so that the mouth shape animation synthesis with vividness, reality and good effect can be achieved.

Owner:韩慧健

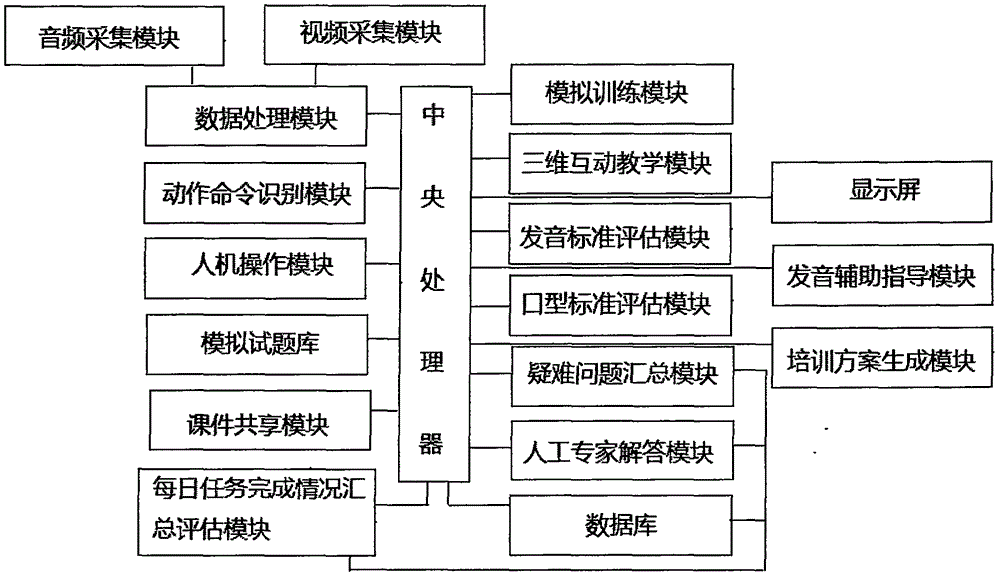

Customized foreign spoken language learning system

InactiveCN106409030AIncrease interest in learningImprove learning efficiencyElectrical appliancesPersonalizationSpoken language

The invention discloses a customized foreign spoken language learning system which comprises a human-machine operation module, a video acquisition module, an audio acquisition module, a data processing module, a pronunciation standard evaluation module, a mouth shape standard evaluation module, a pronunciation aiding and guiding module, a training scheme generating module, an acting instruction recognizing module, a central processor, a three-dimensional interactive teaching module, a daily task completing condition gathering and evaluating module, a simulated training module, a hard question gathering module, a human expert module and a class teaching material sharing module. According to the invention, when students are learning, they can establish connections with the figures and scenes in different moving forms through the three-dimensional simulated teaching module; and the students can get involved into the dialogues and actions of the figures in the entire simulated process. All the simulated training programs are arbitrary ones so that students cannot answer the questions to the programs by rote. And students, therefore, seek to understand more truly about the knowledge points of the programs, raising their study efficiency.

Owner:HENAN UNIV OF ANIMAL HUSBANDRY & ECONOMY

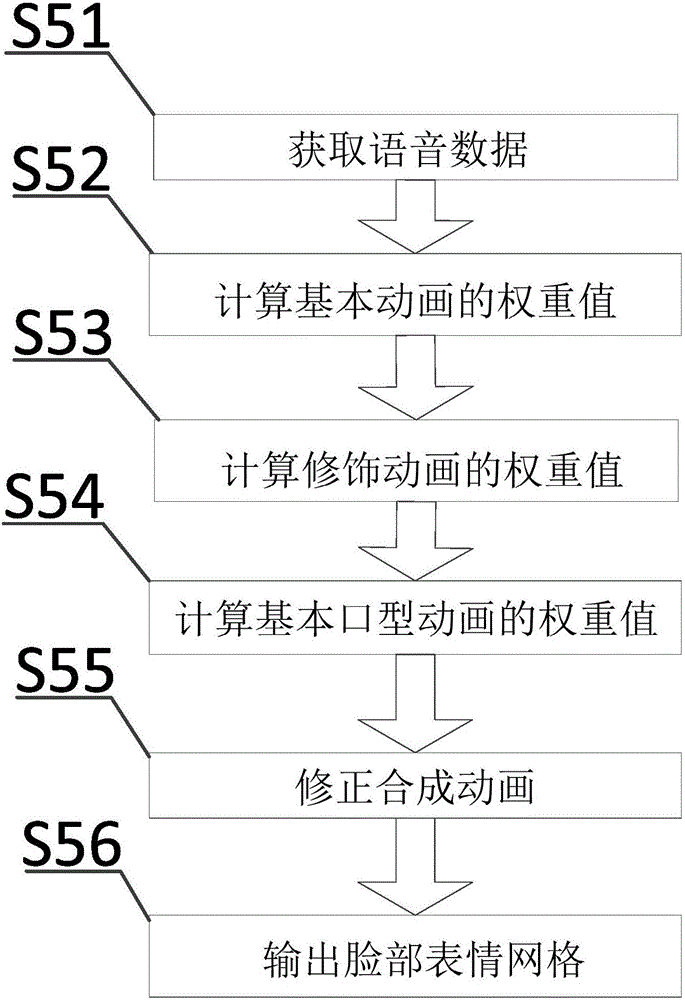

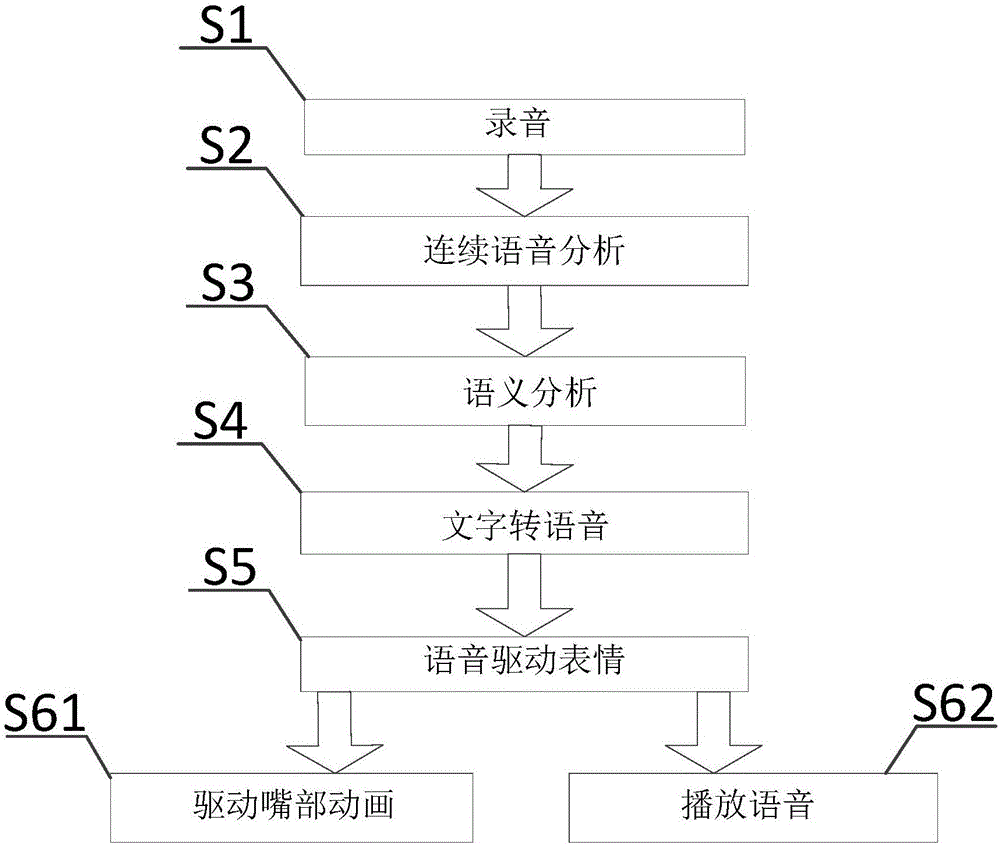

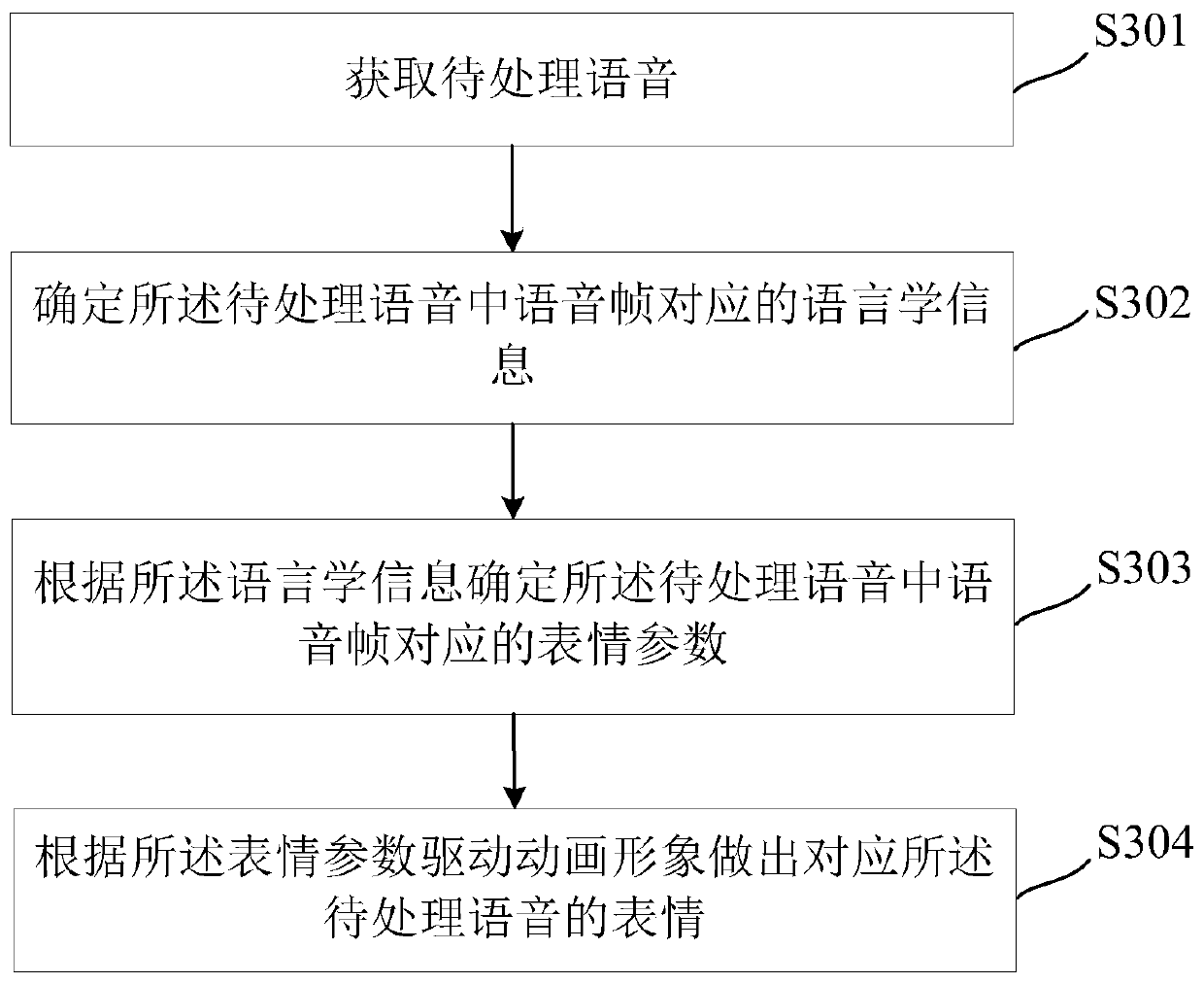

Method of driving expression and gesture of character model in real time based on voice

The invention discloses a method of driving an expression and a gesture of a character model in real time based on voice for driving the expression and the gesture of a speaking virtual reality character model. The method comprises steps: voice data are acquired; the weight values of basic animations are calculated; the weight values of decorative animations are calculated; the weight values of basic mouth shape animations are calculated; a synthesized animation is modified; and a facial expression grid is outputted. Through driving the facial expression and the mouth expression of the current virtual reality character by sound wave information of the voice, the virtual image can automatically generate an expression as natural as a real person, no virtual reality character images need to be made, the cost is low, and time and labor are saved.

Owner:北京五一视界数字孪生科技股份有限公司

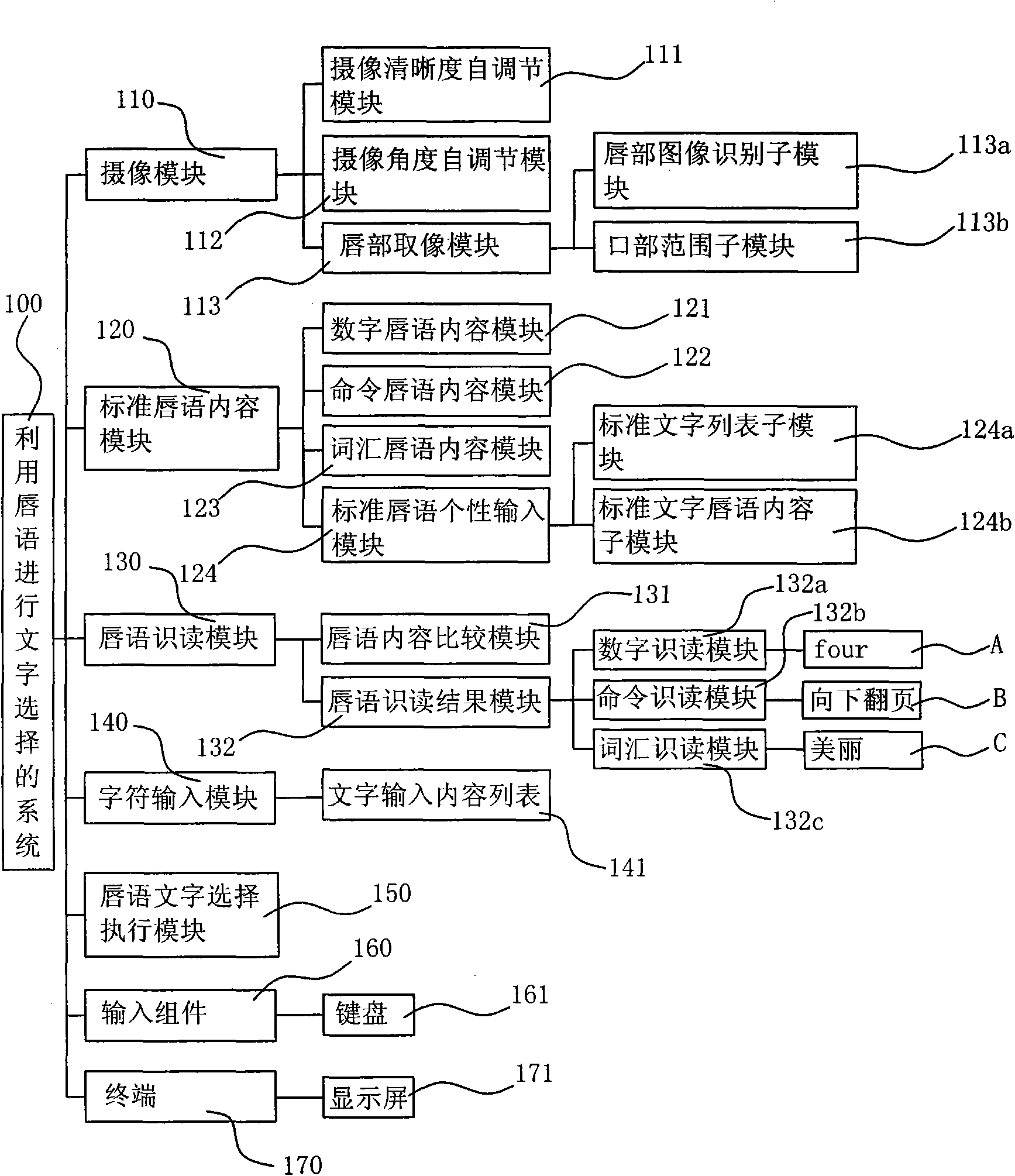

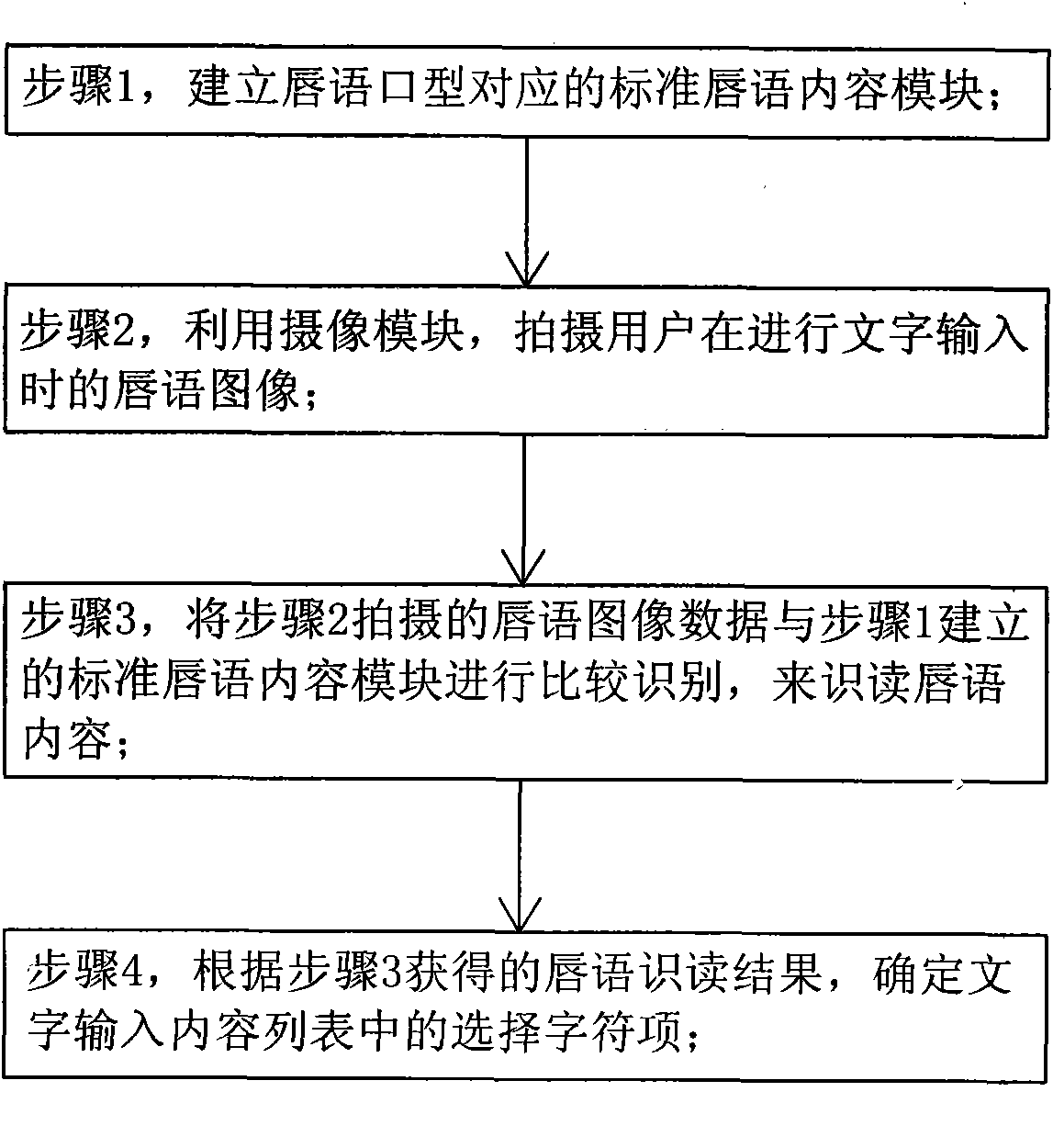

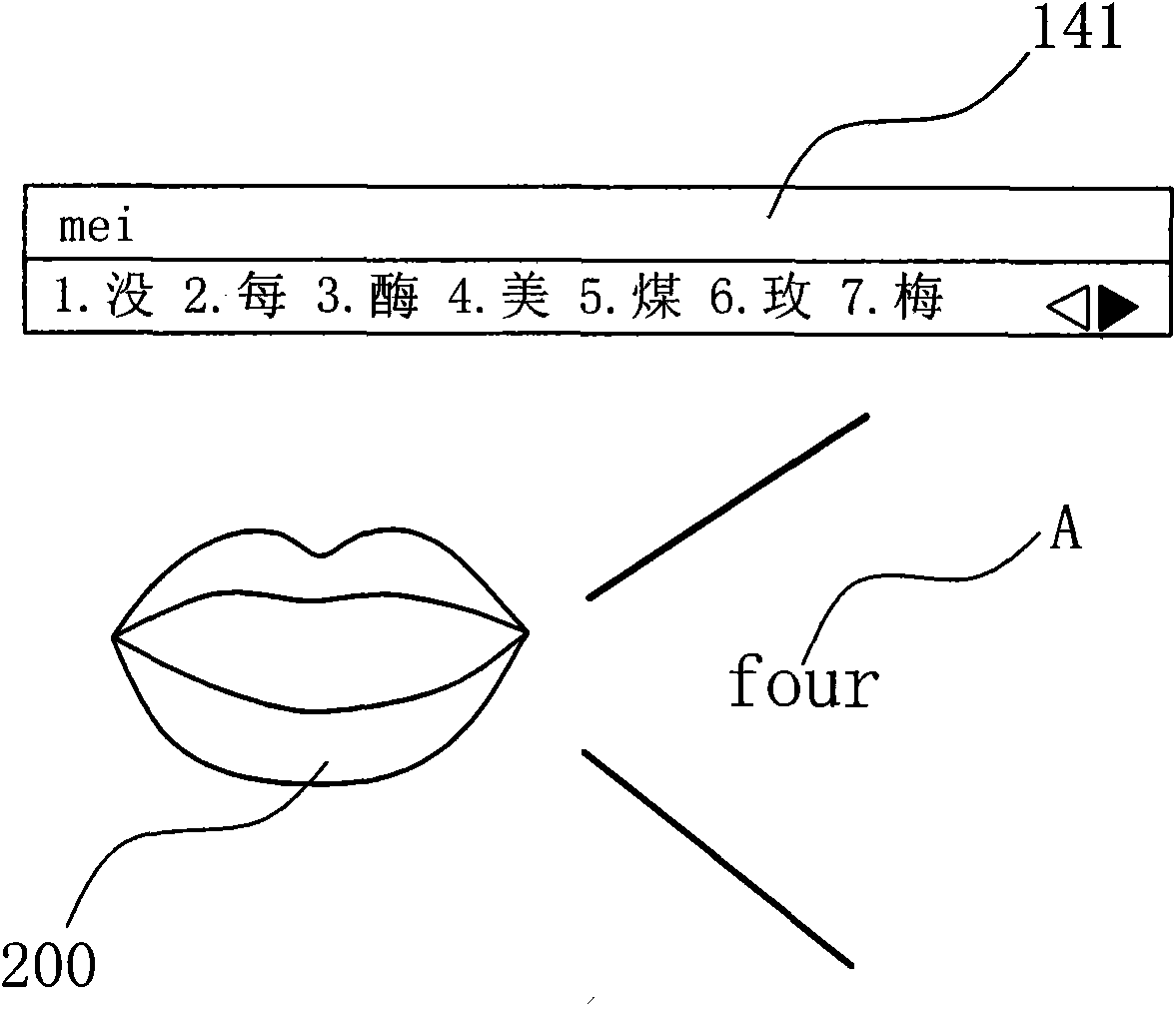

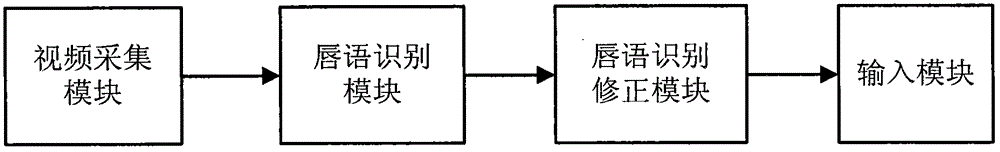

System for realizing text entry selection by using lip-language and realization method thereof

ActiveCN102117115AHigh speedImprove work efficiencyInput/output for user-computer interactionCharacter and pattern recognitionText entryCamera module

The invention provides a system for realizing text entry selection by using lip-language and a realization method thereof, belonging to the application of electronic technologies in the technical field of computer and software. On the basis of the traditional input assembly, the system is provided with a camera module, a standard lip-language content module, a lip-language reading module and a lip-language text selection executing module; the standard lip-language content module corresponding to the lip-language mouth shape is established; the realization method comprises the following steps of: shooting lip-language pictures of a user during text entry by using the camera module; comparing the shot lip-language pictures with the standard lip-language content module and identifying to obtain the read lip-language content; determining a selection character option in a text entry content list according to the obtained lip-language reading result, and realizing the text entry selection of the user. Therefore, the realization method helps the user to improve text entry speed and working efficiency, and increases the interesting for the user during the text entry.

Owner:SHANGHAI LIANGKE ELECTRONICS TECH

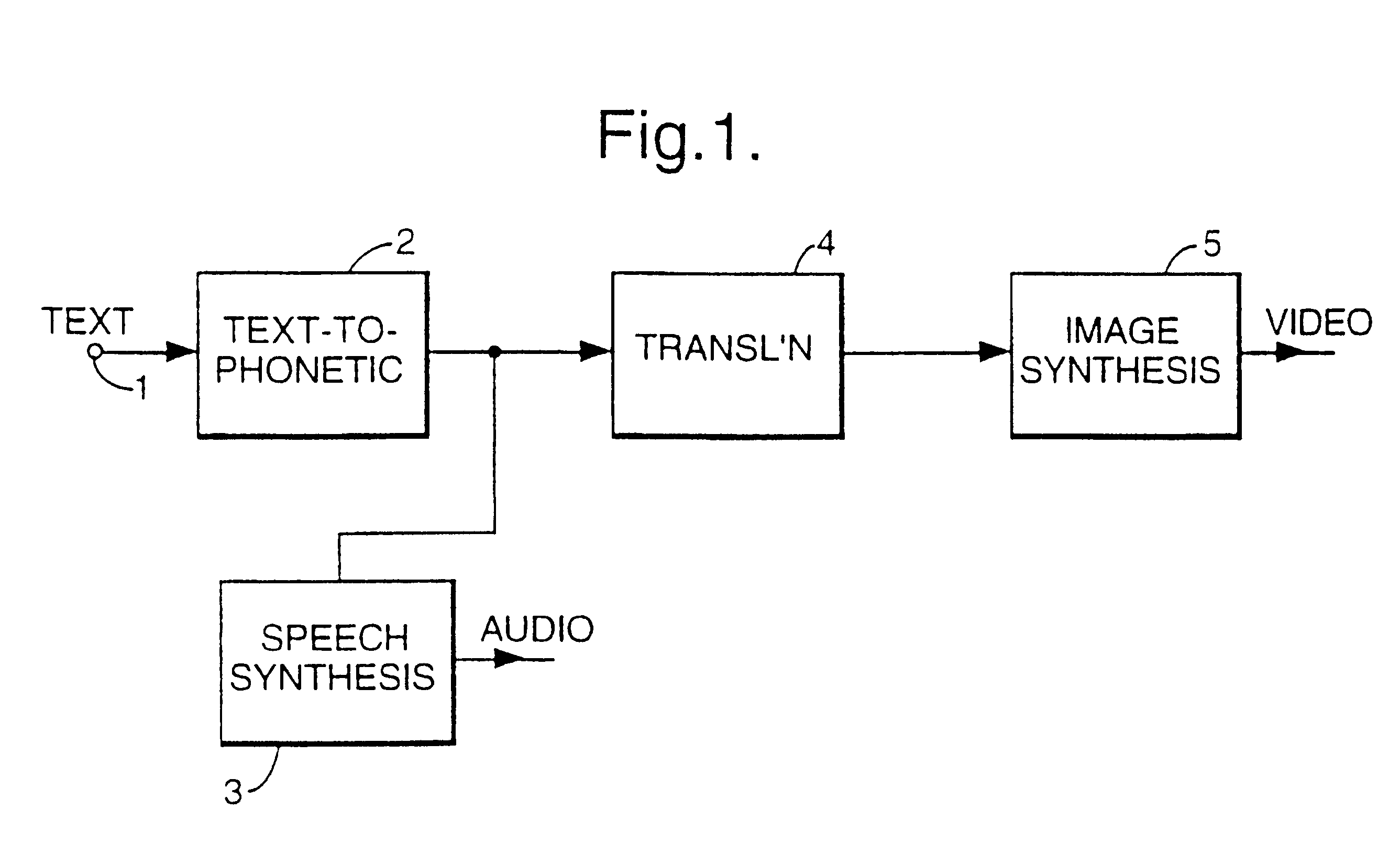

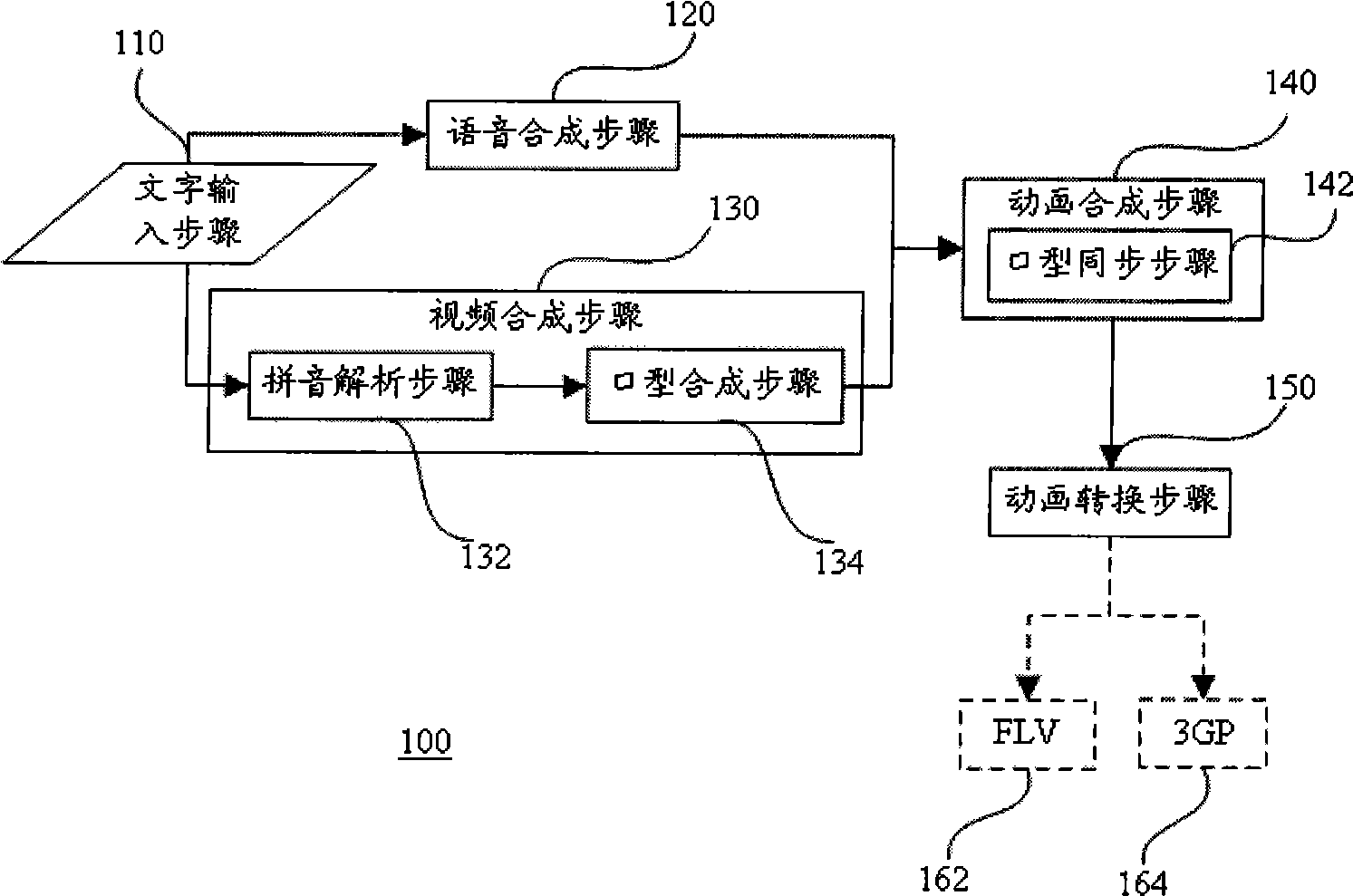

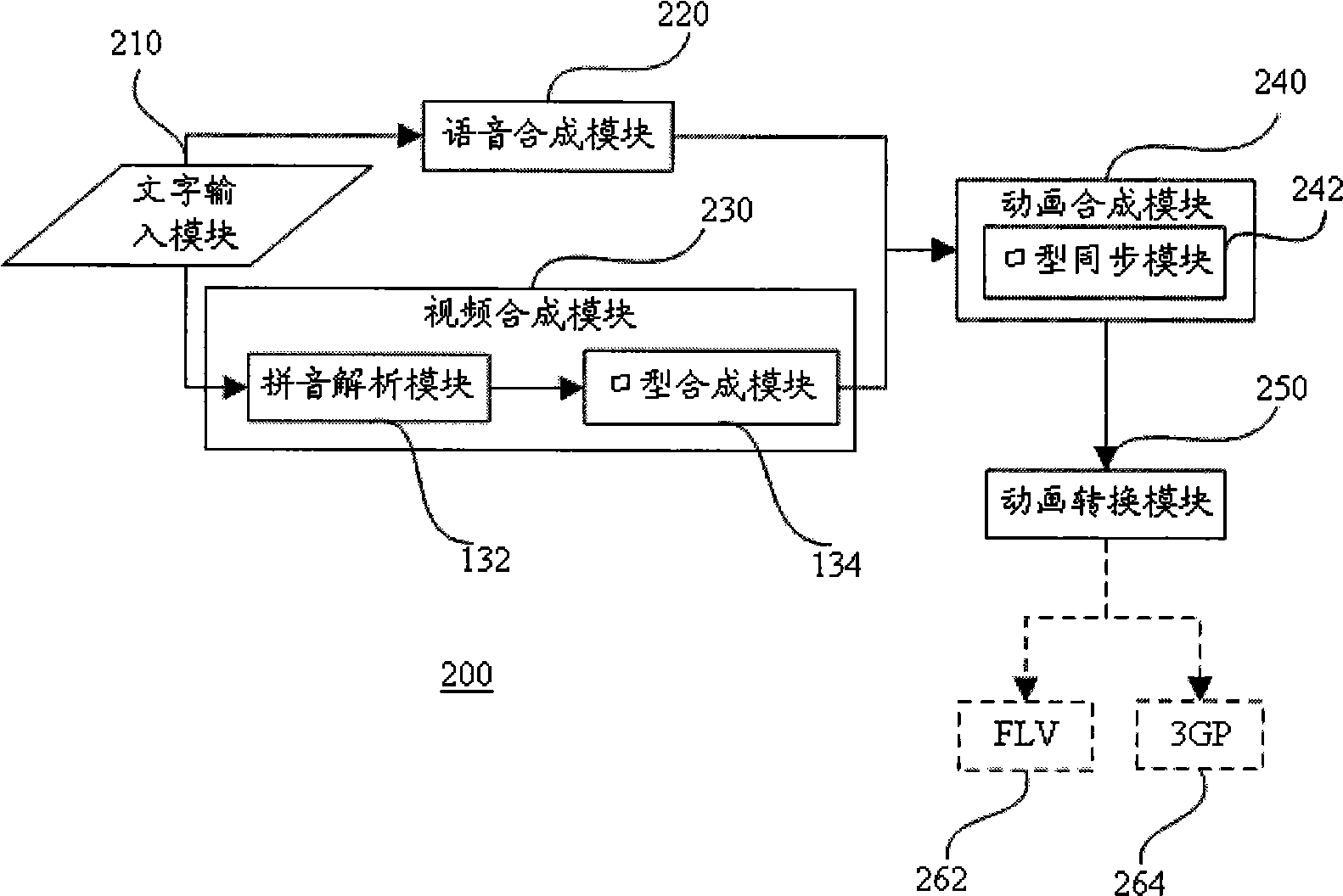

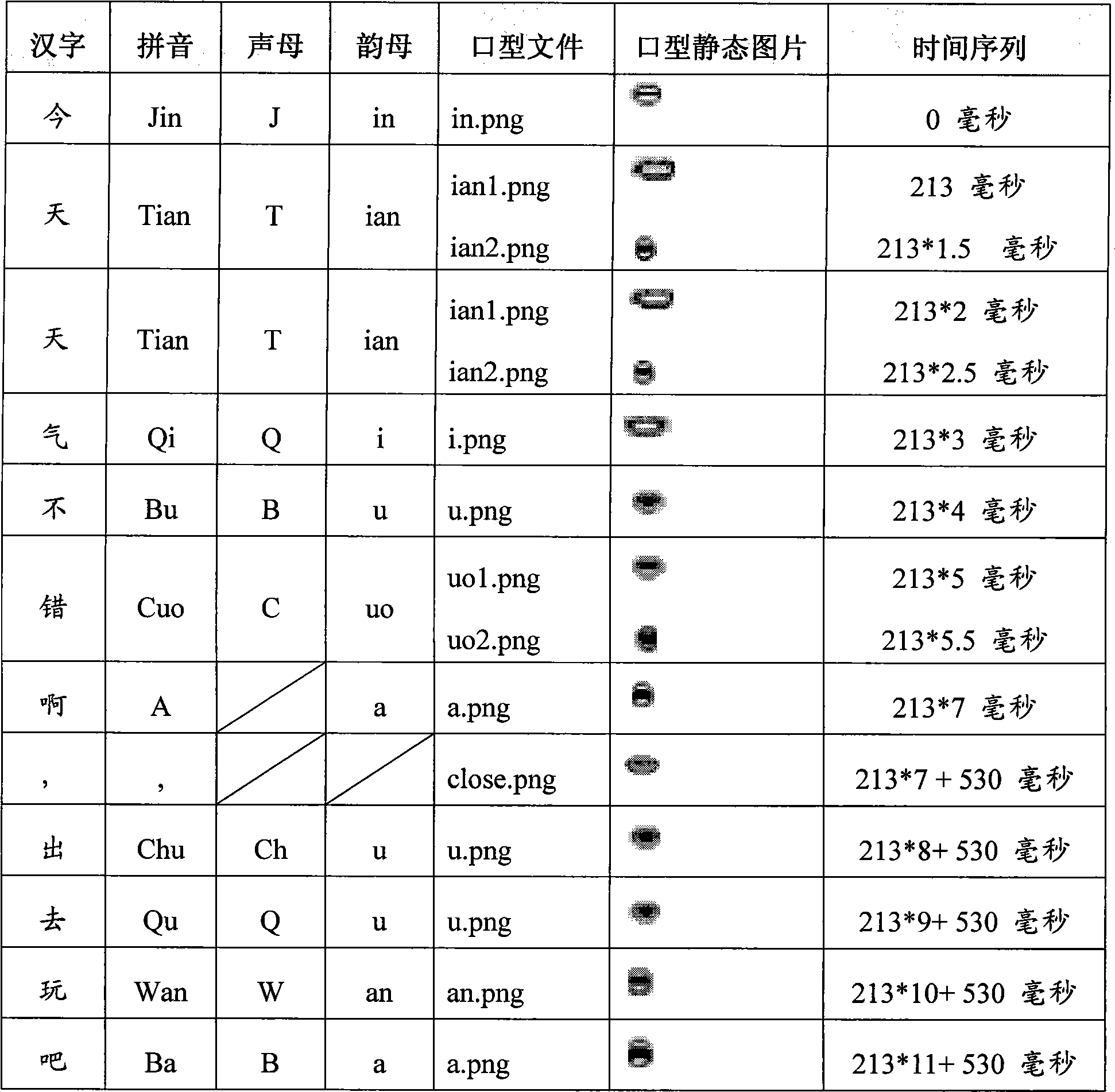

Method and apparatus for converting words into animation

InactiveCN101482975AReasonable handlingEfficient treatment methodTelevision system detailsColor television detailsTransformation of textAnimation

A method for converting text into animation and device thereof is capable of converting text into corresponding animation, comprising steps of text inputting; speech synthesizing, synthesizing an input text in order to obtain corresponding audio files; video synthesizing, including steps of spelling analyzing and mouth shape synthesizing, which are doing phonetic analysis for the text to obtain corresponding spellings for the text, then extracting images corresponding to the spelling from a preset data base of mouth shape of spelling, and finally synthesizing the images into a video files; animation synthesizing, synthesizing the audio file and video file into images. The device for converting text into animation comprises a tent inputting module, a speech synthesizing module, a video synthesizing module, and an animation synthesizing module. The text input by users are processed by the modules, thereby the animation in accordance with text is obtained, achieving the goal of conversion of text to animation.

Owner:李嘉辉

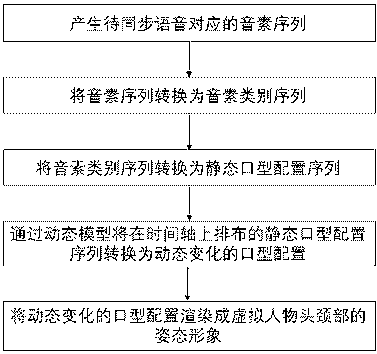

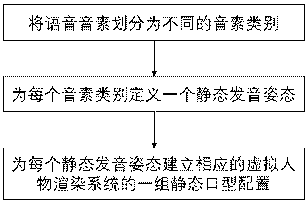

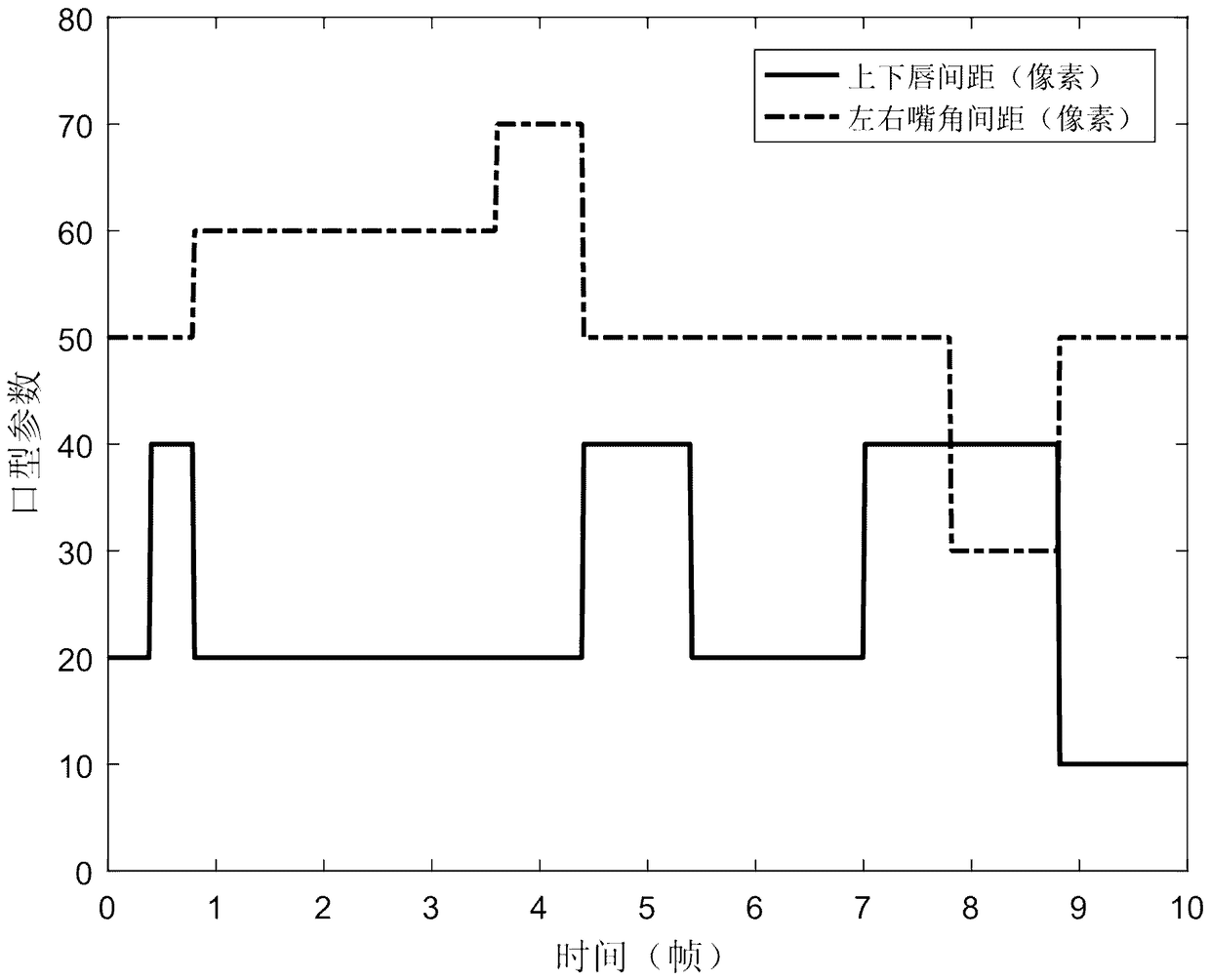

Modeling and controlling method for synchronizing voice and mouth shape of virtual character

ActiveCN108447474AEfficient natural lip-sync controlEfficient natural synchronization controlSpeech recognitionSpeech synthesisAttitude controlSynchronous control

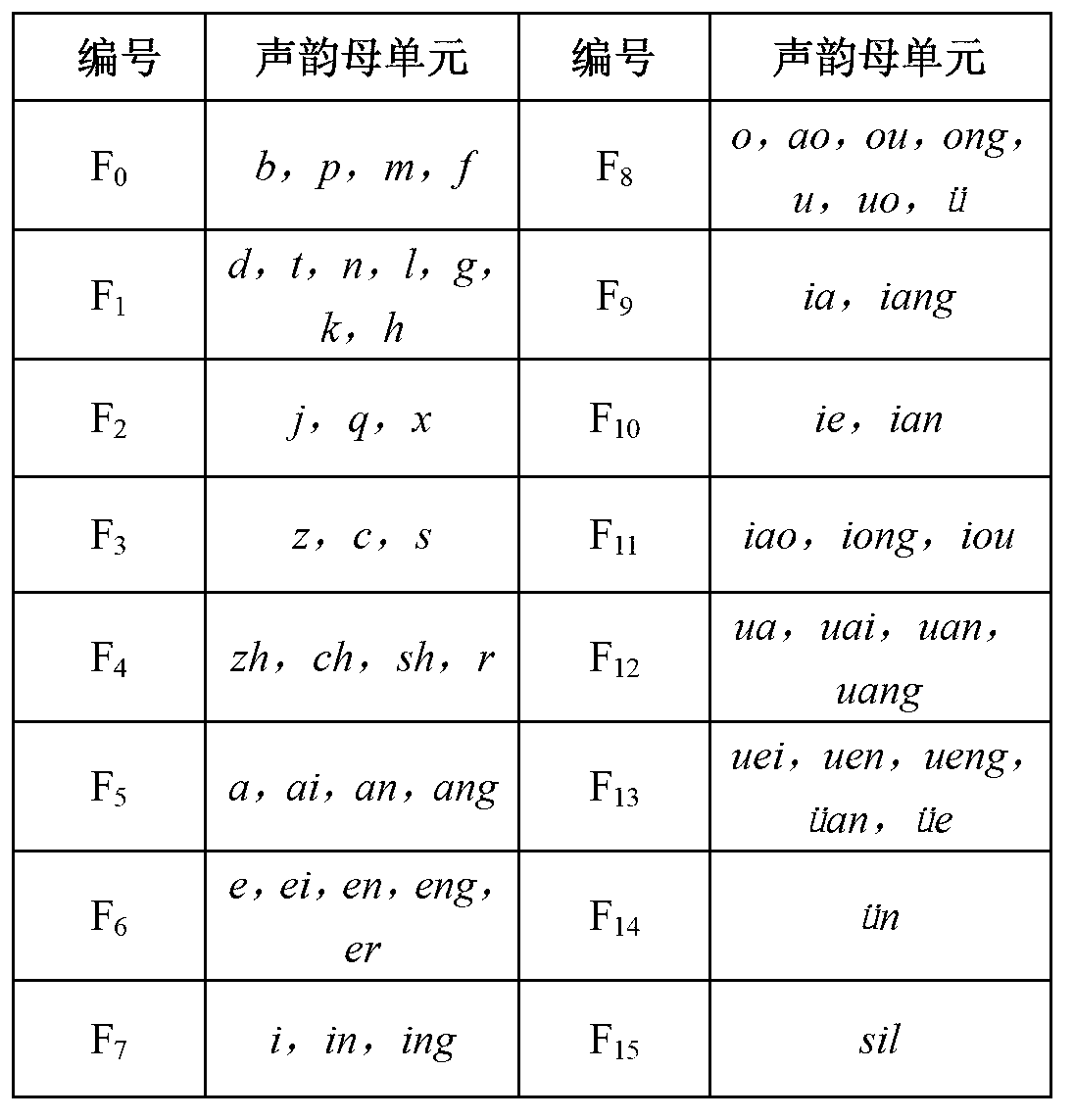

The invention belongs to the virtual character attitude control in the field of speech synthesis, and particularly relates to a modeling and controlling method for synchronizing the voice and the mouth shape of a virtual character. The object of the invention is to reduce the mouth shape animation data annotation amount and to achieve accurate and naturally smooth mouth motion synchronized with the voice. The method comprises: generating a phoneme sequence corresponding to the to-be-synchronized voice; converting the phoneme sequence into a phoneme category sequence; converting the phoneme category sequence into a static mouth shape configuration sequence; and converting the static mouth shape configuration sequence distributed on a time axis into dynamically changing mouth shape configuration by a dynamic model; rendering the dynamically changing mouth shape configuration into an attitude image of the head and neck of the virtual character, and displaying the attitude image in synchronization with a voice signal. The method can realize efficient and natural virtual character mouth shape synchronous control without mouth shape animation data and with a phonetic prior knowledge anddynamic model.

Owner:北京灵伴未来科技有限公司

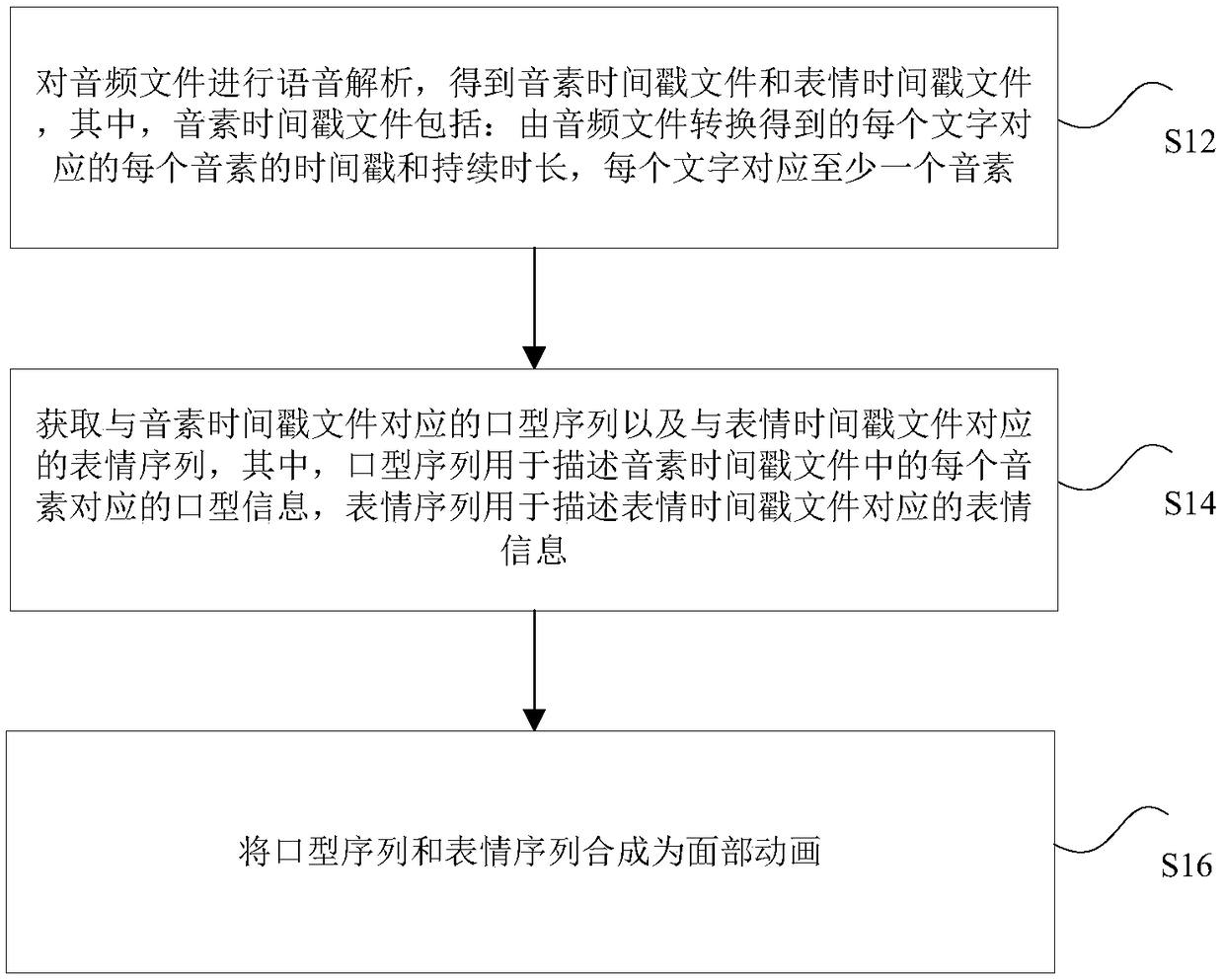

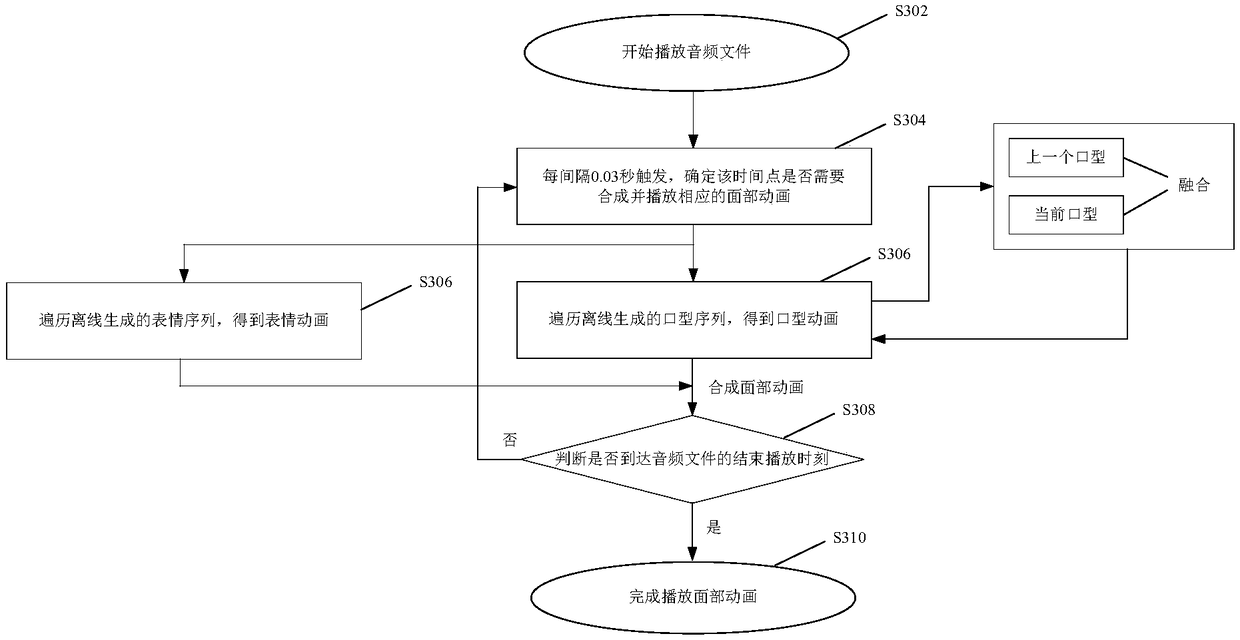

Facial animation synthesis method, a device, a storage medium, a processor and a terminal

PendingCN109377540ATroubleshoot technical issues with the experienceAnimationAnimationSynthesis methods

The invention discloses a facial animation synthesis method, a device, a storage medium, a processor and a terminal. The method comprises: performing voice analysis on the audio file to obtain a phoneme time stamp file and an expression time stamp file, wherein the phoneme time stamp file comprises a timestamp and a duration of each phoneme corresponding to each character converted from the audiofile, and each character corresponds to at least one phoneme; acquiring a mouth shape sequence corresponding to a phoneme timestamp file, wherein the mouth shape sequence is used for describing mouthshape information corresponding to each phoneme in the phoneme timestamp file; acquiring an expression sequence corresponding to the expression timestamp file, wherein, the expression sequence is usedfor describing expression information corresponding to the expression timestamp file; and synthesizing mouth and expression sequences into facial animation. The invention solves the technical problemthat the speech analysis mode provided in the related art is easy to cause the large error of the speech animation synthesized in the follow-up, and affects the user experience.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

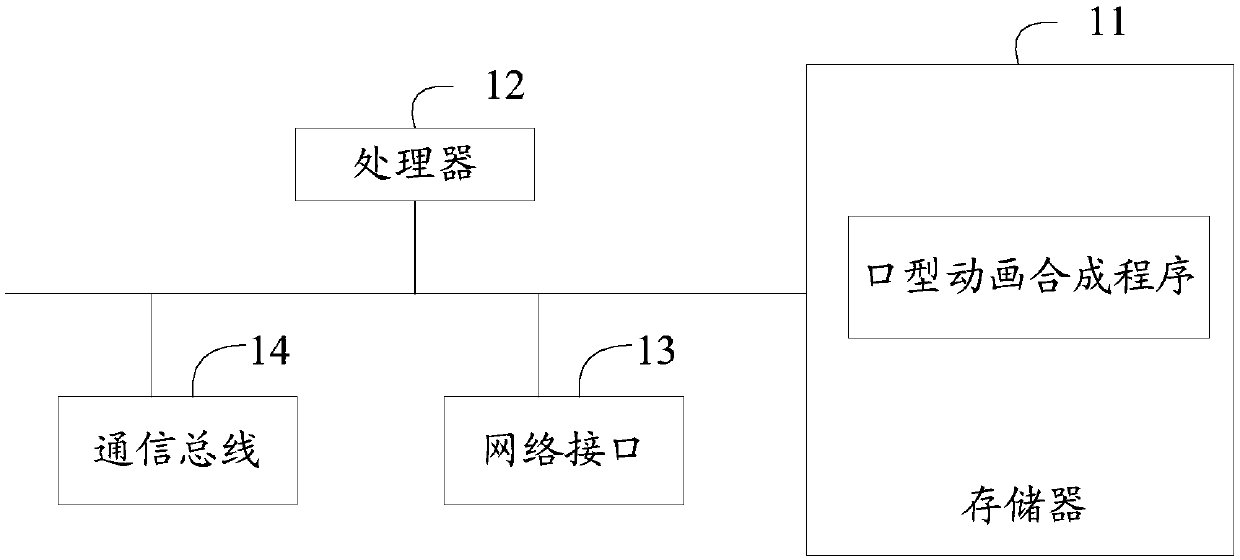

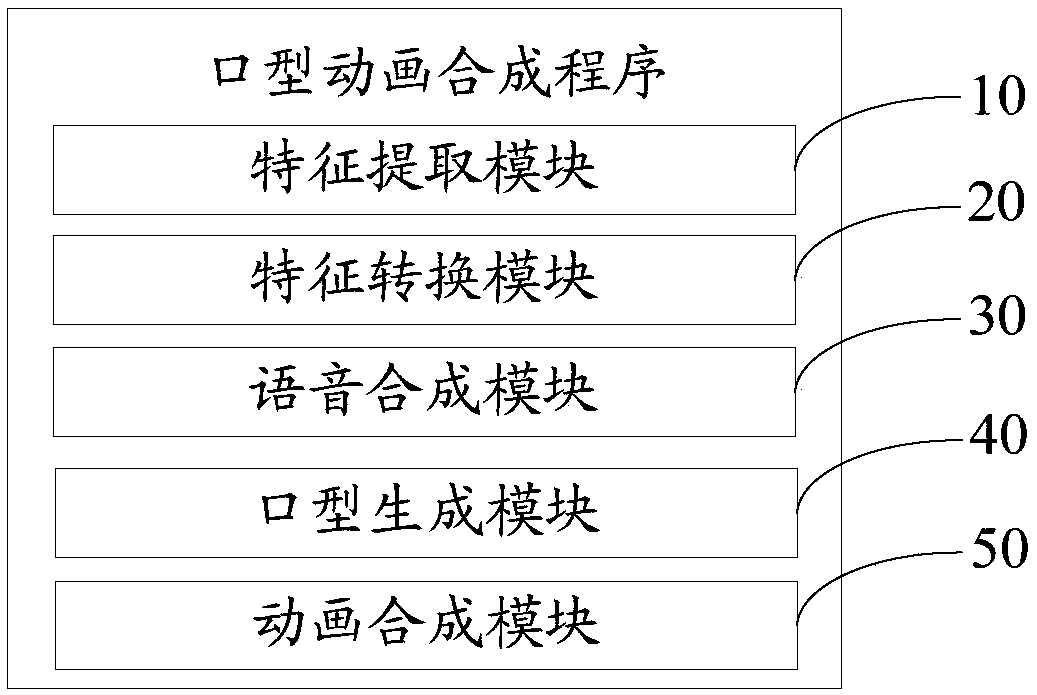

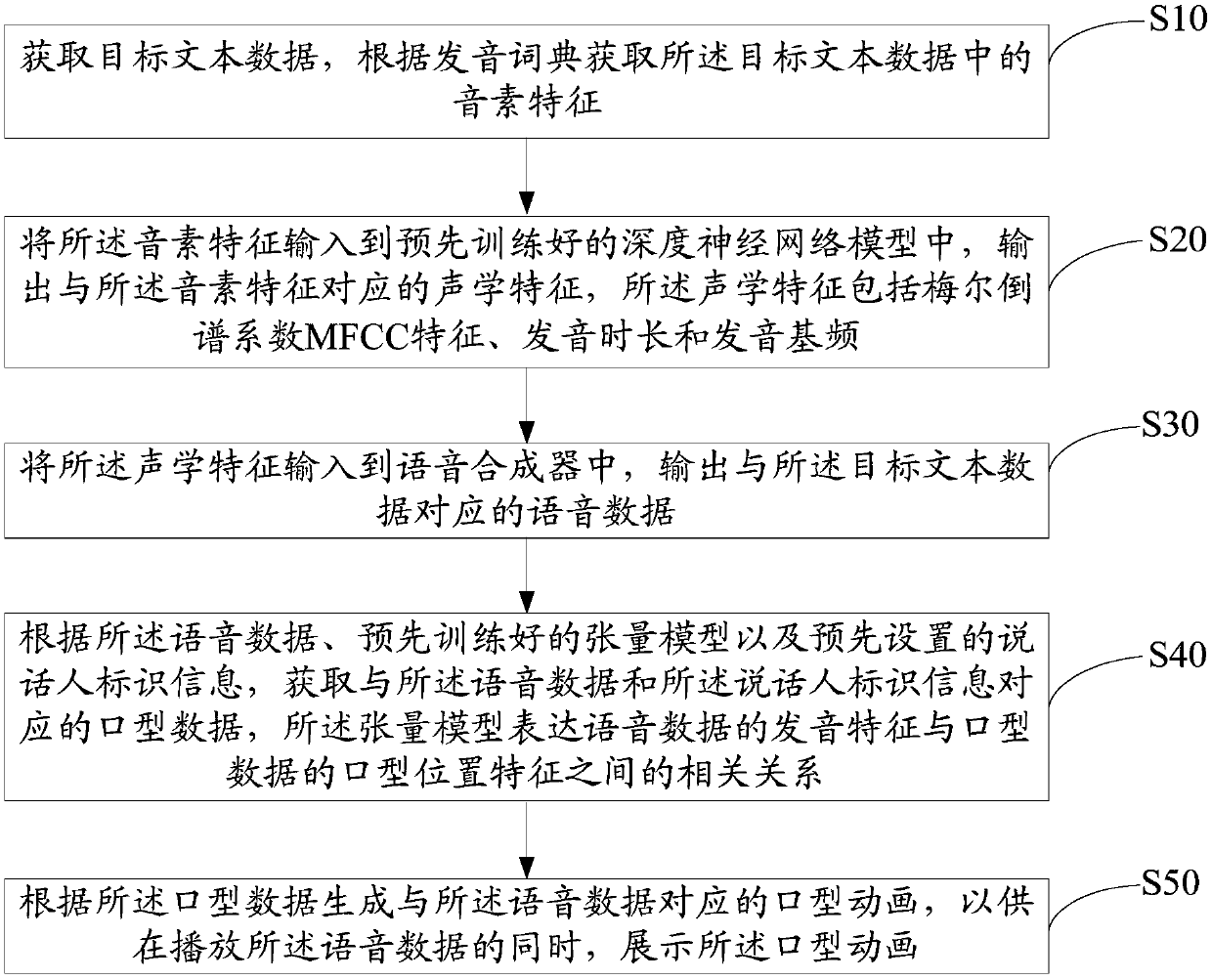

Voice-based mouth shape animation synthesis device and method and readable storage medium

ActiveCN108763190AThe output is accurateNatural outputNatural language data processingAnimationData matchingSynthesis methods

The invention discloses a voice-based mouth shape animation synthesis device. The device comprises a memory and a processor, wherein a mouth shape animation synthesis program capable of running on theprocessor is stored on the memory, and the program is executed by the processor through the steps that target text data is acquired, and phonemic characteristics in the target text data are acquiredaccording to a pronunciation dictionary; the phonemic characteristics are input into a pre-trained deep neural network model to output acoustic characteristics, and the acoustic characteristics are input into a voice synthesizer to output voice data; according to the voice data, a pre-trained tensor model and speaker identification information, mouth shape data is acquired; and a mouth shape animation corresponding to the voice data is generated according to the mouth shape data. The invention furthermore provides a voice-based mouth shape animation synthesis method and a computer readable storage medium. Through the voice-based mouth shape animation synthesis device and method and the computer readable storage medium, the technical problem that a mouth shape animation which is matched with synthesized voice data and has a sense of reality cannot be displayed in the prior art is solved.

Owner:PING AN TECH (SHENZHEN) CO LTD

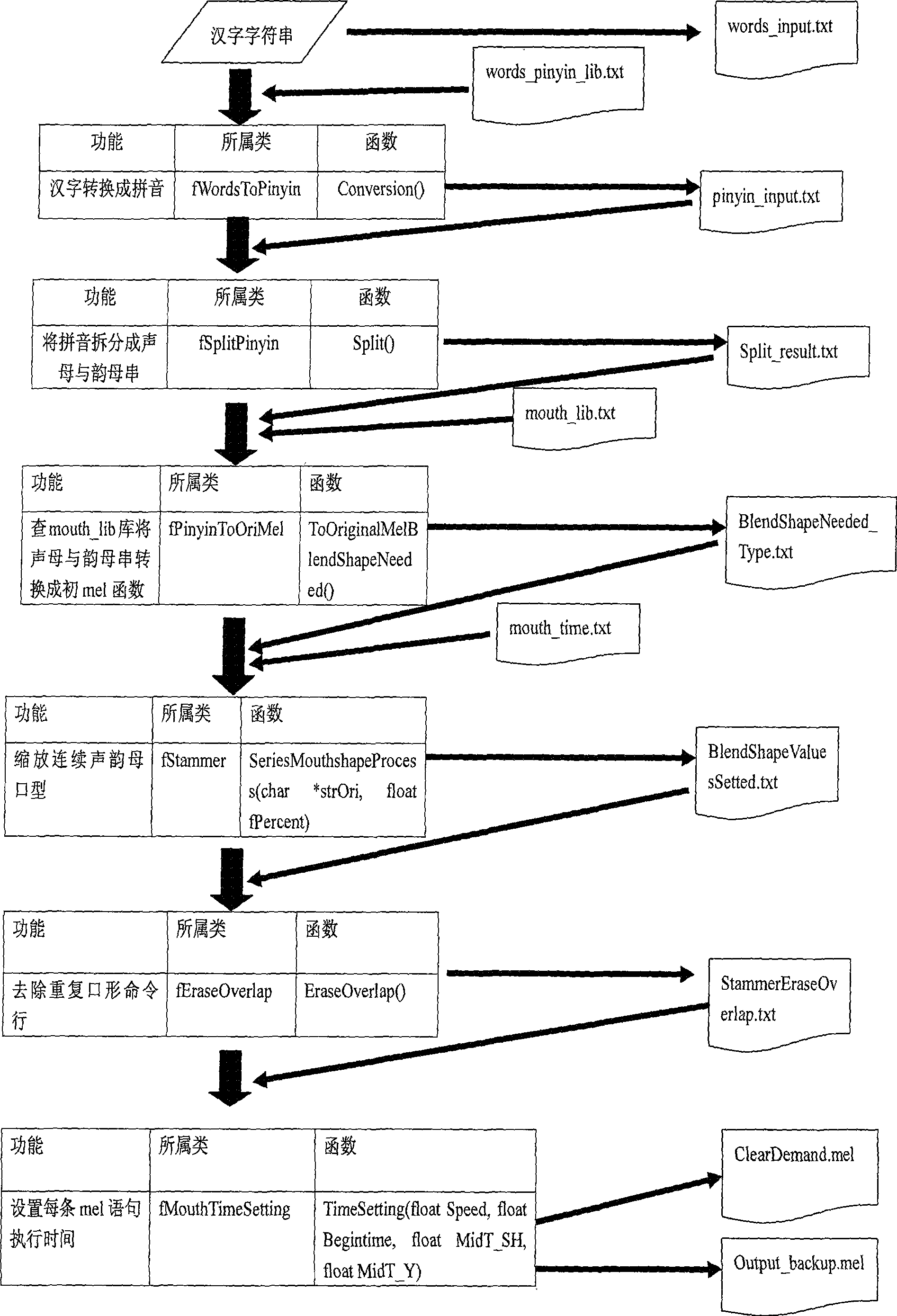

Cartoon generating method for mouth shape of source virtual characters

ActiveCN101364309AAddress usability issuesImprove production efficiencyAnimation3D-image renderingAnimationMouth shape

The invention provides a mouth-shape animation generating method used in a source virtual role, which comprises the following steps: (1) a text is received and is split into individual characters, and the individual characters are split into textual phonemes; (2) the phonemes are transformed into corresponding mouth-shape commands according to a phoneme mouth-shape matching set, wherein, initial mouth-shapes and terminative mouth-shapes corresponding to the phonemes are set in the phoneme mouth-shape matching set; (3) the mouth-shapes corresponding to the textual phonemes in the mouth-shape commands are zoomed according to a phoneme duration table, wherein, the phoneme duration table comprises mouth-shape amplitude marks; (4) initial frames and terminative frames which respectively correspond to the initial mouth-shapes and the terminative mouth-shapes corresponding to the textual phonemes are calculated according to the phoneme duration table, and the execution time of the mouth-shape commands is set, wherein, the phoneme duration table comprises the corresponding mouth-shape duration of the phonemes; (5) the mouth-shape commands are executed, and a mouth-shape animation used in the source virtual role is generated. The mouth-shape animation generating method can solve the applicability problem on the aspect of mouth-shape animation copying in a RBF neural network.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

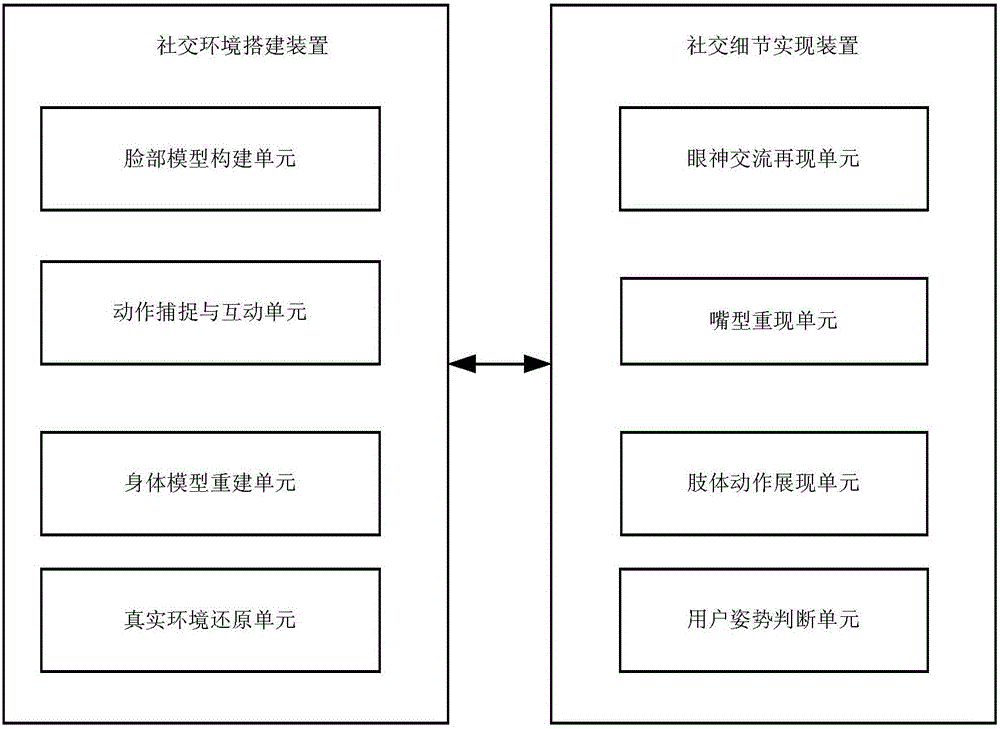

Social implementation system for virtual reality

ActiveCN106648071AImprove retentionHigh activityInput/output for user-computer interactionDetails involving processing stepsAnimationFacial expression

The invention discloses a social implementation system for virtual reality. The system comprises a social environment building device and a social detail implementation device, wherein the social environment building device is used for realizing real-time tracing and reappearance of facial expression and mouth shape as well as human face three-dimensional model building, simultaneously building the body model of a user by tracing the head and hands of the user and the action and position of the body in real time and presenting in the social scenes of the virtual reality, and carrying out reduction and reconstruction of true environment on the scenes; the social detail implementation device is used for truly presenting the action of the mouth shape when the user speaks according to the mood, statement content, tone and volume when the user speaks by sensing the position of a focal point that eyes watch, and simultaneously strengthening the sense of reality in virtual reality social contact of the user by showing the action of the head, the actions of the hands and the action postures of others.

Owner:欧马腾会展科技(上海)有限公司

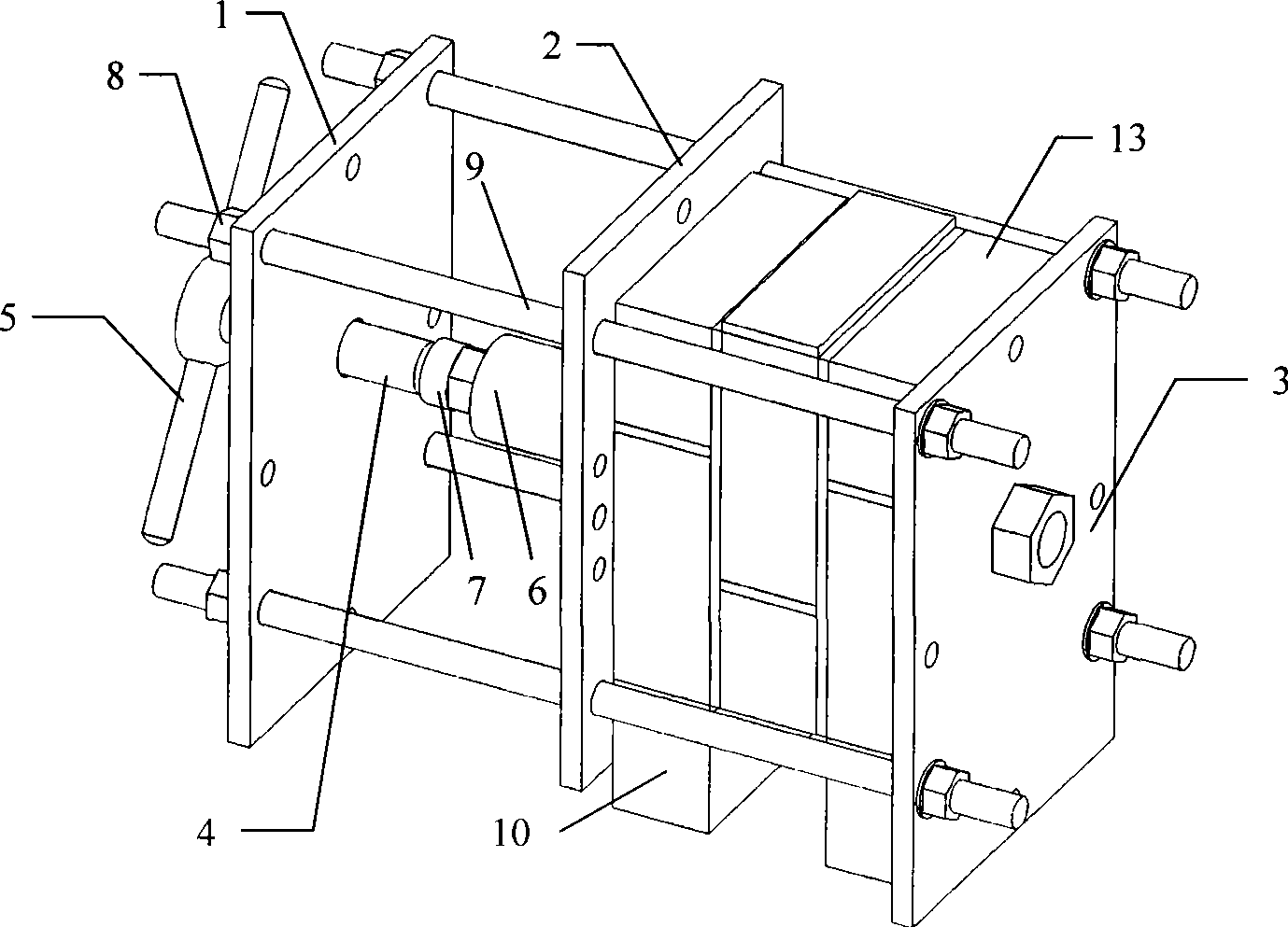

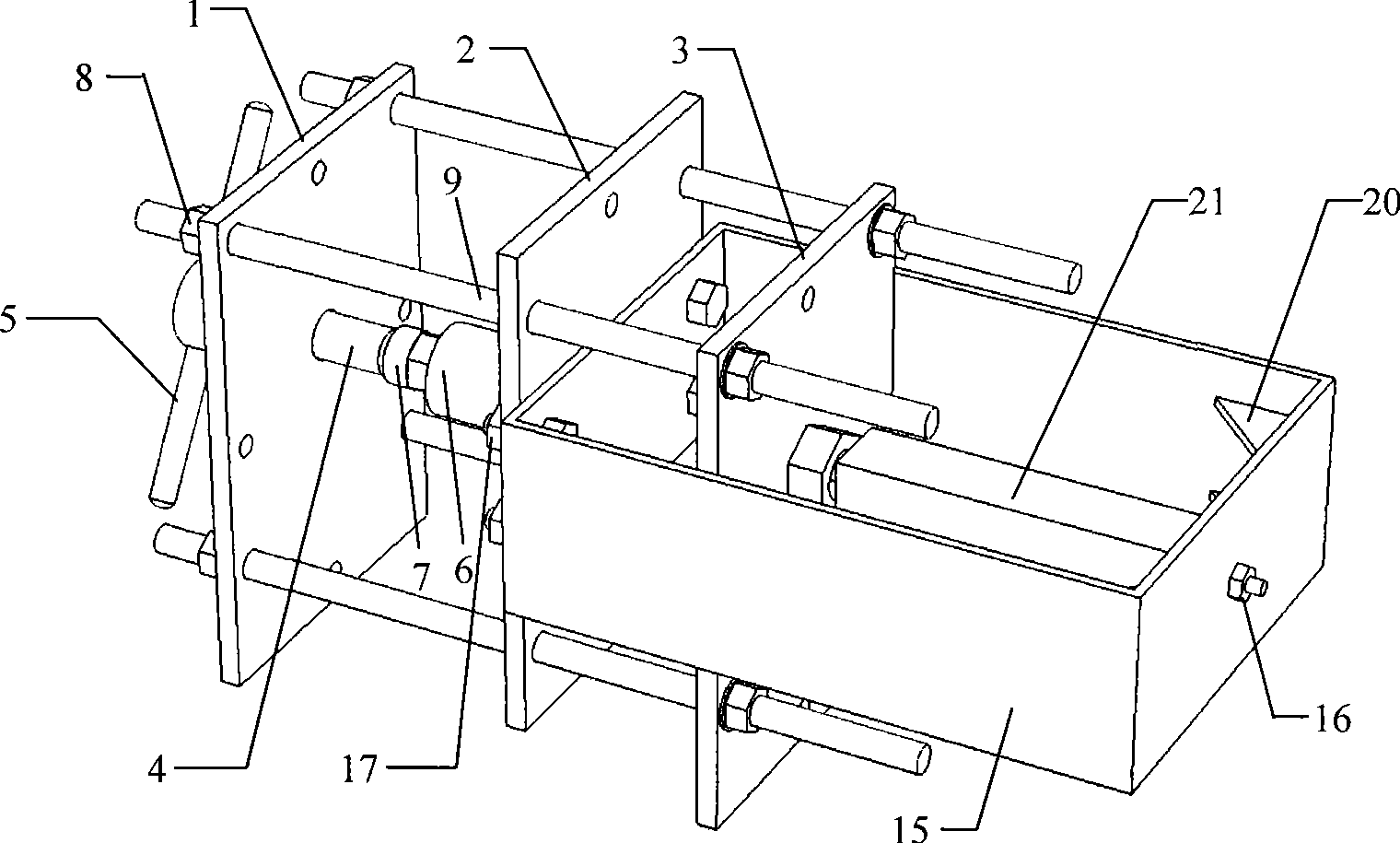

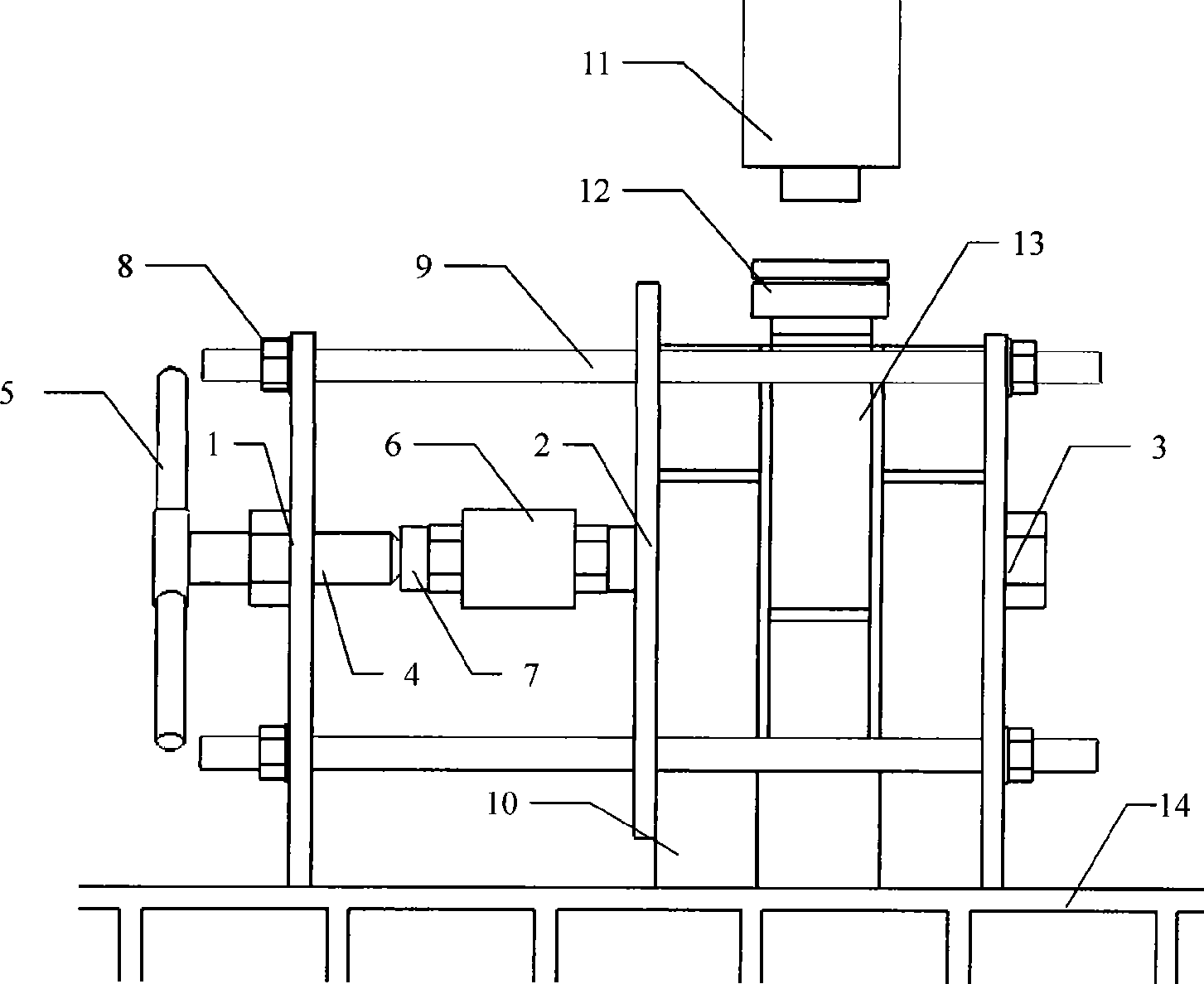

Bidirectional composite force loading test device for masonry test piece

InactiveCN101419143ASimple structureEasy to assemble and disassembleStrength propertiesSheet steelBrick

The invention relates to a bidirectional composite stress loading test device for a test piece of a brick body and belongs to the field of civil engineering. The device mainly comprises an adjustable reaction frame, a pressurization screw stem and a mouth-shaped frame; the steel reaction frame which can adjust distance and the pressurization screw stem with a force transducer are utilized to realize the loading of transverse pressure or tensile force of the test piece of the brick body; and the largest loading value of the pressurization screw stem can reach 150 kN. The steel reaction frame consists of a steel plate and a screw stem; and the relative distance of the steel plate of the reaction frame can be flexibly adjusted so as to meet the requirement of the test pieces with different specifications. The vertical direction of the test piece is matched with the prior hydraulic jack to realize a full-process bidirectional loading test on the test piece of the brick body. The loading device has a simple structure, convenient disassembly and assembly and small occupied area, can be repeatedly used, can move, is convenient to load and control, has accurate and reliable tested data and can fully meet the loading requirement of the bidirectional composite stress loading test on the test piece of the brick body.

Owner:TONGJI UNIV

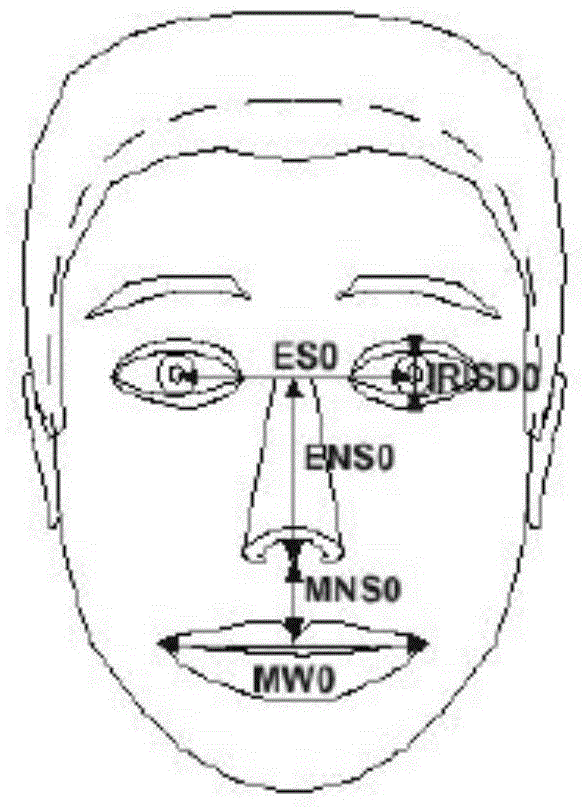

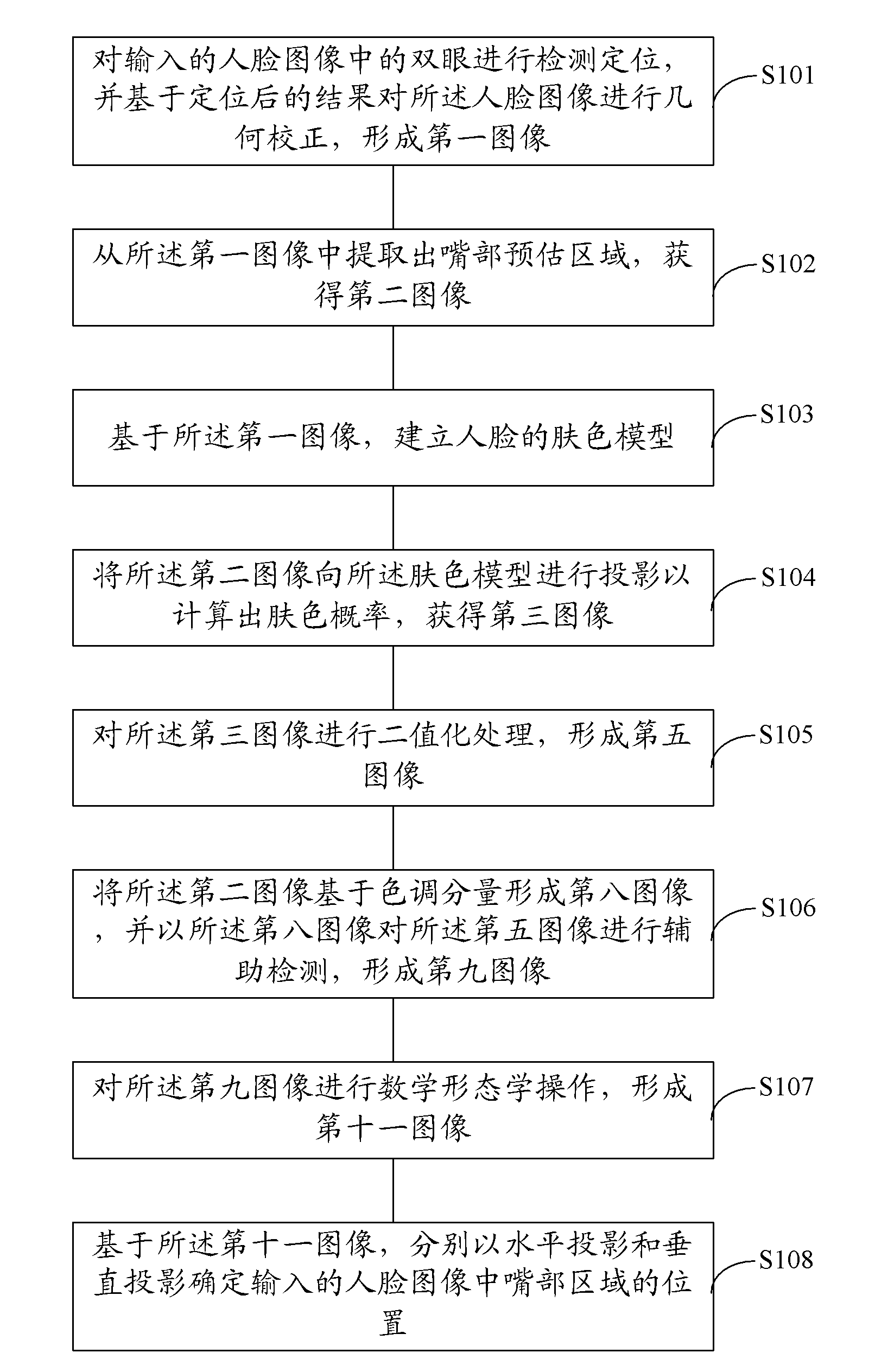

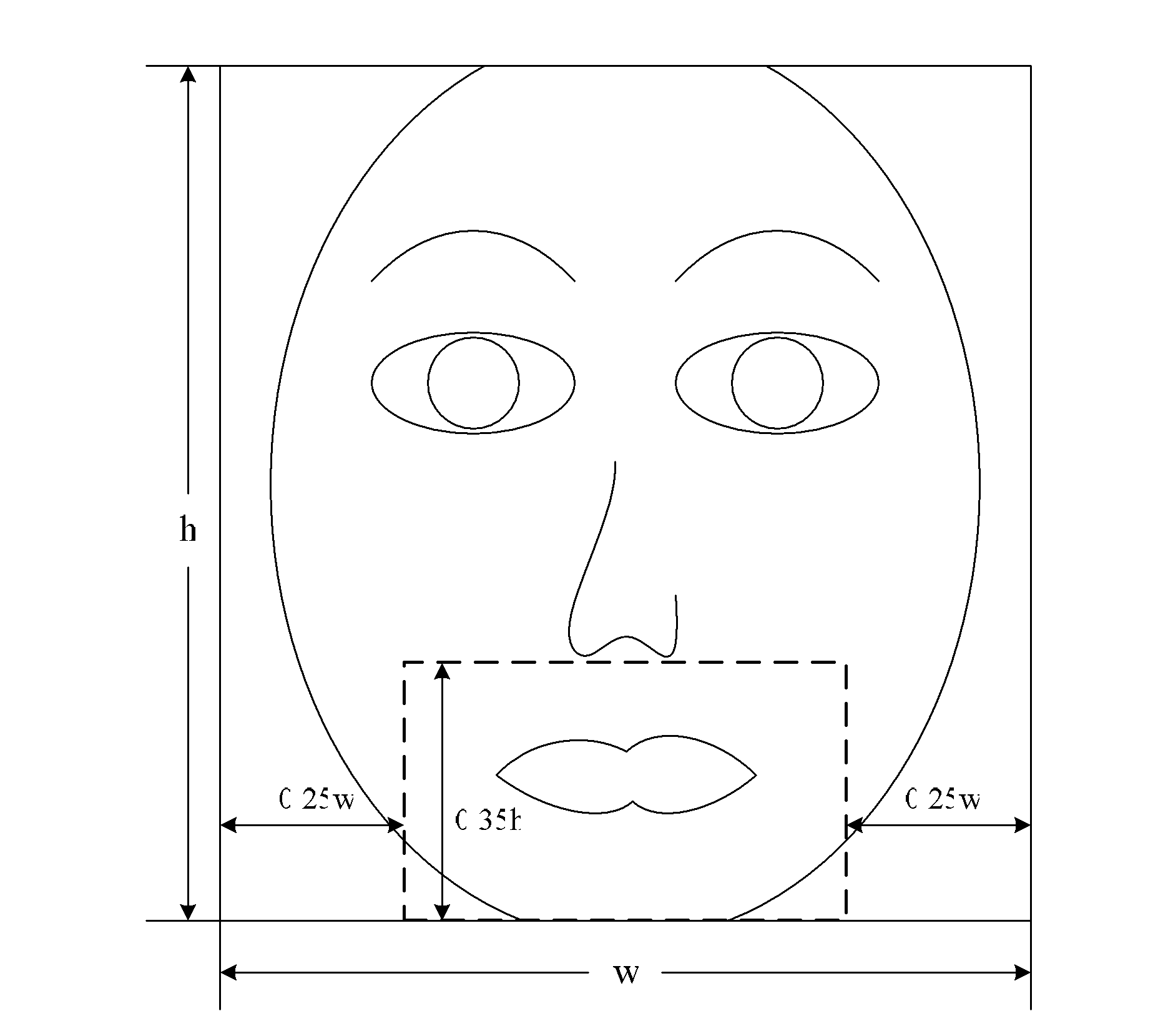

Method and device for positioning mouth part of human face image as well as method and system for recognizing mouth shape

InactiveCN103077368AAchieve precise positioningImprove accuracyCharacter and pattern recognitionPattern recognitionVertical projection

The invention discloses a method and a device for positioning a mouth part of a human face image and a method and a system for recognizing the mouth shape. The method for positioning the mouth part comprises the following steps of: detecting and positioning two eyes in an input human face image; carrying out geometric rectification on the human face image on the basis of the positioned result to form a first image; extracting a mouth part pre-estimation area from the first image to obtain a second image; establishing a skin color model of human face on the basis of the first image; projecting the second image to the skin color model so as to calculate the probability of skin color to obtain a third image; carrying out binarization processing on the third image to form a fifth image; forming an eighth image by the second image on the basis of a color tone component; carrying out assistant detection on the fifth image by the eighth image to form a ninth image; carrying out mathematical morphology operation on the ninth image to form an eleventh image; and determining the position of a mouth part area in the input human face image by a horizontal projection and a vertical projection on the basis of the eleventh image. According to the technical scheme, accurate positioning of the mouth part can be effectively realized.

Owner:SHANGHAI ISVISION INTELLIGENT RECOGNITION TECH

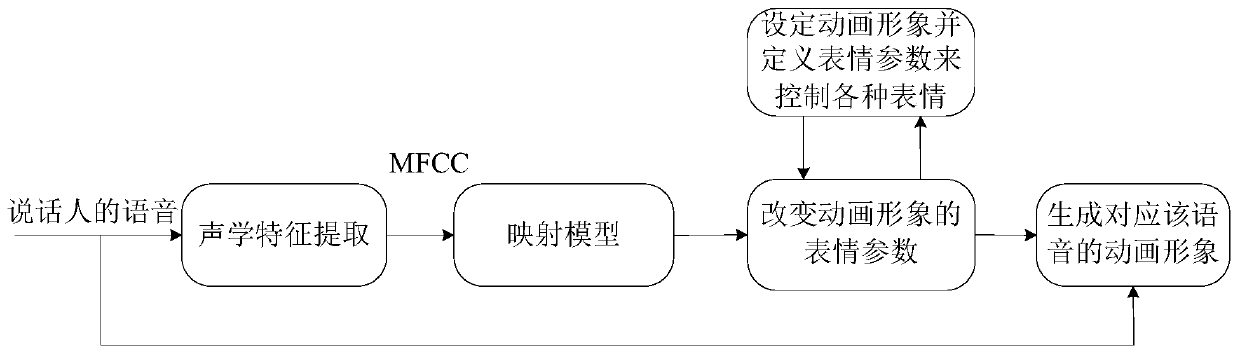

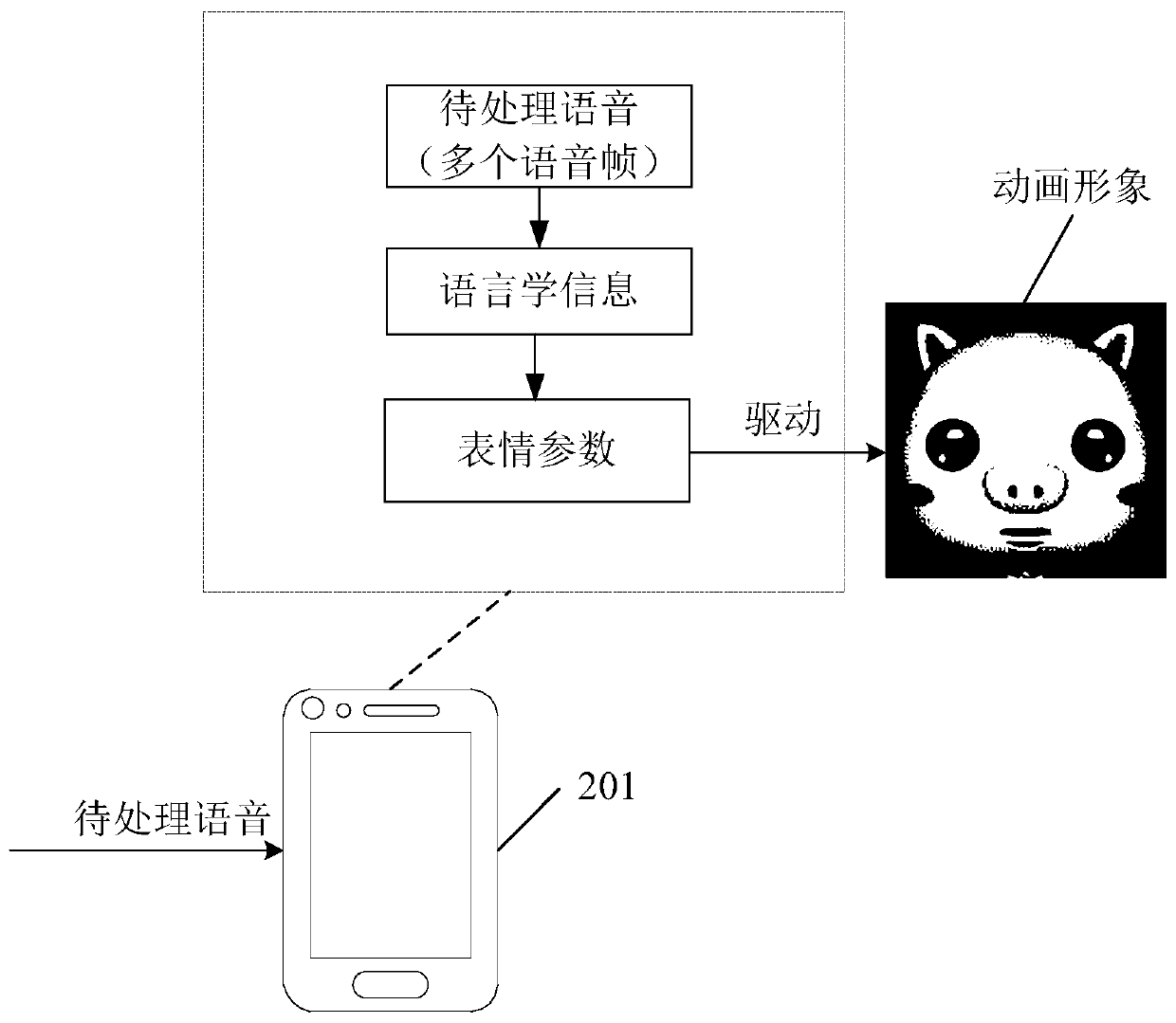

Voice-driven animation method and device based on artificial intelligence

PendingCN110503942AImprove interactive experienceBiological modelsAnimationPattern recognitionAlgorithm

An embodiment of the invention discloses a voice-driven animation method based on artificial intelligence. When a to-be-processed voice comprising a plurality of voice frames is obtained, linguistic information corresponding to the voice frames in the to-be-processed voice can be determined, wherein each piece of the linguistic information is used for identifying the probability of distribution ofphonemes to which the corresponding voice frame belongs, namely, reflecting which probability distribution of the phonemes contents in the voice frame belong to; information carried by the linguisticinformation is irrelevant to an actual speaker of the to-be-processed voice, so that the influence of pronunciation habits of different speakers on determination of subsequent expression parameters can be counteracted; and according to the expression parameters determined by the linguistic information, an animation image can be accurately driven to make an expression corresponding to the to-be-processed voice, such as a mouth shape, so that the to-be-processed voice corresponding to any speaker can be effectively supported and the interactive experience is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

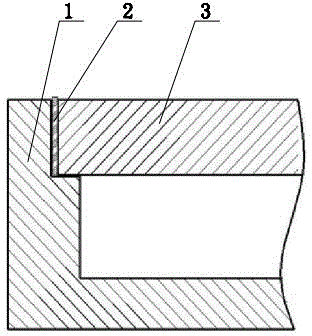

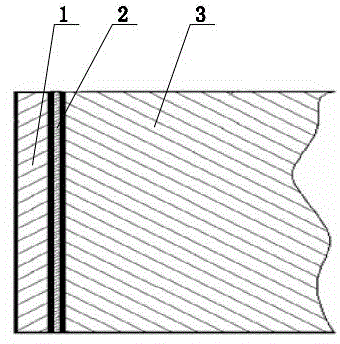

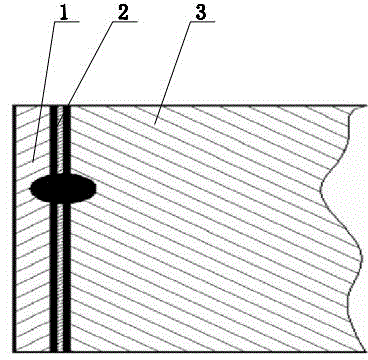

Sealing method for laser filler welding of hybrid integrated circuit package

ActiveCN103331520AImprove sealingMeet various requirements of packagingSemiconductor/solid-state device manufacturingLaser beam welding apparatusCrack freeShielding gas

The invention discloses a sealing method for laser filler welding of hybrid integrated circuit package. The sealing method comprises the following steps: 1, an aluminum alloy casing and an aluminum cover plate are rinsed by using acetone; 2, joints are assembled, a stepped groove is arranged in the aluminum alloy casing, the aluminum alloy cover plate is mounted on the aluminum alloy casing, the aluminum alloy casing and the aluminum alloy cover plate are butted by utilizing a tool, the butting mode is corner joint, and a joint gap is formed at the joints; 3, pre-filler is filled in the joint gap; 4, spot welding is carried out, a Nd:YAG (nipigin-doped: yttrium aluminum garnet) laser is adopted, the relative positions of the aluminum alloy casing, the aluminum alloy cover plate and the pre-filler are fixed, and the spot welding is proceeded in protective gas; 5, the overall welding is carried out, the Nd:YAG laser is adopted, a mouth-shaped welding form is adopted, and the welding process is proceeded in the protective gas. With the adoption of the sealing method, the welding reliability is good, the air-tight seal is good, and the welding seam is attractive and crack-free.

Owner:WUXI HUACE ELECTRONICS SYST

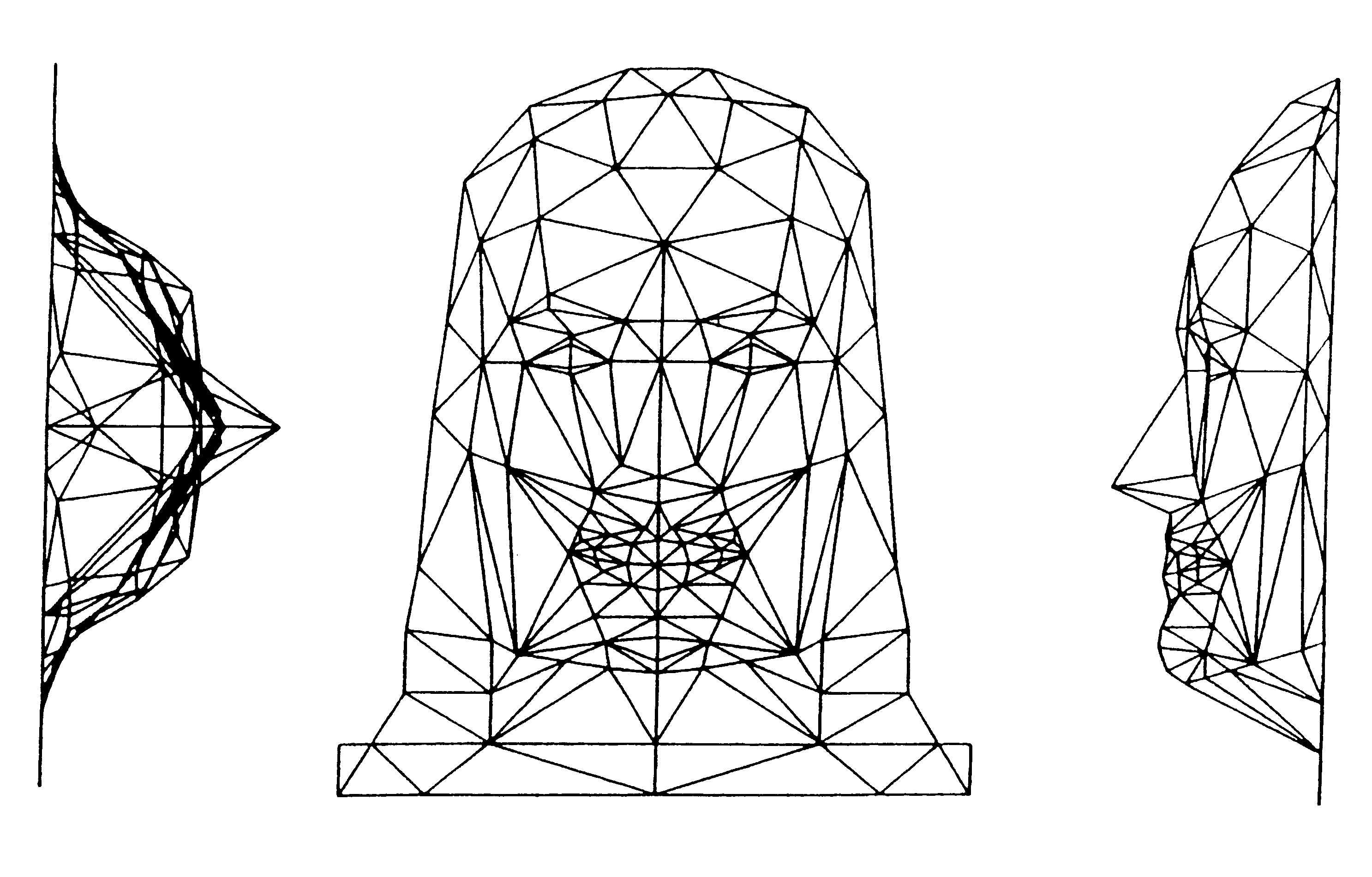

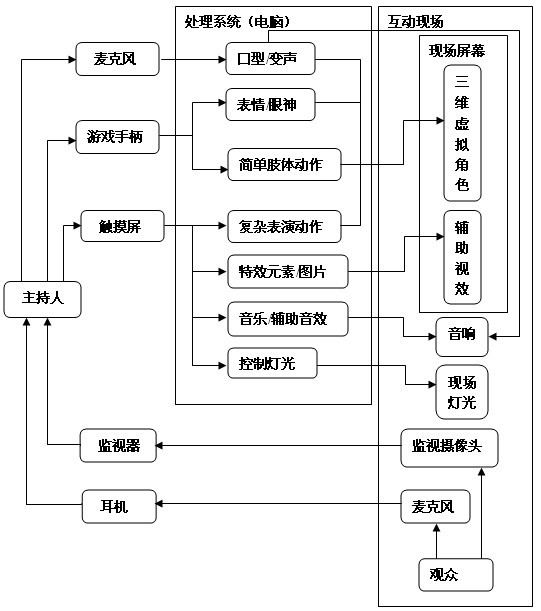

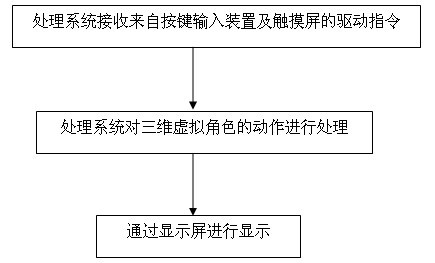

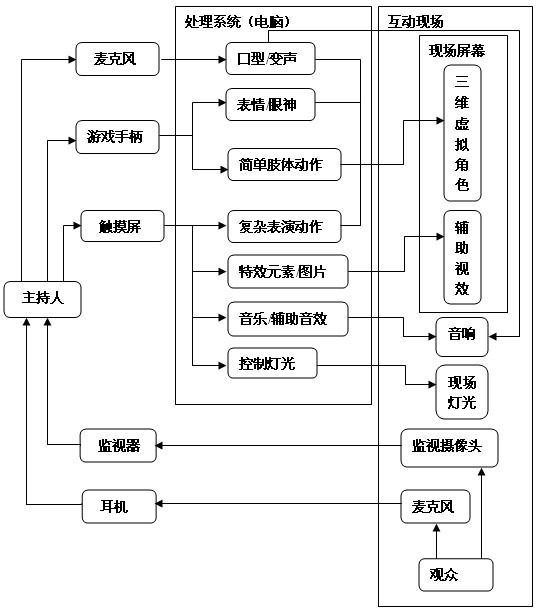

Method for realizing three dimensional virtual characters and system thereof

InactiveCN102693091AEasy to controlImage data processingInput/output processes for data processingProcess systemsTouchscreen

The invention provides a method for realizing three dimensional virtual characters and system thereof. The system comprises a display screen disposed on the stage and a process system conducting image display for the three dimensional characters through the display screen, wherein the process system is configured in communicating joint with a key-press input apparatus for inputting drive orders of expression / eye expression and body movement for the three dimensional virtual characters into the processing system and a touch screen for inputting performance action drive orders for the three dimensional characters into the processing system. The method for realizing three dimensional virtual characters and the system thereof of the invention, by using pre-configured action-control keypresses and mouth shape simulation processing, facilitate a host to operate and control the three dimensional virtual characters when hosting on a stage, providing a vividly real-time interactive entertainment system and method, enabling the three dimensional characters to realistically simulate actions of a real person.

Owner:深圳市环球数码创意科技有限公司

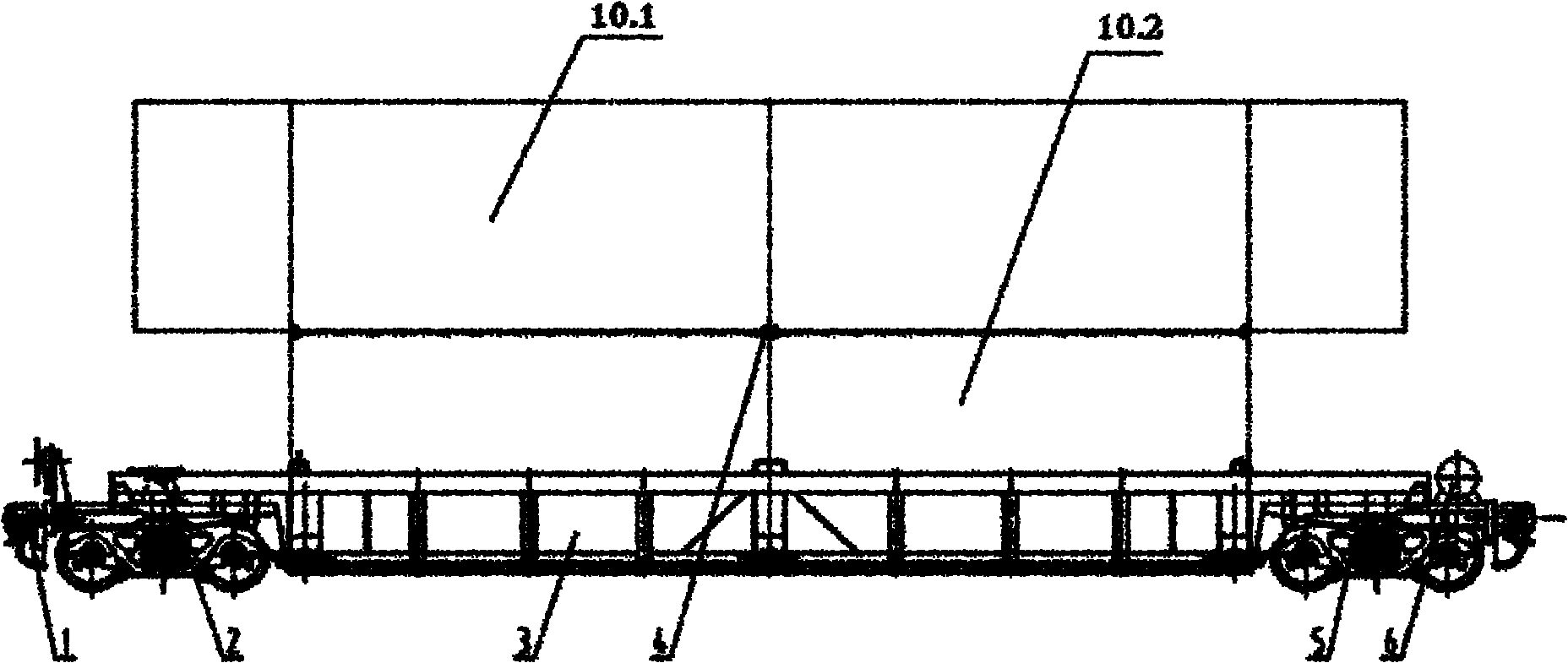

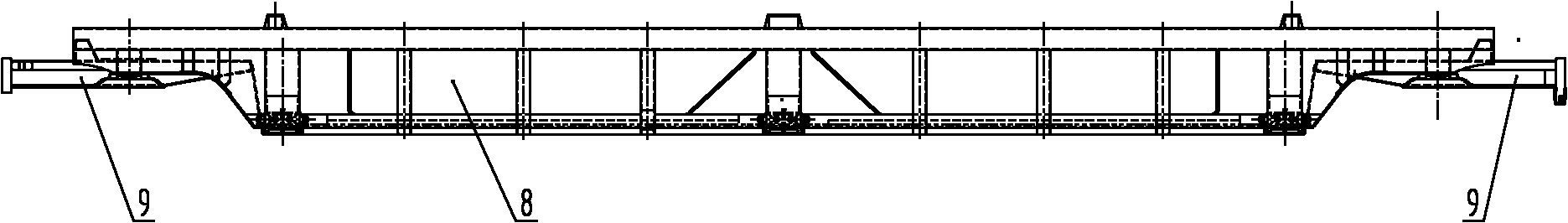

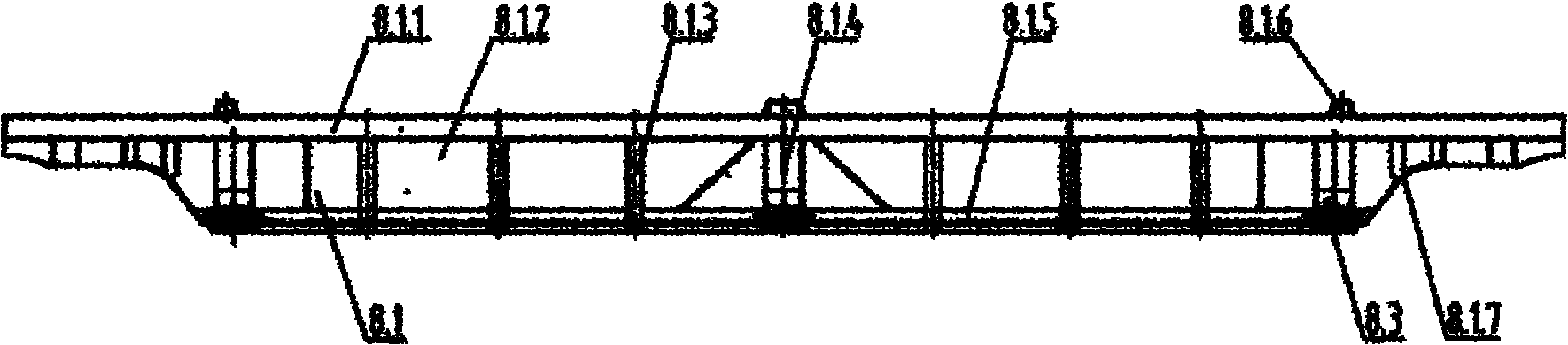

Double-deck container flat car

ActiveCN101927770AEliminate/reduce deformation stressEliminate/reduce concentrated stressWagons/vansBogieMode of transport

The invention discloses a double-deck container flat car, which comprises a car coupler buffer device, a semi-trailer bearing seat, a car body, a bogie, a braking device and a container fixing and locking mechanism, wherein the car body comprises a large underframe and a traction device; the cross section and the vertical section of the large underframe are of an all-steel welding concave structure, and an upper port of the concave structure forms a well-mouth shaped structure of the large underframe; the large underframe comprises side walls and a concave bottom; underframe bearing seats are symmetrically arranged in the middle and at two ends of the large underframe, and the bearing seats are connected with the side walls and the concave bottom. The concave bottom well type structure furthest utilizes line limits and designs a car bottom bearing surface to an allowable minimum value; the bottom of the whole car, the traction device and an upside beam form the concave bottom structure; the container fixing and locking mechanism and corresponding observation ports can carry double-deck multi-sized containers conveniently and safely; and the flat car has light self weight and large loading and can simultaneously meet the transportation mode of multi-size containers and greatly enhance the capacity of railway transportation in multimodal transportation.

Owner:CRRC MEISHAN

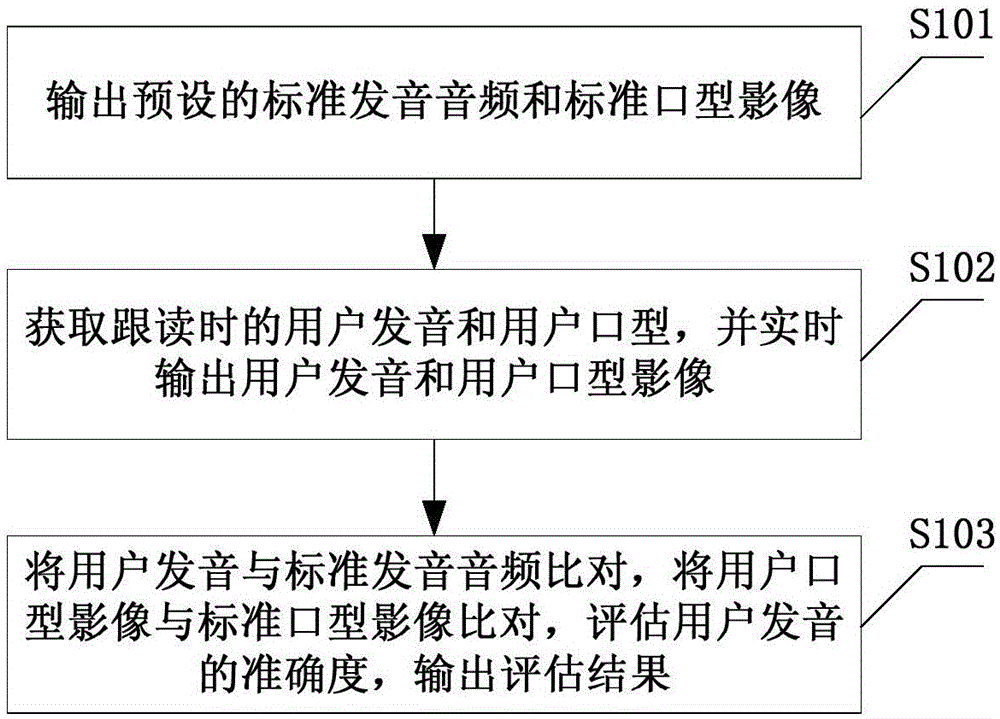

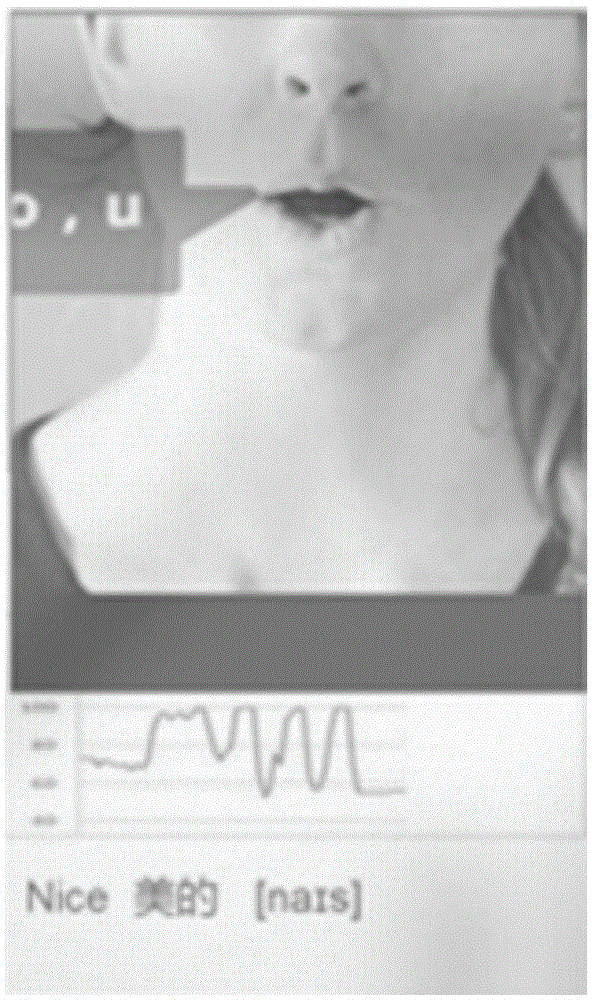

Method of correcting pronunciation aiming at language class learning and device of correcting pronunciation aiming at language class learning

ActiveCN105070118ACorrect mispronunciationEasy to adjustElectrical appliancesMouth shapeHuman language

The present invention discloses a method of correcting pronunciation aiming at the language class learning and a device of correcting pronunciation aiming at the language class learning. The method comprises the steps of outputting a preset standard pronunciation audio and a standard mouth shape image; obtaining the user pronunciation and the user mouth shape at the standard pronunciation imitation, and real-timely outputting the user pronunciation and a user mouth shape image; comparing the user pronunciation with the standard pronunciation audio, comparing the user mouth shape image with the standard mouth shape image, assessing the accuracy of the user pronunciation and outputting an assessment result. By the technical scheme of the present invention, users can compare the difference of the own mouth shape and the standard mouth shape visually and are convenient to adjust the own pronunciation mouth shape, and the user pronunciation accuracy can be assessed, thereby realizing the effect of correcting the wrong pronunciation of users.

Owner:GUANGDONG XIAOTIANCAI TECH CO LTD

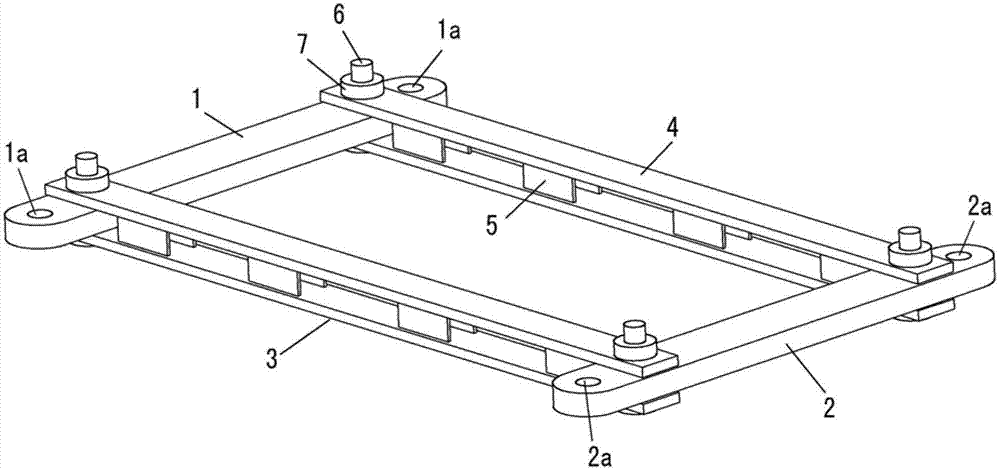

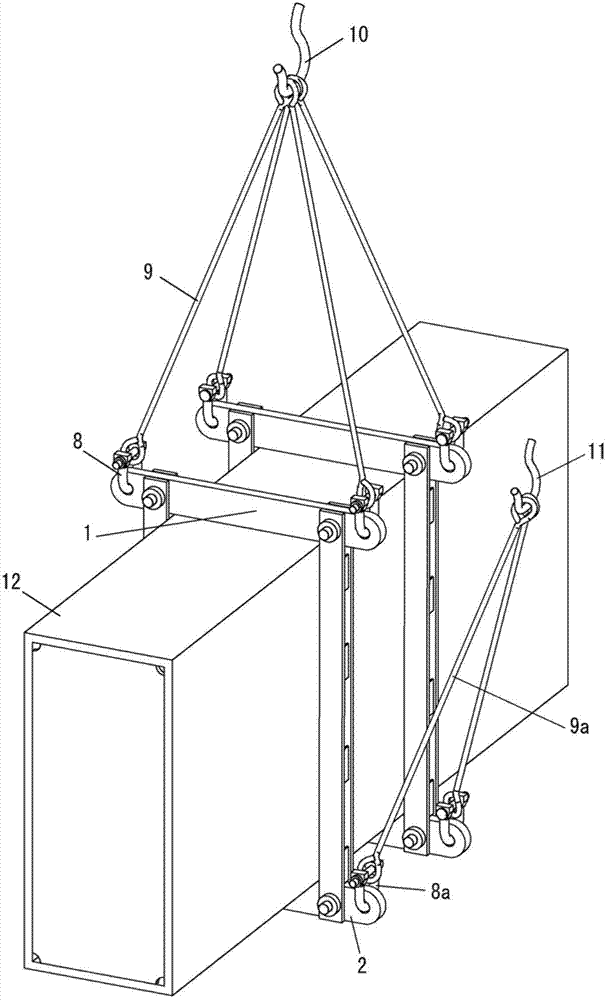

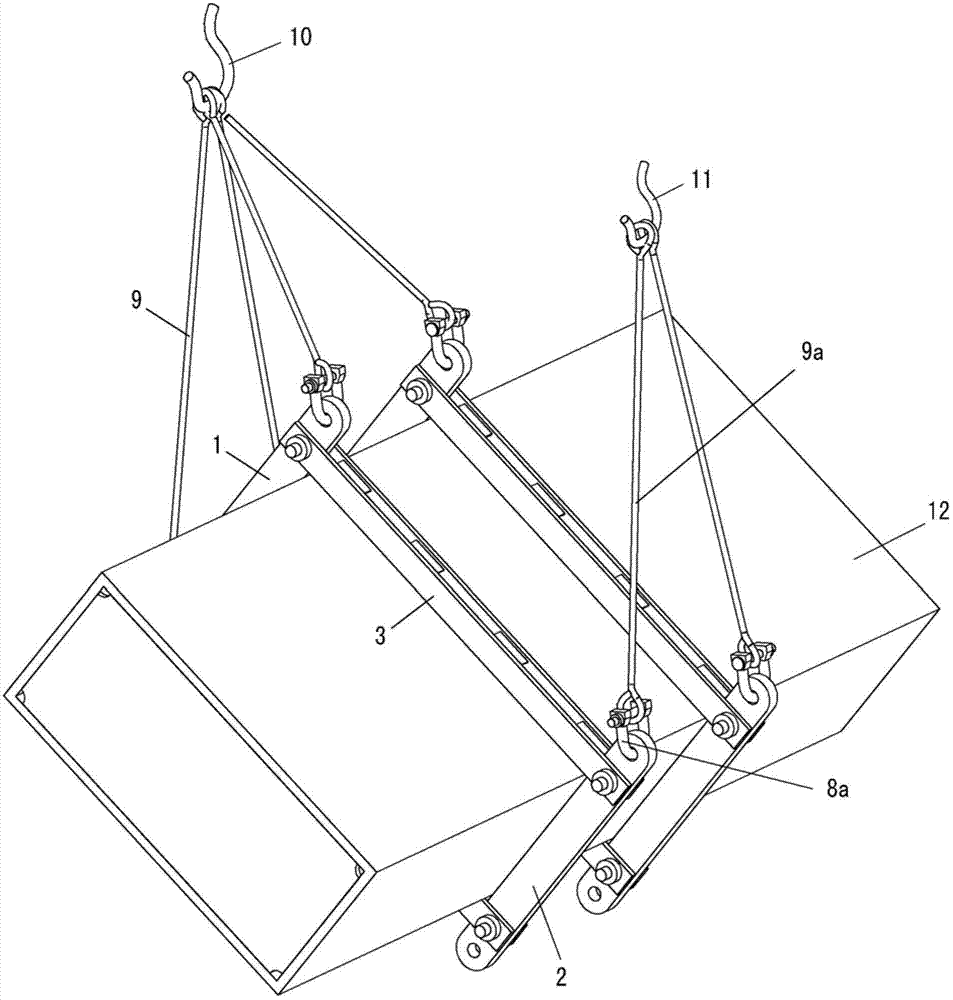

Box-type member turn-over lifting appliance with oversized cross section and application method thereof

Owner:ANHUI FUHUANG STEEL STRUCTURE

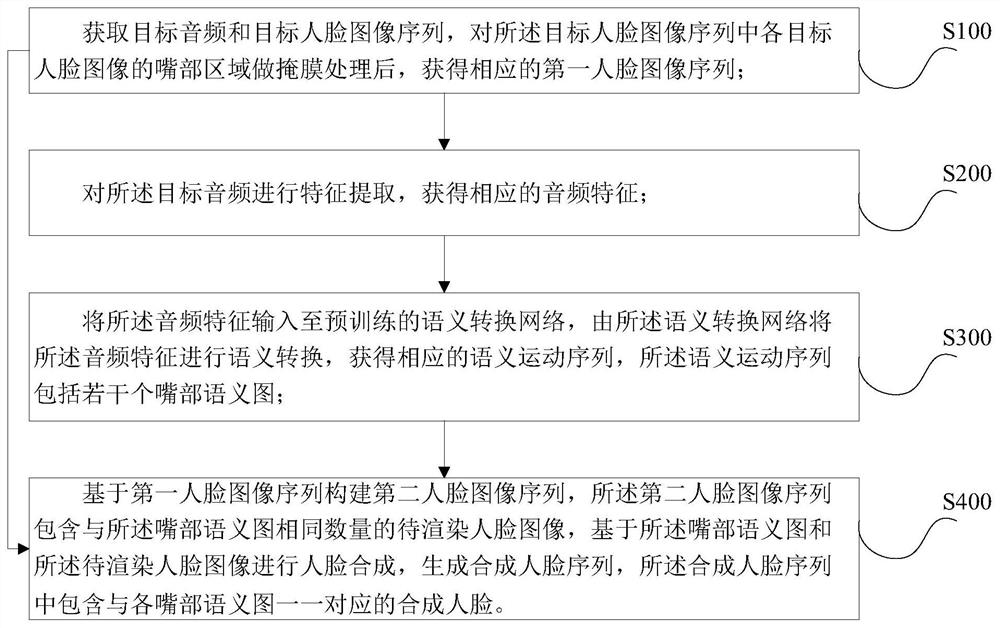

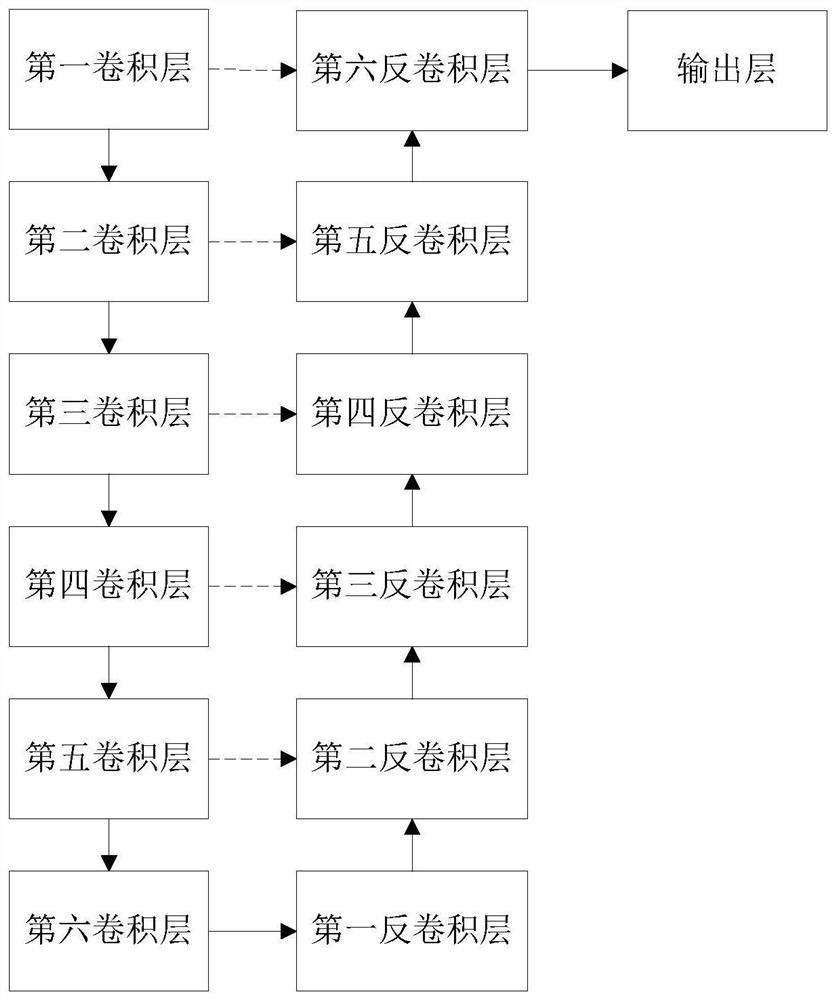

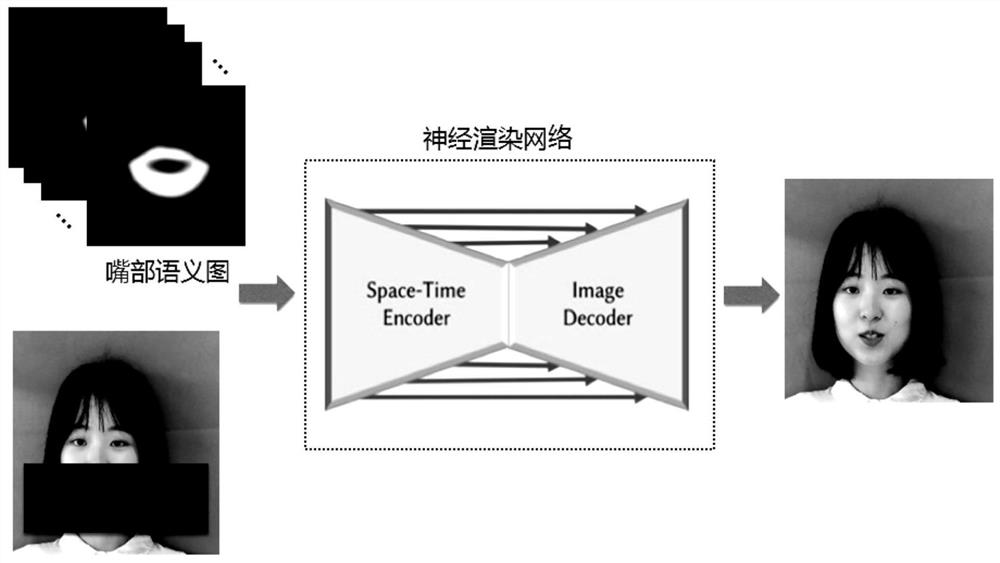

Semantic-based audio-driven digital human generation method and system

PendingCN112562722AGood technical effectAccurate and fine expressionCharacter and pattern recognitionSpeech recognitionFeature extractionSemantic translation

The invention discloses a semantic-based audio-driven digital human generation method and system. The generation method comprises the following steps of obtaining a target audio and a first human faceimage sequence; performing feature extraction on the target audio to obtain corresponding audio features; inputting the audio features into a pre-trained semantic conversion network, and performing semantic conversion on the audio features by the semantic conversion network to obtain a corresponding semantic motion sequence which comprises a plurality of mouth semantic graphs; and acquiring to-be-rendered face images with the same number as the mouth semantic graphs based on a first face image sequence, shielding mouth areas of the to-be-rendered face images, performing face synthesis based on the mouth semantic graphs and the to-be-rendered face images, and generating a synthesized face sequence. According to the invention, conversion between audio and facial semantics is realized through the semantic conversion network, and accurate expression of mouth shapes is realized by utilizing the facial semantics.

Owner:新华智云科技有限公司

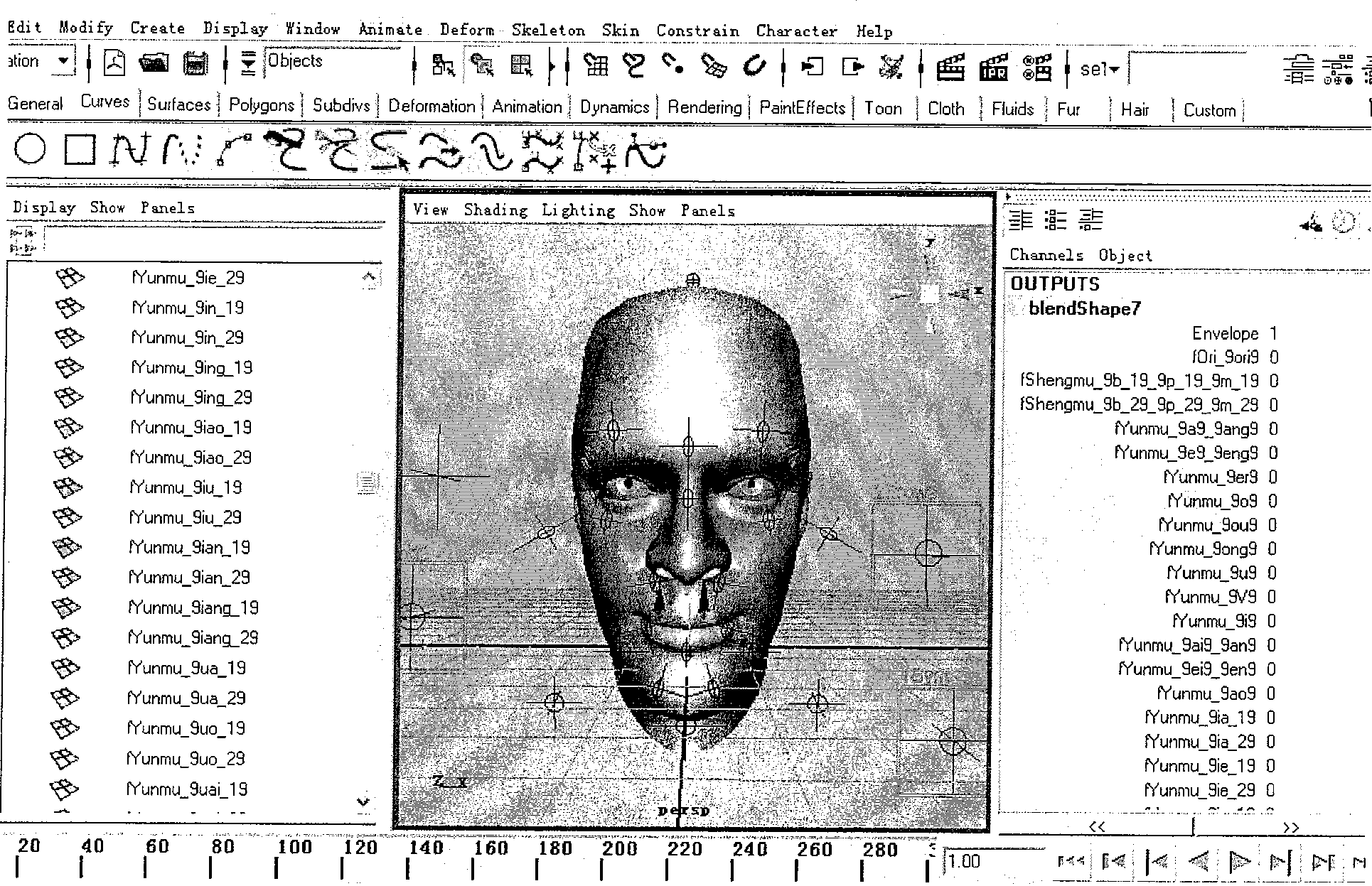

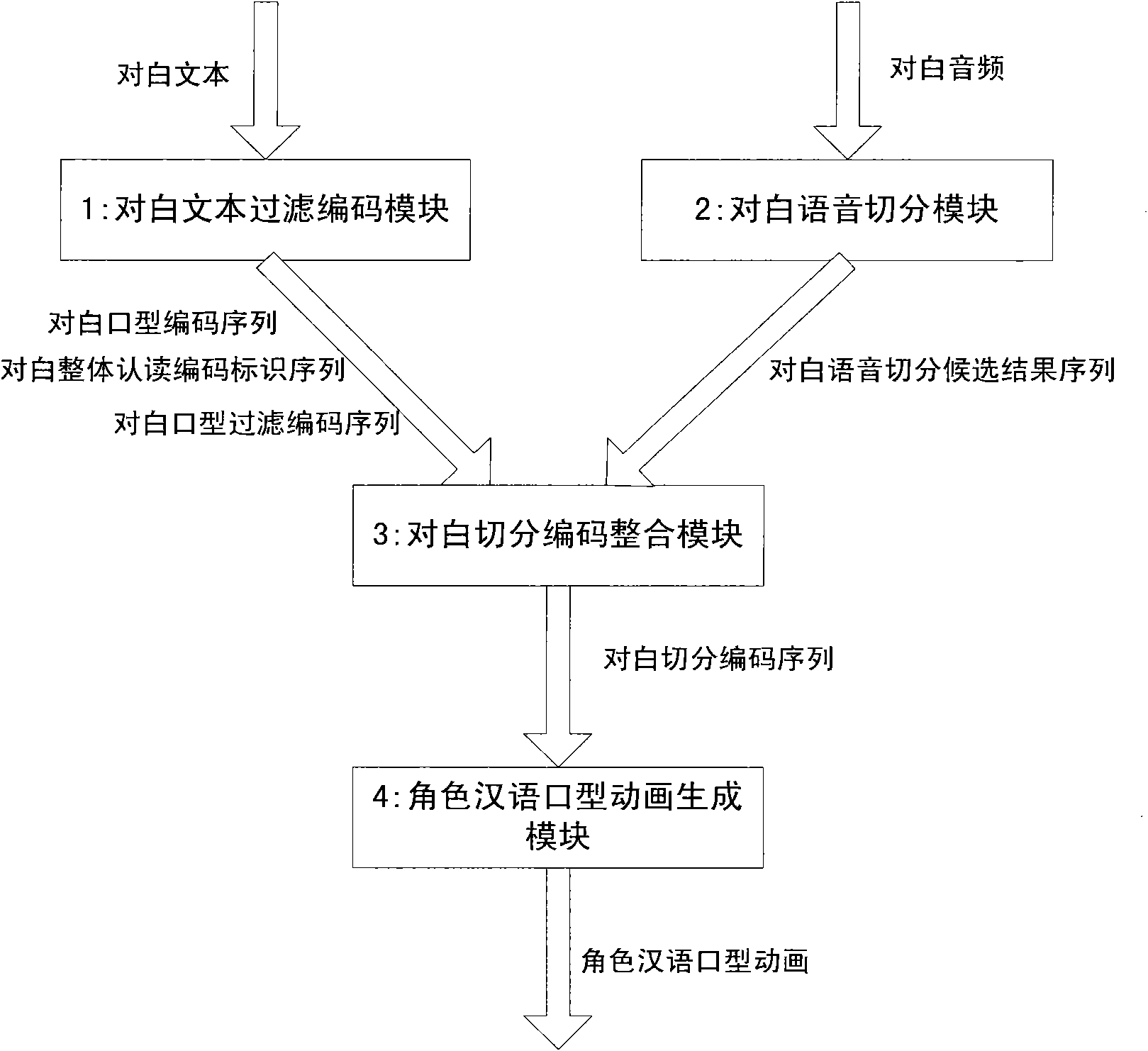

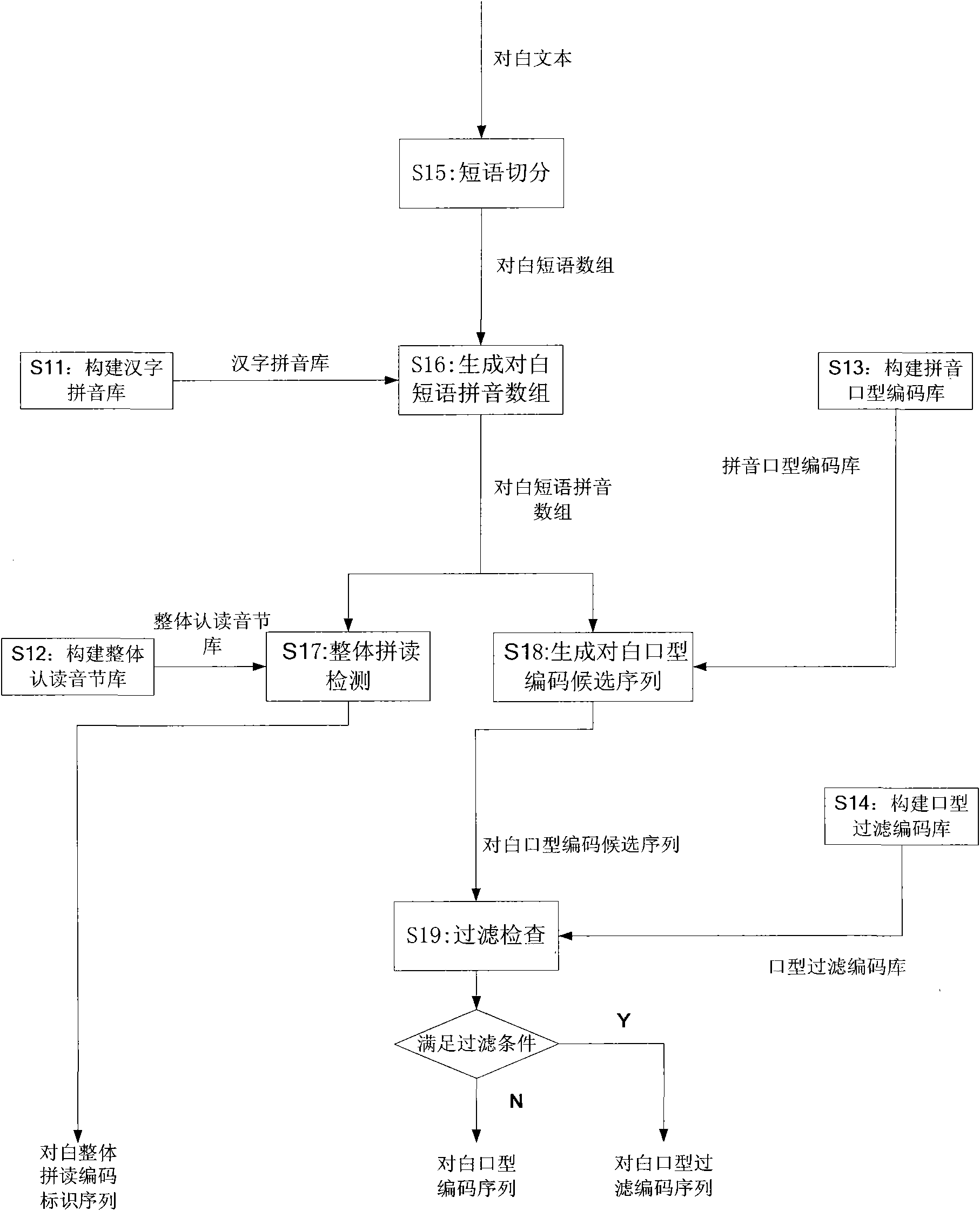

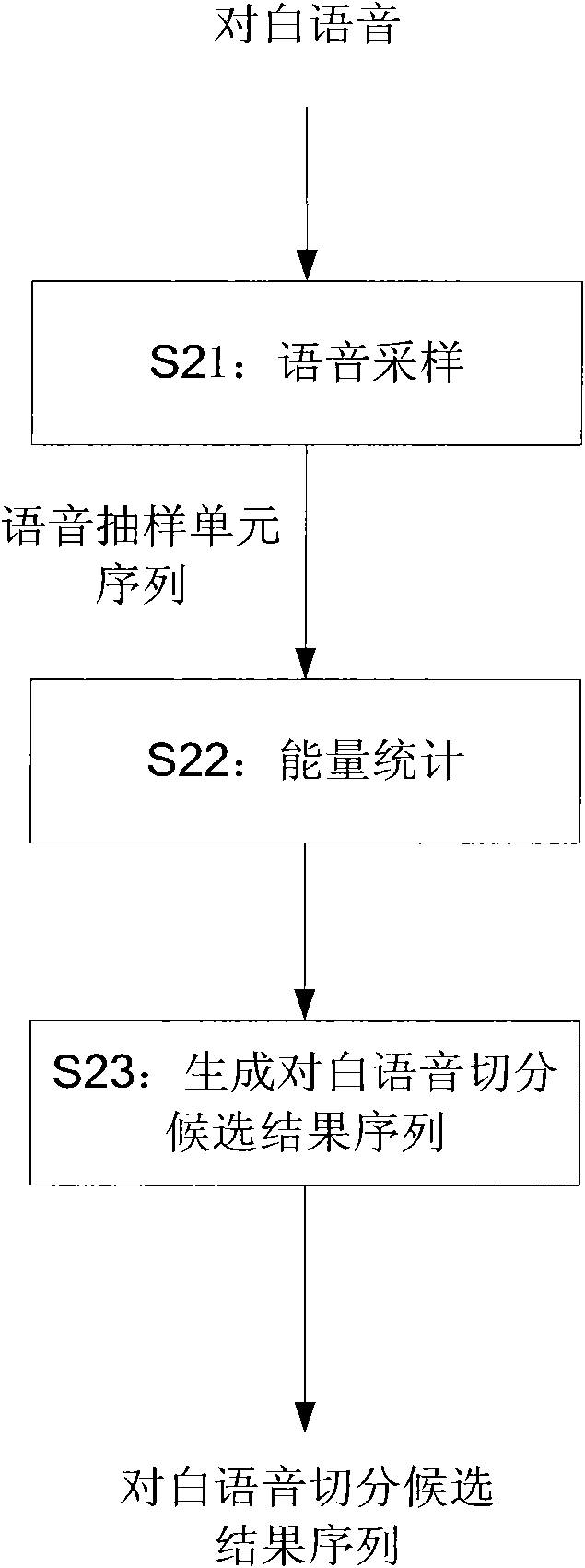

Automatic generating system for role Chinese mouth shape cartoon

InactiveCN101826216AImprove production efficiencyImprove practicality3D-image renderingCode moduleEnergy statistics

The invention discloses an automatic generating system for a role Chinese mouth shape cartoon, which comprises a dialogue text filtering and coding module, a dialogue phonetic segmentation module, a dialogue segmentation code integrating module and a role Chinese mouth shape cartoon generating module, wherein the dialogue text filtering and coding module performs phrase segmentation, pinyin mouth shape coding, integral recognition mark setting and coding and filtering on a dialogue text to generate and output a dialogue mouth shape code, an integral dialogue recognition coding mark and a dialogue mouth shape filtering and coding sequence; the dialogue phonetic segmentation module performs phonetic sampling and phonetic energy statistics on dialogue audio to generate and output dialogue phonetic segmentation candidate result sequences; the dialogue segmentation code integrating module is connected with the dialogue text filtering and coding module and the dialogue phonetic segmentation module and used for integrating and correcting the dialogue phonetic segmentation candidate result sequences to generate and output a dialogue segmentation code sequence; and the role Chinese mouth shape cartoon generating module is connected with the dialogue segmentation code integrating module and used for generating and outputting the role Chinese mouth shape cartoon according to the dialogue segmentation code sequence. The system can automatically finish the manufacture of the whole role Chinese mouth shape cartoon without loading a corresponding phonetic library during processing.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

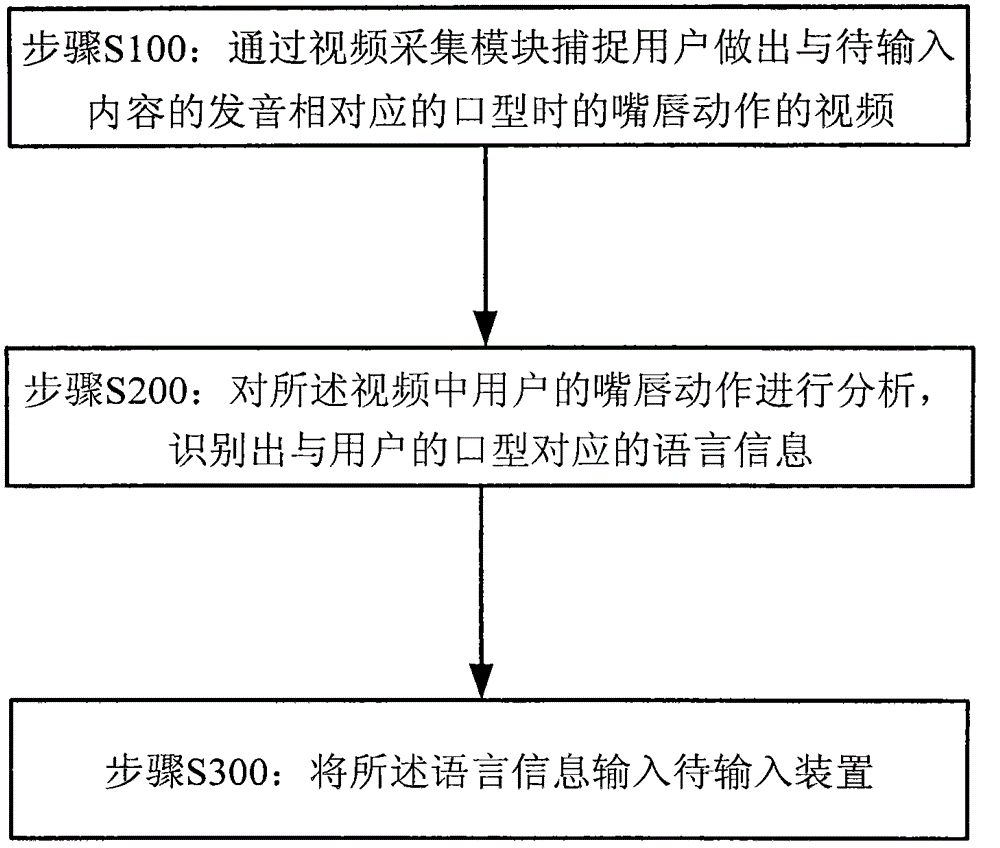

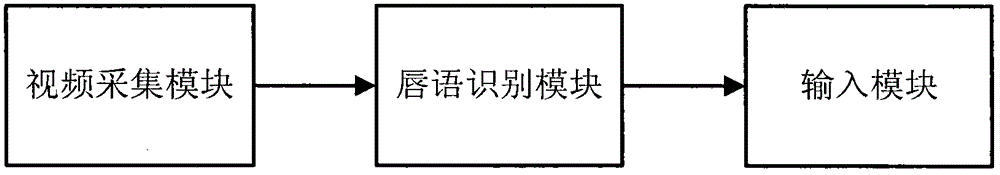

Method and system for inputting lip language

ActiveCN104808794AEasy inputAvoid exposing privacyInput/output for user-computer interactionGraph readingComputer visionMouth shape

The invention relates to a method and a system for inputting a lip language. The method comprises the following steps of step S100, capturing a video of lip action of a mouth shape corresponding to pronunciation of to-be-input content by a user through a video capturing module; step S200, analyzing the lip action of the user in the video, and identifying the language information corresponding to the mouth shape of the user; step S300, inputting the language information into a to-be-input device. The system comprises the video capturing module, a lip language identifying module and an input module. The corresponding language information can be input only by making movement of a lip during speaking on the video capturing module of the to-be-input device, so that the method and the system have the characteristic of convenience in input.

Owner:BEIJING KUANGSHI TECH +1

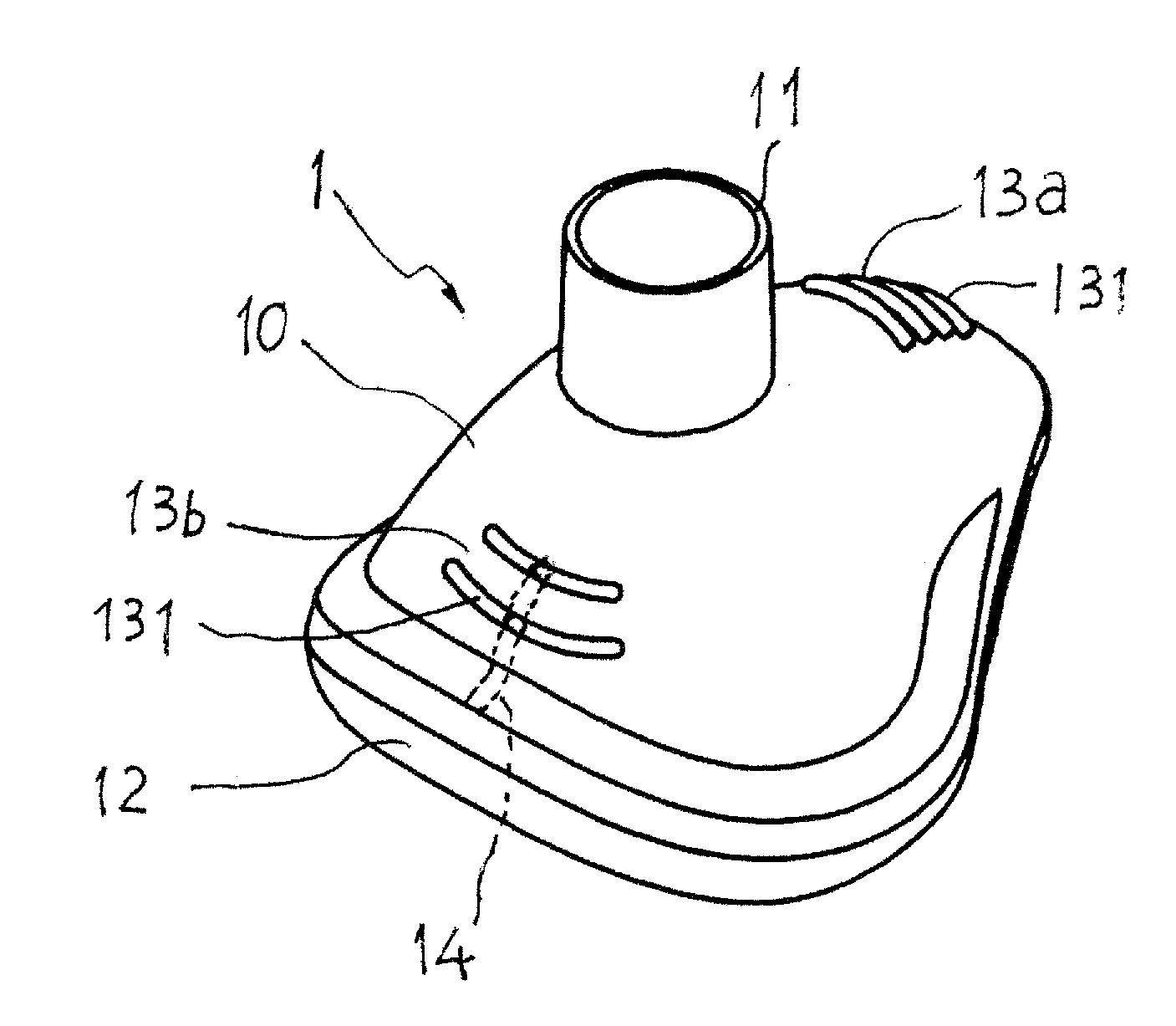

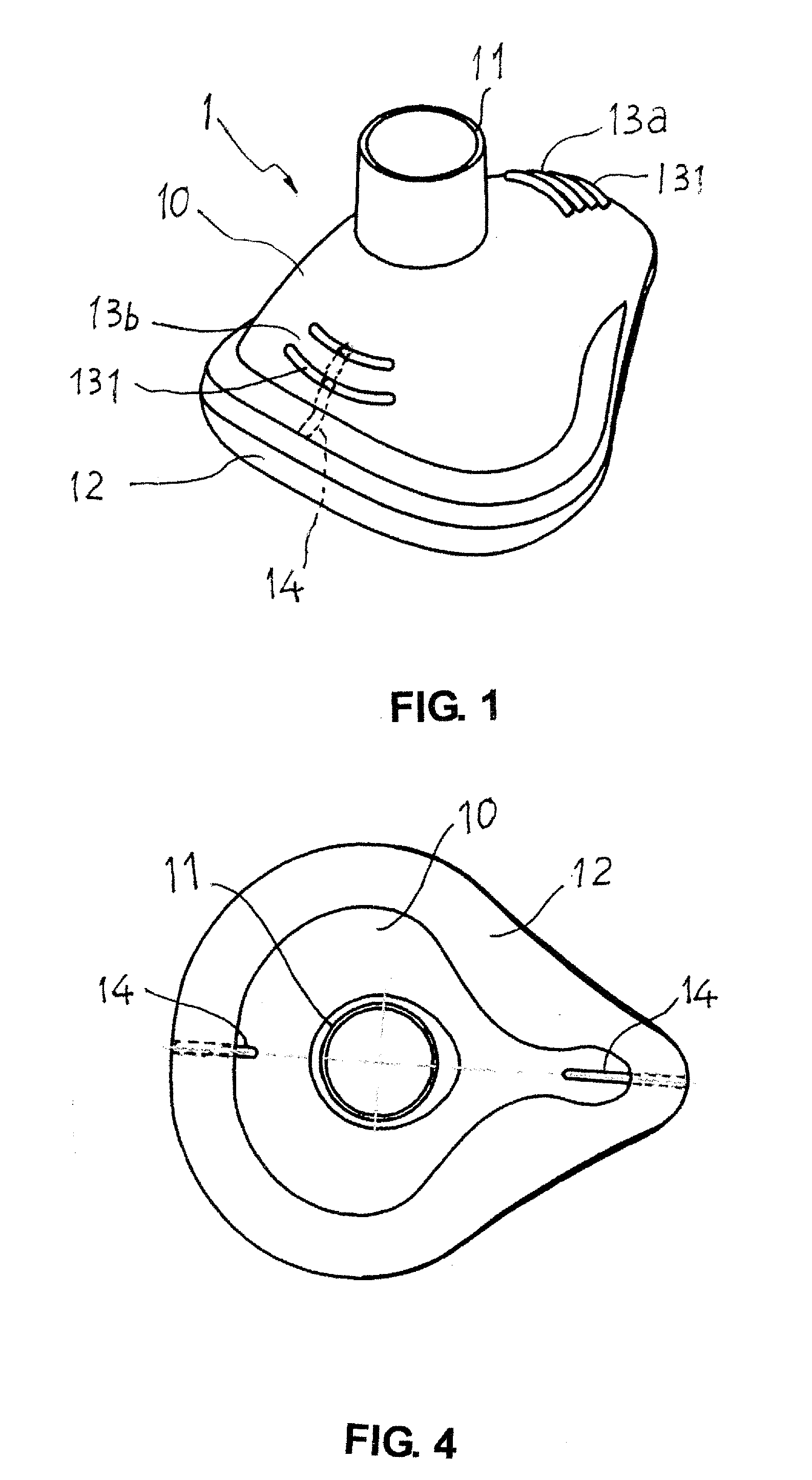

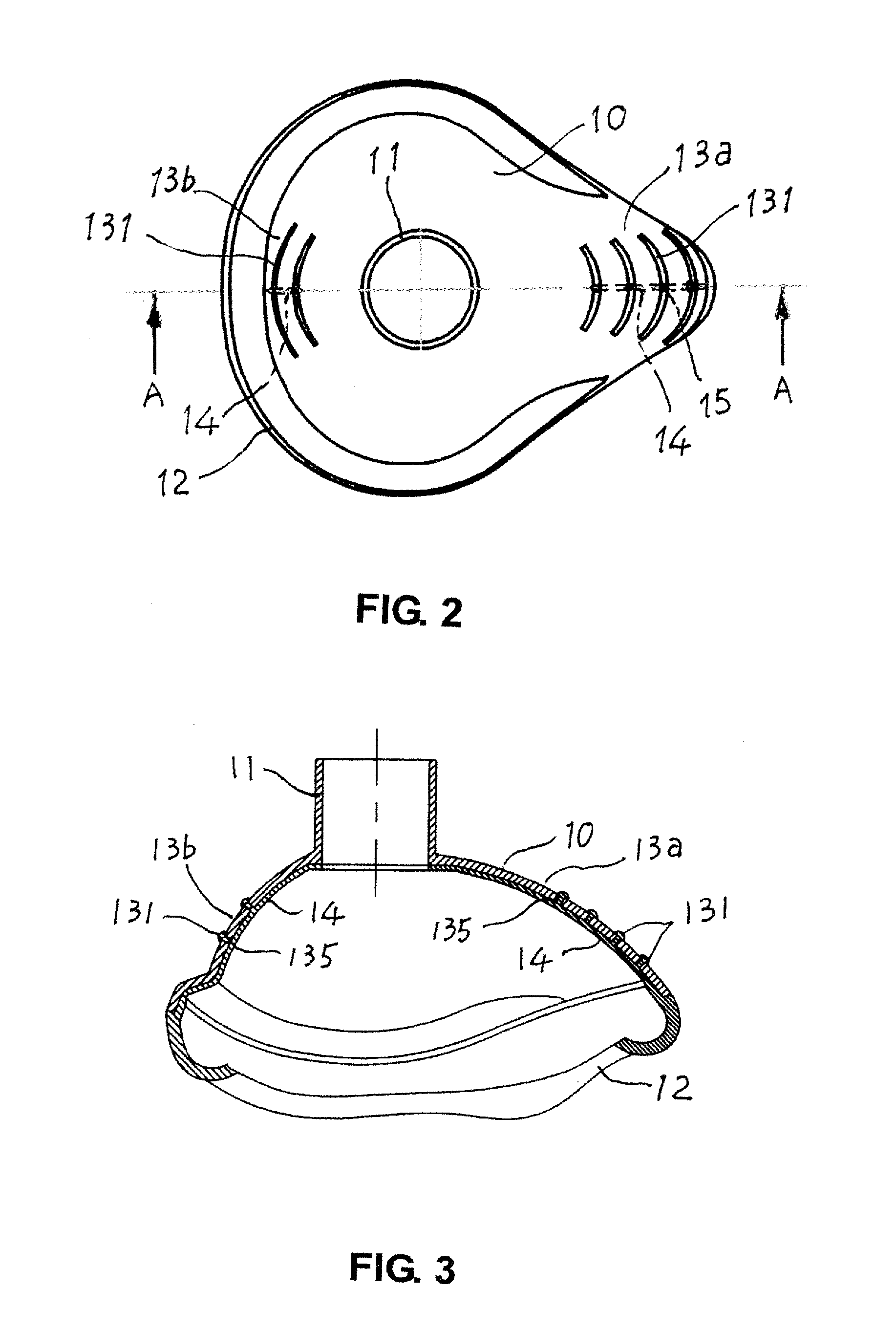

Anti-slip face mask

A face mask for medical use, respiration anesthesia or first aid use. The face mask is structured from a main body casing made of hard plastic, with a tubular shaped air inlet protruding from the surface of casing; and a mouth-shaped adhering piece made of soft elastic plastic. The adhering piece is formed on the peripheral edge of the opening of casing, and is used to adhere to the user's face. Anti-slip portions that are formed as an integral body with the soft adhering piece and formed as protruding strips, sheet forms, protruding points or a mixed configuration thereof are provided on at least one portion of the surface of casing.The anti-slip portions are used to enable health care personnel to easily hold the face mask and prevent the face mask from slipping when fitting it to a patient or removing from the patient for cleaning and sterilizing.

Owner:BESMED HEALTH BUSINESS CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com