Virtual person-based multi-mode interactive processing method and system

A virtual human and multi-modal technology, applied in the field of human-computer interaction, can solve the problems of being unable to realize lifelike, smooth, anthropomorphic, and virtual human being unable to perform multi-modal interaction, so as to improve user experience, meet user needs, and smooth characters The effect of the interaction effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

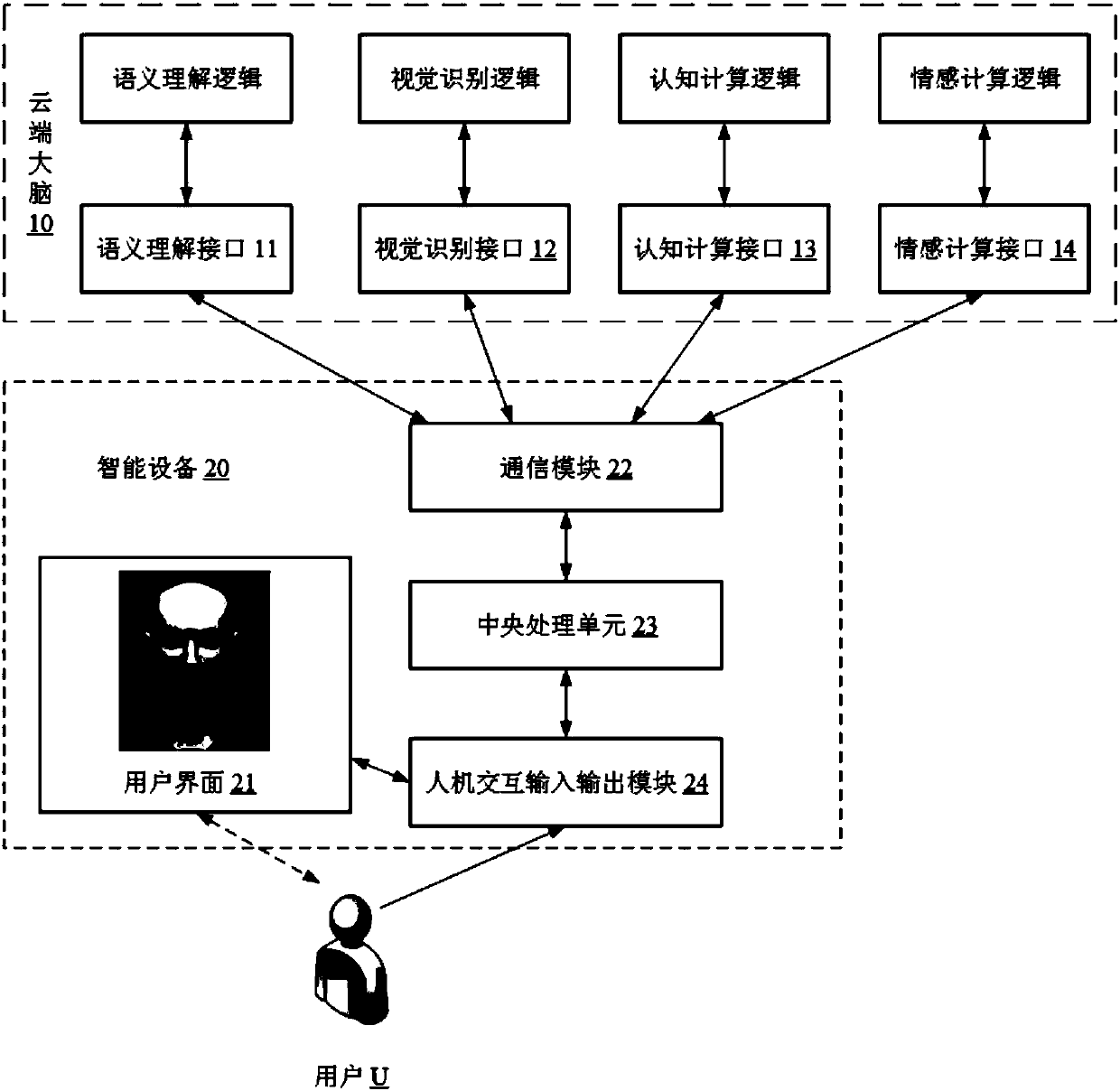

[0043] figure 1 It is a schematic diagram of the application scenario of the virtual human-based multi-modal interaction system according to the first embodiment of the present application. The virtual person A can be displayed to the user in the form of a holographic image or a display interface through the smart device equipped on it, and the virtual person A can achieve voice, facial expression, Emotional, head, and body coordination. In this embodiment, the system mainly includes a cloud brain (cloud server) 10 and a smart device 20 for multimodal interaction with users. The smart device 20 can be a traditional PC personal computer, LapTop notebook computer, etc., or a terminal device that can be carried around and can access the Internet through a wireless local area network, mobile communication network, or other wireless means. In the embodiment of the present application, wireless terminals include but are not limited to mobile phones, Netbooks (netbooks), etc., and ...

no. 2 example

[0086] In this example, the virtual person A can be displayed to the user in the form of a holographic image or a display interface through the smart device carried on it. The difference from the first embodiment is that the virtual person A can interact with the user in different scenarios. Multi-modal interaction, such as family scenes, stage scenes, playground scenes, etc.

[0087] In this example, the description of the same or similar content as the first embodiment is omitted, and the description is focused on the content different from the first embodiment. Such as Figure 5 As shown, the Cloud Brain 10 terminal has the function of obtaining scene information, and the specific operations are as follows: obtain the scene information of the current virtual person, the scene information includes application scene information and external scene information, and then in the process of decision-making multi-modal output data : Extract the scene information and use it to filt...

no. 3 example

[0092] In this example, the virtual person A can be displayed to the user in the form of a holographic image or a display interface through the smart device carried on it. The difference from the first embodiment is that the virtual person A can communicate with the user in different fields. Multi-modal interaction, such as financial fields, education fields, etc.

[0093] The field is the application field associated with the pre-set virtual person, for example, the virtual person with the image of a star, whose field can be entertainment; the virtual person with the image of a teacher, whose field can be education; the virtual person with the image of a white-collar worker, whose field can be for finance etc. These domain information are pre-set for virtual humans with different images, and when multi-modal interaction with virtual humans in different fields, the multi-modal data output by virtual humans will also match the field, for example, in an entertainment field On t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com