Patents

Literature

84 results about "Multimodal interaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Multimodal interaction provides the user with multiple modes of interacting with a system. A multimodal interface provides several distinct tools for input and output of data. For example, a multimodal question answering system employs multiple modalities (such as text and photo) at both question (input) and answer (output) level.

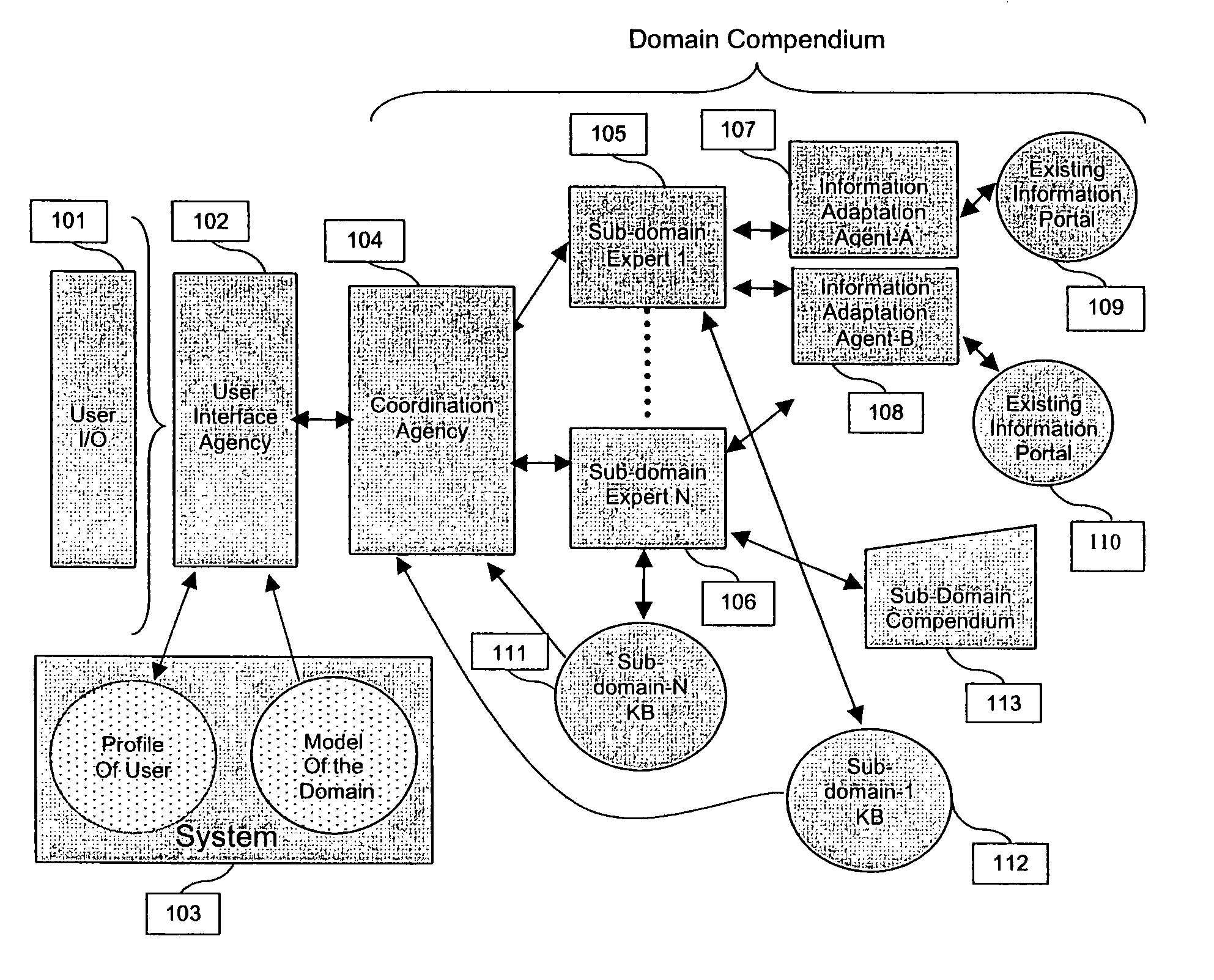

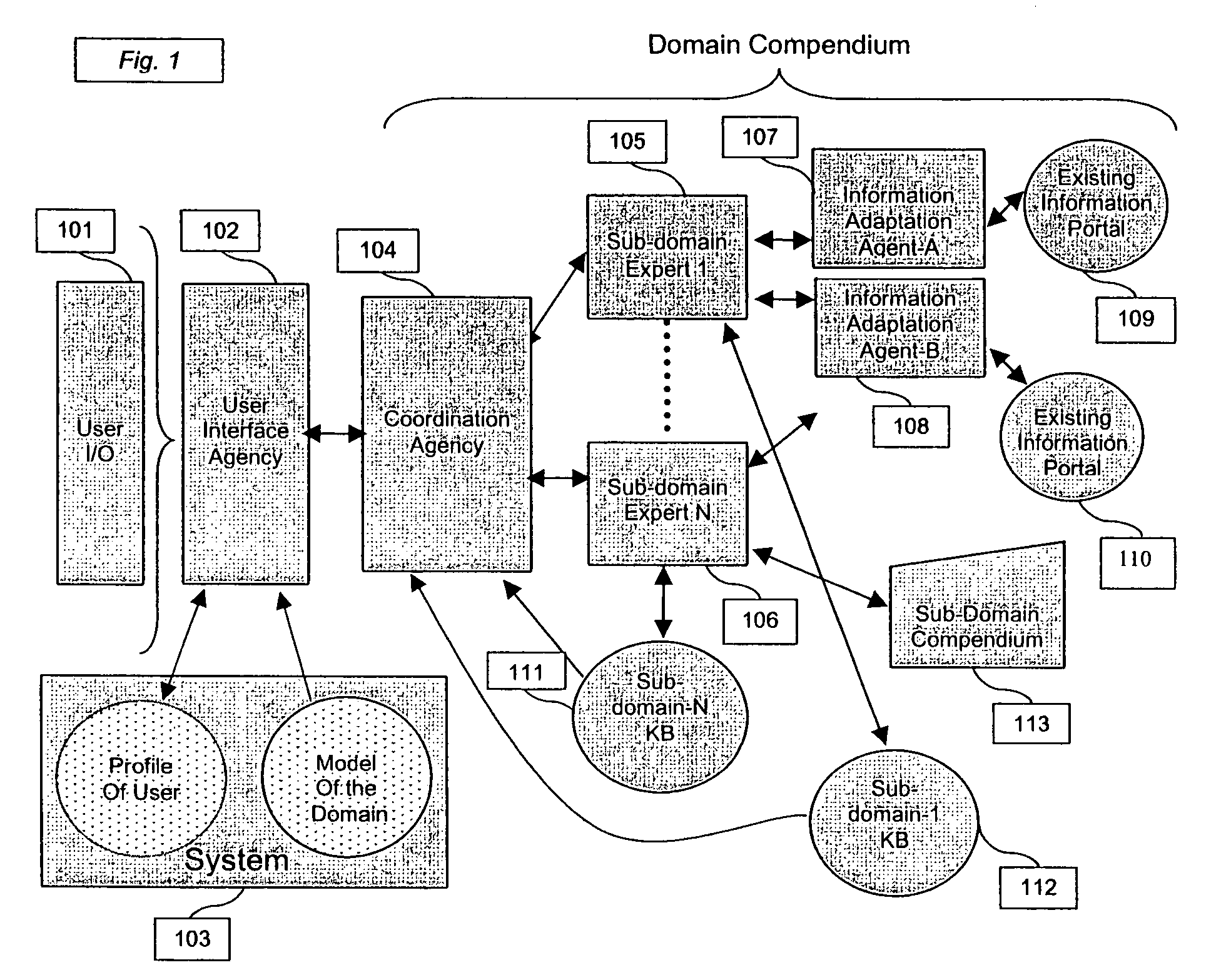

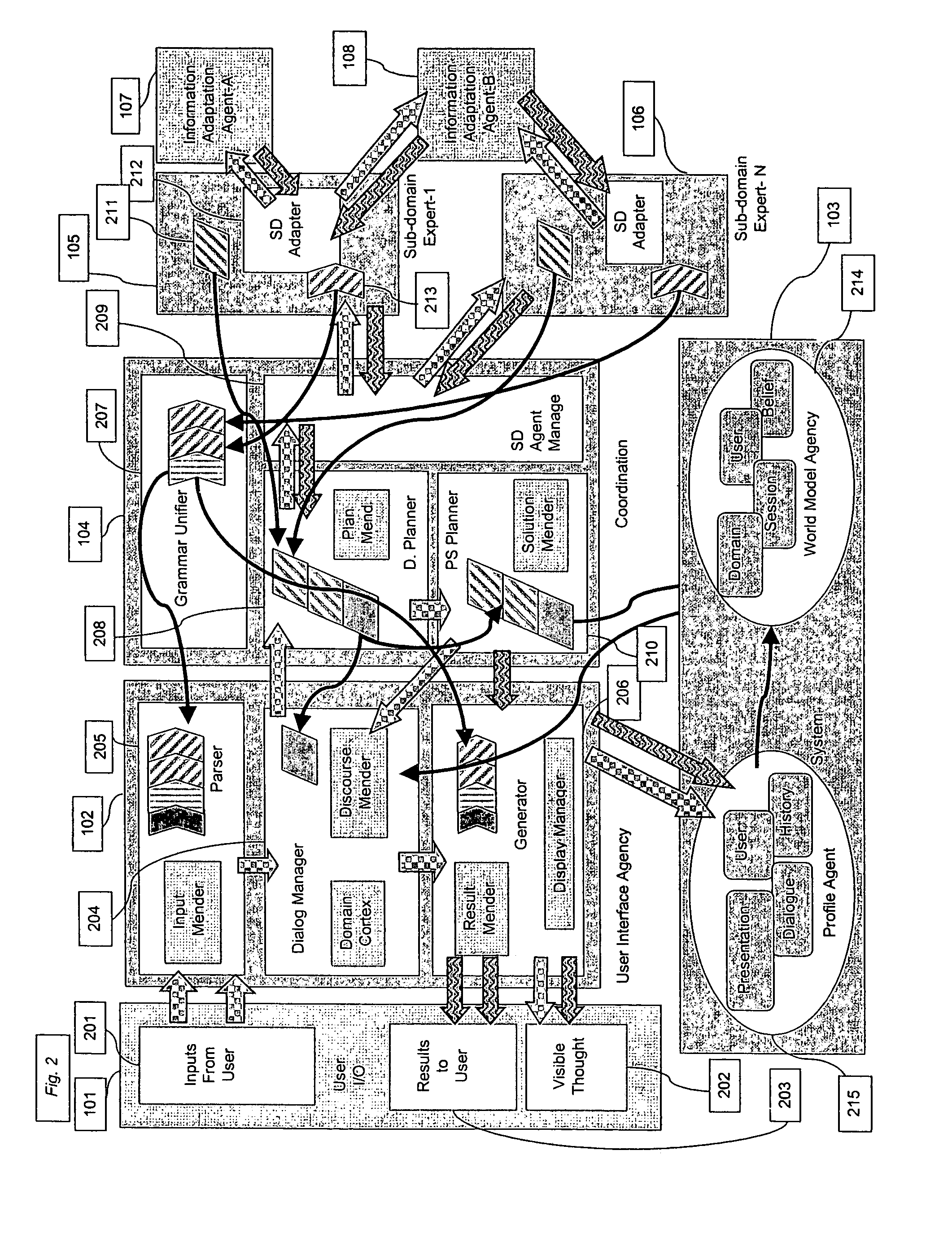

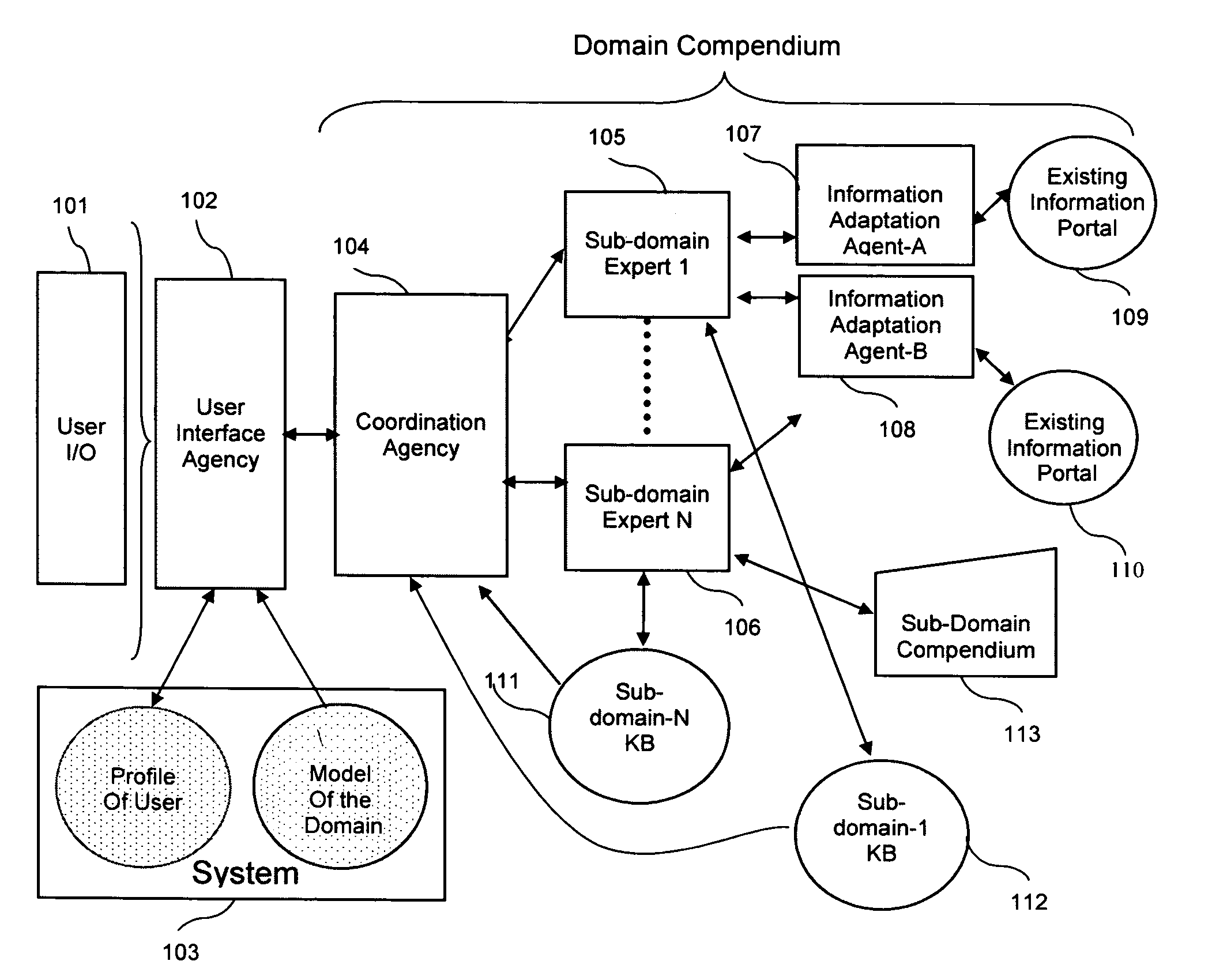

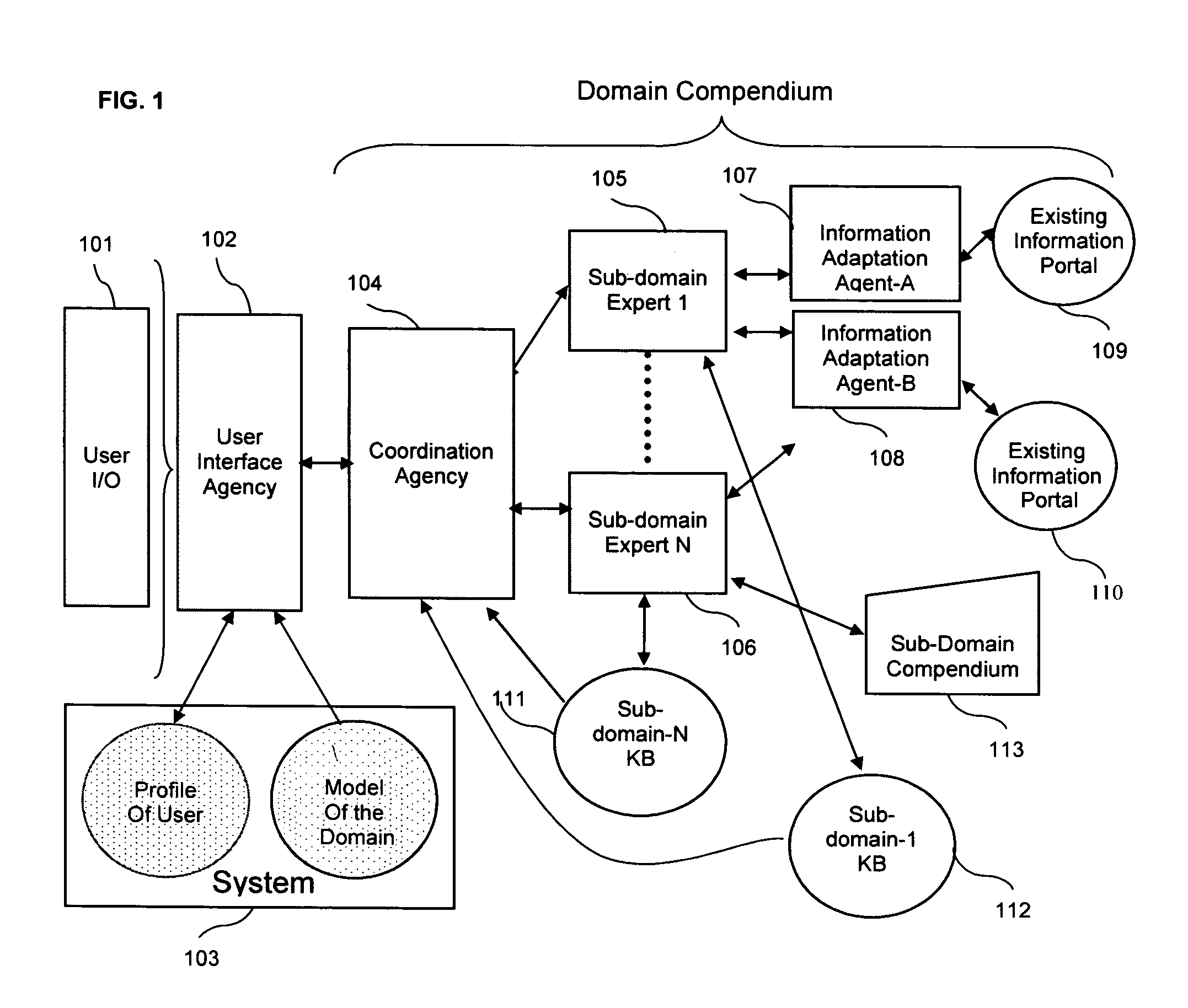

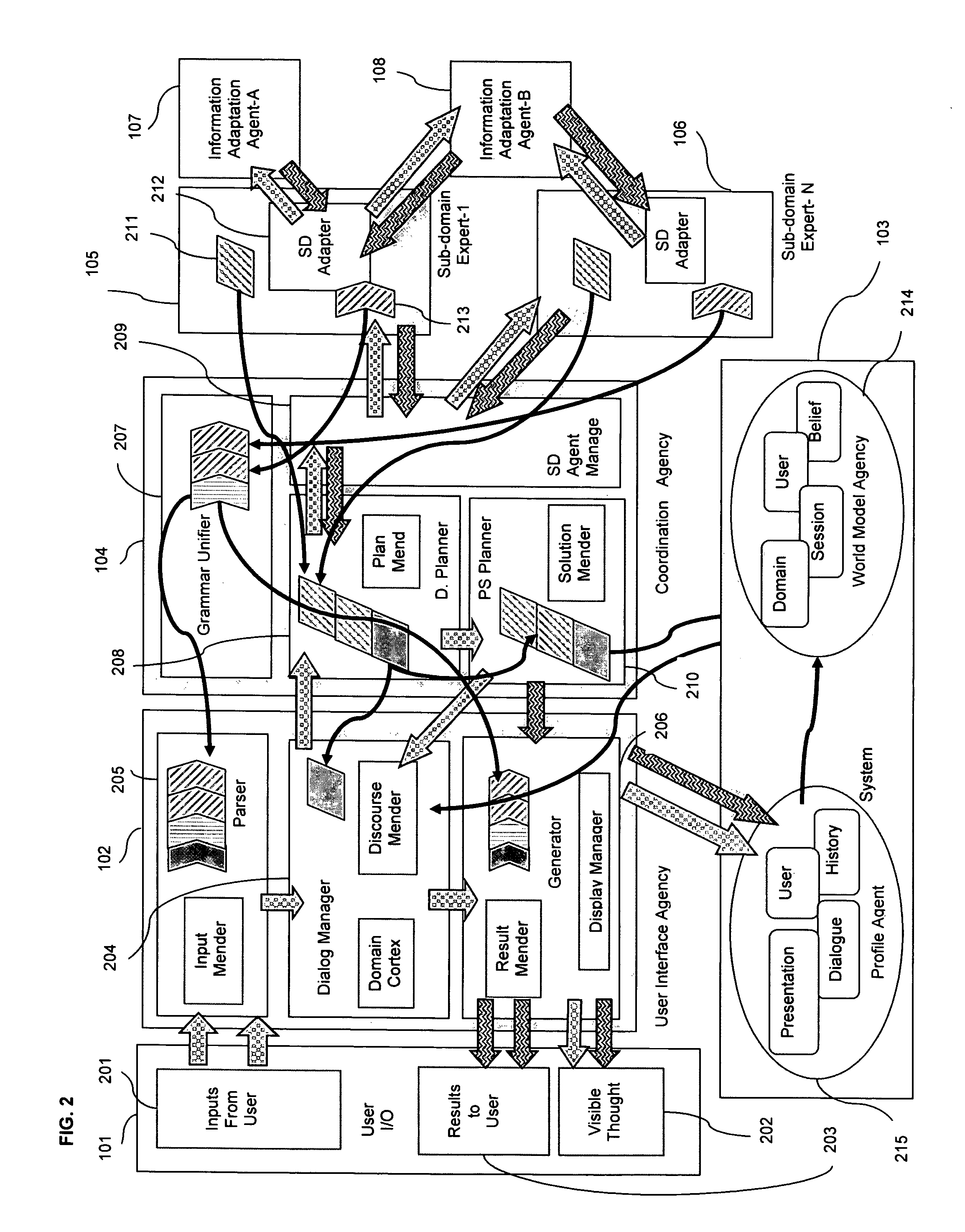

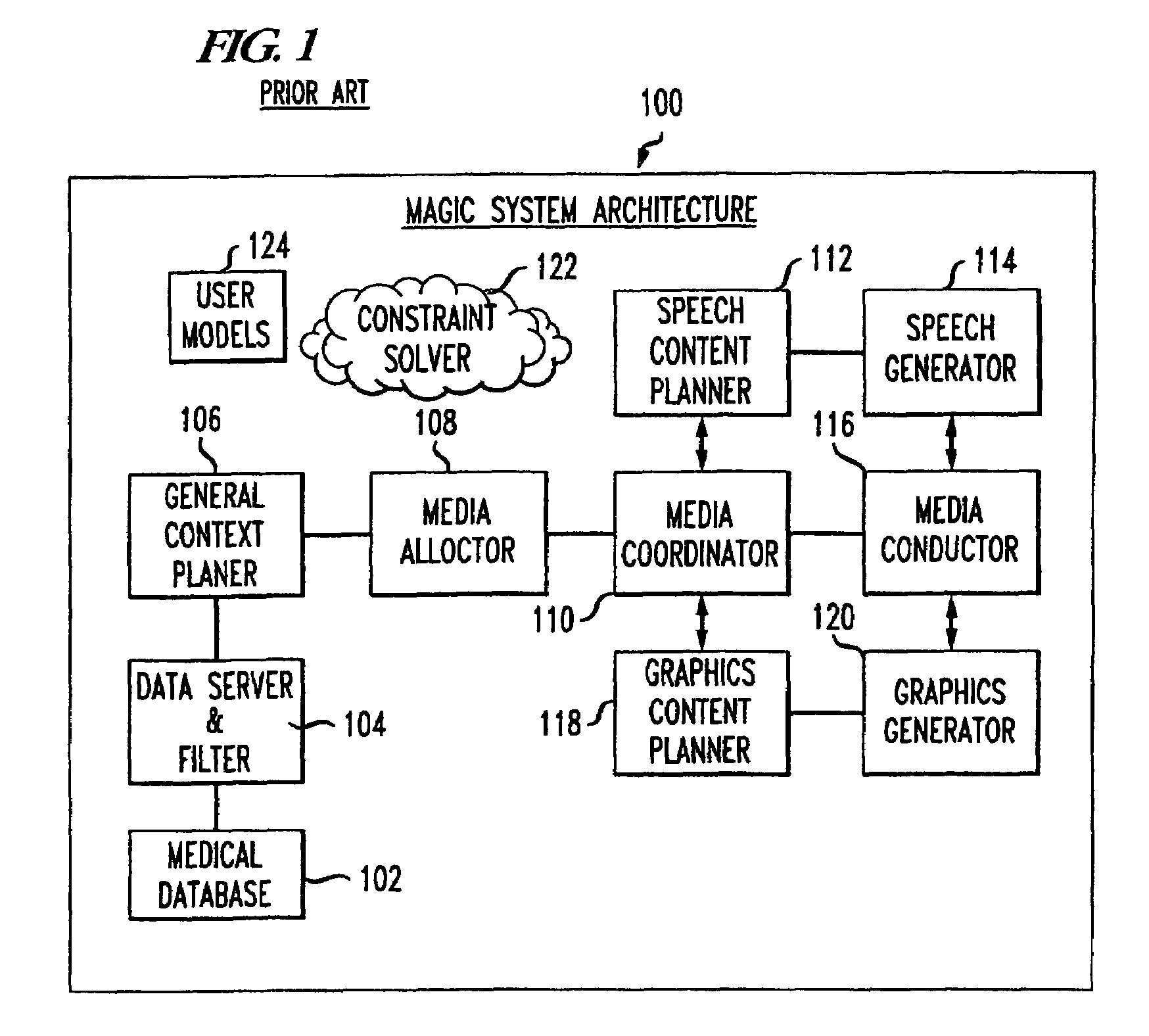

Intelligent portal engine

ActiveUS7092928B1Improve system performanceReduce ambiguityDigital computer detailsNatural language data processingGraphicsHuman–machine interface

Owner:OL SECURITY LIABILITY CO

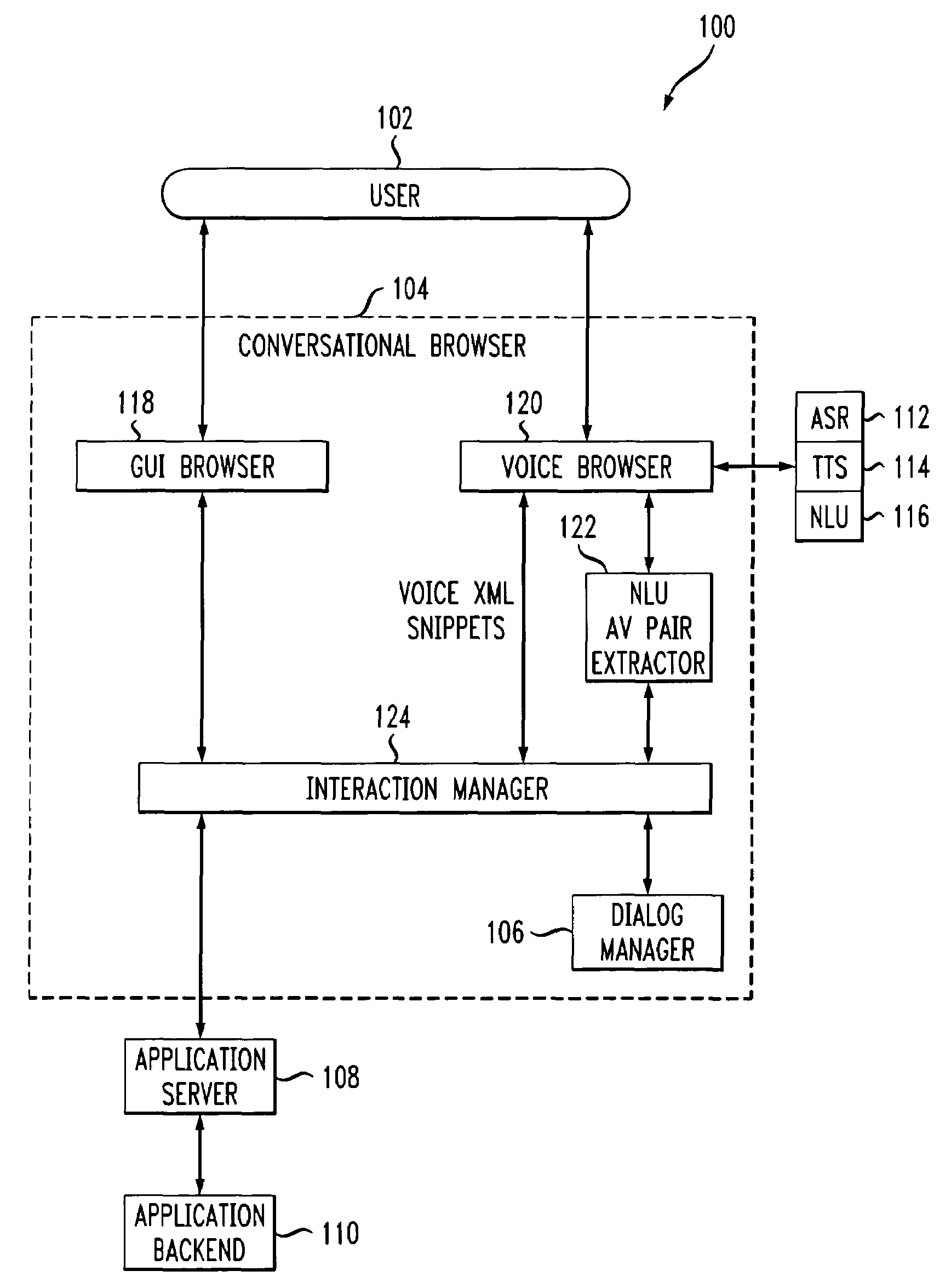

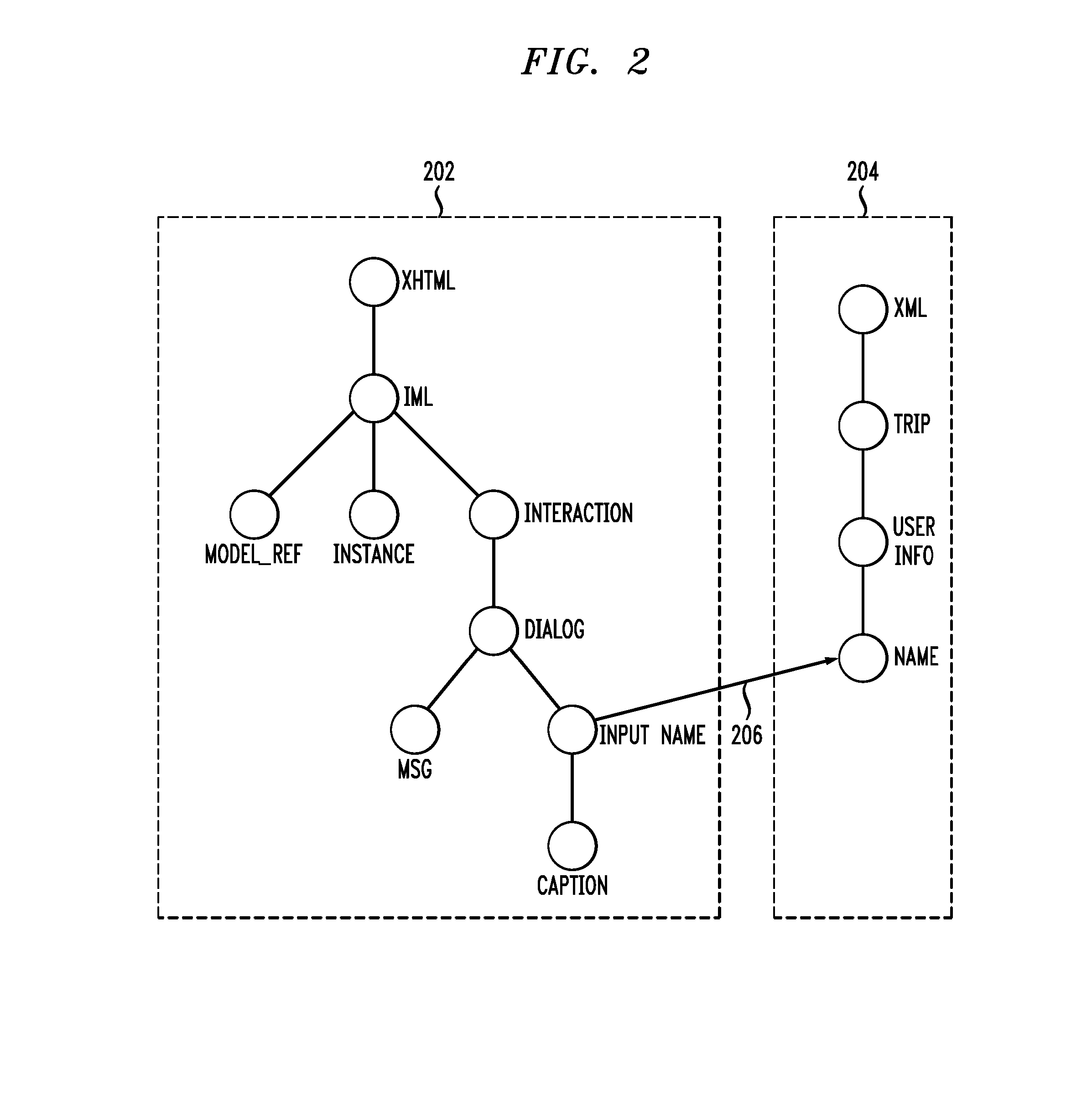

Methods and systems for authoring of mixed-initiative multi-modal interactions and related browsing mechanisms

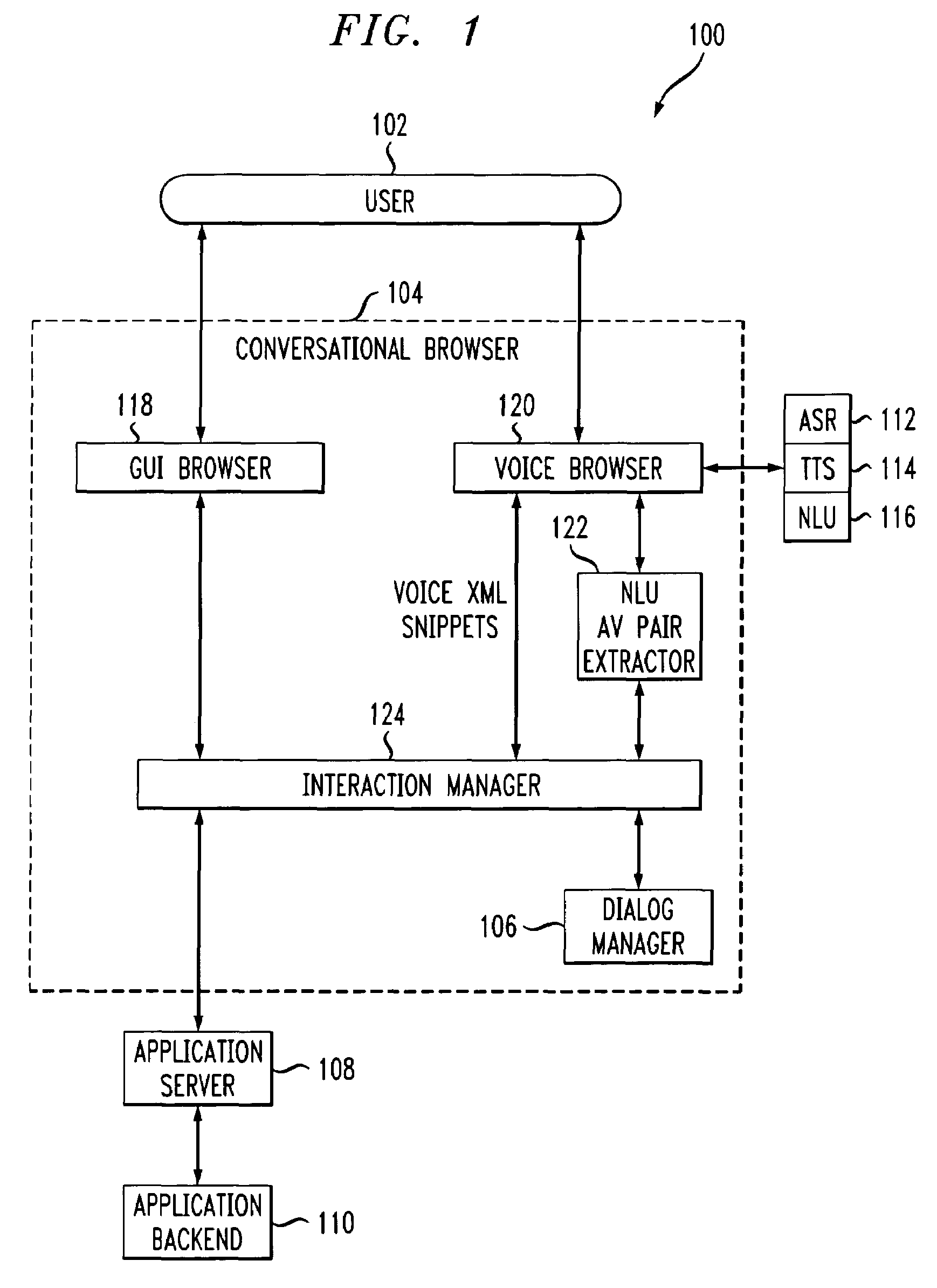

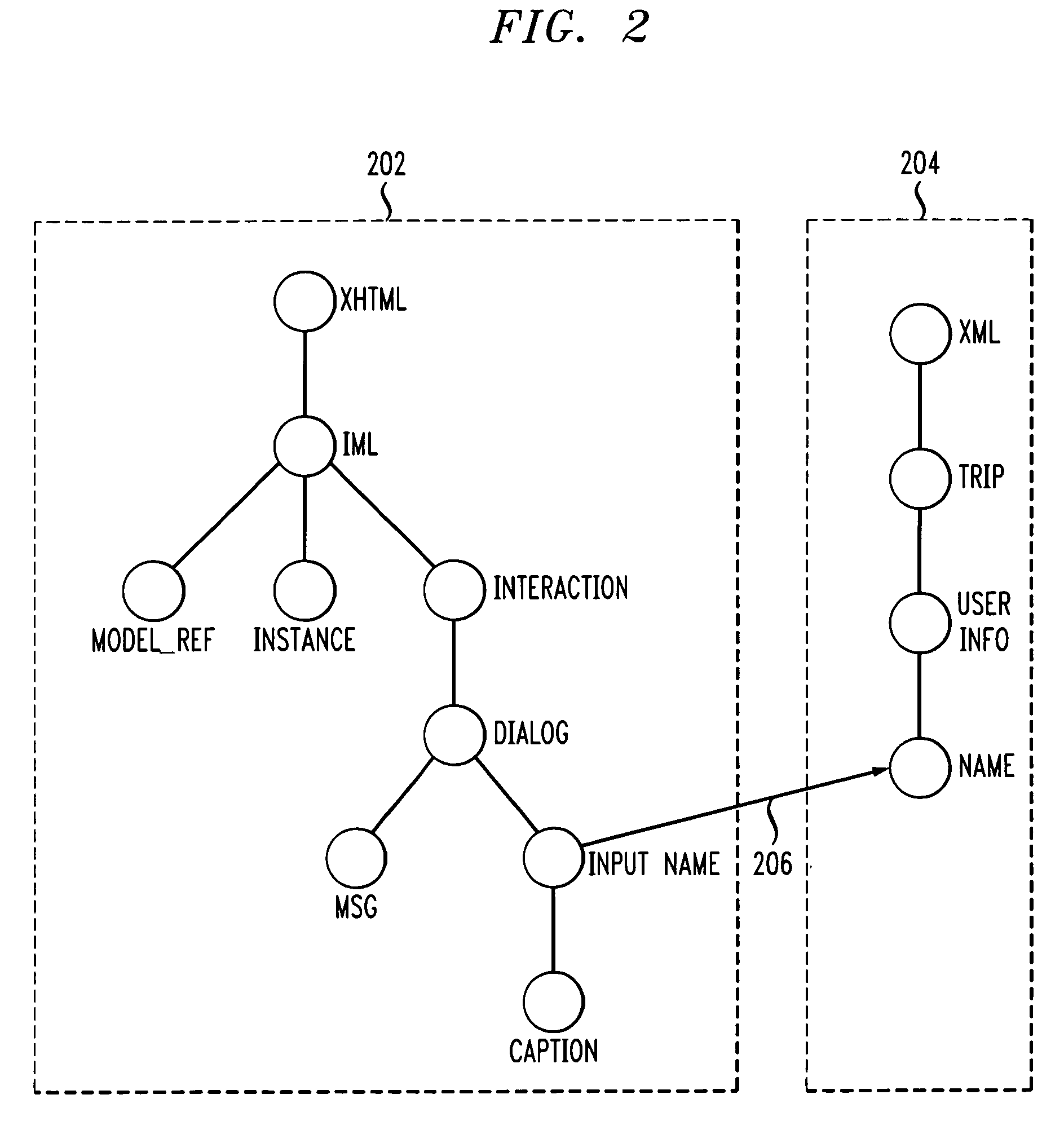

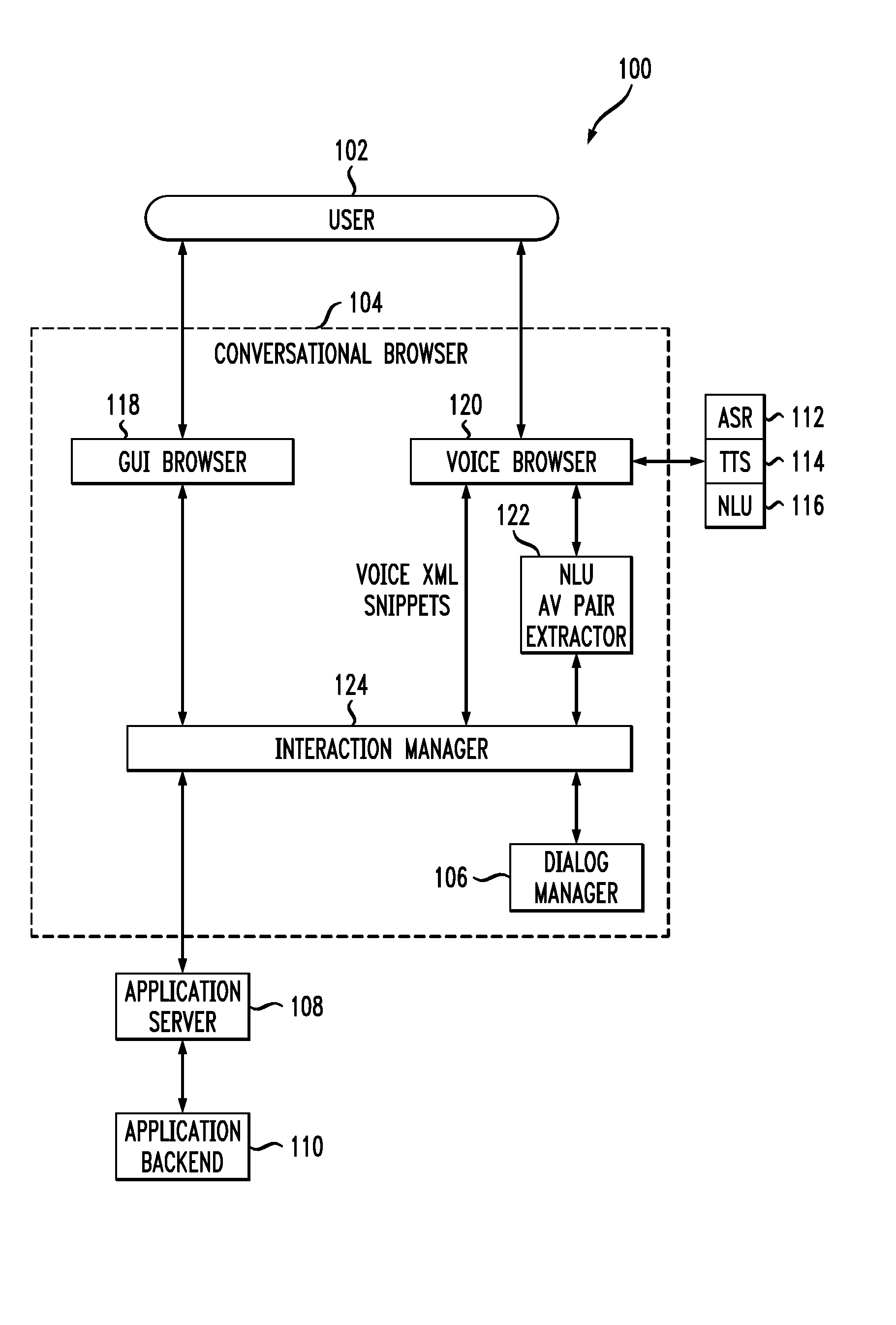

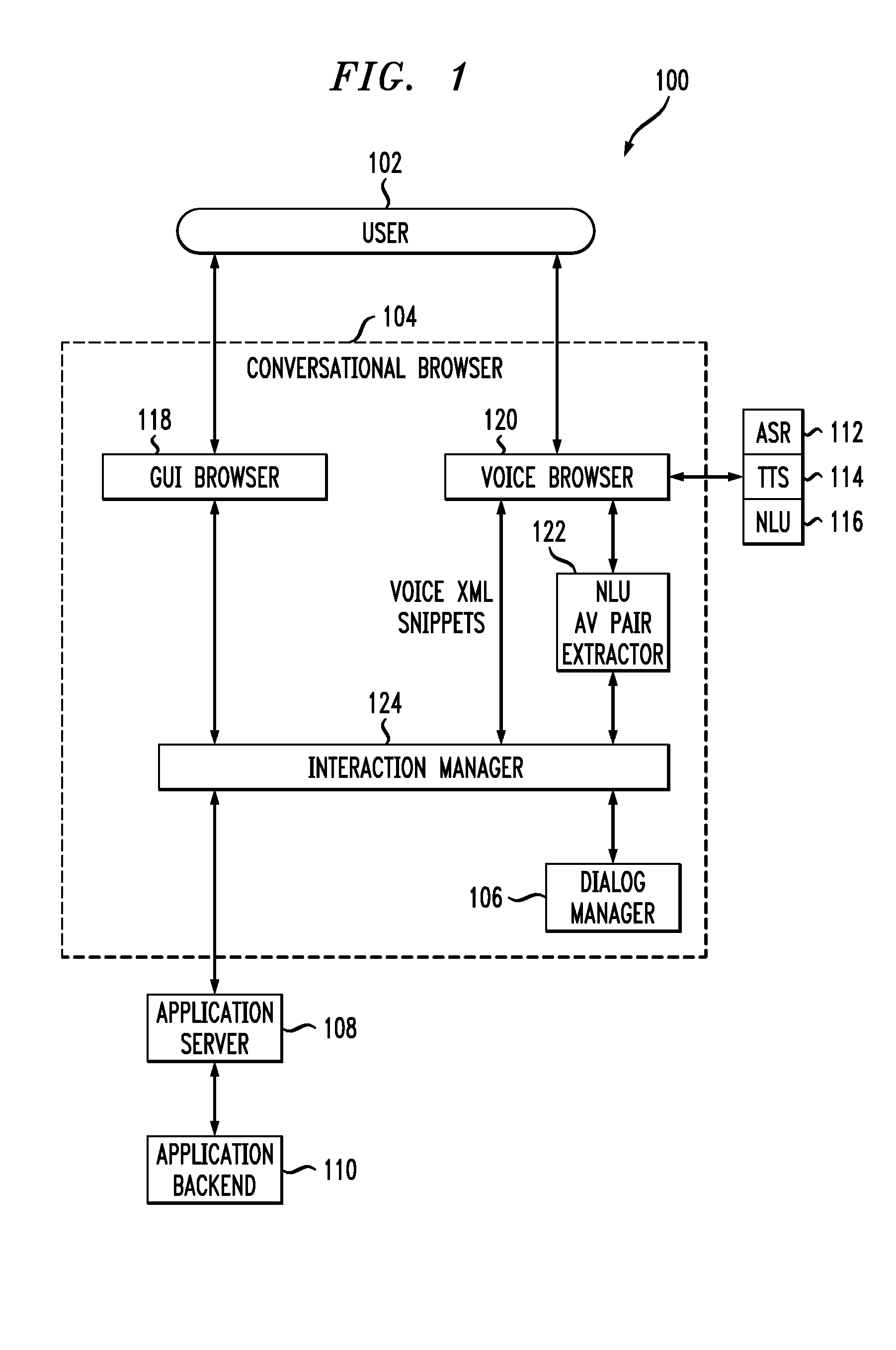

Application authoring techniques, and information browsing mechanisms associated therewith, which employ programming in association with mixed-initiative multi-modal interactions and natural language understanding for use in dialog systems. Also, a conversational browsing architecture is provided for use with these and other authoring techniques.

Owner:INT BUSINESS MASCH CORP

Methods and Systems for Authoring of Mixed-Initiative Multi-Modal Interactions and Related Browsing Mechanisms

InactiveUS20080034032A1Software engineeringNatural language data processingDialog systemNatural language understanding

Application authoring techniques, and information browsing mechanisms associated therewith, which employ programming in association with mixed-initiative multi-modal interactions and natural language understanding for use in dialog systems. Also, a conversational browsing architecture is provided for use with these and other authoring techniques.

Owner:IBM CORP

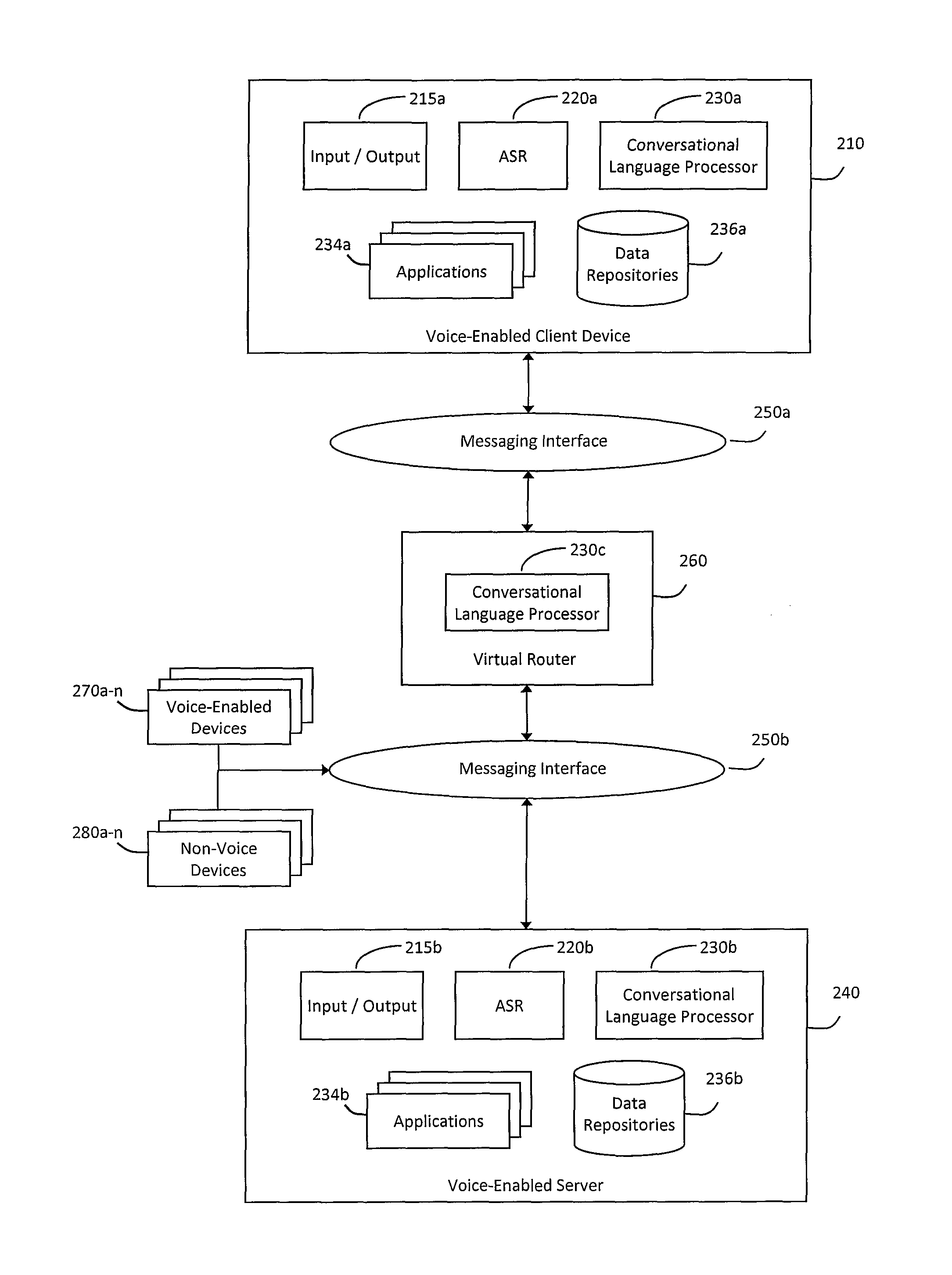

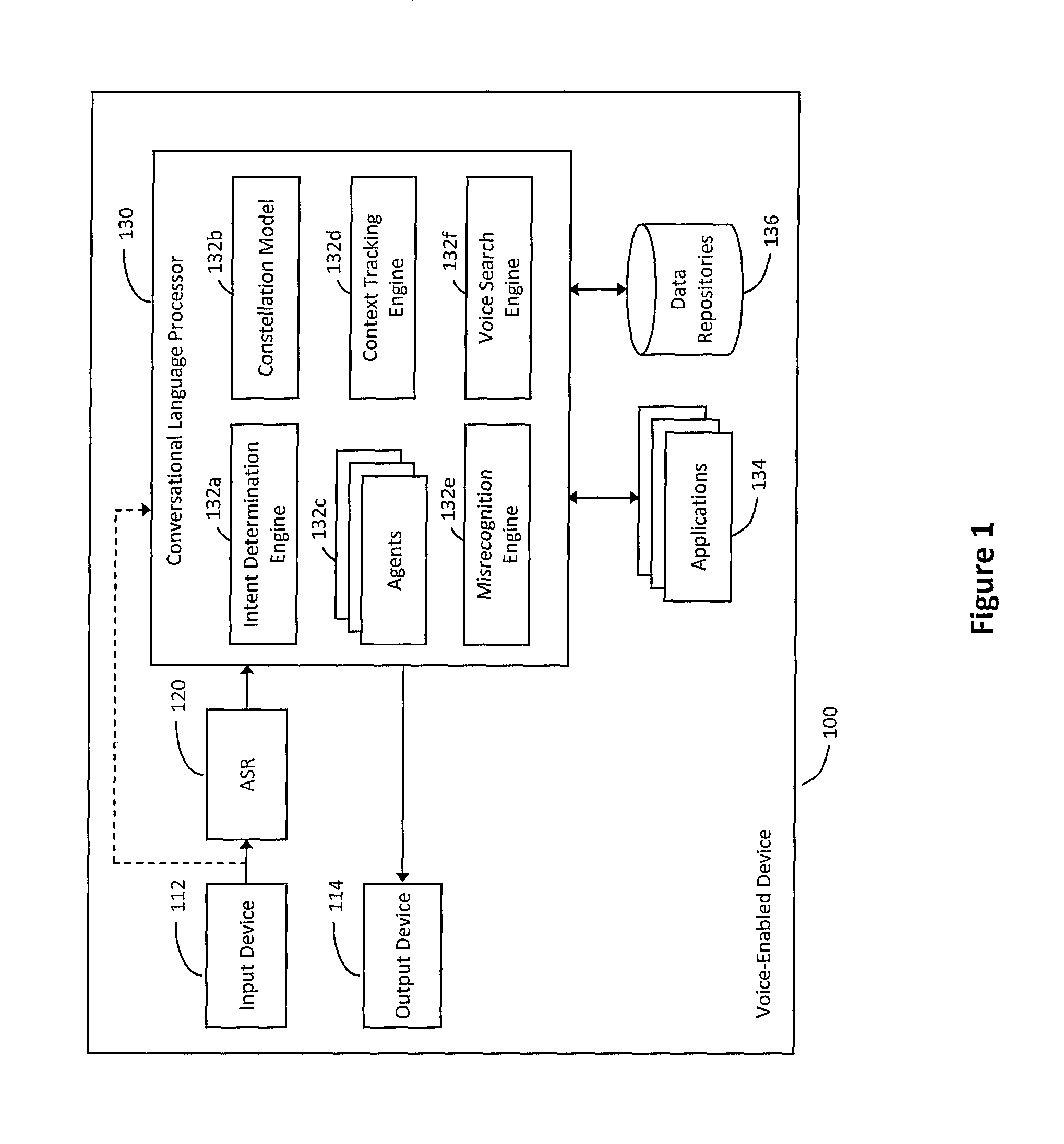

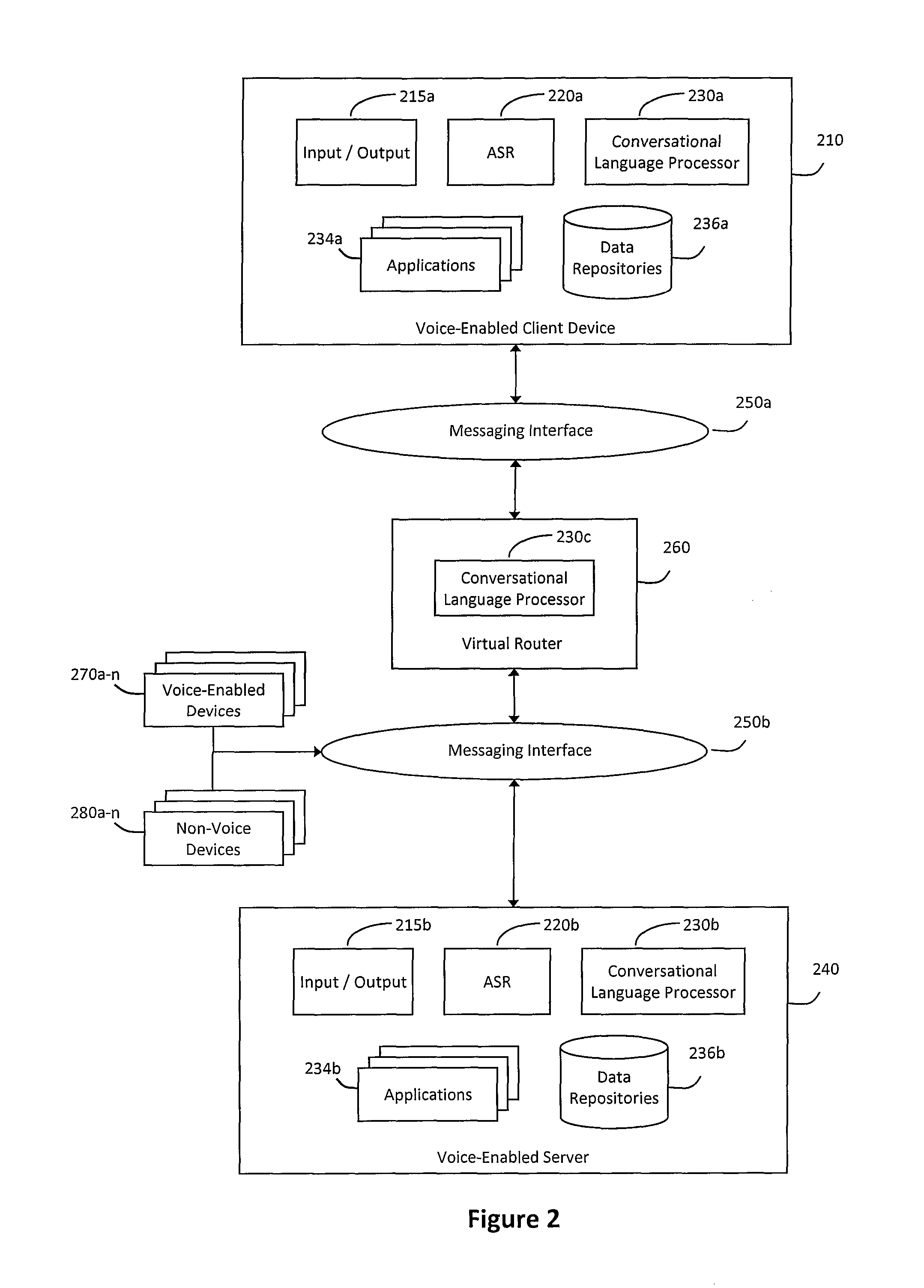

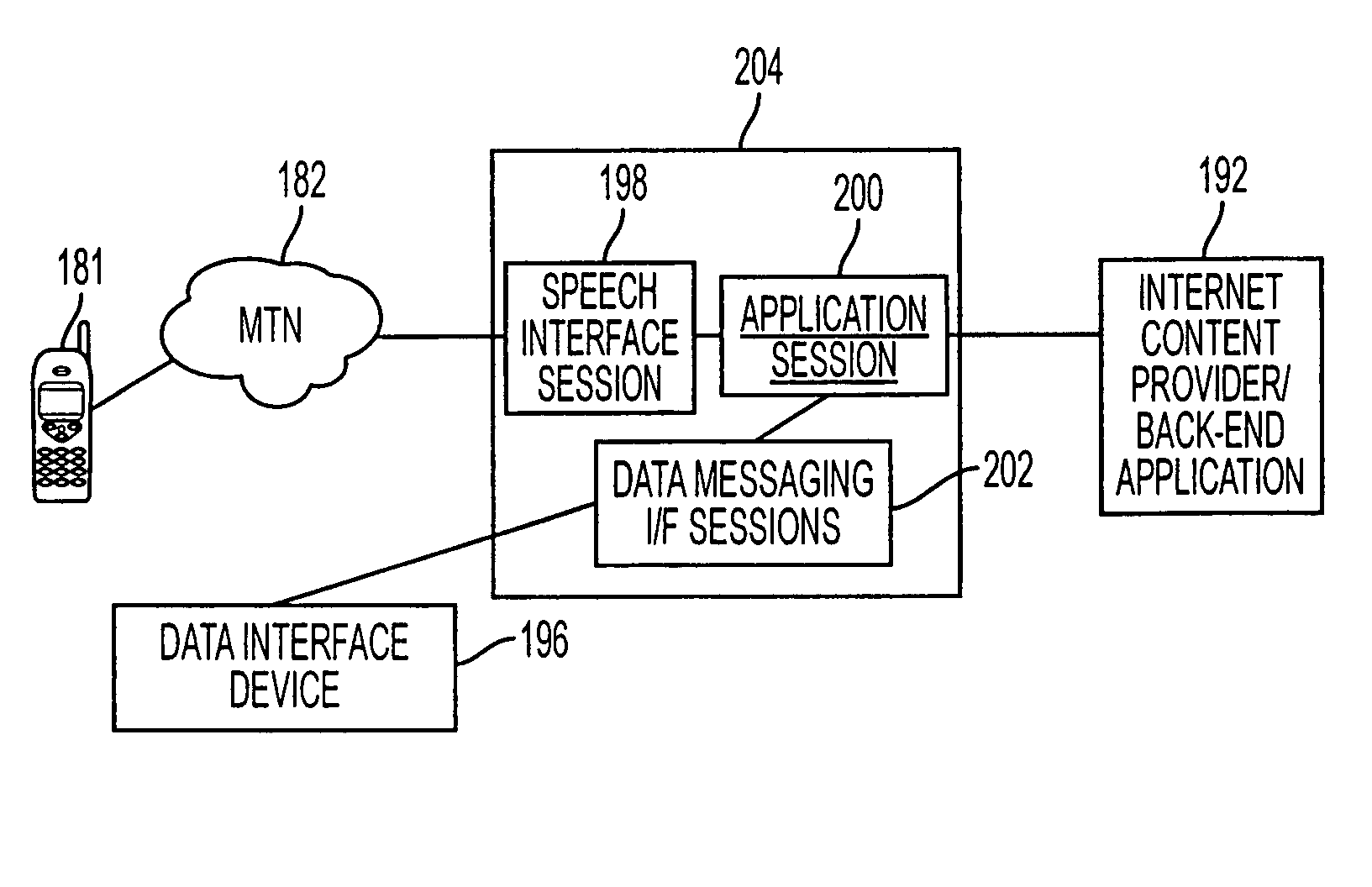

System and method for hybrid processing in a natural language voice services environment

ActiveUS9171541B2Input/output for user-computer interactionSpeech recognitionMultimodal interactionSpeech sound

A system and method for hybrid processing in a natural language voice services environment that includes a plurality of multi-modal devices may be provided. In particular, the hybrid processing may generally include the plurality of multi-modal devices cooperatively interpreting and processing one or more natural language utterances included in one or more multi-modal requests. For example, a virtual router may receive various messages that include encoded audio corresponding to a natural language utterance contained in a multi-modal interaction provided to one or more of the devices. The virtual router may then analyze the encoded audio to select a cleanest sample of the natural language utterance and communicate with one or more other devices in the environment to determine an intent of the multi-modal interaction. The virtual router may then coordinate resolving the multi-modal interaction based on the intent of the multi-modal interaction.

Owner:VOICEBOX TECH INC

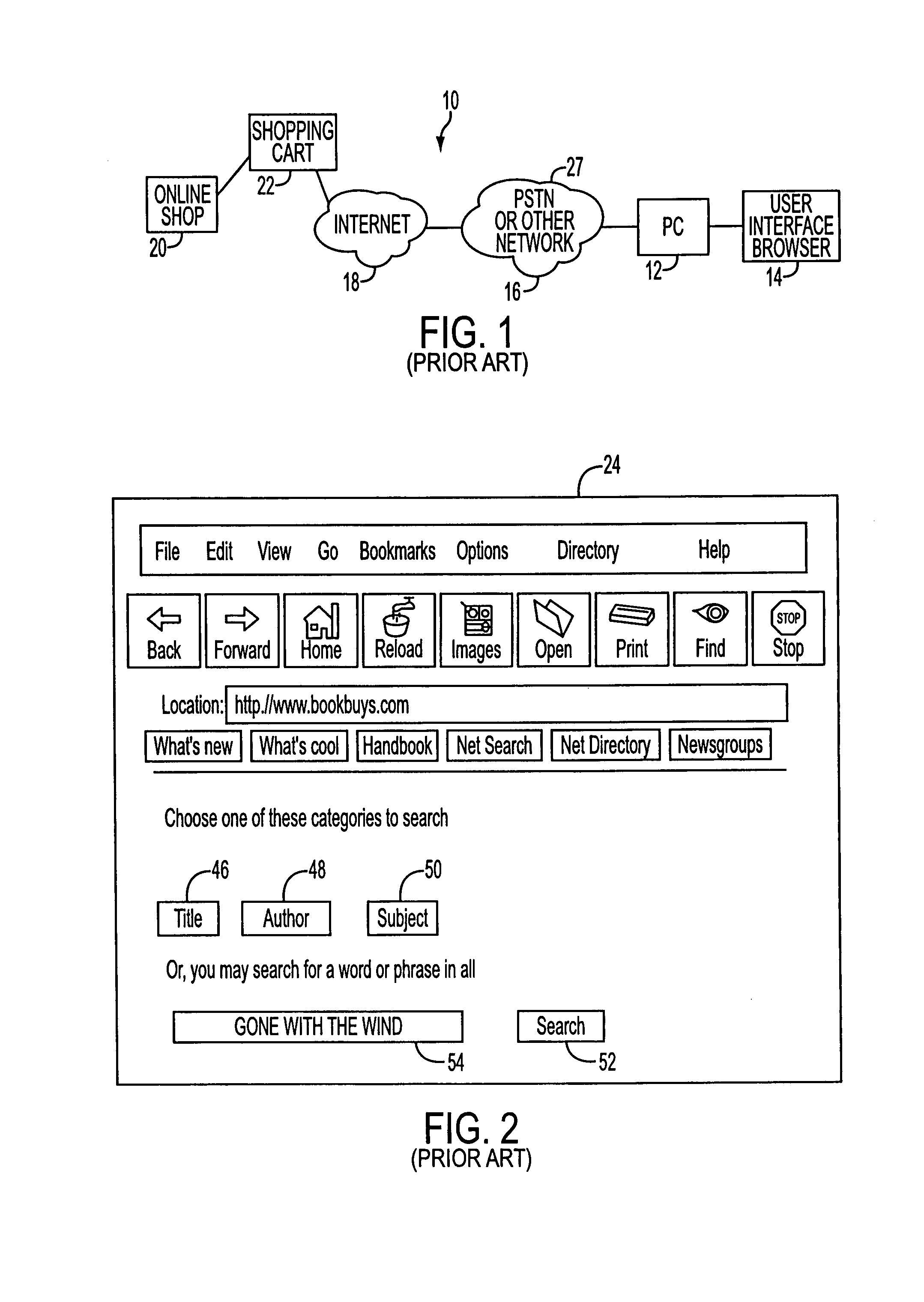

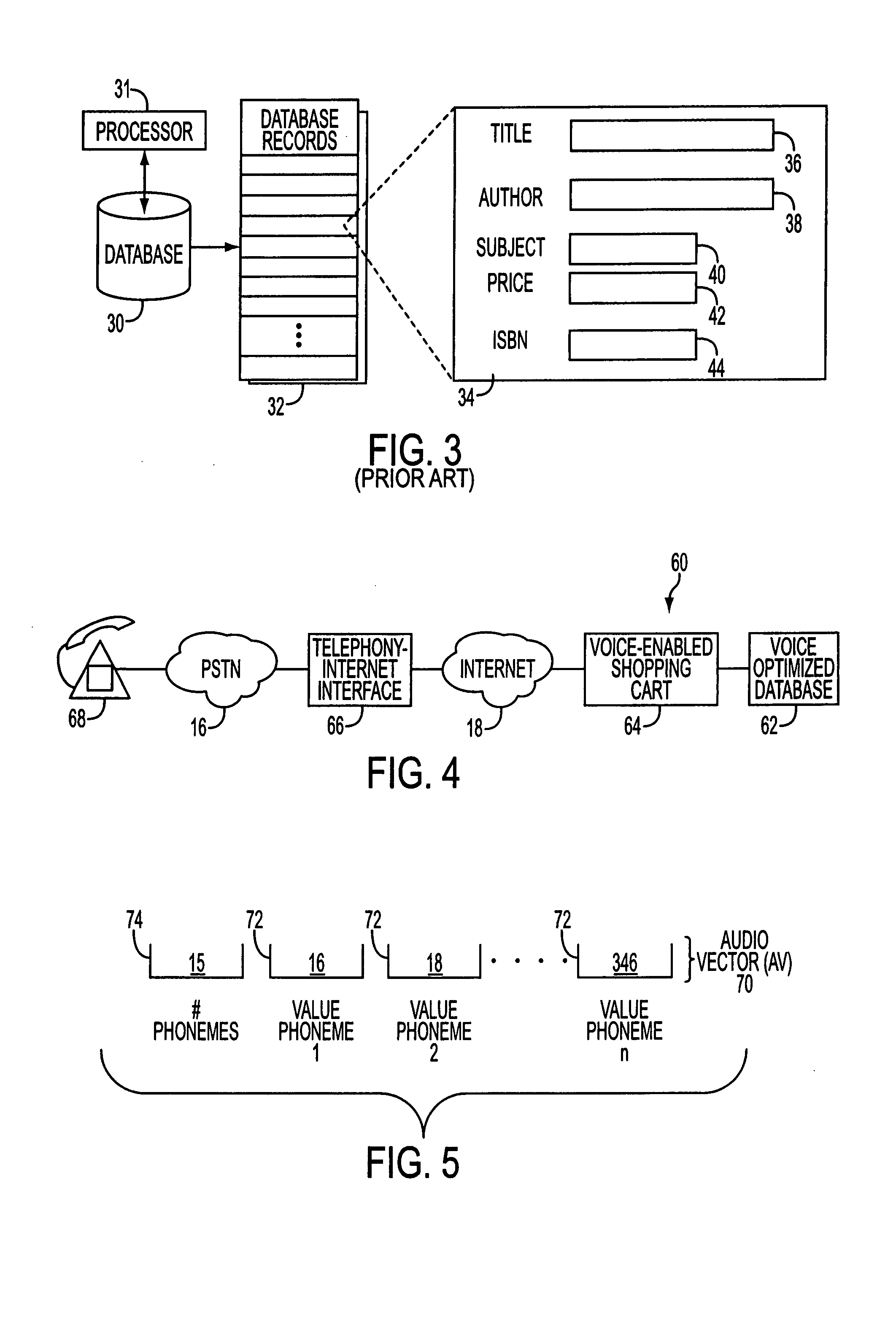

Multi-modal voice-enabled content access and delivery system

InactiveUS7283973B1Overcome deficienciesAutomatic call-answering/message-recording/conversation-recordingMultiple digital computer combinationsCredit cardModal voice

A voice-enabled system for online content access and delivery provides a voice and telephony interface, as well a text and graphic interface, for browsing and accessing requested content or shopping over the Internet using a browser or a telephone. The system allows customers to access an online data application, search for desired content items, select content items, and finally pay for selected items using a credit card, over a phone line or the Internet. A telephony-Internet interface converts spoken queries into electronic commands for transmission to an online data application. Markup language-type pages transmitted to callers from the online data application are parsed to extract selected information. The selected information is then reported to the callers via audio messaging. A voice-enabled technology for mobile multi-modal interaction is also provided.

Owner:LOGIC TREE

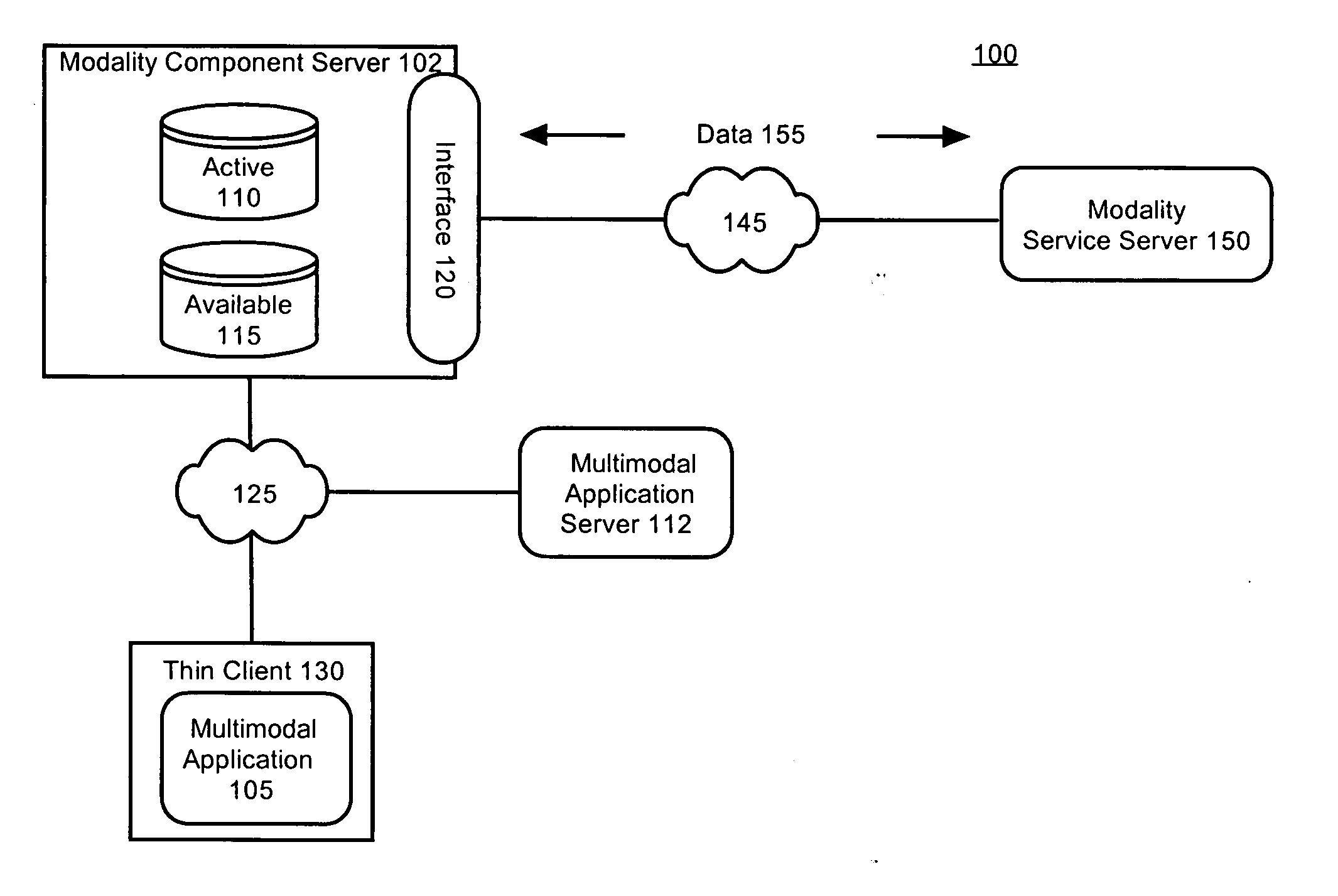

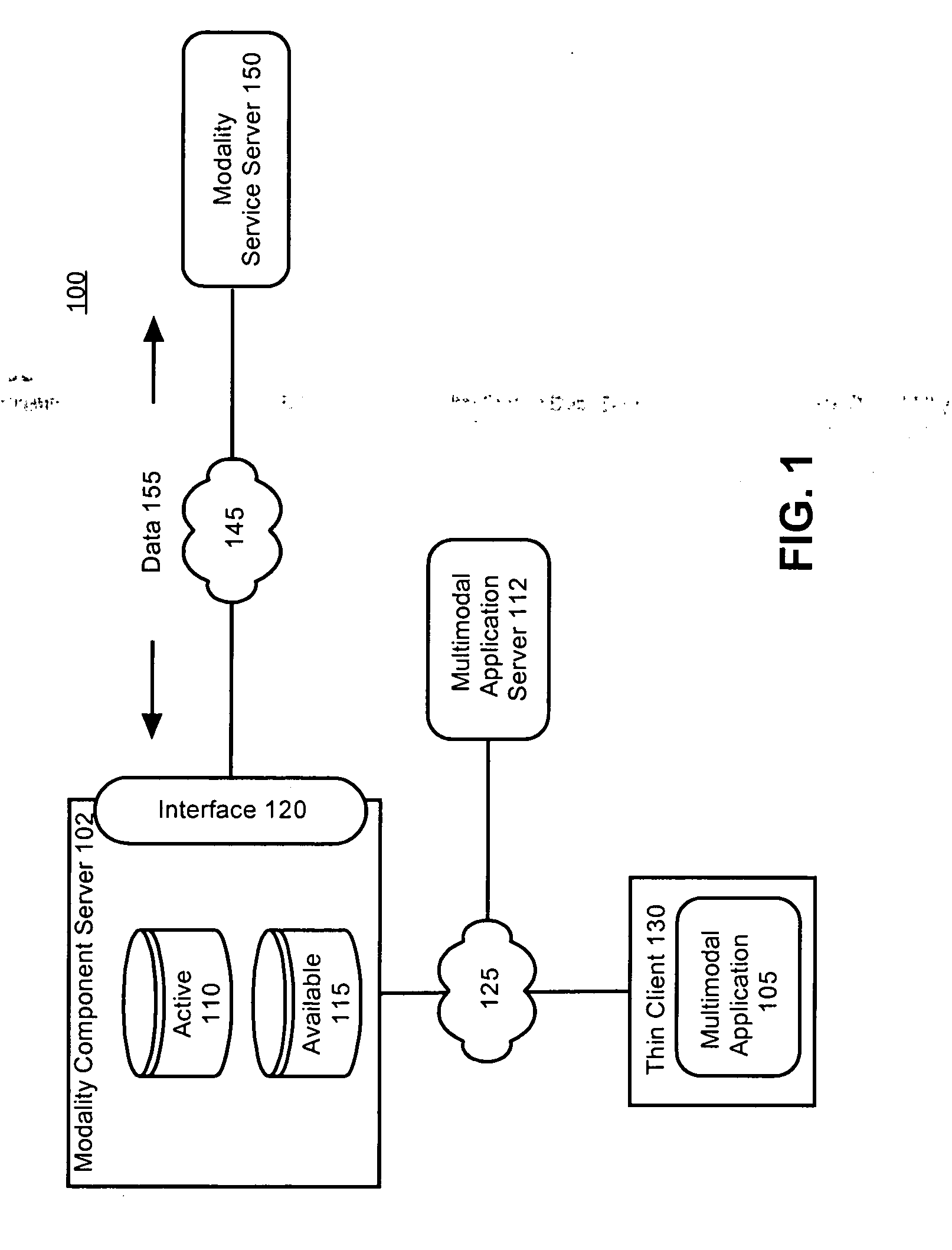

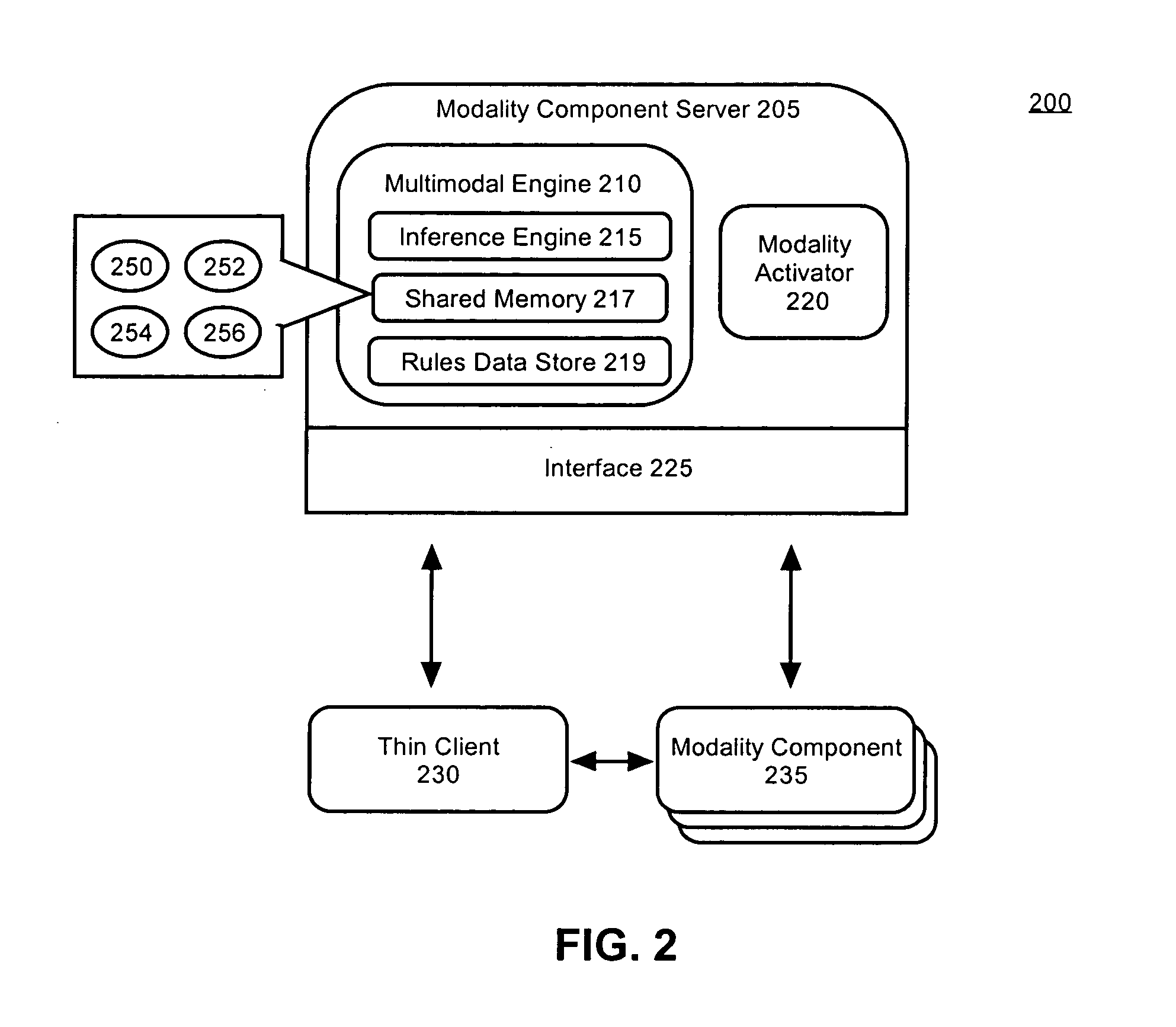

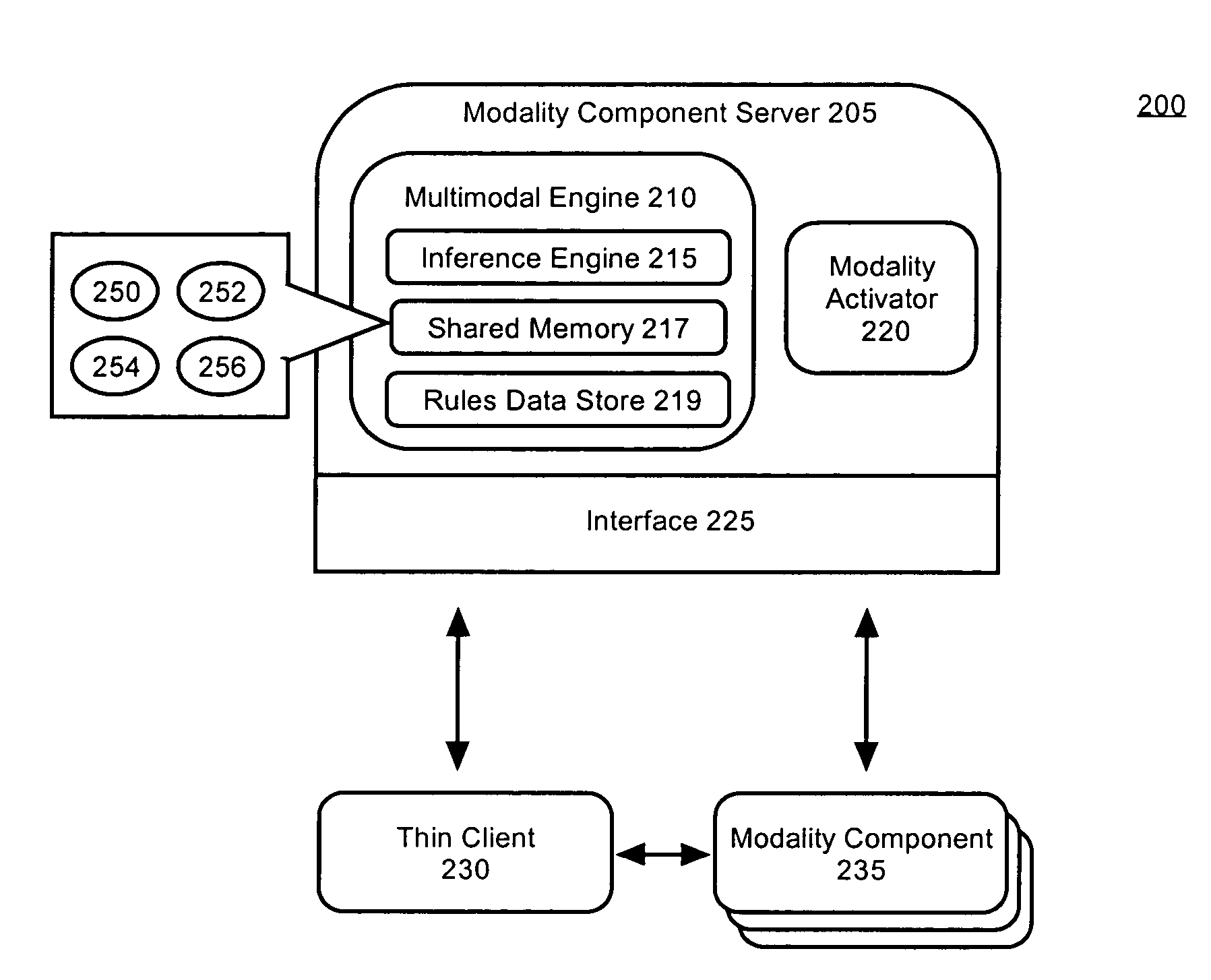

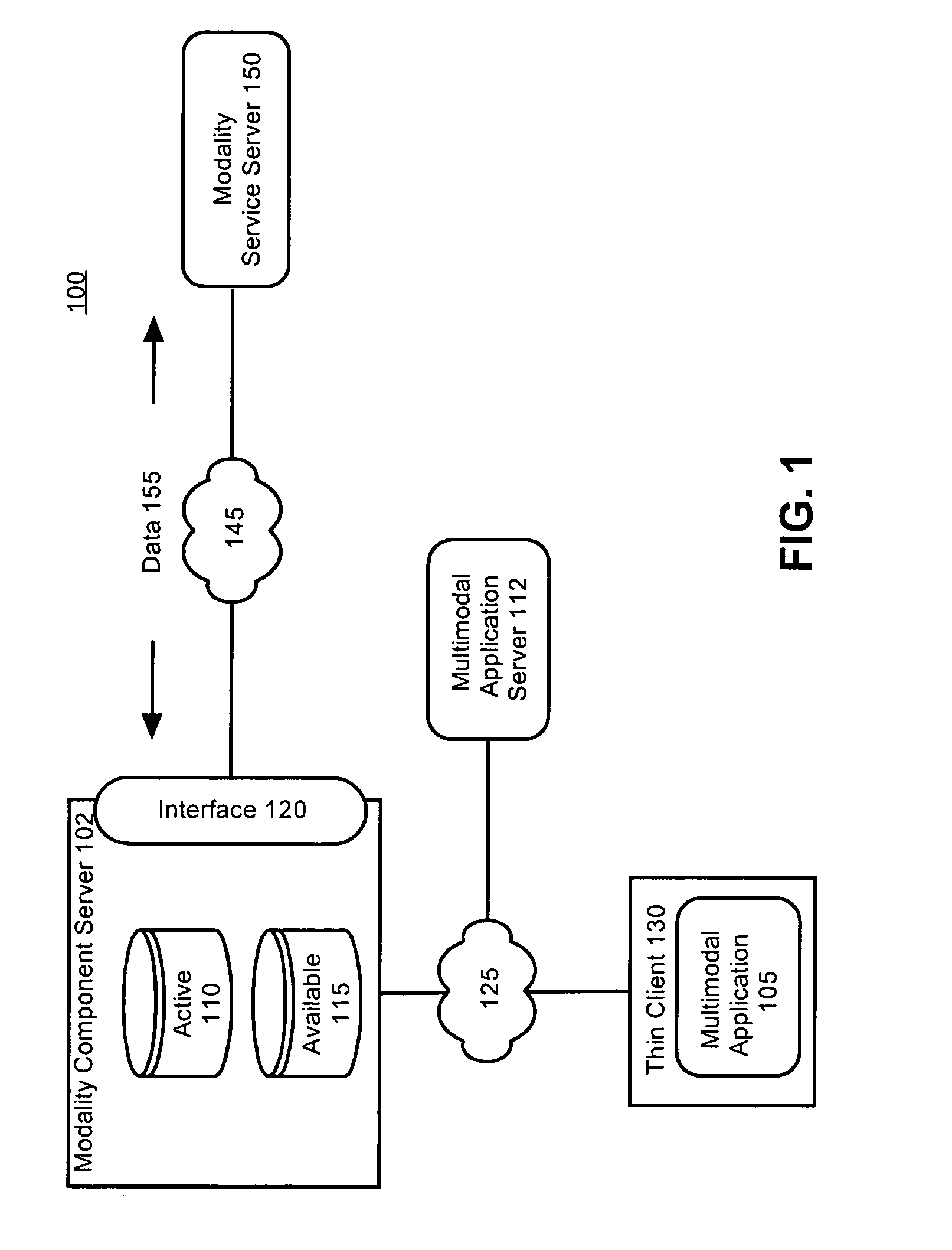

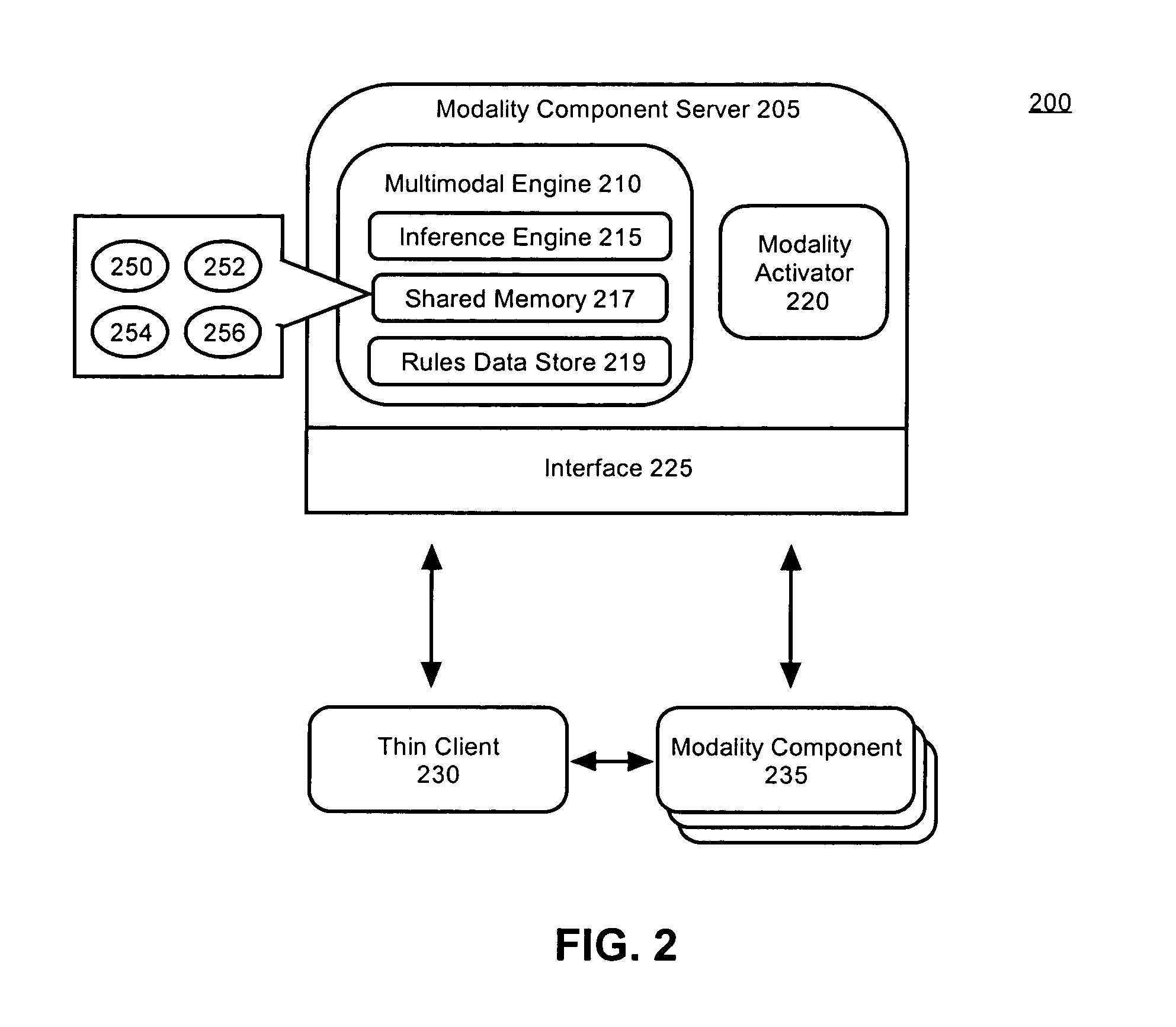

Managing application interactions using distributed modality components

ActiveUS20050138219A1Execution for user interfacesSpecial data processing applicationsMultimodal interactionComputer science

A method for managing multimodal interactions can include the step of registering a multitude of modality components with a modality component server, wherein each modality component handles an interface modality for an application. The modality component can be connected to a device. A user interaction can be conveyed from the device to the modality component for processing. Results from the user interaction can be placed on a shared memory are of the modality component server.

Owner:NUANCE COMM INC

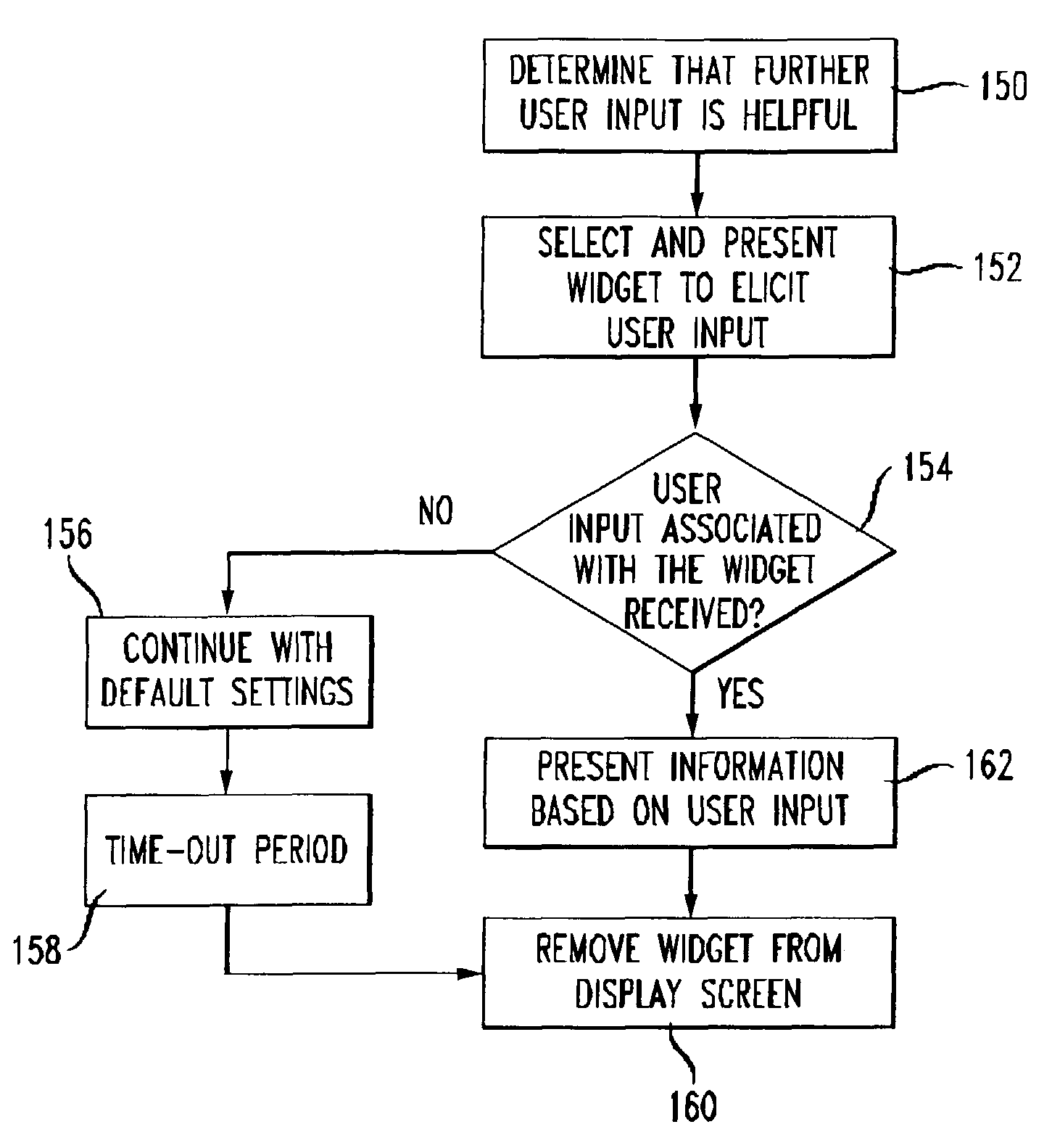

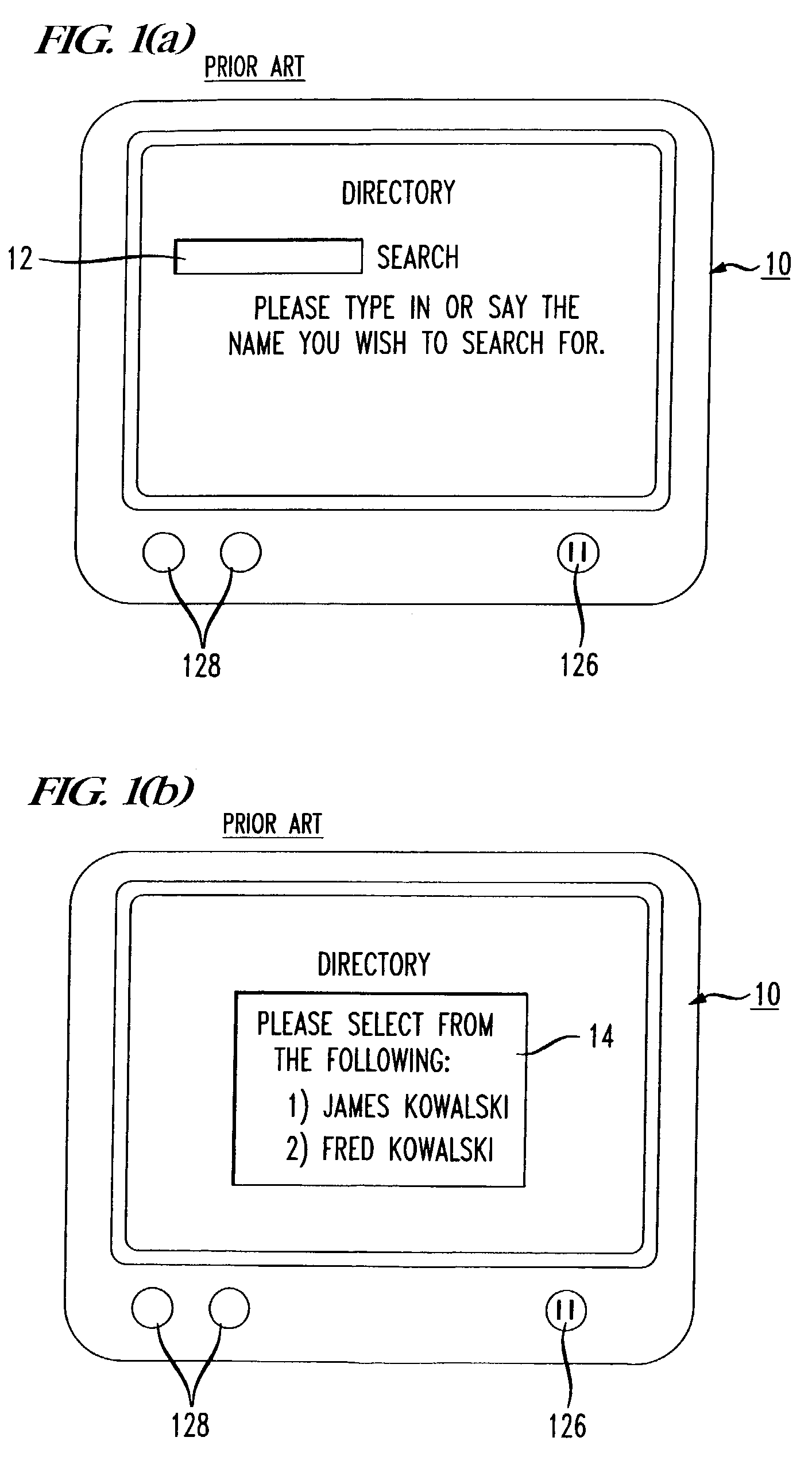

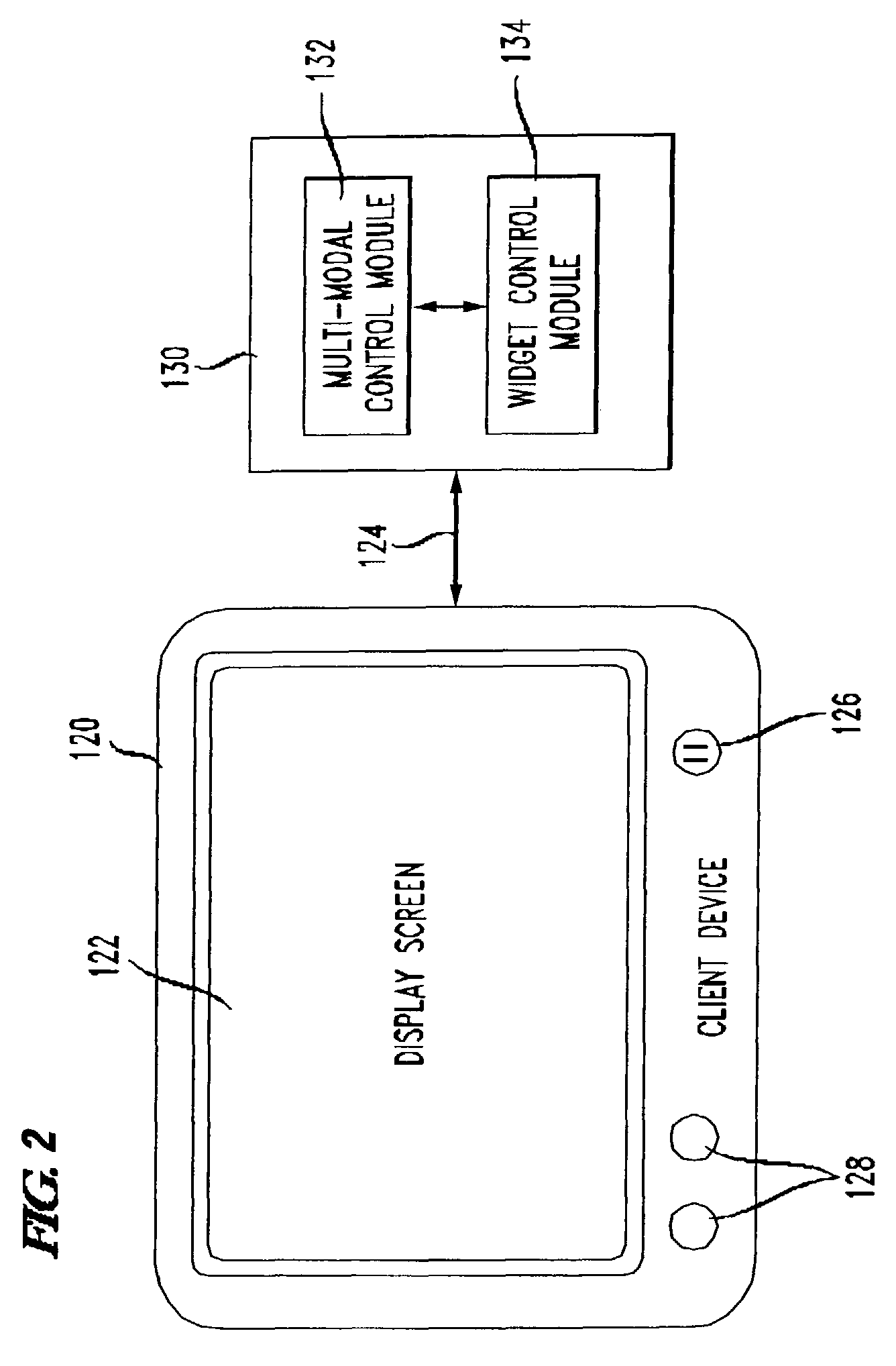

Context-sensitive interface widgets for multi-modal dialog systems

InactiveUS7890324B2Not with unnecessary imageEfficiency and speed of exchangingCathode-ray tube indicatorsMultiple digital computer combinationsInformation typeDialog system

A system and method of presenting widgets to a user during a multi-modal interactive dialog between a user and a computer is presented. The system controls the multi-modal dialog; and when user input would help to clarify or speed up the presentation of requested information, the system presents a temporary widget to the user to elicit the user input in this regard. The system presents the widget on a display screen at a position that will not interfere with the dialog. Various types of widgets are available, such as button widgets, sliders and confirmation widgets, depending on the type of information that the system requires.

Owner:NUANCE COMM INC

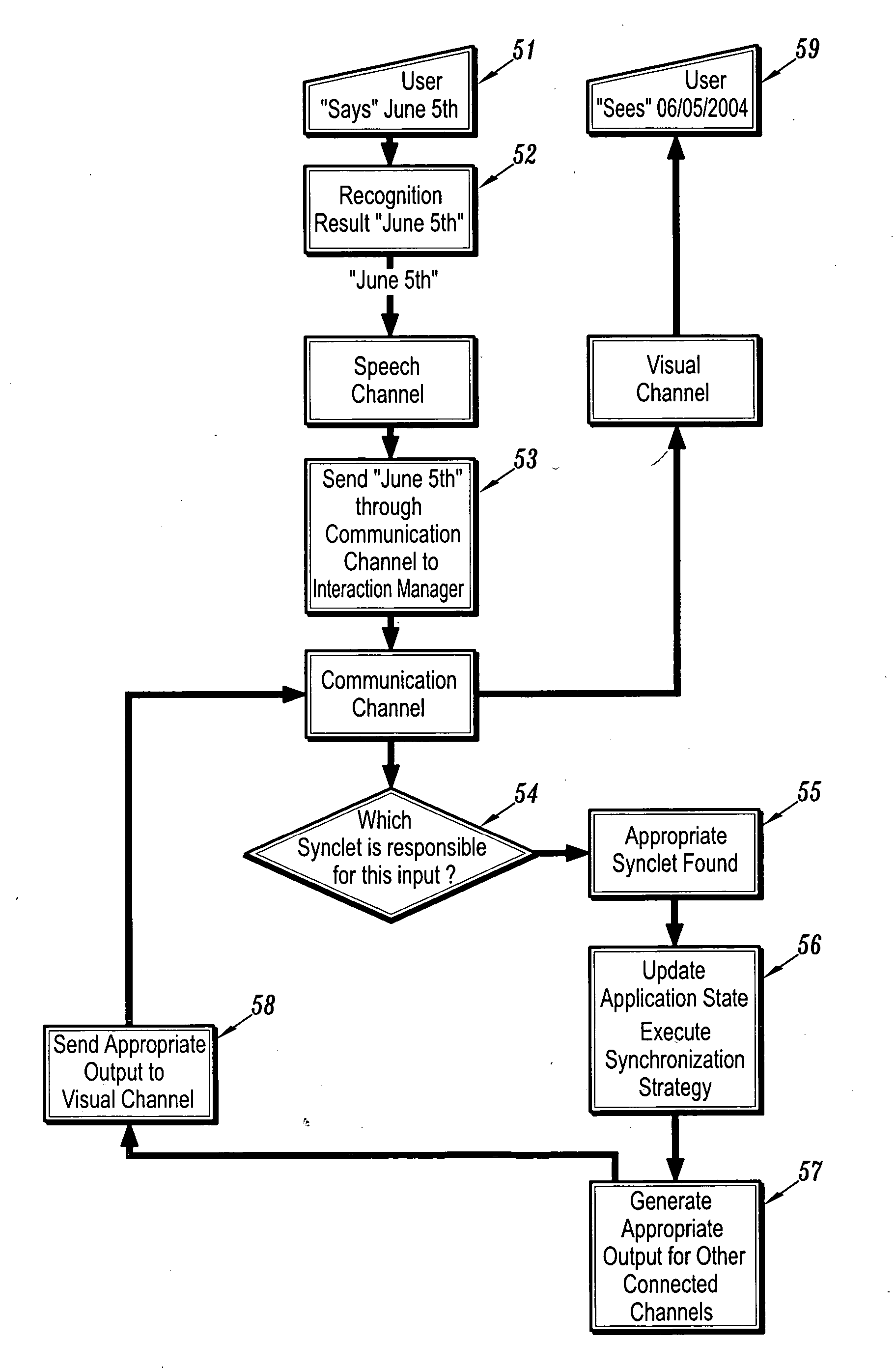

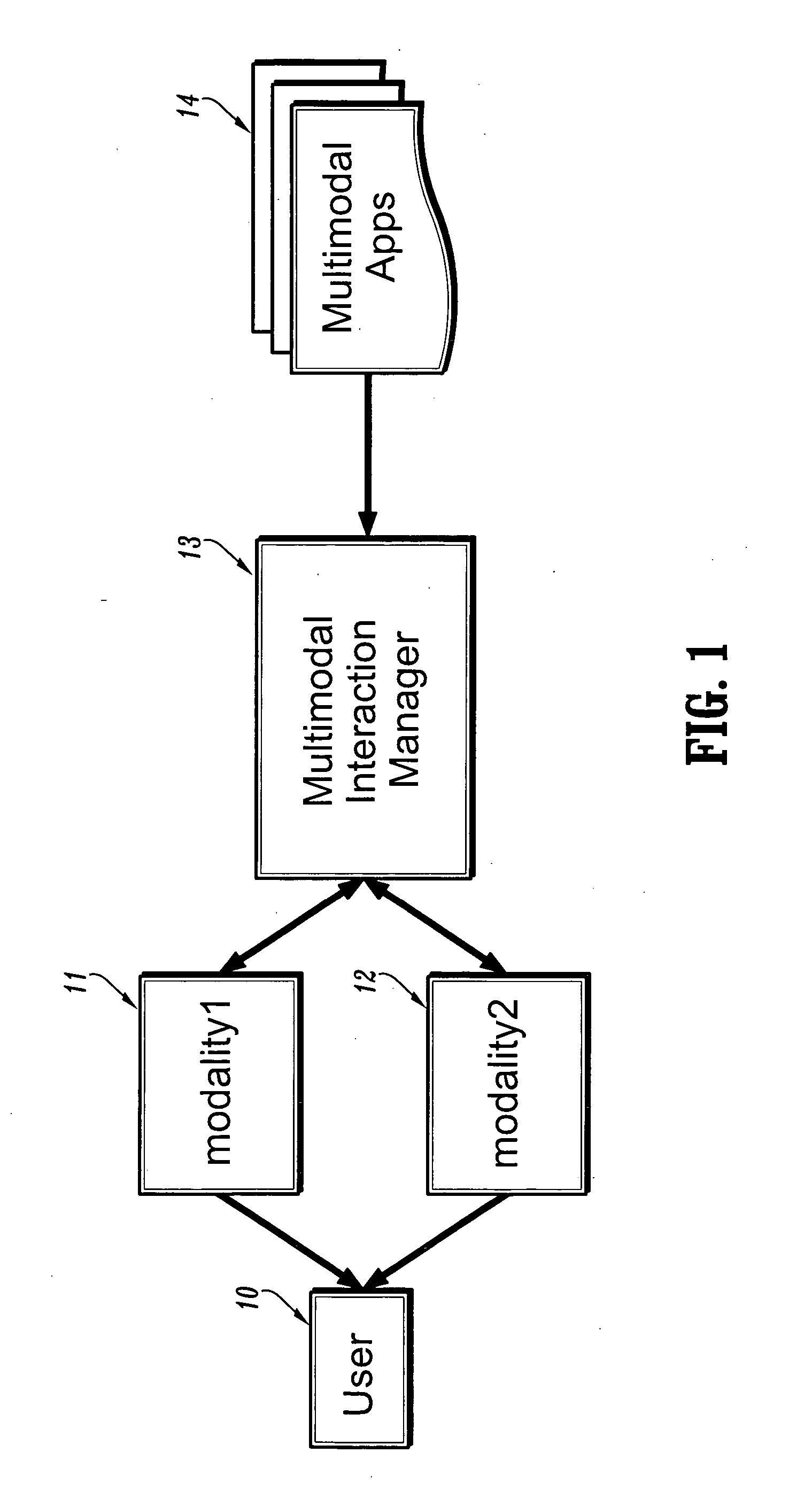

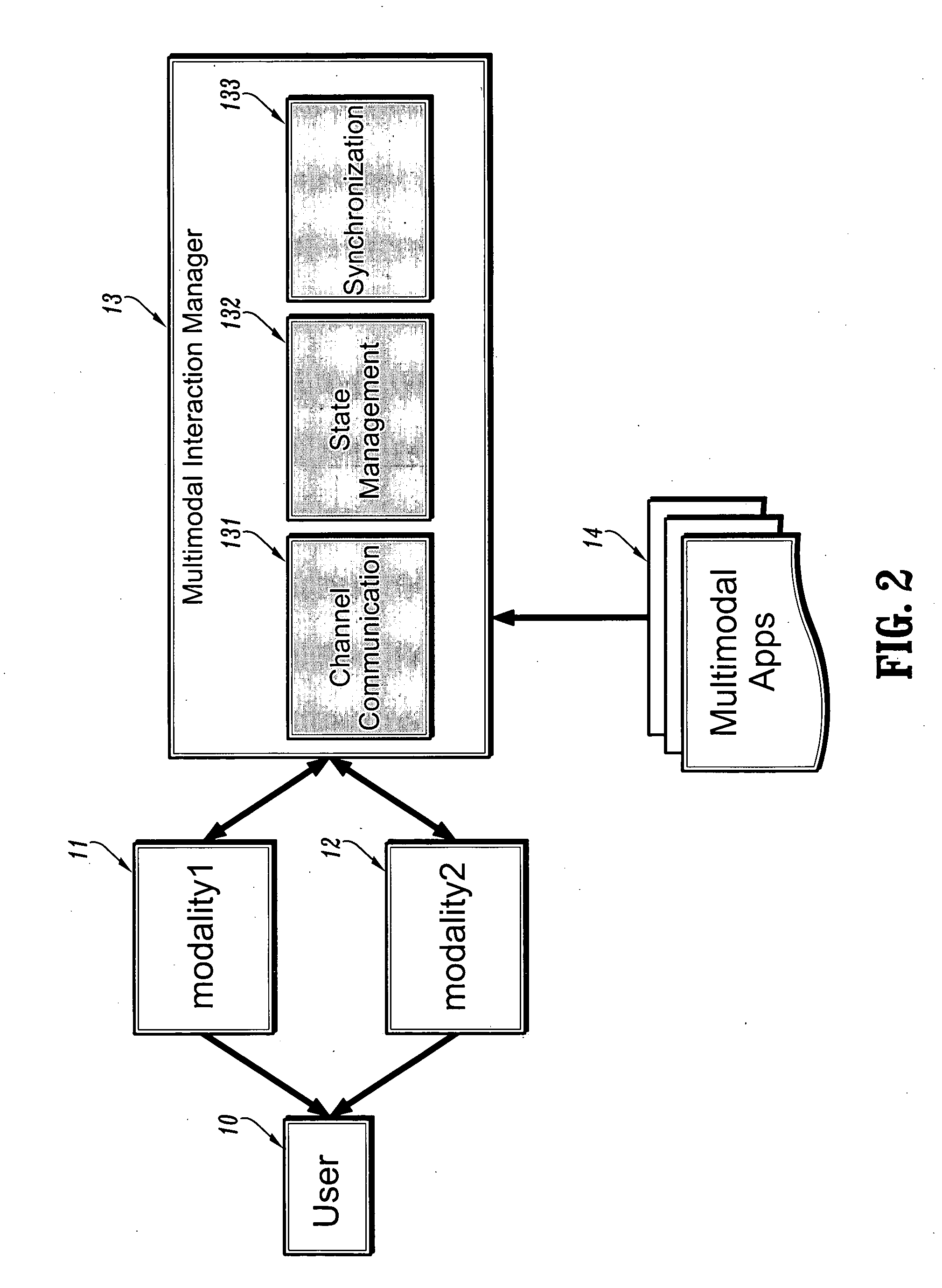

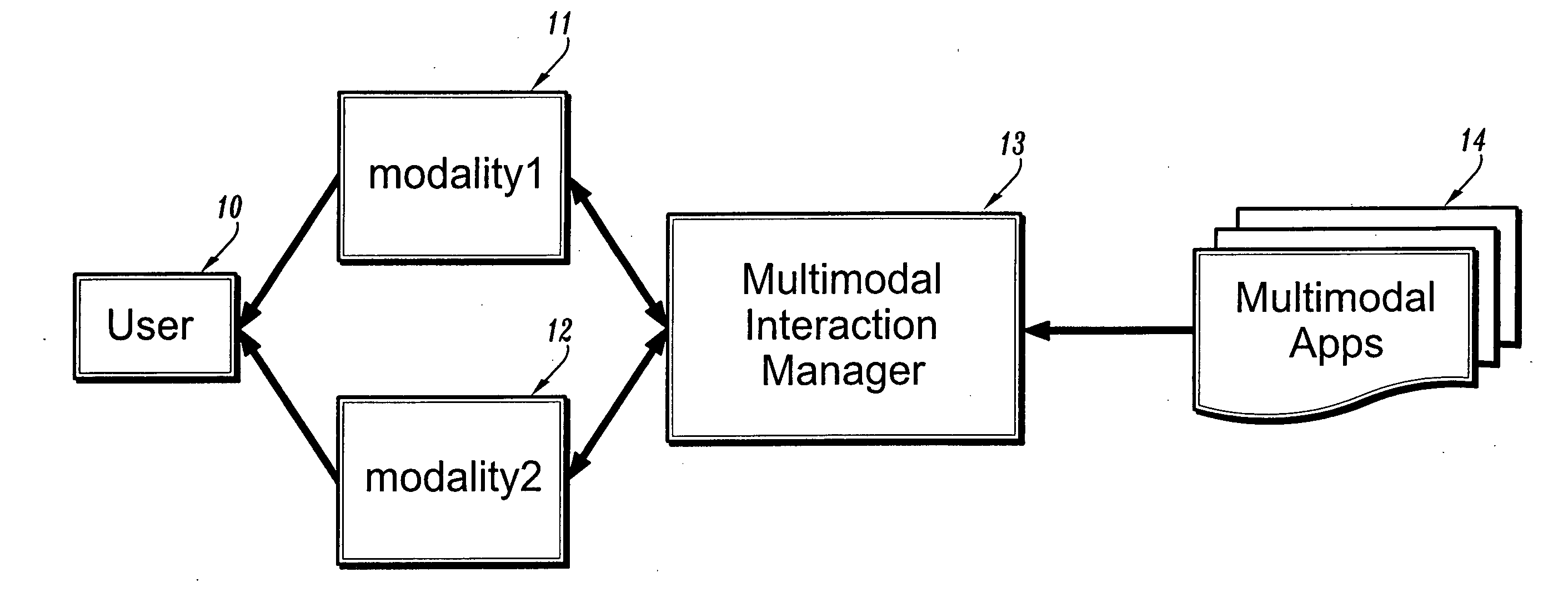

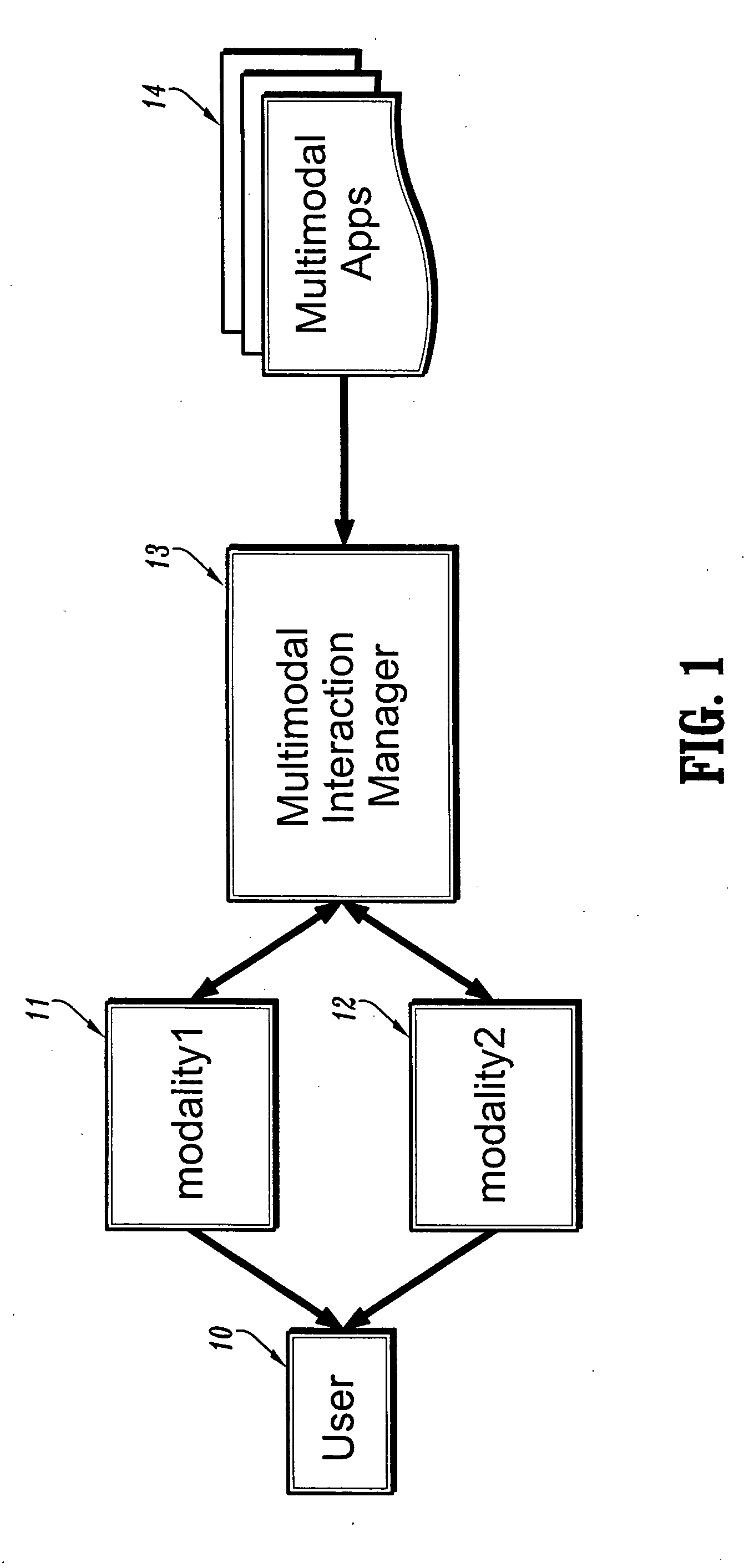

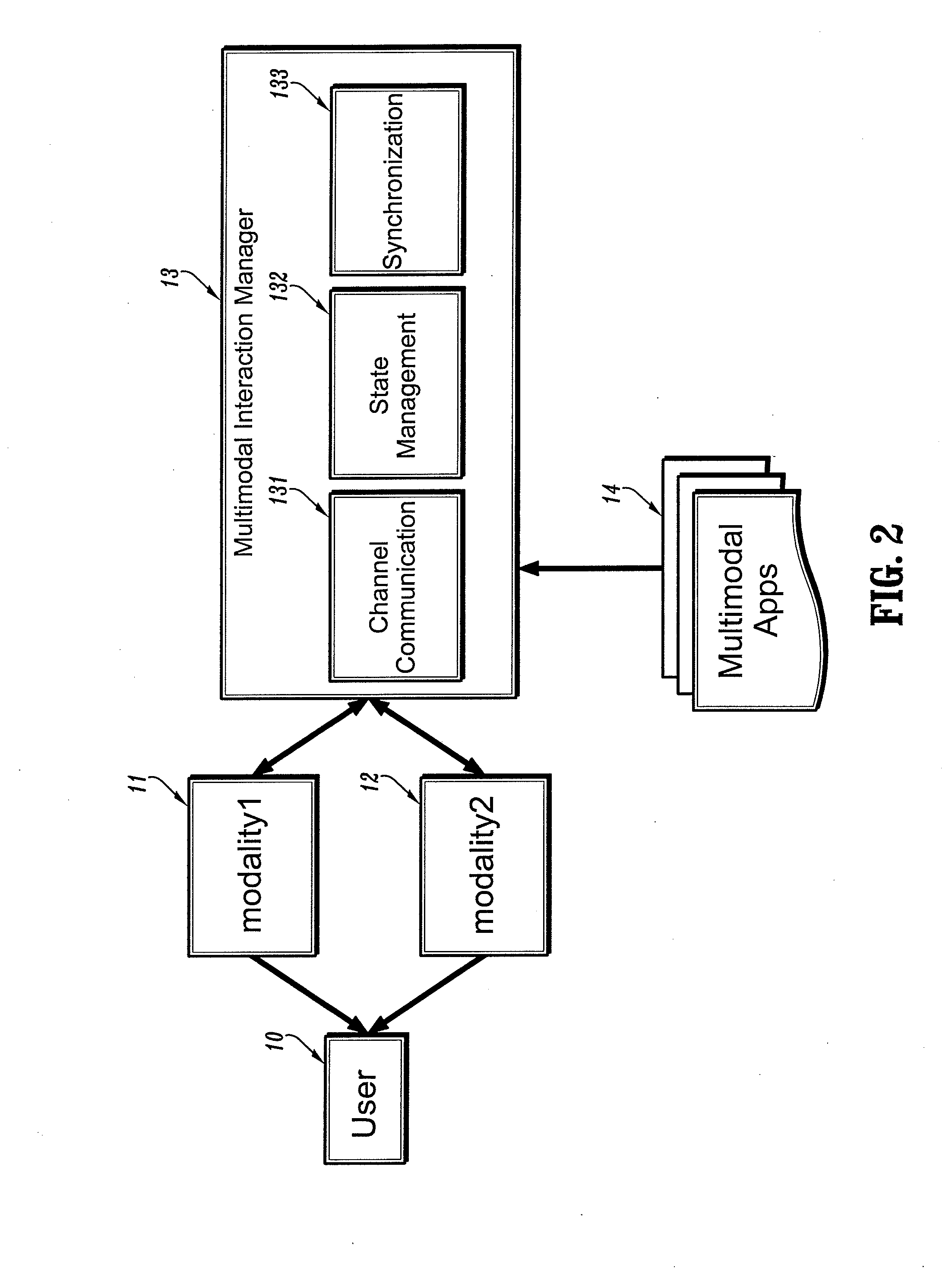

System for factoring synchronization strategies from multimodal programming model runtimes

InactiveUS20060036770A1Wide supportMultiple digital computer combinationsTransmissionApplication serverInteraction management

A factored multimodal interaction architecture for a distributed computing system is disclosed. The distributed computing system includes a plurality of clients and at least one application server that can interact with the clients via a plurality of interaction modalities. The factored architecture includes an interaction manager with a multimodal interface, wherein the interaction manager can receive a client request for a multimodal application in one interaction modality and transmit the client request in another modality, a browser adapter for each client browser, where each browser adapter includes the multimodal interface, and one or more pluggable synchronization modules. Each synchronization module implements one of the plurality of interaction modalities between one of the plurality of clients and the server such that the synchronization module for an interaction modality mediates communication between the multimodal interface of the client browser adapter and the multimodal interface of the interaction manager.

Owner:IBM CORP

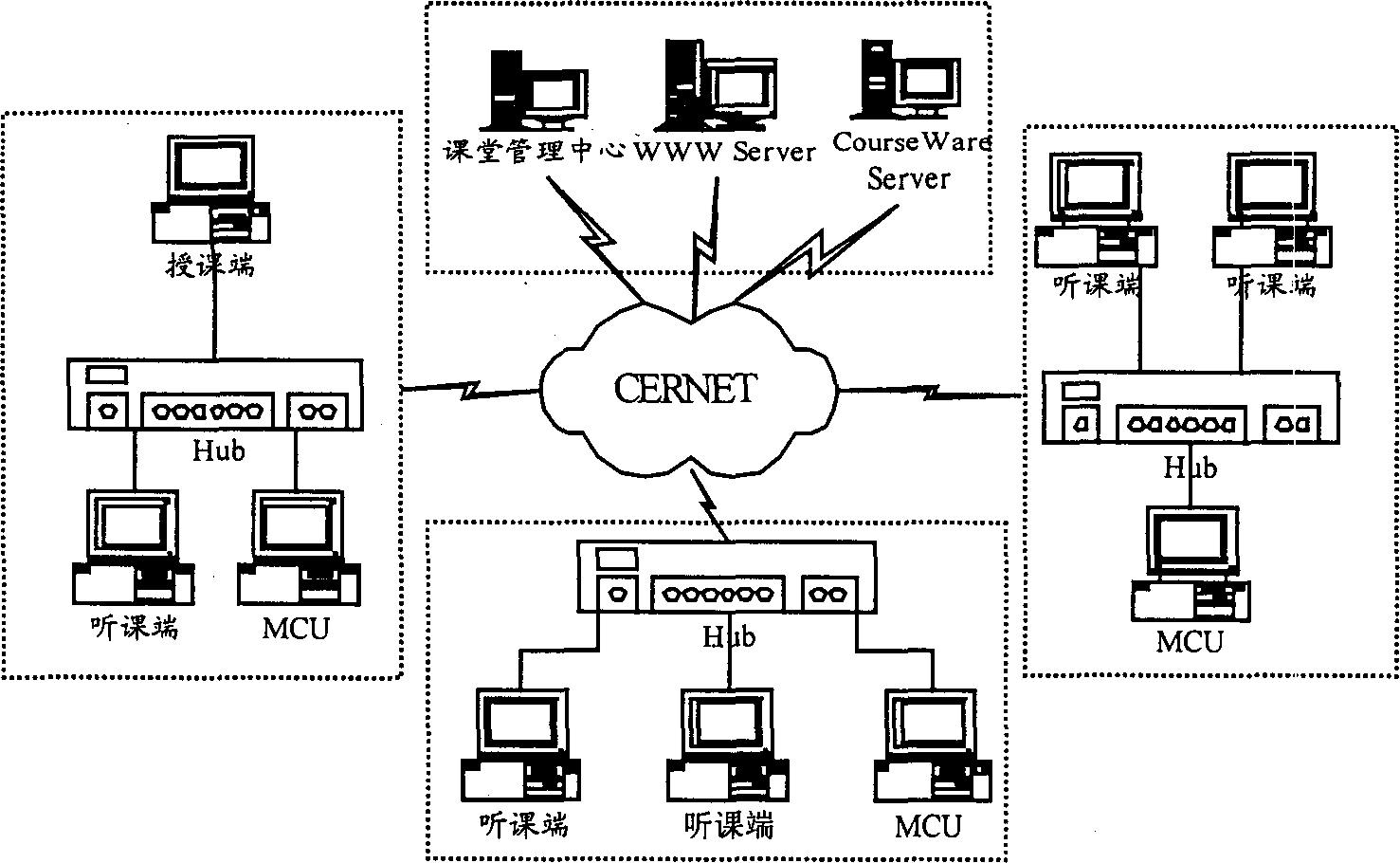

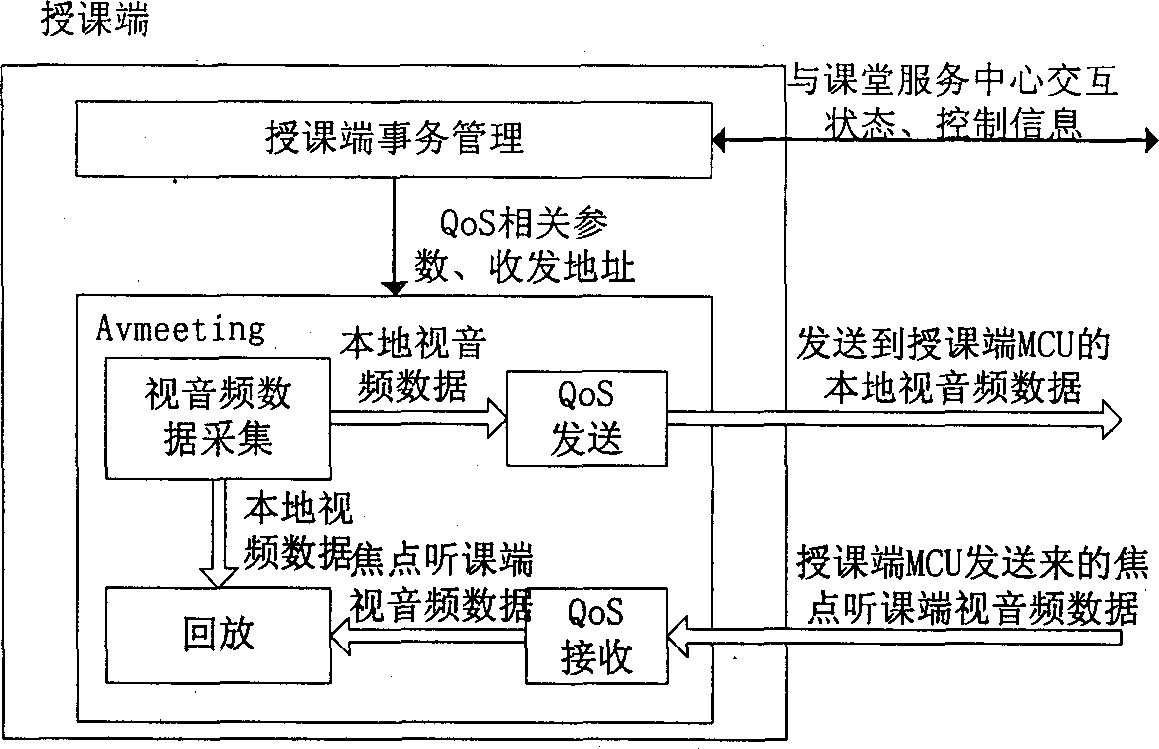

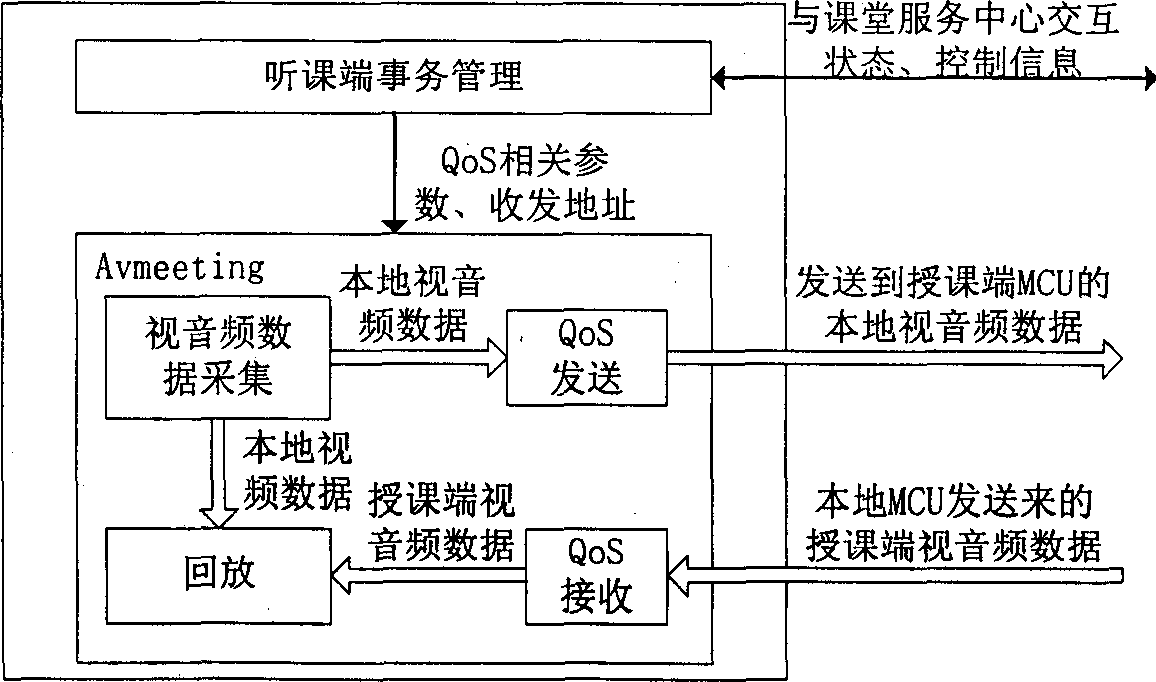

Multimedia real-time lessons-giving system based on IP web

InactiveCN1400541AAchieve sharingResolution timeDigital computer detailsElectric digital data processingWhiteboardMultipoint control unit

The ivnention discloses a multi-media real time control lessons giving system based on IP network, it is made up of lessons giving end, listening end, class service center and multipoint control unit(MCU); it realize a live telecast of teaching through multi-media transcribing the teaching spot and network transmission, through multi-media and multi-mode intercommunication network the teachers and the students natural blackboard-writing teaching by the teacher, teaching content retrieving, share of applied procedure, teaching software skimming, electronic white board, real time control teaching software and the class management function, it broads the teaching scale.

Owner:XI AN JIAOTONG UNIV

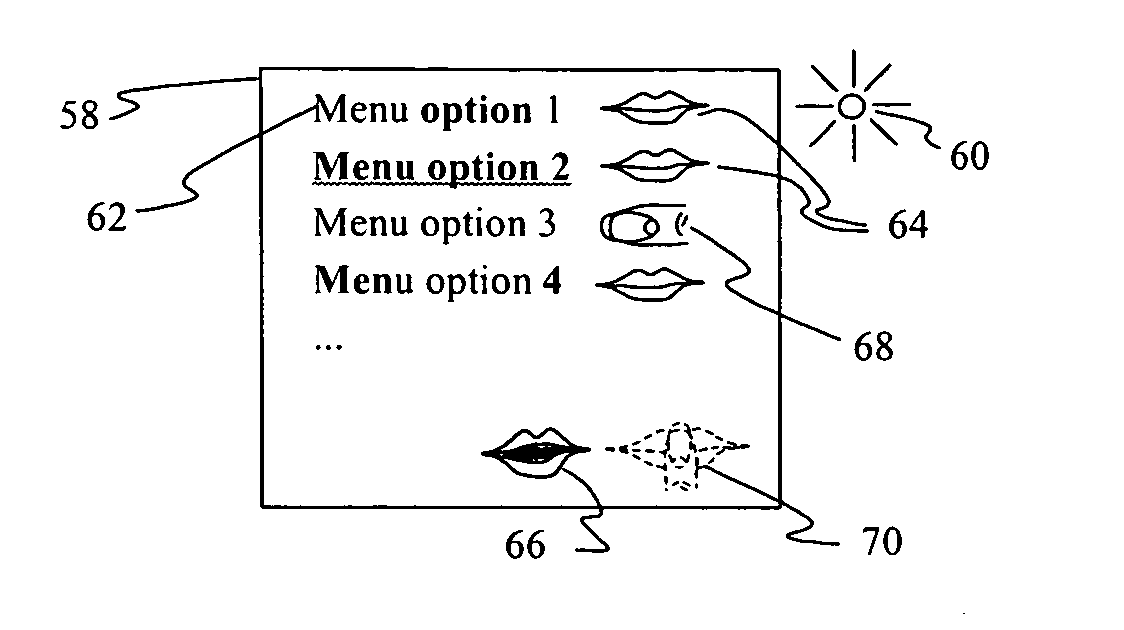

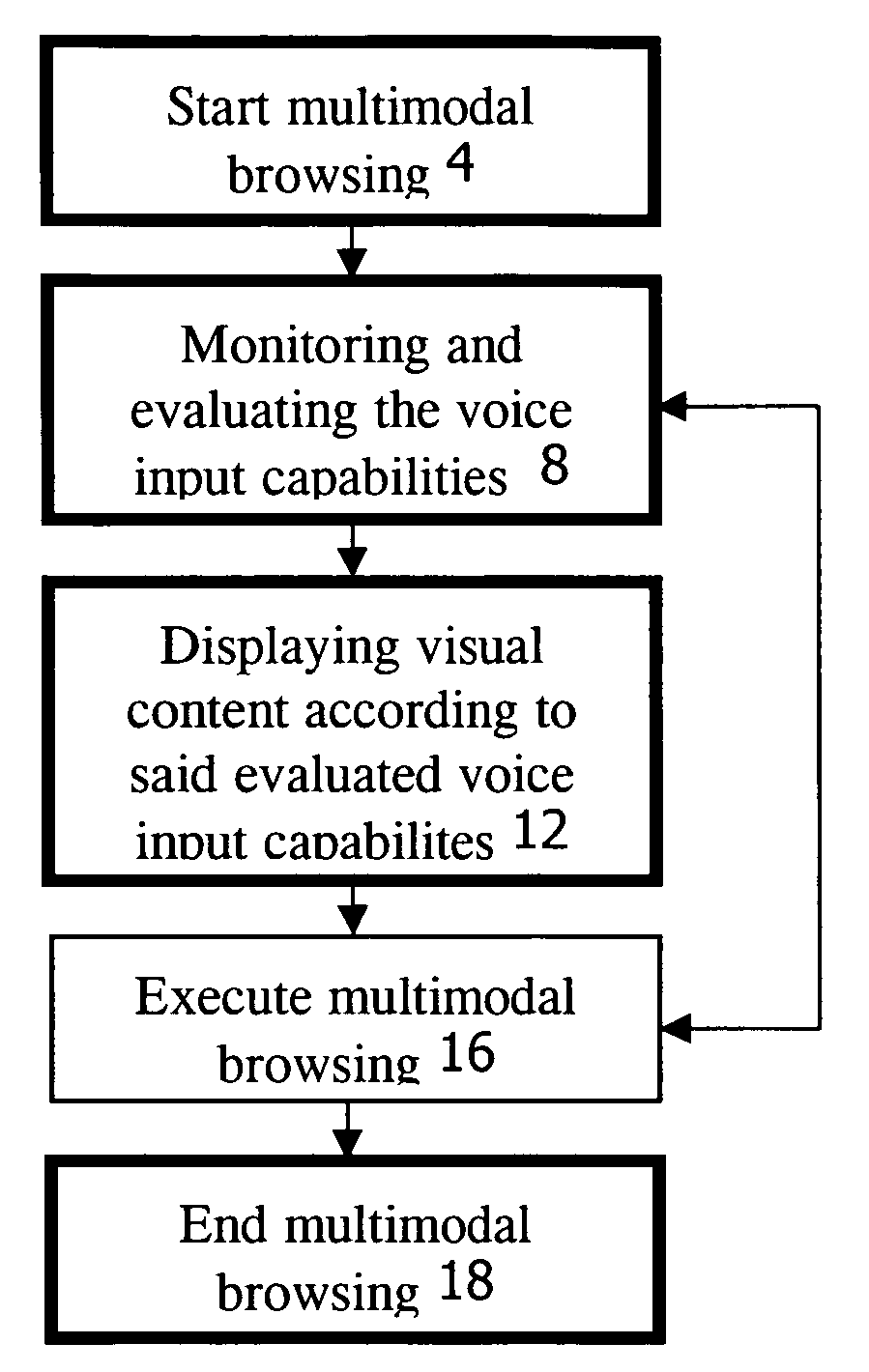

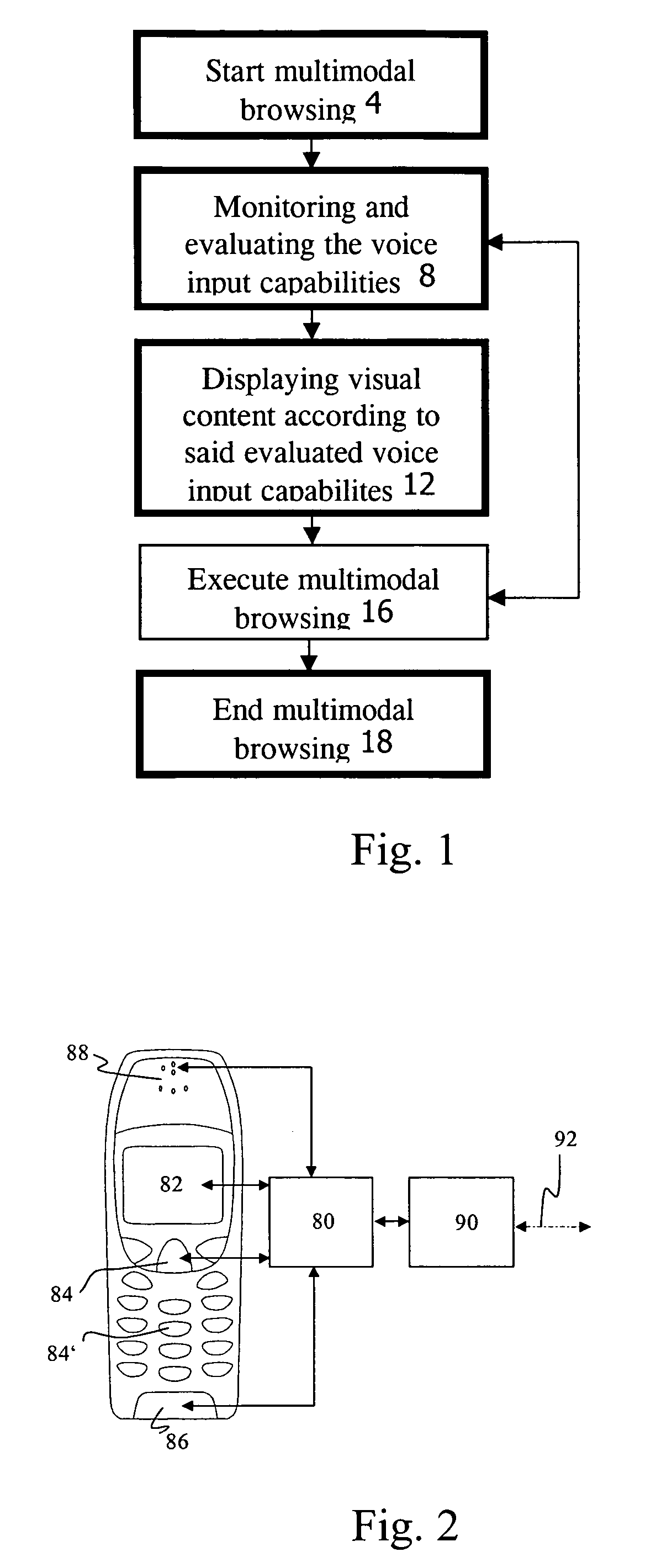

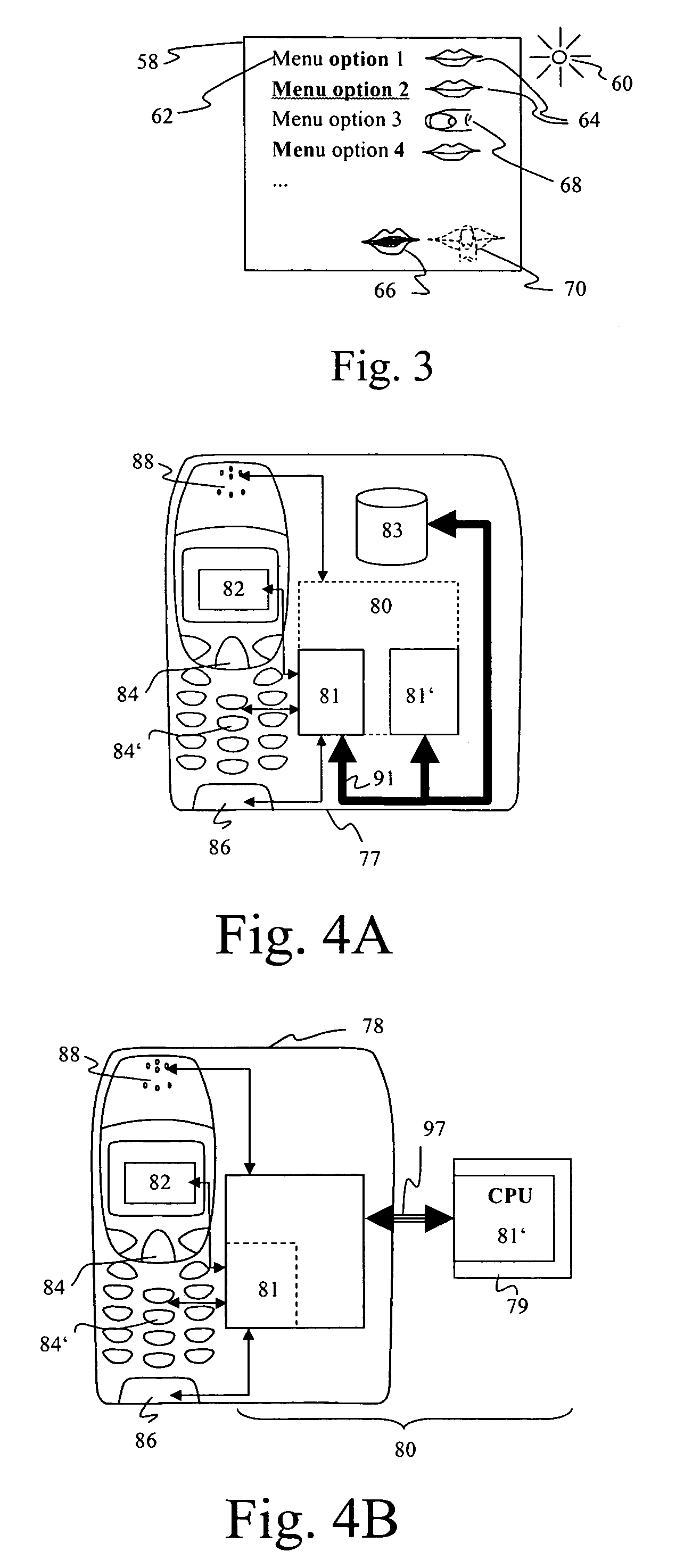

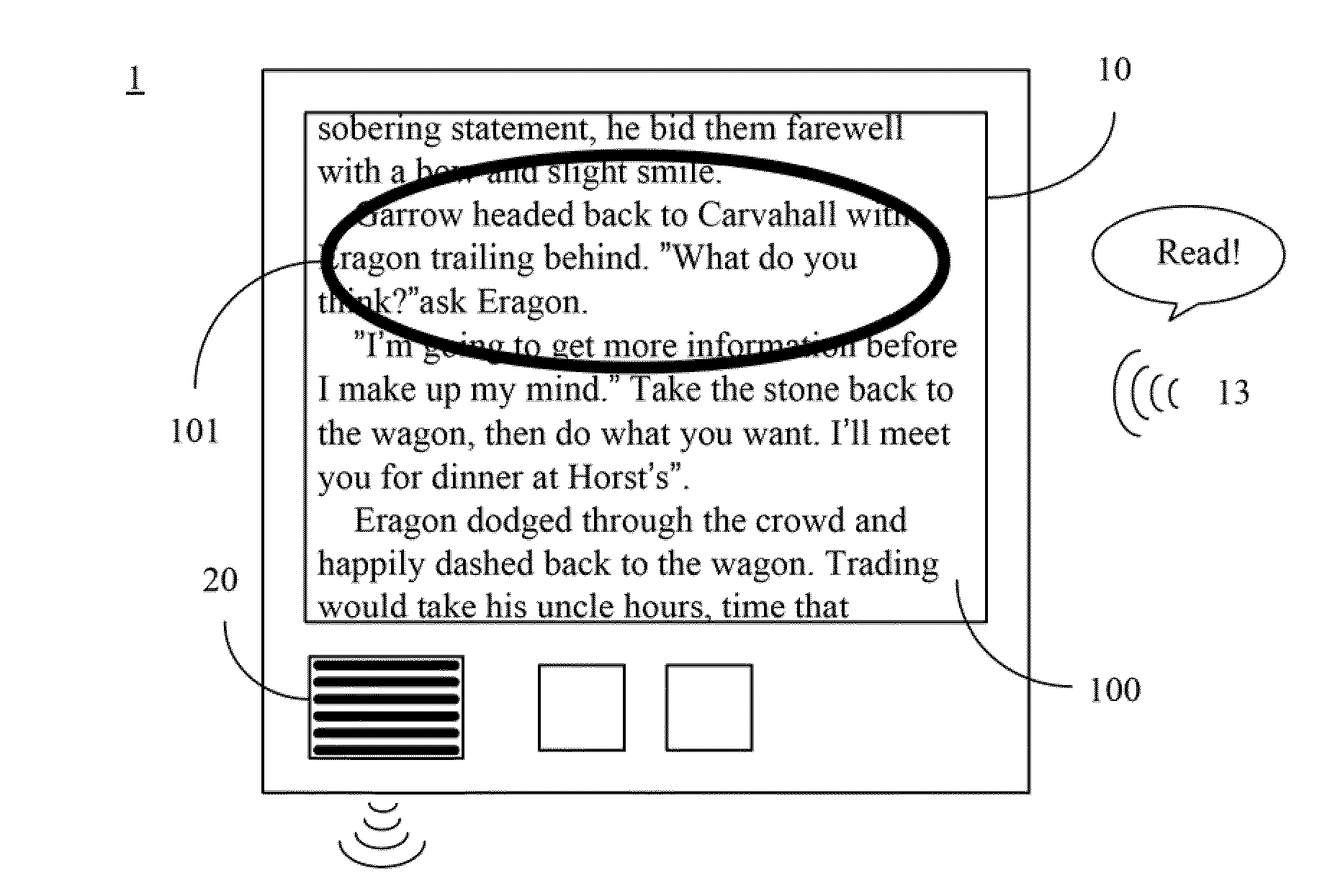

Method and device for providing speech-enabled input in an electronic device having a user interface

InactiveUS20050027538A1Avoid rapid changesLittle changeDevices with voice recognitionSubscriber signalling identity devicesDisplay deviceSpeech input

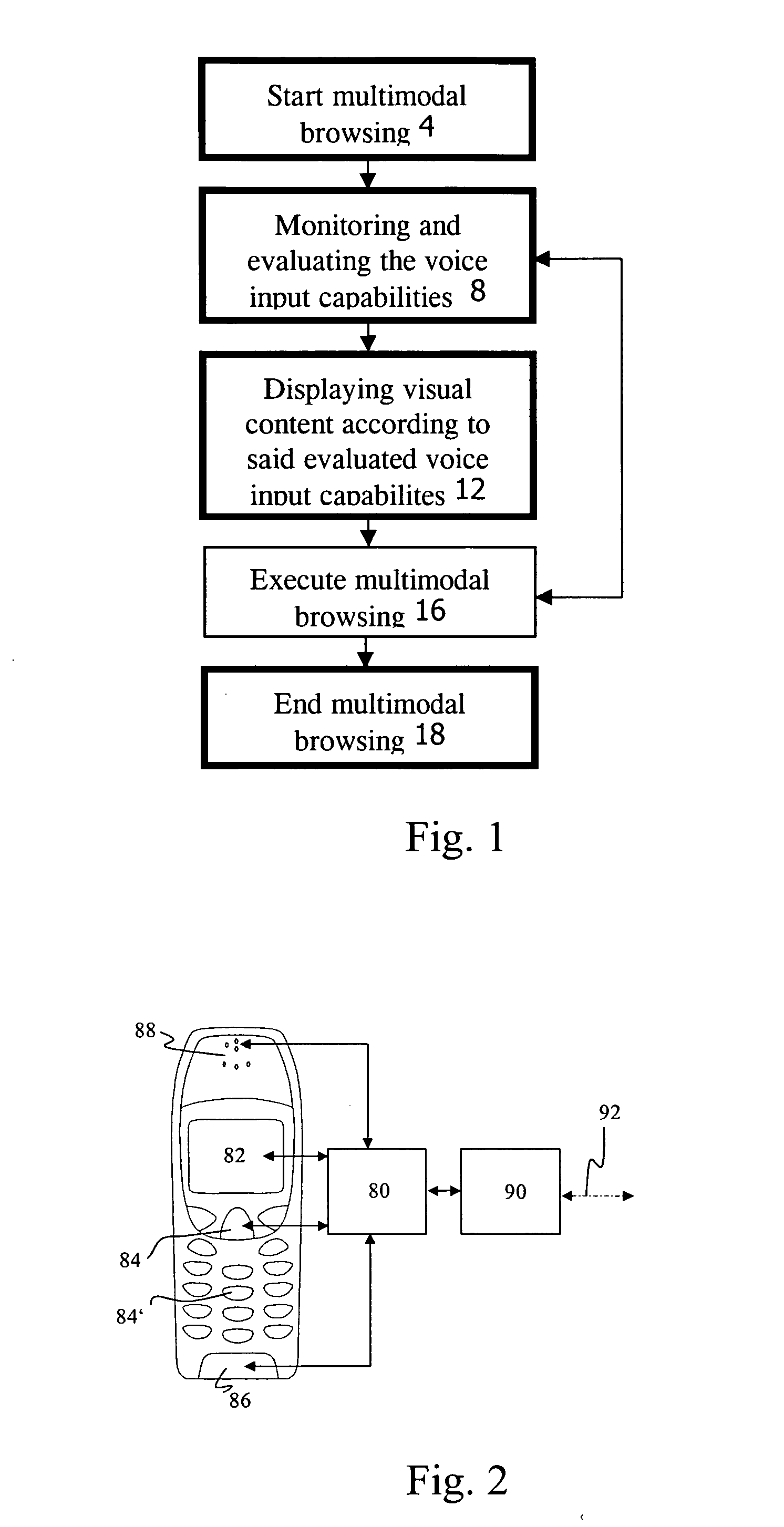

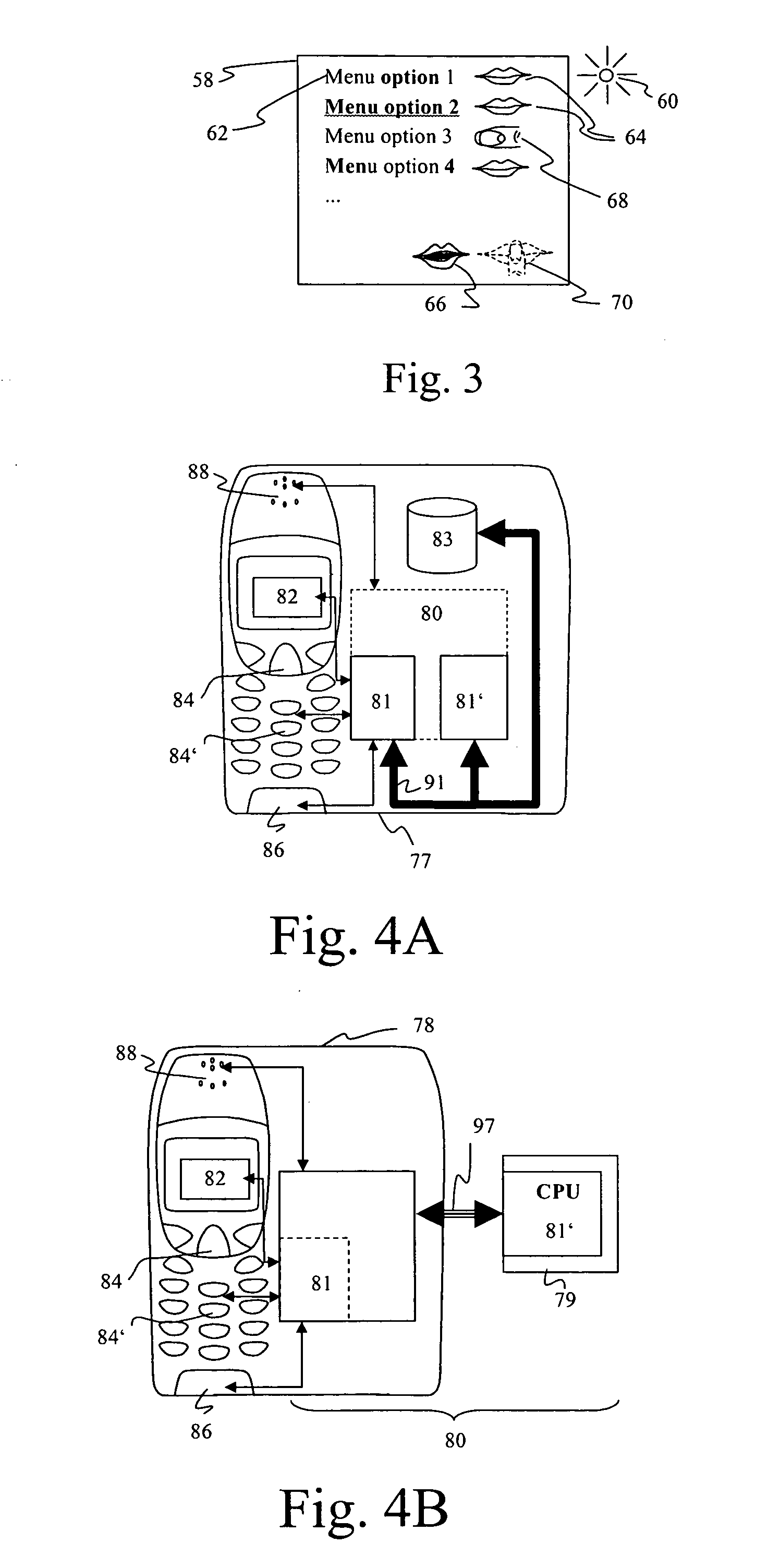

The present invention provides a method, a device and a system for multimodal interactions. The method according to the invention comprises the steps of activating a multimodal user interaction, providing at least one key input option and at least one voice input option, displaying the at least one key input option, checking if there is at least one condition affecting said voice input option, and providing voice input options and displaying indications of the provided voice input options according to the condition. The method is characterized by checking if at least one condition affecting the voice input is fulfilled and providing the at least one voice input option and displaying indications of the voice input options on the display, according to the condition.

Owner:NOKIA TECHNOLOGLES OY

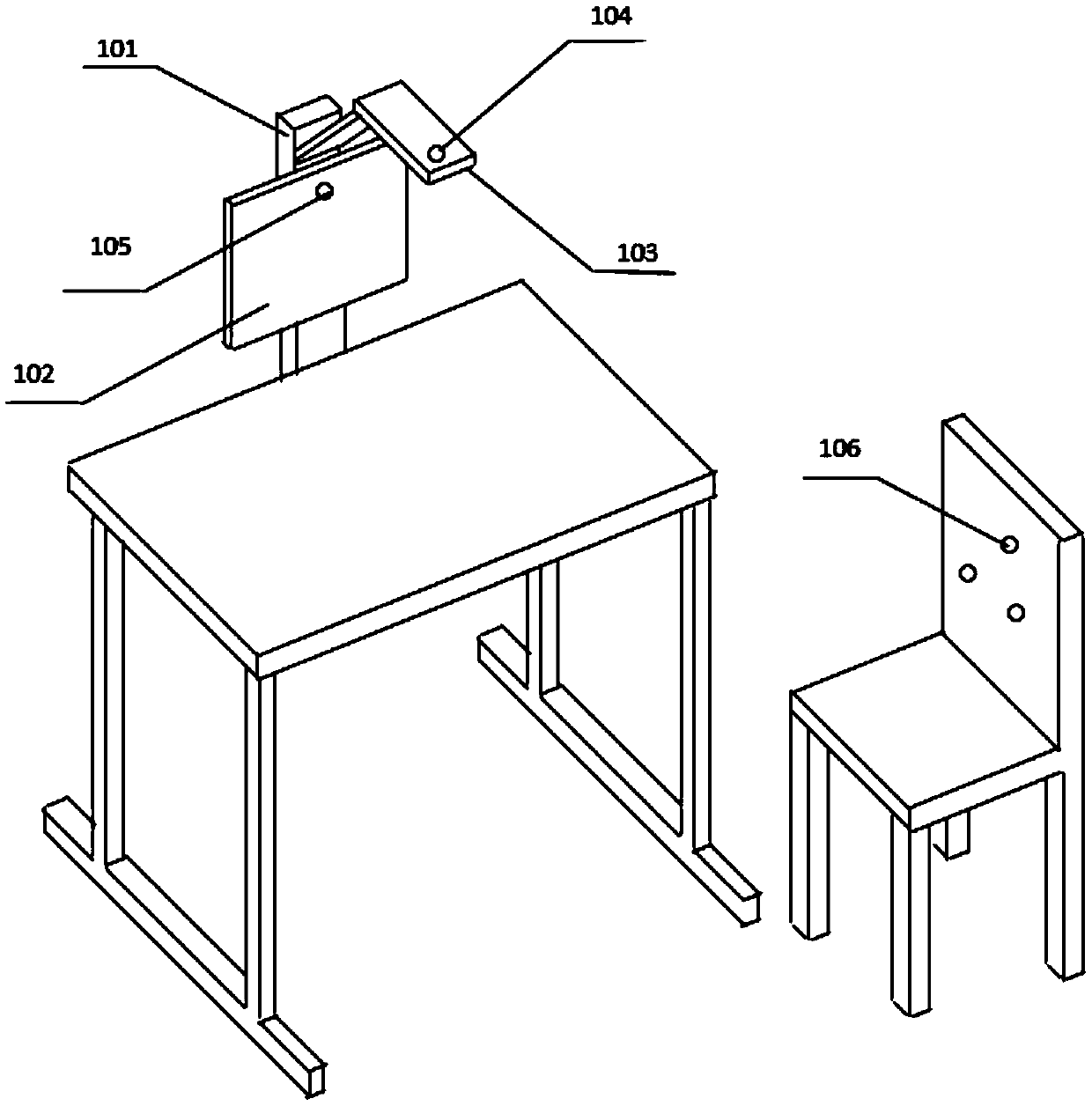

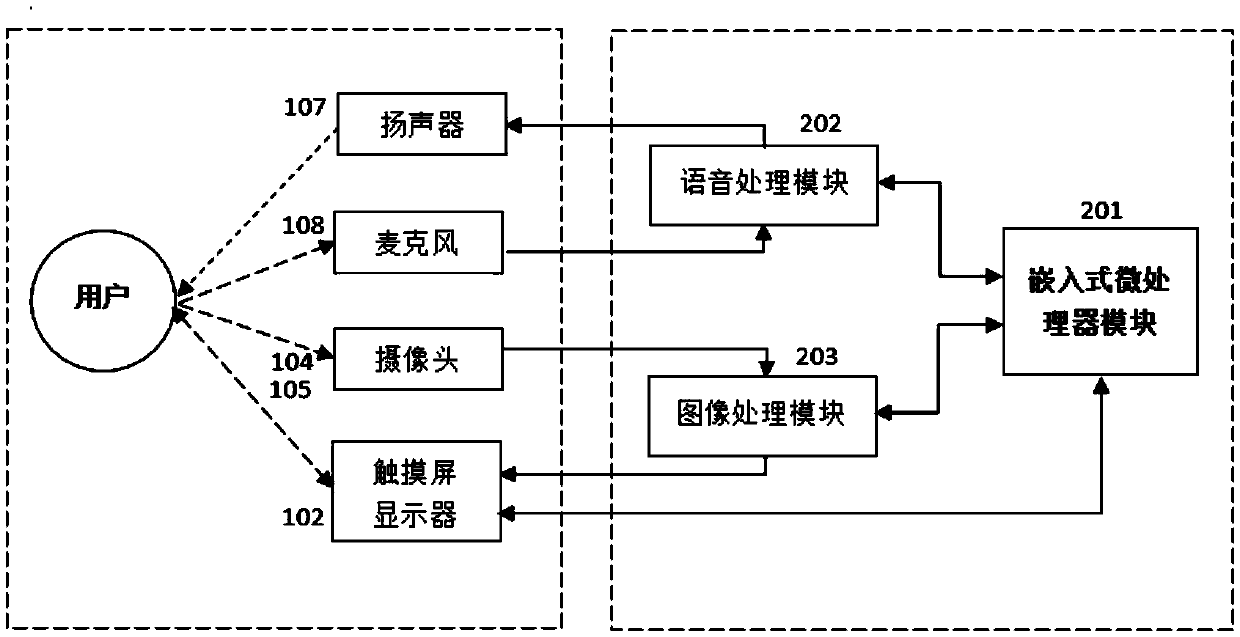

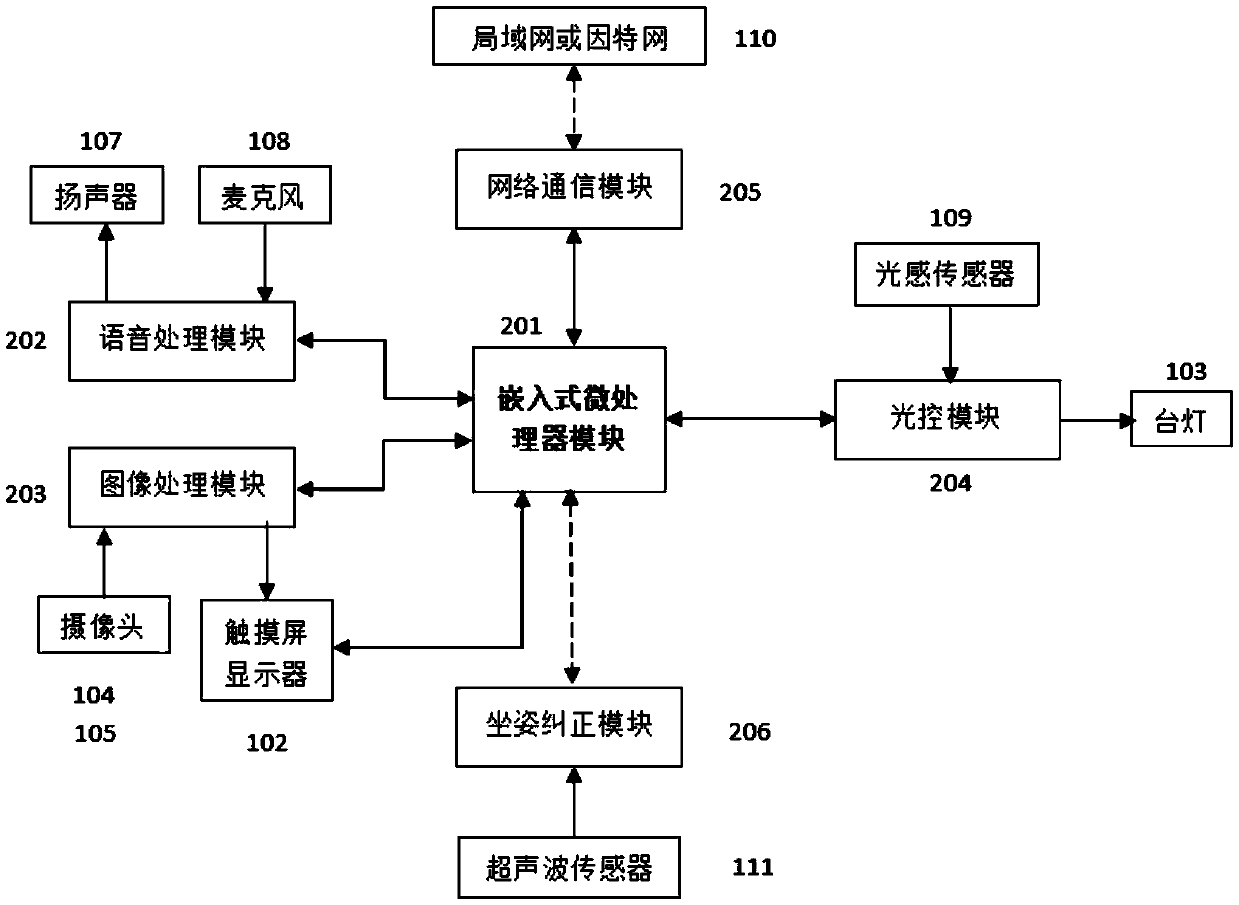

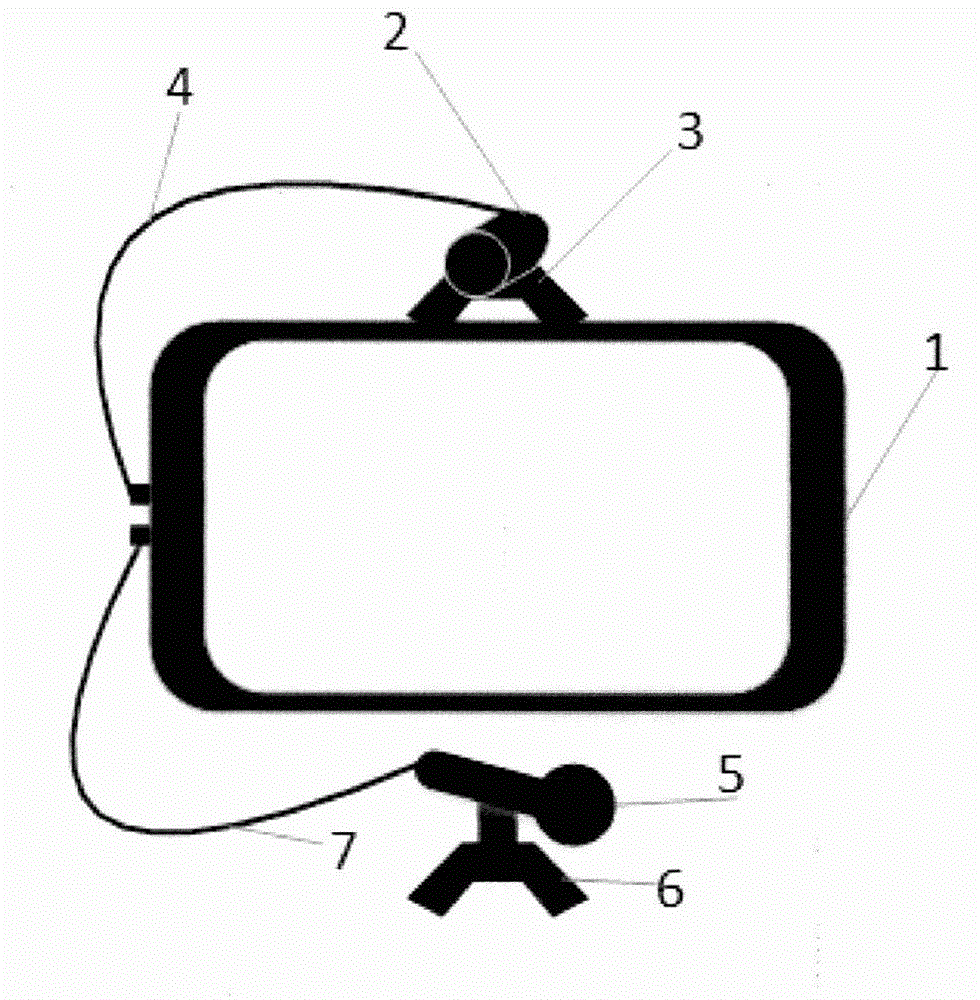

Intelligent studying platform based on multimodal interaction and interaction method of intelligent studying platform

ActiveCN105361429AReduce complicated operationsLess worryOffice tablesStoolsVoice communicationNetwork communication

Owner:重庆奇趣空间科技有限公司

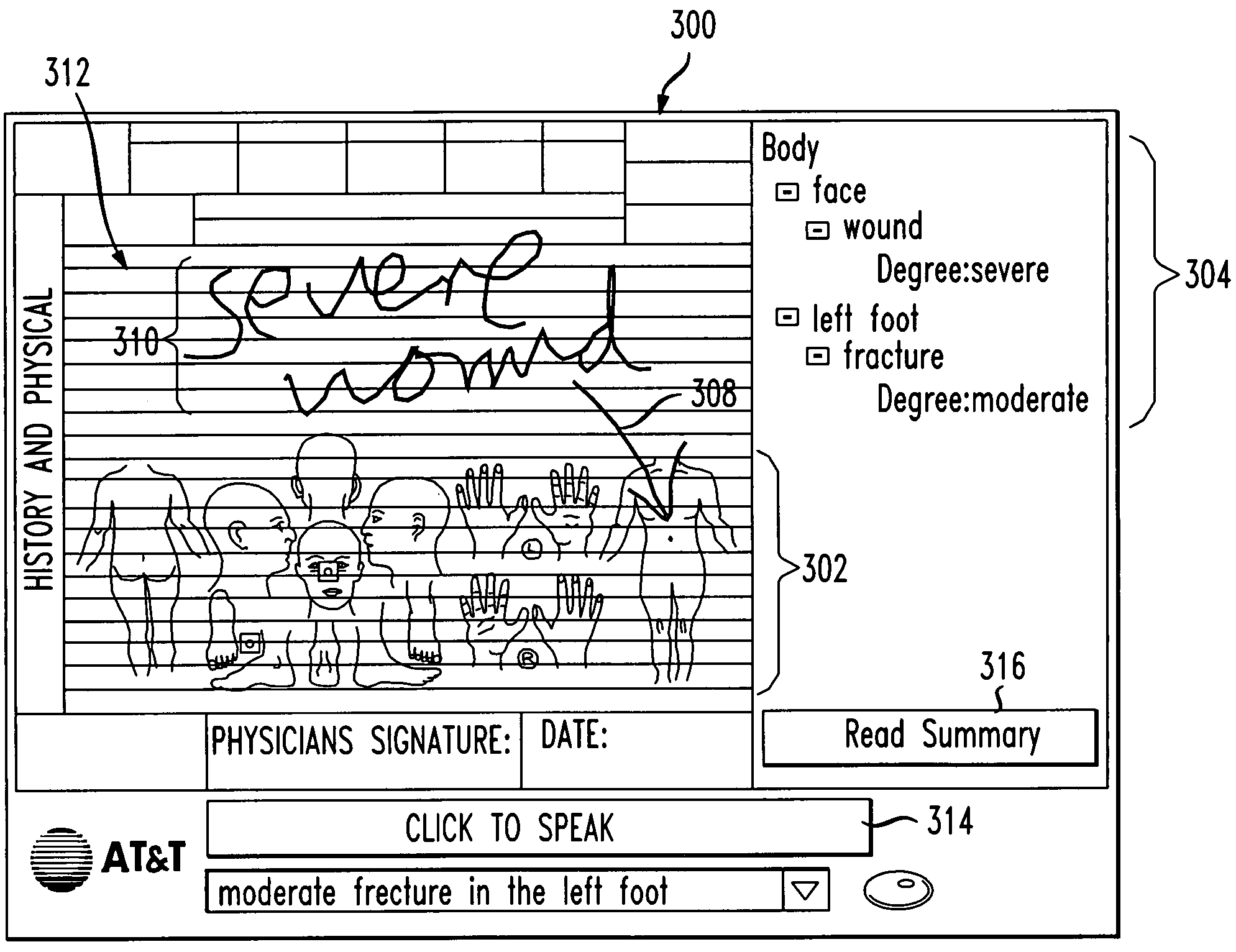

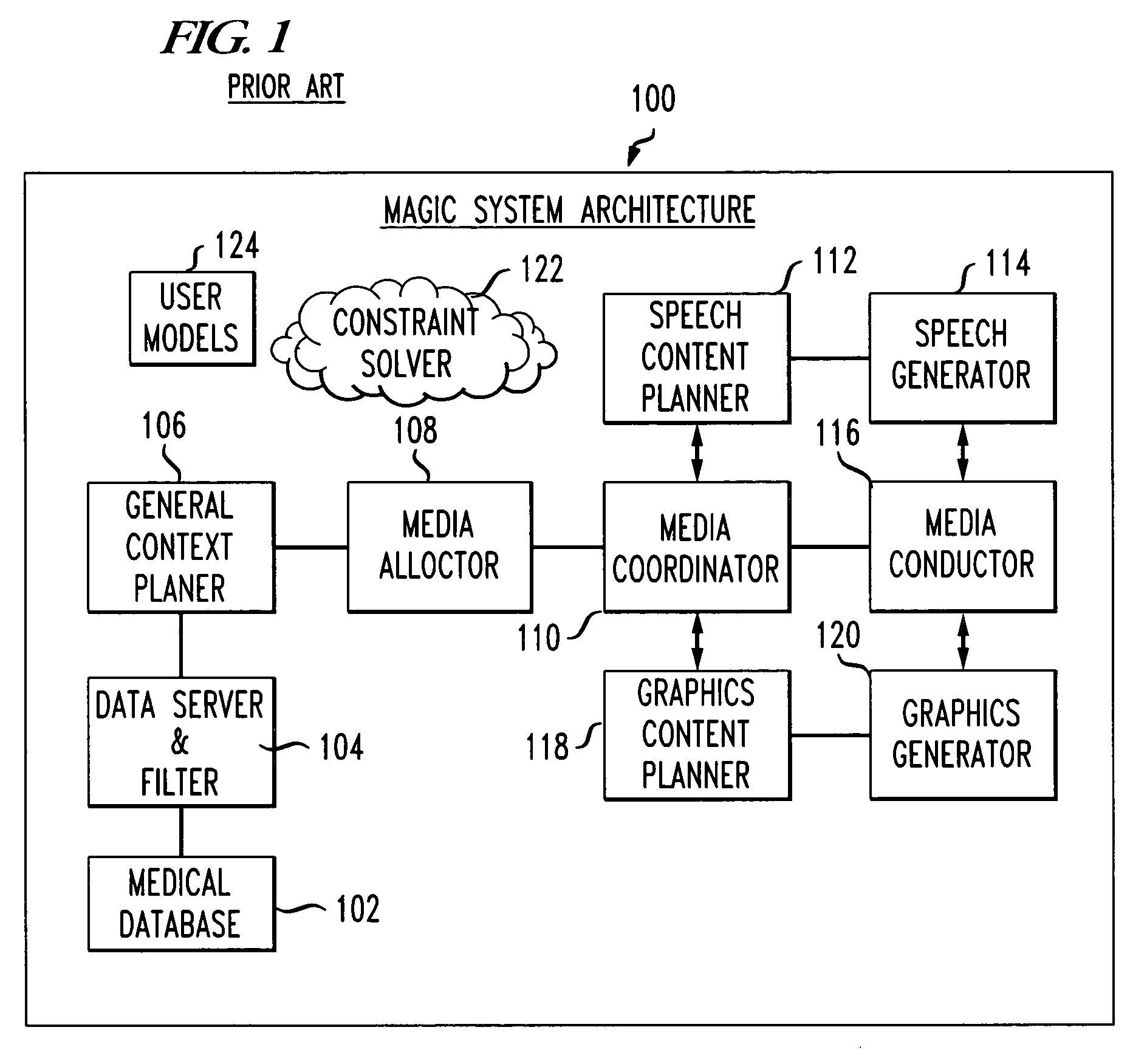

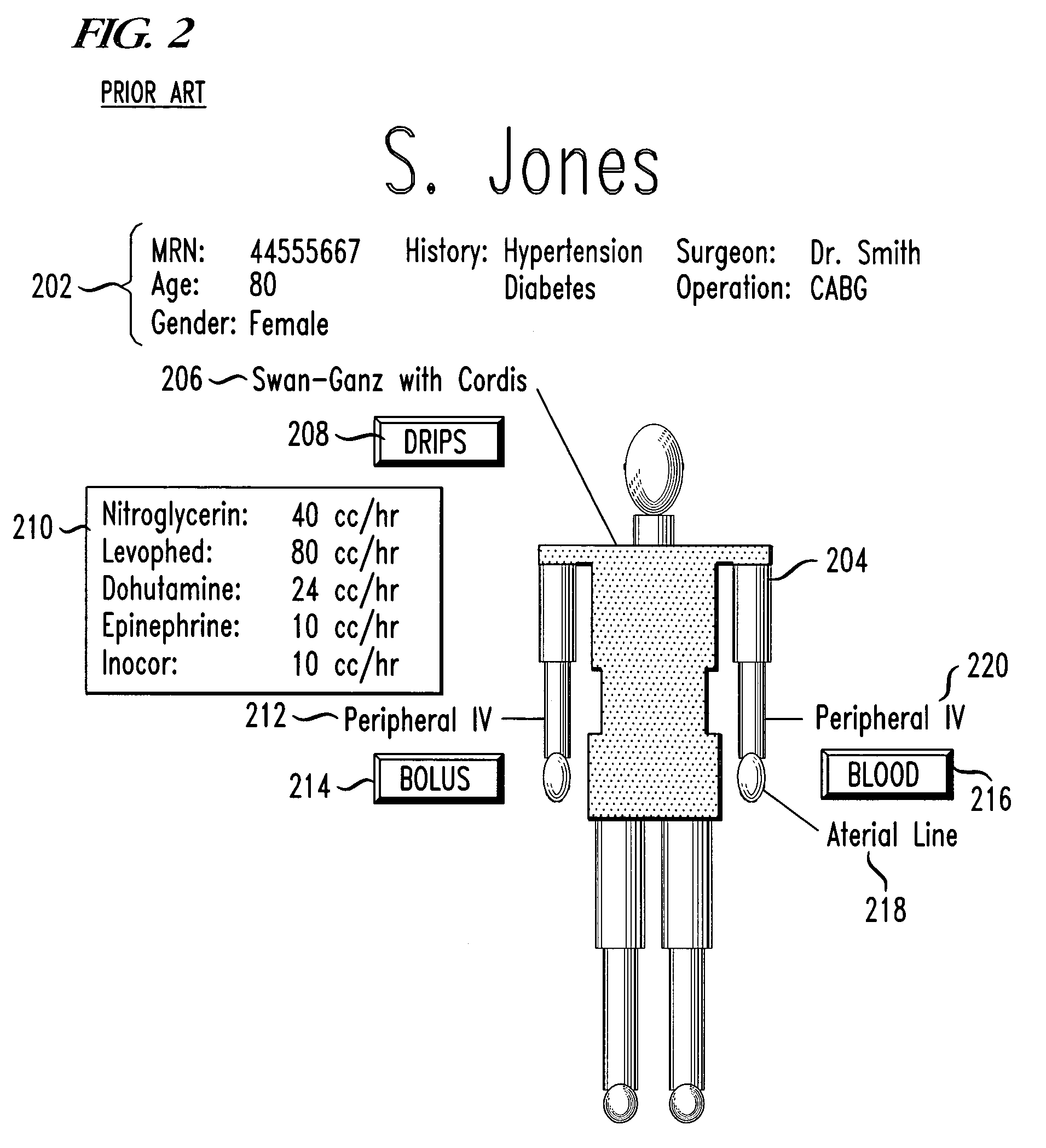

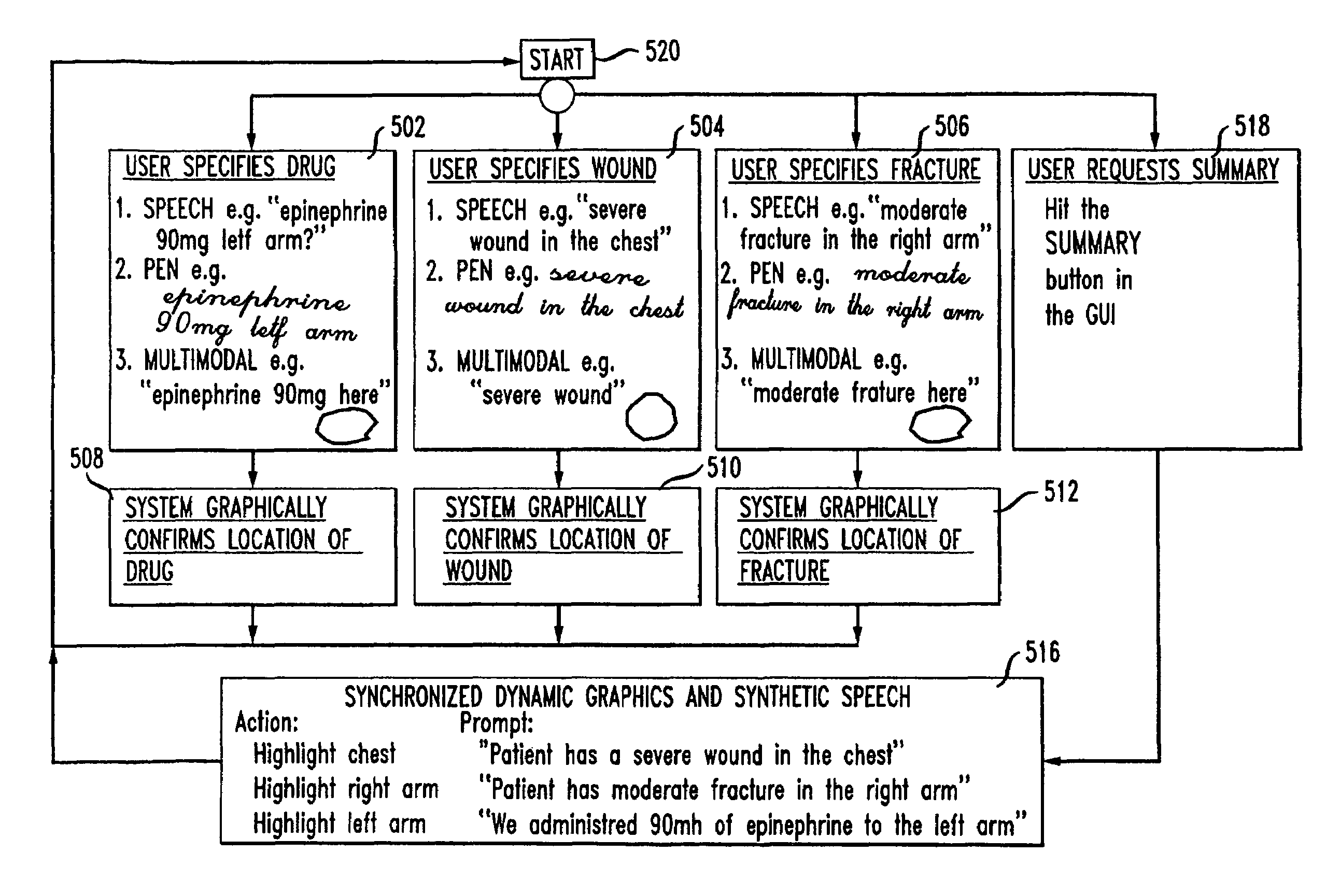

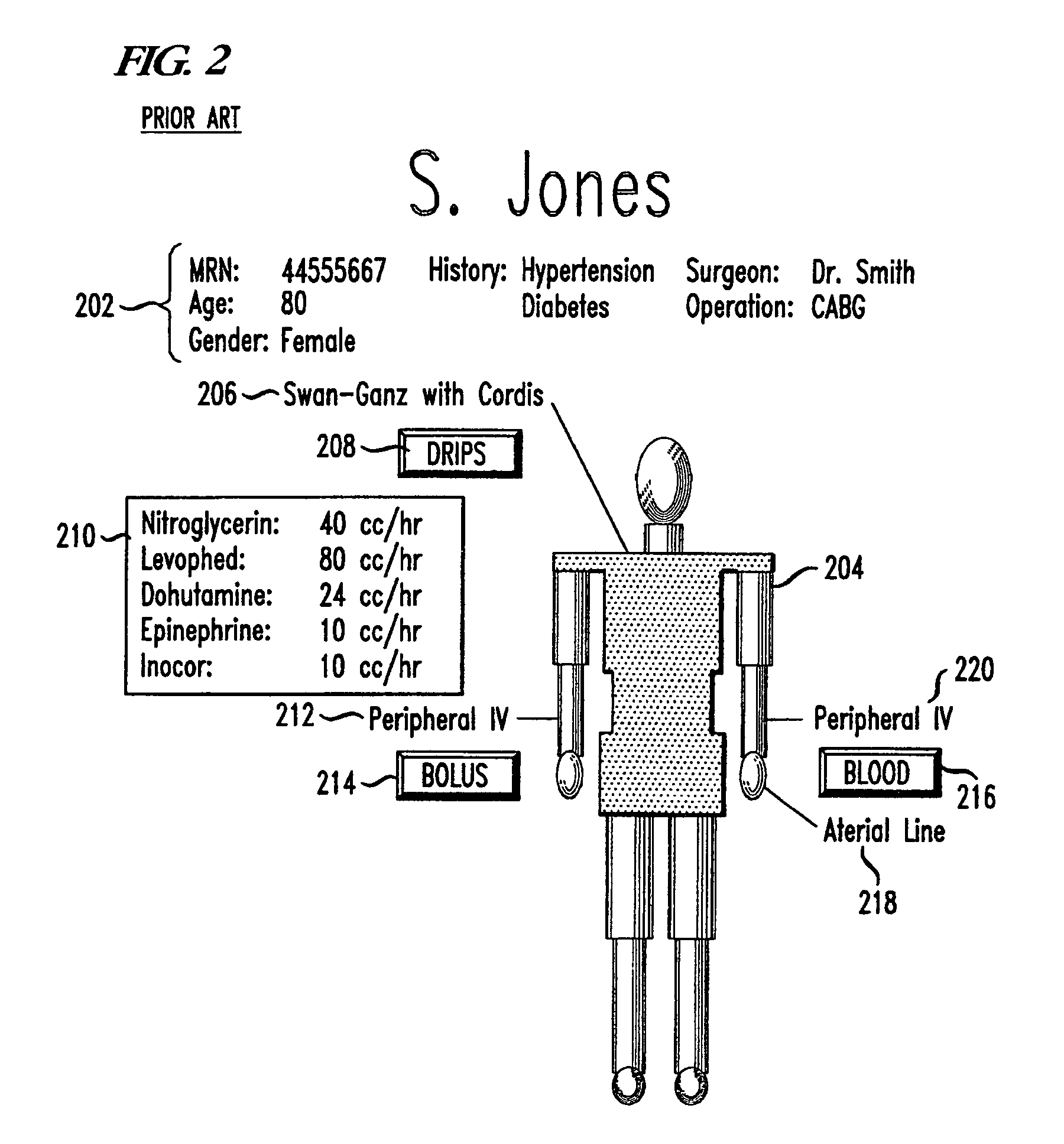

System and method for accessing and annotating electronic medical records using multi-modal interface

A system and method of exchanging medical information between a user and a computer device is disclosed. The computer device can receive user input in one of a plurality of types of user input comprising speech, pen, gesture and a combination of speech, pen and gesture. The method comprises receiving information from the user associated with a medical condition and a bodily location of the medical condition on a patient in one of a plurality of types of user input, presenting in one of a plurality of types of system output an indication of the received medical condition and the bodily location of the medical condition, and presenting to the user an indication that the computer device is ready to receive further information. The invention enables a more flexible multi-modal interactive environment for entering medical information into a computer device. The medical device also generates multi modal output for presenting a patient's medical condition in an efficient manner.

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

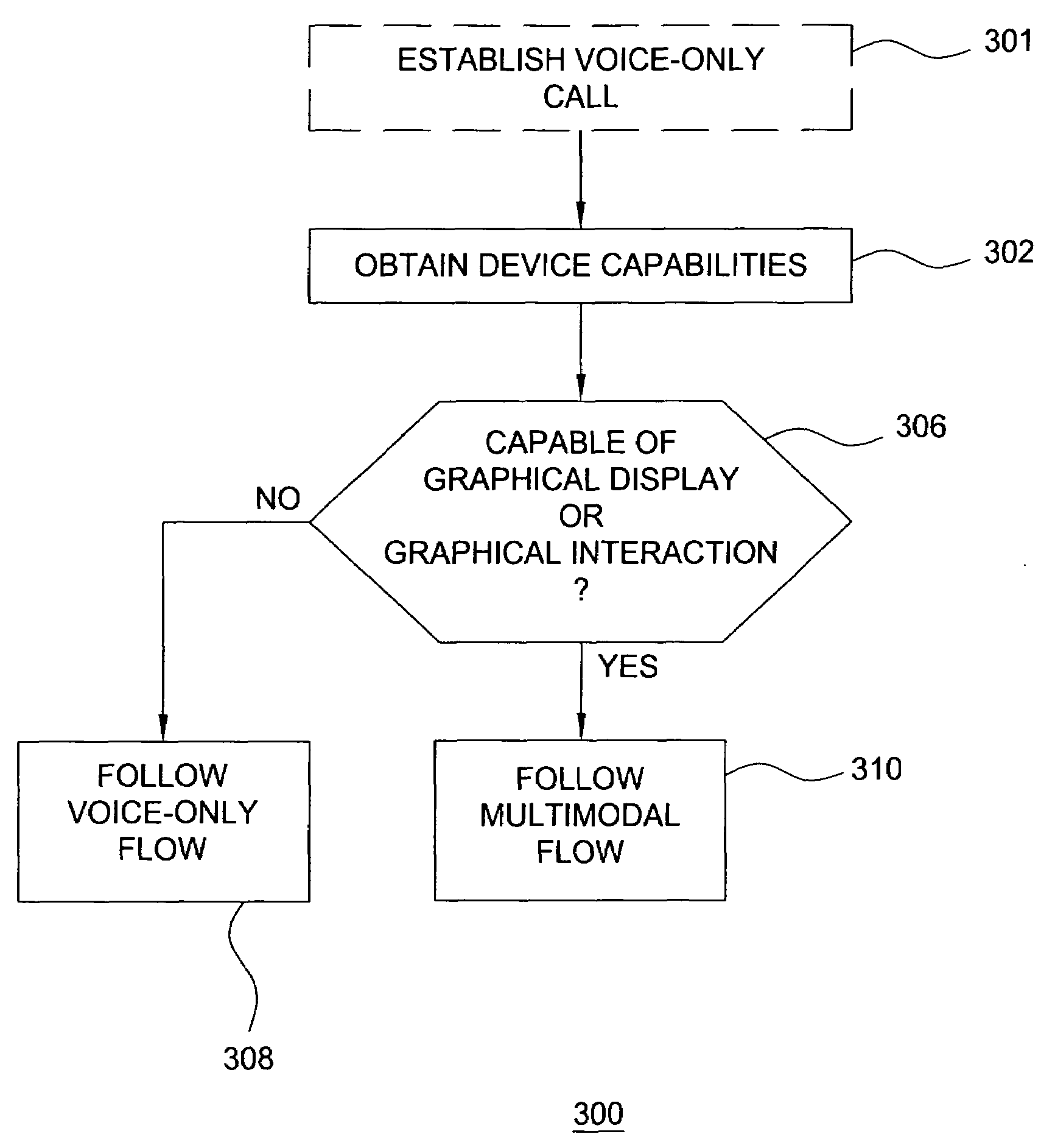

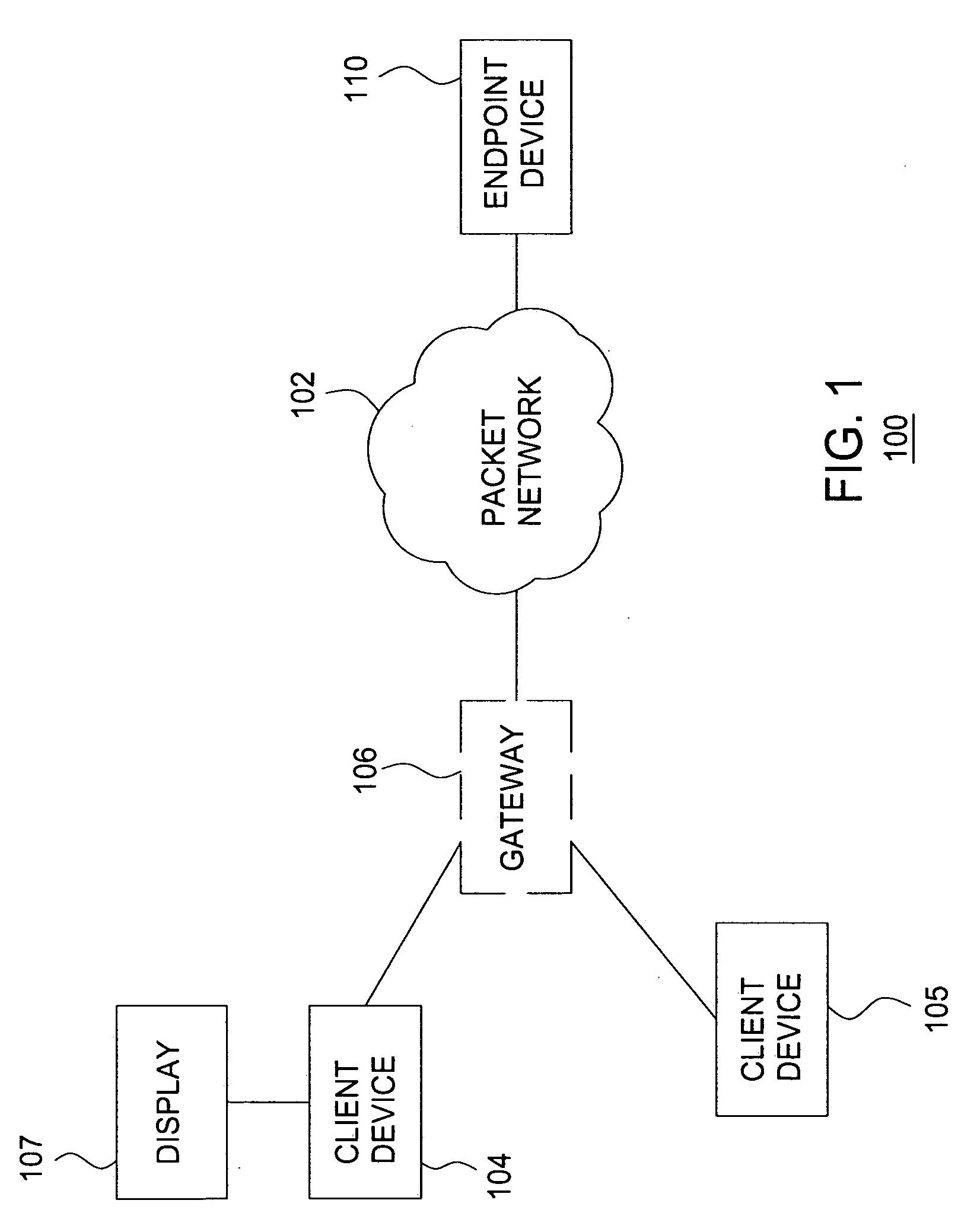

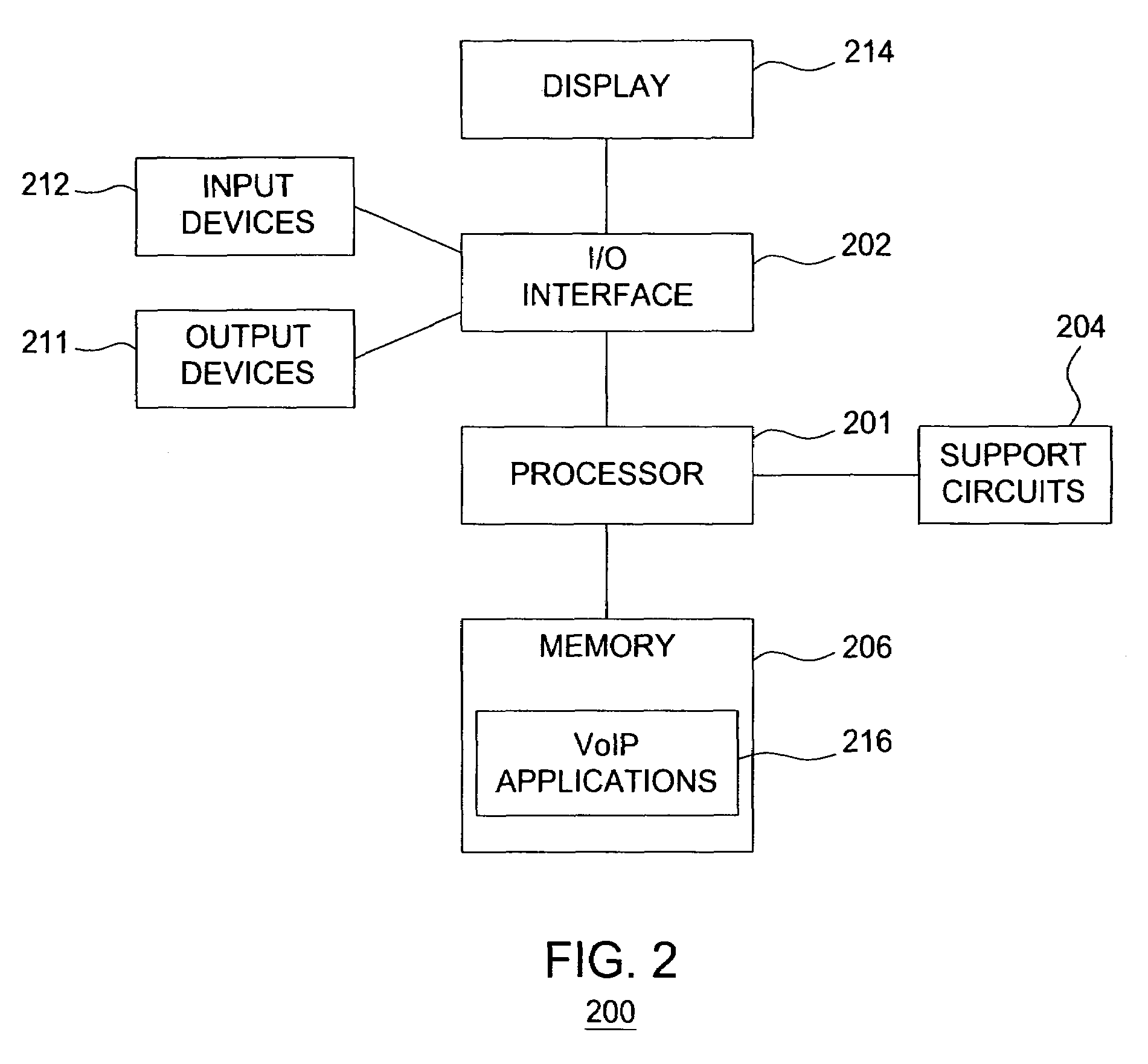

Method and apparatus for invoking multimodal interaction in a VOIP call

Method and apparatus for invoking multimodal interaction in a VOIP call is described. In one example, handling a call at a first client device in a packet network is described. The first client device obtains device capabilities of a second client device in response to the call. The device capabilities are processed to determine whether the second client device is capable of graphical display or graphical interaction. If so, the first client device follows a multimodal call flow. Otherwise, the first client device follows a voice-only call flow.

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

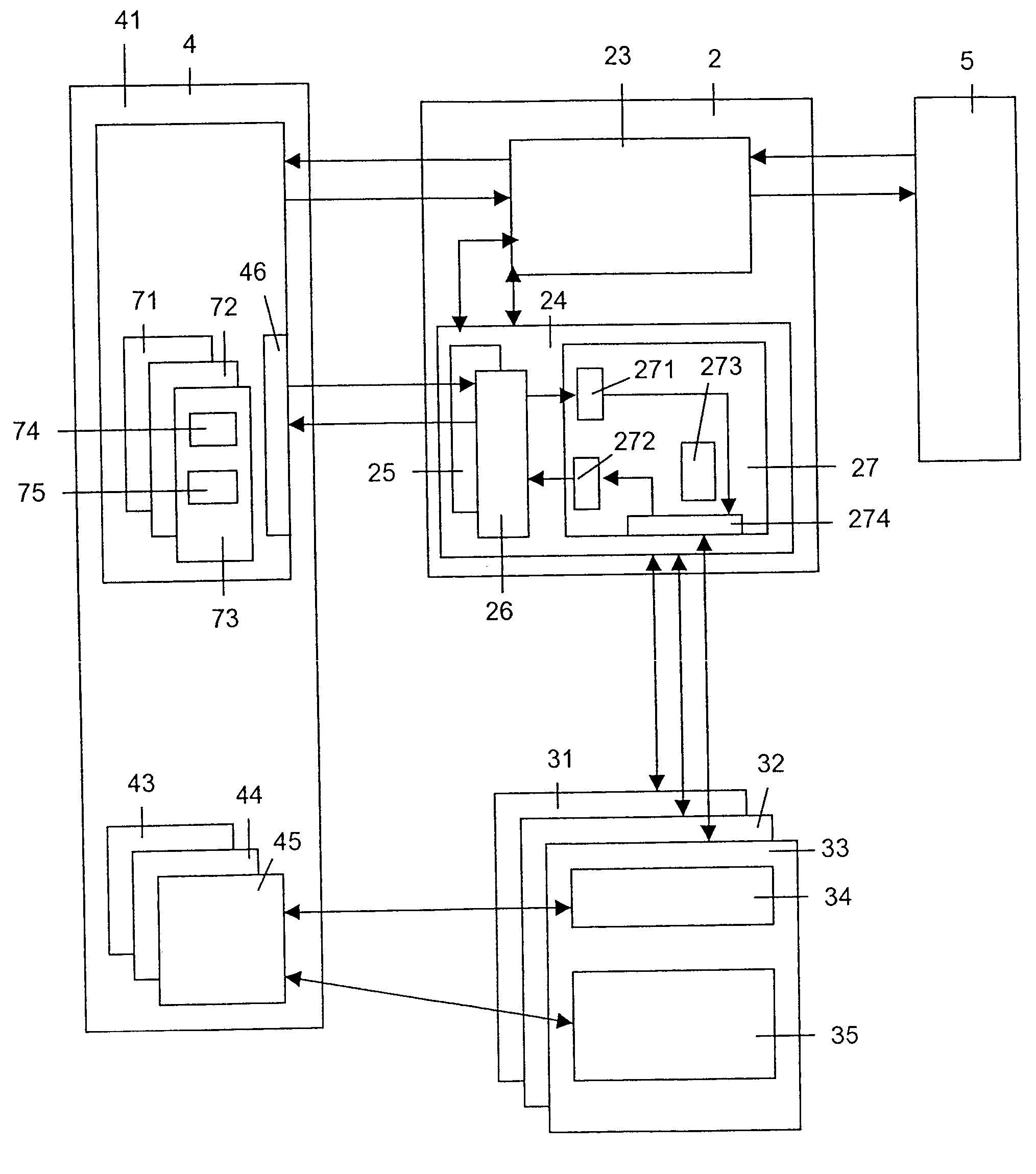

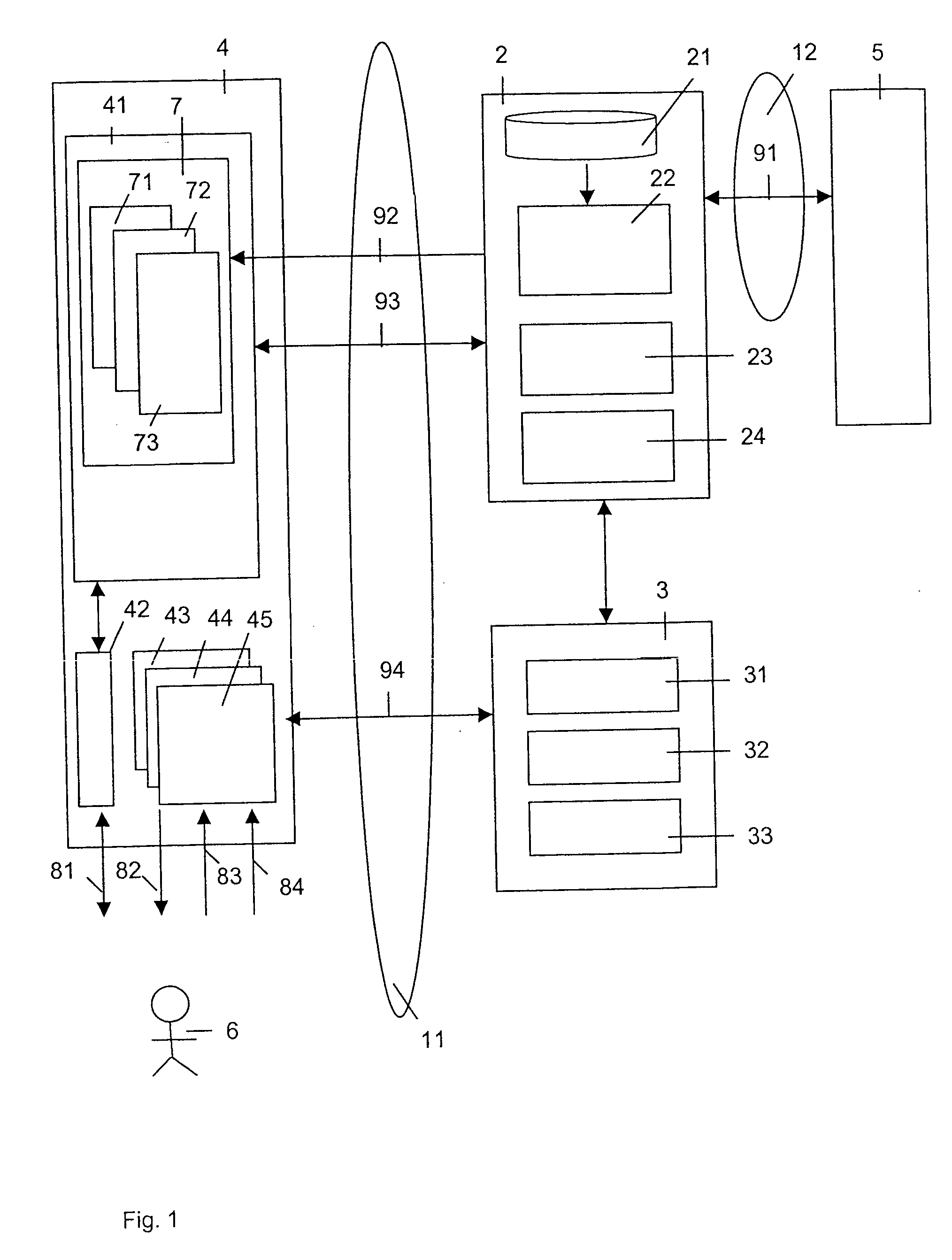

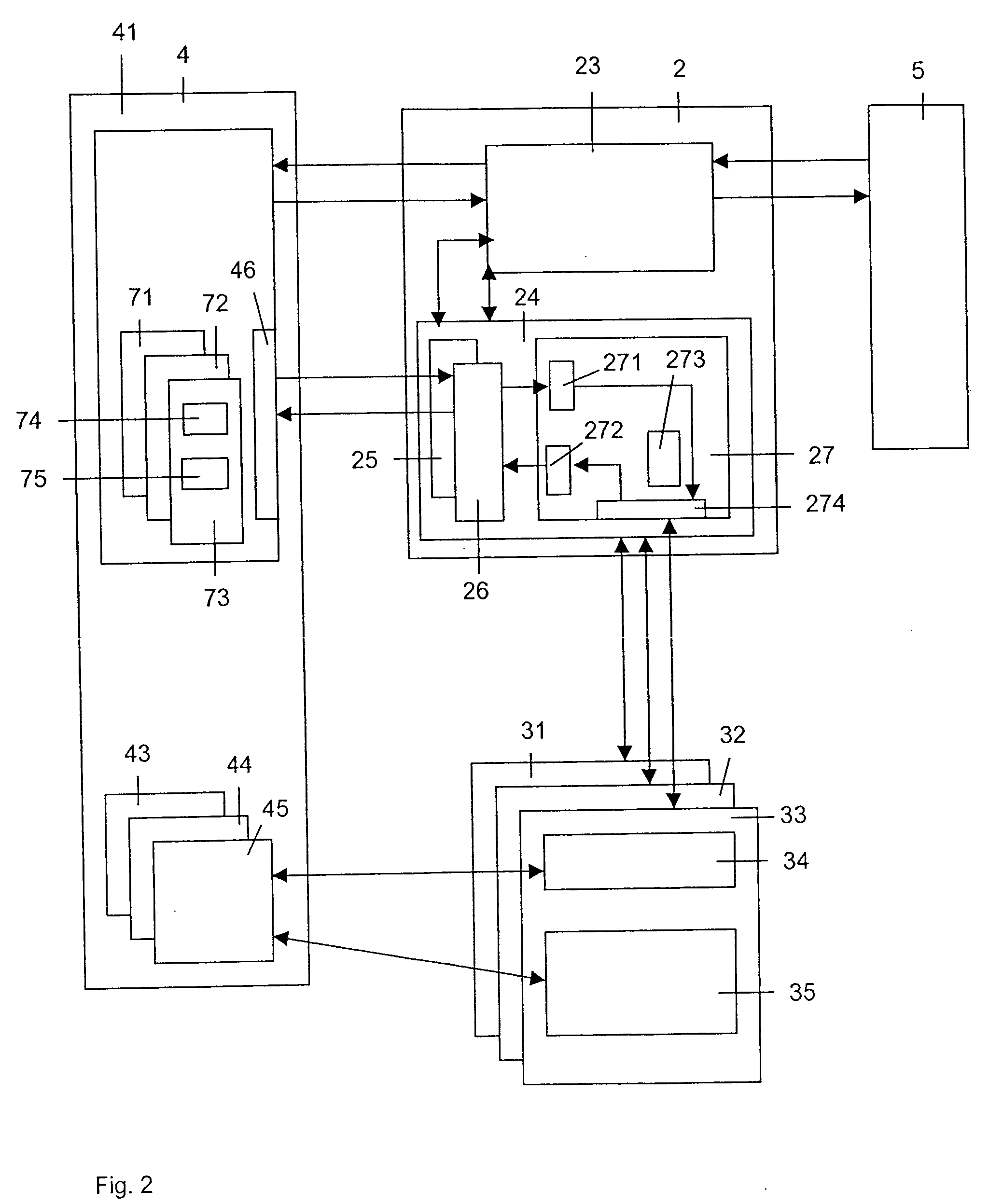

Method and server for providing a multi-modal dialog

InactiveUS20050261909A1Simple and efficientIncrease flexibility applicabilityTransmissionSpecial data processing applicationsDiagnostic Radiology ModalityClient-side

The invention concerns a method of providing a multi-modal dialog between a multi-modal application (5) and a user (6) communicating with the multi-modal application (5) via a client (4) suited to exchange and present documents (7) encoded in standard or extended hyper text mark-up language. The invention further concerns a proxy-server (2) for executing this method. The multi-modal dialog between the multi-modal application (5) and the user (6) is established through the proxy-server (2) interacting with the client (4) via exchange of information encoded in standard or extended hyper text mark-up language. The proxy-server (2) retrieves at least one additional resource of modality requested within the multi-modal dialog. The proxy-server (2) composes a multi-modal interaction with the user (6) based on standard or extended hyper text mark-up language interactions with the client (6) and on the retrieved additional resources (71, 72, 73, 31, 32, 33).

Owner:ALCATEL LUCENT SAS

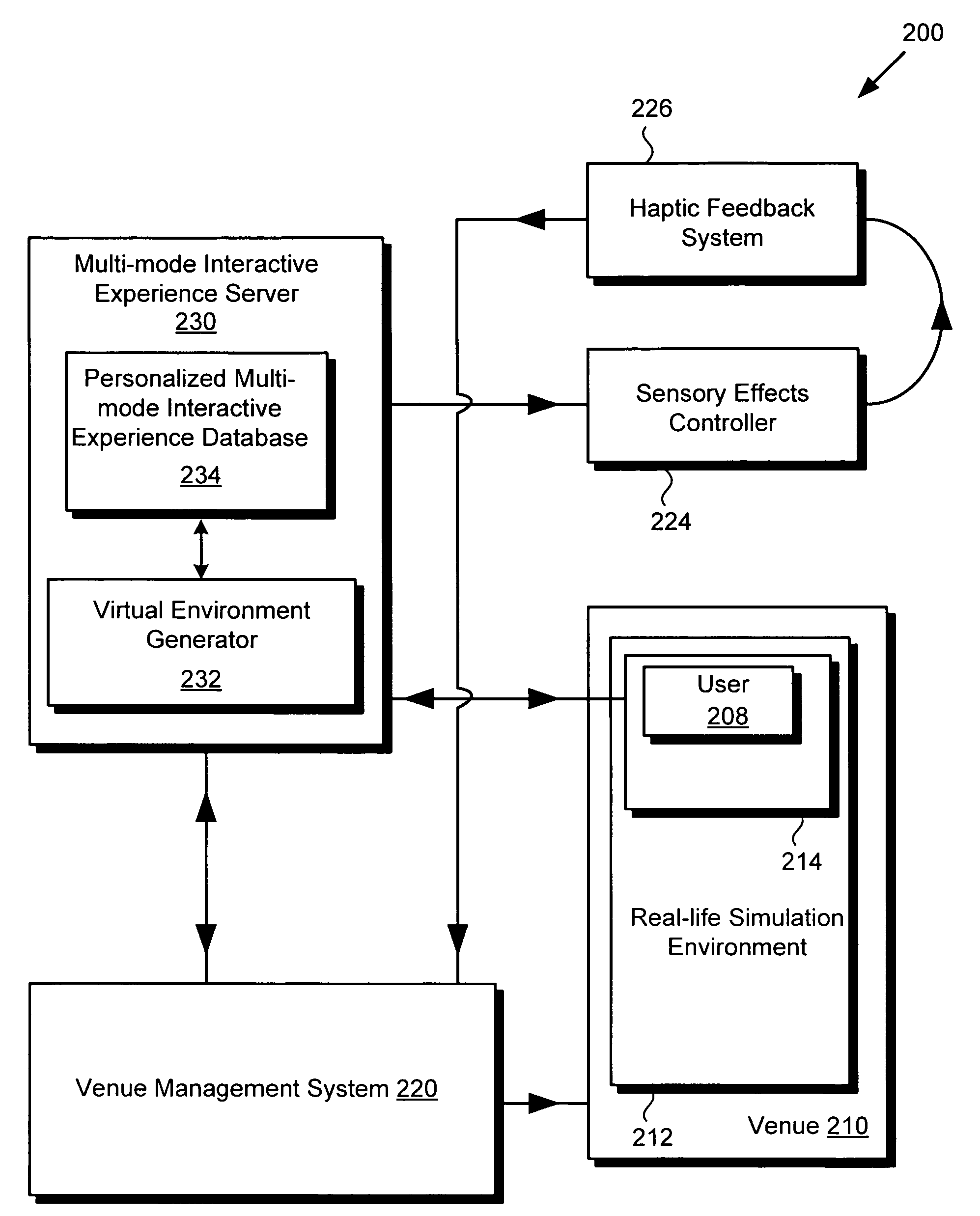

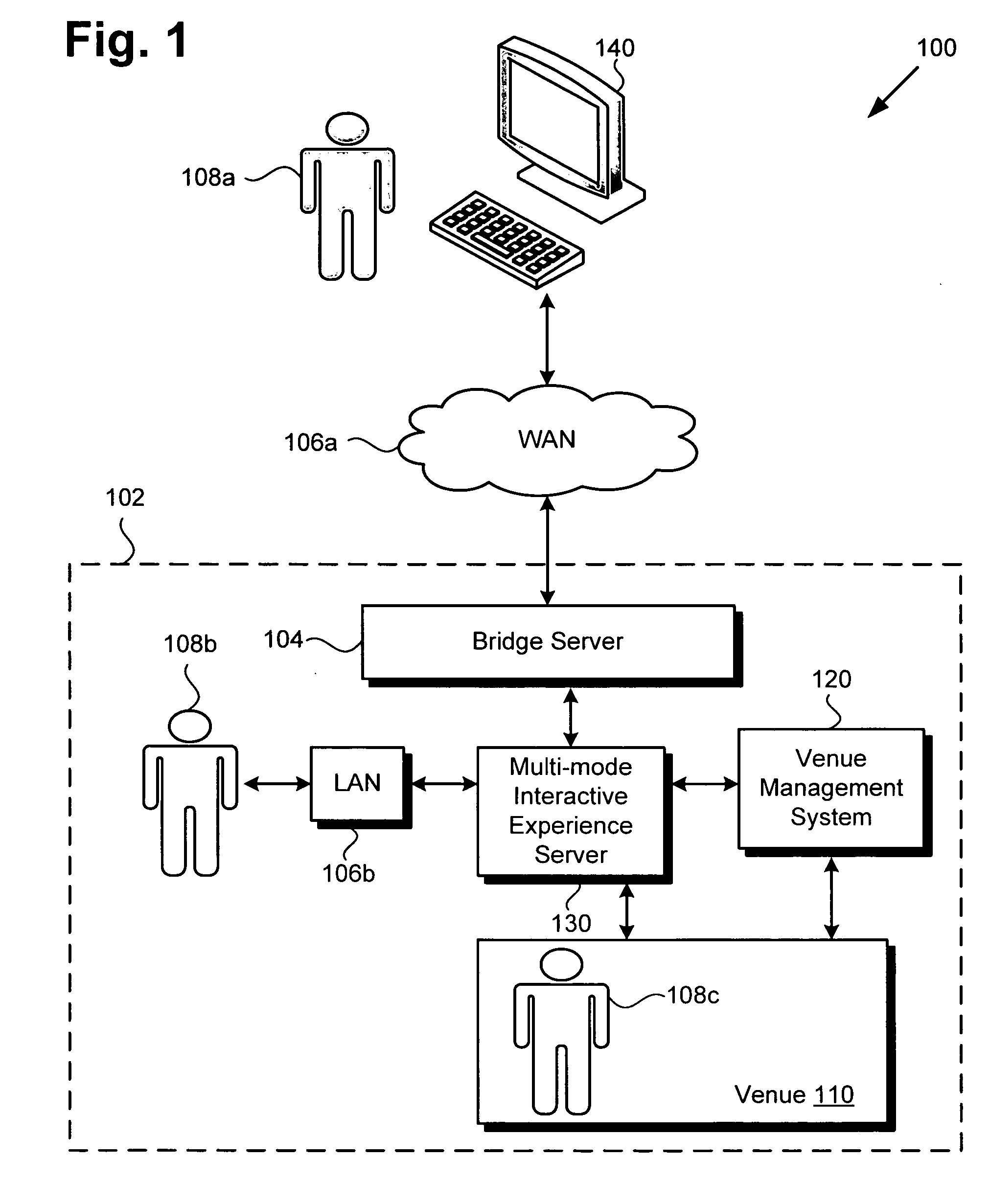

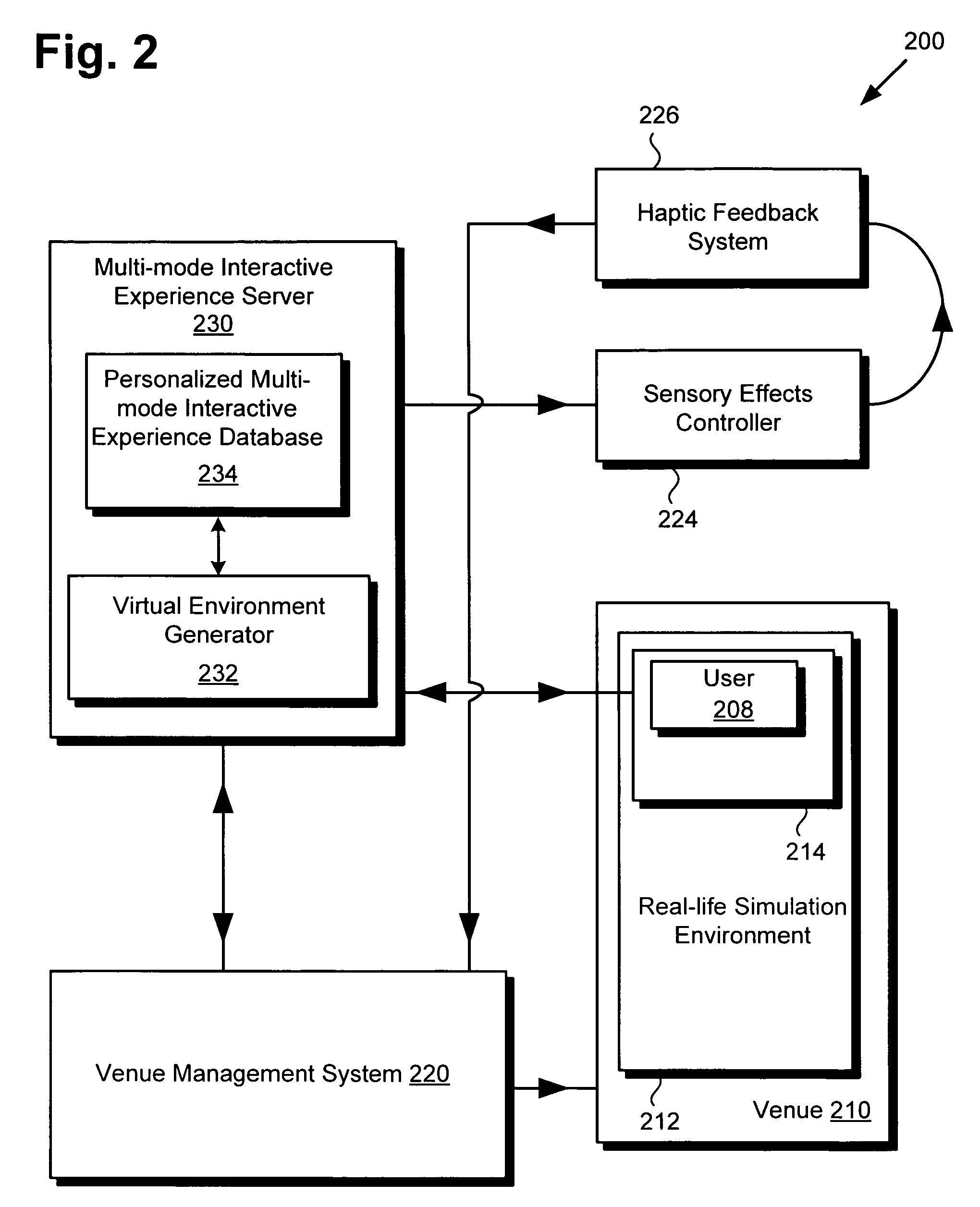

Method and system for providing a multi-mode interactive experience

InactiveUS20100131865A1Input/output processes for data processingPersonalizationMultimodal interaction

Disclosed are methods and systems for providing a multi-mode interactive experience. In one embodiment, a method comprises hosting a virtual environment corresponding to a real-life simulation environment, on a multi-mode interactive experience server, and networking the server and a venue management system configured to control events occurring in the real-life simulation environment. The networking enables a user to interact with the multi-mode interactive experience in a real-life simulation mode or in a virtual mode. The method further comprises associating a personalized version of the multi-mode interactive experience with an identification code assigned to the user, updating the personalized version of the multi-mode interactive experience according to events occurring in the real-life simulation mode and events occurring in the virtual mode of the multi-mode interactive experience, and providing the updated personalized version of the multi-mode interactive experience in one of the real-life simulation mode or the virtual mode.

Owner:DISNEY ENTERPRISES INC

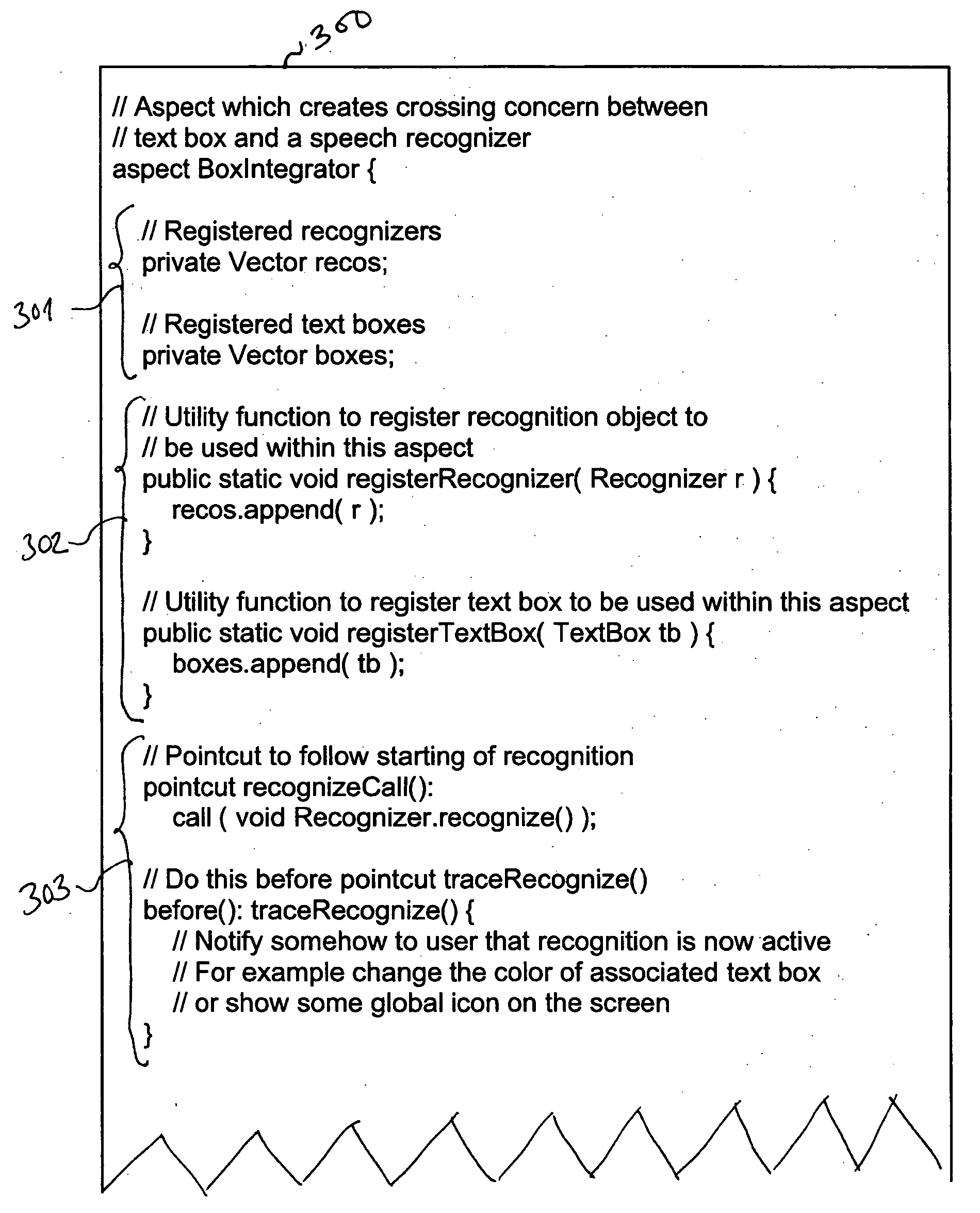

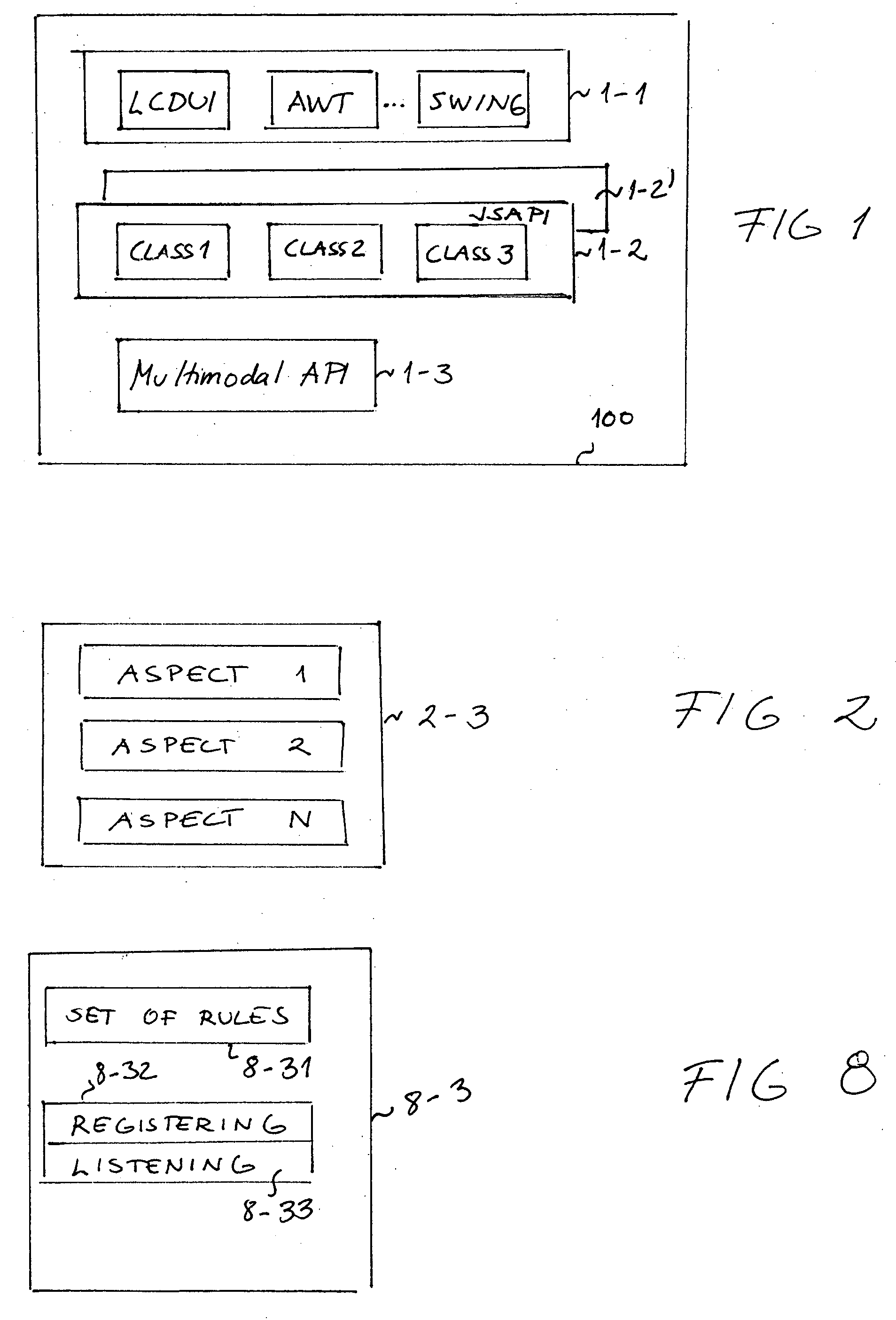

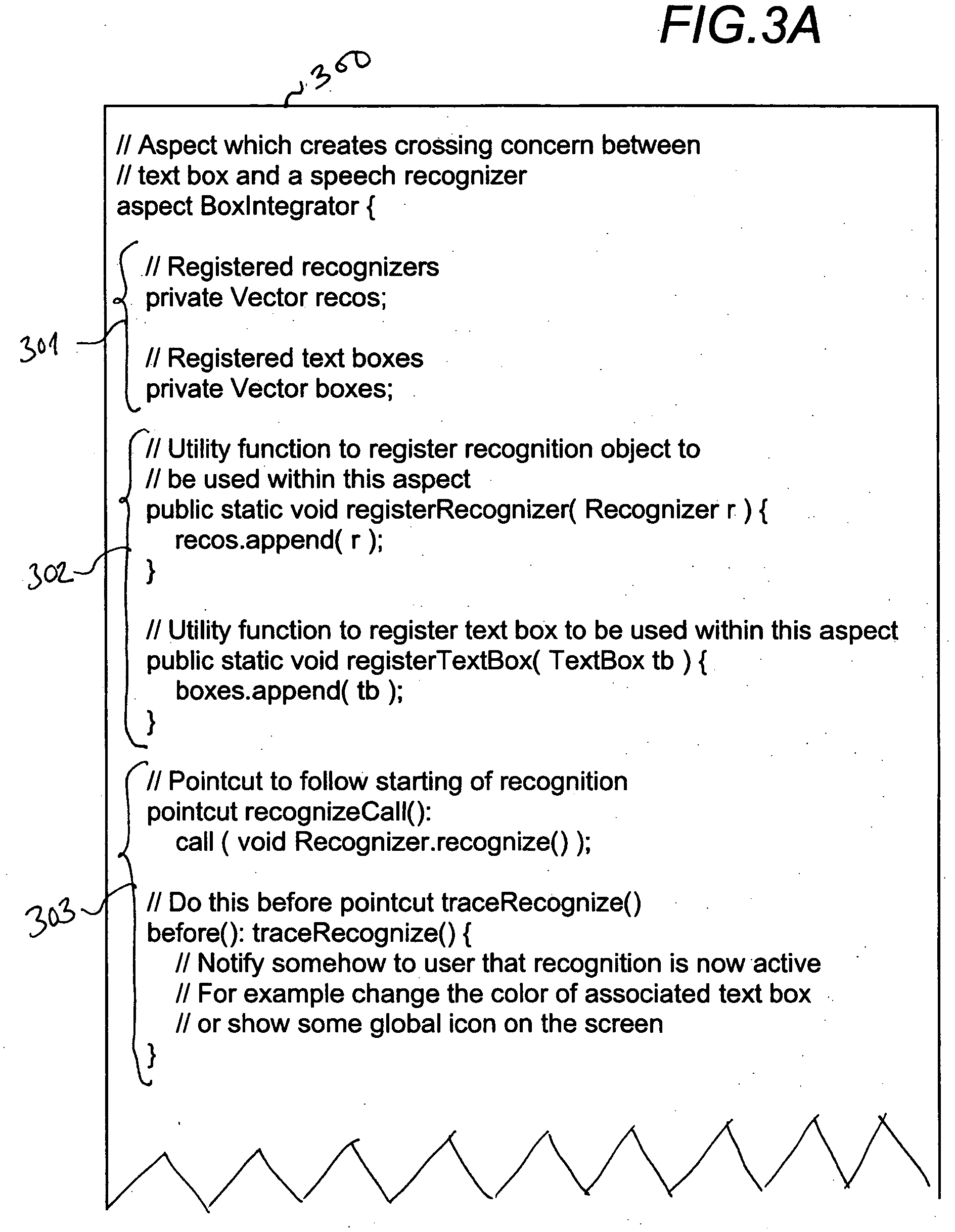

Multimodal interaction

InactiveUS20060149550A1Limited user interfaceSpeech analysisExecution for user interfacesMultimodal interaction

In order to enable an application to be provided with multimodal inputs, a multimodal application interface (API), which contains at least one rule for providing multimodal interaction is provided.

Owner:NOKIA CORP

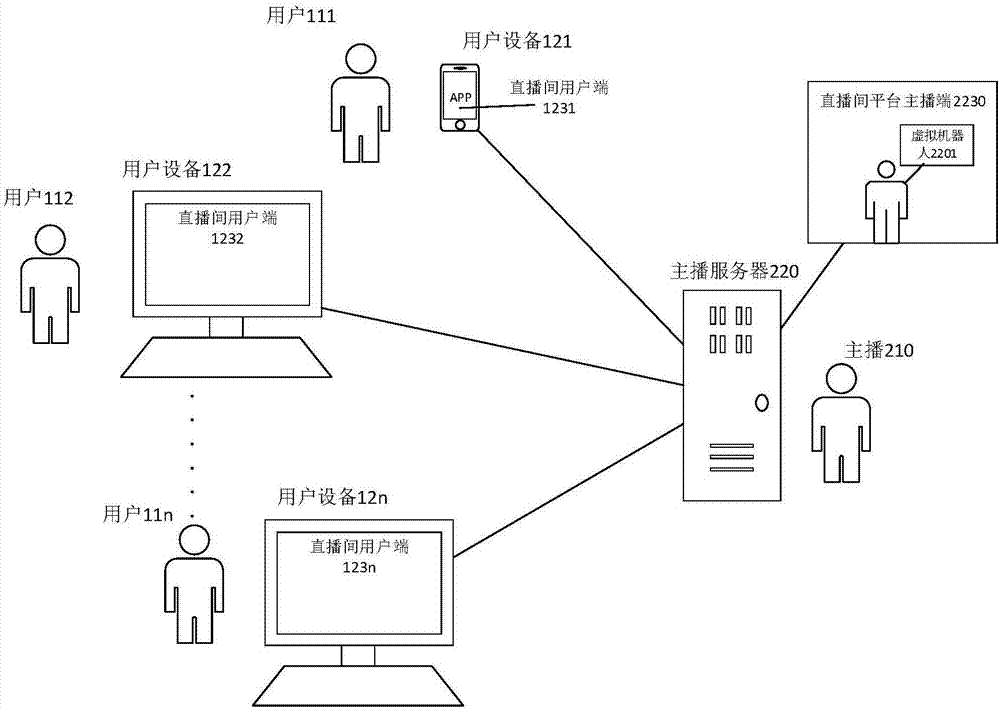

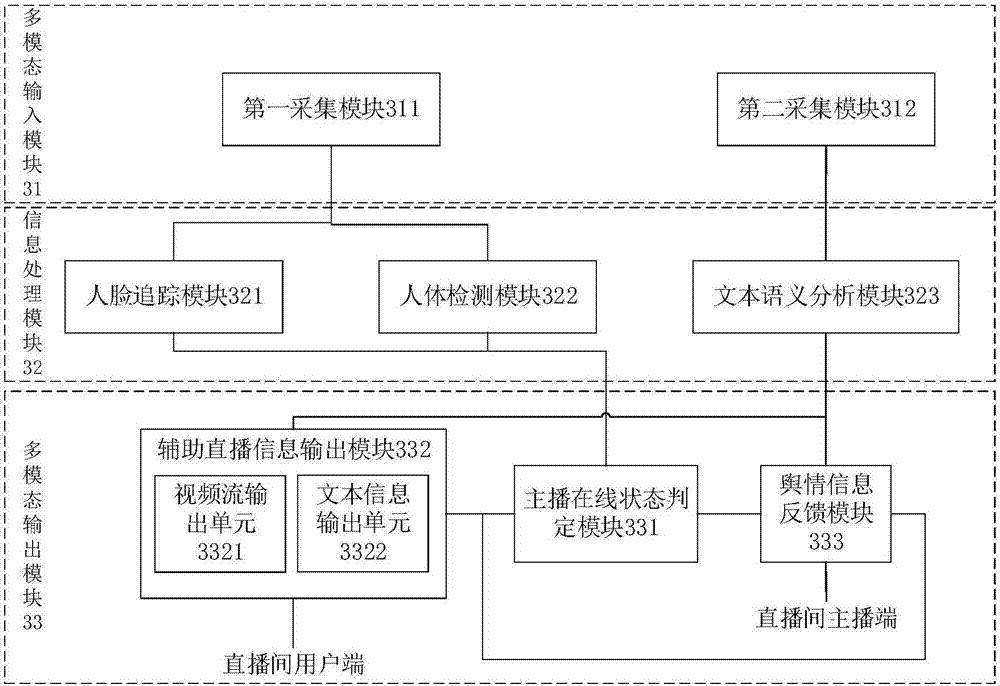

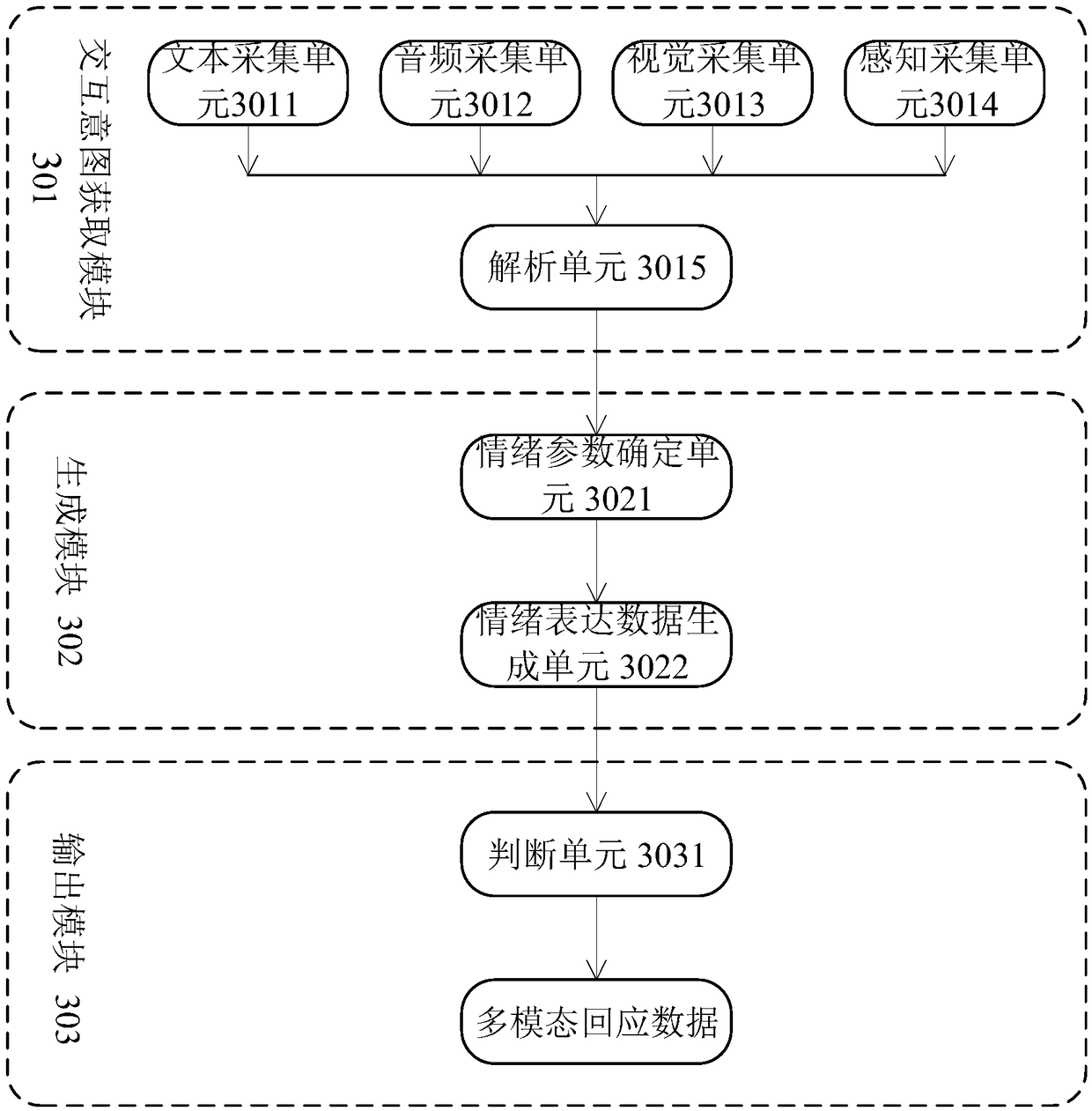

Virtual robot multimodal interaction method and system applied to live video platform

ActiveCN107197384AGuaranteed stickinessImprove experienceSelective content distributionVirtual robotMultimodal interaction

The invention discloses a virtual robot multimodal interaction method and system applied to a live video platform. An application of the live video platform is provided with a virtual robot for associating the live video, the virtual robot has multimodal interaction ability, and the public opinion monitoring method comprises the following steps: information collection step: collecting public opinion information of live broadcast in the current specific direct broadcasting room, wherein the public opinion information comprises viewed text feedback information; public opinion monitoring step: calling text semantic understanding ability and generating a public opinion monitoring result of the specific direct broadcasting room; and a scene event response step: judging an event characterized by the public opinion monitoring result, calling the multimodal interaction ability, and outputting multimodal response data through the virtual robot. By adoption of the virtual robot multimodal interaction method and system disclosed by the invention, the viewing feedback information of audience can be monitored and prompted in real time, the live video operation is assisted by the virtual robot to keep the viscidity with the users consistently, and thus the user experience is improved.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

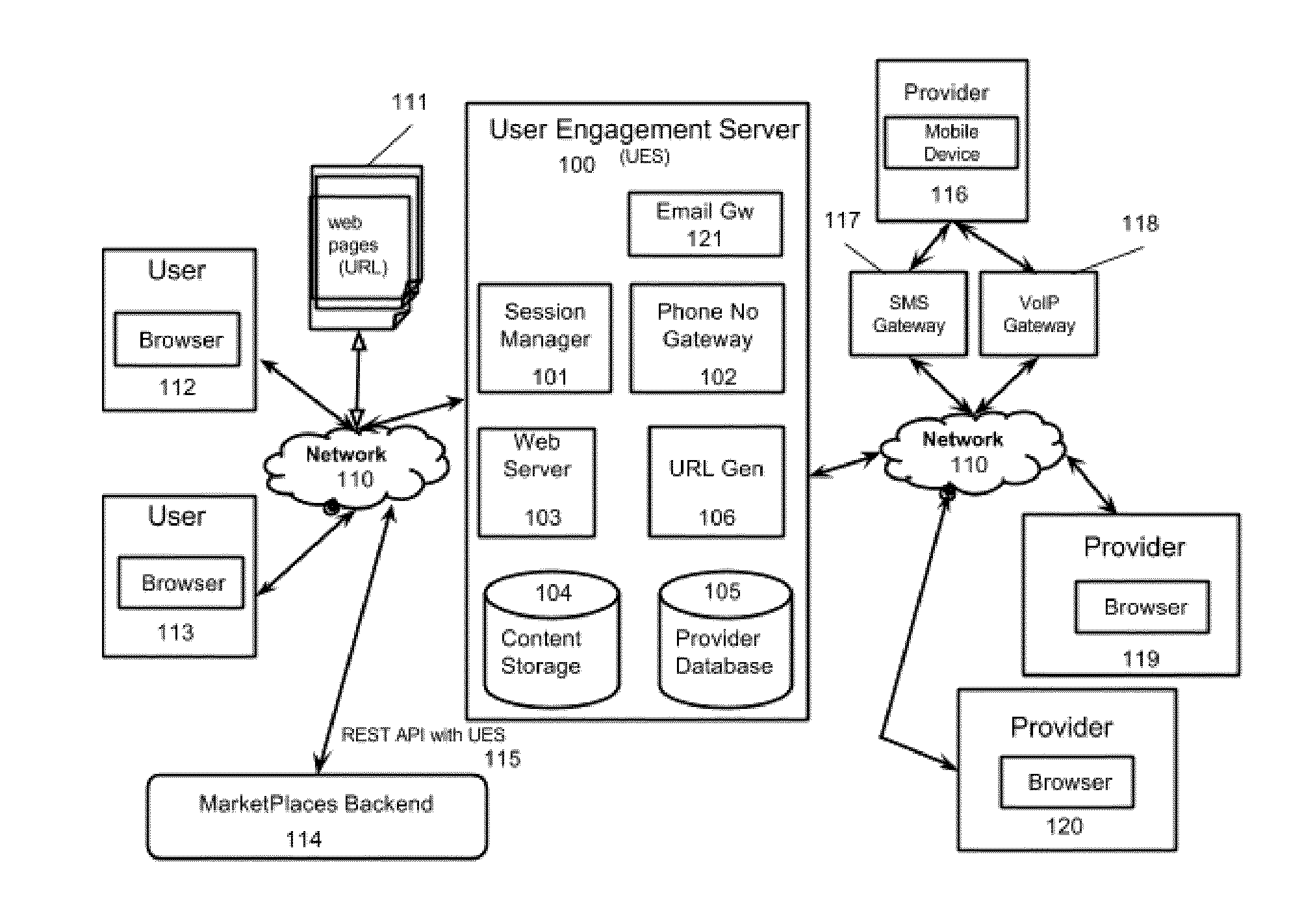

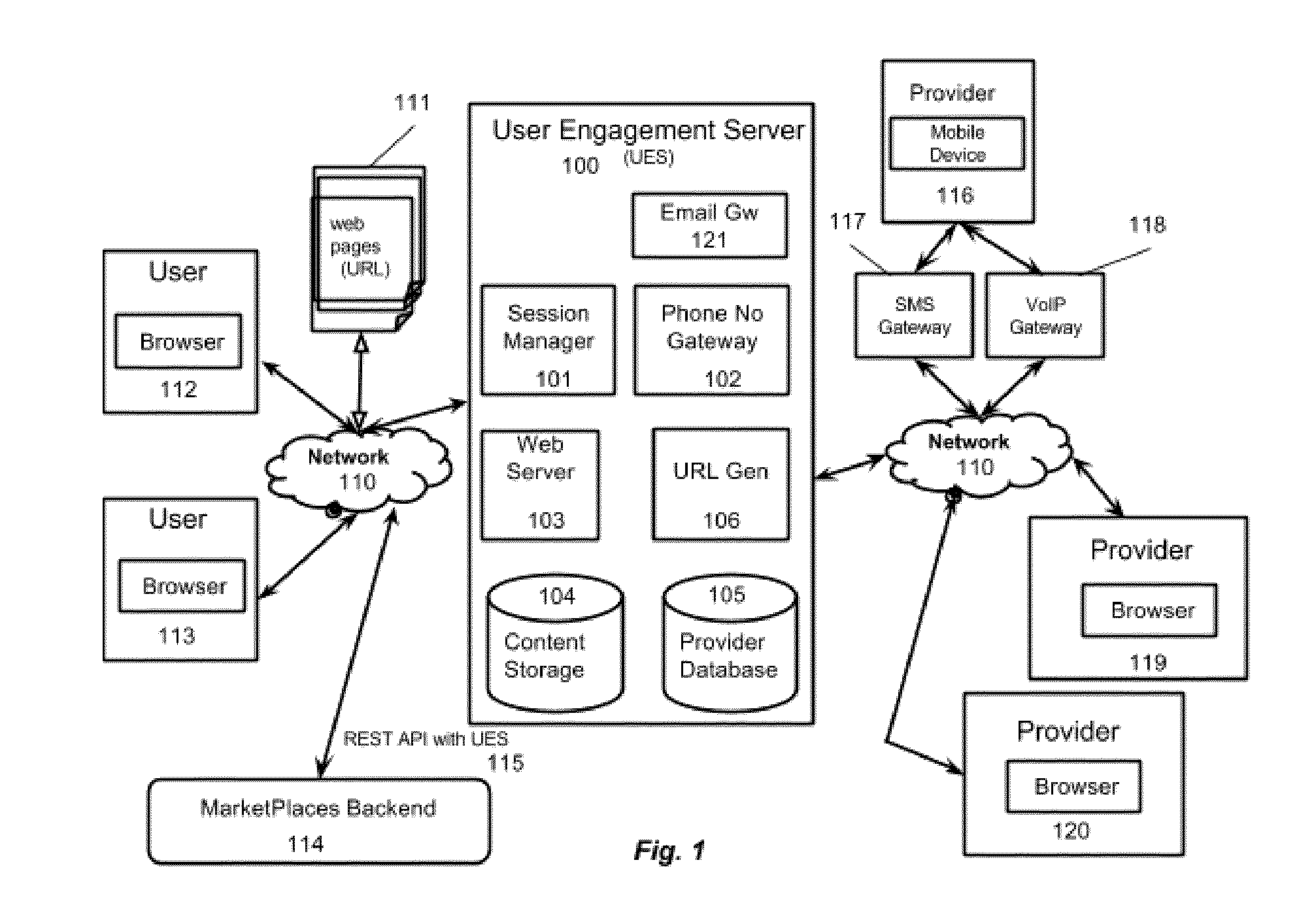

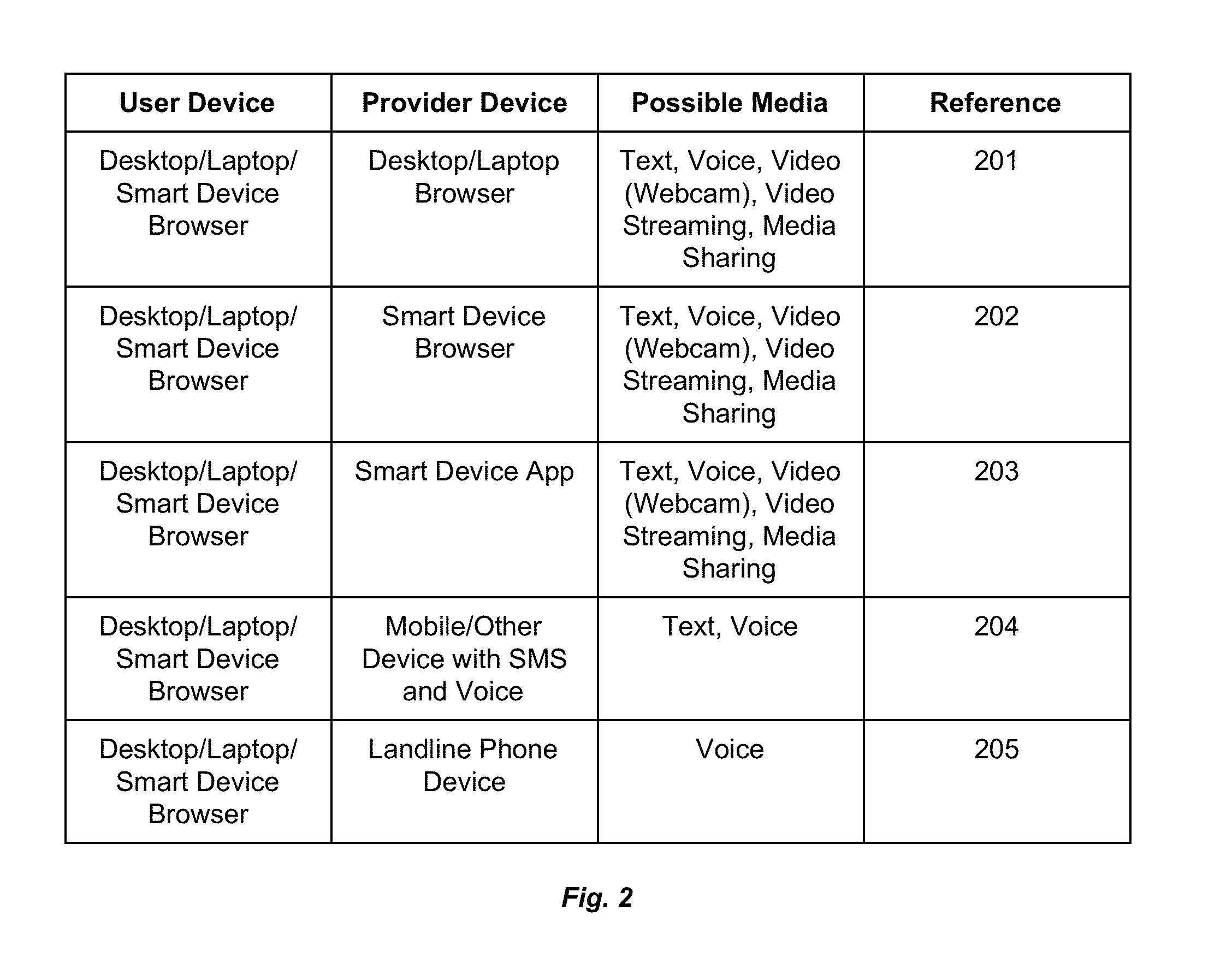

Instant generation and usage of HTTP URL based unique identity for engaging in multi-modal real-time interactions in online marketplaces, social networks and other relevant places

ActiveUS20150339745A1Increase heightEasy to useCustomer relationshipDigital data information retrievalHyperlinkThe Internet

A System with mechanisms is provided that allows anyone or any entity to signup with basic identity information along with any service description resulting in an instant HTTP URL as a means for outside parties to contact this provider of the service and engage in multi-modal interactions involving voice, video, chat and media sharing. A single user or a group of users can signup with this system and provide optionally some contact information such as phone numbers. A HTTP URL is instantly generated that can be advertised as hyperlinks directly by the users to the outside environment such as on a website, email or any other means. The providers, who have signed-up for the service, can also remain signed-in into the system so as to engage with users wishing to contact them via the published URL. Any user with an internet connection upon clicking this URL will be directed to this proposed system and will be provided with a conversation window through which they can start engaging with the provider via chat, voice and video (webcam) and start sharing with each other any media information such as pictures and videos. The provider if not signed-in into the system can also engage with the user via SMS and email. The HTTP URL is being used as a starting point for users to engage with the providers of any service behind that URL. This URL can be advertised by the proposed system in various online marketplaces or social networks of the provider as well as on the web to be identified by search engines.

Owner:USHUR INC

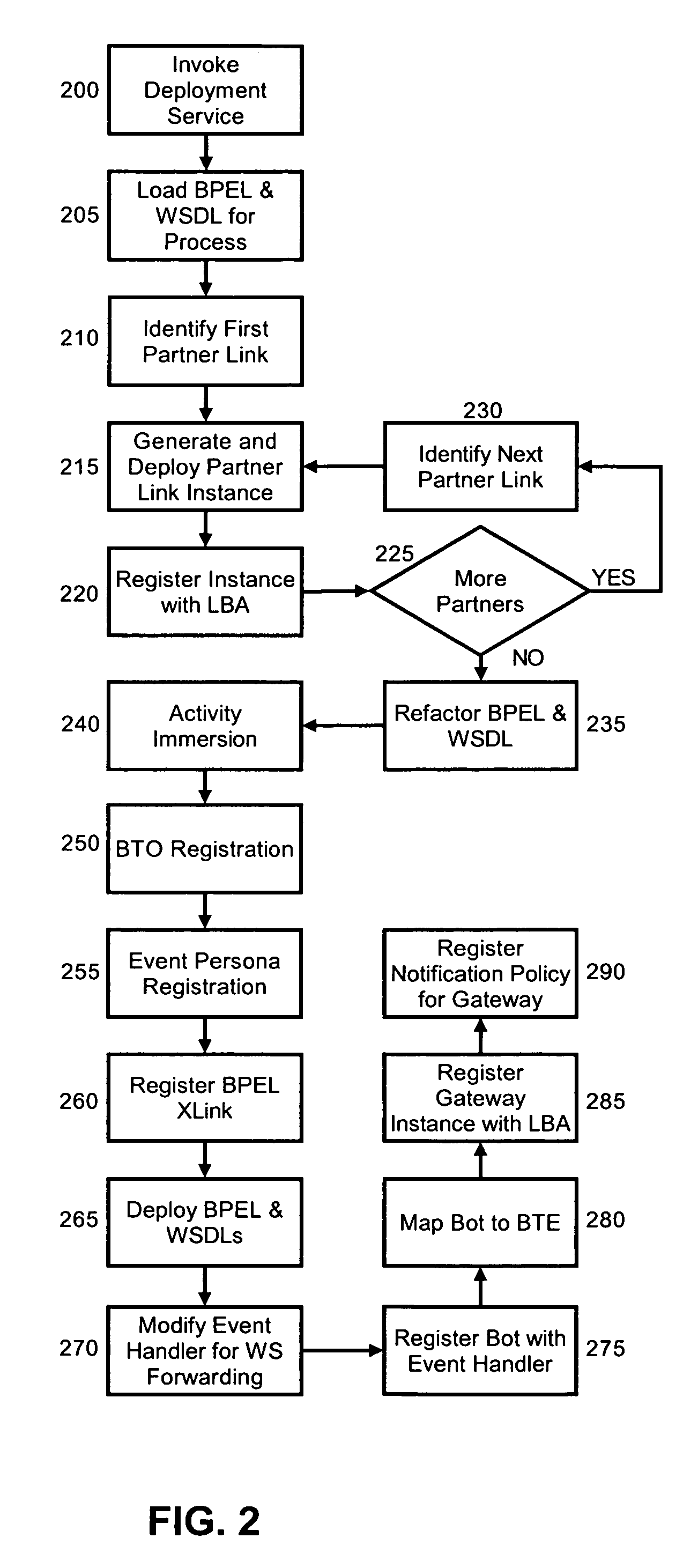

System for Factoring Synchronization Strategies From Multimodal Programming Model Runtimes

InactiveUS20090013035A1Wide supportMultiple digital computer combinationsTransmissionApplication serverInteraction management

Owner:HOSN RAFAH A +3

Intelligent portal engine

InactiveUS8027945B1Reduce ambiguityDigital computer detailsNatural language data processingGraphicsChemical effects

Owner:AI CORE TECH LLC

Method and device for providing speech-enabled input in an electronic device having a user interface

InactiveUS7383189B2Avoid rapid changesHysteresis canDevices with voice recognitionAutomatic call-answering/message-recording/conversation-recordingDisplay deviceSpeech input

The present invention provides a method, a device and a system for multimodal interactions. The method according to the invention comprises the steps of activating a multimodal user interaction, providing at least one key input option and at least one voice input option, displaying the at least one key input option, checking if there is at least one condition affecting said voice input option, and providing voice input options and displaying indications of the provided voice input options according to the condition. The method is characterized by checking if at least one condition affecting the voice input is fulfilled and providing the at least one voice input option and displaying indications of the voice input options on the display, according to the condition.

Owner:NOKIA TECHNOLOGLES OY

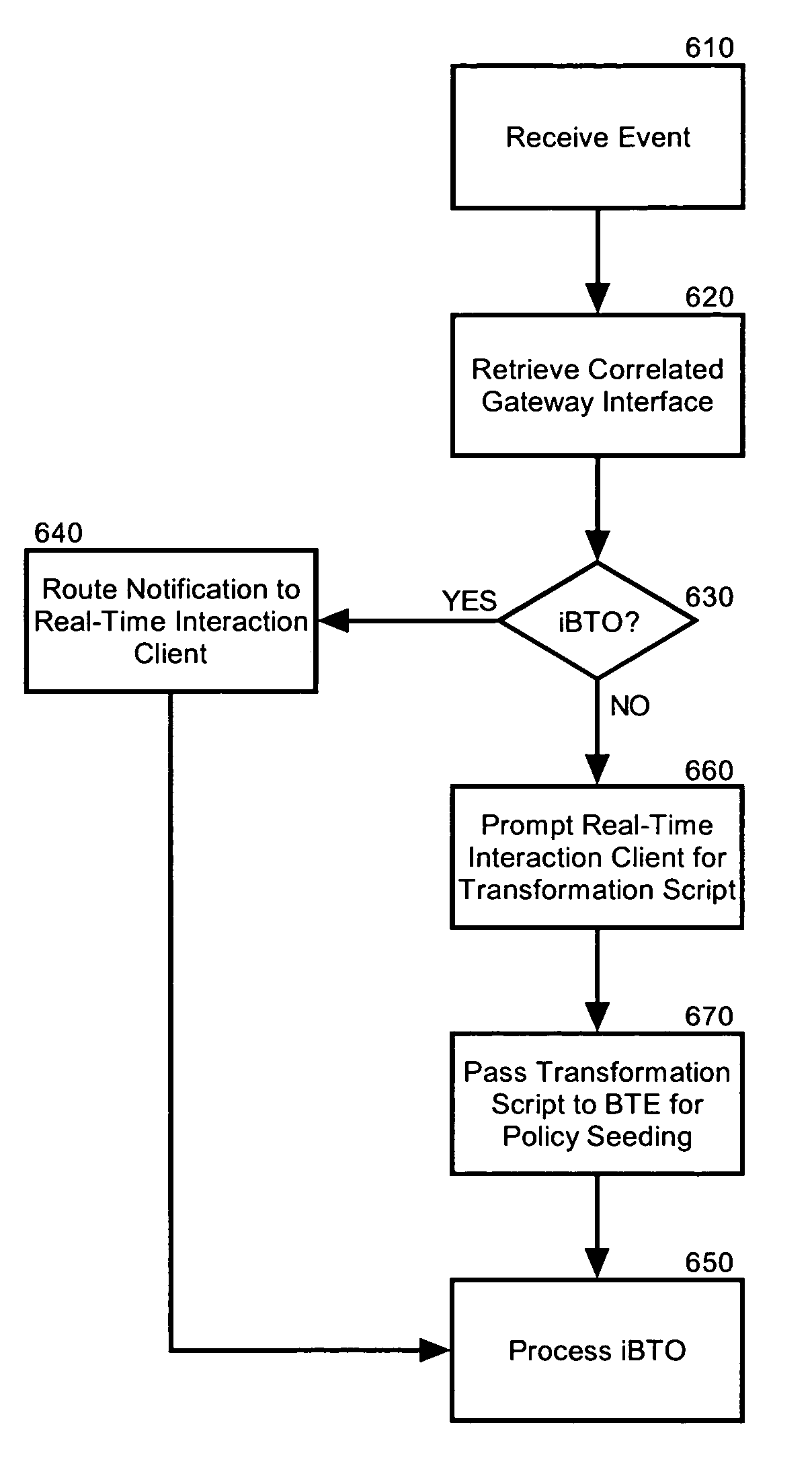

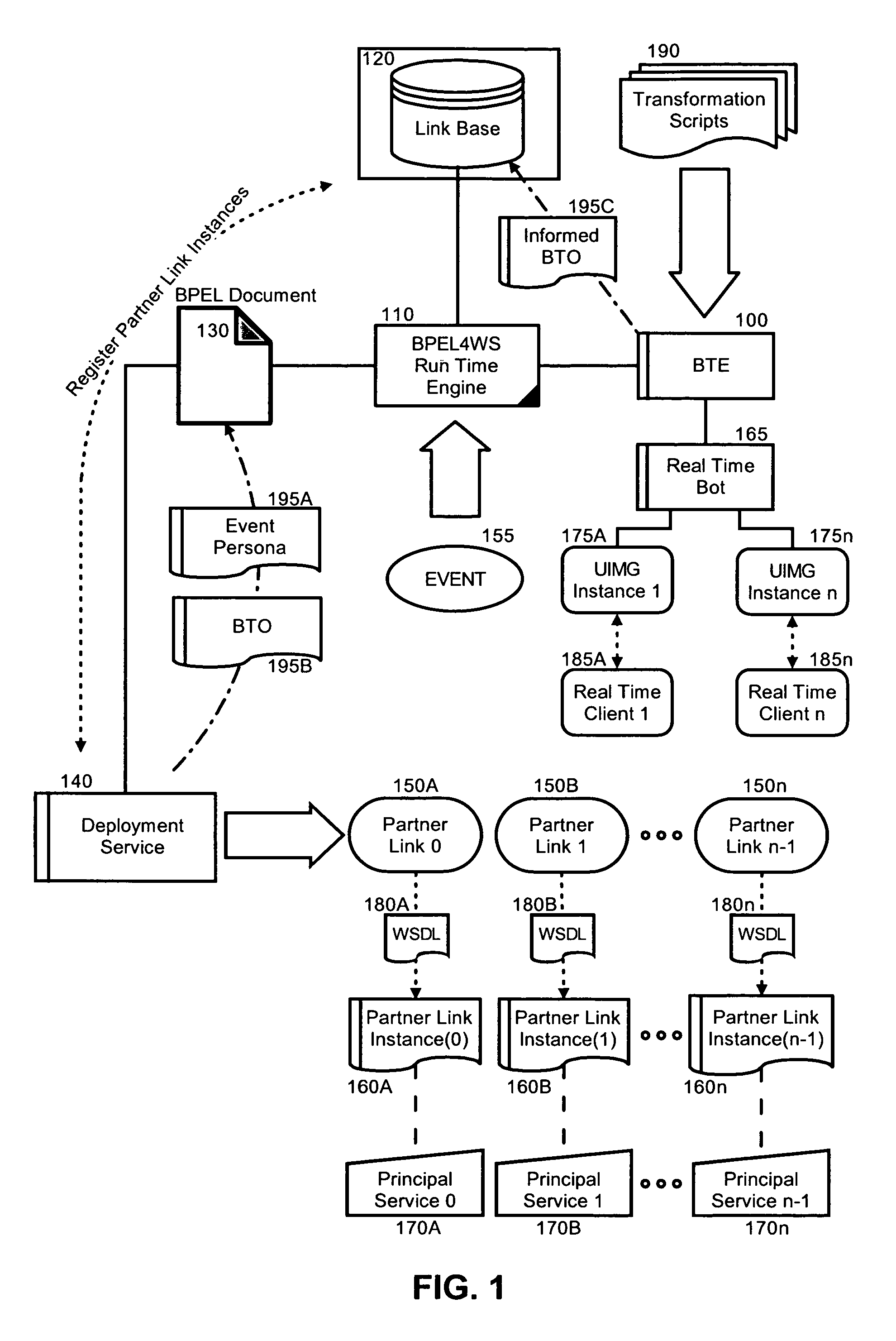

Real-time multi-modal business transformation interaction

A method for real-time multi-modal interaction in a cross-enterprise business process management system includes the steps of handling an event in a business process specification document processing engine and forwarding the event through a gateway interface to a real-time interaction client. A responsive instruction is received from the real-time interaction client through the gateway interface. In consequence, the execution of a business process transformation script is triggered based upon the responsive instruction.

Owner:IBM CORP

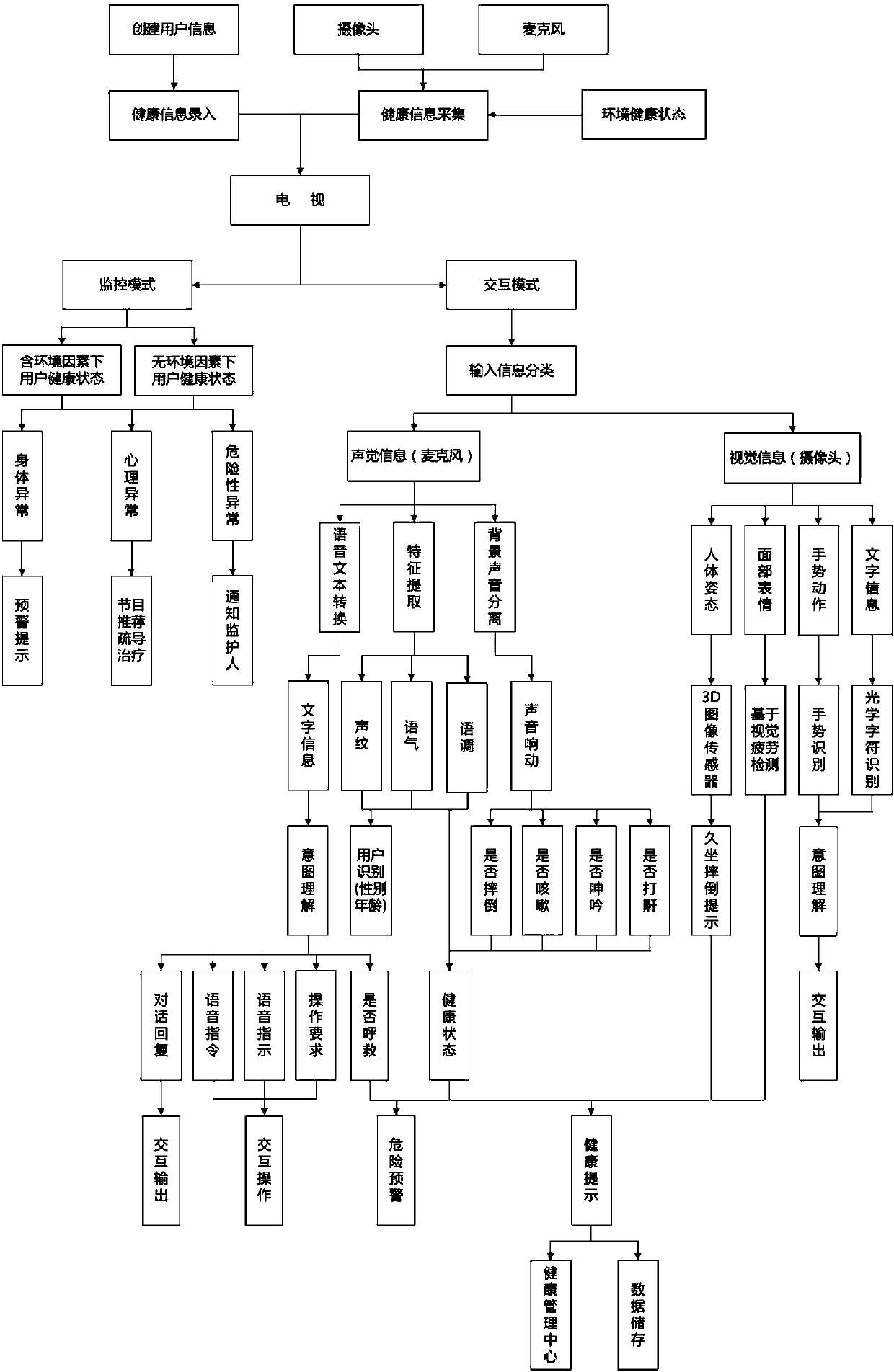

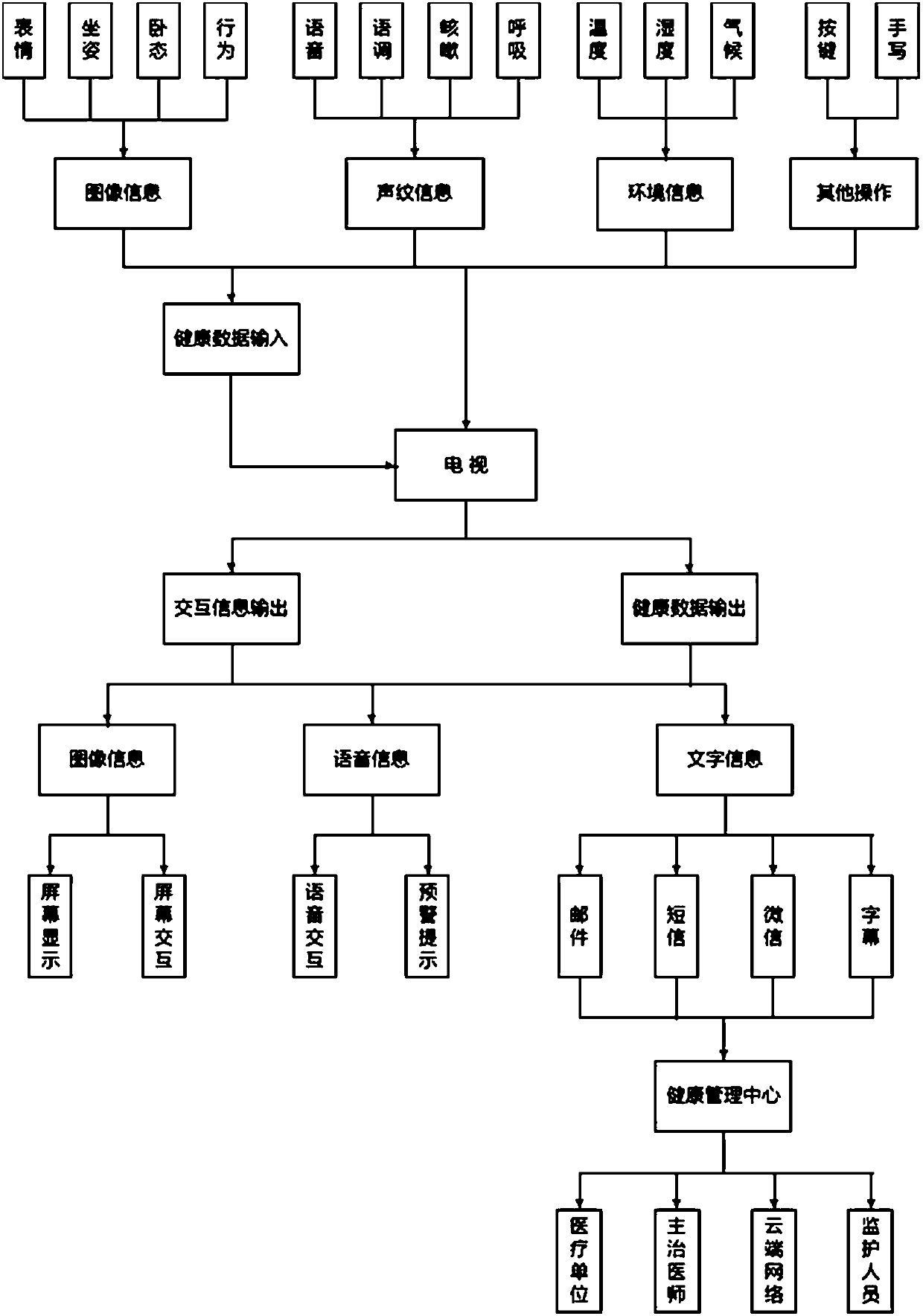

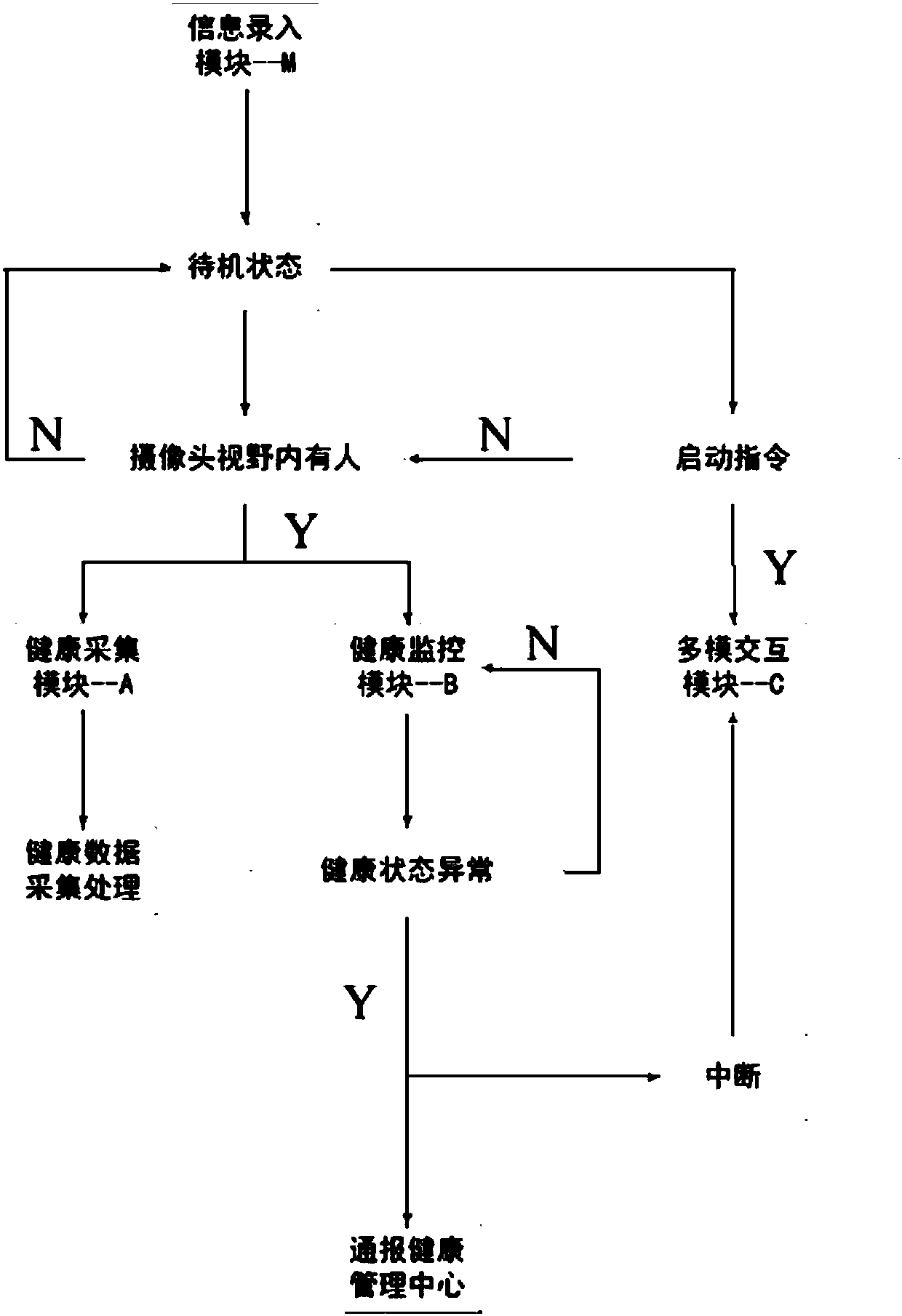

Health information management television based on multimodal human-computer interaction

InactiveCN107635147ARealize managementRealize supervisionSelective content distributionSpecial data processing applicationsMonitoring statusMultimodal interaction

The invention provides a health information management television based on multimodal human-computer interaction. The health information management television comprises an information input module used for inputting health information of a user; an input interaction module used for acquiring image information, sound information, environment information, text input information and the like; a health monitoring module used for judging the health status of the user according to the acquired image information and sound information under a monitoring state, executing a corresponding operation according to the category of health status abnormalities, and giving corresponding hints according to the acquired environment information; and an output interaction module used for interacting with the user through images, texts and voice under an interaction state according to the acquired image information, sound information, environment information and the like, and transmitting health data to a health management center of the cloud. The health information management television based on the multimodal human-computer interaction provided by the invention can understand the behavior intention ofthe user, can realize the health information management between the television and the user through the way of multimodal interaction between the user and the television, and realize the supervision,judgment and recognition of the user behavior.

Owner:SHANGHAI JIAO TONG UNIV

System and method for accessing and annotating electronic medical records using a multi-modal interface

A system and method of exchanging medical information between a user and a computer device is disclosed. The computer device can receive user input in one of a plurality of types of user input comprising speech, pen, gesture and a combination of speech, pen and gesture. The method comprises receiving information from the user associated with a medical condition and a bodily location of the medical condition on a patient in one of a plurality of types of user input, presenting in one of a plurality of types of system output an indication of the received medical condition and the bodily location of the medical condition, and presenting to the user an indication that the computer device is ready to receive further information. The invention enables a more flexible multi-modal interactive environment for entering medical information into a computer device. The medical device also generates multi-modal output for presenting a patient's medical condition in an efficient manner.

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

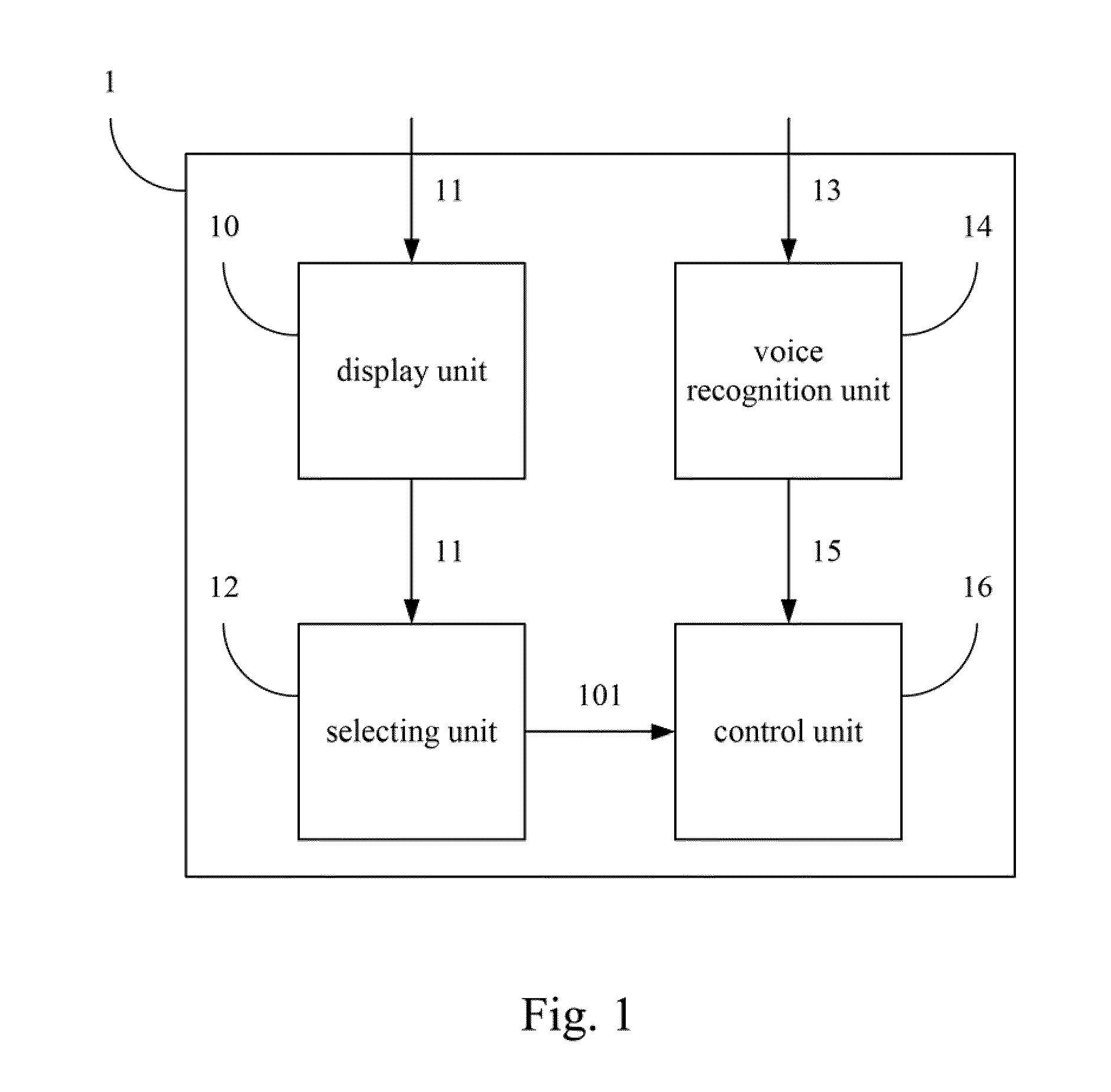

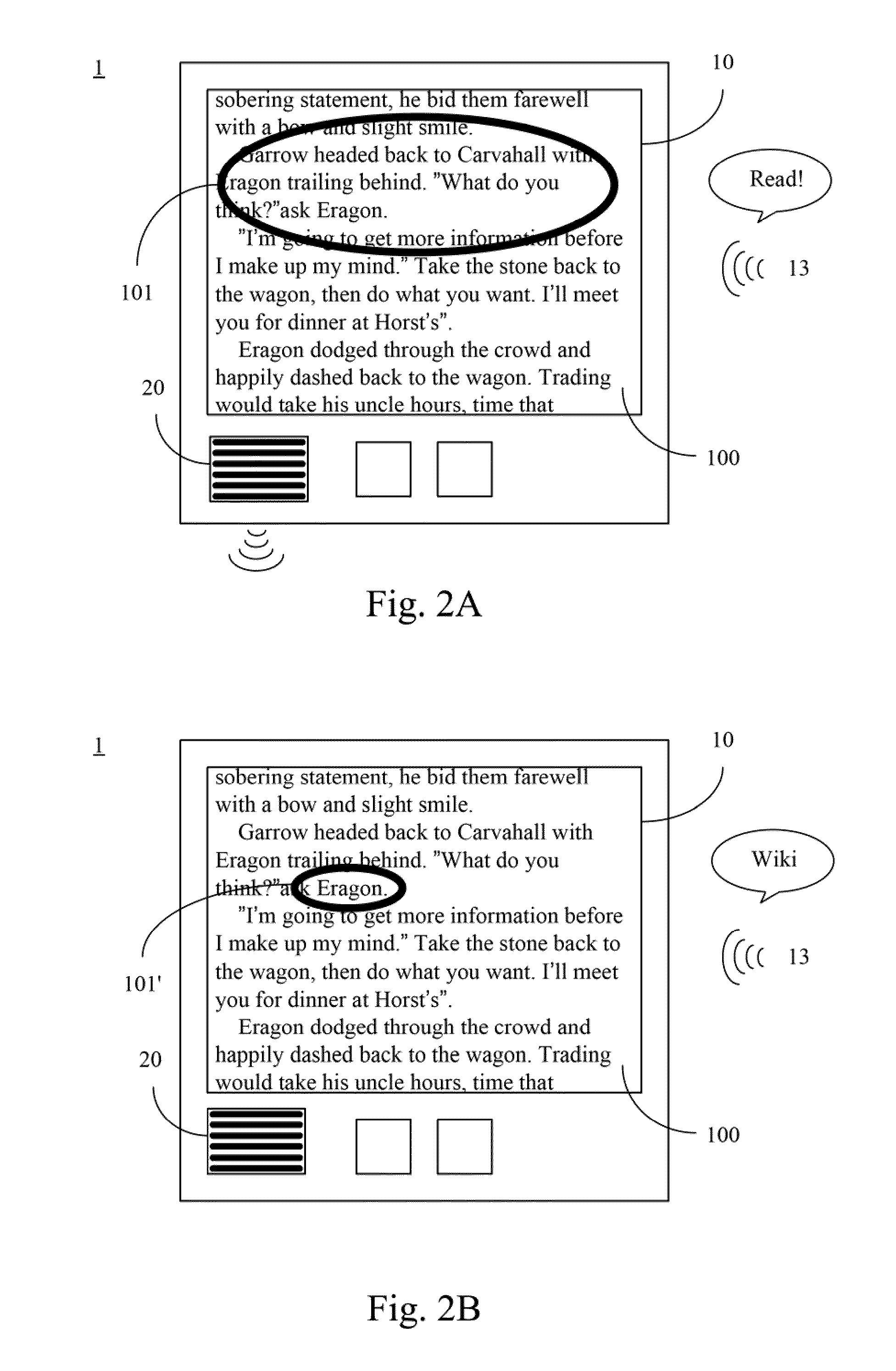

Electronic apparatus with multi-mode interactive operation method

InactiveUS20110288850A1Speech recognitionSpecial data processing applicationsMultimodal interactionElectric equipment

An electronic apparatus with a multi-mode interactive operation method is disclosed. The electronic apparatus includes a display unit, a selecting unit, a voice recognition unit and a control unit. The display unit displays a frame. The selecting unit selects an arbitrary area of the frame on the display unit. The voice recognition unit recognizes a voice signal as a control command. The control unit processes data according to the control command on the content of the arbitrary area selected. A multi-mode interactive operation method is disclosed herein as well.

Owner:DELTA ELECTRONICS INC

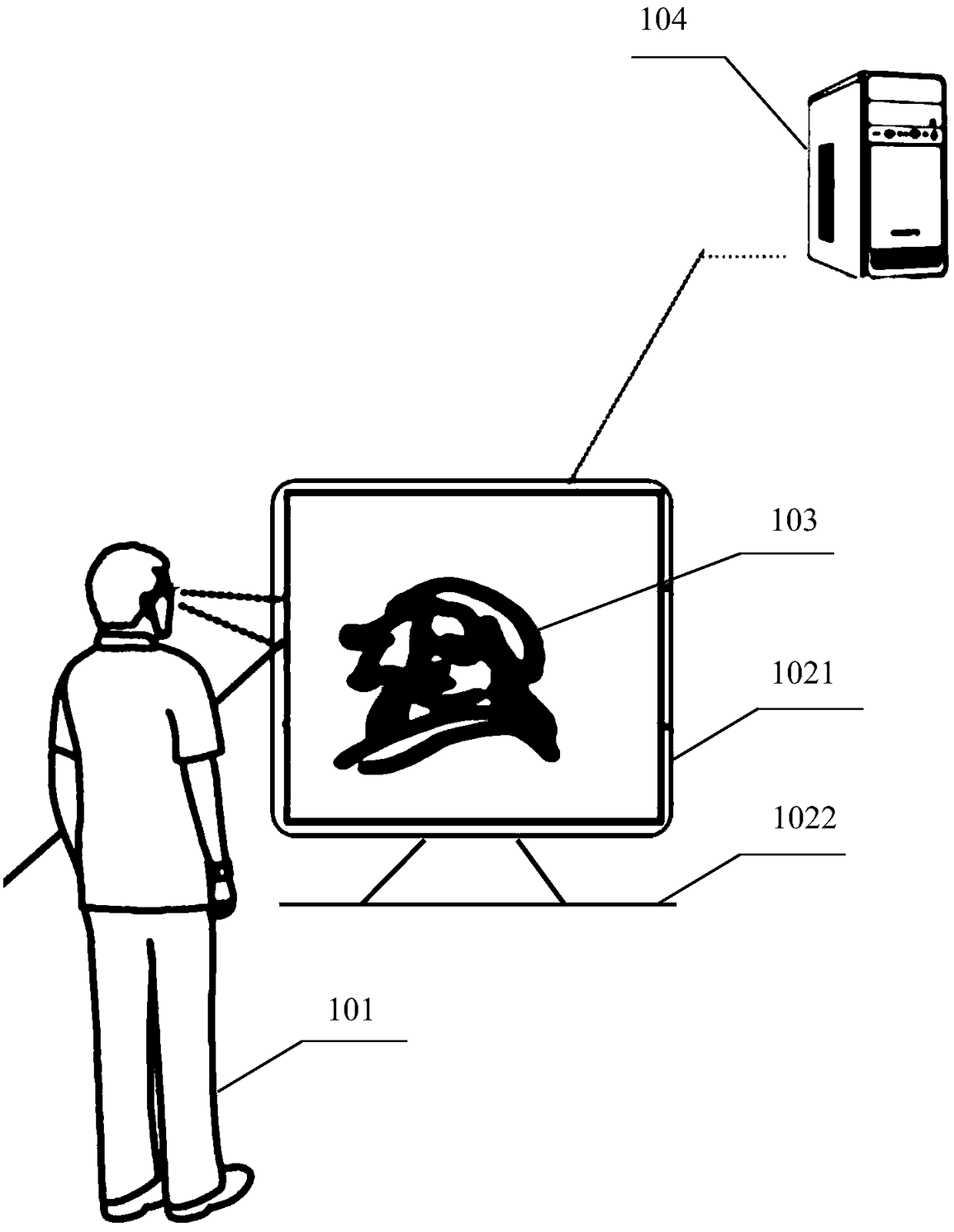

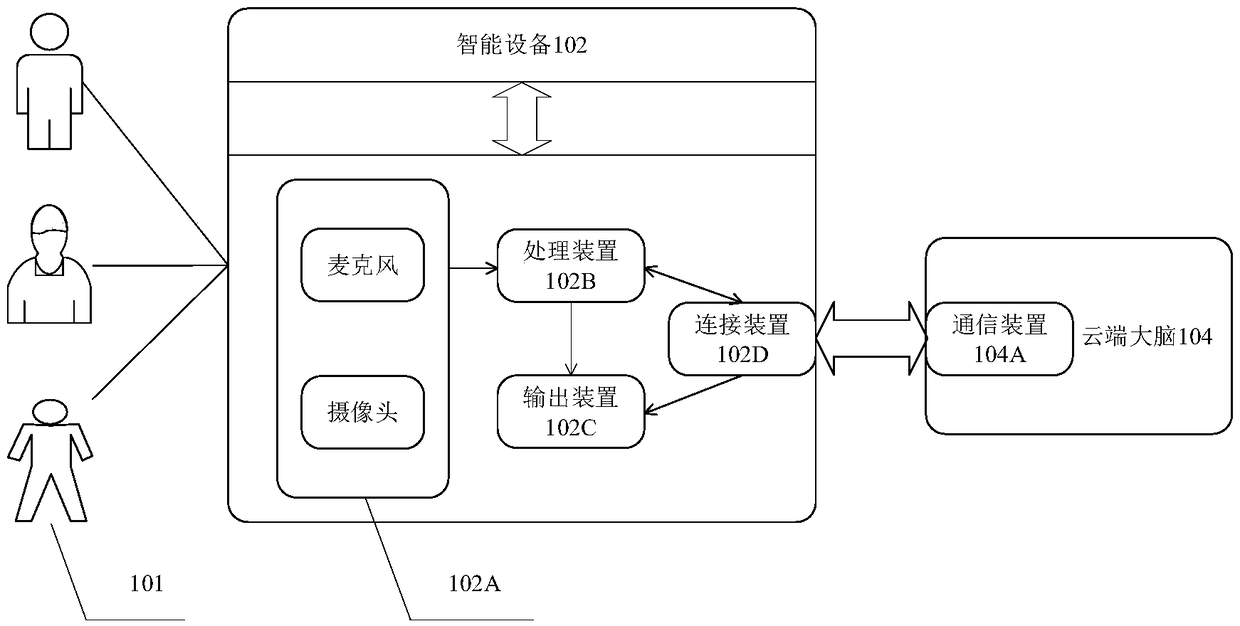

Interaction method and system based on behavior standard of virtual human

InactiveCN109271018AEnjoy interactive experienceSmooth communicationInput/output for user-computer interactionGraph readingData matchingPattern perception

The invention provides an interactive method based on the behavior standard of a virtual human. The virtual human starts the voice, emotion, vision and perception ability when the virtual human is inthe interactive state through the display of an intelligent device. The method comprises the following steps: acquiring the multi-modal interactive data, analyzing the multi-modal interactive data, and obtaining the interactive intention of the user; generating multi-modal response data and virtual human emotion expression data matched with multi-modal response data according to the interaction intention, wherein, the virtual human emotion expression data represent the virtual human's current emotion through the virtual human's facial expression and body movement; outputting multimodal response data with virtual human emotion expression data. The invention provides a virtual human, which can perform multimodal interaction with a user. Moreover, the invention can output the virtual human emotion expression data when outputting the multimodal response data, and express the current virtual human emotion through the virtual human emotion expression data, so that the user can enjoy the human-like interactive experience.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

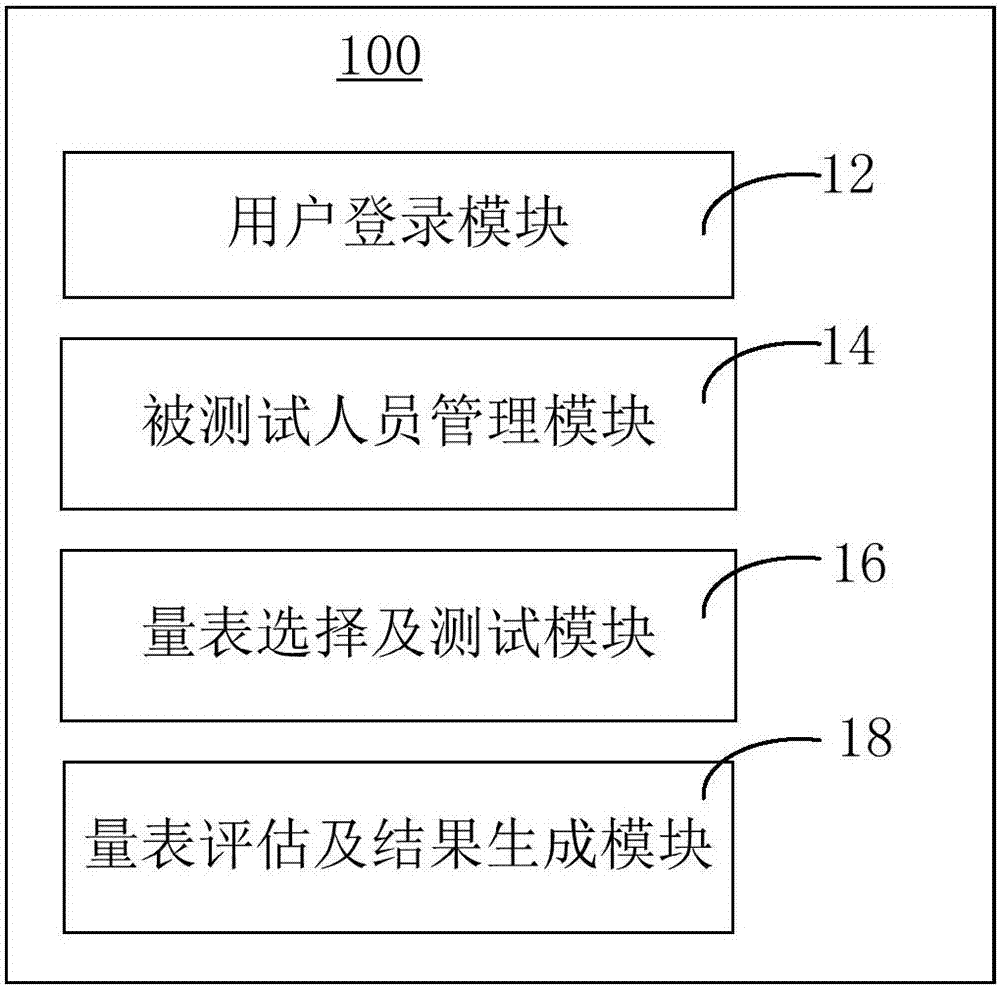

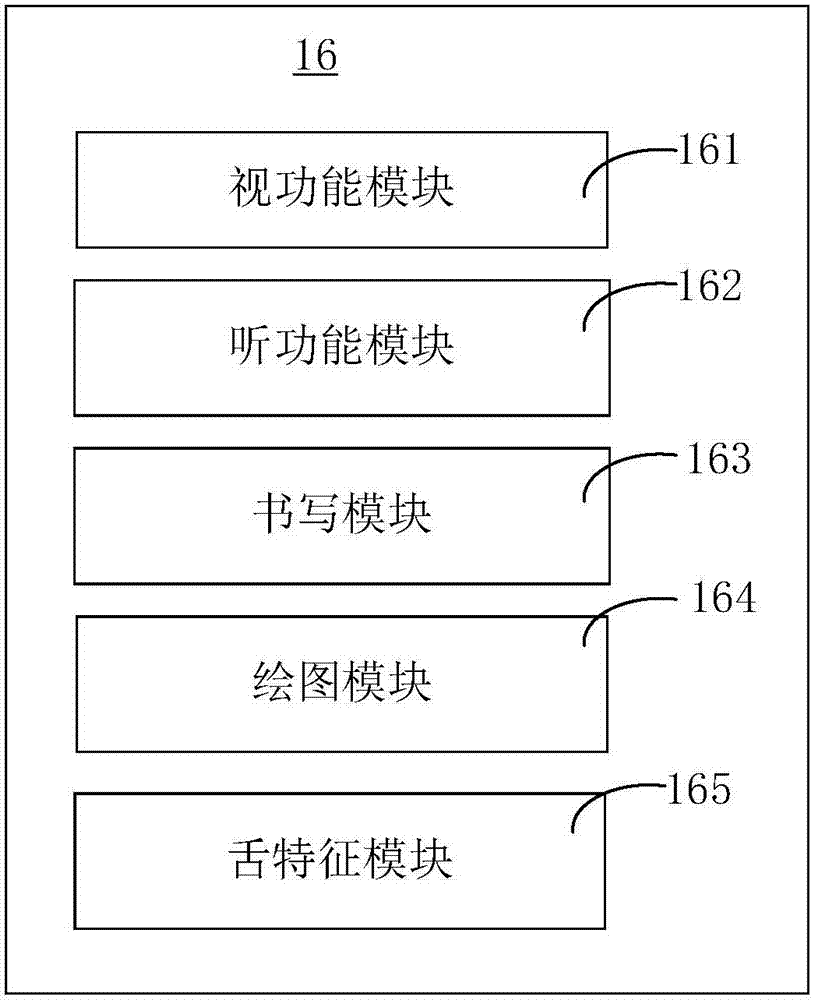

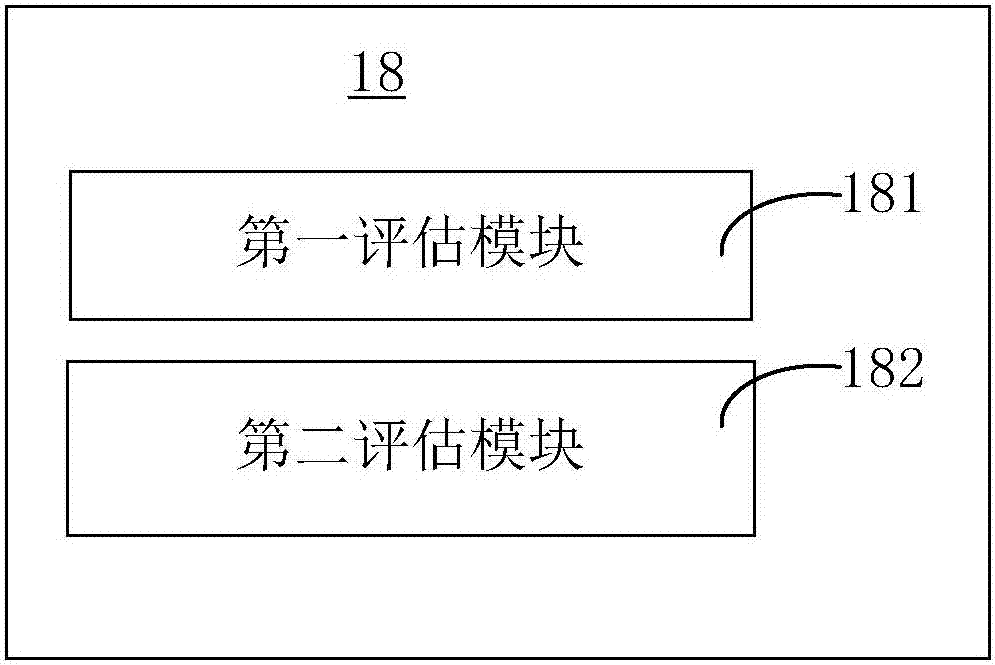

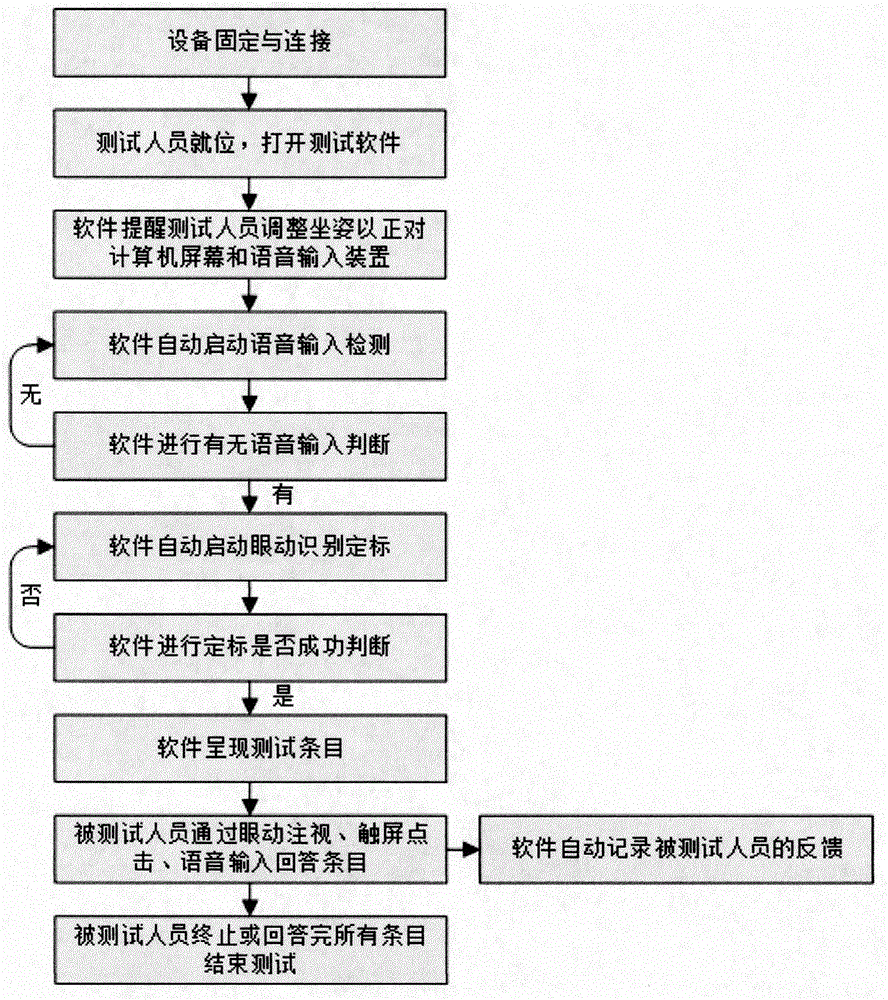

Multi-mode interactive speech language function disorder evaluation system and method

PendingCN107456208AImprove accuracyAudiometeringUser/patient communication for diagnosticsVisual functionPassword

The invention provides a multi-mode interactive speech language function disorder evaluation system. The multi-mode interactive speech language function disorder evaluation system comprises a user login module, a tested person management module, a scale selecting and testing module and a scale assessing and result generating module, wherein the user login module is used for providing entrances for a user to log in, register and retrieve passwords; the tested person management module is used for managing the information of the tested person; the scale selecting and testing module is used for selecting a scale and performing multi-mode interactive testing according to the scale, so that test data is obtained; the scale selecting and testing module comprises a visual function module, a listening function module, a writing module and a drawing module, wherein the visual function module is used for collecting data related to the visual function of the tested person, the listening function module is used for collecting data related to the listening function of the tested person, the writing module is used for collecting writing data of the tested person, and the drawing module is used for collecting drawing data of the tested person; and the scale assessing and result generating module is used for assessing the tested data, so that an assessment result is generated. The invention further provides a corresponding multi-mode interactive speech language function disorder evaluation method.

Owner:SHENZHEN INST OF ADVANCED TECH

Questionnaire test system and method based on multimodal interactions of eye movement, voice and touch screens

InactiveCN105204993AImprove effectivenessImprove accuracySoftware testing/debuggingInput/output processes for data processingCrowdsTouchscreen

The invention belongs to the technical field of computer application, and particularly relates to a questionnaire test system and a questionnaire test method. A questionnaire test system based on multimodal interactions of eye movement, voice and touch screens comprises a computer (1) with built-in questionnaire test software and a touch screen input function, and is characterized by further comprising an eye movement collecting device (2) and a voice input device (5). The test device can realize questionnaire tests based on the multimodal interactions of eye movement, voice and touch screens, and adopts natural interactive modes to collect to-be-tested feedback, so that the difficulty of to-be-tested personnel for inputting information is reduced, and the effectiveness and accuracy of the questionnaire tests are improved. The test device is especially suitable for occasions that two hands of the to-be-tested personnel are occupied, and the continuity of the test process is enhanced. The test method is suitable for special populations who have disabled hands, so that the applicable scope of questionnaire test software is enlarged.

Owner:SCI RES TRAINING CENT FOR CHINESE ASTRONAUTS

Managing application interactions using distributed modality components

ActiveUS7401337B2Multiprogramming arrangementsExecution for user interfacesApplication softwareMultimodal interaction

A method for managing multimodal interactions can include the step of registering a multitude of modality components with a modality component server, wherein each modality component handles an interface modality for an application. The modality component can be connected to a device. A user interaction can be conveyed from the device to the modality component for processing. Results from the user interaction can be placed on a shared memory area of the modality component server.

Owner:NUANCE COMM INC

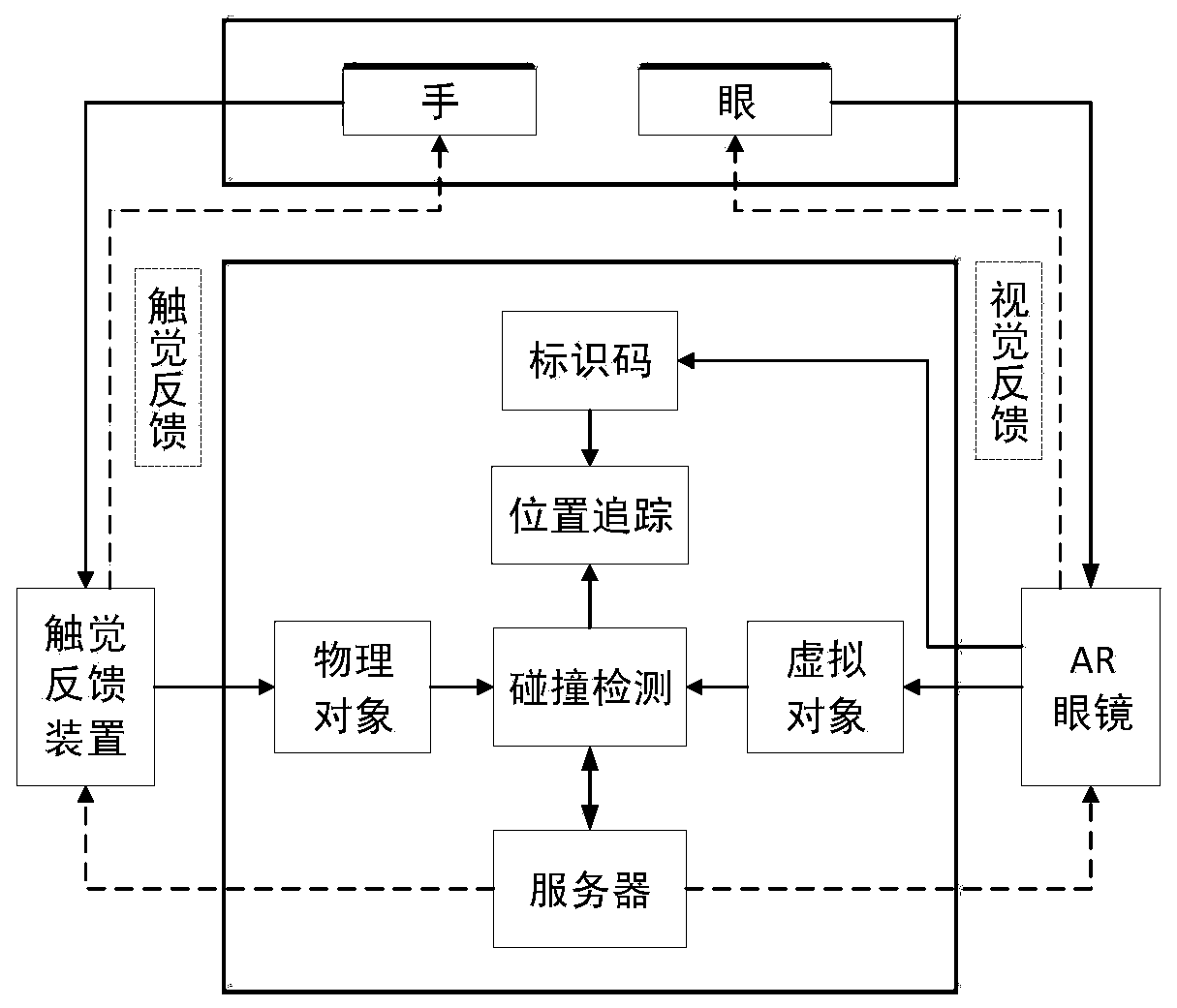

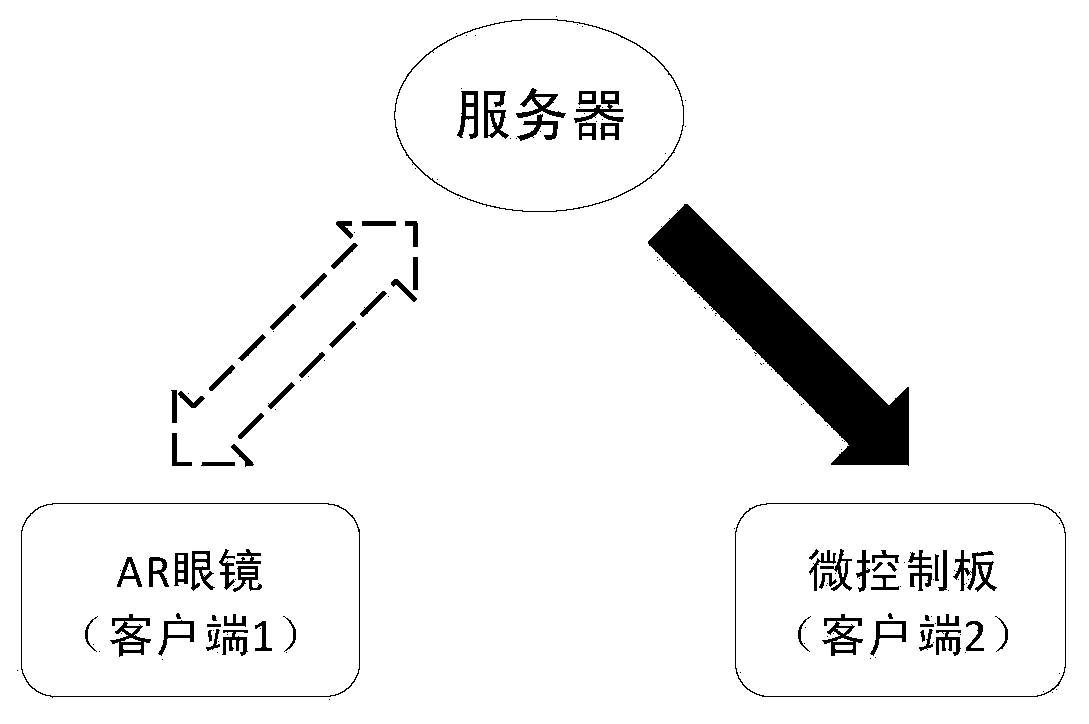

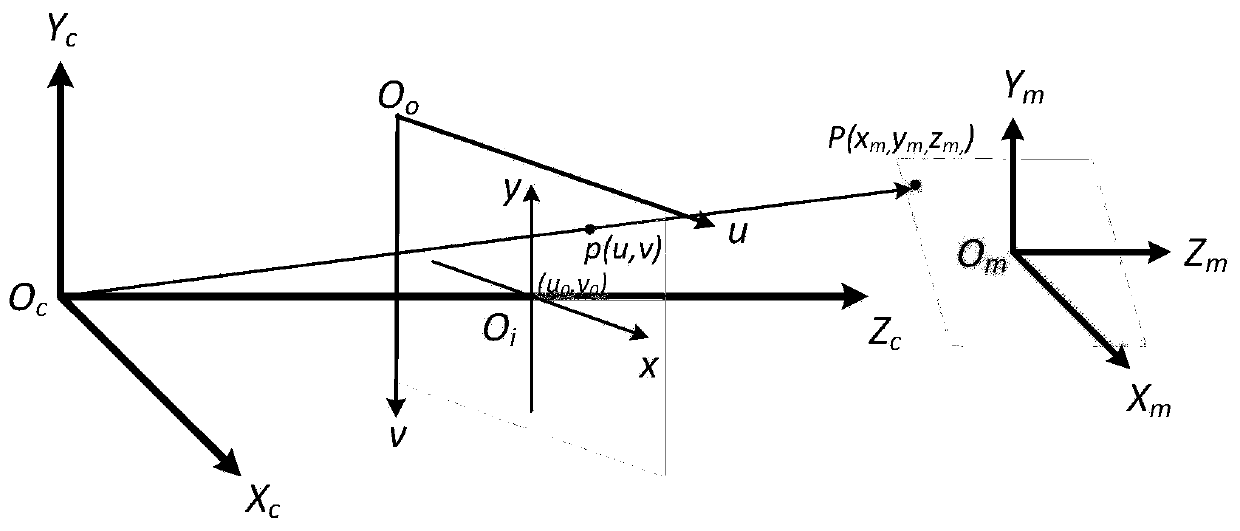

A vibration tactile feedback device design method based on information physical interaction

ActiveCN109917911AImprove multimodal interactionImprove coordinationInput/output for user-computer interactionImage data processingInteraction systemsTouch Perception

The invention discloses a vibration tactile feedback device design method based on information physical interaction, which is characterized in that some information of a virtual-real interaction system is fed back in a non-visual mode, namely a vibration tactile feedback device, and the vibration tactile feedback device is coordinated with a visual feedback system. According to the vibration tactile feedback device, on the basis of real tactile feedback of the hand and through vibration superposition of collision feedback of a real object to a virtual object, collision interaction between thevirtual object and a physical object is simulated, so that a person can sense contact collision between the real object and the virtual object in a virtual-real fusion environment, more real interaction experience is generated, and multi-mode interaction between the person and an AR environment and between the person and the virtual object is improved. The visual and tactile combined multi-mode interaction is helpful for expanding an information feedback channel, improving the coordination of people and a system, enhancing the seamless fusion among participants, a real environment and a virtual environment in an information physical fusion system, and realizing natural harmonious man-machine interaction.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com