Patents

Literature

311 results about "Vowel" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A vowel is a syllabic speech sound pronounced without any stricture in the vocal tract. Vowels are one of the two principal classes of speech sounds, the other being the consonant. Vowels vary in quality, in loudness and also in quantity (length). They are usually voiced, and are closely involved in prosodic variation such as tone, intonation and stress.

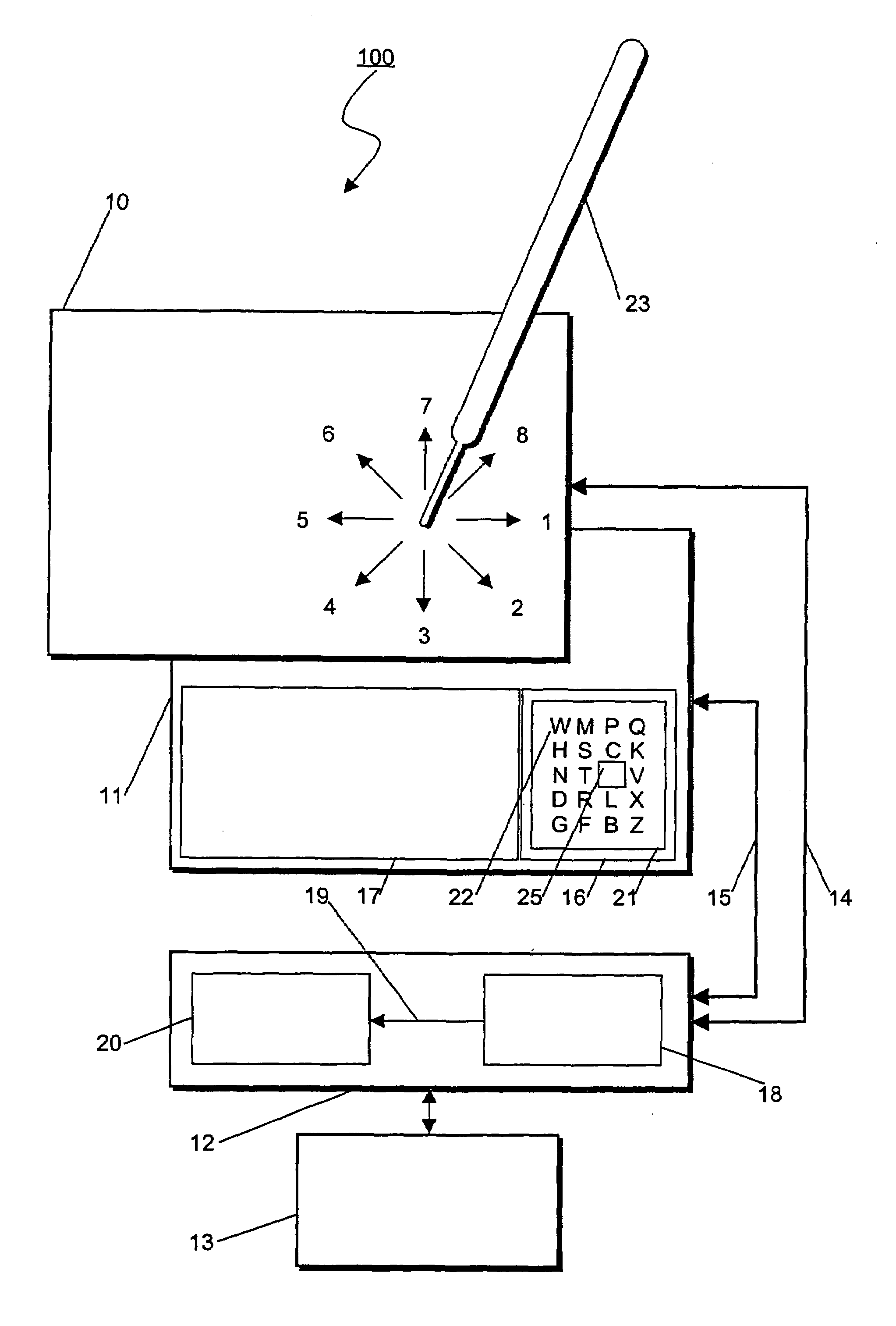

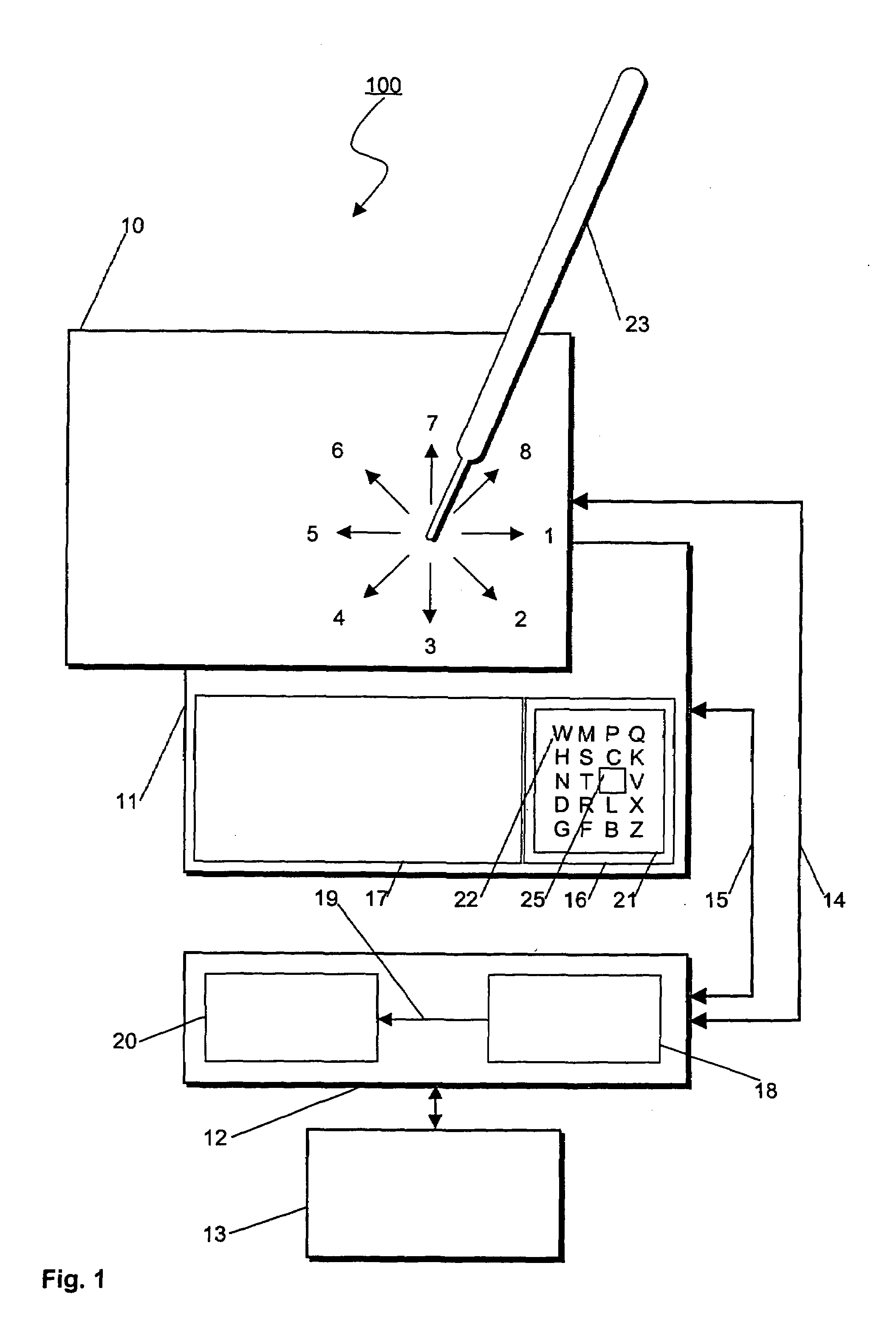

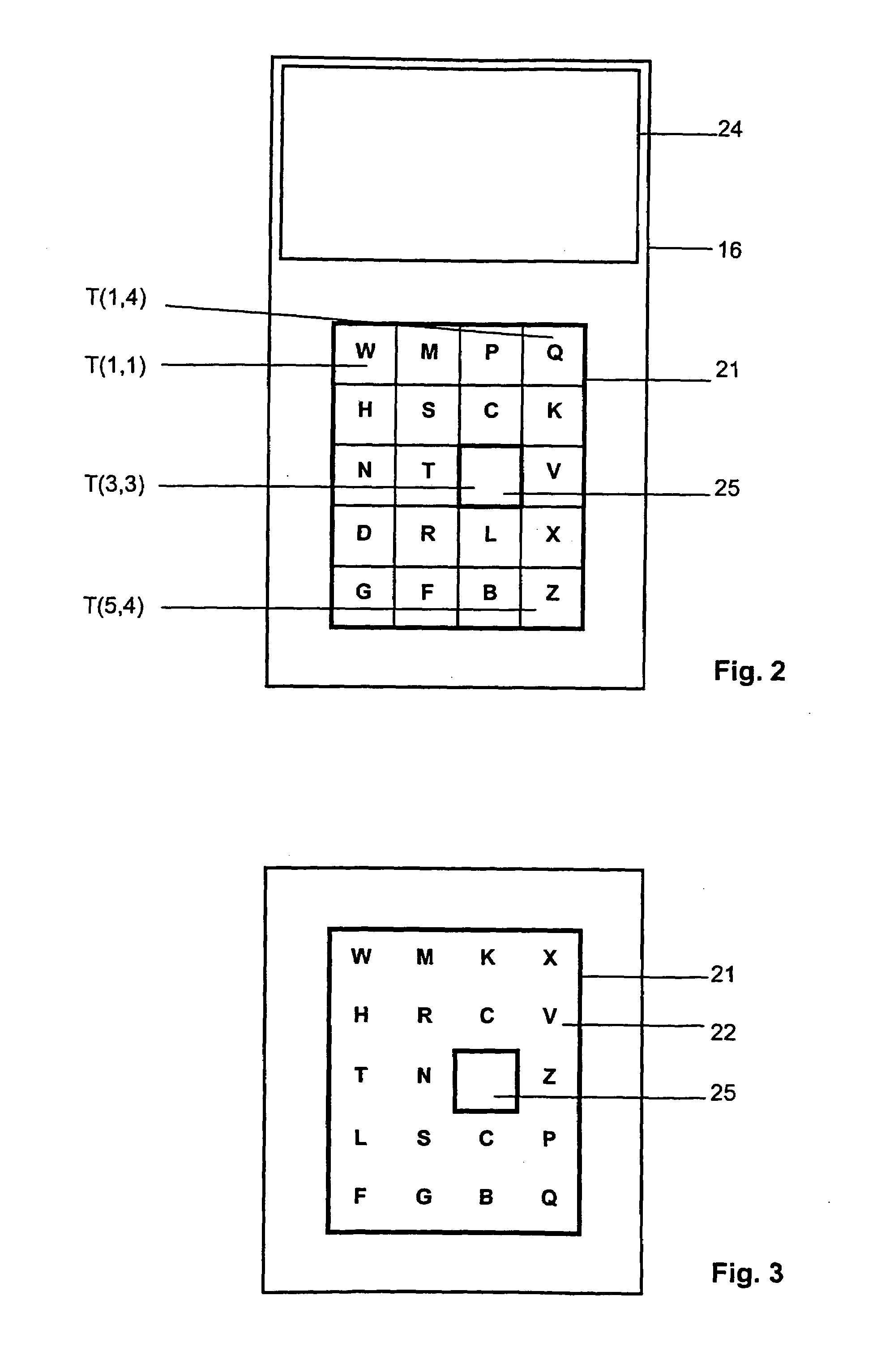

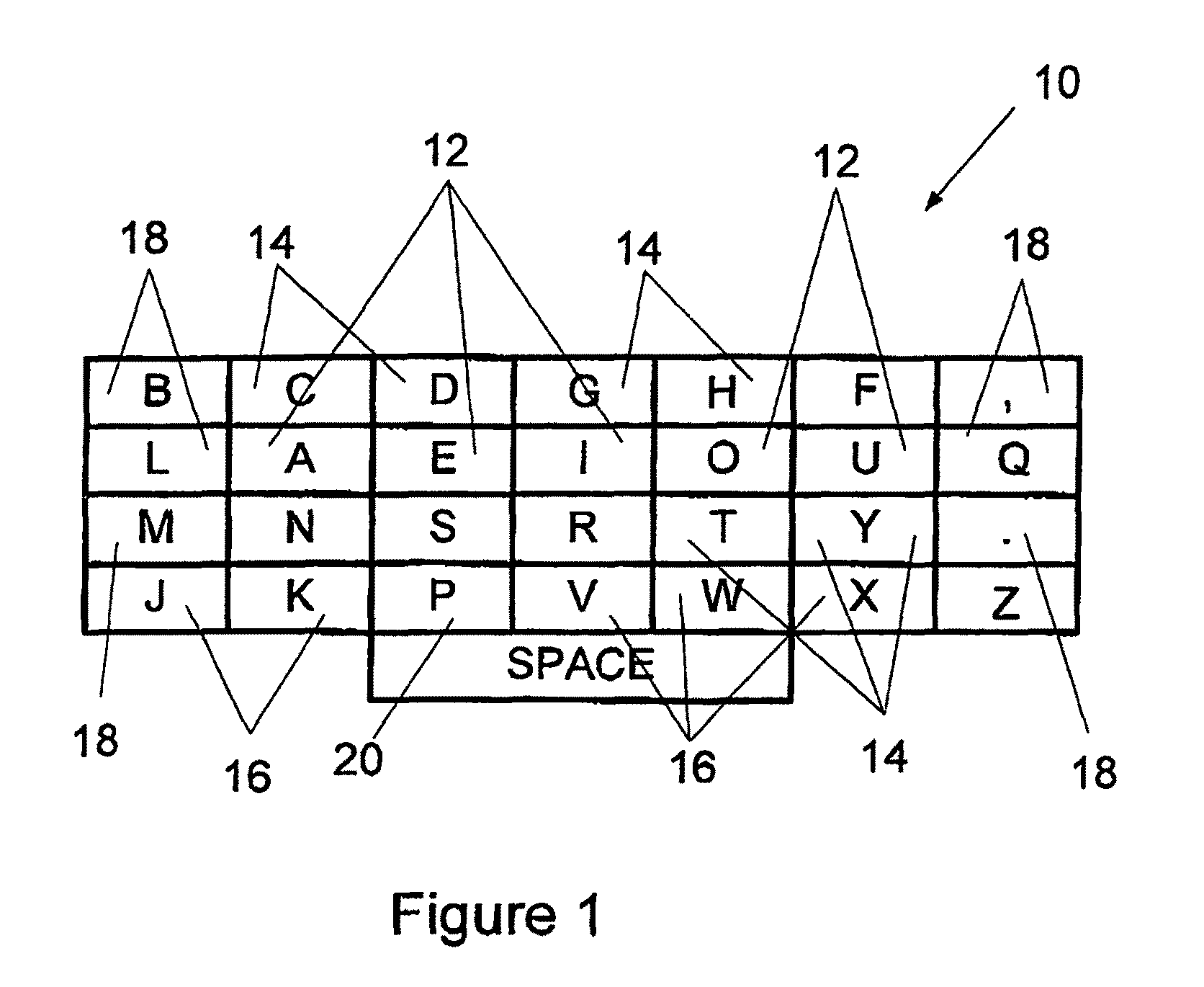

Method for a high-speed writing system and high -speed writing device

InactiveUS7145554B2Difficult to memorizeIncrease speedCathode-ray tube indicatorsInput/output processes for data processingText entryTouchscreen

The invention concerns a high speed writing system and a high speed writing device with special menu selection for entering text by means of a pen and a touch-screen. This method of entering text is suitable for desktop PCs, laptop PCs, palmtop PCs, mobile phones (for SMS, WAP and e-mail), watches and other electronic devices. Significantly higher writing efficiency than on a QWERTY keyboard is reached with little investment in practicing time.The monitor screen features a key section with all consonants of the alphabet, as well as one vowel key distinguished by its arrangement and function. All elements of the writing process defining the method can be generated by pen movements such as selection of a key or guiding it in one of eight stroke directions, as well as combinations of these pen movementsThe high speed writing devices described here excel by their small and ergonomically arranged entry sections, whereby all keys for text entry can be reached from one single hand position that never needs re-setting.

Owner:SPEEDSCRIPT

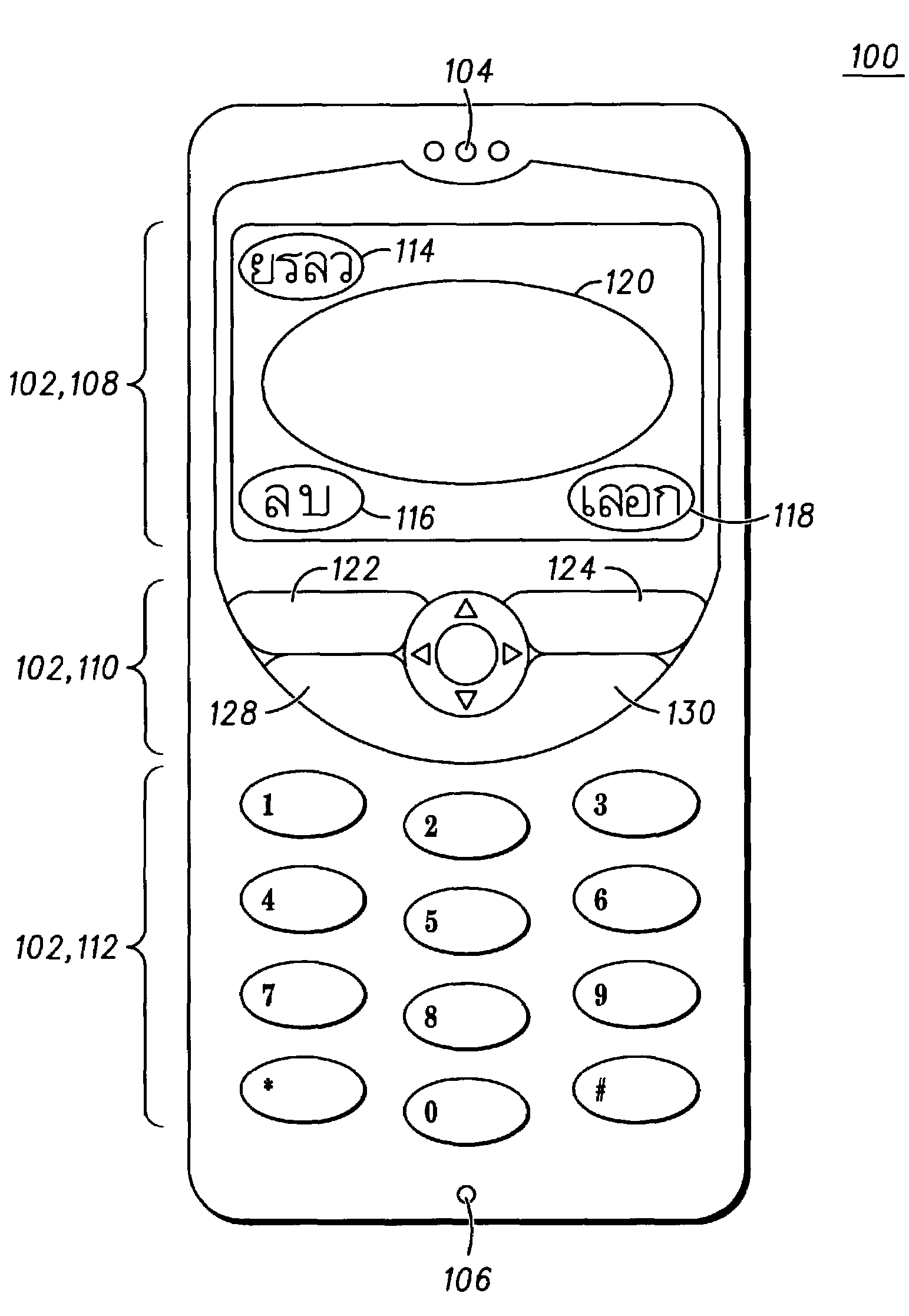

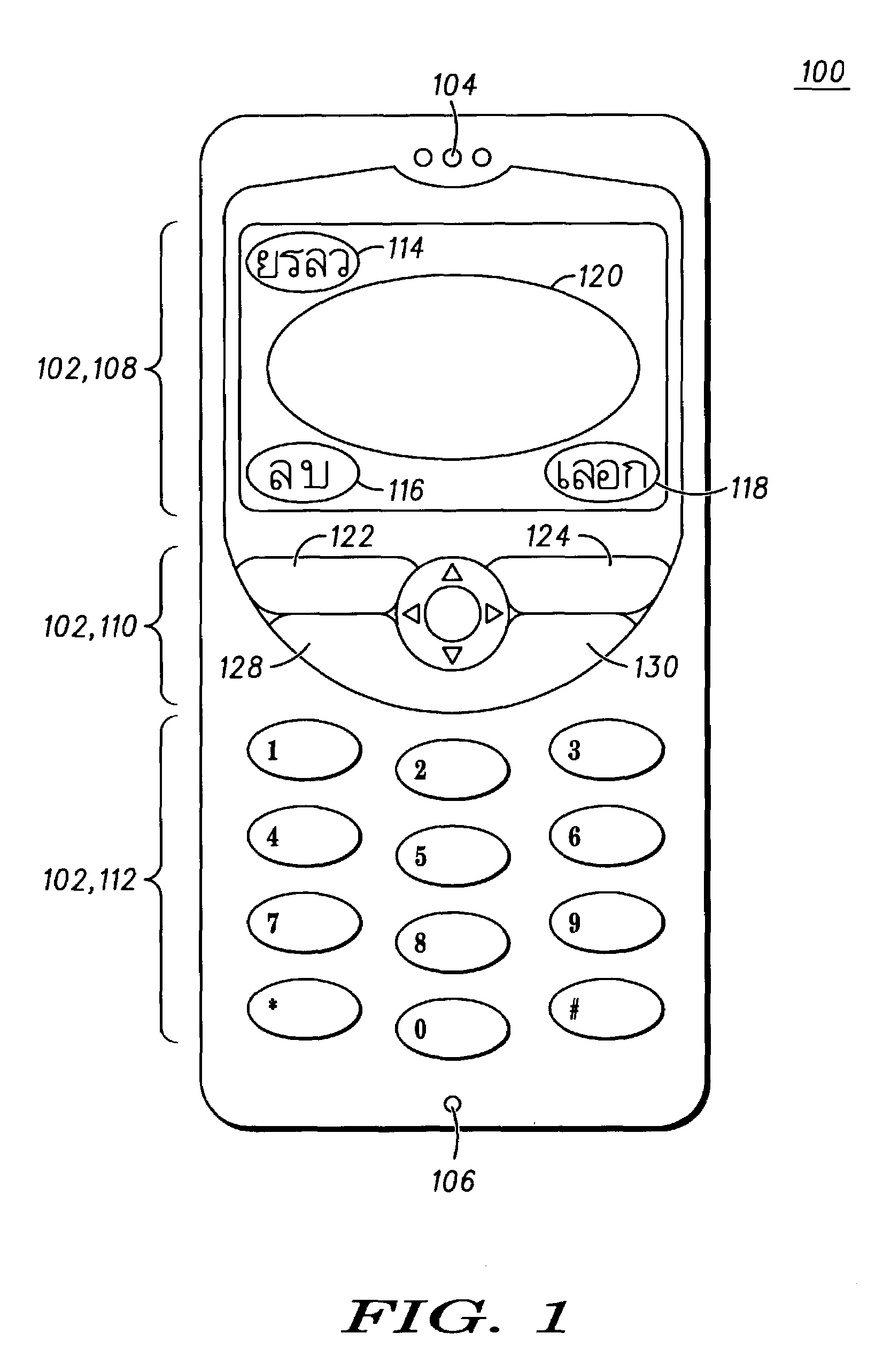

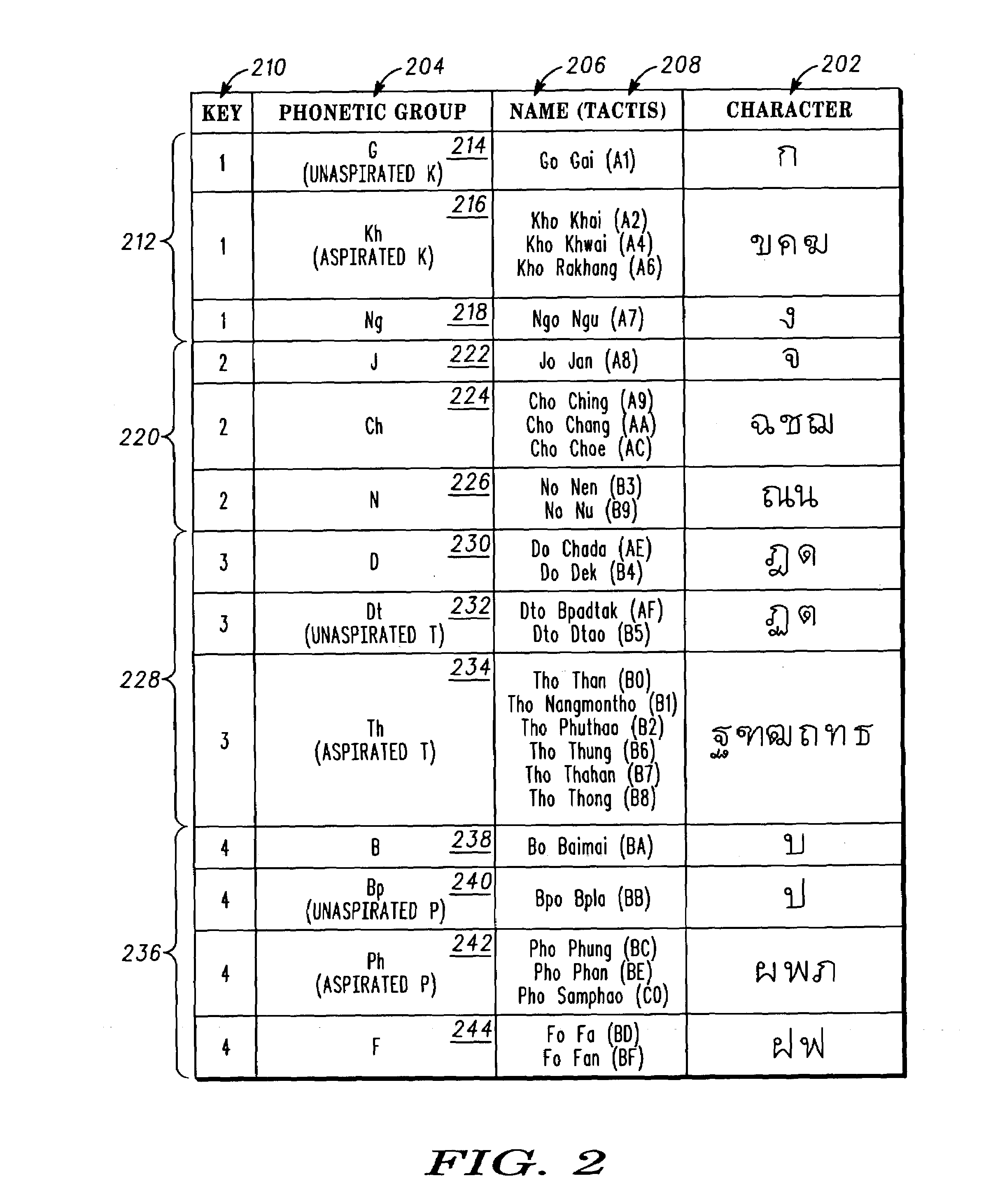

User interface of a keypad entry system for character input

A user interface (102) of a portable electronic device (100) includes a plurality of keys (112). The user interface (102) includes a first group of keys representing vowel characters (402), and a second group of keys representing consonant characters (202). The second group of keys is separate from the first group of keys. For the first group of keys, a first key represents leading vowels (422, 424, 426), a second key represents above and below vowels (428, 430), and a third key represents following vowels (432, 434, 436). The second group of keys may be subdivided into phonetic consonant groups (FIGS. 5&6) or alphabetic consonant groups (FIGS. 7&8).

Owner:GOOGLE TECH HLDG LLC

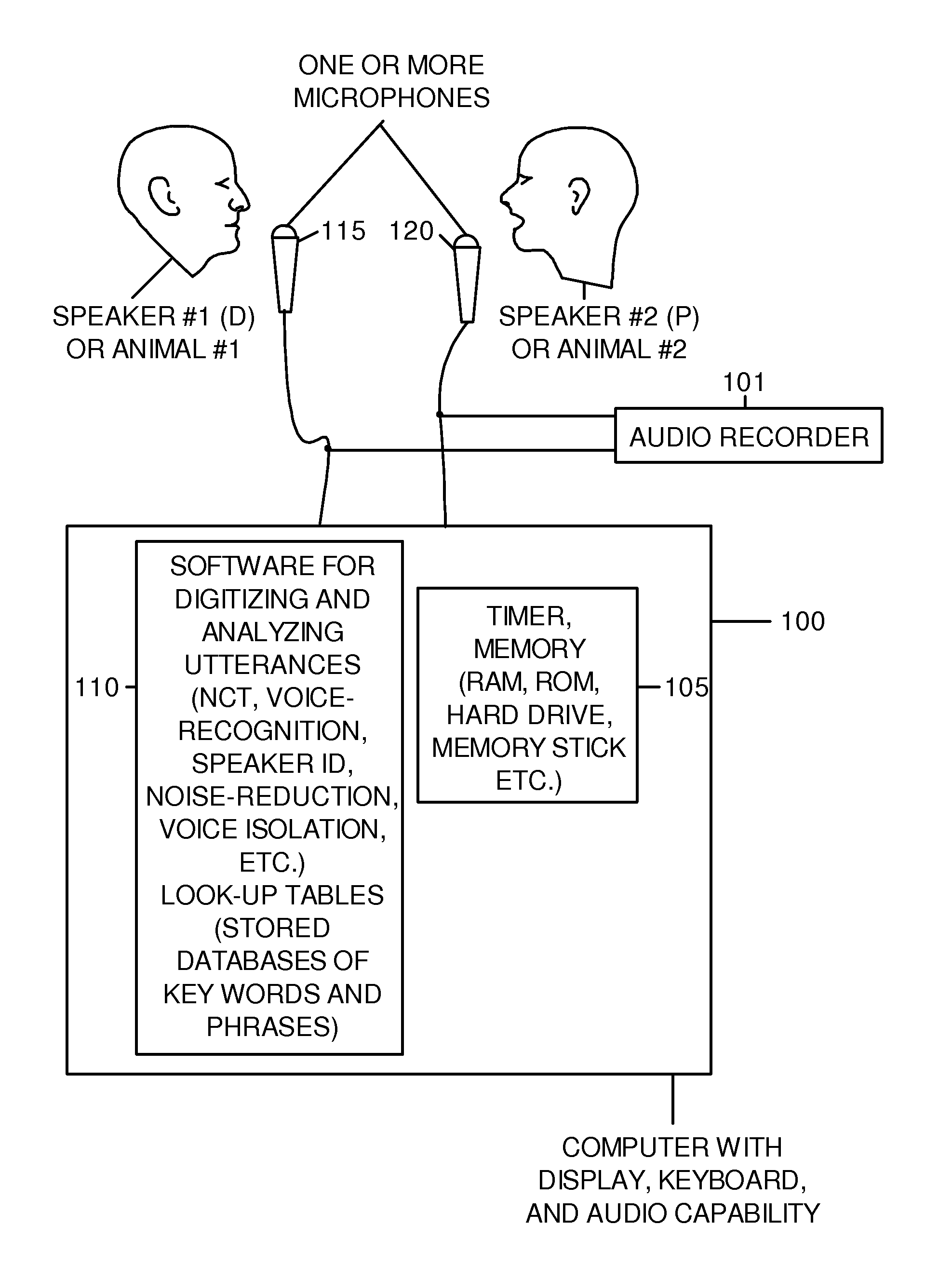

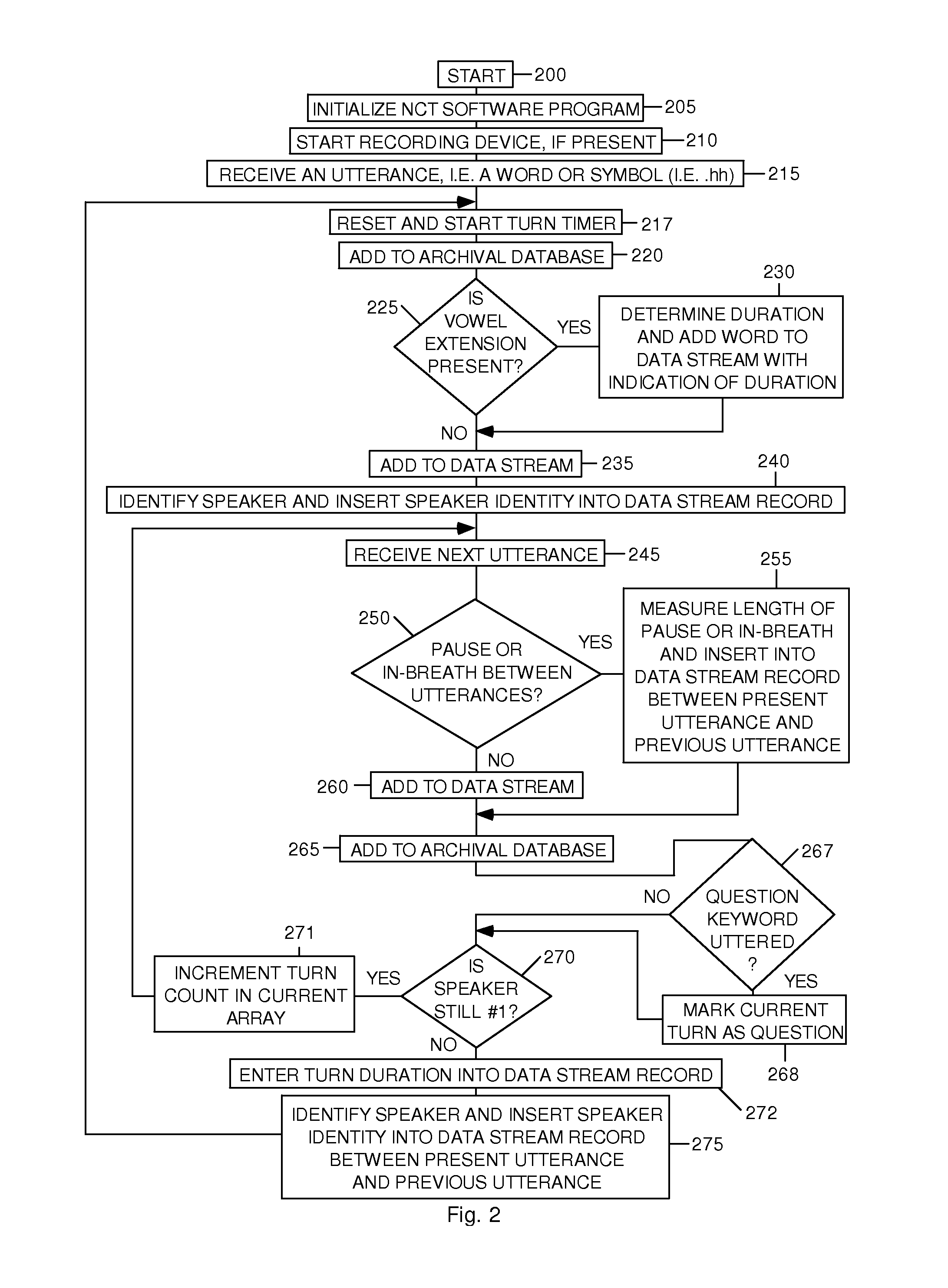

Natural conversational technology system and method

InactiveUS8843372B1Improve understandingSuccessfully understand and respondNatural language data processingSpeech recognitionSpoken languageSpeech sound

A system for analyzing conversations or speech, especially “turns” (a point in time in a person's or an animal's talk when another may or does speak) comprises a computer (100) with a memory (105), at least one microphone (115, 120), and software (110) running in the computer. The system is arranged to recognize and quantify utterances including spoken words, pauses between words, in-breaths, vowel extensions, and the like, and to recognize questions and sentences. The system rapidly and efficiently quantifies and qualifies speech and thus offers substantial improvement over prior-art computerized response systems that use traditional linguistic approaches that depend on single words or a small number of words in grammatical sentences as a basic unit of analysis. The system and method are useful in many applications, including teaching colloquial use of turn-taking, and in forensic linguistics.

Owner:ISENBERG HERBERT M

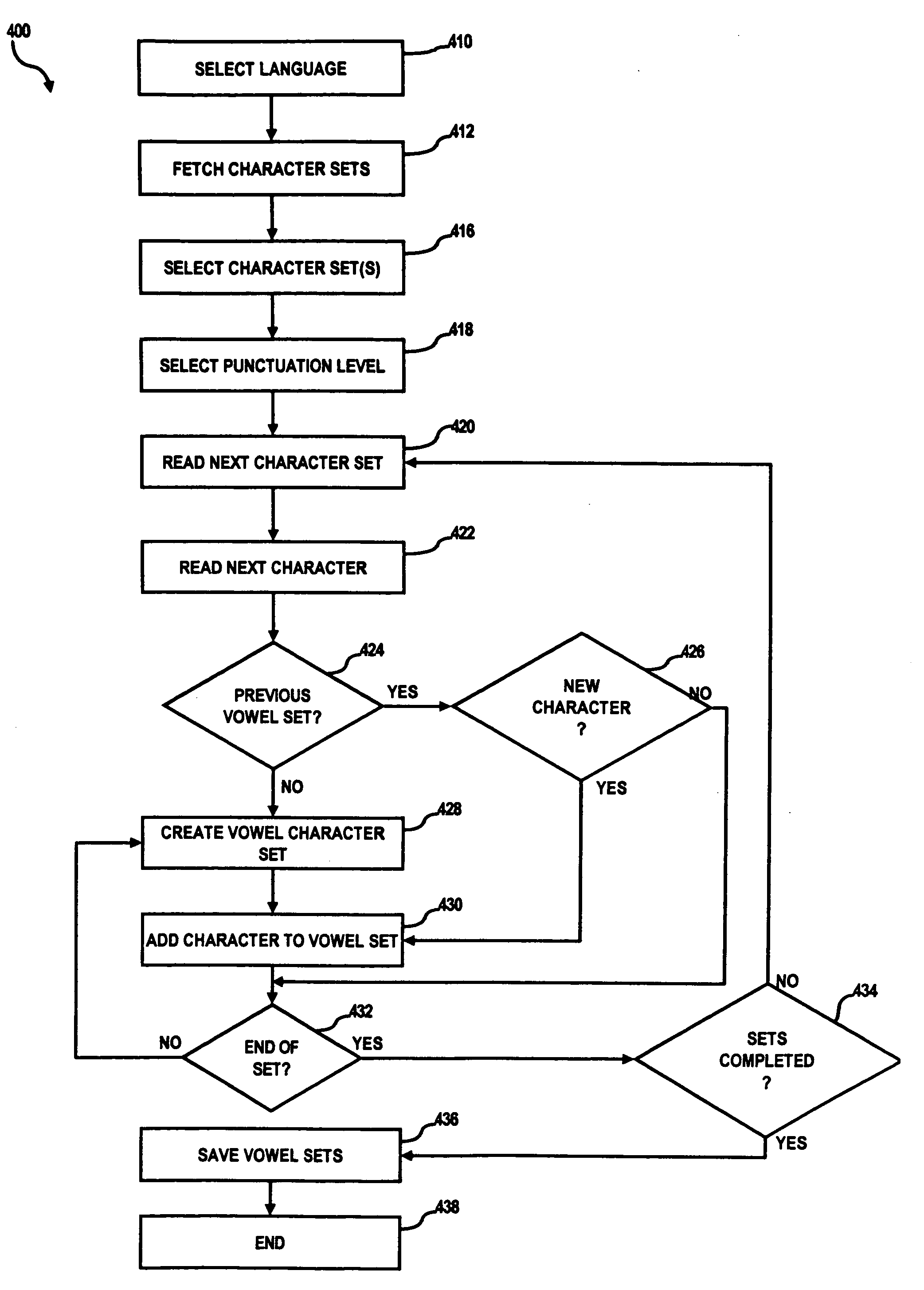

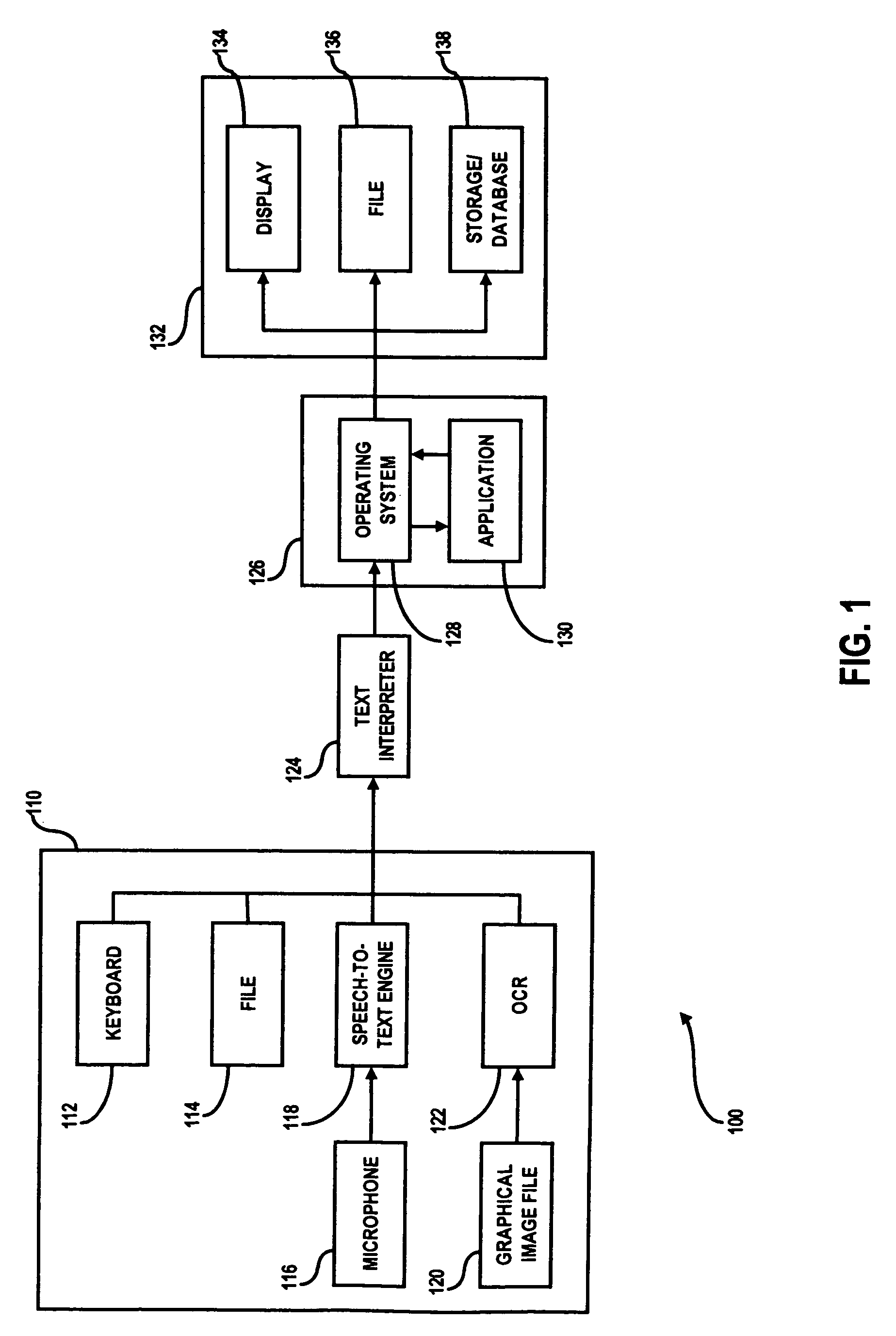

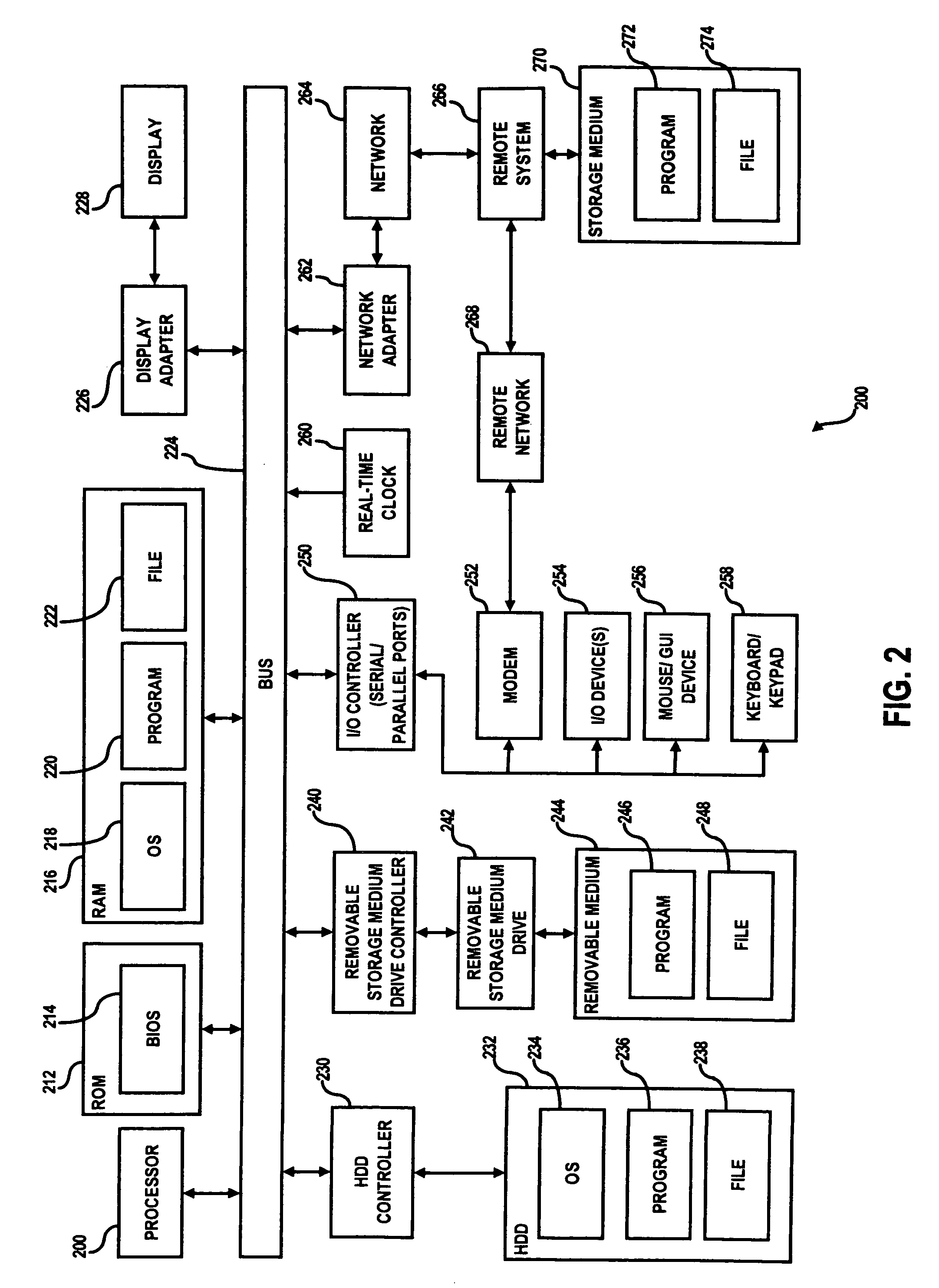

Method and apparatus for processing text and character data

InactiveUS7802184B1Effectively be indexedEfficient use ofBiological modelsNatural language data processingDisplay deviceBiological activation

An apparatus and method for processing text or character data are disclosed. A text processing system receives a character input string and determines whether to apply character processing. A non-English language such as Italian can be entered into a processing system such as a computer using a standard English based keyboard such that additional keys for providing accents or other grammatical and punctuation symbols or characters not existing in English are not required. In one mode, text is automatically accented or punctuated without requiring user intervention. In another mode, a user is provided with a list of accent or punctuation choices so that the user may select the optimum accent or punctuation. Text processing of an input may be activated by a text sequence including a possible vowel accent or apostrophe error, and may continue as an input method editor loop in response to repeated actuations of the key associated with the first activation event. When an activator event input is detected, a rules based system is utilized to select a correctly accented and punctuated character. A list of alternative accents and punctuations is optionally displayed, and a user may toggle through the list using the activator event to select a desired character. The display provides information for a level of certainty of a selected character or word.

Owner:CLOANTO CORP

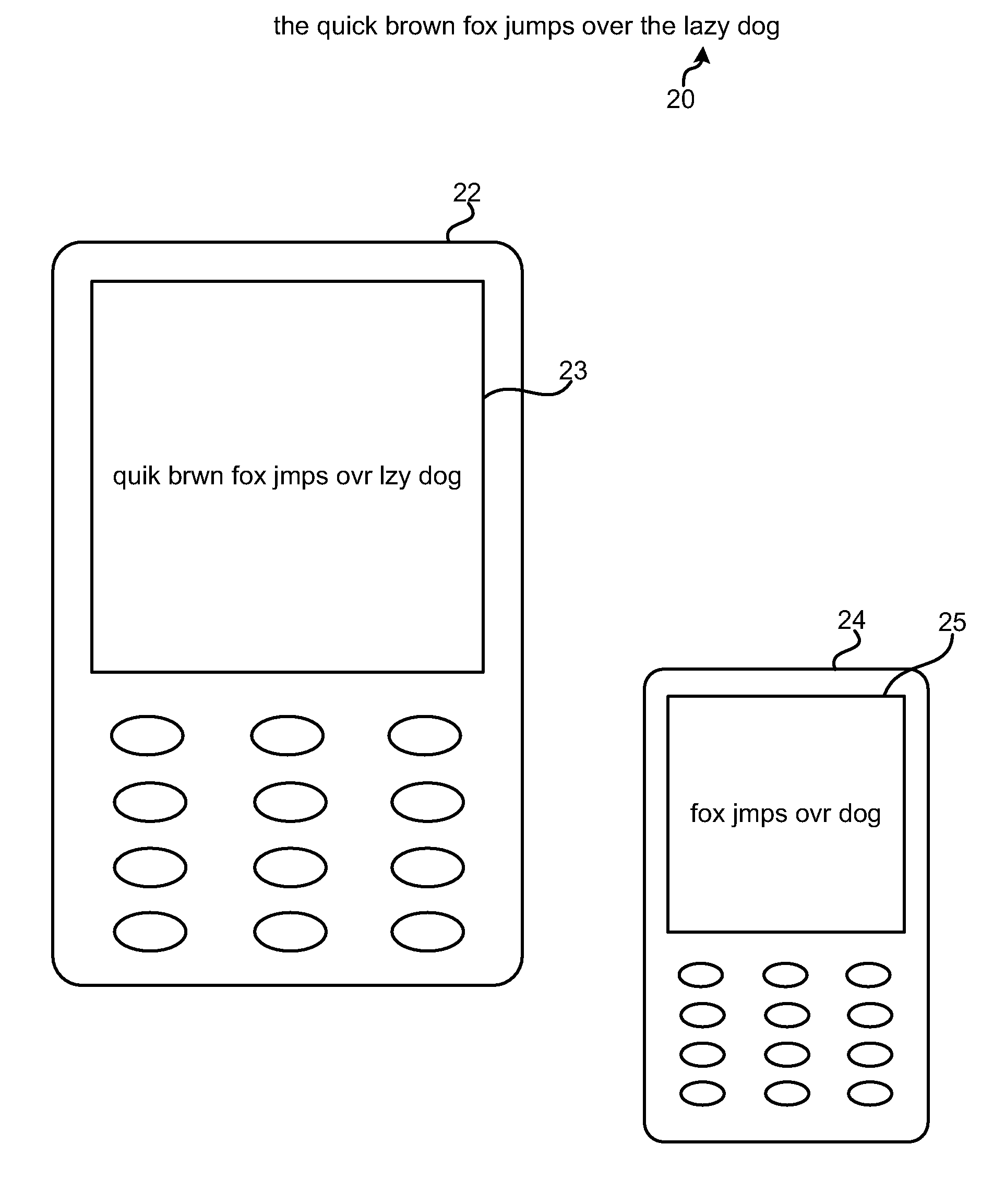

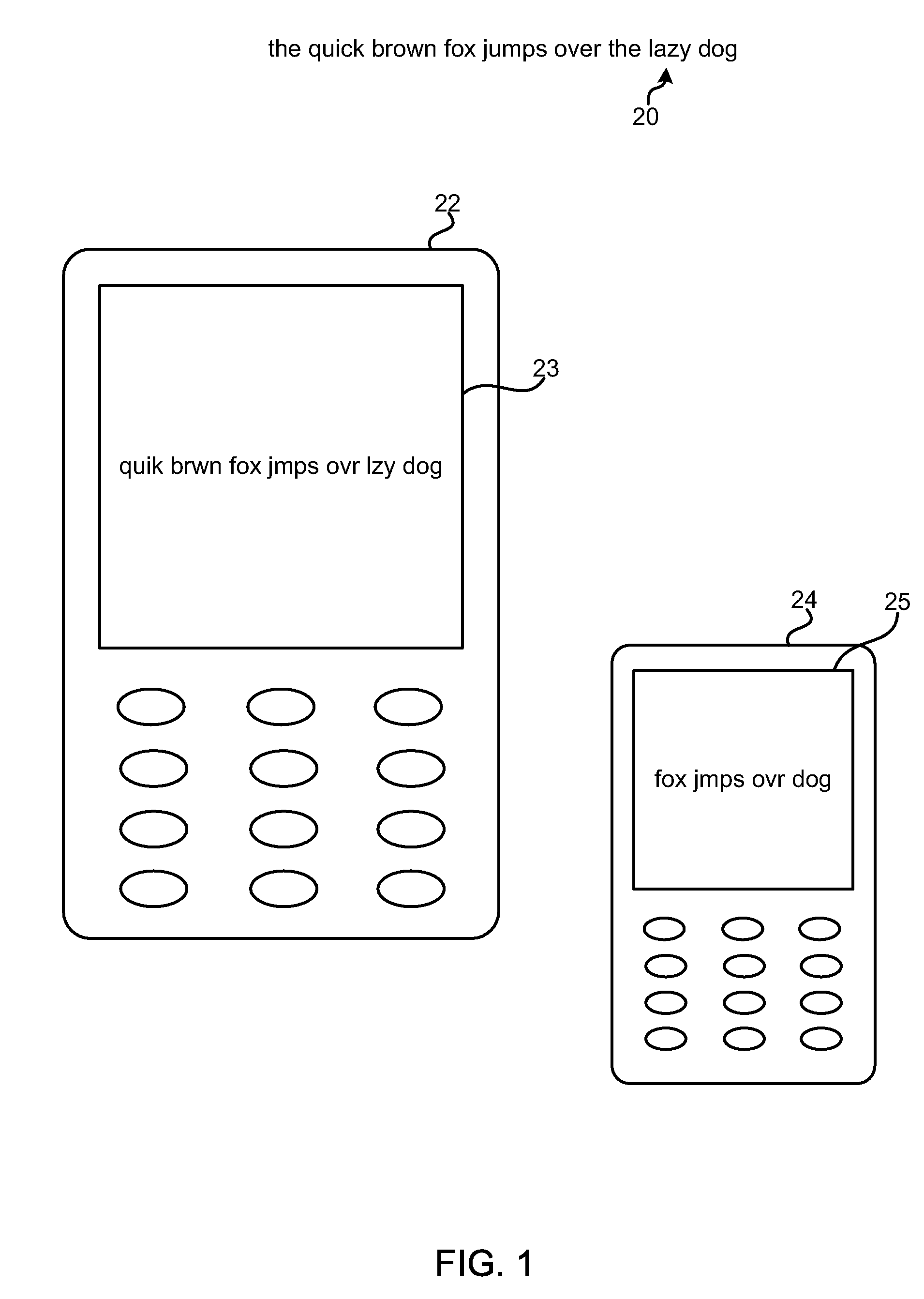

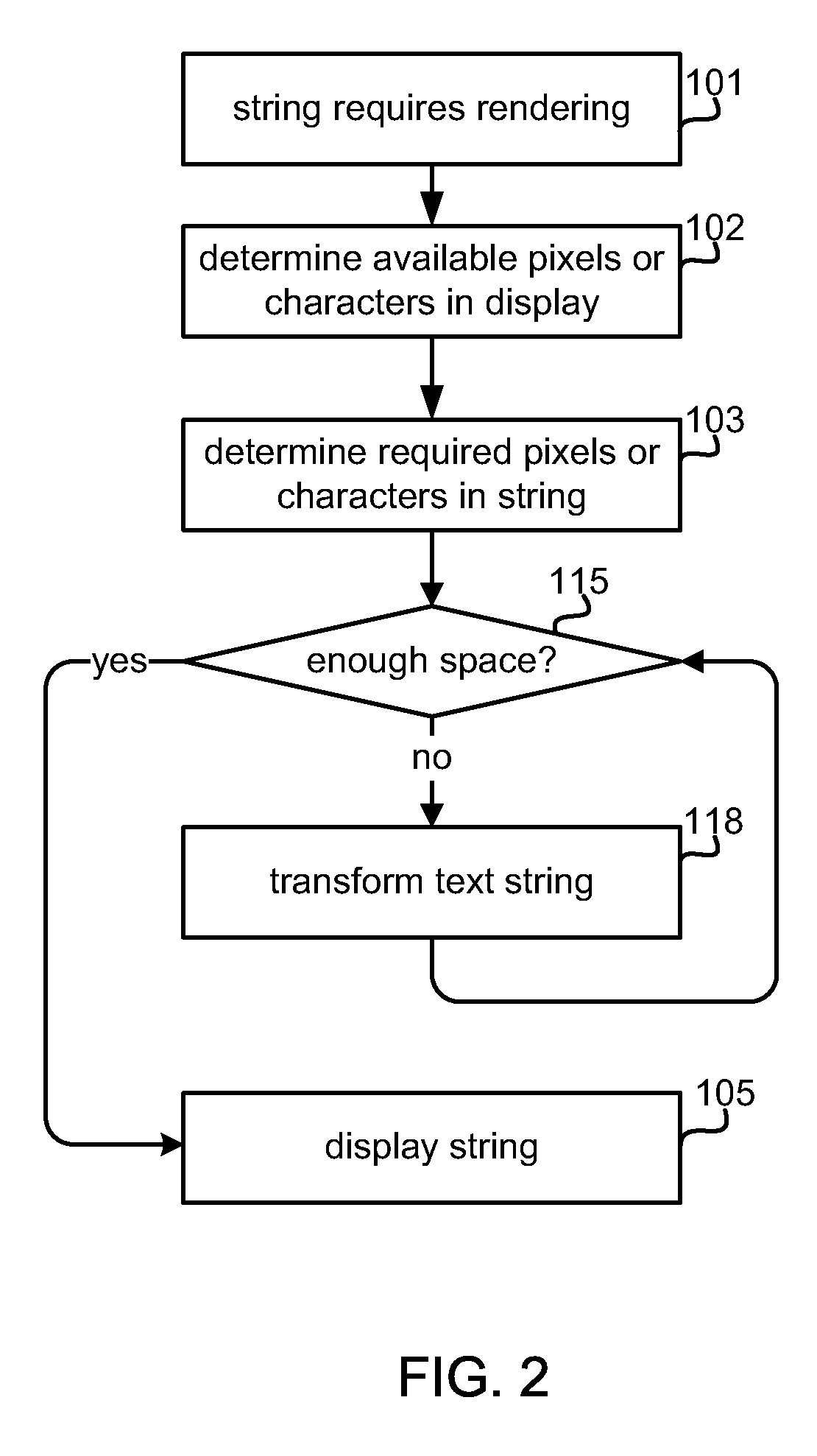

Method and apparatus for adjusting the length of text strings to fit display sizes

InactiveUS20100145676A1Digital data information retrievalNatural language data processingAlgorithmTheoretical computer science

The various aspects provide methods and devices which can reduce the length of a text string to fit dimensions of a display by identifying and deleting elements of the string that are not essential to its meaning. In the various aspects, handheld devices may be configured with software configured to analyze and modify text strings to shorten their length by adjusting font size, changing fonts, deleting unnecessary words, such as articles, abbreviating some words, deleting letters (e.g., vowels) from some words, and deleting non-critical words. The order in which transformations are affected may vary depending upon the text string according to a priority of transformations. Such transformation operations may be applied incrementally until the text string fits within the display size requirements. Similar methods may be implemented to increase the length of text strings by adding words in a manner that does not substantially change the meaning of the text string.

Owner:QUALCOMM INC

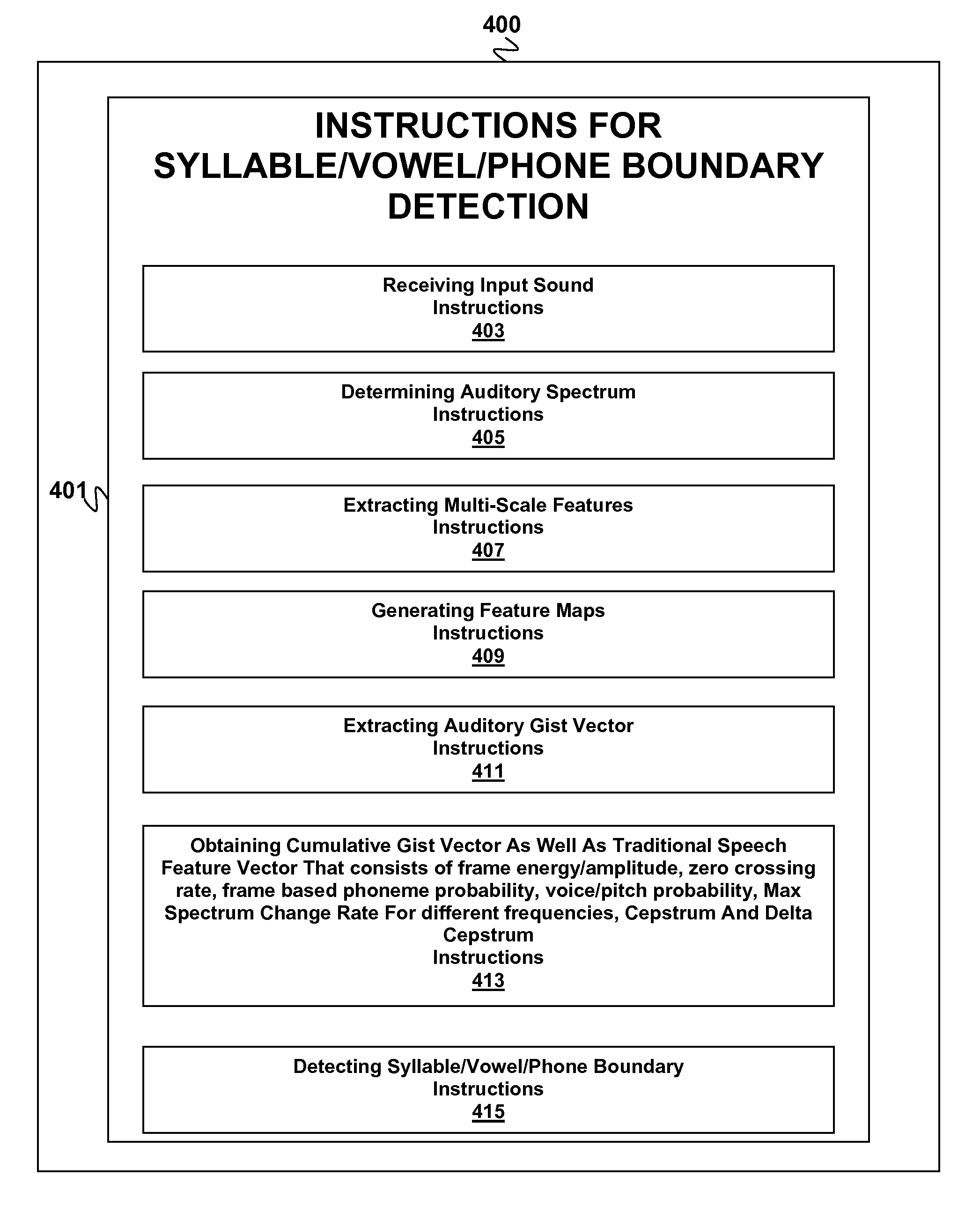

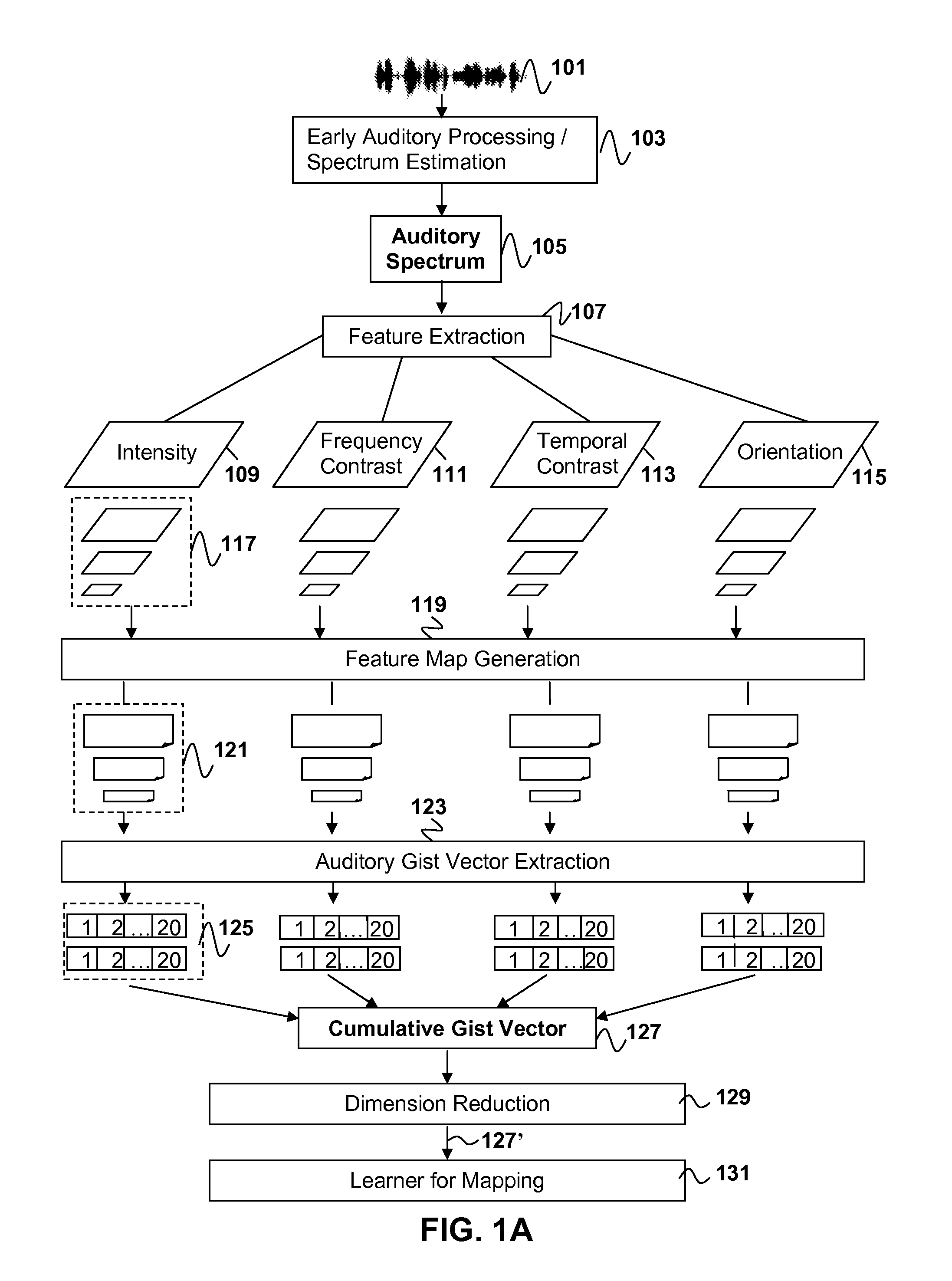

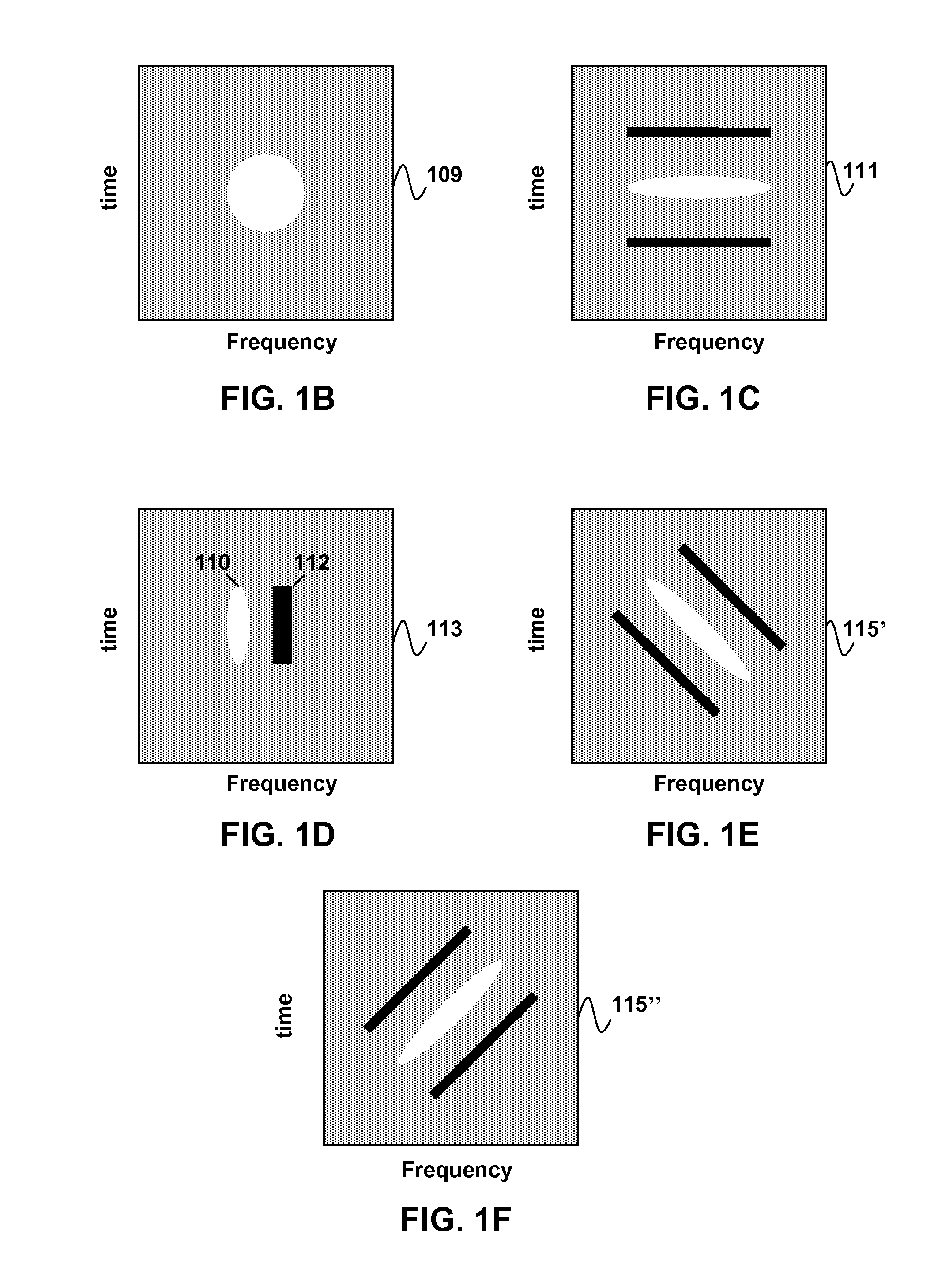

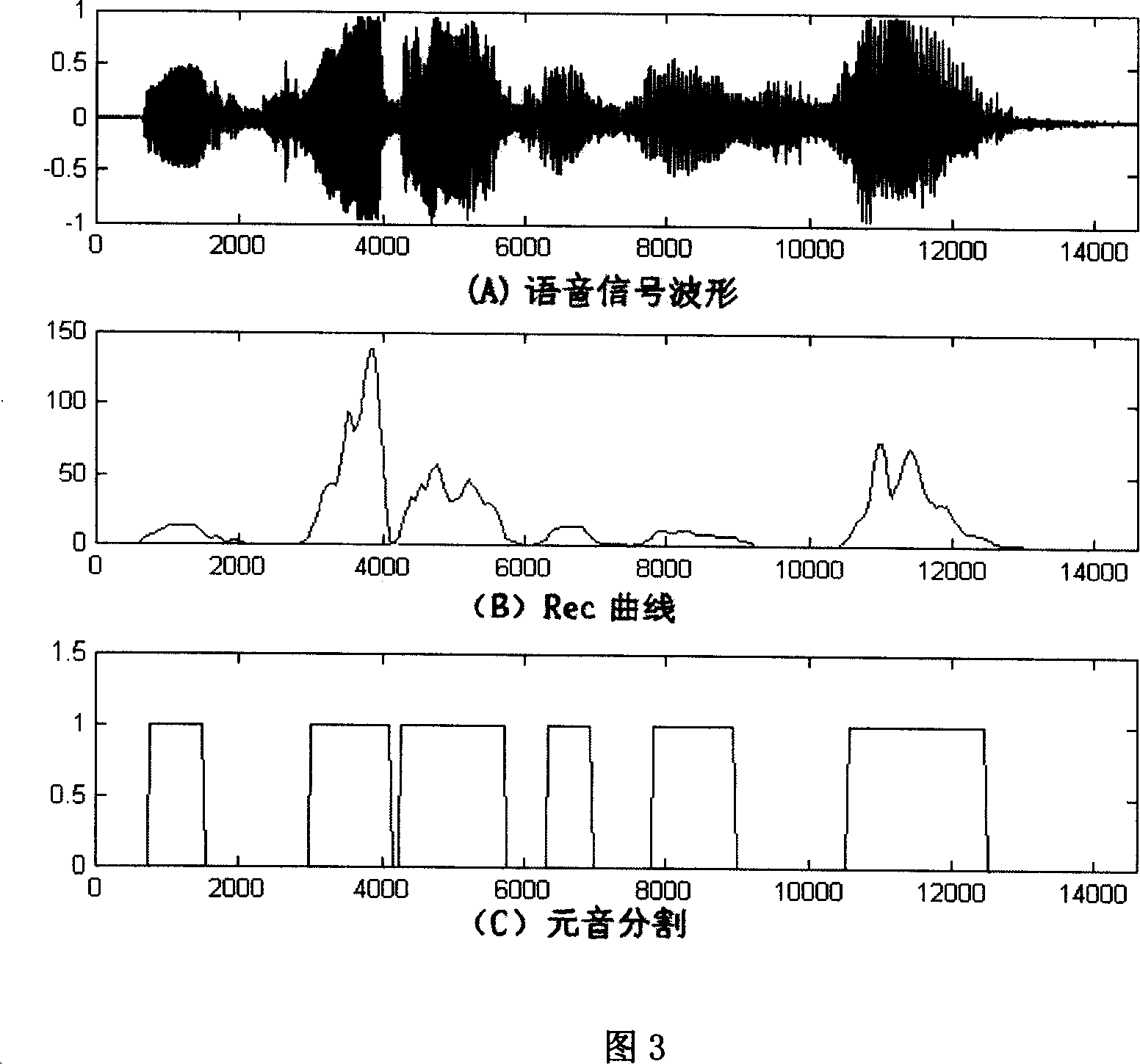

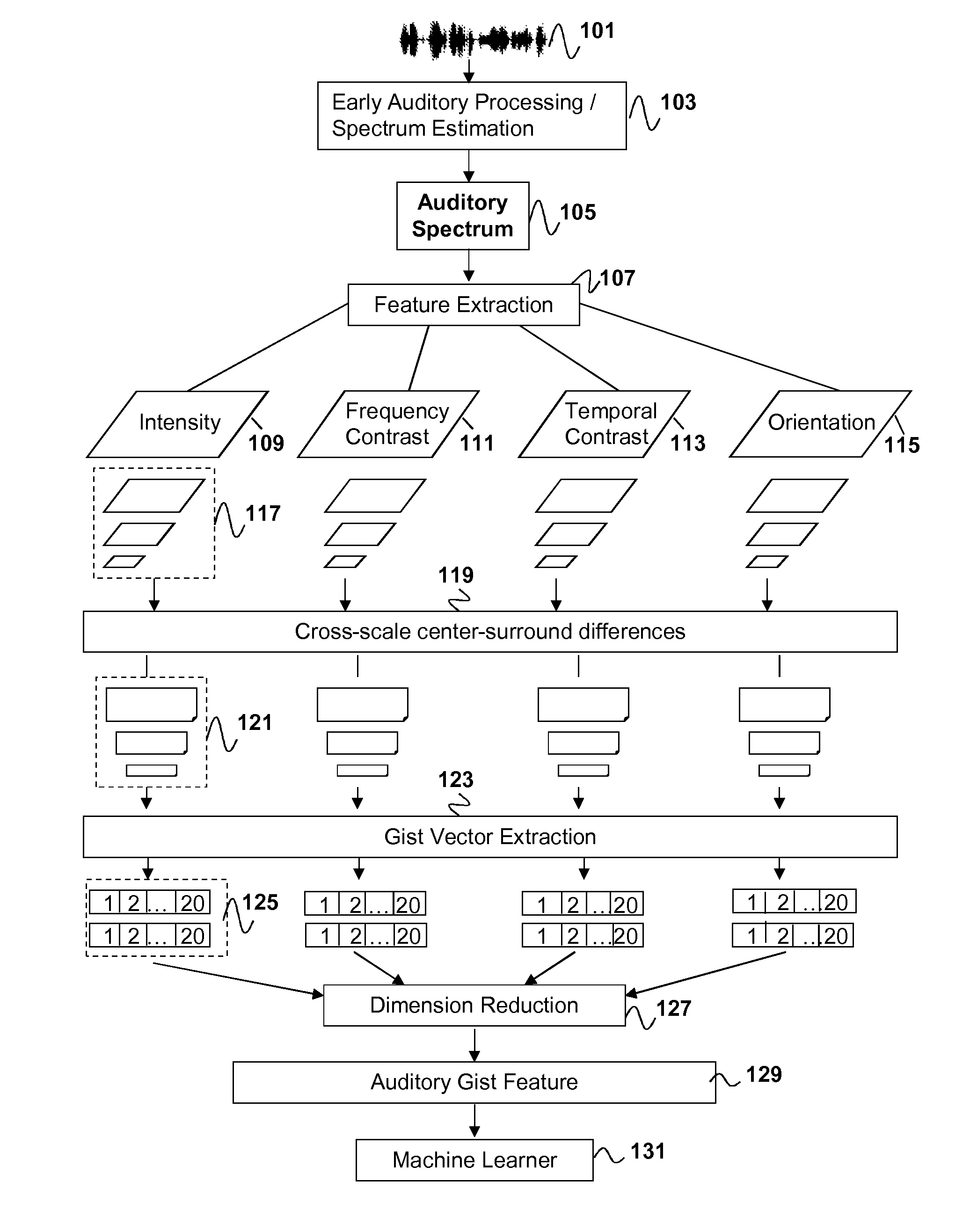

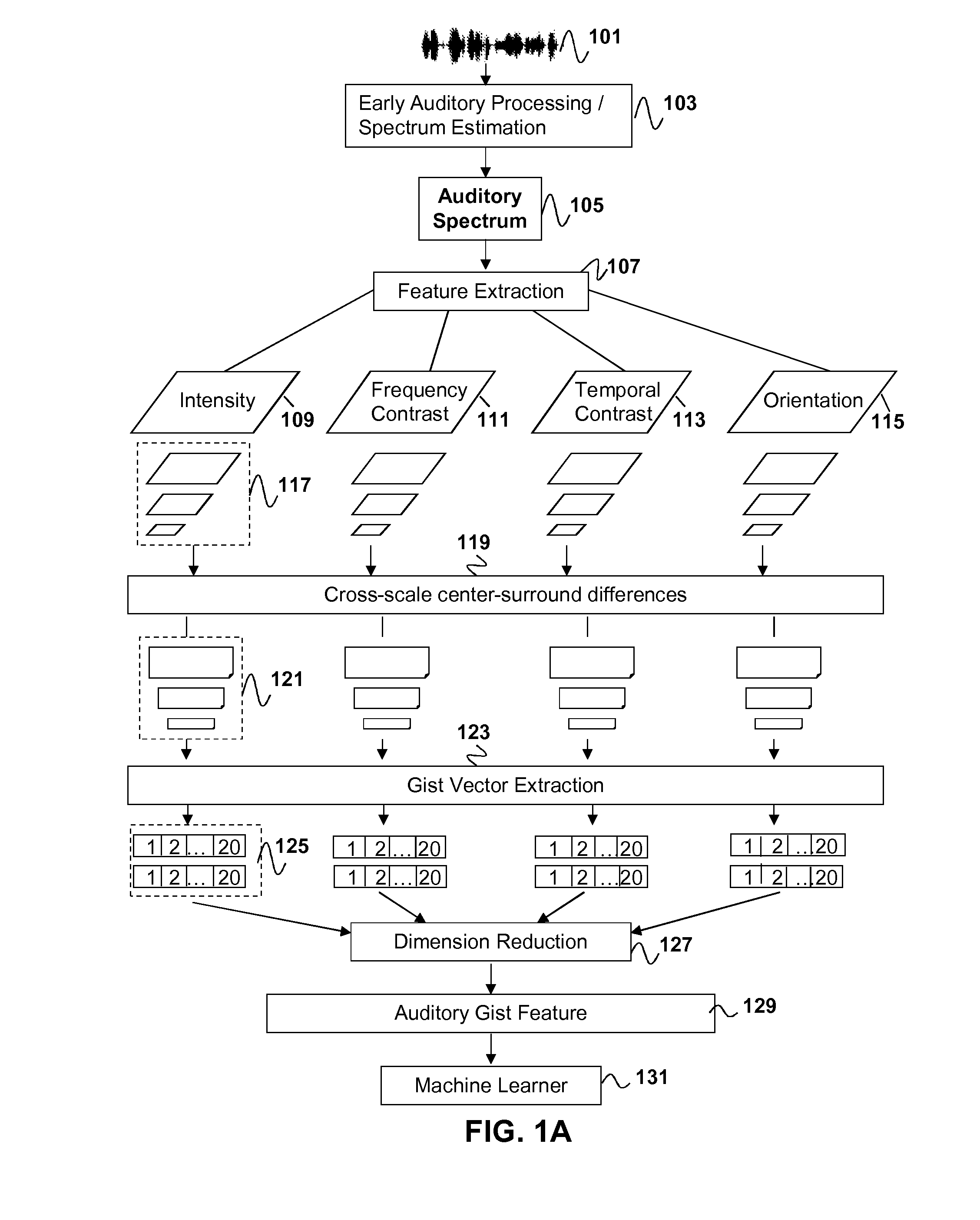

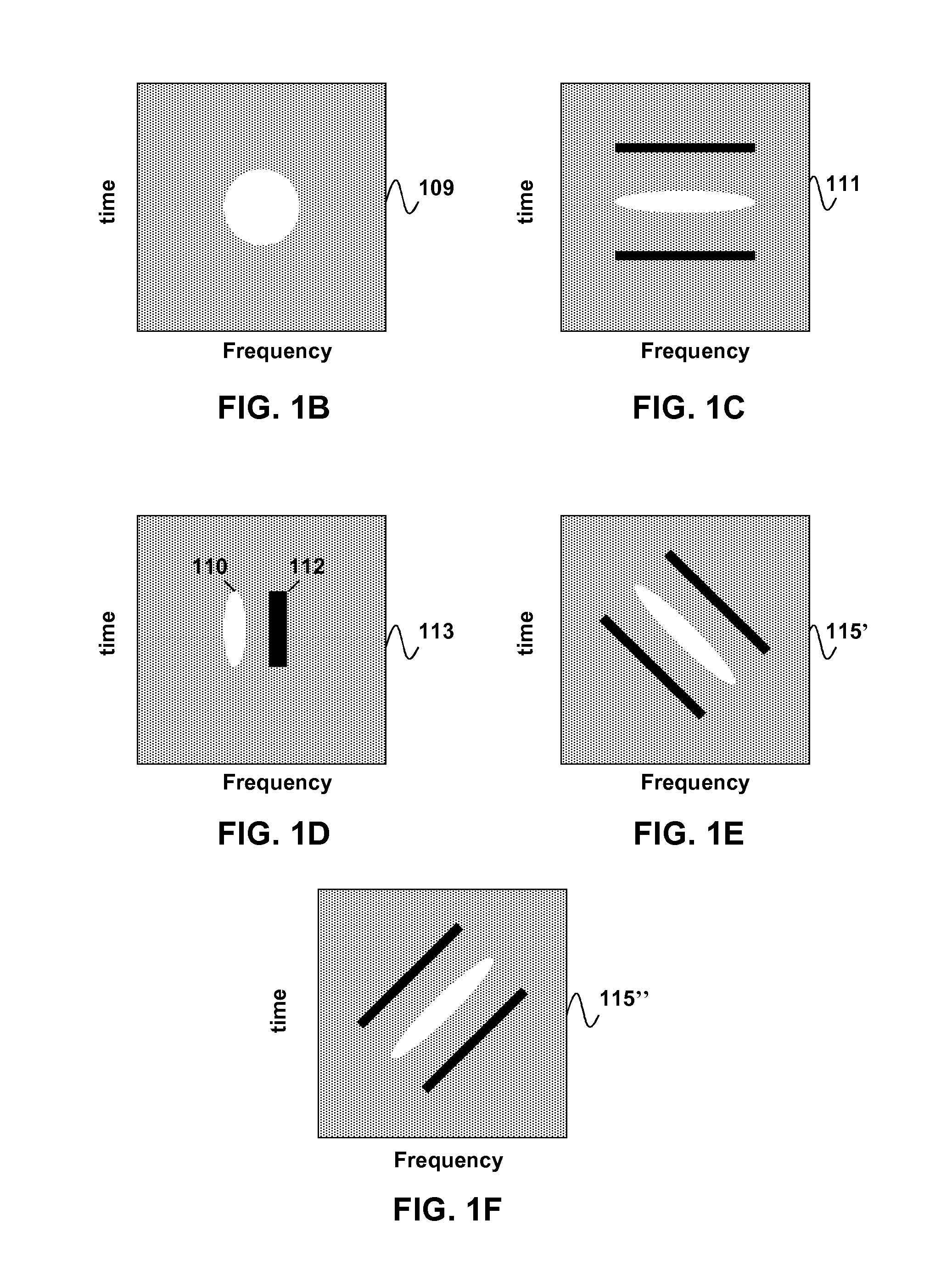

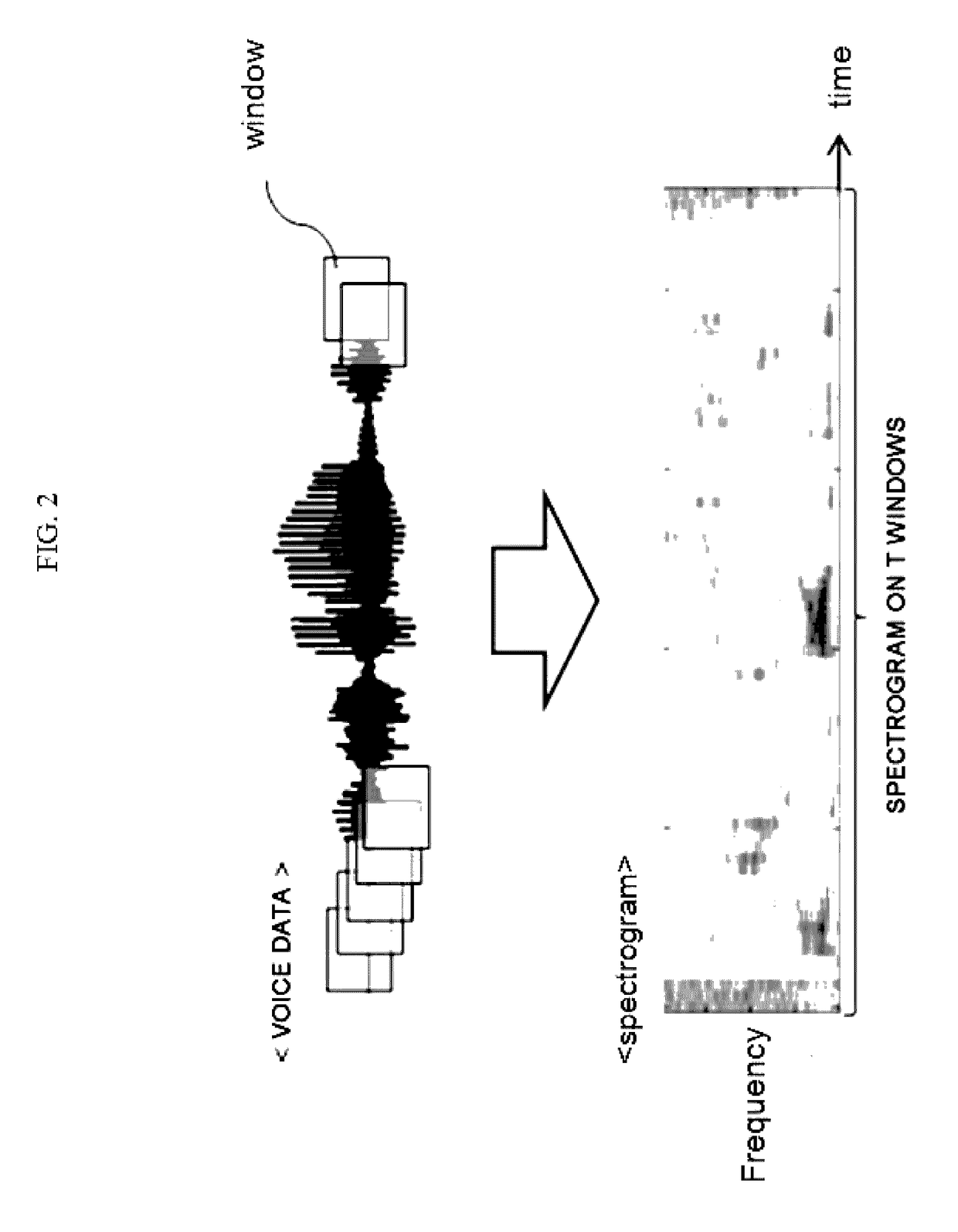

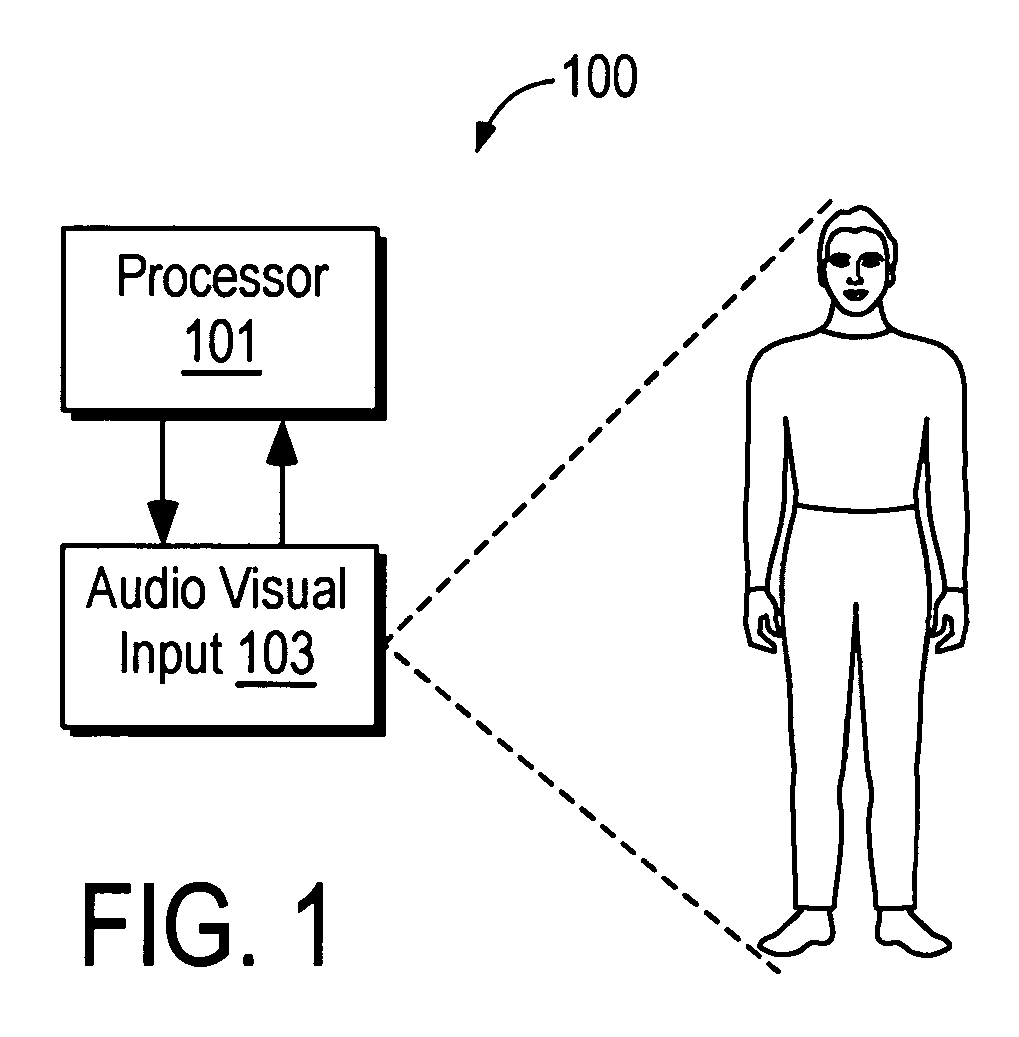

Speech syllable/vowel/phone boundary detection using auditory attention cues

In syllable or vowel or phone boundary detection during speech, an auditory spectrum may be determined for an input window of sound and one or more multi-scale features may be extracted from the auditory spectrum. Each multi-scale feature can be extracted using a separate two-dimensional spectro-temporal receptive filter. One or more feature maps corresponding to the one or more multi-scale features can be generated and an auditory gist vector can be extracted from each of the one or more feature maps. A cumulative gist vector may be obtained through augmentation of each auditory gist vector extracted from the one or more feature maps. One or more syllable or vowel or phone boundaries in the input window of sound can be detected by mapping the cumulative gist vector to one or more syllable or vowel or phone boundary characteristics using a machine learning algorithm.

Owner:SONY COMPUTER ENTERTAINMENT INC

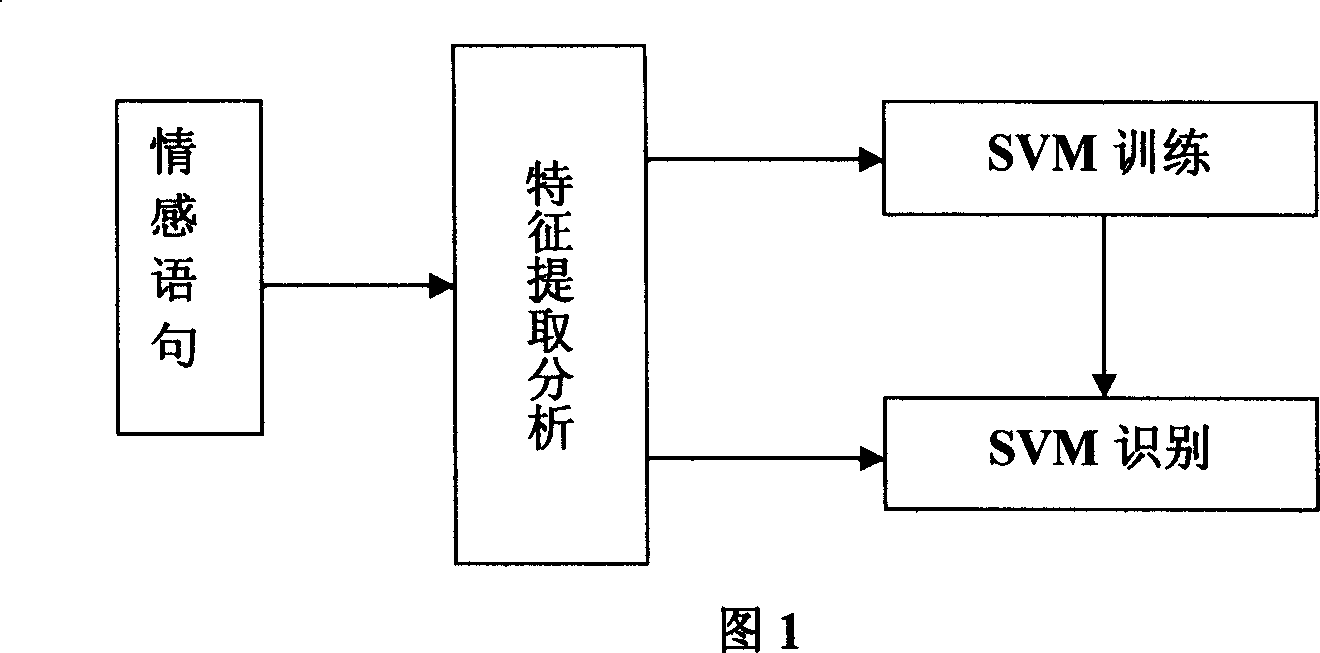

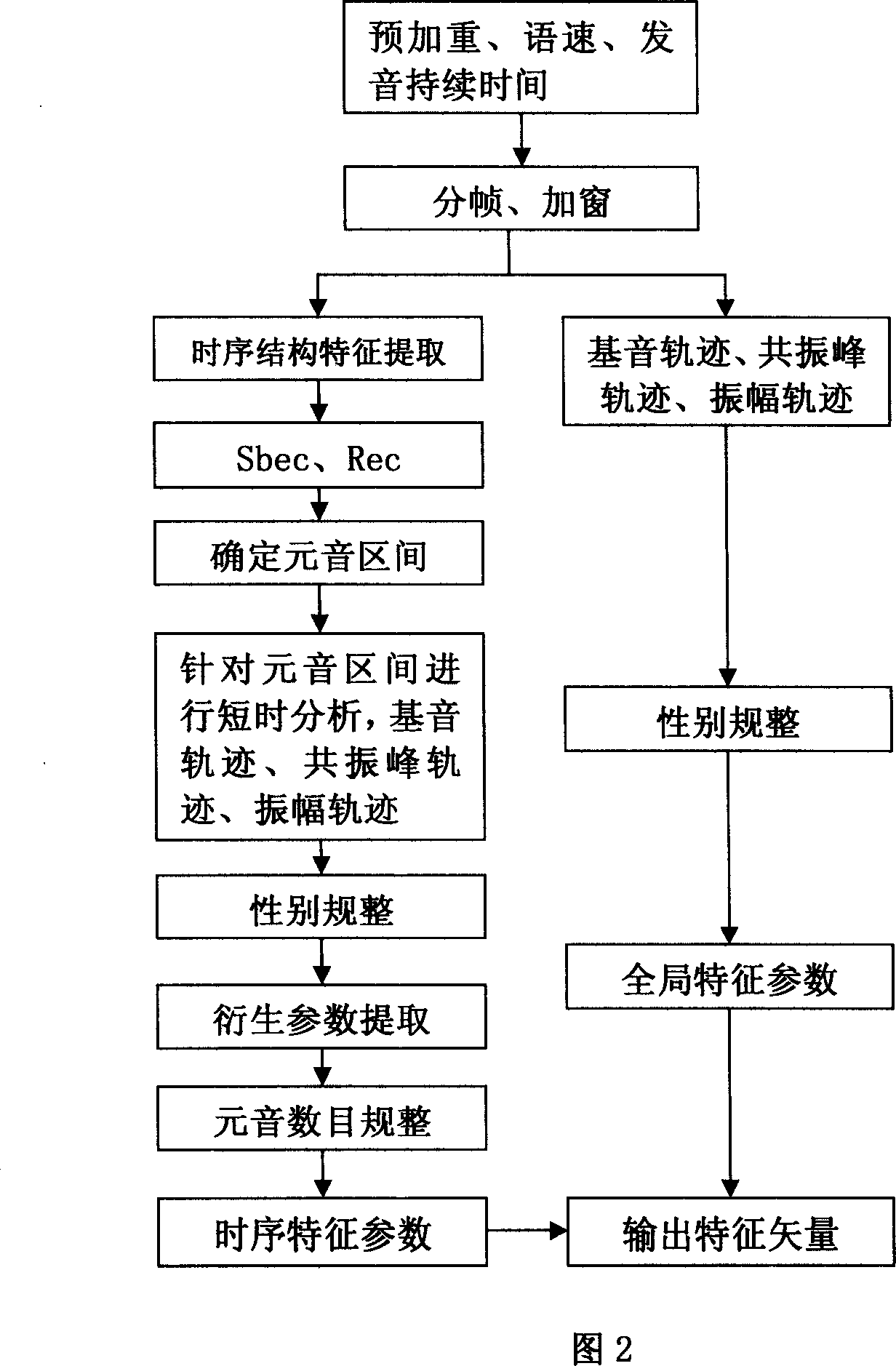

Speech emotion identifying method based on supporting vector machine

InactiveCN1975856AImprove effectivenessImprove performanceSpeech recognitionSupport vector machineFeature extraction

A method for identifying voice emotion based on support vector computer includes characteristic picking up-analyzing to collate characteristic parameter selection of global structure and sex, as well as to collate characteristic parameter selection of time sequence structure and to collate sex and vowel number; support vector computer training to carry out identification on five emotions of happy, angry, sad, fear and surprise.

Owner:邹采荣

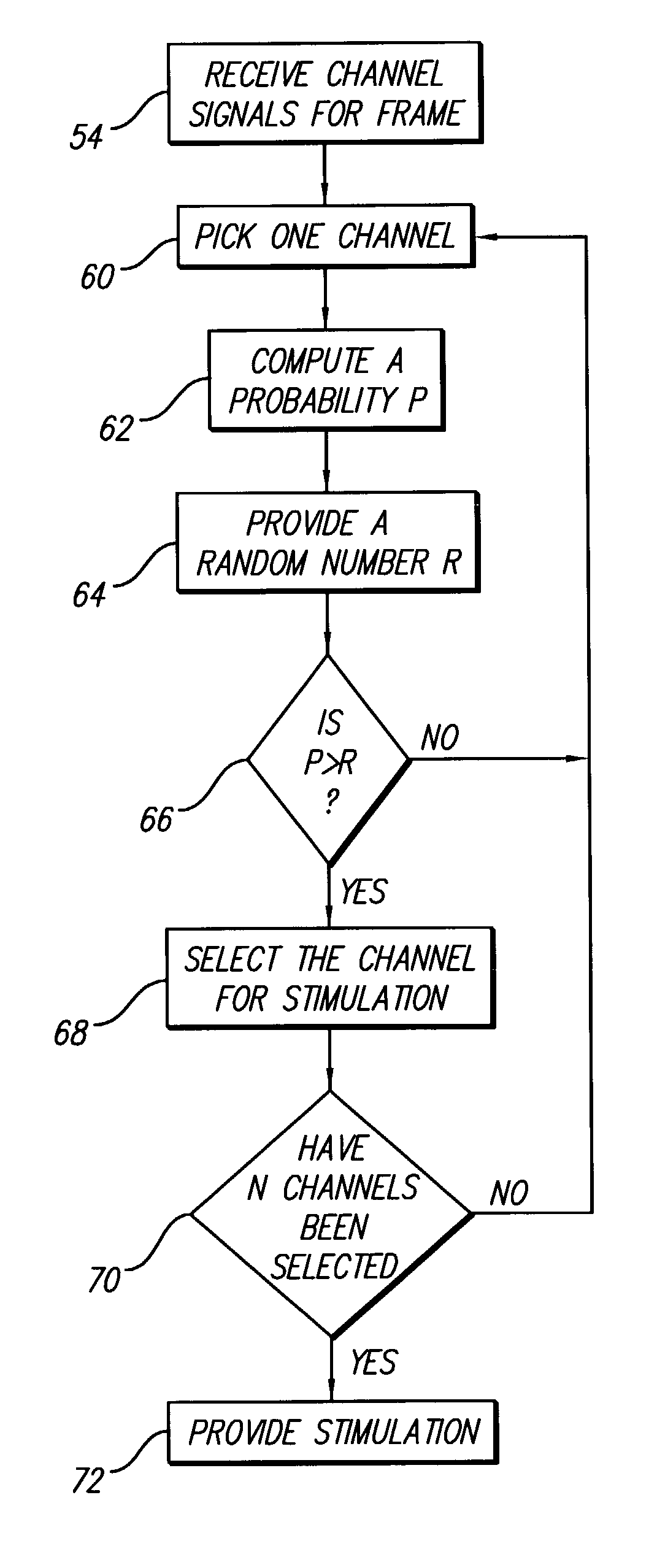

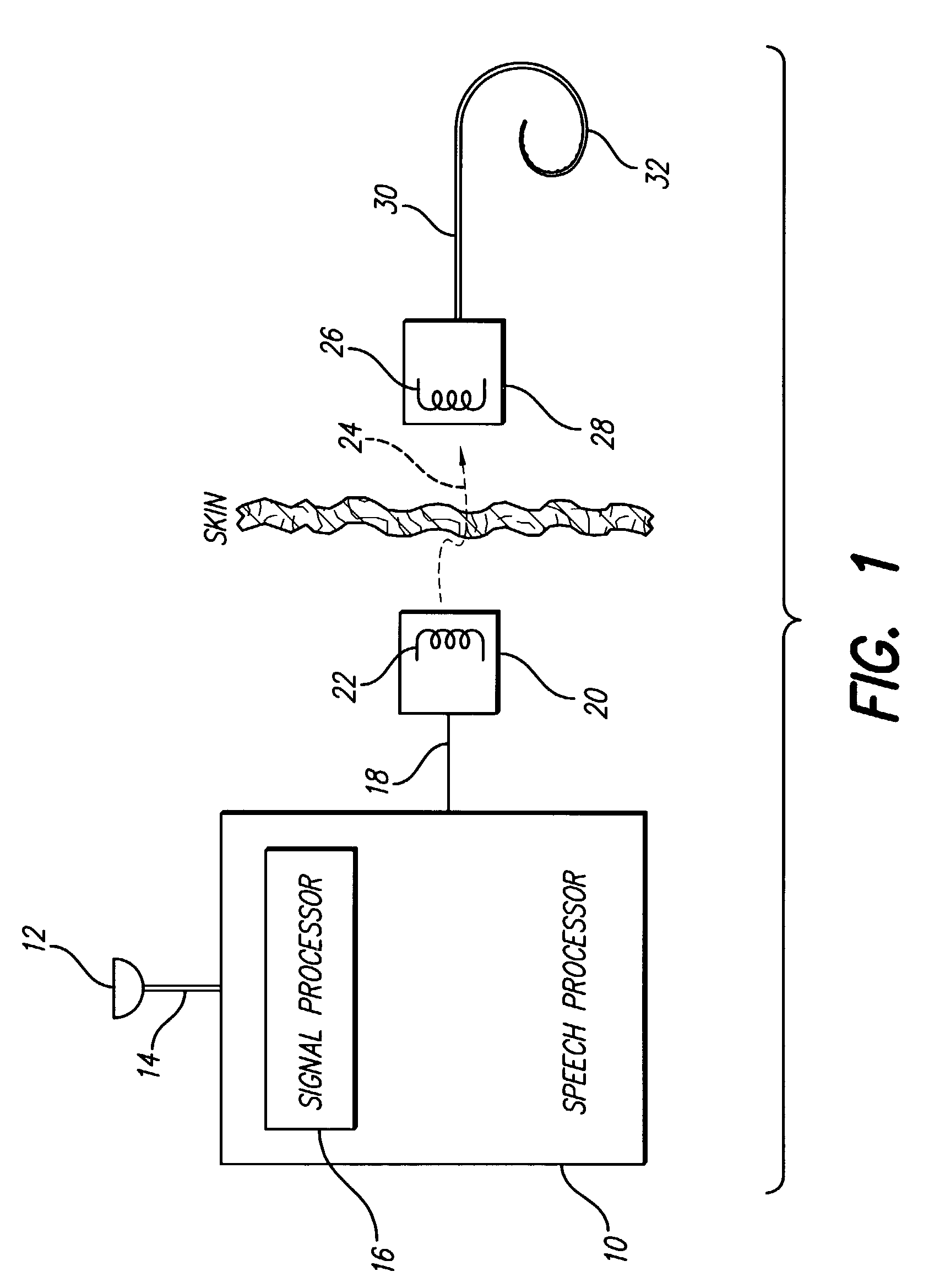

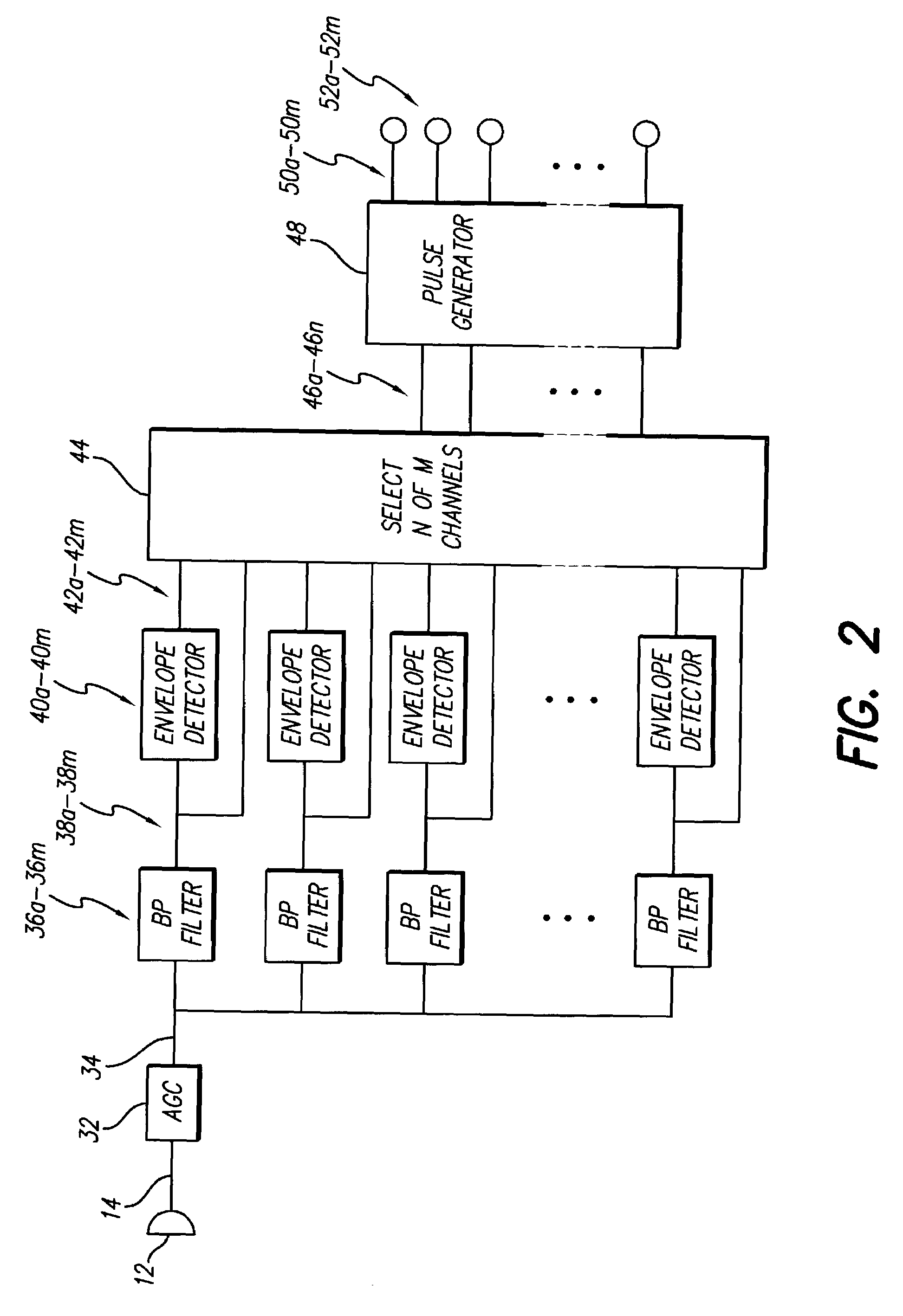

Pulse skipping strategy

InactiveUS7130694B1Reduce processing requirementsSave processor resourcesElectrotherapyBandpass filteringAcoustic energy

Improved skipping strategies for cochlear or other multi-channel neural stimulation implants selects N out of M channels for stimulation during a given stimulation frame. A microphone transduces acoustic energy into an electrical signal. The electrical signal is processed by a family of bandpass filters, or the equivalent, to produce a number of frequency channels. In a first embodiment, a probability based channel selection strategy computes a probably for each of the M channels based on the strength of each channel. N channels are probabilistically selected for stimulation based on their individual probability. The result is a randomized “stochastic” stimulus presentation to the patient. Such randomized stimulation reduces under representation of weaker channels for steady state input conditions such as vowels. In second, third and fourth embodiments, a variable threshold is adjusted to obtain the selection of N channels per frame.

Owner:ADVNACED BIONICS LLC

Combining auditory attention cues with phoneme posterior scores for phone/vowel/syllable boundary detection

Phoneme boundaries may be determined from a signal corresponding to recorded audio by extracting auditory attention features from the signal and extracting phoneme posteriors from the signal. The auditory attention features and phoneme posteriors may then be combined to detect boundaries in the signal.

Owner:SONY COMPUTER ENTERTAINMENT INC

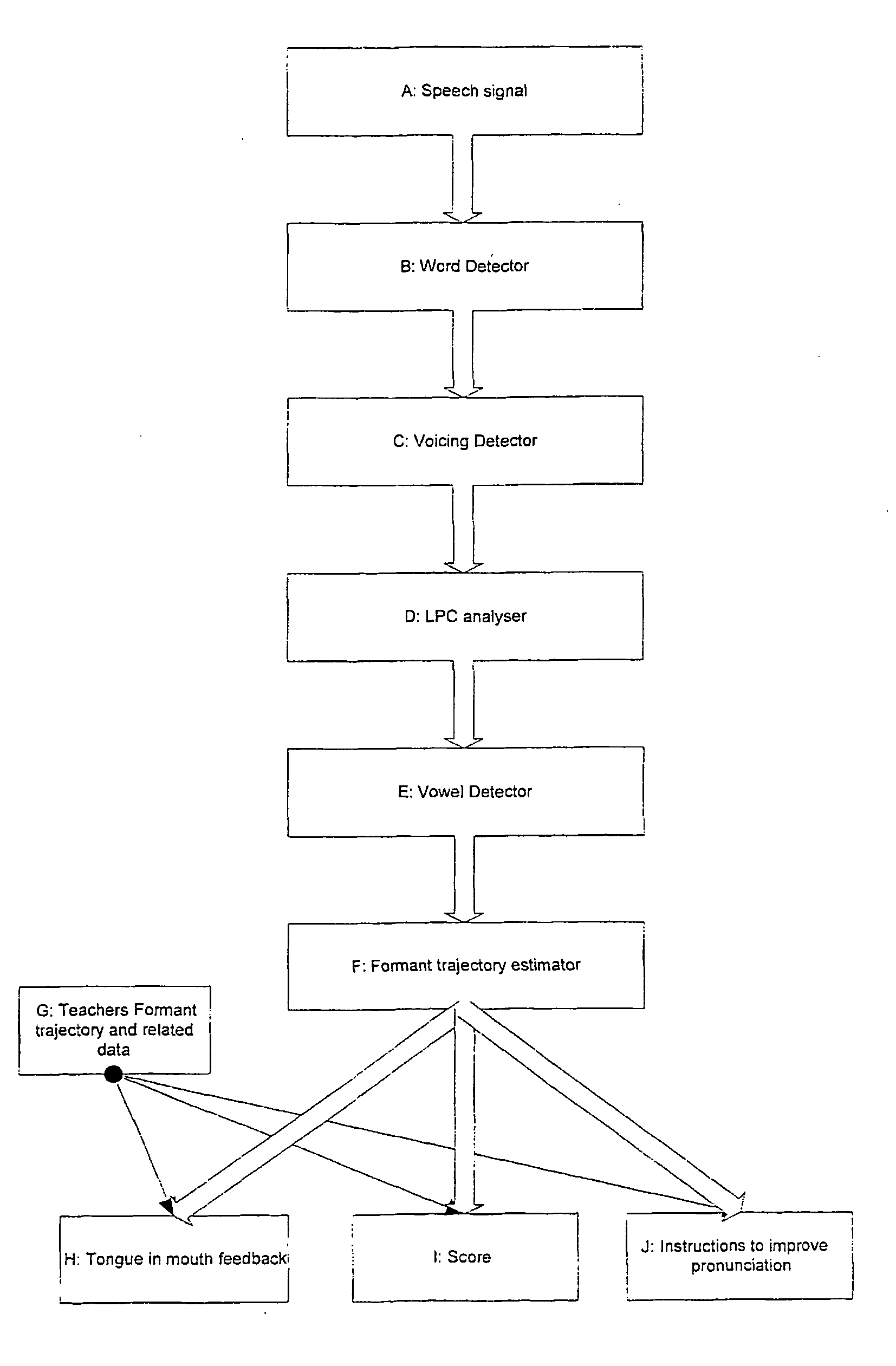

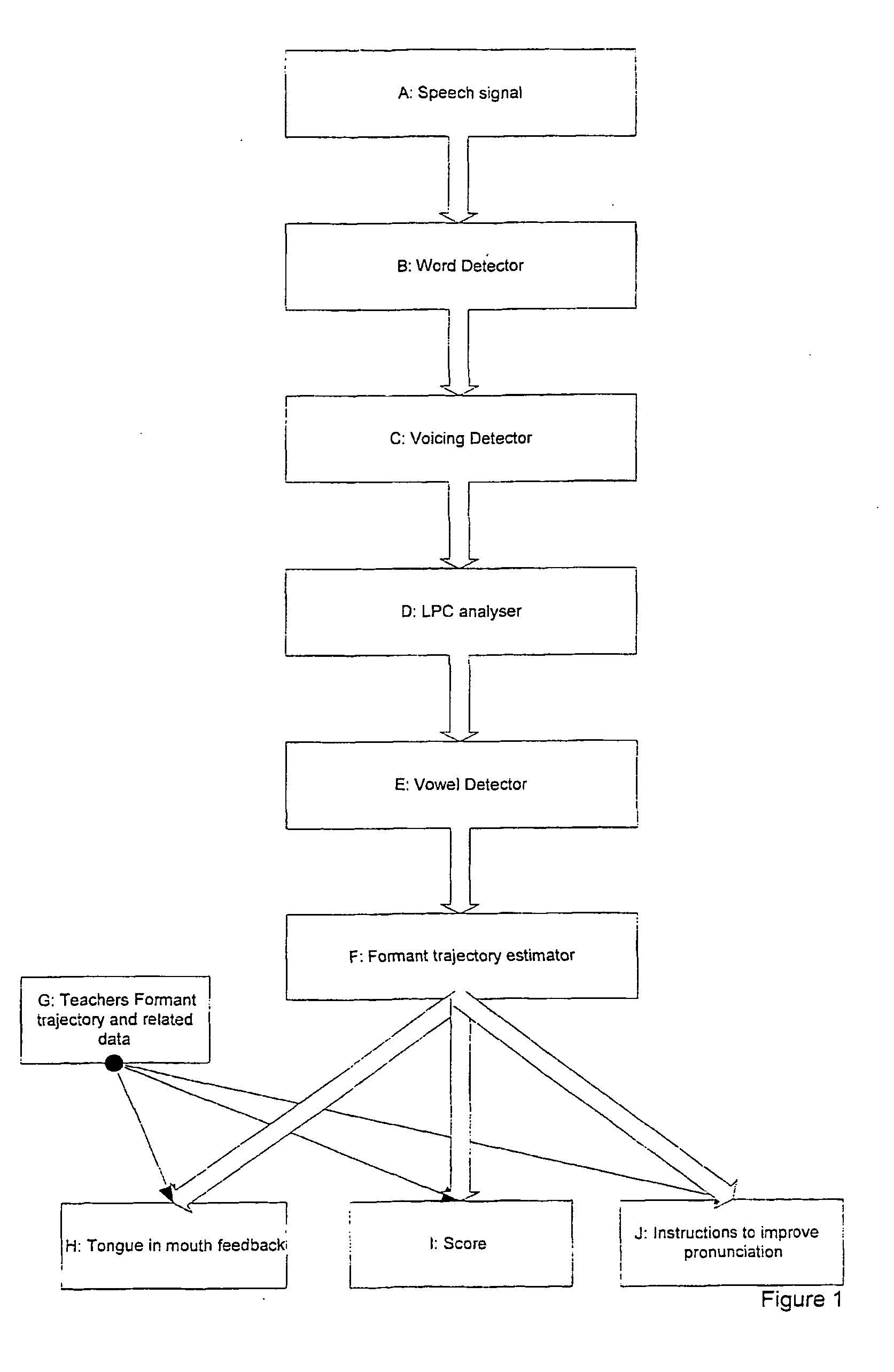

Method, system and software for teaching pronunciation

The present invention relates to a method for teaching pronunciation. More particularly, but not exclusively, the present invention relates to a method for teaching pronunciation using formant trajectories and for teaching pronunciation by splitting speech into phonemes. (A) A speech signal is received from a user; (B) word(s) is / are detected within the signal; (C) voice / unvoiced segments are detected within the word(s); (D) formants of the voiced segments are calculated; (E) vowel phonemes are detected with the voiced segments; the vowel phonemes may be detected using a weighted sum of a Fourier transform measure of frequency energy and a measure based on the formants; and (F) a formant trajectory may be calculated for the vowel phonemes using the detected formants.

Owner:VISUAL PRONUNCIATION SOFTWARE

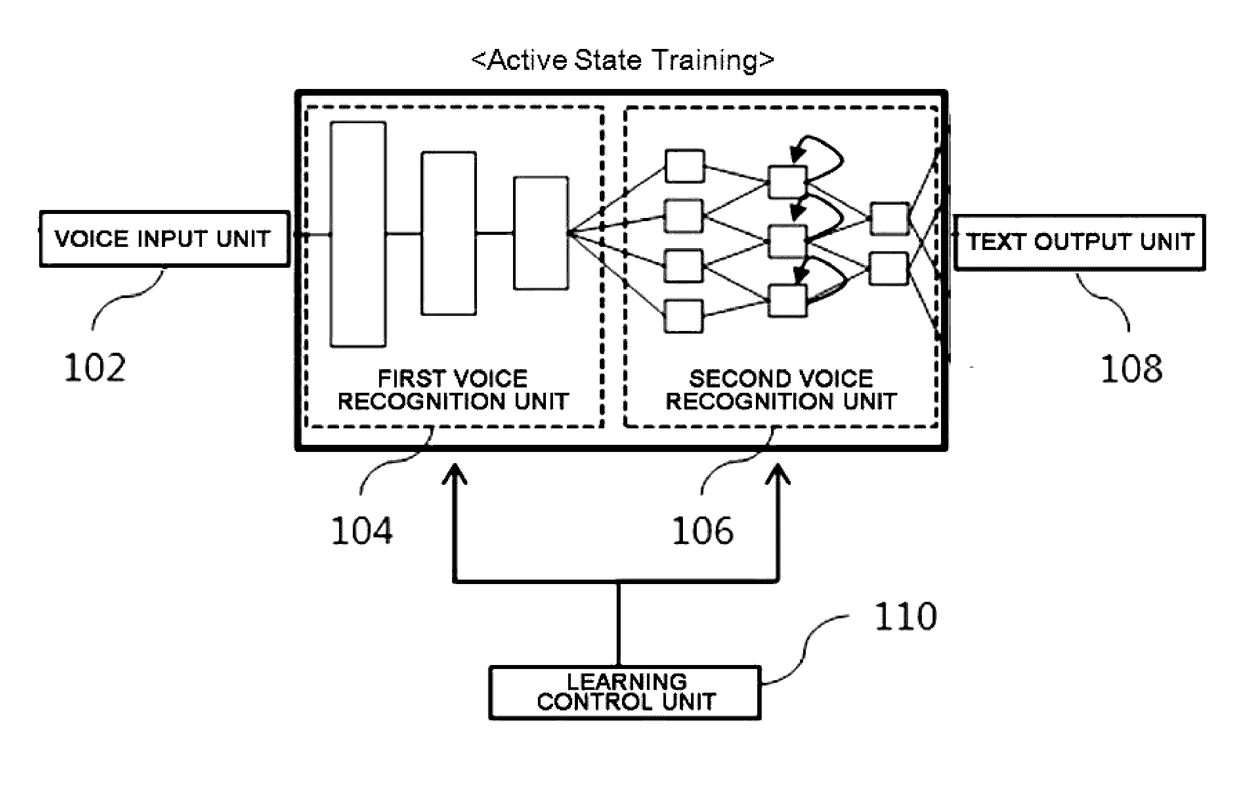

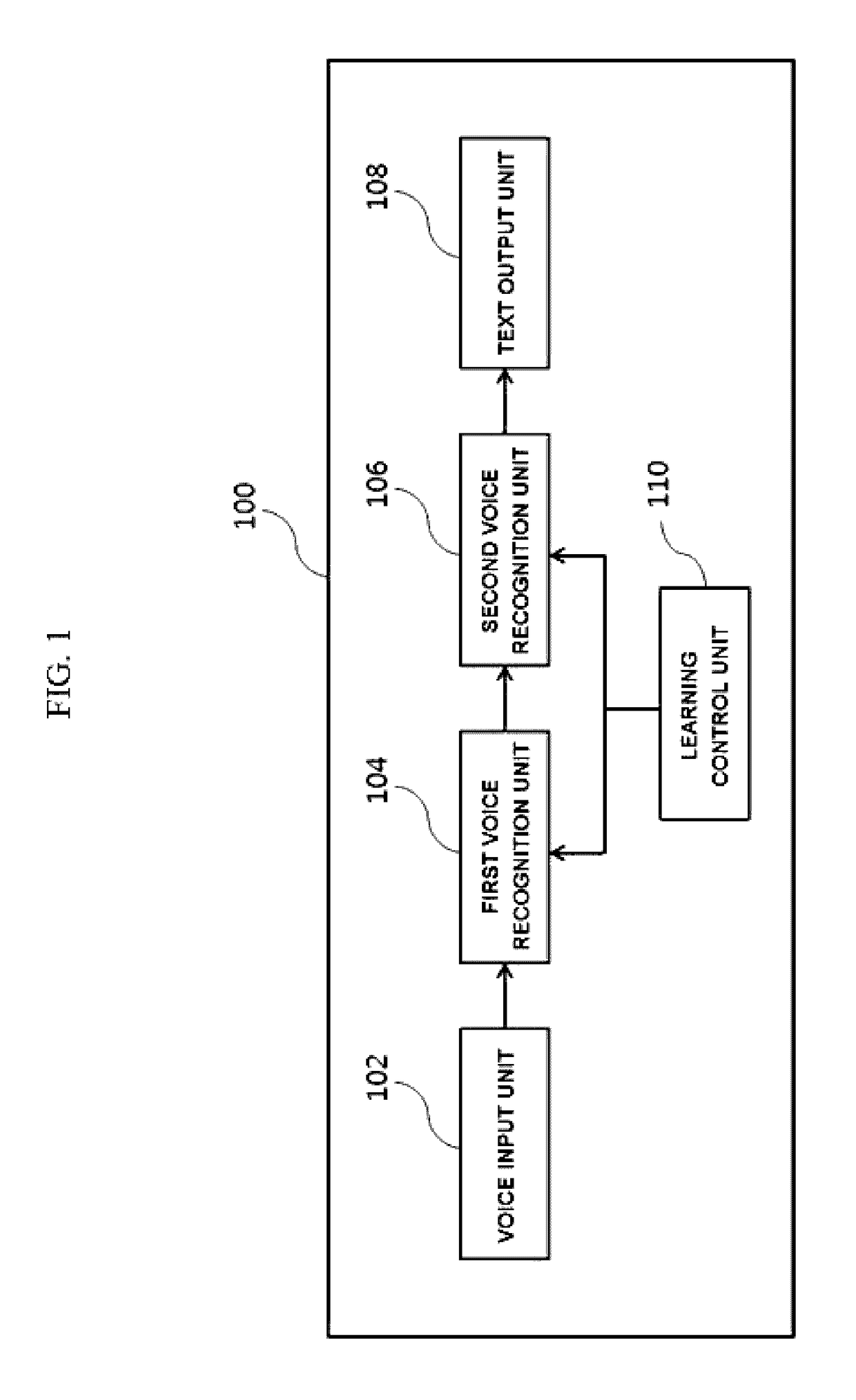

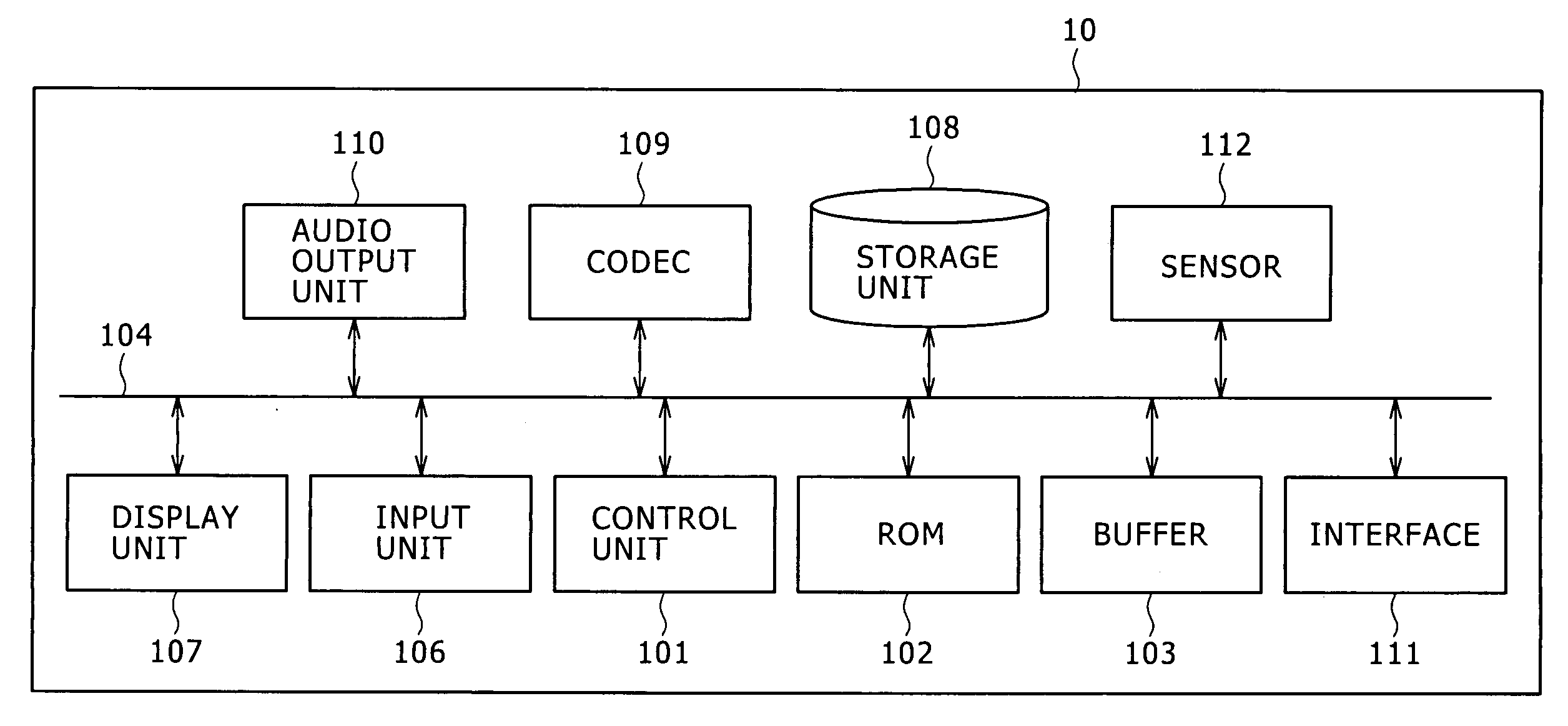

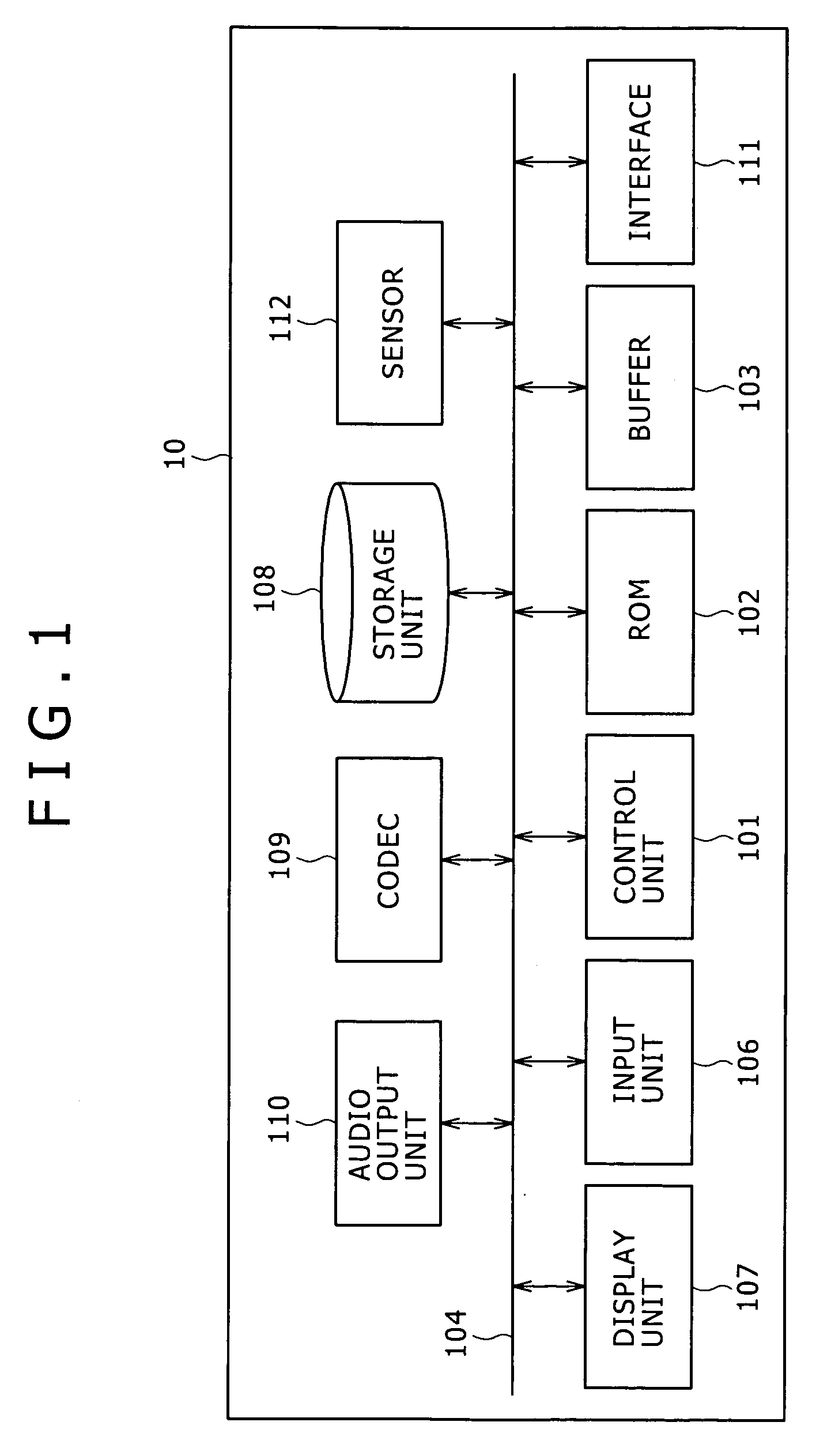

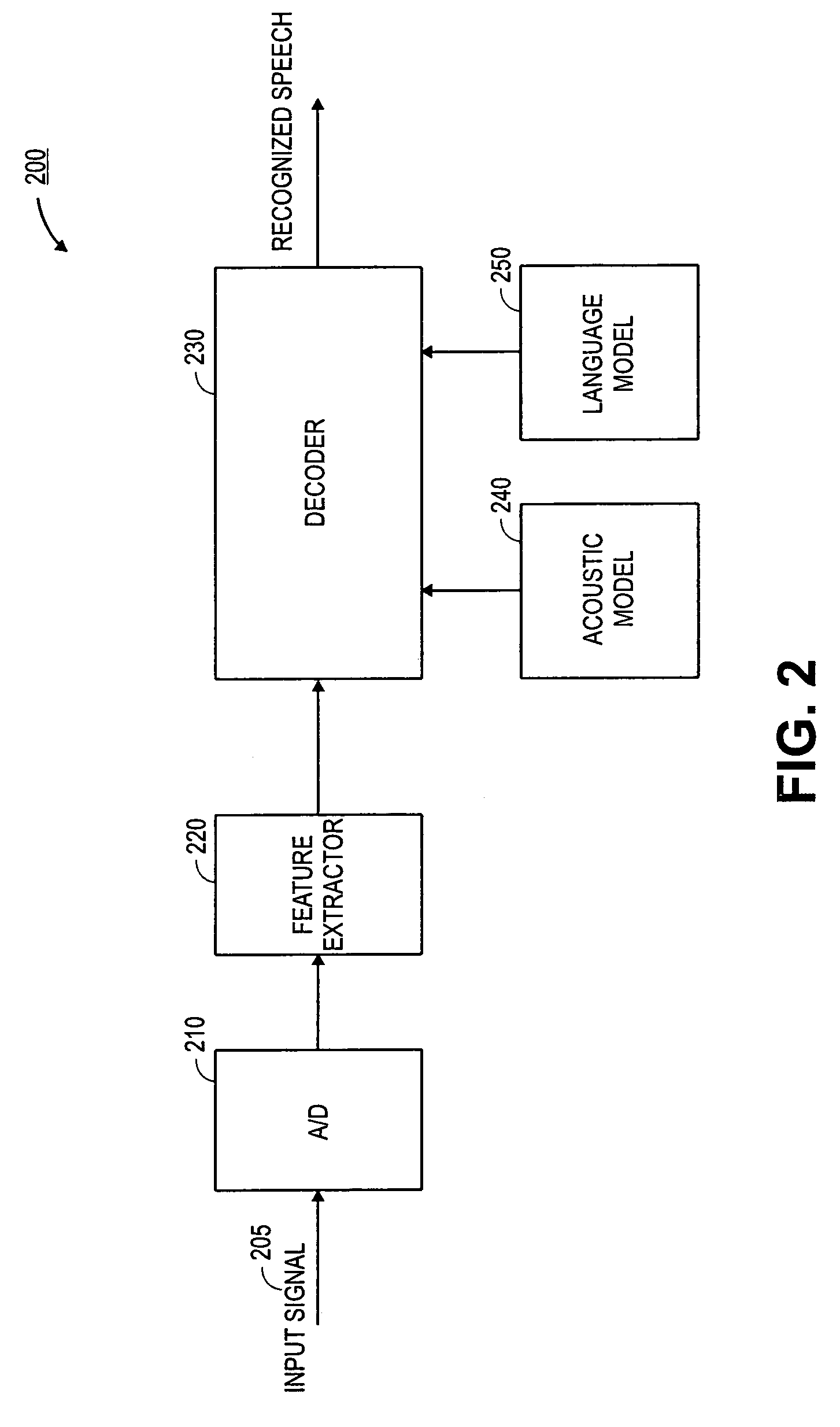

System and method for voice recognition

ActiveUS20170125020A1Reduce the numberBiological modelsSpeech recognitionSpeech identificationNetwork model

A voice recognition system and method are provided. The voice recognition system includes a voice input unit configured to receive learning voice data and a target label including consonant and vowel (letter) information representing the learning voice data, and divide the learning voice data into windows having a predetermined size; a first voice recognition unit configured to learn features of the divided windows using a first neural network model and the target label; a second voice recognition unit configured to learn a time-series pattern of the extracted features using a second neural network model; and a text output unit configured to convert target voice data input to the voice input unit into a text based on learning results of the first voice recognition unit and the second voice recognition unit, and output the text.

Owner:SAMSUNG SDS CO LTD

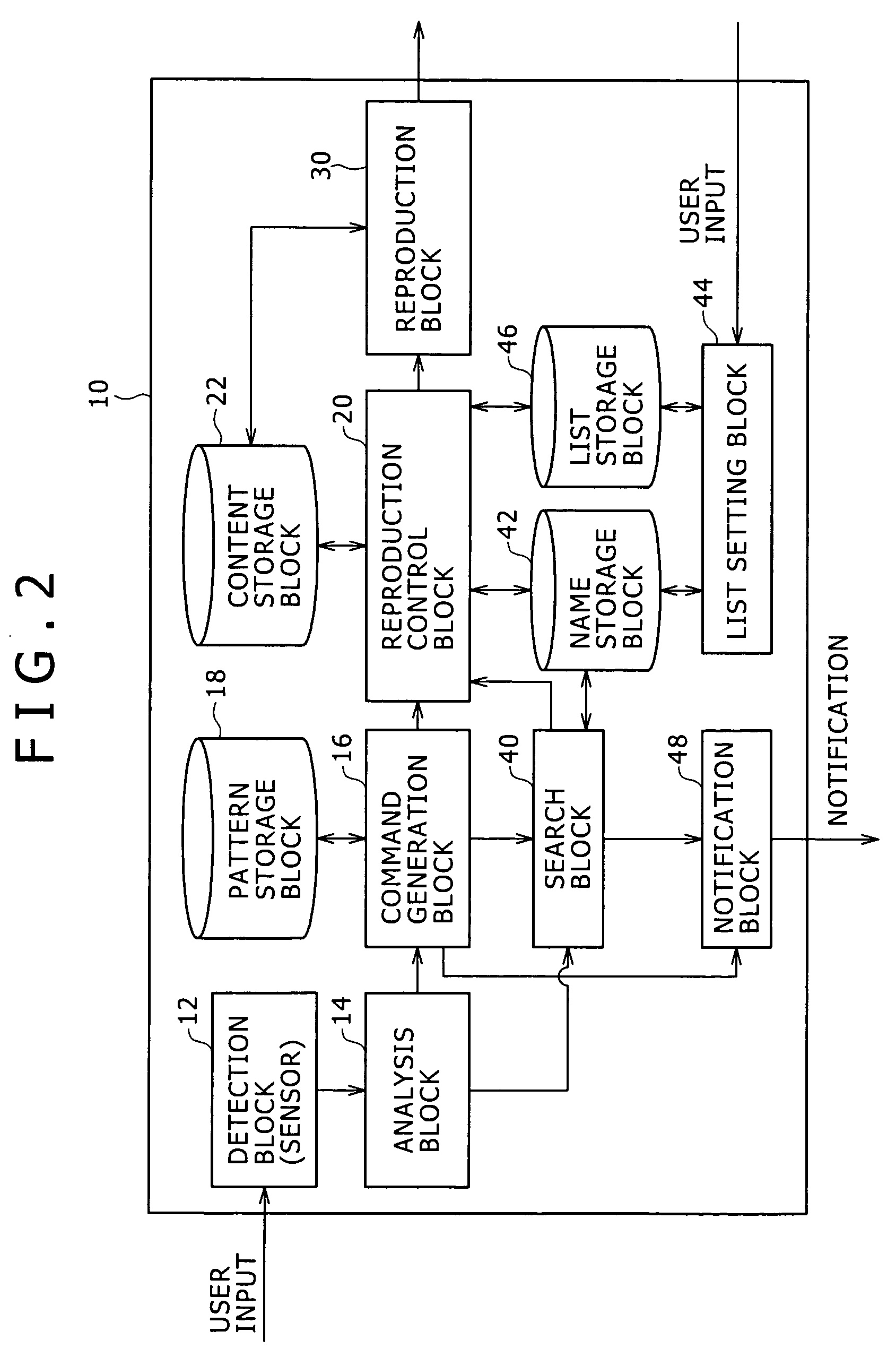

Reproducing apparatus, program, and reproduction control method

InactiveUS20060263068A1Executed quickly and easilyEasy to operateInput/output for user-computer interactionTelevision system detailsData matchingPotential change

The present invention provides a search apparatus that allows a user to execute search processing with ease without user's operating an operator block and checking information shown on a display block. The search apparatus has a name storage block, a vowel conversion block for converting name data stored in the name storage block into first vowel name data, a detection block for detecting an external impact applied by the user to the search apparatus or a myoelectric potential change caused by user movement as a user input signal, an analysis block for analyzing the user input signal to identify an input pattern, a vowel generation block for generating second vowel name data corresponding to the identified input pattern, an extraction block for making a comparison between the first vowel name data and the second vowel name data to extract one or more pieces of first vowel name data that match or are similar to the second vowel name data, and a list creation block for listing the name data corresponding to the extracted first vowel name data to create a candidate list.

Owner:SONY CORP

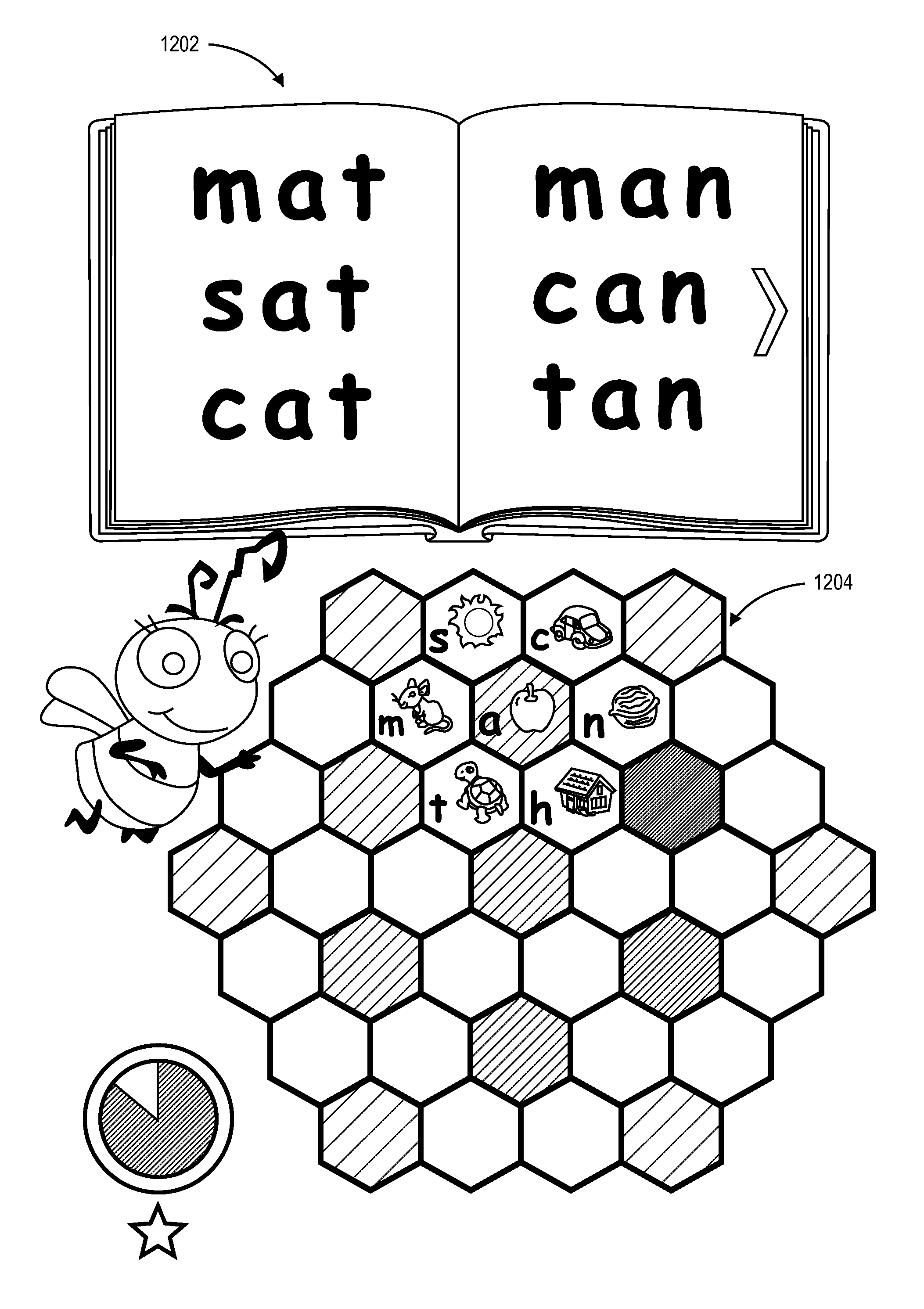

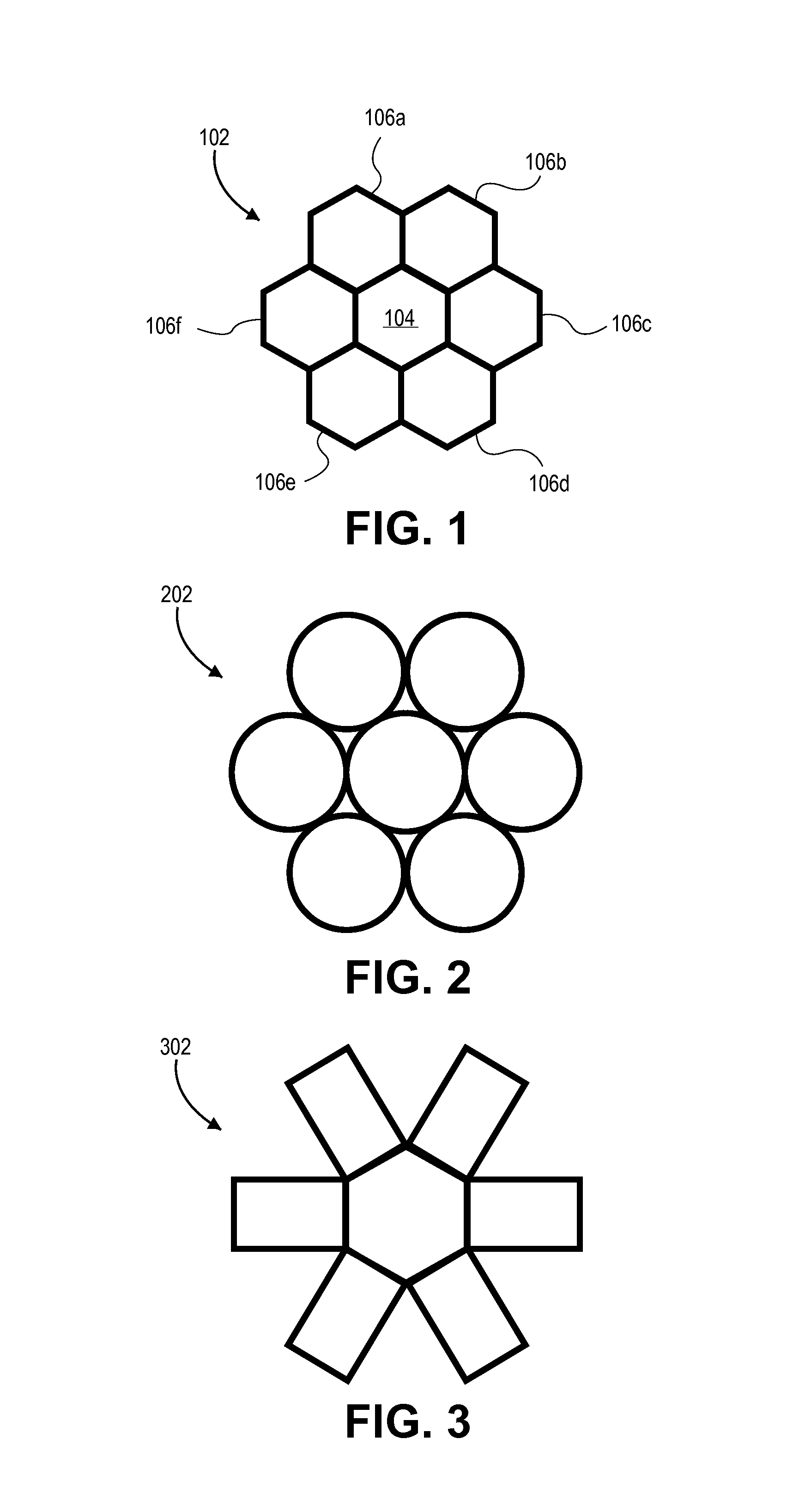

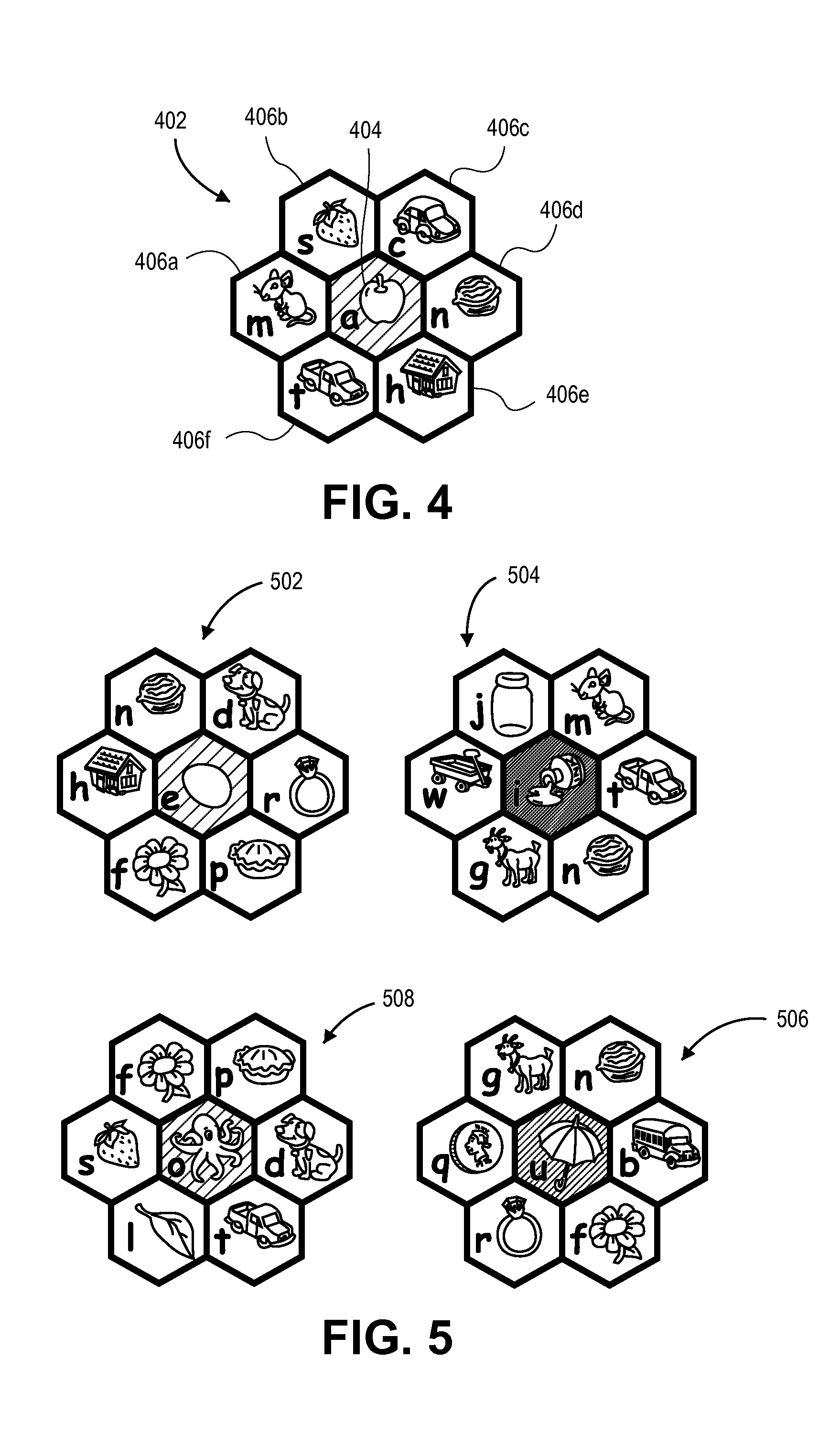

Keyboard for entering text and learning to read, write and spell in a first language and to learn a new language

A novel keyboard made up vowel daisies is used to learn to read, write and spell in English. A story is presented in which a specific set of words that can be generated emphasized. Upon hearing the story, a user enters a response which is received by the device when the specific word is highlighted in the story. A letter configuration, such as a hexagonal ring of letters corresponding to the specific word is displayed on the device. A phonic data relating to a letter in the specific word is manifested at the same time as another characteristic or attribute of the letter, specifically the shape of the letter, is also displayed or manifested to the user. These are done concurrently with displaying the letter configuration that corresponds to the word, wherein the letters are all in the same daisy array.

Owner:LEARNING CIRCLE KIDS

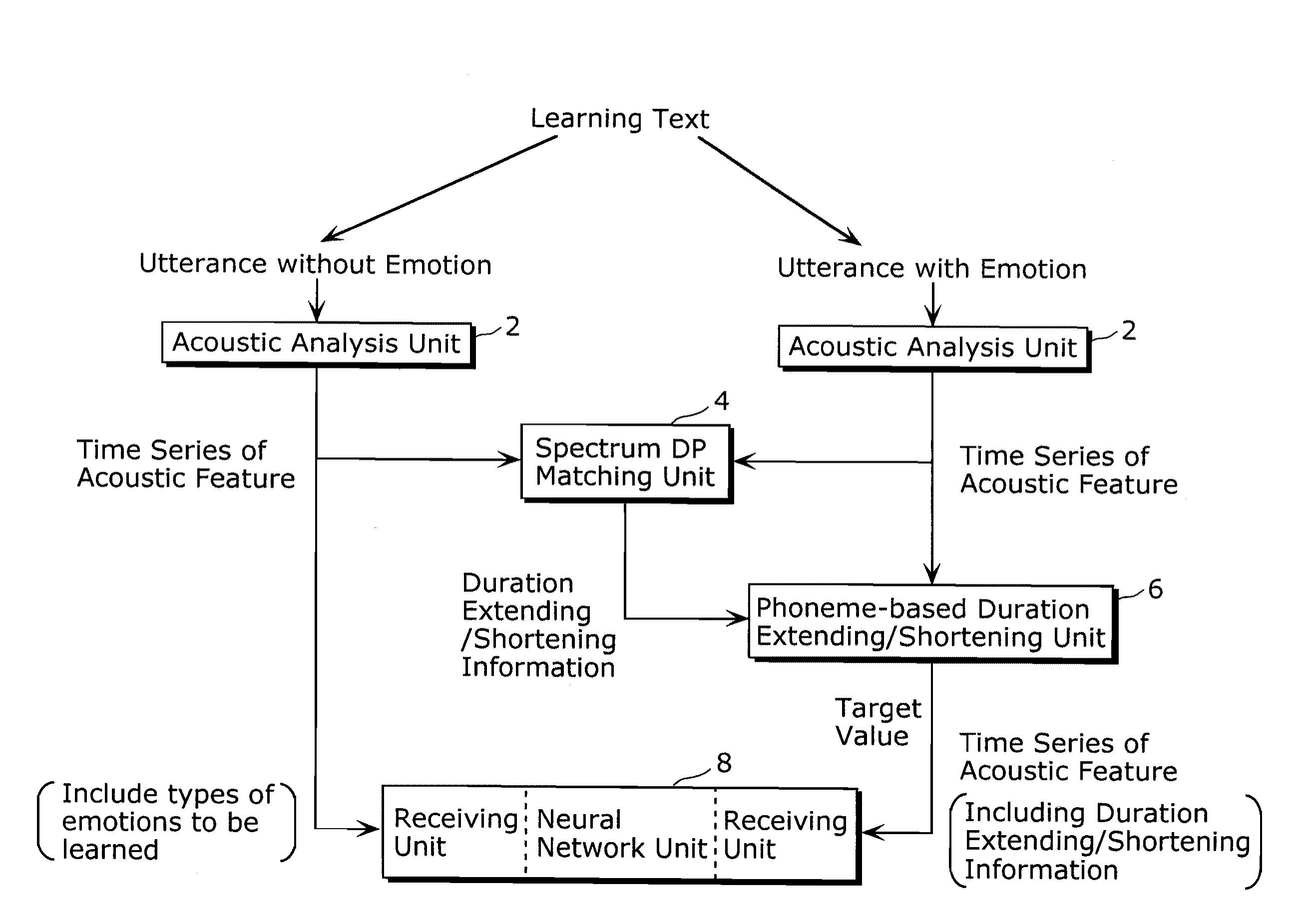

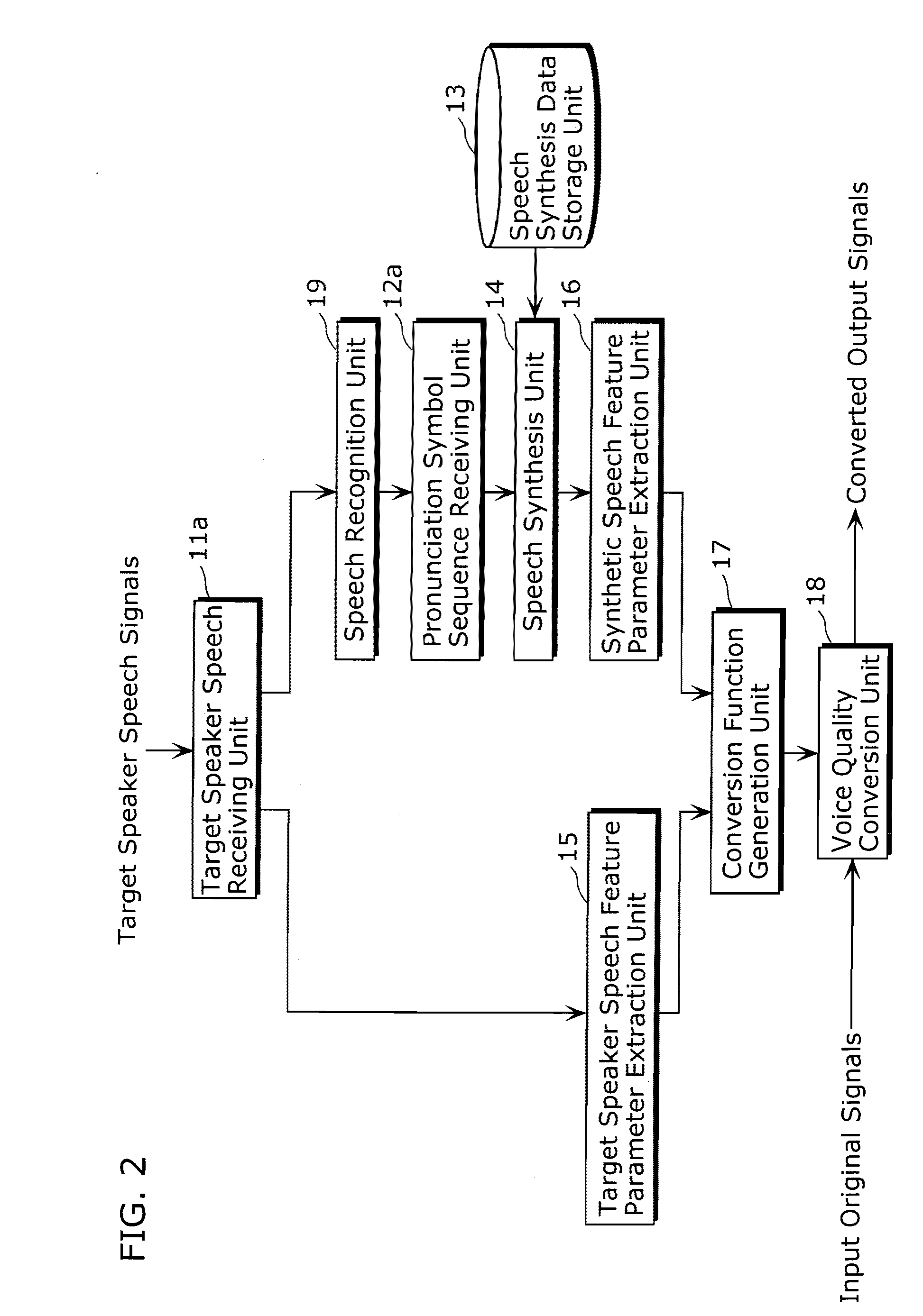

Voice quality conversion device and voice quality conversion method

InactiveUS20090281807A1Reduce loadPromote conversionSpeech recognitionSpeech synthesisTemporal changeVocal tract

A voice quality conversion device converts voice quality of an input speech using information of the speech. The device includes: a target vowel vocal tract information hold unit (101) holding target vowel vocal tract information of each vowel indicating target voice quality; a vowel conversion unit (103) receiving vocal tract information with phoneme boundary information of the speech including information of phonemes and phoneme durations, (ii) approximating a temporal change of vocal tract information of a vowel in the vocal tract information with phoneme boundary information applying a first function, (iii) approximating a temporal change of vocal tract information of the same vowel held in the target vowel vocal tract information hold unit (101) applying a second function, (iv) calculating a third function by combining the first function with the second function, and (v) converting the vocal tract information of the vowel applying the third function; and a synthesis unit (103) synthesizing a speech using the converted information (102).

Owner:SOVEREIGN PEAK VENTURES LLC

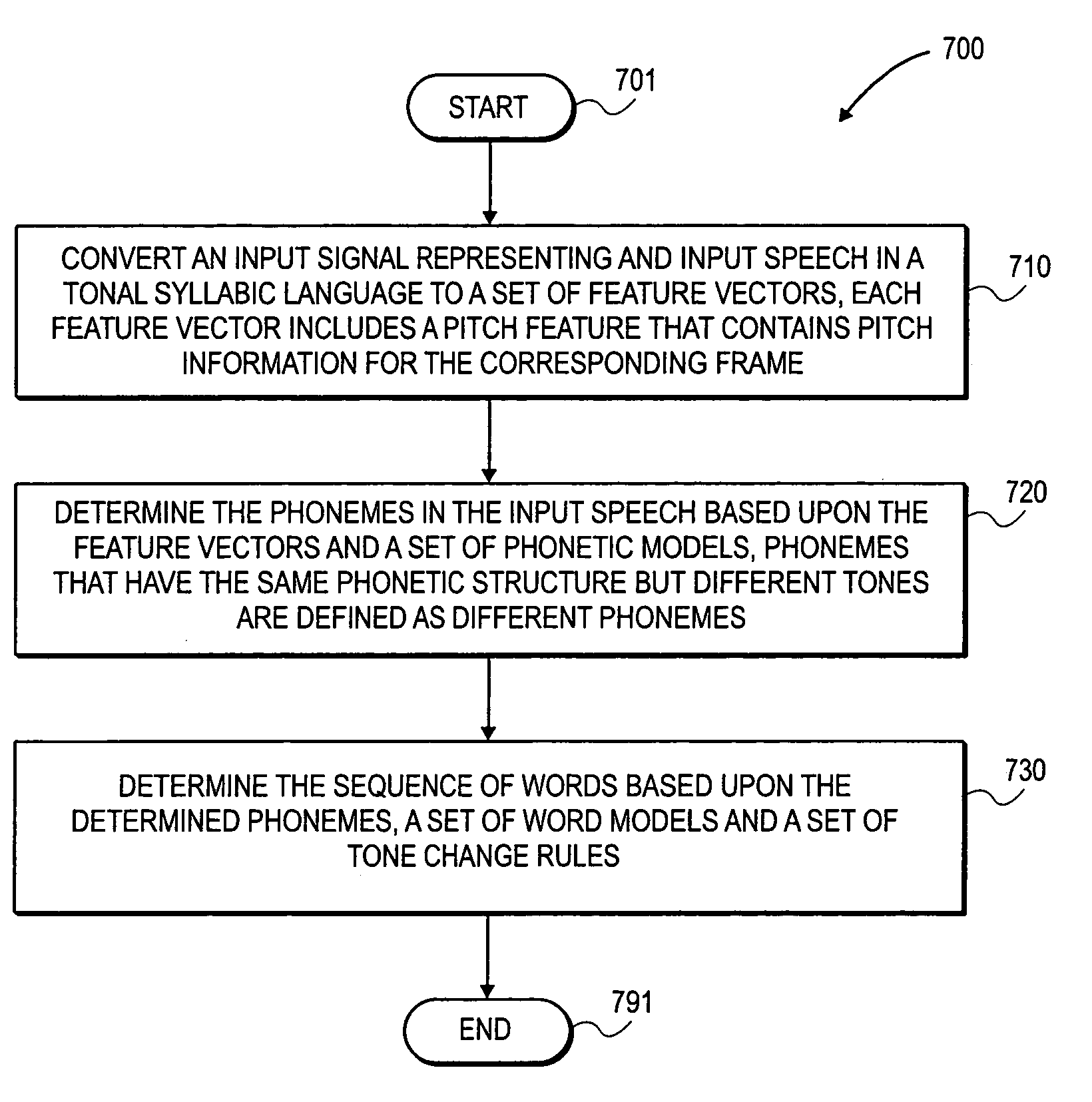

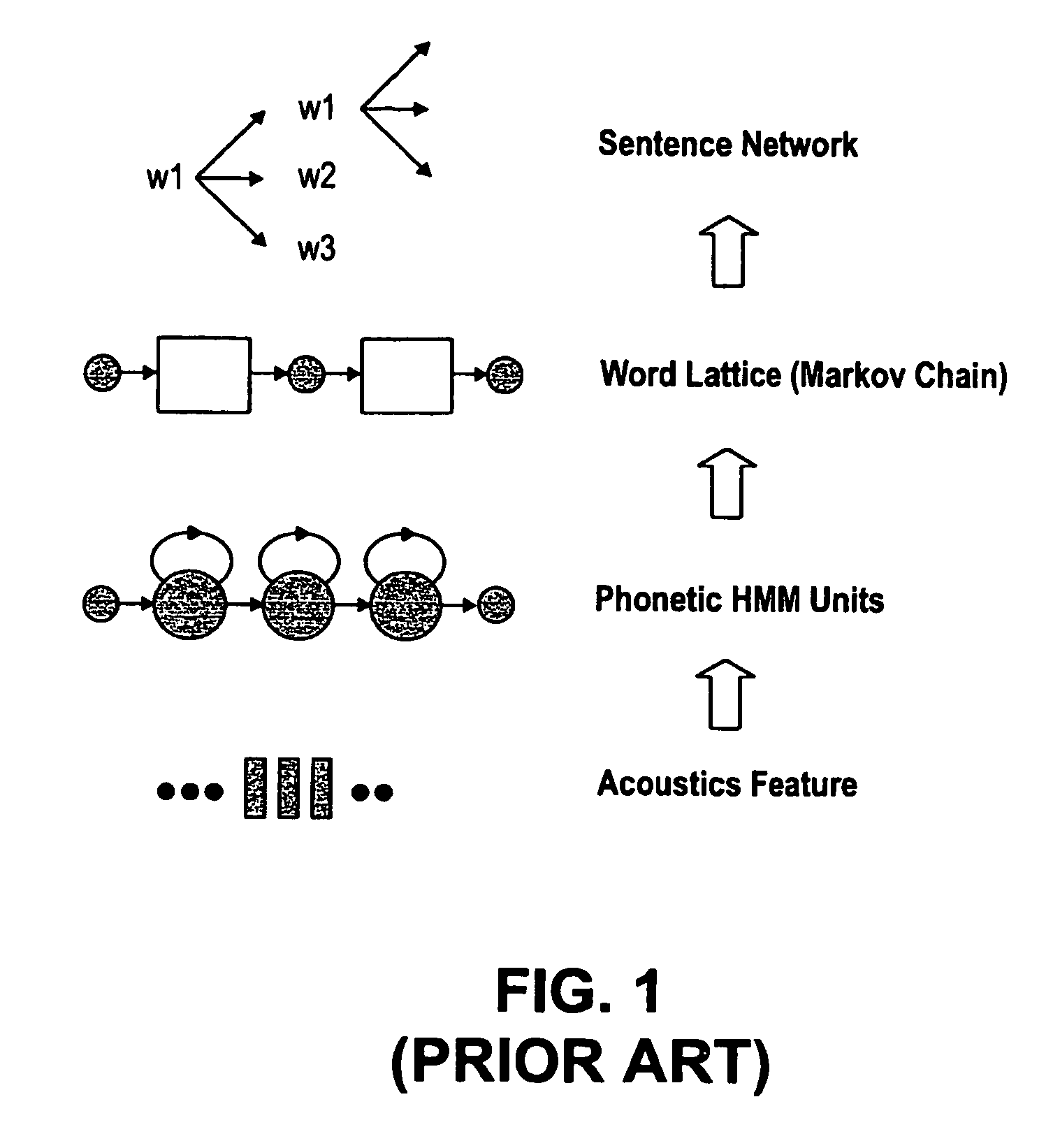

Method, apparatus, and system for bottom-up tone integration to Chinese continuous speech recognition system

InactiveUS7181391B1Reduce word error rateSimple modelNatural language data processingSpeech recognitionSyllableFeature vector

According to one aspect of the invention, a method is provided in which knowledge about tone characteristics of a tonal syllabic language is used to model speech at various levels in a bottom-up speech recognition structure. The various levels in the bottom-up recognition structure include the acoustic level, the phonetic level, the work level, and the sentence level. At the acoustic level, pitch is treated as a continuous acoustic variable and pitch information extracted from the speech signal is included as feature component of feature vectors. At the phonetic level, main vowels having the same phonetic structure but different tones are defined and modeled as different phonemes. At the word level, as set of tone changes rules is used to build transcription for training data and pronunciation lattice for decoding. At sentence level, a set of sentence ending words with light tone are also added to the system vocabulary.

Owner:INTEL CORP

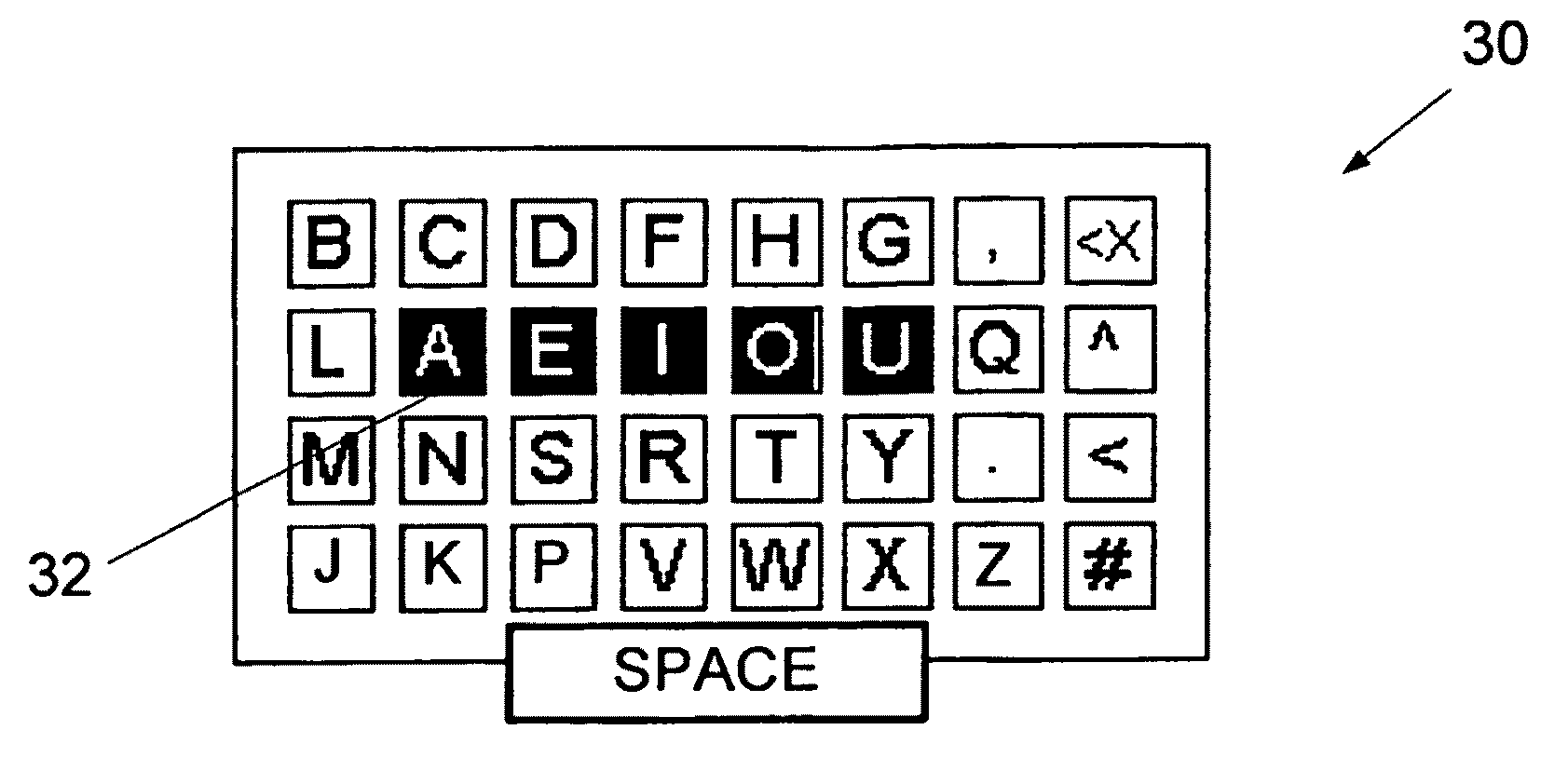

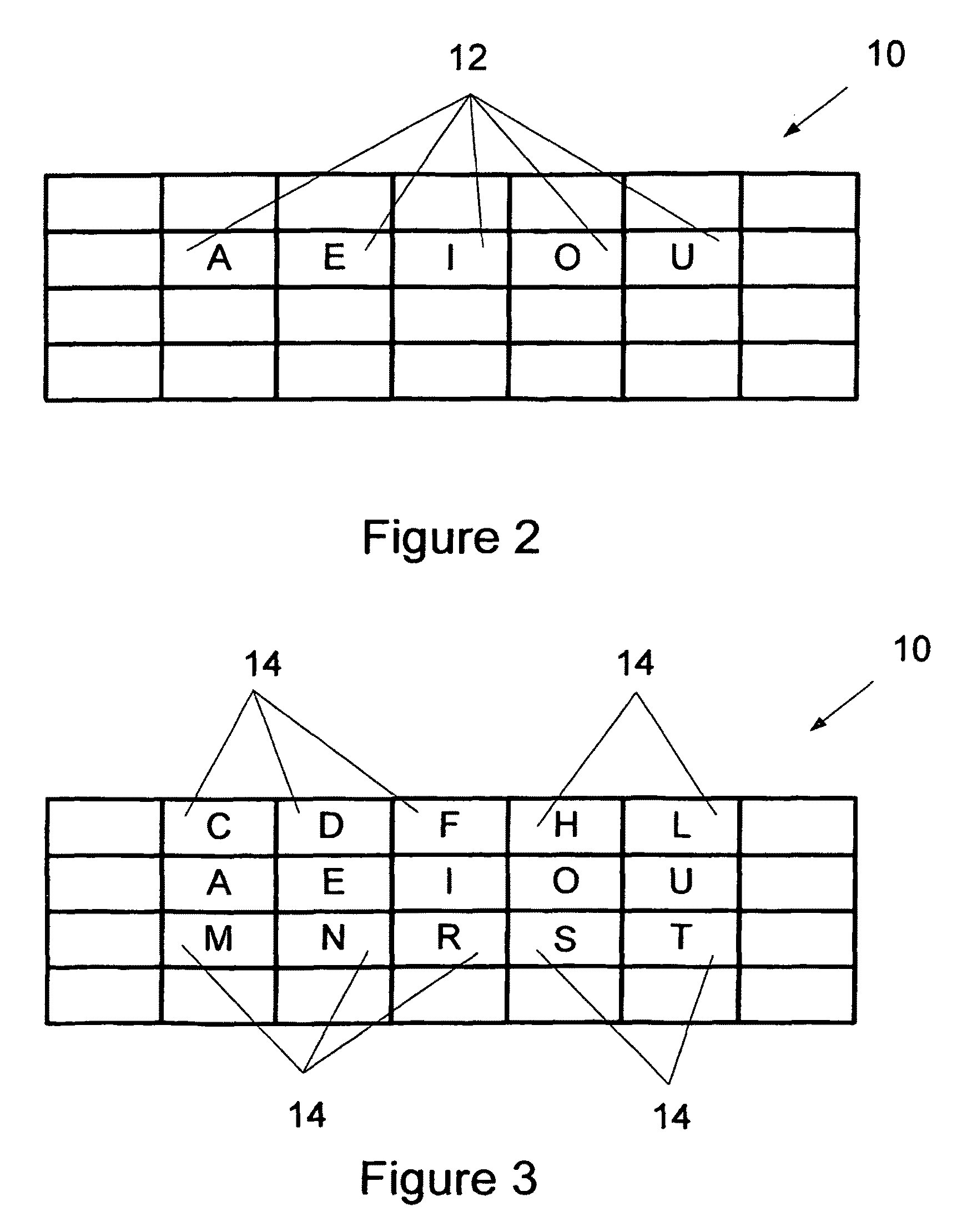

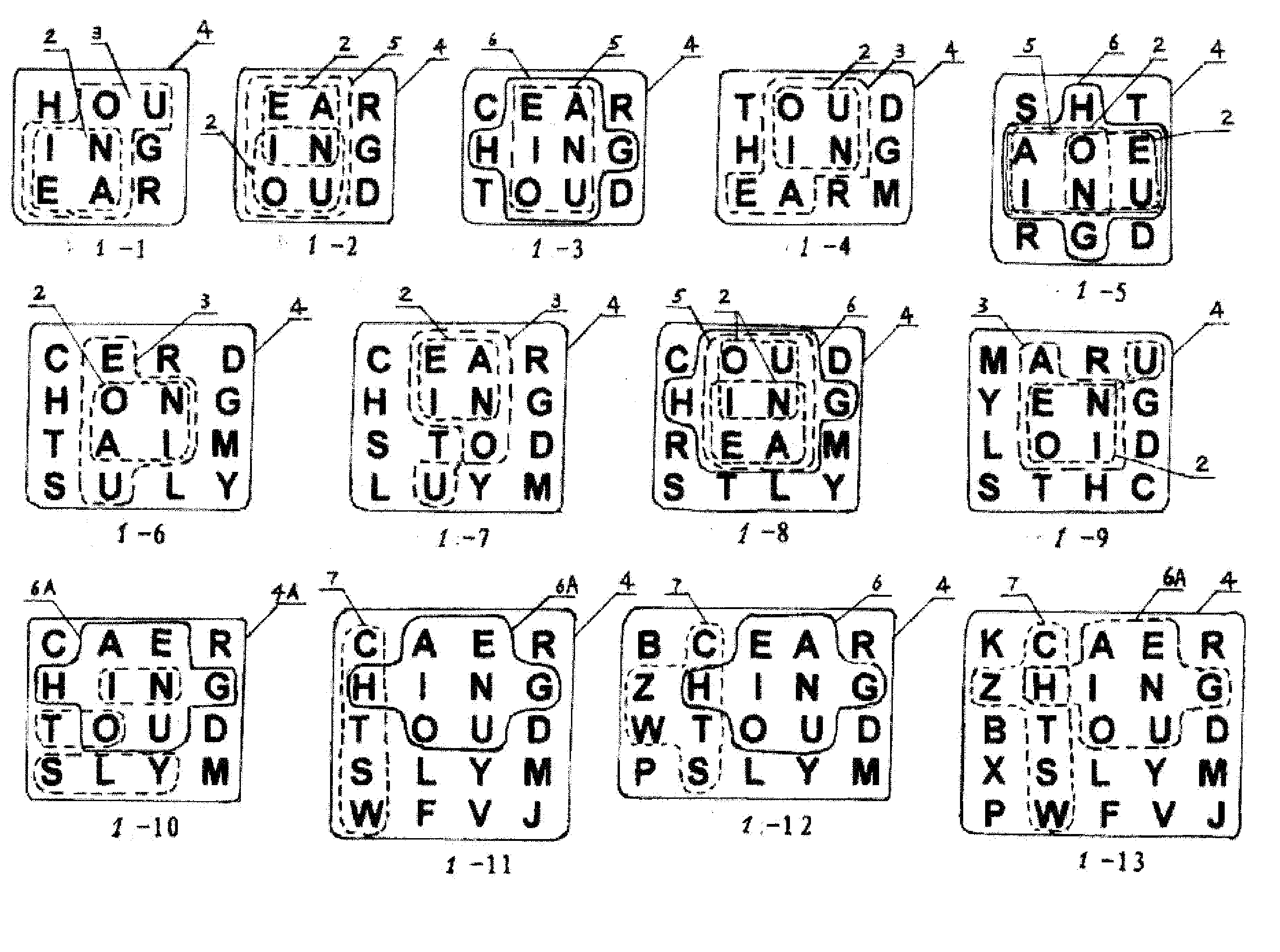

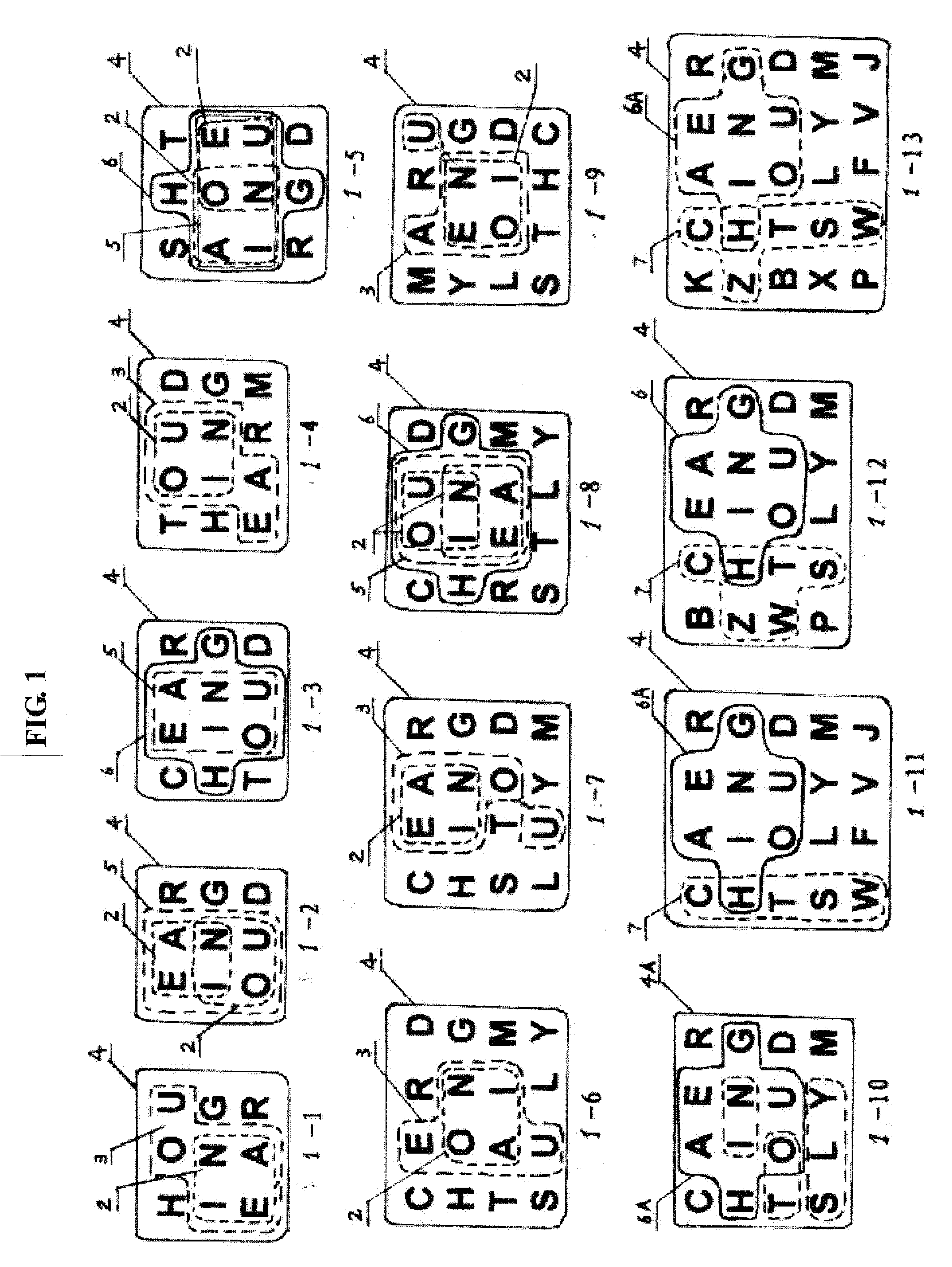

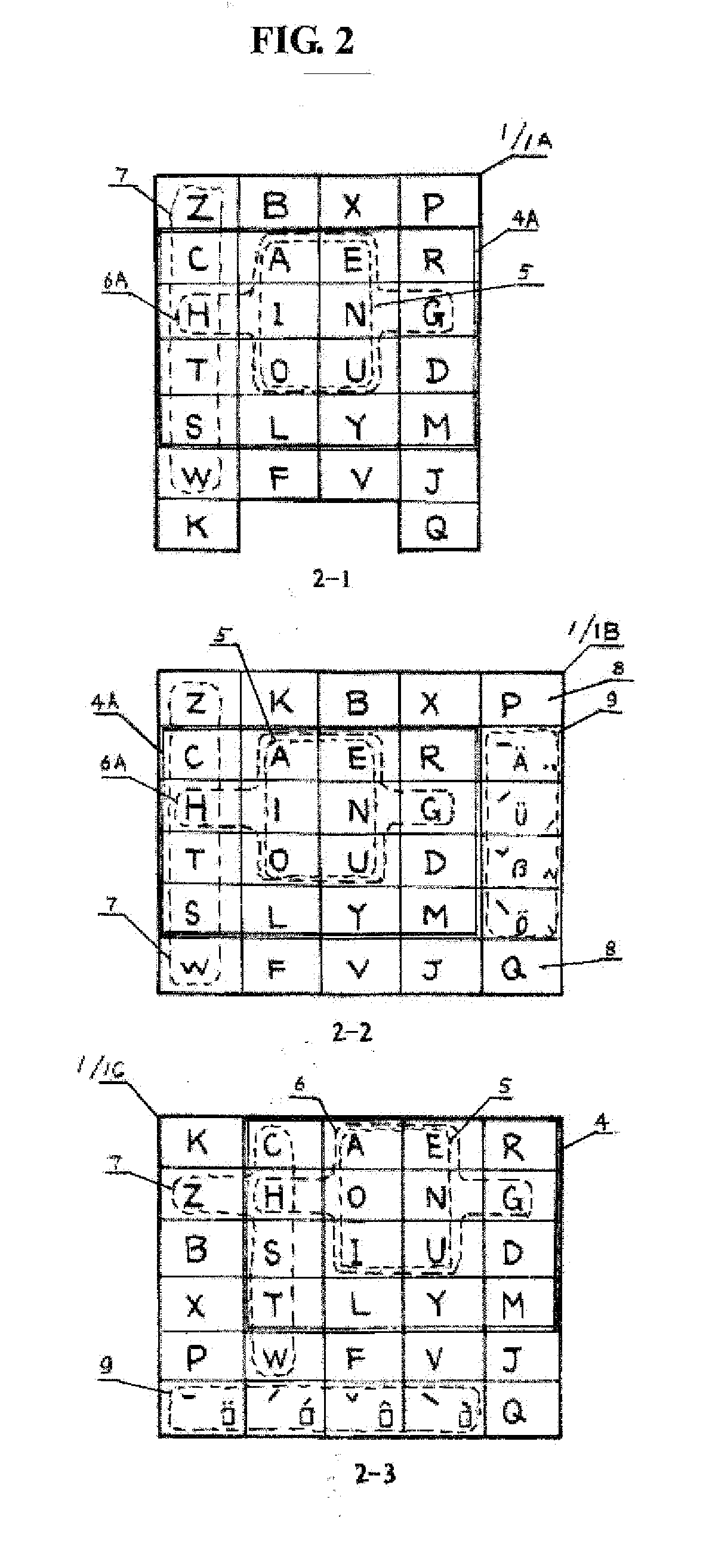

Keyboard for a handheld computer device

InactiveUS8033744B2Other printing apparatusInput/output processes for data processingTheoretical computer scienceHand Held Computer

A keyboard for a hand held computer device including an array of keys representing characters of an alphabet of a language, wherein the array includes: (a) keys representing frequently used vowel characters of said alphabet arranged together in series; (b) keys representing frequently used consonant characters of said alphabet arranged adjacent to said keys representing vowel characters; and (c) keys representing infrequently used consonant characters of said alphabet arranged in positions remote from said keys representing vowel characters, wherein the keys representing frequently used consonant characters are arranged in alphabetical order around the keys representing the vowel characters.

Owner:BAKER PAUL LLOYD

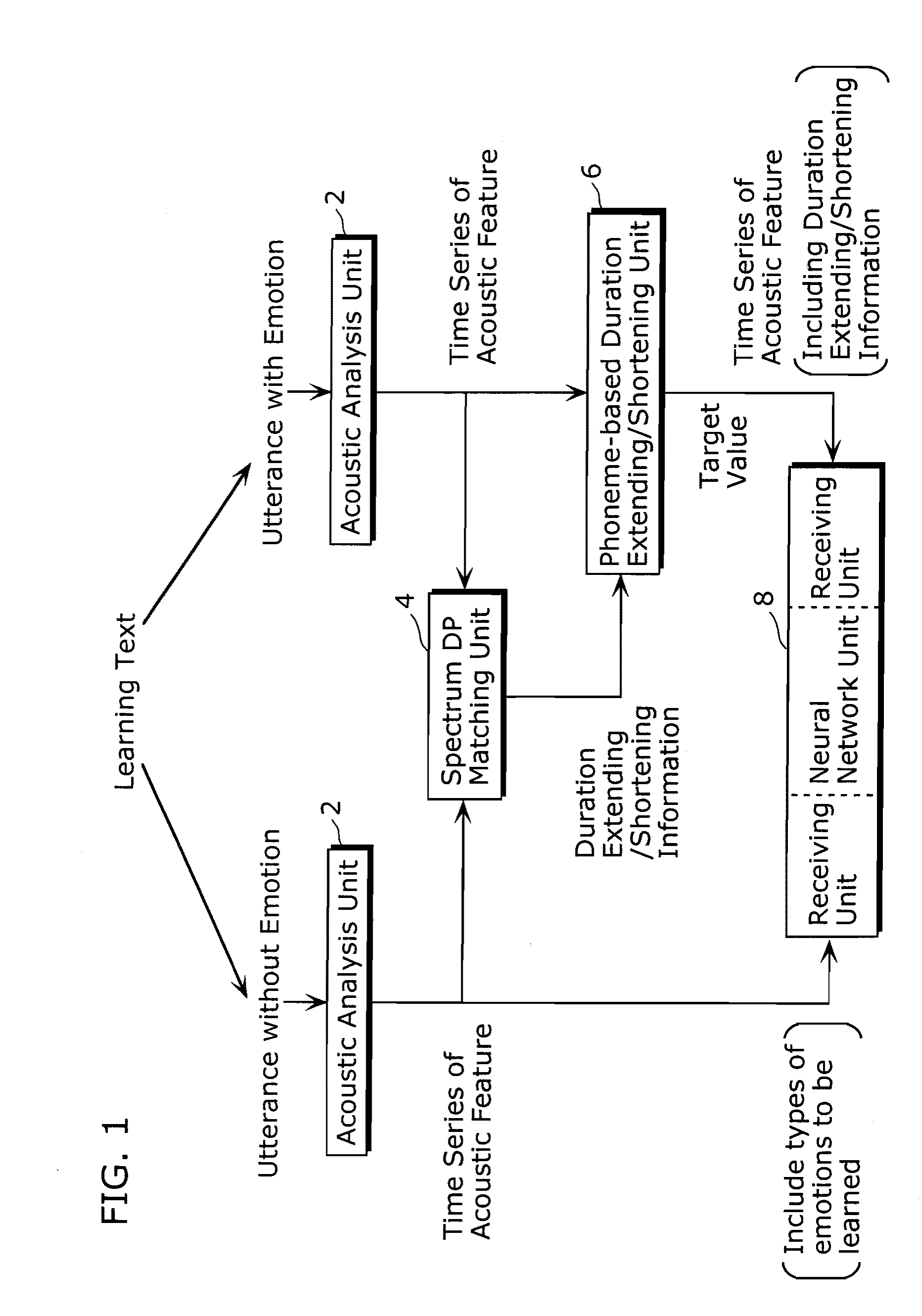

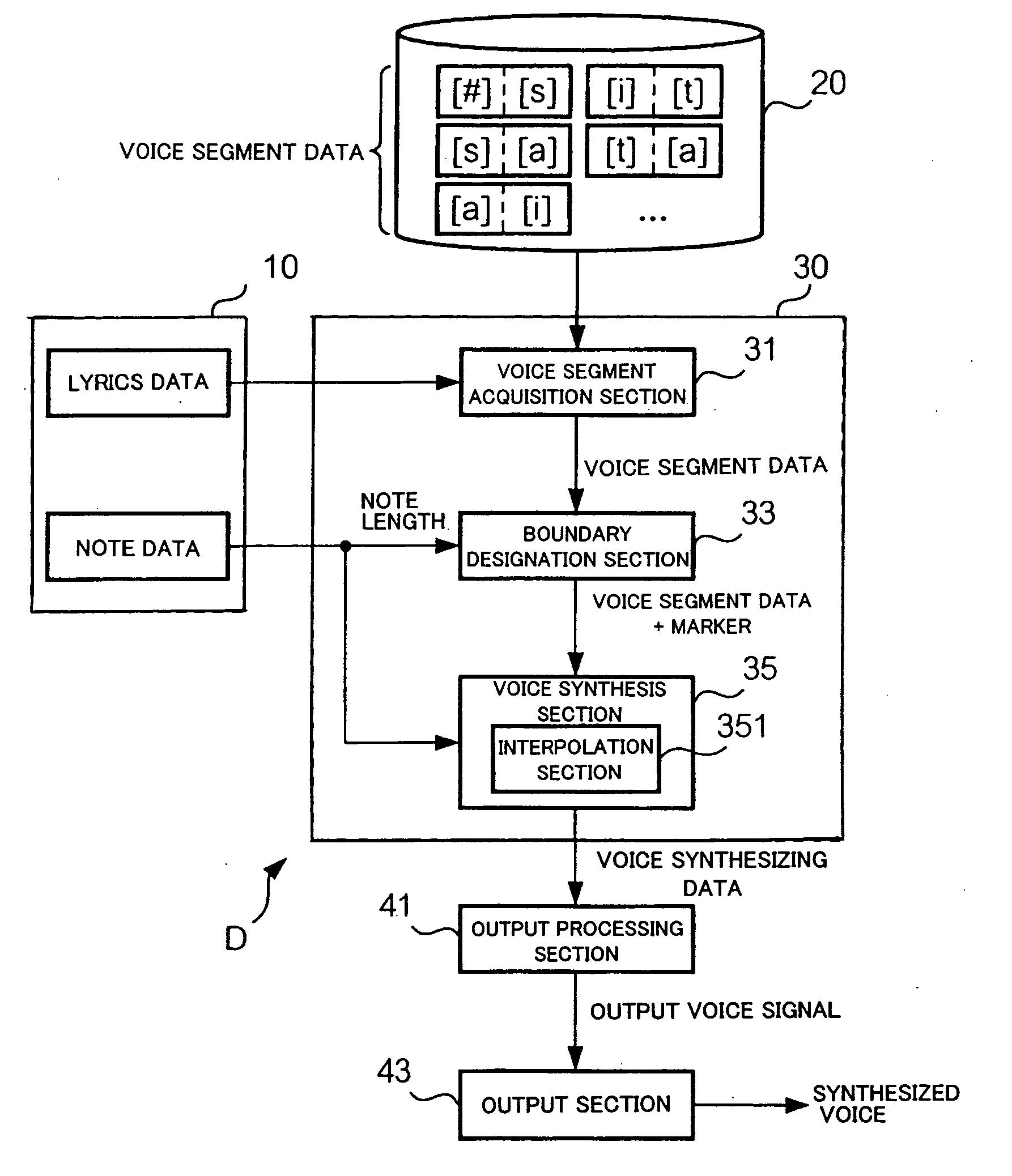

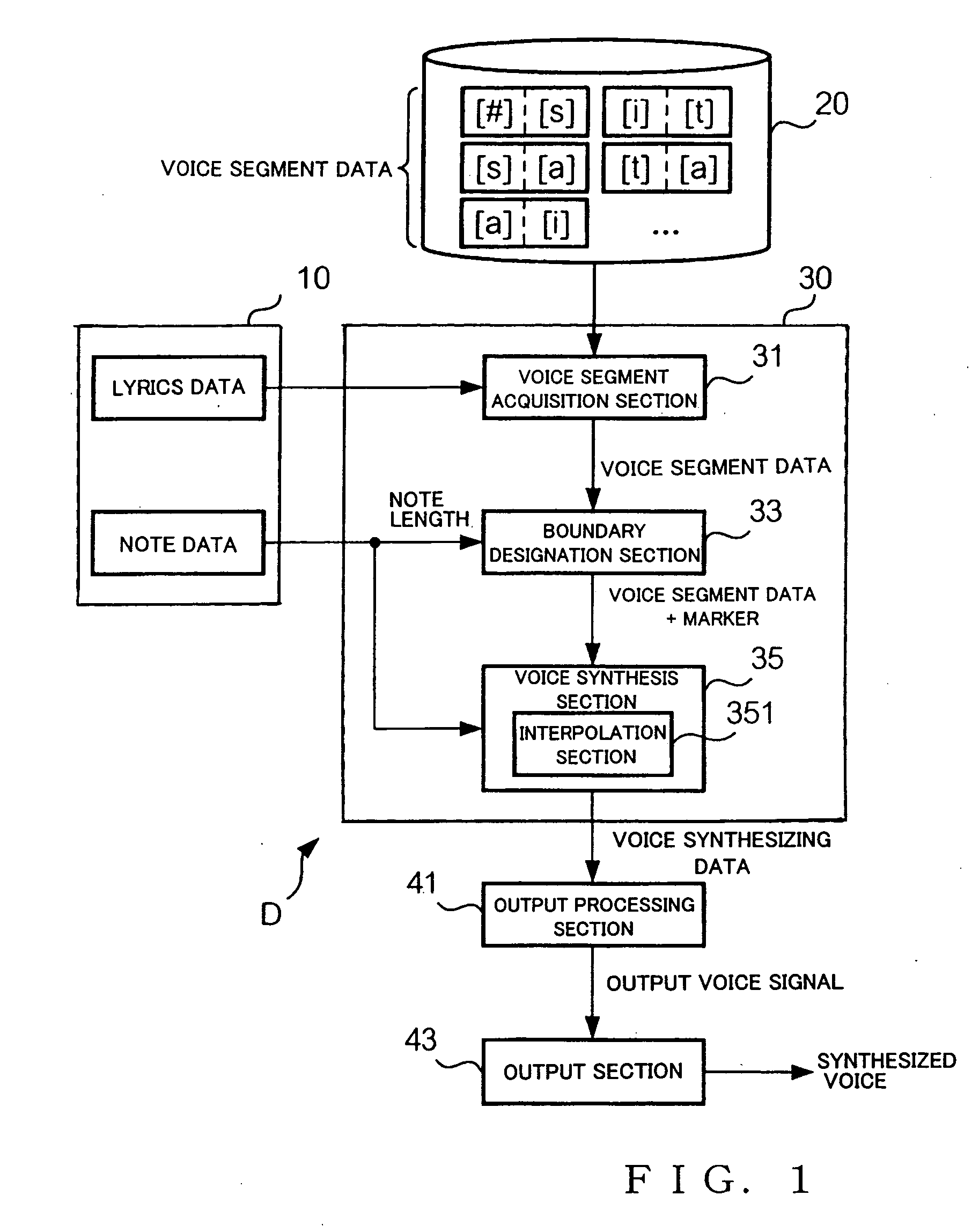

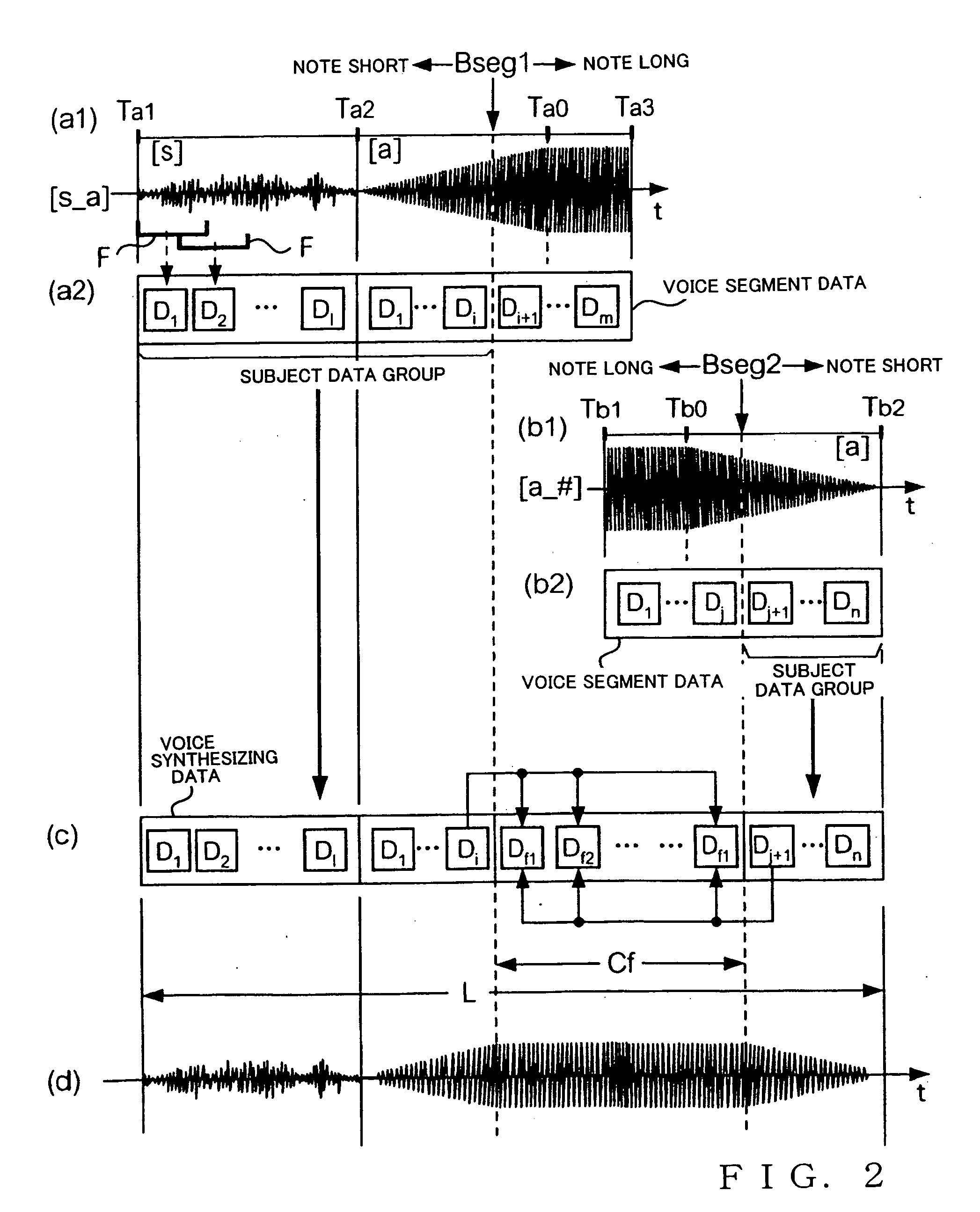

Voice synthesis apparatus and method

A plurality of voice segments, each including one or more phonemes are acquired in a time-serial manner, in correspondence with desired singing or speaking words. As necessary, a boundary is designated between start and end points of a vowel phoneme included in any one of the acquired voice segments. Voice is synthesized for a region of the vowel phoneme that precedes the designated boundary vowel phoneme, or a region of the vowel phoneme that succeeds the designated boundary in the vowel phoneme. By synthesizing a voice for the region preceding the designated boundary, it is possible to synthesize a voice imitative of a vowel sound that is uttered by a person and then stopped to sound with his or her mouth kept opened. Further, by synthesizing a voice for the region succeeding the designated boundary, it is possible to synthesize a voice imitative of a vowel sound that is started to sound with the mouth opened.

Owner:YAMAHA CORP

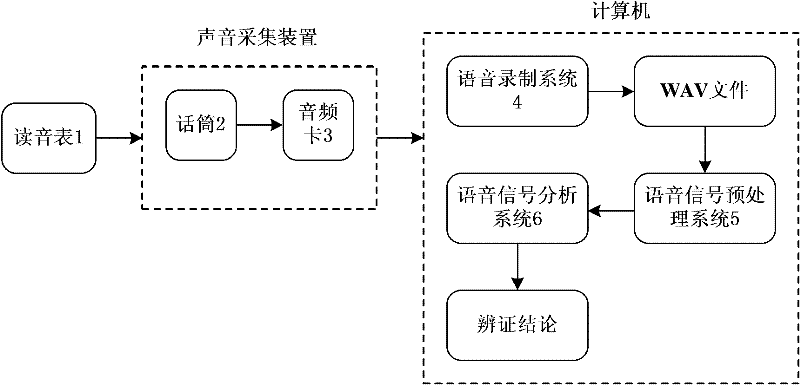

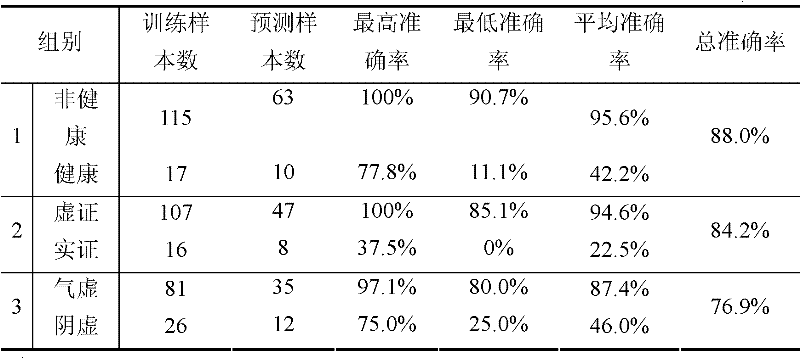

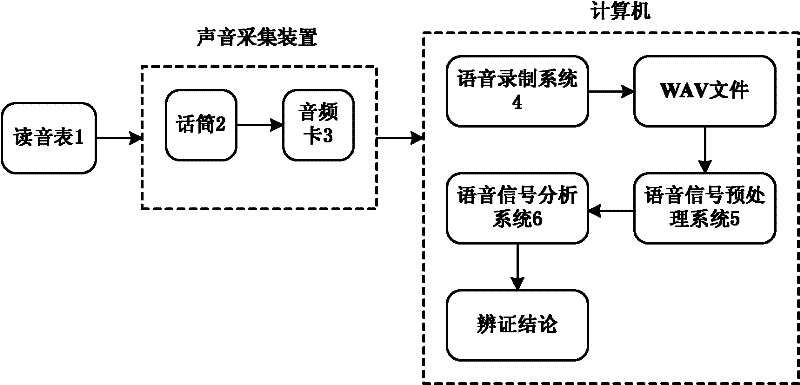

Chinese medicine sound diagnosis acquisition and analysis system

InactiveCN102342858AHigh clinical application valueAddress subjectivitySurgeryDiagnostic recording/measuringVowelDisease cause

Owner:SHANGHAI UNIV OF T C M

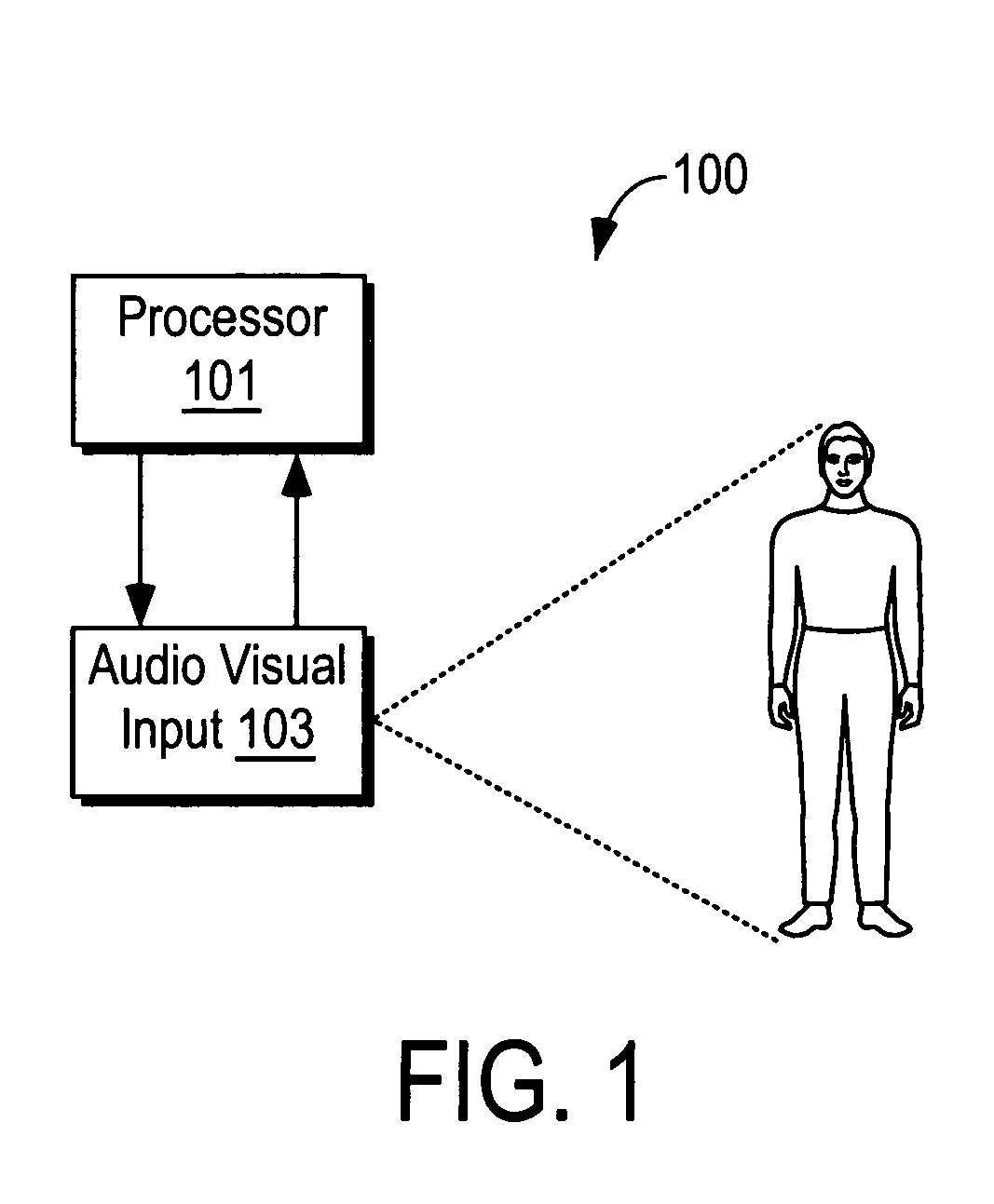

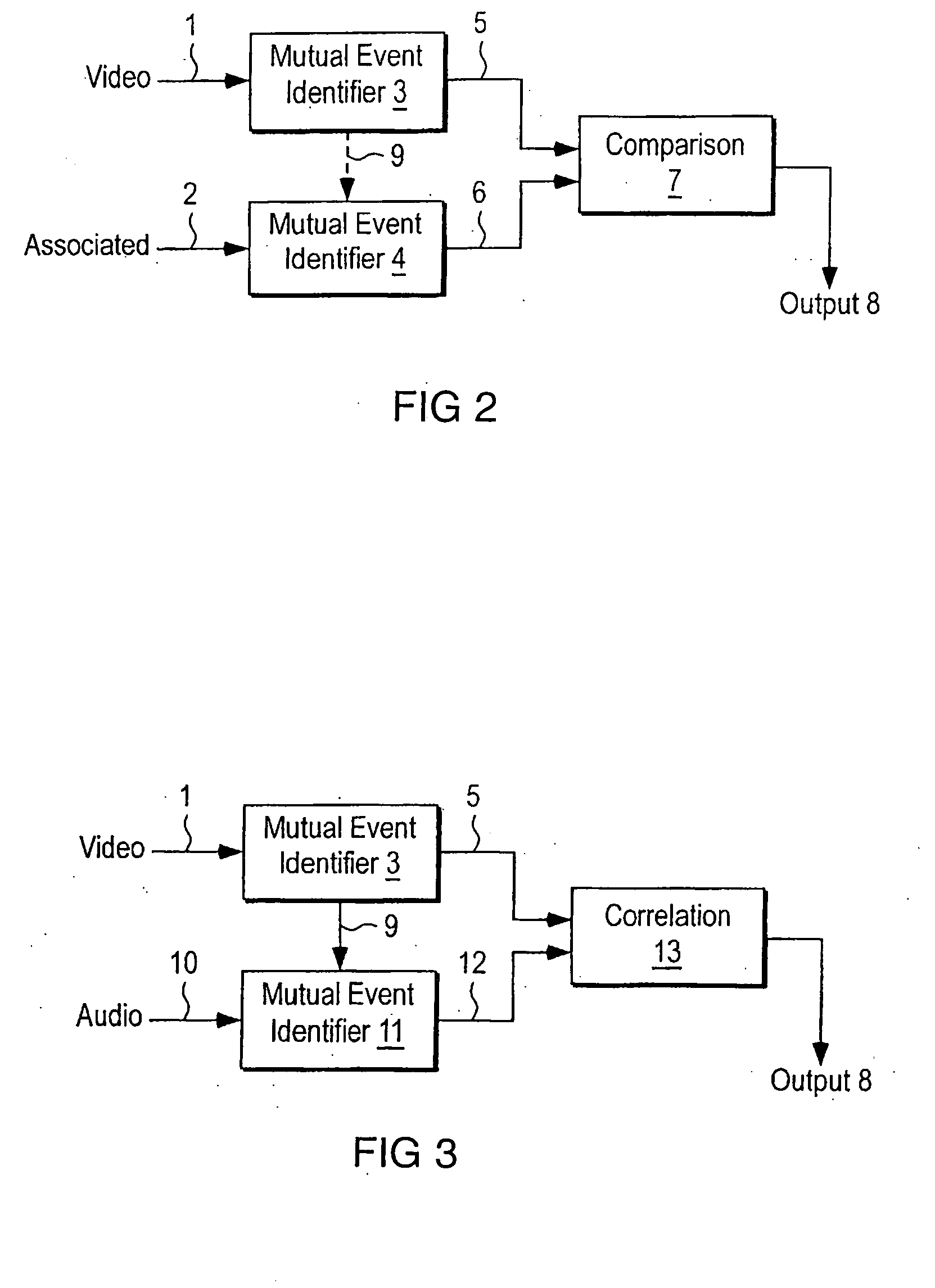

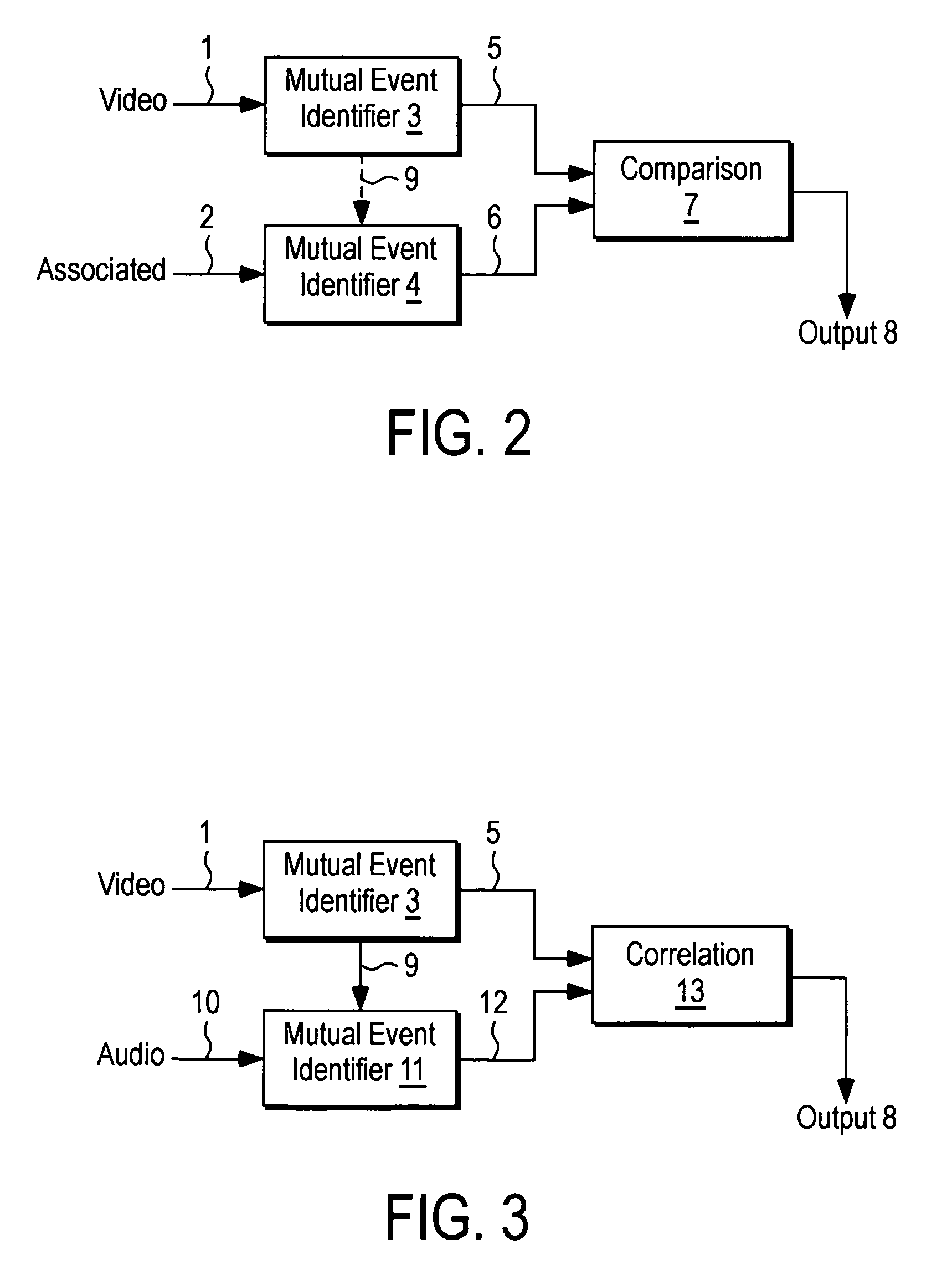

Method, system, and program product for measuring audio video synchronization independent of speaker characteristics

InactiveUS20080111887A1Eliminate the effects ofReduce the impactTelevision system detailsCarrier indexing/addressing/timing/synchronisingDecision boundaryPhase filter

Method, system, and program product for measuring audio video synchronization. This is done by first acquiring audio video information into an audio video synchronization system. The step of data acquisition is followed by analyzing the audio information, and analyzing the video information. Next, the audio information is analyzed to locate the presence of sounds therein related to a speaker's personal voice characteristics. The audio information is then filtered by removing data related to a speakers personal voice characteristics to produce a filtered audio information. In this phase filtered audio information and video information is analyzed, decision boundaries for Audio and Video MuEv-s are determined, and related Audio and Video MuEv-s are correlated. In Analysis Phase Audio and Video MuEv-s are calculated from the audio and video information, and the audio and video information is classified into vowel sounds including AA, EE, OO, silence, and unclassified phonemes. This information is used to determine and associate a dominant audio class in a video frame. Matching locations are determined, and the offset of video and audio is determined.

Owner:PIXEL INSTR CORP

Method for Animating an Image Using Speech Data

InactiveUS20080259085A1Enhance animationLess computationally intensiveSpeech analysisAnimationAnimationImage warping

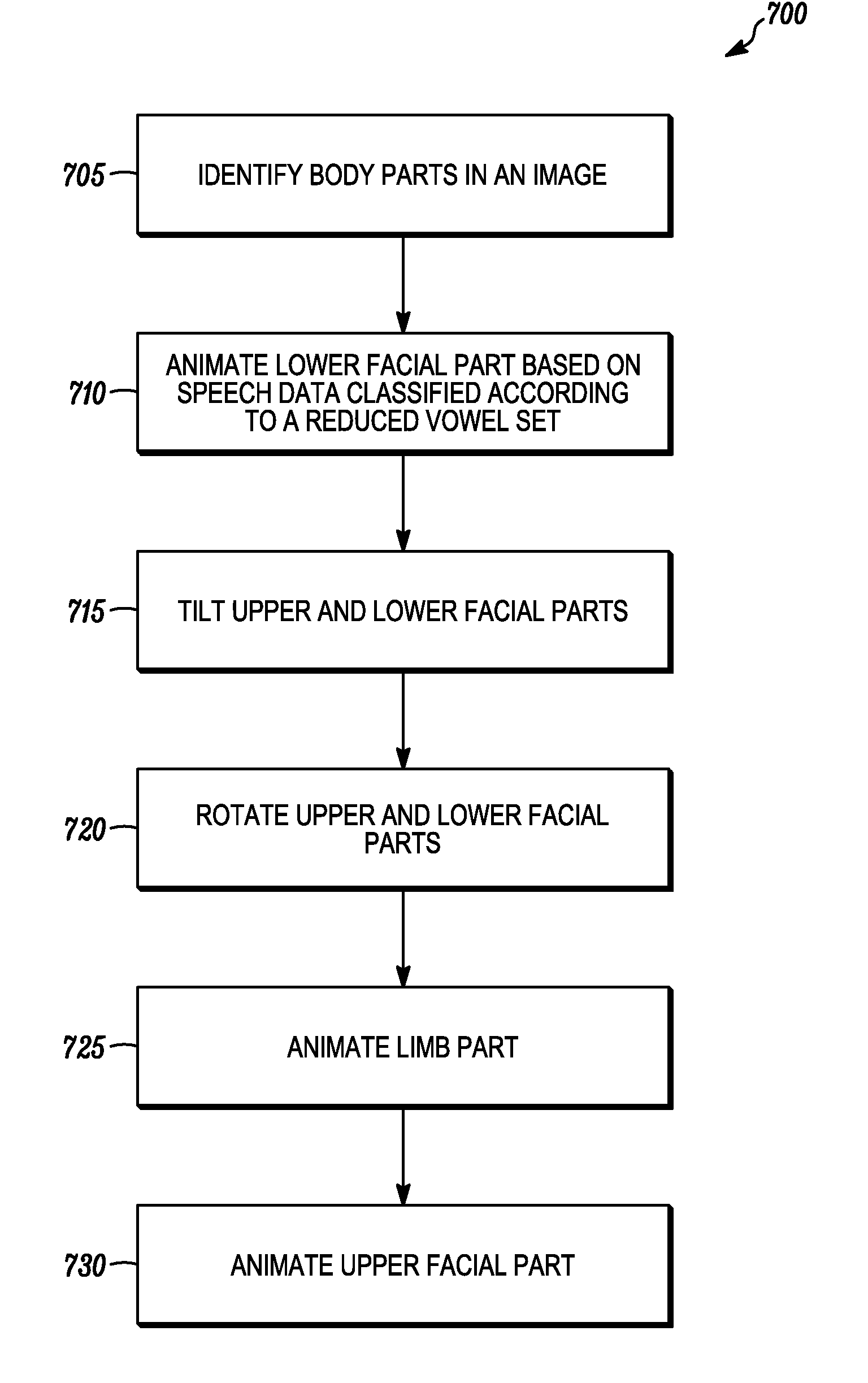

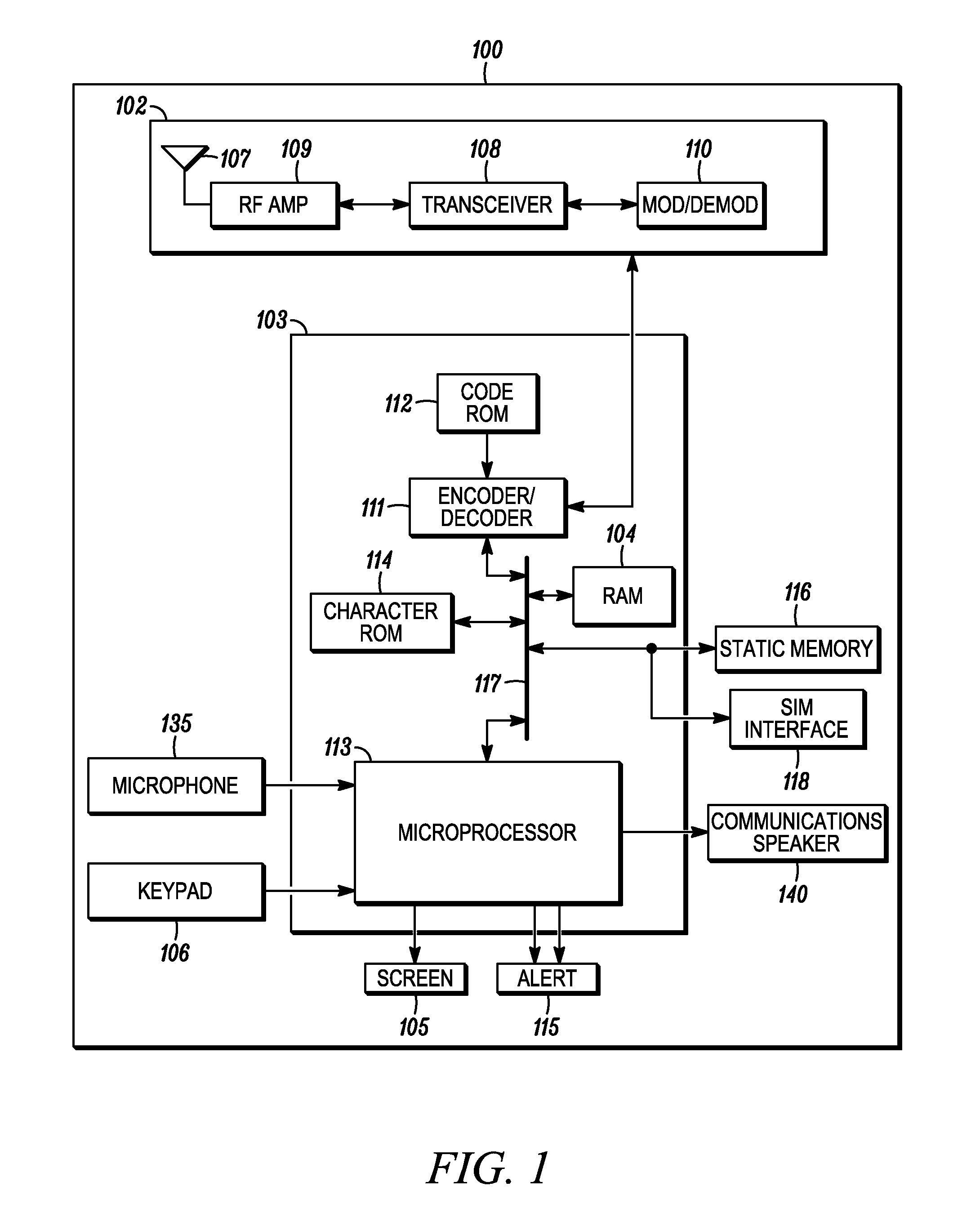

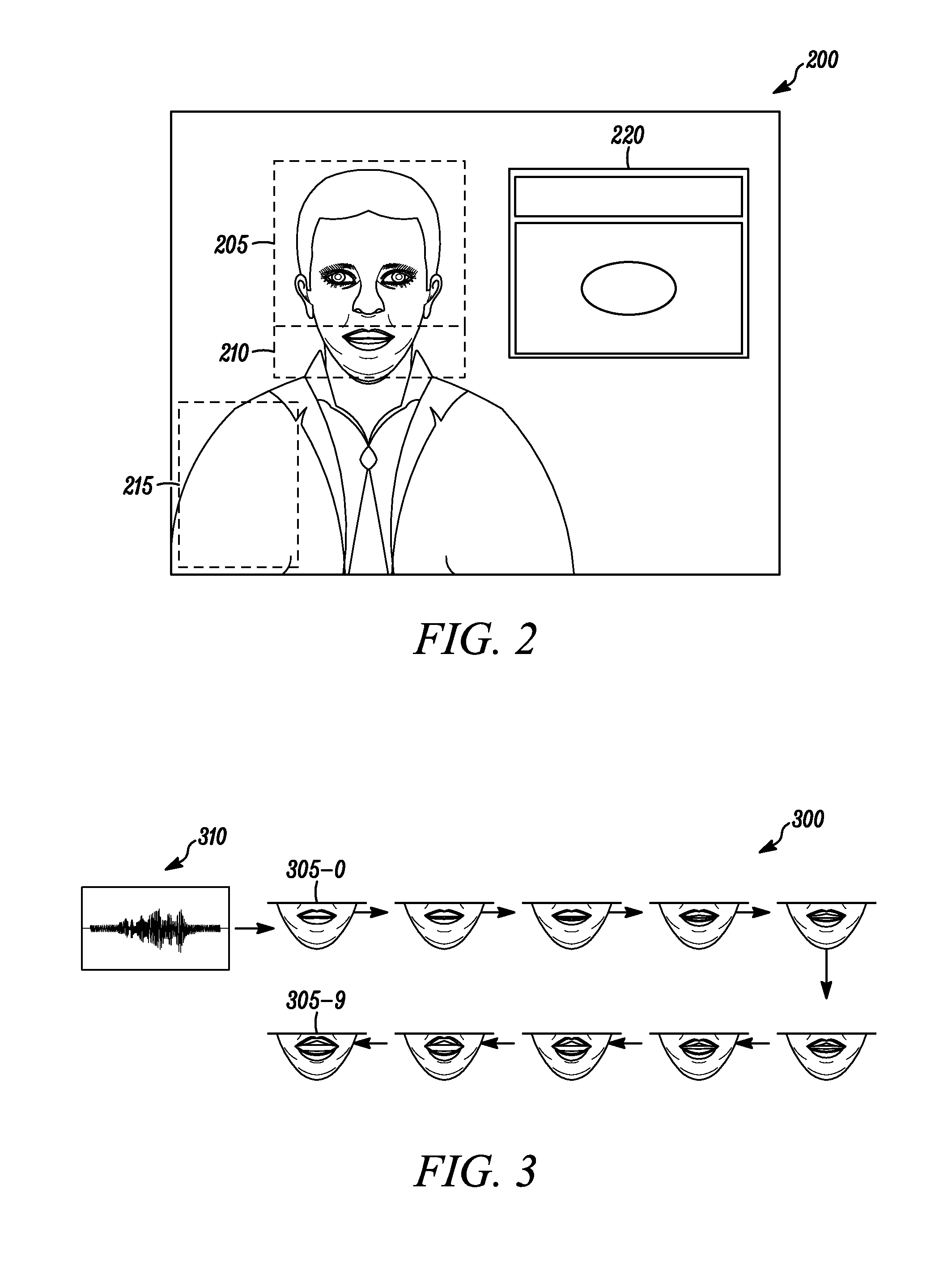

A method for animating an image is useful for animating avatars using real-time speech data. According to one aspect, the method includes identifying an upper facial part and a lower facial part of the image (step 705); animating the lower facial part based on speech data that are classified according to a reduced vowel set (step 710); tilting both the upper facial part and the lower facial part using a coordinate transformation model (step 715); and rotating both the upper facial part and the lower facial part using an image warping model (step 720).

Owner:MOTOROLA MOBILITY LLC

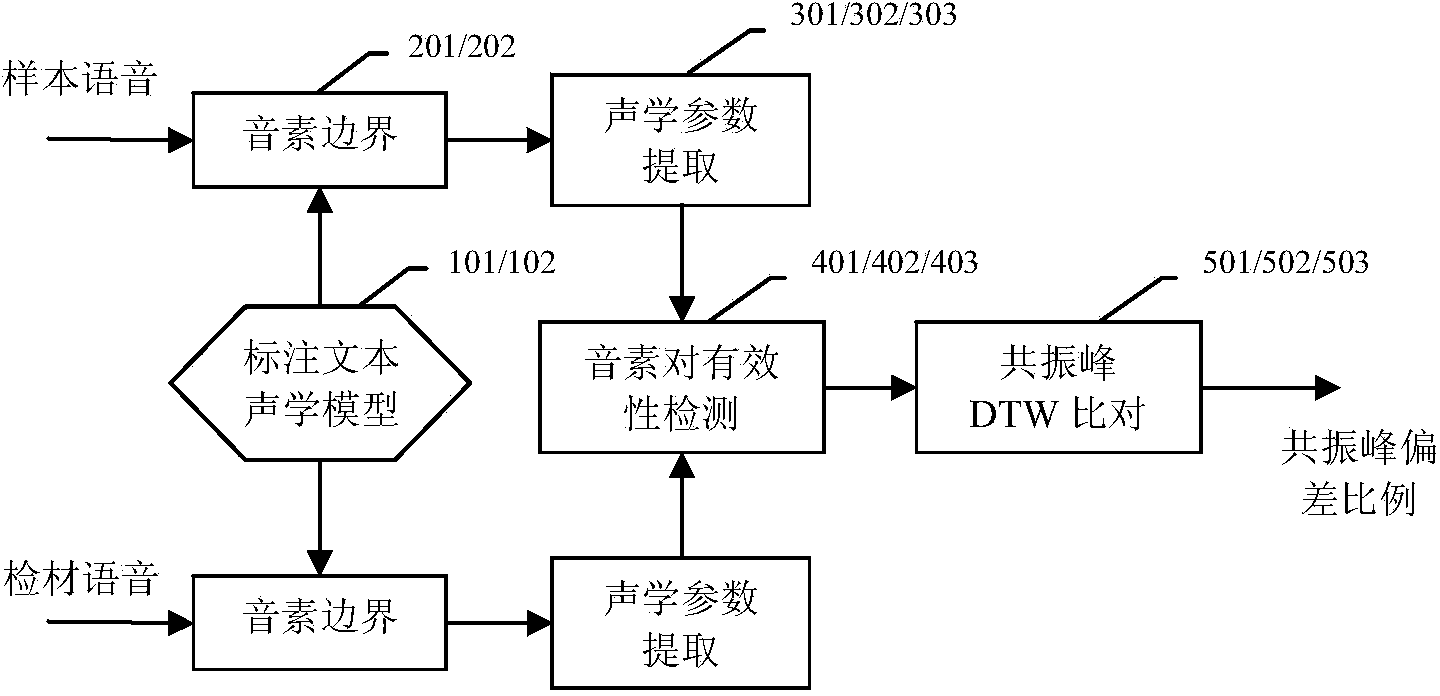

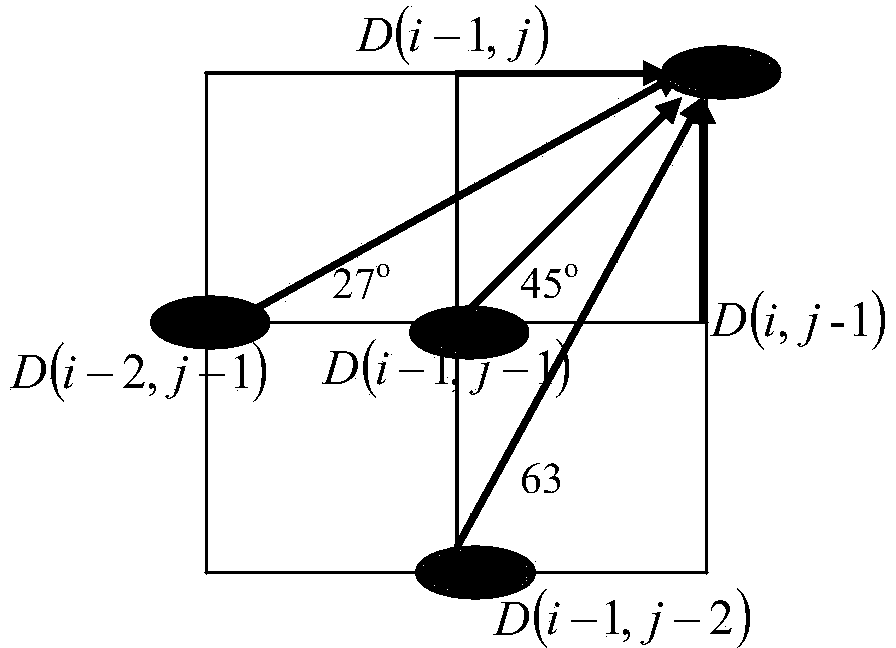

Resonance peak automatic matching method for voiceprint identification

ActiveCN103714826AHigh precisionImprove processing efficiencySpeech recognitionPeak alignmentResonance

The invention provides a resonance peak automatic matching method for voiceprint identification. The method comprises the following steps that: phoneme boundary positions in an inspection material and a sample in the voiceprint identification can be automatically marked through using continuous speech recognition-based forced alignment (FA) technology; as for identical vowel phoneme segments of the inspection material and the sample, whether a current phoneme is a valid analysable phoneme is automatically judged through using fundamental frequencies, resonance peaks and power spectrum density parameters; and deviation ratios of corresponding resonance peak time-frequency areas can be automatically rendered through using a dynamic time warping (DTW) algorithm and are adopted as analysis basis of final manual voiceprint identification. With the resonance peak automatic matching method for the voiceprint identification of the invention adopted, the boundaries of phonemes can be automatically marked, and whether the pronunciation of the phonemes is valid is judged, and therefore, processing efficiency can be greatly improved; and at the same time, an automatic resonance peak deviation alignment algorithm is performed on effective phoneme pairs, and therefore, the accuracy of resonance peak alignment can be improved.

Owner:ANHUI IFLYTEK INTELLIGENT SYST

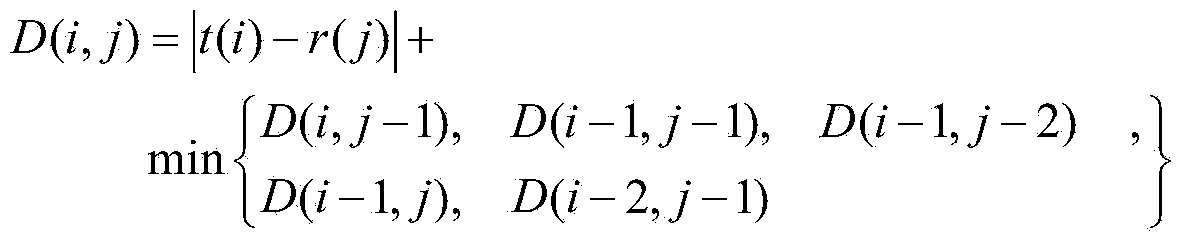

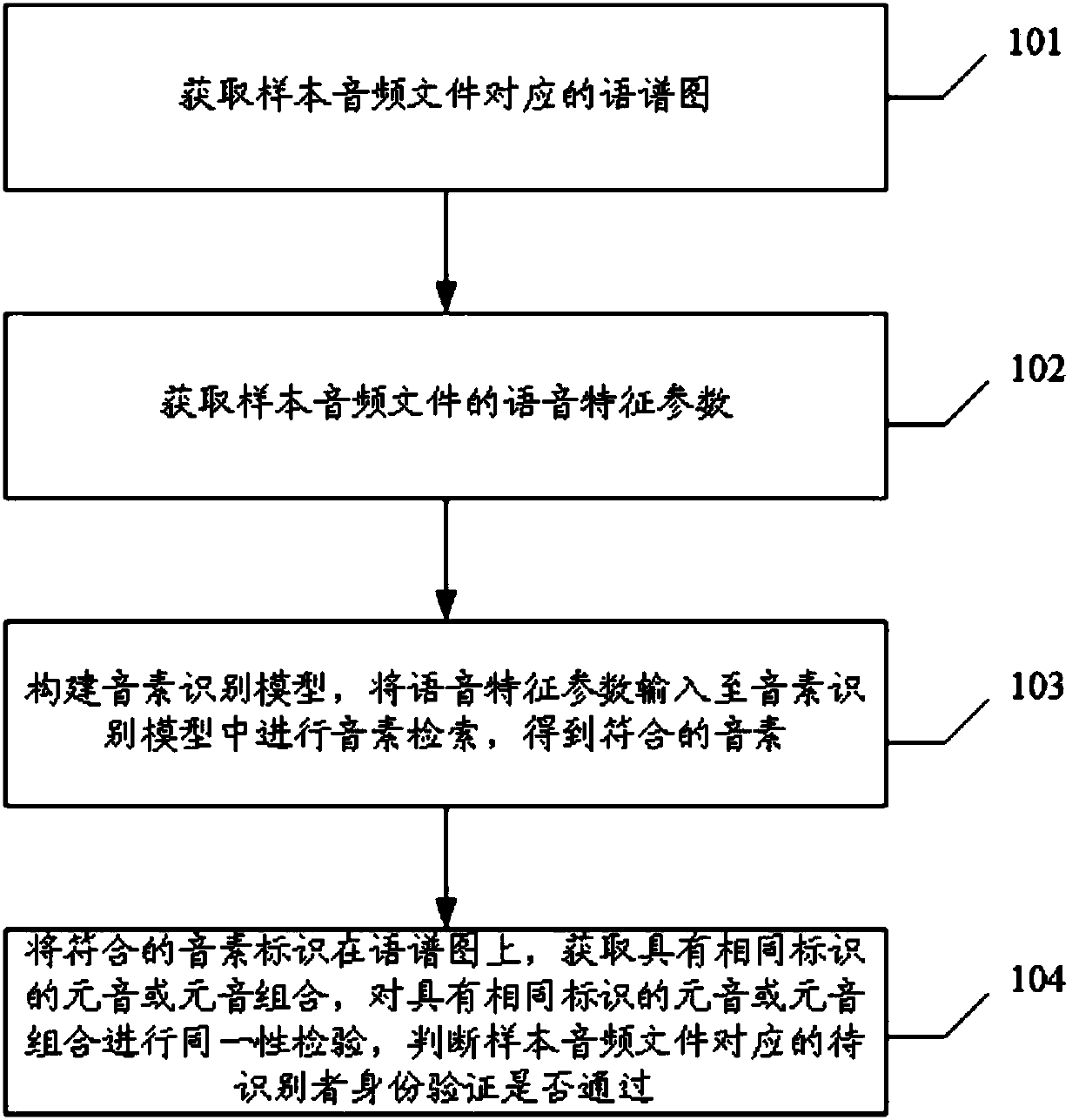

Identity uniformity check method and device based on spectrogram and phoneme retrieval

ActiveCN107680601AImprove identification efficiencyImprove accuracySpeech analysisPhoneme recognitionPhase retrieval

The invention provides an identity uniformity check method and device based on a spectrogram and phoneme retrieval. The method includes the following steps: acquiring a spectrogram corresponding to asample audio file; acquiring the speech feature parameters of the sample audio file; constructing a phoneme recognition model, inputting the speech feature parameters to the phoneme recognition modeland carrying out phoneme retrieval to get qualified phonemes; and marking the qualified phonemes on the spectrogram, checking the uniformity of vowels or vowel combinations with the same identifier, and judging whether a to-be-identified person corresponding to the sample audio file passes identity verification. The technical problem on phoneme searching and finding in actual voiceprint authentication is solved. Phonemes can be displayed visually. The identification efficiency of investigators is improved.

Owner:SPEAKIN TECH CO LTD

Keyboard used in the information terminal and arrangement thereof

InactiveUS20070147933A1Simple designSee clearlyInput/output for user-computer interactionHousing of computer displaysComputer terminalVowel

A keyboard used with information terminals / devices and key arrangement on the keyboard. The keyboard comprises a high click frequency key group chain consisting of at least four of the five vowel keys and the ‘N’ key. Within the key group chain, each member key is next to at least one another member key along a row or along a column. The five vowel keys are A, E, I, 0, U. The keyboard can be operated by a single hand, and can have an alphabet input region with 4 to 6 columns of keys, depending on the particular usage requirement, but still maintain a same central module. The keyboard is convenient for inputting Chinese phonetic letters and alphabets of Western languages.

Owner:ZHANG LI

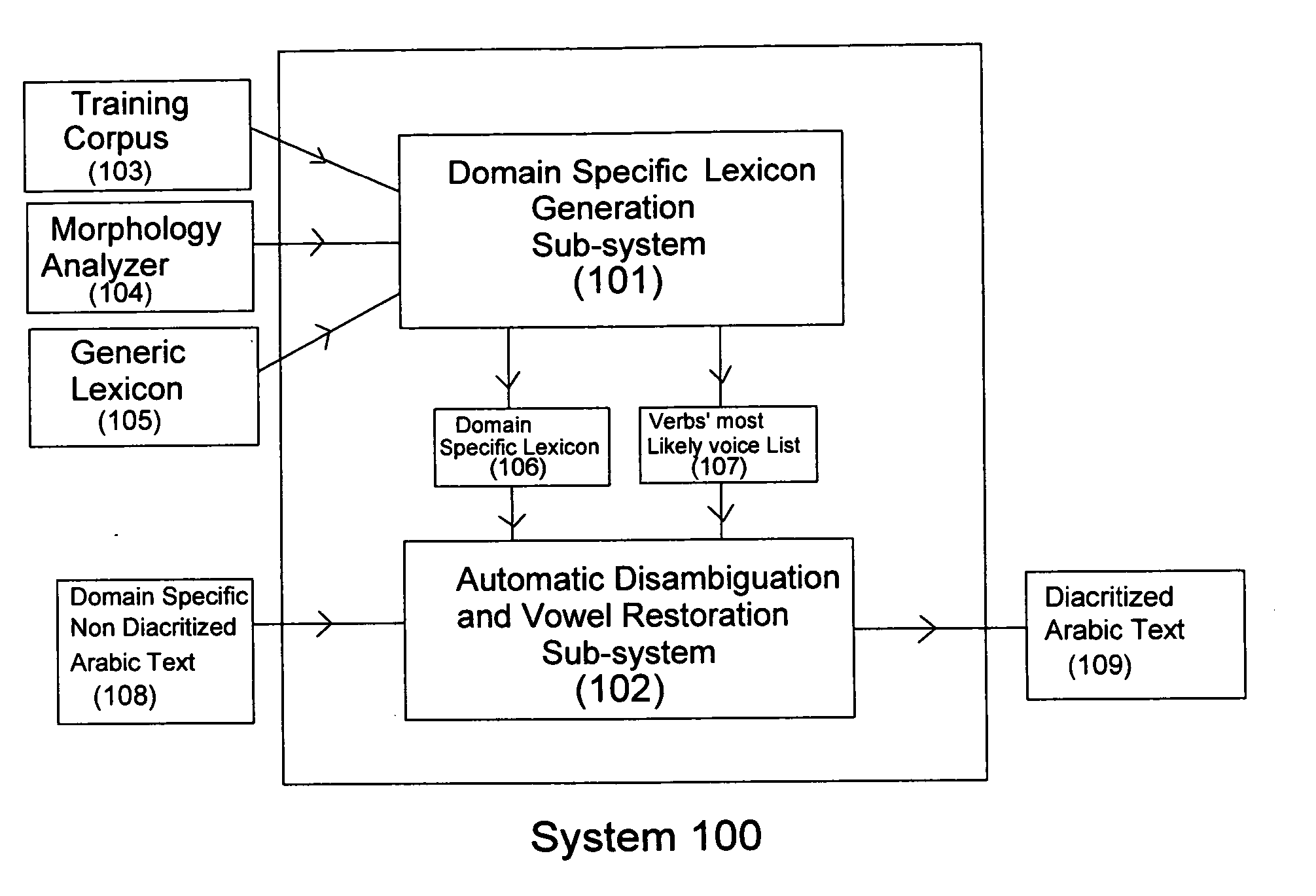

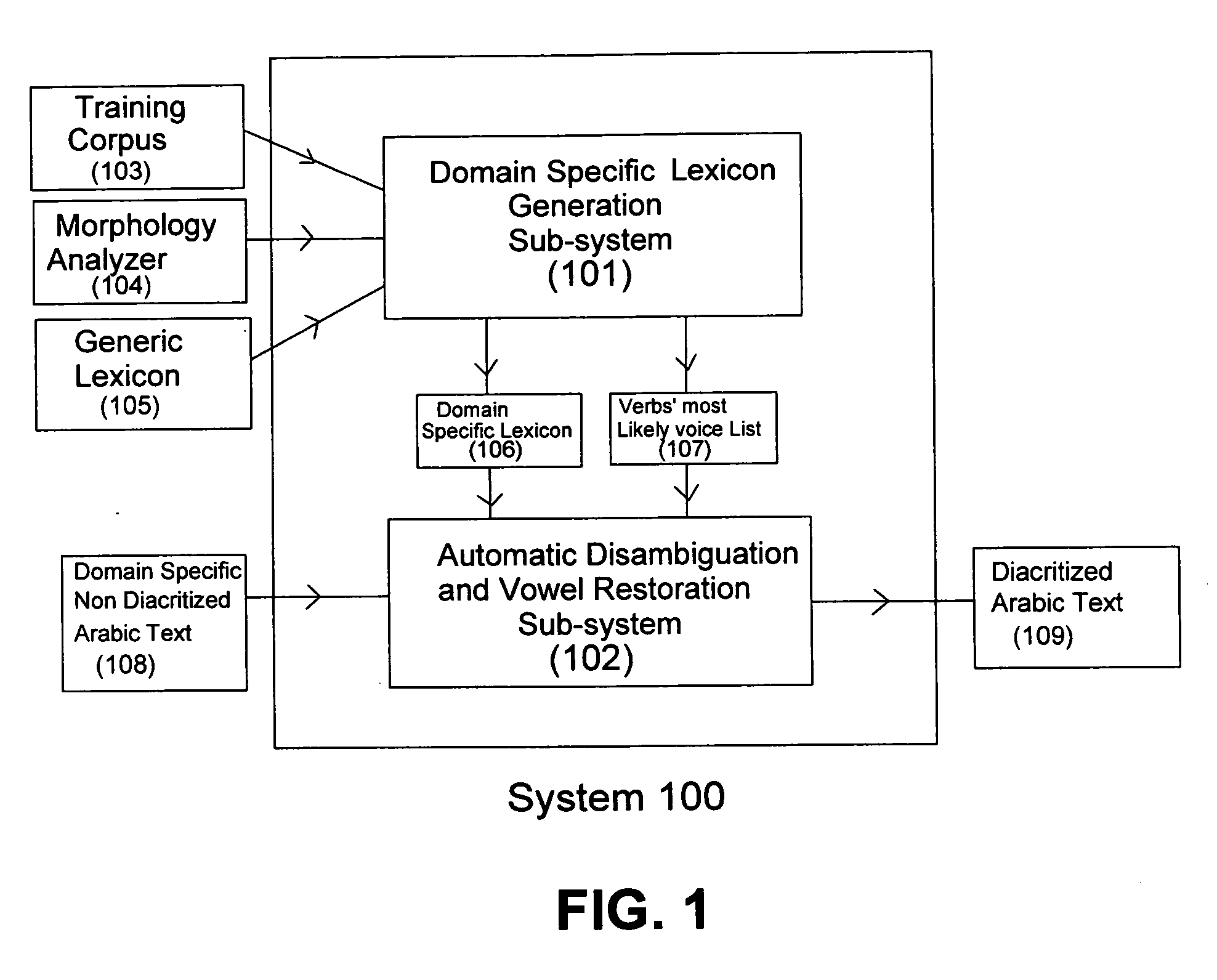

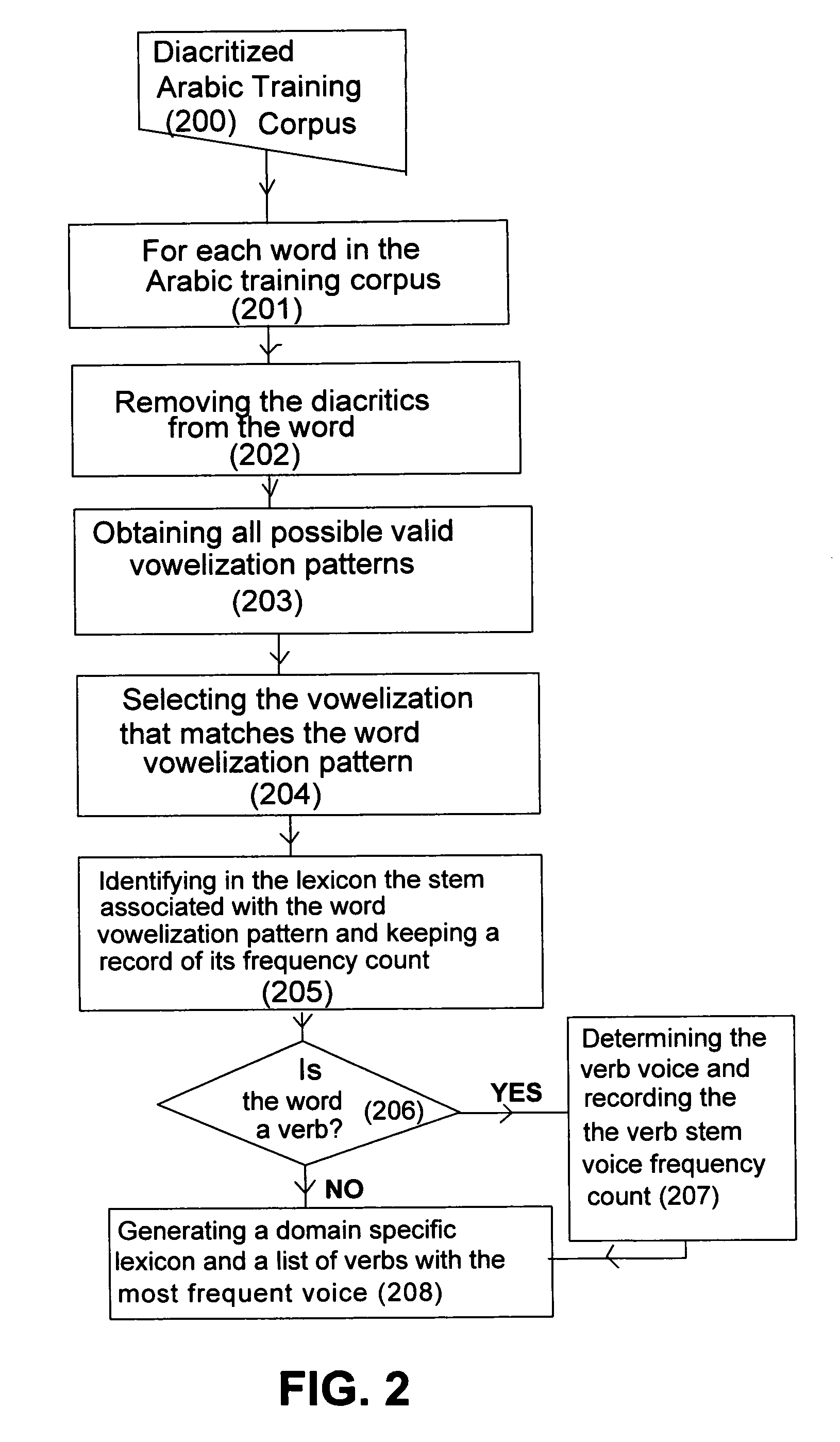

System and method for disambiguating non diacritized arabic words in a text

ActiveUS20060129380A1Improve accuracyCorrect vowel pattern can be identifiedNatural language translationSpecial data processing applicationsAmbiguityA domain

The present invention proposes a solution to the problem of word lexical disambiguation in Arabic texts. This solution is based on text domain-specific knowledge, which facilitates the automatic vowel restoration of modern standard Arabic scripts. Texts similar in their contents, restricted to a specific field or sharing a common knowledge can be grouped in a specific category or in a specific domain (examples of specific domains; sport, art, economic, science . . . ). The present invention discloses a method, system and computer program for lexically disambiguating non diacritized Arabic words in a text based on a learning approach that exploits; Arabic lexical look-up, and Arabic morphological analysis, to train the system on a corpus of diacritized Arabic text pertaining to a specific domain. Thereby, the contextual relationships of the words related to a specific domain are identified, based on the valid assumption that there is less lexical variability in the use of the words and their morphological variants within a domain compared to an unrestricted text.

Owner:MACHINES CORP INT BUSINESS

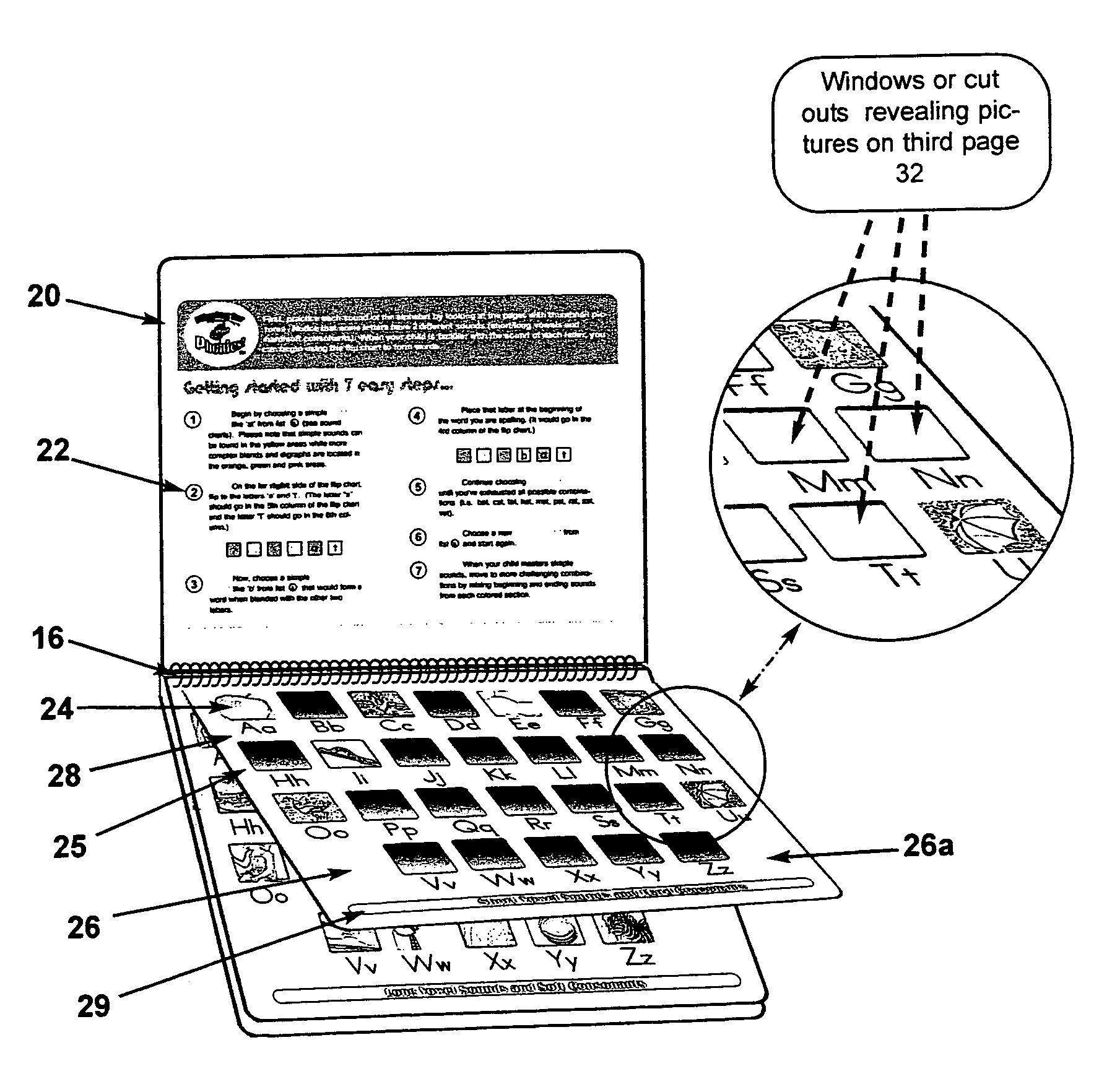

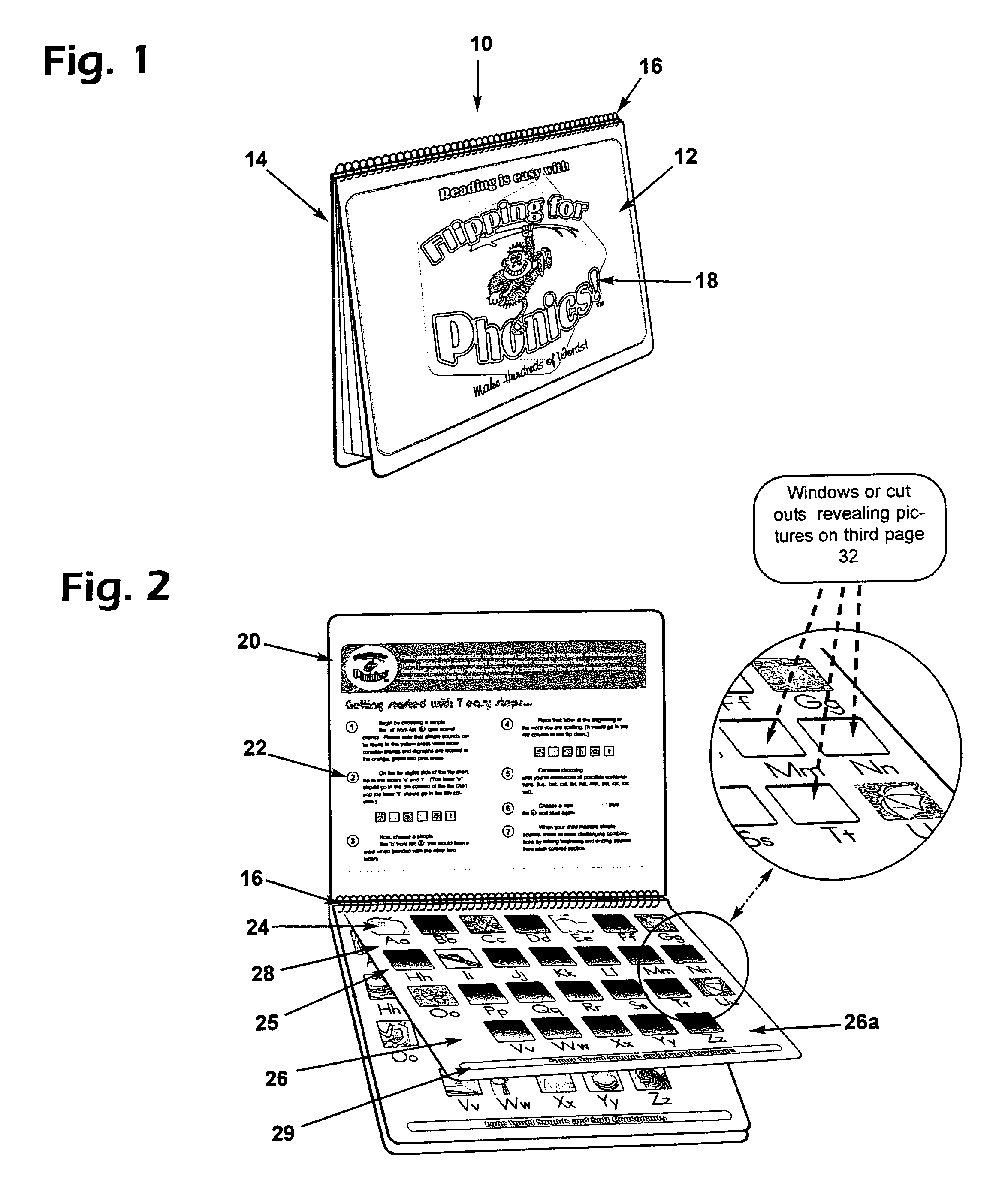

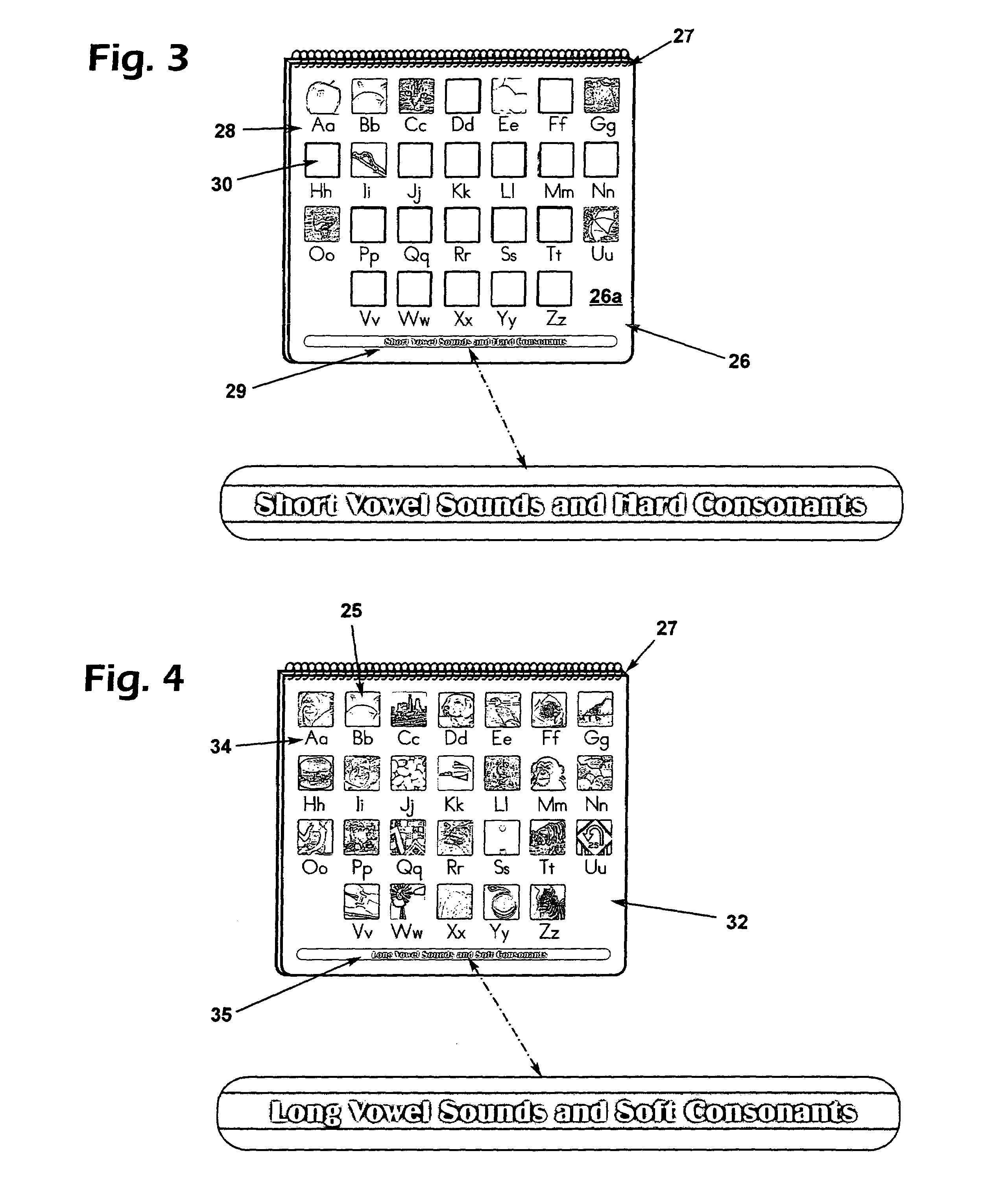

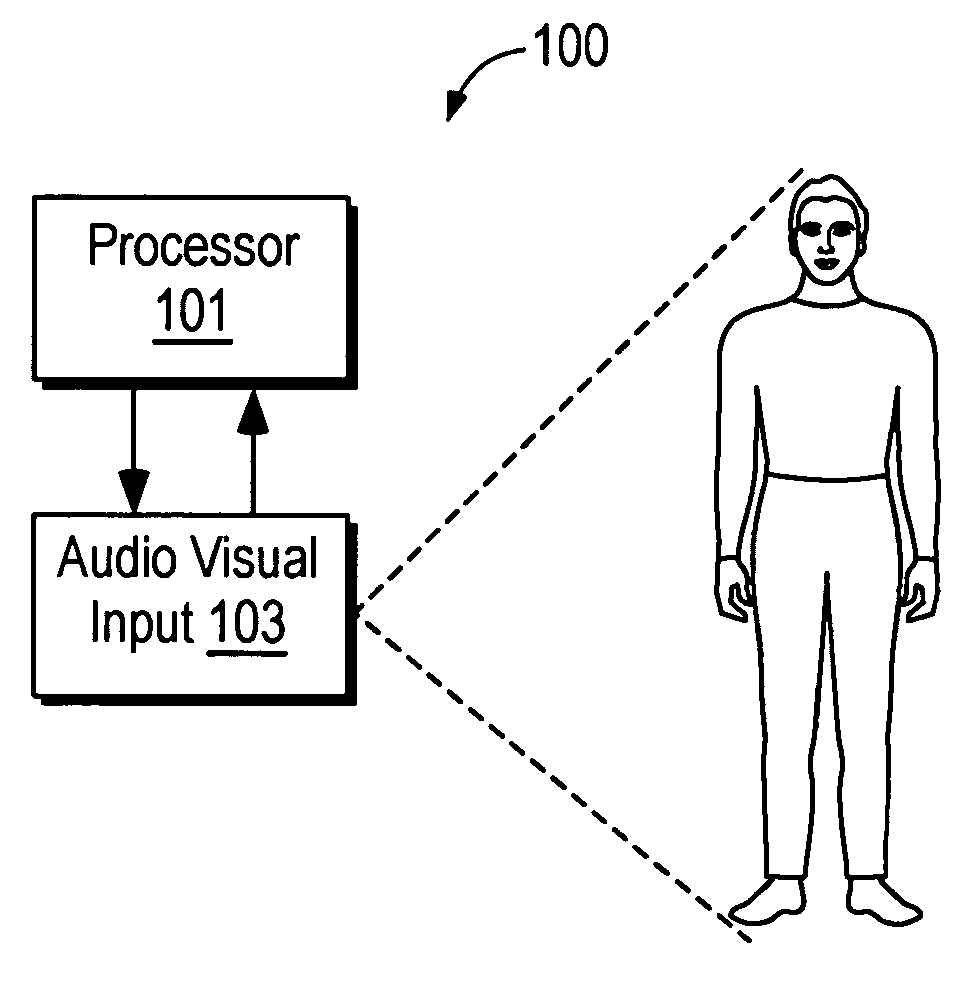

Tool device, system and method for teaching reading

InactiveUS6966777B2Convenient teachingConvenient to mixReadingMechanical appliancesTeaching toolLettering

A book-like reading teaching tool having a plurality of columns wherein each column contains each letter of the alphabet in ascending format, which are simultaneously viewable, a picture corresponding to each sound of each letter in the alphabet and structure for interchanging, mixing and matching letters and identifying and selecting long vowel and short vowel sounds. The reading tool includes a front cover, back cover, spiral binder, instructions, alphabet indicia, plurality of pictures corresponding to each letter and sound in the alphabet, plurality of windows or cutouts corresponding to selected letters and sounds, short vowel sound indicia, long vowel sound indicia and plurality of flip panels arranged in columns wherein each panel in each column contains a letter indicia. The covers, pages and panels have a plurality of apertures that are joined at coinciding ends by the spiral binder. The windows are formed in the second page over pictures corresponding to letters having only one sound or one common sound, such as the letter “B.” The instructions indicate how to form words and sounds using the panels and also include suggested beginning sounds, set of suggested complex beginning sounds, set of suggested ending sounds and set of suggested complex ending sounds.

Owner:ROBOTHAM JOHN +2

Method, system, and program product for measuring audio video synchronization using lip and teeth characteristics

ActiveUS20070153089A1Improve accuracyAccurately extract and examinesTelevision system detailsPulse modulation television signal transmissionData acquisitionLoudspeaker

Method, system, and program product for measuring audio video synchronization. This is done by first acquiring audio video information into an audio video synchronization system. The step of data acquisition is followed by analyzing the audio information, and analyzing the video information. Next, the audio information is analyzed to locate the presence of sounds therein related to a speaker's personal voice characteristics. In Analysis Phase Audio and Video MuEv-s are calculated from the audio and video information, and the audio and video information is classified into vowel sounds including AA, EE, OO, B, V, TH, F, silence, other sounds, and unclassified phonemes. The inner space between the lips are also identified and determined. This information is used to determine and associate a dominant audio class in a video frame. Matching locations are determined, and the offset of video and audio is determined.

Owner:PIXEL INSTR CORP

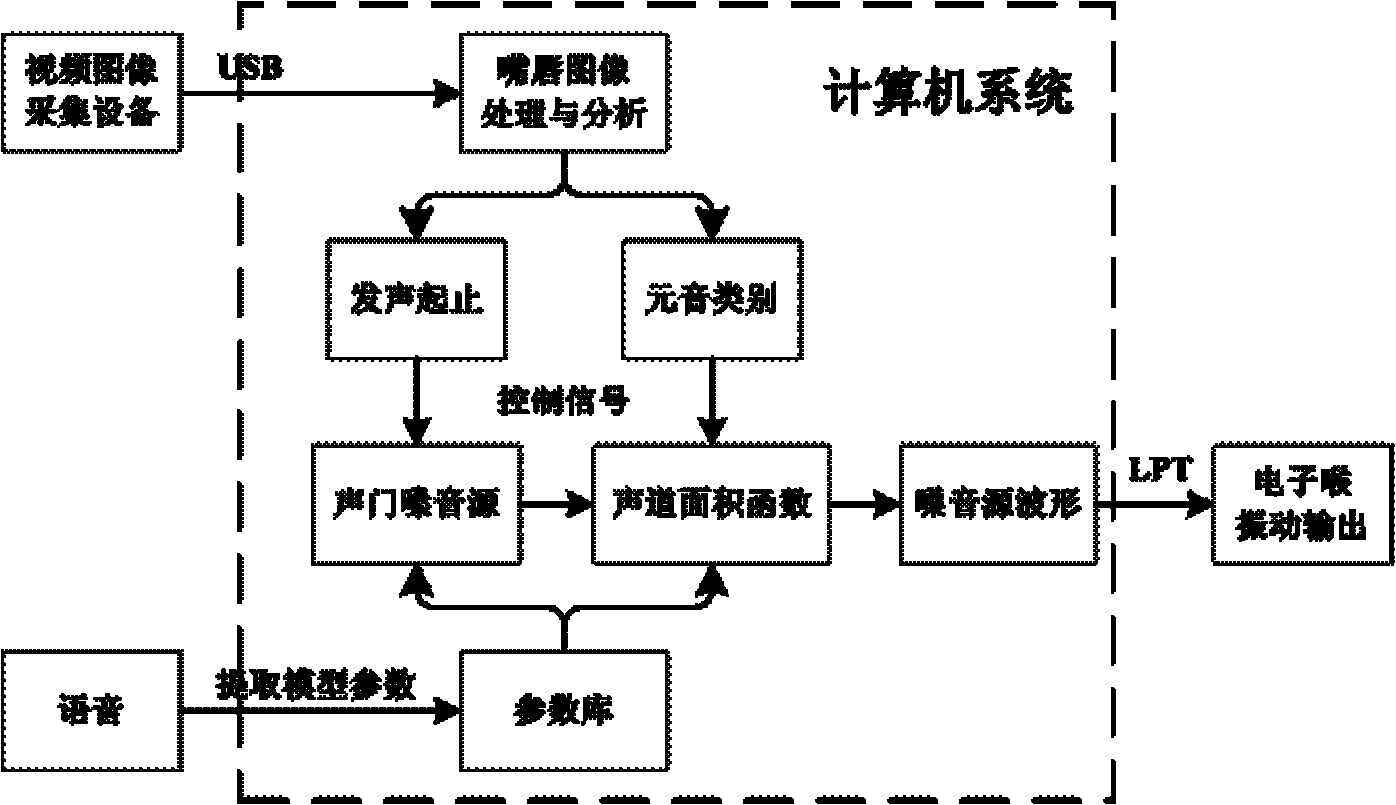

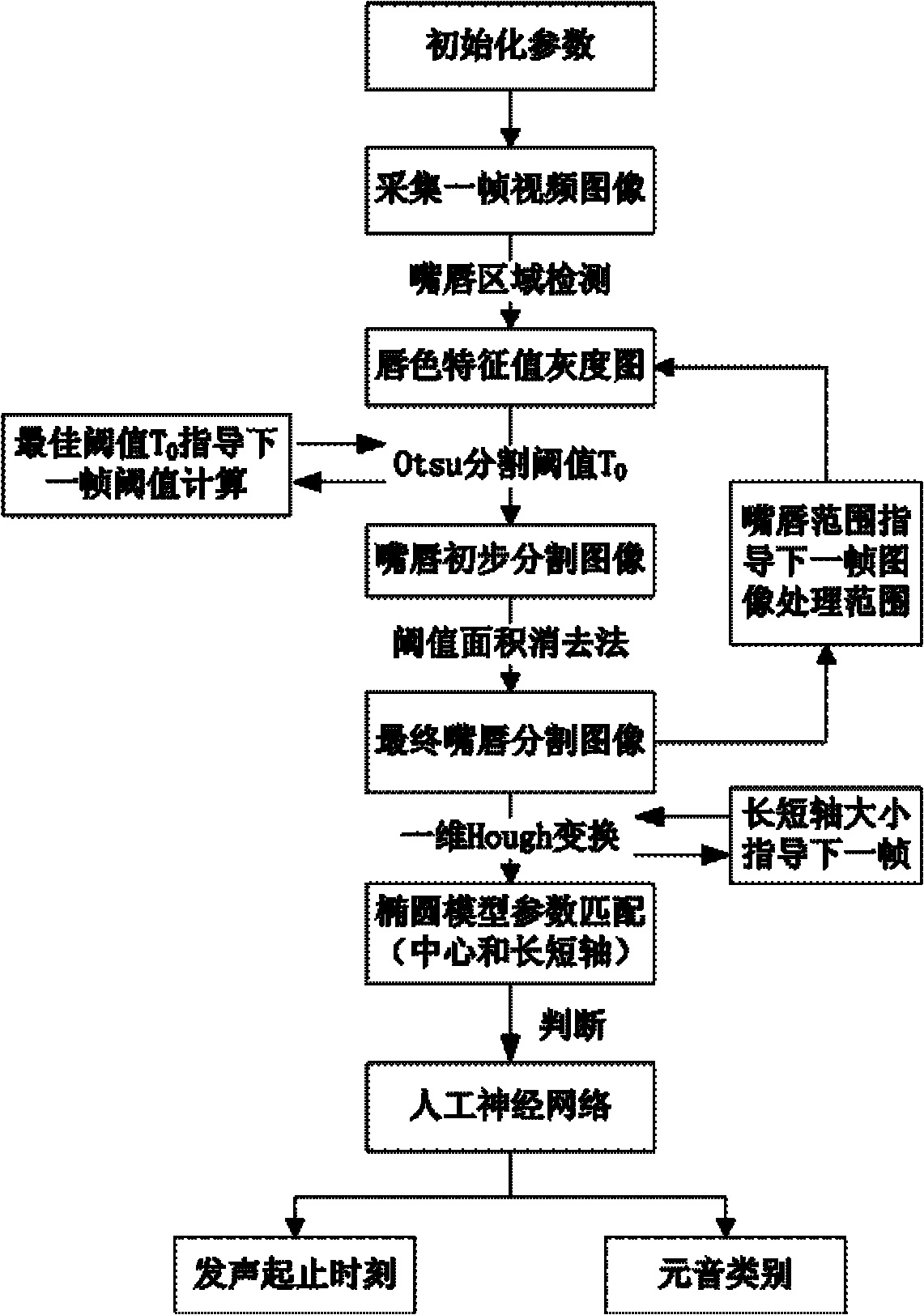

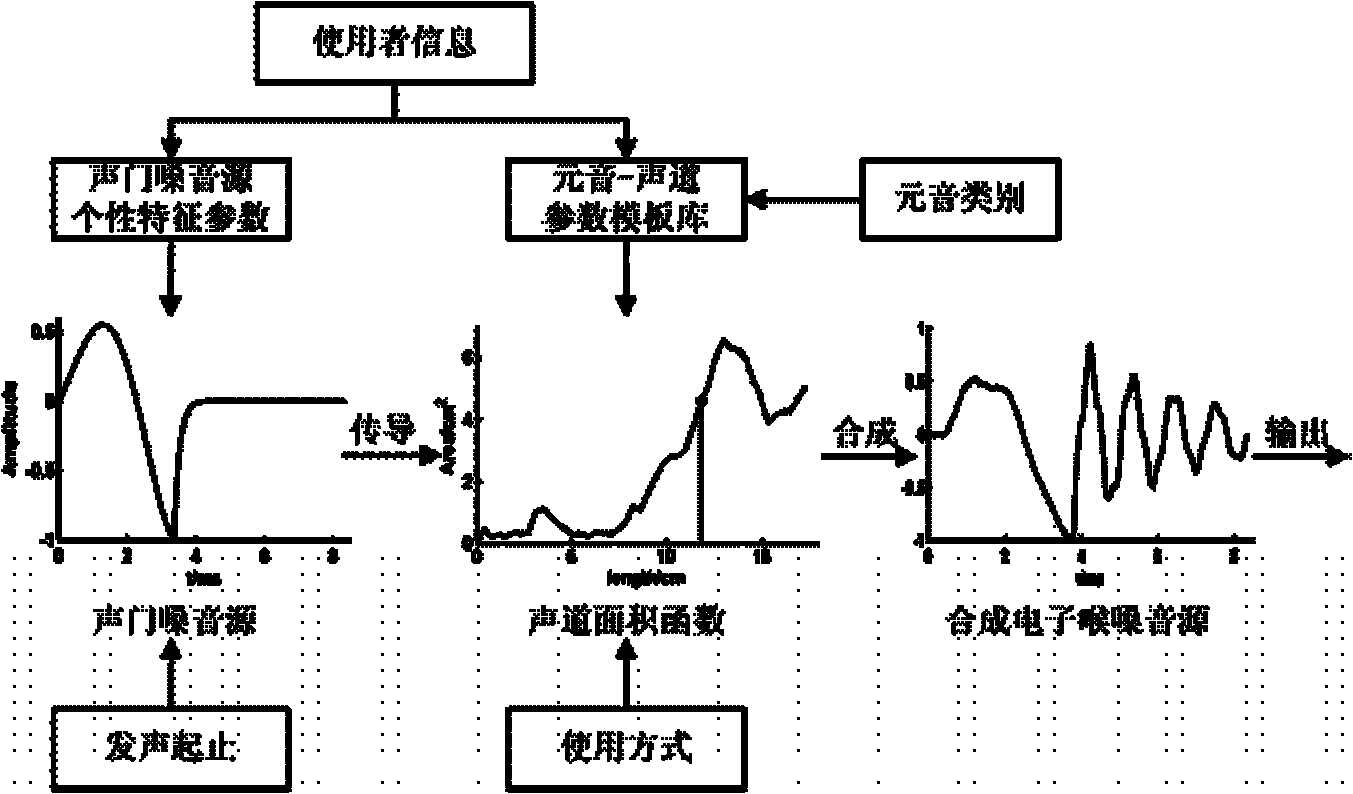

Electronic larynx speech reconstructing method and system thereof

ActiveCN101916566ARetain personality traitsQuality improvementCharacter and pattern recognitionSpeech recognitionImaging analysisVocal tract

The invention provides an electronic larynx speech reconstructing method and a system thereof. The method comprises the following steps of: firstly, extracting model parameters form collected speech as a parameter library; secondly, collecting the face image of a sounder, and transmitting the face image to an image analysis and processing module to obtain the sounding start moment, the sounding stop moment and the sounding vowel category; thirdly, synthesizing a voice source wave form through a voice source synthesizing module; and finally, outputting the voice source wave form through an electronic larynx vibration output module. Wherein the voice source synthesizing module is used for firstly setting the model parameters of a glottis voice source to synthesize the glottis voice source wave form, then simulating the transmission of the sound in the vocal tract by using a waveguide model and selecting the form parameters of the vocal tract according to the sounding vowel category so as to synthesize the electronic larynx voice source wave form. The speech reconstructed by the method and the system is closer to the sound of the sounder per se.

Owner:XI AN JIAOTONG UNIV

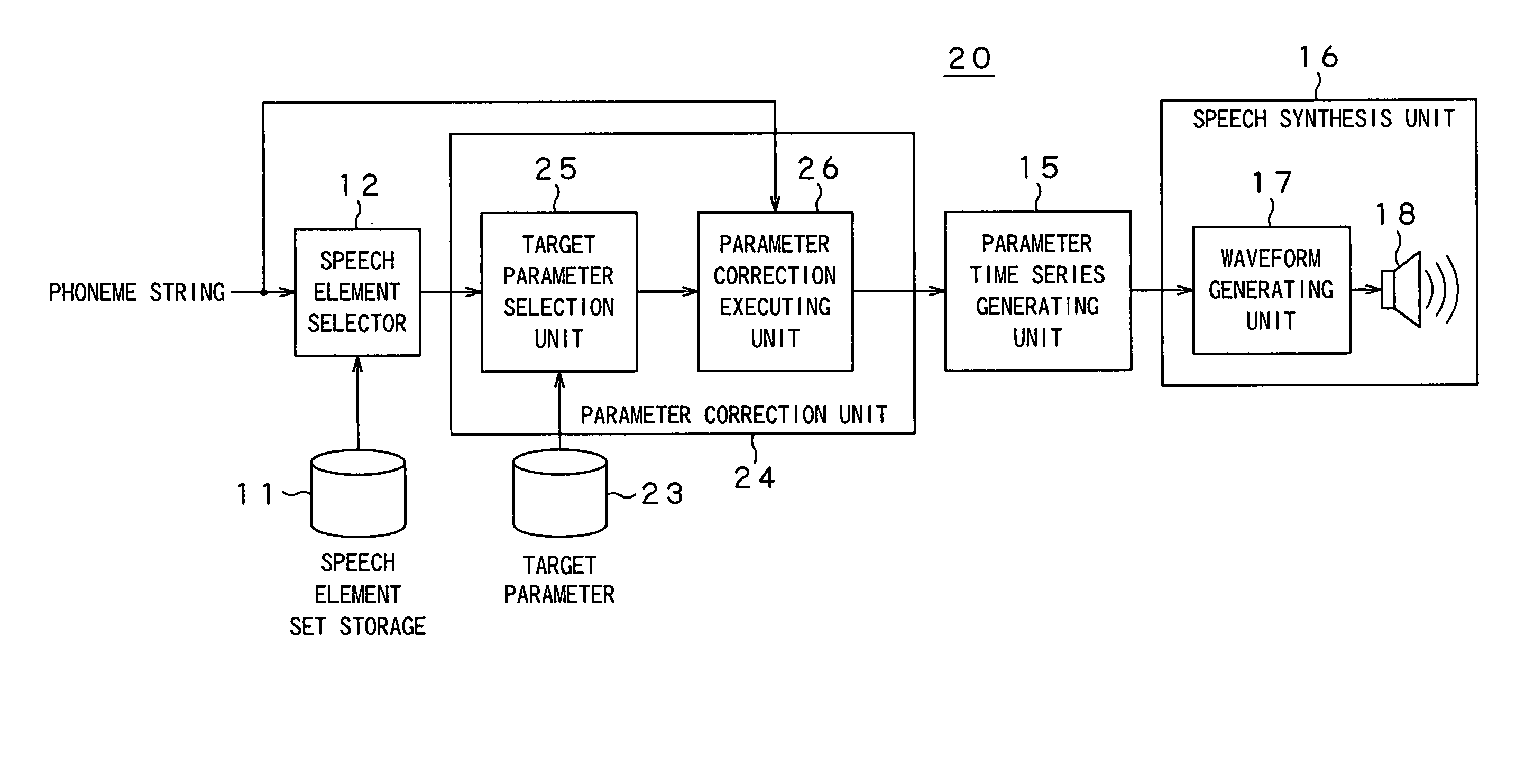

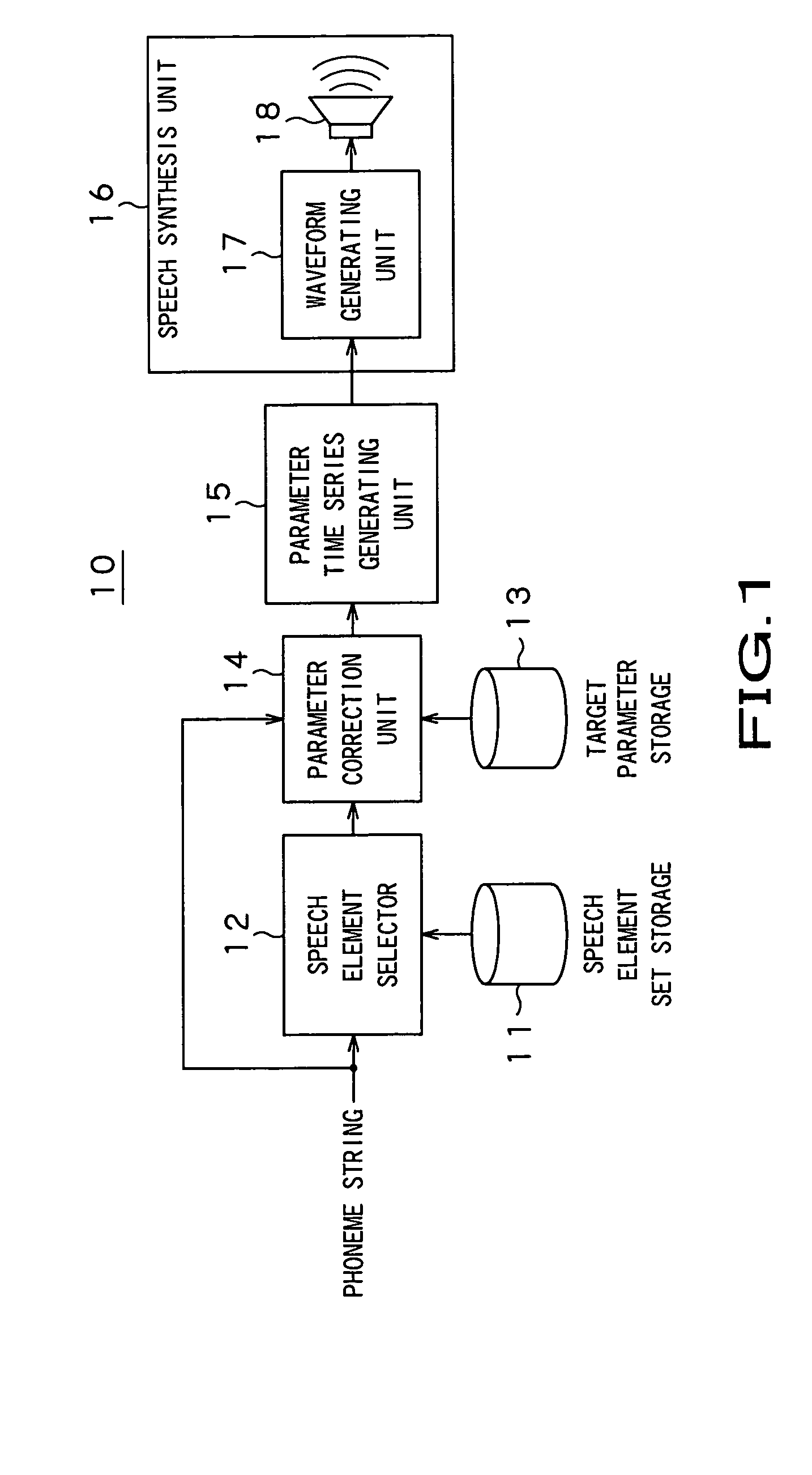

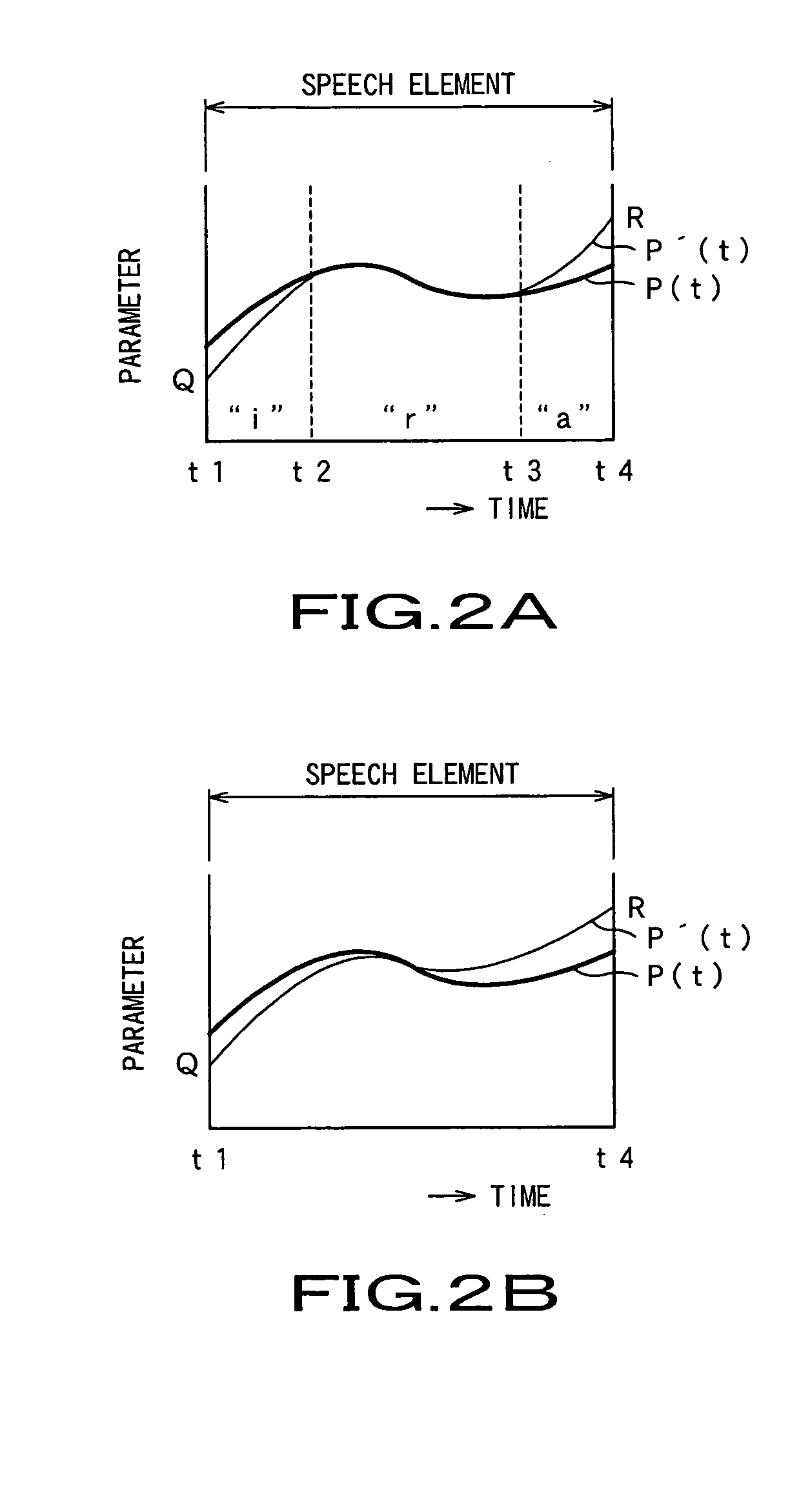

Rule based speech synthesis method and apparatus

InactiveUS20050119889A1Less distortionQuality improvementSpeech recognitionSpeech synthesisSpeech synthesisFeature parameter

A rule based speech synthesis apparatus by which concatenation distortion may be less than a preset value without dependency on utterance, wherein a parameter correction unit reads out a target parameter for a vowel from a target parameter storage, responsive to the phoneme at the a leading end and at a trailing end of a speech element and acoustic feature parameters output from a speech element selector, and accordingly corrects the acoustic feature parameters of the speech element. The parameter correction unit corrects the parameters, so that the parameters ahead and behind the speech element are equal to the target parameter for the vowel of the corresponding phoneme, and outputs the so corrected parameters.

Owner:SONY CORP

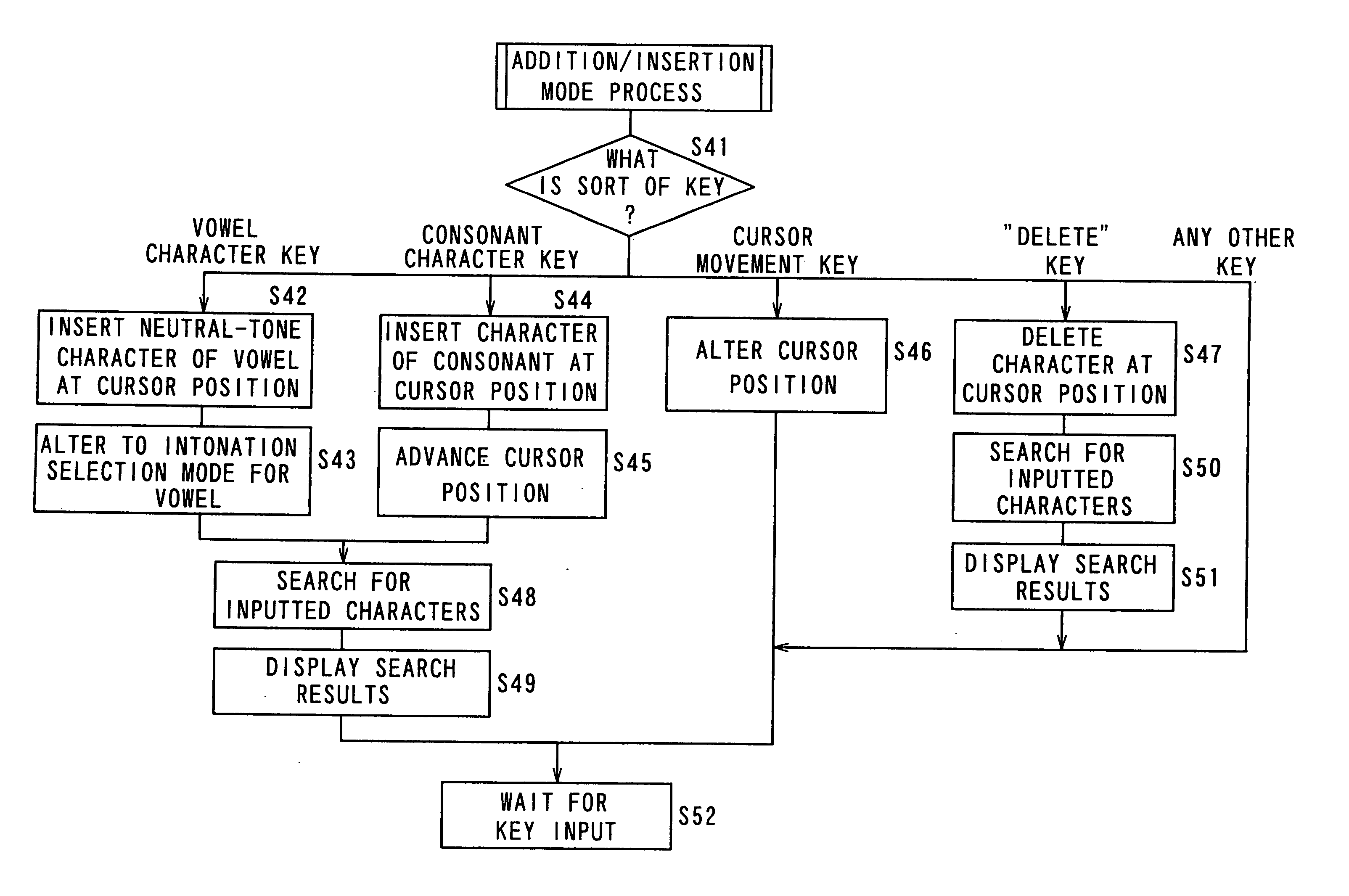

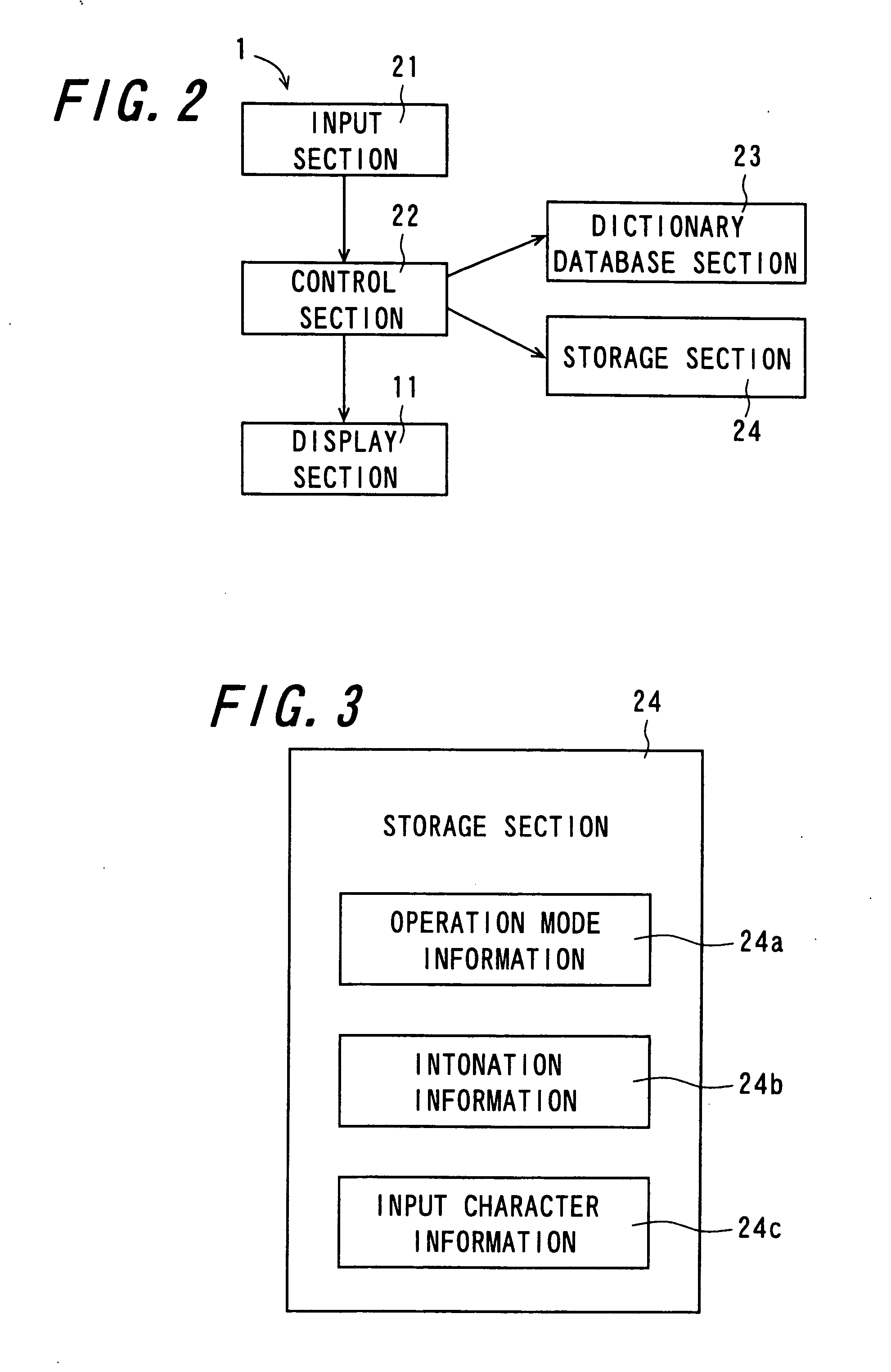

Character inputting method and character inputting apparatus

InactiveUS20050114138A1Easy to operateInput/output for user-computer interactionNatural language data processingVowel

When an input section is actuated by a user, a character is inputted, and a storage section stores therein the inputted character. On this occasion, when the inputted character is a vowel character, a control section causes the storage section to store therein the inputted vowel character and an intonation associated therewith. In a case where a vowel character identical to the inputted vowel character is successively inputted by the input section, the control section causes the storage section to store therein the vowel character and the initial intonation in association with each other, for a first input. At second and subsequent inputs, the control section does not cause the storage section to store therein the identical vowel character anew, but it alters only the intonation associated with the vowel character which has been already stored in the storage section.

Owner:SHARP KK

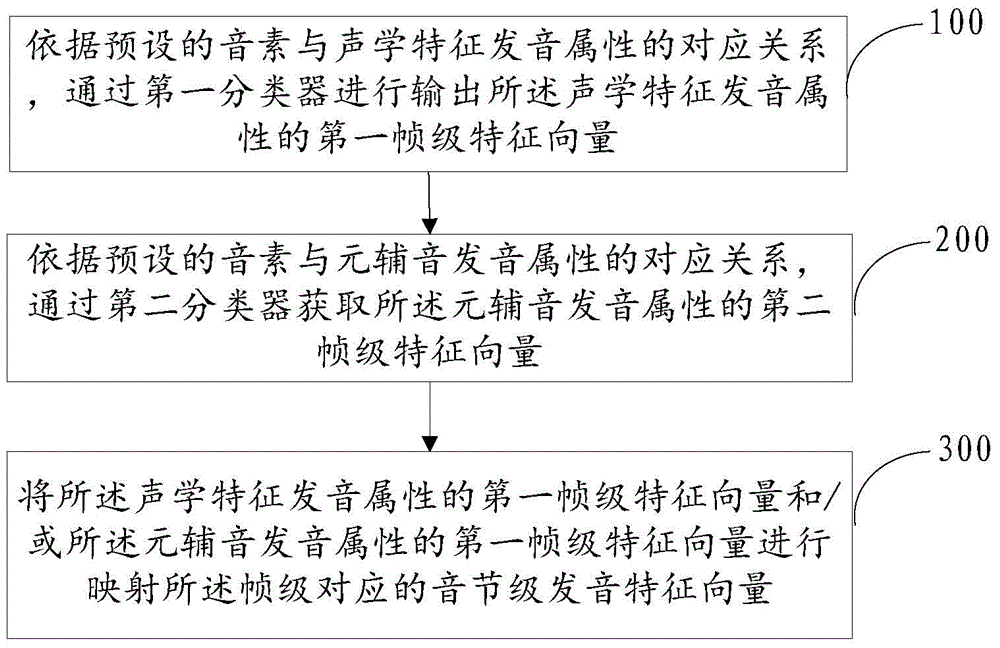

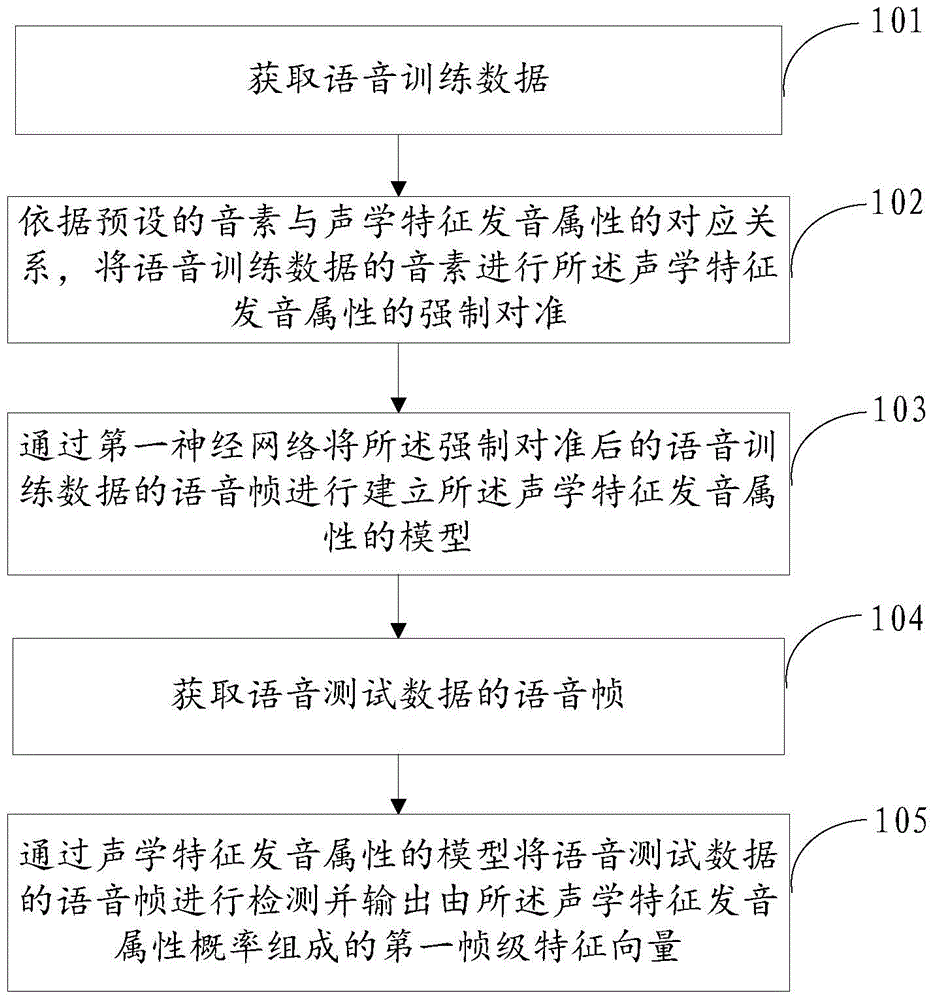

Feature extraction method and device as well as stress detection method and device

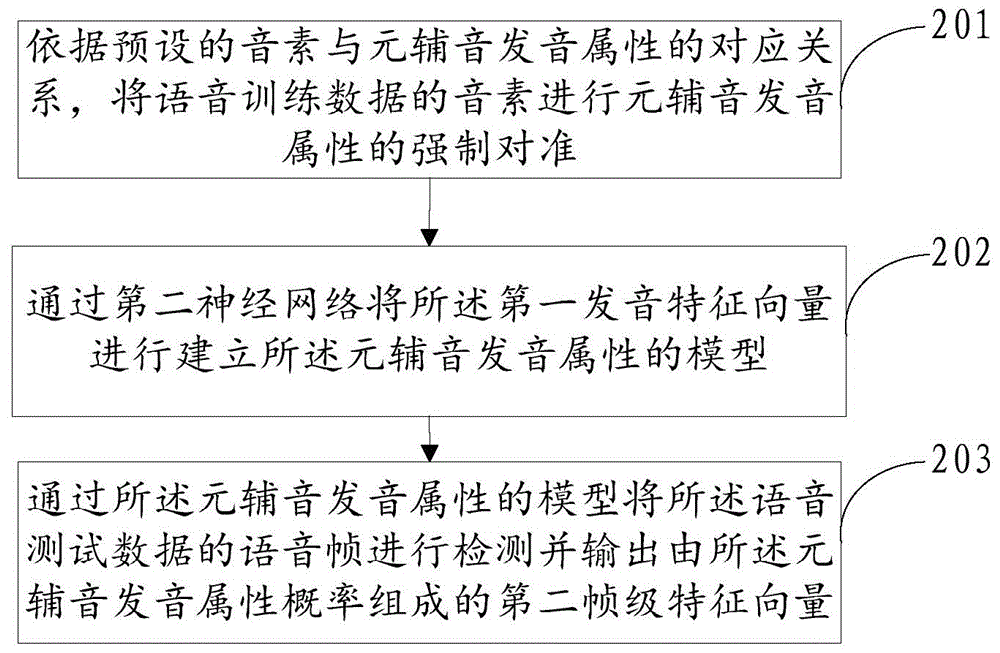

The invention discloses a feature extraction method and device as well as a stress detection method and device, relates to the voice detection technology, and aims to solve the problems of low accuracy of stress detection in the prior art. The technical scheme is that the feature extraction method comprises the following steps: according to a preset corresponding relationship between phonemes and acoustic feature pronunciation attributes, a first frame-level eigenvector of the acoustic feature pronunciation attributes is output through a first classifier; a second frame-level eigenvector of vowel and consonant pronunciation attributes is output by a second classifier according to the preset corresponding relationship between preset preset phonemes and the vowel and consonant pronunciation attributes; the first frame-level eigenvector of the acoustic feature pronunciation attributes or the second frame-level eigenvector of the vowel and consonant pronunciation attributes is used for mapping a syllable-level pronunciation eigenvector. The scheme can be applied to a voice detection process.

Owner:TSINGHUA UNIV +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com