Feature extraction method and device as well as stress detection method and device

A feature extraction and detection technology, applied in speech analysis, speech recognition, instruments, etc., can solve the problem of low accuracy and achieve the effect of accurate features and high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

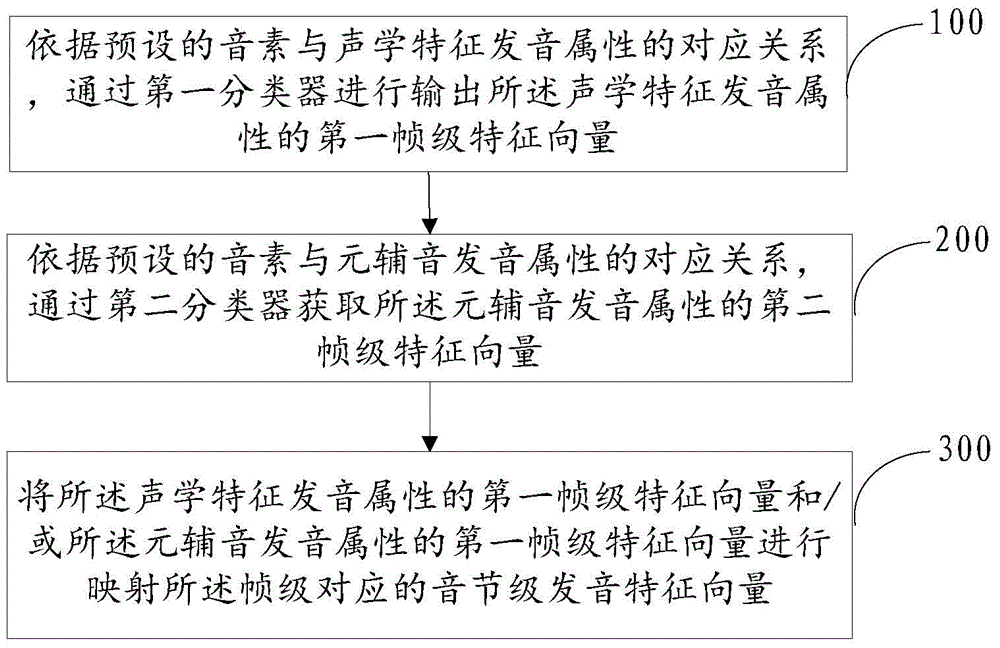

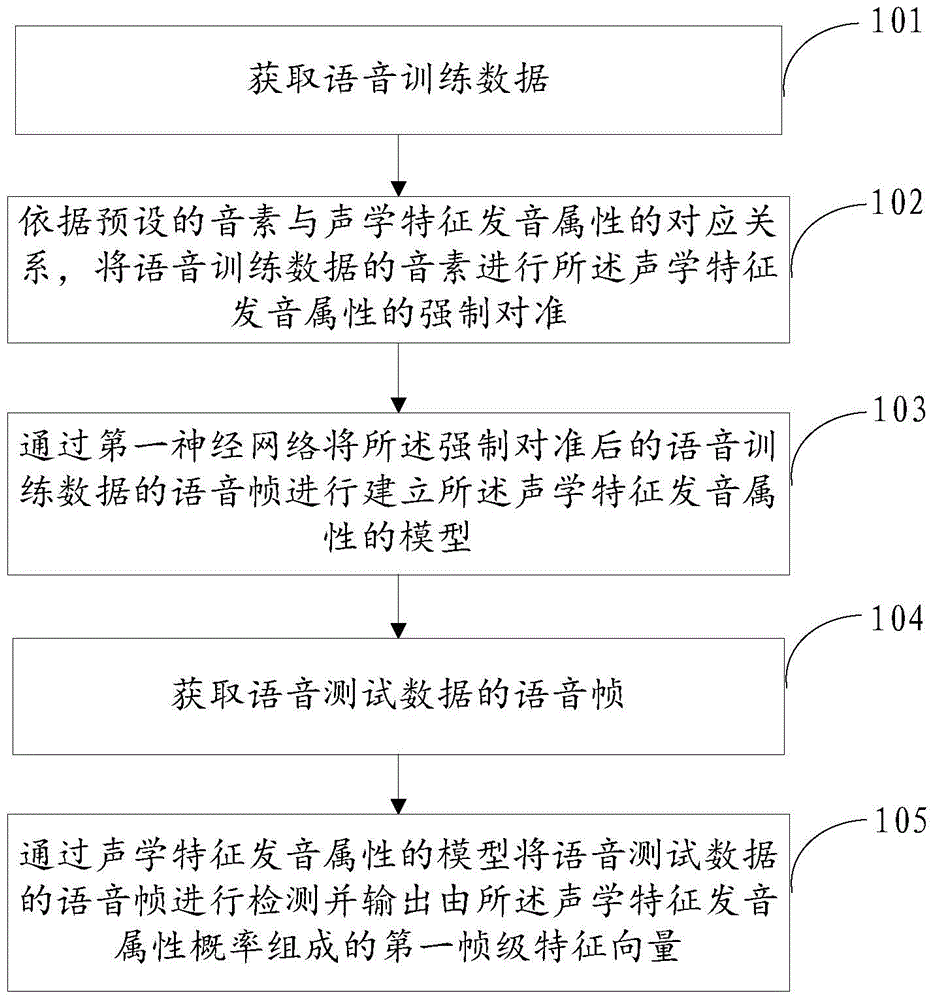

[0064] Such as figure 1 As shown, the embodiment of the present invention provides a feature extraction method, which can be used for accent detection, and the method includes:

[0065] Step 100, outputting the first frame-level feature vector of the acoustic feature pronunciation attribute through the first classifier according to the preset correspondence between the phoneme and the acoustic feature pronunciation attribute.

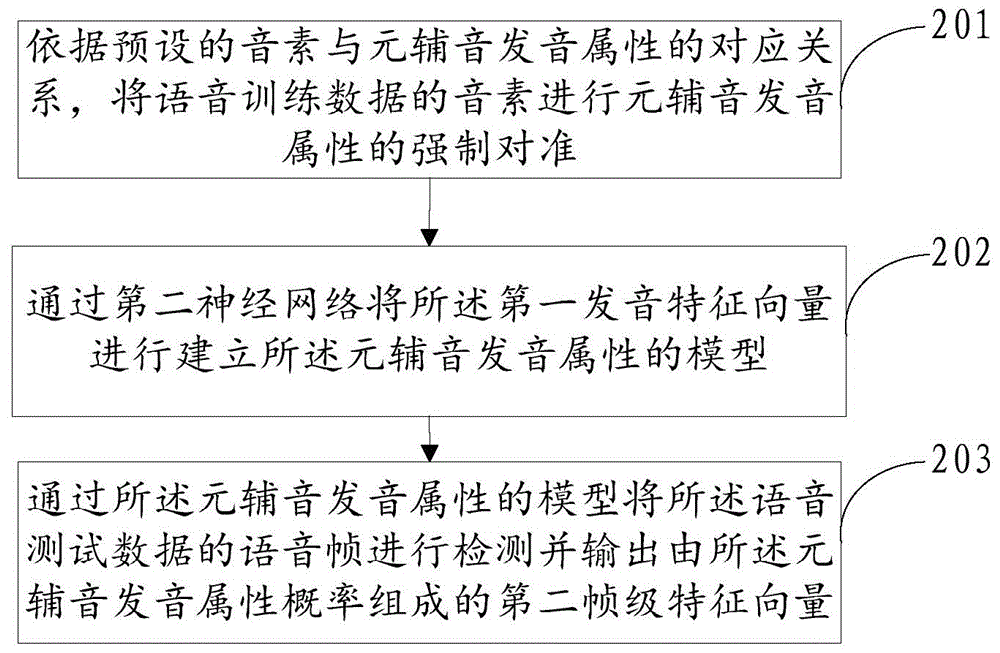

[0066] Step 200: Obtain the second frame-level feature vector of the pronunciation attribute of the vowel consonant through the second classifier according to the preset correspondence between the phoneme and the pronunciation attribute of the vowel consonant.

[0067] Step 300: Map the first frame-level feature vector of the acoustic feature pronunciation attribute or the first frame-level feature vector of the vowel-consonant pronunciation attribute to the syllable-level pronunciation feature vector corresponding to the frame level.

[0068] In this ...

Embodiment 2

[0103] Such as Figure 4 As shown, the embodiment of the present invention provides a method for stress detection, the method includes:

[0104] Step 401, receiving detected voice data.

[0105] Step 402, obtaining the voice recognition result of the detected voice data through voice recognition technology;

[0106] Step 403, dividing the detected voice data into syllables according to the voice recognition result;

[0107] Step 404, acquire the syllable-level pronunciation feature vectors of the syllable-divided detected speech data through an accent feature extraction method.

[0108] In this embodiment, the accent feature extraction method in step 404 can be the extraction method provided in Embodiment 1, such as Figure 5 As shown, step 404 may also include:

[0109] Step 501, acquiring the prosody features of the detected speech data.

[0110] In this embodiment, various methods in the prior art may be used for the extraction method of the corresponding prosodic feat...

Embodiment 3

[0115] Such as Figure 6 As shown, the embodiment of the present invention provides a feature extraction device, which can be used for accent detection, and the device includes:

[0116] The acoustic feature extraction module 901 is configured to output the first frame-level feature vector of the acoustic feature pronunciation attribute through the first neural network according to the preset correspondence between the phoneme and the acoustic feature pronunciation attribute.

[0117] The vowel and consonant pronunciation feature extraction module 902 is used to output the first frame-level feature vector of the acoustic feature attribute extracted by the acoustic feature extraction module 904 through the second neural network according to the preset correspondence between phonemes and vowel and consonant pronunciation attributes. A second frame-level feature vector of consonant articulation attributes;

[0118] The mapping module 903 is configured to map the second frame-lev...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com