Patents

Literature

115 results about "Speech training" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

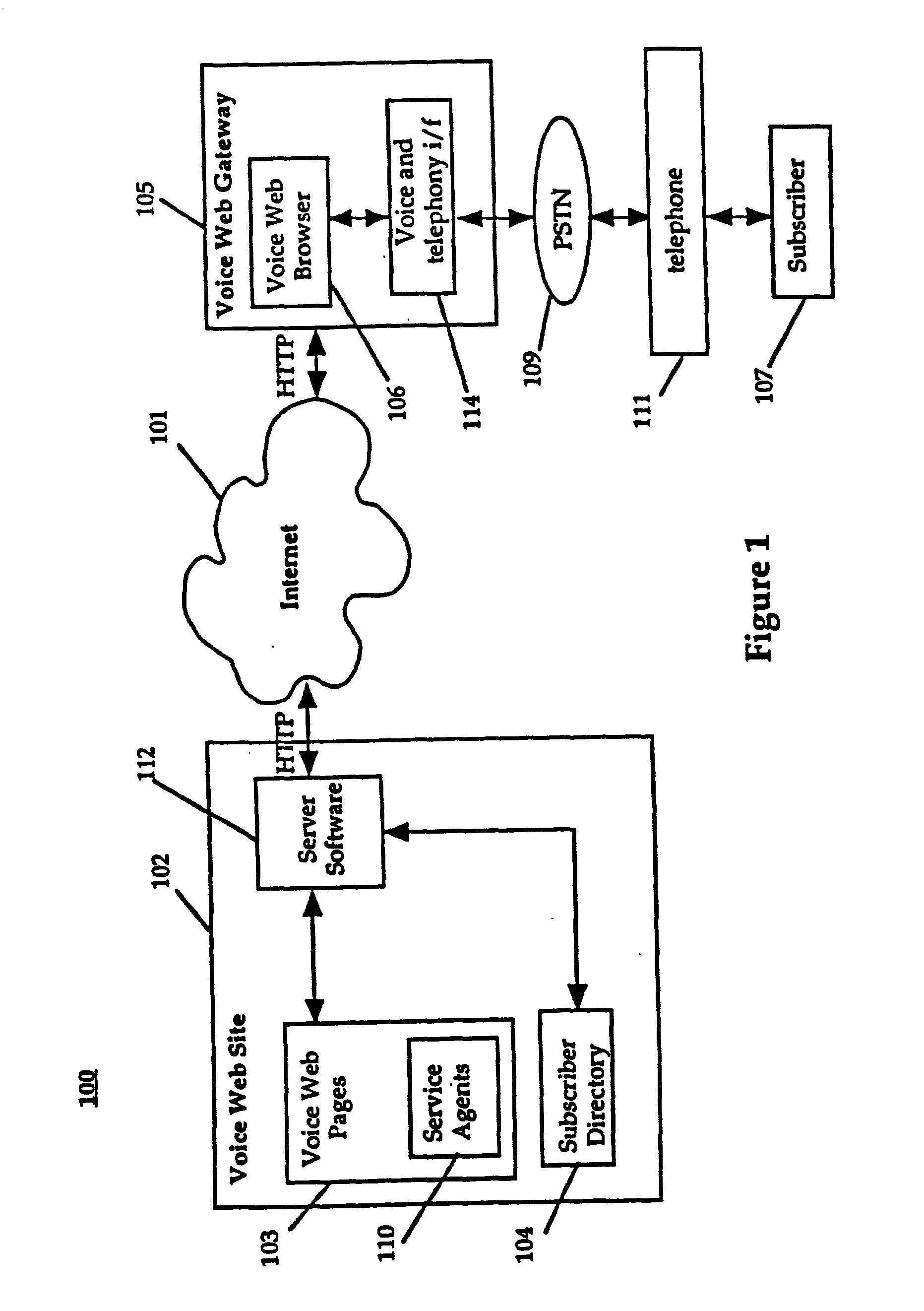

System and method for providing and using universally accessible voice and speech data files

InactiveUS6400806B1Automatic call-answering/message-recording/conversation-recordingAutomatic exchangesSpeech trainingHyperlink

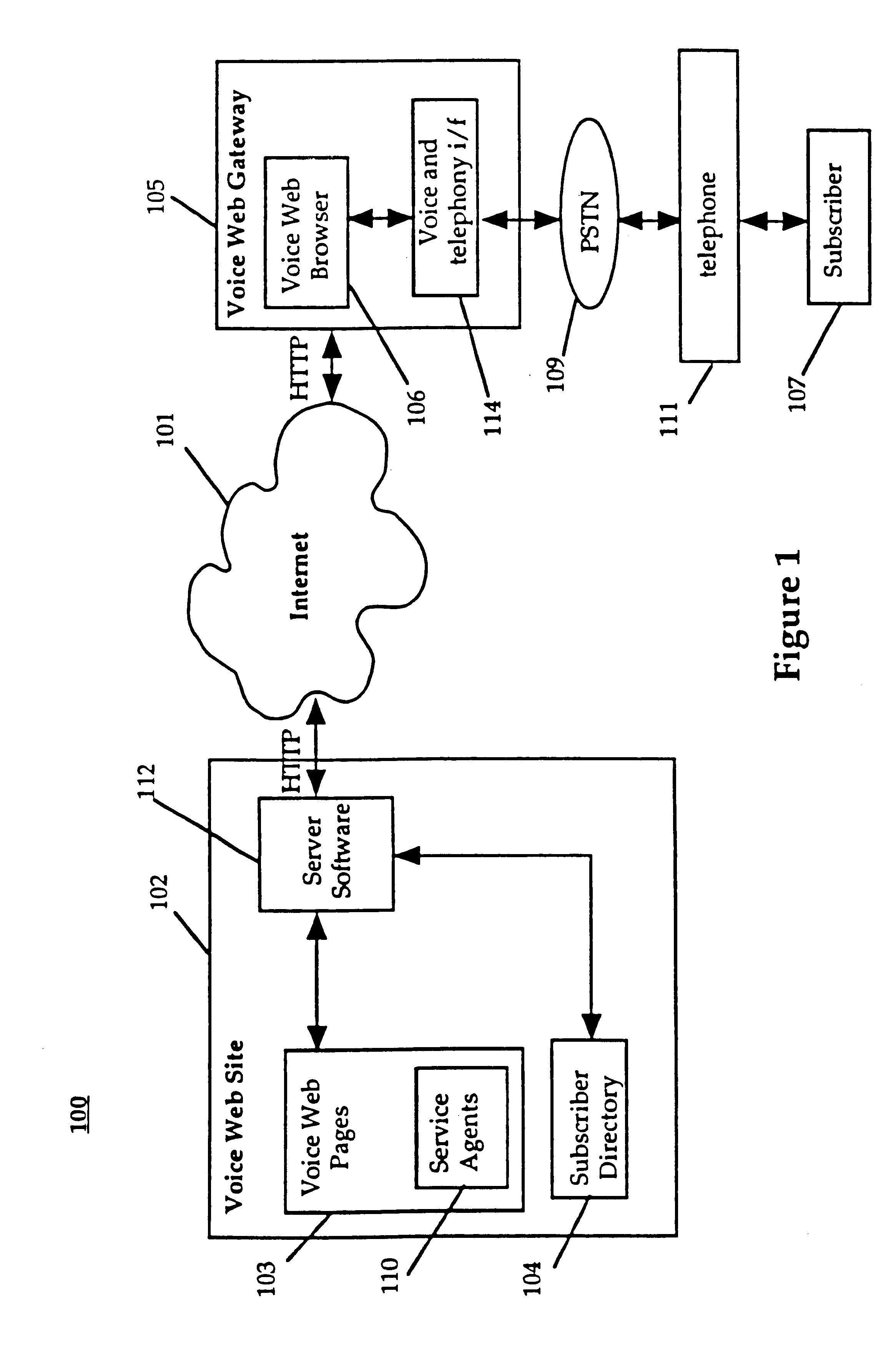

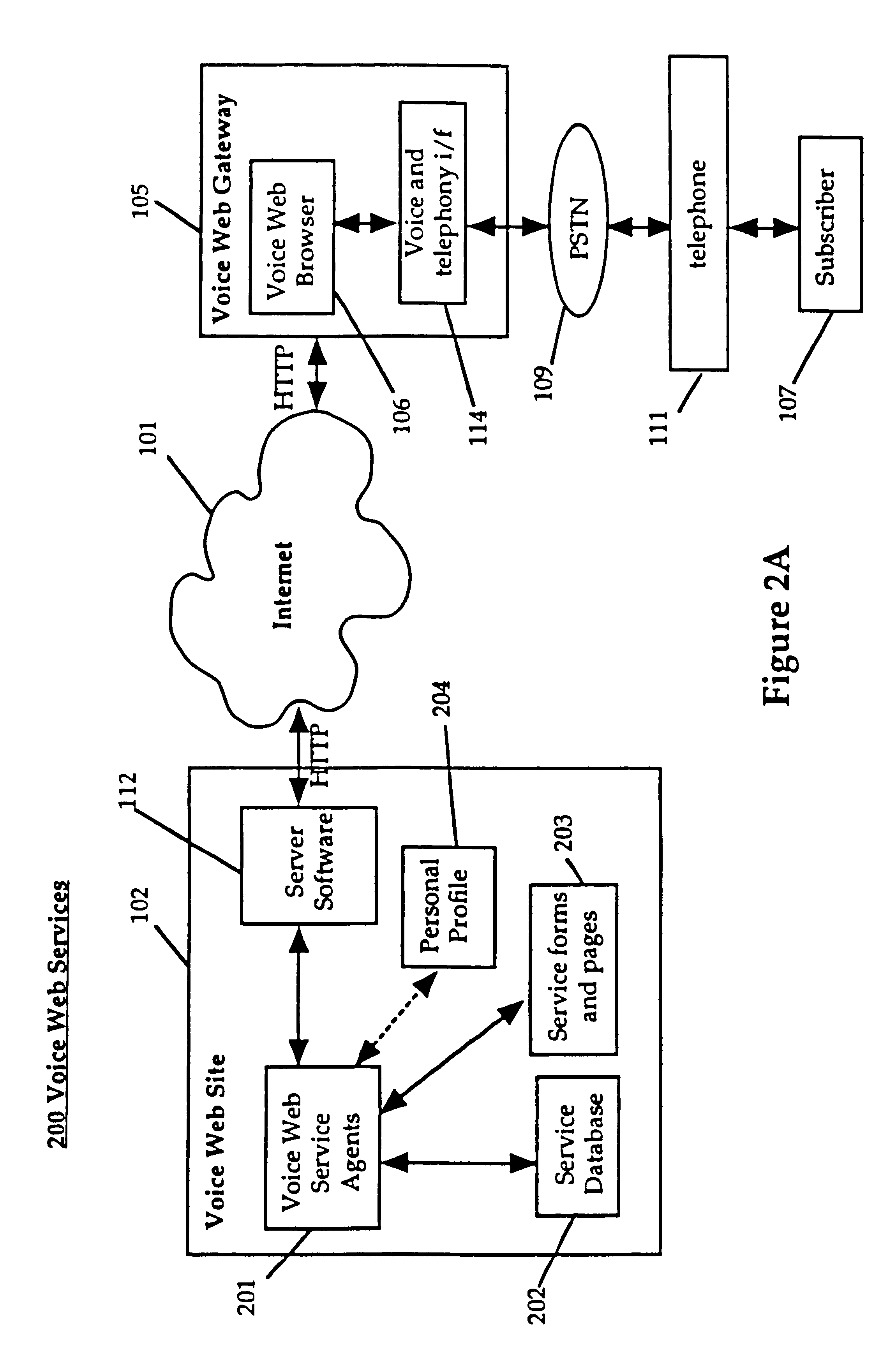

A system and method provides universal access to voice-based documents containing information formatted using MIME and HTML standards using customized extensions for voice information access and navigation. These voice documents are linked using HTML hyper-links that are accessible to subscribers using voice commands, touch-tone inputs and other selection means. These voice documents and components in them are addressable using HTML anchors embedding HTML universal resource locators (URLs) rendering them universally accessible over the Internet. This collection of connected documents forms a voice web. The voice web includes subscriber-specific documents including speech training files for speaker dependent speech recognition, voice print files for authenticating the identity of a user and personal preference and attribute files for customizing other aspects of the system in accordance with a specific subscriber.

Owner:NUANCE COMM INC

Speech training method with alternative proper pronunciation database

InactiveUS6963841B2Improving speech patternSpeech recognitionElectrical appliancesSpeech trainingUser input

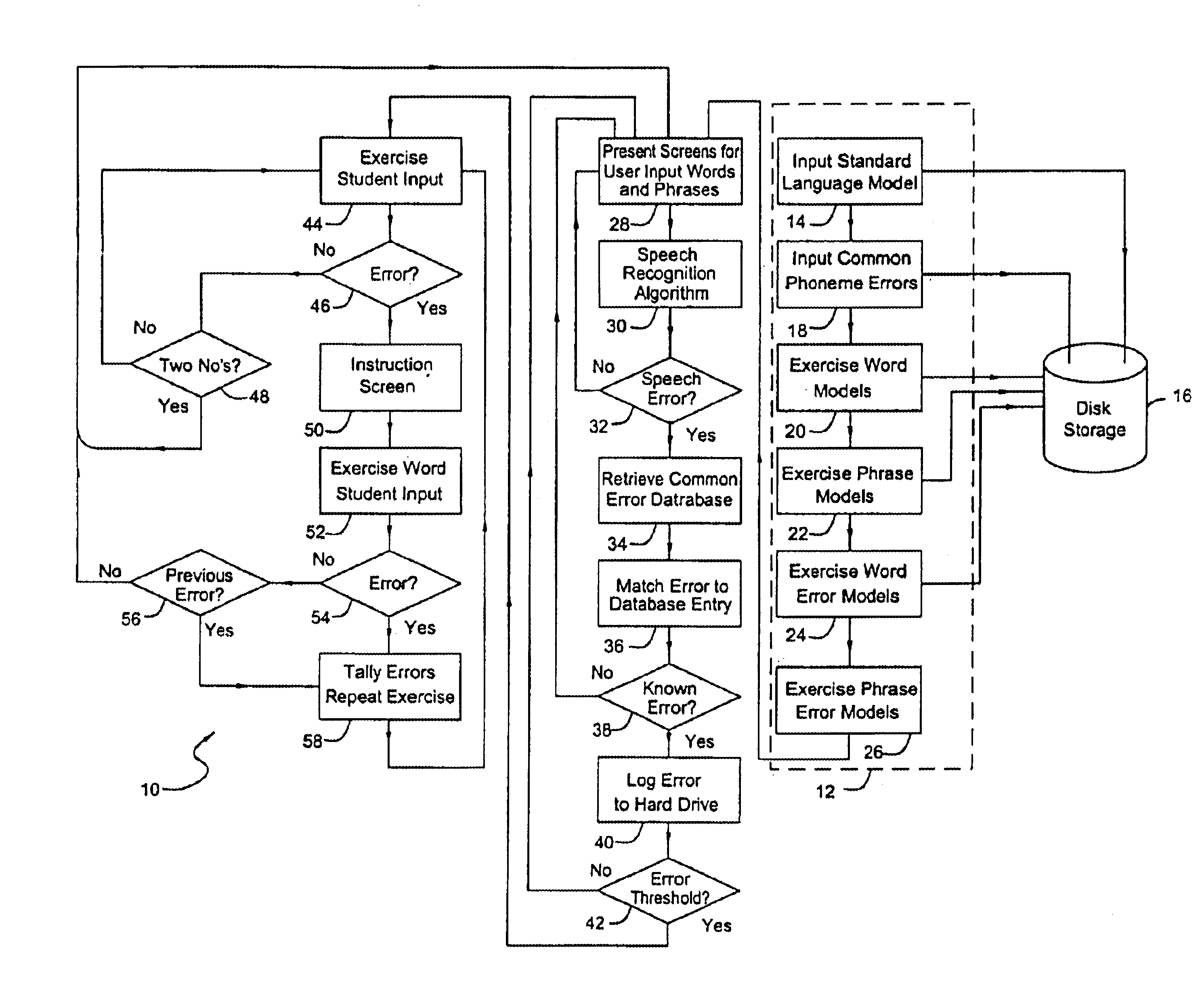

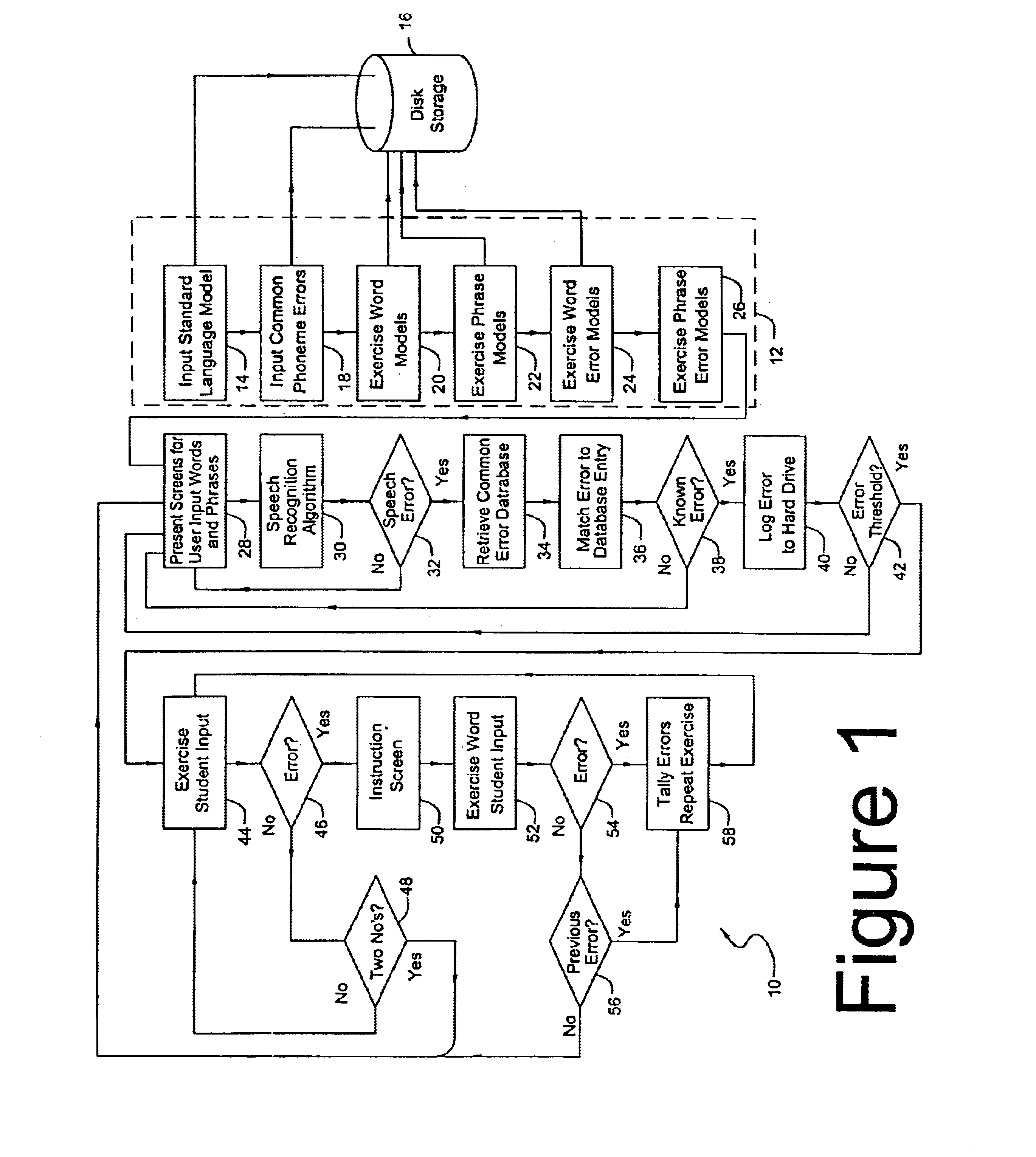

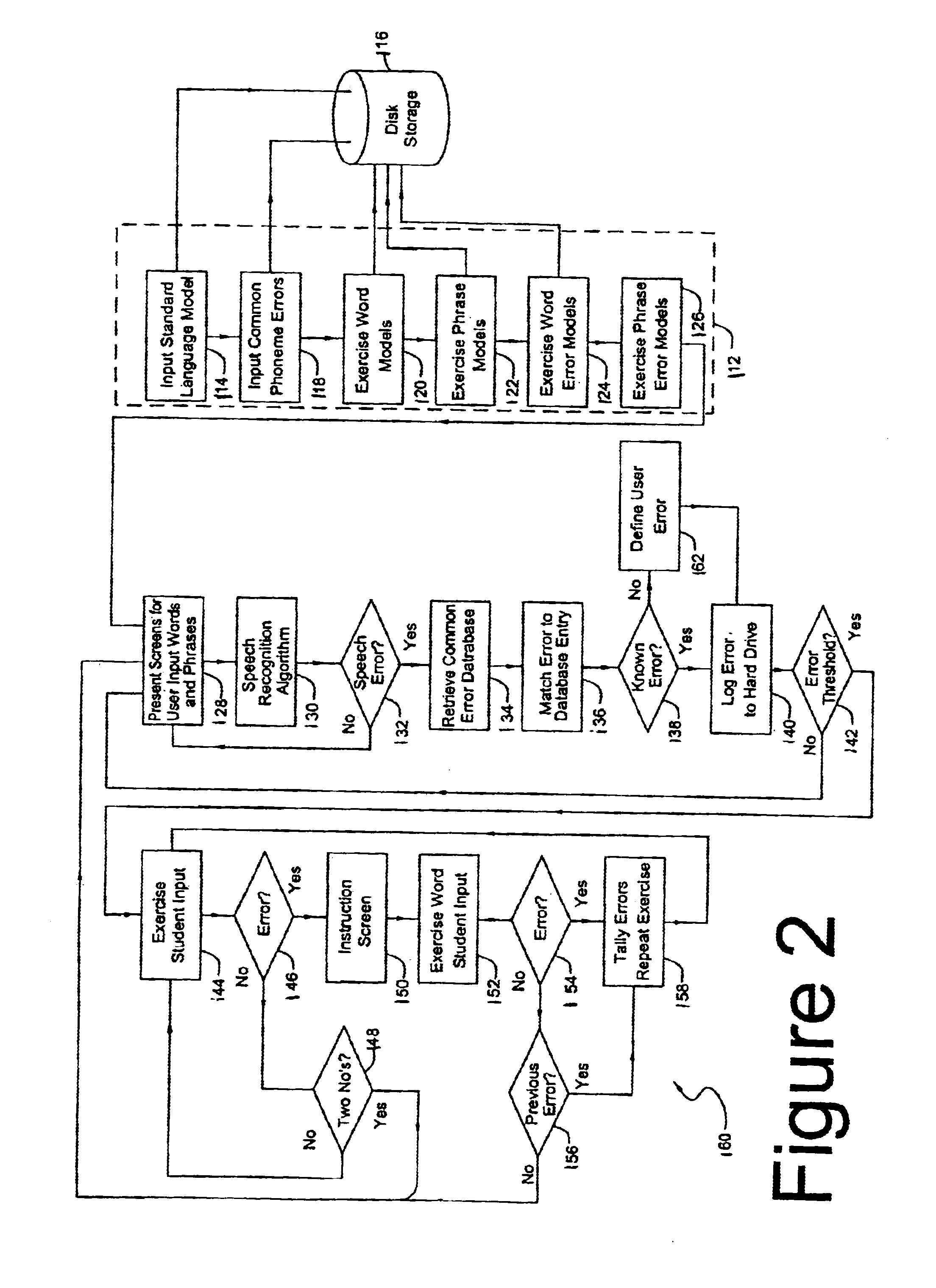

In accordance with a present invention speech training system is disclosed. It uses a microphone to receive audible sounds input by a user into a first computing device having a program with a database consisting of (i) digital representations of known audible sounds and associated alphanumeric representations of the known audible sounds, and (ii) digital representations of known audible sounds corresponding to mispronunciations resulting from known classes of mispronounced words and phrases. The method is performed by receiving the audible sounds in the form of the electrical output of the microphone. A particular audible sound to be recognized is converted into a digital representation of the audible sound. The digital representation of the particular audible sound is then compared to the digital representations of the known audible sounds to determine which of those known audible sounds is most likely to be the particular audible sound being compared to the sounds in the database. In response to a determination of error corresponding to a known type or instance of mispronunciation, the system presents an interactive training program from the computer to the user to enable the user to correct such mispronunciation.

Owner:LESSAC TECH INC

Smart training and smart scoring in SD speech recognition system with user defined vocabulary

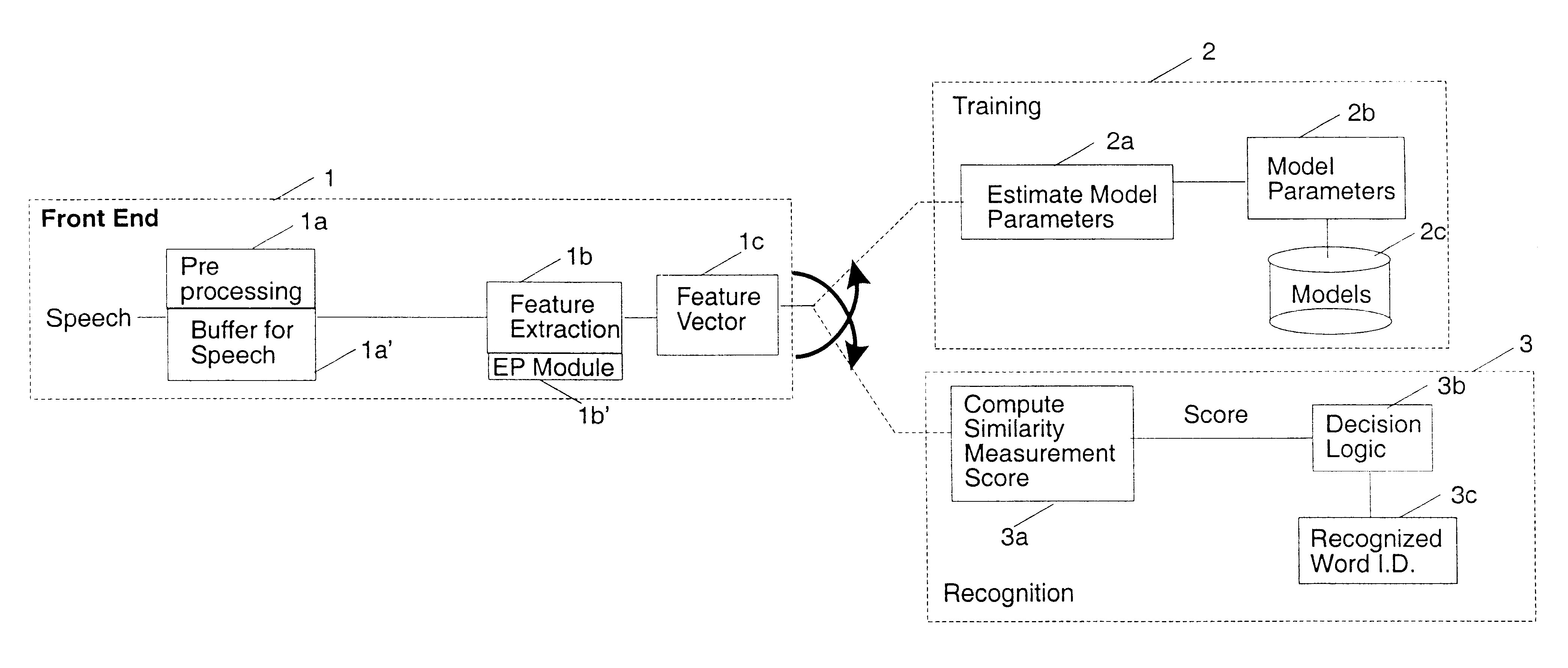

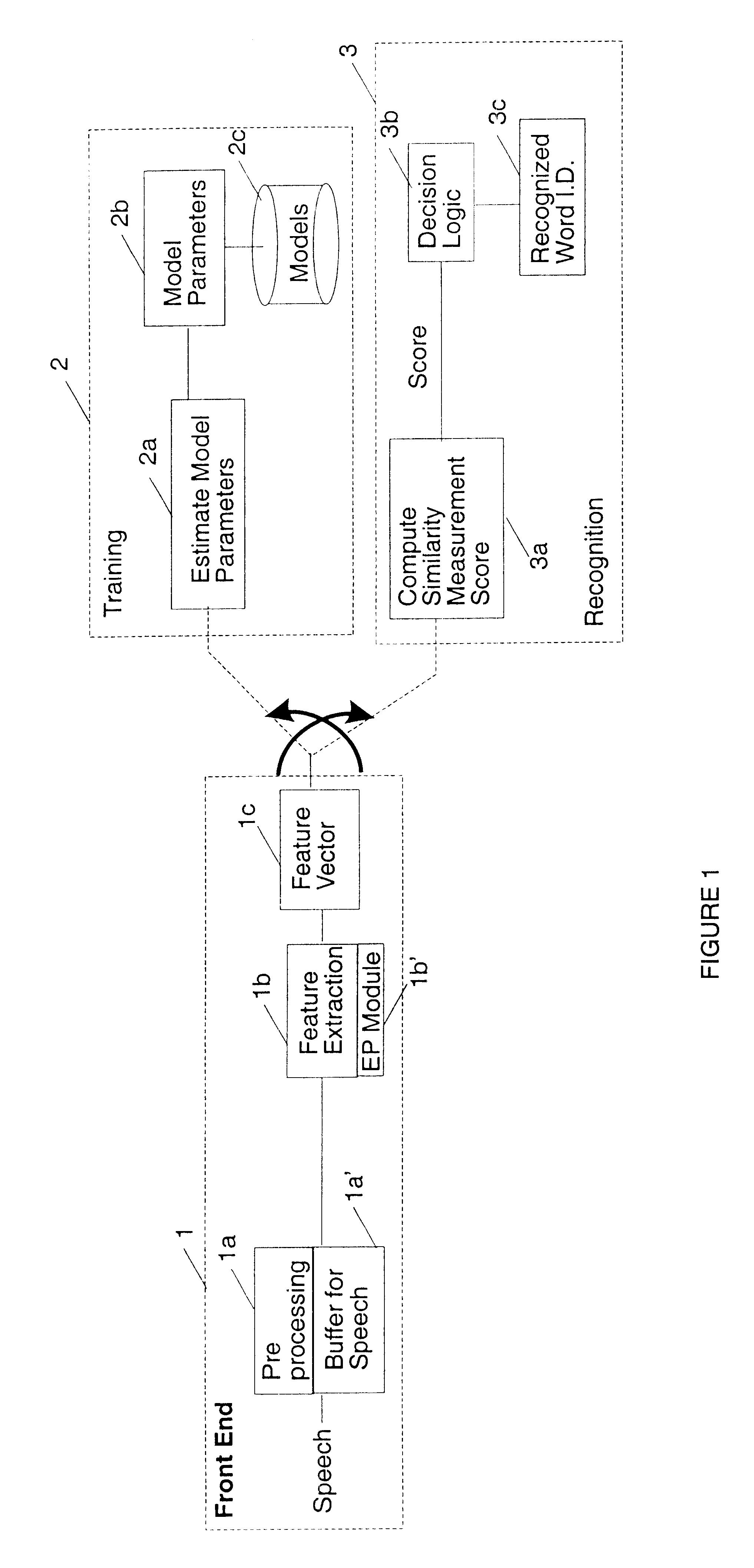

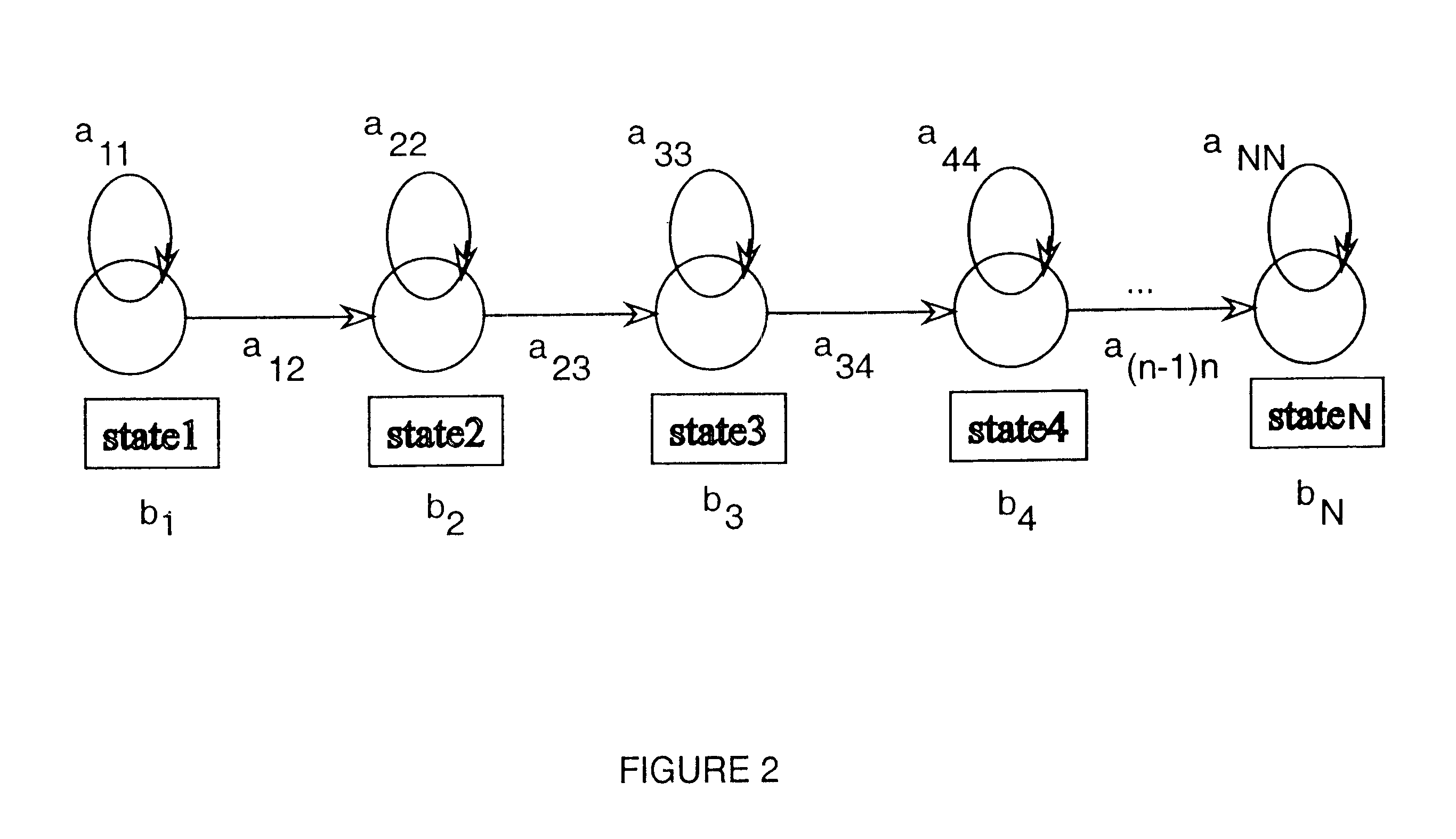

In a speech training and recognition system, the current invention detects and warns the user about the similar sounding entries to vocabulary and permits entry of such confusingly similar terms which are marked along with the stored similar terms to identify the similar words. In addition, the states in similar words are weighted to apply more emphasis to the differences between similar words than the similarities of such words. Another aspect of the current invention is to use modified scoring algorithm to improve the recognition performance in the case where confusing entries were made to the vocabulary despite the warning. Yet another aspect of the current invention is to detect and warn the user about potential problems with new entries such as short words and two or more word entries with long silence periods in between words. Finally, the current invention also includes alerting the user about the dissimilarity of the multiple tokens of the same vocabulary item in the case of multiple-token training.

Owner:WIAV SOLUTIONS LLC

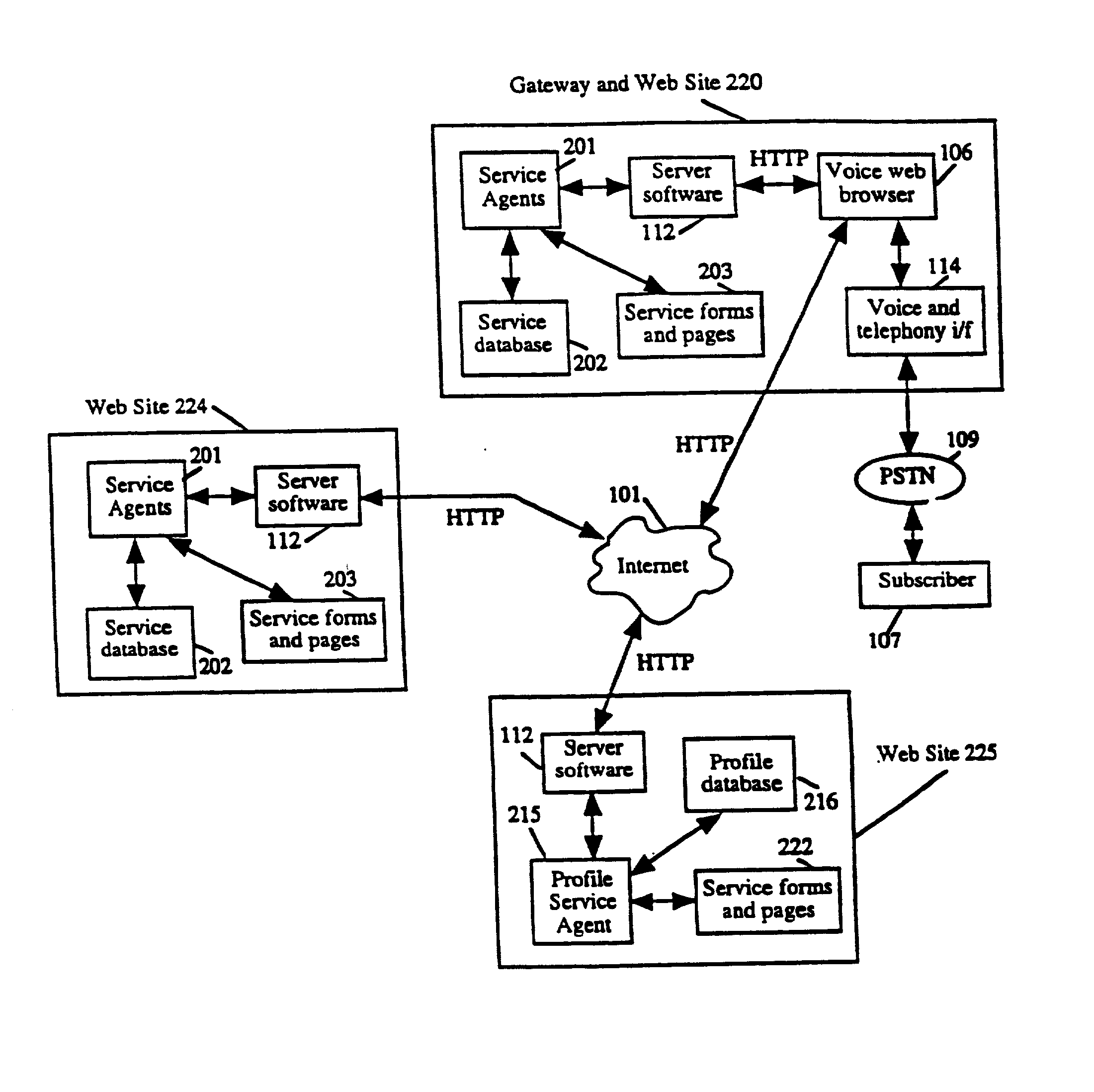

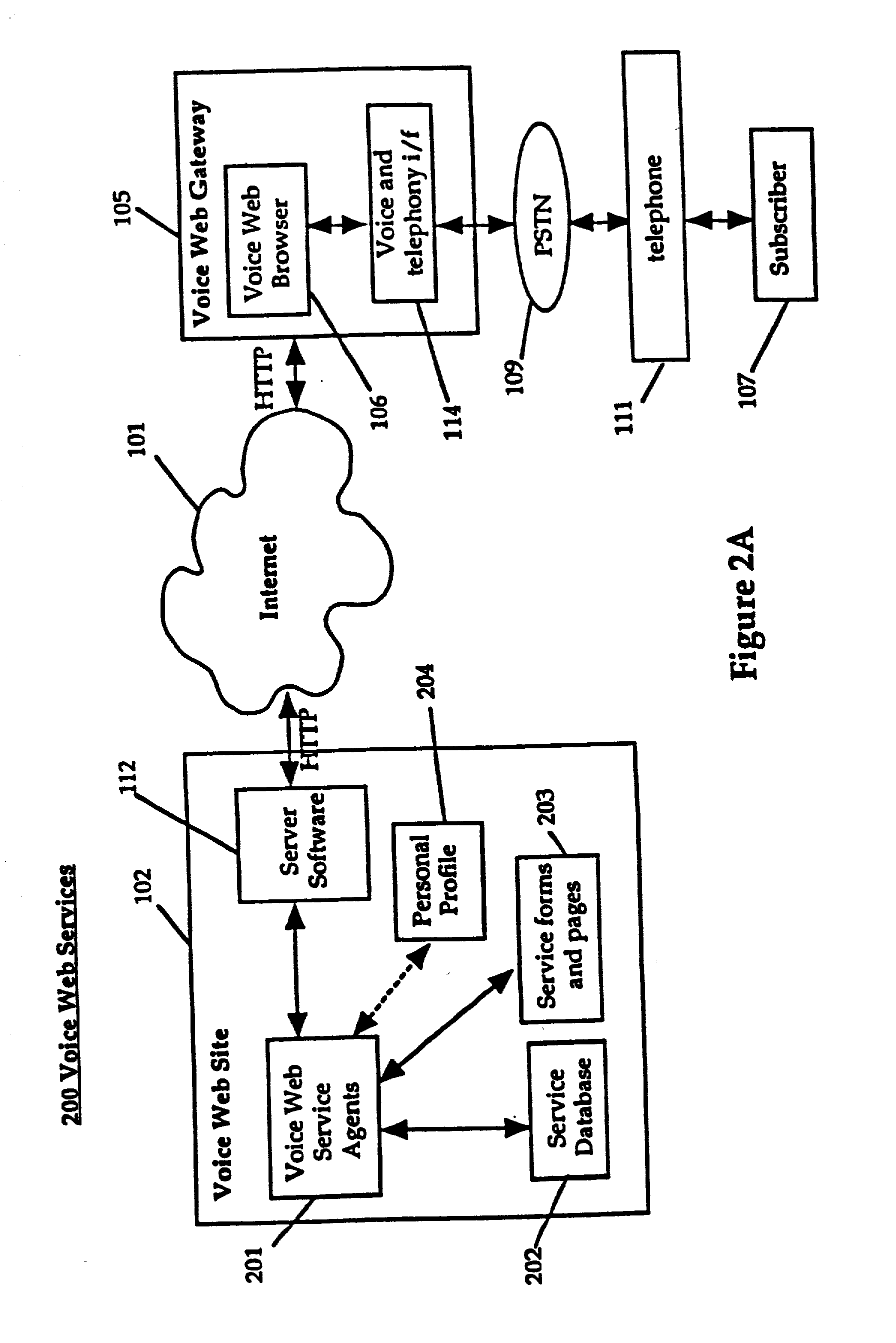

System and method for providing and using universally accessible voice and speech data files

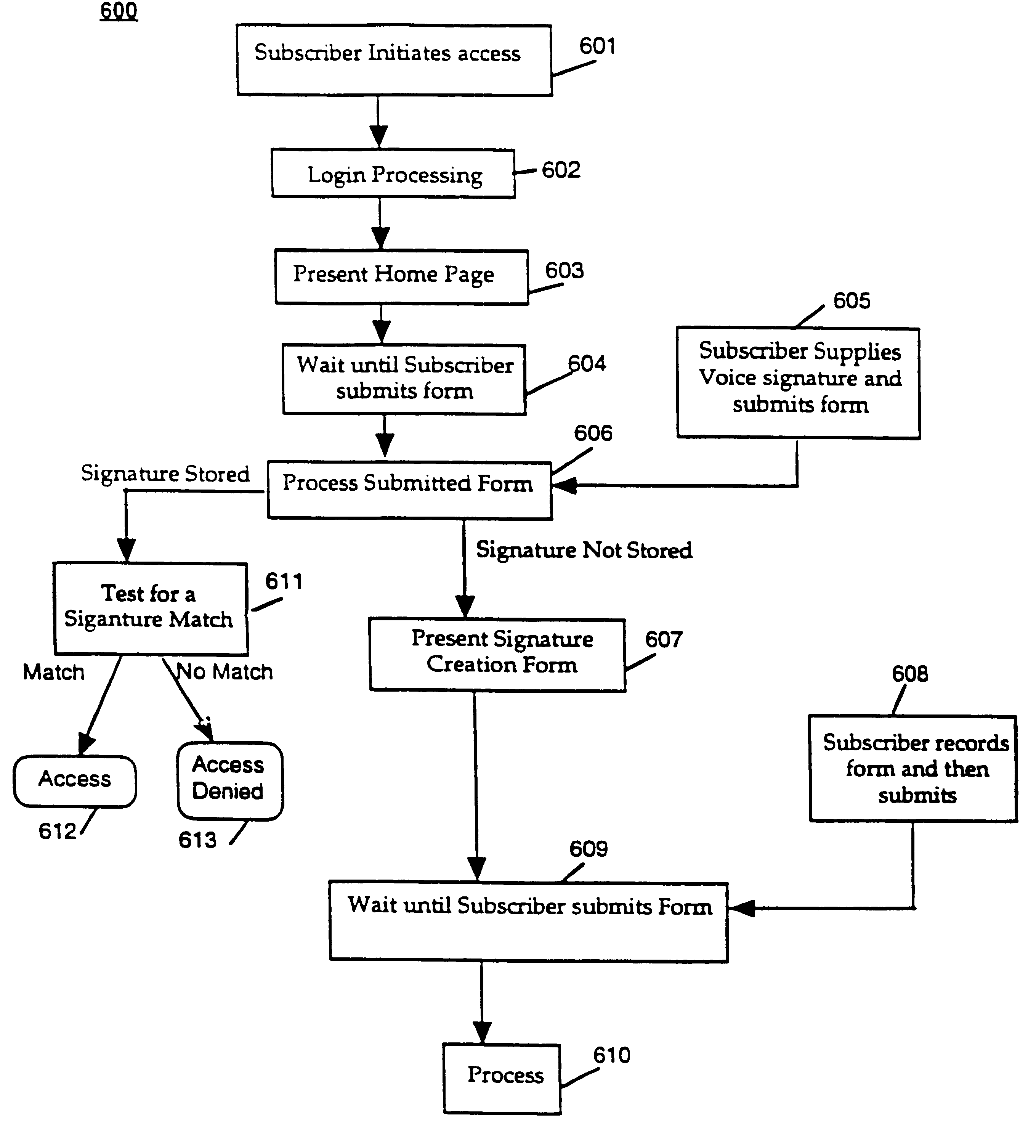

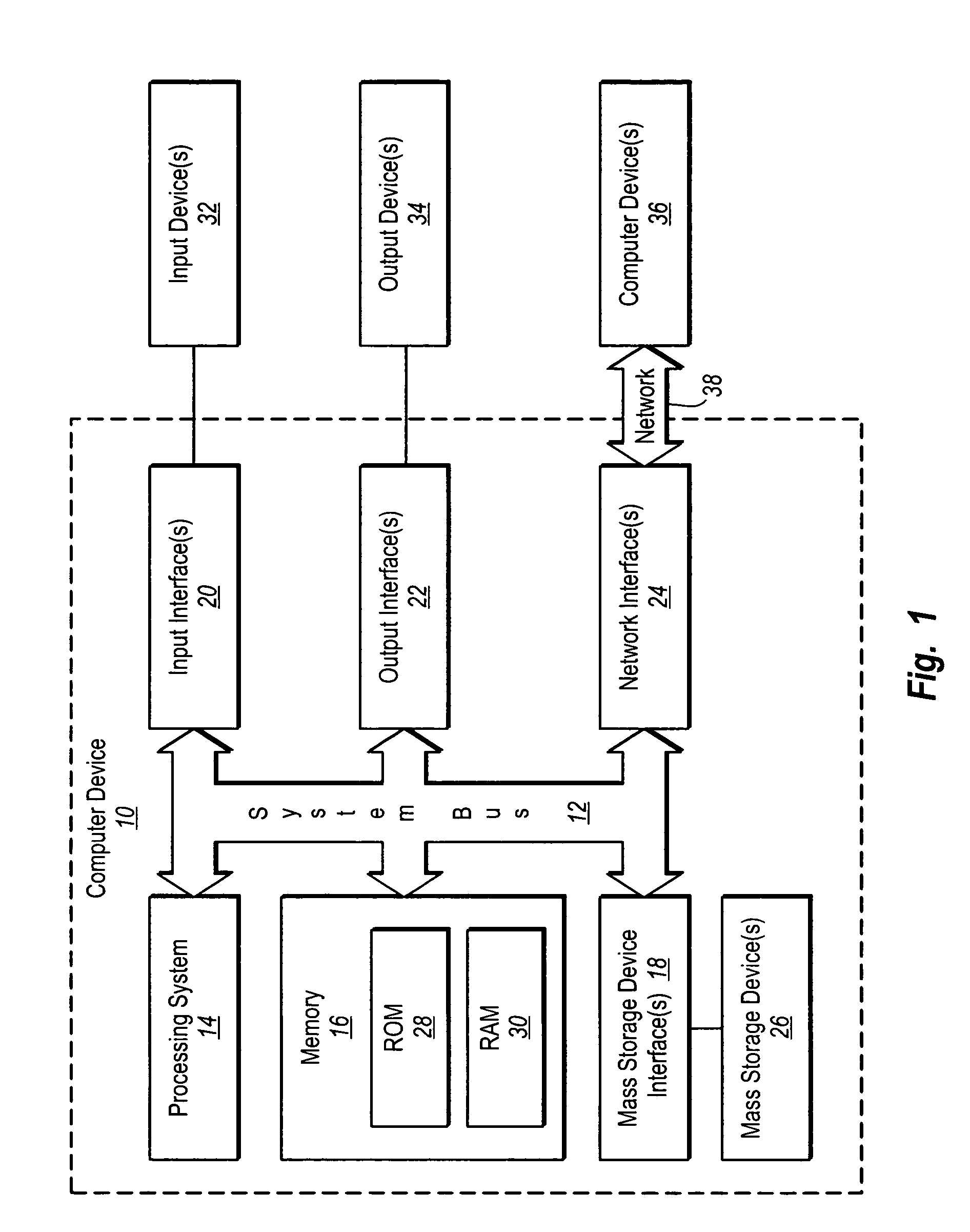

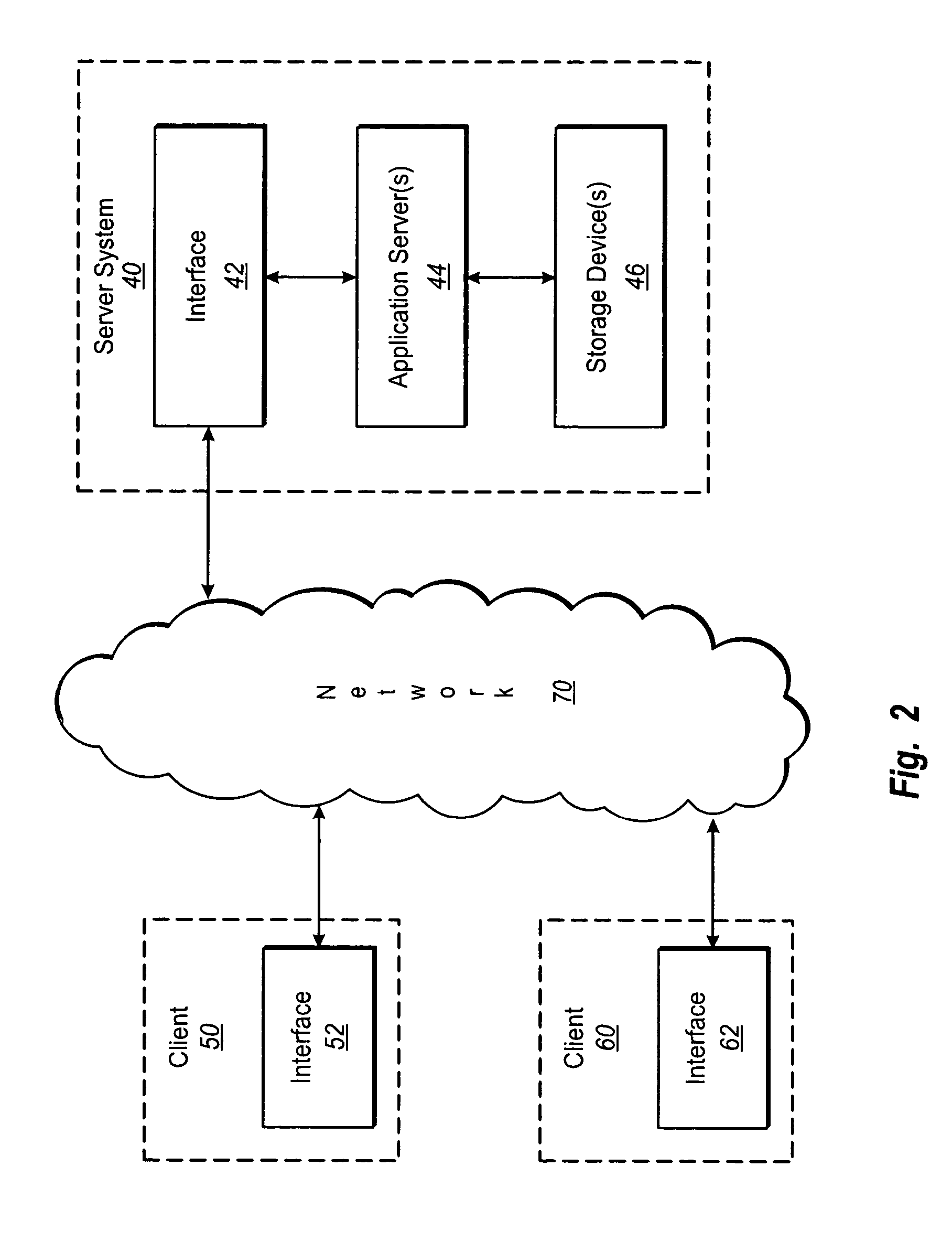

InactiveUS20020080927A1Automatic call-answering/message-recording/conversation-recordingMultiple digital computer combinationsHyperlinkSpeech training

A system and method provides universal access to voice-based documents containing information formatted using MIME and HTML standards using customized extensions for voice information access and navigation. These voice documents are linked using HTML hyper-links that are accessible to subscribers using voice commands, touch-tone inputs and other selection means. These voice documents and components in them are addressable using HTML anchors embedding HTML universal resource locators (URLs) rendering them universally accessible over the Internet. This collection of connected documents forms a voice web. The voice web includes subscriber-specific documents including speech training files for speaker dependent speech recognition, voice print files for authenticating the identity of a user and personal preference and attribute files for customizing other aspects of the system in accordance with a specific subscriber.

Owner:NUANCE COMM INC

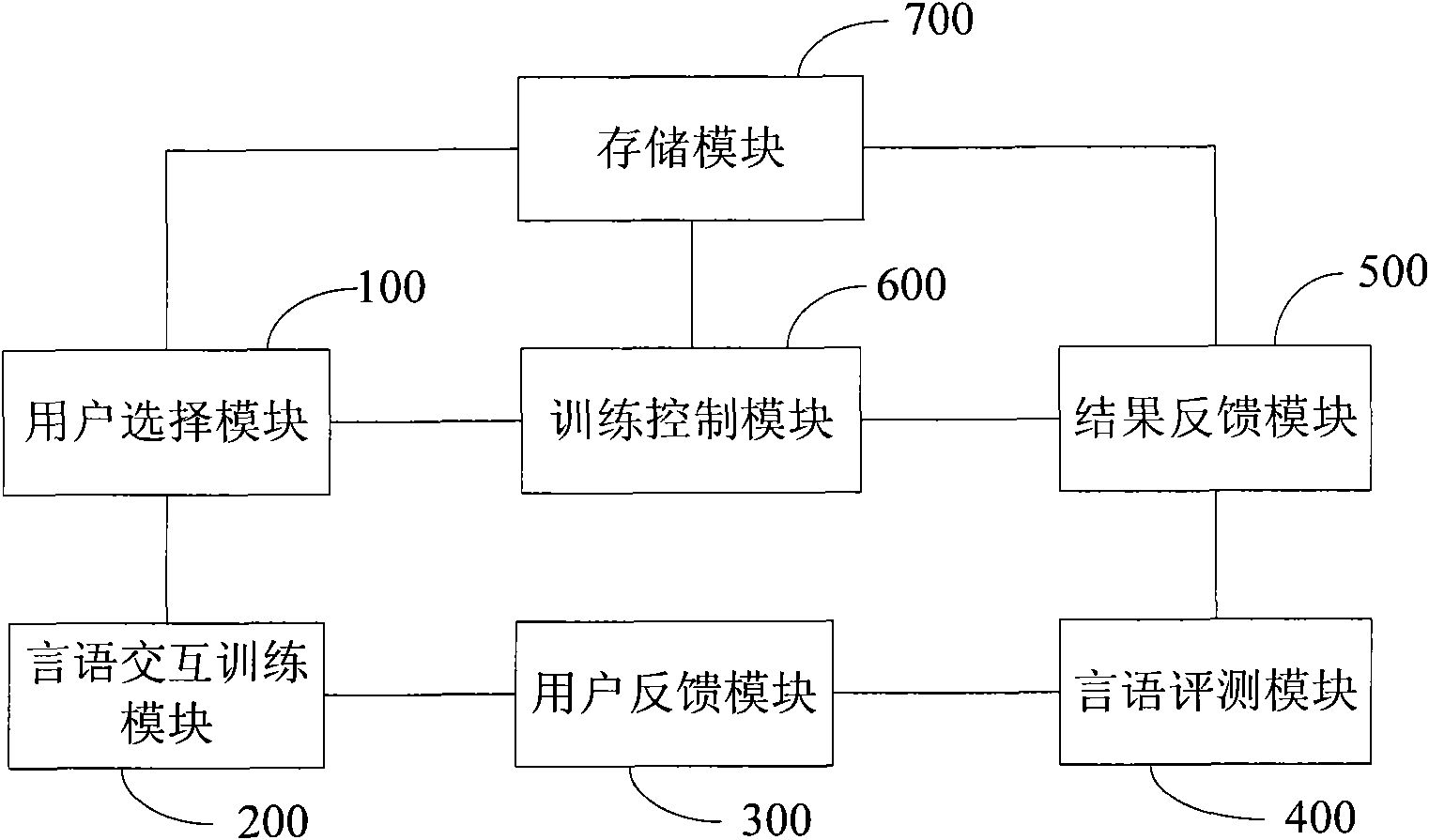

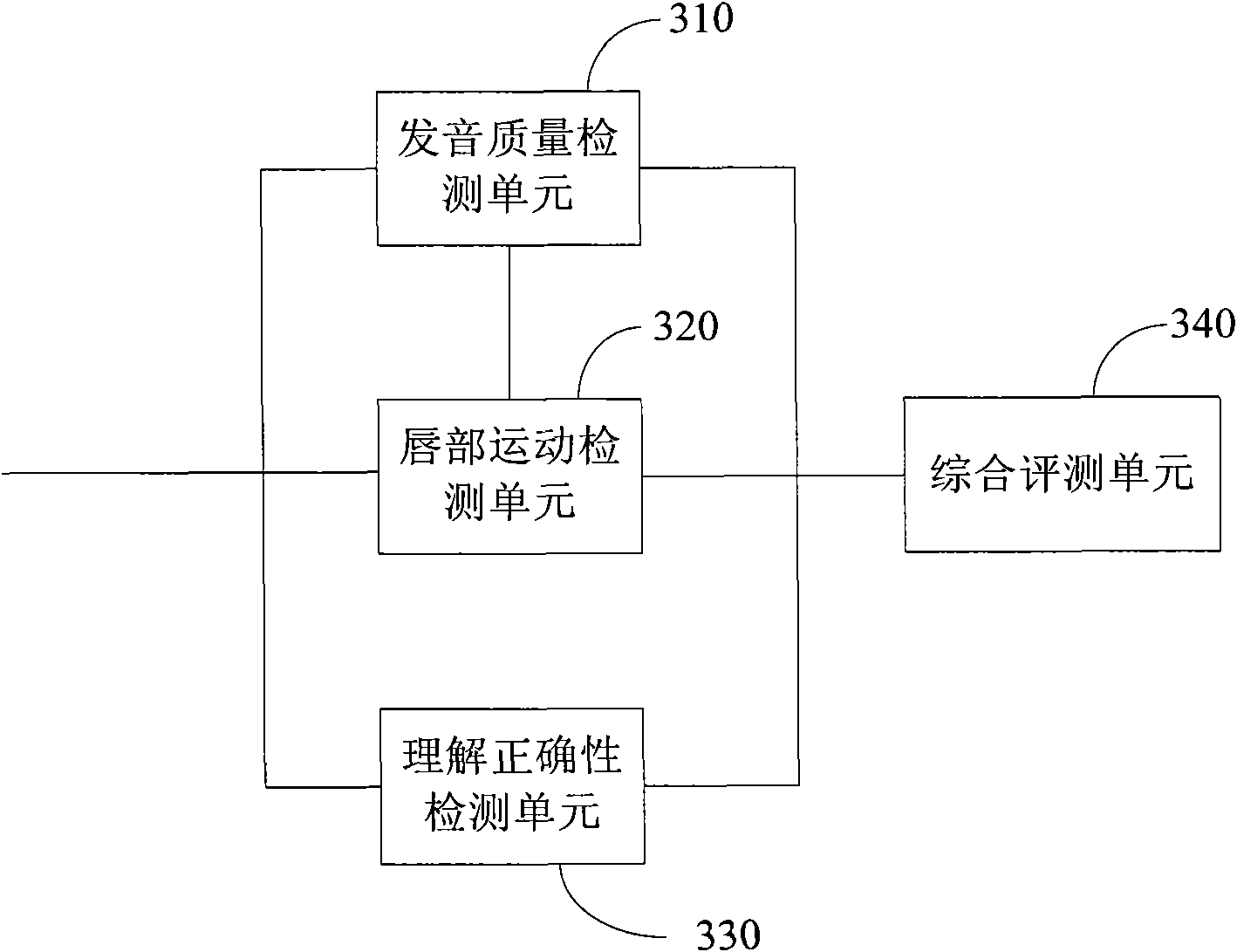

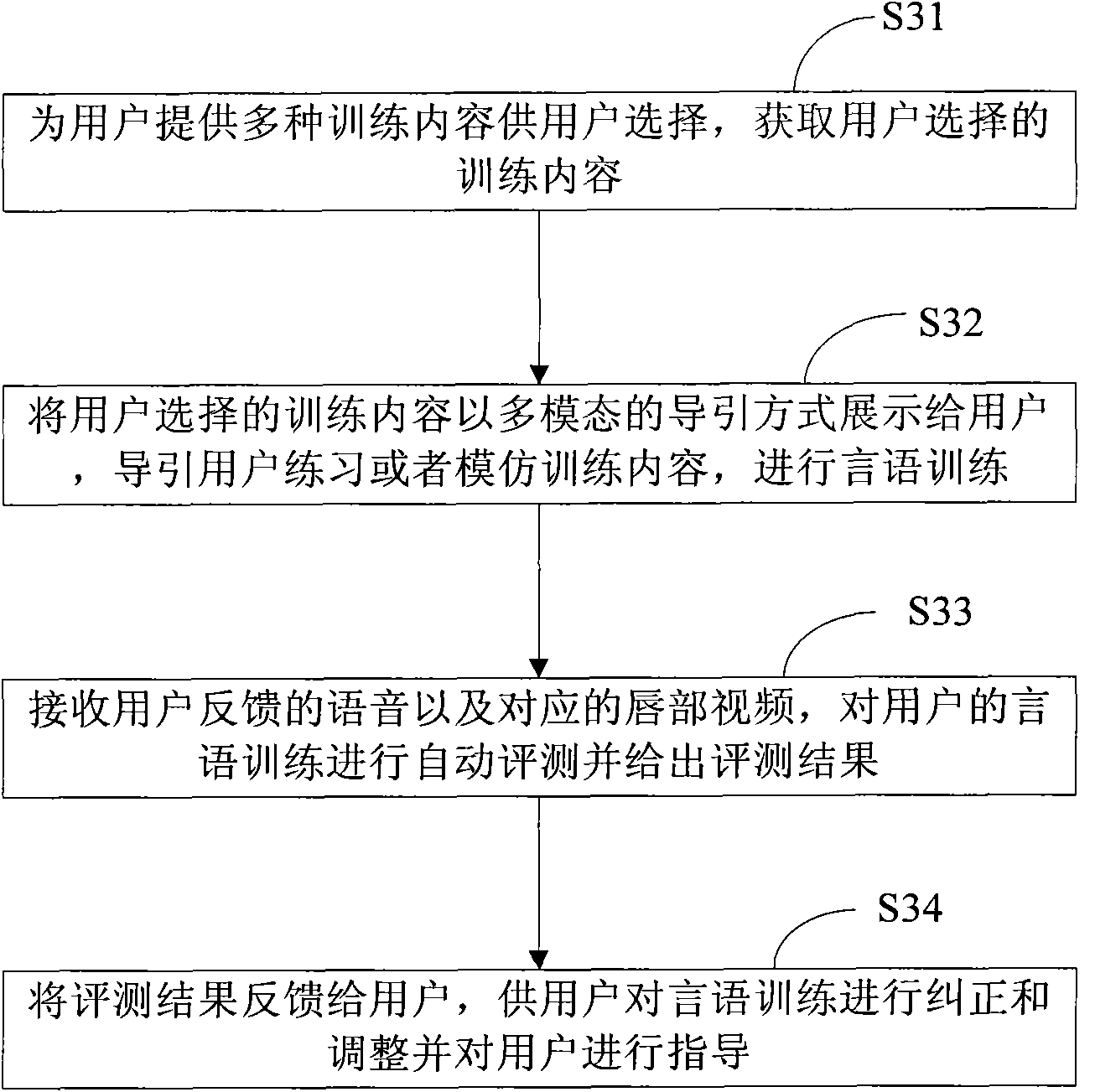

Speech interactive training system and speech interactive training method

ActiveCN102063903AImprove training effectImprove the level ofSpeech recognitionEvaluation resultSpeech training

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

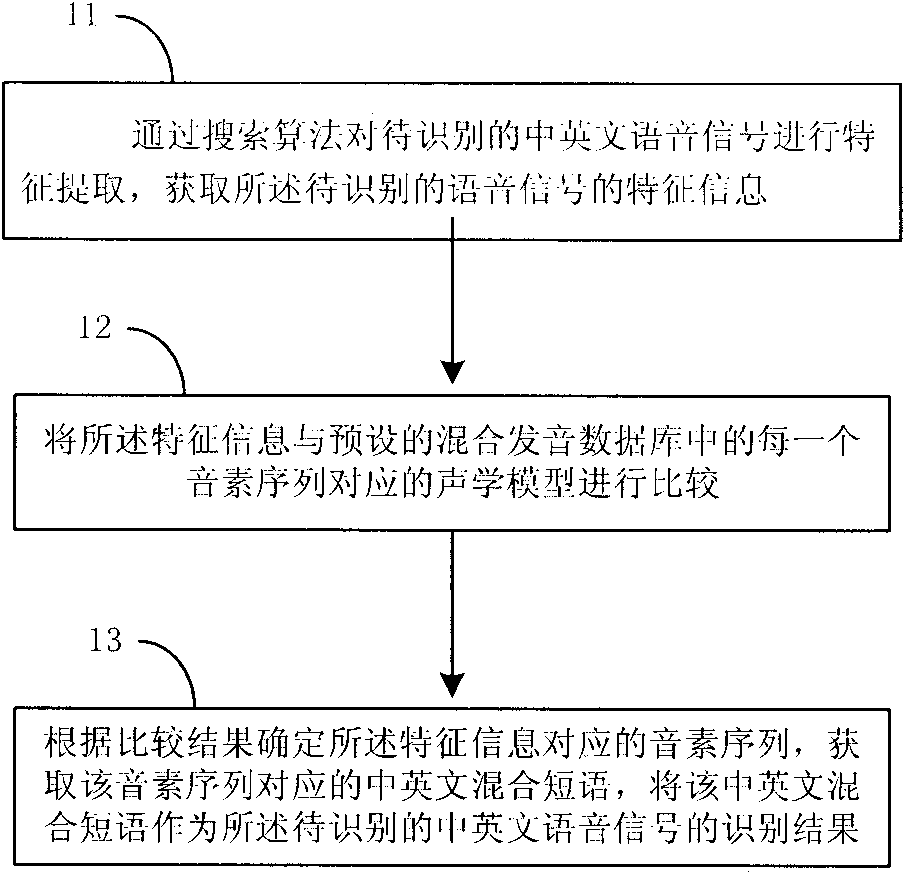

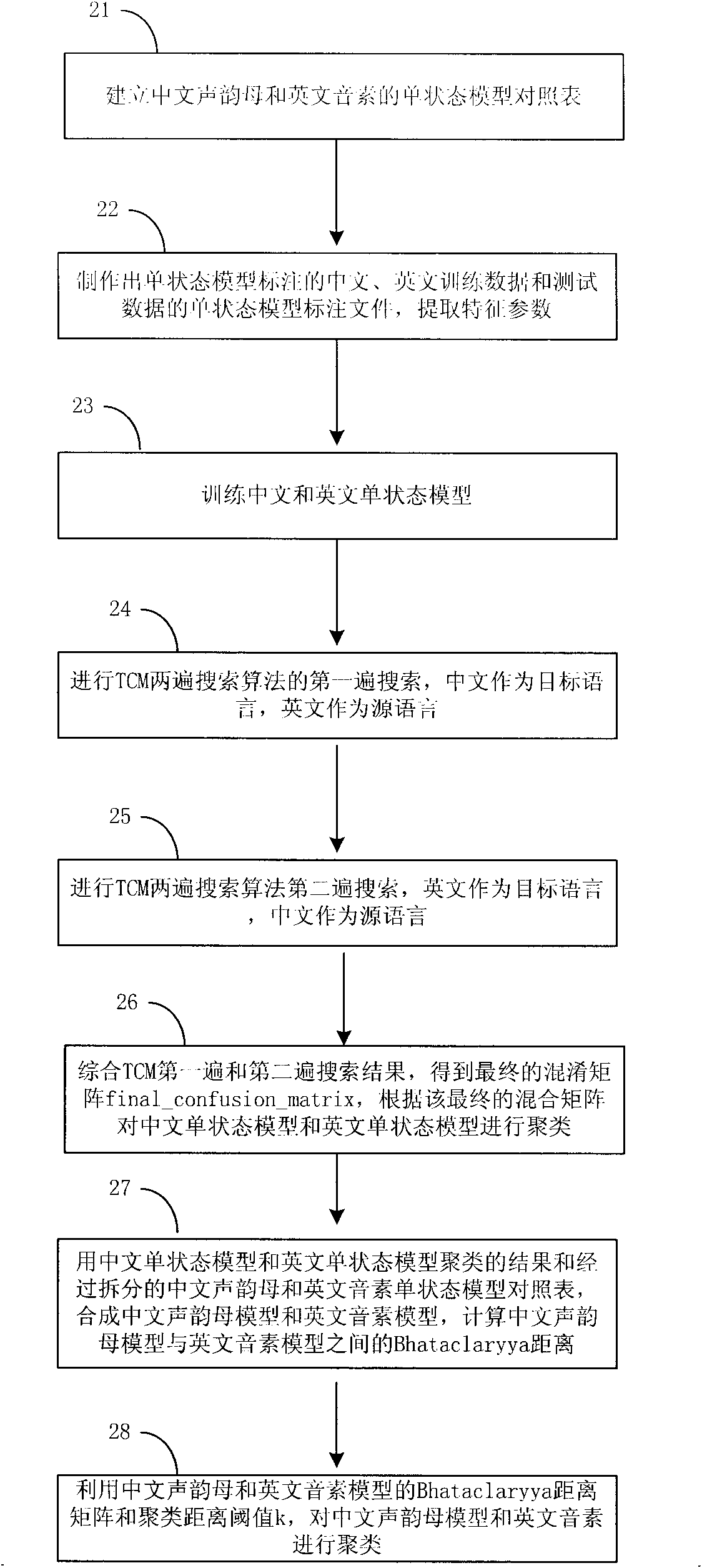

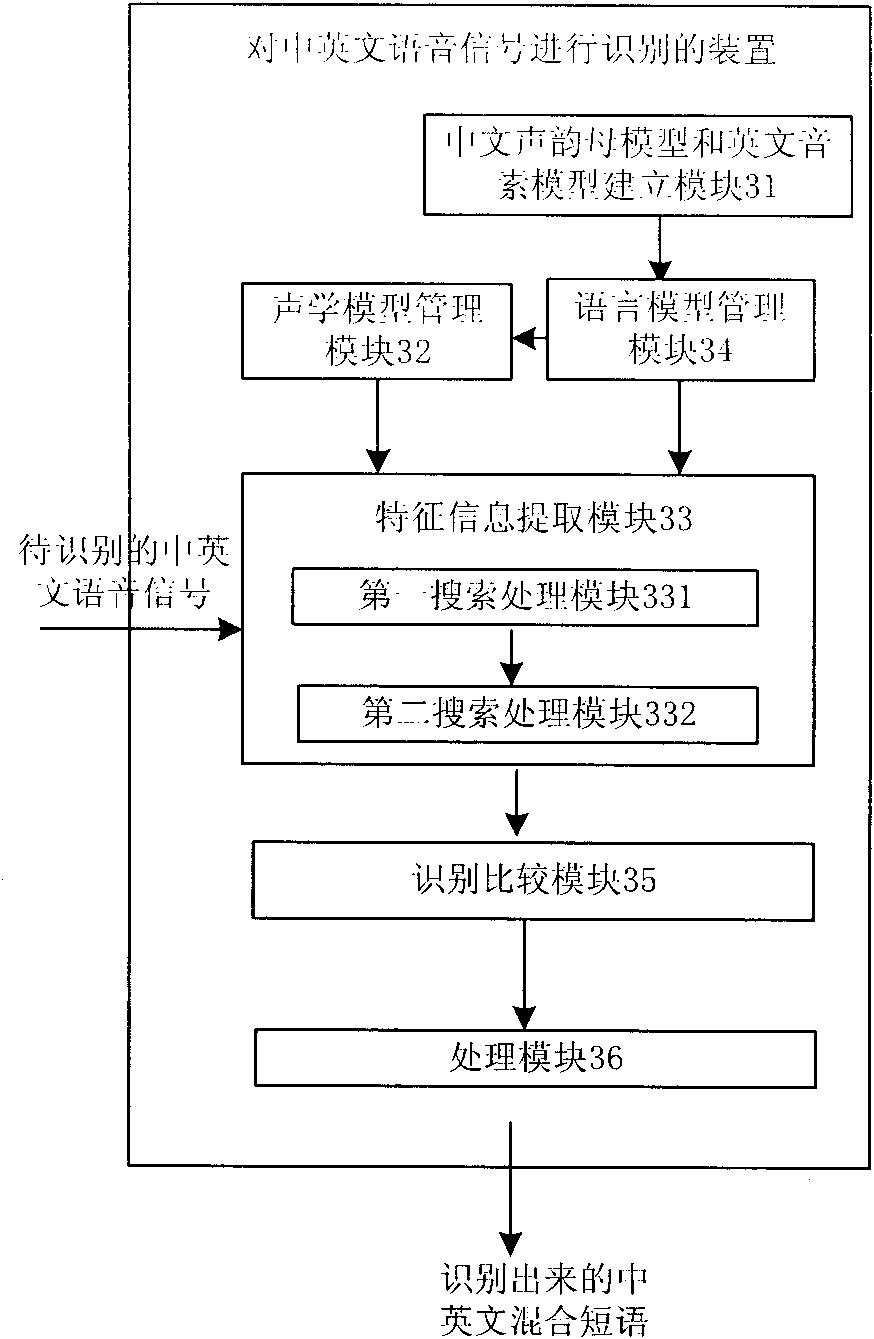

Method and device for identifying Chinese and English speech signal

The invention provides a method and a device for identifying a Chinese and English speech signal. The method mainly comprises the following steps of: carrying out feature extraction on a Chinese and English speech signal to be identified by a searching algorithm to acquire the feature information of the speech signal to be identified; and comparing the feature information with an acoustic model corresponding to each phoneme sequence in a mixed speech database, determining a phoneme sequence corresponding to the feature information based on the comparative result, and acquiring a Chinese and English mixed phrase corresponding to the phoneme sequence, wherein the Chinese and English mixed phrase is taken as an identification result of the Chinese and English speech signal to be identified. The invention can establish the acoustic model with less confusion, and does not need a large amount of labeled speech training data, thereby saving system resources. The invention can effectively raise the identification rate of the Chinese and English speech signal.

Owner:GLOBAL INNOVATION AGGREGATORS LLC

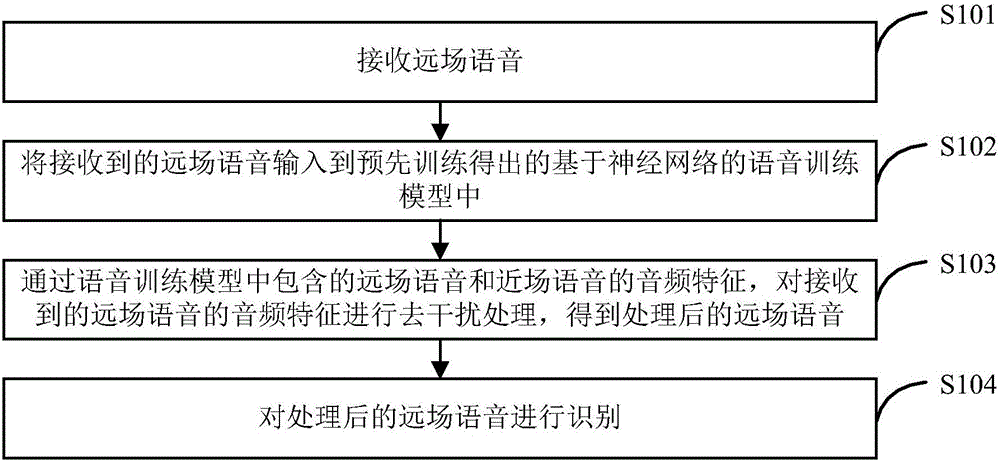

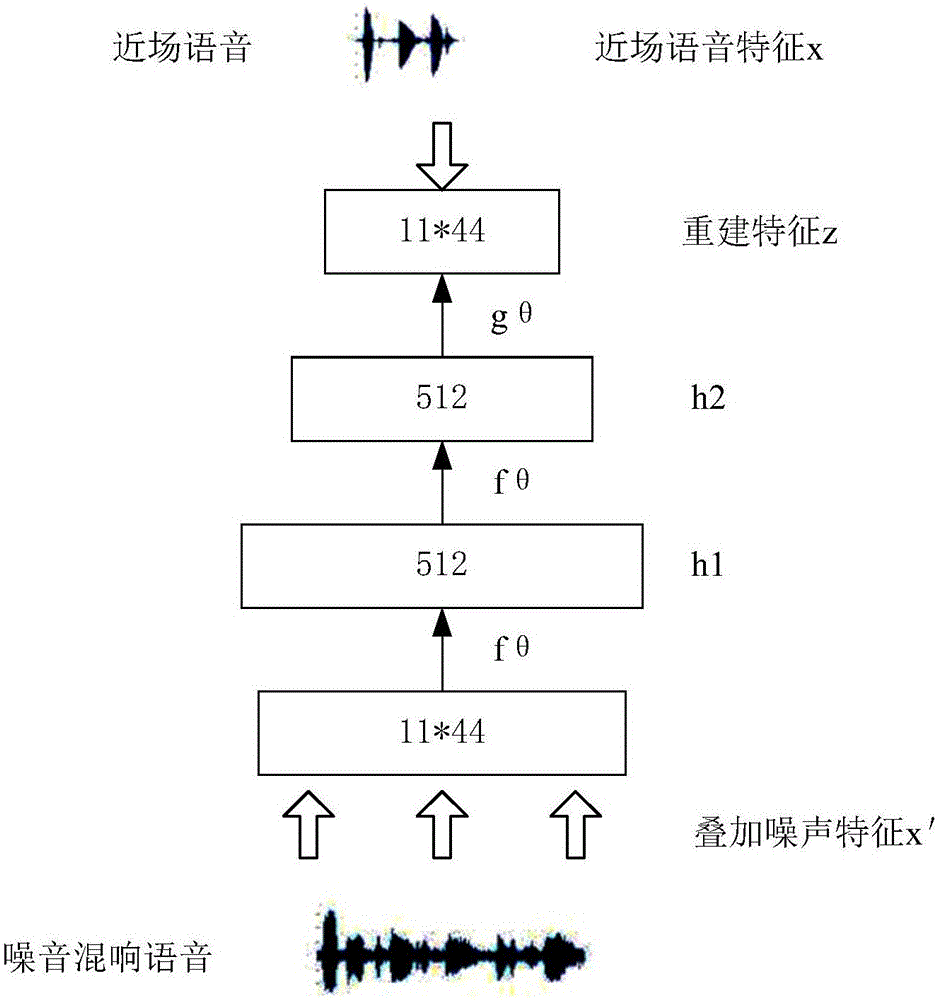

Far-field speech recognition processing method and device

ActiveCN106328126AReduce cost inputGood denoising effectSpeech recognitionSpeech trainingSpeech identification

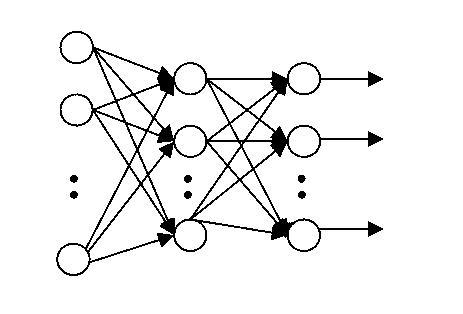

The invention discloses a far-field speech recognition processing method and device. The method comprises the steps that a far-field speech is received; the far-field speech is input into a neural network-based speech training model obtained through pre-training; interference removing processing is conducted on audio features of the received far-field speech through audio features of far-field speeches and near-field speeches in the speech training model, and the processed far-field speech is obtained; the processed far-field speech is recognized. According to the method, optimization processing on the far-field speech can be achieved, a better processing result can be acquired, and equipment cost input is reduced.

Owner:BEIJING UNISOUND INFORMATION TECH +1

Method and system for training far-field speech acoustic model

ActiveCN107680586AAvoid time costReduce economic costsSpeech recognitionNeural learning methodsSpeech trainingAcoustic model

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

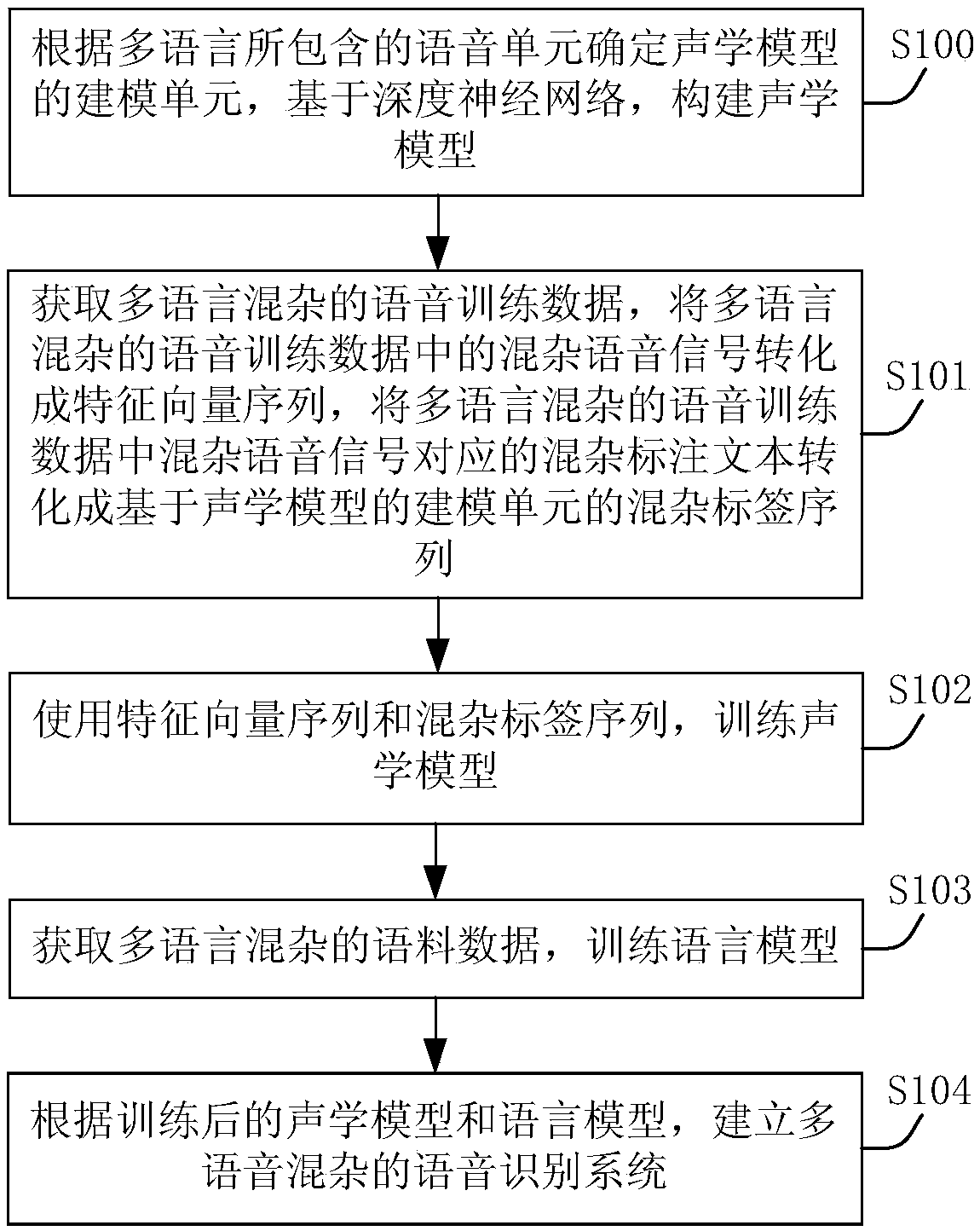

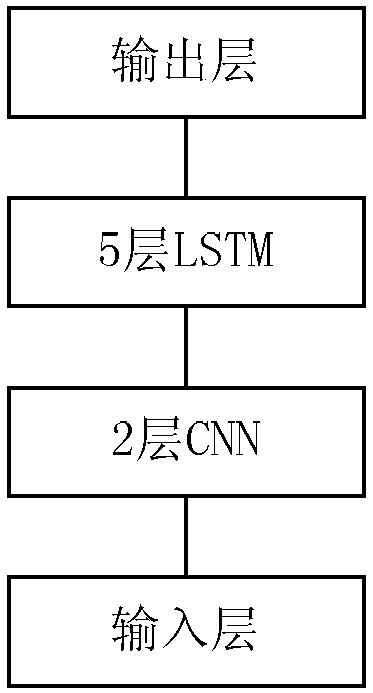

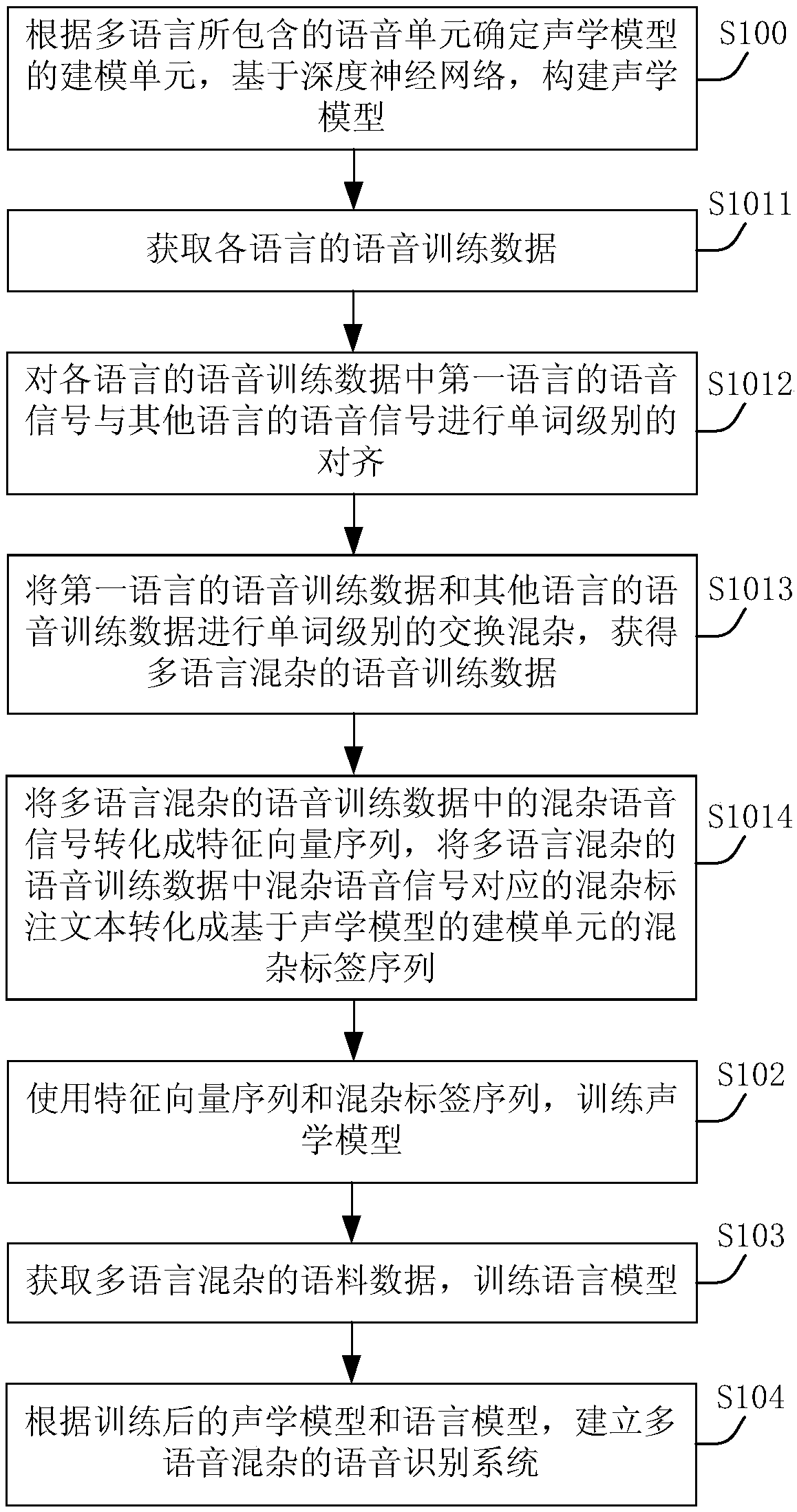

Method and device for multilingual hybrid model establishment and data acquisition, and electronic equipment

ActiveCN108711420ASolve classification problemsImprove recognition accuracySpeech recognitionSpeech synthesisData acquisitionLabelling

Embodiments of the invention provide a method and device for multilingual hybrid model establishment and data acquisition, and electronic equipment. The method includes determining a modeling unit ofan acoustic model according to a speech unit contained by multilanguage, and establishing the acoustic model based on a deep neural network, wherein the modeling unit is the context free speech unit;obtaining multilingual hybrid speech training data, converting a hybrid speech signal in the multilingual hybrid speech training data into an eigenvector sequence, and converting a hybrid labelling text corresponding to the hybrid speech signal into a hybrid label sequence of the modeling unit based on the acoustic model; training the acoustic model by using the eigenvector sequence and the hybridlabel sequence; obtaining multilingual hybrid corpus data to train a language model; and establishing a multilingual hybrid speech recognition system according to the acoustic model and the languagemodel. According to the embodiments, recognition accuracy of speech data mixing multiple languages can be enhanced.

Owner:BEIJING ORION STAR TECH CO LTD

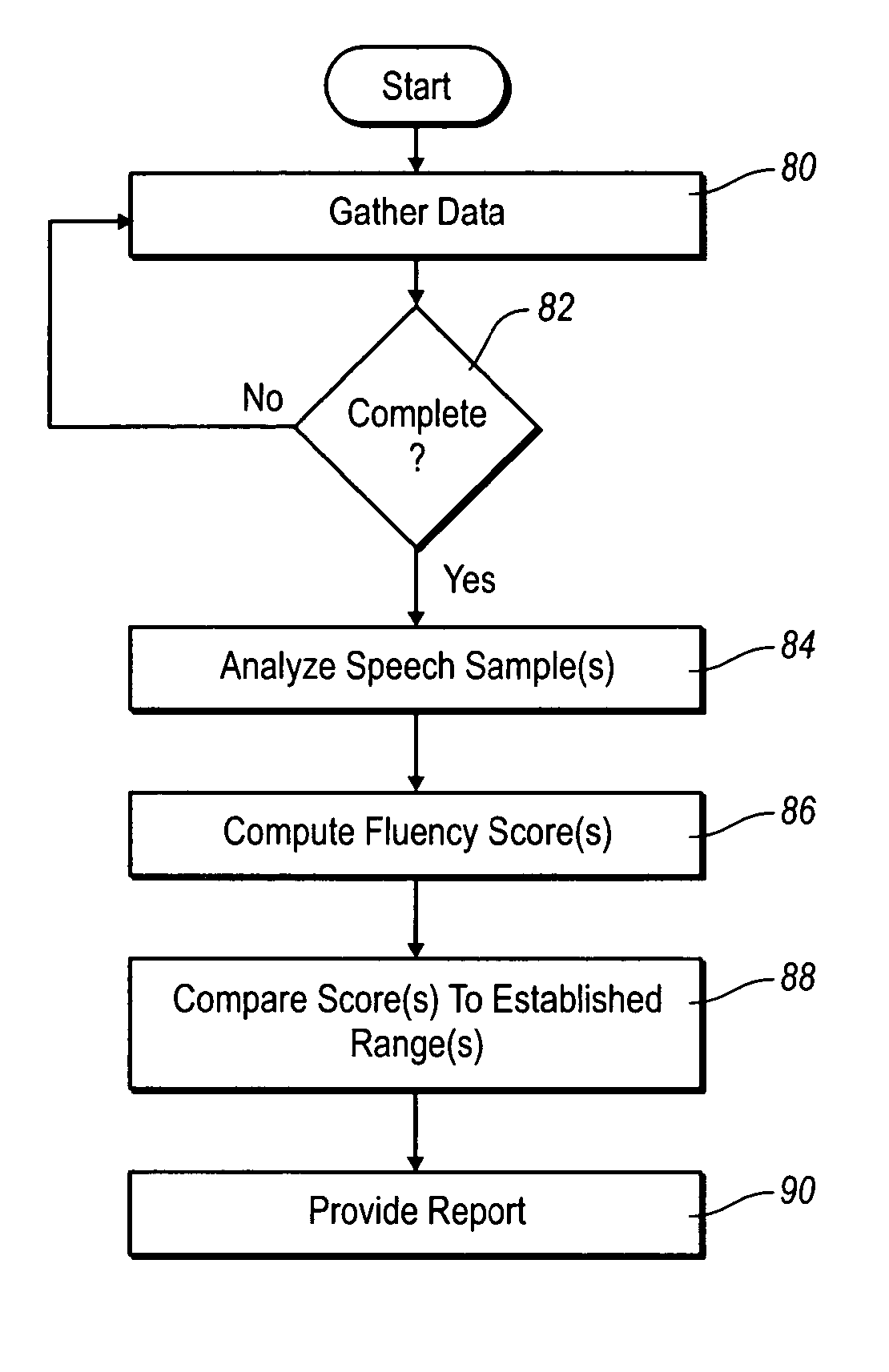

Systems and methods for dynamically analyzing temporality in speech

Systems and methods for dynamically analyzing temporality in an individual's speech in order to selectively categorize the speech fluency of the individual and / or to selectively provide speech training based on the results of the dynamic analysis. Temporal variables in one or more speech samples are dynamically quantified. The temporal variables in combination with a dynamic process, which is derived from analyses of temporality in the speech of native speakers and language learners, are used to provide a fluency score that identifies a proficiency of the individual. In some implementations, temporal variables are measured instantaneously.

Owner:BRIGHAM YOUNG UNIV

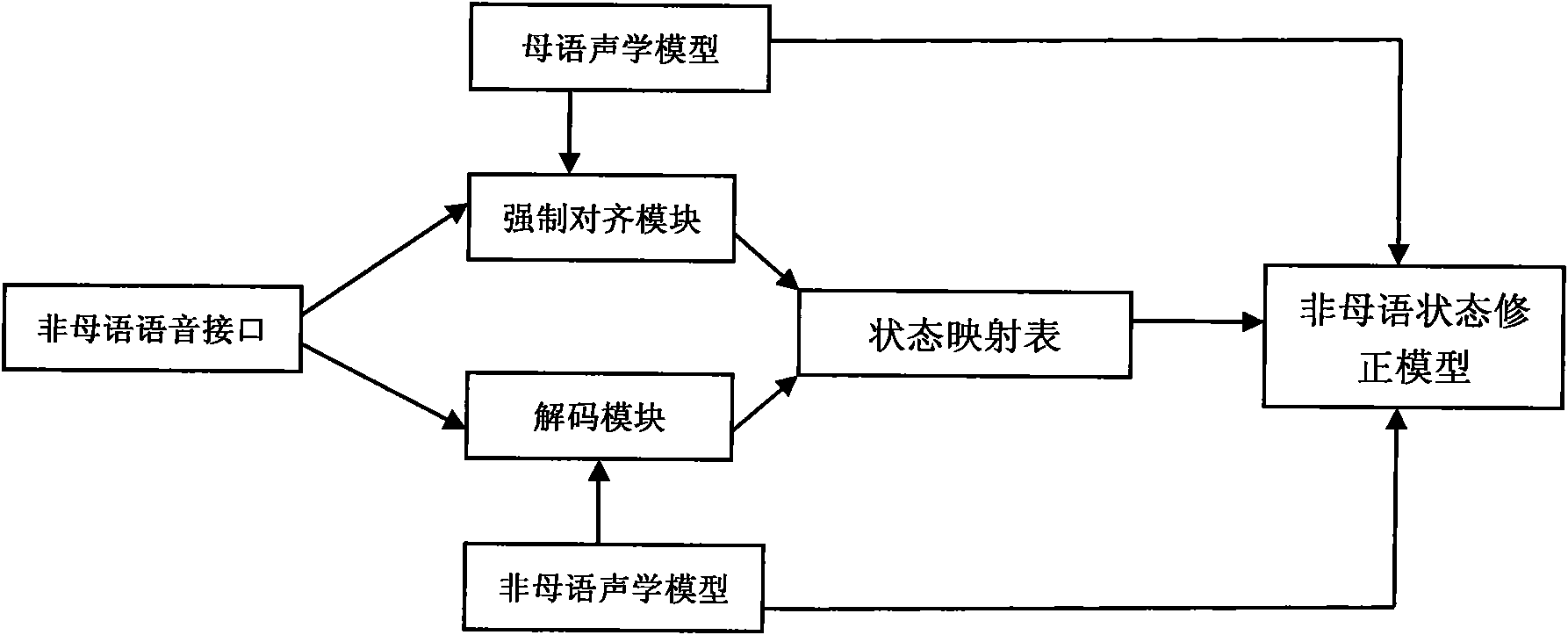

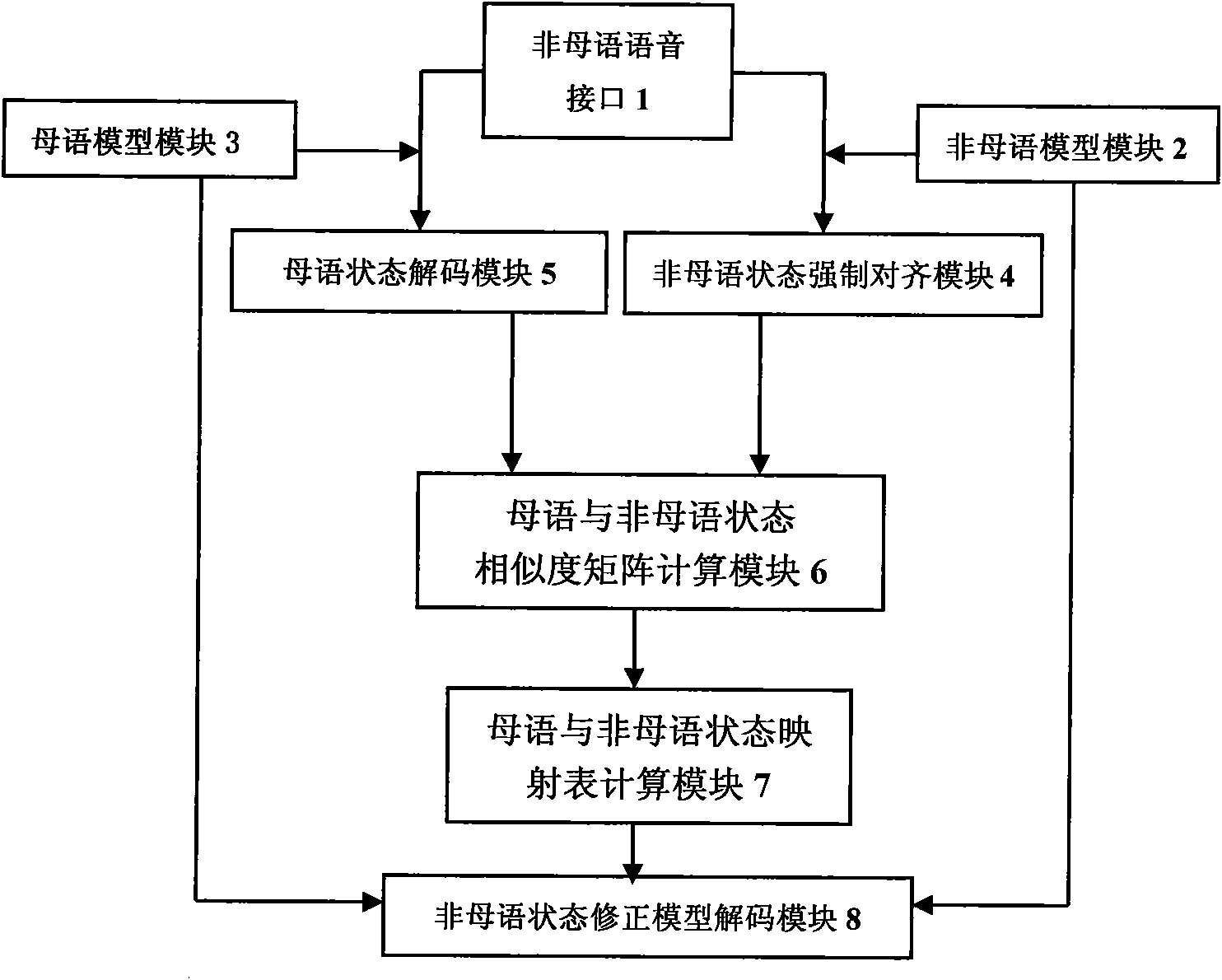

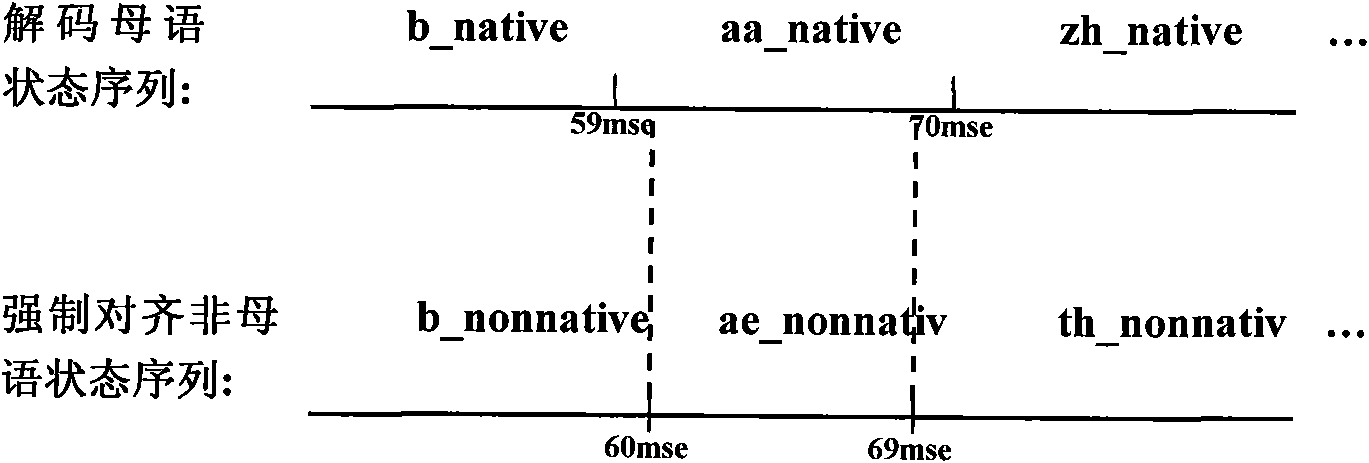

Non-native speech recognition system and method thereof

The invention relates to a non-native speech recognition system based on mixed model state correction and a method thereof. The non-native speech recognition system comprises a non-native speech interface, a native model module, a non-native model module, a native state decoding module, a non-native state forced alignment module, a native-non-native state similarity matrix computation module, a native-non-native state mapping table computation module and a non-native state correction model decoding module. In the system and the method thereof, a non-native acoustic model is corrected at statelevels based on a native acoustic model of a speaker and state mapping among different models, thus obtaining a model that better meets non-native pronunciation characteristics. The system and the method thereof have the advantages of obvious improvement of recognition performance compared with that of a recognition system which is not corrected by the method on the premise of only the native training data without increase of any non-native speech training data, no obvious speed fall of the recognition speech of the system and very high practicability.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

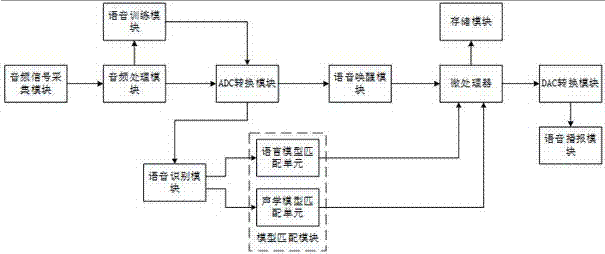

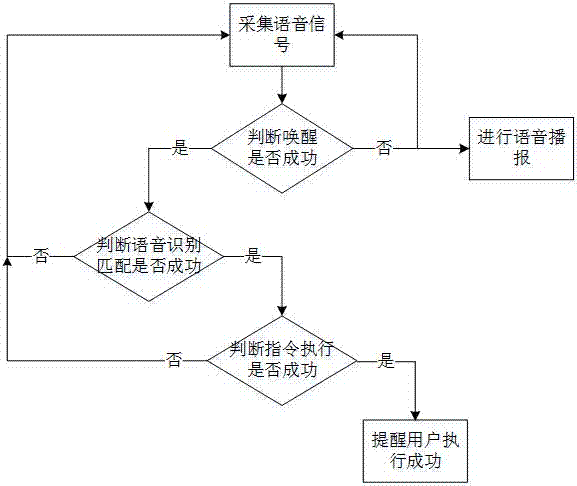

A voice interaction system and method of a smart home

PendingCN107016993AProblems preventing incorrect voice inputAvoid misuseData switching by path configurationSpeech recognitionInteraction systemsPhonological memory

The invention discloses a voice interaction system for smart home, which includes an audio signal acquisition module, an audio processing module, a voice training module, a voice recognition module, a voice wake-up module, a model matching module, an ADC conversion module, a microprocessor, and a storage module , a DAC conversion module and a voice playback module; the steps of the method are as follows: S1, collecting voice and audio signals; S2, judging whether the voice and audio signals wake up the smart home device; S3, judging whether the voice recognition matching is successful; S4, completing according to the voice command Task. Forming voice memory through voice training for users can effectively prevent the problem of wrong voice input to smart home devices by other non-user voices; by setting voice wake-up, it can effectively prevent misoperation problems caused by users who do not want to command smart home devices.

Owner:成都铅笔科技有限公司

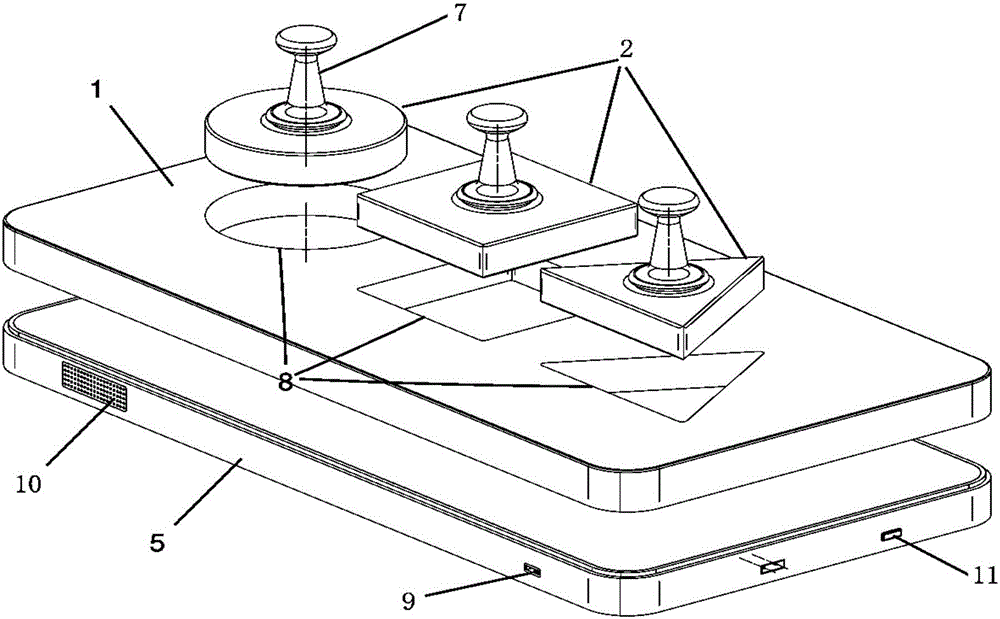

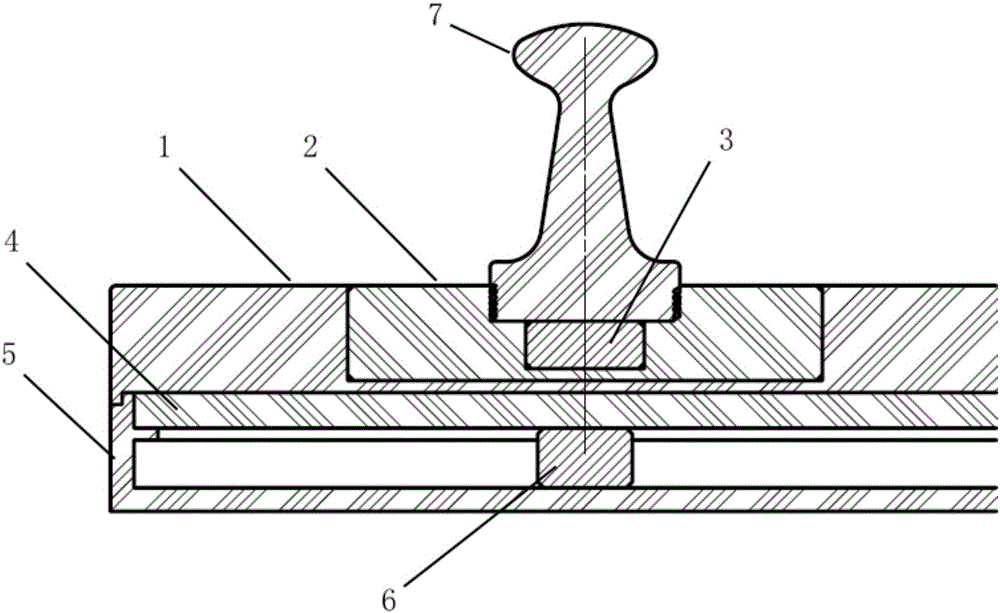

Acousto-optic embedding plate and control method thereof

PendingCN105797368APromote vision developmentPromote vision recoveryMusical toysIndoor gamesSpeech trainingLED display

The invention provides an acousto-optic embedding plate. The acousto-optic embedding plate is characterized by comprising a cover plate (1), an embedding block (2), a magnet (3), an LED display screen (4), a base (5) and a magnetic switch (6). A power switch (9), a loudspeaker (10) and a mode switching button (11) are arranged in the base (5). The LED display screen (4) is installed in the base (5) in a buckling or binding mode. The cover plate (1) is installed on the base (5) in a buckling mode. A handle (7) is installed on the embedding block (2) through threads. The magnet (3) is installed between the handle (7) and the embedding block (2). The magnetic switch (6) is installed on the lower portion of the LED display screen (4) in a bonding mode. According to the acousto-optic embedding plate, by means of rich sound, light and image visual stimulation, an effective path is provided for hand operation of children with low vision, and the possibility is provided for the children with the low vision to complete hand-eye coordination training; meanwhile, in combination with corresponding cognition and speech training, vision recovery can be promoted effectively.

Owner:NANJING MEDICAL UNIV

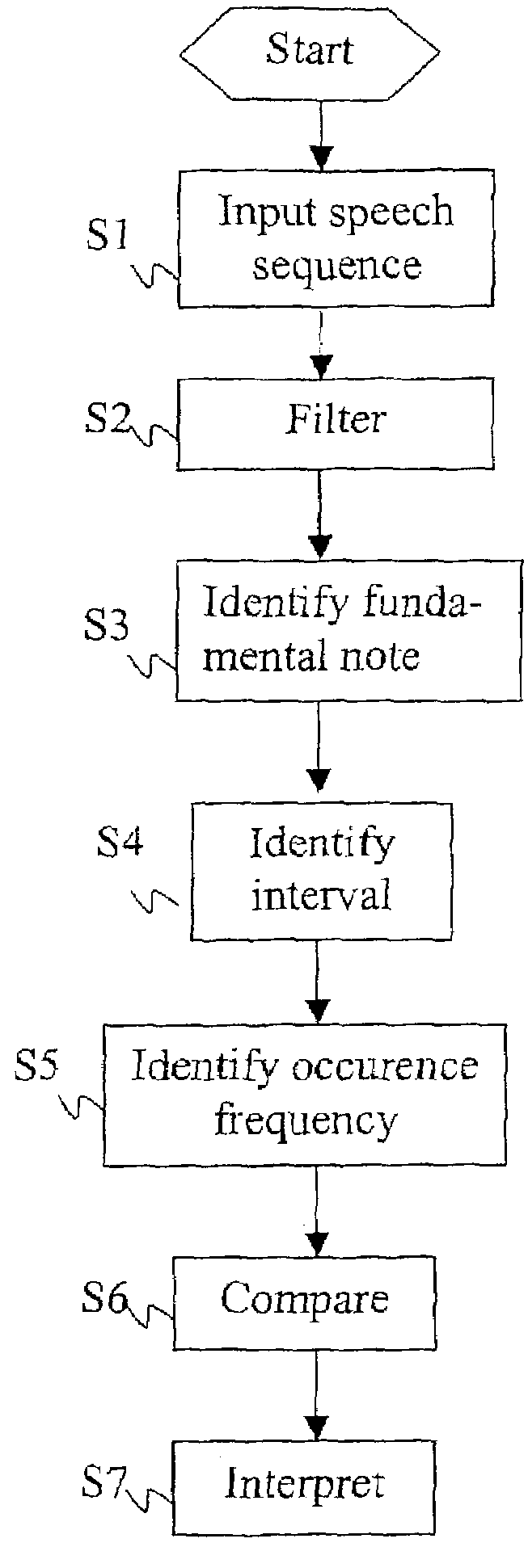

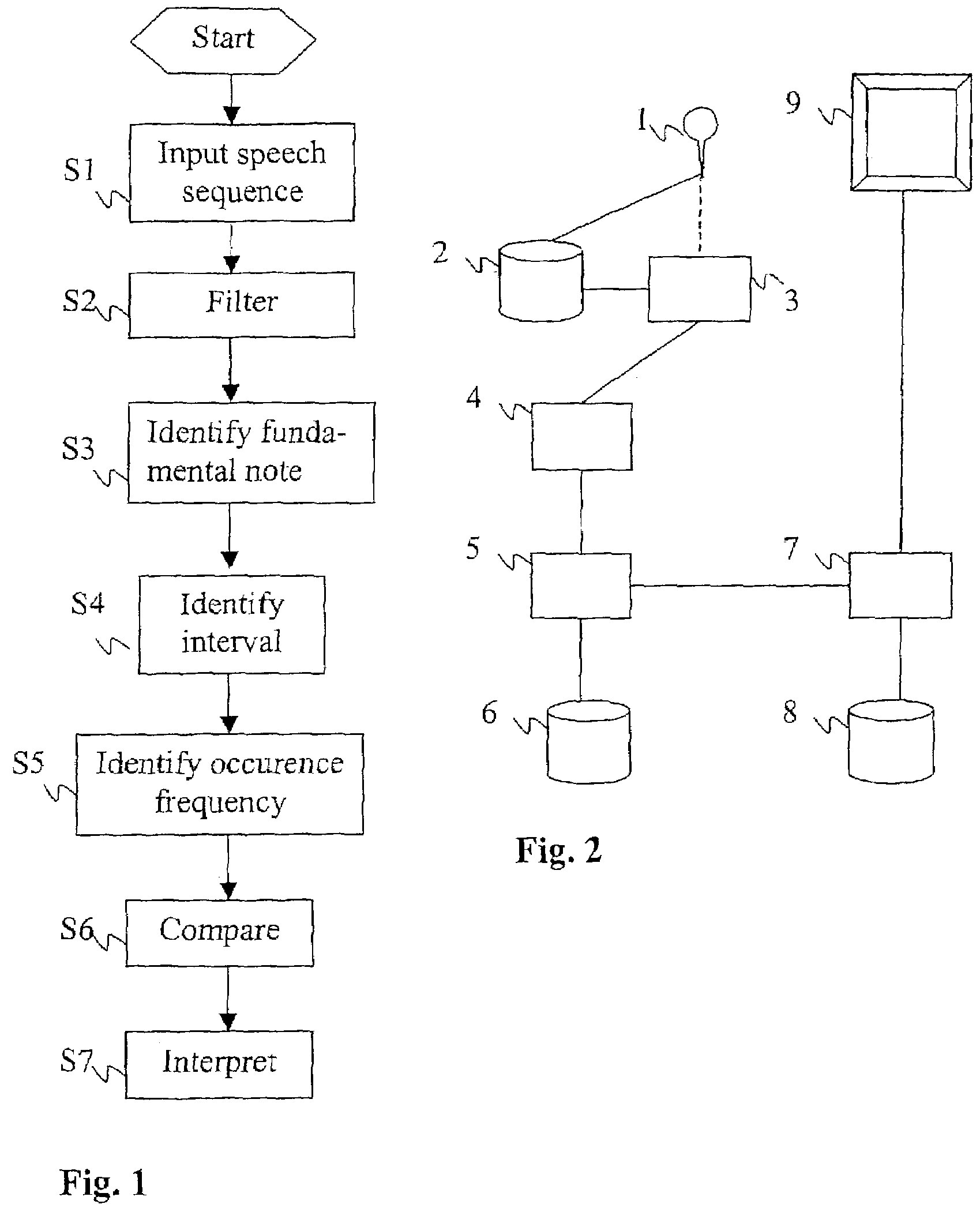

Method and device for speech analysis

A device and a method for speech analysis are provided, comprising measuring fundamental notes of a speech sequence to be analysed and identifying frequency intervals between at least some of said fundamental notes. An assessment is then made as to the frequency at which at least some of these thus identified intervals occur in the speech sequence to be analysed. Among other applications are speech training and diagnosis of pathological conditions.

Owner:TRANSPACIFIC INTELLIGENCE

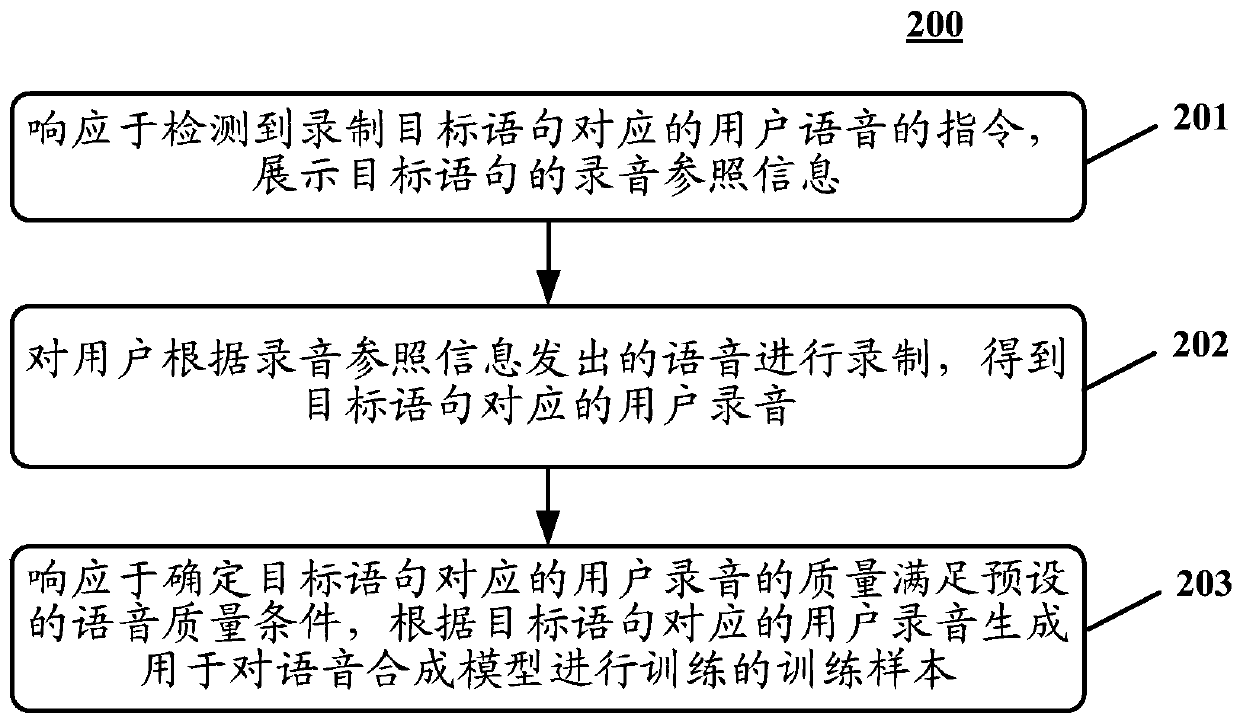

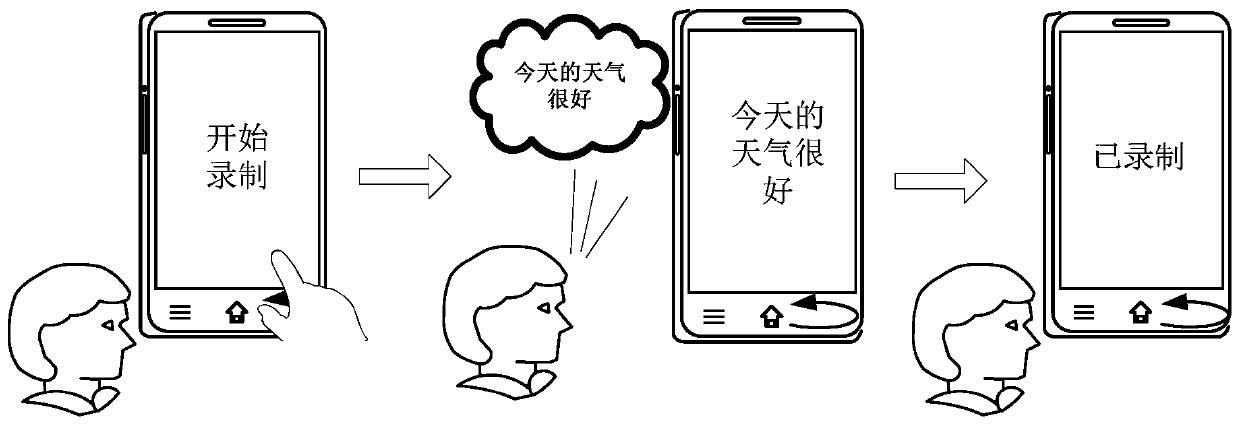

Method and device for acquiring speech training sample

ActiveCN110473525AGuaranteed Voice QualityImprove accuracySpeech recognitionSpeech trainingSpeech synthesis

The embodiment of the invention relates to the technical field of speech synthesis, and discloses a method and a device for acquiring a speech training sample. A specific implementation mode of the method comprises the steps of responding to a detected command for recording user speech corresponding to a target statement, and displaying record reference information of the target statement; recording speech sent by a user according to the record reference information, and obtaining a user record corresponding to the target statement; responding to the circumstance that the quality of the user record corresponding to the target statement is determined to meet the preset speech quality condition; and generating the training sample for training a speech synthesis model according to the user record corresponding to the target statement. According to the method and the device for acquiring the speech training sample provided by the embodiment of the invention, under the circumstance that theuser record meet the preset speech quality condition, through generating the training sample, the follow-up speech synthesis model training is realized, so that the speech synthesis model obtained through training is accurate.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

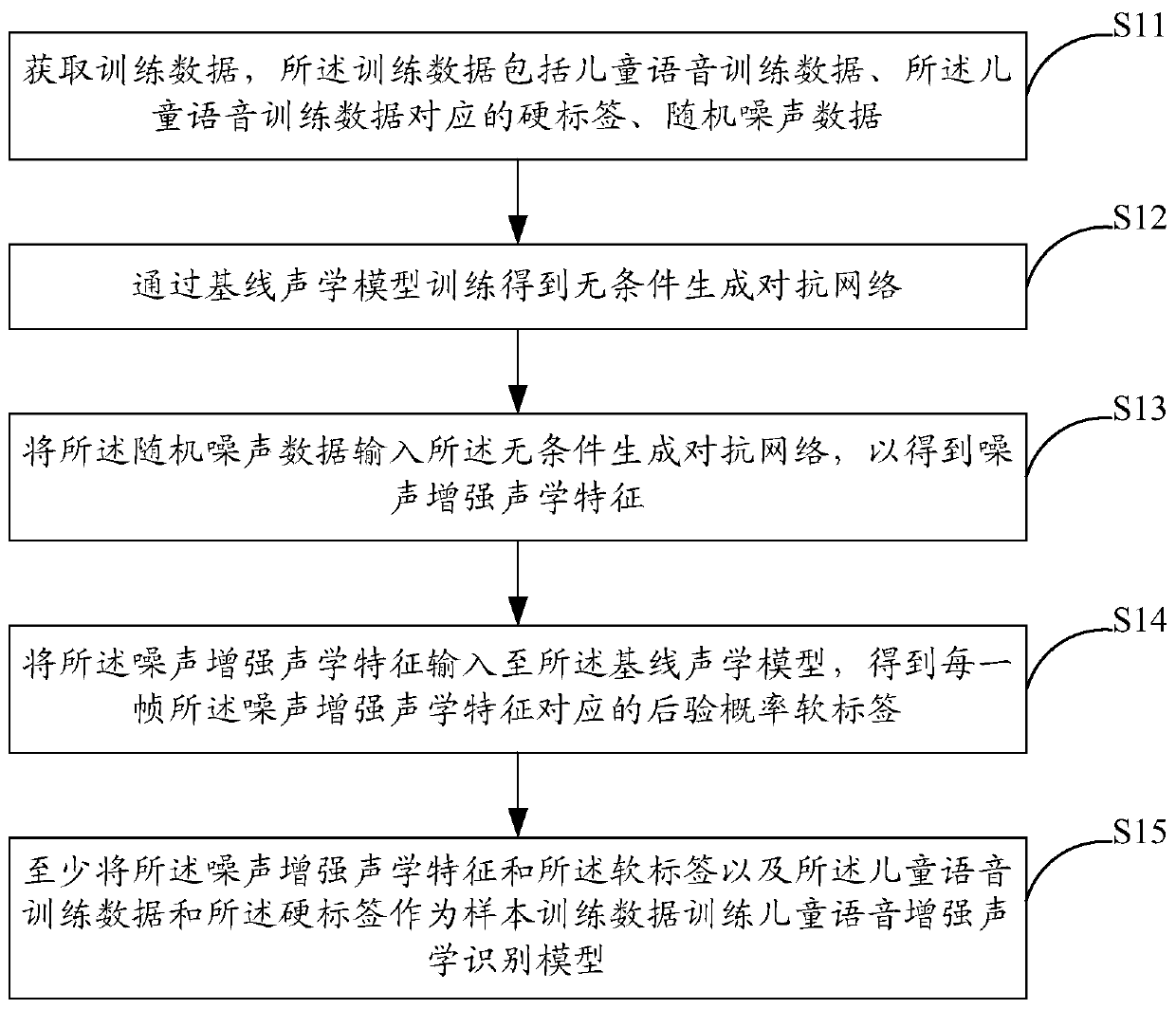

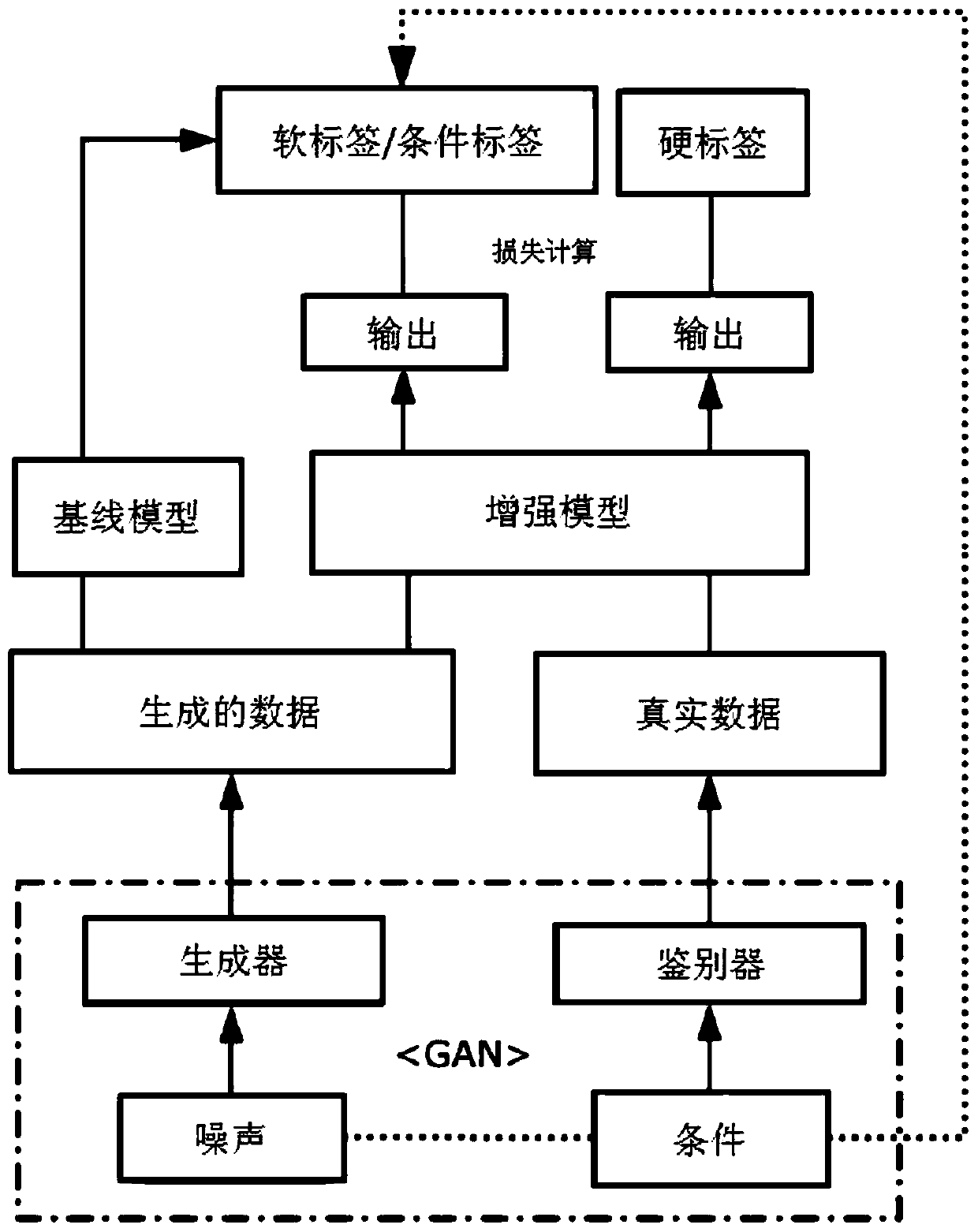

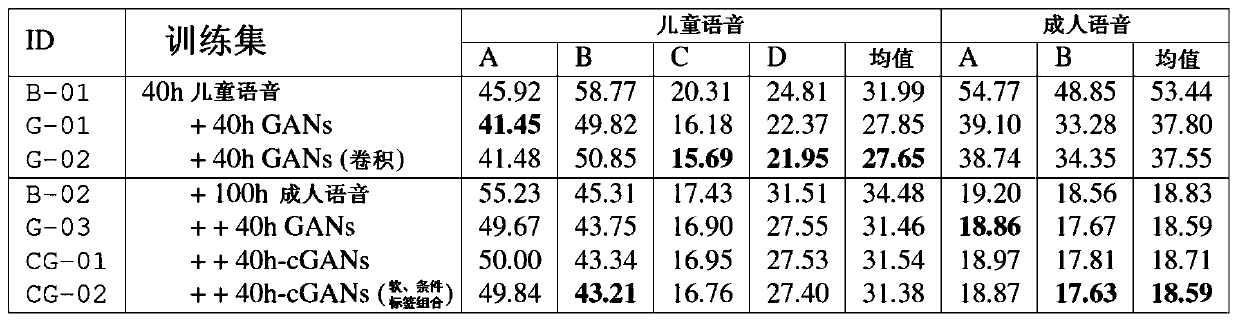

Training method and system for child speech recognition model

ActiveCN110706692AChange the nature of pronunciationImprove recognition accuracySpeech recognitionSpeech trainingGenerative adversarial network

The embodiment of the invention provides a training method of a child speech recognition model. The method comprises the steps of obtaining training data; training through a baseline acoustic model toobtain an unconditional generative adversarial network; inputting the random noise data into an unconditional generative adversarial network to obtain noise enhanced acoustic features; inputting thenoise enhancement acoustic features into a baseline acoustic model to obtain a posterior probability soft label corresponding to each frame of noise enhancement acoustic features; and training the children speech enhancement acoustic recognition model at least by taking the noise enhancement acoustic features, the soft label, the children speech training data and the hard label as sample trainingdata. The embodiment of the invention further provides a training system of the child speech recognition model. According to the embodiment of the invention, the pronunciation nature of the child speech is changed under the condition that the child speech is limited, diversified child speeches are generated, and the recognition accuracy of the child speech recognition model is improved.

Owner:AISPEECH CO LTD

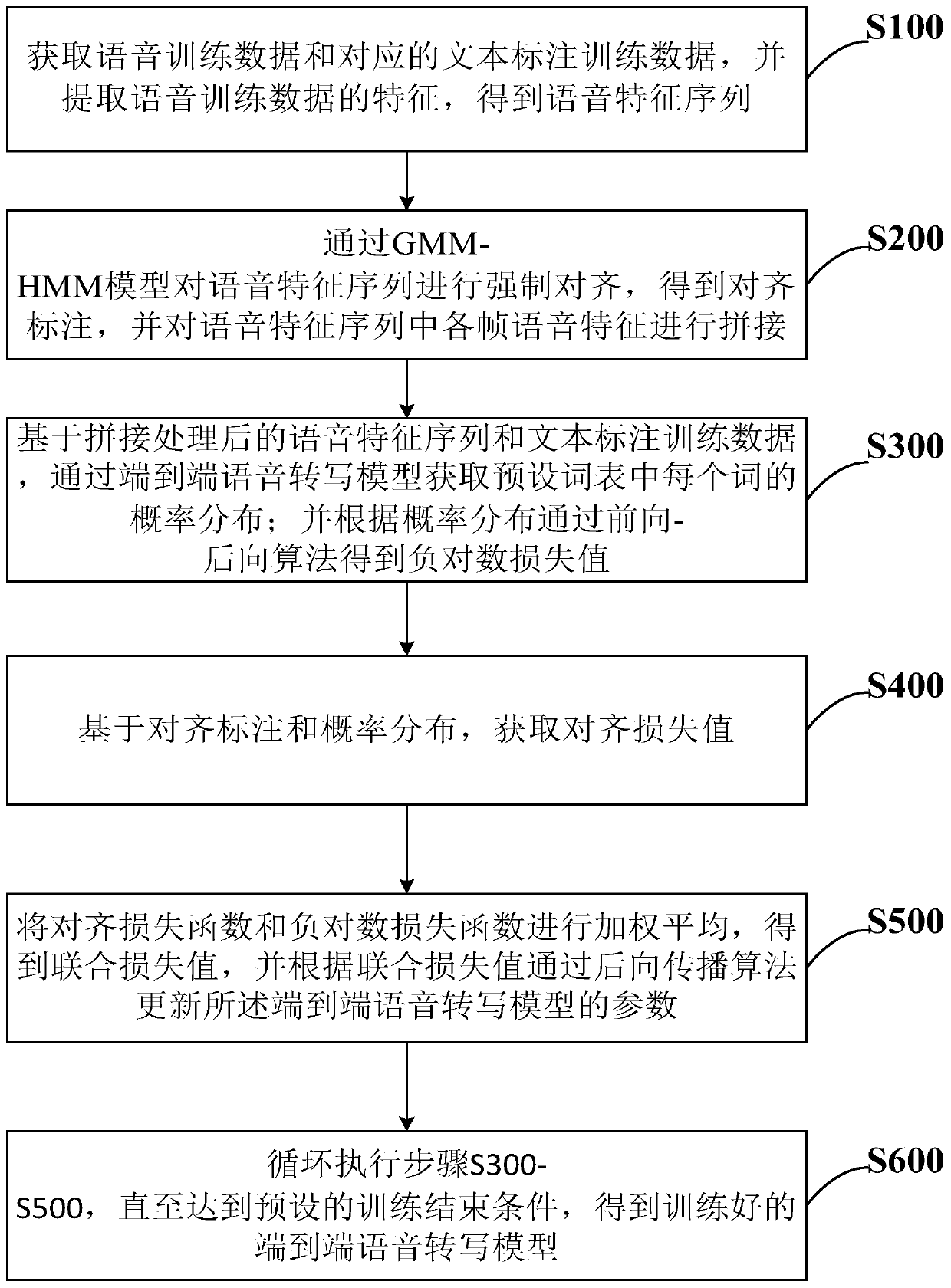

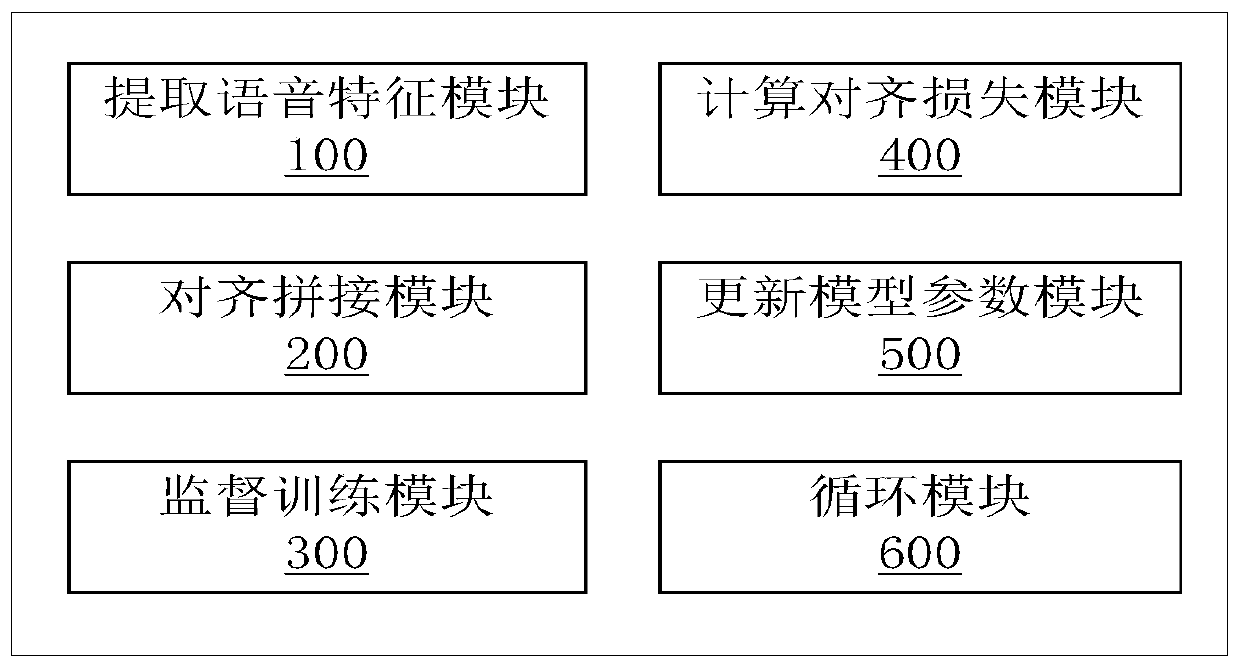

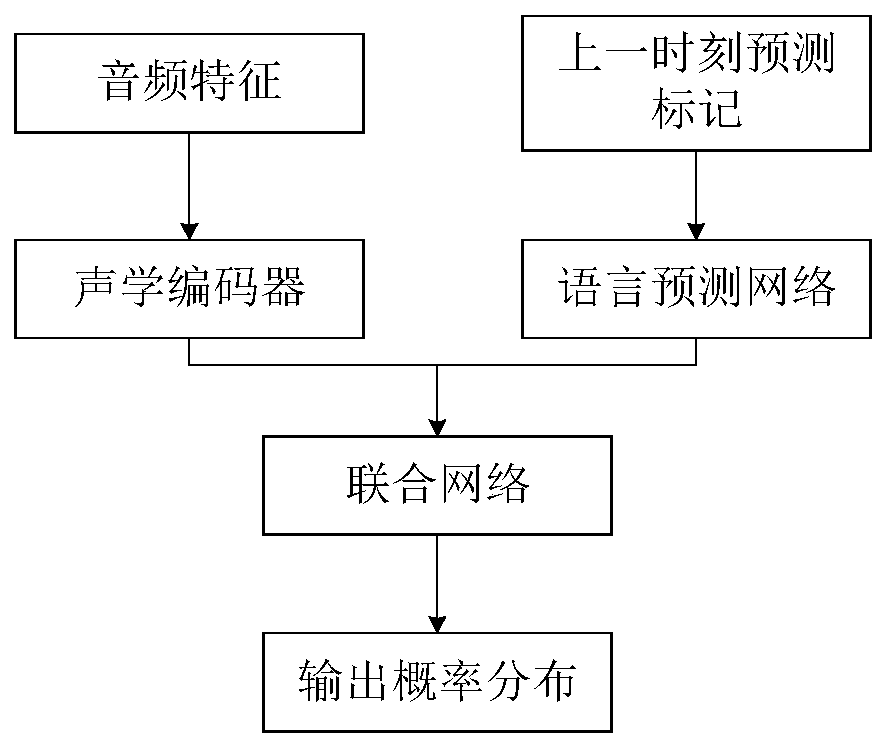

Training method and system for RNN transducer model, and device

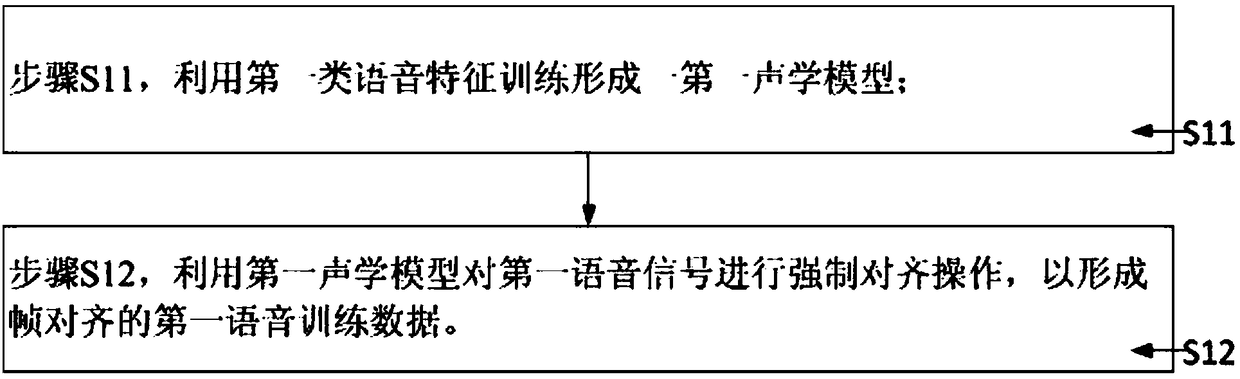

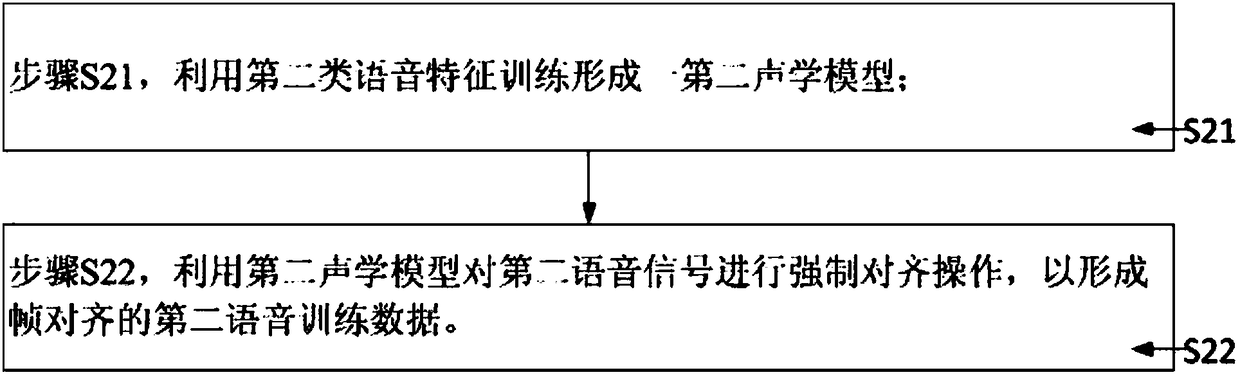

The invention belongs to the technical field of electronic signal processing, particularly relates to a training method and system for an RNN transducer model, and a device, and aims to solve the problem that the RNN transducer model cannot learn alignment information of speech data well. The training method comprises the following steps of extracting the features of speech training data to obtaina speech feature sequence; forcedly aligning the speech feature sequence through a GMM-HMM (Gaussian Mixture Model-Hidden Markov Model) model to obtain an alignment annotation, and splicing differentframes of speech features; training the RNN transducer model based on the spliced speech feature sequence and text annotation training data to obtain the probability distribution and the negative logarithmic loss value of each word in a preset word list; acquiring an alignment loss value; carrying out weighted averaging on the alignment loss value and the negative logarithmic loss value to obtaina combined loss value, and updating the parameters of the model through a backward propagation algorithm; and iteratively training the model. Through the adoption of the training method and system, the alignment information of the speech data can be learned accurately.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

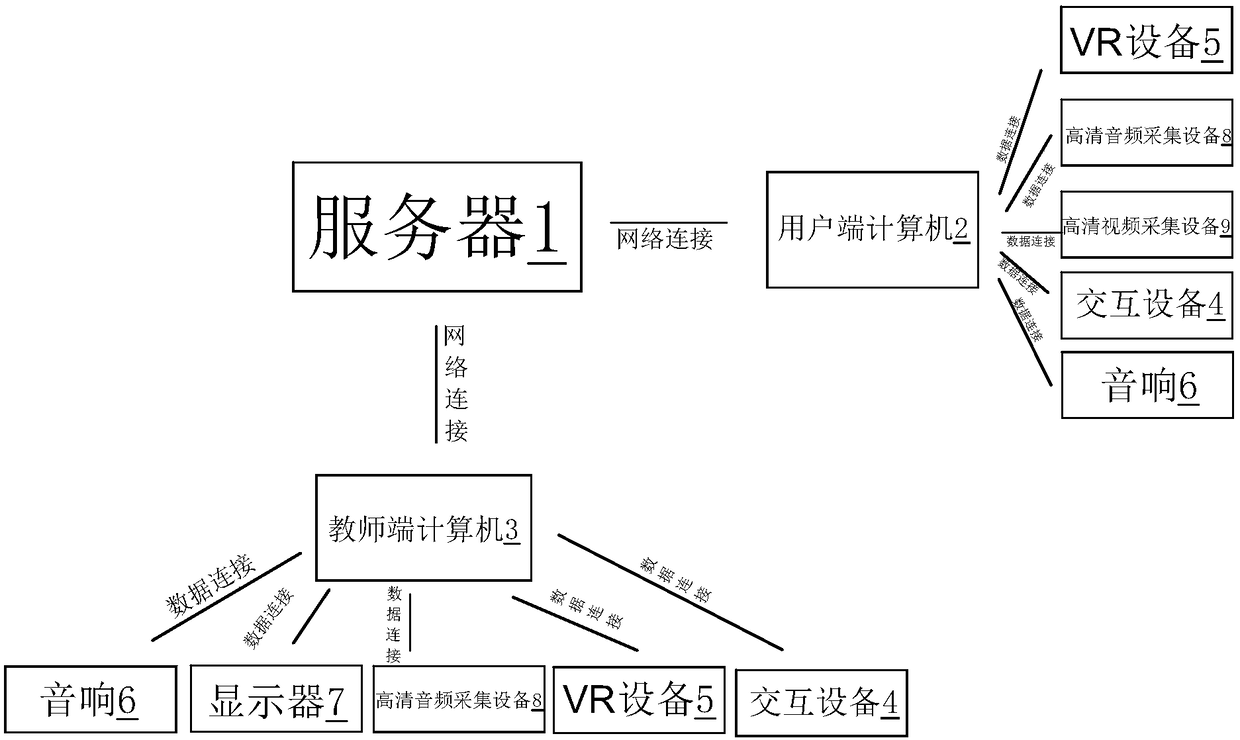

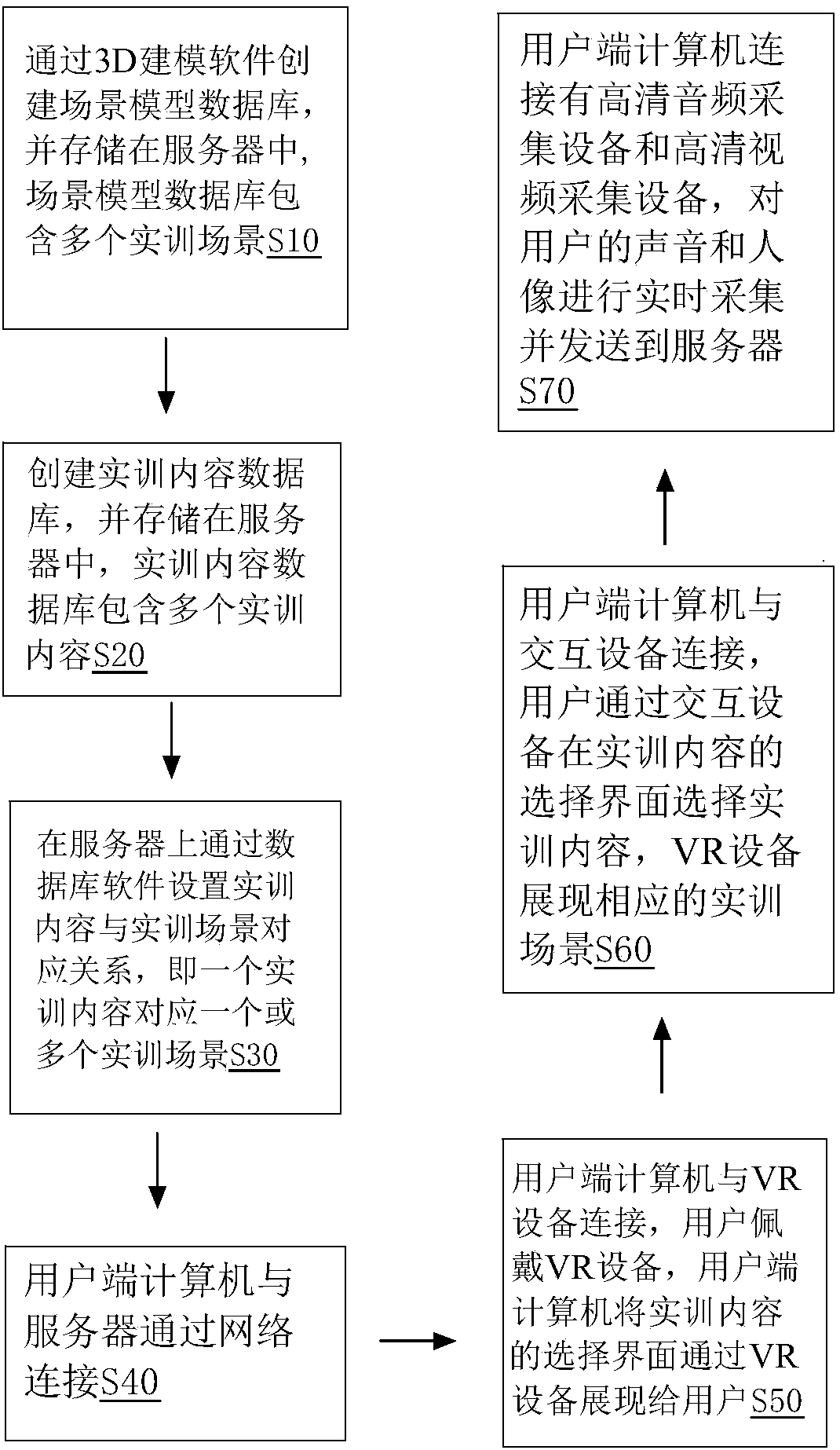

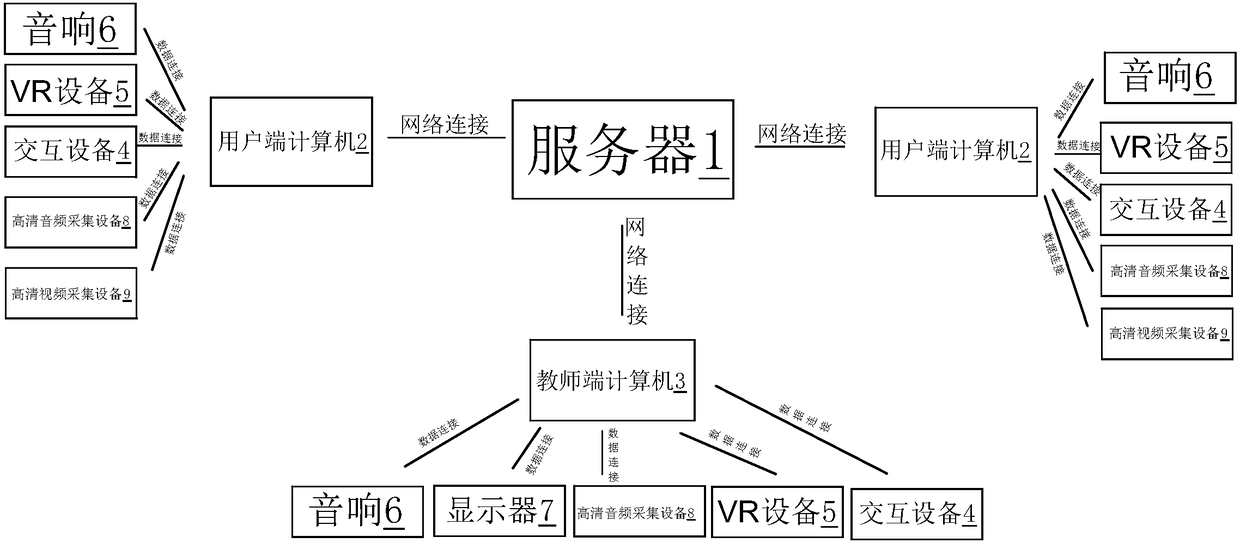

System and method for speech training by applying VR technology

PendingCN108074431AImprove the level of speechMultiple heuristicsCosmonautic condition simulationsElectrical appliancesSpeech trainingNetwork connection

The invention relates to the field of smart education, and particularly relates to a system and method for speech training by applying a VR technology. The method includes the following steps that S10, a scene model database is created through 3D modeling software; S20, a training content database is created; S30, a correspondence relationship between training contents and training scenes is set through database software; S40, a user terminal computer and a server are connected through a network; S50, a user wears a VR device, and the user terminal computer presents a selection interface of the training contents to the user through the VR device; S60, the user selects the training contents in the selection interface of the training contents through an interactive device, and the VR deviceshows the corresponding training scenes; S70, the user terminal computer collects the voice and portrait of the user in real time and sends the voice and the portrait to the server. By adopting the method, the user can conduct speech training in an interesting and diversified highly simulated virtual reality environment, and the speech level of the user is significantly improved by means of telepresence as a feature.

Owner:HANGZHOU NORMAL UNIVERSITY +2

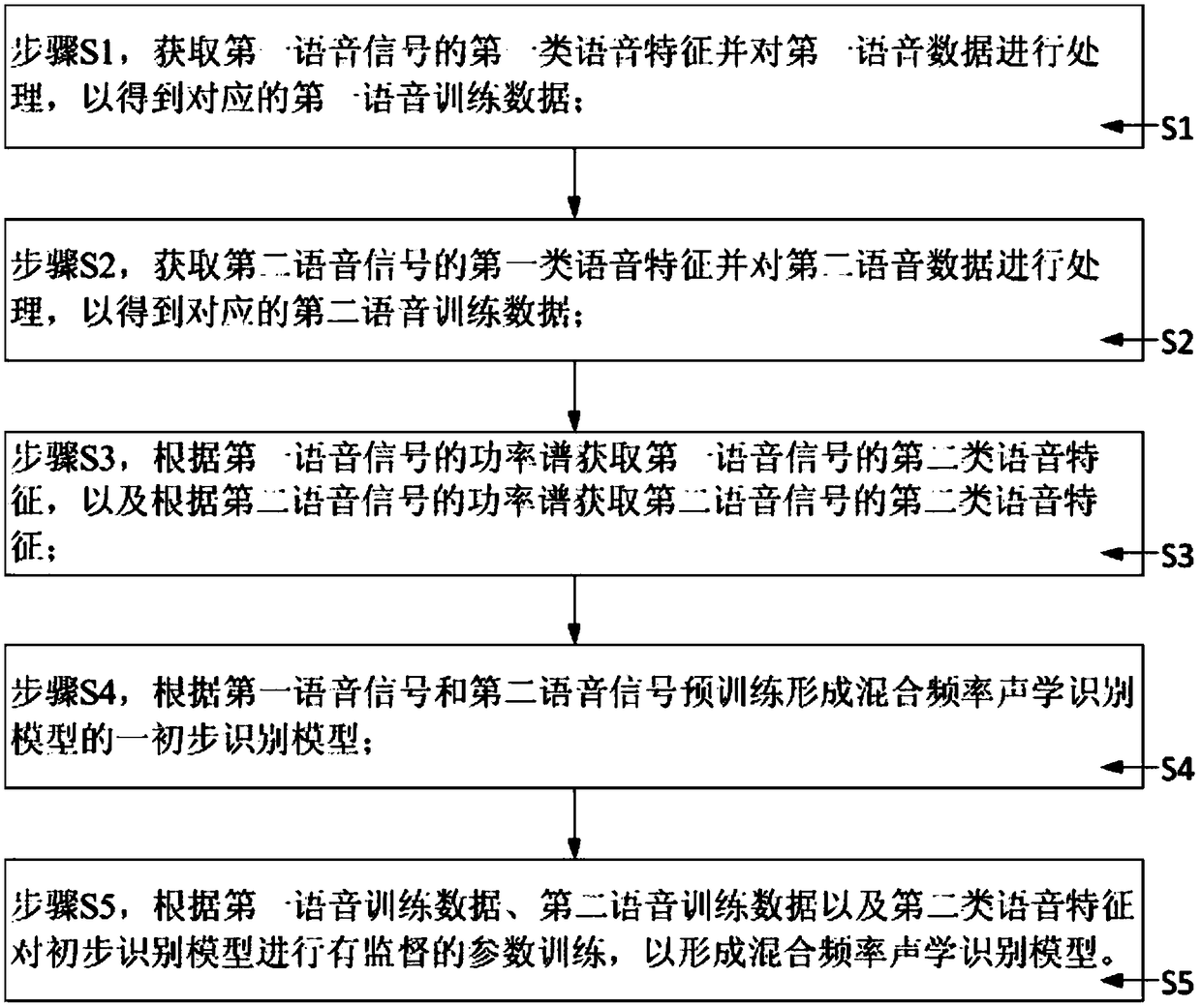

Training method for hybrid frequency acoustic recognition model and speech recognition method

ActiveCN108510979AImprove robustnessImprove generalization abilitySpeech recognitionSpeech trainingSpeech sound

Owner:YUTOU TECH HANGZHOU

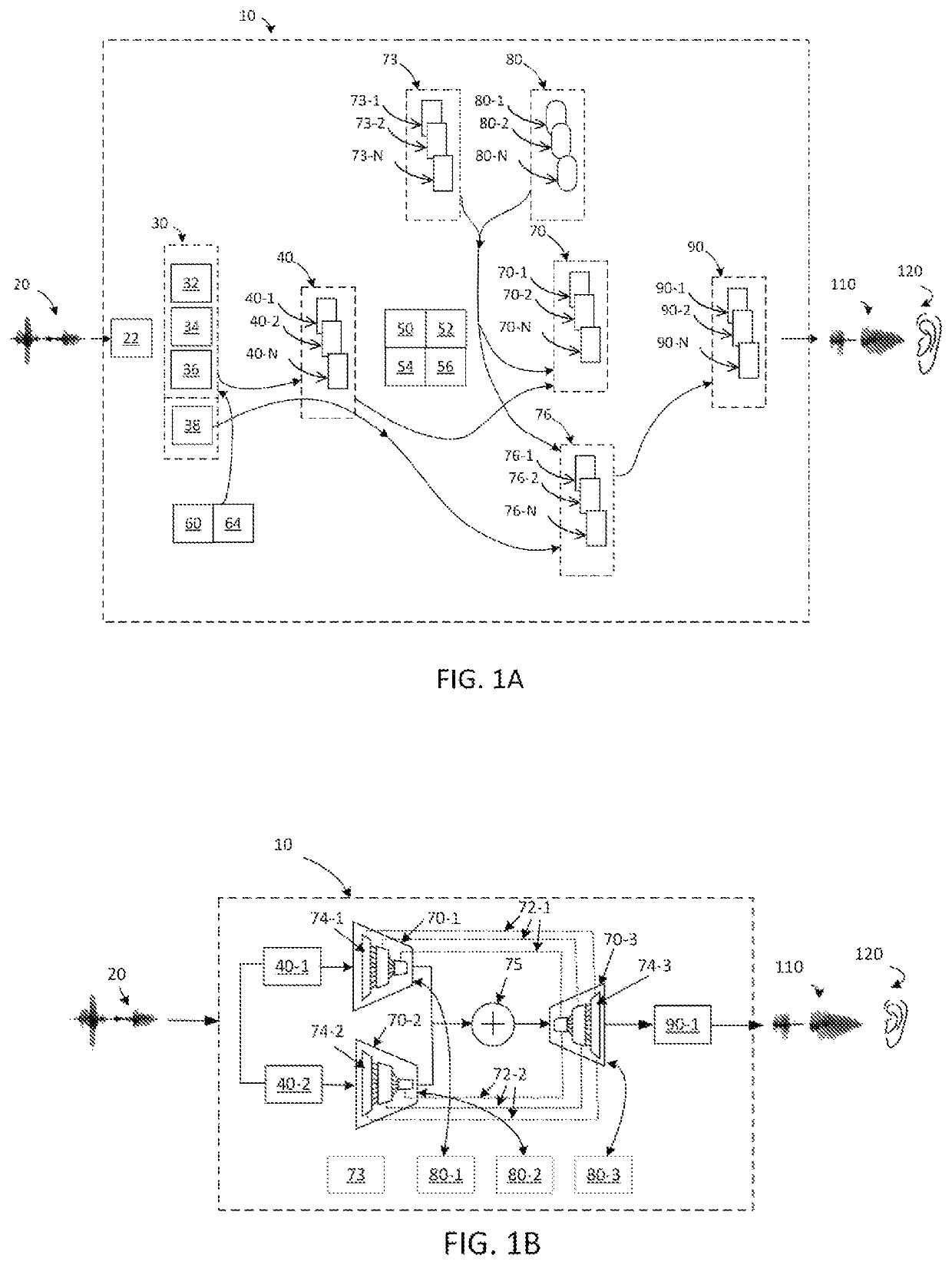

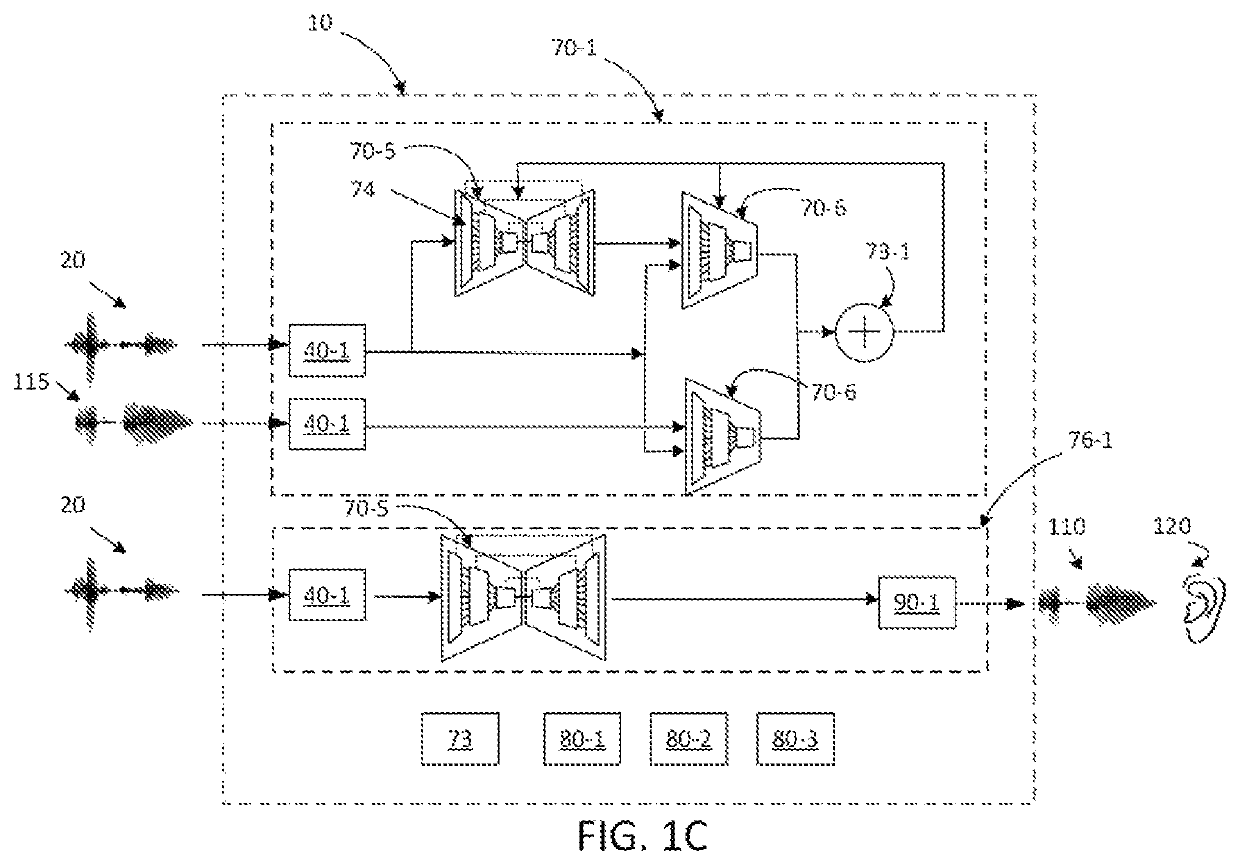

Methods and systems implementing language-trainable computer-assisted hearing aids

ActiveUS10997970B1Speed up the processImprove voice qualityHearing aids signal processingSpeech recognitionSpeech trainingComputer-aided

Owner:RAFII ABBAS

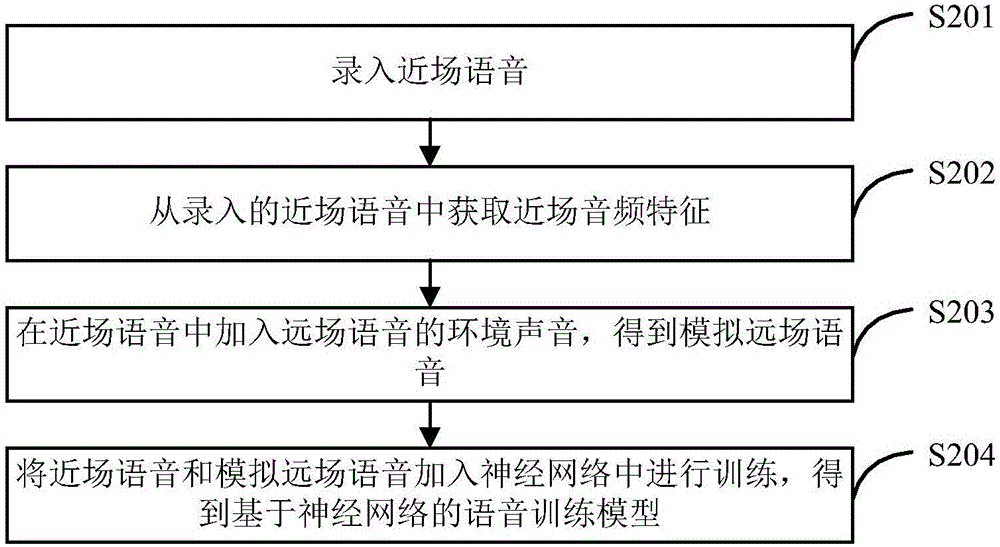

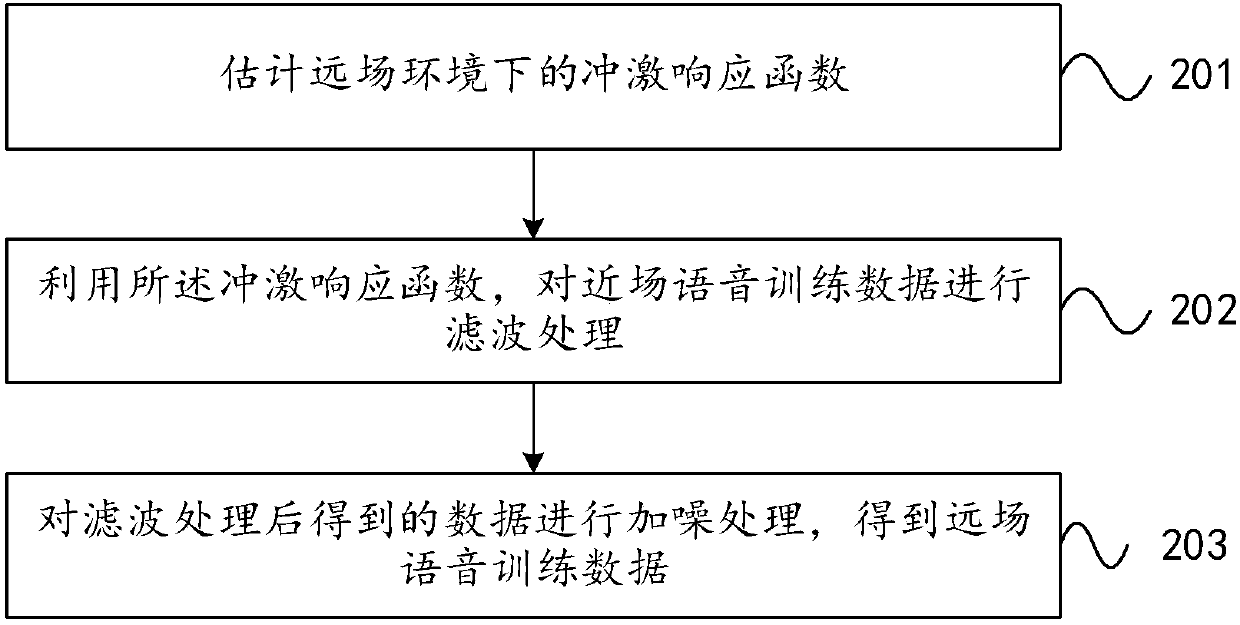

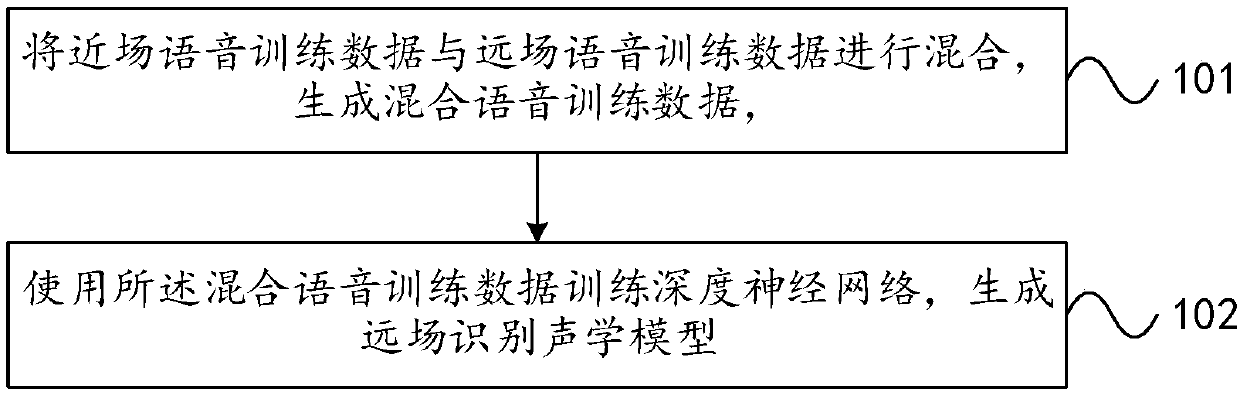

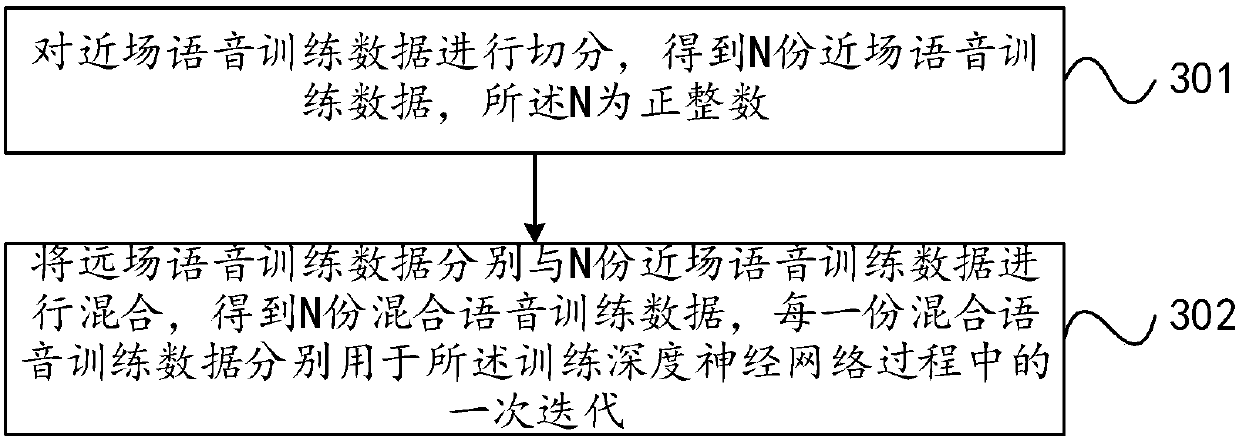

Far field speech acoustic model training method and system

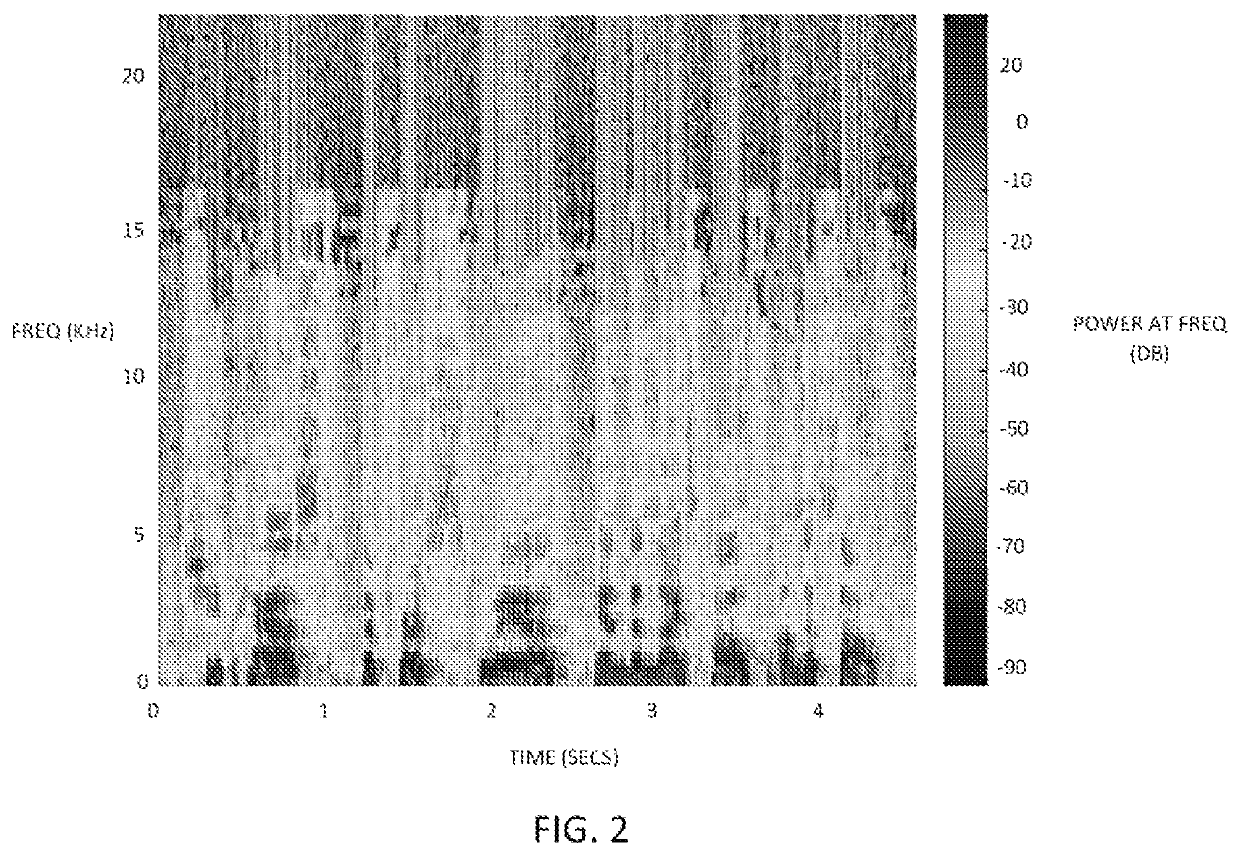

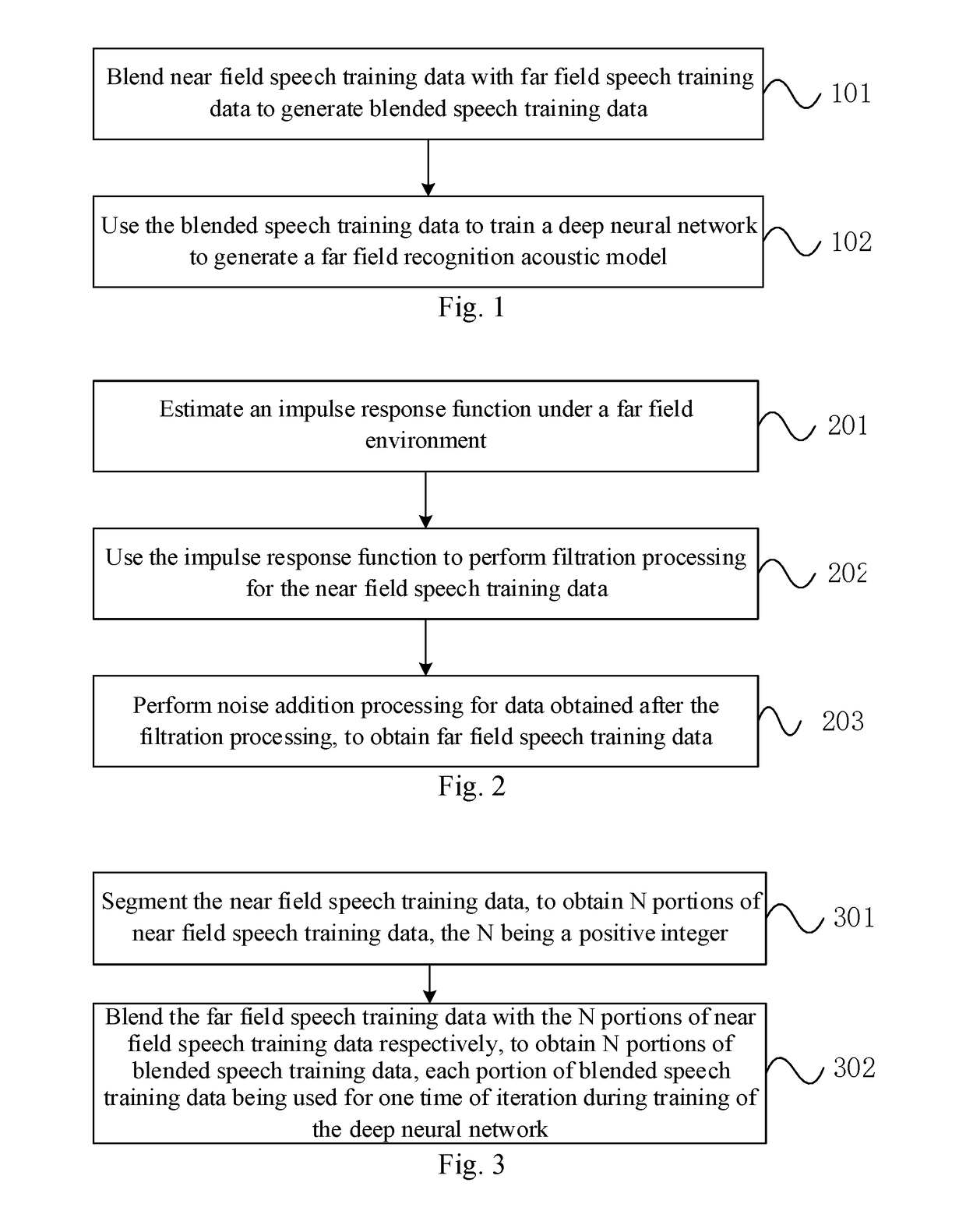

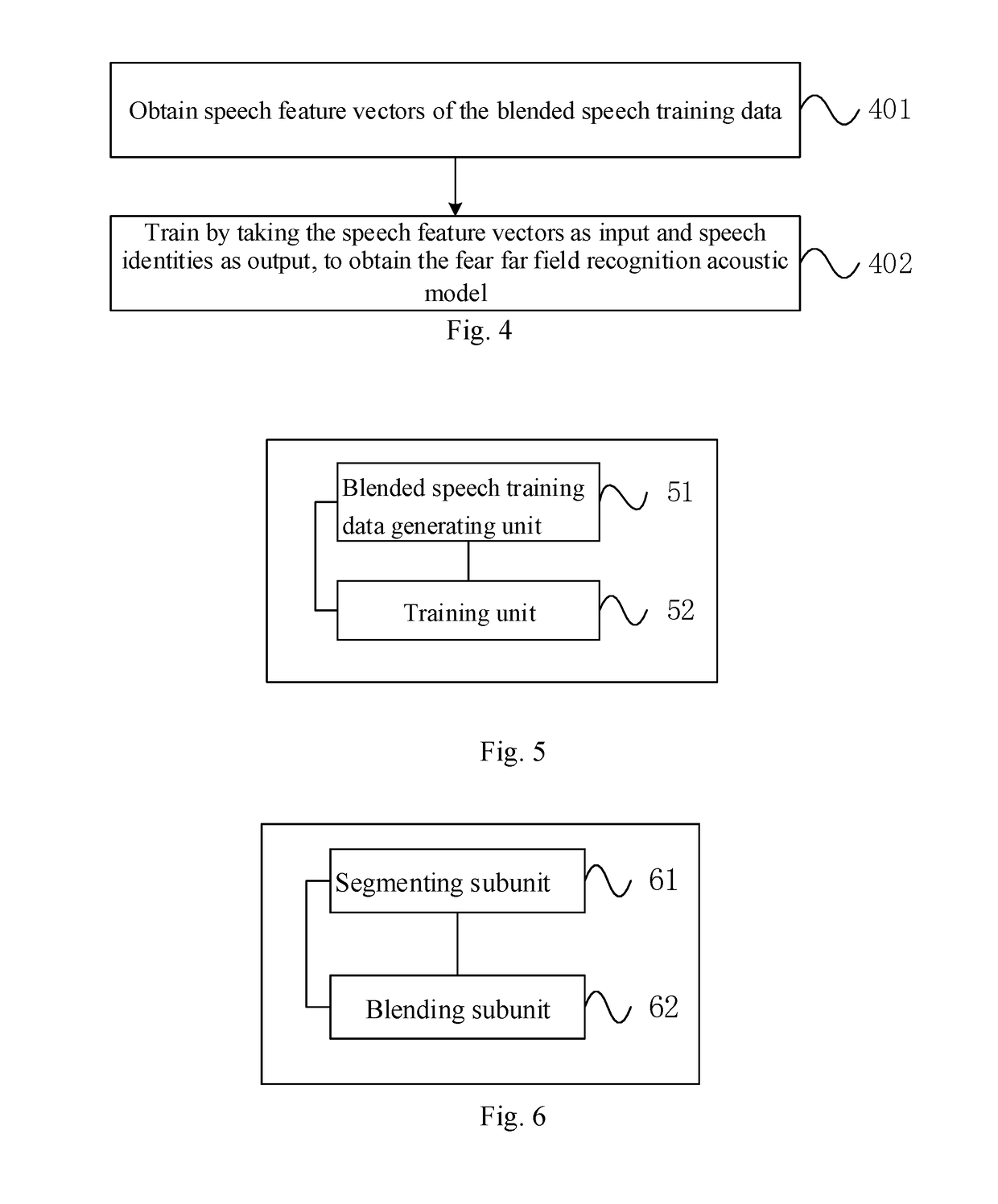

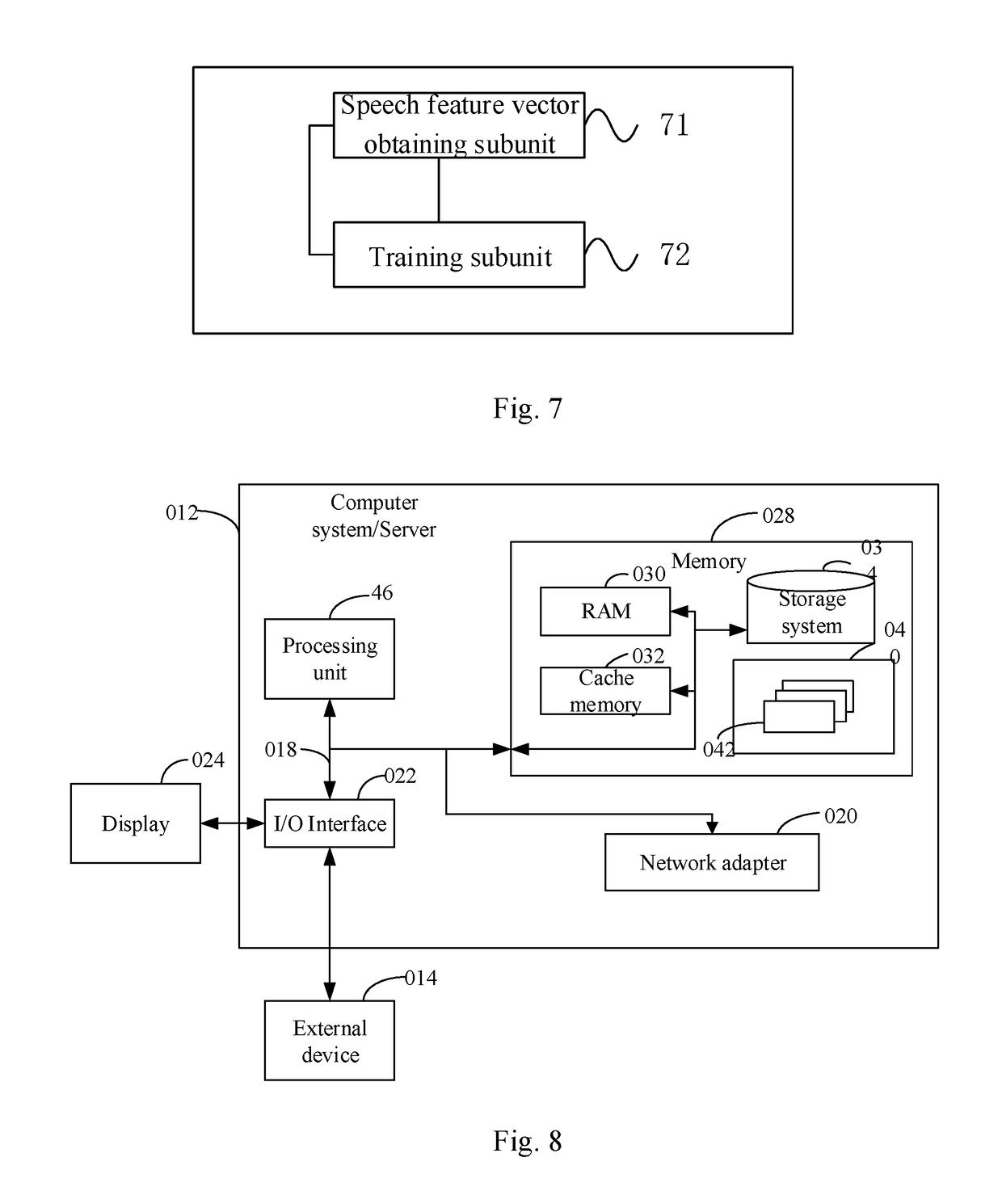

InactiveUS20190043482A1Shorten the timeLow costSpeech recognitionNeural learning methodsSpeech trainingAcoustic model

The present disclosure provides a far field speech acoustic model training method and system. The method comprises: blending near field speech training data with far field speech training data to generate blended speech training data, wherein the far field speech training data is obtained by performing data augmentation processing for the near field speech training data; using the blended speech training data to train a deep neural network to generate a far field recognition acoustic model. The present disclosure can avoid the problem of spending a lot of time costs and economic costs in recording the far field speech data in the prior art; and reduce time and economic costs of obtaining the far field speech data, and improve the far field speech recognition effect.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

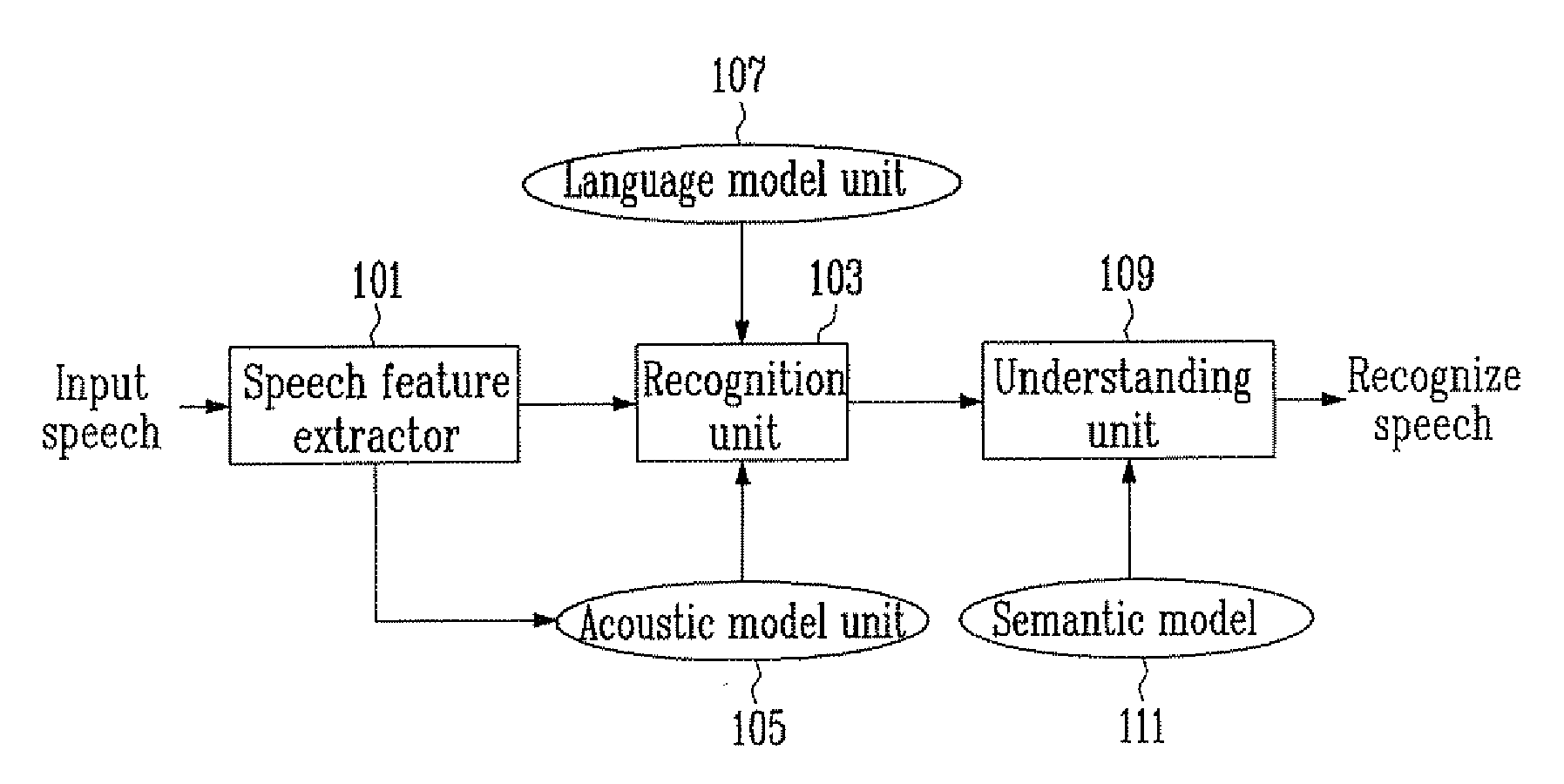

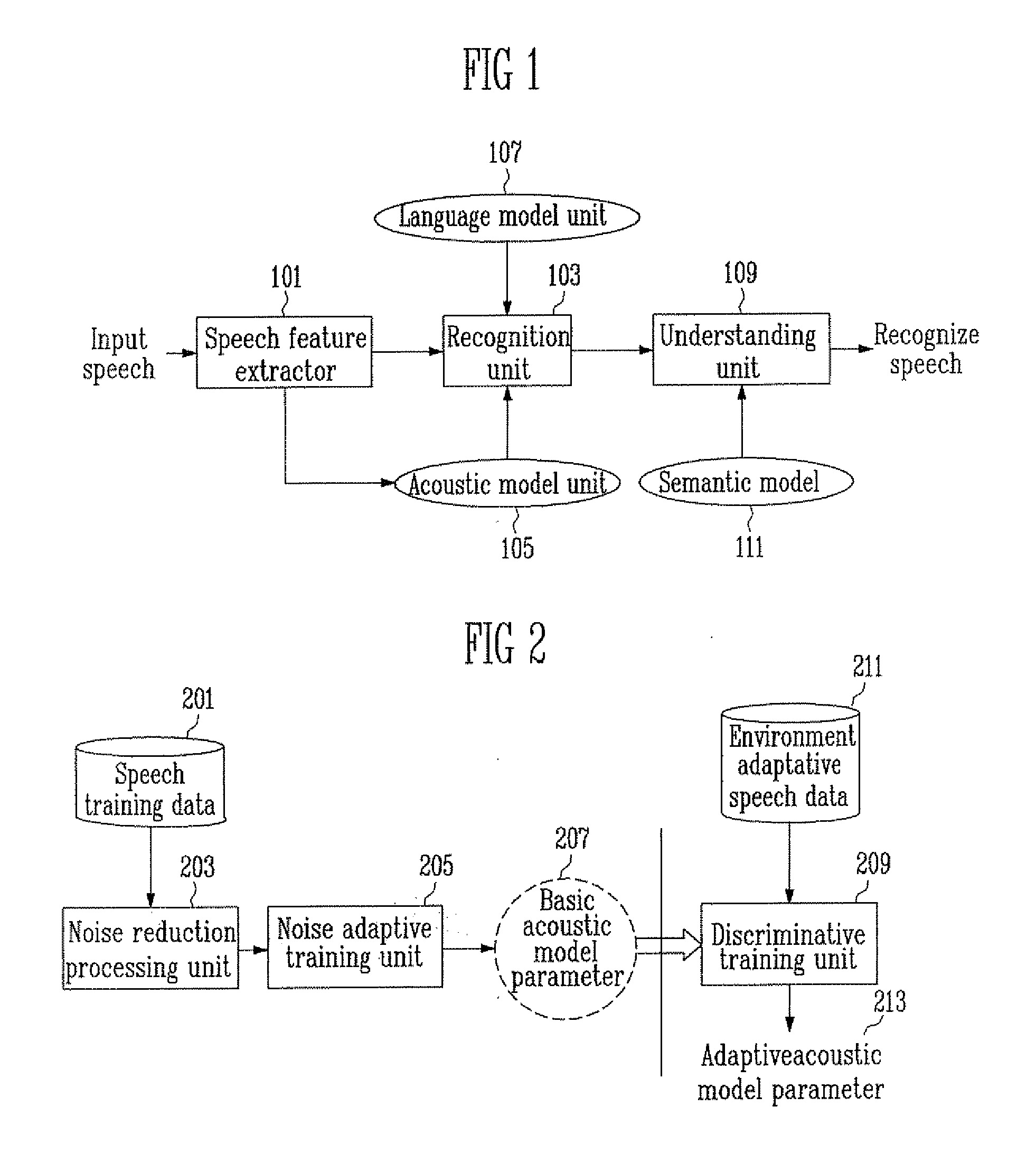

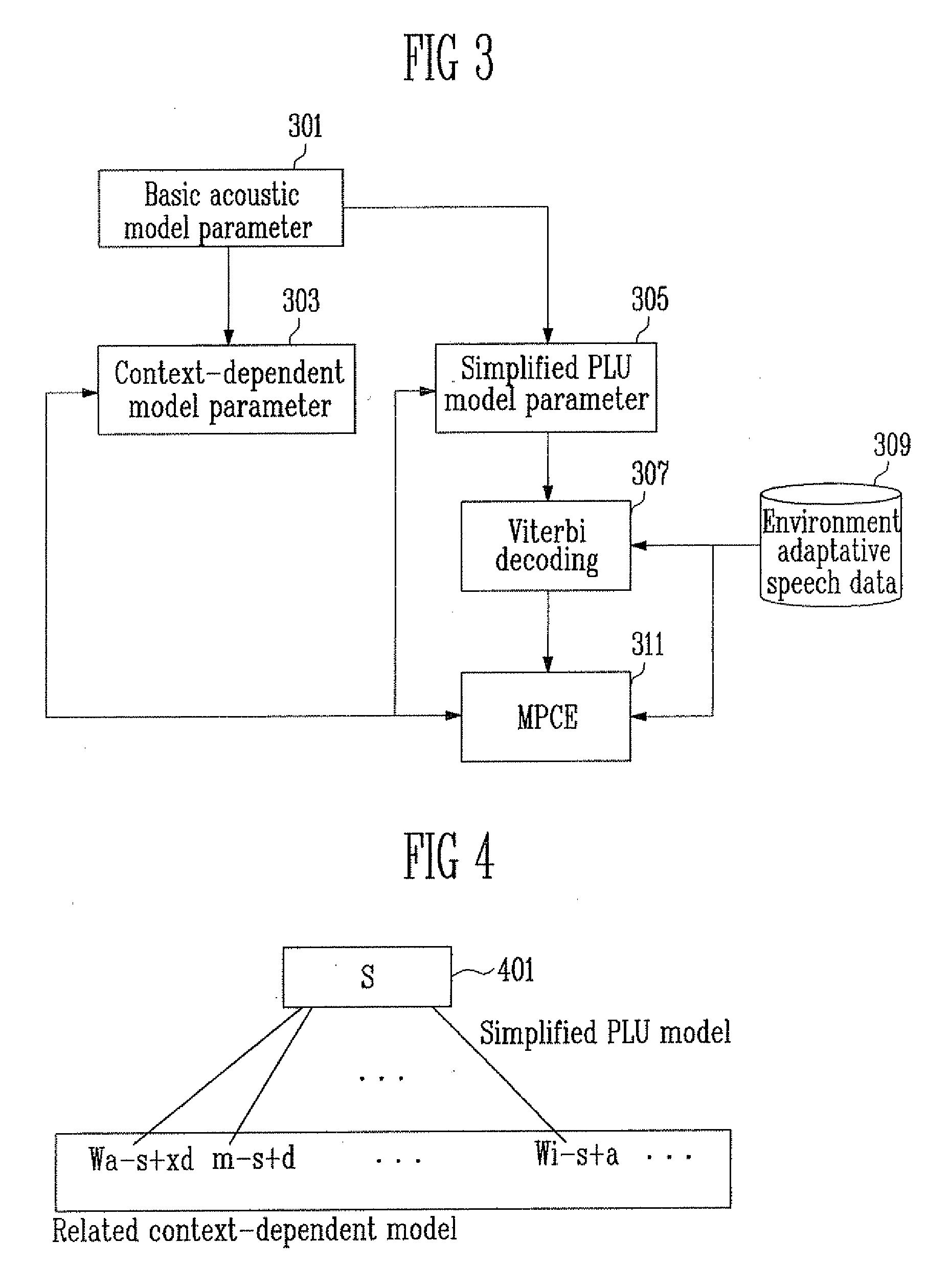

Apparatus and method for generating noise adaptive acoustic model for environment migration including noise adaptive discriminative adaptation method

InactiveUS20090055177A1Improve speech recognition performanceImprove performanceSpeech recognitionSpeech trainingPattern recognition

Provided are an apparatus and method for generating a noise adaptive acoustic model including a noise adaptive discriminative adaptation method. The method includes: generating a baseline model parameter from large-capacity speech training data including various noise environments; and receiving the generated baseline model parameter and applying a discriminative adaptation method to the generated results to generate an migrated acoustic model parameter suitable for an actually applied environment.

Owner:ELECTRONICS & TELECOMM RES INST

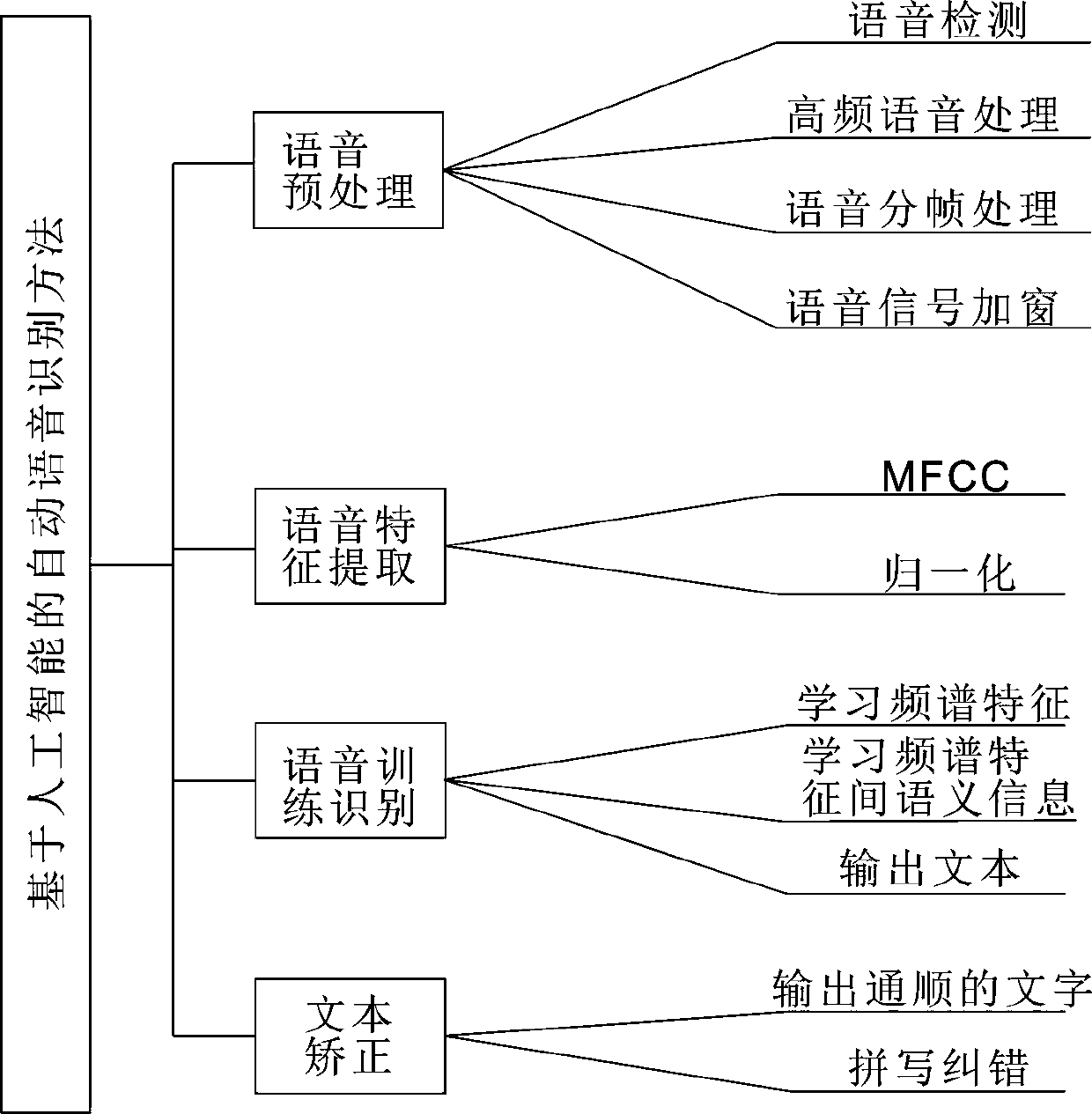

Automatic speech recognition method and automatic speech recognition system based on artificial intelligence

ActiveCN110827801AHigh quality parametersImprove speech processing qualitySpeech recognitionFrequency spectrumRecognition system

The invention discloses an automatic speech recognition method and an automatic speech recognition system based on artificial intelligence. The system mainly comprises four modules of a speech preprocessing module, a speech feature extraction module, a speech training recognition module and a text correction module. According to the system, the speech training recognition module is adopted to learn the speech features and speech corresponding character codes, firstly, convolution learning of frequency spectrum characteristics is carried out through a feature learning layer, then the semantic information among the frequency spectrum features is learned through a semantic learning layer, finally, the comprehensively learned information is decoded through an output layer, and a correspondingtext is output. Therefore, a Chinese character mapping table is directly used for encoding and decoding labels, phoneme encoding and decoding of the text are not needed, then the text is decoded intothe text, and the training process is simplified.

Owner:成都无糖信息技术有限公司

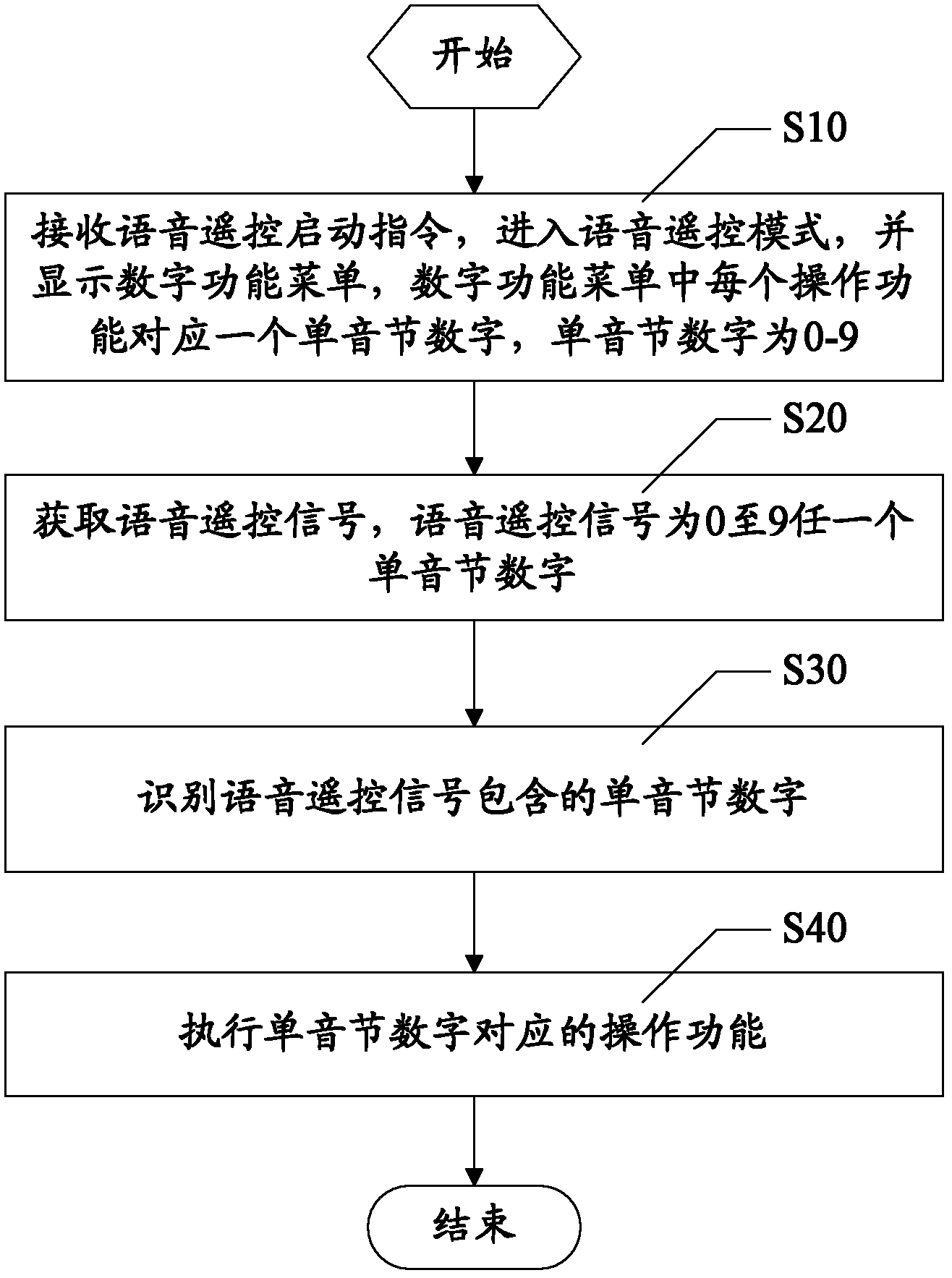

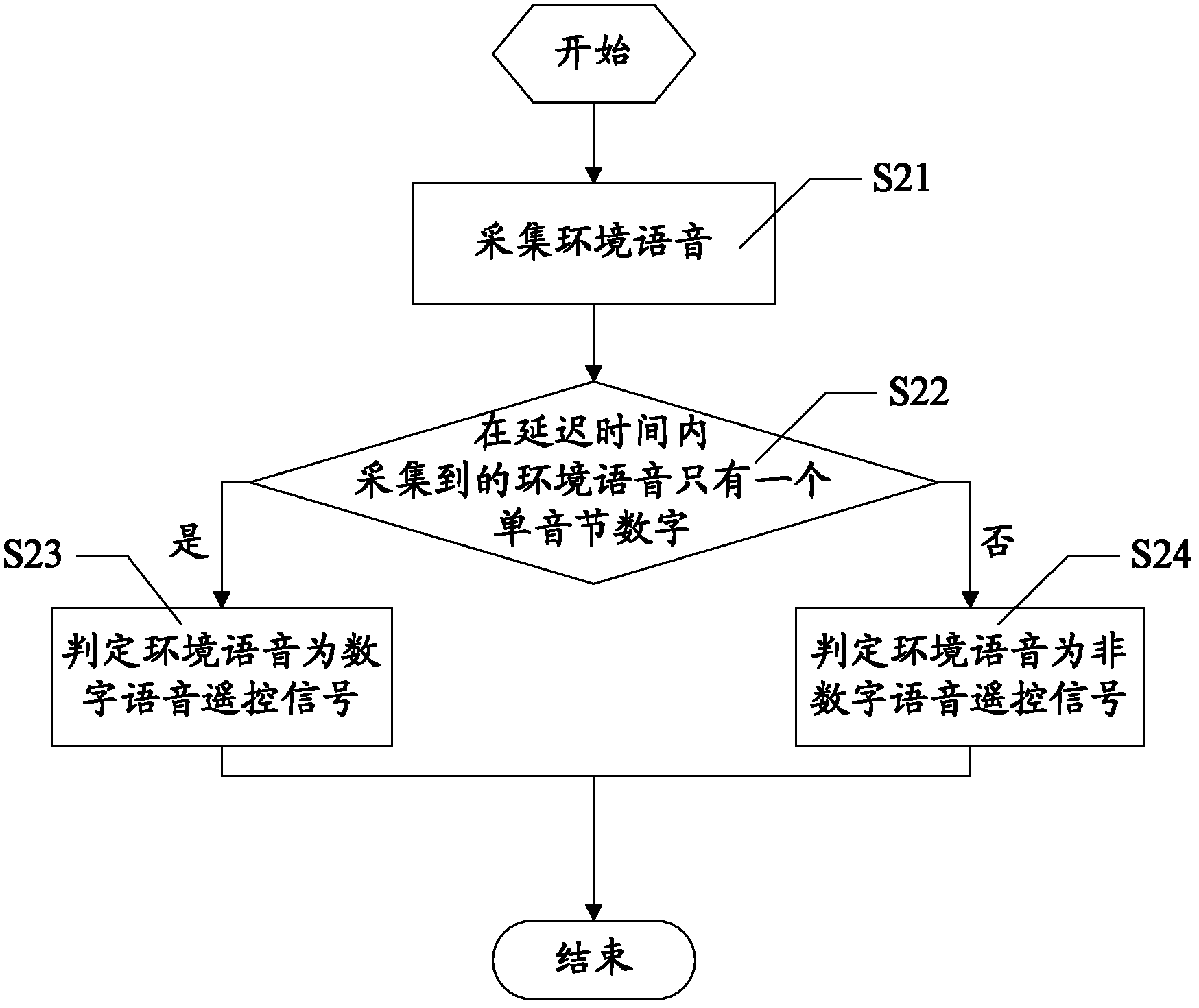

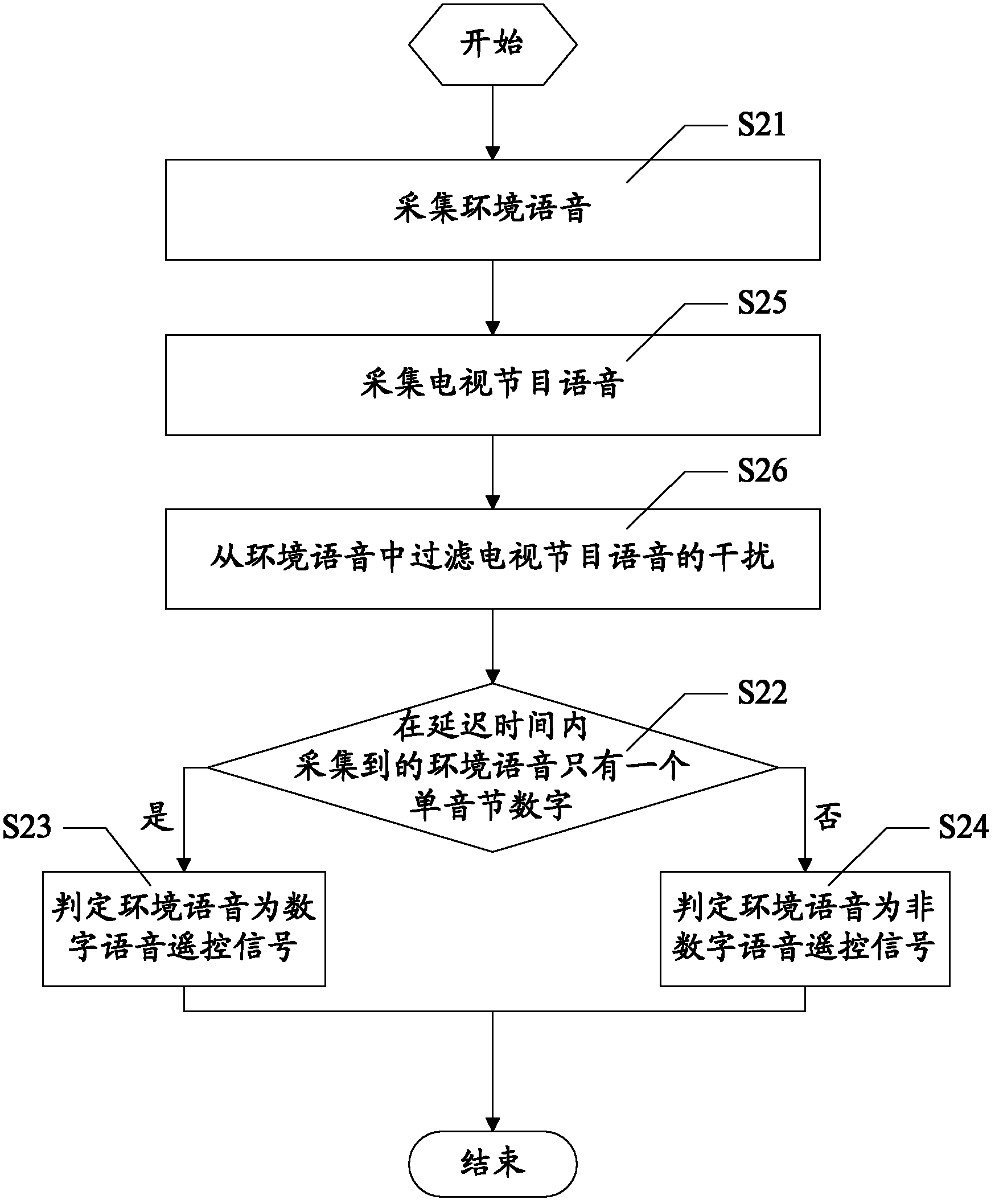

Speech remote control method for television and television

ActiveCN102404522AEasy to identifyImprove work efficiencyTelevision system detailsNon-electrical signal transmission systemsSpeech trainingRepeated speech

The invention discloses a speech remote control method for televisions and a television. The speech remote control method for televisions comprises steps: receiving actuating command of speech remote control to enter speech remote control mode and display digital function menu of which each operating function corresponds to a monosyllabic digit from 0 to 9, obtaining speech remote control signal which is any monosyllabic digit from 0 to 9, identifying the monosyllabic digit contained in the speech remote control signal, and executing corresponding operating function of the monosyllabic digit. The invention adopts very simple monosyllabic digit as speech remote control signal, thus the speech command is easy to identify, which can effectively improve the speed of speech recognition and process without making repeated speech training on the speech command of every user, therefore the speech training workload is reduced, and work efficiency of speech remote control is improved.

Owner:TCL KING ELECTRICAL APPLIANCES HUIZHOU

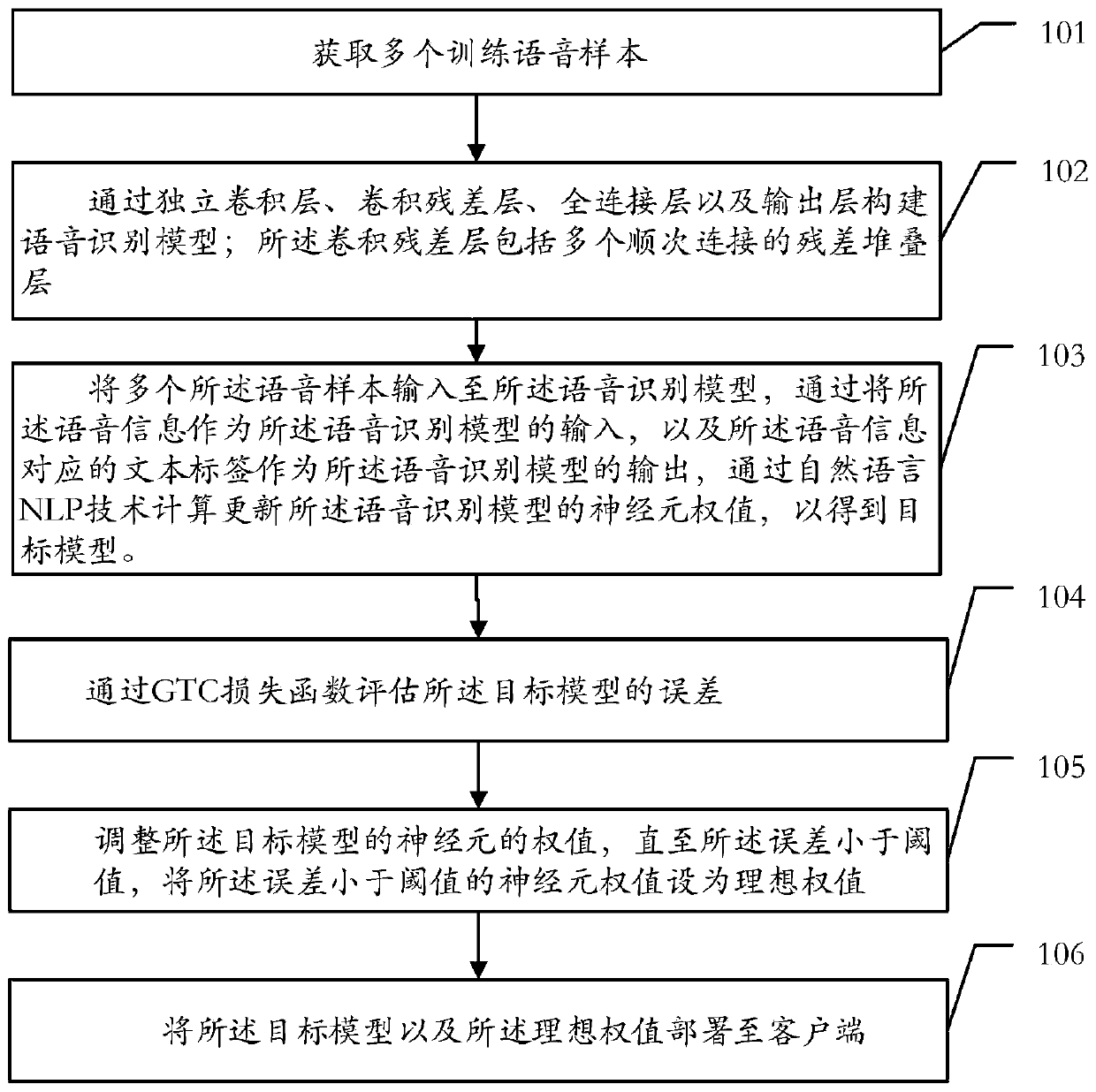

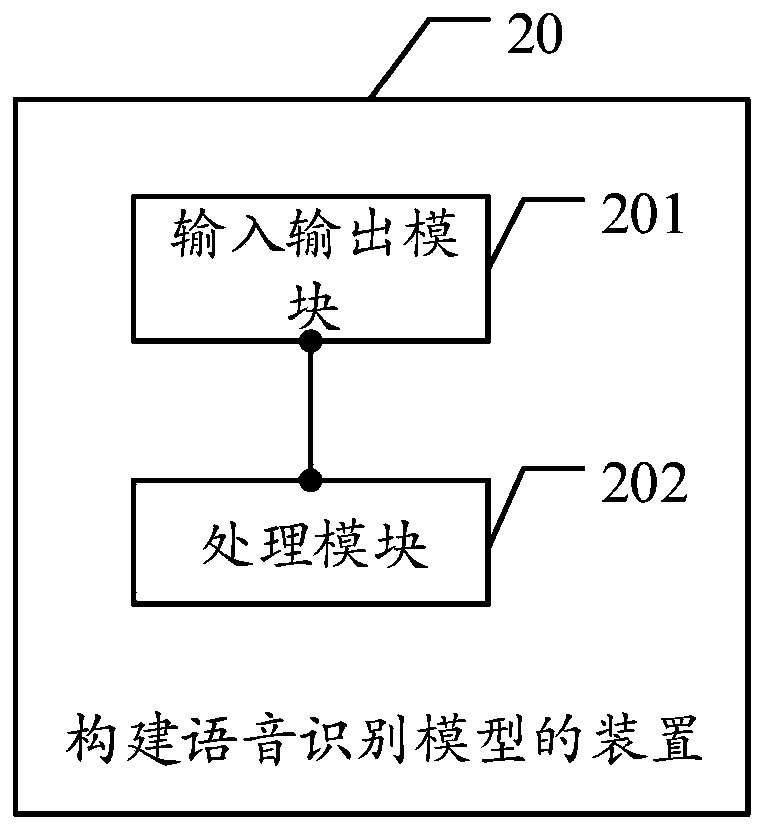

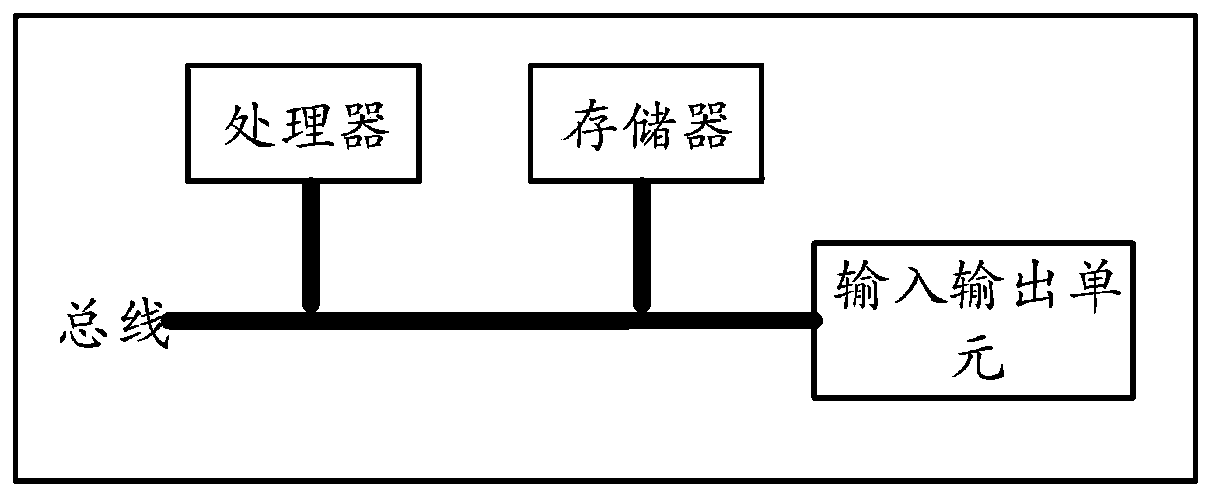

Method, device and equipment for constructing speech recognition model and storage medium

PendingCN110751944AImprove utilization efficiencyImprove accuracySpeech recognitionSpeech trainingEngineering

The present invention relates to the field of artificial intelligence, and provides a method, a device and equipment for constructing a speech recognition model and a storage medium. The method comprises the following steps: acquiring a plurality of training speech samples; constructing the speech recognition model by using an independent convolution layer, a convolution residual layer, a full connection layer and an output layer; inputting speech training information to the speech recognition model, updating a weight value of neurons in the speech recognition model with the speech informationand a text label corresponding to the speech information through a natural language processing NLP technology, and then obtaining a target model; evaluating an error of the target model by L(S) = -ln[Pi]<(h(x),z) being an element of a set S> p(z|h(x))= -sigma<(h(x),z) being an element of a set S> ln p(z|h(x)); adjusting the weight value of the neurons in the target model until the error is less than a threshold value; setting the weight value of the neurons with the error less than the threshold value as an ideal weight value; deploying the target model and the ideal weight value on a client.The method of the present invention reduces influence of tone in the speech information on a predicted text and computation burden during recognition process in the speech recognition model.

Owner:PING AN TECH (SHENZHEN) CO LTD

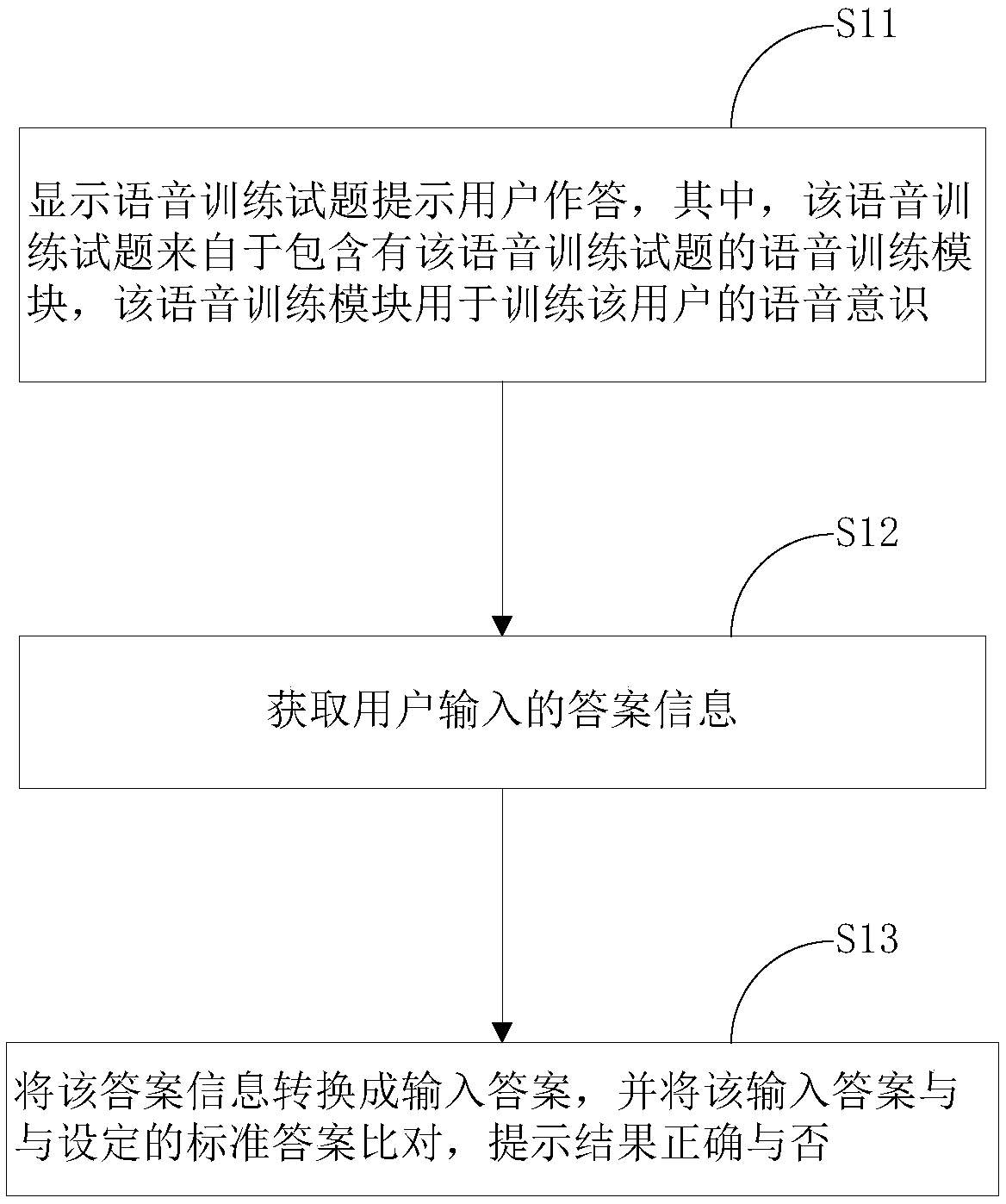

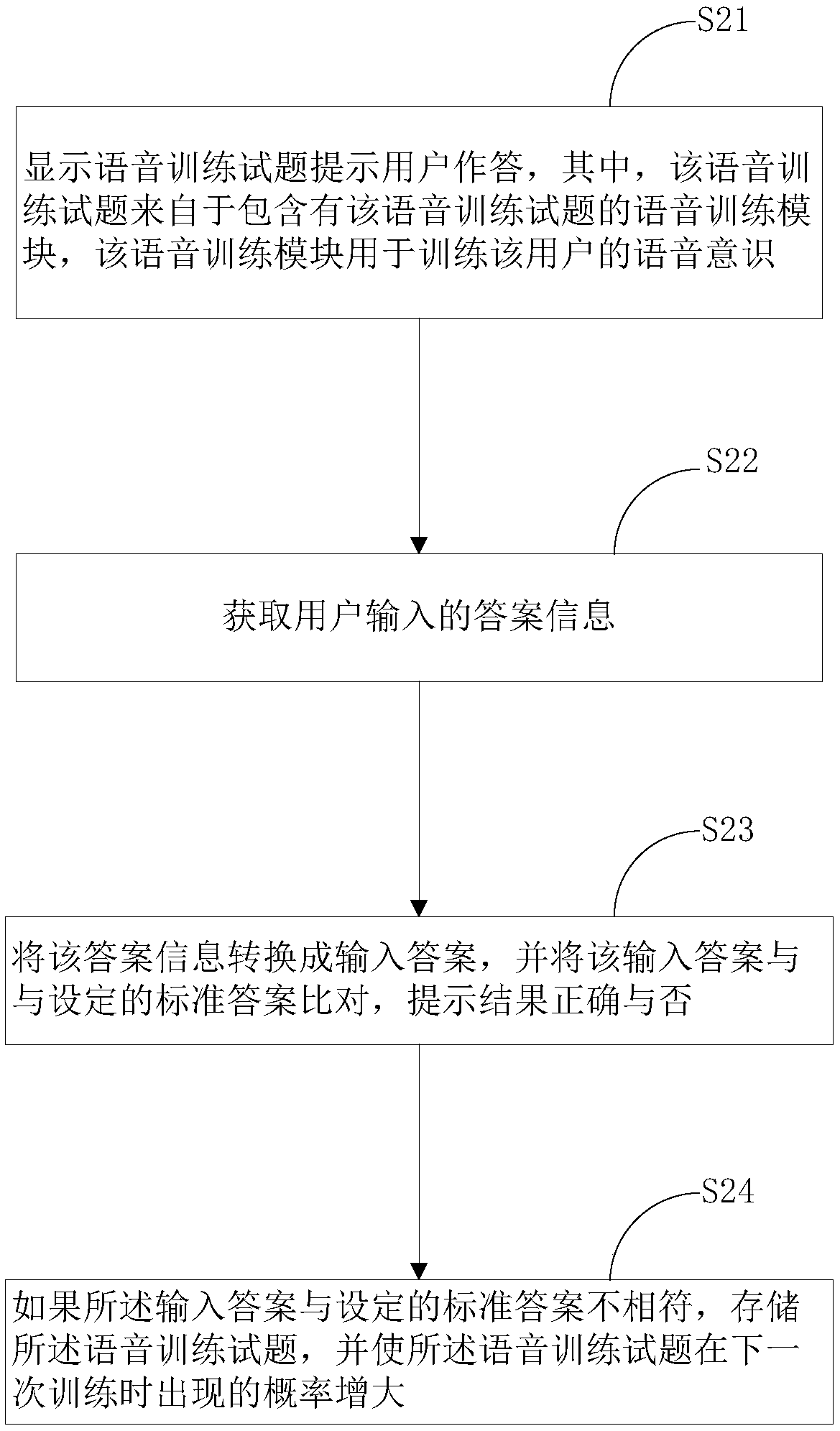

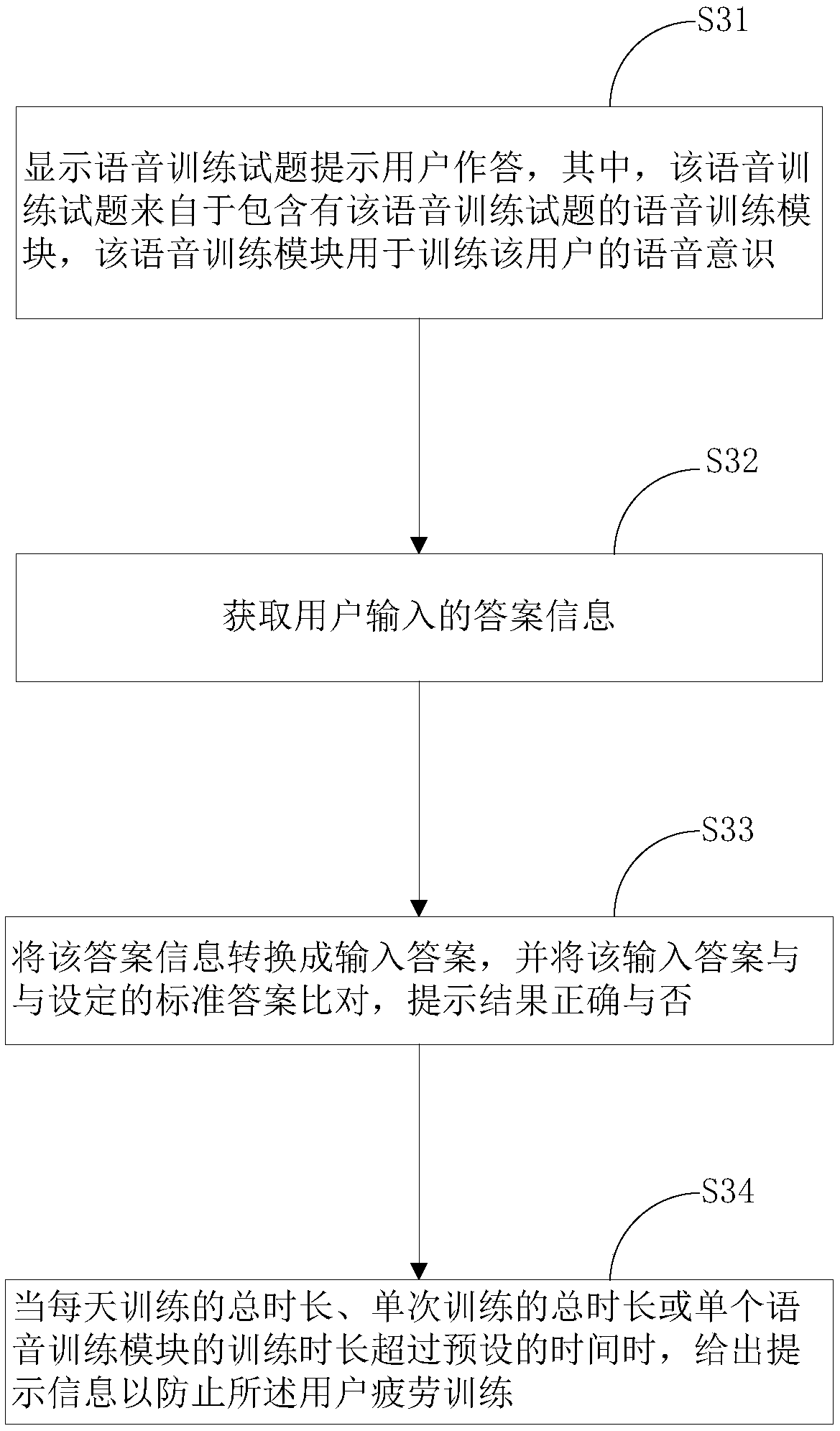

Method and system for Chinese speech training

InactiveCN109410937AImprove pronunciationEasy to identifyData processing applicationsDigital data information retrievalSpeech trainingSoftware system

The invention discloses a method and a system for Chinese speech training. The Chinese speech training method comprises: displaying speech training test questions and prompting a user to answer; obtaining answer information input by a user; converting the answer information into an input answer, and comparing the input answer with a set standard answer, and prompting whether a result is correct ornot, wherein the speech training test questions are from a speech training module including the speech training test questions, and the speech training module is used to train user's voice awareness.The highly targeted speech training test questions are used to perform human-computer interaction speech training on the user. The method and the system overcome technical problems in the prior art of lacking a training method and a software system capable of effectively improving phonological awareness capacity, and are beneficial for improving the user's phonological awareness, thereby furtherimproving user's Chinese reading ability.

Owner:SHENZHEN INST OF NEUROSCIENCE

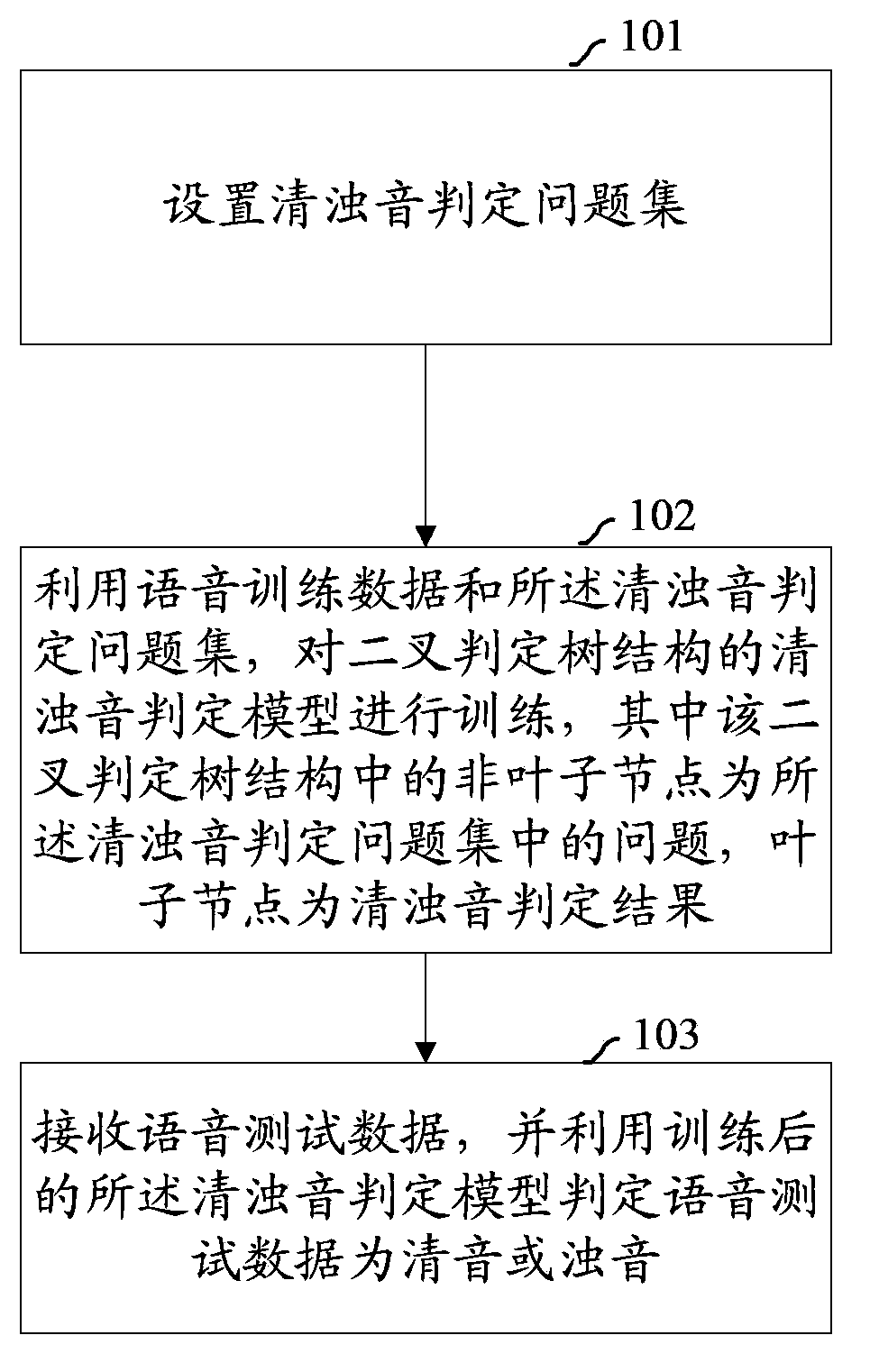

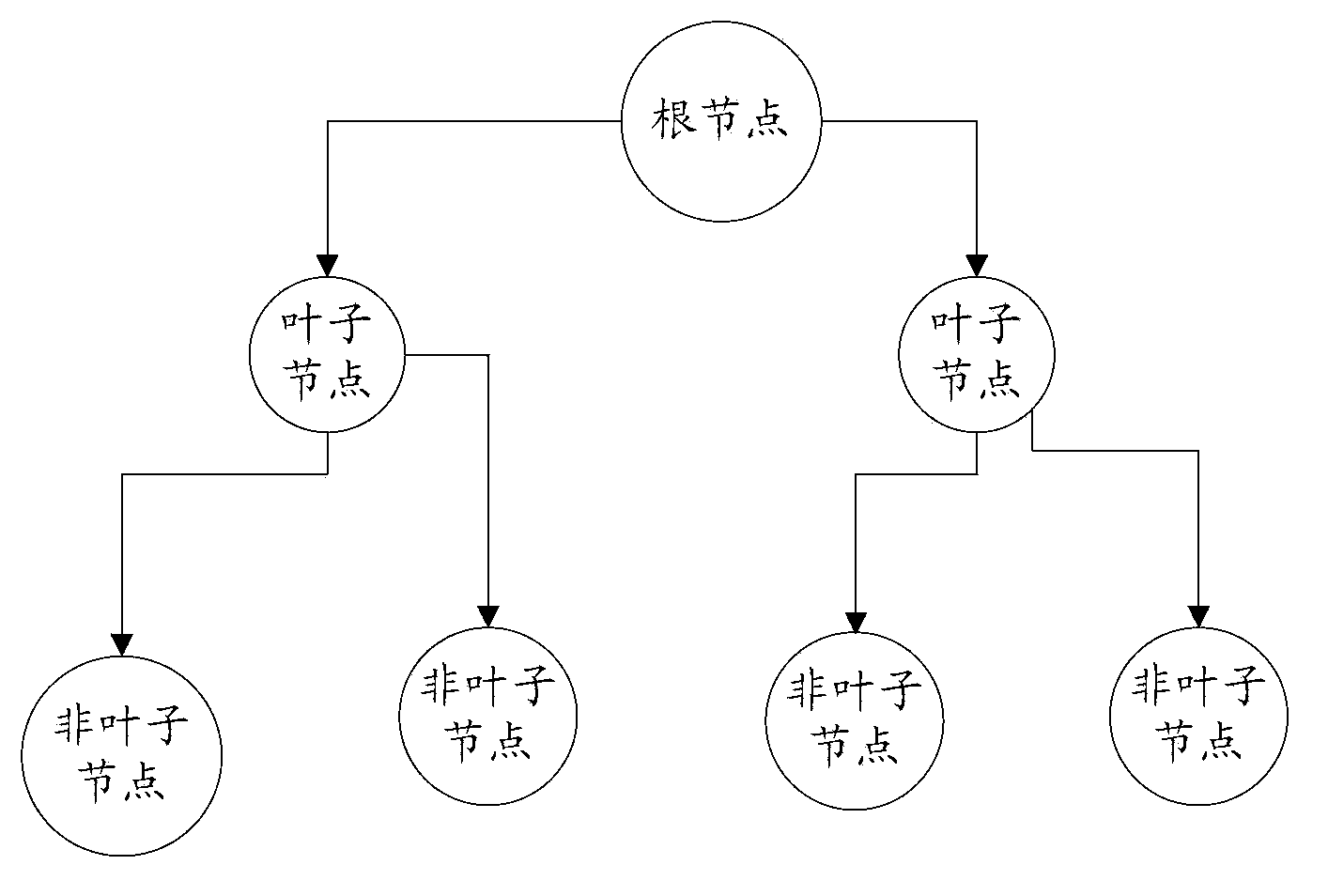

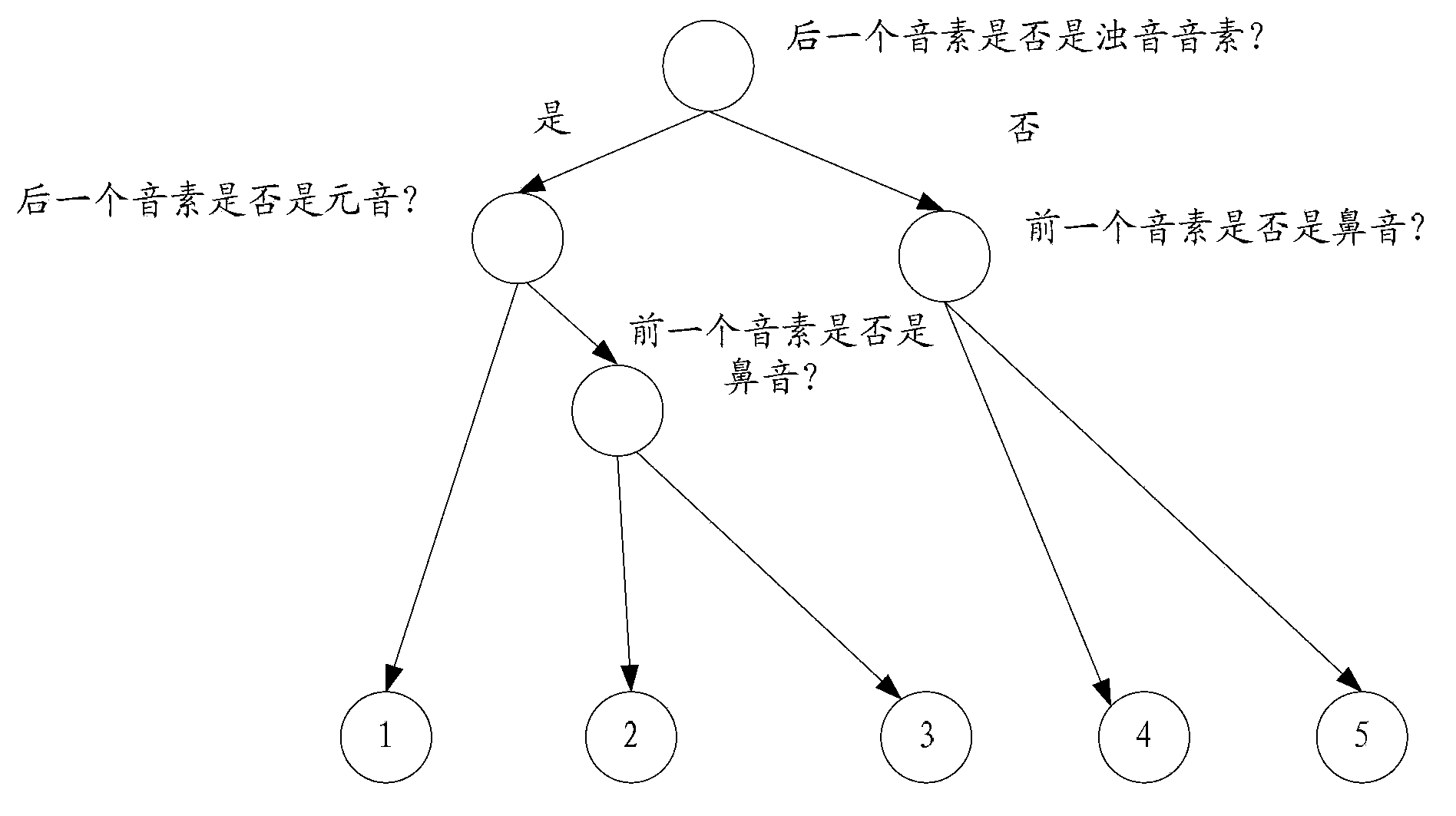

Voiceless sound and voiced sound judging method and device and voice synthesizing system

ActiveCN104143342AImprove the success rate of judgmentSolve the defect of low synthesis effectSpeech synthesisSpeech trainingSpeech synthesis

The embodiment of the invention provides a voiceless sound and voiced sound judging method and device and a voice synthesizing system. The method comprises the steps of setting a voiceless sound and voiced sound judging problem set, utilizing speech training data and the voiceless sound and voiced sound judging problem set to train a voiceless sound and voiced sound judging model of a dichotomia decision tree structure, receiving voice test data and utilizing the trained voiceless sound and voiced sound judging model to judge whether the voice test data are voiceless sound or voiced sound. In addition, non-leaf nodes in the dichotomia decision tree structure are problems in the voiceless sound and voiced sound judging problem set, and leaf nodes in the dichotomia decision tree structure are voiceless sound and voiced sound judging results. The embodiment of the voiceless sound and voiced sound judging method improves the voiceless sound and voiced sound judging success rate and the voice synthesizing quality.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

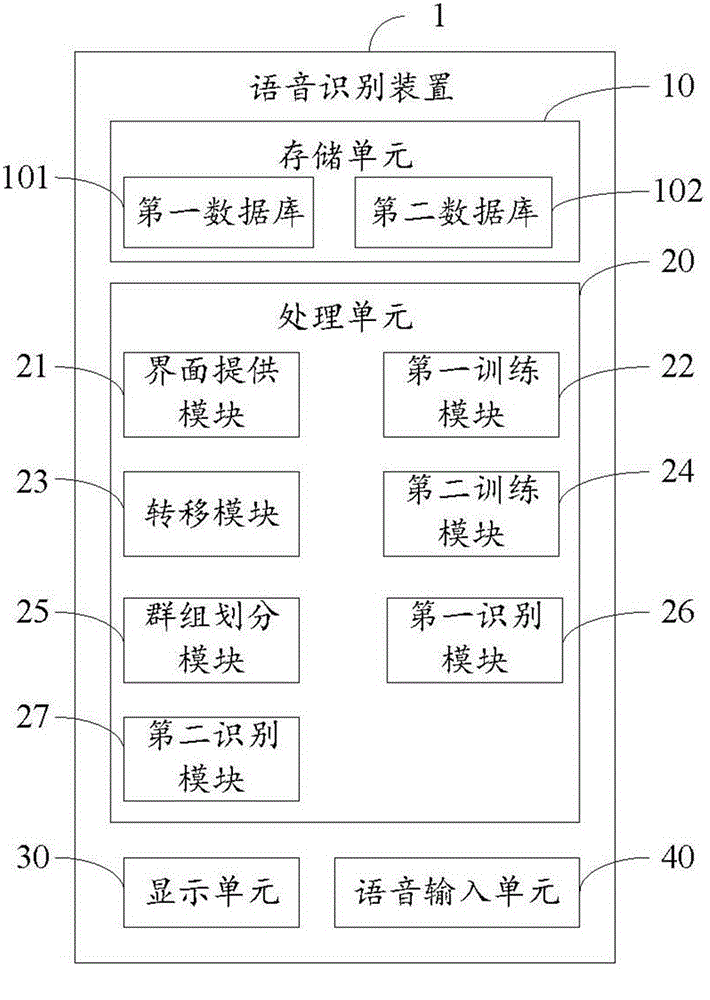

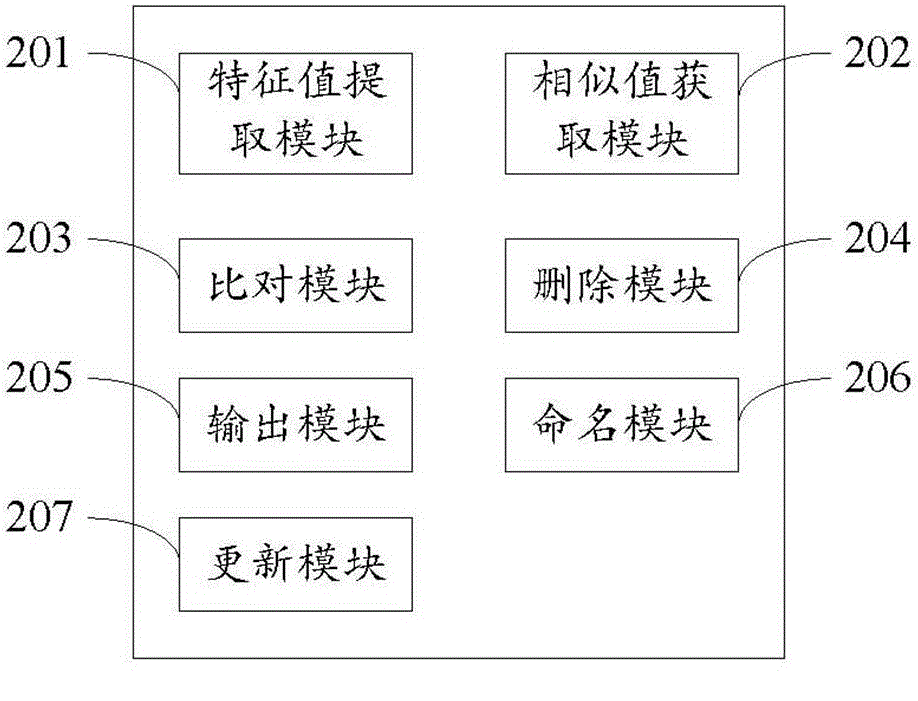

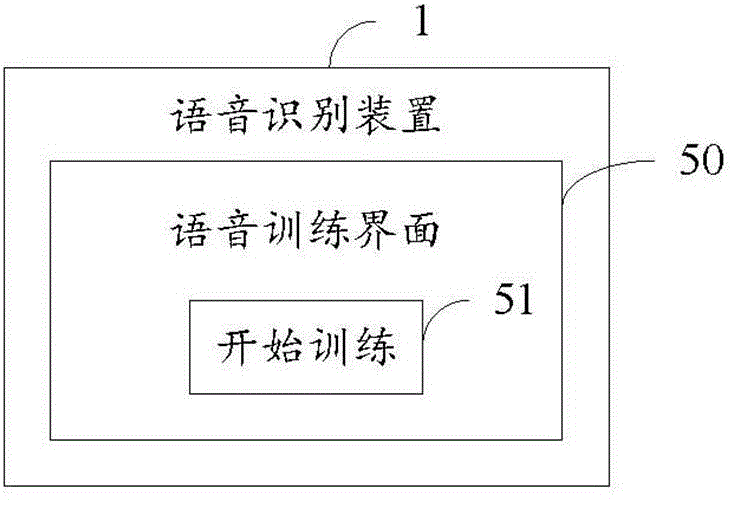

Speech recognition device and speech recognition method

ActiveCN106297775AShorten the timeSpeech recognitionSpecial data processing applicationsSpeech trainingSmall data

The invention provides a speech recognition device and a speech recognition method. The speech recognition device comprises a storage unit and a processing unit. The storage unit is used for storing a first database and a second database. The first database is used for storing a preset number of sections of speeches, a characteristic value of every section of speech, and speech characteristic mean value of various users. The second database is used for storing historical speech data. The processing unit comprises a first training module, a transfer module, and a second training module. When a section of speech is newly stored in the first database, the speech training of all of the speeches including the newly stored section of speech is carried out. When the speech training of all of the speeches is finished, the earliest stored section of speech in the first database is transferred to the second database for storage. When the earliest stored section of speech in the first database is transferred to the second database for the storage, the speech training of all of the speeches in the second database is carried out. The speech training is carried out in the first database having a small data size, and therefore the time consumption of the speech training is reduced.

Owner:FU TAI HUA IND SHENZHEN +1

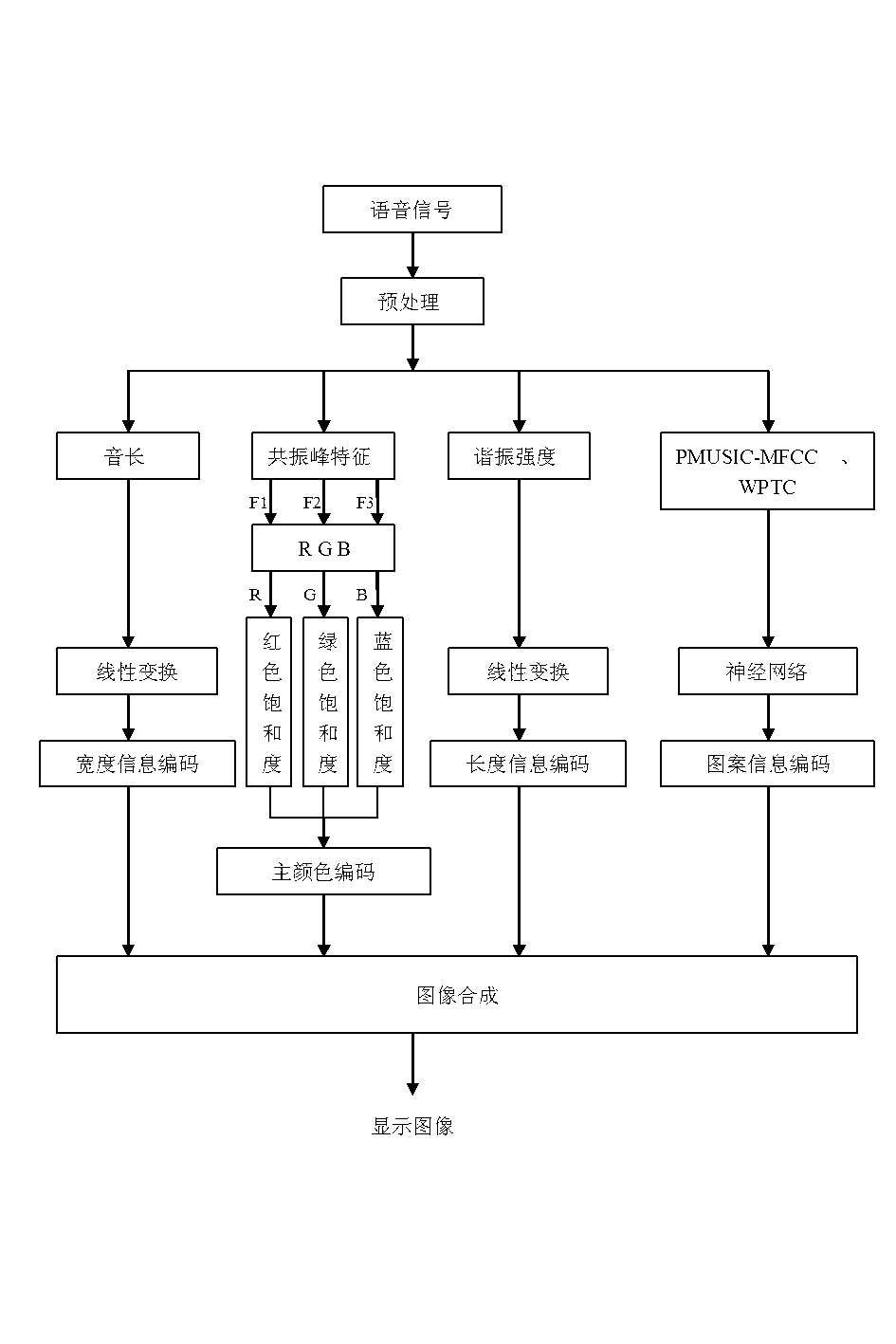

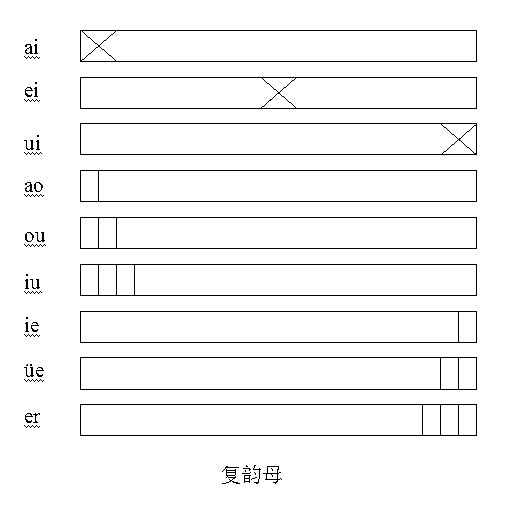

Chinese initial and final visualization method based on combination feature

InactiveCN102820037AMake up for indiscernibilityMake up for the shortcomings of memorySpeech analysisSpeech trainingAlgorithm

The invention relates to a Chinese initial and final visualization method based on a combination feature, which comprises the steps of: pre-processing a voice signal; calculating the frame number of the pre-processed voice signal as a length feature, representing a resonance strength feature by correlation of a frequency domain peak amplitude and an average amplitude to obtain a resonant peak feature value of each frame signal, and calculating robust feature parameters WPTC1-WPTC20 and PMUSIC-MFCC1-PMUSIC-MFCC12; respectively encoding image width information and image length information by the length feature and the resonance strength feature; encoding the main color information by the resonant peak feature; enabling 32 feature parameters to serve as input of a neural network and the output of the neural network to be corresponding pattern information, wherein the output corresponds to 23 initials and 24 finals sequentially; and fusing the width, length, main color and pattern information in an image and displaying the image on a display screen. The Chinese initial and final visualization method has the advantages that the Chinese initial and final visualization method based on the combination feature is helpful for deaf-mutes for speech training to establish and improve auditory perception and form correct speed reflection so as to recover the speed function of the deaf-mutes.

Owner:BOHAI UNIV

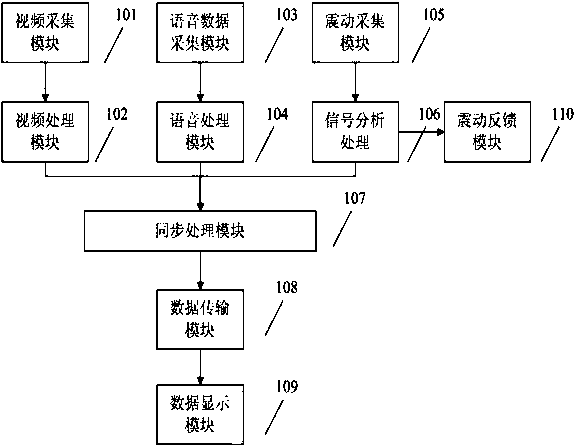

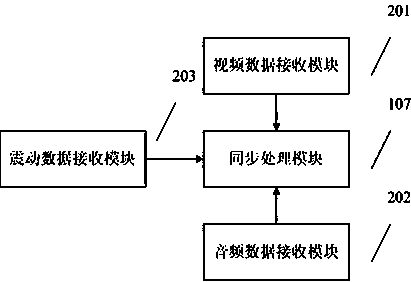

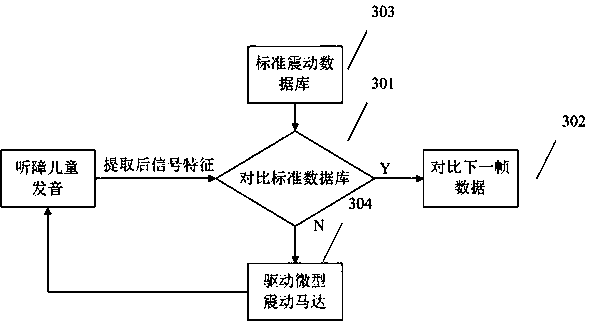

Vibration feedback system and device for speech rehabilitation

InactiveCN108320625AImprove the effect of rehabilitation trainingSolve the problem that the rehabilitation training teacher needs to touch the hand to know that the vibration of the vocal cords needs to occurSpeech analysisAcquiring/recognising facial featuresData displaySpeech rehabilitation

The invention relates to a vibration feedback system and device for speech rehabilitation. The vibration feedback system and device comprise a video acquisition module, a video processing module, a speech acquisition module, a speech processing module, a synchronous processing module, a data transmission module, a data display module, a vibration acquisition module, a signal processing module anda vibration feedback module. The invention provides an audio and video recognition system; meanwhile, the video acquisition module and the vibration feedback module are added to assist deaf students in a visual three-dimensional human face speech production model and vocal cord vibration simulation during hearing-speech rehabilitation training; the vibration feedback module can feed back the vocalcord vibrating condition in the speech production process of deaf children and gives feedback; the vibration feedback system is a rehabilitation system fusing mentor simulation of speech production process by three-dimensional virtual speech, vocal cord vibrating simulation and vibration information feedback and assisting speech training of the deaf students.

Owner:CHANGCHUN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com