Patents

Literature

1021 results about "Syllable" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A syllable is a unit of organization for a sequence of speech sounds. It is typically made up of a syllable nucleus (most often a vowel) with optional initial and final margins (typically, consonants). Syllables are often considered the phonological "building blocks" of words. They can influence the rhythm of a language, its prosody, its poetic metre and its stress patterns. Speech can usually be divided up into a whole number of syllables: for example, the word ignite is composed of two syllables: ig and nite.

Dynamic speech sharpening

ActiveUS7634409B2Enhancing automated speech interpretationImprove accuracySpeech recognitionSyllableVerbal expression

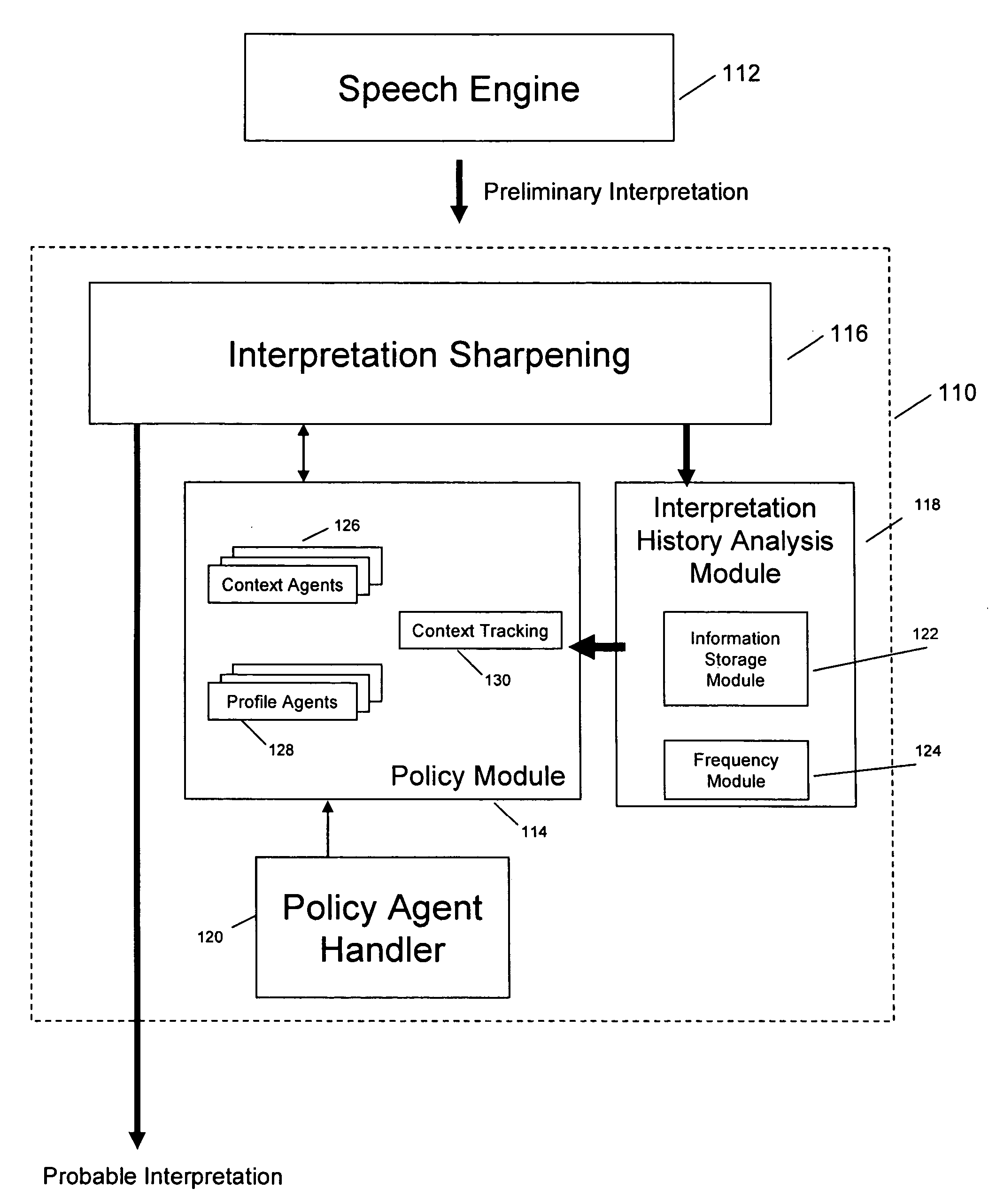

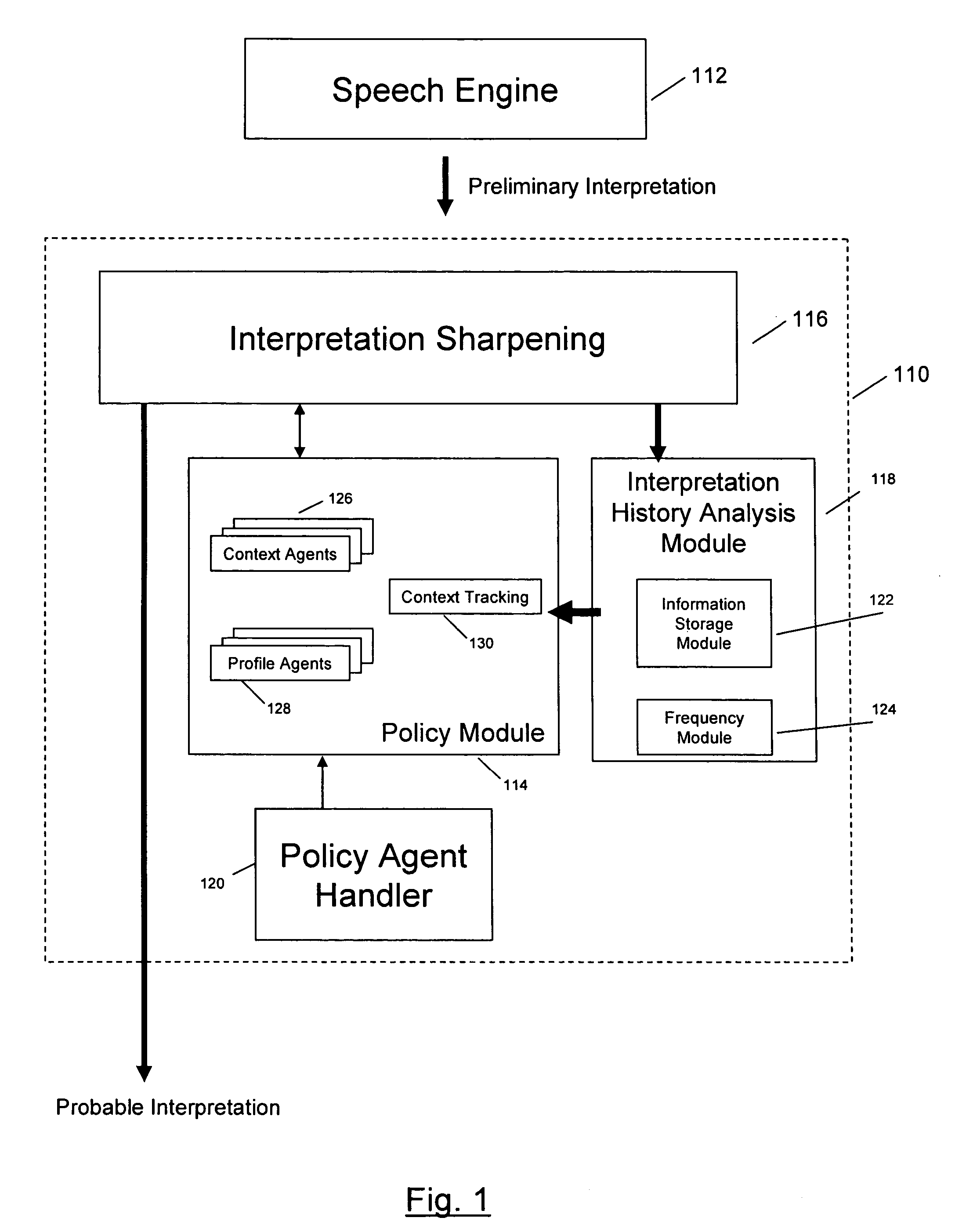

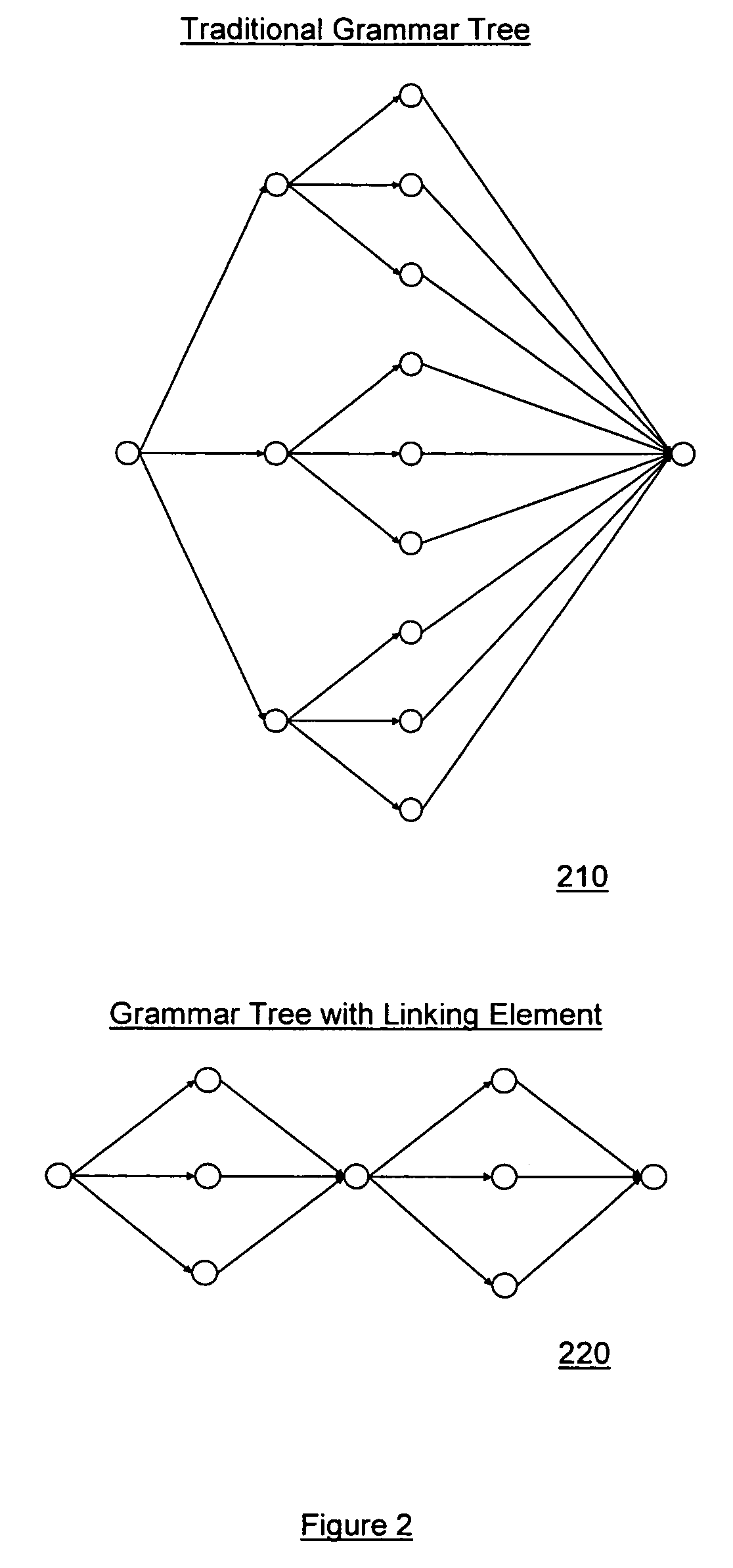

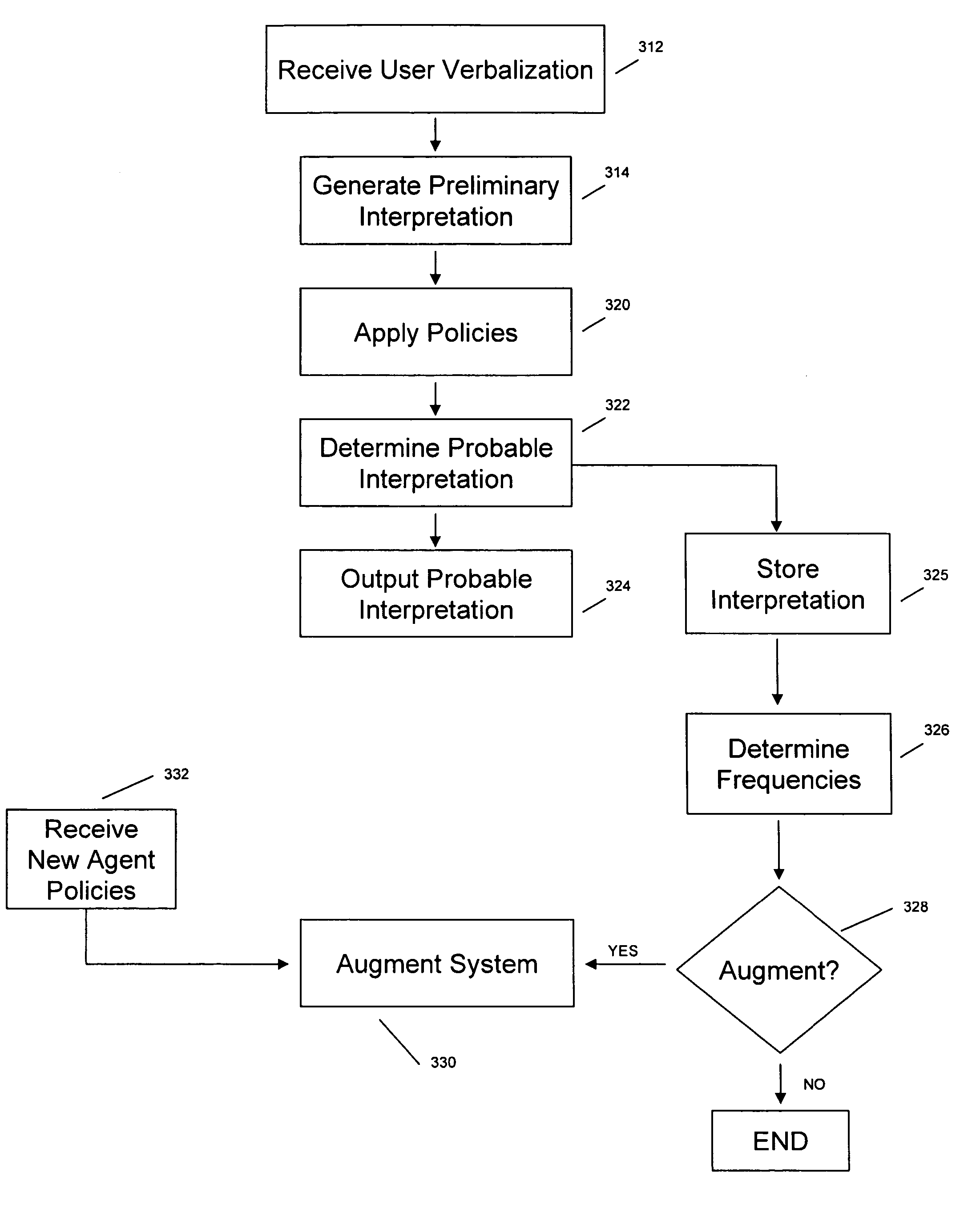

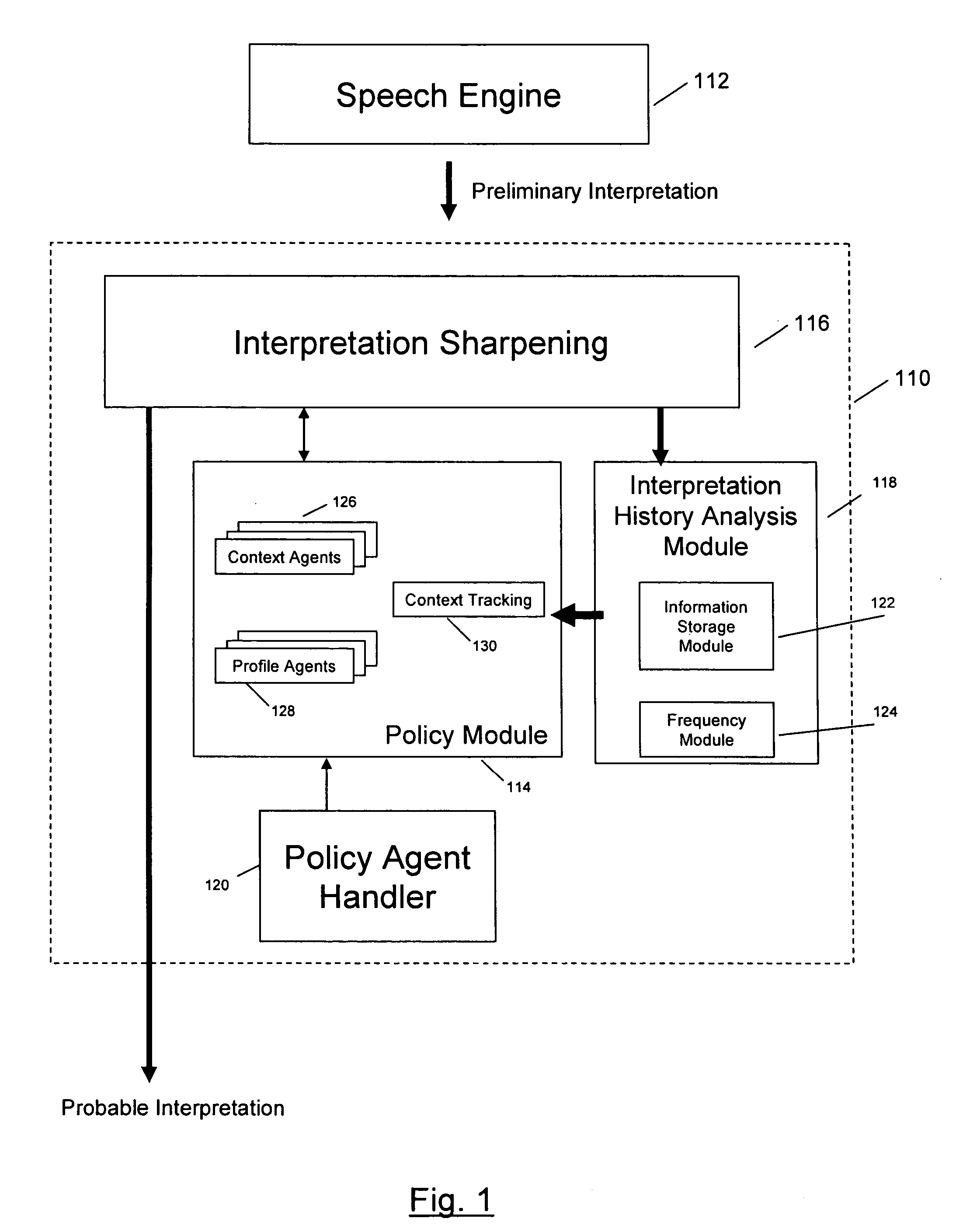

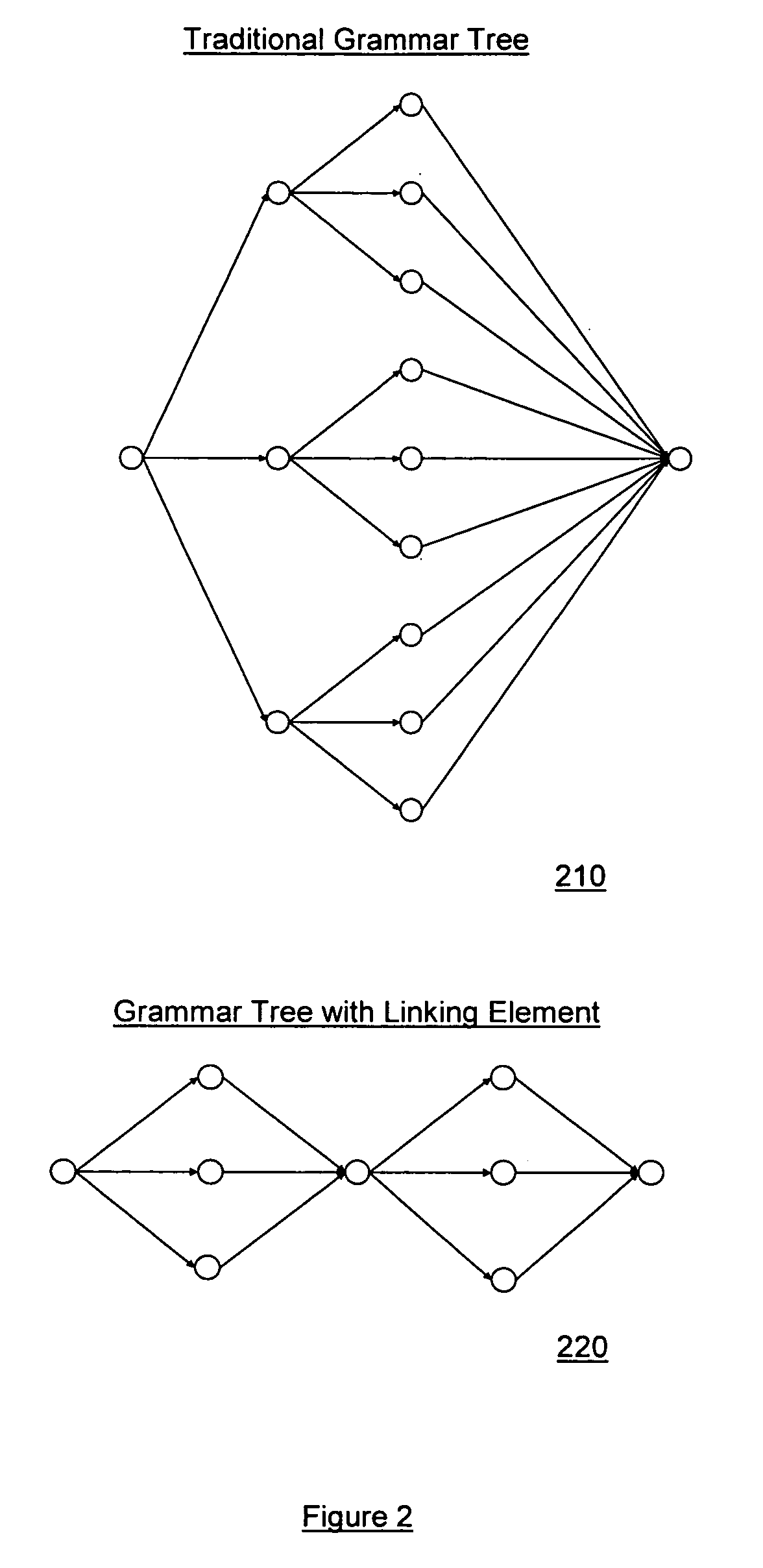

An enhanced system for speech interpretation is provided. The system may include receiving a user verbalization and generating one or more preliminary interpretations of the verbalization by identifying one or more phonemes in the verbalization. An acoustic grammar may be used to map the phonemes to syllables or words, and the acoustic grammar may include one or more linking elements to reduce a search space associated with the grammar. The preliminary interpretations may be subject to various post-processing techniques to sharpen accuracy of the preliminary interpretation. A heuristic model may assign weights to various parameters based on a context, a user profile, or other domain knowledge. A probable interpretation may be identified based on a confidence score for each of a set of candidate interpretations generated by the heuristic model. The model may be augmented or updated based on various information associated with the interpretation of the verbalization.

Owner:DIALECT LLC

Generating large units of graphonemes with mutual information criterion for letter to sound conversion

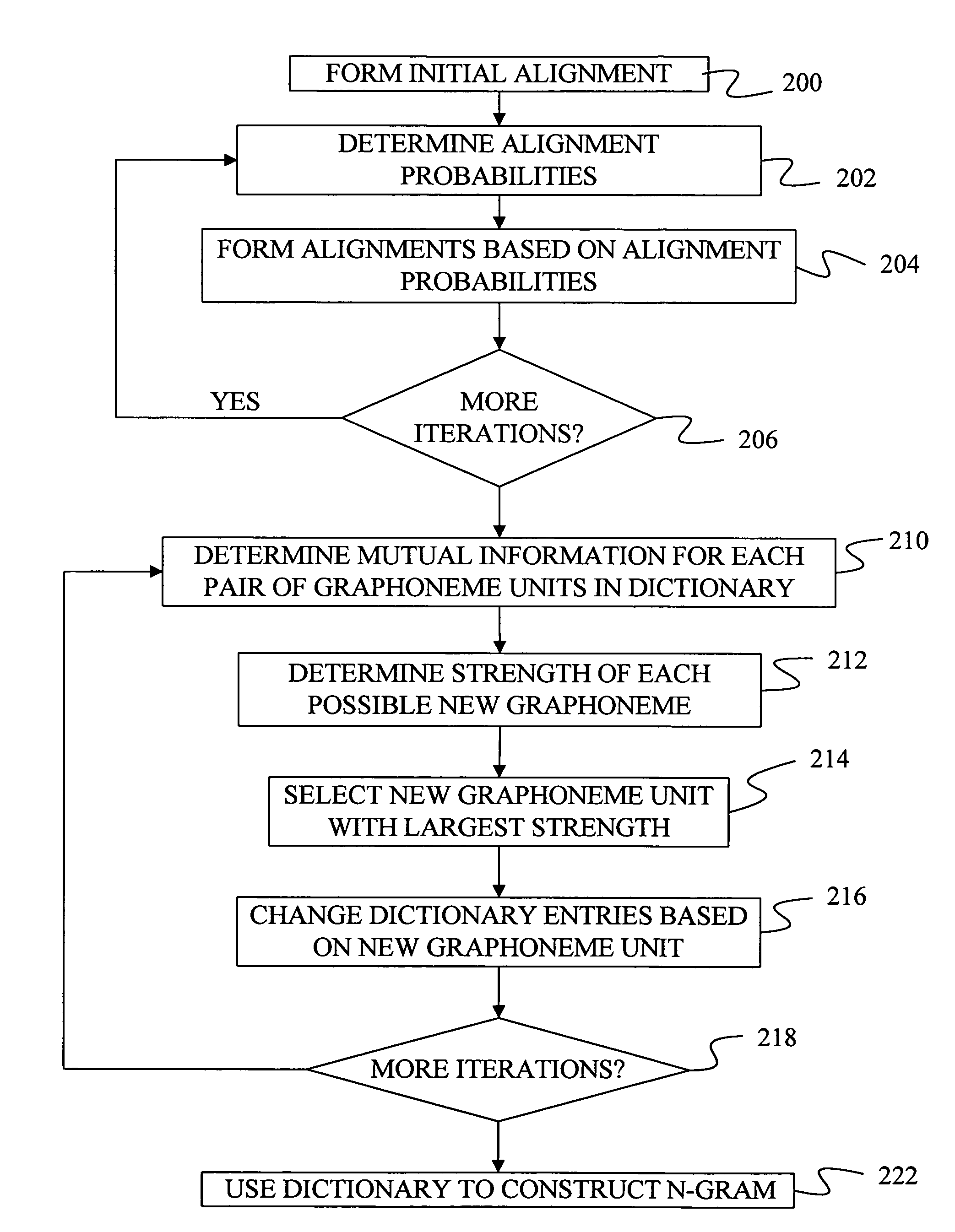

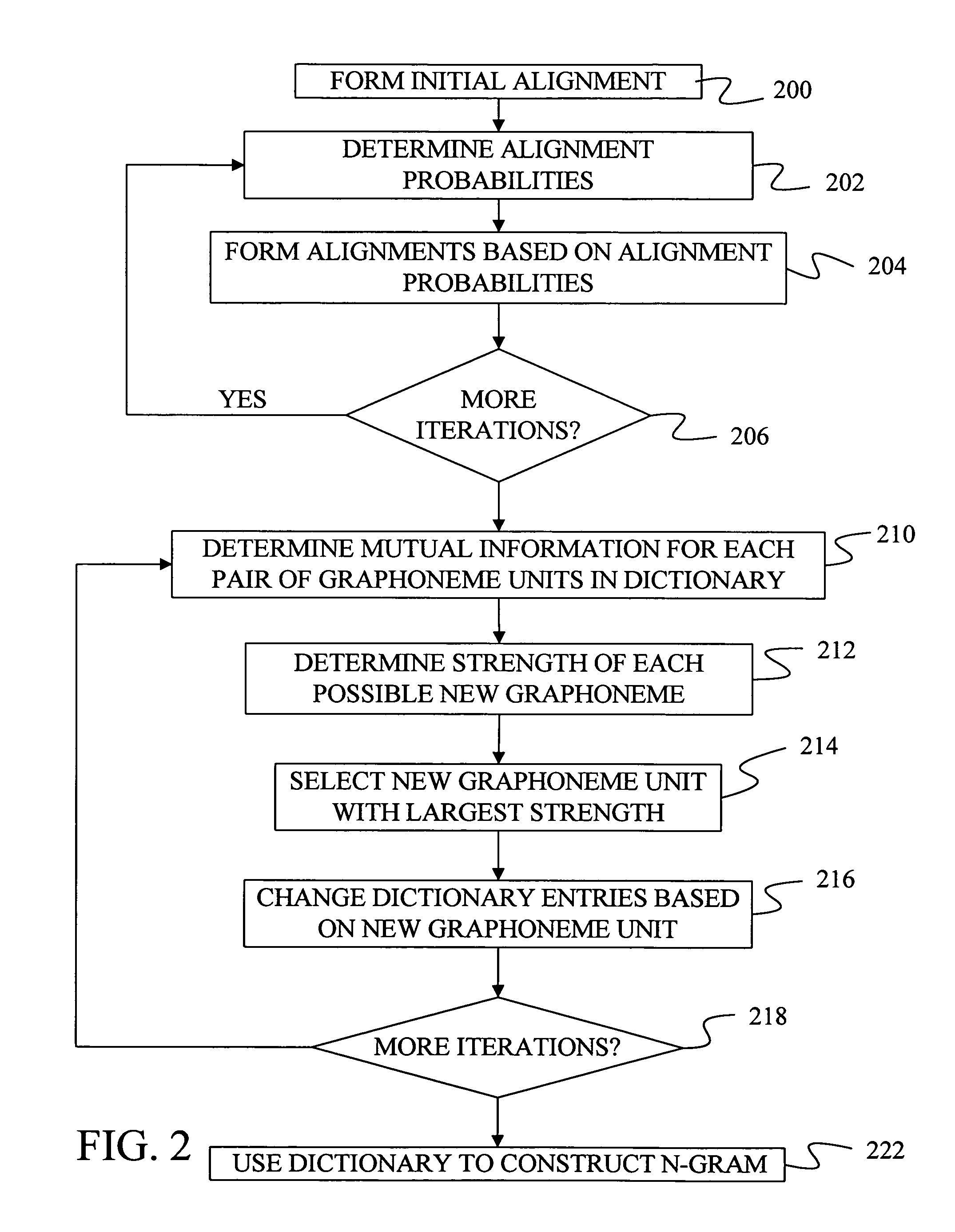

A method and apparatus are provided for segmenting words into component parts. Under the invention, mutual information scores for pairs of graphoneme units found in a set of words are determined. Each graphoneme unit includes at least one letter. The graphoneme units of one pair of graphoneme units are combined based on the mutual information score. This forms a new graphoneme unit. Under one aspect of the invention, a syllable n-gram model is trained based on words that have been segmented into syllables using mutual information. The syllable n-gram model is used to segment a phonetic representation of a new word into syllables. Similarly, an inventory of morphemes is formed using mutual information and a morpheme n-gram is trained that can be used to segment a new word into a sequence of morphemes.

Owner:MICROSOFT TECH LICENSING LLC

Dynamic speech sharpening

ActiveUS20070055525A1Enhancing automated speech interpretationImprove accuracySpeech recognitionSyllableContext based

An enhanced system for speech interpretation is provided. The system may include receiving a user verbalization and generating one or more preliminary interpretations of the verbalization by identifying one or more phonemes in the verbalization. An acoustic grammar may be used to map the phonemes to syllables or words, and the acoustic grammar may include one or more linking elements to reduce a search space associated with the grammar. The preliminary interpretations may be subject to various post-processing techniques to sharpen accuracy of the preliminary interpretation. A heuristic model may assign weights to various parameters based on a context, a user profile, or other domain knowledge. A probable interpretation may be identified based on a confidence score for each of a set of candidate interpretations generated by the heuristic model. The model may be augmented or updated based on various information associated with the interpretation of the verbalization.

Owner:DIALECT LLC

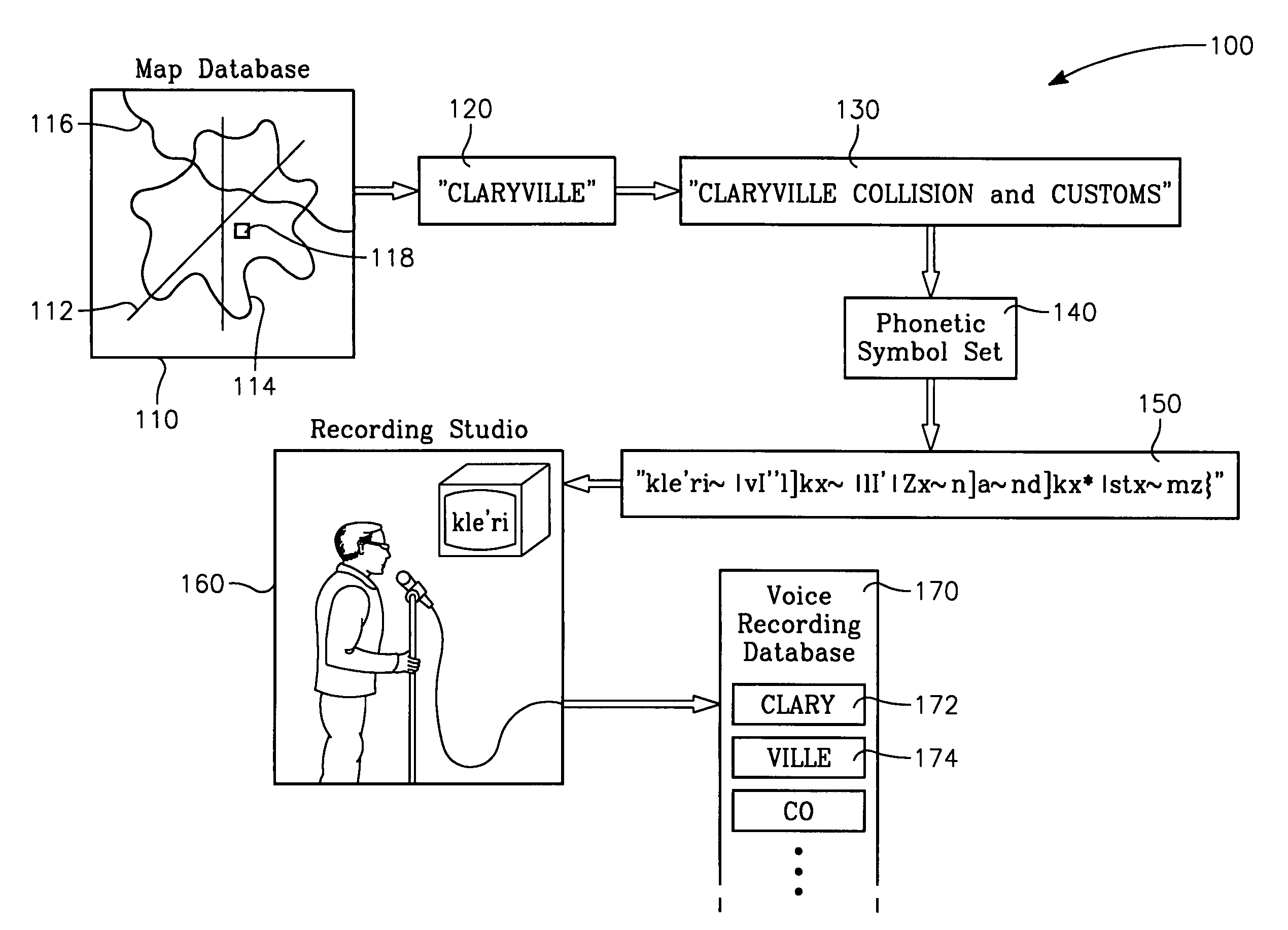

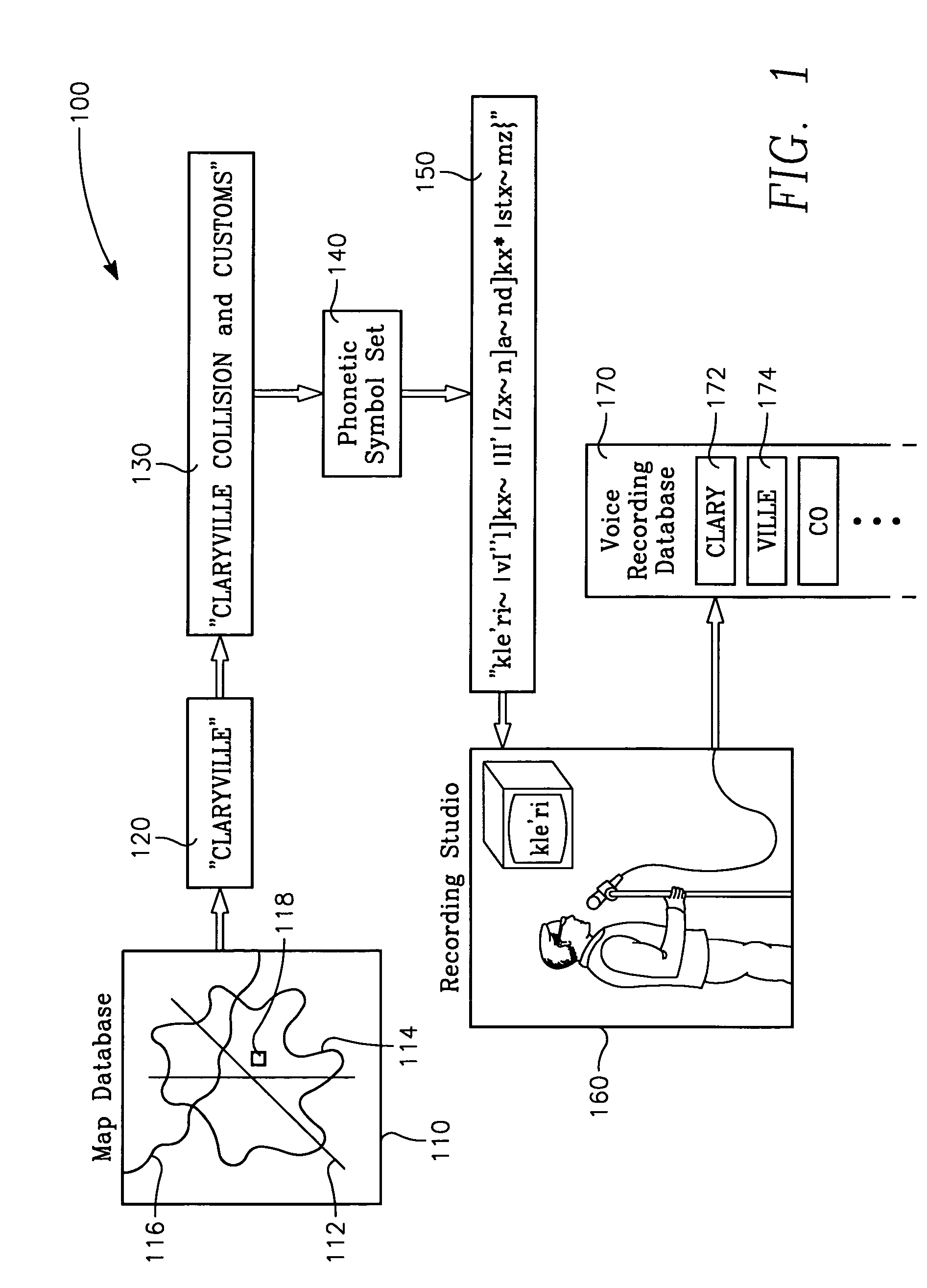

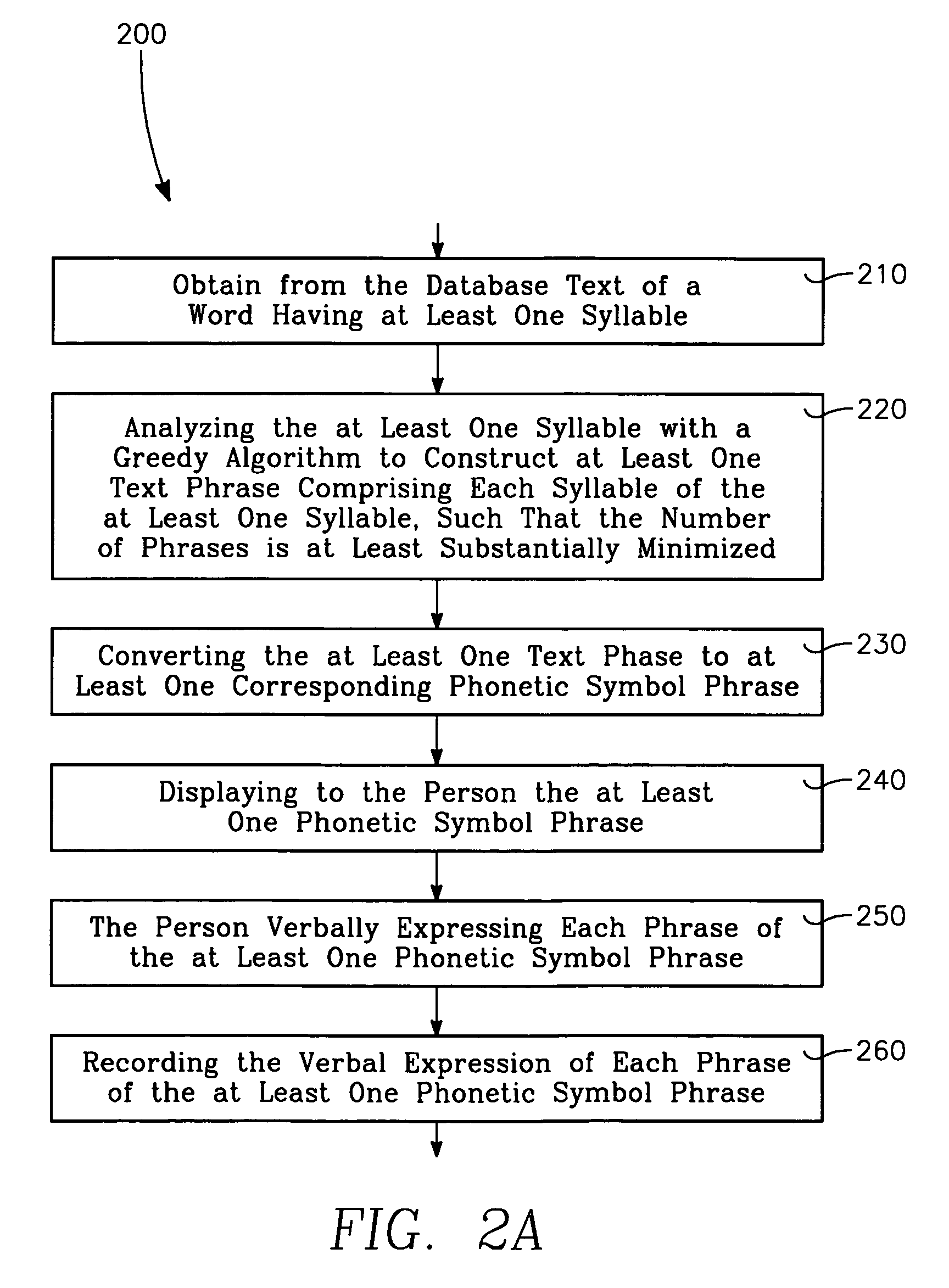

Voice recording tool for creating database used in text to speech synthesis system

A method records verbal expressions of a person for use in a vehicle navigation system. The vehicle navigation system has a database including a map and text describing street names and points of interest of the map. The method includes the steps of obtaining from the database text of a word having at least one syllable, analyzing the syllable with a greedy algorithm to construct at least one text phrase comprising each syllable, such that the number of phrases is substantially minimized, converting the text phrase to at least one corresponding phonetic symbol phrase, displaying to the person the phonetic symbol phrase, the person verbally expressing each phrase of the phonetic symbol phrase, and recording the verbal expression of each phrase of the phonetic symbol phrase.

Owner:ALPINE ELECTRONICS INC

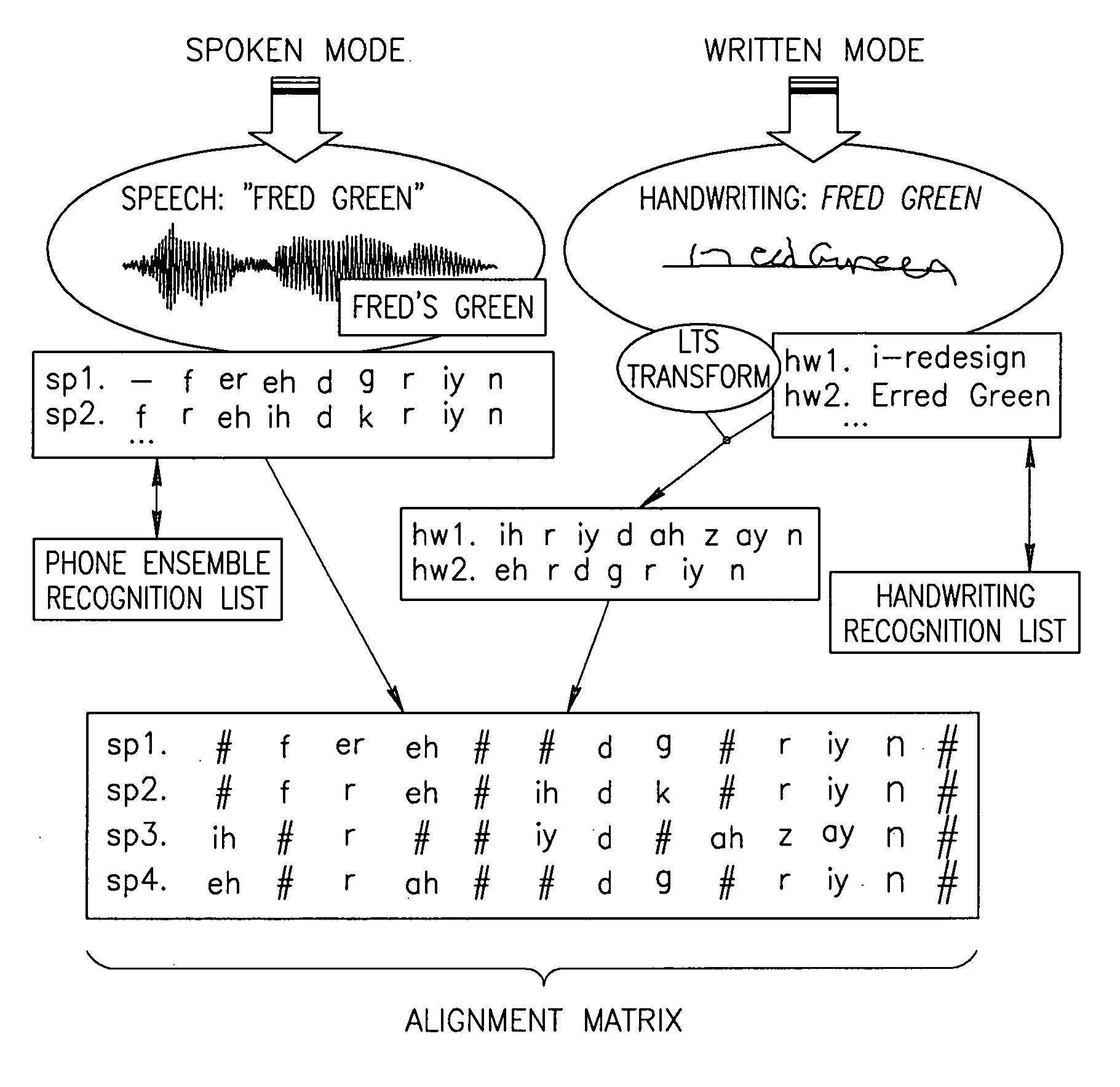

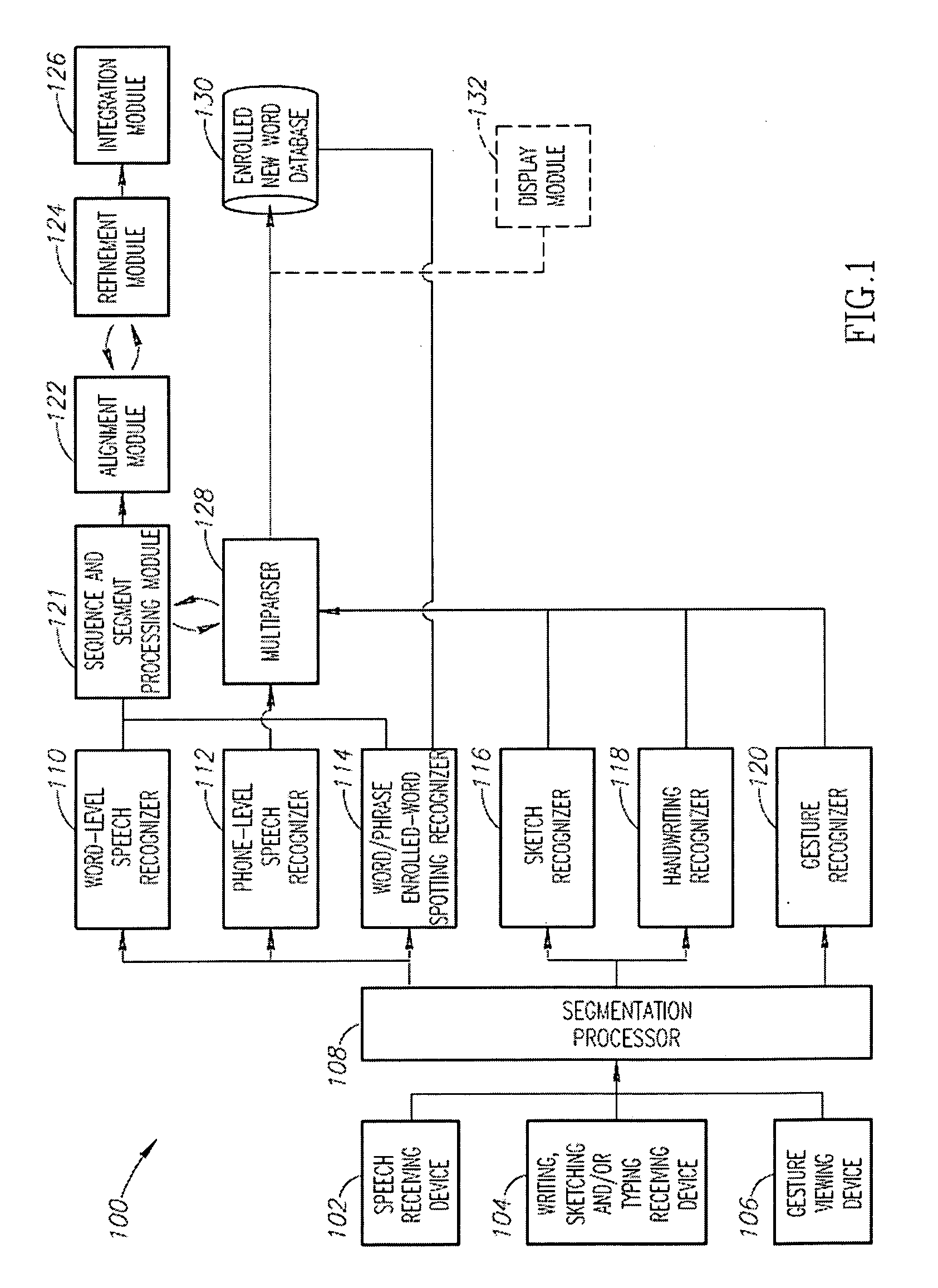

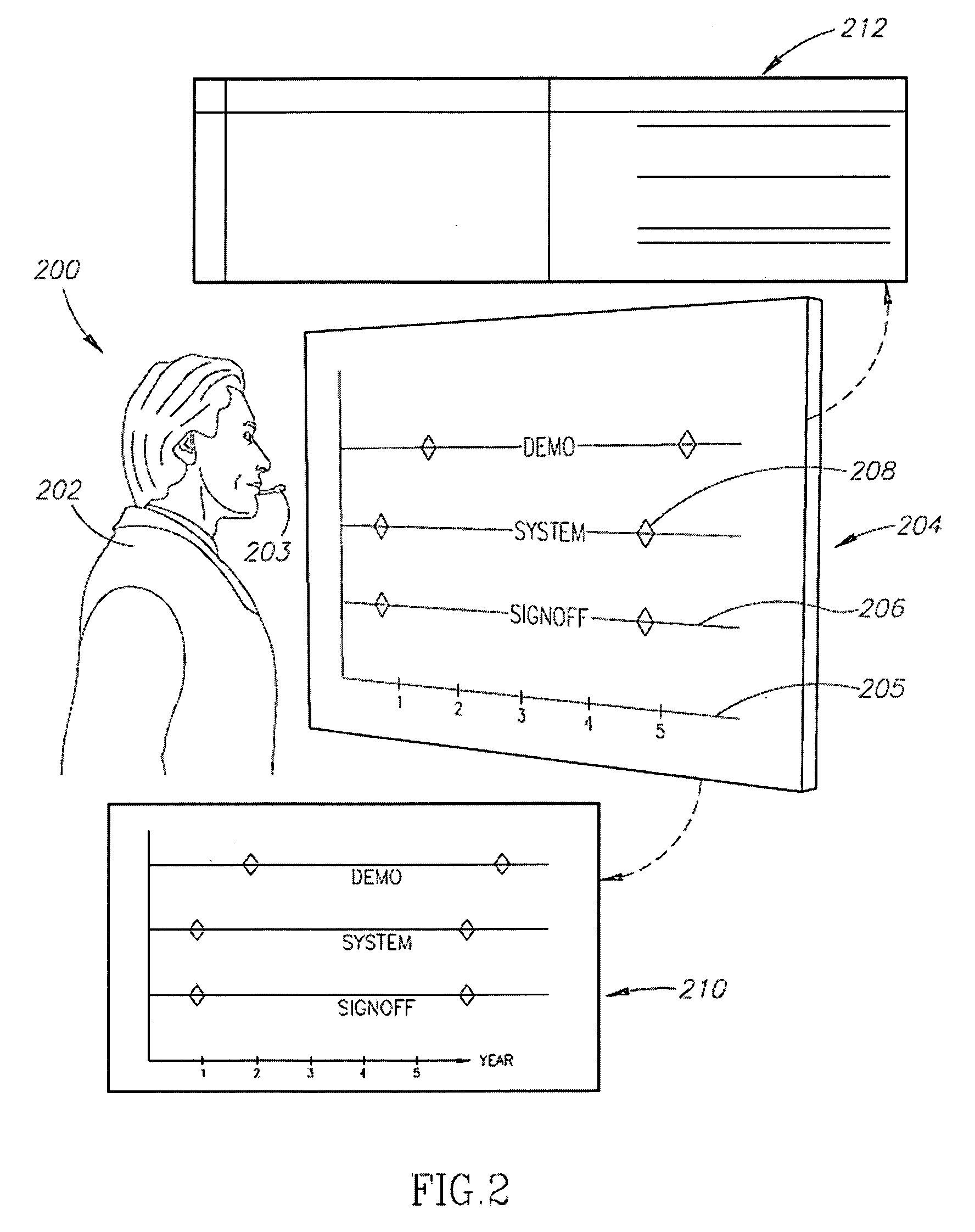

Systems and methods for implicitly interpreting semantically redundant communication modes

Owner:ADAPX INC

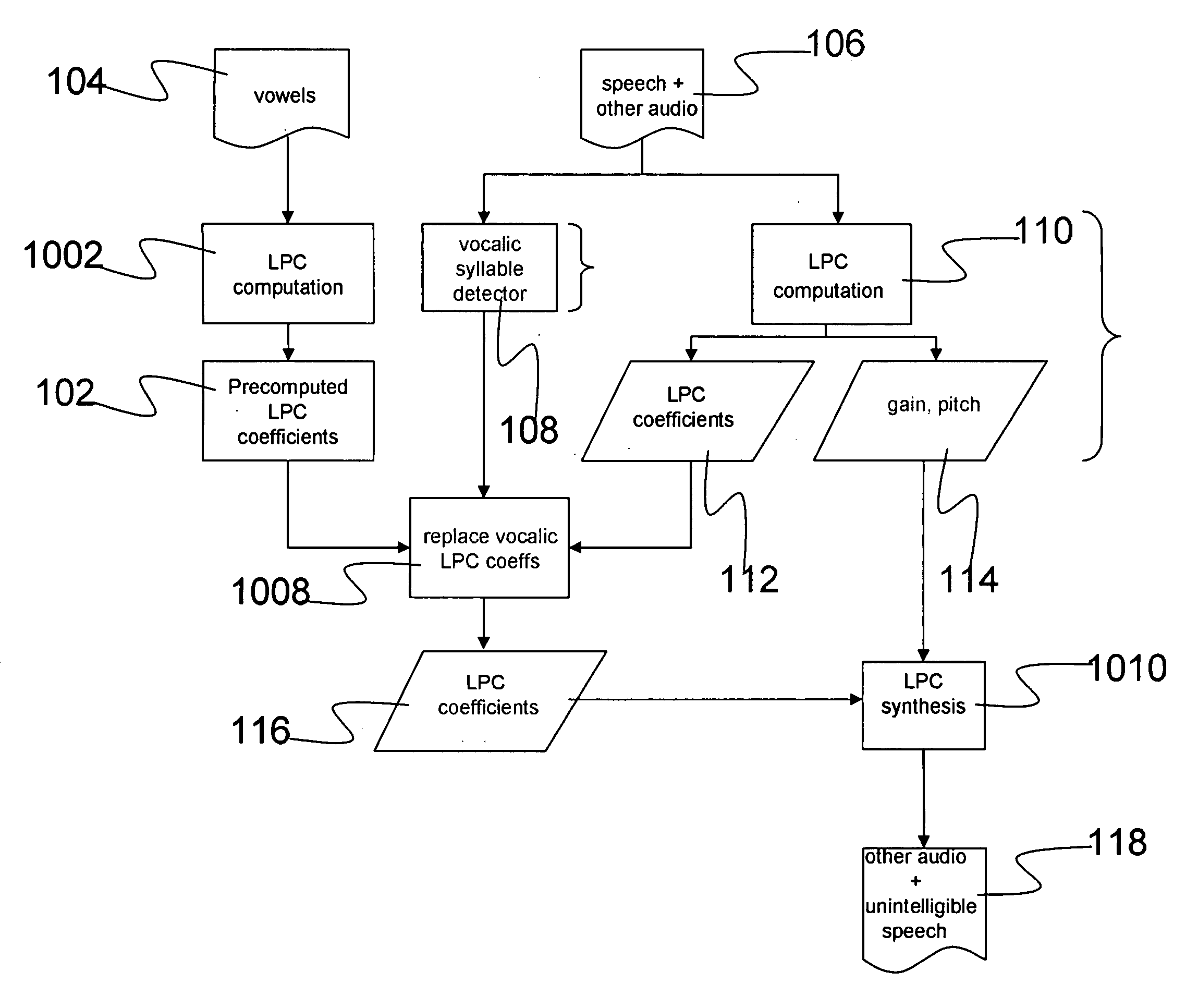

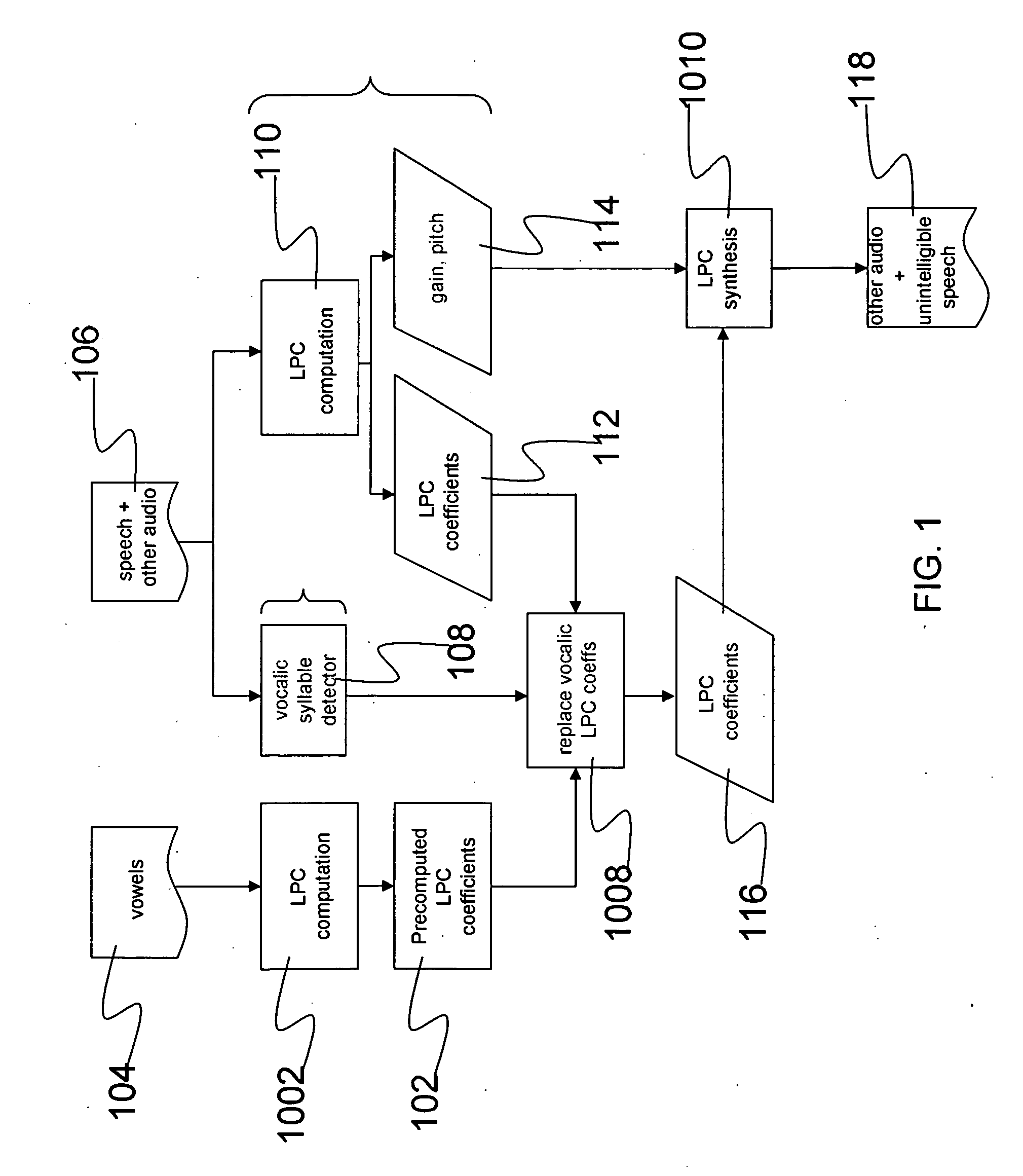

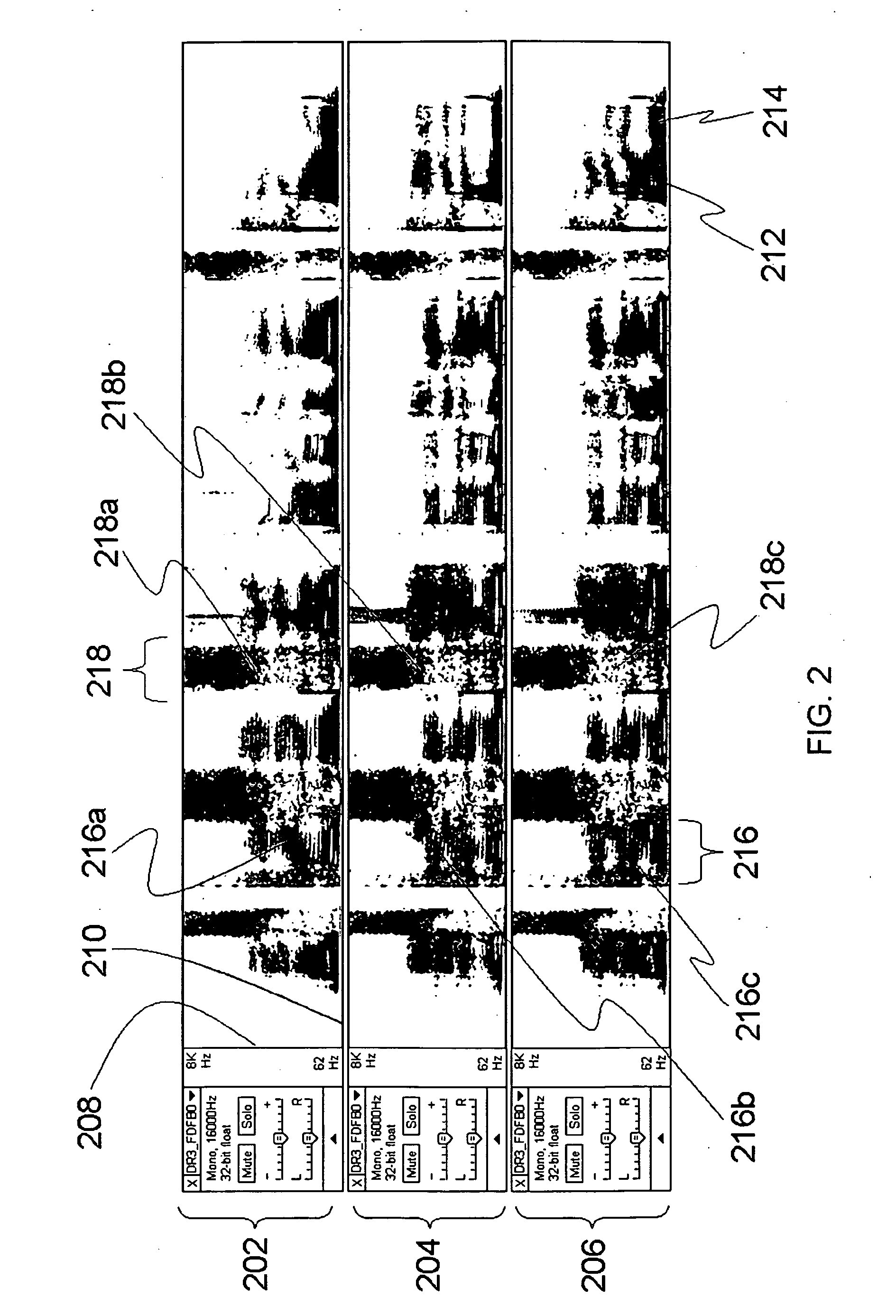

Systems and methods for reducing speech intelligibility while preserving environmental sounds

ActiveUS20090306988A1Reduced speech intelligibilitySecret communicationSpeech synthesisSyllableRelative energy

An audio privacy system reduces the intelligibility of speech in an audio signal while preserving prosodic information, such as pitch, relative energy and intonation so that a listener has the ability to recognize environmental sounds but not the speech itself. An audio signal is processed to separate non-vocalic information, such as pitch and relative energy of speech, from vocalic regions, after which syllables are identified within the vocalic regions. Representations of the vocalic regions are computed to produce a vocal tract transfer function and an excitation. The vocal tract transfer function for each syllable is then replaced with the vocal tract transfer function from another prerecorded vocalic sound. In one aspect, the identity of the replacement vocalic sound is independent of the identity of the syllable being replaced. A modified audio signal is then synthesized with the original prosodic information and the modified vocal tract transfer function to produce unintelligible speech that preserves the pitch and energy of the speech as well as environmental sounds.

Owner:FUJIFILM BUSINESS INNOVATION CORP

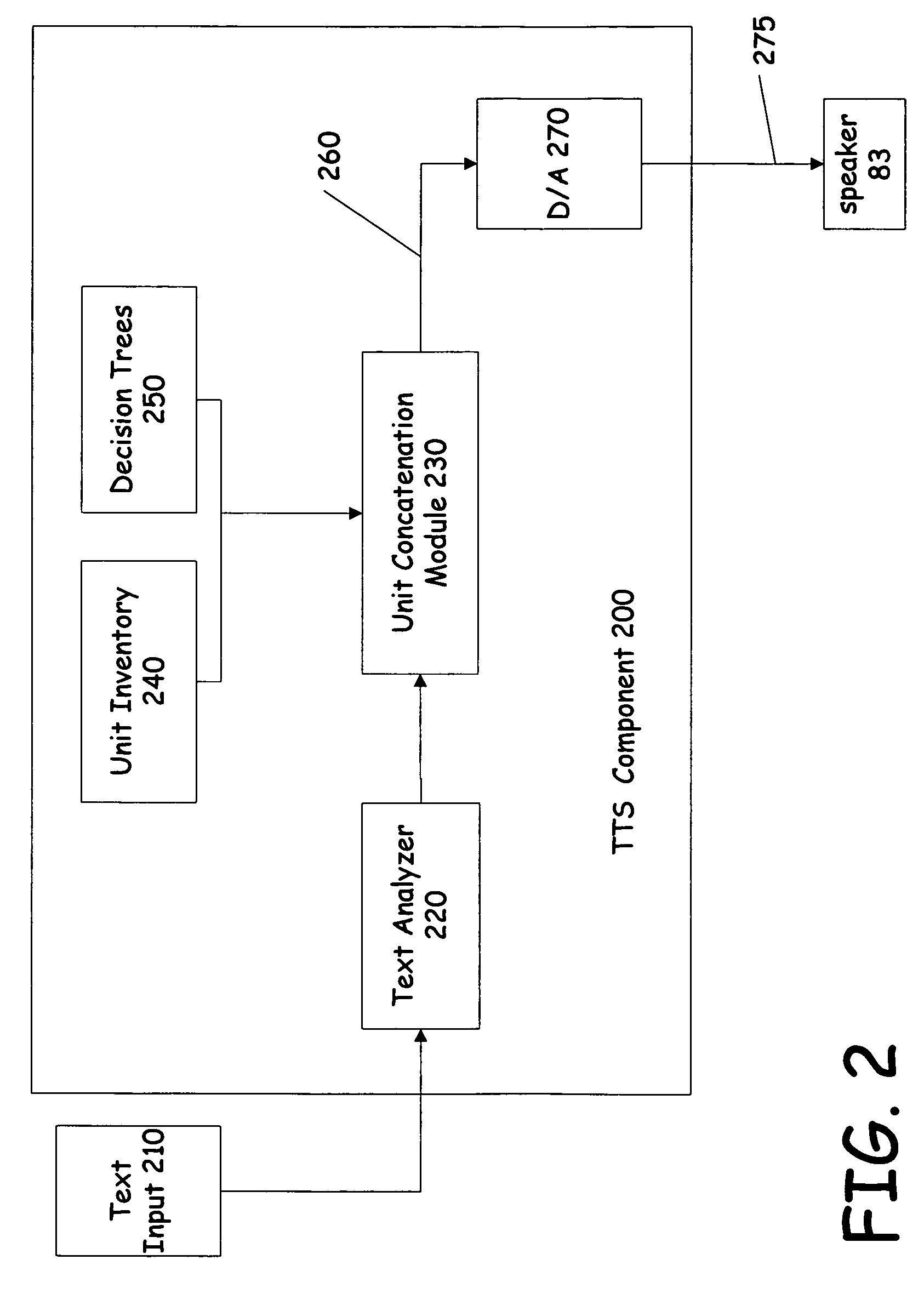

Defining atom units between phone and syllable for TTS systems

A method for identifying common multiphone units to add to a unit inventory for a text-to-speech generator is disclosed. The common multiphone units are units that are larger than a phone, but smaller than a syllable. The method slices each syllable into a plurality of slices. These slices are then sorted and the frequency of each slice is determined. Those slices whose frequencies exceed a threshold are added to the unit inventory. The remaining slices are decomposed according to a predetermined set of rules to determine if they contain slices that should be added to the unit inventory.

Owner:MICROSOFT TECH LICENSING LLC

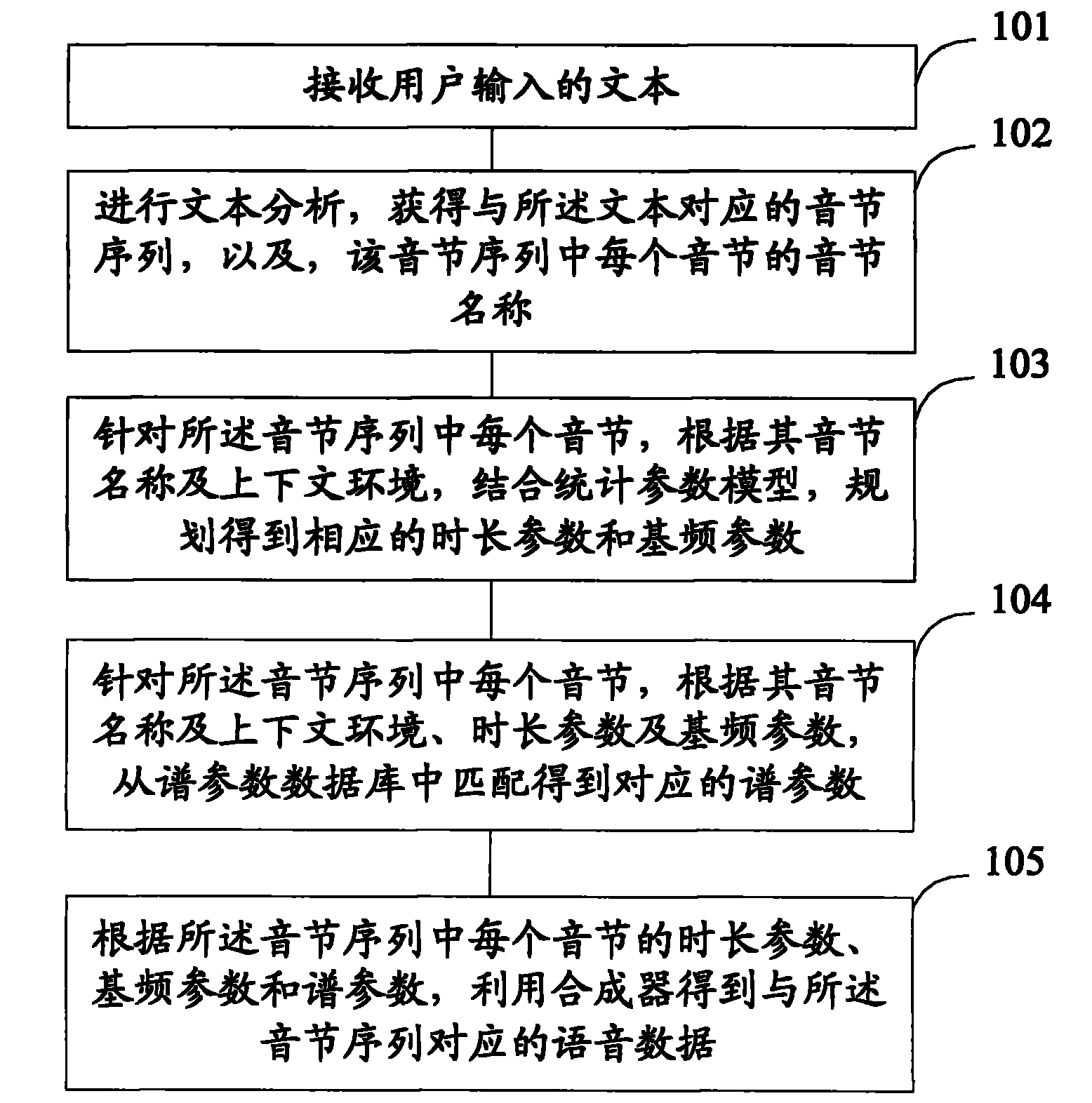

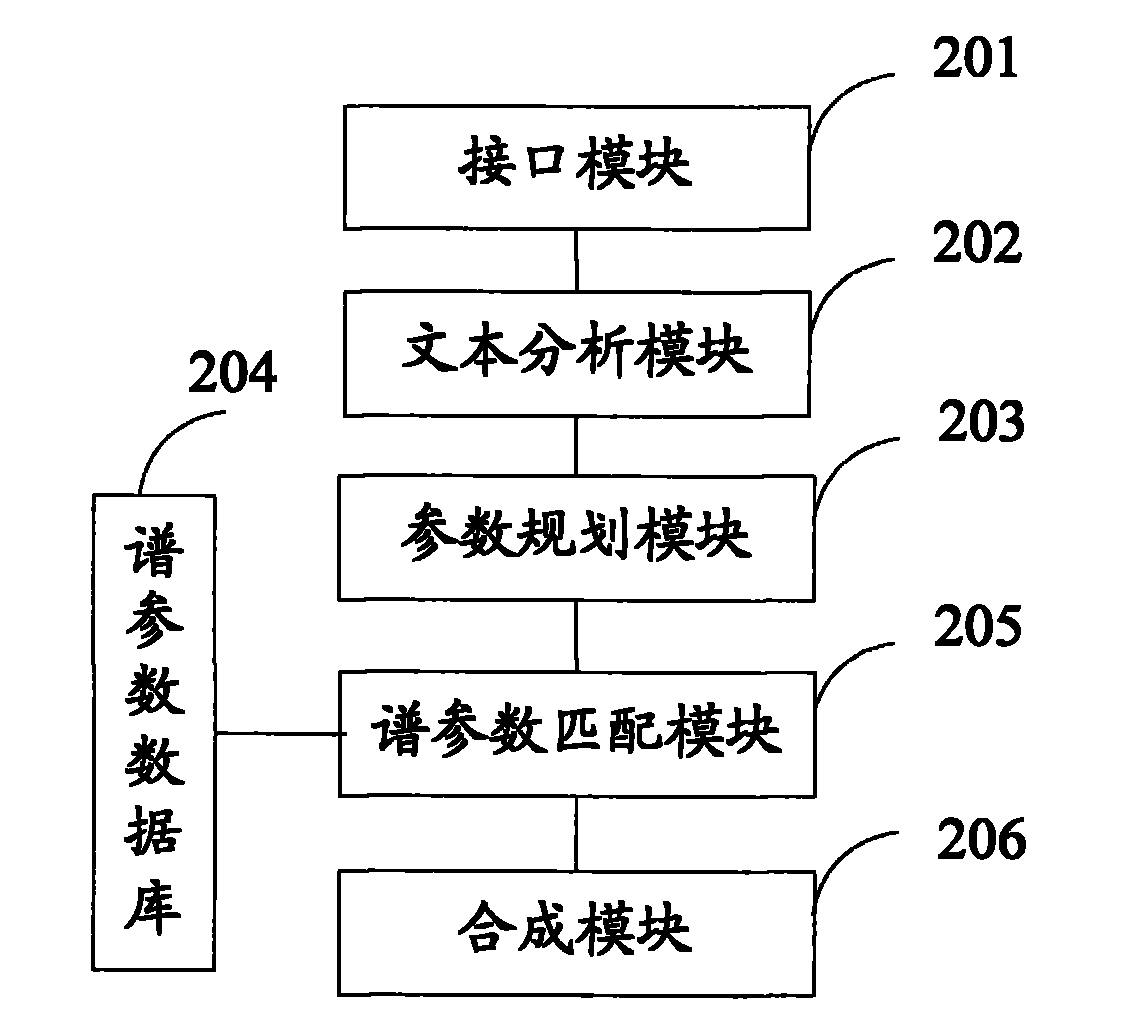

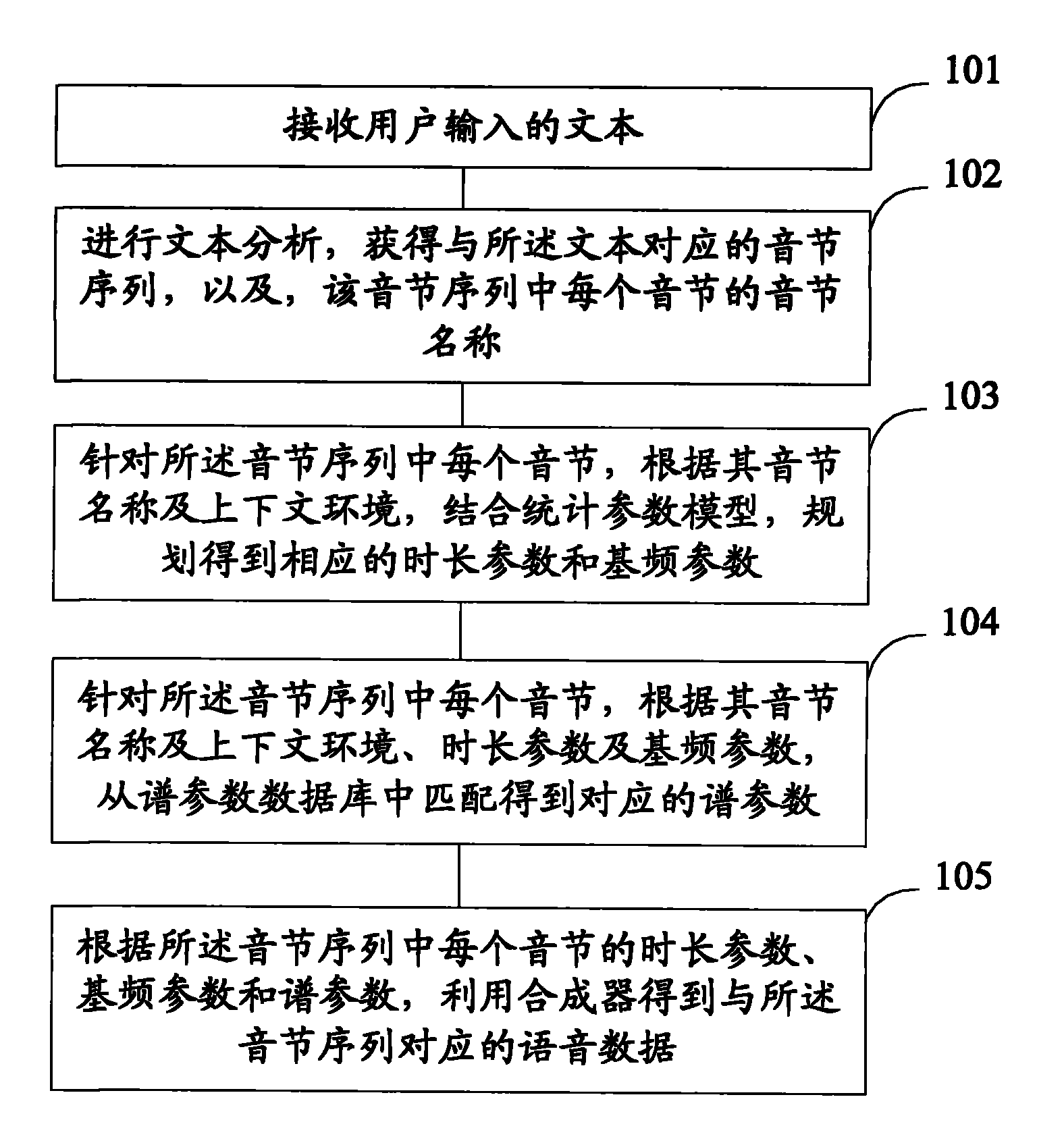

Speech synthesis method and system

InactiveCN101894547ASave storage spaceFull and round soundSpeech recognitionSpeech synthesisSyllableSpeech synthesis

The invention provides a speech synthesis method and a speech synthesis system. The method comprises: receiving a text input by a user; performing text analysis to obtain a syllable sequence corresponding to the text and the syllable name of each syllable in the syllable sequence; for each syllable in the syllable sequence, planning and acquiring a corresponding duration parameter and a corresponding basic frequency parameter by combining a statistic parameter model according to the syllable name and context; for each syllable in the syllable sequence, acquiring corresponding spectrum parameter by matching from a spectrum parameter database according to the syllable name, the context, the duration parameter and the basic frequency parameter; and acquiring speech data corresponding to the syllable sequence by using a synthesizer according to the duration parameter, duration parameter, basic frequency parameter and spectrum parameter of each syllable in the syllable sequence. The method and the system can be used in embedded equipment and effectively reduce data storage space occupation while achieving a high tone quality.

Owner:BEIJING SINOVOICE TECH CO LTD

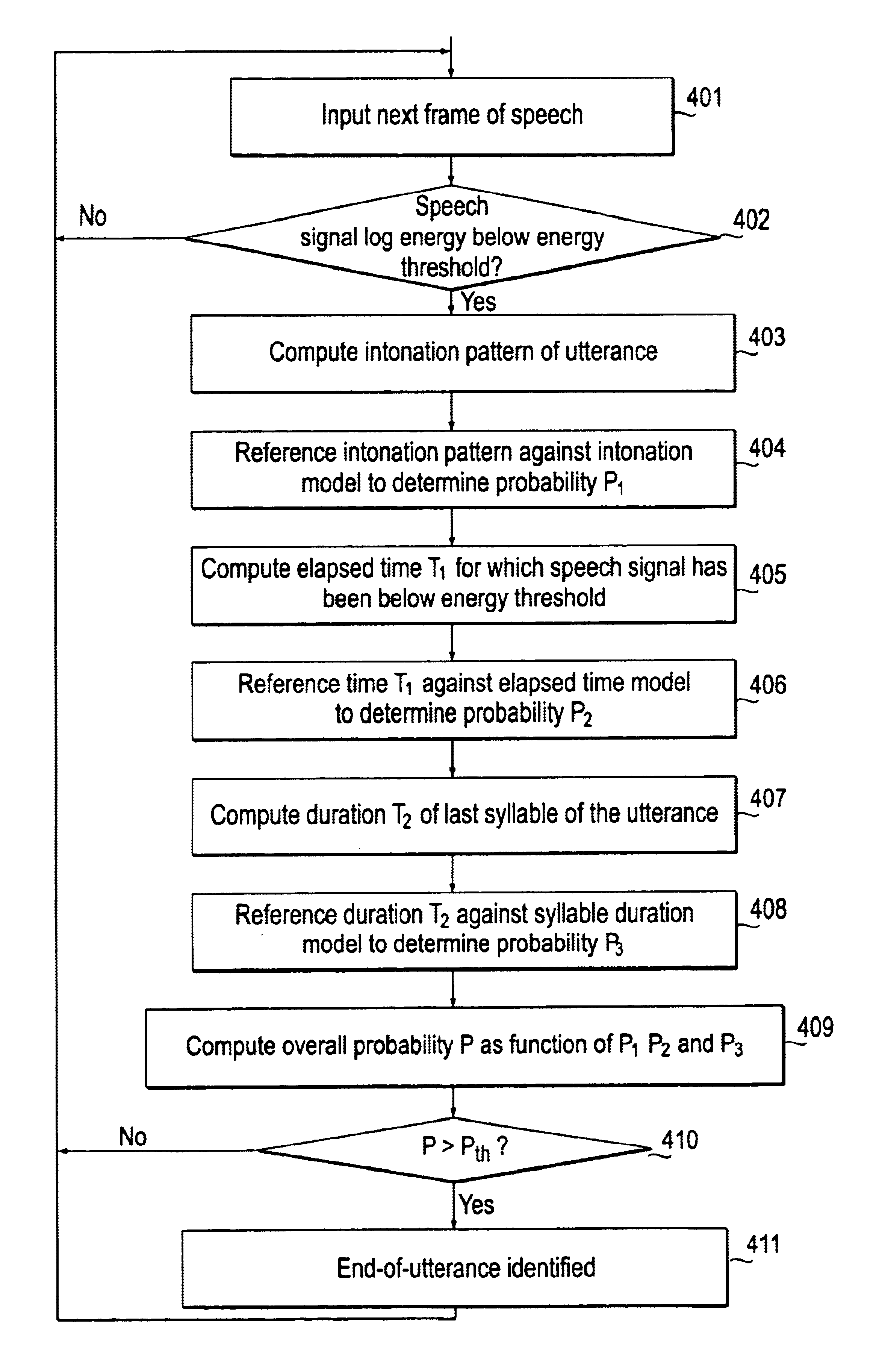

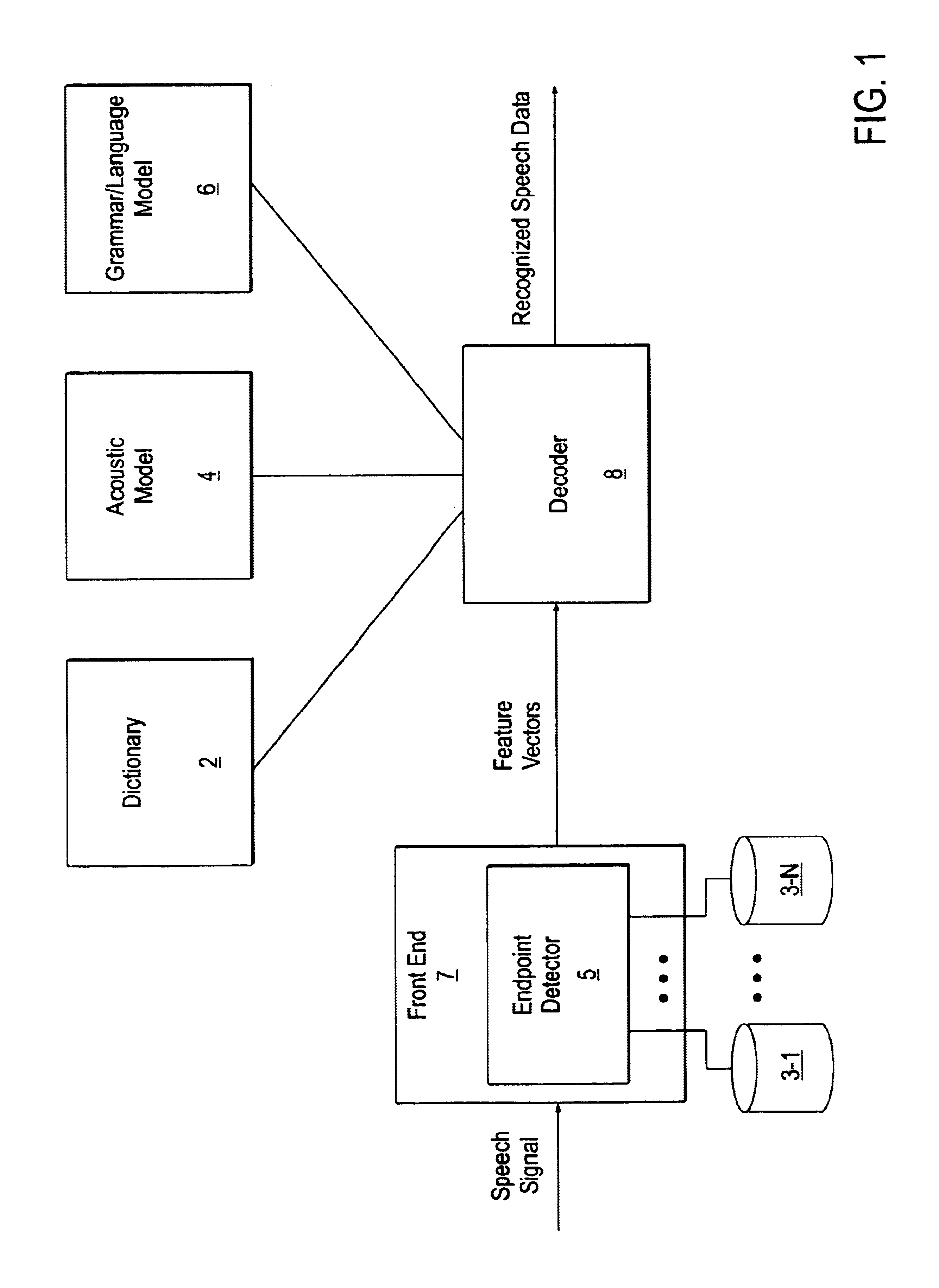

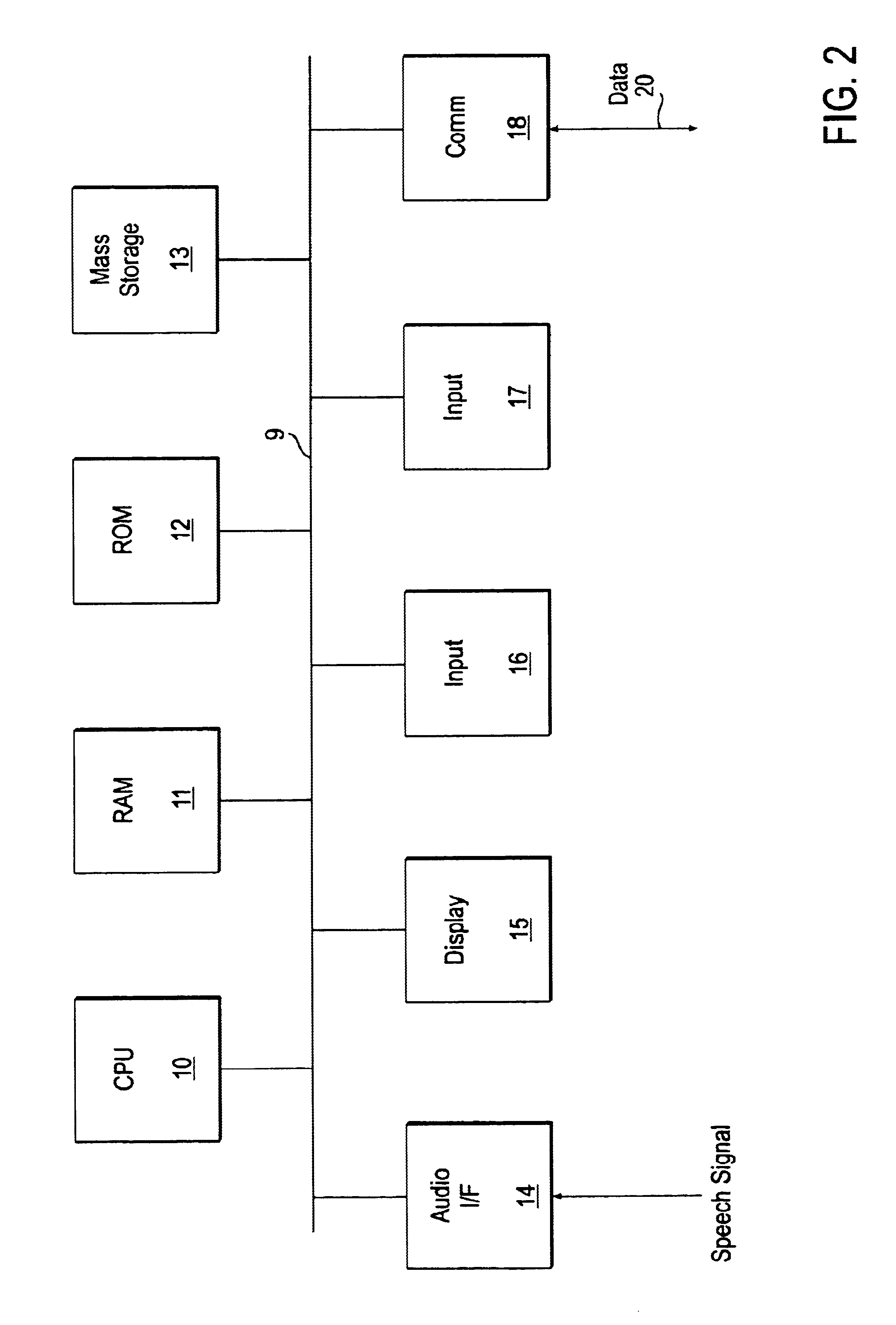

Prosody based endpoint detection

A method and apparatus are provided for performing prosody based endpoint detection of speech in a speech recognition system. Input speech represents an utterance, which has an intonation pattern. An end-of-utterance condition is identified based on prosodic parameters of the utterance, such as the intonation pattern and the duration of the final syllable of the utterance, as well as non-prosodic parameters, such as the log energy of the speech.

Owner:NUANCE COMM INC

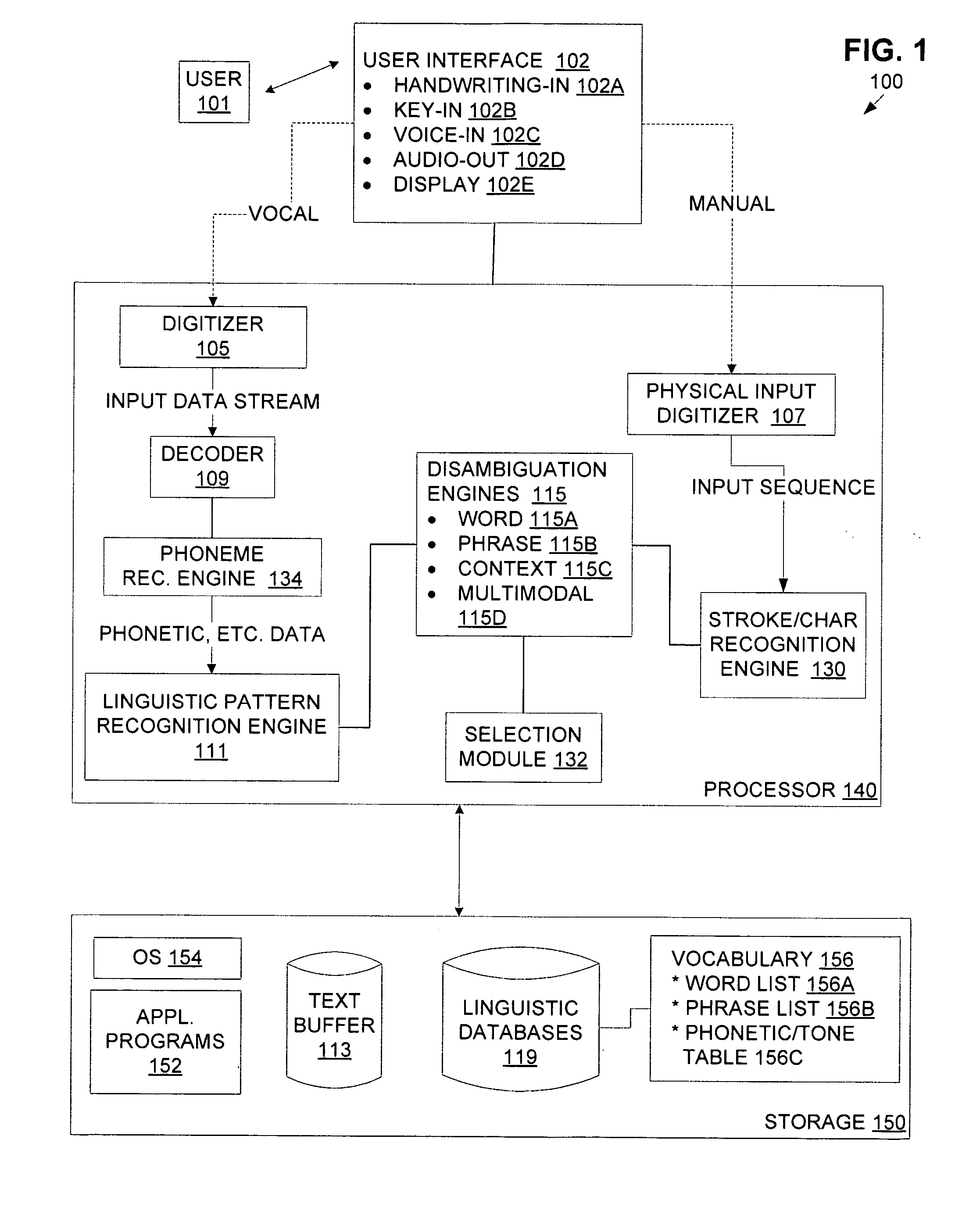

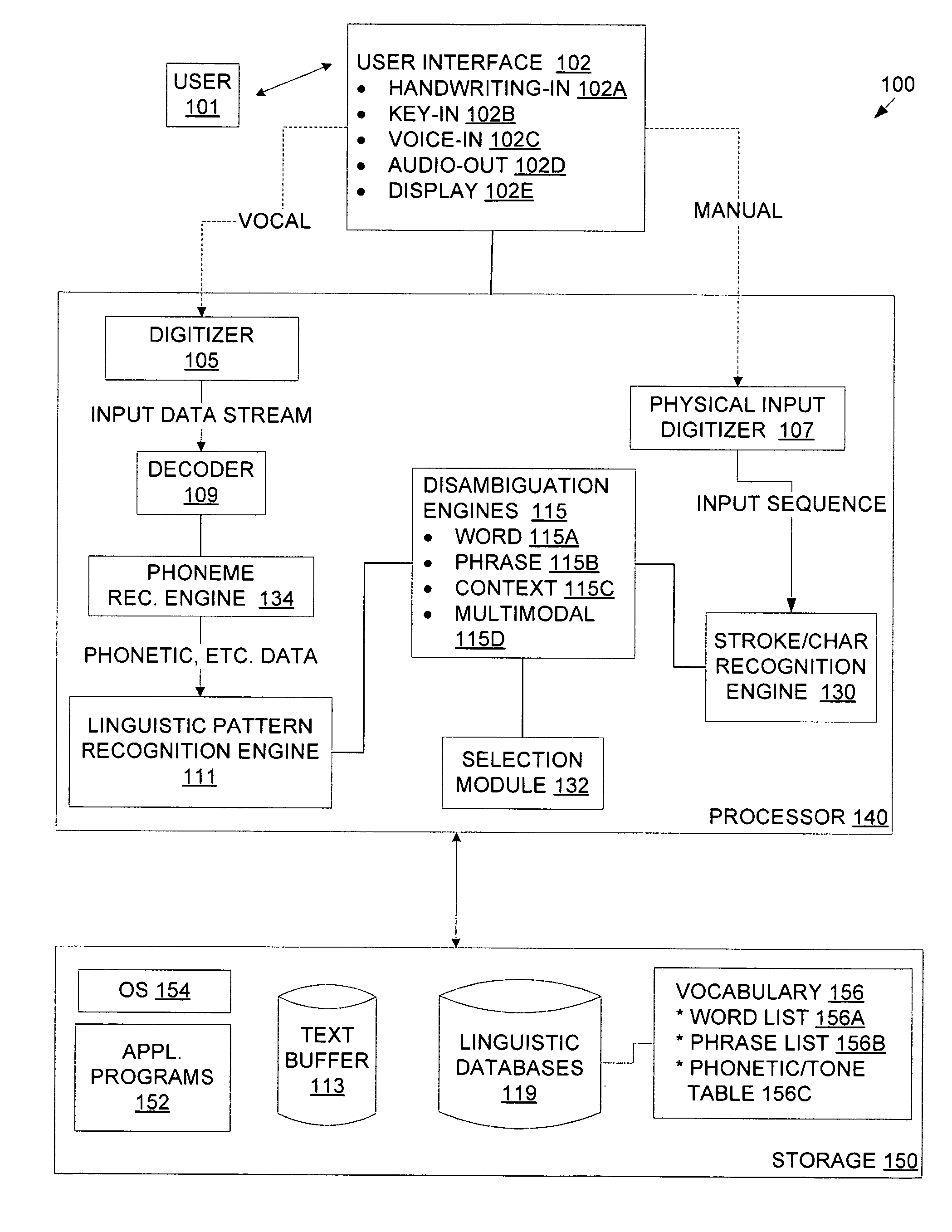

Method and apparatus utilizing voice input to resolve ambiguous manually entered text input

InactiveUS20060190256A1Character and pattern recognitionNatural language data processingDigital dataSyllable

From a text entry tool, a digital data processing device receives inherently ambiguous user input. Independent of any other user input, the device interprets the received user input against a vocabulary to yield candidates such as words (of which the user input forms the entire word or part such as a root, stem, syllable, affix), or phrases having the user input as one word. The device displays the candidates and applies speech recognition to spoken user input. If the recognized speech comprises one of the candidates, that candidate is selected. If the recognized speech forms an extension of a candidate, the extended candidate is selected. If the recognized speech comprises other input, various other actions are taken.

Owner:TEGIC COMM

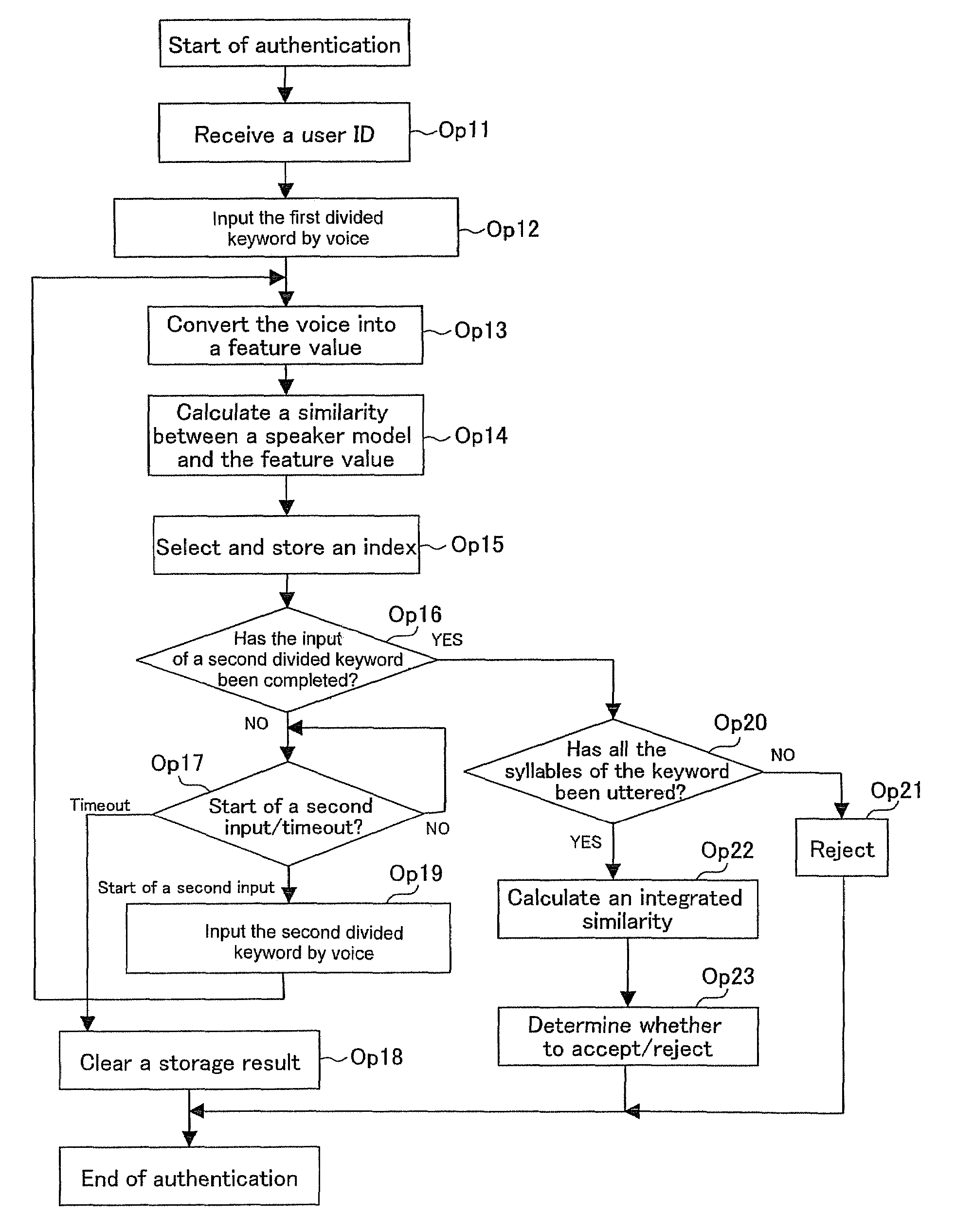

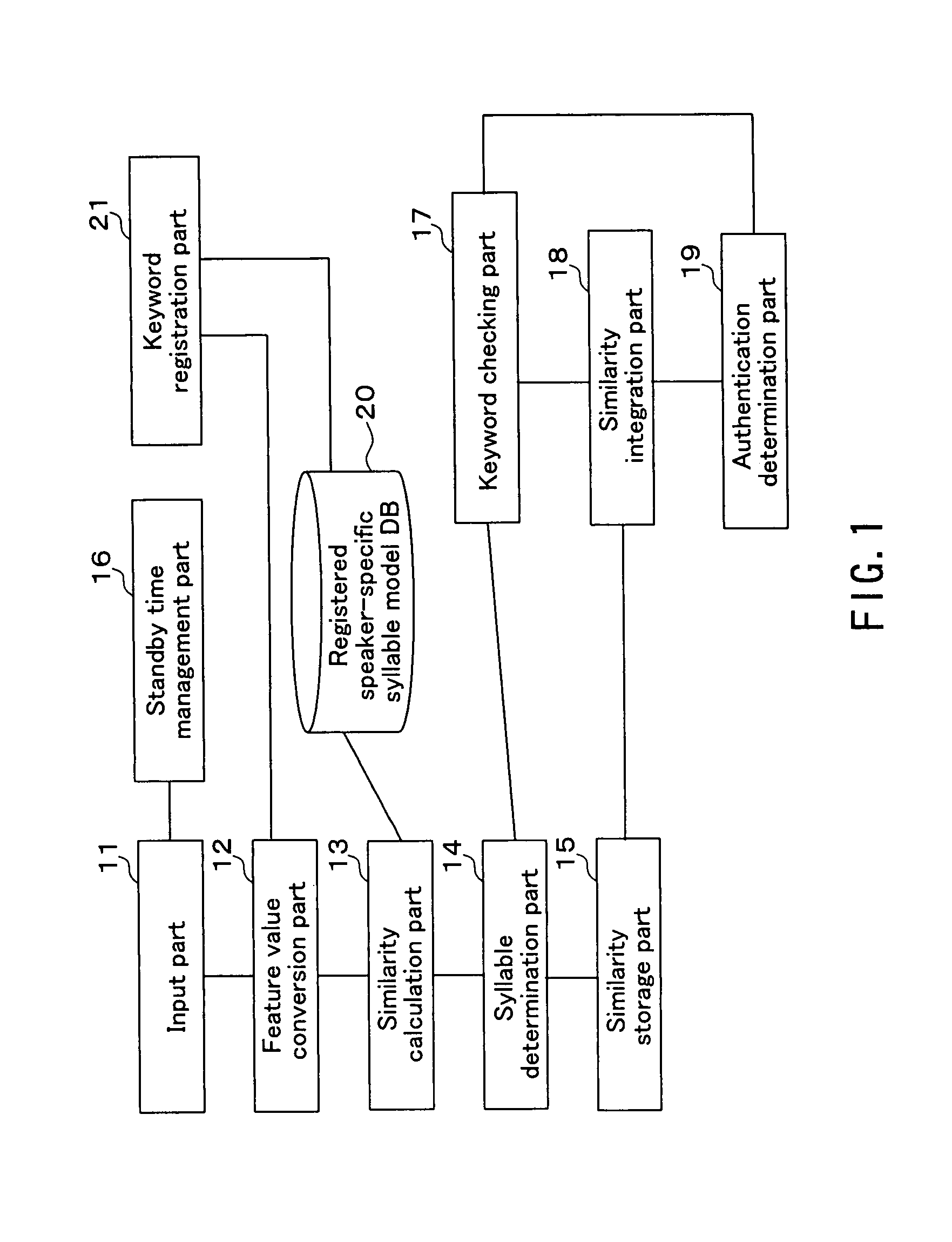

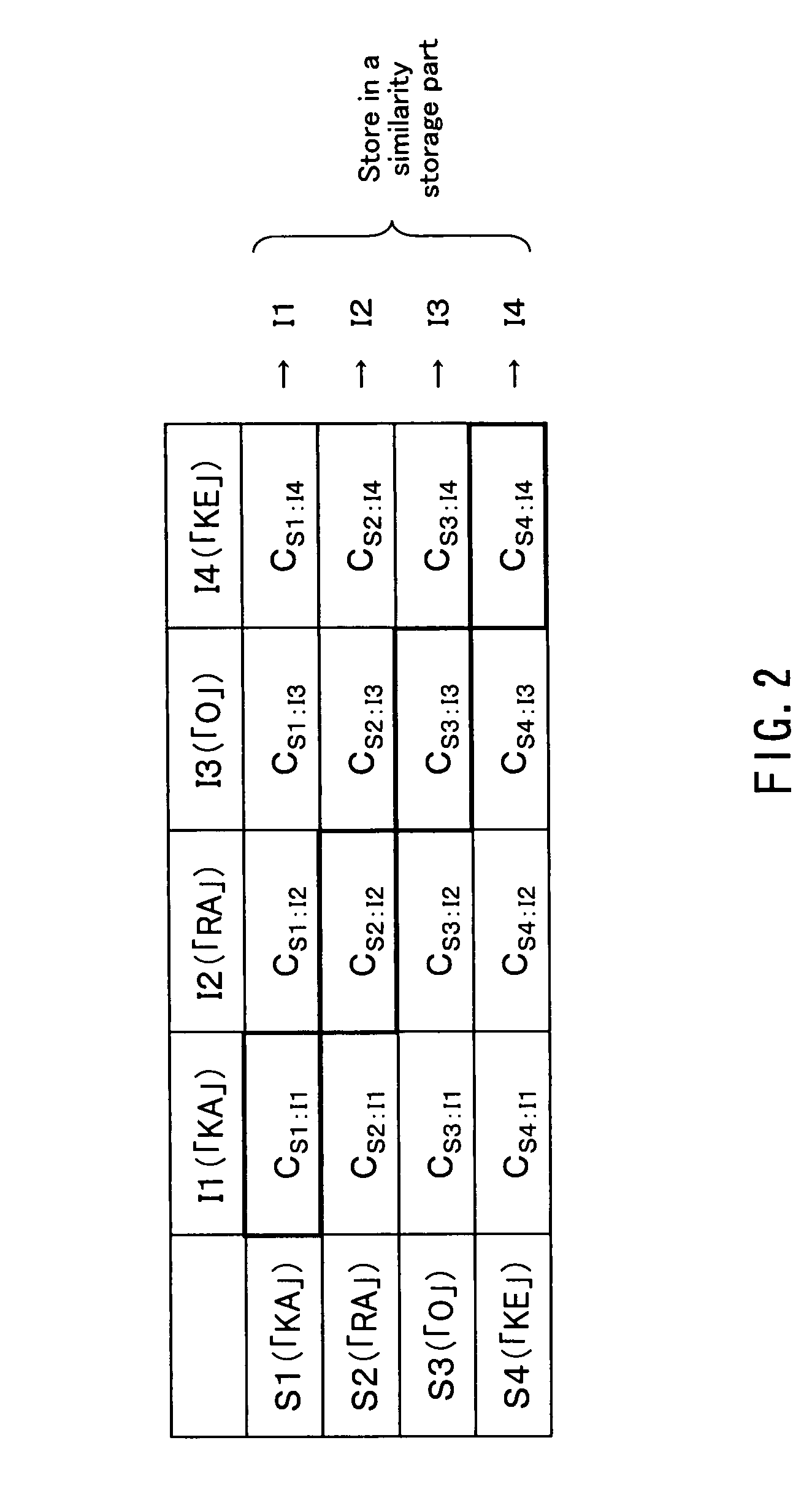

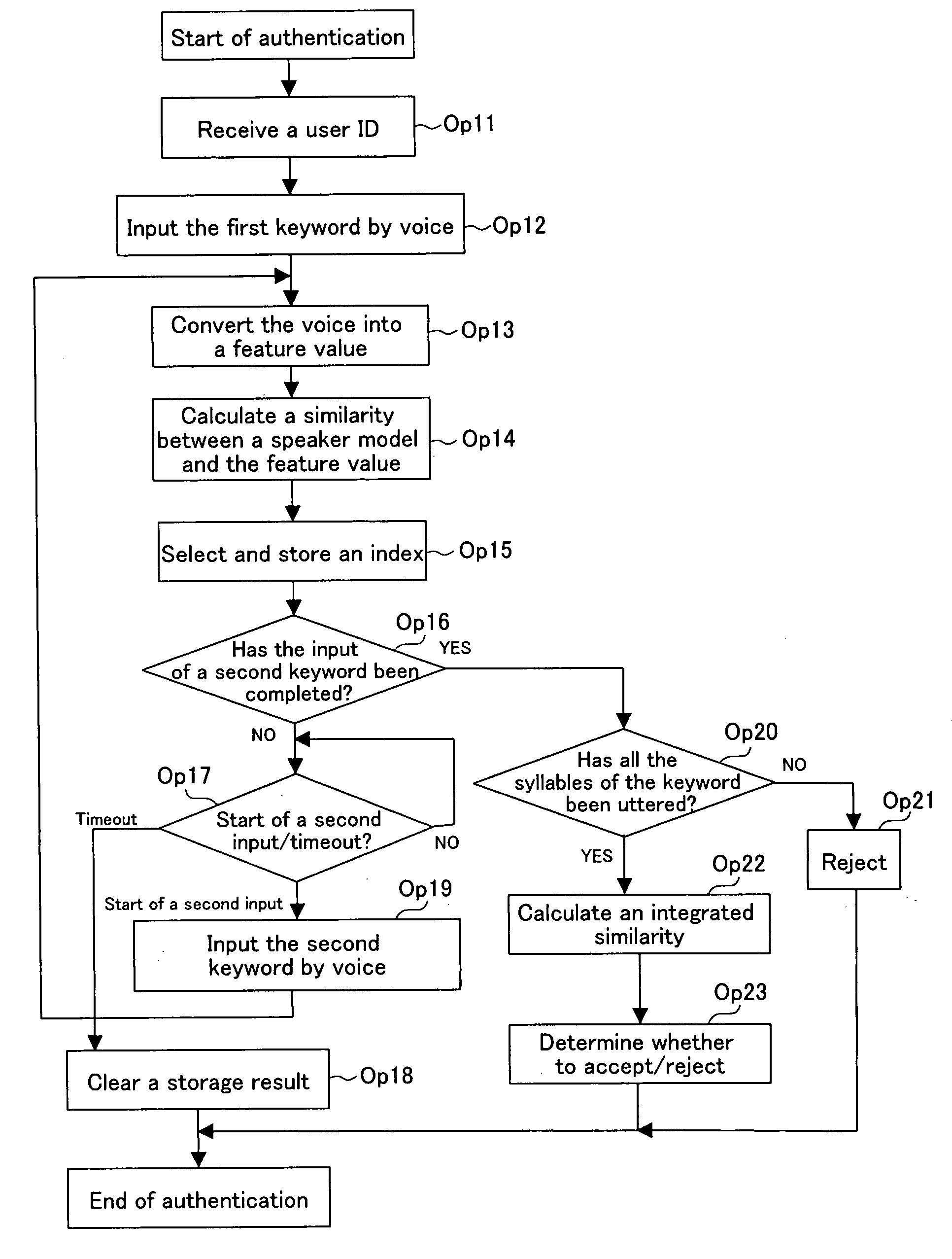

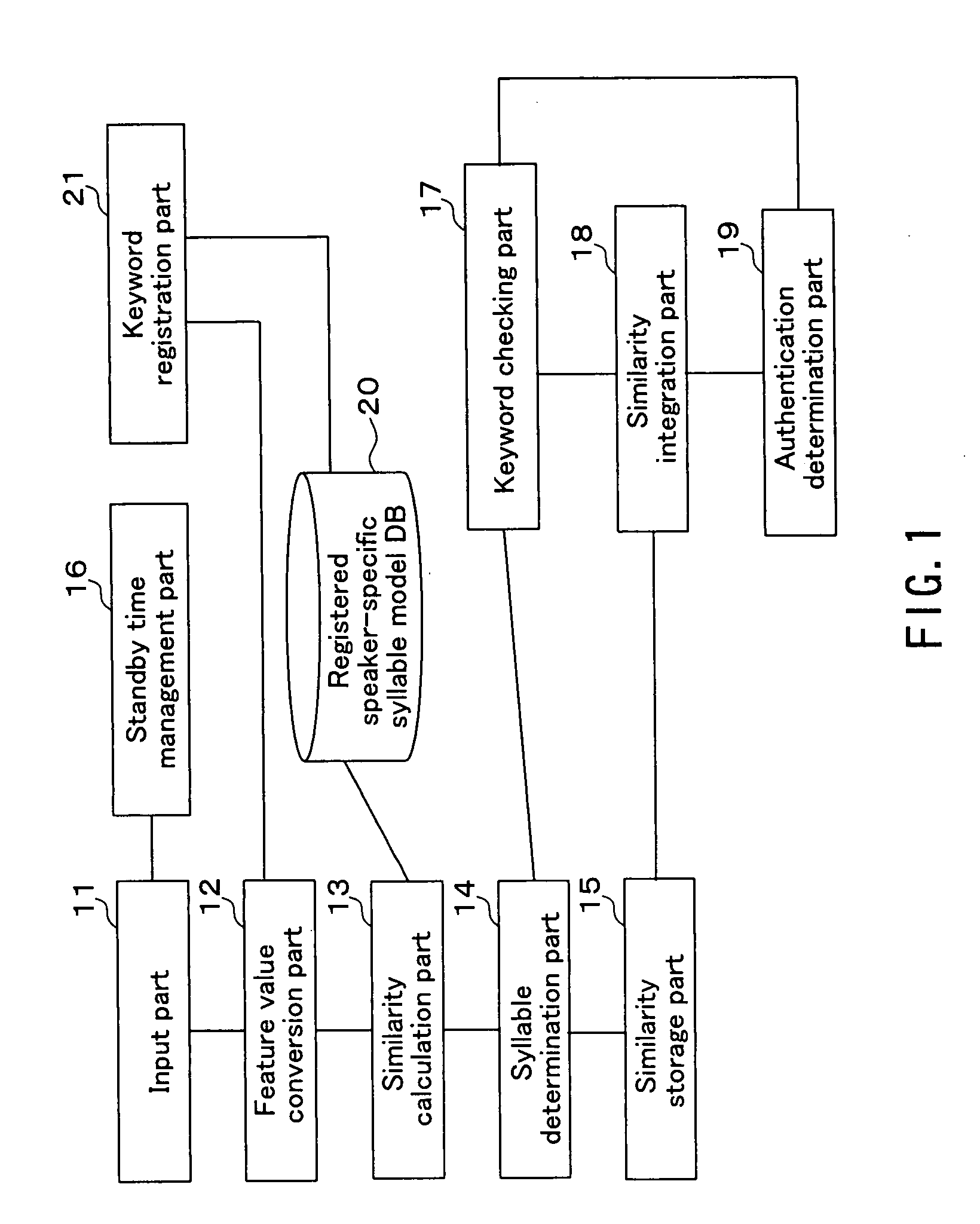

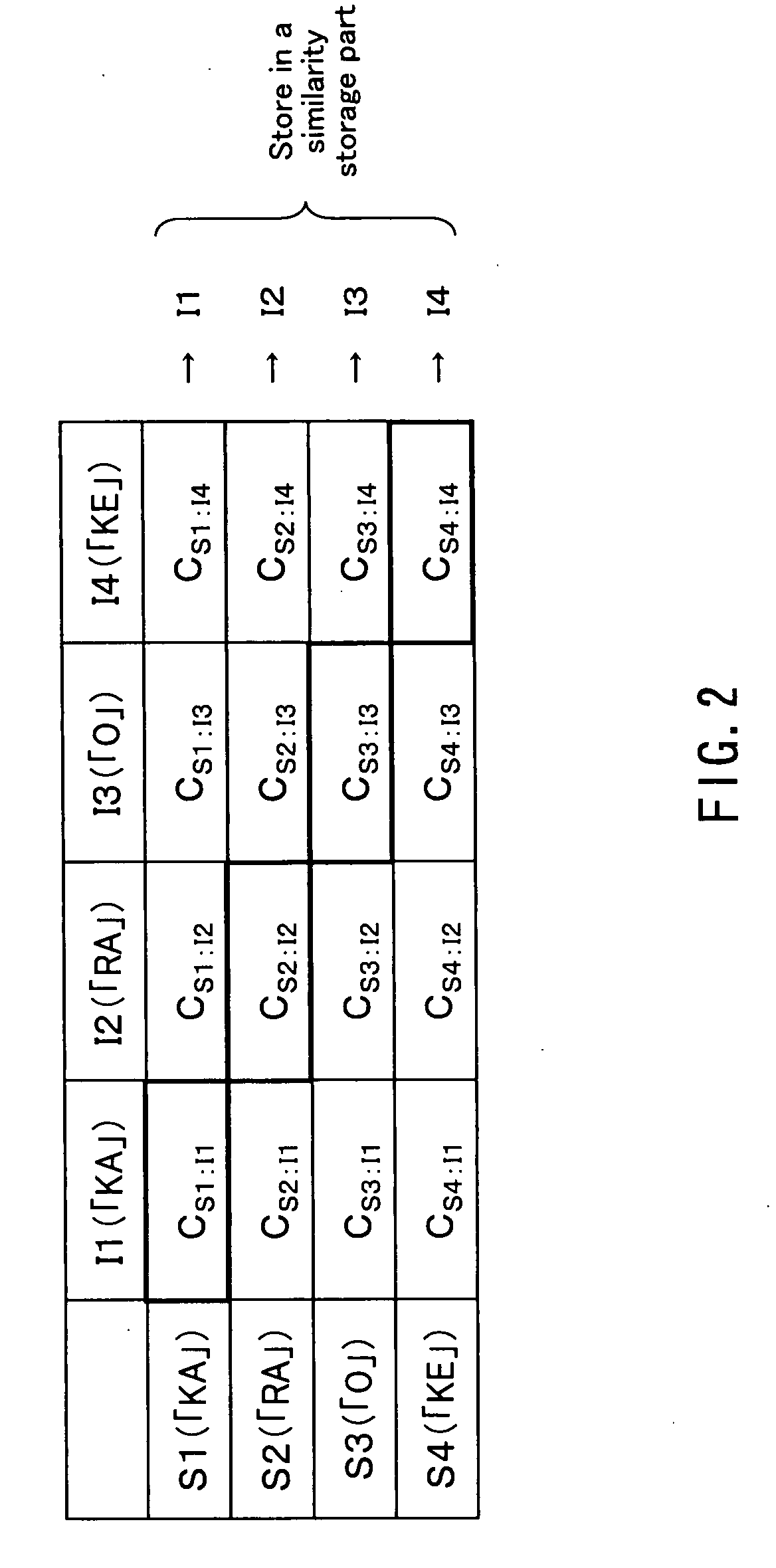

Voice authentication system

A text-dependent voice authentication system that performs authentication by urging a user to input a keyword by voice includes: an input part (11) that receives a voice input of a keyword divided into a plurality of portions with an utterable unit being a minimum unit over a plurality of times at a time interval for each of the portions; registered speaker-specific syllable model DB (20) that previously stores a registered keyword of a user as a speaker model created in the utterable unit; a feature value conversion part (12) that obtains a feature value of a voice contained in a portion of the keyword received by each voice input in the input part (11) from the portion; a similarity calculation part (13) that obtains a similarity between the feature value and the speaker model; a keyword checking part (17) that determines whether or not voice inputs of all the syllables or phonemes configuring an entire registered keyword by the plurality of times of voice inputs, based on the similarity obtained in the similarity calculation part; and an authentication determination part (19) that determines whether to accept or reject authentication, based on a determination result in the keyword checking part and the similarity obtained in the similarity calculation part.

Owner:FUJITSU LTD

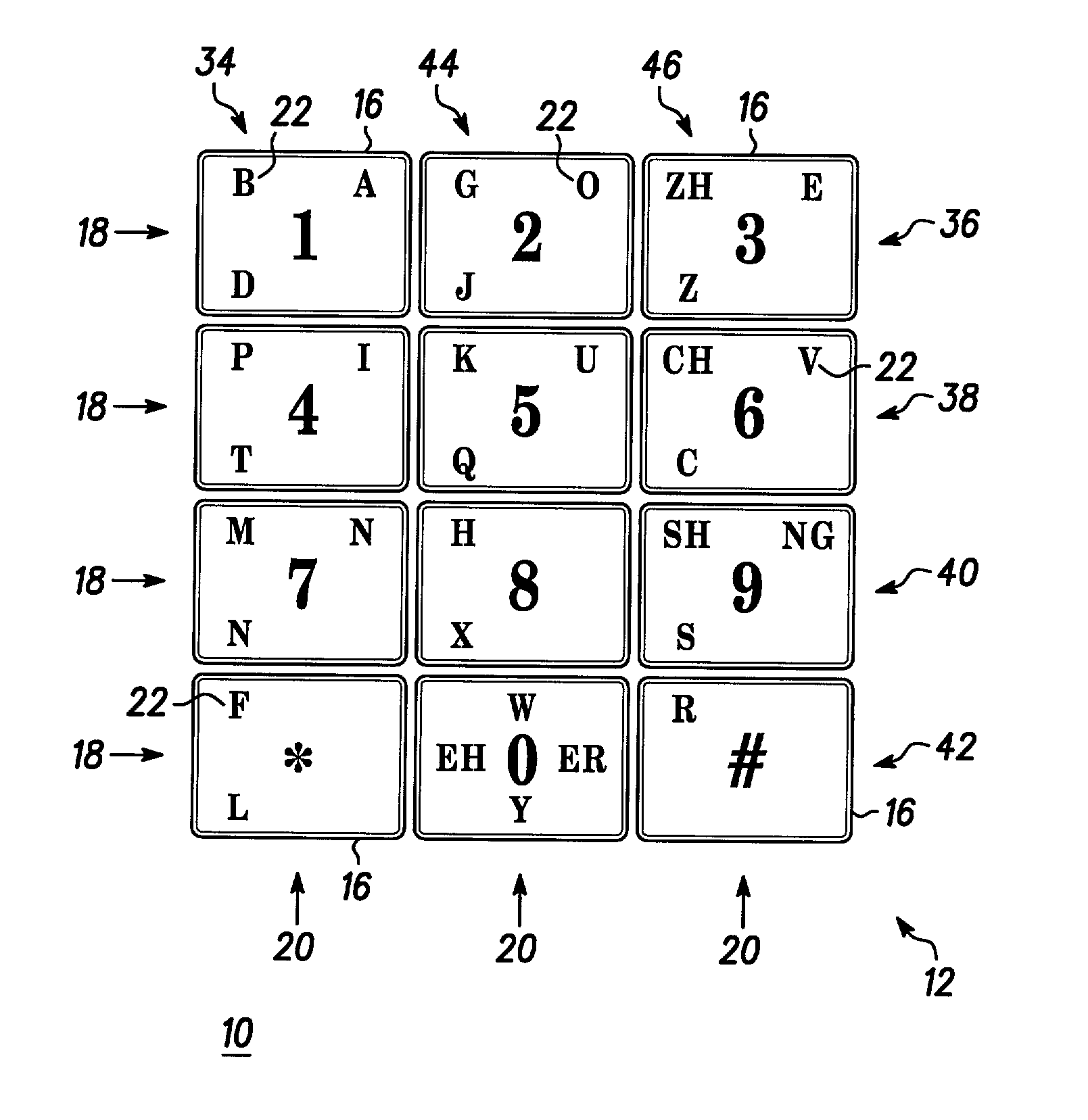

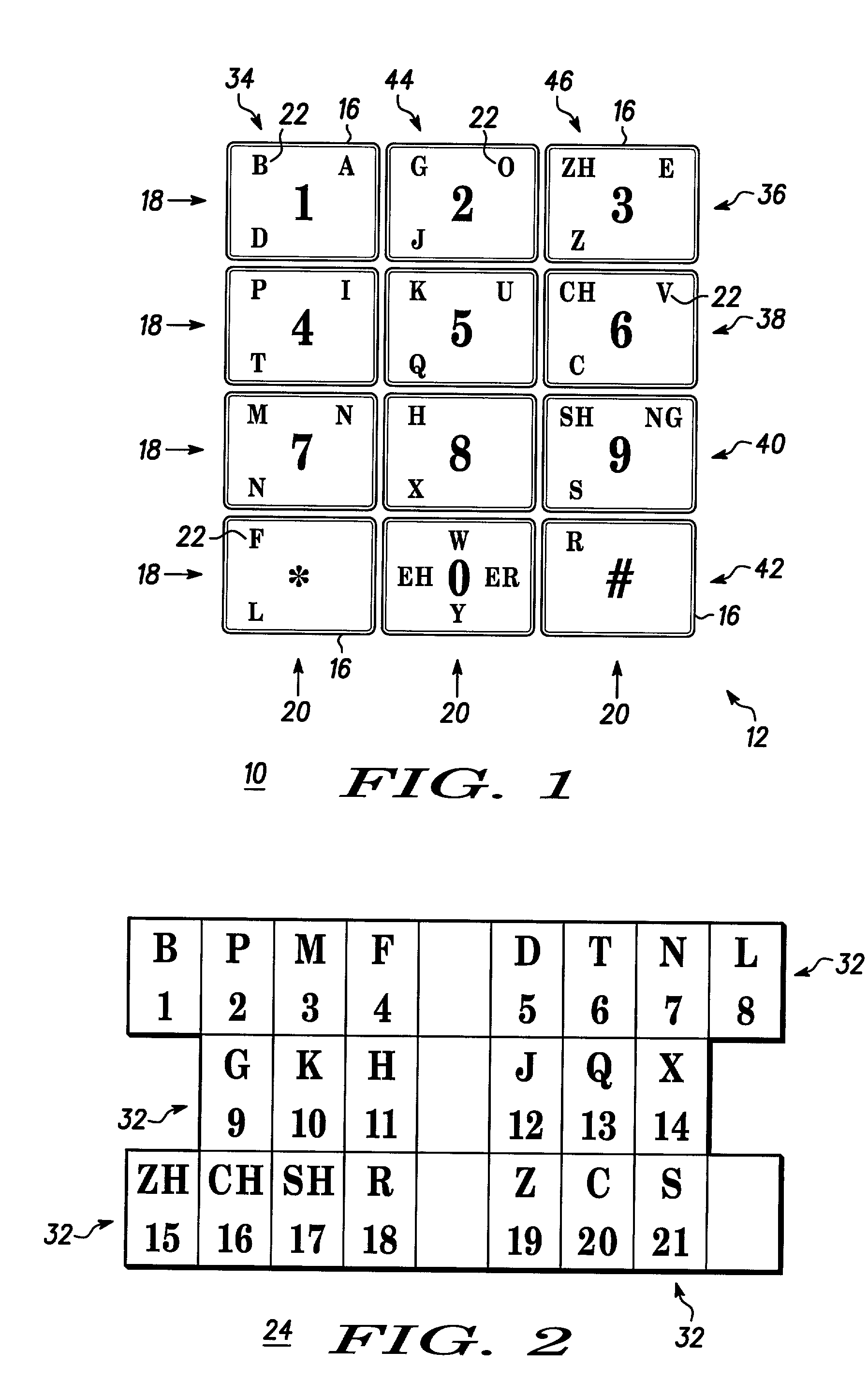

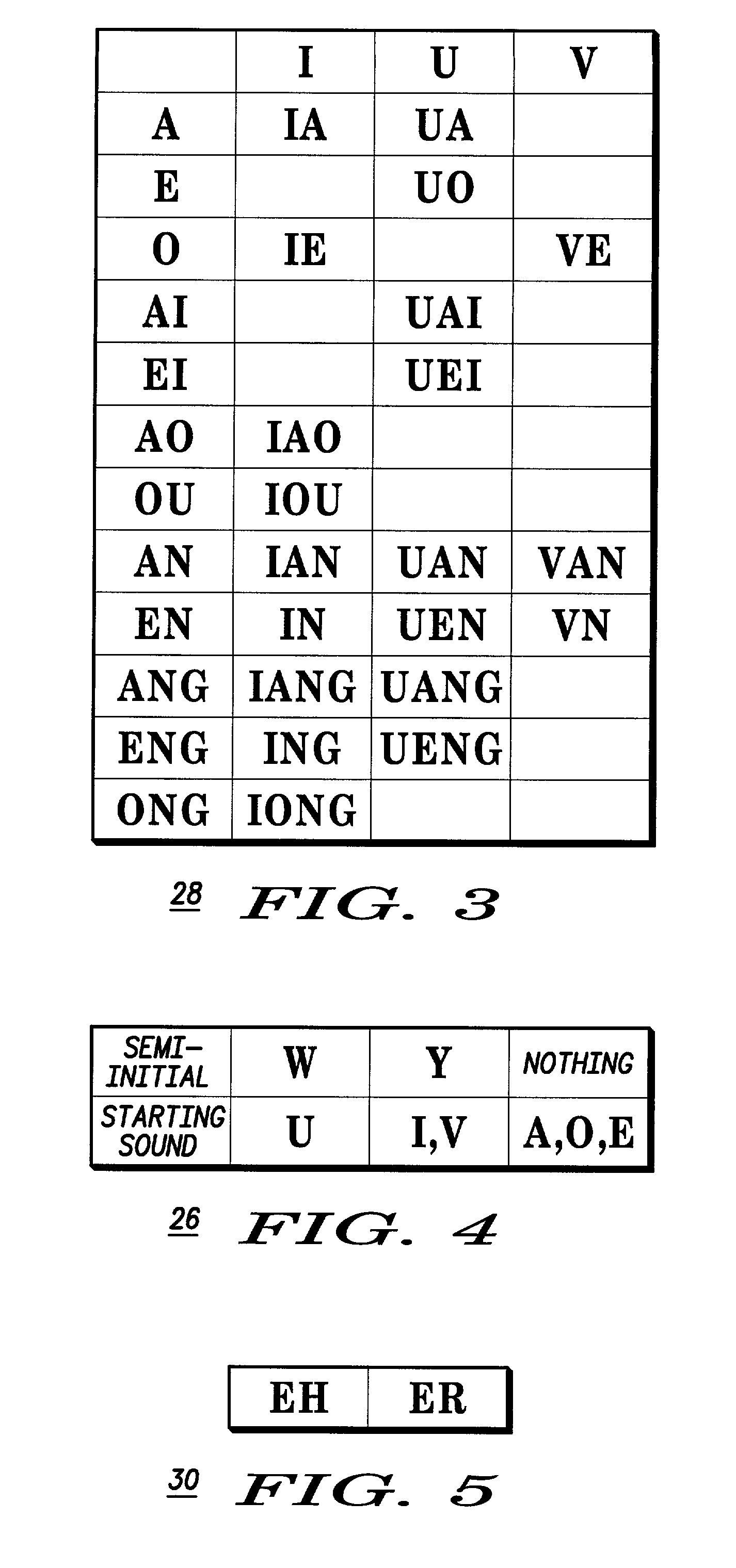

Keypad layout for alphabetic symbol input

InactiveUS6982658B2Easy to useFast and accurate inputInput/output for user-computer interactionInterconnection arrangementsNatural language processingSyllable

Layouts for keypads are provided that have an improved combination of a regular of intuitive arrangement of alphabetic symbols of a language defined by letter characters of the Roman alphabet and an efficient distribution of the symbols to minimize input ambiguities. More particularly, the symbols are for the Pinyin alphabet which includes two main symbol groups thereof for forming Pinyin syllables. The various layouts employ a columnar-based arrangement for the symbols of the initial group. The symbols for the final group are parsed down to basic characters and are distributed in a row-based arrangement.

Owner:CERENCE OPERATING CO

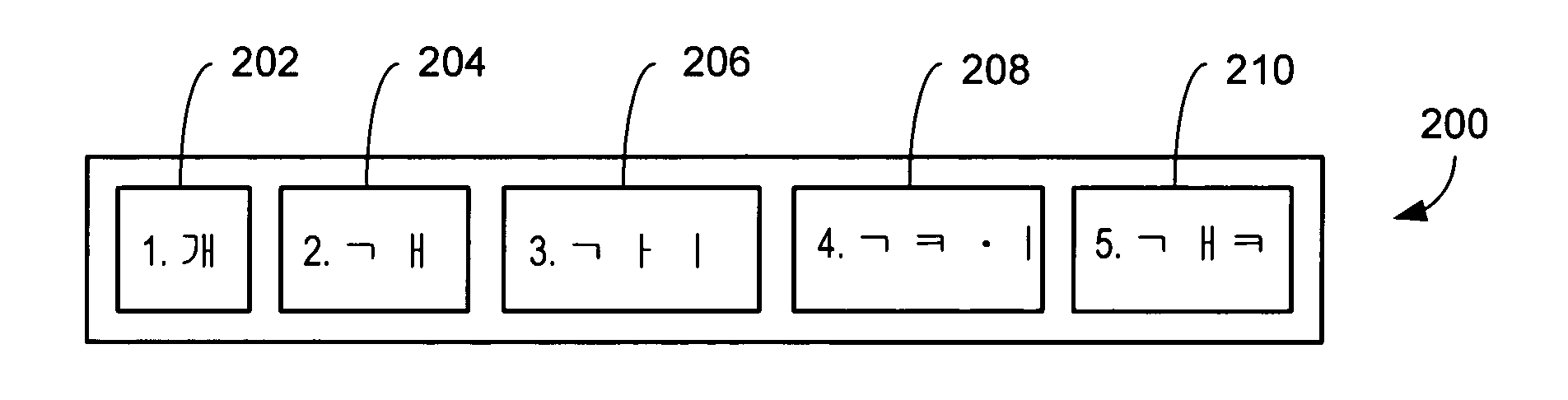

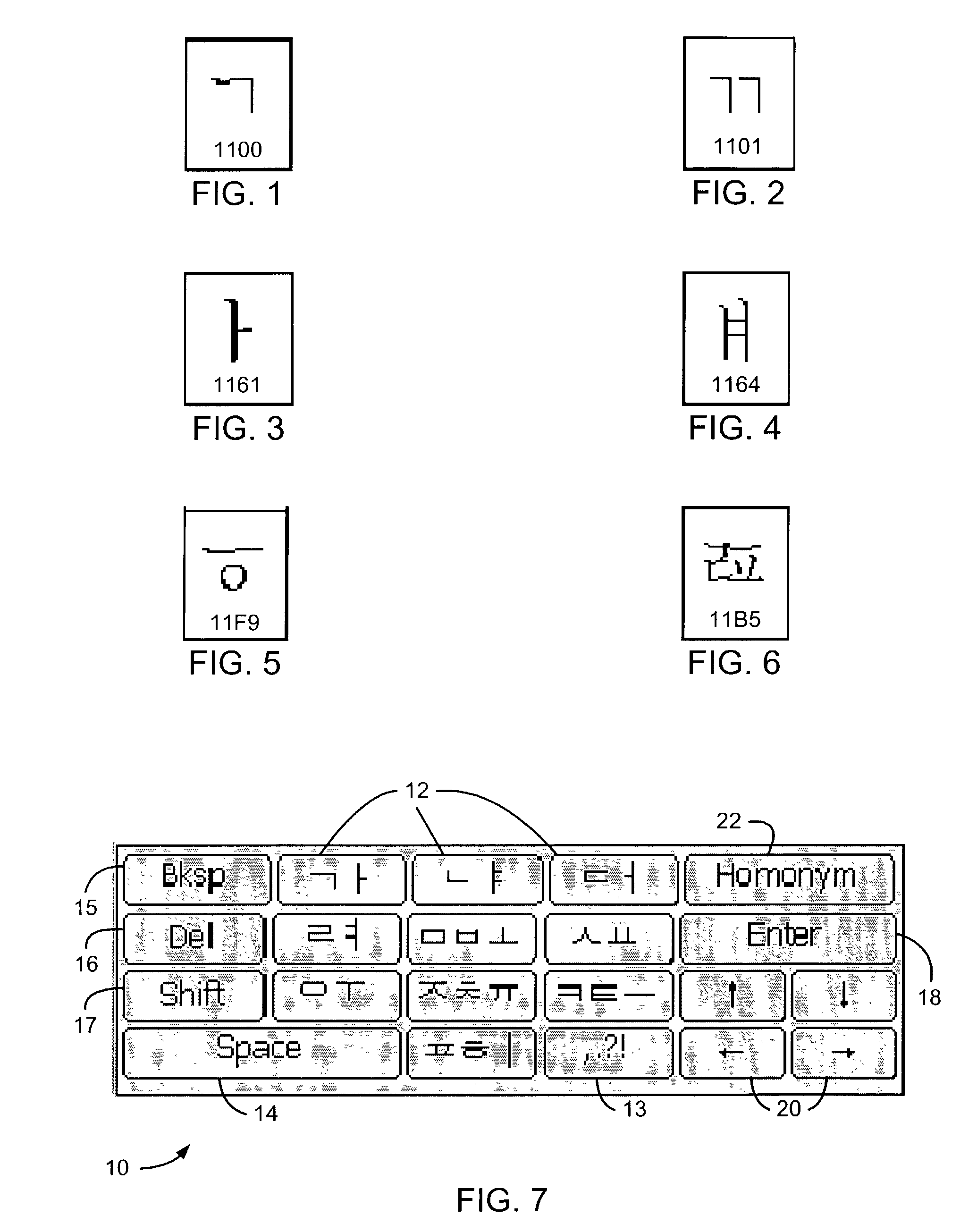

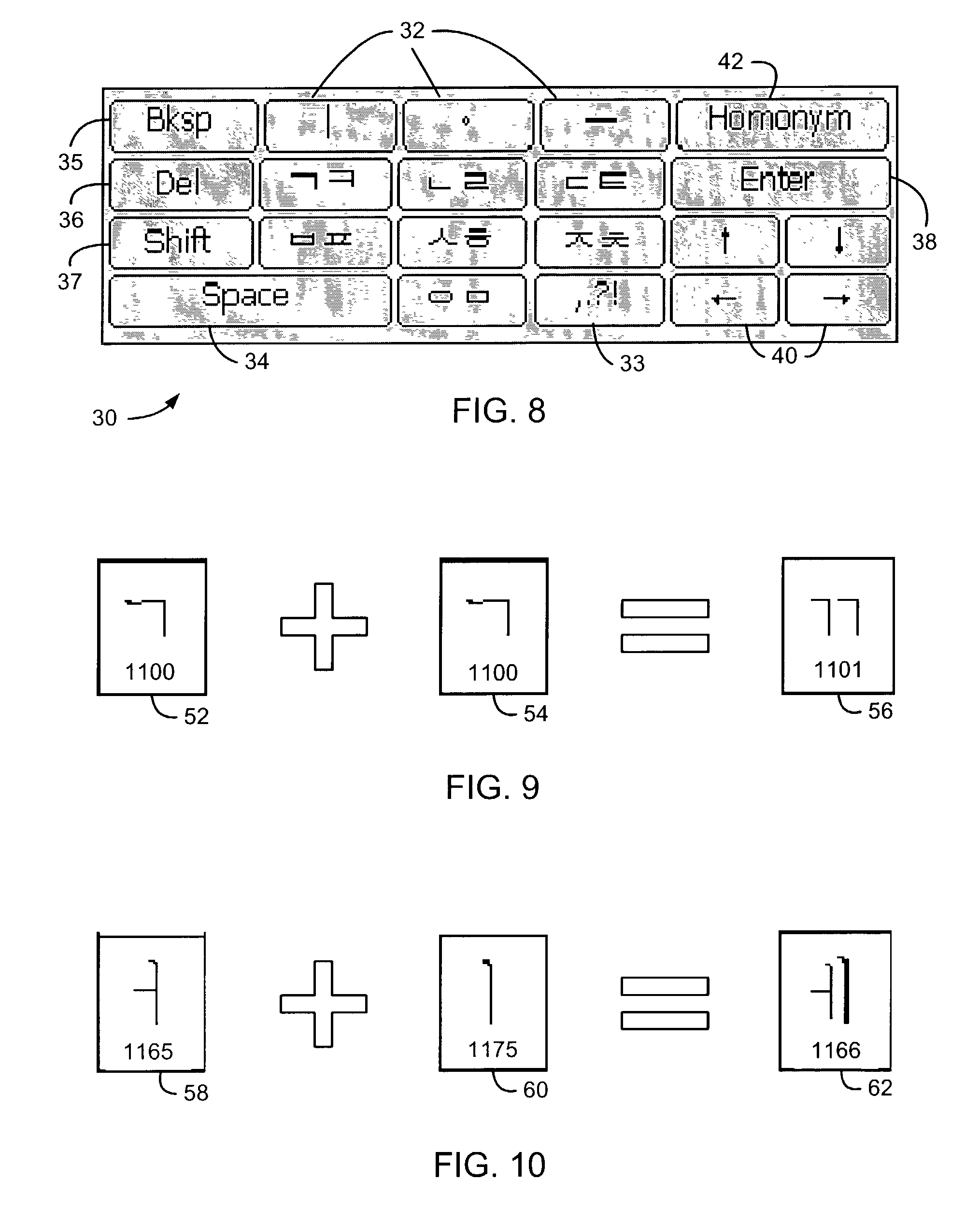

Apparatus and method for input of ideographic Korean syllables from reduced keyboard

ActiveUS7061403B2Low priorityInput/output for user-computer interactionElectronic switchingSyllableAudiology

Systems and methods for input of text symbols into an electronic device comprising a reduced keyboard having keys representing a plurality of characters are disclosed. Possible symbol variants are identified based on character inputs received from the reduced keyboard. Each identified symbol variant is grouped into one of a plurality of groups of symbol variants, each group having an associated priority, according to a type of the symbol variant. Within at least one of the groups, the symbol variants are ranked in decreasing order of frequencies of use of the symbol variants. A list of symbol variants comprising the plurality of groups of symbol variants in order of decreasing priority is then displayed, and an input symbol is selected from the list of symbol variants. The symbol variants of the at least one of the groups of symbol variants are thereby sorted by both priority and frequency of use.

Owner:MALIKIE INNOVATIONS LTD

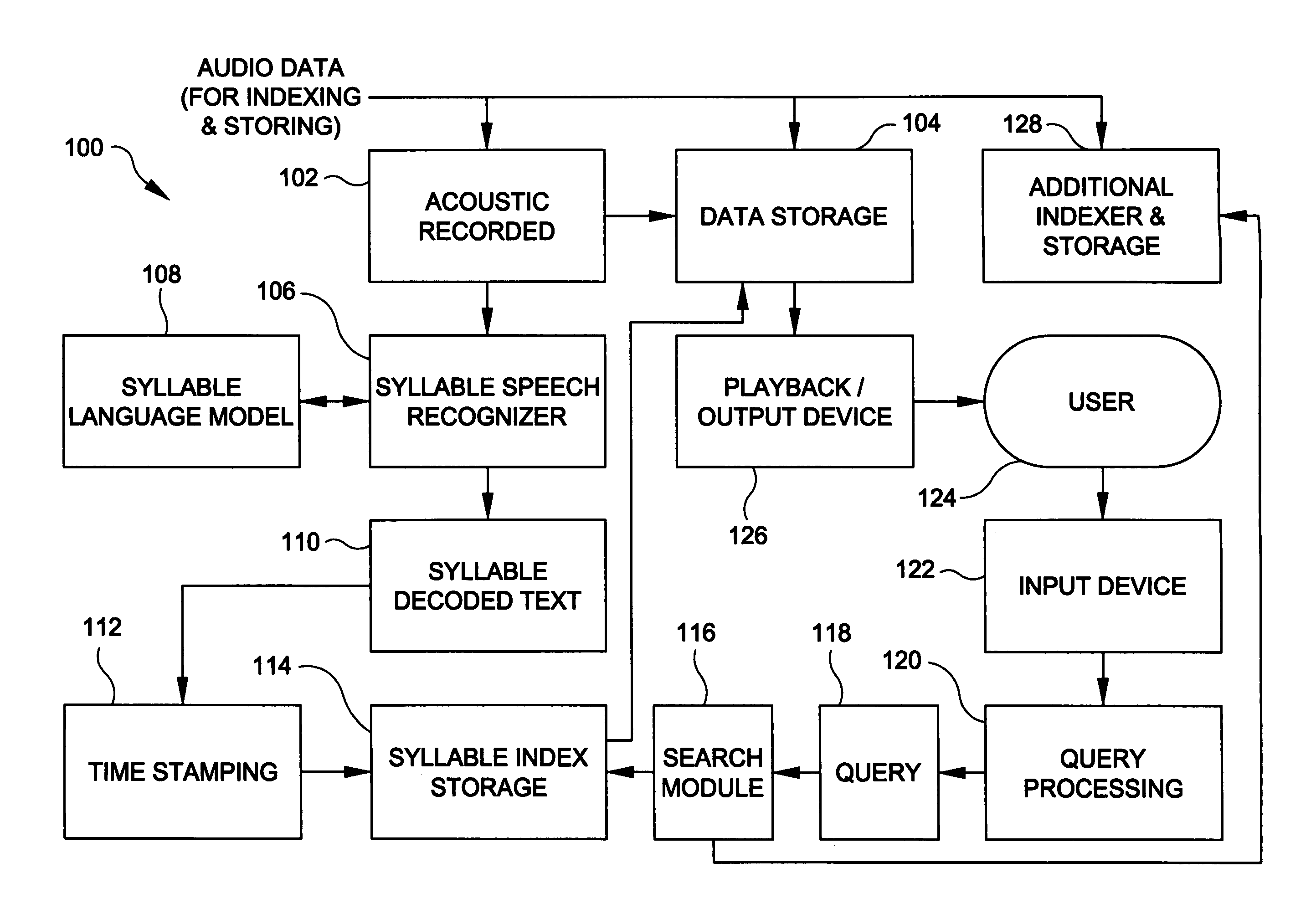

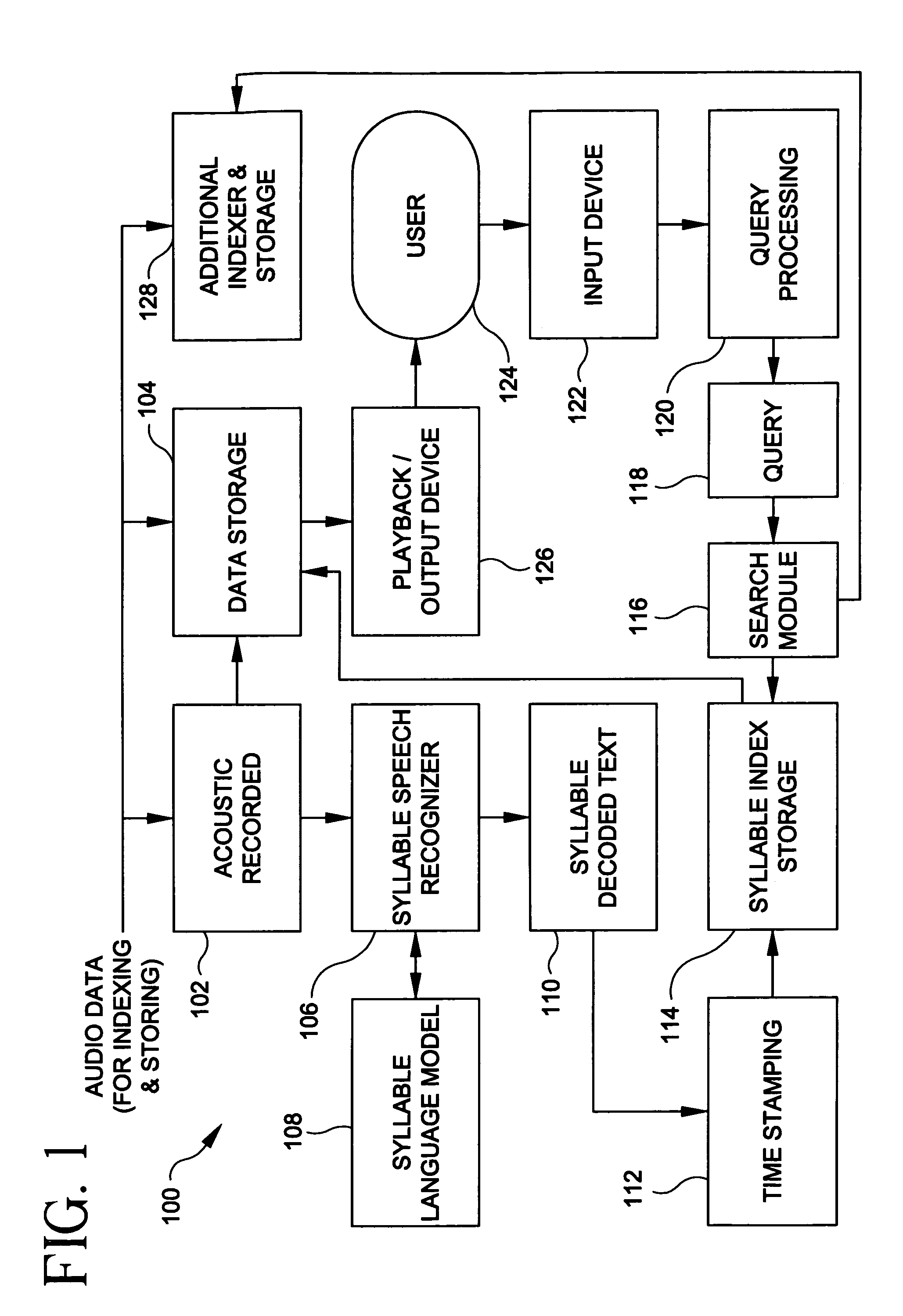

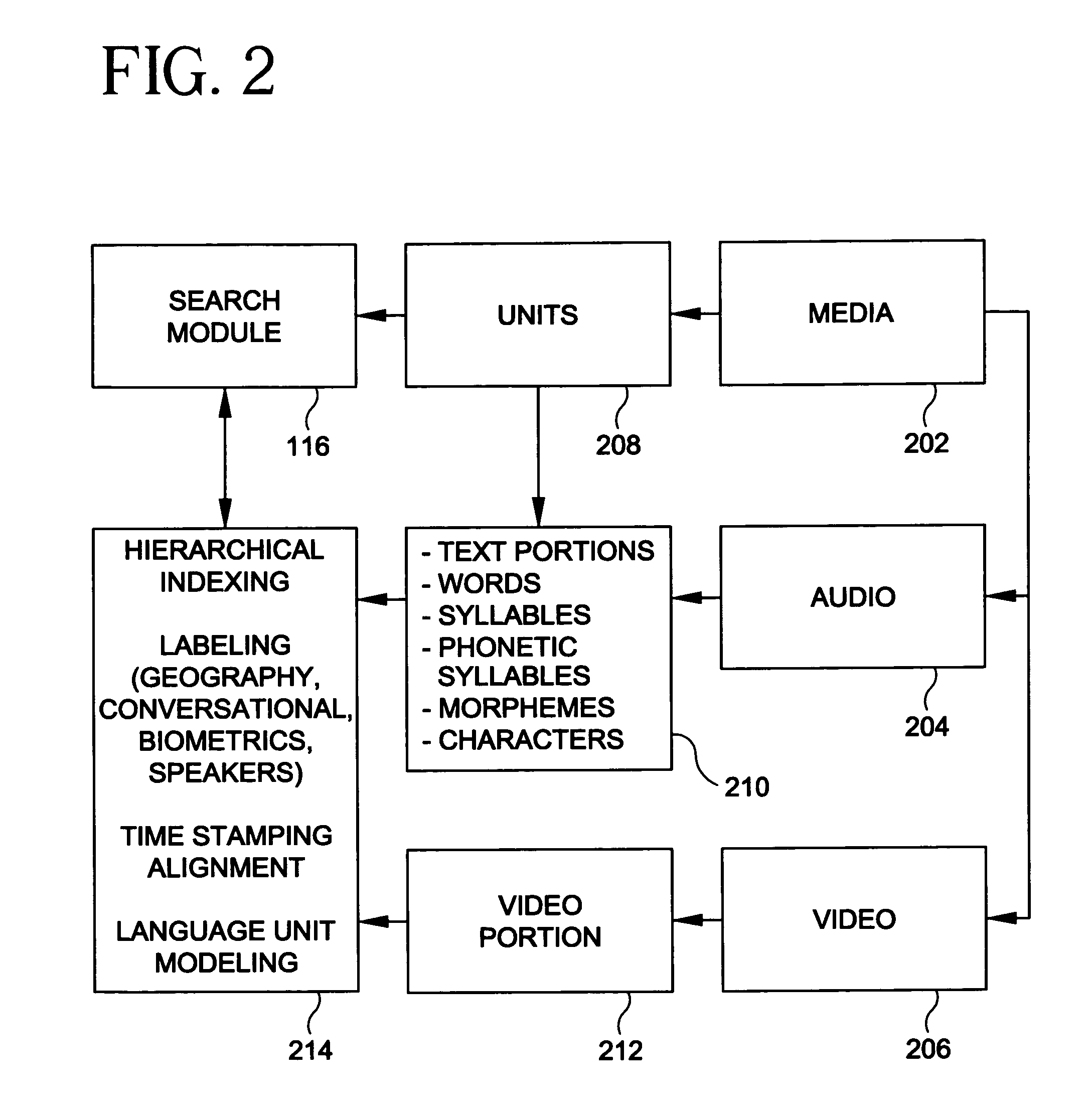

Methods and apparatus for semantic unit based automatic indexing and searching in data archive systems

InactiveUS7177795B1Efficient data compressionMinimize and eliminate deficiencyDigital data information retrievalSpeech recognitionSyllableAutomatic indexing

An audio-based data indexing and retrieval system for processing audio-based data associated with a particular language, comprising: (i) memory for storing the audio-based data; (ii) a semantic unit based speech recognition system for generating a textual representation of the audio-based data, the textual representation being in the form of one or more semantic units corresponding to the audio-based data; (iii) an indexing and storage module, operatively coupled to the semantic unit based speech recognition system and the memory, for indexing the one or more semantic units and storing the one or more indexed semantic units; and (iv) a search engine, operatively coupled to the indexing and storage module and the memory, for searching the one or more indexed semantic units for a match with one or more semantic units associated with a user query, and for retrieving the stored audio based data based on the one or more indexed semantic units. The semantic unit may preferably be a syllable or morpheme. Further, the invention is particularly well suited for use with Asian and Slavic languages.

Owner:NUANCE COMM INC

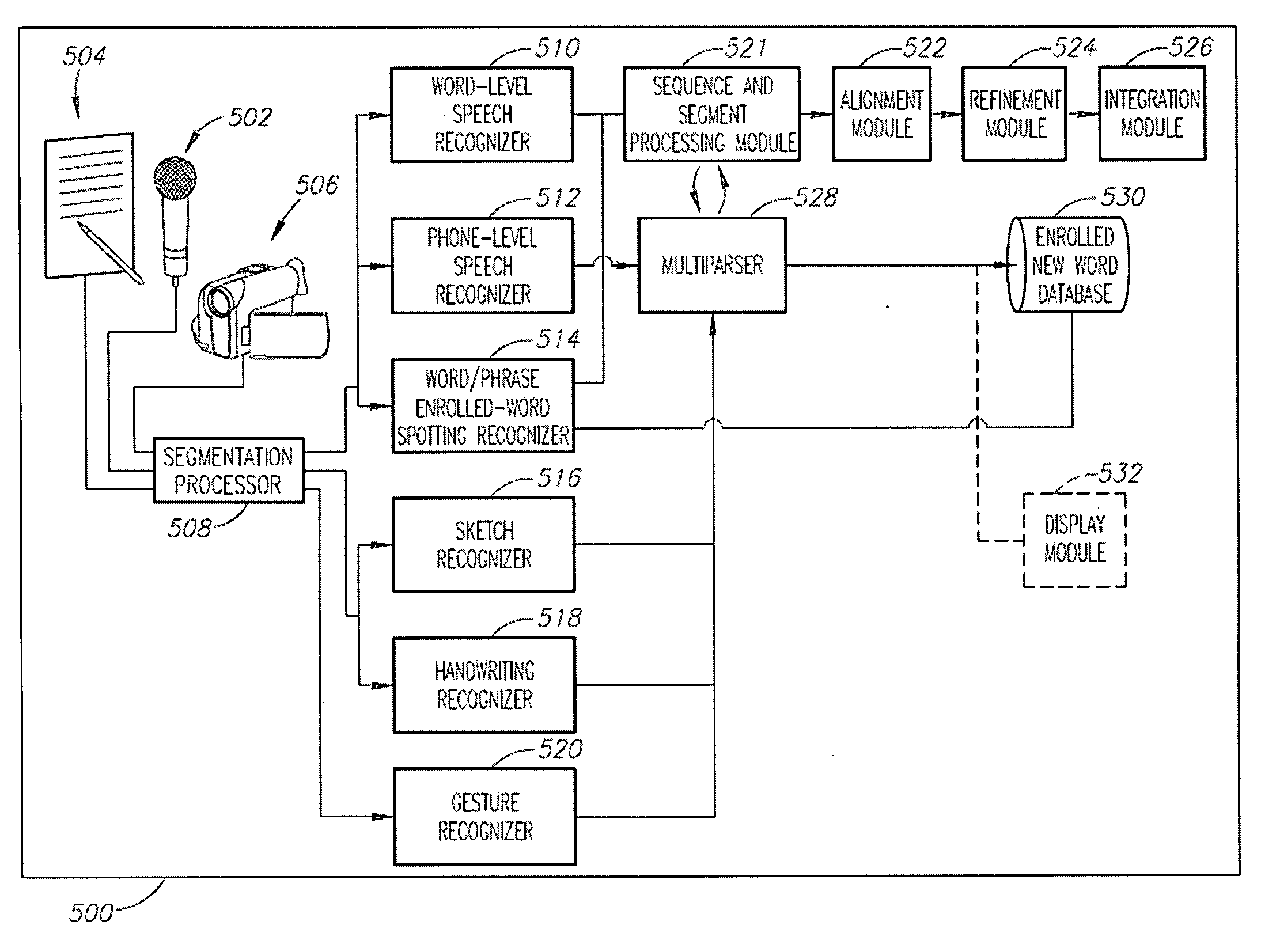

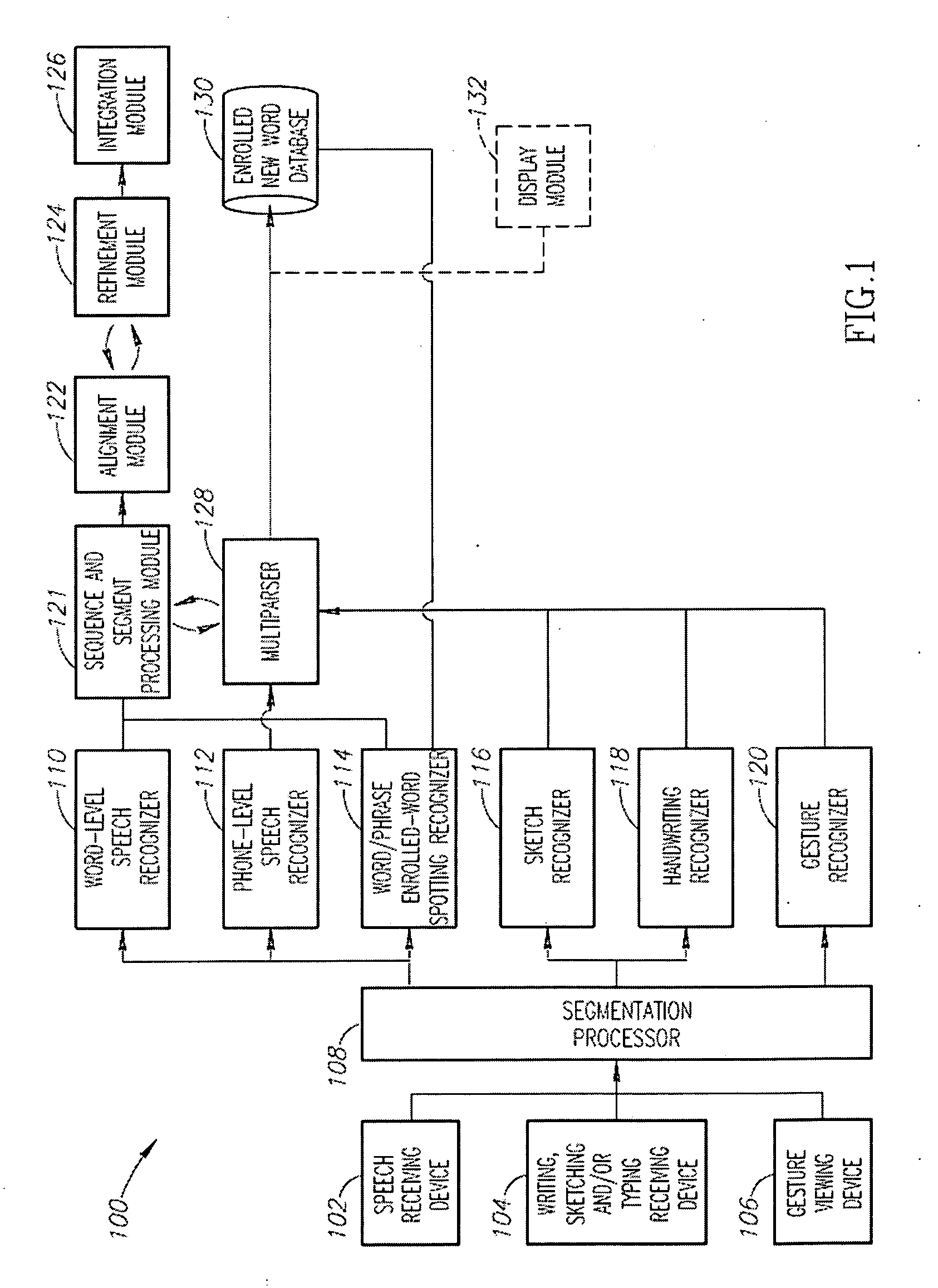

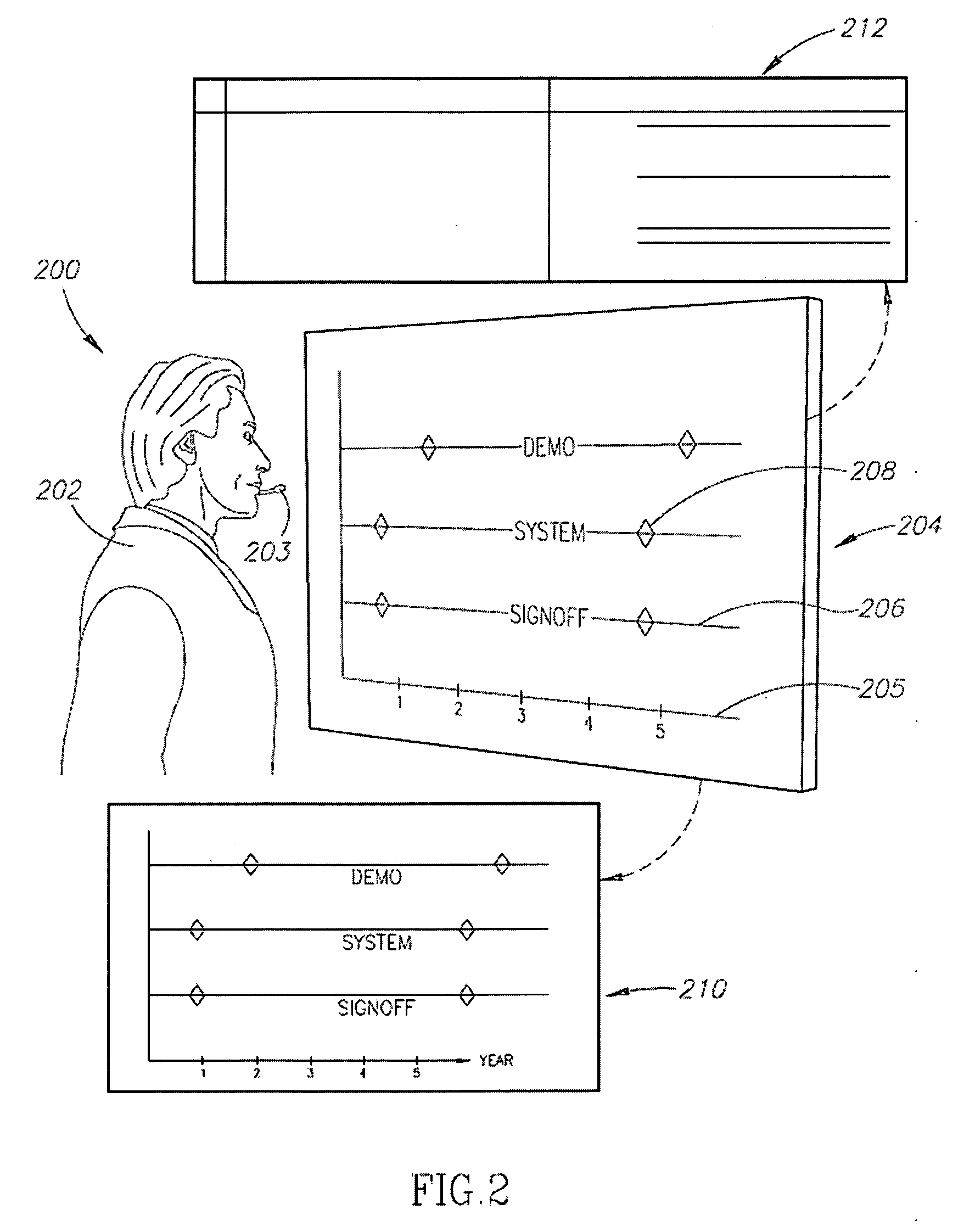

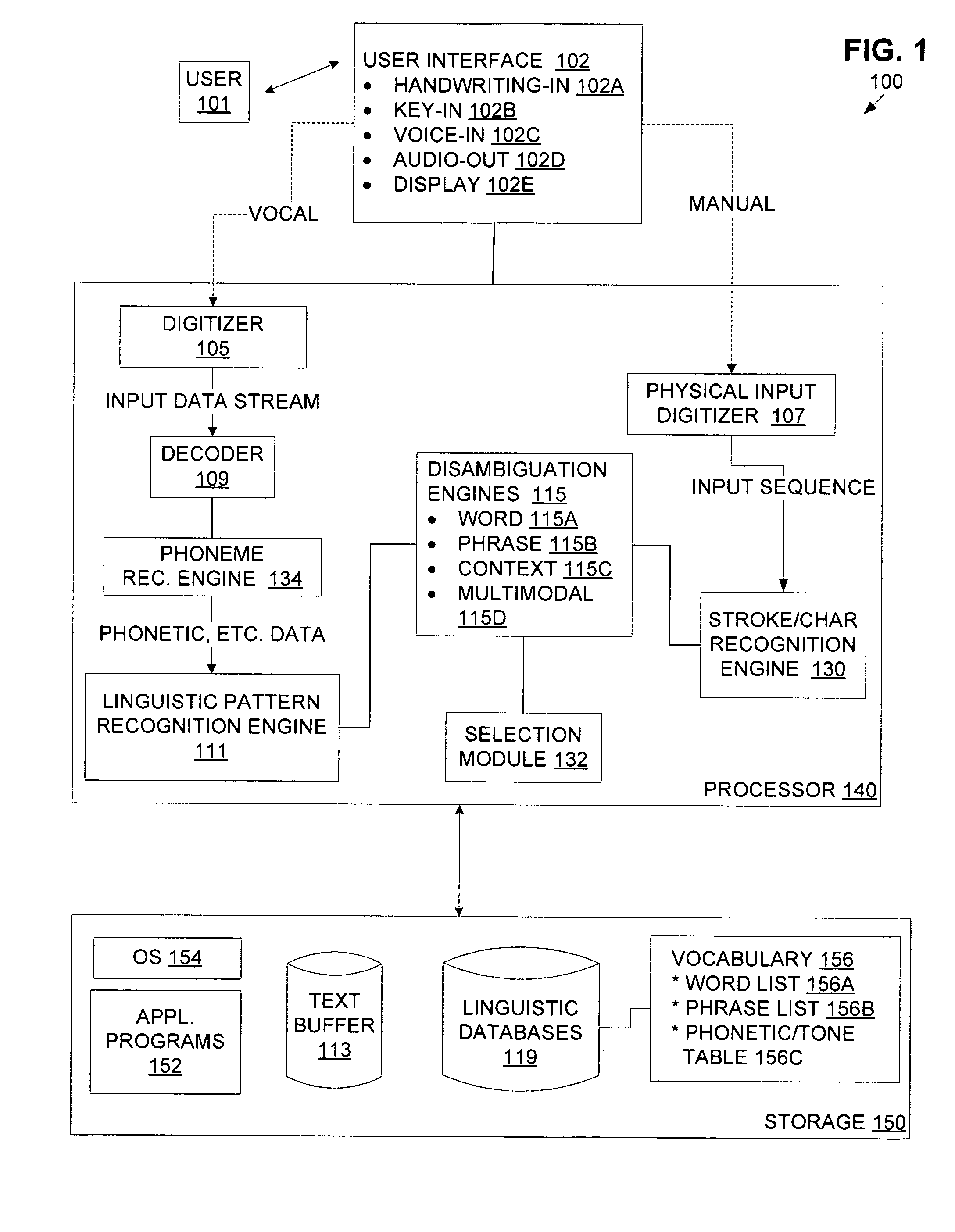

System and method for dynamic learning

New language constantly emerges from complex, collaborative human-human interactions like meetings—such as when a presenter handwrites a new term on a whiteboard while saying it redundantly. The system and method described includes devices for receiving various types of human communication activities (e.g., speech, writing and gestures) presented in a multimodally redundant manner, includes processors and recognizers for segmenting or parsing, and then recognizing selected sub-word units such as phonemes and syllables, and then includes alignment, refinement, and integration modules to find or at least an approximate match to the one or more terms that were presented in the multimodally redundant manner. Once the system has performed a successful integration, one or more terms may be newly enrolled into a database of the system, which permits the system to continuously learn and provide an association for proper names, abbreviations, acronyms, symbols, and other forms of communicated language.

Owner:ADAPX INC

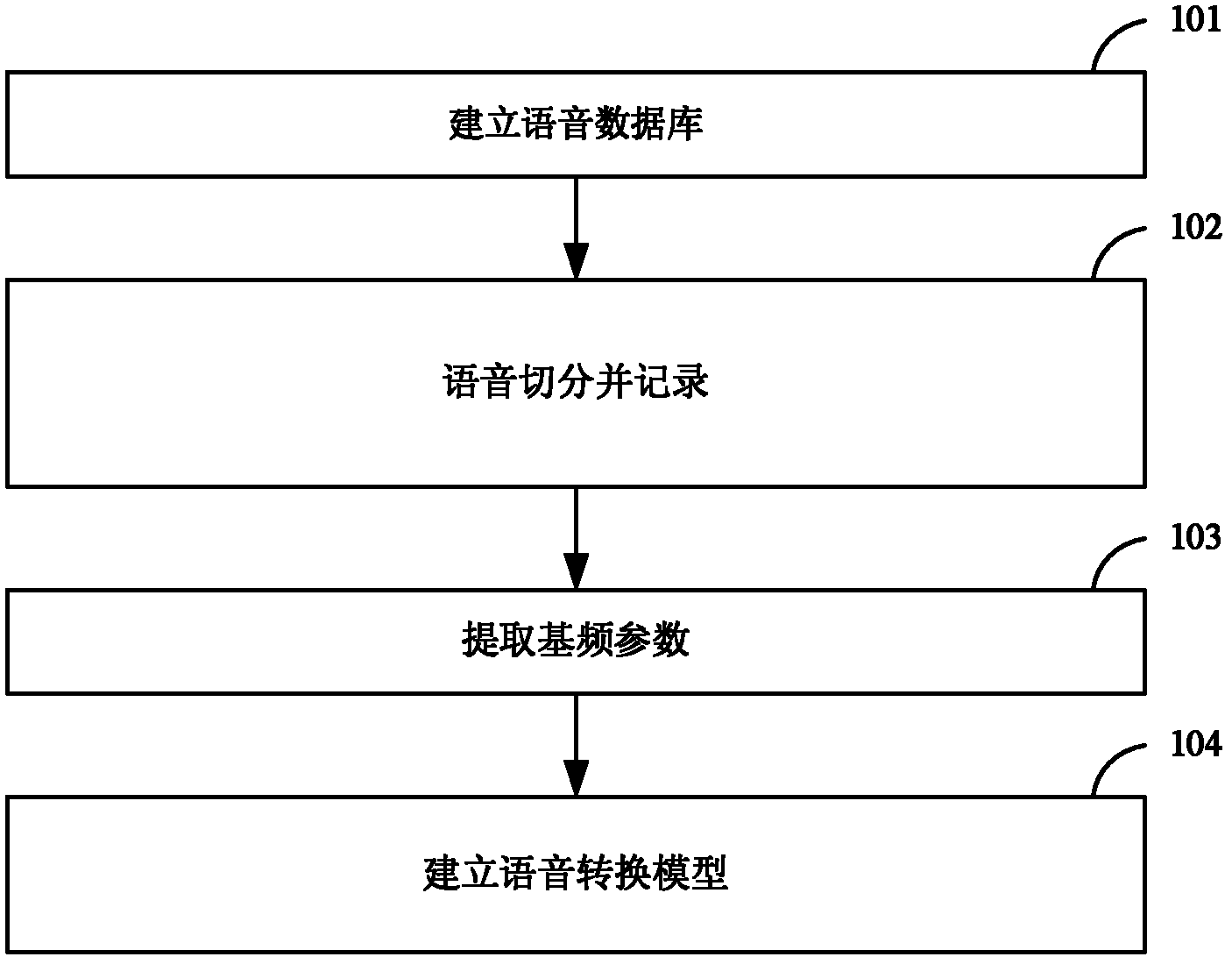

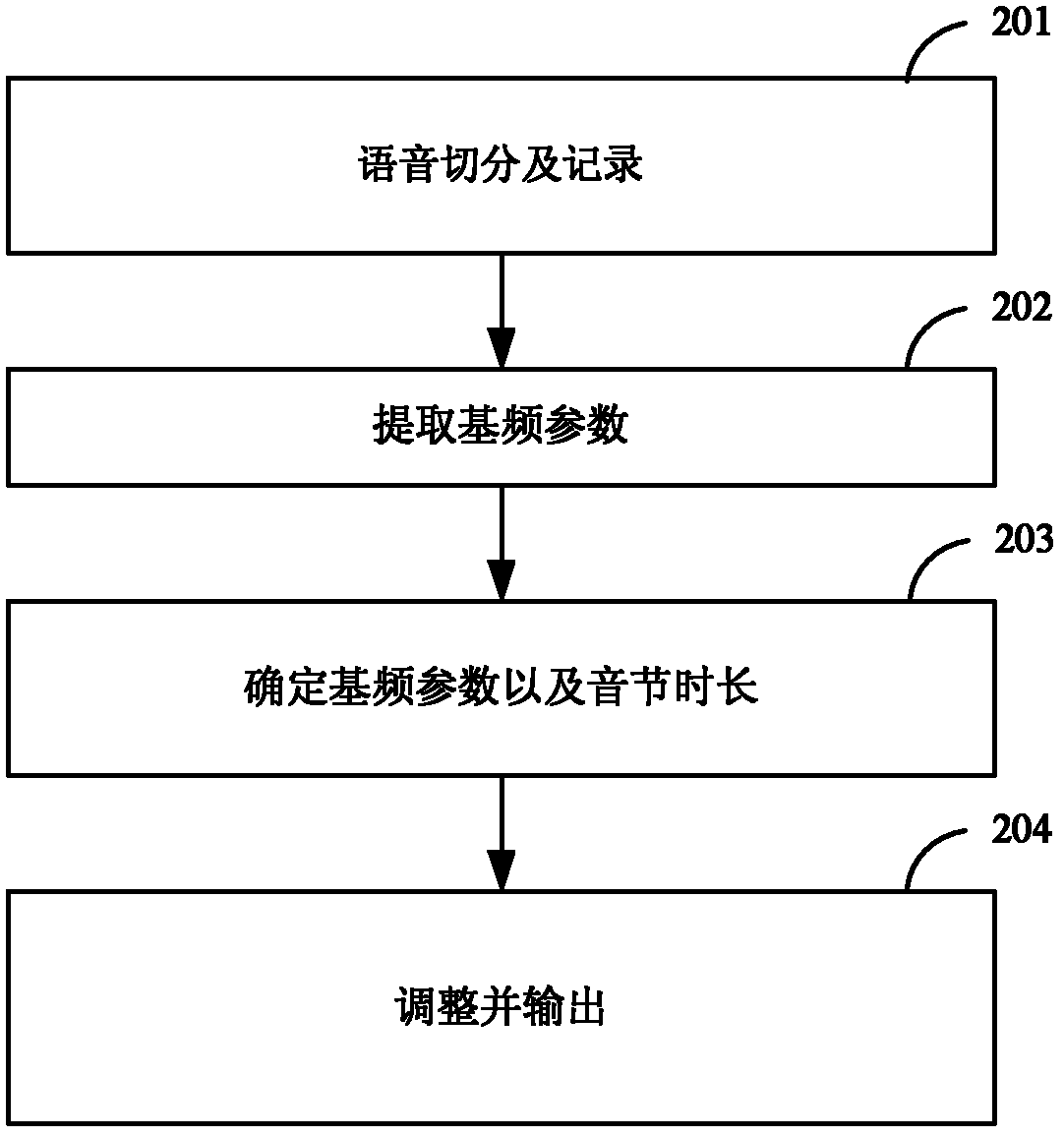

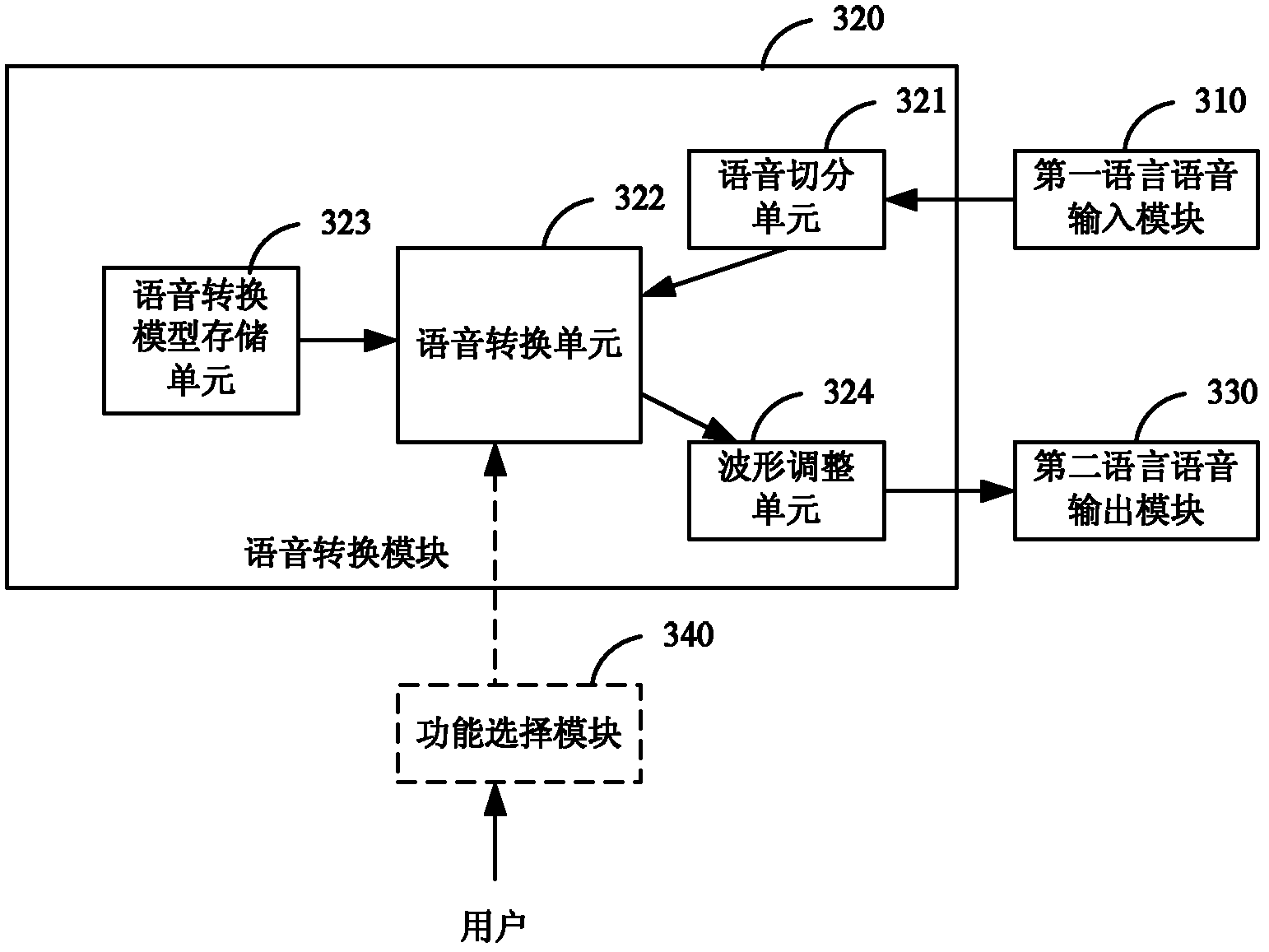

Method for building voice transformation model and method and system for voice transformation

The invention discloses a method for building a voice transformation model and a method and device for achieving voice transformation between first language and second language. The method for transformation includes conducting voice segmentation on first language voice to be transformed to obtain at least one first language syllable, recording syllable duration parameter of each first language syllable and obtained by voice segmentation, extracting base frequency parameter of each first language syllable, determining base frequency parameter and syllable duration of each corresponding second language syllable according to the base frequency parameter and the syllable duration parameter of each first language syllable, adjusting voice waveform of the first corresponding language syllables by the base frequency parameter and the syllable duration according to each second language syllable to obtain voice waveform of each second language syllable and output the syllables. When the method is used for conducting voice transformation, voice quality of input voice and transformed output voice is basically consistent, and real-time transformation can be conducted.

Owner:SIEMENS AG

Method and apparatus utilizing voice input to resolve ambiguous manually entered text input

InactiveUS7720682B2Character and pattern recognitionNatural language data processingDigital dataSyllable

From a text entry tool, a digital data processing device receives inherently ambiguous user input. Independent of any other user input, the device interprets the received user input against a vocabulary to yield candidates such as words (of which the user input forms the entire word or part such as a root, stem, syllable, affix), or phrases having the user input as one word. The device displays the candidates and applies speech recognition to spoken user input. If the recognized speech comprises one of the candidates, that candidate is selected. If the recognized speech forms an extension of a candidate, the extended candidate is selected. If the recognized speech comprises other input, various other actions are taken.

Owner:TEGIC COMM

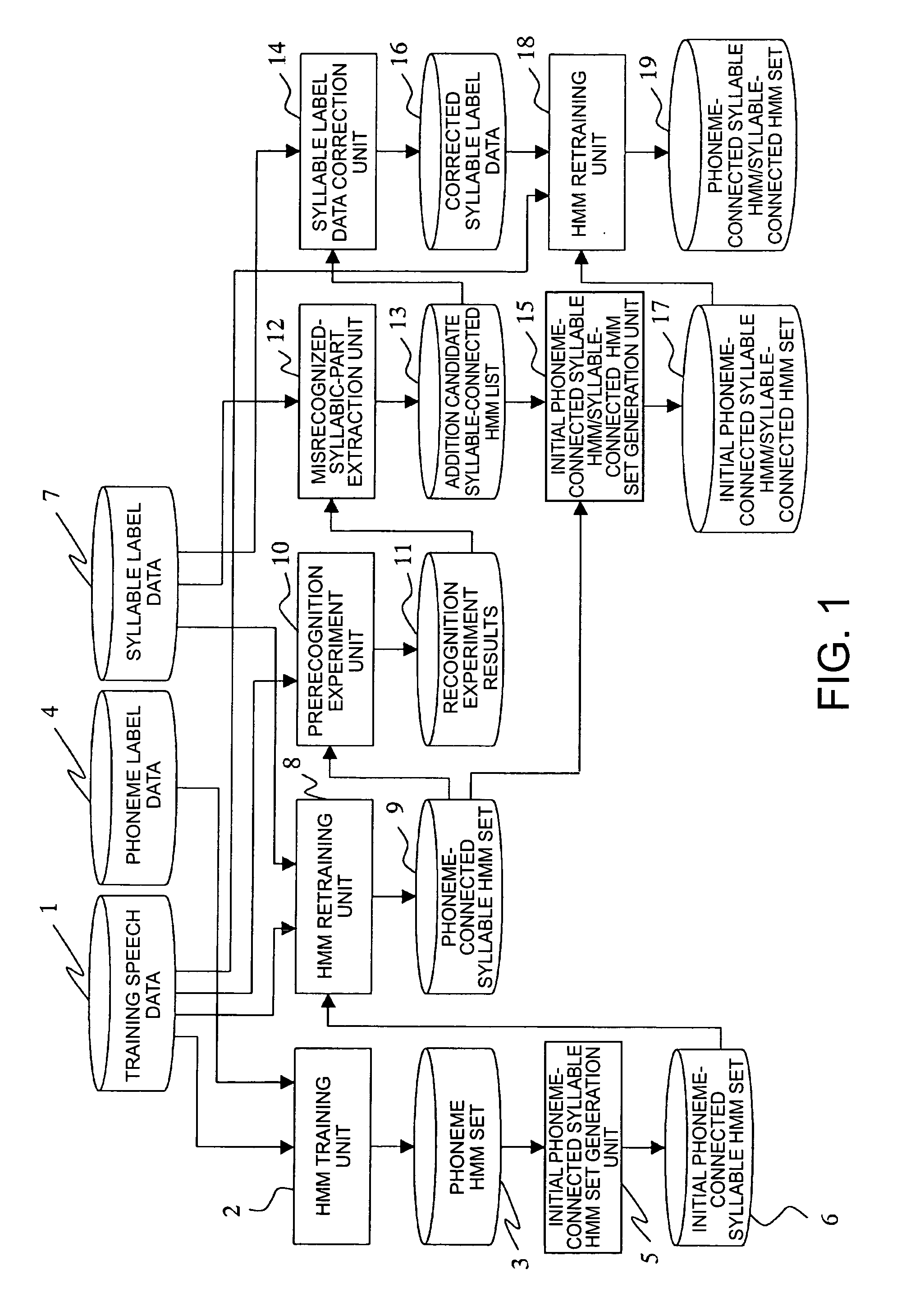

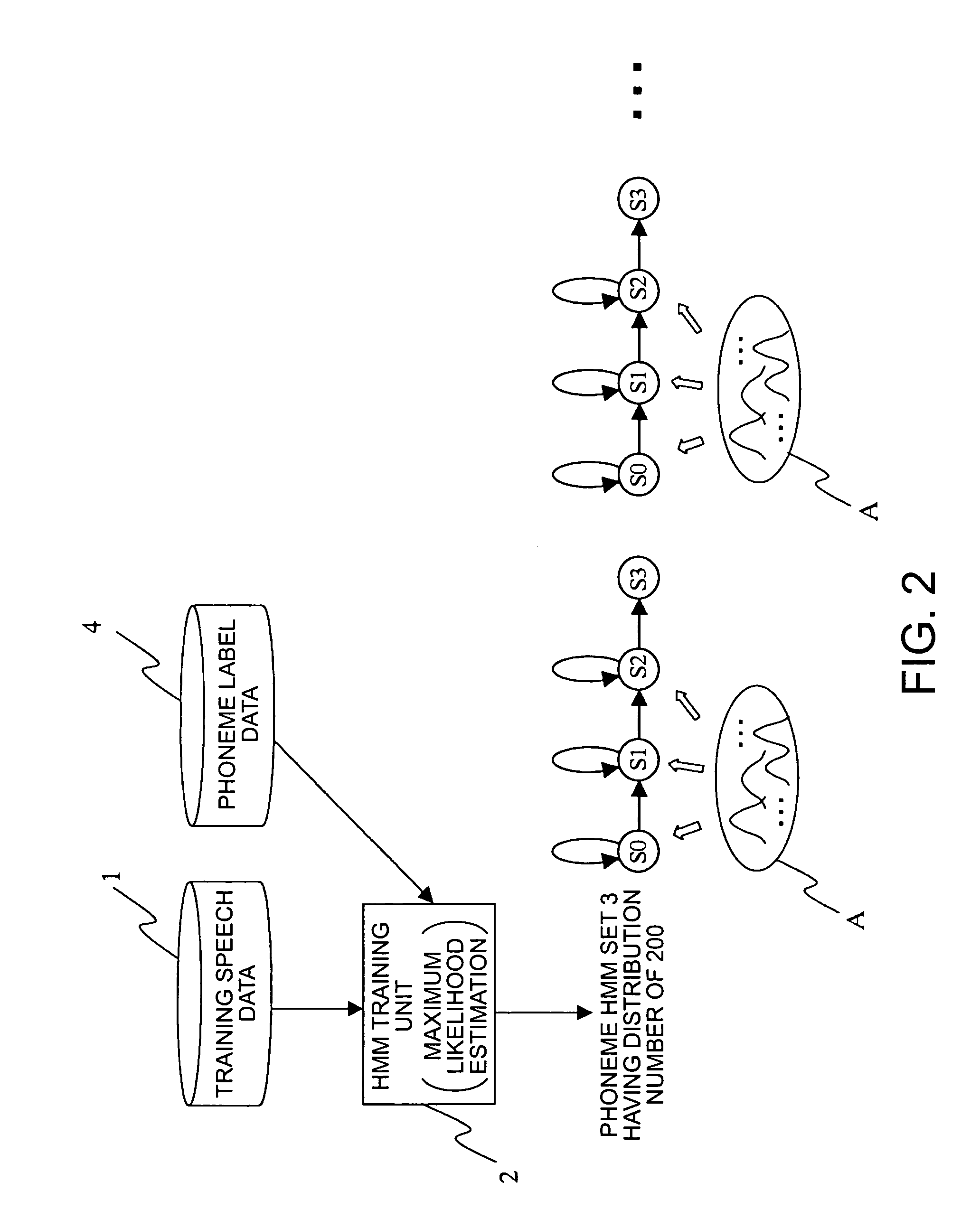

Acoustic model creation method as well as acoustic model creation apparatus and speech recognition apparatus

InactiveUS7366669B2Satisfactory recognition performanceLow processibilitySpeech recognitionSyllableSpeech identification

To provide an acoustic model which can absorb the fluctuation of a phonemic environment in an interval longer than a syllable, with the number of parameters of the acoustic model suppressed to be small, a phoneme-connected syllable HMM / syllable-connected HMM set is generated in such a way that a phoneme-connected syllable HMM set corresponding to individual syllables is generated by combining phoneme HMMs. A preliminary experiment is conducted using the phoneme-connected syllable HMM set and training speech data. Any misrecognized syllable and the preceding syllable of the misrecognized syllable are checked using results of a preliminary experiment syllable label data. The combination between a correct answer syllable for the misrecognized syllable and the preceding syllable of the misrecognized syllable is extracted as a syllable connection. A syllable-connected HMM corresponding to this syllable connection is added into the phoneme-connected syllable HMM set. The resulting phoneme-connected syllable HMM set is trained using the training speech data and the syllable label data.

Owner:SEIKO EPSON CORP

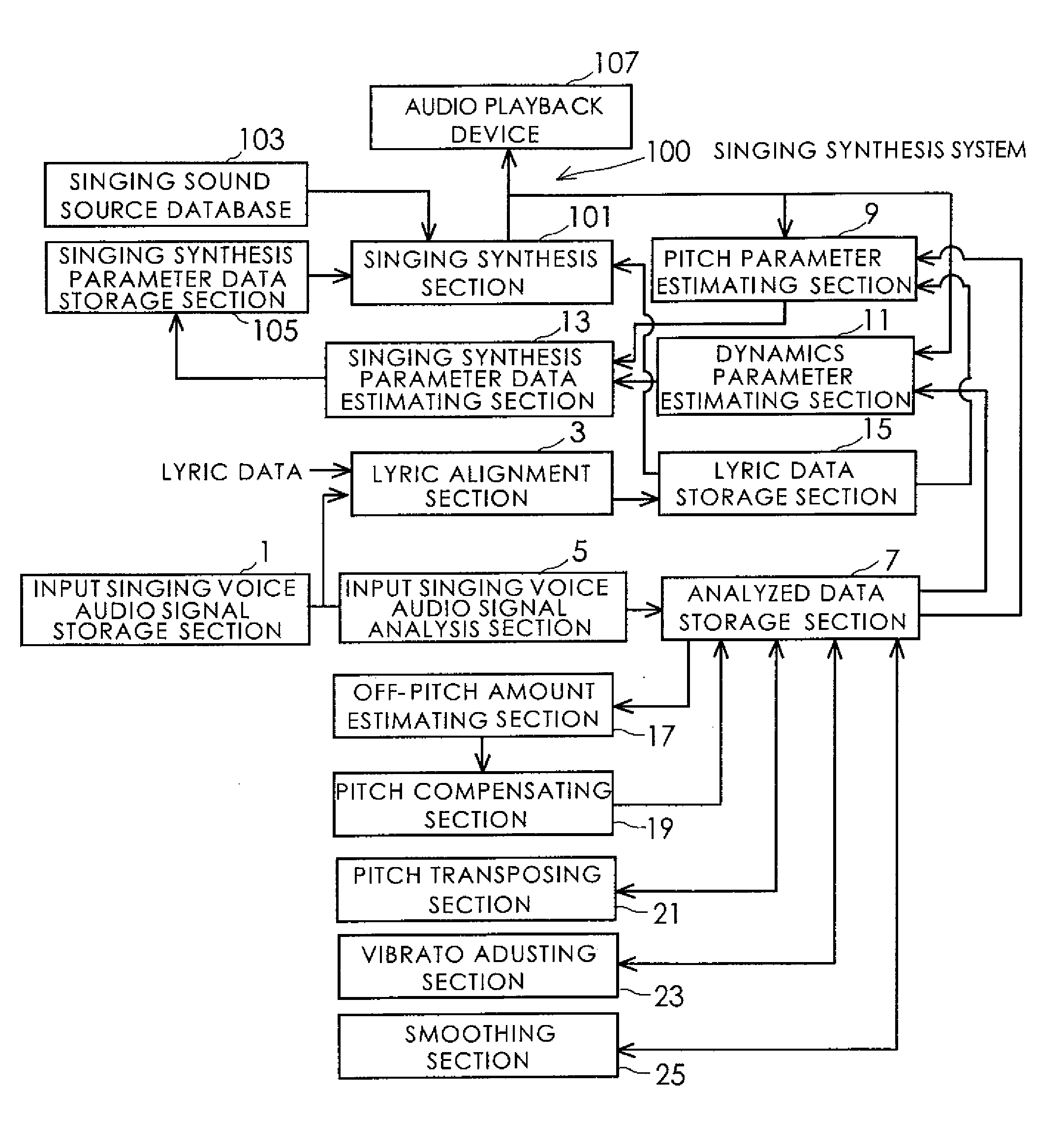

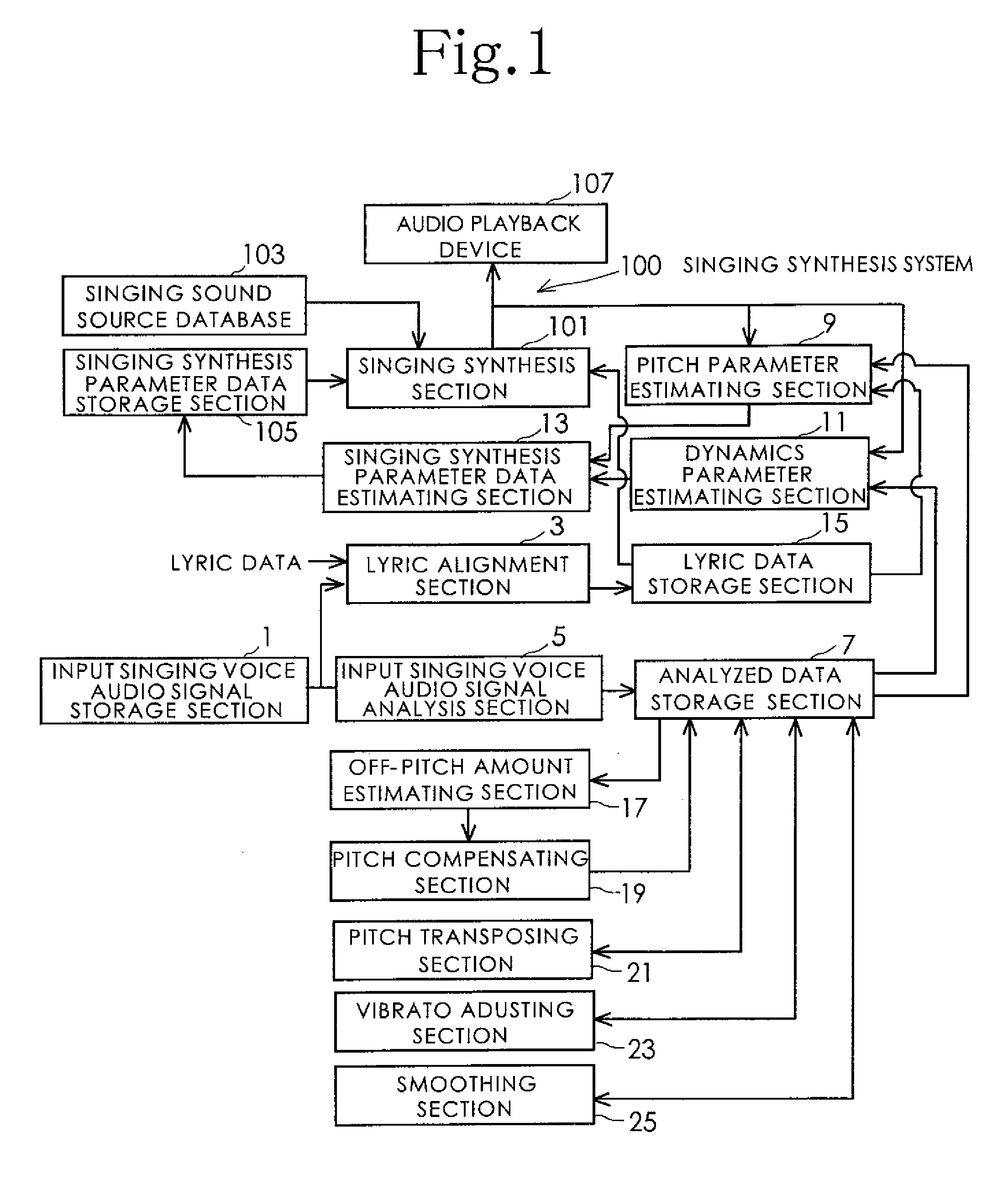

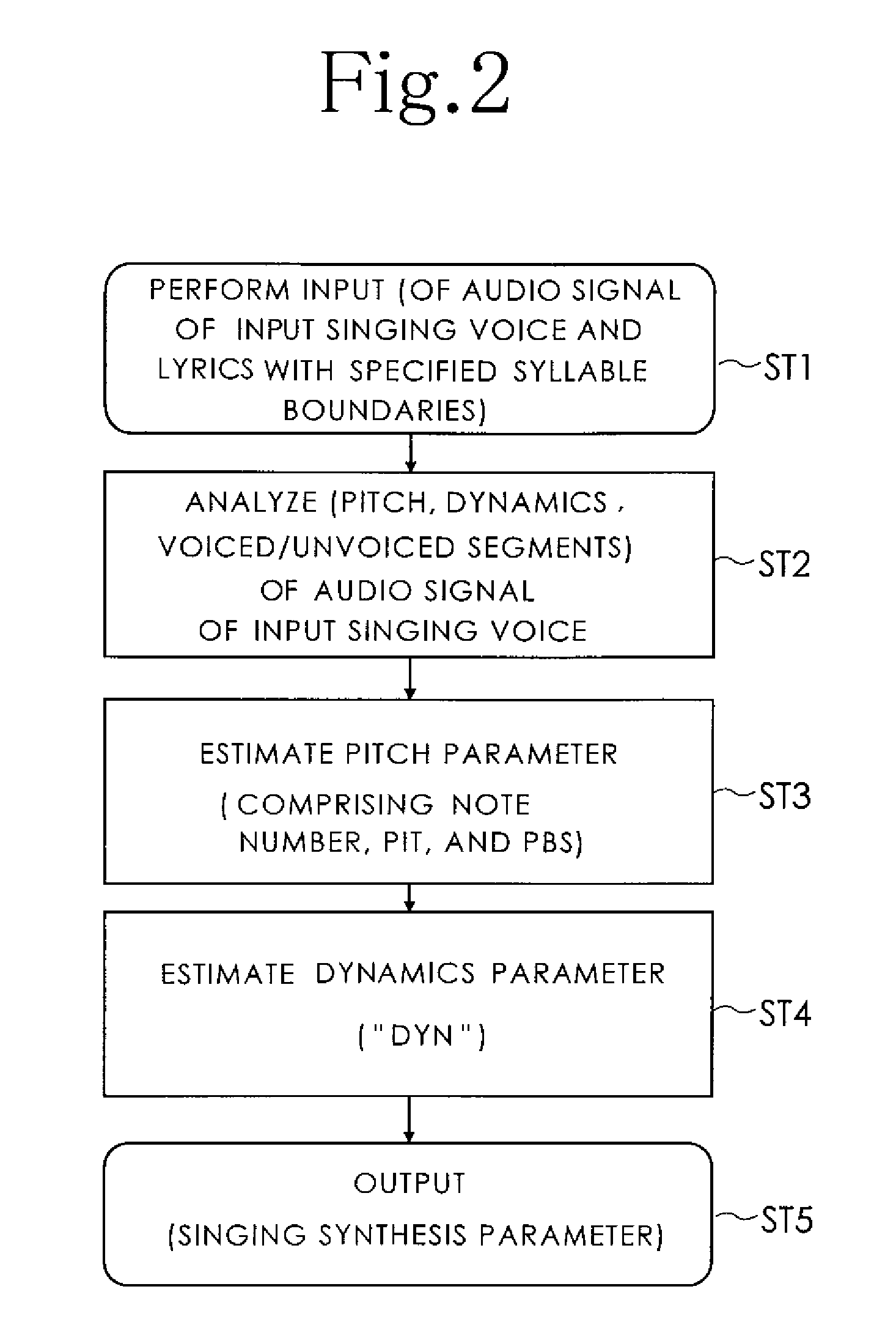

Singing synthesis parameter data estimation system

ActiveUS20090306987A1Raise the possibilityQuality improvementElectrophonic musical instrumentsSpeech synthesisSyllableAudio signal flow

There is provided a singing synthesis parameter data estimation system that automatically estimates singing synthesis parameter data for automatically synthesizing a human-like singing voice from an audio signal of input singing voice. A pitch parameter estimating section 9 estimates a pitch parameter, by which the pitch feature of an audio signal of synthesized singing voice is got closer to the pitch feature of the audio signal of input singing voice based on at least both of the pitch feature and lyric data with specified syllable bondaries of the audio signal of input singing voice. A dynamics parameter estimating section 11 converts the dynamics feature of the audio signal of input singing voice to a relative value with respect to the dynamics feature of the audio signal of synthesized singing voice, and estimates a dynamics parameter, by which the dynamics feature of the audio signal of synthesized singing voice is got close to the dynamics feature of the audio signal of input singing voice that has been converted to the relative value.

Owner:NAT INST OF ADVANCED IND SCI & TECH

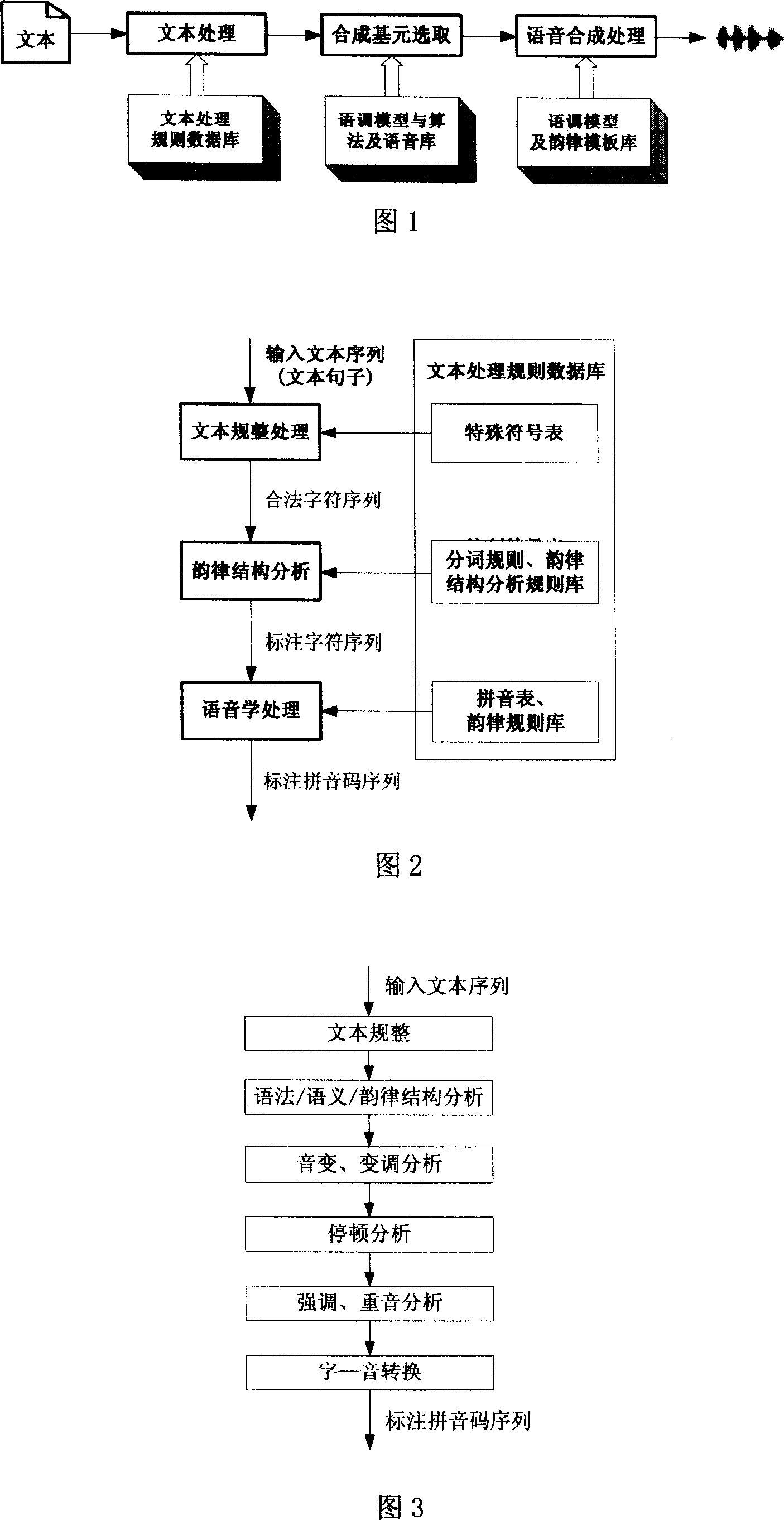

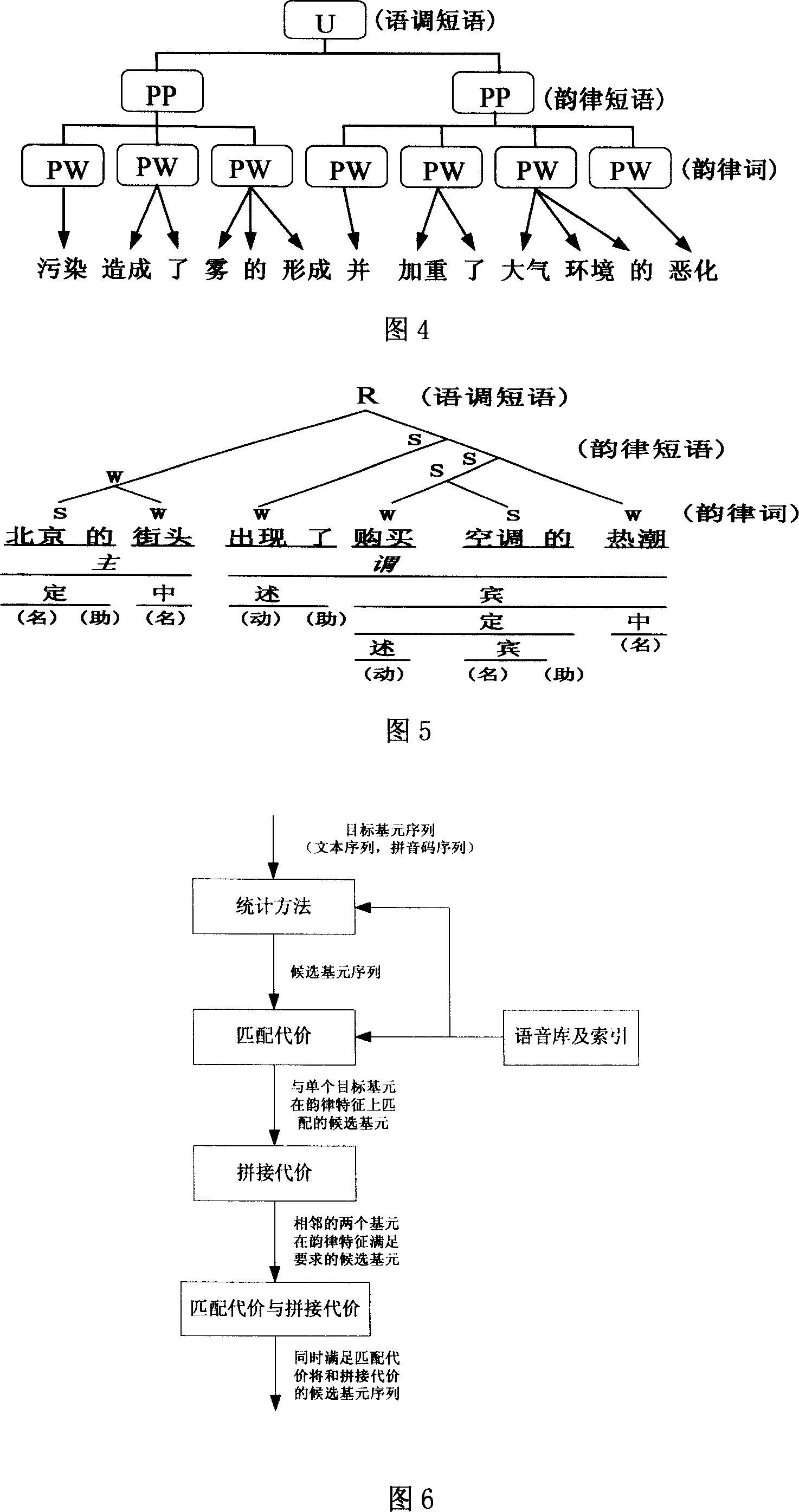

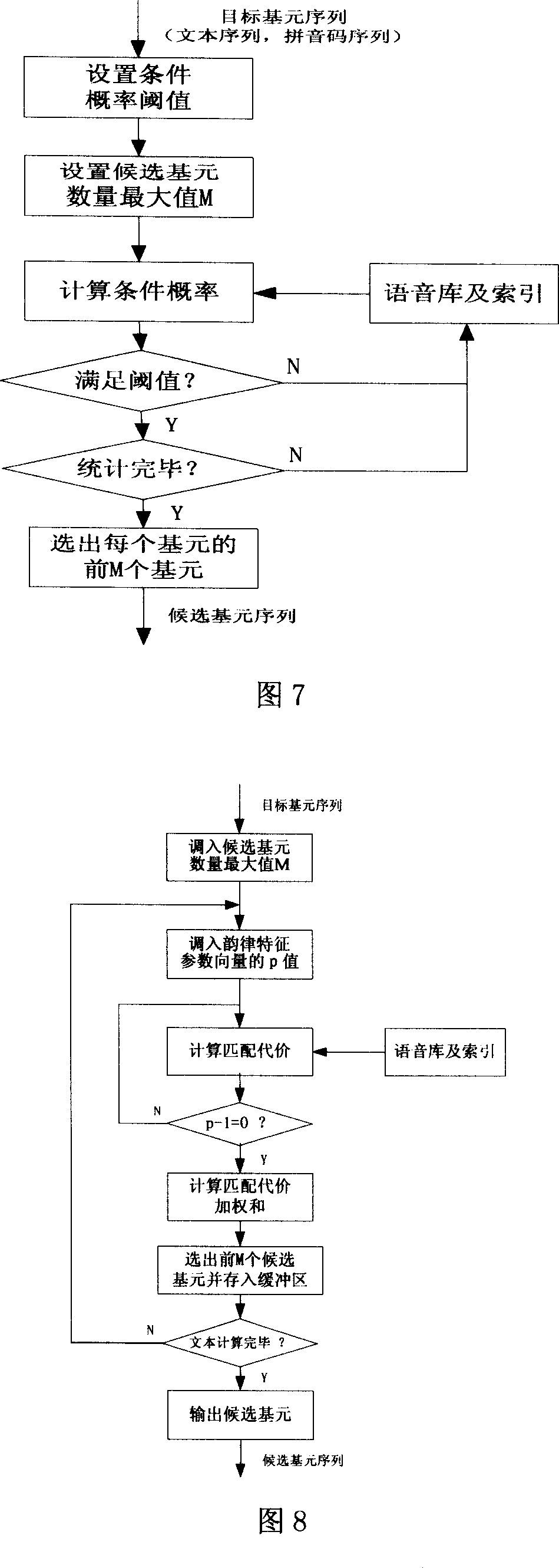

Speech synthetic method based on rhythm character

A method for synthesizing voice based on rhythm character includes text processing program formed by text standardizing step, rhythm structure analysis step and language treatment step, synthetic element selecting program formed by element confirming step, matching step, pasting-up step, optimizing and screening step; voice synthesization processing program formed by base frequency outline generating step of phrase unit, base frequency outline generating step of syllable unit and intonation superposing step.

Owner:HEILONGJIANG UNIV

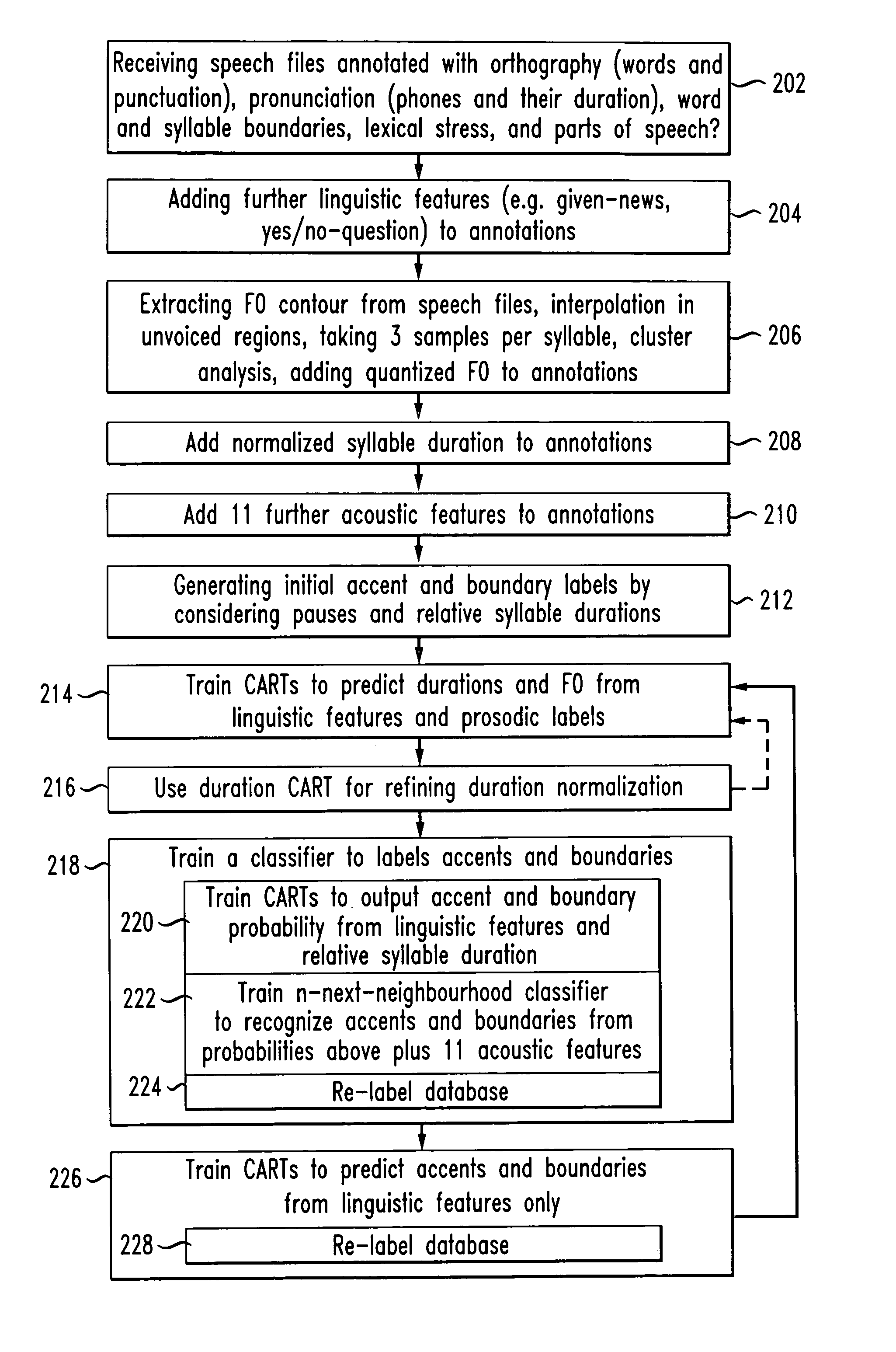

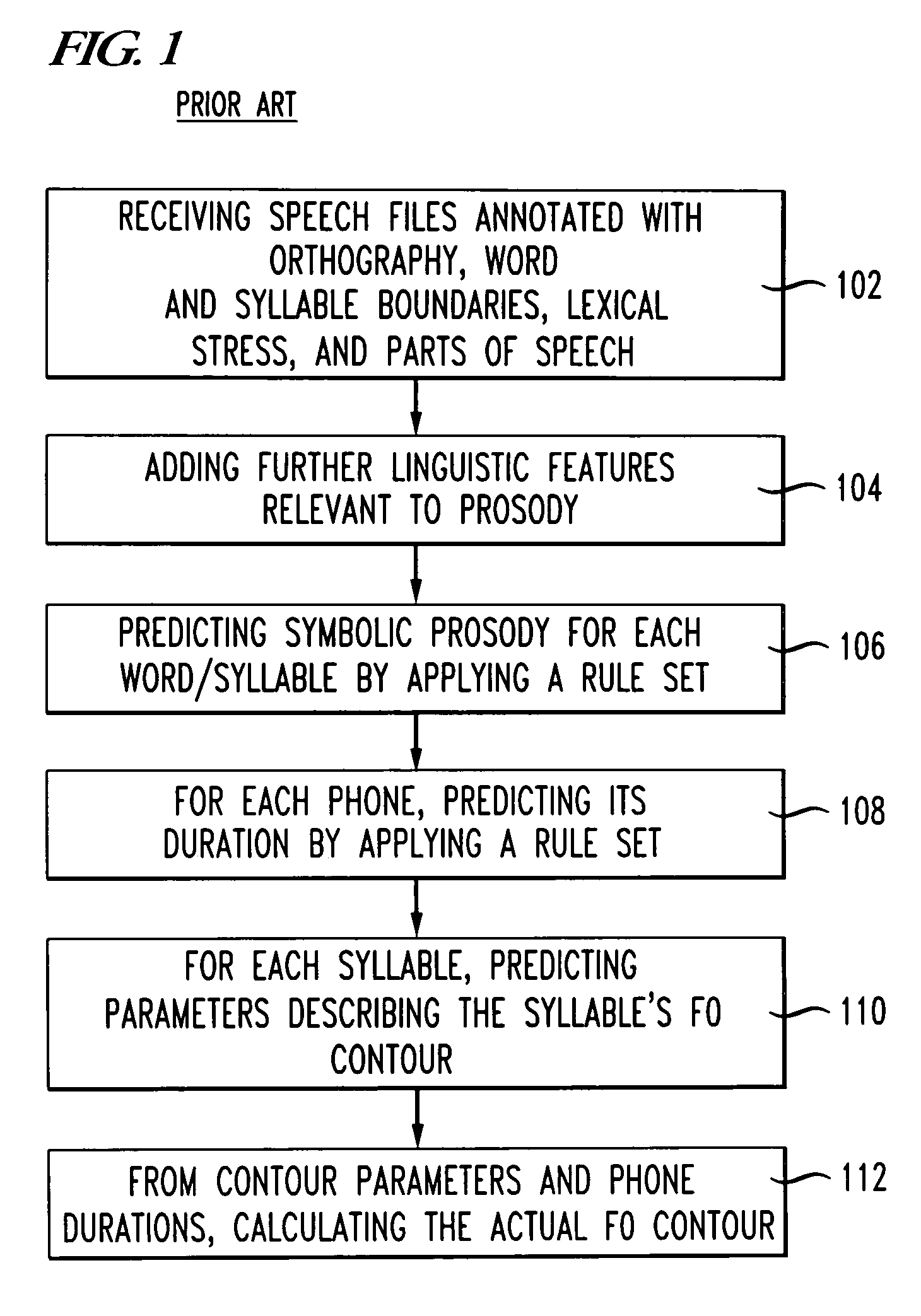

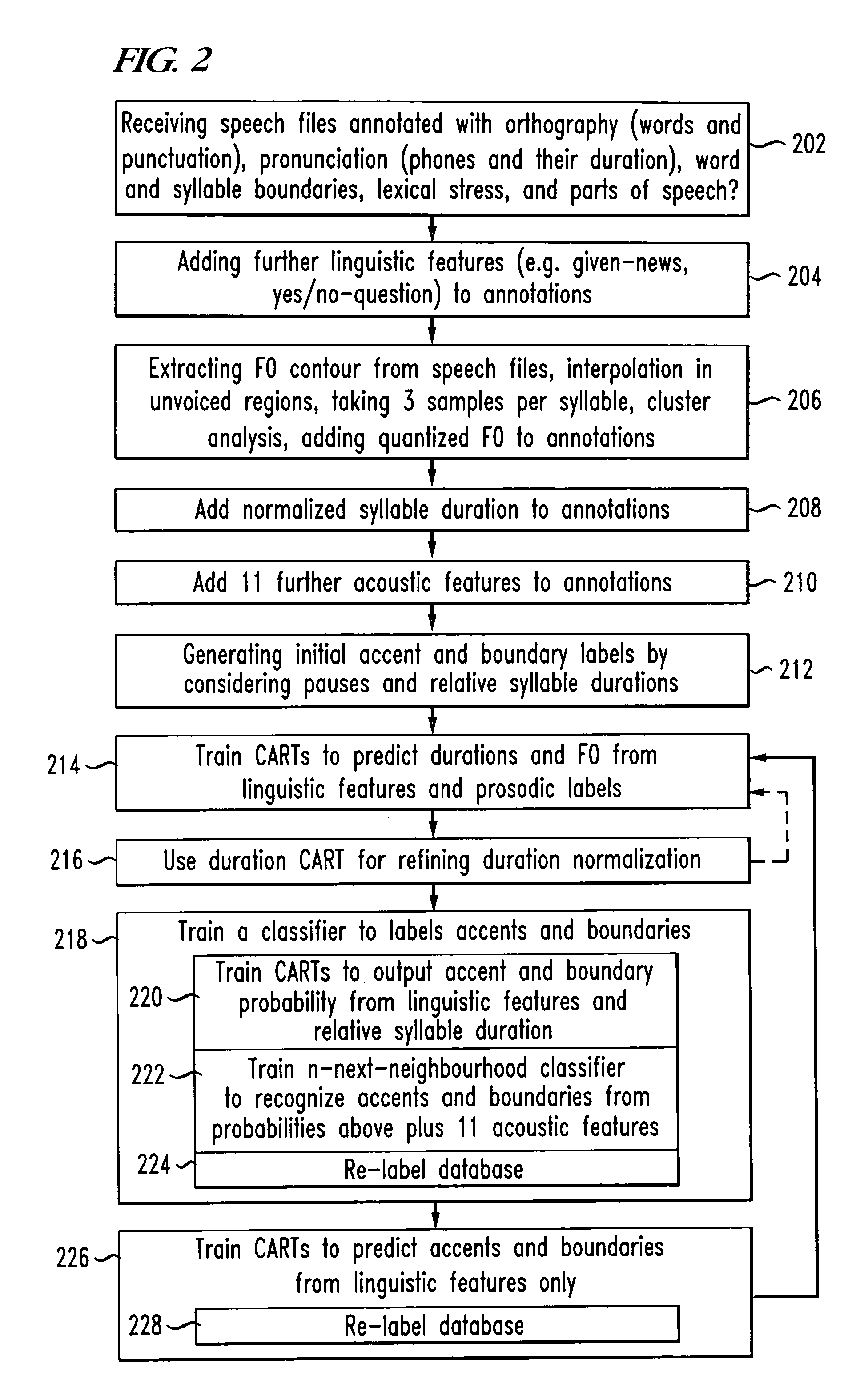

System and method for predicting prosodic parameters

A method for generating a prosody model that predicts prosodic parameters is disclosed. Upon receiving text annotated with acoustic features, the method comprises generating first classification and regression trees (CARTs) that predict durations and F0 from text by generating initial boundary labels by considering pauses, generating initial accent labels by applying a simple rule on text-derived features only, adding the predicted accent and boundary labels to feature vectors, and using the feature vectors to generate the first CARTs. The first CARTs are used to predict accent and boundary labels. Next, the first CARTs are used to generate second CARTs that predict durations and F0 from text and acoustic features by using lengthened accented syllables and phrase-final syllables, refining accent and boundary models simultaneously, comparing actual and predicted duration of a whole prosodic phrase to normalize speaking rate, and generating the second CARTs that predict the normalized speaking rate.

Owner:CERENCE OPERATING CO

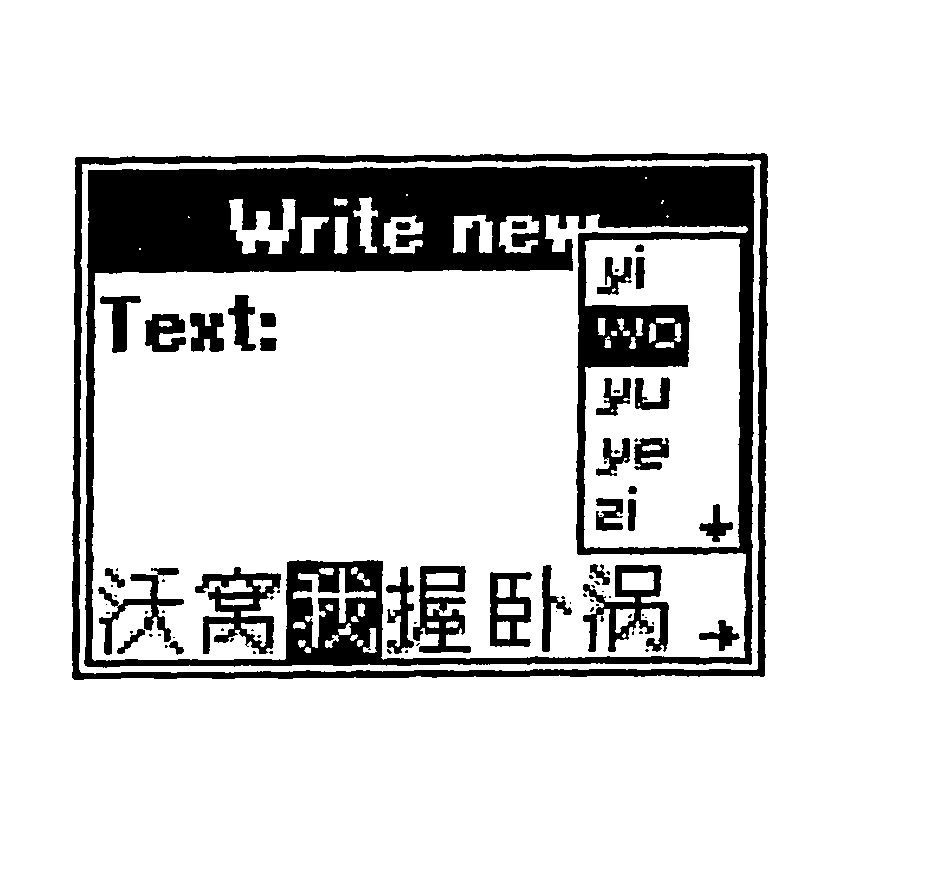

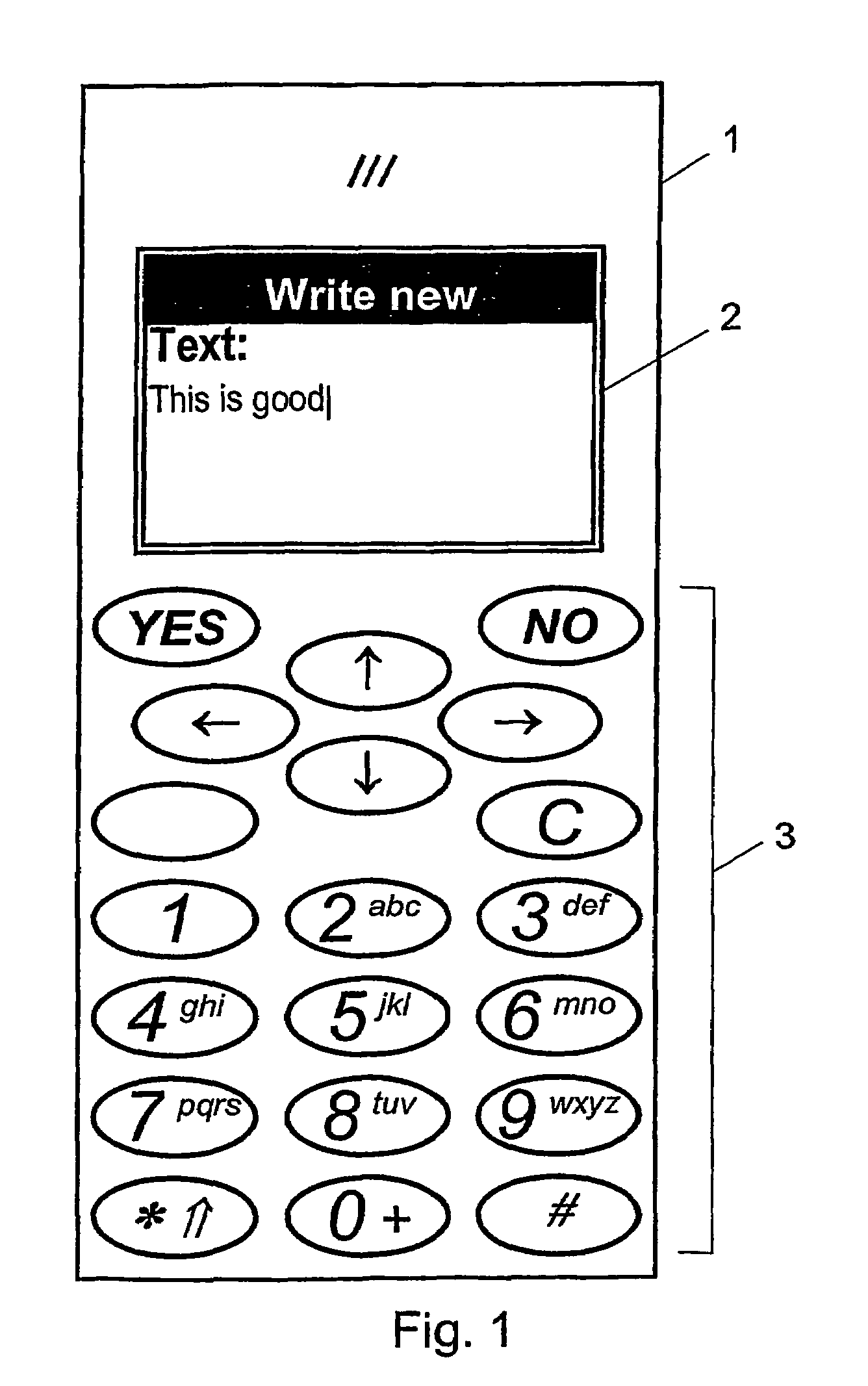

Entering text into an electronic communications device

InactiveUS7385531B2Easy to getEasy to browseInput/output for user-computer interactionElectronic switchingGraphicsSyllable

In a method of entering text into an electronic communications device by means of a keypad having a number of keys, each key representing a plurality of letters and / or phonetic symbols, entered text is displayed on a display on the device. Possible phonetic syllables corresponding to an activated key sequence are generated. These are compared with a stored vocabulary comprising syllables and corresponding characters occurring in a given language. Those stored syllables and corresponding characters that match the possible syllables are pre-selected; and a number of these are presented in a separate first graphical object arranged predominantly on the display. Characters corresponding to one of the syllables in the first object are simultaneously presented in a second graphical object. Thus, there is provided a way of entering text with characters having a phonetic representation by means of keys representing a plurality of letters or phonetic symbols, which may be easier to use even in the case where a phonetic syllable corresponds to several characters.

Owner:SONY ERICSSON MOBILE COMM AB

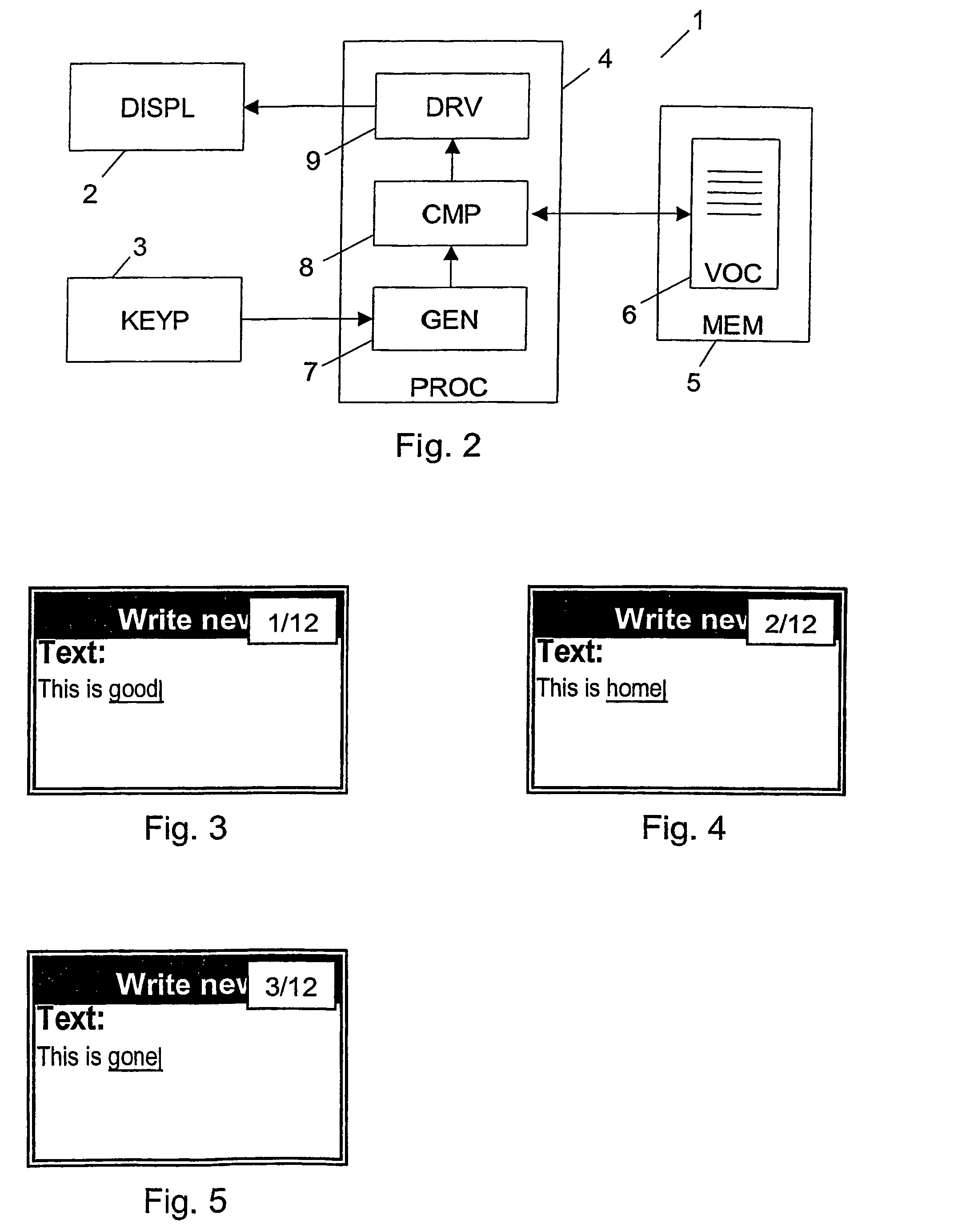

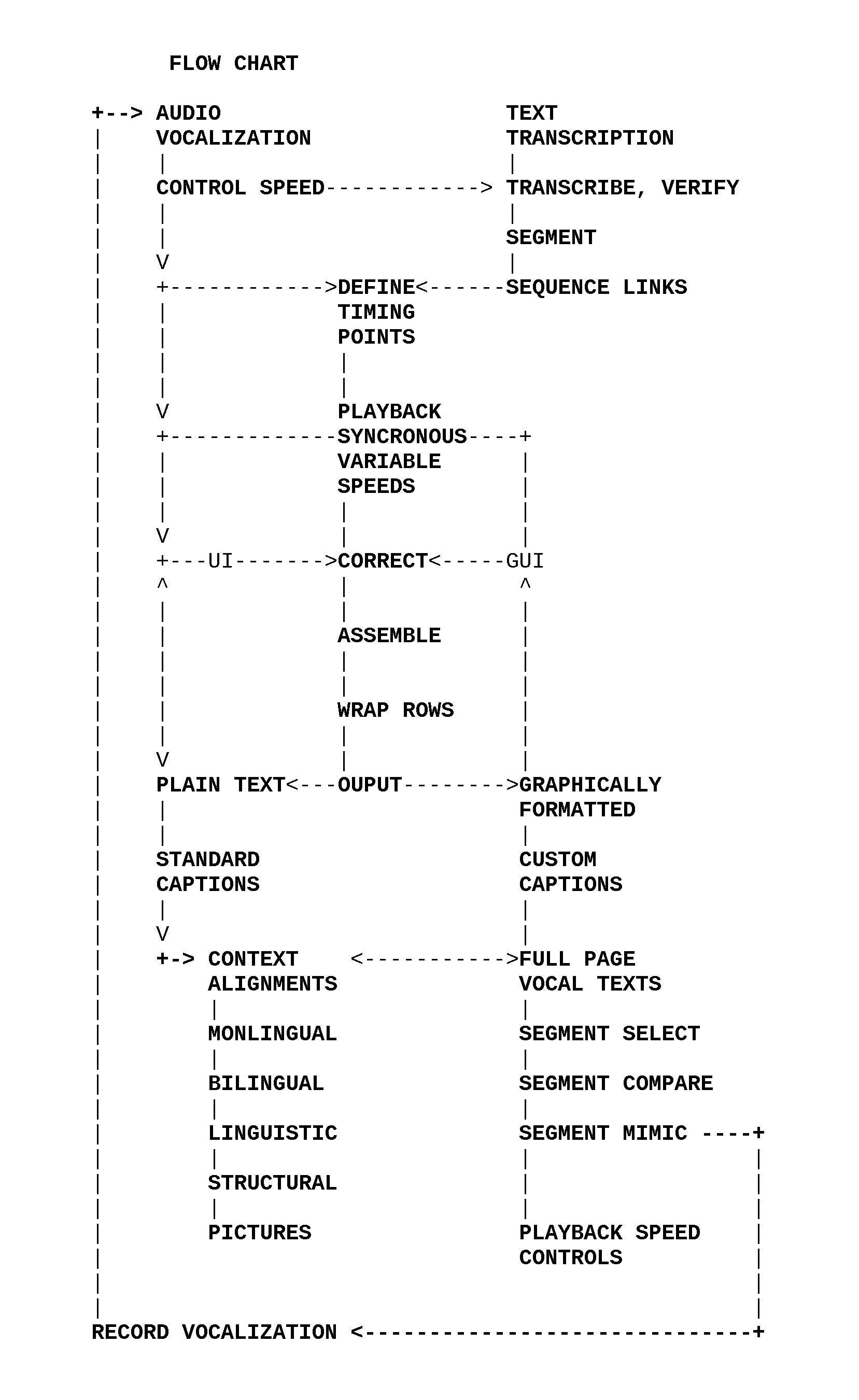

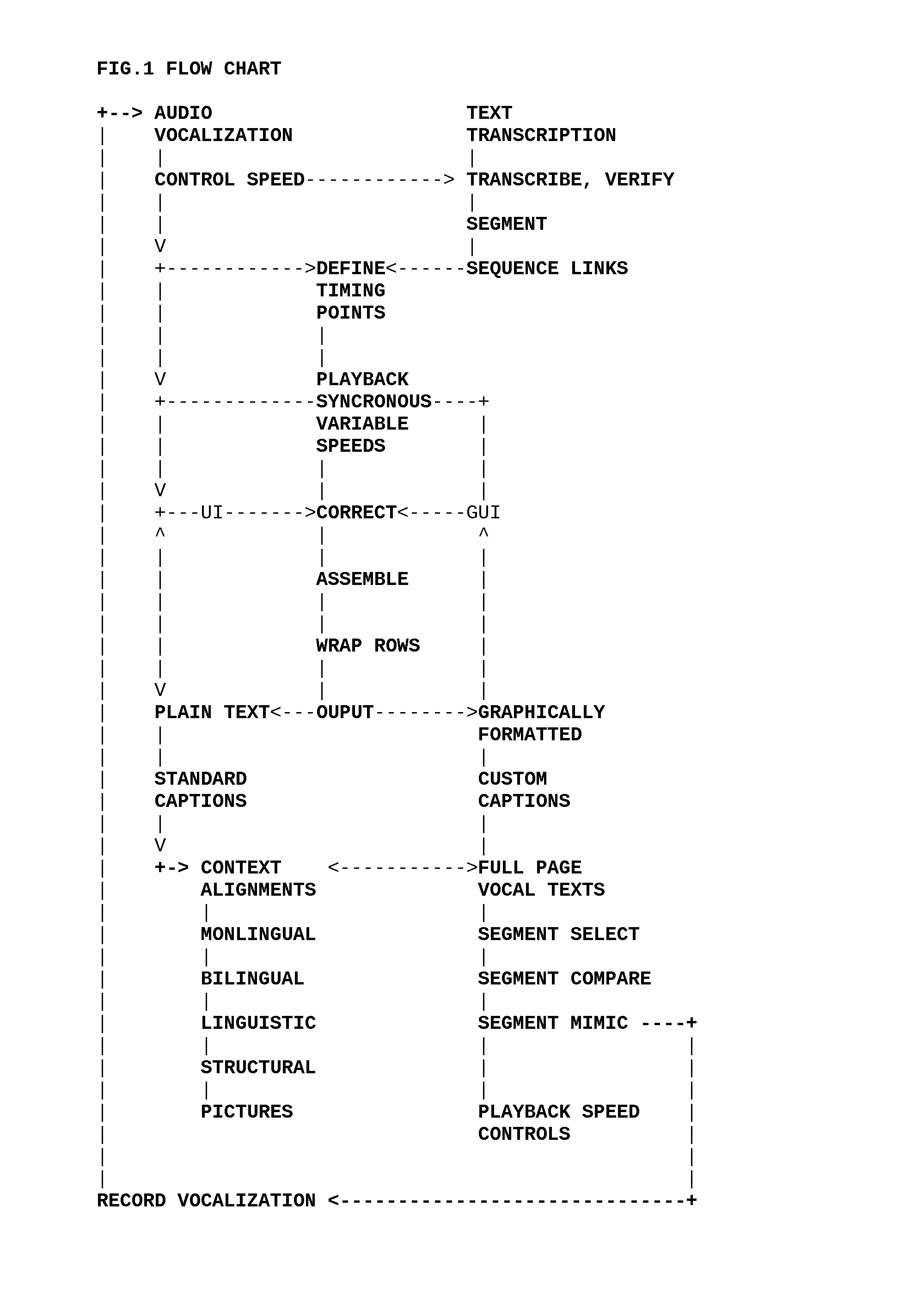

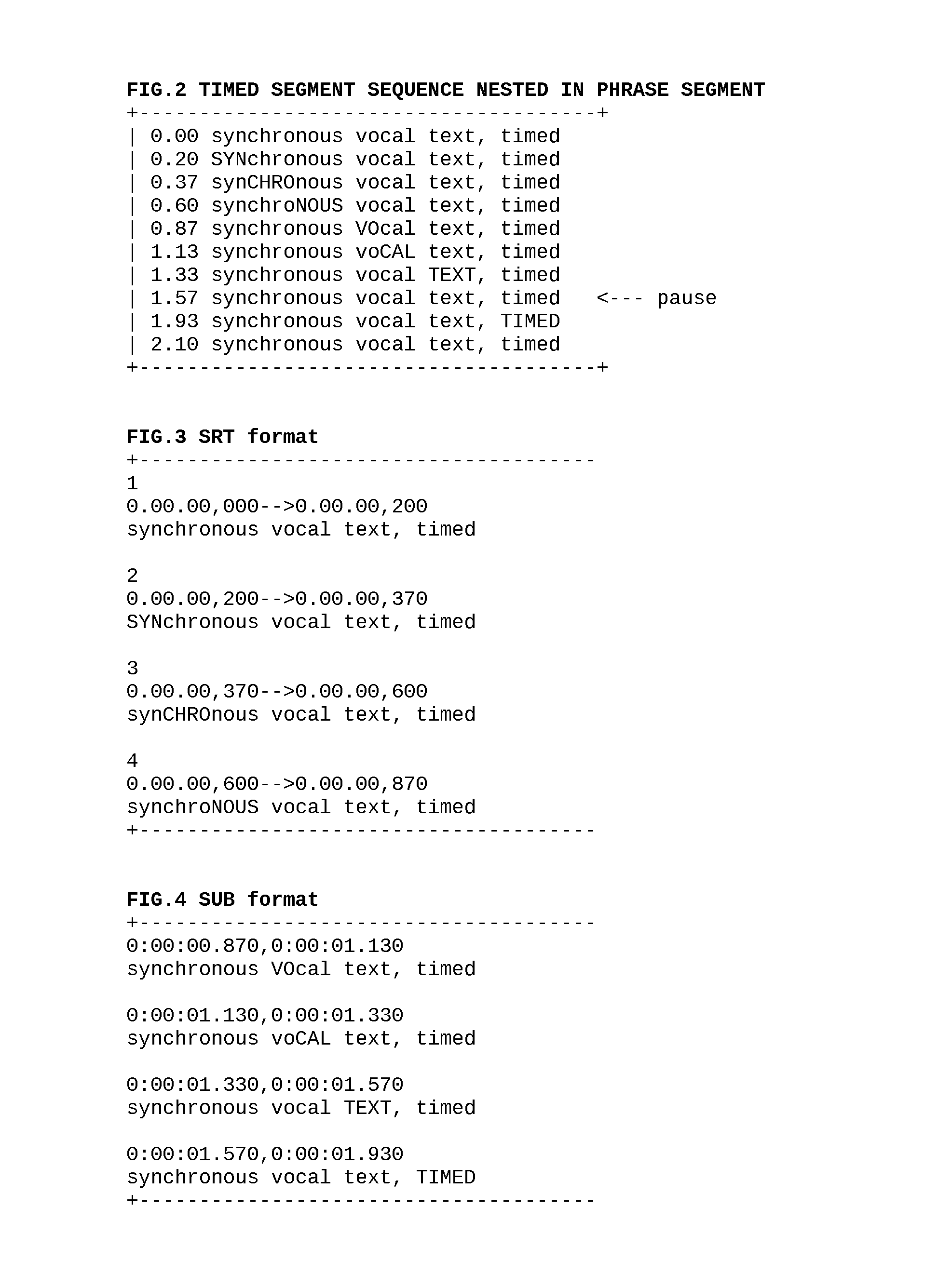

Synchronous Texts

InactiveUS20140039871A1Enhanced touch controlEasy to controlNatural language translationSpecial data processing applicationsPersonalizationProgramming language

A method and apparatus to synchronize segments of text with timed vocalizations. Plain text captions present syllabic timings visually while their vocalization is heard. Captions in standard formats are optionally used. Synchronous playback speeds are controlled. Syllabic segments are aligned with timing points in a custom format. Verified constant timings are variably assembled into component segments. Outputs include styled custom caption and HTML presentations. Related texts are aligned with segments and controlled in plain text row sets. Translations, synonyms, structures, pictures and other context rows are aligned. Pictures in sets are aligned and linked in tiered sorting carousels. Alignment of row set contents is constant with variable display width wraps. Sorting enables users to rank aligned contexts where segments are used. Personalized contexts are compared with group sorted links. Variable means to express constant messages are compared. Vocal language is heard in sound, seen in pictures and animated text. The methods are used to learn language.

Owner:CRAWFORD RICHARD HENRY DANA

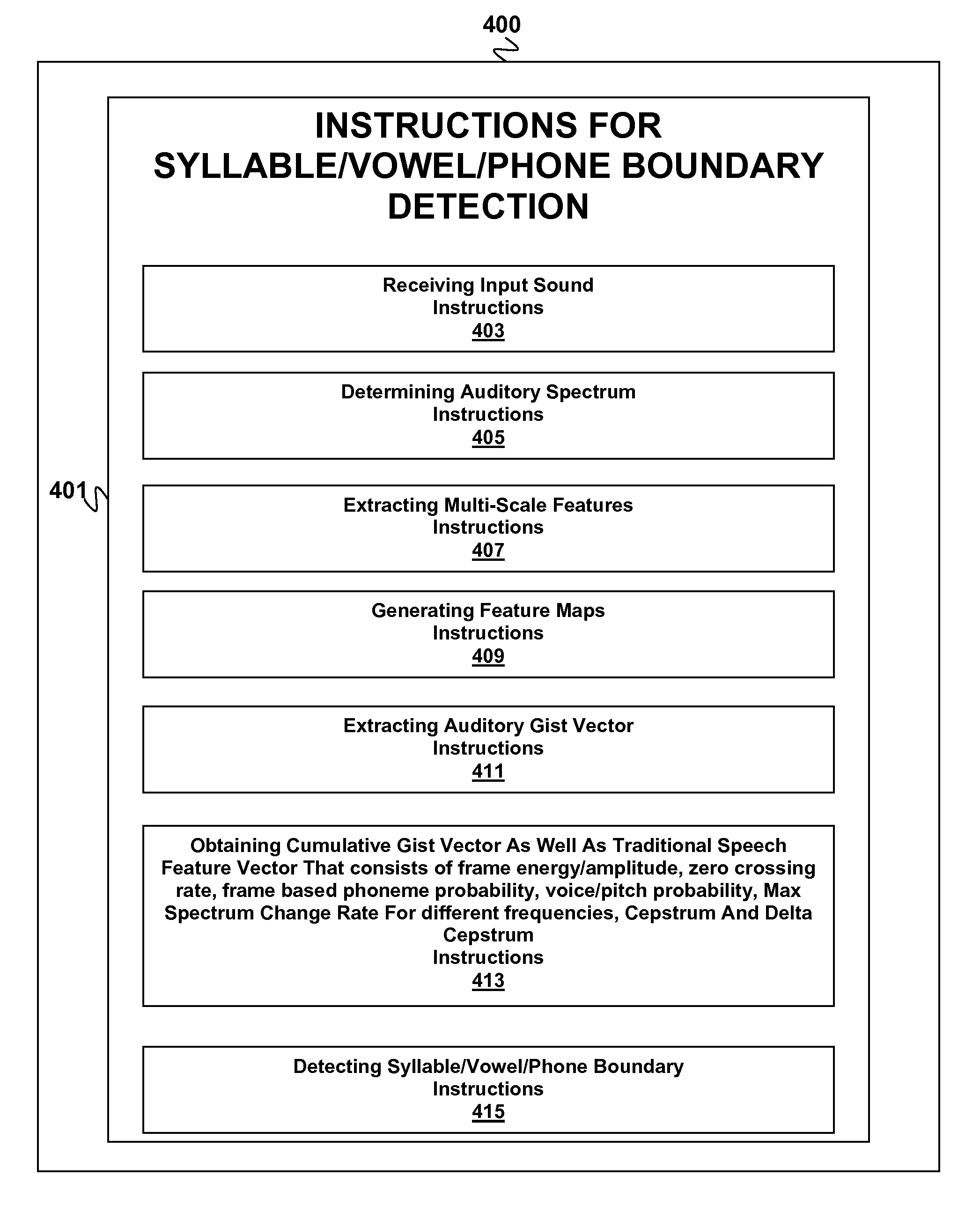

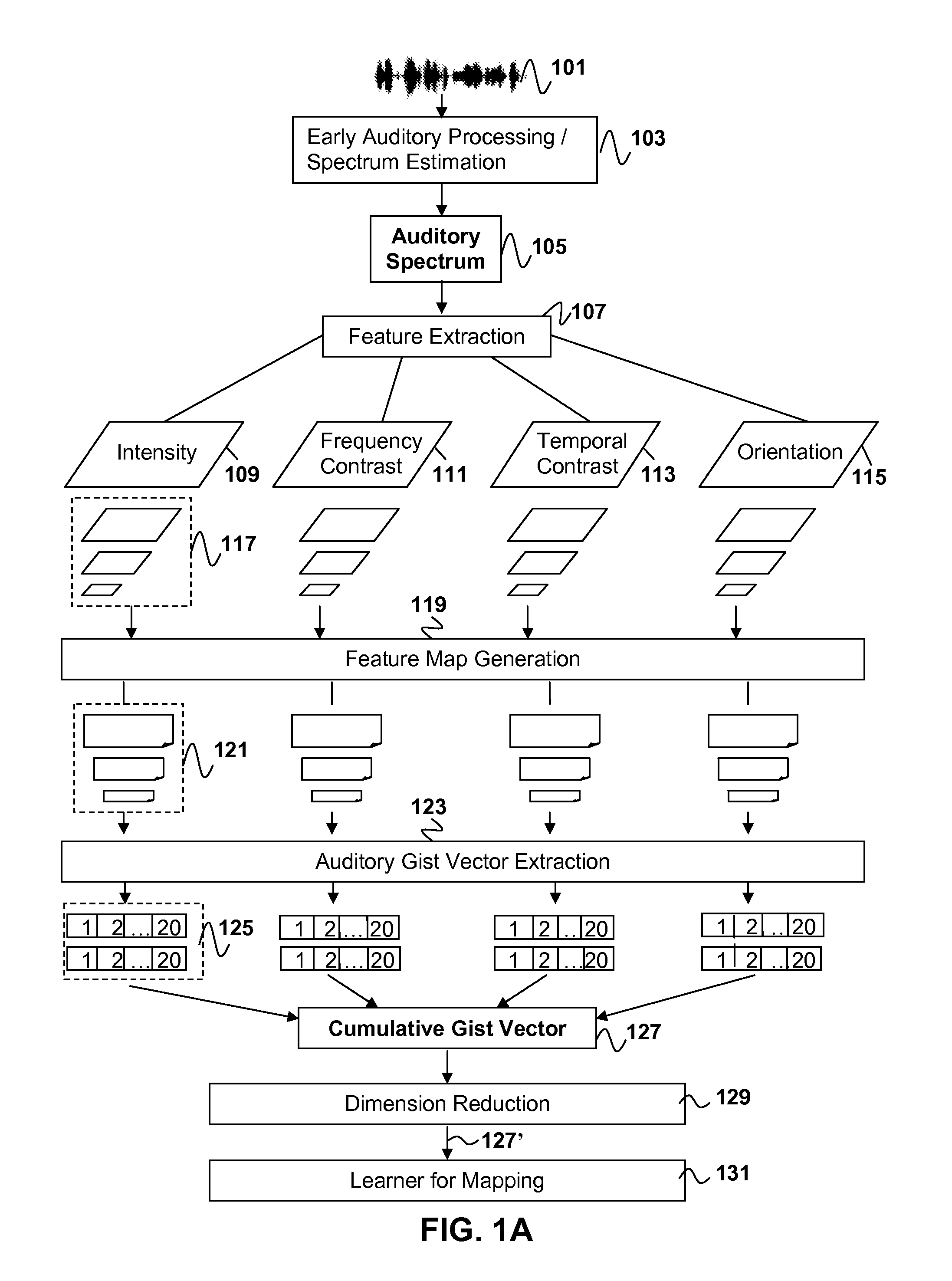

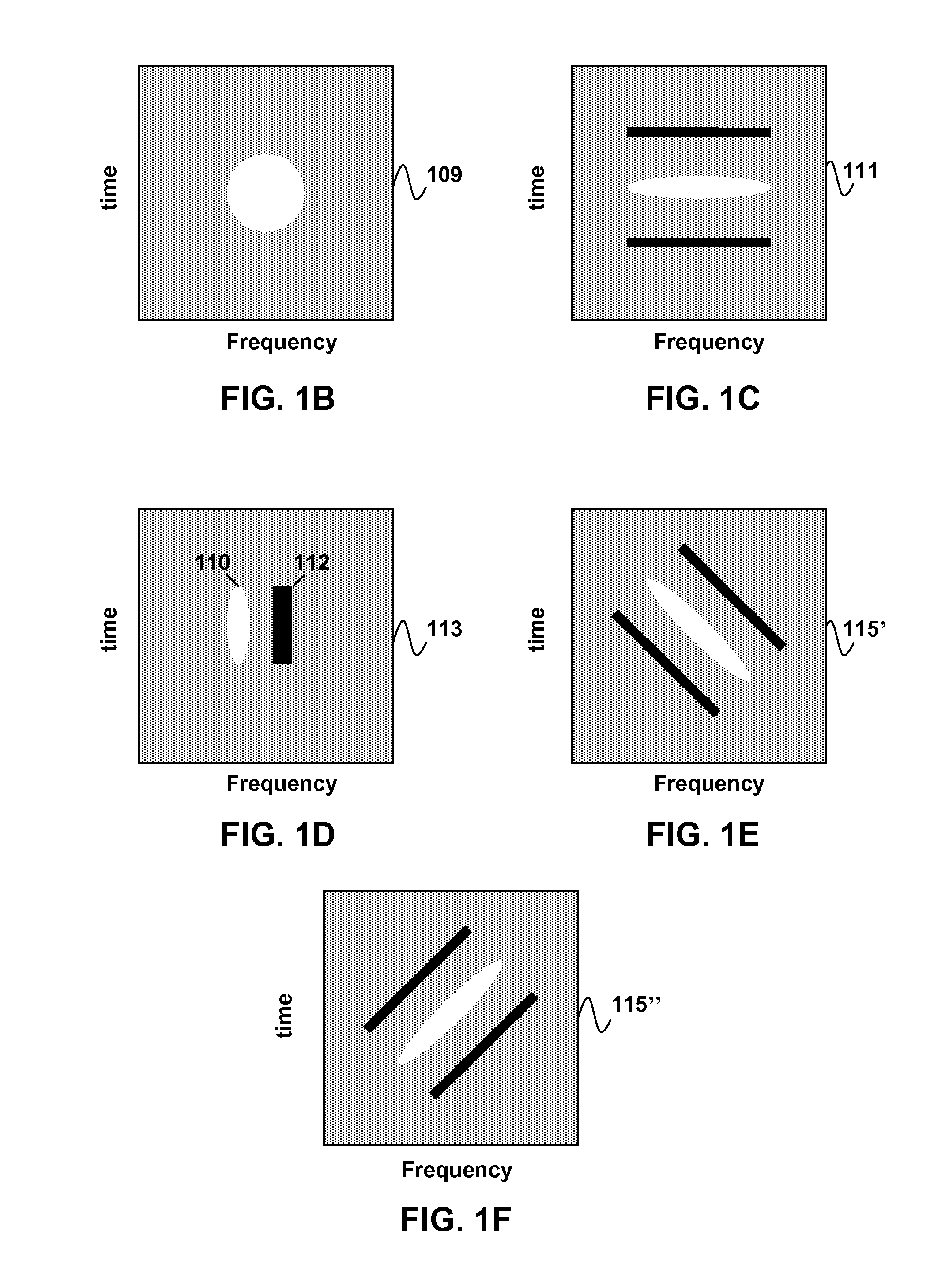

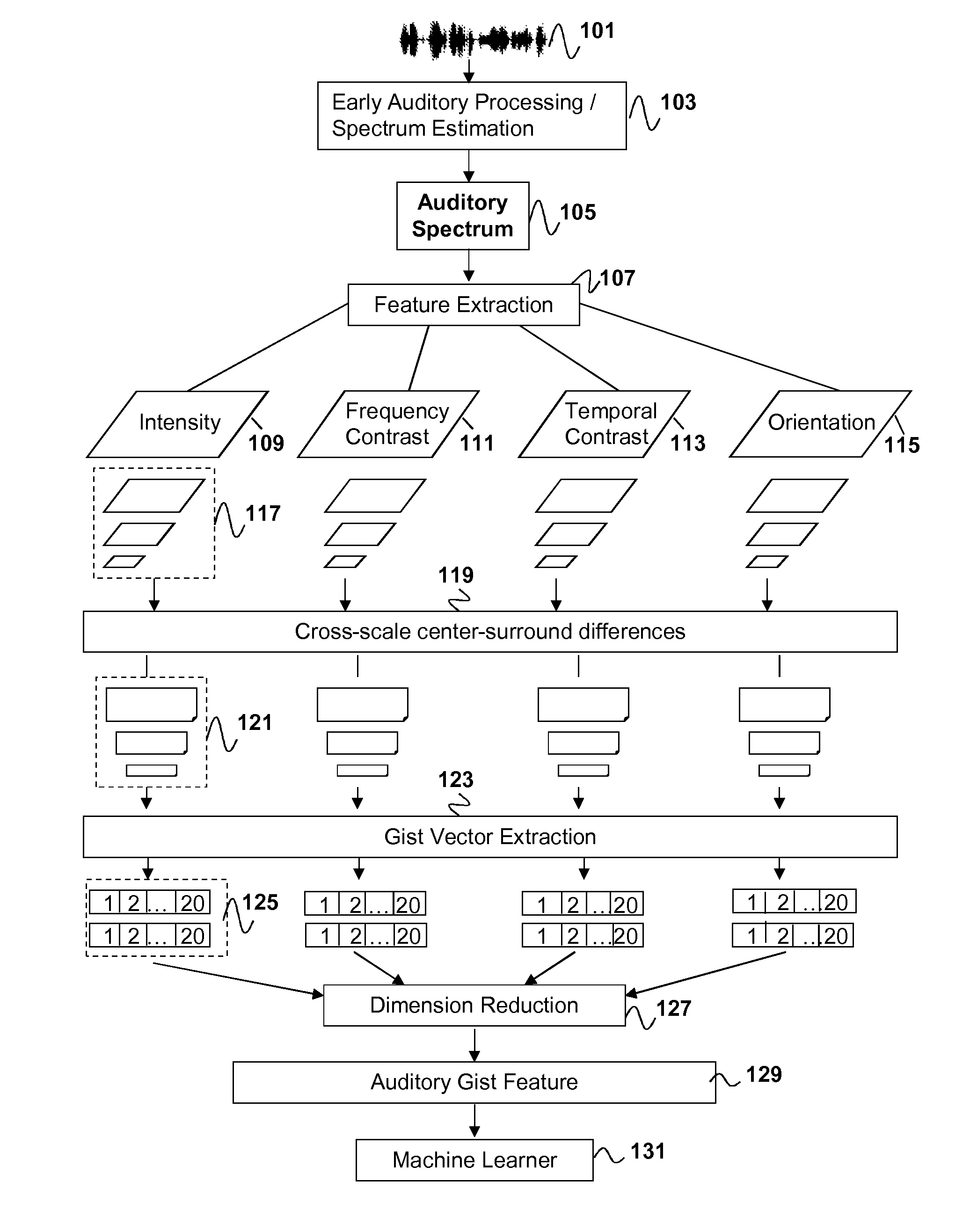

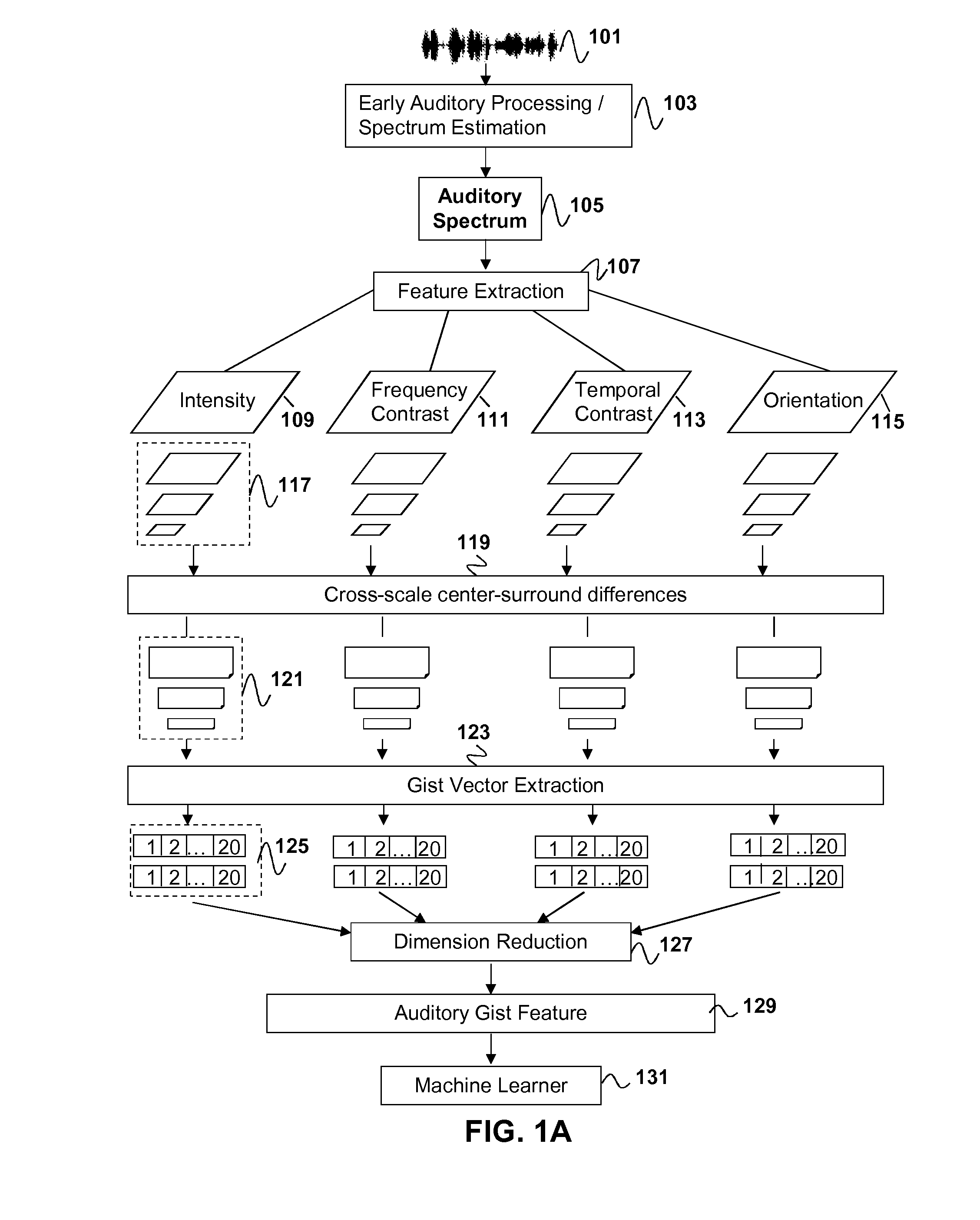

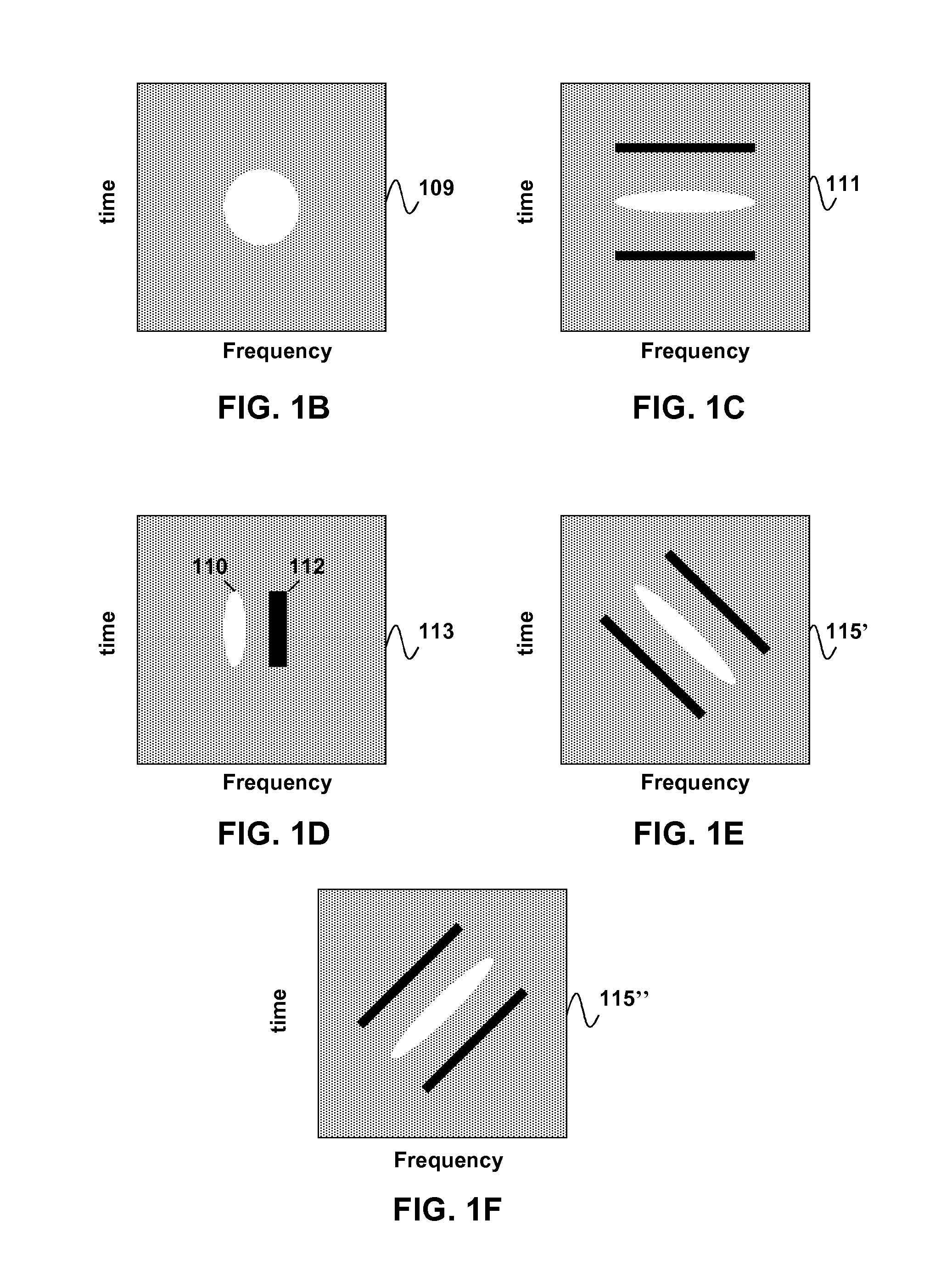

Speech syllable/vowel/phone boundary detection using auditory attention cues

In syllable or vowel or phone boundary detection during speech, an auditory spectrum may be determined for an input window of sound and one or more multi-scale features may be extracted from the auditory spectrum. Each multi-scale feature can be extracted using a separate two-dimensional spectro-temporal receptive filter. One or more feature maps corresponding to the one or more multi-scale features can be generated and an auditory gist vector can be extracted from each of the one or more feature maps. A cumulative gist vector may be obtained through augmentation of each auditory gist vector extracted from the one or more feature maps. One or more syllable or vowel or phone boundaries in the input window of sound can be detected by mapping the cumulative gist vector to one or more syllable or vowel or phone boundary characteristics using a machine learning algorithm.

Owner:SONY COMPUTER ENTERTAINMENT INC

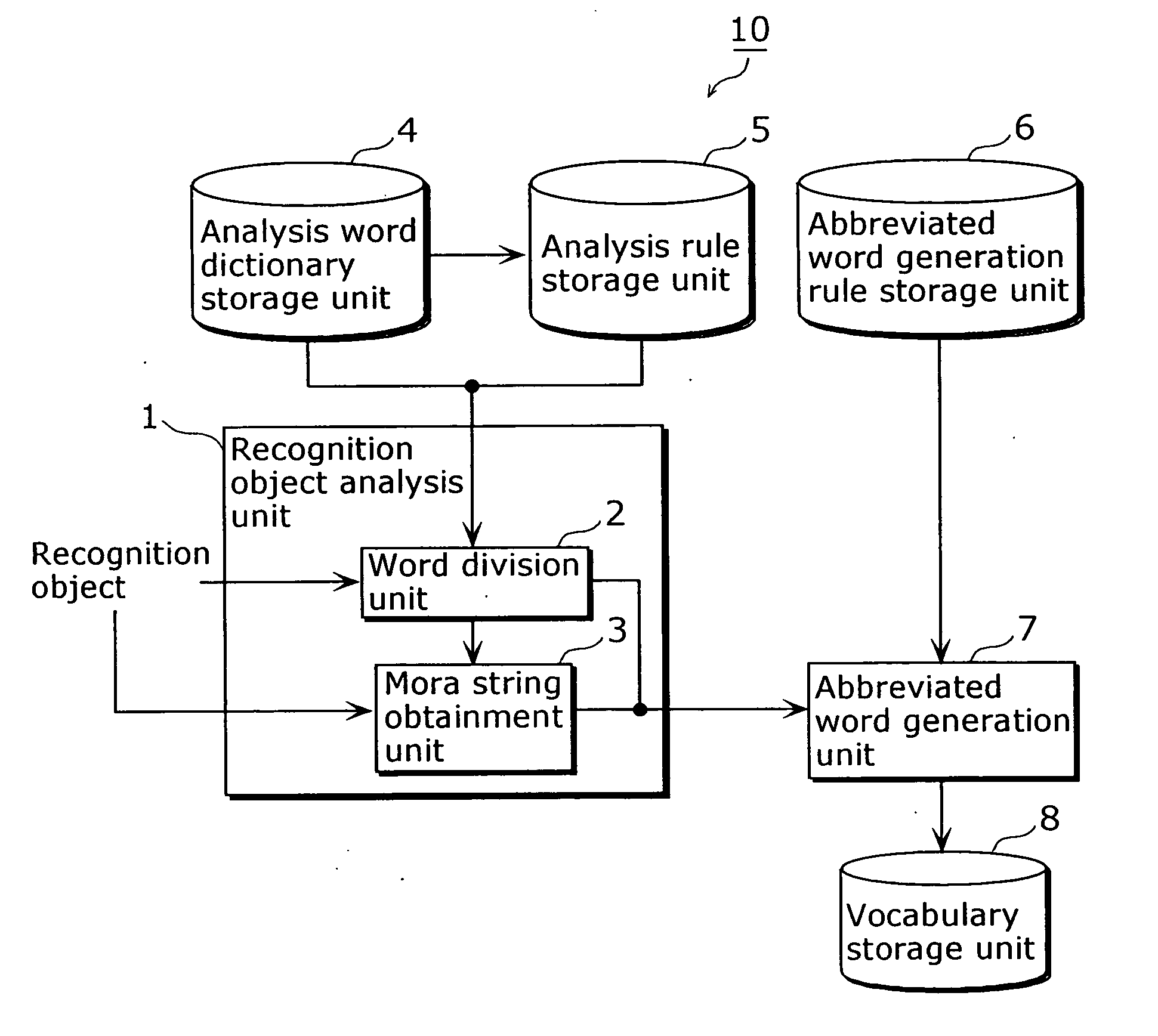

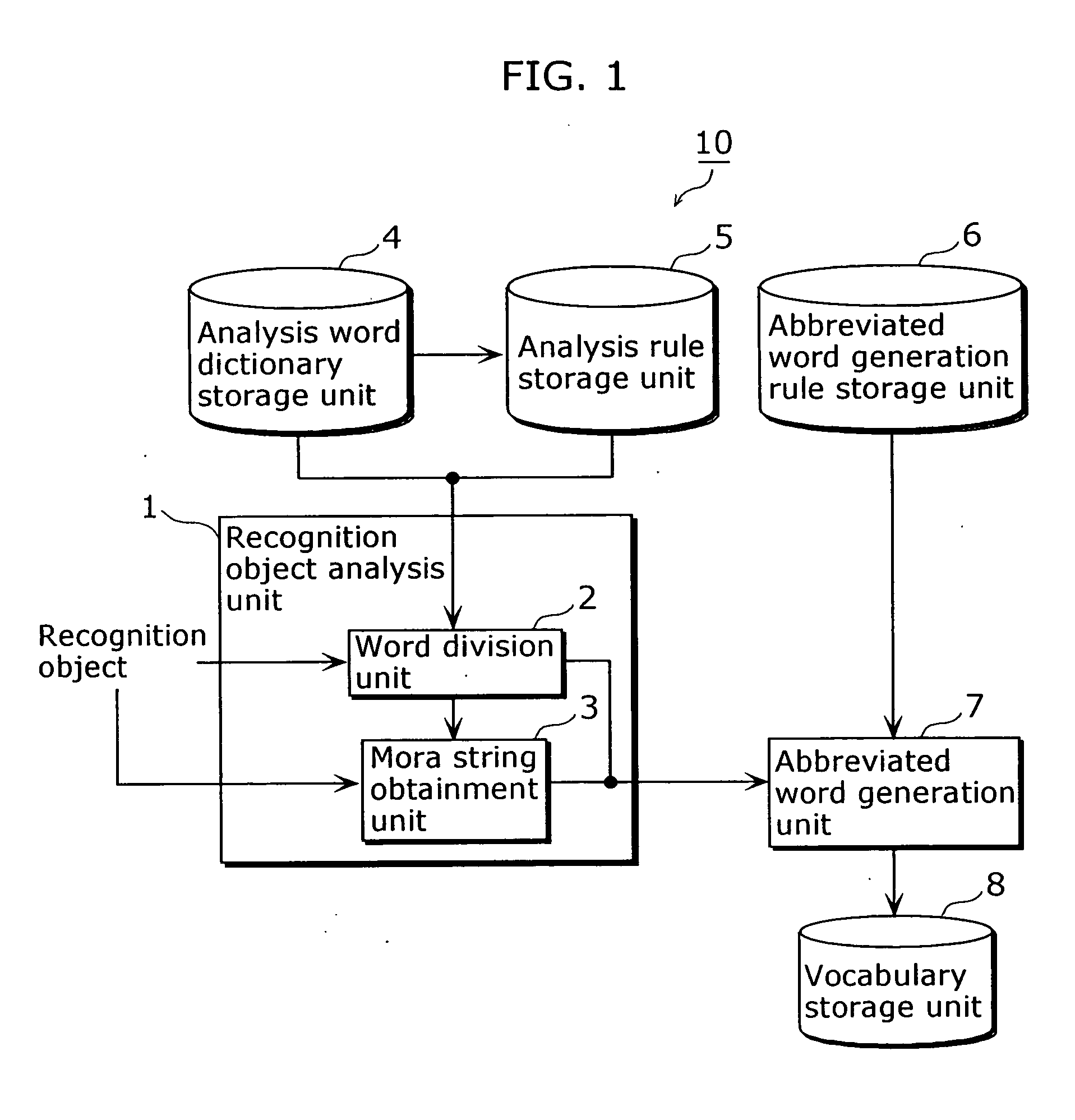

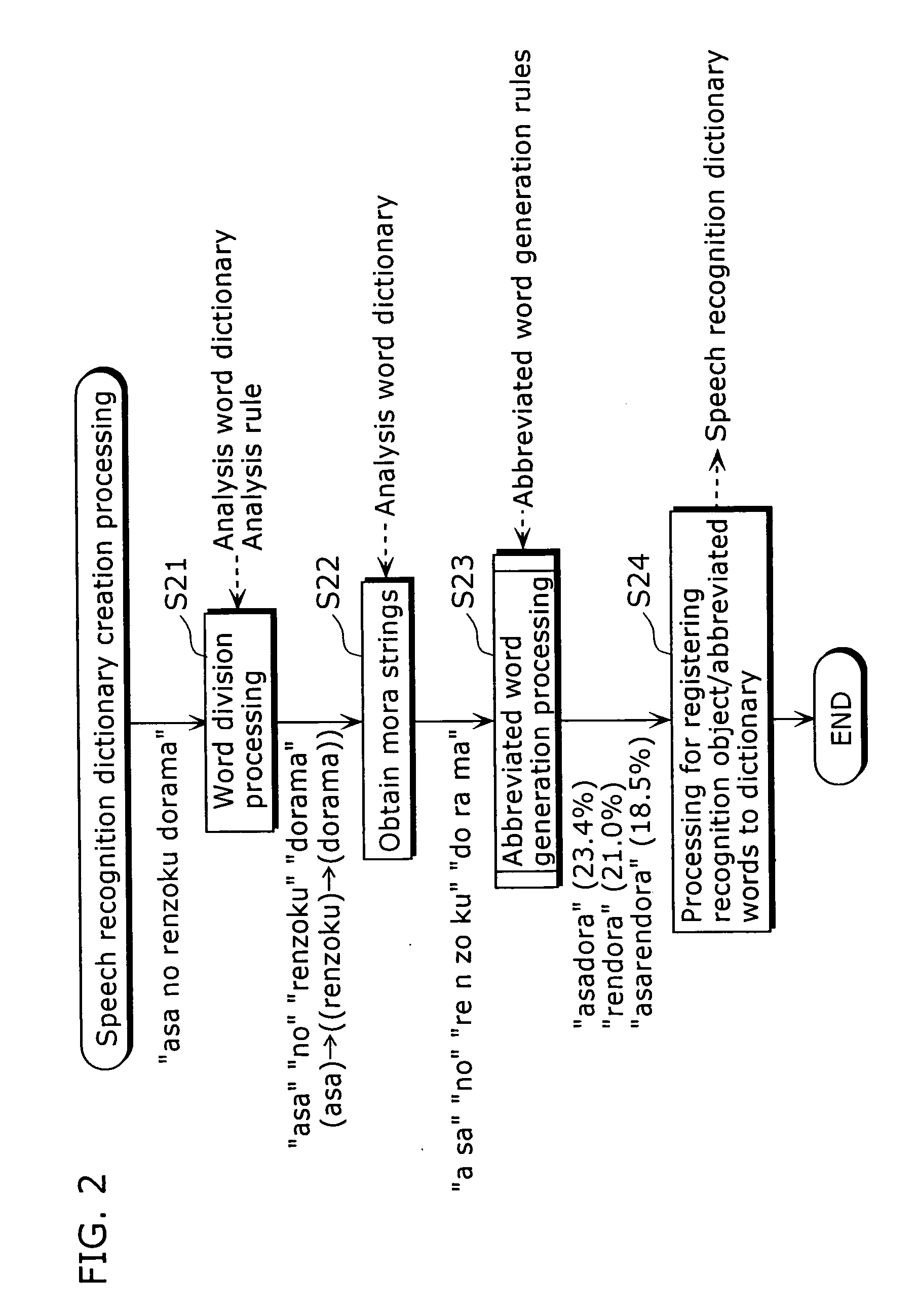

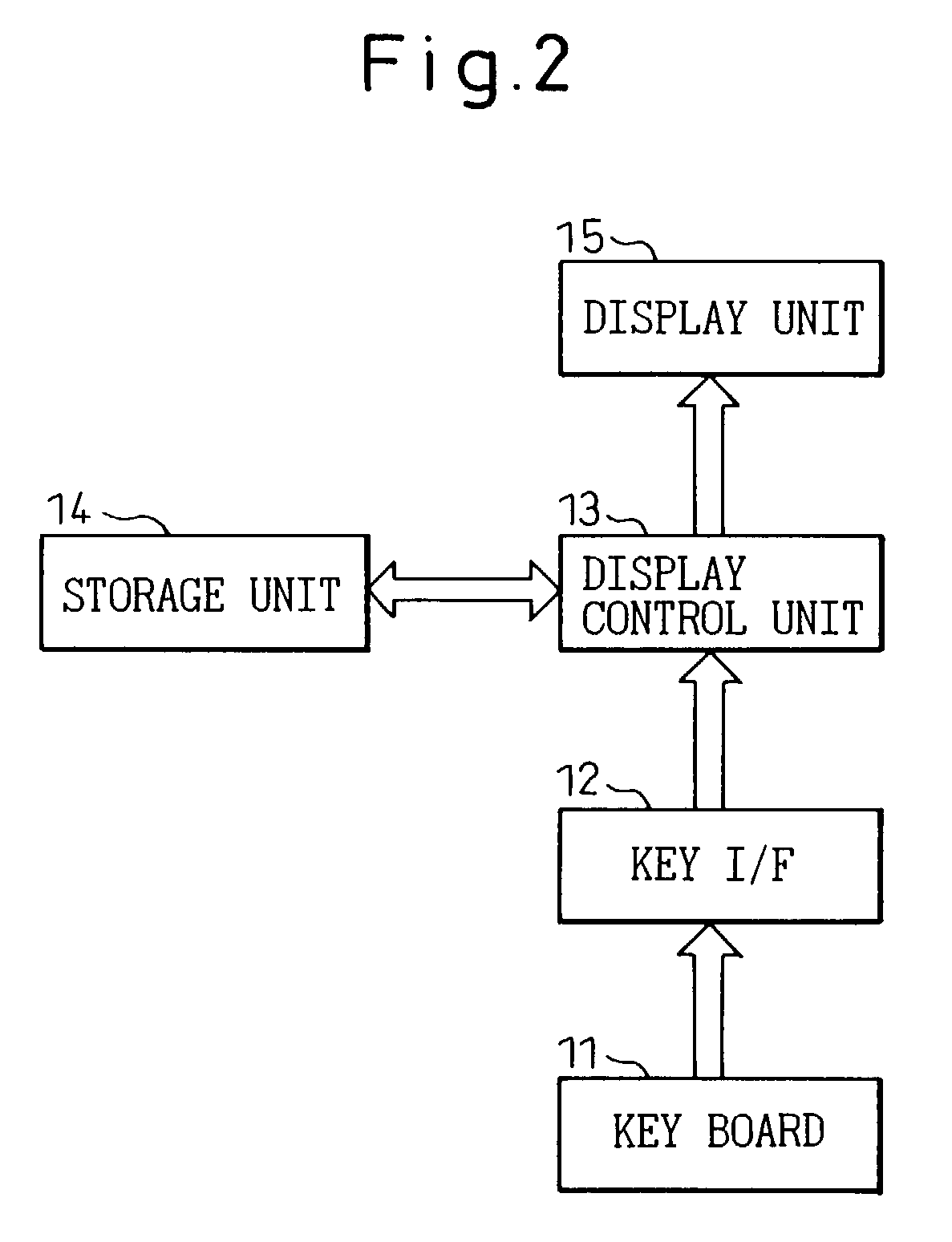

Speech recognition dictionary creation device and speech recognition device

InactiveUS20060106604A1Improve recognition rateReduce the numberSpeech recognitionSyllableParaphrase

A speech recognition dictionary creation device (10) that efficiently creates a speech recognition dictionary that enables even an abbreviated paraphrase of a word to be recognized with high recognition rate, the device including: a word division unit (2) that divides a recognition object made up of one or more words into constituent words; a mora string obtainment unit (3) that generates mora strings of the respective constituent words based on the readings of the respective divided constituent words; an abbreviated word generation rule storage unit (6) that stores a generation rule for generating an abbreviated word using moras; an abbreiivaed word generation unit (7) that generates candidate abbreviated words, each made up of one or more moras, by extracting moras from the mora strings of the respective constituent words and concatenating the extracted moras, and that generates an abbreviated word by applying the abbreviated word generation rule to such candidates; and a vocabulary storage unit (8) that stores, as the speech recognition dictionary, the generated abbreviated word together with its recognition object.

Owner:PANASONIC CORP

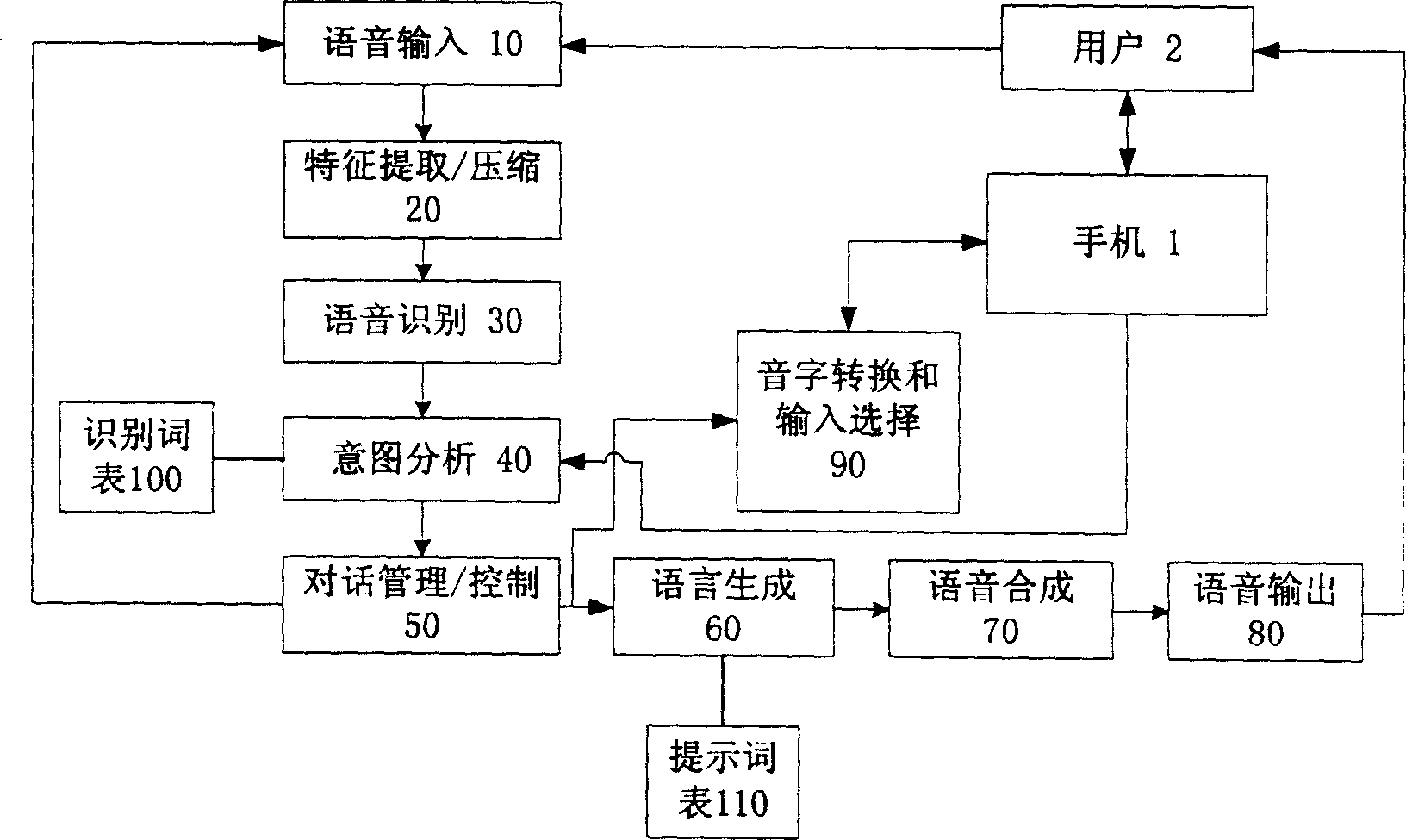

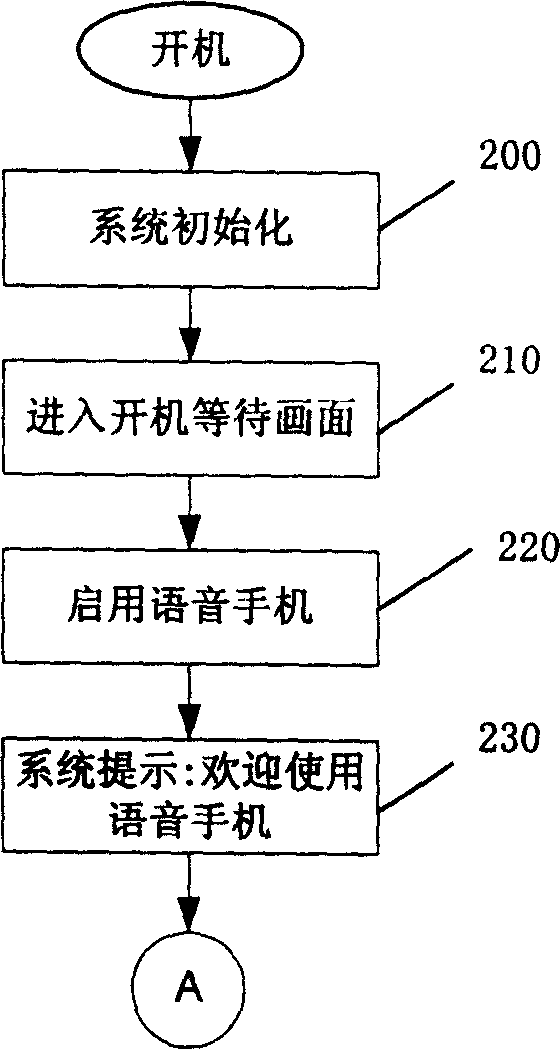

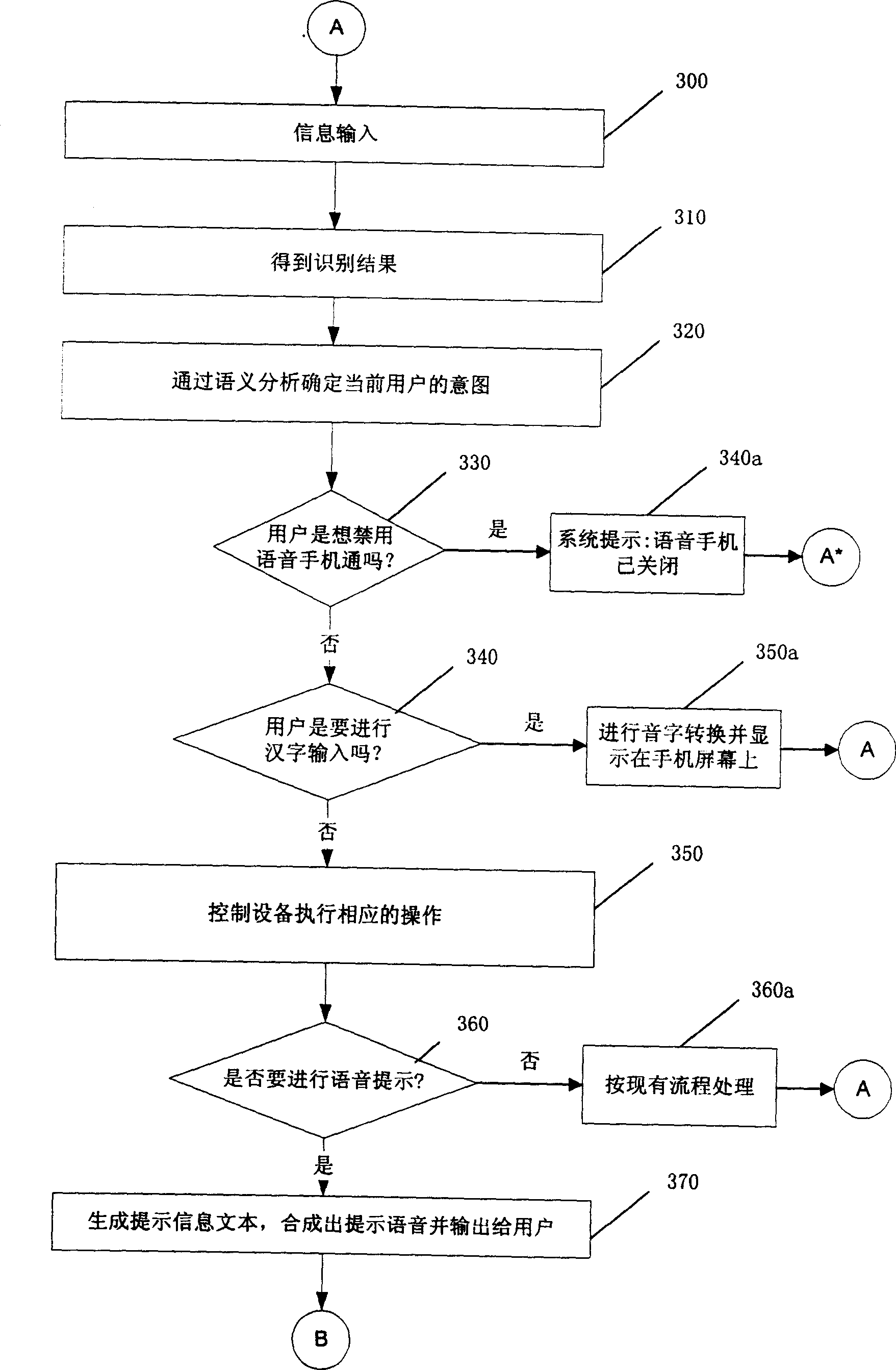

Portable digital mobile communication apparatus and voice control method and system thereof

ActiveCN1703923AFlexible feedbackUser friendlySubscriber signalling identity devicesRadio/inductive link selection arrangementsSyllableOperational system

The present invention discloses a portable digital mobile communication apparatus with voice operation system and controlling method of voice operation. The feature vector sequences of speech are quantify encoded when the speech is recognized, and in decoding operation, each code in efficiency speech character codes are directly looked up observation probability of on search path from the probability schedule in the decode operation. In association with the present invention, full syllabic speech recognition can be achieved in mobile telephone without the need of training, and input Chinese characters by speech and speech prompting with full syllable. This system comprises semantic analysis, dialogue management and language generation module, and it can also process complicated dialog procedure and feed flexible prompting message back to the user. The present invention can also customize speech command and prompting content by user.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

Voice authentication system

InactiveUS20080172230A1Improve authentication accuracyImprove accuracySpeech recognitionSyllableSpeech verification

A text-dependent voice authentication system that performs authentication by urging a user to input a keyword by voice includes: an input part (11) that receives a voice input of a keyword divided into a plurality of portions with an utterable unit being a minimum unit over a plurality of times at a time interval for each of the portions; registered speaker-specific syllable model DB (20) that previously stores a registered keyword of a user as a speaker model created in the utterable unit; a feature value conversion part (12) that obtains a feature value of a voice contained in a portion of the keyword received by the first voice input in the input part (11) from the portion; a similarity calculation part (13) that obtains a similarity between the feature value and the speaker model; a keyword checking part (17) that determines whether or not voice inputs of all the syllables or phonemes configuring an entire registered keyword by the plurality of times of voice inputs, based on the similarity obtained in the similarity calculation part; and an authentication determination part (19) that determines whether to accept or reject authentication, based on a determination result in the keyword checking part and the similarity obtained in the similarity calculation part.

Owner:FUJITSU LTD

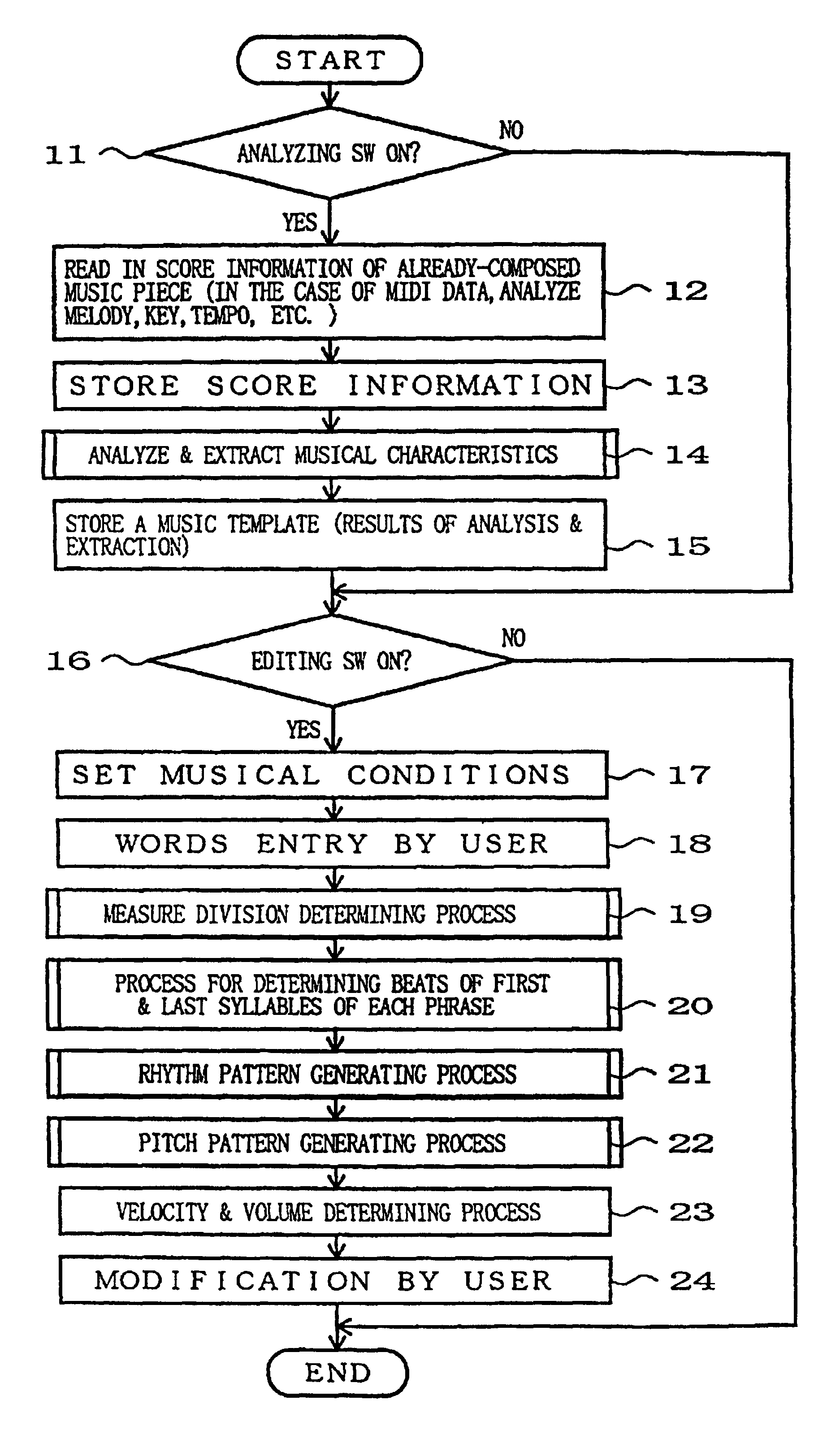

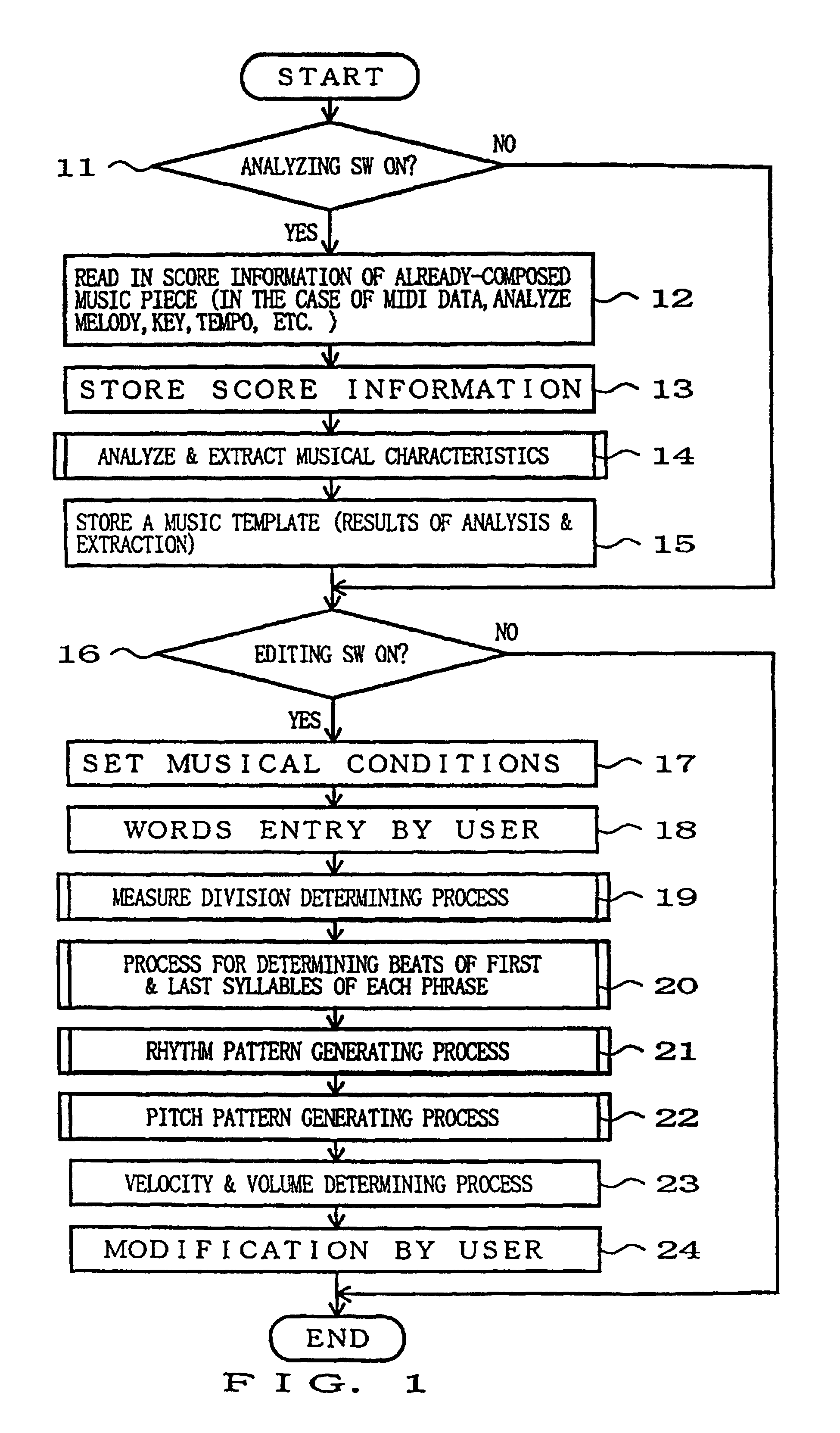

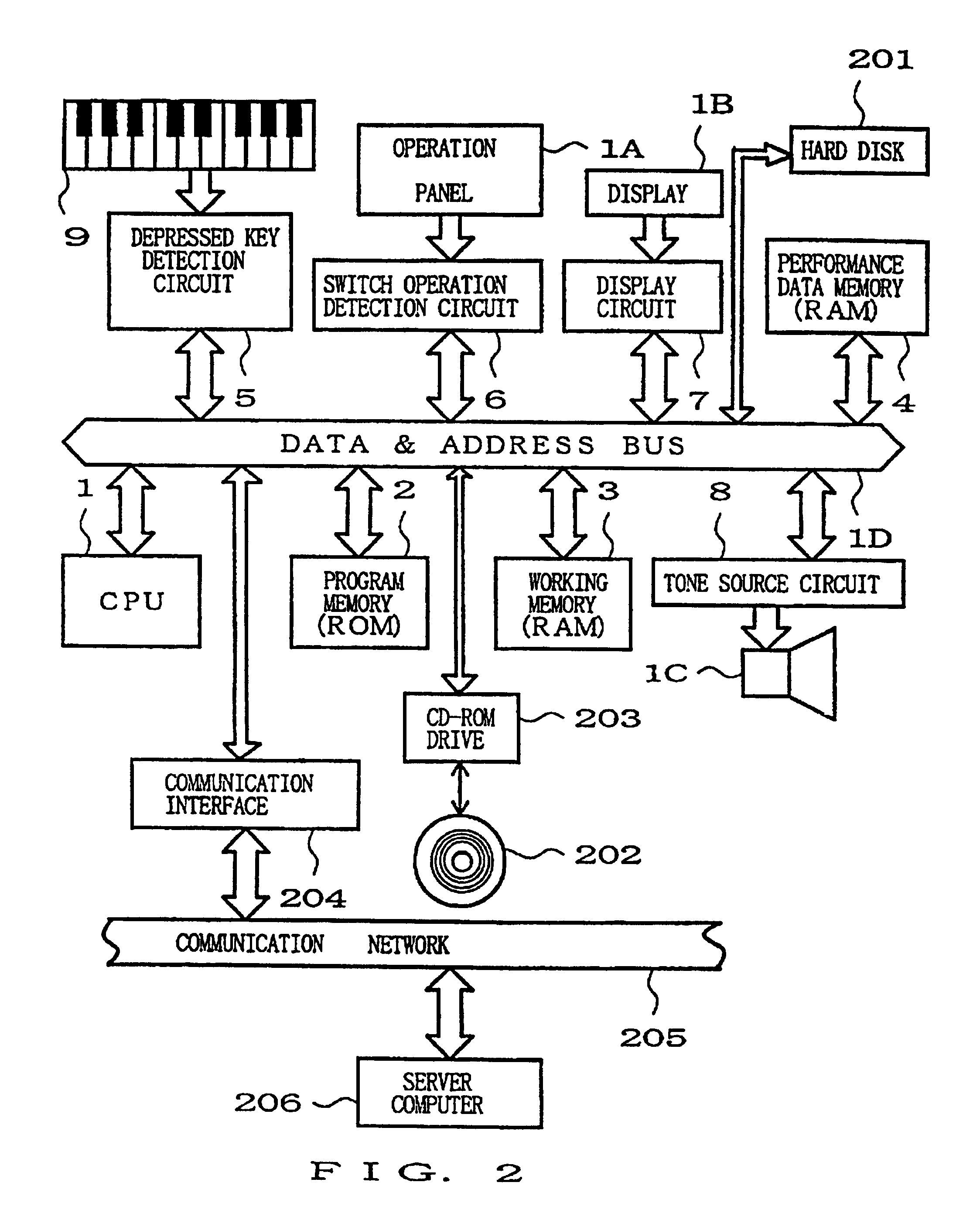

Method and device for automatic music composition employing music template information

For each section forming a music piece, music template information is supplied which includes at least information indicative of a pitch variation tendency in the section. A plurality of already-composed music pieces are preferably analyzed to store a plurality of pieces of such music template information so that any users can select from the stored pieces of music template information. For a music piece to be composed, the user enters information indicative of a tendency relating to the number of notes, such as syllable information of desired words or scat words, in each section of the music piece. On the basis of one of the pieces of music template information supplied and the user-entered information indicative of the number-of-notes tendency (syllable information), the length and pitch of notes in each section can be determined, which permits automatic generation of music piece data. If the music template information is used with no modification, an automatically composed music piece will considerably resemble its original music piece. The user can freely modify the music template information to be used, so that a music piece different from its corresponding original music piece can be created with the user's intention reflected thereon. The music template information can be easily modified by any users having poor knowledge of composition, and the present composing technique can be easily used by everyone.

Owner:YAMAHA CORP

Combining auditory attention cues with phoneme posterior scores for phone/vowel/syllable boundary detection

Phoneme boundaries may be determined from a signal corresponding to recorded audio by extracting auditory attention features from the signal and extracting phoneme posteriors from the signal. The auditory attention features and phoneme posteriors may then be combined to detect boundaries in the signal.

Owner:SONY COMPUTER ENTERTAINMENT INC

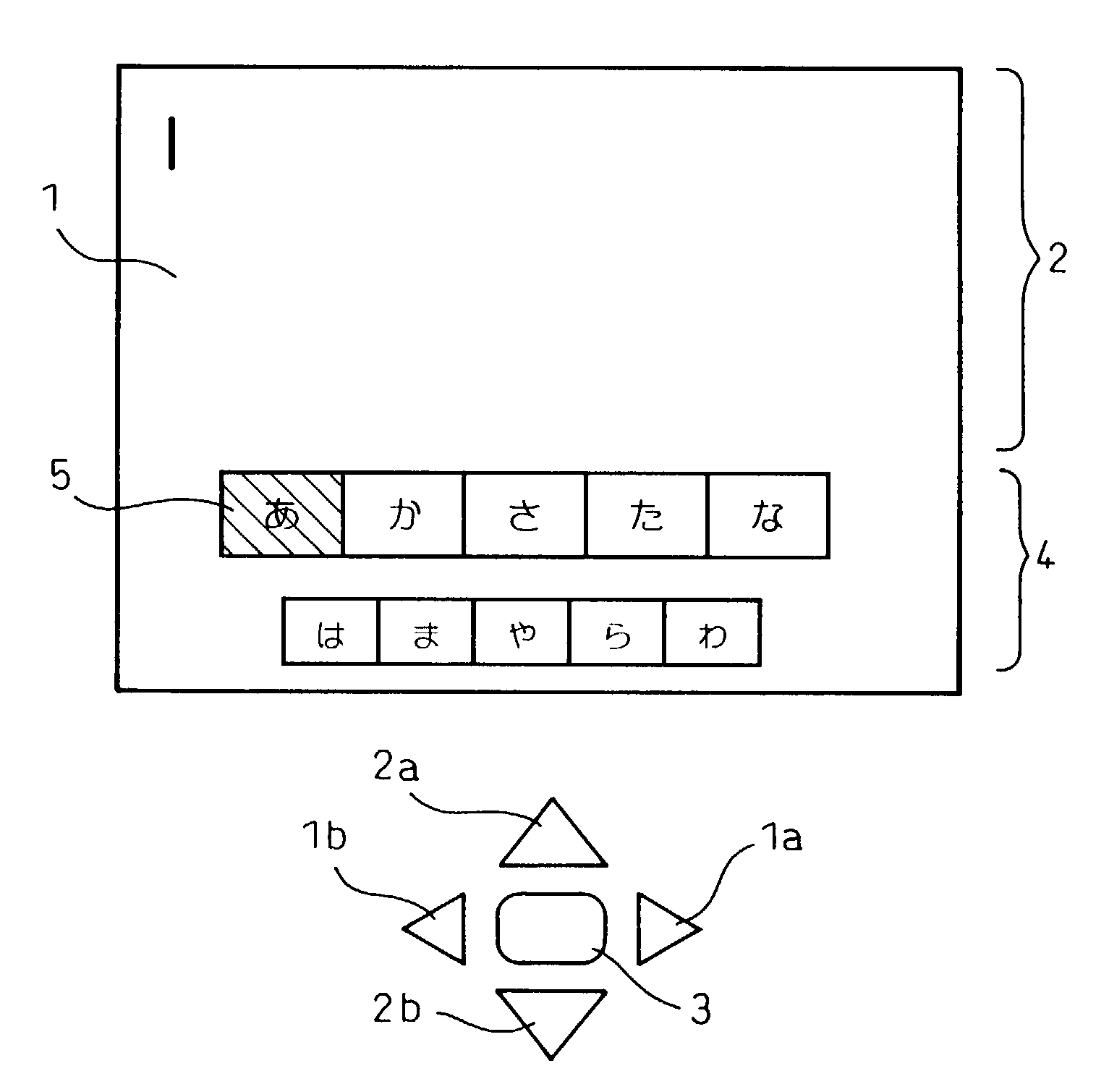

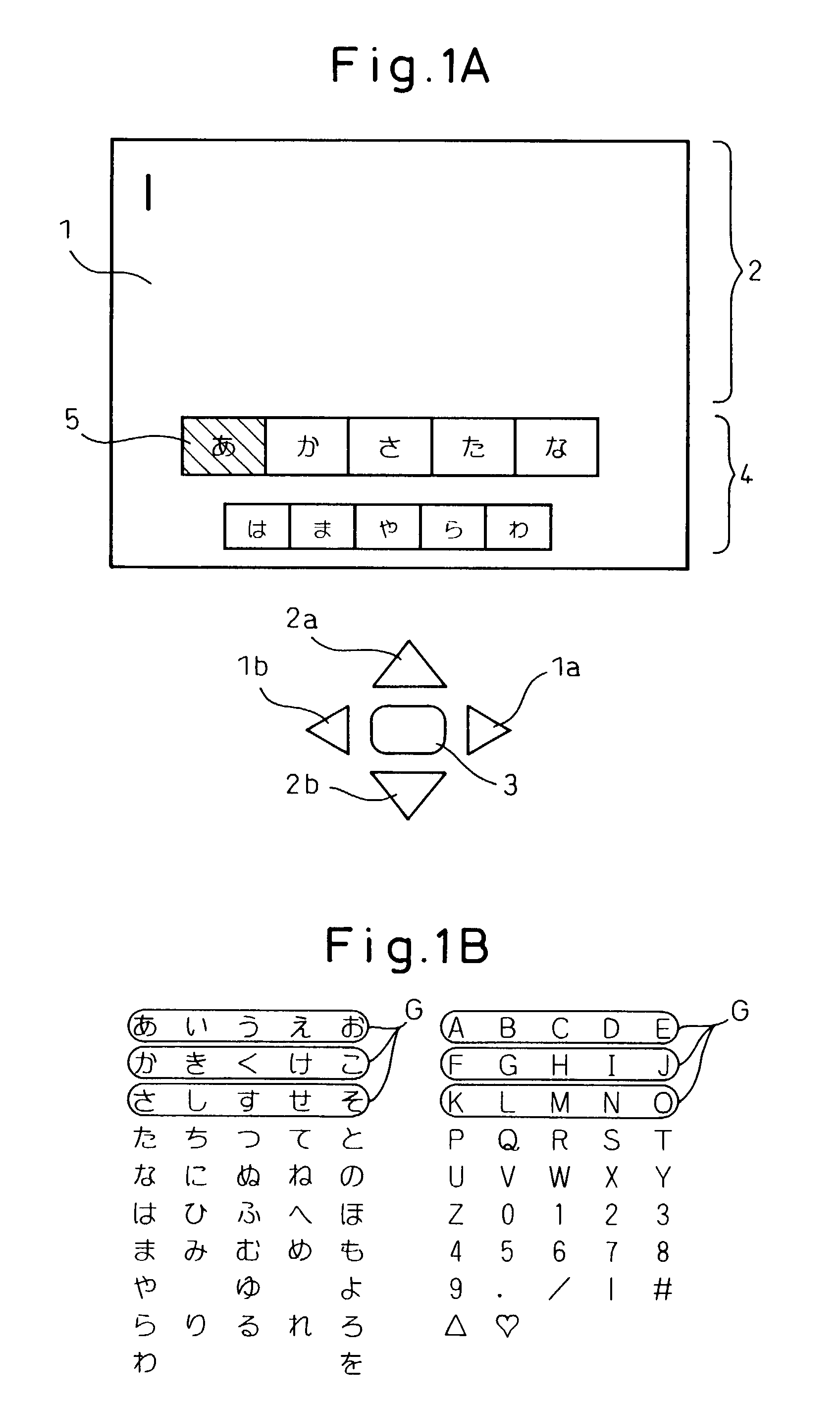

Character input device

InactiveUS7530031B2Reduce exerciseSimple input and correctionInput/output for user-computer interactionTelephone sets with user guidance/featuresSyllableHiragana

A character input device not completely occupying a screen, good in visibility, and enabling input of characters while reducing to a minimum the movement of the fingers and line of sight, which, in the case of input of for example the hiragana 50-character phonetic syllabary of the Japanese language, displays an initial block of the major group lead characters a (), ka (), sa (), ta (), na () on a first row of a character input region on a display screen and the block of the remaining major group lead characters ha (), ma (), ya (), ra (), wa () on the second row, allows movement of a focus position or character position by “left” and “right” keys (a ()→ka ()→sa ()→ . . . ), switches the character focused to the block of ha (), ma (), ya (), ra (), wa () after the focus position reaches na (), allows switching between displayed characters by for example using the “down” key to move to the next character (for example, a ()→i ()→u ()→ . . . ) and using the “up” key to move to the furthest character in the group (for example, a ()→o ()→ . . . ), and allowing input of the character by using a “decision” key when the character to be input is focused on.

Owner:FUJITSU LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com