Patents

Literature

342 results about "Speaker identification" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Who said that? teleconference speaker identification apparatus and method

InactiveUS7266189B1Unauthorised/fraudulent call preventionSpecial service for subscribersIdentification deviceTeleconference

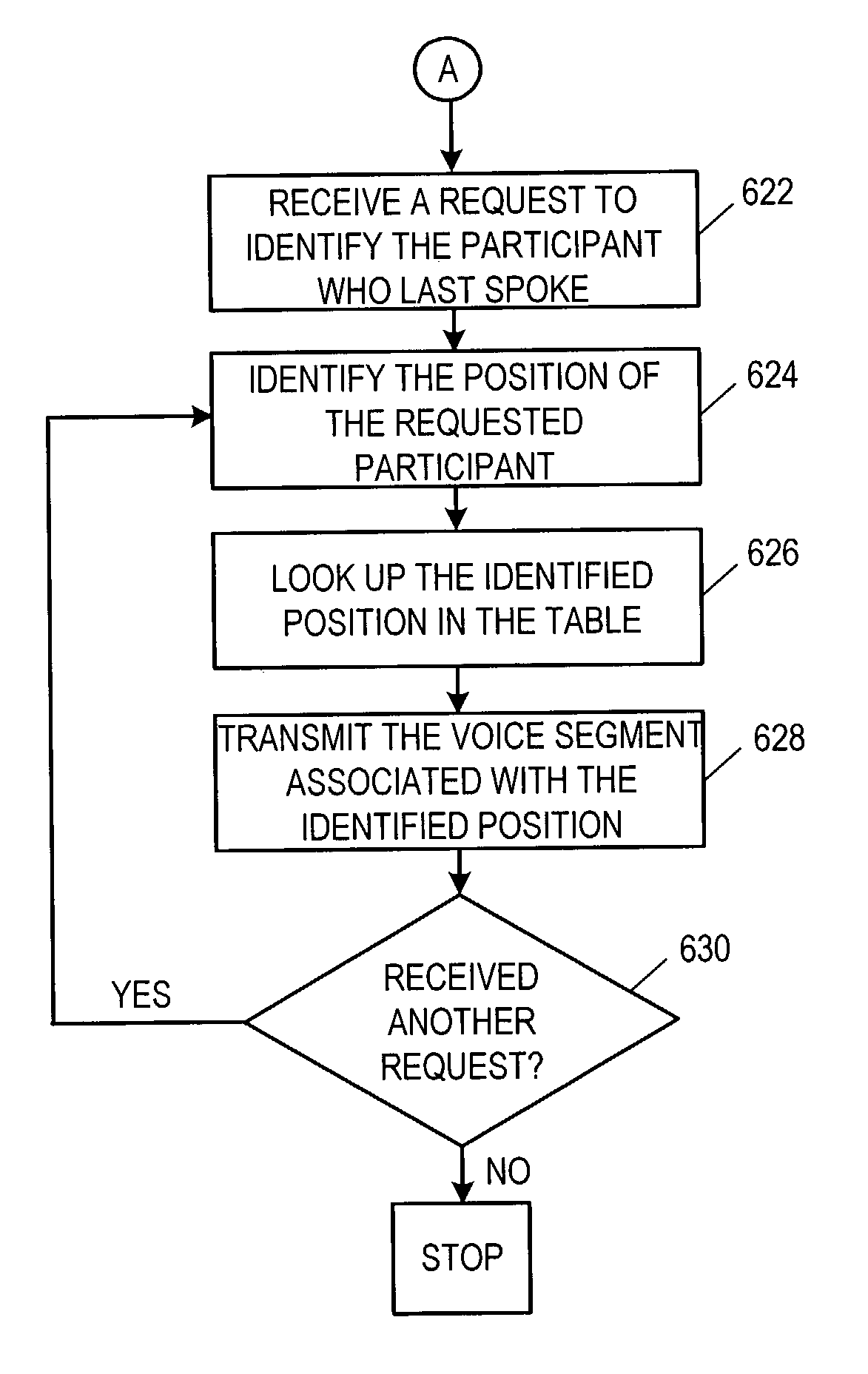

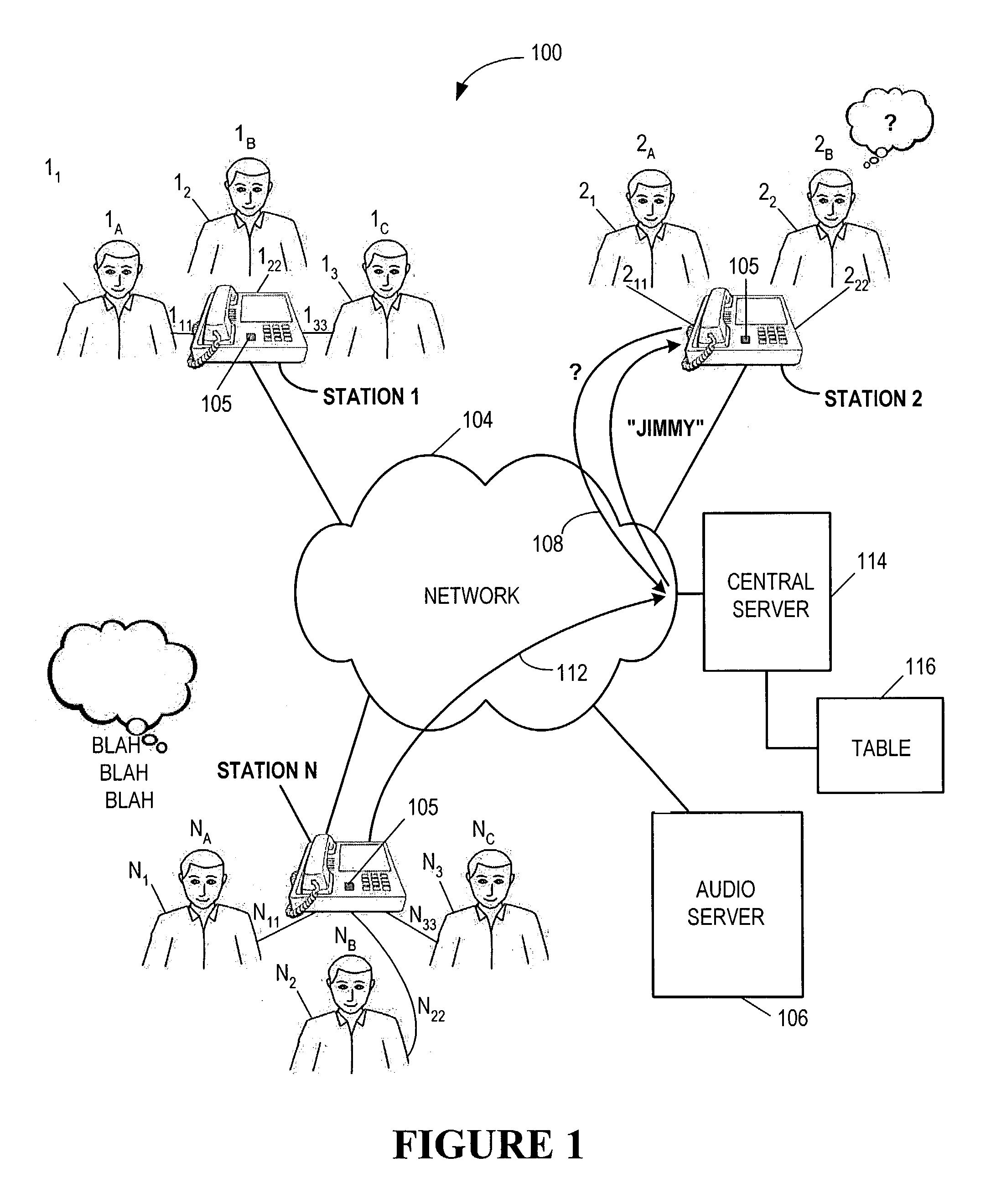

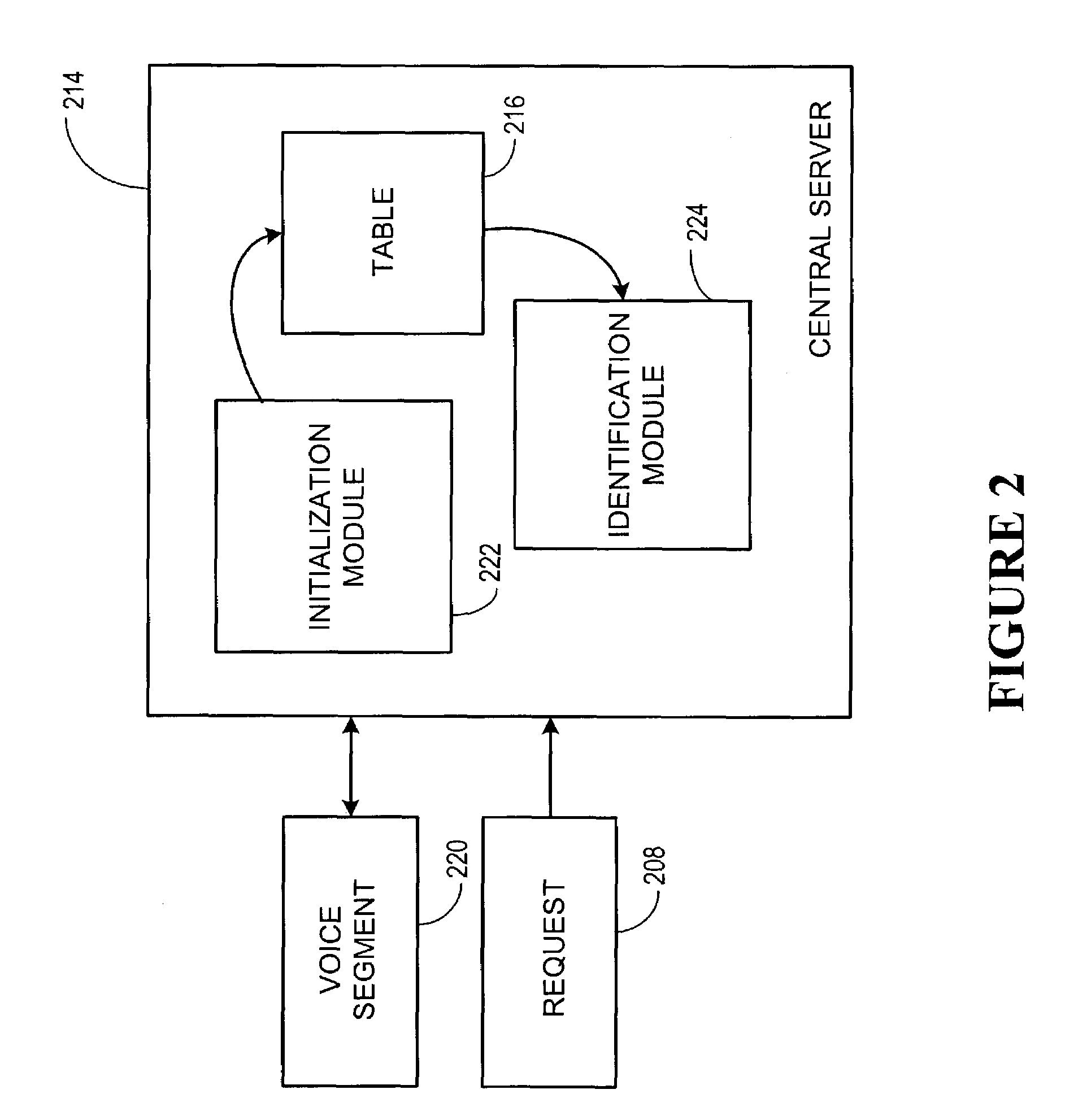

The invention relates to an apparatus and method for identifying teleconference participants. More particularly, the invention relates to a conference system that includes an initialization means for initializing a call between participants located in at least two remote stations and an identification means for identifying one of the participants in one remote station responsive to a request from another of the participants in another remote station. The initialization means comprises table means for creating a table associating each of the participants to a position in a particular remote station and including a recorded voice segment of each of the participants. The identification means uses the table to identify the participant last to speak by looking up the position of the last speaker on the table and playing back the recorded voice segment of the participant associated with that position.

Owner:CISCO TECH INC

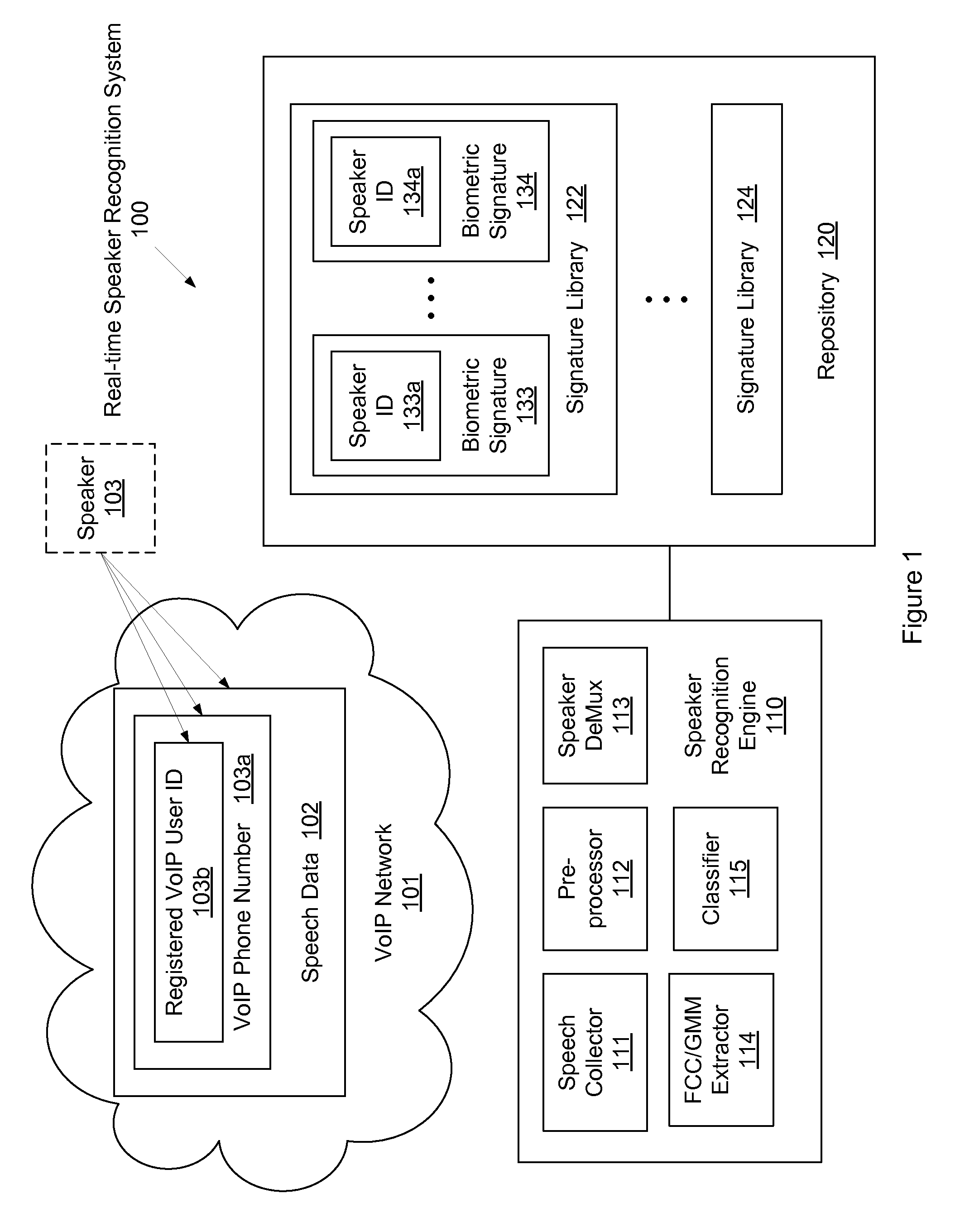

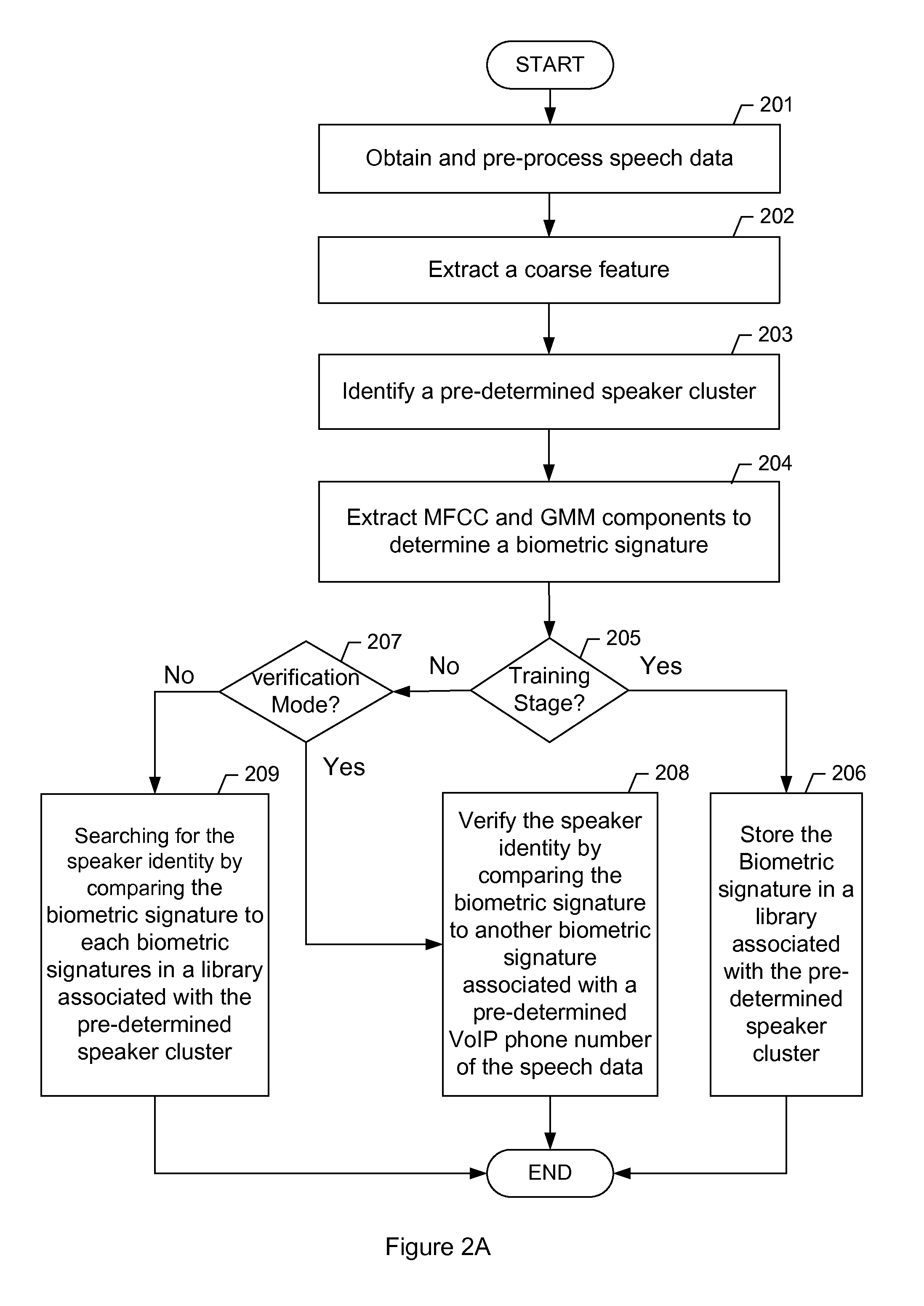

Hierarchical real-time speaker recognition for biometric VoIP verification and targeting

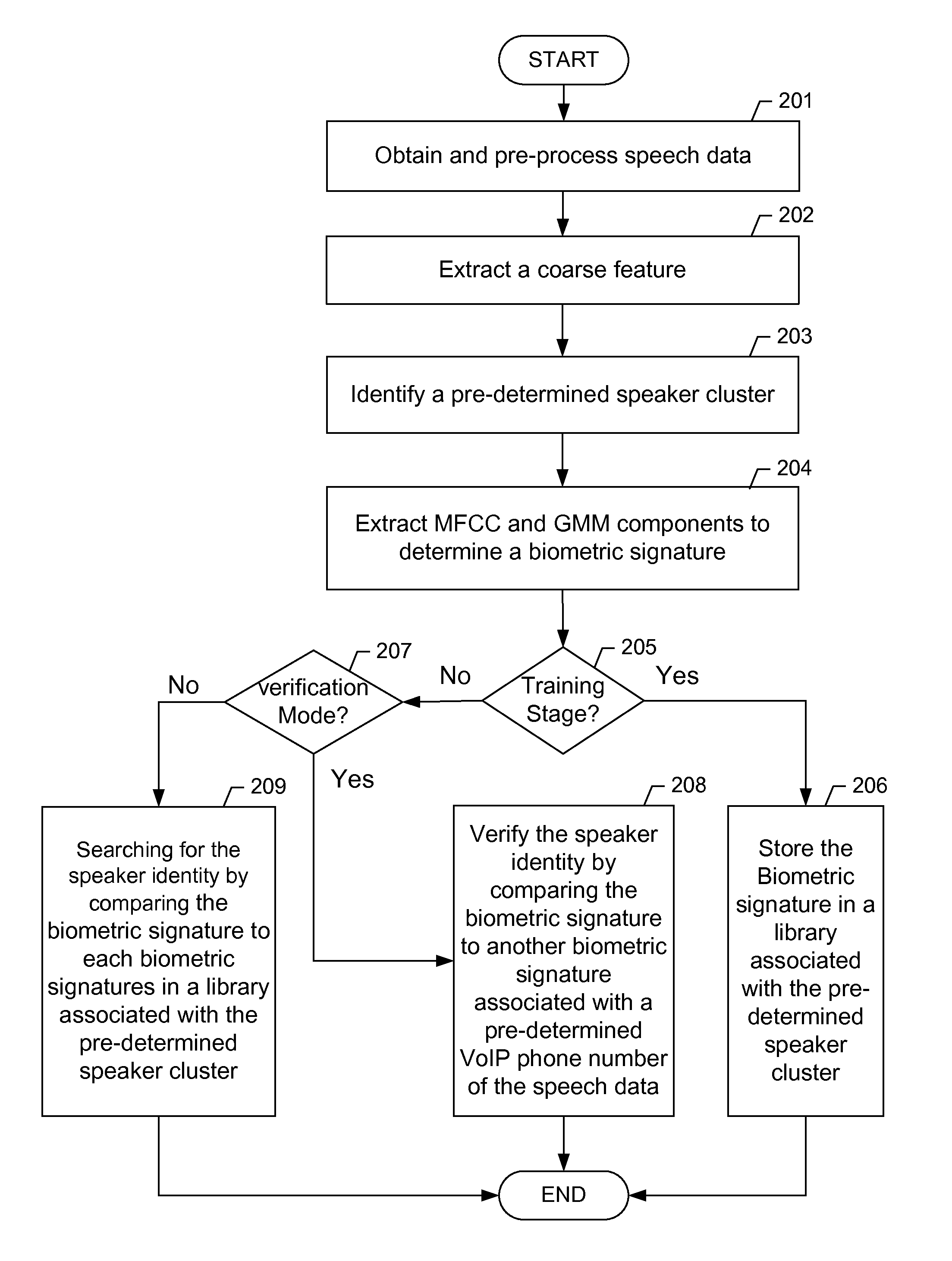

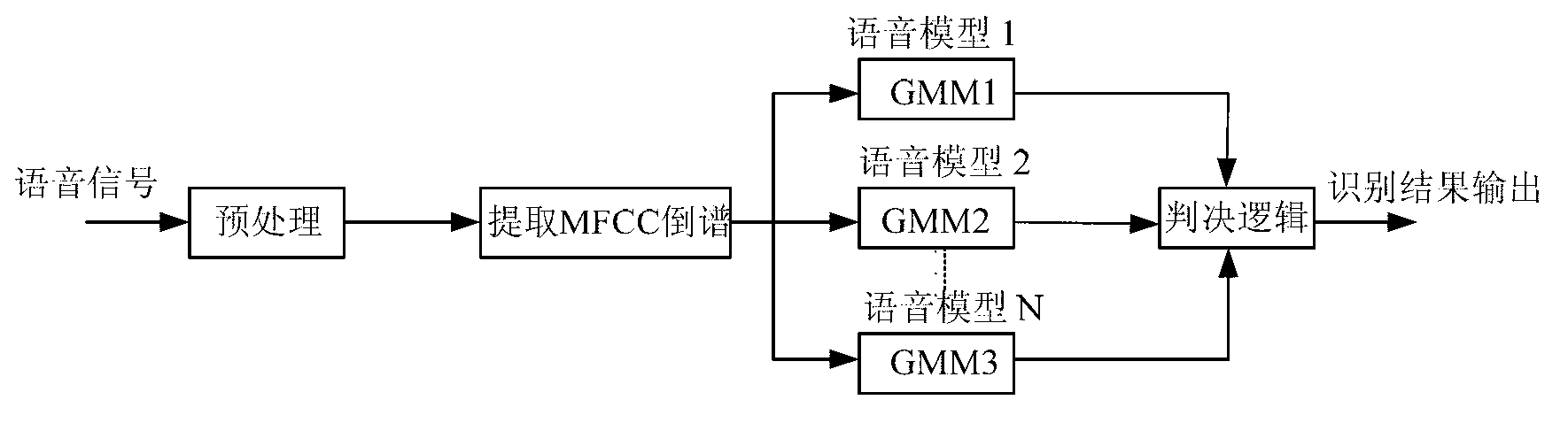

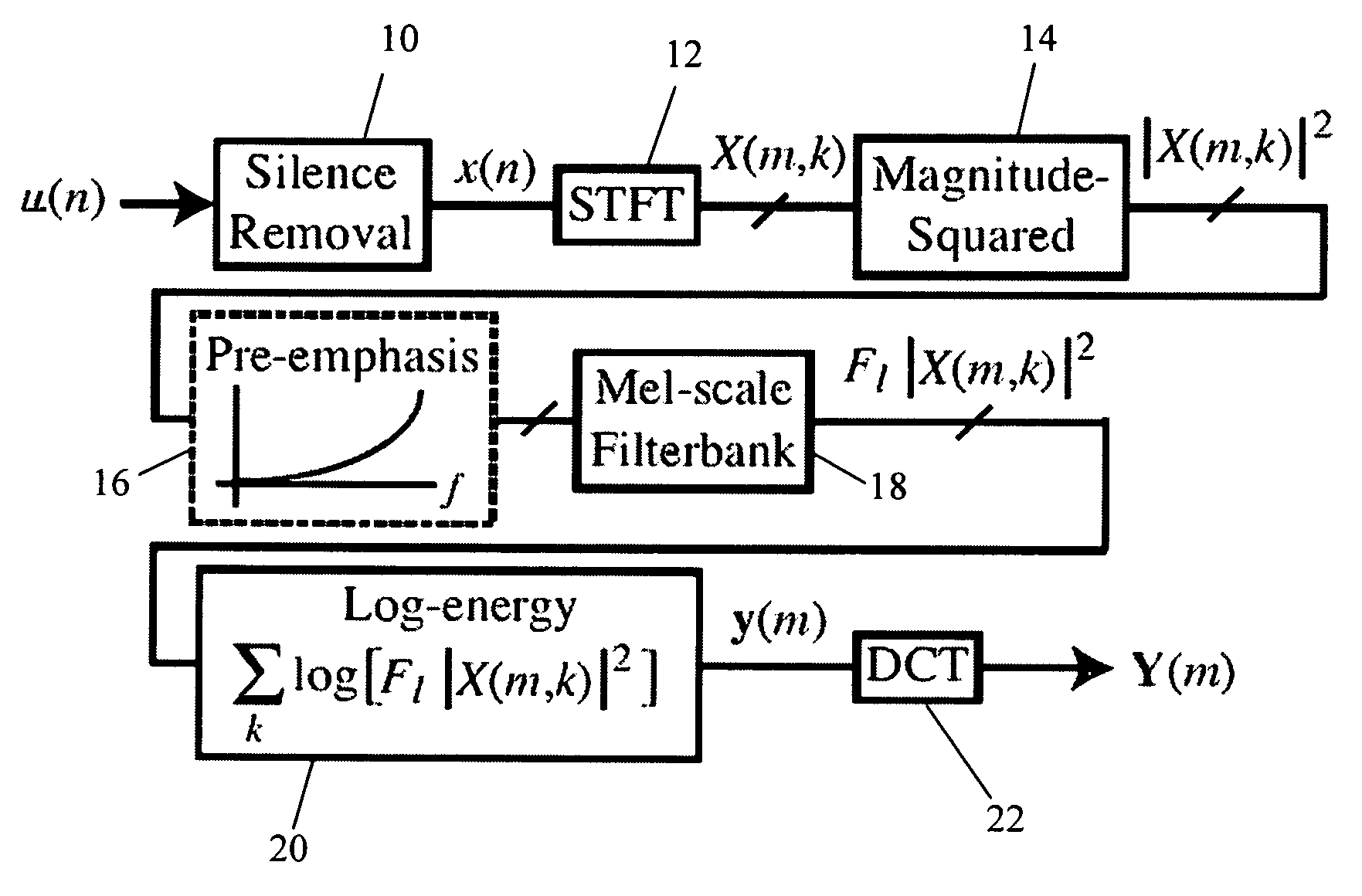

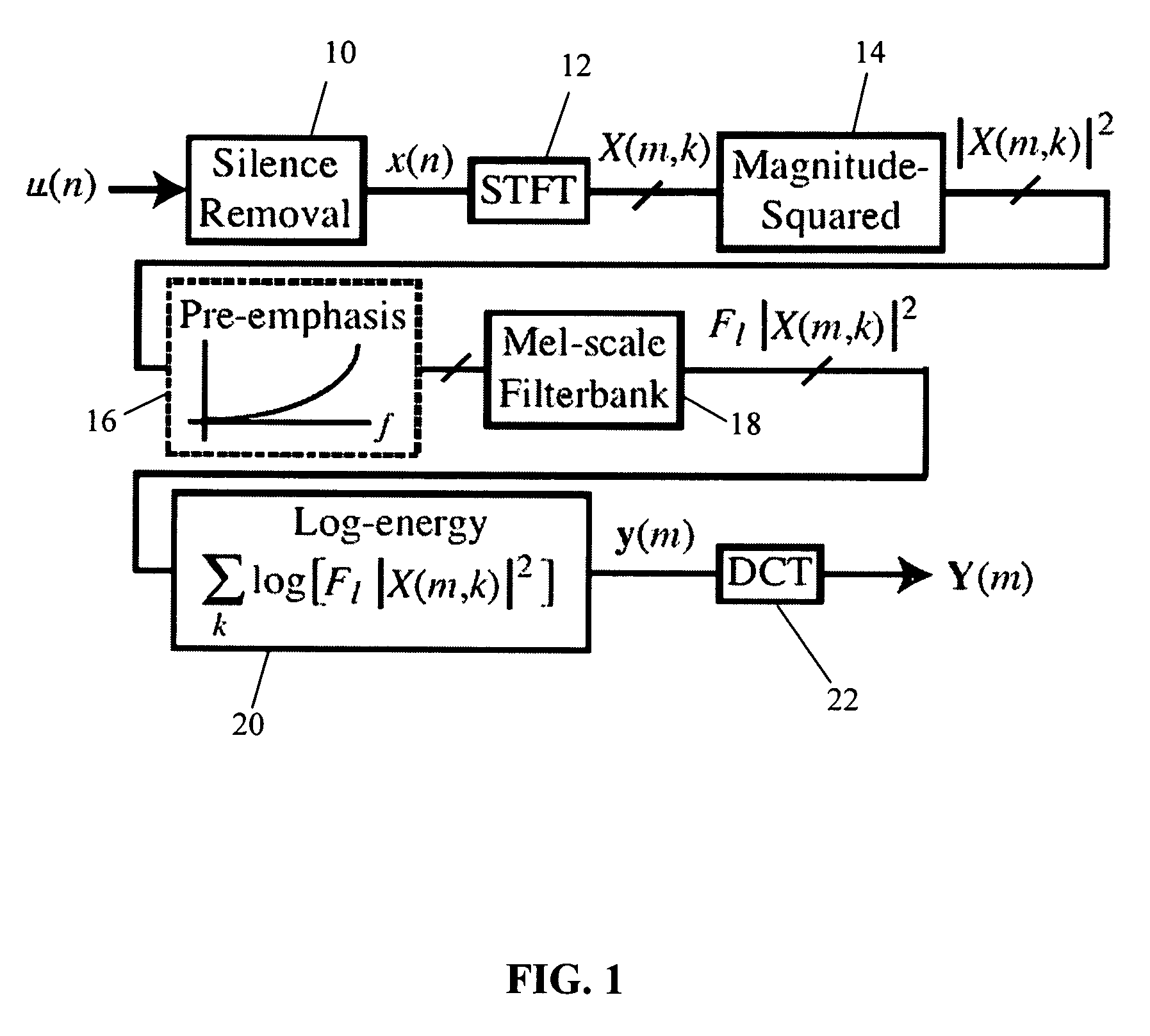

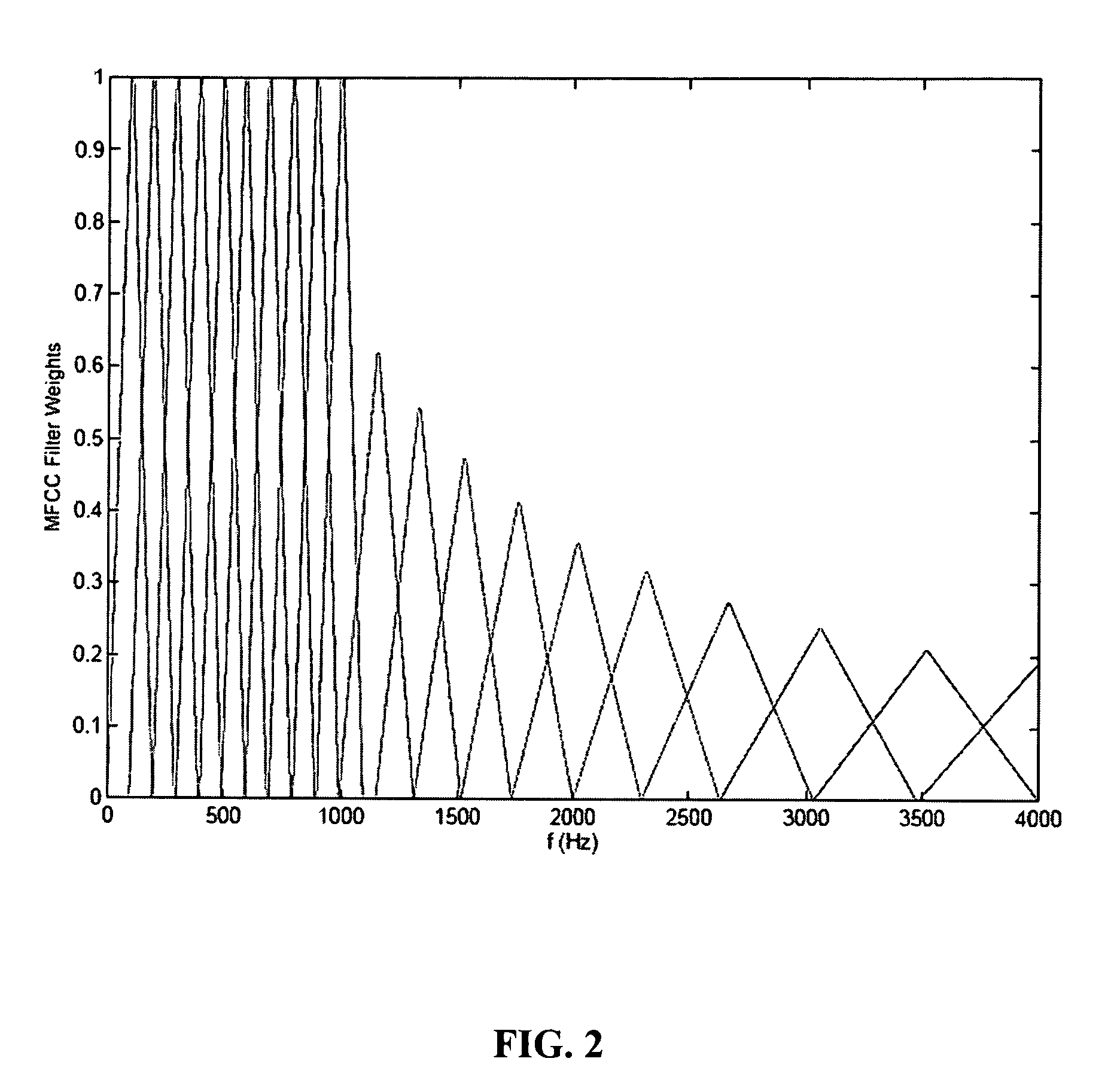

A method for real-time speaker recognition including obtaining speech data of a speaker, extracting, using a processor of a computer, a coarse feature of the speaker from the speech data, identifying the speaker as belonging to a pre-determined speaker cluster based on the coarse feature of the speaker, extracting, using the processor of the computer, a plurality of Mel-Frequency Cepstral Coefficients (MFCC) and a plurality of Gaussian Mixture Model (GMM) components from the speech data, determining a biometric signature of the speaker based on the plurality of MFCC and the plurality of GMM components, and determining in real time, using the processor of the computer, an identity of the speaker by comparing the biometric signature of the speaker to one of a plurality of biometric signature libraries associated with the pre-determined speaker cluster.

Owner:THE BOEING CO

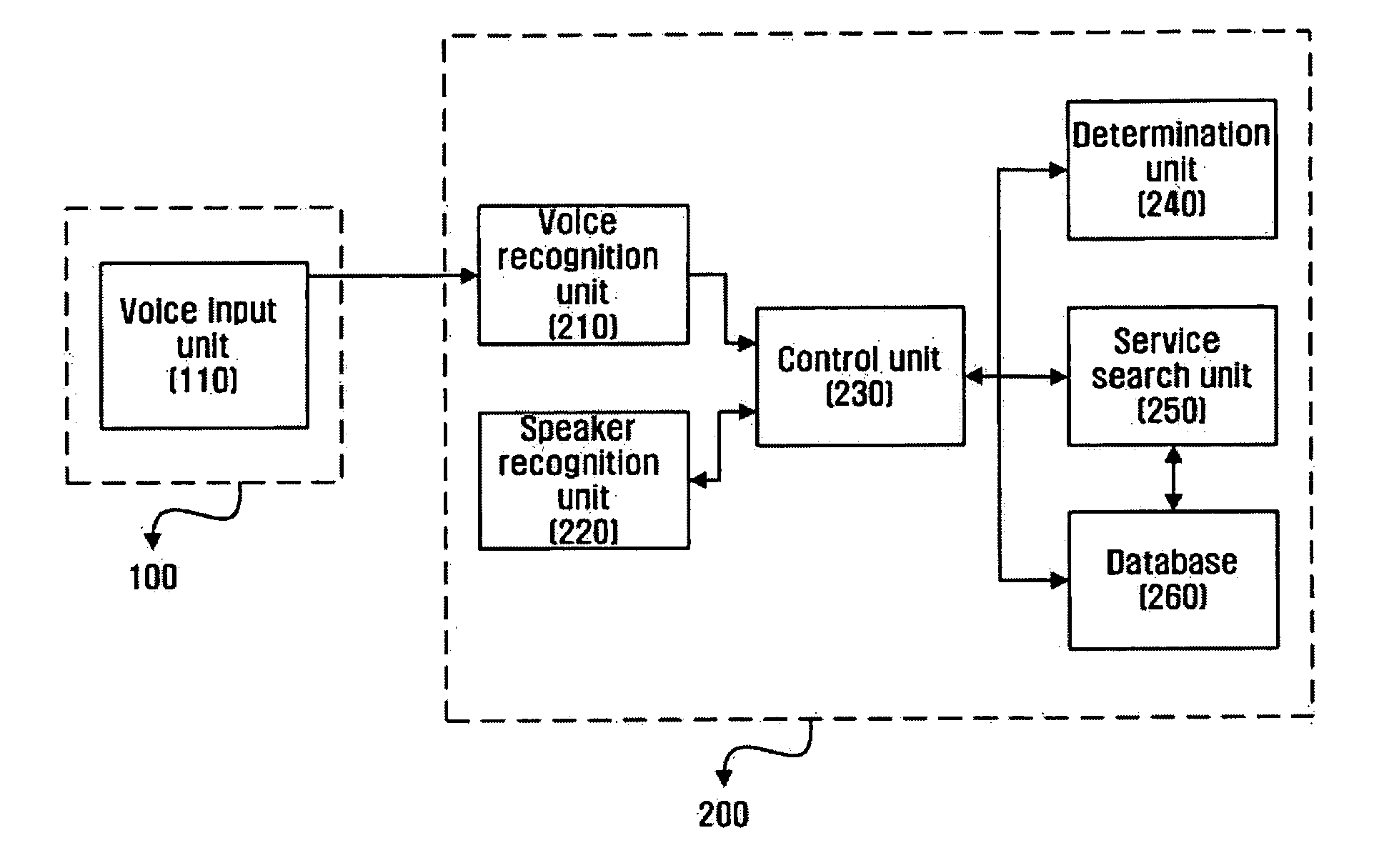

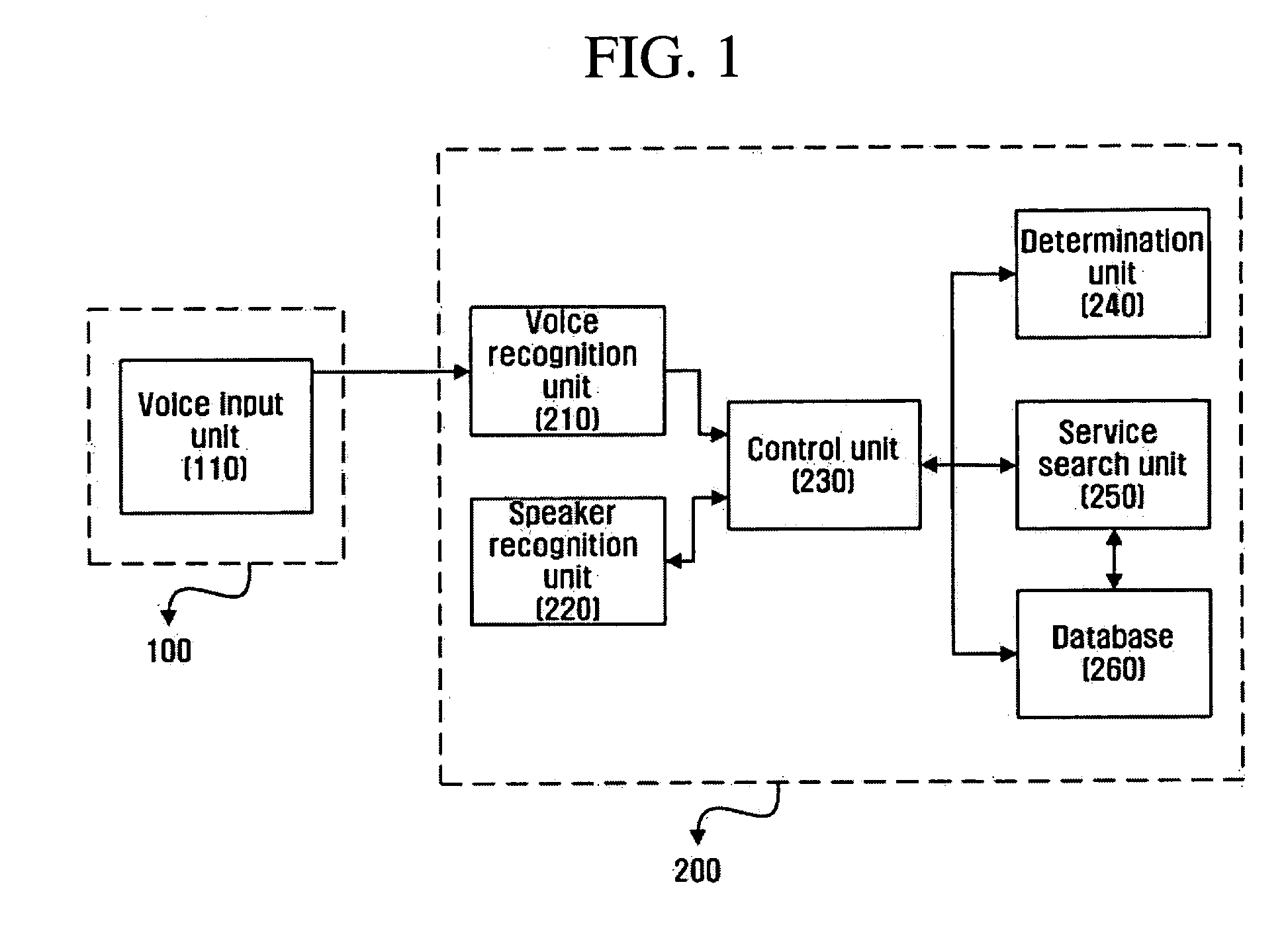

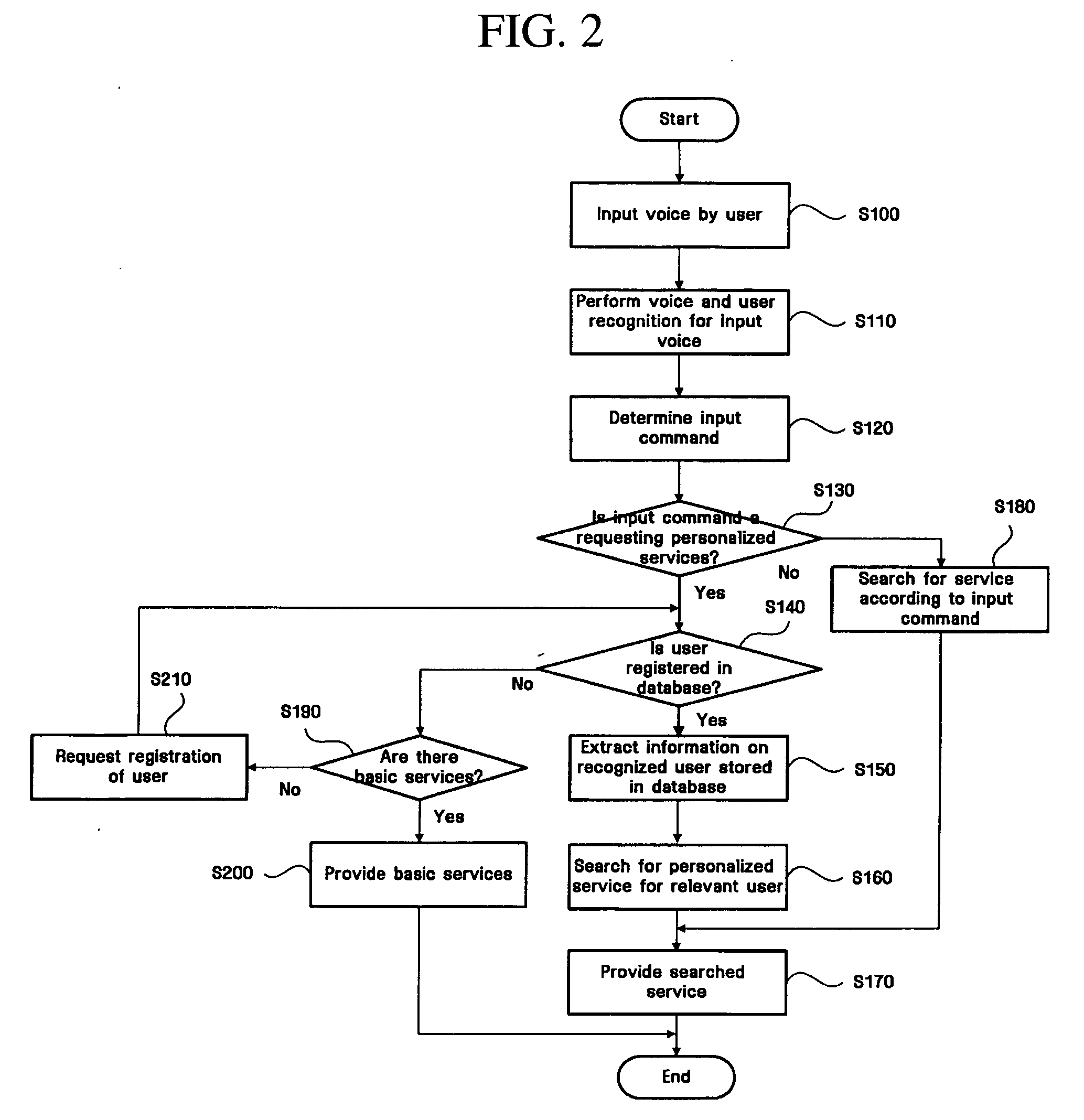

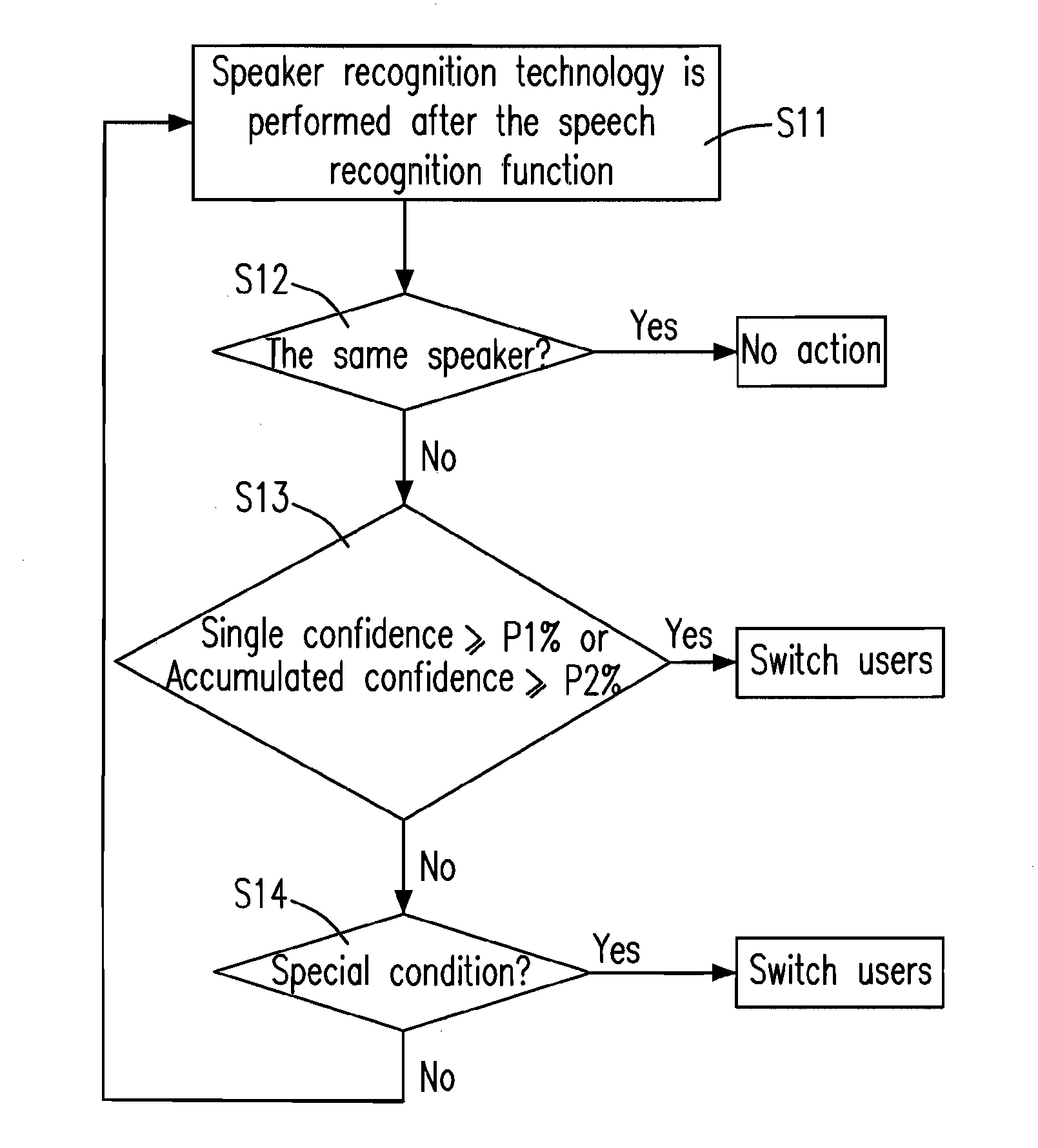

Audio/video apparatus and method for providing personalized services through voice and speaker recognition

InactiveUS20050049862A1Provide quicklyTelemetry/telecontrol selection arrangementsSpeech recognitionWireless microphoneRemote control

Disclosed is an audio / video apparatus for providing personalized services to a user through voice and speaker recognition, wherein when the user inputs his / her voice through a wireless microphone of a remote control, the voice recognition and speaker recognition for the input voice are performed and determination on a command corresponding to the input voice is made, thereby providing the user's personalized services to the user. Further, disclosed is a method for providing personalized services through voice and speaker recognition, comprising the steps of inputting, by a user, his / her voice through a wireless microphone of a remote control; if the voice is input, recognizing the input voice and the speaker that has input the voice; determining a command based on the input voice; and providing a service according to the determination results.

Owner:SAMSUNG ELECTRONICS CO LTD

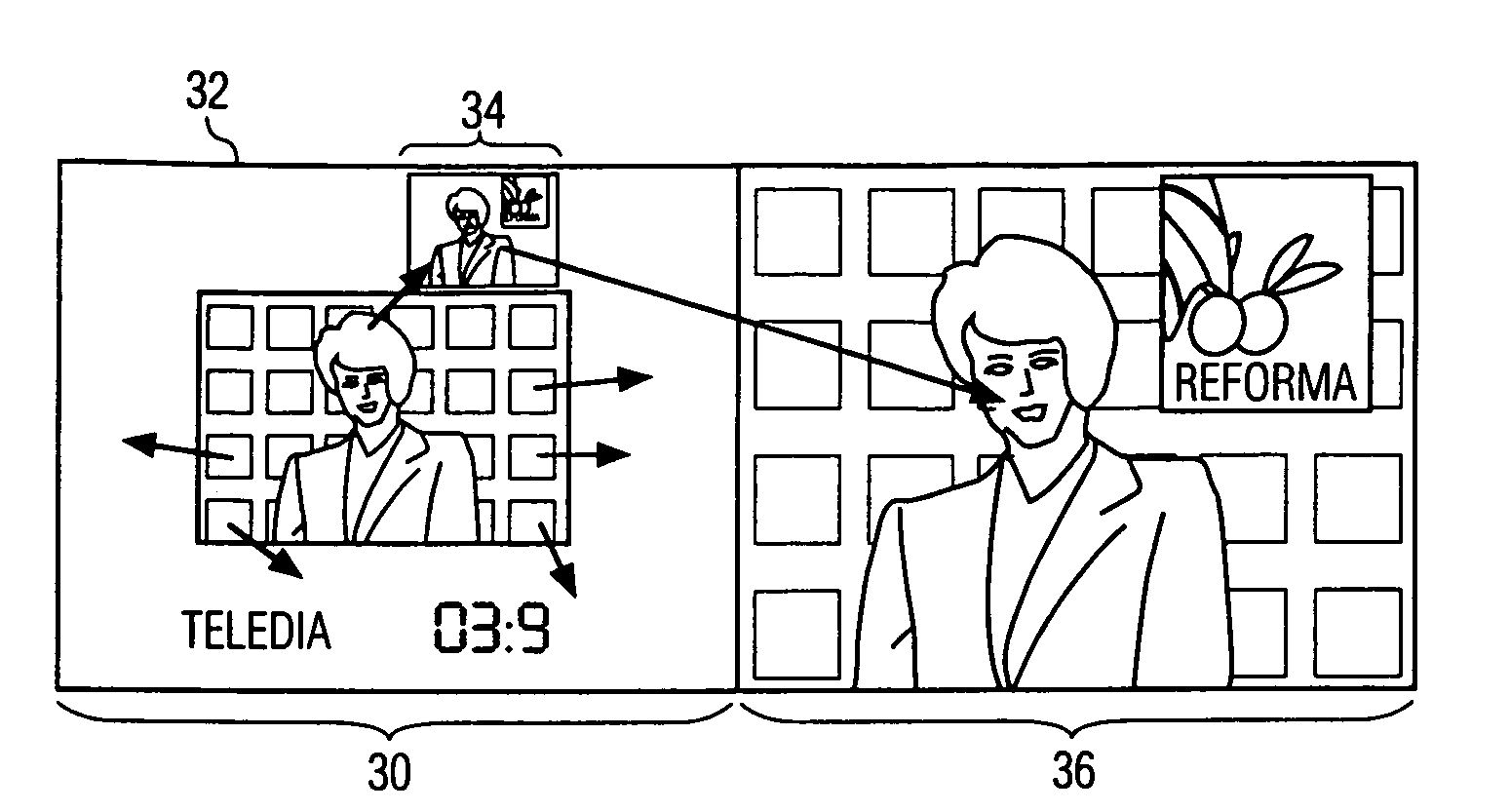

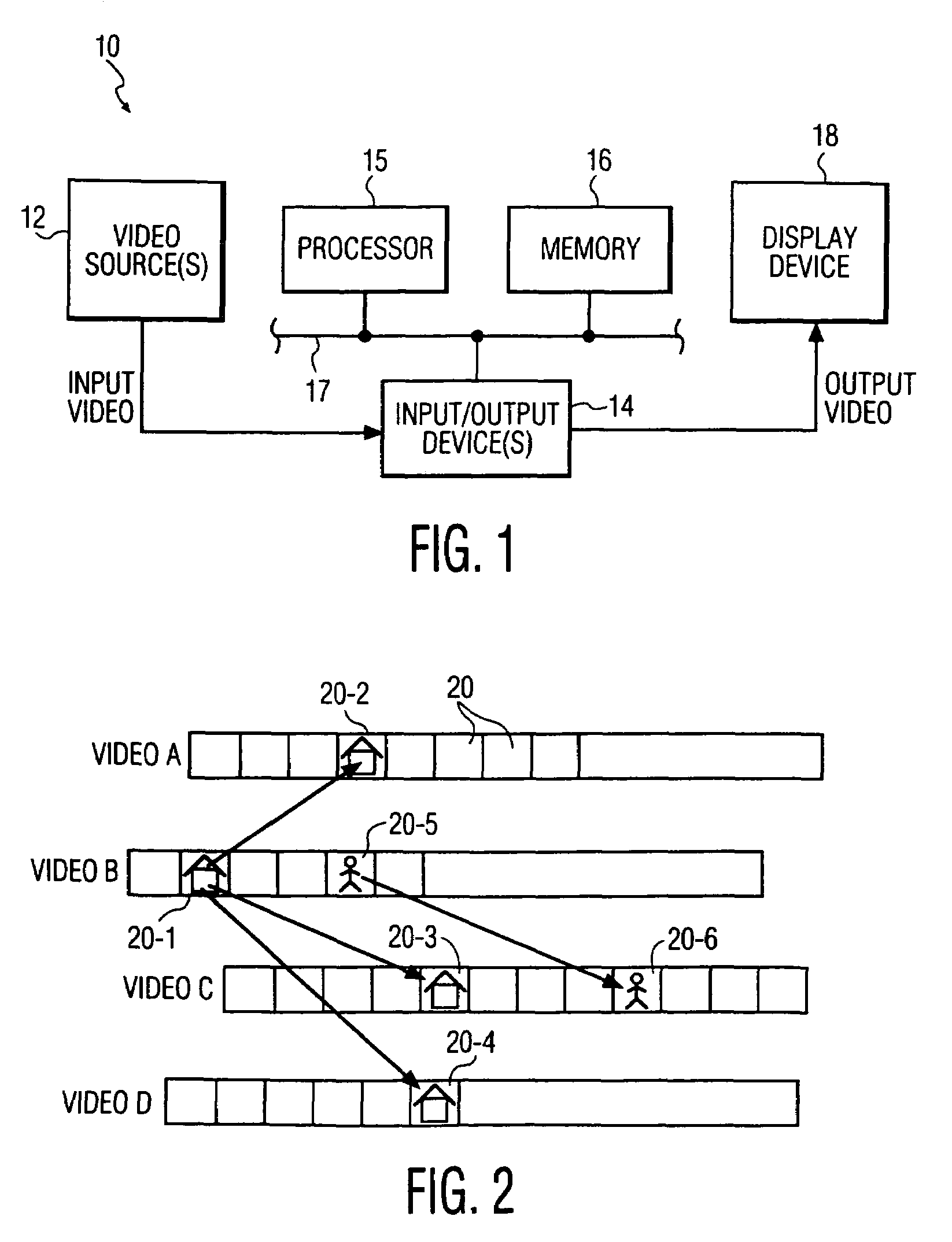

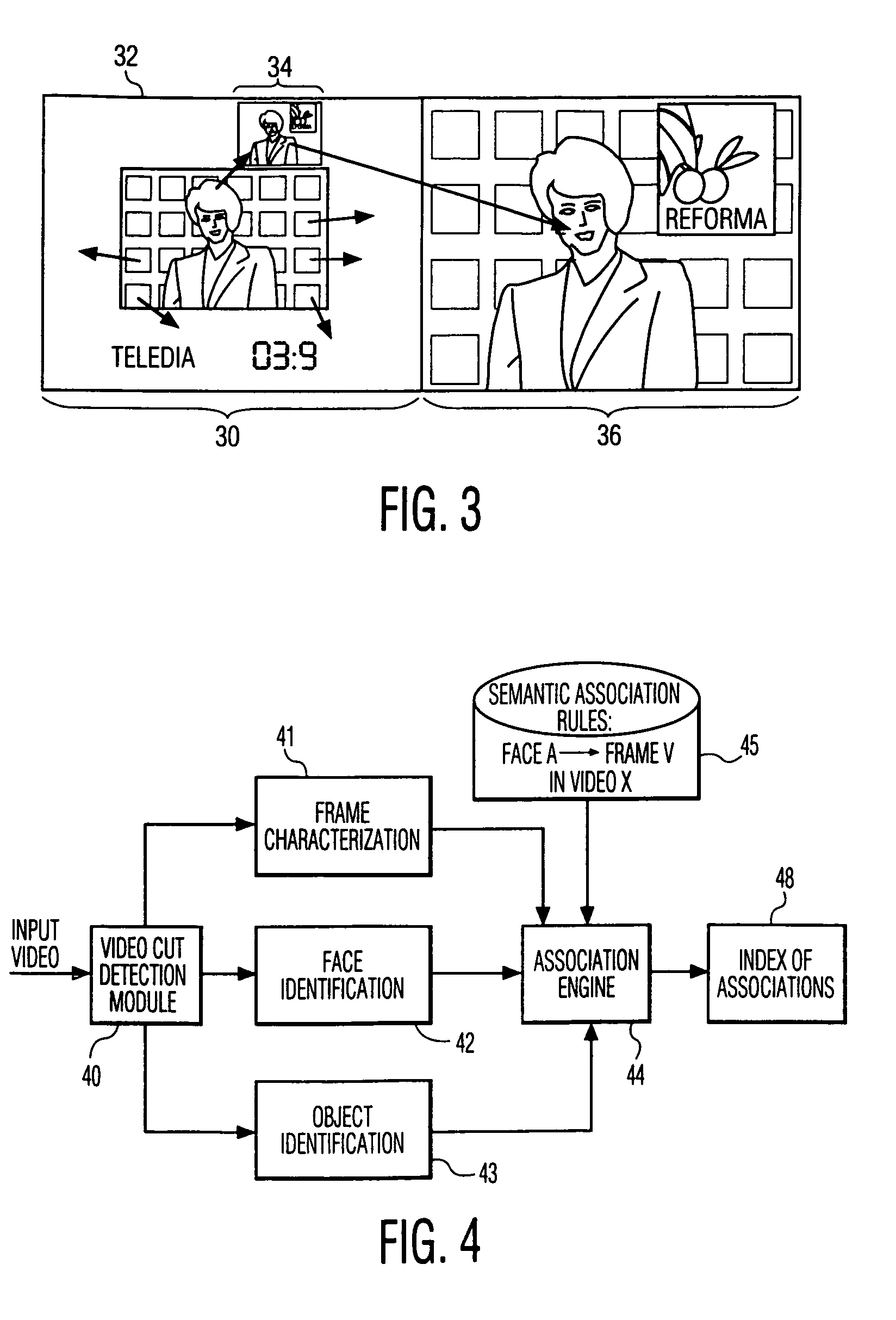

Method and apparatus for linking a video segment to another segment or information source

InactiveUS7356830B1Improve interactivityTelevision system detailsPulse modulation television signal transmissionVideo processingHandling system

A given video segment is configured to include links to one or more other video segments or information sources. The given video segment is processed in a video processing system to determine an association between an object, entity, characterization or other feature of the segment and at least one additional information source containing the same feature. The association is then utilized to access information from the additional information source, such that the accessed information can be displayed to a user in conjunction with or in place of the original video segment. A set of associations for the video segment can be stored in a database or other memory of the processing system, or incorporated into the video segment itself, e.g., in a transport stream of the video segment. The additional information source may be, e.g., an additional video segment which includes the designated feature, or a source of audio, text or other information containing the designated feature. The feature may be a video feature extracted from a frame of the video segment, e.g., an identification of a particular face, scene, event or object in the frame, an audio feature such as a music signature extraction, a speaker identification, or a transcript extraction, or a textual feature. The invention allows a user to access information by clicking on or otherwise selecting an object or other feature in a displayed video segment, thereby facilitating the retrieval of information related to that segment.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

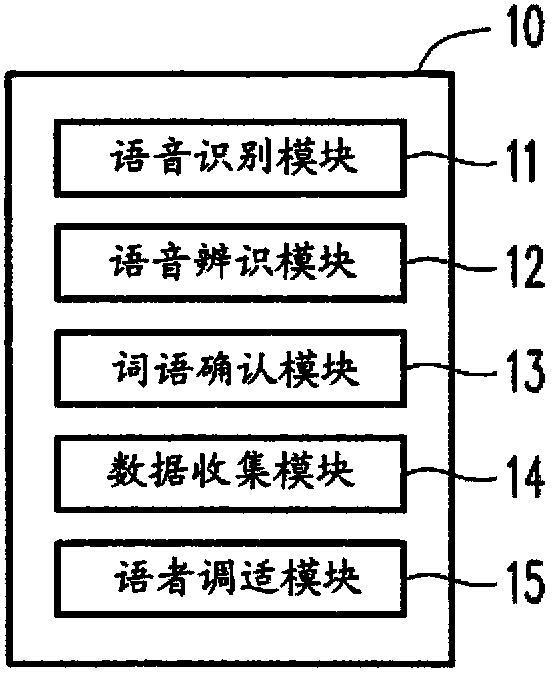

Voice recognition system

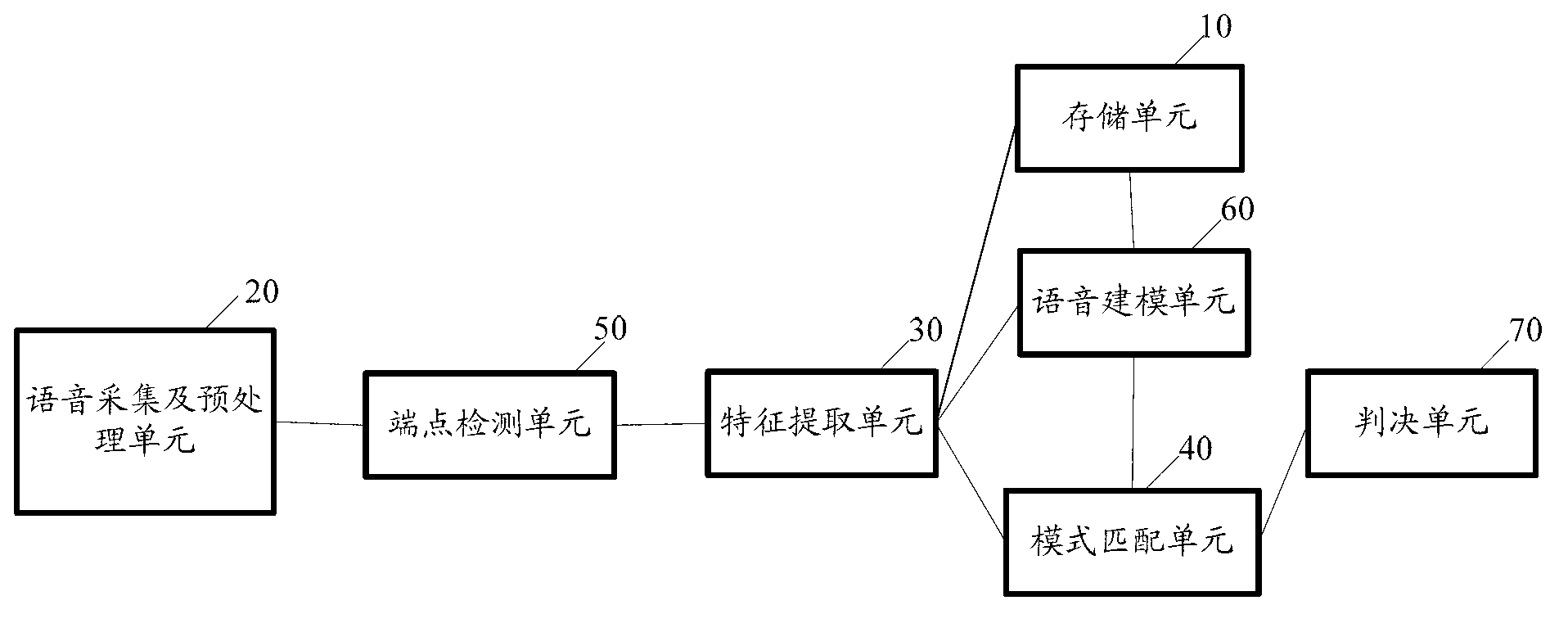

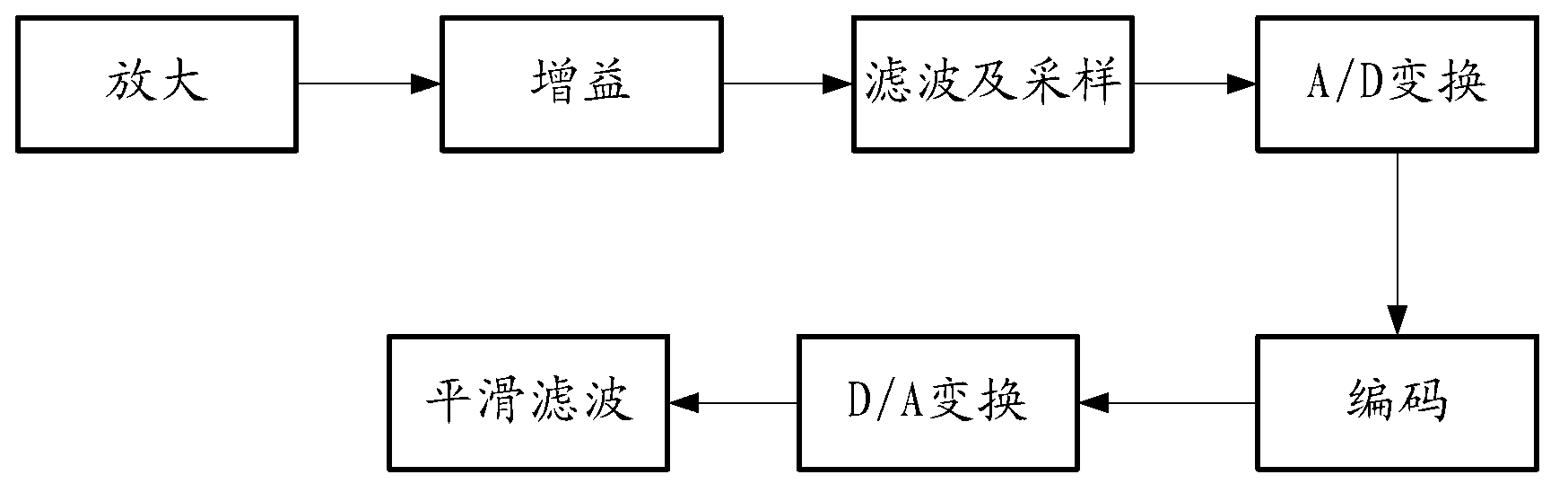

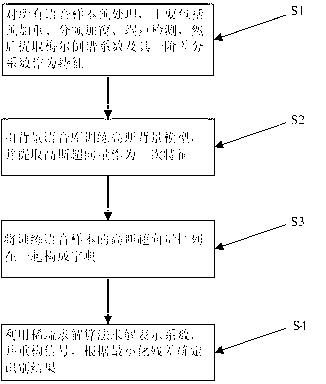

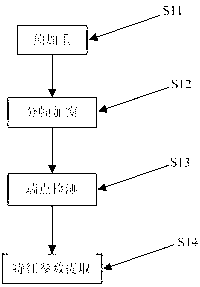

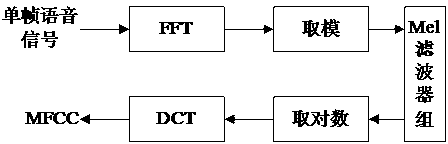

ActiveCN103236260AImprove detection reliabilityRealize the recognition functionSpeech recognitionMel-frequency cepstrumPattern matching

The invention provides a voice recognition system comprising a storage unit, a voice acquisition and pre-processing unit, a feature extraction unit and a pattern matching unit, wherein the storage unit is used for storing at least one user's voice model; the voice acquisition and pre-processing unit is used for acquiring a to-be-identified voice signal, transforming the format of the to-be-identified voice signal and encoding the to-be-identified voice signal; the feature extraction unit is used for extracting voice feature parameters from the encoded to-be-identified voice signal; and the pattern matching unit is used for matching the extracted voice feature parameters with at least one voice model so that the user to which the to-be-identified voice signal belongs is identified. Based on the voice generation principle, voice features are analyzed, MFCC (mel frequency cepstrum coefficient) parameters are used, a speaker voice feature model is established, and speaker feature recognition algorithms are achieved, and thus, the purpose to improve speaker detection reliability can be achieved, and the speaker identification function can be finally realized on an electronic product.

Owner:BOE TECH GRP CO LTD +1

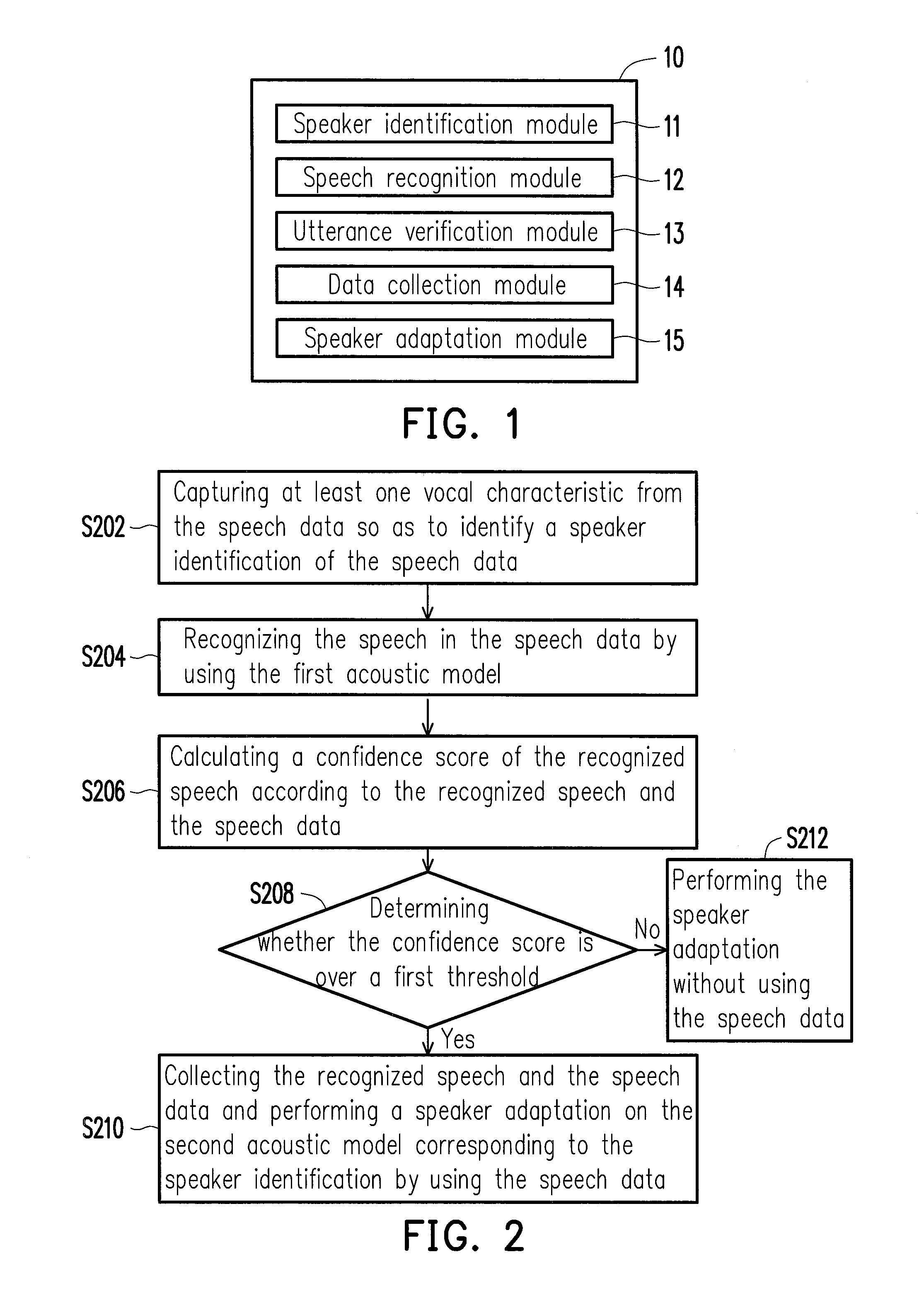

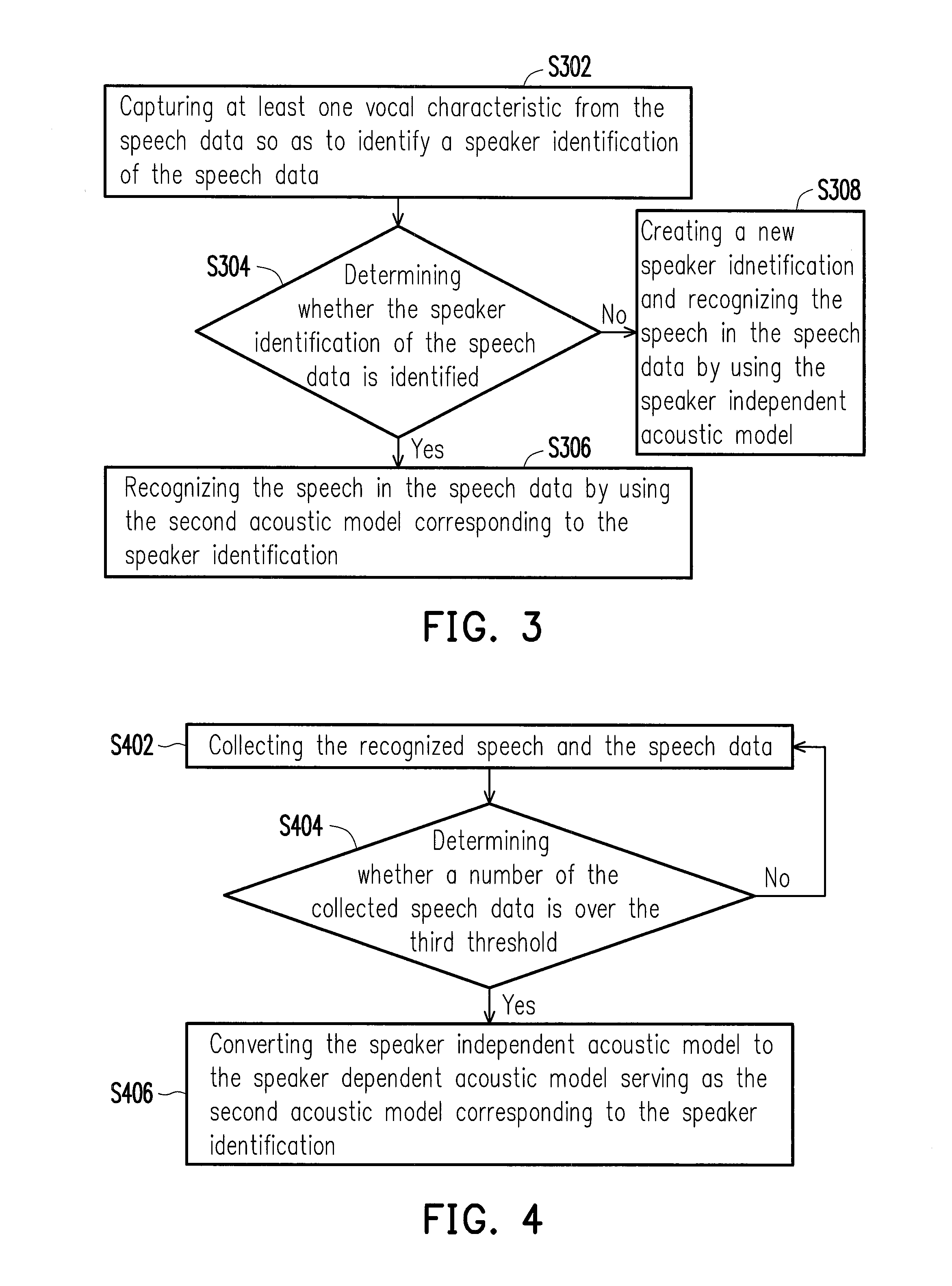

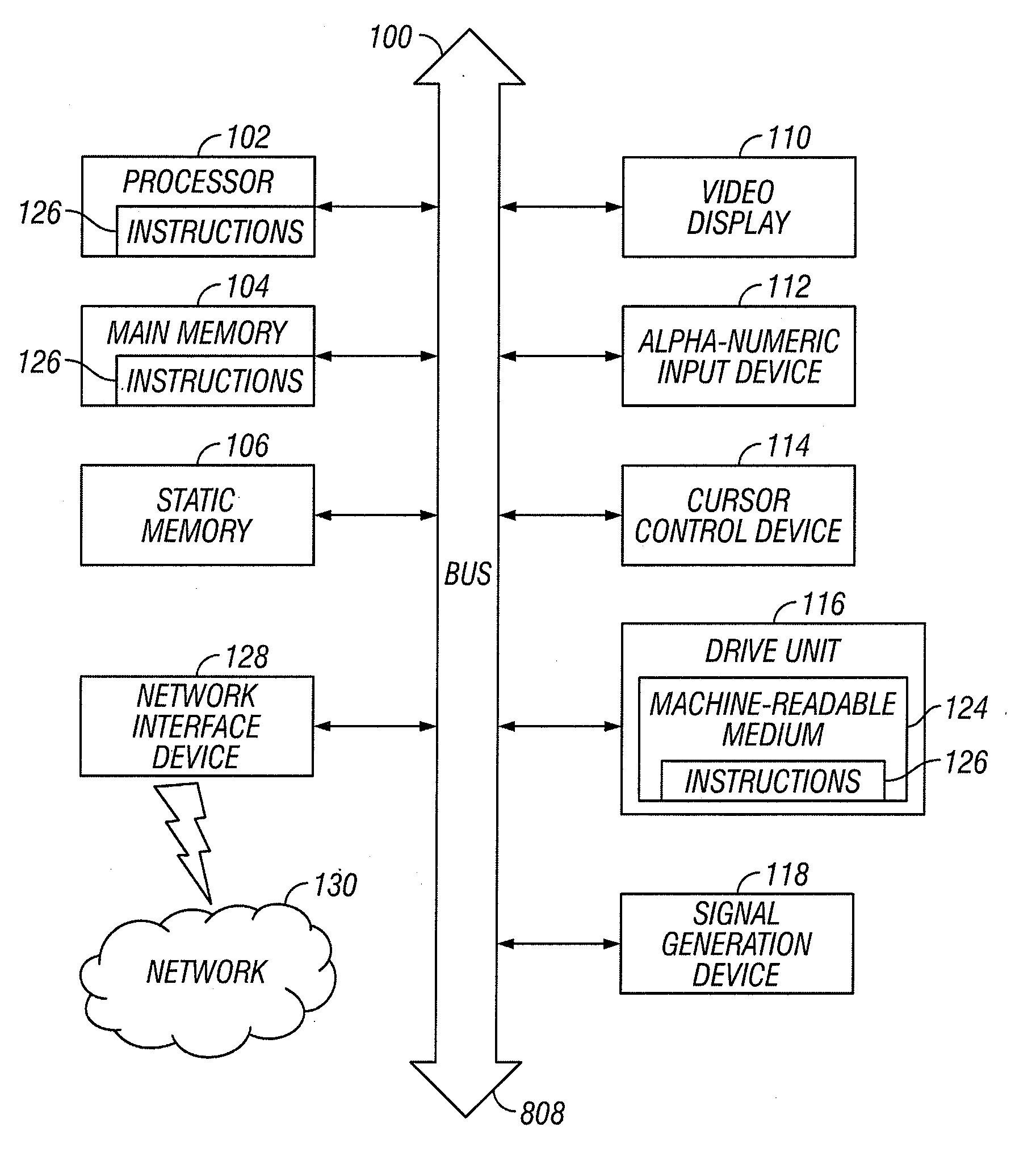

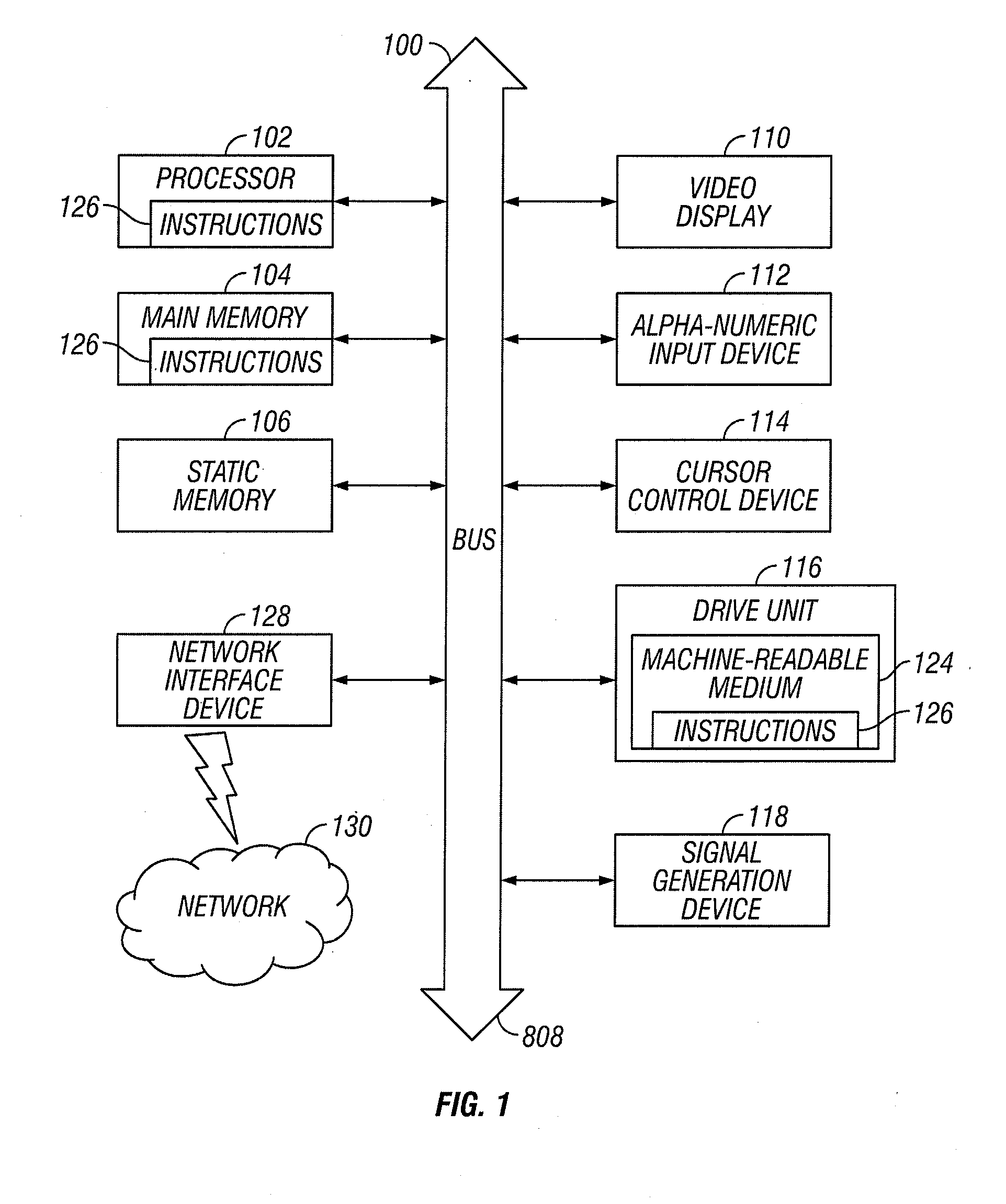

Method and system for speech recognition

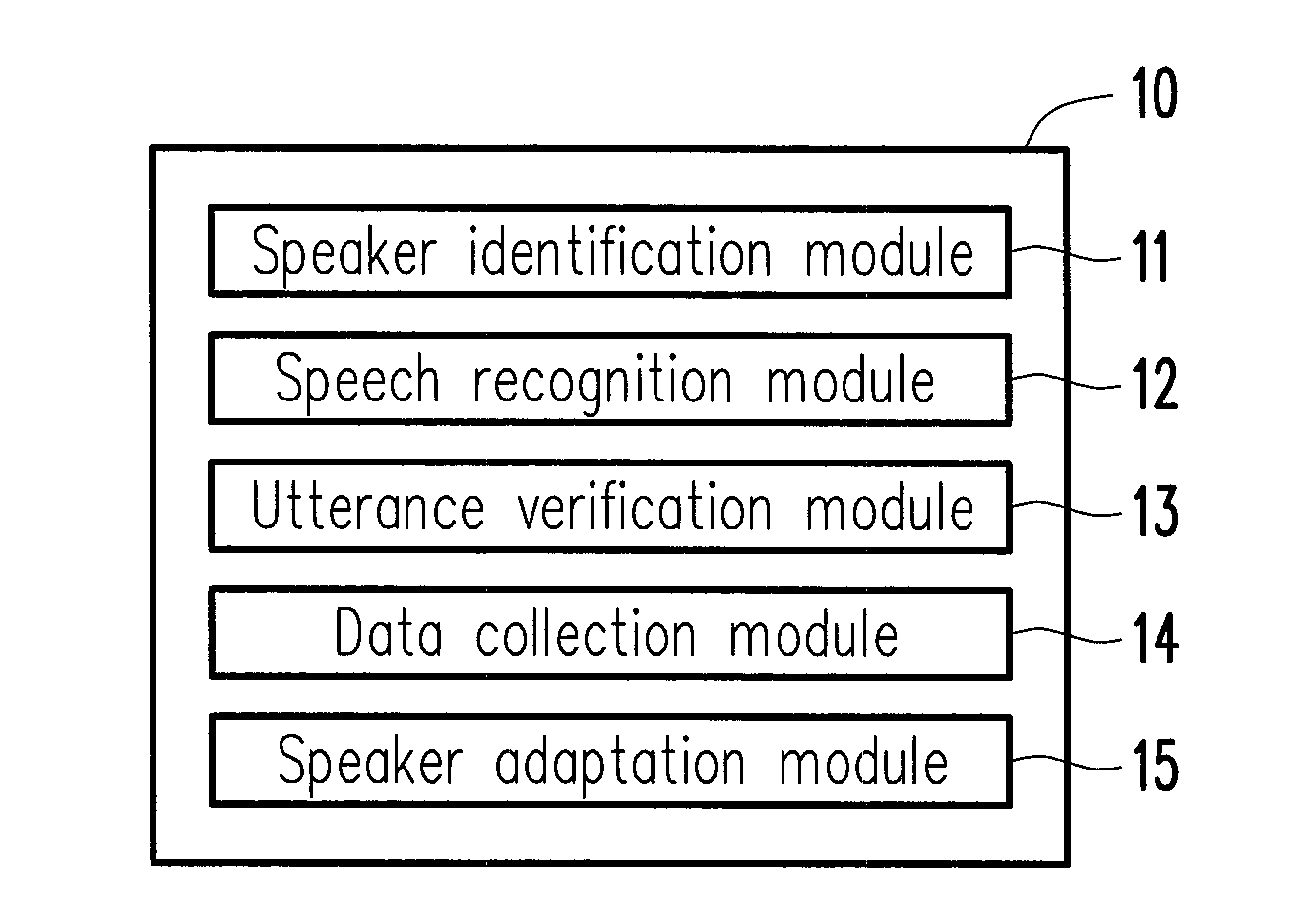

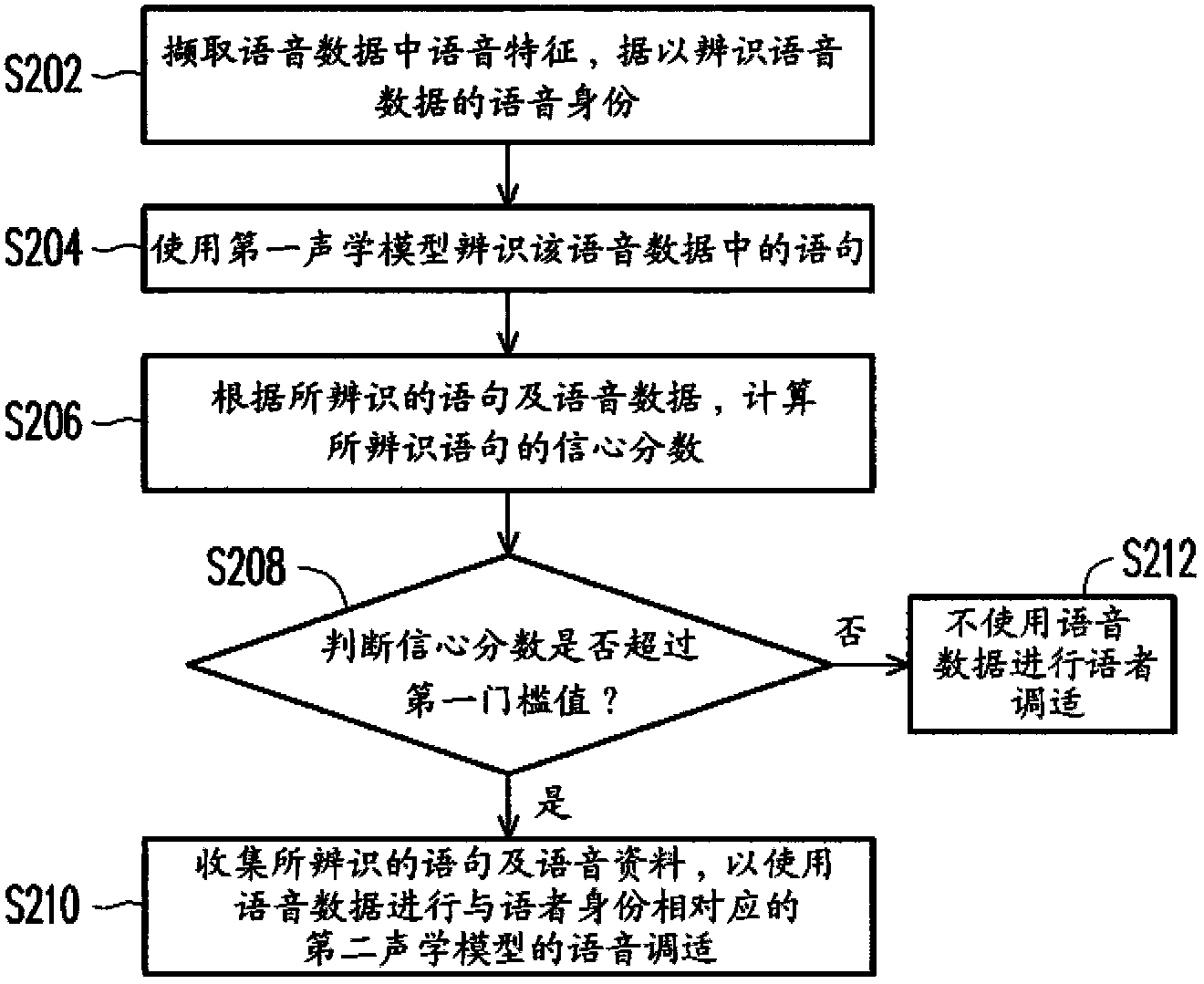

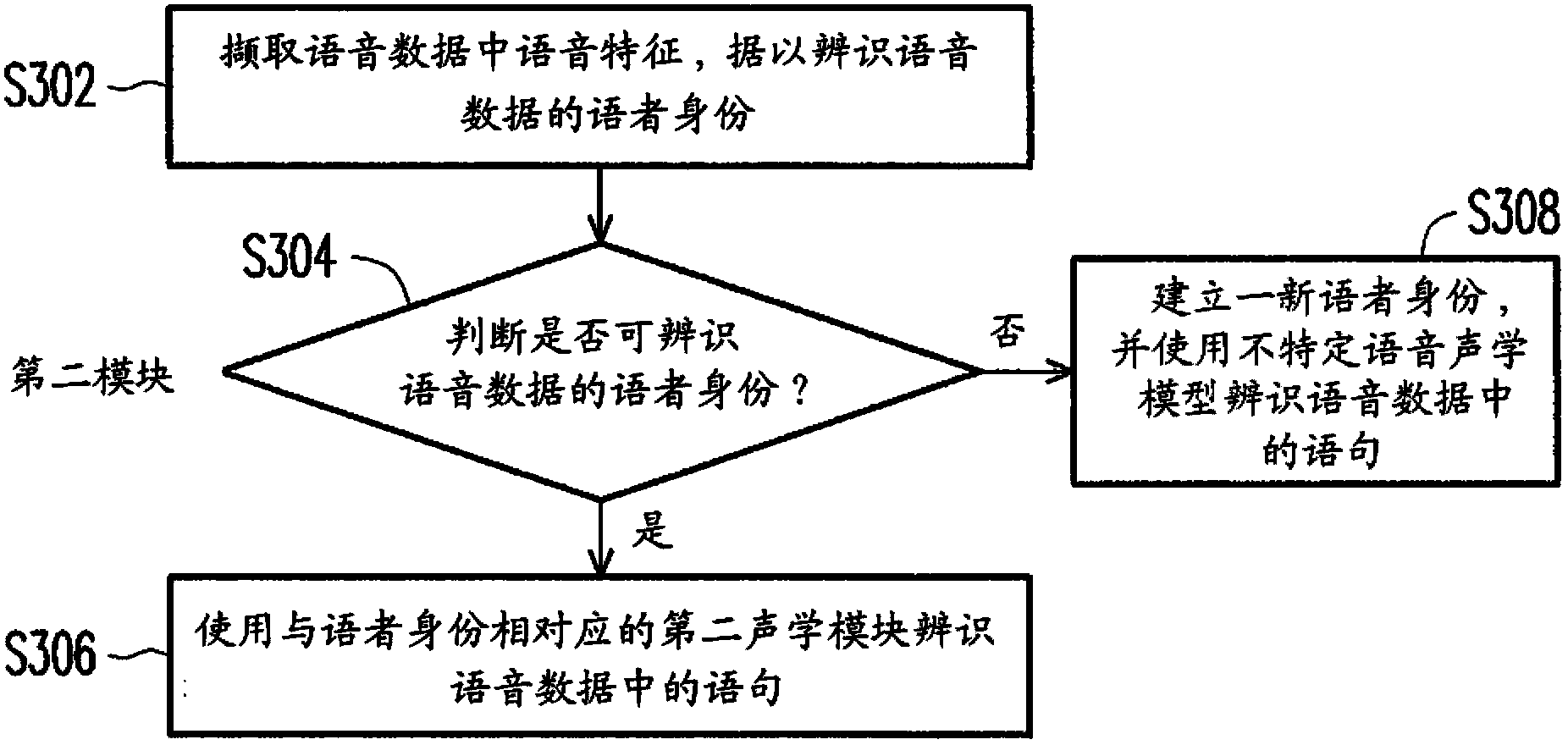

InactiveUS20130311184A1Improve speech recognition accuracyImprove accuracySpeech recognitionSpeech identificationAcoustic model

A method and a system for speech recognition are provided. In the method, vocal characteristics are captured from speech data and used to identify a speaker identification of the speech data. Next, a first acoustic model is used to recognize a speech in the speech data. According to the recognized speech and the speech data, a confidence score of the speech recognition is calculated and it is determined whether the confidence score is over a threshold. If the confidence score is over the threshold, the recognized speech and the speech data are collected, and the collected speech data is used for performing a speaker adaptation on a second acoustic model corresponding to the speaker identification.

Owner:ASUSTEK COMPUTER INC

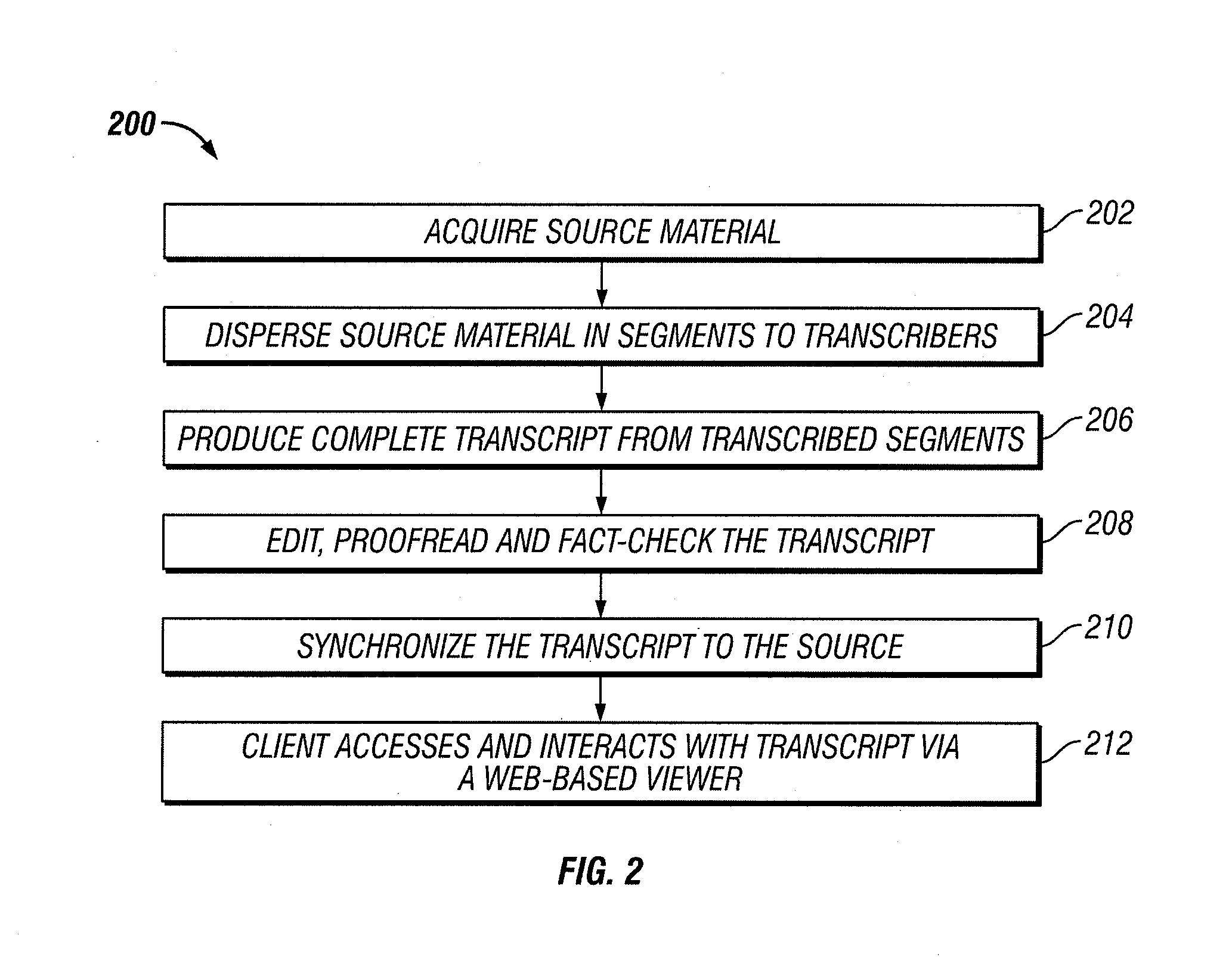

Method and system for rapid transcription

ActiveUS20080319744A1Eliminate timeEasy to handleText processingOffice automationVideo recordingDocumentation

A method and system for producing and working with transcripts according to the invention eliminates the foregoing time inefficiencies. By dispersing a source recording to a transcription team in small segments, so that team members transcribe segments in parallel, a rapid transcription process delivers a fully edited transcript within minutes. Clients can view accurate, grammatically correct, proofread and fact-checked documents that shadow live proceedings by mere minutes. The rapid transcript includes time coding, speaker identification and summary. A viewer application allows a client to view a video recording side-by-side with a transcript. Clicking on a word in the transcript locates the corresponding recorded content; advancing a recording to a particular point locates and displays the corresponding spot in the transcript. The recording is viewed using common video features, and may be downloaded. The client can edit the transcript and insert comments. Any number of colleagues can view and edit simultaneously.

Owner:TIGERFISH

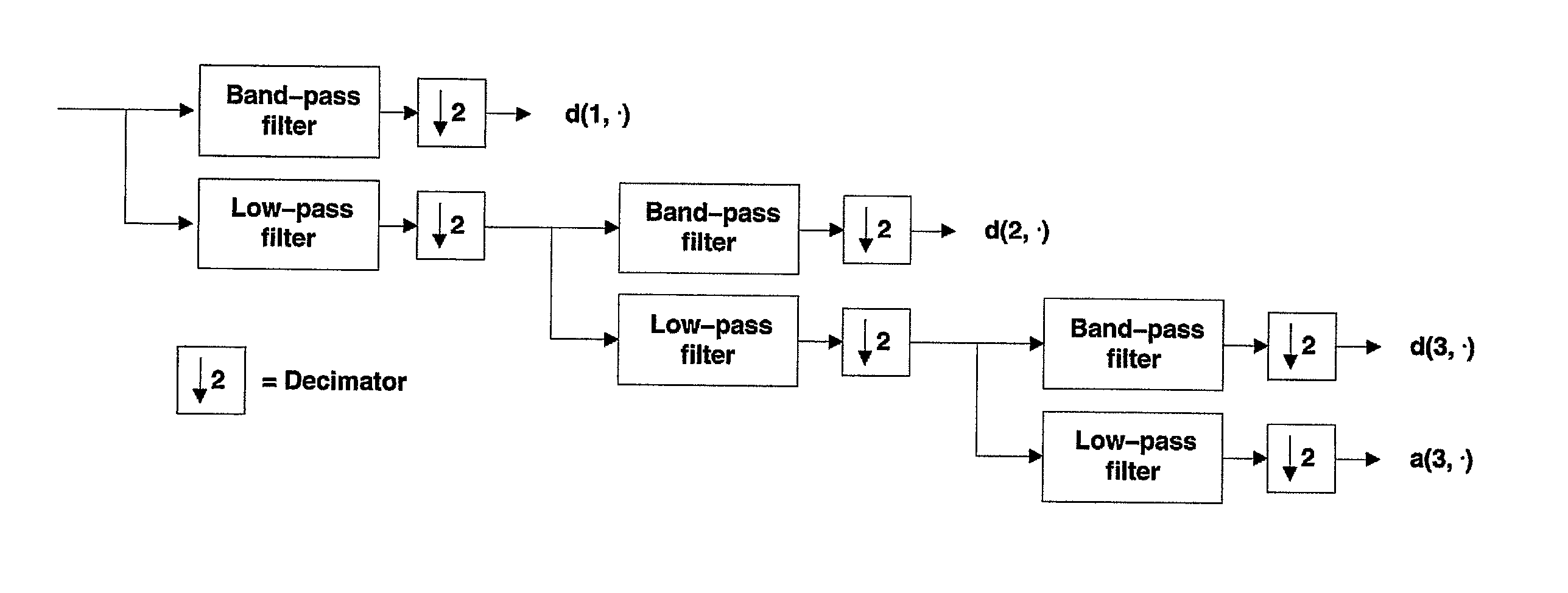

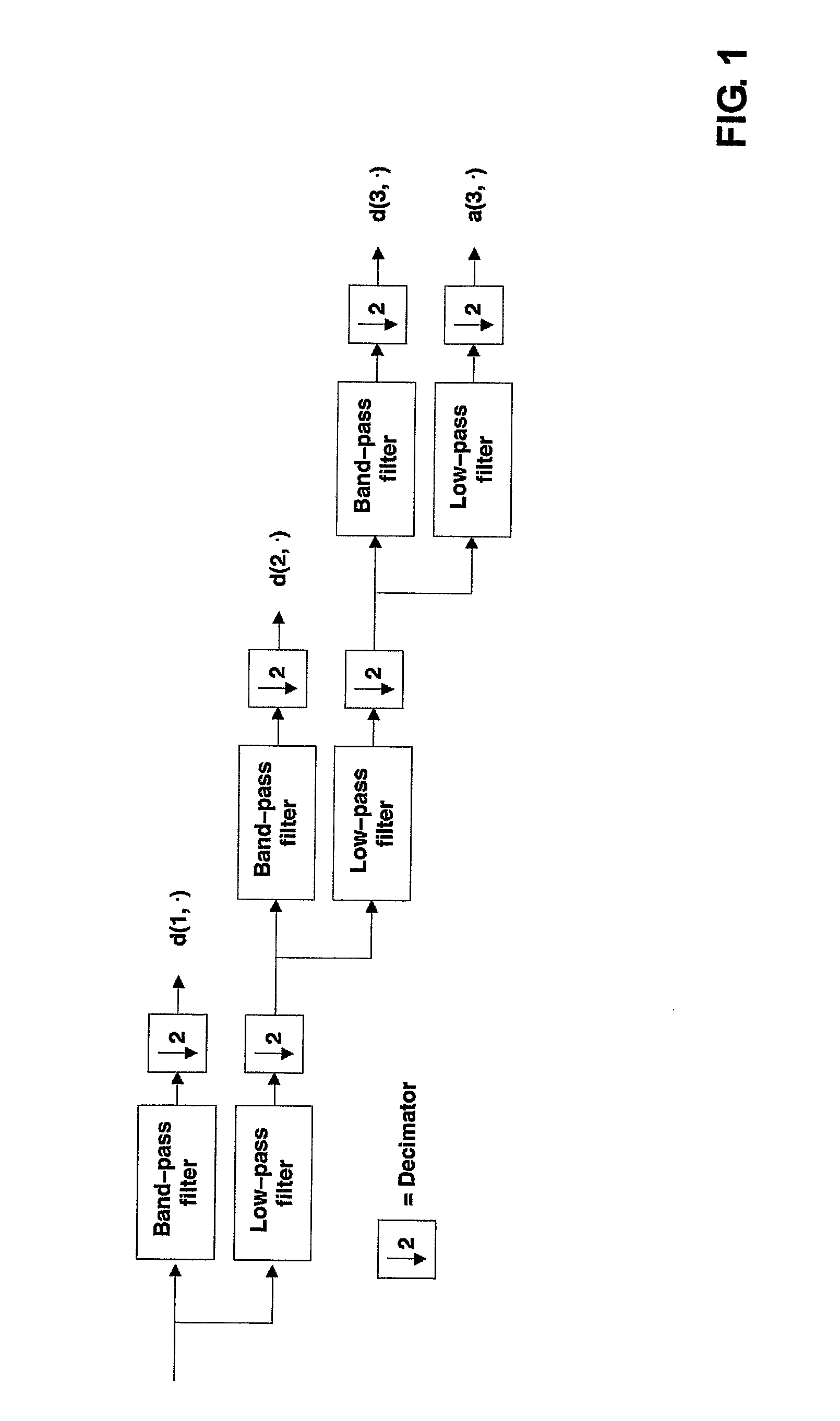

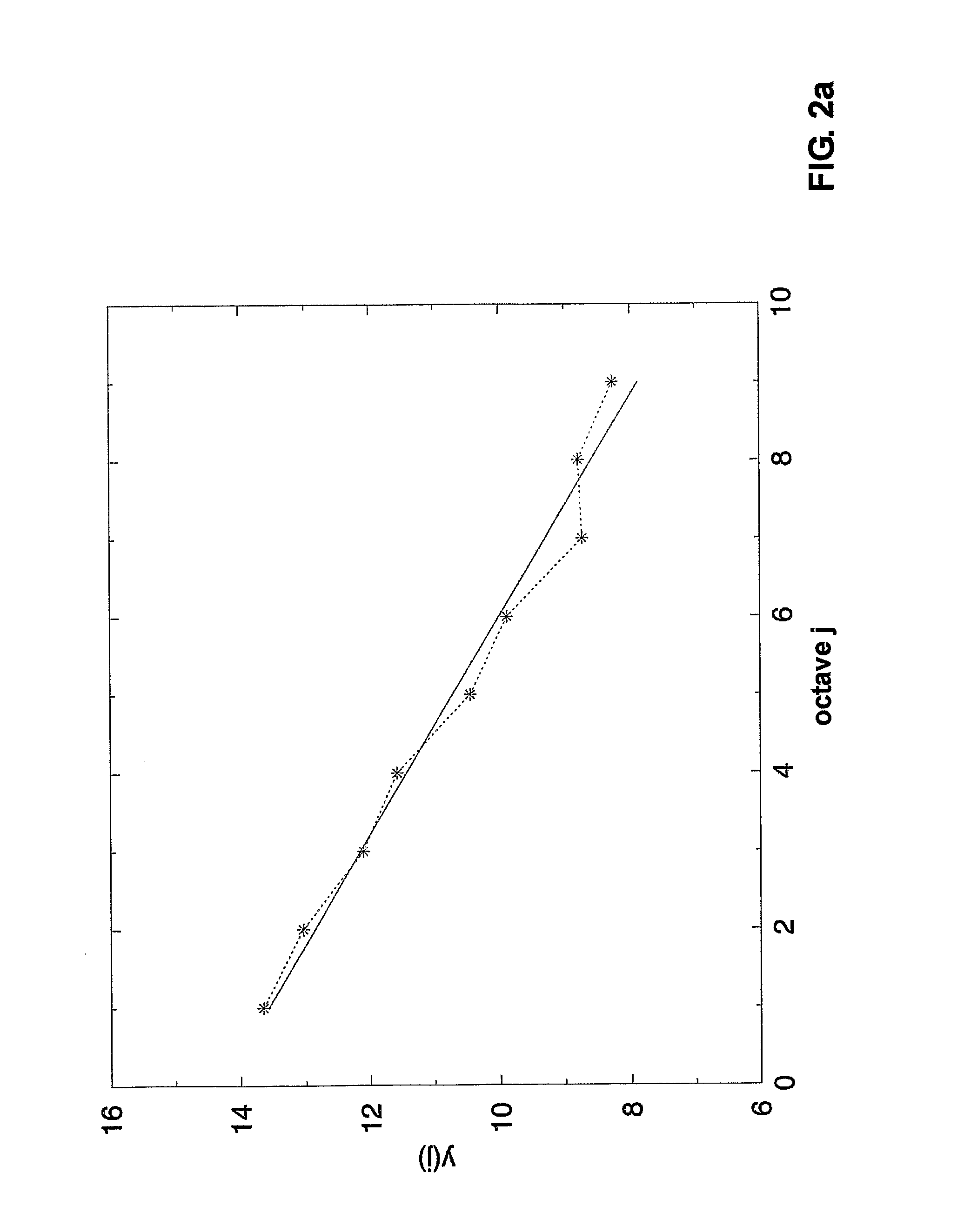

Method for Automatic Speaker Recognition

InactiveUS20070233484A1Improve accuracyReduce the amount of calculationSpeech recognitionFractional Brownian motionTime segment

It is proposed a text-independent automatic speaker recognition (ASkR) system which employs a new speech feature and a new classifier. The statistical feature pH is a vector of Hurst parameters obtained by applying a wavelet-based multi-dimensional estimator (M dim wavelets) to the windowed short-time segments of speech. The proposed classifier for the speaker identification and verification tasks is based on the multi-dimensional fBm (fractional Brownian motion) model, denoted by M dim fBm. For a given sequence of input speech features, the speaker model is obtained from the sequence of vectors of H parameters, means and variances of these features.

Owner:COELHO ROSANGELO FERNANDES

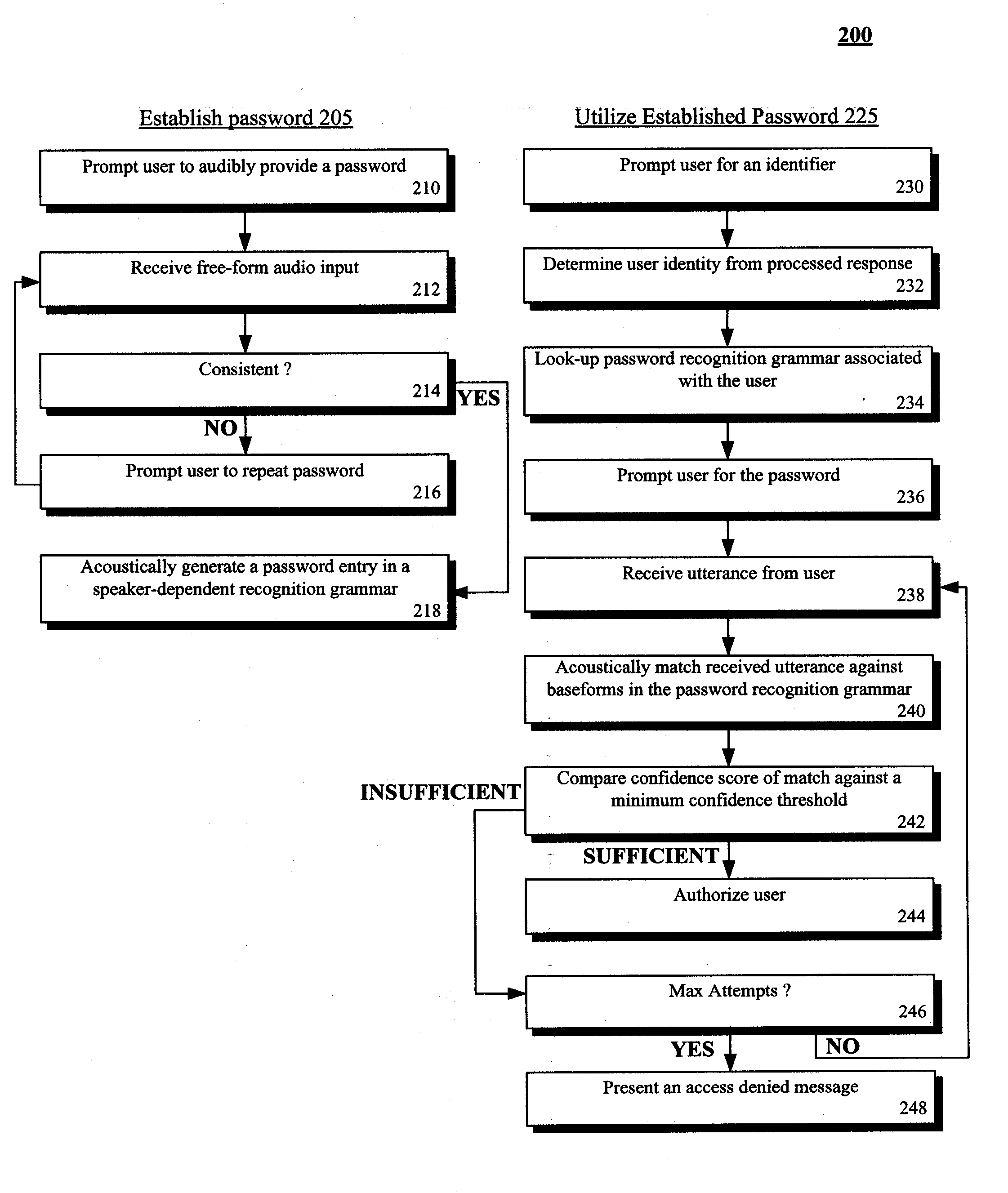

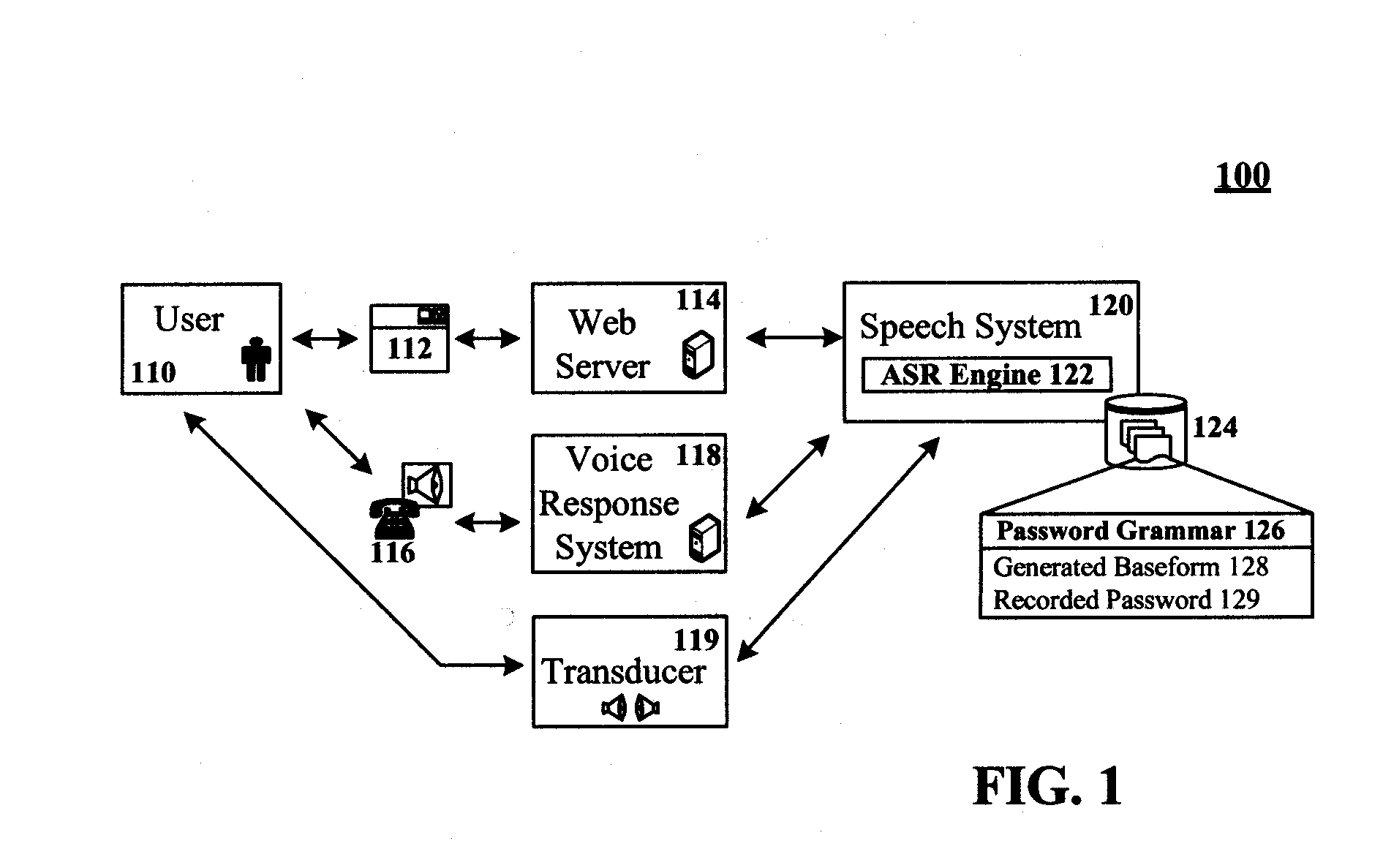

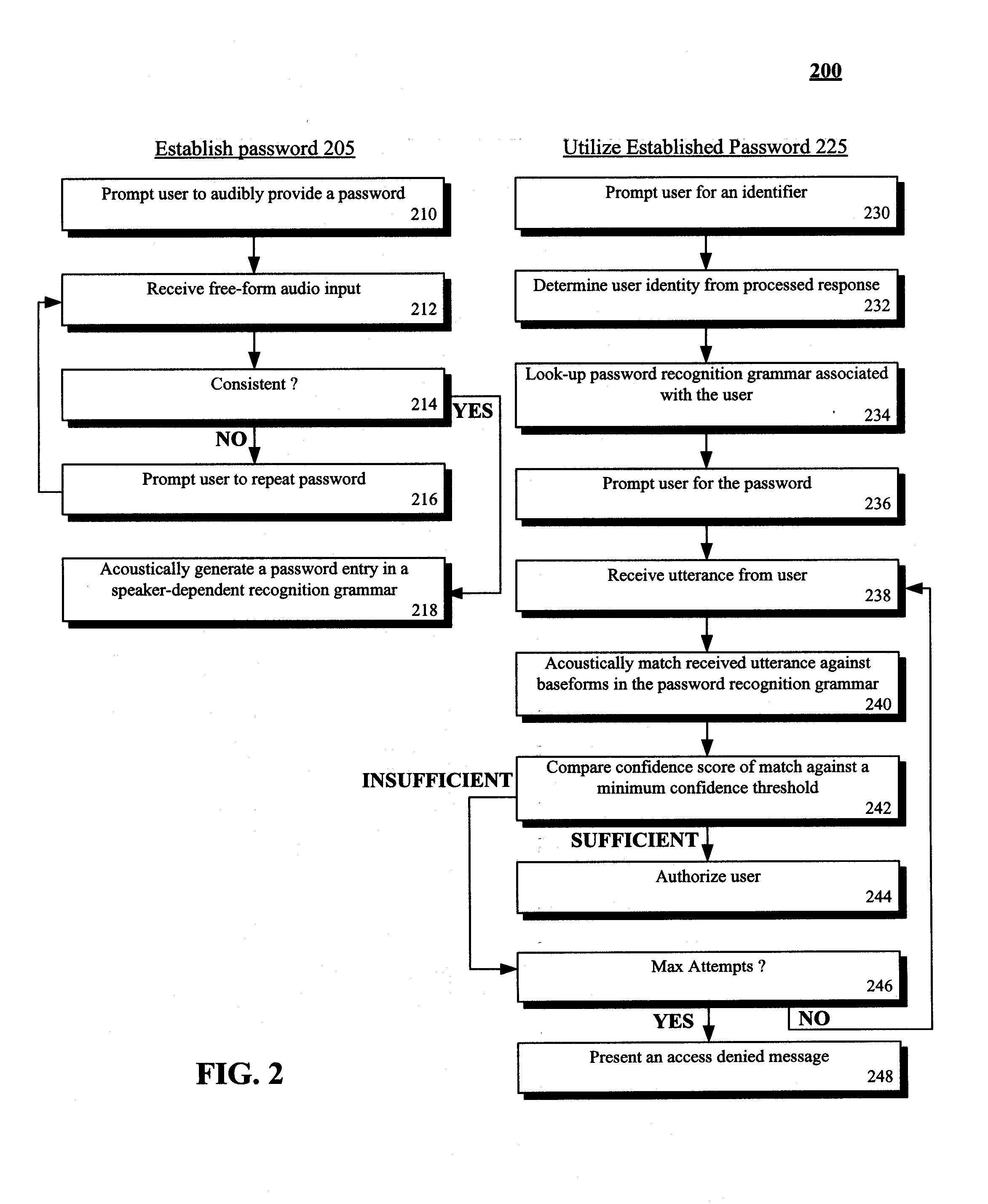

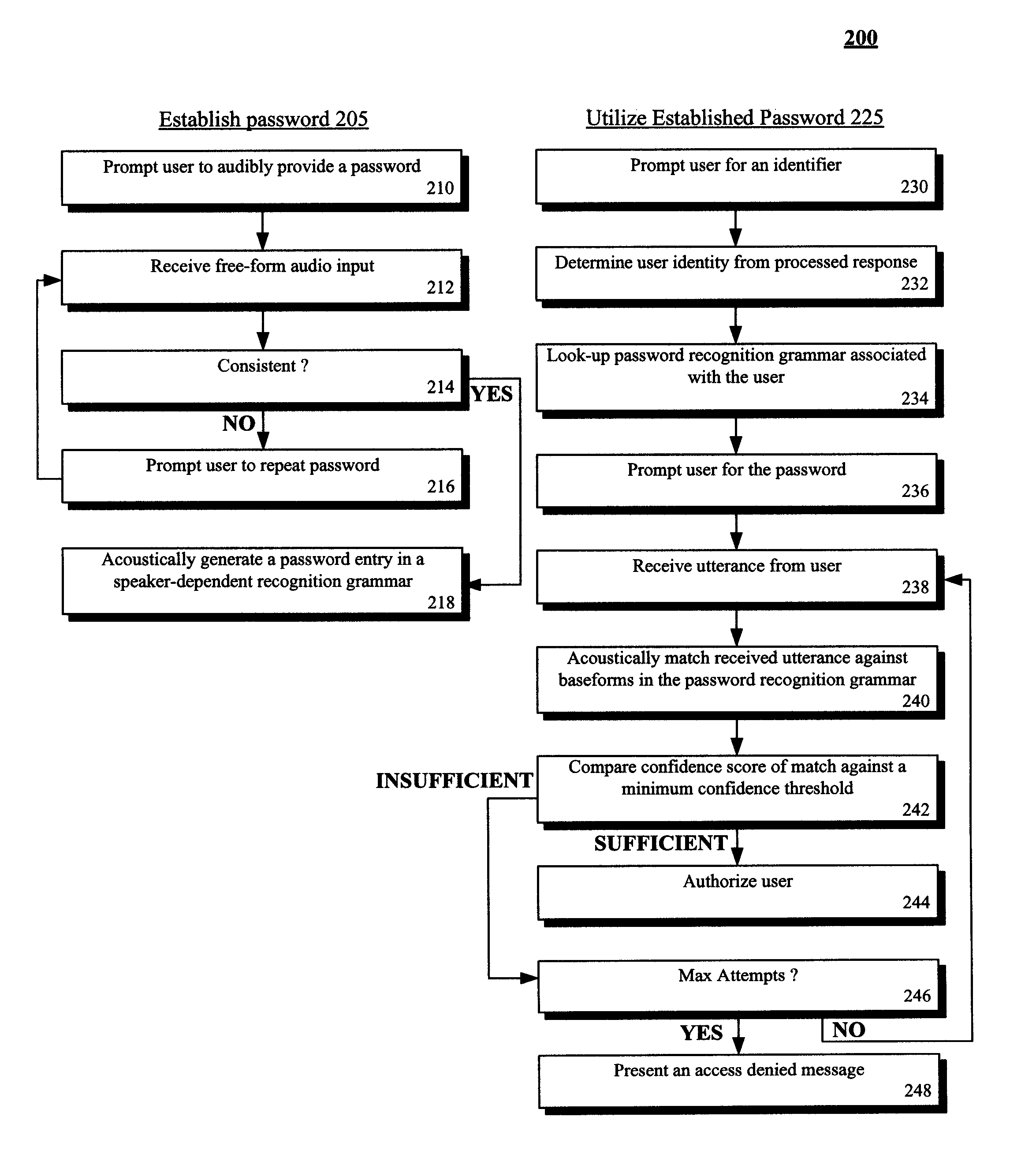

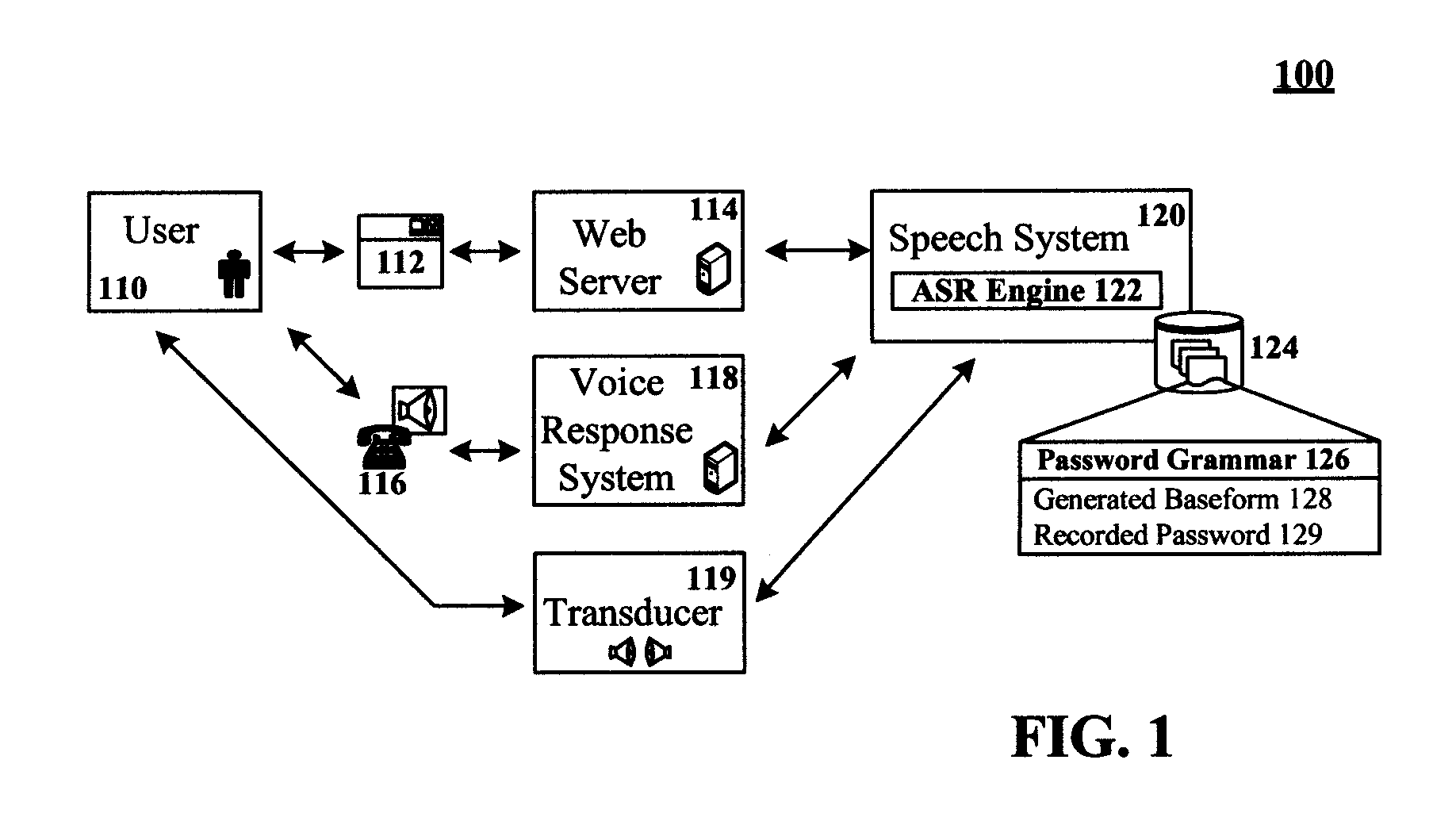

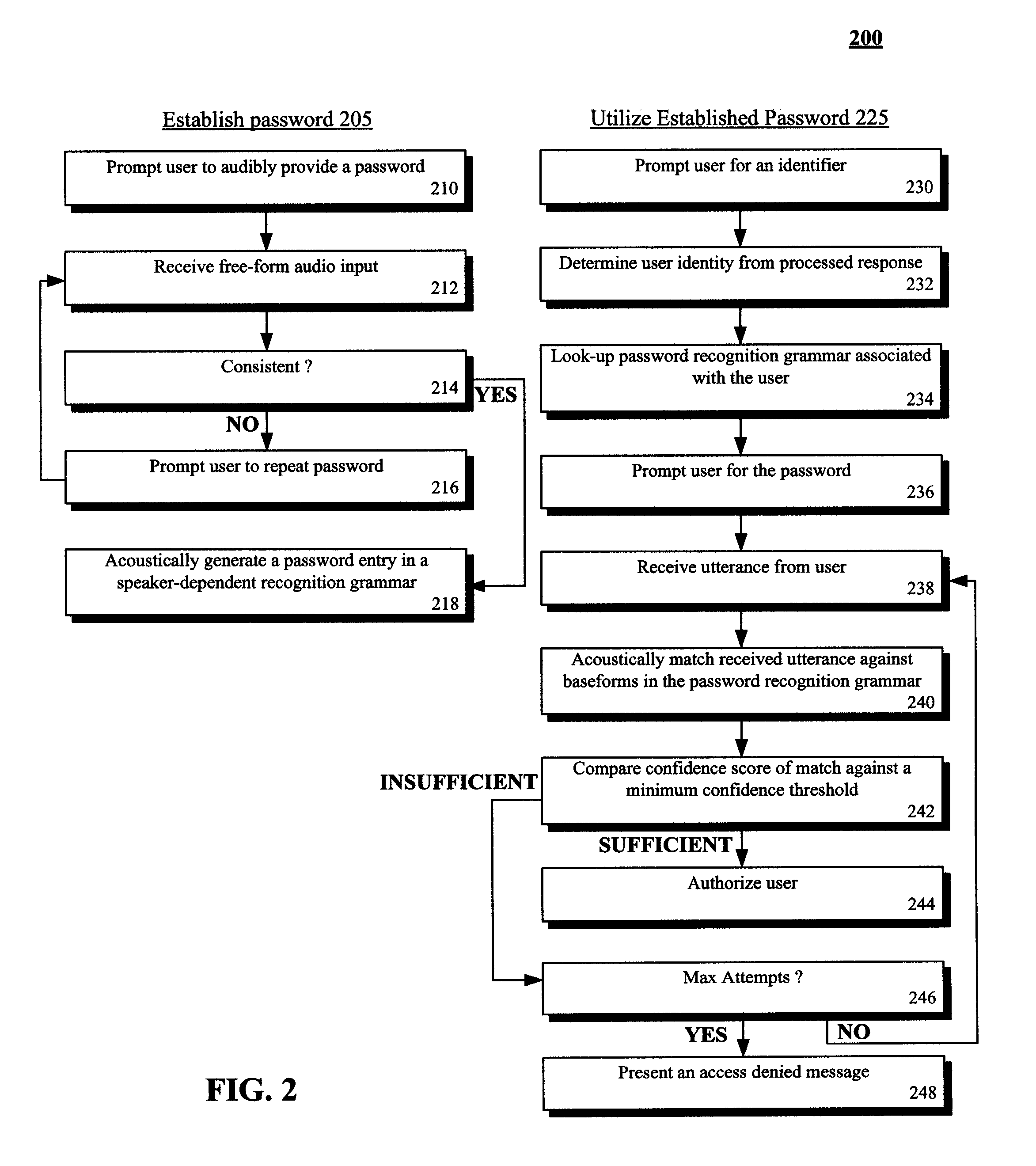

Spoken free-form passwords for light-weight speaker verification using standard speech recognition engines

The present invention discloses a system and a method for authenticating a user based upon a spoken password processed though a standard speech recognition engine lacking specialized speaker identification and verification (SIV) capabilities. It should be noted that the standard speech recognition grammar can be capable of acoustically generating speech recognition grammars in accordance with the cross referenced application indicated herein. The invention can prompt a user for a free-form password and can receive a user utterance in response. The utterance can be processed through a speech recognition engine (e.g., during a grammar enrollment operation) to generate an acoustic baseform. Future user utterances can be matched against the acoustic baseform. Results from the future matches can be used to determine whether to grant the user access to a secure resource.

Owner:NUANCE COMM INC

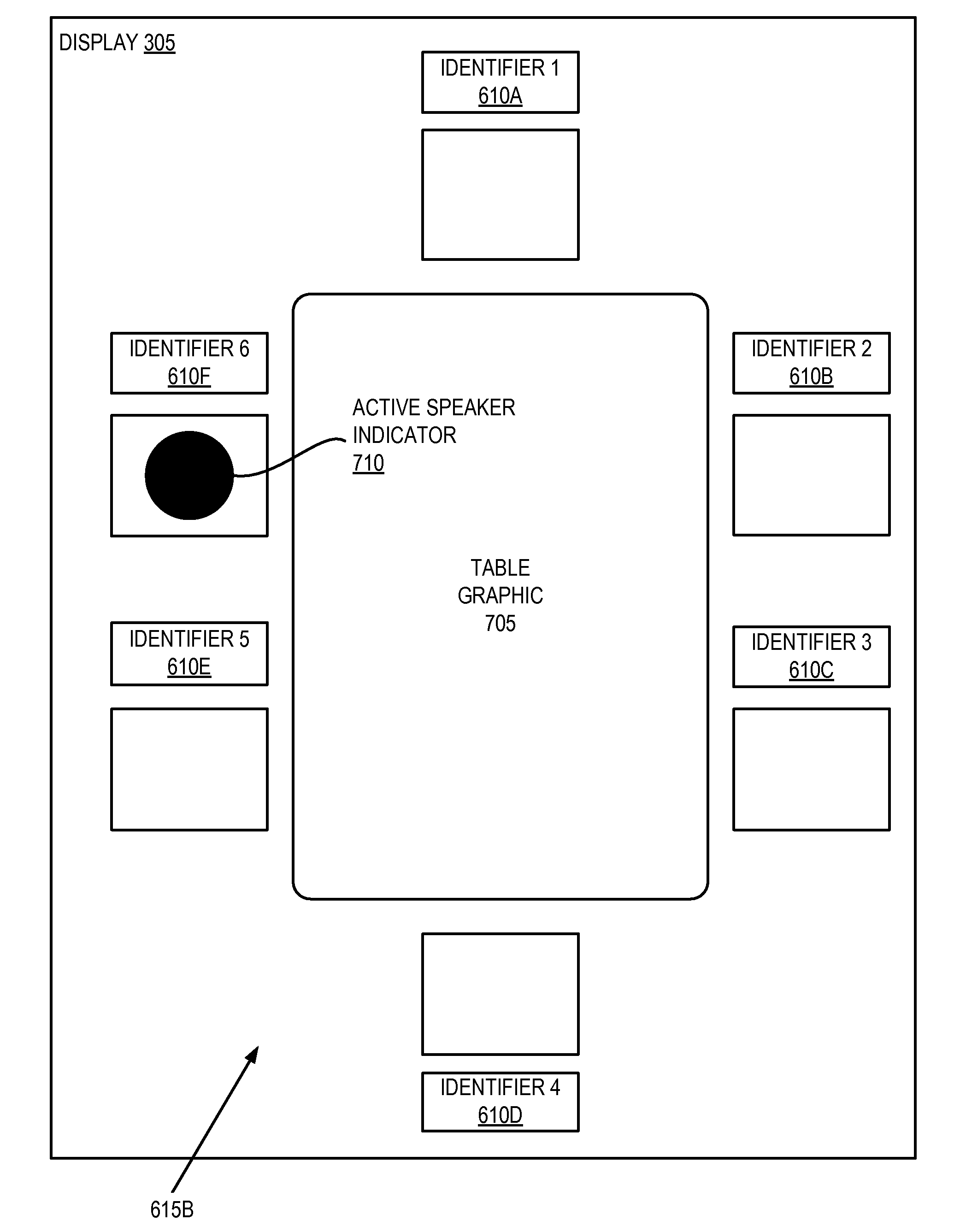

Speaker Identification and Representation For a Phone

ActiveUS20100020951A1Called number recording/indicationSpeech recognitionDisplay deviceComputer science

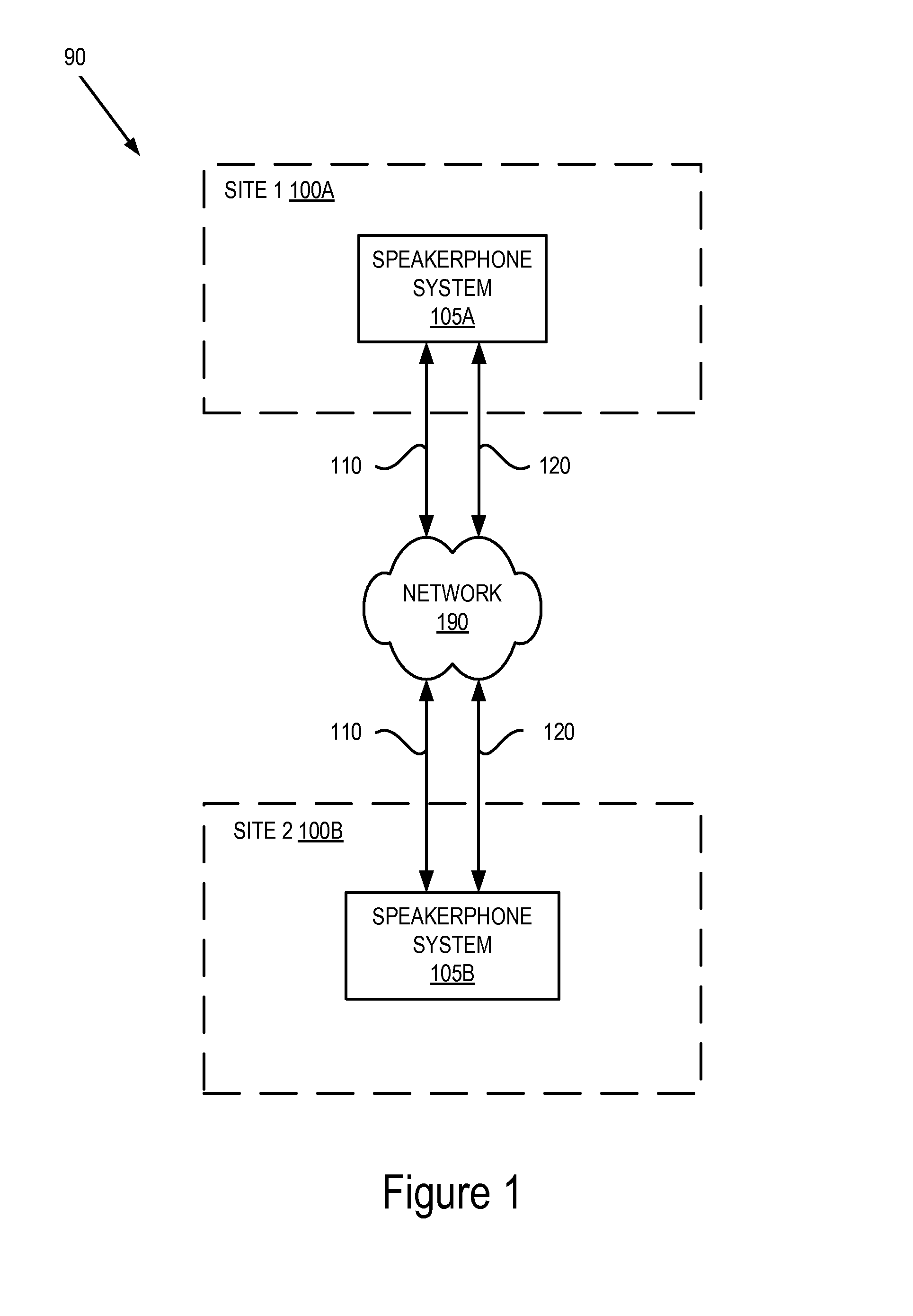

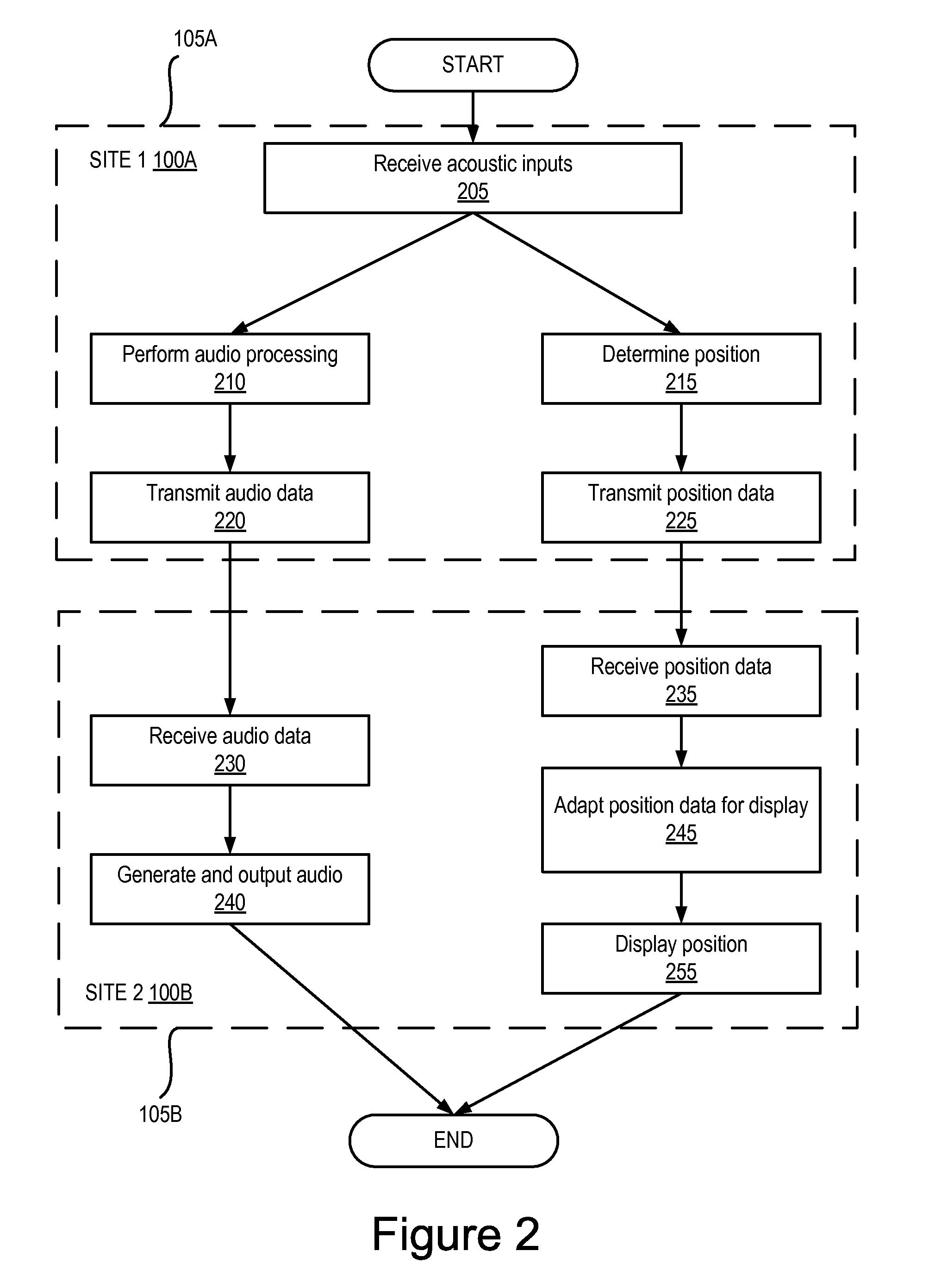

A system and method for determining a speaker's position and a generating a display showing the position of the speaker. In one embodiment, the system comprises a first speakerphone system and a second speakerphone system communicatively coupled to send and receive data. The speakerphone system comprises a display, an input device, a microphone array, a speaker, and a position processing module. The position processing module is coupled to receive acoustic signals from the microphone array. The position processing module uses these acoustic signals to determine a position of the speaker. The position information is then sent to other speakerphone system for presentation on the display. In one embodiment, the position processing module comprises an auto-detection module, a position analysis module, a tracking module and an identity matching module for the detection of sound, the determination of position and transmission of position information over the network. The position processing module comprises a position display module and a position translation module for receiving position information from the network and generating a user interface for display. The present invention also includes a method for determining speaker position and presenting position information.

Owner:SHORETEL +1

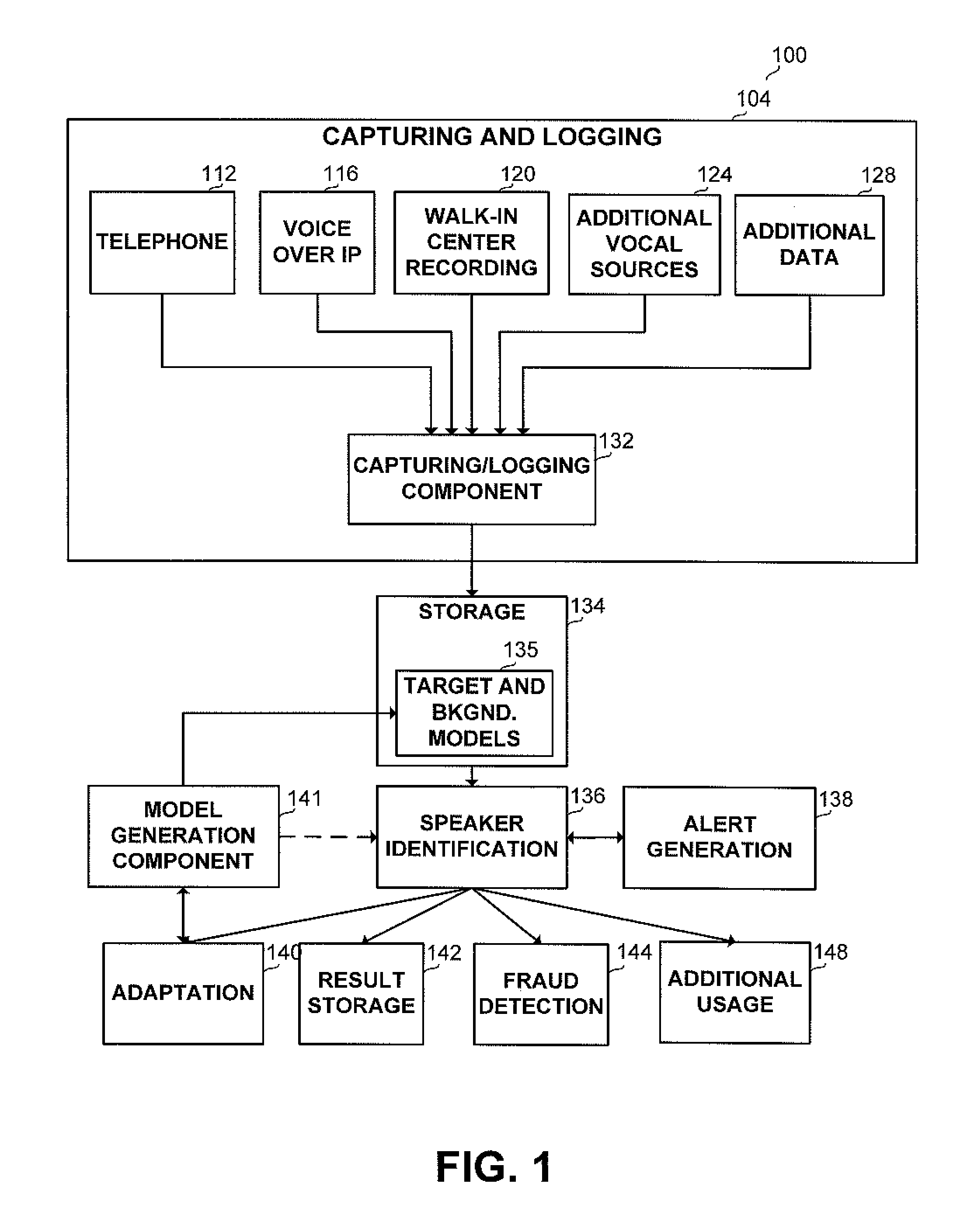

Method and apparatus for large population speaker identification in telephone interactions

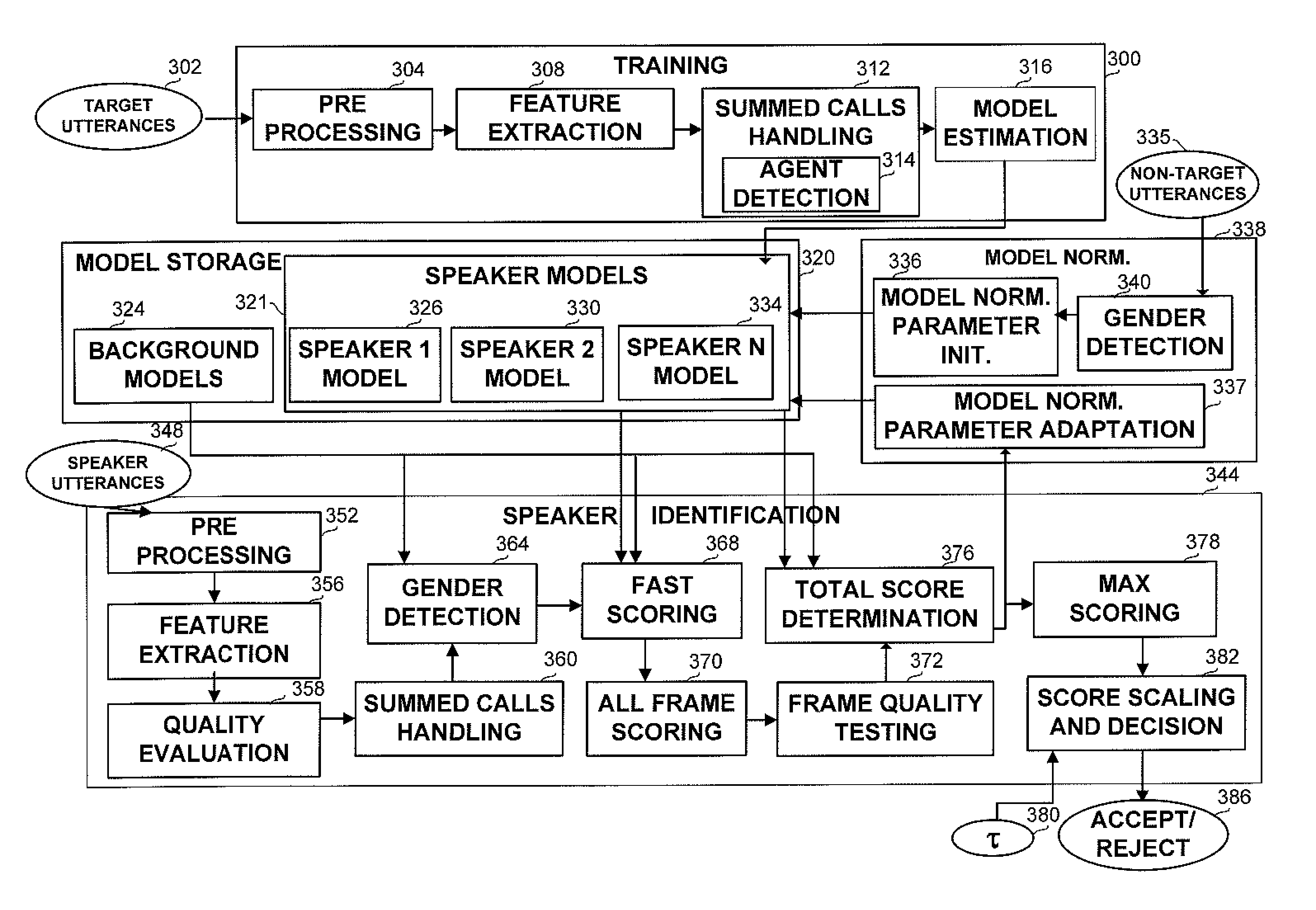

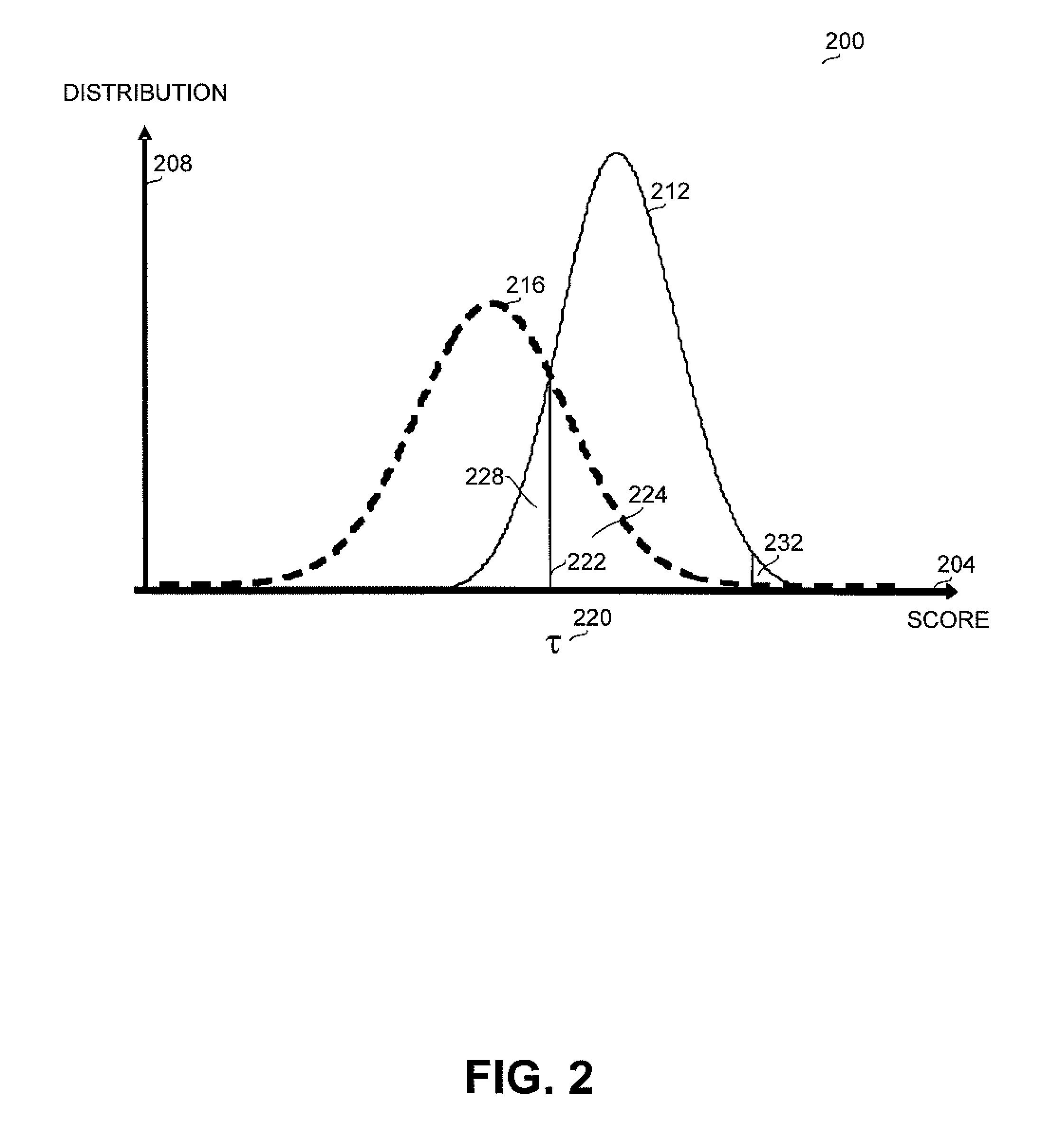

A method and apparatus for determining whether a speaker uttering an utterance belongs to a predetermined set comprising known speakers, wherein a training utterance is available for each known speaker. The method and apparatus test whether features extracted from the tested utterance provide a score exceeding a threshold when matched against one or more of models constructed upon voice samples of each known speaker. The method and system further provide optional enhancements such as determining, using, and updating model normalization parameters, a fast scoring algorithm, summed calls handling, or quality evaluation for the tested utterance.

Owner:NICE SYSTEMS

System and method for providing speaker identification in a conference call

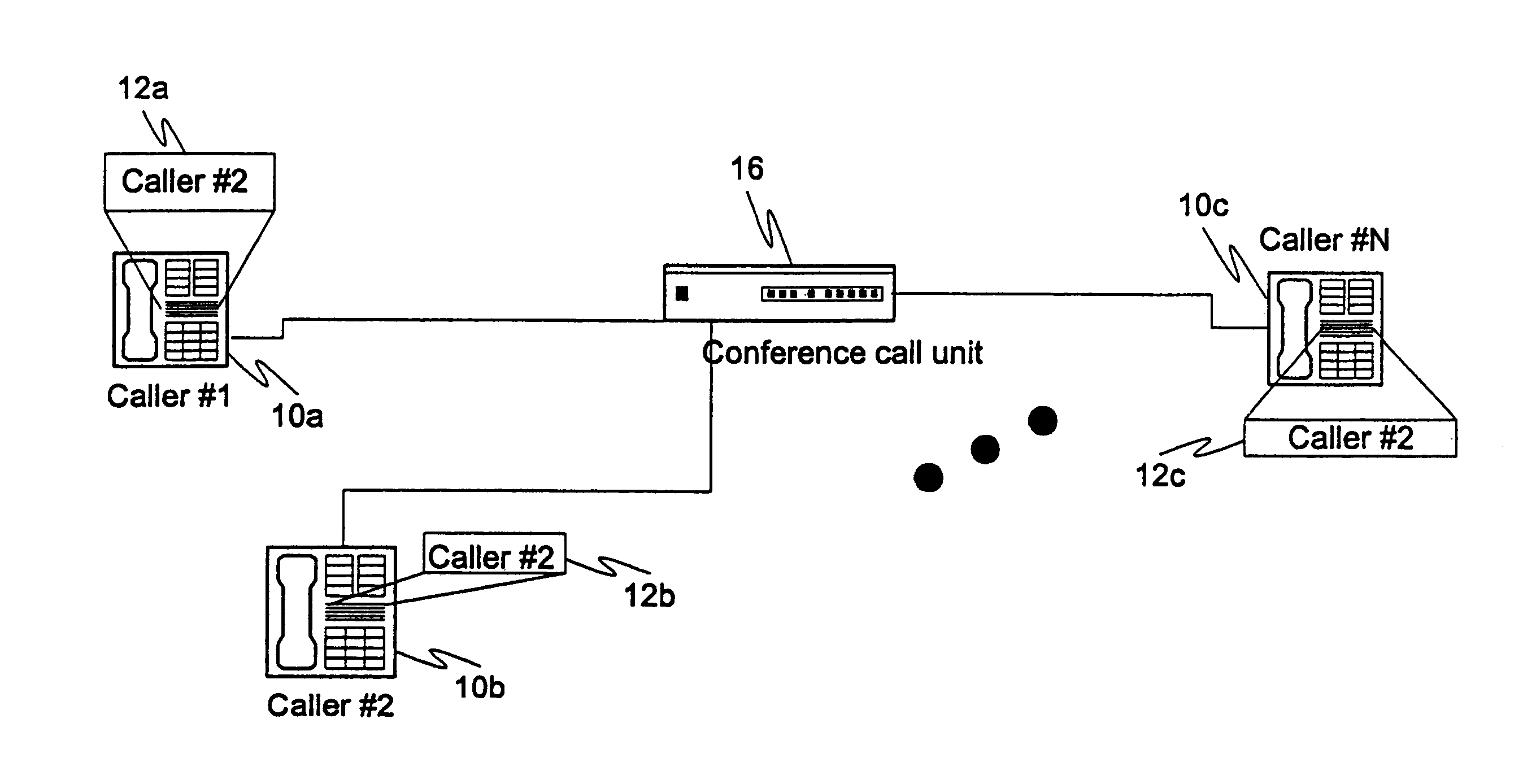

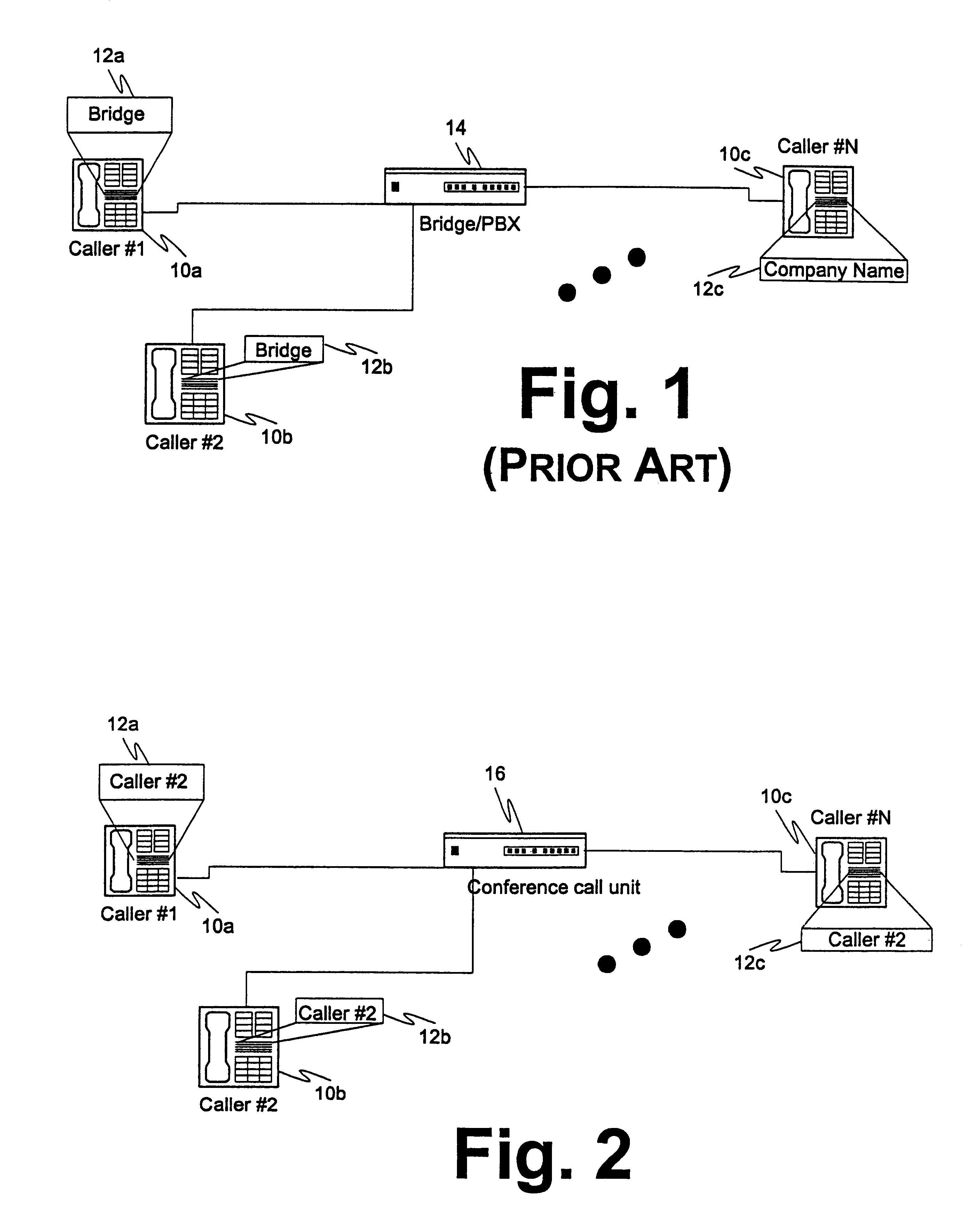

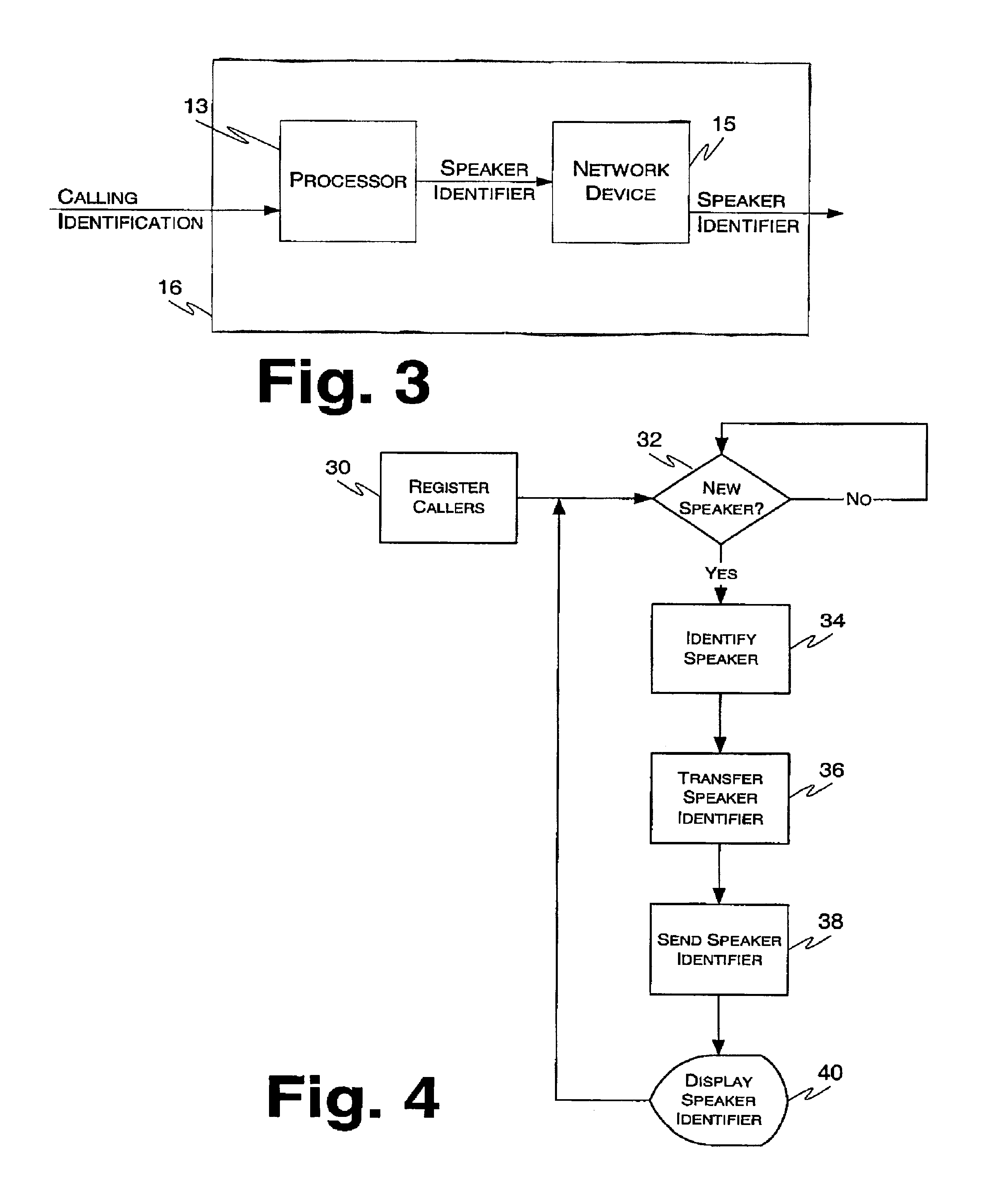

InactiveUS6826159B1Multiplex system selection arrangementsSpecial service provision for substationTeleconferenceSpeaker identification

A system and method for speaker identification in a conference calling unit. The method identifies the speaker, locates the speaker identifier, transfers the speaker identifier to an outgoing path and sends the speaker identifier to endpoints participating in the conference call. If the endpoints have the capability of displaying caller identification, their displays will be updated dynamically with the speaker identifier in place of the caller identifier. The system includes a conference call unit. The unit has a processing unit operable to extract the caller identification of the line having the speaker and a network interface unit operable to send the caller identification to endpoints participating in the call as speaker identification.

Owner:CISCO TECH INC

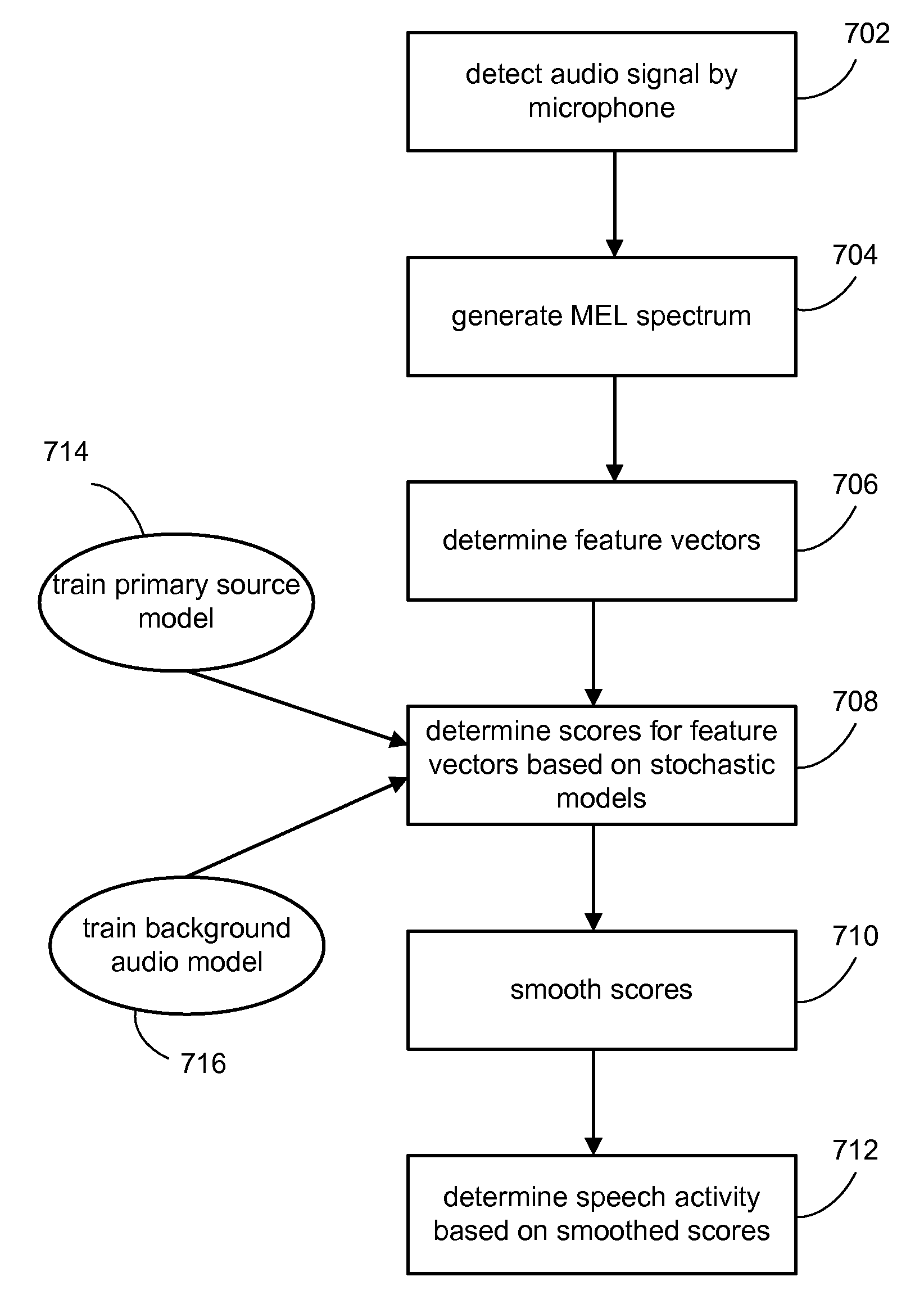

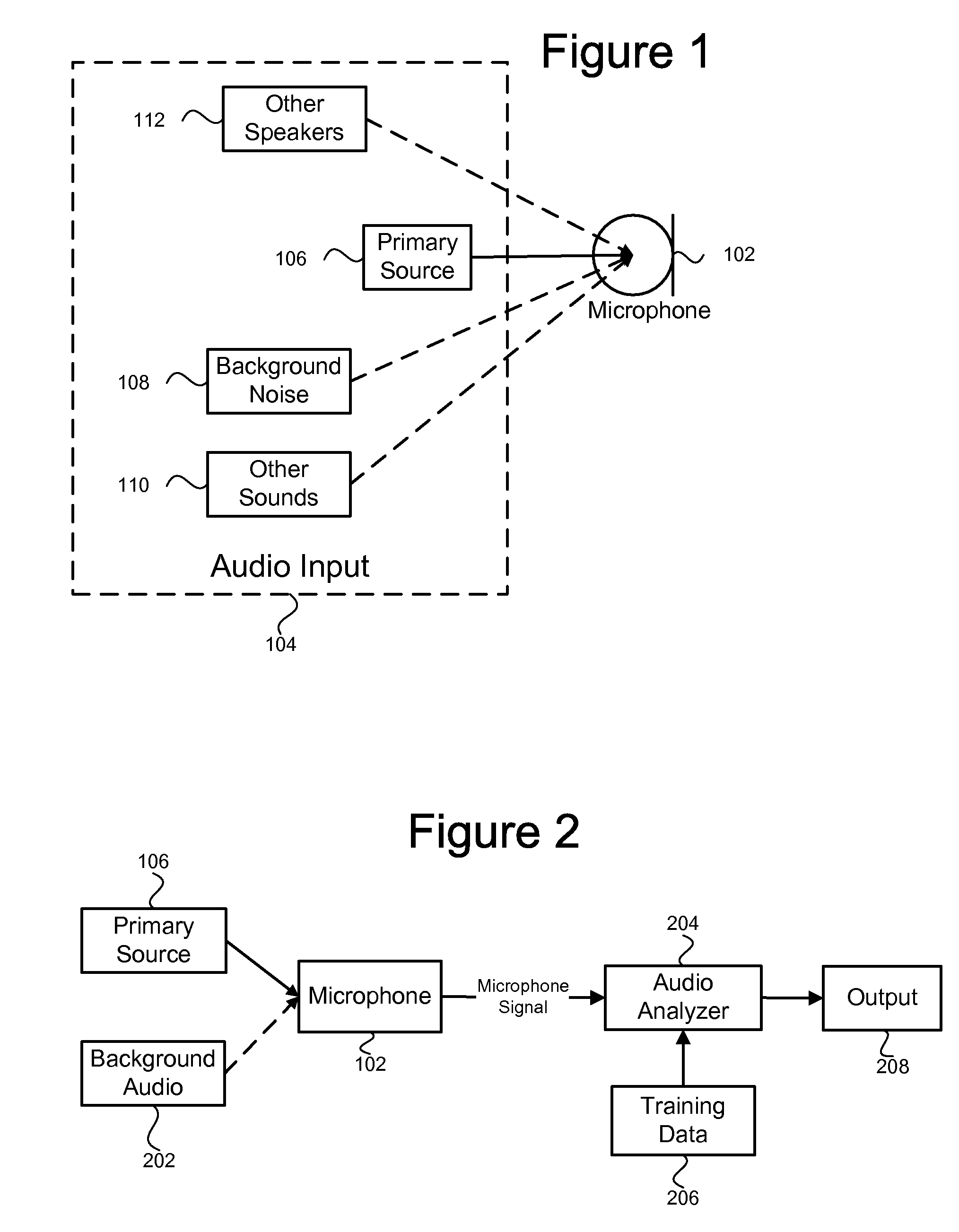

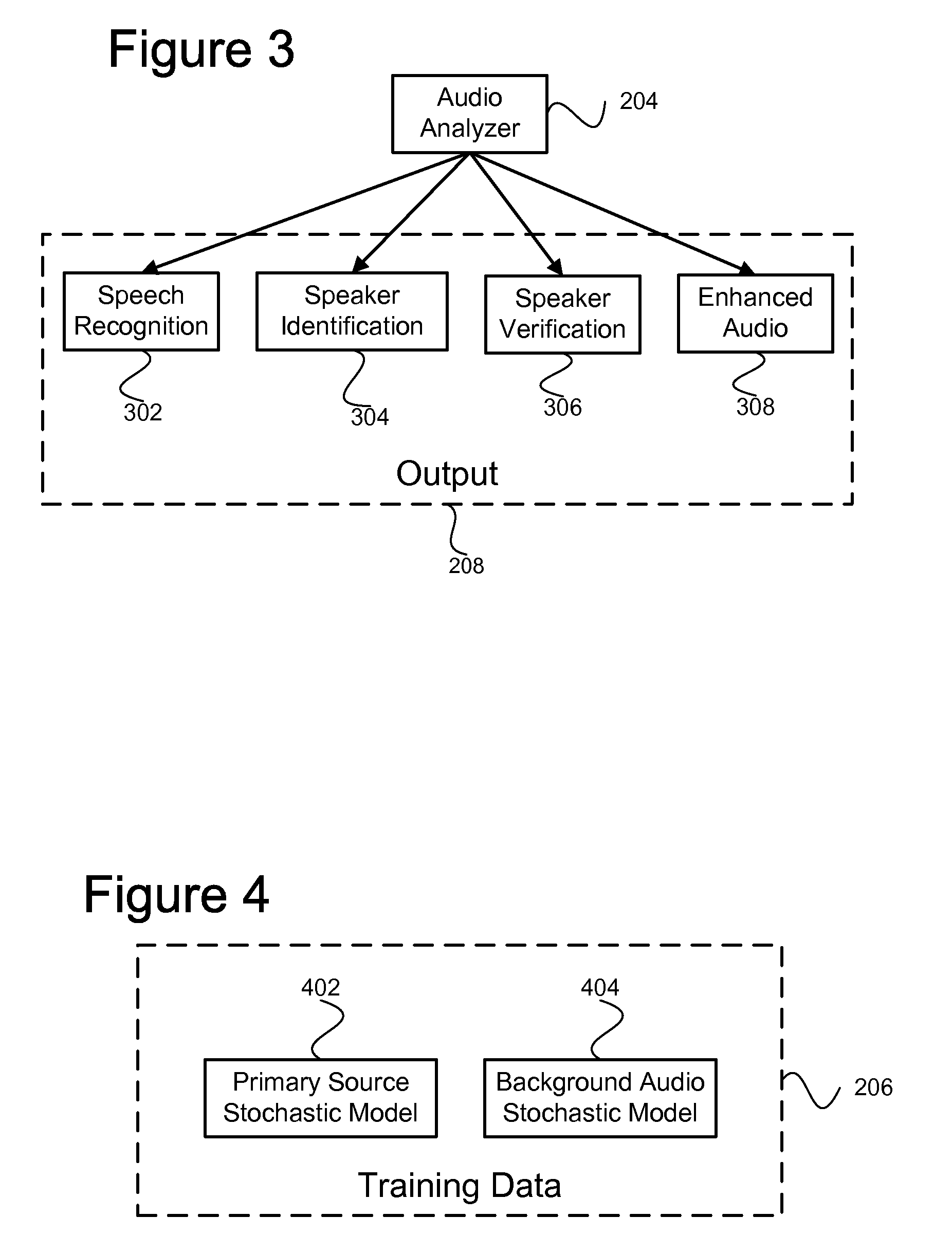

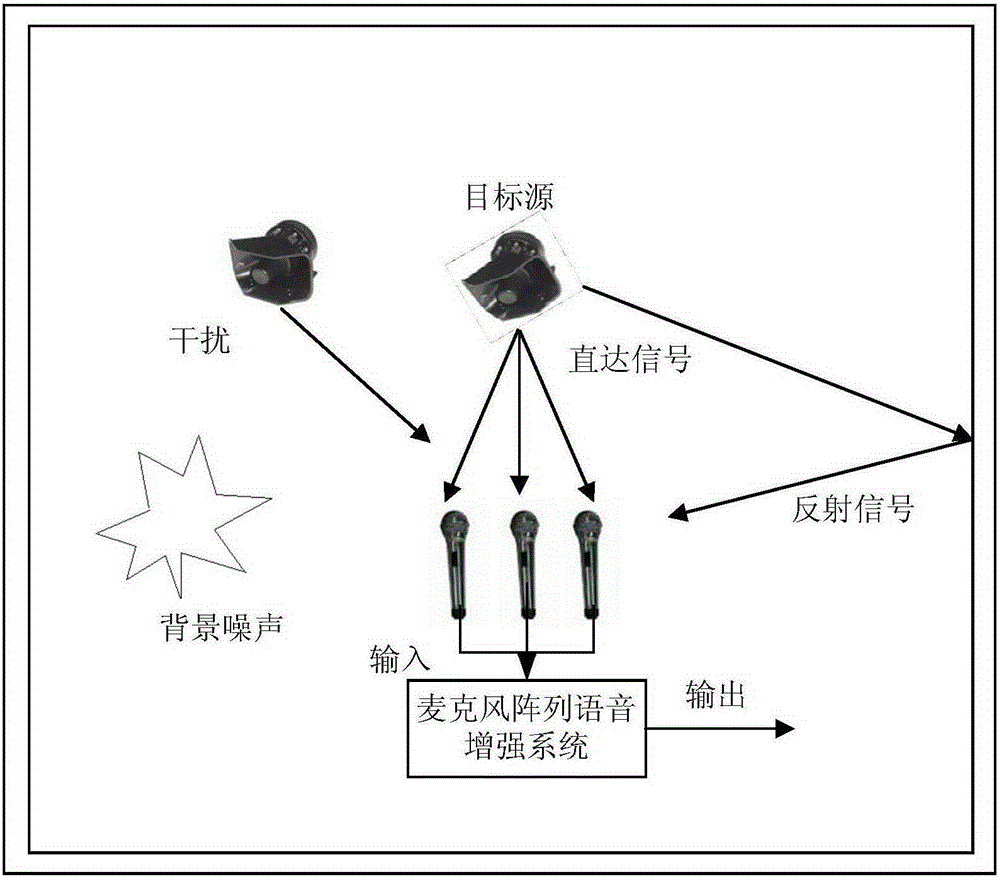

System for distinguishing desired audio signals from noise

ActiveUS20090228272A1Quality improvementEnhance speechSpeech recognitionTransmission noise suppressionSignal qualityAudio signal flow

A system distinguishes a primary audio source and background noise to improve the quality of an audio signal. A speech signal from a microphone may be improved by identifying and dampening background noise to enhance speech. Stochastic models may be used to model speech and to model background noise. The models may determine which portions of the signal are speech and which portions are noise. The distinction may be used to improve the signal's quality, and for speaker identification or verification.

Owner:NUANCE COMM INC

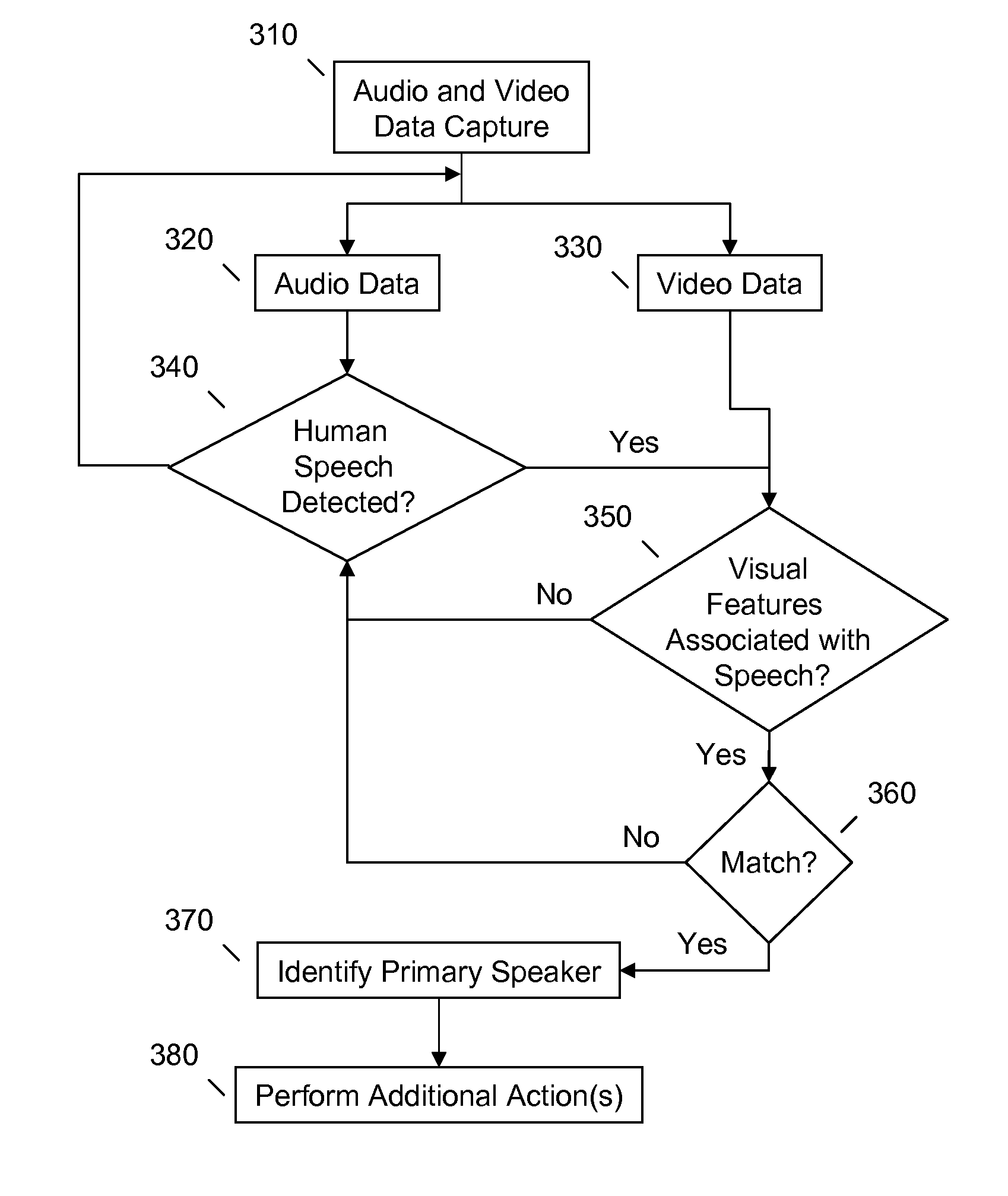

Primary speaker identification from audio and video data

An aspect provides a method, including: receiving image data from a visual sensor of an information handling device; receiving audio data from one or more microphones of the information handling device; identifying, using one or more processors, human speech in the audio data; identifying, using the one or more processors, a pattern of visual features in the image data associated with speaking; matching, using the one or more processors, the human speech in the audio data with the pattern of visual features in the image data associated with speaking; selecting, using the one or more processors, a primary speaker from among matched human speech; assigning control to the primary speaker; and performing one or more actions based on audio input of the primary speaker. Other aspects are described and claimed.

Owner:LENOVO (SINGAPORE) PTE LTD

Speaker identification in the presence of packet losses

InactiveUS7720012B1Multiplex system selection arrangementsSpecial service provision for substationPacket lossLoudspeaker

A system, method, and apparatus for identifying a speaker of an utterance, particularly when the utterance has portions of it missing due to packet losses. Different packet loss models are applied to each speaker's training data in order to improve accuracy, especially for small packet sizes.

Owner:ARROWHEAD CENT

Spoken free-form passwords for light-weight speaker verification using standard speech recognition engines

The present invention discloses a system and a method for authenticating a user based upon a spoken password processed though a standard speech recognition engine lacking specialized speaker identification and verification (SIV) capabilities. It should be noted that the standard speech recognition grammar can be capable of acoustically generating speech recognition grammars in accordance with the cross referenced application indicated herein. The invention can prompt a user for a free-form password and can receive a user utterance in response. The utterance can be processed through a speech recognition engine (e.g., during a grammar enrollment operation) to generate an acoustic baseform. Future user utterances can be matched against the acoustic baseform. Results from the future matches can be used to determine whether to grant the user access to a secure resource.

Owner:NUANCE COMM INC

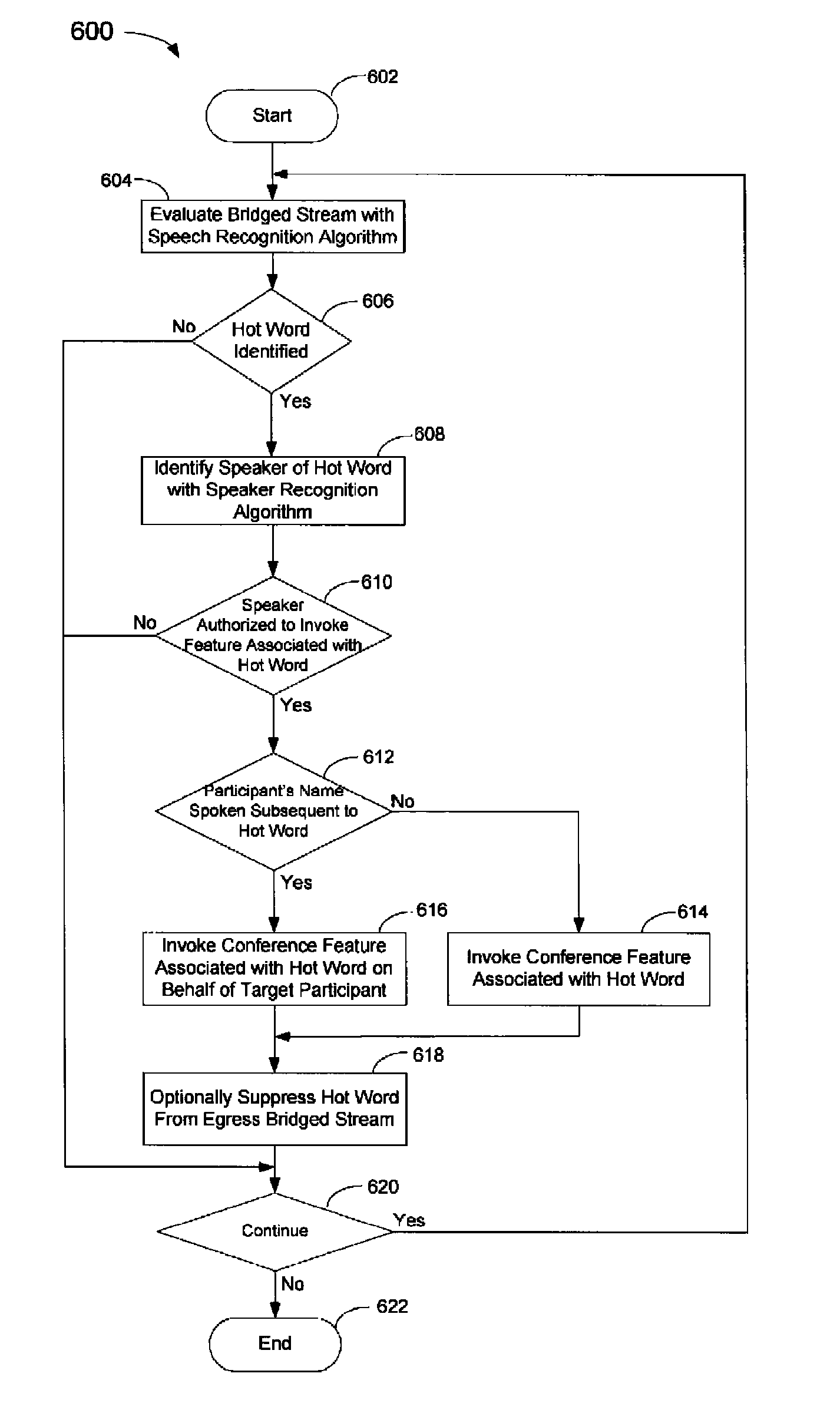

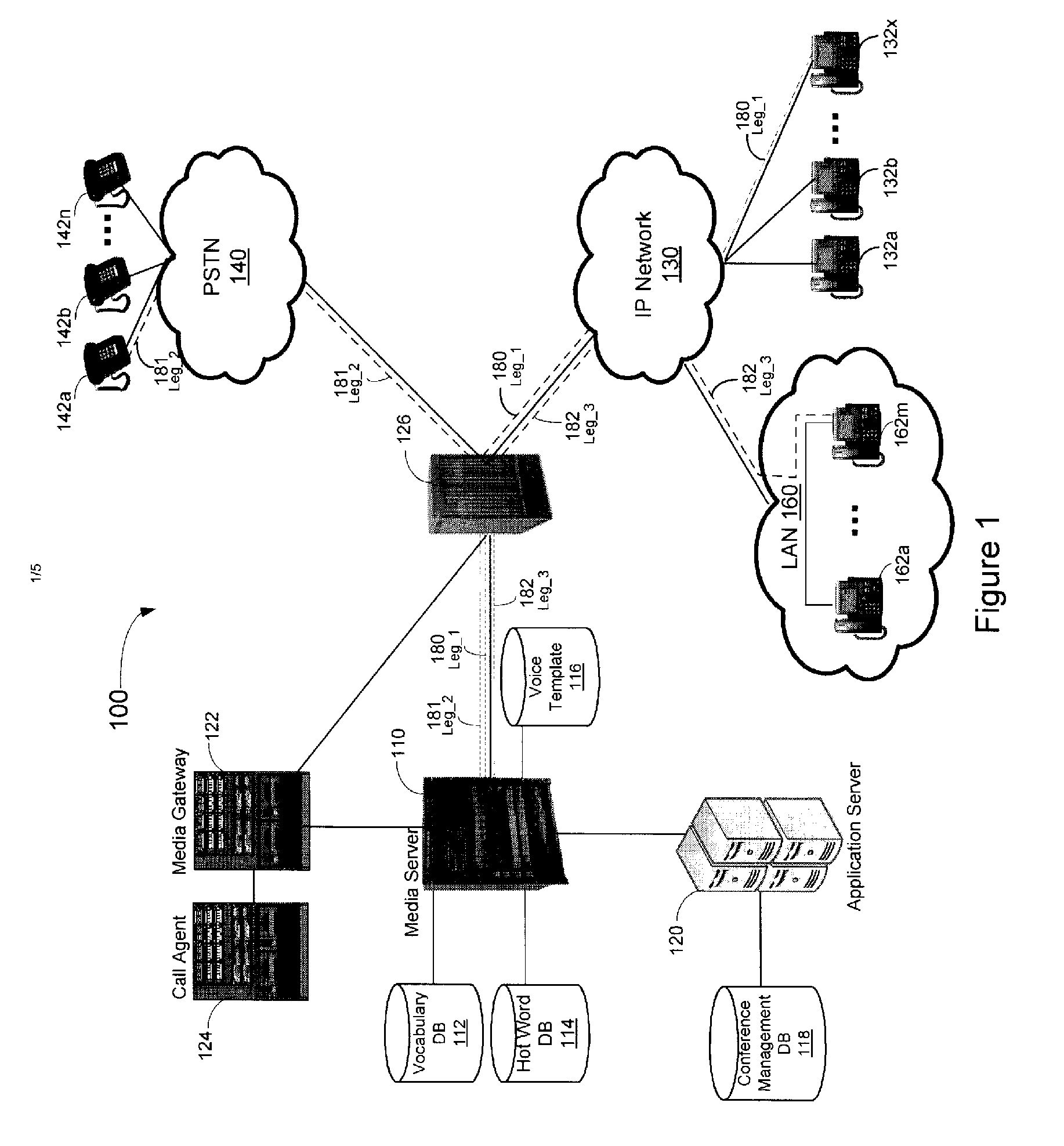

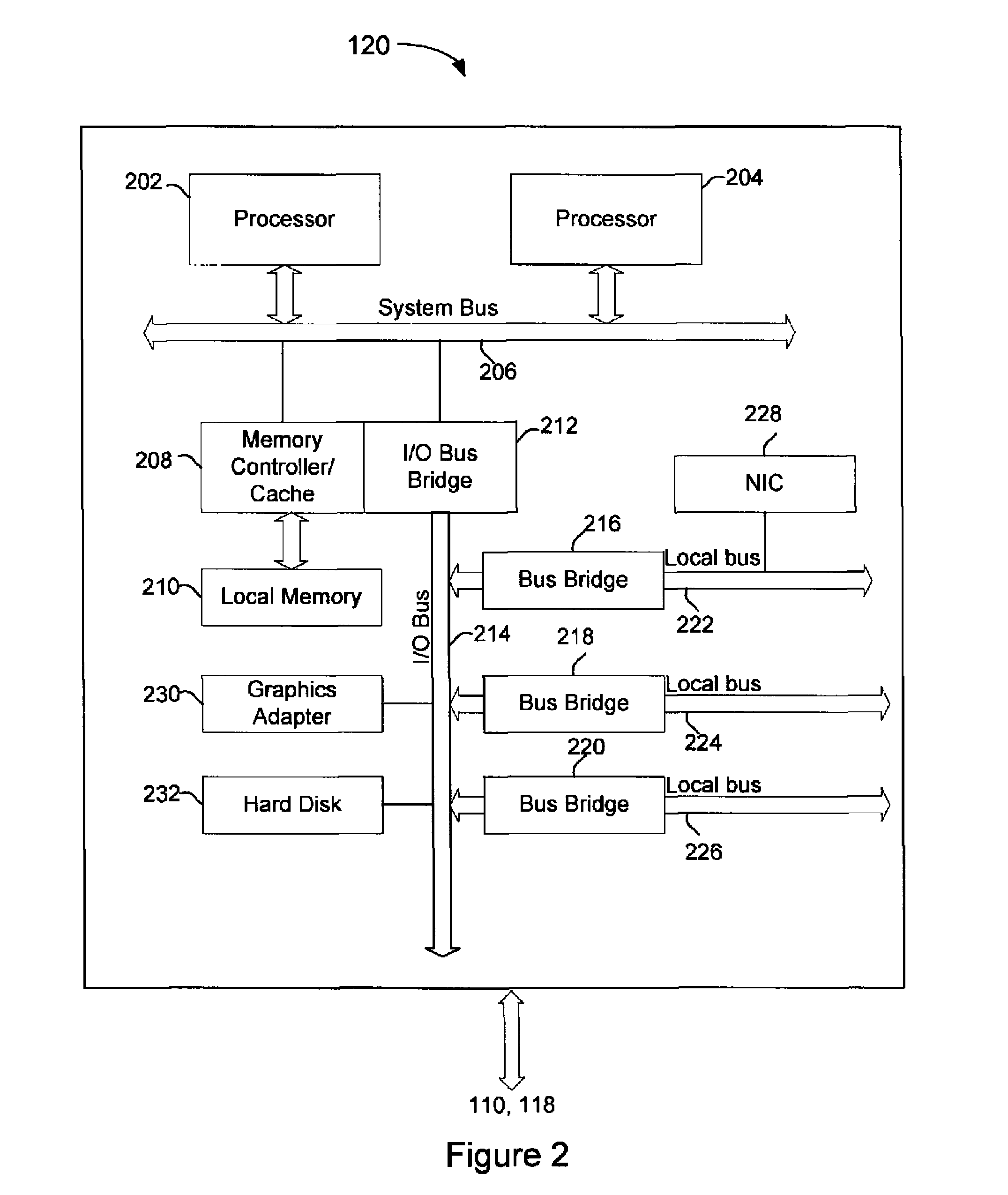

System, method, and computer-readable medium for verbal control of a conference call

ActiveUS8060366B1Multiplex system selection arrangementsSpecial service provision for substationConference controlAutomatic speech

A system, method, and computer readable medium that facilitate verbal control of conference call features are provided. Automatic speech recognition functionality is deployed in a conferencing platform. Hot words are configured in the conference platform that may be identified in speech supplied to a conference call. Upon recognition of a hot word, a corresponding feature may be invoked. A speaker may be identified using speaker identification technologies. Identification of the speaker may be utilized to fulfill the speaker's request in response to recognition of a hot word and the speaker. Particular participants may be provided with conference control privileges that are not provided to other participants. Upon recognition of a hot word, the speaker may be identified to determine if the speaker is authorized to invoke the conference feature associated with the hot word.

Owner:WEST TECH GRP LLC

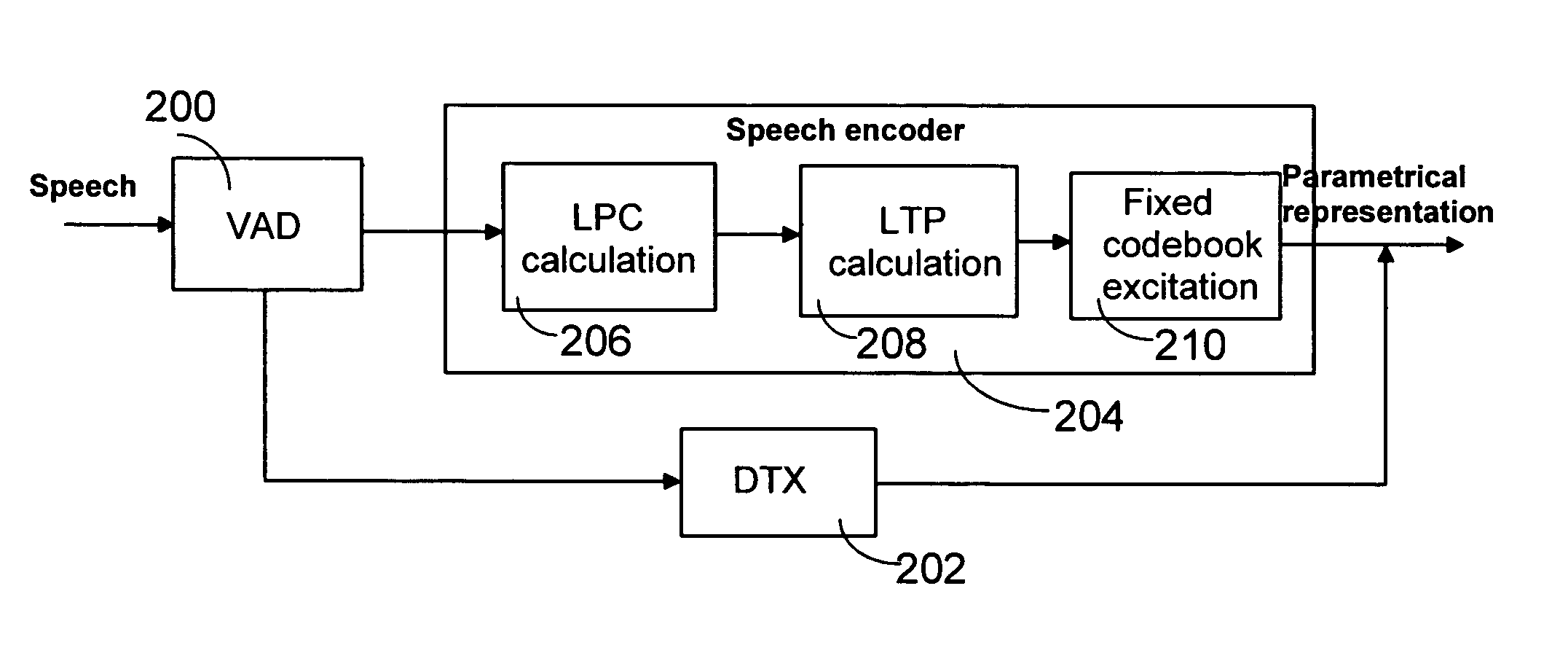

Spatialization arrangement for conference call

InactiveUS20070025538A1Reduce complexitySpeech analysisSpecial service for subscribersConference callLoudspeaker

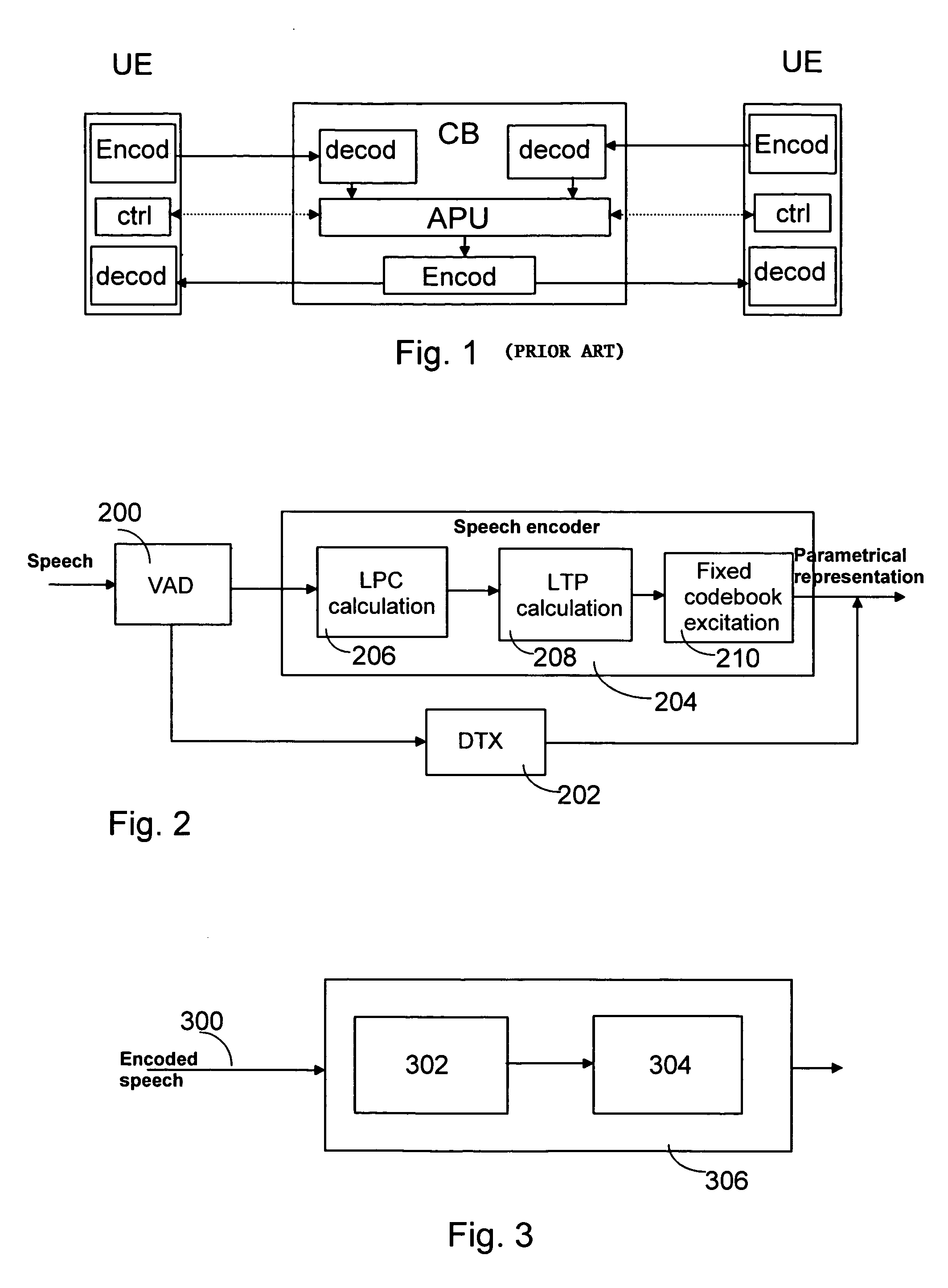

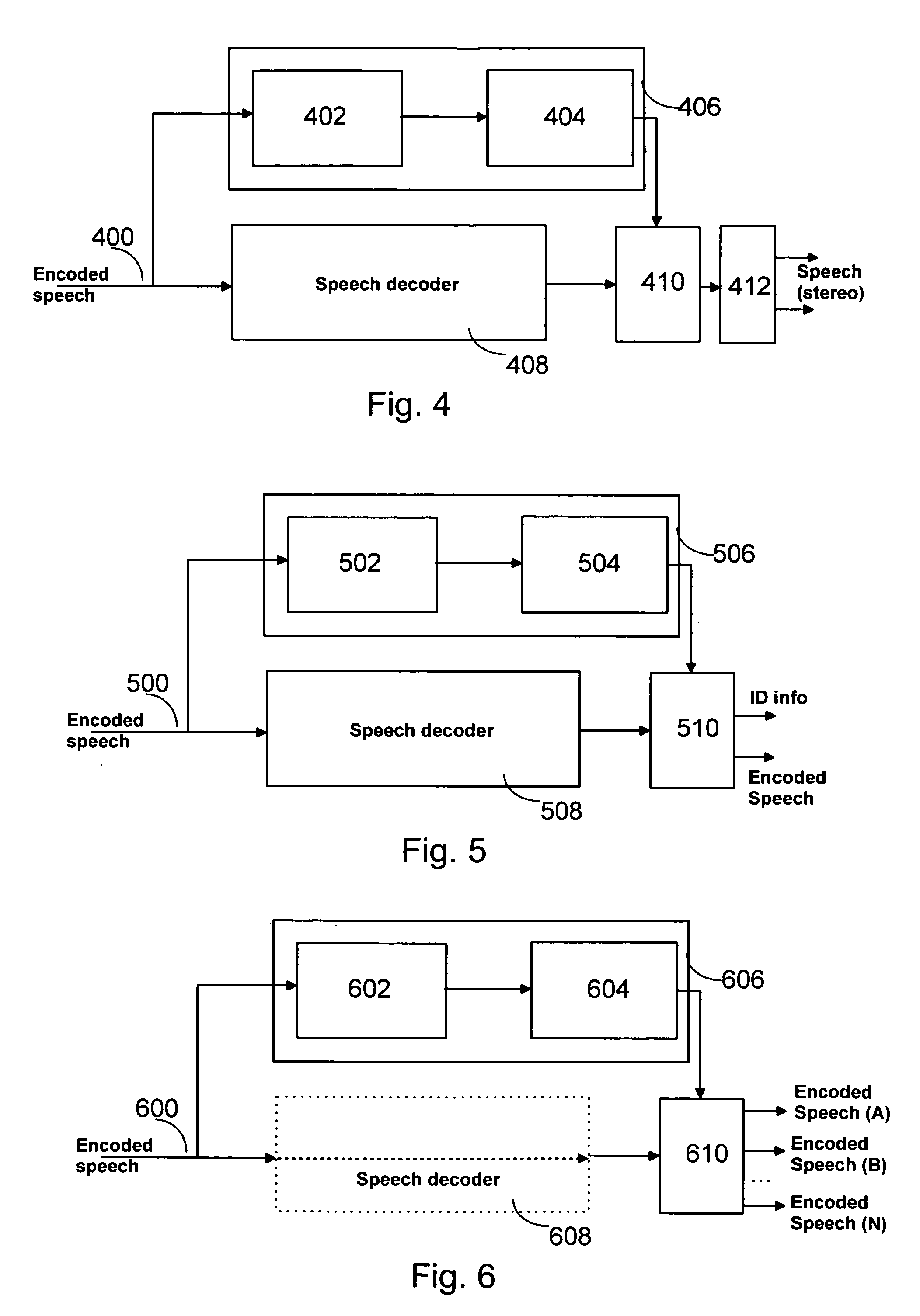

A method for distinguishing speakers in a conference call of a plurality of participants, in which method speech frames of the conference call are received in a receiving unit, which speech frames include encoded speech parameters. At least one parameter of the received speech frames is examined in an audio codec of the receiving unit, and the speech frames are classified to belong to one of the participants, the classification being carried out according to differences in the examined at least one speech parameter. These functions may be carried out in a speaker identification block, which is applicable in various positions of a teleconferencing processing chain. Finally, a spatialization effect is created in a terminal reproducing the audio signal according to notified differences by placing the participants at distinct positions in an acoustical space of the audio signal.

Owner:NOKIA CORP

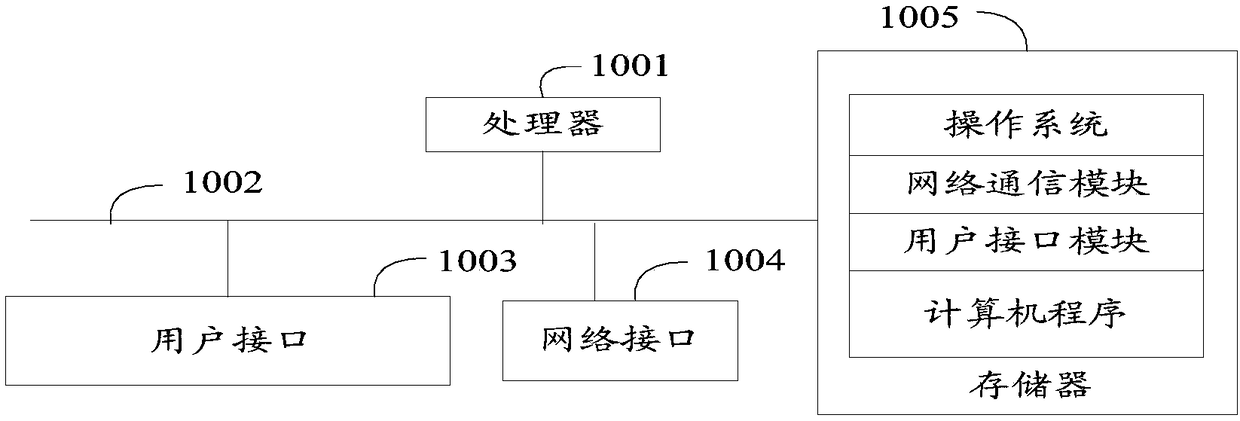

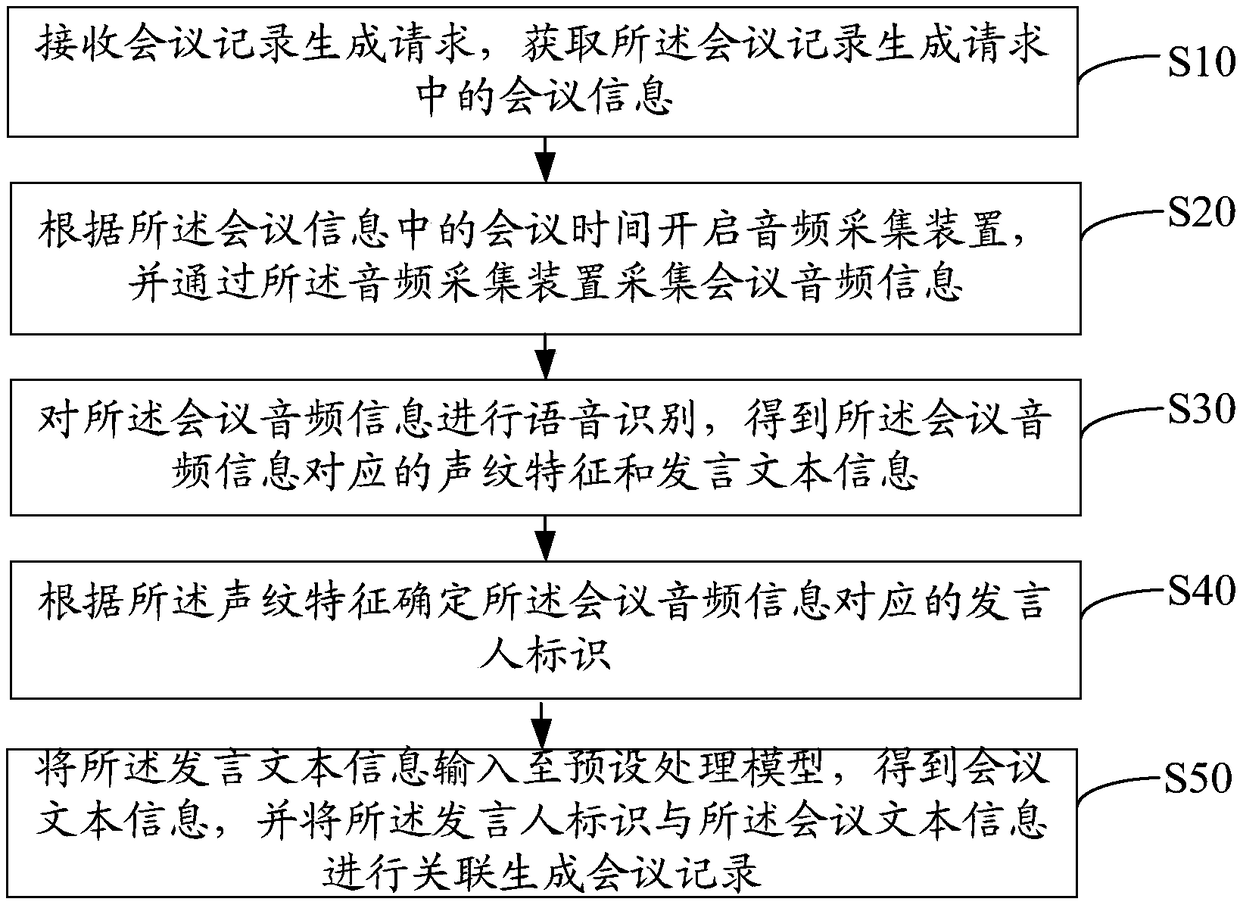

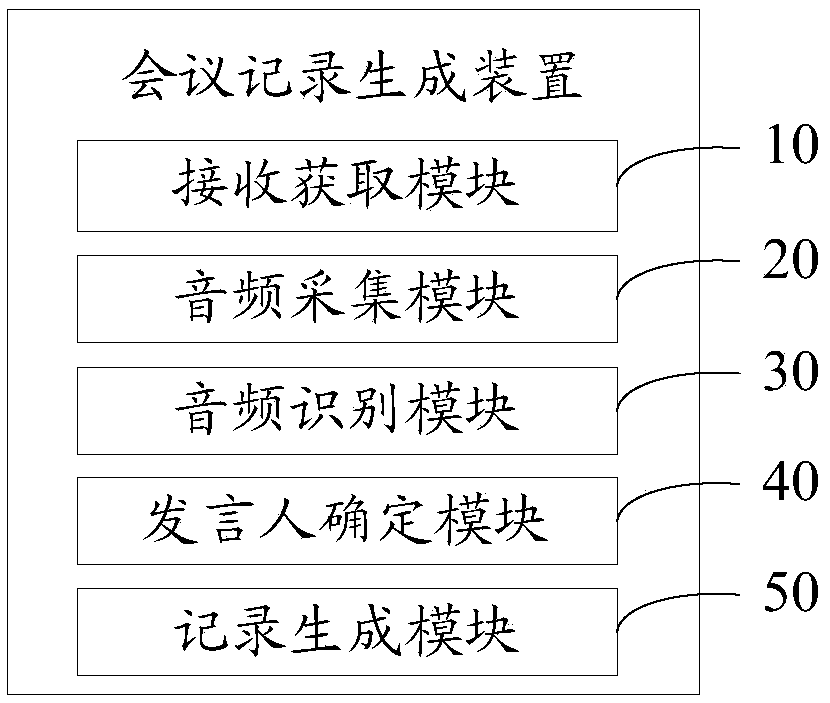

Meeting record generation method, apparatus, apparatus, and computer storage medium

PendingCN109388701AGenerate intelligenceImprove efficiencyDigital data information retrievalSpeech recognitionSpeaker identificationSubvocal recognition

The invention discloses a meeting record generation method, comprising the following steps: receiving a meeting record generation request and obtaining meeting information in the meeting record generation request; And collecting audio information of the meeting through the audio collecting device; Performing speech recognition on the conference audio information to obtain voice print features andspeech text information corresponding to the conference audio information; Determining a speaker identification corresponding to the conference audio information according to the voiceprint characteristics; inputting The speech text information is to a preset processing model to obtain meeting text information, and associating the speaker identification with the meeting text information to generate meeting records. The invention also discloses a meeting record generation device, an apparatus and a computer storage medium. that the speech text information is obtained by the speech recognitionis used in the invention, the speech text information is input into a preset processing model obtained by the preset machine learn training, and the meeting records are automatically generated by theautomatic processing, so that the meeting records generation is more intelligent.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

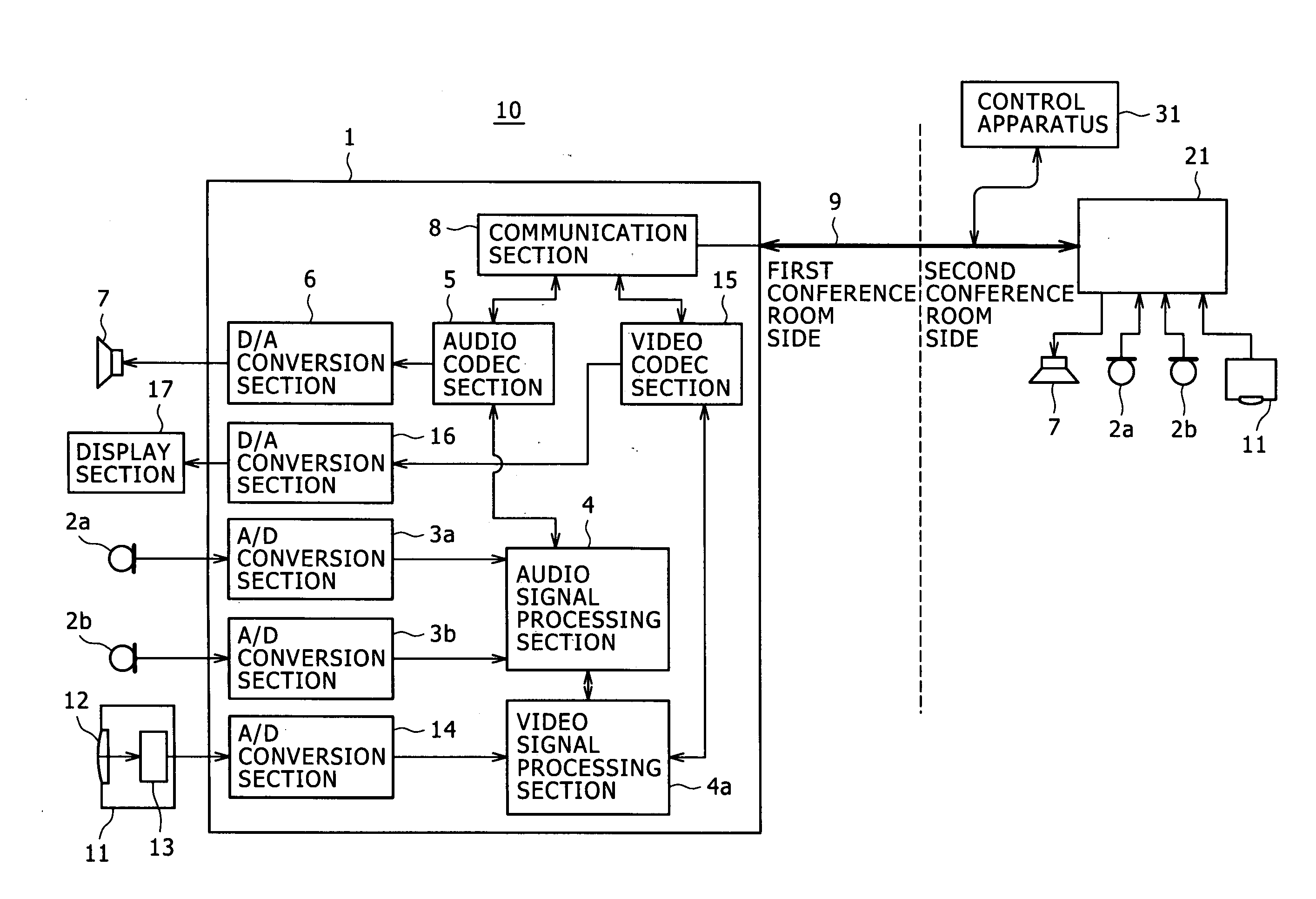

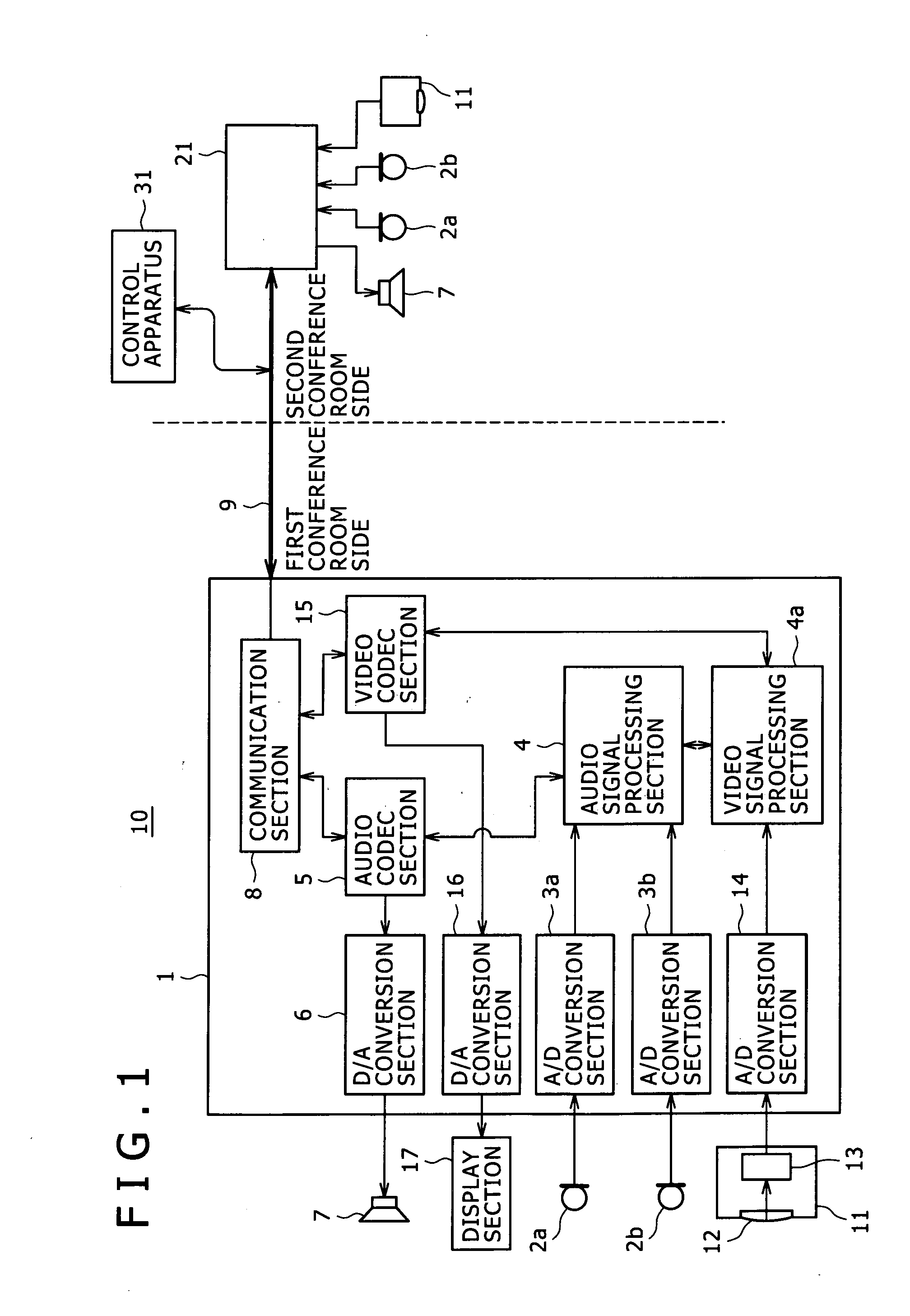

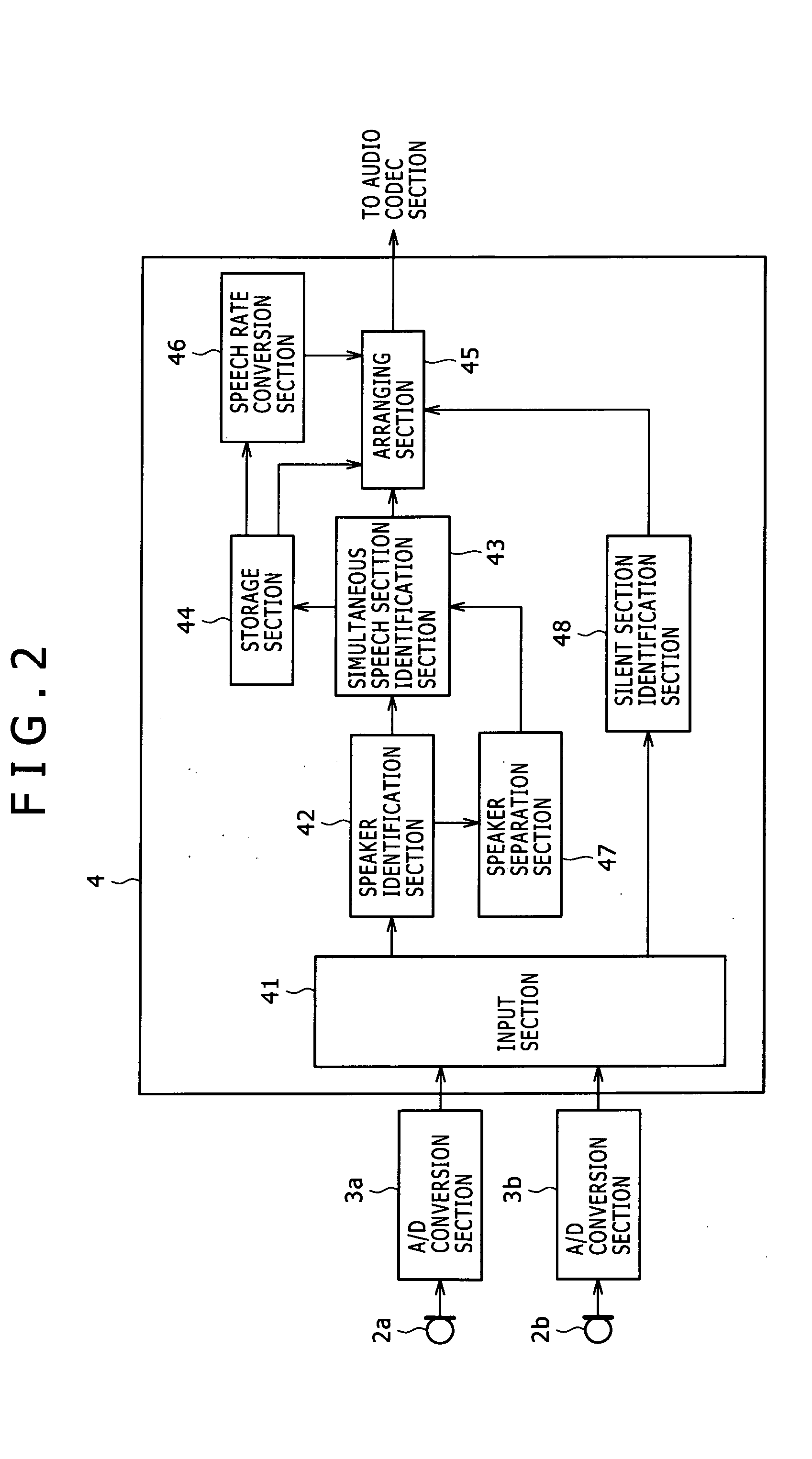

Audio processing apparatus, audio processing system, and audio processing program

InactiveUS20090150151A1Easy detectionFacilitates auditory lateralizationSpeech recognitionLoudspeakerAudio frequency

Disclosed herein is an audio processing apparatus for processing a plurality of pieces of audio data of sounds picked up by a plurality of microphones. The apparatus includes: a speaker identification section configured to identify a speaker based on the audio data; a simultaneous speech section identification section configured to, when at least first and second speakers have been identified, identify speech sections during which the first and second speakers have made speeches, and identify a section during which the first and second speakers have made the speeches at the same time as a simultaneous speech section; and an arranging section configured to separate audio data of the first speaker and audio data of the second speaker from the simultaneous speech section, and allow the audio data of the first speaker and the audio data of the second speaker to be outputted at mutually different timings.

Owner:SONY CORP

Sparse representation based short-voice speaker recognition method

InactiveCN103345923AAlleviate underrepresentation of personality traitsDealing with mismatchesSpeech analysisMajorization minimizationSelf adaptive

The invention discloses a sparse representation based short-voice speaker recognition method, which belongs to the technical field of voice signal processing and pattern recognition, and aims to solve the problem that the existing method is low in recognition rate under limited voice data conditions. The method mainly comprises the following steps: (1) pretreating all voice samples, and then extracting Mel-frequency cepstral coefficients and first-order difference coefficients thereof as characteristic; (2) training a gaussian background model by a background voice library, and extracting gaussian supervectors as secondary characteristics; (3) arranging the gaussian supervectors for training voice samples together so as to form a dictionary; and (4) solving an expression coefficient by using a sparse solving algorithm, reconstructing signals, and determining a recognition result according to a minimized residual error. According to the invention, the gaussian supervectors obtained through self-adaption can greatly relieve the problem that the personality characteristics of a speaker are expressed insufficiently due to limited voice data; through carrying out classification by using sparsely represented reconstructed residual errors, a speaker model mismatch problem caused by mismatched semantic information can be handled.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Speech recognition method and speech recognition system

Provided is a speech recognition method and a speech recognition system. The speech recognition method includes the steps of capturing speech features in speech data, recognizing speaker identification of the speech data according to the speech features, then using a first acoustic model to recognize statements in the speech data, calculating confidence scores of the recognized statements according to the recognized statements and the speech data, and judging whether the confidence scores exceed a threshold value. When the confidence scores exceed the threshold value, the recognized statements and the speech data are collected so as to use the speech data to carry out speaker adaptation of a second acoustic model corresponding to the speaker identification.

Owner:ASUSTEK COMPUTER INC

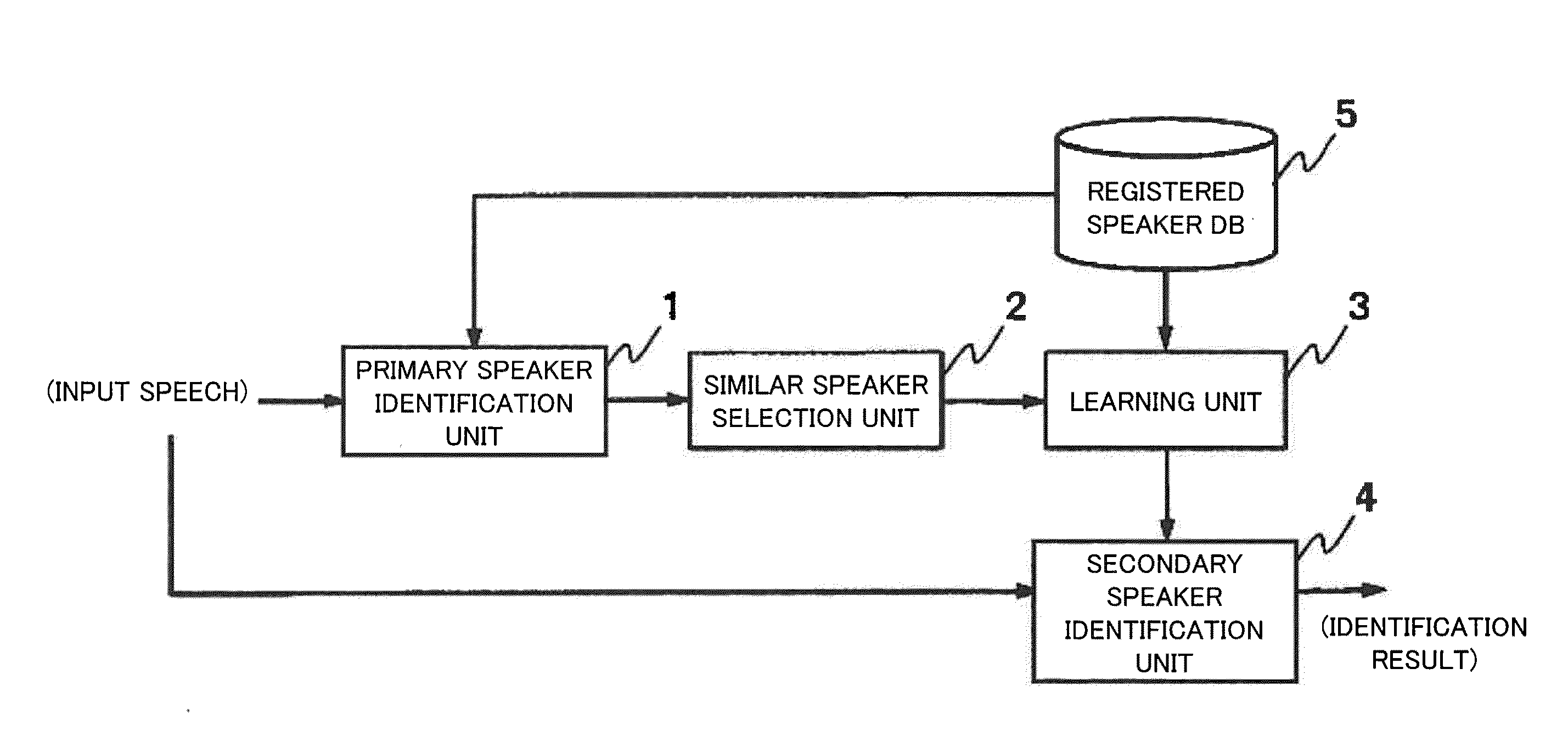

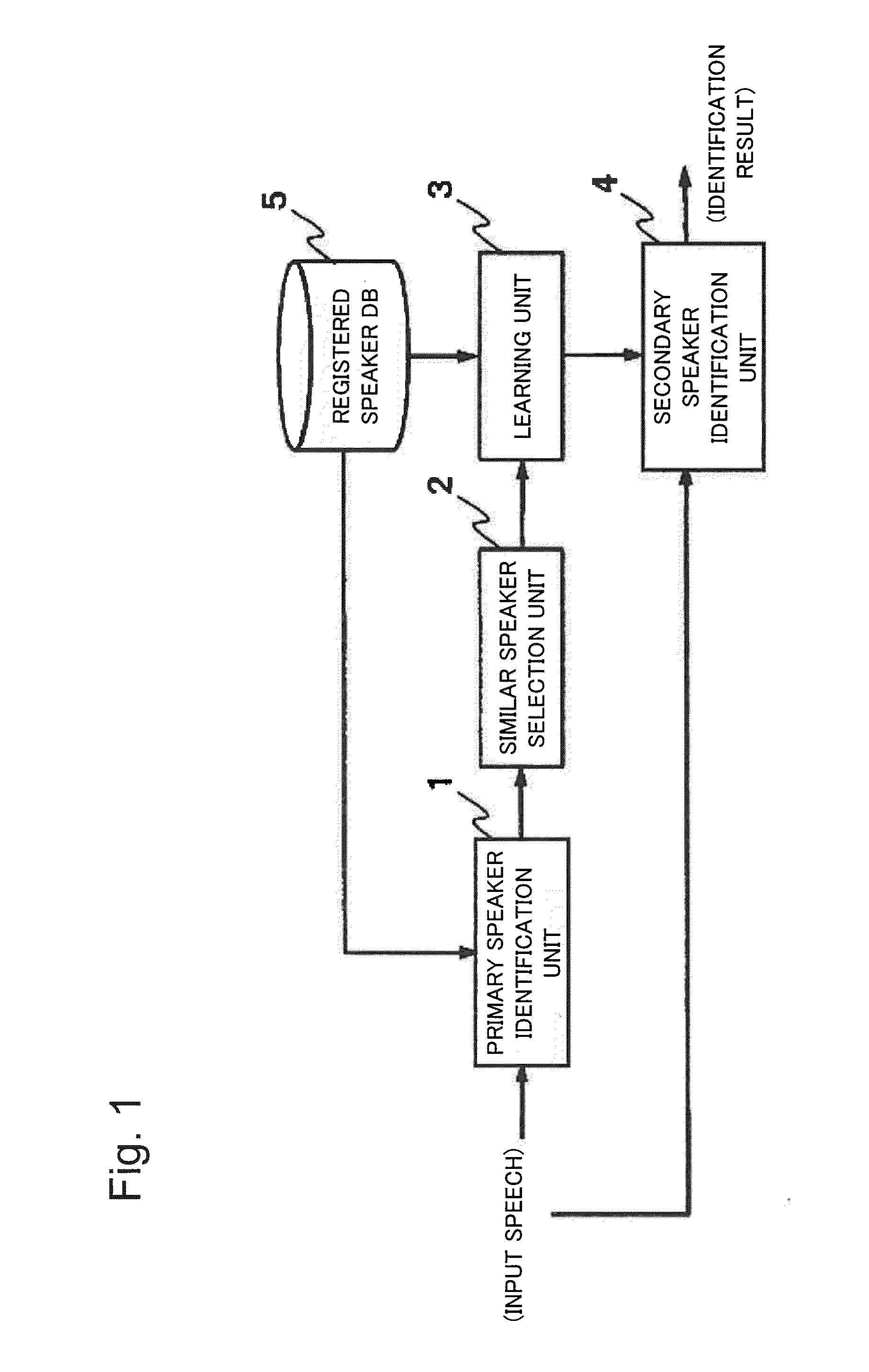

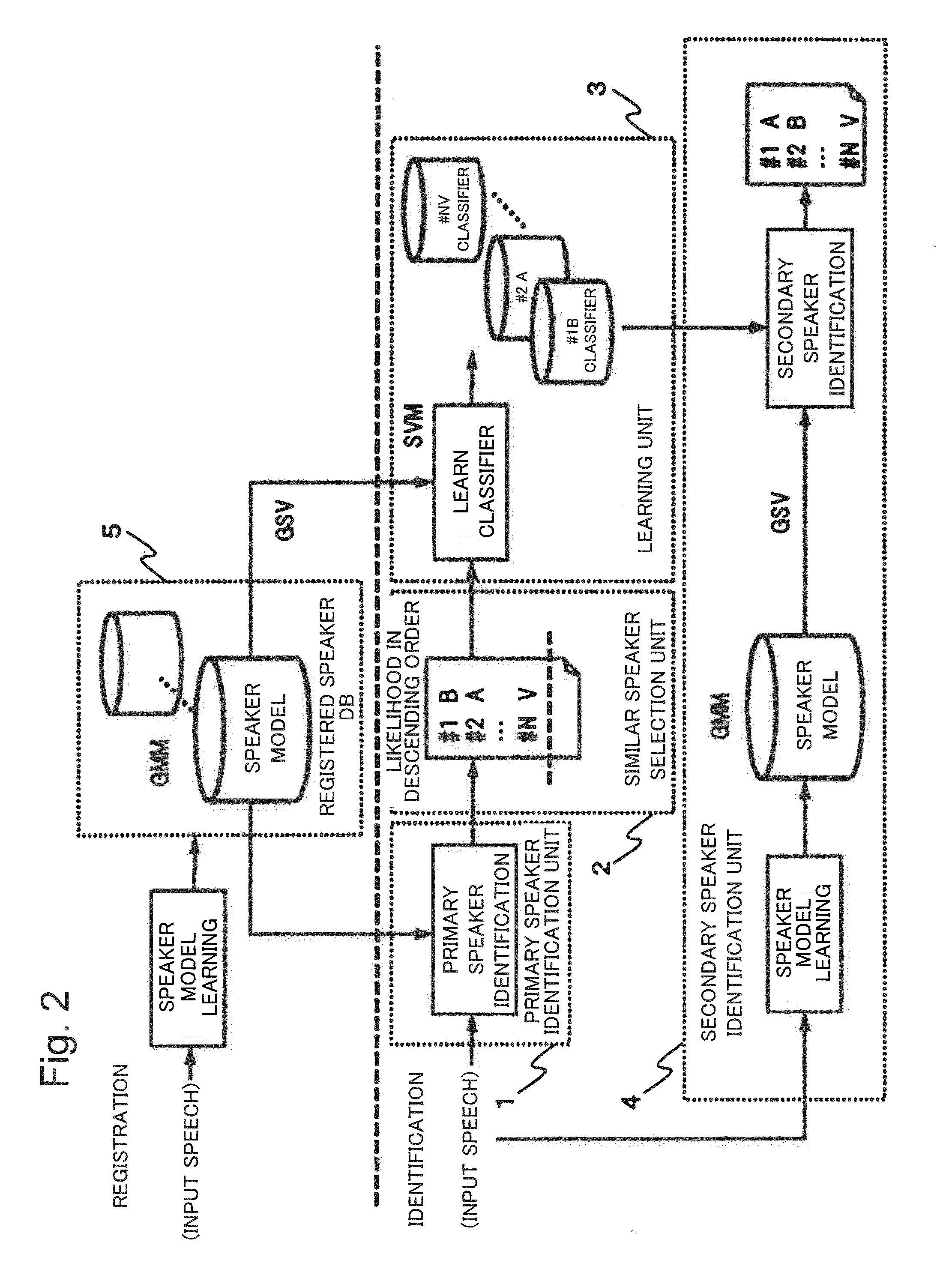

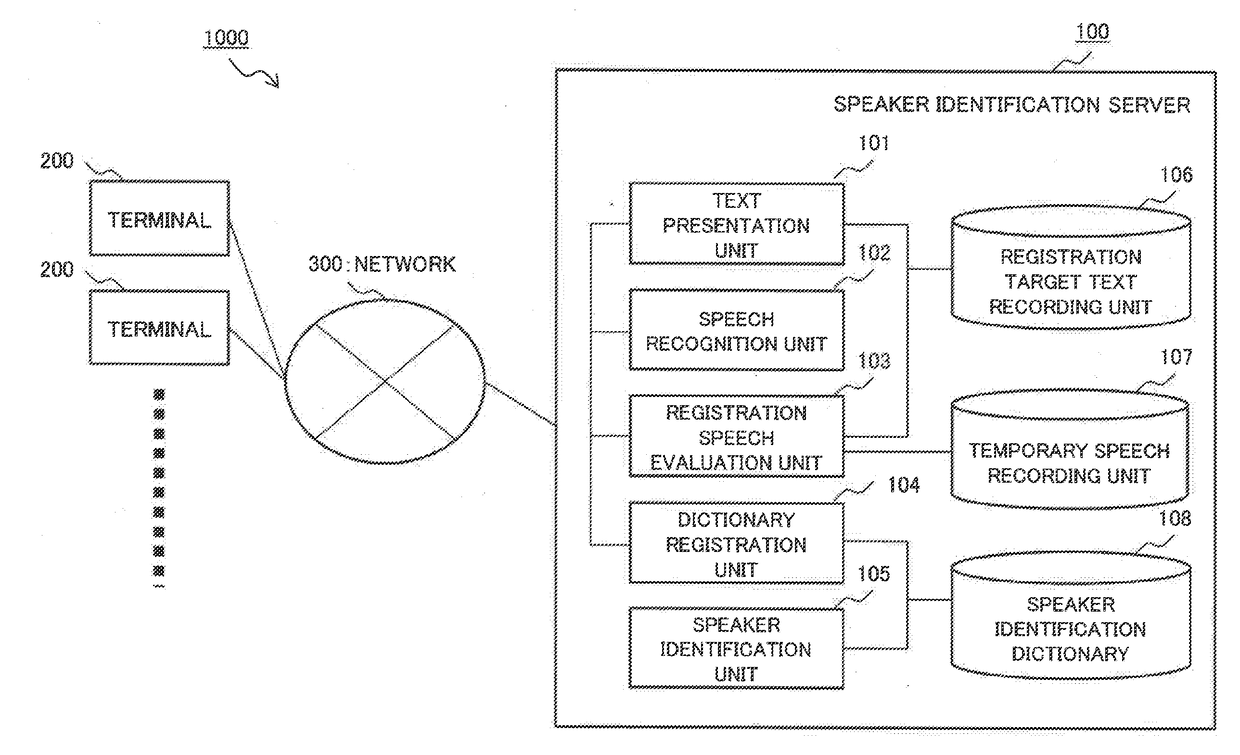

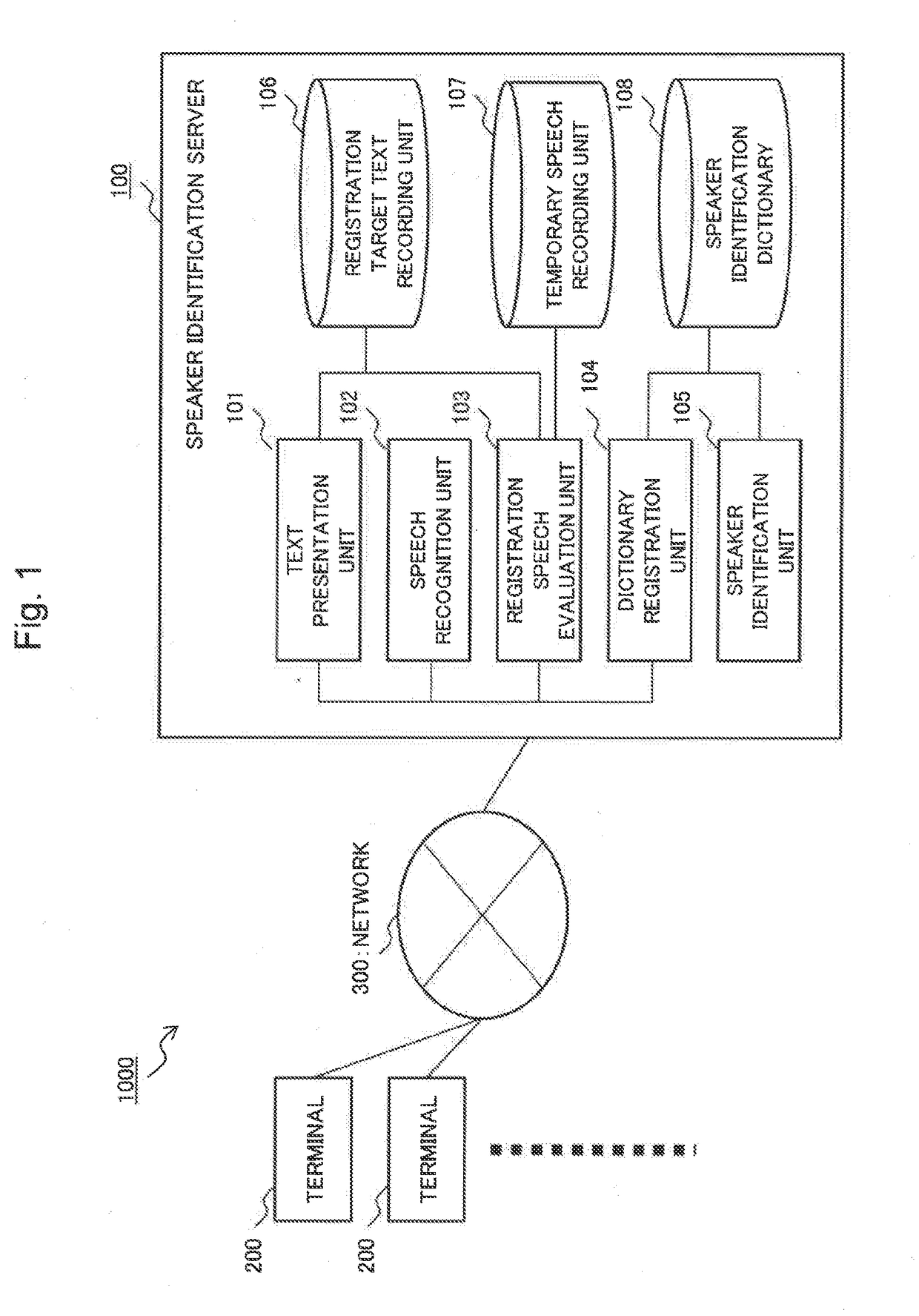

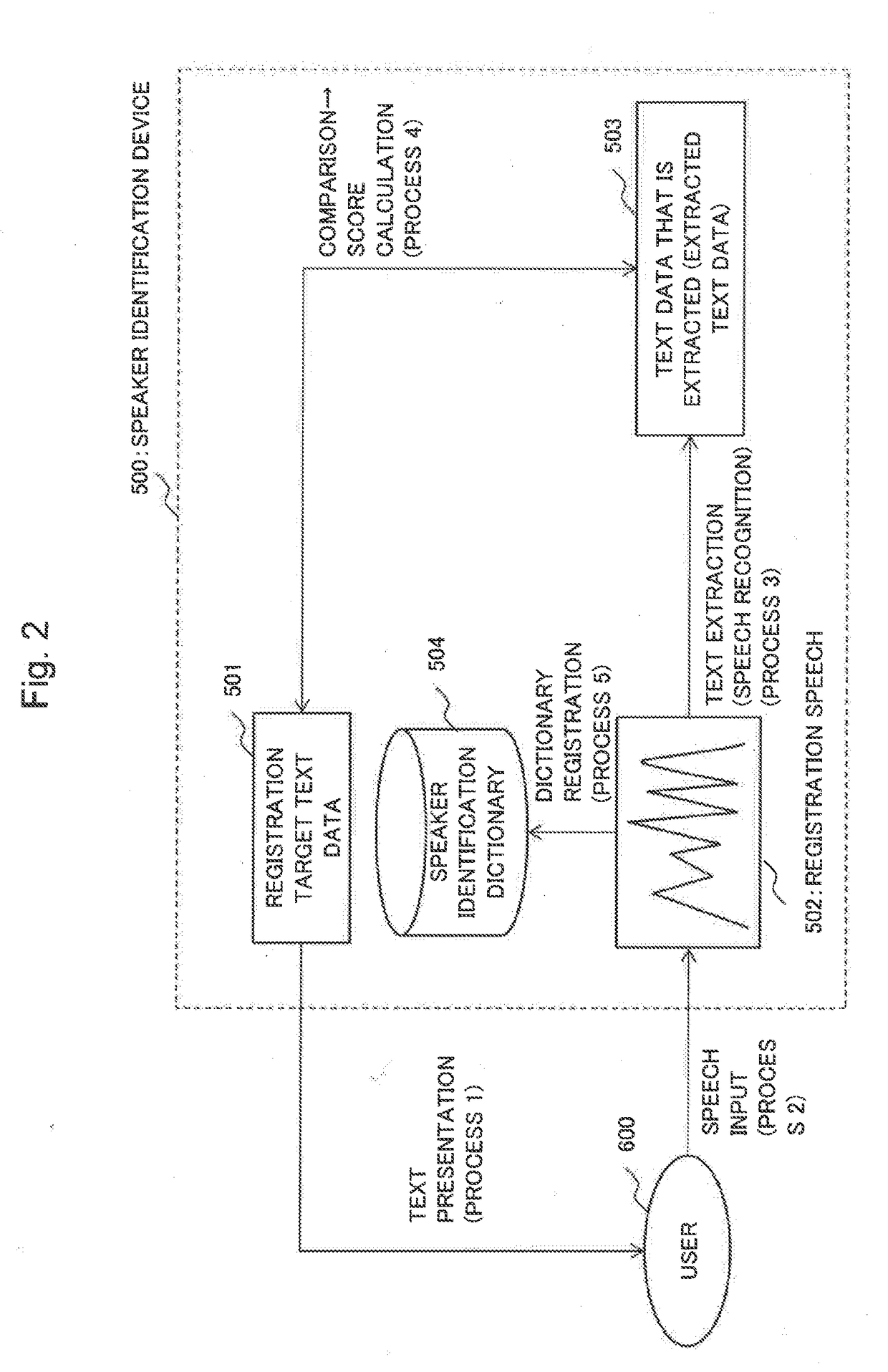

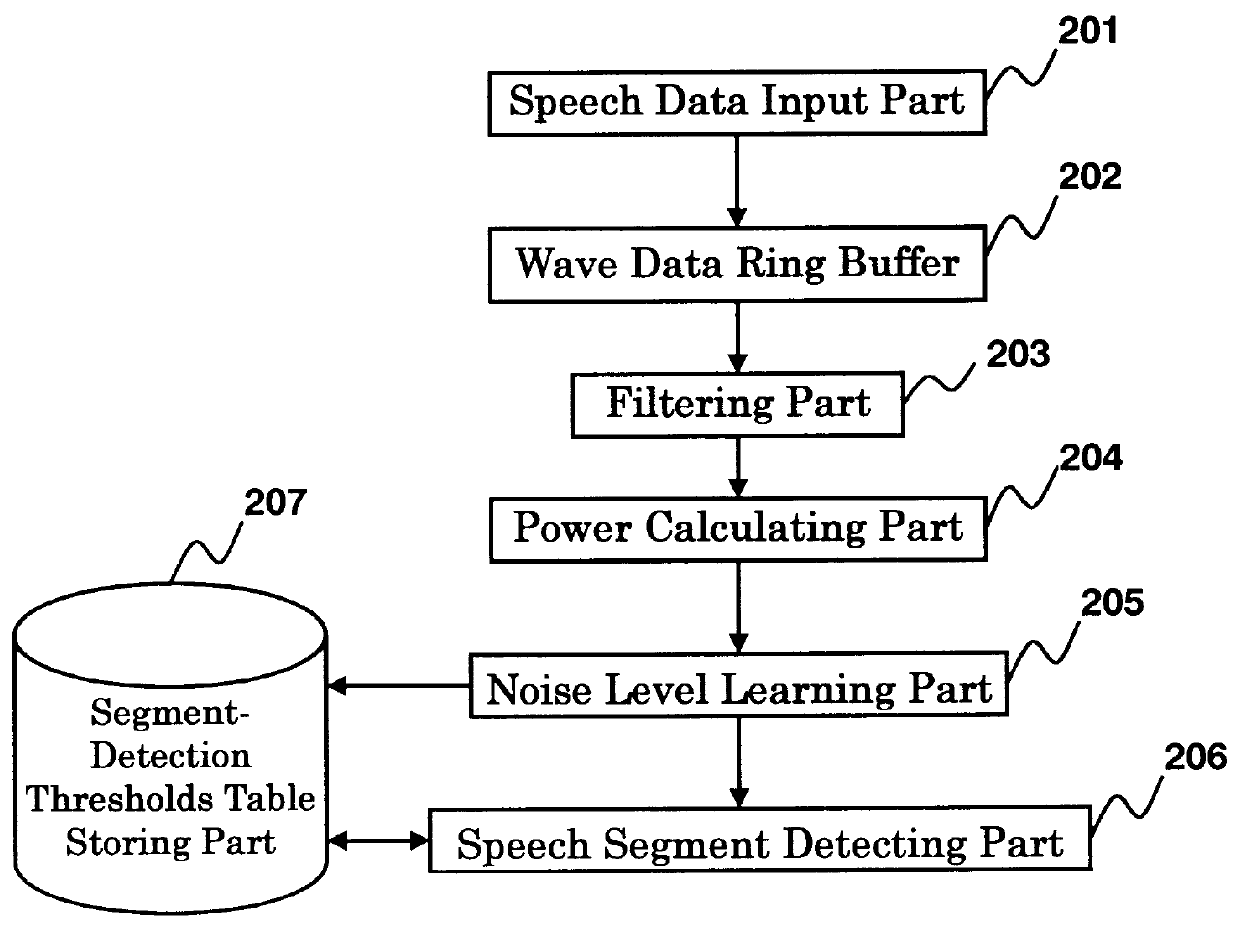

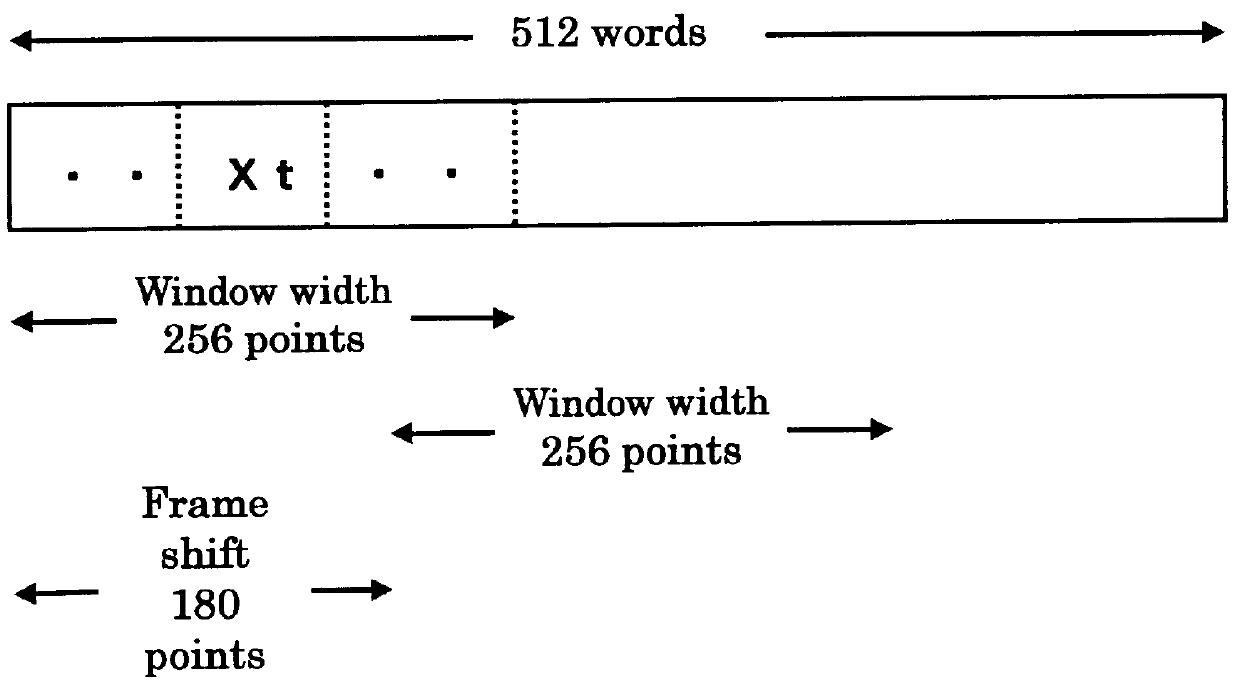

Speaker identification device, speaker identification method, and recording medium

ActiveUS20150356974A1Highly precise speaker identificationAccurate identificationSpeech recognitionLearning unitLoudspeaker

A speaker identification device includes: a primary speaker identification unit that computes, for each pre-stored registered speaker, a score that indicates the similarity between input speech and speech of the registered speakers; a similar speaker selection unit that selects a plurality of the registered speakers as similar speakers according to the height of the scores thereof; a learning unit that creates a classifier for each similar speaker by sorting the speech of a certain similar speaker among the similar speakers as a positive instance and the speech of the other similar speakers as negative instances; and a secondary speaker identification unit that computes, for each classifier, a score of the classifier with respect to the input speech, and outputs an identification result.

Owner:NEC CORP

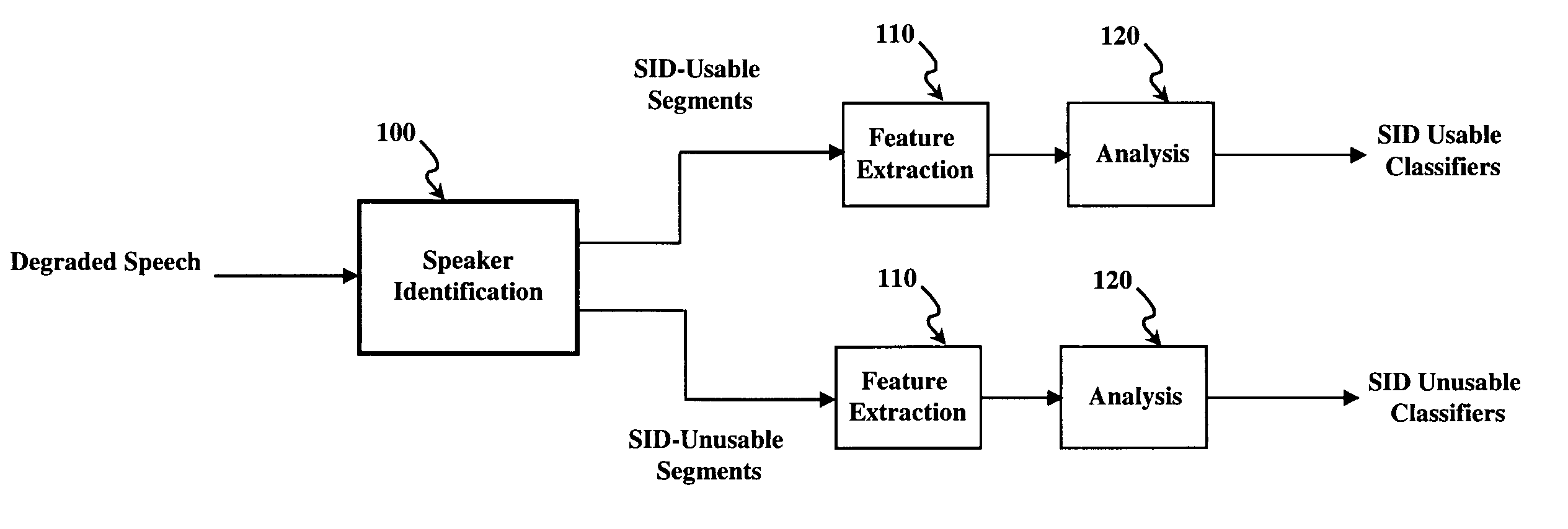

Method for improving speaker identification by determining usable speech

InactiveUS7177808B2Overcome limitationsEnhance a target speakerSpeech recognitionDependabilityFormant

Method for improving speaker identification by determining usable speech. Degraded speech is preprocessed in a speaker identification (SID) process to produce SID usable and SID unusable segments. Features are extracted and analyzed so as to produce a matrix of optimum classifiers for the detection of SID usable and SID unusable speech segments. Optimum classifiers possess a minimum distance from a speaker model. A decision tree based upon fixed thresholds indicates the presence of a speech feature in a given speech segment. Following preprocessing, degraded speech is measured in one or more time, frequency, cepstral or SID usable / unusable domains. The results of the measurements are multiplied by a weighting factor whose value is proportional to the reliability of the corresponding time, frequency, or cepstral measurements performed. The measurements are fused as information, and usable speech segments are extracted for further processing. Such further processing of co-channel speech may include speaker identification where a segment-by-segment decision is made on each usable speech segment to determine whether they correspond to speaker #1 or speaker #2. Further processing of co-channel speech may also include constructing the complete utterance of speaker #1 or speaker #2. Speech features such as pitch and formants may be extended back into the unusable segments to form a complete utterance from each speaker.

Owner:THE UNITED STATES OF AMERICA AS REPRESETNED BY THE SEC OF THE AIR FORCE

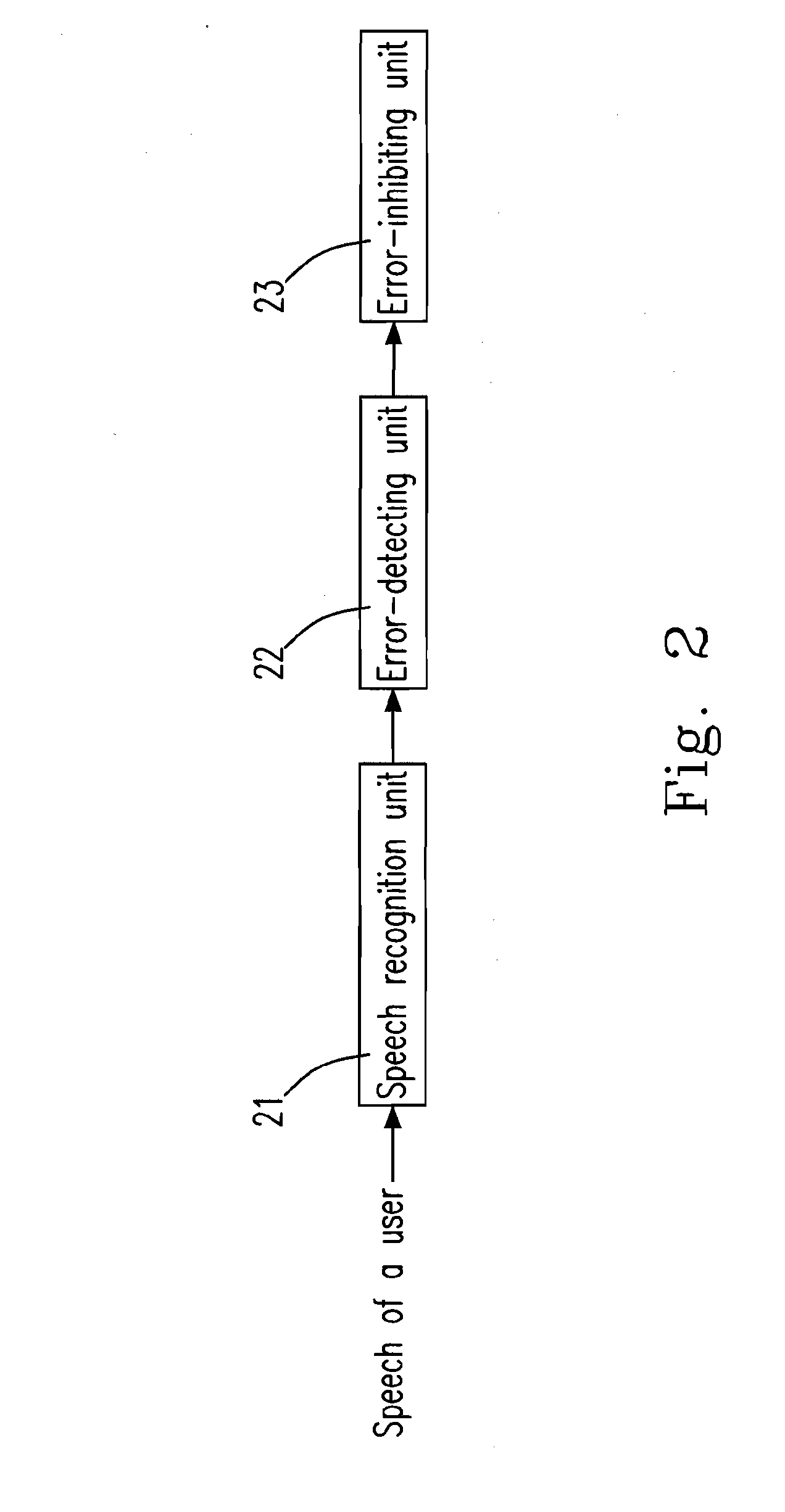

Speaker identification device and method for registering features of registered speech for identifying speaker

InactiveUS20170323644A1Stable and accurate identificationSuppress errorSpeech analysisIdentification deviceSpeech input

[Problem] To suppress an erroneous identification resulting from registration speech, and identify the speaker stably and precisely.[Solving means] The speech recognition unit 102 extracts the text data corresponding to the registration speech, as the extraction text data. The registration speech is a speech input by a registration speaker reading aloud registration target text data that is preliminarily set text data. The registration speech evaluation unit 103 calculates a score representing a similarity degree between the extracted text data and the registration target text data (registration speech score) for each registration speaker. The dictionary registration unit 104 registers the feature value of the registration speech in the speaker identification dictionary 108 for registering the feature value of the registration speech for each registration speaker, according to the evaluation result by the registration speech evaluation unit 103.

Owner:NEC CORP

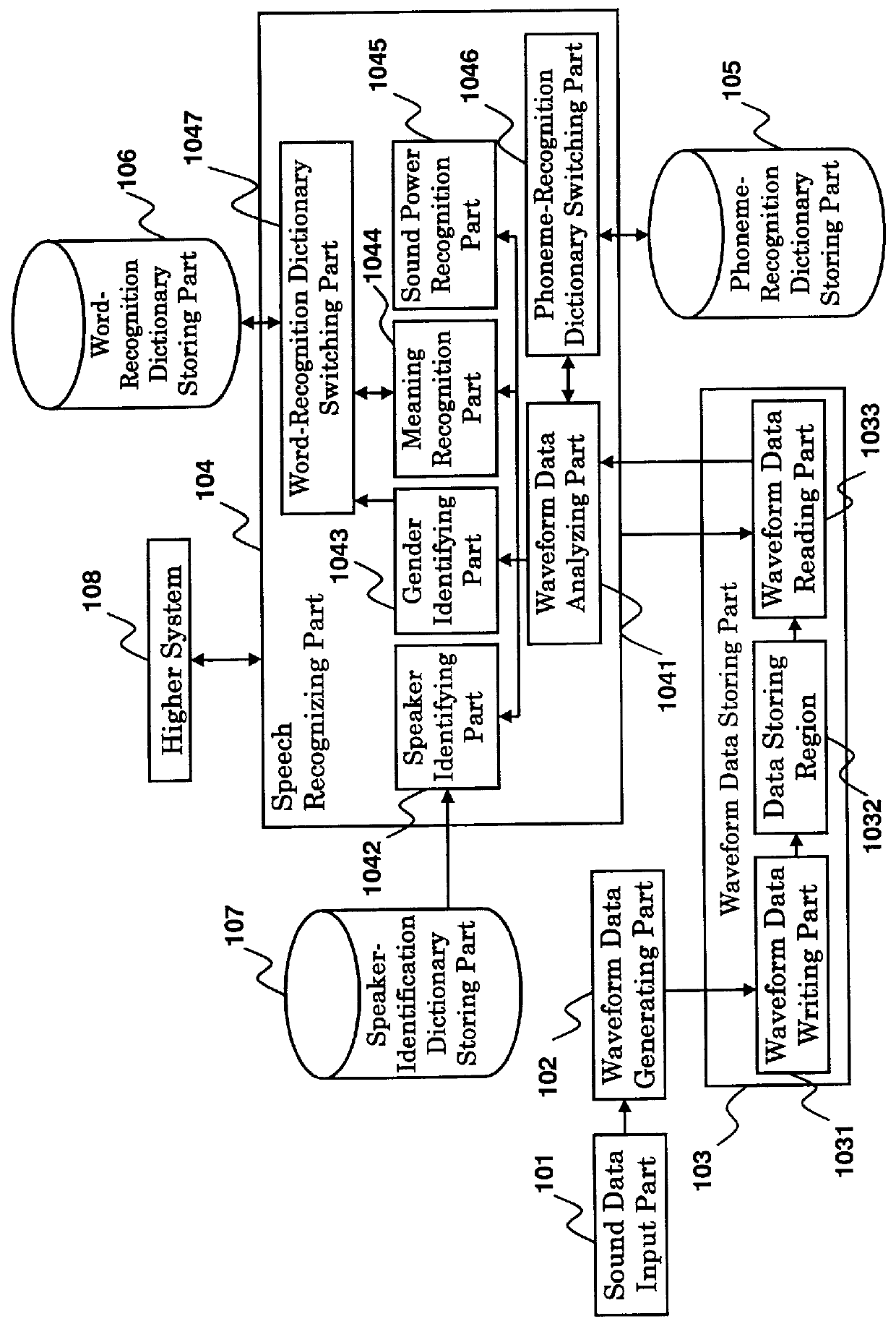

Speech recognizer using speaker categorization for automatic reevaluation of previously-recognized speech data

A speech recognizer includes storing means for storing speech data, and reevaluating means for reevaluating the speech data stored in the storing means in response to a request from a data processor utilizing the results of speaker categorization (the results of categorization with speaker categorization means such as a gender-dependent speech model or speaker identification). Thus, the present invention makes it possible to correct the speech data that has been wrongly recognized before.

Owner:FUJITSU LTD

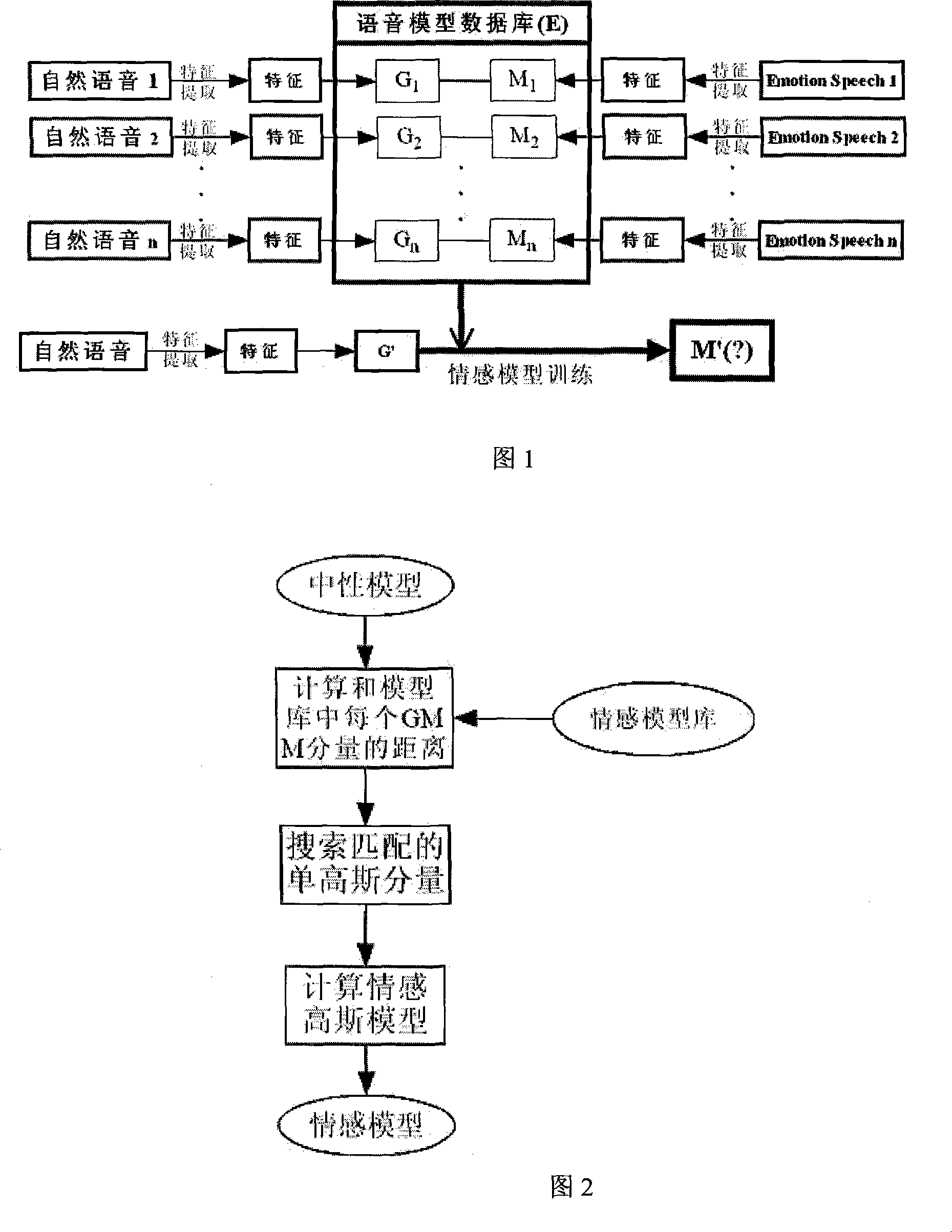

Method for recognizing speaker based on conversion of neutral and affection sound-groove model

The invention relates to a speaker identification method based on neutralization and sound-groove model conversion, the steps comprises (1) extracting voice feature, firstly conducting voice frequency pre-treating which is divided into three parts of sample-taking quantification, zero drift elimination, then extracting reverse spectrum signature MFCC, (2) building emotion model library, conducting Gaussian compound model training, training neutral model according to the neutral voice training of the users, conducting neutralization-emotion model conversion and obtaining emotion voice model by algorithm approach of neutralization-emotion voice conversion and (3) scoring for the voice test to identify the speakers. The invention has the advantages that the technique uses the algorithm approach of neutralization-emotion model conversion to increase the identification rate of the emotive speaker identifying. The technique trains out emotion voice model of the users according to the neutralization voice model of the users and increases the identification rate of the system.

Owner:ZHEJIANG UNIV

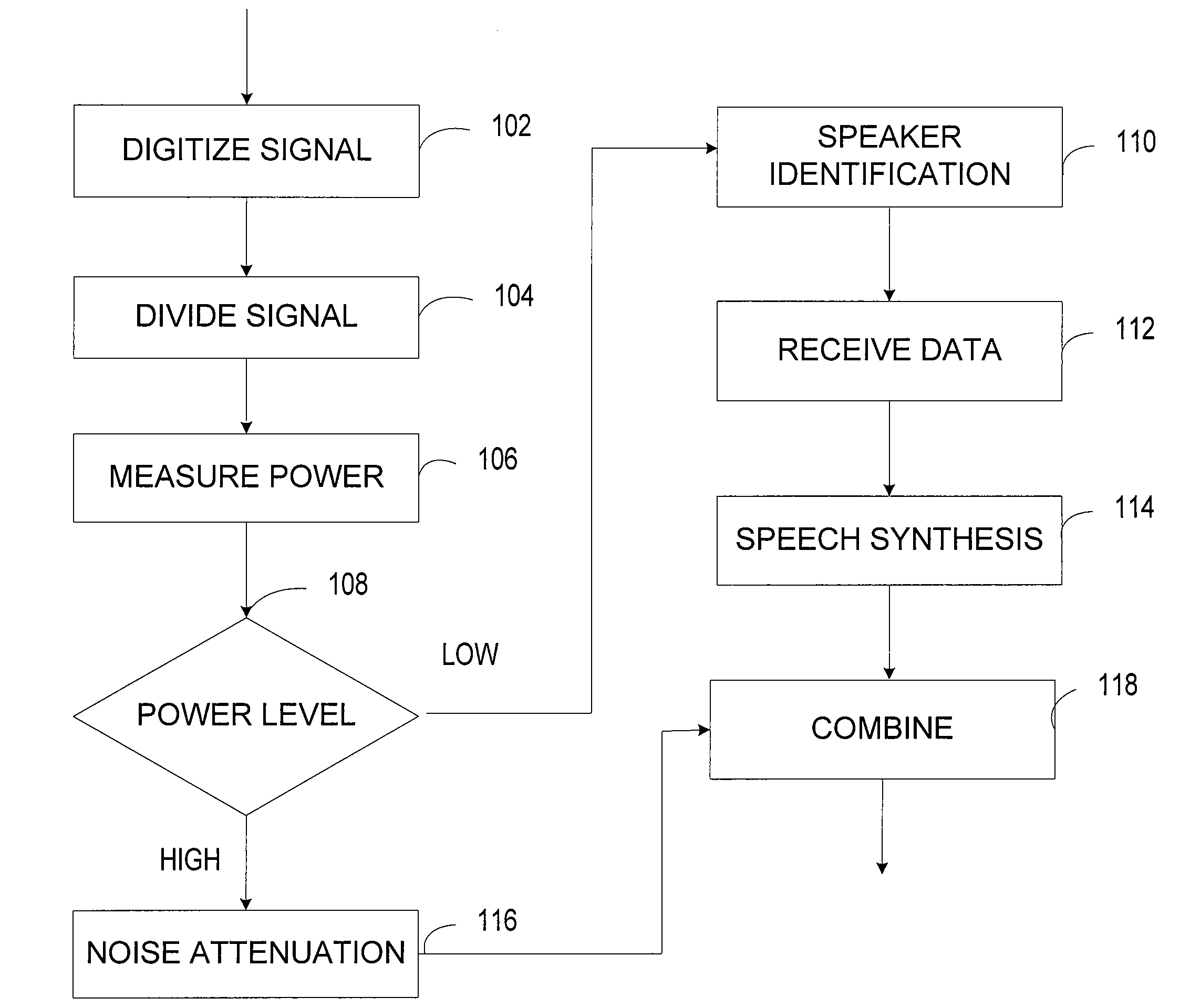

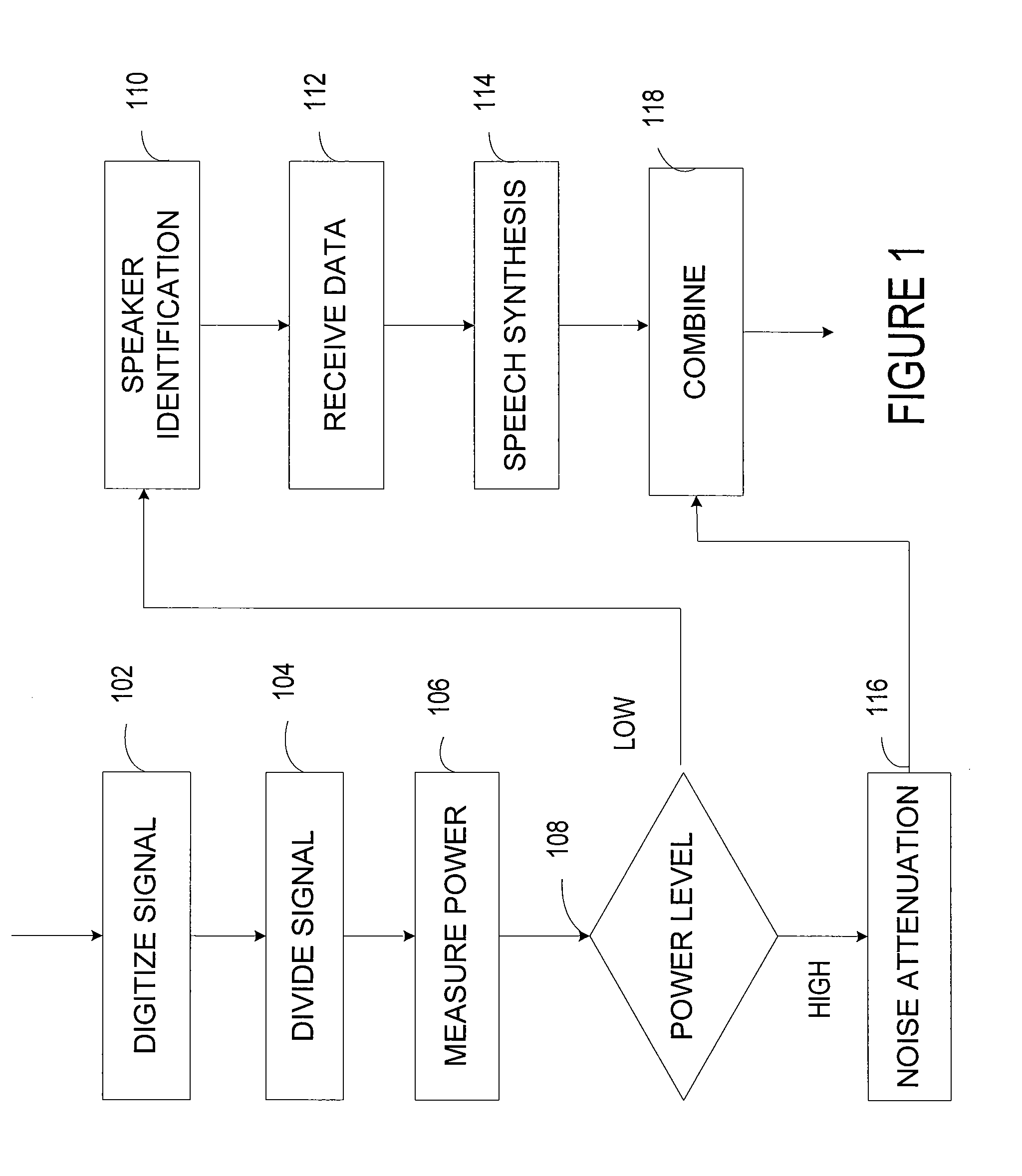

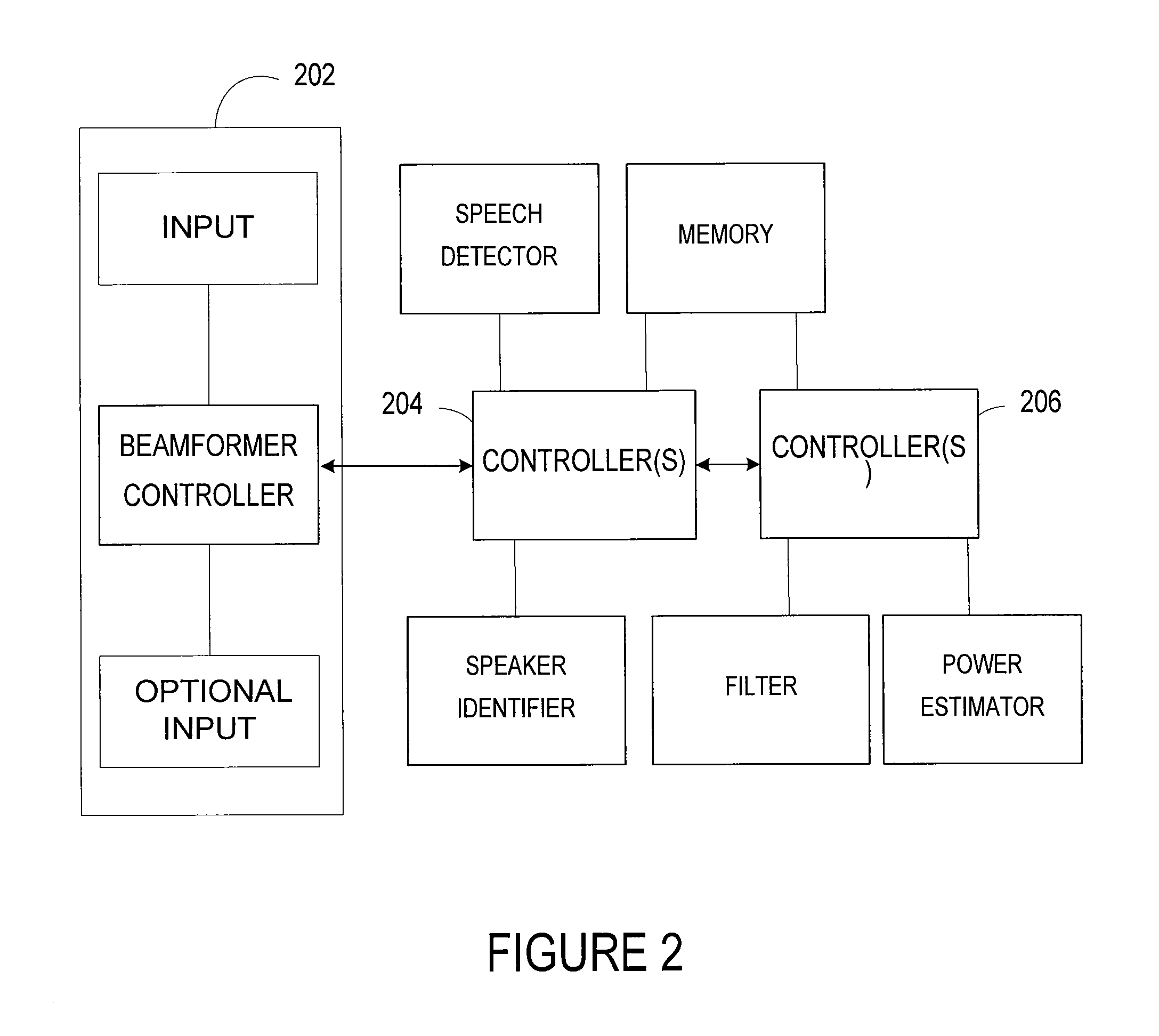

Partial speech reconstruction

InactiveUS20090119096A1Quality improvementMicrophonesPublic address systemsSignal-to-noise ratio (imaging)Speech reconstruction

A system enhances the quality of a digital speech signal that may include noise. The system identifies vocal expressions that correspond to the digital speech signal. A signal-to-noise ratio of the digital speech signal is measured before a portion of the digital speech signal is synthesized. The selected portion of the digital speech signal may have a signal-to-noise ratio below a predetermined level and the synthesis of the digital speech signal may be based on speaker identification.

Owner:NUANCE COMM INC

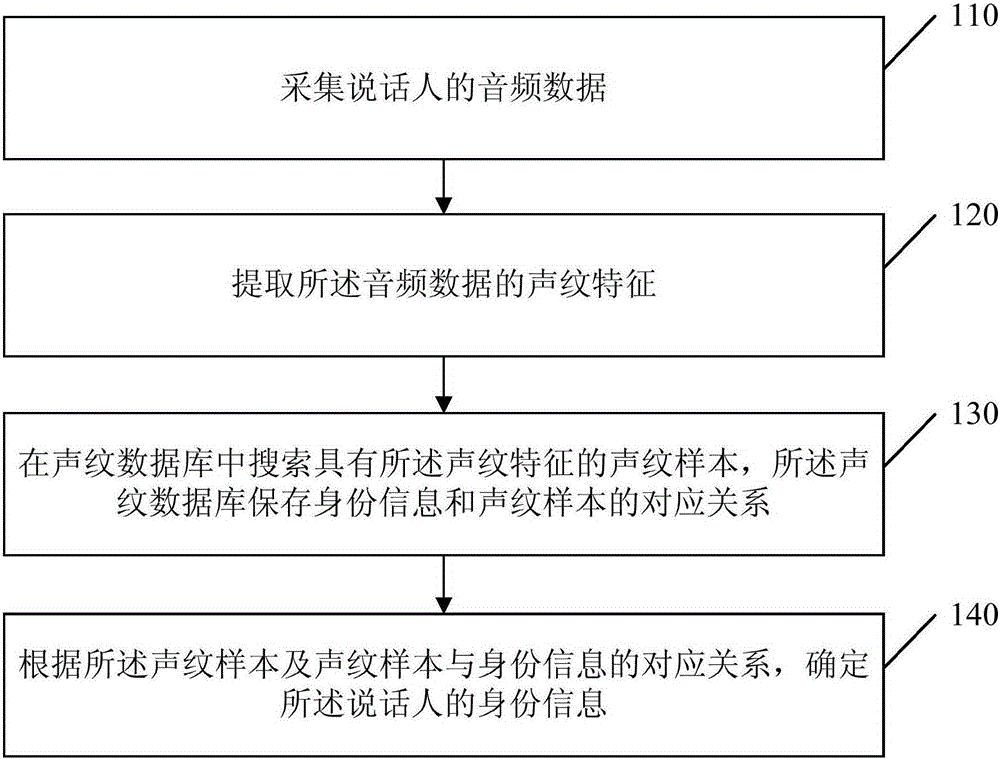

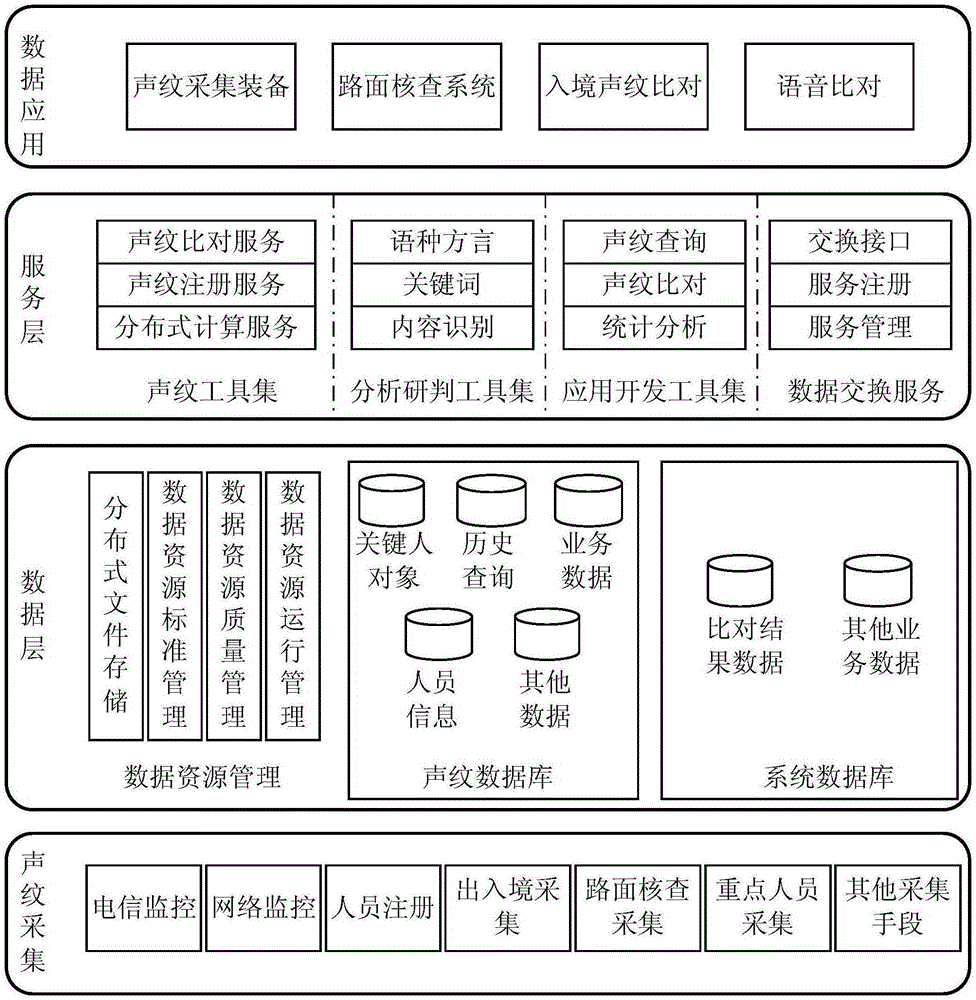

Speaker identification method and device

InactiveCN105244031AImprove the efficiency of voice monitoringPublic Safety GuaranteeSpeech analysisSpeech soundAudio frequency

The invention discloses a speaker identification method and a device. The speaker identification method comprises steps of collecting audio frequency data of a speaker, extracting voiceprint characteristics of the audio frequency data, searching a voiceprint sample having the voiceprint characteristic from a voiceprint database, wherein the voiceprint database stores a corresponding relation between identity information and the voiceprint sample, and determining the identity information of the speaker according to the voiceprint sample and the corresponding relation between the voiceprint sample and the identity information. The invention improves the efficiency of voice monitoring and provides the guarantee to the common safety.

Owner:RUN TECH CO LTD BEIJING

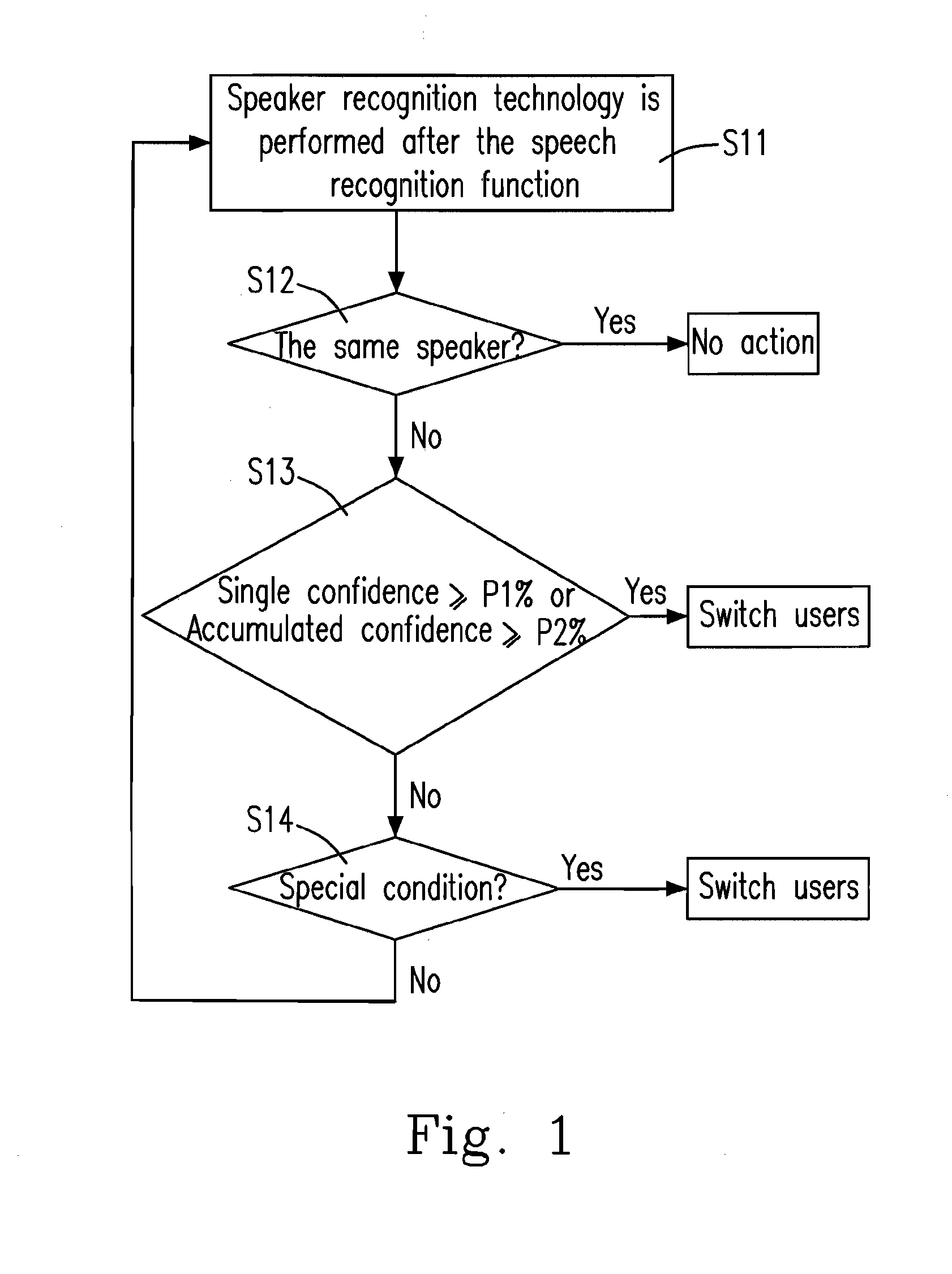

Speech recognition method and system with intelligent speaker identification and adaptation

InactiveUS20080147396A1Increase incidenceReduce incidenceSpeech recognitionSpeech identificationAcoustic model

A speech recognition method is provided. The speech recognition method includes the steps of (a) receiving a speech from a user; (b) recognizing the speech to generate a recognition result with a score; and (c) according to the score of the recognition result, performing one of the following steps, (c1) preventing from performing an adaptation for an acoustic model but using a utility rate of the speech to learn a new language and grammar probability model when the score is relatively high, (c2) performing a confirmation by the user when the score is relatively low, further comprising: (c21) when the recognition result is confirmed in the confirmation by the user, performing the adaptation in the acoustic model to increase an occurrence probability of the speech and using the utility rate of the speech to learn the new language and grammar probability model, (c22) when the recognition result is rejected in the confirmation by the user, performing the adaptation in the acoustic model to decrease the occurrence probability of the speech.

Owner:DELTA ELECTRONICS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com