Patents

Literature

749 results about "Speech model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Serial Processing Models. Serial models of speech production present the process as a series of sequential stages or modules, with earlier stages comprising of the large units (i.e. sentences and phrases), and later stage comprising of their smaller unit constituents (i.e. distinct features like voicing, phonemes, morphemes, syllables).

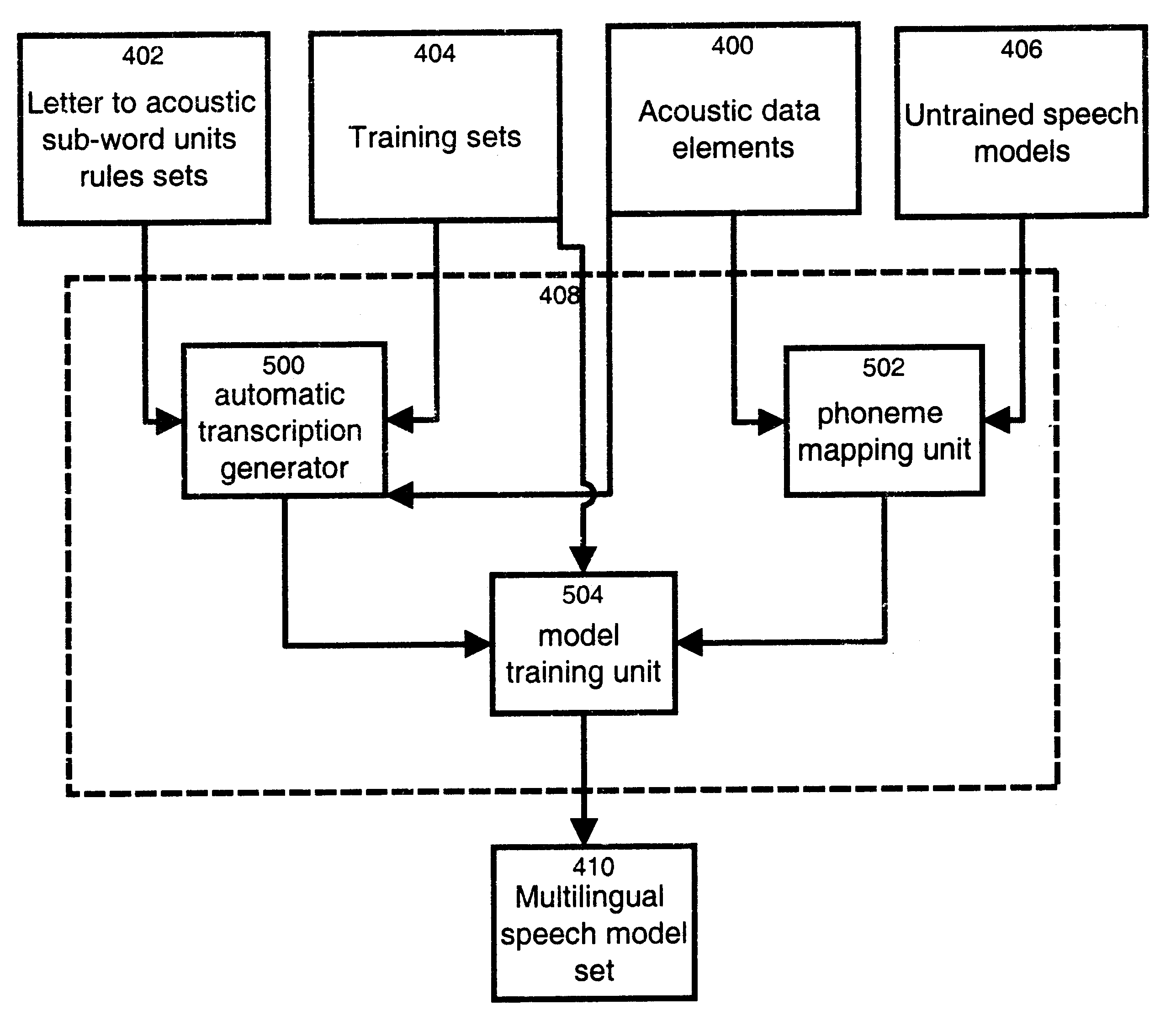

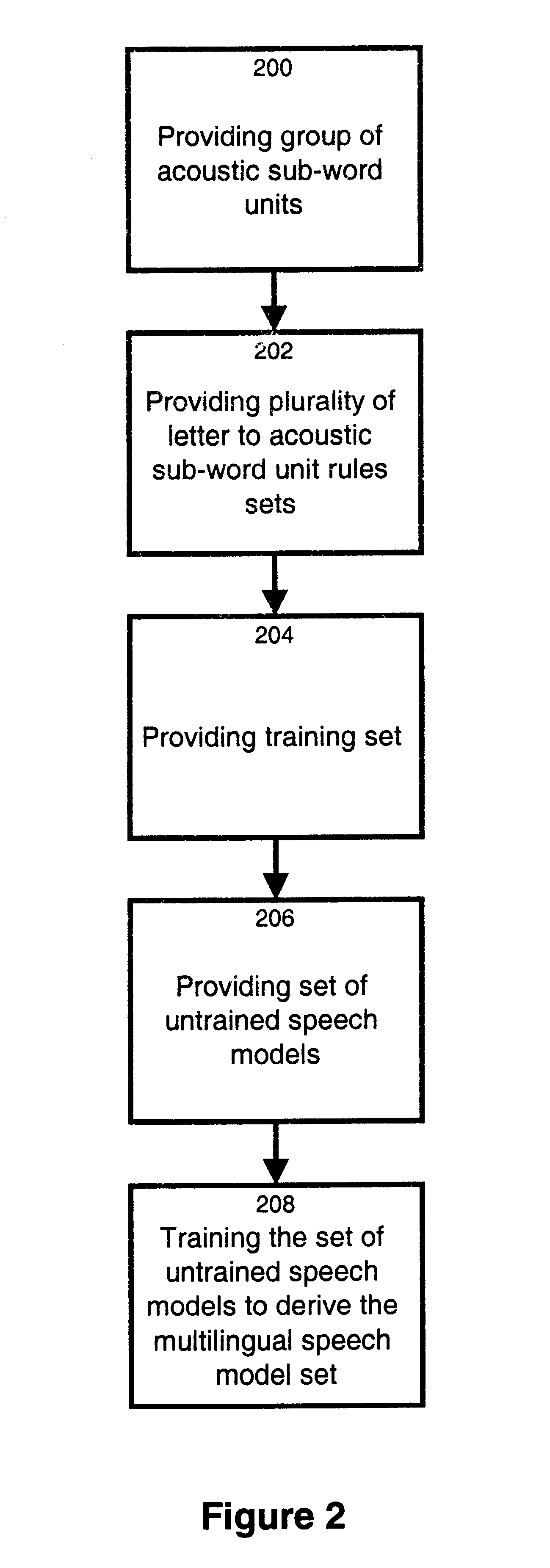

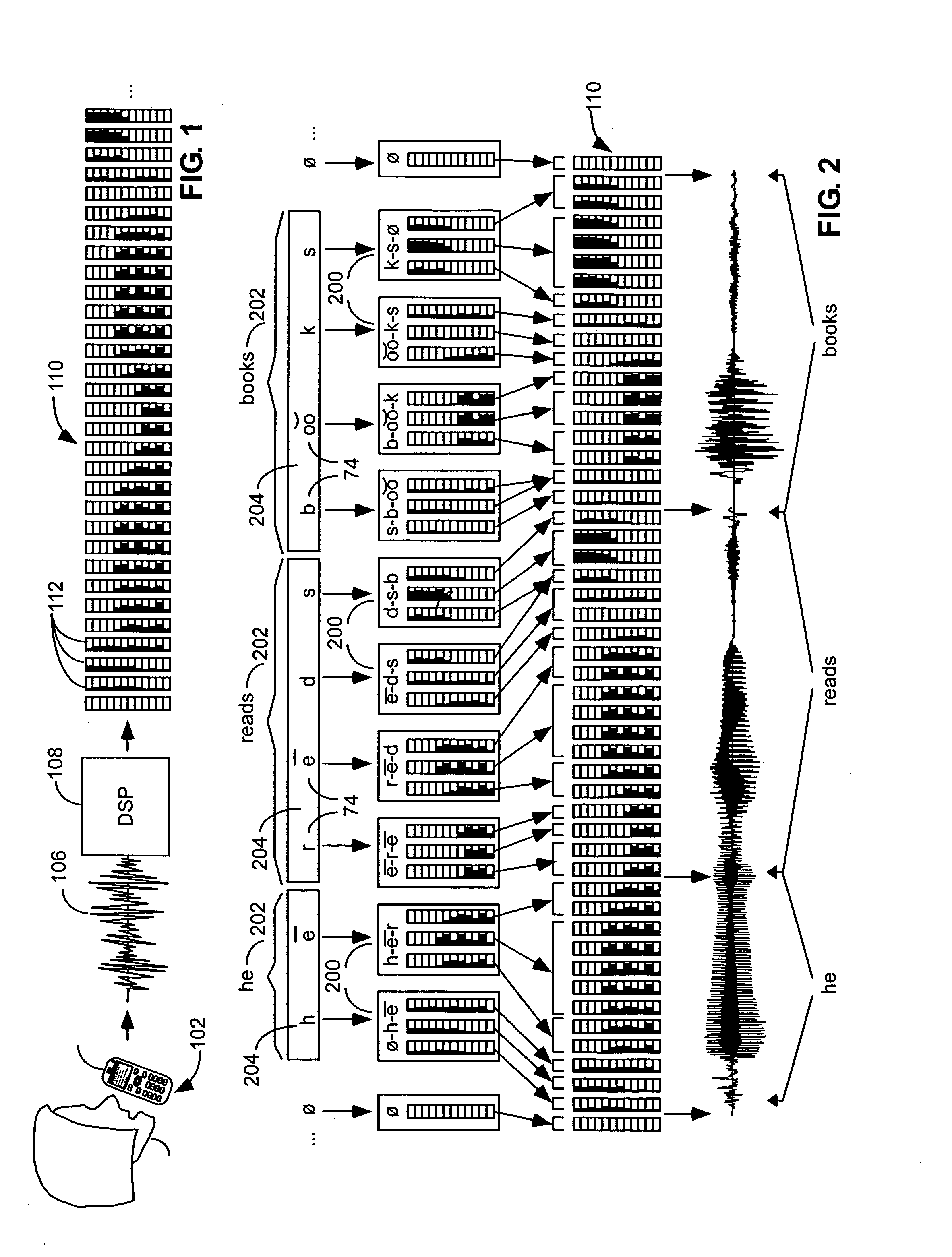

Method and apparatus for training a multilingual speech model set

InactiveUS6912499B1Reduce development costsShorten development timeSpeech recognitionSpecial data processing applicationsMore languageSpeech identification

The invention relates to a method and apparatus for training a multilingual speech model set. The multilingual speech model set generated is suitable for use by a speech recognition system for recognizing spoken utterances for at least two different languages. The invention allows using a single speech recognition unit with a single speech model set to perform speech recognition on utterances from two or more languages. The method and apparatus make use of a group of a group of acoustic sub-word units comprised of a first subgroup of acoustic sub-word units associated to a first language and a second subgroup of acoustic sub-word units associated to a second language where the first subgroup and the second subgroup share at least one common acoustic sub-word unit. The method and apparatus also make use of a plurality of letter to acoustic sub-word unit rules sets, each letter to acoustic sub-word unit rules set being associated to a different language. A set of untrained speech models is trained on the basis of a training set comprising speech tokens and their associated labels in combination with the group of acoustic sub-word units and the plurality of letter to acoustic sub-word unit rules sets. The invention also provides a computer readable storage medium comprising a program element for implementing the method for training a multilingual speech model set.

Owner:RPX CLEARINGHOUSE

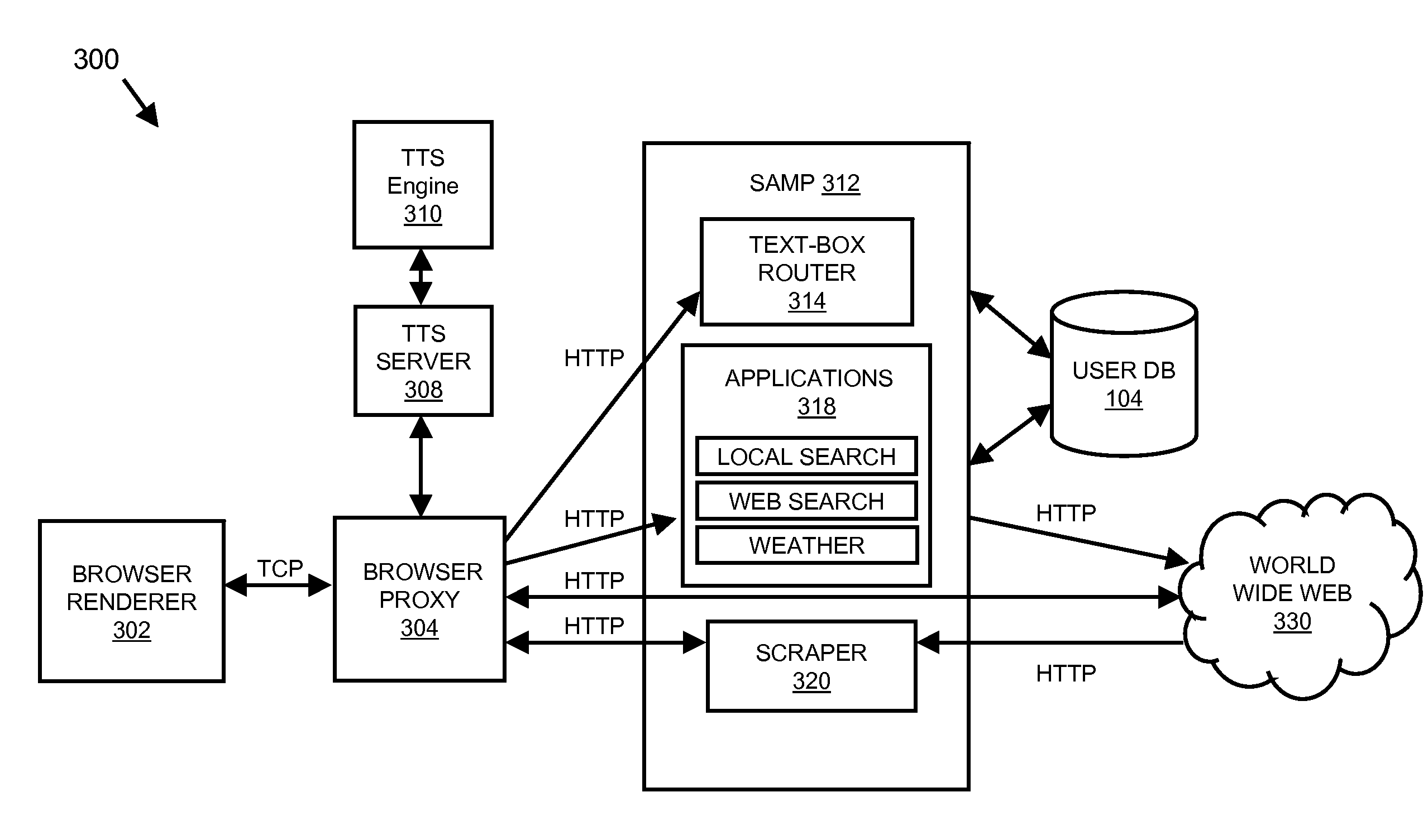

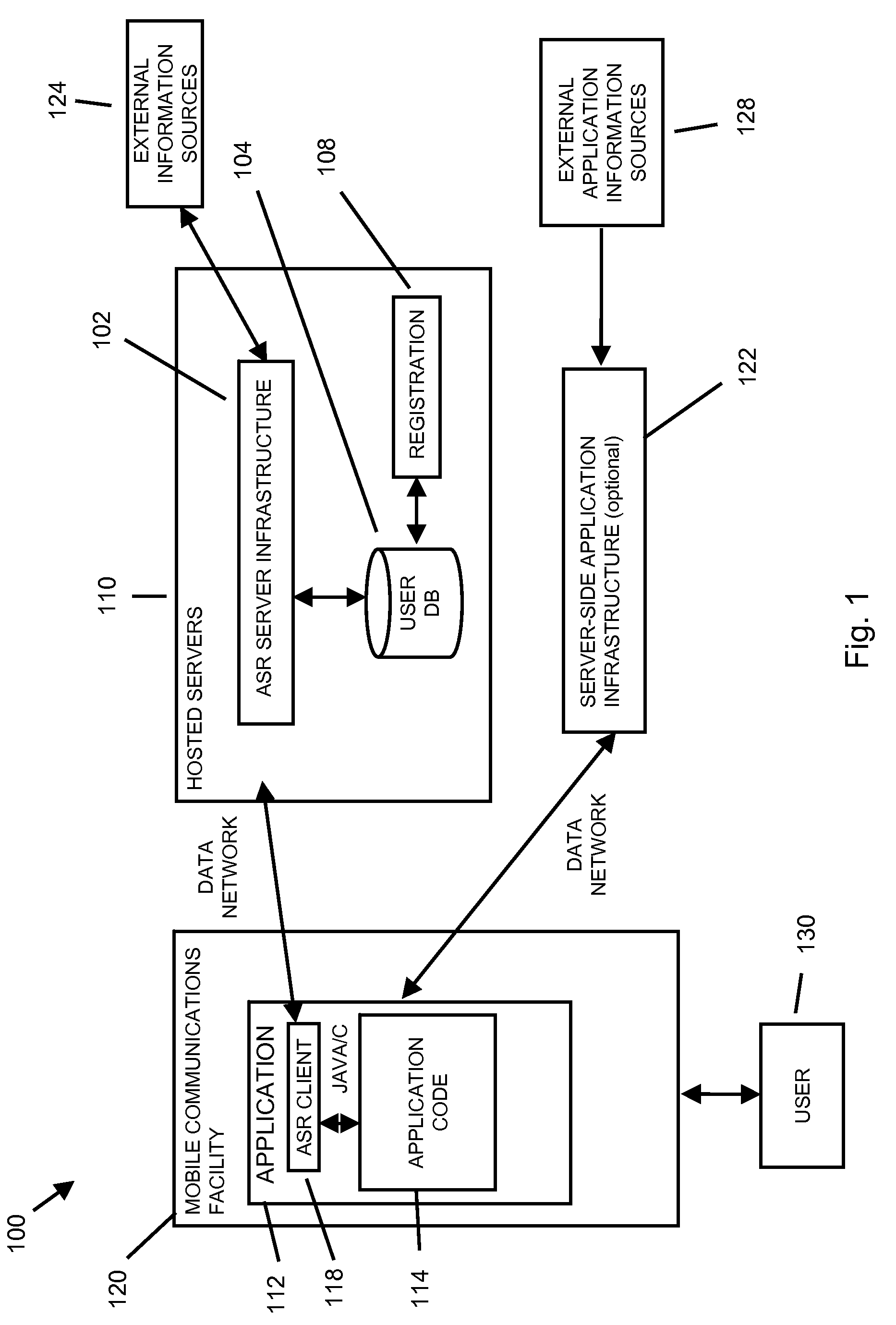

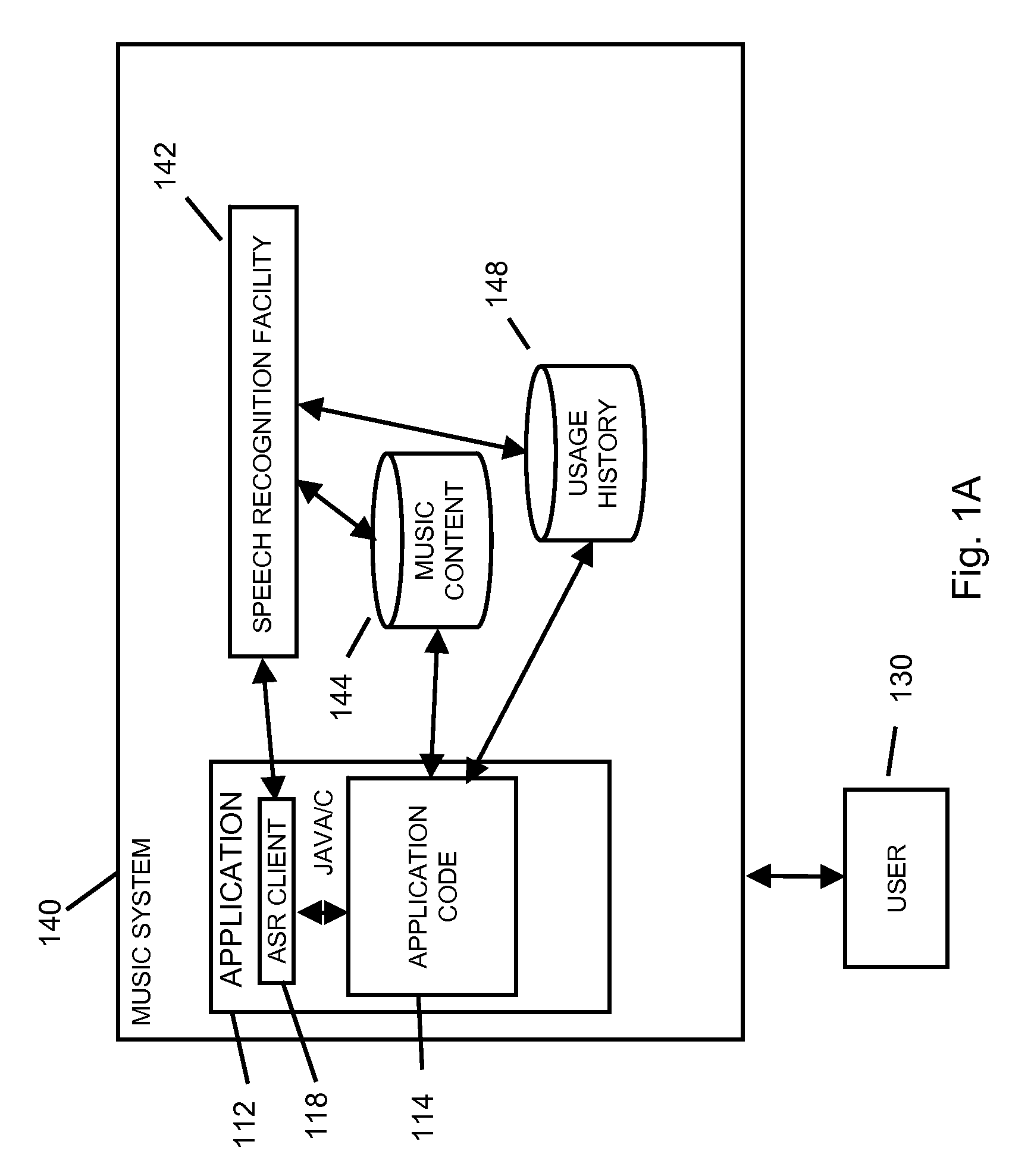

Using results of unstructured language model based speech recognition to control a system-level function of a mobile communications facility

InactiveUS8838457B2Task to performImprove performanceSpeech recognitionEngineeringHuman–computer interaction

A user may control a mobile communication facility through recognized speech provided to the mobile communication facility. Speech that is recorded by a user using a mobile communication facility resident capture facility. A speech recognition facility generates results of the recorded speech using an unstructured language model based at least in part on information relating to the recording. A function of the operating system of the mobile communication facility is controlled based on the results.

Owner:NUANCE COMM INC +1

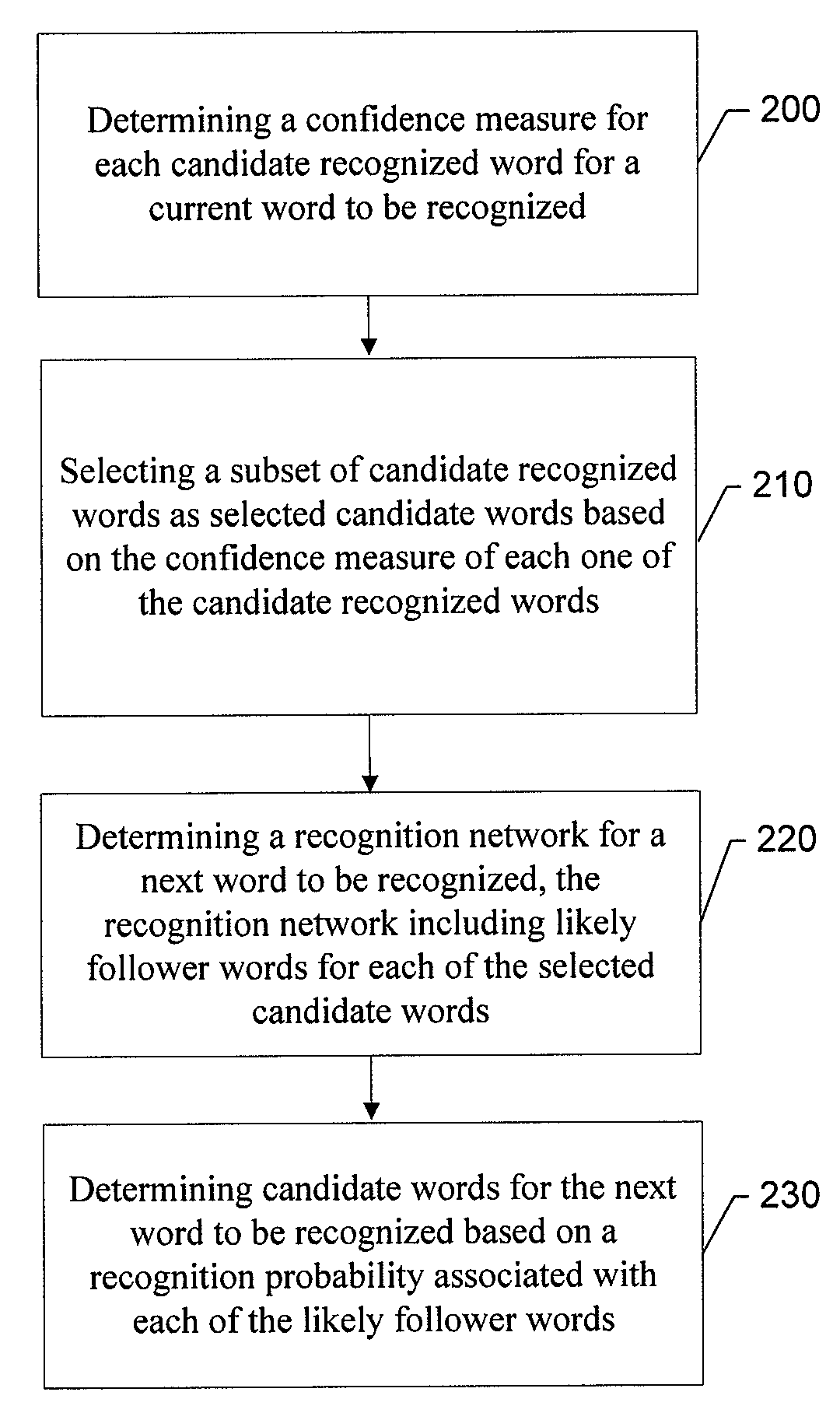

System, Method, Apparatus and Computer Program Product for Providing Dynamic Vocabulary Prediction for Speech Recognition

InactiveUS20080154600A1Improve speech processingAccurate word recognitionSpeech recognitionNatural language processingConfidence measures

An apparatus for providing dynamic vocabulary prediction for setting up a speech recognition network of resource constrained portable devices may include a recognition network element. The recognition network element may be configured to determine a confidence measure for each candidate recognized word for a current word to be recognized. The recognition network element may also be configured to select a subset of candidate recognized words as selected candidate words based on the confidence measure of each one of the candidate recognized words, and determine a recognition network for a next word to be recognized, the recognition network including likely follower words for each of the selected candidate words, e.g. using language model and highly frequently used words.

Owner:NOKIA CORP

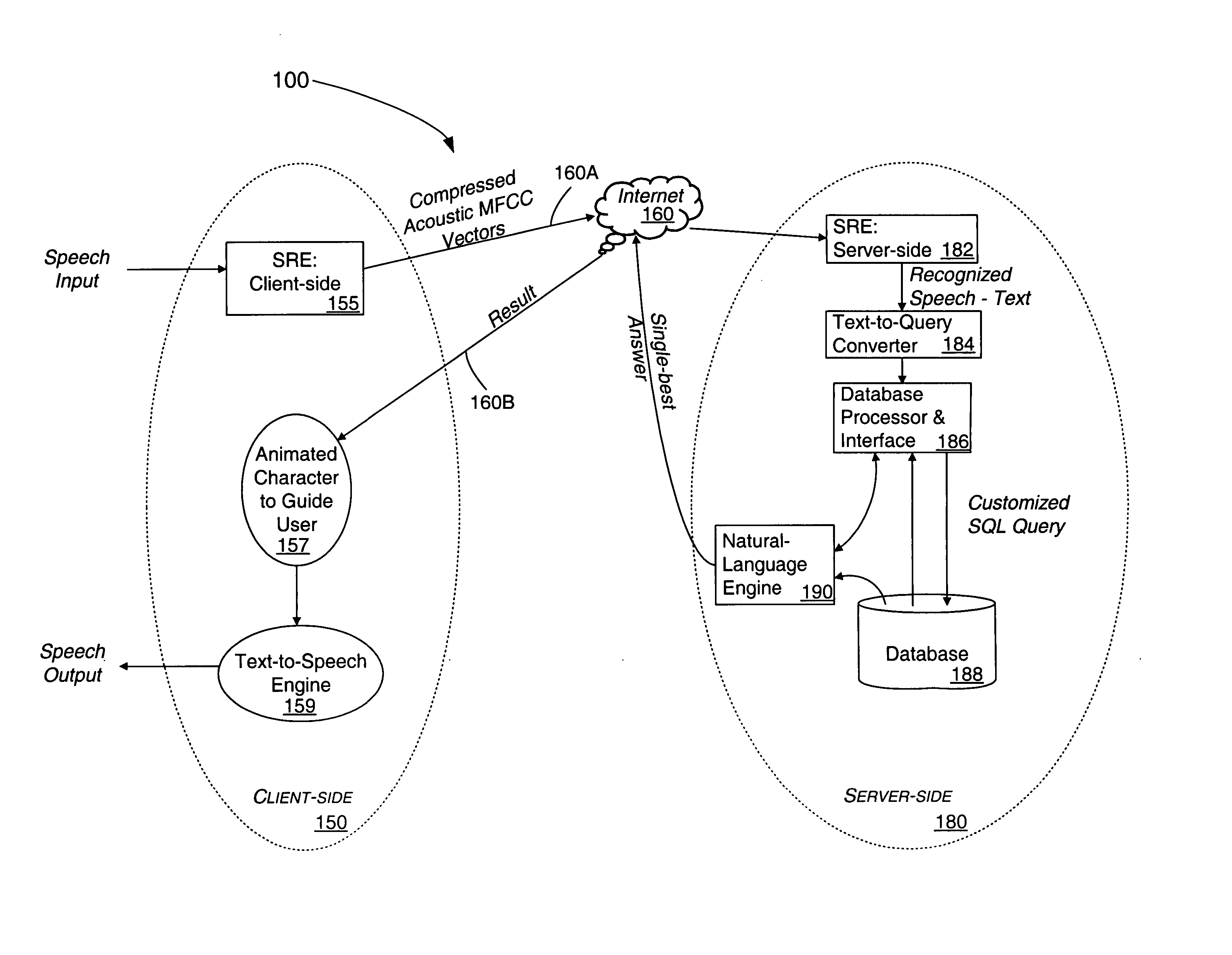

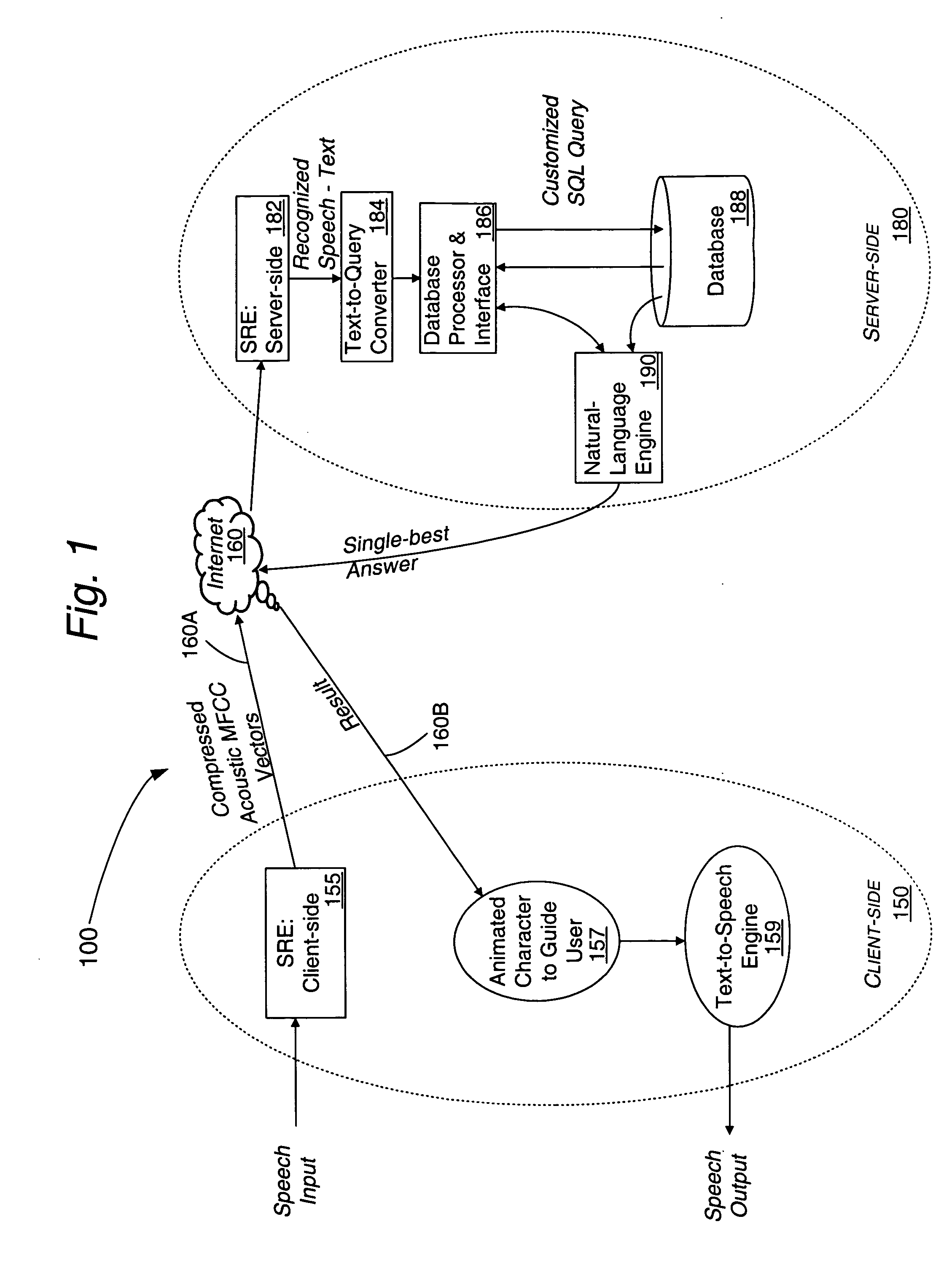

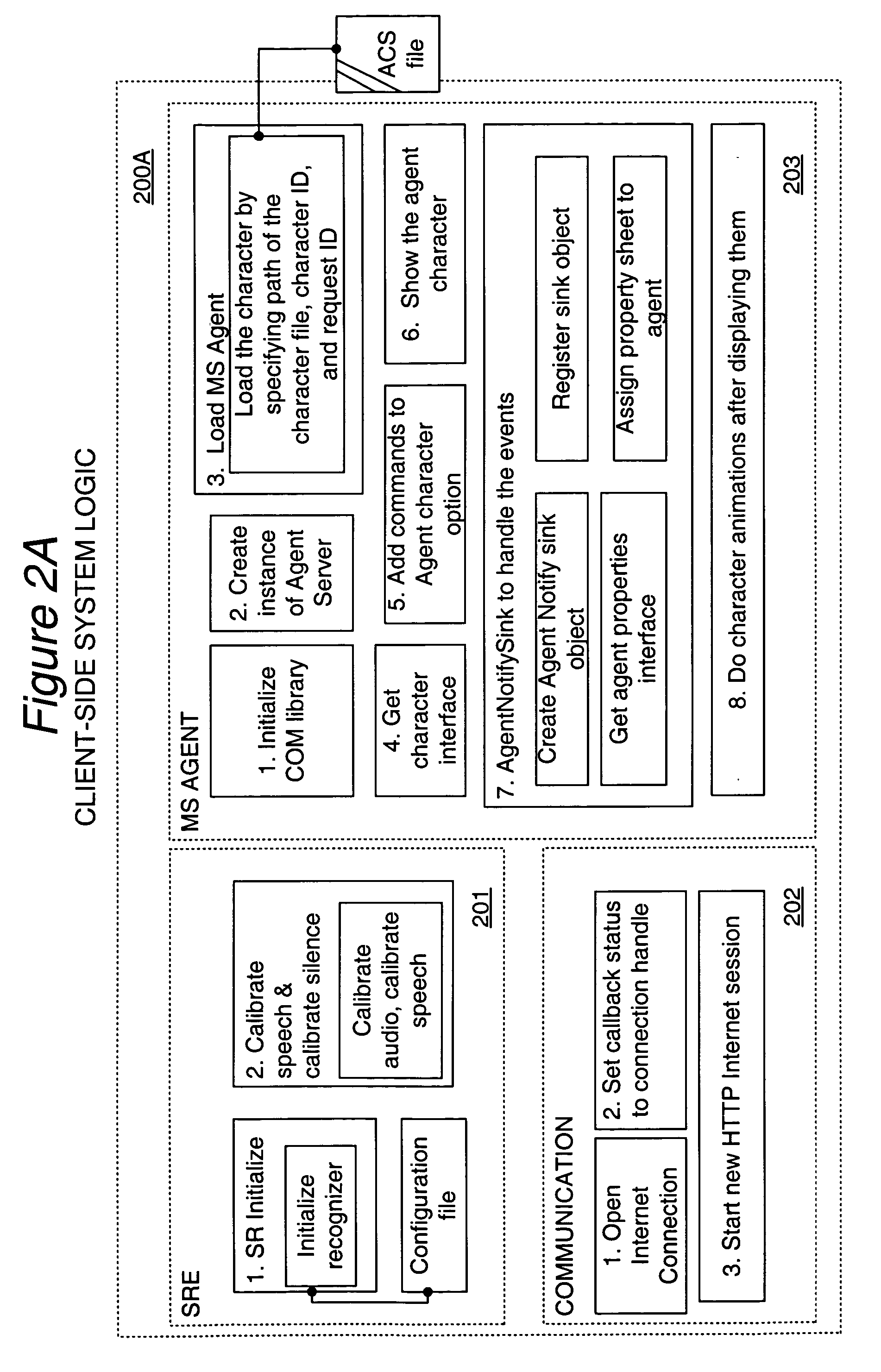

Multi-language speech recognition system

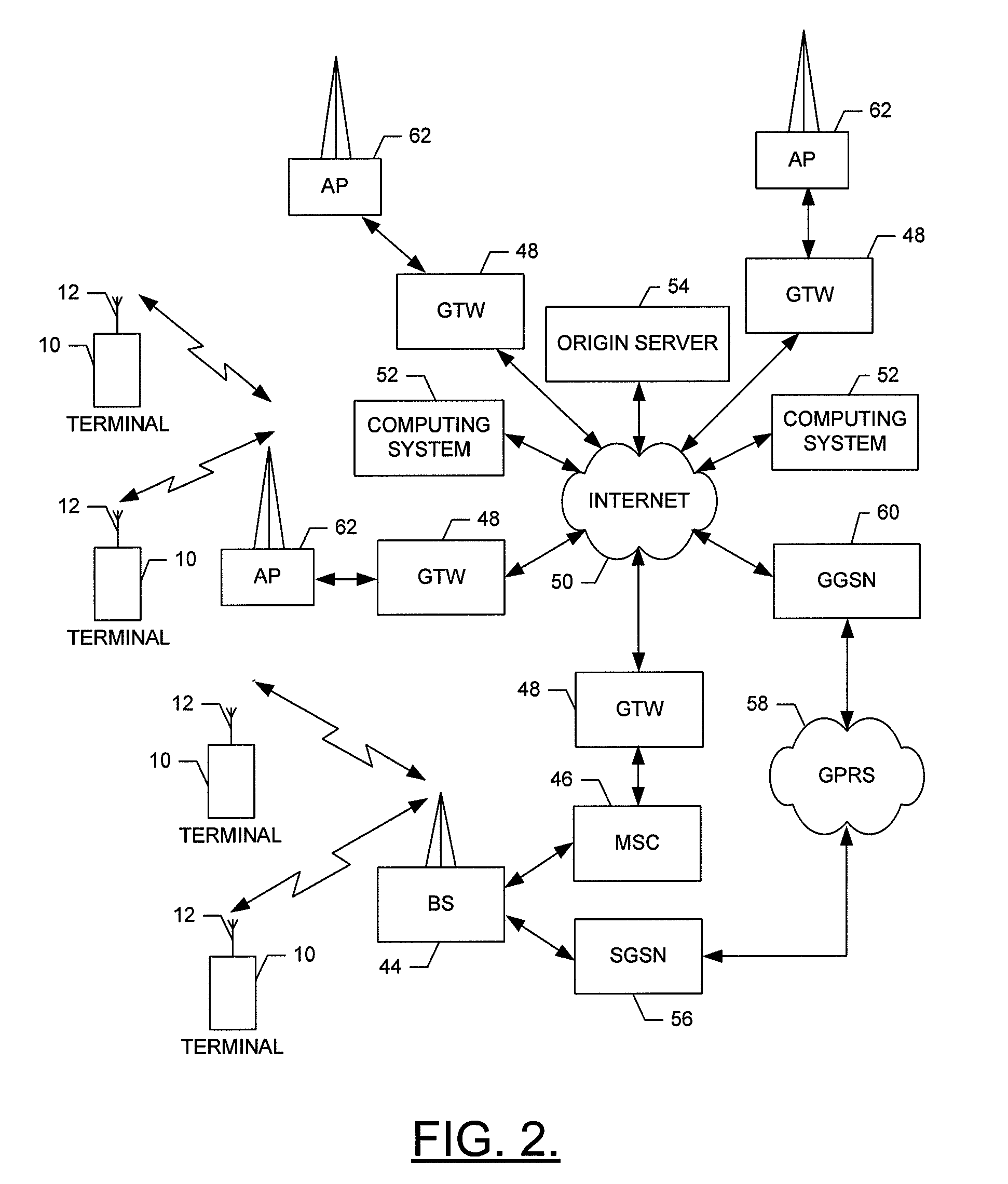

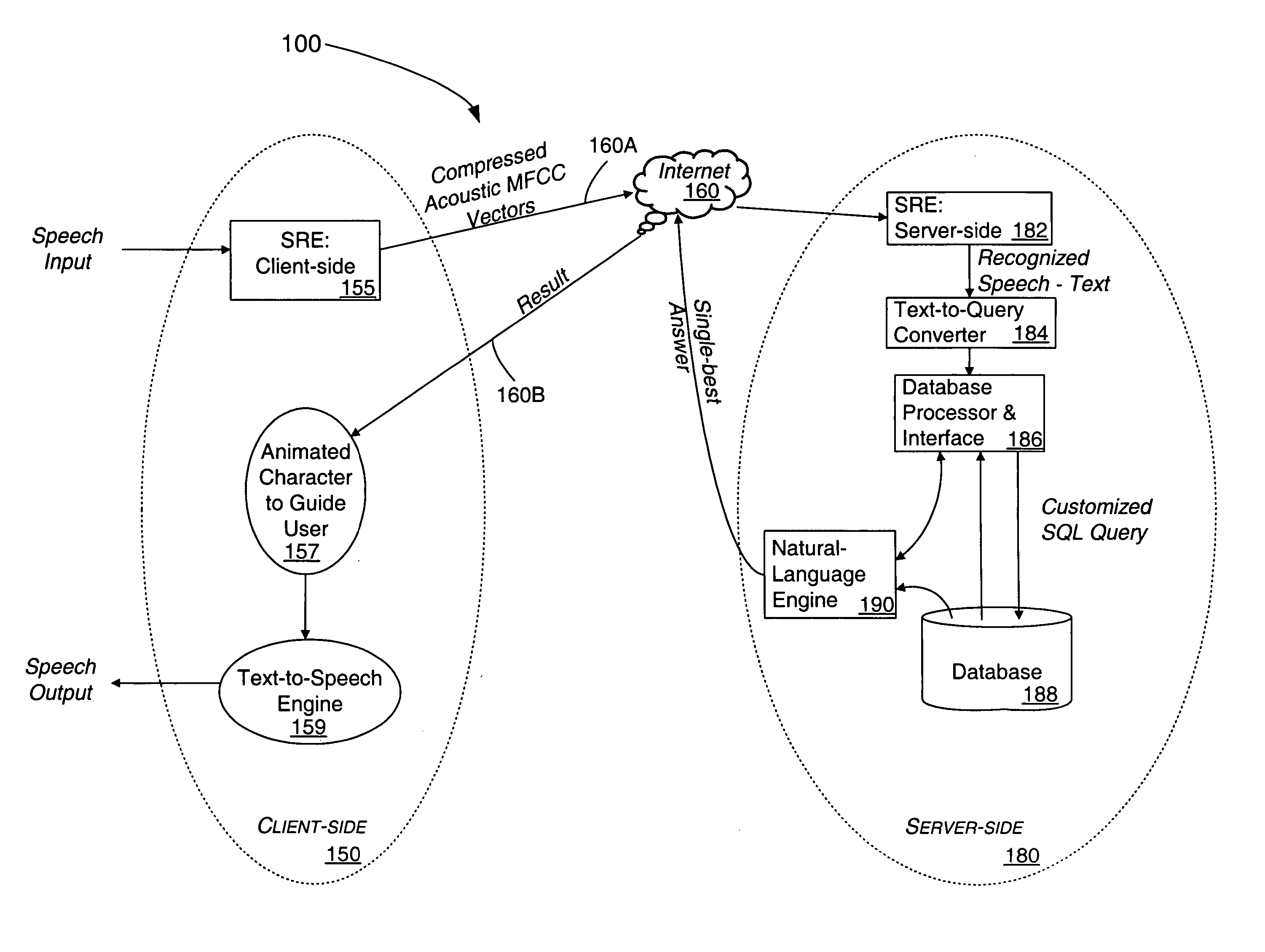

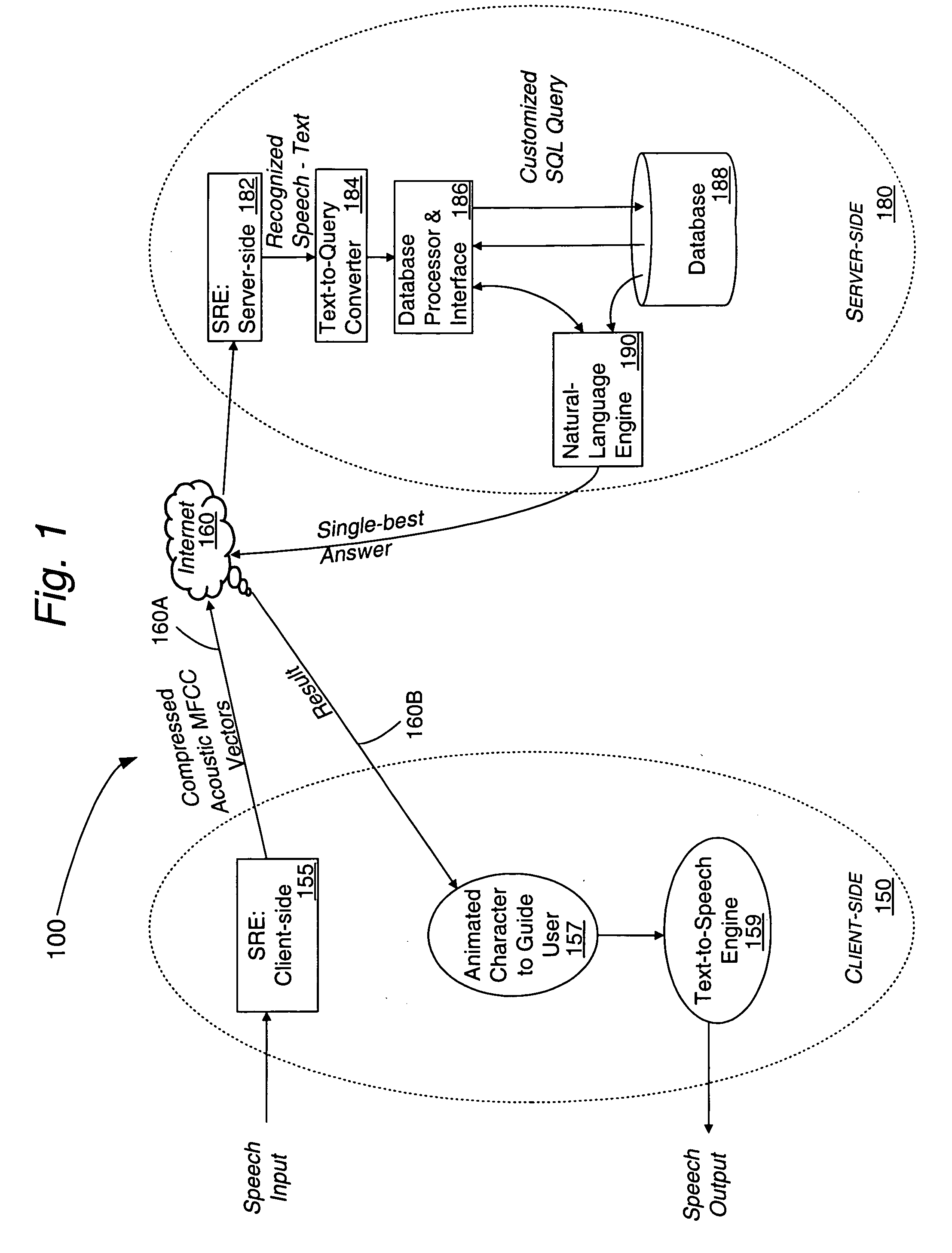

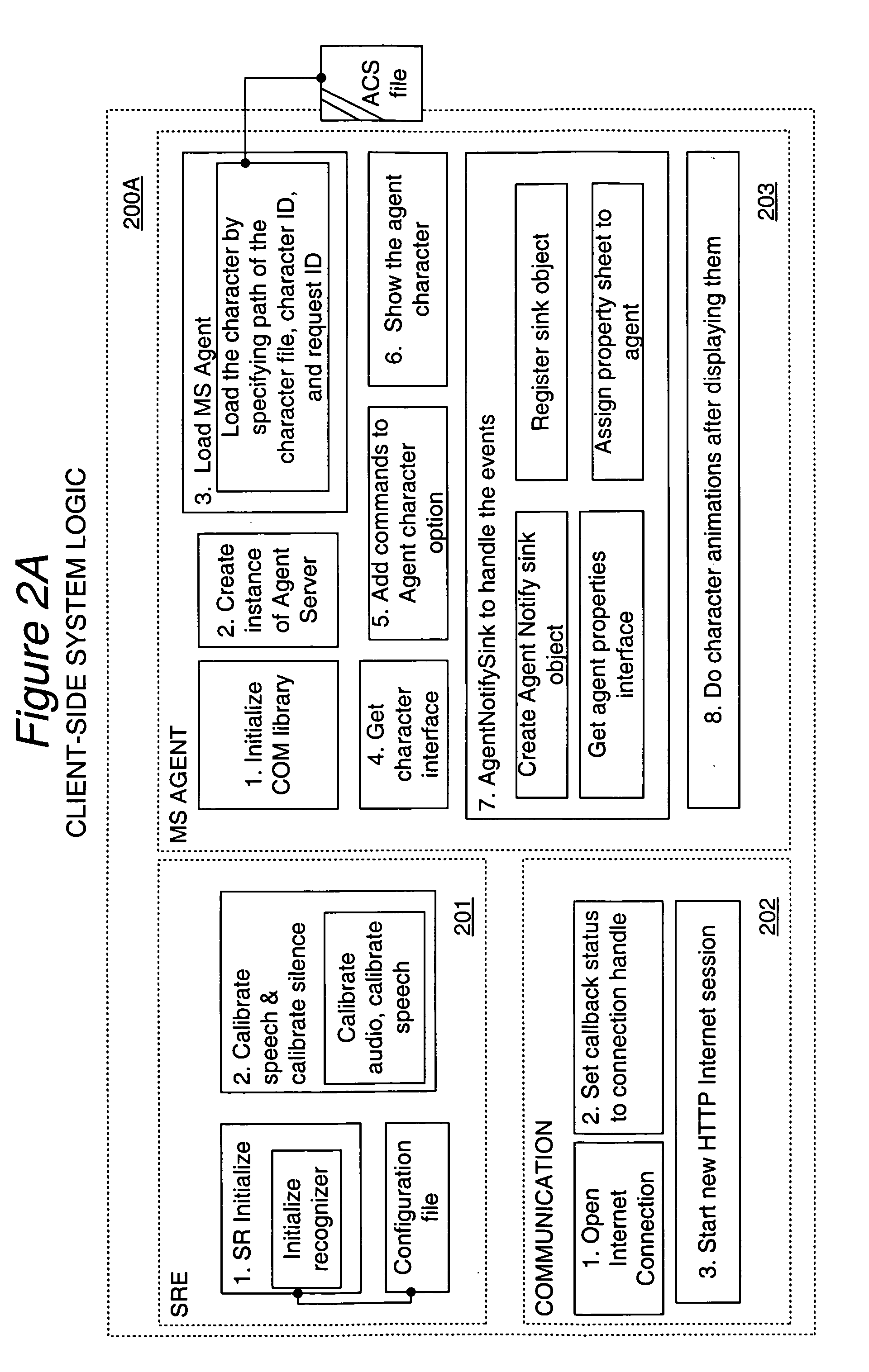

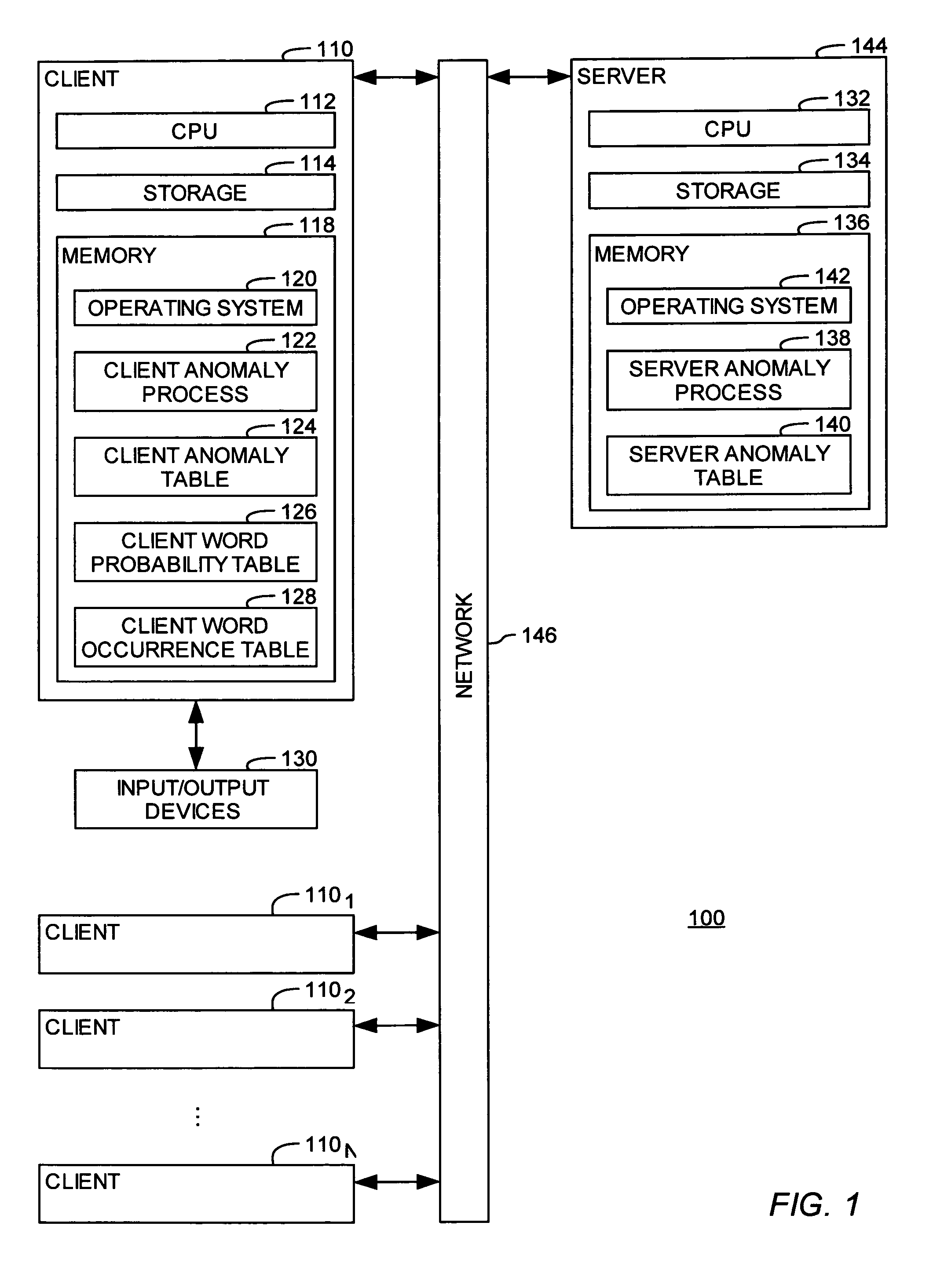

InactiveUS20050119897A1Flexibly and optimally distributedImprove accuracyNatural language translationDigital data information retrievalMulti languageClient-side

A speech recognition system includes distributed processing across a client and server for recognizing a spoken query by a user. A number of different speech models for different natural languages are used to support and detect a natural language spoken by a user. In some implementations an interactive electronic agent responds in the user's native language to facilitate an real-time, human like dialogue.

Owner:NUANCE COMM INC

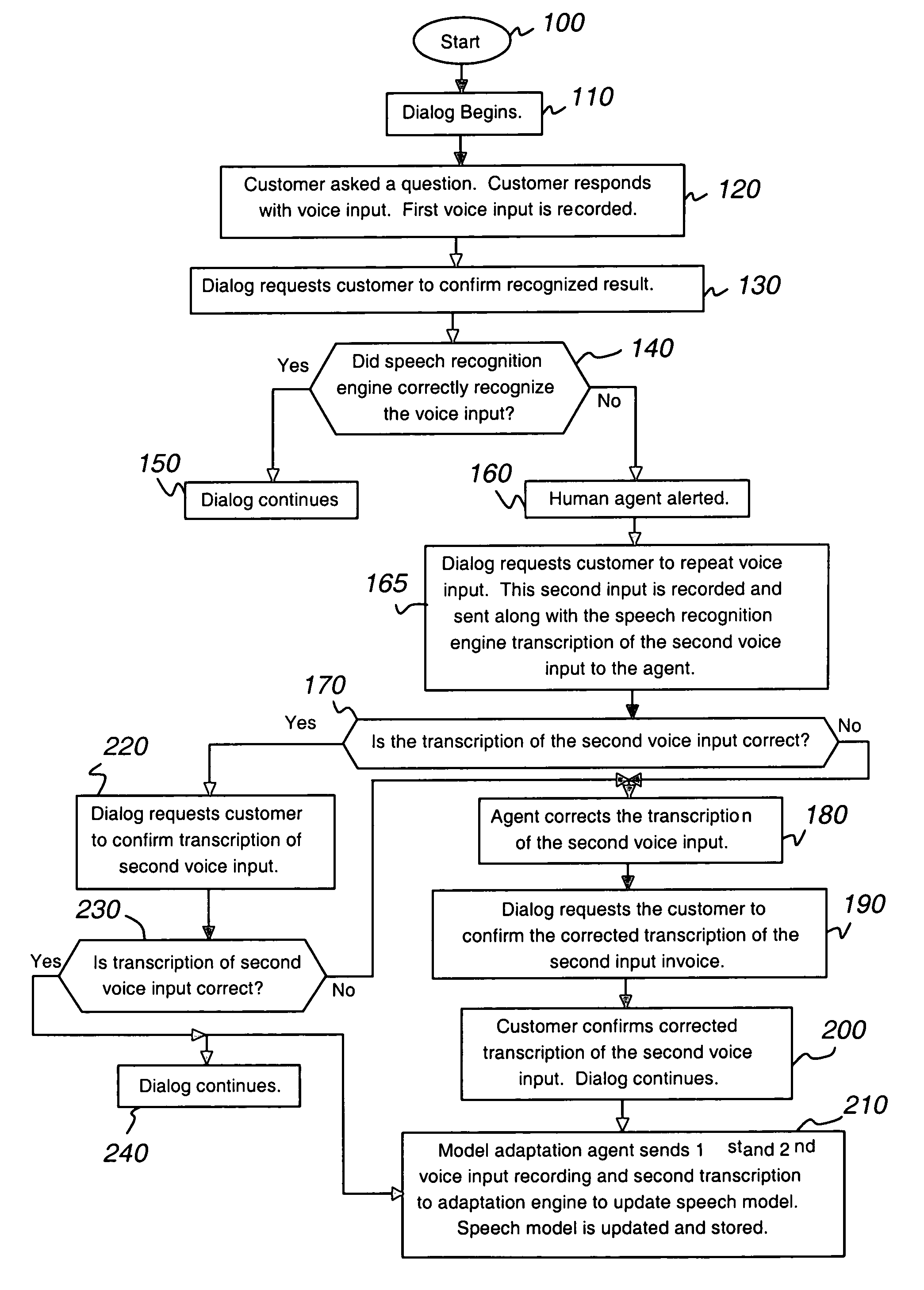

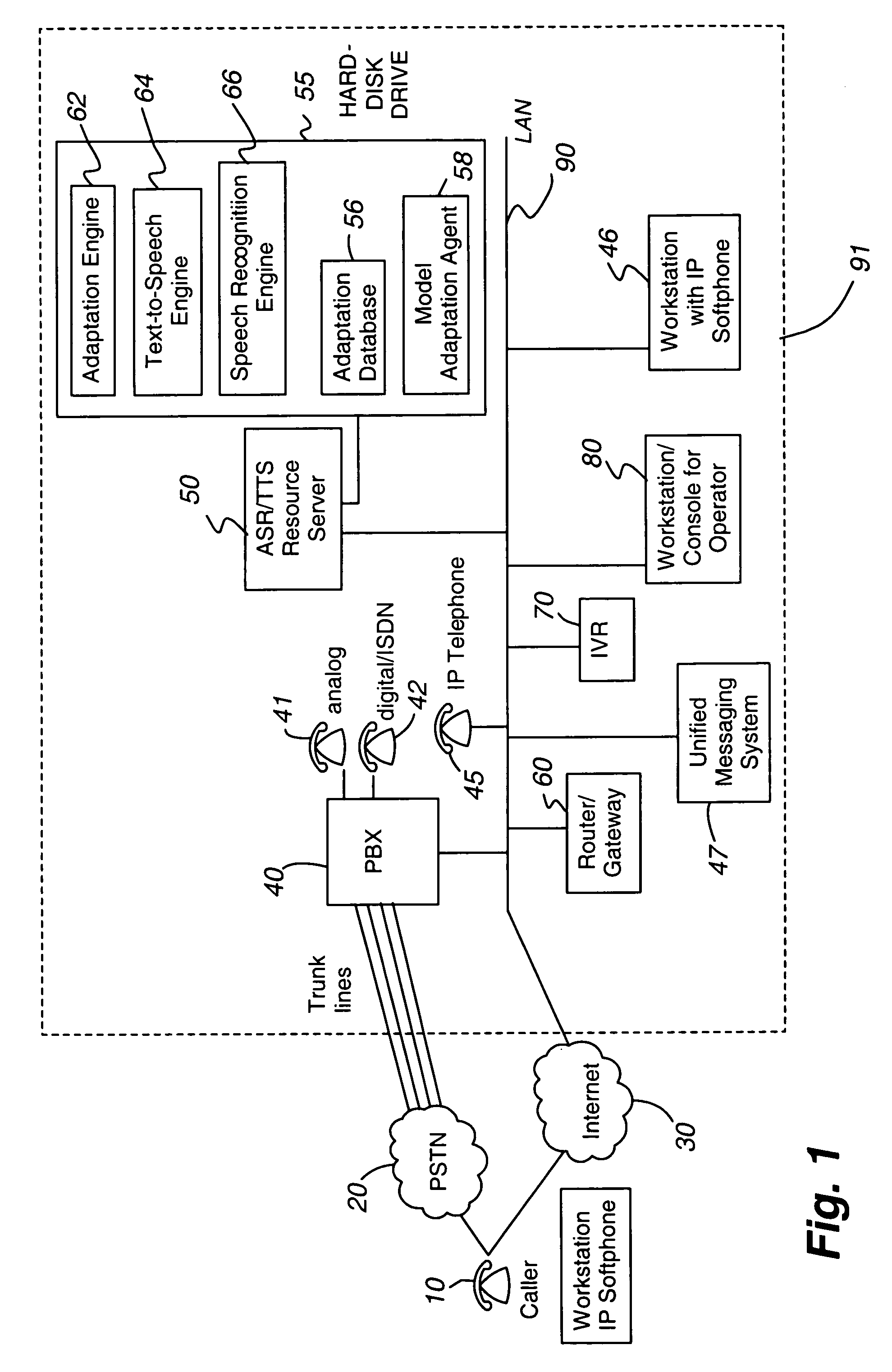

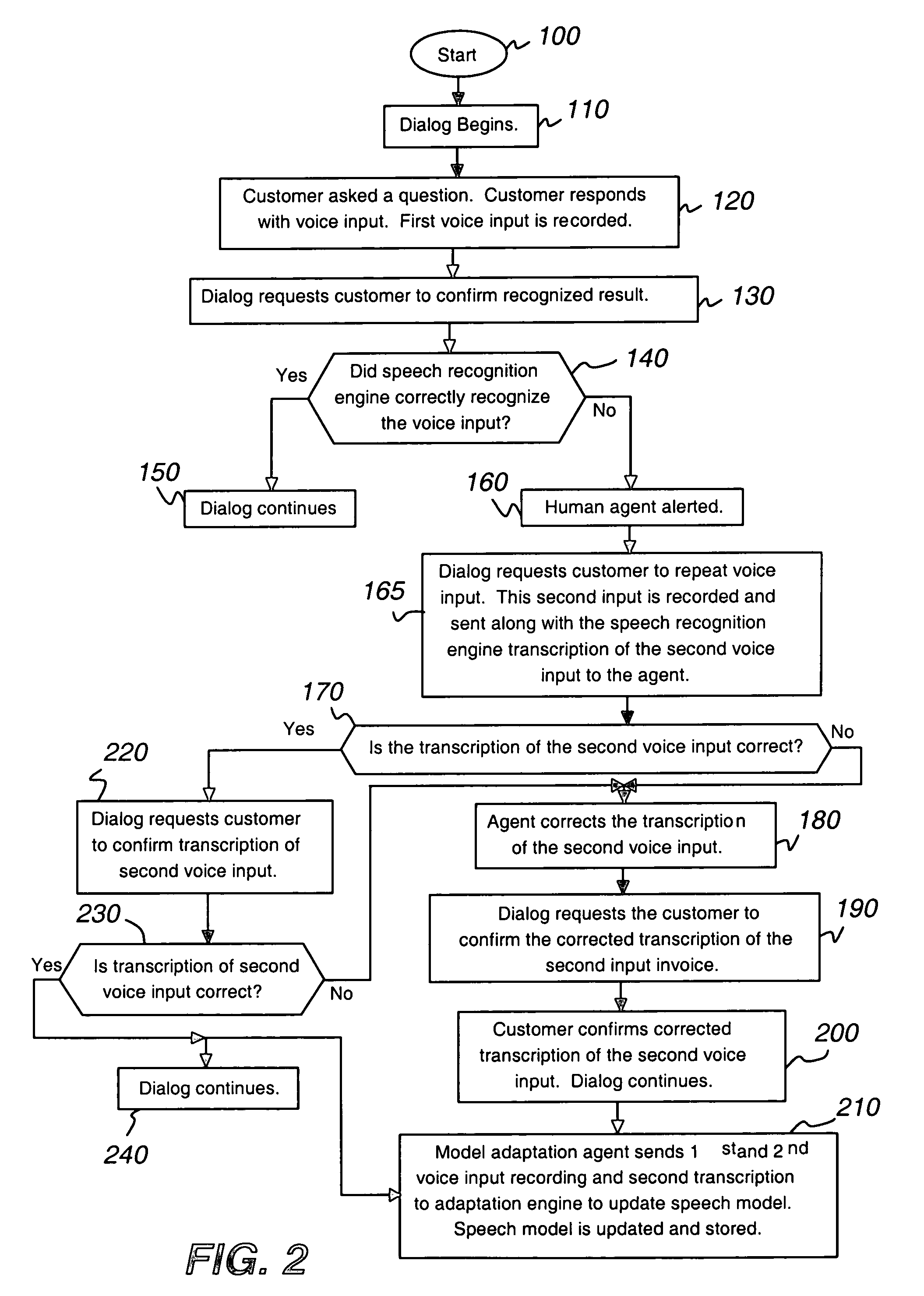

Transparent monitoring and intervention to improve automatic adaptation of speech models

ActiveUS7660715B1Improve speech recognition accuracyQuality improvementSpeech recognitionFrustrationMostly True

A system and method to improve the automatic adaptation of one or more speech models in automatic speech recognition systems. After a dialog begins, for example, the dialog asks the customer to provide spoken input and it is recorded. If the speech recognizer determines it may not have correctly transcribed the verbal response, i.e., voice input, the invention uses monitoring and if necessary, intervention to guarantee that the next transcription of the verbal response is correct. The dialog asks the customer to repeat his verbal response, which is recorded and a transcription of the input is sent to a human monitor, i.e., agent or operator. If the transcription of the spoken input is correct, the human does not intervene and the transcription remains unmodified. If the transcription of the verbal response is incorrect, the human intervenes and the transcription of the misrecognized word is corrected. In both cases, the dialog asks the customer to confirm the unmodified and corrected transcription. If the customer confirms the unmodified or newly corrected transcription, the dialog continues and the customer does not hang up in frustration because most times only one misrecognition occurred. Finally, the invention uses the first and second customer recording of the misrecognized word or utterance along with the corrected or unmodified transcription to automatically adapt one or more speech models, which improves the performance of the speech recognition system.

Owner:AVAYA INC

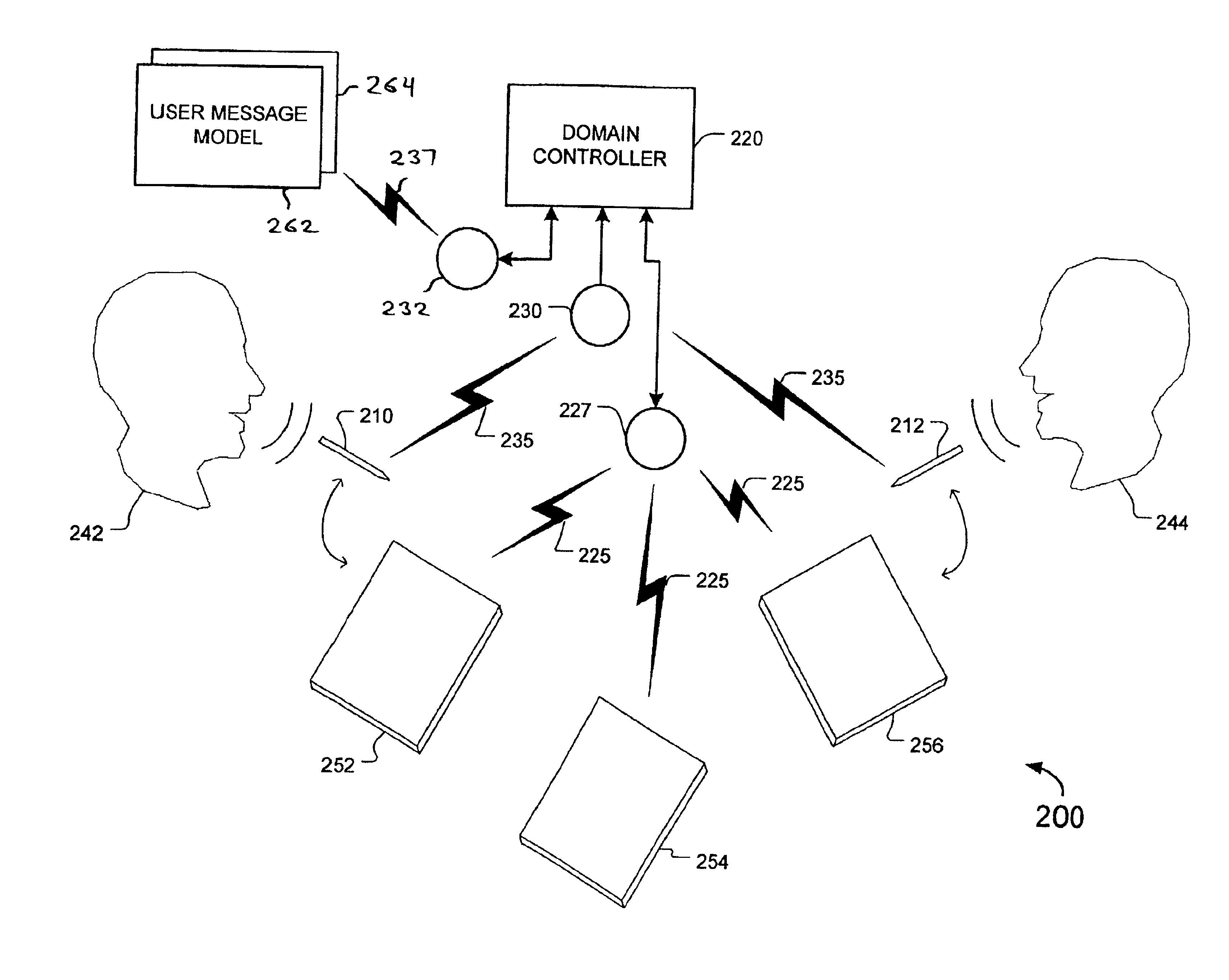

Message recognition using shared language model

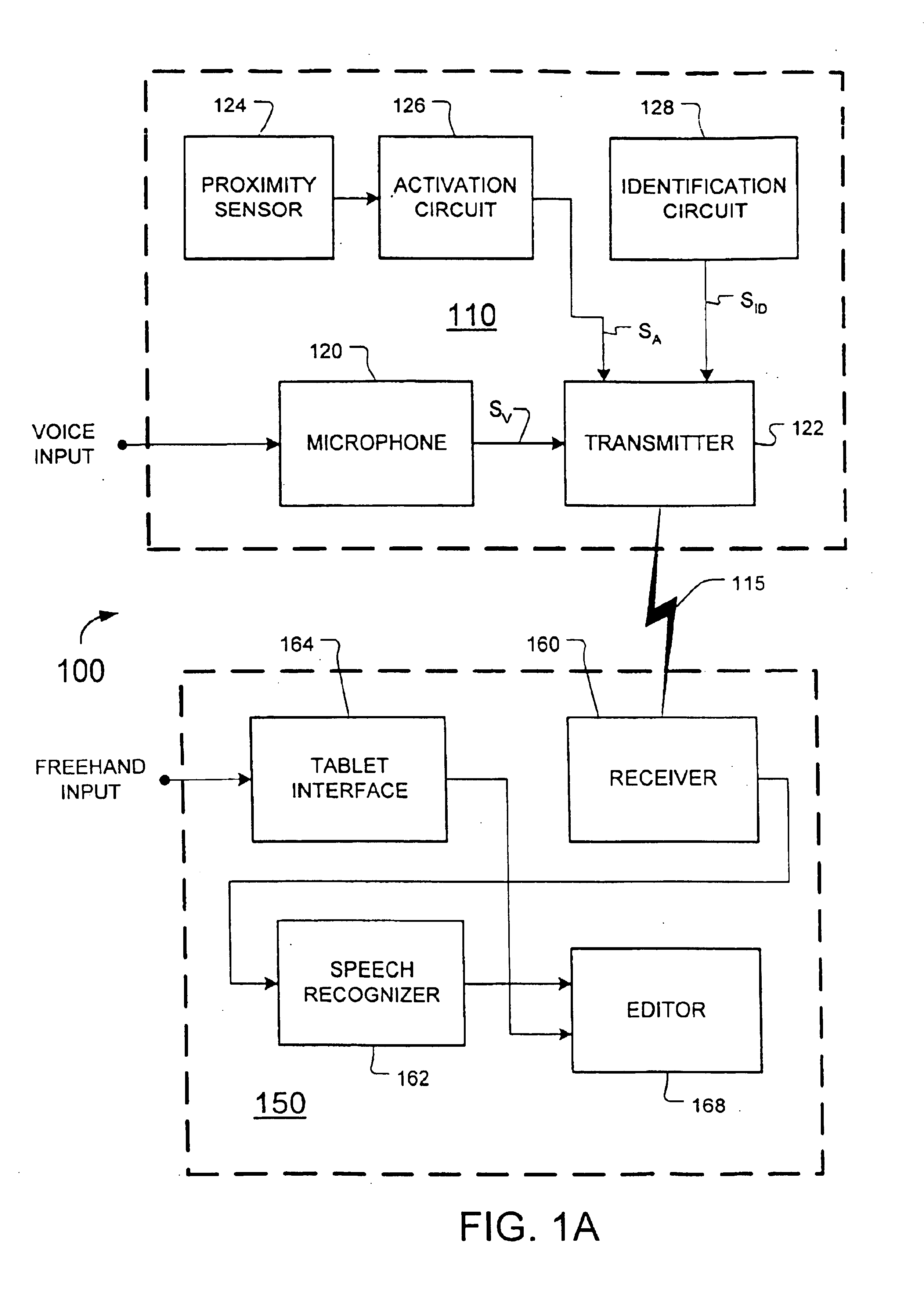

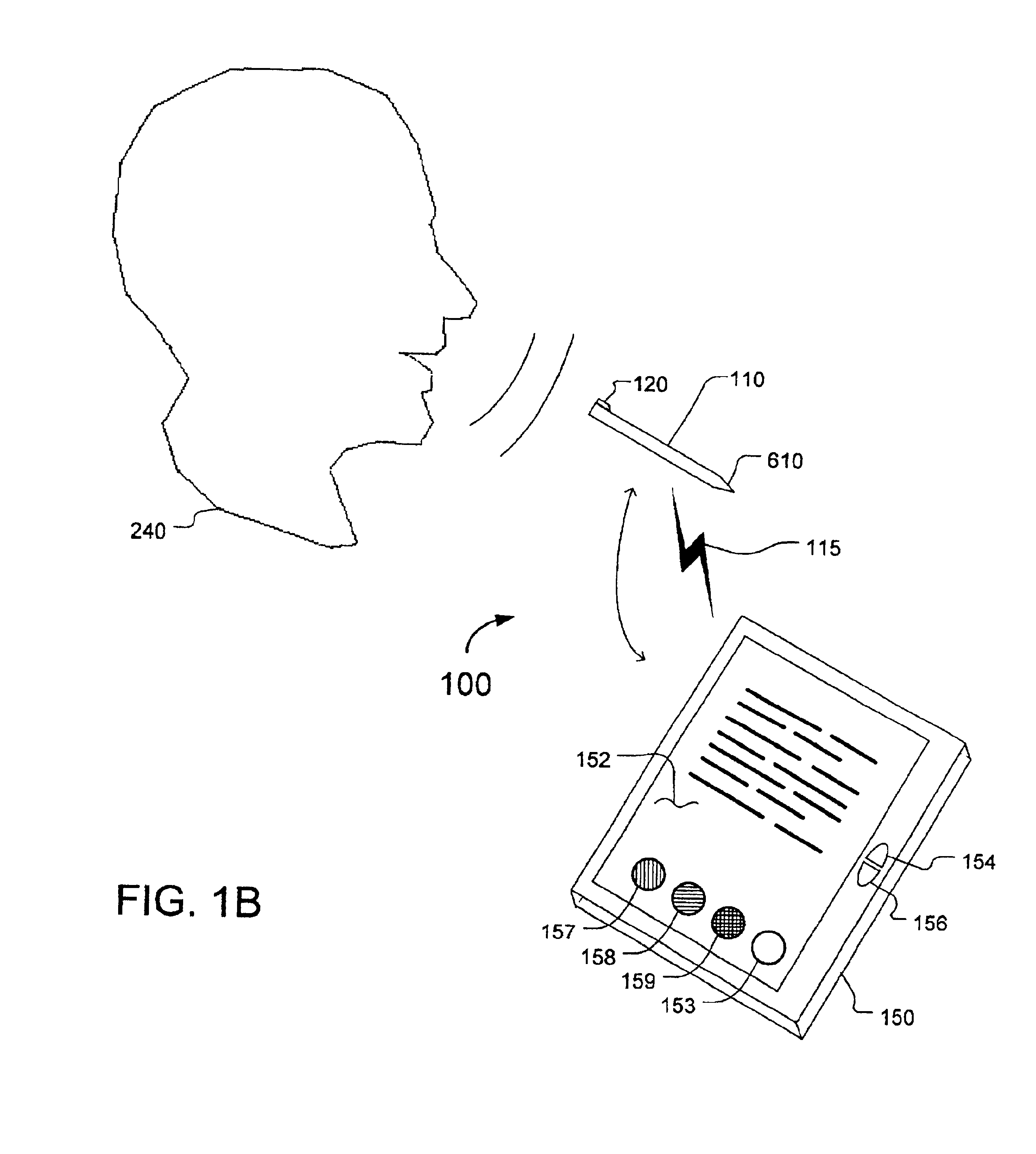

InactiveUS6904405B2Speech recognitionInput/output processes for data processingHandwritingAcoustic model

Certain disclosed methods and systems perform multiple different types of message recognition using a shared language model. Message recognition of a first type is performed responsive to a first type of message input (e.g., speech), to provide text data in accordance with both the shared language model and a first model specific to the first type of message recognition (e.g., an acoustic model). Message recognition of a second type is performed responsive to a second type of message input (e.g., handwriting), to provide text data in accordance with both the shared language model and a second model specific to the second type of message recognition (e.g., a model that determines basic units of handwriting conveyed by freehand input). Accuracy of both such message recognizers can be improved by user correction of misrecognition by either one of them. Numerous other methods and systems are also disclosed.

Owner:BUFFALO PATENTS LLC

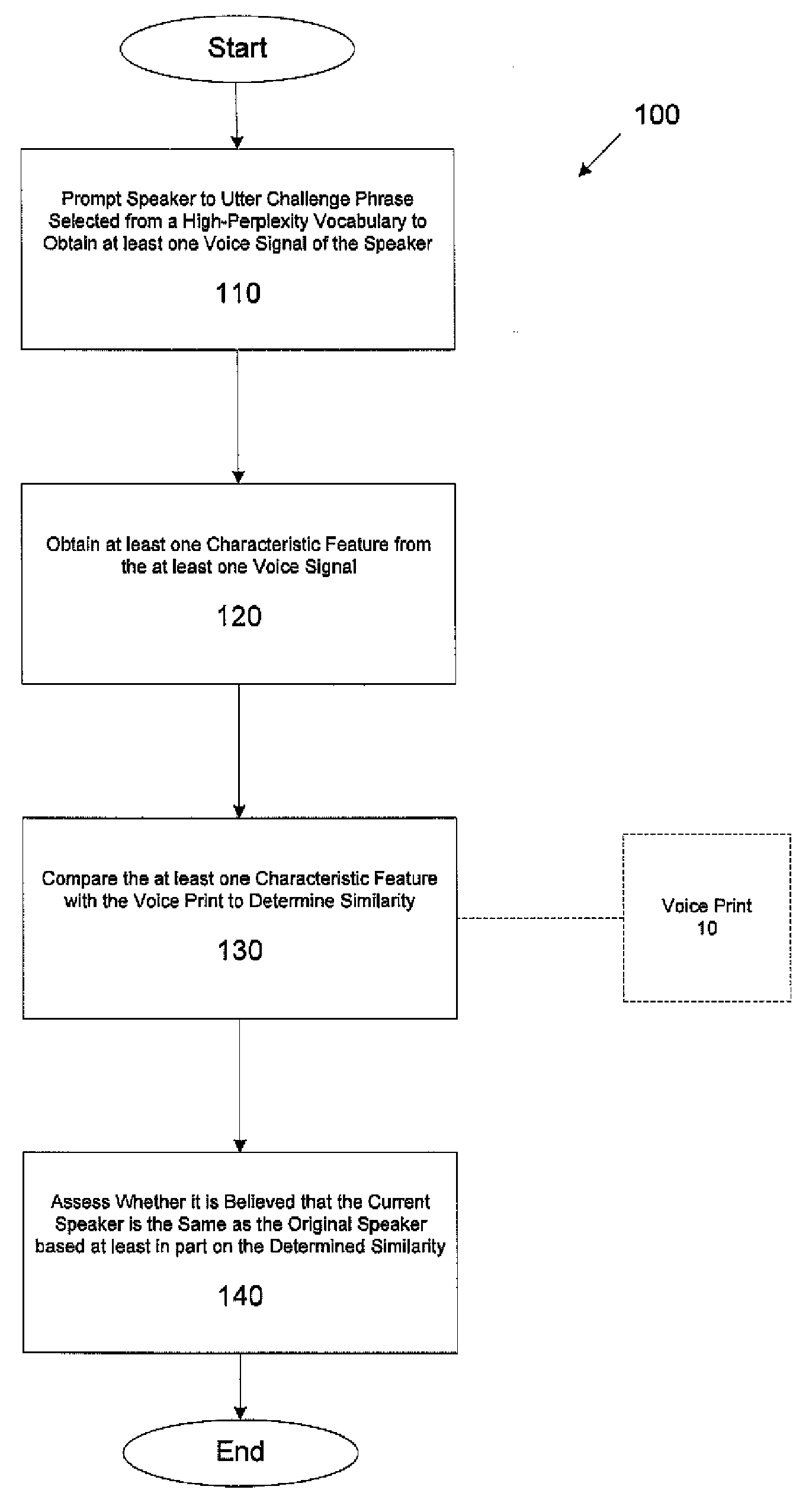

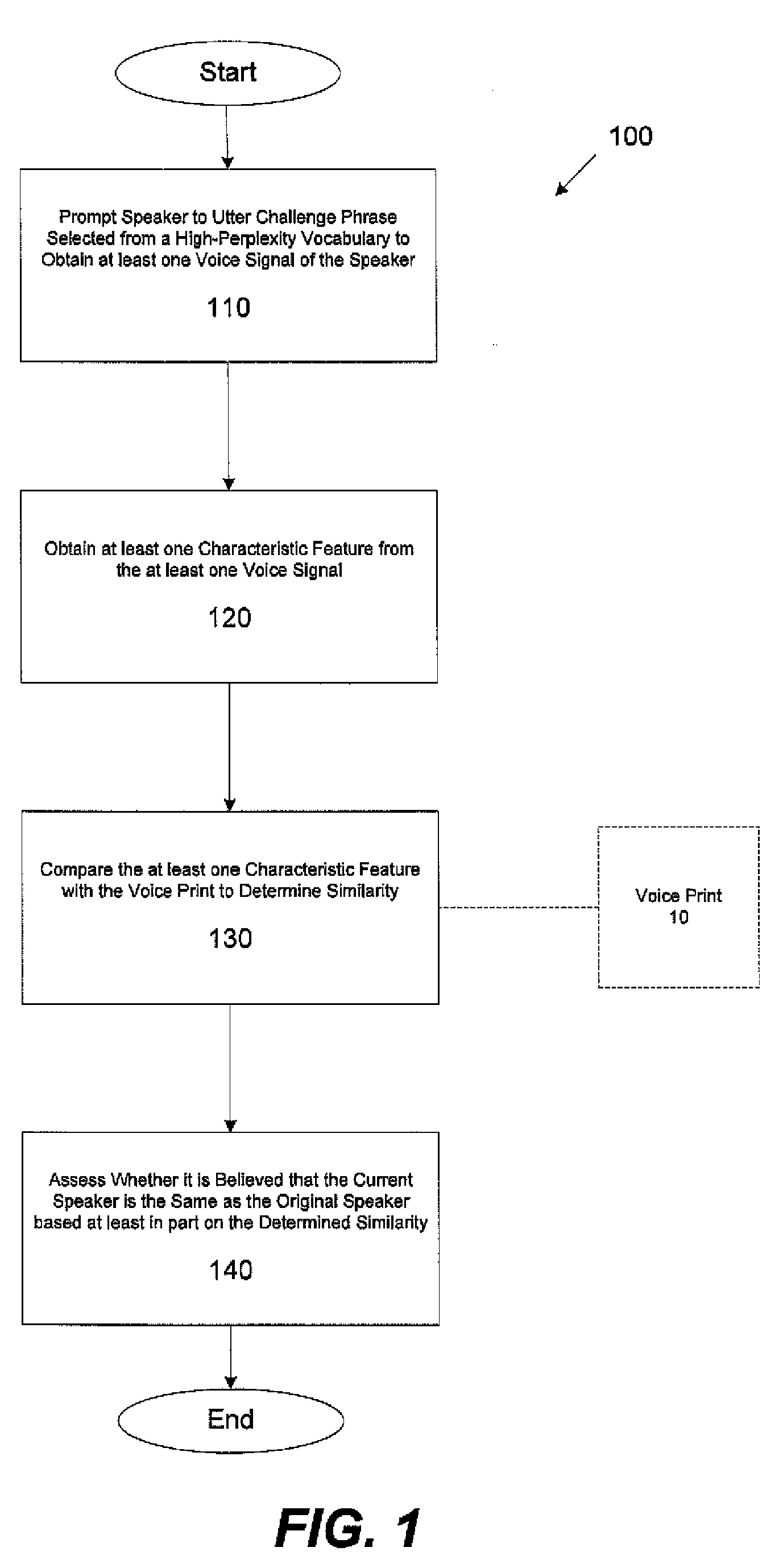

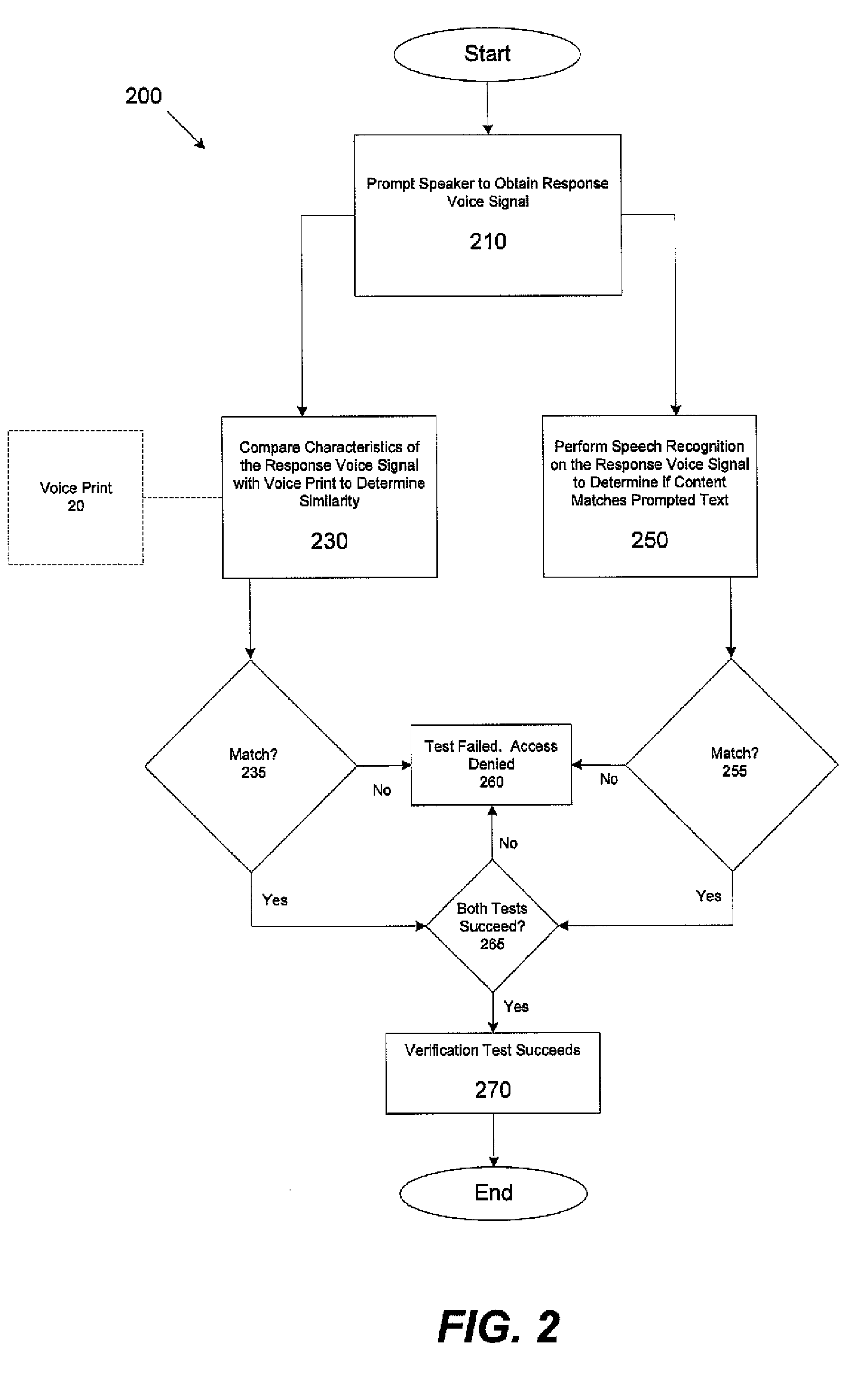

Speaker verification methods and apparatus

In one aspect, a method for determining a validity of an identity asserted by a speaker using a voice print that models speech of a user whose identity the speaker is asserting is provided. The method comprises acts of performing a first verification stage comprising acts of obtaining a first voice signal from the speaker uttering at least one first challenge utterance; and comparing at least one characteristic feature of the first voice signal with at least a portion of the voice print to assess whether the at least one characteristic feature of the first voice signal is similar enough to the at least a portion of the voice print to conclude that the first voice signal was obtained from an utterance by the user. The method further comprises performing a second verification stage if it is concluded in the first verification stage that the first voice signal was obtained from an utterance by the user, the second verification stage comprising acts of adapting at least one parameter of the voice print based, at least in part, on the first voice signal to obtain an adapted voice print, obtaining a second voice signal from the speaker uttering at least one second challenge utterance, and comparing at least one characteristic feature of the second voice signal with at least a portion of the adapted voice print to assess whether the at least one characteristic feature of the second voice signal is similar enough to the at least a portion of the adapted voice print to conclude that the second voice signal was obtained from an utterance by the user.

Owner:NUANCE COMM INC

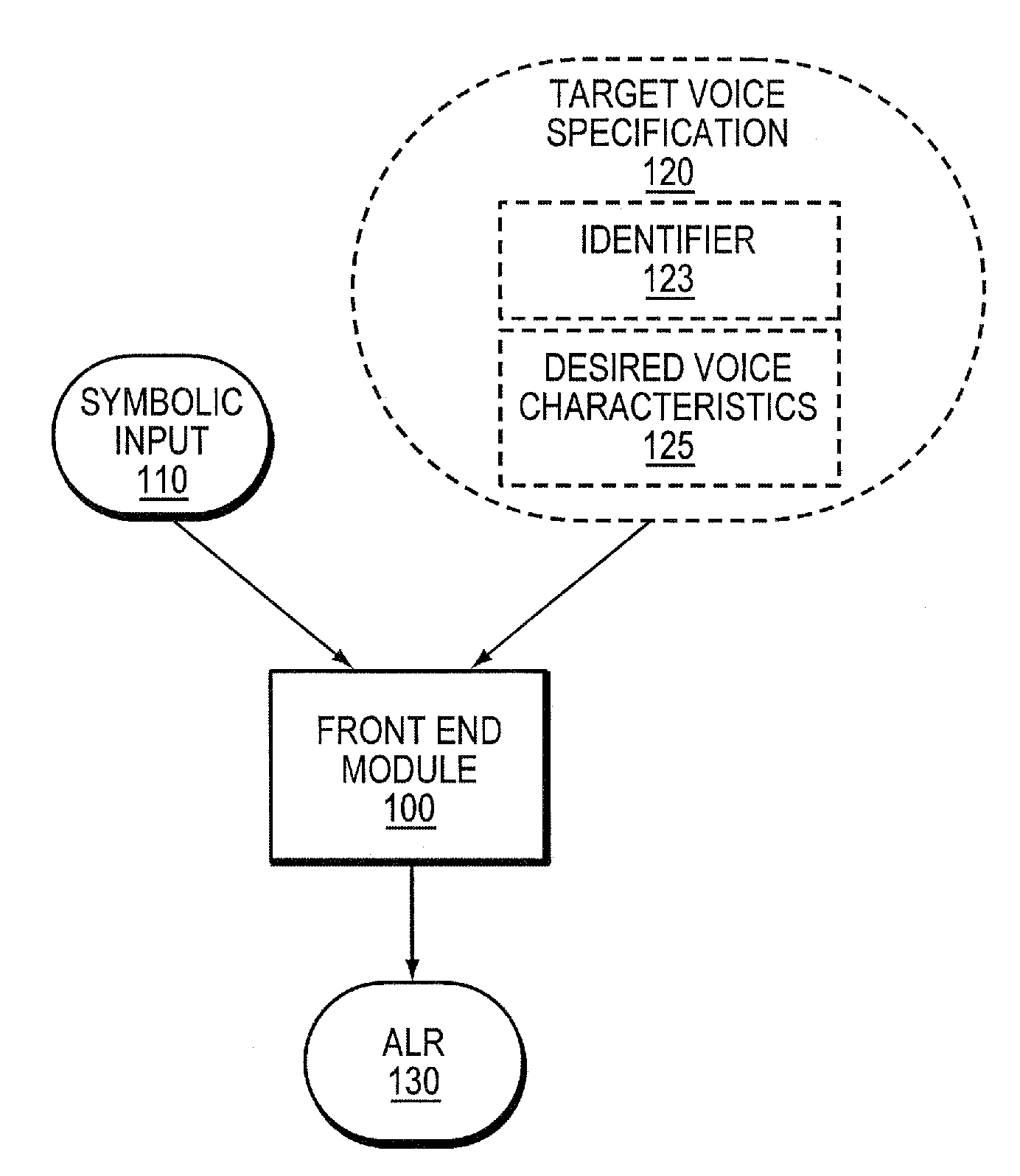

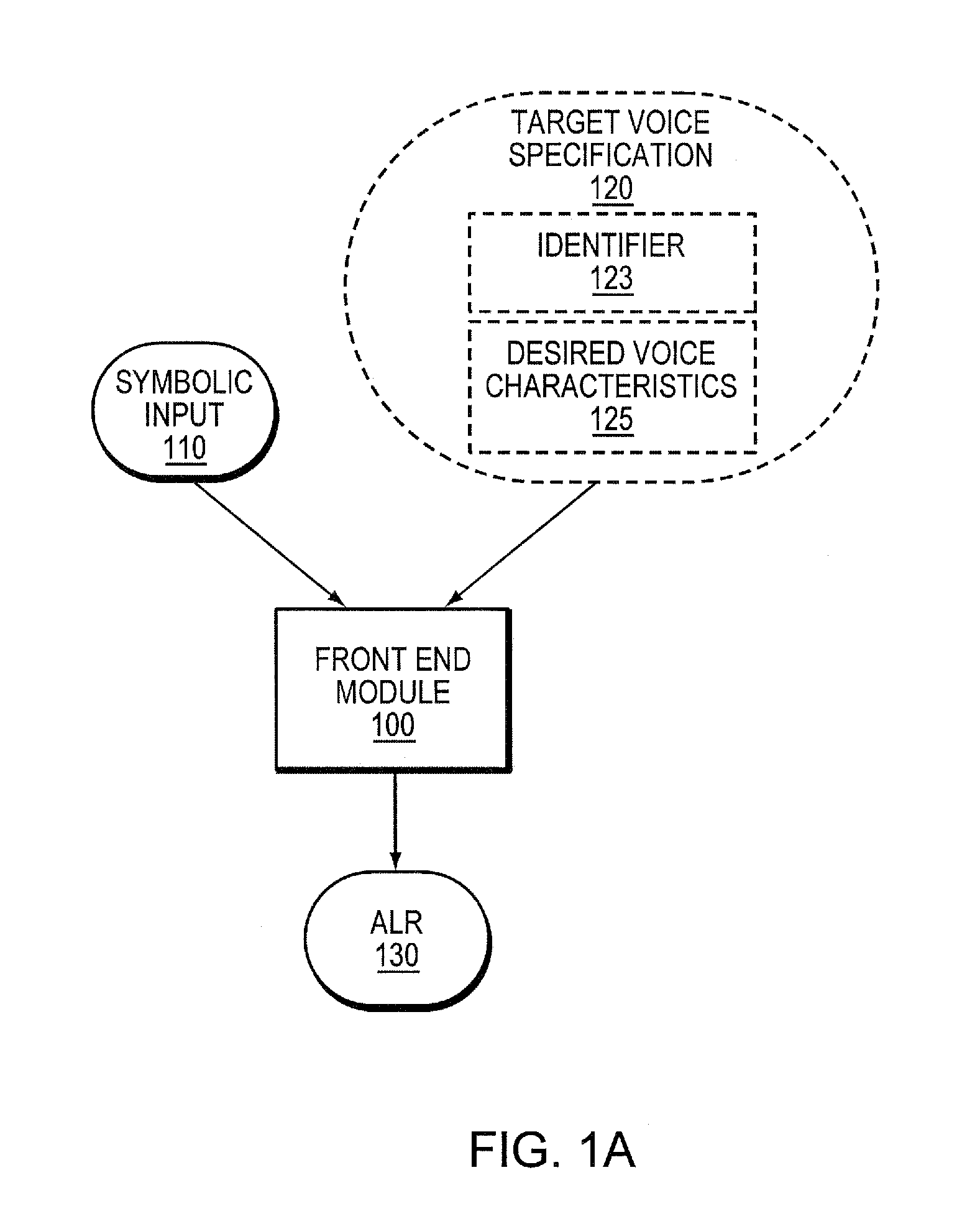

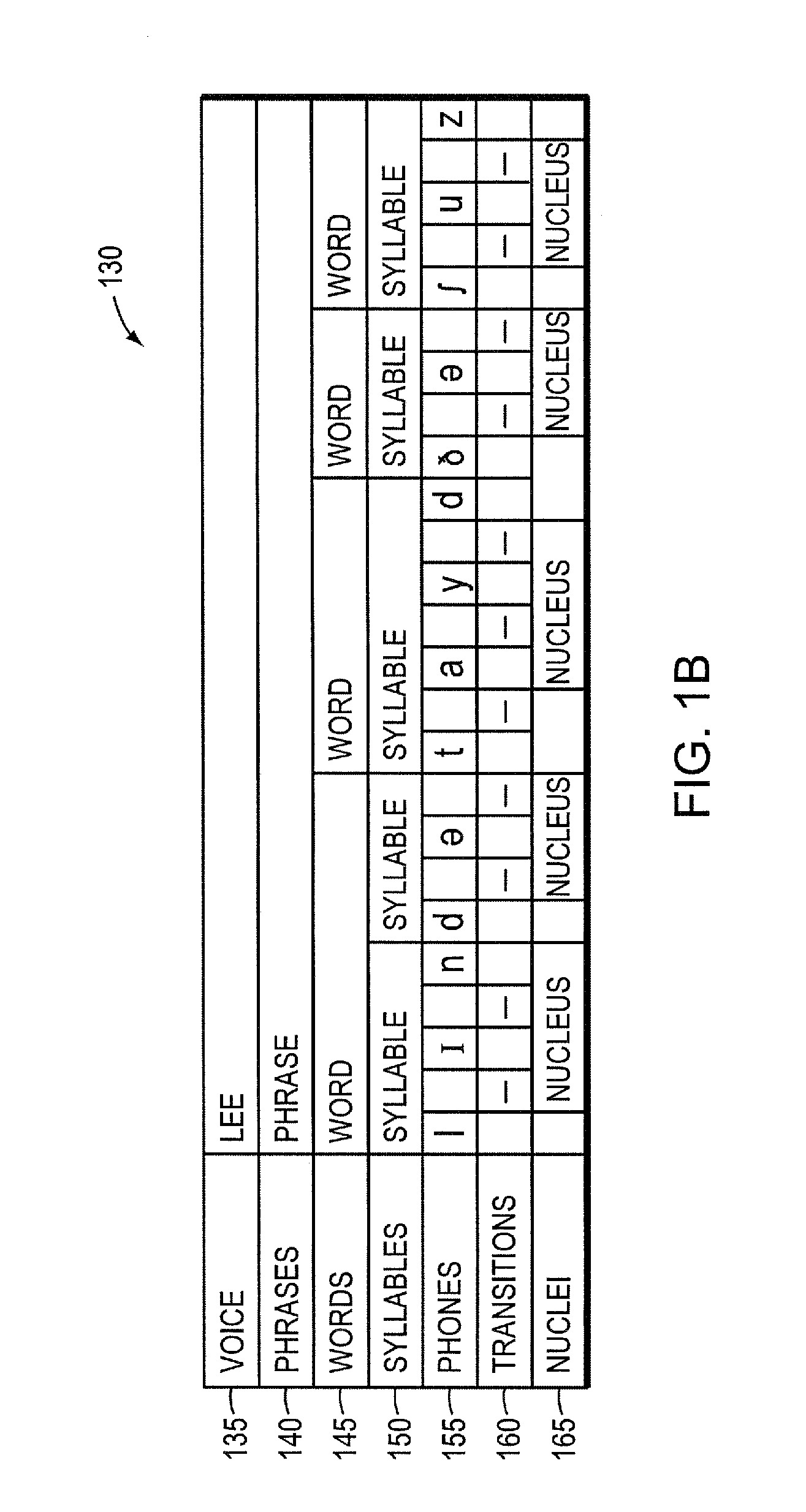

System and method for hybrid speech synthesis

ActiveUS20080270140A1Cost-efficientlySolve the real problemSpeech synthesisSpeech corpusSpeech sound

A speech synthesis system receives symbolic input describing an utterance to be synthesized. In one embodiment, different portions of the utterance are constructed from different sources, one of which is a speech corpus recorded from a human speaker whose voice is to be modeled. The other sources may include other human speech corpora or speech produced using Rule-Based Speech Synthesis (RBSS). At least some portions of the utterance may be constructed by modifying prototype speech units to produce adapted speech units that are contextually appropriate for the utterance. The system concatenates the adapted speech units with the other speech units to produce a speech waveform. In another embodiment, a speech unit of a speech corpus recorded from a human speaker lacks transitions at one or both of its edges. A transition is synthesized using RBSS and concatenated with the speech unit in producing a speech waveform for the utterance.

Owner:NOVASPEECH

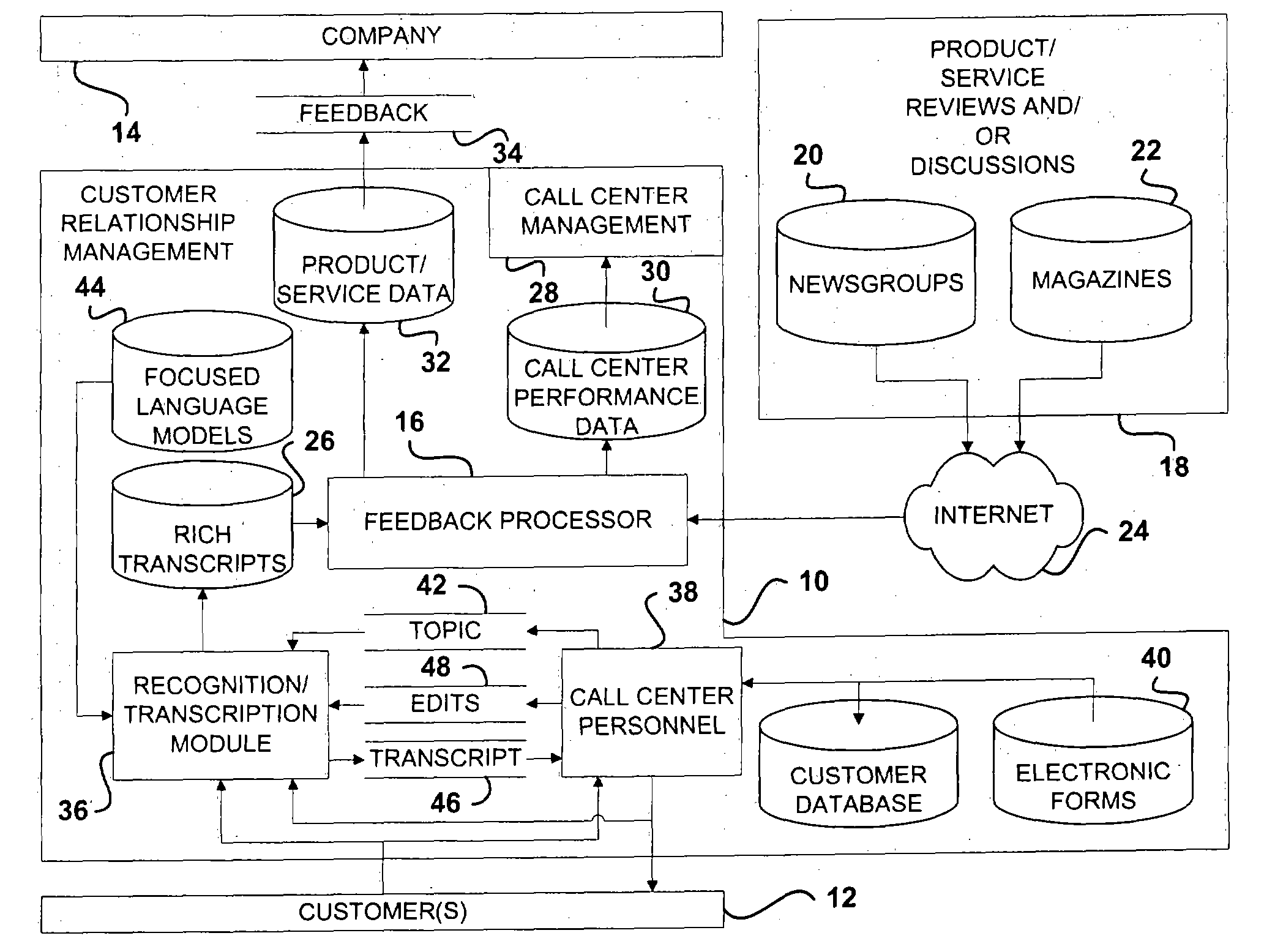

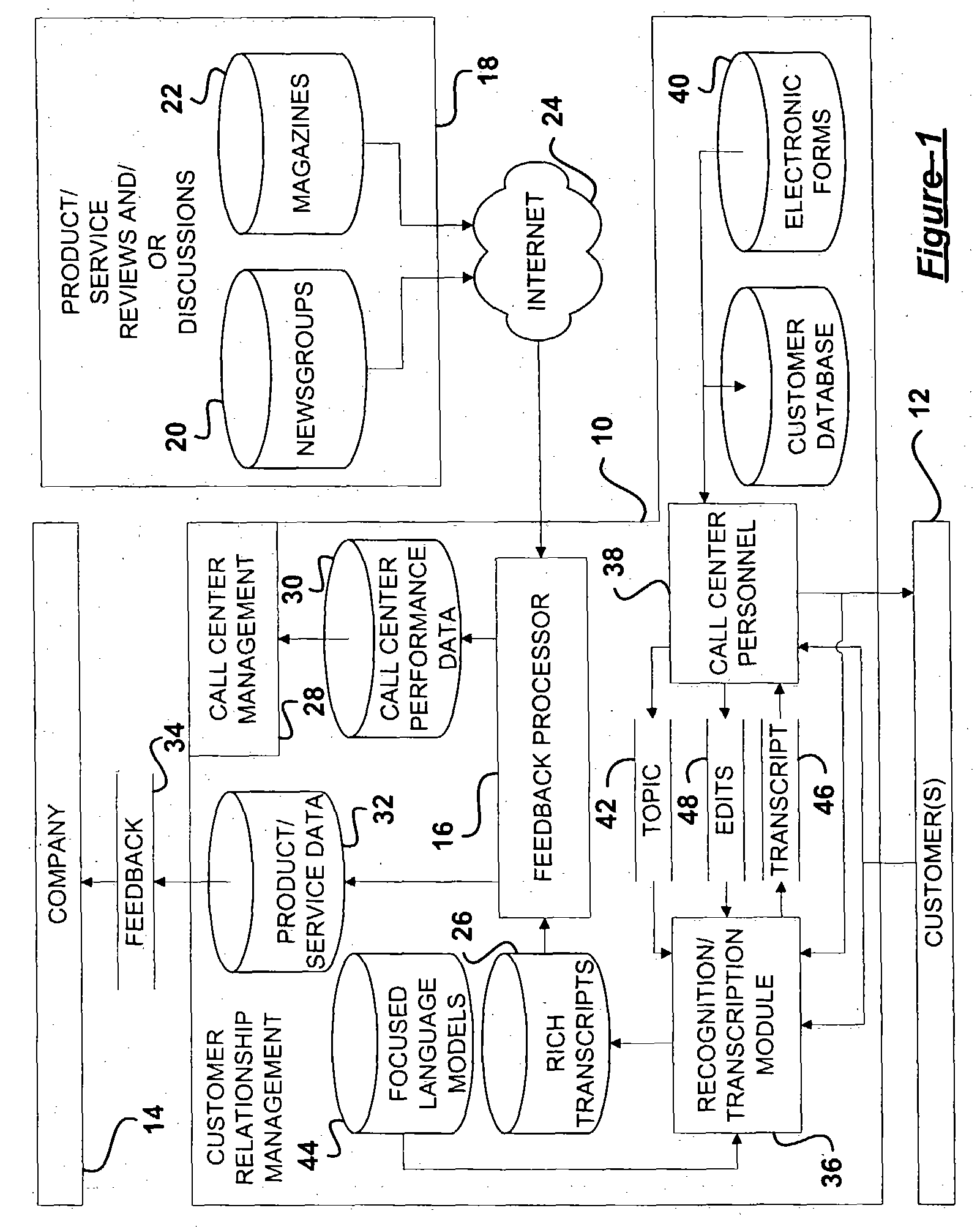

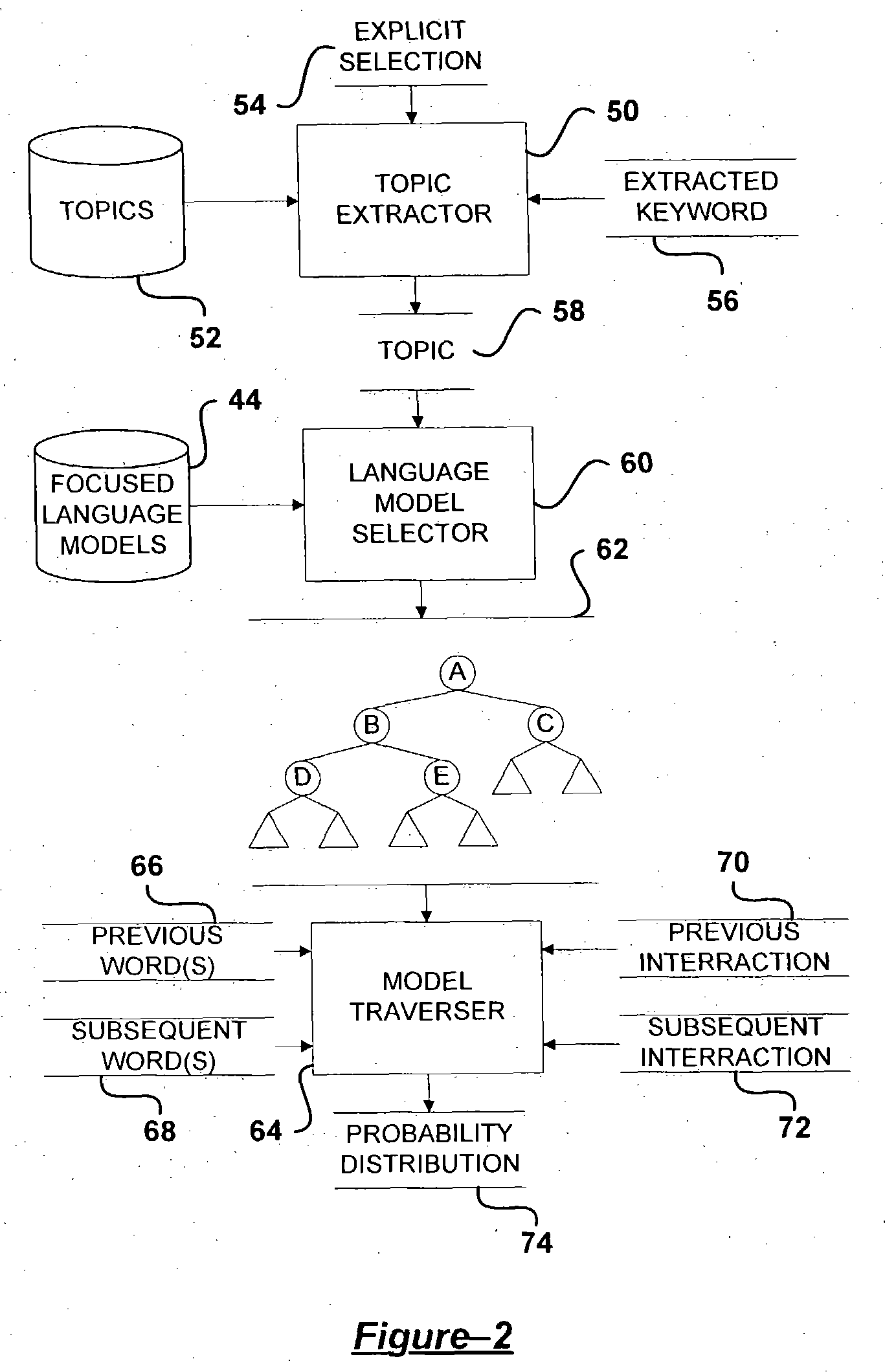

Speech data mining for call center management

InactiveUS20050010411A1Improving automatic recognition of speechEasy to identifySpeech recognitionQuality of serviceFrustration

A speech data mining system for use in generating a rich transcription having utility in call center management includes a speech differentiation module differentiating between speech of interacting speakers, and a speech recognition module improving automatic recognition of speech of one speaker based on interaction with another speaker employed as a reference speaker. A transcript generation module generates a rich transcript based on recognized speech of the speakers. Focused, interactive language models improve recognition of a customer on a low quality channel using context extracted from speech of a call center operator on a high quality channel with a speech model adapted to the operator. Mined speech data includes number of interaction turns, customer frustration phrases, operator polity, interruptions, and / or contexts extracted from speech recognition results, such as topics, complaints, solutions, and resolutions. Mined speech data is useful in call center and / or product or service quality management.

Owner:PANASONIC CORP

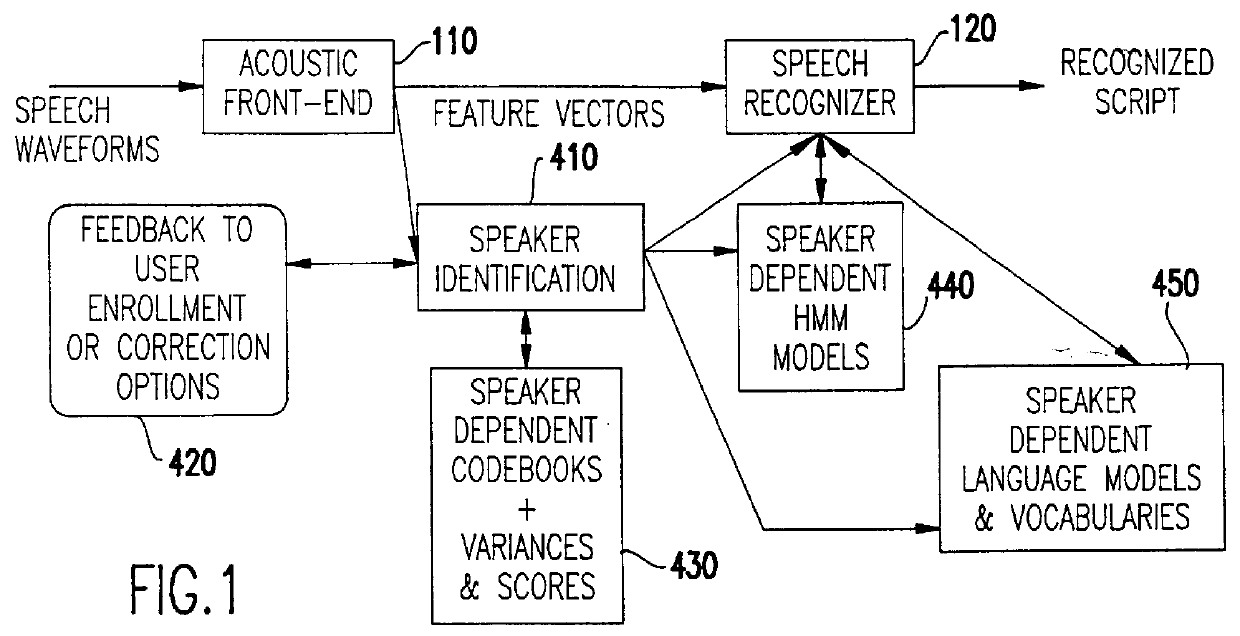

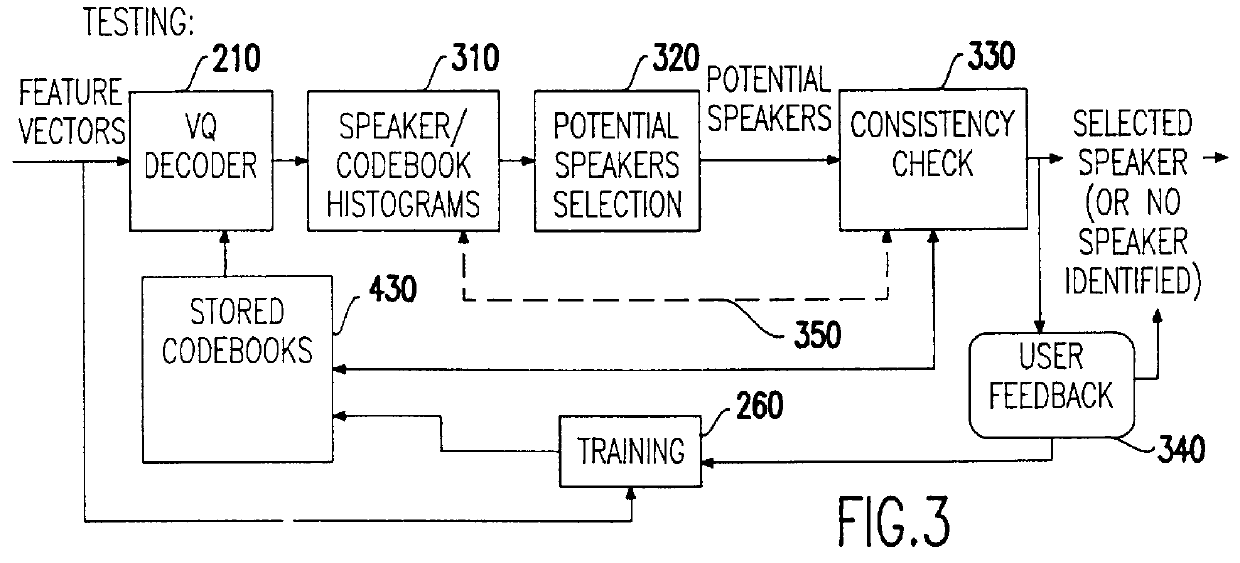

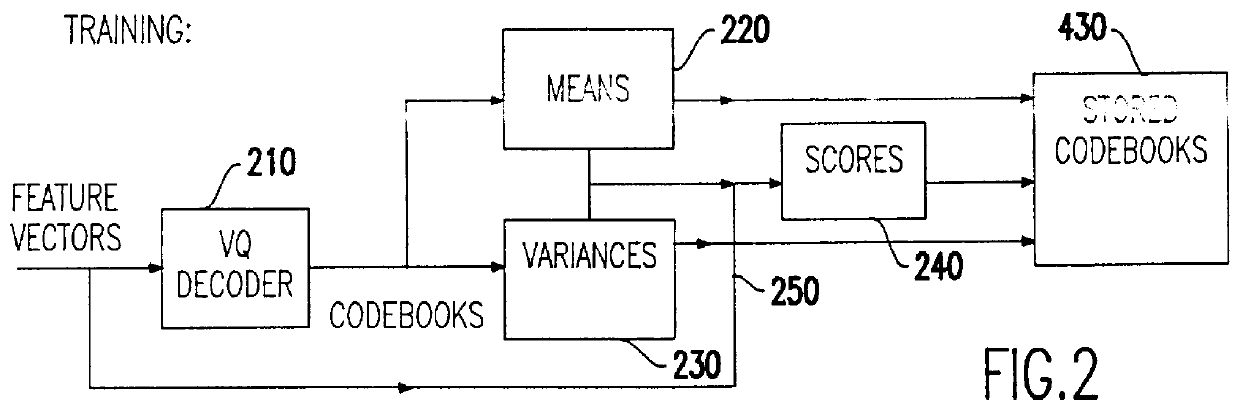

Speech recognition with attempted speaker recognition for speaker model prefetching or alternative speech modeling

Speaker recognition is attempted on input speech signals concurrently with provision of input speech signals to a speech recognition system. If a speaker is recognized, a speaker dependent model which has been trained on an enrolled speaker is supplied to the speech recognition system. If not recognized, then a speaker-independent recognition model is used or, alternatively, the new speaker is enrolled. Other speaker specific information such as a special language model, grammar, vocabulary, a dictionary, a list of names, a language and speaker dependent preferences can also be provided to improve the speech recognition function or even configure or customize the speech recognition system or the response of any system such as a computer or network controlled in response thereto. A consistency check in the form of a decision tree is preferably provided to accelerate the speaker recognition process and increase the accuracy thereof. Further training of a model and / or enrollment of additional speakers may be initiated upon completion of speaker recognition and / or adaptively upon each speaker utterance.

Owner:IBM CORP

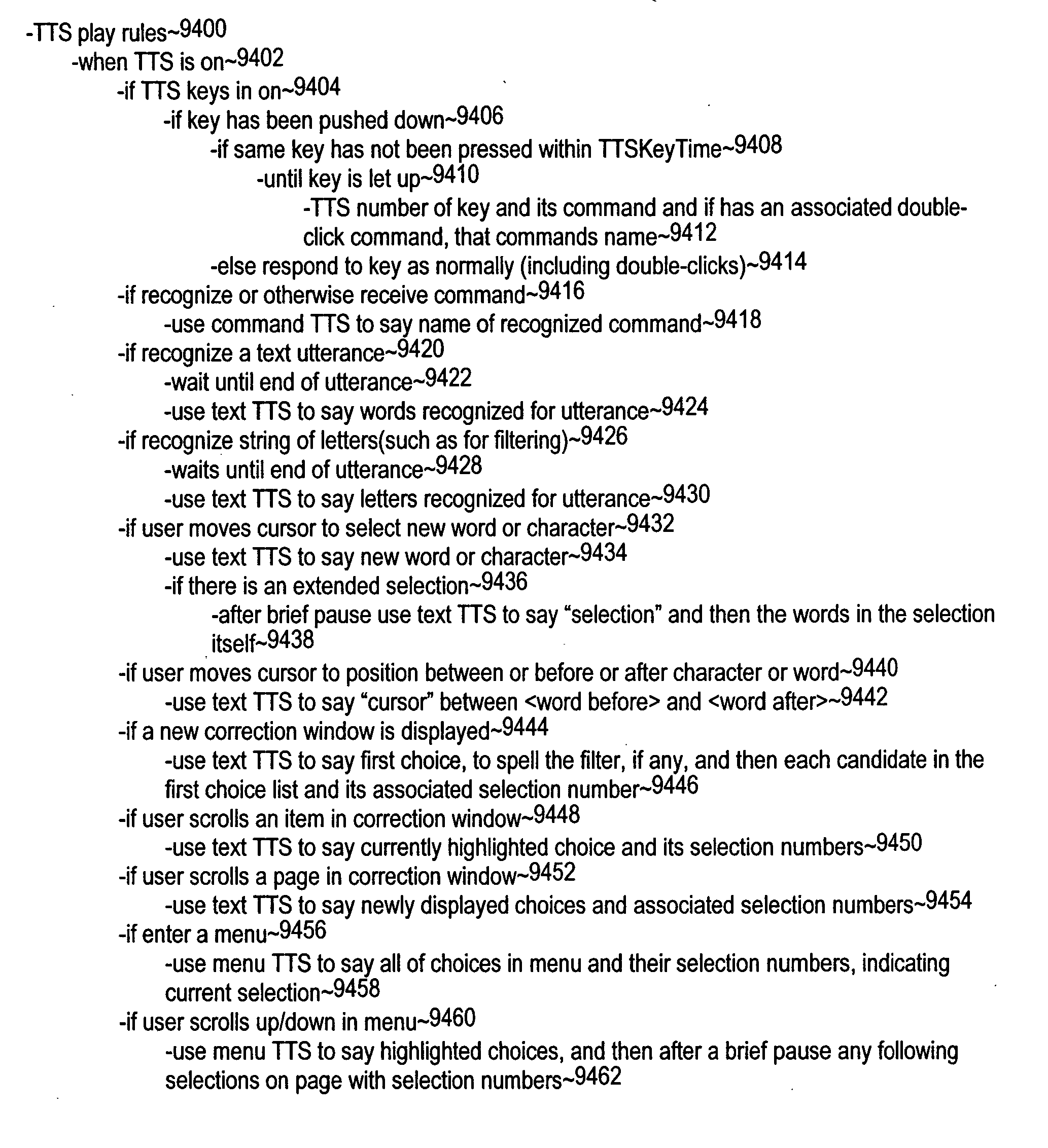

Combined speech recongnition and text-to-speech generation

Text-to-speech (TTS) generation is used in conjunction with large vocabulary speech recognition to say words selected by the speech recognition. The software for performing the large vocabulary speech recognition can share speech modeling data with the TTS software. TTS or recorded audio can be used to automatically say both recognized text and the names of recognized commands after their recognition. The TTS can automatically repeats text recognized by the speech recognition after each of a succession of end of utterance detections. A user can move a cursor back or forward in recognized text, and the TTS can speak one or more words at the cursor location after each such move. The speech recognition can be used to produces a choice list of possible recognition candidates and the TTS can be used to provide spoken output of one or more of the candidates on the choice list.

Owner:CERENCE OPERATING CO

Corrective feedback loop for automated speech recognition

ActiveUS20090240488A1Simple methodIncrease probabilitySpeech recognitionSpecial data processing applicationsAutomatic speechClient-side

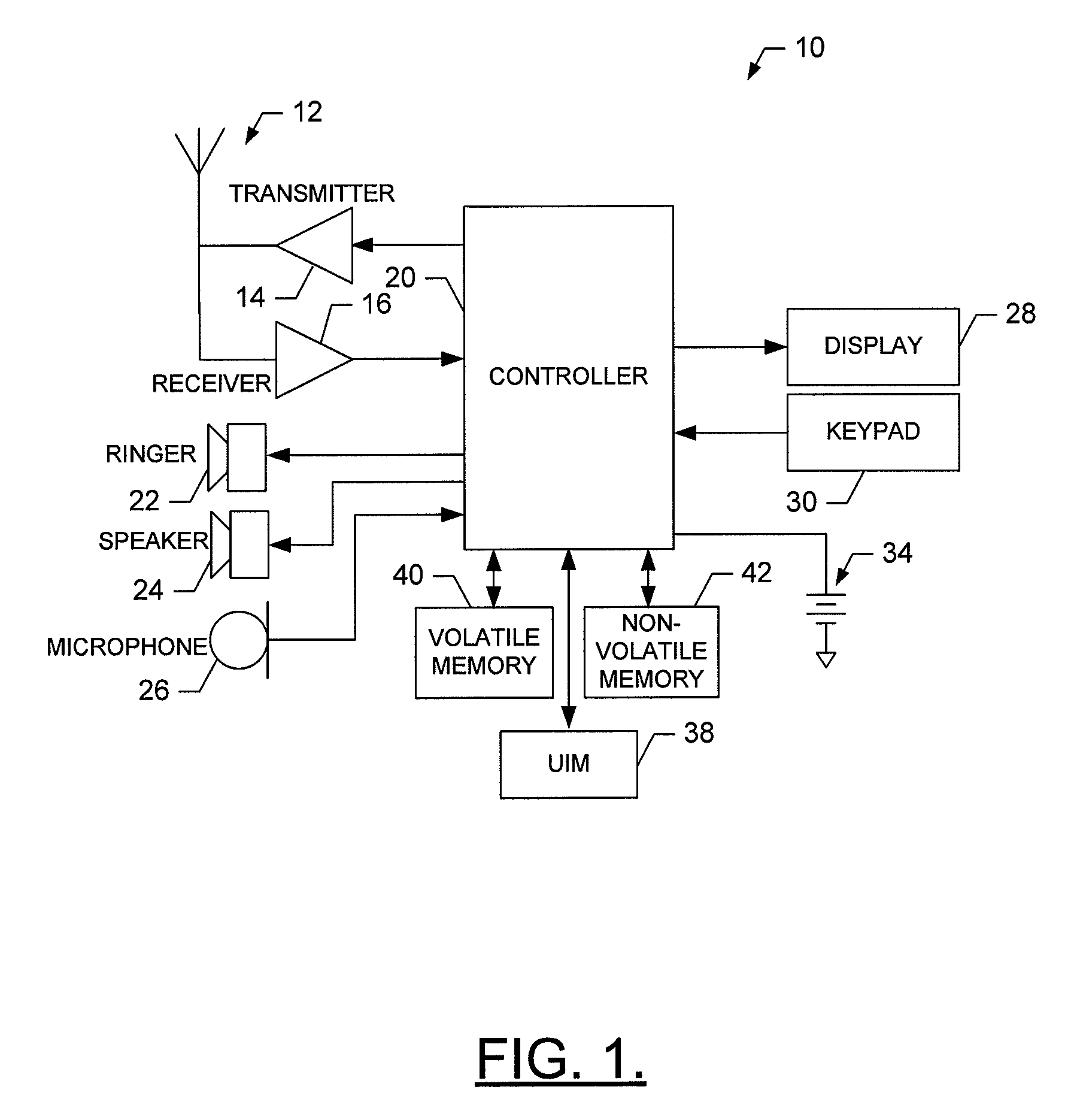

A method for facilitating the updating of a language model includes receiving, at a client device, via a microphone, an audio message corresponding to speech of a user; communicating the audio message to a first remote server; receiving, that the client device, a result, transcribed at the first remote server using an automatic speech recognition system (“ASR”), from the audio message; receiving, at the client device from the user, an affirmation of the result; storing, at the client device, the result in association with an identifier corresponding to the audio message; and communicating, to a second remote server, the stored result together with the identifier.

Owner:AMAZON TECH INC

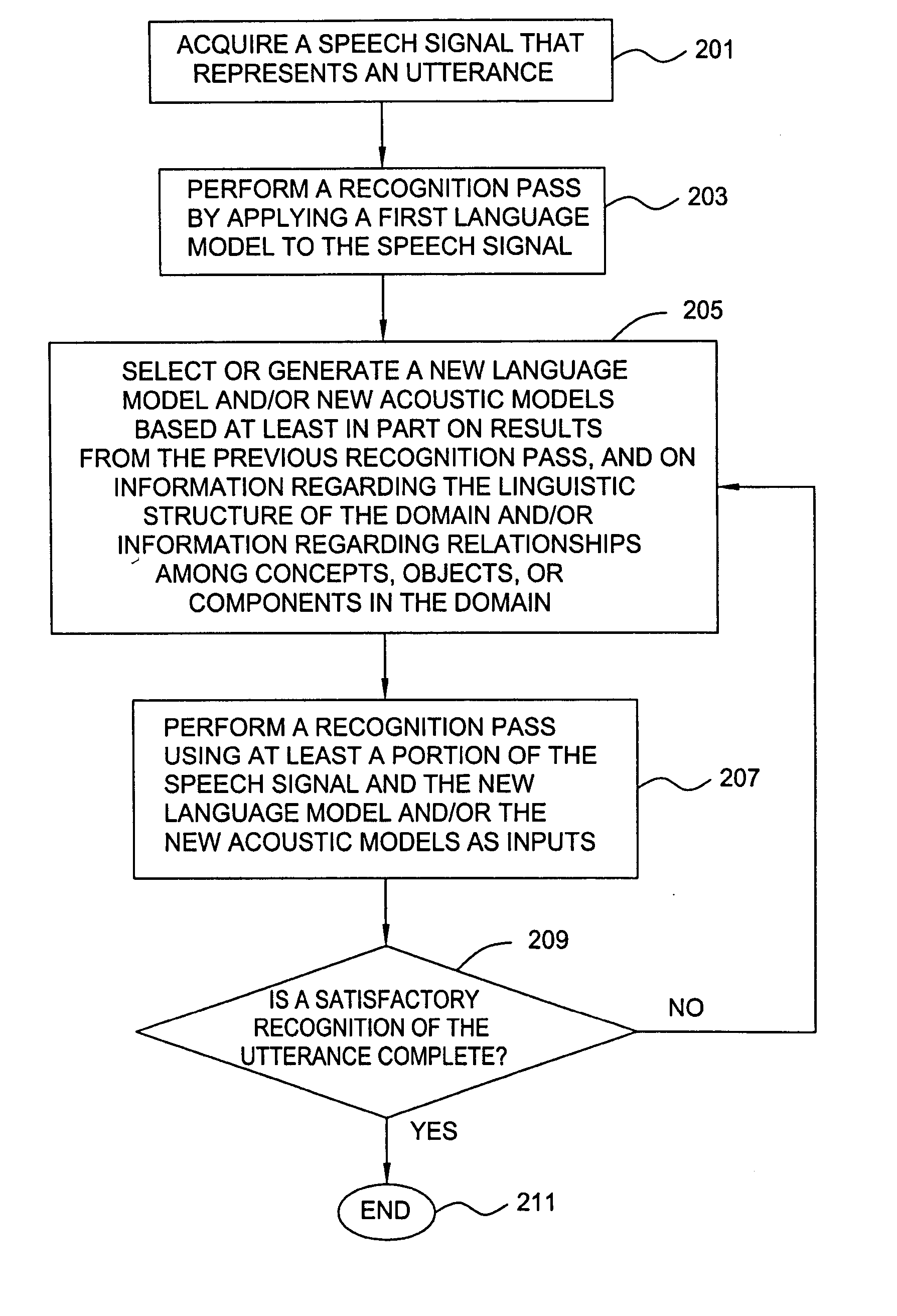

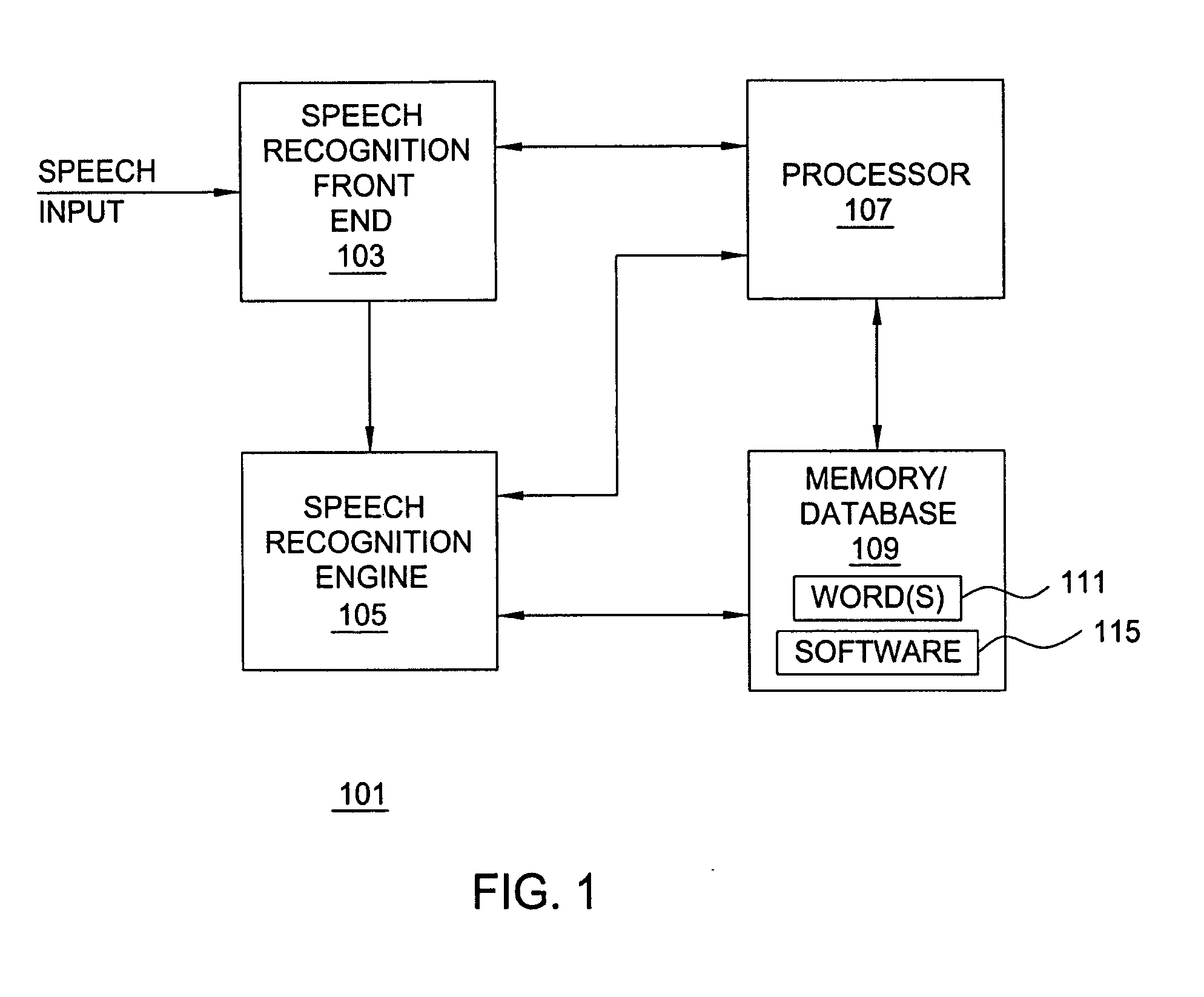

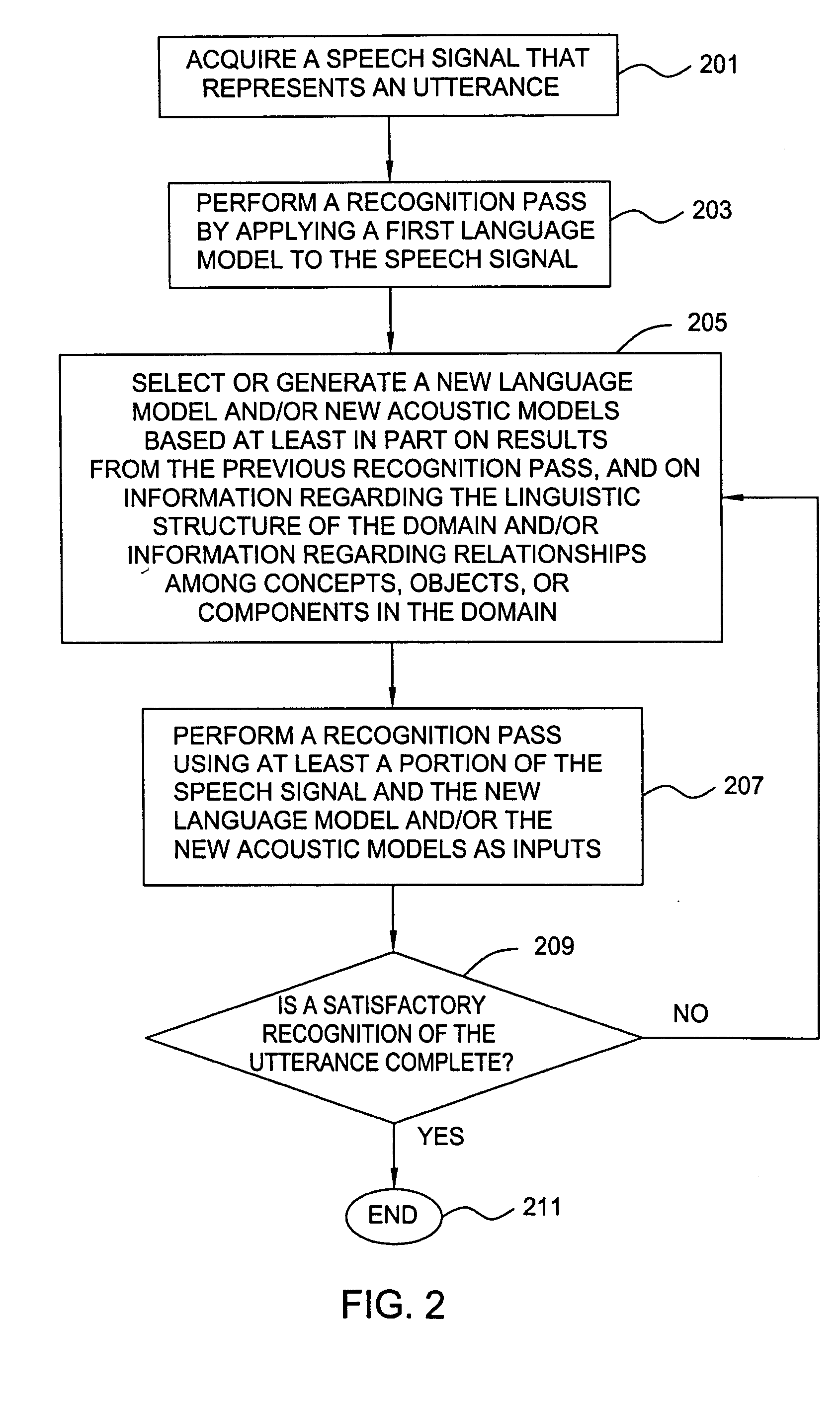

Method and apparatus for speech recognition using a dynamic vocabulary

A method and apparatus are provided for performing speech recognition using a dynamic vocabulary. Results from a preliminary speech recognition pass can be used to update or refine a language model in order to improve the accuracy of search results and to simplify subsequent recognition passes. This iterative process greatly reduces the number of alternative hypotheses produced during each speech recognition pass, as well as the time required to process subsequent passes, making the speech recognition process faster, more efficient and more accurate. The iterative process is characterized by the use of results from one or more data set queries, where the keys used to query the data set, as well as the queries themselves, are constructed in a manner that produces more effective language models for use in subsequent attempts at decoding a given speech signal.

Owner:NUANCE COMM INC

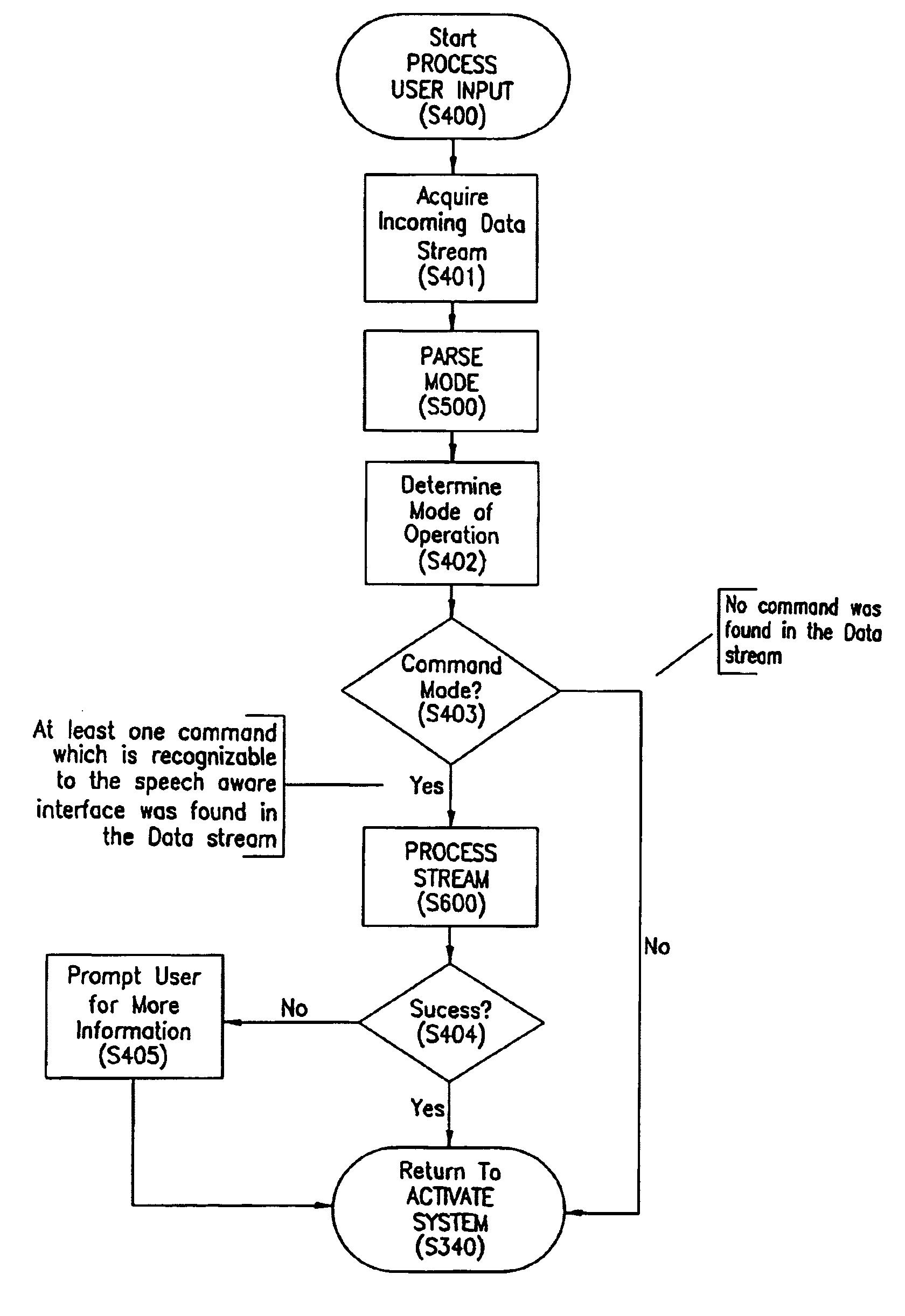

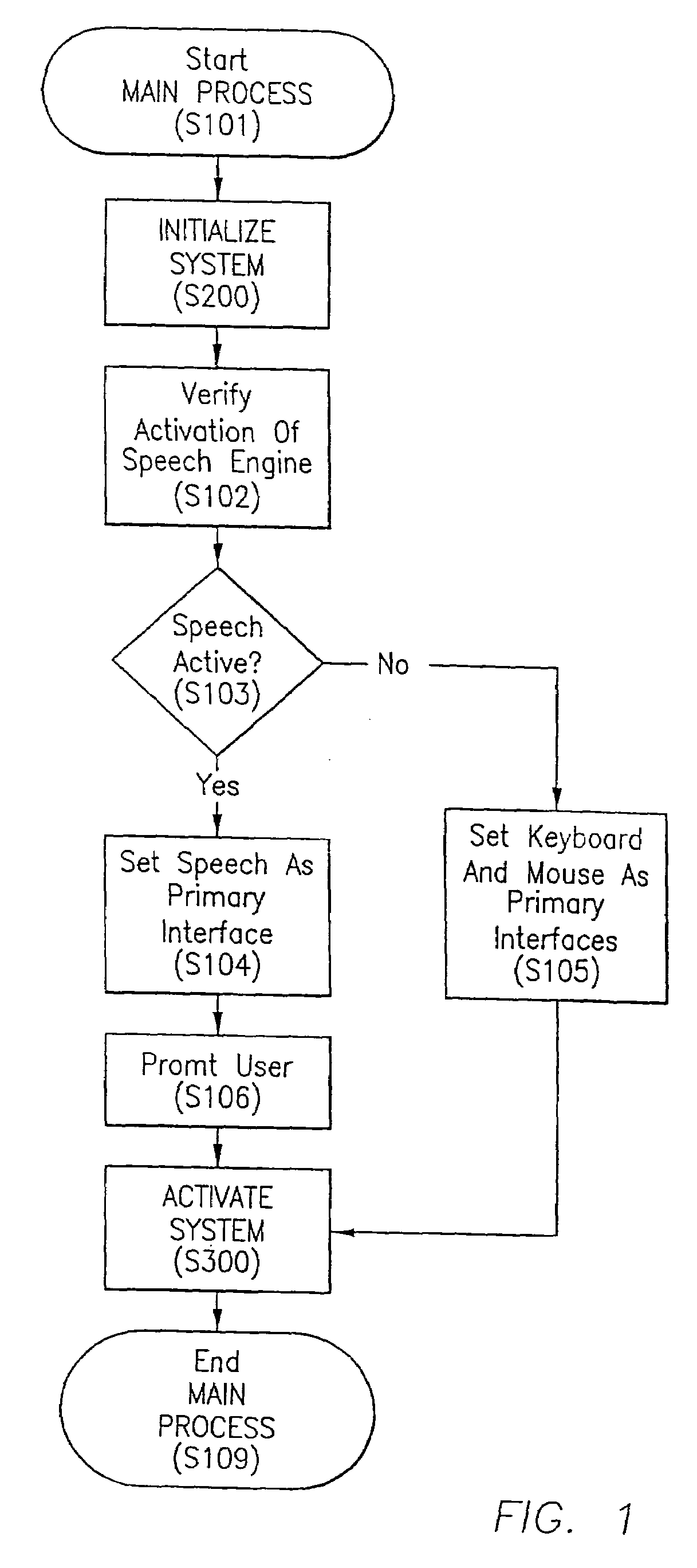

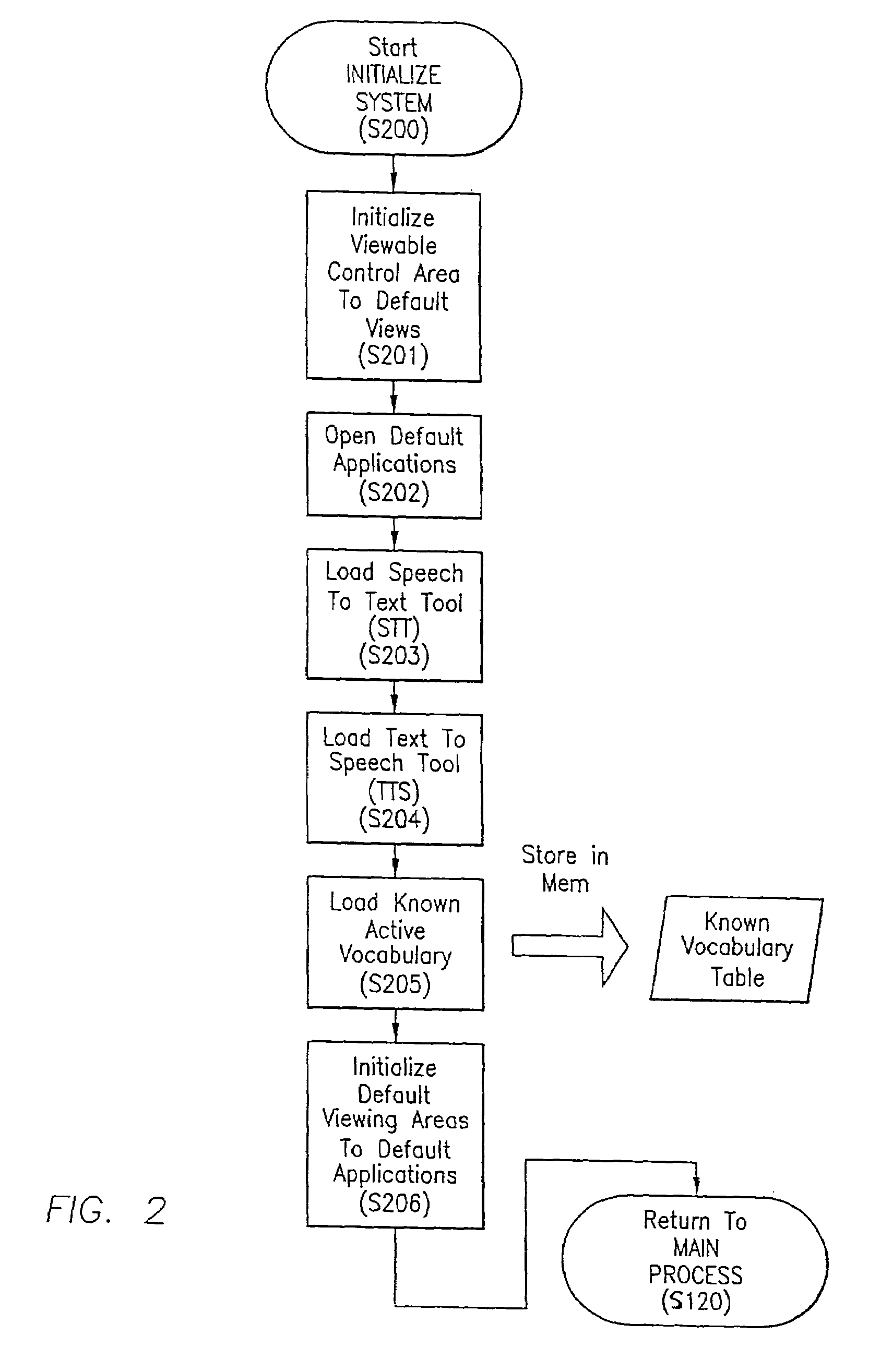

Method for integrating processes with a multi-faceted human centered interface

InactiveUS7188067B2Comfortable to userPrevented from appearingSpeech recognitionDividing attentionHands free

According to the present invention, a method for integrating processes with a multi-faceted human centered interface is provided. The interface is facilitated to implement a hands free, voice driven environment to control processes and applications. A natural language model is used to parse voice initiated commands and data, and to route those voice initiated inputs to the required applications or processes. The use of an intelligent context based parser allows the system to intelligently determine what processes are required to complete a task which is initiated using natural language. A single window environment provides an interface which is comfortable to the user by preventing the occurrence of distracting windows from appearing. The single window has a plurality of facets which allow distinct viewing areas. Each facet has an independent process routing its outputs thereto. As other processes are activated, each facet can reshape itself to bring a new process into one of the viewing areas. All activated processes are executed simultaneously to provide true multitasking.

Owner:NUANCE COMM INC

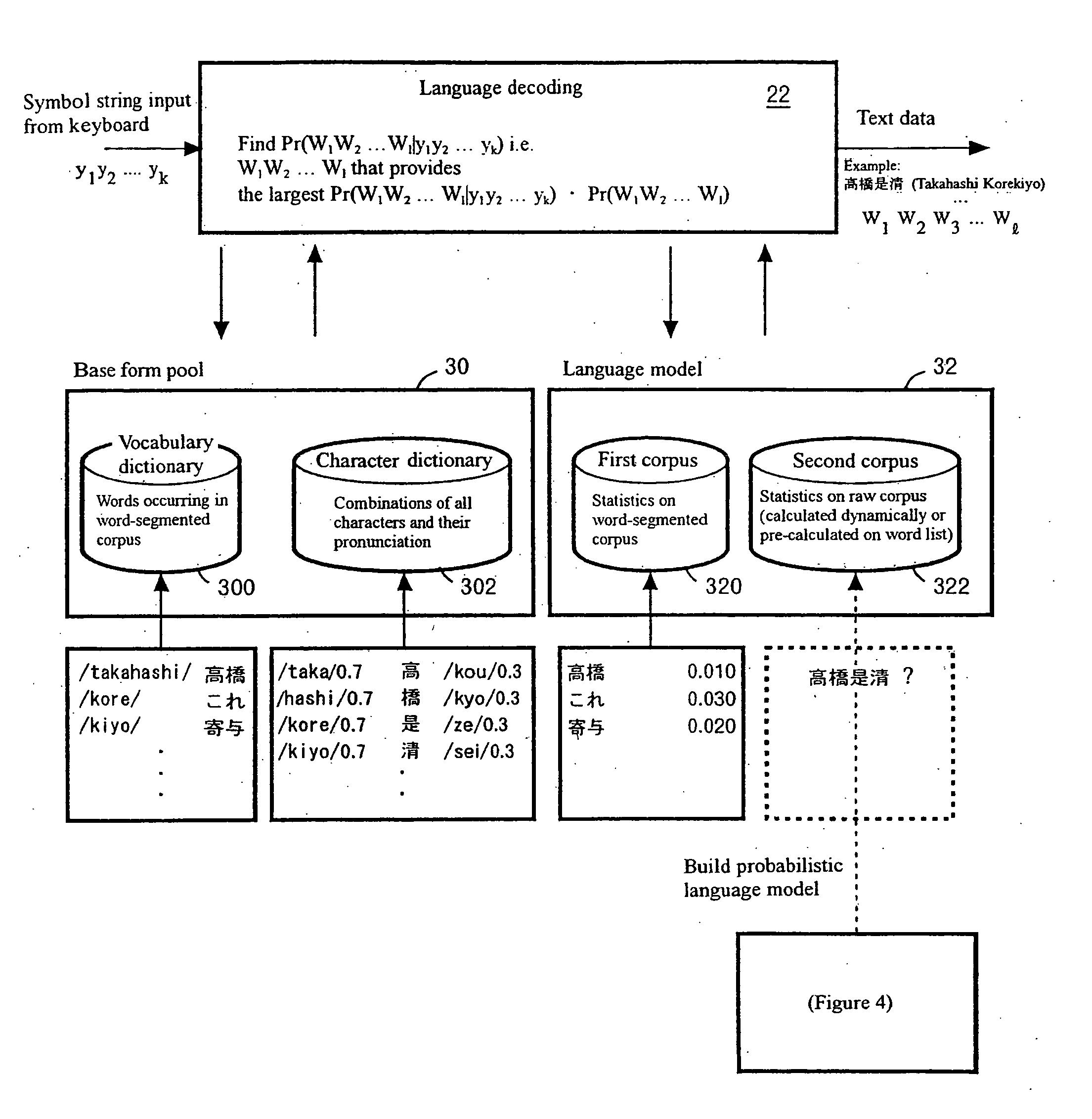

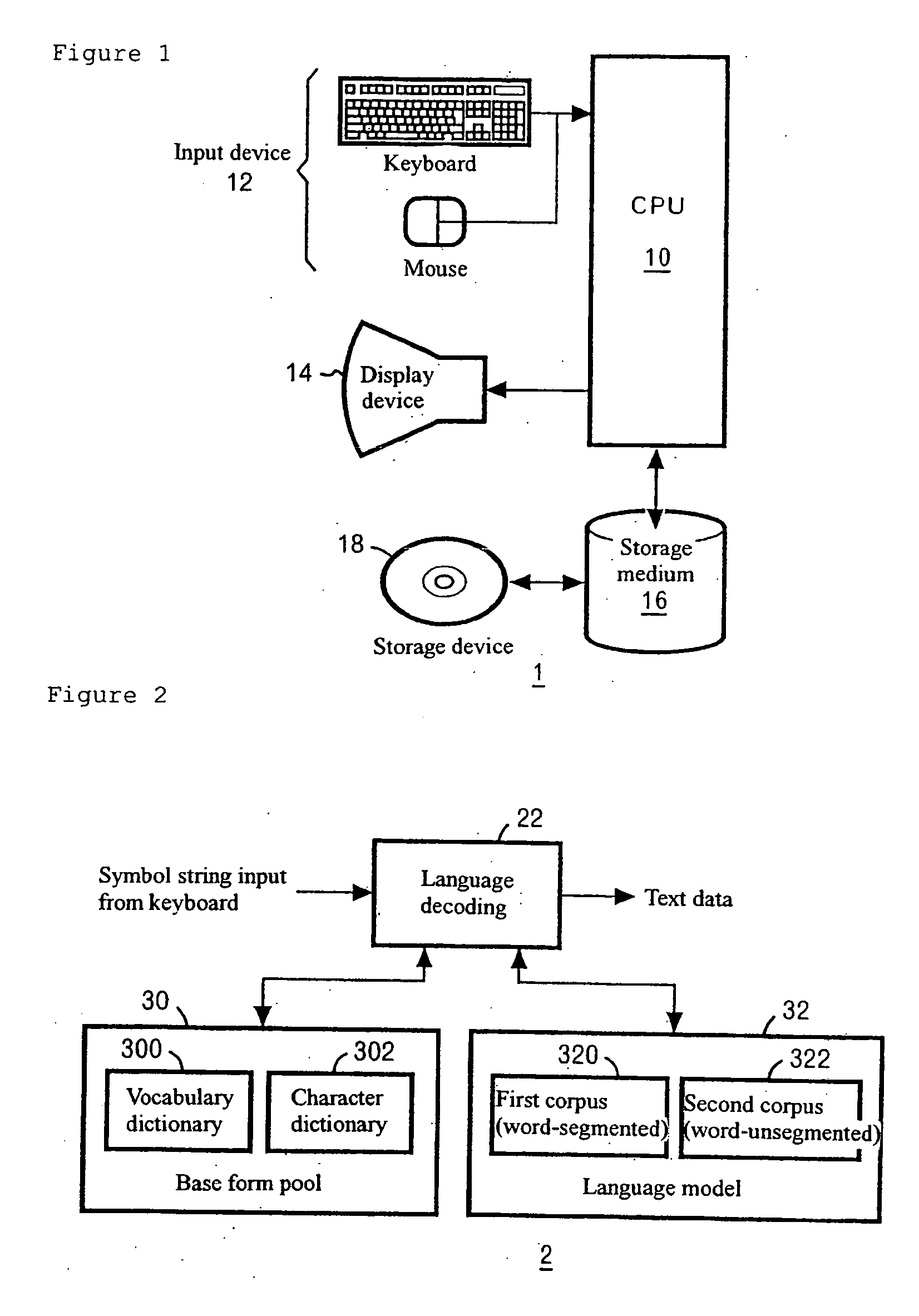

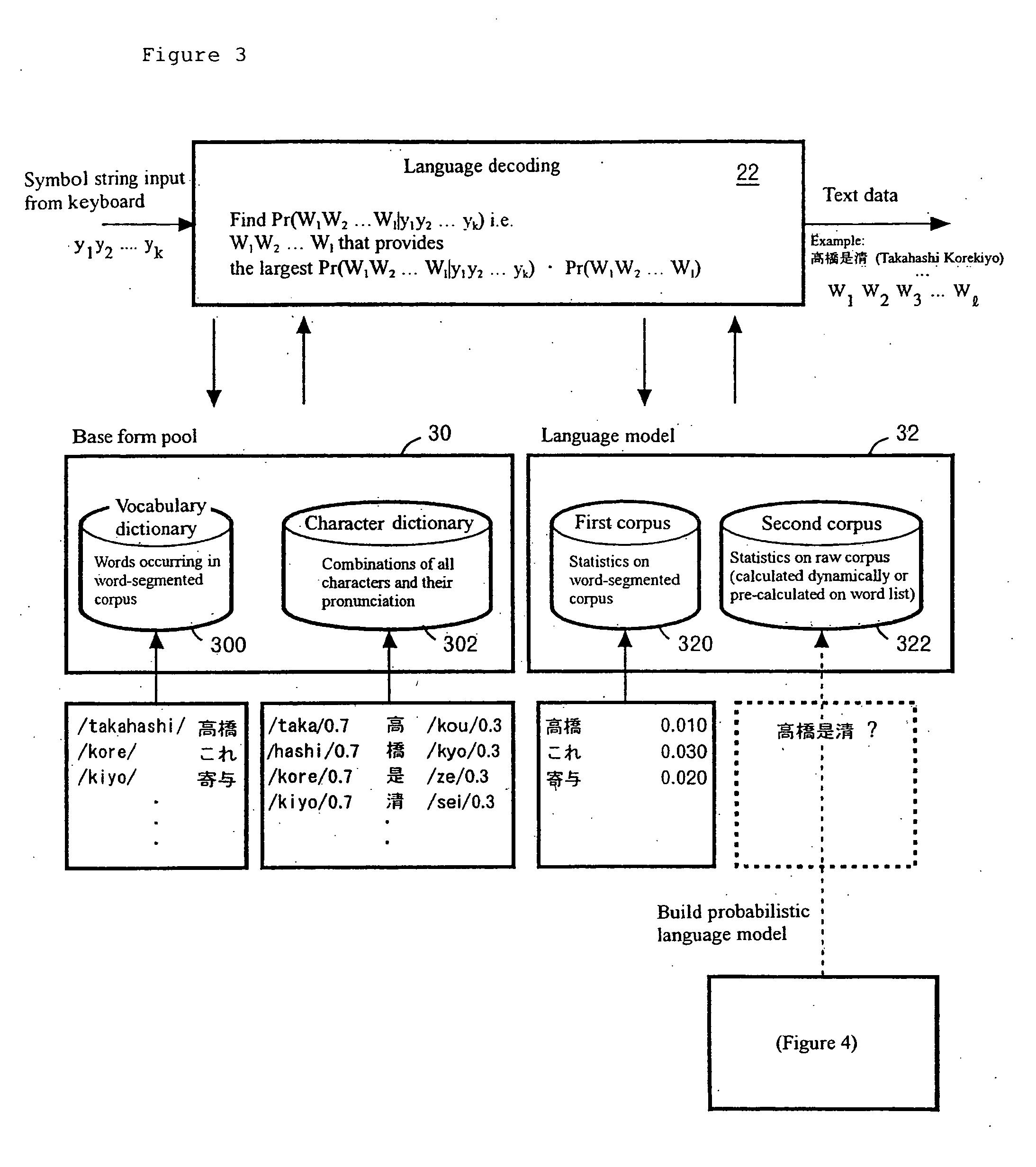

Word boundary probability estimating, probabilistic language model building, kana-kanji converting, and unknown word model building

InactiveUS20060015326A1Improve recognition accuracyImprove capabilityNatural language translationSpecial data processing applicationsText corpusProbabilistic estimation

Calculates a word n-gram probability with high accuracy in a situation where a first corpus), which is a relatively small corpus containing manually segmented word information, and a second corpus, which is a relatively large corpus, are given as a training corpus that is storage containing vast quantities of sample sentences. Vocabulary including contextual information is expanded from words occurring in first corpus of relatively small size to words occurring in second corpus of relatively large size by using a word n-gram probability estimated from an unknown word model and the raw corpus. The first corpus (word-segmented) is used for calculating n-grams and the probability that the word boundary between two adjacent characters will be the boundary of two words (segmentation probability). The second corpus (word-unsegmented), in which probabilistic word boundaries are assigned based on information in the first corpus (word-segmented), is used for calculating a word n-grams.

Owner:IBM CORP

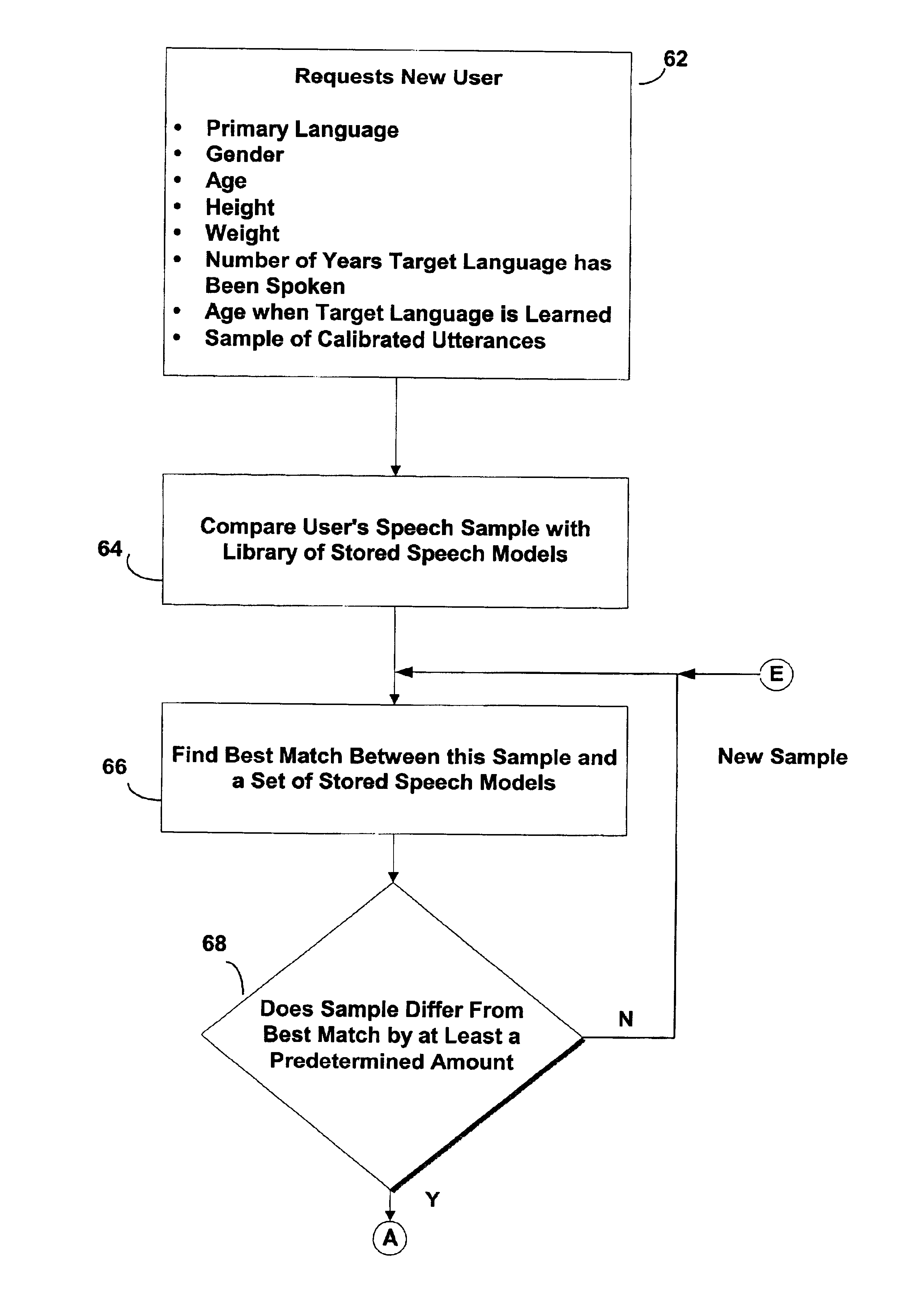

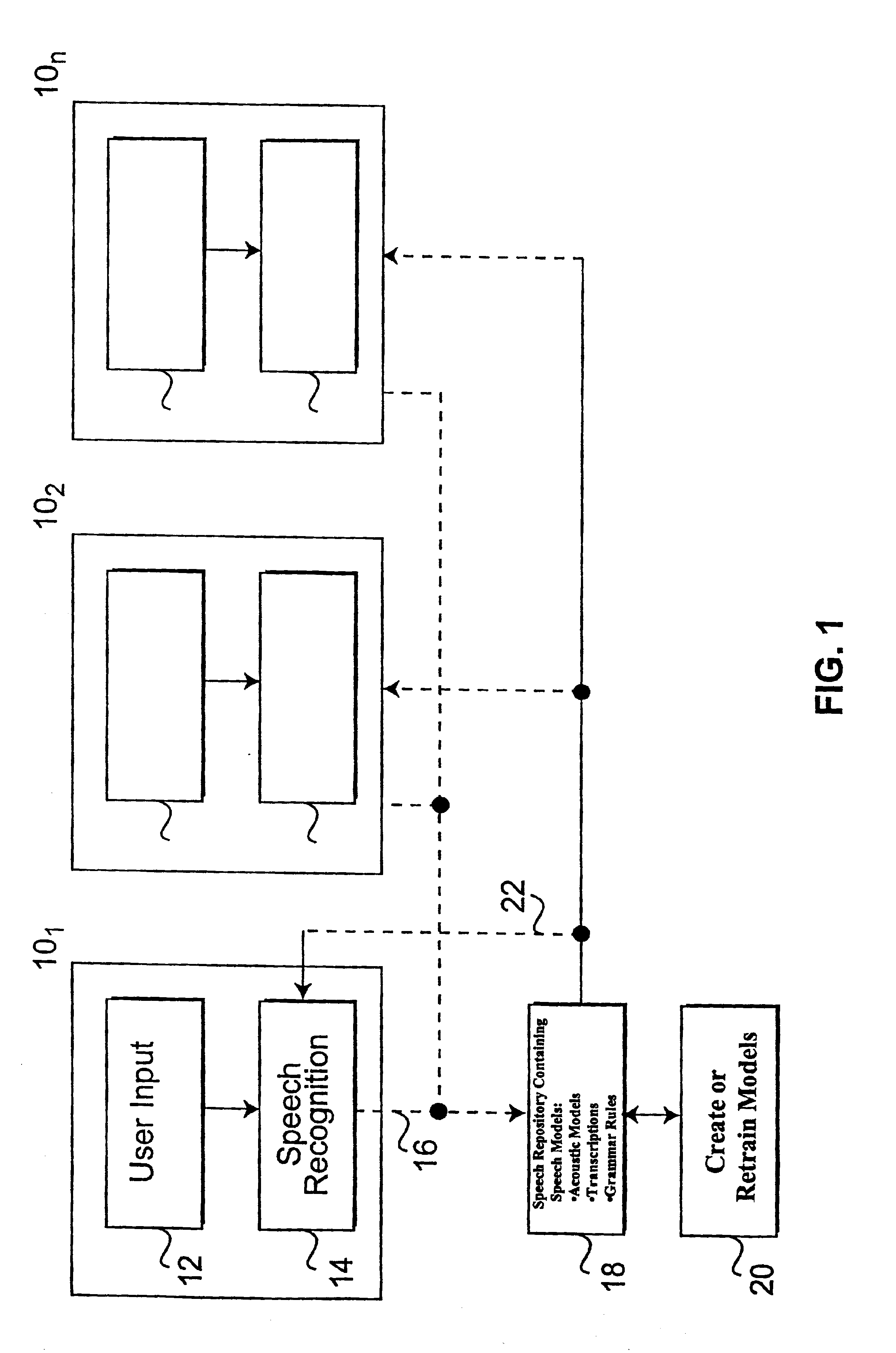

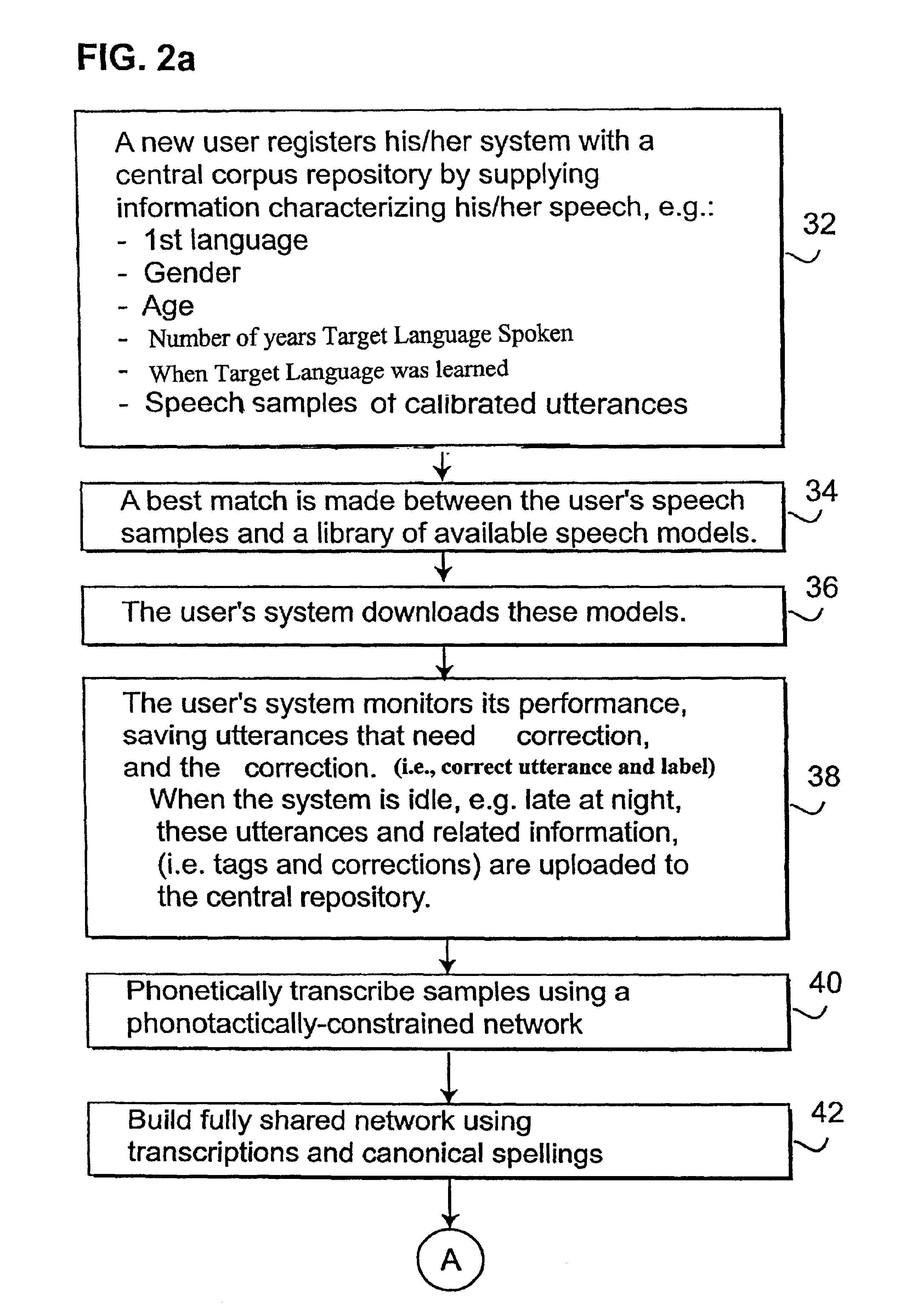

Retraining and updating speech models for speech recognition

InactiveUS6941264B2Efficiently and inexpensively adaptingSpeech recognitionThe InternetSpeech identification

A technique is provided for updating speech models for speech recognition by identifying, from a class of users, speech data for a predetermined set of utterances that differ from a set of stored speech models by at least a predetermined amount. The identified speech data for similar utterances from the class of users is collected and used to correct the set of stored speech models. As a result, the corrected speech models are a closer match to the utterances than were the set of stored speech models. The set of speech models are subsequently updated with the corrected speech models to provide improved speech recognition of utterances from the class of users. For example, the corrected speech models may be processed and stored at a central database and returned, via a suitable communications channel (e.g. the Internet) to individual user sites to update the speech recognition apparatus at those sites.

Owner:SONY CORP +1

Speech recognition system interactive agent

InactiveUS20050144004A1Flexibly and optimally distributedImprove accuracyNatural language translationData processing applicationsSpeech soundSpeech model

A speech recognition system includes distributed processing across a client and server for recognizing a spoken query by a user. A number of different speech models for different languages are used to support and detect a language spoken by a user. In some implementations an interactive electronic agent responds in the user's language to facilitate a real-time, human like dialogue.

Owner:NUANCE COMM INC

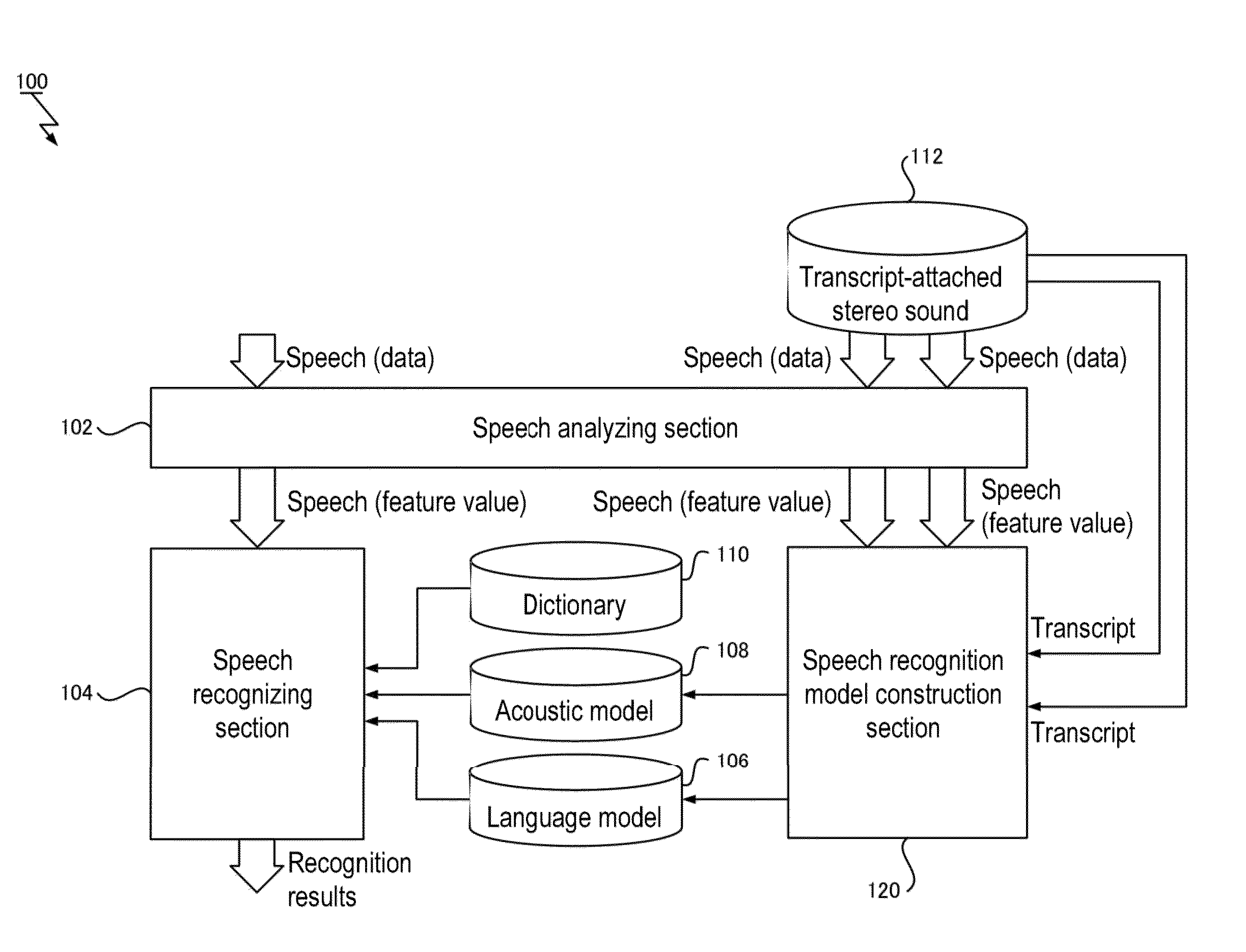

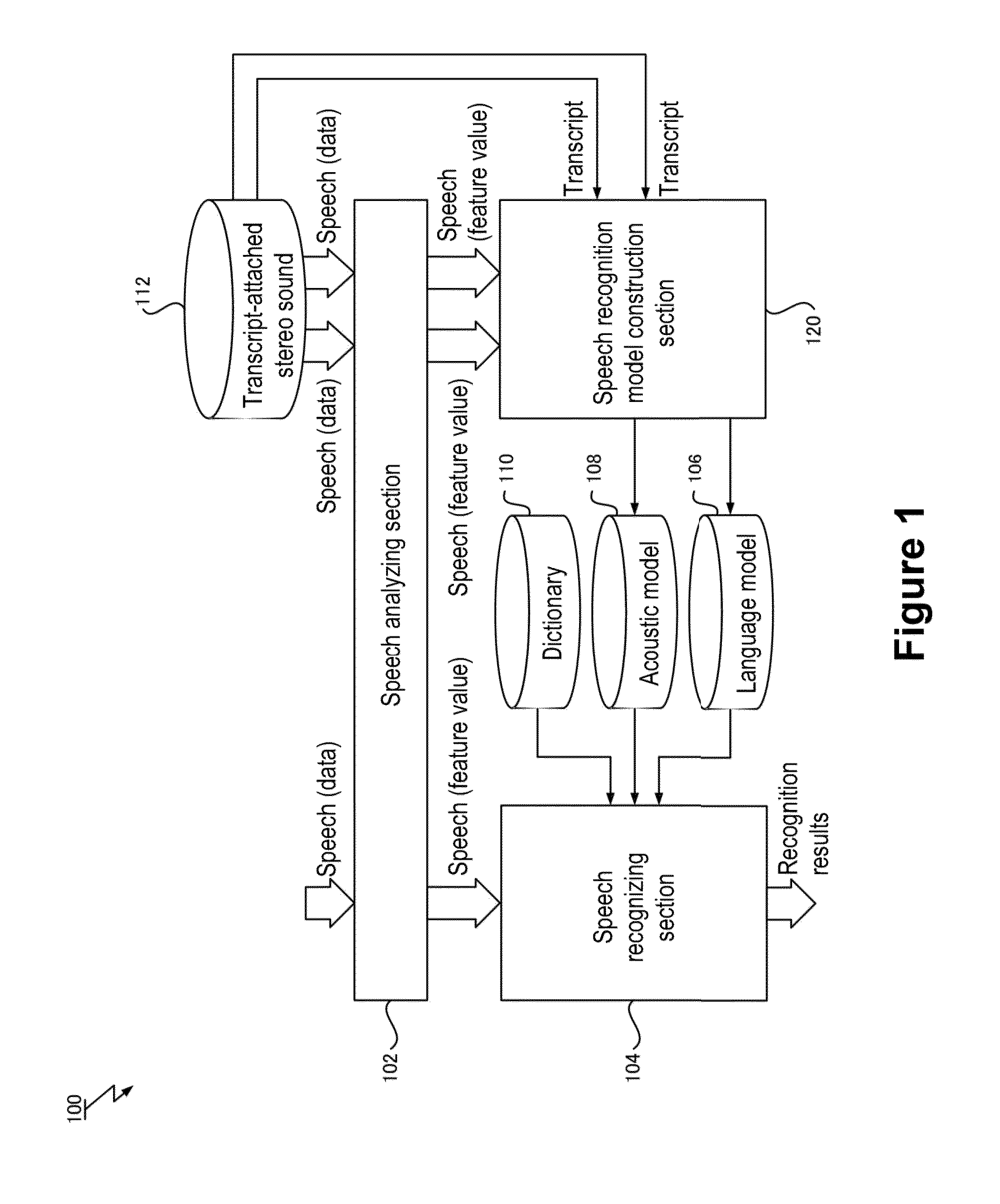

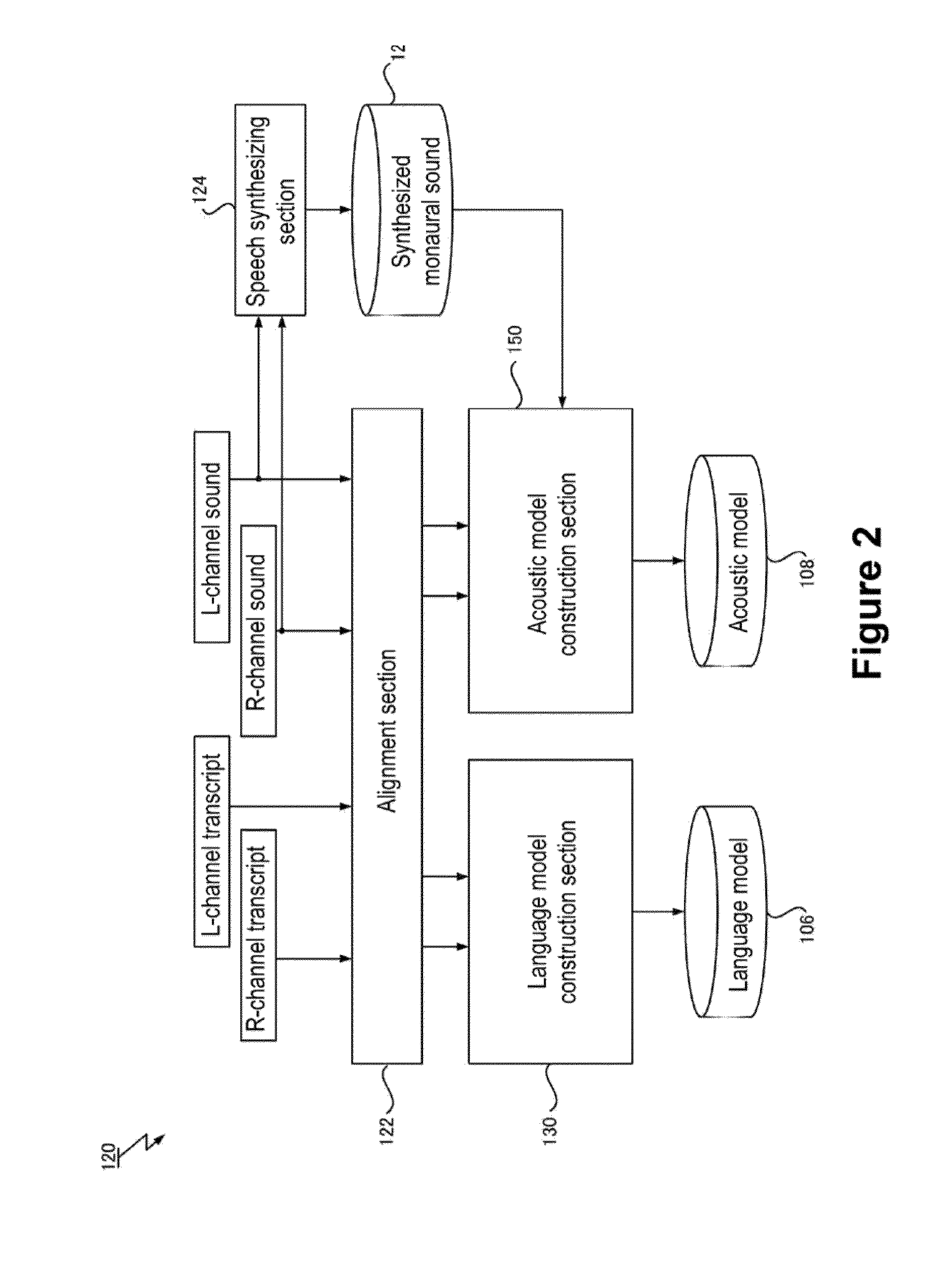

Speech Recognition Model Construction Method, Speech Recognition Method, Computer System, Speech Recognition Apparatus, Program, and Recording Medium

ActiveUS20160086599A1Efficient constructionSpeech recognitionSpeech synthesisComputerized systemAcoustic model

A construction method for a speech recognition model, in which a computer system includes; a step of acquiring alignment between speech of each of a plurality of speakers and a transcript of the speaker; a step of joining transcripts of the respective ones of the plurality of speakers along a time axis, creating a transcript of speech of mixed speakers obtained from synthesized speech of the speakers, and replacing predetermined transcribed portions of the plurality of speakers overlapping on the time axis with a unit which represents a simultaneous speech segment; and a step of constructing at least one of an acoustic model and a language model which make up a speech recognition model, based on the transcript of the speech of the mixed speakers.

Owner:IBM CORP

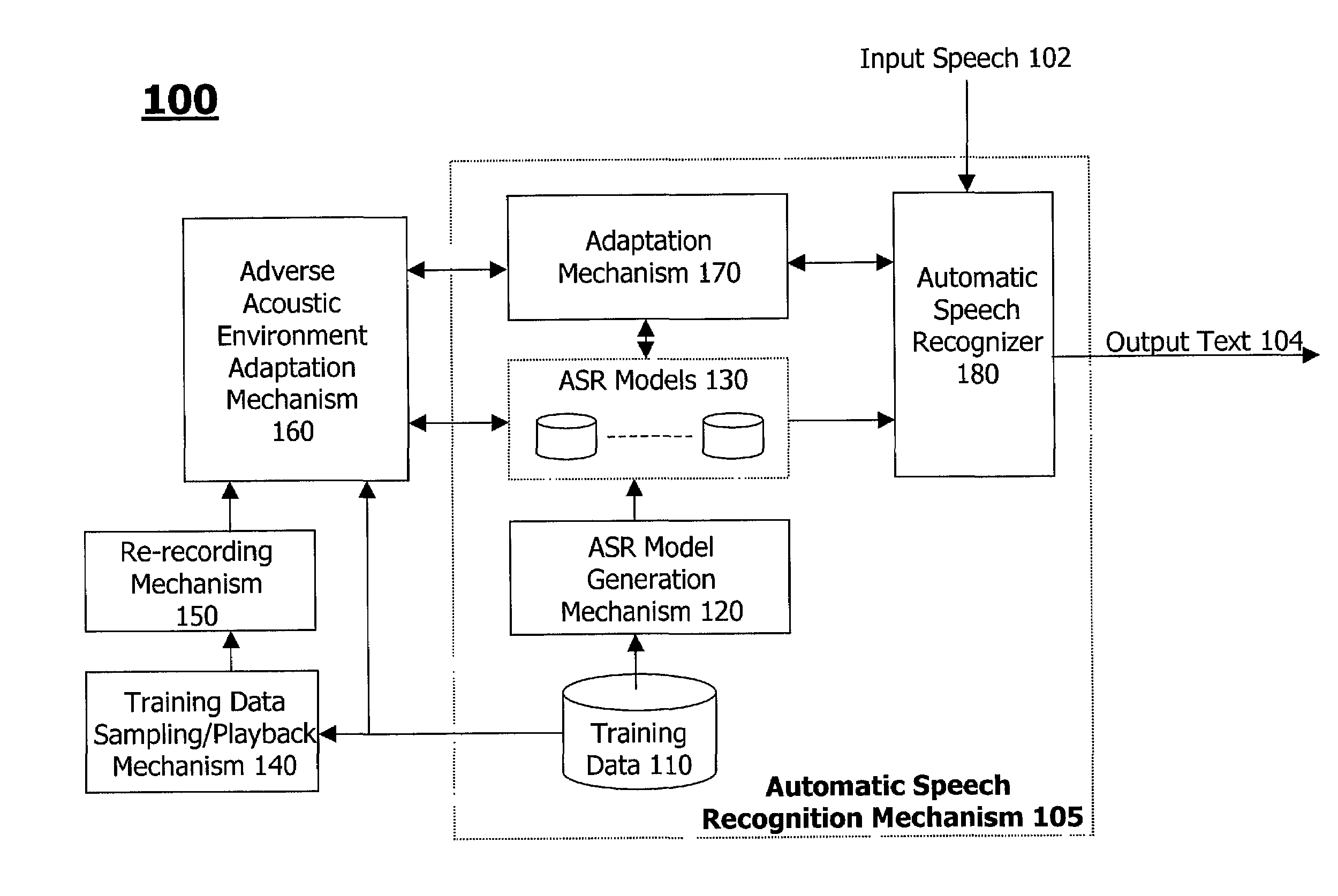

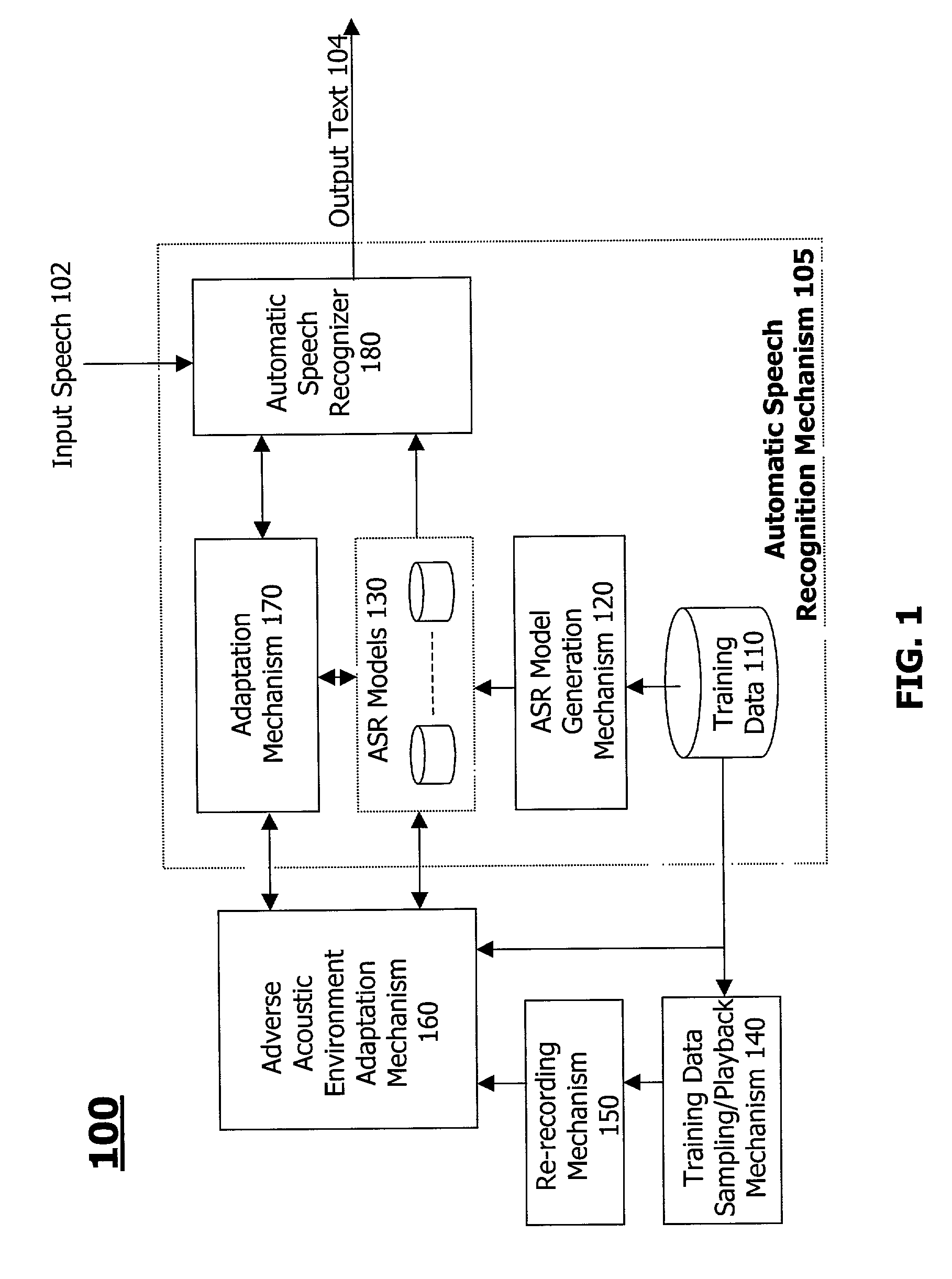

Adapting to adverse acoustic environment in speech processing using playback training data

An arrangement is provided for an automatic speech recognition mechanism to adapt to an adverse acoustic environment. Some of the original training data, collected from an original acoustic environment, is played back in an adverse acoustic environment. The playback data is recorded in the adverse acoustic environment to generate recorded playback data. An existing speech model is then adapted with respect to the adverse acoustic environment based on the recorded playback data and / or the original training data.

Owner:INTEL CORP

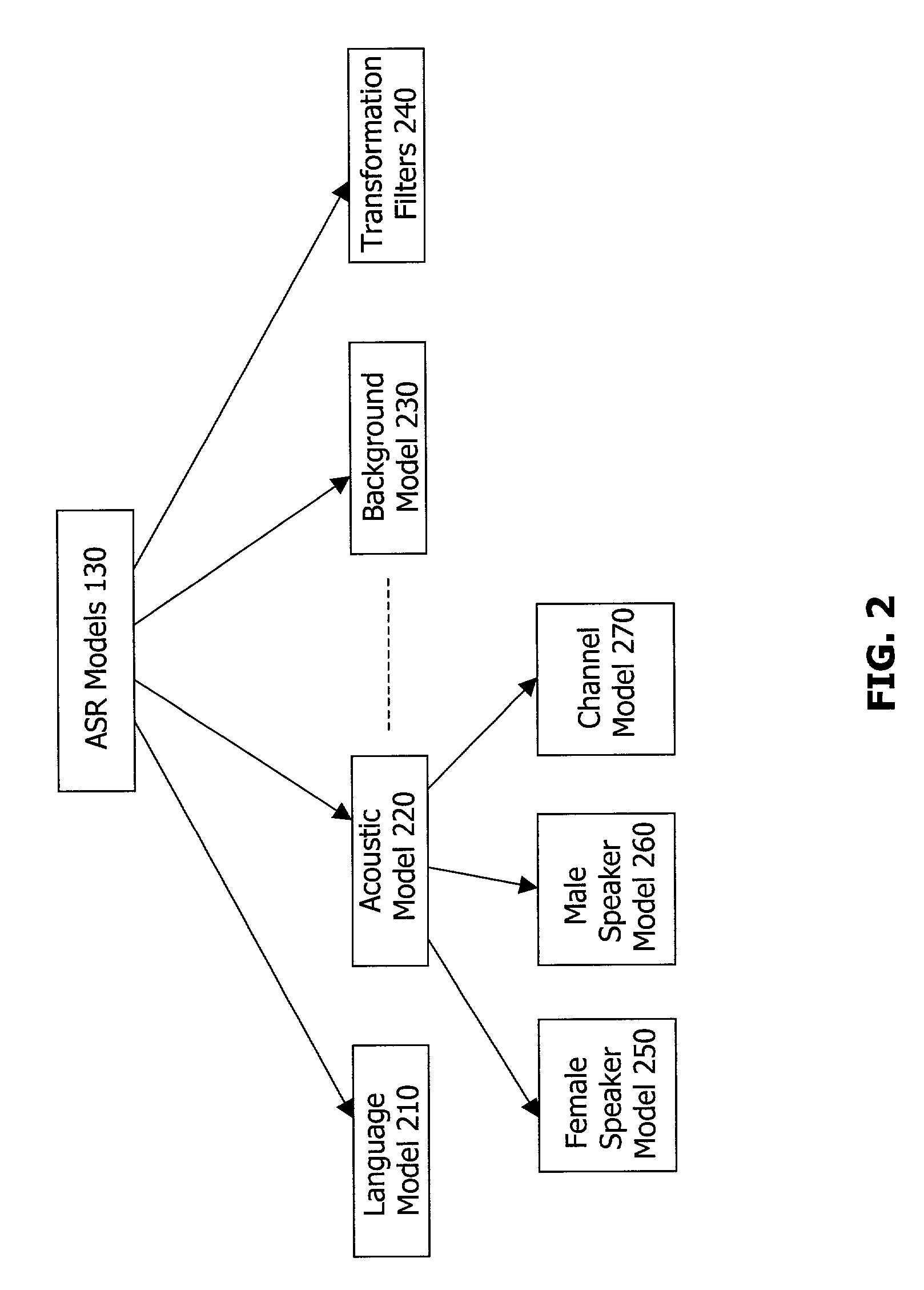

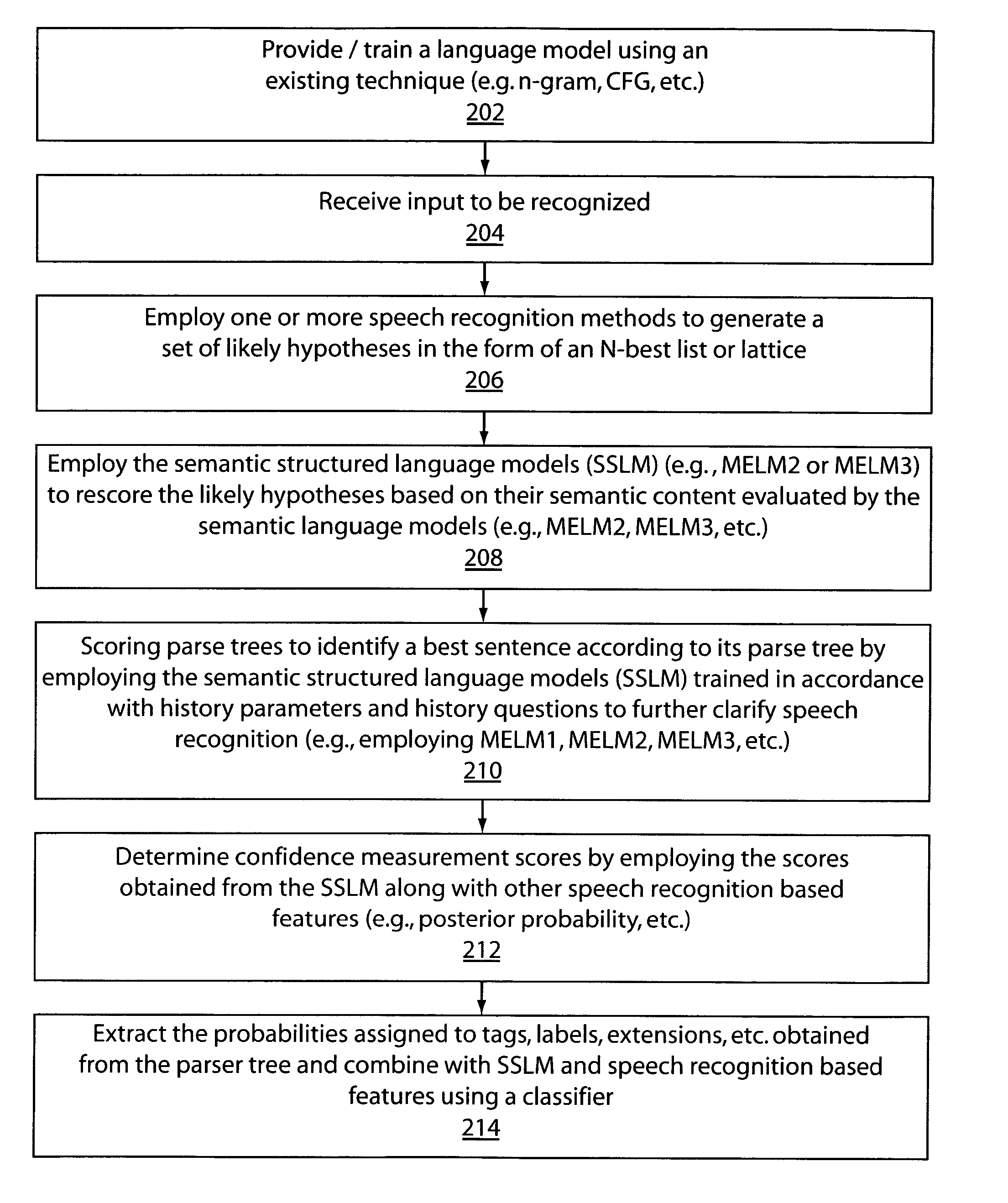

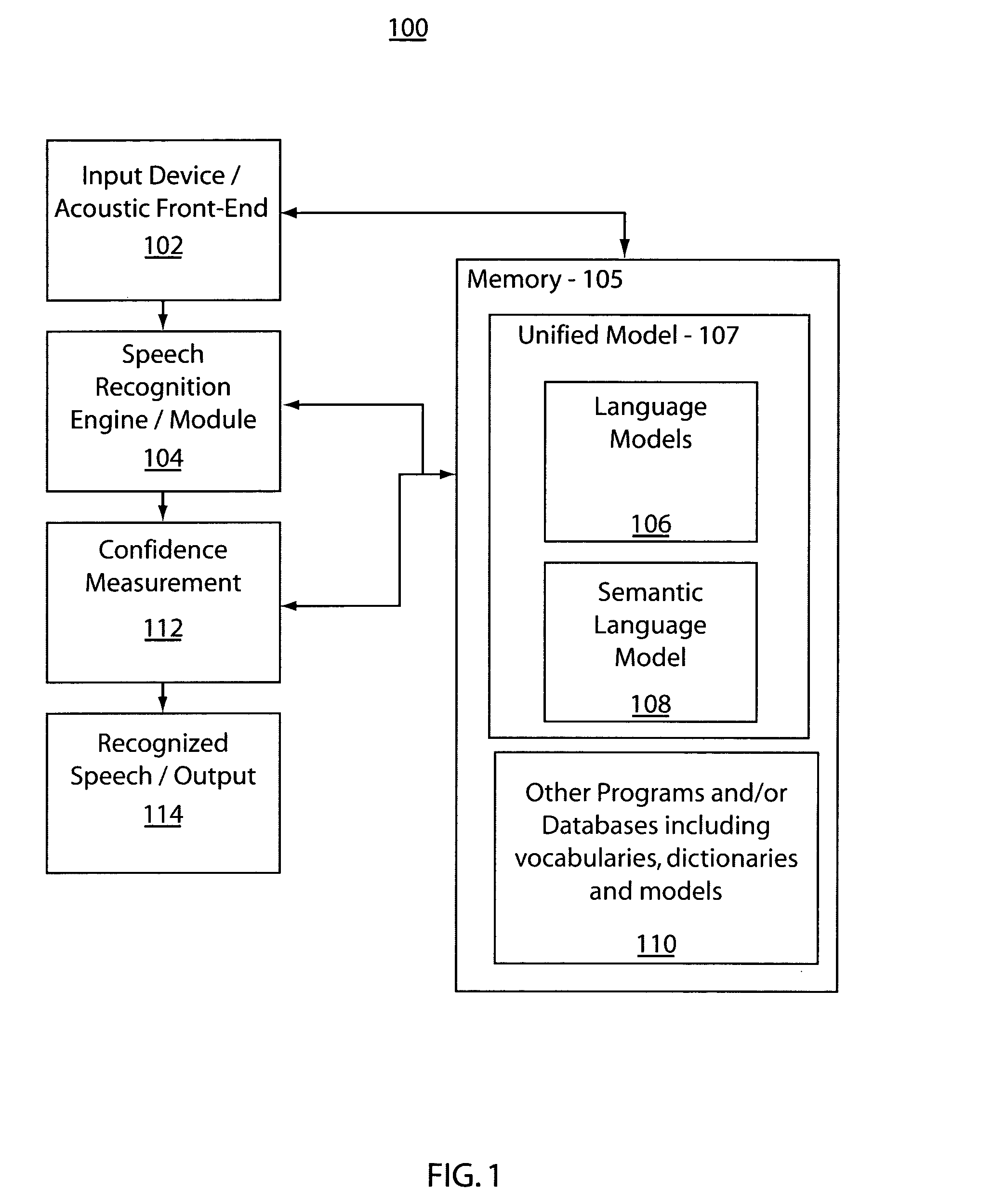

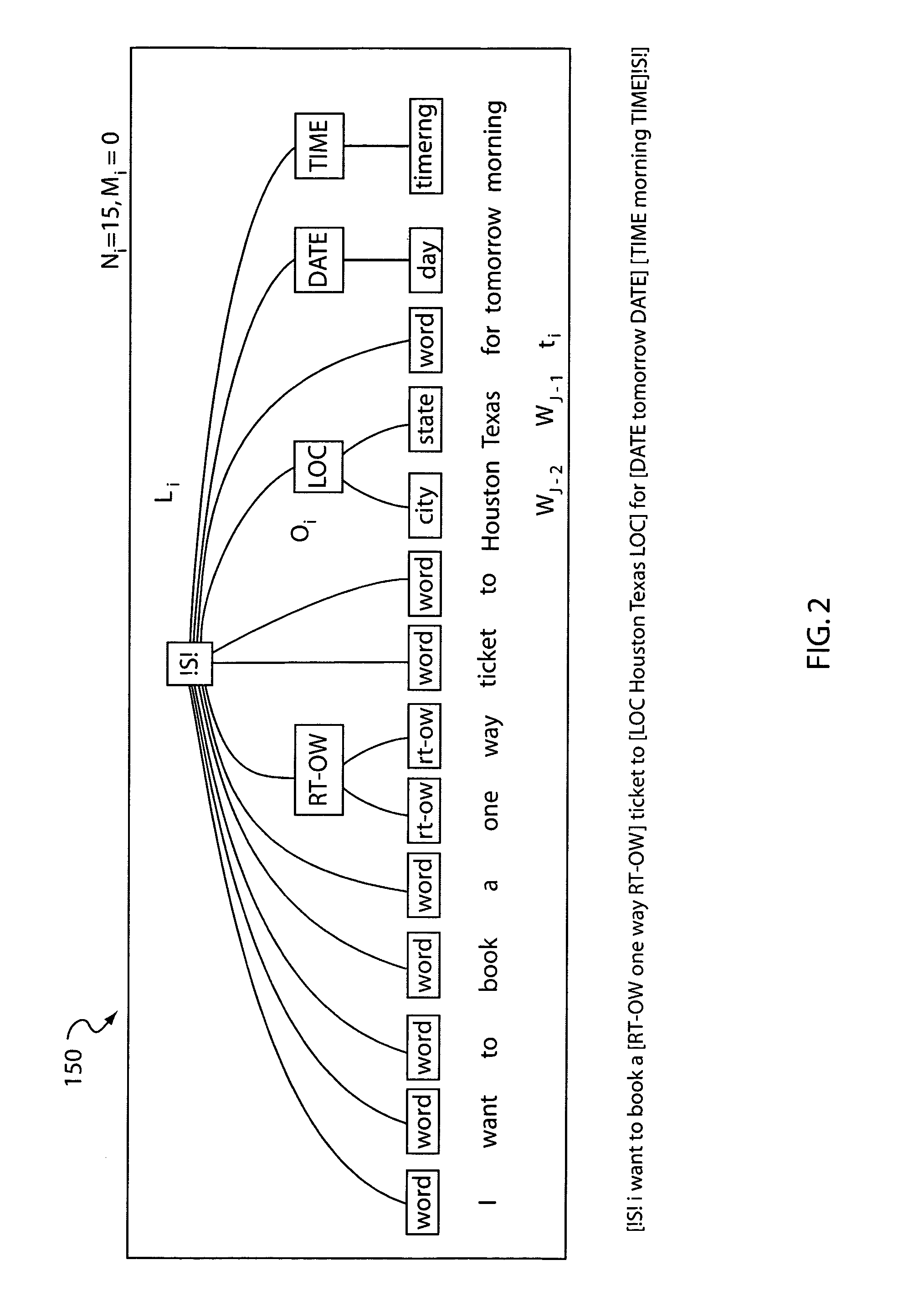

Semantic language modeling and confidence measurement

ActiveUS20050055209A1Speech recognitionSpecial data processing applicationsLanguage modellingParse tree

A system and method for speech recognition includes generating a set of likely hypotheses in recognizing speech, rescoring the likely hypotheses by using semantic content by employing semantic structured language models, and scoring parse trees to identify a best sentence according to the sentence's parse tree by employing the semantic structured language models to clarify the recognized speech.

Owner:NUANCE COMM INC

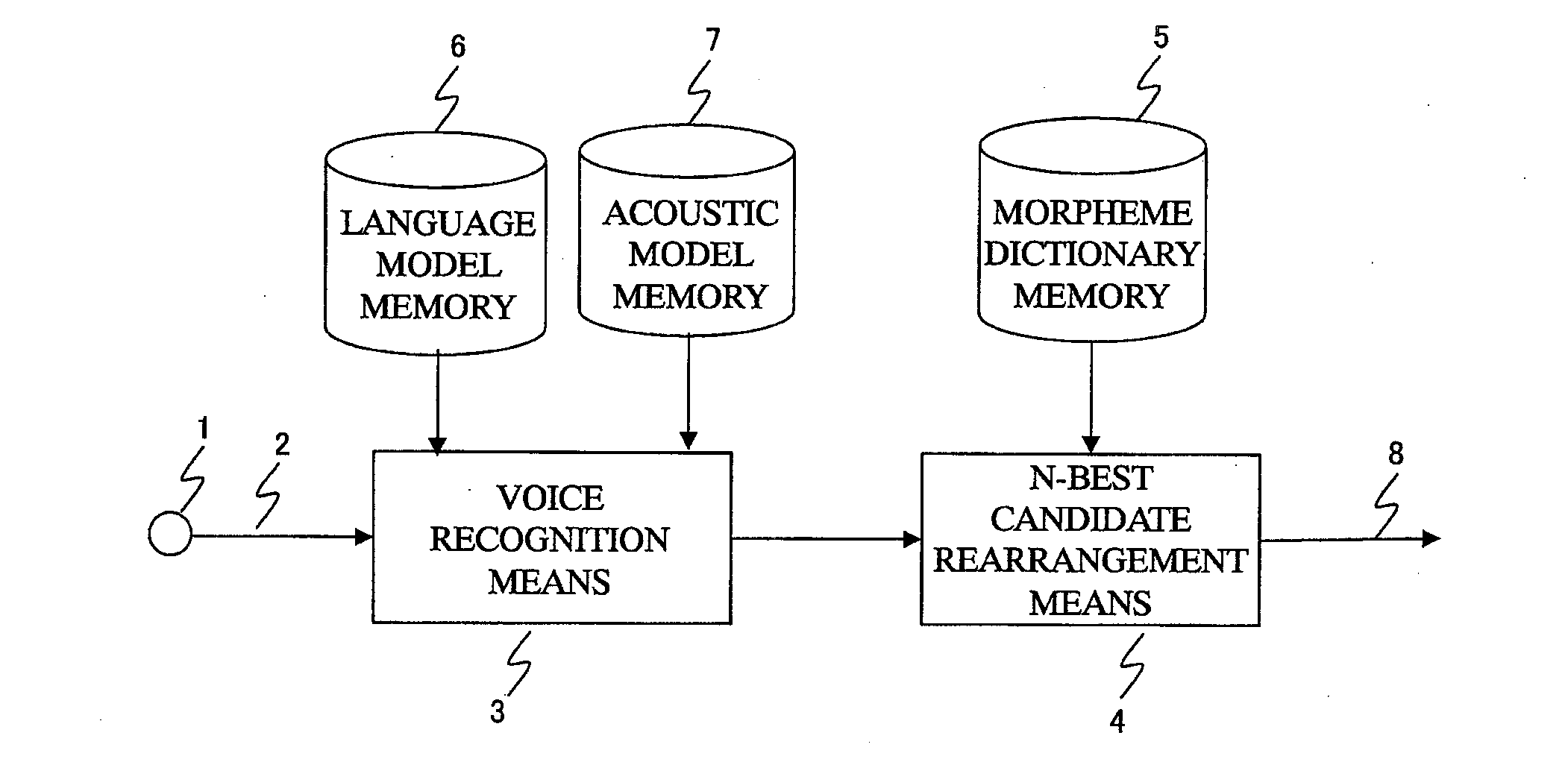

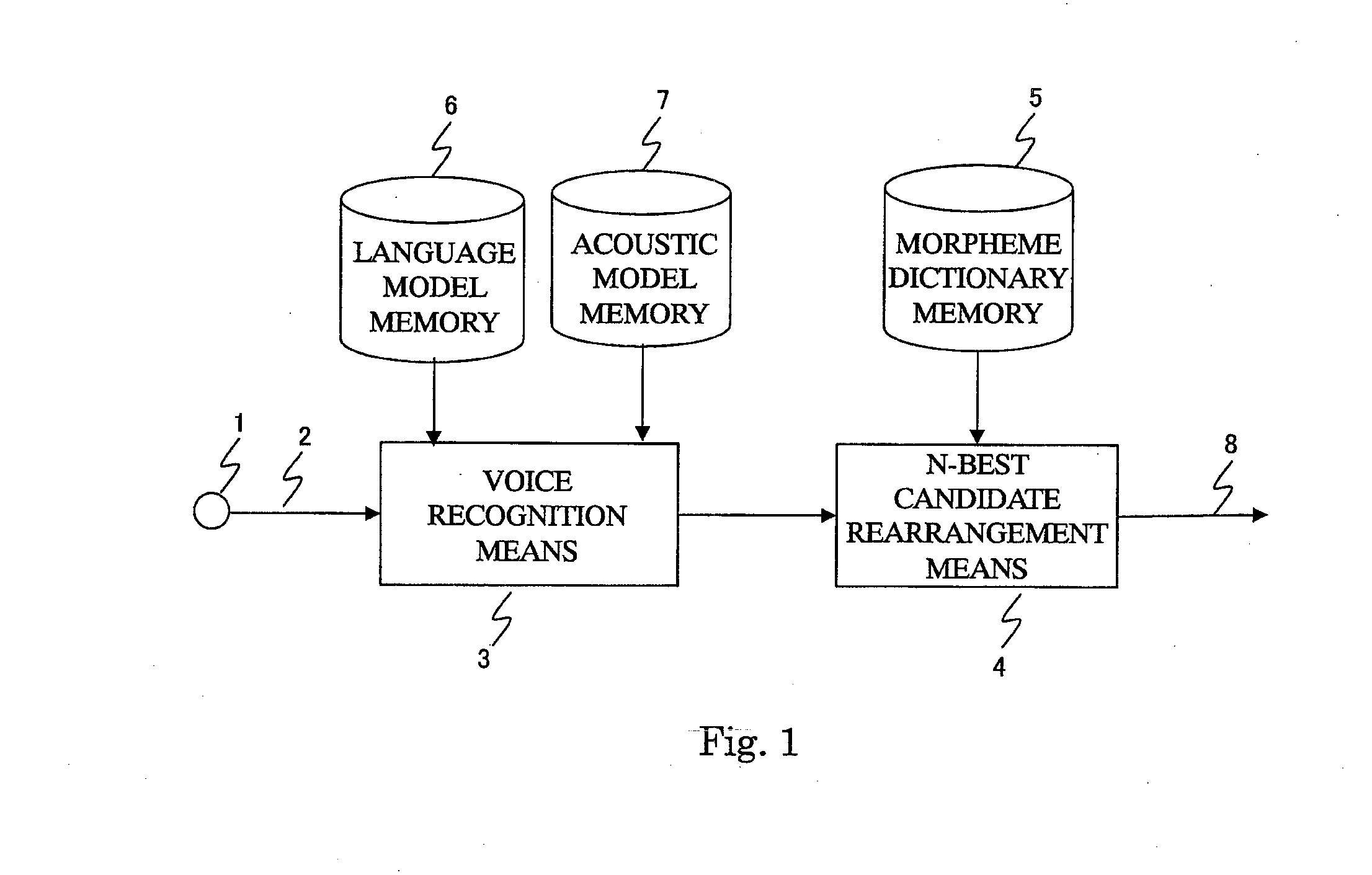

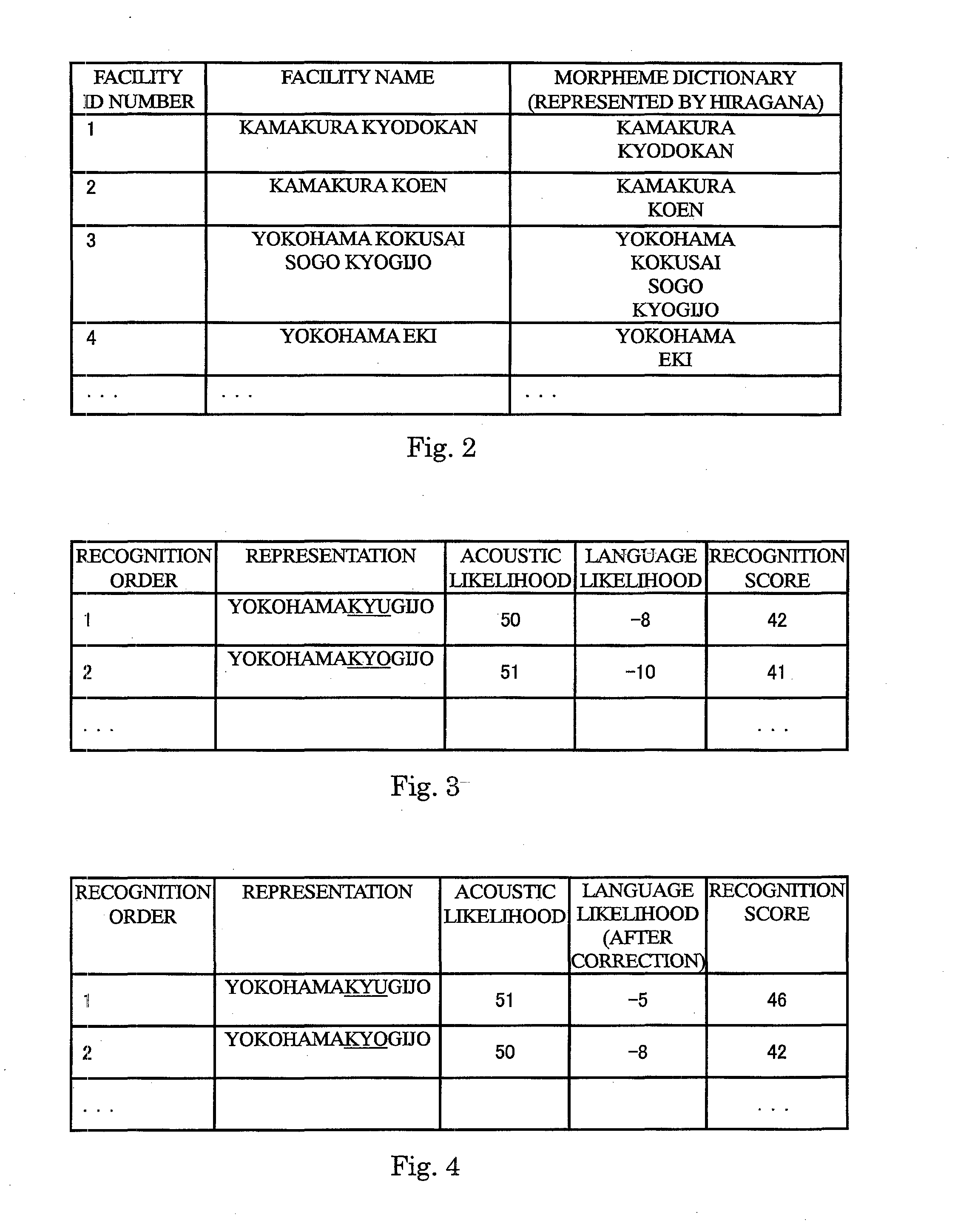

Voice recognition device

InactiveUS20120041756A1High precisionReduce the amount requiredSpeech recognitionSpecial data processing applicationsPattern matchingAcoustic model

Voice recognition is realized by a pattern matching with a voice pattern model, and when a large number of paraphrased words are required for one facility, such as a name of a hotel or a tourist facility, the pattern matching needs to be performed with the voice pattern models of all the paraphrased words, resulting in an enormous amount of calculation. Further, it is difficult to generate all the paraphrased words, and a large amount of labor is required. A voice recognition device includes: voice recognition means for applying the voice recognition to an input voice by using a language model and an acoustic model, and outputting a predetermined number of recognition results each including a set of a recognition score and a text representation; and N-best candidate rearrangement means for: comparing the recognition result to a morpheme dictionary held in a morpheme dictionary memory; checking whether a representation of the recognition result can be expressed by any one of combinations of the morphemes of the morpheme dictionaries; correcting the recognition score when the representation can be expressed; and rearranging an order according to the corrected recognition score so as to acquire recognition results.

Owner:MITSUBISHI ELECTRIC CORP

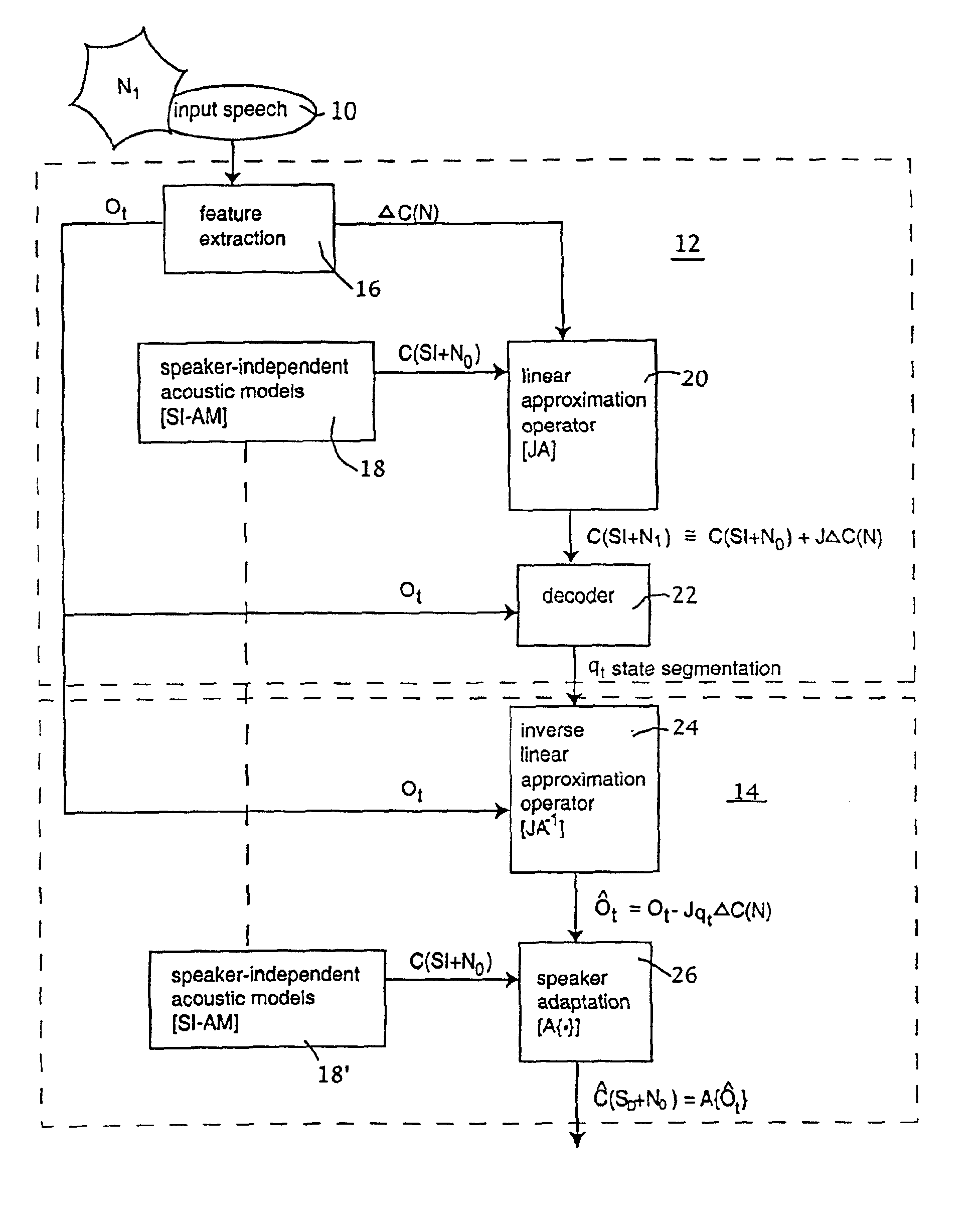

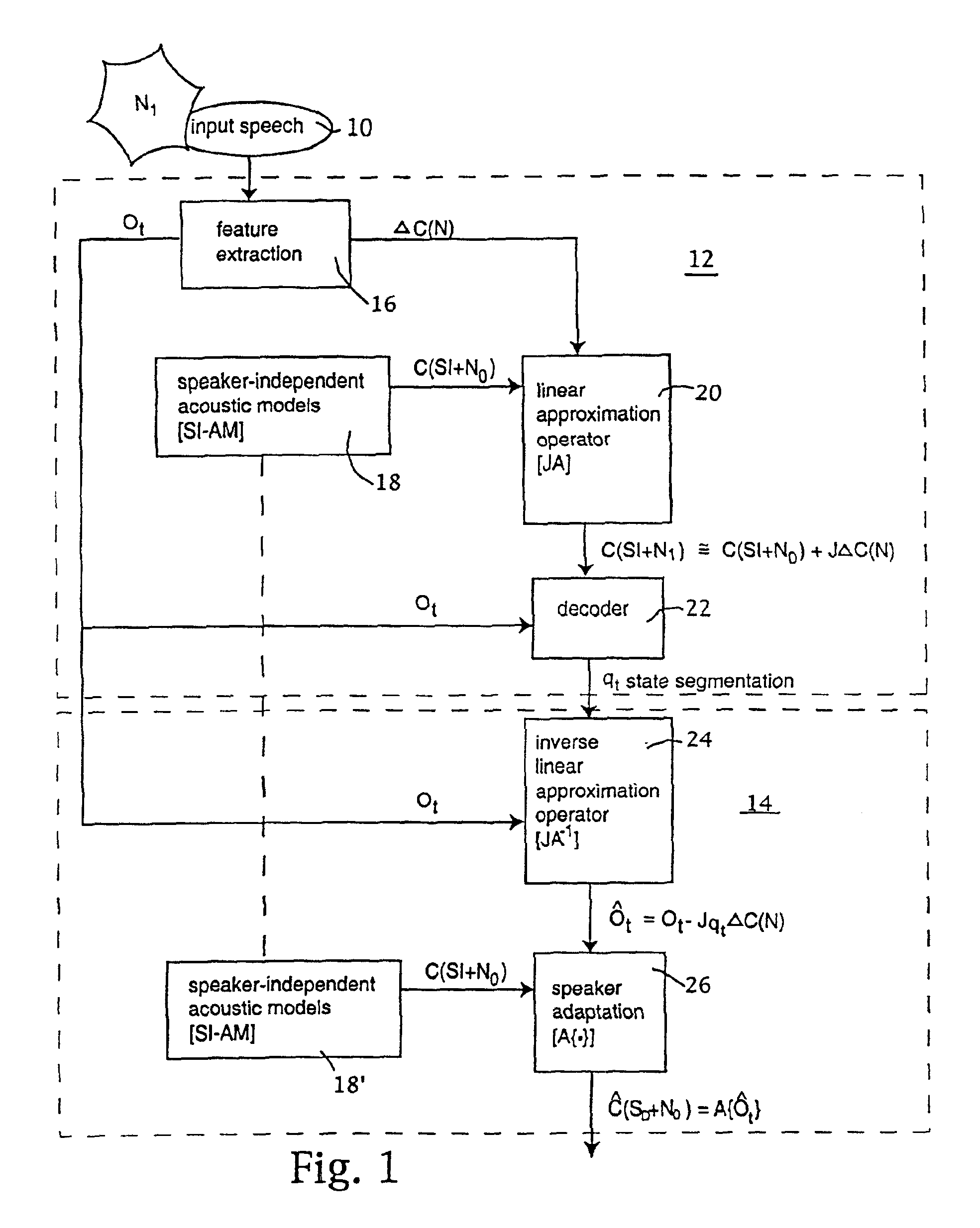

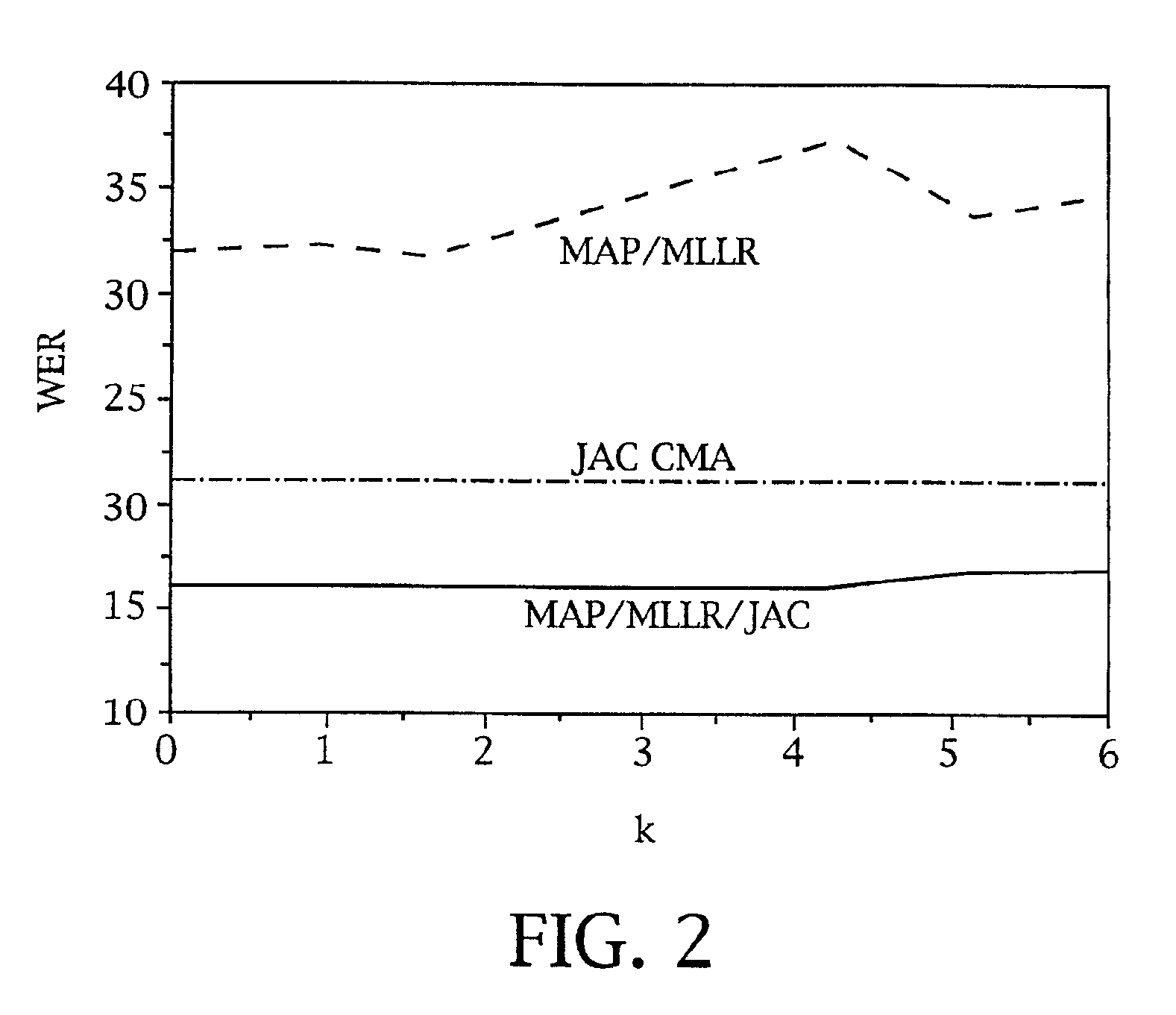

Speaker and environment adaptation based on linear separation of variability sources

Owner:SOVEREIGN PEAK VENTURES LLC

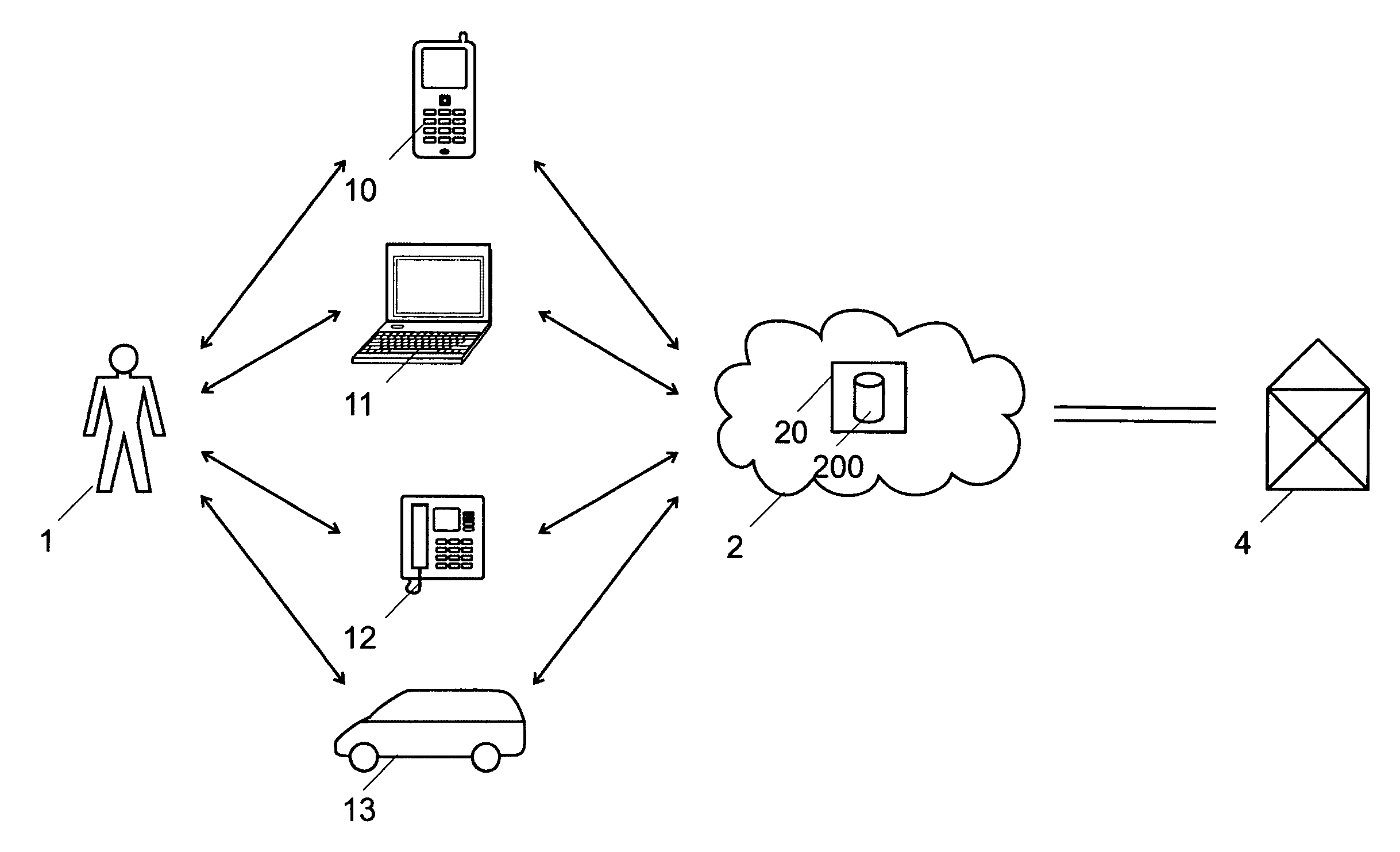

Method for personalization of a service

ActiveUS8005680B2Quality improvementEnhanced authenticationDevices with voice recognitionAutomatic call-answering/message-recording/conversation-recordingService provisionSpeech sound

Method for building a multimodal business channel between users, service providers and network operators. The service provided to the users is personalized with a user's profile derived from language and speech models delivered by a speech recognition system. The language and speech models are synchronized with user dependent language models stored in a central platform made accessible to various value added service providers. They may also be copied into various devices of the user. Natural language processing algorithms may be used for extracting topics from user's dialogues.

Owner:HUAWEI TECH CO LTD

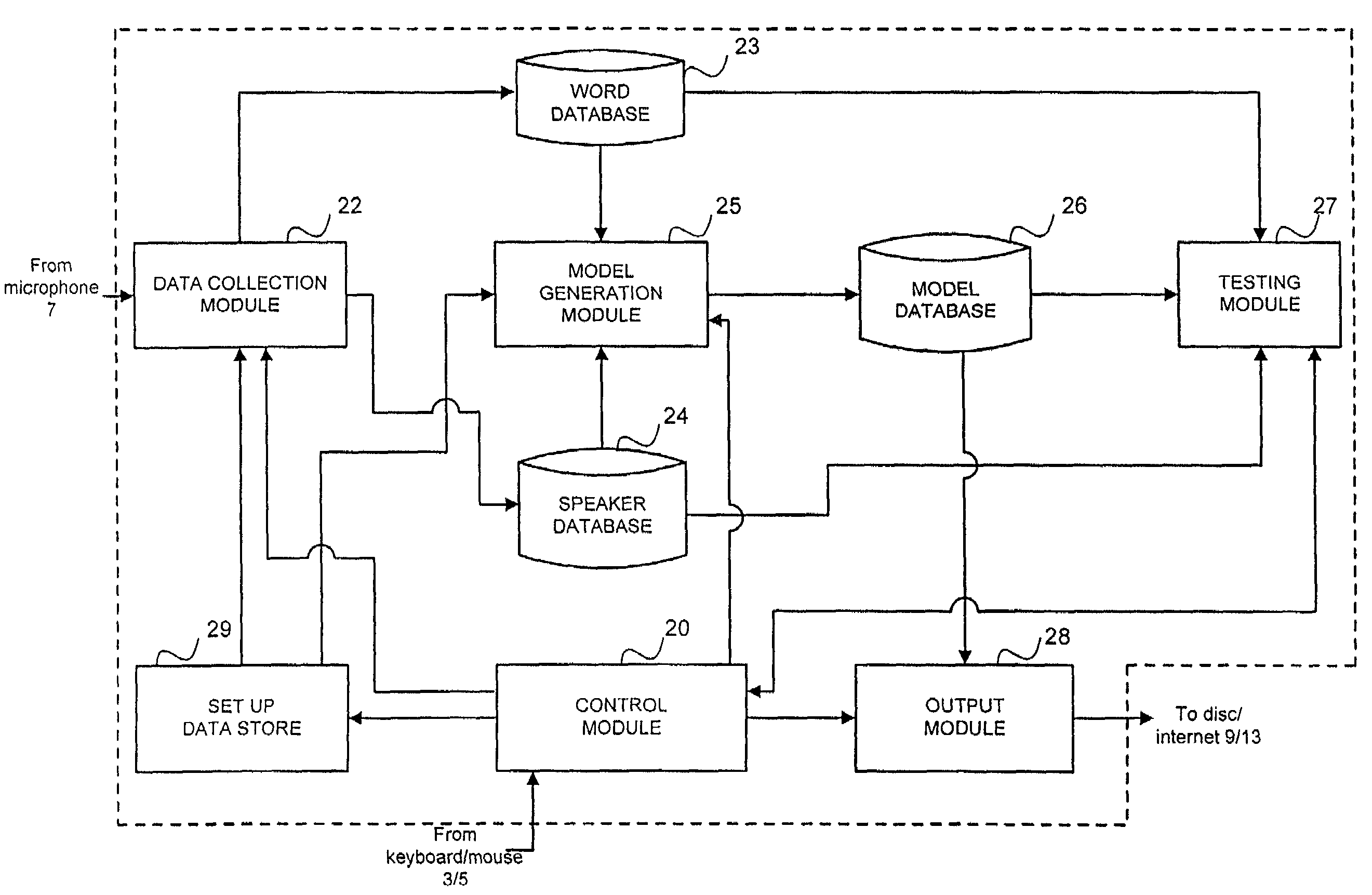

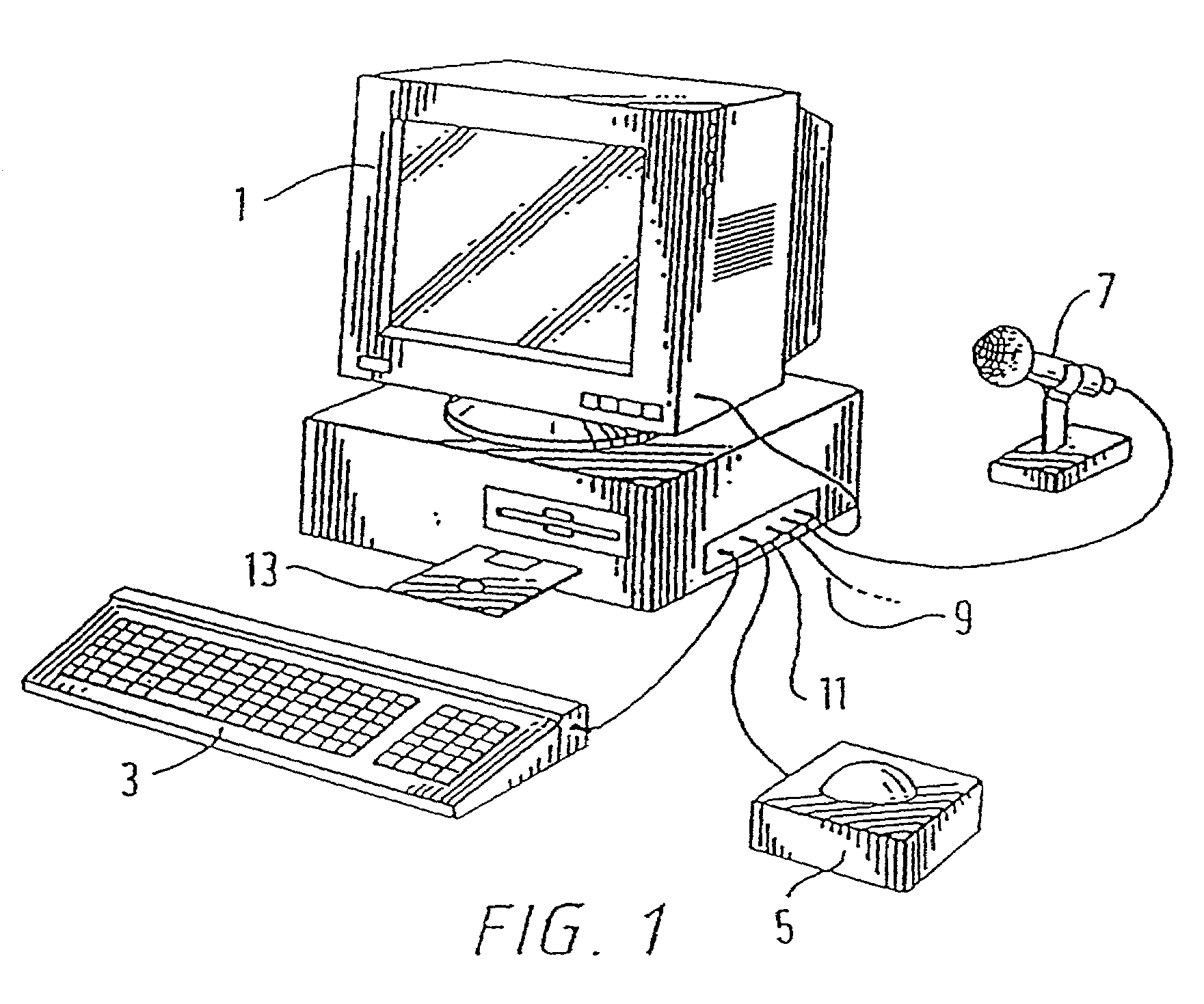

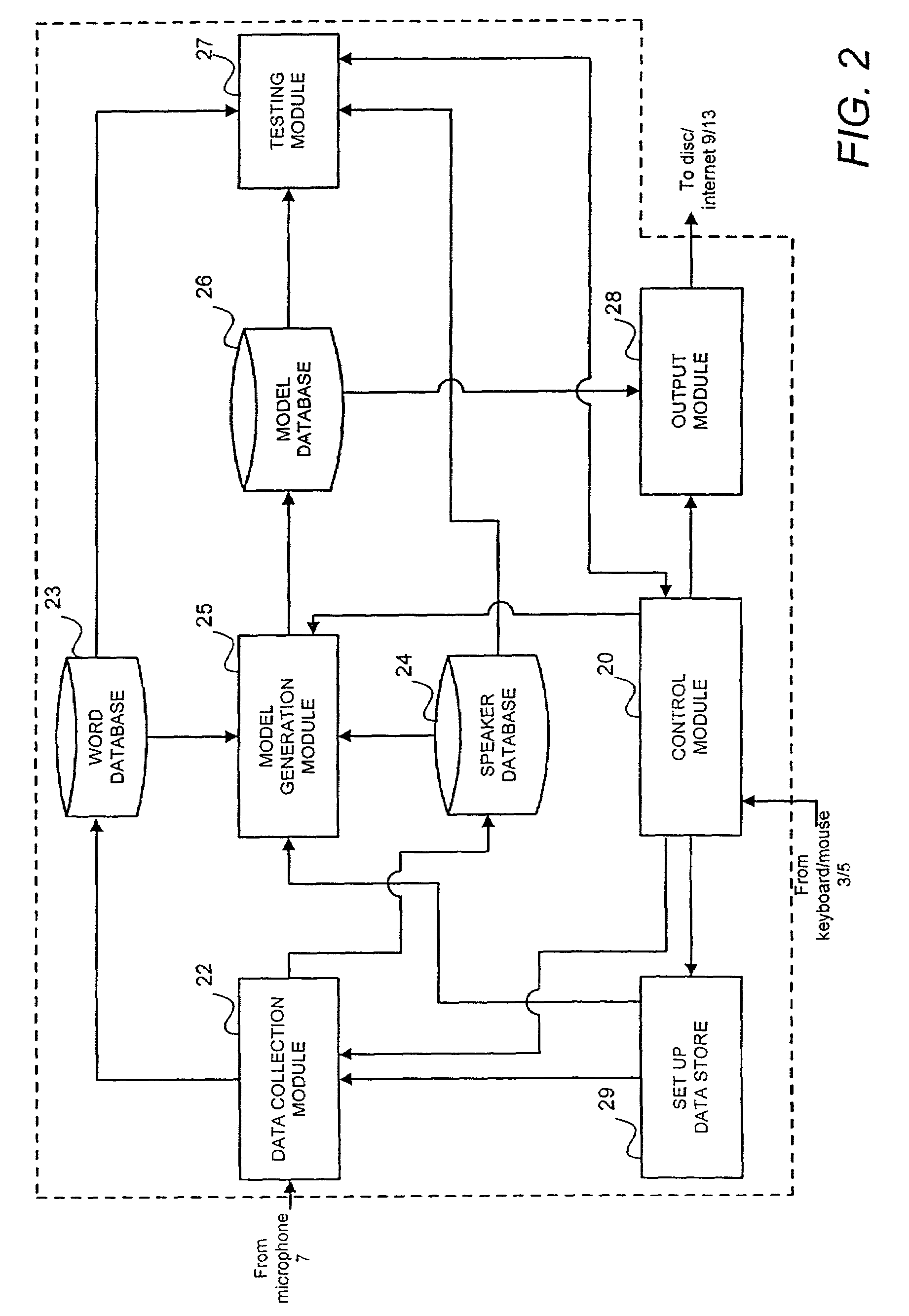

User interface for speech model generation and testing

A computer system is provided including a control module 20 and data collection module 22 which generate user interfaces enabling a user to identify a vocabulary and a number of speakers from whom utterances are to be obtained. The data collection module 22 then co-ordinates the collection of utterance data for the words in the vocabulary from these speakers and stores the data in a speaker database 24. When a satisfactory set of utterances have been collected the utterances are passed to a model generation module 25 which generates a speech model using the utterances. The speech model is stored by the model generation module 25 in a model database 26. The generated model stored within the model database 26 can then be tested using a testing module 27 and other utterances stored within the speaker database 24. If the performance of the model is unsatisfactory further or different utterances can be used to generate new models for storage within the model database 26. When a speech model is determined to be satisfactory the control module 20 can invoke the output module 28 to output a copy of the model.

Owner:CANON EURPOPA NV

Attribute-based word modeling

An attribute-based speech recognition system is described. A speech pre-processor receives input speech and produces a sequence of acoustic observations representative of the input speech. A database of context-dependent acoustic models characterize a probability of a given sequence of sounds producing the sequence of acoustic observations. Each acoustic model includes phonetic attributes and suprasegmental non-phonetic attributes. A finite state language model characterizes a probability of a given sequence of words being spoken. A one-pass decoder compares the sequence of acoustic observations to the acoustic models and the language model, and outputs at least one word sequence representative of the input speech.

Owner:MULTIMODAL TECH INC

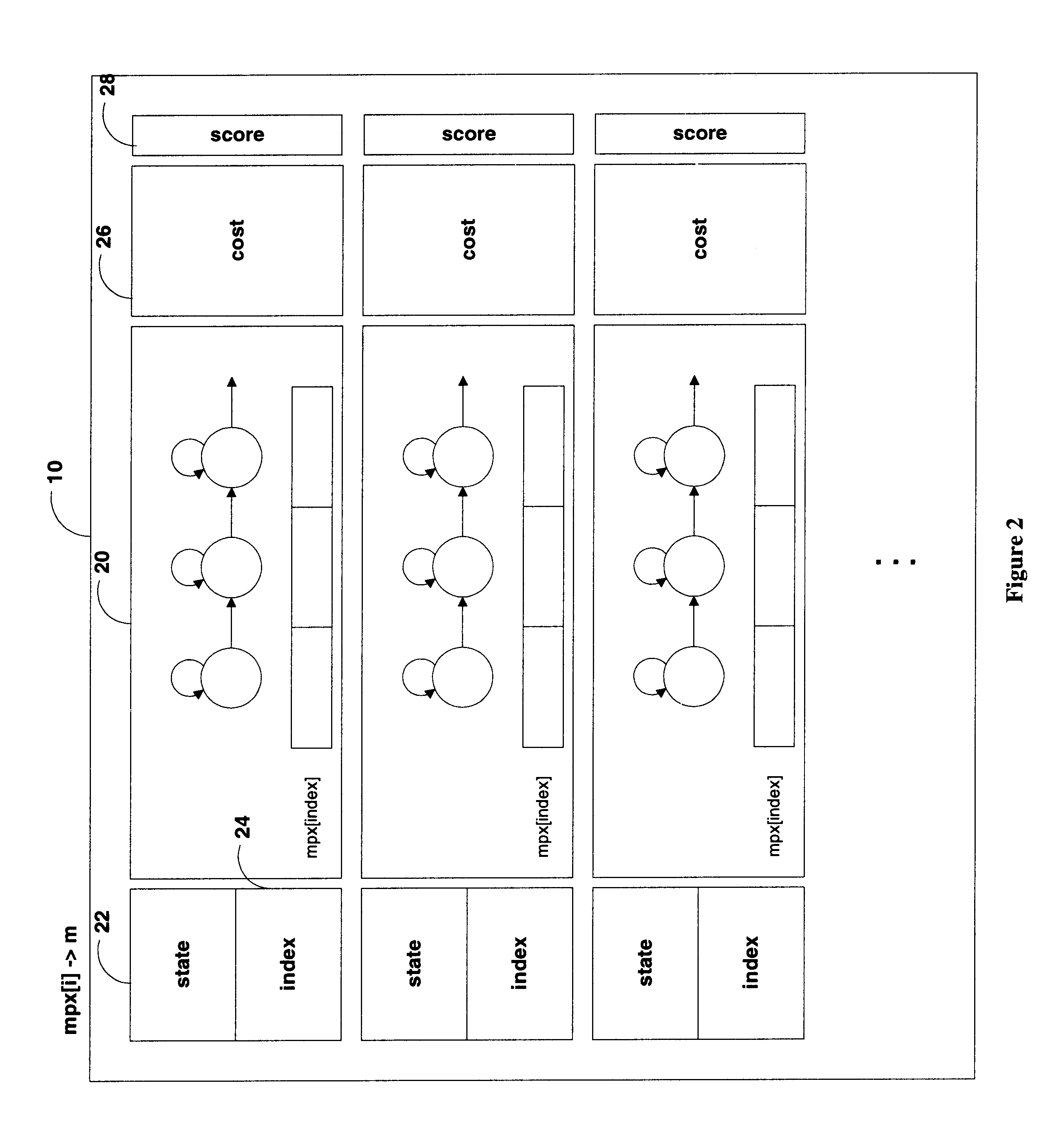

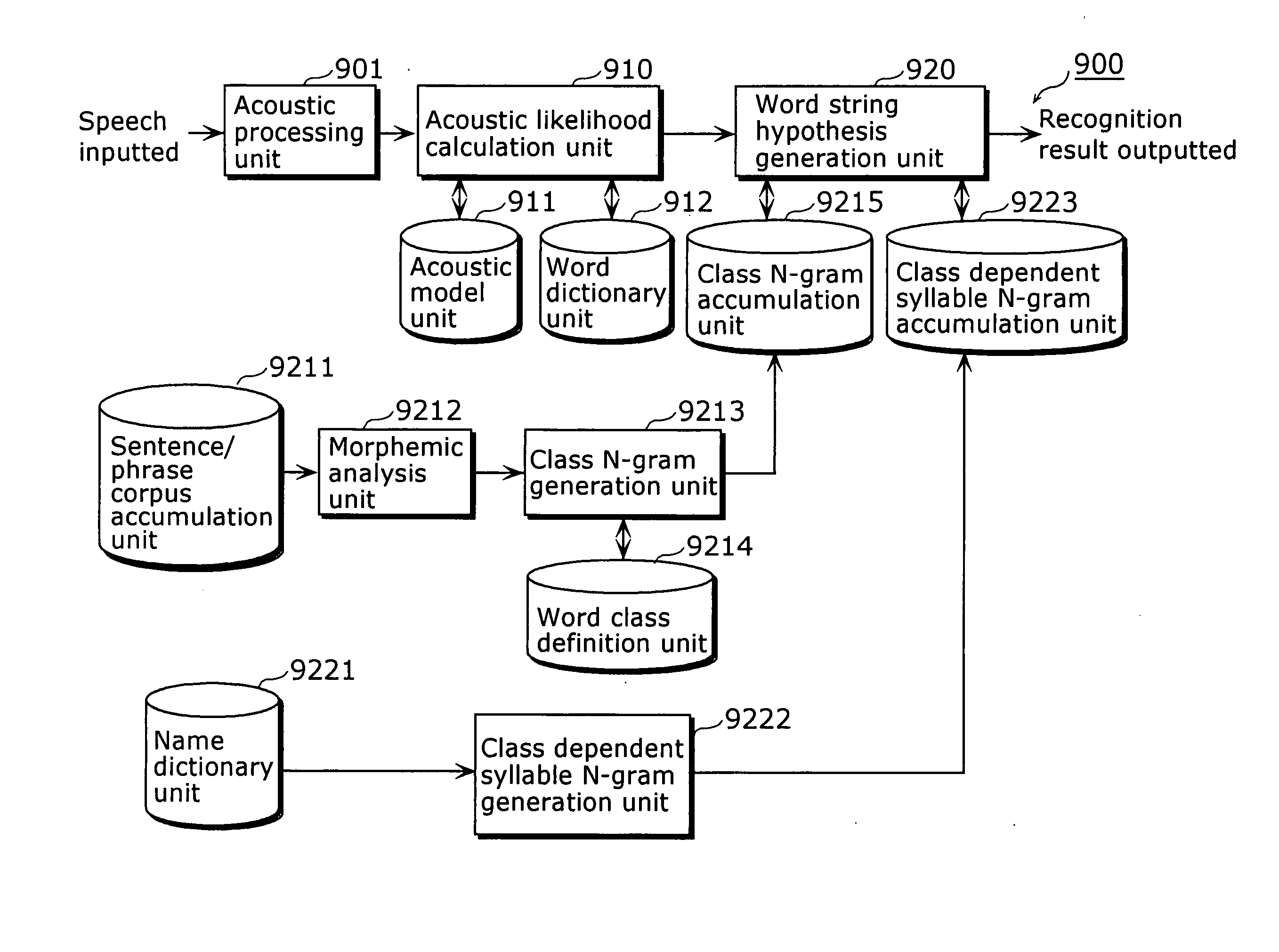

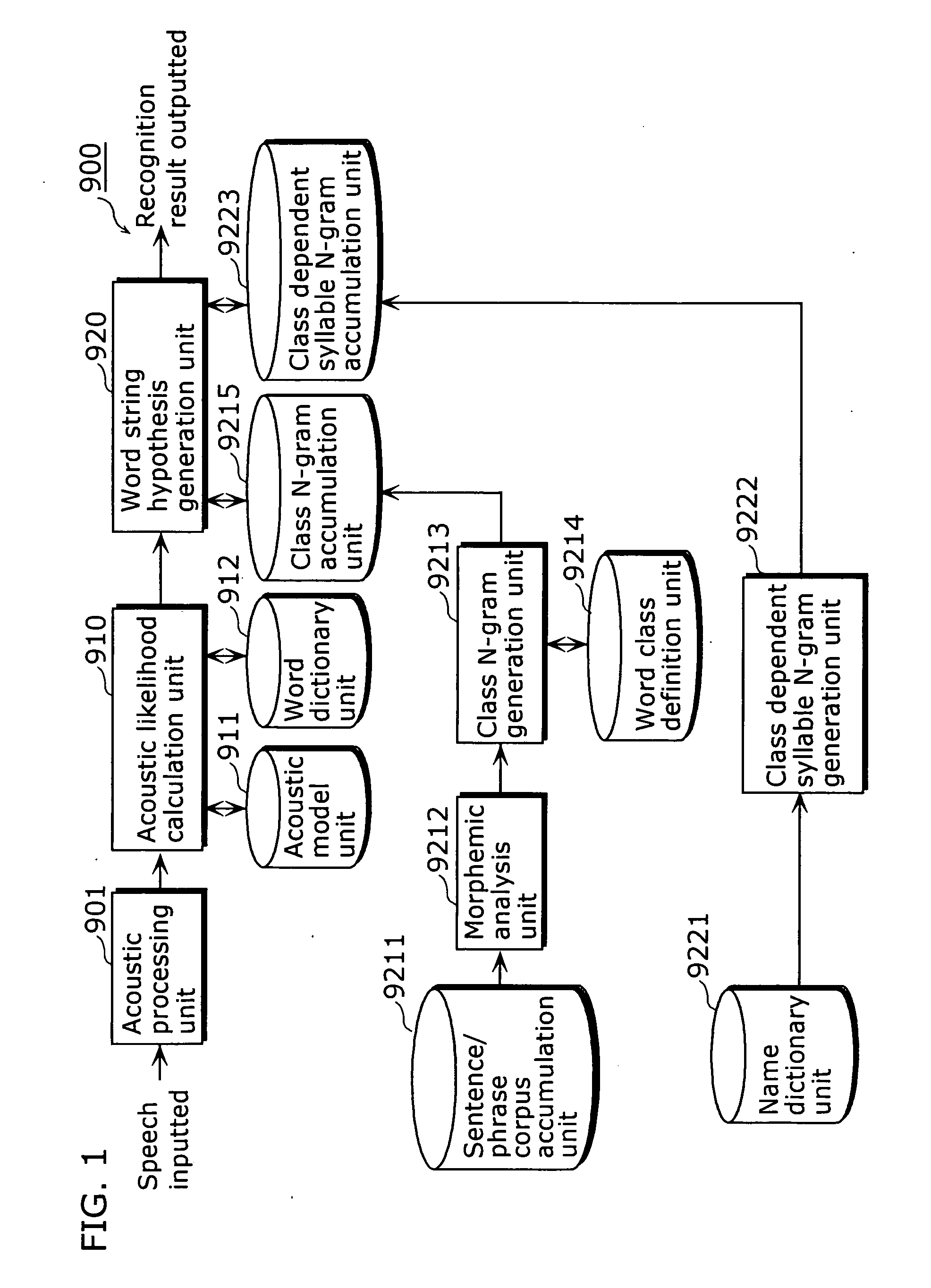

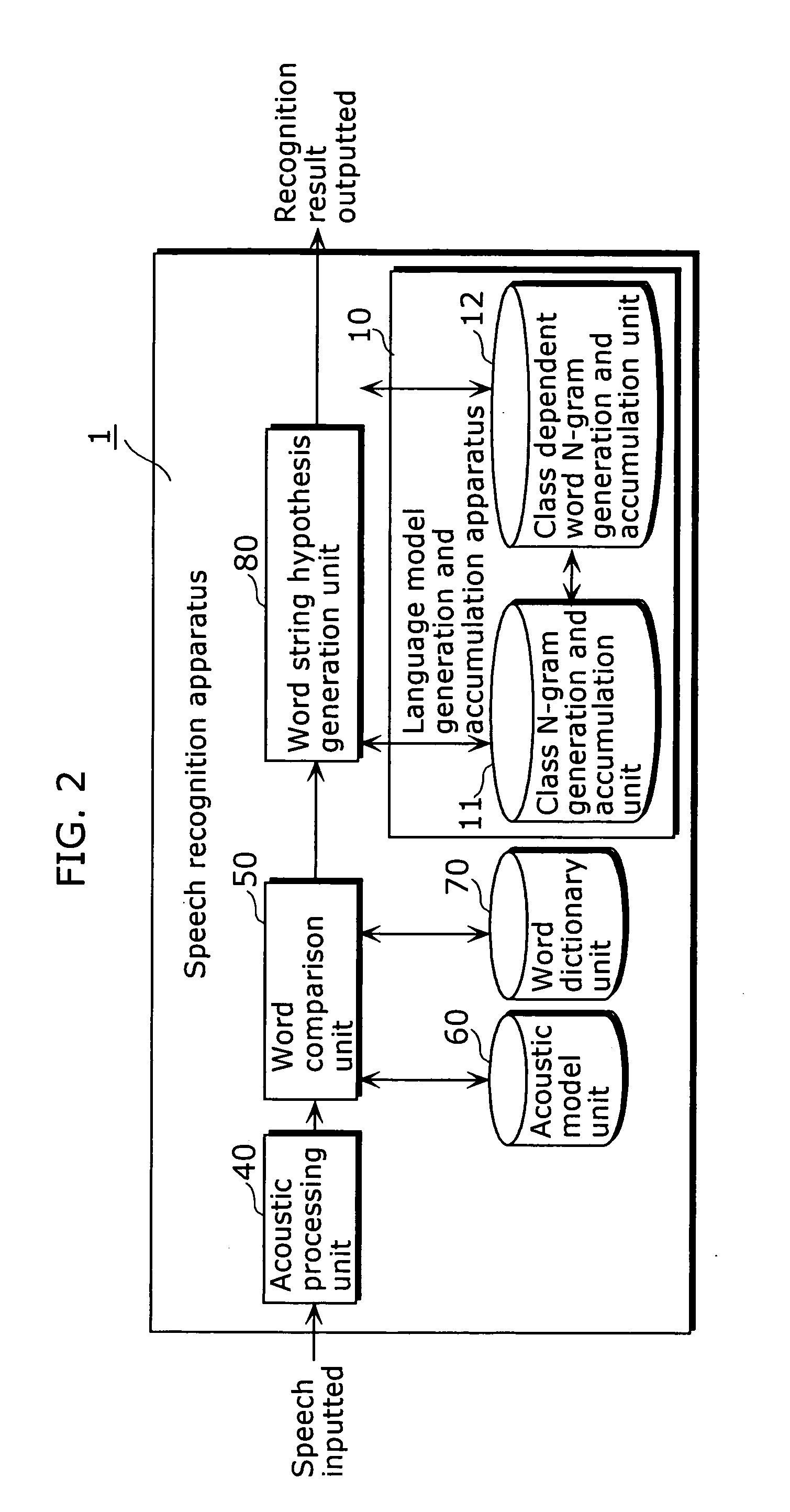

Language model generation and accumulation device, speech recognition device, language model creation method, and speech recognition method

InactiveUS20050256715A1Improve recognition accuracyHas practical valueNatural language data processingSpeech recognitionAcousticsSpeech sound

A language model generation and accumulation apparatus (10) that generates and accumulates language models for speech recognition is comprised of: a higher-level N-gram generation and accumulation unit (11) that generates and accumulates a higher-level N-gram language model obtained by modeling each of a plurality of texts as a string of words including a word string class having a specific linguistic property; and a lower class dependent word N-gram generation and accumulation unit (12) that generates and accumulates a lower-level N-gram language model obtained by modeling a sequence of words included in each word string class.

Owner:PANASONIC CORP

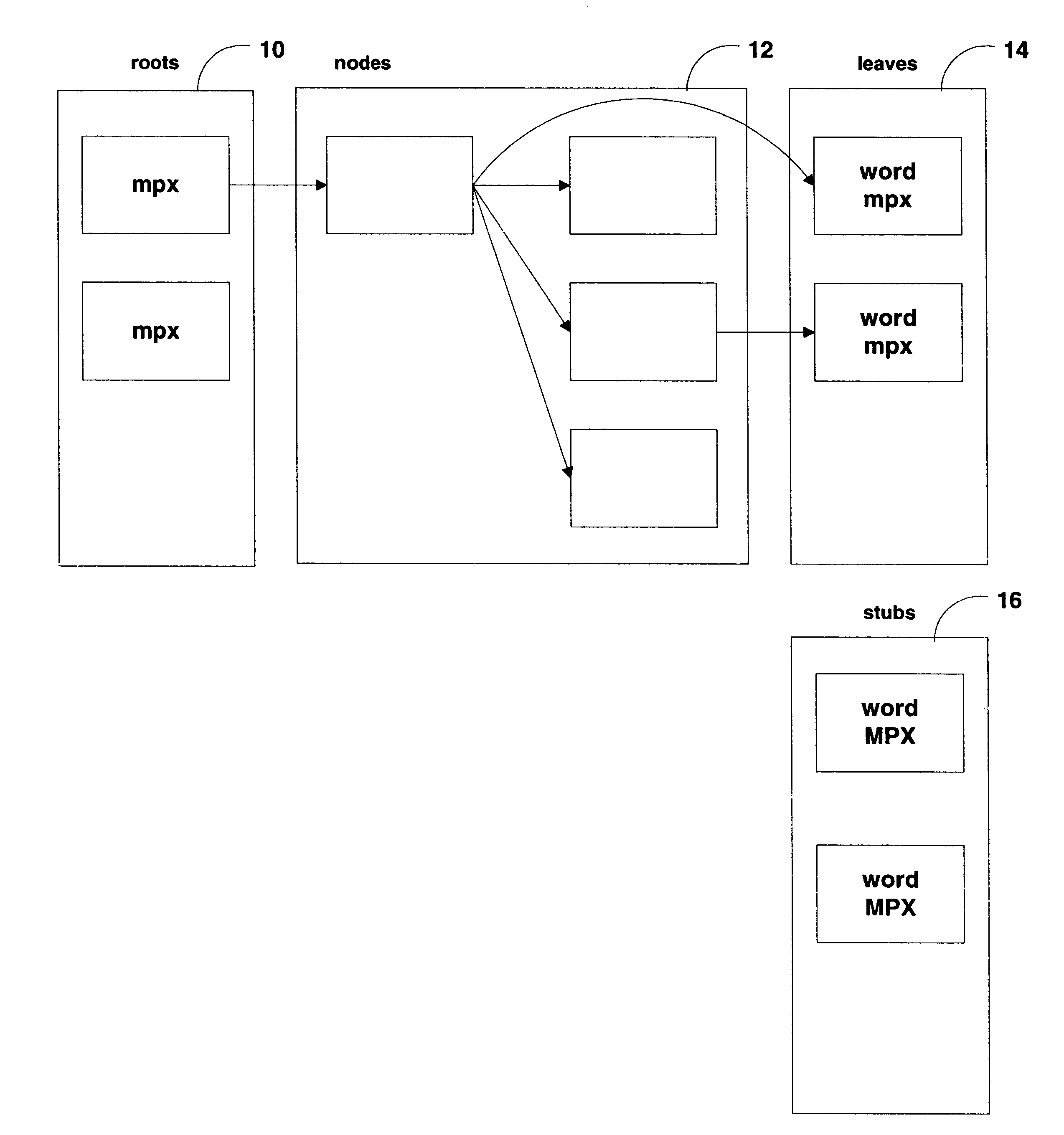

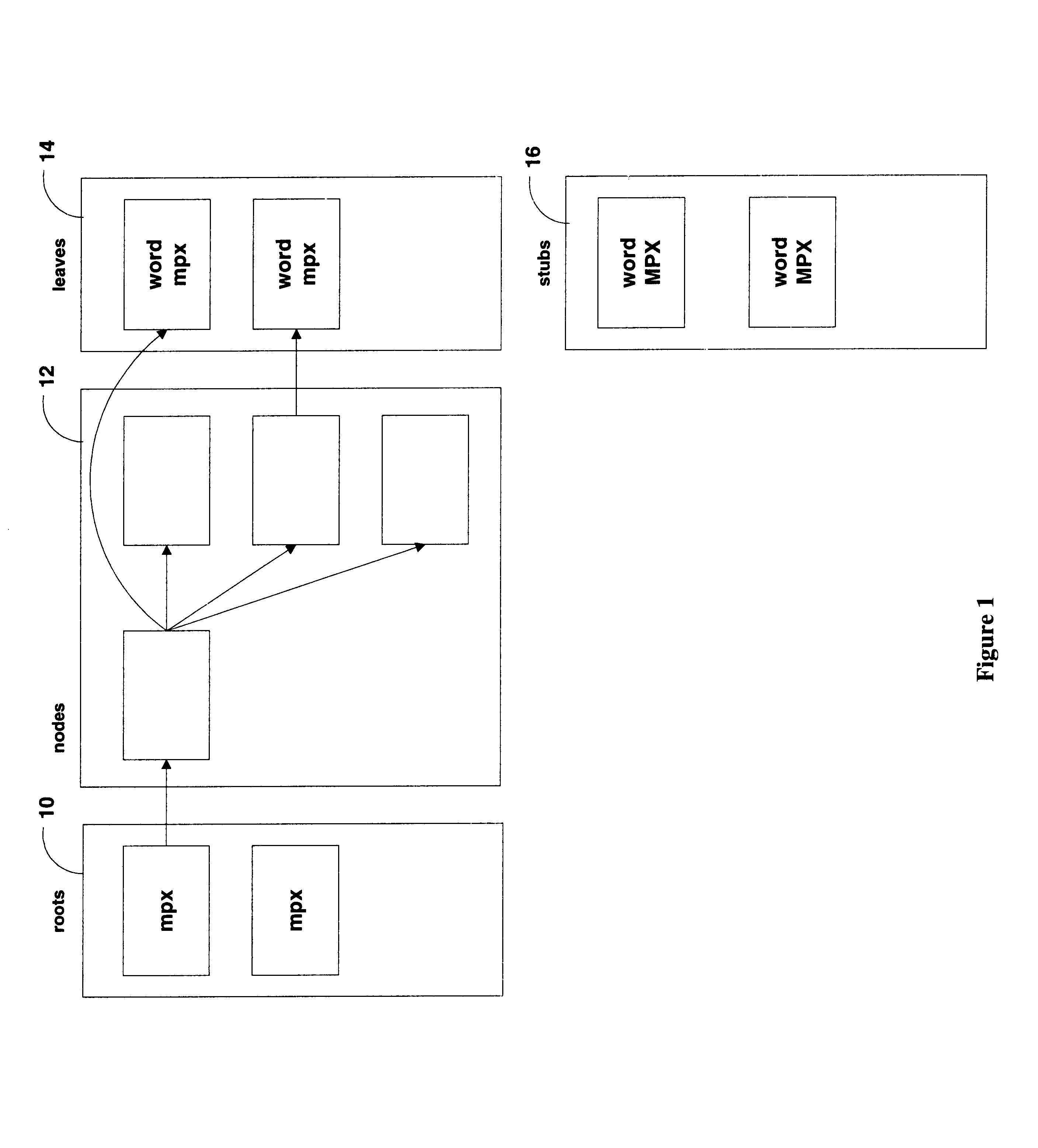

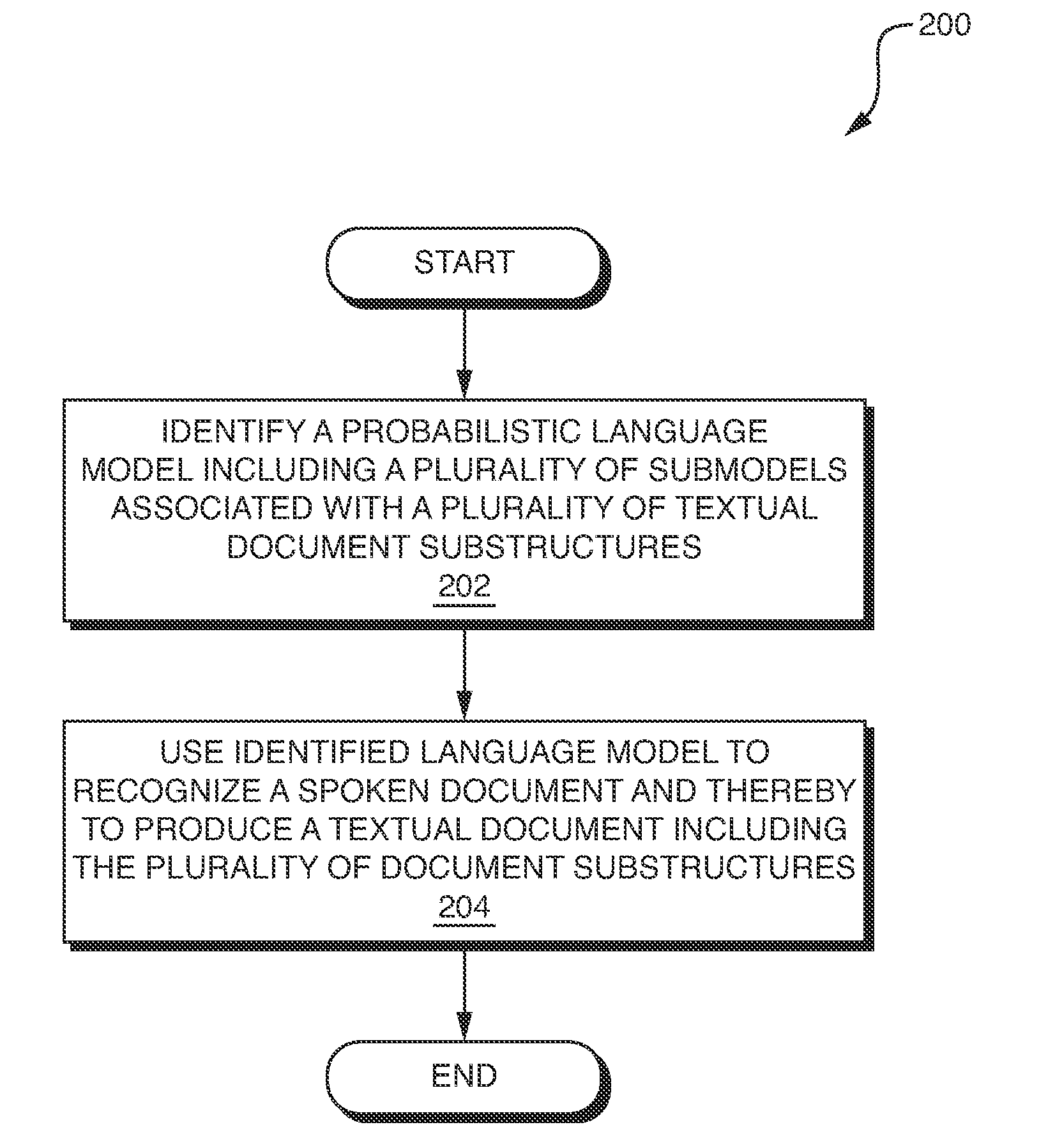

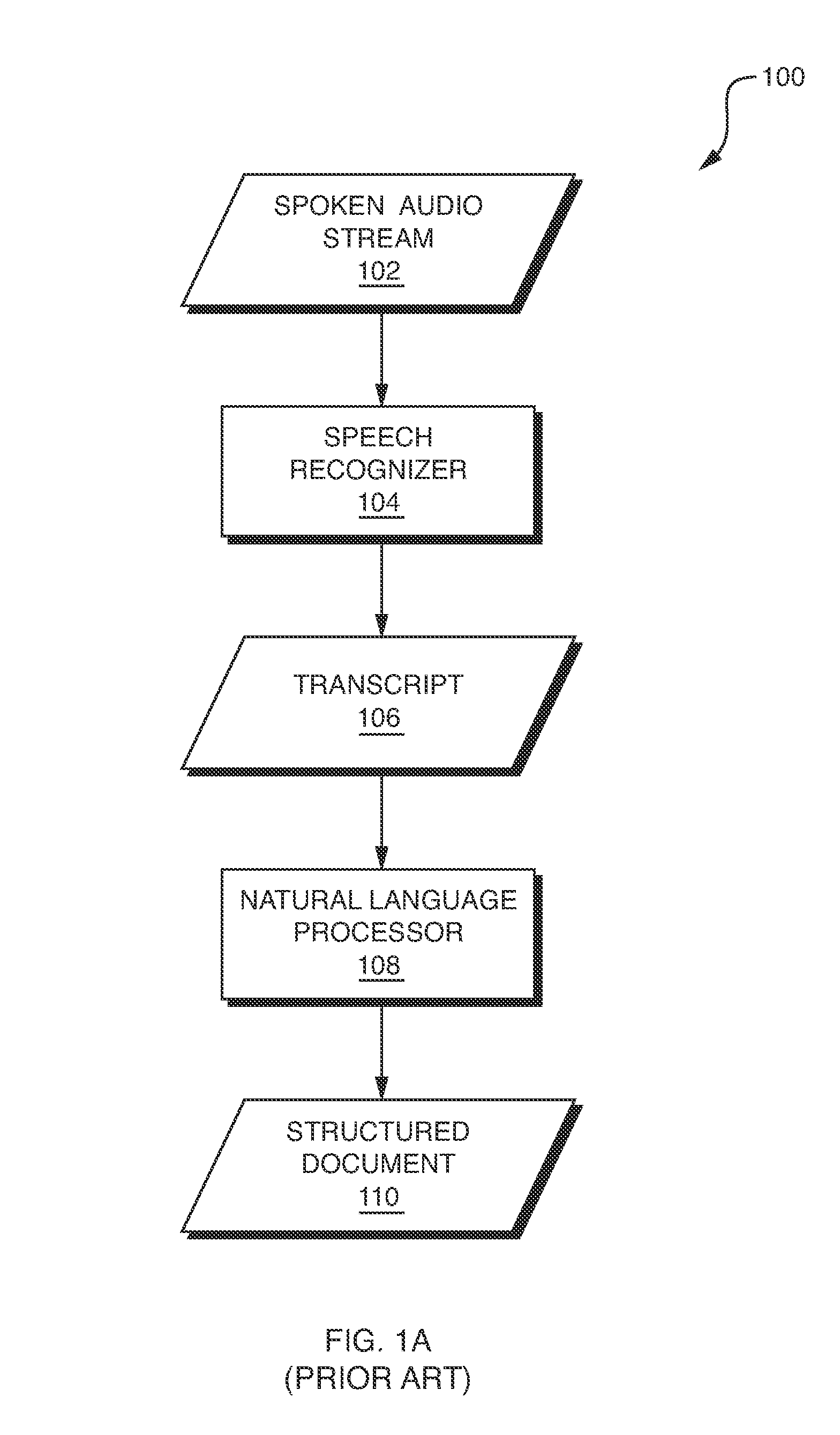

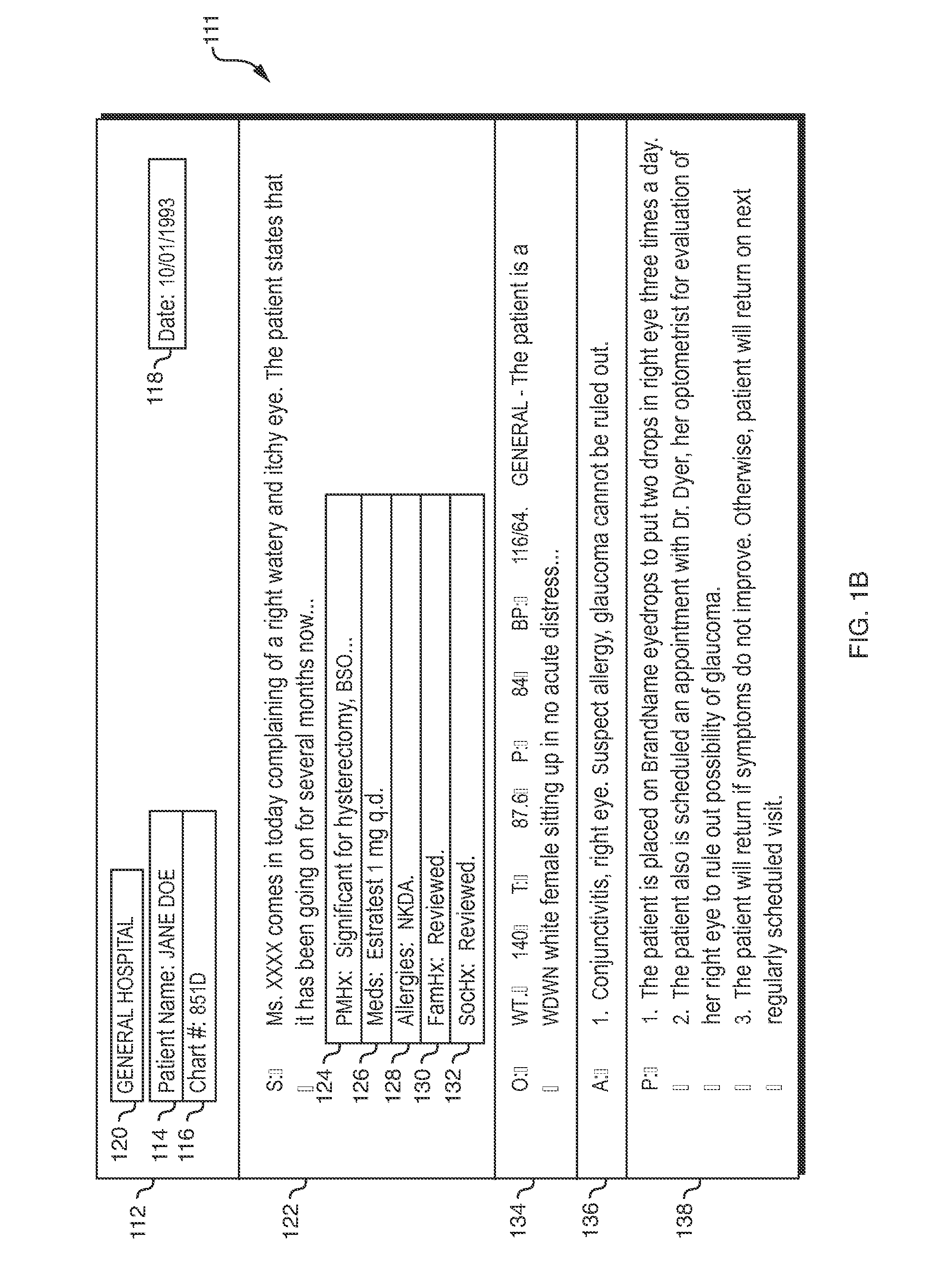

Automated Extraction of Semantic Content and Generation of a Structured Document from Speech

Techniques are disclosed for automatically generating structured documents based on speech, including identification of relevant concepts and their interpretation. In one embodiment, a structured document generator uses an integrated process to generate a structured textual document (such as a structured textual medical report) based on a spoken audio stream. The spoken audio stream may be recognized using a language model which includes a plurality of sub-models arranged in a hierarchical structure. Each of the sub-models may correspond to a concept that is expected to appear in the spoken audio stream. Different portions of the spoken audio stream may be recognized using different sub-models. The resulting structured textual document may have a hierarchical structure that corresponds to the hierarchical structure of the language sub-models that were used to generate the structured textual document.

Owner:MULTIMODAL TECH INC

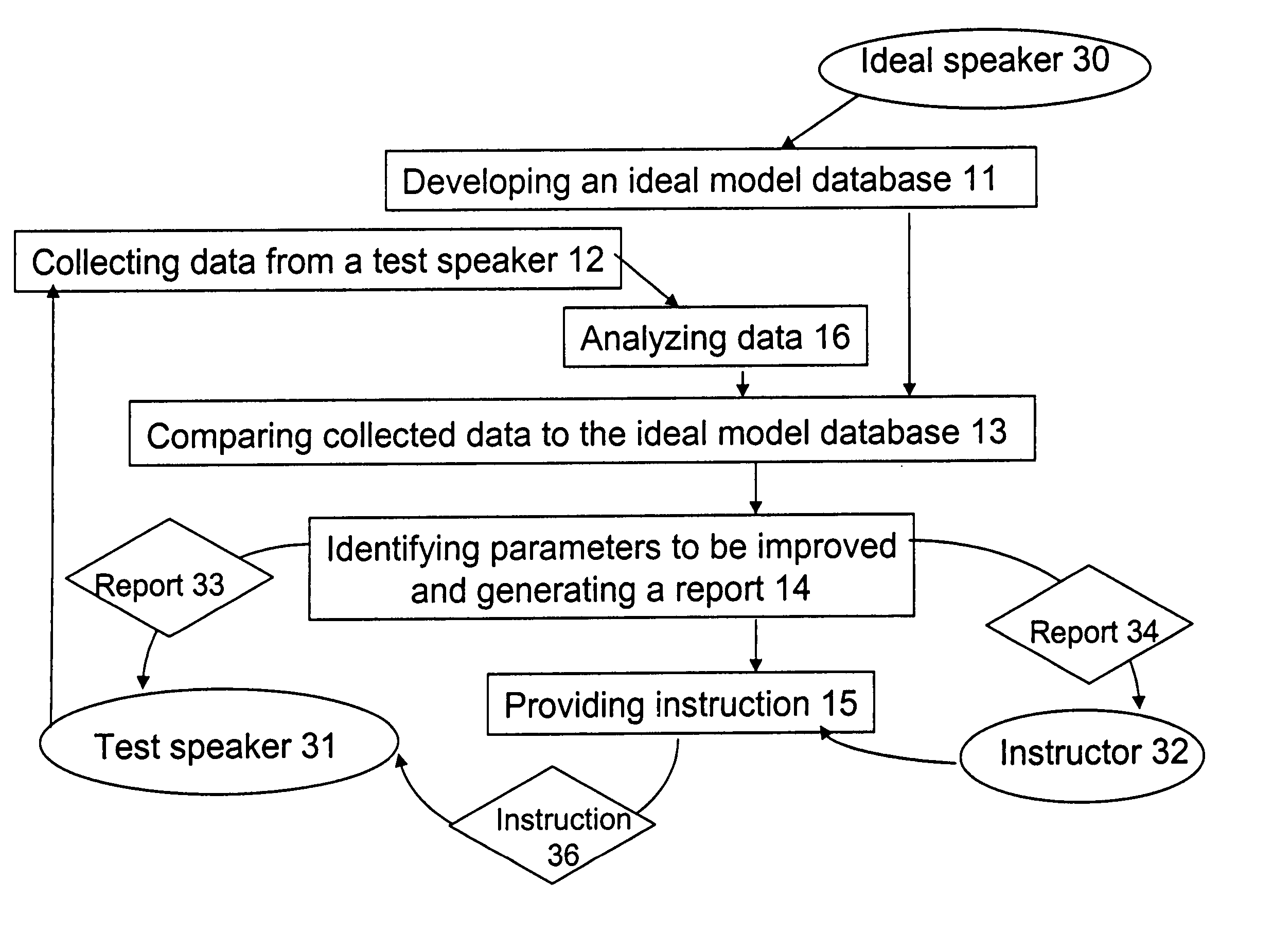

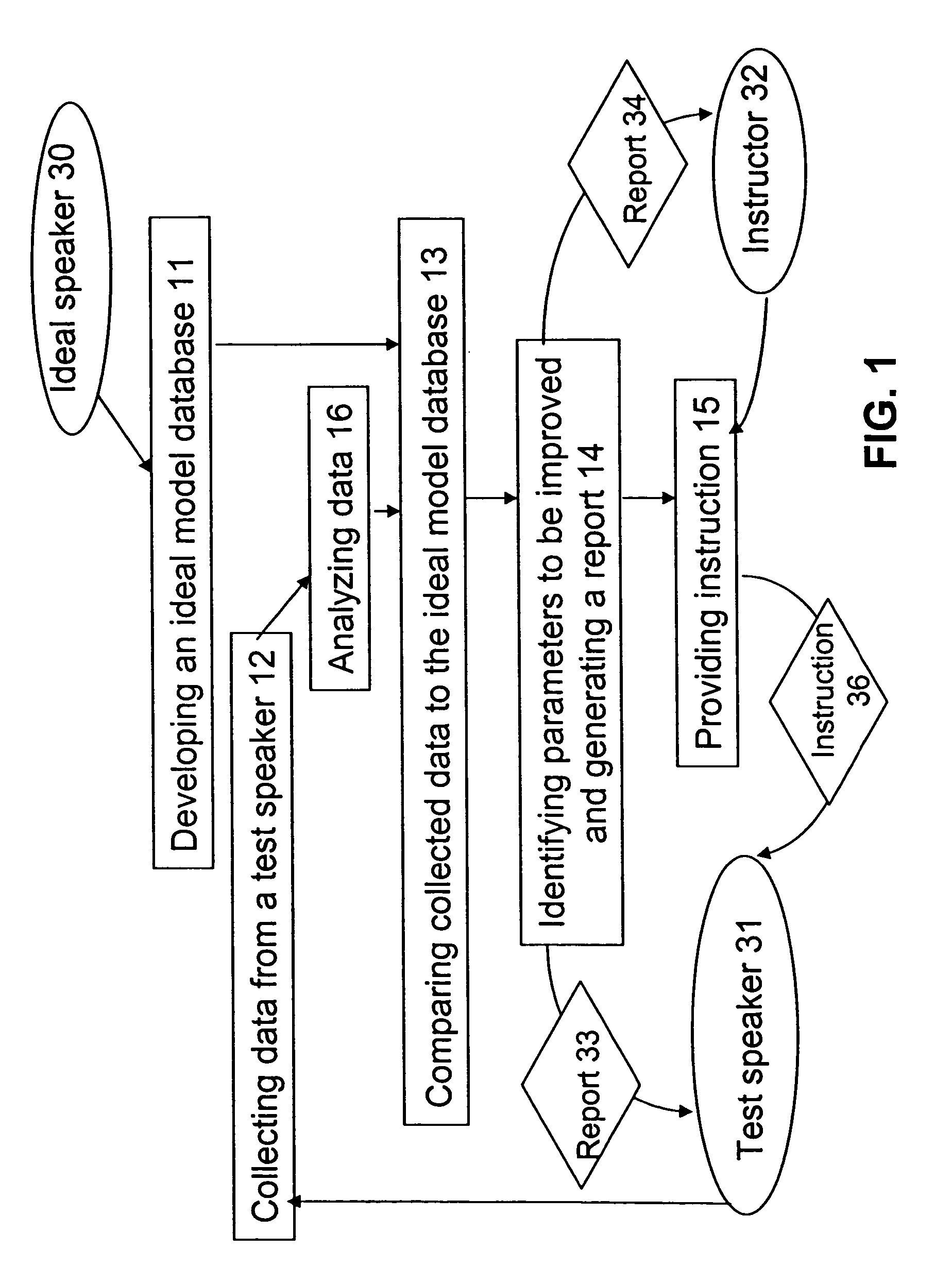

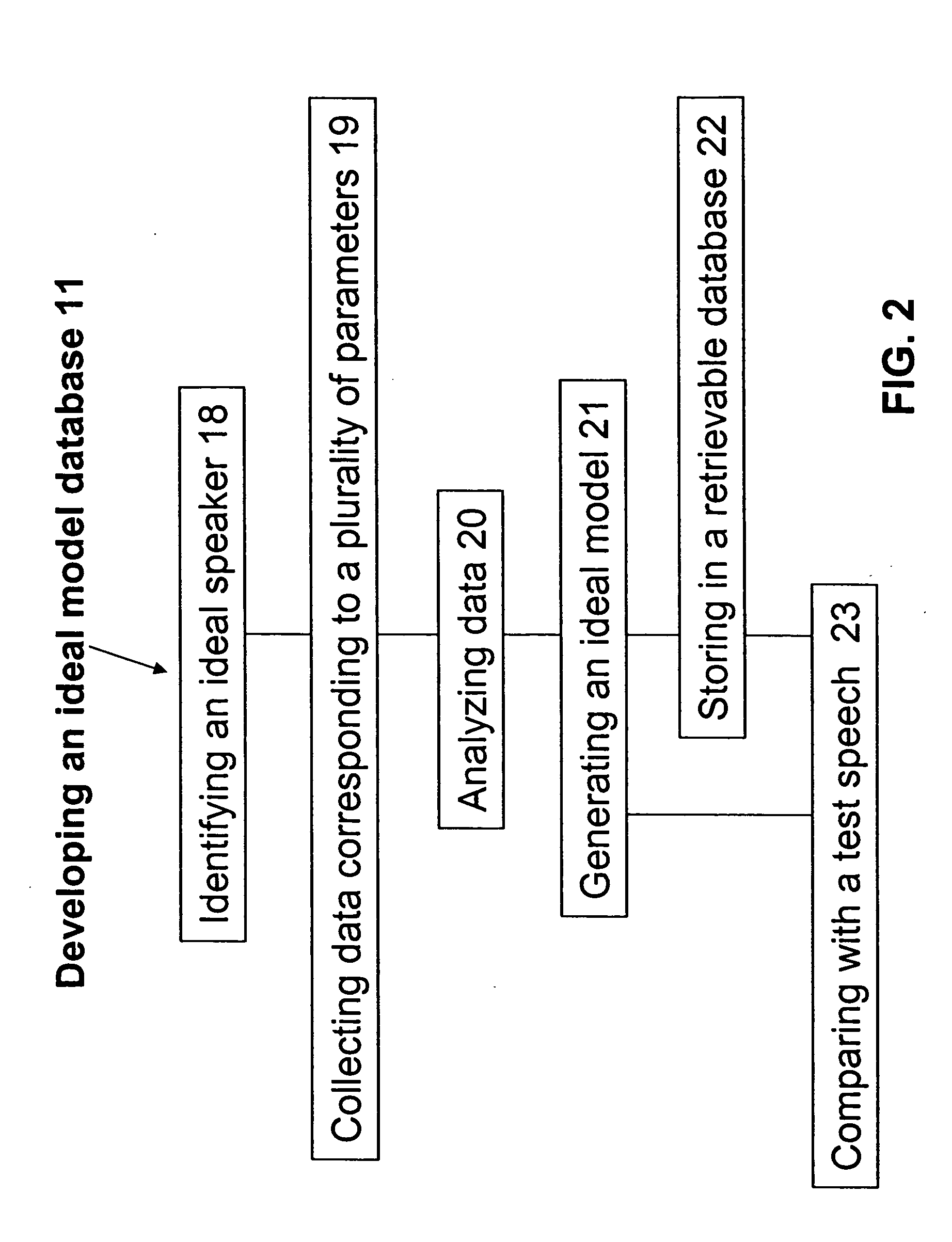

System and process for feedback speech instruction

InactiveUS20050119894A1Reduce deliveryIncrease impactSpeech recognitionVolume variationPitch variation

The present invention involves methods and systems for providing feedback speech instruction. The method involves collecting data corresponding to a plurality of parameters associated with verbal and non-verbal expression of a speaker and analyzing the data based on an ideal model. The method also includes generating a report or an instruction responsive to the report, and delivering the report or the instruction to the speaker. The plurality of parameters associated with verbal and non-verbal expression includes pitch, volume, pitch variation, volume variation, frequency of variation of pitch, frequency of volume, rhythm, tone, and speech cadence. The system includes a device for collecting speech data from a speaker, a module with software or firmware enabling analysis of the collected data as compared to an ideal speech model, and an output device for delivering a report and / or instruction to the speaker.

Owner:UNIVERSITY OF INDIANAPOLIS

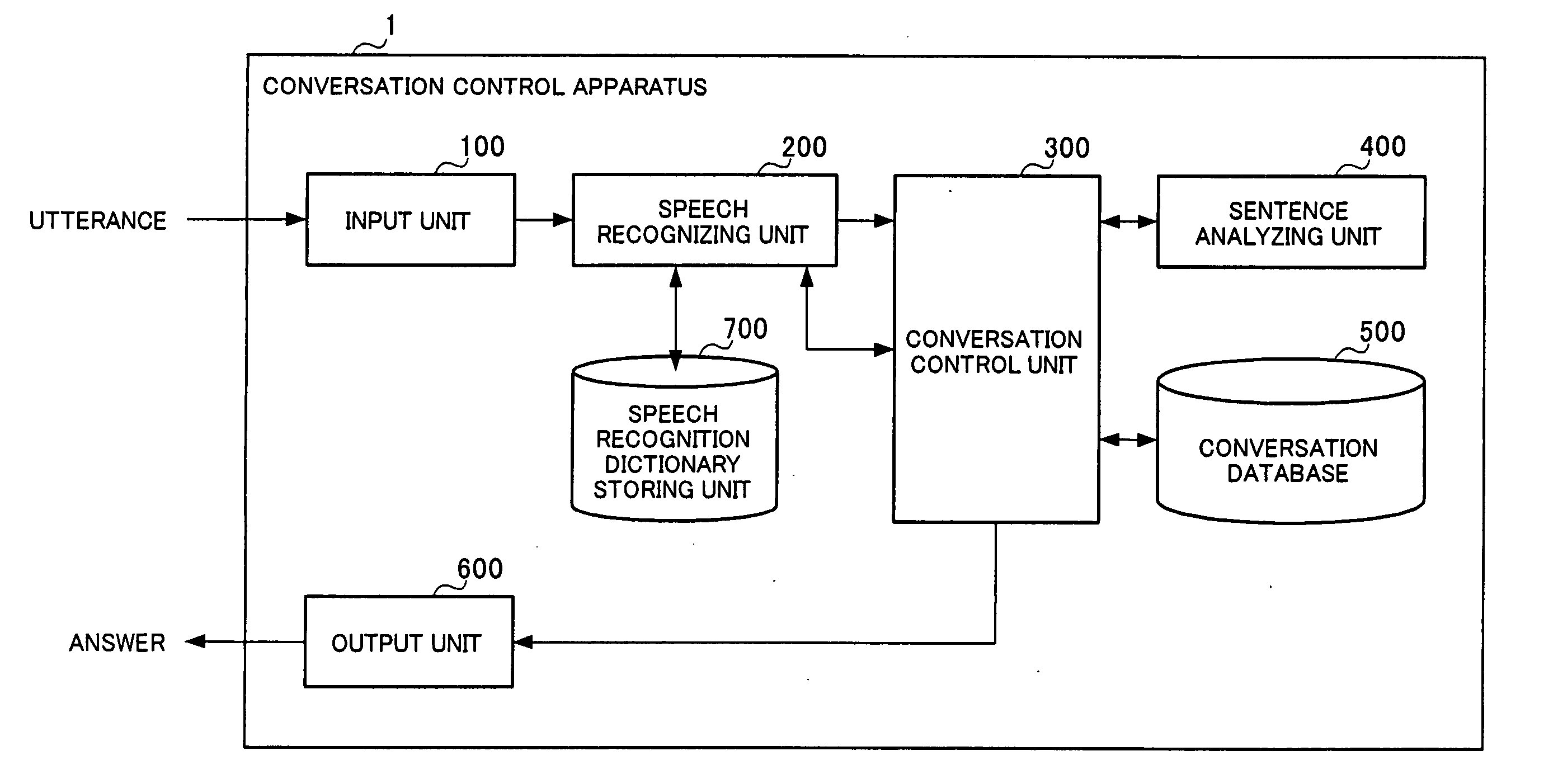

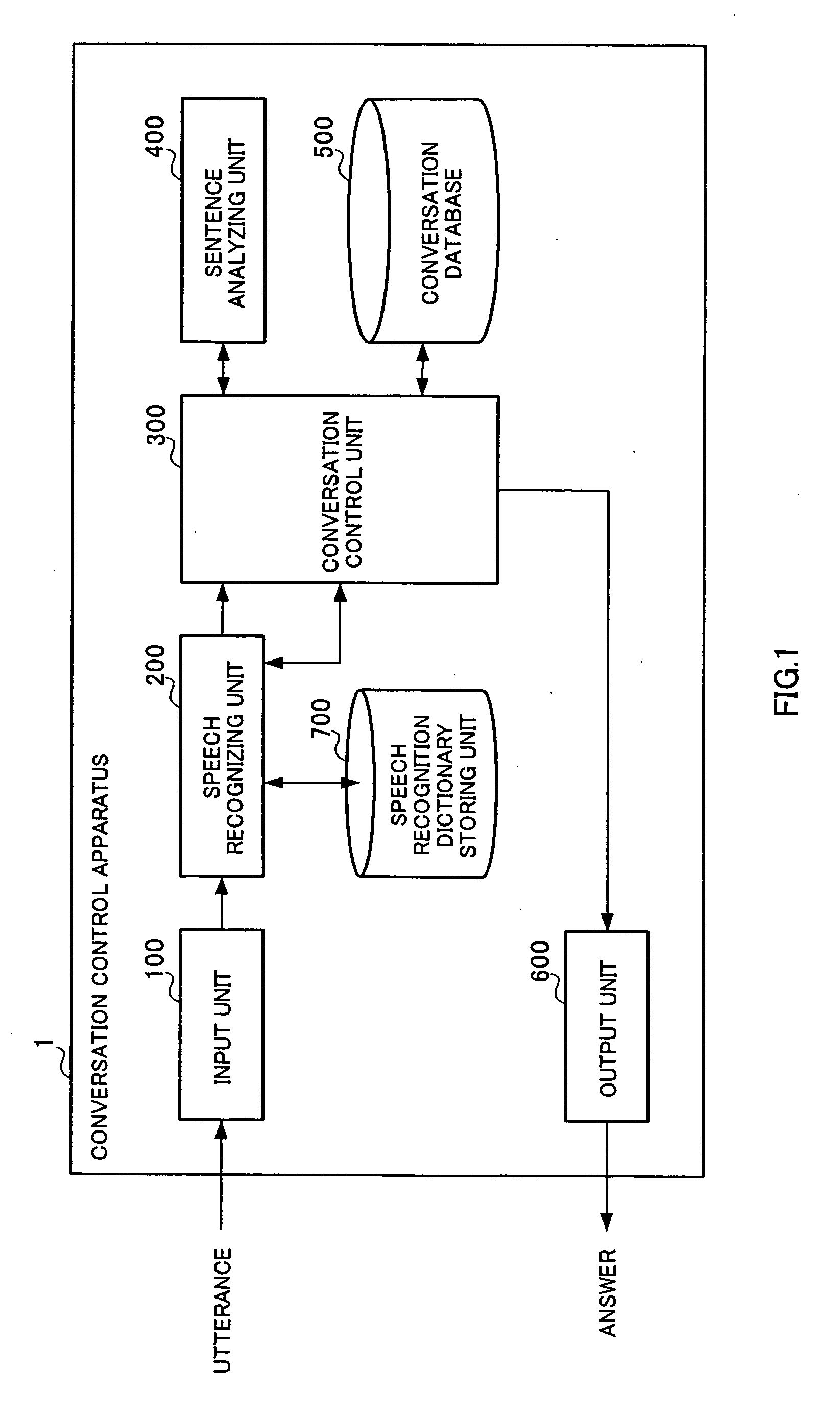

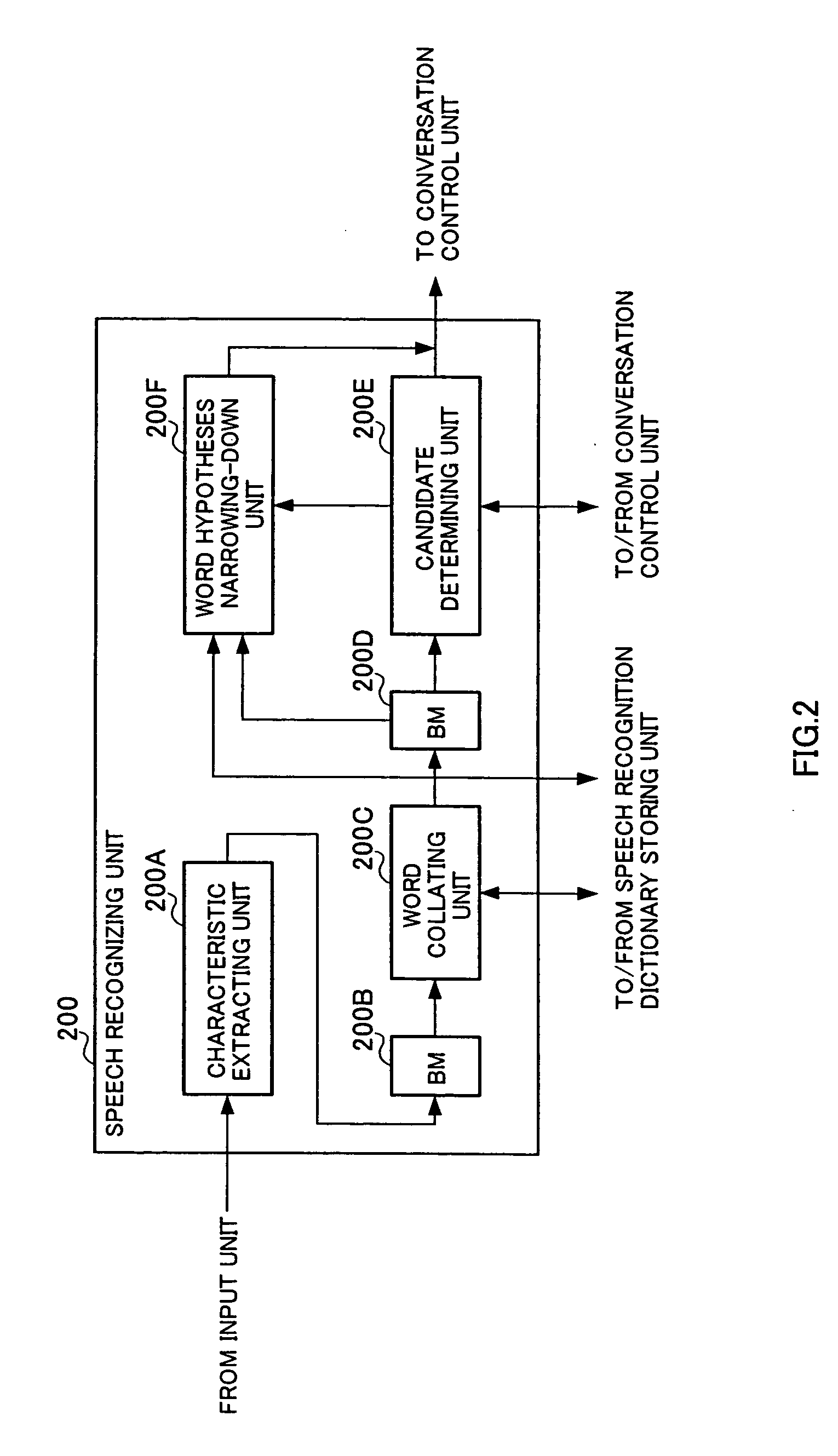

Speech recognition apparatus, speech recognition method, conversation control apparatus, conversation control method, and programs for therefor

ActiveUS20050021331A1Improve recognition rateSpeech recognitionSpecial data processing applicationsSession controlComputer science

An automatic conversation apparatus includes a speech recognizing unit receiving a speech signal and outputting characters / character string corresponding to the speech signal as a recognition result; a speech recognition dictionary storing unit storing a language model for determining candidates corresponding to the speech signal; a conversation database storing plural pieces of topic specifying information; a sentence analyzing unit analyzing the characters / character string outputted from the speech recognizing unit; and a conversation control unit storing a conversation history and acquiring an answer sentence based on an analysis result of the sentence analyzing unit. Speech recognizing unit includes a collating unit that outputs plural candidates based on the speech recognition dictionary storing unit; and a candidate determining unit comparing the plural candidates outputted from collating unit with topic specifying information corresponding to the conversation history with reference to the conversation database and outputs one candidate based on the comparison.

Owner:MYCLERK LLC

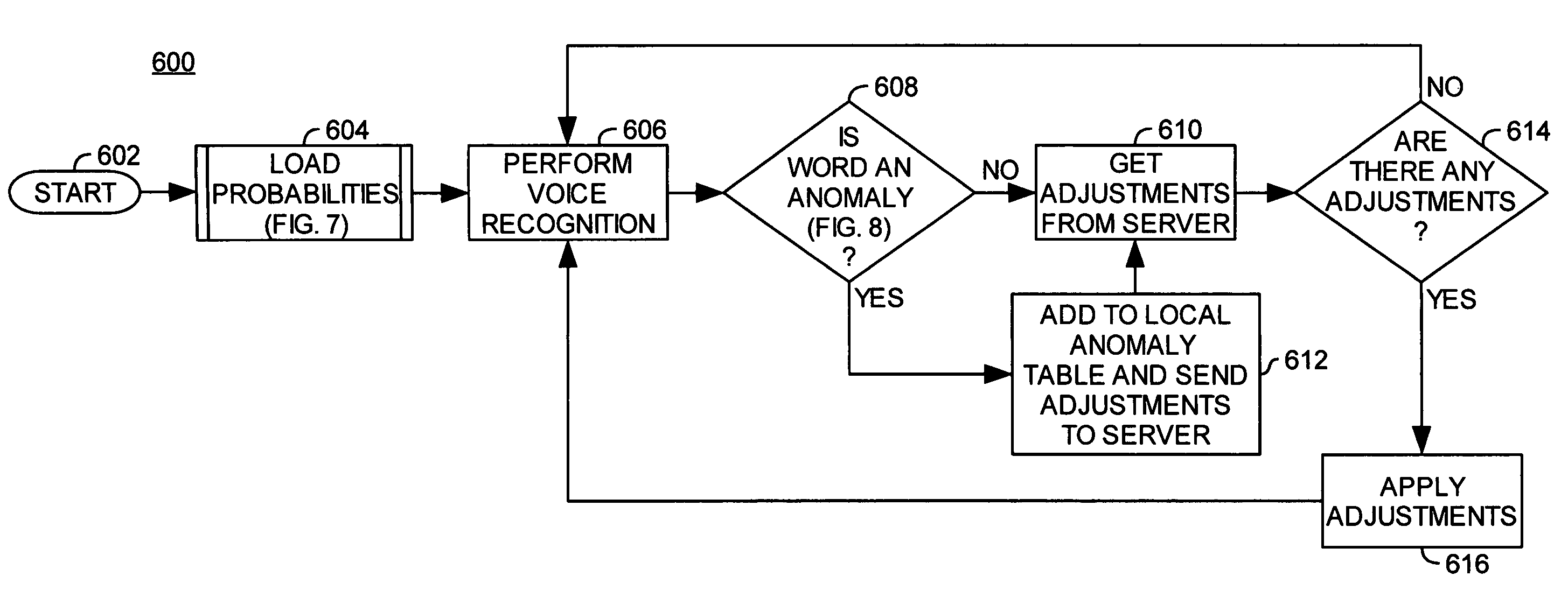

Voice language model adjustment based on user affinity

InactiveUS7590536B2Improve accuracySpeech recognitionData processing systemFacial recognition system

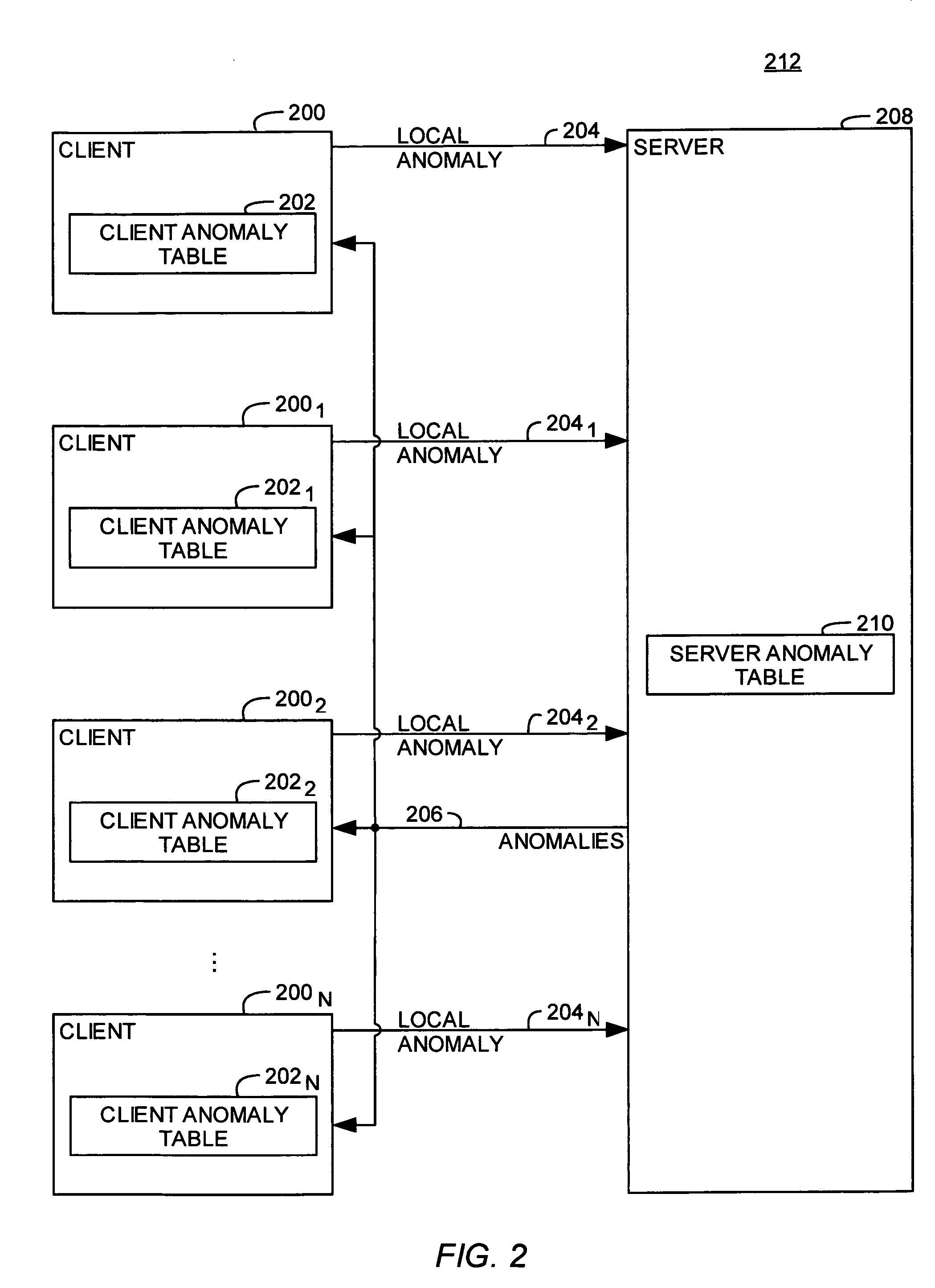

Methods, systems and computer readable medium for improving the accuracy of voice processing are provided. Embodiments of the present invention generally provide methods, systems and articles of manufacture for adjusting a language model within a voice recognition system. In one embodiment, changes are made to the language model by identifying a word-usage pattern that qualifies as an anomaly. In one embodiment, an anomaly occurs when the use of a given word (or phrase) differs from an expected probability for the word (or phrase), as predicted by a language model. Additionally, observed anomalies may be shared and applied by different users of the voice processing system, depending on an affinity in word-usage frequency between different users.

Owner:NUANCE COMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com