Patents

Literature

59 results about "Speaker adaptation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

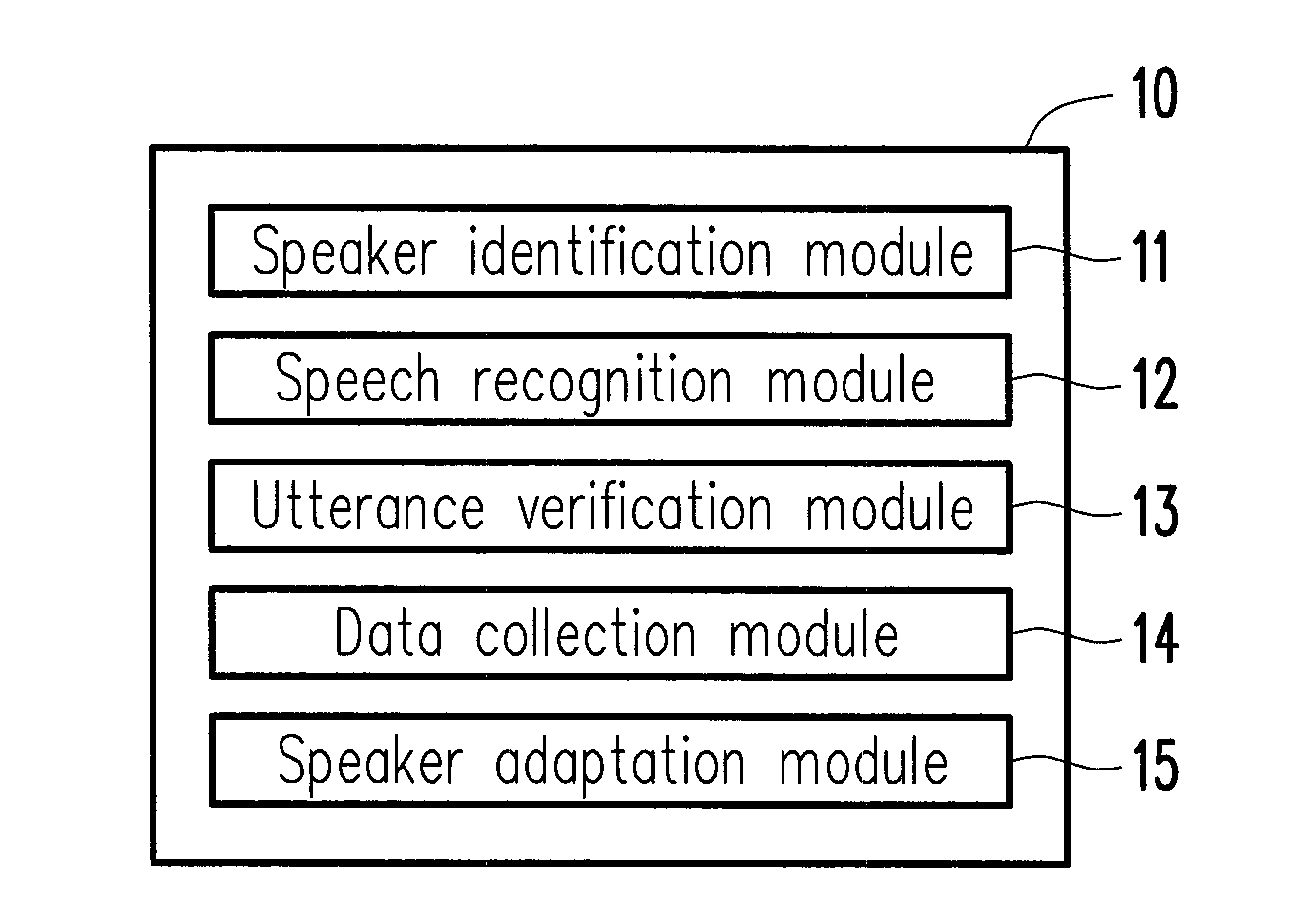

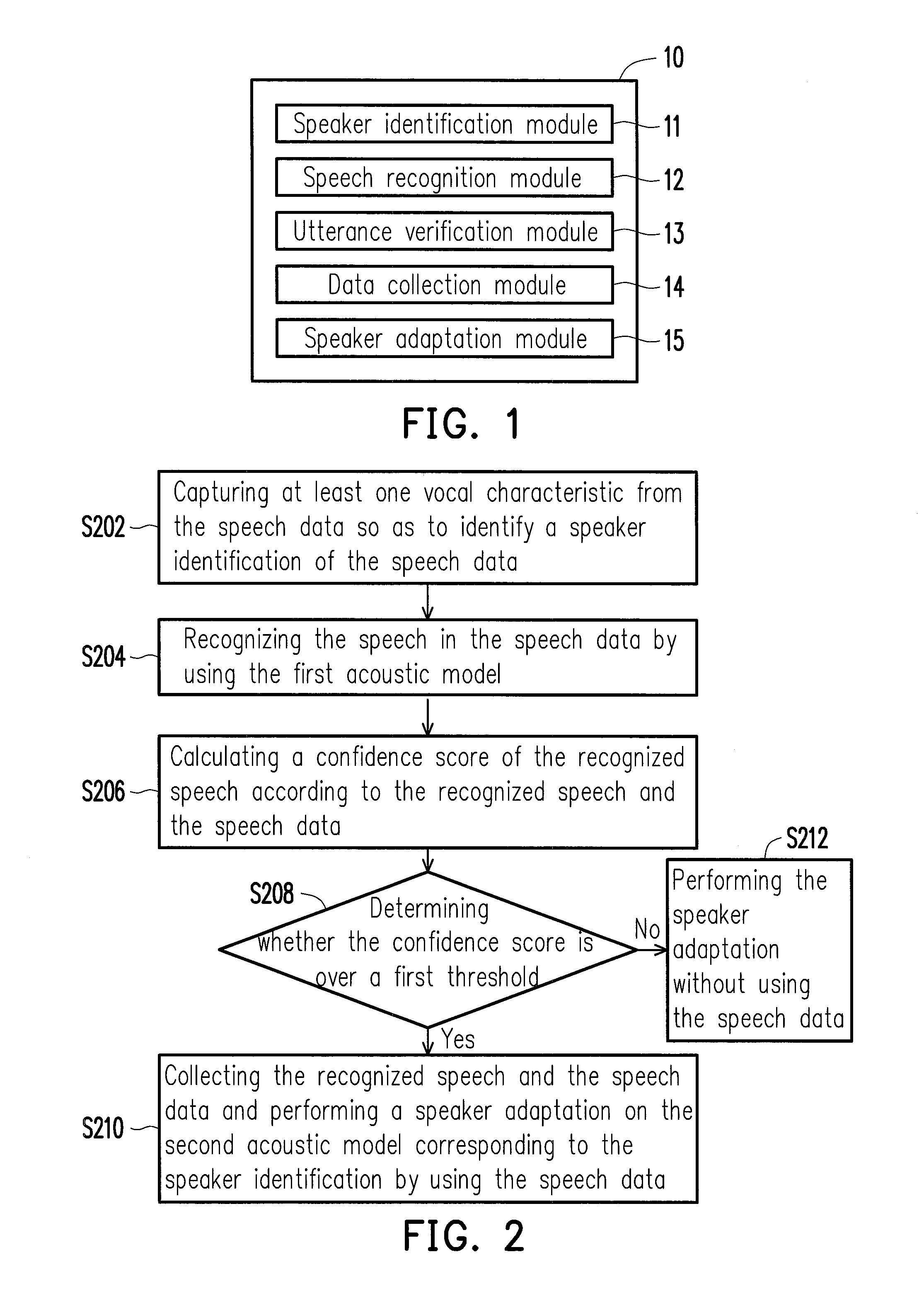

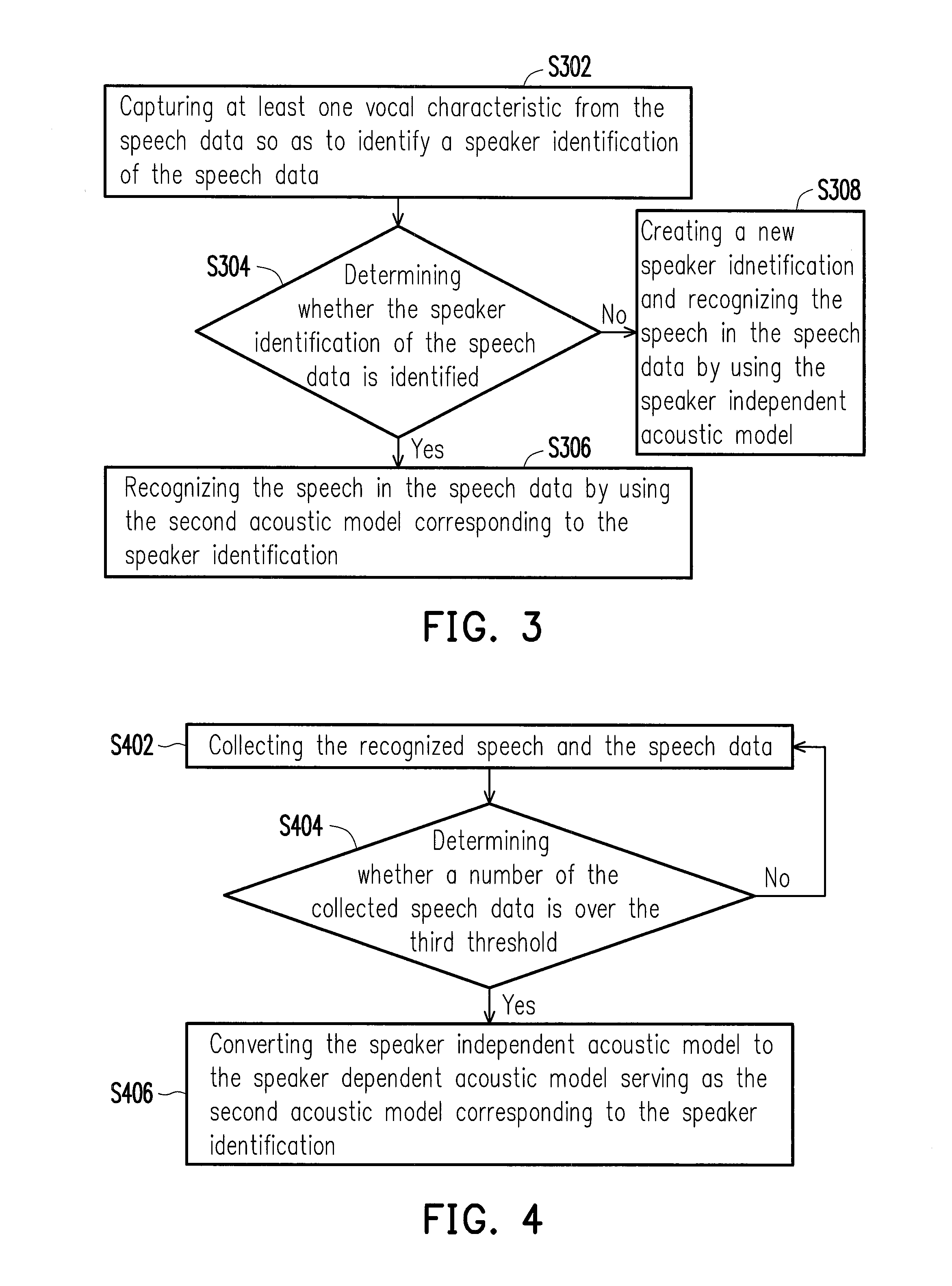

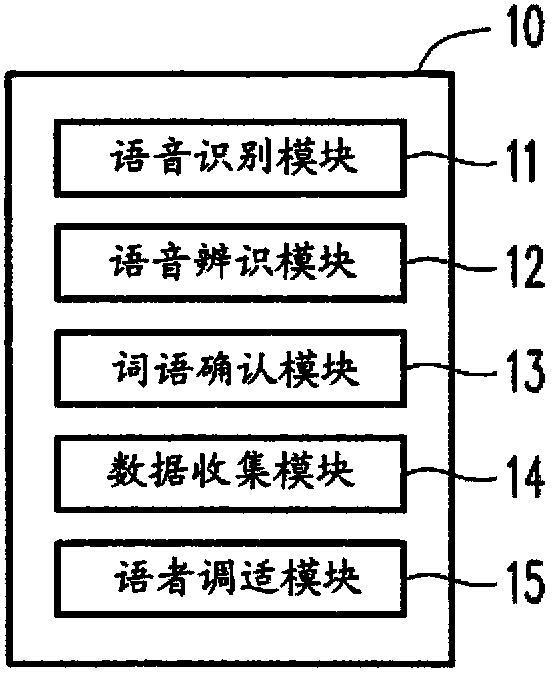

Method and system for speech recognition

InactiveUS20130311184A1Improve speech recognition accuracyImprove accuracySpeech recognitionSpeech identificationAcoustic model

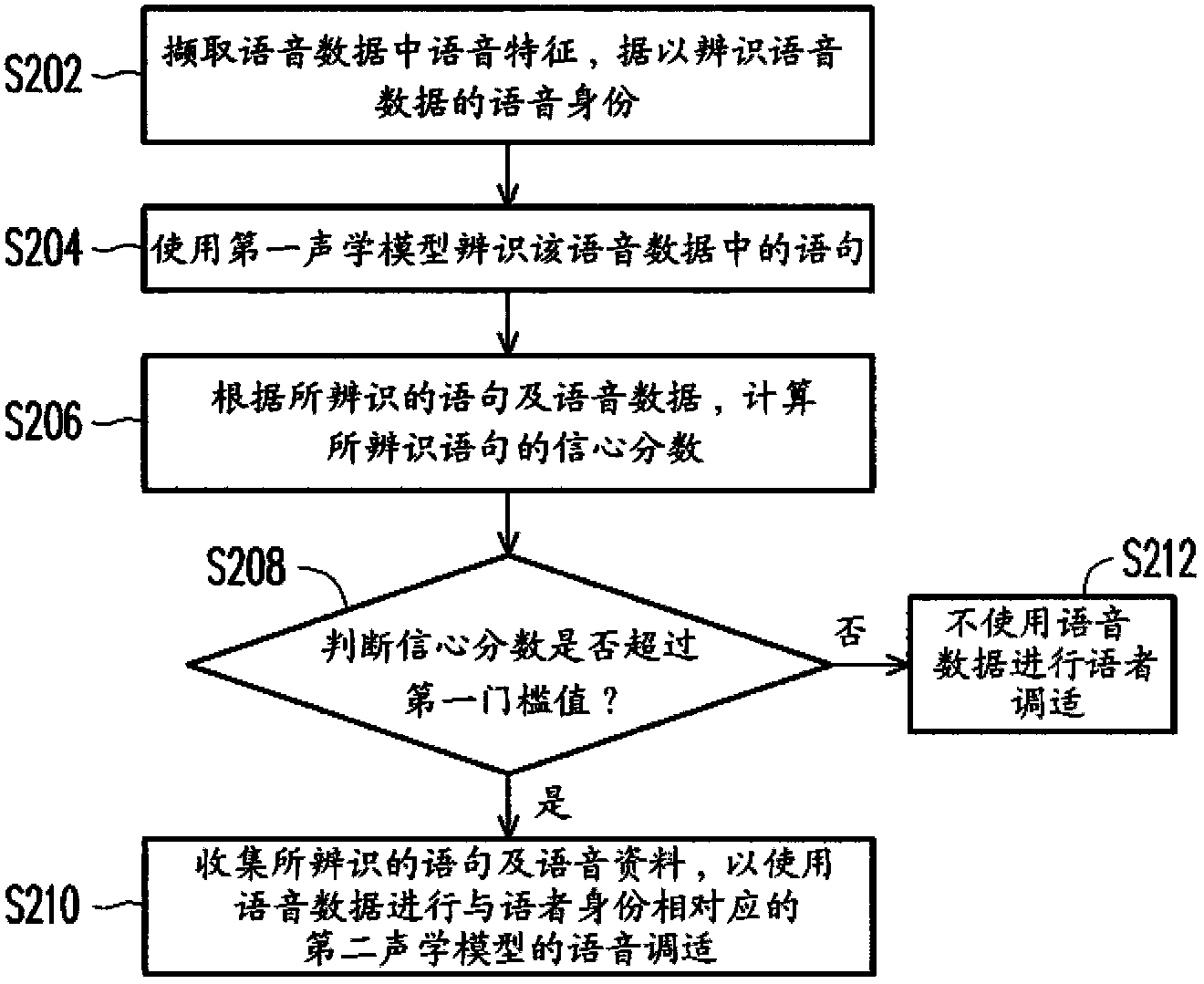

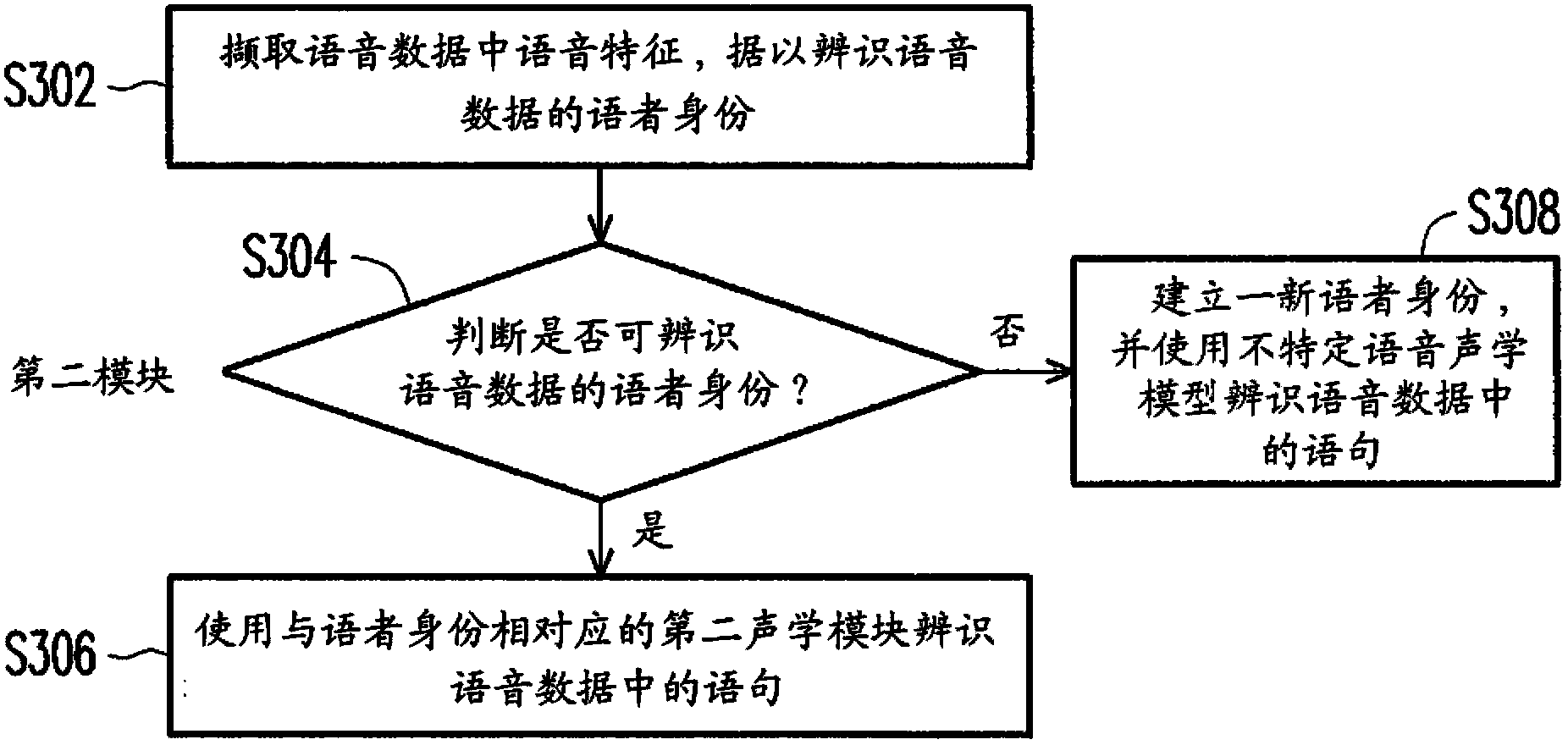

A method and a system for speech recognition are provided. In the method, vocal characteristics are captured from speech data and used to identify a speaker identification of the speech data. Next, a first acoustic model is used to recognize a speech in the speech data. According to the recognized speech and the speech data, a confidence score of the speech recognition is calculated and it is determined whether the confidence score is over a threshold. If the confidence score is over the threshold, the recognized speech and the speech data are collected, and the collected speech data is used for performing a speaker adaptation on a second acoustic model corresponding to the speaker identification.

Owner:ASUSTEK COMPUTER INC

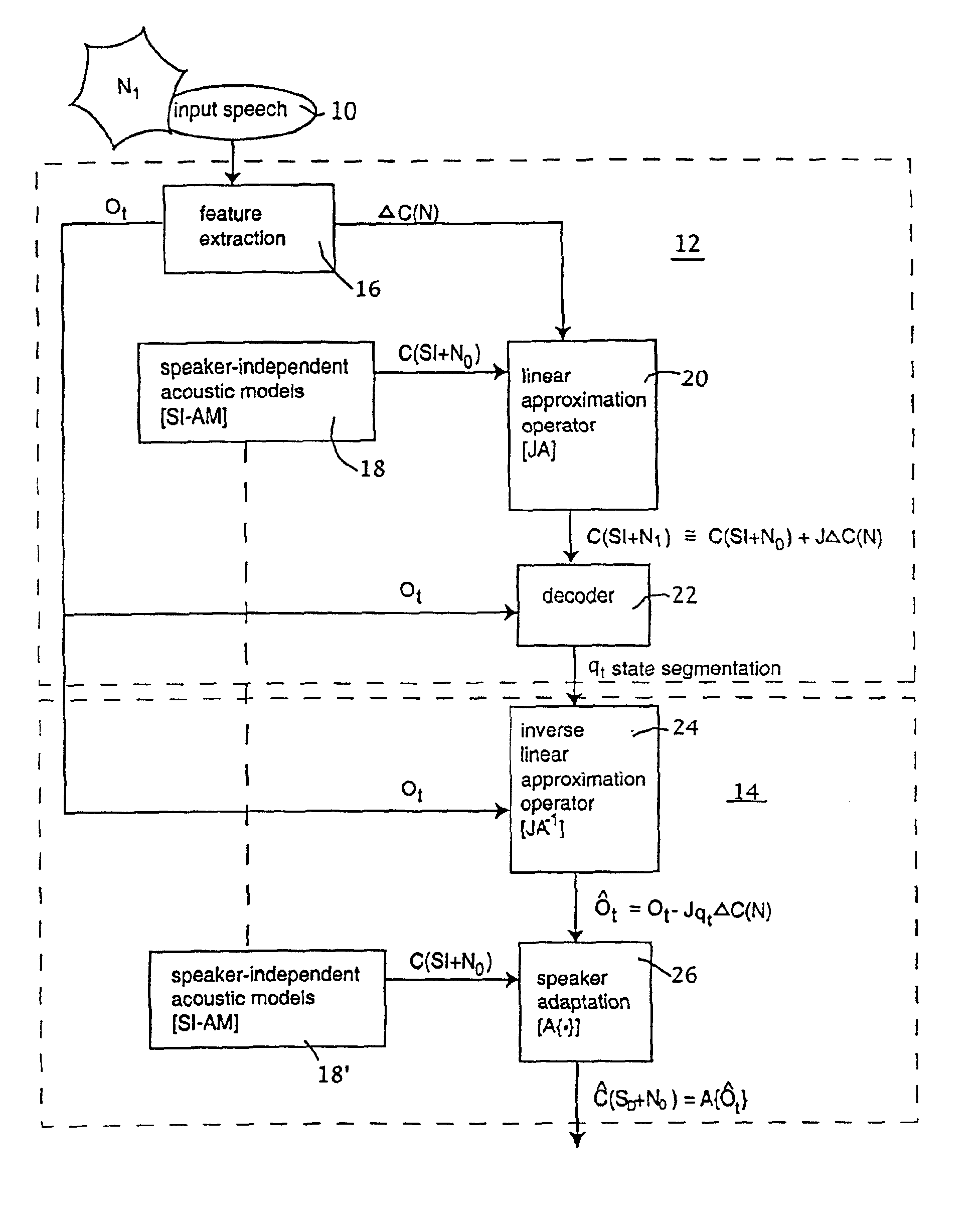

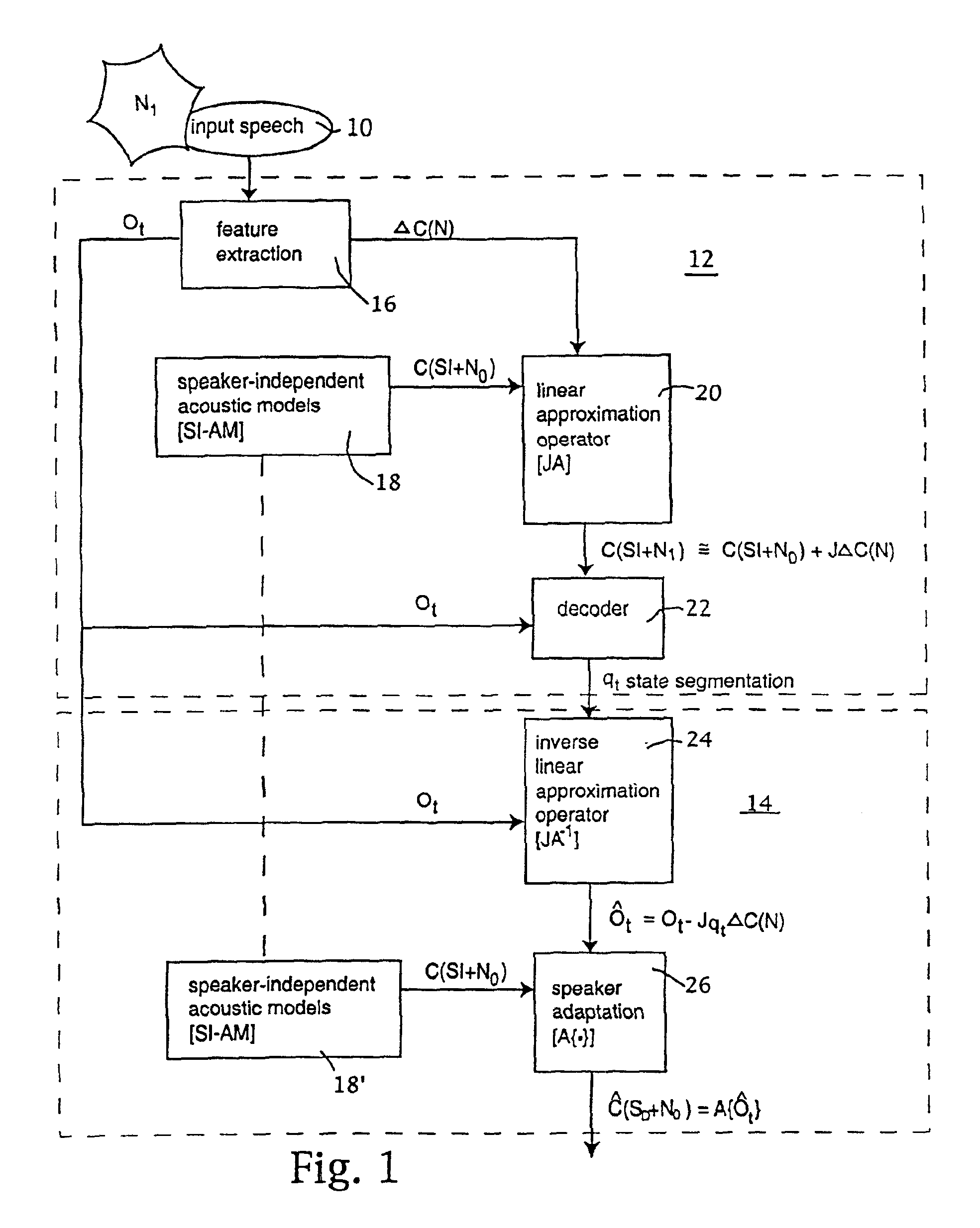

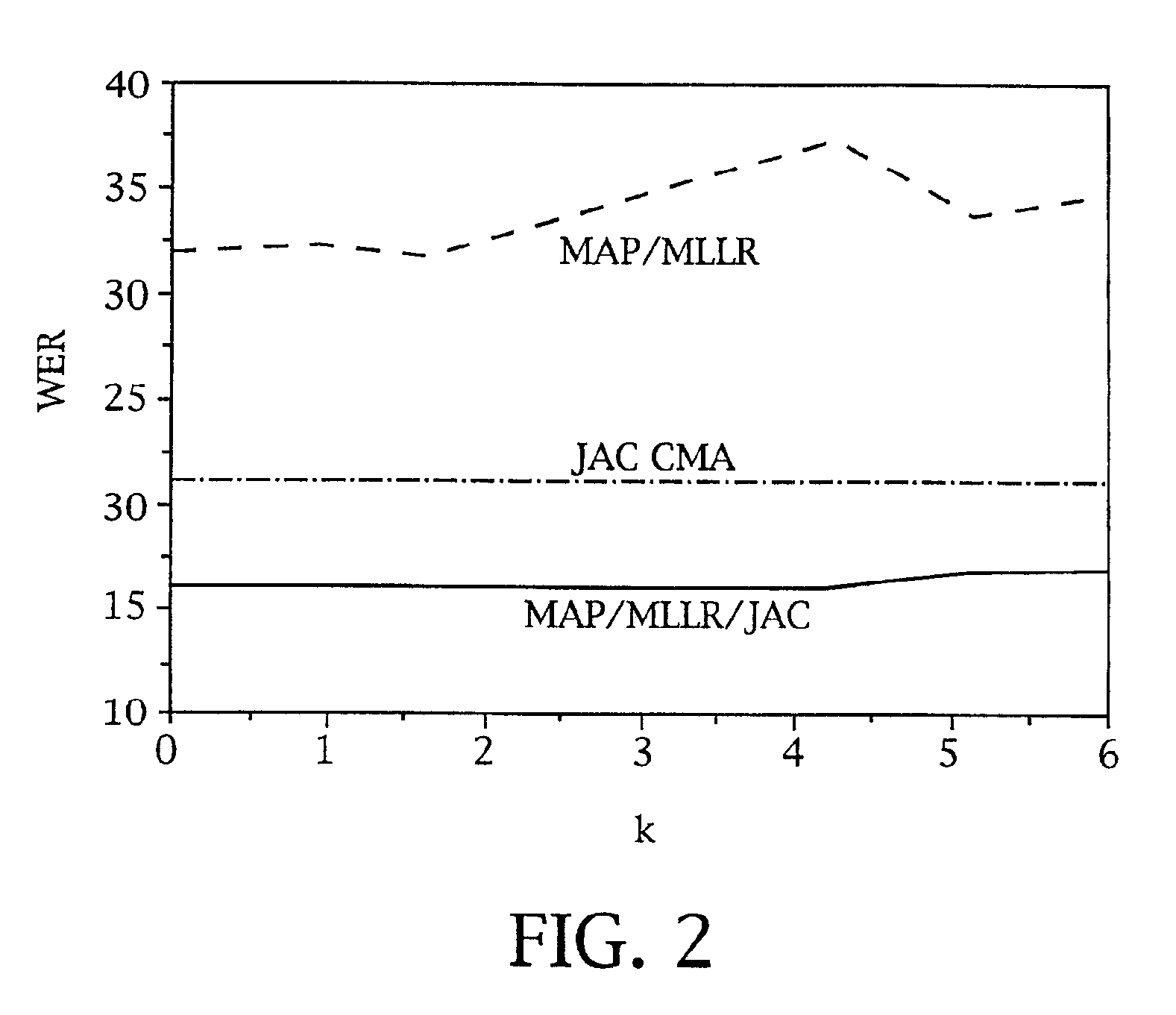

Speaker and environment adaptation based on linear separation of variability sources

Owner:SOVEREIGN PEAK VENTURES LLC

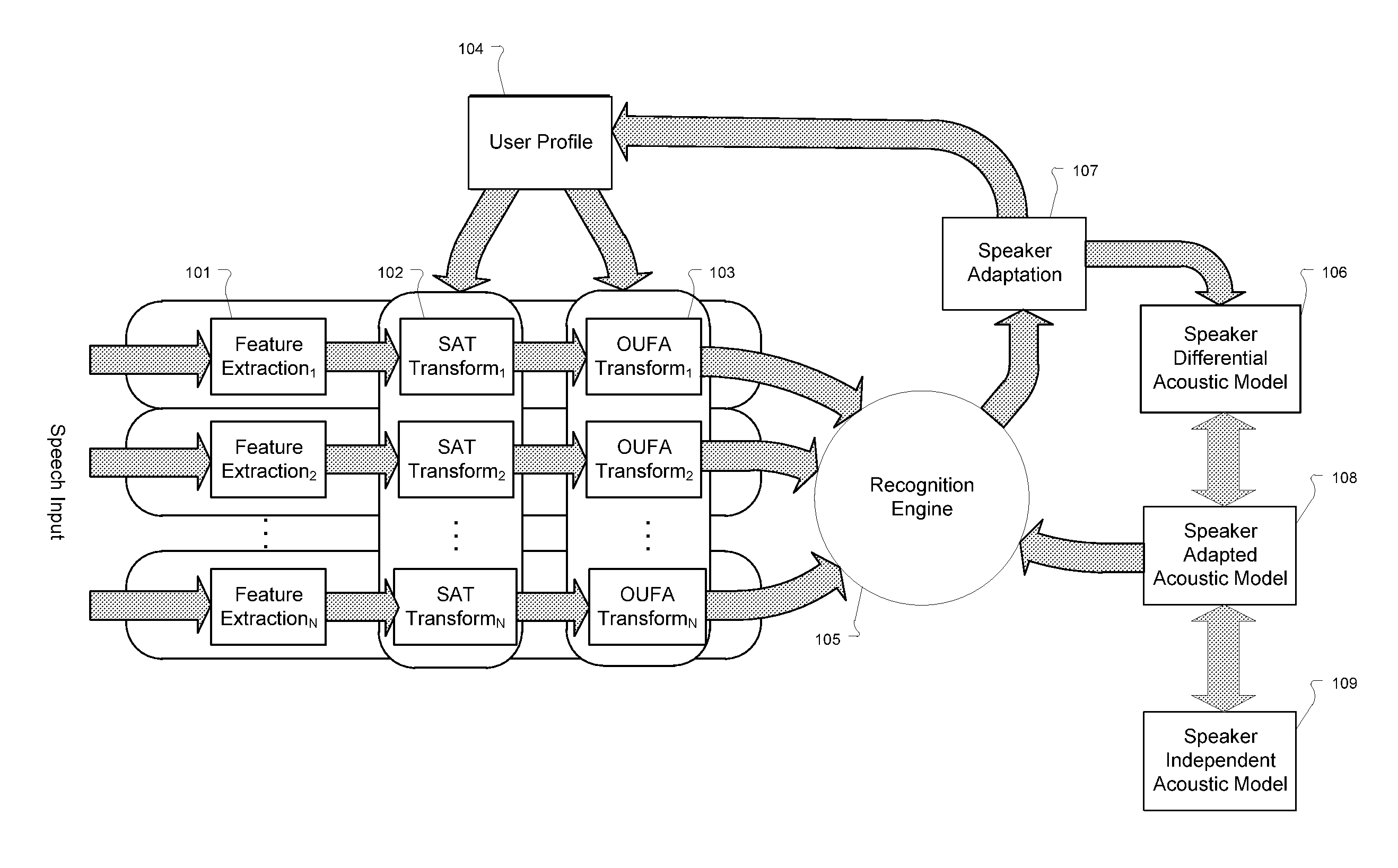

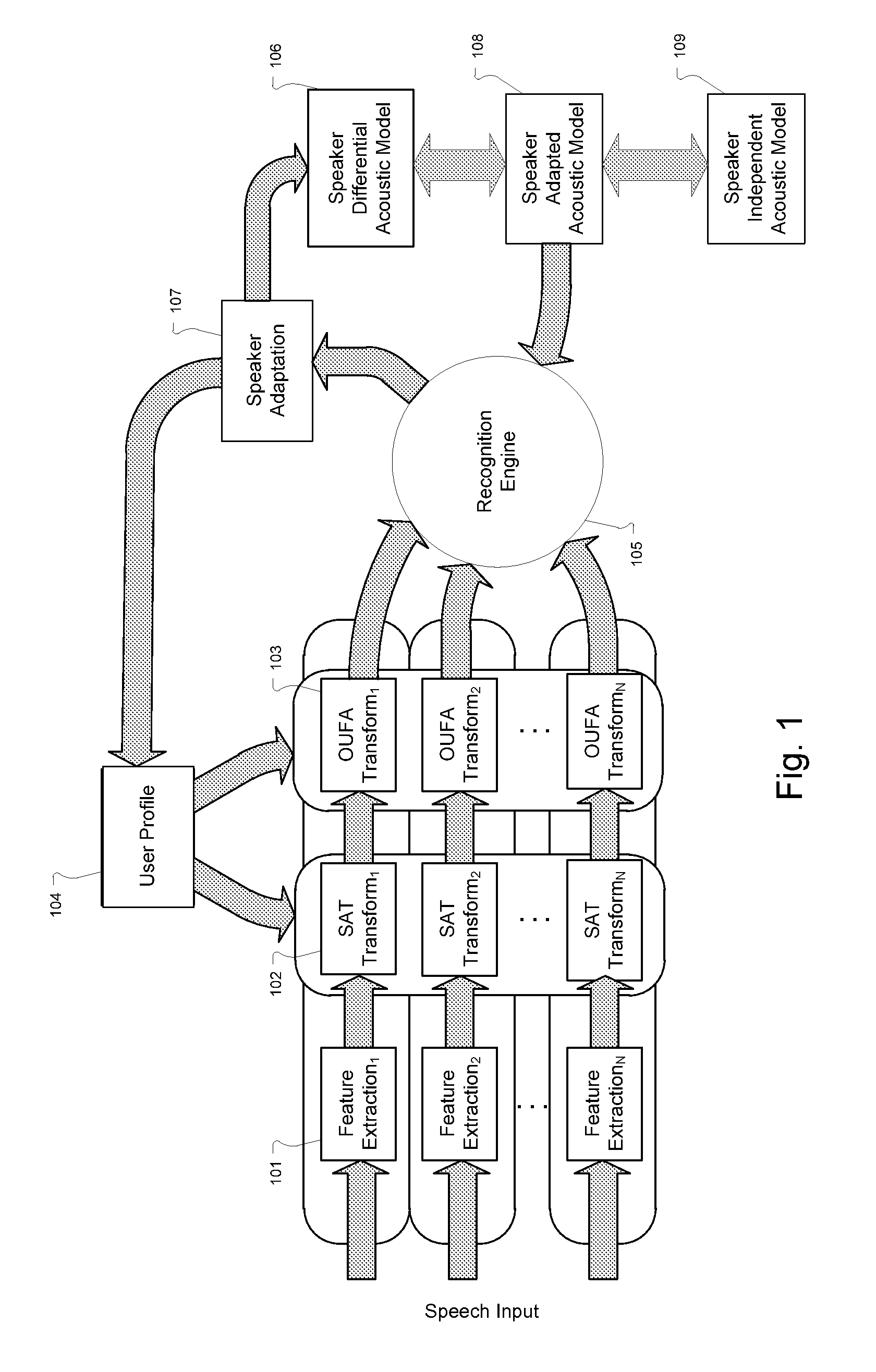

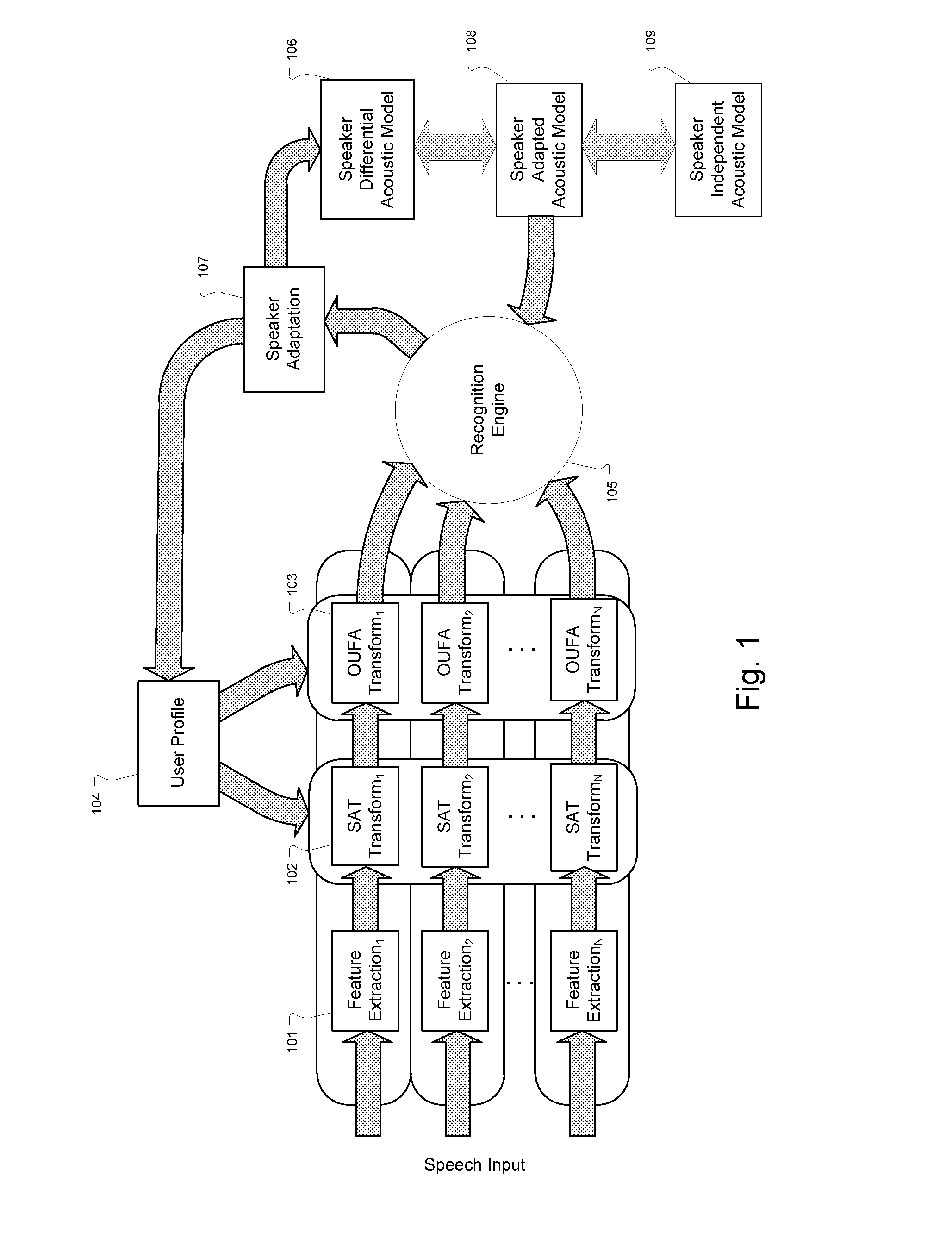

Differential acoustic model representation and linear transform-based adaptation for efficient user profile update techniques in automatic speech recognition

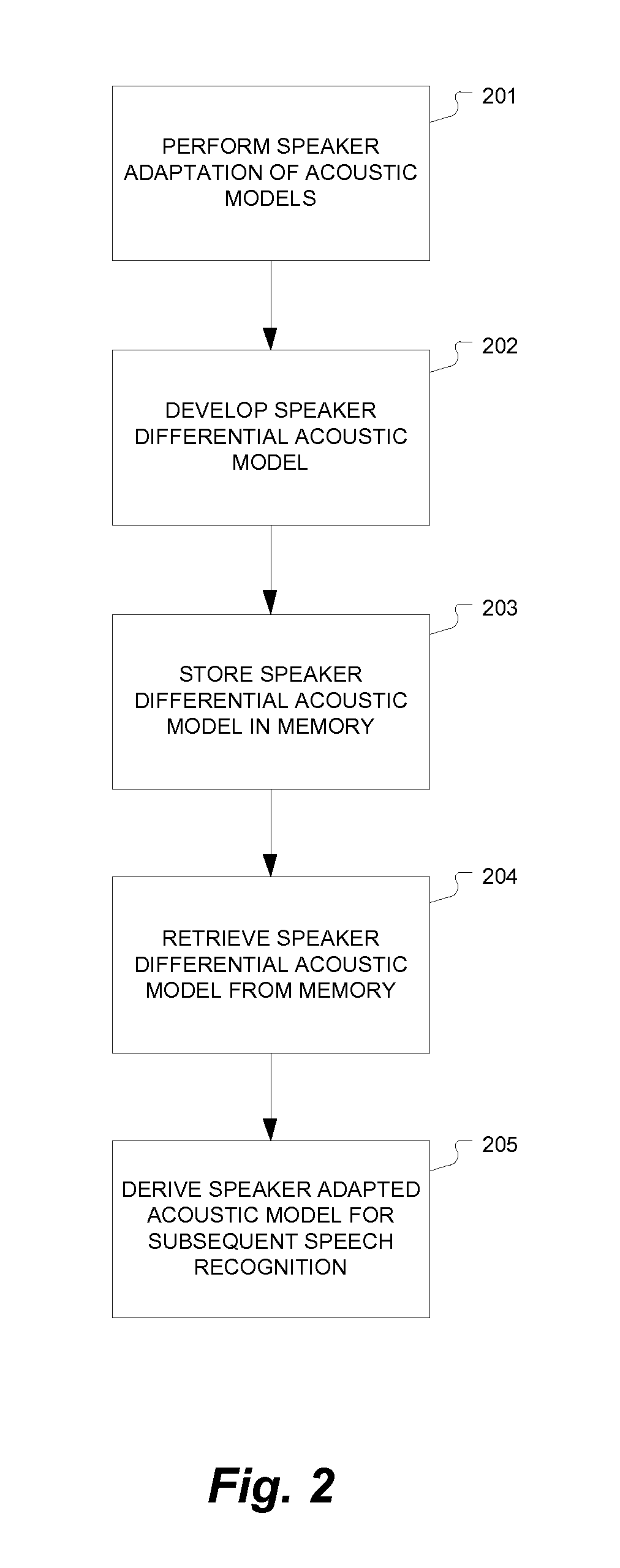

A computer-implemented method is described for speaker adaptation in automatic speech recognition. Speech recognition data from a particular speaker is used for adaptation of an initial speech recognition acoustic model to produce a speaker adapted acoustic model. A speaker dependent differential acoustic model is determined that represents differences between the initial speech recognition acoustic model and the speaker adapted acoustic model. In addition, an approach is also disclosed to estimate speaker-specific feature or model transforms over multiple sessions. This is achieved by updating the previously estimated transform using only adaptation statistics of the current session.

Owner:MICROSOFT TECH LICENSING LLC

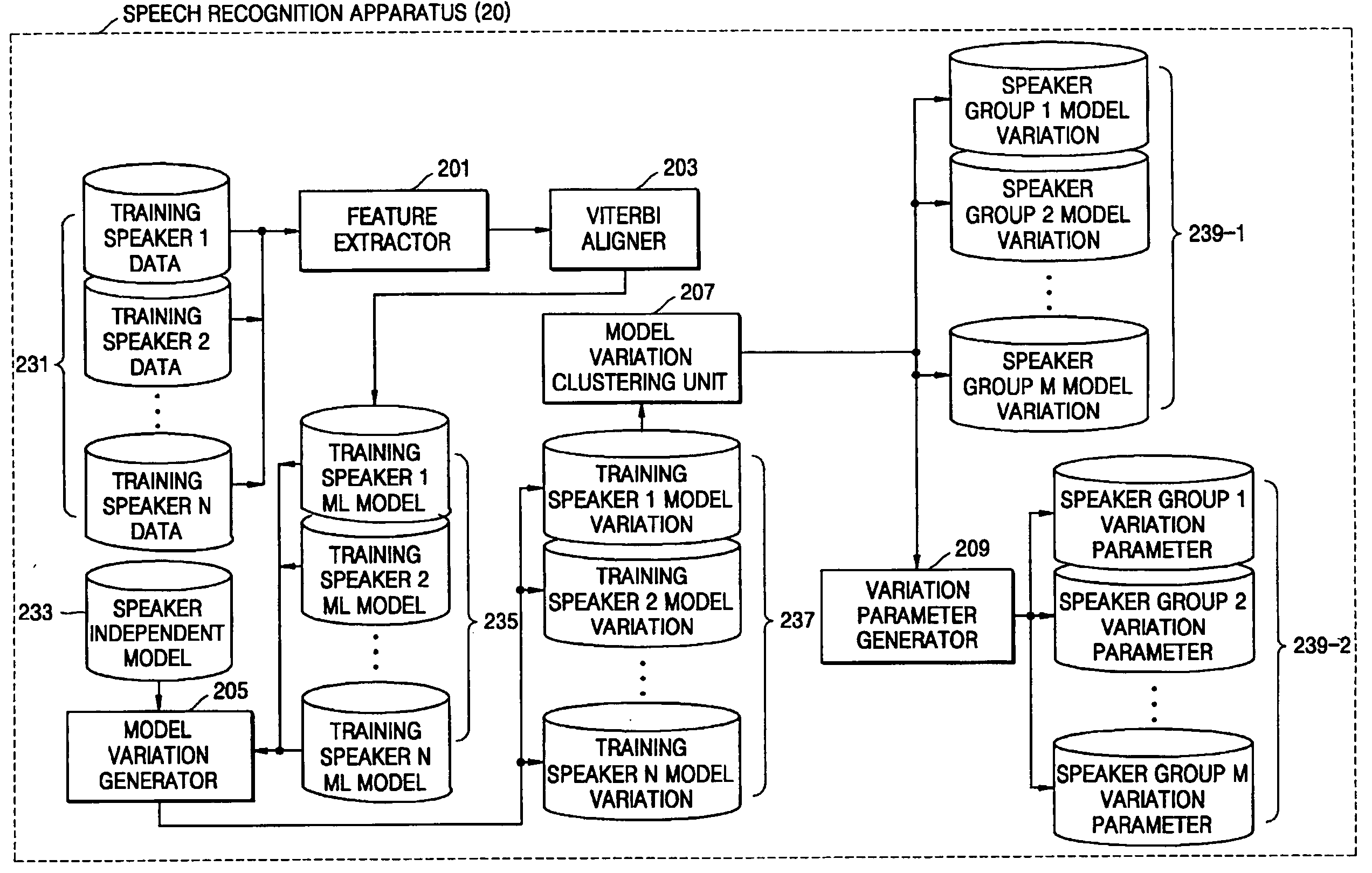

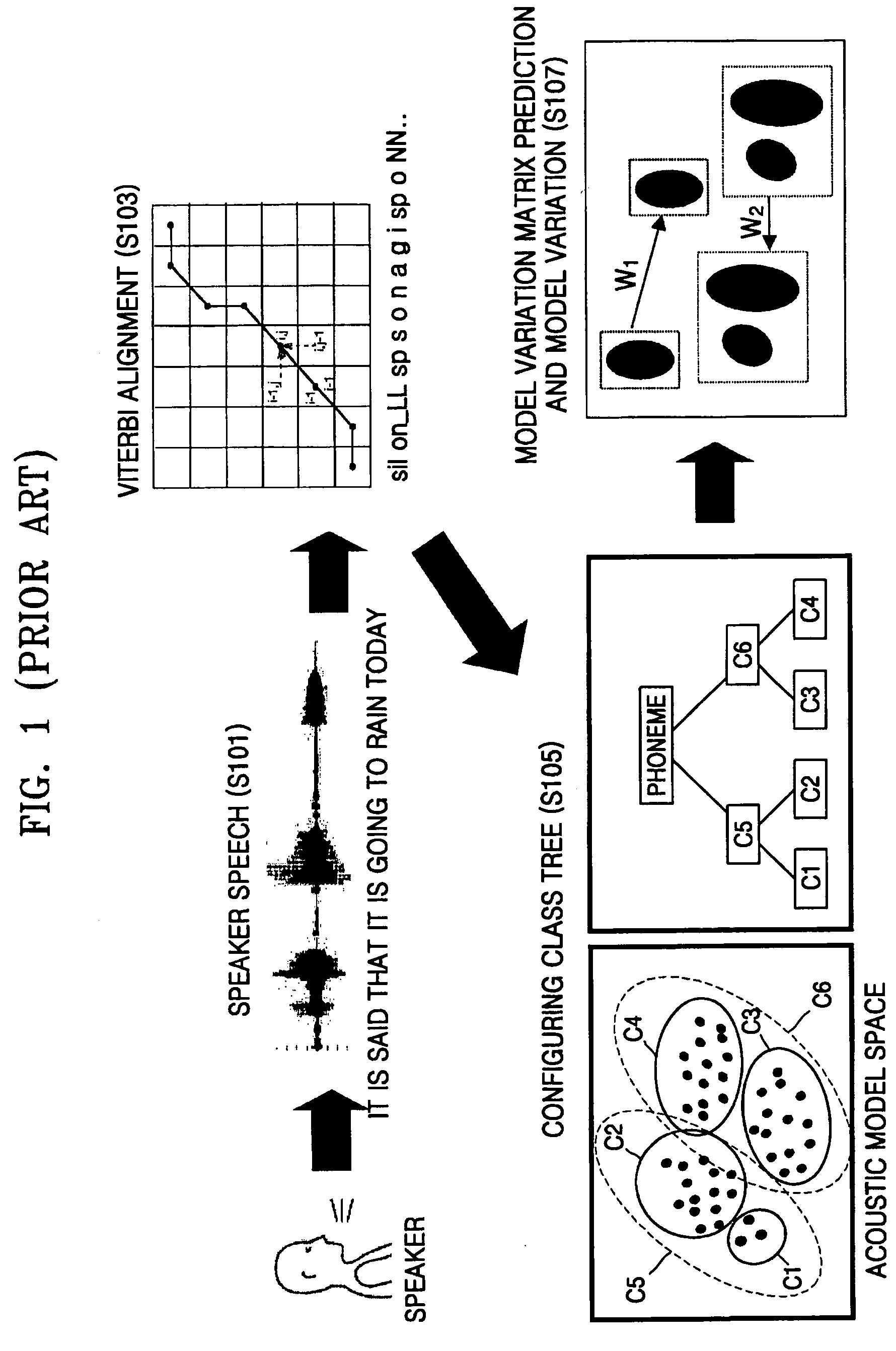

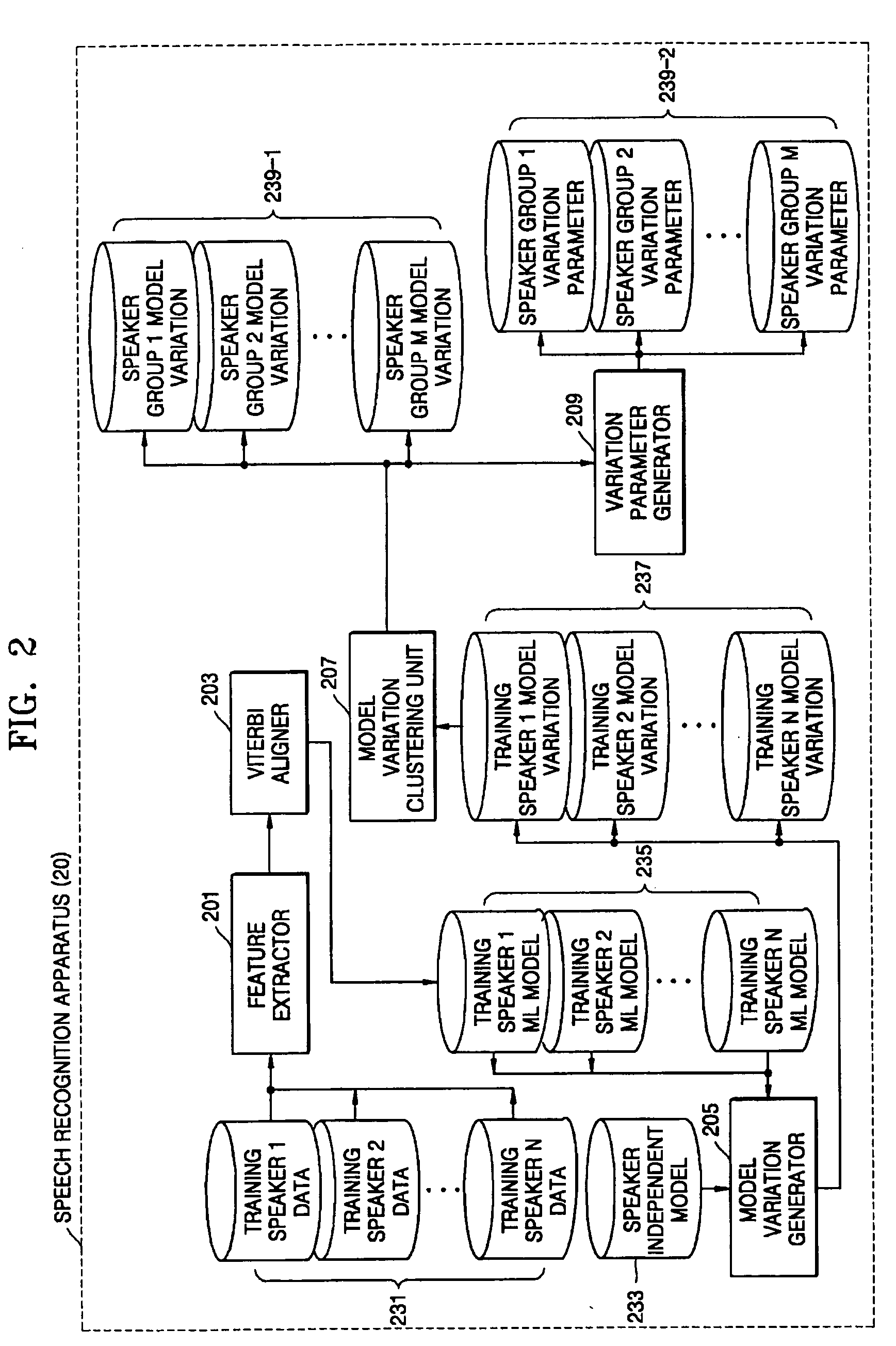

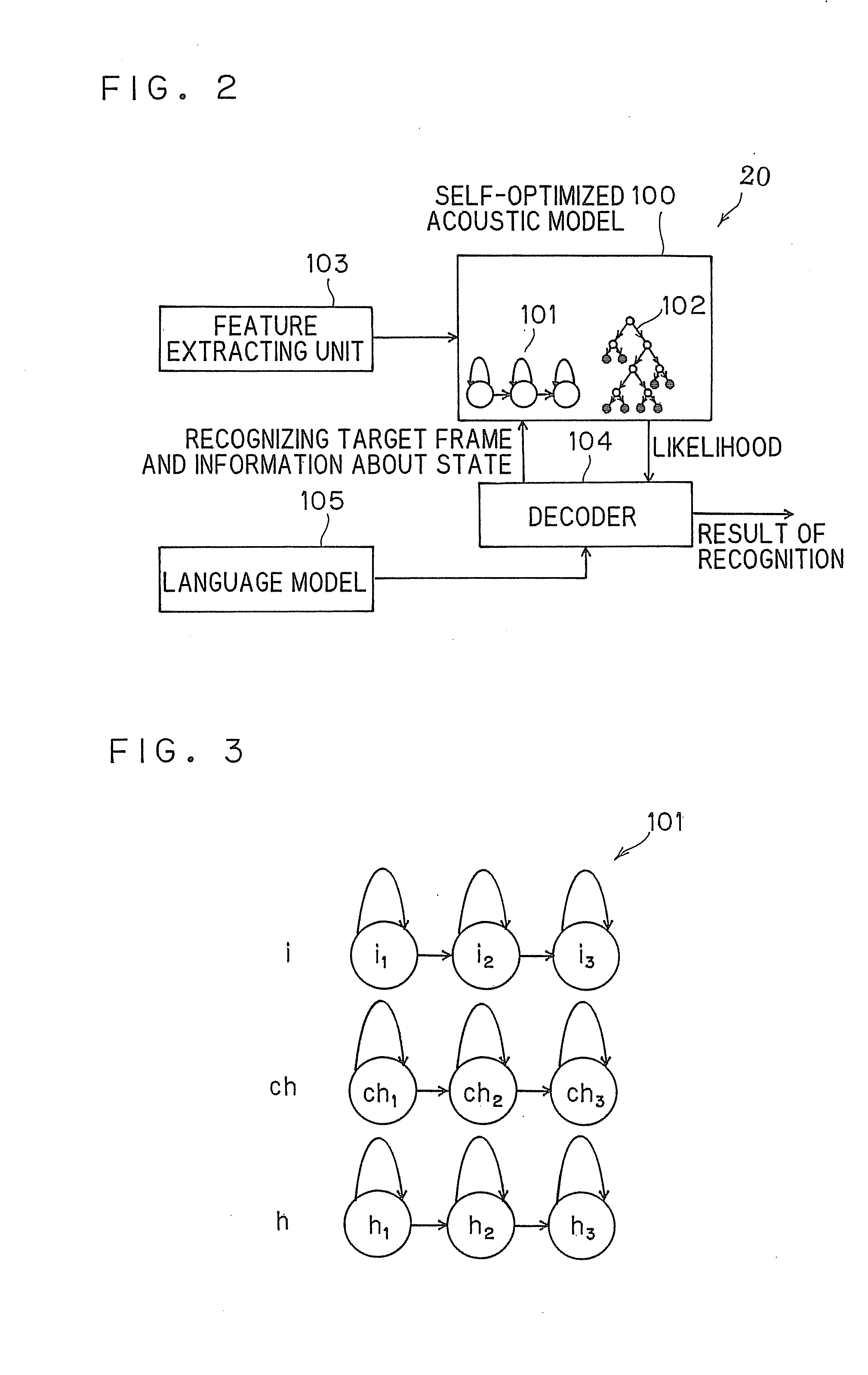

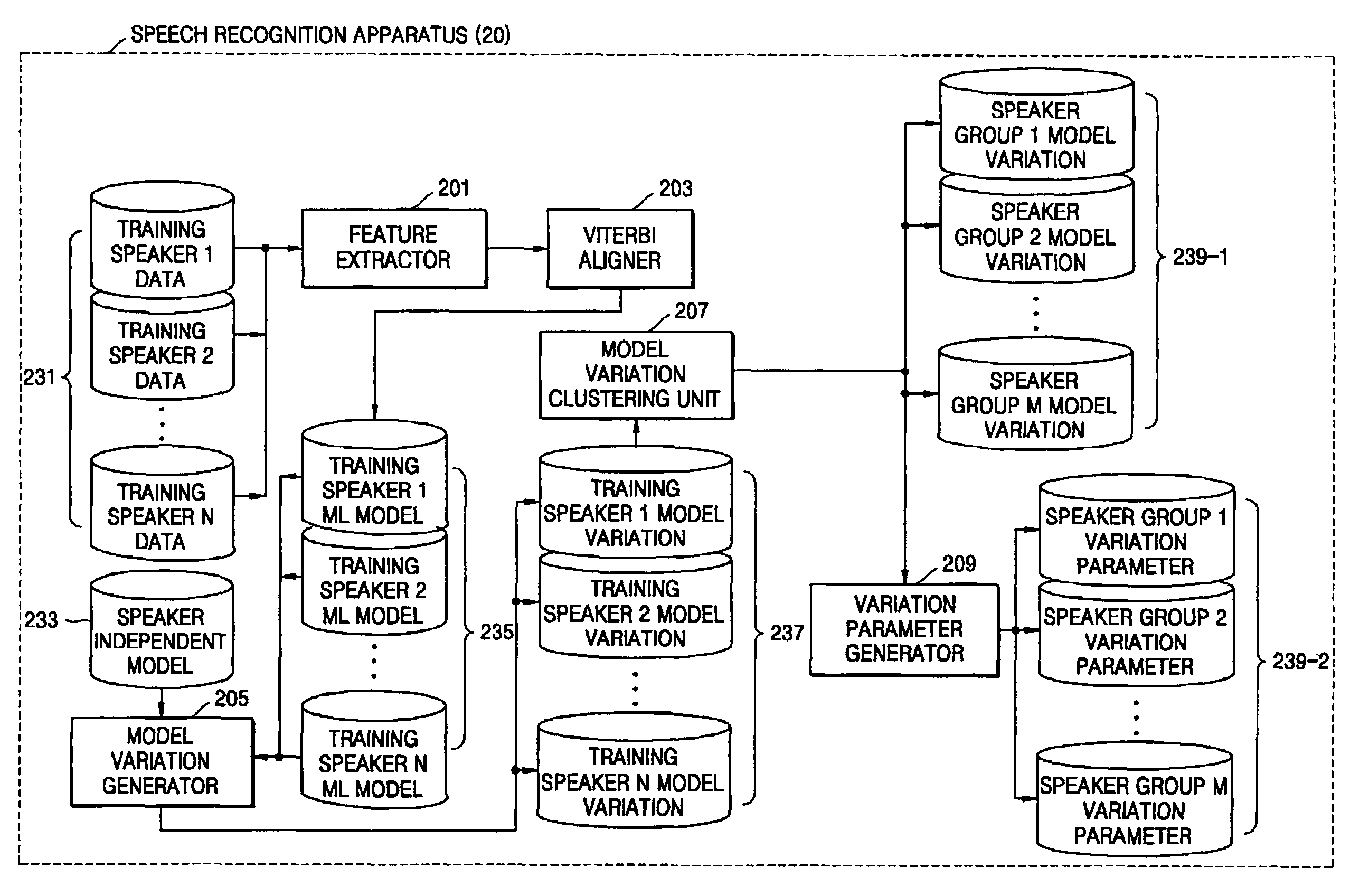

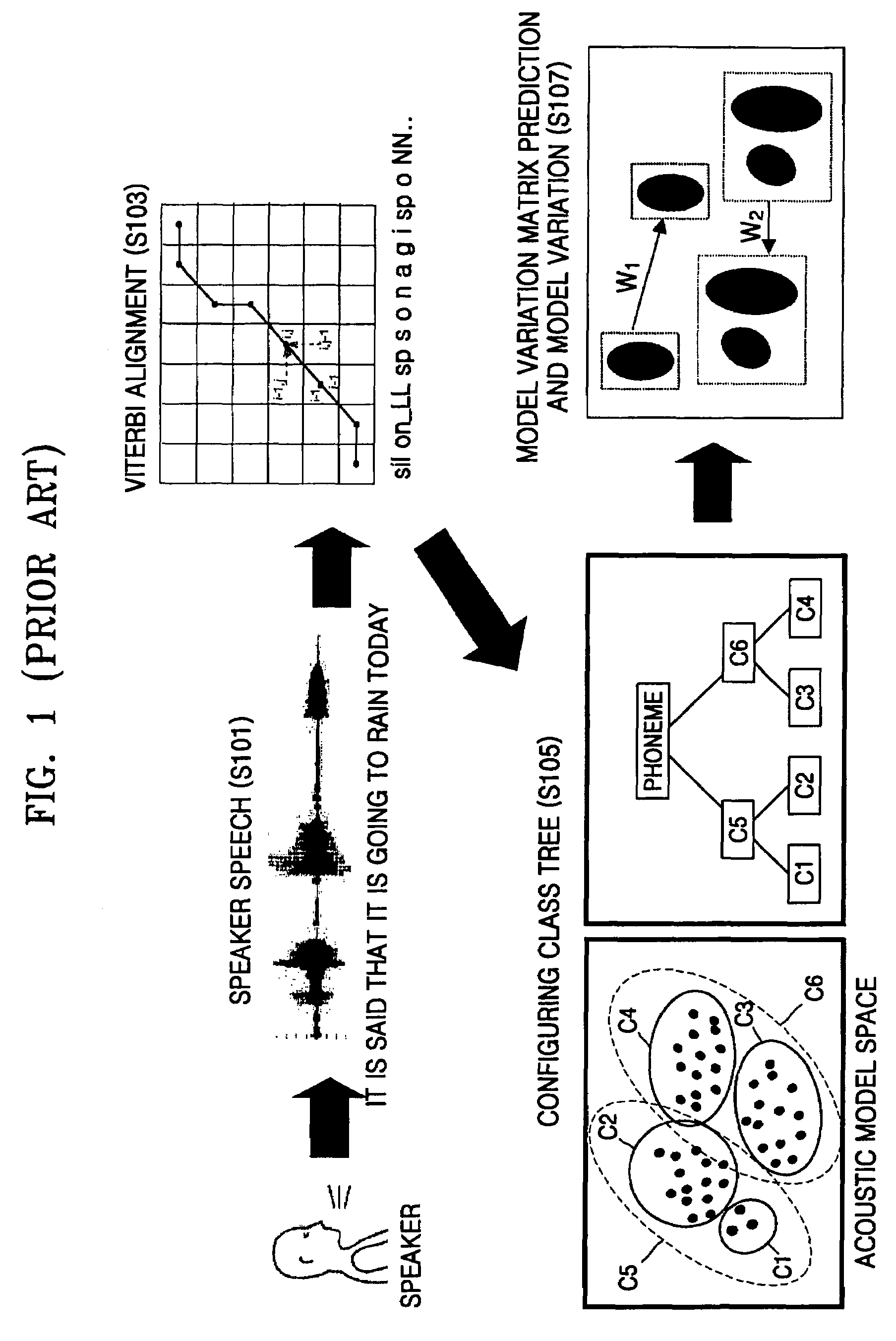

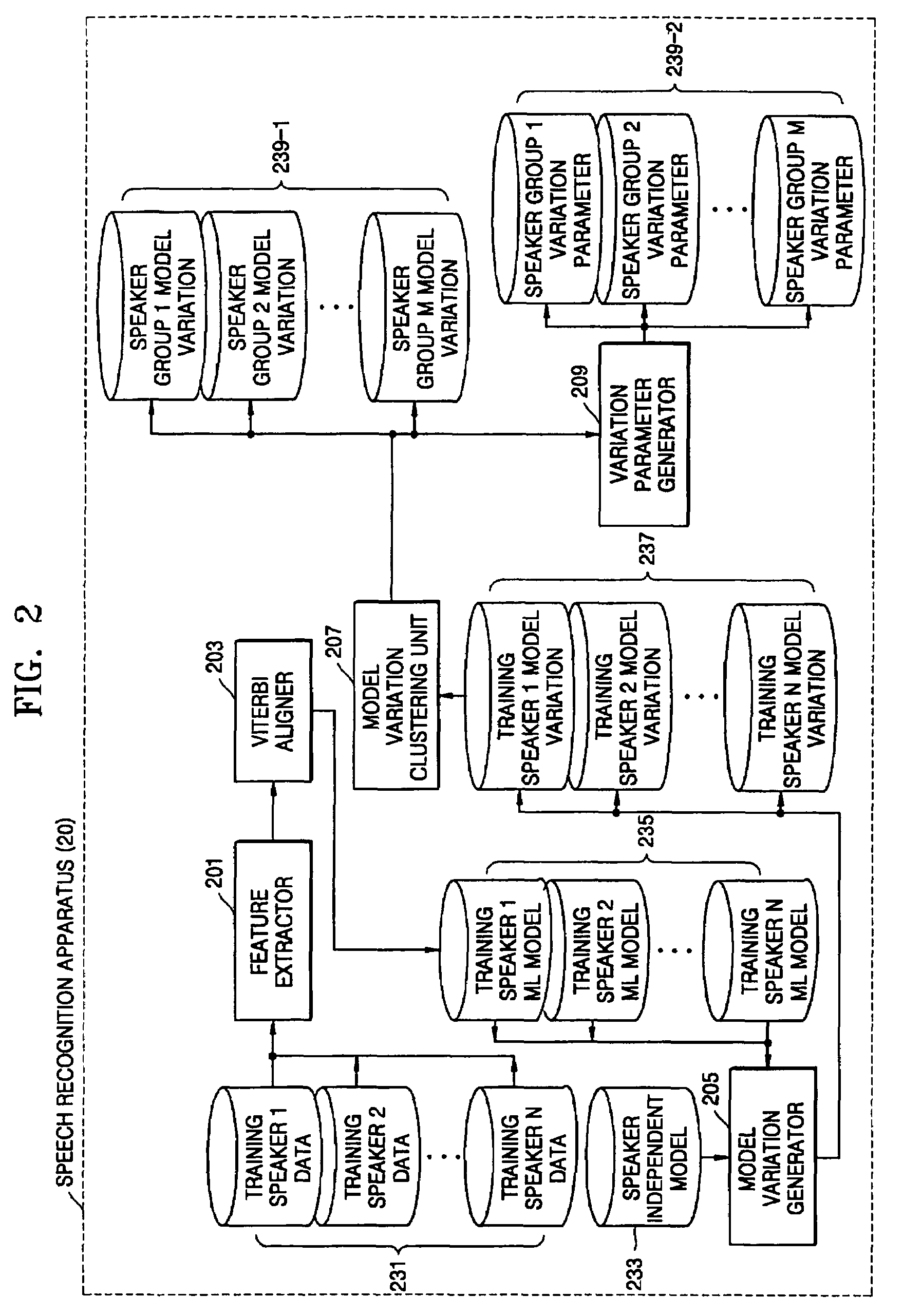

Speaker clustering and adaptation method based on the HMM model variation information and its apparatus for speech recognition

InactiveUS20050182626A1Improve performanceAlcoholic beverage preparationSpeech recognitionSpeech identificationLoudspeaker

A speech recognition method and apparatus perform speaker clustering and speaker adaptation using average model variation information over speakers while analyzing the quantity variation amount and the directional variation amount. In the speaker clustering method, a speaker group model variation is generated based on the model variation between a speaker-independent model and a training speaker ML model. In the speaker adaptation method, the model in which the model variation between a test speaker ML model and a speaker group ML model to which the test speaker belongs which is most similar to a training speaker group model variation is found, and speaker adaptation is performed on the found model. Herein, the model variation in the speaker clustering and the speaker adaptation are calculated while analyzing both the quantity variation amount and the directional variation amount. The present invention may be applied to any speaker adaptation algorithm of MLLR and MAP.

Owner:SAMSUNG ELECTRONICS CO LTD

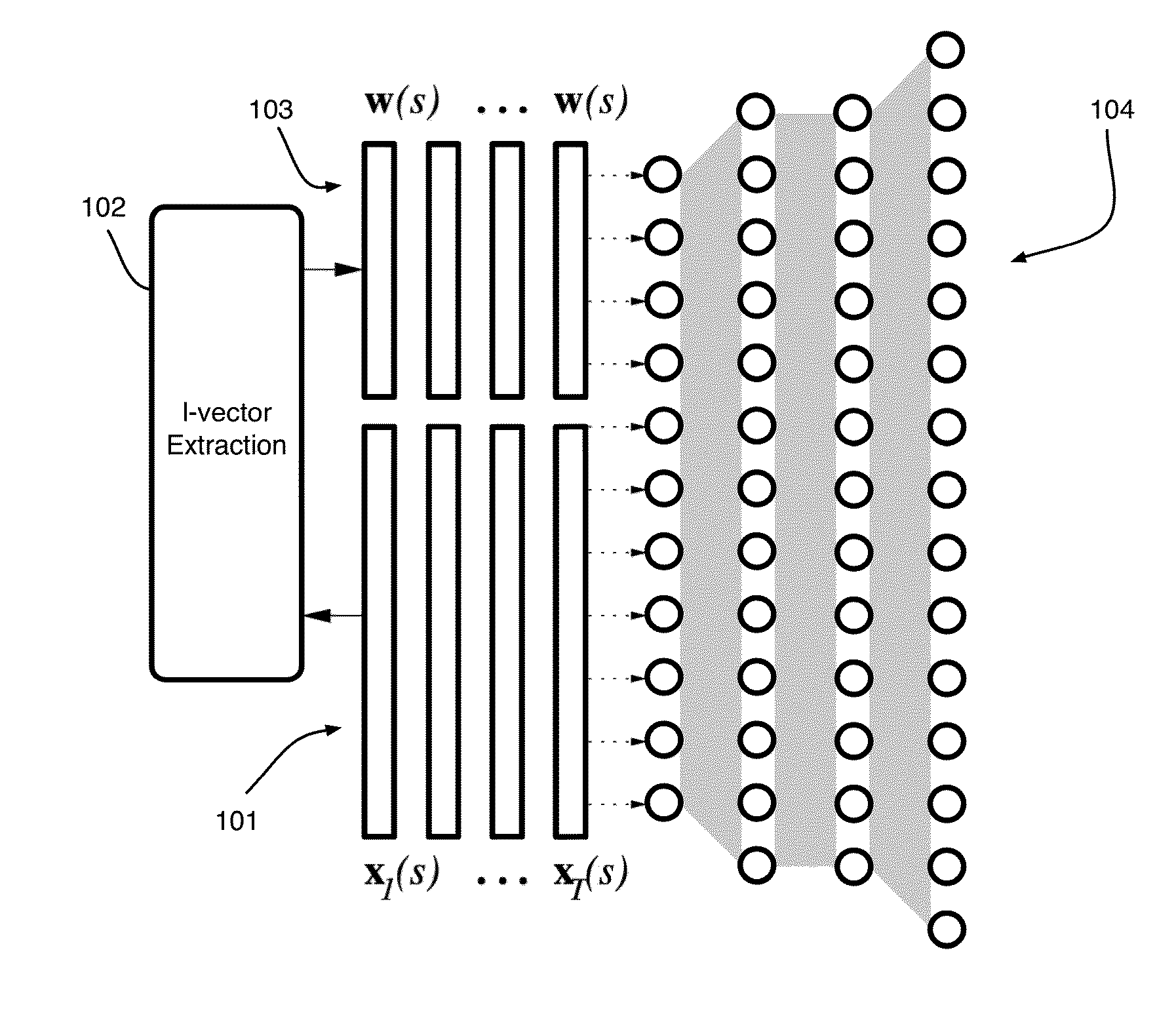

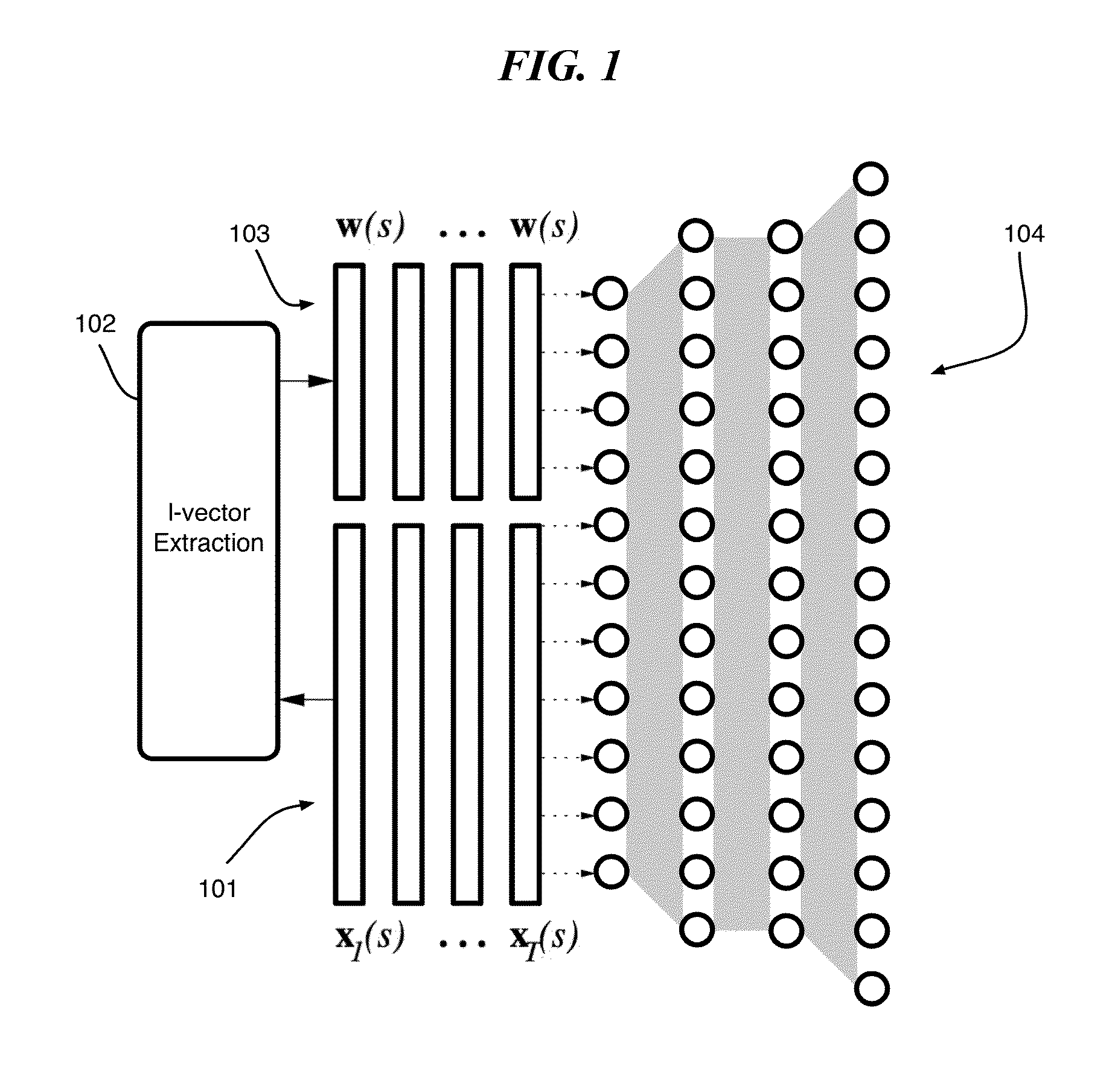

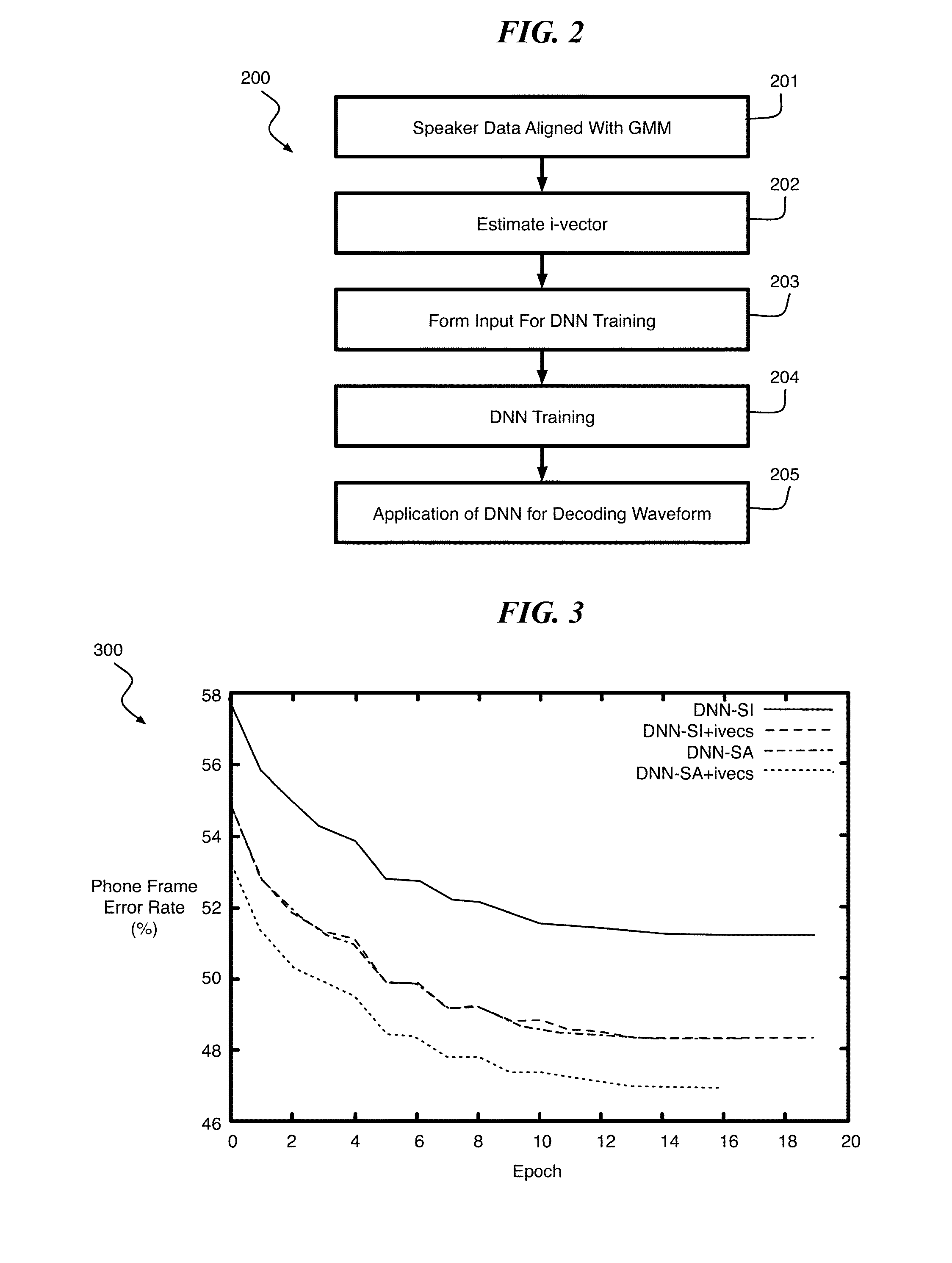

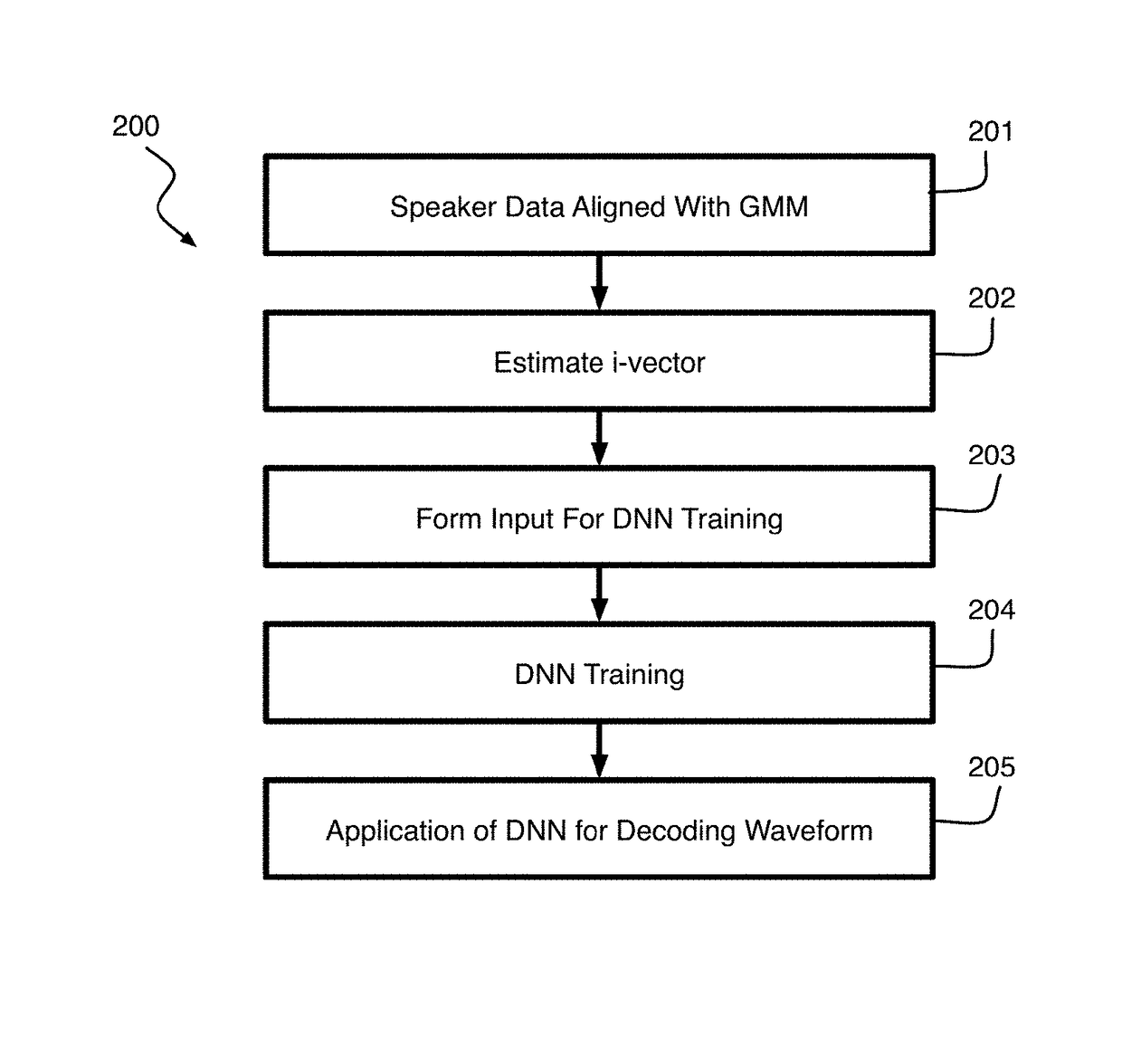

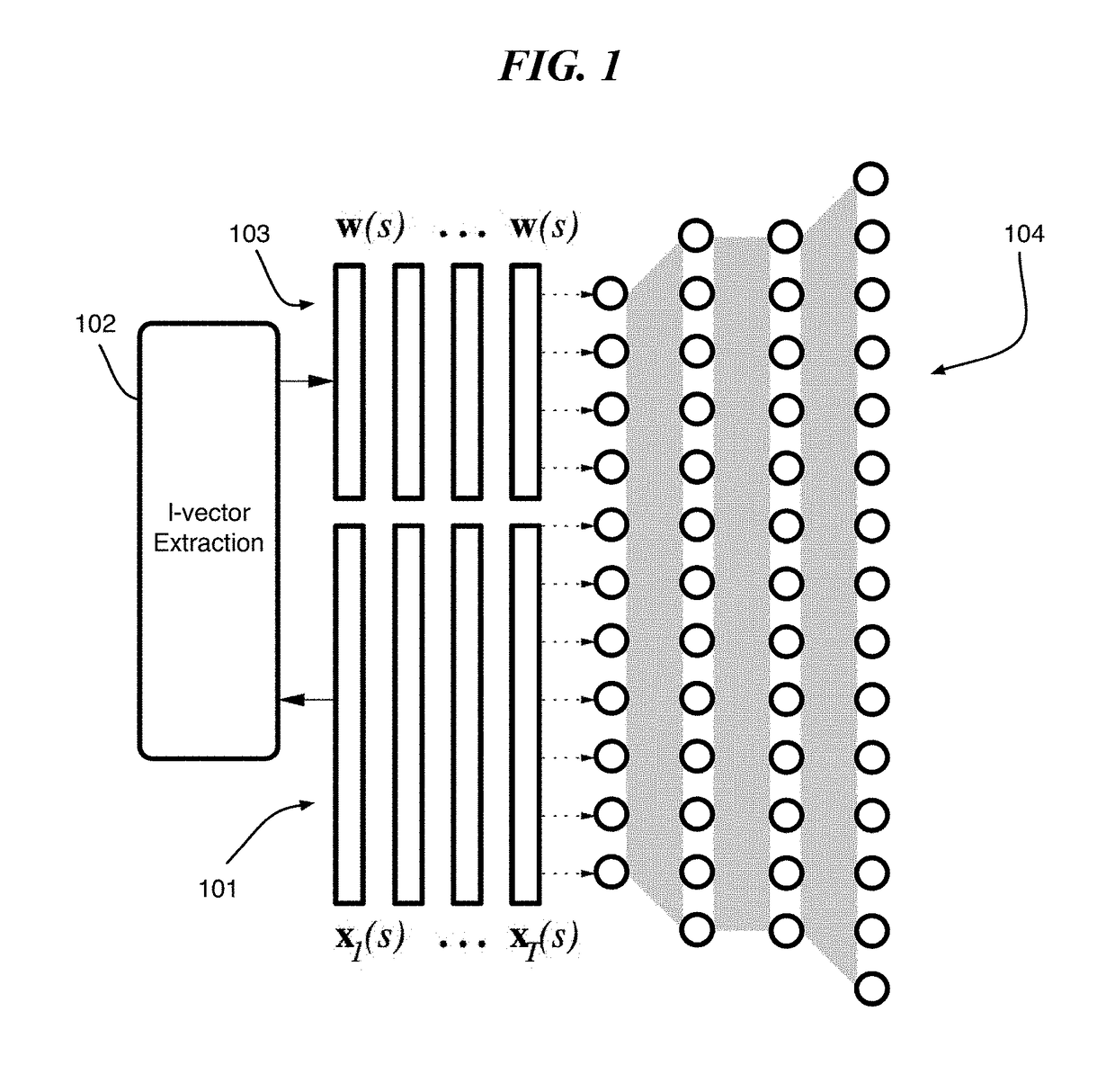

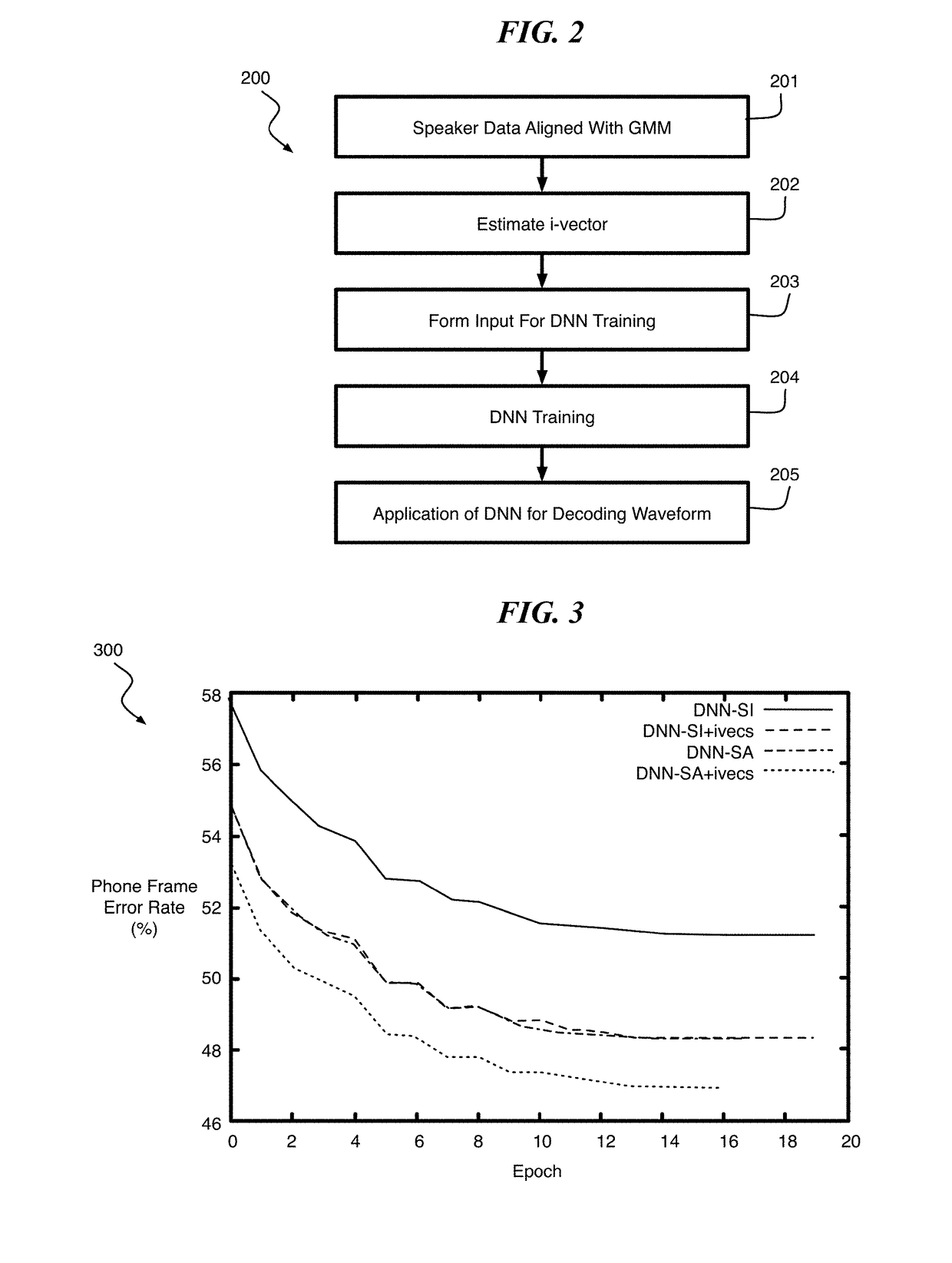

Speaker Adaptation of Neural Network Acoustic Models Using I-Vectors

A method includes providing a deep neural network acoustic model, receiving audio data including one or more utterances of a speaker, extracting a plurality of speech recognition features from the one or more utterances of the speaker, creating a speaker identity vector for the speaker based on the extracted speech recognition features, and adapting the deep neural network acoustic model for automatic speech recognition using the extracted speech recognition features and the speaker identity vector.

Owner:IBM CORP

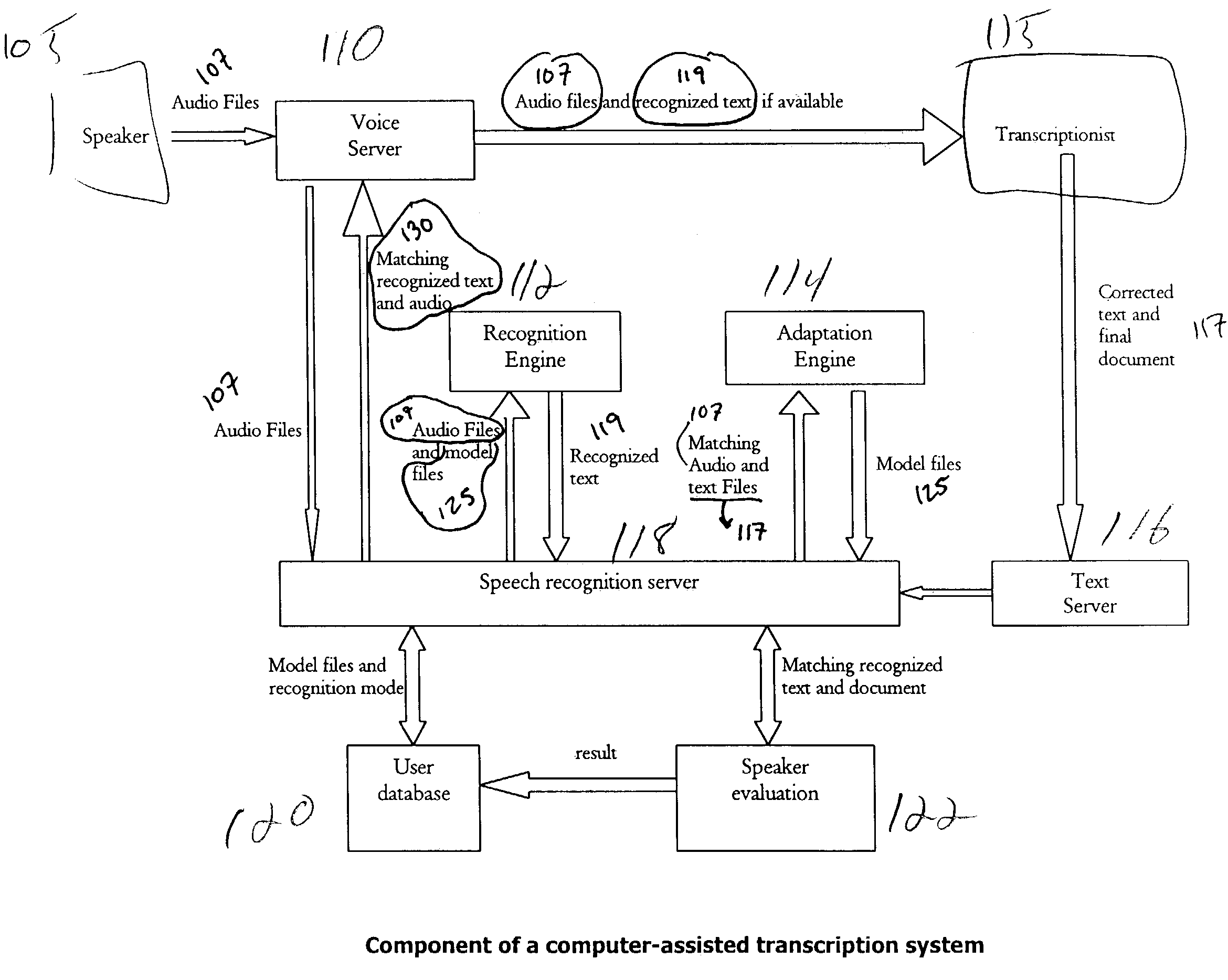

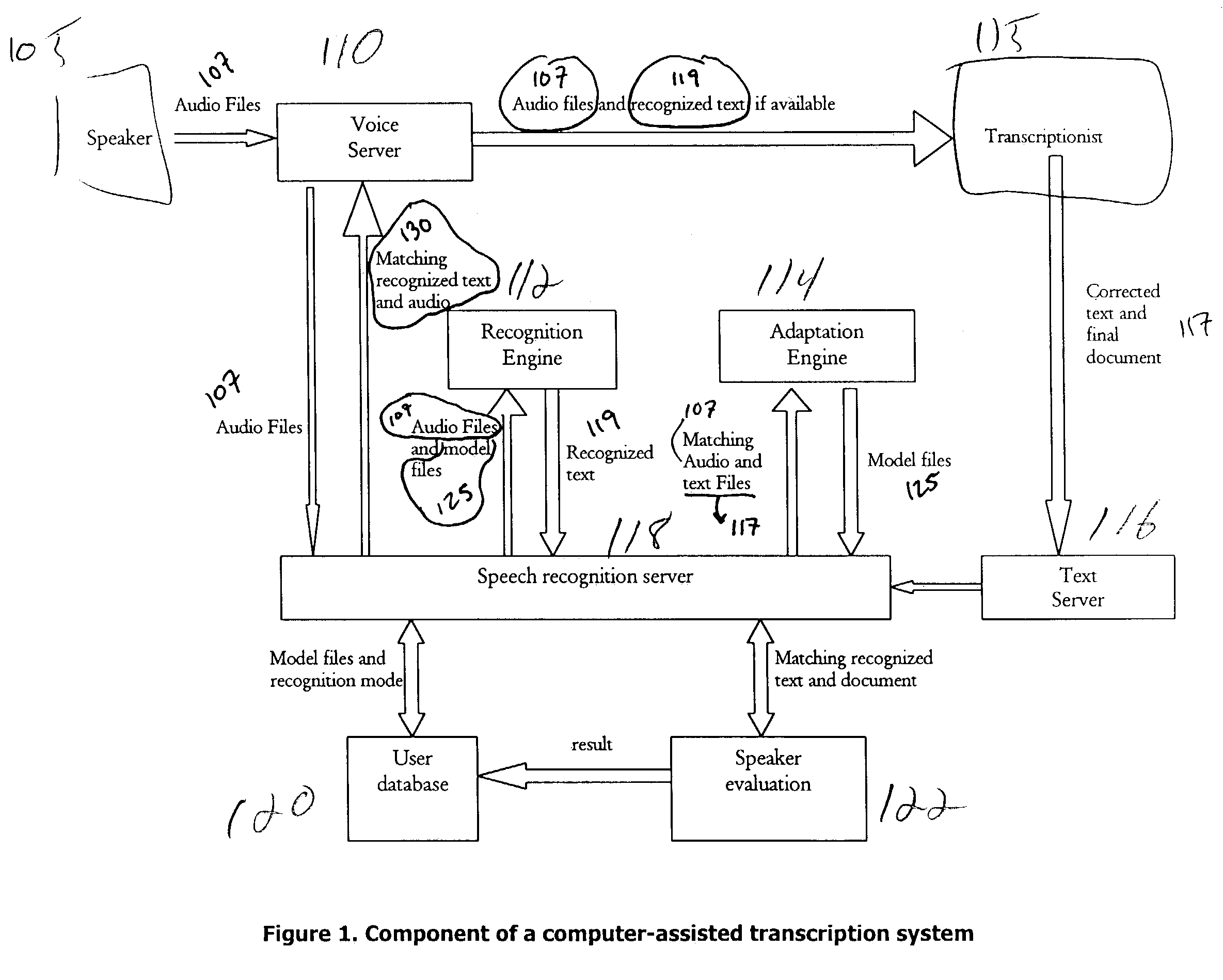

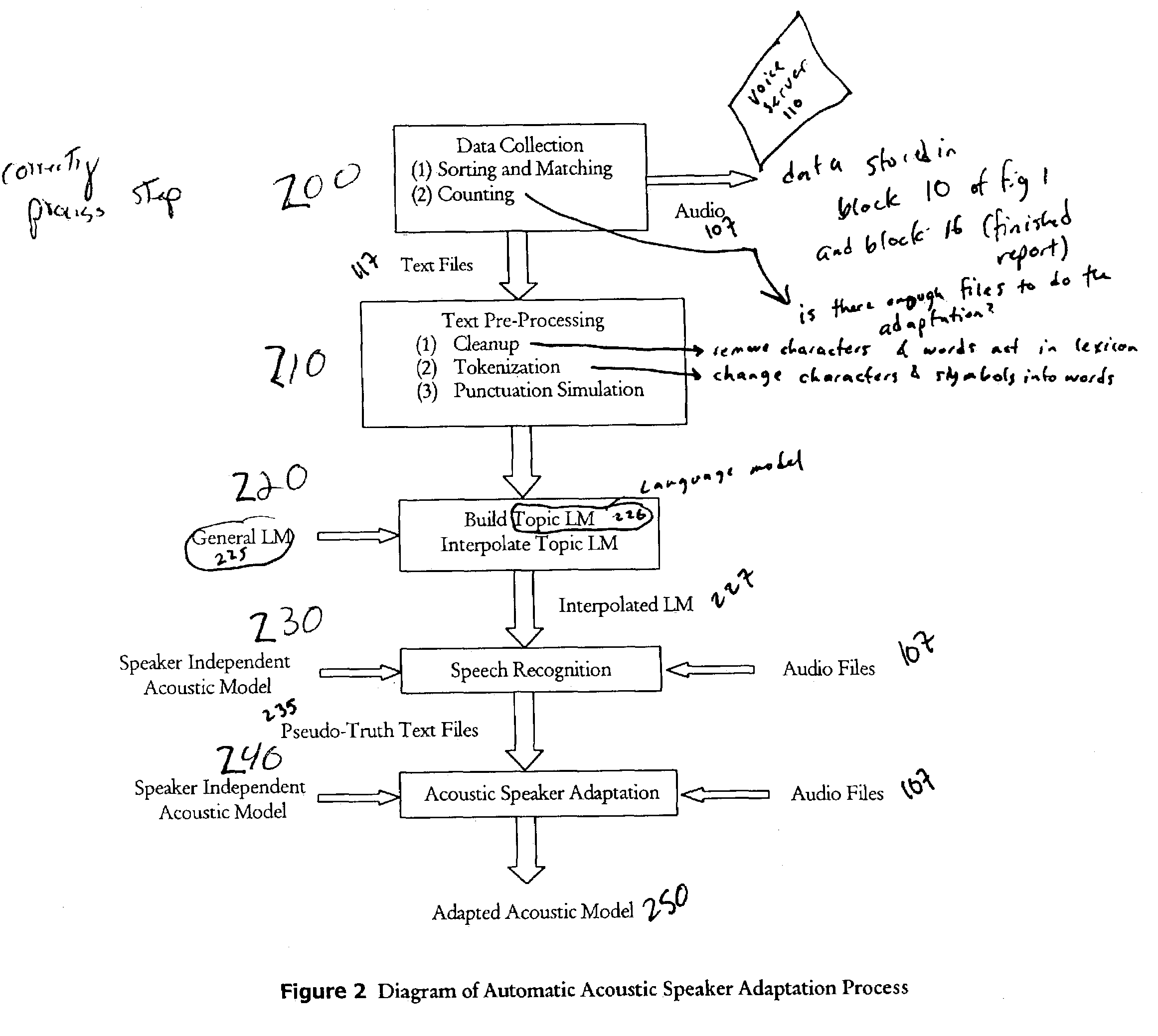

Systems and methods for automatic acoustic speaker adaptation in computer-assisted transcription systems

The invention is a system and method for automatic acoustic speaker adaptation in an automatic speech recognition assisted transcription system. Partial transcripts of audio files are generated by a transcriptionist. A topic language model is generated from the partial transcripts. The topic language model is interpolated with a general language model. Automatic speech recognition is performed on the audio files by a speech recognition engine using a speaker independent acoustic model and the interpolated language model to generate semi-literal transcripts of the audio files. The semi-literal transcripts are then used with the corresponding audio files to generate a speaker dependent acoustic model in an acoustic adaptation engine.

Owner:SCANSOFT +1

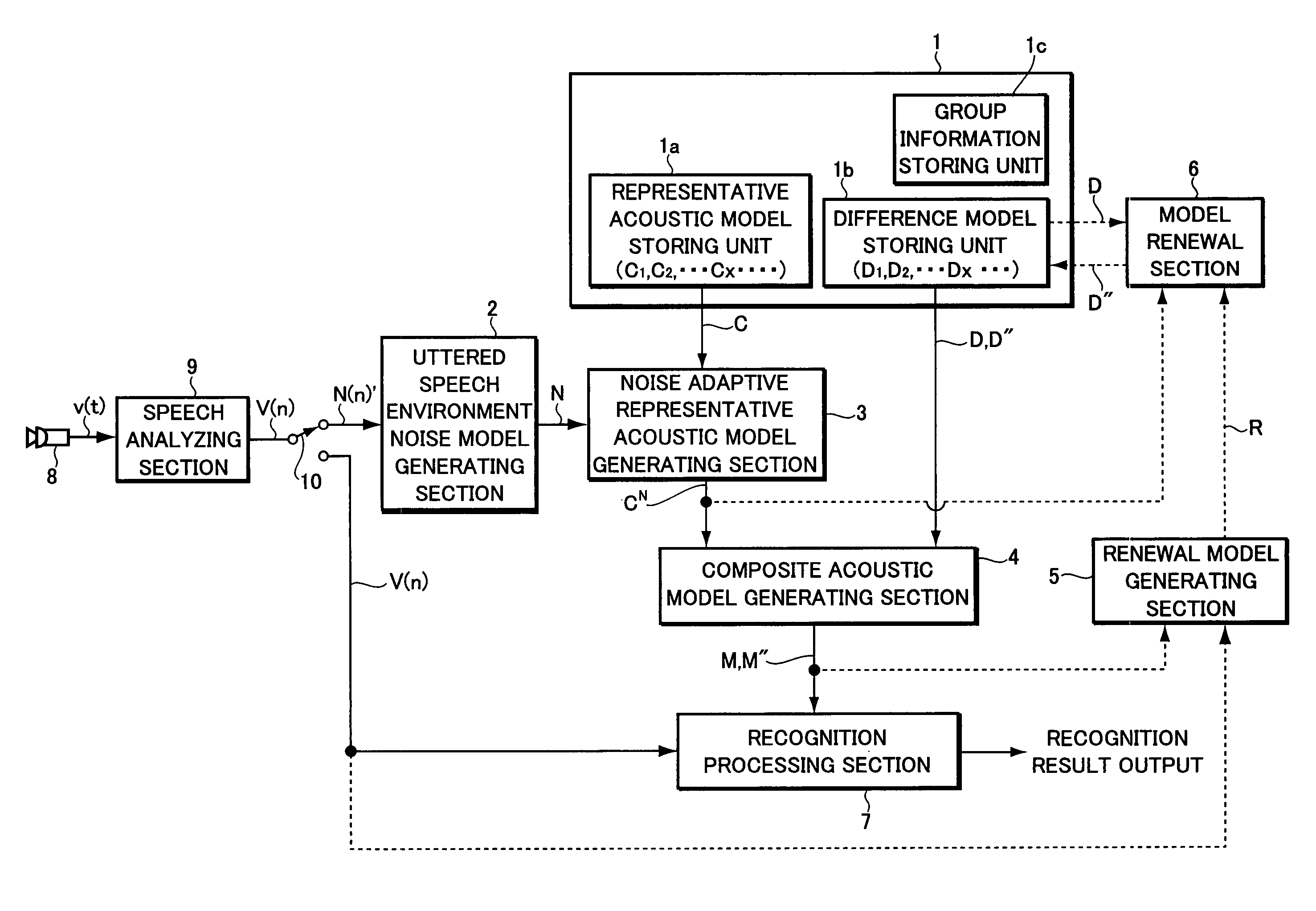

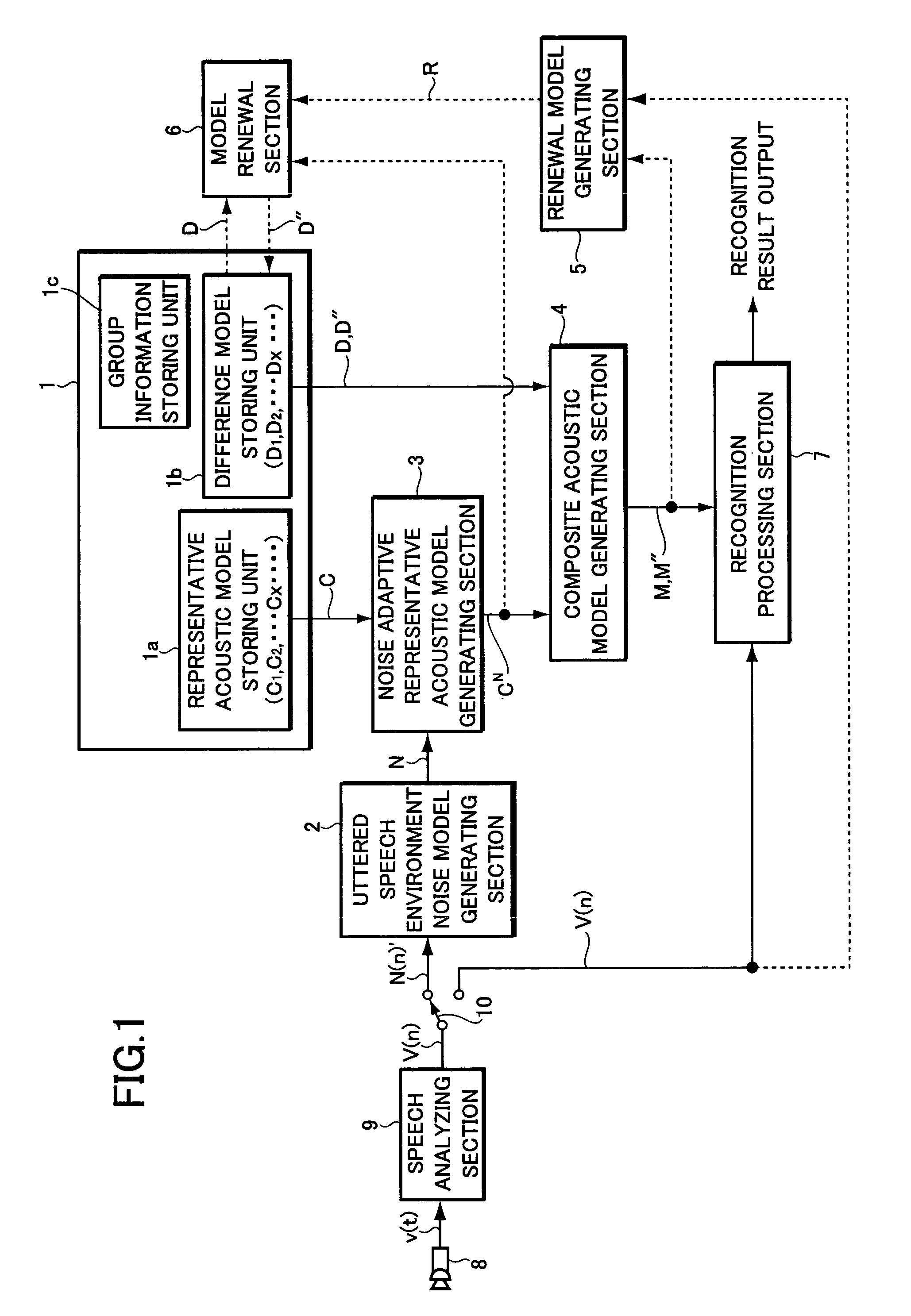

Apparatus and method for speech recognition

Before executing a speech recognition, a composite acoustic model adapted to noise is generated by composition of a noise adaptive representative acoustic model generated by noise-adaptation of each representative acoustic model and difference models stored in advance in a storing section, respectively. Then, the noise and speaker adaptive acoustic model is generated by executing speaker-adaptation to the composite acoustic model with the feature vector series of uttered speech. The renewal difference model is generated by the difference between the noise and speaker adaptive acoustic model and the noise adaptive representative acoustic model, to replace the difference model stored in the storing section therewith. The speech recognition is performed by comparing the feature vector series of the uttered speech to be recognized with the composite acoustic model adapted to noise and speaker generated by the composition of the noise adaptive representative acoustic model and the renewal difference model.

Owner:PIONEER CORP

Speech recognition system with an adaptive acoustic model

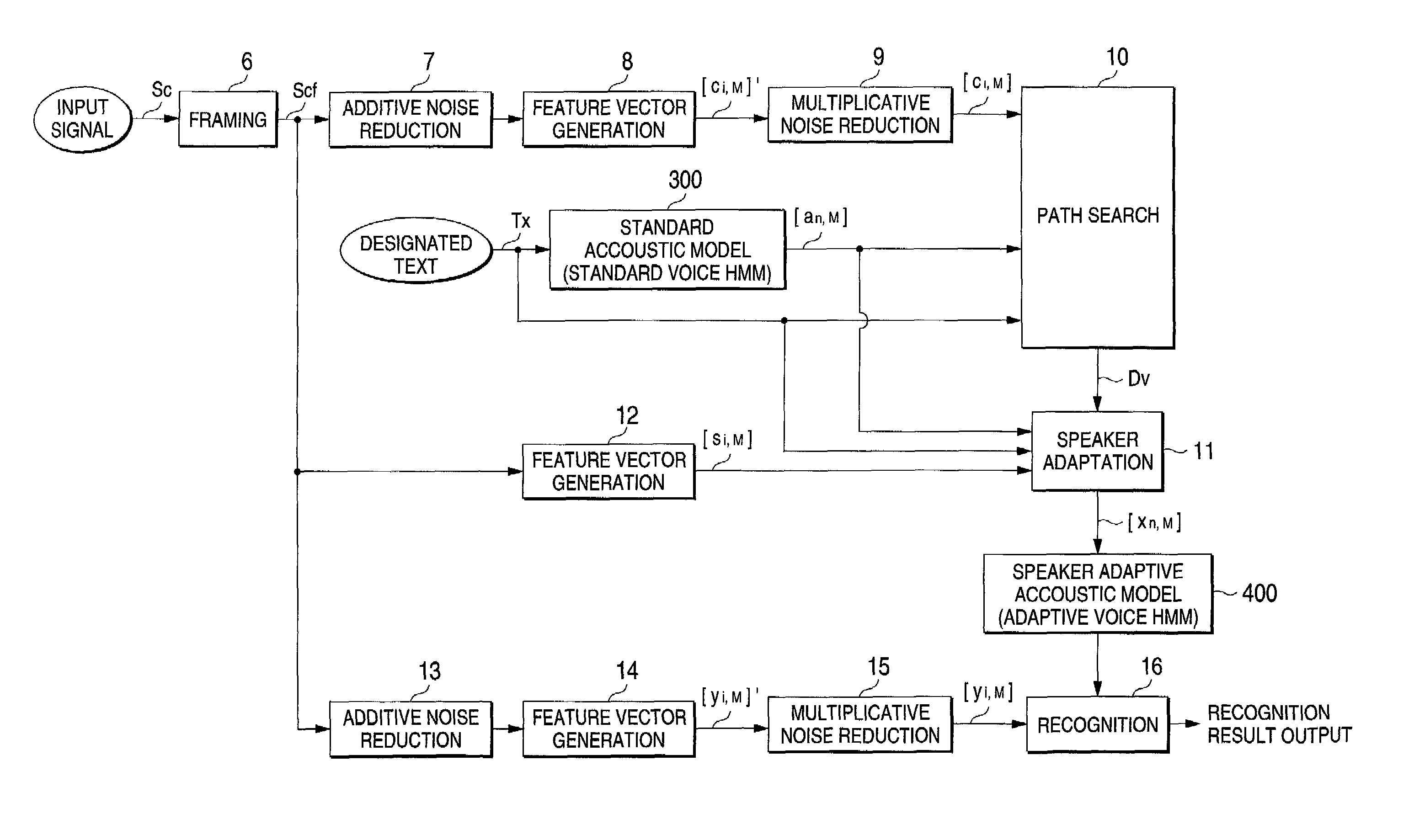

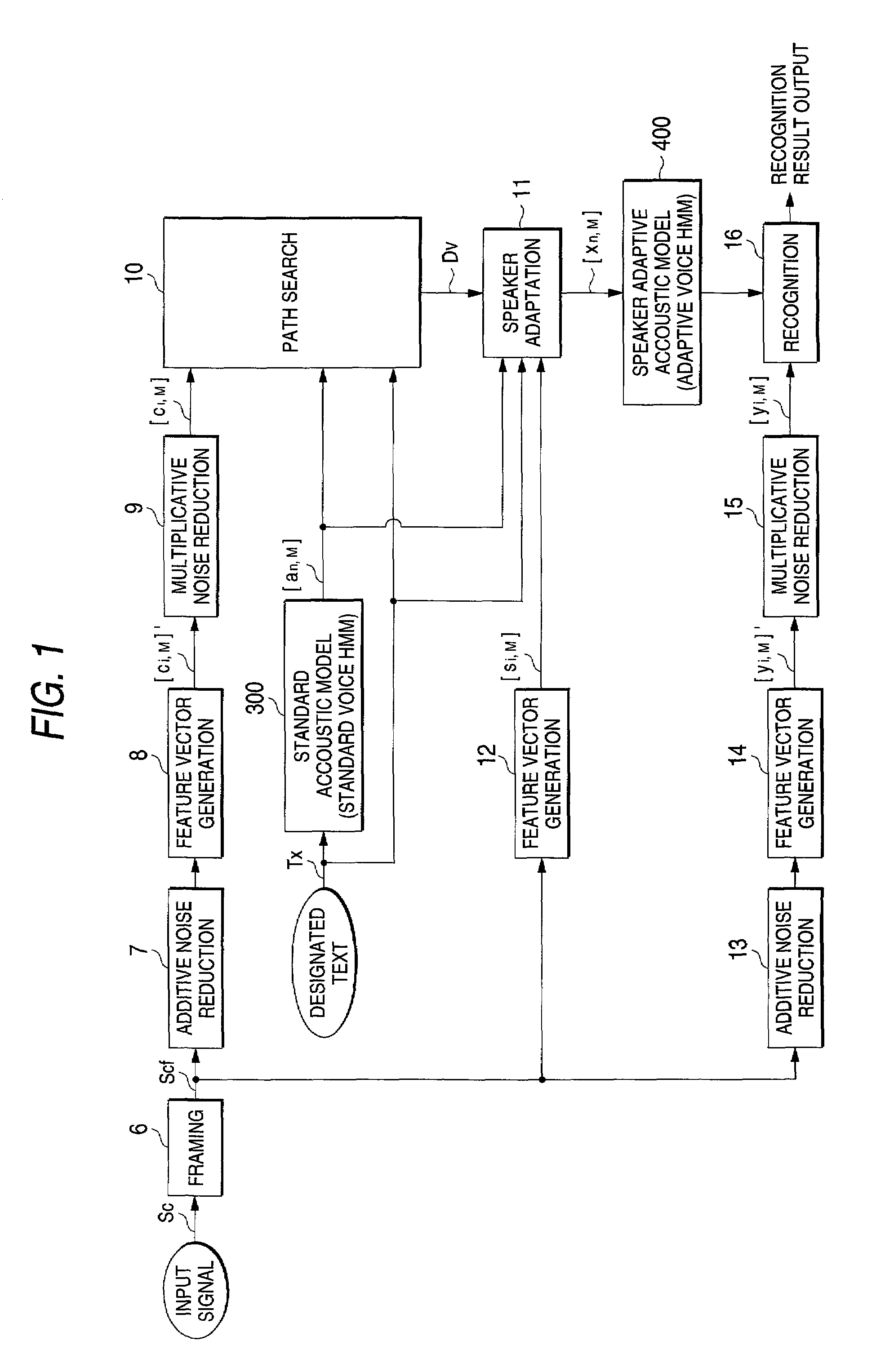

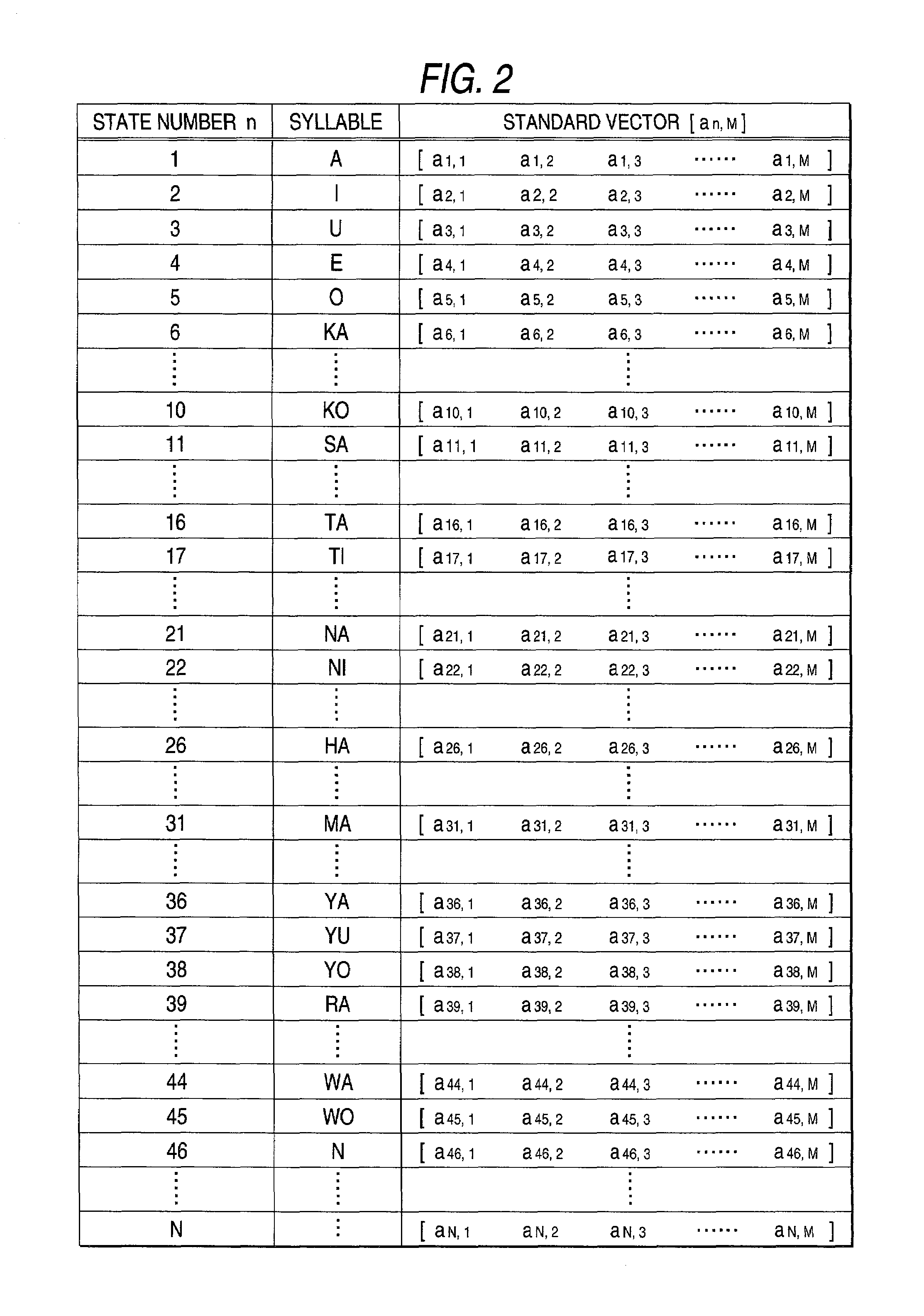

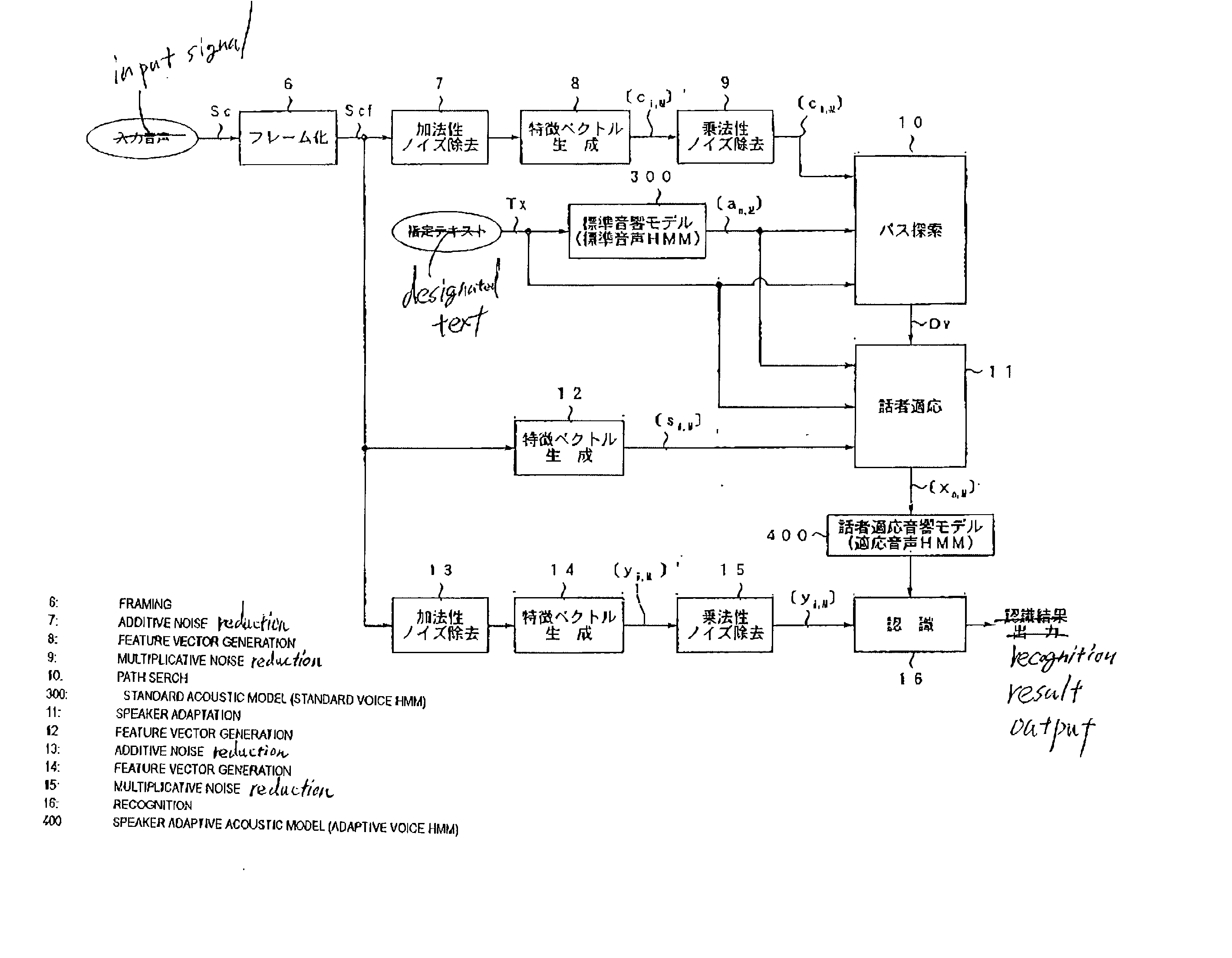

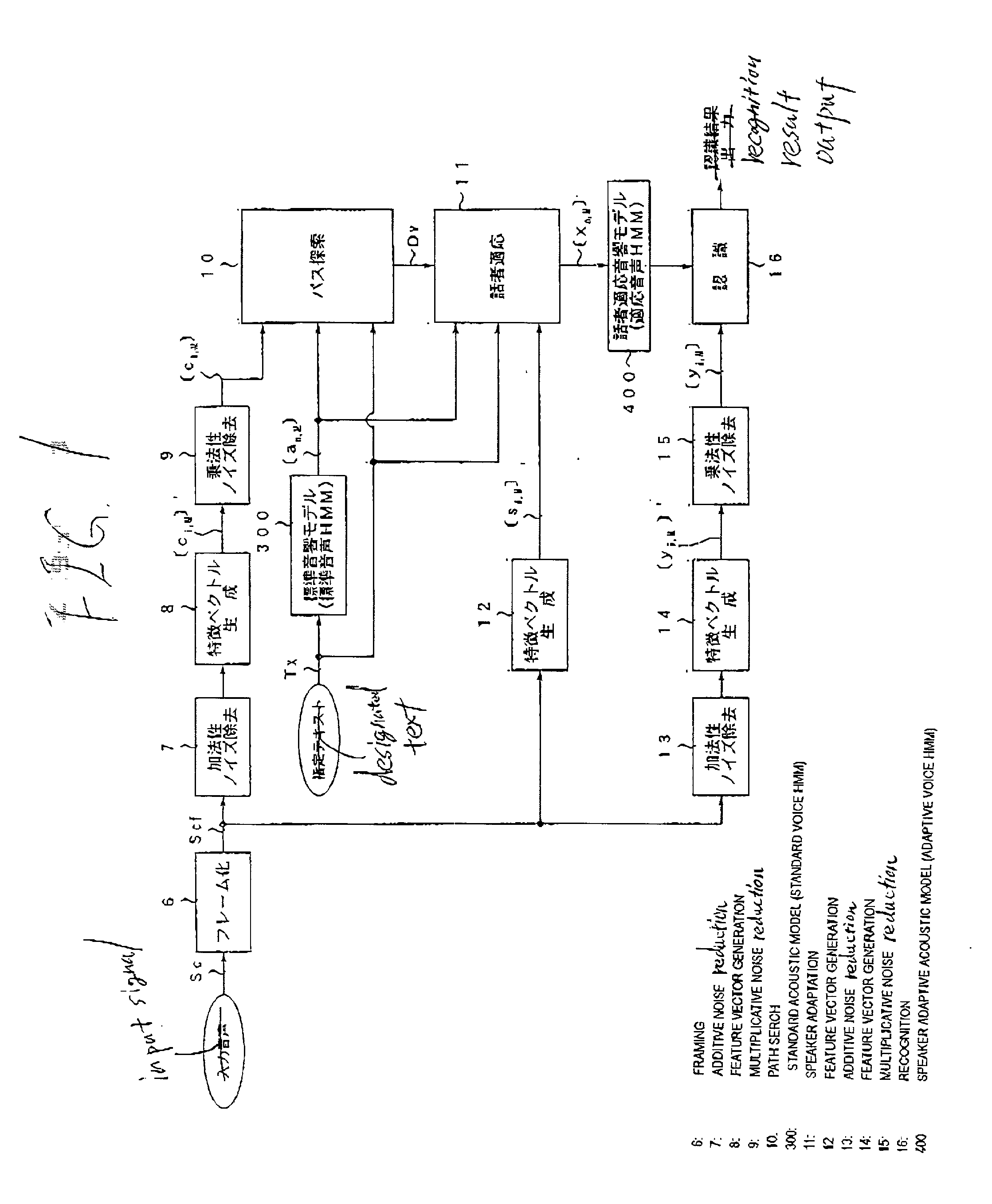

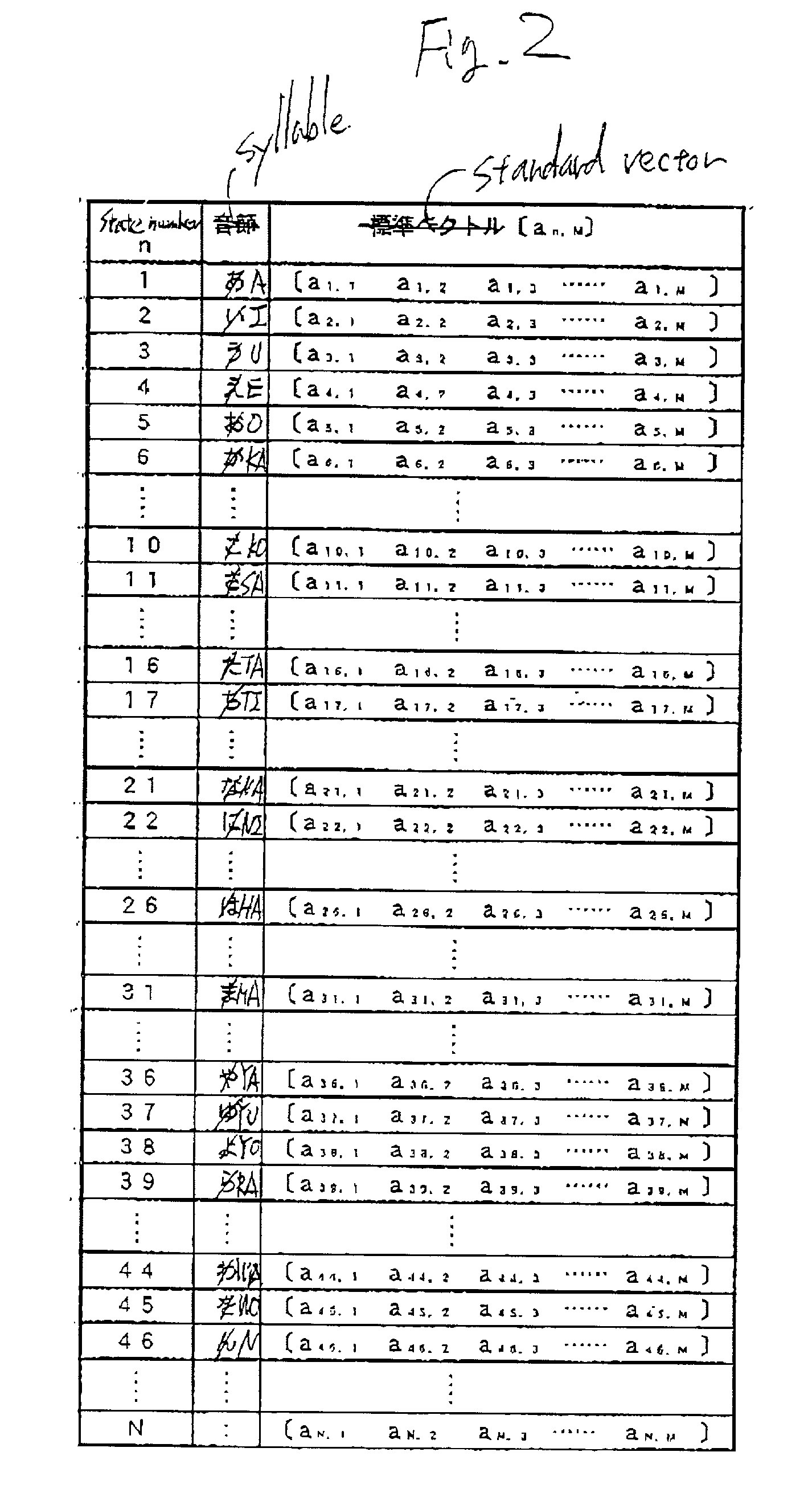

InactiveUS7065488B2Improve speech recognition performanceReduce noiseSpeech recognitionFeature vectorAcoustic model

At the time of the speaker adaptation, first feature vector generation sections (7, 8, 9) generate a feature vector series [Ci, M] from which the additive noise and multiplicative noise are removed. A second feature vector generation section (12) generates a feature vector series [Si, M] including the features of the additive noise and multiplicative noise. A path search section (10) conducts a path search by comparing the feature vector series [Ci, m] to the standard vector [an, m] of the standard voice HMM (300). When the speaker adaptation section (11) conducts correlation operation on an average feature vector [S^n, m] of the standard vector [an, m] corresponding to the path search result Dv and the feature vector series [Si, m], the adaptive vector [xn, m] is generated. The adaptive vector [xn, m] updates the feature vector of the speaker adaptive acoustic model (400) used for the speech recognition.

Owner:PIONEER CORP

Speech recognition method and speech recognition system

Provided is a speech recognition method and a speech recognition system. The speech recognition method includes the steps of capturing speech features in speech data, recognizing speaker identification of the speech data according to the speech features, then using a first acoustic model to recognize statements in the speech data, calculating confidence scores of the recognized statements according to the recognized statements and the speech data, and judging whether the confidence scores exceed a threshold value. When the confidence scores exceed the threshold value, the recognized statements and the speech data are collected so as to use the speech data to carry out speaker adaptation of a second acoustic model corresponding to the speaker identification.

Owner:ASUSTEK COMPUTER INC

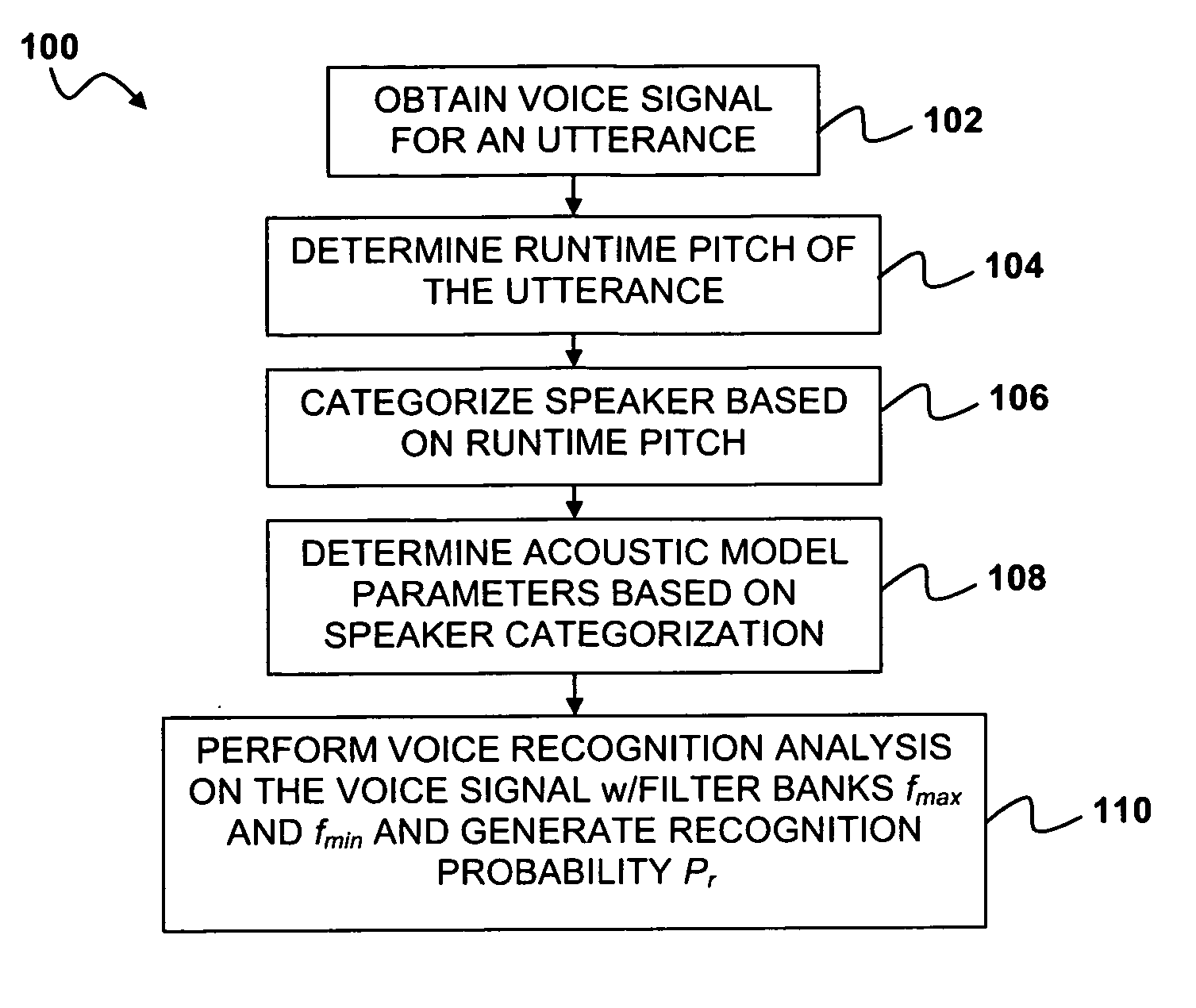

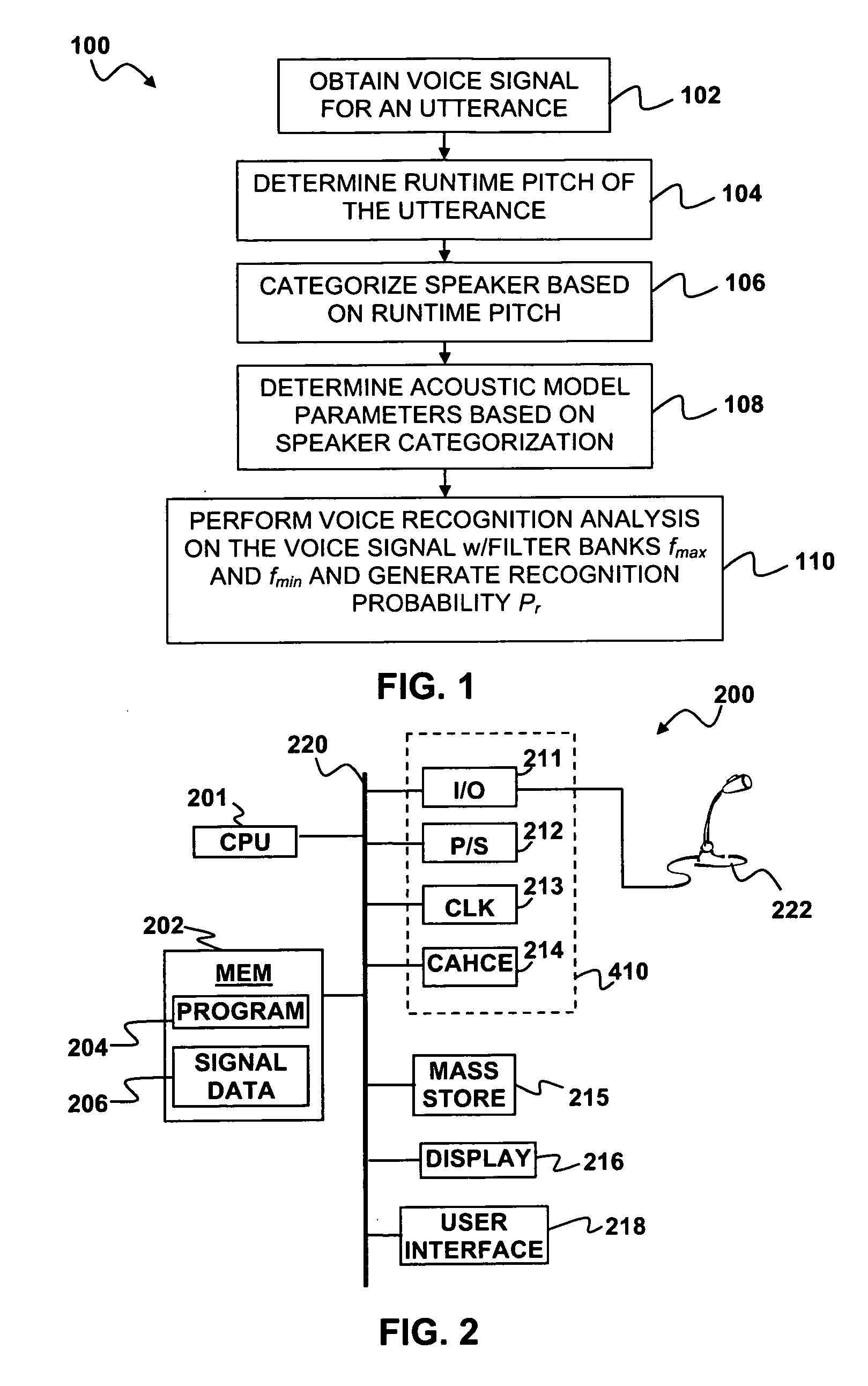

Voice recognition with speaker adaptation and registration with pitch

Voice recognition methods and systems are disclosed. A voice signal is obtained for an utterance of a speaker. A runtime pitch is determined from the voice signal for the utterance. The speaker is categorized based on the runtime pitch and one or more acoustic model parameters are adjusted based on a categorization of the speaker. The parameter adjustment may be performed at any instance of time during the recognition. A voice recognition analysis of the utterance is then performed based on the acoustic model.

Owner:SONY COMPUTER ENTERTAINMENT INC

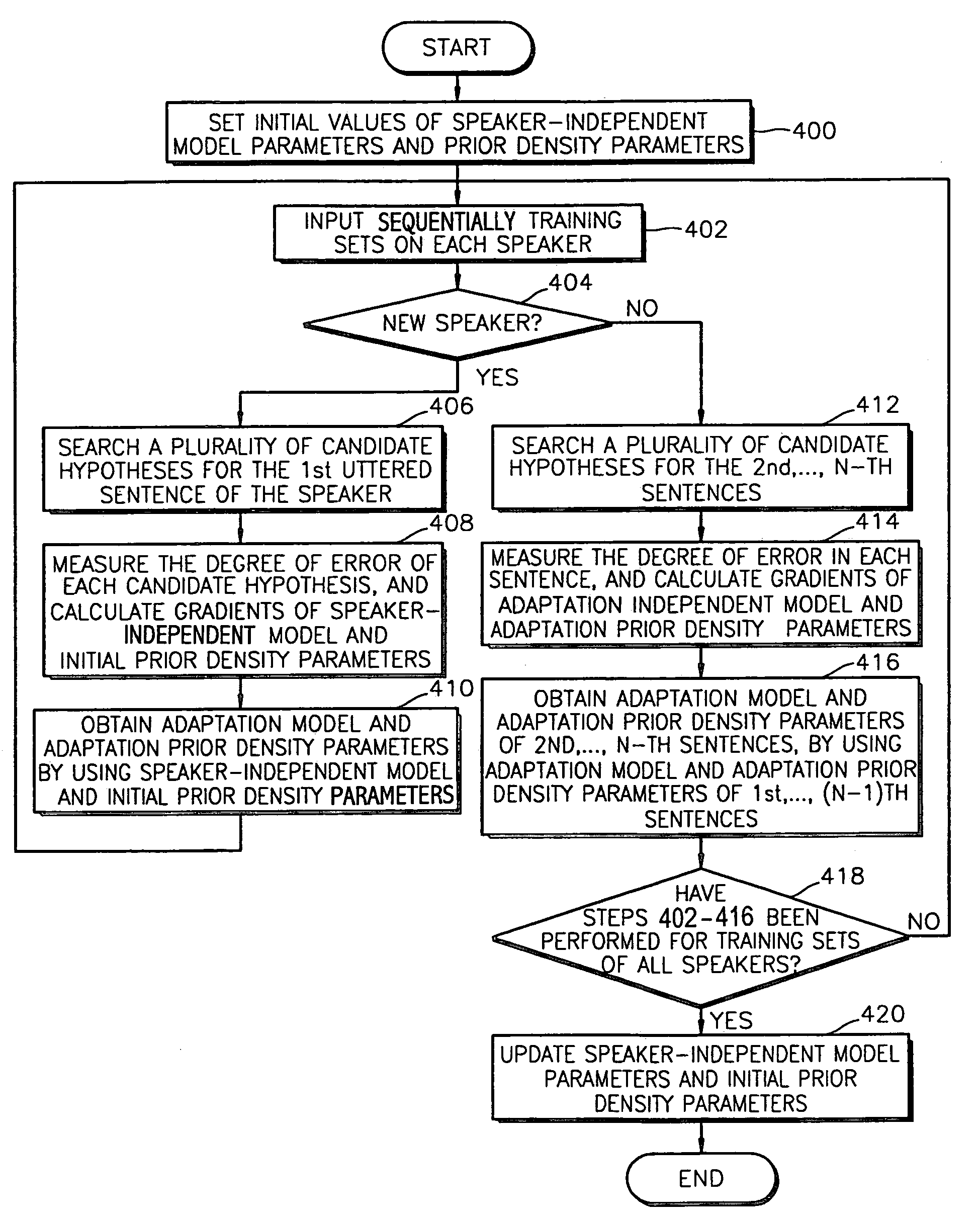

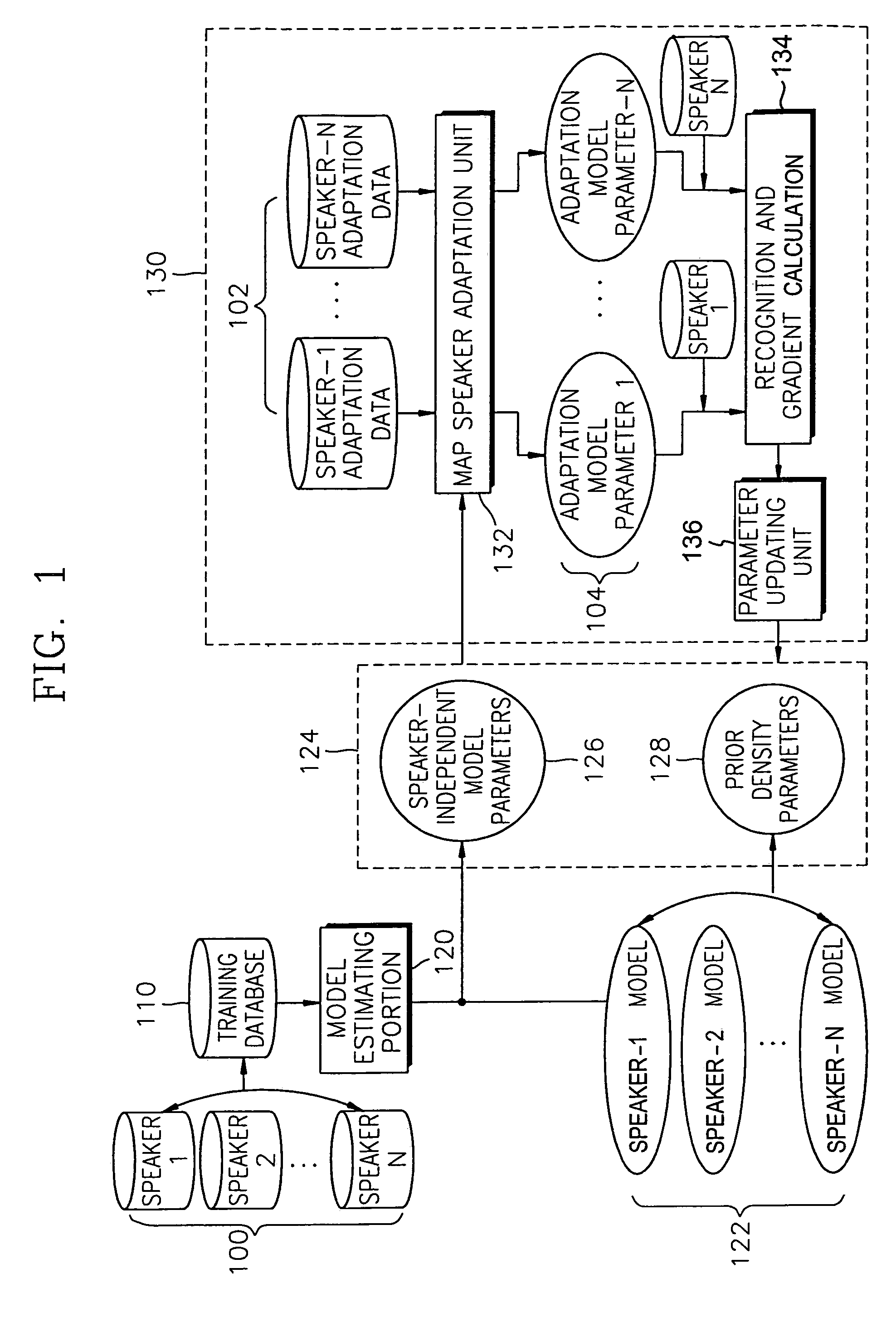

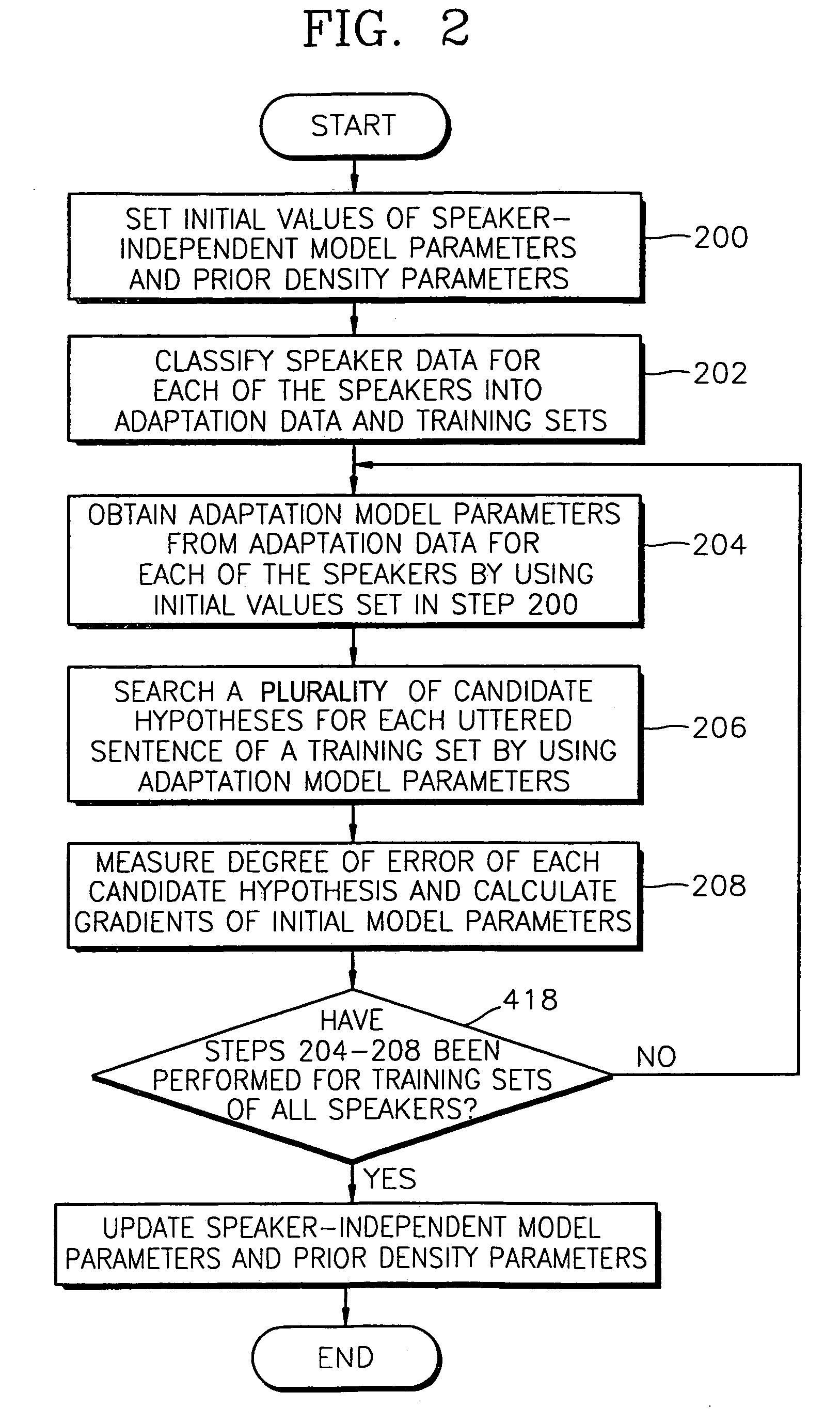

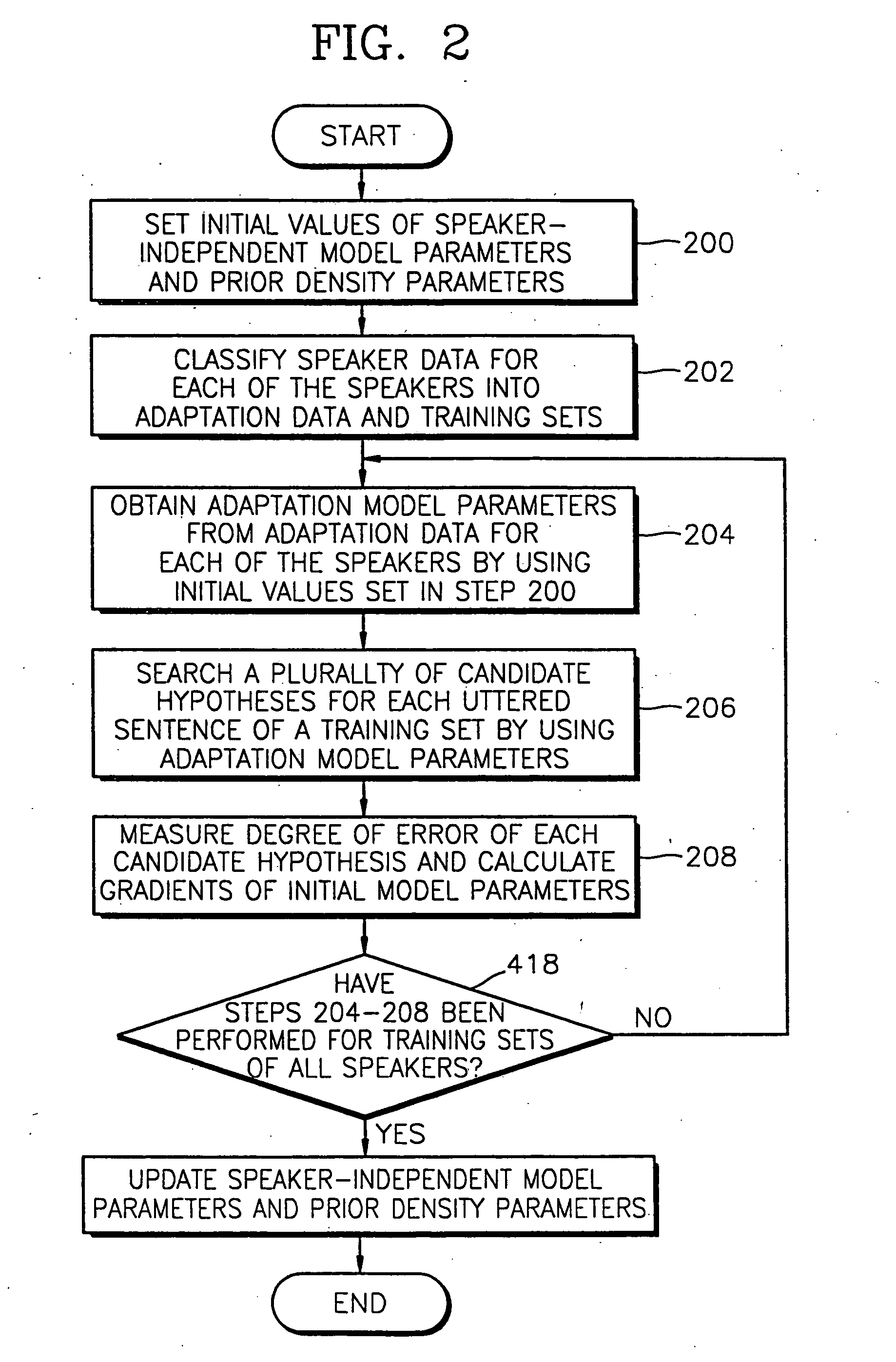

Method and apparatus for discriminative estimation of parameters in maximum a posteriori (MAP) speaker adaptation condition and voice recognition method and apparatus including these

InactiveUS7324941B2Classification errors on training sets are minimizedError minimizationSpeech recognitionHypothesisSpeech identification

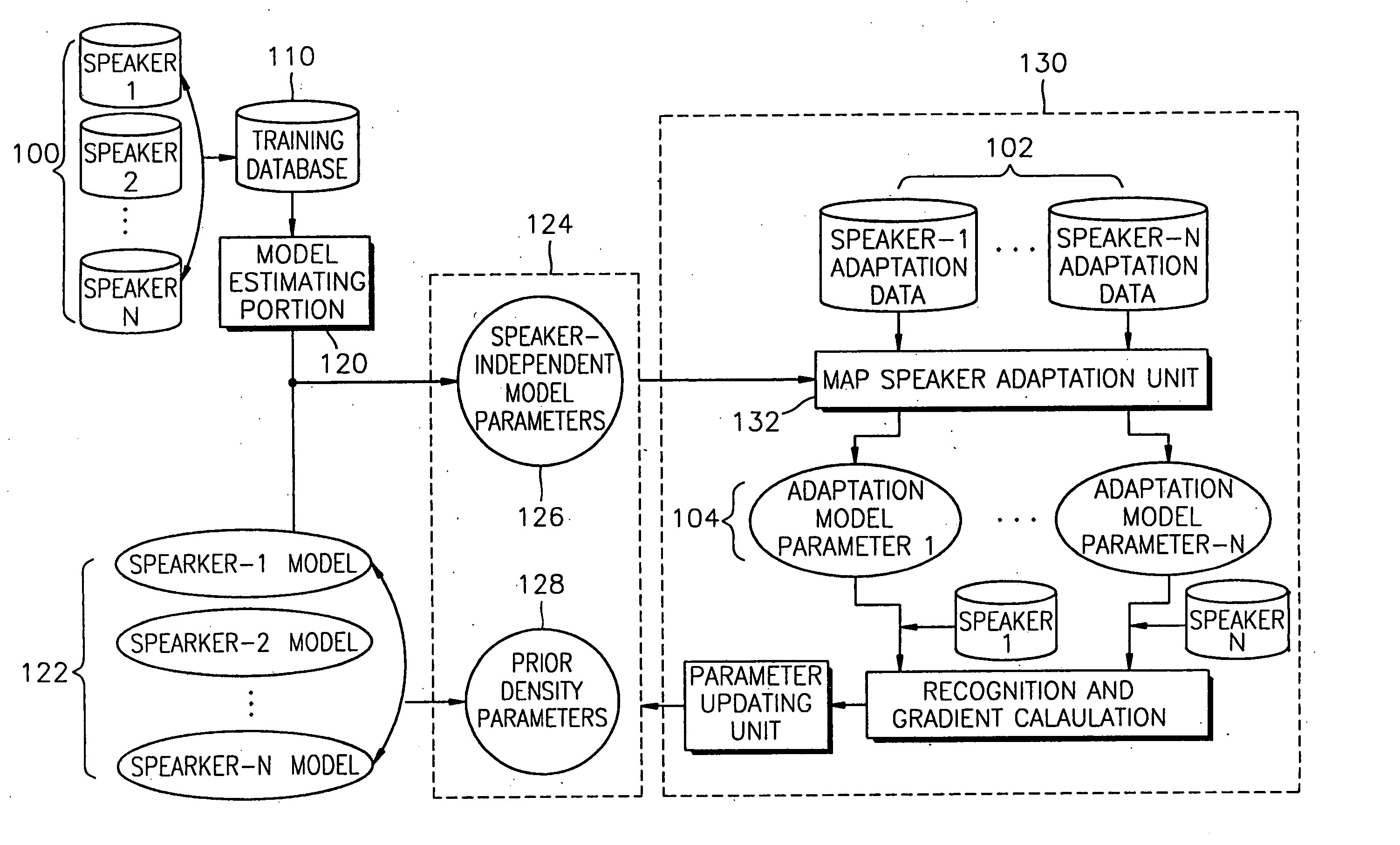

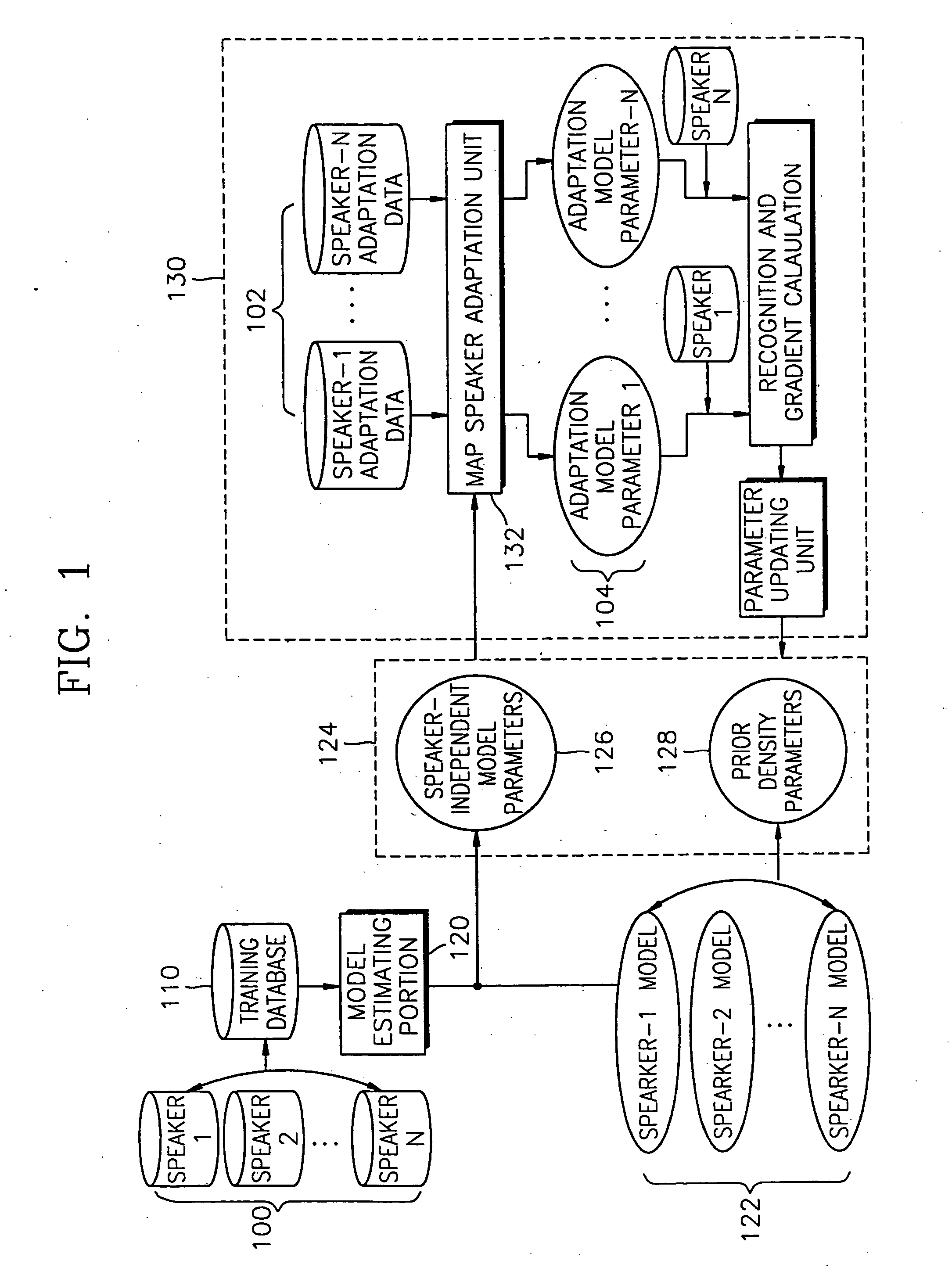

A method and apparatus for discriminative estimation of parameters in a maximum a posteriori (MAP) speaker adaptation condition, and a voice recognition apparatus having the apparatus and a voice recognition method using the method are provided. The method for discriminative estimation of parameters in a maximum a posteriori (MAP) speaker adaptation condition, in which at least speaker-independent model parameters and prior density parameters, which are standards in recognizing a speaker's voice, are obtained as the result of model training after fetching training sets on a plurality of speakers from a training database, has the steps of (a) classifying adaptation data among training sets for respective speakers; (b) obtaining model parameters adapted from adaptation data on each speaker by using the initial values of the parameters; (c) searching a plurality of candidate hypotheses on each uttered sentence of training sets by using the adapted model parameters, and calculating gradients of speaker-independent model parameters by measuring the degree of errors on each training sentence; and (d) when training sets of all speakers are adapted, updating parameters, which were set at the initial stage, based on the calculated gradients.

Owner:SAMSUNG ELECTRONICS CO LTD

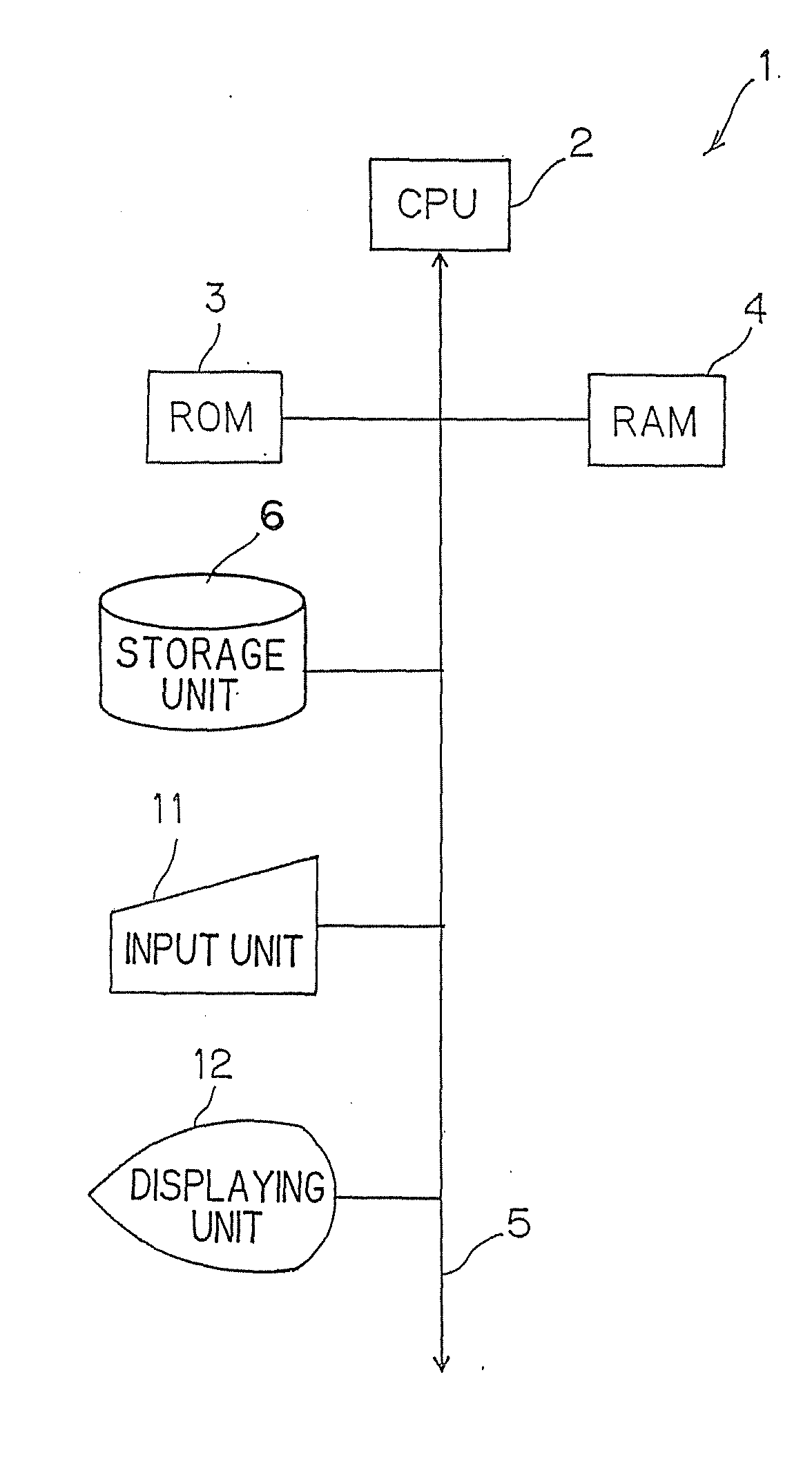

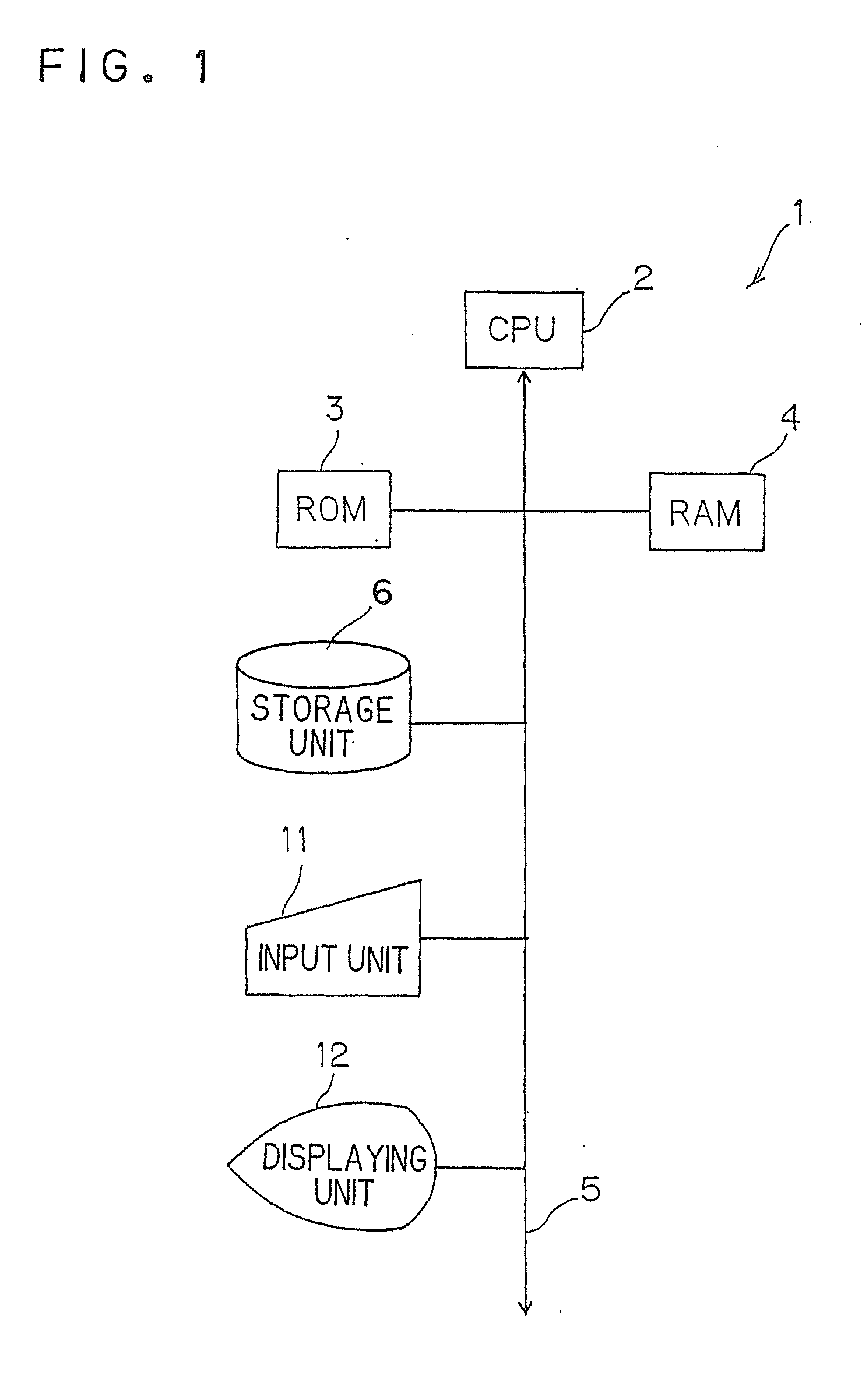

Speaker adaptation apparatus and program thereof

A speaker adaptation apparatus includes an acquiring unit configured to acquire an acoustic model including HMMs and decision trees for estimating what type of the phoneme or the word is included in a feature value used for speech recognition, the HMMs having a plurality of states on a phoneme-to-phoneme basis or a word-to-word basis, and the decision trees being configured to reply to questions relating to the feature value and output likelihoods in the respective states of the HMMs, and a speaker adaptation unit configured to adapt the decision trees to a speaker, the decision trees being adapted using speaker adaptation data vocalized by the speaker of an input speech.

Owner:KK TOSHIBA

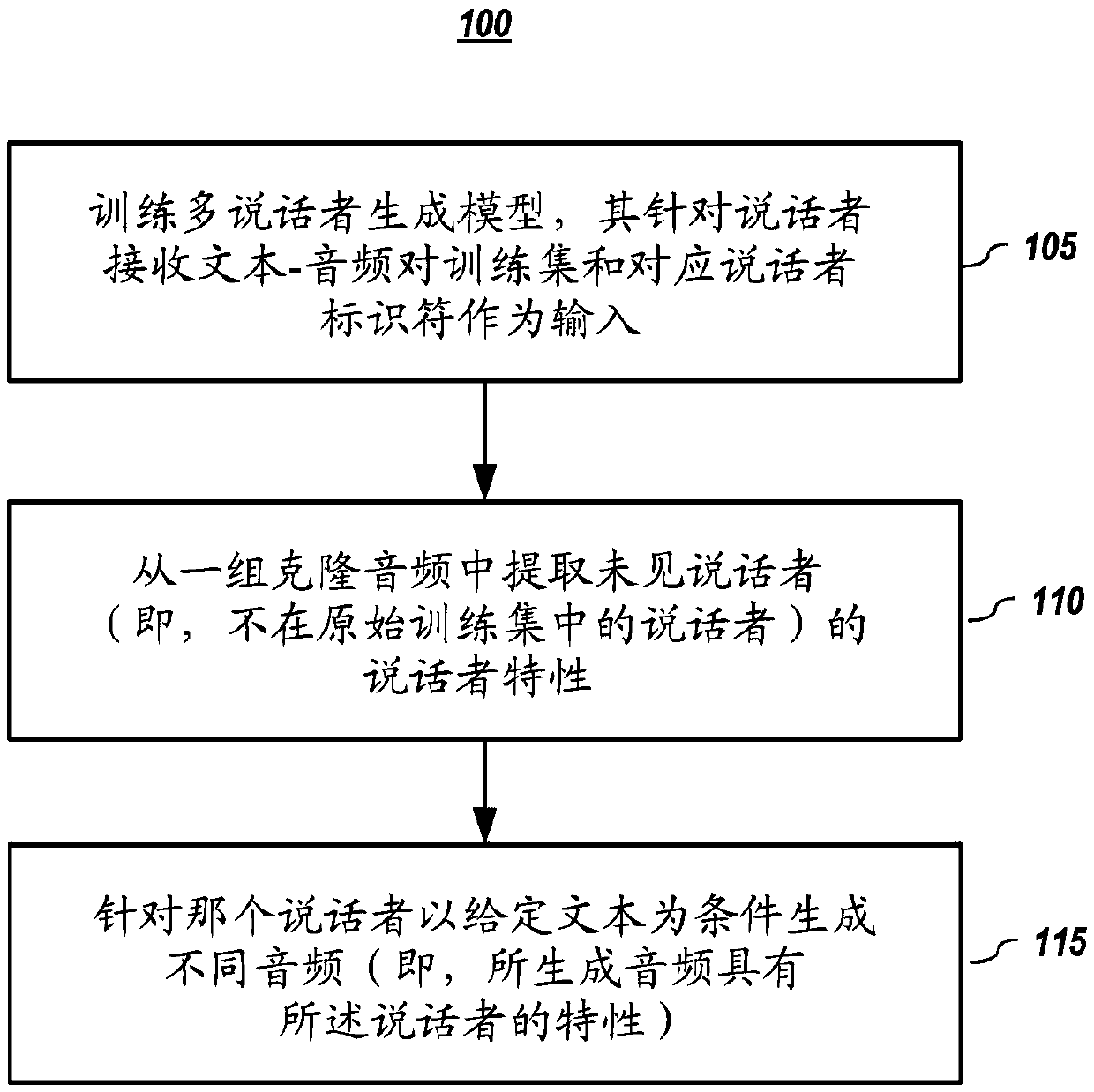

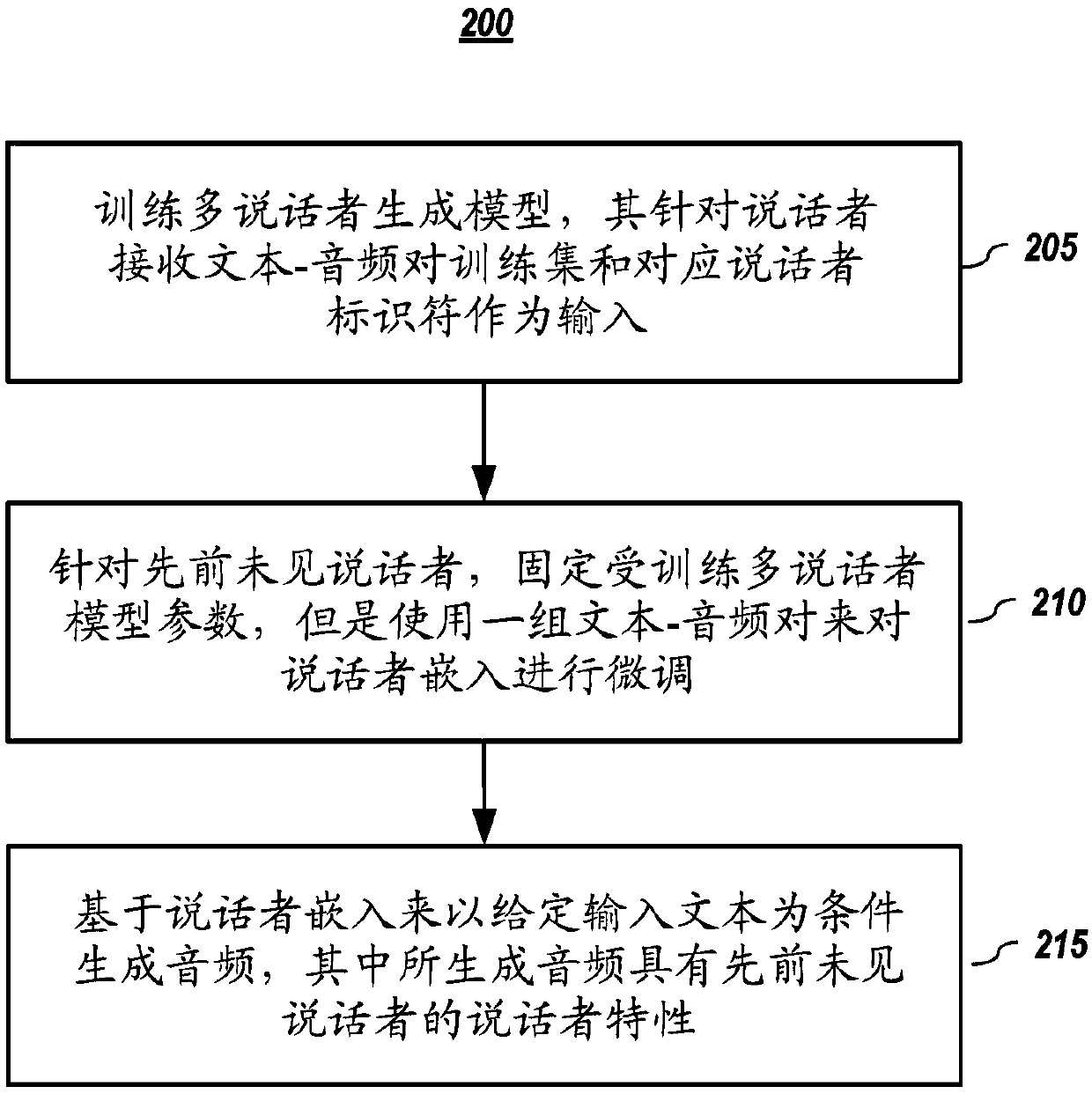

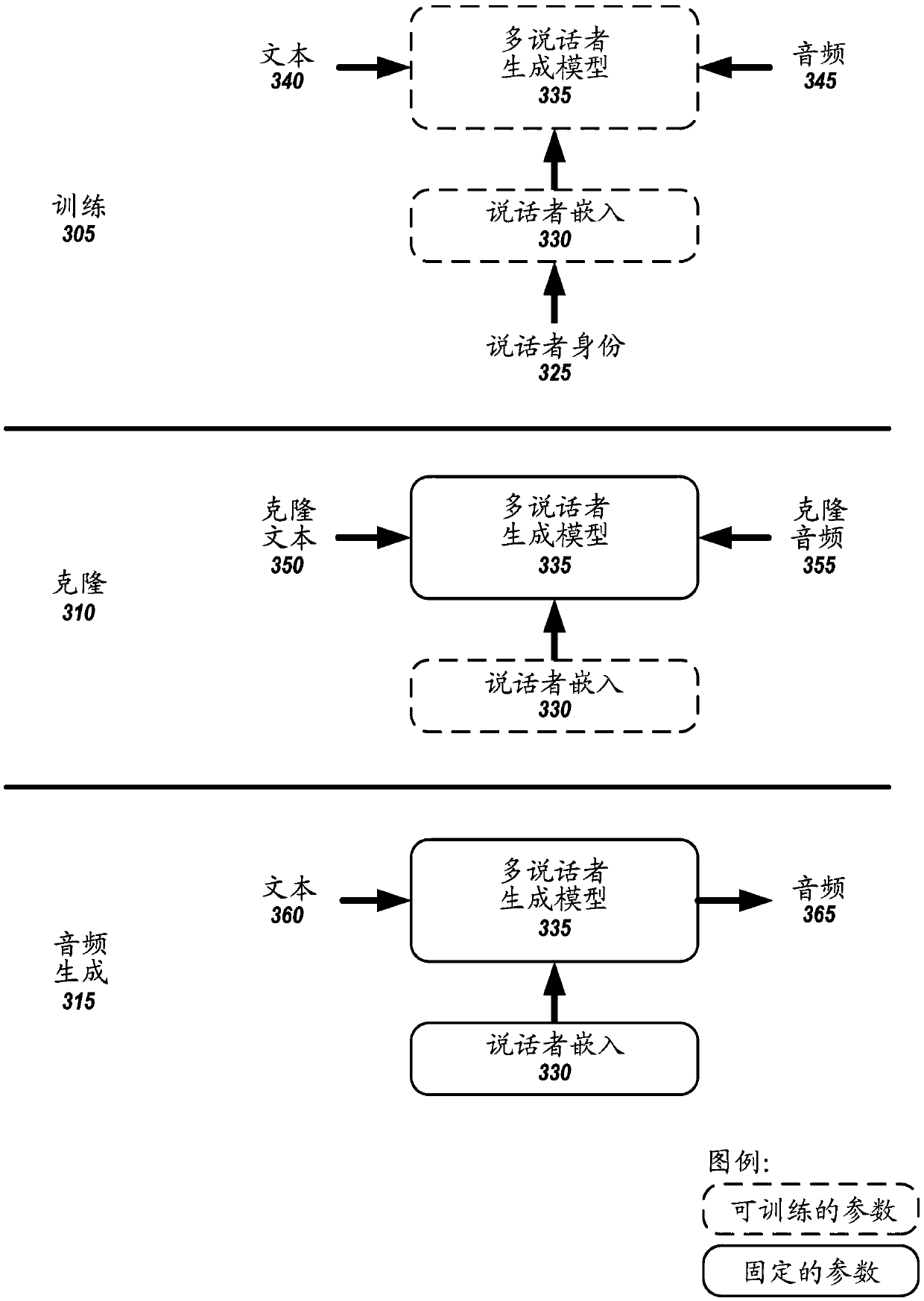

Systems and methods for neural voice cloning with a few samples

Voice cloning is a highly desired capability for personalized speech interfaces. Neural network-based speech synthesis has been shown to generate high quality speech for a large number of speakers. Neural voice cloning systems that take a few audio samples as input are presented herein. Two approaches, speaker adaptation and speaker encoding, are disclosed. Speaker adaptation embodiments are basedon fine-tuning a multi-speaker generative model with a few cloning samples. Speaker encoding embodiments are based on training a separate model to directly infer a new speaker embedding from cloningaudios, which is used in or with a multi-speaker generative model. Both approaches achieve good performance in terms of naturalness of the speech and its similarity to original speaker-even with veryfew cloning audios.

Owner:BAIDU USA LLC

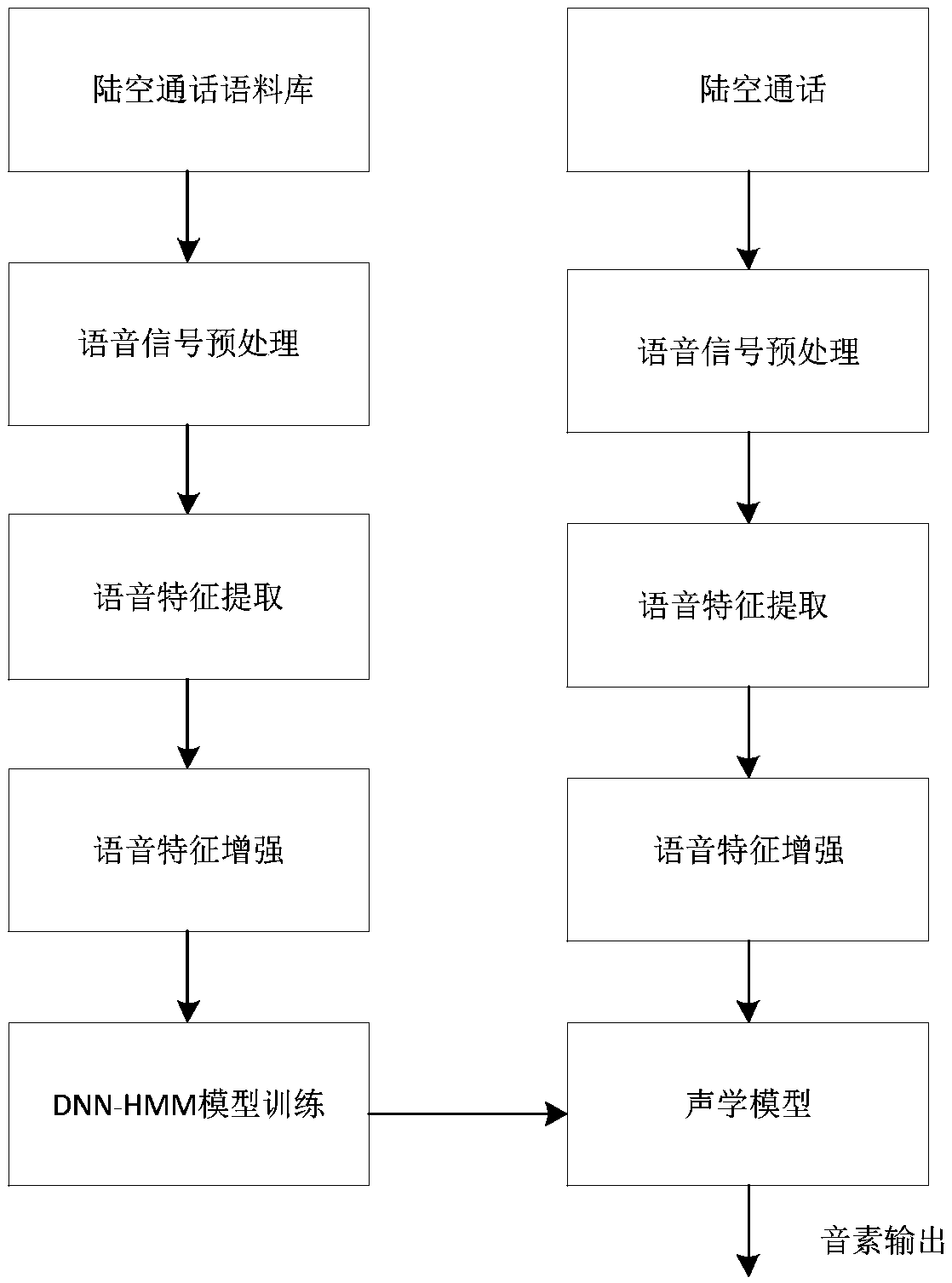

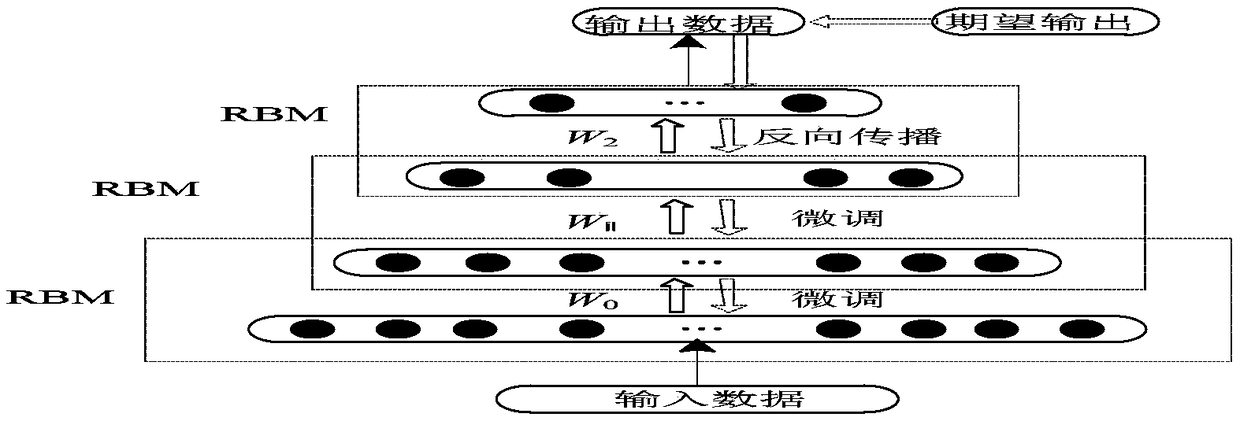

DNN (Deep Neural Network)-HMM (Hidden Markov Model)-based civil aviation radiotelephony communication acoustic model construction method

InactiveCN109119072AReduce false recognition rateSpeech recognitionPhoneme recognitionHide markov model

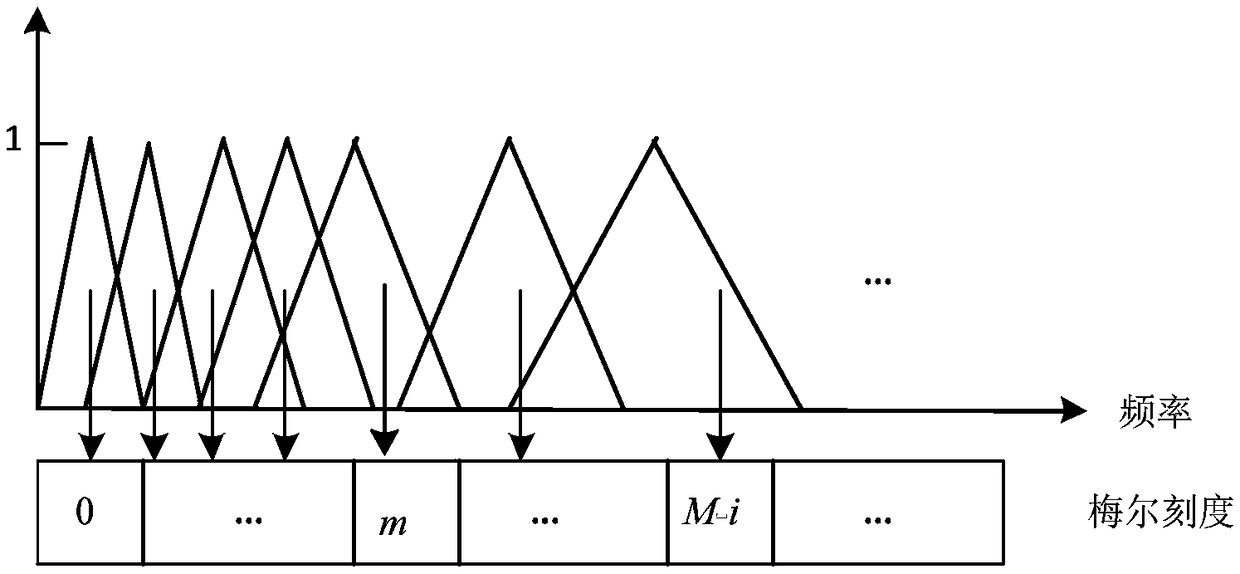

The invention relates to a DNN (Deep Neural Network)-HMM (Hidden Markov Model)-based civil aviation radiotelephony communication acoustic model construction method. The method includes the following steps that: a Chinese radiotelephony communication corpus is set up; civil aviation radiotelephony communication speech signals are pre-processed; Fbank features are extracted from the civil aviation radiotelephony communication speech signals and are adopted as civil aviation radiotelephony communication speech features; linear discrimination analysis, feature space maximum likelihood regression transformation and speaker adaptive training transformation processing are performed on the civil aviation radiotelephony communication speech features; and the processed speech features are utilized to build a DNN-HMM-based radiotelephony communication acoustic model. With the method of the invention adopted, the FBANK and MFCC features of radiotelephony communication speech are extracted to traina DNN network, so that the DNN-HMM acoustic model suitable for radiotelephony communication speech recognition can be obtained; and since a dictionary and a language model are combined, so that the feature enhanced DNN-HMM model can reduce the phoneme recognition error rate of the radiotelephony communication speech to 5.62% on the basis of constructed data.

Owner:CIVIL AVIATION UNIV OF CHINA

Speaker clustering and adaptation method based on the HMM model variation information and its apparatus for speech recognition

InactiveUS7590537B2Improve performanceAlcoholic beverage preparationSpeech recognitionSelf adaptiveSpeech sound

A speech recognition method and apparatus perform speaker clustering and speaker adaptation using average model variation information over speakers while analyzing the quantity variation amount and the directional variation amount. In the speaker clustering method, a speaker group model variation is generated based on the model variation between a speaker-independent model and a training speaker ML model. In the speaker adaptation method, the model in which the model variation between a test speaker ML model and a speaker group ML model to which the test speaker belongs which is most similar to a training speaker group model variation is found, and speaker adaptation is performed on the found model. Herein, the model variation in the speaker clustering and the speaker adaptation are calculated while analyzing both the quantity variation amount and the directional variation amount. The present invention may be applied to any speaker adaptation algorithm of MLLR and MAP.

Owner:SAMSUNG ELECTRONICS CO LTD

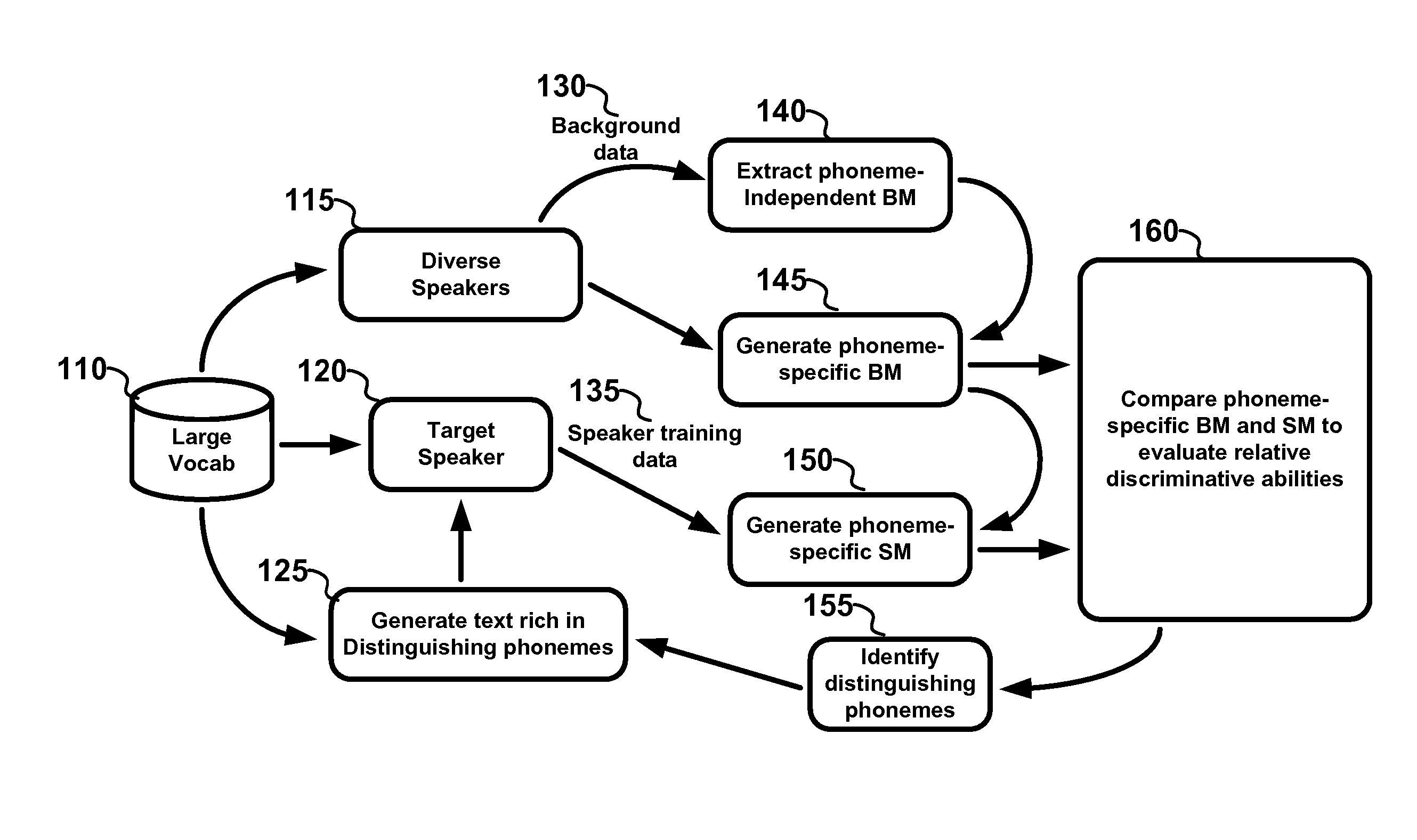

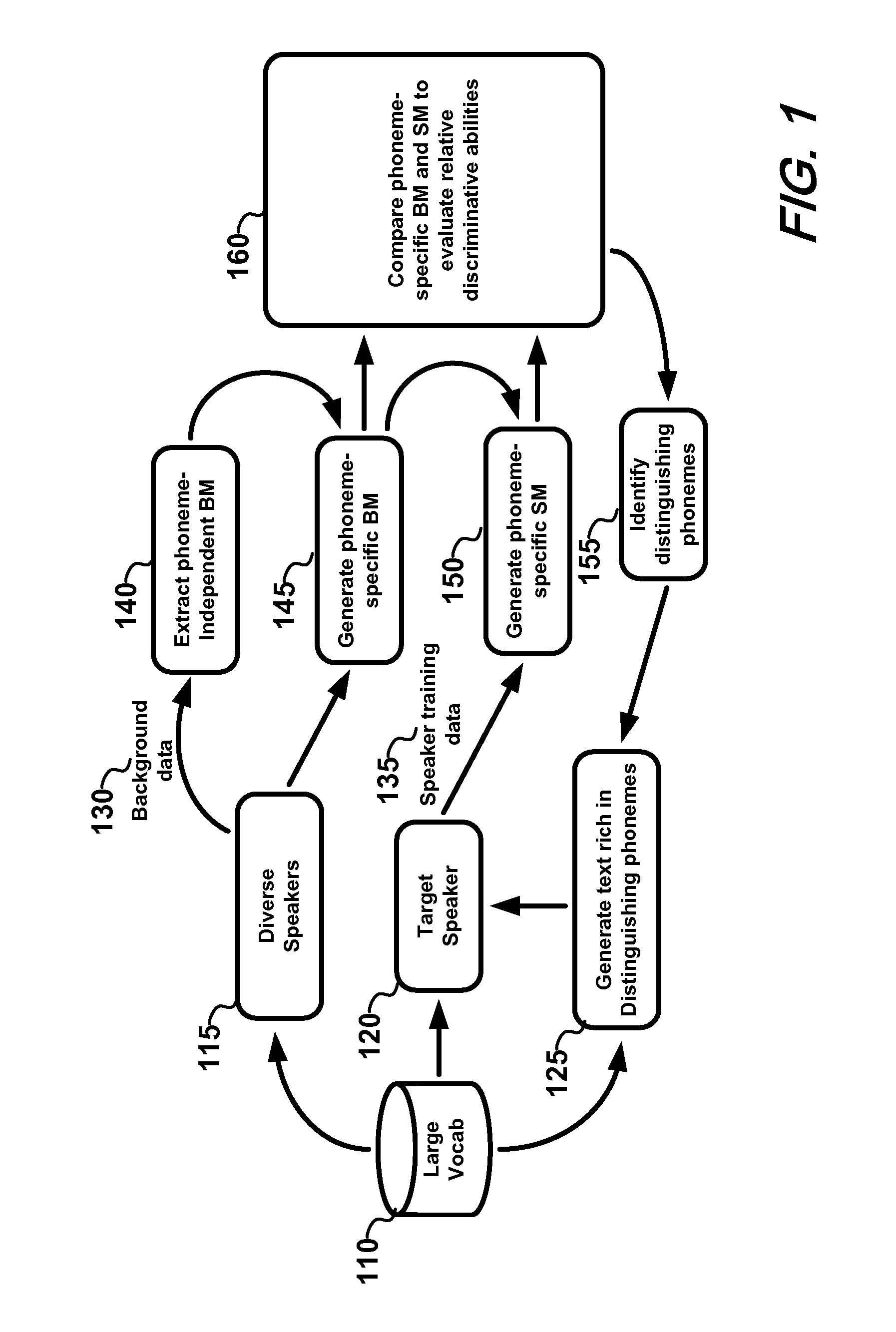

Voice-based multimodal speaker authentication using adaptive training and applications thereof

InactiveUS20080059176A1Improve authentication accuracyAutomatic call-answering/message-recording/conversation-recordingSpeech recognitionSpeech soundAdaptive method

A voice based multimodal speaker authentication method and telecommunications application thereof employing a speaker adaptive method for training phenome specific Gaussian mixture models. Applied to telecommunications services, the method may advantageously be implemented in contemporary wireless terminals.

Owner:NEC CORP

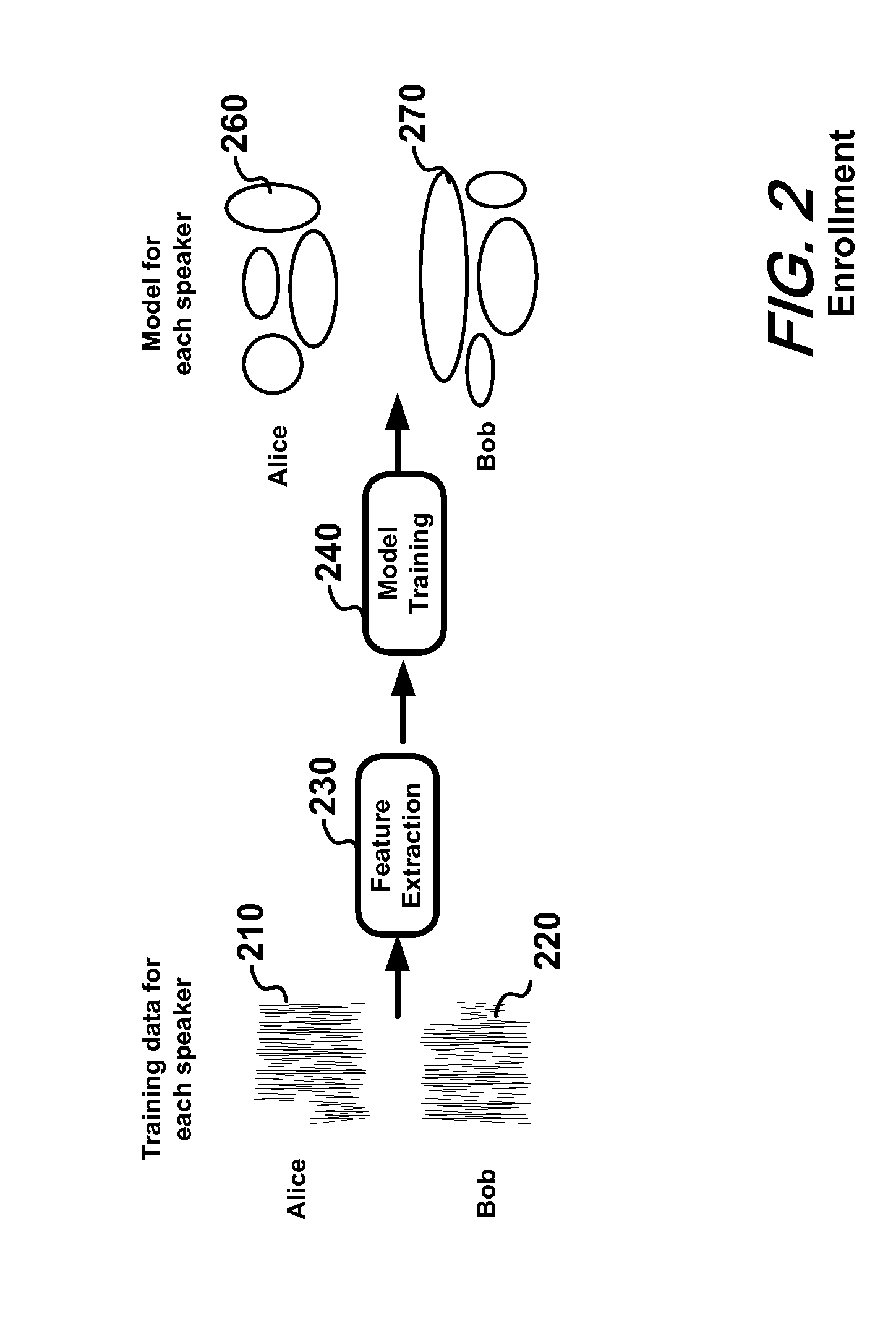

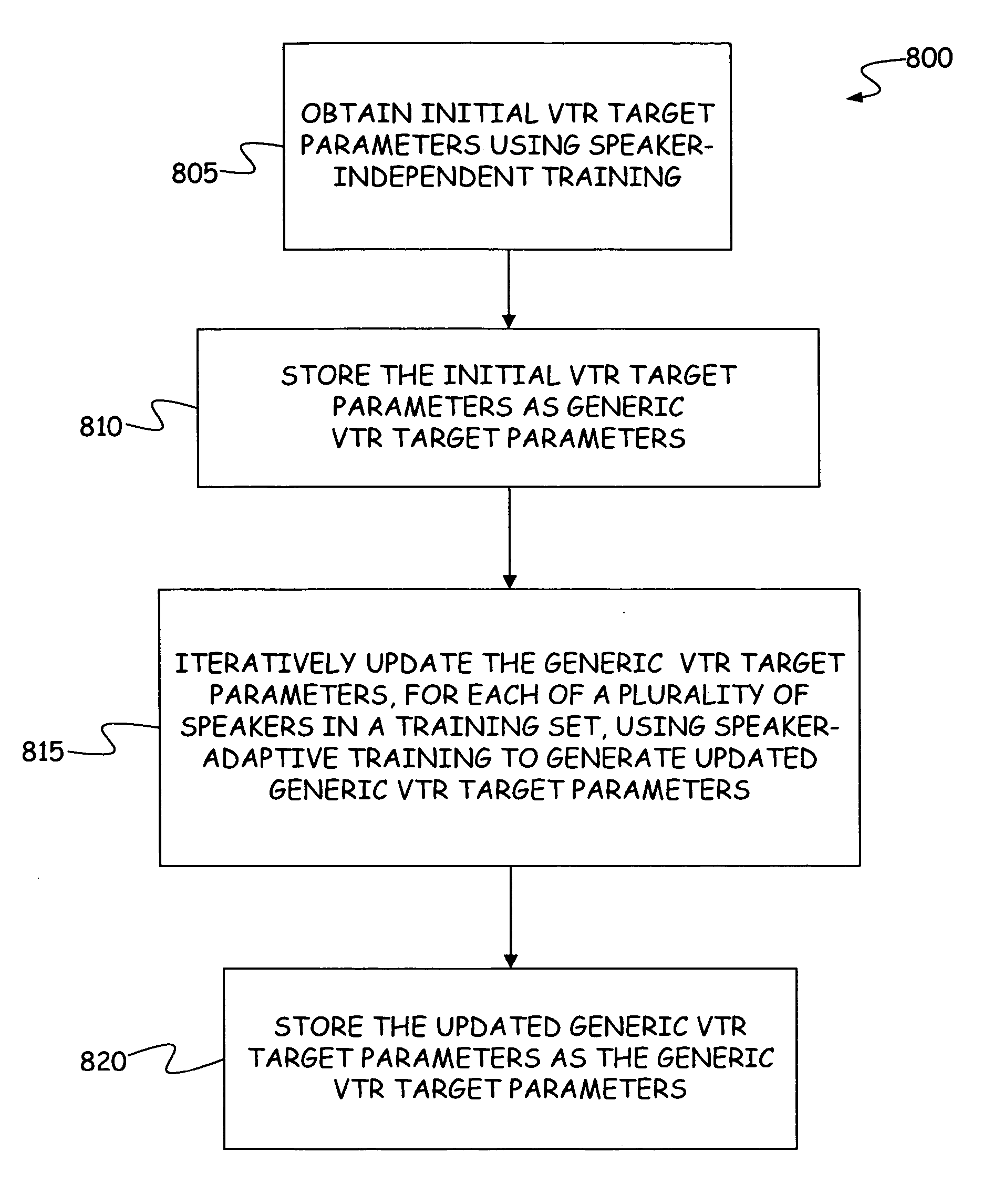

Speaker adaptive learning of resonance targets in a hidden trajectory model of speech coarticulation

A computer-implemented method is provided for training a hidden trajectory model, of a speech recognition system, which generates Vocal Tract Resonance (VTR) targets. The method includes obtaining generic VTR target parameters corresponding to a generic speaker used by a target selector to generate VTR target sequences. The generic VTR target parameters are scaled for a particular speaker using a speaker-dependent scaling factor for the particular speaker to generate speaker-adaptive VTR target parameters. This scaling is performed for both the training data and the test data, and for the training data, the scaling is performed iteratively with the process of obtaining the generic targets. The computation of the scaling factor makes use of the results of a VTR tracker. The speaker-adaptive VTR target parameters for the particular speaker are then stored in order to configure the hidden trajectory model to perform speech recognition for the particular speaker using the speaker-adaptive VTR target parameters.

Owner:MICROSOFT TECH LICENSING LLC

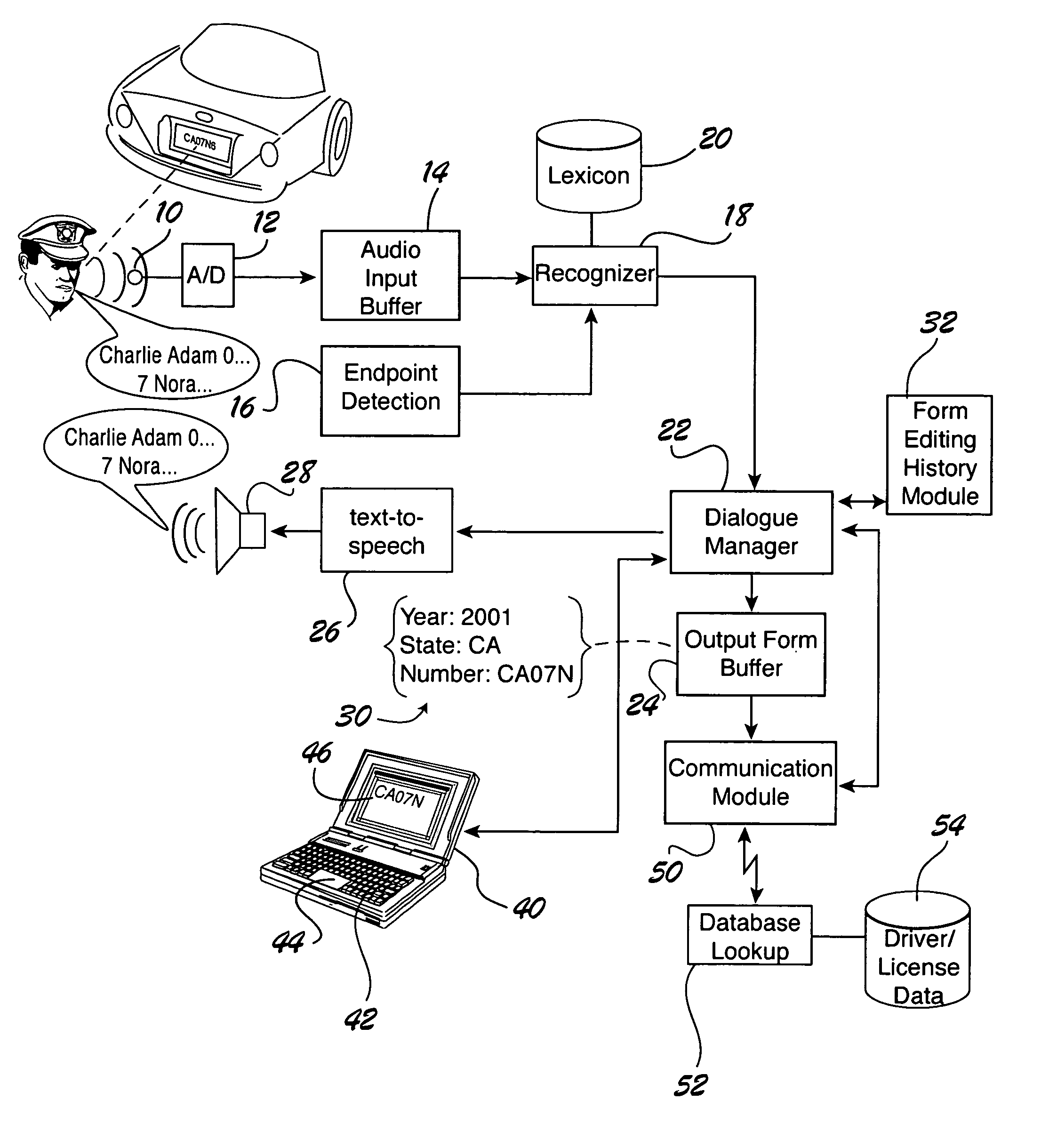

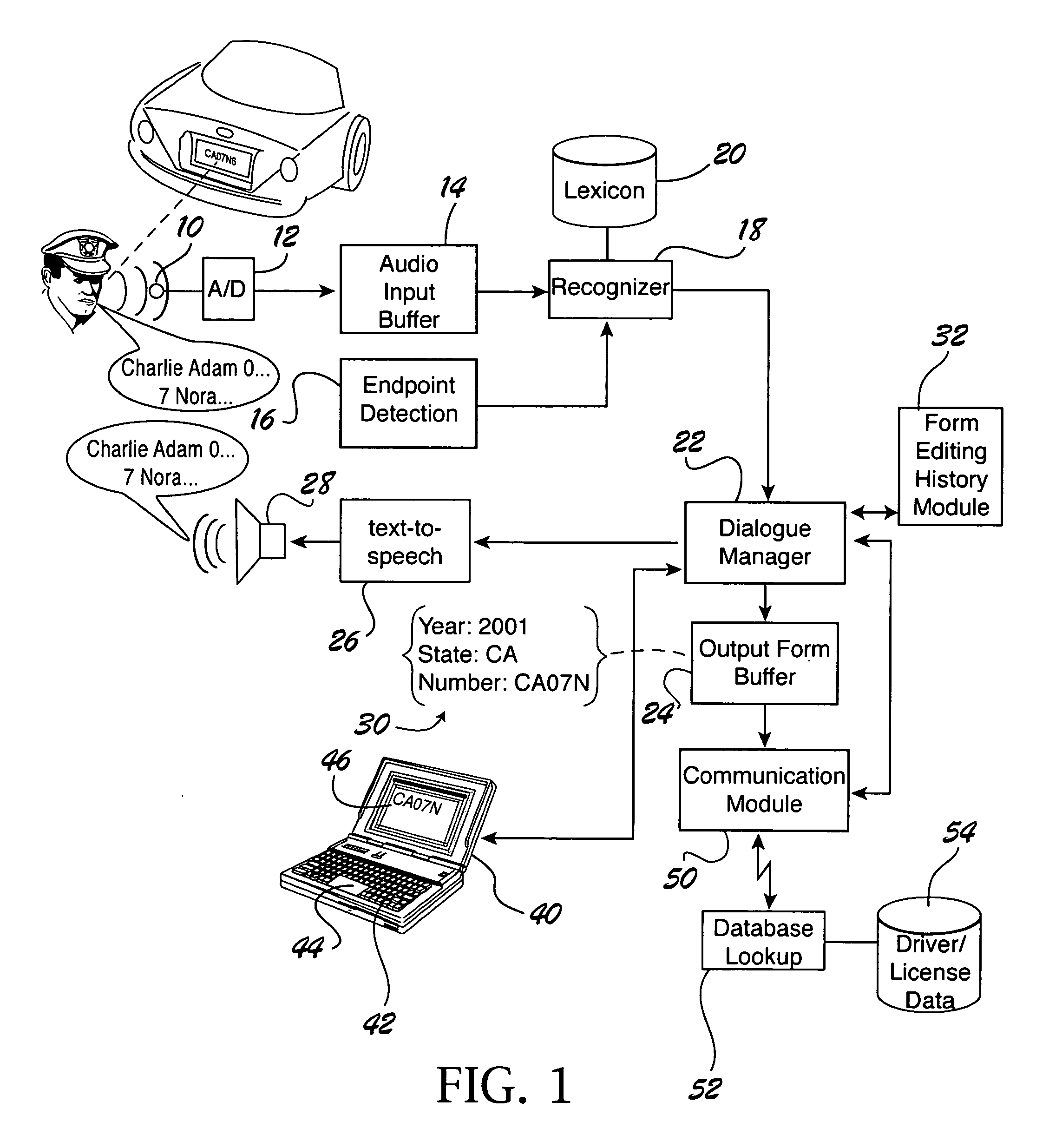

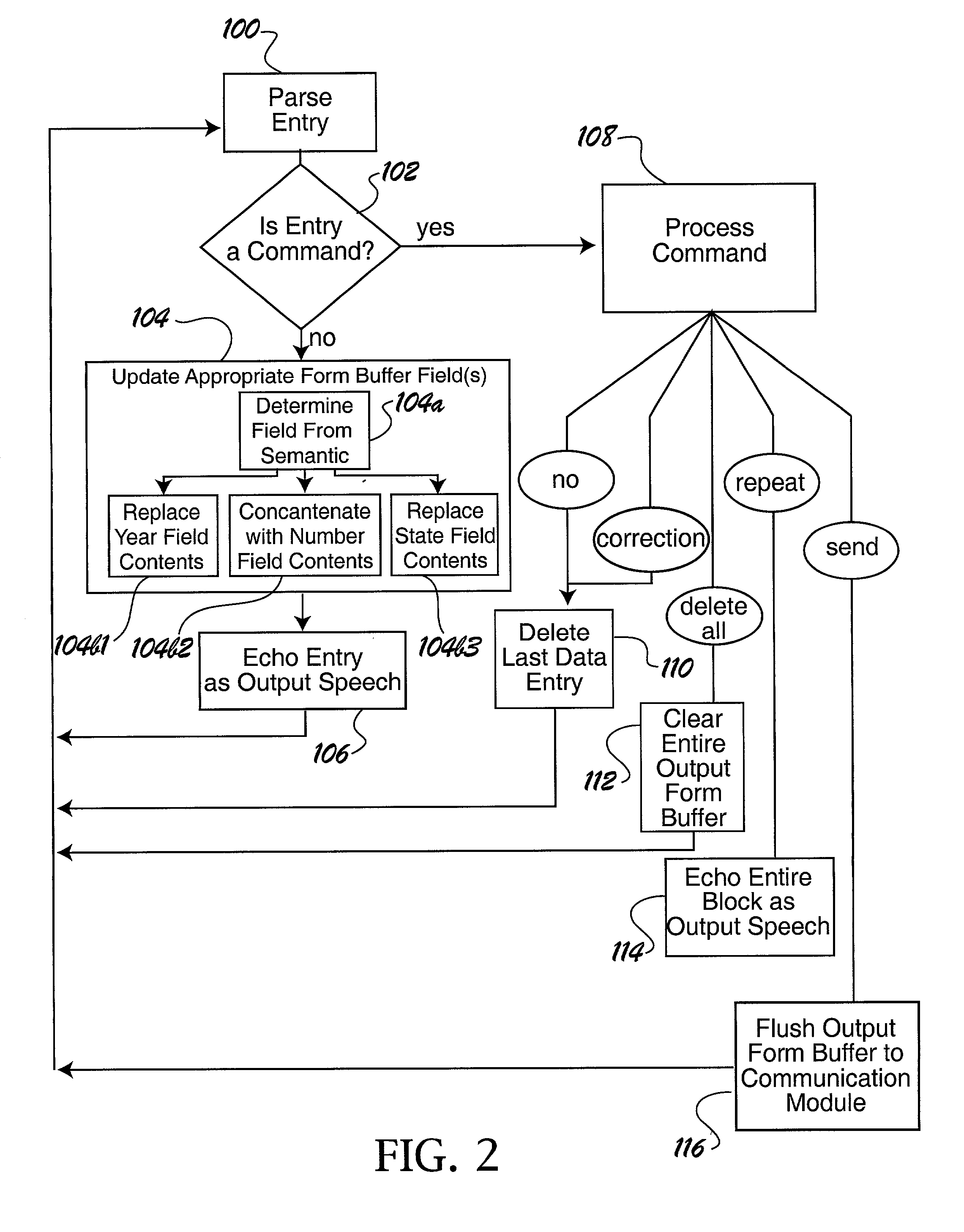

Method for efficient, safe and reliable data entry by voice under adverse conditions

InactiveUS6996528B2Efficient and robust form fillingImprove speech recognition accuracySpeech recognitionSpeech identificationSpeech input

A method and apparatus for data entry by voice under adverse conditions is disclosed. More specifically it provides a way for efficient and robust form filling by voice. A form can typically contain one or several fields that must be filled in. The user communicates to a speech recognition system and word spotting is performed upon the utterance. The spotted words of an utterance form a phrase that can contain field-specific values and / or commands. Recognized values are echoed back to the speaker via a text-to-speech system. Unreliable or unsafe inputs for which the confidence measure is found to be low (e.g. ill-pronounced speech or noises) are rejected by the spotter. Speaker adaptation is furthermore performed transparently to improve speech recognition accuracy. Other input modalities can be additionally supported (e.g. keyboard and touch-screen). The system maintains a dialogue history to enable editing and correction operations on all active fields.

Owner:PANASONIC CORP

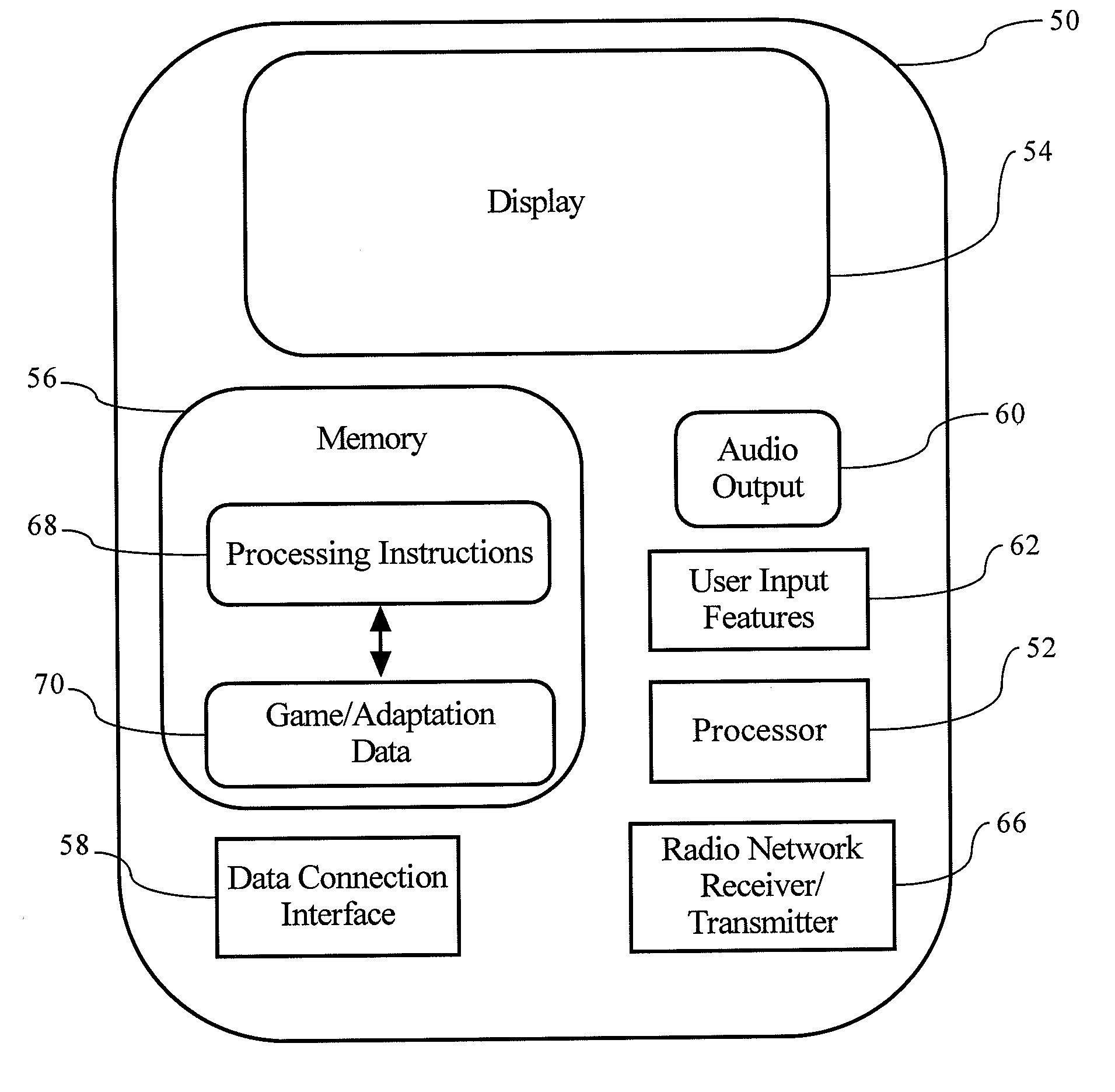

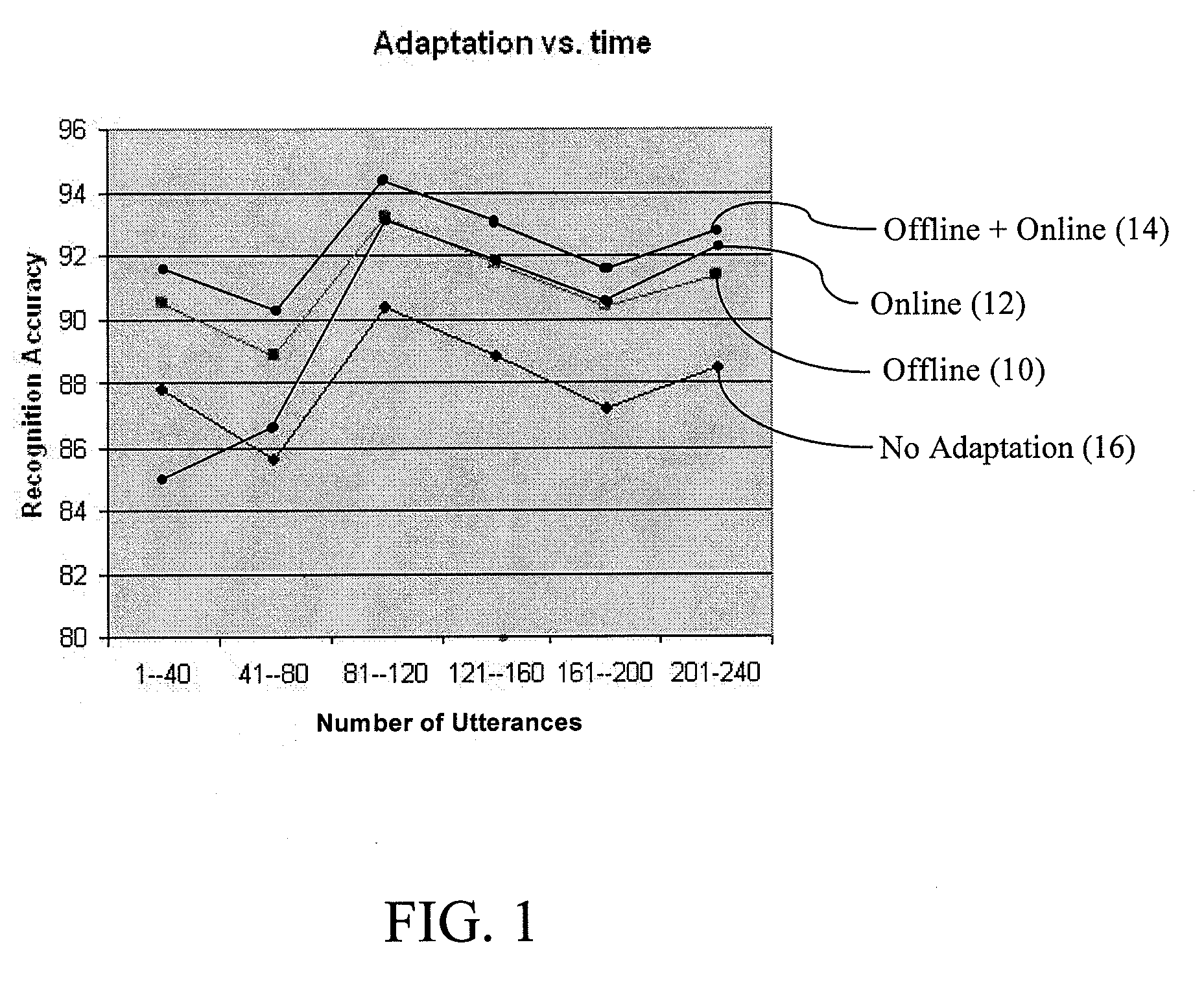

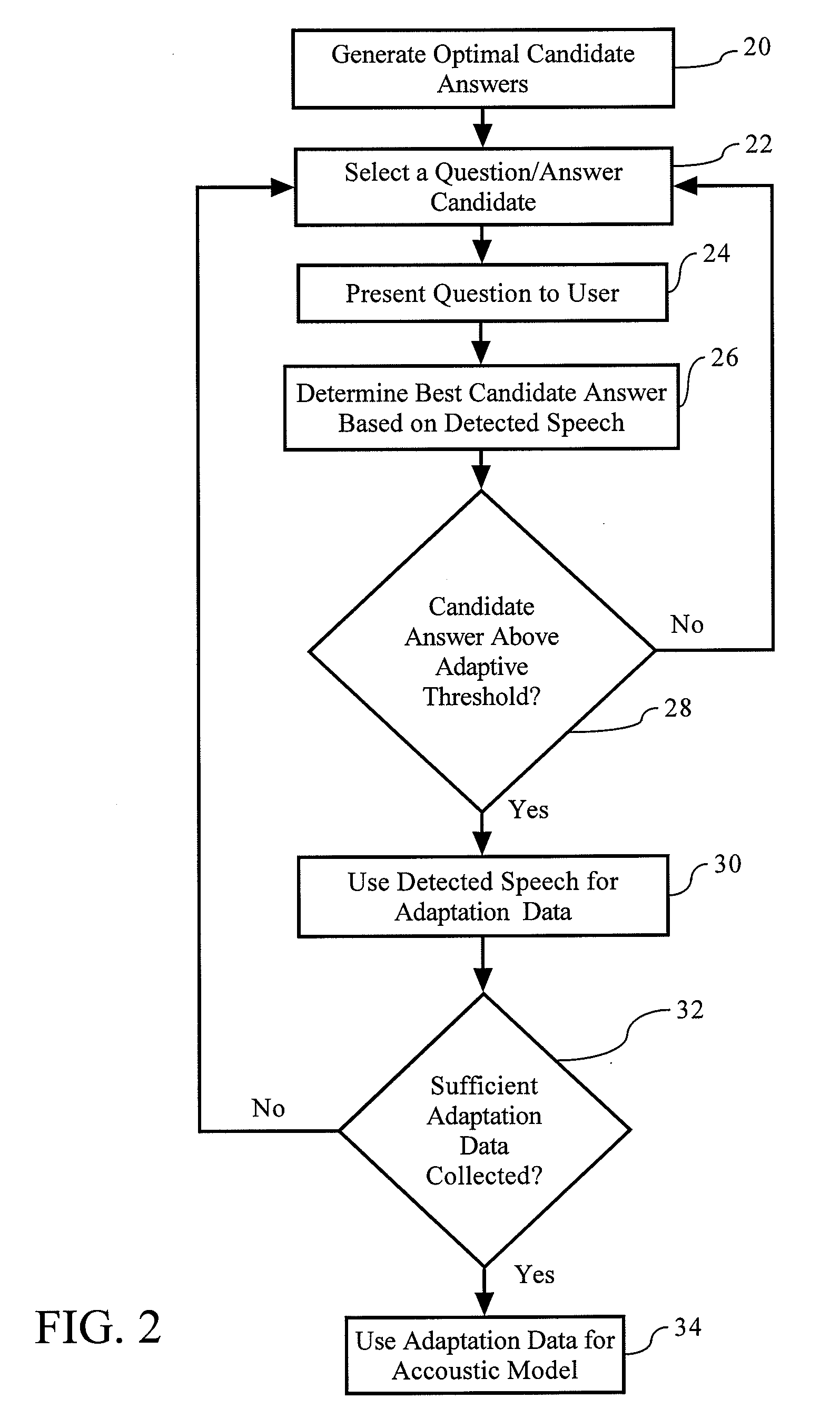

User friendly speaker adaptation for speech recognition

InactiveUS20100088097A1Easy to identifyImprove usabilitySpeech recognitionAcoustic modelSpeech identification

Improved performance and user experience for speech recognition application and system by utilizing for example offline adaptation without tedious effort by a user. Interactions with a user may be in the form of a quiz, game, or other scenario wherein the user may implicitly provide vocal input for adaptation data. Queries with a plurality of candidate answers may be designed in an optimal and efficient way, and presented to the user, wherein detected speech from the user is then matched to one of the candidate answers, and may be used to adapt an acoustic model to the particular speaker for speech recognition.

Owner:NOKIA CORP

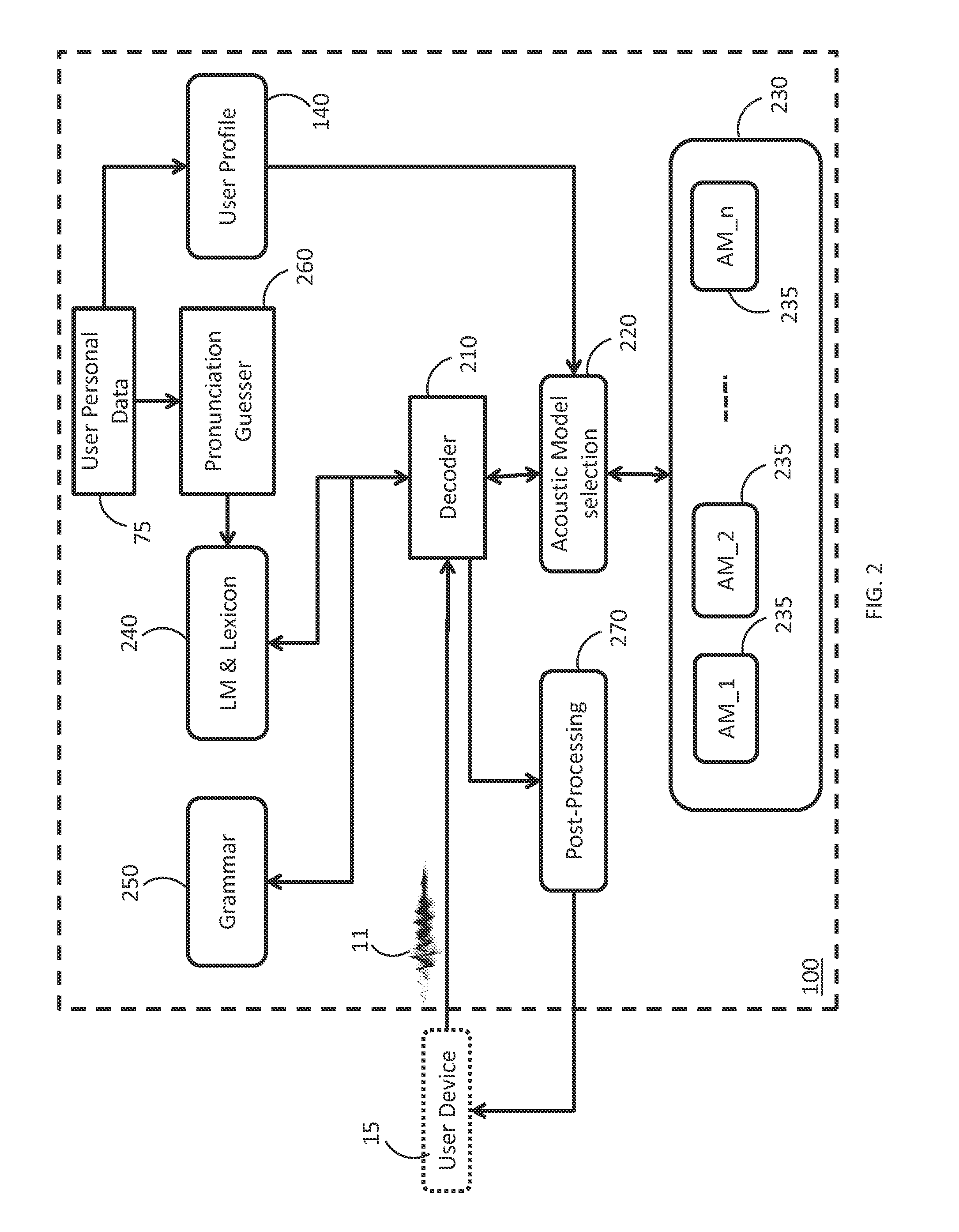

Method And Apparatus For Exploiting Language Skill Information In Automatic Speech Recognition

Typical speech recognition systems usually use speaker-specific speech data to apply speaker adaptation to models and parameters associated with the speech recognition system. Given that speaker-specific speech data may not be available to the speech recognition system, information indicative of language skills is employed in adapting configurations of a speech recognition system. According to at least one example embodiment, a method and corresponding apparatus, for speech recognition comprise maintaining information indicative of language skills of users of the speech recognition system. A configuration of the speech recognition system for a user is determined based at least in part on corresponding information indicative of language skills of the user. Upon receiving speech data from the user, the configuration of the speech recognition system determined is employed in performing speech recognition.

Owner:NUANCE COMM INC

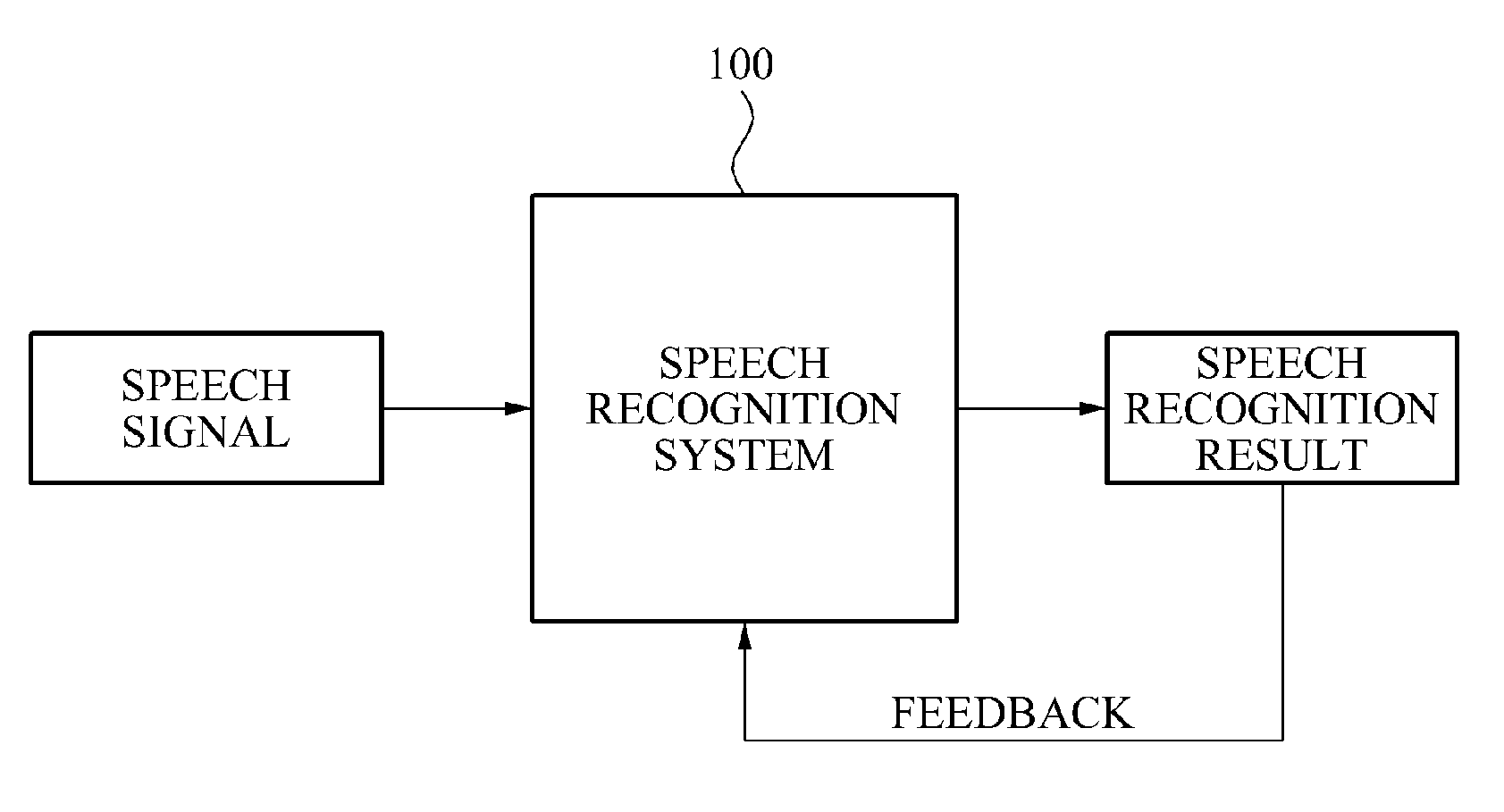

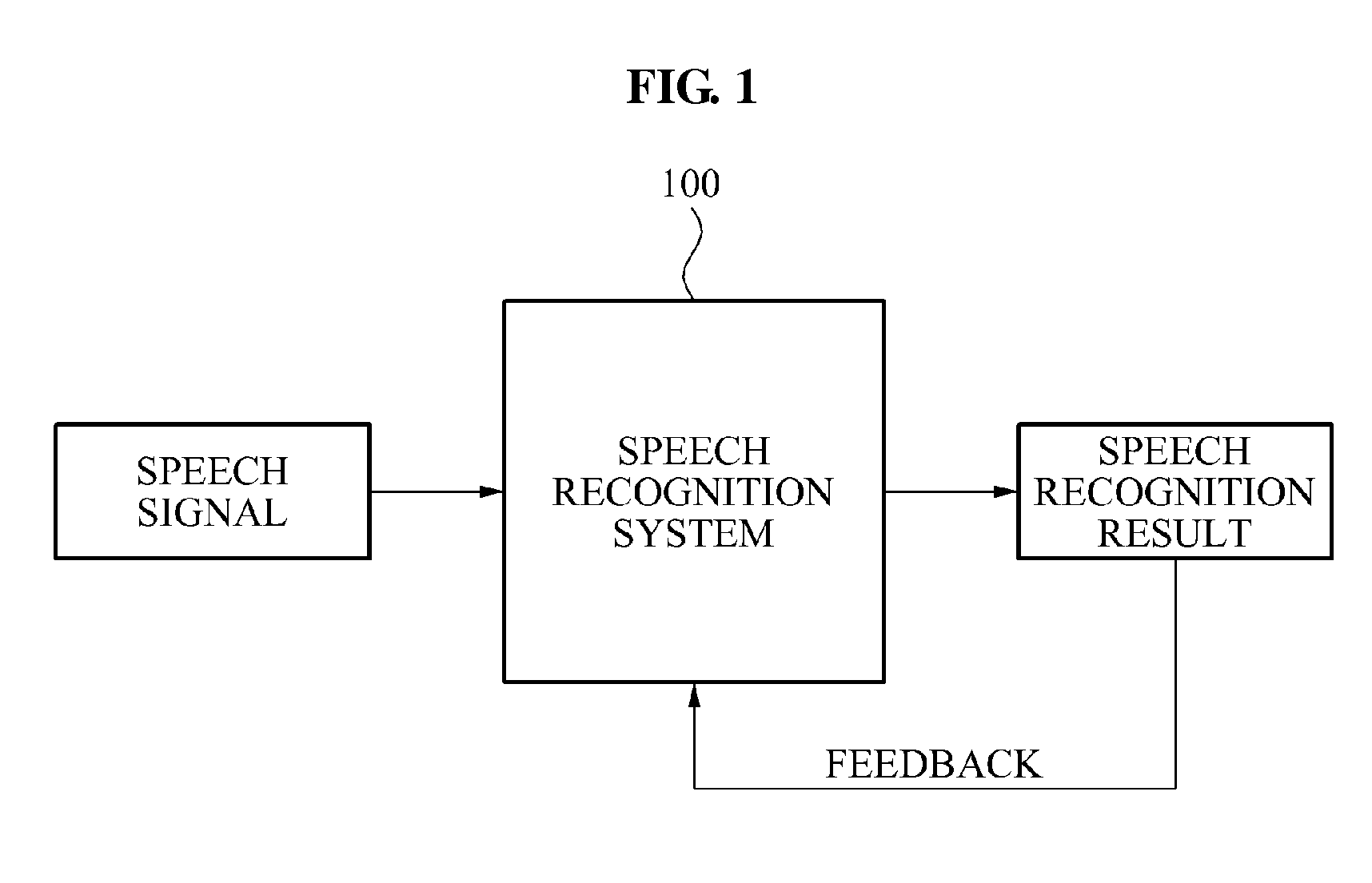

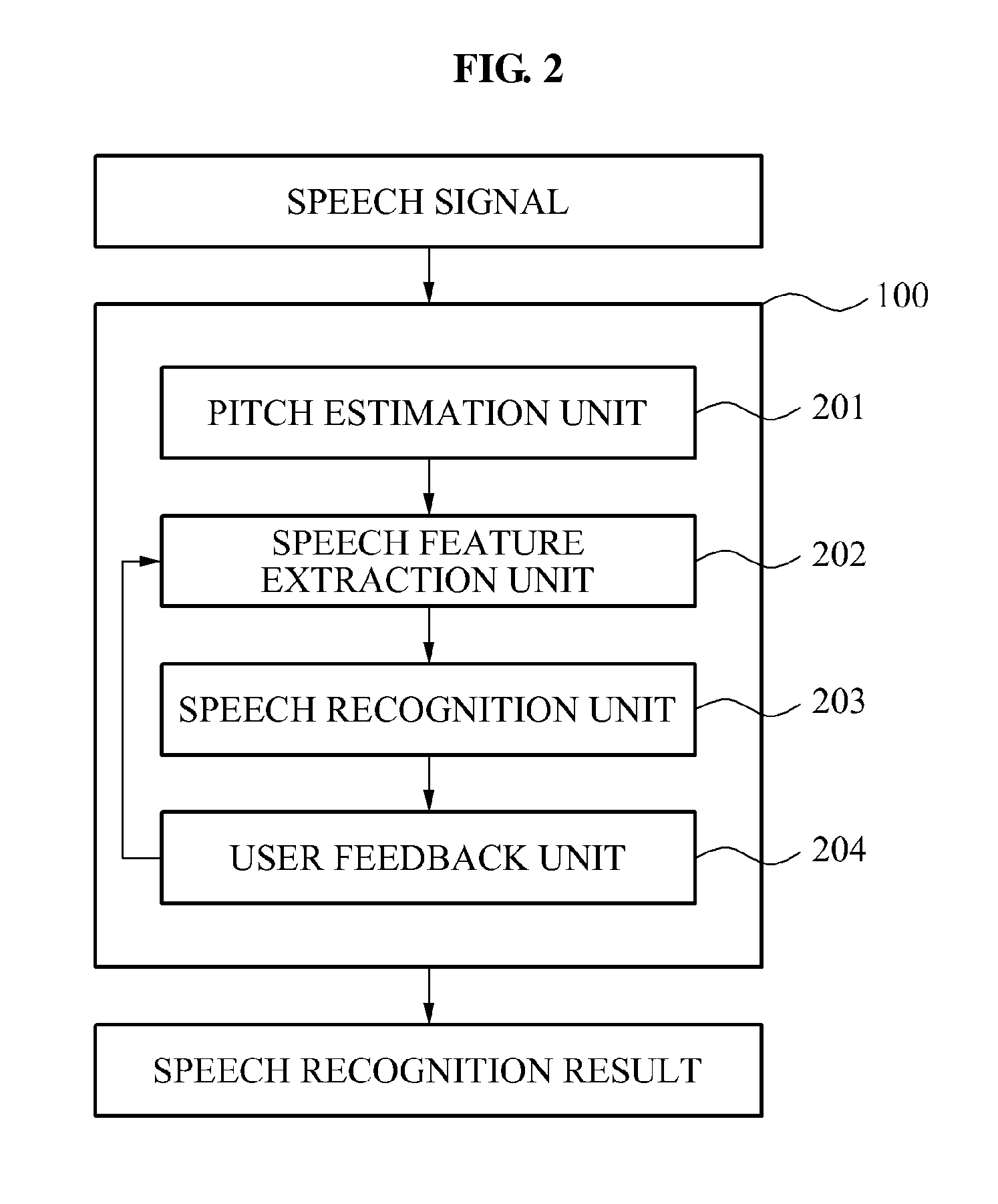

Real-time speaker-adaptive speech recognition apparatus and method

A speech recognition apparatus and method for real-time speaker adaptation are provided. The speech recognition apparatus may estimate a pitch of a speech section from an inputted speech signal, extract a speech feature for speech recognition based on the estimated pitch, and perform speech recognition with respect to the speech signal based on the speech feature. The speech recognition apparatus may be adaptively normalized depending on a speaker. Thus, the speech recognition apparatus may extract a speech feature for speech recognition, and may improve a performance of speech recognition based on the extracted speech feature.

Owner:SAMSUNG ELECTRONICS CO LTD

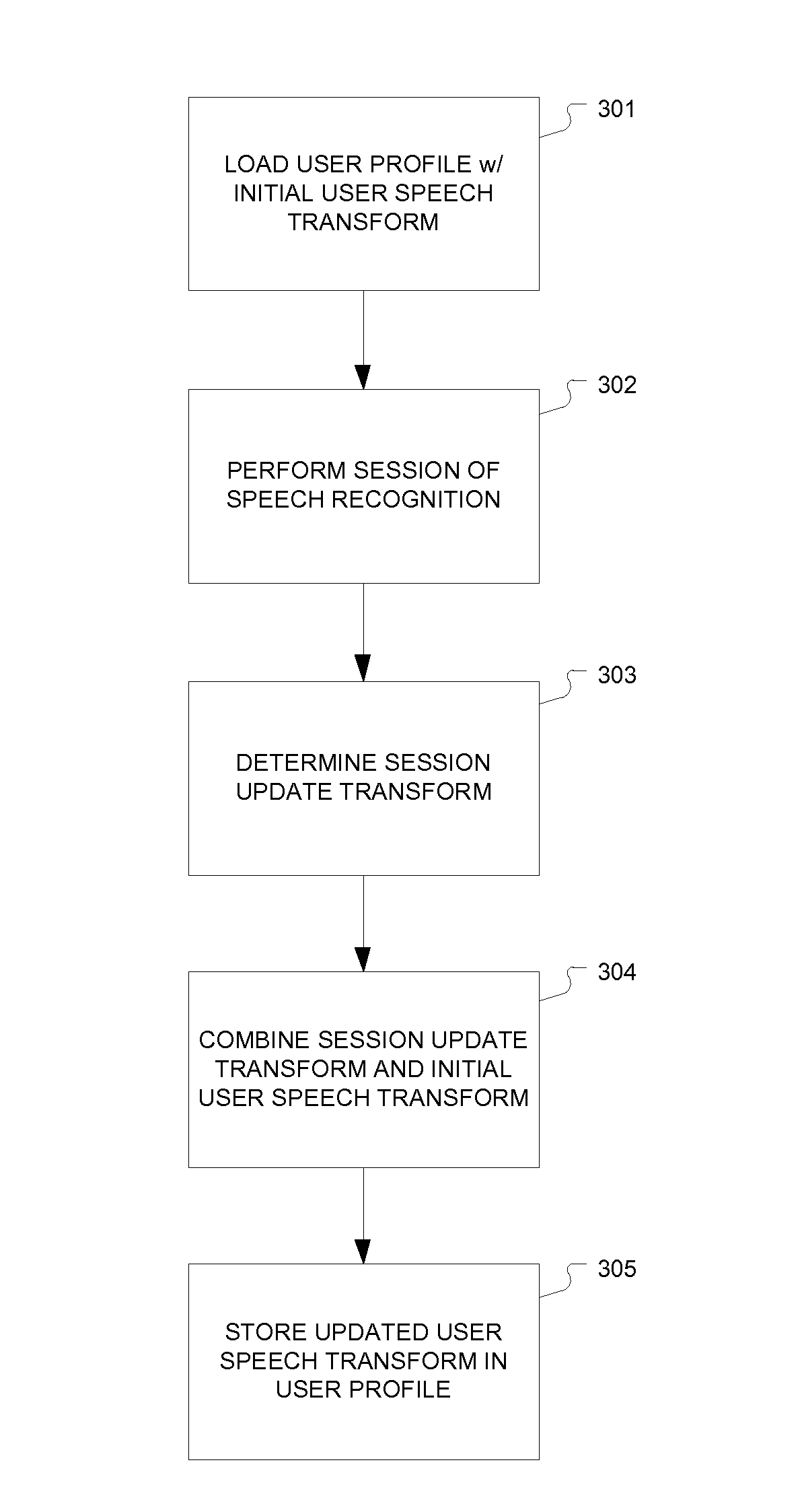

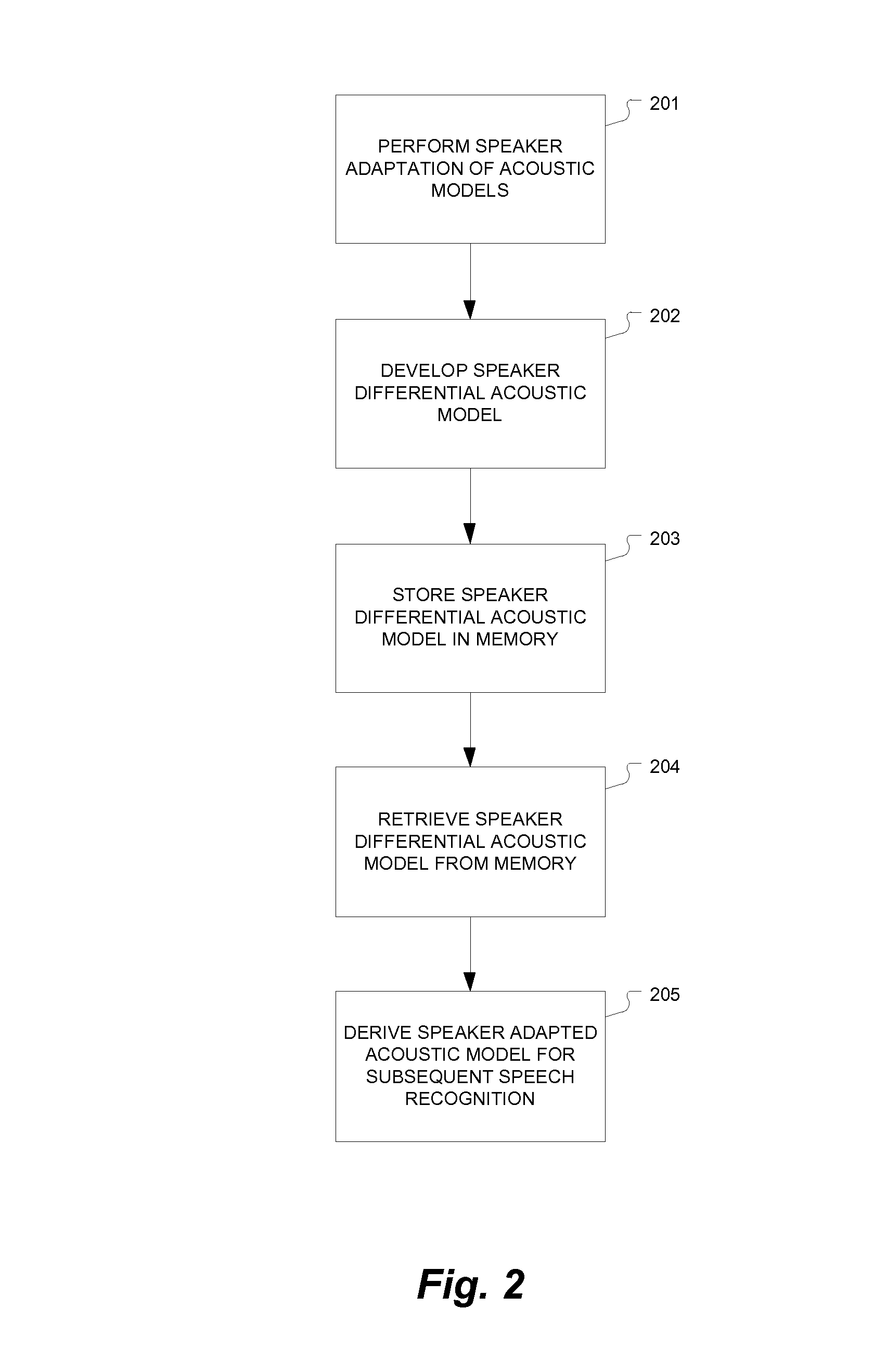

Differential acoustic model representation and linear transform-based adaptation for efficient user profile update techniques in automatic speech recognition

A computer-implemented method is described for speaker adaptation in automatic speech recognition. Speech recognition data from a particular speaker is used for adaptation of an initial speech recognition acoustic model to produce a speaker adapted acoustic model. A speaker dependent differential acoustic model is determined that represents differences between the initial speech recognition acoustic model and the speaker adapted acoustic model. In addition, an approach is also disclosed to estimate speaker-specific feature or model transforms over multiple sessions. This is achieved by updating the previously estimated transform using only adaptation statistics of the current session.

Owner:NUANCE COMM INC

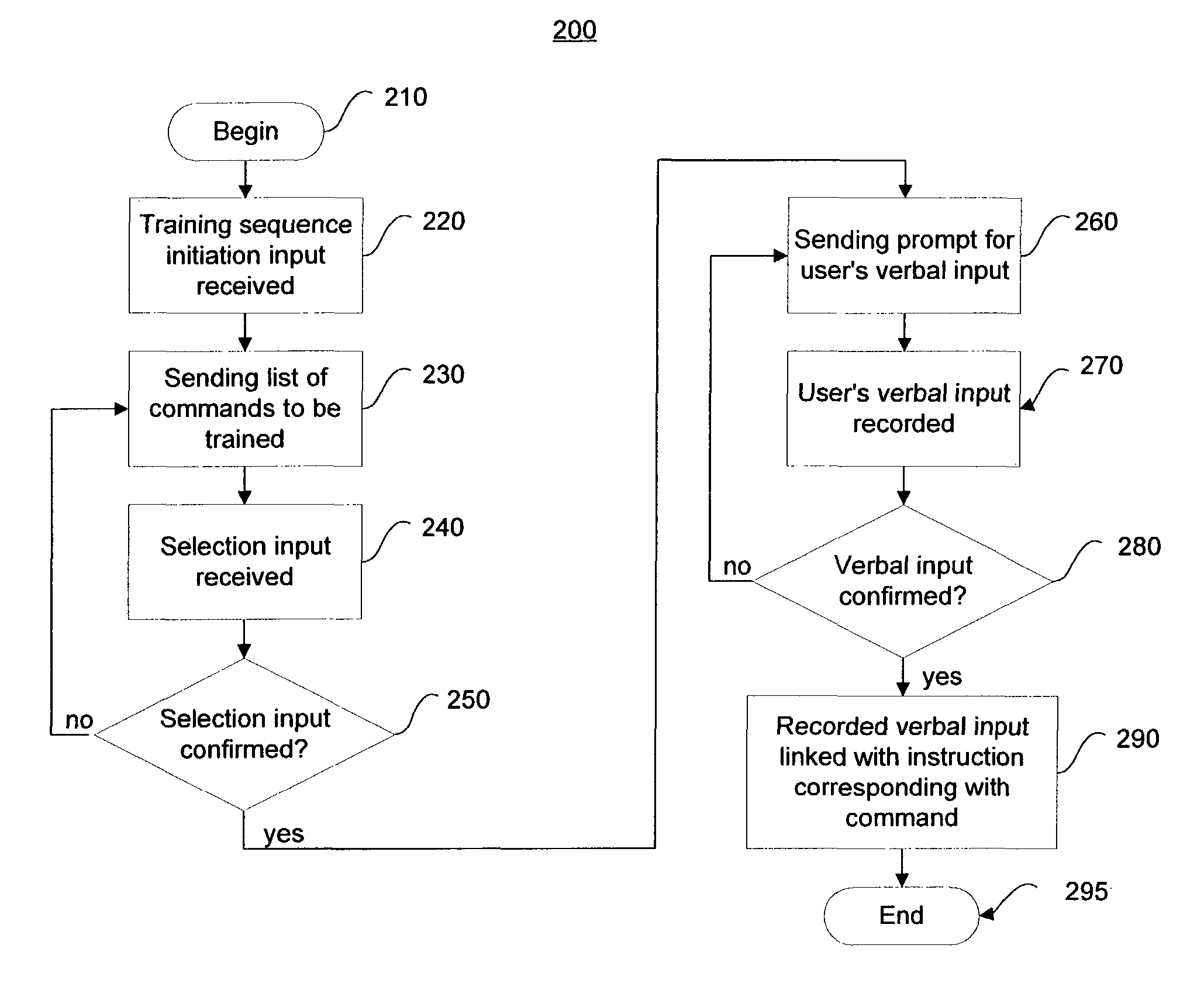

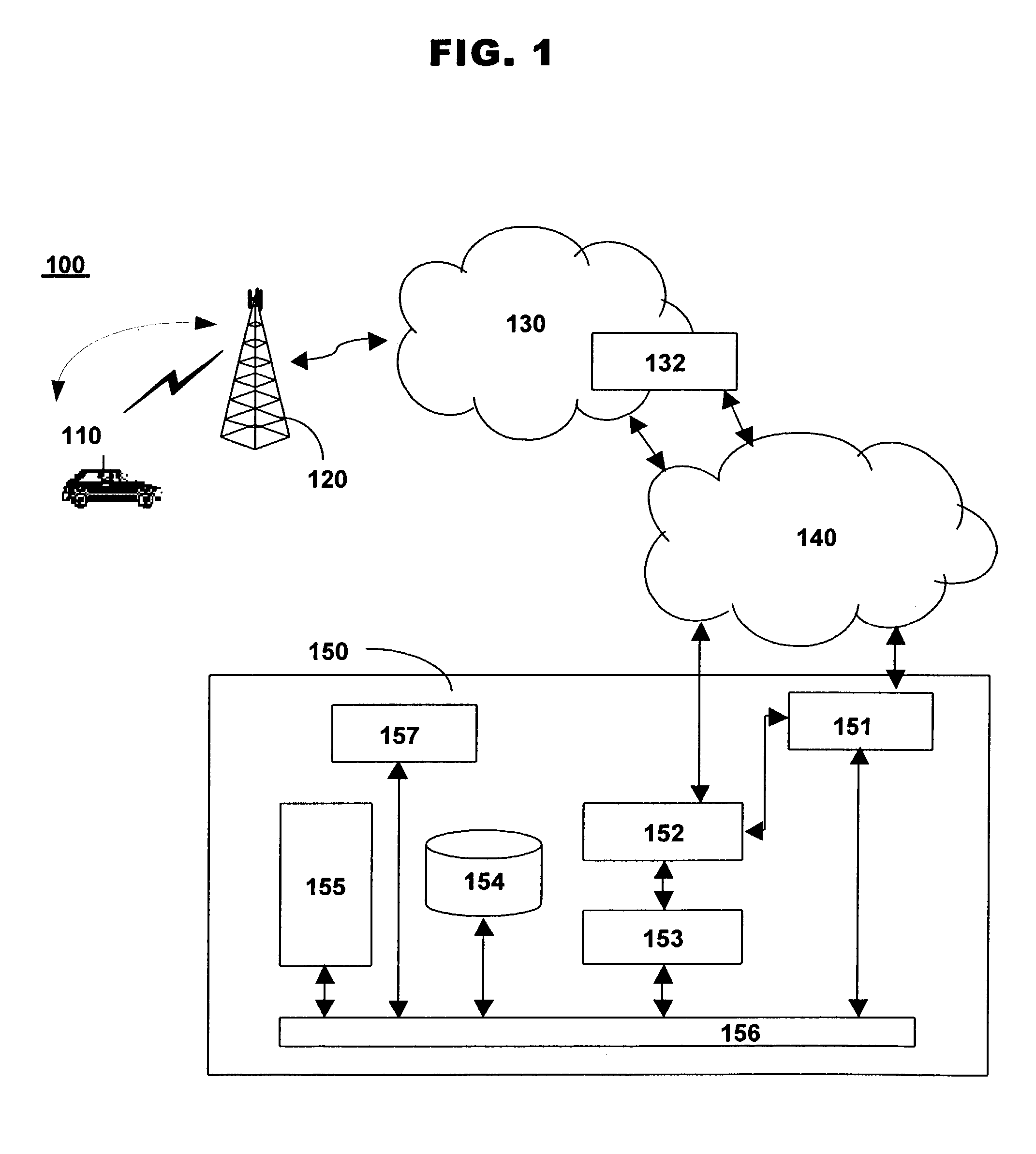

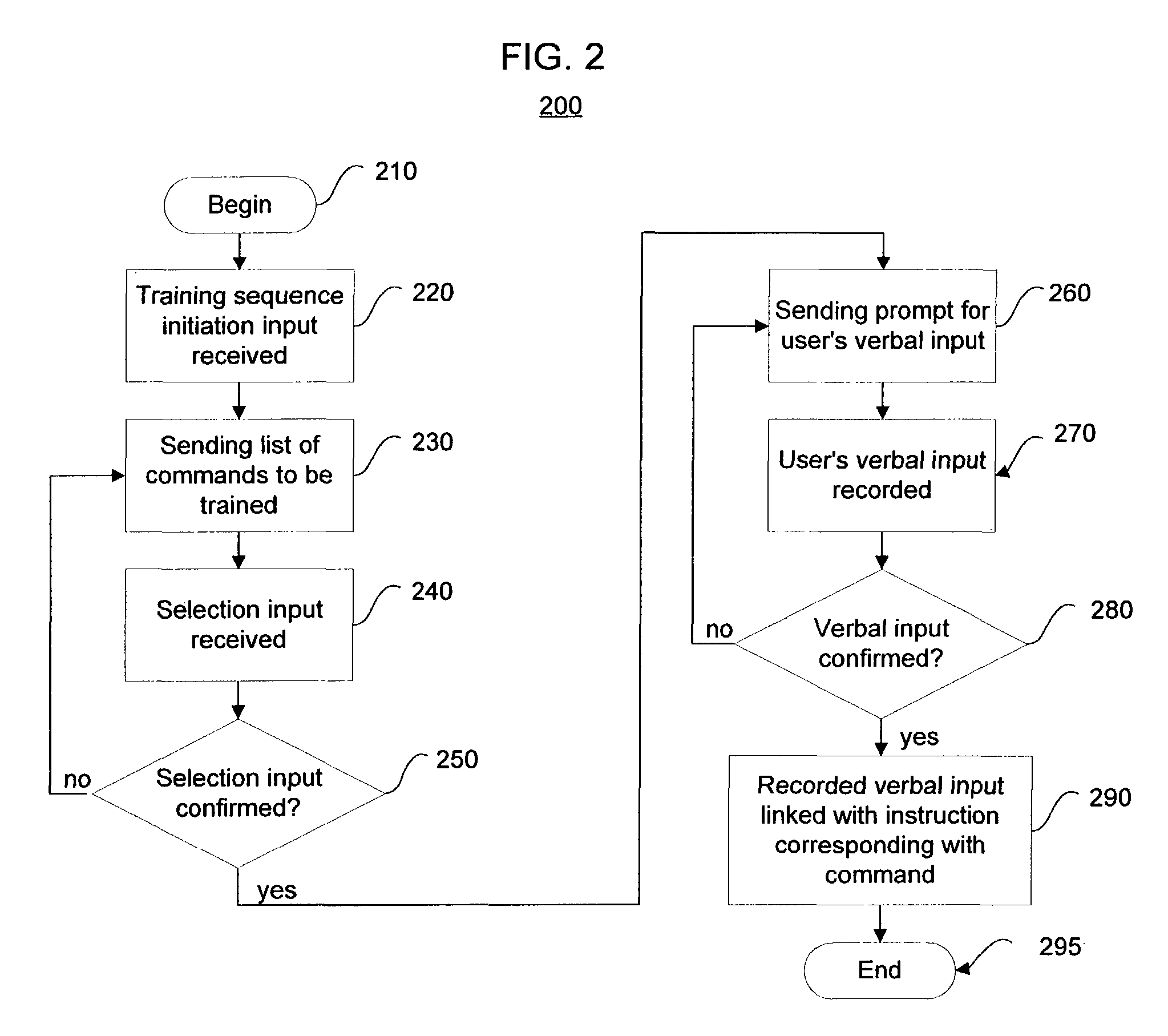

Context specific speaker adaptation user interface

InactiveUS7986974B2Two-way loud-speaking telephone systemsSubstation speech amplifiersContext specificSpeech sound

A method for providing a context specific speaker adaptation user interface is disclosed. The method allows a specific user of a voice activated system to train a voice recognition system to understand specific uttered commands by receiving a training sequence initiation input, sending at least one command to be trained, receiving a selection input, prompting a verbal input, recording the verbal input, and linking a voice input with an instruction corresponding with the command input.

Owner:GENERA MOTORS LLC

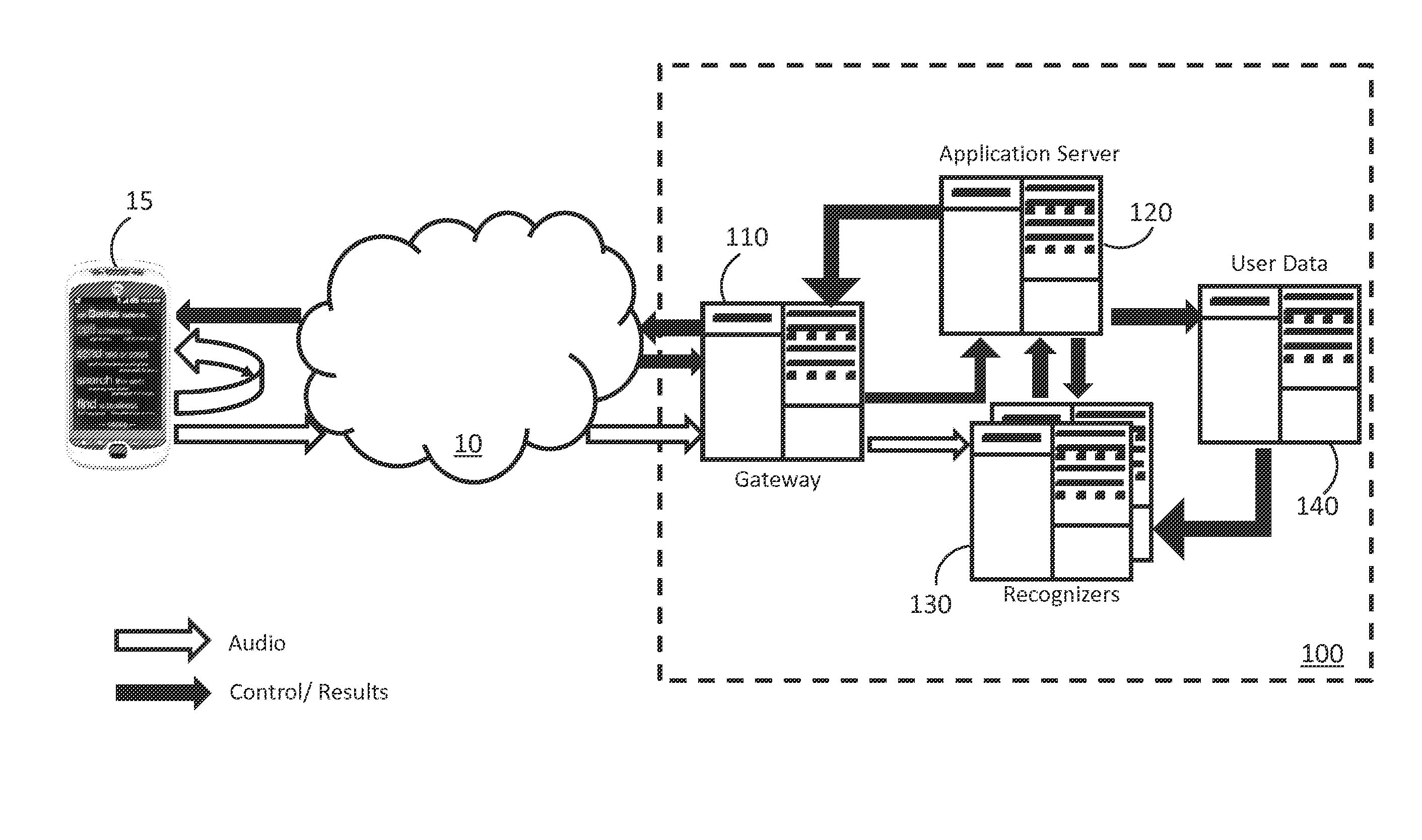

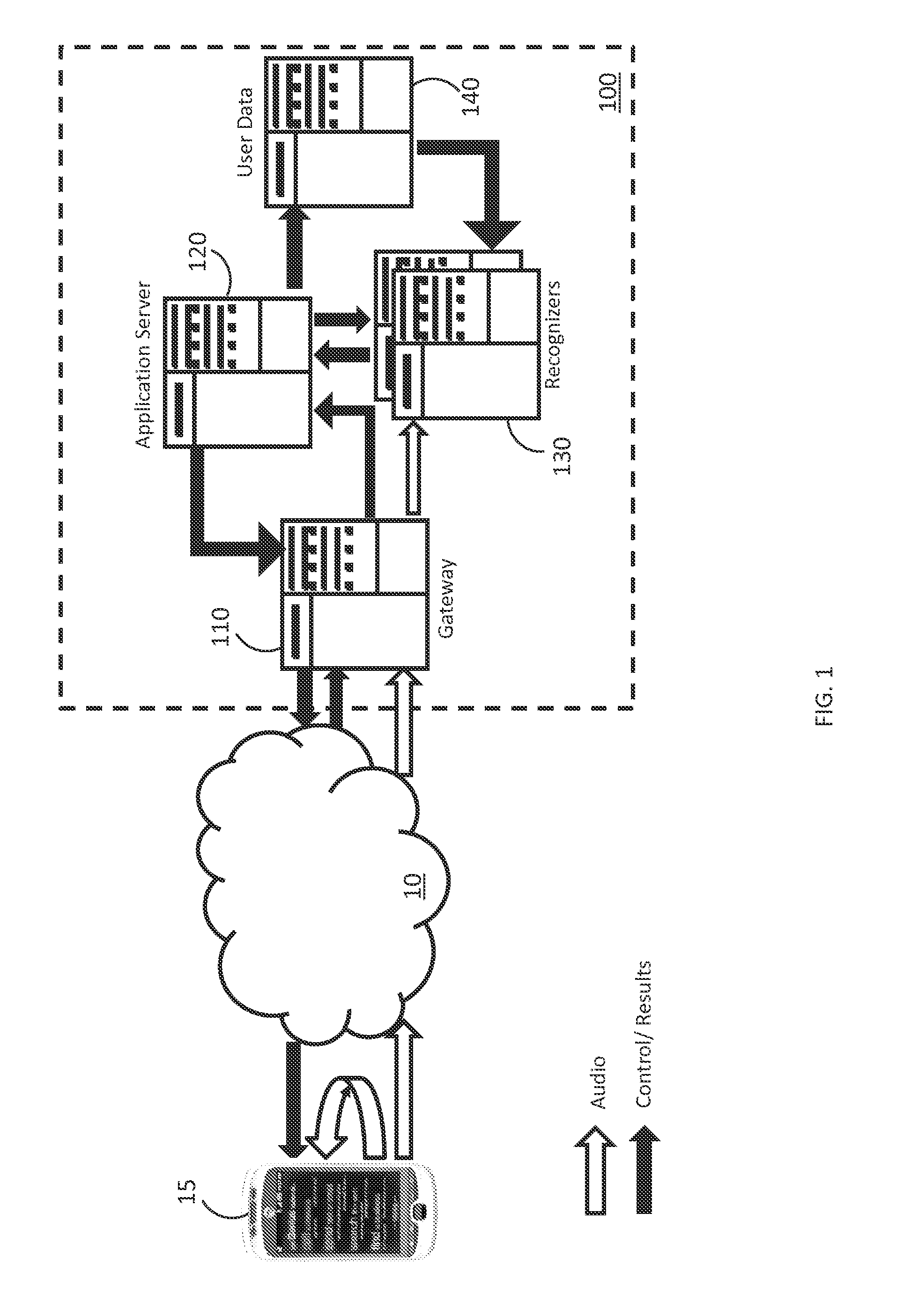

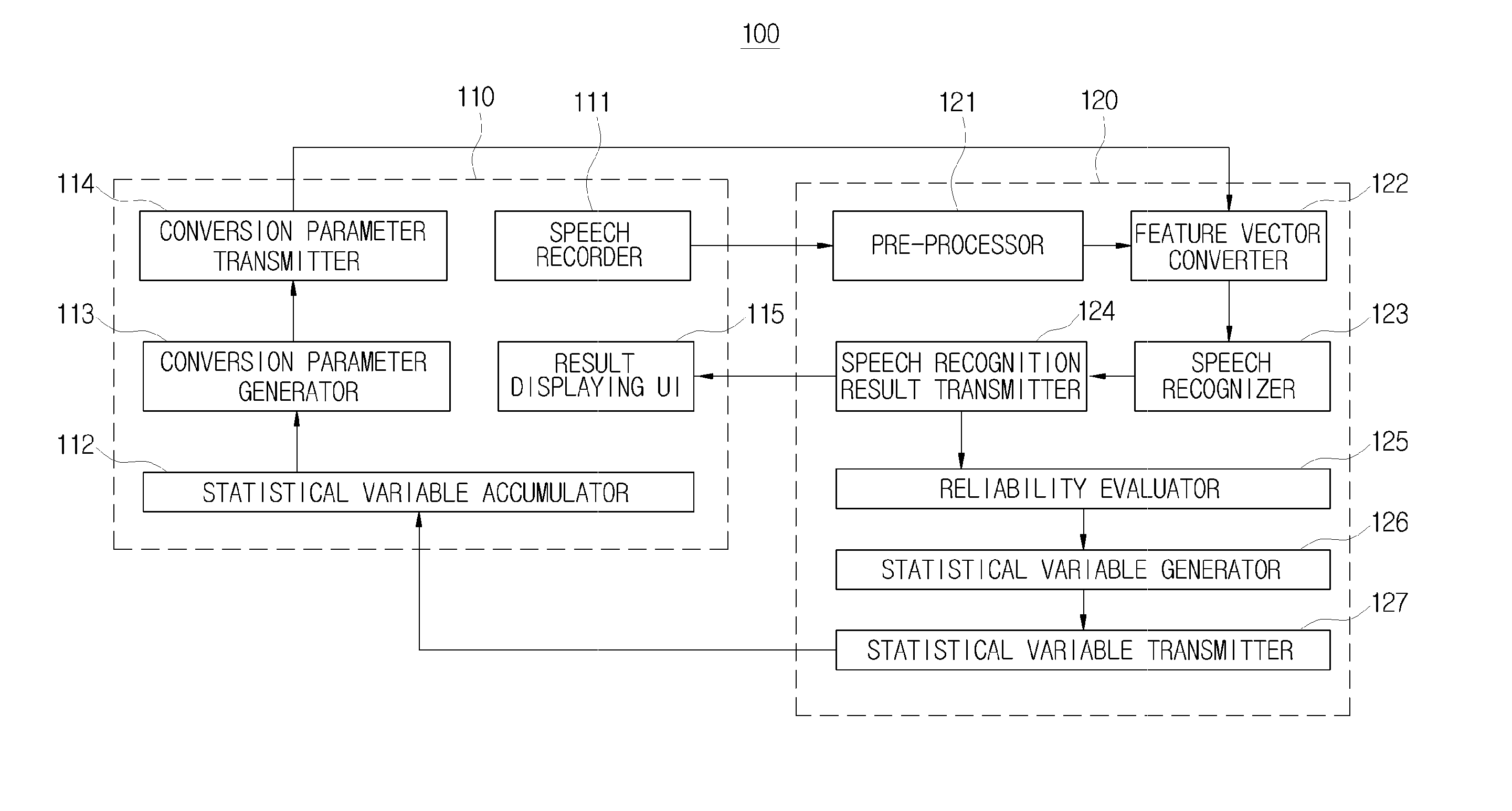

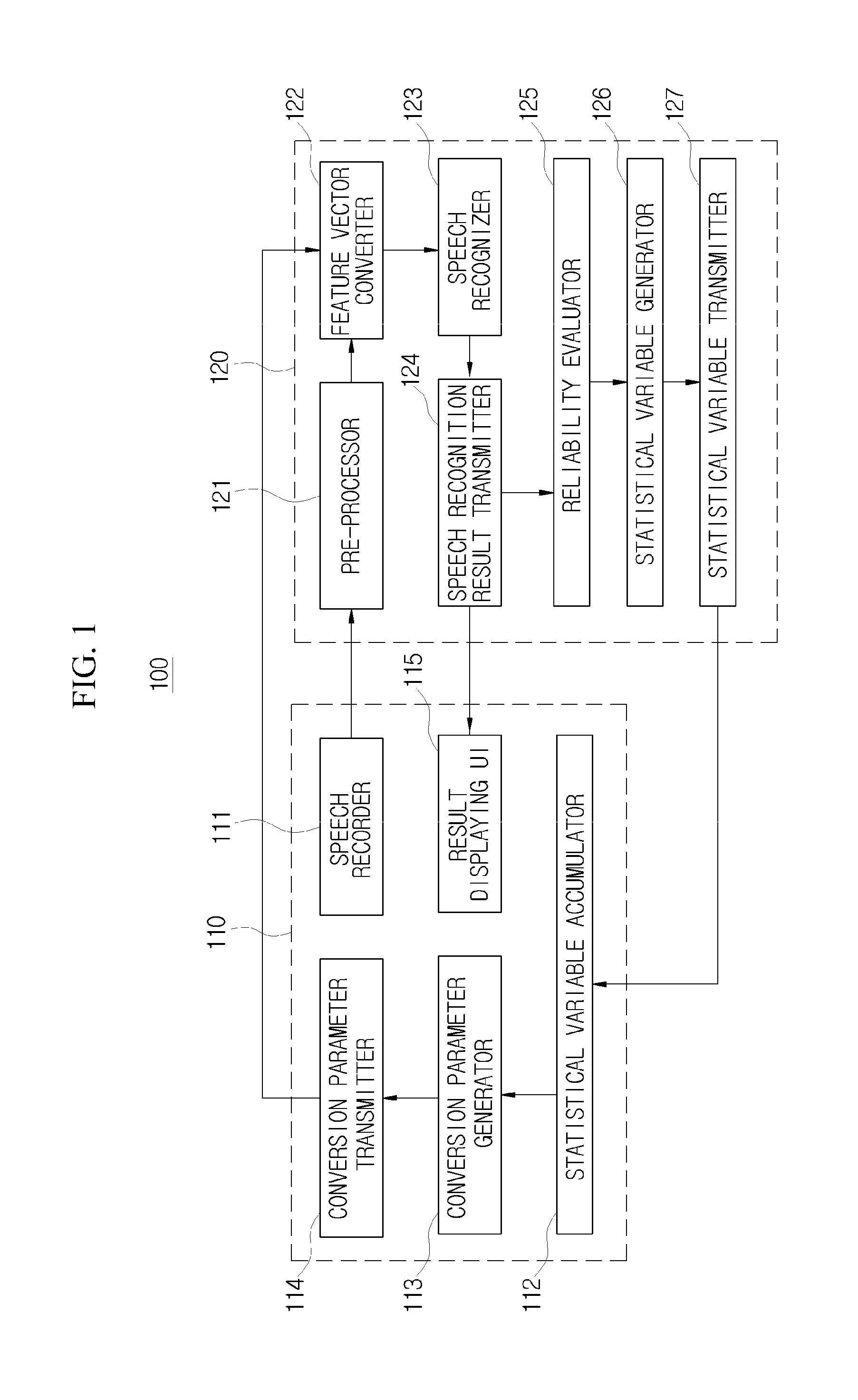

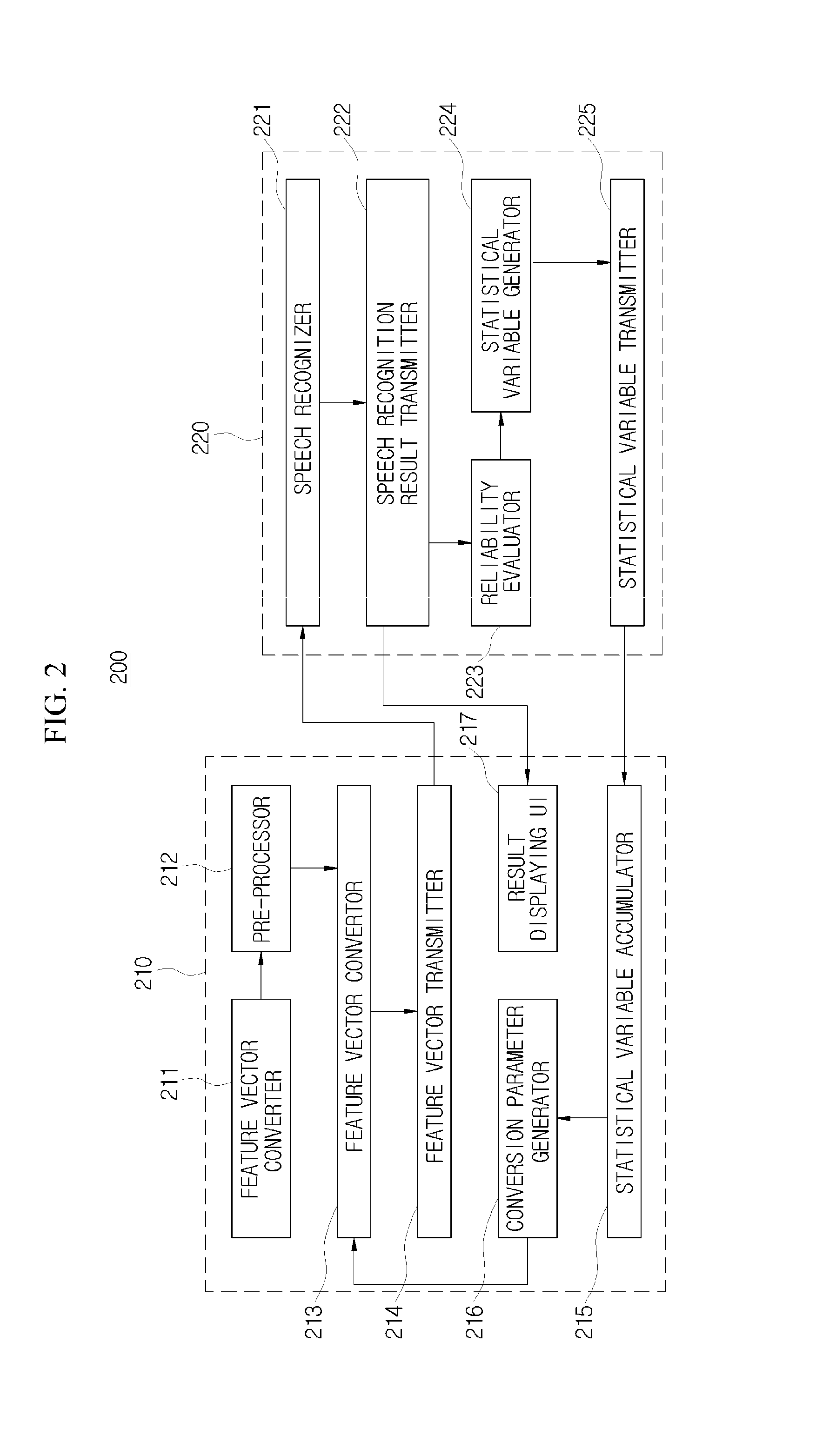

Terminal and server of speaker-adaptation speech-recognition system and method for operating the system

Provided are a terminal and server of a speaker-adaptation speech-recognition system and a method for operating the system. The terminal in the speaker-adaptation speech-recognition system includes a speech recorder which transmits speech data of a speaker to a speech-recognition server, a statistical variable accumulator which receives a statistical variable including acoustic statistical information about speech of the speaker from the speech-recognition server which recognizes the transmitted speech data, and accumulates the received statistical variable, a conversion parameter generator which generates a conversion parameter about the speech of the speaker using the accumulated statistical variable and transmits the generated conversion parameter to the speech-recognition server, and a result displaying user interface which receives and displays result data when the speech-recognition server recognizes the speech data of the speaker using the transmitted conversion parameter and transmits the recognized result data.

Owner:ELECTRONICS & TELECOMM RES INST

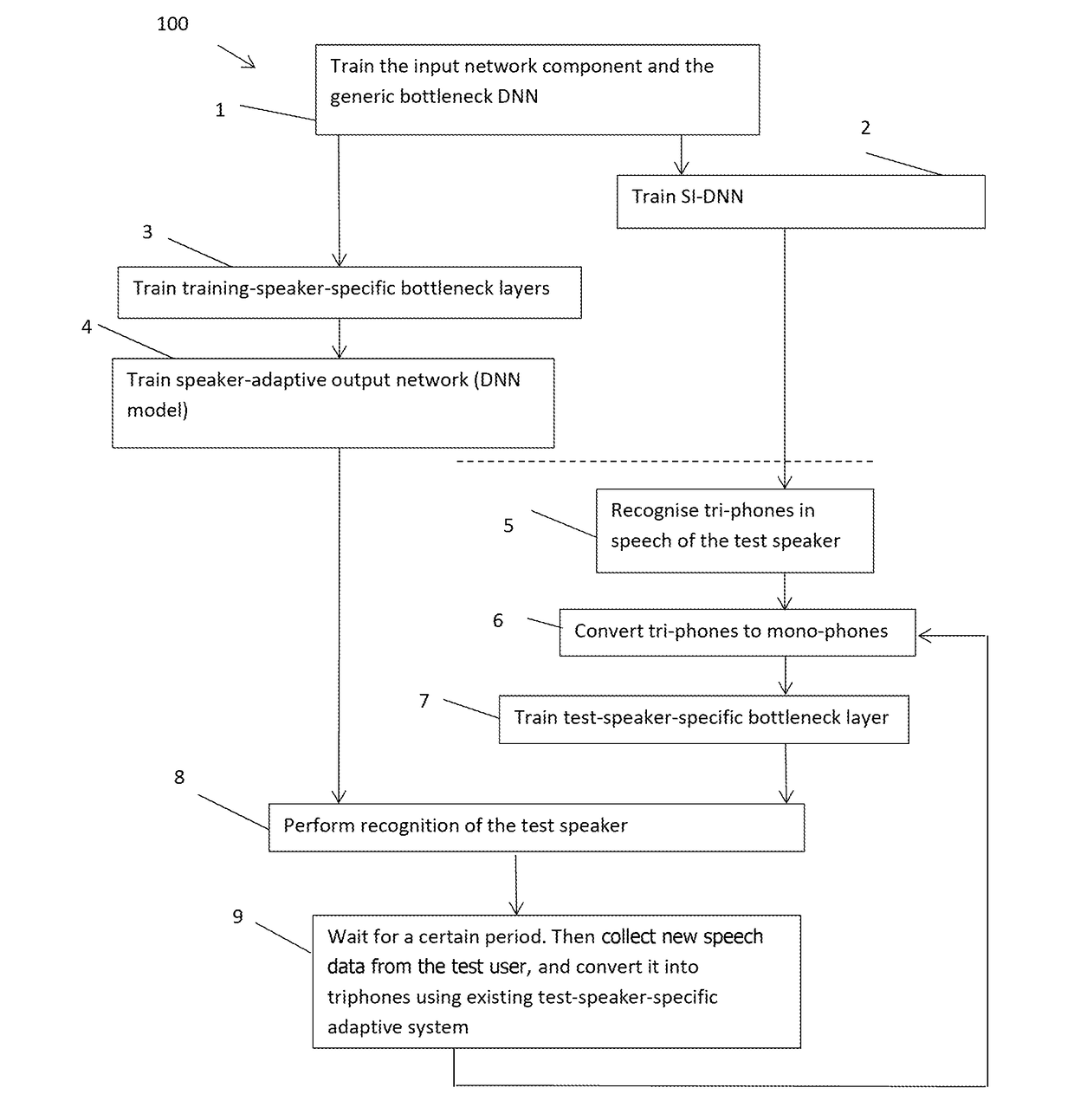

Speaker-adaptive speech recognition

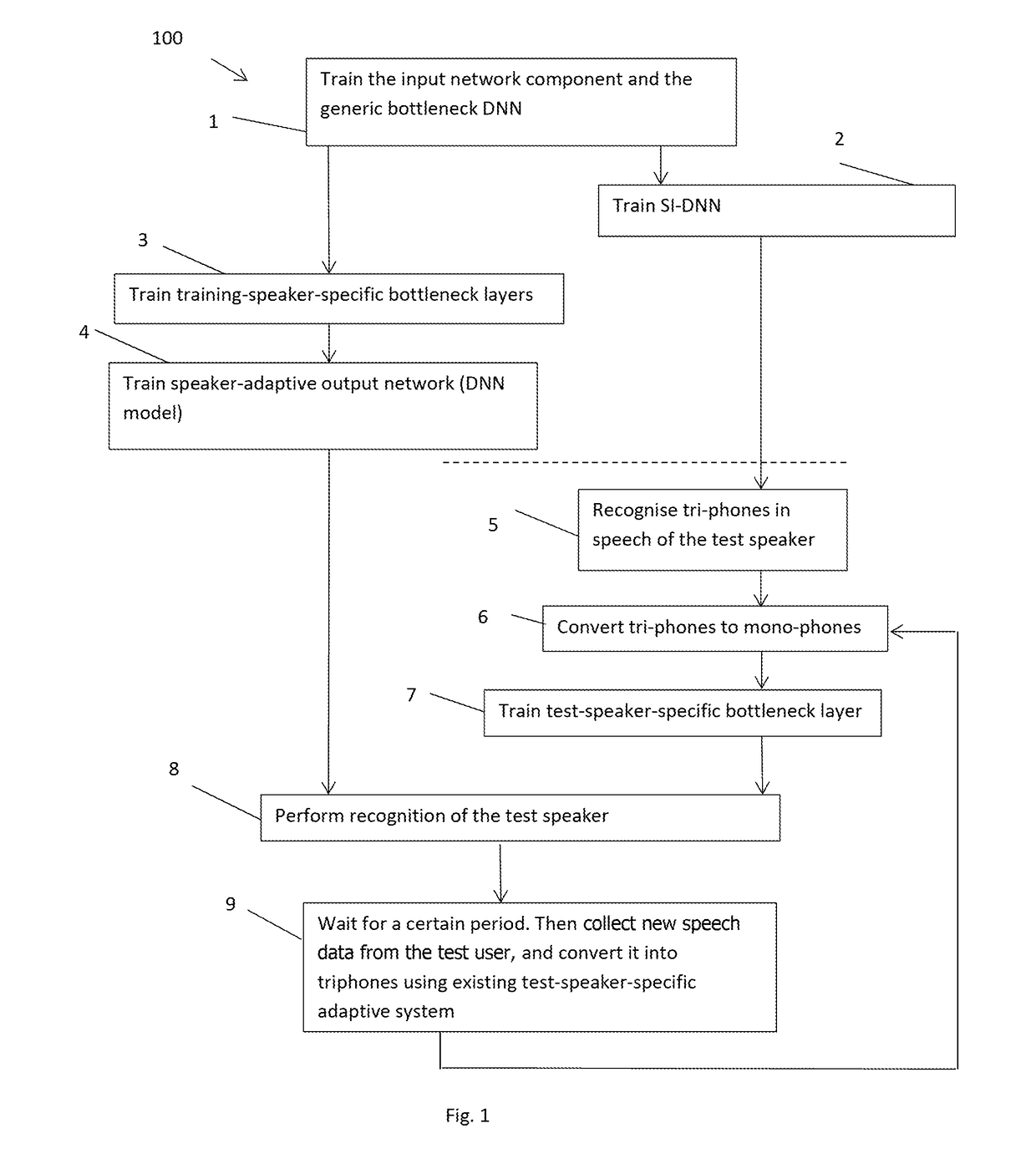

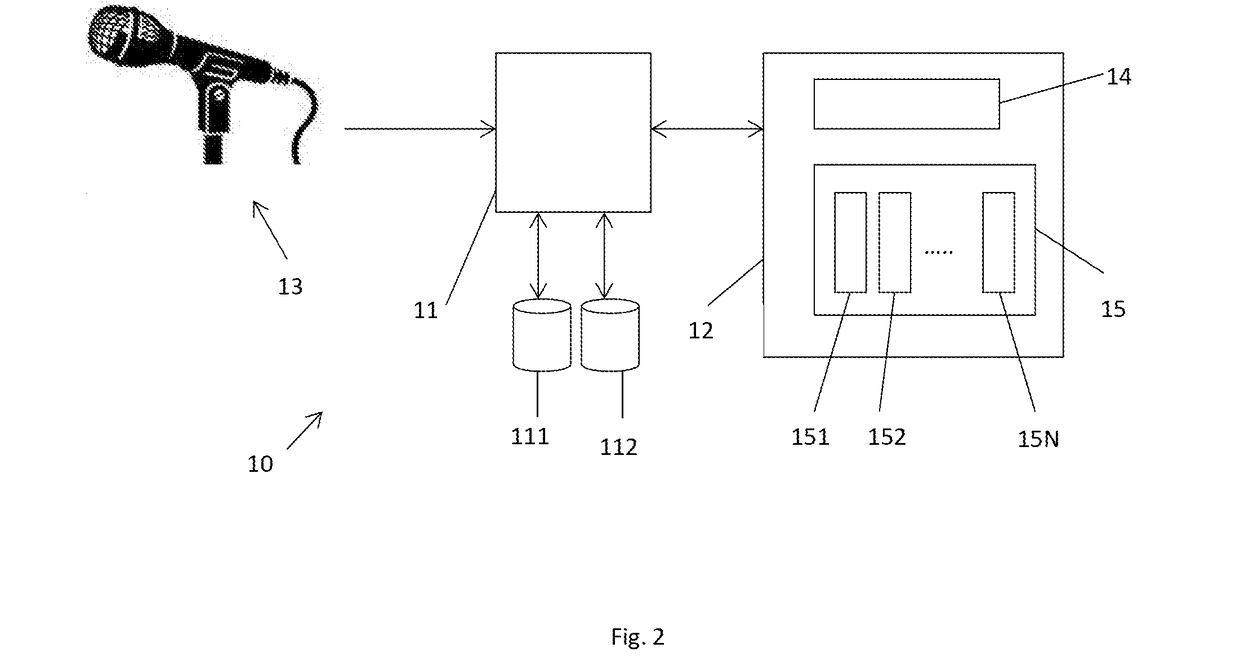

A method for generating a test-speaker-specific adaptive system for recognising sounds in speech spoken by a test speaker; the method employing:(i) training data comprising speech items spoken by the test speaker; and(ii) an input network component and a speaker adaptive output network, the input network component and speaker adaptive output network having been trained using training data from training speakers;the method comprising:(a) using the training data to train a test-speaker-specific adaptive model component of an adaptive model comprising the input network component, and the test-speaker-specific adaptive model component, and(b) providing the test-speaker-specific adaptive system comprising the input network component, the trained test-speaker-specific adaptive model component, and the speaker-adaptive output network.

Owner:KK TOSHIBA

Method and apparatus for discriminative estimation of parameters in maximum a posteriori (MAP) speaker adaptation condition and voice recognition method and apparatus including these

A method and apparatus for discriminative estimation of parameters in a maximum a posteriori (MAP) speaker adaptation condition, and a voice recognition apparatus having the apparatus and a voice recognition method using the method are provided. The method for discriminative estimation of parameters in a maximum a posteriori (MAP) speaker adaptation condition, in which at least speaker-independent model parameters and prior density parameters, which are standards in recognizing a speaker's voice, are obtained as the result of model training after fetching training sets on a plurality of speakers from a training database, has the steps of (a) classifying adaptation data among training sets for respective speakers; (b) obtaining model parameters adapted from adaptation data on each speaker by using the initial values of the parameters; (c) searching a plurality of candidate hypotheses on each uttered sentence of training sets by using the adapted model parameters, and calculating gradients of speaker-independent model parameters by measuring the degree of errors on each training sentence; and (d) when training sets of all speakers are adapted, updating parameters, which were set at the initial stage, based on the calculated gradients.

Owner:SAMSUNG ELECTRONICS CO LTD

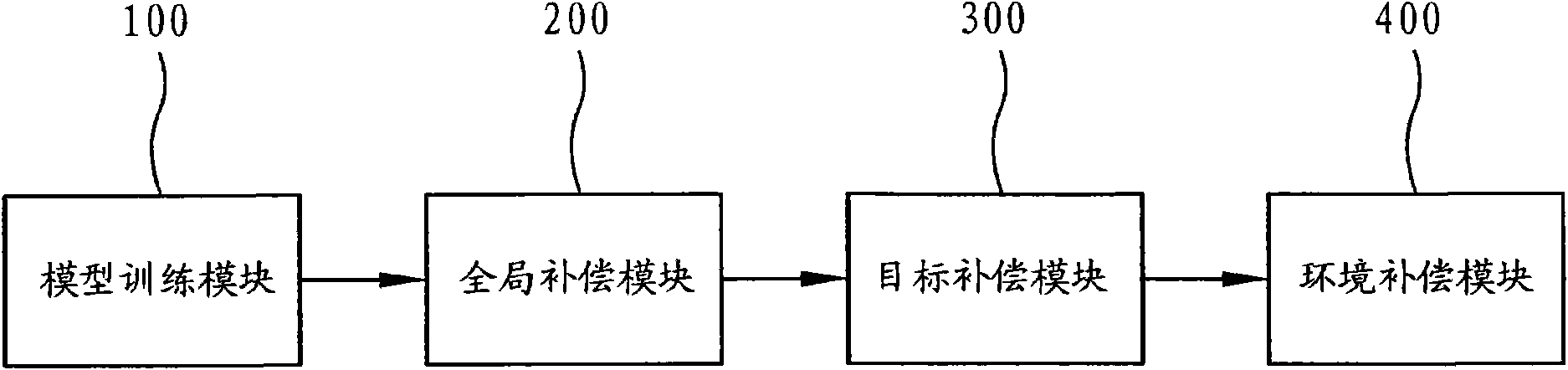

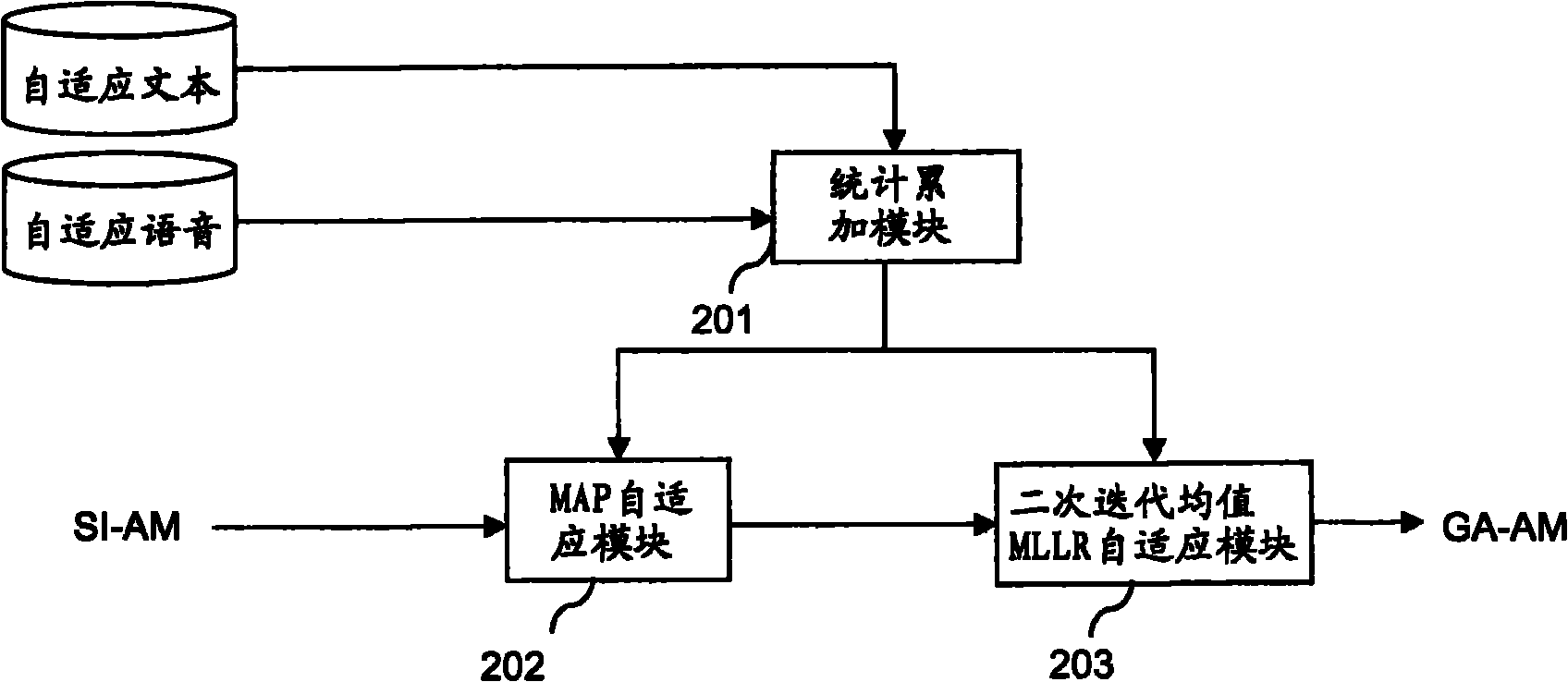

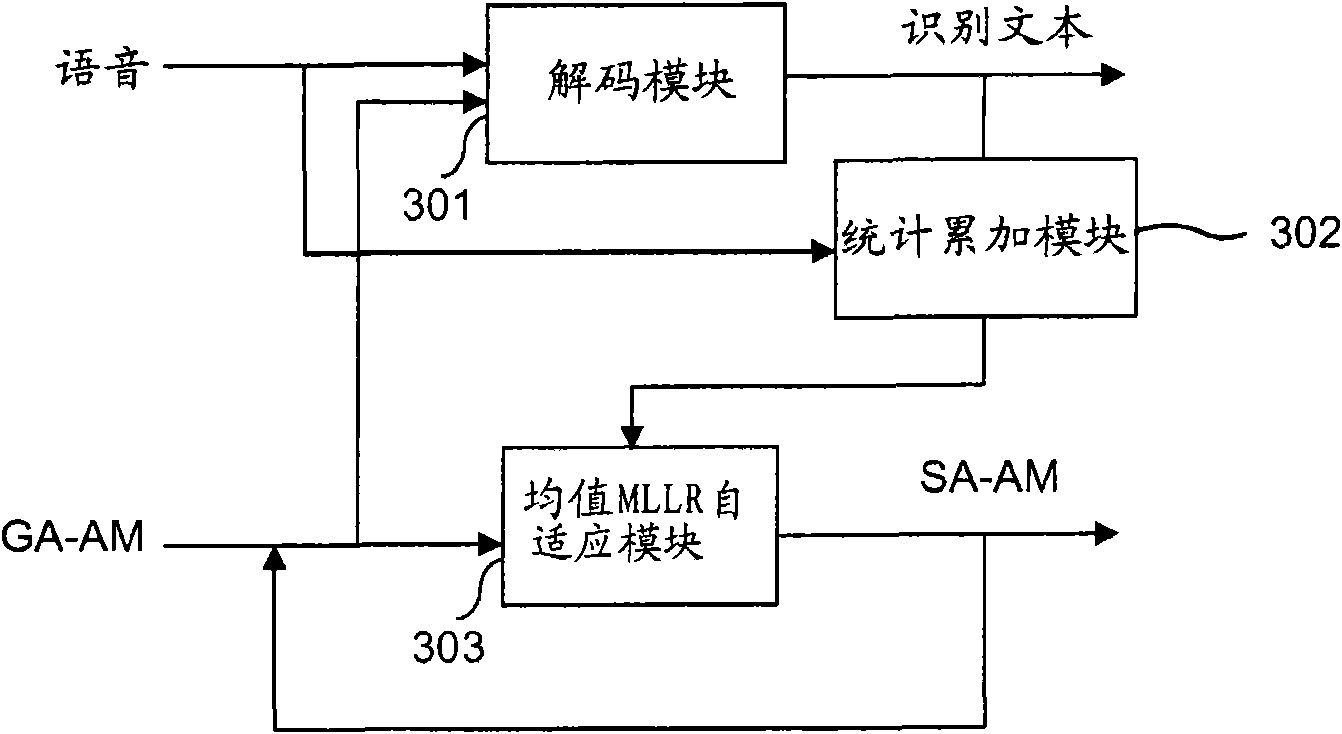

Compensation device and method for voice recognition equipment

The invention provides a compensation device and a compensation method for voice recognition equipment. The compensation device comprises a model training module, a global compensation module, a target compensation module and an environment compensation module, wherein the model training module trains a preset acoustic model by using an expectation maximization algorithm and outputs an acoustic model of an unspecific speaker; the global compensation module compensates a specific domain and a specific device which influence acoustic data by using the acoustic model and outputs a globally adaptive acoustic model; the target compensation module compensates change of a specific speaker by using the globally adaptive acoustic model and outputs an acoustic model adapting to the speaker; and the environment compensation module compensates change of a specific environment by using the acoustic model adapting to the speaker and outputs an acoustic model adapting to the environment.

Owner:SAMSUNG ELECTRONICS CO LTD +1

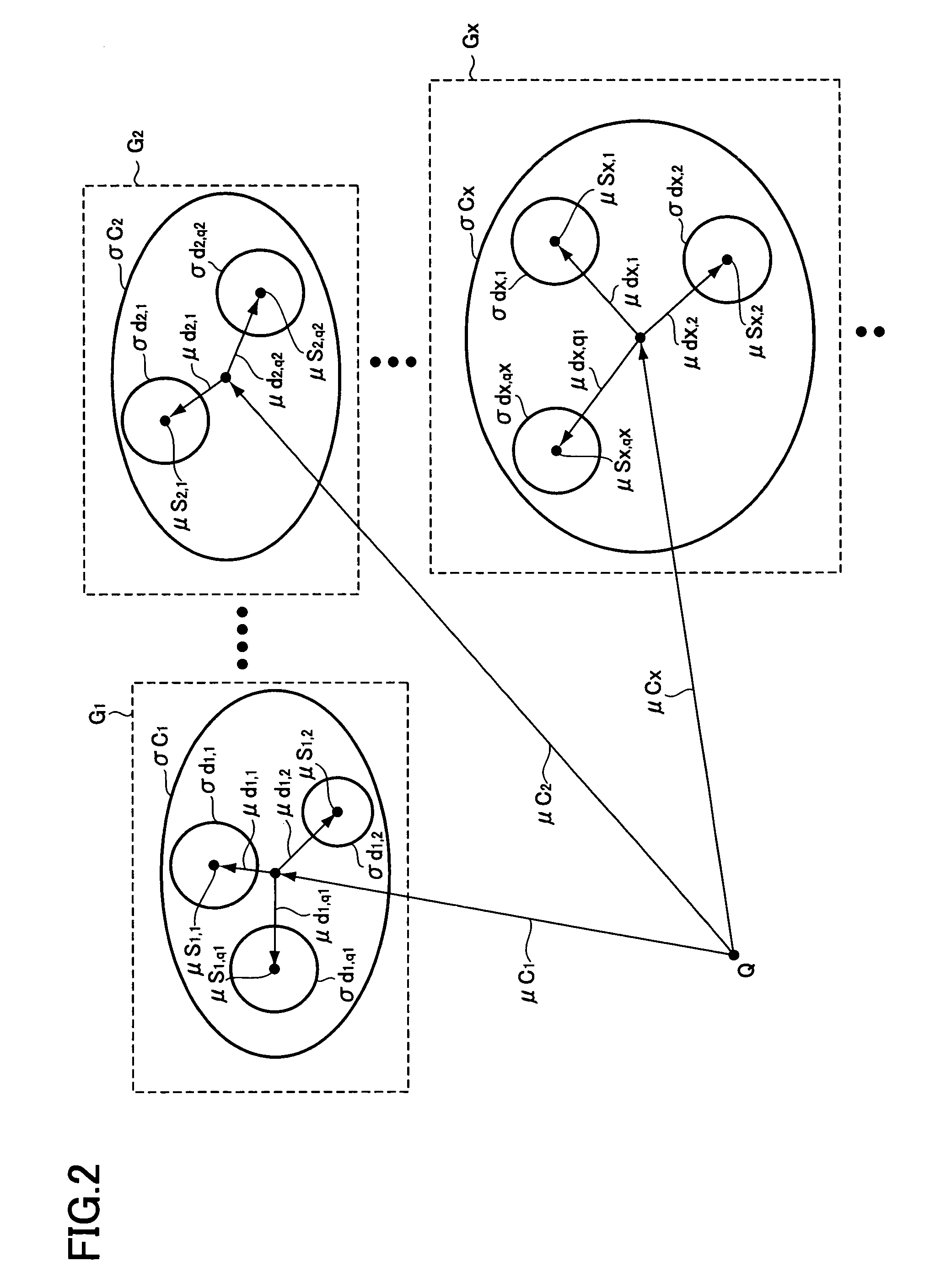

Voice recognition system

At the time of the speaker adaptation, first feature vector generation sections (7, 8, 9) generate a feature vector series [ci, M] from which the additive noise and multiplicative noise are removed. A second feature vector generation section (12) generates a feature vector series [si, M] including the features of the additive noise and multiplicative noise. A path search section (10) conducts a path search by comparing the feature vector series [ci, M] to the standard vector [an, M] of the standard voice HMM (300). When the speaker adaptation section (11) conducts correlation operation on an average feature vector [s<custom-character file="US20020042712A1-20020411-P00900.TIF" wi="20" he="20" id="custom-character-00001" / >n, M] of the standard vector [an, M] corresponding to the path search result Dv and the feature vector series [si, M], the adaptive vector [xn, M] is generated. The adaptive vector [xn, M] updates the feature vector of the speaker adaptive acoustic model (400) used for the voice recognition.

Owner:PIONEER CORP

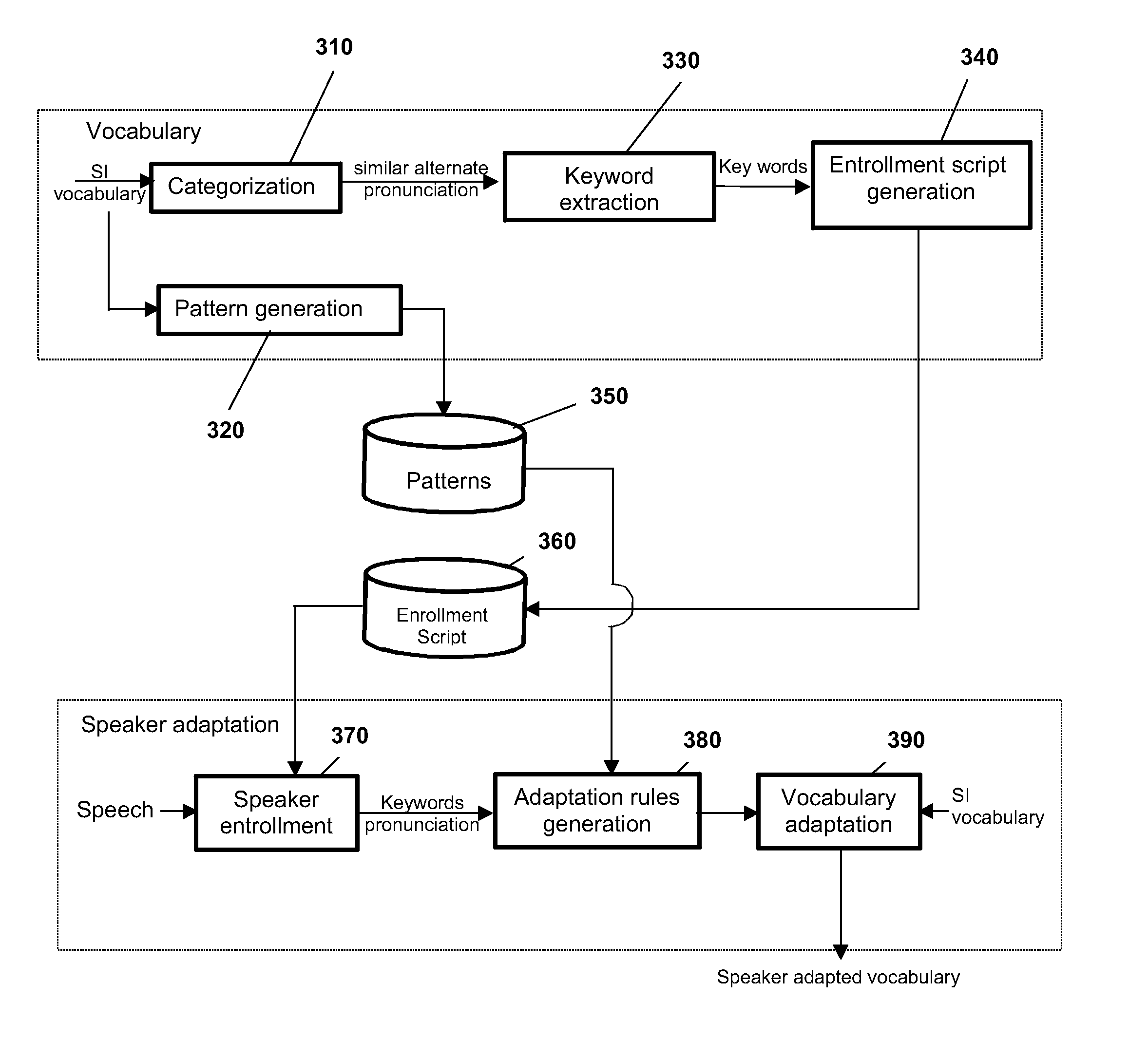

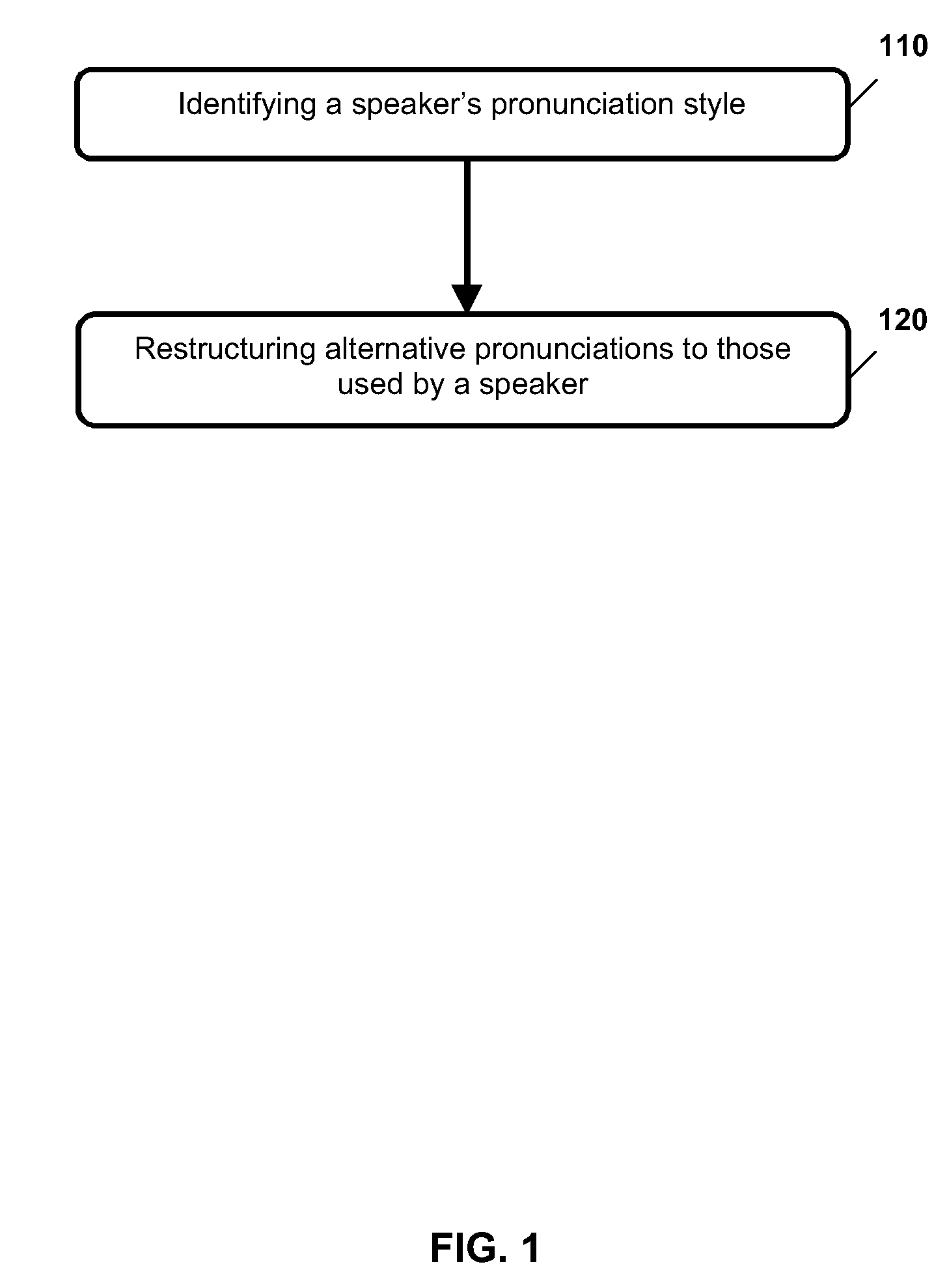

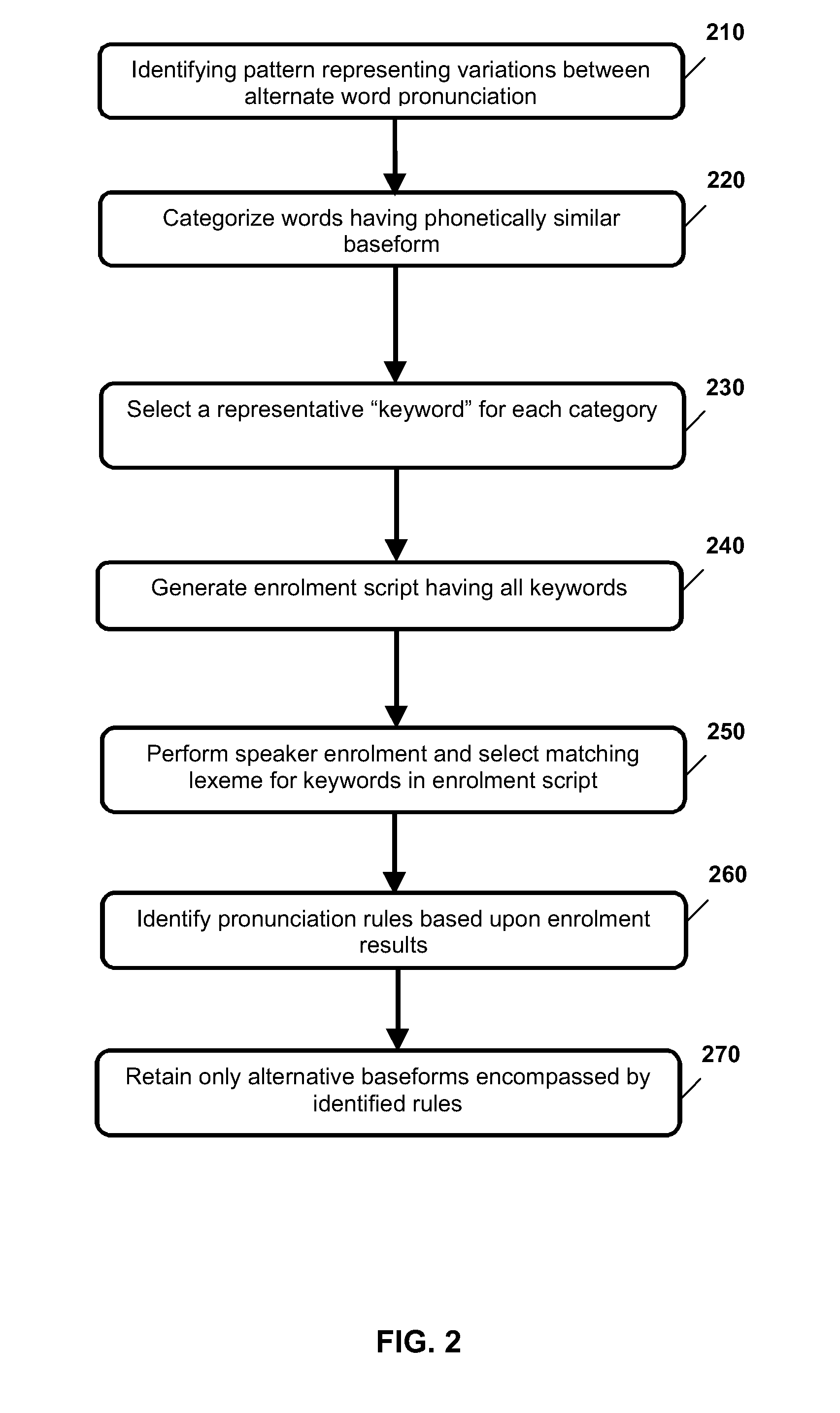

Speaker adaptation of vocabulary for speech recognition

A phonetic vocabulary for a speech recognition system is adapted to a particular speaker's pronunciation. A speaker can be attributed specific pronunciation styles, which can be identified from specific pronunciation examples. Consequently, a phonetic vocabulary can be reduced in size, which can improve recognition accuracy and recognition speed.

Owner:NUANCE COMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com