Patents

Literature

1560 results about "Speech characteristics" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Speech characteristics are features of speech that in varying may affect intelligibility. They include: Articulation. Pronunciation. Speech disfluency. Speech pauses. Speech pitch. Speech rate.

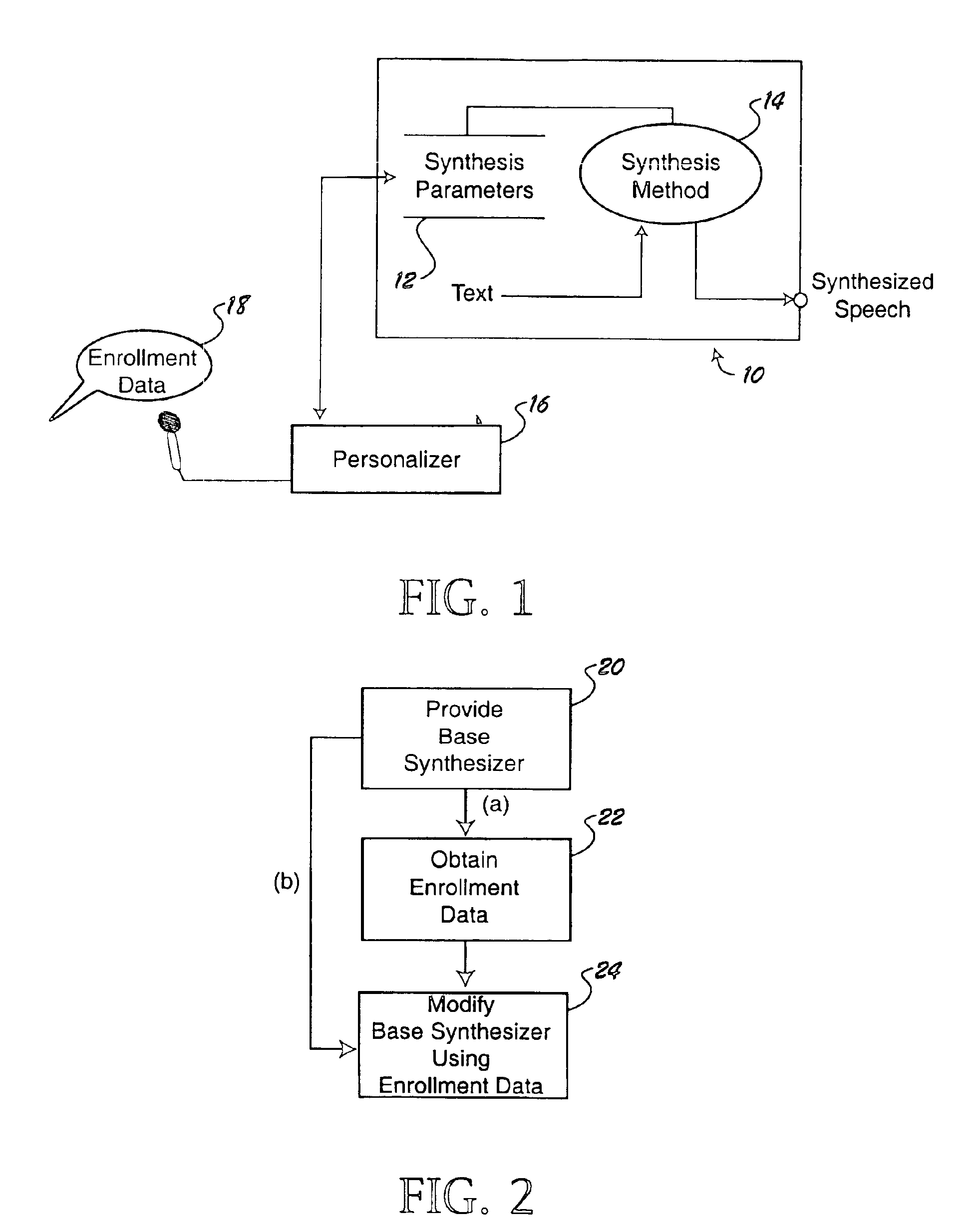

System and method for personalized text-to-voice synthesis

A communication device and method are provided for audibly outputting a received text message to a user, the text message being received from a sender. A text message to present audibly is received. An output voice to present the text message is retrieved, wherein the output voice is synthesized using predefined voice characteristic information to represent the sender's voice. The output voice is used to audibly present the text message to the user.

Owner:MALIKIE INNOVATIONS LTD

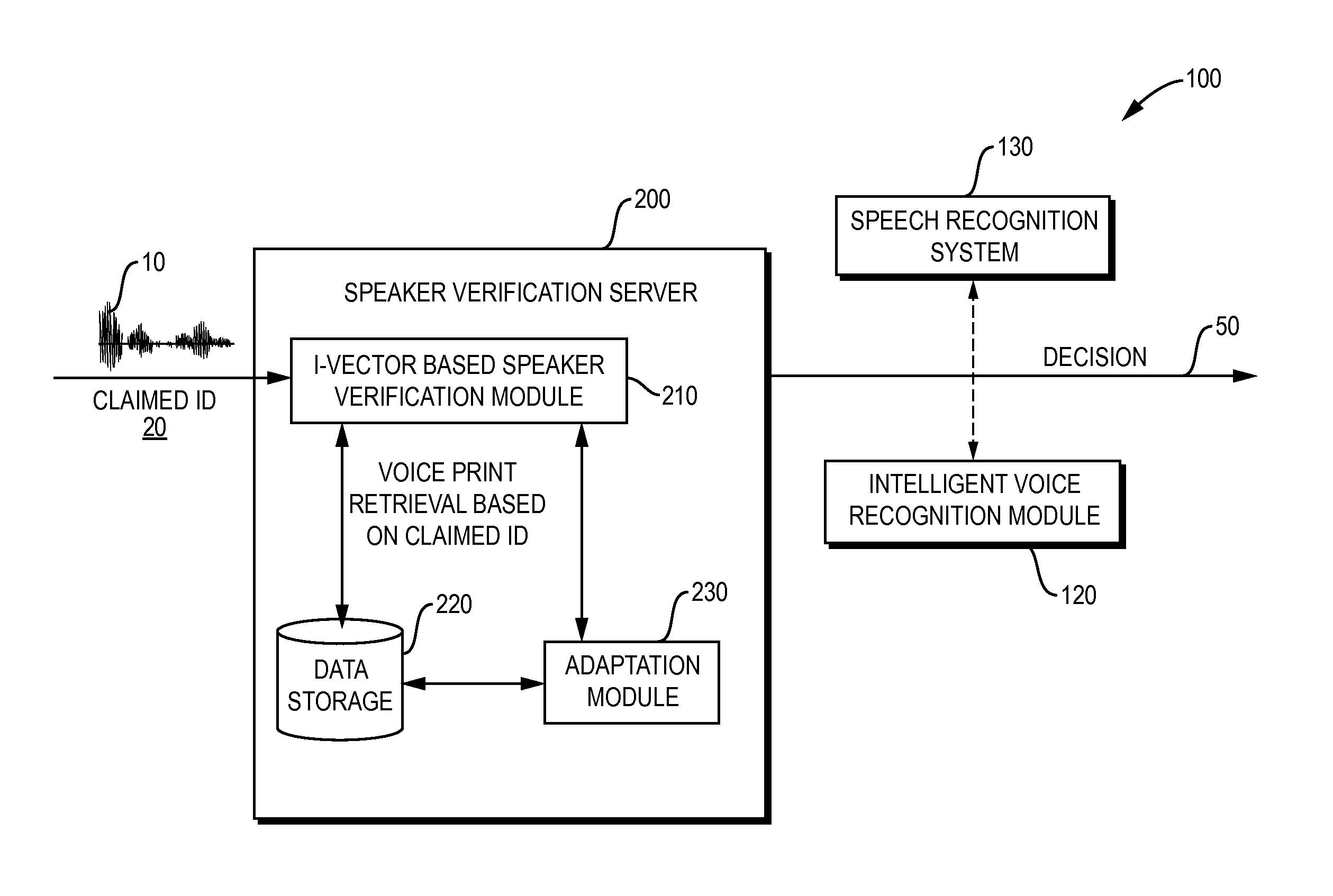

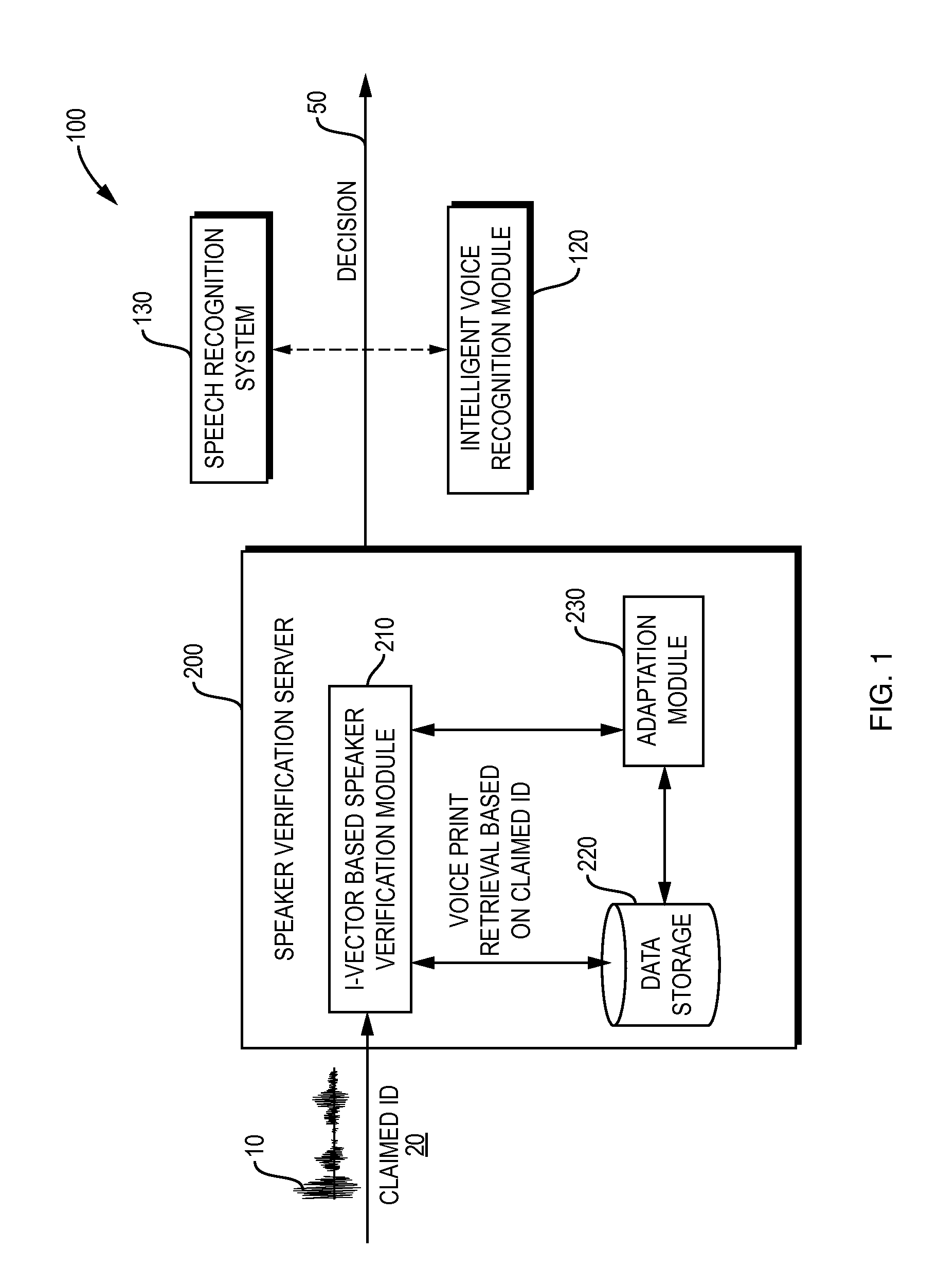

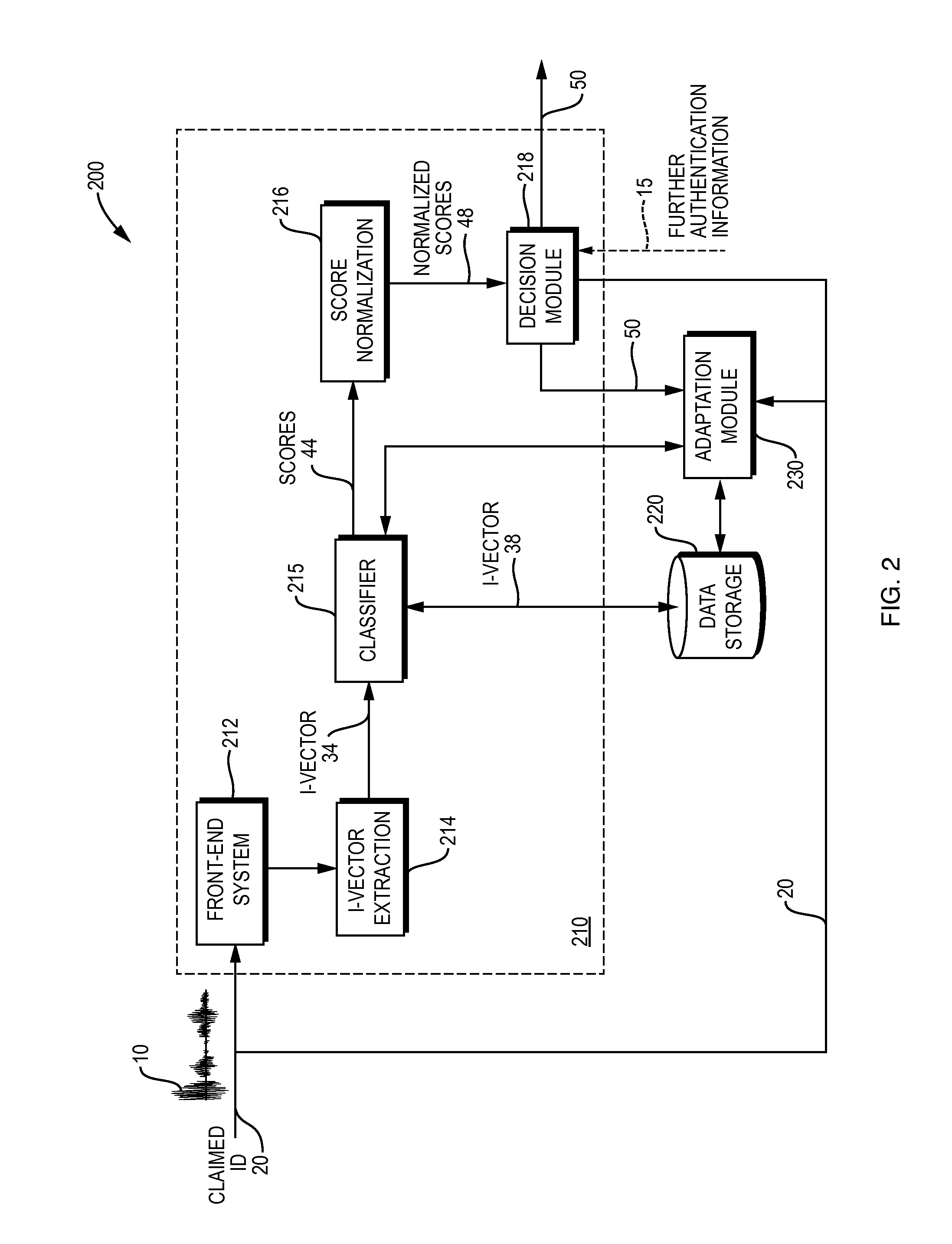

Method and Apparatus for Automated Speaker Parameters Adaptation in a Deployed Speaker Verification System

Typical speaker verification systems usually employ speakers' audio data collected during an enrollment phase when users enroll with the system and provide respective voice samples. Due to technical, business, or other constraints, the enrollment data may not be large enough or rich enough to encompass different inter-speaker and intra-speaker variations. According to at least one embodiment, a method and apparatus employing classifier adaptation based on field data in a deployed voice-based interactive system comprise: collecting representations of voice characteristics, in association with corresponding speakers, the representations being generated by the deployed voice-based interactive system; updating parameters of the classifier, used in speaker recognition, based on the representations collected; and employing the classifier, with the corresponding parameters updated, in performing speaker recognition.

Owner:NUANCE COMM INC

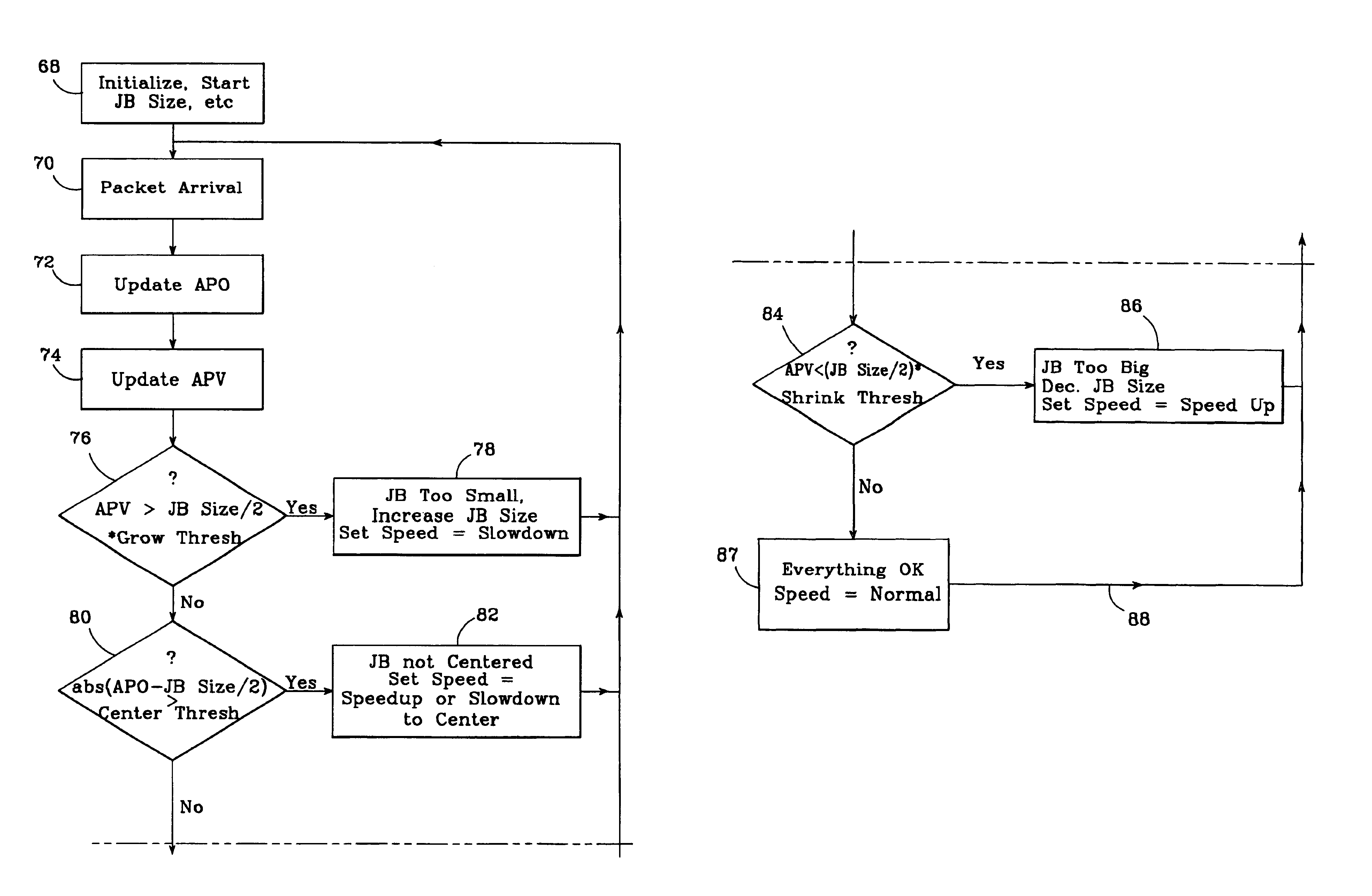

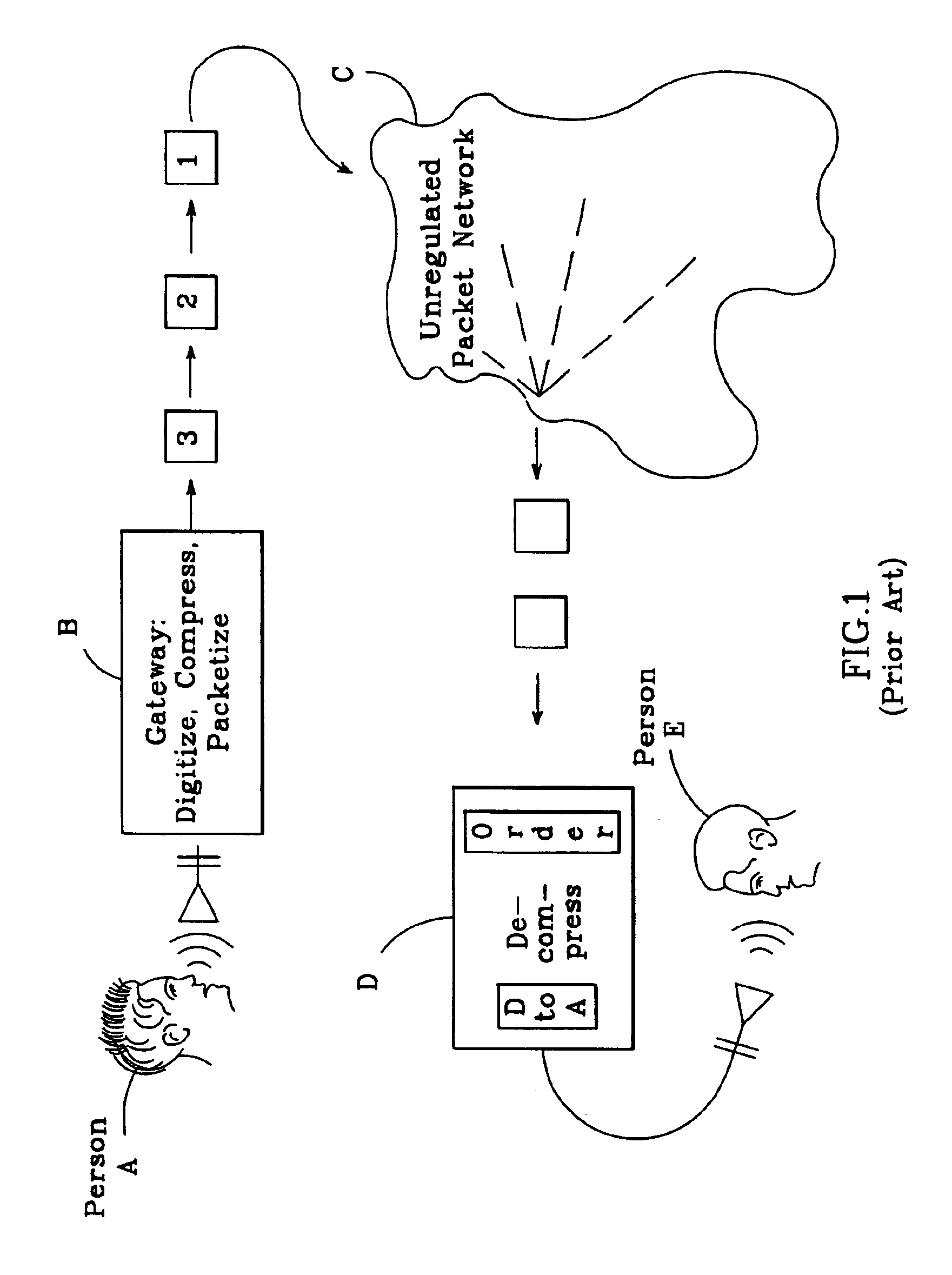

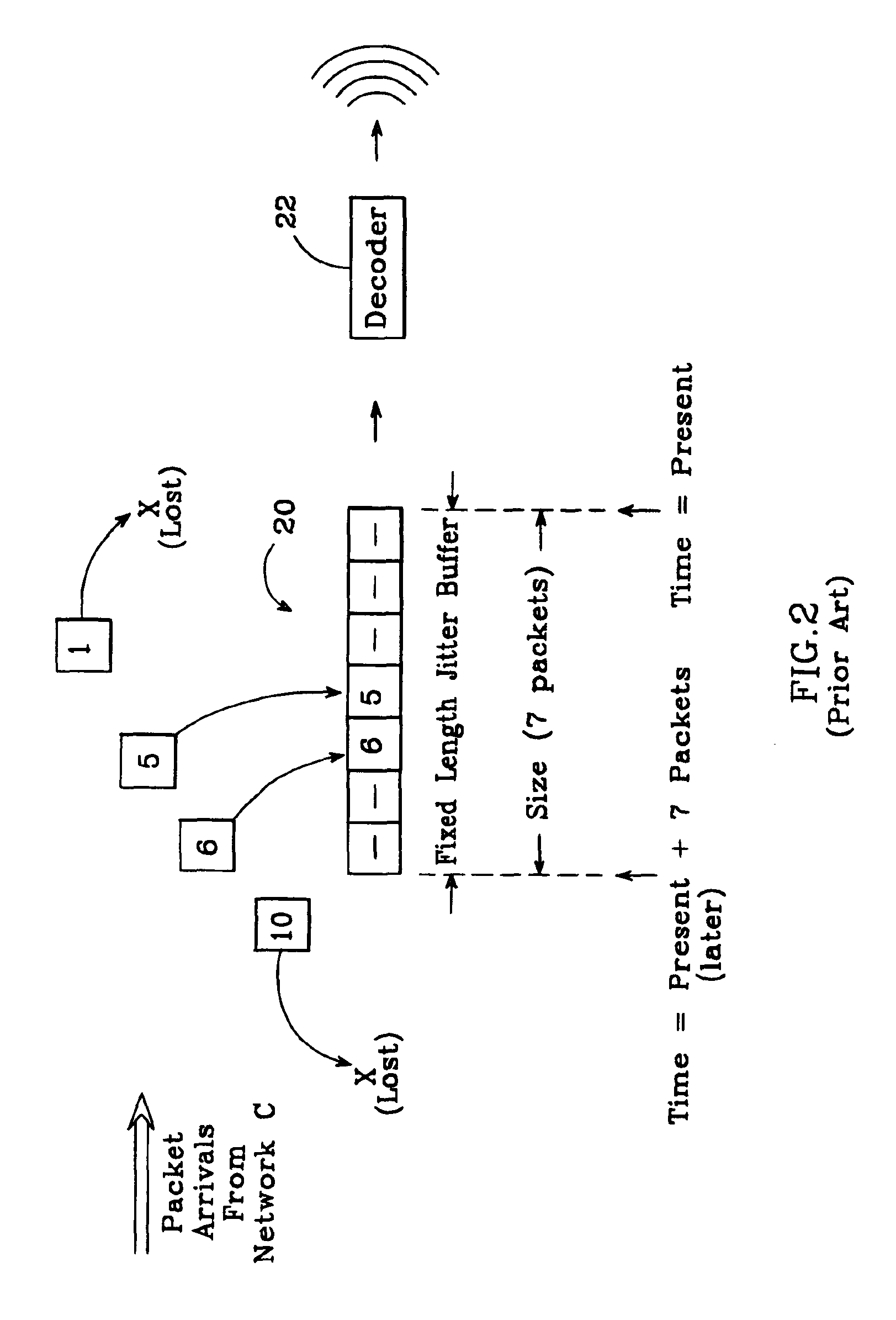

Adaptive jitter buffer for internet telephony

InactiveUS6862298B1Improve audio qualityTo overcome the large delayError preventionFrequency-division multiplex detailsPacket arrivalControl signal

In an improved system for receiving digital voice signals from a data network, a jitter buffer manager monitors packet arrival times, determines a time varying transit delay variation parameter and adaptively controls jitter buffer size in response to the variation parameter. A speed control module responds to a control signal from the jitter buffer manager by modifying the rate of data consumption from the jitter buffer, to compensate for changes in buffer size, preferably in a manner which maintains audio output with acceptable, natural human speech characteristics. Preferably, the manager also calculates average packet delay and controls the speed control module to adaptively align the jitter buffer's center with the average packet delay time.

Owner:GOOGLE LLC

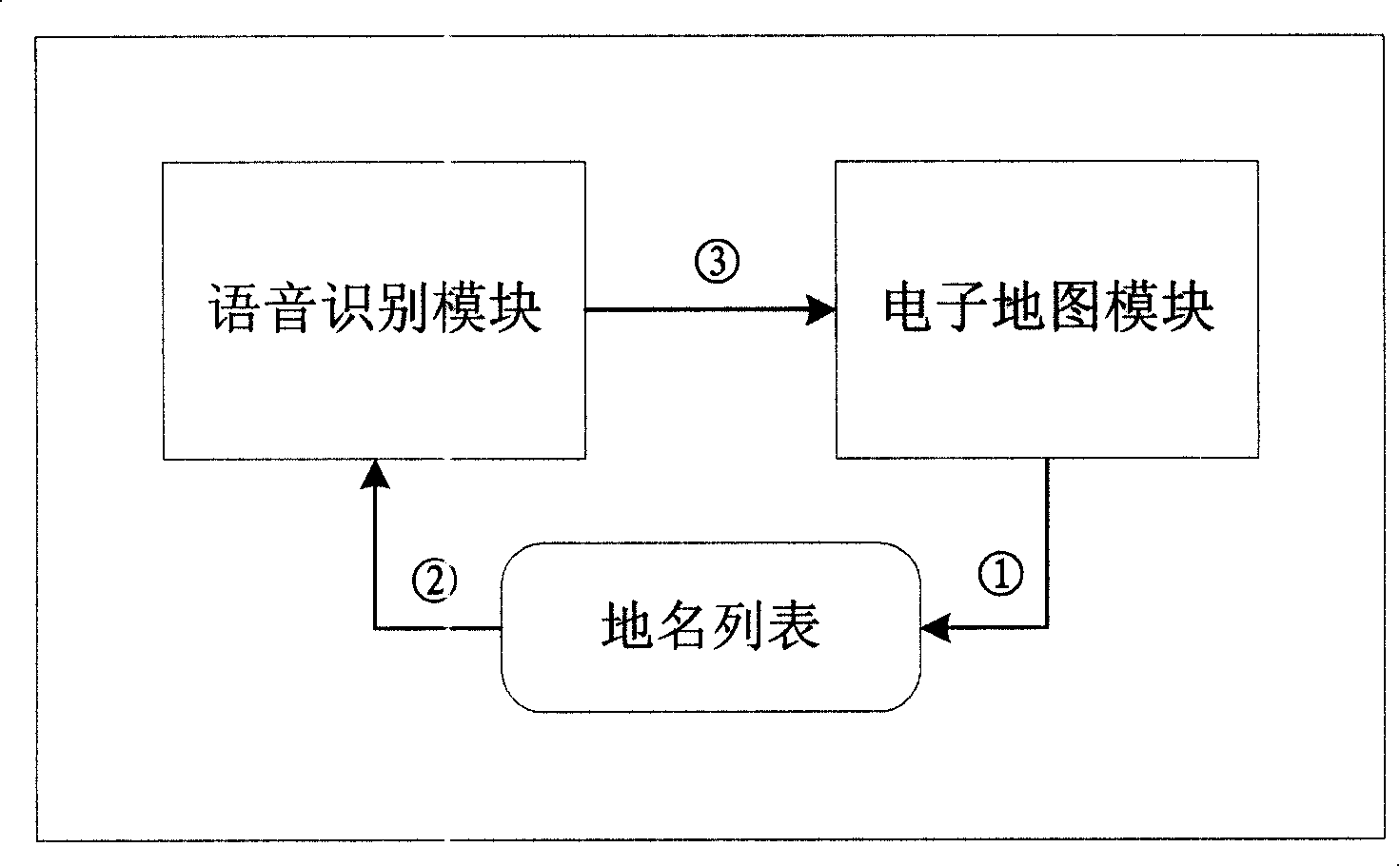

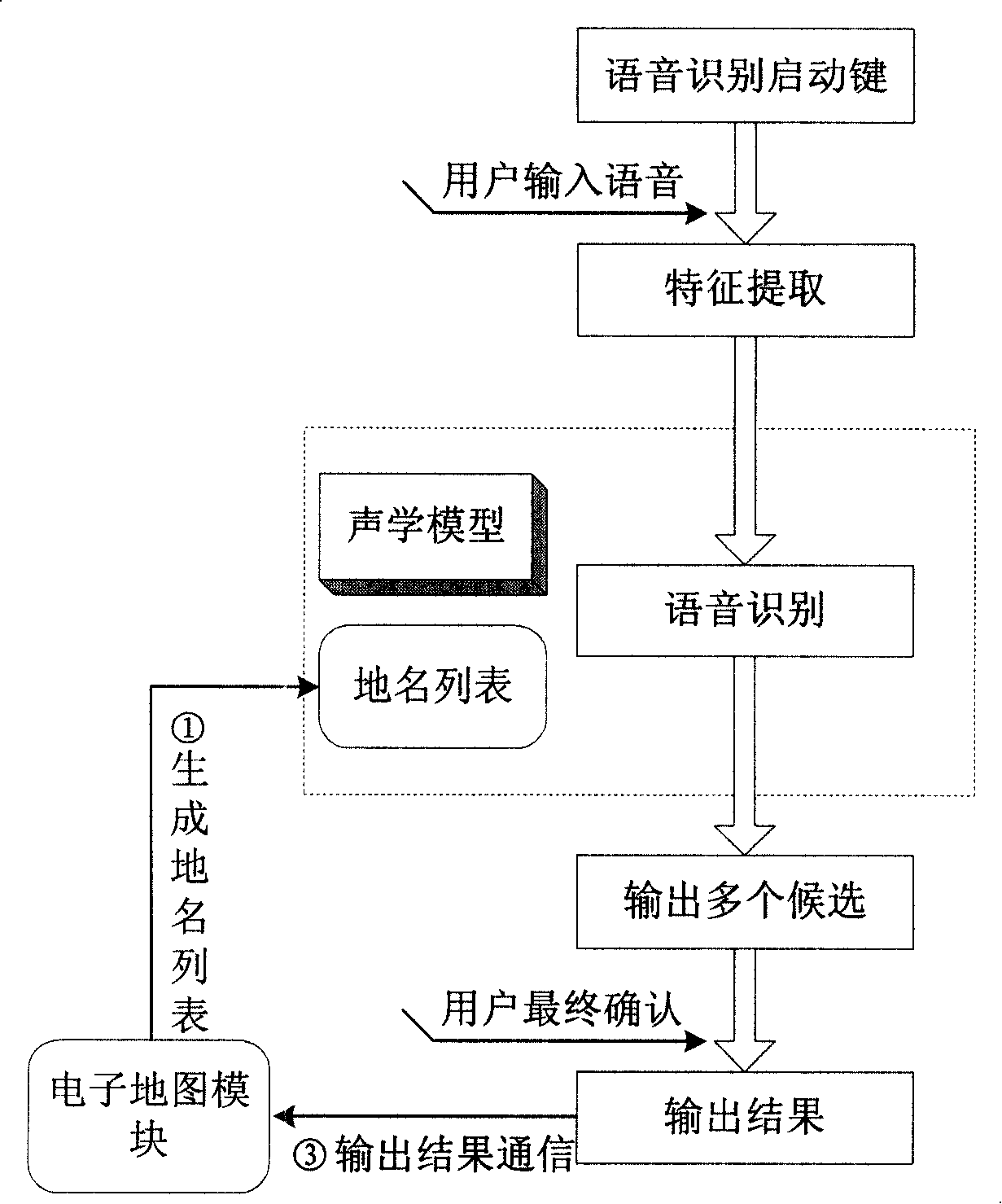

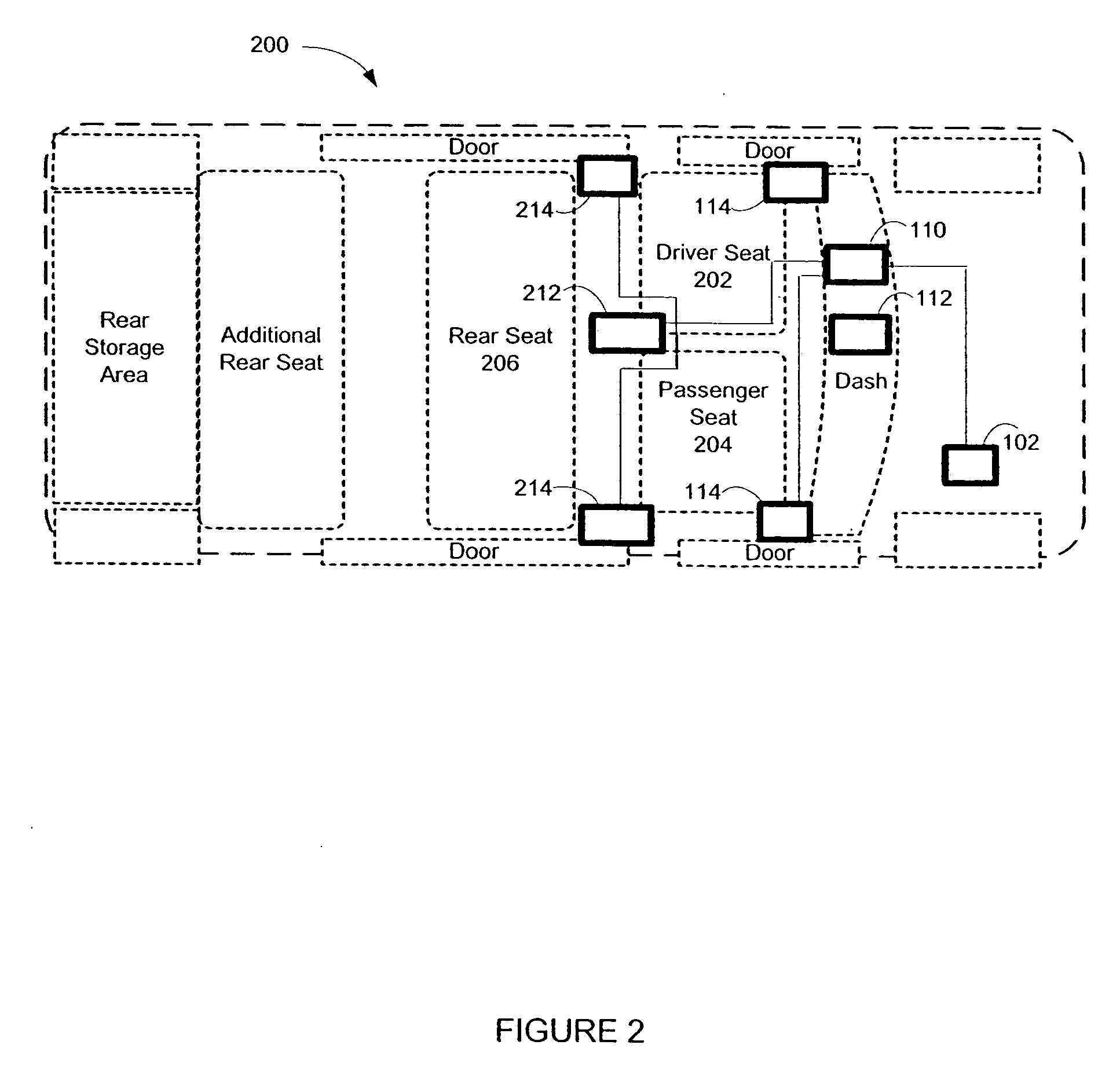

Voice controlled vehicle mounted GPS guidance system and method for realizing same

InactiveCN101162153AEasy to operateEnsure safe drivingInstruments for road network navigationNavigational calculation instrumentsGuidance systemNavigation system

The invention relates to a voice-controlled vehicle GPS navigating system and the method for realizing the same, comprising the following steps of: 1, an electric map module make the place names in a map into a place name list, and the place name list is used as a distinguishing set for a voice distinguishing module and then is sent to the voice distinguishing module; 2, the start-up key of the voice distinguishing module is turned on; 3, the voice distinguishing module receives input voice and extracts characters of the input voice; 4, voice recognition is performed after abstracting the characters; 5, after the voice recognition is finished, a plurality of candidates are output for the final affirmation of users; 6, the final results affirmed by users are transmitted to the electric map module and then is mapped into corresponding map coordinate to be displayed on a screen. The invention not only simplified the operation procedures of GPS device, but also provides safeguards for drivers, and the invention, at the same time, facilitates the popularization of GPS.

Owner:丁玉国

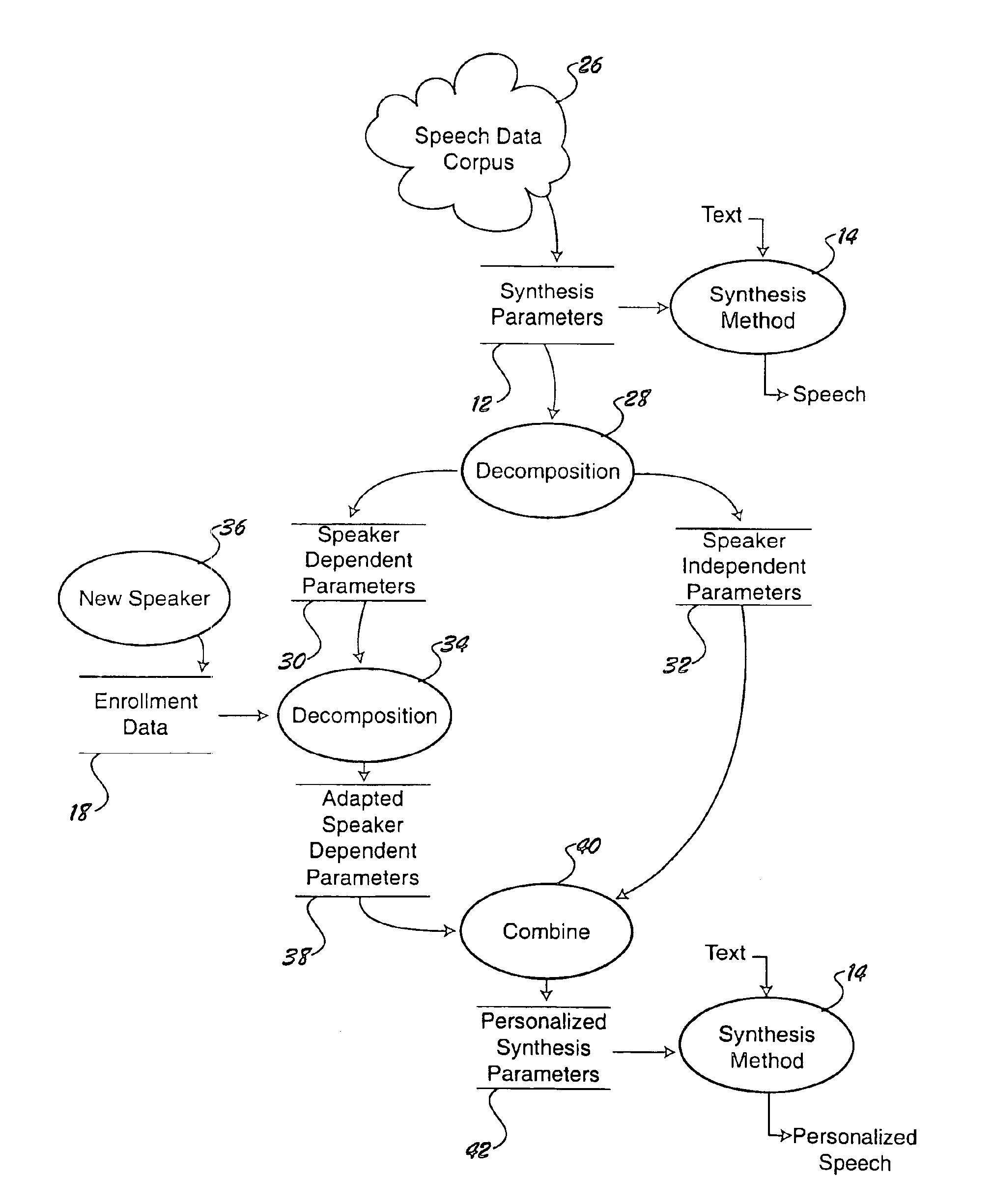

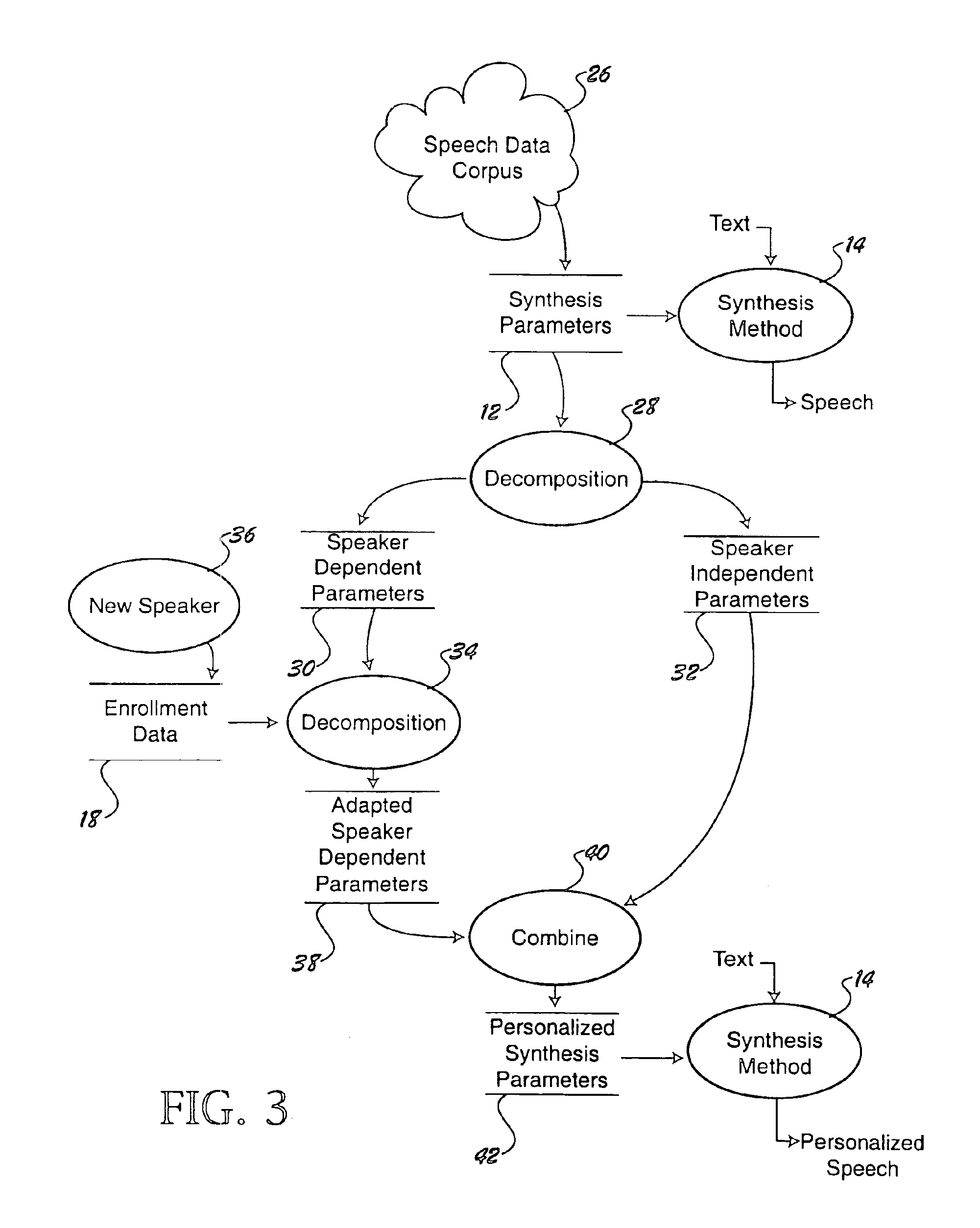

Voice personalization of speech synthesizer

InactiveUS6970820B2Excellent personalization resultMinimal computing burdenSpeech synthesisPersonalizationIndependent parameter

The speech synthesizer is personalized to sound like or mimic the speech characteristics of an individual speaker. The individual speaker provides a quantity of enrollment data, which can be extracted from a short quantity of speech, and the system modifies the base synthesis parameters to more closely resemble those of the new speaker. More specifically, the synthesis parameters may be decomposed into speaker dependent parameters, such as context-independent parameters, and speaker independent parameters, such as context dependent parameters. The speaker dependent parameters are adapted using enrollment data from the new speaker. After adaptation, the speaker dependent parameters are combined with the speaker independent parameters to provide a set of personalized synthesis parameters. To adapt the parameters with a small amount of enrollment data, an eigenspace is constructed and used to constrain the position of the new speaker so that context independent parameters not provided by the new speaker may be estimated.

Owner:SOVEREIGN PEAK VENTURES LLC

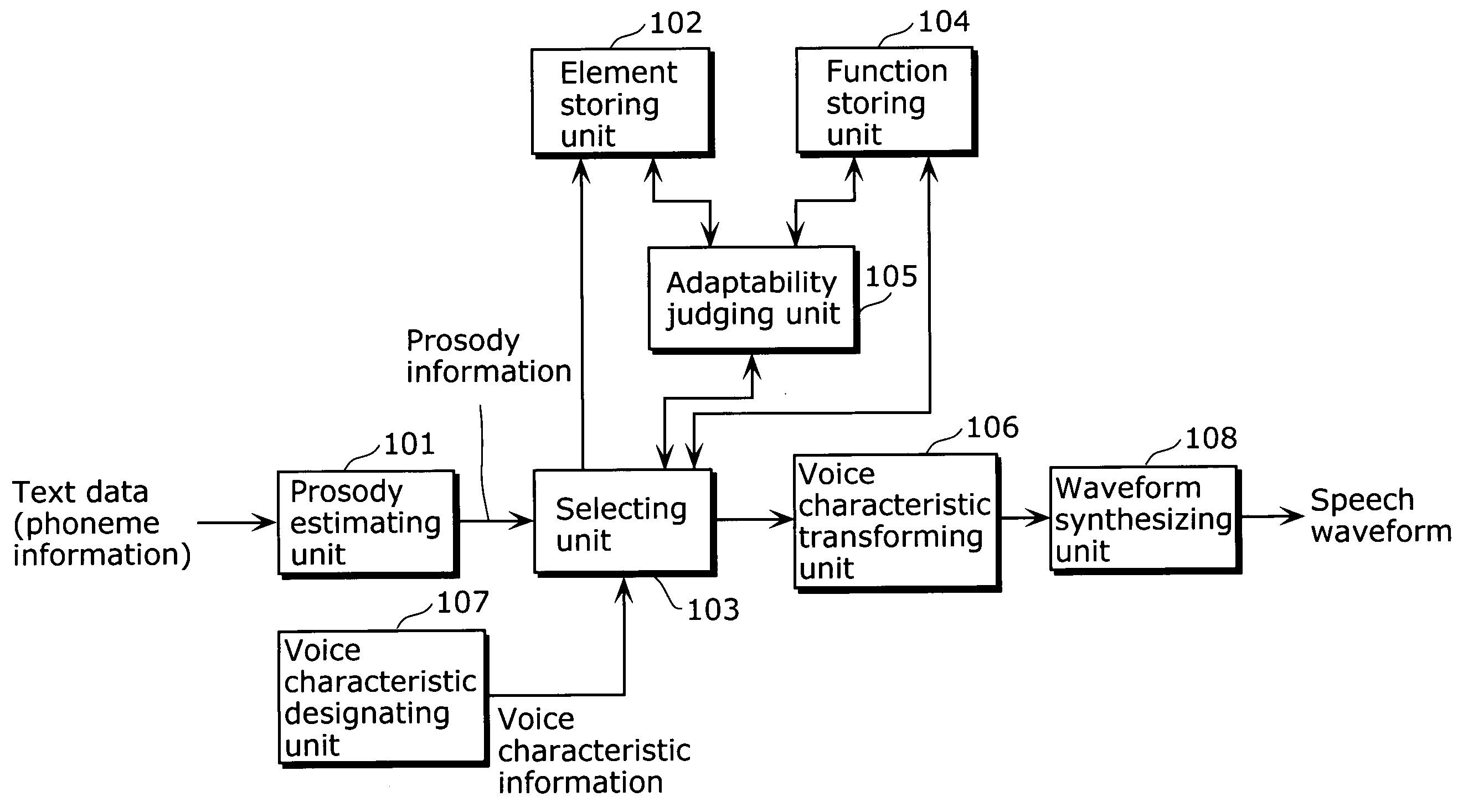

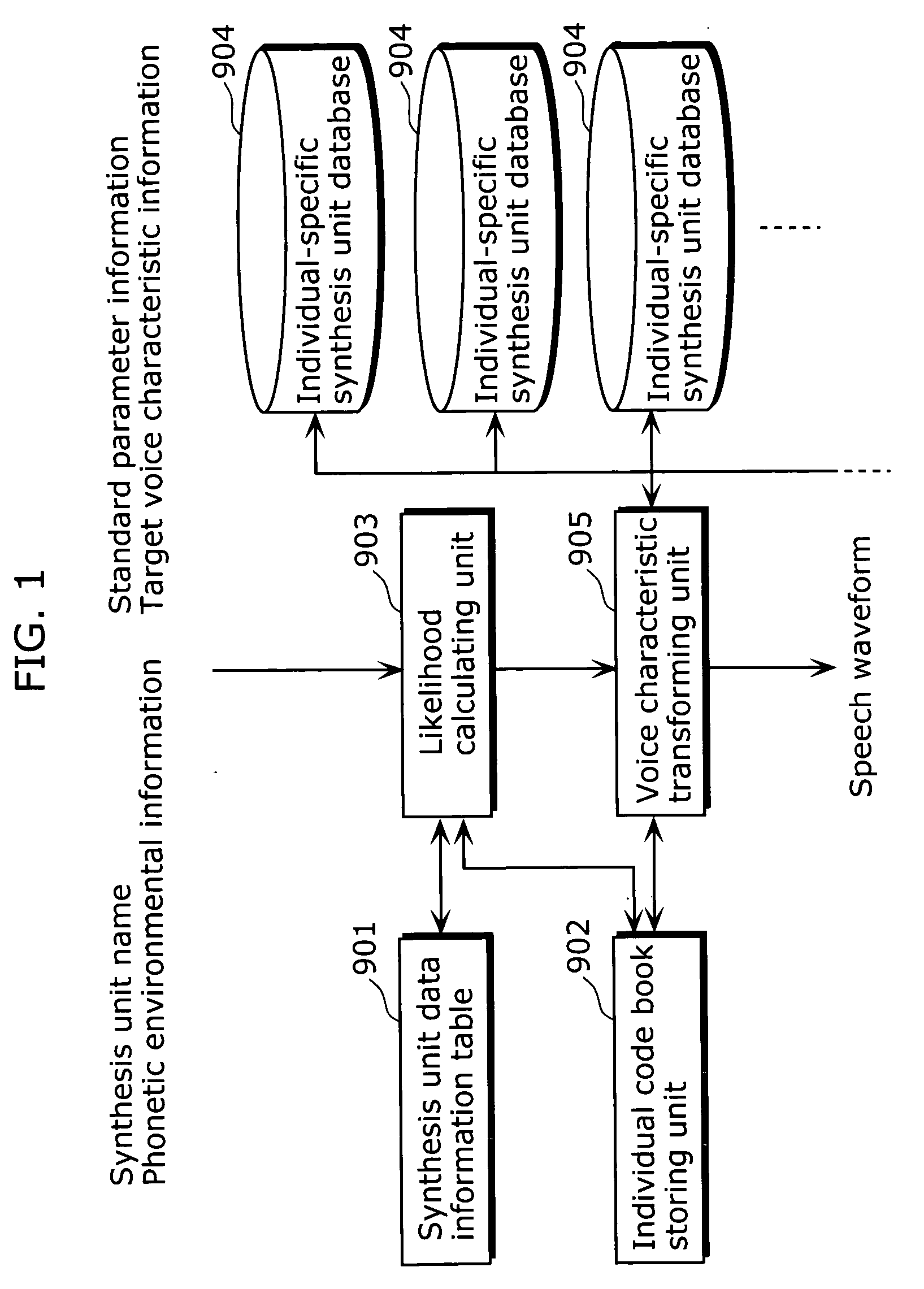

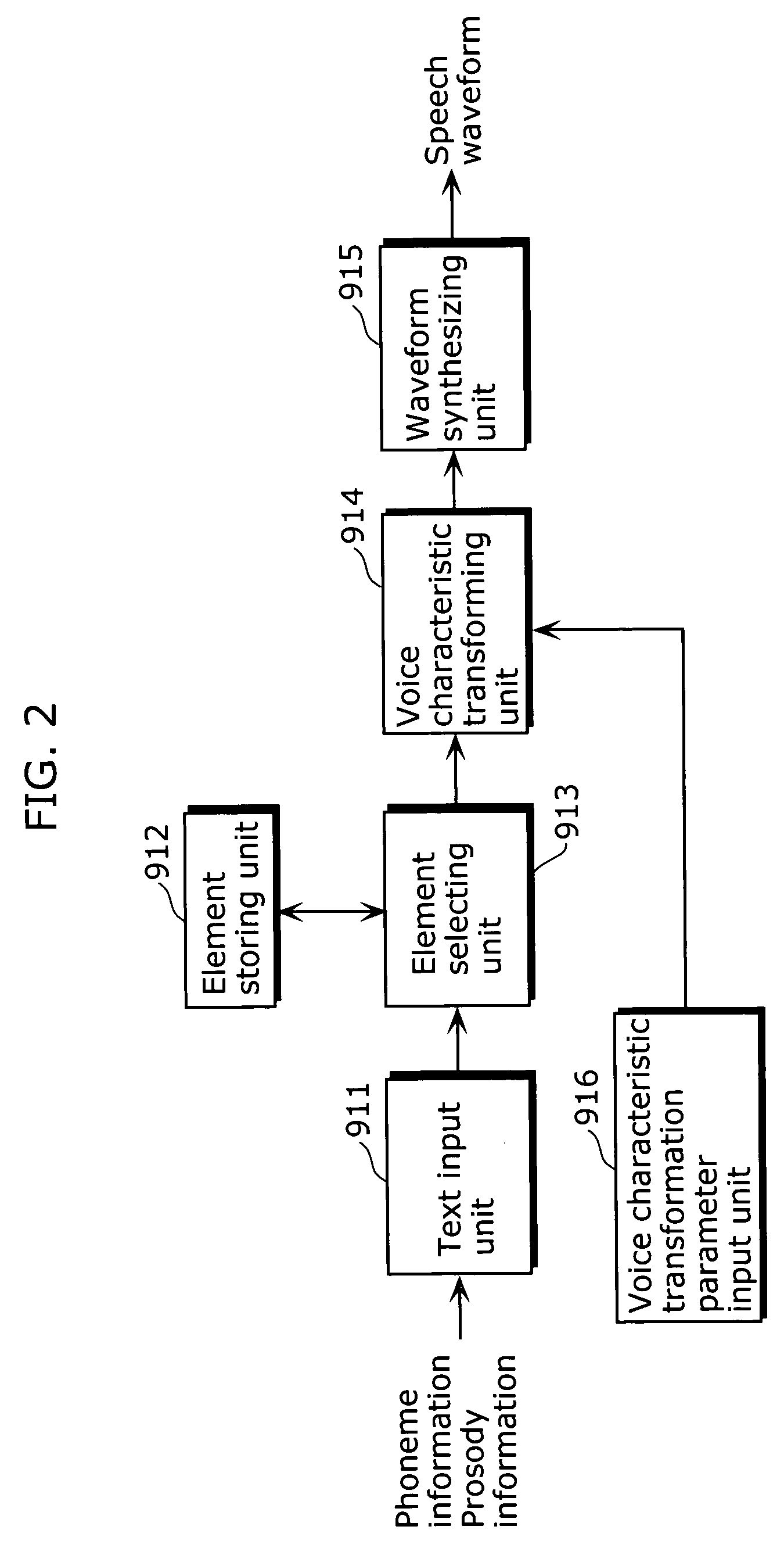

Speech synthesis apparatus and speech synthesis method

ActiveUS20060136213A1Appropriately transformedQuality improvementSpeech synthesisAcousticsSpeech synthesis

A speech synthesis apparatus which can appropriately transform a voice characteristic of a speech is provided. The speech synthesis apparatus includes an element storing unit in which speech elements are stored, a function storing unit in which transformation functions are stored, an adaptability judging unit which derives a degree of similarity by comparing a speech element stored in the element storing unit with an acoustic characteristic of the speech element used for generating a transformation function stored in the function storing unit, and a selecting unit and voice characteristic transforming unit which transforms, for each speech element stored in the element storing unit, based on the degree of similarity derived by the adaptability judging unit, a voice characteristic of the speech element by applying one of the transformation functions stored in the function storing unit.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

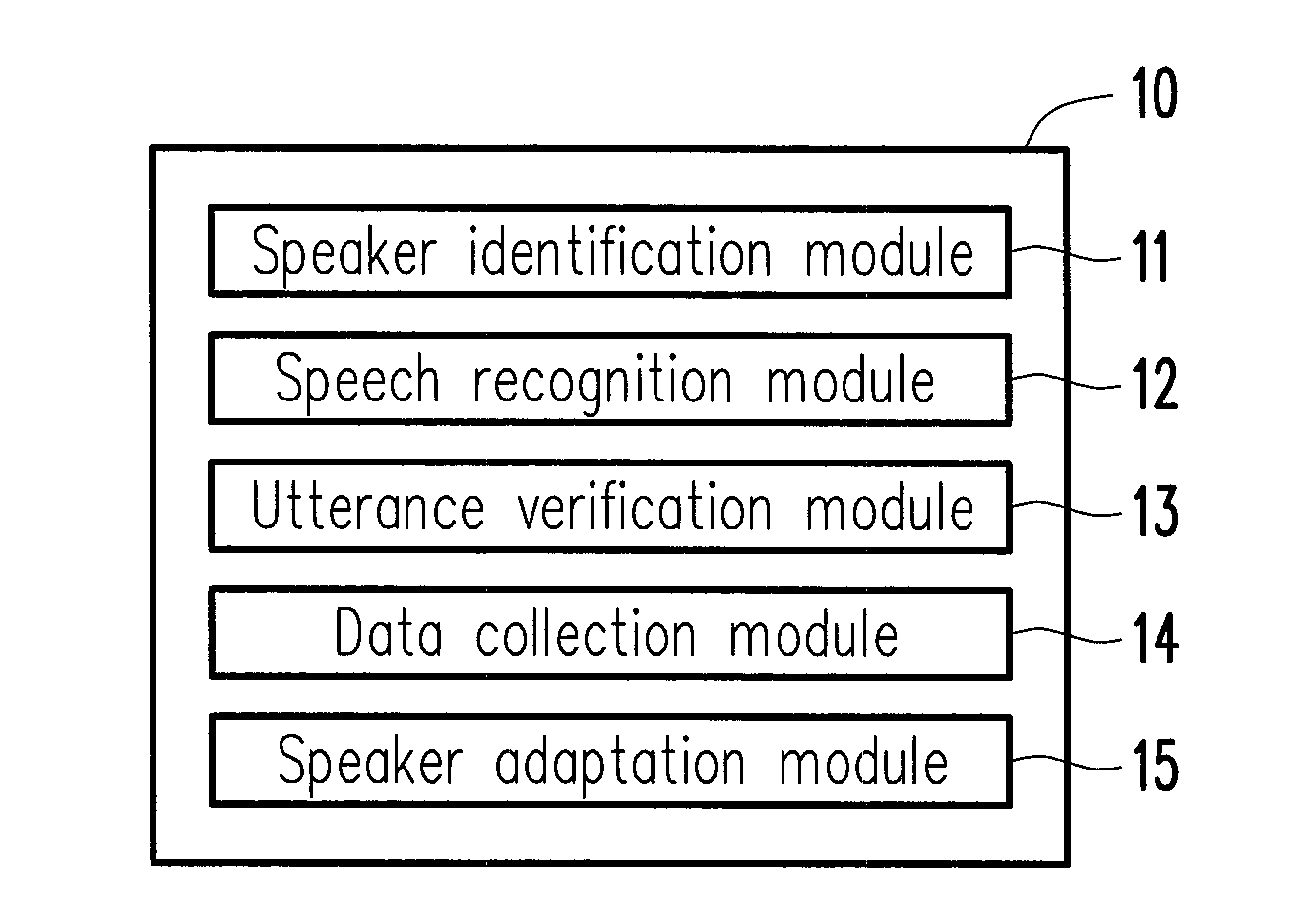

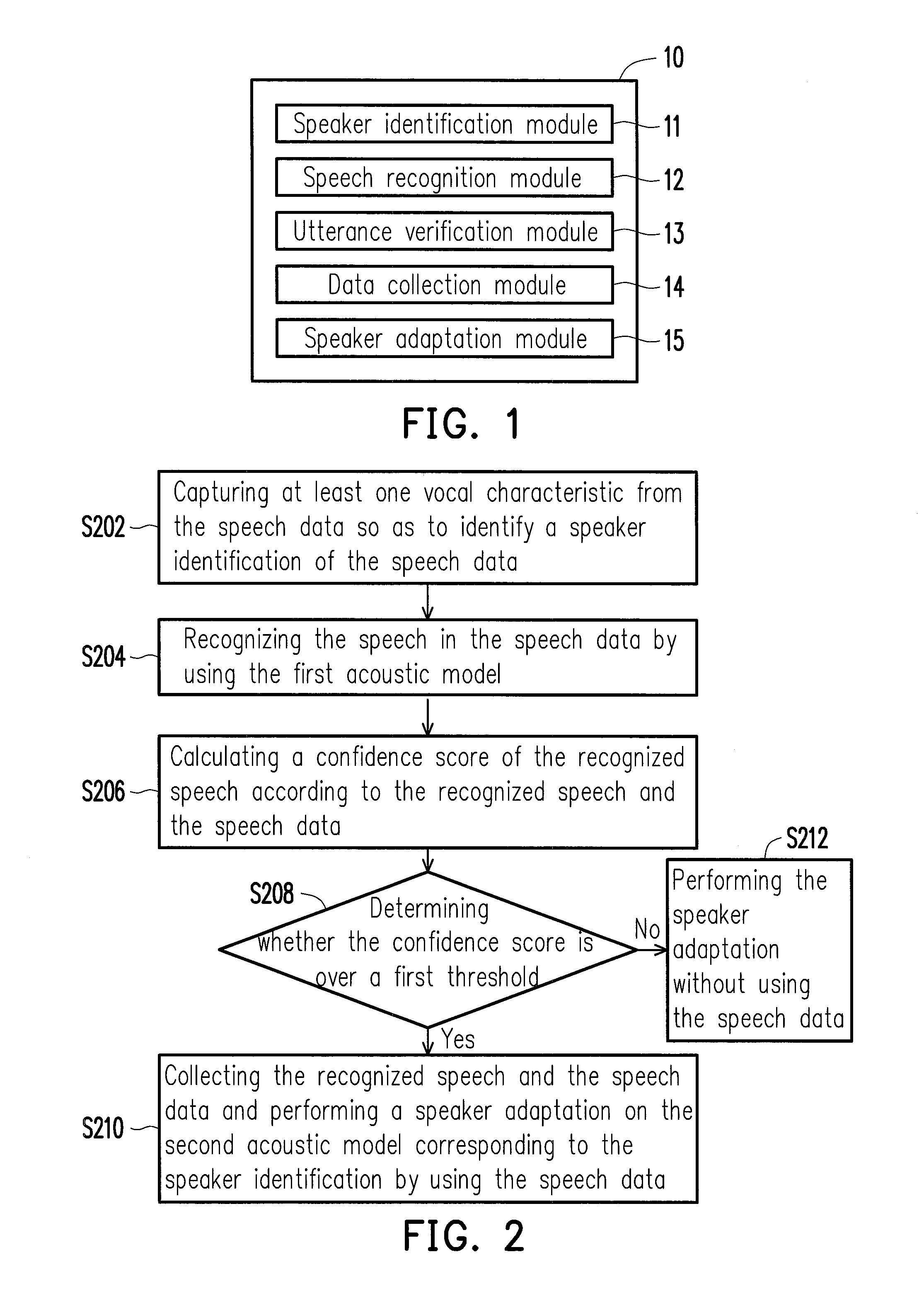

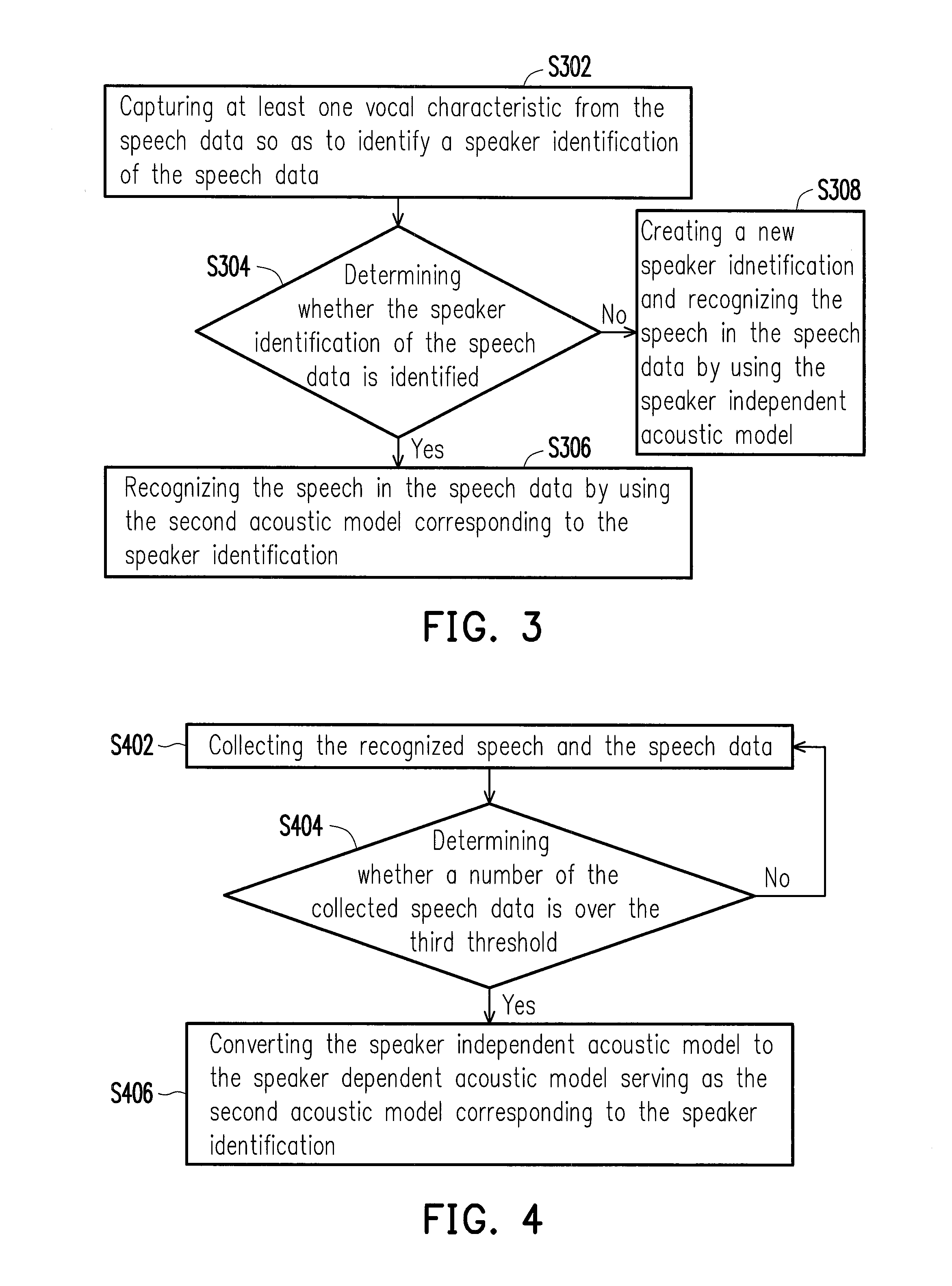

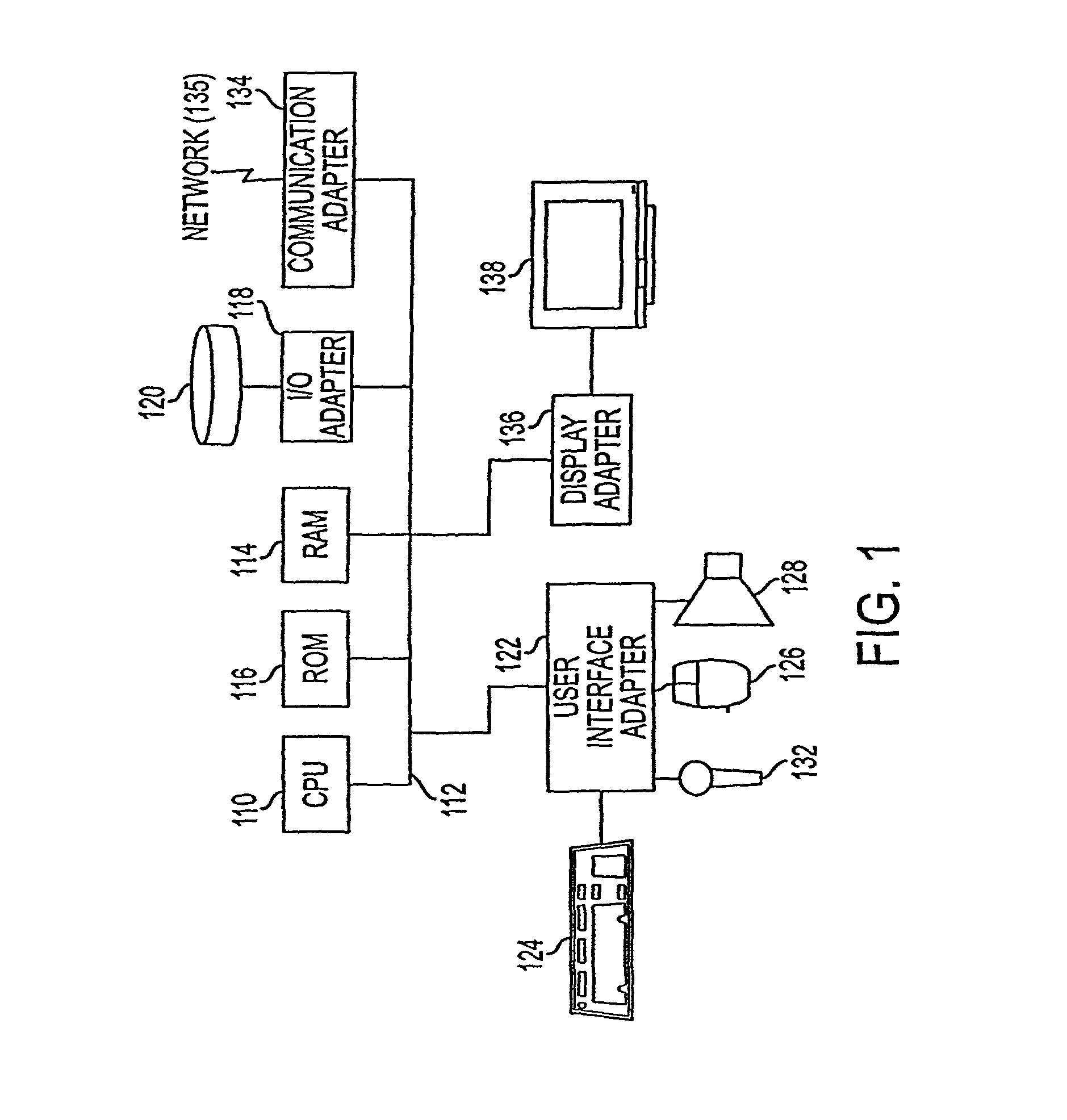

Method and system for speech recognition

InactiveUS20130311184A1Improve speech recognition accuracyImprove accuracySpeech recognitionSpeech identificationAcoustic model

A method and a system for speech recognition are provided. In the method, vocal characteristics are captured from speech data and used to identify a speaker identification of the speech data. Next, a first acoustic model is used to recognize a speech in the speech data. According to the recognized speech and the speech data, a confidence score of the speech recognition is calculated and it is determined whether the confidence score is over a threshold. If the confidence score is over the threshold, the recognized speech and the speech data are collected, and the collected speech data is used for performing a speaker adaptation on a second acoustic model corresponding to the speaker identification.

Owner:ASUSTEK COMPUTER INC

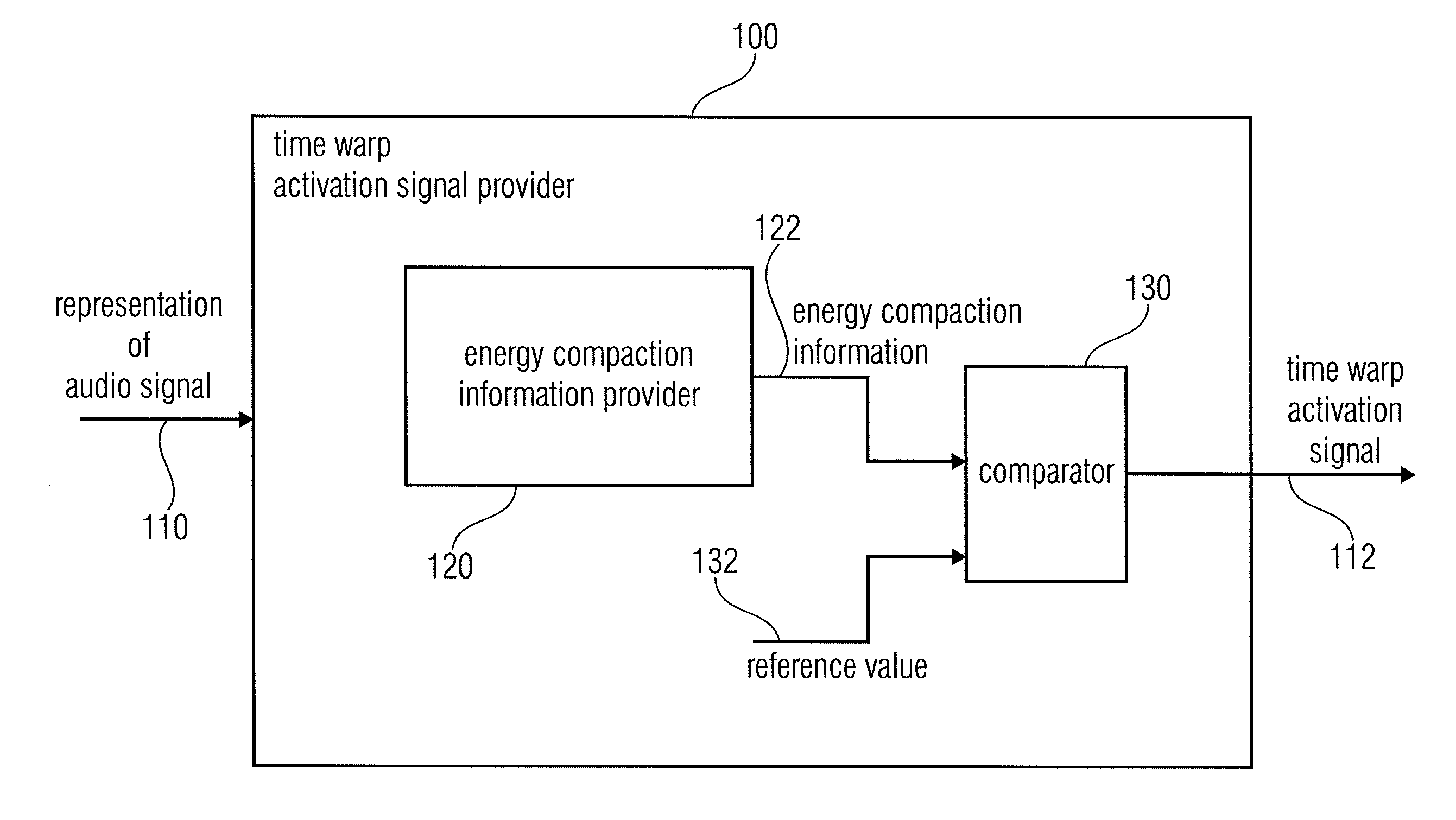

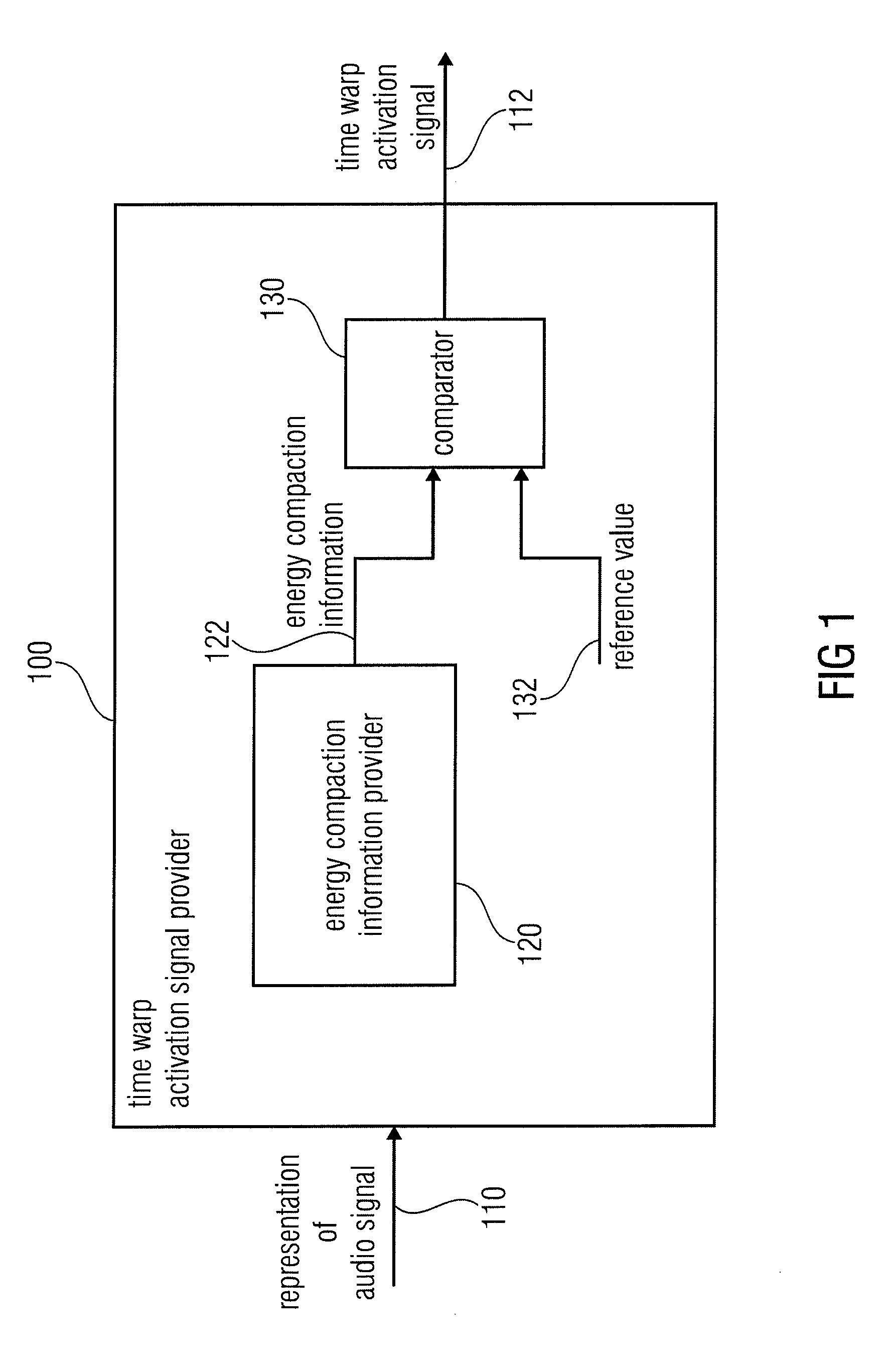

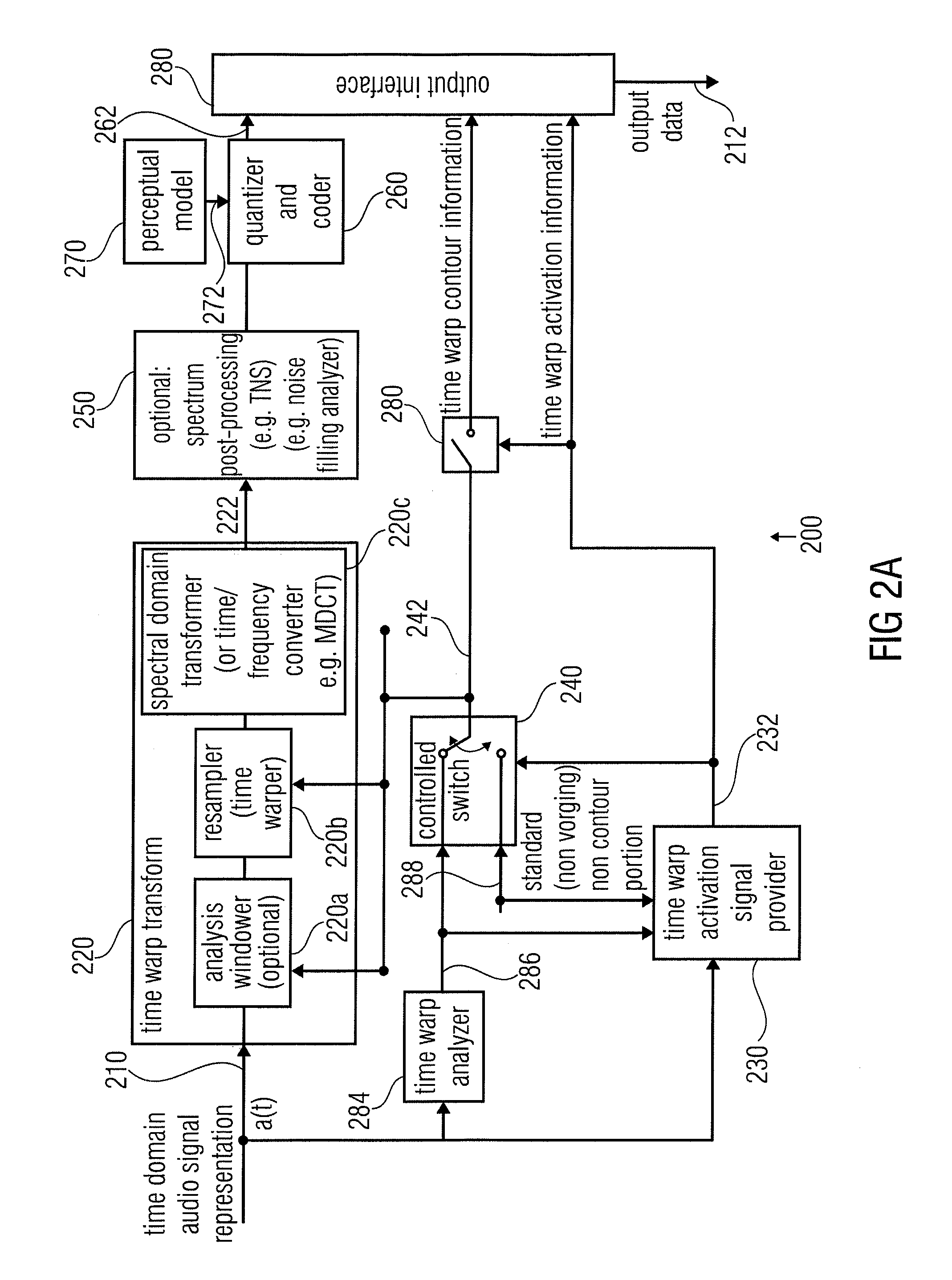

Time warp activation signal provider, audio signal encoder, method for providing a time warp activation signal, method for encoding an audio signal and computer programs

An audio encoder has a window function controller, a windower, a time warper with a final quality check functionality, a time / frequency converter, a TNS stage or a quantizer encoder, the window function controller, the time warper, the TNS stage or an additional noise filling analyzer are controlled by signal analysis results obtained by a time warp analyzer or a signal classifier. Furthermore, a decoder applies a noise filling operation using a manipulated noise filling estimate depending on a harmonic or speech characteristic of the audio signal.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

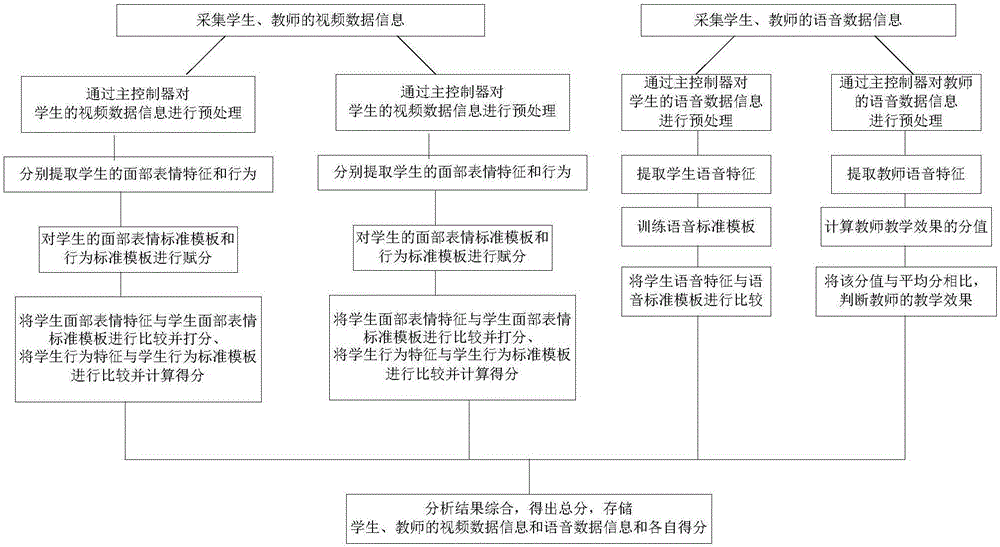

Classroom behavior monitoring system and method based on face and voice recognition

ActiveCN106851216AImprove developmentImprove learning effectSpeech analysisClosed circuit television systemsFacial expressionSpeech sound

Owner:SHANDONG NORMAL UNIV

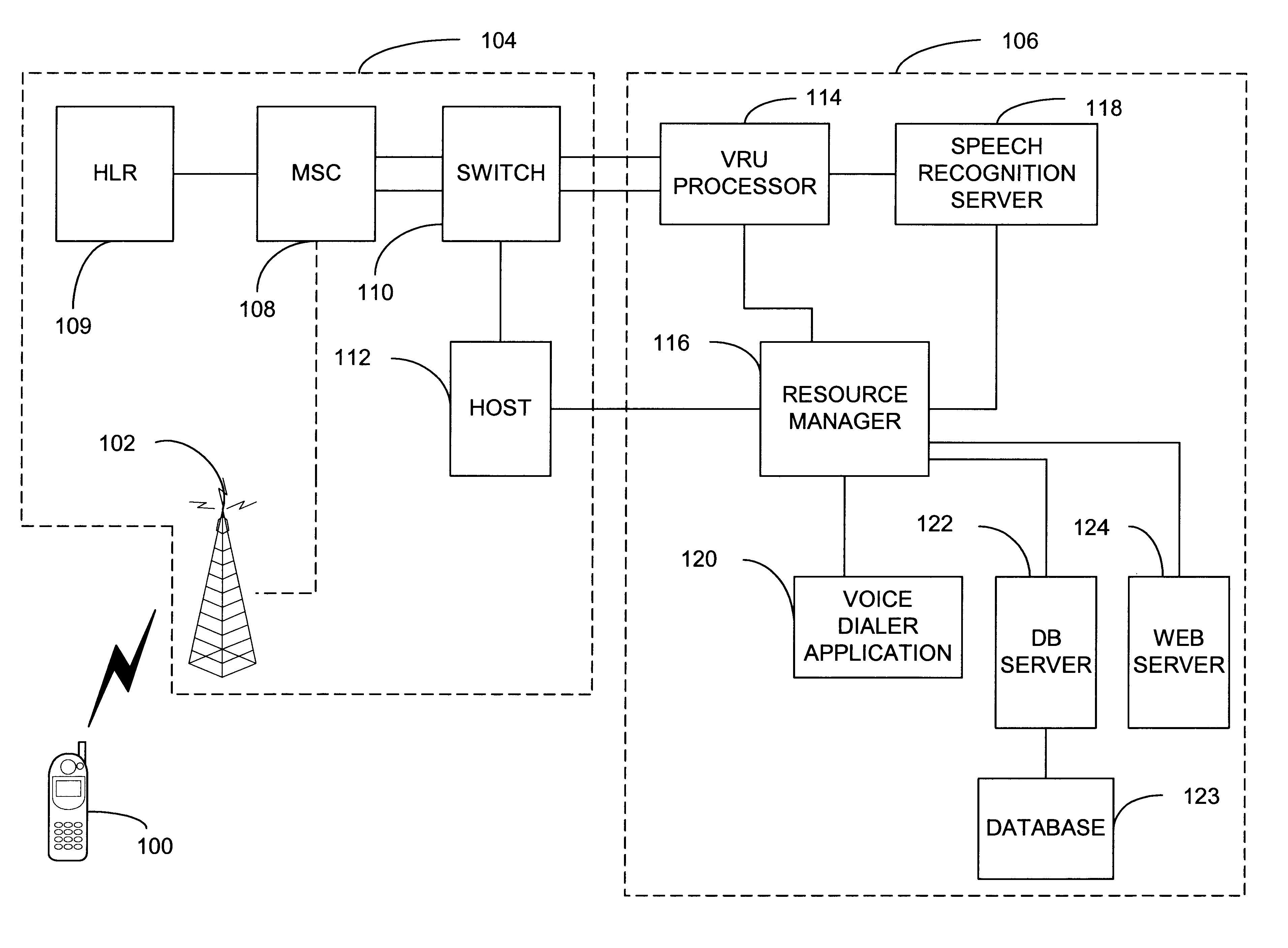

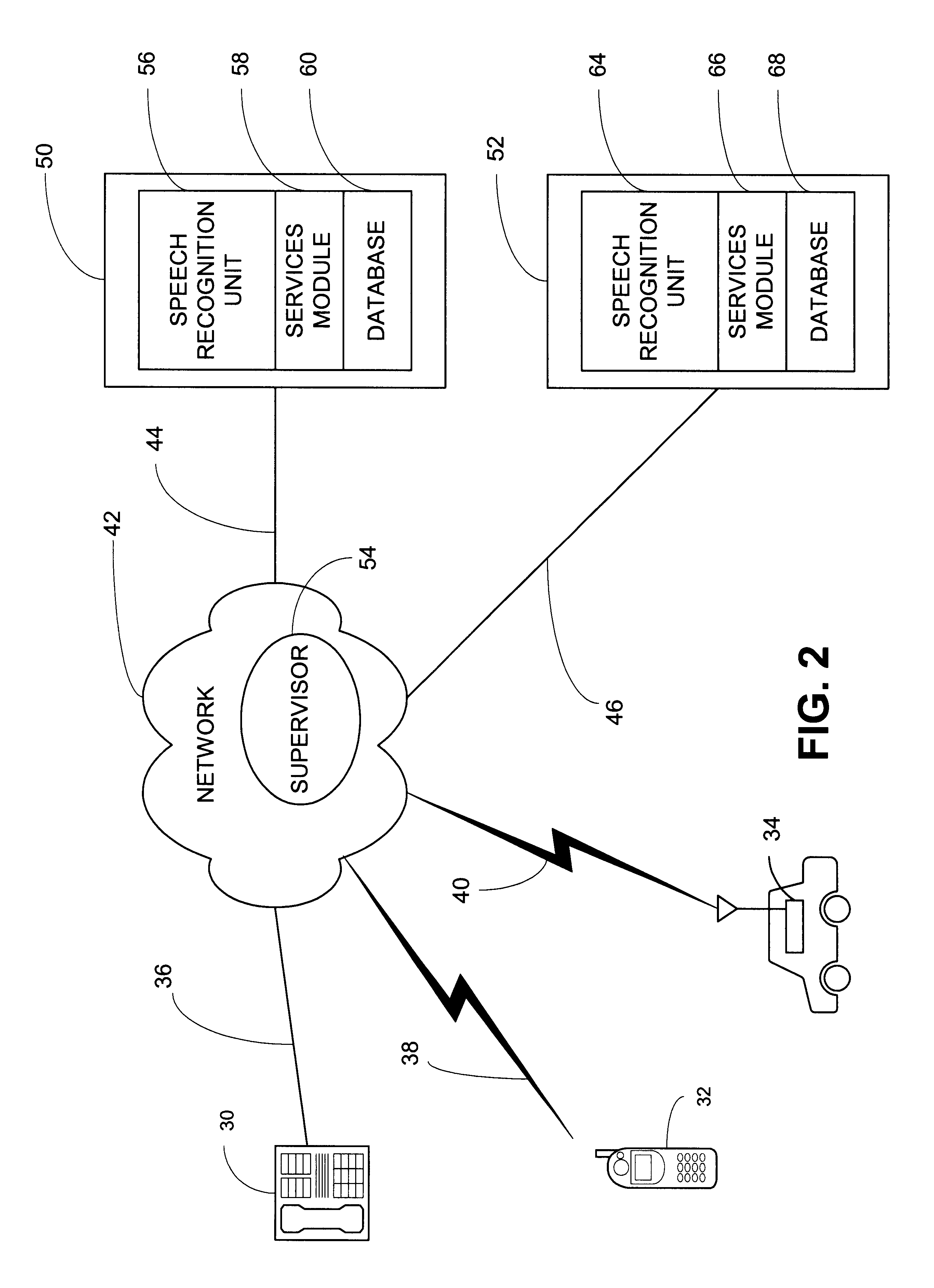

Speech recognition that adjusts automatically to input devices

A system and method for customizing the operating characteristics of a speech recognition system with characteristics of a user device. The user device transmits information representing the operating characteristics of itself to the speech recognition system. The speech recognition system determines the speech characteristics of the user device from this information. The speech recognition system obtains the speech characteristics relating to the device from a database and configures the speech recognition system with these characteristics.

Owner:SPRING SPECTRUM LP

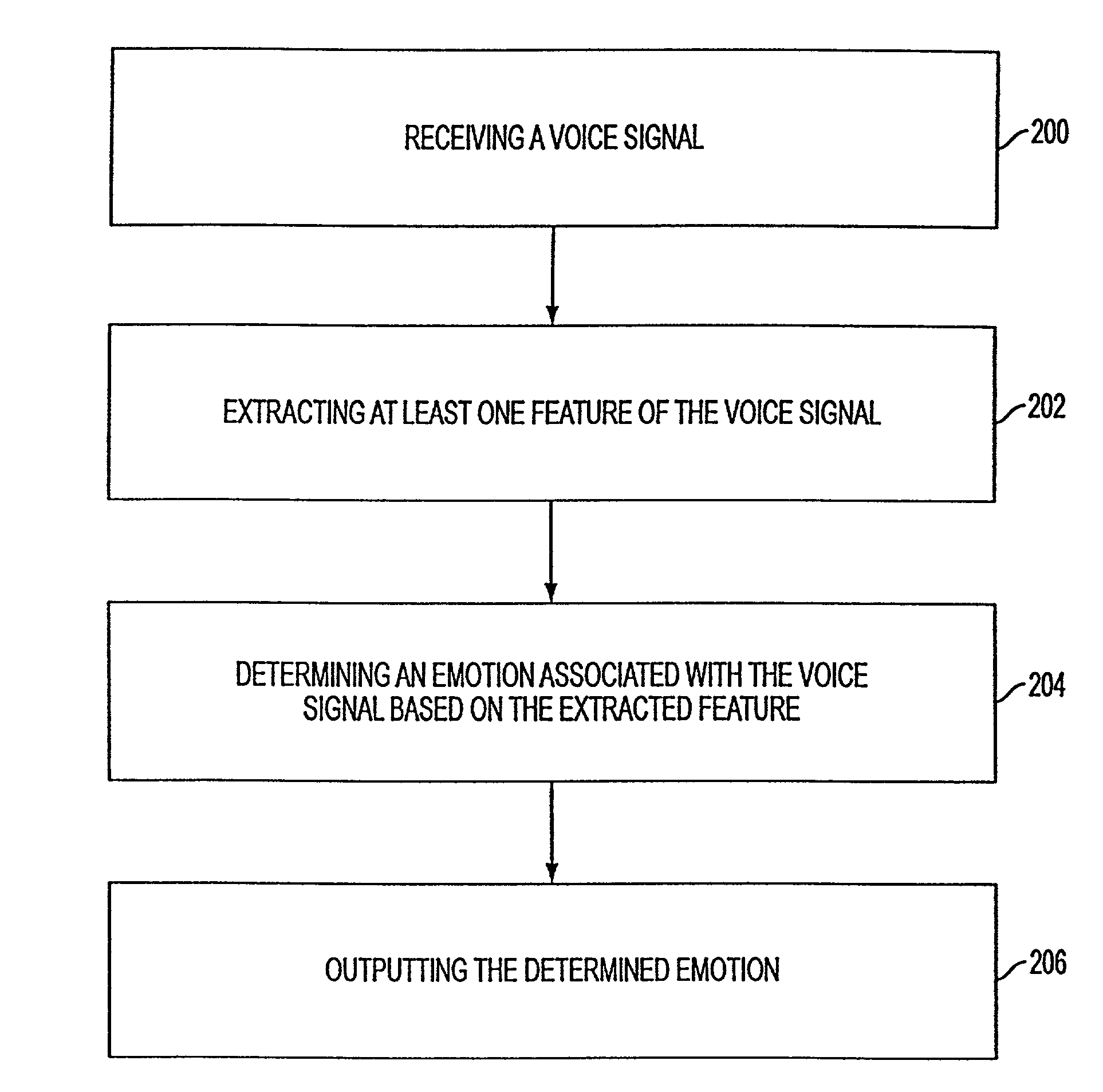

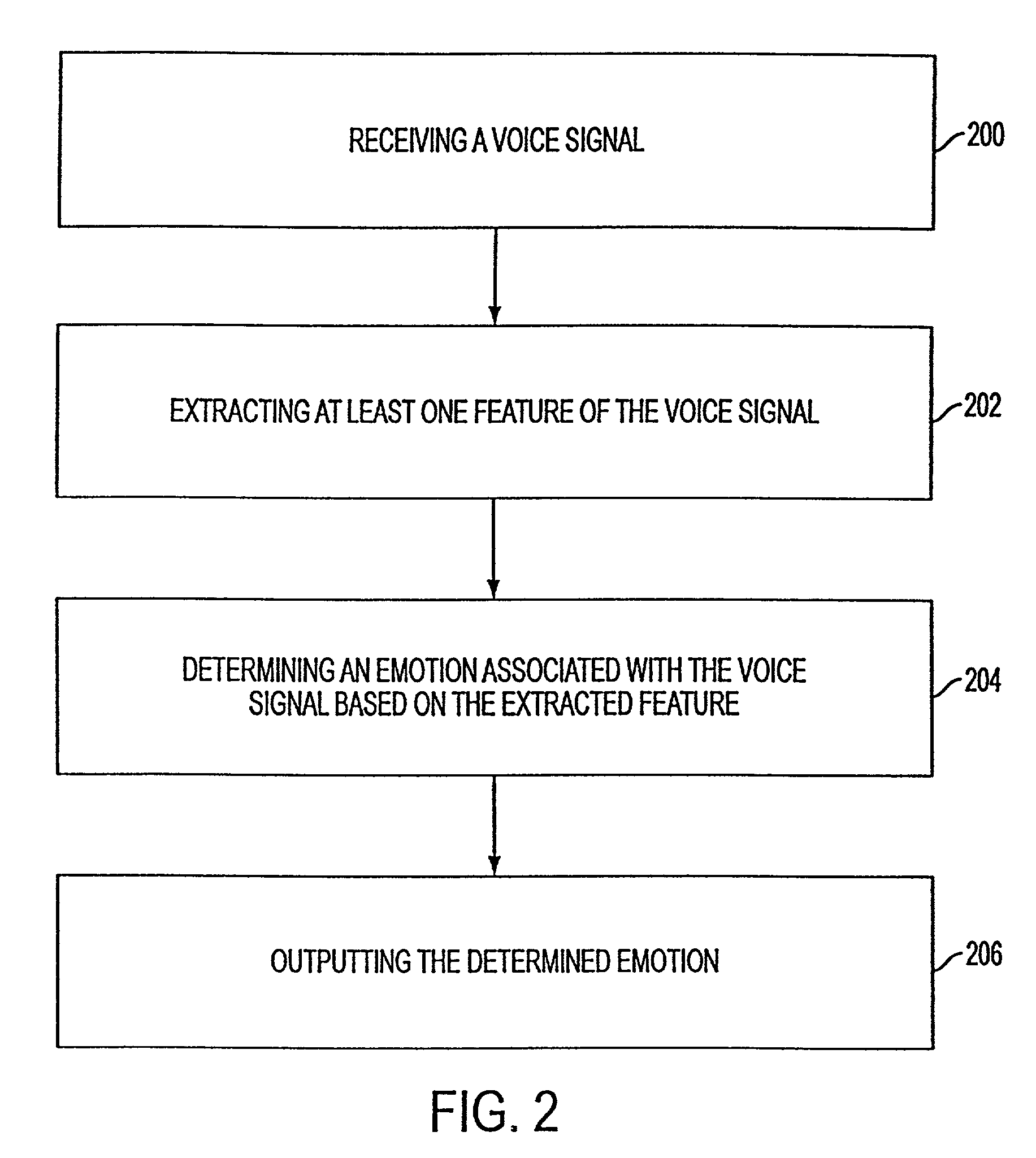

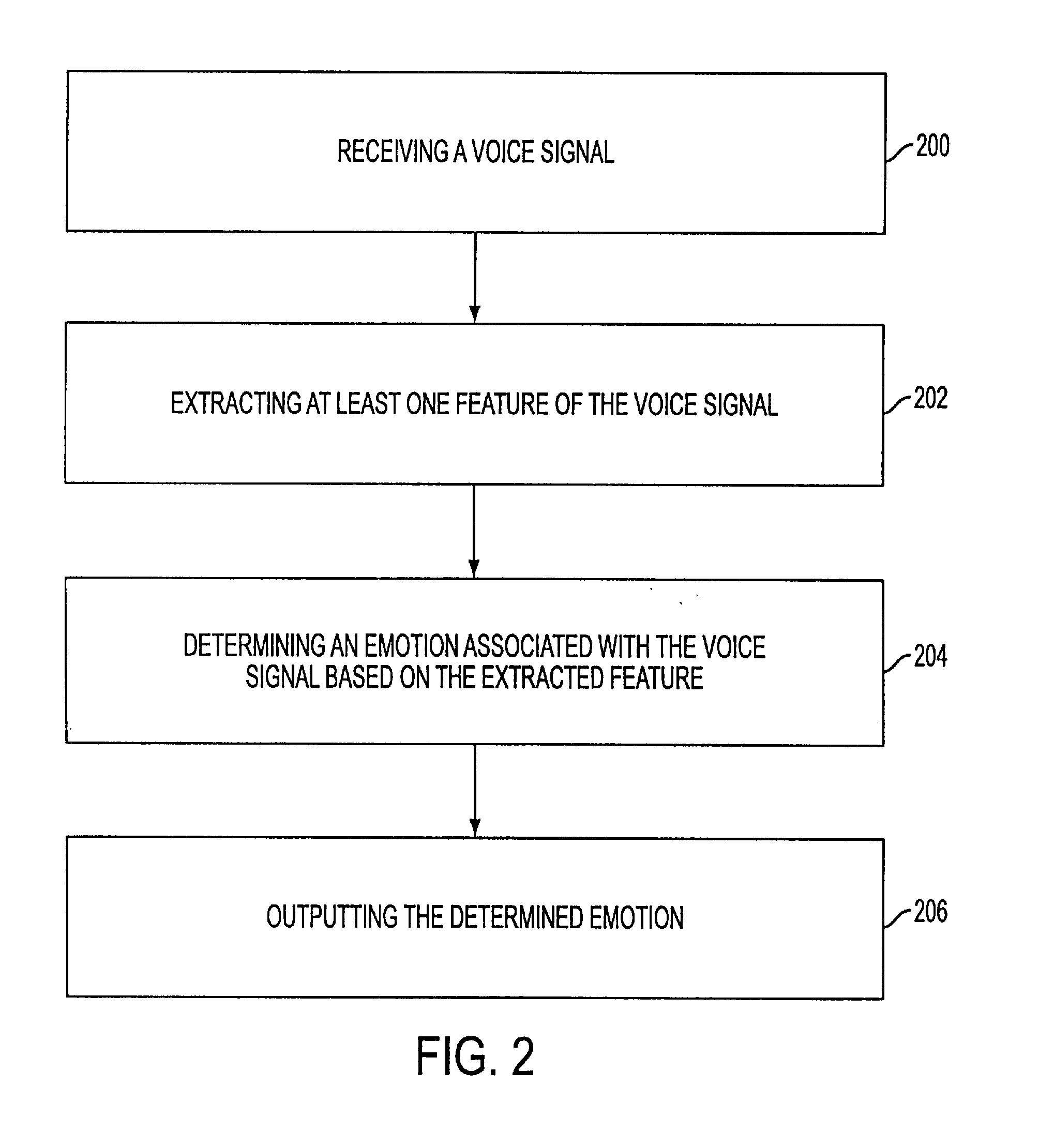

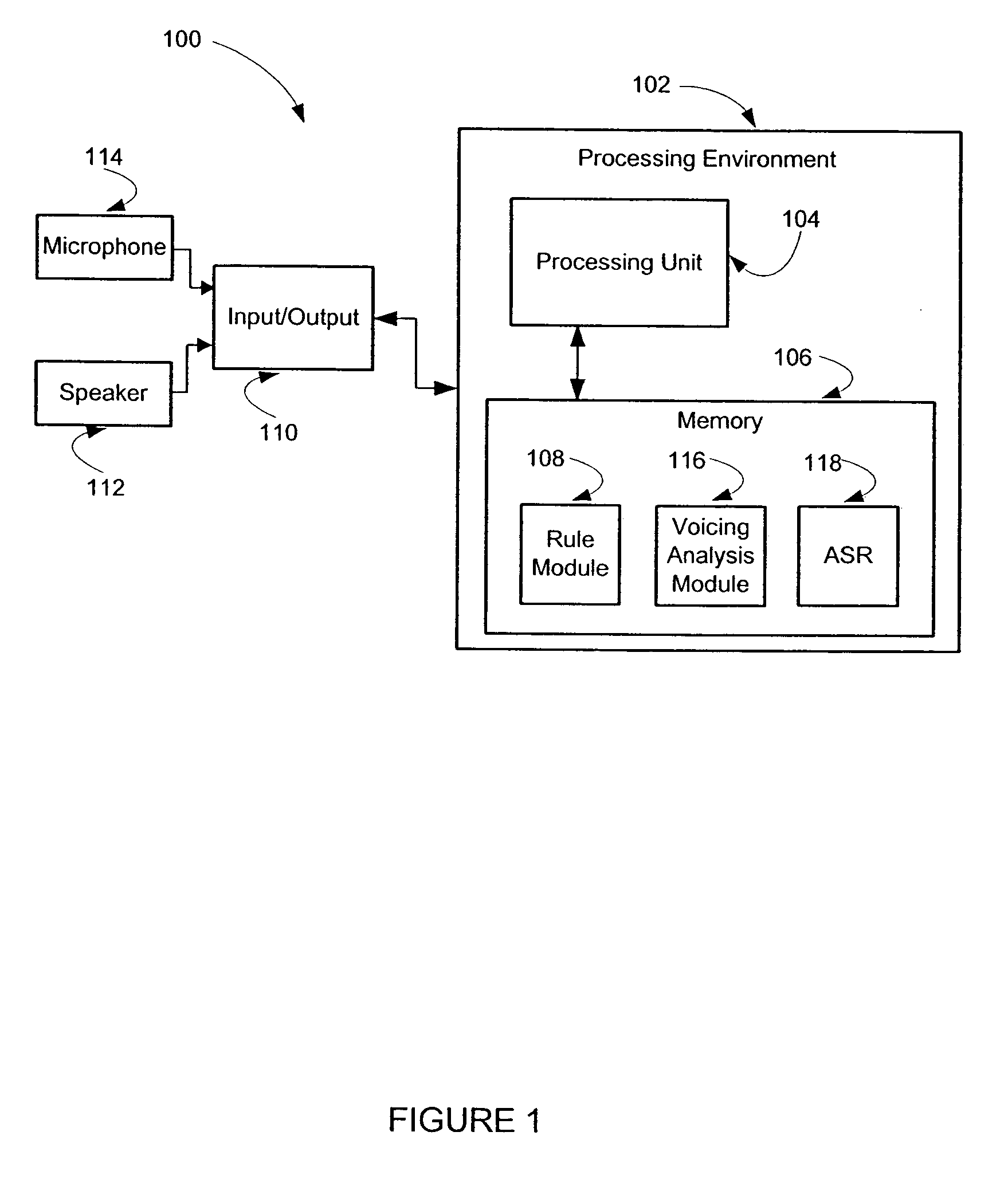

Detecting emotion in voice signals in a call center

A system, method and article of manufacture are provided for detecting emotion using statistics. First, a database is provided. The database has statistics including human associations of voice parameters with emotions. Next, a voice signal is received. At least one feature is extracted from the voice signal. Then the extracted voice feature is compared to the voice parameters in the database. An emotion is selected from the database based on the comparison of the extracted voice feature to the voice parameters and is then output.

Owner:ACCENTURE GLOBAL SERVICES LTD

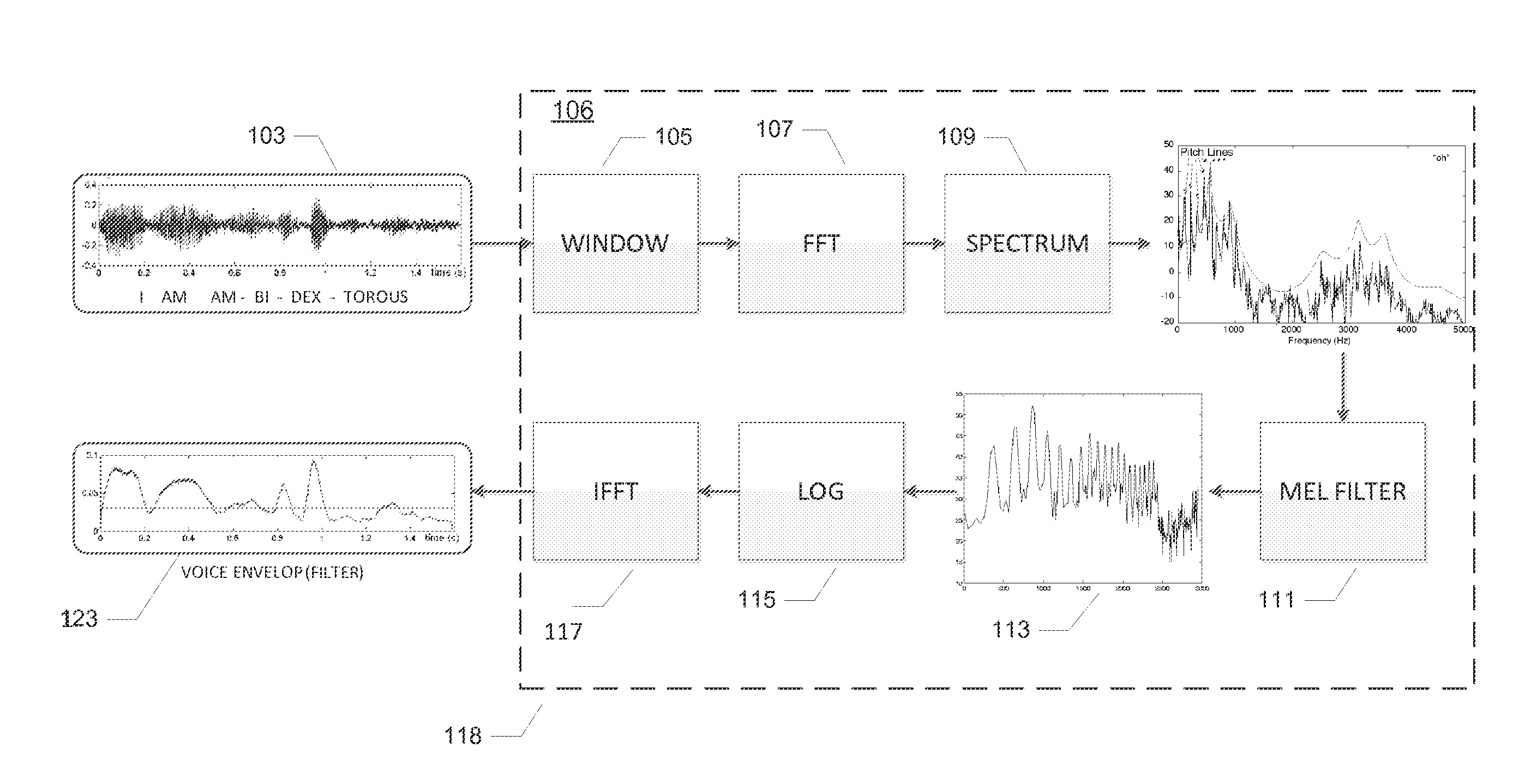

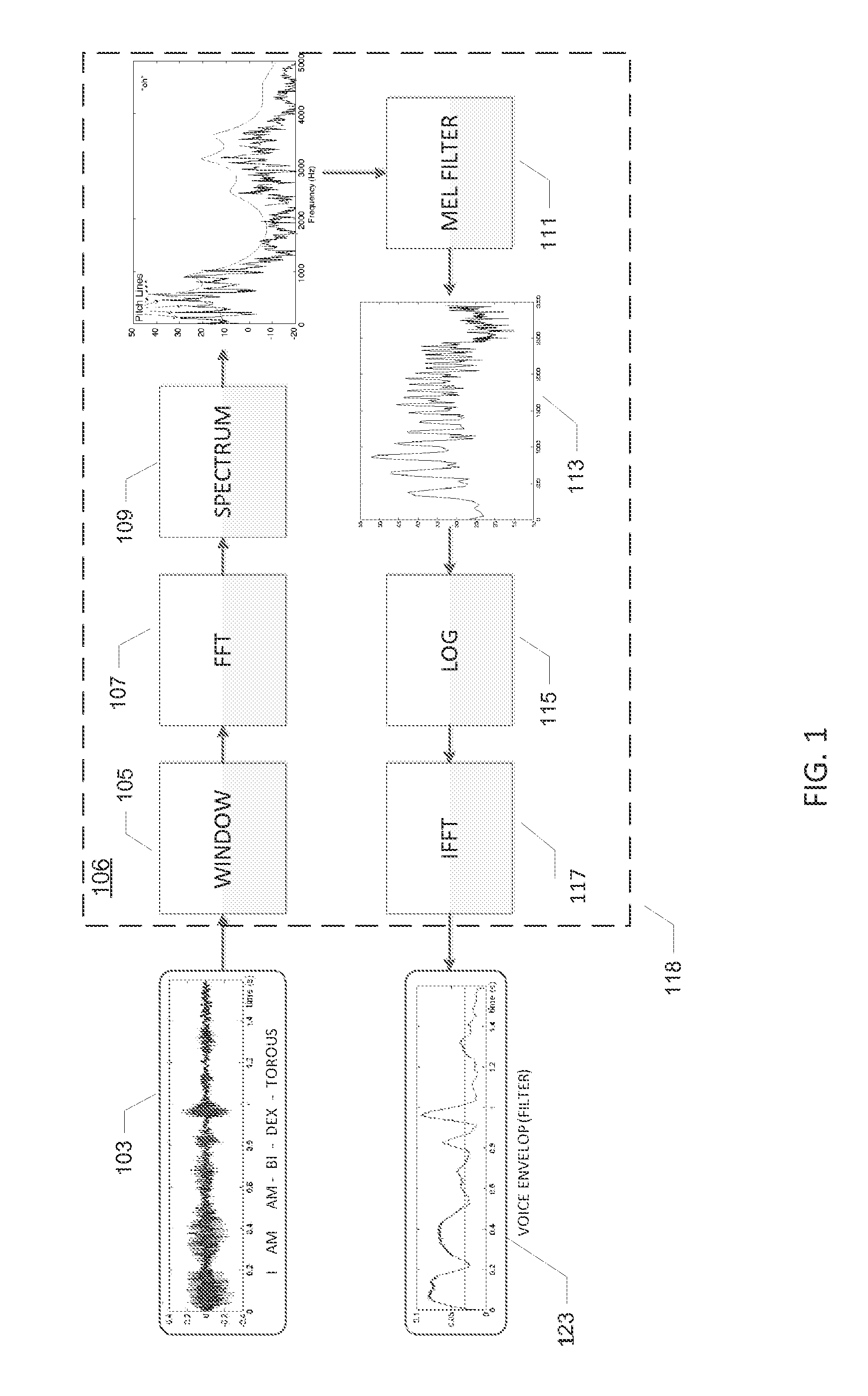

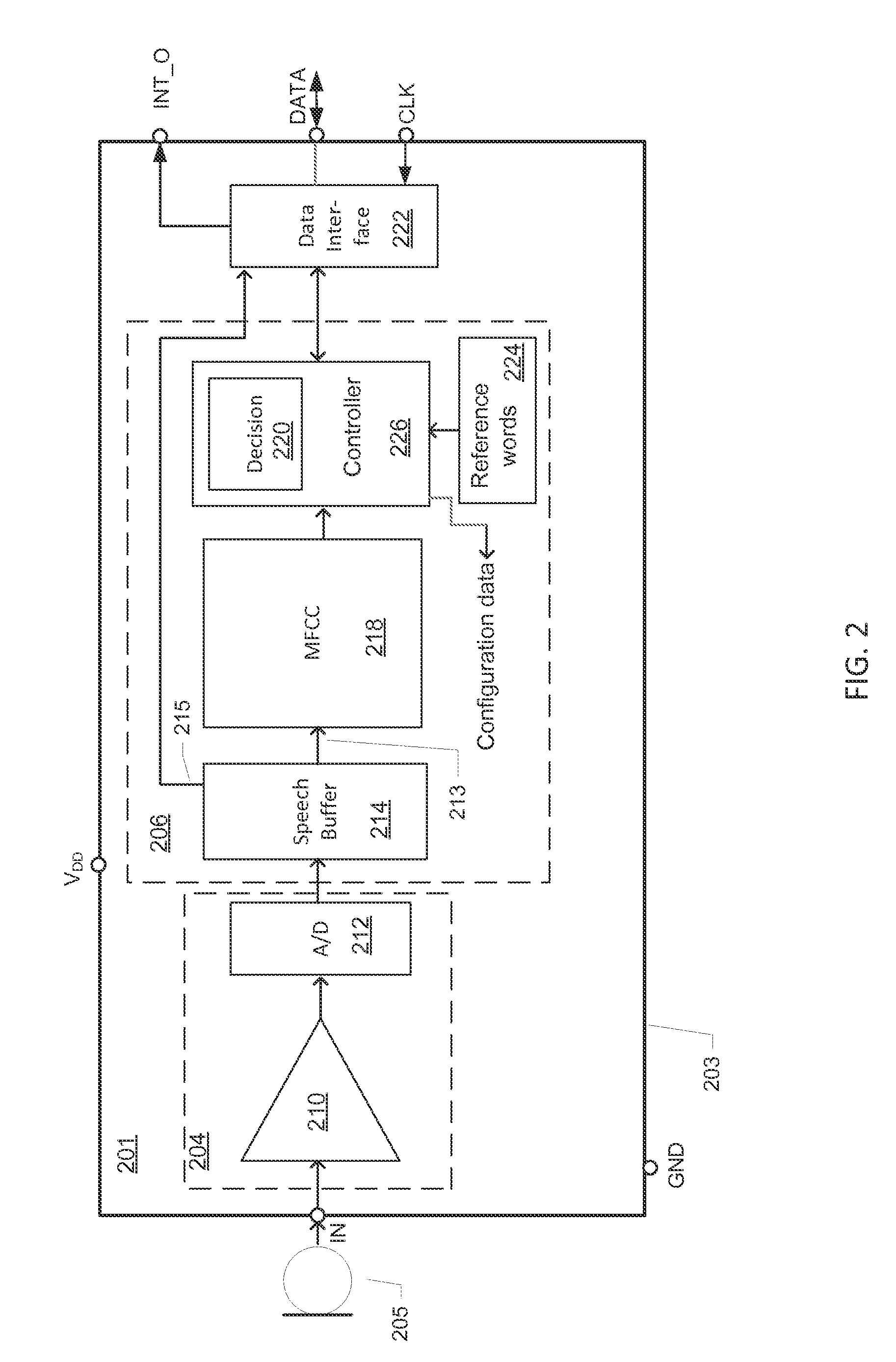

Microphone circuit assembly and system with speech recognition

ActiveUS20140257813A1Reduce consumptionReduce loadHearing aids signal processingSpeech recognitionAnalog-to-digital converterApplication processor

A microphone circuit assembly for an external application processor, such as a programmable Digital Signal Processor, may include a microphone preamplifier and analog-to-digital converter to generate microphone signal samples at a first predetermined sample rate. A speech feature extractor is configured for receipt and processing of predetermined blocks of the microphone signal samples to extract speech feature vectors representing speech features of the microphone signal samples. The microphone circuit assembly may include a speech vocabulary comprising a target word or target phrase of human speech encoded as a set of target feature vectors and a decision circuit is configured to compare the speech feature vectors generated by the speech feature extractor with the target feature vectors to detect the target speech word or phrase.

Owner:ANALOG DEVICES INT UNLTD

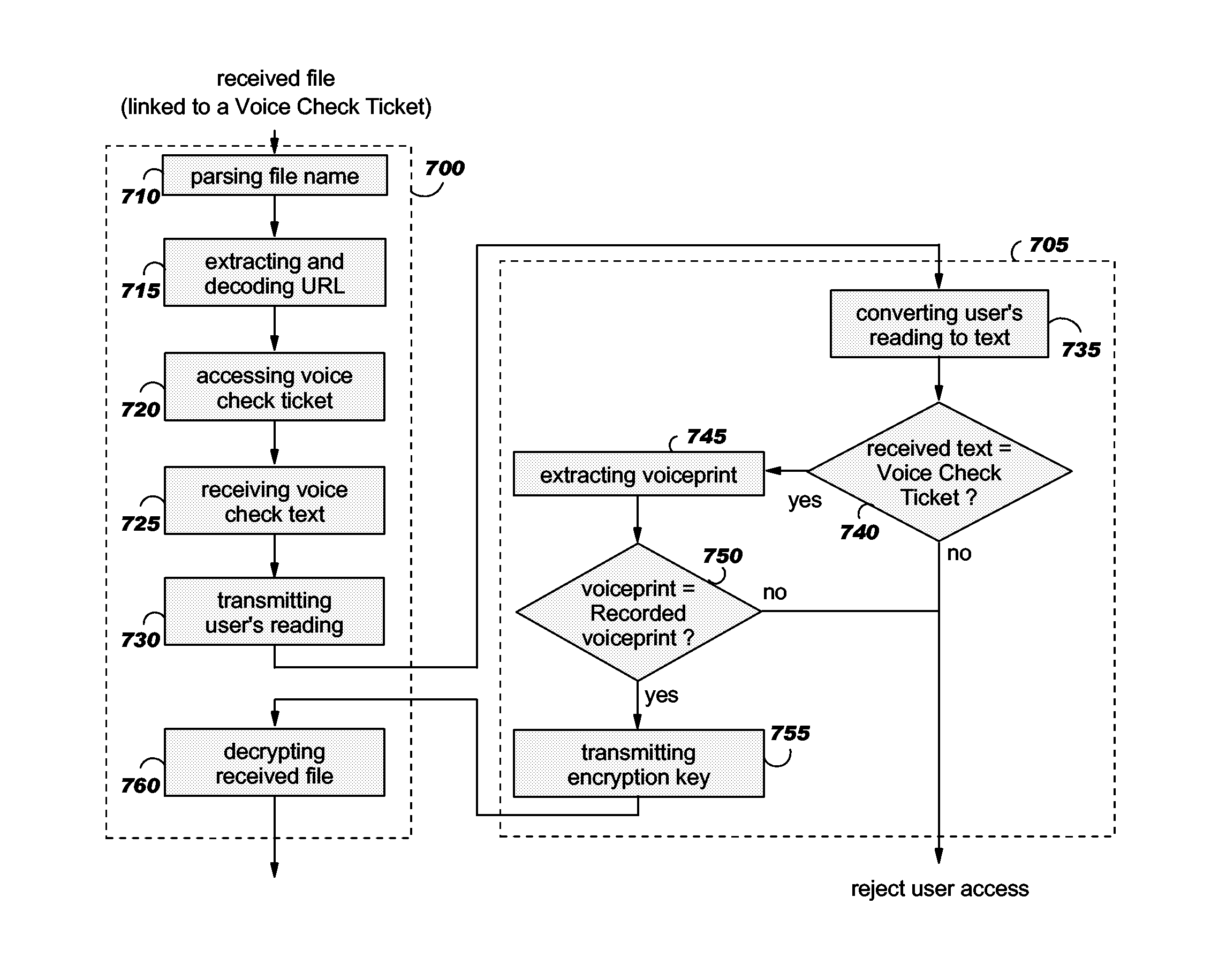

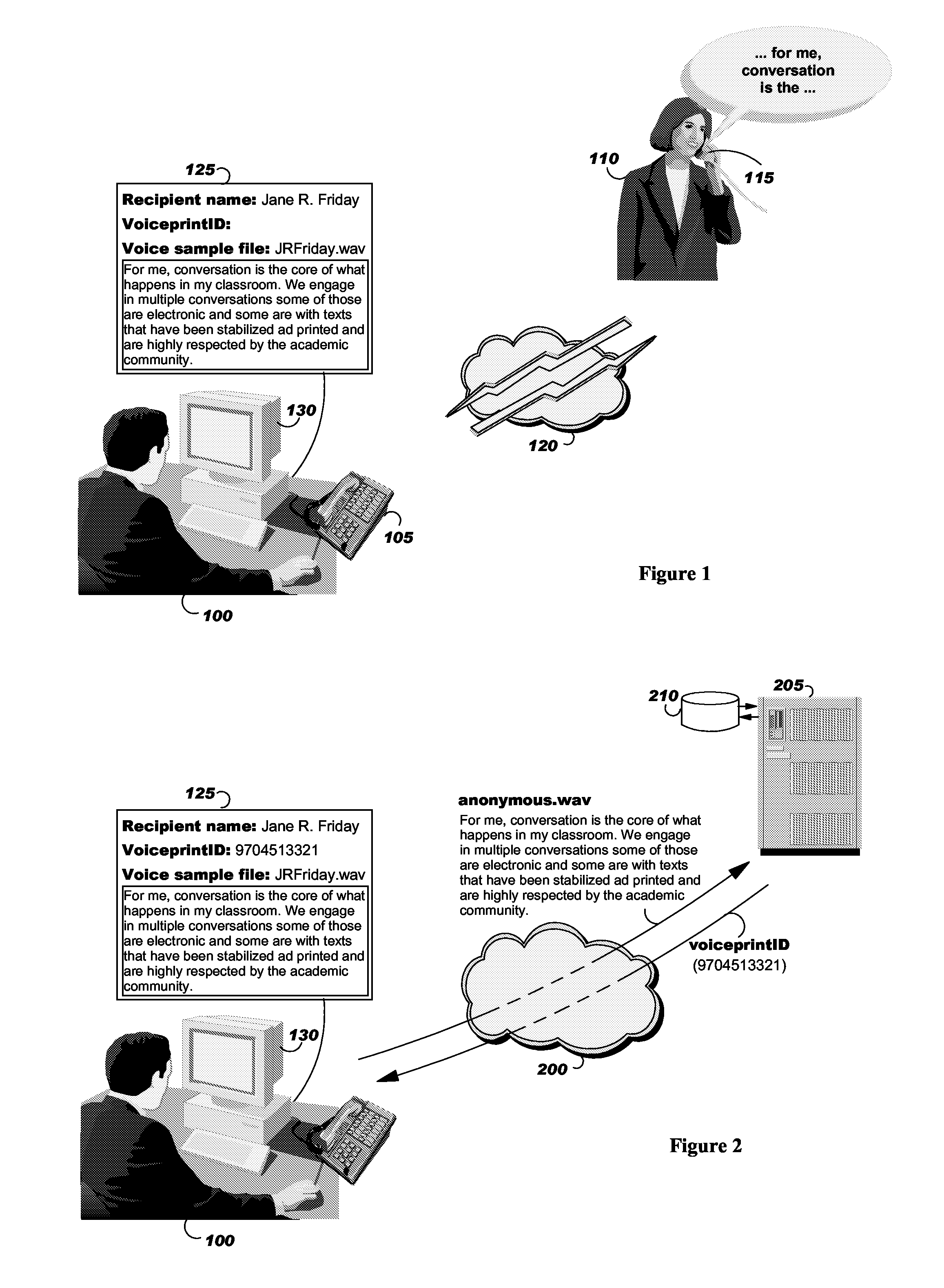

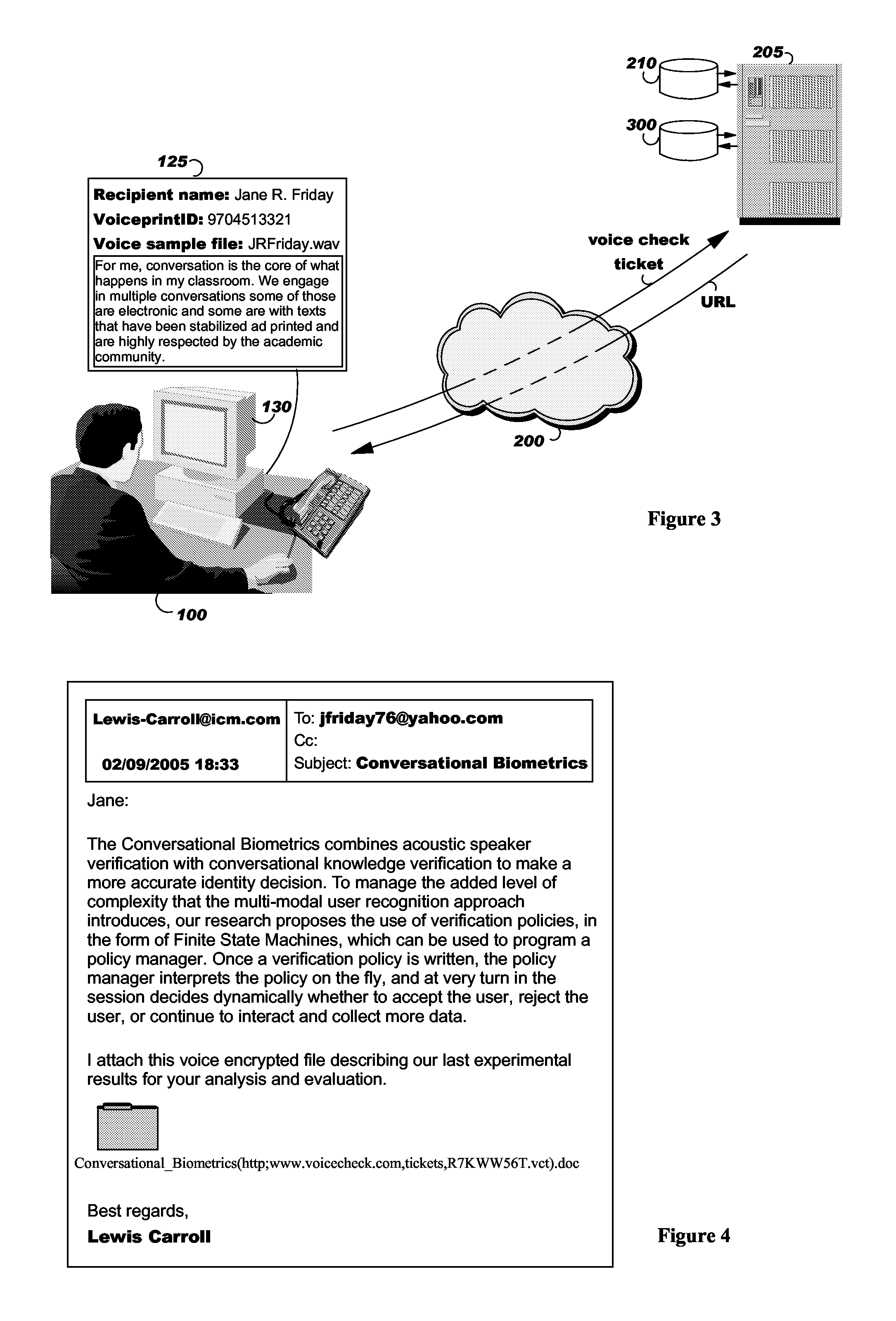

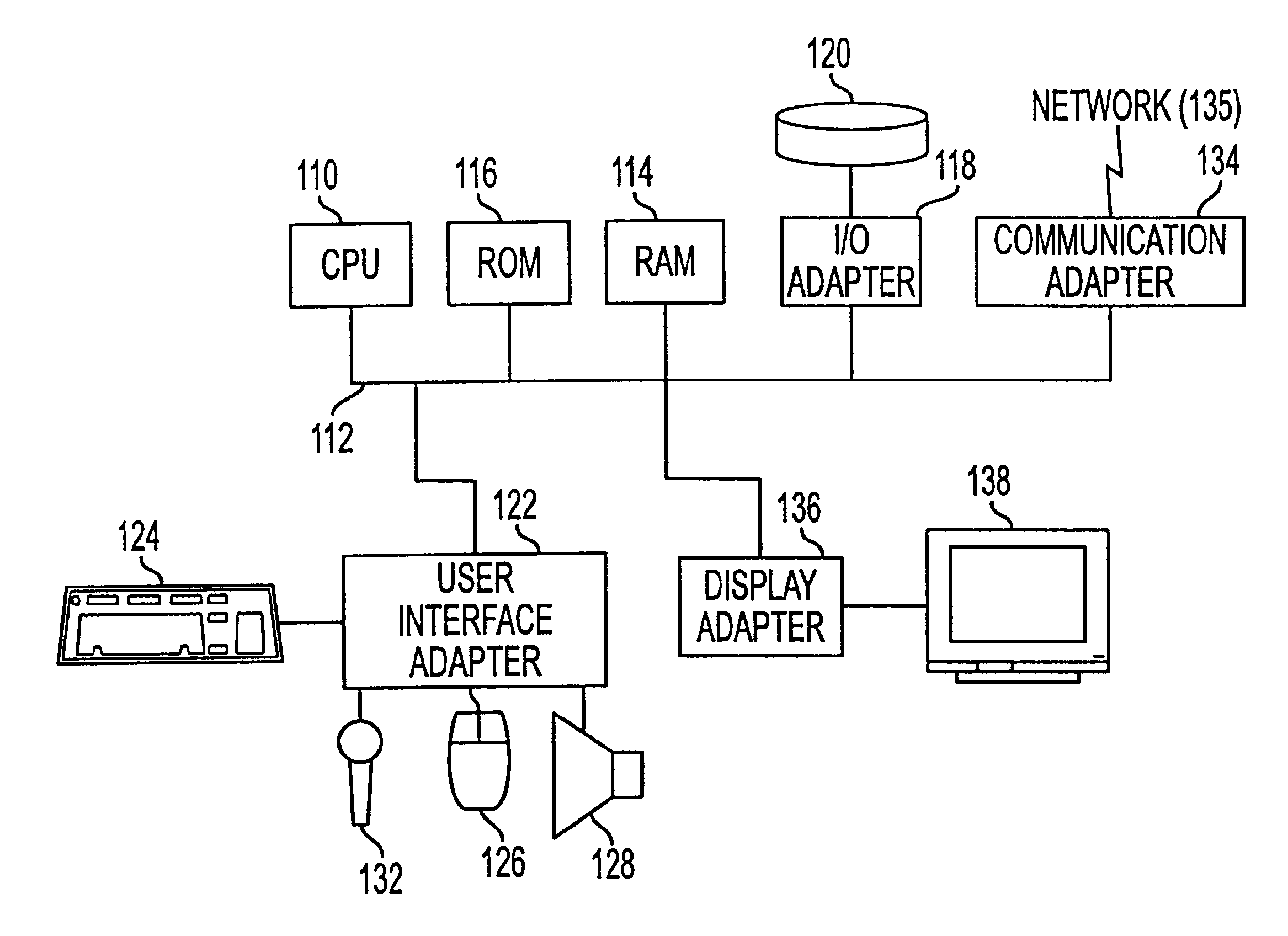

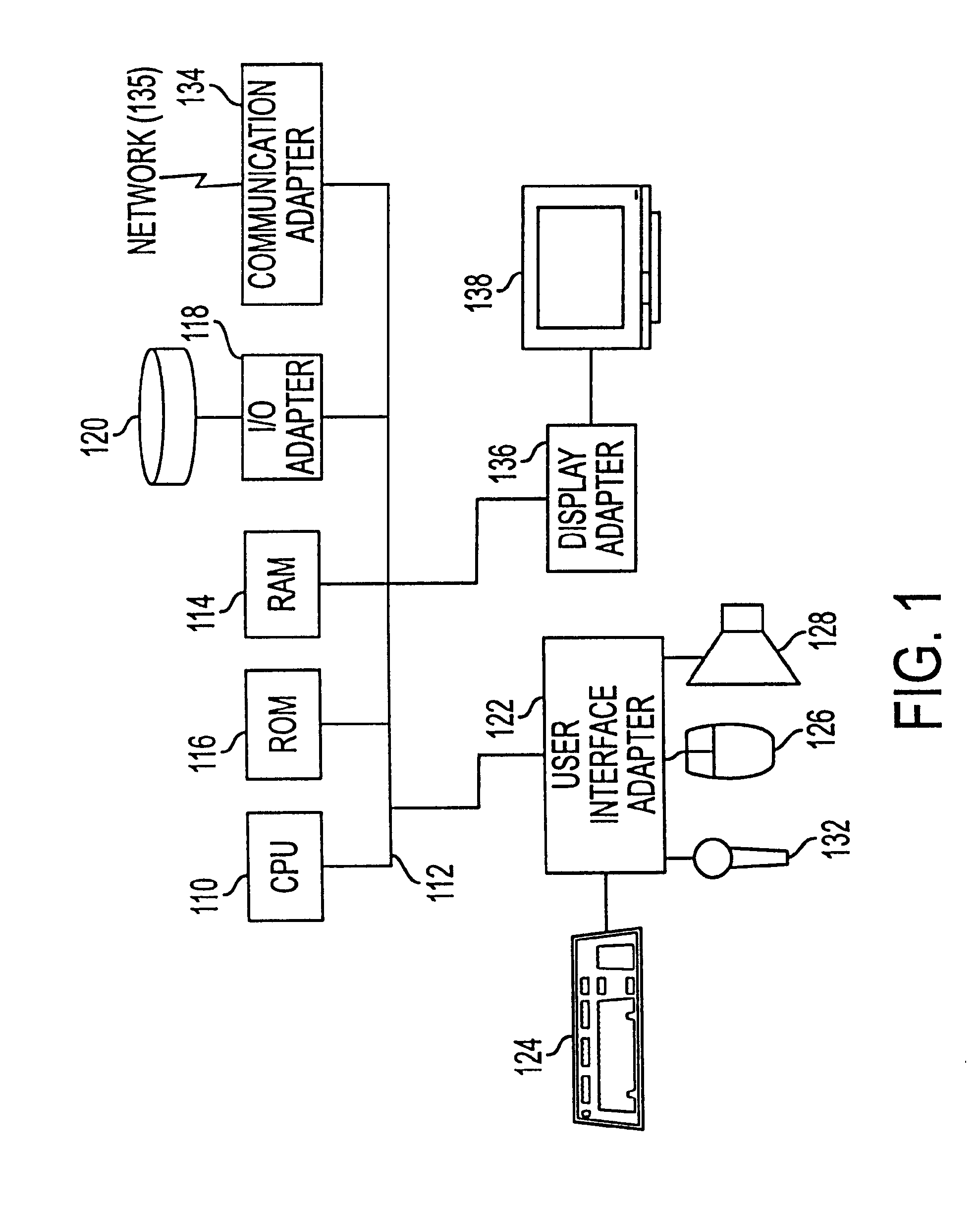

Systems and method for secure delivery of files to authorized recipients

ActiveUS20100281254A1Encryption apparatus with shift registers/memoriesUser identity/authority verificationUniform resource locatorAutomatic speech

By asking the recipient of an encrypted received file to read aloud a check text, retrieved from a network server, that address, or URL, is encoded within the file name of the encrypted received file, the system of the invention automatically verifies the identity of the recipient, confirms that the file has been received by the intended recipient, and then decrypts the file. The utterances of text spoken by the recipient are processed by means of an automatic speech recognition component. The system determines whether the spoken text corresponds to the check text presented to the reader, in which case the system applies an automatic speaker recognition algorithm to determine whether the person reciting the check text has voice characteristics matching those of the intended recipient based on a previous enrollment of the intended recipient's voice to the system. When the system confirms the identity of the recipient, the decryption key is transmitted and the encrypted received file is automatically decrypted and displayed to the recipient. In a preferred embodiment, the system records and marks with a time-stamp the recipient's reciting of the voice check text, so that it can later be compared to the intended recipient's voice if the recipient repudiates reception.

Owner:KYNDRYL INC

Detecting emotion in voice signals in a call center

A computer system monitors a conversation between an agent and a customer. The system extracts a voice signal from the conversation and analyzes the voice signal to detect a voice characteristic of the customer. The system identifies an emotion corresponding to the voice characteristic and initiates an action based on the emotion. The action may include communicating the emotion to an emergency response team, or communicating feedback to a manager of the agent, as examples.

Owner:ACCENTURE GLOBAL SERVICES LTD

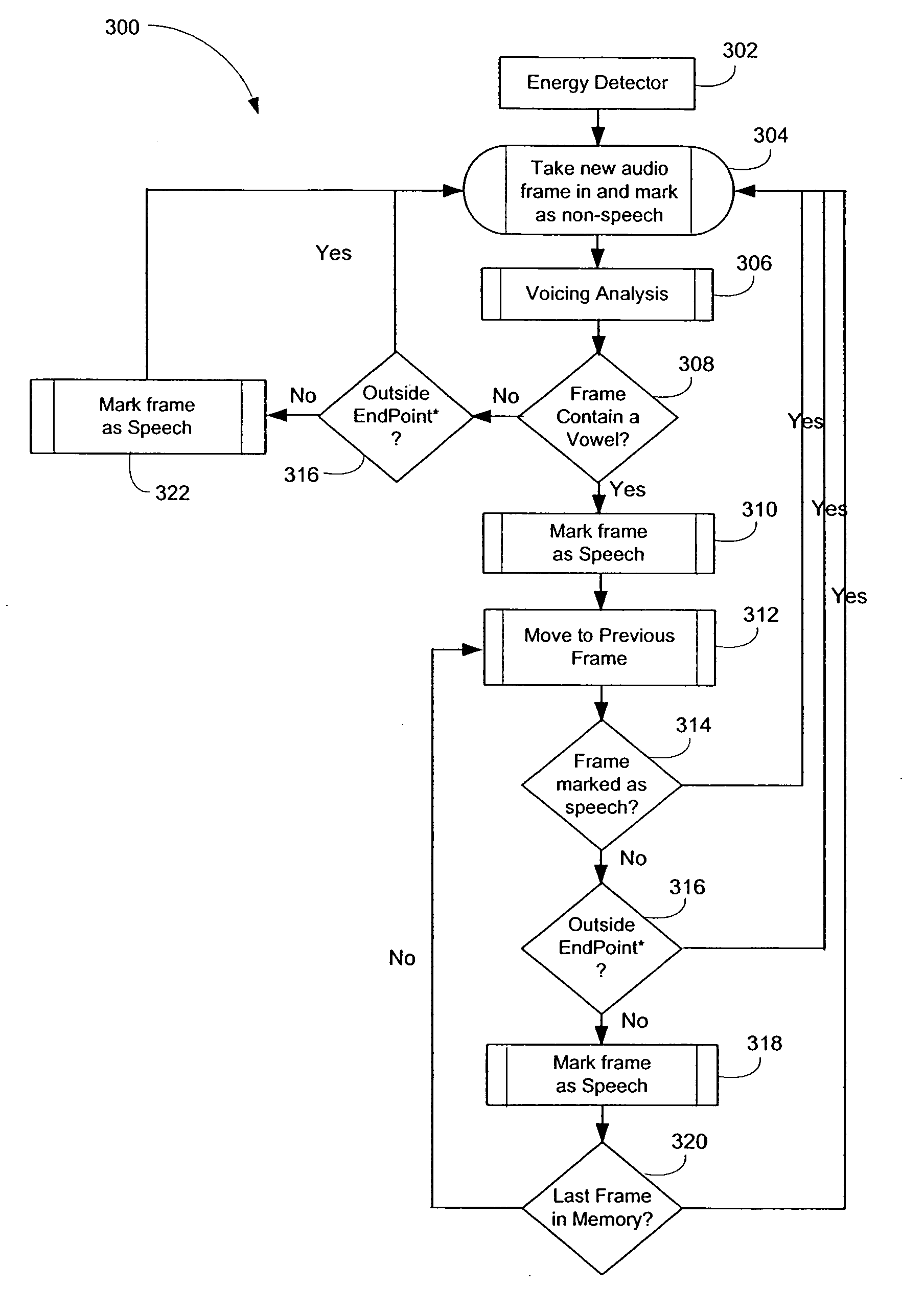

Speech end-pointer

A rule-based end-pointer isolates spoken utterances contained within an audio stream from background noise and non-speech transients. The rule-based end-pointer includes a plurality of rules to determine the beginning and / or end of a spoken utterance based on various speech characteristics. The rules may analyze an audio stream or a portion of an audio stream based upon an event, a combination of events, the duration of an event, or a duration relative to an event. The rules may be manually or dynamically customized depending upon factors that may include characteristics of the audio stream itself, an expected response contained within the audio stream, or environmental conditions.

Owner:BLACKBERRY LTD

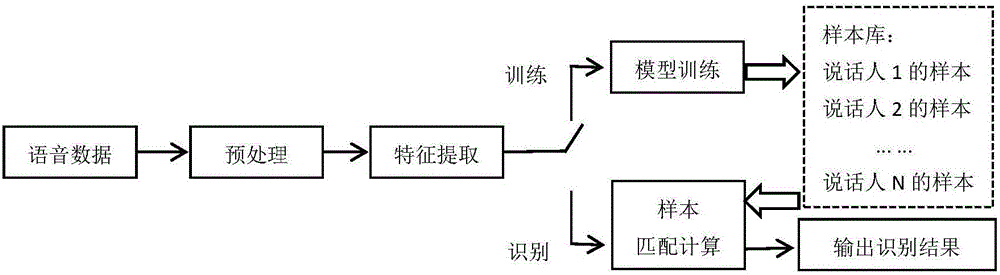

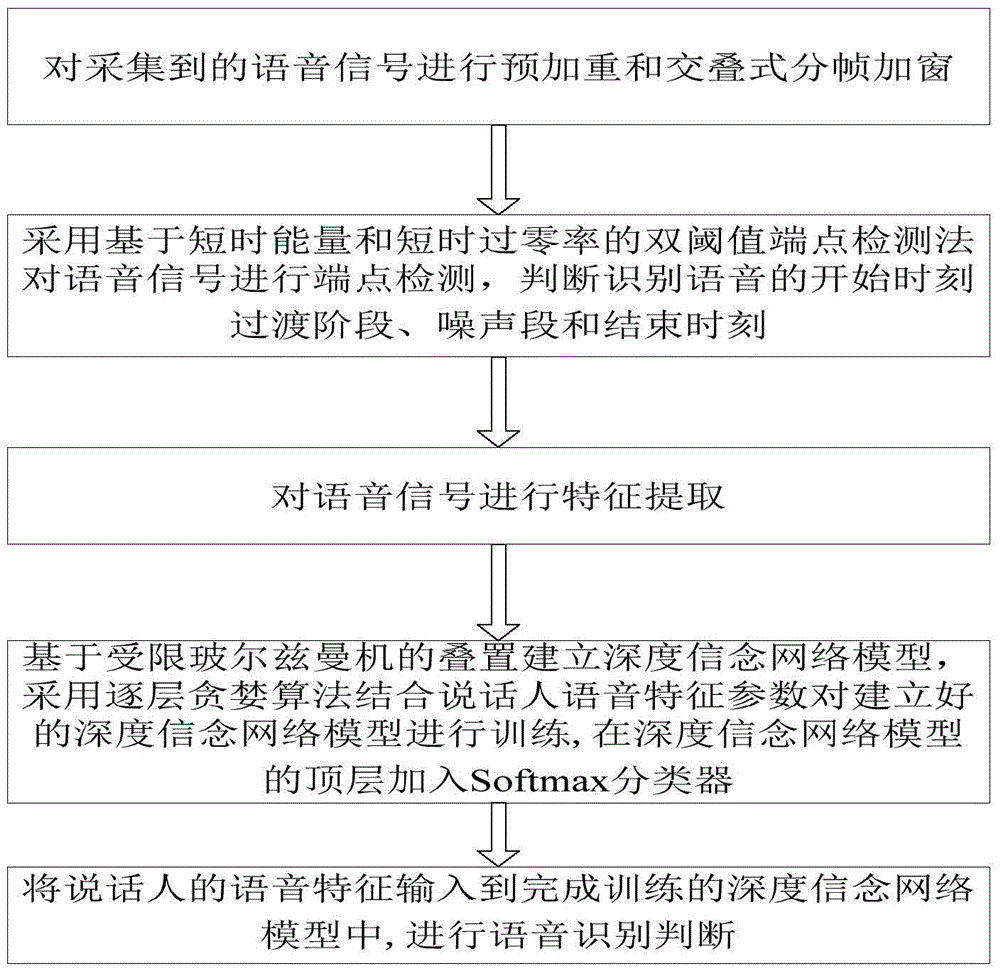

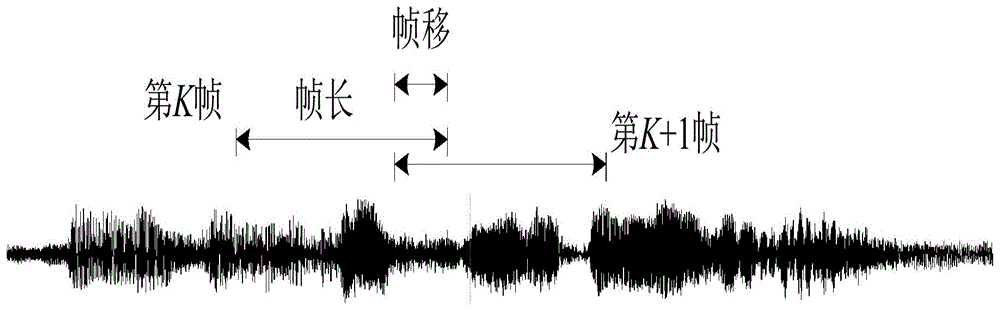

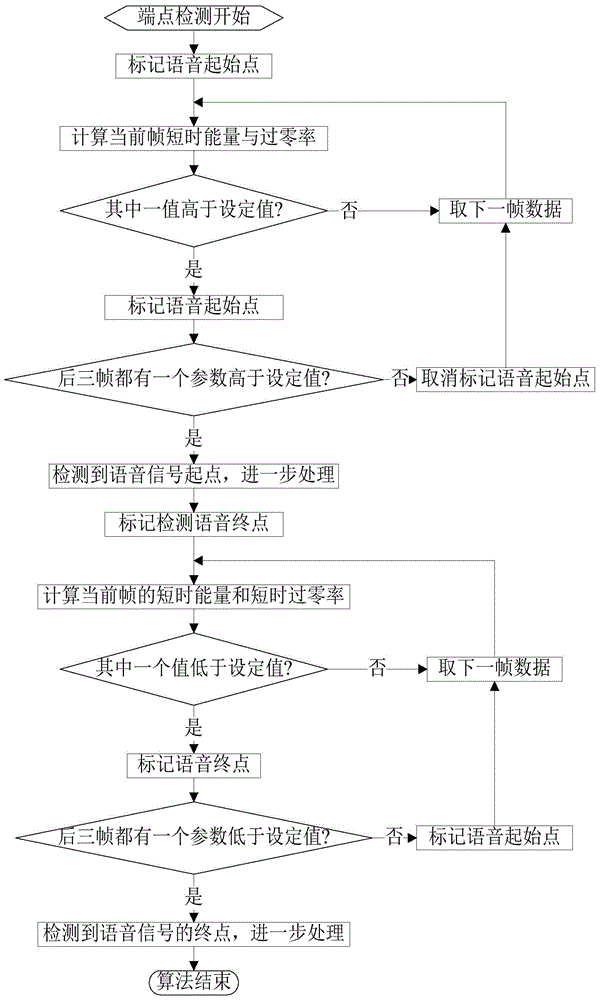

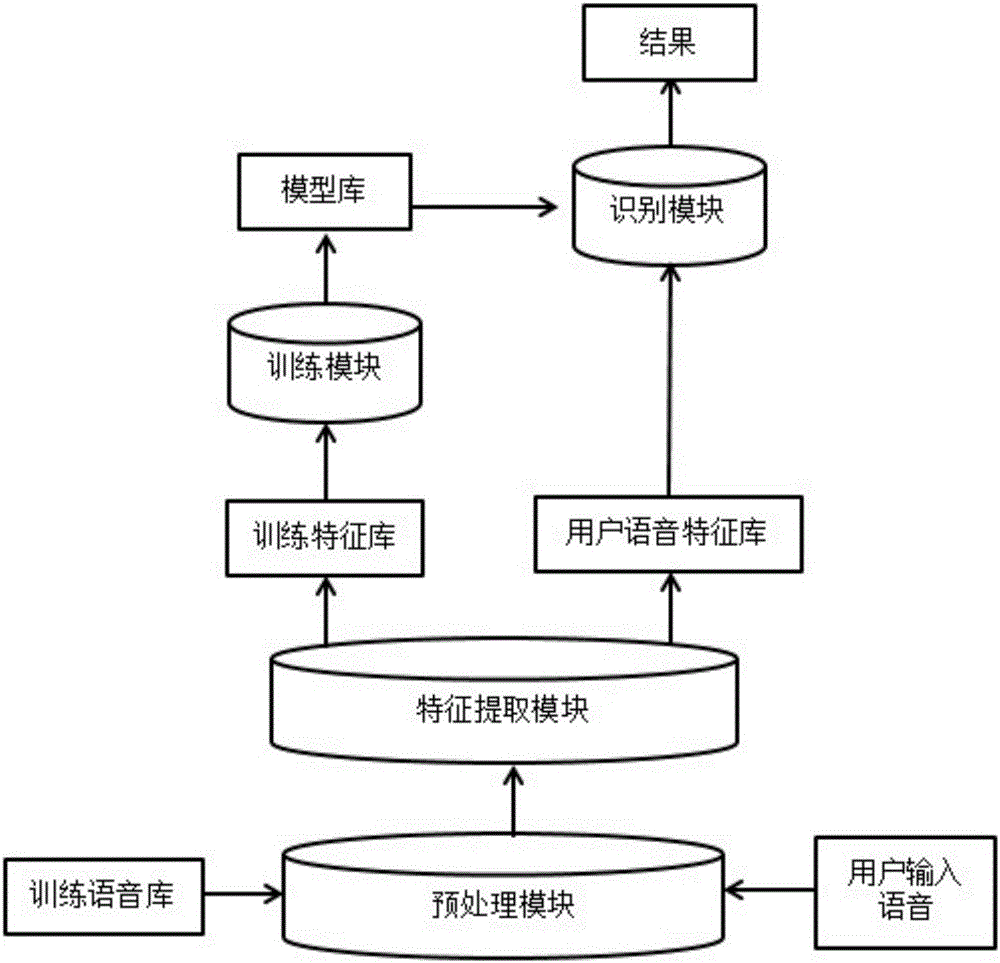

Speaker recognition method based on depth learning

ActiveCN104157290AImprove recognition rateOvercoming problems such as easy convergence to local minimaSpeech analysisDeep belief networkRestricted Boltzmann machine

The invention discloses a speaker recognition method based on depth learning. The method comprises the following steps: S1) carrying out pre-emphasis and overlapping-type framing windowing on collected voice signals; S2) carrying out endpoint detection on the collected voice signals by utilizing a dual-threshold endpoint detection method based on short-time energy and short-time zero-crossing rate, and judging and indentifying the staring moment, transition stage , noise section and ending moment of the voice; S3) carrying out feature extraction on the voice signals; S4) forming a depth belief network model based on restricted boltzmann machine hierarchy, training the established depth belief network model by utilizing layer-by-layer greedy algorithm and with speaker voice feature parameters being combined, and adding a Softmax classifier to the top layer of the depth belief network model; and S5) inputting the voice features of a speaker to the depth belief network model obtained after being subjected to training, calculating the probability that the model outputs voice features similar to the voice features of the other speakers, and selecting the speaker corresponding to the maximum probability as recognition result.

Owner:DALIAN UNIV OF TECH

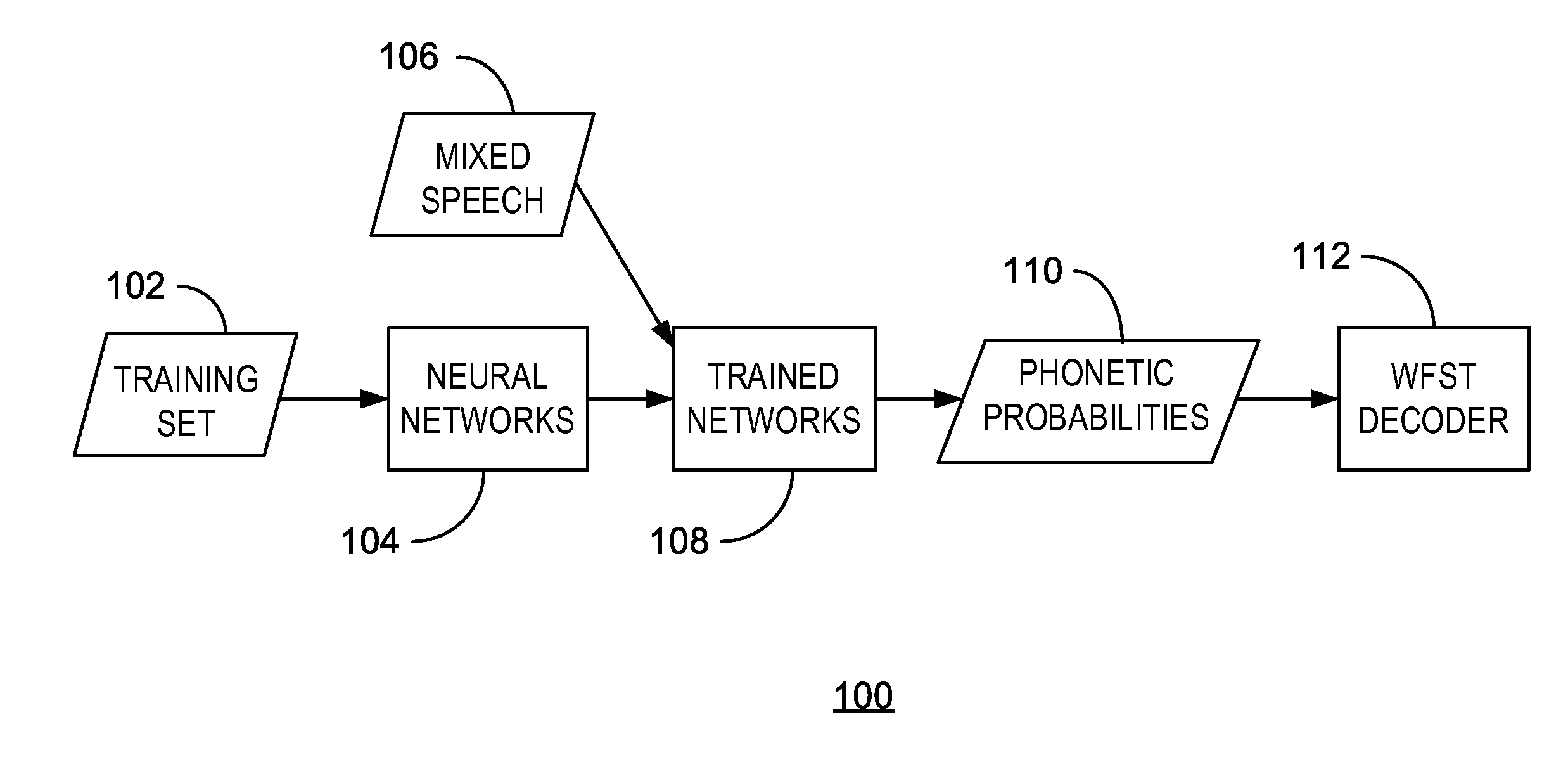

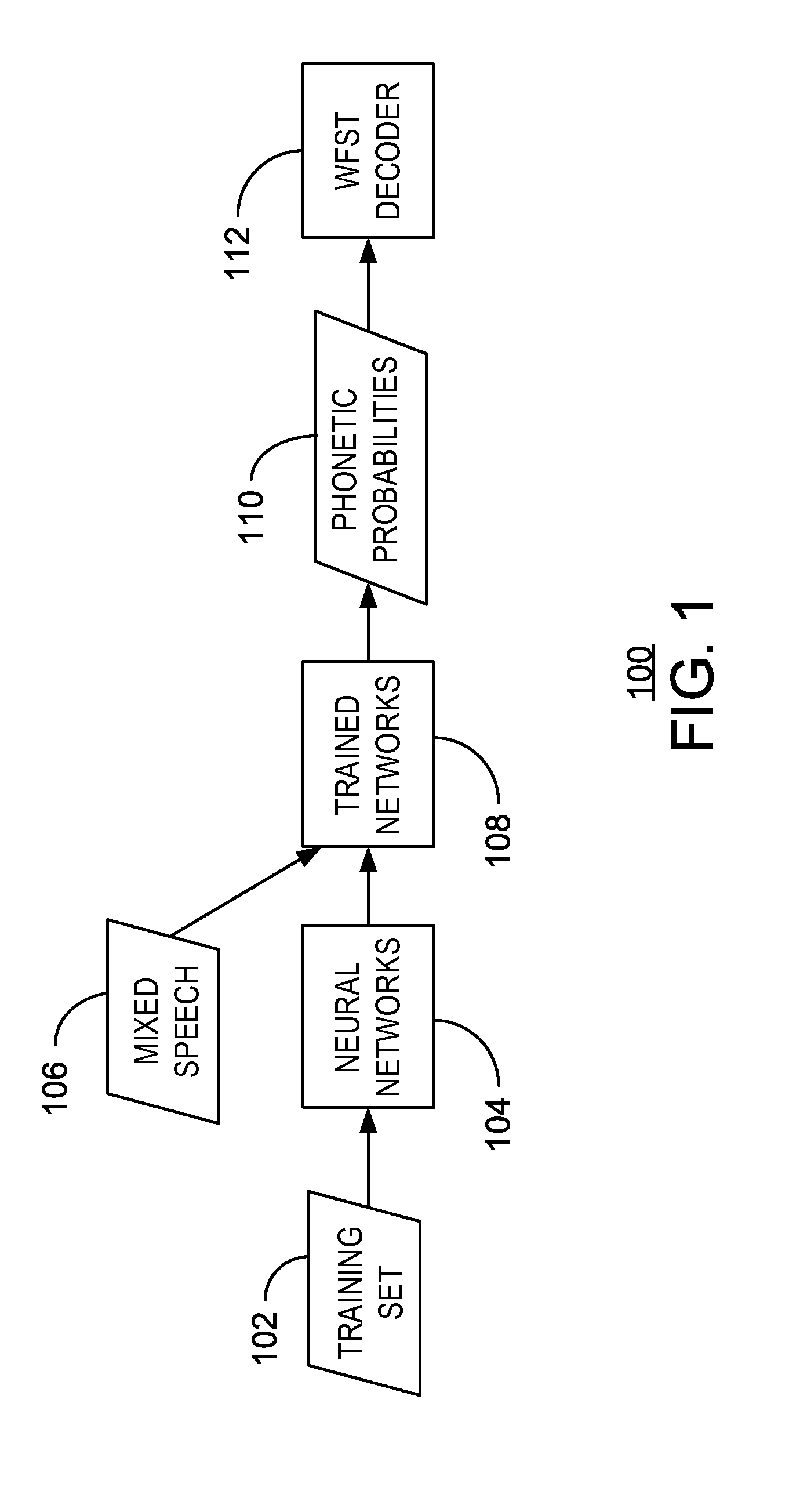

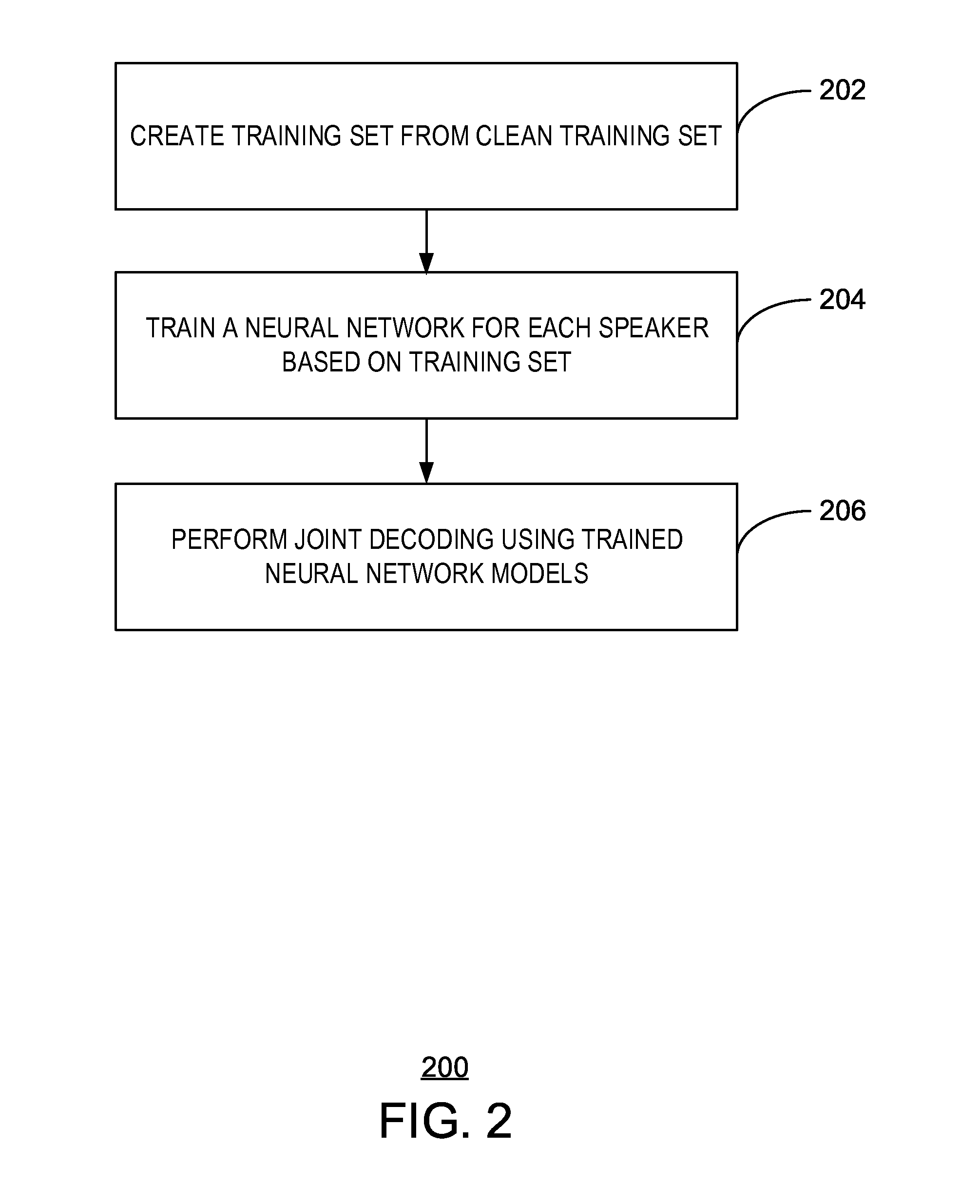

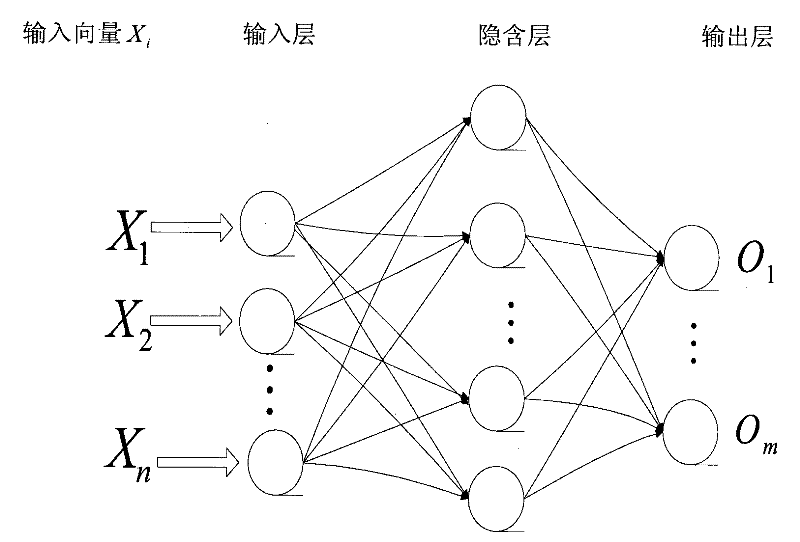

Mixed speech recognition

The claimed subject matter includes a system and method for recognizing mixed speech from a source. The method includes training a first neural network to recognize the speech signal spoken by the speaker with a higher level of a speech characteristic from a mixed speech sample. The method also includes training a second neural network to recognize the speech signal spoken by the speaker with a lower level of the speech characteristic from the mixed speech sample. Additionally, the method includes decoding the mixed speech sample with the first neural network and the second neural network by optimizing the joint likelihood of observing the two speech signals considering the probability that a specific frame is a switching point of the speech characteristic.

Owner:MICROSOFT TECH LICENSING LLC

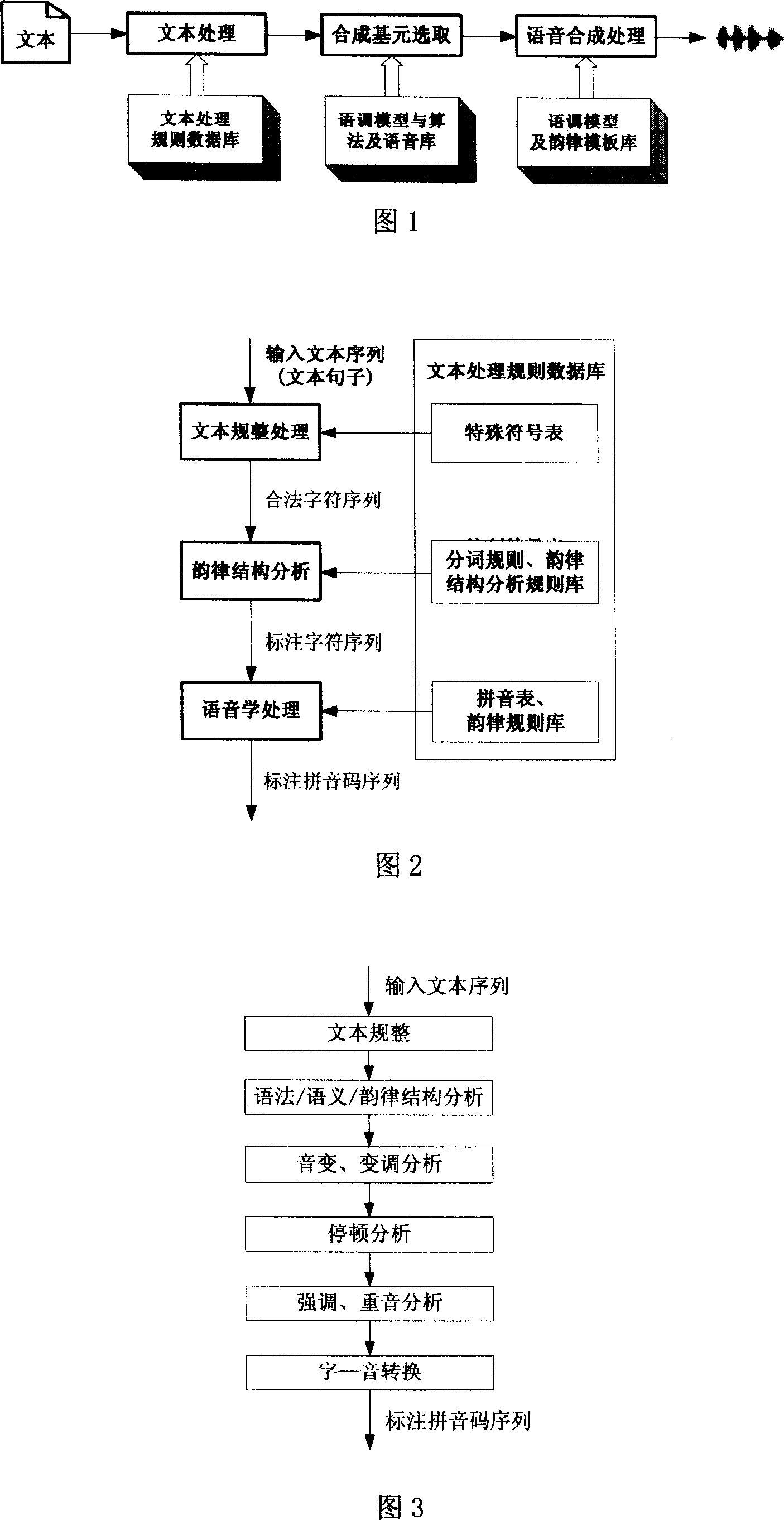

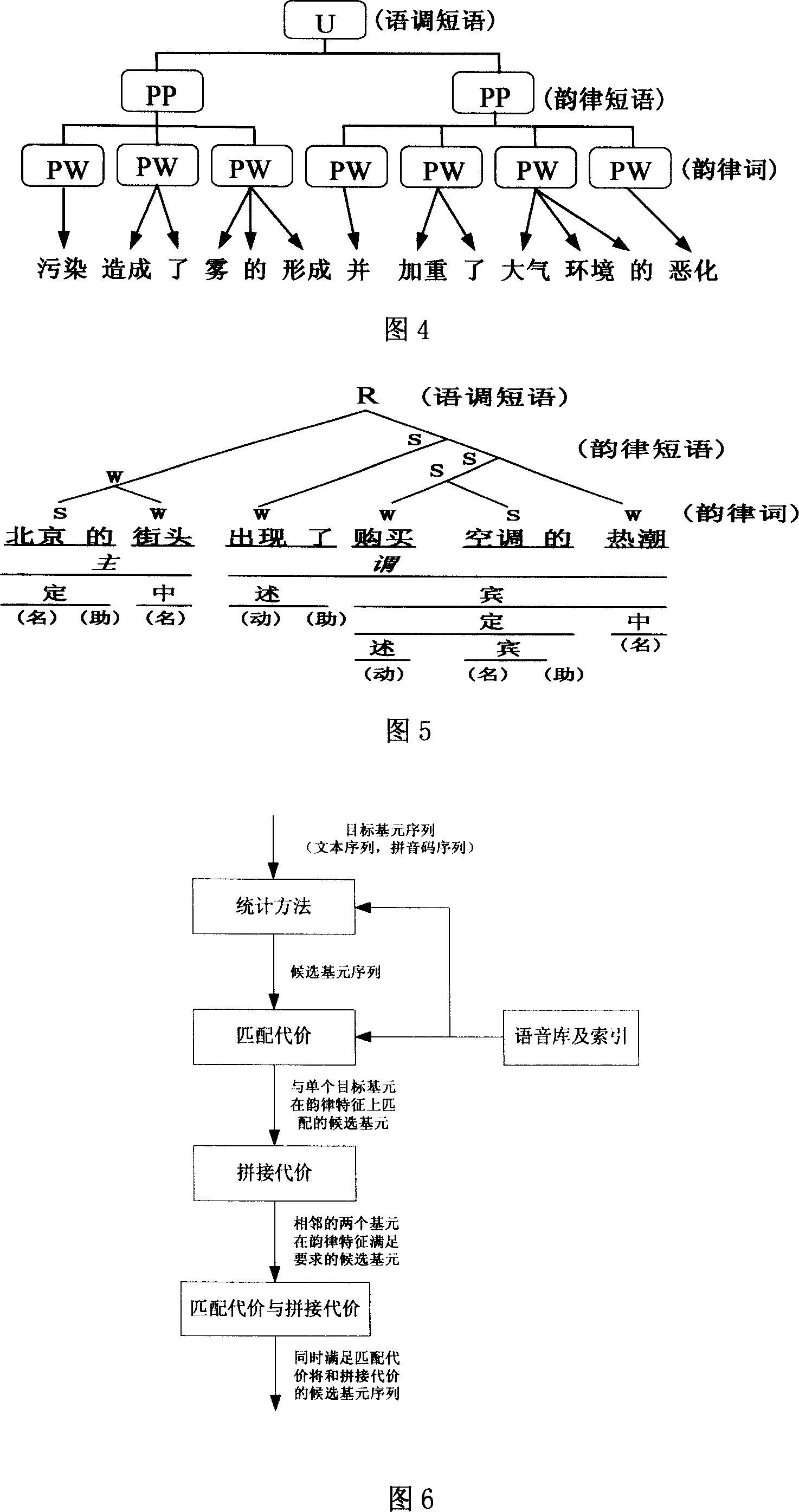

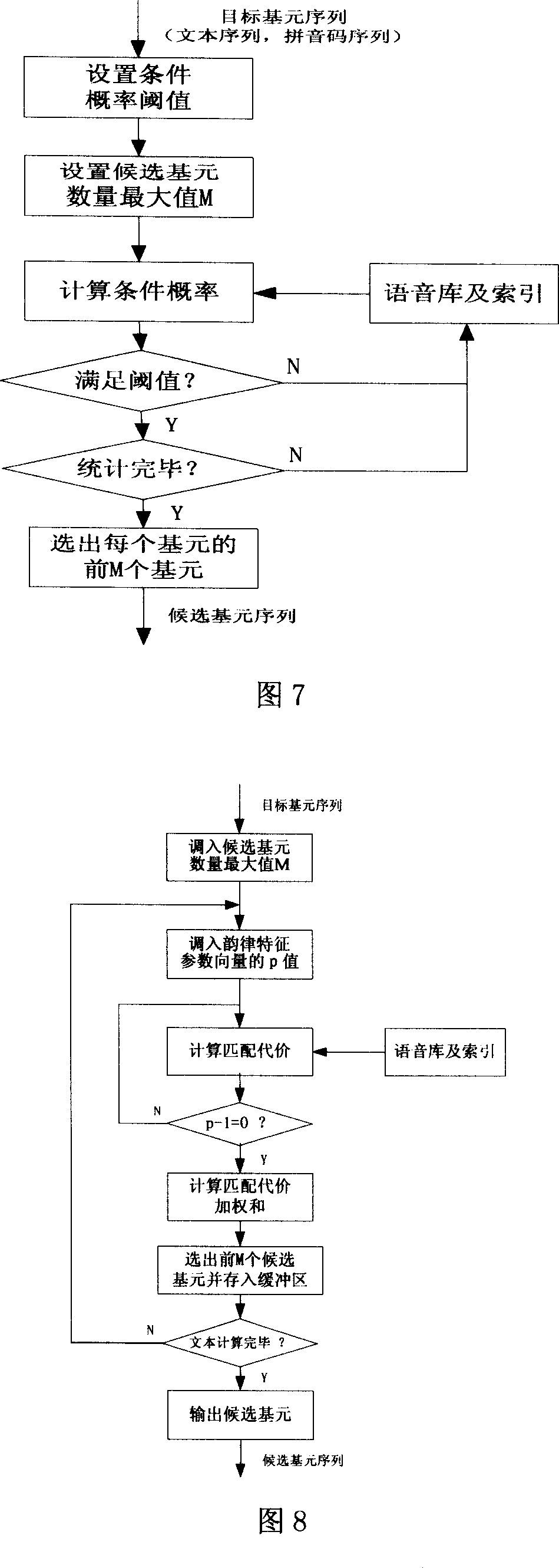

Speech synthetic method based on rhythm character

A method for synthesizing voice based on rhythm character includes text processing program formed by text standardizing step, rhythm structure analysis step and language treatment step, synthetic element selecting program formed by element confirming step, matching step, pasting-up step, optimizing and screening step; voice synthesization processing program formed by base frequency outline generating step of phrase unit, base frequency outline generating step of syllable unit and intonation superposing step.

Owner:HEILONGJIANG UNIV

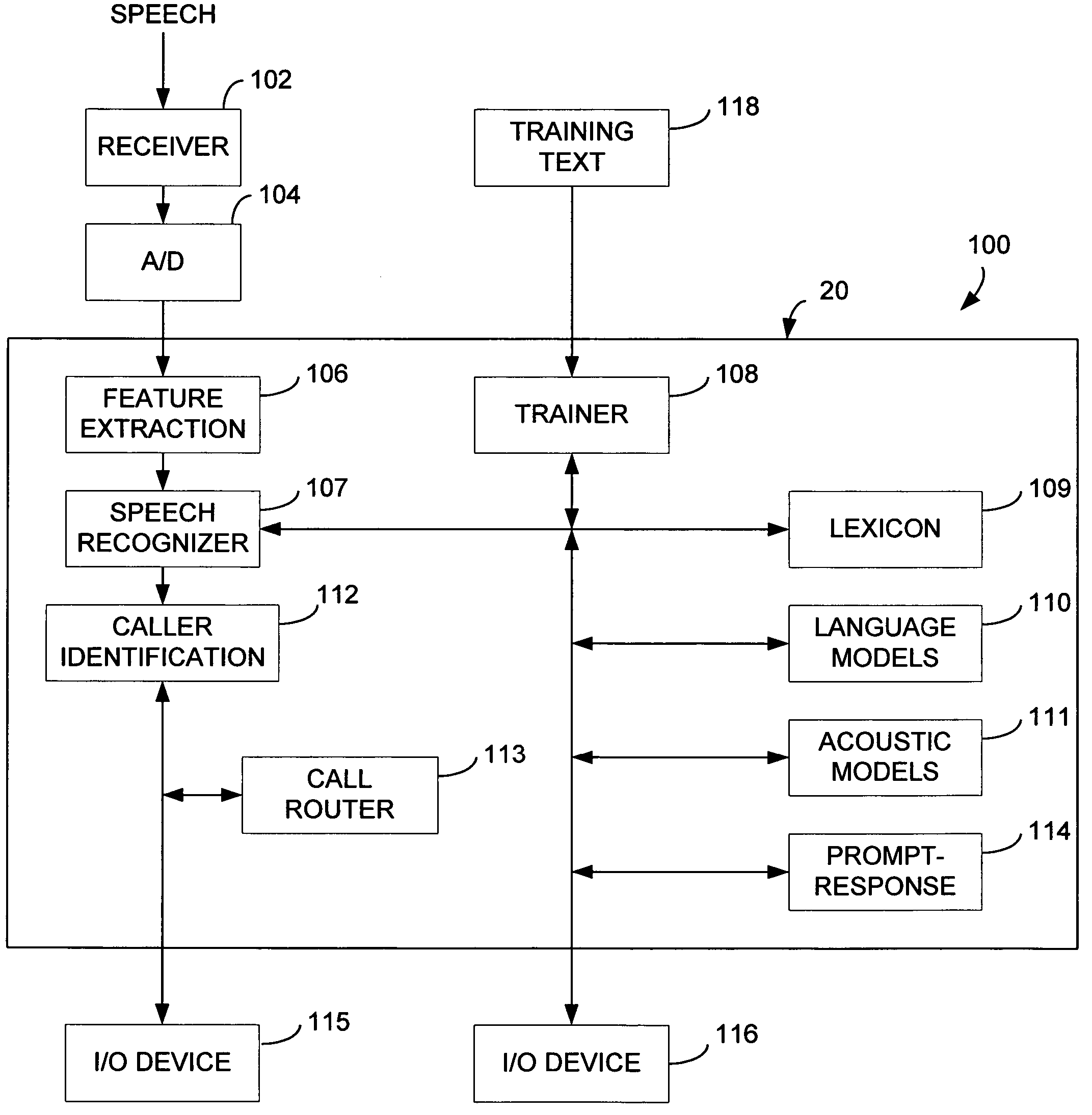

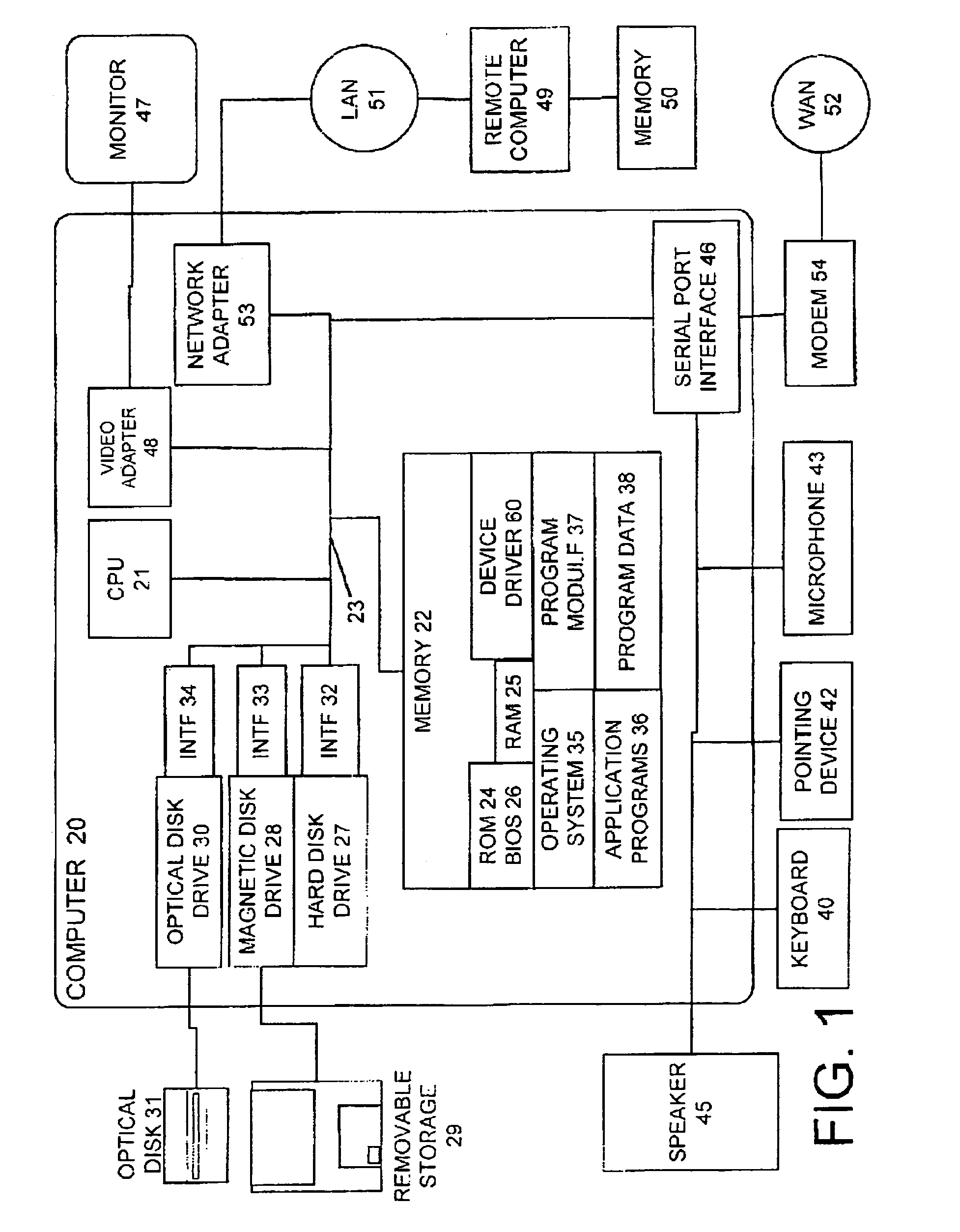

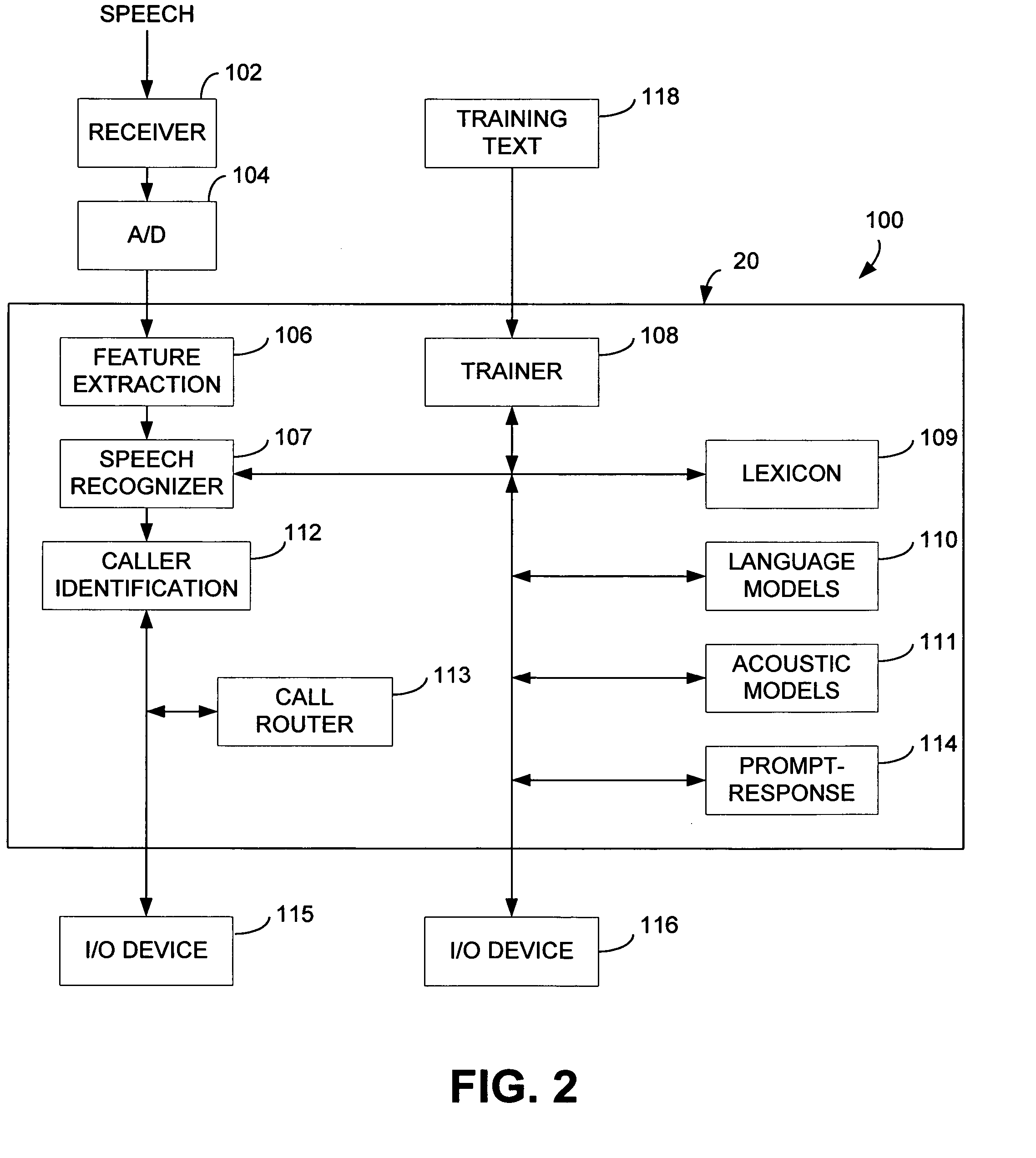

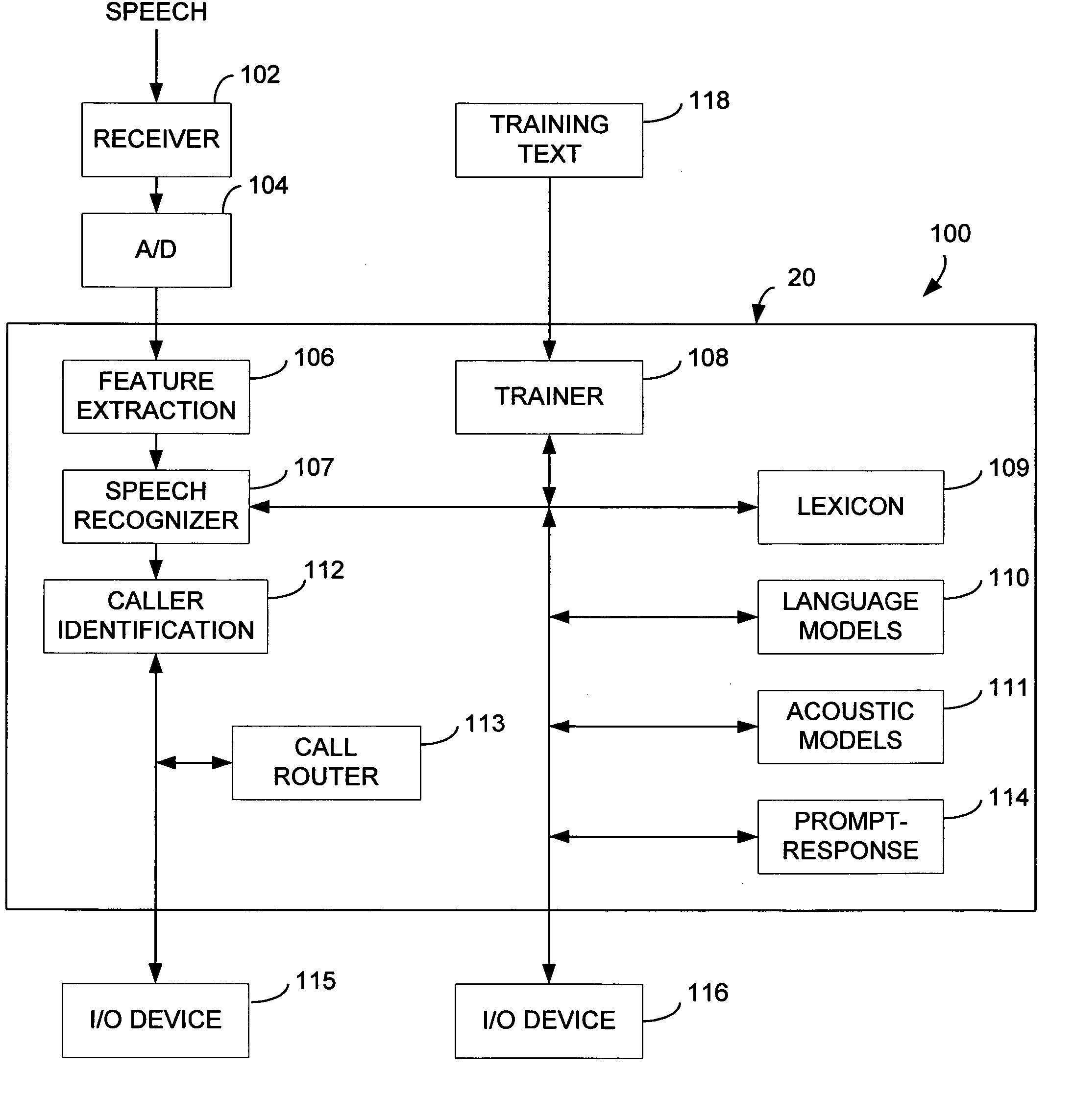

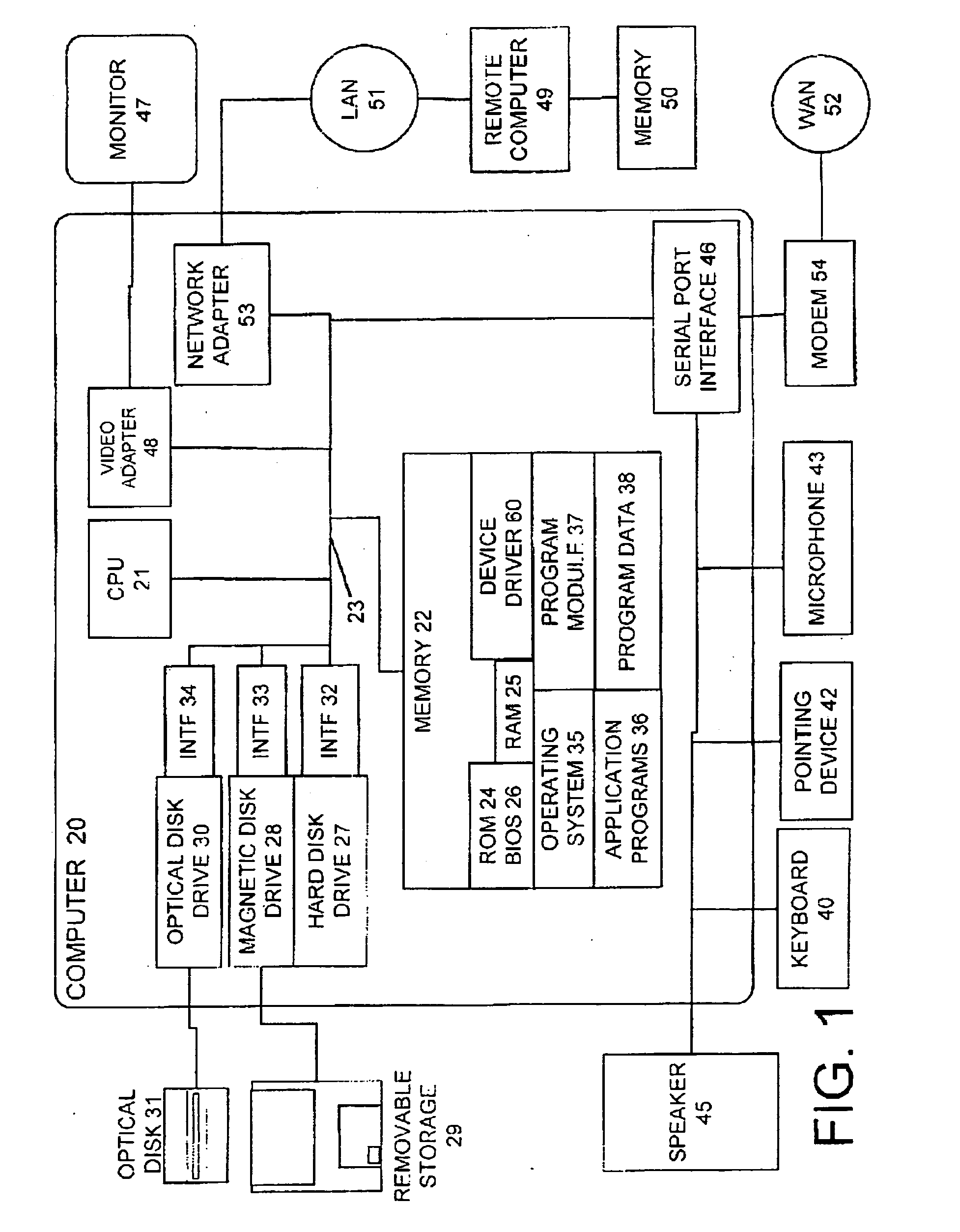

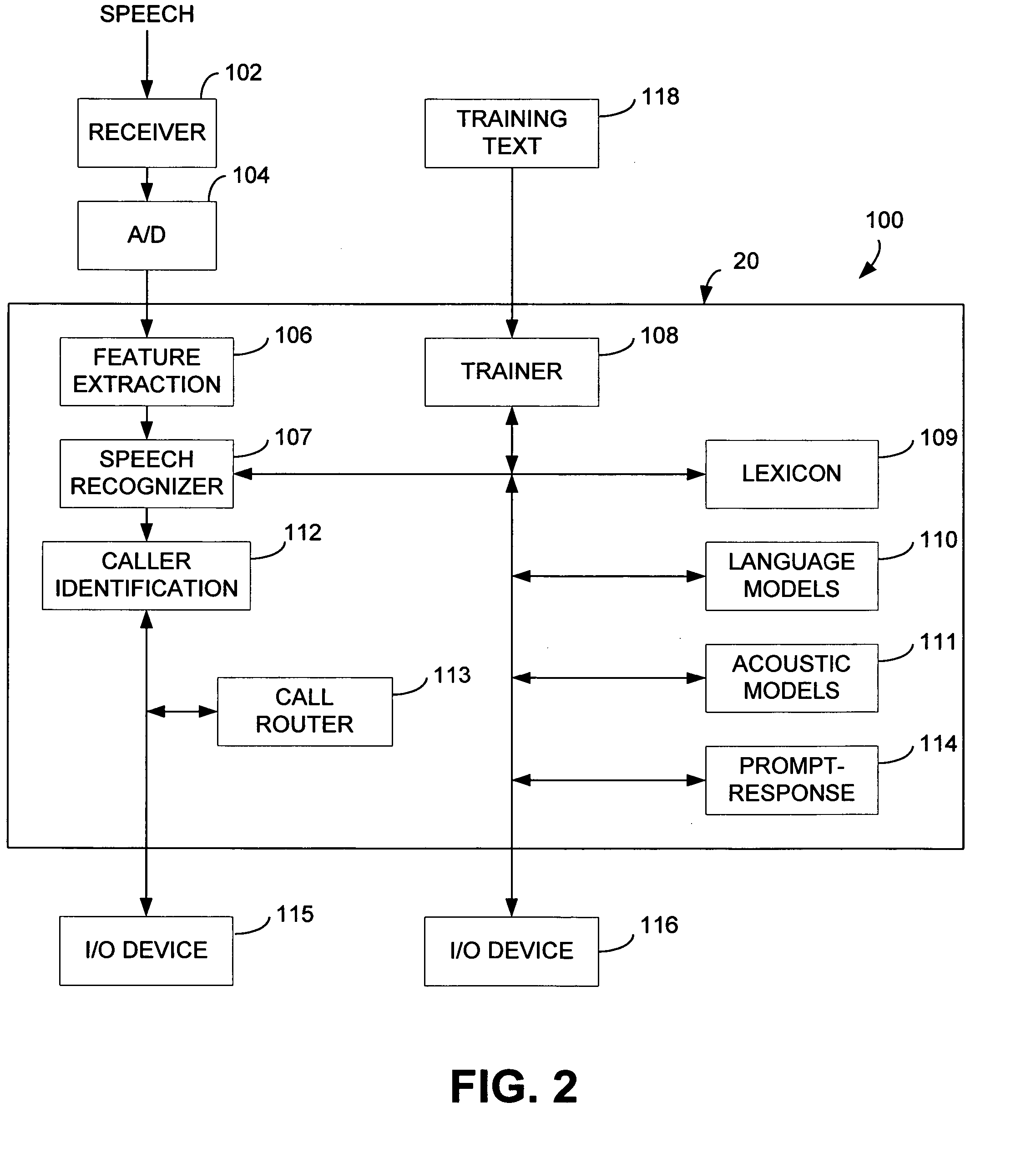

Automatic identification of telephone callers based on voice characteristics

ActiveUS7231019B2Automatic call-answering/message-recording/conversation-recordingSpecial service for subscribersAcoustic modelSpeech sound

A method and apparatus are provided for identifying a caller of a call from the caller to a recipient. A voice input is received from the caller, and characteristics of the voice input are applied to a plurality of acoustic models, which include a generic acoustic model and acoustic models of any previously identified callers, to obtain a plurality of respective acoustic scores. The caller is identified as one of the previously identified callers or as a new caller based on the plurality of acoustic scores. If the caller is identified as a new caller, a new acoustic model is generated for the new caller, which is specific to the new caller.

Owner:MICROSOFT TECH LICENSING LLC

Apparatus and method for determining speech signal

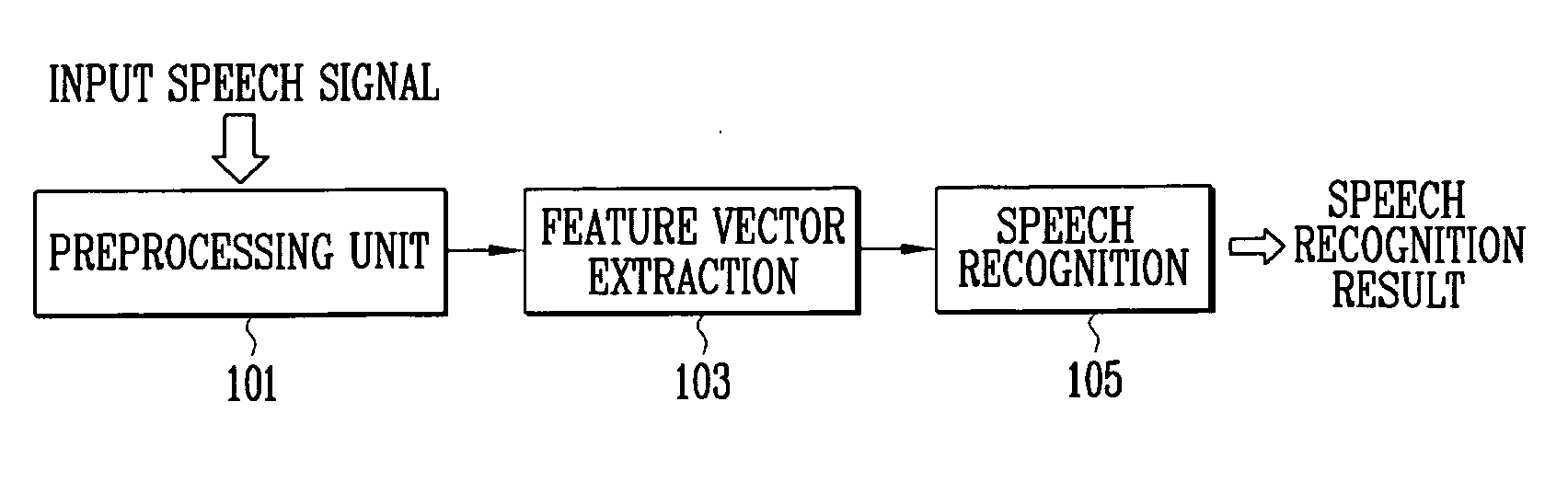

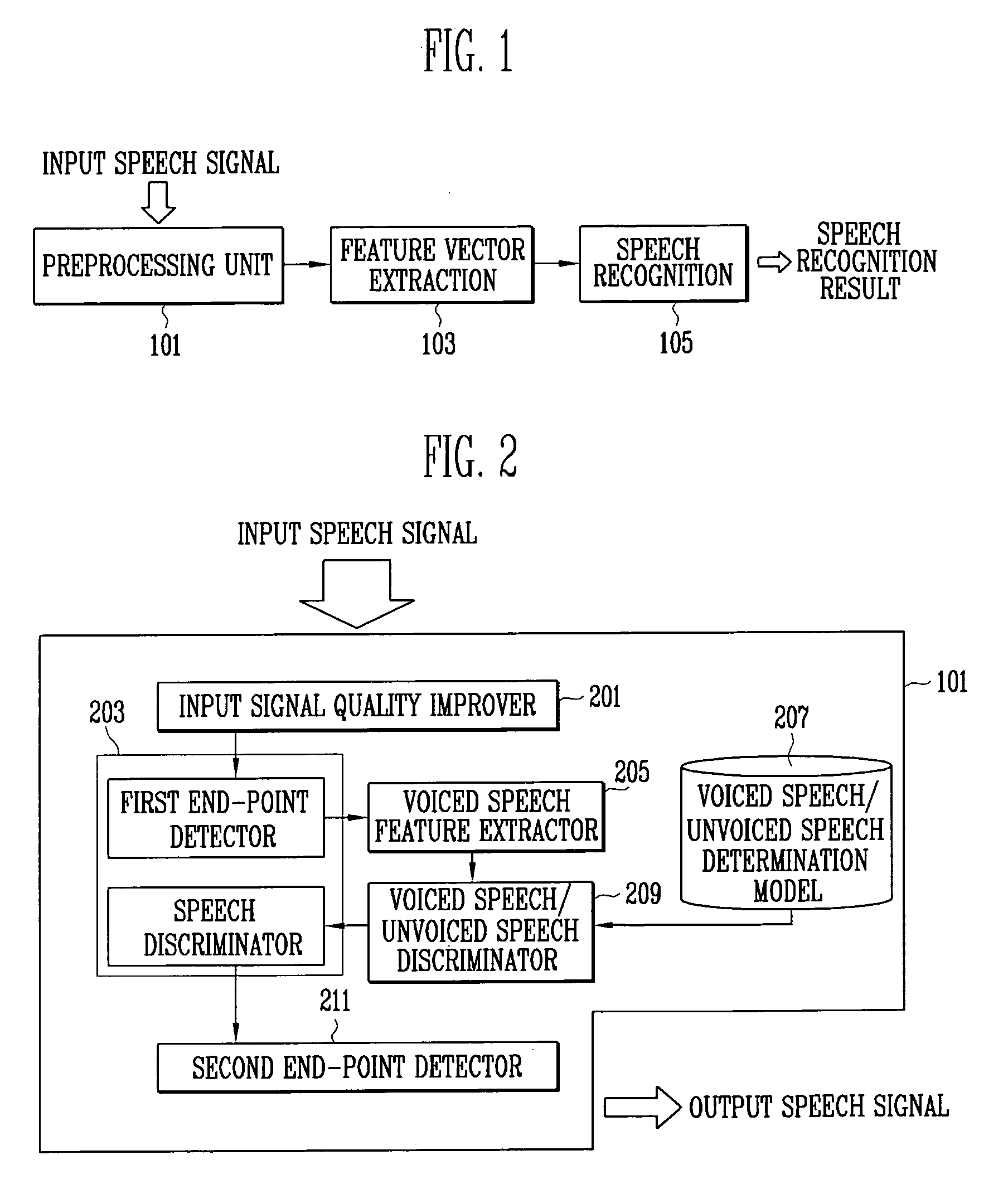

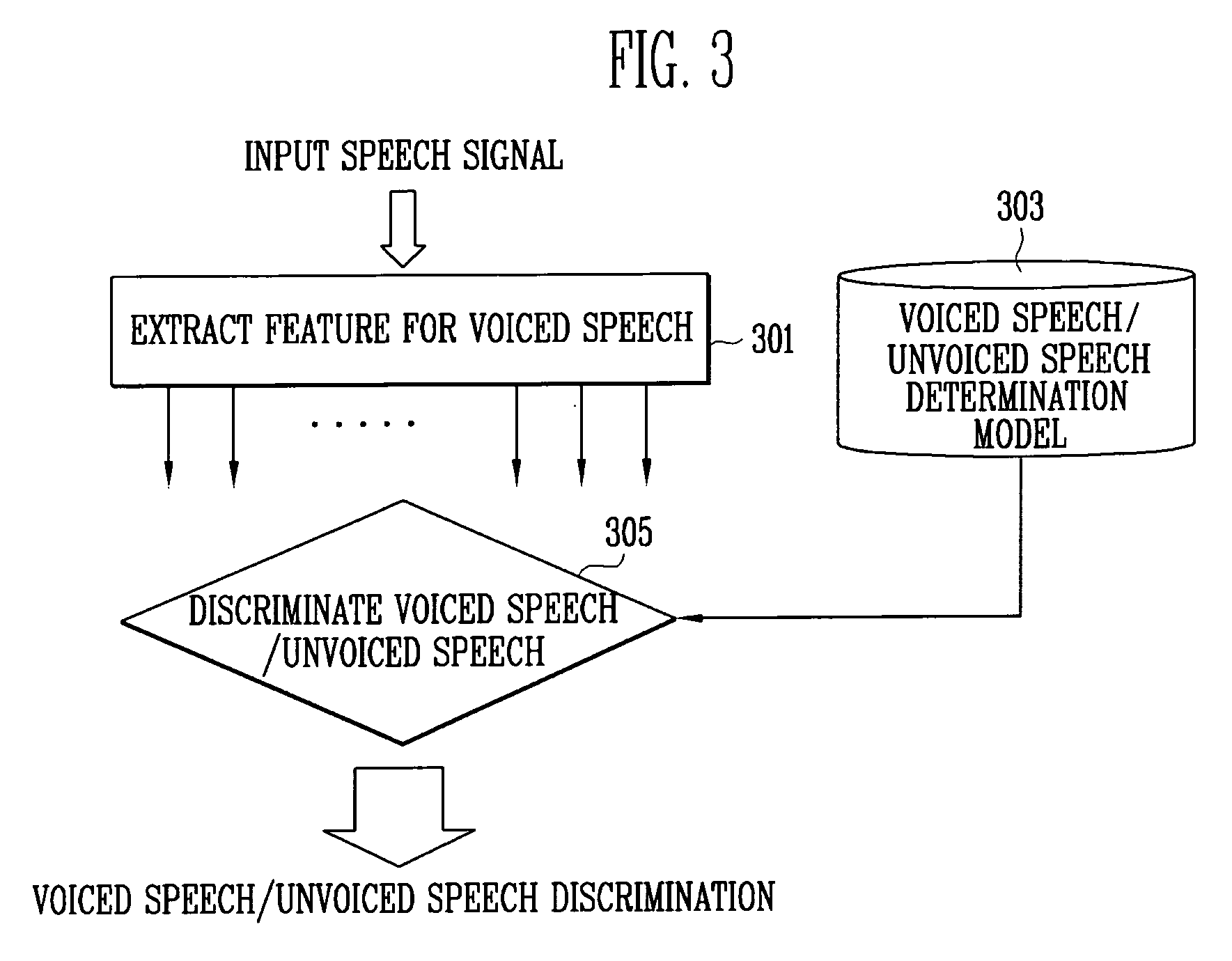

InactiveUS20090076814A1Highly robustLower performance requirementsSpeech recognitionDiscriminatorSignal quality

Provided are a method and apparatus for discriminating a speech signal. The apparatus for discriminating a speech signal includes: an input signal quality improver for reducing additional noise from an acoustic signal received from outside; a first start / end-point detector for receiving the acoustic signal from the input signal quality improver and detecting an end-point of a speech signal included in the acoustic signal; a voiced-speech feature extractor for extracting voiced-speech features of the input signal included in the acoustic signal received from the first start / end-point detector; a voiced-speech / unvoiced-speech discrimination model for storing a voiced-speech model parameter corresponding to a discrimination reference of the voiced-speech feature parameter extracted from the voiced-speech feature extractor; and a voiced-speech / unvoiced-speech discriminator for discriminating a voiced-speech portion using the voiced-speech features extracted by the voiced-speech feature extractor and the voiced-speech discrimination model parameter of the voiced / unvoiced-speech discrimination model.

Owner:ELECTRONICS & TELECOMM RES INST

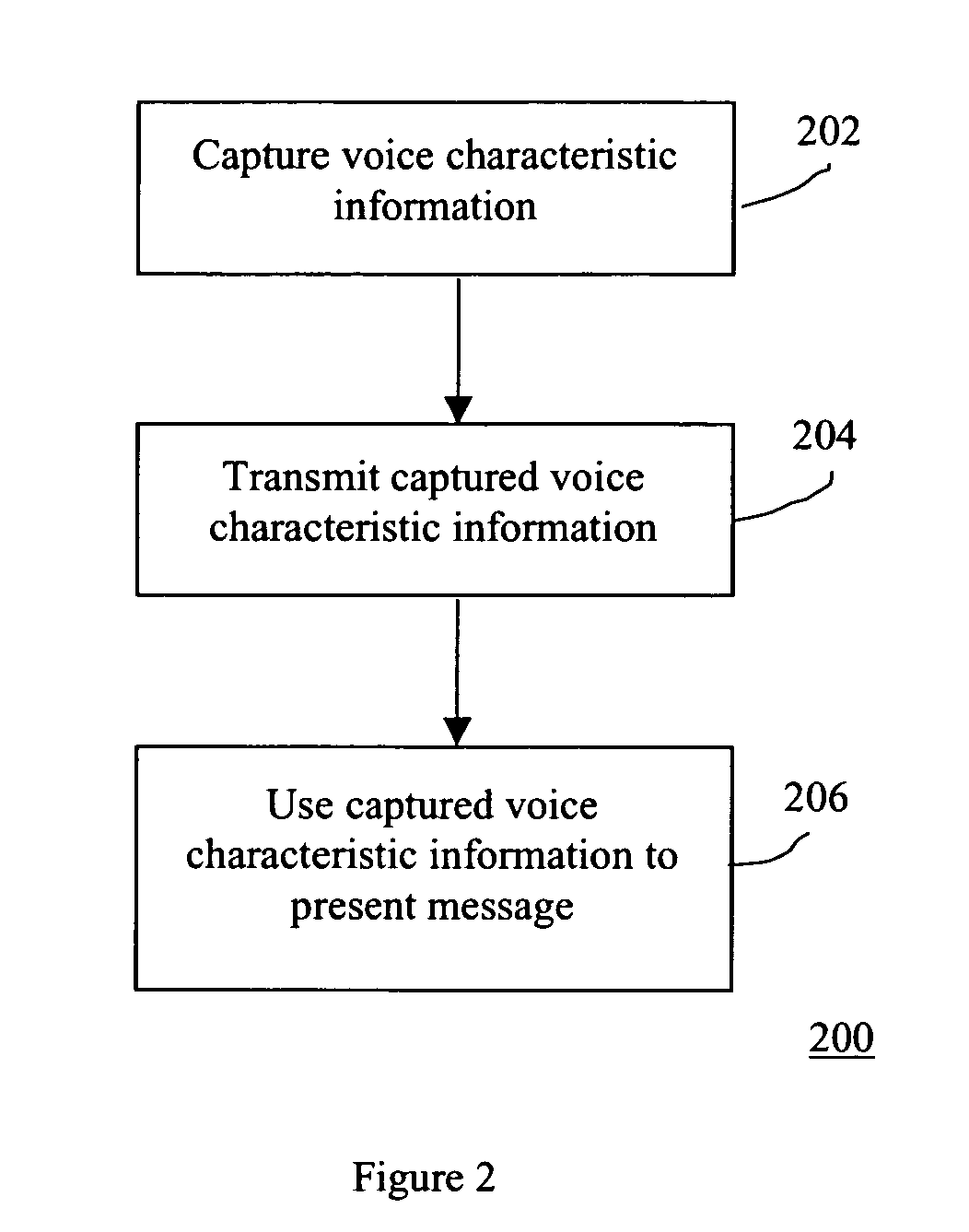

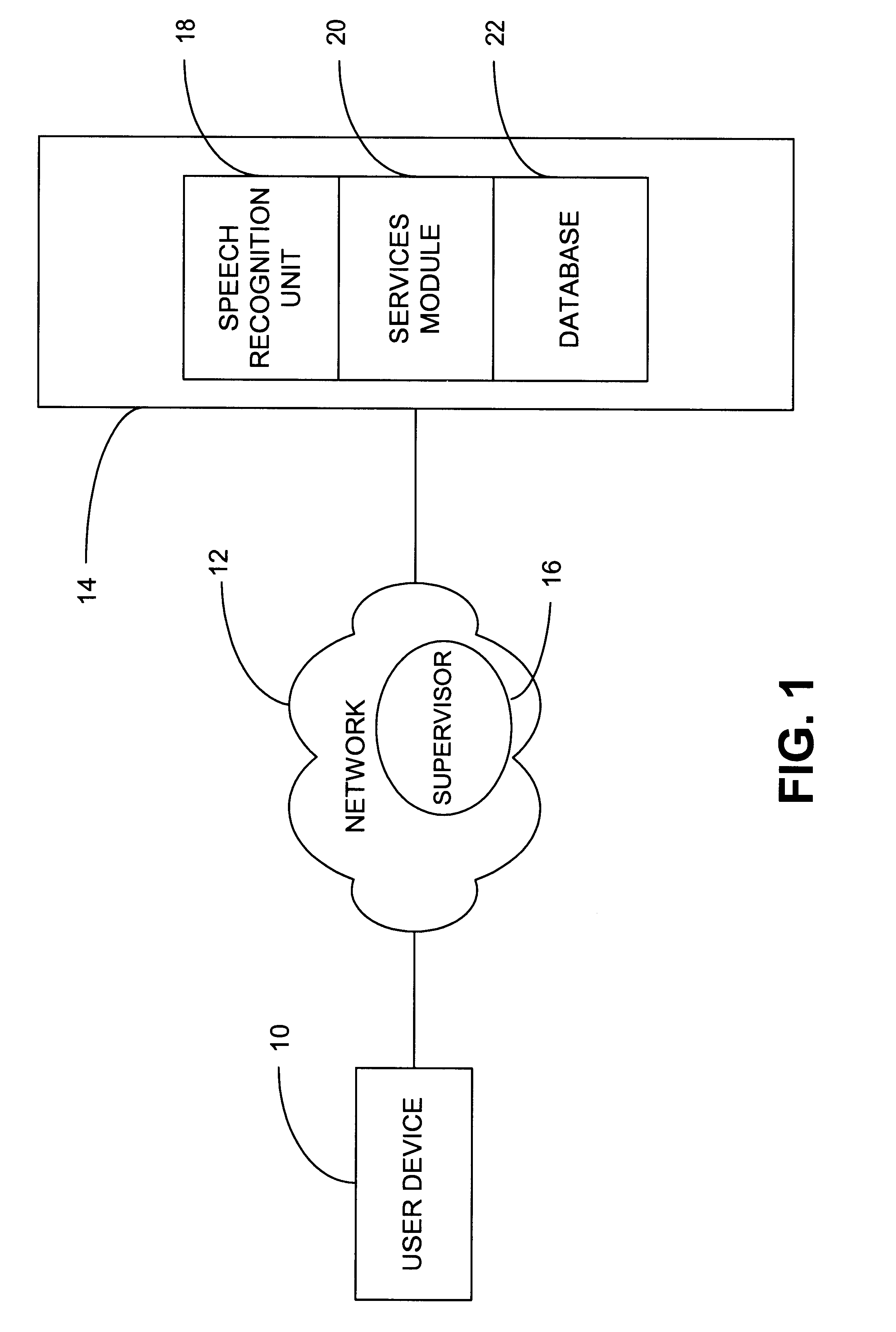

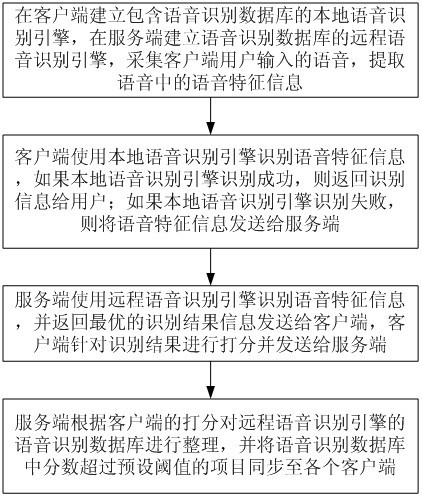

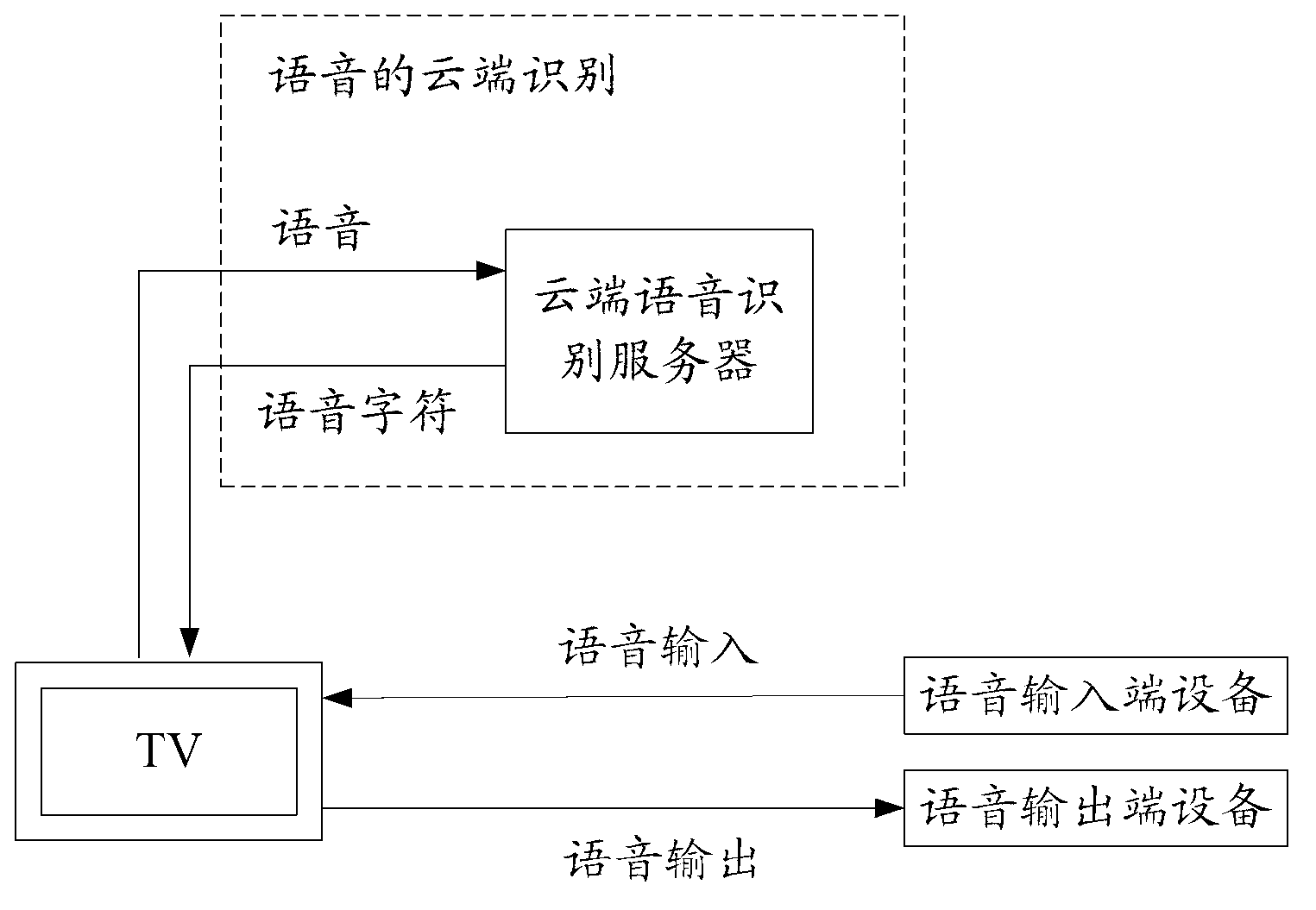

Interactive speech recognition method based on cloud network

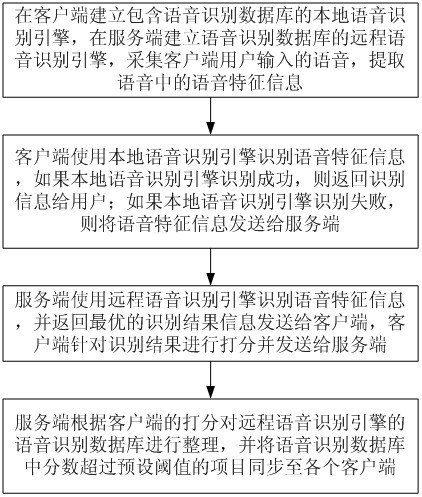

InactiveCN102496364AImplement speech recognitionImprove speech recognition accuracySpeech recognitionTransmissionSpeech soundSubvocal recognition

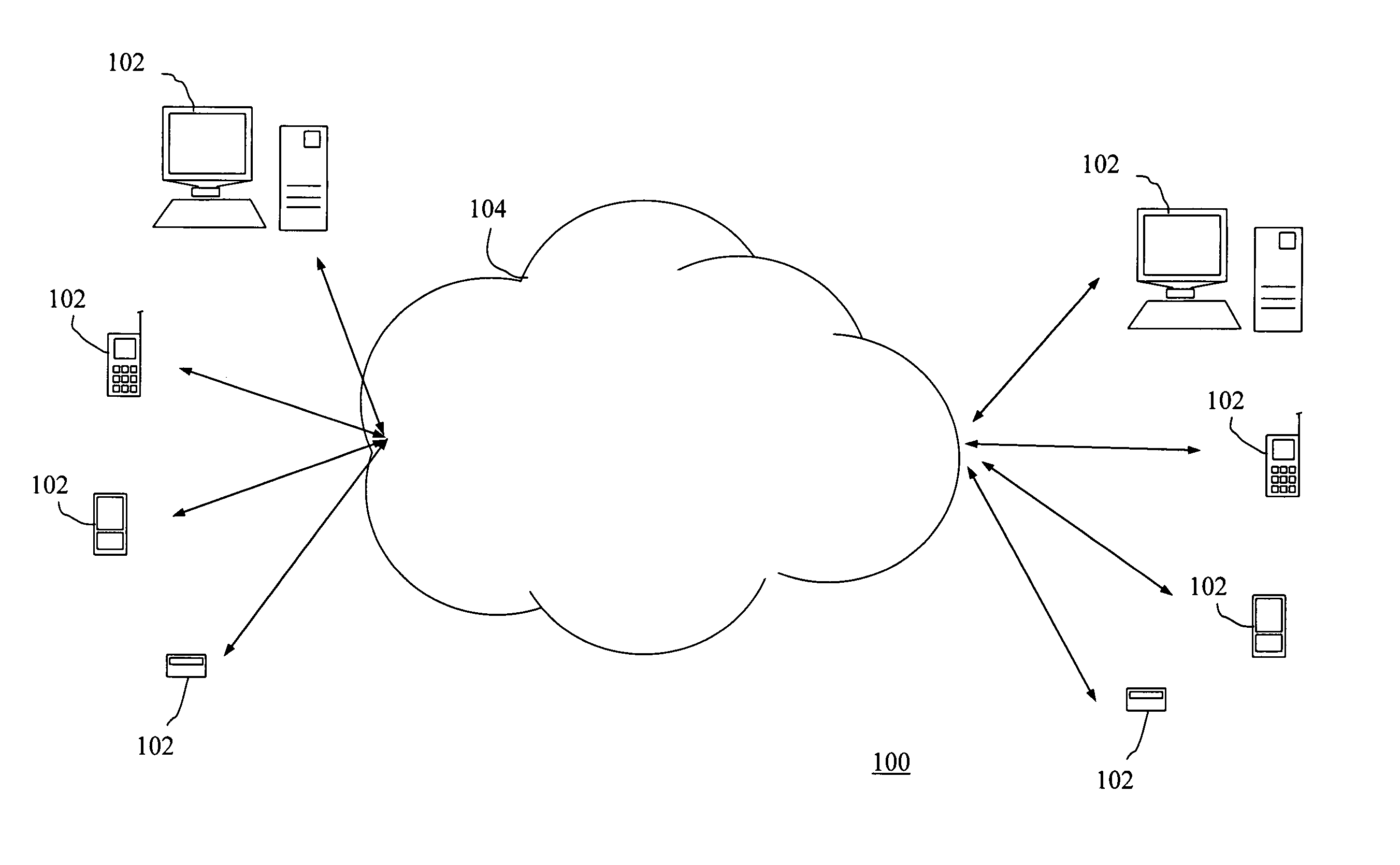

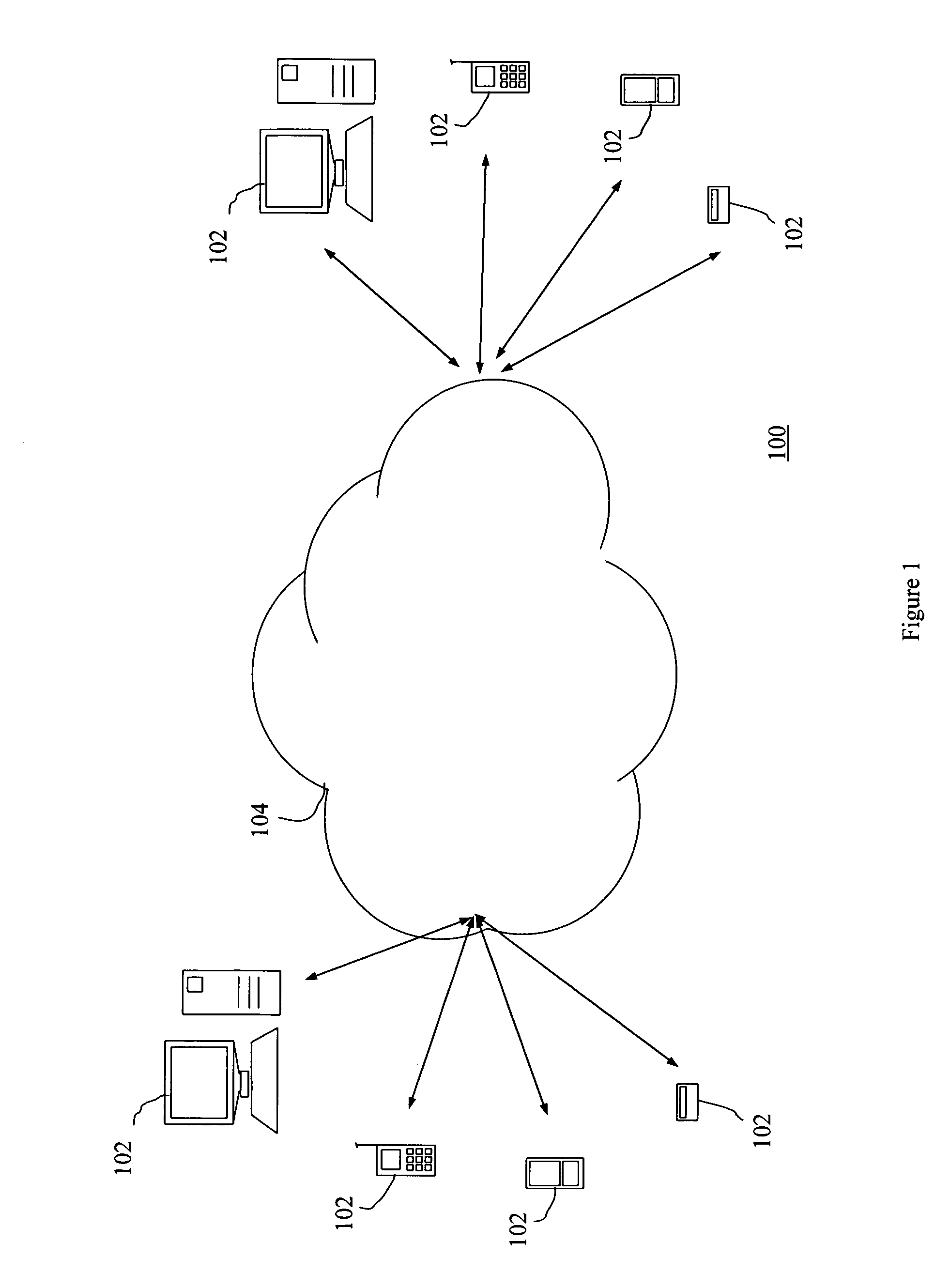

The invention discloses an interactive speech recognition method based on a cloud network. The method comprises the following steps that: 1) a local speech recognition engine is established on a client, a remote speech recognition engine is established on a server, client speech is collected and speech characteristic information is extracted; 2) the client recognizes the speech characteristic information, if the local speech recognition engine recognizes successfully, identifying information is returned to a user; if the local speech recognition engine does not recognize successfully, the speech characteristic information is sent to the server; 3) the server uses the remote speech recognition engine to recognize the speech characteristic information, return an optimal recognition result information and send to the client, and the client scores aiming at the recognition result and sends to the server; 4) the server organizes a speech recognition database of the remote speech recognition engine according to the scoring of the client and synchronizes to the each client. The method has the following advantages that: a speech recognition effect is good; a self-learning function is possessed; usage is simple and convenient.

Owner:苏州奇可思信息科技有限公司

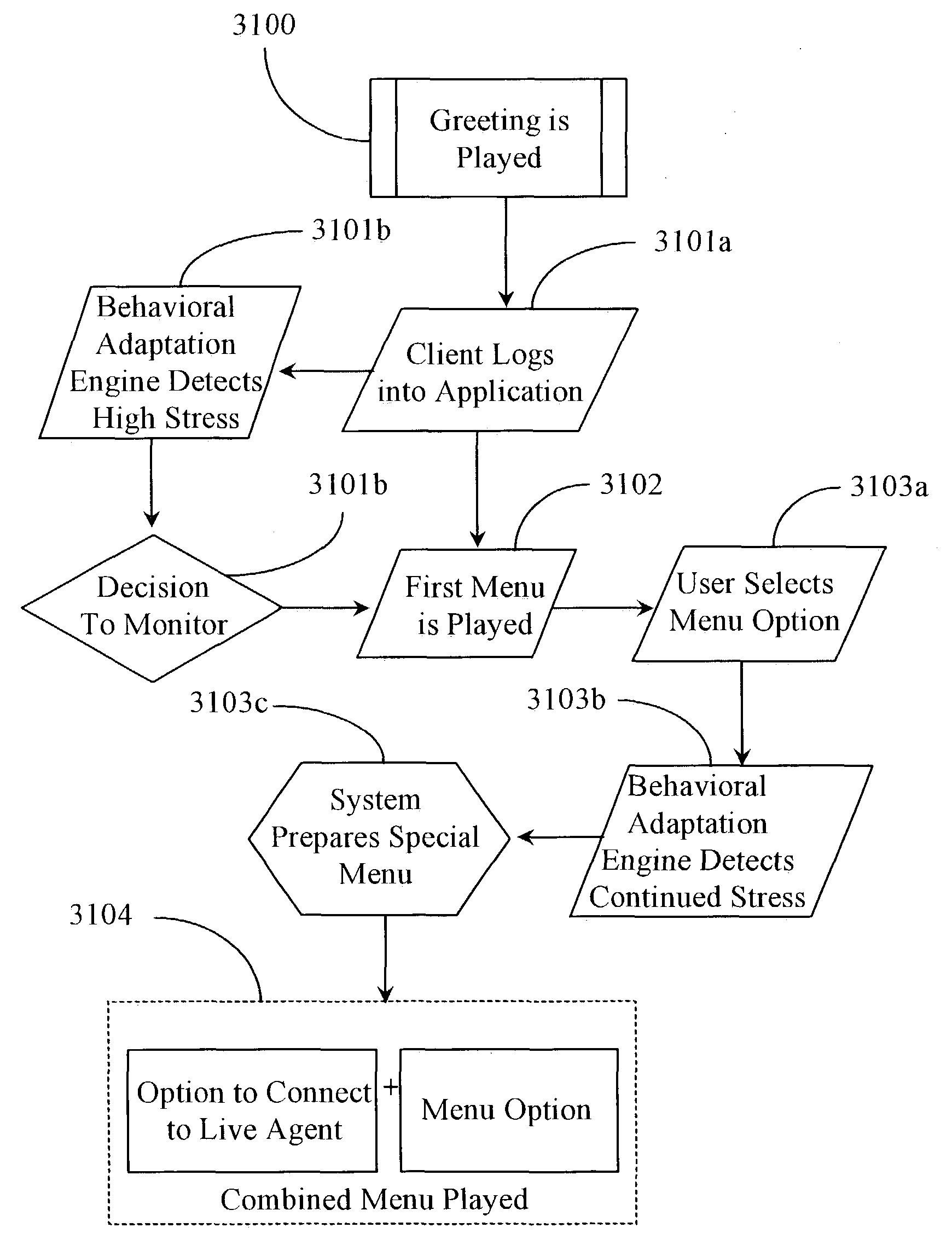

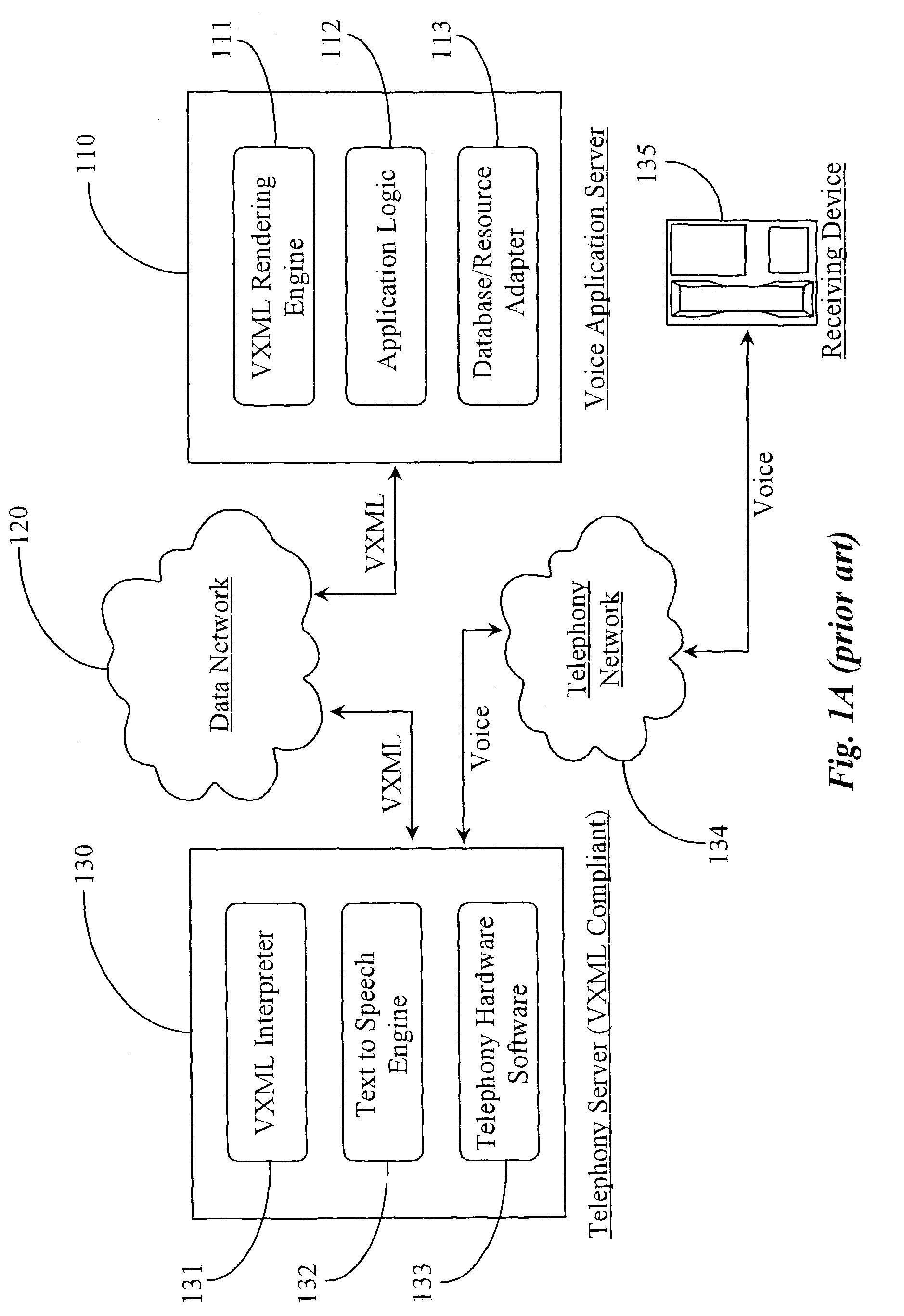

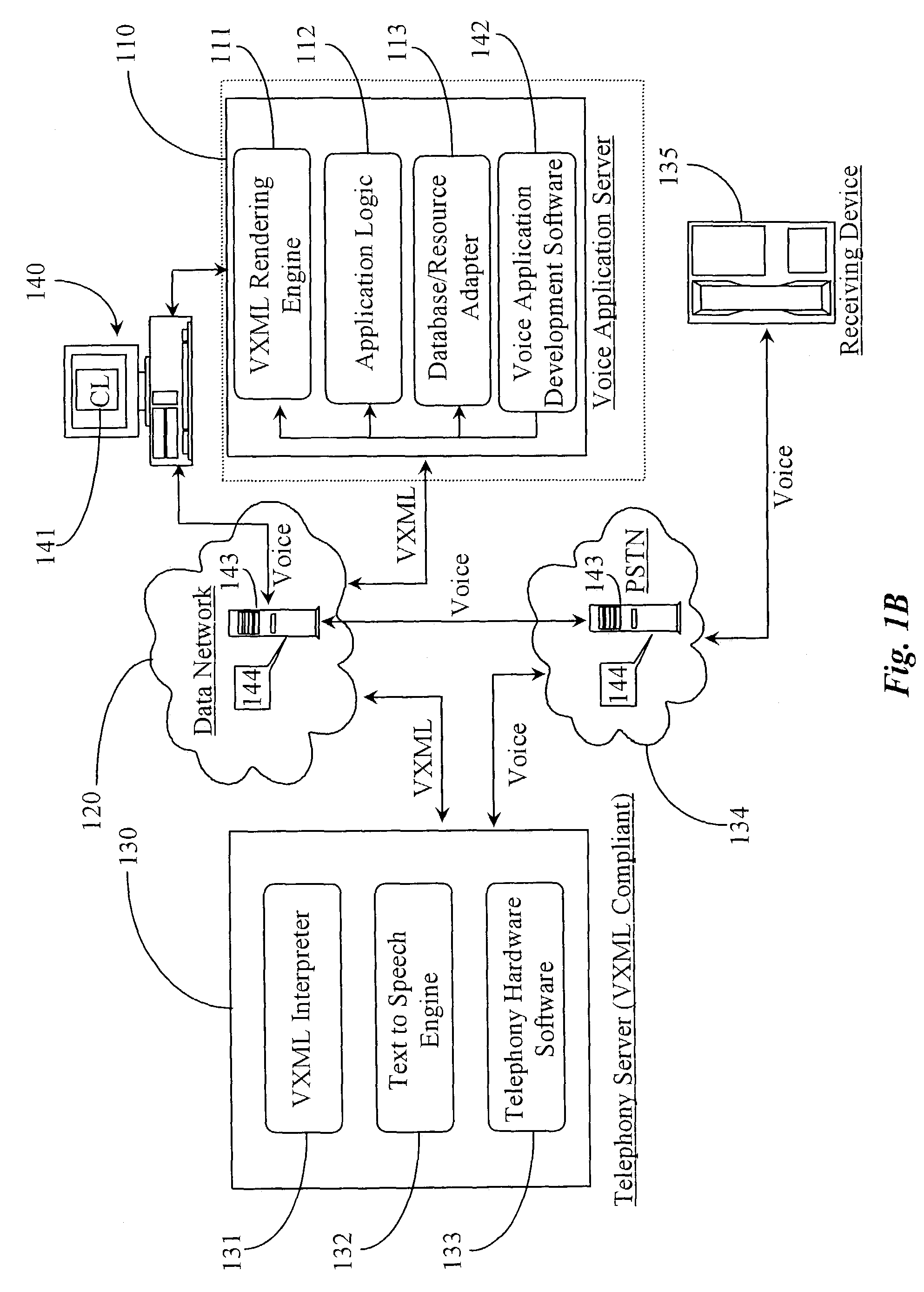

Behavioral adaptation engine for discerning behavioral characteristics of callers interacting with an VXML-compliant voice application

ActiveUS7242752B2Narrow selectionAutomatic call-answering/message-recording/conversation-recordingAutomatic exchangesClient dataData system

A behavioral adaptation engine integrated with a voice application creation and deployment system has at least one data input port for receiving XML-based client interaction data including audio files attached to the data; at least one data port for sending data to and receiving data from external data systems and modules; a logic processing component including an XML reader, voice player, and analyzer for processing received data; and a decision logic component for processing result data against one or more constraints. The engine intercepts client data including dialog from client interaction with a served voice application in real time and processes the received data for behavioral patterns and if attached, voice characteristics of the audio files whereupon the engine according to the results and one or more valid constraints identifies one or a set of possible enterprise responses for return to the client during interaction.

Owner:HTC CORP

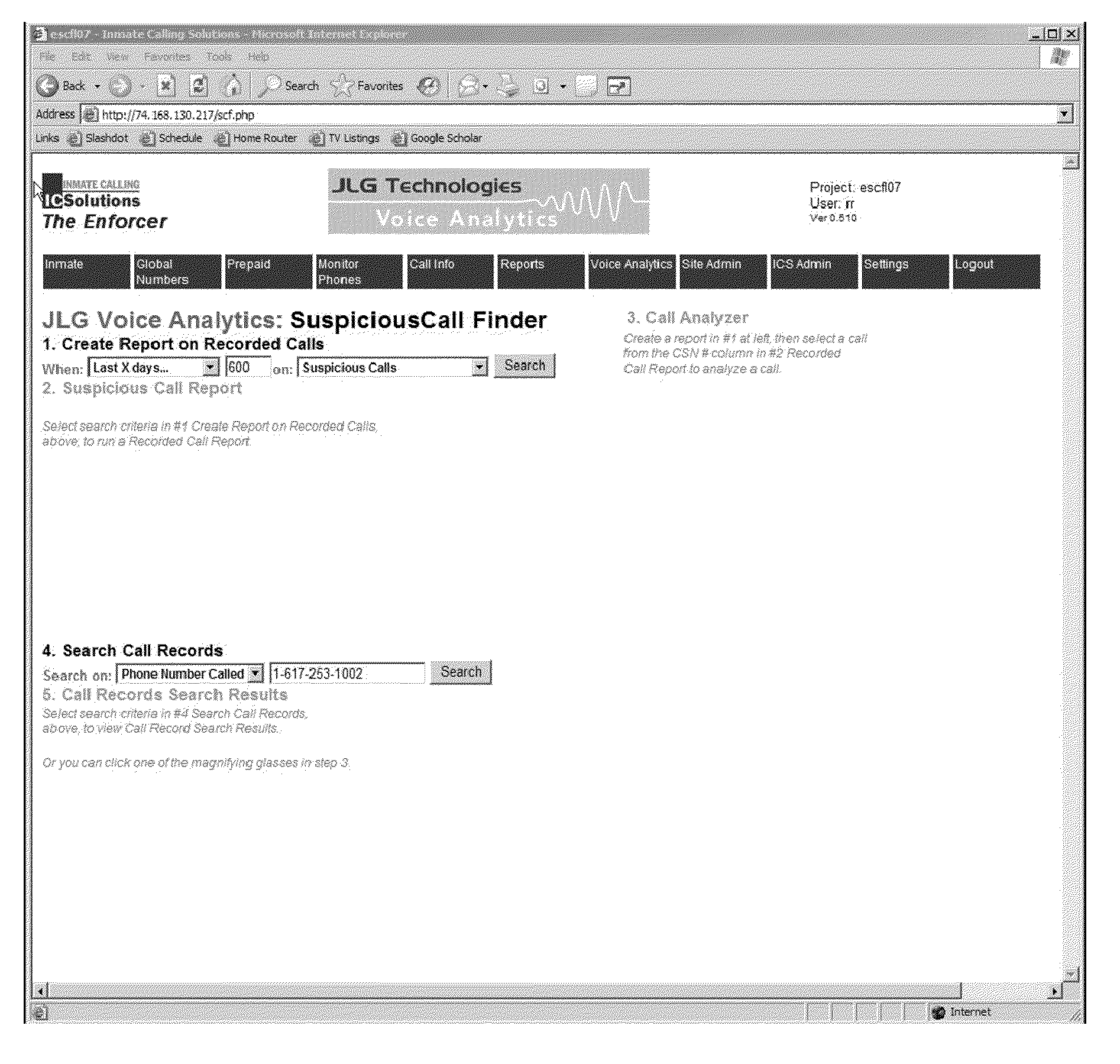

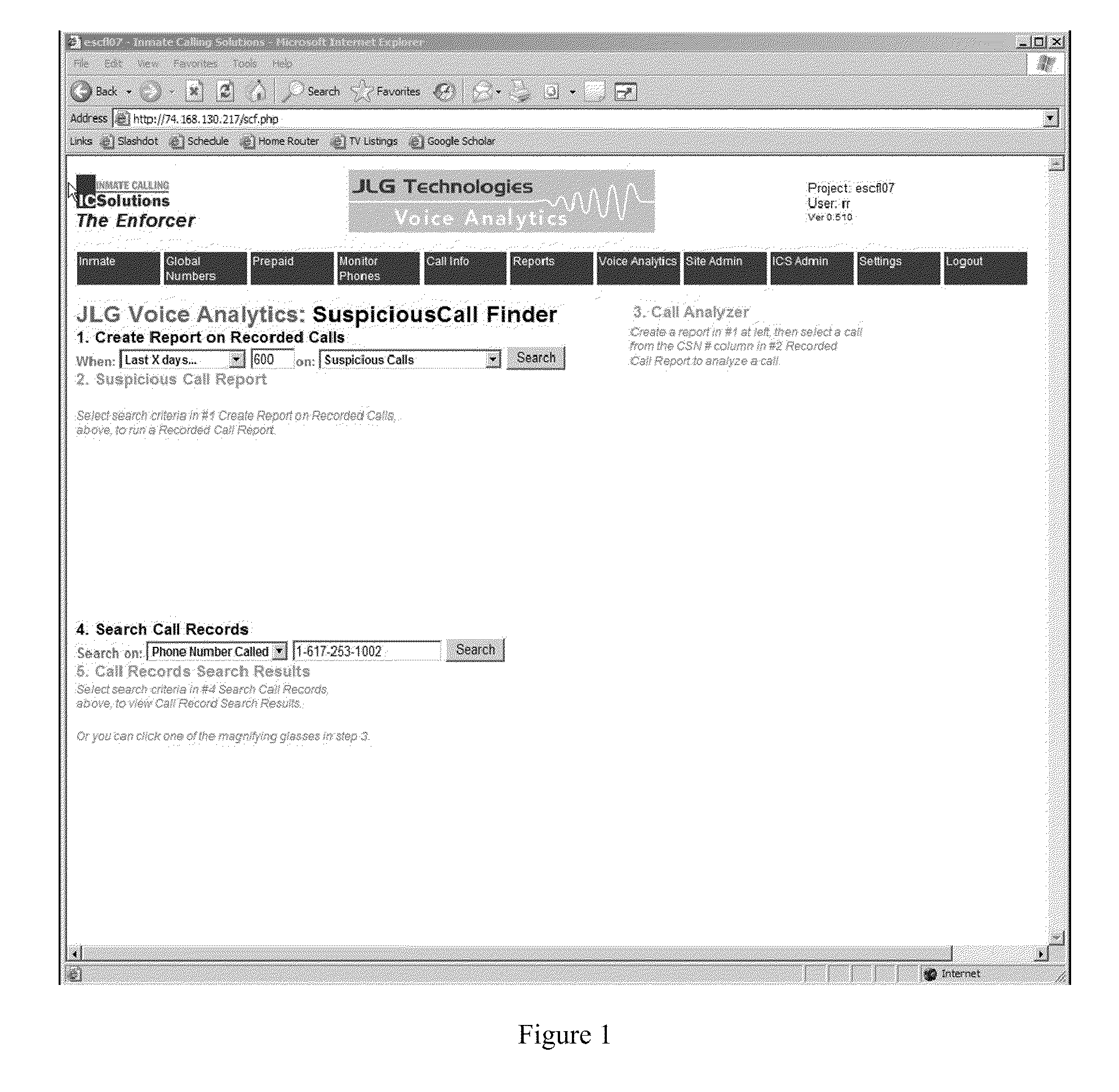

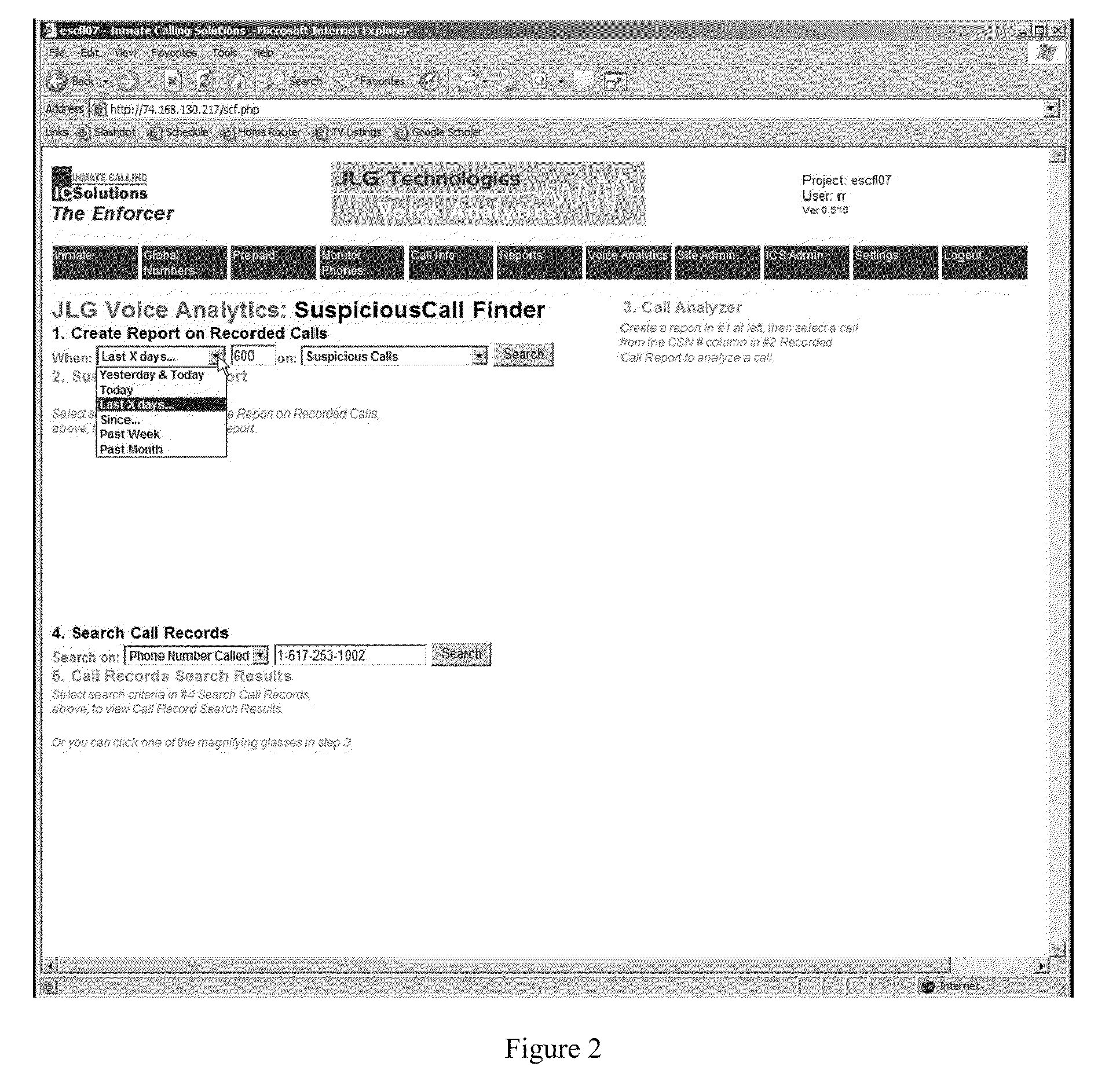

Multi-party conversation analyzer & logger

ActiveUS20110082874A1Increasing customer retentionFrustration on partDigital data information retrievalDigital data processing detailsGraphicsIdentity theft

The multi-party conversation analyzer of the present invention allows users to search a database of recorded phone calls to find calls which fit user-defined criteria for “suspicious calls”. Such criteria may include indications that a call included a 3-way call event, presence of an unauthorized voiced during the call, presence of the voice of an individual known to engage in identity theft, etc. A segment of speech within a call may be graphically selected and a search for calls with similar voices rapidly initiated. Searches across the database for specified voices are speeded by first searching for calls which contain speech from cohort speakers with similar voice characteristics.

Owner:SECURUS TECH LLC

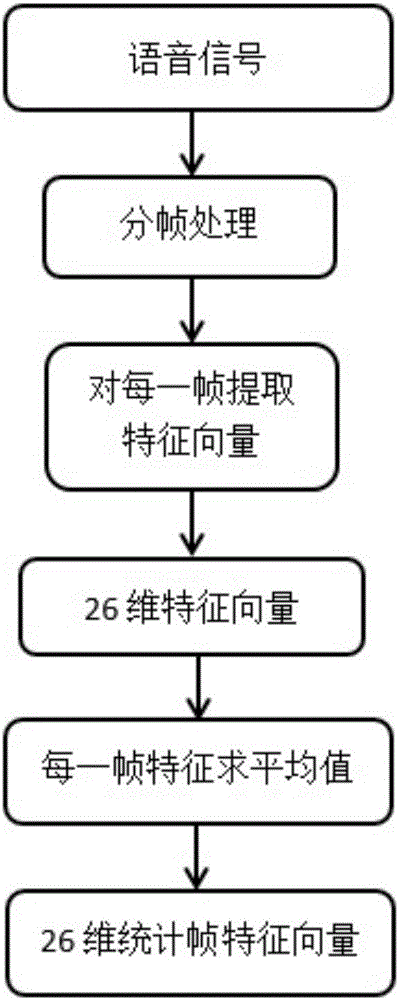

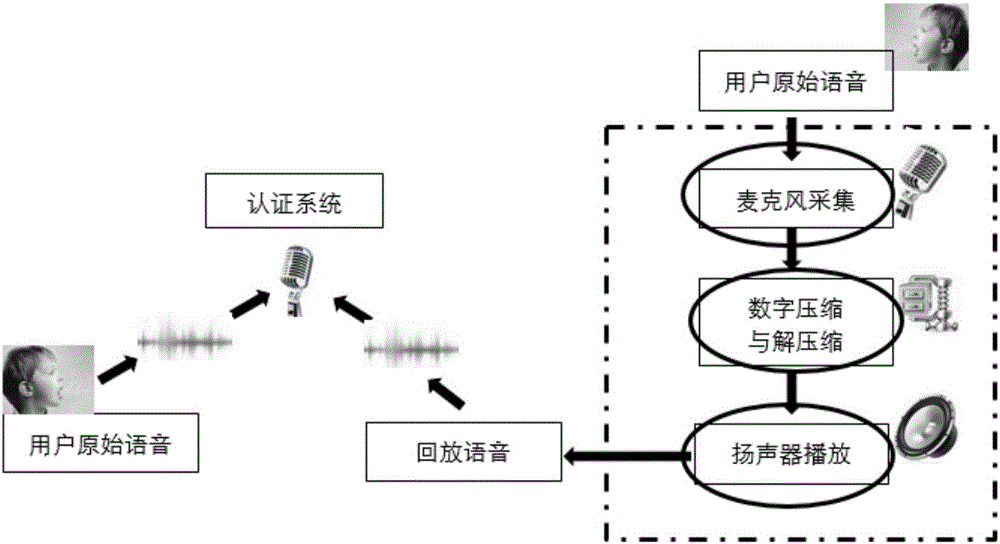

Replay attack detection method based on distortion features of speech signals introduced by loudspeaker

InactiveCN106297772AEfficient detectionSpeech recognitionTransmissionNonlinear distortionFeature vector

The invention discloses a replay attack detection method based on the distortion features of speech signals introduced by a loudspeaker. The method includes the following steps that: speech signals to be detected are pre-processed, and noised speech frames in the speech signals to be detected are reserved; feature extraction is carried out on each noised speech frame in the pre-processed speech signals, so that speech signal linear distortion and nonlinear distortion feature-based feature vectors can be obtained; the average value of the feature vectors of all the noised speech frames is obtained, and statistical feature vectors can be obtained, and the feature model of the speech signals to be detected is obtained; the feature vectors of a training speech sample are extracted, so that a training speech feature model can be obtained, the training speech feature model is utilized to train an SVM (Support Vector Machine model), so that a speech model library can be obtained; and SVM mode matching is performed on the feature model of the speech signals to be detected and the trained speech model library, and a decision result can be outputted. With the replay attack detection method of the invention adopted, real-time and effective detection of replayed speech can be realized.

Owner:WUHAN UNIV

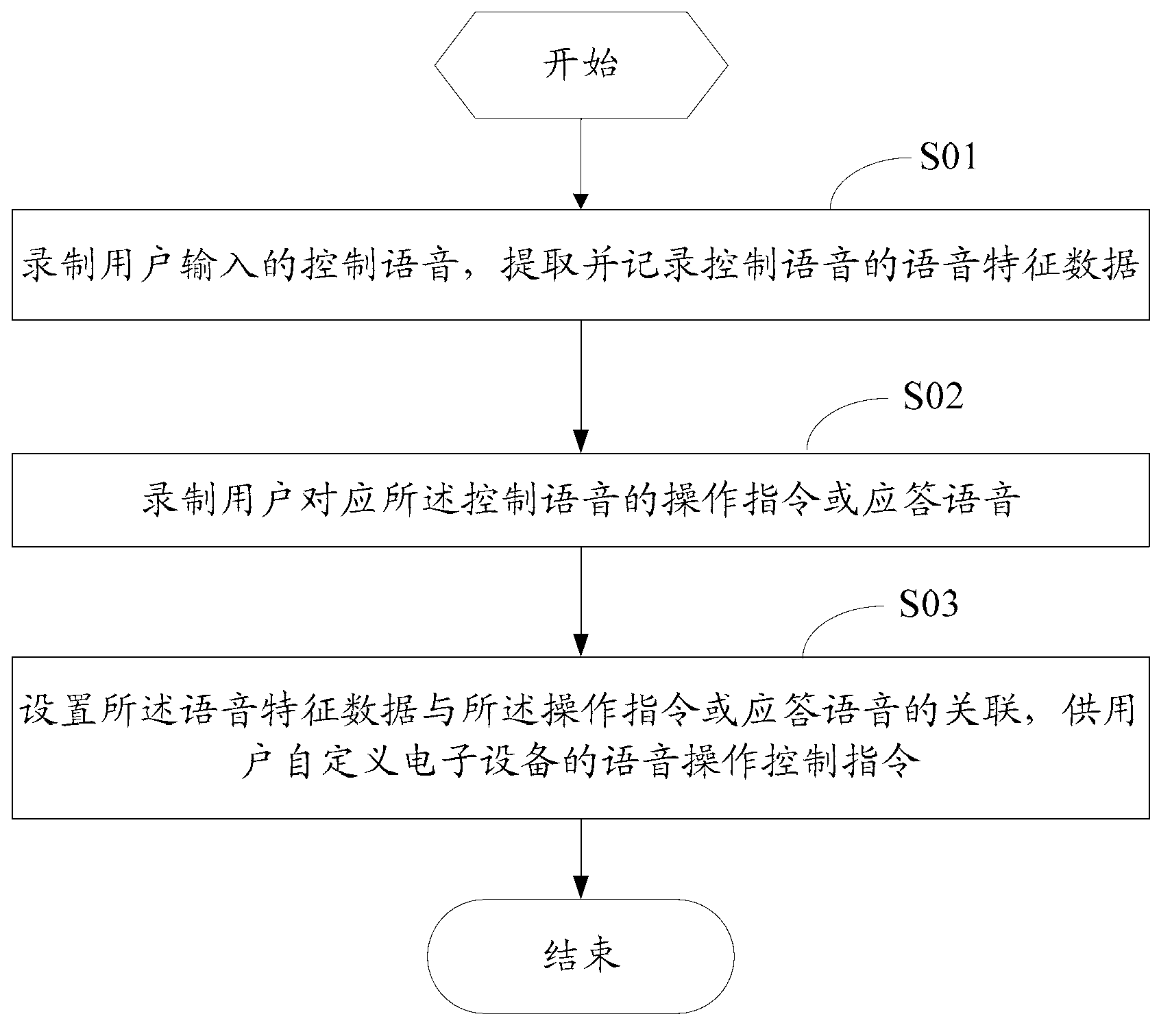

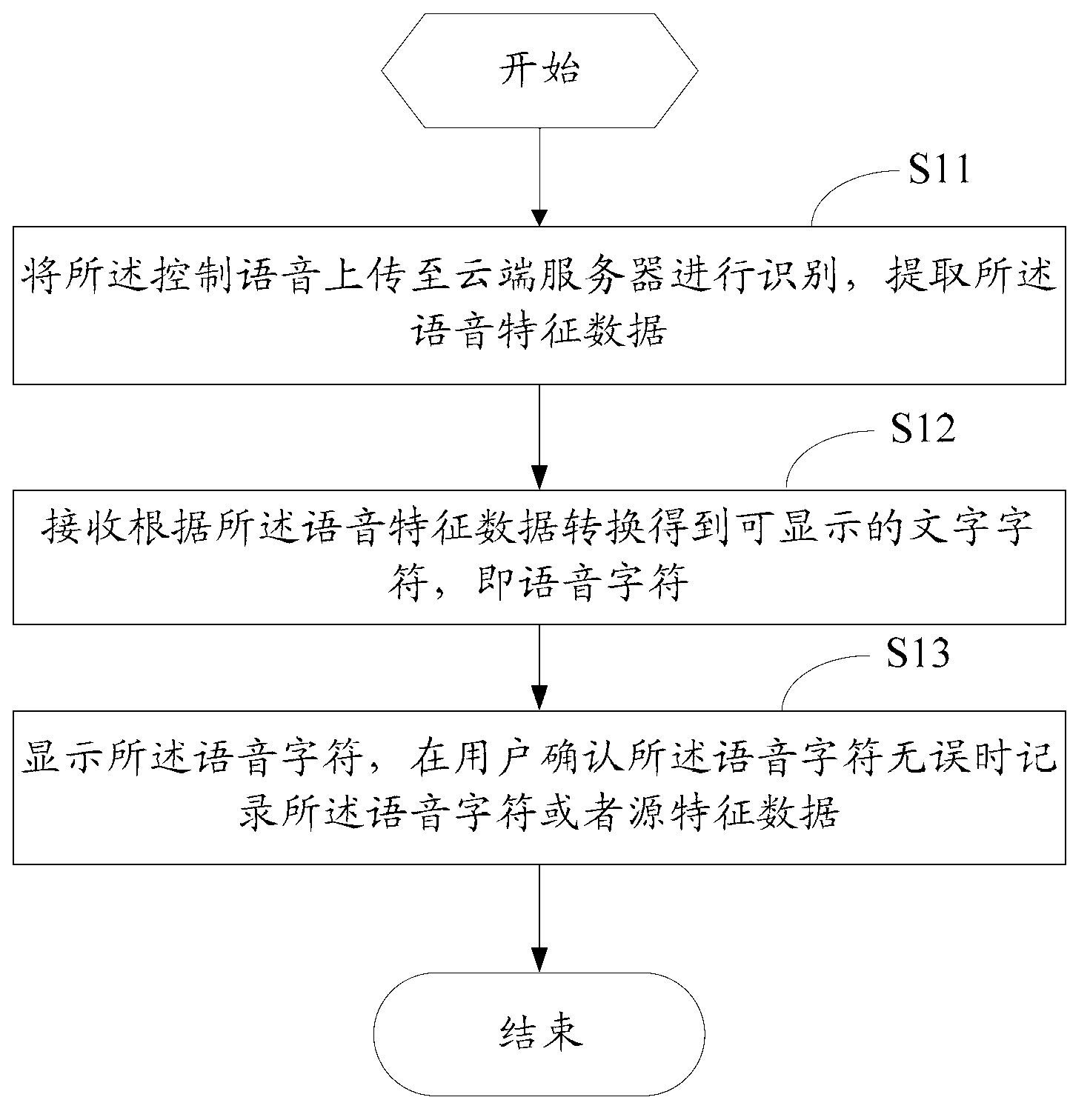

Voice control method and device as well as voice response method and device

ActiveCN102842306AImprove experienceImprove TV performanceSpeech recognitionSelective content distributionResponse methodUser input

The invention discloses a voice control method which comprises the following steps of: recording a control voice input by a user; extracting and recording voice characteristic data of the control voice; recording an operation instruction or a response voice of the user corresponding to the control voice; and setting association between the voice characteristic data and the operation instruction or the response voice for the user to define a voice operation control instruction of electronic equipment. The invention further discloses a voice control device as well as a voice response method and device for responding to the control voice. Through recording the control voice input by the user, extracting and recording the voice characteristic data of the control voice, recording the operation instruction or the response voice of the control voice, setting association between the voice characteristic data and the operation instruction or the response voice and responding to the control voice, the voice control method and device as well as the voice response method and device, disclosed by the invention, have the advantage that the user can define voice identification control and can communicate with a TV set, so that the TV set has a learning function, the TV performance is enhanced and user experience is enhanced.

Owner:SHENZHEN TCL NEW-TECH CO LTD

Automatic identification of telephone callers based on voice characteristics

ActiveUS20050180547A1Automatic call-answering/message-recording/conversation-recordingSpecial service for subscribersAcoustic modelSpeech sound

A method and apparatus are provided for identifying a caller of a call from the caller to a recipient. A voice input is received from the caller, and characteristics of the voice input are applied to a plurality of acoustic models, which include a generic acoustic model and acoustic models of any previously identified callers, to obtain a plurality of respective acoustic scores. The caller is identified as one of the previously identified callers or as a new caller based on the plurality of acoustic scores. If the caller is identified as a new caller, a new acoustic model is generated for the new caller, which is specific to the new caller.

Owner:MICROSOFT TECH LICENSING LLC

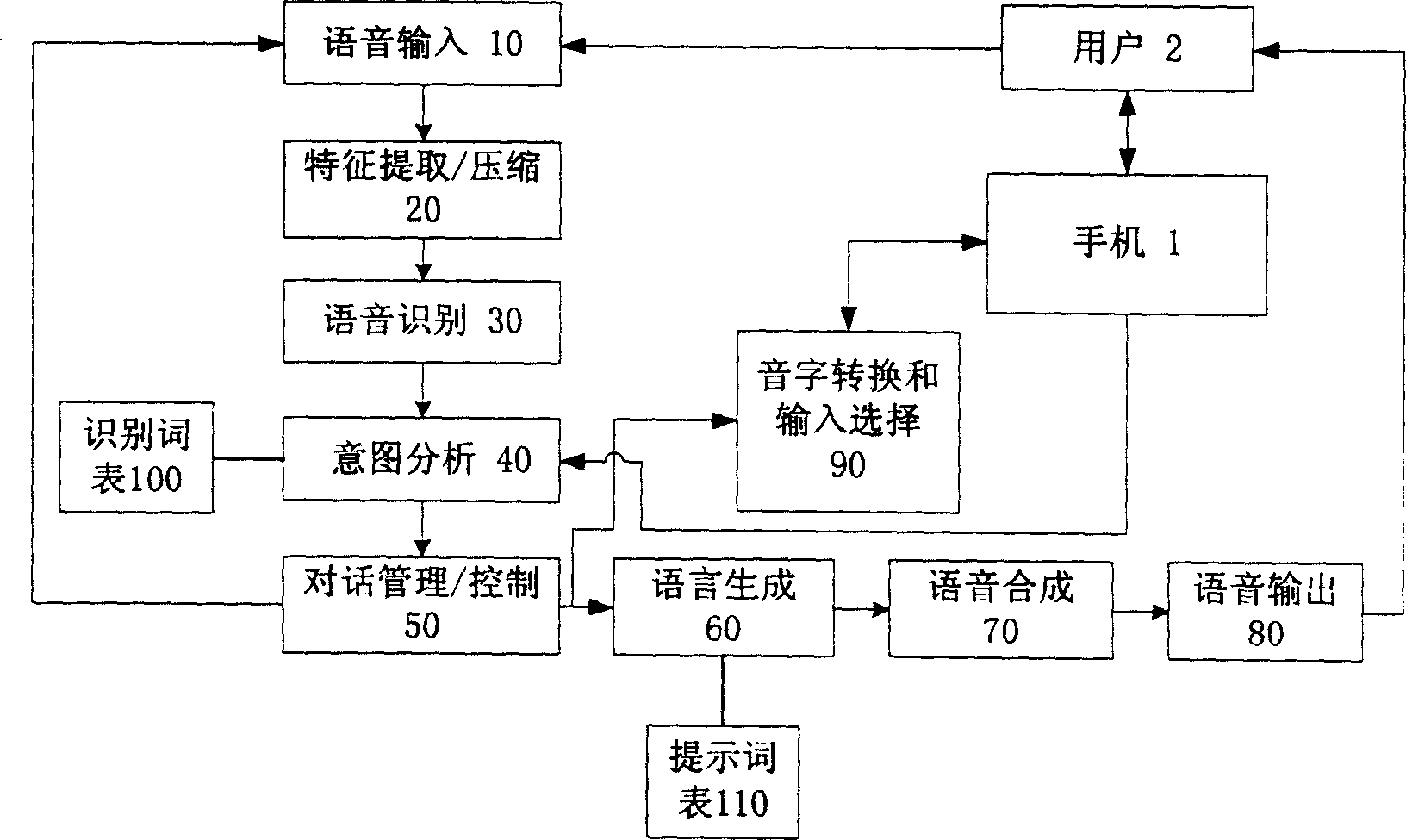

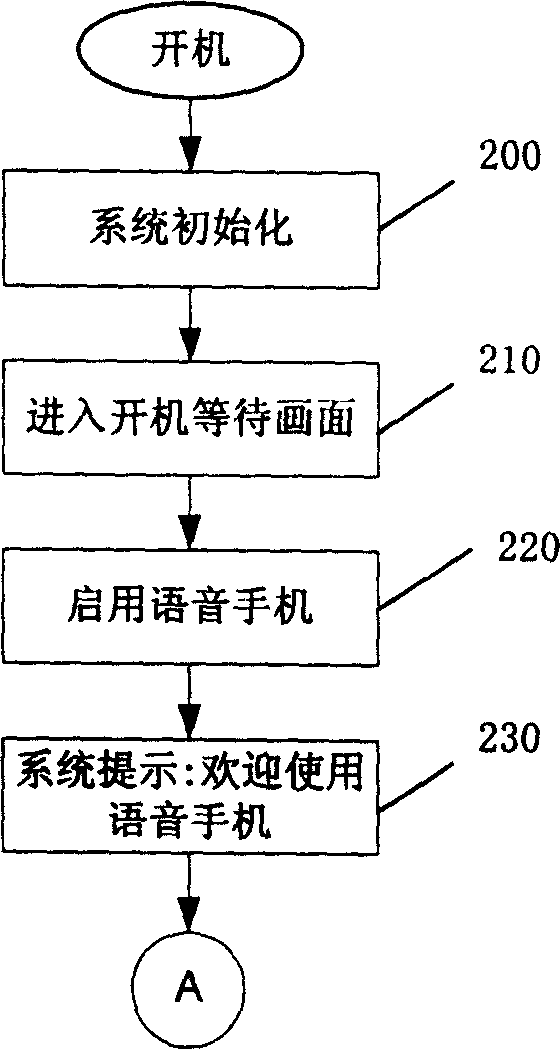

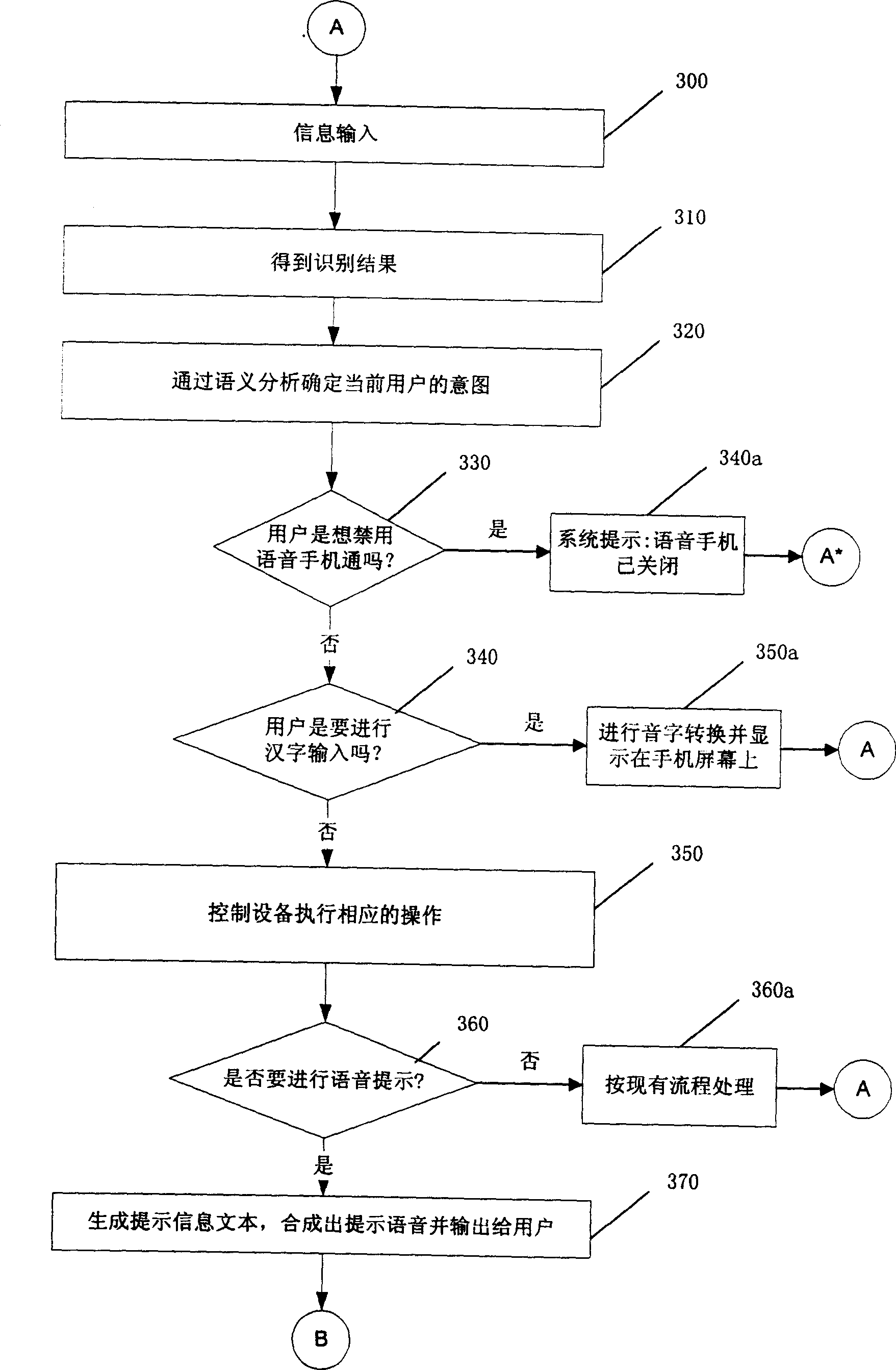

Portable digital mobile communication apparatus and voice control method and system thereof

ActiveCN1703923AFlexible feedbackUser friendlySubscriber signalling identity devicesRadio/inductive link selection arrangementsSyllableOperational system

The present invention discloses a portable digital mobile communication apparatus with voice operation system and controlling method of voice operation. The feature vector sequences of speech are quantify encoded when the speech is recognized, and in decoding operation, each code in efficiency speech character codes are directly looked up observation probability of on search path from the probability schedule in the decode operation. In association with the present invention, full syllabic speech recognition can be achieved in mobile telephone without the need of training, and input Chinese characters by speech and speech prompting with full syllable. This system comprises semantic analysis, dialogue management and language generation module, and it can also process complicated dialog procedure and feed flexible prompting message back to the user. The present invention can also customize speech command and prompting content by user.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

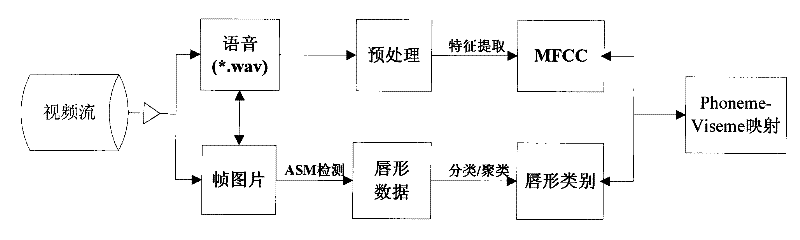

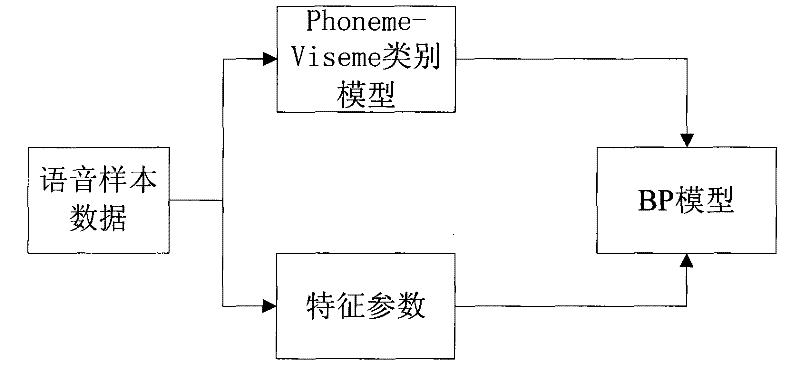

Method for voice-driven lip animation

InactiveCN101751692AImprove exercise efficiencyActionableAnimationSpeech recognitionConsonant vowelAnimation

The invention discloses a method for voice-driven lip animation which is characterized by including the following steps: sorting a syllable structure in Chinese by adopting Consonant-Vowel, collecting the original video data and audio data of a plurality of persons, acquiring corresponding lips, acquiring voice characteristic data, training and modeling the acquired lip information and voice characteristic information, and inputting a lip motion sequence synthesized by voice in real time according to the trained model. The method overcomes the defects of the prior art, and has the characteristics of small calculated data volume, strong maneuverability, etc.

Owner:SICHUAN UNIV

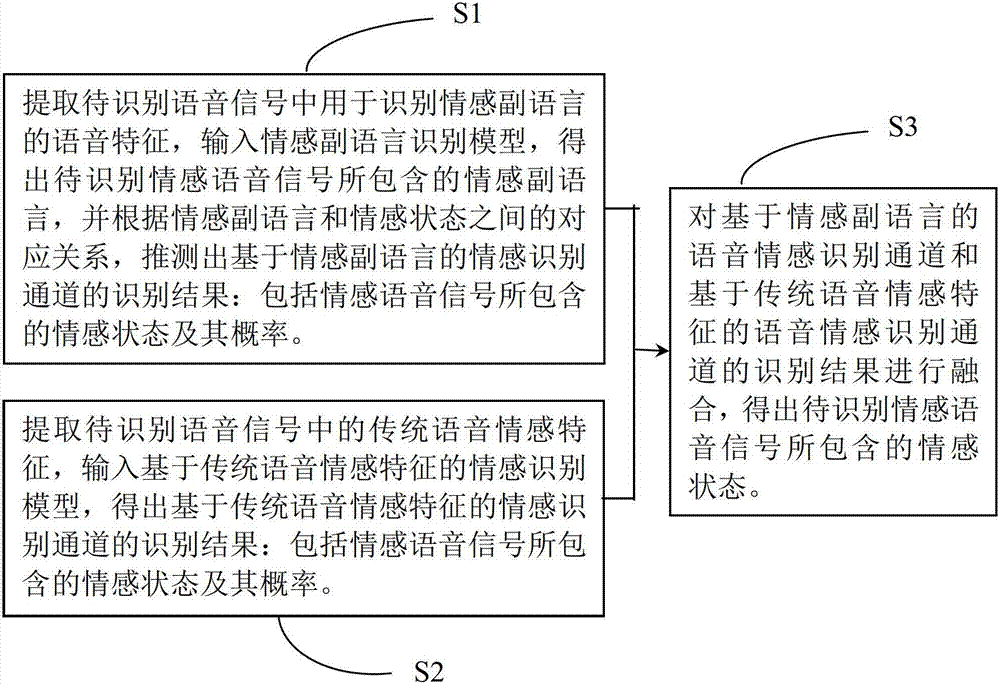

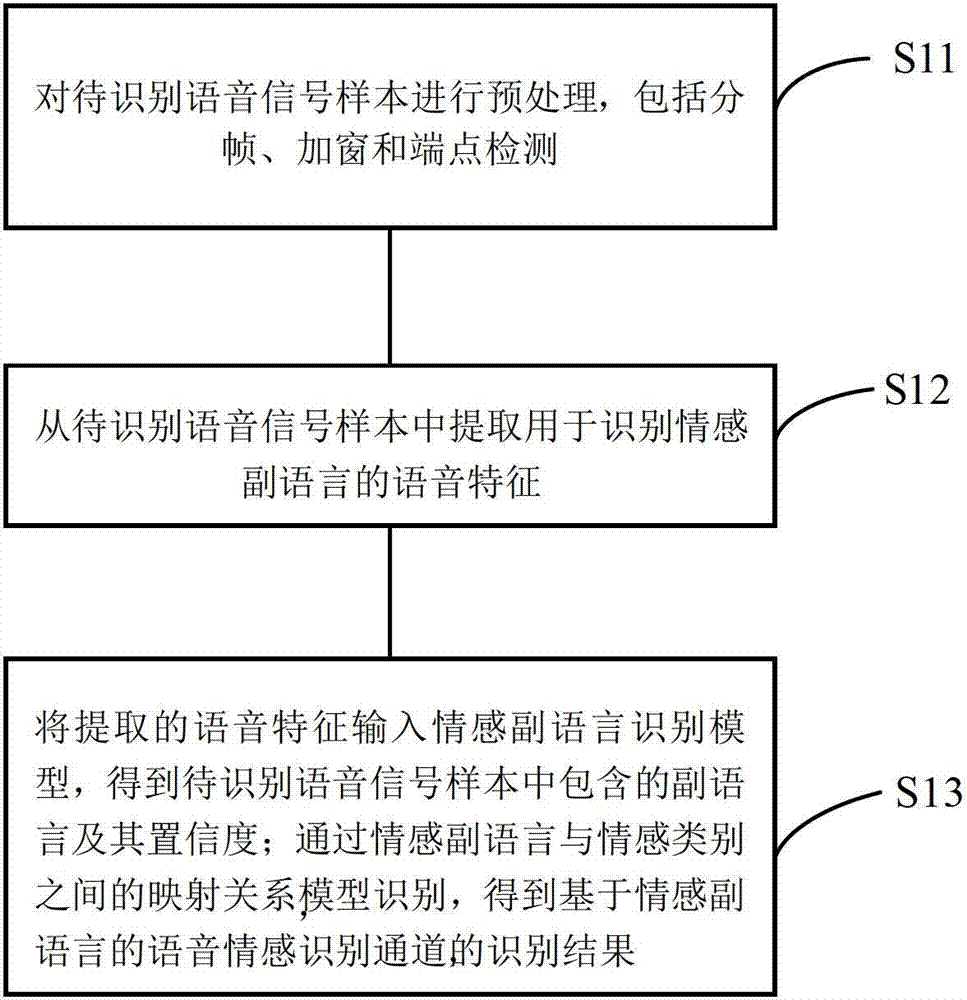

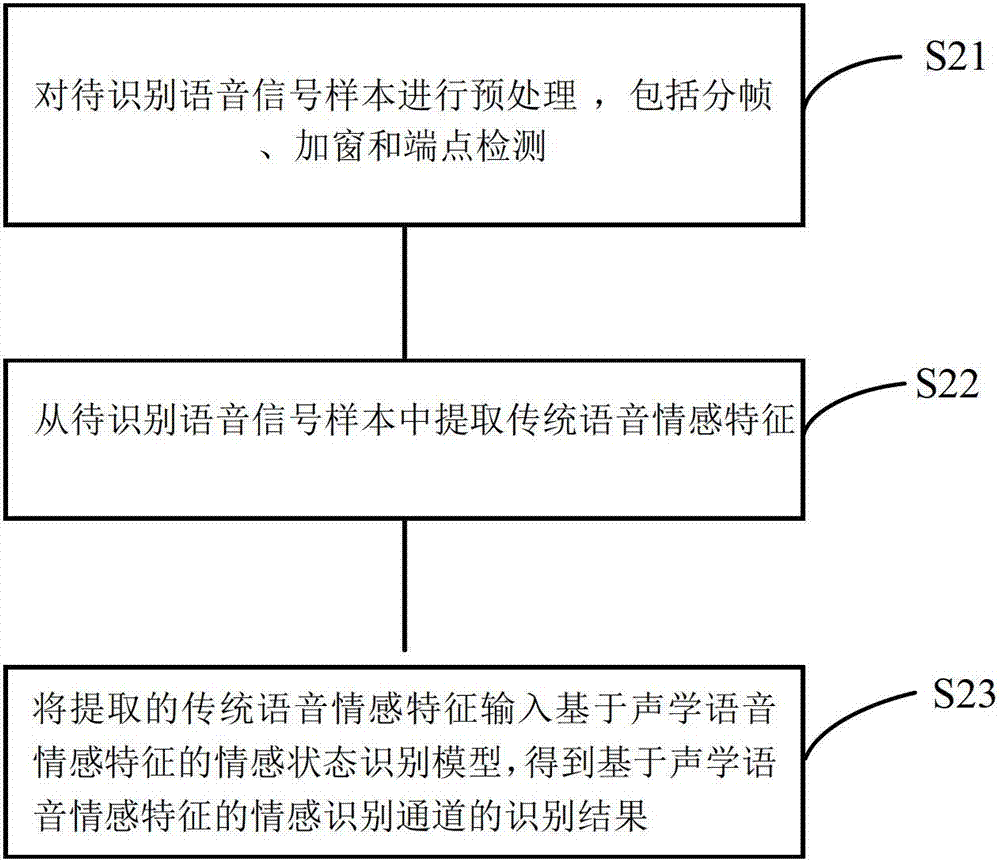

Unspecific human voice and emotion recognition method and system

ActiveCN102881284AOvercoming the shortcoming of being easily disturbed by speaker changesImprove robustnessSpeech recognitionSpeech soundVocal sound

The invention provides an unspecific human voice and emotion recognition method and system, wherein the method comprises the steps of extracting phonetic features used for recognizing the emotional paralanguage from the voice signal to be recognized, extracting acoustic voice emotional characteristics of the emotional voice signal to be recognized, and mixing recognition results of an emotion recognition channel based on emotional paralanguage and an emotion recognition channel based on acoustic voice emotional characteristics to obtain the emotional state contained in the emotional voice signal to be recognized. By utilizing the characteristics that the change of speakers has little influence on the emotional paralanguage, the emotional paralanguage reflecting the emotion information can be extracted from the emotional voice signal, and the emotion information contained in the emotional paralanguage can assist the auxiliary acoustic emotional characteristics for emotion recognition, so that the purposes of improving the robustness and recognition rate of the voice and emotion recognition can be achieved.

Owner:JIANGSU UNIV

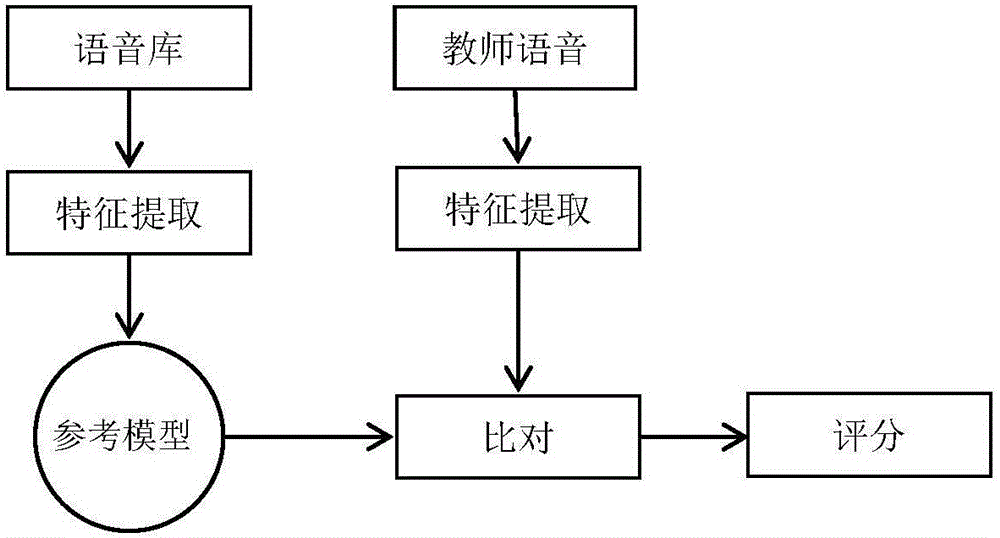

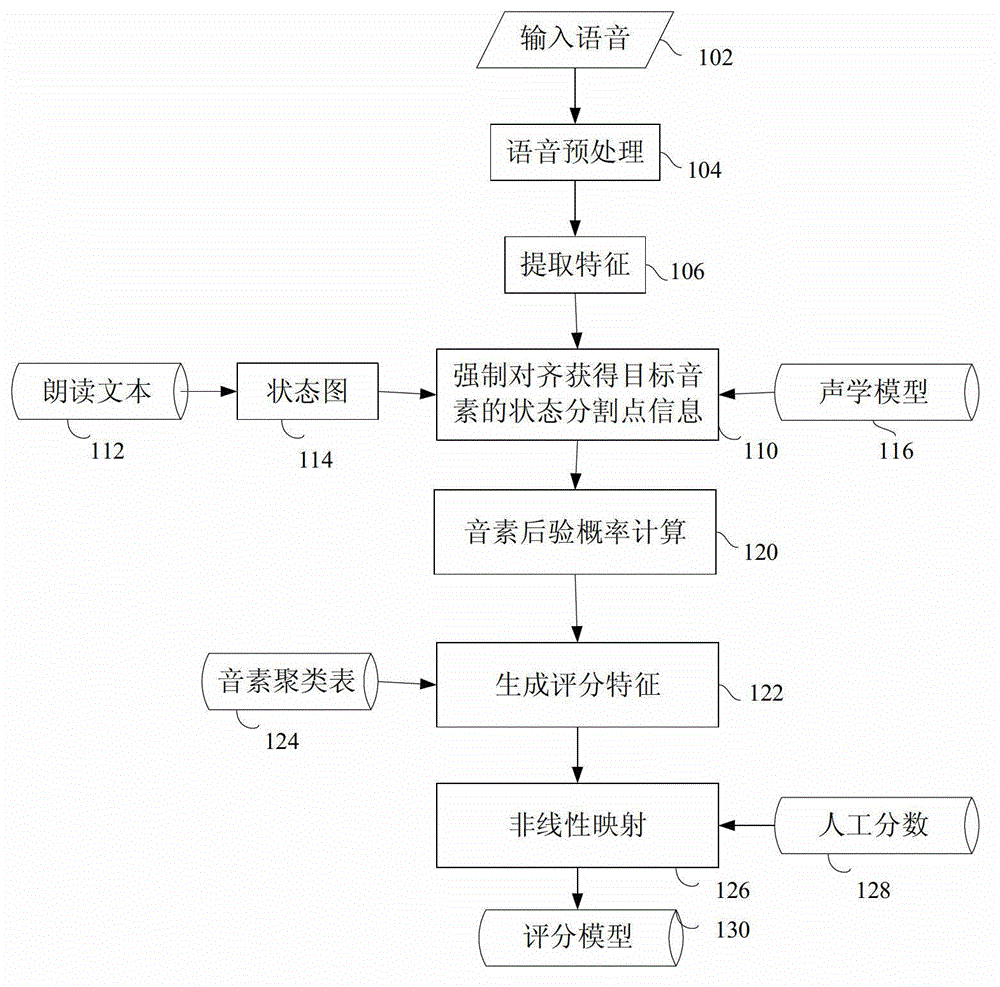

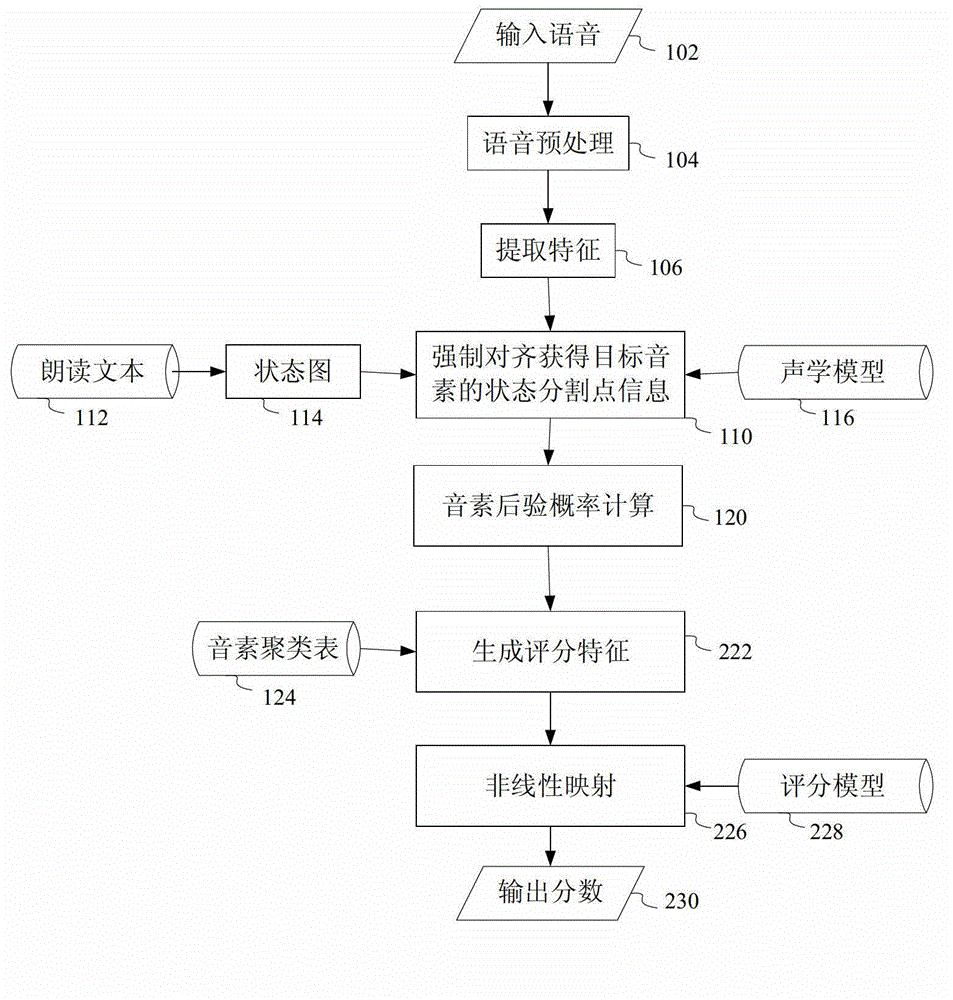

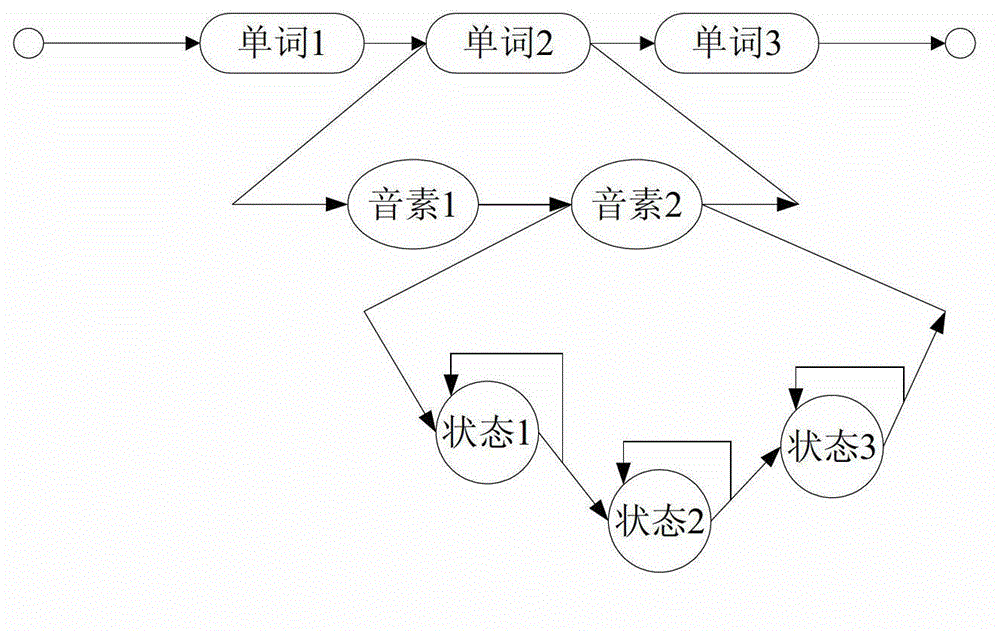

Automatic grading method and automatic grading equipment for read questions in test of spoken English

ActiveCN103065626ADoes not deviate from human scoringSpeech recognitionTeaching apparatusSpoken languageAlgorithm

The invention provides an automatic grading method and automatic grading equipment for read questions in a test of spoken English. According to the automatic grading method, preprocessing is carried out on input voice; the preprocessing comprises framing processing; phonetic feature is extracted from the preprocessed voice; by means of a linear grammar network and an acoustic model set up by reading texts, phonetic feature vector order is forcedly aligned to acquire information of the each break point of each phoneme; according to the information of the each break point of each phoneme, the posterior probability of each phoneme is calculated; based on the posterior probability of each phoneme, multi-dimensional grading characteristics are extracted; and based on the grading characteristics and manual grading information, a nonlinear regression model is trained by means of a support vector regression method, so that the nonlinear regression model is utilized to grade on reading of spoken English. The grading model is trained by means of expert scoring data, and therefore a result of machining grading is guaranteed not to deviate from a manual grading result in statistics, and the high simulation of a computer on the expert grading is achieved.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com