Patents

Literature

132 results about "Mel-frequency cepstrum" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

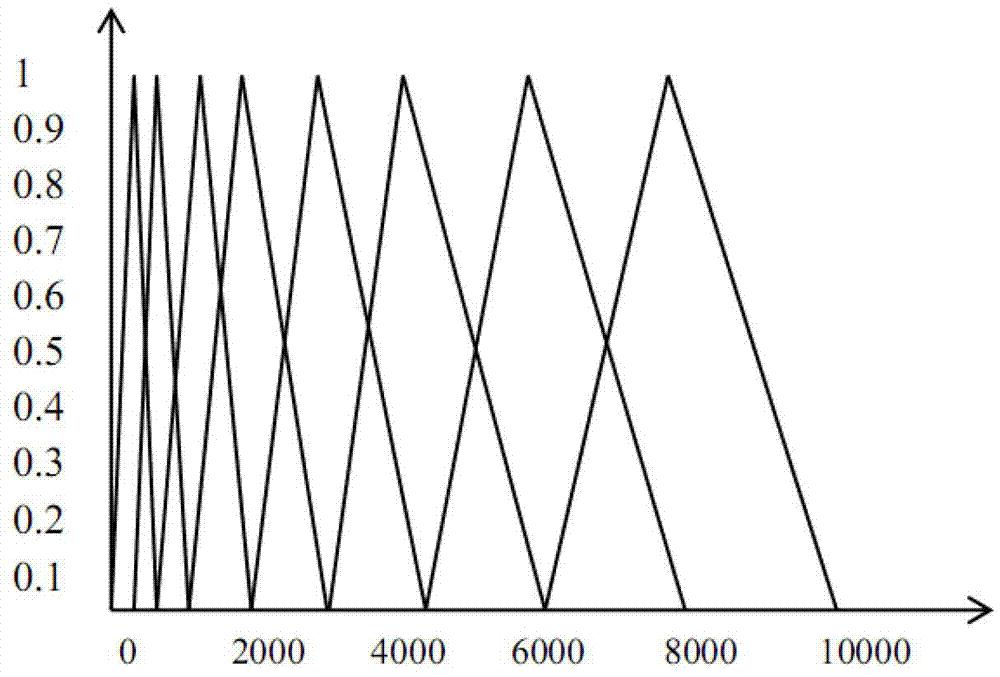

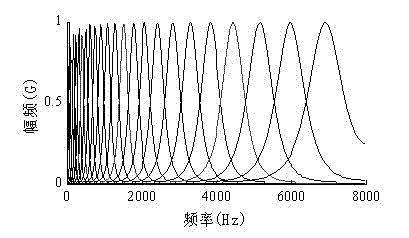

In sound processing, the mel-frequency cepstrum (MFC) is a representation of the short-term power spectrum of a sound, based on a linear cosine transform of a log power spectrum on a nonlinear mel scale of frequency.

Voice recognition system

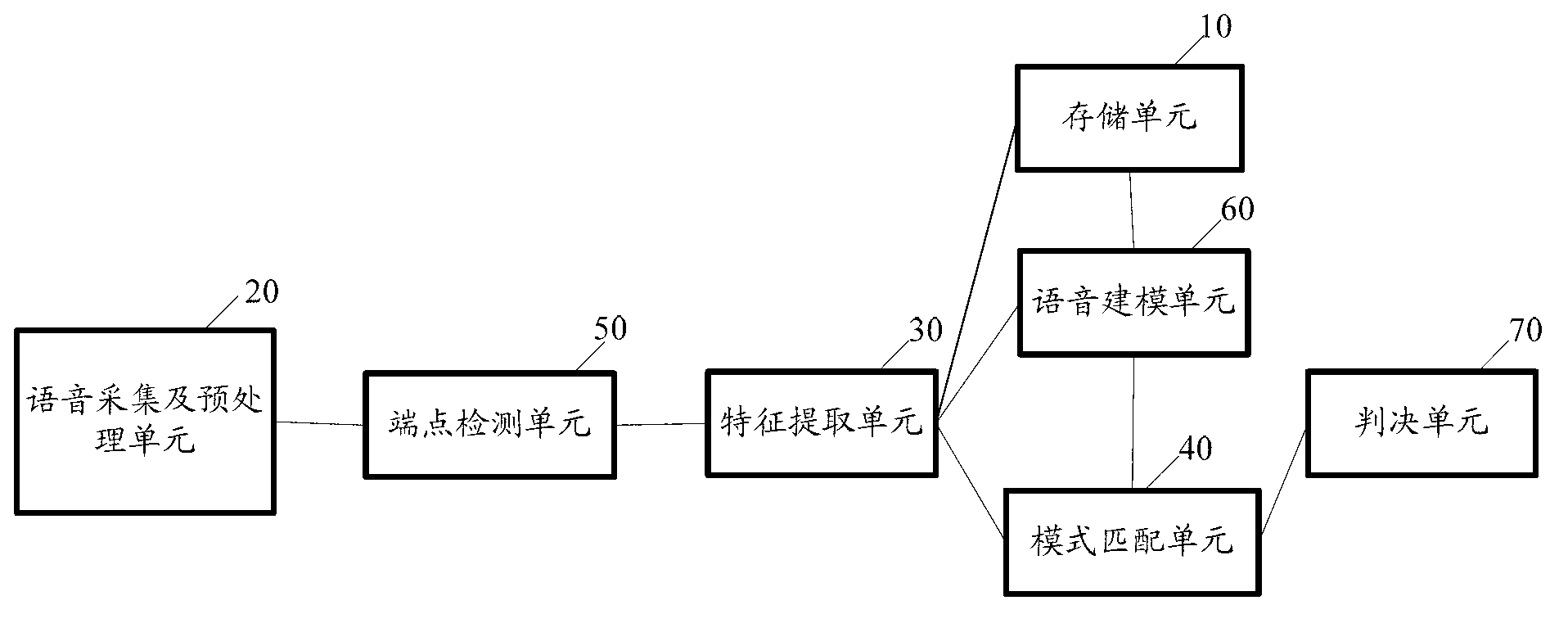

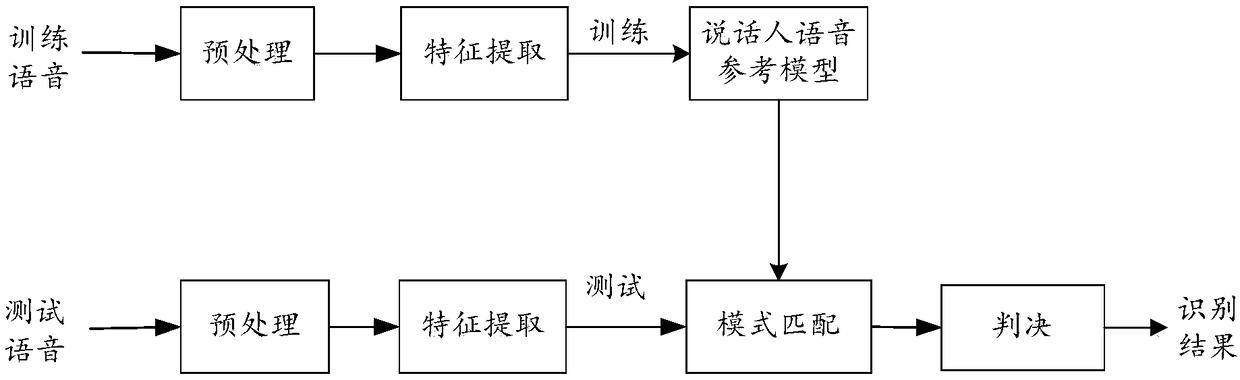

ActiveCN103236260AImprove detection reliabilityRealize the recognition functionSpeech recognitionMel-frequency cepstrumPattern matching

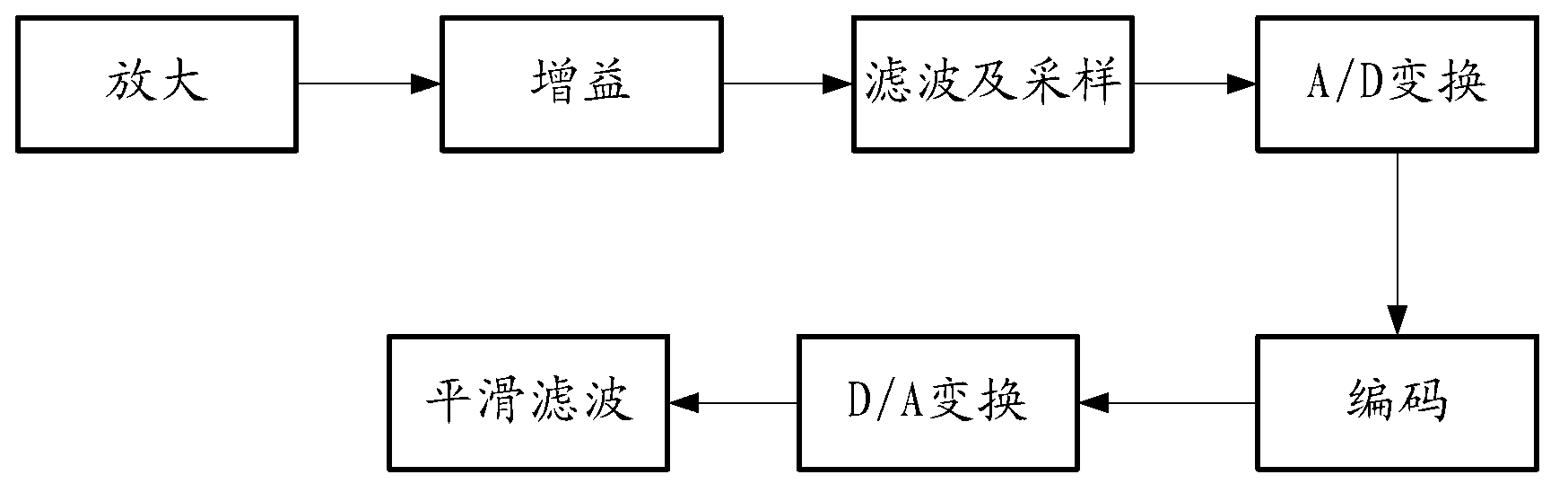

The invention provides a voice recognition system comprising a storage unit, a voice acquisition and pre-processing unit, a feature extraction unit and a pattern matching unit, wherein the storage unit is used for storing at least one user's voice model; the voice acquisition and pre-processing unit is used for acquiring a to-be-identified voice signal, transforming the format of the to-be-identified voice signal and encoding the to-be-identified voice signal; the feature extraction unit is used for extracting voice feature parameters from the encoded to-be-identified voice signal; and the pattern matching unit is used for matching the extracted voice feature parameters with at least one voice model so that the user to which the to-be-identified voice signal belongs is identified. Based on the voice generation principle, voice features are analyzed, MFCC (mel frequency cepstrum coefficient) parameters are used, a speaker voice feature model is established, and speaker feature recognition algorithms are achieved, and thus, the purpose to improve speaker detection reliability can be achieved, and the speaker identification function can be finally realized on an electronic product.

Owner:BOE TECH GRP CO LTD +1

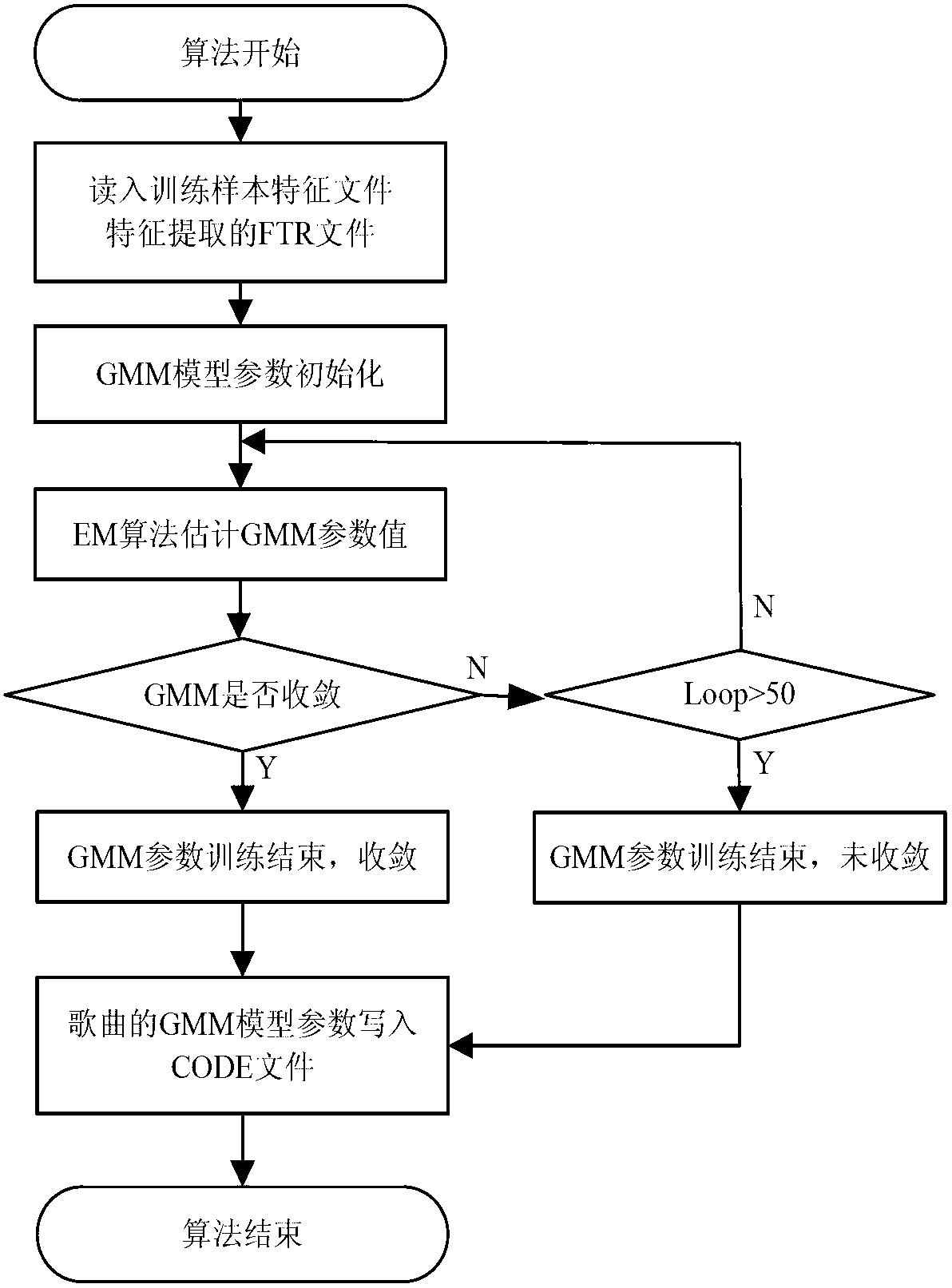

Voiceprint identification method based on Gauss mixing model and system thereof

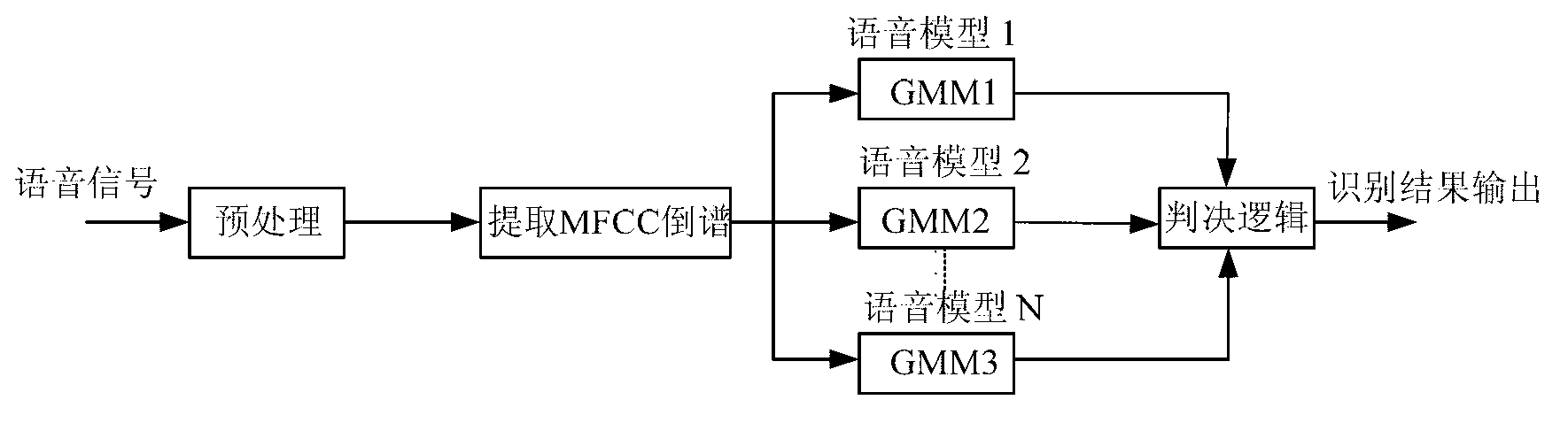

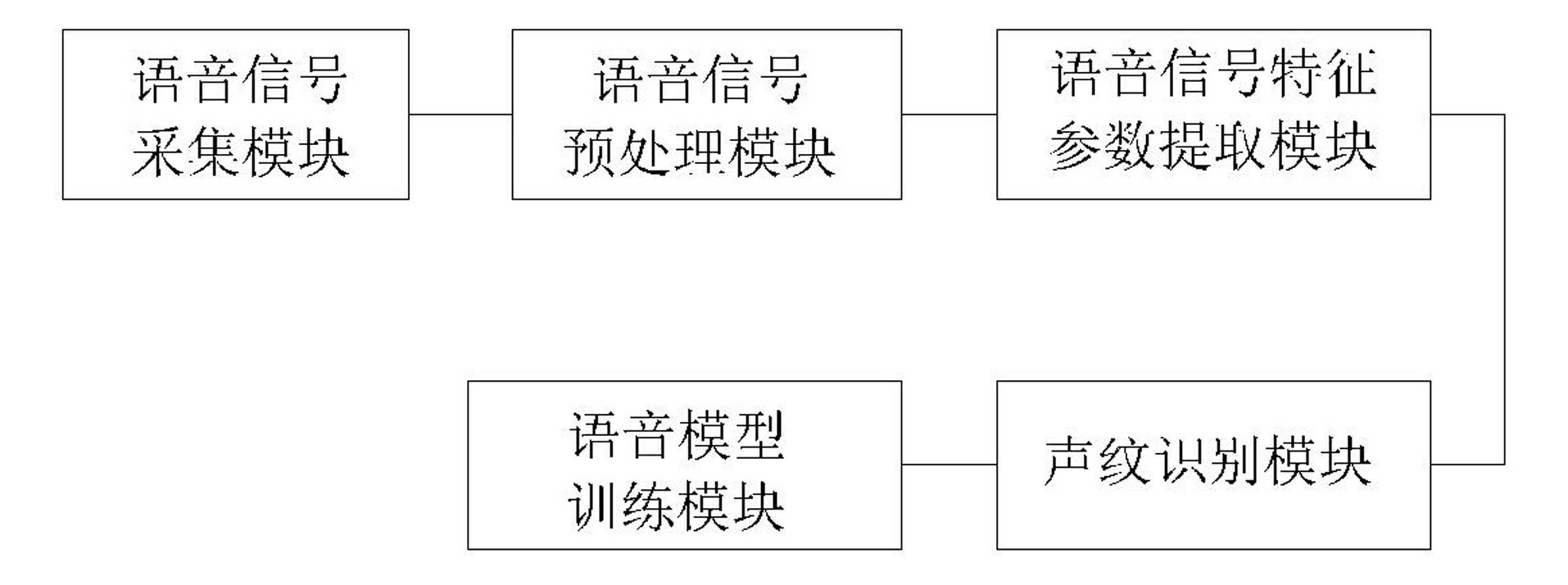

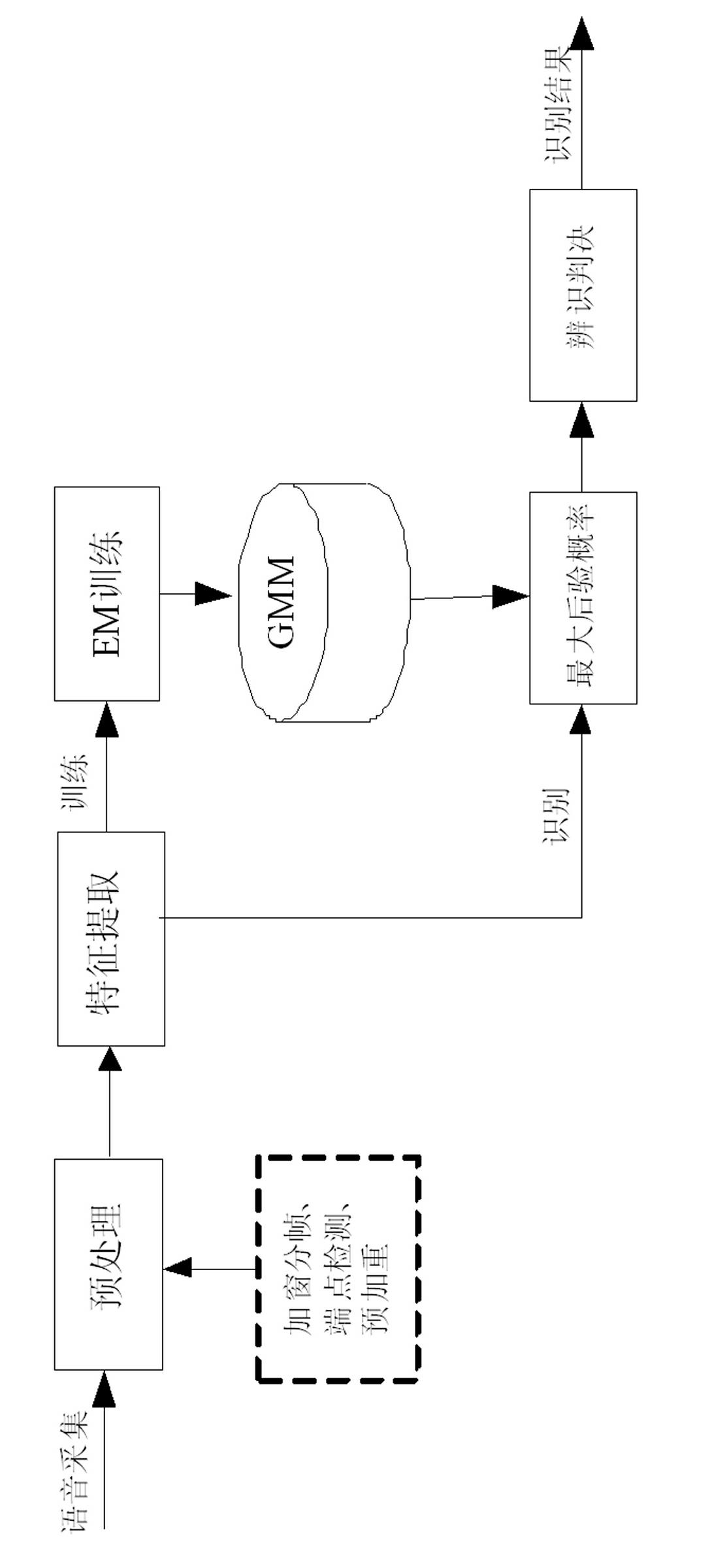

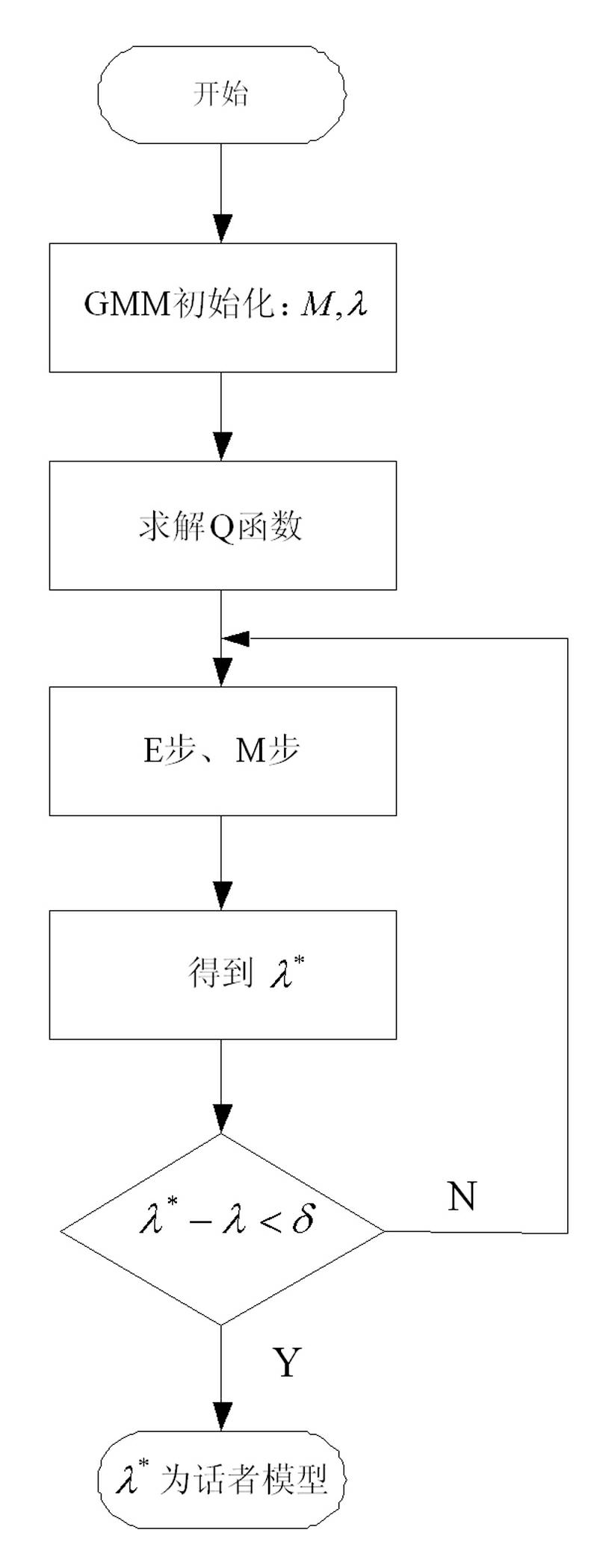

InactiveCN102324232AGood estimateEasy to trainSpeech recognitionMel-frequency cepstrumNormal density

The invention provides a voiceprint identification method based on a Gauss mixing model and a system thereof. The method comprises the following steps: voice signal acquisition; voice signal pretreatment; voice signal characteristic parameter extraction: employing a Mel Frequency Cepstrum Coefficient (MFCC), wherein an order number of the MFCC usually is 12-16; model training: employing an EM algorithm to train a Gauss mixing model (GMM) for a voice signal characteristic parameter of a speaker, wherein a k-means algorithm is selected as a parameter initialization method of the model; voiceprint identification: comparing a collected voice signal characteristic parameter to be identified with an established speaker voice model, carrying out determination according to a maximum posterior probability method, and if a corresponding speaker model enables a speaker voice characteristic vector X to be identified to has maximum posterior probability, identifying the speaker. According to the method, the Gauss mixing model based on probability statistics is employed, characteristic distribution of the speaker in characteristic space can be reflected well, a probability density function is common, a parameter in the model is easy to estimate and train, and the method has good identification performance and anti-noise capability.

Owner:LIAONING UNIVERSITY OF TECHNOLOGY

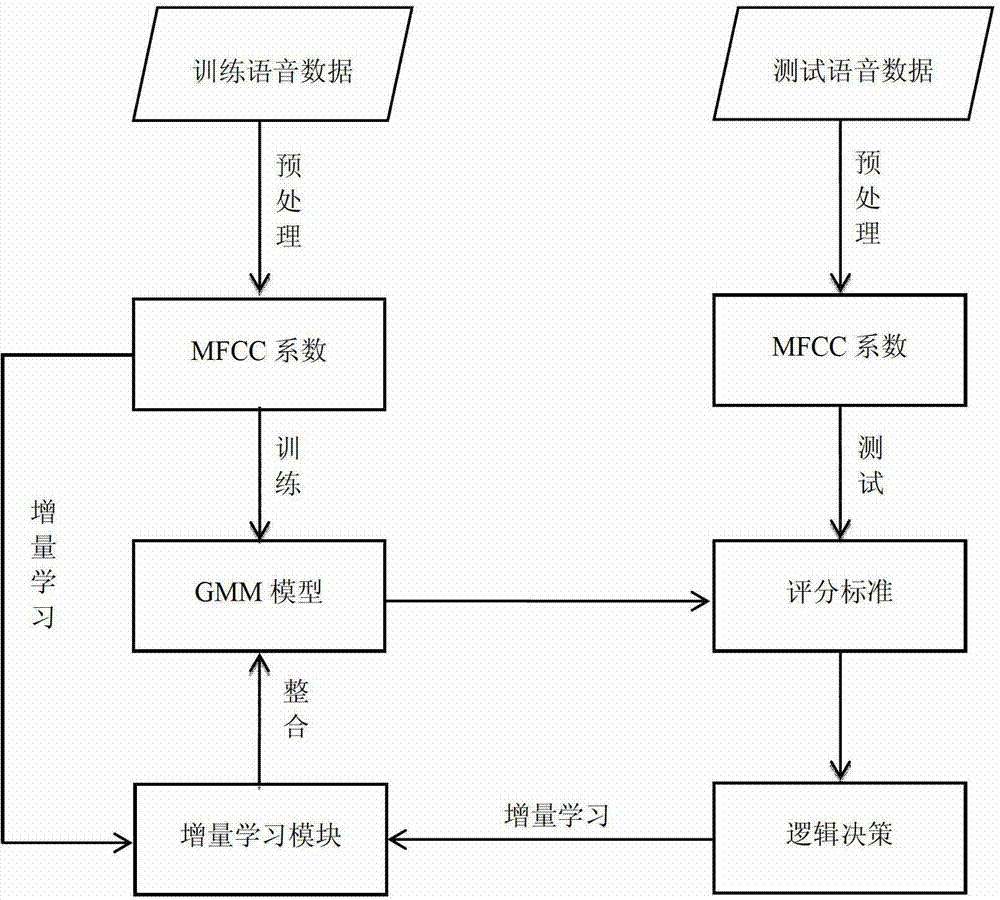

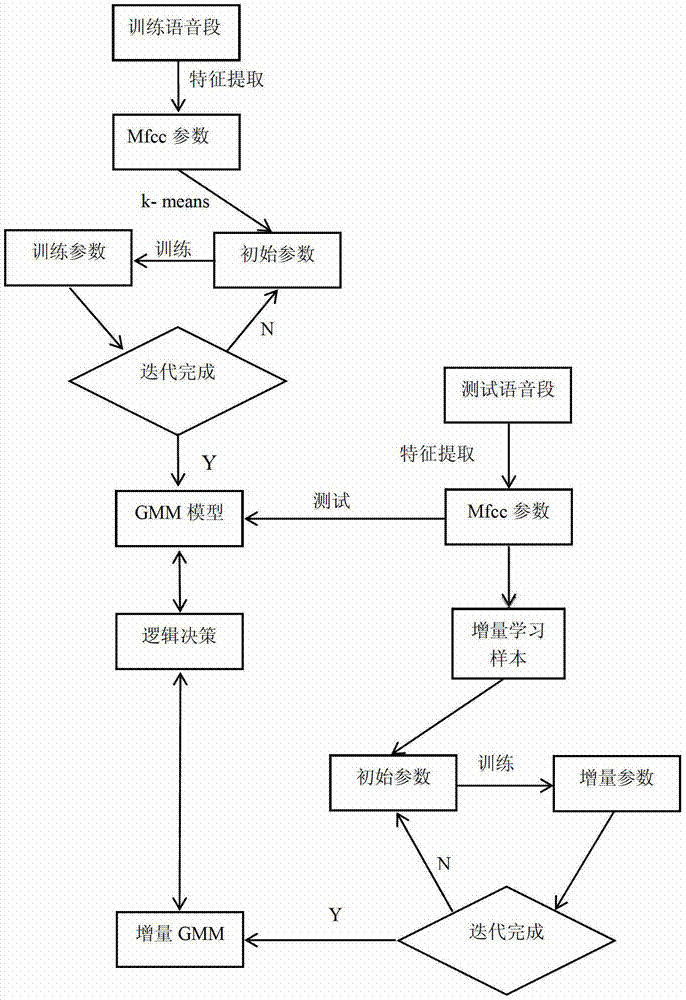

Voiceprint identification method

The invention discloses a voiceprint identification method. The voiceprint identification method comprises the following steps of: 1, preprocessing segmented speech data of each speaker in a training speech set to form a group of sample sets corresponding to each speaker; 2, extracting Mel-frequency cepstrum coefficients from each sample in all sample sets; 3, selecting a sample set one by one and randomly selecting the Mel-frequency cepstrum coefficients of part samples of the sample set, and training a Gaussian mixture model for the sample set; 4, performing incremental learning on the samples which are not selected and trained in the step 3 and the Gaussian mixture model of the sample set corresponding to the sample set one by one to obtain all optimized Gaussian mixture models, and optimizing a model library by utilizing all optimized Gaussian mixture models; and 5, inputting and identifying test voice data, identifying the Gaussian mixture model of the sample set corresponding to the test voice data by utilizing the optimized model library in the step 4, and adding the test voice data to the sample set corresponding to the speaker.

Owner:NANJING UNIV

Method and system for voiceprint recognition based on vector quantization based

InactiveCN102509547ARealize simulationEasy to identifySpeech recognitionMel-frequency cepstrumCluster algorithm

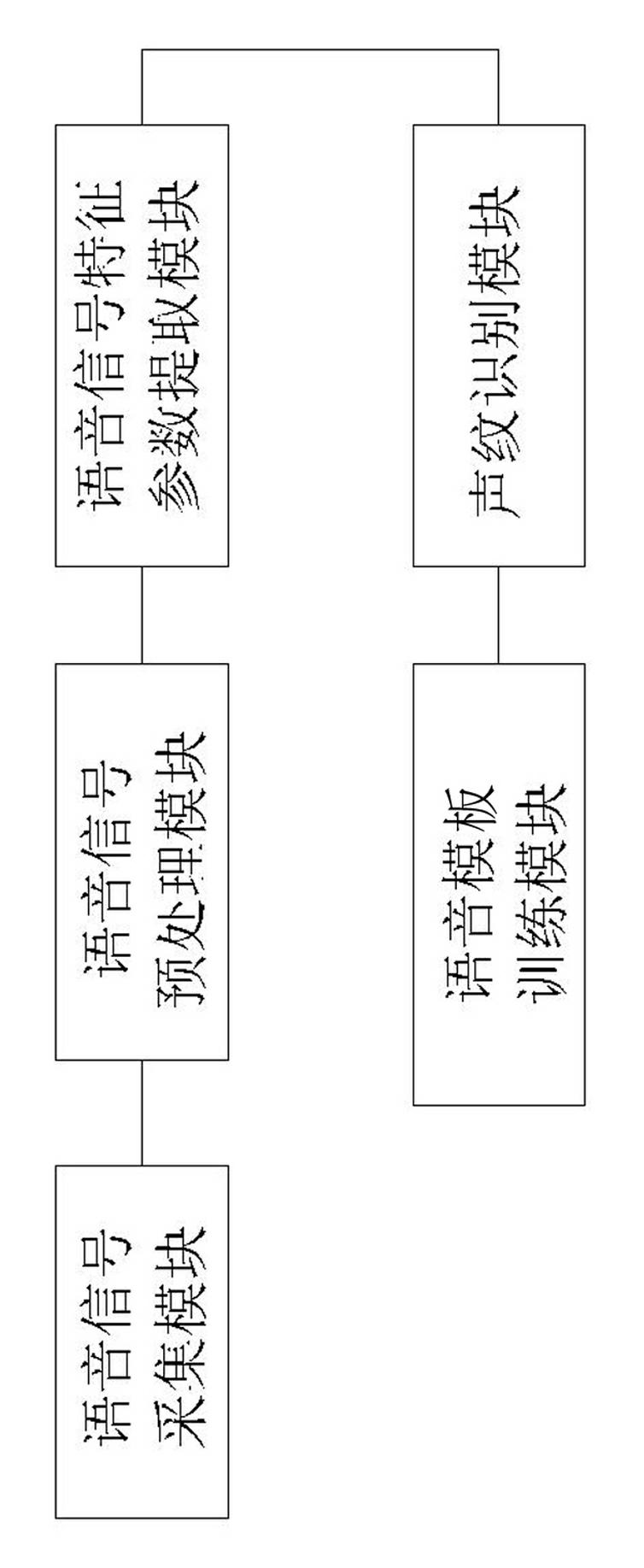

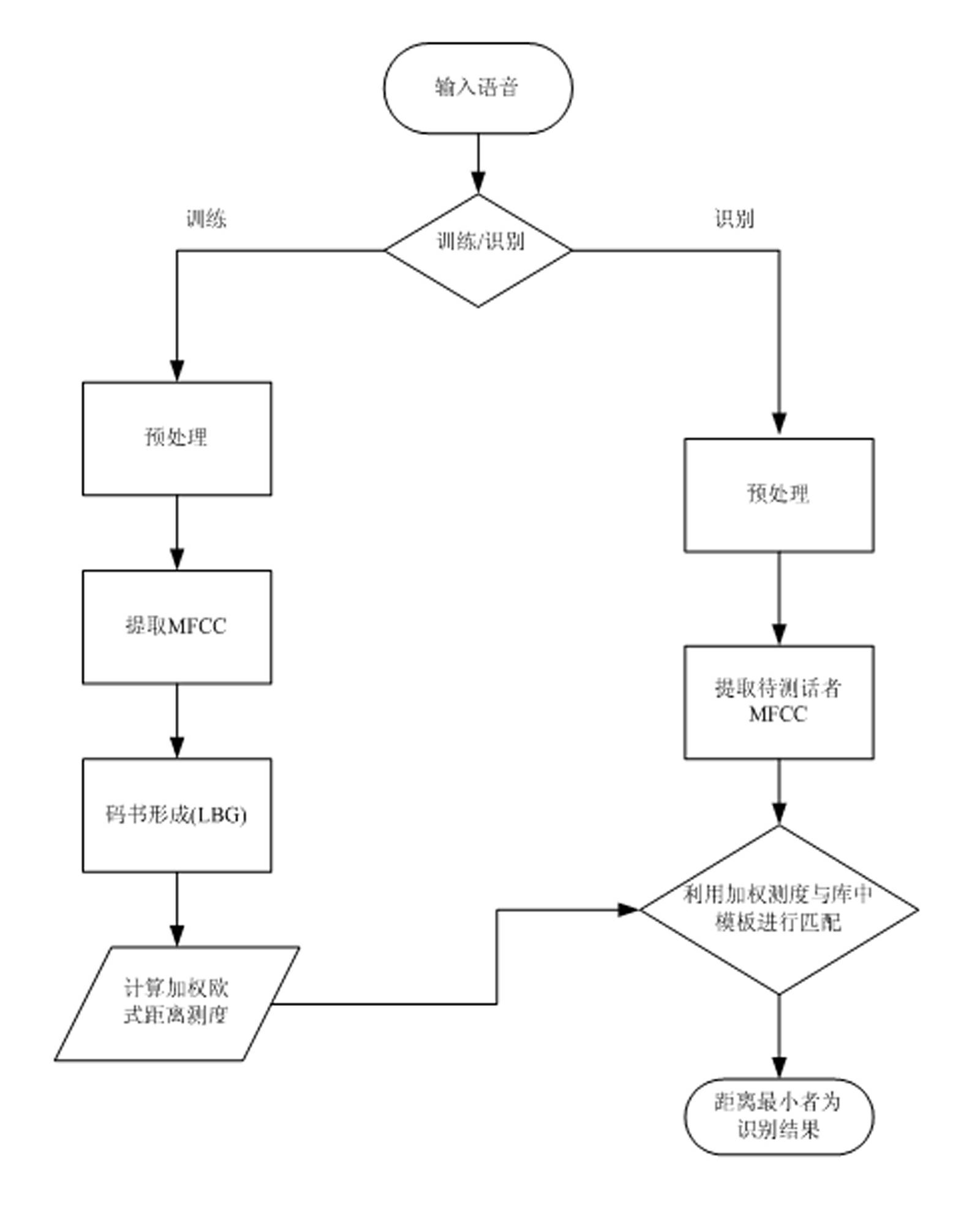

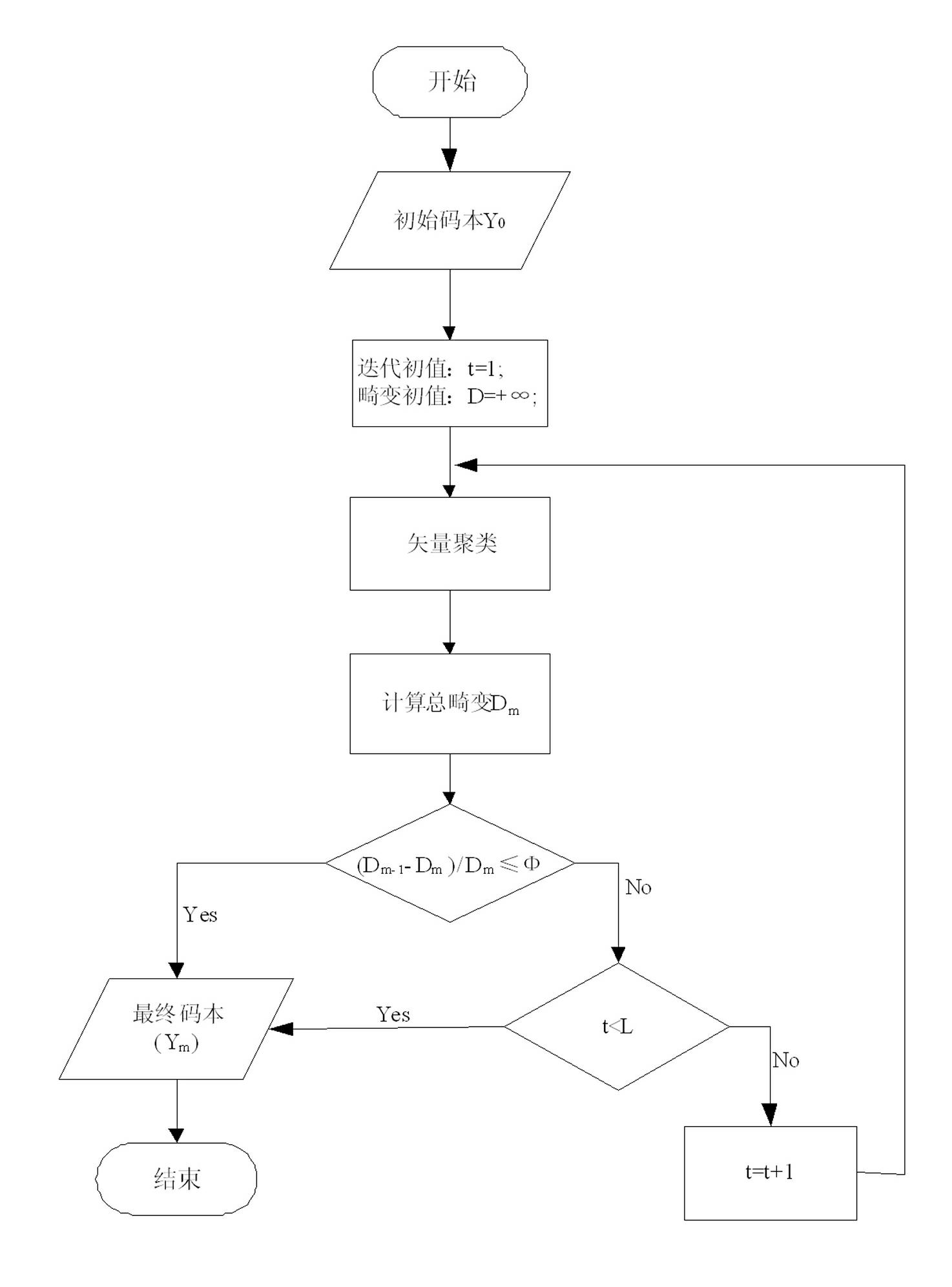

The invention discloses a method and a system for voiceprint recognition based on vector quantization, which have high recognition performance and noise immunity, are effective in recognition, require few modeling data, and are quick in judgment speed and low in complexity. The method includes steps: acquiring audio signals; preprocessing the audio signals; extracting audio signal characteristic parameters by using MFCC (mel-frequency cepstrum coefficient) parameters, wherein the order of the MFCC ranges from 12 to 16; template training, namely using the LBG (linde, buzo and gray) clustering algorithm to set up a codebook for each speaker and store the codebooks in an audio data base to be used as the audio templates of the speakers; voiceprint recognizing, namely comparing acquired characteristic parameters of the audio signals to be recognized with the speaker audio templates set up in the audio data base and judging according to weighting Euclidean distance measure, and if the corresponding speaker template enables the audio characteristic vector X of a speaker to be recognized to have the minimum average distance measure, the speaker is supposed to be recognized.

Owner:LIAONING UNIVERSITY OF TECHNOLOGY

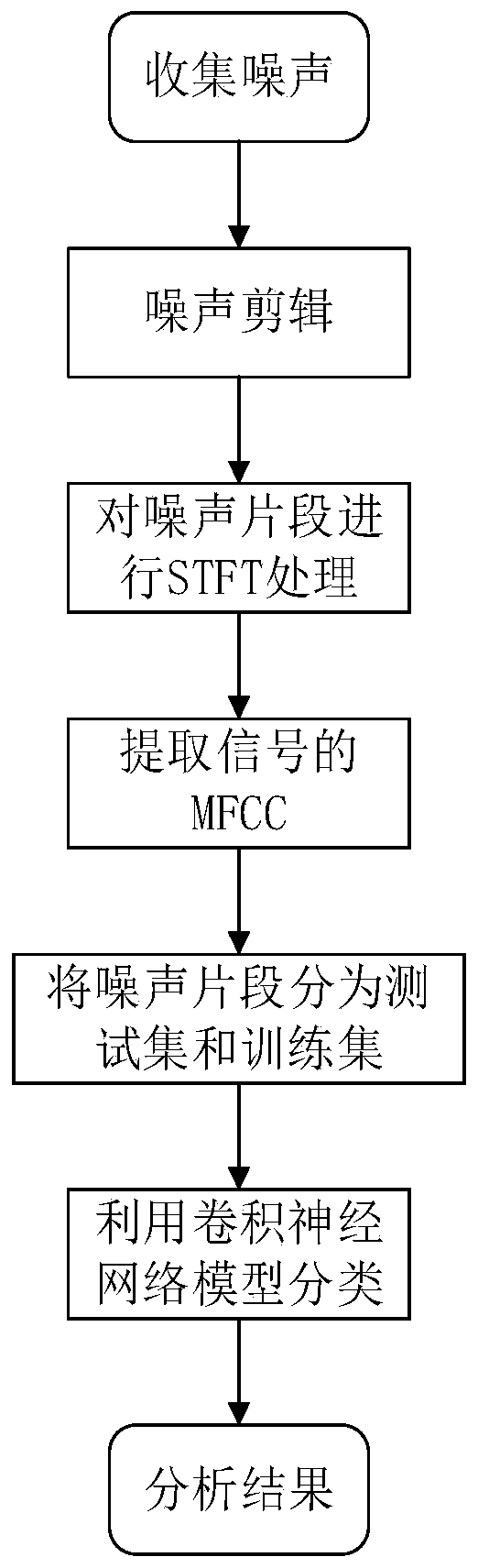

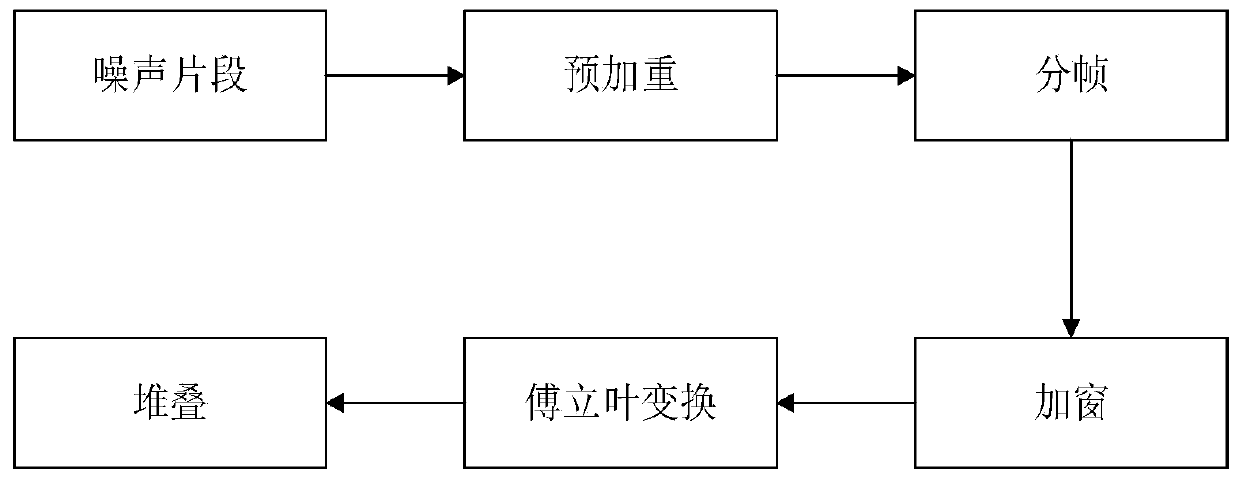

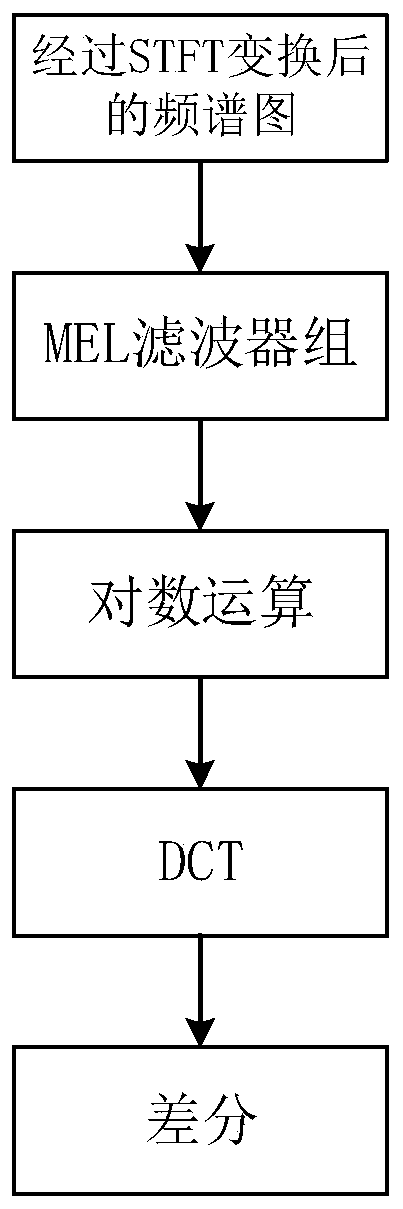

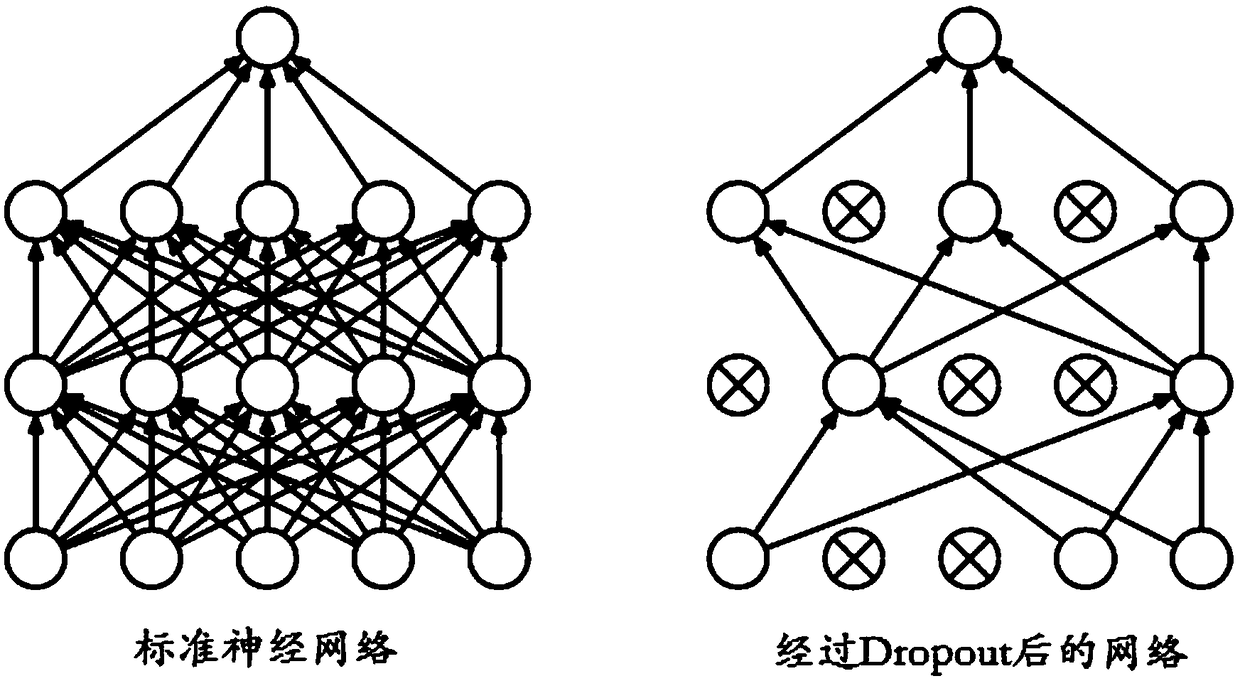

Environment noise identification classification method based on convolutional neural network

InactiveCN109767785AUniversalSolve problems that are easy to fall into the optimal solutionSpeech analysisMel-frequency cepstrumEnvironmental noise

The invention relates to an environment noise identification classification method based on a convolutional neural network. The method comprises the following steps of: S1, extracting natural environment noise, and editing the natural environment noise into noise segments with duration of 300ms to 30s and a converted frequency of 44.1kHz; S2, carrying out short time Fourier transformation on the noise segments, and converting a one-dimensional time-domain signal into a two-dimensional time-domain signal to obtain a sonagraph; S3, extracting a MFCC (Mel Frequency Cepstrum Coefficient) of the signal; S4, forming a training set with 80% of all the noise segments and forming a testing set with the residual 20% of all the noise segments; S5, carrying out noise classification by a convolutionalneural network model; and S6, training a classification model by the training set, and verifying accuracy of the model by the testing set so as to complete environment noise identification classification based on the convolutional neural network. According to the invention, the sound segments are input, sound feature information is extracted, an output is a classification result, and automatic extraction on the sound feature information can be implemented.

Owner:HEBEI UNIV OF TECH

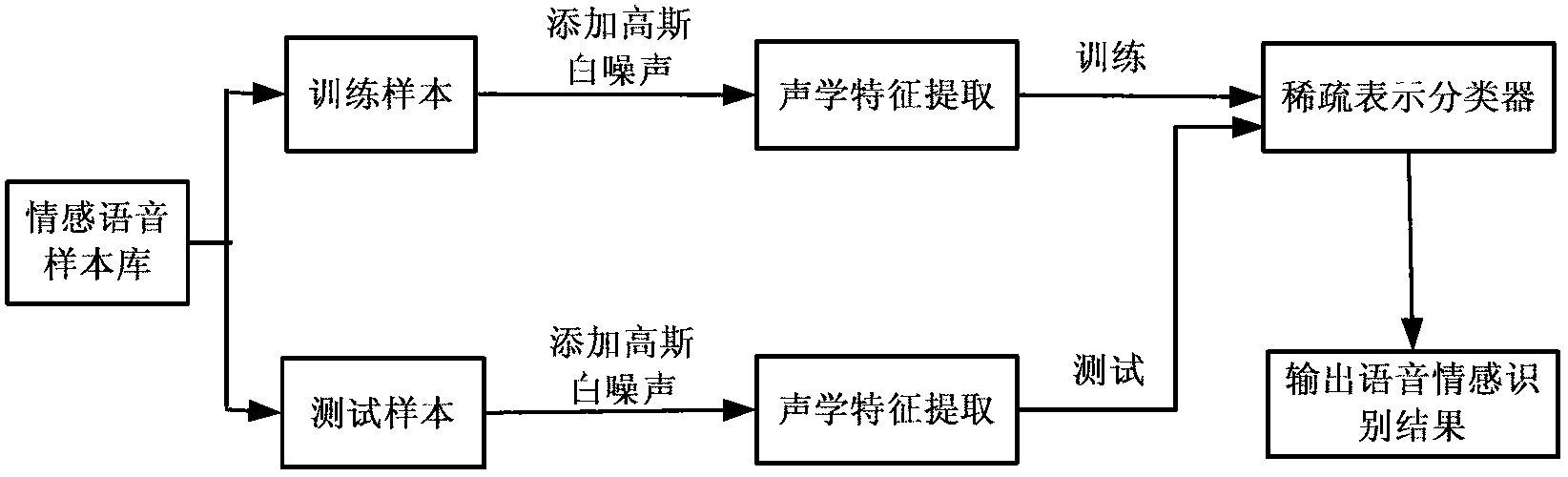

Robust speech emotion recognition method based on compressive sensing

InactiveCN103021406AImprove noise immunitySpeech recognitionMel-frequency cepstrumSparse representation classifier

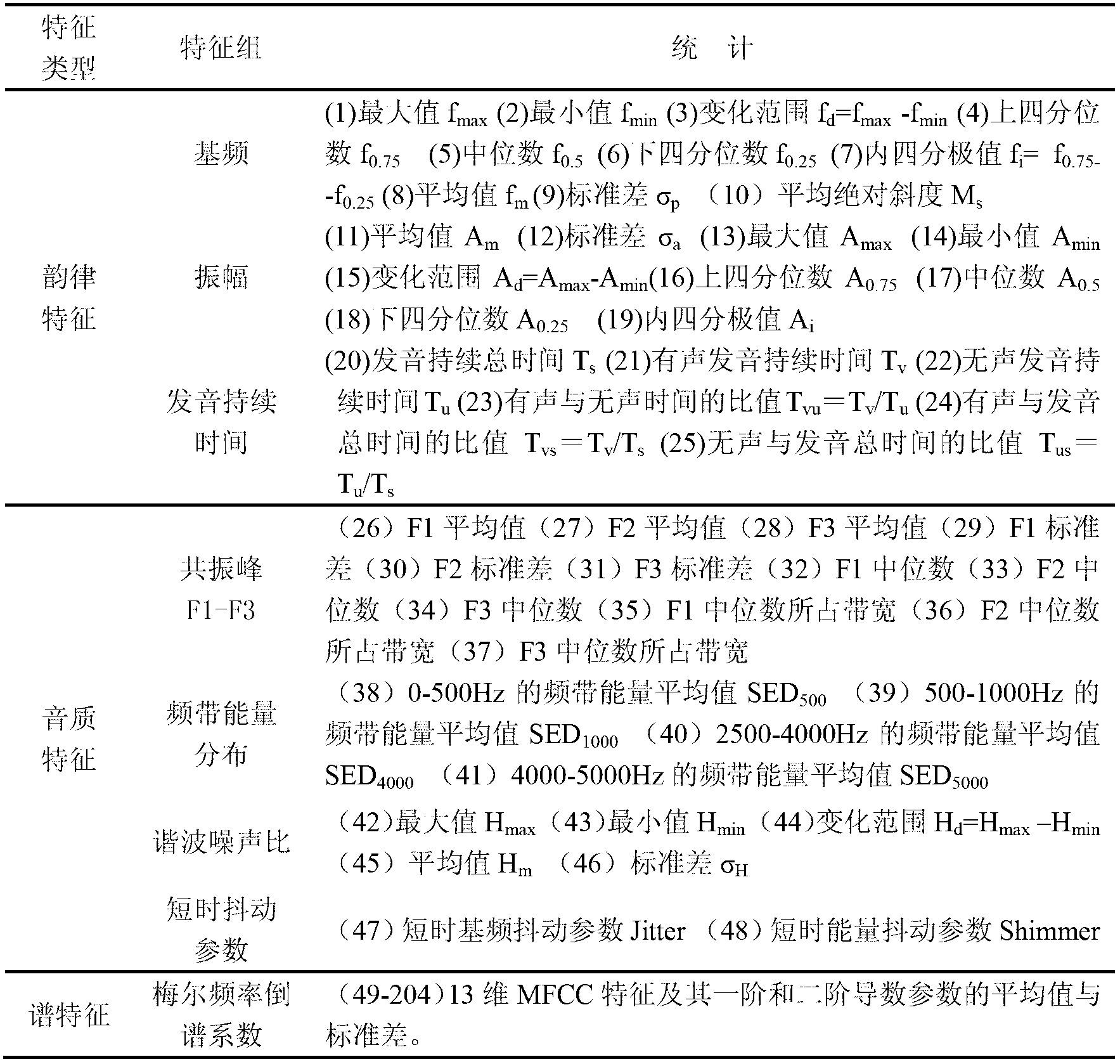

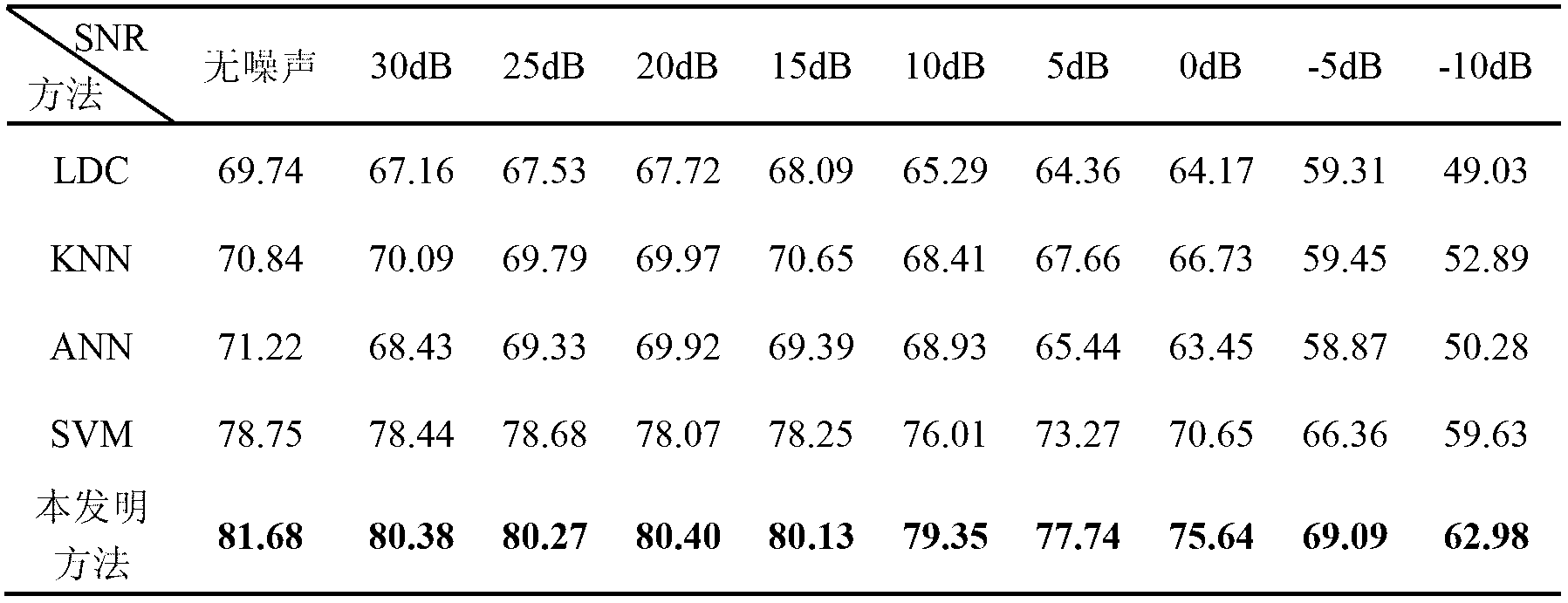

The invention discloses a robust speech emotion recognition method based on compressive sensing. The recognition method includes generating a noisy emotion speech sample, establishing an acoustic feature extraction module, constructing a sparse representation classifier model, and outputting a speech emotion recognition result. The robust speech emotion recognition method has the advantages that effects of noise on emotion speech in the natural environment are fully considered, and the robust speech emotion recognition method under the noise background is provided; validity of feature parameters in different types is fully considered, extraction of feature parameters is extended to the Mel frequency cepstrum coefficient (MFCC) from prosodic and tone features, and anti-noise effects of the feature parameters are further improved; and the high-performance robust speech emotion recognition method based on the compressive sensing theory is provided through the sparse representation distinguishing in the compressive sensing theory.

Owner:TAIZHOU UNIV +2

Abnormal voice detecting method based on time-domain and frequency-domain analysis

ActiveCN102664006AIncrease flexibilityImprove noise immunitySpeech recognitionTime domainMel-frequency cepstrum

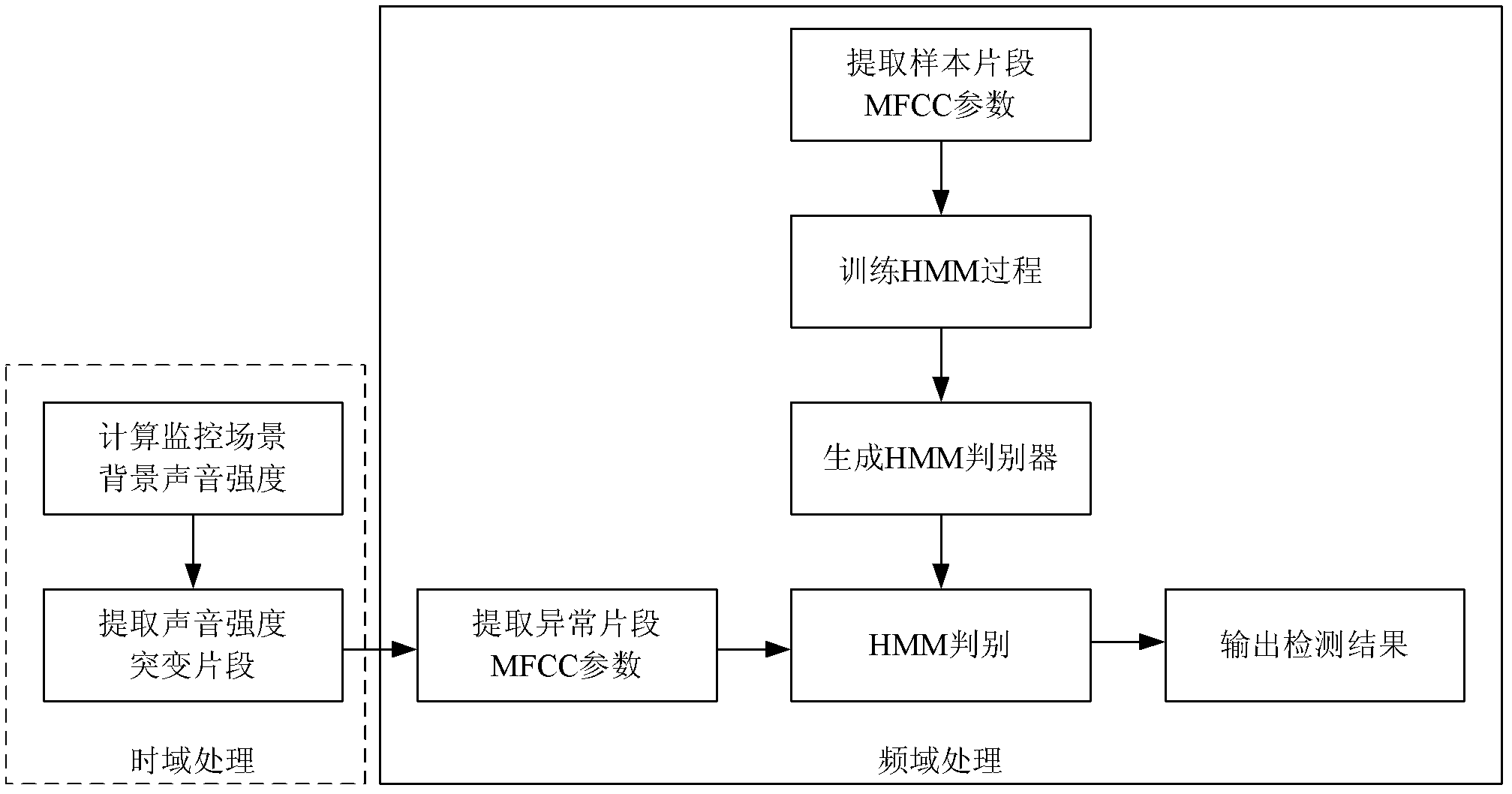

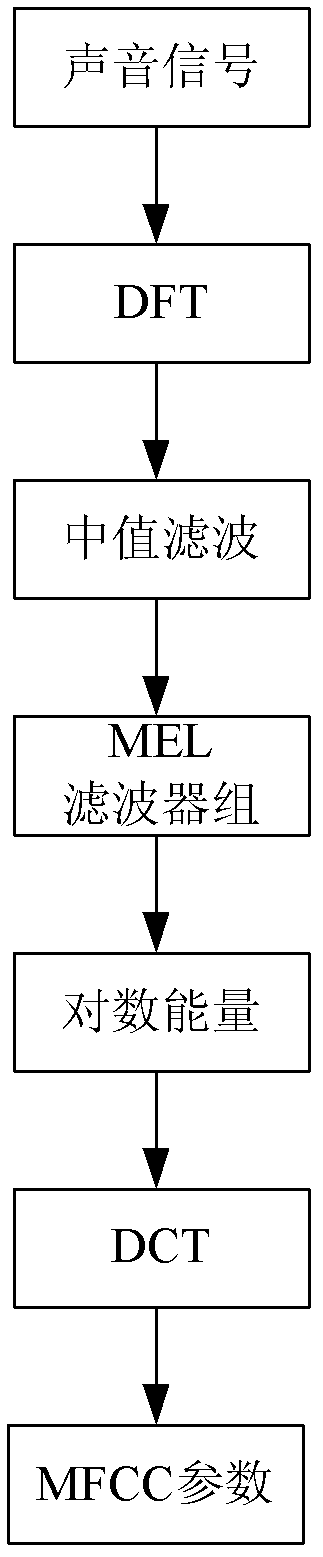

The invention relates an abnormal voice detecting method based on time-domain and frequency-domain analysis. The method includes computing the background sound intensity of a monitored scene updated in real time at first, and detecting and extracting suddenly changed fragments of the sound intensity; then extracting uniform filter Mel frequency cepstrum coefficients of the suddenly changed fragments; and finally using the extracted Mel frequency cepstrum coefficients of sound of the abnormal fragments as observation sequences, inputting a trained modified hidden Markov process model, and analyzing whether the abnormal fragments are abnormal voice or not according to frequency characteristics of voice. Time sequence correlation is improved when the hidden Markov process model is added. The method is combined with time-domain extraction of suddenly changed energy frames and verification within a frequency-domain range, the abnormal voice can be effectively detected, instantaneity is good, noise resistance is high, and robustness is fine.

Owner:NAT UNIV OF DEFENSE TECH

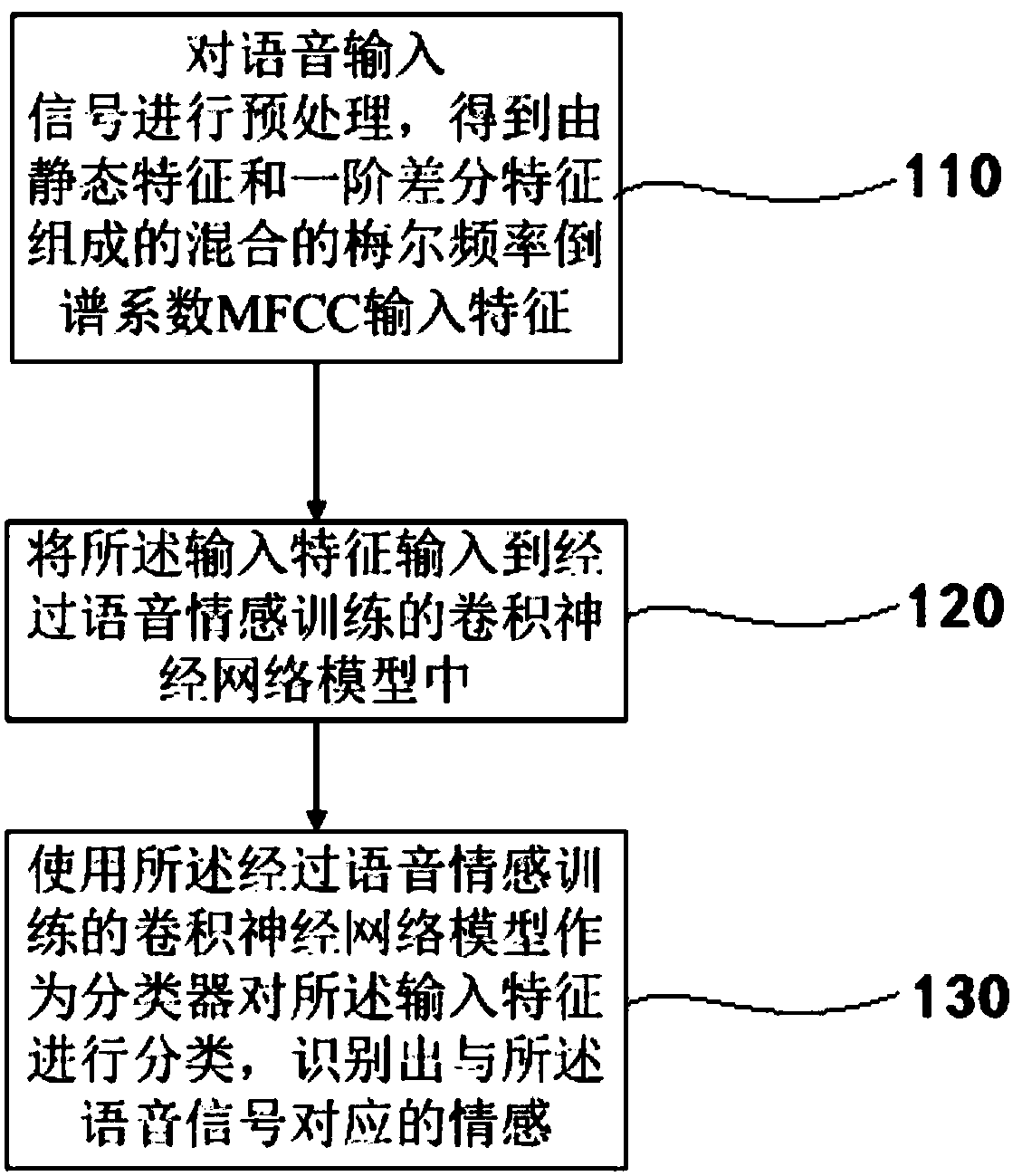

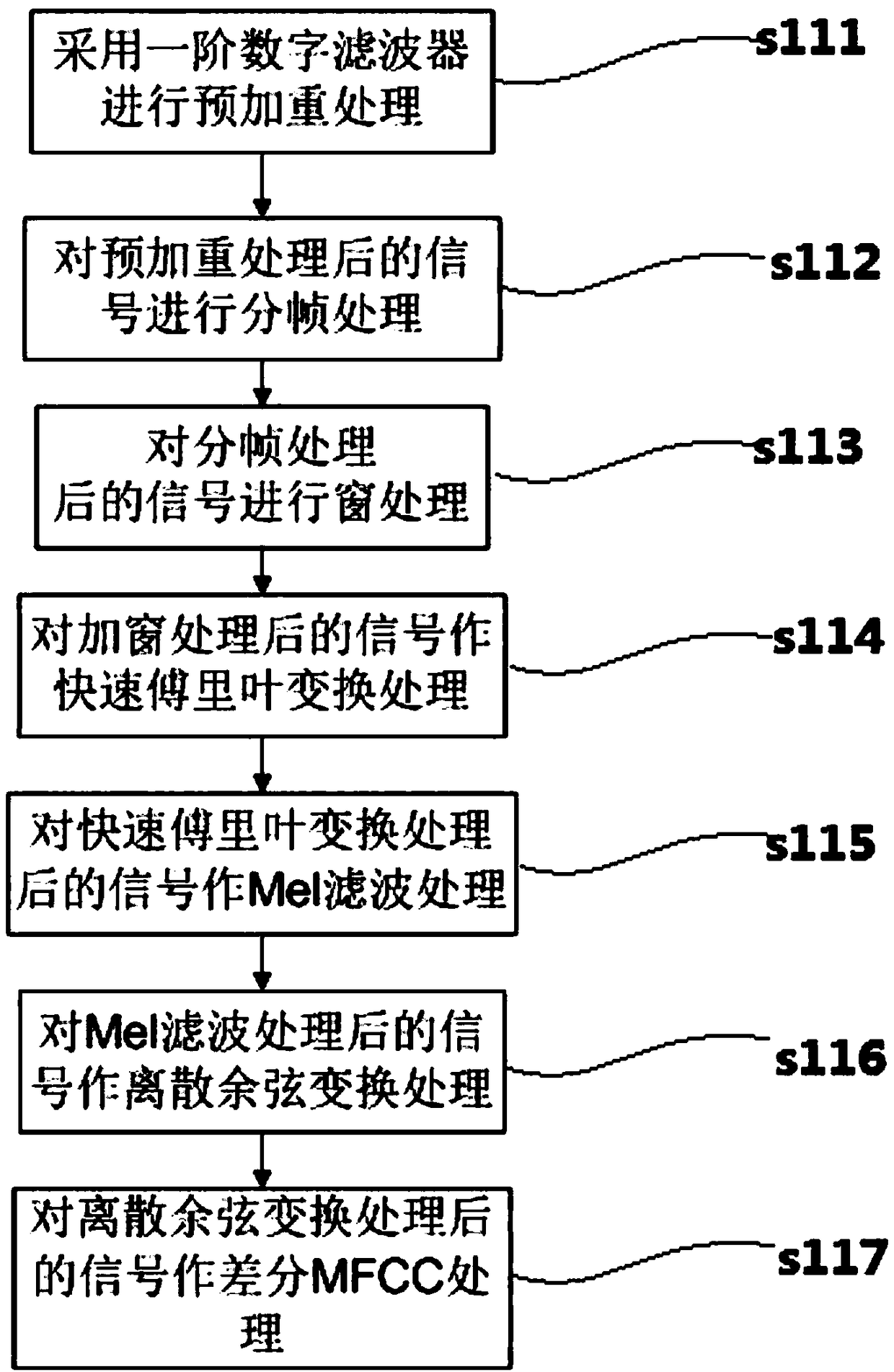

Voice signal-based emotion recognition method, emotion recognition device and computer equipment

InactiveCN108550375AOvercome the technical problem of low judgment accuracyImprove generalization abilitySpeech recognitionMel-frequency cepstrumSemantics

The invention relates to a voice signal-based emotion recognition method, an emotion recognition device and computer equipment. The method comprises the following steps: preprocessing a voice input signal, and obtaining a mixed mel frequency cepstrum coefficient MFCC input characteristics composed of static characteristics and first-order differential characteristics; inputting the input characteristics into a convolutional neural network model which is subjected to speech emotion training; classifying the input characteristics by using a convolutional neural network model which is subjected to speech emotion training as a classifier, and recognizing an emotion corresponding to the voice signal. According to the voice signal-based emotion recognition method, the technical problem that therecognition accuracy is low can be overcome compared with other semantics and voice-based emotion recognition methods in the prior art. Meanwhile, different voice emotions can be distinguished, and the accuracy is satisfactory. At the same time, according to the experiment result, the method has good generalization ability.

Owner:LUDONG UNIVERSITY

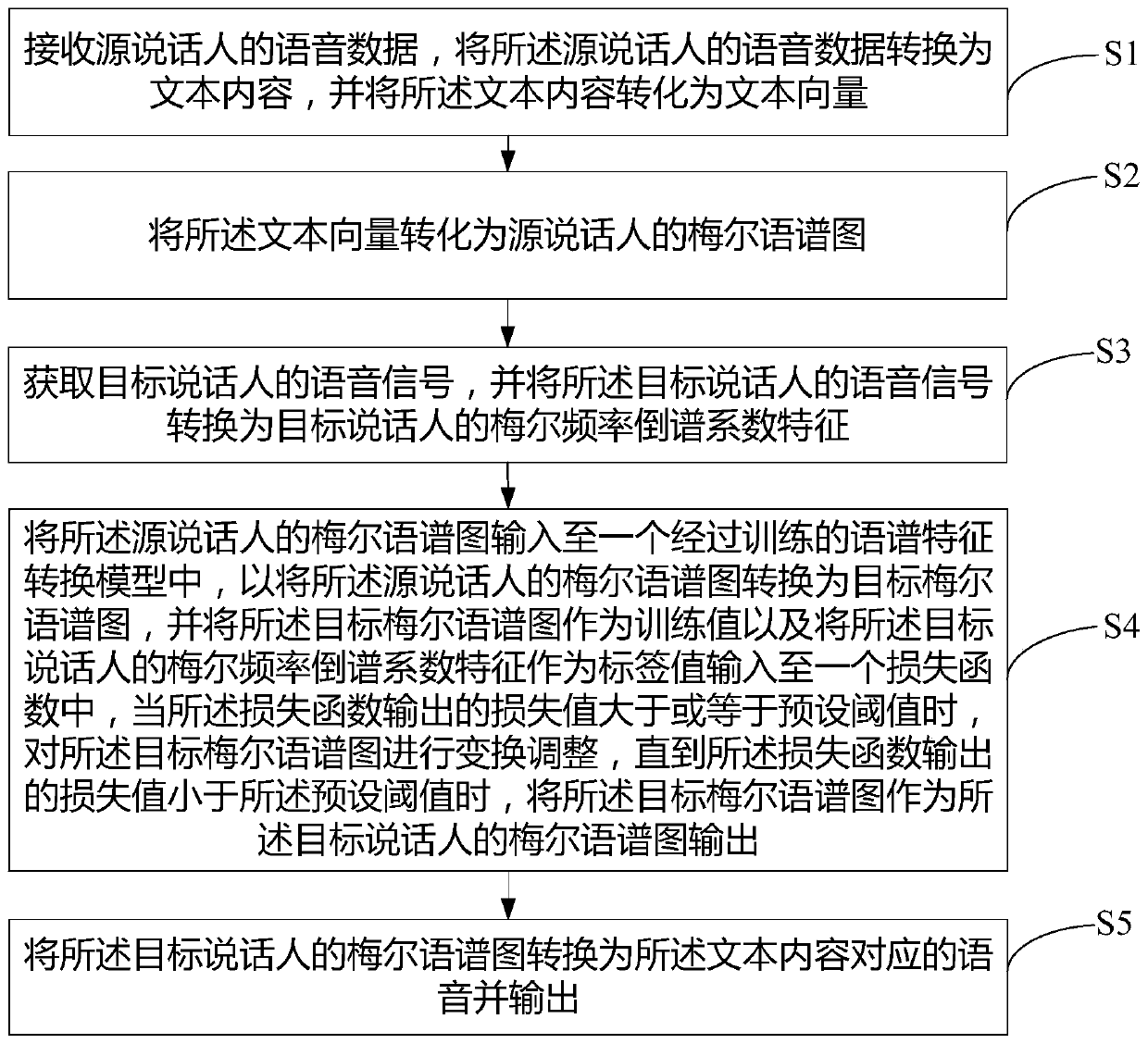

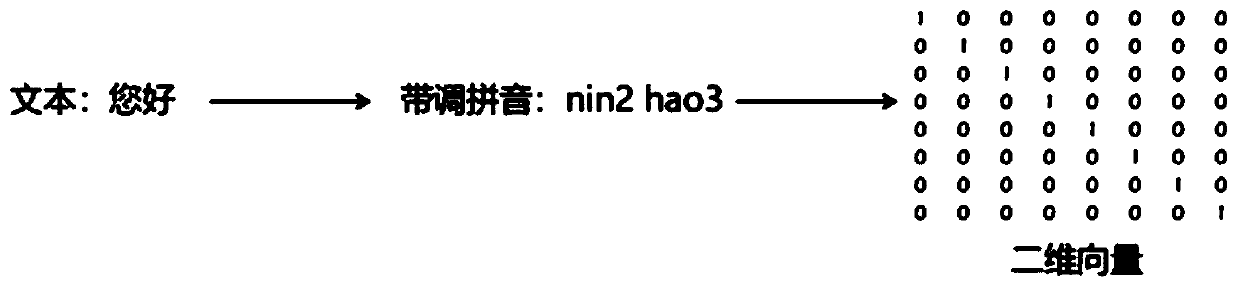

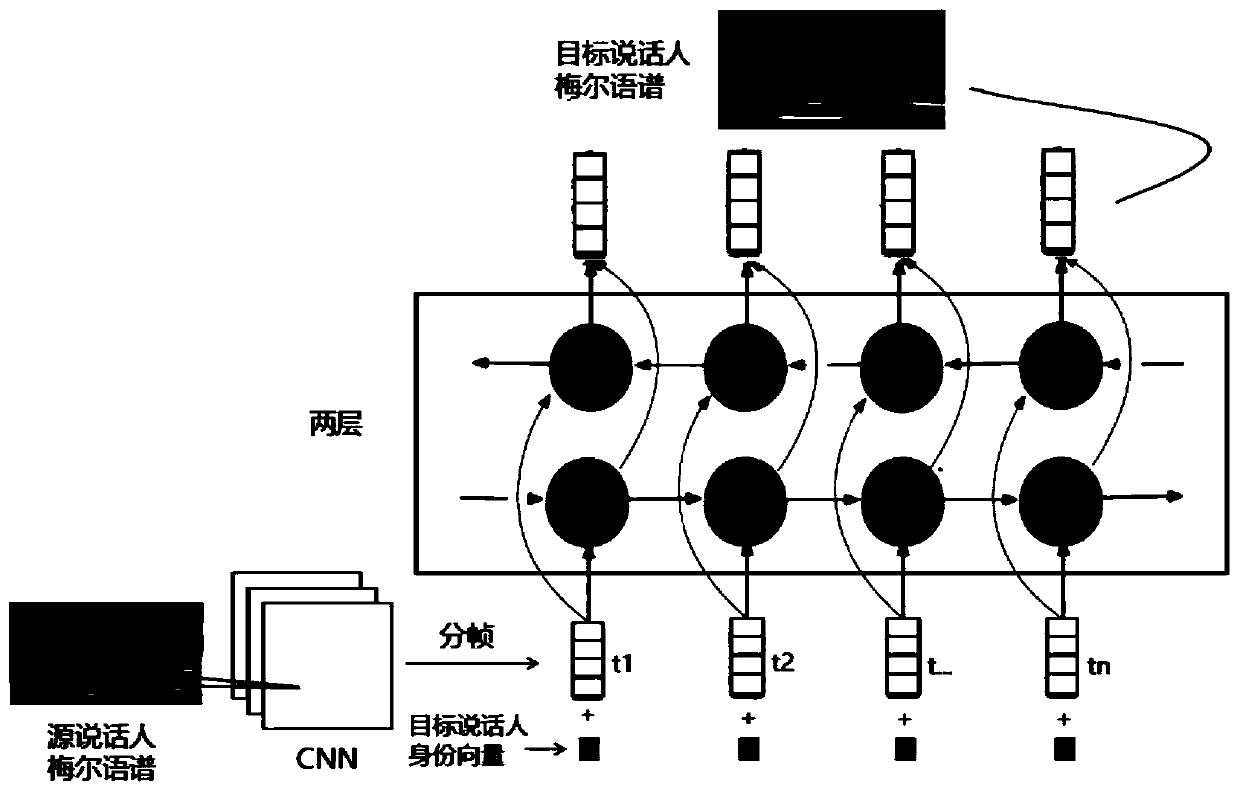

Speech synthesis method and device and computer readable storage medium

PendingCN110136690ARealize voice conversionSpeech recognitionSpeech synthesisMel-frequency cepstrumSpeech synthesis

The invention relates to the technical field of artificial intelligence, and discloses a speech synthesis method. The method comprises the steps that speech data of a source speaker is converted intotext content, and the text content is converted into a text vector; the text vector is converted into a Mel spectrogram of the source speaker; a speech signal of a target speaker is obtained, the speech signal of the target speaker is converted into Mel frequency cepstrum coefficient characteristics of the target speaker; the Mel spectrogram of the source speaker and the Mel frequency cepstrum coefficient characteristics of the target speaker are input into a trained spectral feature transformation model, and a Mel spectrogram of the target speaker is obtained; the Mel spectrogram of the target speaker is converted into speech corresponding of the text content, and the speech is output. The invention further provides a speech synthesis device and a computer readable storage medium. Accordingly, tone shift of the speech synthesis system can be achieved.

Owner:PING AN TECH (SHENZHEN) CO LTD

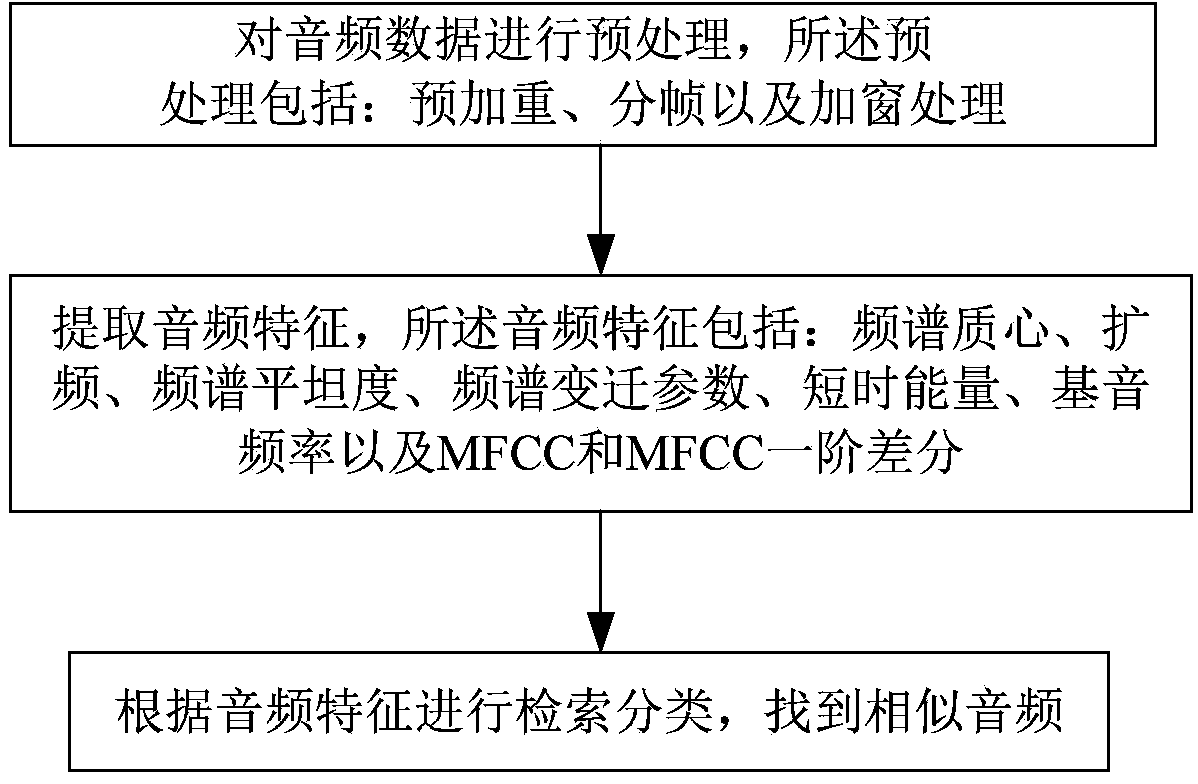

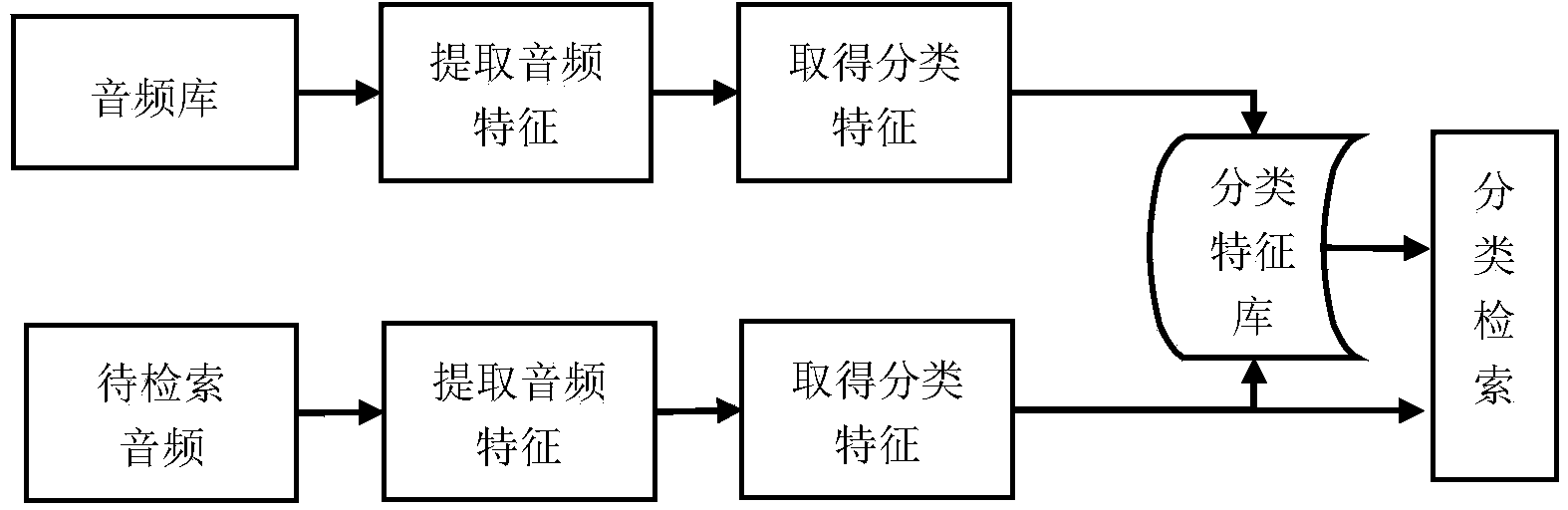

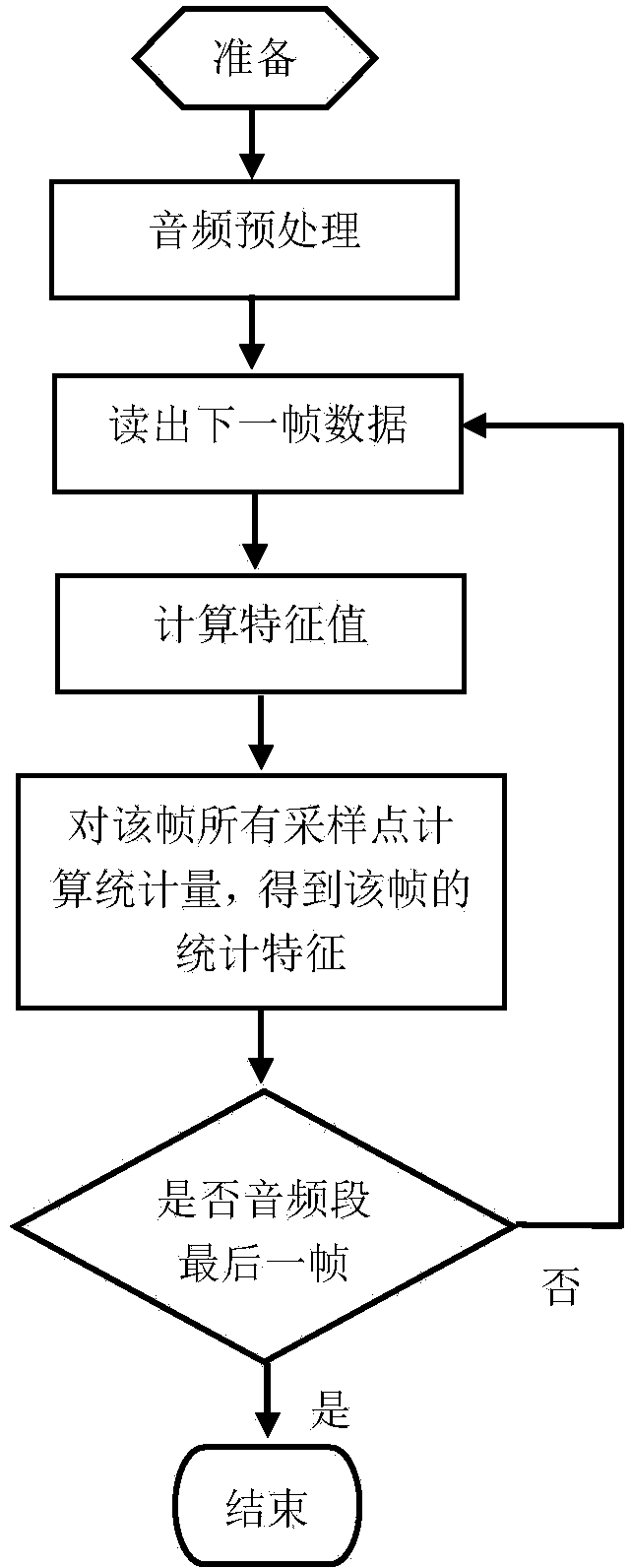

Method for classifying digital audio automatically

ActiveCN103854646AHigh precisionImprove retrieval efficiencySpeech recognitionMel-frequency cepstrumFrequency spectrum

The invention discloses a method for classifying digital audio automatically. The method particularly comprises the following steps: pretreating an audio signal: pre-emphasis treatment, framing treatment and windowing treatment; extracting an audio characteristics comprising frequency spectrum mass center, spectrum spreading, frequency spectrum flatness, frequency spectrum transition parameters, short time energy, fundamental frequency and Mel frequency cepstrum coefficient (MFCC) and MFCC first order difference; indexing and classifying according to the audio characteristic, and finding out the similar audio. According to the invention, the problem of error caused by indexing audio by adopting a single audio characteristic in the prior art is solved; moreover, the method is simple in computation process, is easy to apply practically, and is high in indexing efficiency.

Owner:UESTC COMSYS INFORMATION

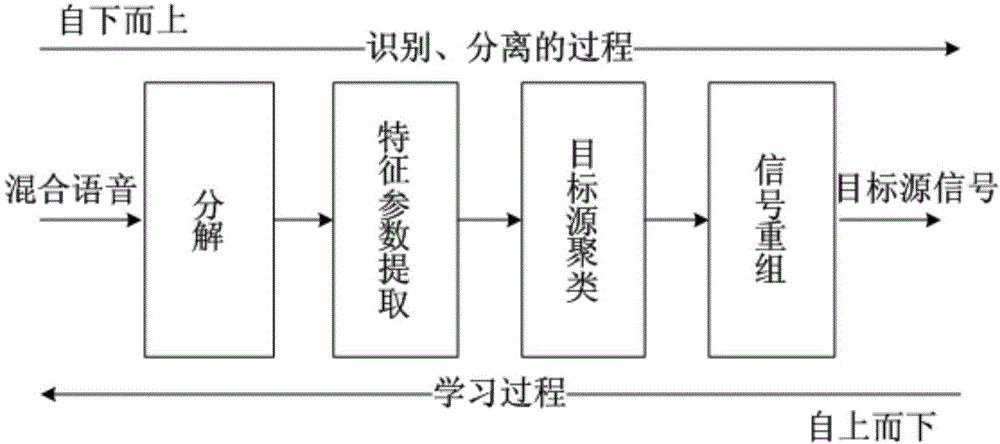

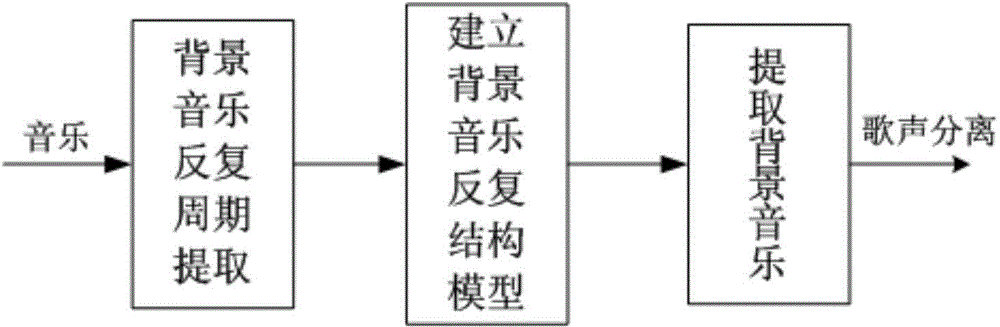

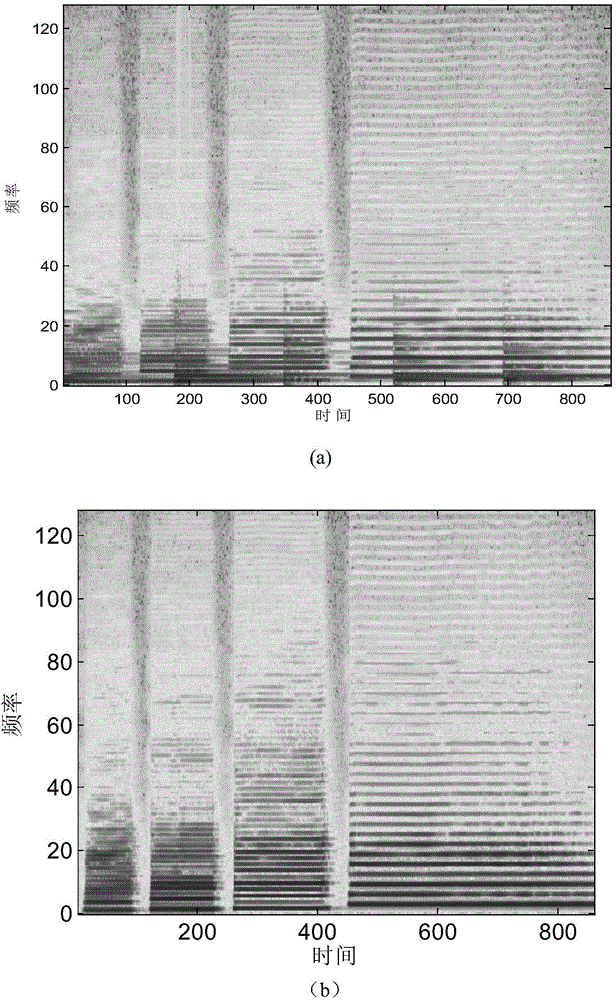

Music separation method of MFCC (Mel Frequency Cepstrum Coefficient)-multi-repetition model in combination with HPSS (Harmonic/Percussive Sound Separation)

InactiveCN104616663AImprovements to missing issuesSimplify complexityElectrophonic musical instrumentsSpeech analysisMel-frequency cepstrumSound sources

The invention discloses a music separation method of an MFCC (Mel Frequency Cepstrum Coefficient)-multi-repetition model in combination with an HPSS (High Performance Storage System), and relates to the technical field of signal processing. In consideration of high probability of ignore of a gentle sound source and time-varying change characteristic of music, the sound source type is analyzed through a harmonic / percussive sound separation (HPSS) method to separate out a harmonic source, then MFCC characteristic parameters of the remaining sound sources are extracted, and similar operation is performed on the sound sources to construct a similar matrix so as to establish a multi-repetition structural model of the sound source suitable for tune transformation, so that a mask matrix is obtained, and finally the time domain waveform of a song and background music is obtained through ideal binary mask (IBM) and fourier inversion. According to the method, effective separation can be performed on different types of sound source signals, so the separation precision is improved; meanwhile the method is low in complexity, high in processing speed and higher in stability, and has broad application prospect in the fields such as singer retrieval, song retrieval, melody extraction and voice recognition in a musical instrument background.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

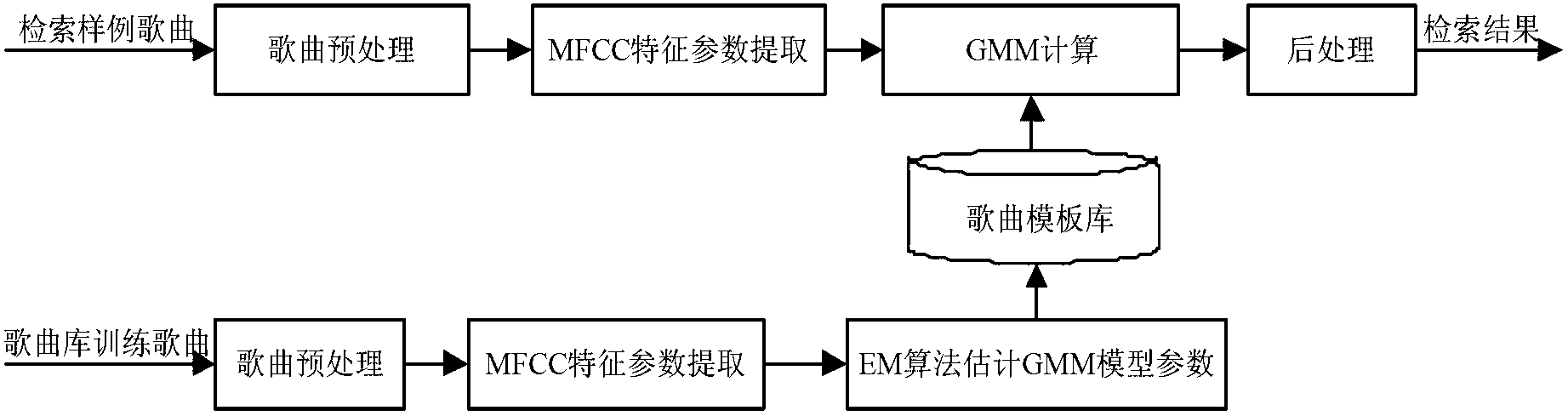

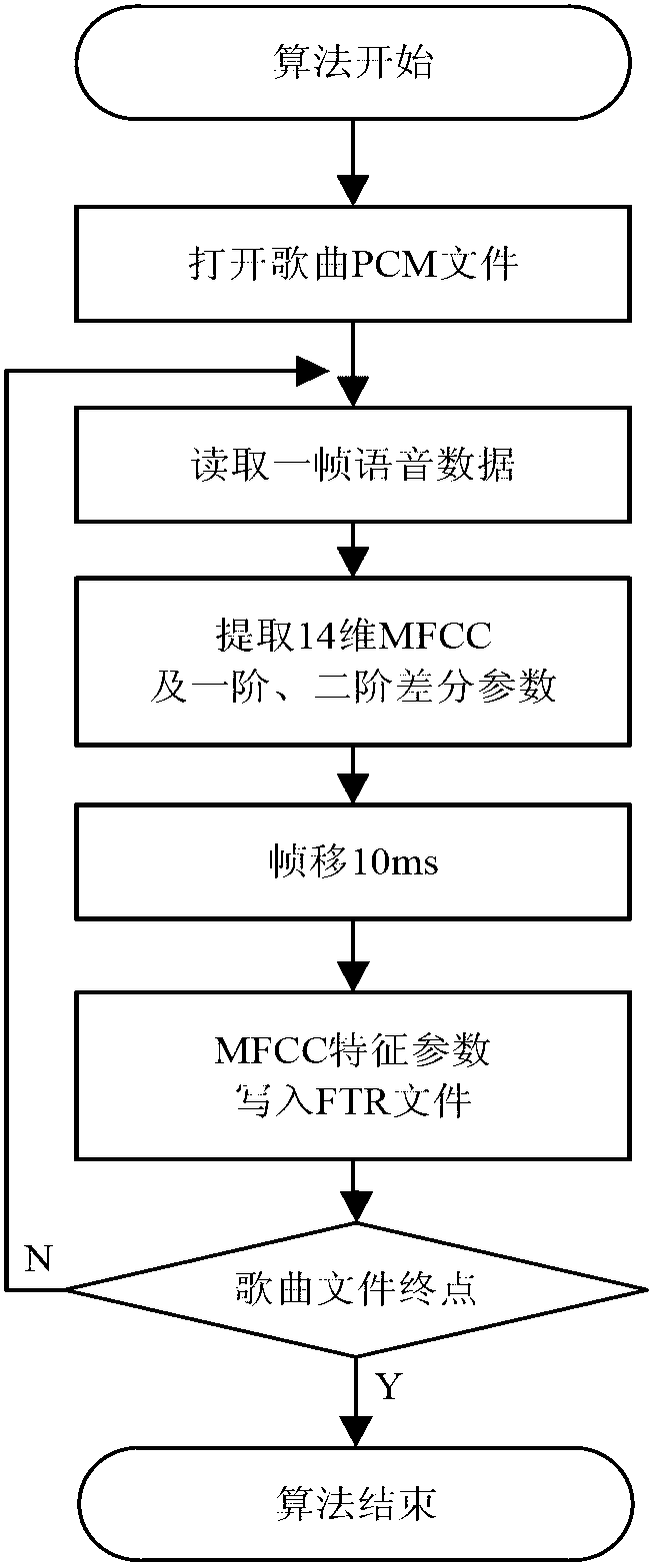

Tone-similarity-based song retrieval method

InactiveCN103177722AAchieve retrievalHigh utility valueSpeech recognitionSpecial data processing applicationsMel-frequency cepstrumSystem stability

The invention relates to a tone-similarity-based song retrieval method, aims at content-based music retrieval technology and provides a method of personal song modeling, calculation, retrieval, and matching based on MFCC (Mel frequency cepstrum coefficient) and GMM (Gaussian mixture model) and implementation of the method by comprehensively utilizing features such as song background music tone and voice characteristics of singers. Experimental results show that a system using the method is fast in retrieval, high in stability and highly expansible. The method is especially suitable for retrieval of audios with high requirement on tone, such as instrumental music audio retrieval systems and multimedia audio management systems.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

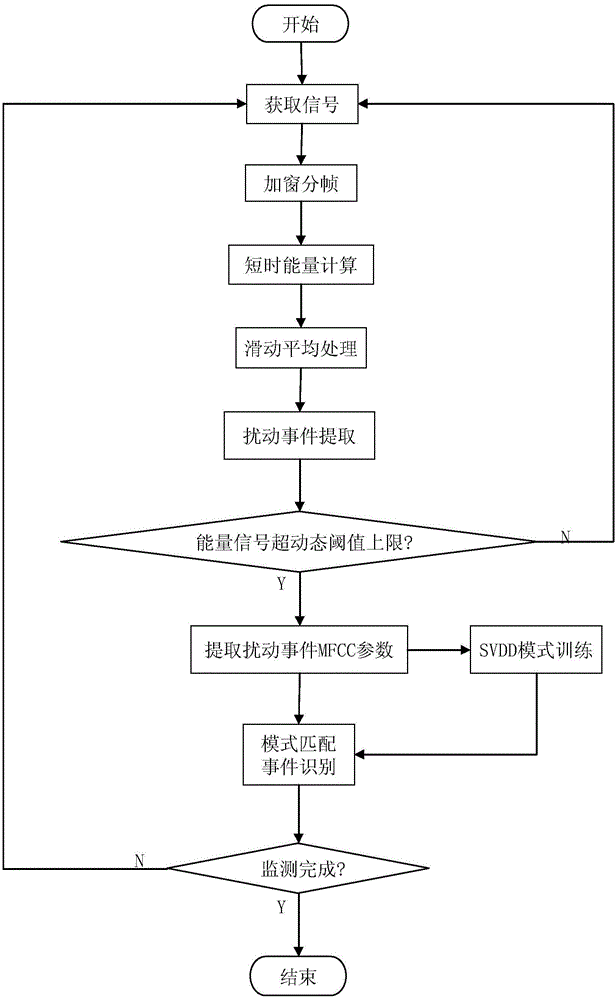

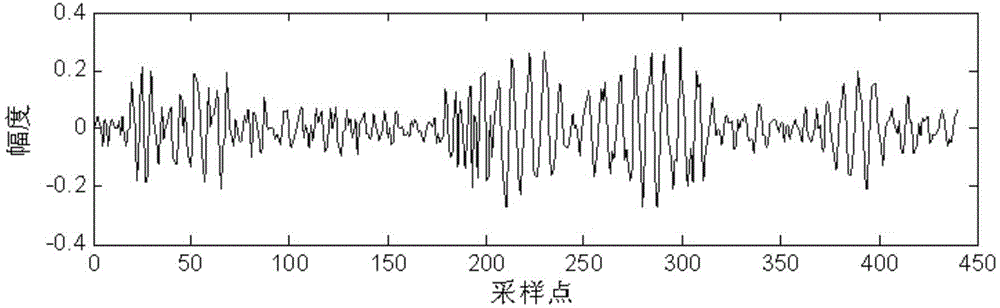

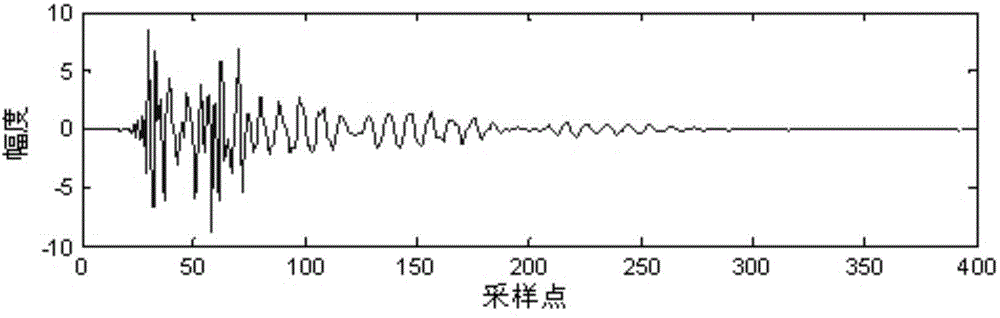

Method for identifying optical fibre sensing vibration signal

ActiveCN105095624AImprove accuracyImprove recognition rateBurglar alarmSpecial data processing applicationsMel-frequency cepstrumMoving average

The invention relates to a method for identifying an optical fibre sensing vibration signal. The method comprises the following specific steps: (1), obtaining the signal so as to obtain a discrete digital signal s(n); (2), windowing and framing to obtain a kth-frame windowed signal sk(n); (3), calculating to obtain a kth-frame energy signal e(k); (4), obtaining an energy signal e'(k) after moving average processing; (5), extracting a disturbance event, comparing the e'(k) with dynamic threshold values Th1 and Th2, intercepting a continuous signal as a disturbance event signal, and determining that no any disturbance event happens if the e'(k) is not beyond the Th1; (6), solving an MFCC (Mel Frequency Cepstrum Coefficient) parameter of the disturbance event, obtaining a feature set of Y type events; and establishing a pattern base of Y type disturbance events; (7), performing SVDD (Support Vector Data Description) training; and (8), matching a feature parameter set of an event to be detected with an SVDD training model in the pattern base, judging which the event to be detected belongs to, or judging that the event to be detected is an unknown event. By means of the method disclosed by the invention, the identification accuracy of the optical fibre sensing signal is improved; false reports are reduced; pattern training of single disturbance event can be completed; and the database establishing complexity is reduced.

Owner:NO 34 RES INST OF CHINA ELECTRONICS TECH GRP +2

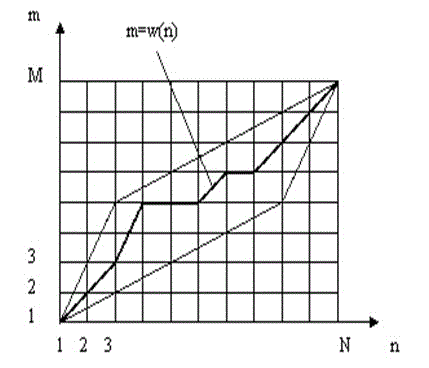

Isolated word speech recognition method based on HRSF and improved DTW algorithm

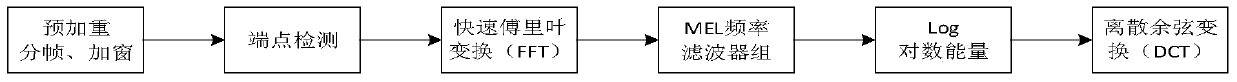

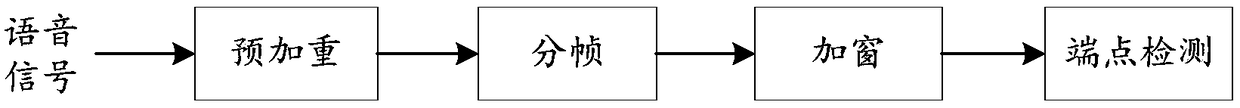

InactiveCN102982803AEasy to identifyRecognition speed is fastSpeech recognitionMel-frequency cepstrumSpeech identification

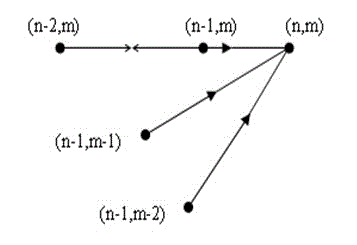

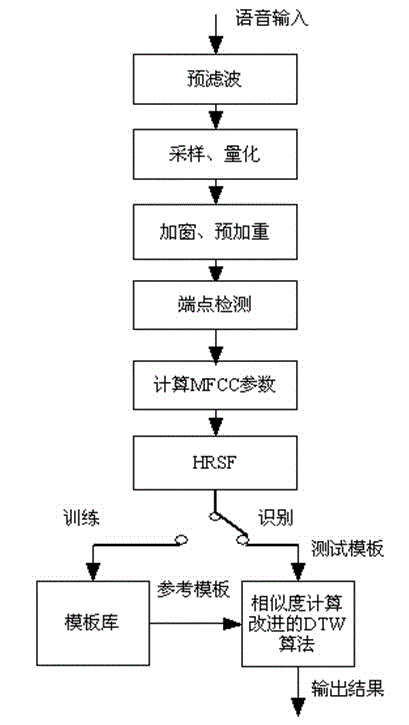

The invention discloses an isolated word speech recognition method based on an HRSF (Half Raised Sine Function) and an improved DTW (Dynamic Time Warping) algorithm. The isolated word speech recognition method comprises the following steps that (1), a received analog voice signal is preprocessed; preprocessing comprises pre-filtering, sampling, quantification, pre-emphasis, windowing, short-time energy analysis, short-time average zero crossing rate analysis and end-point detection; (2), a power spectrum X(n) of a frame signal is obtained by FFT (Fast Fourier Transform) and is converted into a power spectrum under a Mel frequency; an MFCC (Mel Frequency Cepstrum Coefficient) parameter is calculated; the calculated MFCC parameter is subjected to HRSF cepstrum raising after a first order difference and a second order difference are calculated; and (3), the improved DTW algorithm is adopted to match test templates with reference templates; and the reference template with the maximum matching score serves as an identification result. According to the isolated word speech recognition method, the identification of a single Chinese character is achieved through the improved DTW algorithm, and the identification rate and the identification speed of the single Chinese character are increased.

Owner:SOUTH CHINA NORMAL UNIVERSITY +1

Hearing perception characteristic-based objective voice quality evaluation method

InactiveCN102881289AImprove relevanceReduce complexitySpeech analysisMel-frequency cepstrumGammatone filter

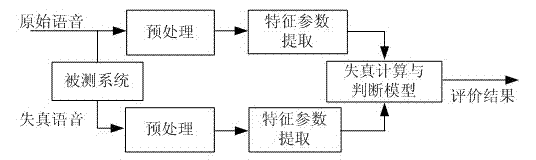

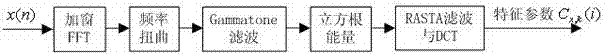

The invention discloses a hearing perception characteristic-based objective voice quality evaluation method which is simple and effective. An ear hearing model and non-linear compression conversion are introduced into an extraction process of MFCC (Mel frequency cepstrum coefficient) characteristic parameters according to psychoacoustics principles. According to the method, a Gammatone filter is adopted to simulate a cochlea basement membrane; and the strength-loudness perception characteristics of the voice are simulated through cube root non-linear compression conversion in an amplitude non-linear conversion process. By using new characteristic parameters, a voice quality evaluation method which is more accordant with the ear hearing perception characteristics is provided. Compared with other methods, the relevancy between objective evaluation results and subjective evaluation results is effective improved, the operation time is shorter and the complexity is lower, and the method has stronger adaptability, reliability and practicability. A new solution to improve the objective voice quality evaluation can be provided through the method for voice quality evaluation by simulating the hearing perception characteristics of human ears.

Owner:CHONGQING UNIV

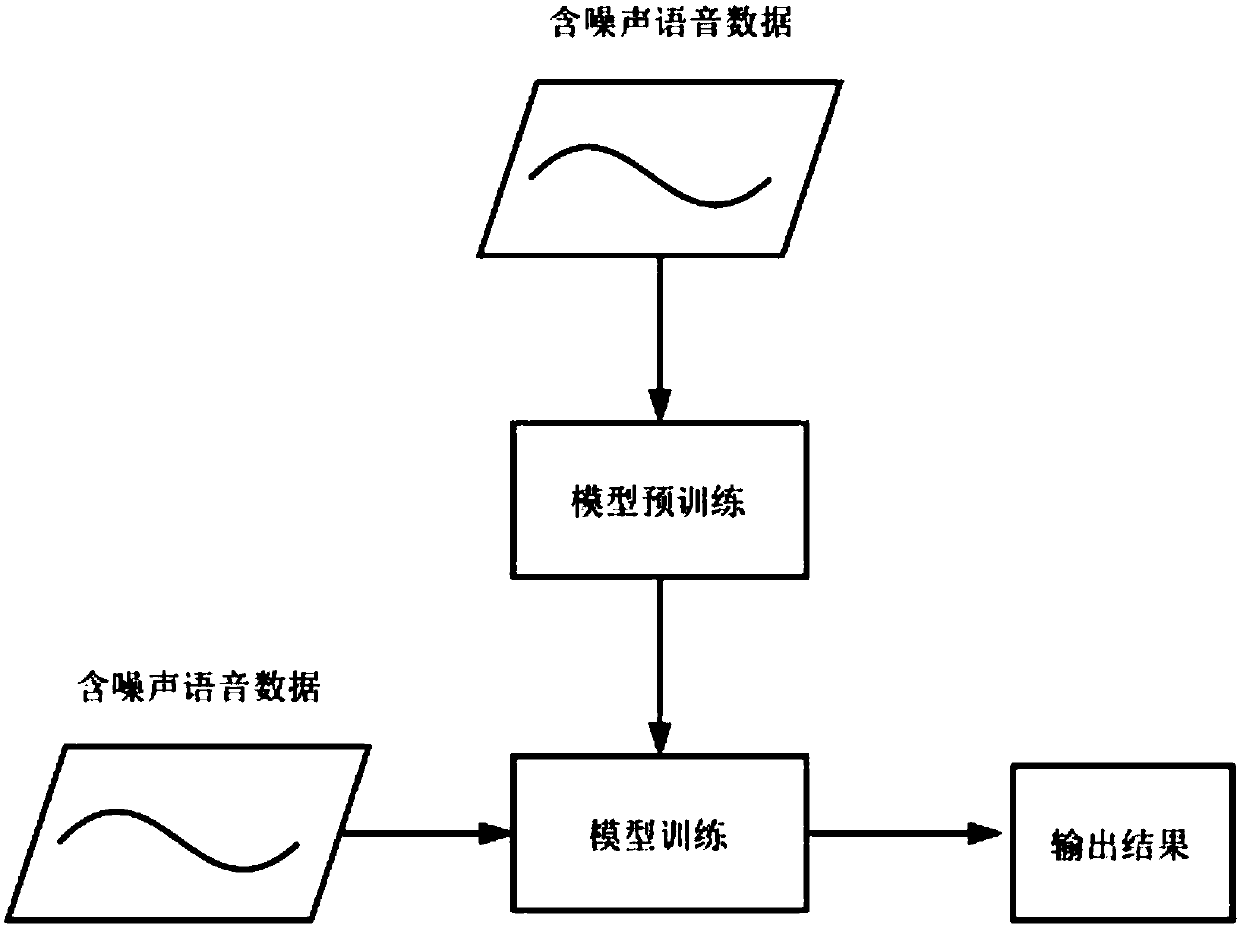

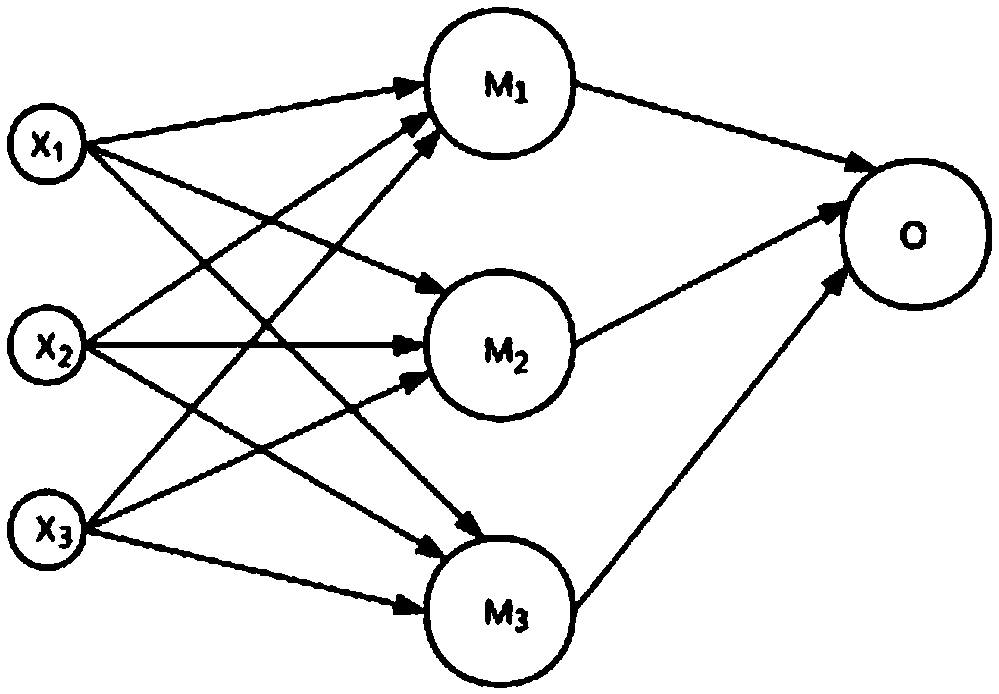

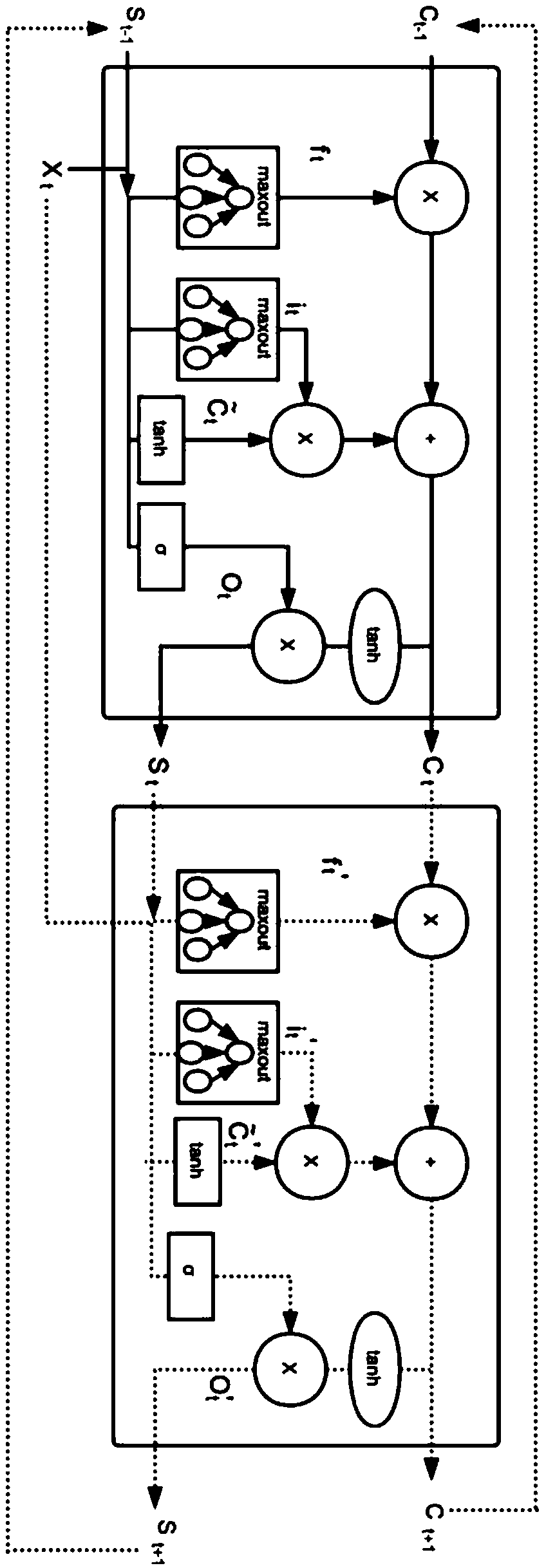

Speech recognition method based on model pre-training and bidirectional LSTM

The invention discloses a speech recognition method based on model pre-training and bidirectional LSTM and belongs to the field of deep learning and speech recognition. The method comprises steps of 1) inputting a to-be-processed speech signal; 2) preprocessing the to-be-processed speech signal; 3) extracting a Mel-frequency cepstrum coefficient and a dynamic difference to obtain a speech feature;4) constructing a bidirectional LSTM structure; 5) optimizing the bidirectional LSTM by using an maxout function to obtain maxout-biLSTM; 6) performing model pre-training; 7) training the noise-containing speech signal by using the pre-trained maxout-biLSTM to obtain a result. The method improves the original activation function of the bidirectional LSTM by using the maxout activation function, and uses the model pre-training method to improve the robustness of an acoustic model in a noisy environment, and can be used for building and training a speech recognition model in a high-noise environment.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

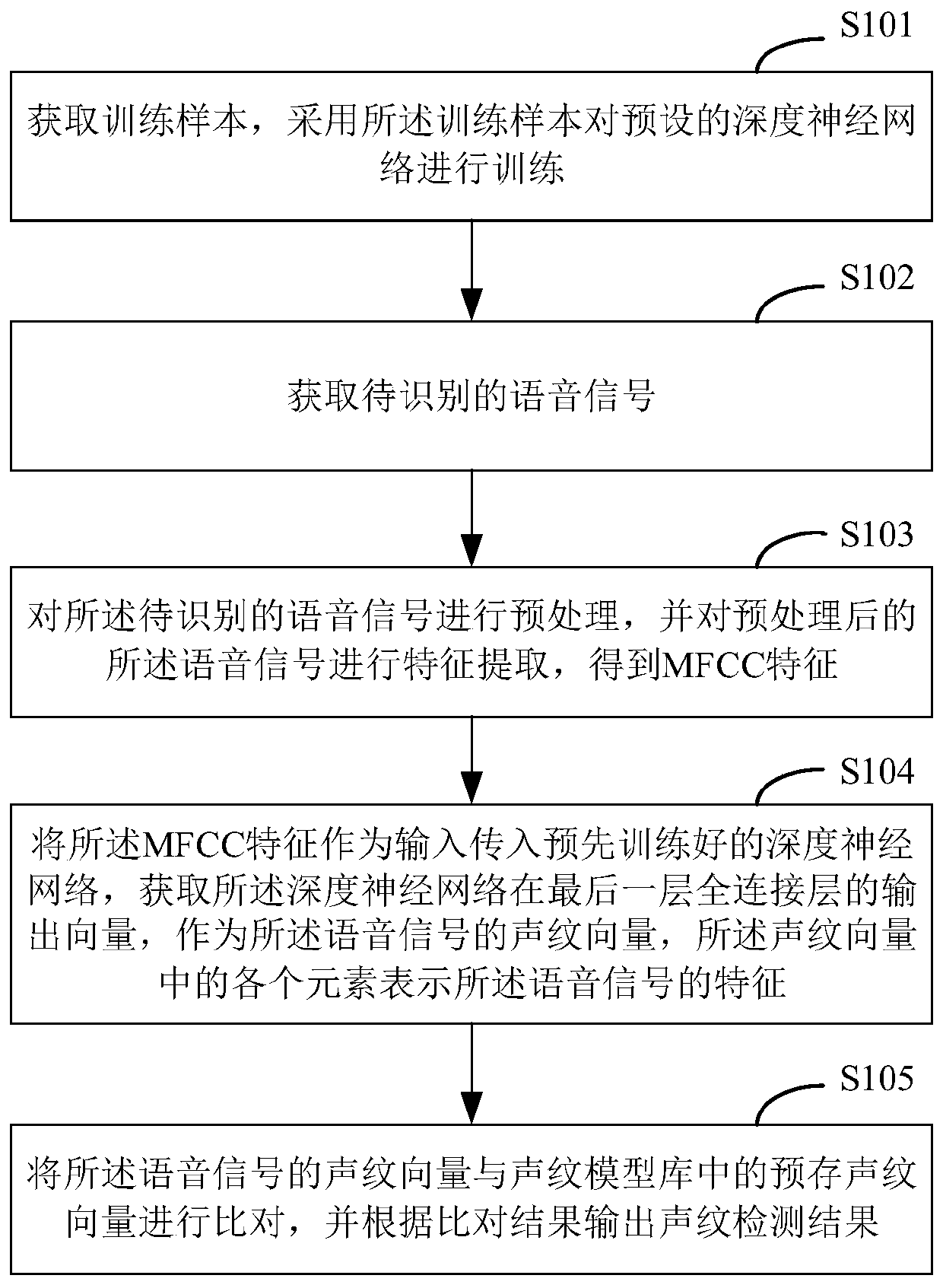

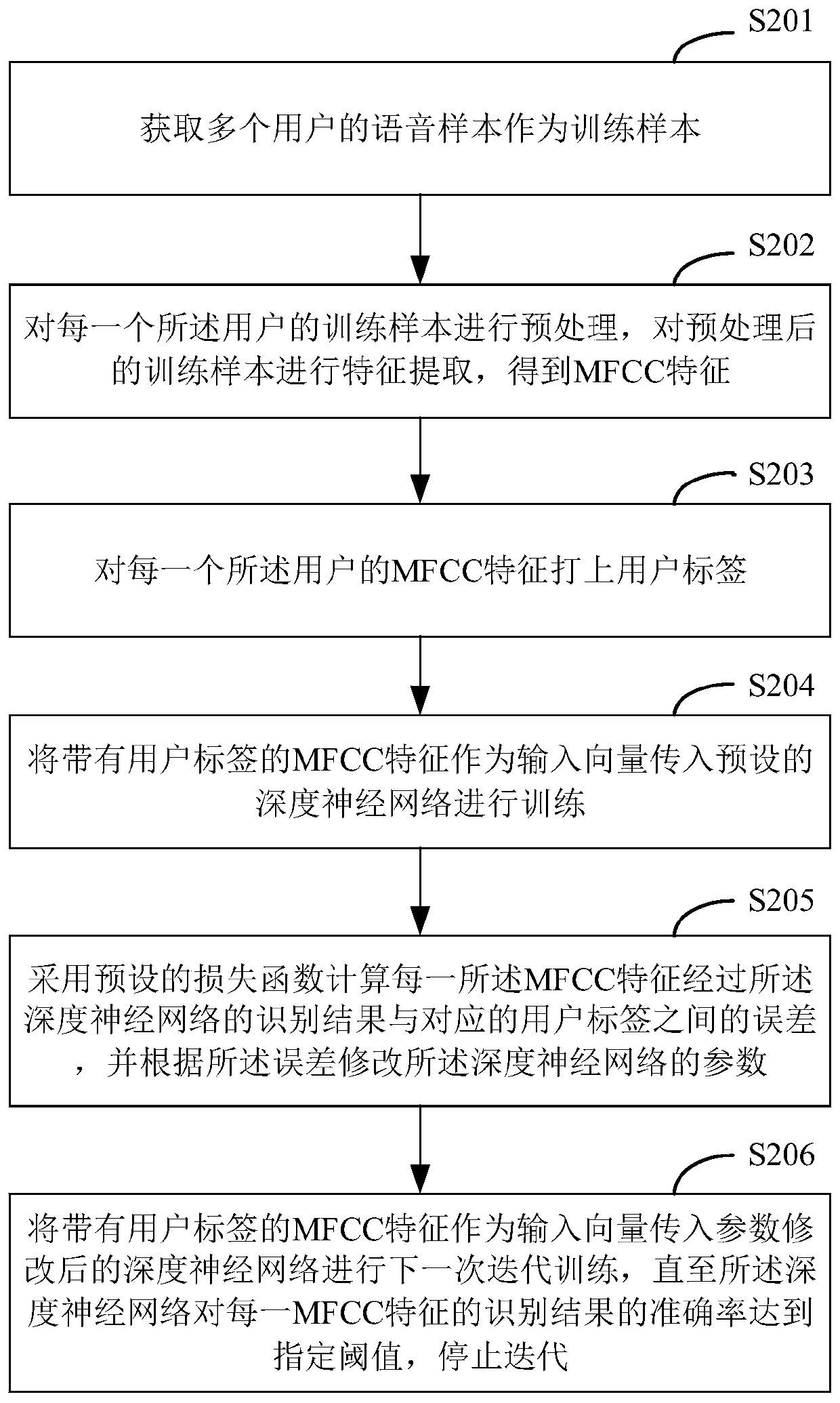

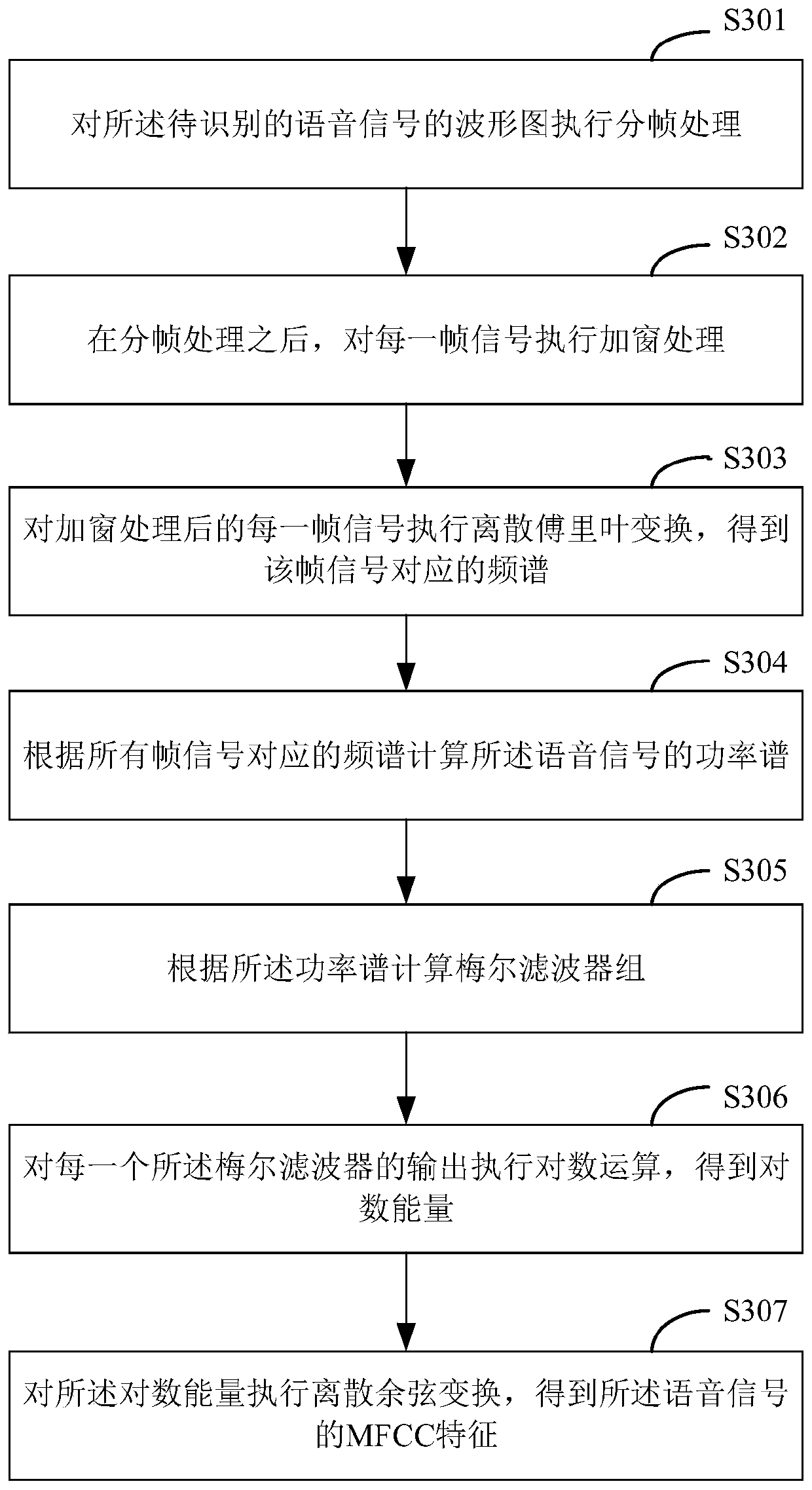

Voice print detection method, device and equipment and storage medium based on short texts

PendingCN110010133AReduce the input vectorSpeech recognitionMel-frequency cepstrumInformation quantity

The invention discloses a voice print detection method, device and equipment and a storage medium based on short texts. The voice print detection method includes the steps of training a preset deep neural network by adopting training samples; acquiring speech signals to be recognized; preprocessing the speech signals to be recognized, and carrying out feature extraction on the preprocessed speechsignals to obtain Mel frequency cepstrum coefficients; transmitting the Mel frequency cepstrum coefficients to the pre-trained deep neural network as input vectors,, obtaining output vectors of the deep neural network on a last full connection layer and using the output vectors as voice print vectors of the speech signals; and comparing the voice print vectors of the speech signals with pre-storedvoice print vectors in a voice print model base, and outputting voice print detection results according to compared results, wherein the training samples and the speech signals both are the short texts. The voice print detection method solves the problems of long speech signals, large sample information quantities and high requirements for computing resources in existing voice print detection methods.

Owner:PING AN TECH (SHENZHEN) CO LTD

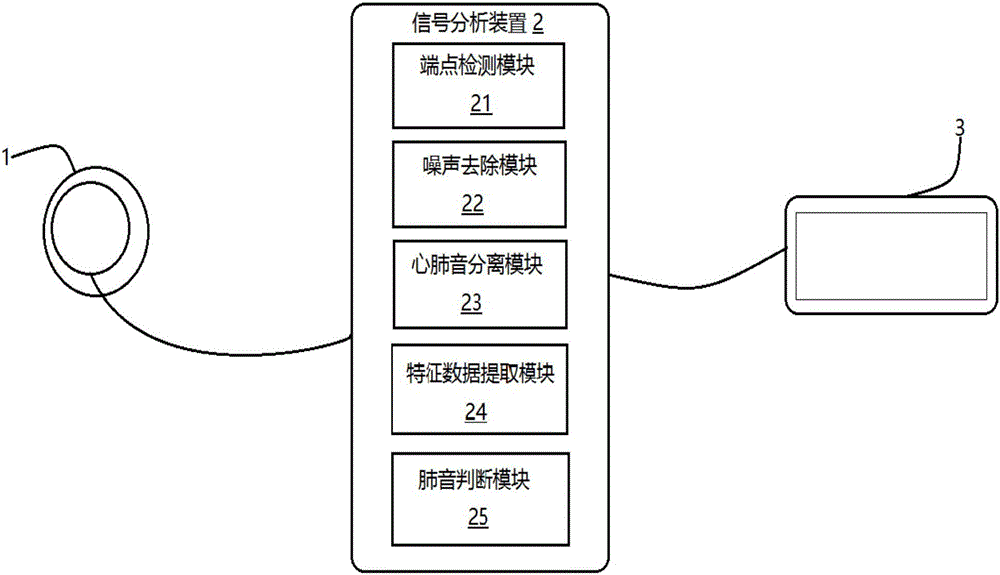

Digital stethoscope and method for filtering heart sounds and extracting lung sounds

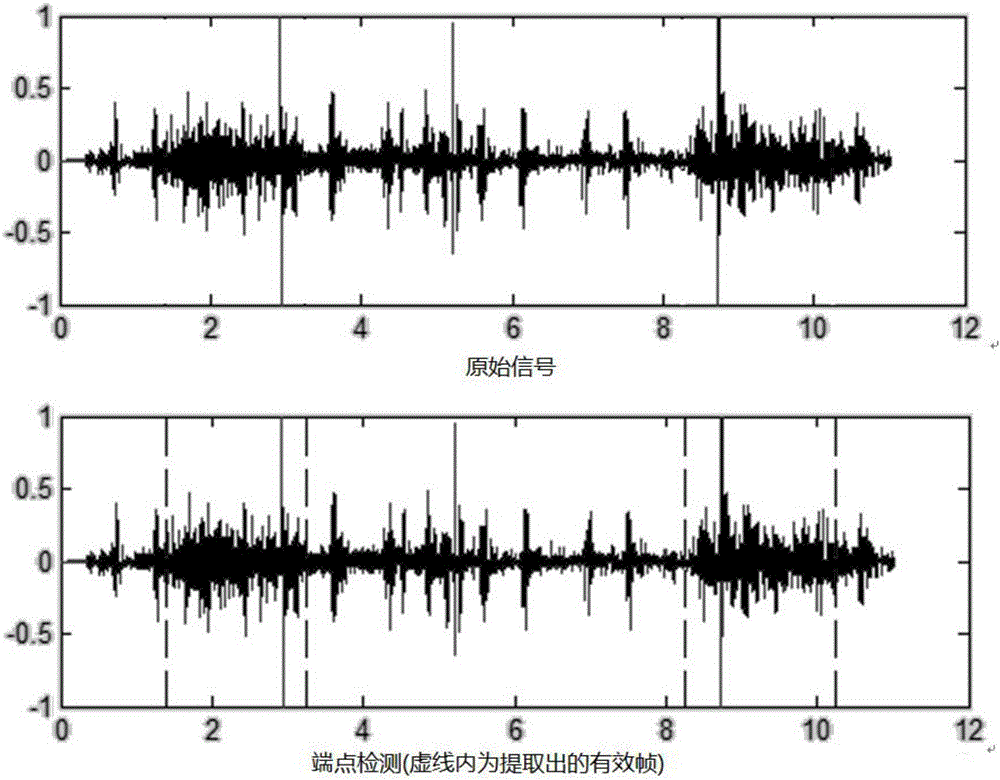

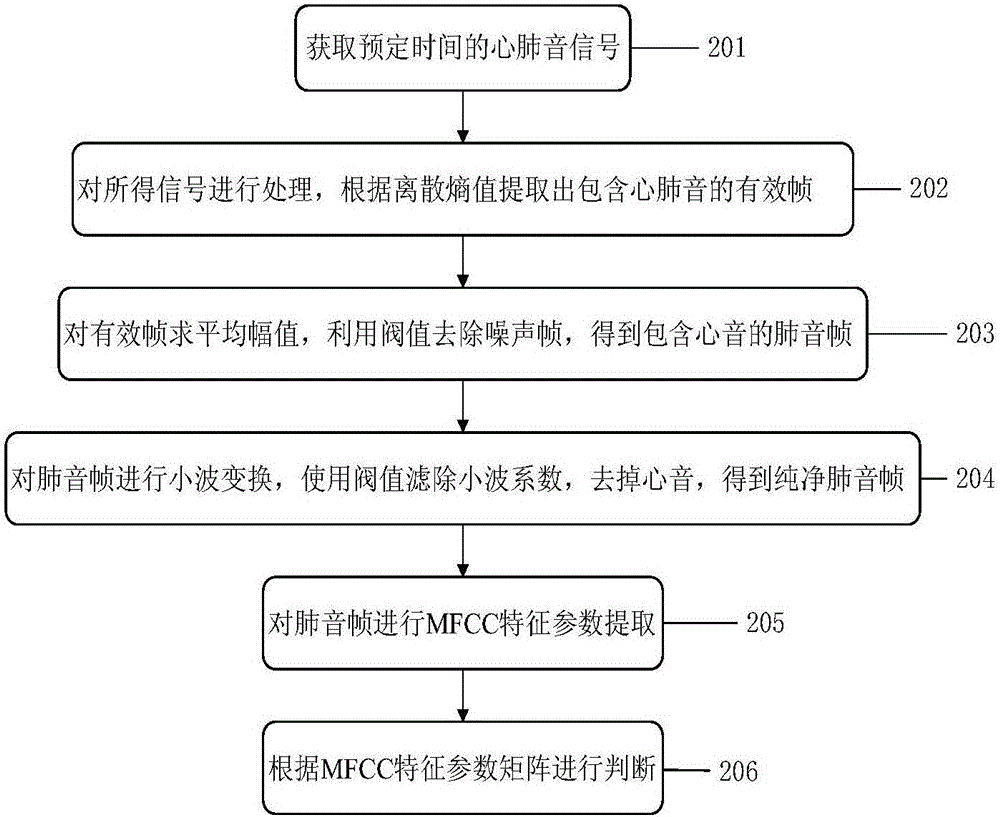

InactiveCN106022258AAchieve prediagnosisQuick extractionStethoscopeCharacter and pattern recognitionMel-frequency cepstrumMedicine

The invention provides a digital stethoscope and a method for filtering heart sounds and extracting lung sounds. The method for filtering the heart sounds and extracting the lung sounds comprises the steps of: acquiring heart and lung sound signals in scheduled time; processing the obtained signals and obtaining heart and lung sounds-included valid frames according to a discrete entropy value; calculating an average amplitude value of the valid frames, and removing noise frames by using a threshold value to obtain heart sound-included lung sound frames; carrying out wavelet transform on the obtained lung sound frames, filtering a wavelet coefficient by using the threshold value, and filtering the heart sounds to obtain pure lung sound frames; carrying out MFCC (Mel Frequency Cepstrum Coefficient) characteristic parameter extraction on the lung sound frames; and judging the lung sound frames according to an obtained MFCC characteristic parameter matrix, judging whether the lung sound signals are normal or judging that the lungs are most likely to suffer from a certain or multiple respiratory diseases. Through the abovementioned method for filtering the heart sounds and extracting the lung sounds, the lung sound signals can be rapidly extracted from the acquired heart and lung sound signals and are then judged so as to pre-diagnose the respiratory diseases.

Owner:成都济森科技有限公司

Isolated digit speech recognition classification system and method combining principal component analysis (PCA) with restricted Boltzmann machine (RBM)

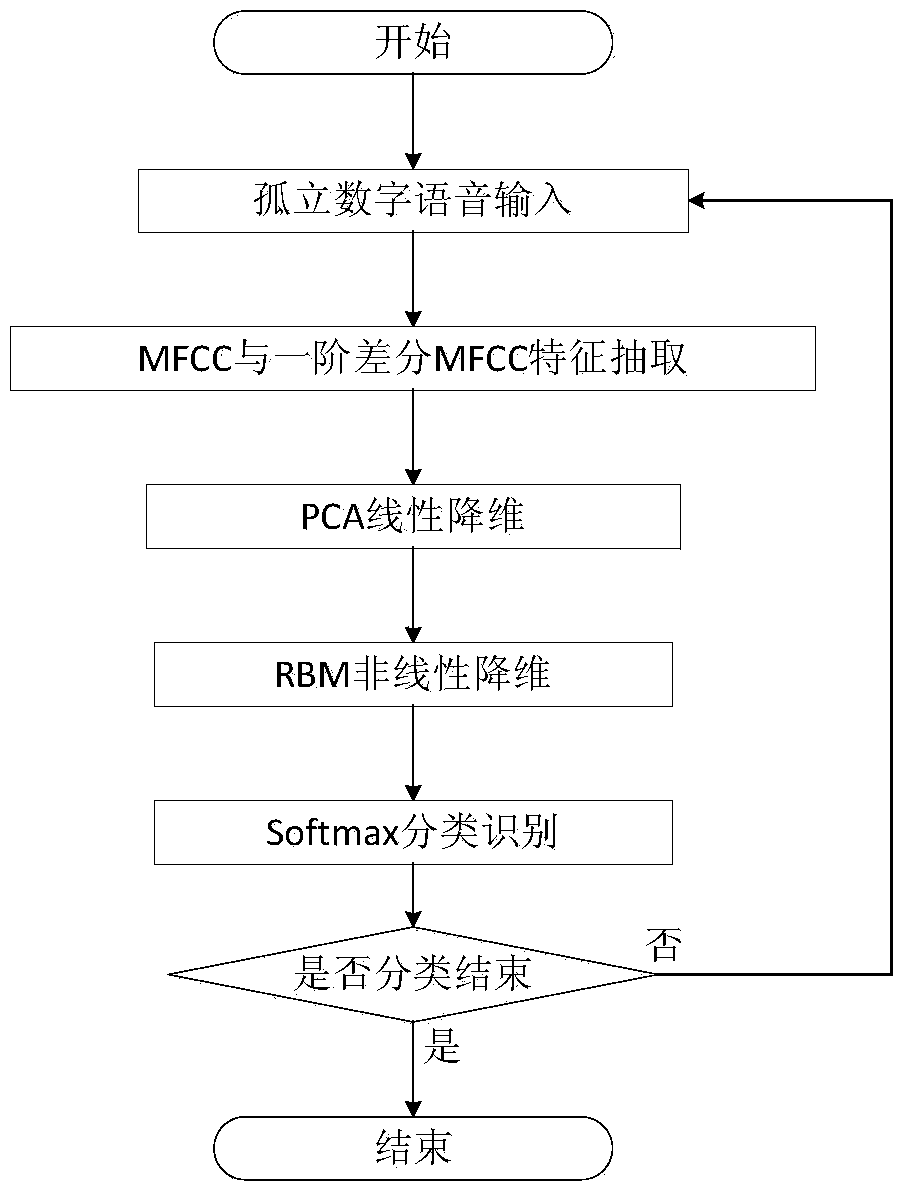

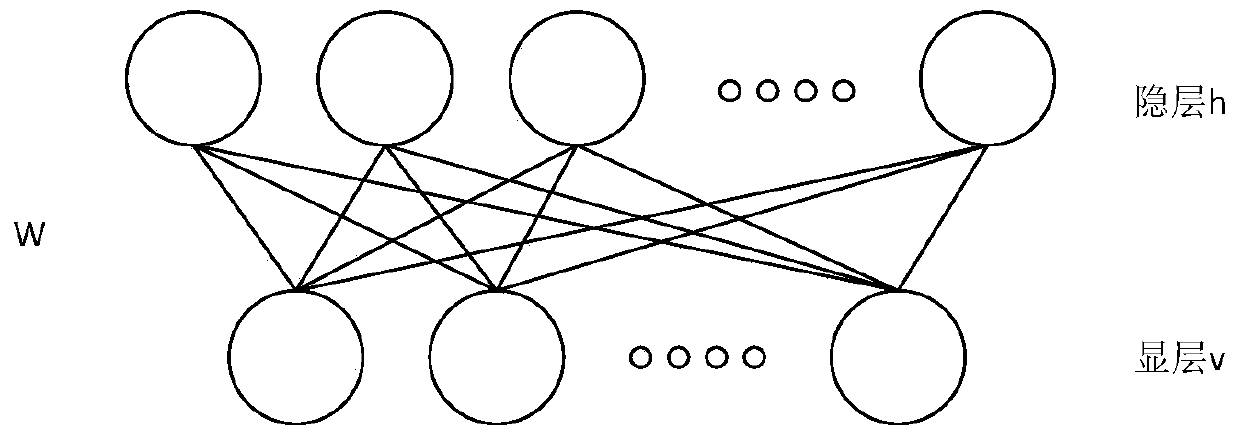

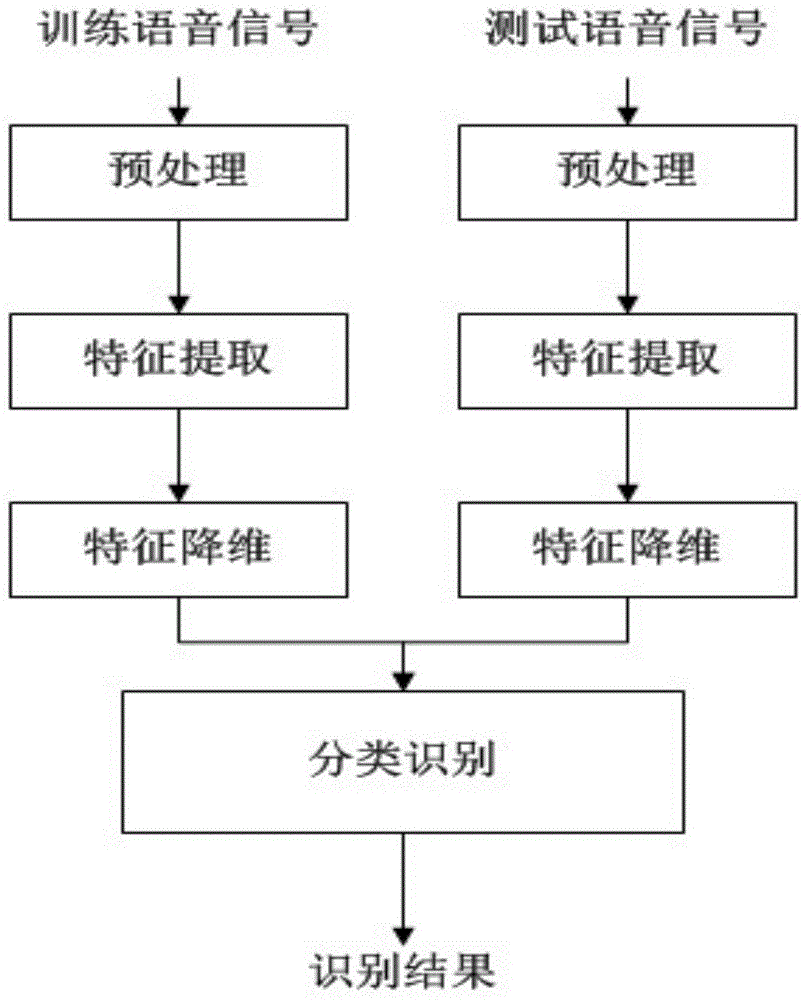

InactiveCN105206270AImprove classification accuracyReduce data volumeSpeech recognitionMel-frequency cepstrumNonlinear dimensionality reduction

The invention discloses an isolated digit speech recognition classification system and method combining a principal component analysis (PCA) with a restricted Boltzmann machine (RBM). First of all, a Mel frequency cepstrum coefficient (MFCC) is employed for combination with a one-order difference MFCC, and a voice dynamic characteristic of an isolated digit is preliminarily drawn off; then, linear dimension reduction processing is carried out on an MFCC combination characteristic by use of the PCA, and dimensions of a newly obtained characteristic are unified; accordingly, nonlinear dimension reduction processing is performed on the obtained new characteristic by use of the RBM; and finally, finishing recognition classification on a digit voice characteristic after nonlinear dimension reduction by use of a Softmax classifier. According to the invention, PCA linear dimension reduction, unification of the dimensions of the characteristic and RBM nonlinear dimension reduction are combined together, such that the characteristic representation and classification capabilities of a model are greatly improved, the isolated digit voice recognition correct rate is improved, and an efficient solution is provided for high-accuracy recognition of isolated digit voice.

Owner:CHANGAN UNIV

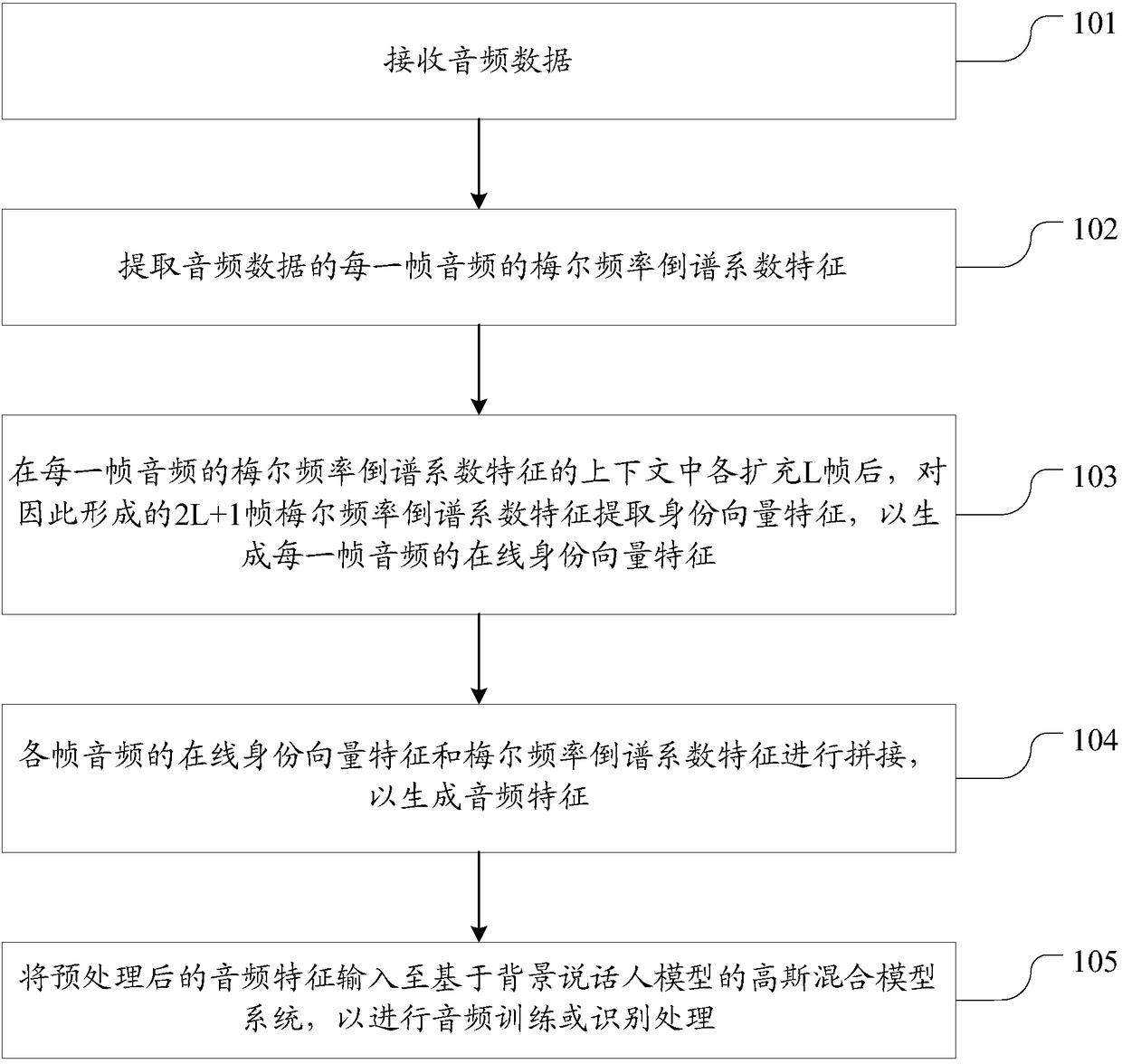

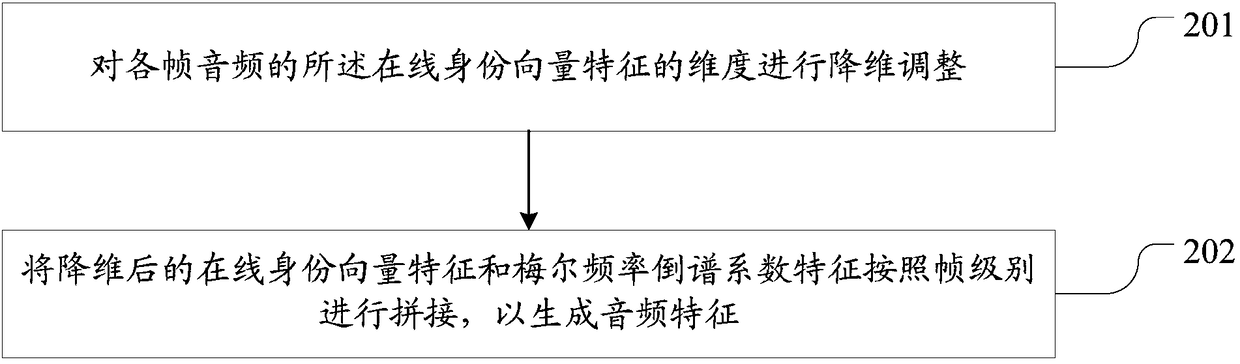

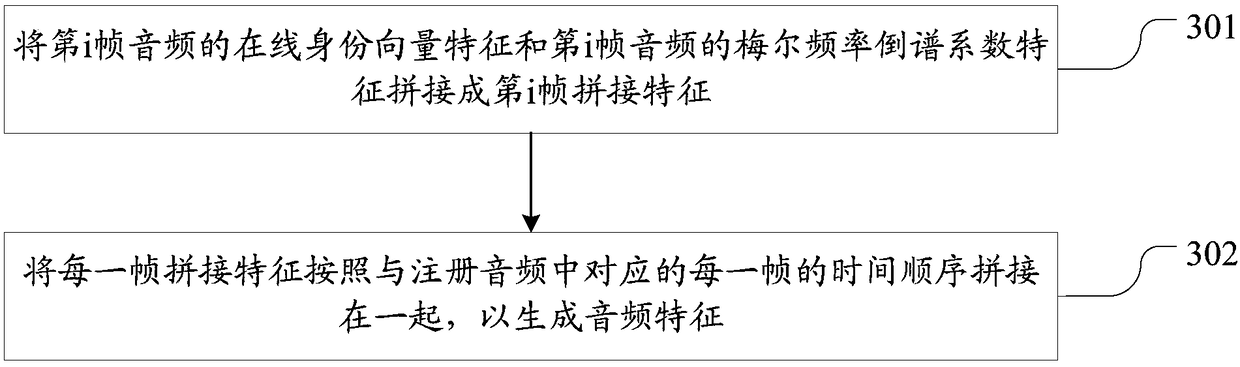

Audio training or recognizing method for intelligent dialogue voice platform and electronic equipment

The invention discloses an audio training or recognizing method and system for an intelligent dialogue voice platform and electronic equipment. The method comprises the steps of receiving audio data;extracting the identity vector characteristics of the audio data, and preprocessing the identity vector characteristics; wherein the preprocessing process comprises extracting Mel Frequency Cepstrum Coefficients (MFCCs) of each frame of audio of the audio data; extracting the identity vector characteristics of the formed 2L+1 frame MFCCs characteristics after the L frames are expanded in the context of the MFCCs characteristic of each frame of audio, so as to generate an on-line identity vector characteristics of each frame of audio; splicing the online identity vector characteristics and theMFCCs characteristics of each frame of audio according to the frame level, so as to generate audio characteristics; and inputting the preprocessed audio characteristics into a gaussian mixture model system based on a background speaker model, so as to carry out audio training or recognition processing. The identity and the speaking content of the speaker can be matched at the same time, and the recognition rate is higher.

Owner:AISPEECH CO LTD

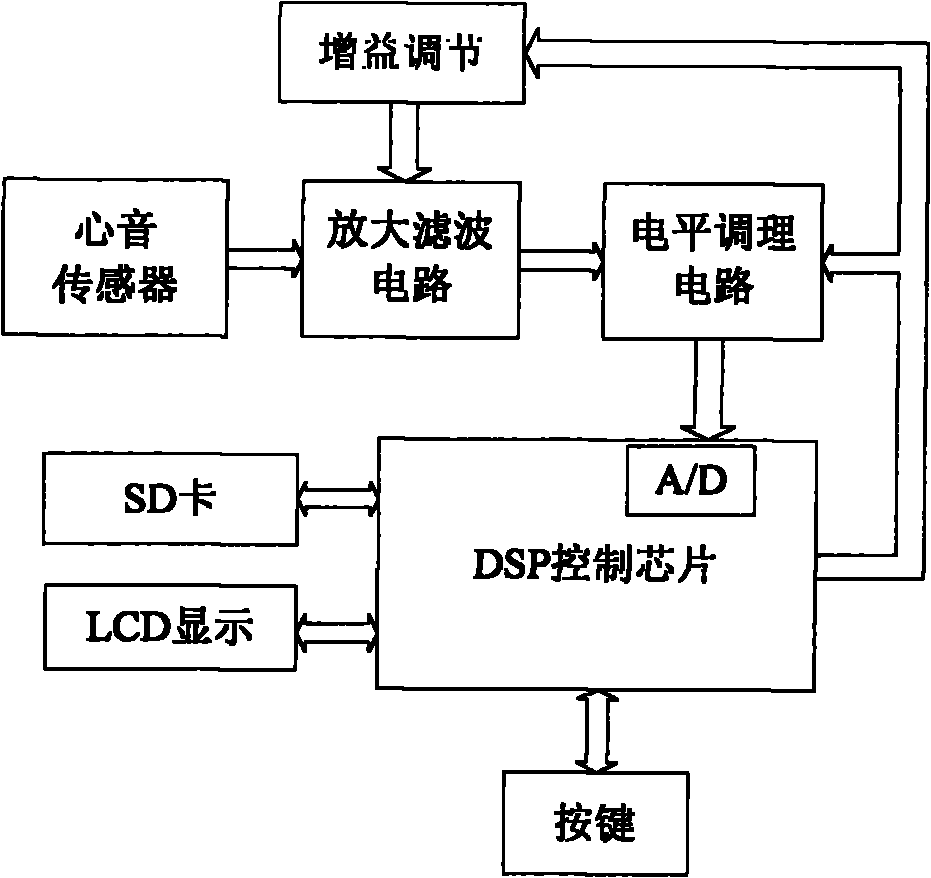

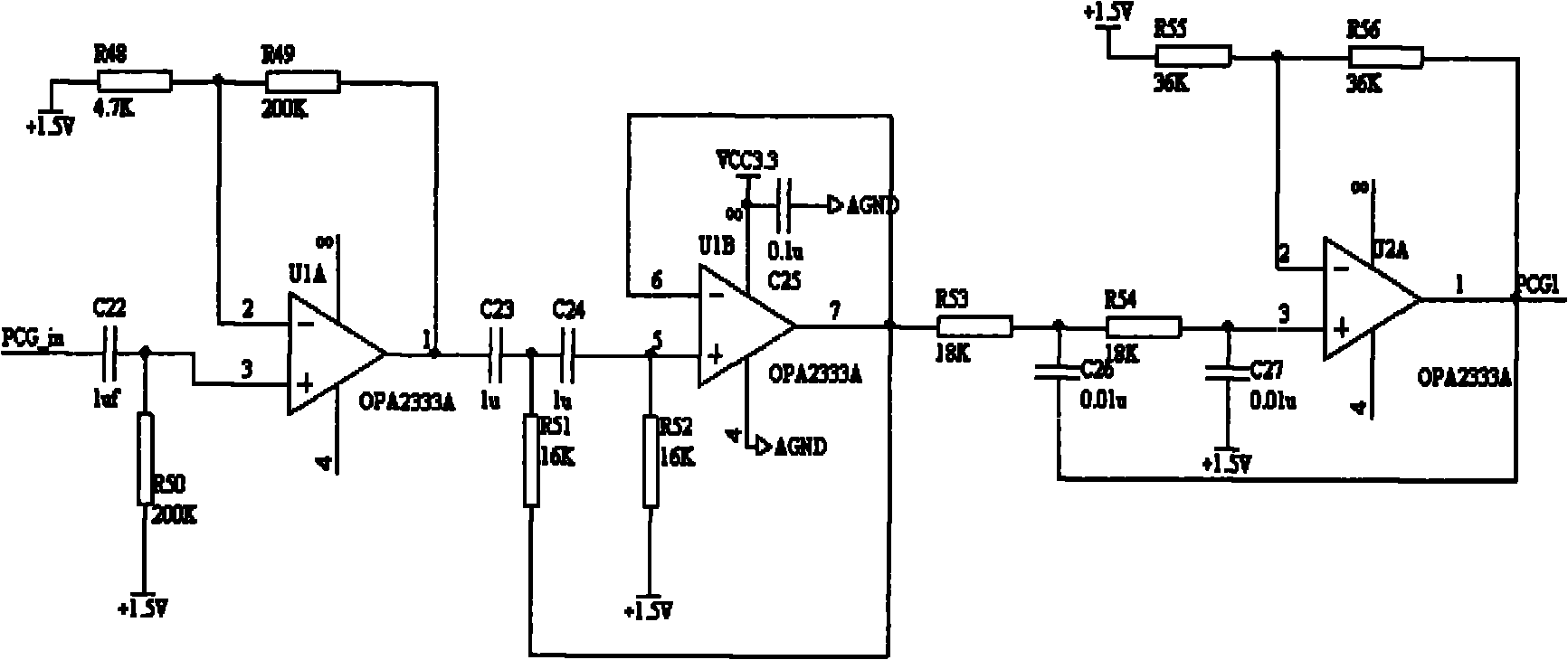

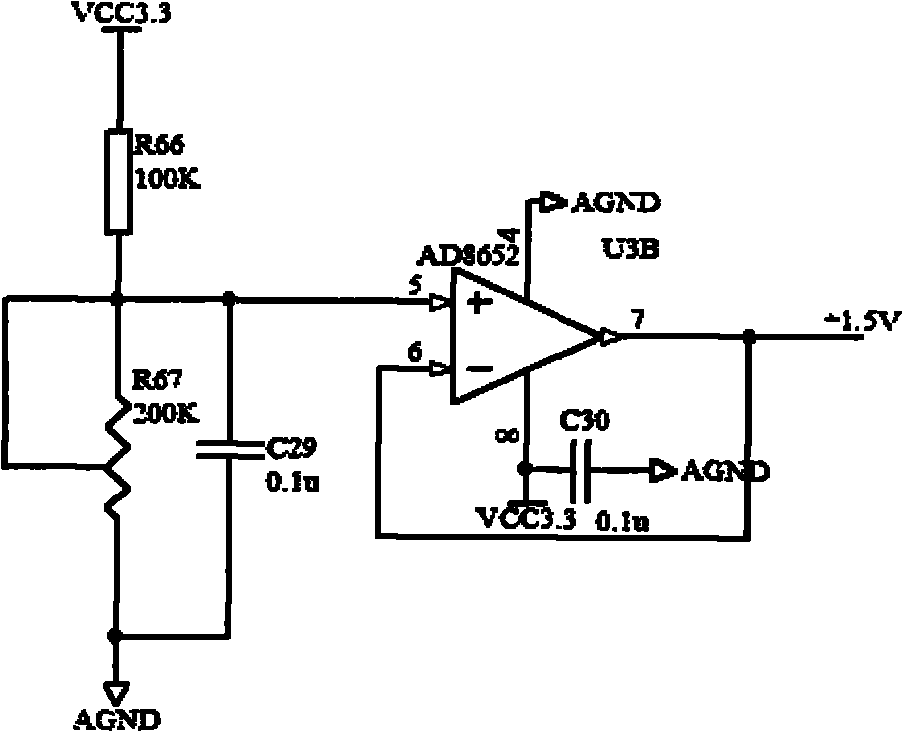

Classification and identification method and device for cardiechema signals

InactiveCN101930734AFew parametersSimplified featuresUltrasonic/sonic/infrasonic diagnosticsInfrasonic diagnosticsMel-frequency cepstrumProcessing core

The invention provides a classification and identification method for cardiechema signals and a classification and identification device for cardiechema signals by applying the method. In the method, the likelihood of GMM template features and Mel-frequency cepstrum coefficients of the cardiechema signals to be detected is used as the matching standard to perform classification and identification, so the method has the advantages of few extracted parameters, simplified processes of feature extraction and calculation, strong anti-interference capacity and high accuracy and efficiency of the classification and identification. The classification and identification device for the cardiechema signals is designed by taking the method as the total idea, adopts a DSP processor as a processing core, and has a simple, exquisite, light and convenient structure, can be carried on for measurement, is a low-cost and non-invasive cardiechema detection device, and is helpful for discovering and treating cardiac diseases in time.

Owner:CHONGQING UNIV

Method for distinguishing speakers based on protective kernel Fisher distinguishing method

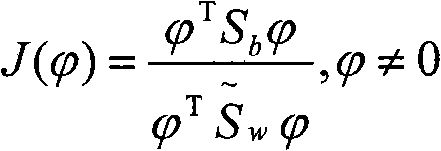

InactiveCN101650944AIdentify fitIntuitive and fast to buildSpeech analysisCharacter and pattern recognitionMel-frequency cepstrumOriginal data

The invention relates to a method for distinguishing speakers based on a protective kernel Fisher distinguishing method. The method comprises steps as follows: (1) pretreating voice signals; (2) extracting characteristic parameters: after framing and end point detection of voice signals, extracting Mel frequency cepstrum coefficients as characteristic vectors of speakers; (3) creating a speaker distinguishing model; (4) calculating model optimal projection vector: by using optimal solution of LWFD method, calculating to obtain an optimal projection vector group; (5) distinguishing speakers: projecting original data xi to yi belonging to R<r>( r is more than or equal to 1 and less than or equal to d) according to optimal projection classification vector phi, wherein r is cut dimensionality;the optimal projection classification dimensionality of original c type data space is c-1, then solving a central value of data of each type after injection and normalizing; after projecting data tobe classified to a sub space and normalizing, calculating Euclidean distance from the normalized protecting data to the central point of each type of data in the sub space, and judging the nearest tobe a distinguishing result. The invention has high distinguishing rate, simple model construction and favorable rapidity.

Owner:ZHEJIANG UNIV OF TECH

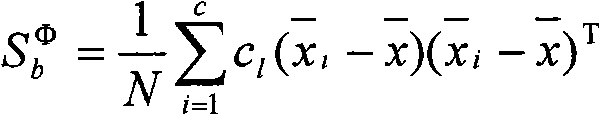

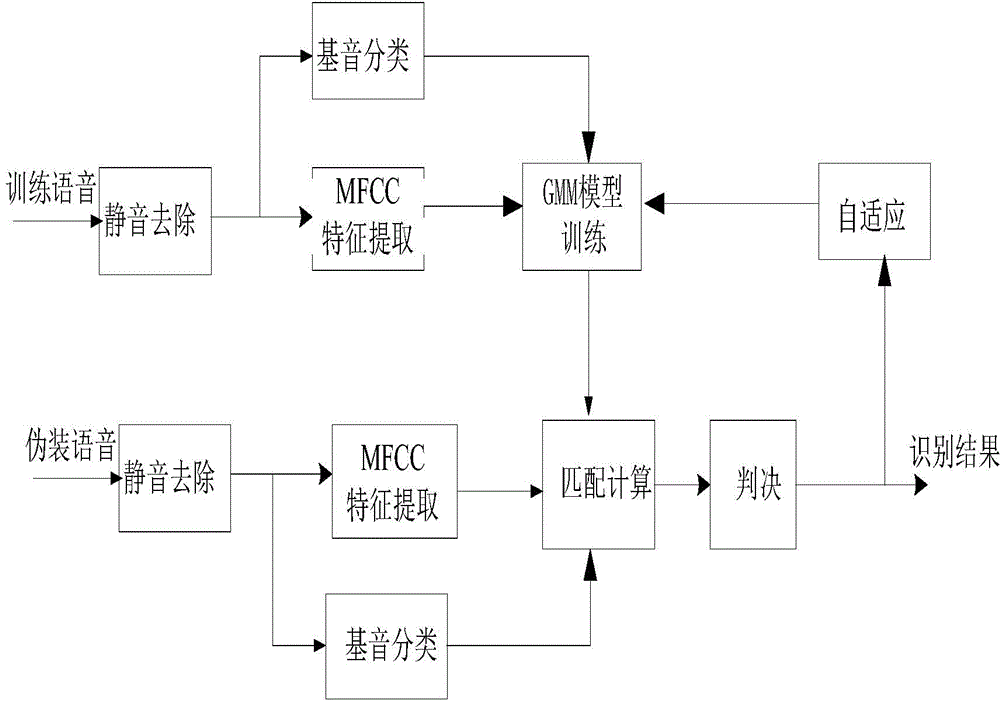

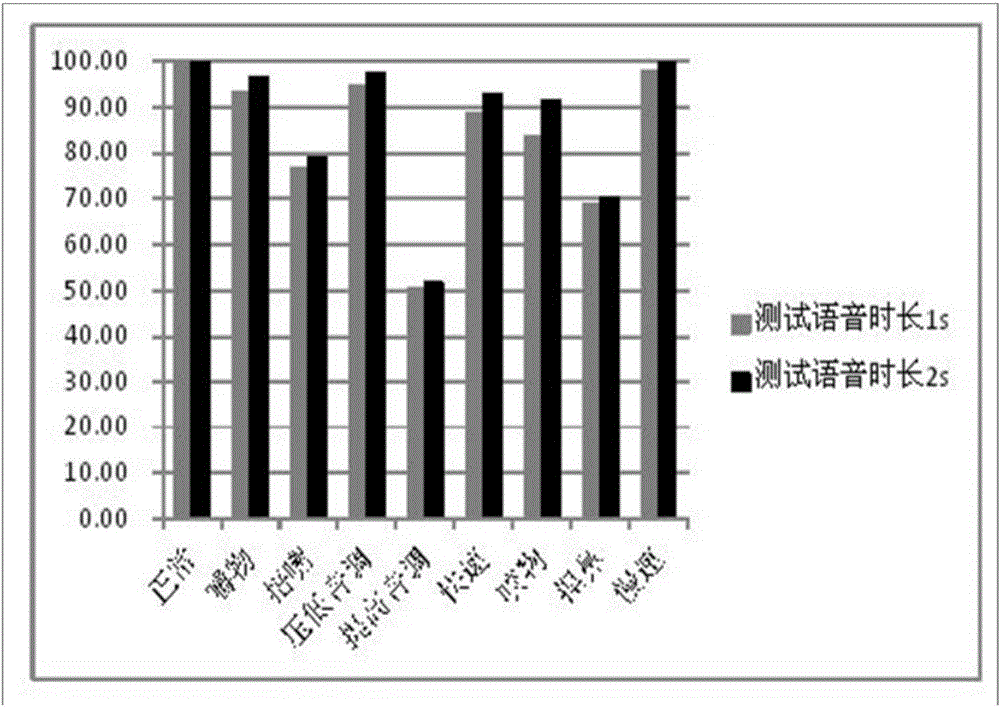

Speaker recognition method for deliberately pretended voices

The invention provides a speaker recognition method for deliberately pretended voices. Firstly, a reasonable recording scheme is set up in an anechoic room without noise and reflection for eight deliberately pretended voices, namely tone raising, tone lowering, quick speaking, slow speaking, nose nipping, mouth covering, object biting (holding a pencil in the mouth) and chewing (chewing gum), then based on pitch period presorting, the Mel frequency cepstrum coefficient and a Gauss hybrid model are used for carrying out recognition under pretending of a speaker, and finally self-adaptive group adjustment is adopted to achieve high-quality speaker recognition of pretended voices. The method can be applied to voice cases that criminals cover up identities through pretended voices.

Owner:NANJING UNIV OF POSTS & TELECOMM

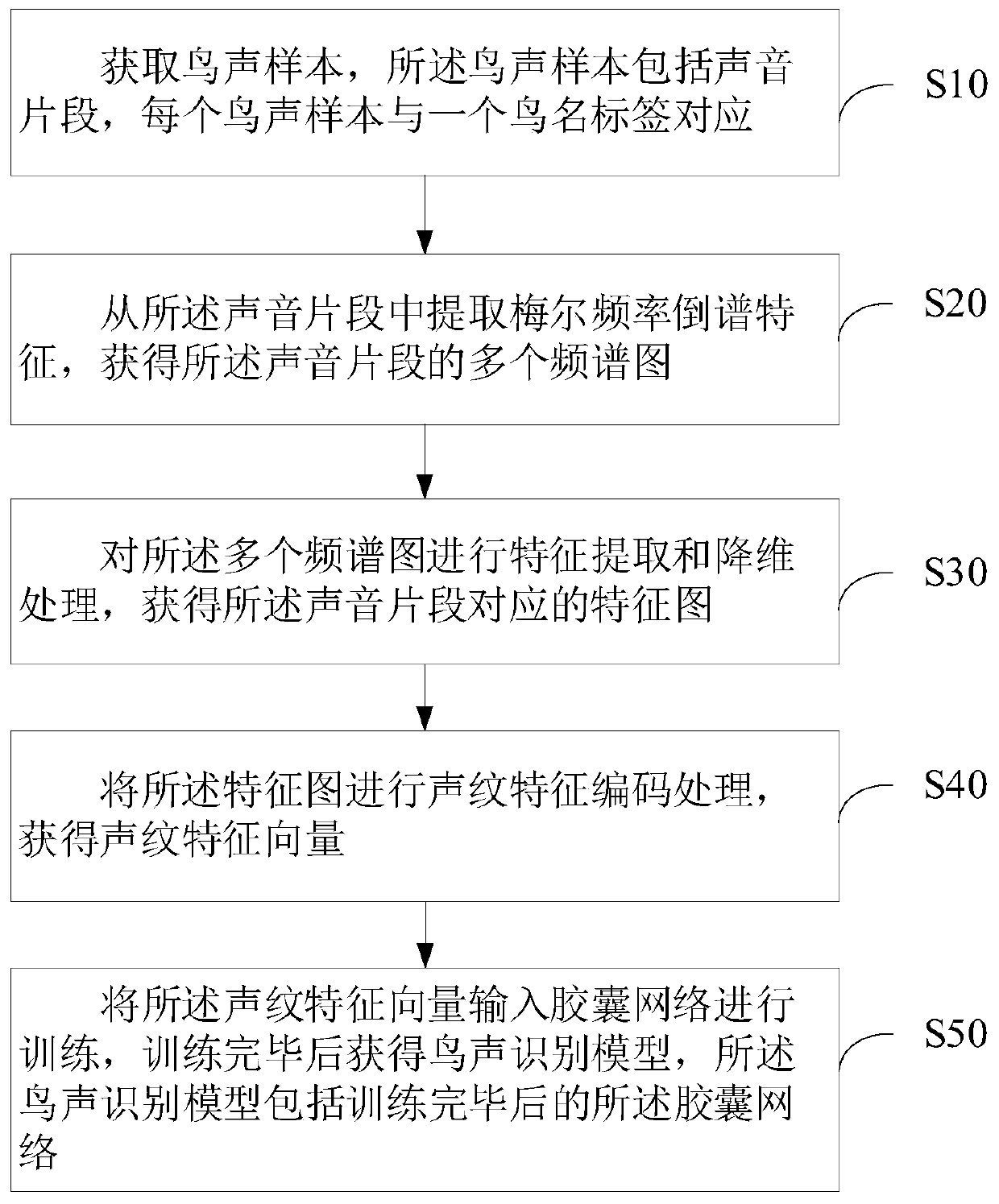

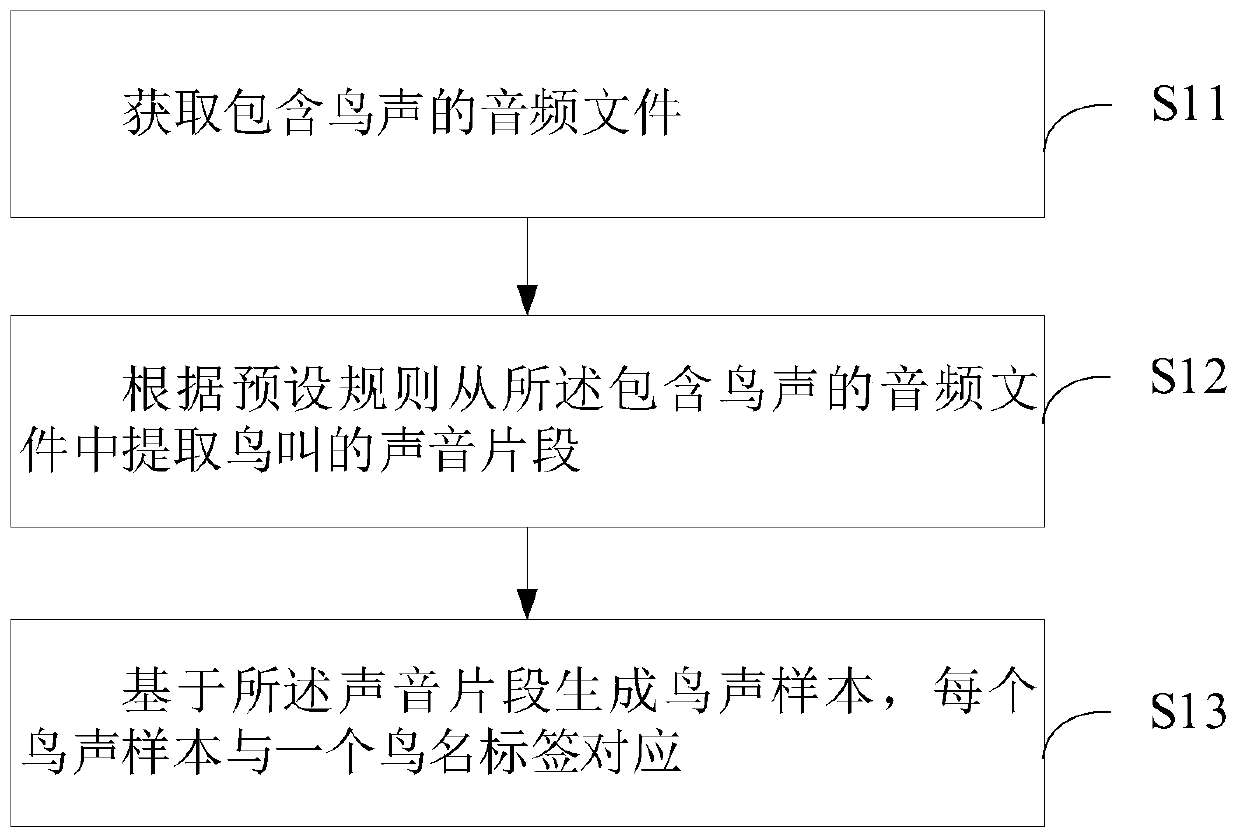

Construction method and device of bird sound recognition model, computer device and storage medium

ActiveCN110120224AEasy to trainSmall amount of calculationSpeech analysisMel-frequency cepstrumFeature vector

The invention relates to the field of sound recognition and discloses a construction method and device of a bird sound recognition model, a computer device and a storage medium. The construction method comprises: acquiring bird sound samples that include sound fragments, wherein each bird sound sample corresponds to one bird name tag; extracting Mel-frequency cepstrum features from the sound fragments to obtain multiple spectrums of the sound fragments; subjecting the spectrums to feature extraction and dimensionality reduction to obtain feature graphs corresponding to the sound fragments; subjecting the feature graphs to voiceprint feature encoding to obtain voiceprint feature vectors; inputting the voiceprint feature vectors into a capsule network for training, and training to acquire abird sound recognition model that includes the trained capsule network. The bird sound recognition model acquired herein is suitable for processing sound fragments containing bird sound and recognizing types of birds.

Owner:PING AN TECH (SHENZHEN) CO LTD

Mel frequency cepstrum coefficient (MFCC) underwater target feature extraction and recognition method

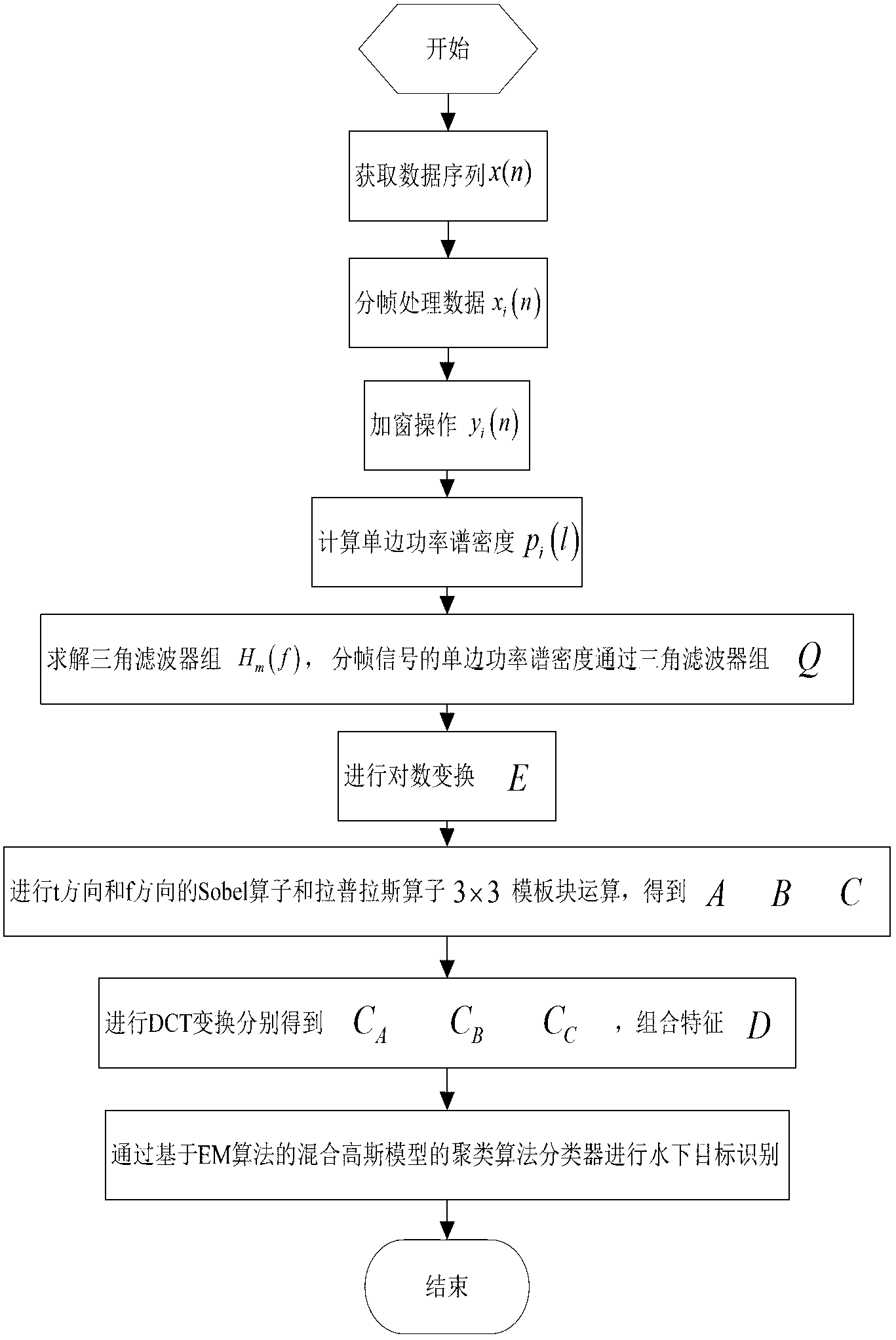

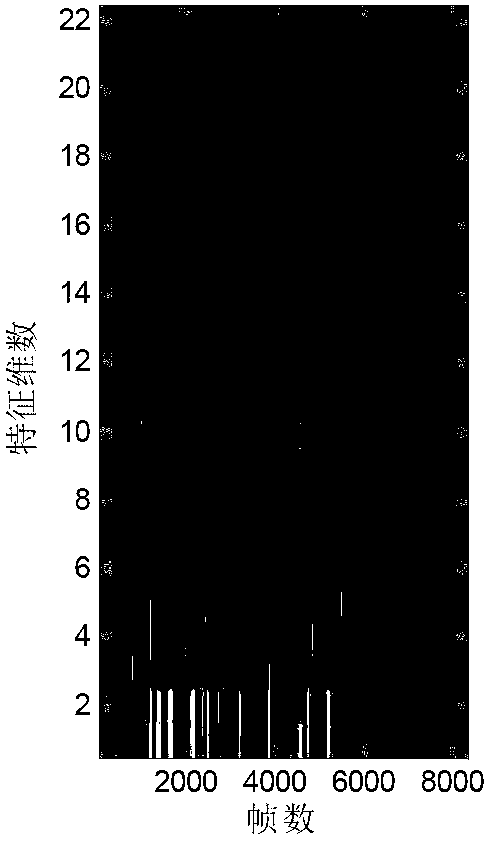

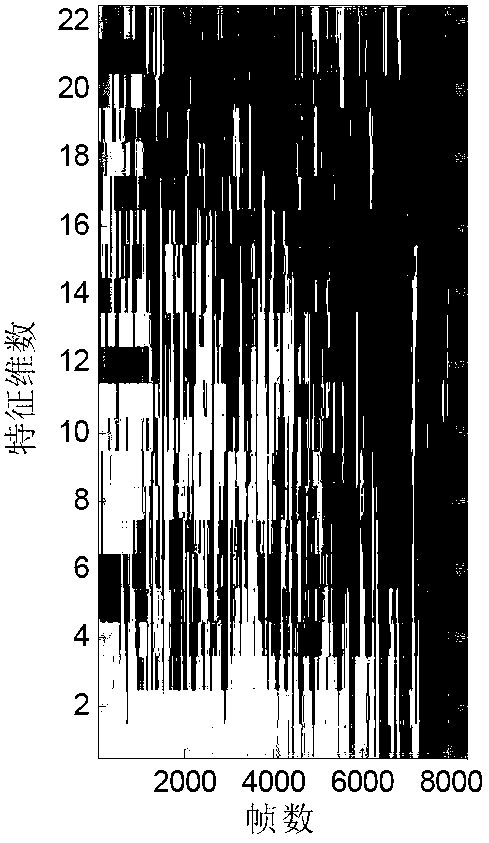

ActiveCN102799892AImprove recognition rateFeature refinementCharacter and pattern recognitionMel-frequency cepstrumFeature set

The invention discloses a Mel frequency cepstrum coefficient (MFCC) underwater target feature extraction and recognition method. The method comprises the following steps of: 1) acquiring a data sequence x(n); 2) framing to obtain xi(n); 3) obtaining yi(n) through windowing operation; 4) calculating the one-sided power spectrum density pi(l) of a framed and windowed signal; 5) solving a transfer function Hm(f) of a triangular filter bank, and obtaining Q by using the triangular filter bank; 6) performing logarithmic transformation to obtain E; 7) performing 3*3 template block operation of a Sobel operator and a Laplace operator in a t direction and a f direction respectively to obtain A, B and C; 8) performing discrete cosine transform (DCT) respectively to obtain feature sets, namely CA, CB and CC, and combining features; and 9) performing underwater target identification by using a clustering classifier of an expectation-maximization (EM) algorithm-based Gaussian mixture model. The method is favorable for improving the recognition rate of underwater targets.

Owner:SOUTHEAST UNIV

Method and device for recognizing short speech speaker

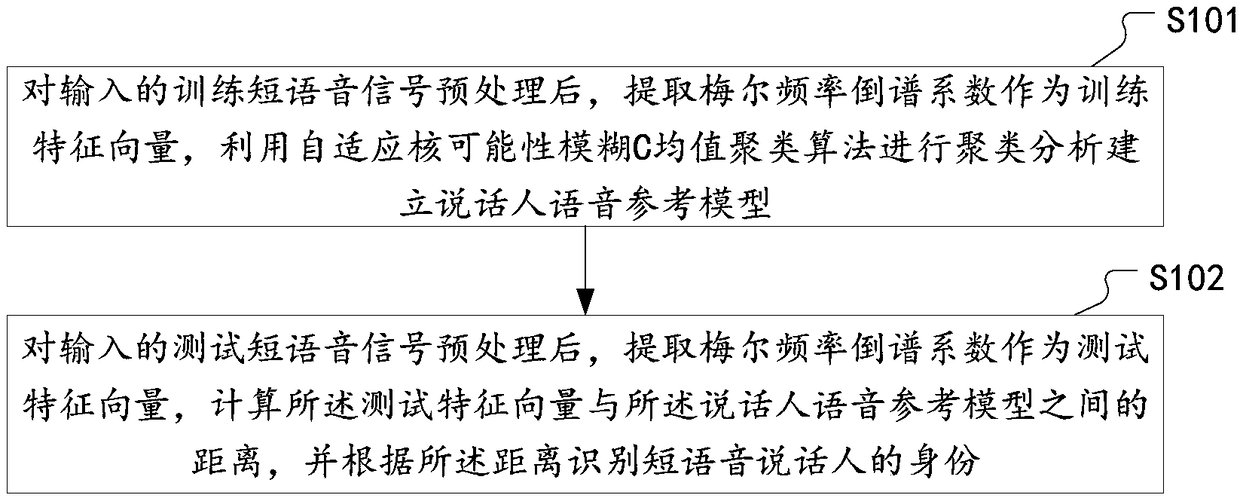

ActiveCN108281146AOvercome the defect of recognition performance degradationImprove recognition accuracySpeech analysisFeature vectorMel-frequency cepstrum

The invention discloses a method and device for recognizing a short speech speaker. The method comprises the following steps: after an input training short speech signal is preprocessed, a Mel frequency Cepstral coefficient is extracted as a training feature vector, and an adaptive kernel likelihood fuzzy C-means clustering algorithm is used for performing clustering analysis to establish a speaker speech reference model; after the input test short speech signal is preprocessed, the Mel frequency cepstral coefficient is extracted as a test feature vector, distance between the test feature vector and the speaker speech reference model is calculated, and the identity of the short speech speaker is identified according to the distance. Via the method and device for recognizing the short speech speaker disclosed in the present embodiment, the Mel frequency Cepstrum coefficient is extracted as a feature, and the feature and the adaptive kernel likelihood fuzzy C-means clustering algorithm are used for performing the clustering analysis to establish the speaker speech reference model; after an execution model is matched, the identity of the short speech speaker is recognized, recognitionaccuracy is improved, and practical application requirements are met.

Owner:GEER TECH CO LTD

Speech emotion recognition method based on fuzzy support vector machine

InactiveCN104091602AReduce redundancyReduce the impactSpeech recognitionMel-frequency cepstrumFuzzy support vector machine

The invention relates to a speech emotion recognition technology, in particular to a speech emotion recognition method based on a fuzzy support vector machine. The method comprises the steps that input speech signals with emotions are pre-processed, the pre-processing comprises pre-emphasis filtering and windowing framing, the Mel frequency cepstrum coefficient (MFCC) of feature information of the processed speech signals is extracted, dimension reduction processing is carried out on the extracted MFCC by utilizing principal component analysis (KPCA), classification and recognition are carried out according to the MFCC feature information after the dimension reduction is carried out, and recognition results are output. A specific classification and recognition method is carried out by adopting an FSVM algorithm. The speech emotion recognition method based on the fuzzy support vector machine has the advantages that KPCA is adopted for carrying out dimension reduction on MFCC emotion features to reduce redundant information, the recognition effect is better than that with the MFCC features directly used, recognition efficiency of the method is higher, the effect is better, and the recognition speed is higher. The speech emotion recognition method based on the fuzzy support vector machine is especially suitable for intelligent speech emotion recognition.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

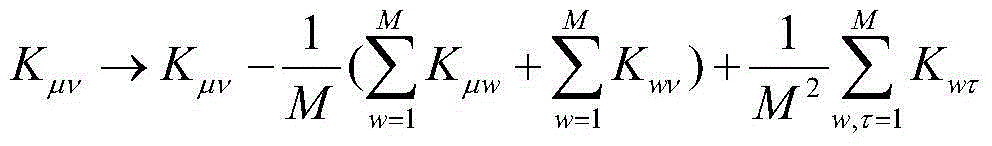

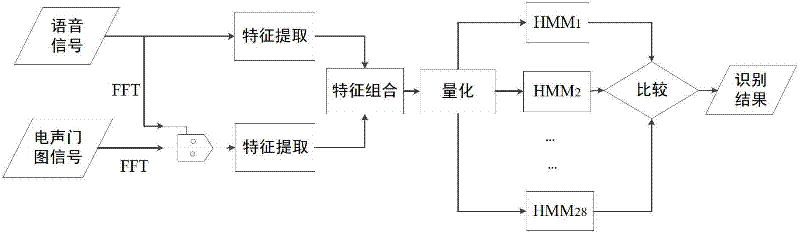

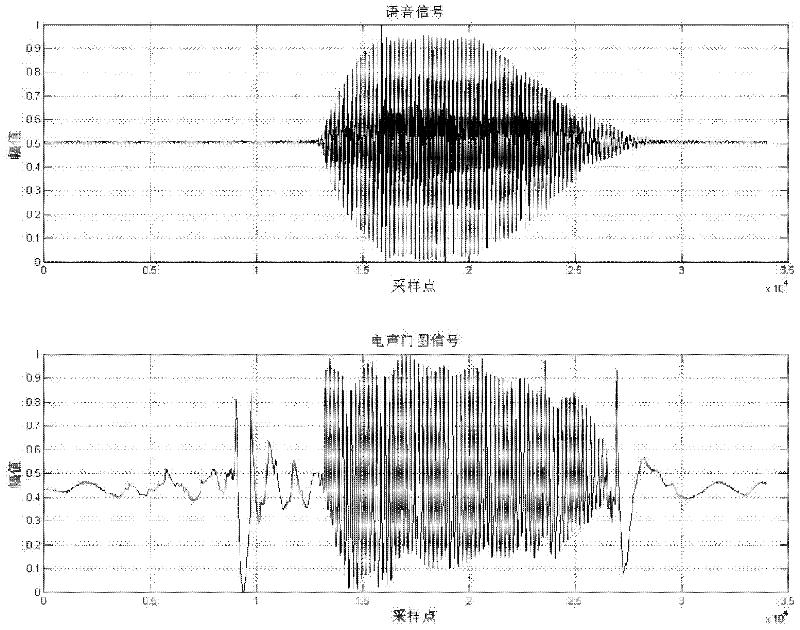

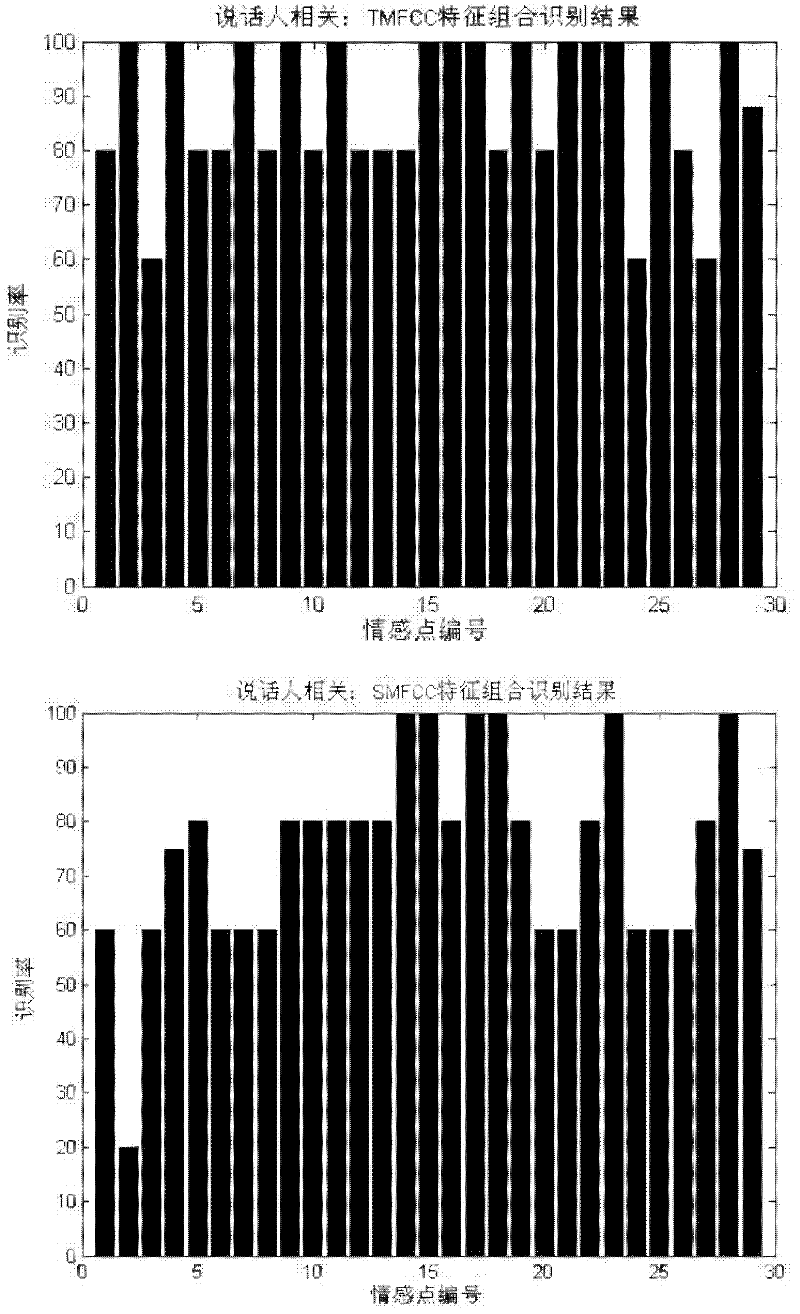

Method for recognizing emotion points of Chinese pronunciation based on sound-track modulating signals MFCC (Mel Frequency Cepstrum Coefficient)

InactiveCN102655003AImprove recognition rateSpeech recognitionMel-frequency cepstrumFast Fourier transform

The invention provides a method capable of increasing the average recognition rate of emotion points. The method comprises the following steps of: making specifications of emotion data of an electroglottography and voice database; collecting the emotion data of the electroglottography and voice data; carrying out subjective evaluation on the collected data, and selecting one set of data subset as a study object; carrying out preprocessing on electroglottography signals and voice signals, and extracting short-time characteristics and corresponding statistical characteristics in the voice signals and MEL frequency cepstrum coefficient SMFCC; carrying out fast Fourier transform on the electroglottography signals and the voice signals, then dividing the electroglottography signals and the voice signals, and after dividing, obtaining MEL frequency cepstrum coefficient TMFCC; and respectively using different characteristic combination for carrying out experiment, and solving the average recognition rate of 28 emotion points under different characteristic combinations when a speaker is related and not related. The experimental result shows that by adoption of TMFCC characteristics, the average recognition rate of the emotion points can be increased.

Owner:BEIHANG UNIV

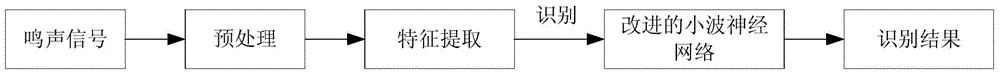

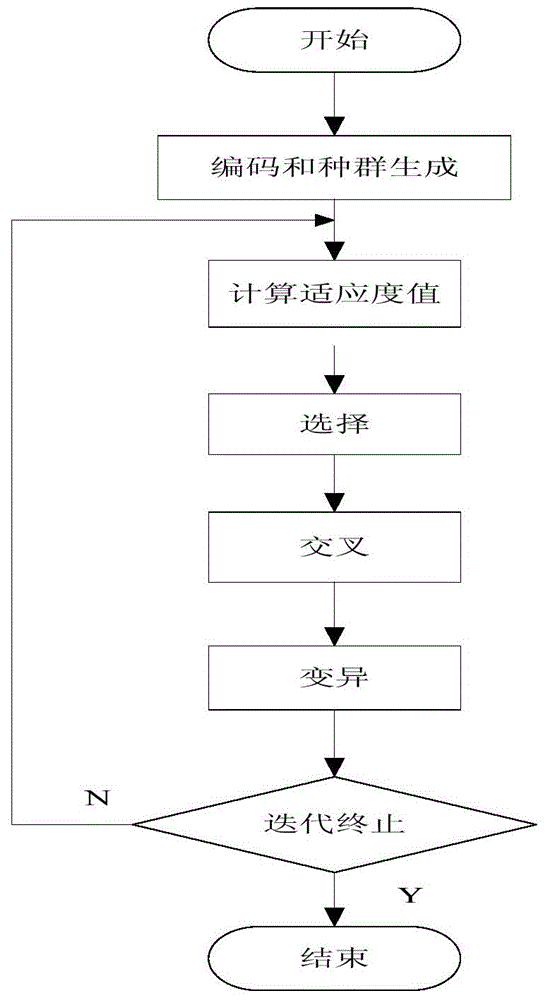

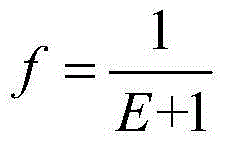

Nipponia nippon individual recognition method based on MFCC algorithm

InactiveCN104102923AEasy extractionImprove noise immunitySpeech analysisCharacter and pattern recognitionMel-frequency cepstrumFast Fourier transform

The invention discloses a nipponia nippon individual recognition method based on an MFCC (Mel Frequency Cepstrum Coefficient) algorithm. The method comprises the following steps that: (1) collected nipponia nippon warbling signals are subjected to preprocessing including pre-emphasis, framing and windowing, and end point detection; (2) through fast Fourier transform, a power spectrum of the nipponia nippon warbling signals is obtained, and after the power spectrum is smoothened, a measure of combining the MFCC, a Mid MFCC and an IMFCC (Inverse Mel Frequency Cepstrum Coefficient) is used for extracting feature parameters; and (3) an improved wavelet neural network is utilized for carrying out training recognition on the feature parameters of the nipponia nippon warbling signals. The nipponia nippon individual recognition method has good anti-noise performance, the feature parameters which can better express the nipponia nippon warbling features can be extracted out, and the recognition rate of a system can be improved.

Owner:XI'AN UNIVERSITY OF ARCHITECTURE AND TECHNOLOGY

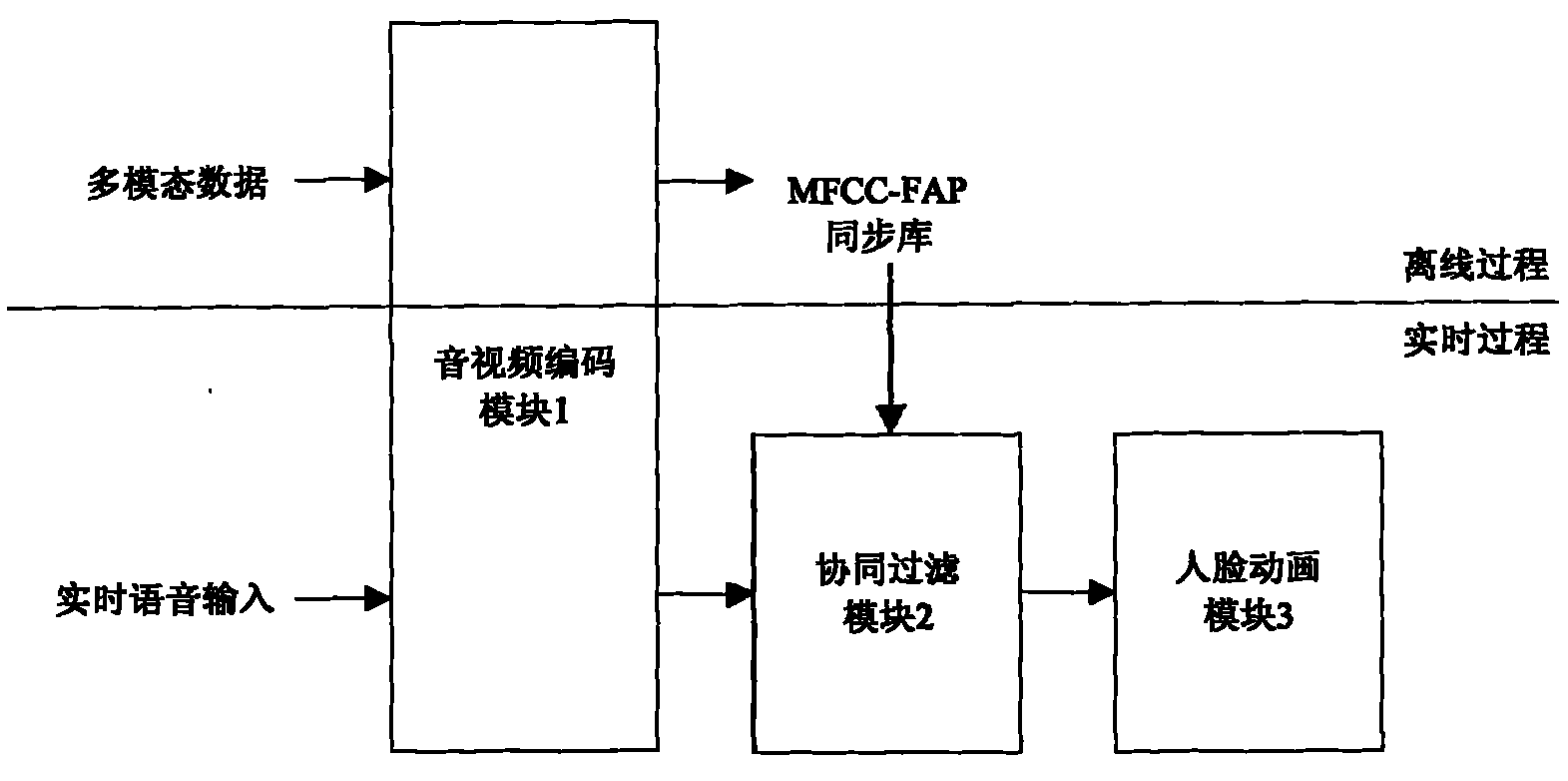

Collaborative filtering-based real-time voice-driven human face and lip synchronous animation system

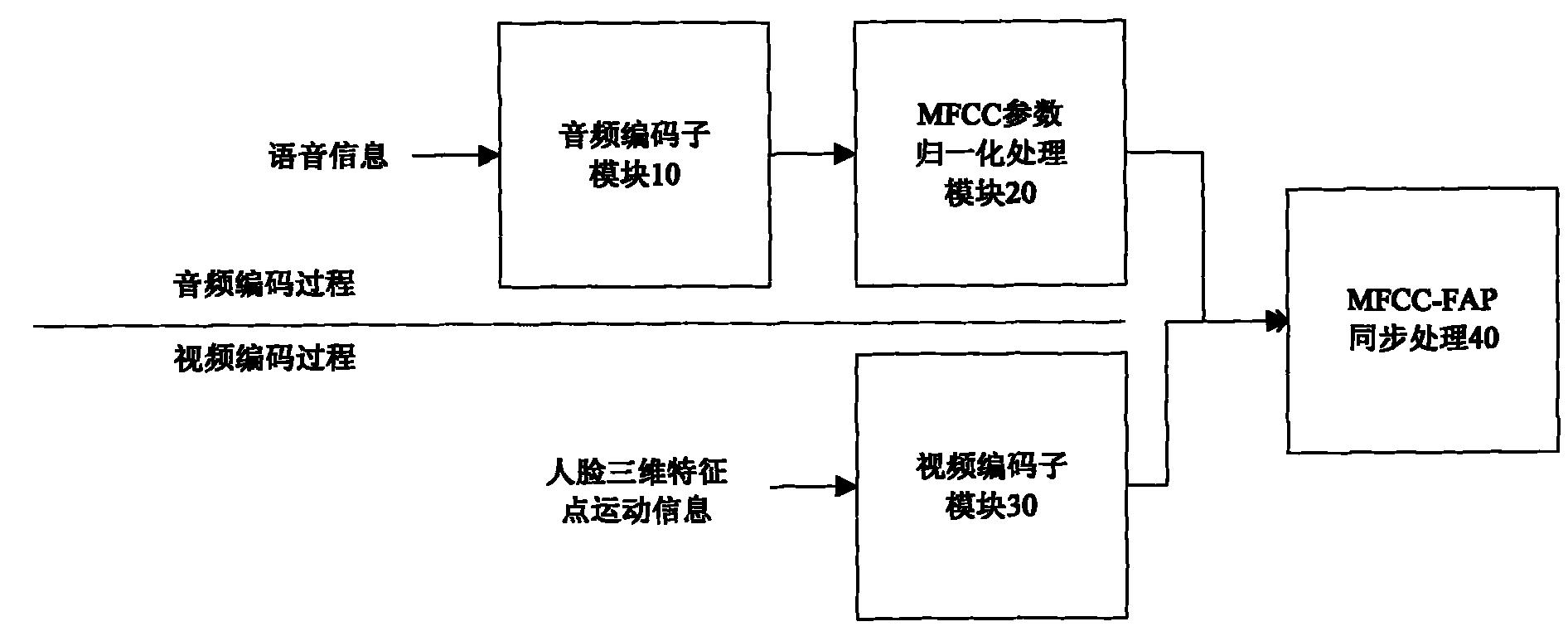

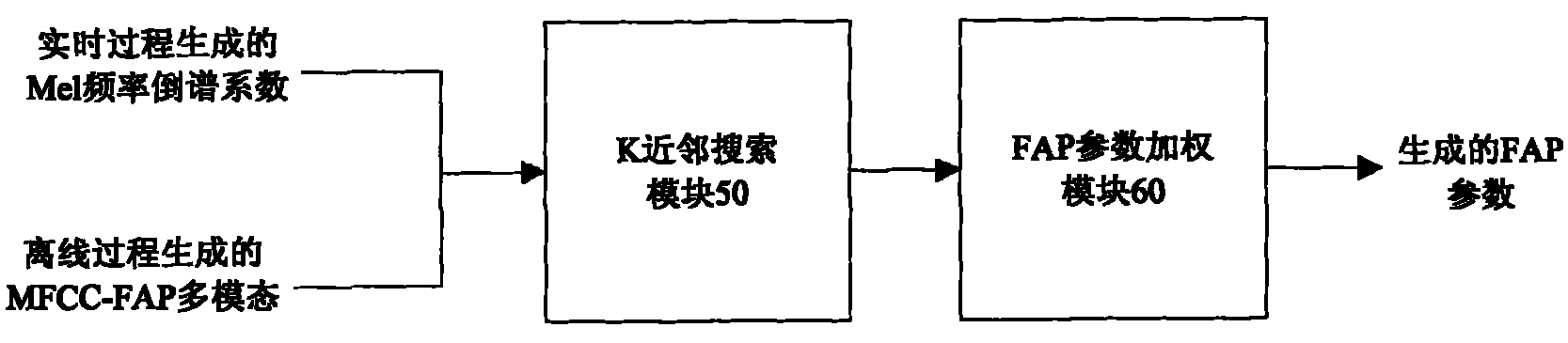

InactiveCN101930619AReduce complexityReduce decreaseTelevision systemsAnimationMel-frequency cepstrumAnimation system

The invention discloses a collaborative filtering-based real-time voice-driven human face and lip synchronous animation system. By inputting voice in real time, a human head model makes lip animation synchronous with the input voice. The system comprises an audio / video coding module, a collaborative filtering module, and an animation module; the module respectively performs Mel frequency cepstrum parameter coding and human face animation parameter coding in the standard of Moving Picture Experts Group (MPEG-4) on the acquired voice and human face three-dimensional characteristic point motion information to obtain a Mel frequency cepstrum parameter and human face animation parameter multimodal synchronous library; the collaborative filtering module solves a human face animation parameter synchronous with the voice by combining Mel frequency cepstrum parameter coding of the newly input voice and the Mel frequency cepstrum parameter and human face animation parameter multimodal synchronous library through collaborative filtering; and the animation module carries out animation by driving the human face model through the human face animation parameter. The system has the advantages of better sense of reality, real-time and wider application environment.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com