Collaborative filtering-based real-time voice-driven human face and lip synchronous animation system

A collaborative filtering algorithm and real-time voice technology, applied in animation production, voice analysis, voice recognition, etc., can solve the problems of slow speed and low recognition rate of voice recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The present invention will be further described below with reference to the drawings and examples, and the steps and processes for realizing the present invention will be better described through the detailed description of each component of the system in conjunction with the drawings.

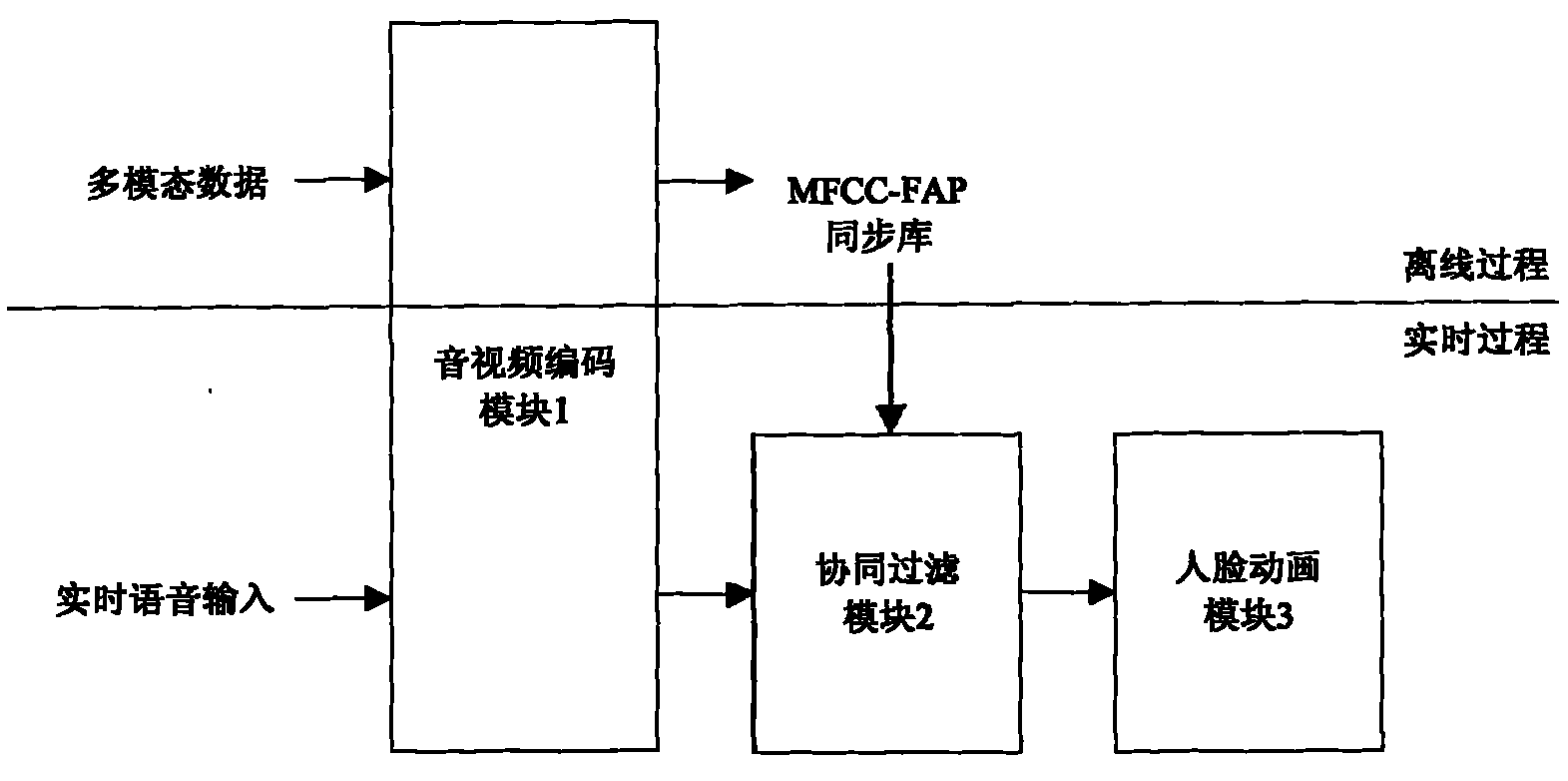

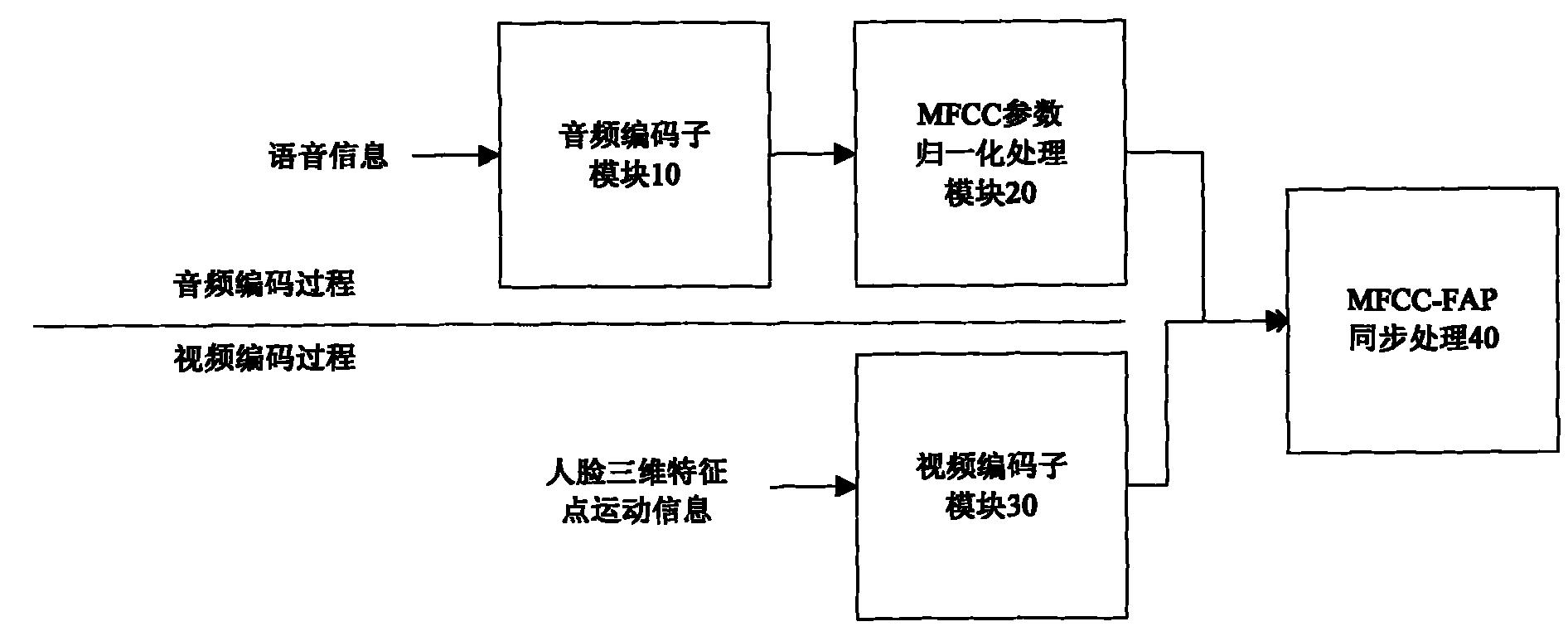

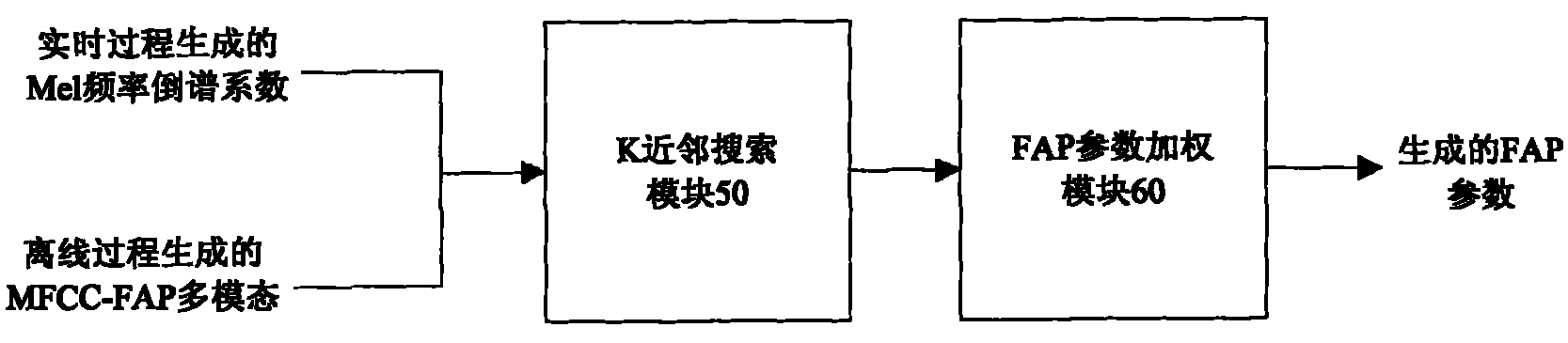

[0023] figure 1 It shows a schematic diagram of a real-time voice-driven human face lip synchronization animation system based on a collaborative filtering algorithm. The system is written in C language and can be compiled and run with visual studio under the windows platform, and can be compiled with the GNU compiler suite (GCC) under the linux platform run. exist figure 1 In the preferred embodiment of the present invention, the system of the present invention is divided into three parts: an audio and video encoding module 1, a collaborative filtering module 2, and a facial animation module 3. Among them, the multi-modal data acquisition equipment is used to collect and record the s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com