Patents

Literature

127 results about "Animation system" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The Computer Animation Production System (CAPS) was a digital ink and paint system used in animated feature films, the first at a major studio, designed to replace the expensive process of transferring animated drawings to cels using India ink or xerographic technology, and painting the reverse sides of the cels with gouache paint.

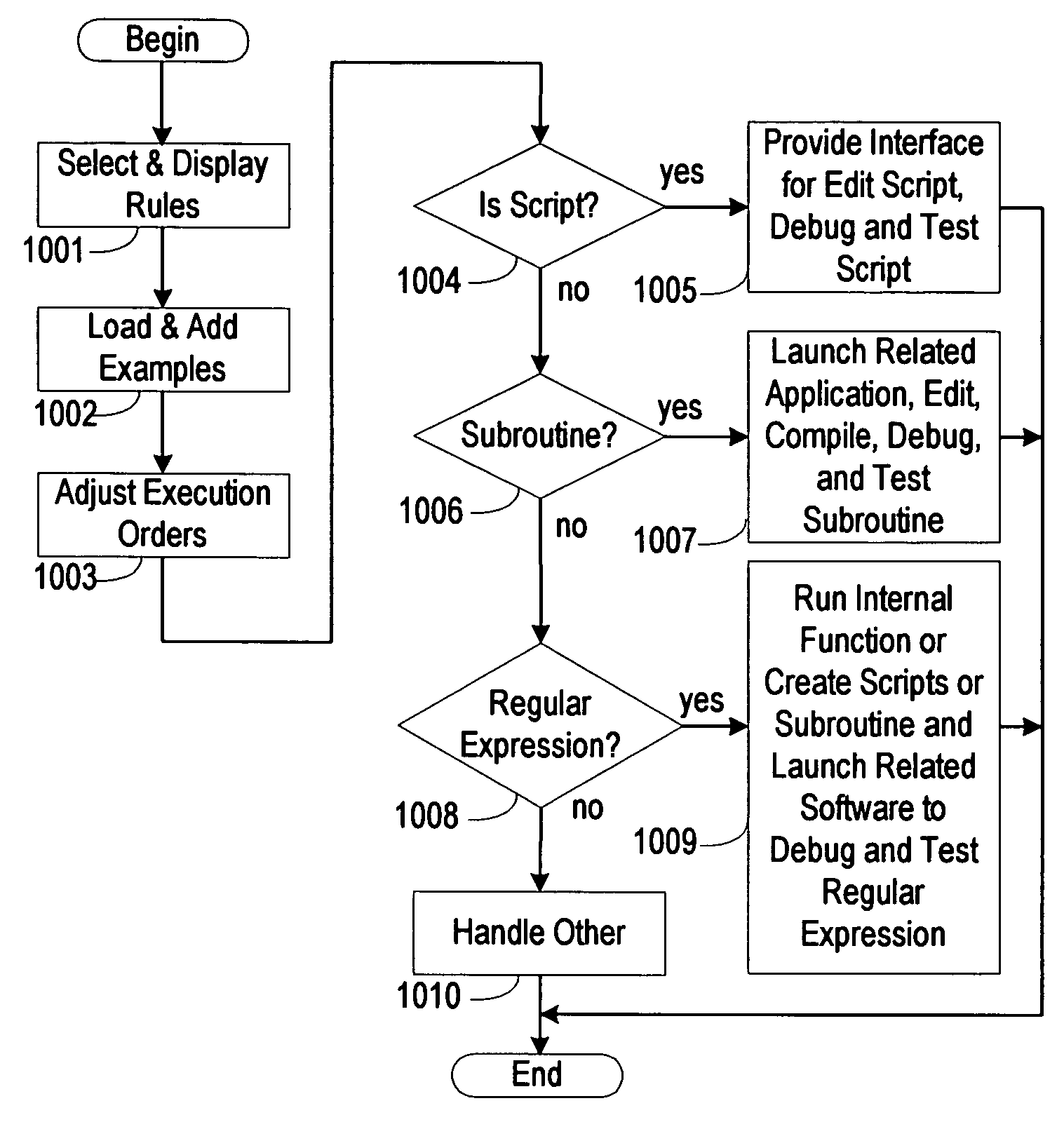

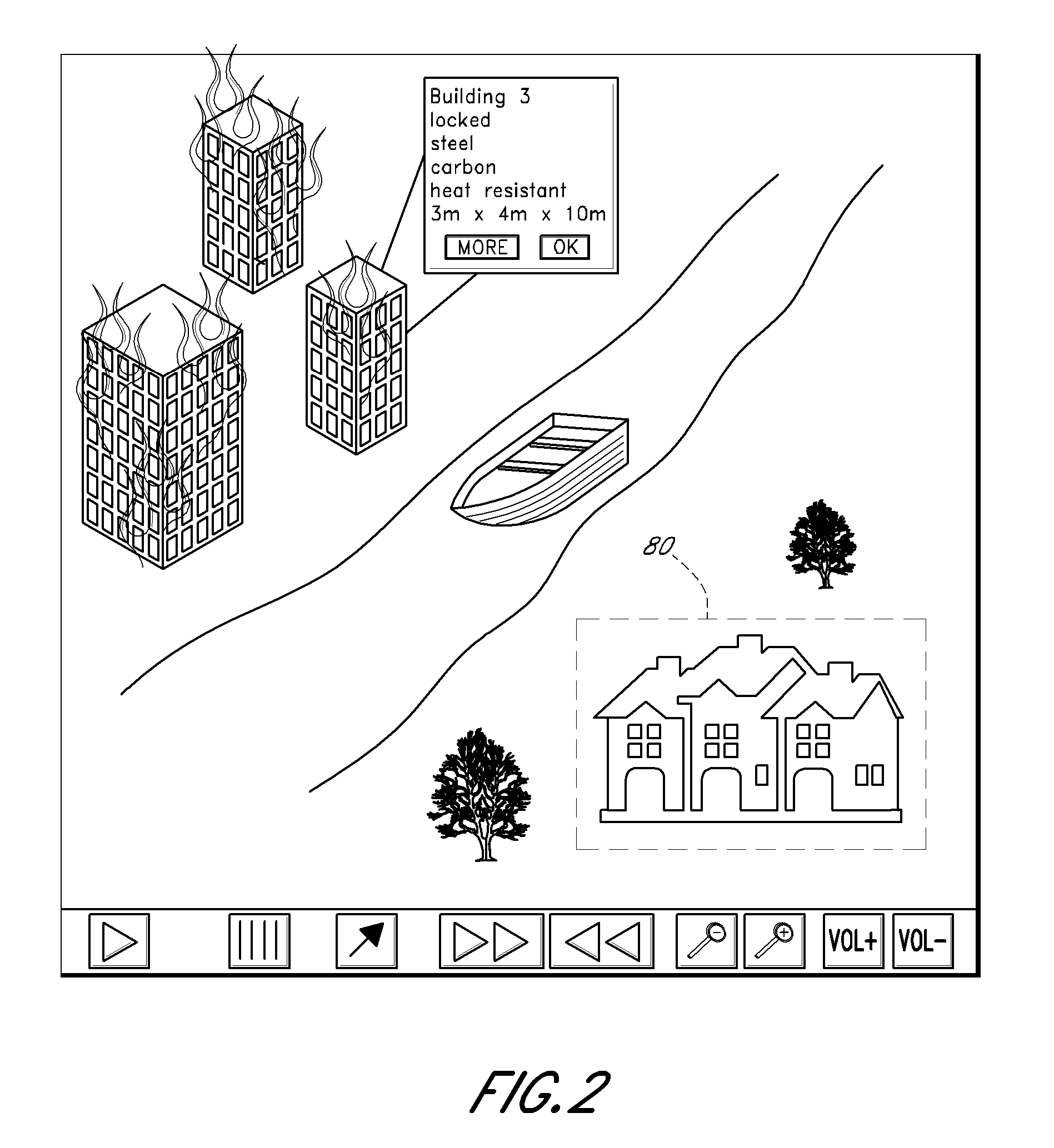

Document animation system

InactiveUS7412389B2Enhance vocabulary quicklySubtle differences among wordsAnimationSpeech synthesisDocumentation procedureAnimation

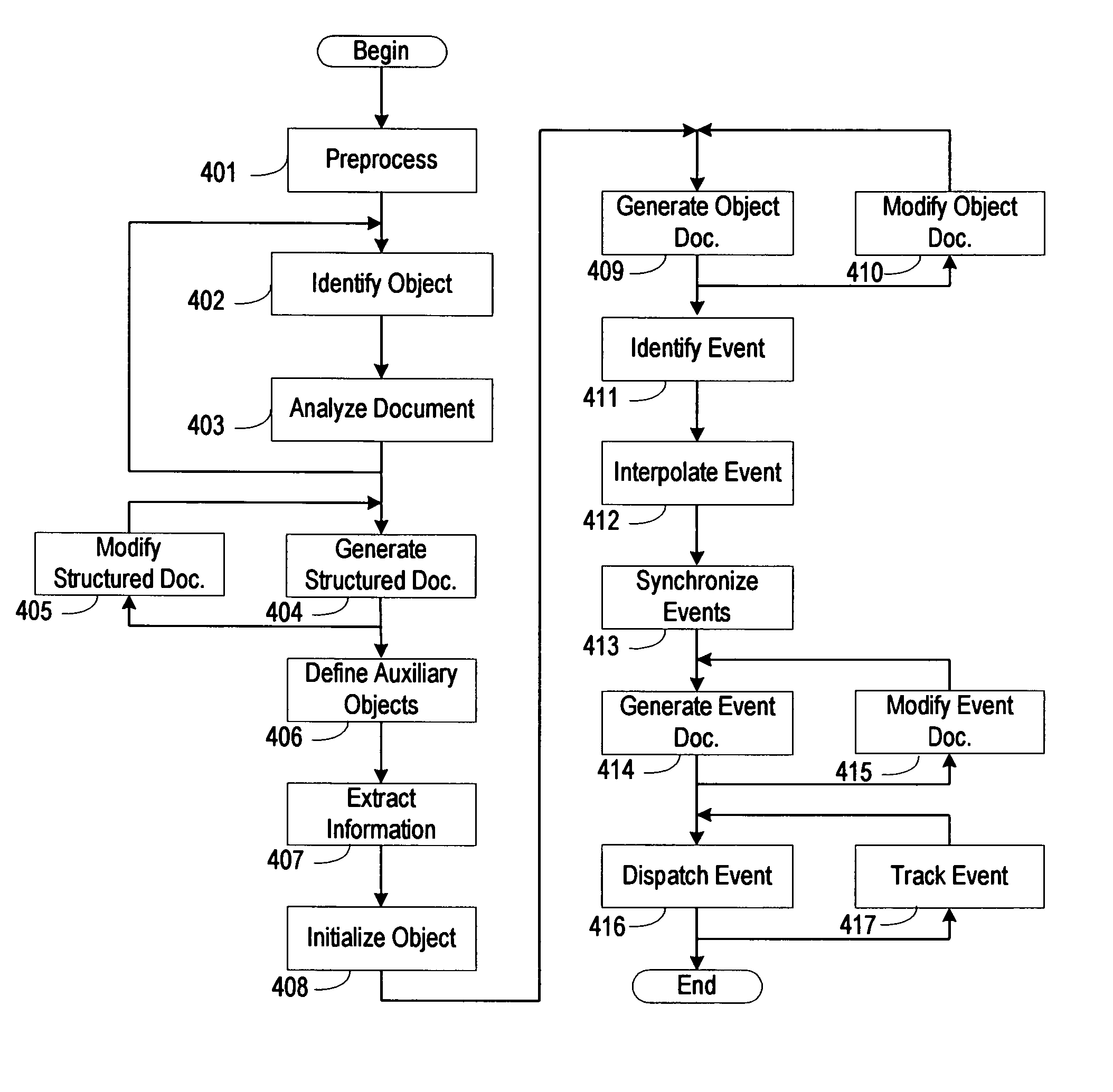

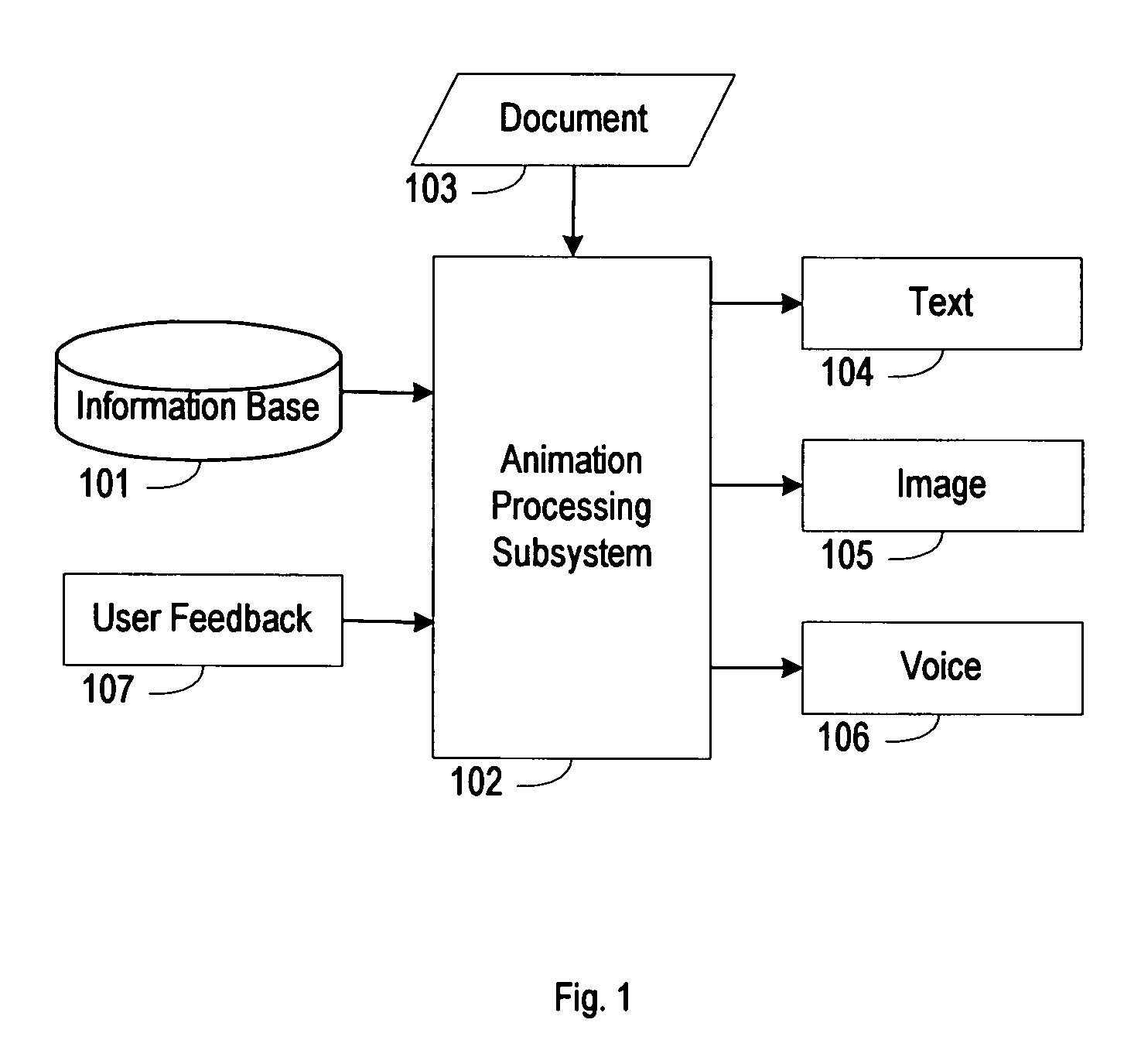

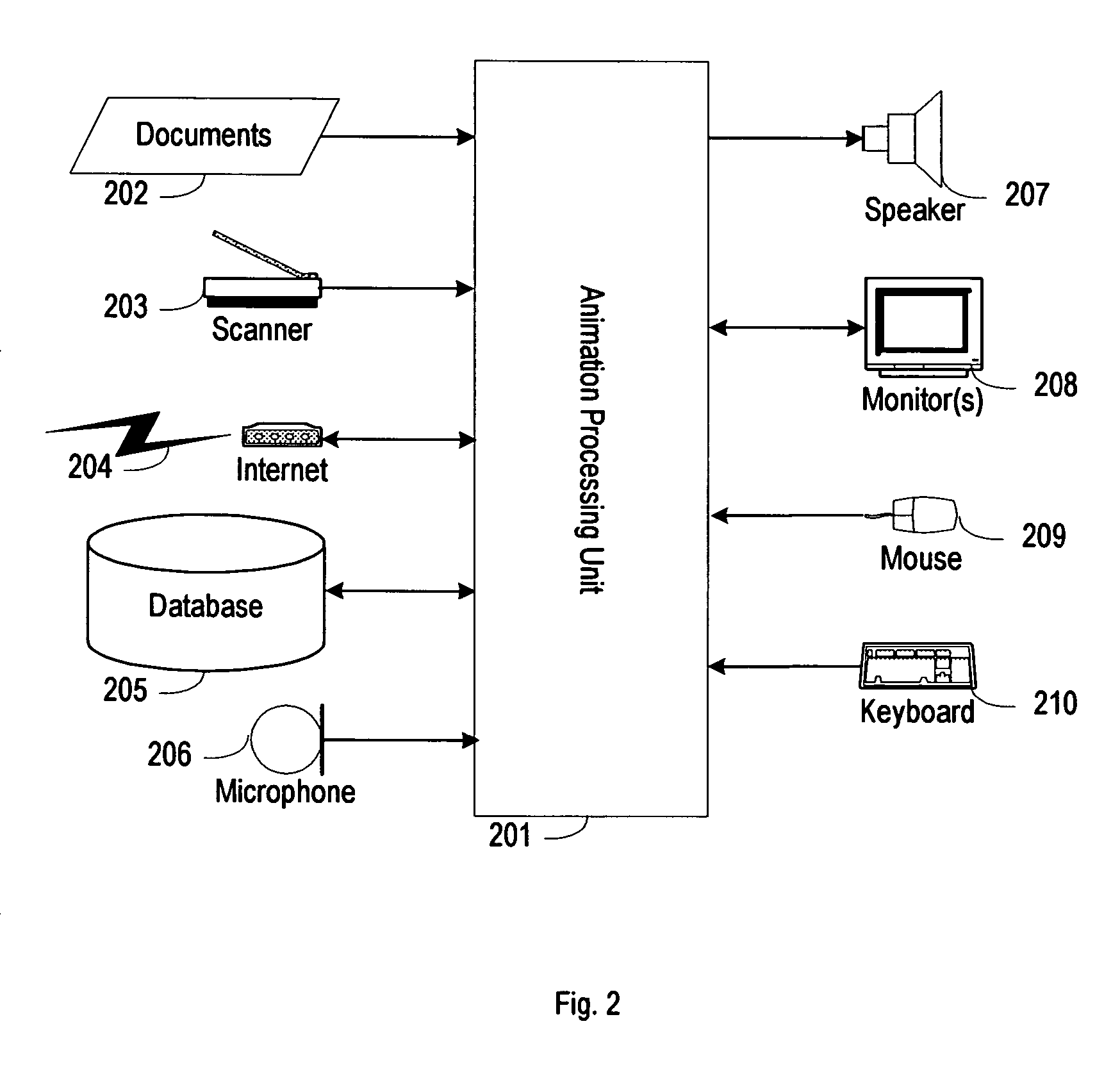

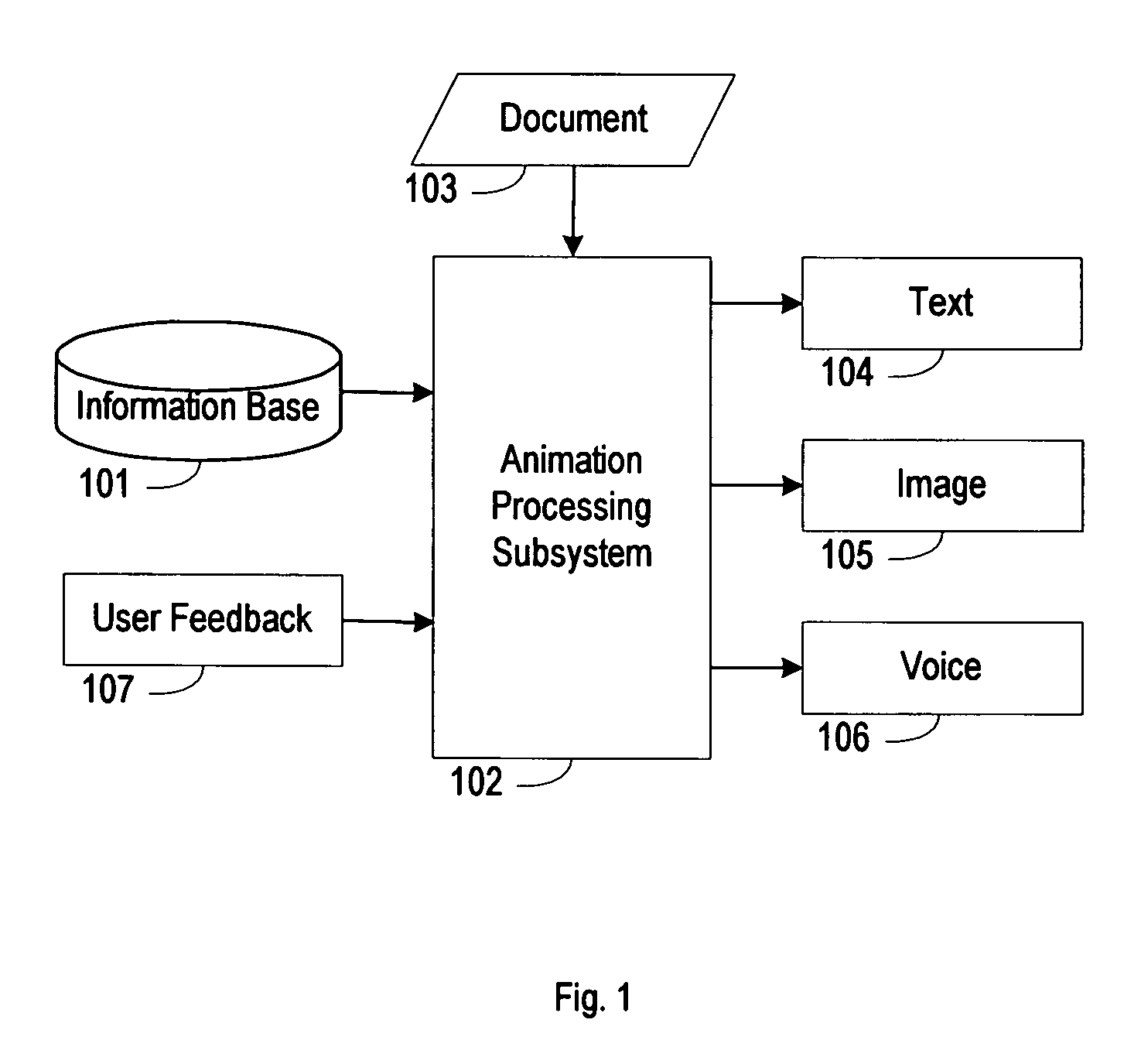

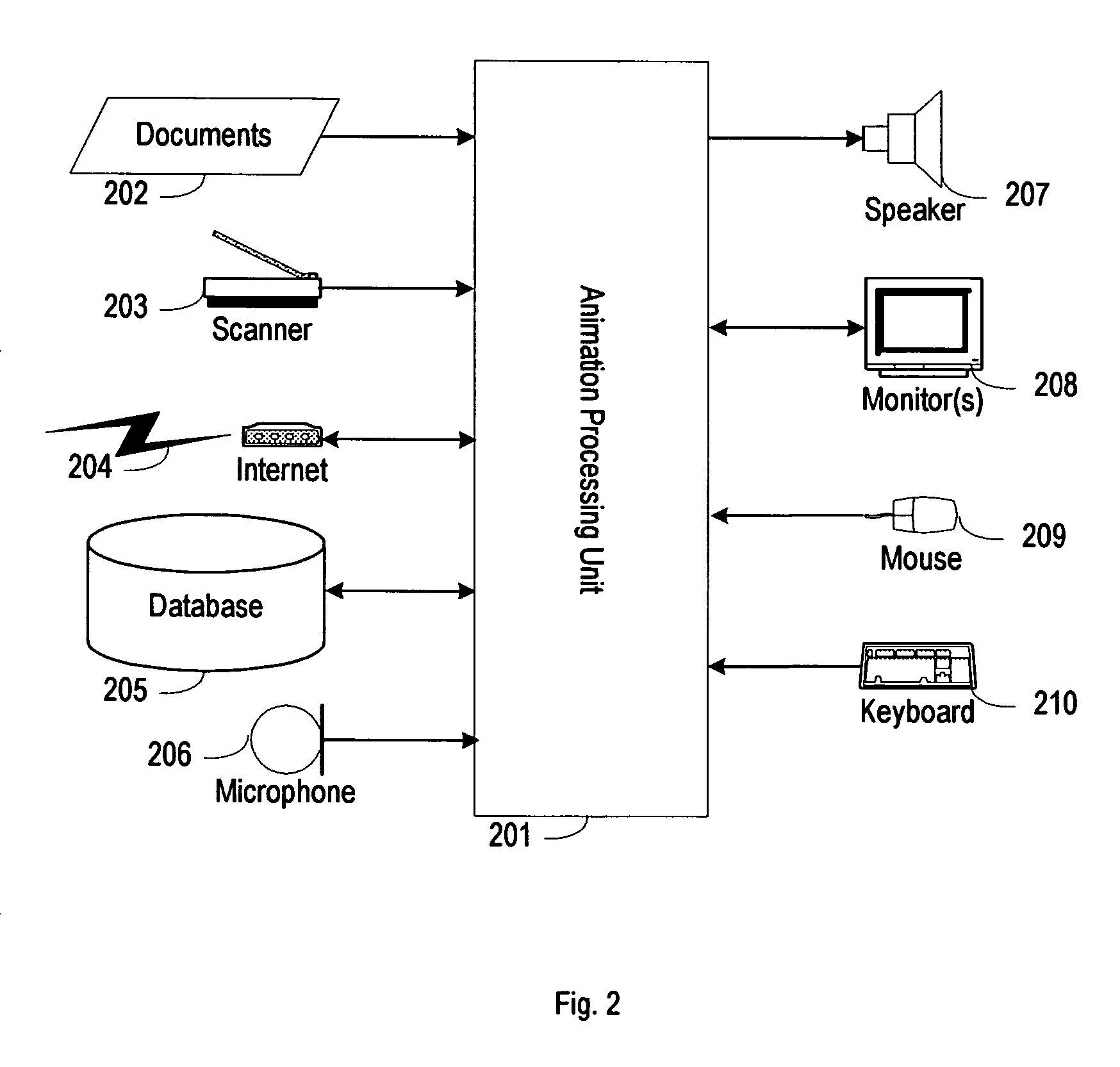

An animating system converts a text-based document into a sequence of animating pictures for helping a user to understand better and faster. First, the system provides interfaces for a user to build various object models, specify default rules for these object models, and construct the references for meanings and actions. Second, the system will analyze the document, extract desired information, identify various objects, and organize information. Then the system will create objects from corresponding object models and provide interfaces to modify default values and default rules and define specific values and specific rules. Further, the system will identify the meanings of words and phrases. Furthermore, the system will identify, interpolate, synchronize, and dispatch events. Finally, the system provides interface for the user to track events and particular objects.

Owner:YANG GEORGE L

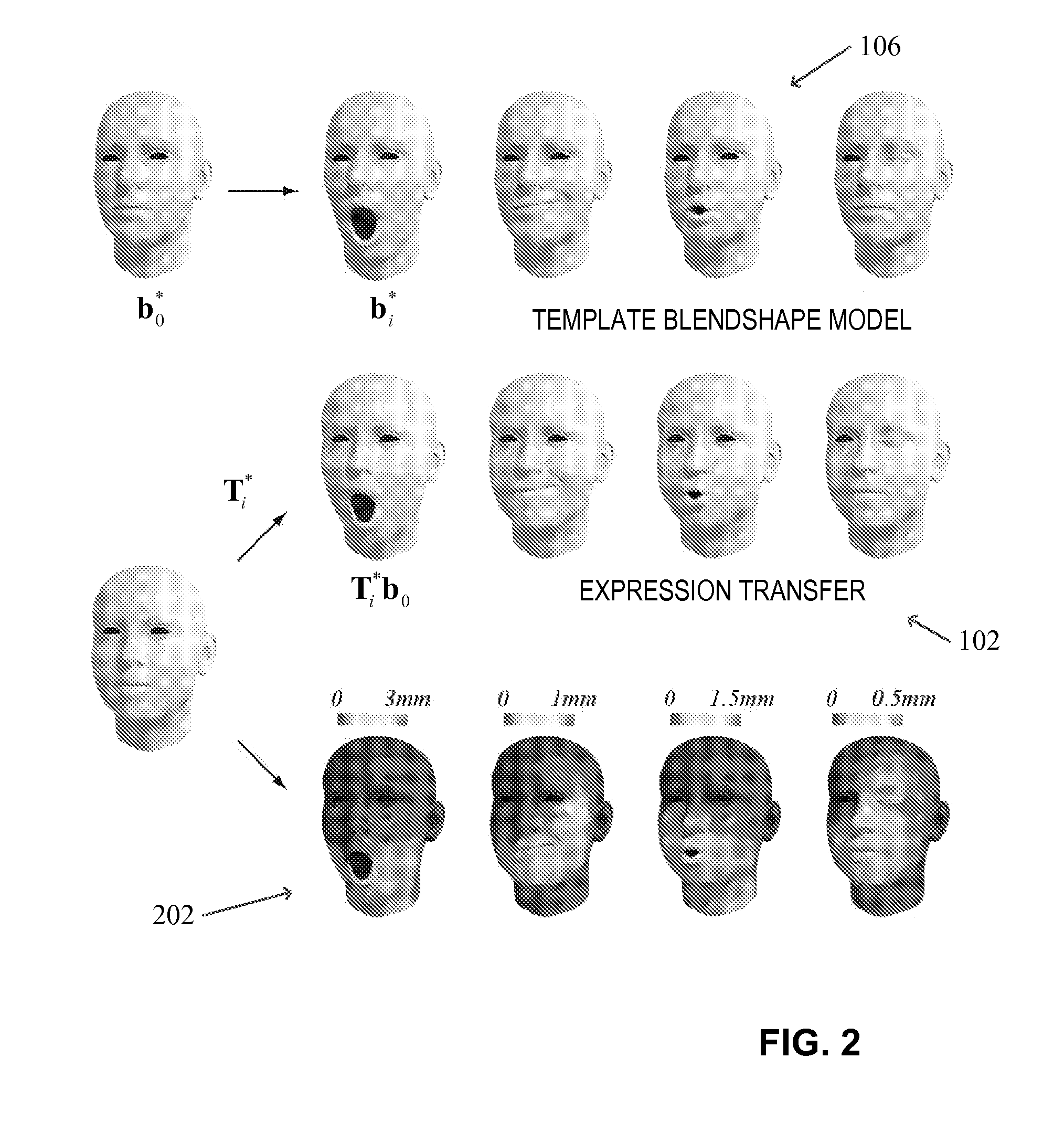

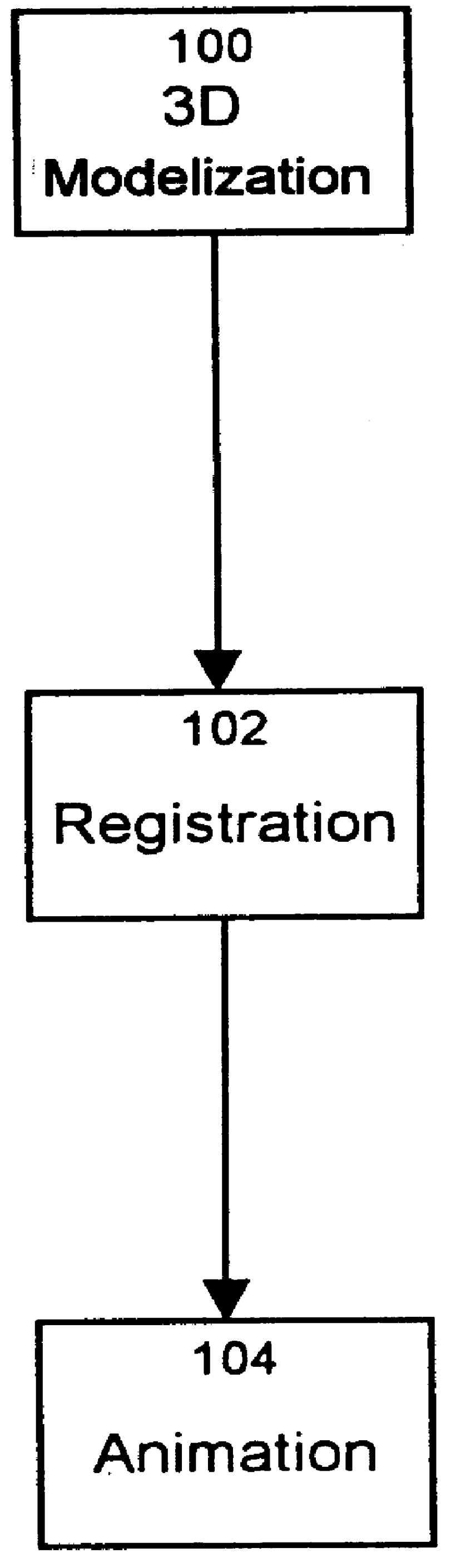

Online modeling for real-time facial animation

ActiveUS9378576B2Shorten the trackImprove tracking performanceImage analysisCharacter and pattern recognitionGraphicsPattern recognition

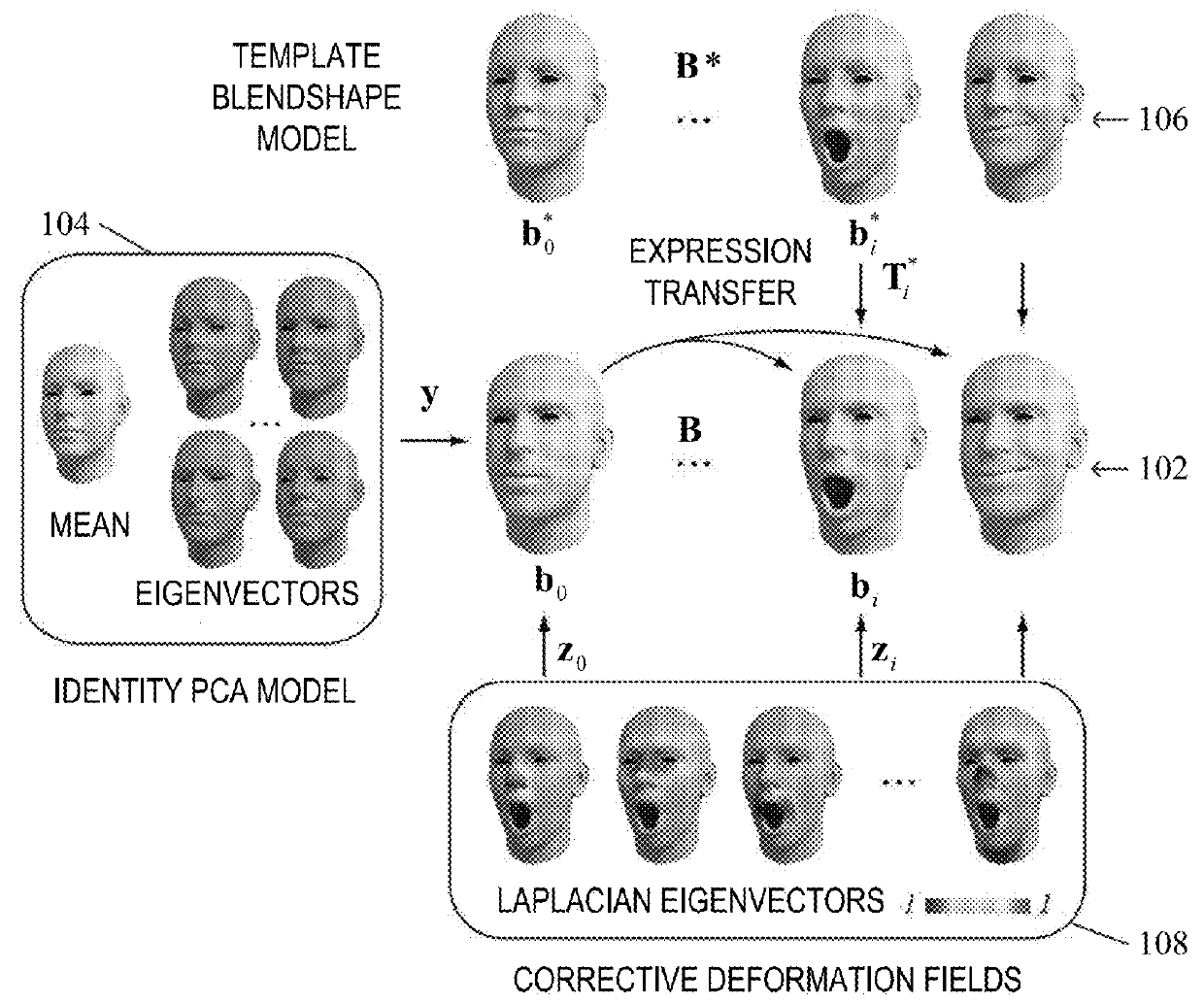

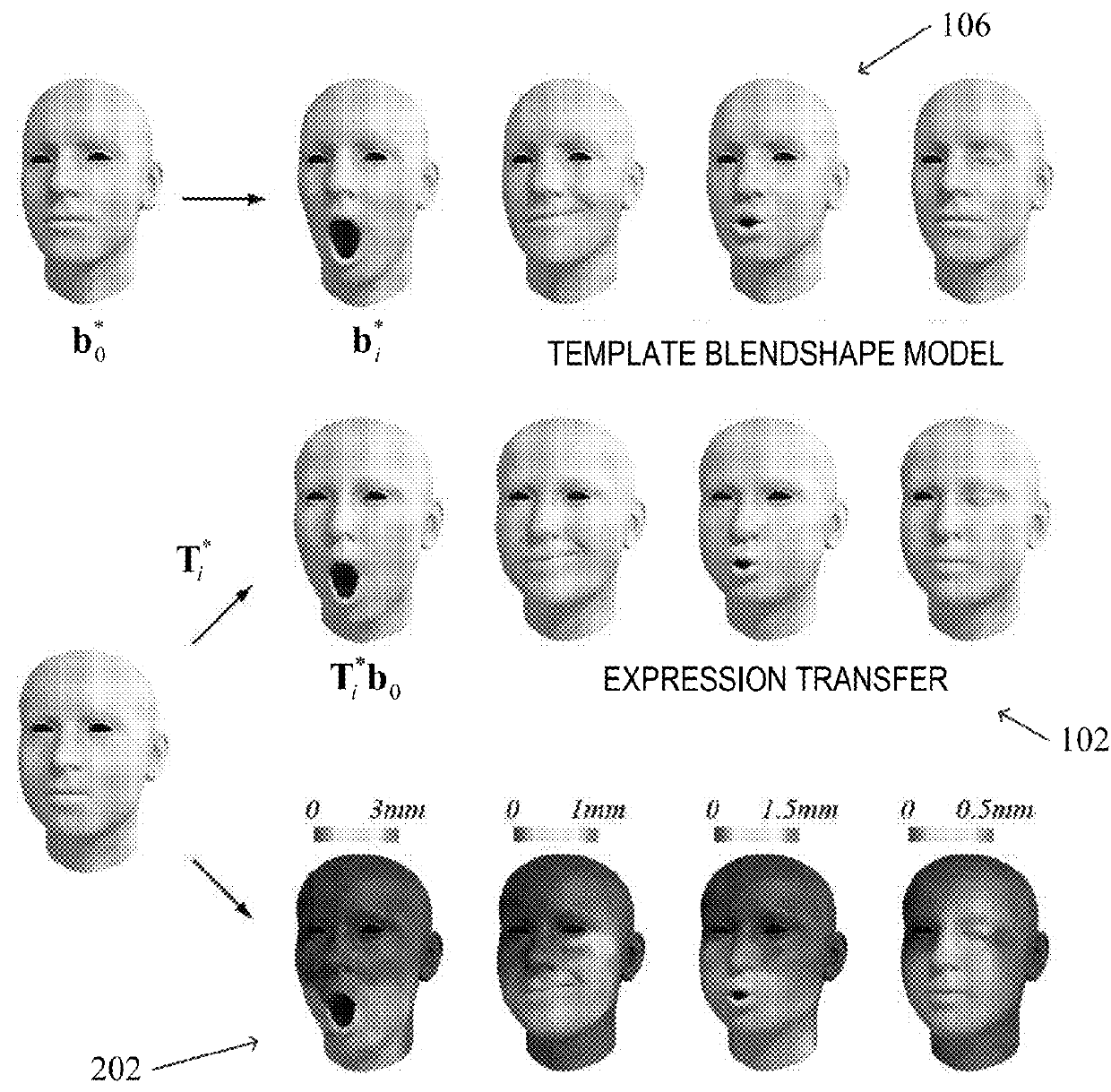

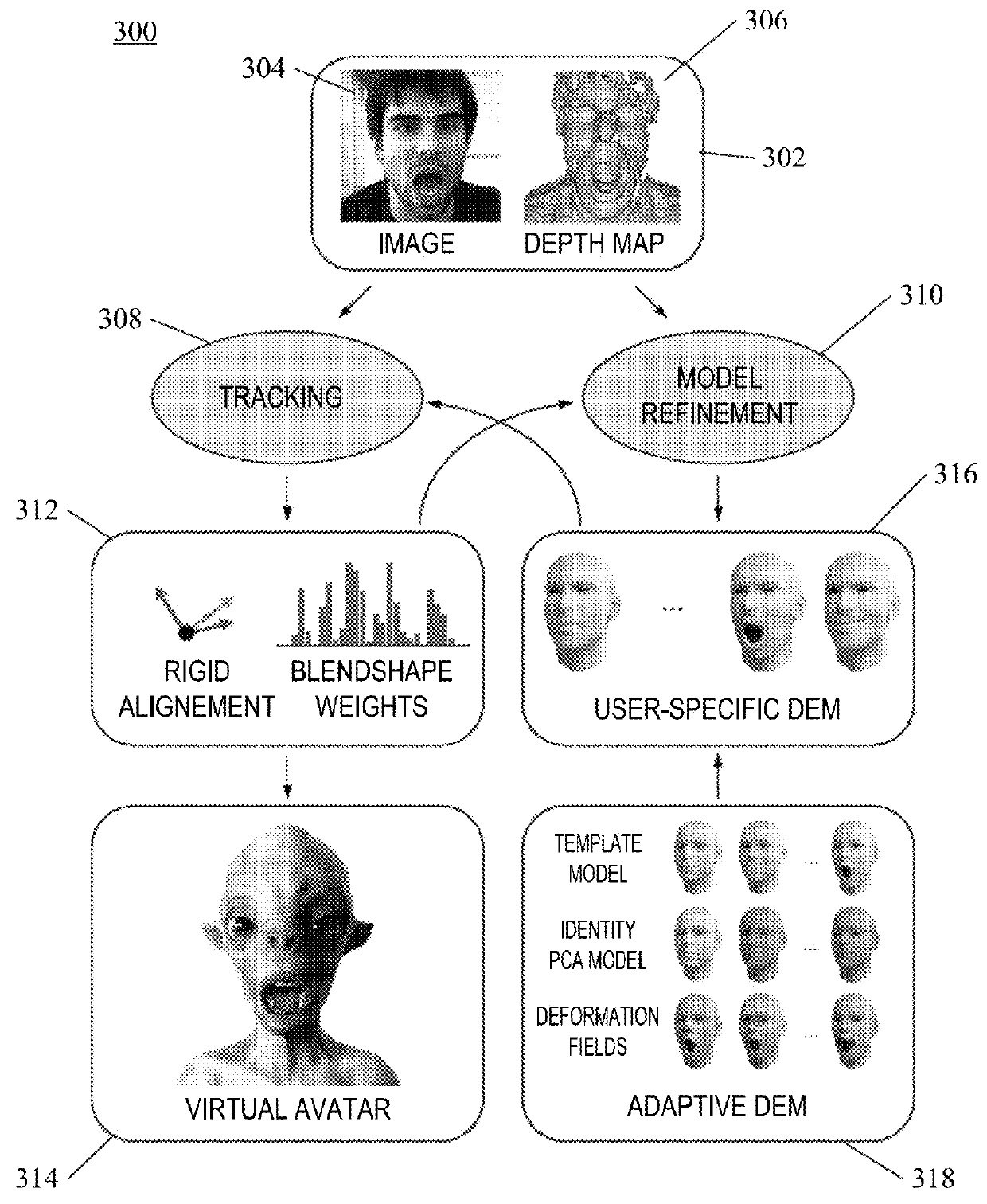

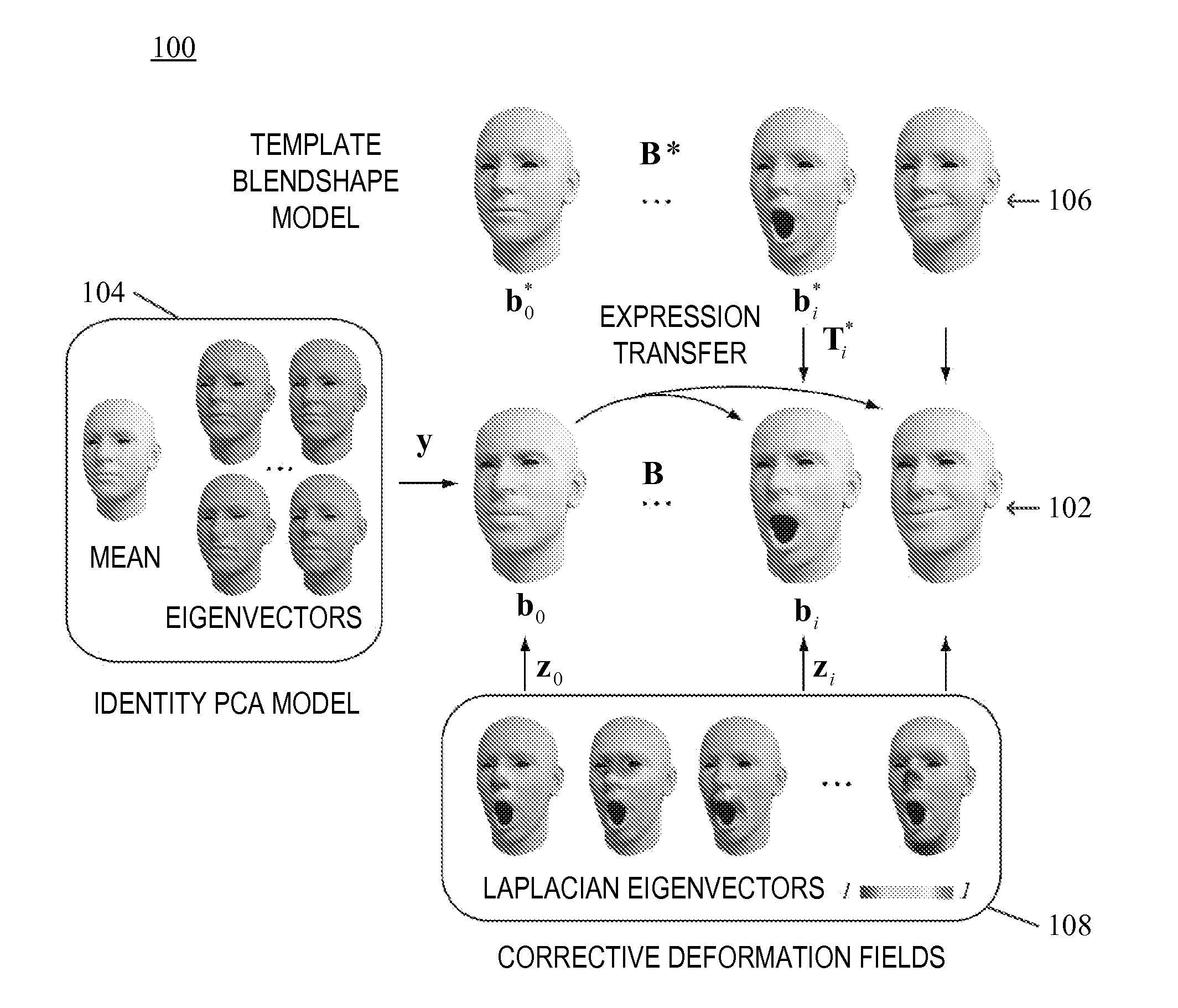

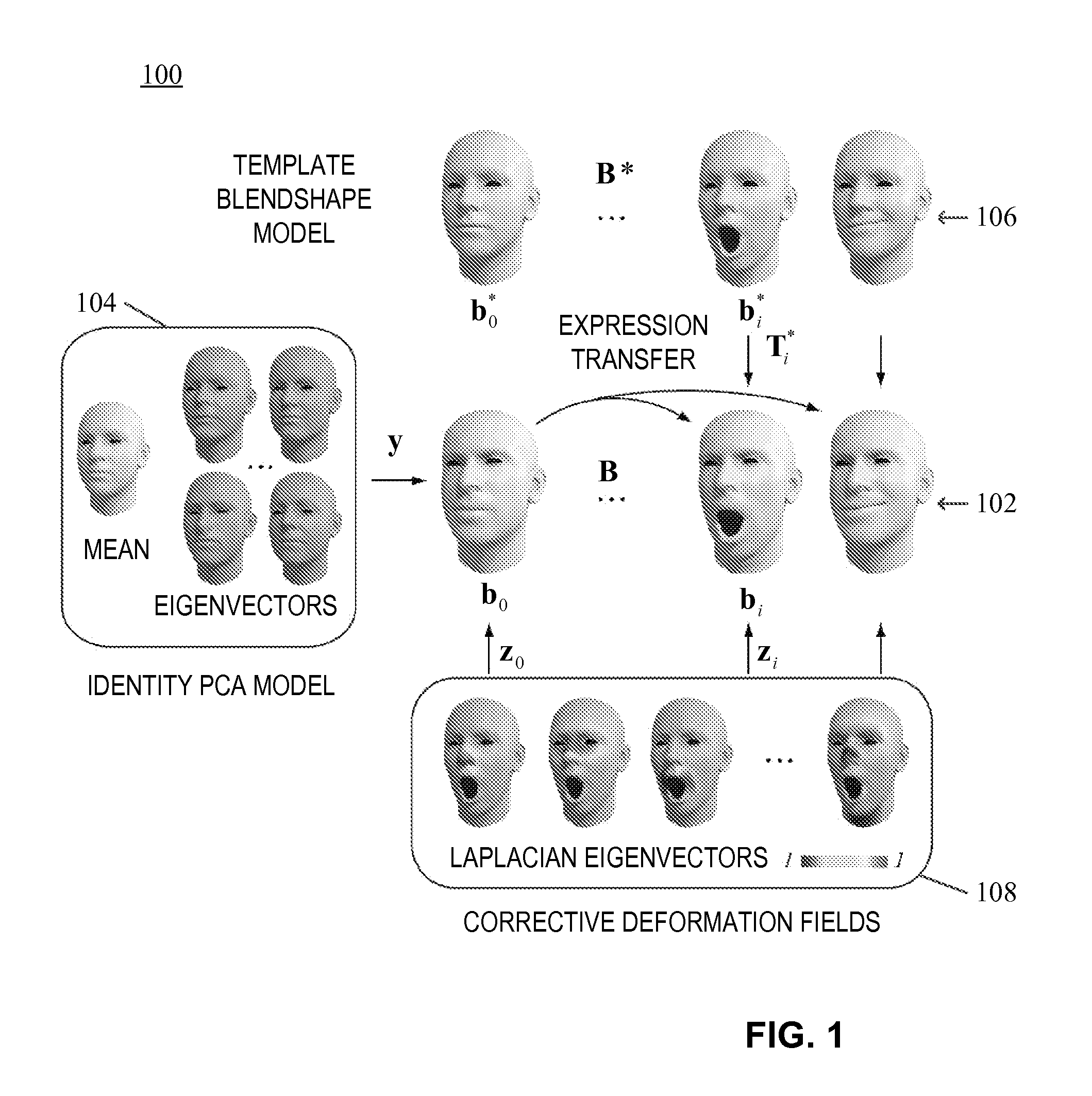

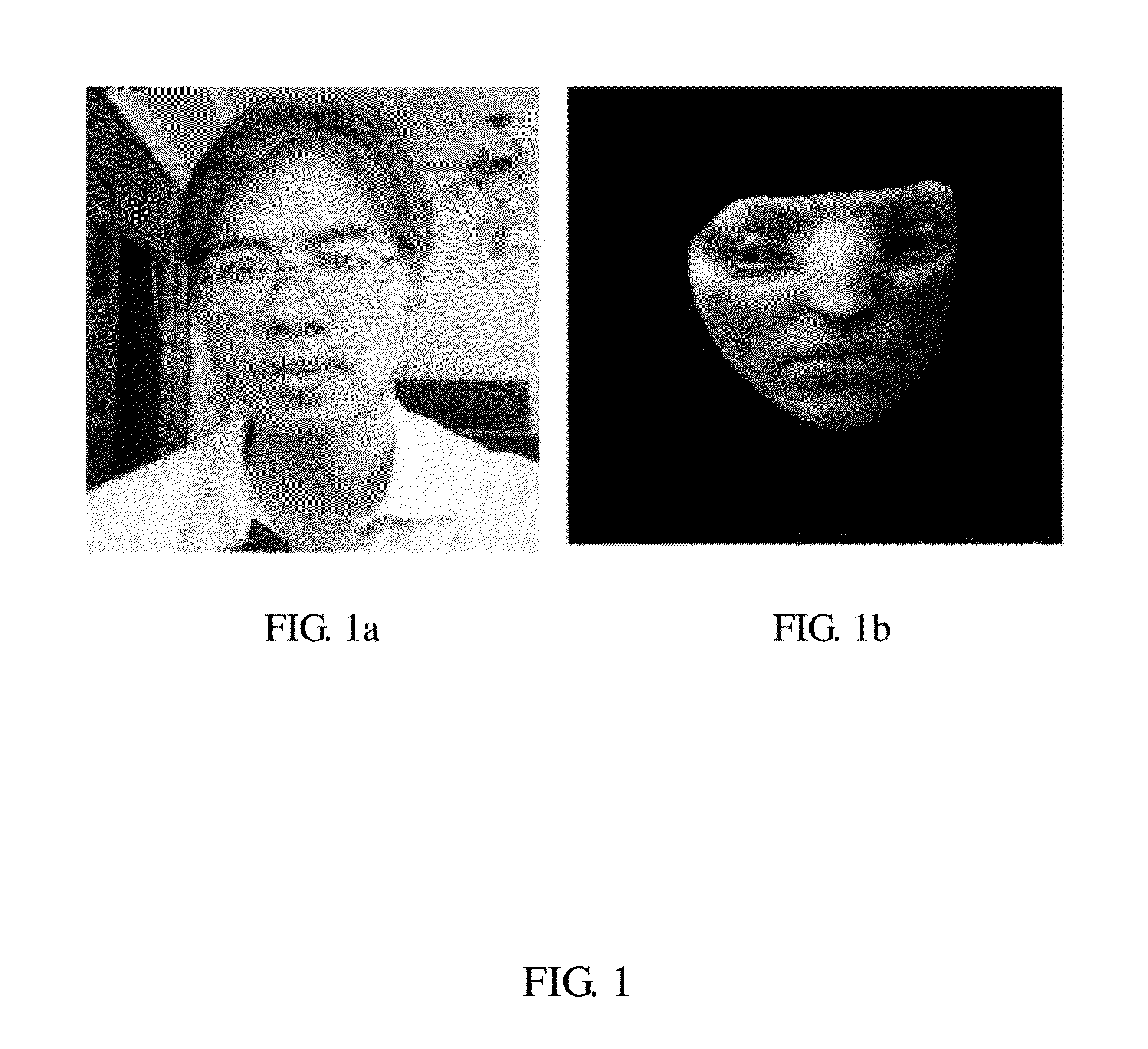

Embodiments relate to a method for real-time facial animation, and a processing device for real-time facial animation. The method includes providing a dynamic expression model, receiving tracking data corresponding to a facial expression of a user, estimating tracking parameters based on the dynamic expression model and the tracking data, and refining the dynamic expression model based on the tracking data and estimated tracking parameters. The method may further include generating a graphical representation corresponding to the facial expression of the user based on the tracking parameters. Embodiments pertain to a real-time facial animation system.

Owner:APPLE INC

Online modeling for real-time facial animation

ActiveUS20140362091A1Improve tracking performanceShorten the trackImage analysisCharacter and pattern recognitionGraphicsPattern recognition

Embodiments relate to a method for real-time facial animation, and a processing device for real-time facial animation. The method includes providing a dynamic expression model, receiving tracking data corresponding to a facial expression of a user, estimating tracking parameters based on the dynamic expression model and the tracking data, and refining the dynamic expression model based on the tracking data and estimated tracking parameters. The method may further include generating a graphical representation corresponding to the facial expression of the user based on the tracking parameters. Embodiments pertain to a real-time facial animation system.

Owner:APPLE INC

Method and apparatus for providing real-time animation utilizing a database of postures

InactiveUS6163322AReduce computing timeInput/output for user-computer interactionAnimationPhysical MarkingAnimation

A method and apparatus for animating a synthetic body part. The 3D-animation system and method use a database of basic postures. In a first step, for each frame, a linear combination of the basic postures from a database of basic postures is obtained by minimizing the Euclidean distance between the displacement of critical points. The displacement information is supplied externally, and typically can be obtained by observing the displacement of physical markers placed on a moving physical body part in the real world. For instance, the synthetic body part may be an expression of a human face and the displacement data are obtained by observing physical markers placed on the face of an actor. The linear combination of the postures in the database of postures is then used to construct the desired posture. Postures are constructed for each time frame and are then displayed consecutively to provide animation. A computer readable storage medium containing a program element to direct a processor of a computer to implement the animation process is also provided.

Owner:TAARNA STUDIOS

Reactive animation

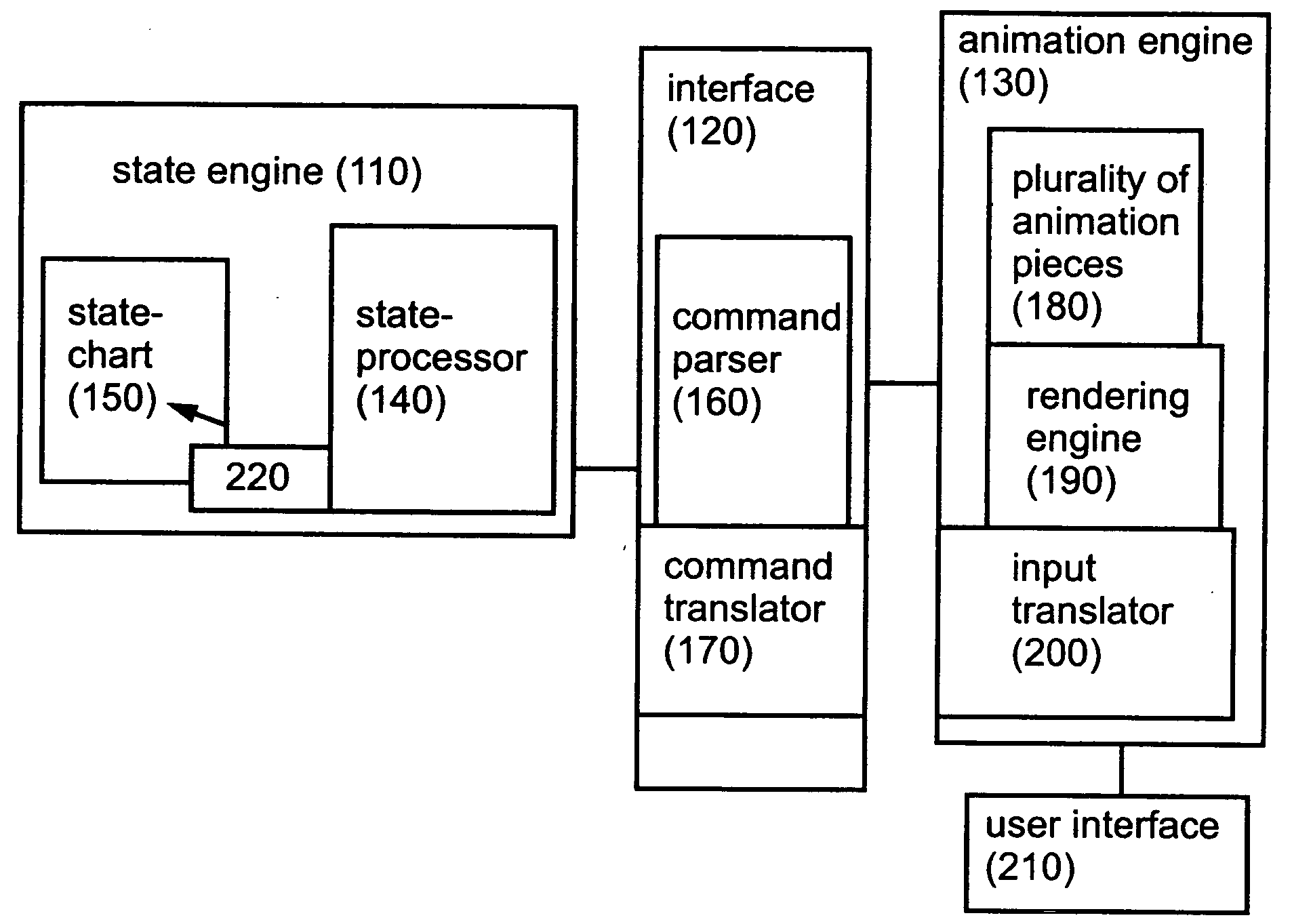

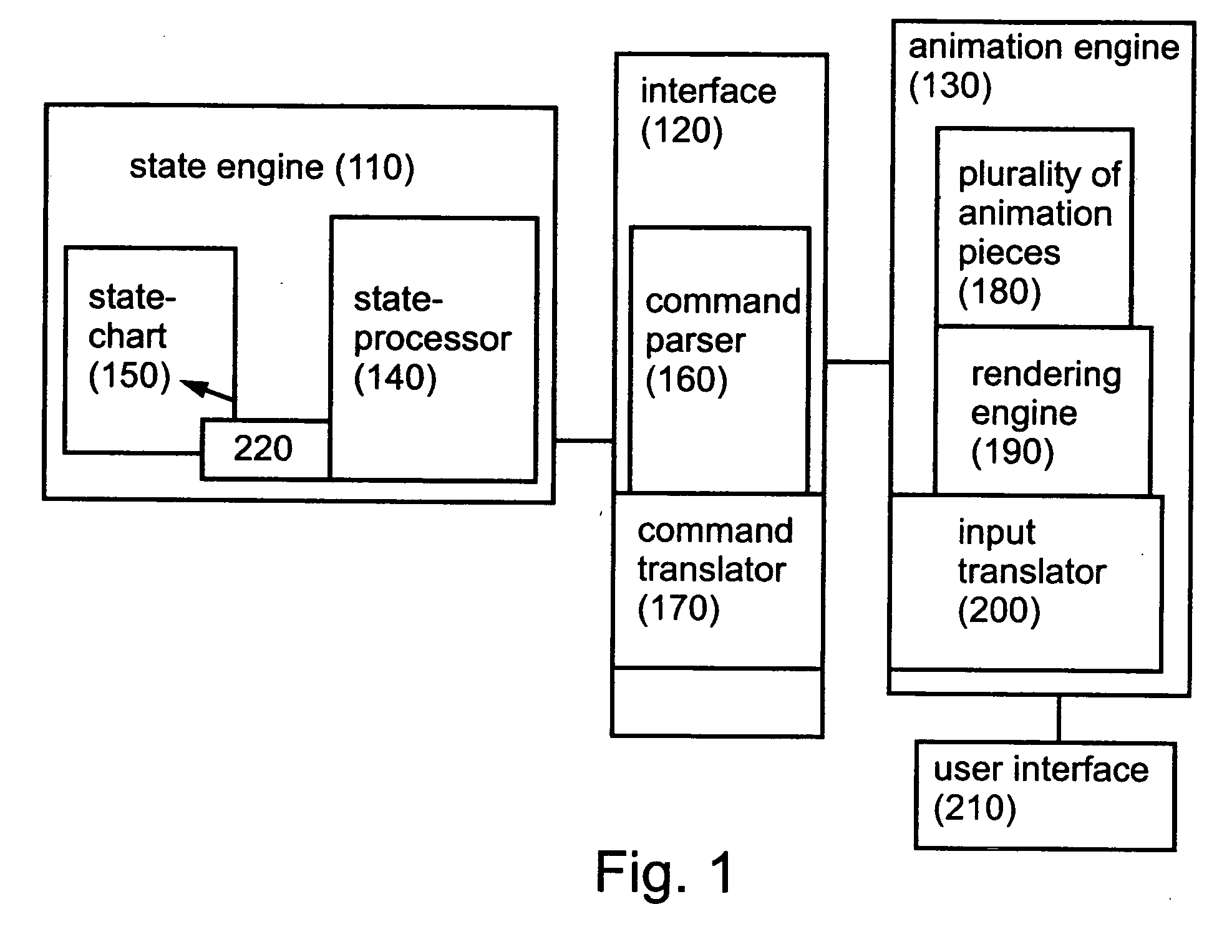

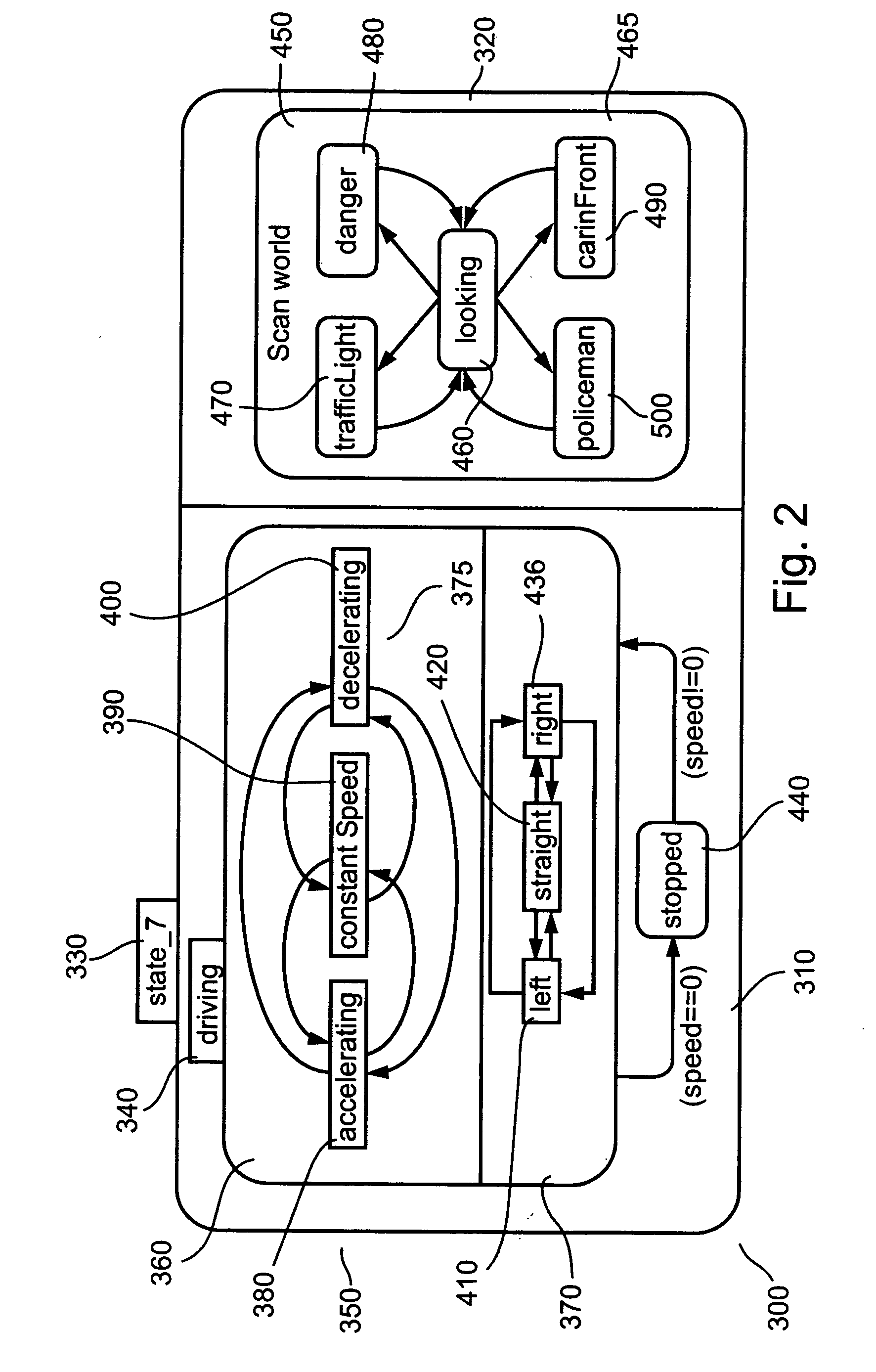

InactiveUS20060221081A1Easy to useImprove representationAnimation3D-image renderingGraphicsAnimation

Owner:YEDA RES & DEV CO LTD

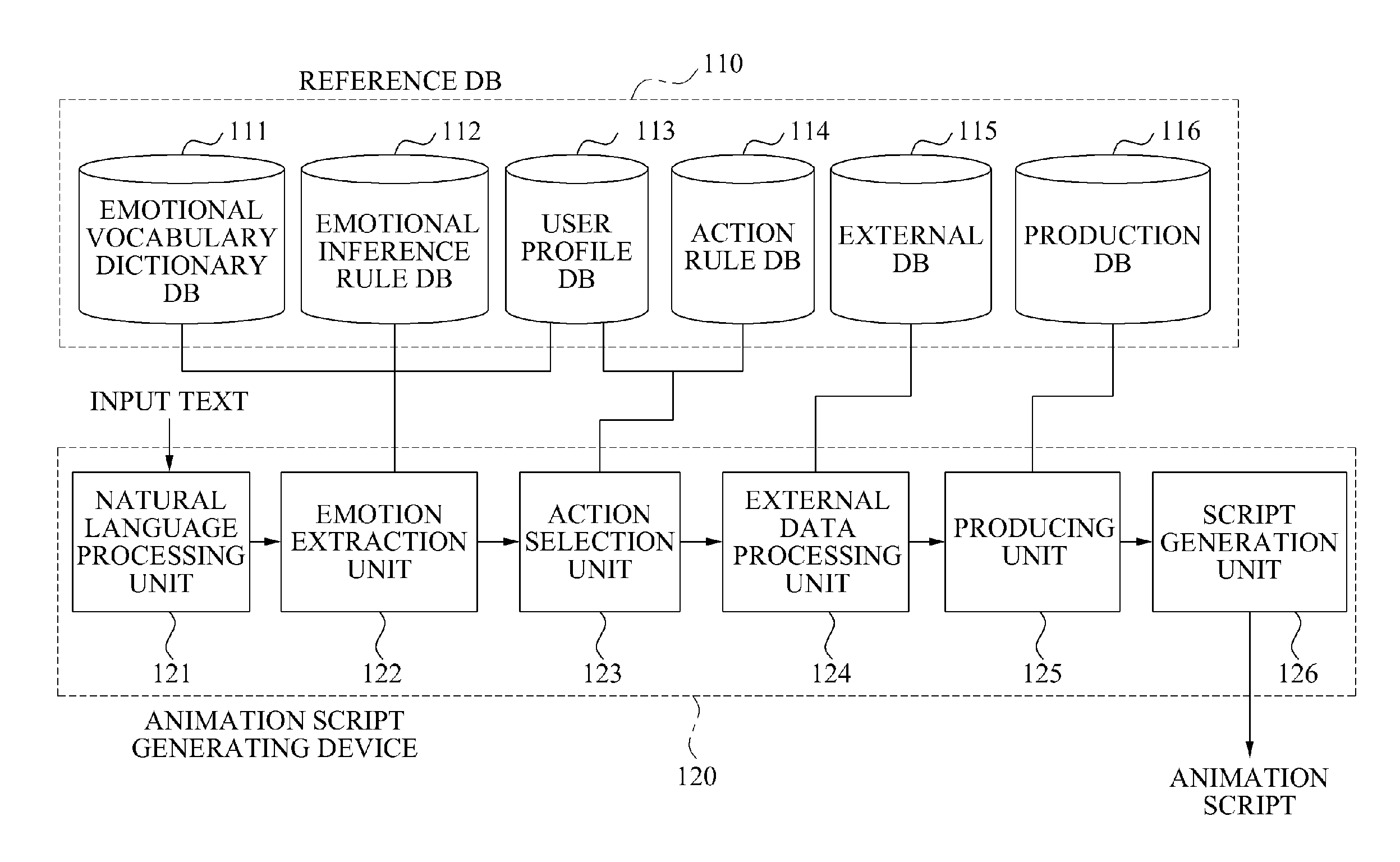

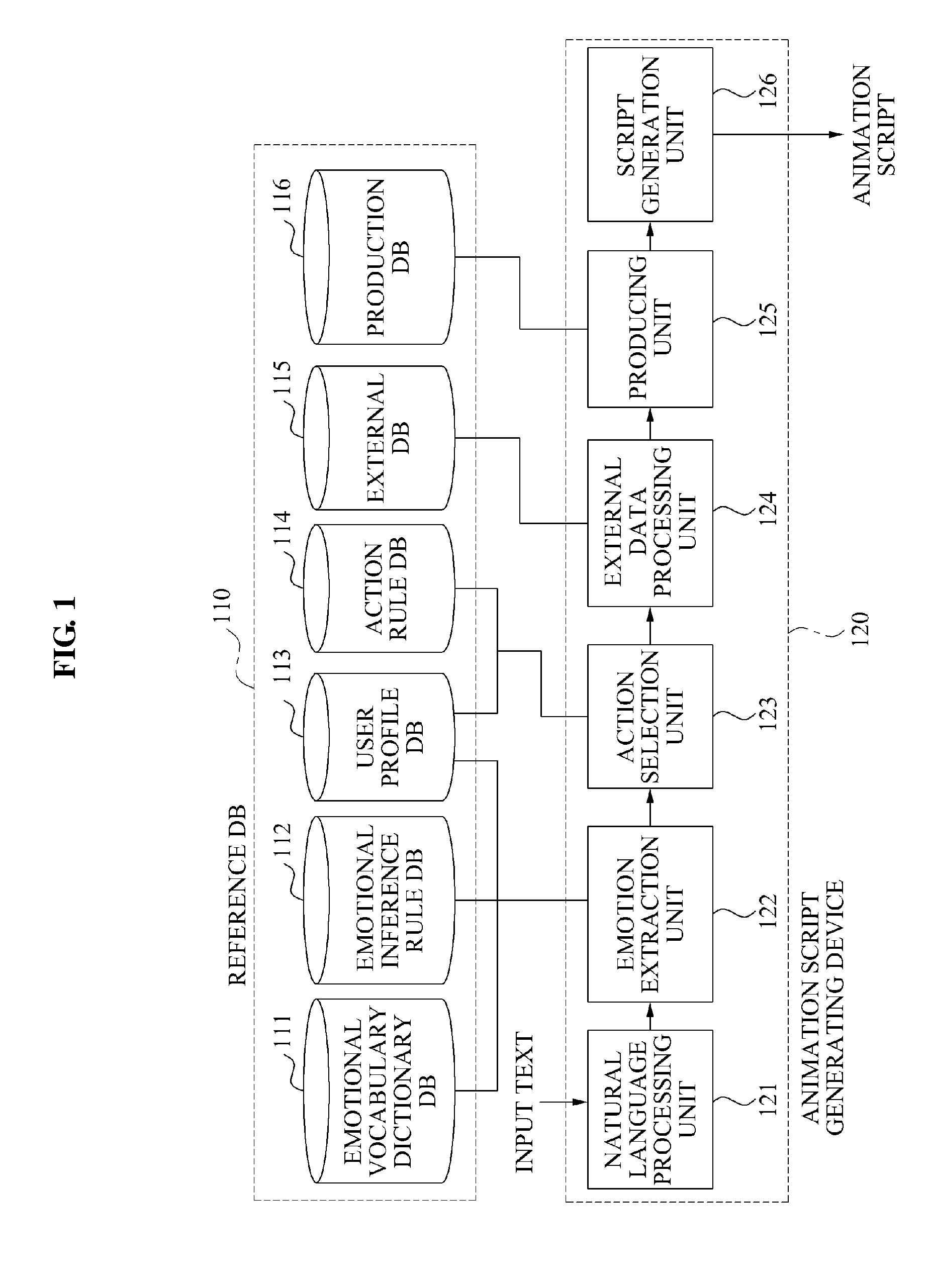

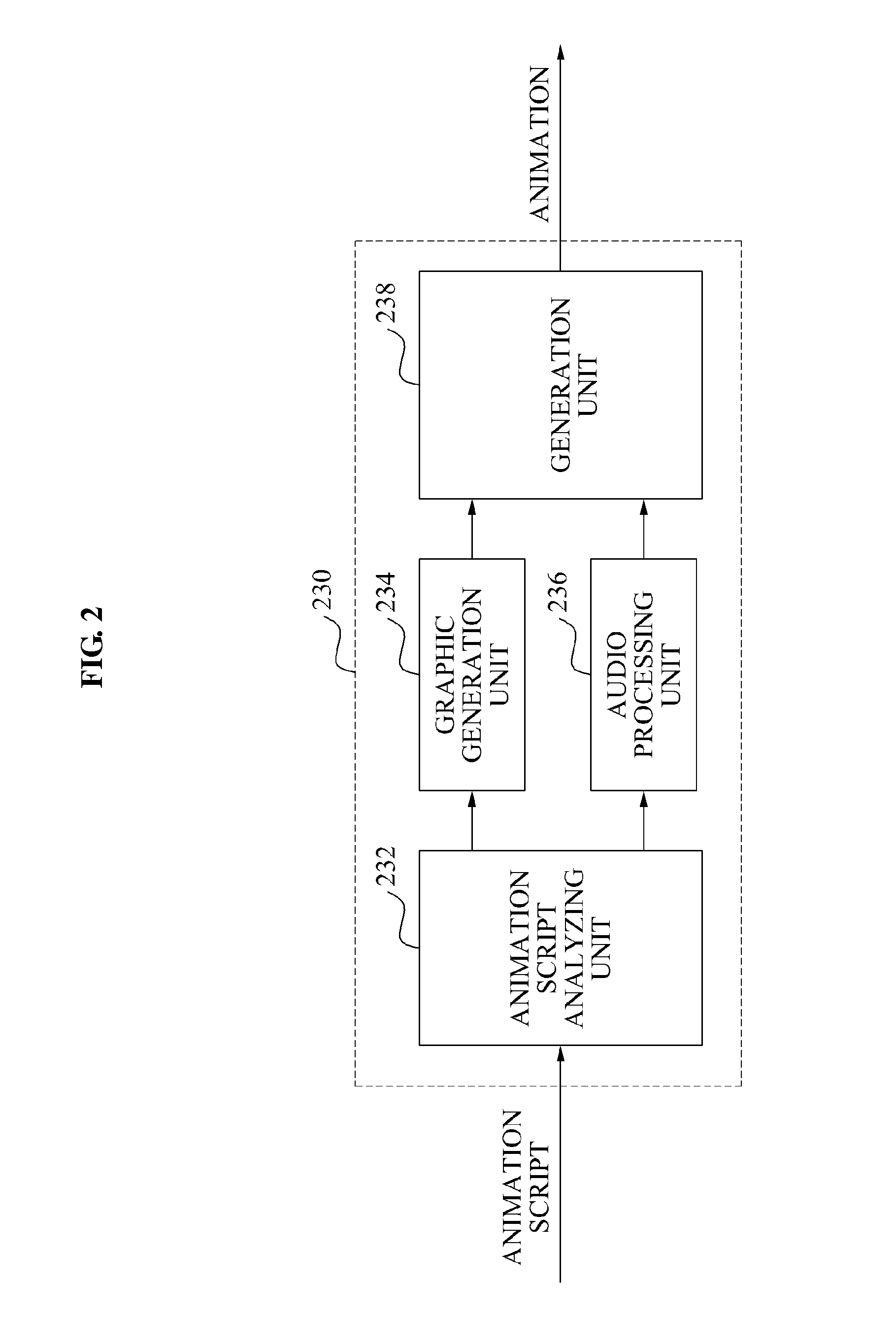

Animation system and methods for generating animation based on text-based data and user information

Animation devices and a method that may output text-based data as an animation, are provided. The device may be a terminal, such as a mobile phone, a computer, and the like. The animation device may extract one or more emotions corresponding to a result obtained by analyzing text-based data. The emotion may be based on user relationship information managed by a user of the device. The device may select an action corresponding to the emotion from a reference database, and combine the text-based data with the emotion and action to generate an animation script. The device may generate a graphic in which a character is moved based on the action information, the emotion information, and the text-based data.

Owner:SAMSUNG ELECTRONICS CO LTD

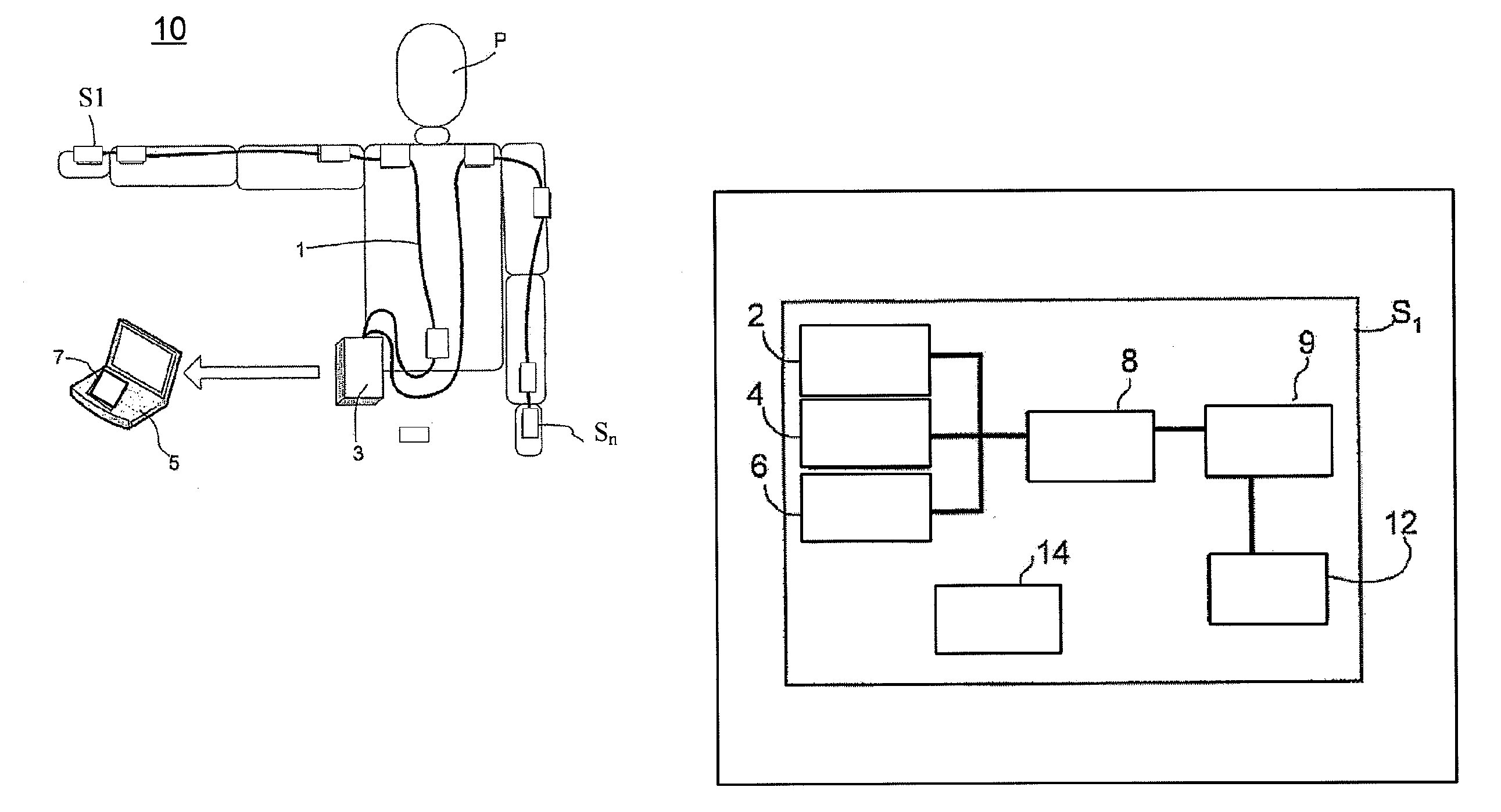

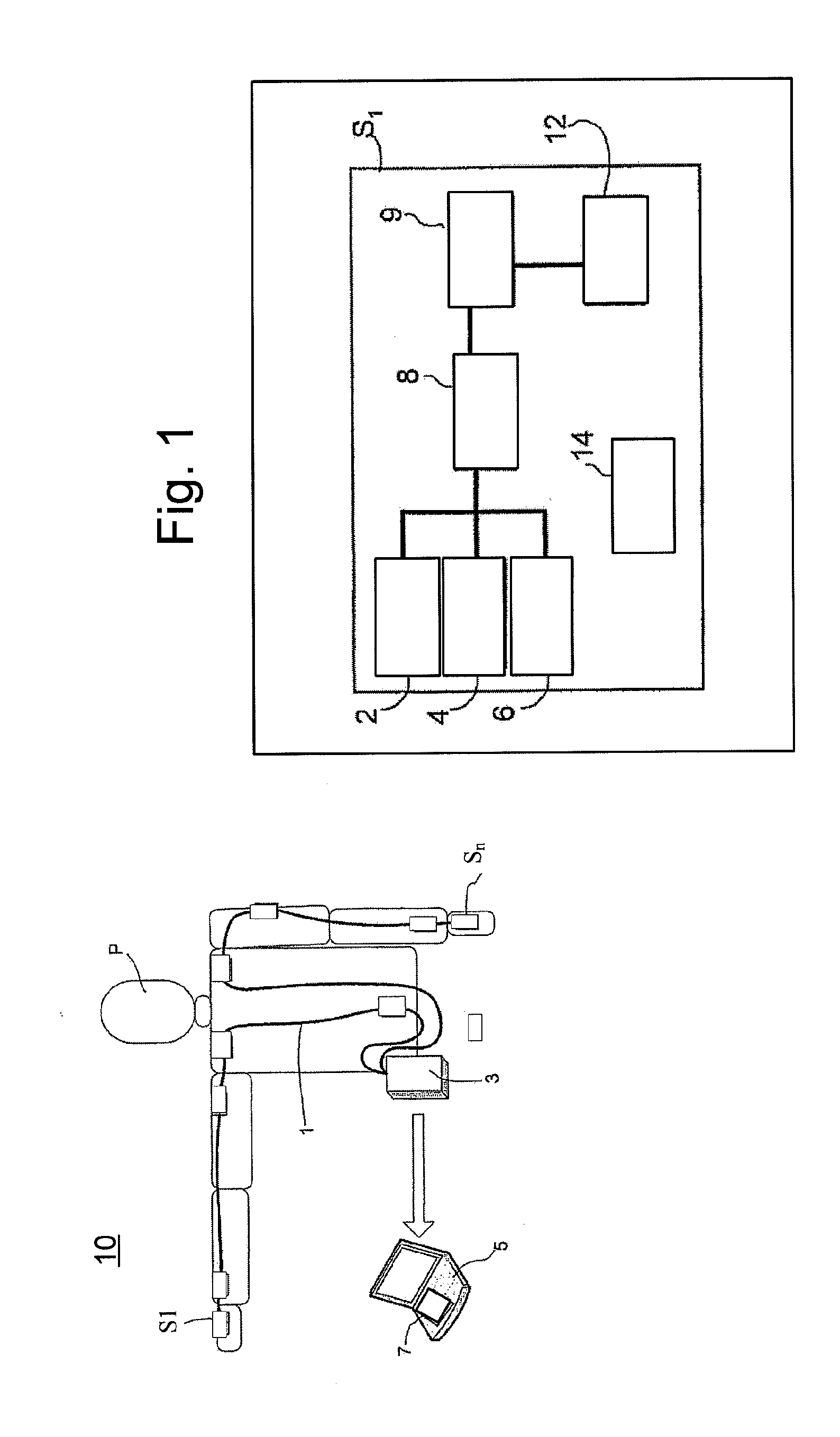

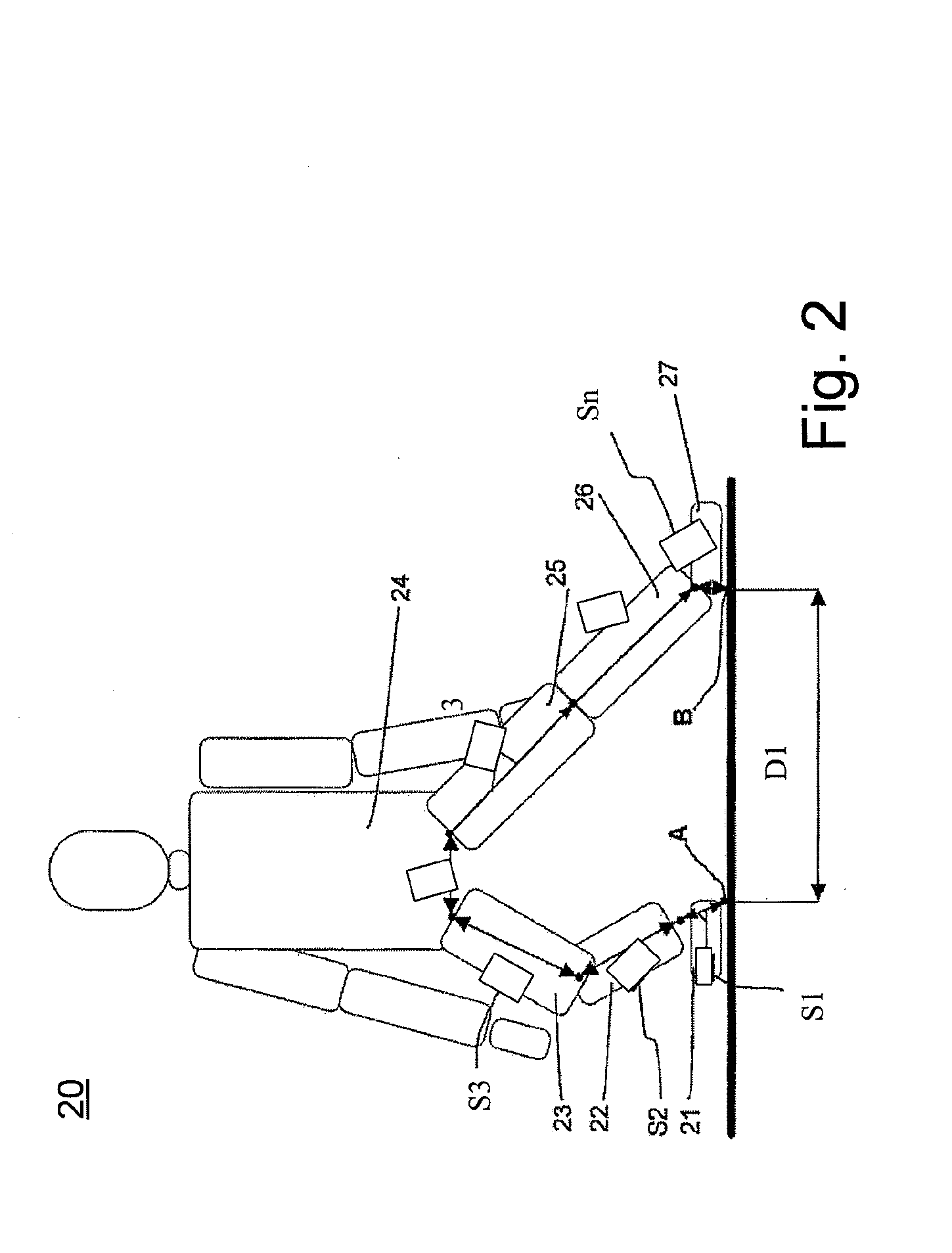

Sytem and a Method for Motion Tracking Using a Calibration Unit

ActiveUS20080262772A1Improve accuracySimple procedureProgramme controlProgramme-controlled manipulatorAnimationThree-dimensional space

The invention relates to motion tracking system (10) for tracking a movement of an object (P) in a three-dimensional space, the said object being composed of object portions having individual dimensions and mutual proportions and being sequentially interconnected by joints the system comprising orientation measurement units (S1, S3, . . . SN) for measuring data related to at least orientation of the object portions, wherein the orientation measurement units are arranged in positional and orientational relationships with respective object portions and having at least orientational parameters; a processor (3, 5) for receiving data from the orientation measurement units, the said processor comprising a module for deriving orientation and / or position information of the object portions using the received data and a calibration unit (7) arranged to calculate calibration values based on received data and pre-determined constraints for determining at least the mutual proportions of the object portions and orientational parameters of the orientation measurement units based on received data, pre-determined constrains and additional input data. The invention further relates to a method for tracking a movement of an object, a medical rehabilitation system and an animation system.

Owner:XSENS HLDG BV

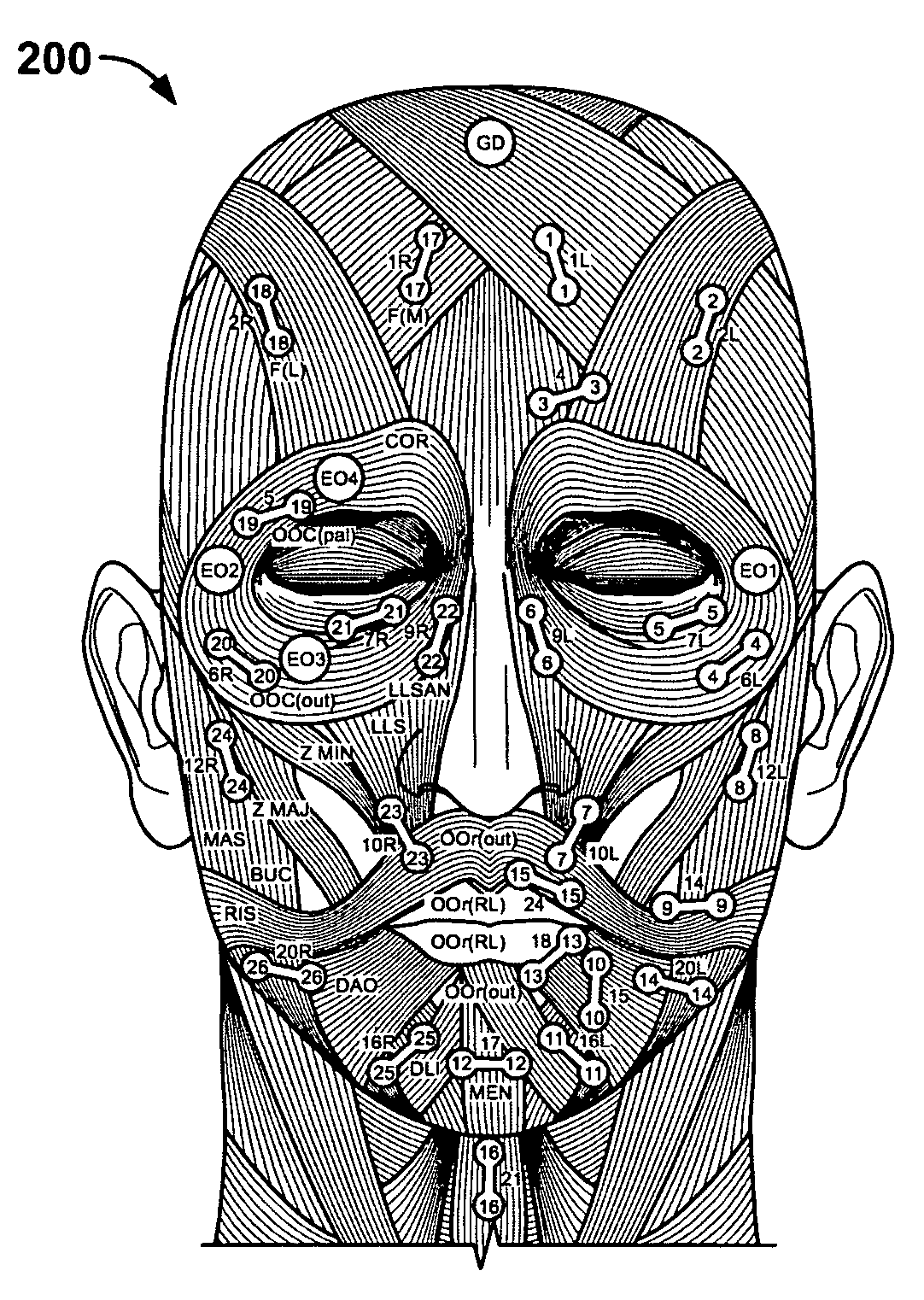

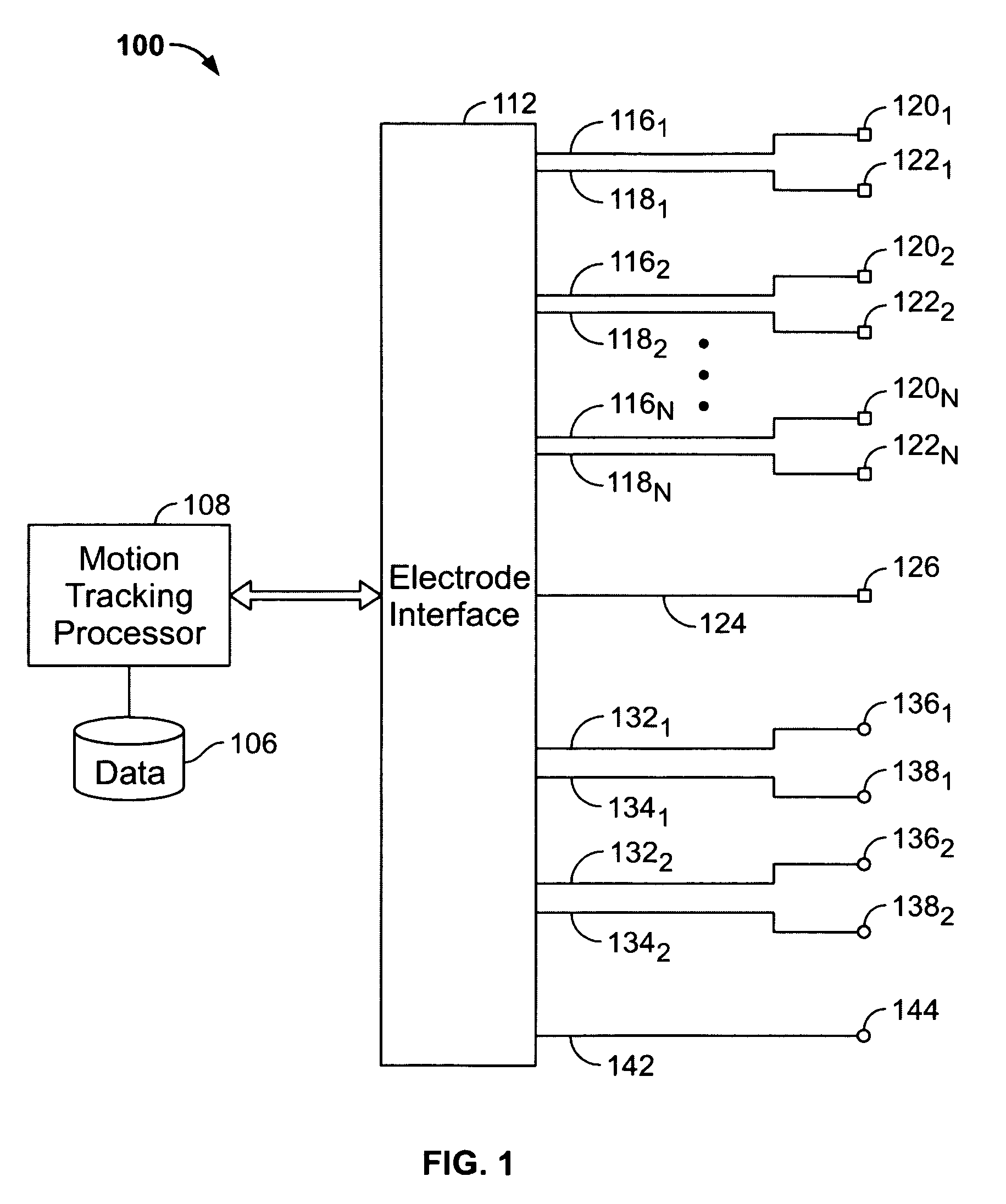

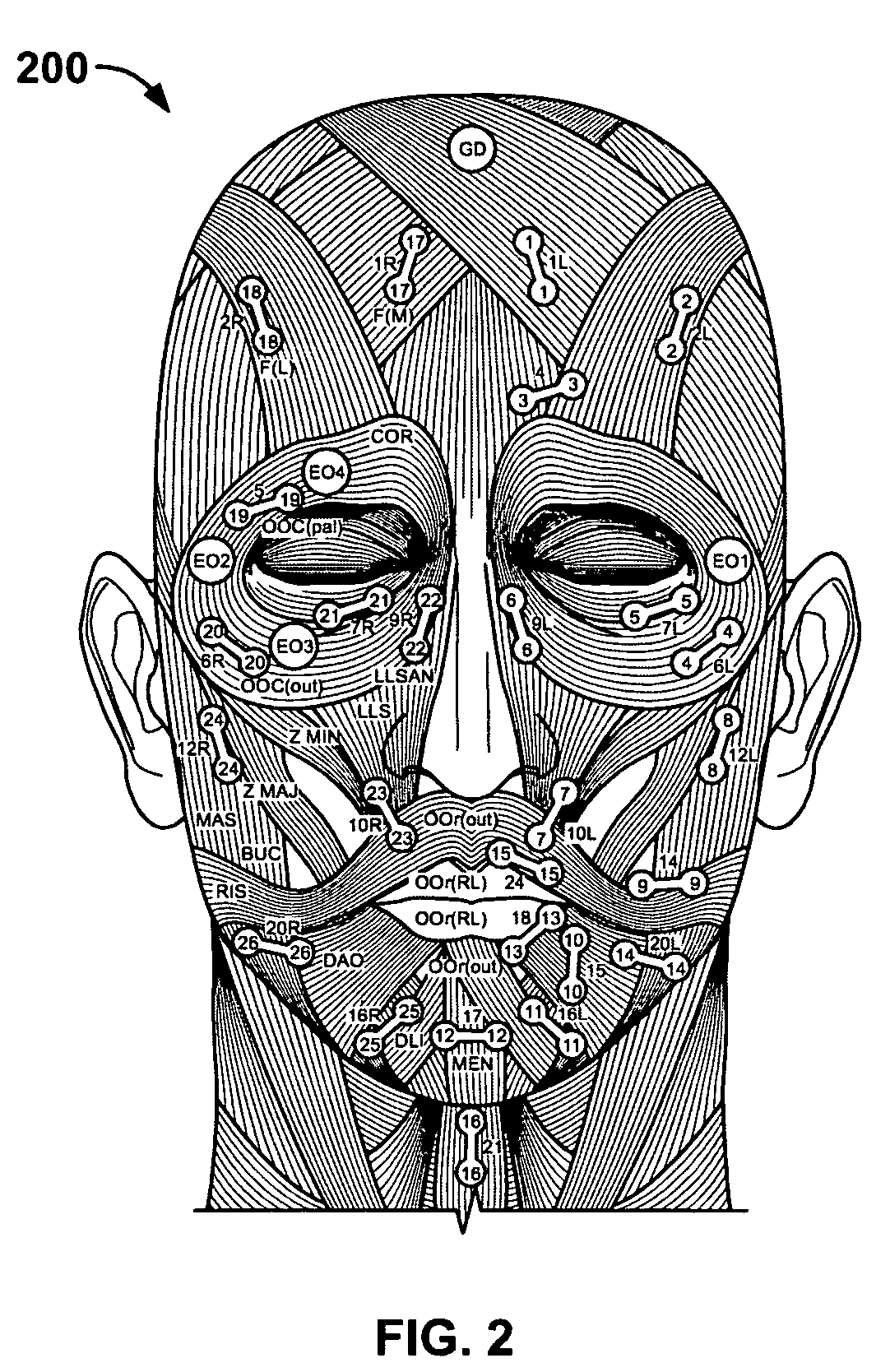

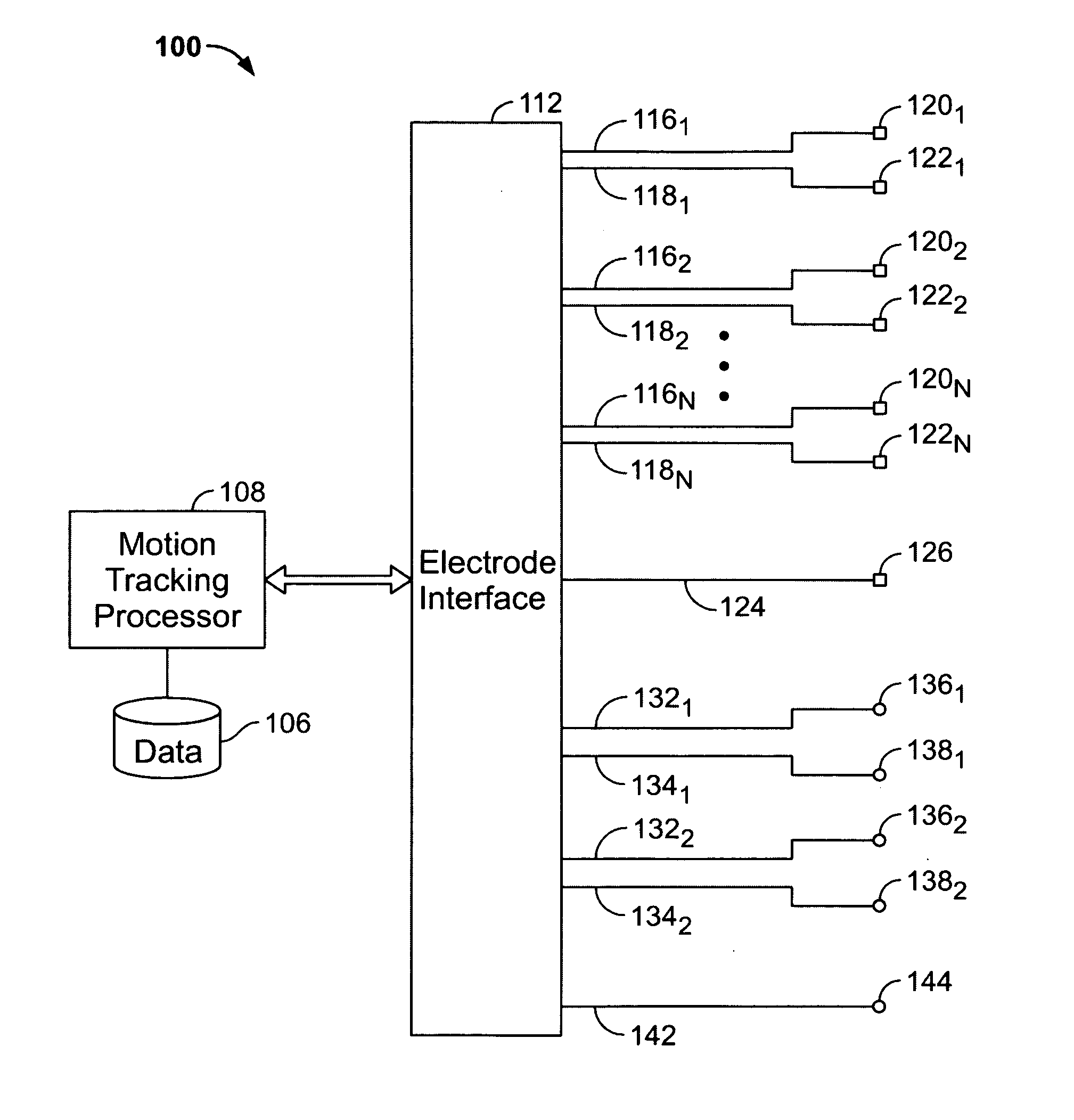

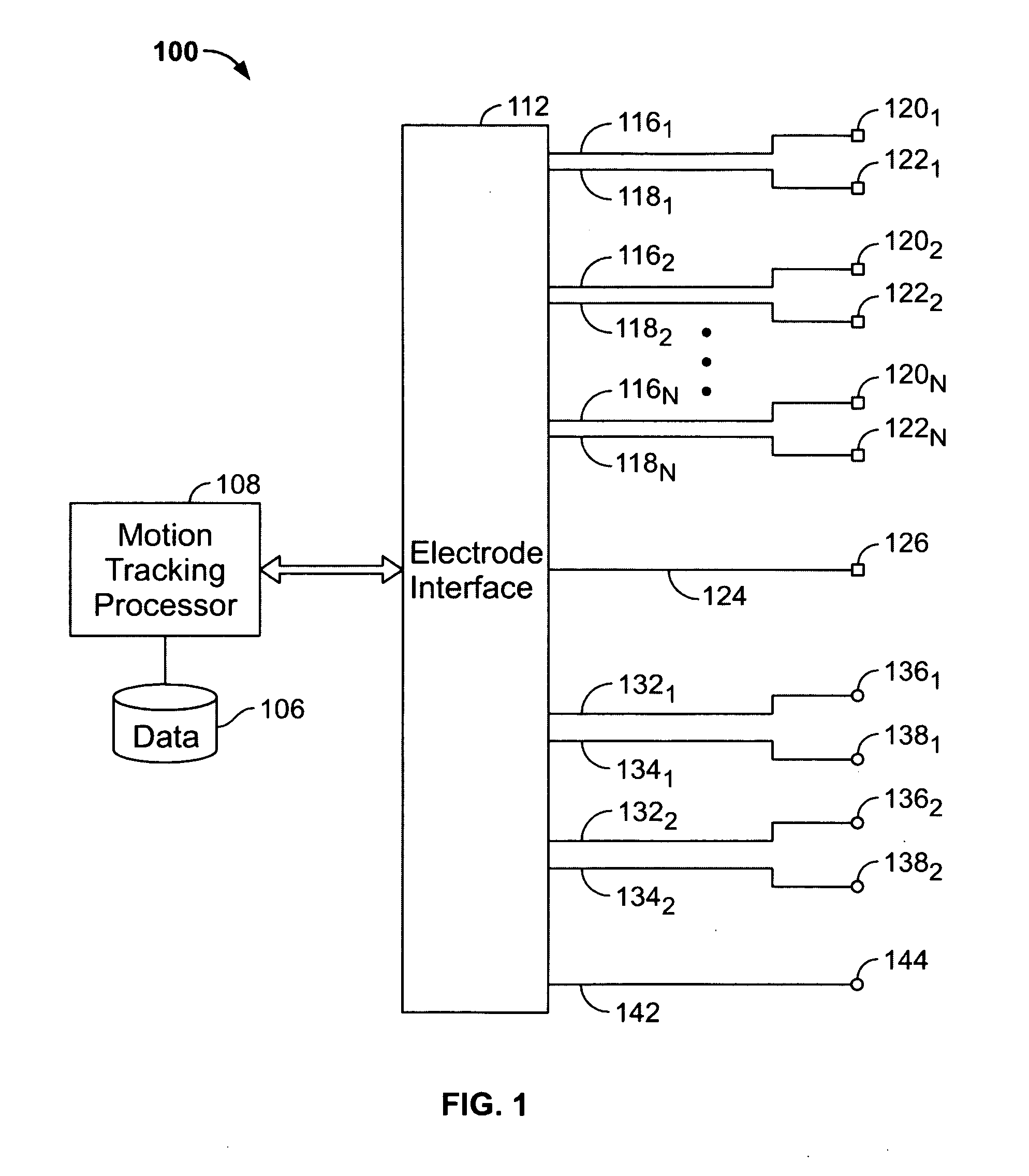

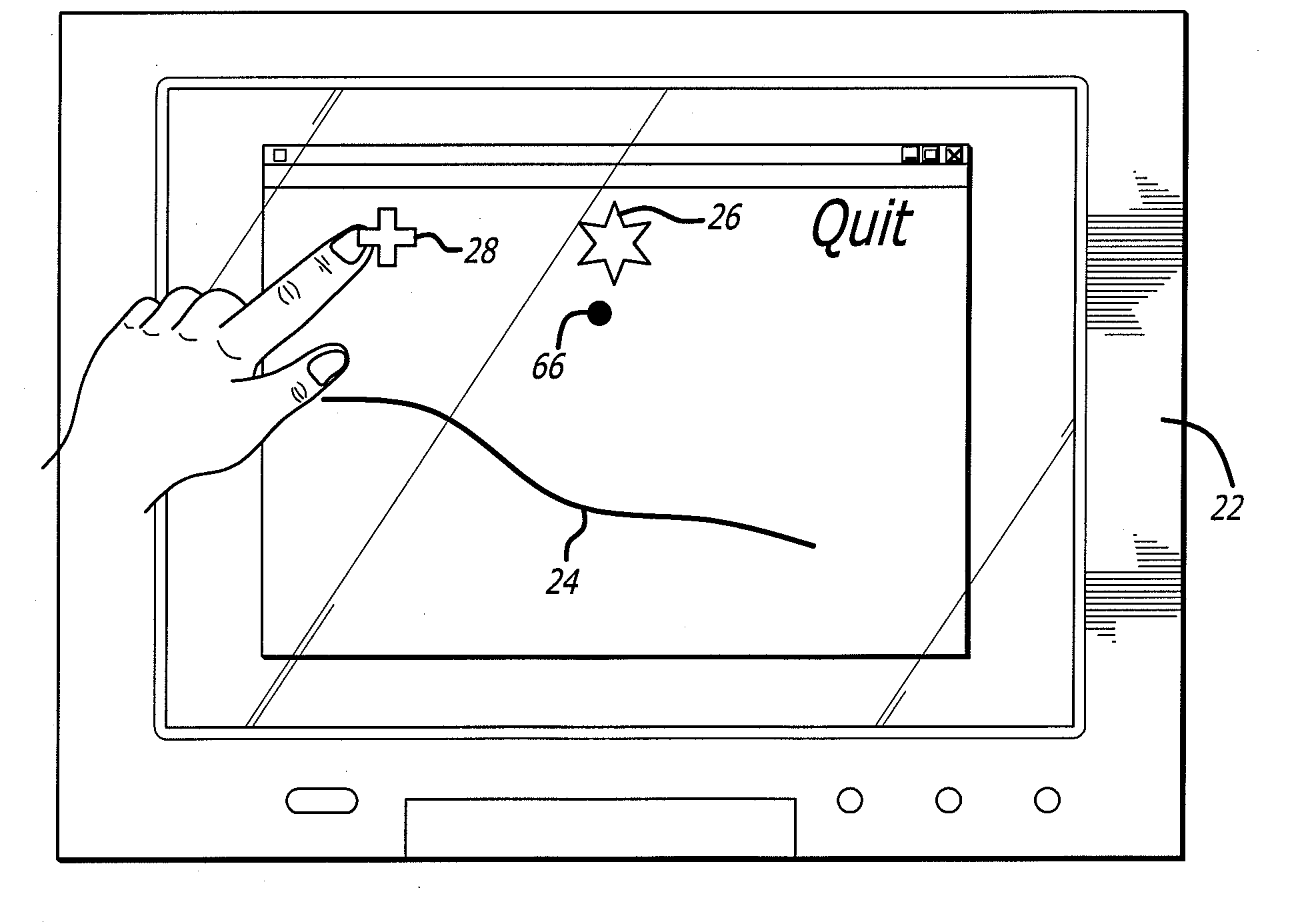

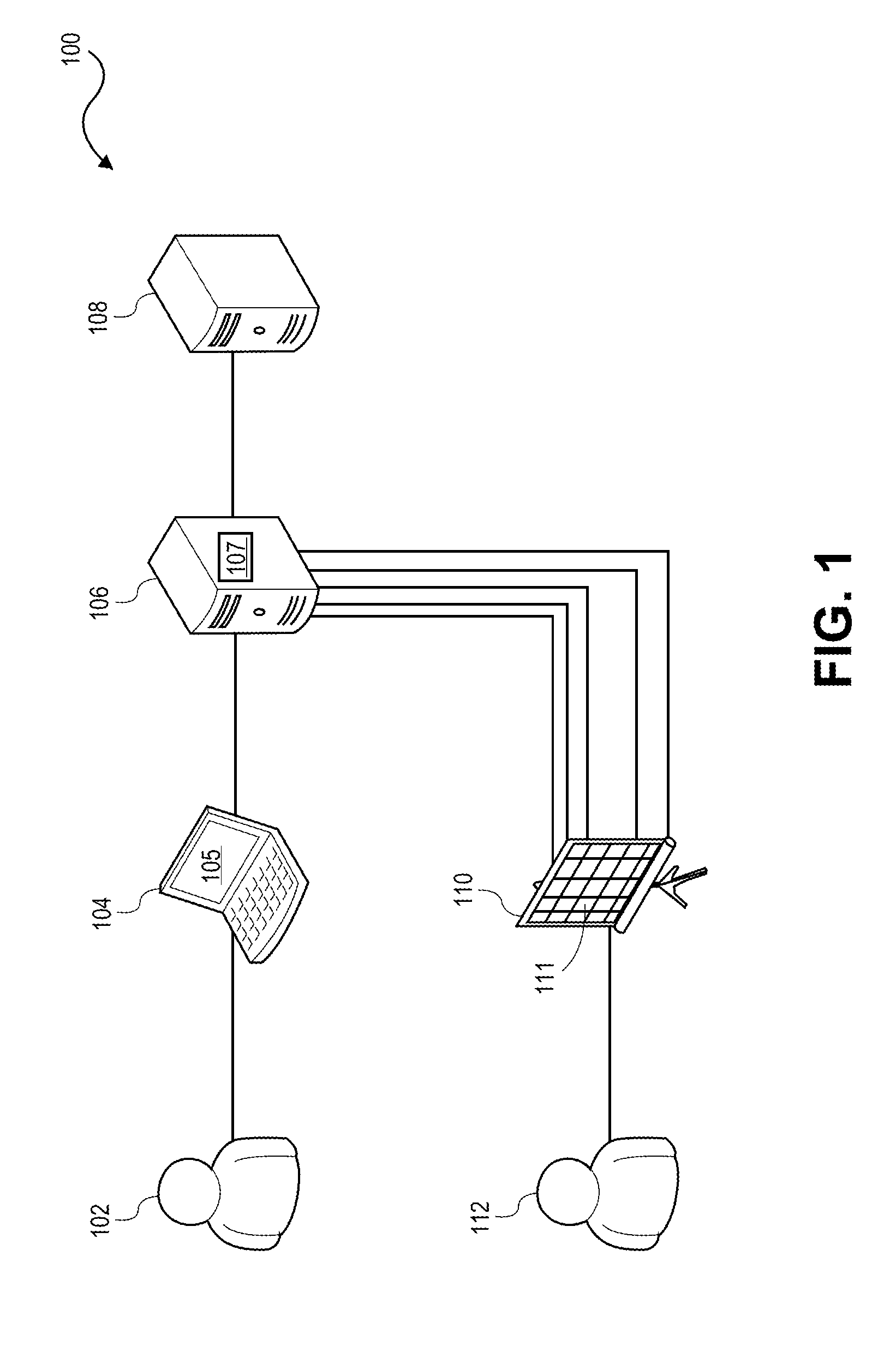

System and method for tracking facial muscle and eye motion for computer graphics animation

A motion tracking system enables faithful capture of subtle facial and eye motion using a surface electromyography (EMG) detection method to detect muscle movements and an electrooculogram (EOG) detection method to detect eye movements. An embodiment of the motion tracking animation system comprises a plurality of pairs of EOG electrodes adapted to be affixed to the skin surface of the performer at locations adjacent to the performer's eyes. The EOG data comprises electrical signals corresponding to eye movements of a performer during a performance. Programming instructions further provide processing of the EOG data and mapping of processed EOG data onto an animated character. As a result, the animated character will exhibit he same muscle and eye movements as the performer.

Owner:SONY CORP +1

System and method for tracking facial muscle and eye motion for computer graphics animation

A motion tracking system enables faithful capture of subtle facial and eye motion using a surface electromyography (EMG) detection method to detect muscle movements and an electrooculogram (EOG) detection method to detect eye movements. Signals corresponding to the detected muscle and eye movements are used to control an animated character to exhibit the same movements performed by a performer. An embodiment of the motion tracking animation system comprises a plurality of pairs of EMG electrodes adapted to be affixed to a skin surface of a performer at plural locations corresponding to respective muscles, and a processor operatively coupled to the plurality of pairs of EMG electrodes. The processor includes programming instructions to perform the functions of acquiring EMG data from the plurality of pairs of EMG electrodes. The EMG data comprises electrical signals corresponding to muscle movements of the performer during a performance. The programming instructions further include processing the EMG data to provide a digital model of the muscle movements, and mapping the digital model onto an animated character. In another embodiment of the invention, a plurality of pairs of EOG electrodes are adapted to be affixed to the skin surface of the performer at locations adjacent to the performer's eyes. The processor is operatively coupled to the plurality of pairs of EOG electrodes and further includes programming instructions to perform the functions of acquiring EOG data from the plurality of pairs of EOG electrodes. The EOG data comprises electrical signals corresponding to eye movements of the performer during a performance. The programming instructions further provide processing of the EOG data and mapping of the processed EOG data onto the animated character. As a result, the animated character will exhibit the same muscle and eye movements as the performer.

Owner:SONY CORP +1

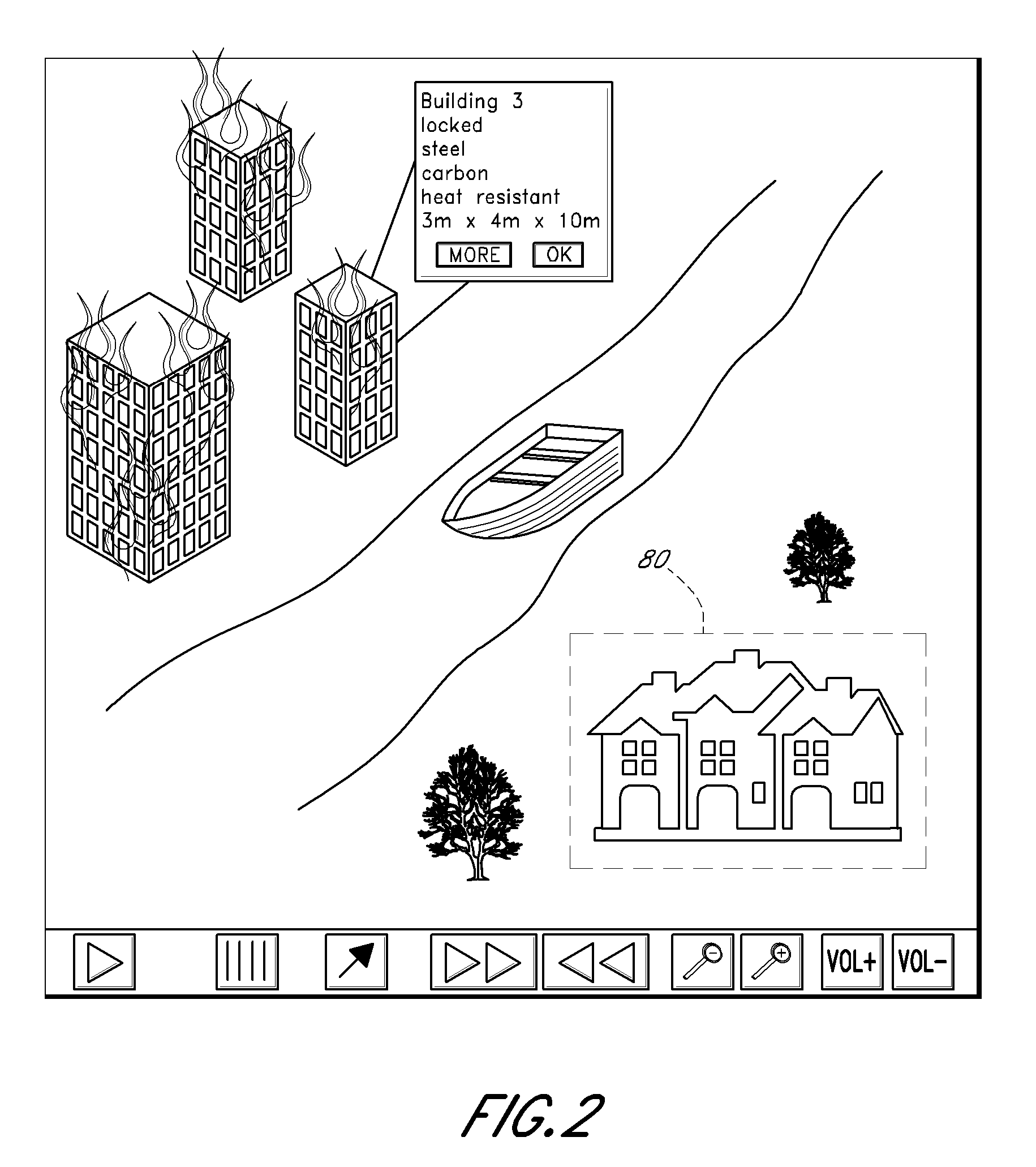

Document animation system

InactiveUS20060197764A1Expand their vocabularyEnhance vocabulary quicklyAnimationSpeech synthesisAnimationPaper document

An animating system converts a text-based document into a sequence of animating pictures for helping a user to understand better and faster. First, the system provides interfaces for a user to build various object models, specify default rules for these object models, and construct the references for meanings and actions. Second, the system will analyze the document, extract desired information, identify various objects, and organize information. Then the system will create objects from corresponding object models and provide interfaces to modify default values and default rules and define specific values and specific rules. Further, the system will identify the meanings of words and phrases. Furthermore, the system will identify, interpolate, synchronize, and dispatch events. Finally, the system provides interface for the user to track events and particular objects.

Owner:YANG GEORGE L

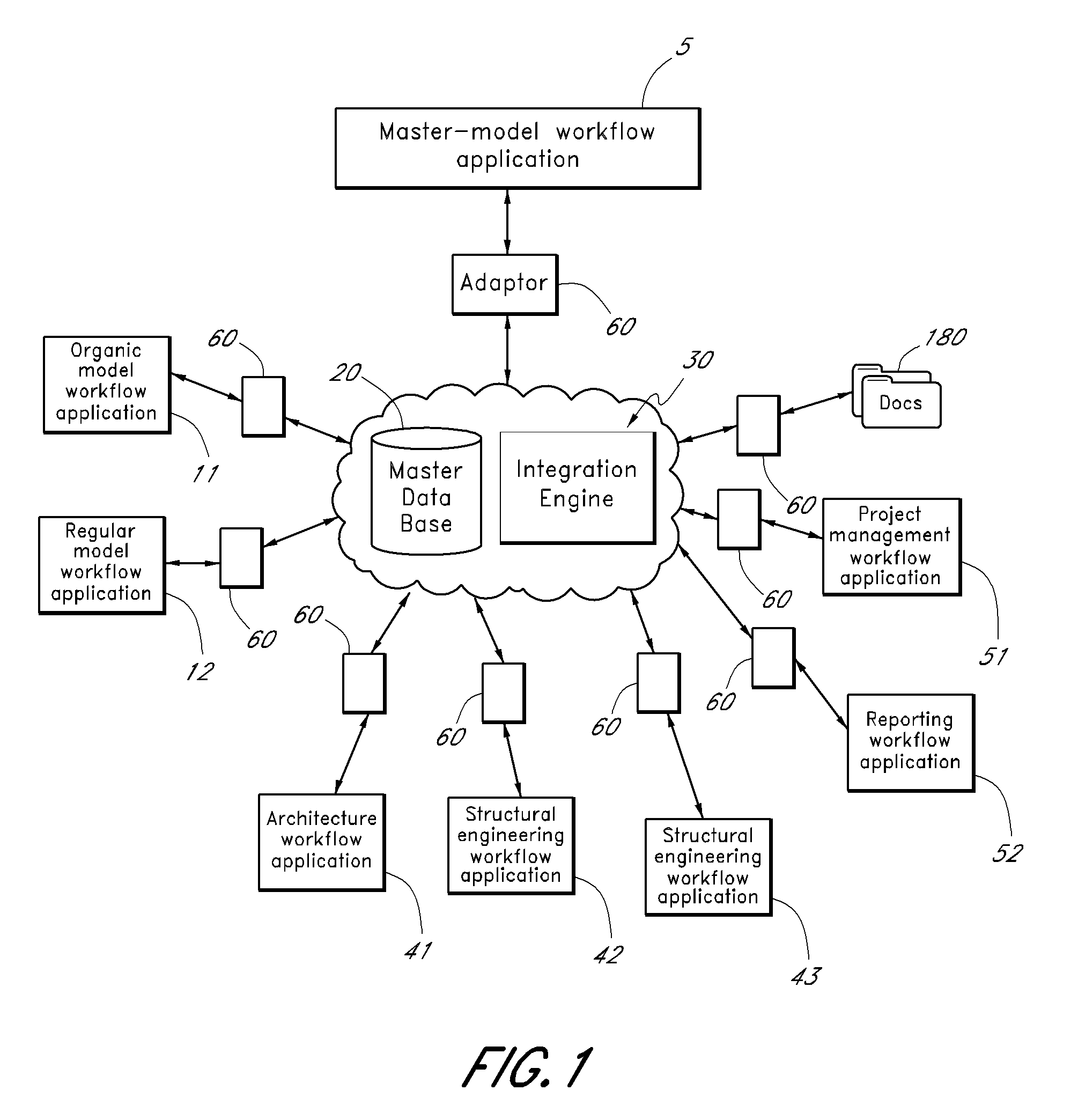

Dimensioned modeling system

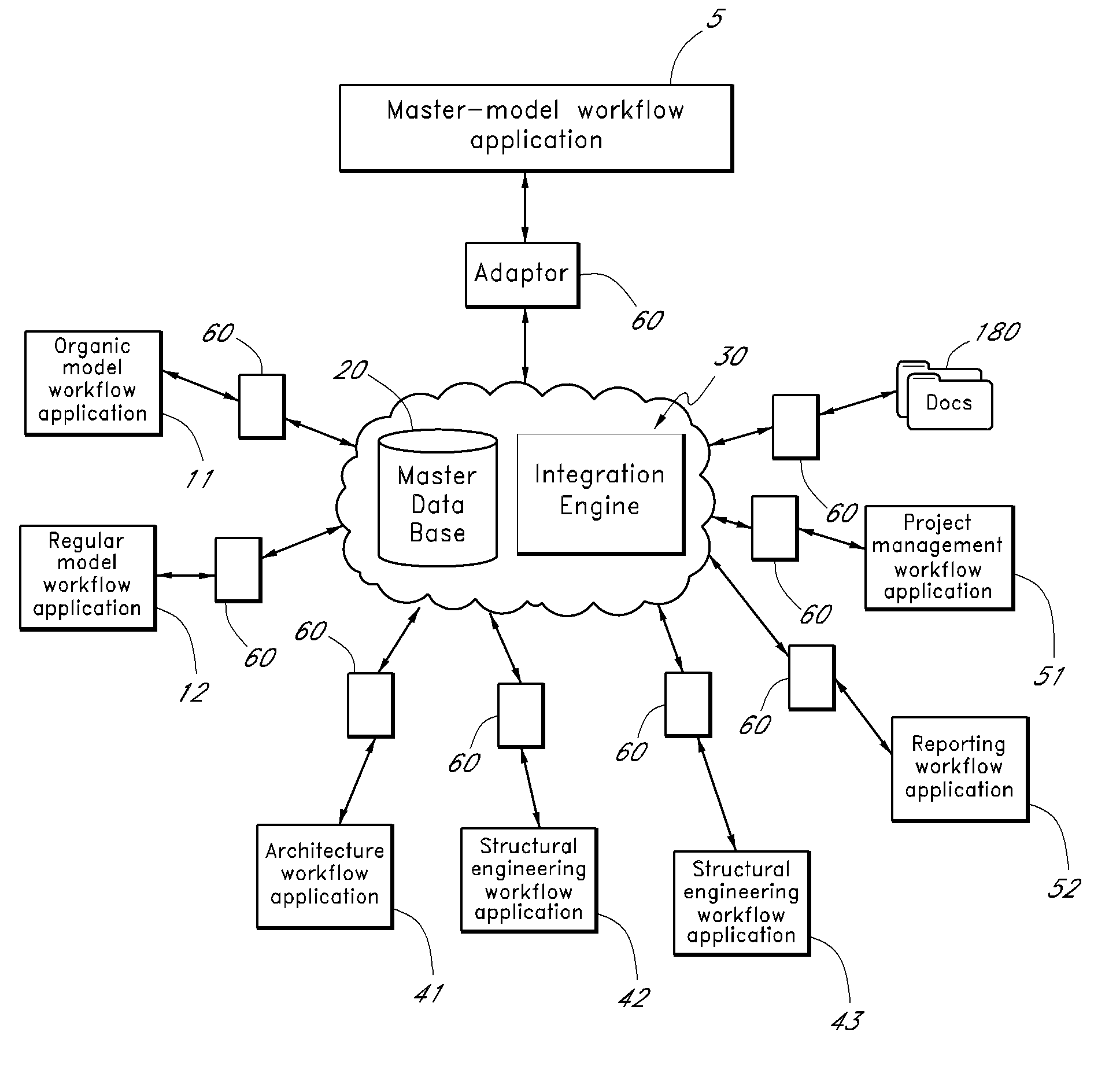

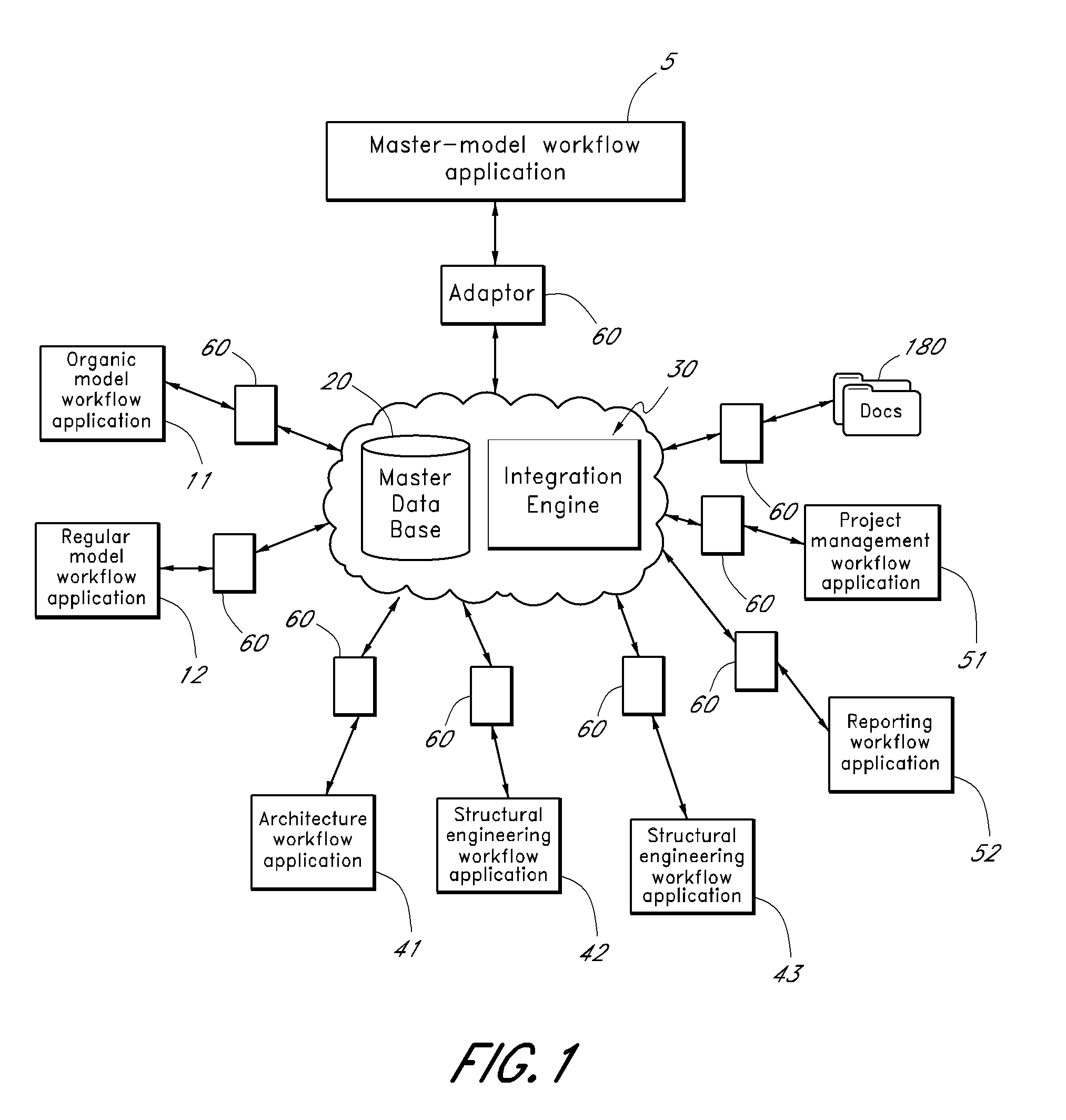

InactiveUS20100241477A1Maintain consistencyProvide functionalityGeometric CADResourcesThird partyInformation sharing

The disclosed inventions and embodiments relate to the propagation of information among workflow applications used by a design project such as a construction design project and the creation and use of dimensioned and animated models for such projects. The workflow applications may be extended to enable participation in the information sharing or the system may provide functionality external to the tools that facilitates the participation of the tools. Information from various sources, including the workflow applications and third party sources, can be represented and modeled in an animation system. Sometimes the propagation is enabled in part by a store of information that also enabled reuse and reporting. Information used and generated by the various workflow applications is kept consistent among the different workflow applications and among the different representations of that information.

Owner:SCENARIO DESIGN

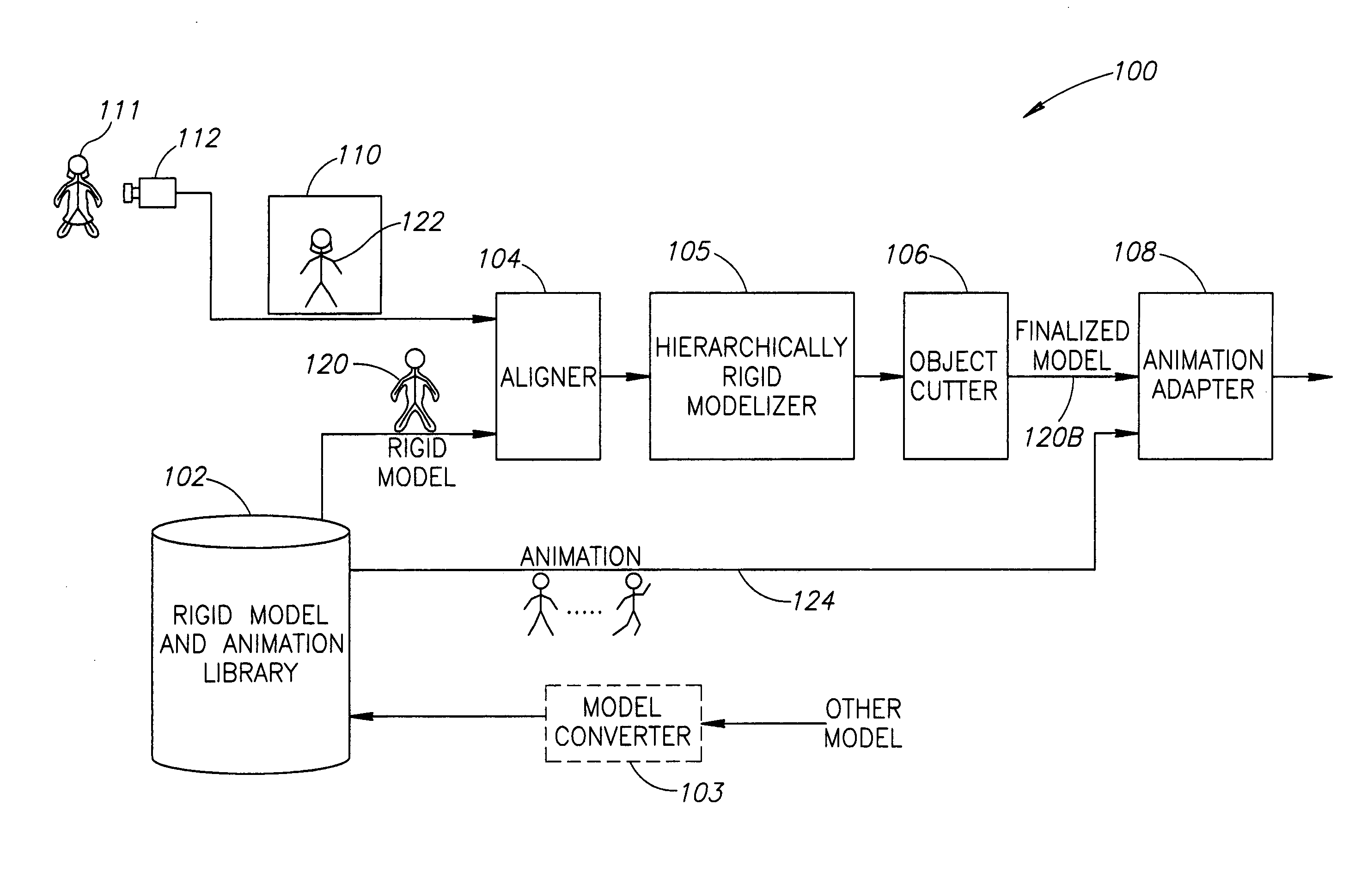

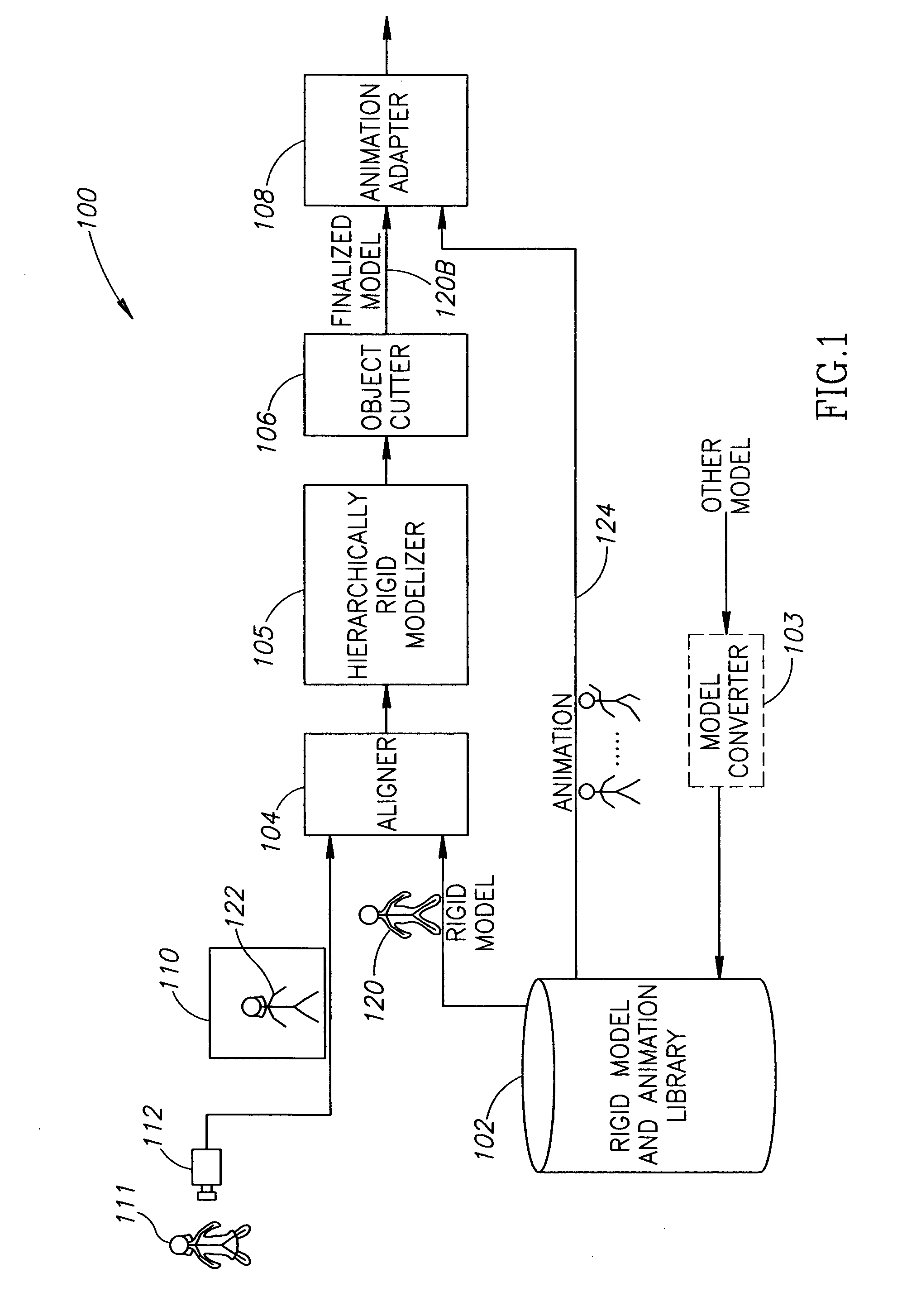

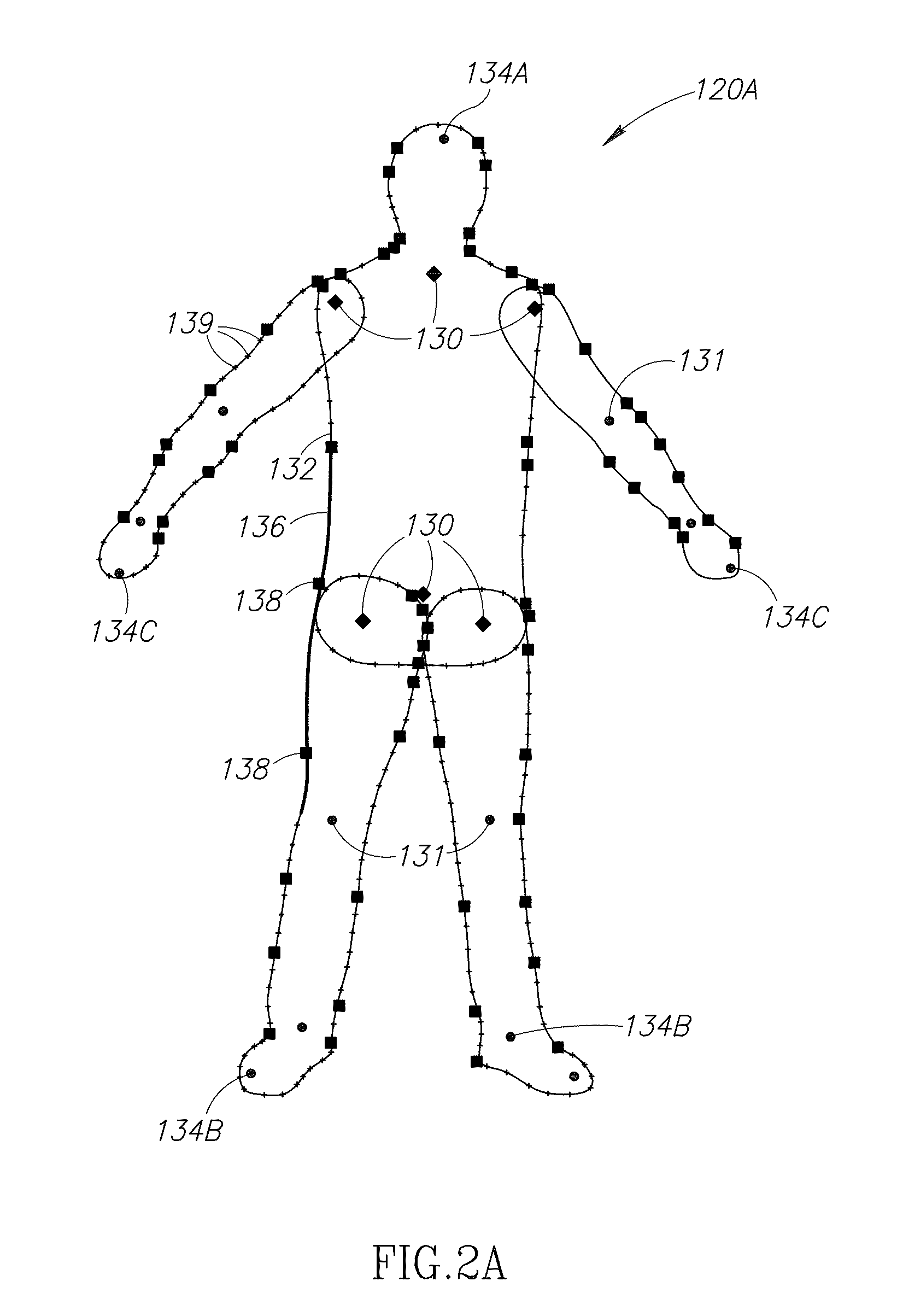

Modelization of objects in images

A system includes an aligner to align an initial position of an at least partially kinematically, parameterized model with an object in an image, and a modelizer to adjust parameters of the model to match the model to contours of the object, given the initial alignment. An animation system includes a modelizer to hierarchically match a hierarchically rigid model to an object in an image, and a cutter to cut said object from said image and to associate it with said model. A method for animation includes hierarchically matching a hierarchically rigid model to an object in an image, and cutting said object from said image to associate it with said model.

Owner:YEDA RES & DEV CO LTD

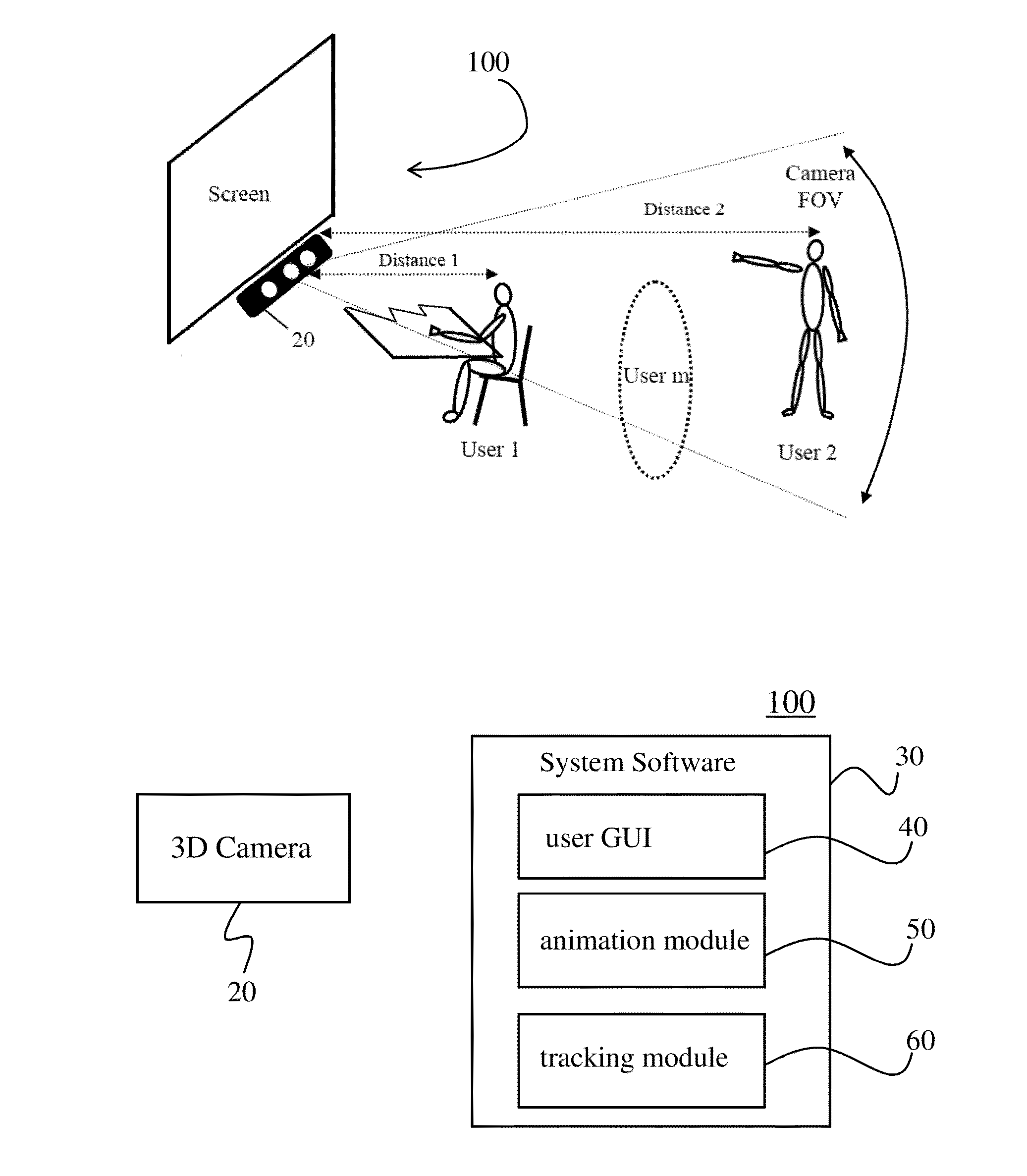

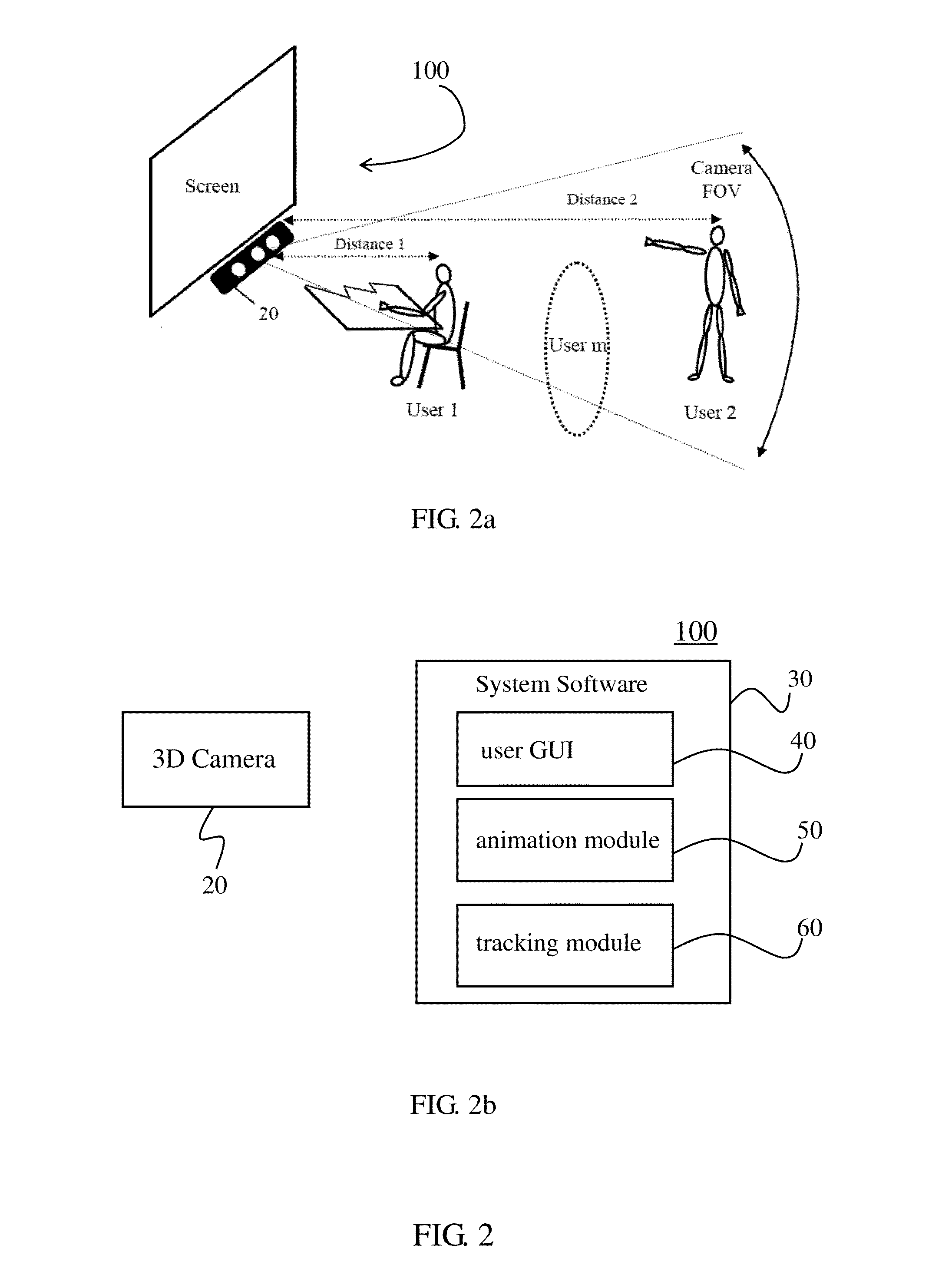

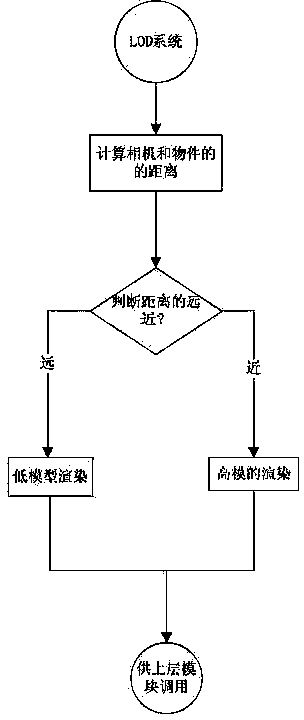

Human body and facial animation systems with 3D camera and method thereof

InactiveUS20130100140A1AnimationDetails involving graphical user interfaceHuman bodyImage resolution

An animation system integrating face and body tracking for puppet and avatar animation by using a 3D camera is provided. The 3D camera human body and facial animation system includes a 3D camera having an image sensor and a depth sensor with same fixed focal length and image resolution, equal FOV and aligned image center. The system software of the animation system provides on-line tracking and off-line learning functions. An algorithm of object detection for the on-line tracking function includes detecting and assessing a distance of an object; depending upon the distance of the object, the object can be identified as a face, body, or face / hand so as to perform face tracking, body detection, or ‘face and hand gesture’ detection procedures. The animation system can also have zoom lens which includes an image sensor with an adjustable focal length f′ and a depth sensor with a fixed focal length f.

Owner:ULSEE

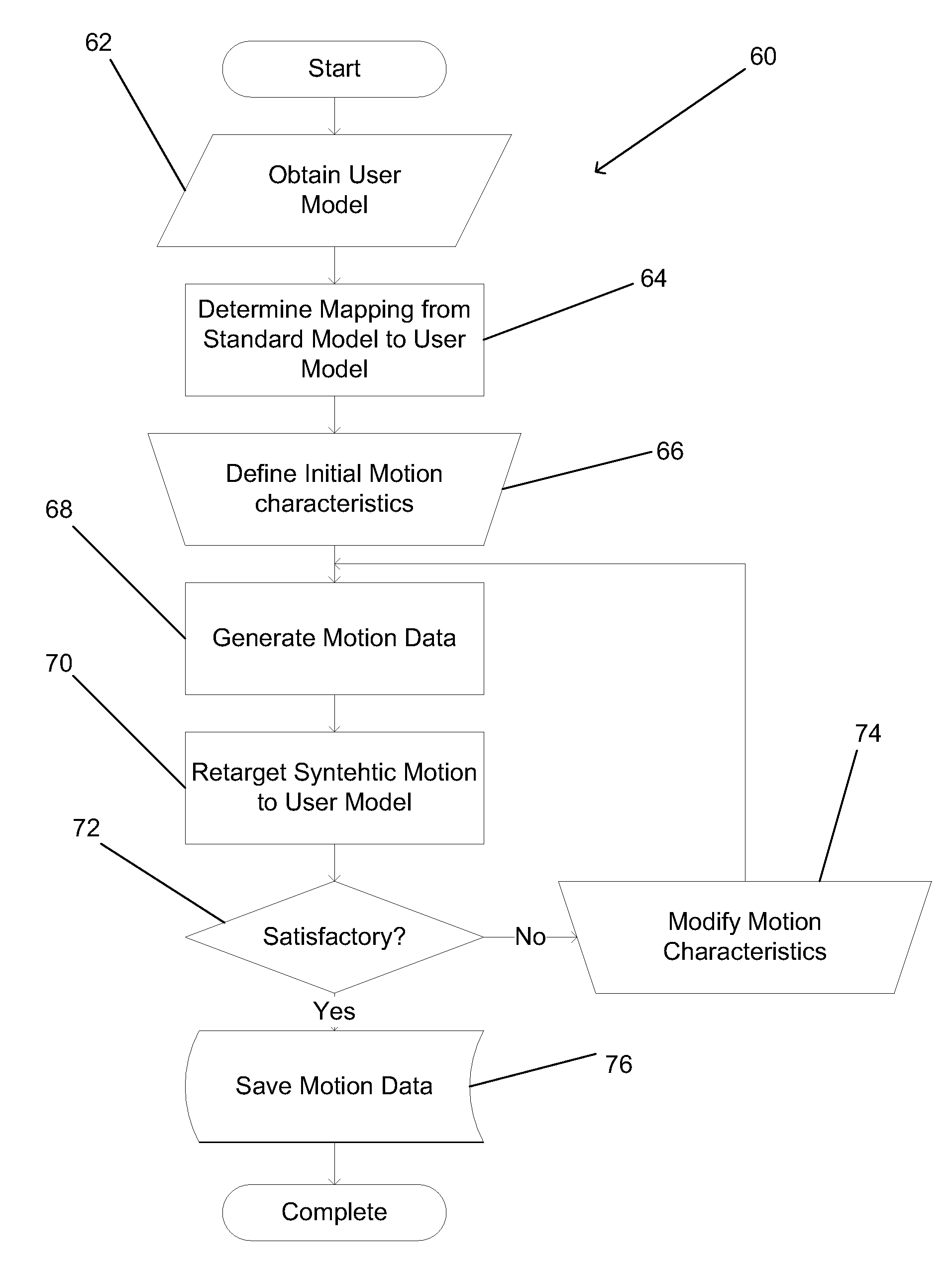

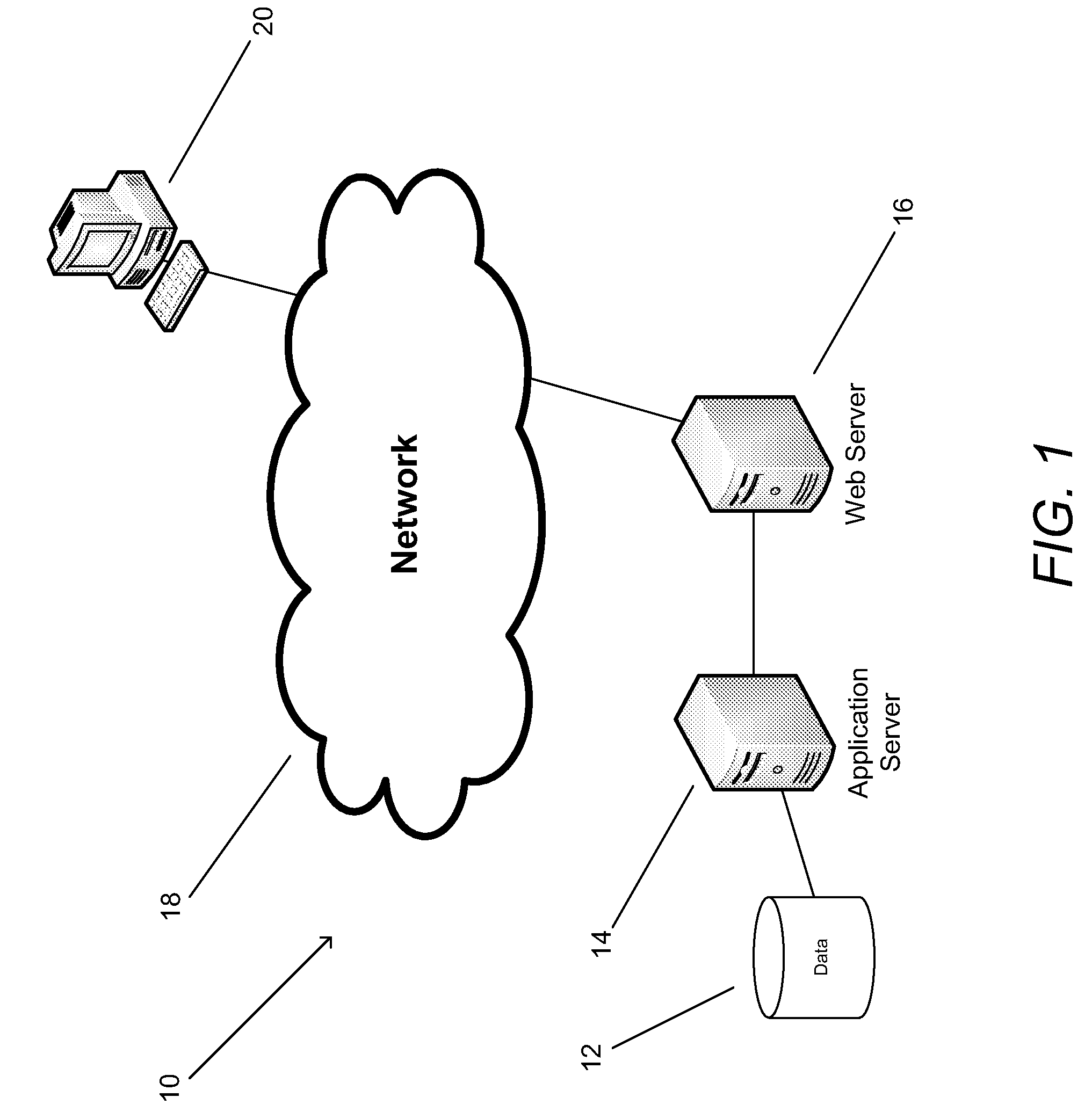

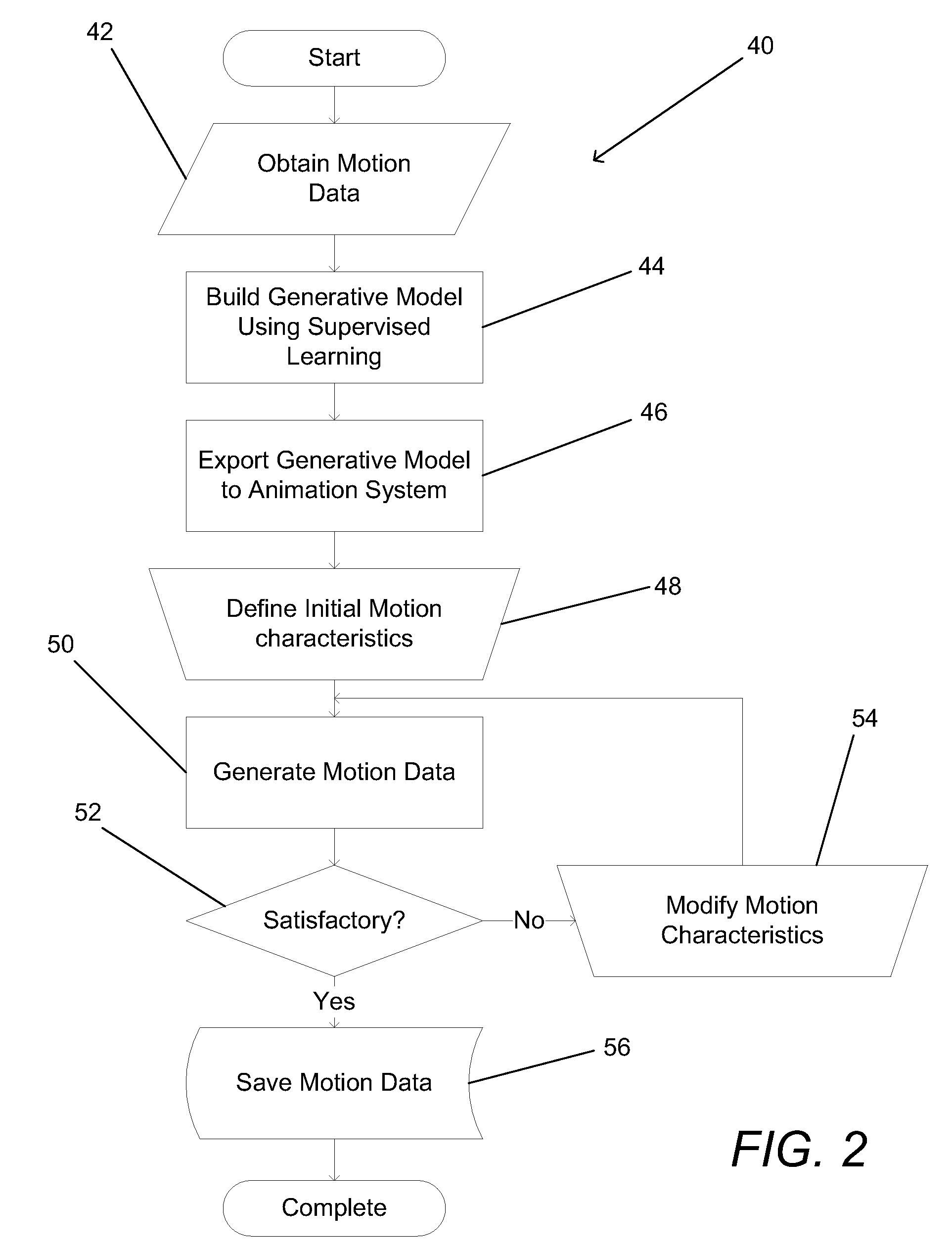

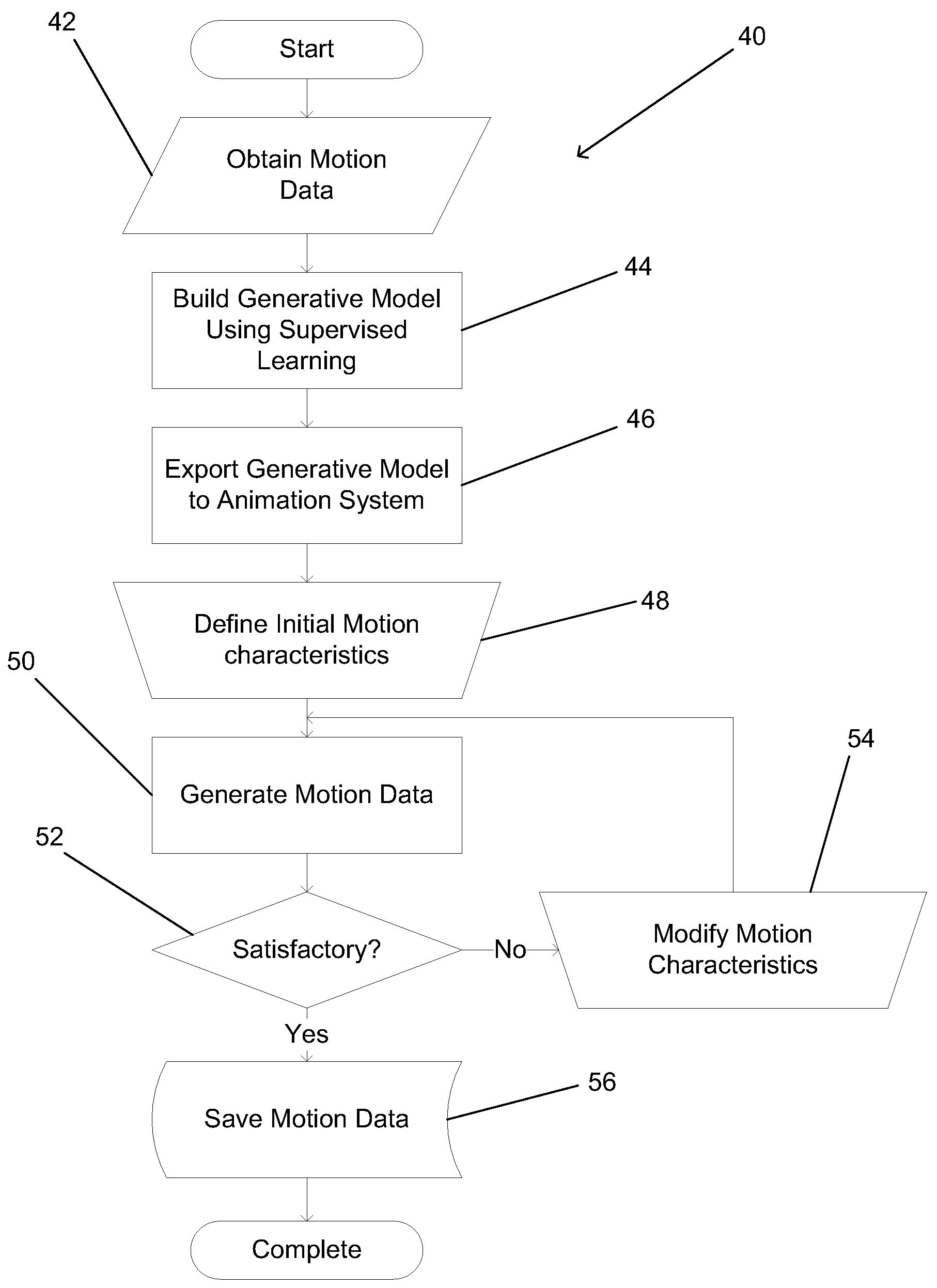

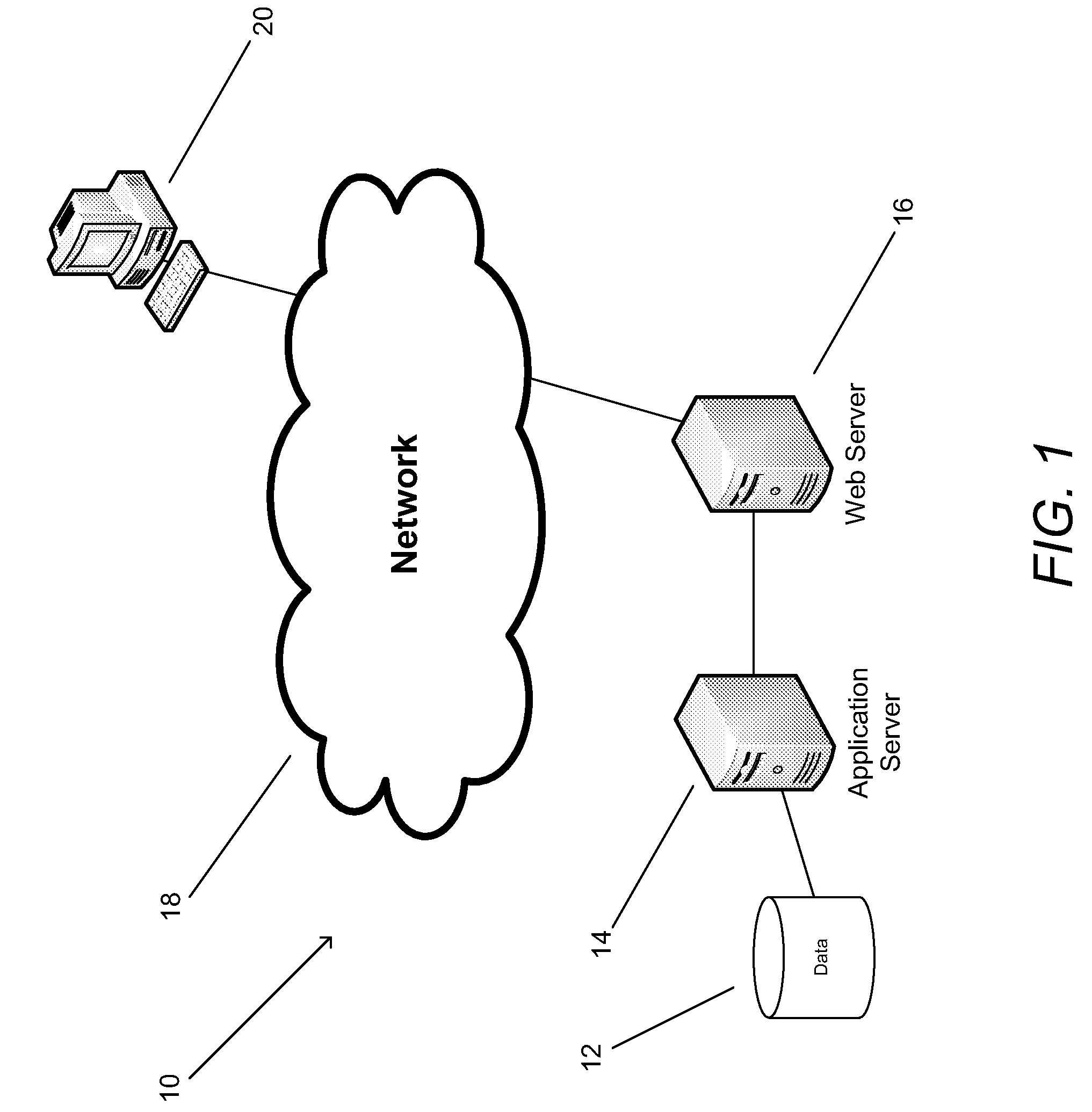

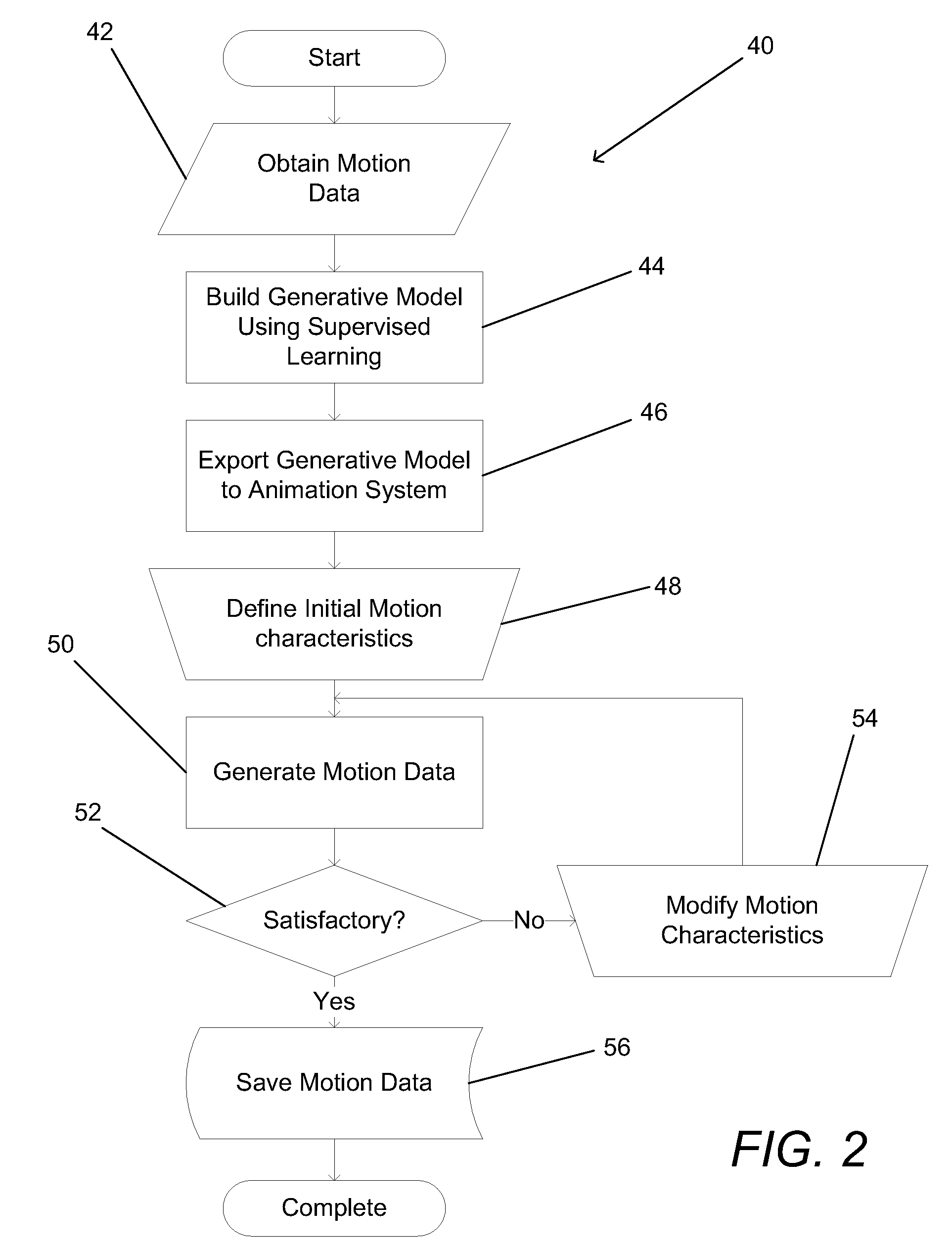

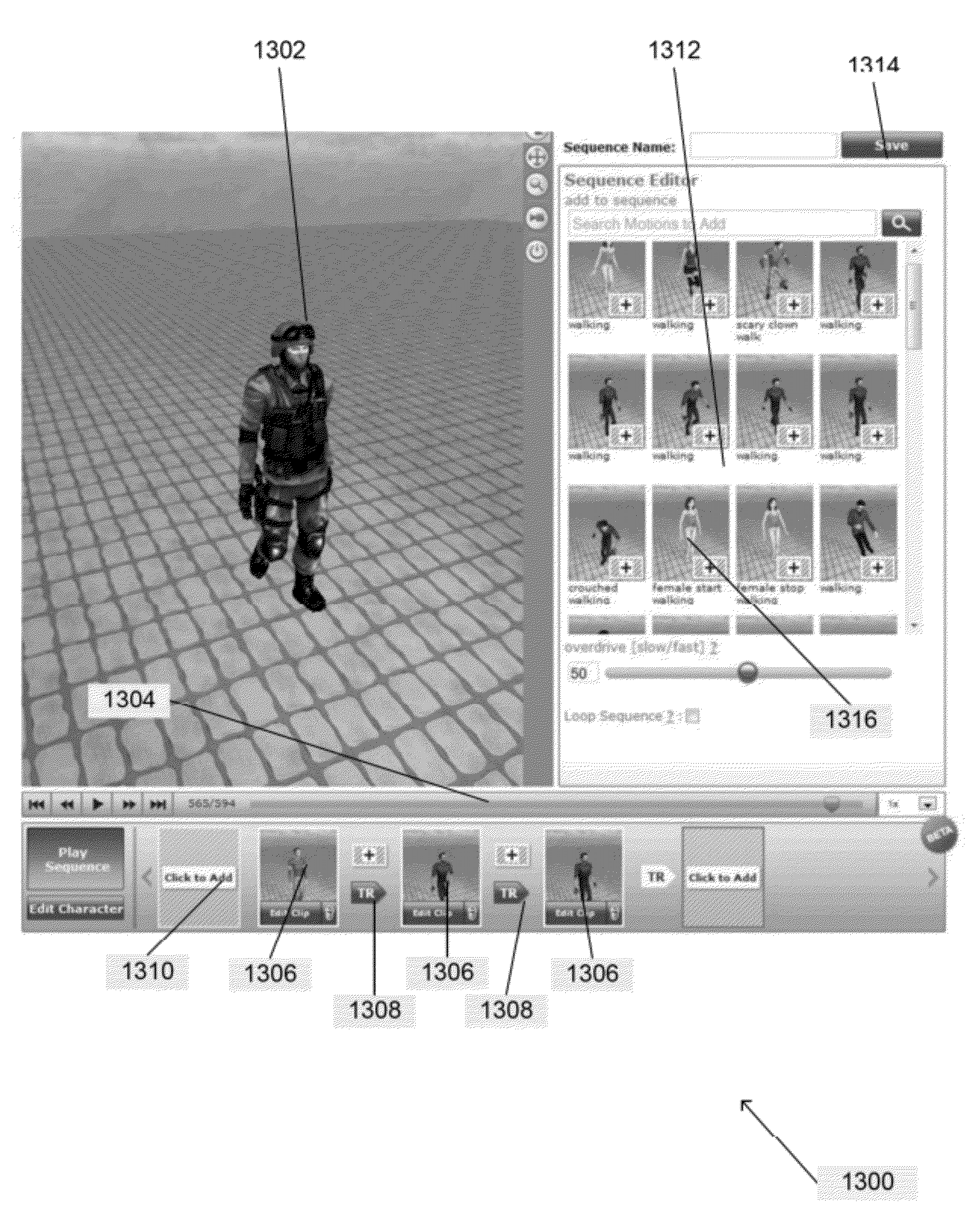

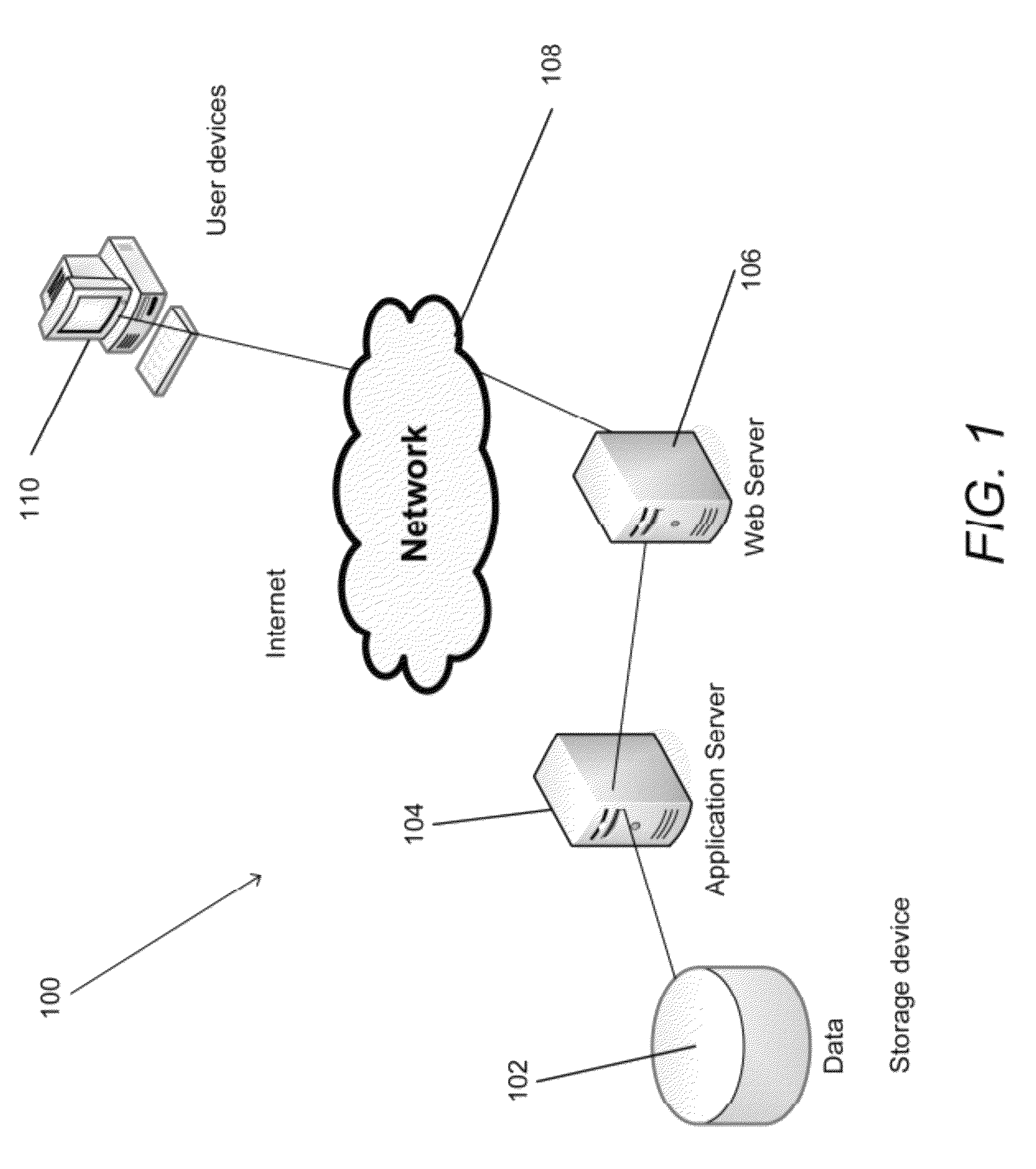

Interactive design, synthesis and delivery of 3D character motion data through the web

ActiveUS8704832B2Reduce in quantityImage data processing detailsAnimationInteractive designAnimation

Systems and methods are described for animating 3D characters using synthetic motion data generated by generative models in response to a high level description of a desired sequence of motion provided by an animator. An animation system is accessible via a server system that utilizes the ability of generative models to generate synthetic motion data across a continuum to enable multiple animators to effectively reuse the same set of previously recorded motion capture data to produce a wide variety of desired animation sequences. An animator can upload a custom model of a 3D character and the synthetic motion data generated by the generative model is retargeted to animate the custom 3D character.

Owner:ADOBE SYST INC

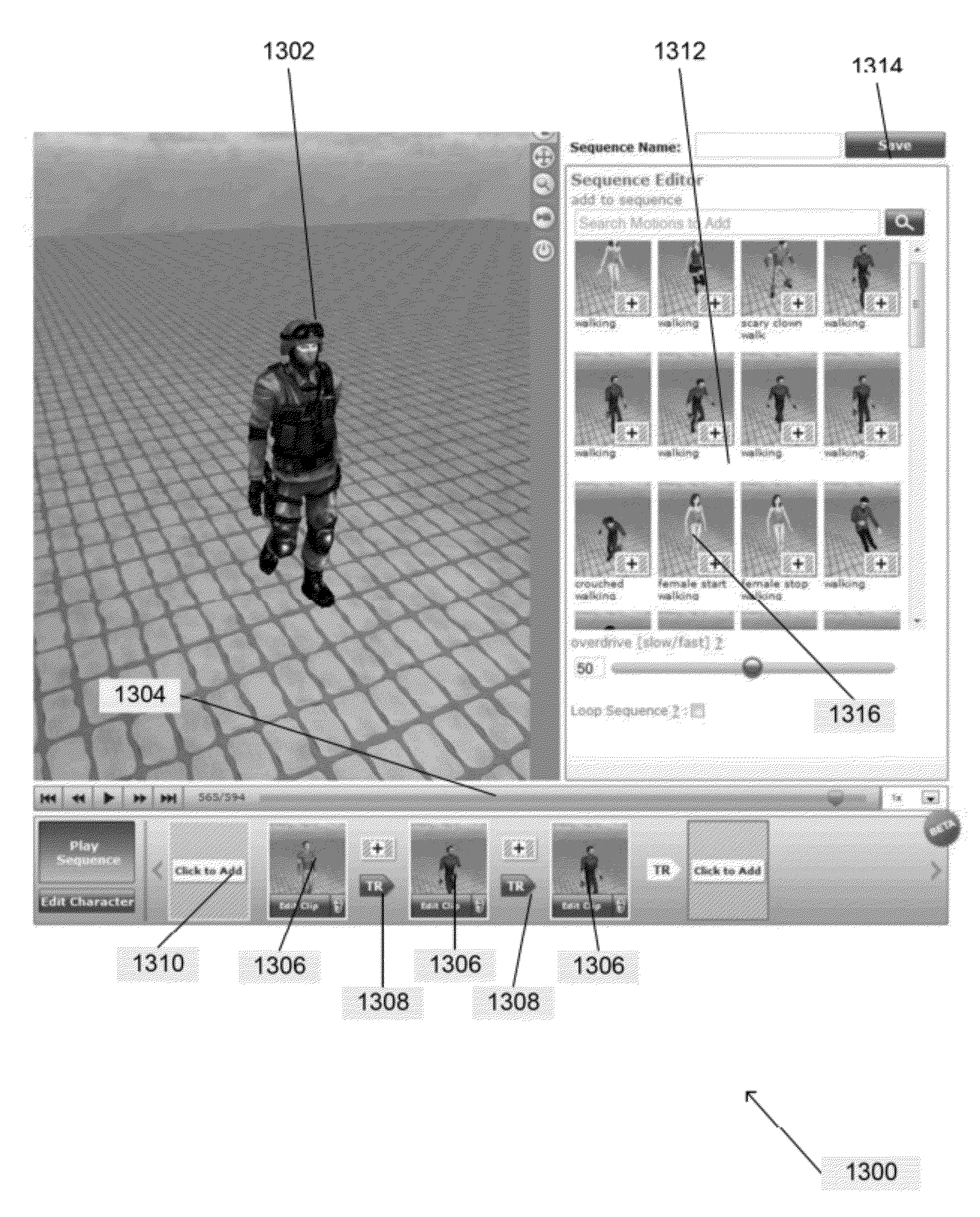

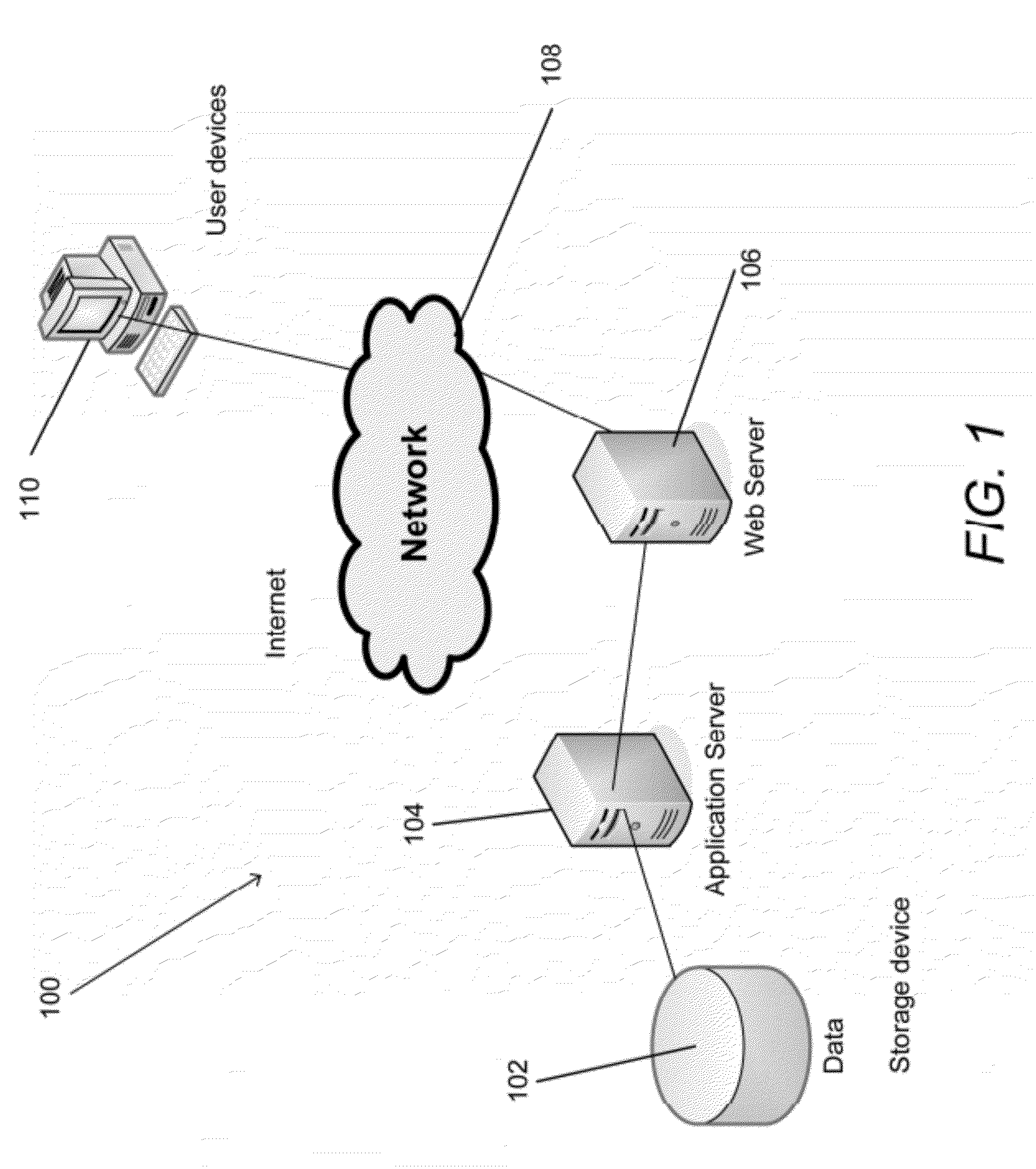

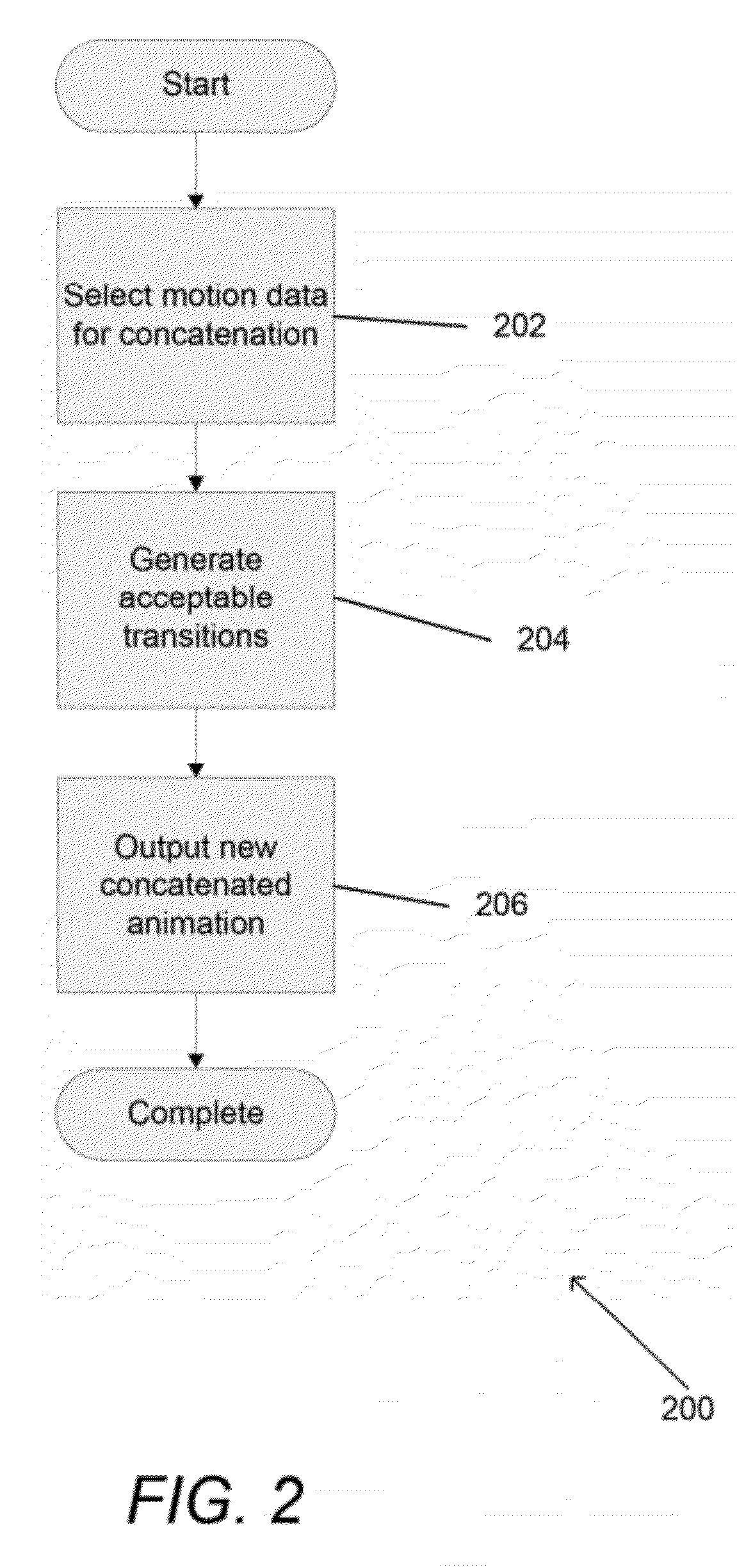

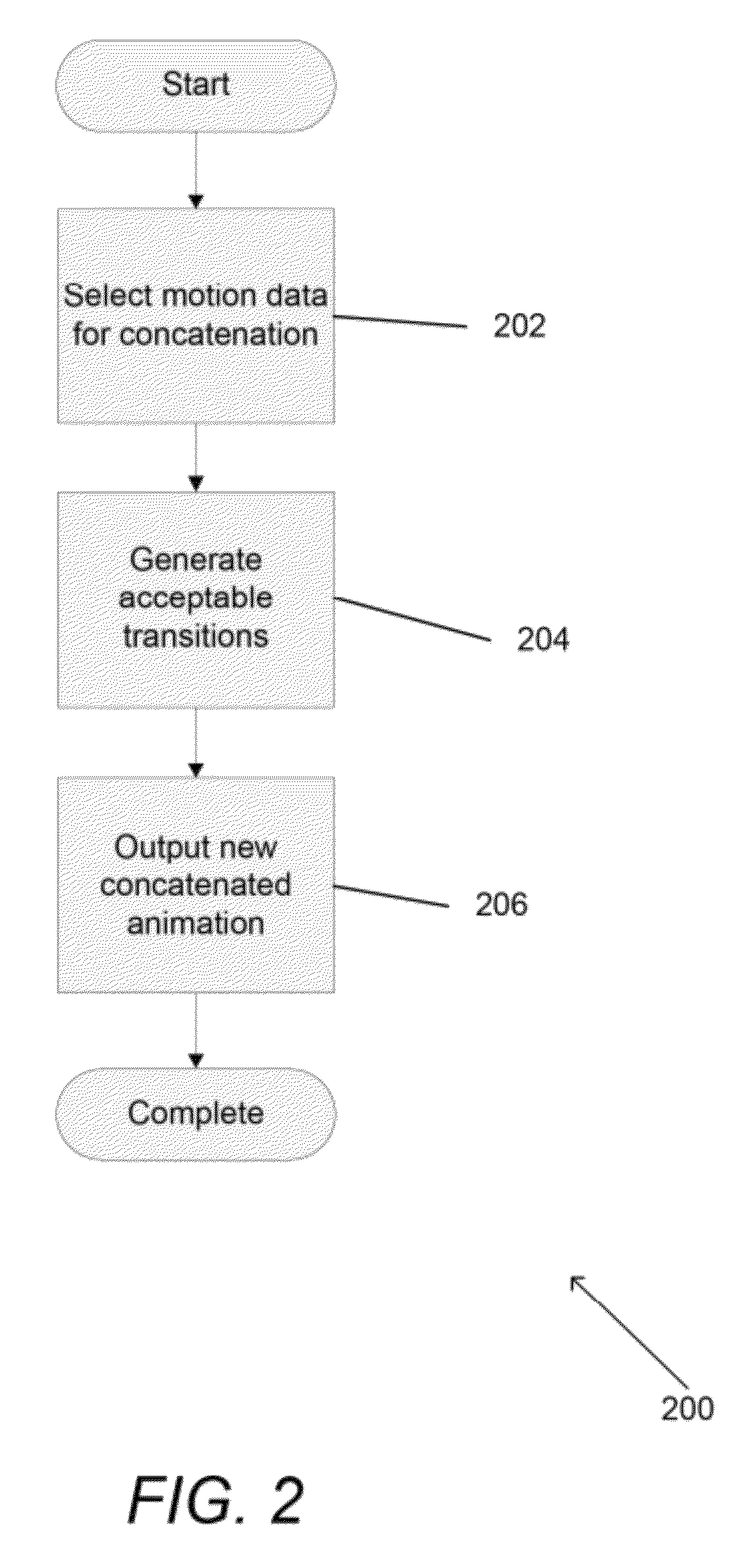

Real-time automatic concatenation of 3D animation sequences

Systems and methods for generating and concatenating 3D character animations are described including systems in which recommendations are made by the animation system concerning motions that smoothly transition when concatenated. One embodiment includes a server system connected to a communication network and configured to communicate with a user device that is also connected to the communication network. In addition, the server system is configured to generate a user interface that is accessible via the communication network, the server system is configured to receive high level descriptions of desired sequences of motion via the user interface, the server system is configured to generate synthetic motion data based on the high level descriptions and to concatenate the synthetic motion data, the server system is configured to stream the concatenated synthetic motion data to a rendering engine on the user device, and the user device is configured to render a 3D character animated using the streamed synthetic motion data.

Owner:ADOBE INC

Interactive design, synthesis and delivery of 3D character motion data through the web

ActiveUS20100073361A1Reduce in quantityImage data processing detailsAnimationInteractive designInteraction design

Systems and methods are described for animating 3D characters using synthetic motion data generated by generative models in response to a high level description of a desired sequence of motion provided by an animator. In a number of embodiments, an animation system is accessible via a server system that utilizes the ability of generative models to generate synthetic motion data across a continuum to enable multiple animators to effectively reuse the same set of previously recorded motion capture data to produce a wide variety of desired animation sequences. In several embodiments, an animator can upload a custom model of a 3D character and the synthetic motion data generated by the generative model is retargeted to animate the custom 3D character. One embodiment of the invention includes a server system configured to communicate with a database containing motion data including repeated sequences of motion, where the differences between the repeated sequences of motion are described using at least one high level characteristic. In addition, the server system is connected to a communication network, the server system is configured to train a generative model using the motion data, the server system is configured to generate a user interface that is accessible via the communication network, the server system is configured to receive a high level description of a desired sequence of motion via the user interface, the server system is configured to use the generative model to generate synthetic motion data based on the high level description of the desired sequence of motion, and wherein the server system is configured to transmit a stream via the communication network including information that can be used to display a 3D character animated using the synthetic motion data.

Owner:ADOBE INC

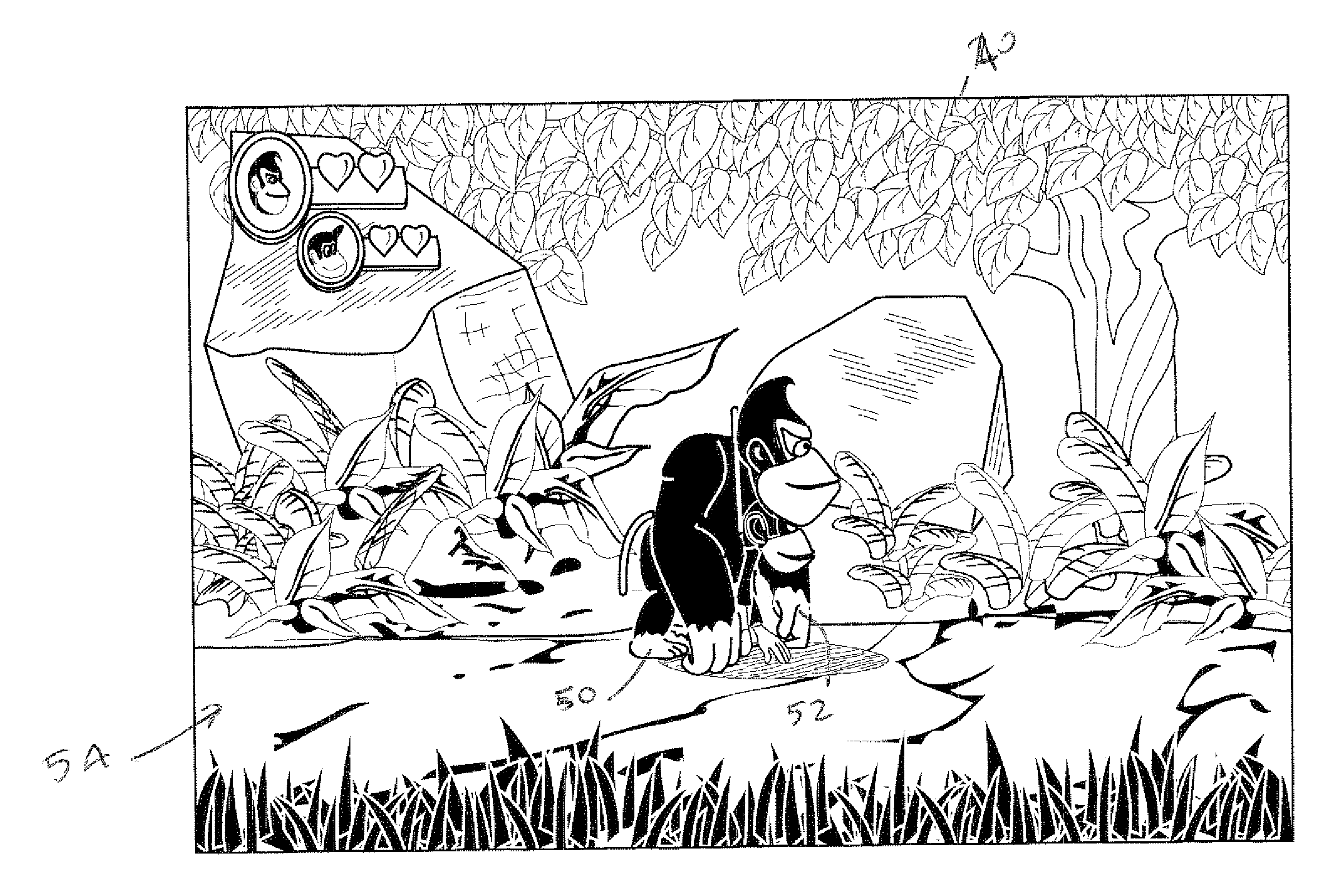

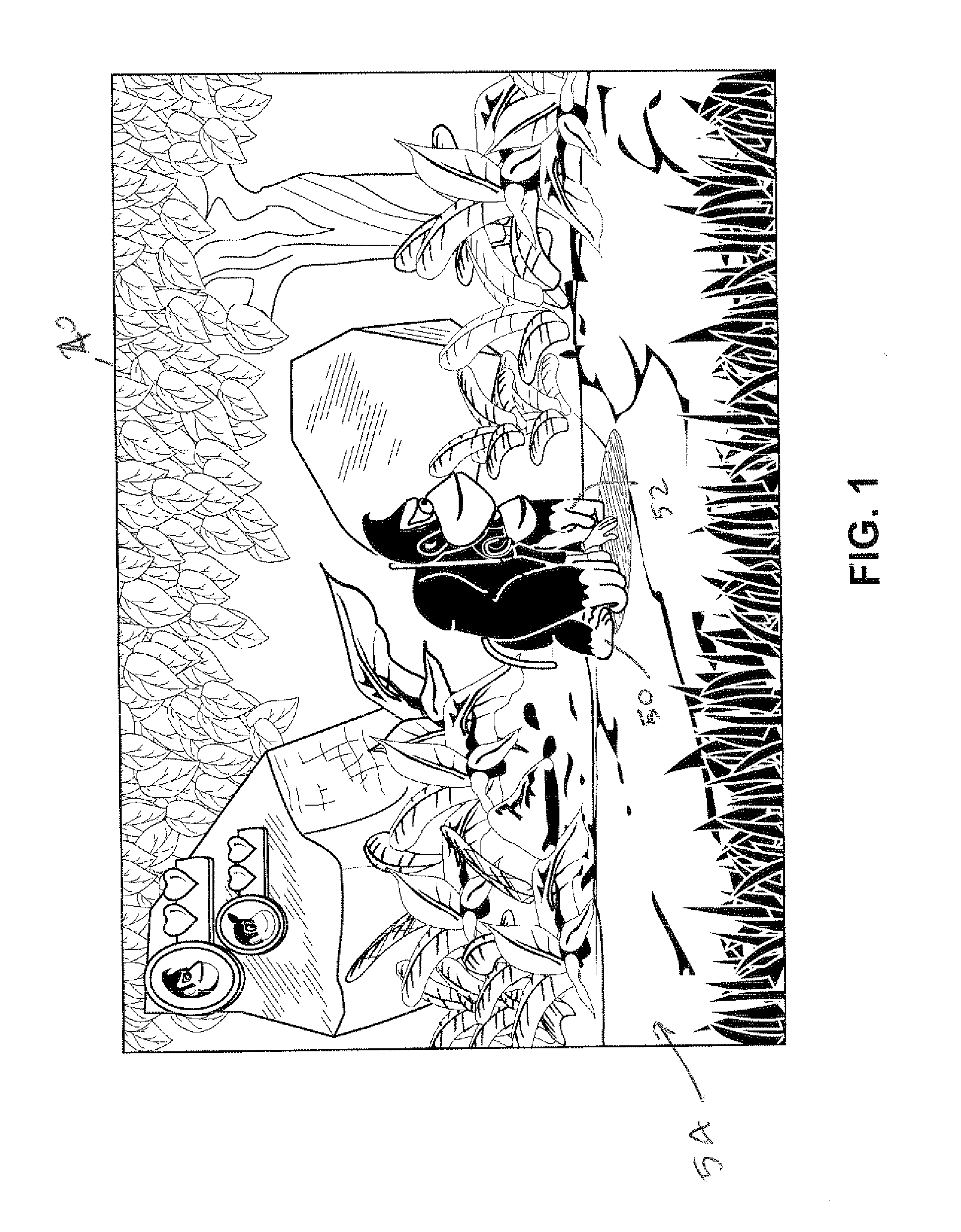

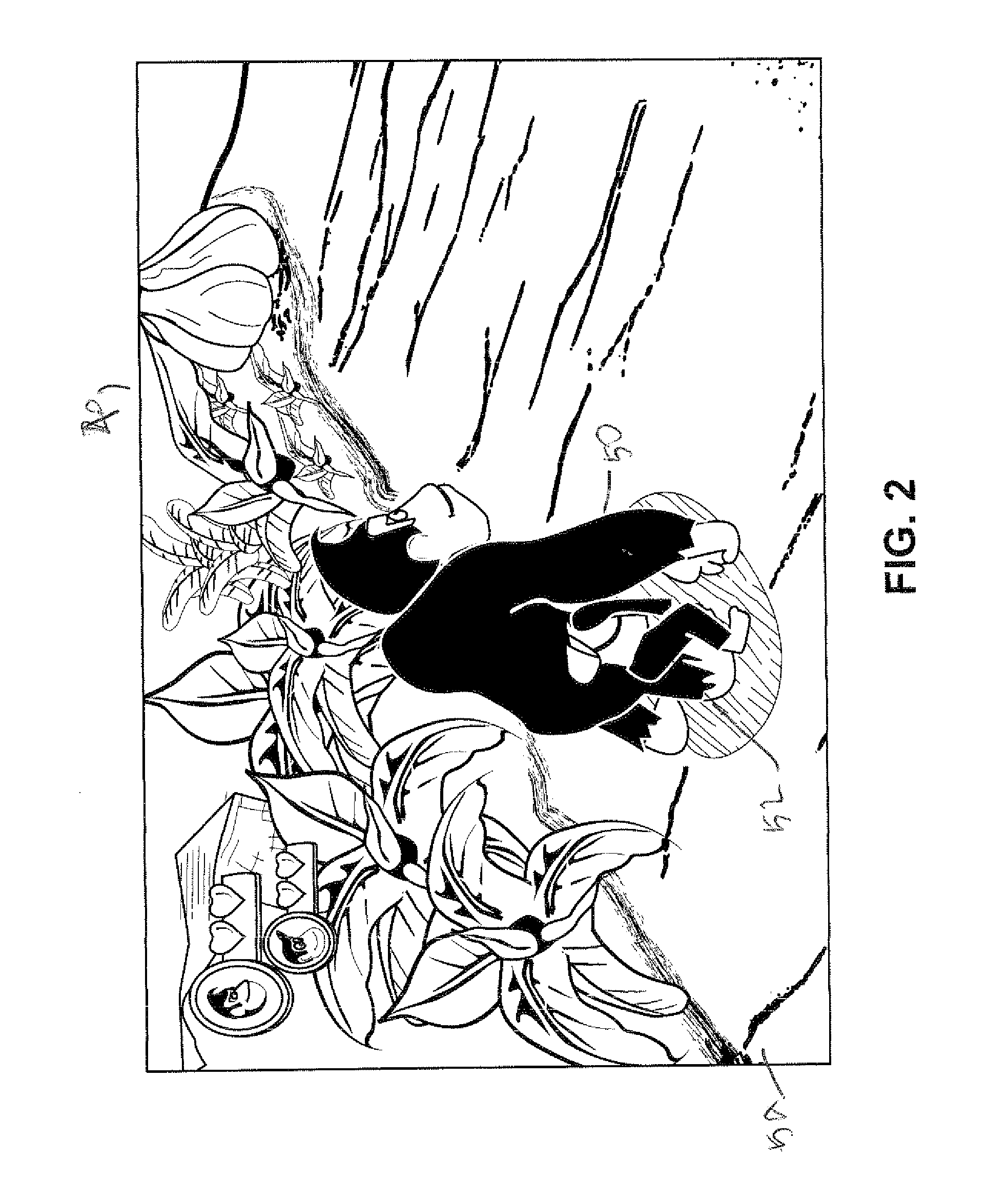

2d imposters for simplifying processing of plural animation objects in computer graphics generation

ActiveUS20110306417A1Reduce process complexityReduce memory loadAnimationVideo gamesGraphicsViewpoints

The technology herein involves use of 2D imposters to achieve seemingly 3D effects with high efficiency where plural objects such as animated characters move together such as when one character follows or carries another character. A common 2D imposter or animated sprite is used to image and animate the plural objects in 2D. When the plural objects are separated in space, each object can be represented using its respective 3D model. However, when the plural objects contact one another, occupy at least part of the same space, or are very close to one other (e.g., as would arise in a situation when the plural objects are moving together in tandem), the animation system switches from using plural respective 3D models to using a common 2D model to represent the plural objects. Such use of a common 2D model can be restricted in some implementations to situations where the user's viewpoint can be restricted to be at least approximately perpendicular to the plane of 2D model, or the 2D surface on which the combined image is texture mapped can be oriented in response to the current virtual camera position.

Owner:NINTENDO CO LTD

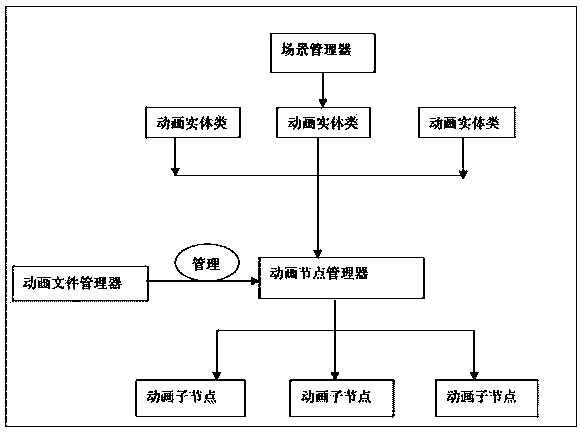

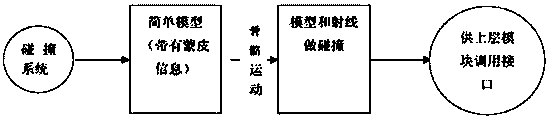

Three-dimensional game animation system

The invention discloses a three-dimensional game animation system which comprises an upper layer function module called by a program developer, an upper layer editing module called by an art developer, a bottom layer management module and a bottom layer function module, wherein the bottom layer management module and the bottom layer function module are called by a bottom layer program developer for development and maintenance. The modules are connected through standard interfaces and game animation can be conveniently manufactured simply by understanding calling rules of the interfaces of the modules. Due to the fact that the system comprises the upper layer function module, the upper layer editing module, the bottom layer management module and the bottom layer function module, functions are complete, the standard interfaces are adopted, the modules are independent of one another, the standard interfaces are used for connection, and developers can manufacture the game animation conveniently only through understanding the calling rules of the interfaces of the modules without understanding detail development modes of the animation system. Thus, the three-dimensional game animation system is easy to use and expand.

Owner:WUXI FANTIAN INFORMATION TECH

Real-time automatic concatenation of 3D animation sequences

Systems and methods for generating and concatenating 3D character animations are described including systems in which recommendations are made by the animation system concerning motions that smoothly transition when concatenated. One embodiment includes a server system connected to a communication network and configured to communicate with a user device that is also connected to the communication network. In addition, the server system is configured to generate a user interface that is accessible via the communication network, the server system is configured to receive high level descriptions of desired sequences of motion via the user interface, the server system is configured to generate synthetic motion data based on the high level descriptions and to concatenate the synthetic motion data, the server system is configured to stream the concatenated synthetic motion data to a rendering engine on the user device, and the user device is configured to render a 3D character animated using the streamed synthetic motion data.

Owner:ADOBE SYST INC

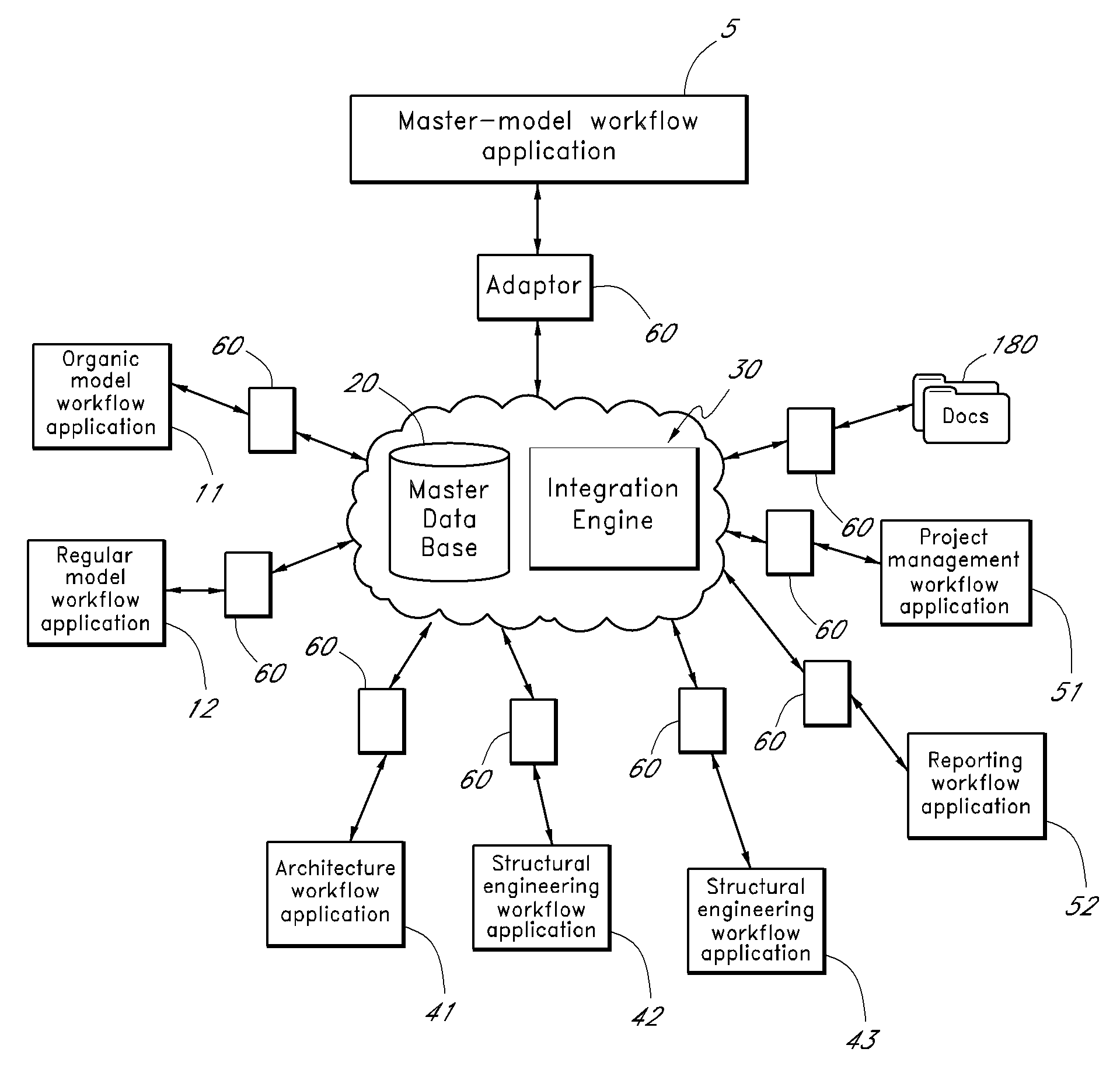

Integration system supporting dimensioned modeling system

InactiveUS20100241471A1Maintain consistencyProvide functionalityGeometric CADResourcesThird partyInformation sharing

The disclosed inventions and embodiments relate to the propagation of information among workflow applications used by a design project such as a construction design project and the creation and use of dimensioned and animated models for such projects. The workflow applications may be extended to enable participation in the information sharing or the system may provide functionality external to the tools that facilitates the participation of the tools. Information from various sources, including the workflow applications and third party sources, can be represented and modeled in an animation system. Sometimes the propagation is enabled in part by a store of information that also enabled reuse and reporting. Information used and generated by the various workflow applications is kept consistent among the different workflow applications and among the different representations of that information.

Owner:SCENARIO DESIGN

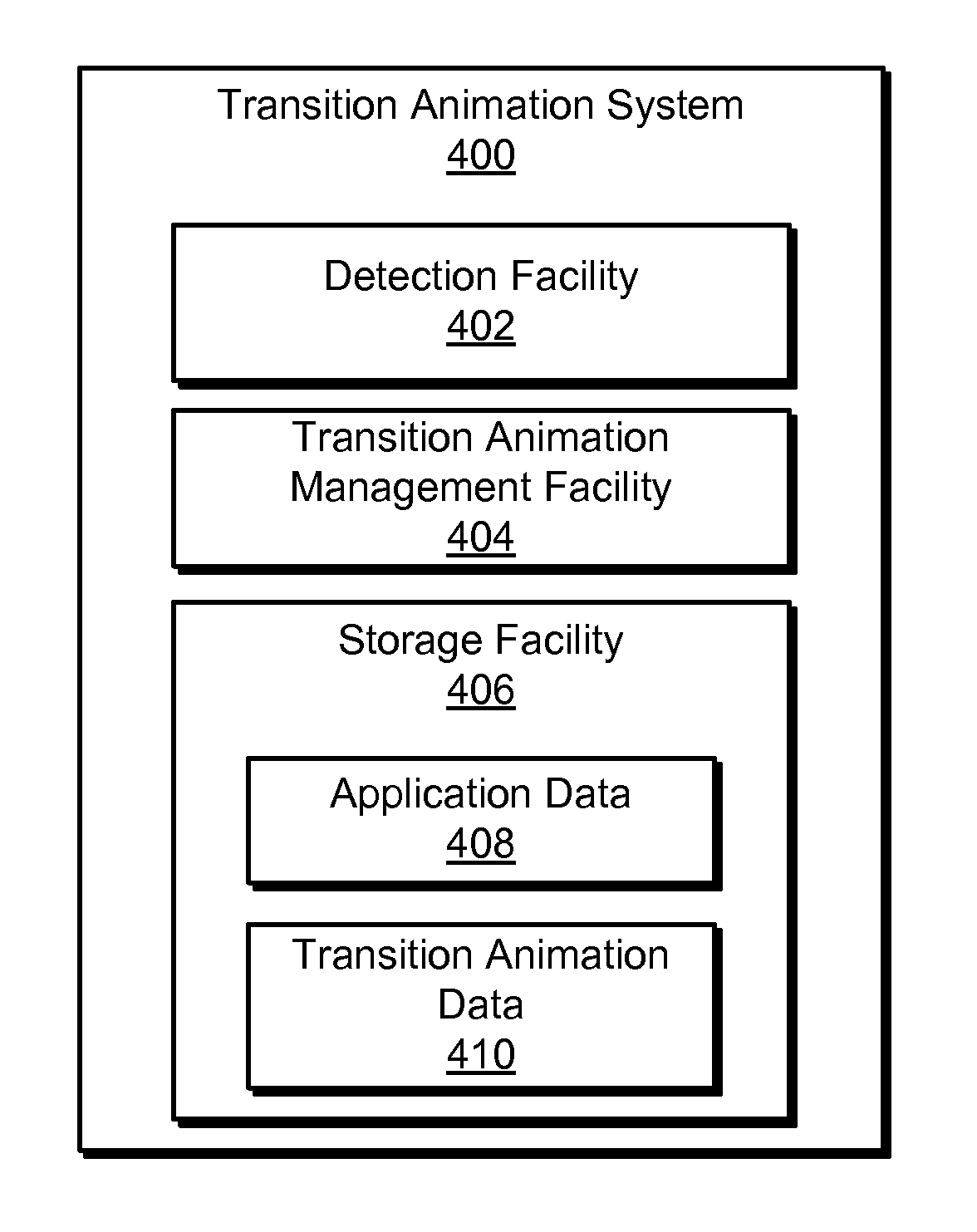

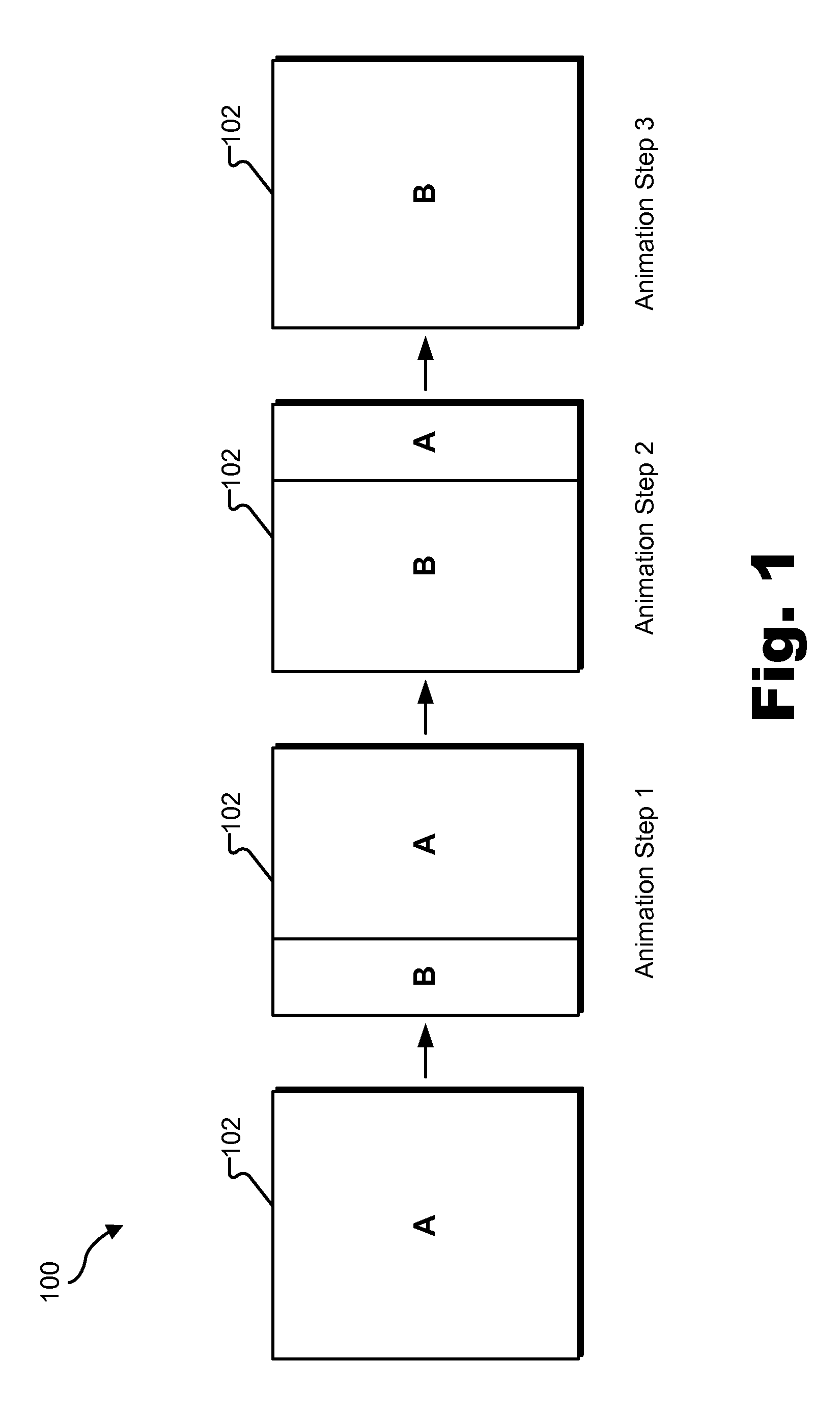

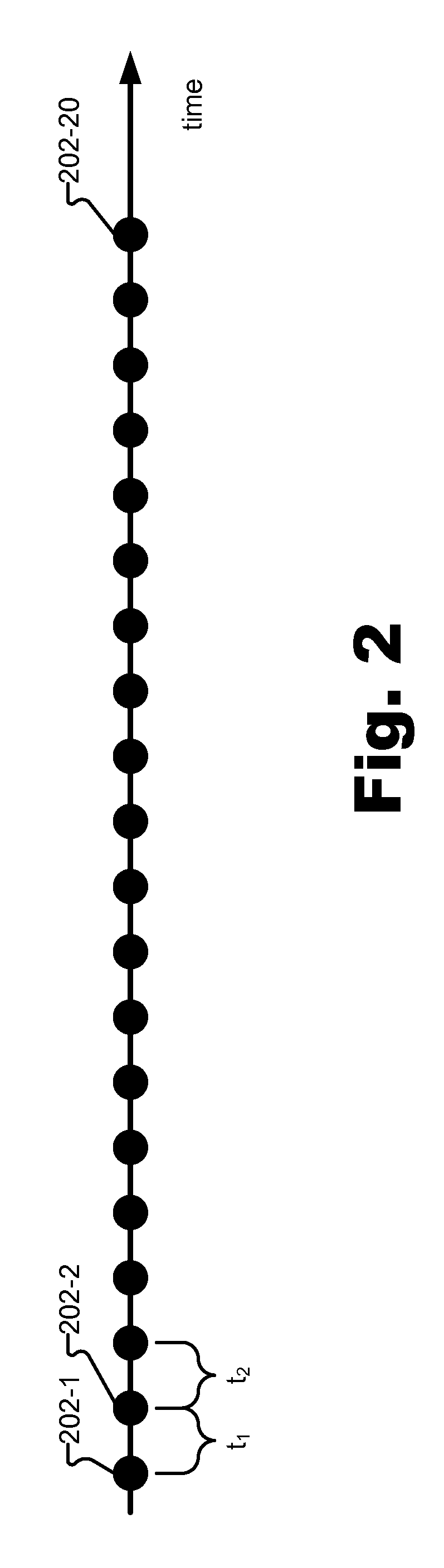

Transition Animation Methods and Systems

An exemplary method includes a transition animation system detecting a screen size of a display screen associated with a computing device executing an application, automatically generating, based on the detected screen size, a plurality of animation step values each corresponding to a different animation step included in a plurality of animation steps that are to be involved in an animation of a transition of a user interface associated with the application into the display screen, and directing the computing device to perform the plurality of animation steps in accordance with the generated animation step values. Corresponding methods and systems are also disclosed.

Owner:VERIZON PATENT & LICENSING INC

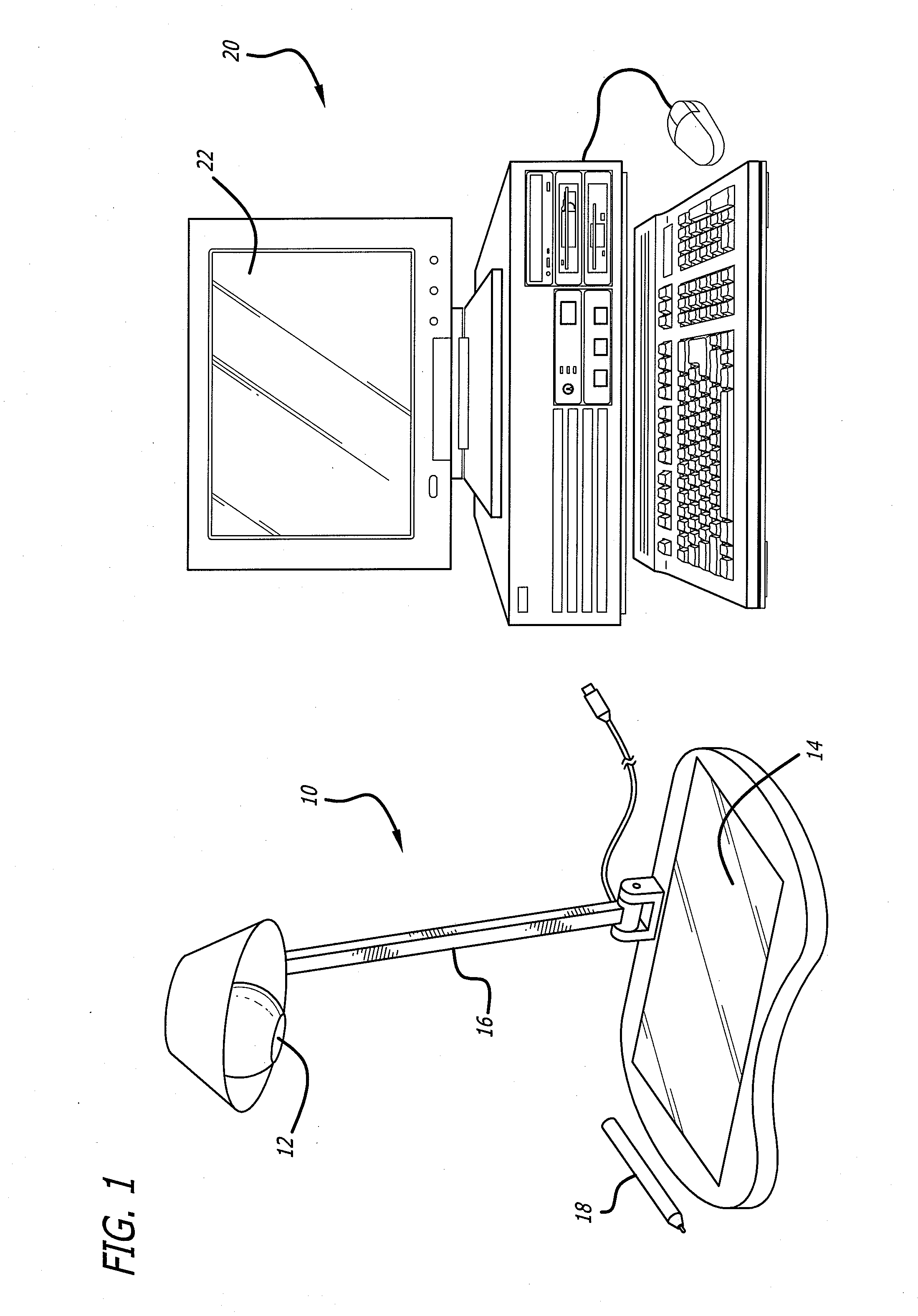

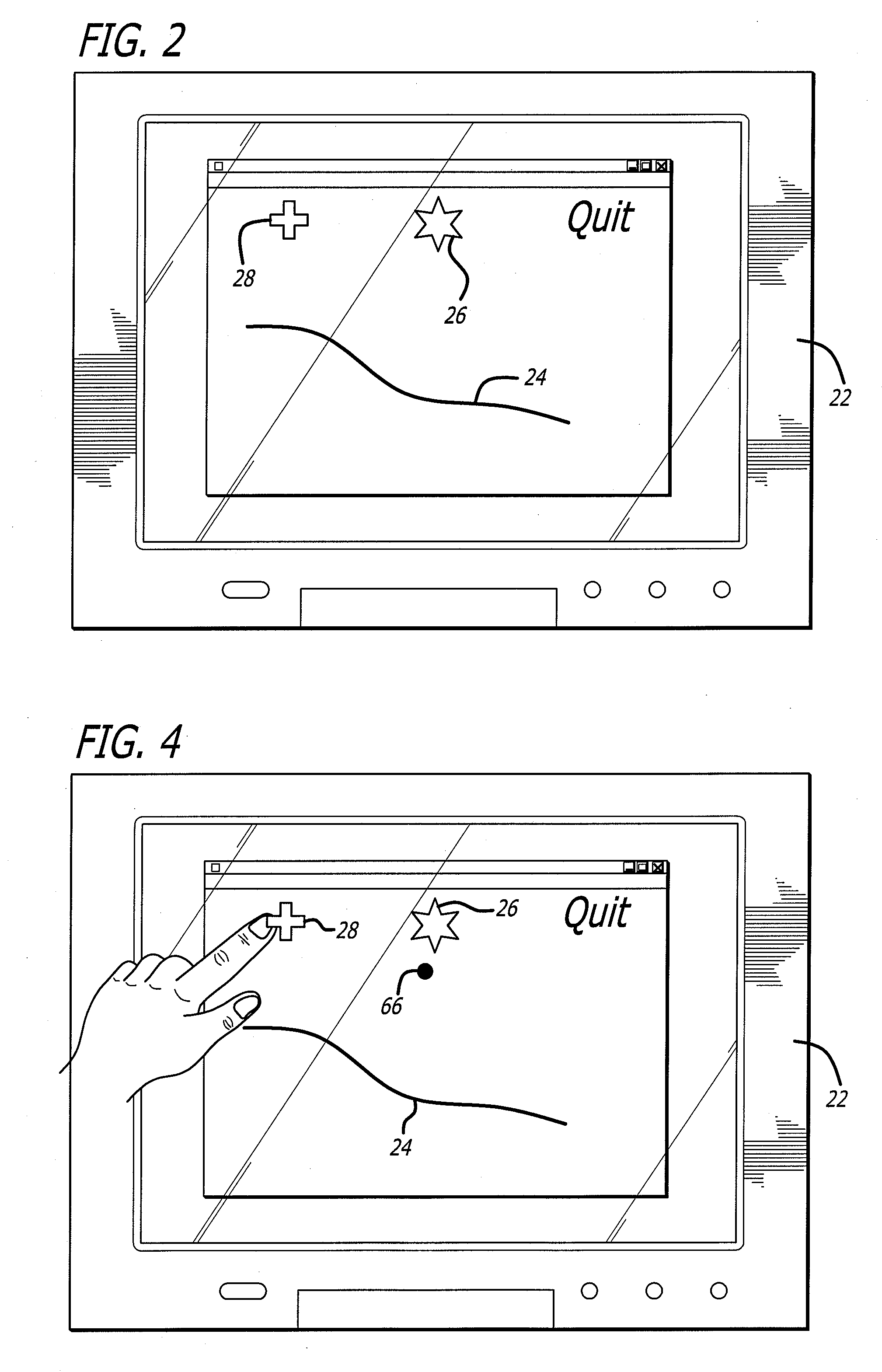

Electronic image identification and animation system

InactiveUS20090174656A12D-image generationCharacter and pattern recognitionGraphicsElectronic systems

An electronic system that includes a working surface and a camera that can capture a plurality of images on the working surface. The system also includes a control station that is coupled to the camera and has a monitor that can display the captured images. The monitor displays a moving graphical image having a characteristic that is a function of a user input on the working surface. By way of example, the graphical image may be a character created from markings formed on the working surface by the user. The system can then “animate” the character by causing graphical character movement.

Owner:RUDELL DESIGN

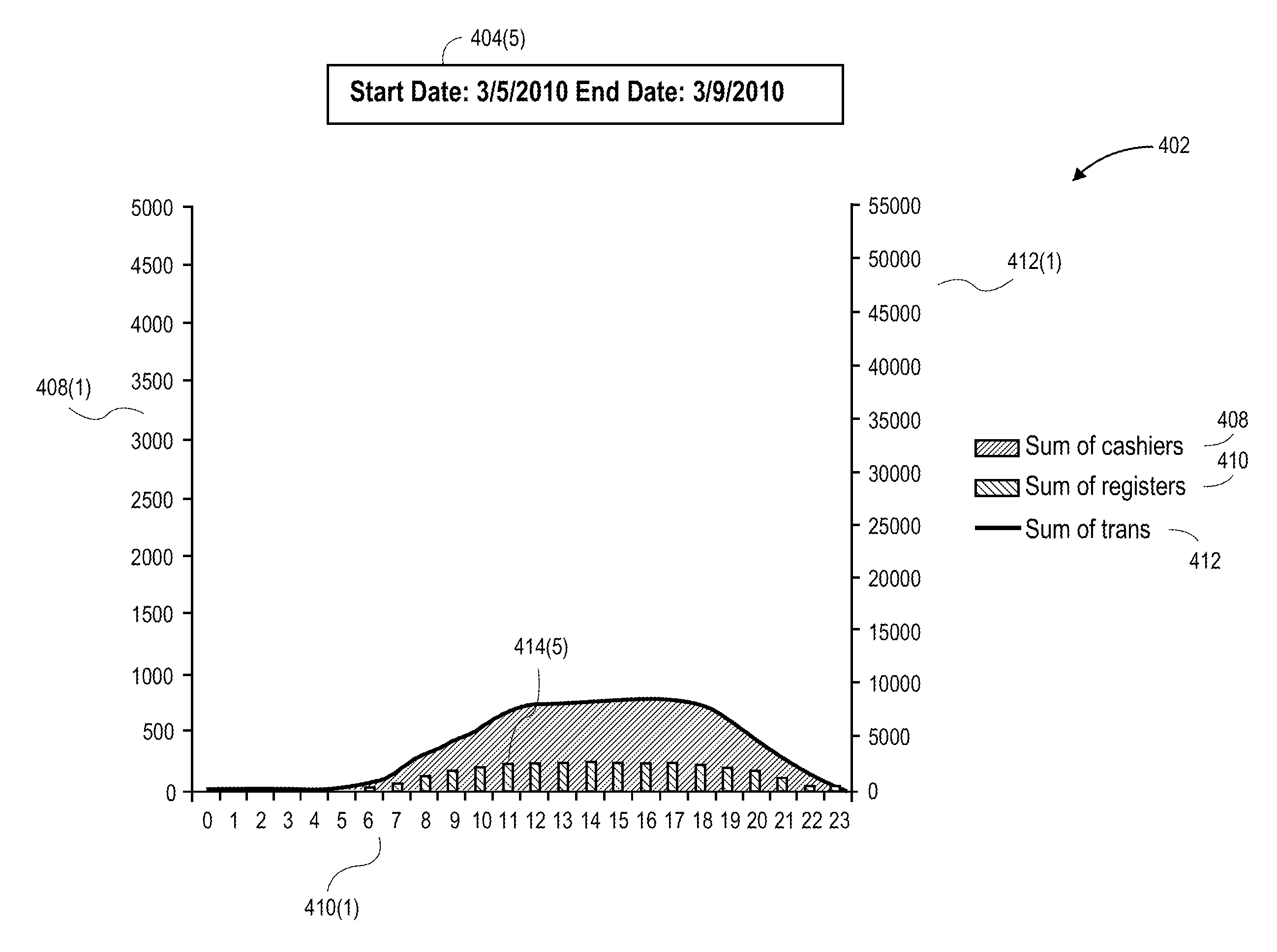

Data Analytics Animation System and Method

InactiveUS20150113460A1Execution for user interfacesSpecial data processing applicationsData setAnimation

Owner:WALMART APOLLO LLC

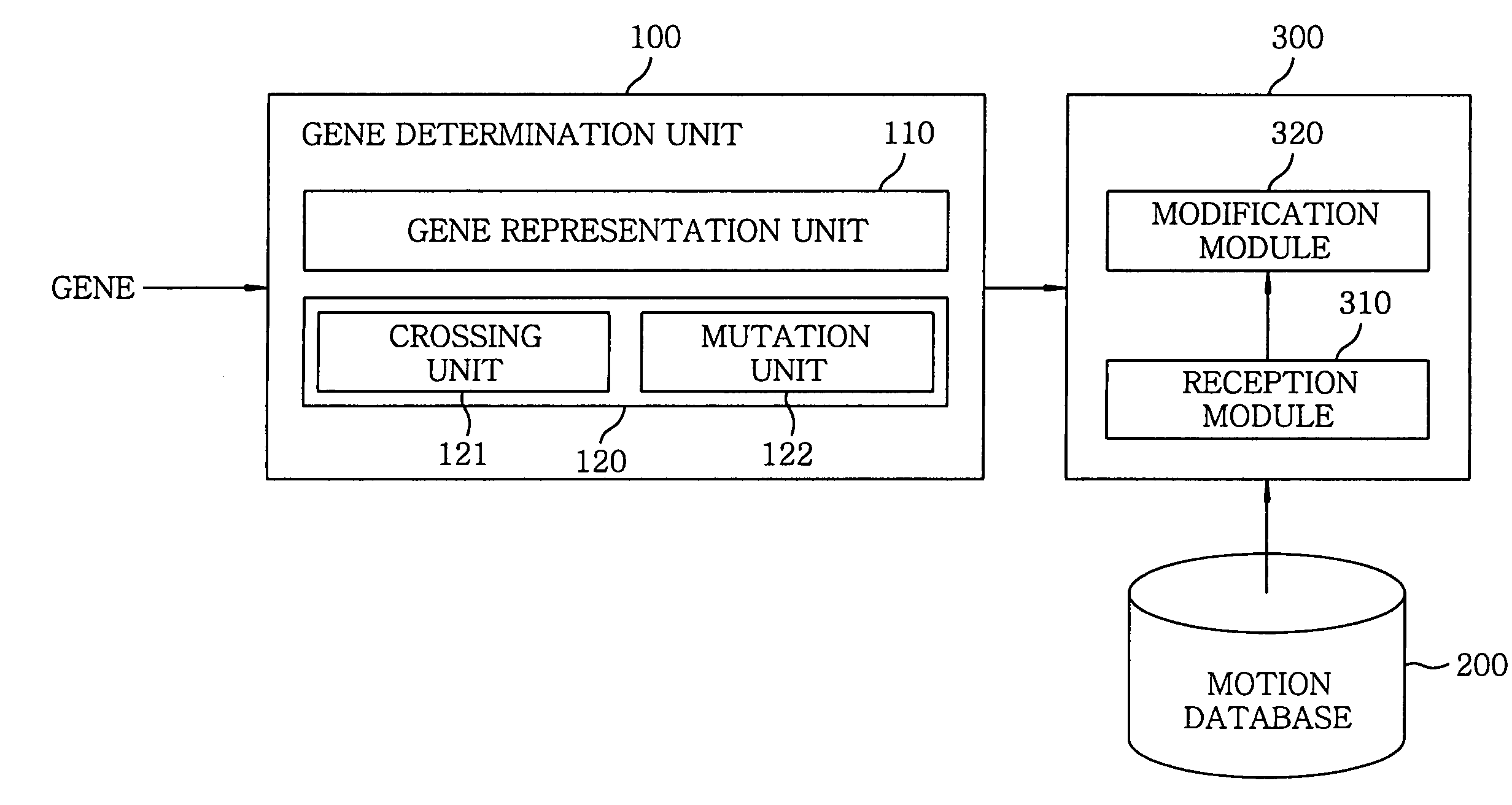

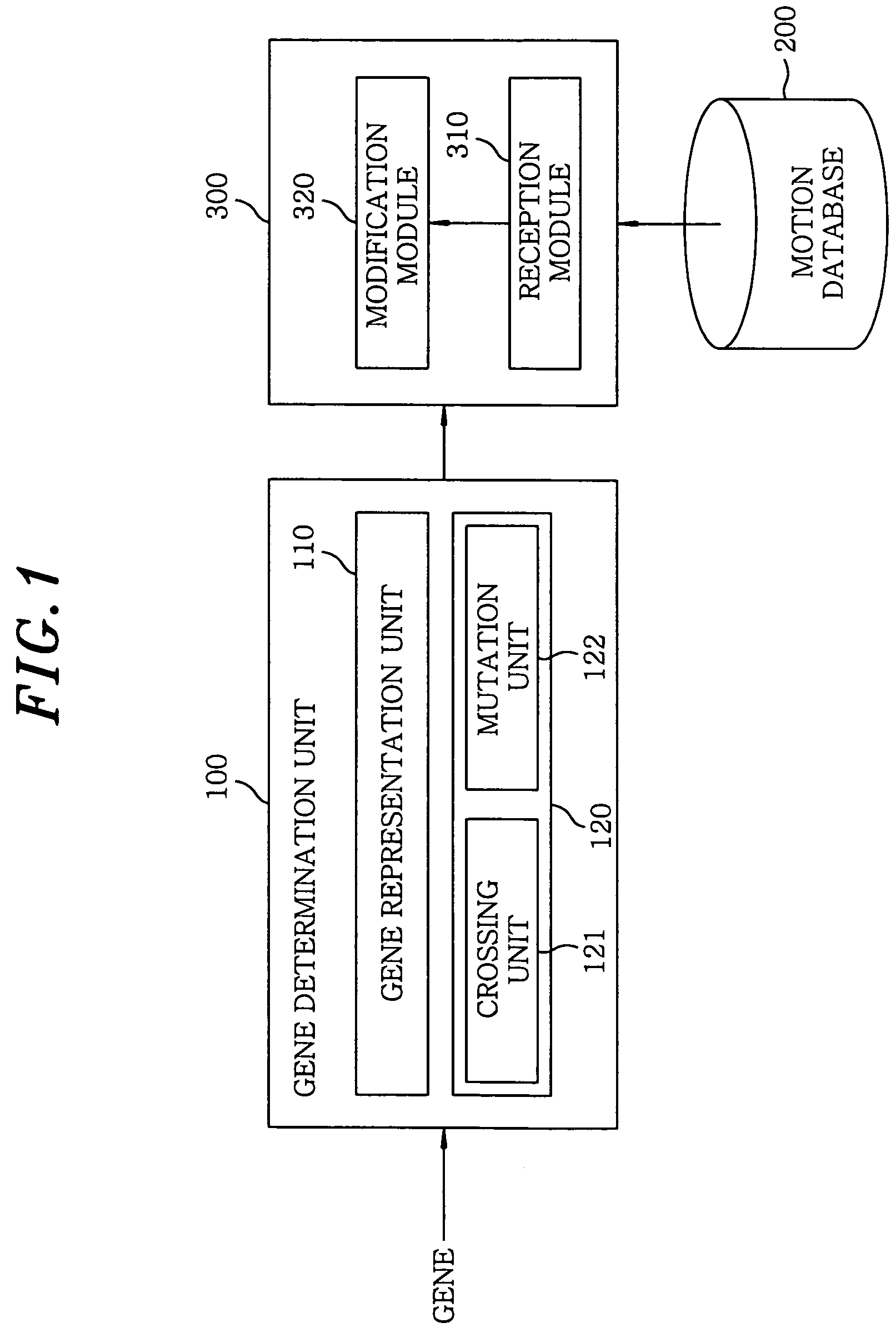

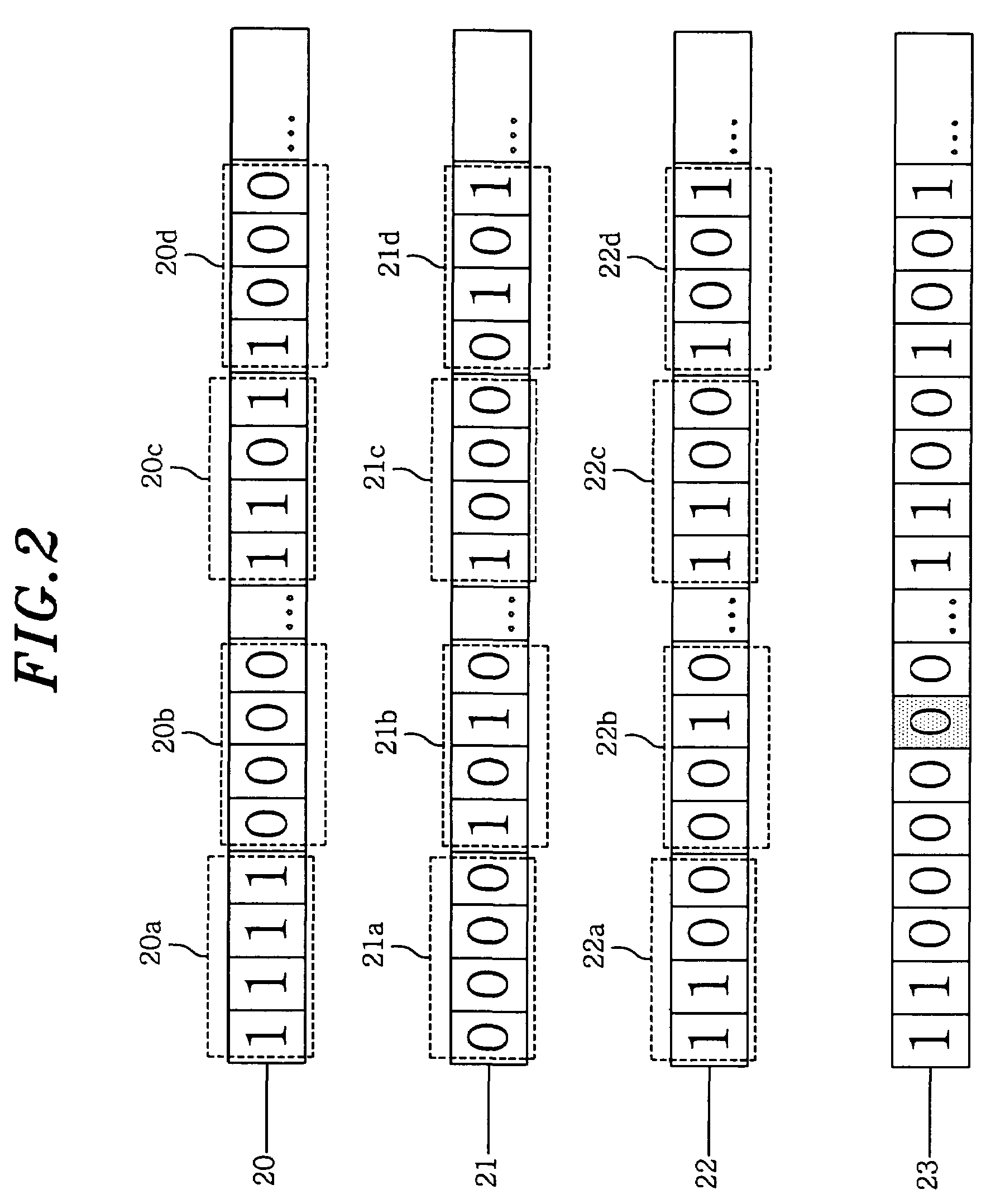

Three-dimensional animation system and method using evolutionary computation

A three-dimensional animation system using evolutionary computation includes a gene determination unit and a motion generation unit. The gene determination unit calculates modified gene information by receiving at least one genes and modifying the genes evolutionarily. The motion generation unit receives motion data and modifies the motion data based on the modified gene information. A three-dimensional animation method is also disclosed.

Owner:ELECTRONICS & TELECOMM RES INST

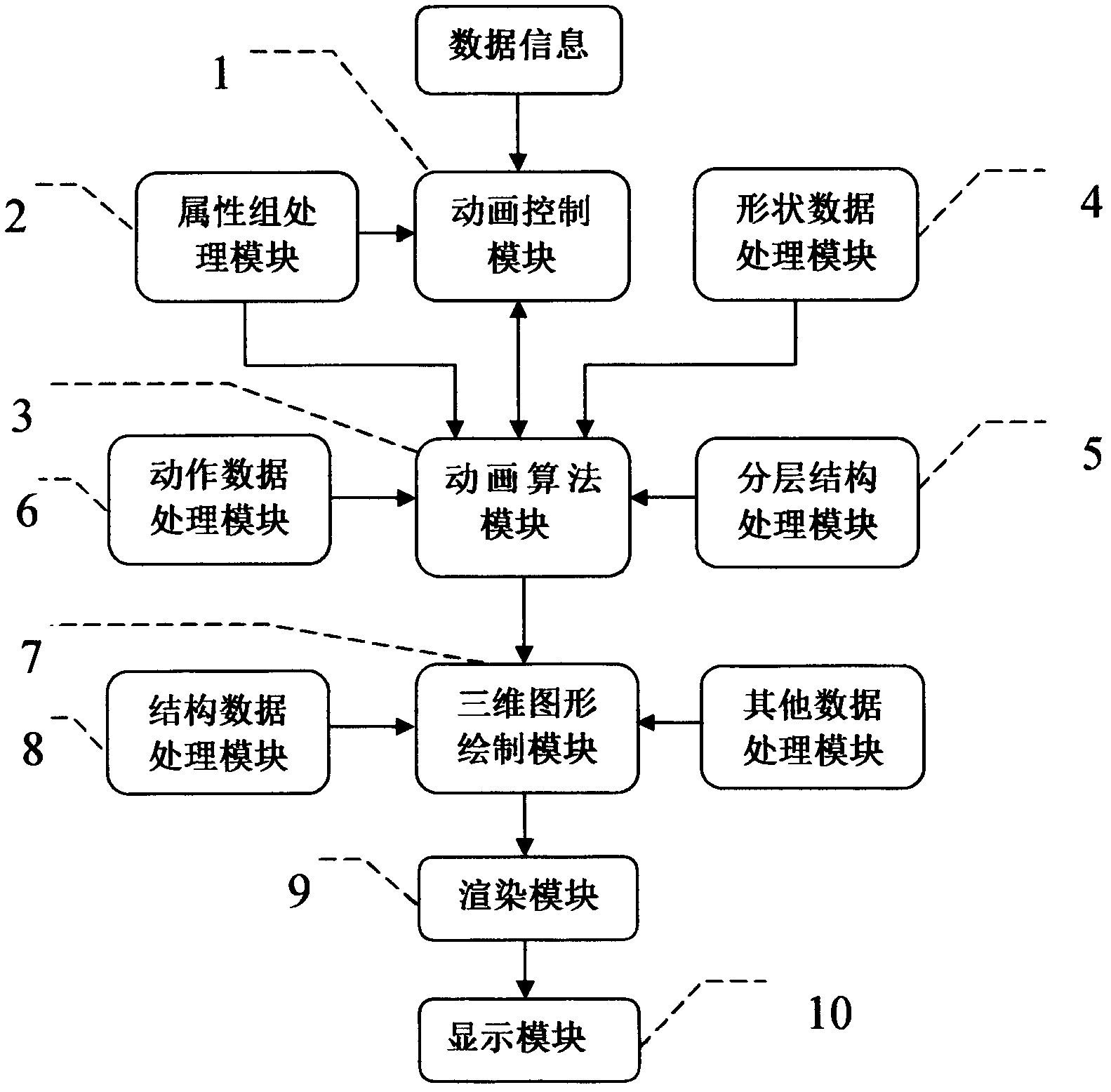

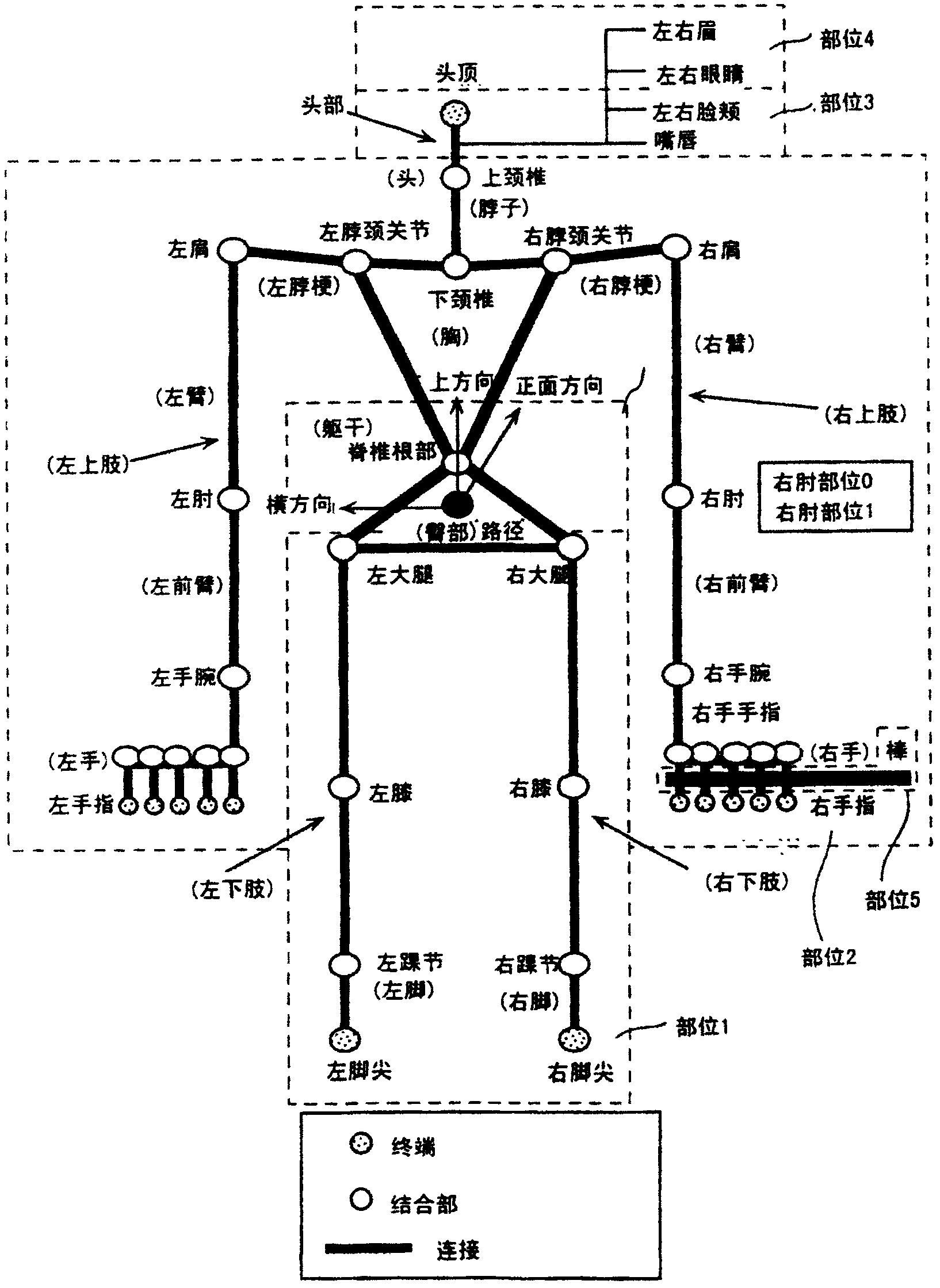

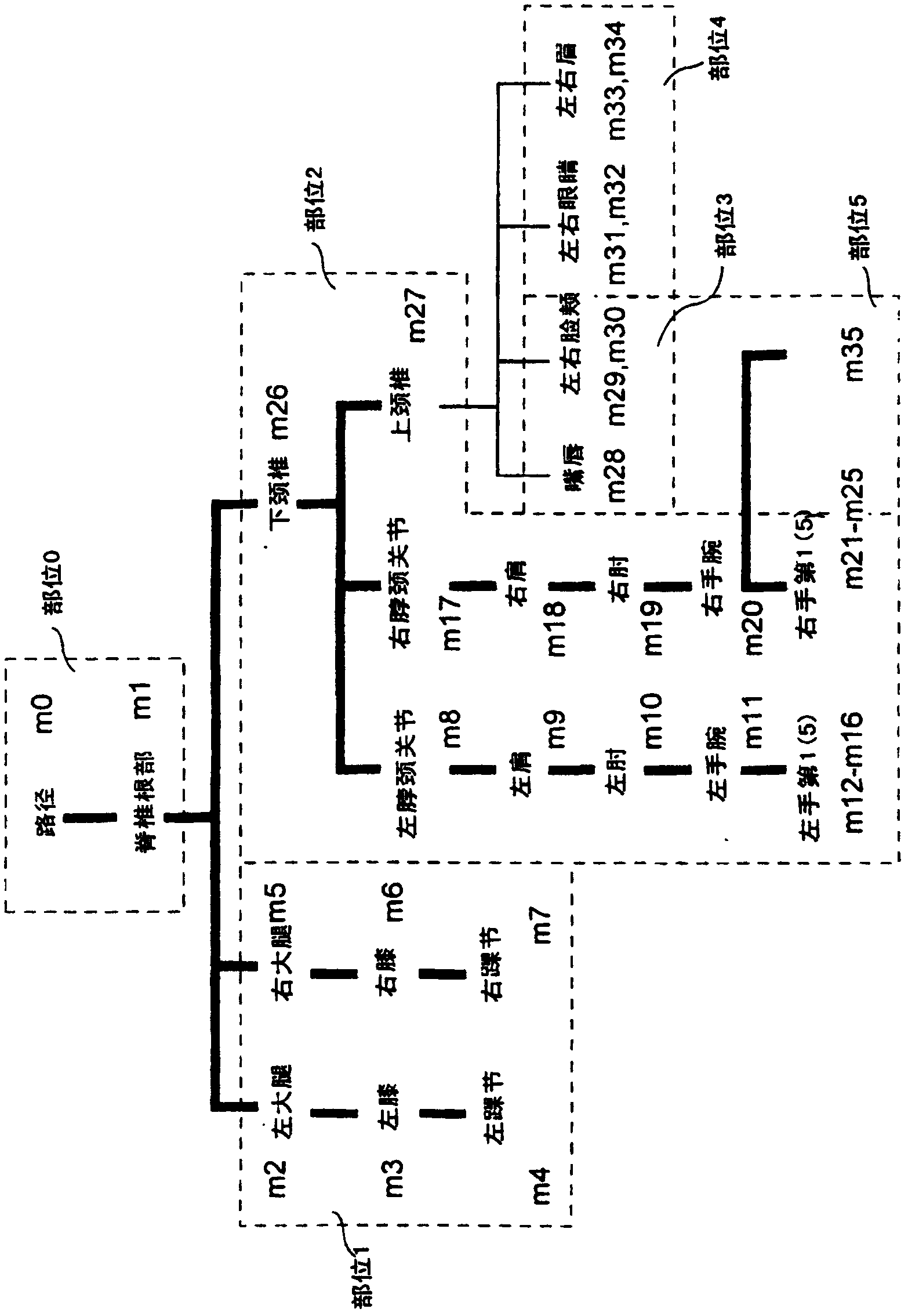

Three-dimensional animation system of computer and animation method

InactiveCN102999934AIncrease diversityPromote generationAnimationAnimationThree dimensional graphics

The invention discloses a three-dimensional animation system of a computer. The three-dimensional animation system is implemented by few data and is efficient and diversified, and redundancy of the data can be reduced. The three-dimensional animation system is characterized in that an animation control module 1 specifies shape data, specifies hierarchical data, specifies attribute groups and specifies state information; and an animation algorithm module 3 acquires the shape data from a shape data processing module 5, acquires the hierarchical data from a hierarchical processing module 4, acquires attributes from an attribute group processing module 2 and acquires action data recorded in the state information from an action data processing module 6. The action data which are acquired corresponding to numbers of the attribute groups are changed into the shape data according to the hierarchical data; and then a three-dimensional graph drawing module 7 carries out three-dimensional drawing processing for the changed shape data to generate images, and graph rendering is carried out in a rendering module 9, and the images are displayed in a display module 10.

Owner:SHANGHAI WEITA DIGITAL TECH

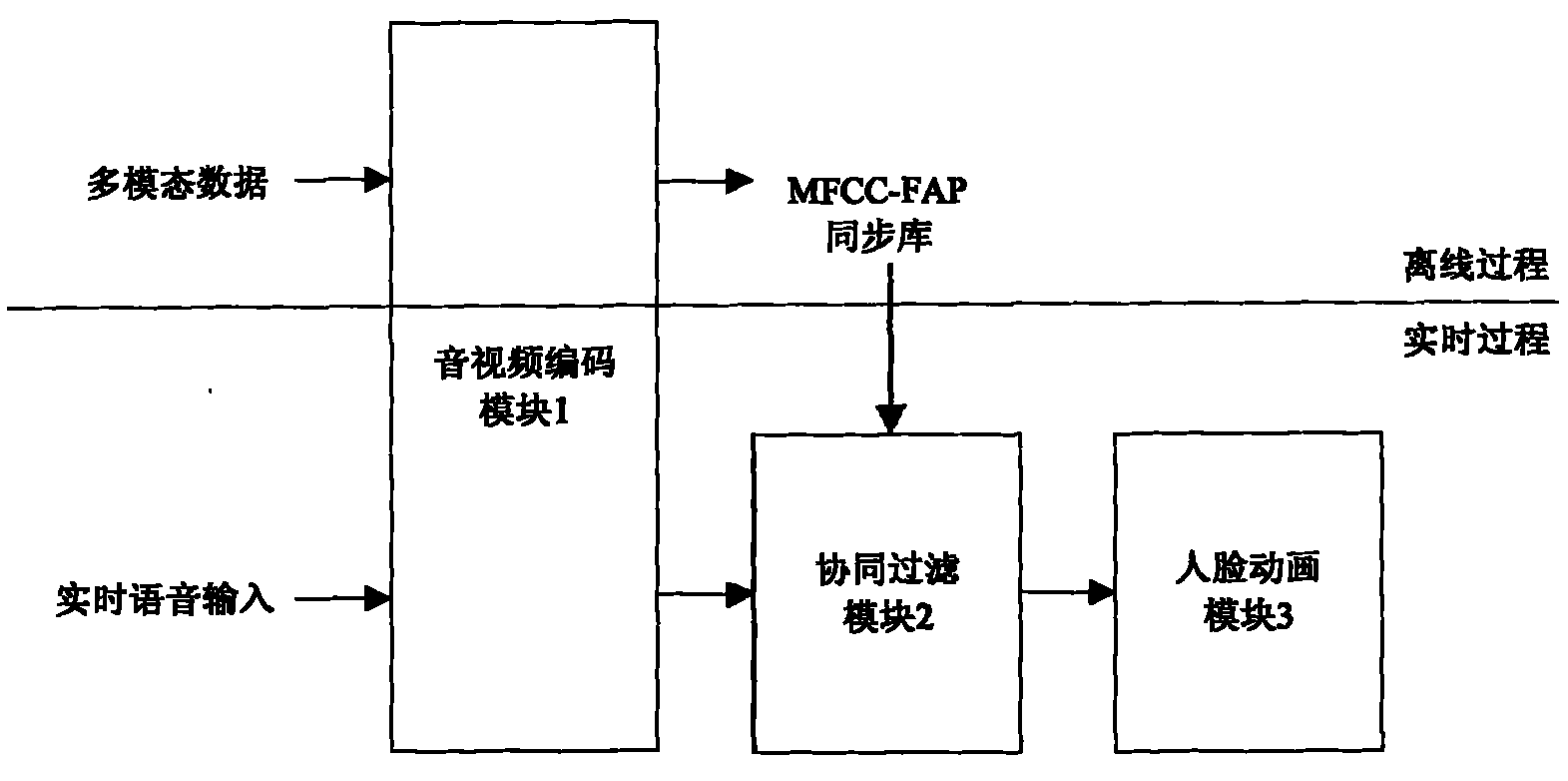

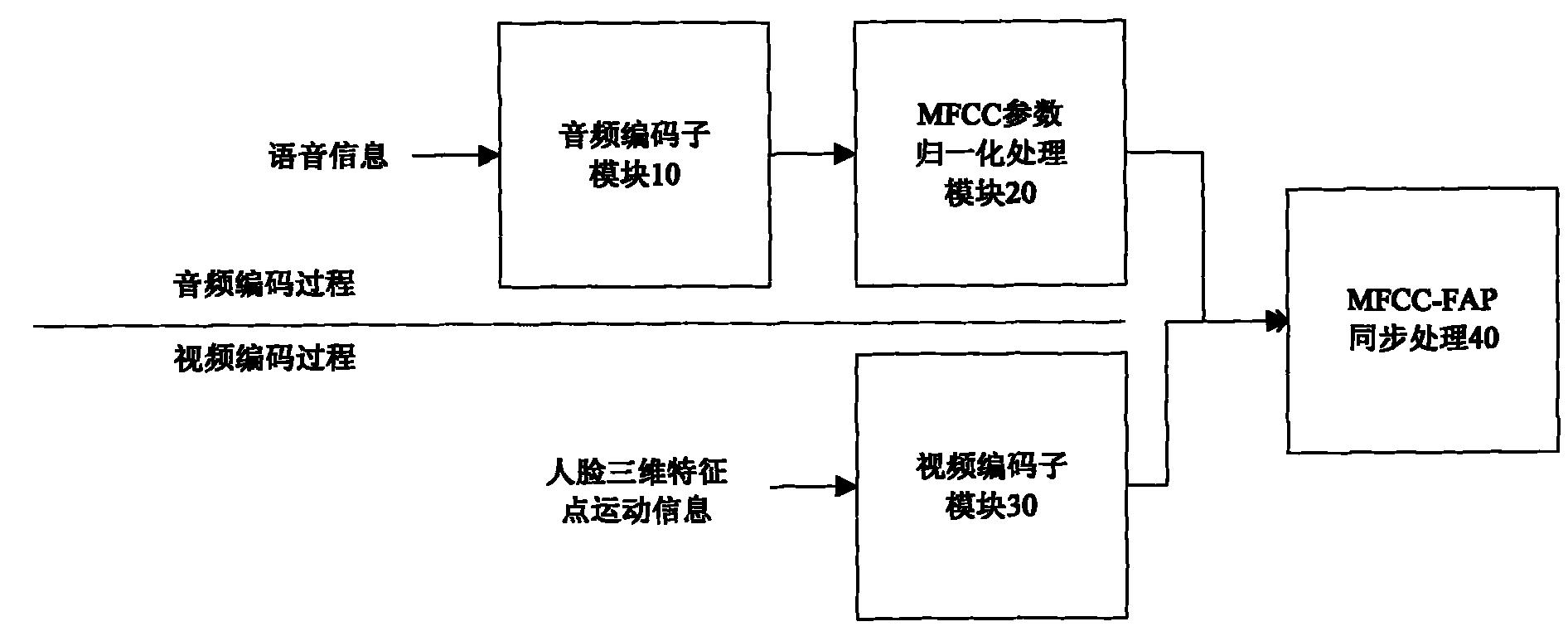

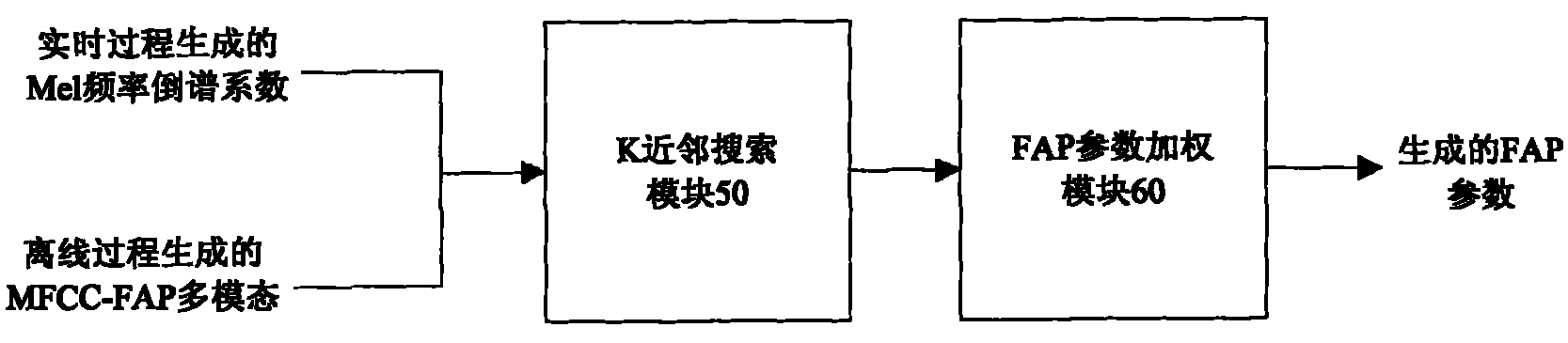

Collaborative filtering-based real-time voice-driven human face and lip synchronous animation system

InactiveCN101930619AReduce complexityReduce decreaseTelevision systemsAnimationMel-frequency cepstrumAnimation system

The invention discloses a collaborative filtering-based real-time voice-driven human face and lip synchronous animation system. By inputting voice in real time, a human head model makes lip animation synchronous with the input voice. The system comprises an audio / video coding module, a collaborative filtering module, and an animation module; the module respectively performs Mel frequency cepstrum parameter coding and human face animation parameter coding in the standard of Moving Picture Experts Group (MPEG-4) on the acquired voice and human face three-dimensional characteristic point motion information to obtain a Mel frequency cepstrum parameter and human face animation parameter multimodal synchronous library; the collaborative filtering module solves a human face animation parameter synchronous with the voice by combining Mel frequency cepstrum parameter coding of the newly input voice and the Mel frequency cepstrum parameter and human face animation parameter multimodal synchronous library through collaborative filtering; and the animation module carries out animation by driving the human face model through the human face animation parameter. The system has the advantages of better sense of reality, real-time and wider application environment.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

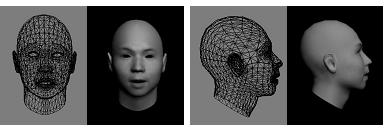

Method for realizing three-dimensional facial animation based on layered modeling and multi-body driving

InactiveCN102157010AFine forehead wrinkle effectFlexible driving of three-dimensional rotary motionAnimationAnimationModelSim

The invention relates to a method for realizing a three-dimensional facial animation based on layered modeling and multi-body driving. In the method, on the basis of constructing a layered facial model, independent driving is carried out according to the characteristics of each rigid or flexible body so as to simulate more true and more subtle expressions to synthesize driving effects of eyes and a mouth. In the embodiment of the invention, the three-dimensional facial animation can be acquired through simulation experiments only by respectively shooting a front photograph and a profile photograph of the face of a model, wherein the face of the model needs to be in a naturally relaxed state, two eyes look ahead and the head needs to be vertical to the ground. The method for realizing a three-dimensional facial animation based on layered modeling and multi-body driving is used as the key link of synthesizing a virtual human and has an important actual application value to human-machine interaction and a virtual human voice animation system.

Owner:SHANGHAI UNIV

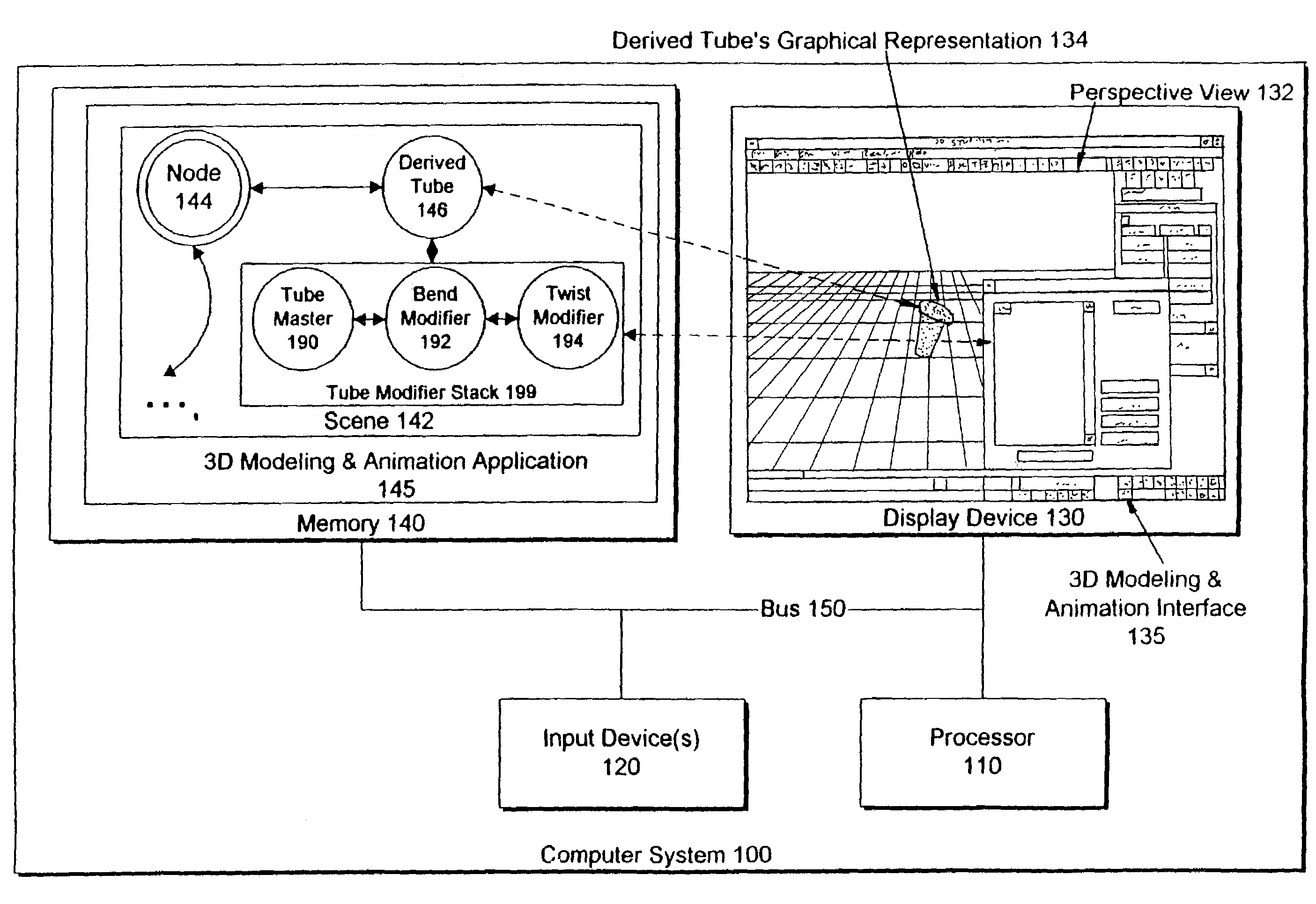

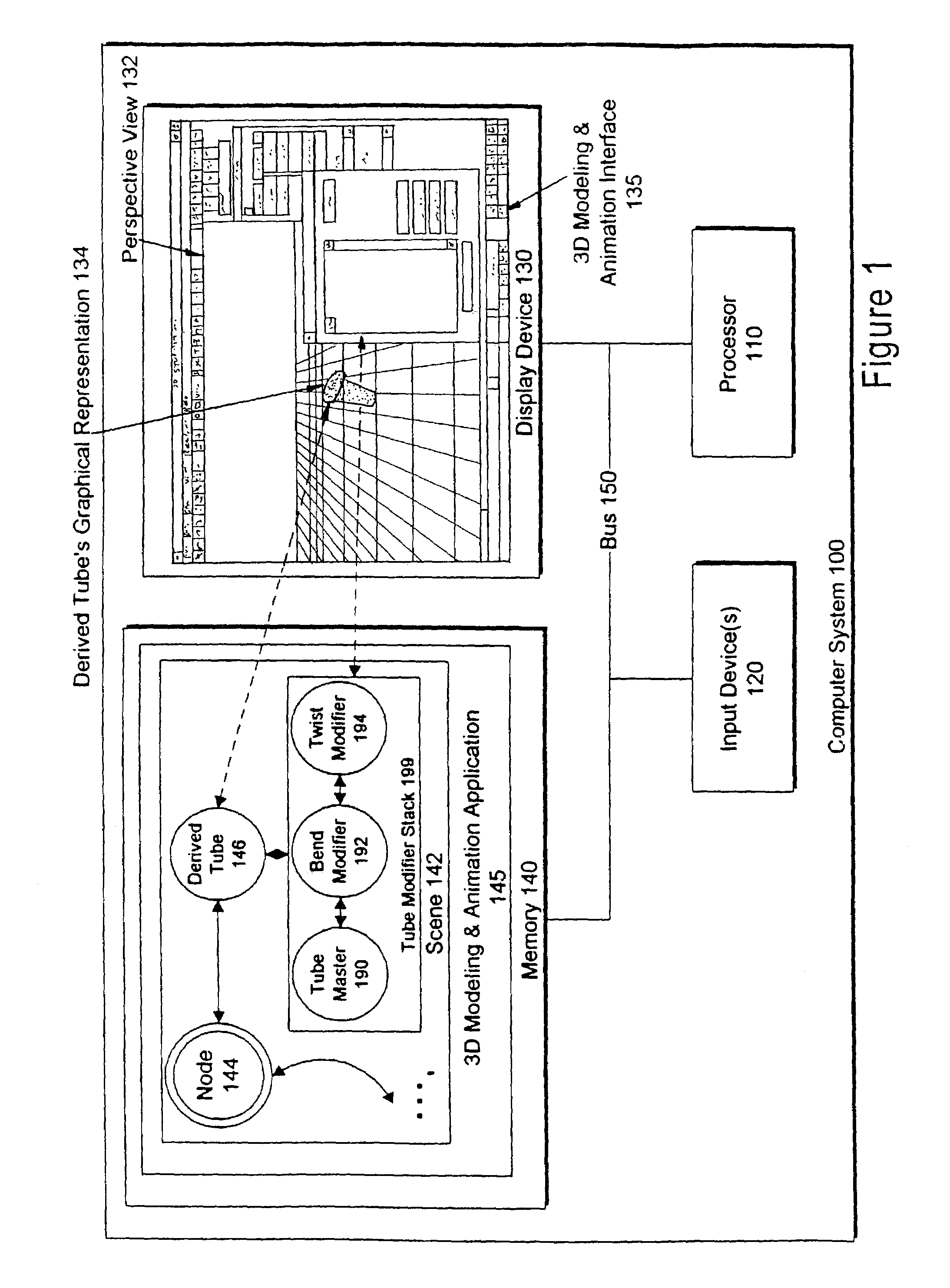

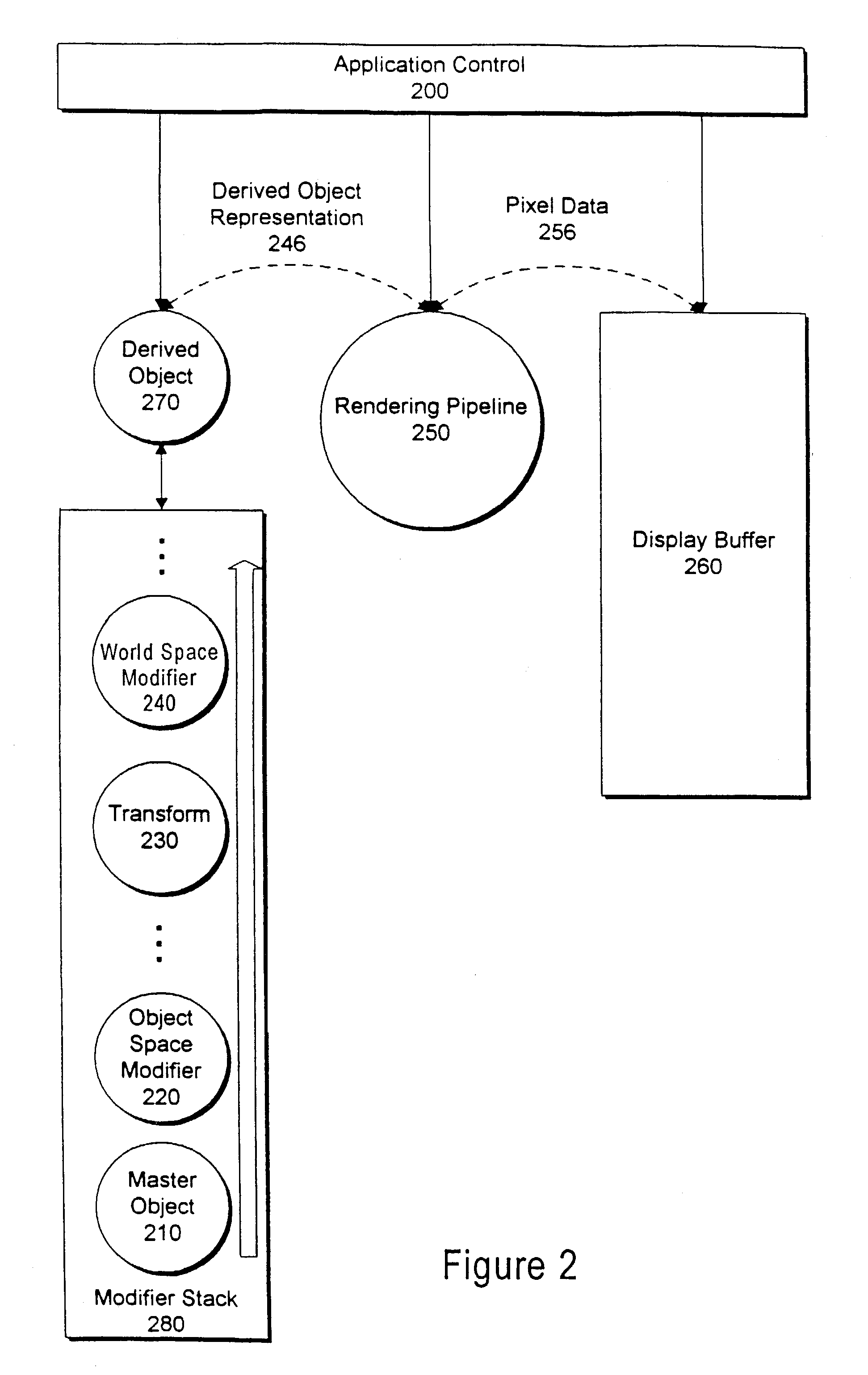

Three dimensional modeling and animation system using master objects and modifiers

InactiveUS7233326B1Easy to shareGood flexibilityCathode-ray tube indicatorsAnimationAlgorithmAnimation

A three dimensional (3D) modeling system for generating a 3D representation of a modeled object on a display device of a computer system. The modeled object is represented by an initial definition of an object and a set of modifiers. Each modifier modifies some portion of the definition of an object that may result in a change in appearance of the object when rendered. The modifiers are ordered so that the first modifier modifies some portion of the initial definition of the object and produces a modified definition. The next modifier modifies the results of the previous modifier. The results of the last modifier are then used in rendering processes to generate the 3D representation. Each modifier is associated with a three dimensional representation so that the user can more easily visualize the effect of the modifier.

Owner:AUTODESK INC

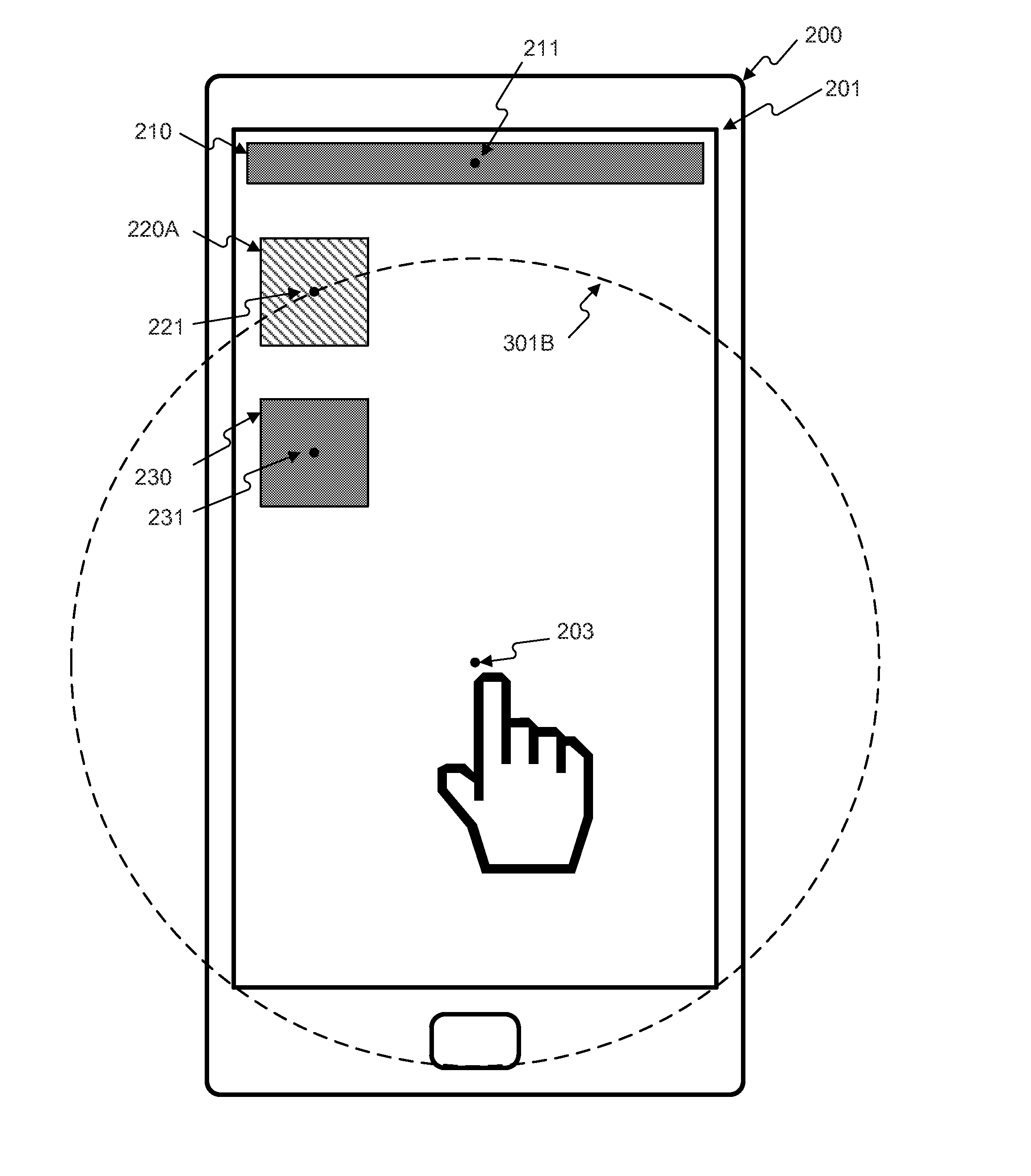

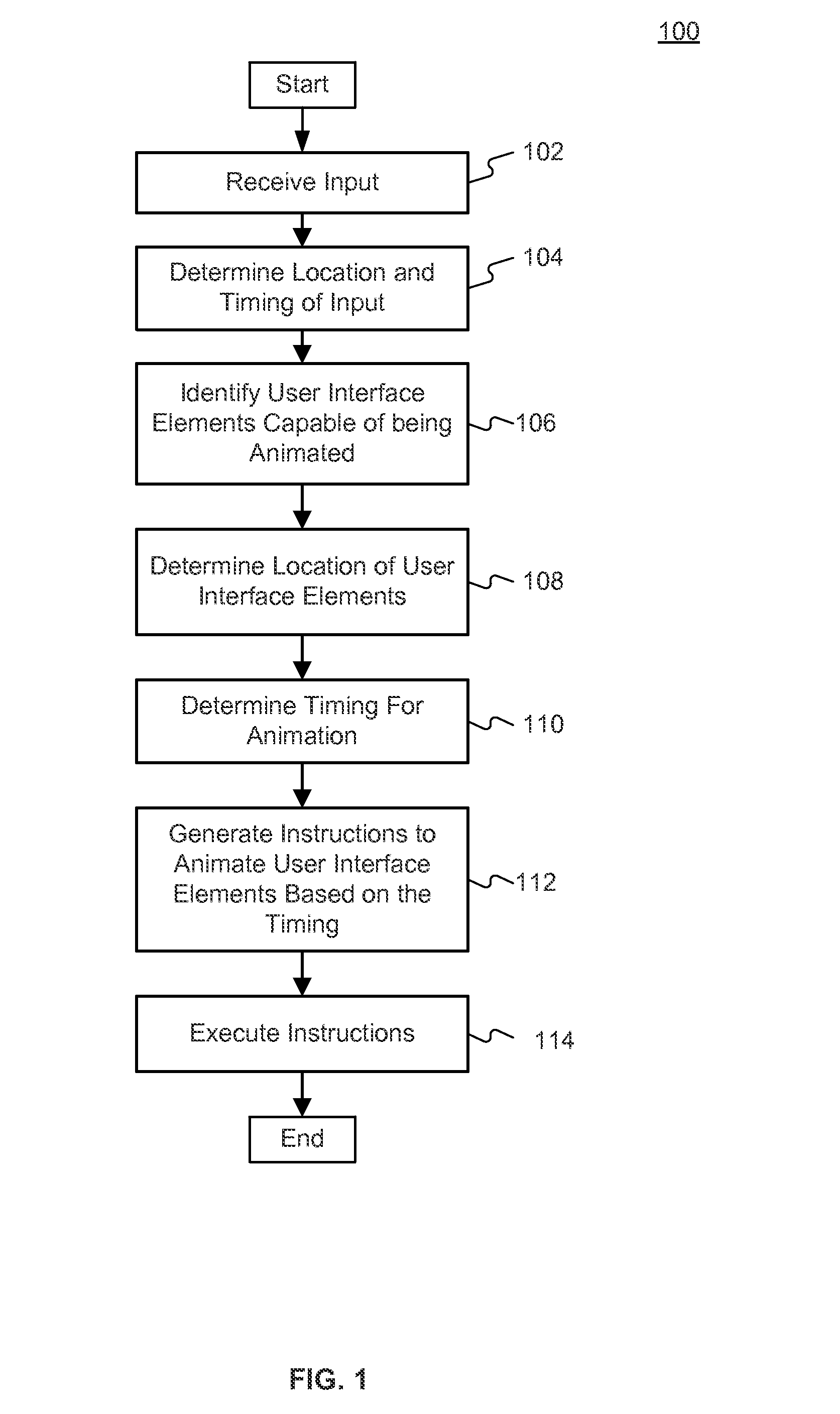

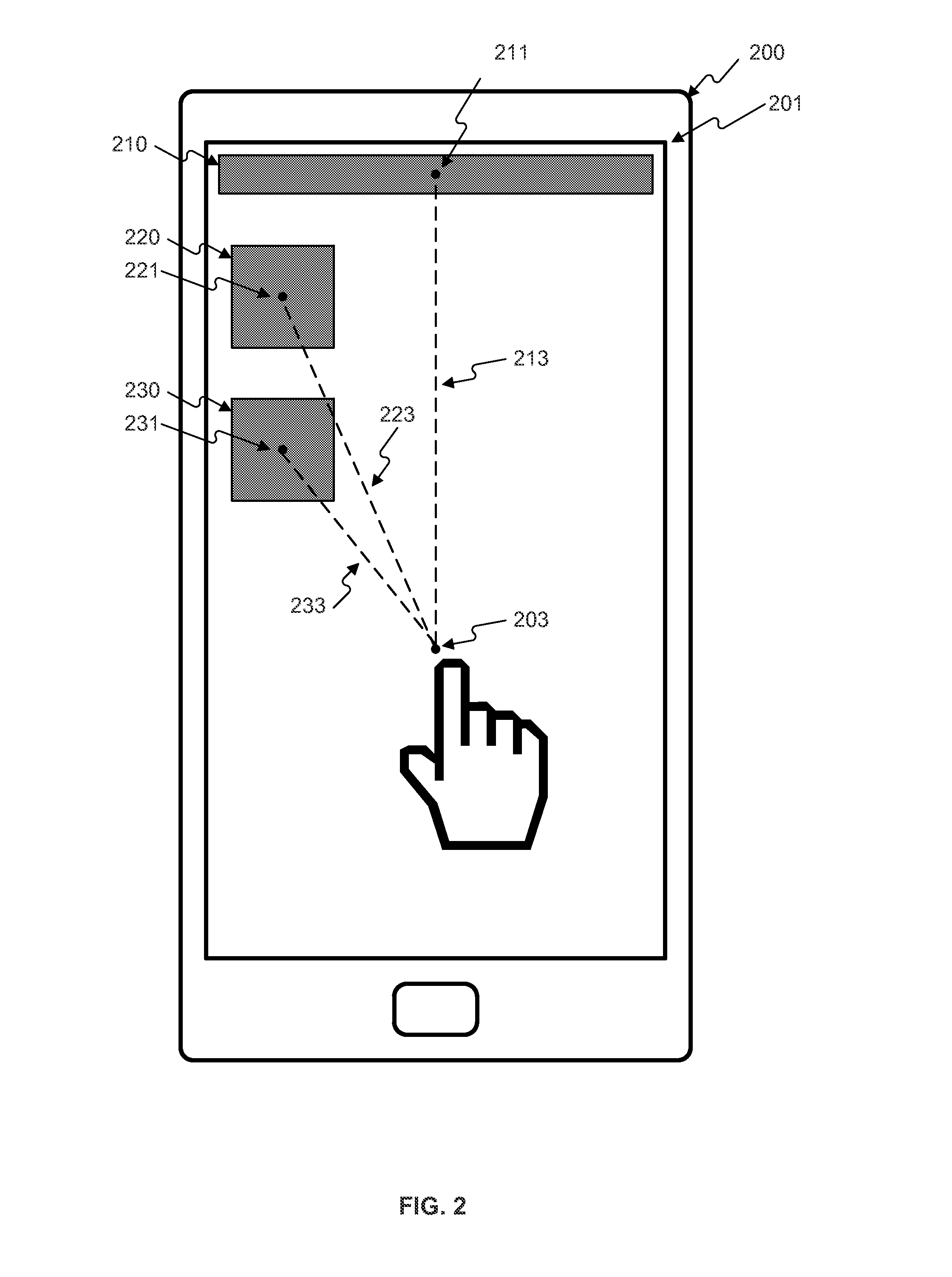

Computerized systems and methods for cascading user interface element animations

InactiveUS20150370447A1AnimationInput/output processes for data processingAnimationComputerized system

Systems, methods, and computer-readable media are provided for generating a cascaded animation in a user interface. In accordance with one implementation, a method is provided that includes operations performed by at least one processor, including determining coordinates and an initial time for an input to the user interface. The method may also include identifying at least one user interface element capable of being animated. Additionally, the method may determine coordinates for the at least one user interface element corresponding to the spatial location of the at least one user interface element in the user interface. The method also may include calculating a target time based on the initial time and distance between the coordinates of the input and the coordinates of the at least one user interface element. The method may generate a command to animate the display of the at least one user interface element when the target time is reached.

Owner:GOOGLE LLC

Auxiliary production system of 3Dmax cartoon

InactiveCN101271593AReduce difficultyImprove efficiencyAnimation3D-image renderingManagement toolAnimation

The invention provides a 3Dmax aided animation system. A 3Dmax three-dimensional animation system self does not provide a management tool, which makes an animator not only create a picture but also manage a material. Aimed at the problem in the 3Dmax system, the invention provides the 3Dmax aided animation system, comprising an interface module which is connected with the 3Dmax system in order to realize a data transmission between the 3Dmax system and the aided unit, a display module which provides a user interface and receives an operational order of a user, and an expression binding management module which respectively tabulates a skeleton and a muscle of the different positions of an animated character, and produces the expression of the character according to the skeleton or the muscle selected by the user, and generates a continuous motion by invoking the expression of a task. The 3D max aided animation system provides a friendly interface and integrates functions necessary for the animation into the interface, which reduces the difficulty of the animation for the animator and improves the efficiency.

Owner:石家庄市深度动画科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com