Method for recognizing emotion points of Chinese pronunciation based on sound-track modulating signals MFCC (Mel Frequency Cepstrum Coefficient)

A Chinese and emotional technology, applied in the information field, can solve the problem of low average recognition rate of emotional points

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] The technical scheme of the present invention will be further explained below in conjunction with the drawings.

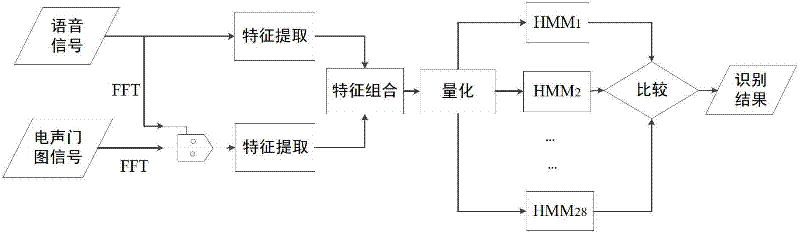

[0049] figure 1 It is a flow chart of using electroglottic diagram signals and speech signals for feature extraction, training models and identifying emotional points. It is mainly divided into two parts: the acquisition of Chinese voice emotional points and the recognition of Chinese voice emotional points.

[0050] 1. To acquire the emotional points of Chinese speech, the method steps are as follows:

[0051] Step 1. Formulate the recording specifications of emotional speech database, the specific rules are as follows;

[0052] (1) Speakers: Between the ages of 20 and 25, with a bachelor's degree, the number is 5 males and 5 females, 10 people.

[0053] (2) Speaking content: 28 interjections are selected as emotional points, and each emotional point is recorded 3 times during the experiment.

[0054] (3) Emotion classification: angry, happy, sad, surprised, fearful, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com