Patents

Literature

955results about How to "Improve classification effect" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

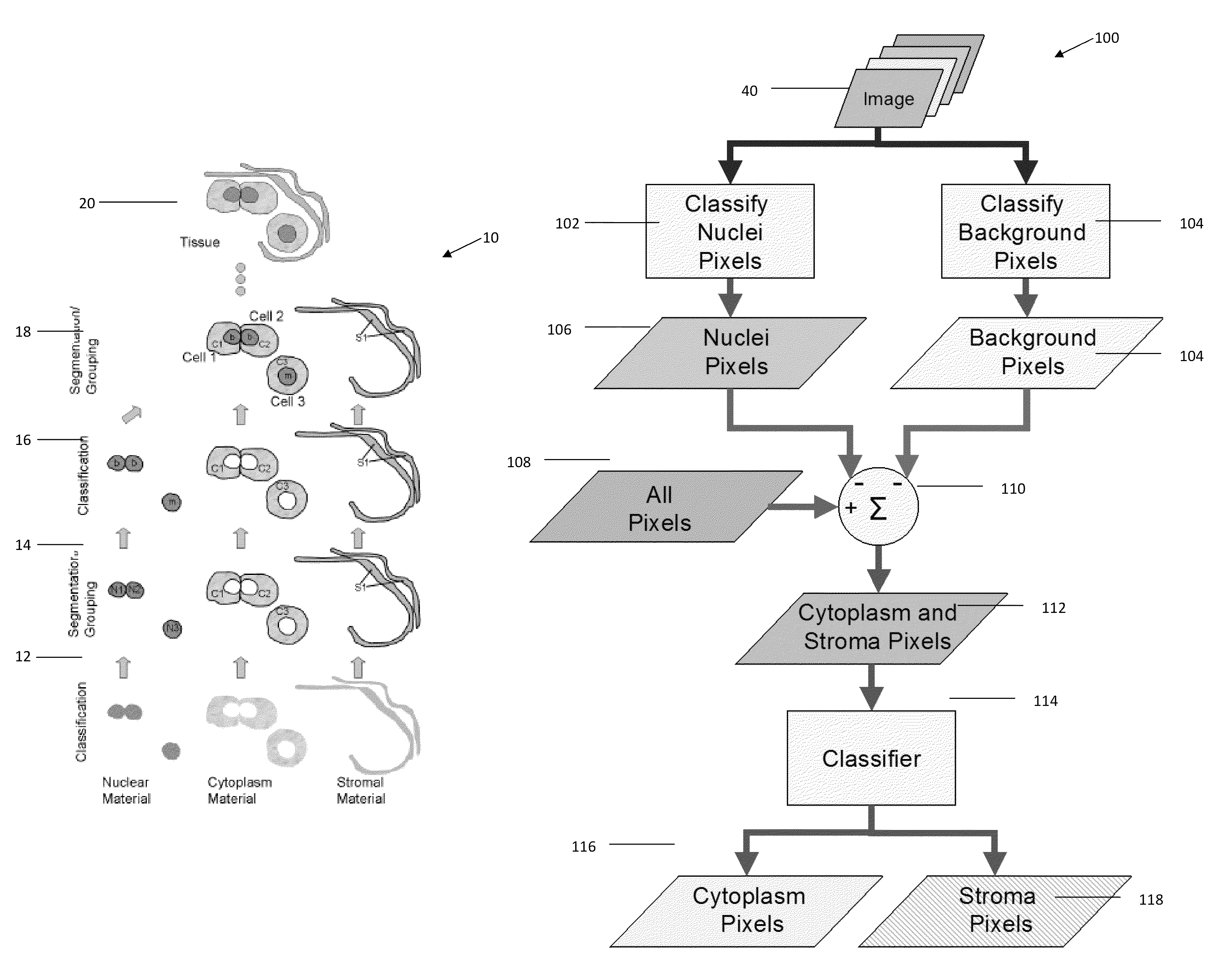

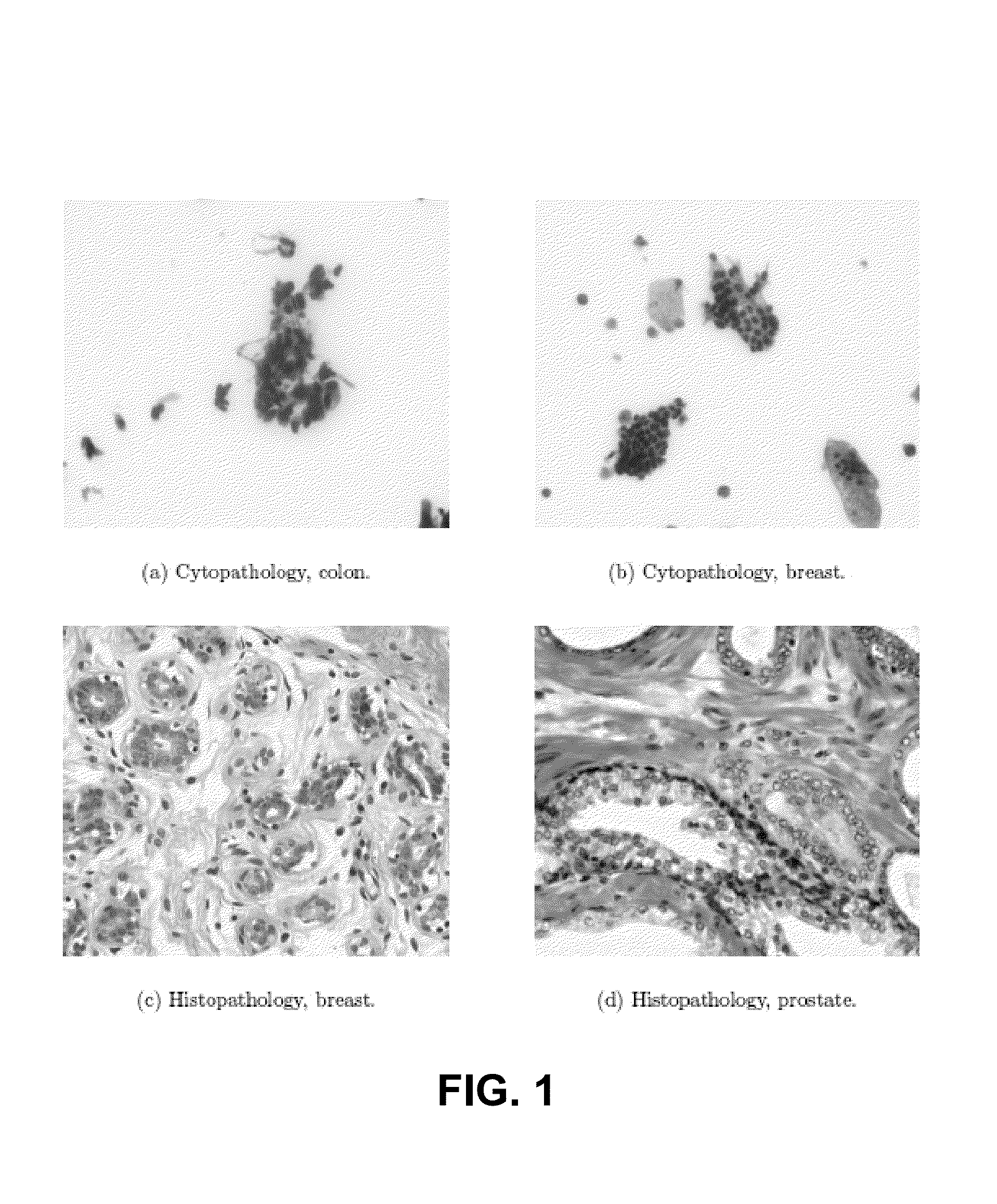

Object and spatial level quantitative image analysis

InactiveUS20100111396A1Improve classification effectImprove classification performanceImage enhancementImage analysisGraphicsGraphical user interface

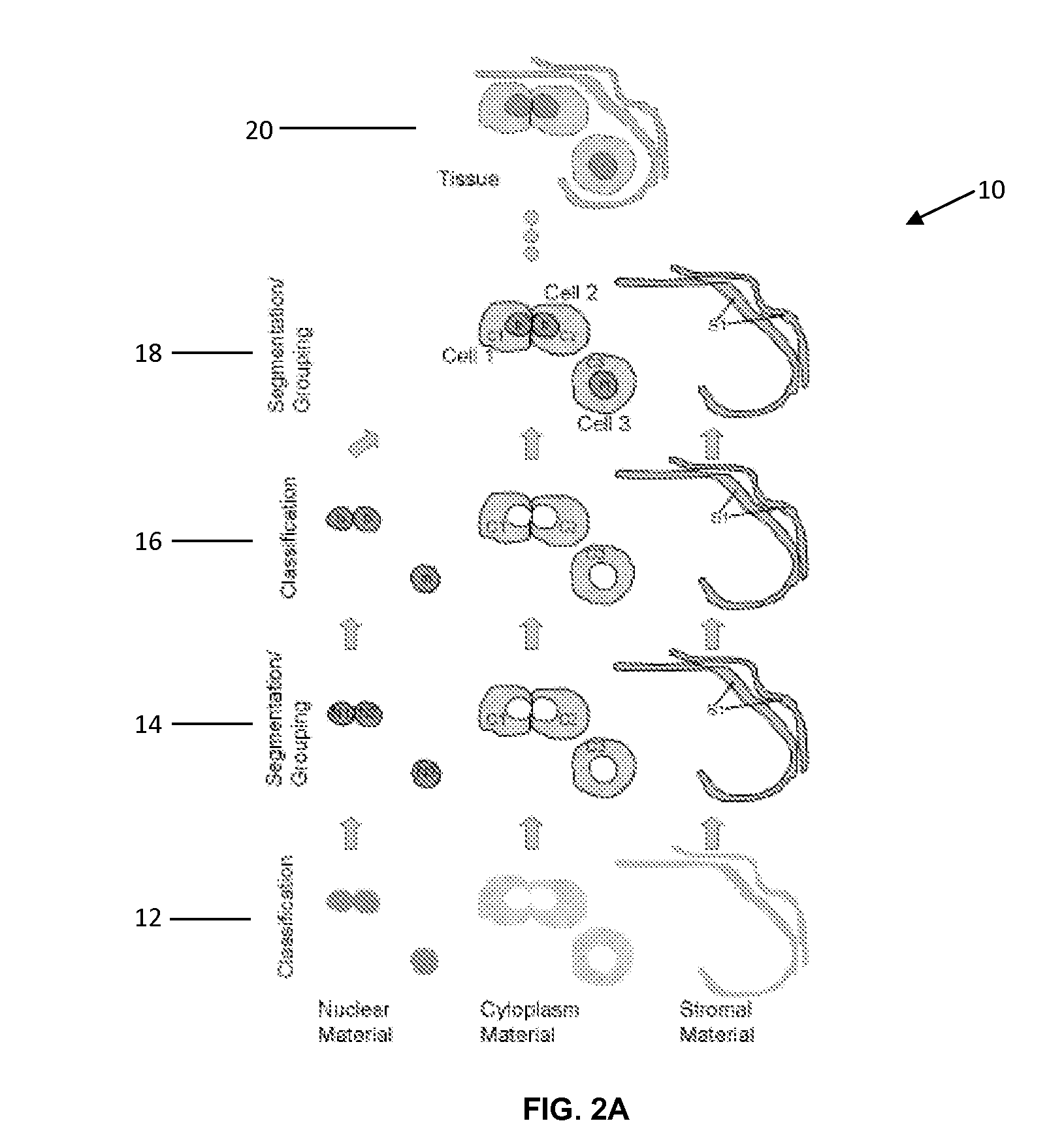

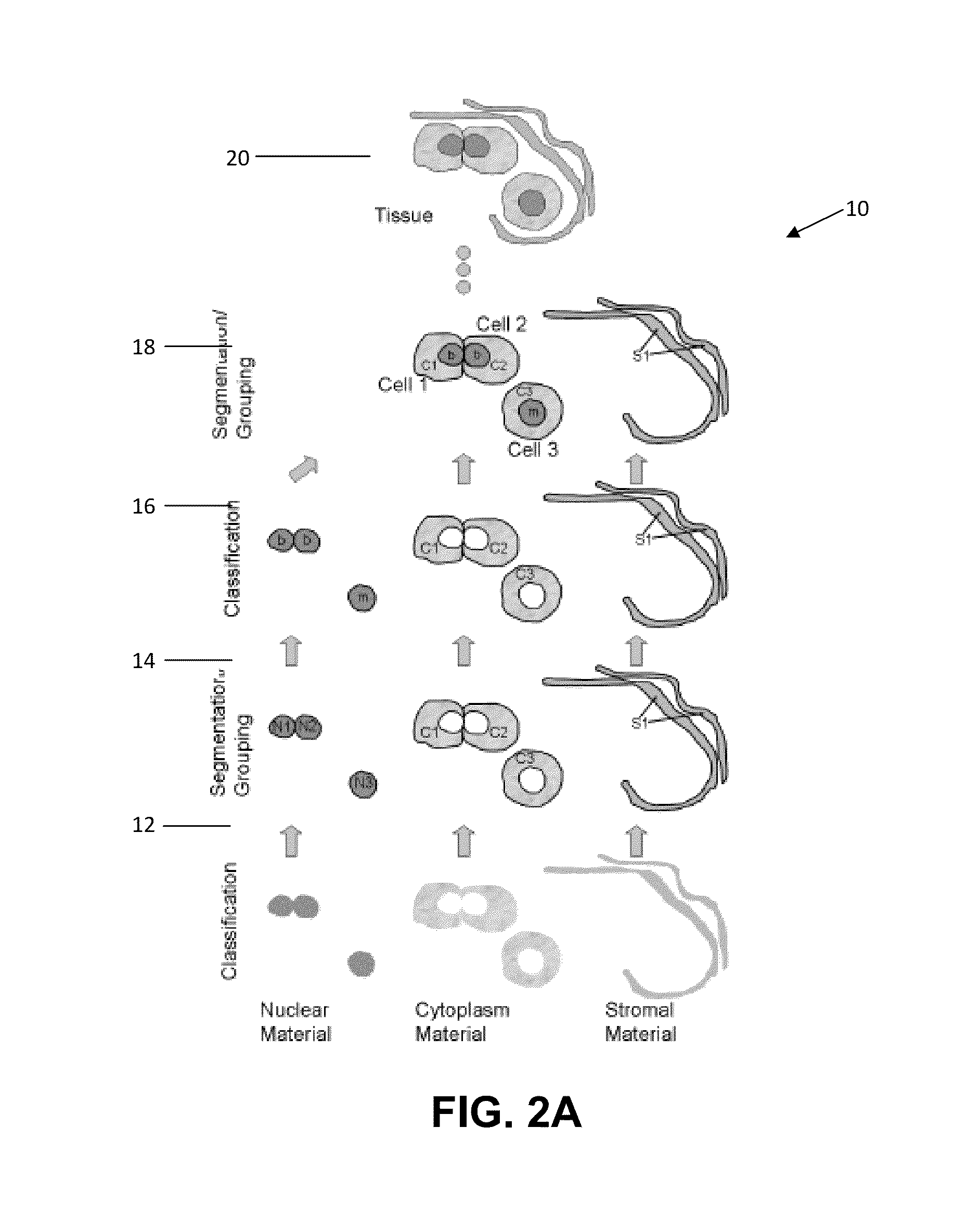

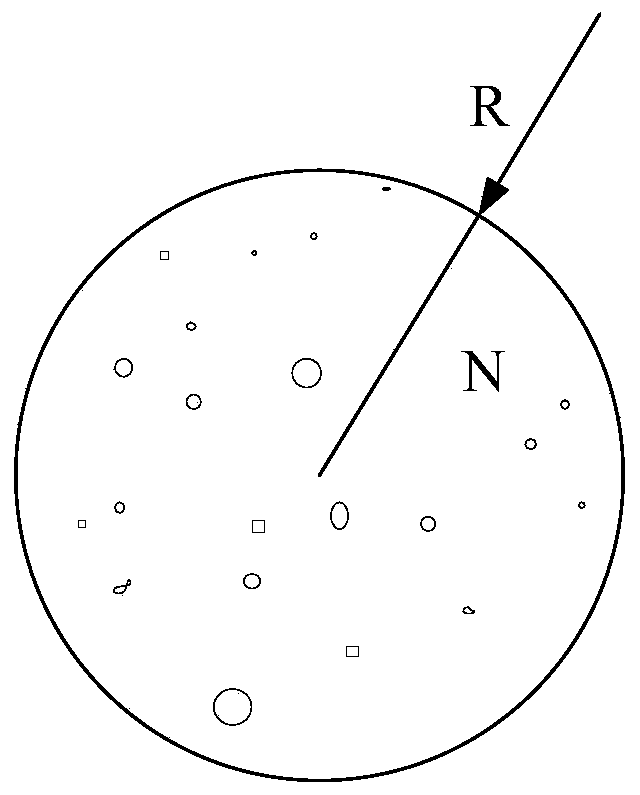

Quantitative object and spatial arrangement-level analysis of tissue are detailed using expert (pathologist) input to guide the classification process. A two-step method is disclosed for imaging tissue, by classifying one or more biological materials, e.g. nuclei, cytoplasm, and stroma, in the tissue into one or more identified classes on a pixel-by-pixel basis, and segmenting the identified classes to agglomerate one or more sets of identified pixels into segmented regions. Typically, the one or more biological materials comprises nuclear material, cytoplasm material, and stromal material. The method further allows a user to markup the image subsequent to the classification to re-classify said materials. The markup is performed via a graphic user interface to edit designated regions in the image.

Owner:TRIAD NAT SECURITY LLC

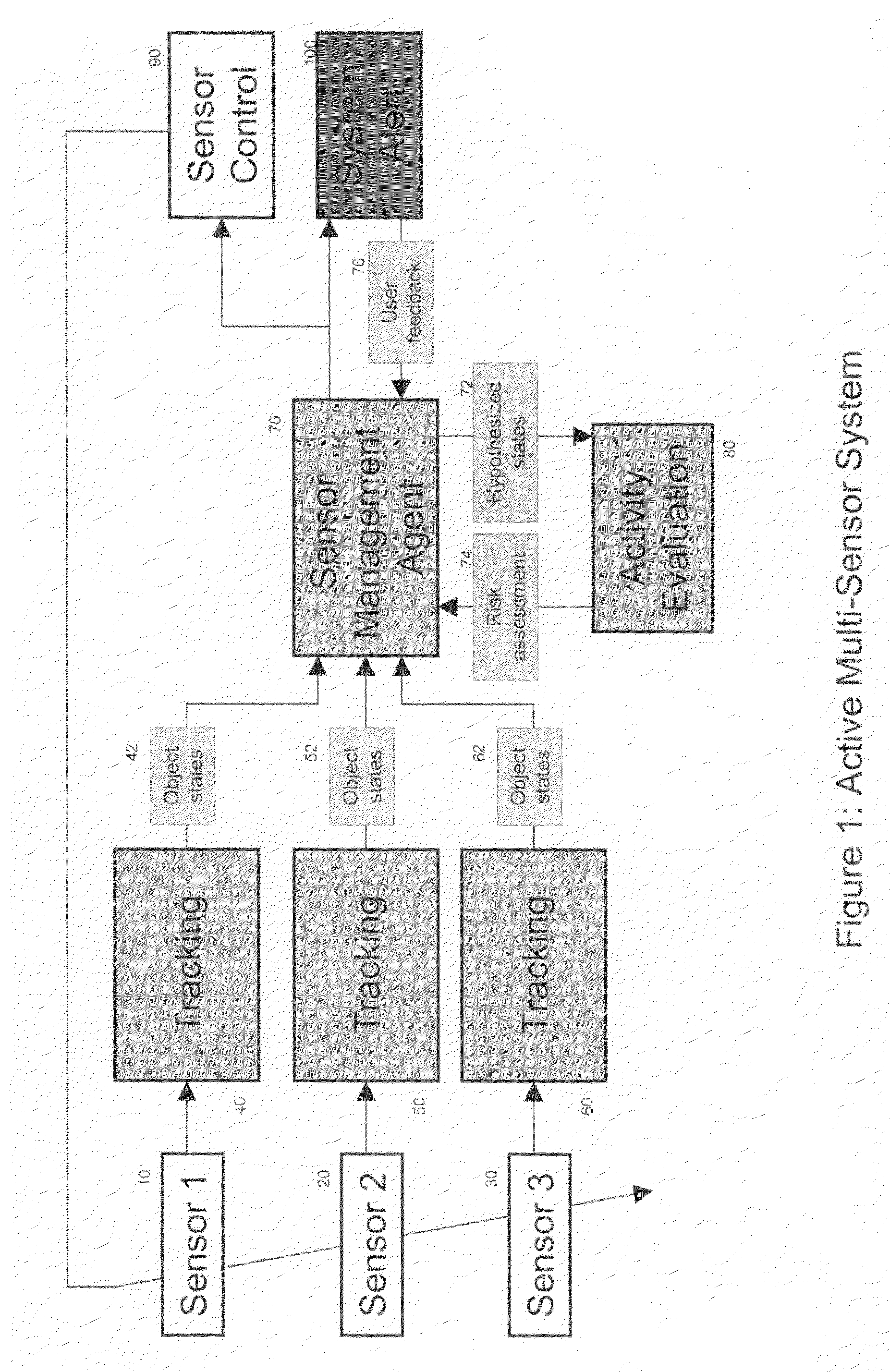

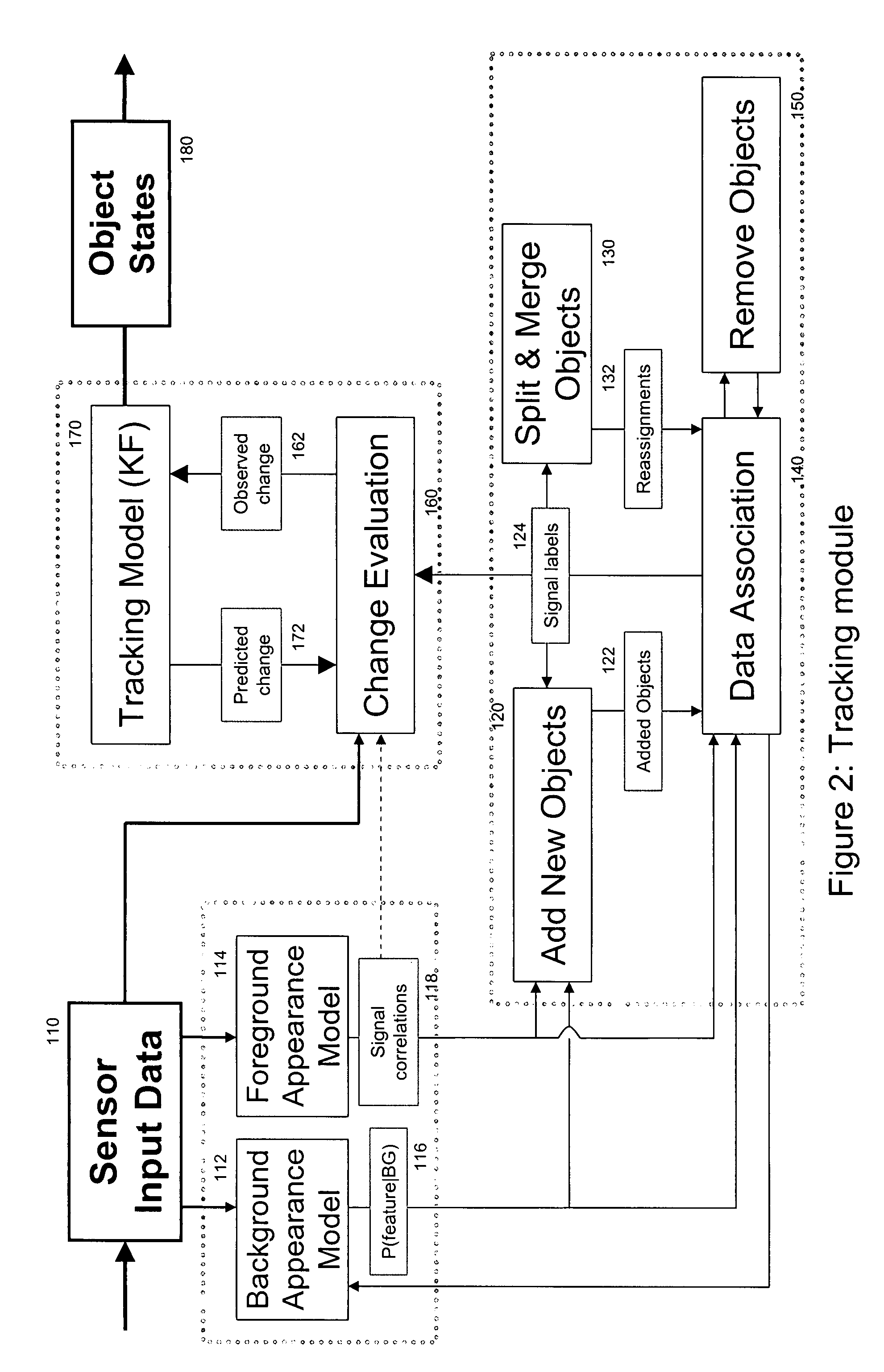

Sensor exploration and management through adaptive sensing framework

InactiveUS20080243439A1Improve system performanceOptimal performanceImage enhancementImage analysisPolicy decisionRelative cost

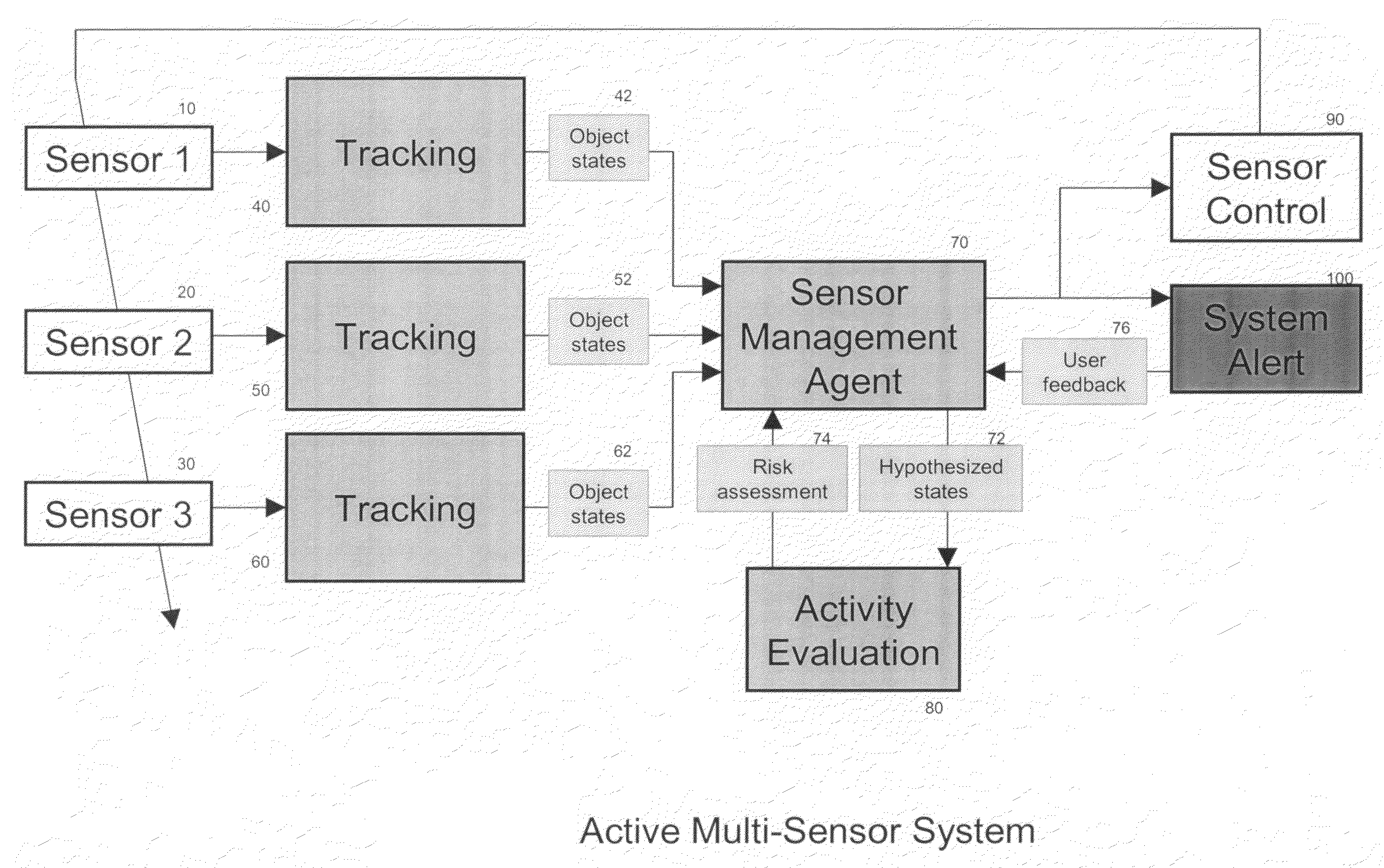

The identification and tracking of objects from captured sensor data relies upon statistical modeling methods to sift through large data sets and identify items of interest to users of the system. Statistical modeling methods such as Hidden Markov Models in combination with particle analysis and Bayesian statistical analysis produce items of interest, identify them as objects, and present them to users of the system for identification feedback. The integration of a training component based upon the relative cost of sampling sensors for additional parameters, provides a system that can formulate and present policy decisions on what objects should be tracked, leading to an improvement in continuous data collection and tracking of identified objects within the sensor data set.

Owner:SIGNAL INNOVATIONS GRP

Combinational pixel-by-pixel and object-level classifying, segmenting, and agglomerating in performing quantitative image analysis that distinguishes between healthy non-cancerous and cancerous cell nuclei and delineates nuclear, cytoplasm, and stromal material objects from stained biological tissue materials

InactiveUS8488863B2Improve classification effectImprove classification performanceImage enhancementImage analysisStainingCytoplasm

Owner:TRIAD NAT SECURITY LLC

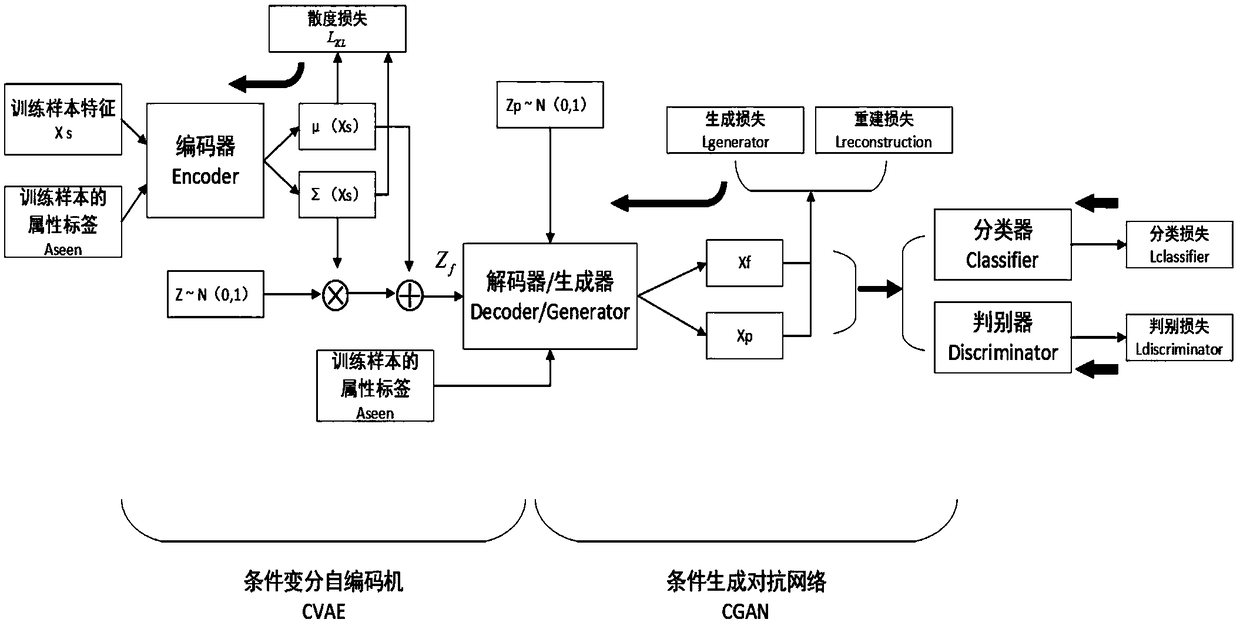

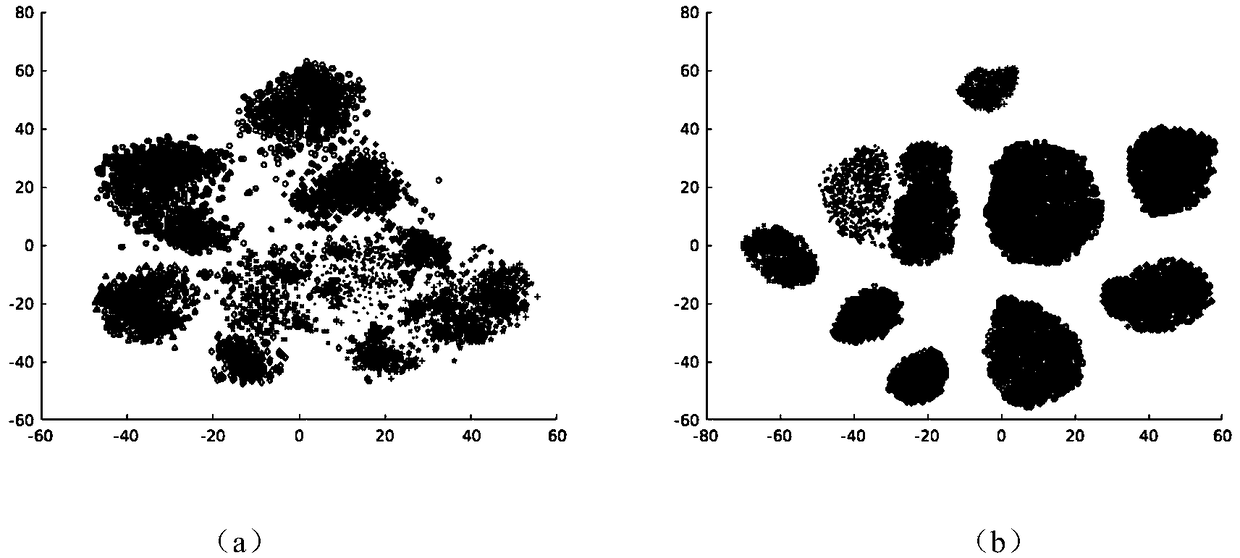

Zero sample image classification method based on combination of variational autocoder and adversarial network

ActiveCN108875818AImplement classificationMake up for the problem of missing training samples of unknown categoriesCharacter and pattern recognitionPhysical realisationClassification methodsSample image

The invention discloses a zero sample image classification method based on combination of a variational autocoder and an adversarial network. Samples of a known category are input during model training; category mapping of samples of a training set serves as a condition for guidance; the network is subjected to back propagation of optimization parameters through five loss functions of reconstruction loss, generation loss, discrimination loss, divergence loss and classification loss; pseudo-samples of a corresponding unknown category are generated through guidance of category mapping of the unknown category; and a pseudo-sample training classifier is used for testing on the samples of the unknown category. The high-quality samples beneficial to image classification are generated through theguidance of the category mapping, so that the problem of lack of the training samples of the unknown category in a zero sample scene is solved; and zero sample learning is converted into supervised learning in traditional machine learning, so that the classification accuracy of traditional zero sample learning is improved, the classification accuracy is obviously improved in generalized zero sample learning, and an idea for efficiently generating the samples to improve the classification accuracy is provided for the zero sample learning.

Owner:XI AN JIAOTONG UNIV

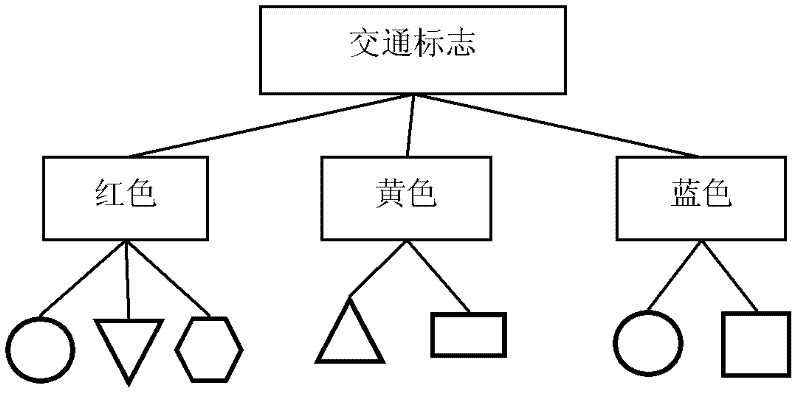

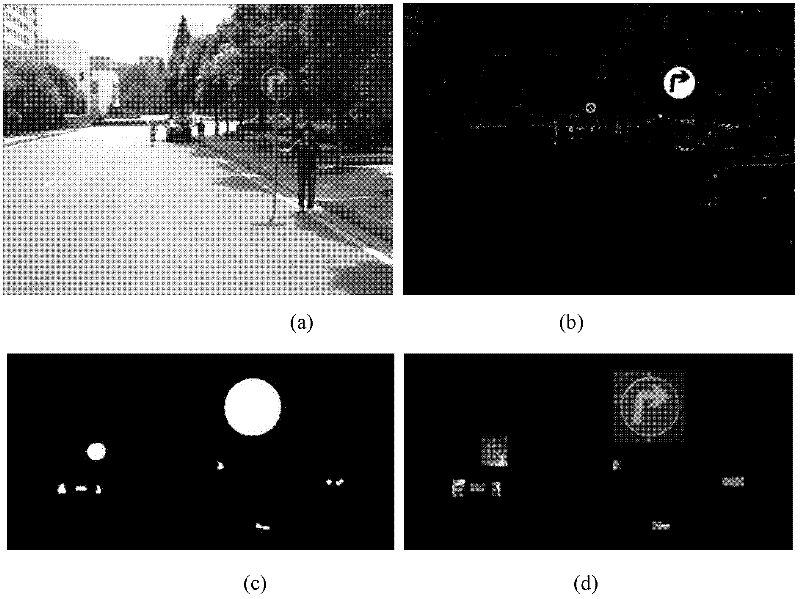

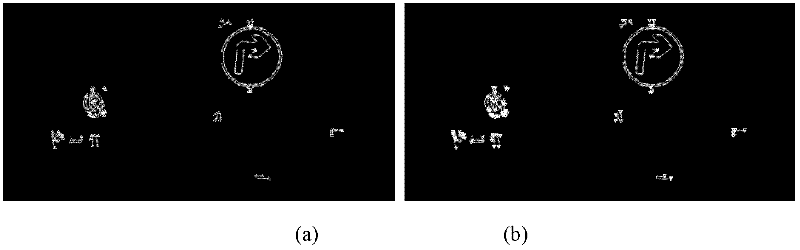

Method for recognizing road traffic sign for unmanned vehicle

InactiveCN102542260AFast extractionFast matchingDetection of traffic movementCharacter and pattern recognitionClassification methodsNear neighbor

The invention discloses a method for recognizing a road traffic sign for an unmanned vehicle, comprising the following steps of: (1) changing the RGB (Red, Green and Blue) pixel value of an image to strengthen a traffic sign feature color region, and cutting the image by using a threshold; (2) carrying out edge detection and connection on a gray level image to reconstruct an interested region; (3) extracting a labeled graph of the interested region as a shape feature of the interested region, classifying the shape of the region by using a nearest neighbor classification method, and removing a non-traffic sign region; and (4) graying and normalizing the image of the interested region of the traffic sign, carrying out dual-tree complex wavelet transform on the image to form a feature vector of the image, reducing the dimension of the feature vector by using a two-dimension independent component analysis method, and sending the feature vector into a support vector machine of a radial basis function to judge the type of the traffic sign of the interested region. By using the method, various types of traffic signs in a running environment of the unmanned vehicle can be stably and efficiently detected and recognized.

Owner:CENT SOUTH UNIV

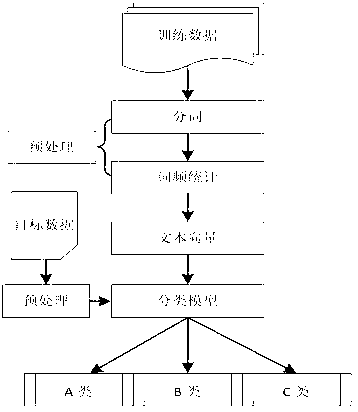

Valueless image removing method based on deep convolutional neural networks

InactiveCN104200224AImprove classification effectNot easy to misjudgeImage enhancementCharacter and pattern recognitionComputer scienceEncoder

The invention relates to a valueless image removing method based on deep convolutional neural networks. The valueless image removing method comprises the steps of firstly, after performing whitening preprocessing on an image sample set, performing pre-training on a sparse autocoder to obtain the initialization results of deep convolutional network parameters, secondly, building a plurality of layers of deep convolutional neural networks and optimizing the network parameters layer by layer, and finally, classifying a plurality of classes of problems by use of a realized multi-classification softmax model and then realizing the removal of valueless images. Due to the automatic image learning characteristic of the sparse autocoder, the classification correction rate of the valueless image removing method based on the deep convolutional neural networks is increased. The plurality of layers of deep convolutional neural networks are built on the basis of the automatic image learning characteristic of the sparse autocoder, the network parameters are optimized layer by layer, the characteristic of each layer after learning is the combination result of the characteristics of the previous layer, and the multi-classification softmax model is trained to judge images, and consequently, the removal of valueless images is realized.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

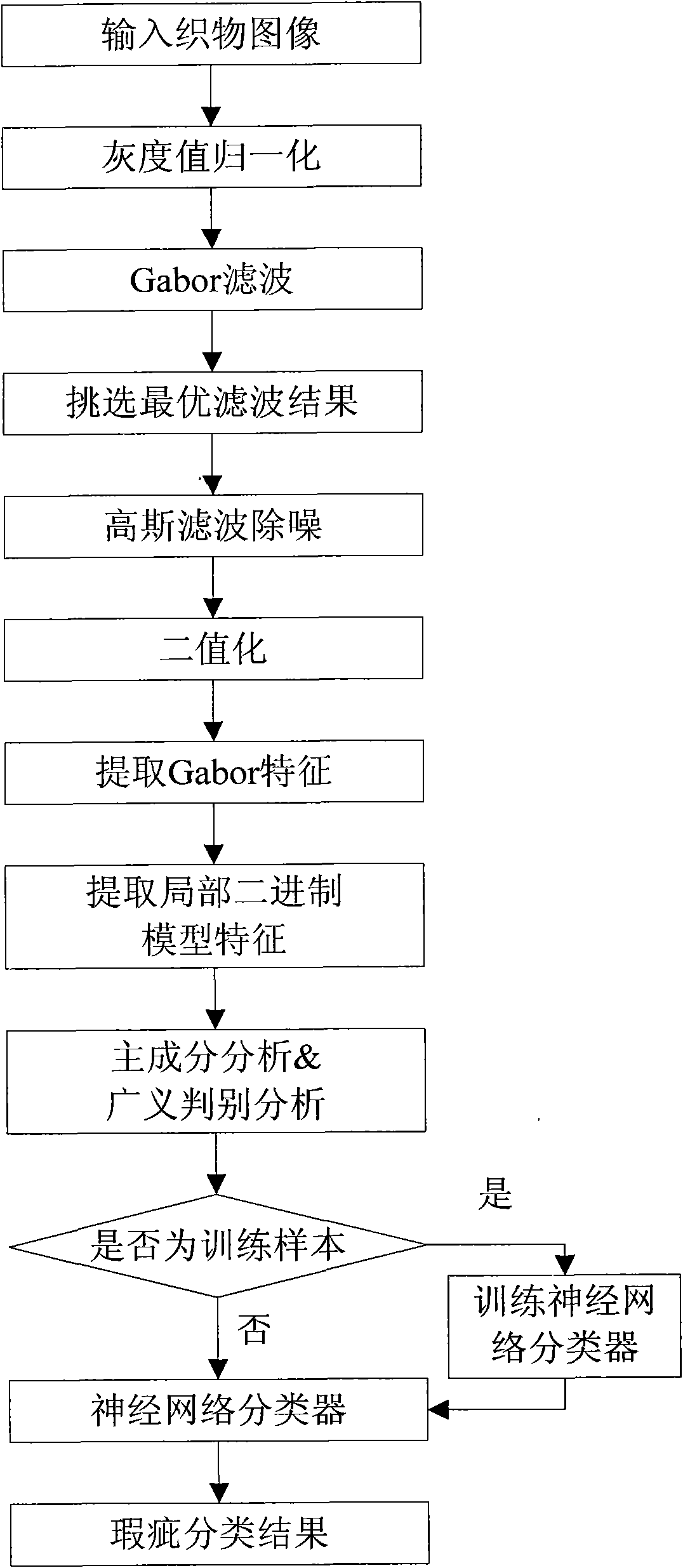

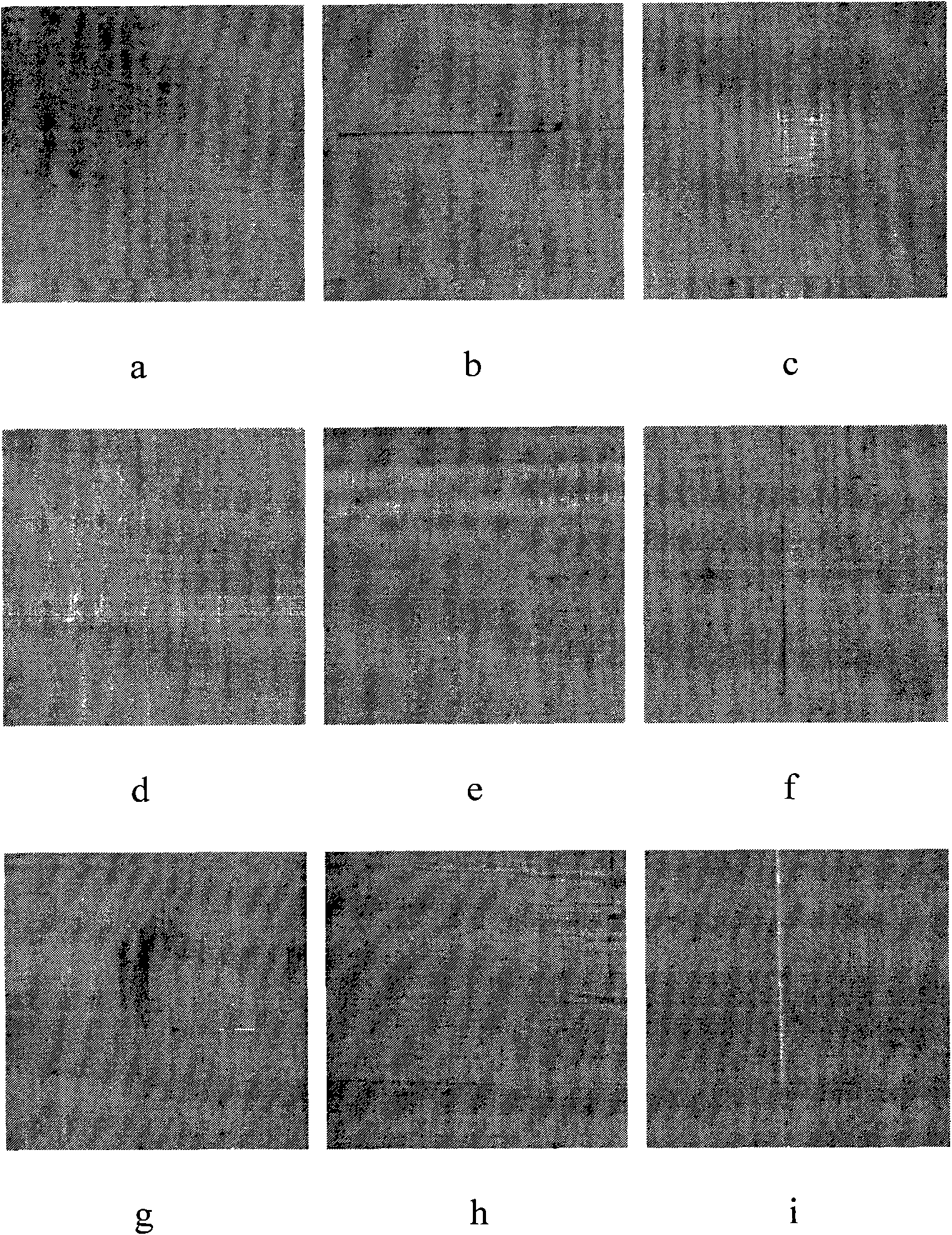

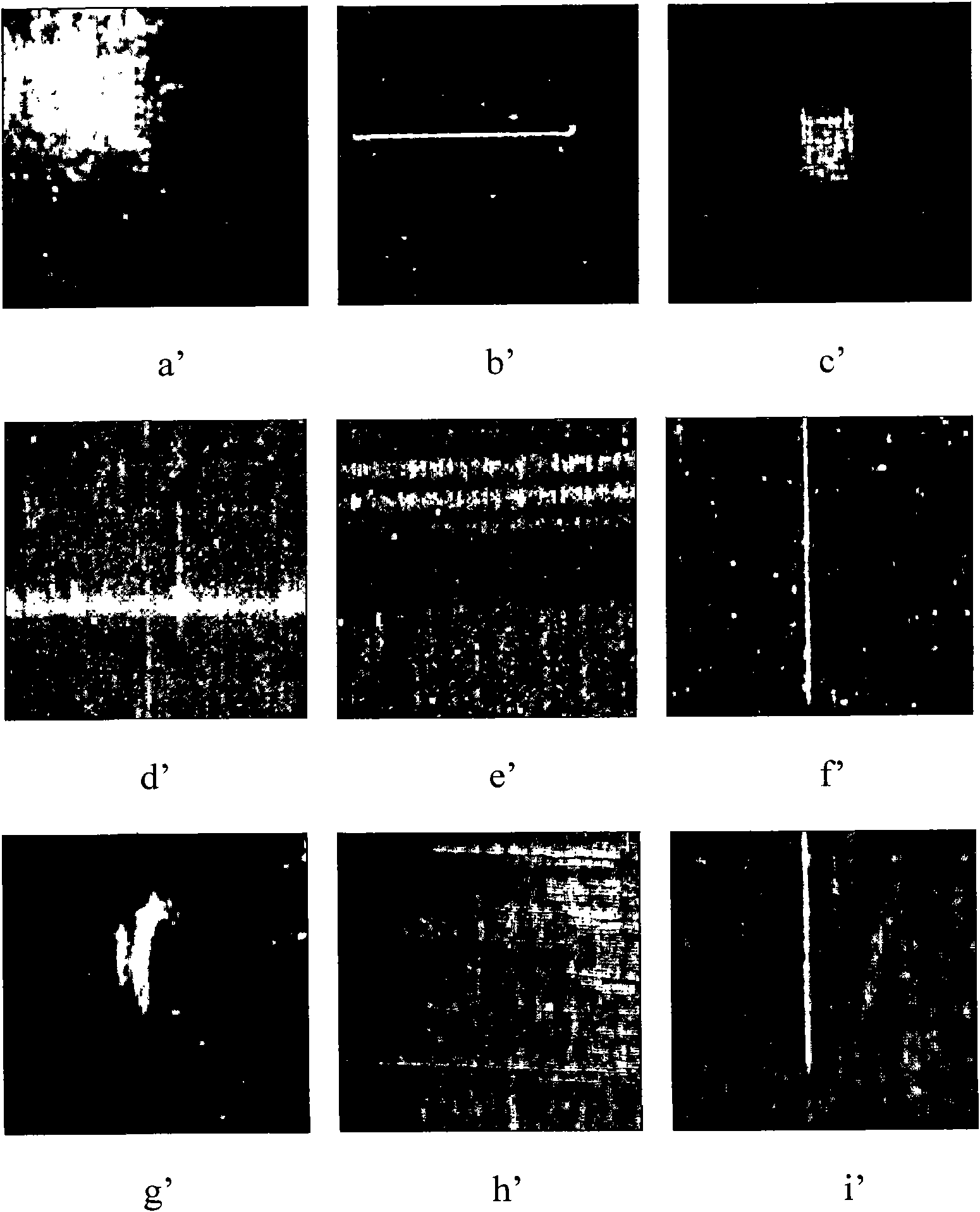

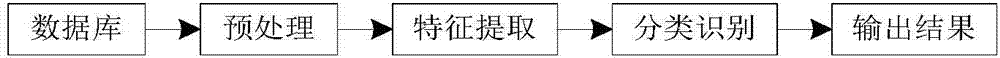

Method for detecting and classifying fabric defects

InactiveCN101866427APrecise positioningFully reflect the difference of flawsCharacter and pattern recognitionTextile millPrincipal component analysis

The invention discloses a method for detecting and classifying fabric defects and mainly aims to solve the problem of automatic detection and classification of fabric defects. The method comprises the following steps of: firstly, detecting a picture of the fabric defects, filtering the picture by using a Gabor filter group, selecting an optimal filtering result and performing binaryzation on the optimal filtering result by using a reference picture so as to position the positions of the defects in the picture; secondly, extracting a compound characteristic consisting of a Gabor characteristic and a partial binary model characteristic according to the positions of the defects; thirdly, performing pre-treatment on the compound characteristic by main constituent analysis and generalized discriminant analysis algorithm; fourthly, training a neural network classifier by using a pre-treated defect characteristic; and lastly, realizing accurate classification of a fabric defect characteristic by using a trained classifier. The method has the advantages of accurate defect positioning and high classification accuracy and can be used for detecting and classifying the fabric defects in a textile mill.

Owner:XIDIAN UNIV

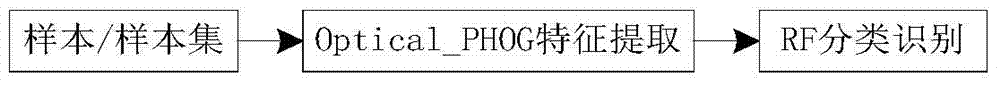

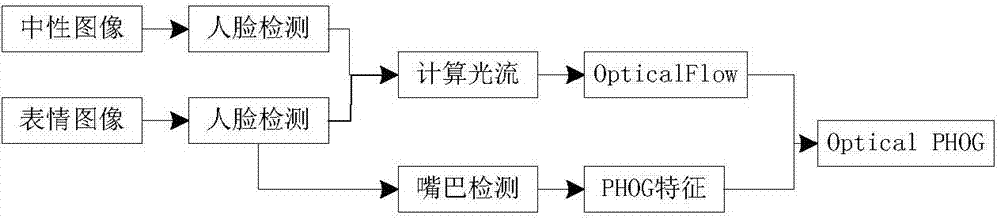

System and method for smiling face recognition in video sequence

InactiveCN104504365AImprove classification effectSolve the problem of low resourcesCharacter and pattern recognitionFace detectionFeature vector

The invention discloses a system and a method for smiling face recognition in a video sequence. The system comprises a pre-processing module, a feature extraction module, and a classification recognition module. According to the pre-processing module, through video collection, face detection and mouth detection, a face image region capable of directly extracting optical flow features or PHOG features can be acquired; according to the feature extraction module, Optical-PHOG algorithm is adopted to extract smiling face features, and information most facilitating smiling face recognition is obtained; and according to the classification recognition module, random forest algorithm is adopted, and classification standards on a smiling face type and a non-smiling face type are obtained according to feature vectors of a large number of training samples obtained by the feature extraction module in a machine learning method. Comparison or matching or other operation is carried out between feature vectors of a to-be-recognized image and the classifier, and the smiling face type or the non-smiling face type to which the to-be-recognized image belongs can be recognized, and the purpose of classification recognition can be achieved. Thus, according to the system and the method for smiling face recognition in the video sequence, accuracy of smiling face recognition can be improved.

Owner:WINGTECH COMM

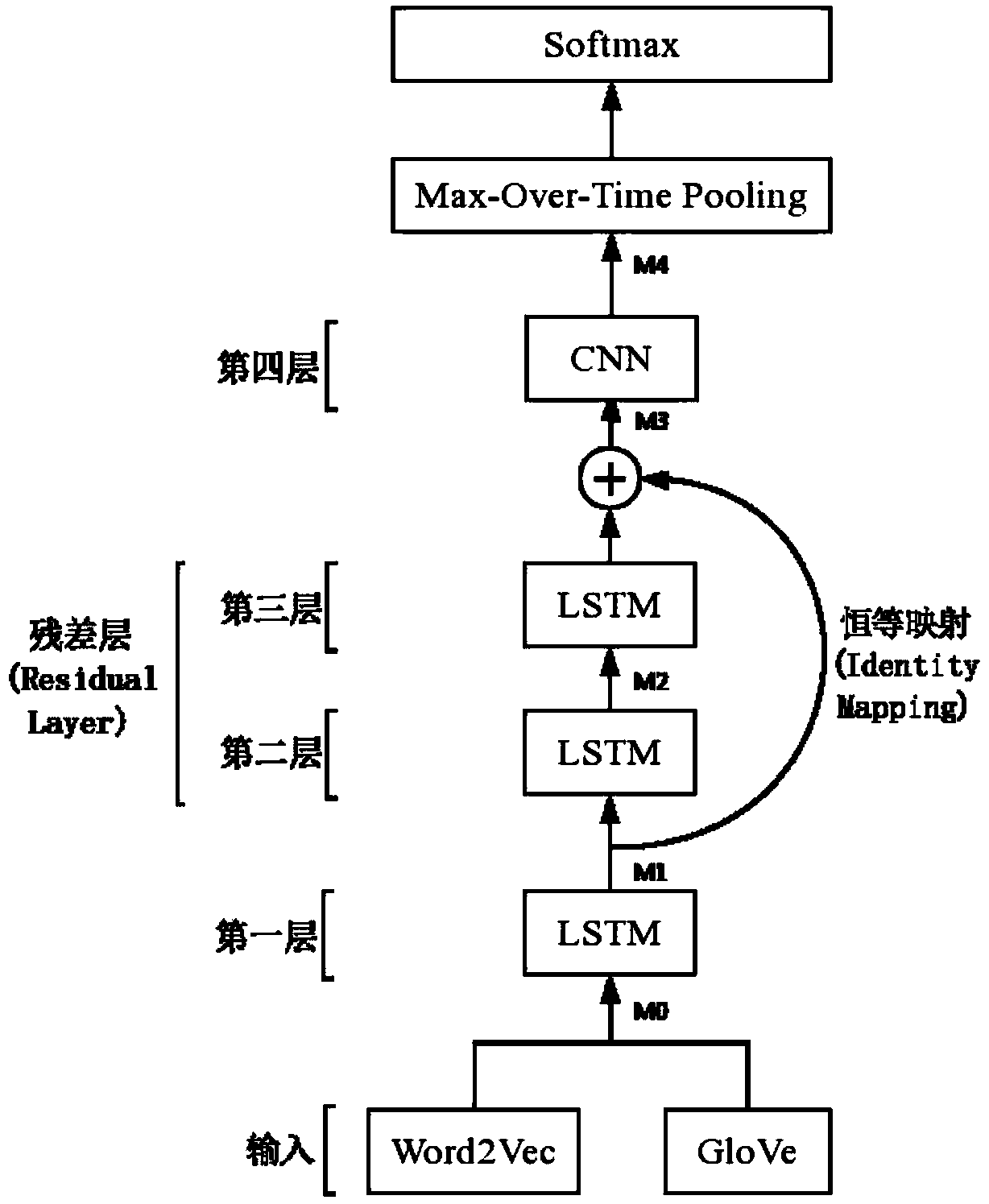

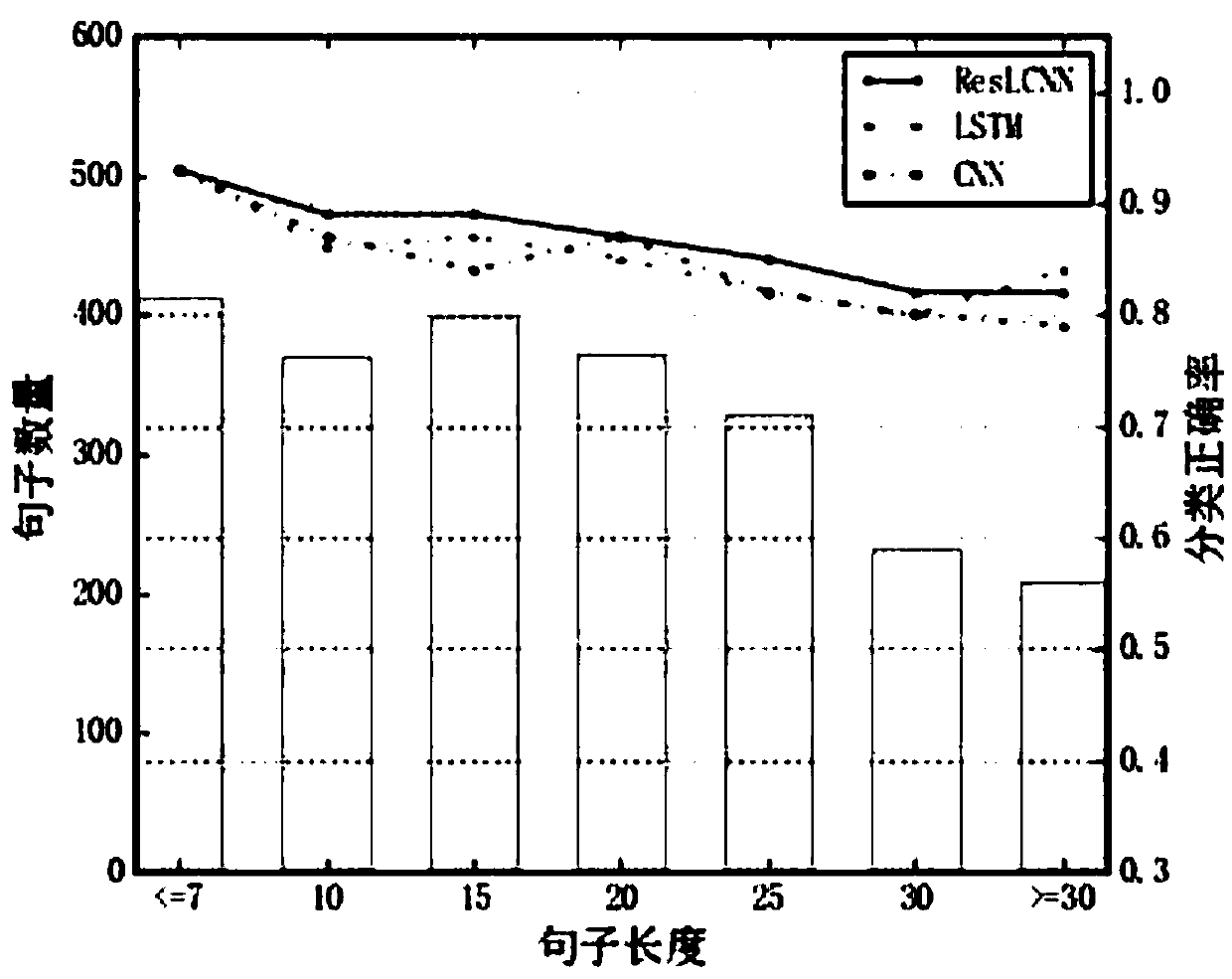

ResLCNN model-based short text classification method

InactiveCN107562784AImprove classification effectNeural architecturesSpecial data processing applicationsText miningText categorization

The invention discloses a ResLCNN model-based short text classification method, relates to the technical field of text mining and deep learning, and in particular to a deep learning model for short text classification. According to the method, characteristics of a long-short term memory network and a convolutional neural network are combined to build a ResLCNN deep text classification model for short text classification. The model comprises three long-short term memory network layer and one convolutional neural network layer; and through using a residual model theory for reference, identity mapping is added between the first long-short term memory network layer and the convolutional neural network layer to construct a residual layer, so that the problem of deep model gradient missing is relieved. According to the model, the advantage, of obtaining long-distance dependency characteristics of text sequence data, of the long-short term memory network and the advantage, of obtaining localfeatures of sentences through convolution, of the convolutional neural network are effectively combined, so that the short text classification effect is improved.

Owner:TONGJI UNIV

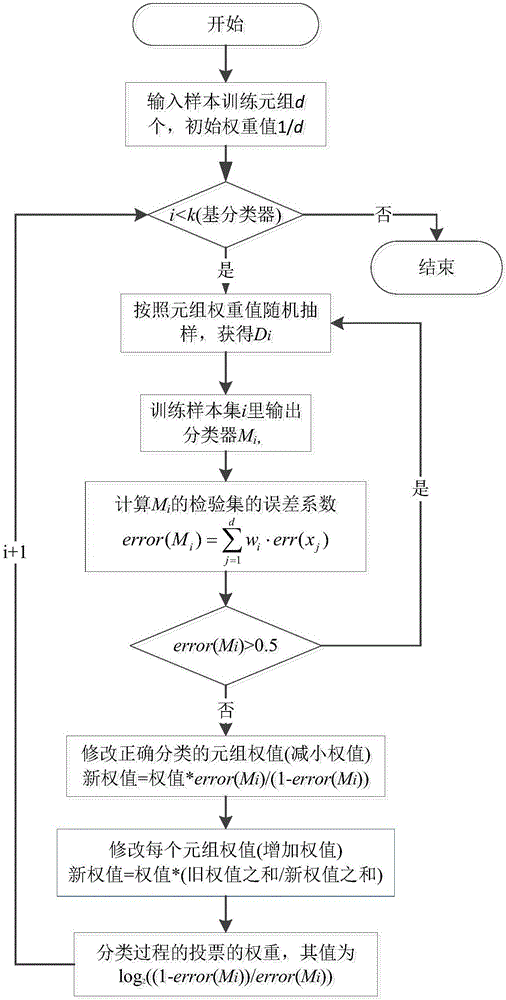

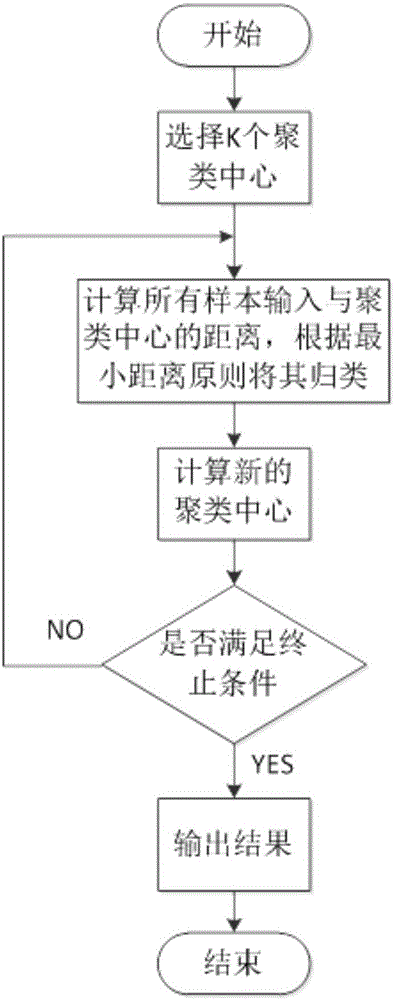

Optimized classification method and optimized classification device based on random forest algorithm

InactiveCN105844300AImprove robustnessIncrease weightCharacter and pattern recognitionAlgorithmClassification methods

The invention relates to an optimized classification method and an optimized classification device based on a random forest algorithm. The optimized classification method comprises the following steps of a first step, dividing given sample data into k sub-training sets which are independent from one another, selecting different decision tress according to each training sub-set, selecting different decision attributes by the decision trees for forming base classifiers, and forming a random forest by the base classifiers; a second step, in each base classifier, distributing a preset weight to each set, then transmitting to-be-classified data into the random forest which is constructed in the step 1) for performing classification, and adjusting the weight according to a classification result and a predication result, if the classification predication result of the set does not accord with the actual result, increasing the weight of the set, and if the classification predication result accords with the actual result, reducing the weight of the set; and a third step, performing classification on the to-be-classified data according to the adjusted weight of each set until the classification result accords with the predication result.

Owner:HENAN NORMAL UNIV

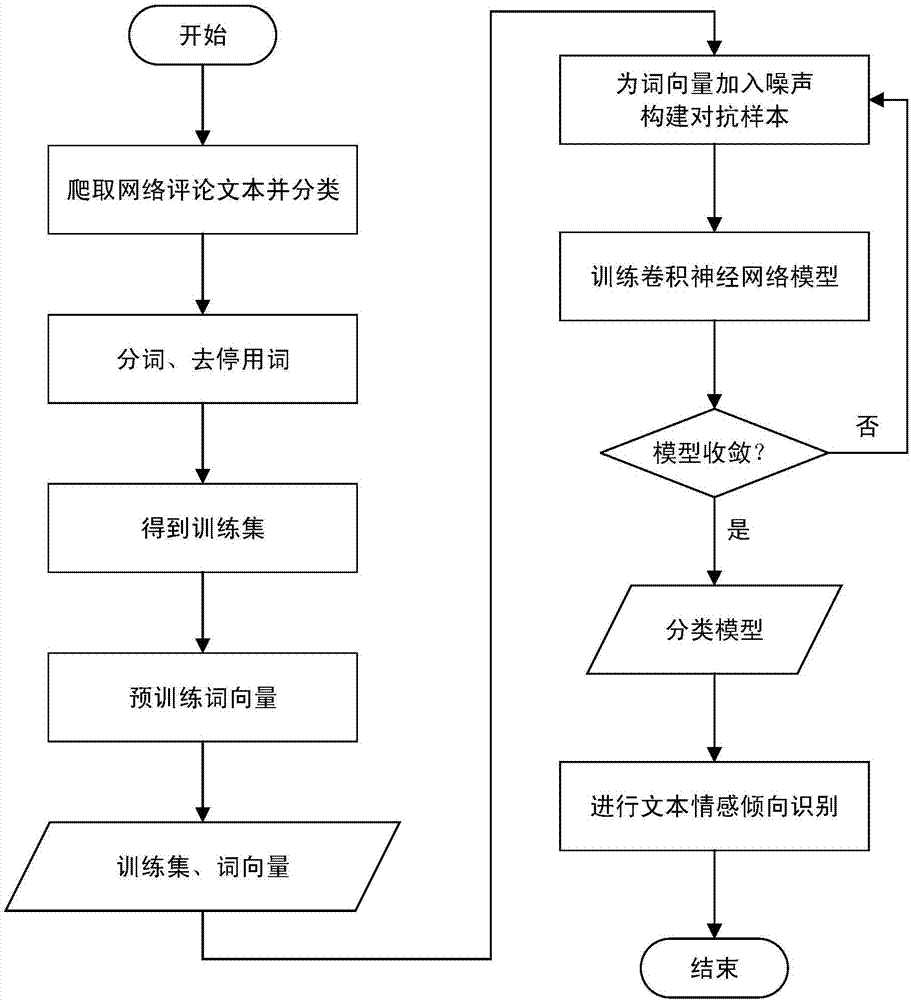

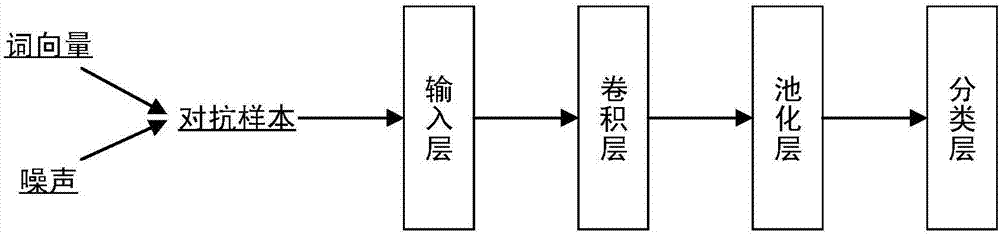

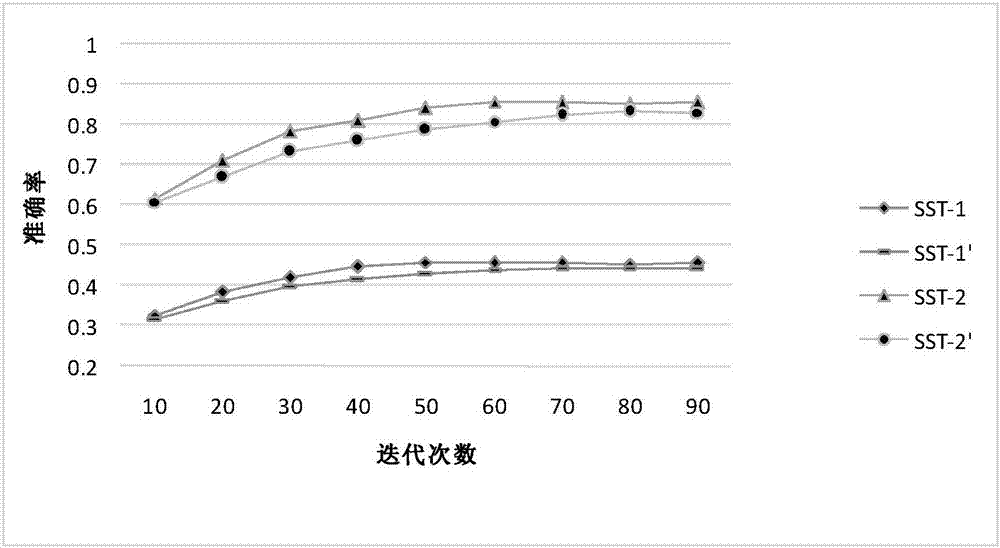

Identification method of emotional tendency of network comment texts and convolutional neutral network model

ActiveCN107025284AQuality improvementExpressive abilityCharacter and pattern recognitionNatural language data processingStochastic gradient descentData set

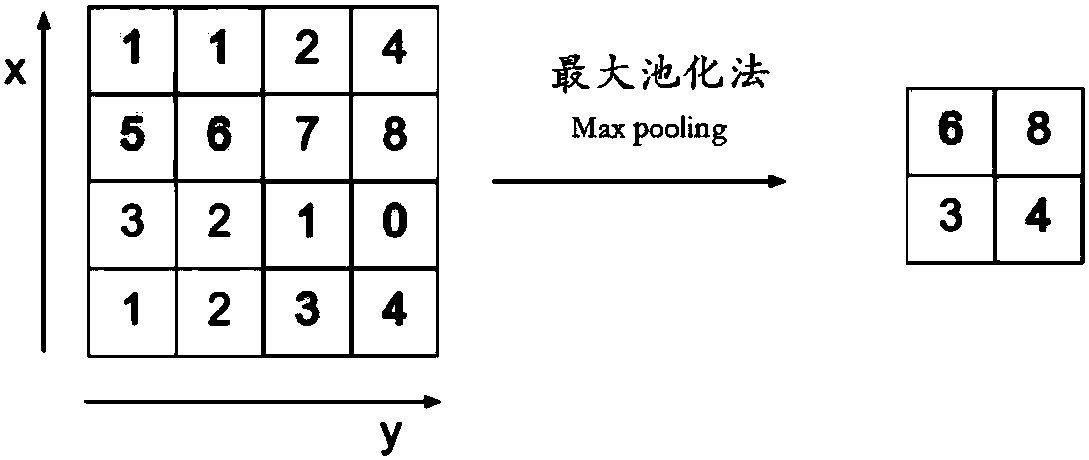

The invention discloses an identification method of emotional tendency of network comment texts and a convolutional neutral network model. The method comprises the steps as follows: grabbed network comment texts constitute a data set; word segmentation and text preprocessing are performed; all words subjected to text preprocessing are trained, and word vector representation of all words is obtained; the convolutional neutral network model is constructed and trained on a training set selected from the data set, and network parameters are updated with a back-propagating algorithm; in each step of training, noise is added to word vectors of an input layer for construction of adversarial samples, adversarial training is performed, and network parameters are updated with a random gradient descent algorithm; a classification model is obtained through repeated iteration to identify the emotional tendency of the network review texts. The convolutional neutral network model is used in the method and comprises the input layer, a convolution layer, a pooling layer and a classification layer. The adversarial samples can be classified correctly and the identification accuracy is improved.

Owner:CENT SOUTH UNIV

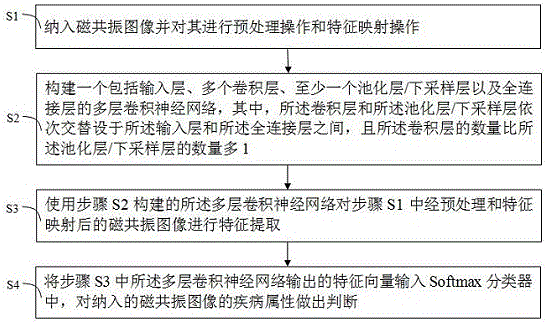

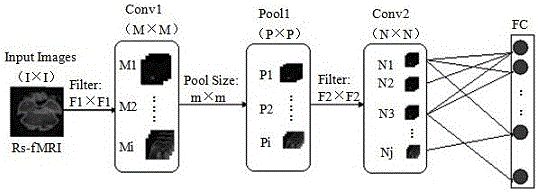

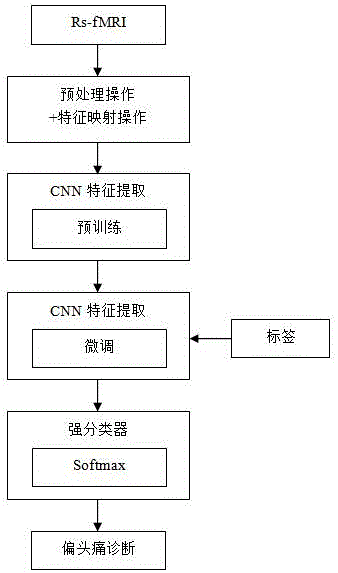

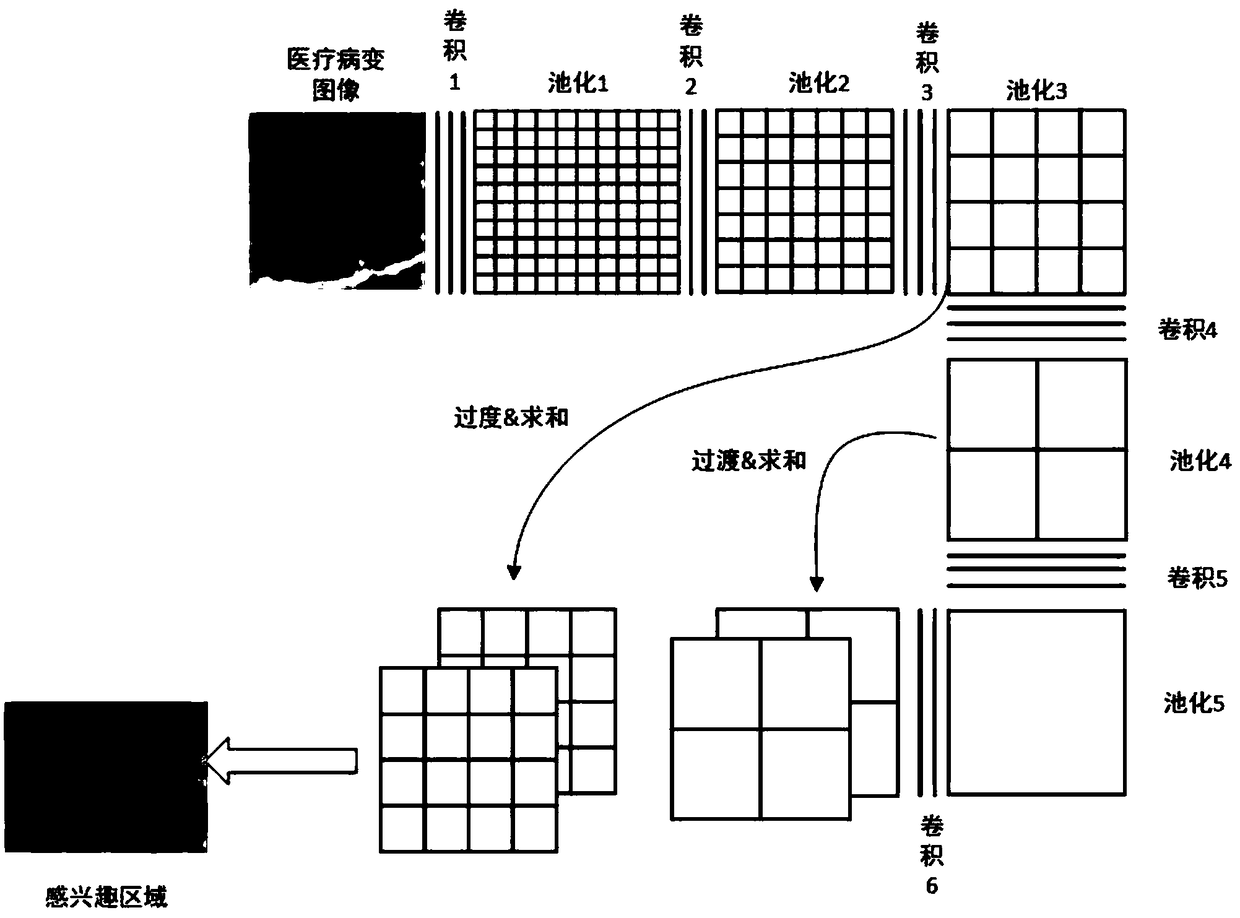

Magnetic resonance image feature extraction and classification method based on deep learning

InactiveCN106096616AImprove classification effectAccurate classification effectCharacter and pattern recognitionDiseaseClassification methods

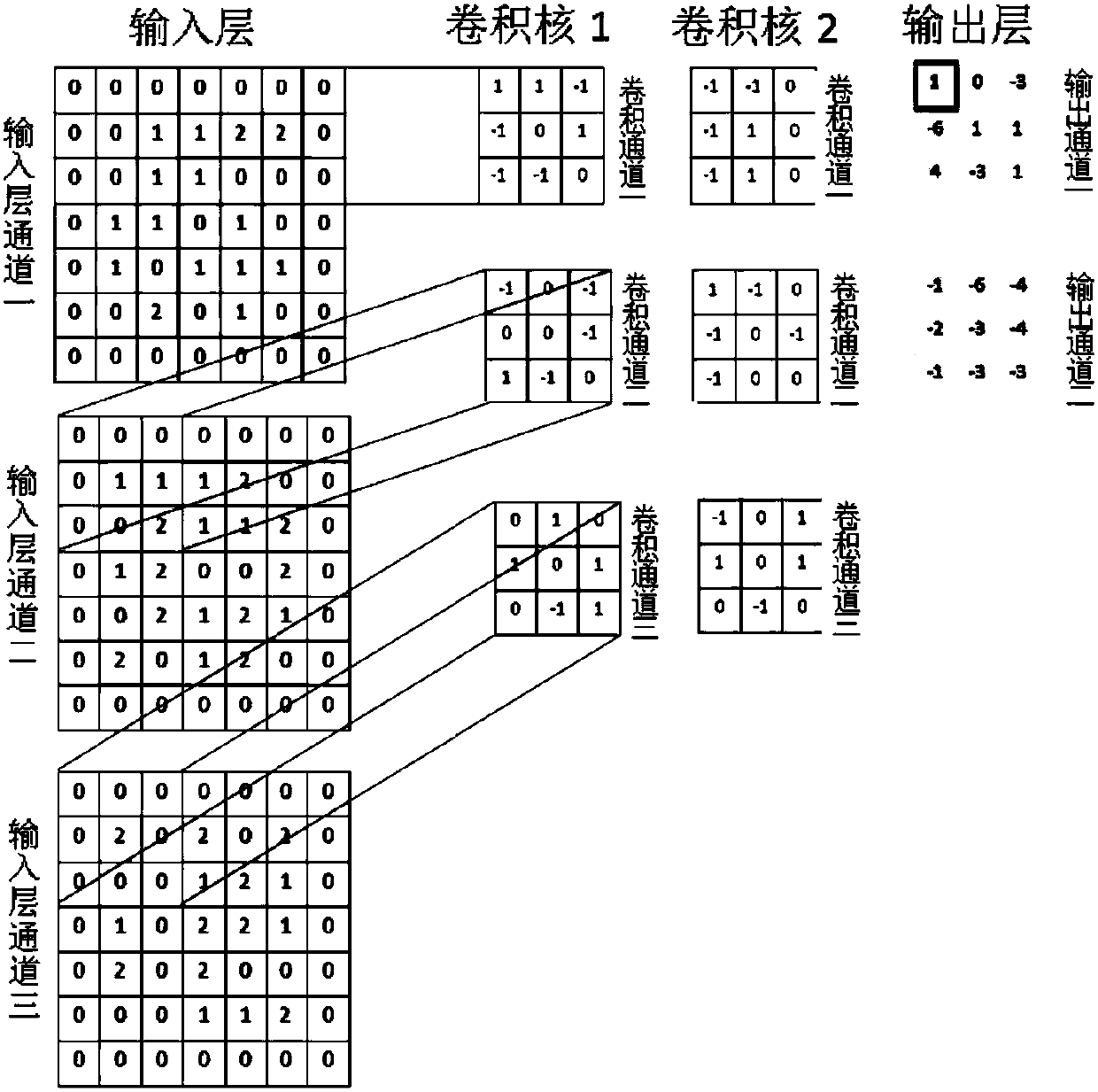

The invention provides a magnetic resonance image feature extraction and classification method based on deep learning, comprising: S1, taking a magnetic resonance image, and performing pretreatment operation and feature mapping operation on the magnetic resonance image; S2, constructing a multilayer convolutional neural network including an input layer, a plurality of convolutional layers, at least one pooling layer / lower sampling layer and a fully connected layer, wherein the convolutional layers and the pooling layer / lower sampling layer are successively alternatively arranged between the input layer and the fully connected layer, and the convolutional layers are one more than the pooling layer / lower sampling layer; S3, employing the multilayer convolutional neural network constructed in Step 2 to extract features of the magnetic resonance image; and S4, inputting feature vectors outputted in Step 3 into a Softmax classifier, and determining the disease attribute of the magnetic resonance image. The magnetic resonance image feature extraction and classification method can automatically obtain highly distinguishable features / feature combinations based on the nonlinear mapping of the multilayer convolutional neural network, and continuously optimize a network structure to obtain better classification effects.

Owner:WEST CHINA HOSPITAL SICHUAN UNIV

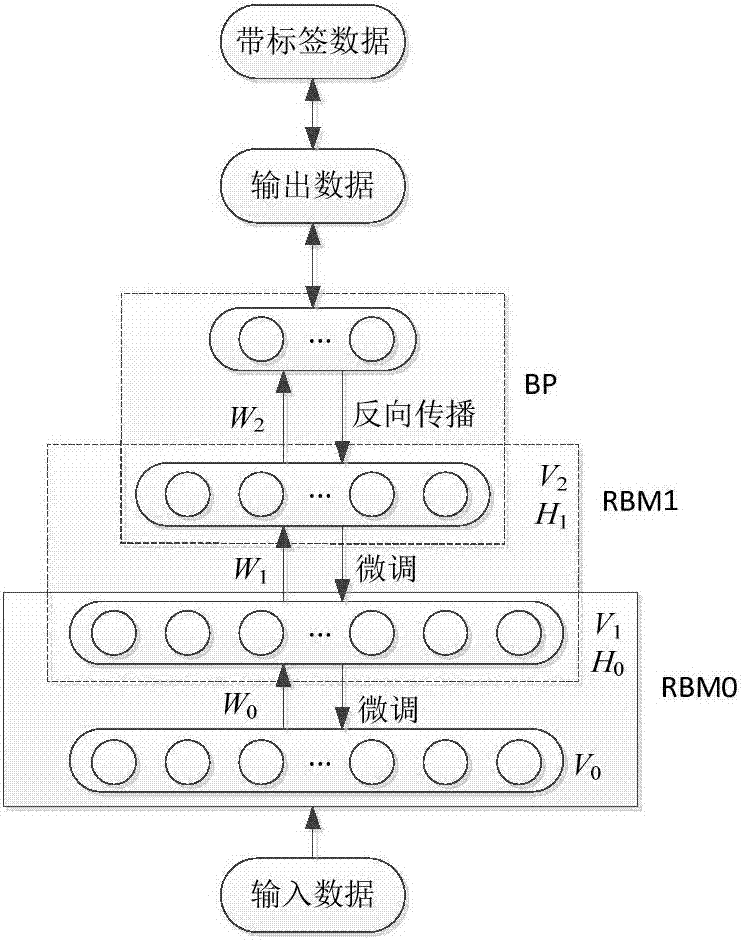

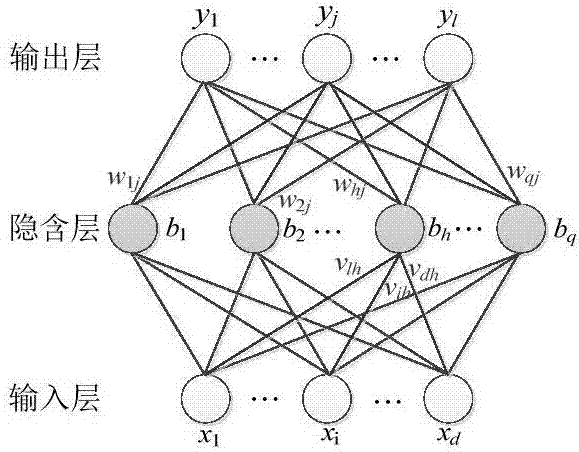

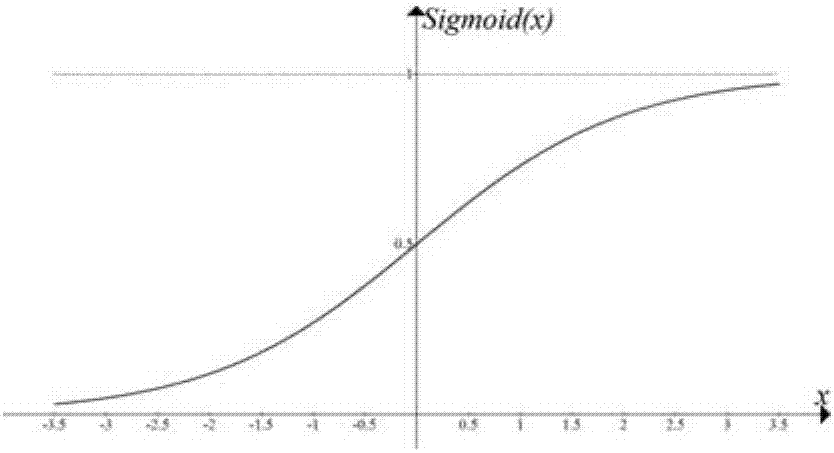

Feature extraction and state recognition of one-dimensional physiological signal based on depth learning

ActiveCN107256393ATo solve the classification accuracy is not highOptimize network structureCharacter and pattern recognitionNeural architecturesSignal classificationAnalysis models

The present invention discloses a feature extraction and state recognition method for one-dimensional physiological signal based on depth learning. The method comprises: establishing a feature extraction and state recognition analysis model DBN of a on-dimensional physiological signal based on depth learning, wherein the DBN model adopts a "pre-training+fine-tuning" training process, and in a pre-training stage, a first RBM is trained firstly and then a well-trained node is used as an input of a second RBM, and then the second RBM is trained, and so forth; and after training of all RBMs is finished, using a BP algorithm to fin-tune a network, and finally inputting an eigenvector output by the DBN into a Softmax classifier, and determining a state of an individual that is incorporated into the one-dimensional physiological signal. The method provided by the present invention effectively solves the problem that in the conventional one-dimensional physiological signal classification process, feature inputs need to be selected manually so that classification precision is low; and through non-linear mapping of the deep confidence network, highly-separable features / feature combinations are automatically obtained for classification, and a better classification effect can be obtained by keeping optimizing the structure of the network.

Owner:SICHUAN UNIV

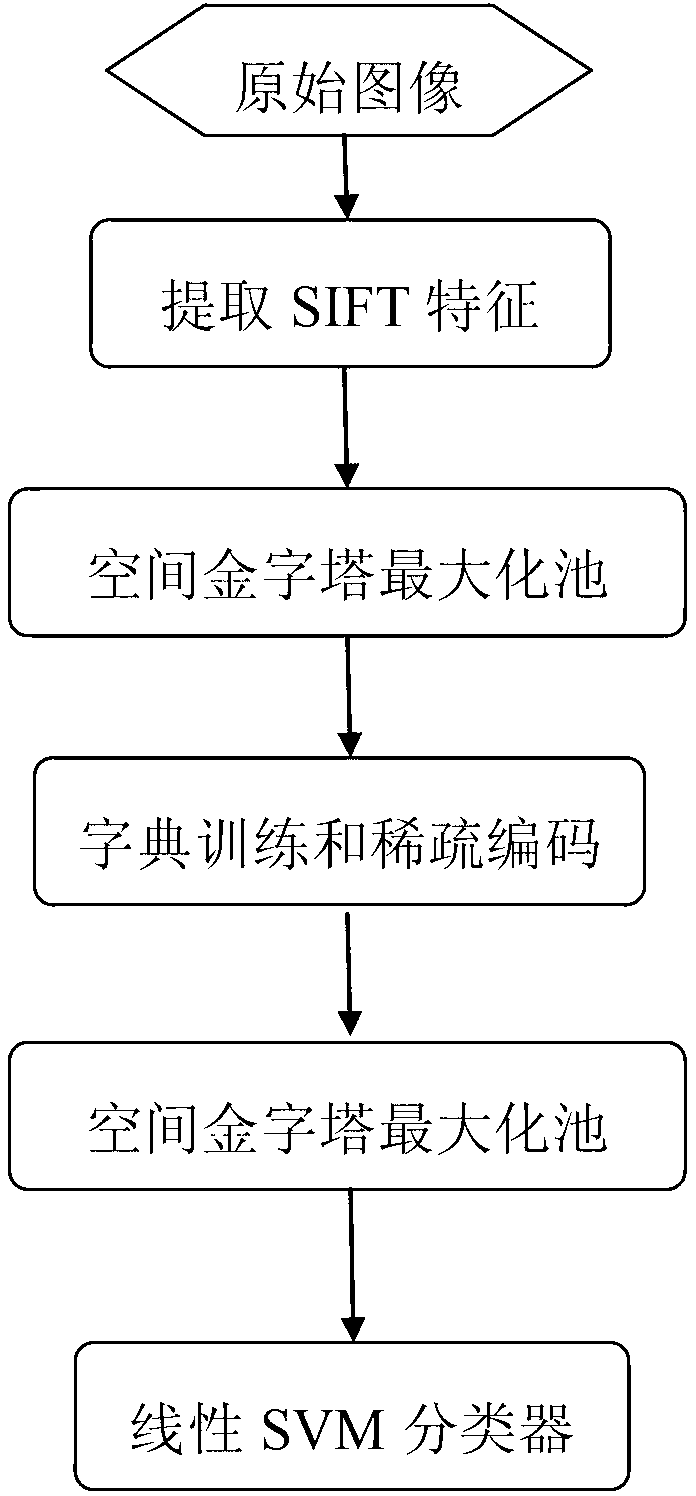

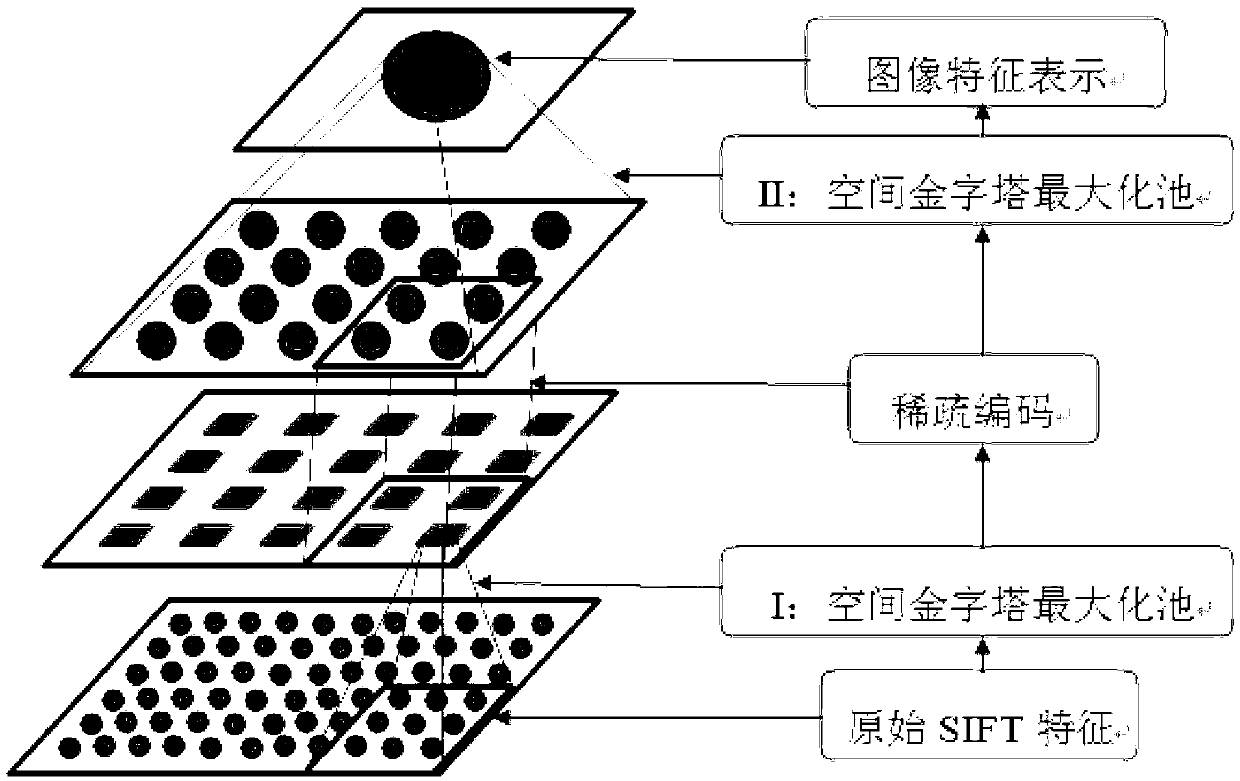

Image classification method based on hierarchical SIFT (scale-invariant feature transform) features and sparse coding

InactiveCN103020647AReduce the dimensionality of SIFT featuresHigh simulationCharacter and pattern recognitionSingular value decompositionData set

The invention discloses an image classification method based on hierarchical SIFT (scale-invariant feature transform) features and sparse coding. The method includes the implementation steps: (1) extracting 512-dimension scale unchanged SIFT features from each image in a data set according to 8-pixel step length and 32X32 pixel blocks; (2) applying a space maximization pool method to the SIFT features of each image block so that a 168-dimension vector y is obtained; (3) selecting several blocks from all 32X32 image blocks in the data set randomly and training a dictionary D by the aid of a K-singular value decomposition method; (4) as for the vectors y of all blocks in each image, performing sparse representation for the dictionary D; (5) applying the method in the step (2) for all sparse representations of each image so that feature representations of the whole image are obtained; and (6) inputting the feature representations of the images into a linear SVM (support vector machine) classifier so that classification results of the images are obtained. The image classification method has the advantages of capabilities of capturing local image structured information and removing image low-level feature redundancy and can be used for target identification.

Owner:XIDIAN UNIV

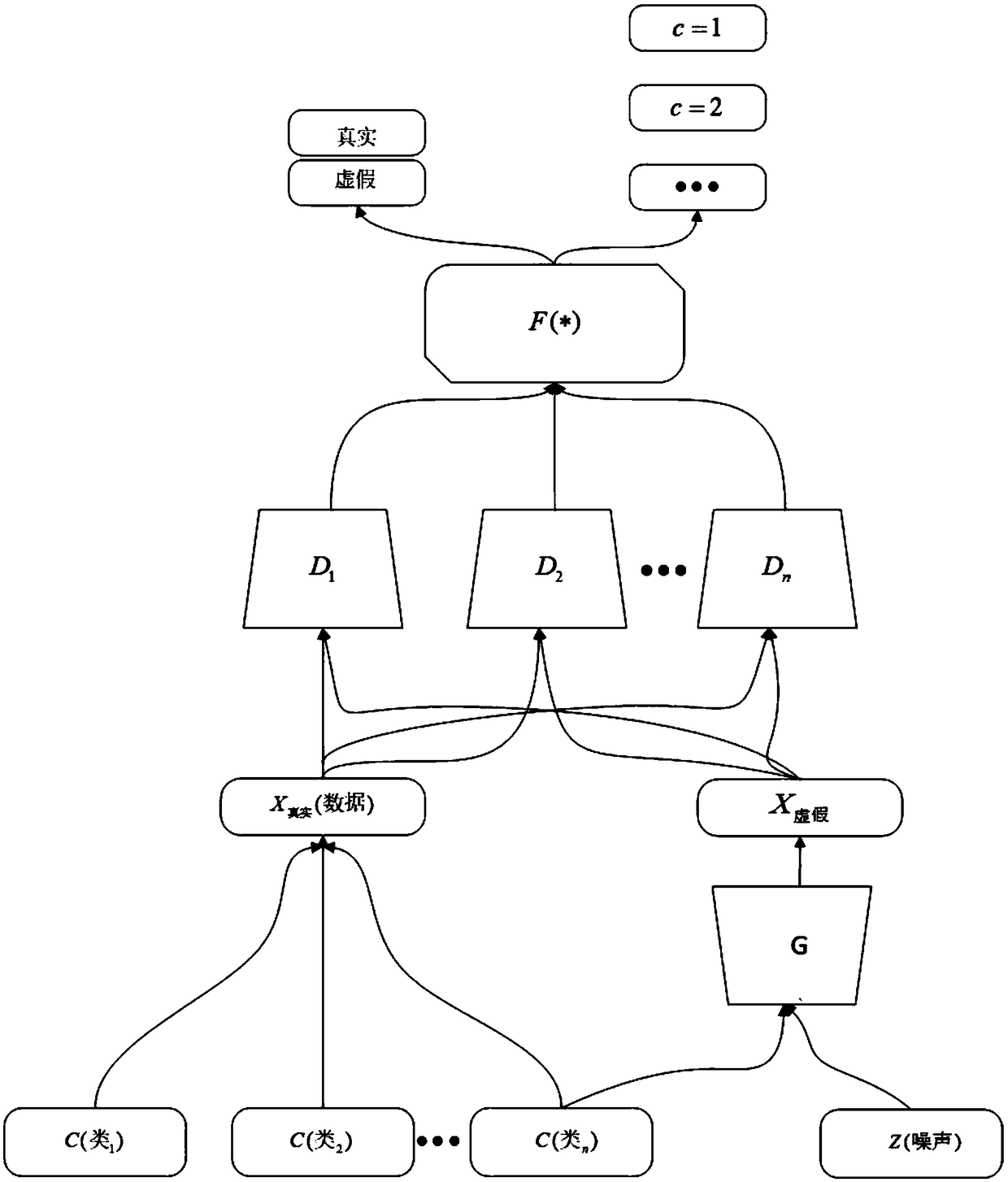

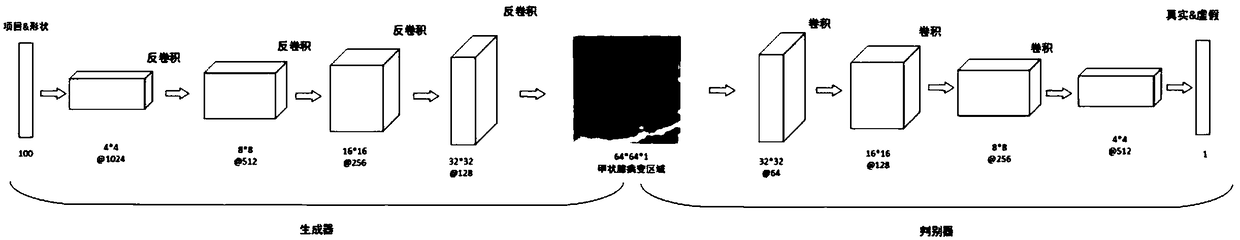

Medical image synthesis and classification method based on a conditional multi-judgment generative adversarial network

ActiveCN109493308AMultiple variabilityImprove accuracyImage enhancementImage analysisData setClassification methods

The invention discloses a medical image synthesis and classification method based on a conditional multi-judgment generative adversarial network. The method comprises the following steps: 1, segmenting a lesion area in a computed tomography (CT) image, and extracting a lesion interested area (Region of Interest, ROIs for short); 2, performing data preprocessing on the lesion ROIs extracted in thestep 1; 3, designing a Conditional Multi-Discriminant Generative Adversarial Network (Conditional Multi-) based on multiple conditions The method comprises the following steps: firstly, establishing aCMDGAN model architecture for short, and training the CMDGAN model architecture by using an image in the second step to obtain a generation model; 4, performing synthetic data enhancement on the extracted lesion ROIs by using the generation model obtained in the step 3; and 5, designing a multi-scale residual network (Multiscale ResNet Network for short), and training the multi-scale residual network. According to the method provided by the invention, the synthetic medical image data set with high quality can be generated, and the classification accuracy of the classification network on the test image is relatively high, so that auxiliary diagnosis can be better provided for medical workers.

Owner:JILIN UNIV

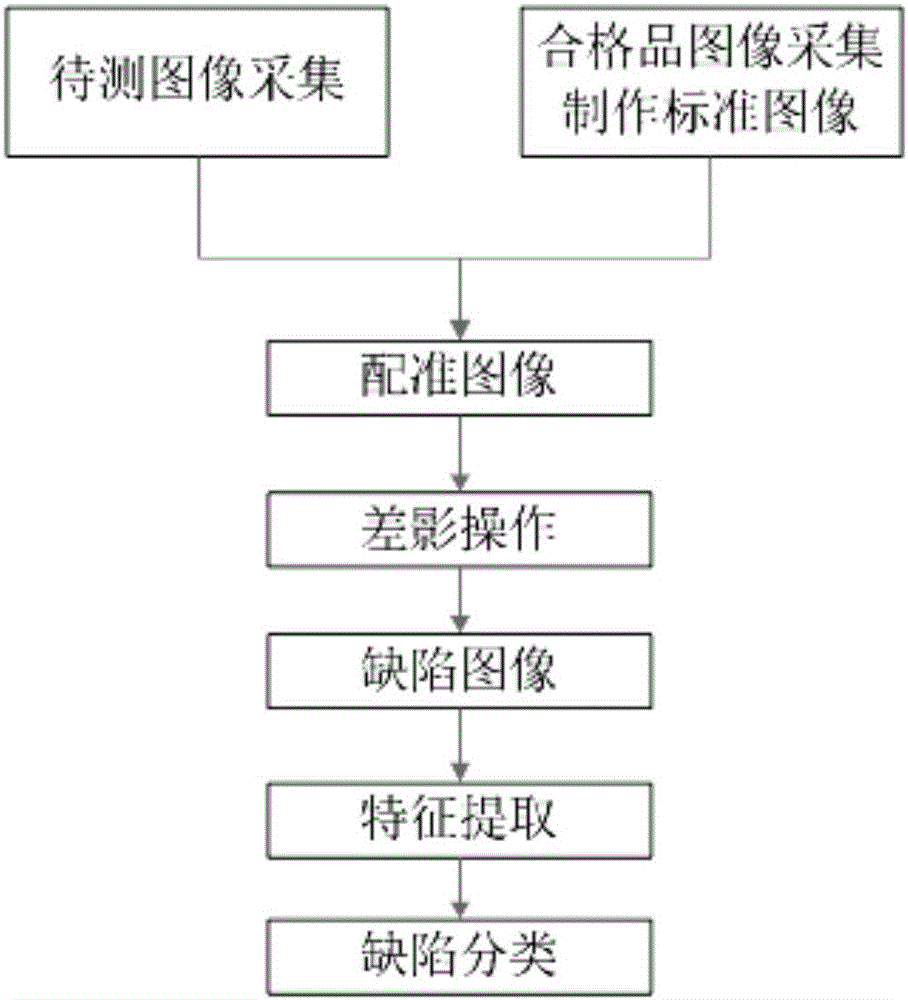

Product packaging surface defect detection and classification method based on machine vision

InactiveCN106204618AAvoid human interferenceAvoid trainingImage enhancementImage analysisColor imageFeature extraction

The invention discloses a product packaging surface defect detection and classification method based on machine vision. The method comprises the steps of 1, acquiring a high-definition color image of defect-free product packaging, making the high-definition color image into a standard image, conducting real-time shooting with a camera, conducting online acquisition of a high-definition color image of product packaging to be detected, and making the high-definition color image as an image to be detected; 2, conducting image matching on the image to be detected and the standard image based on SURF algorithm; 3, conducting difference image operation on the two images matched in step 2 to obtain a defective image; 4, conducting feature extraction on the defective image to obtain the geometrical features and color features of the defective image; 5, classifying product packaging surface defects by means of RBF neural network algorithm. Automatic defect detection and classification are conducted by means of a machine vision system, human factor interference can be avoided, labor cost is reduced greatly, and then huge hidden cost caused by training and management when artificial detection is adopted is avoided.

Owner:NANJING WENCAI SCI & TECH

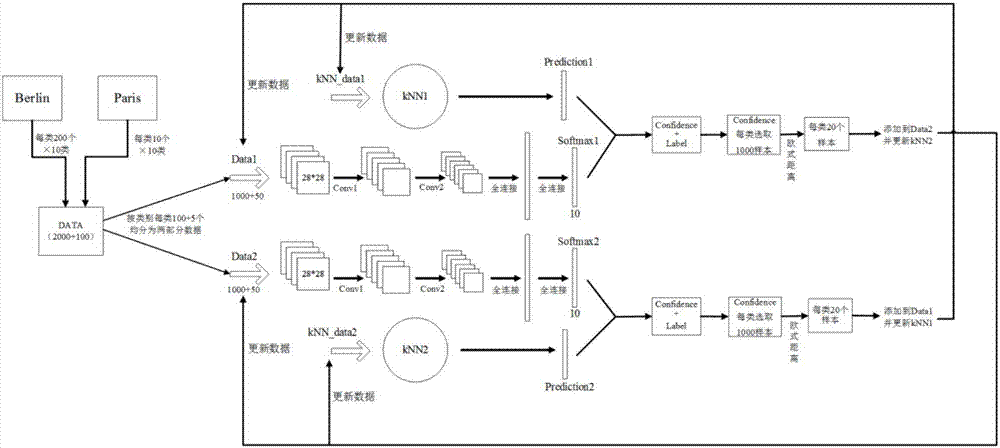

Multispectral remote sensing image terrain classification method based on deep and semi-supervised transfer learning

InactiveCN107451616AHigh feature accuracyGood classification effectCharacter and pattern recognitionNeural architecturesTraining data setsNear neighbor

The invention discloses a multispectral remote sensing image terrain classification method based on deep and semi-supervised transfer learning. A training data set and kNN data are extracted according to ground truth; the training data set is divided into two parts to be trained respectively; a multispectral image to be classified is inputted, and two classification result images are obtained from two CNN models; two kNN nearest neighbor algorithm images are constructed according to the training samples; the tested data are extracted by using the two classification result images, and the data are classified by using the kNN nearest neighbor algorithm; the classification result images are updated; the training samples and the kNN training samples of cooperative training are updated; and two cooperative training CNN networks are trained again, and the points having the class label of the test data set are classified by using the trained model so that the class of partial pixel points in the test data set is obtained and compared with the real class label. The k nearest neighbor algorithm and the sample similarity are introduced so that deviation of cooperative training can be prevented, the classification accuracy in case of insufficient training samples can be enhanced and thus the method can be used for target recognition.

Owner:XIDIAN UNIV

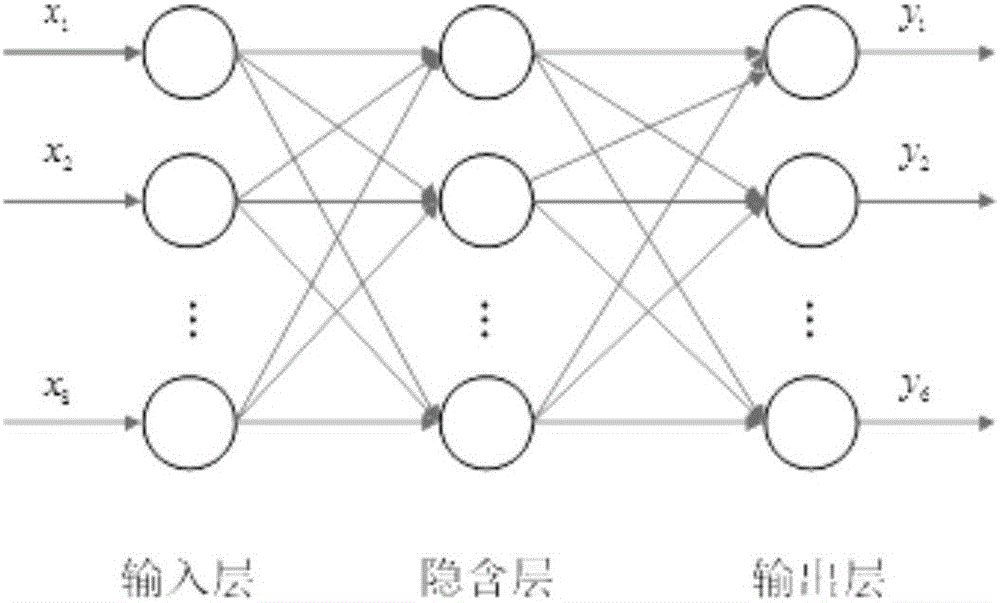

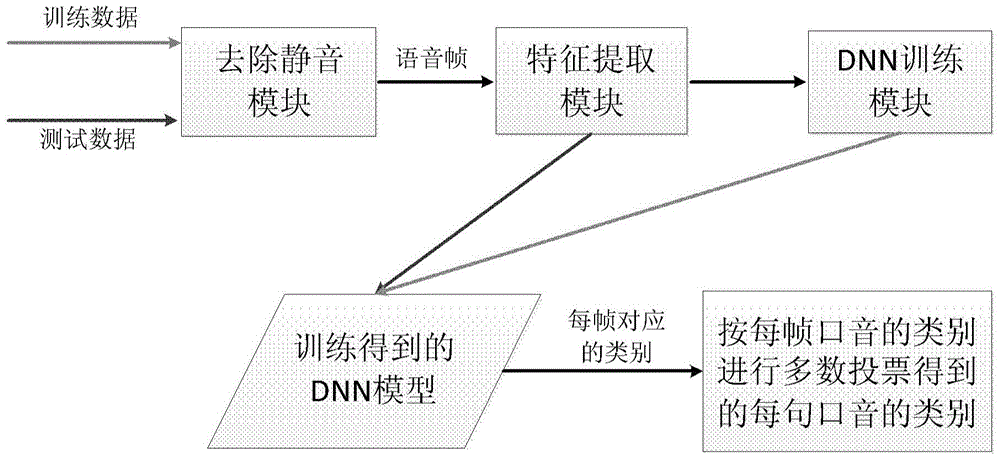

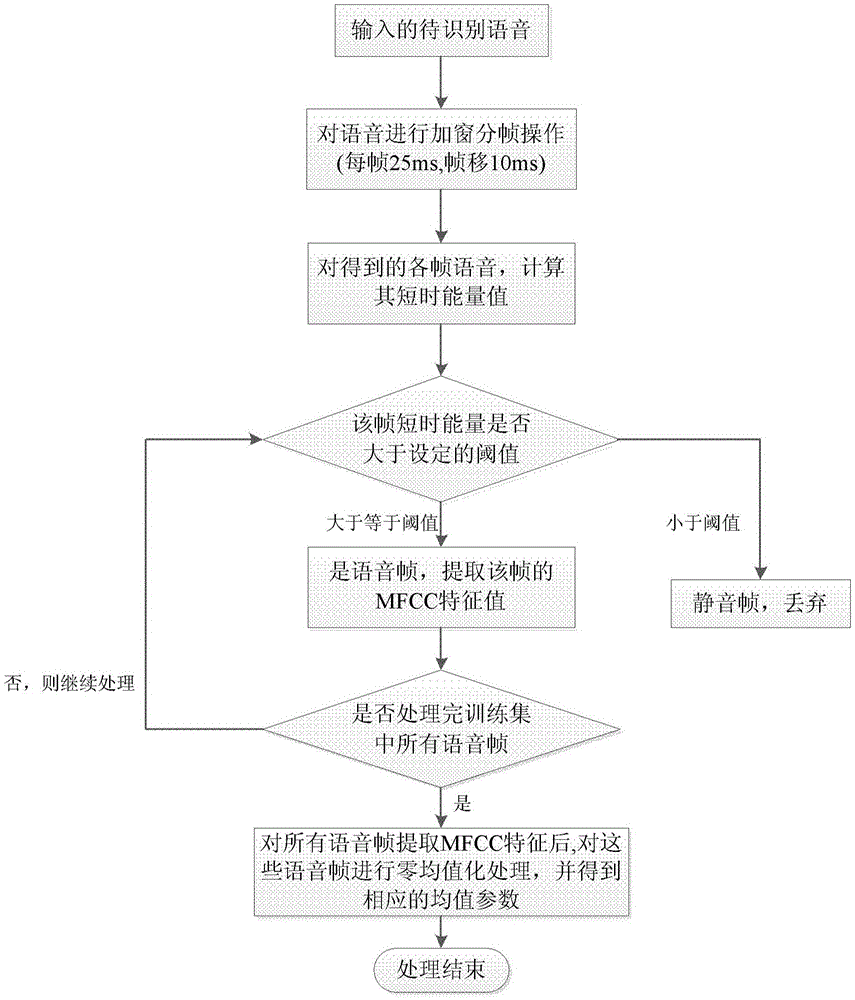

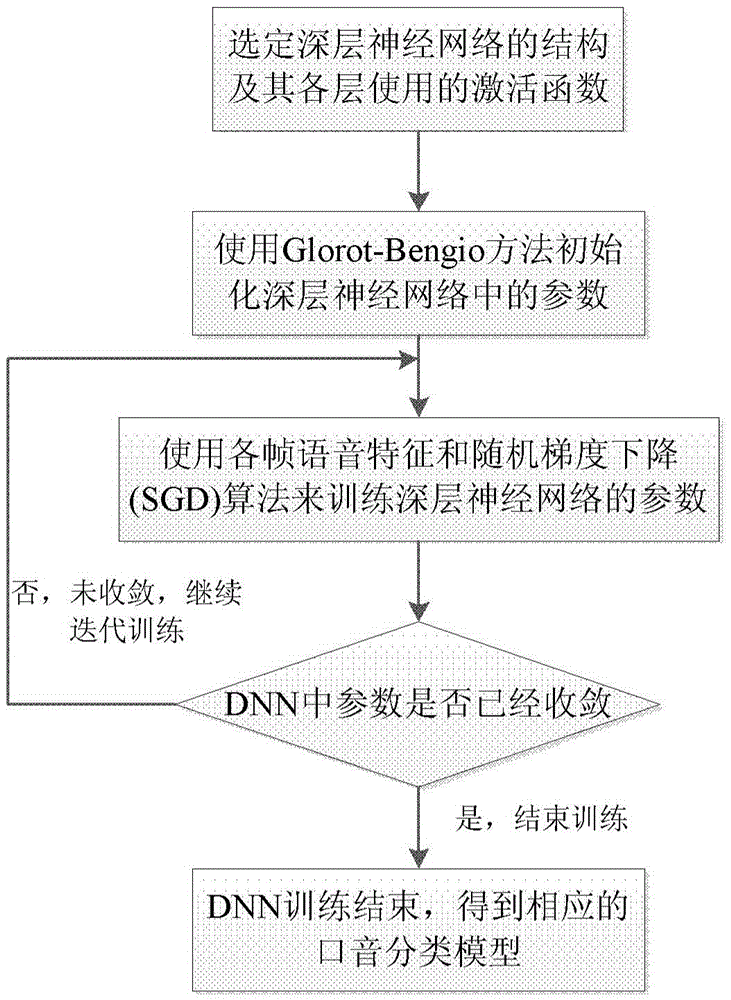

Deep-learning-technology-based automatic accent classification method and apparatus

InactiveCN105632501AImprove performanceImprove classification effectSpeech recognitionHidden layerFeature extraction

The invention discloses a deep-learning-technology-based automatic accent classification method and apparatus. The method comprises: mute voice elimination is carried out on all accent voices in a training set and mel-frequency cepstrum coefficient (MFCC) feature extraction is carried out; according to the extracted MFCC feature, deep neural networks of various accent voices are trained to describe acoustic characteristics of various accent voices, wherein the deep neural networks are forward artificial neural networks at least including two hidden layers; probability scores of all voice frames of a to-be-identified voice at all accent classifications in the deep neural networks are calculated and an accent classification tag with the largest probability score is set as a voice identification tag of the voice frame; and the voice classification of each voice frame in the to-be-identified voice is used for carrying out majority voting to obtain a voice classification corresponding to the to-be-identified voice. According to the invention, context information can be utilized effectively and thus a classification effect better than a traditional superficial layer model can be provided.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +2

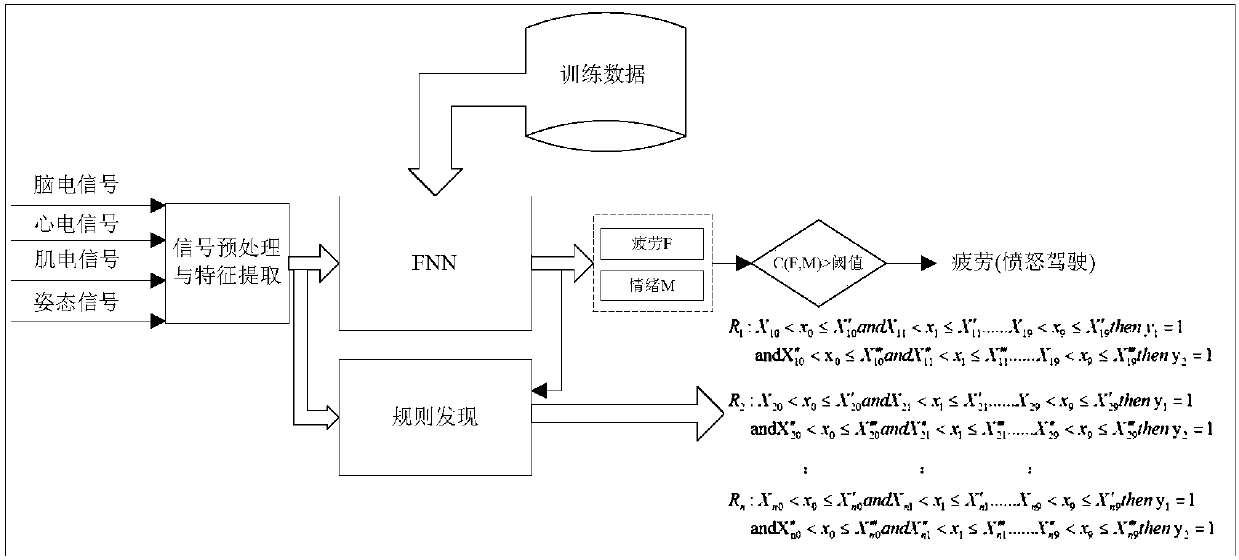

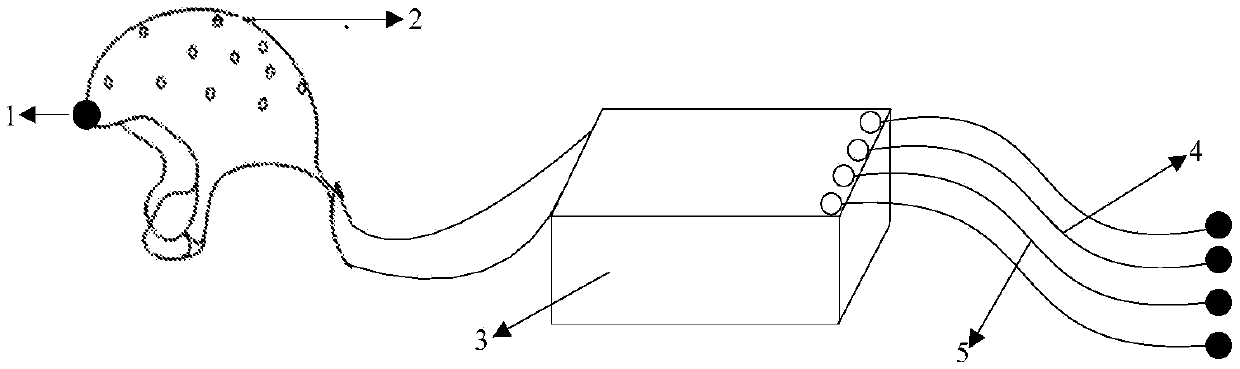

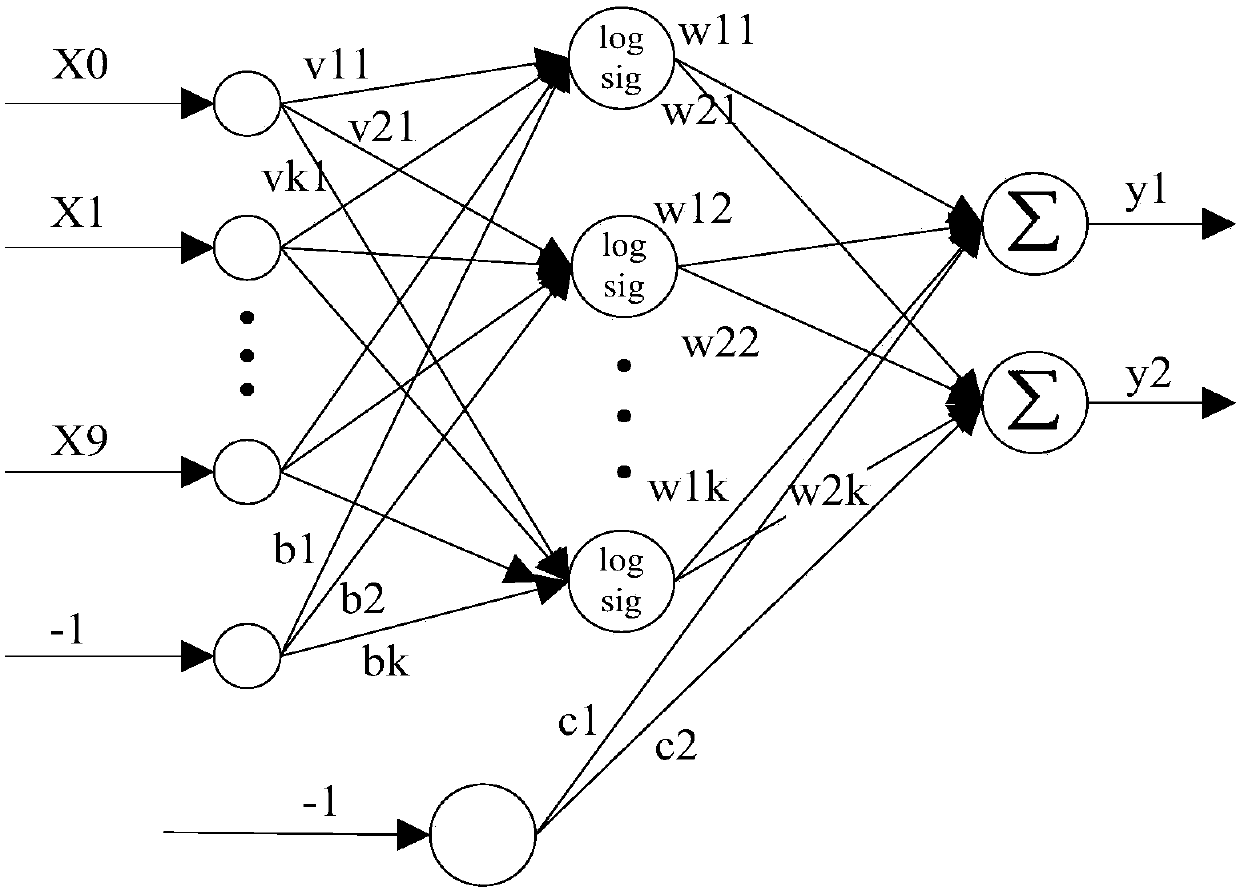

Driver fatigue and emotion evaluation method based on multi-source physiological information

InactiveCN107822623AEmphasis on comprehensivenessImprove classification effectDiagnostic signal processingSensorsEmotion assessmentFeature extraction

The invention discloses a driver fatigue and emotion evaluation method based on multi-source physiological information. The method comprises the following steps of 1, simultaneously collecting a driver's EEG, ECG, EMG and attitude signals; 2, performing pretreatment and feature extraction on the physiological signals; 3, building a fuzzy neural network evaluation model to achieve driver fatigue and emotion evaluation; and 4, based on the evaluation model, using genetic algorithms for continuously learning the driver's evaluation index, extracting rules and methods of driver fatigue and emotionevaluation, and improving the evaluation accuracy. The method emphasizes the comprehensiveness of decision information and the advanced nature of a classification method, greatly improves the accuracy of driver fatigue and emotion evaluation, and reduces the probability of occurrence of traffic accidents.

Owner:YANSHAN UNIV

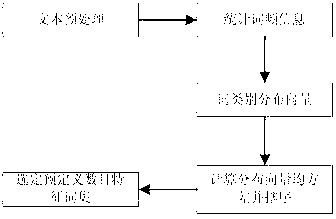

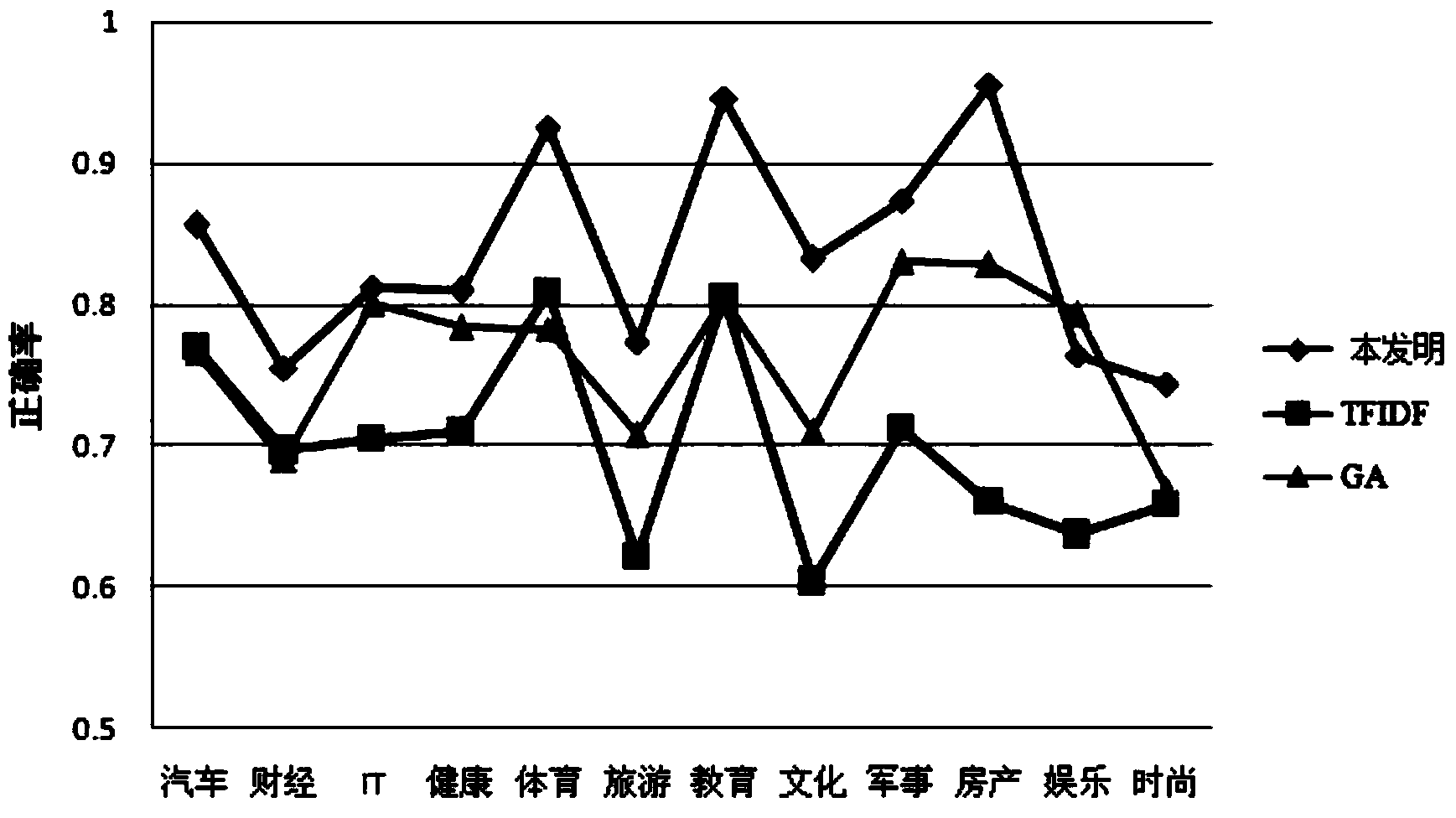

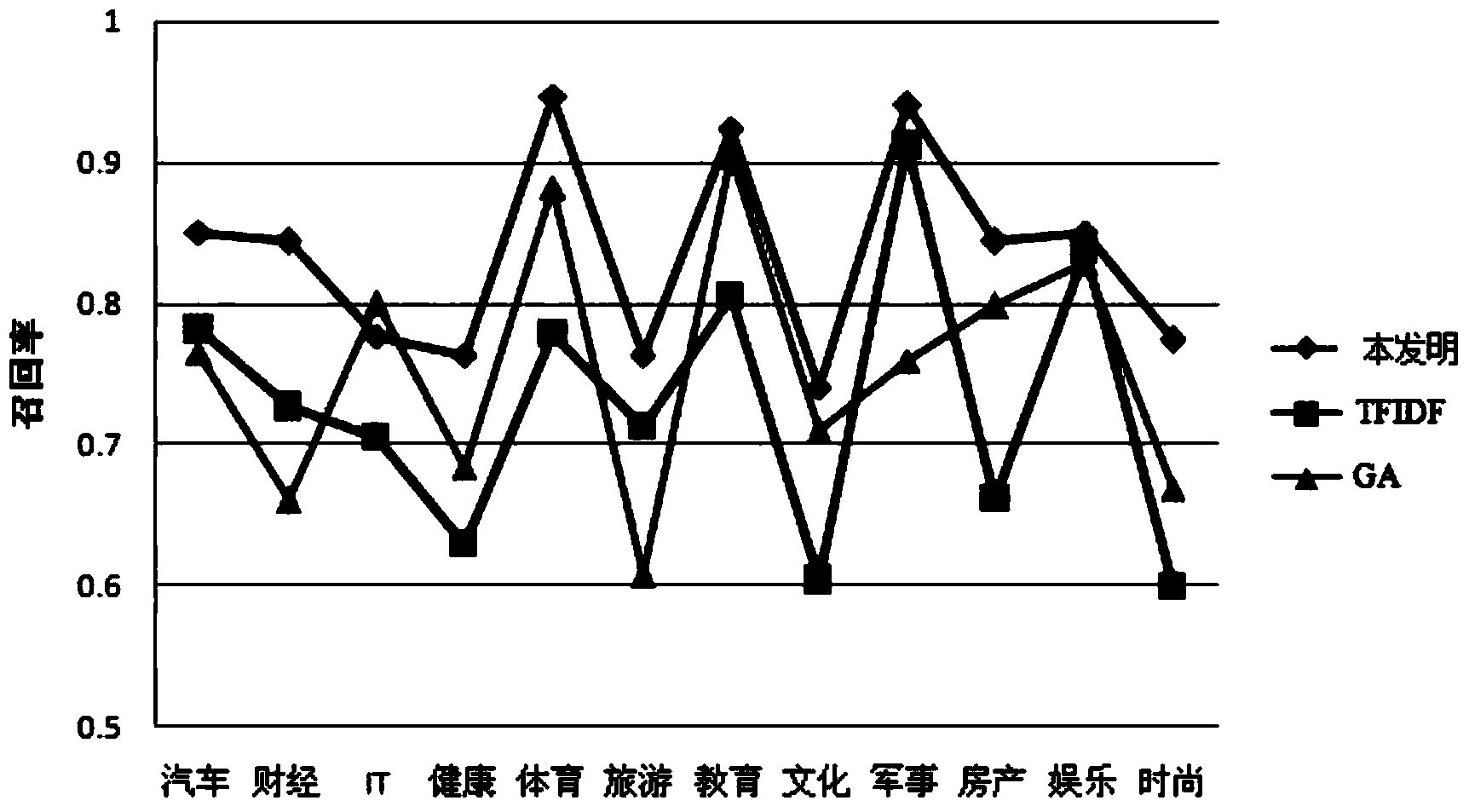

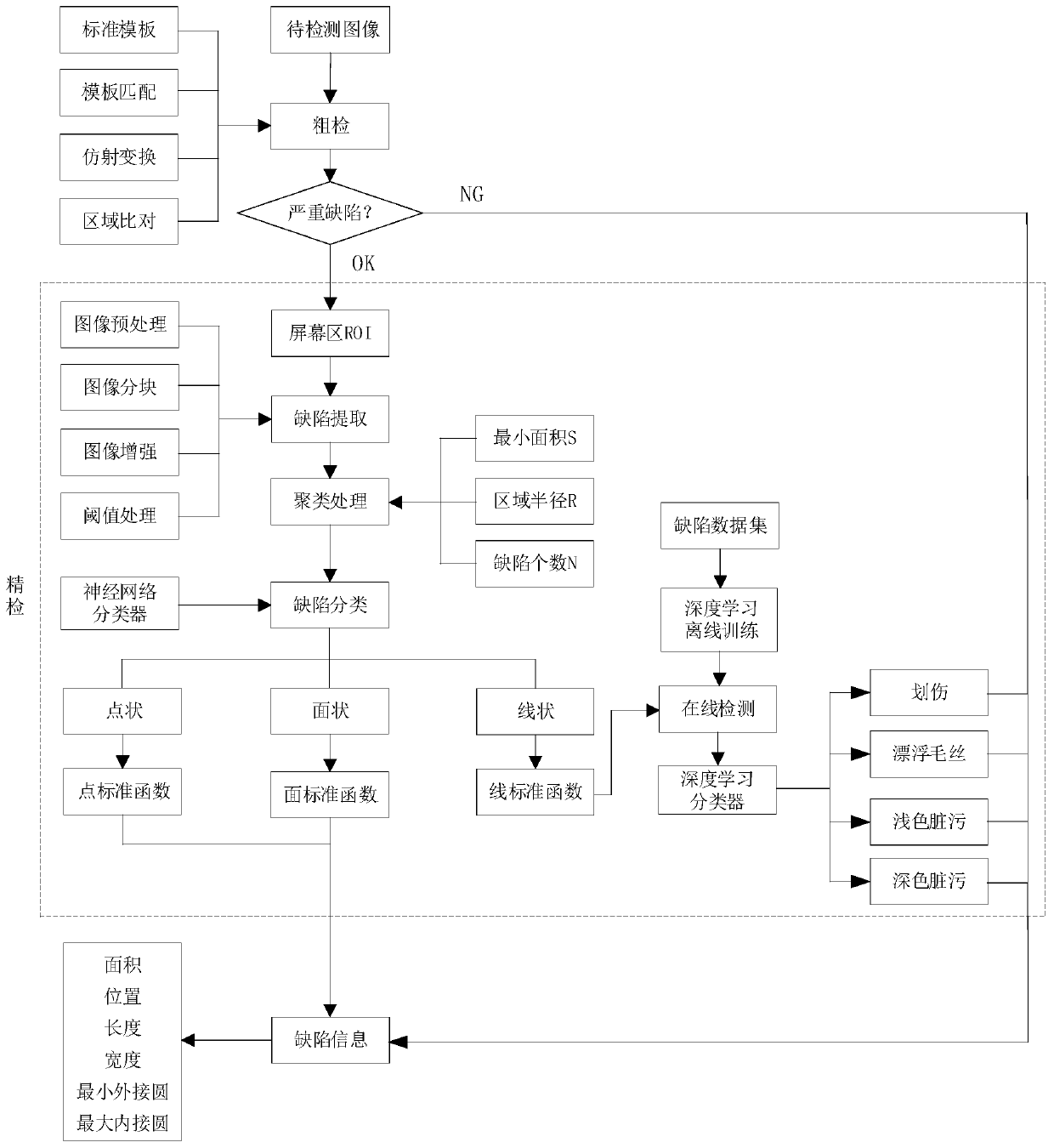

Text feature extraction method based on categorical distribution probability

InactiveCN103294817AReduce running timeImprove processing efficiencySpecial data processing applicationsAcquired characteristicFeature set

The invention discloses a text feature extraction method based on categorical distribution probability. The text feature extraction method based on the categorical distribution probability extracts text feature words by means of the manner according to which categorical distribution difference estimation is carried out on words of a text to be categorized. Mean square error values of probability distribution of each word at different categories are worked out by means of category word frequency probability of the words. A certain number of words with high mean square error values are extracted to form a final feature set. The obtained feature set is used as feature words of a text categorizing task to build a vector space model in practical application. A designated categorizer is used for training and obtaining a final category model to categorize the text to be categorized. According to the text feature extraction method based on the categorical distribution probability, category distribution of the words is accurately measured in a probability statistics manner. Category values of the words are estimated in a mean square error manner so as to accurately select features of the text. As far as the text categorizing task is concerned, a text categorizing effect of balanced linguistic data and non-balanced linguistic data is obviously improved.

Owner:EAST CHINA NORMAL UNIV

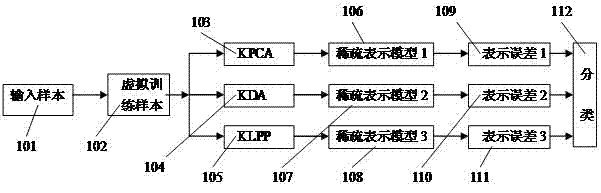

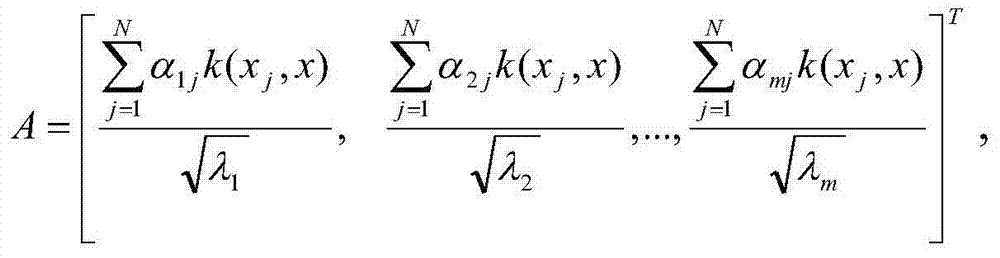

Multiple-sparse-representation face recognition method for solving small sample size problem

InactiveCN104268593AImprove robustnessImprove classification effectCharacter and pattern recognitionKernel principal component analysisSmall sample

Provided is a multiple-sparse-representation face recognition method for solving the small sample size problem. In the method, two modes are adopted to solve the small sample size problem during face recognition, one mode is that given original training samples produce 'virtual samples' so as to increase the number of the training samples, and the other mode is that three nonlinear feature extraction methods, namely a kernel principle component analysis method, a kernel discriminant analysis method and a kernel locality preserving projection algorithm method are adopted to extract features of the samples on the basis that the virtual samples are produced. Therefore, three feature modes are obtained, sparse-representation models are established for each feature mode. Three sparse-representation models are established for each sample, and finally classification is performed according to representation results. By means of the multiple-sparse-representation face recognition method, virtual faces are produced through mirror symmetry, and then norm L1 based multiple-sparse-representation models are established and classified. Compared with other classification methods, the multiple-sparse-representation face recognition method is good in robustness and classification effect and is especially suitable for a lot of classification occasions with high data dimensionality and few training samples.

Owner:EAST CHINA JIAOTONG UNIVERSITY

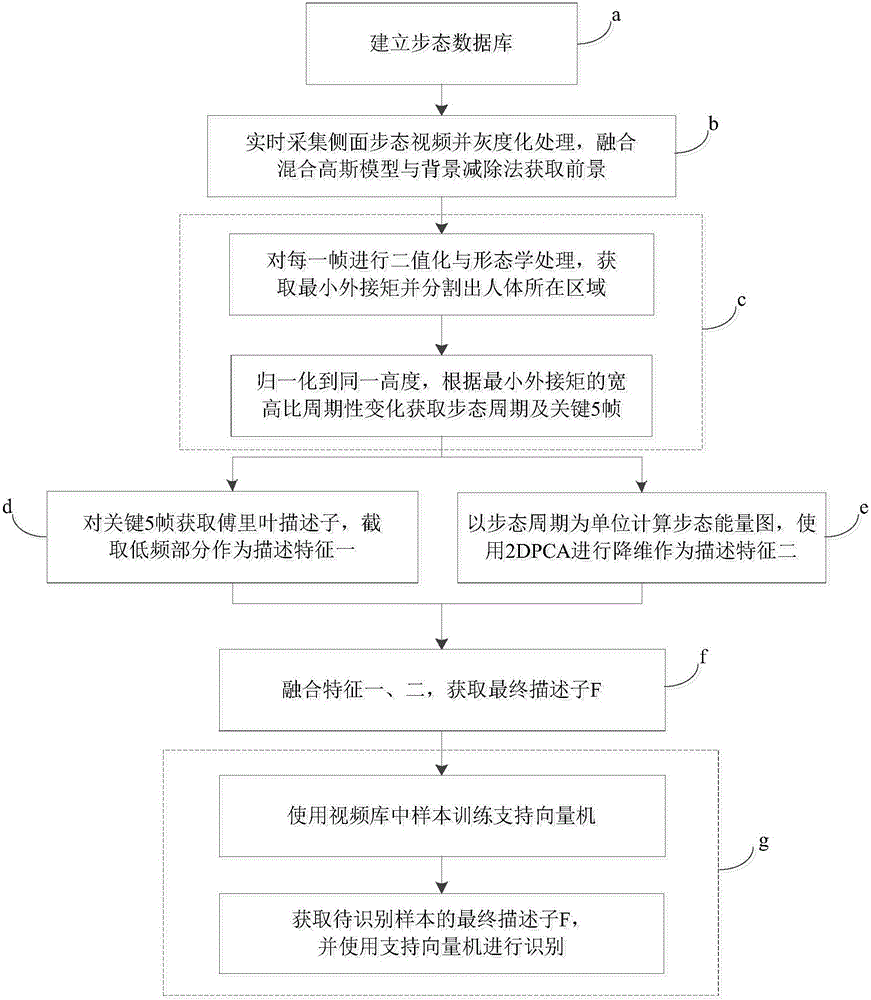

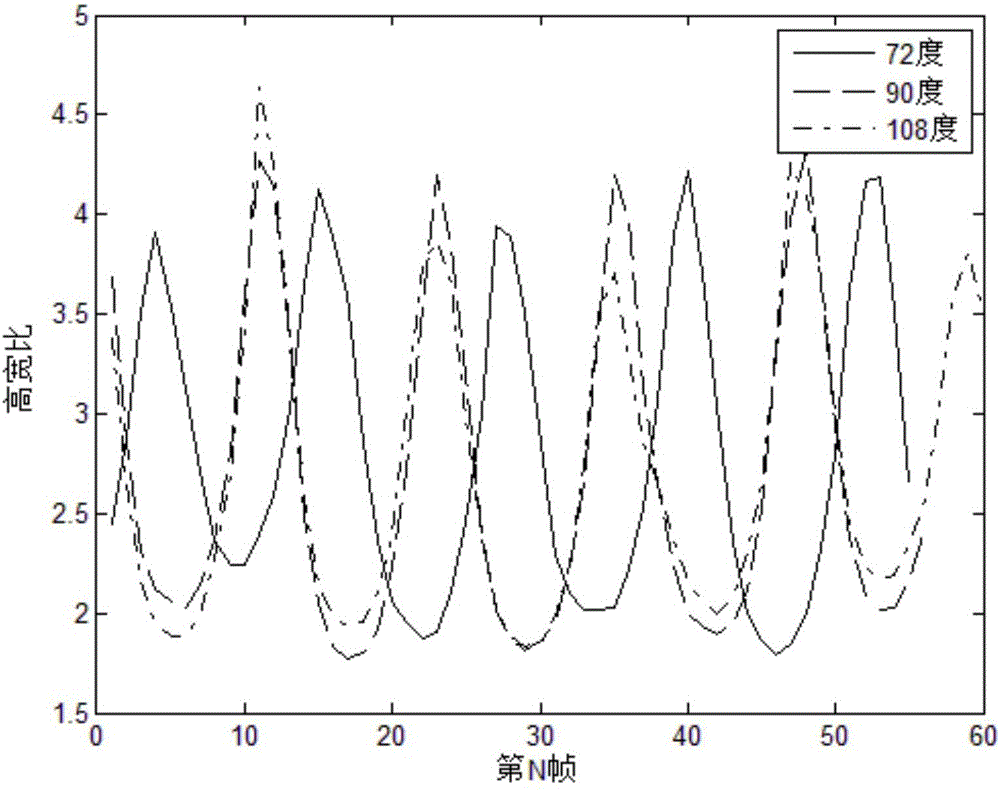

Fourier descriptor and gait energy image fusion feature-based gait identification method

InactiveCN106529499AUpdate Mechanism ImprovementsAccurate modelingCharacter and pattern recognitionHuman behaviorHuman body

The invention relates to a Fourier descriptor and gait energy image fusion feature-based gait identification method. The method comprises the steps of performing graying preprocessing on a single frame of image, updating a background in real time by using a Gaussian mixture model, and obtaining a foreground through a background subtraction method; performing binarization and morphological processing on each frame, obtaining a minimum enclosing rectangle of a moving human body, performing normalization to a same height, and obtaining a gait cycle and key 5 frames according to cyclic variation of a height-width ratio of the minimum enclosing rectangle; extracting low-frequency parts of Fourier descriptors of the key 5 frames to serve as features I; centralizing all frames in the cycle to obtain a gait energy image, and performing dimension reduction through principal component analysis to serve as features II; and fusing the features I and II and performing identification by adopting a support vector machine. According to the method, the judgment whether a current human behavior is abnormal or not can be realized; the background is accurately modeled by using the Gaussian mixture model, and relatively good real-time property is achieved; and the used fused feature has strong representability and robustness, so that the abnormal gait identification rate can be effectively increased.

Owner:WUHAN UNIV OF TECH

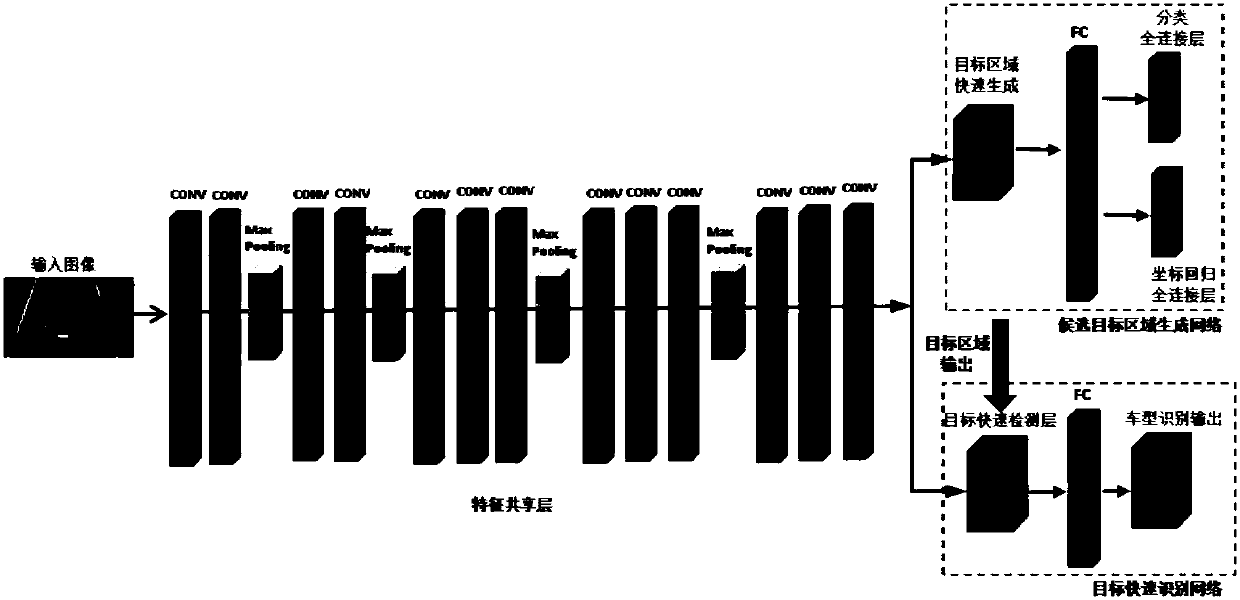

Vehicle type identification method and system based on deep neural network

InactiveCN107871126AAvoid double countingMeet the needs of real-time identificationCharacter and pattern recognitionNeural learning methodsFeature extractionHigh dimensional

In order to improve the efficiency of vehicle positioning to meet the real-time requirements for vehicle type identification, the present invention provides a vehicle type identification method and system based on a deep neural network. The method comprises: integrating candidate target extraction and target identification into a network, and using end-to-end detection / identification methods to integrate feature extraction, target location, and target detection into a single network. According to the method and system provided by the present invention, target extraction t is no longer extracted from the original image, but is extracted from the high-dimensional feature map with very small dimensions by using the reference point and multi-dimensional coverage manner, and at the same time, the method for sharing the deep convolutional network parameters is used in the feature extraction layer, so that repetitive calculation of features is avoided, the identification efficiency is greatlyimproved, 20fps is reached in reality, the effect of the processing efficiency of a single server reaches 2 million sheets / day, and the requirement for real-time vehicle type identification is satisfied.

Owner:XIAN XIANGXUN TECH

Traveling vehicle vision detection method combining laser point cloud data

ActiveCN110175576AAvoid the problem of difficult access to spatial geometric informationRealize 3D detectionImage enhancementImage analysisHistogram of oriented gradientsVehicle detection

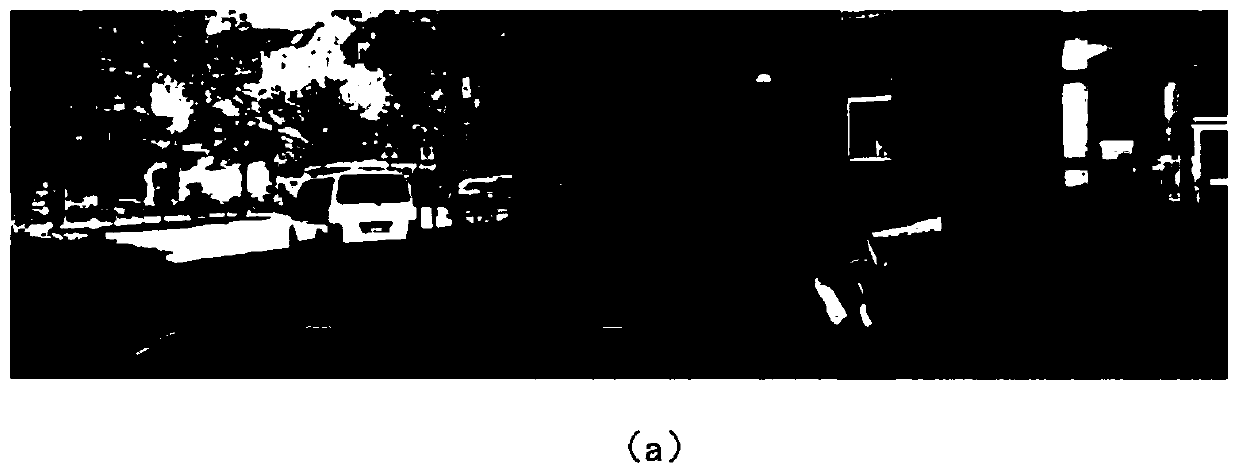

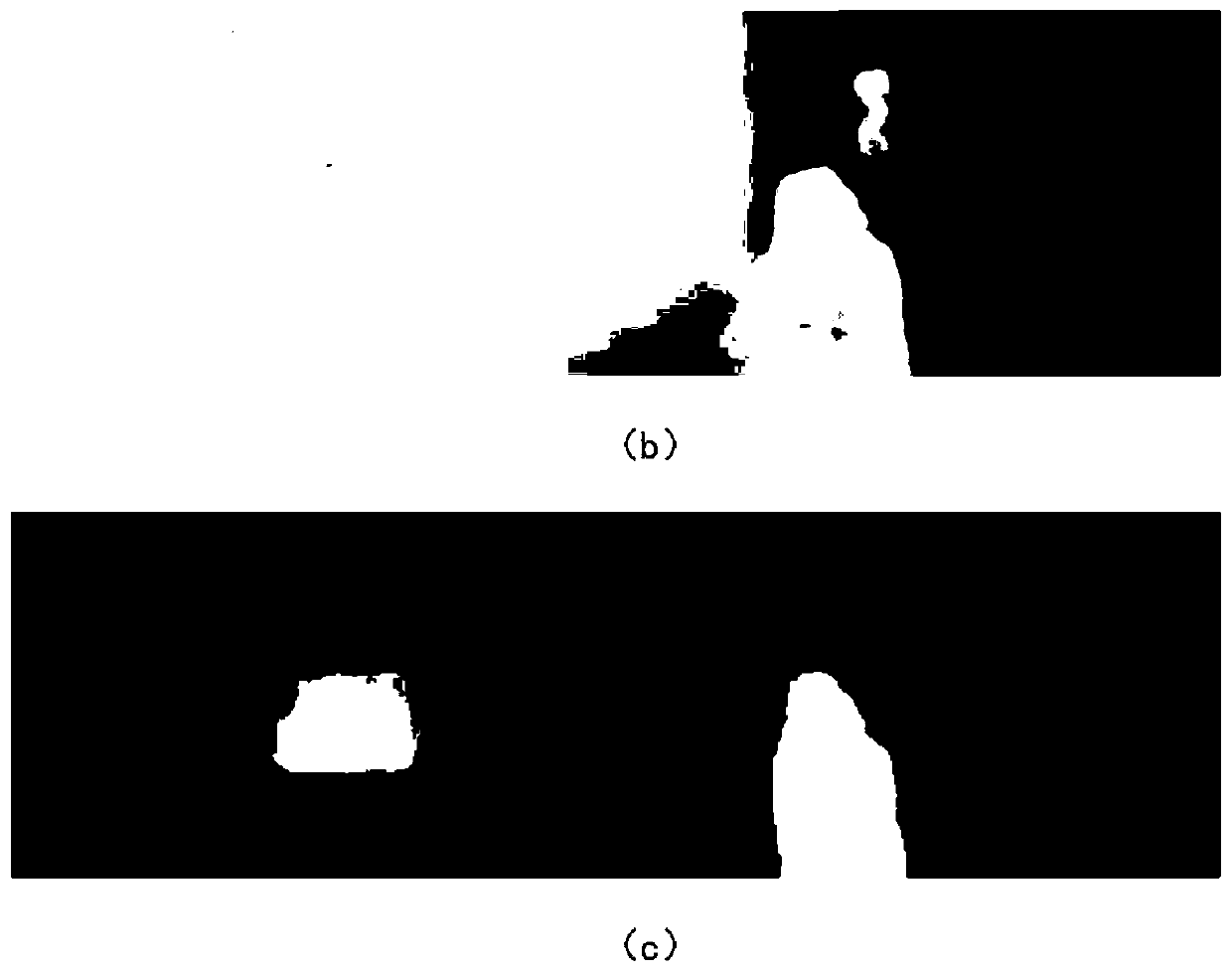

The invention discloses a traveling vehicle vision detection method combining laser point cloud data, belongs to the field of unmanned driving, and solves the problems in vehicle detection with a laser radar as a core in the prior art. The method comprises the following steps: firstly, completing combined calibration of a laser radar and a camera, and then performing time alignment; calculating anoptical flow grey-scale map between two adjacent frames in the calibrated video data, and performing motion segmentation based on the optical flow grey-scale map to obtain a motion region, namely a candidate region; searching point cloud data corresponding to the vehicle in a conical space corresponding to the candidate area based on the point cloud data after time alignment corresponding to eachframe of image to obtain a three-dimensional bounding box of the moving object; based on the candidate region, extracting a direction gradient histogram feature from each frame of image; extracting features of the point cloud data in the three-dimensional bounding box; and based on a genetic algorithm, carrying out feature level fusion on the obtained features, and classifying the motion areas after fusion to obtain a final driving vehicle detection result. The method is used for visual inspection of the driving vehicle.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

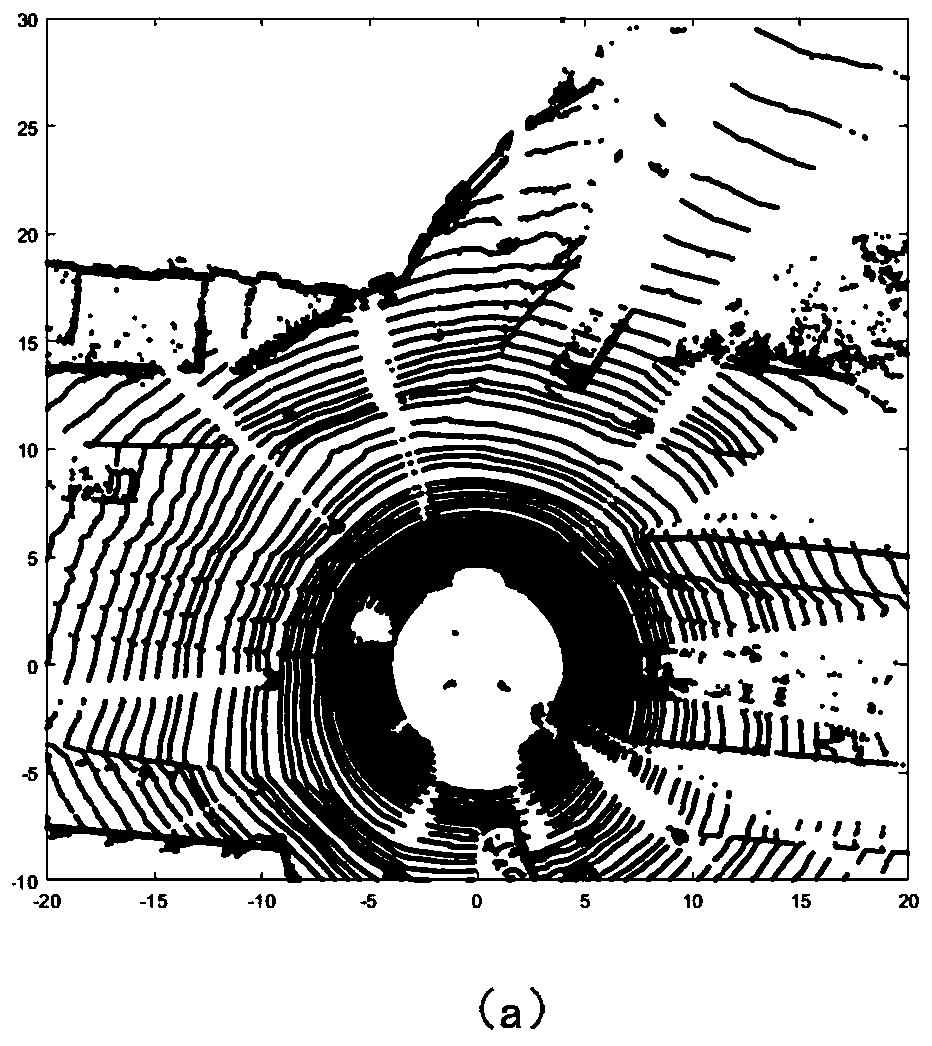

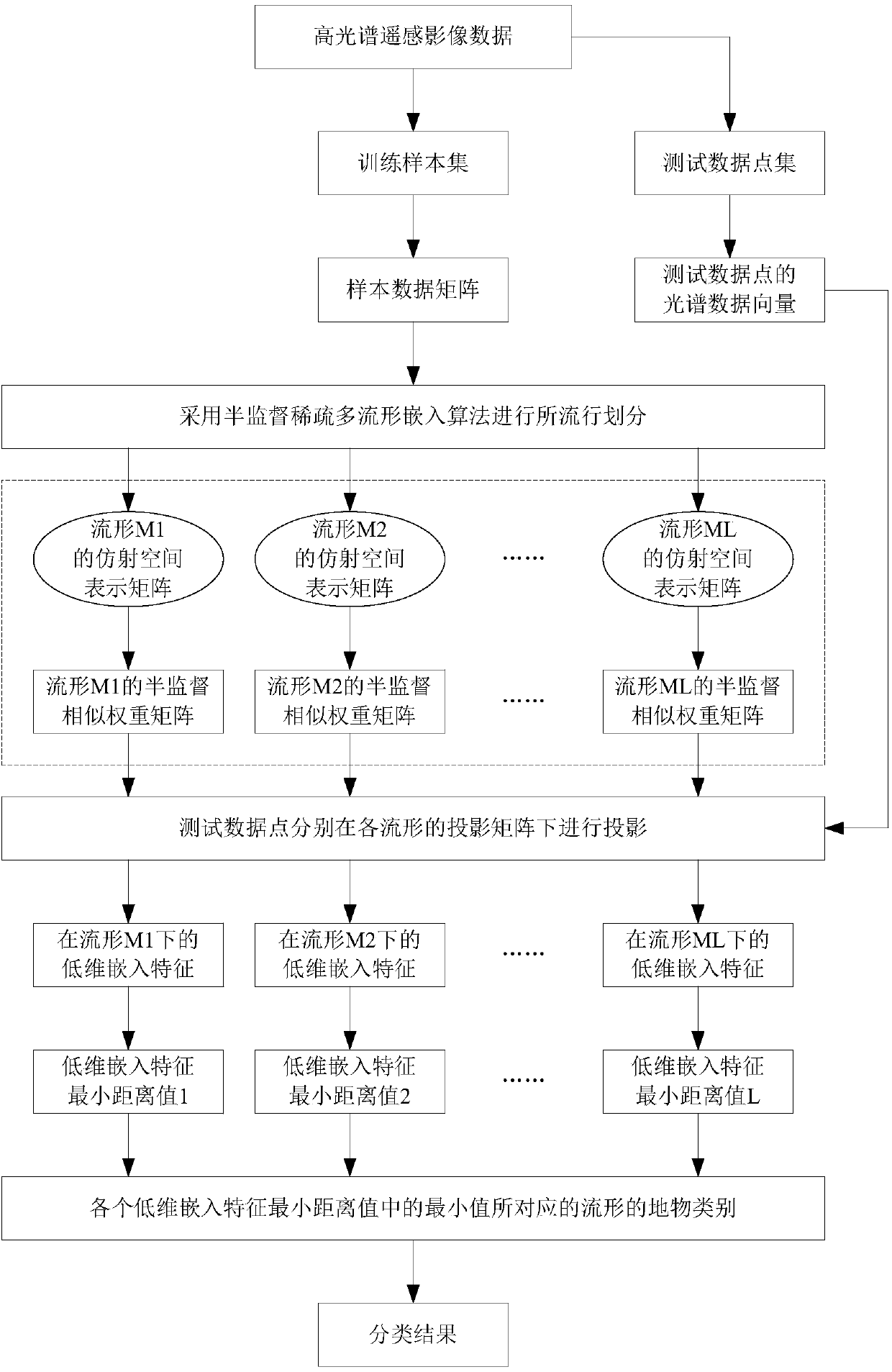

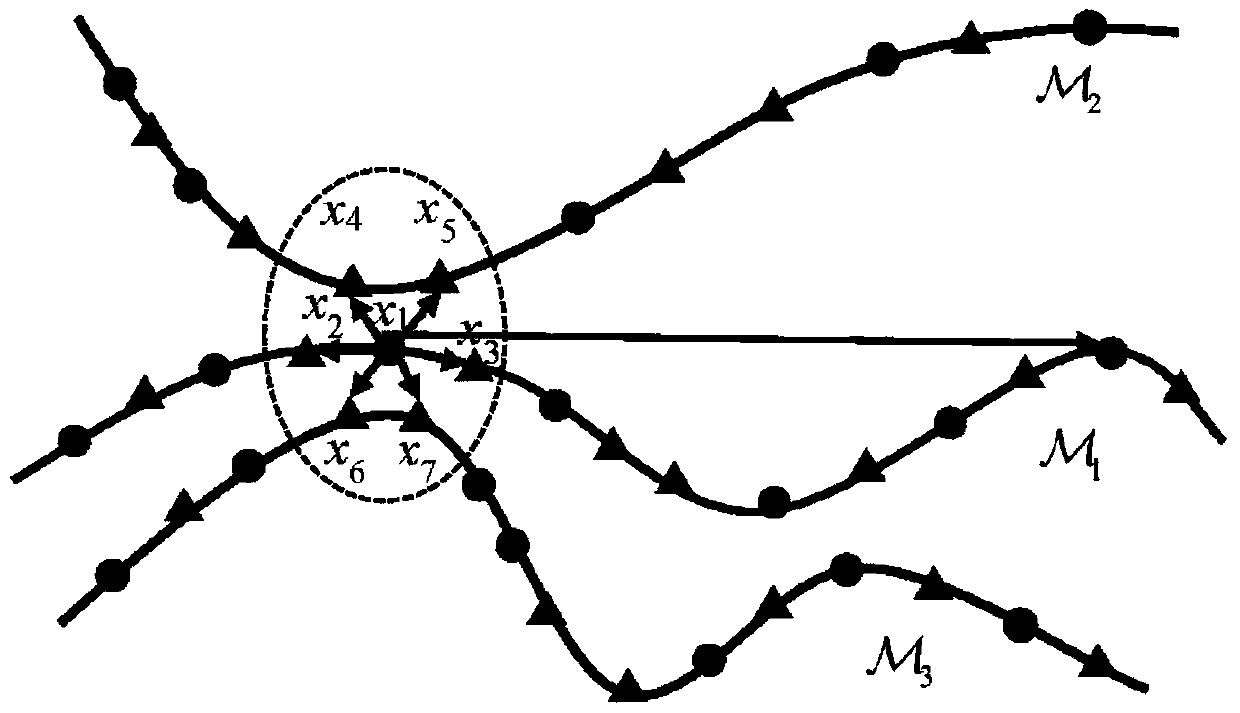

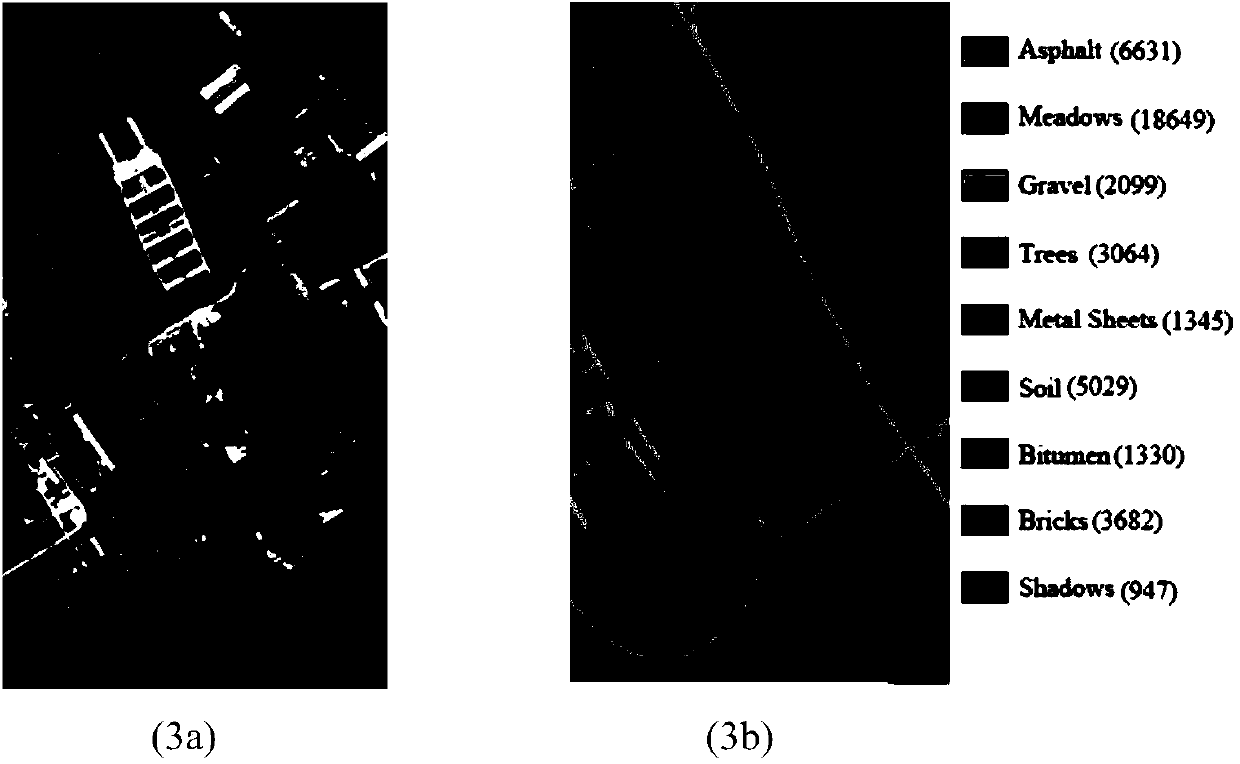

Sparse self-adaptive semi-supervised manifold learning hyperspectral image classification method

InactiveCN104751191ADimension reductionIncrease weightCharacter and pattern recognitionData pointSelf adaptive

The invention provides a sparse self-adaptive semi-supervised manifold learning hyperspectral image classification method and provides a semi-supervised sparse manifold learning dimension reduction algorithm and a nearest manifold classification algorithm. Internal attributes and a manifold structure contained in high-dimensional data are well discovered by marking small amount of data points in a data sample and combining part of unmarked data points for learning, low-dimension embedded features with better identification performance can be extracted, classification result is improved, classification precision of land feature classification in hyperspectral remote sending images is improved, and problems about 'out-of-sample learning' of a sparse manifold clustering and embedding algorithm and difficulty in labeling the remote sensing image classification can be effectively solved; meanwhile, as is shown in experimental results in a PaviaU data set and compared with a common identification method in the prior art, the sparse self-adaptive semi-supervised manifold learning hyperspectral image classification method is better in classification result.

Owner:CHONGQING UNIV

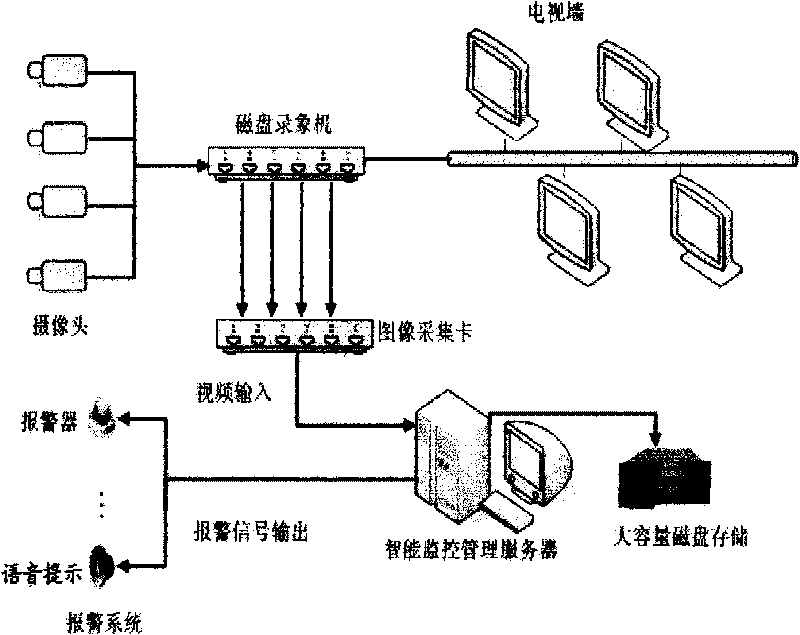

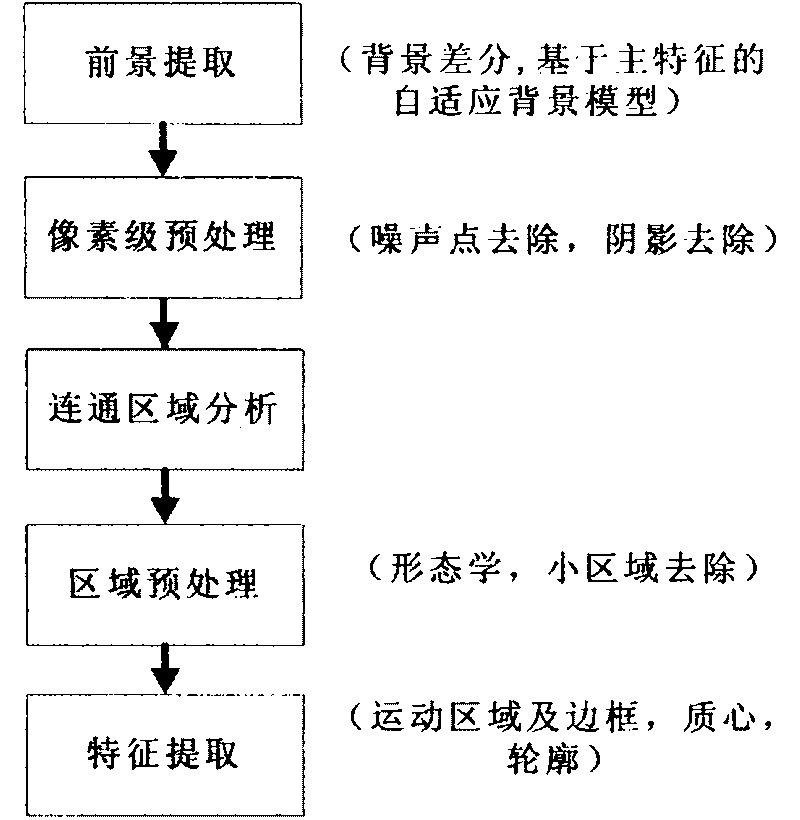

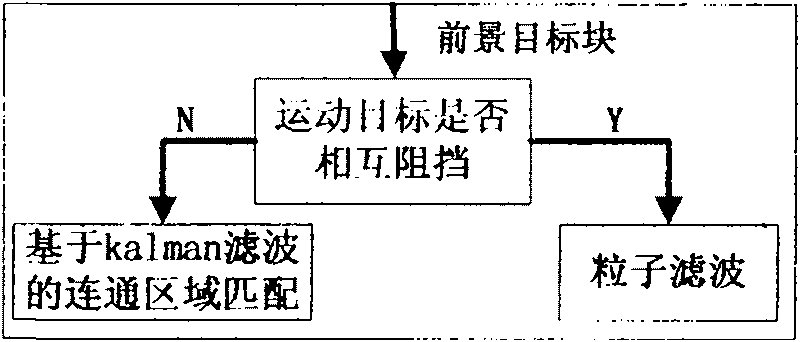

Method for detecting, tracking and identifying object abandoning/stealing event

InactiveCN101727672AFull motion goal stateAccurate motion target stateImage analysisCharacter and pattern recognitionMorphological processingInter frame

The invention discloses a method for detecting, tracking and identifying an object abandoning / stealing event, which comprises the following steps of: 1) the detection of a moving object, namely, establishing an adaptive background model, extracting the moving object by utilizing a background difference and performing morphological processing and shadow processing to obtain a more complete and more accurate state of the moving object; 2) the tracking of the moving object, namely, realizing the inter-frame matching of the moving object through a recursion process of estimating, observing and correcting the state of the moving object, and tracking a moving track of the moving object; and 3) event identification, namely, giving a clear definition to the object abandoning / stealing event, judging the occurrence of the event according to the characteristics and the moving track of the moving object, if the event occurs, distinguishing an object abandoning event from an object stealing event, capturing evidence pictures for the identified abandoning and stealing events and giving an audible alarm.

Owner:YUNNAN ZHENGZHUO INFORMATION TECH

Webpage text classification method based on feature selection

InactiveCN103810264AImprove accuracyImprove classification effectSpecial data processing applicationsFeature vectorData set

Provided is a webpage text classification method based on feature selection. Firstly, data sets formed by a large number of webpages are divided into a training set and a testing set; secondly, different weights are endowed to labels according to webpage content expression capacity of information in a webpage label domain, and the weights (the product of a normalized word frequency and an inverse document frequency) of feature words in each webpage in the training set are calculated; on the basis of the obtained weights and through the combination of an intra-class distribution law and inter-class deviation, feather vectors of all webpages in the training set are calculated, and accordingly feature vectors of all classes in the training set are calculated; finally, the word frequency of feature words in each webpage in the testing set is calculated, the similarity between a webpage to be classified and each class in the training set is calculated, the class with the maximum similarity serves as the class where the webpage to be classified belongs, and a classification result is obtained.

Owner:XIAN UNIV OF TECH

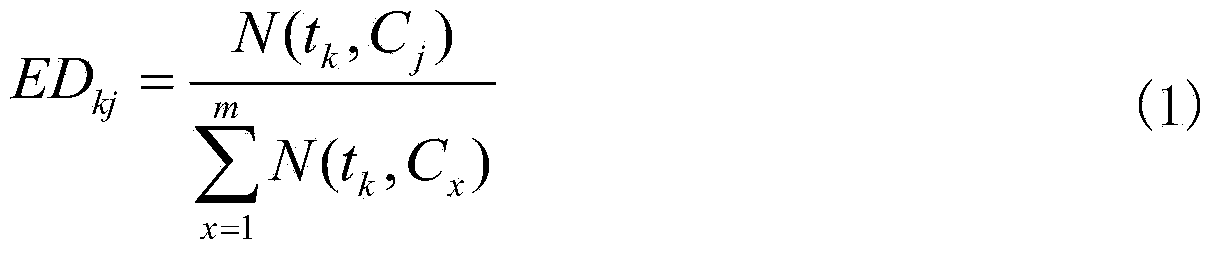

Mobile phone glass cover plate window area defect detection method based on machine vision

PendingCN110570393AImprove versatilityAlgorithm detection efficiency is highImage enhancementImage analysisNeural network classifierMobile phone

The invention discloses a mobile phone glass cover plate window area defect detection method based on machine vision. The method comprises the following steps of 1, acquiring a mobile phone screen image; 2, carrying out rough detection on a mobile phone screen area; 3, extracting defects of the mobile phone screen image through a threshold segmentation algorithm; 4, connecting the scattered pointsin the dense point cluster area by using a clustering algorithm; 5, performing defect classification by using a neural network classifier; 6, extracting the area, the length and the radius of the defect area, and comparing according to a detection standard; 7, re-classifying the defects by using a deep learning classifier; and 8, counting information and quantity of various defects. According tothe detection algorithm, the principle of rough detection and fine detection in sequence is followed, the defects of pits, scratches, dirt, broken filaments and the like in the screen areas of the mobile phone glass cover plates of various different types can be rapidly and accurately extracted, and meanwhile the detection precision can be adjusted according to the detection standards of differentproduct window areas.

Owner:SOUTH CHINA UNIV OF TECH

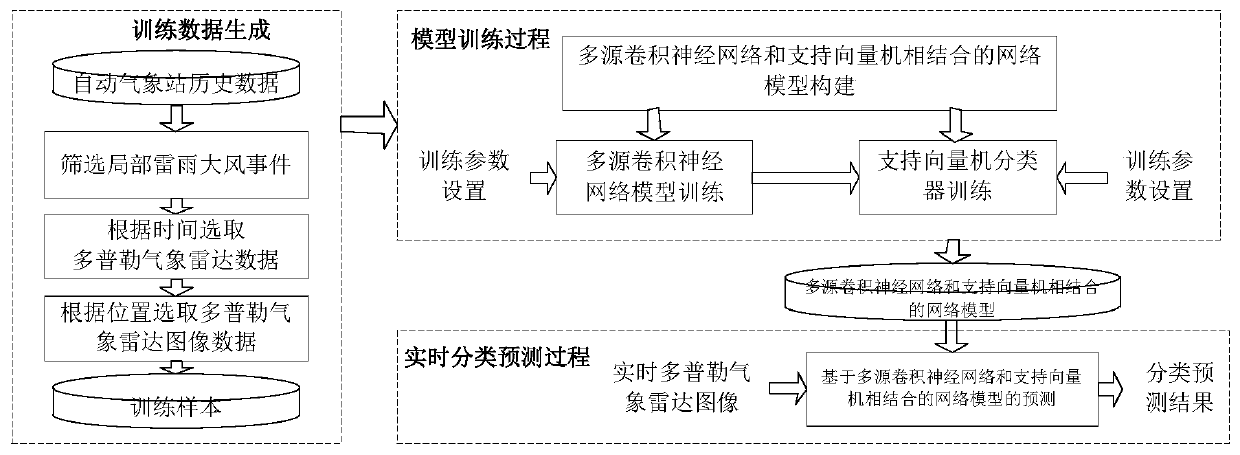

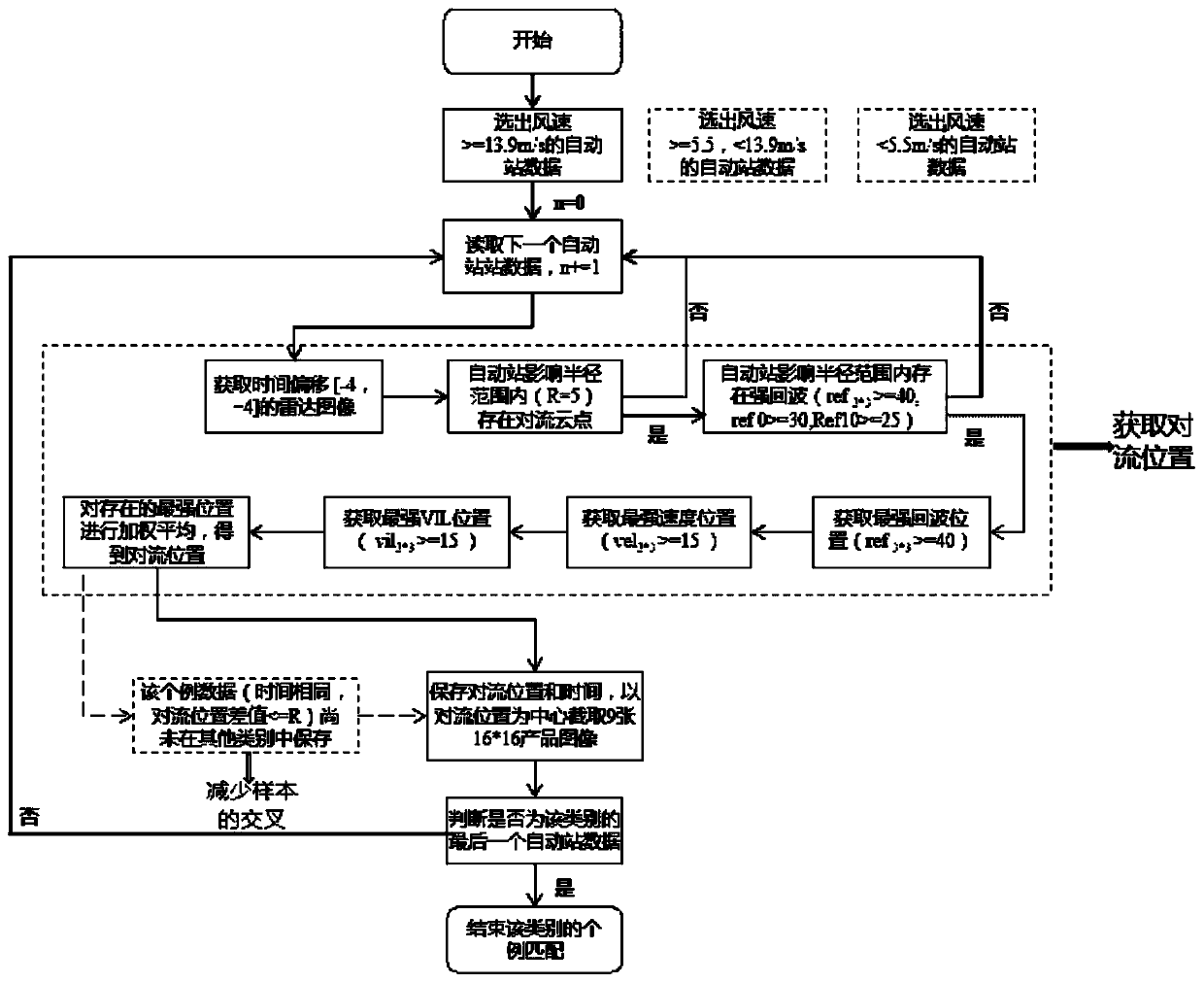

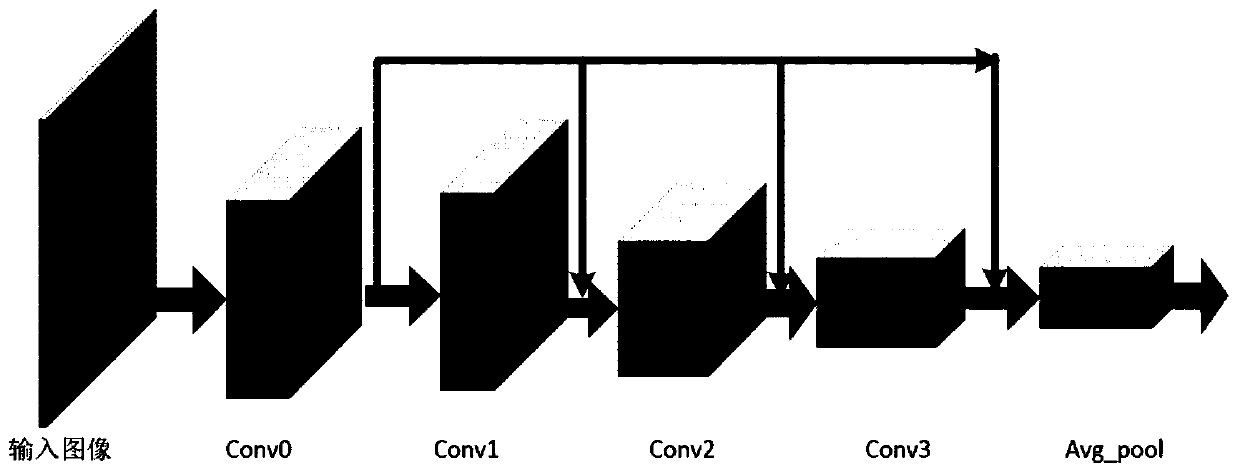

Thunderstorm strong wind grade prediction classification method based on multi-source convolutional neural network

ActiveCN110197218AFully integratedClassification prediction effect is goodCharacter and pattern recognitionNeural architecturesSupport vector machineFeature extraction

The invention discloses a thunderstorm strong wind grade prediction classification method based on a multi-source convolutional neural network. According to the method, a multi-source convolutional neural network model is adopted to carry out feature extraction on various data images obtained by the Doppler meteorological mine, more meteorological data information can be fused, and the extractionof difference features is improved. Meanwhile, the method is combined with a classification method in a support vector machine, and a model obtained on a meteorological data training set of small andmedium samples has a very good thunderstorm strong wind level prediction classification effect.

Owner:绍兴达道生涯教育信息咨询有限公司 +1

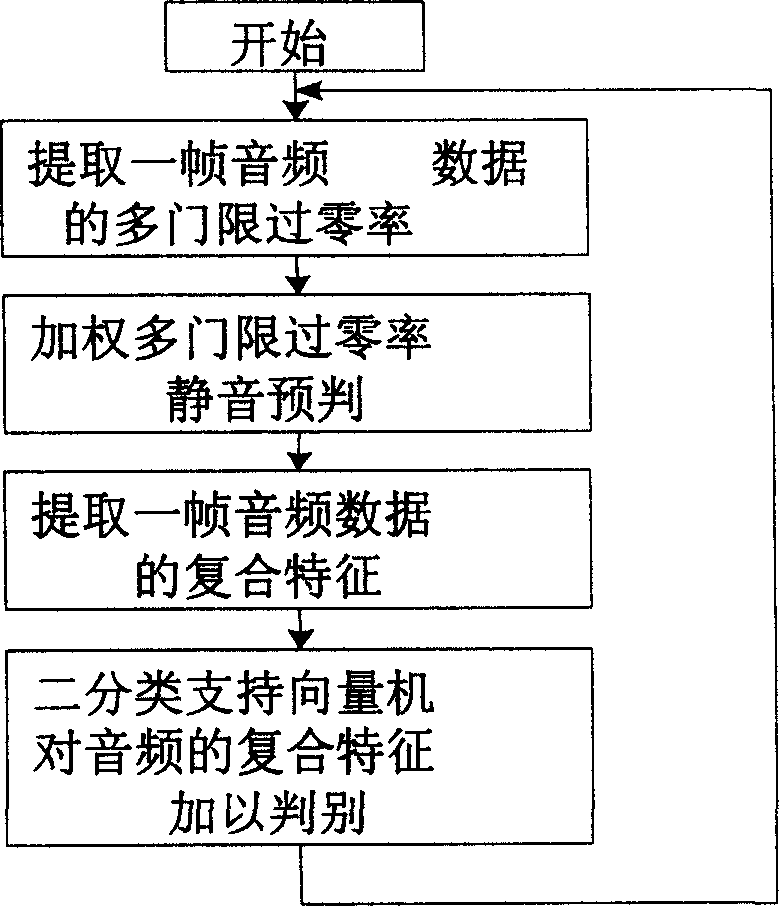

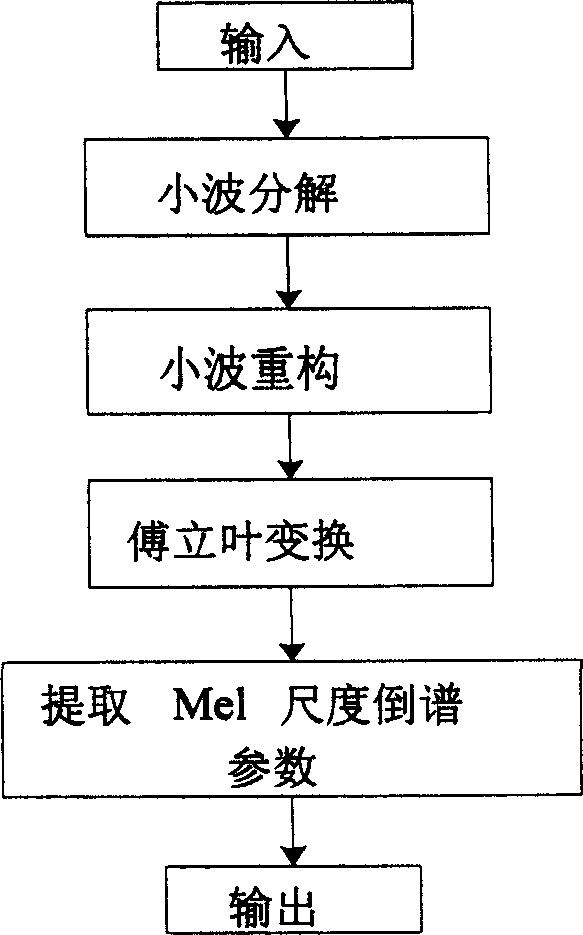

Mute detection method based on speech characteristic to jude

InactiveCN1835073AImprove classification effectImprove robustnessSpeech analysisSupport vector machineFrequency spectrum

The invention discloses a voice characteristic identification-based silence detecting method, firstly extracting multi-threshold overzero rate of an audio data frame; pre-identify silence by weighting the multi-threshold overzero rate to identify obvious silence; extracting composite characteristic of an audio data frame, where the composite characteristic comprises overzero rate, short-time energy value, and variable resolution frequency spectrum-based Mel scale revere spectrum coefficient; using dichotomy support vector machine to identify the composite character of the audio frequency, one class normal voice and the other class silence. And the invention can raise success rate of silence detection and can identify some special voices, able to be widely applied to network voice talking, especially voice chatting and video meeting.

Owner:NANJING UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com