Patents

Literature

36 results about "Emotion assessment" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

System and method for sentiment-based text classification and relevancy ranking

ActiveUS20100262454A1Digital data information retrievalDigital data processing detailsEmotion assessmentSentiment score

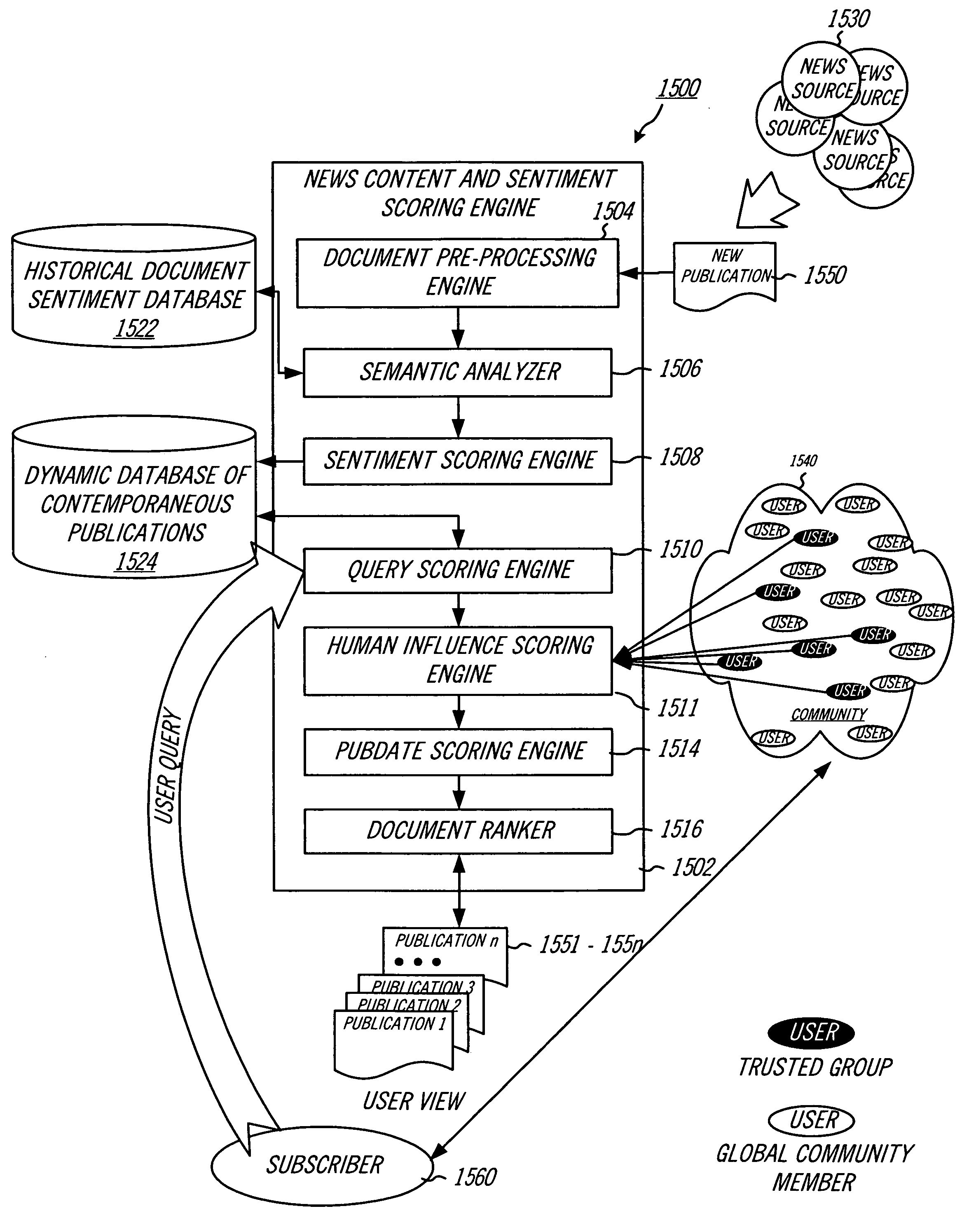

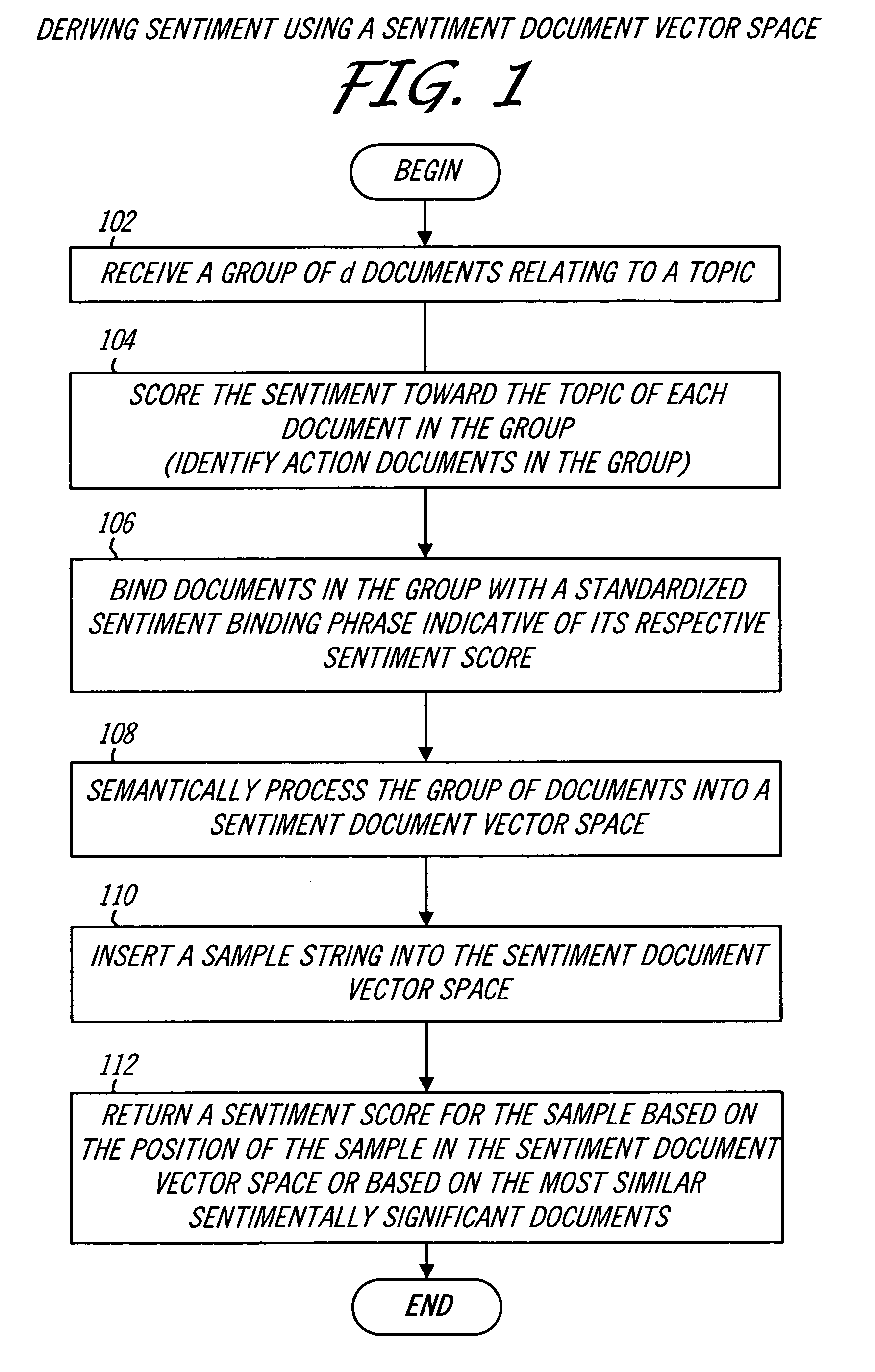

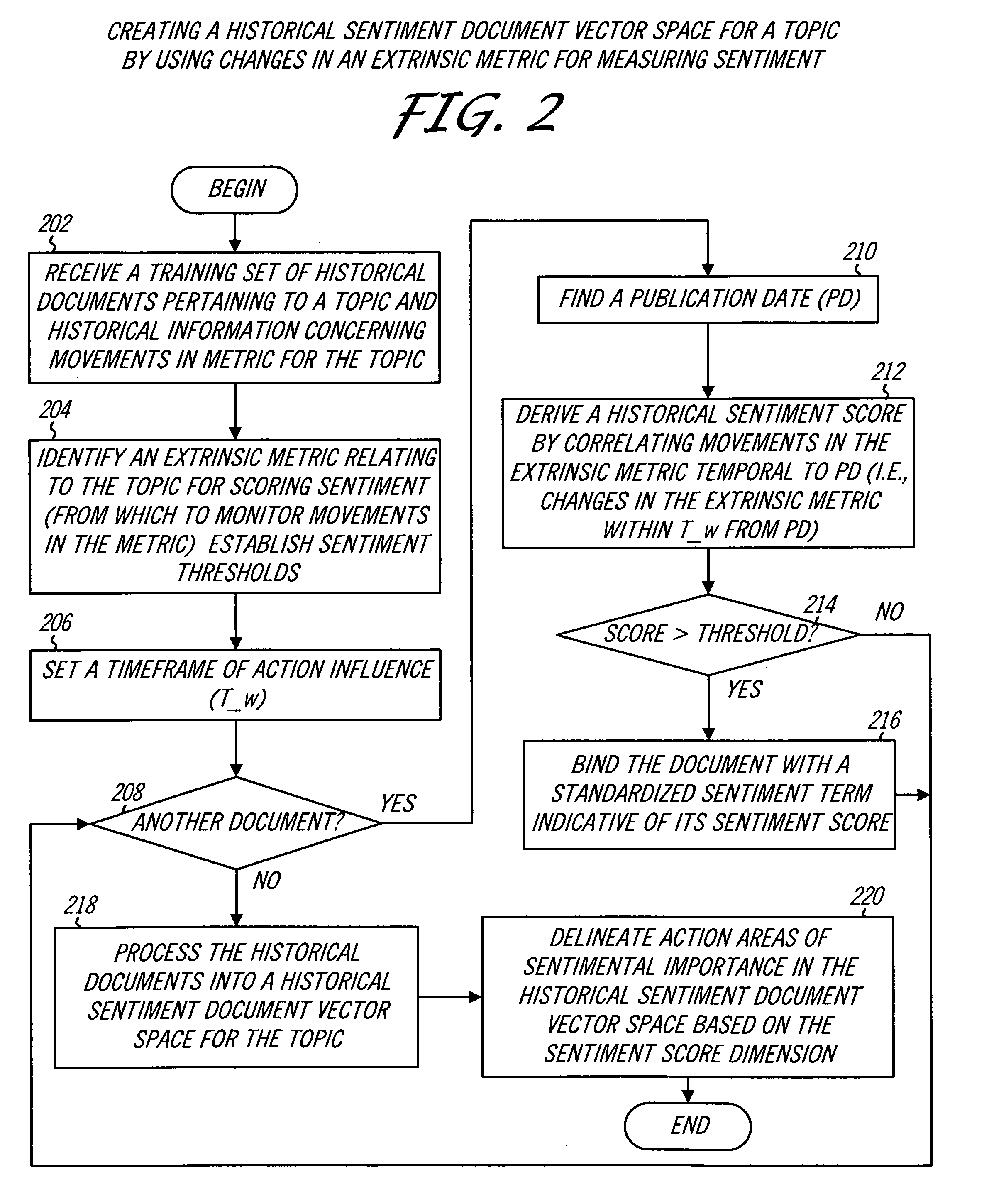

The sentimental significance of a group of historical documents related to a topic is assessed with respect to change in an extrinsic metric for the topic. A unique sentiment binding label is included to the content of actions documents that are determined to have sentimental significance and the group of documents is inserted into a historical document sentiment vector space for the topic. Action areas in the vector space are defined from the locations of action documents and singular sentiment vector may be created that describes the cumulative action area. Newly published documents are sentiment-scored by semantically comparing them to documents in the space and / or to the singular sentiment vector. The sentiment scores for the newly published documents are supplemented by human sentiment assessment of the documents and a sentiment time decay factor is applied to the supplemented sentiment score of each newly published documents. User queries are received and a set of sentiment-ranked documents is returned with the highest age-adjusted sentiment scores.

Owner:MARKETCHORUS

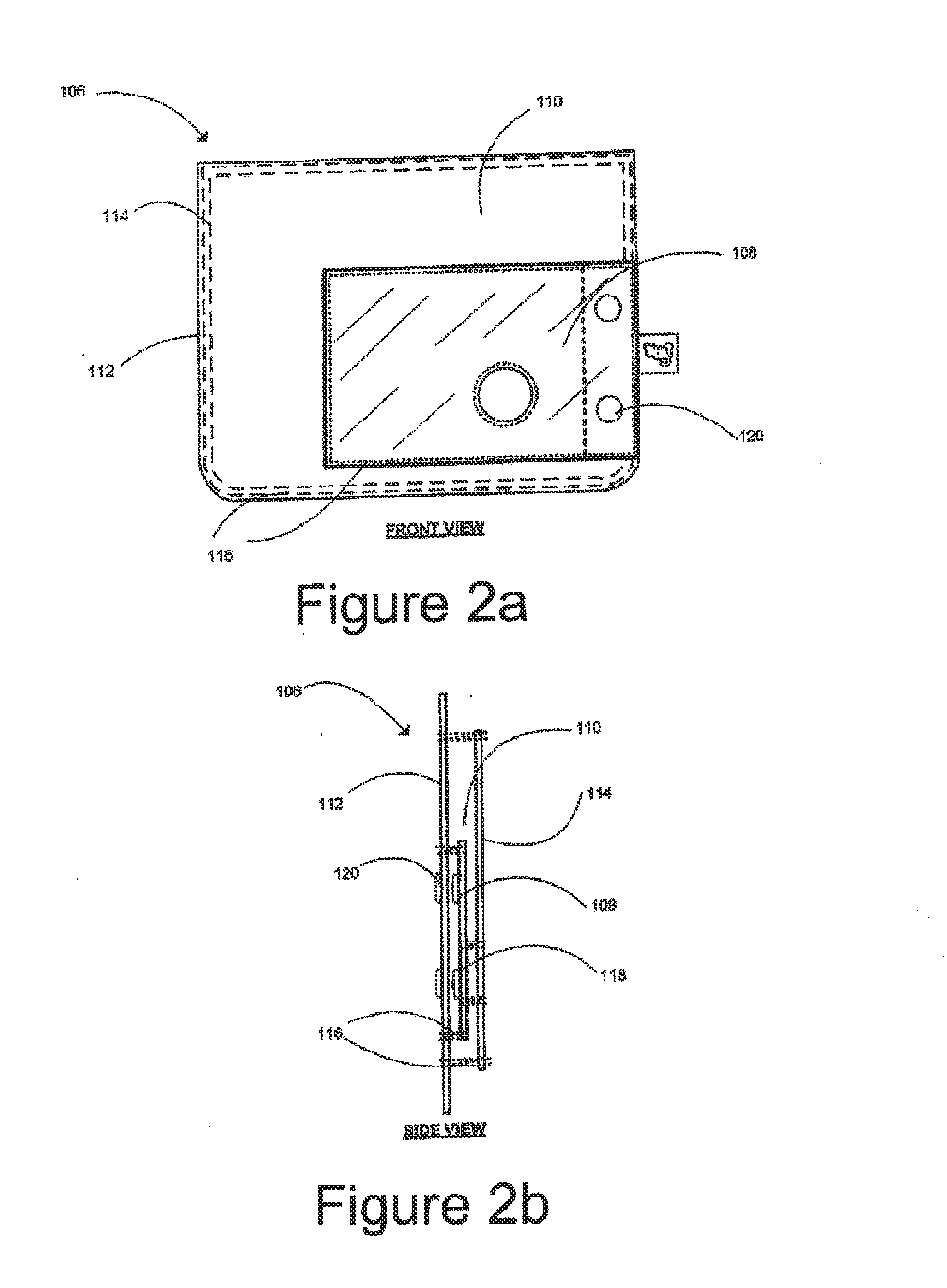

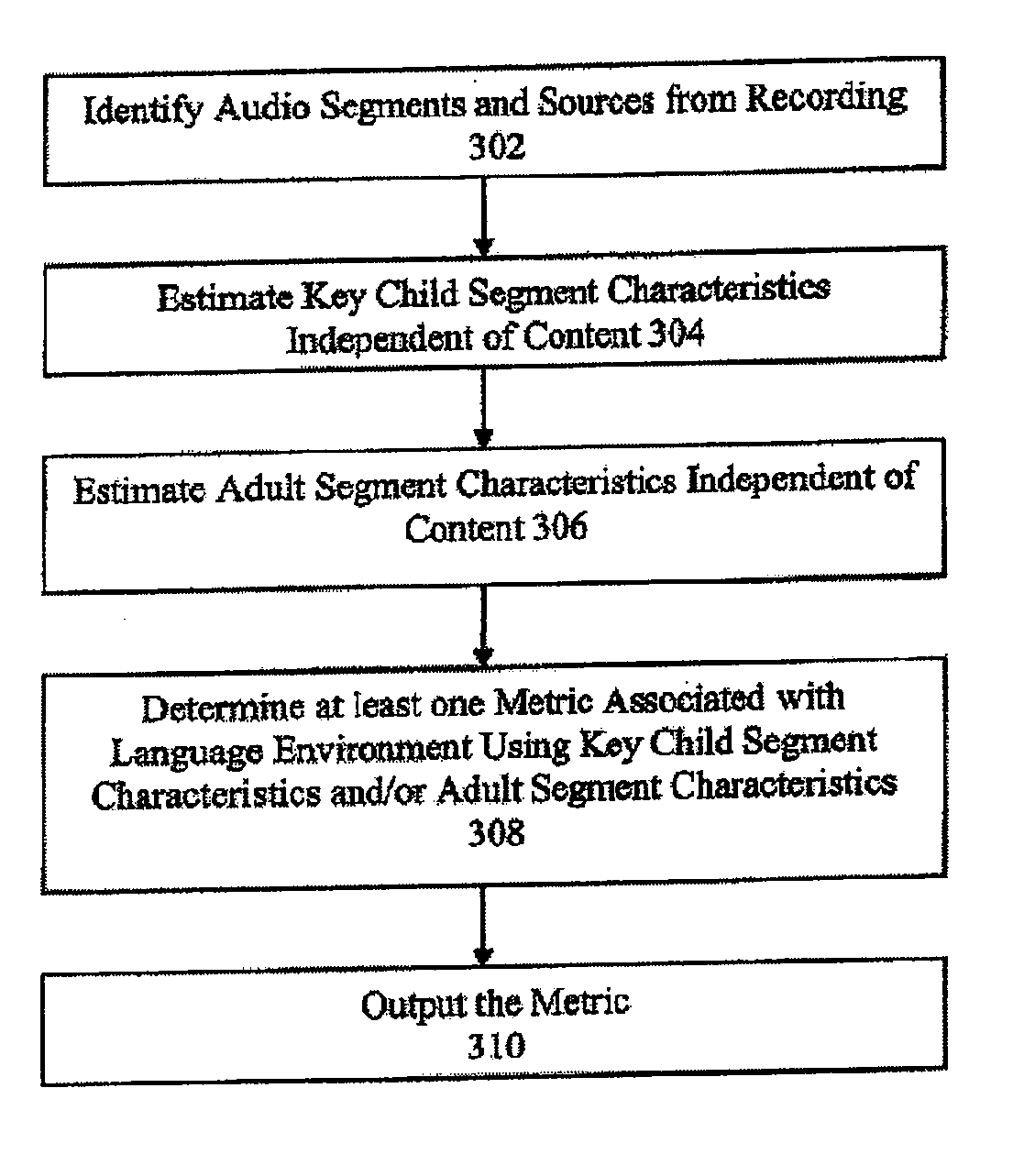

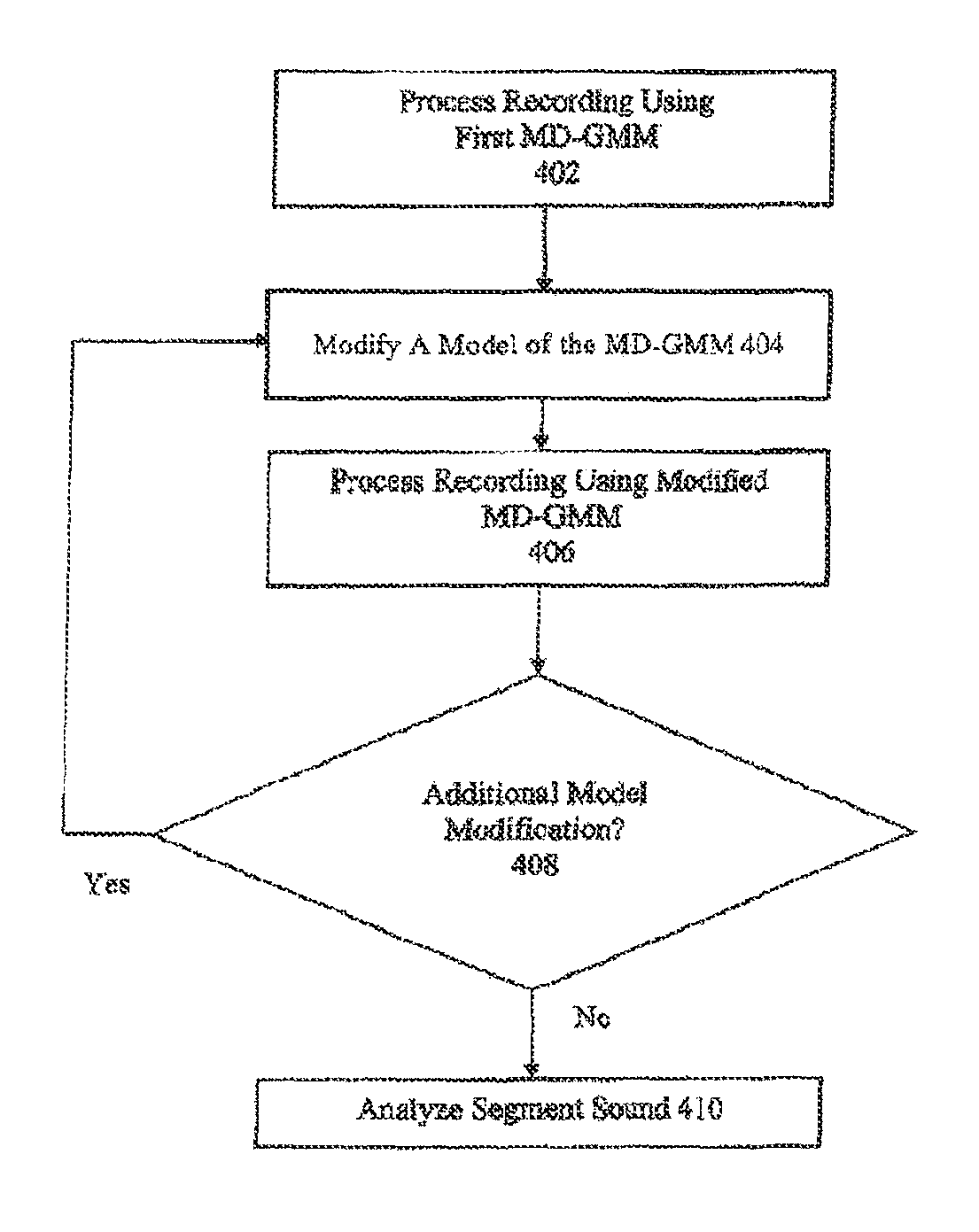

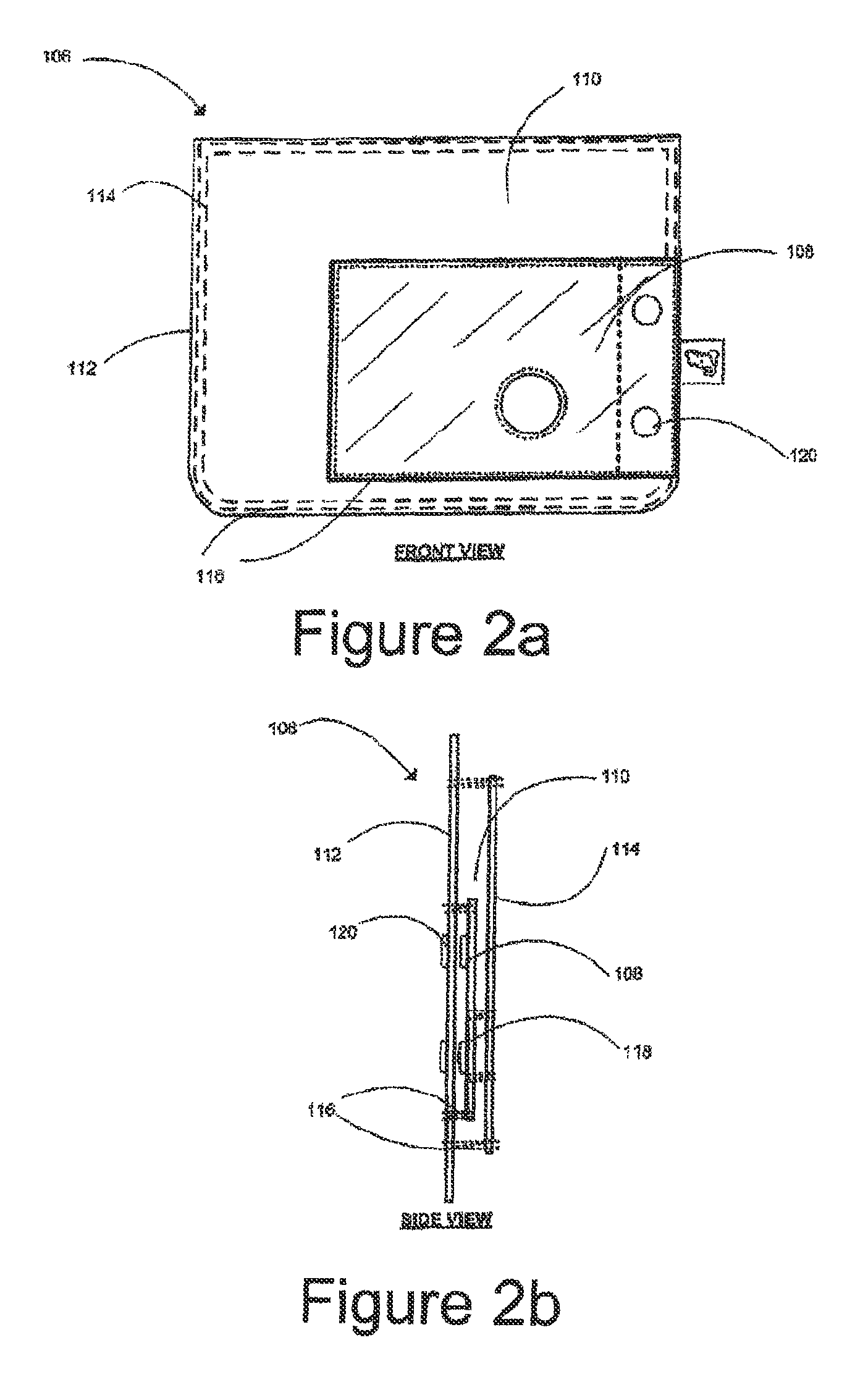

System and method for expressive language, developmental disorder, and emotion assessment

ActiveUS20140255887A1Fast and cost-effective mannerEasy to optimizeMedical automated diagnosisDiagnostic recording/measuringEmotion assessmentDevelopmental disorder

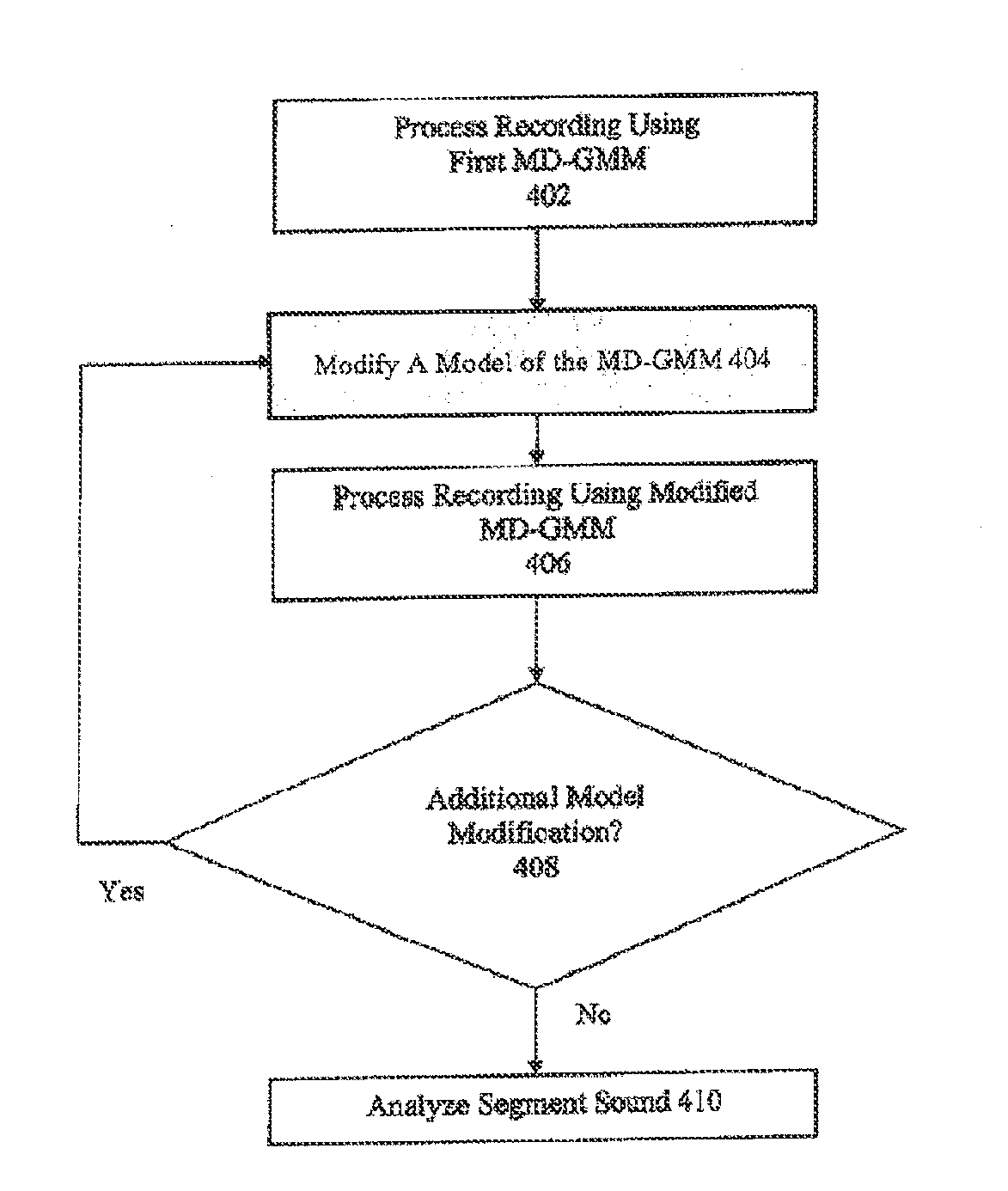

In one embodiment, a method for detecting autism in a natural language environment using a microphone, sound recorder, and a computer programmed with software for the specialized purpose of processing recordings captured by the microphone and sound recorder combination, the computer programmed to execute the method, includes segmenting an audio signal captured by the microphone and sound recorder combination using the computer programmed for the specialized purpose into a plurality recording segments. The method further includes determining which of the plurality of recording segments correspond to a key child. The method further includes determining which of the plurality of recording segments that correspond to the key child are classified as key child recordings. Additionally, the method includes extracting phone-based features of the key child recordings; comparing the phone-based features of the key child recordings to known phone-based features for children; and determining a likelihood of autism based on the comparing.

Owner:LENA FOUNDATION

System and method for expressive language, developmental disorder, and emotion assessment

ActiveUS20090208913A1Fast and cost-effective mannerEasy to optimizeSpeech analysisMedical automated diagnosisEmotion assessmentDevelopmental disorder

In one embodiment, a method for detecting autism in a natural language environment using a microphone, sound recorder, and a computer programmed with software for the specialized purpose of processing recordings captured by the microphone and sound recorder combination, the computer programmed to execute the method, includes segmenting an audio signal captured by the microphone and sound recorder combination using the computer programmed for the specialized purpose into a plurality recording segments. The method further includes determining which of the plurality of recording segments correspond to a key child. The method further includes determining which of the plurality of recording segments that correspond to the key child are classified as key child recordings. Additionally, the method includes extracting phone-based features of the key child recordings; comparing the phone-based features of the key child recordings to known phone-based features for children; and determining a likelihood of autism based on the comparing.

Owner:LENA FOUNDATION

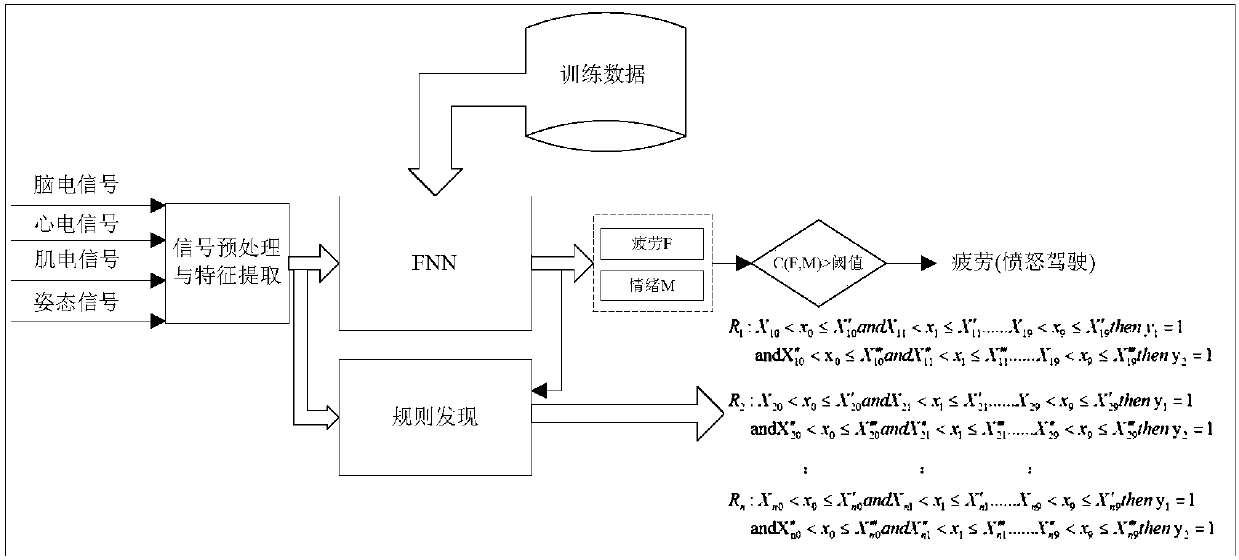

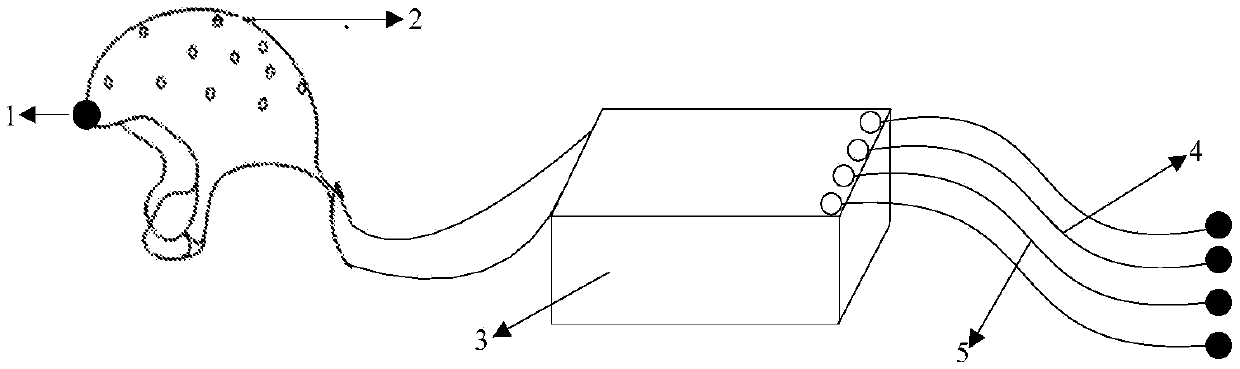

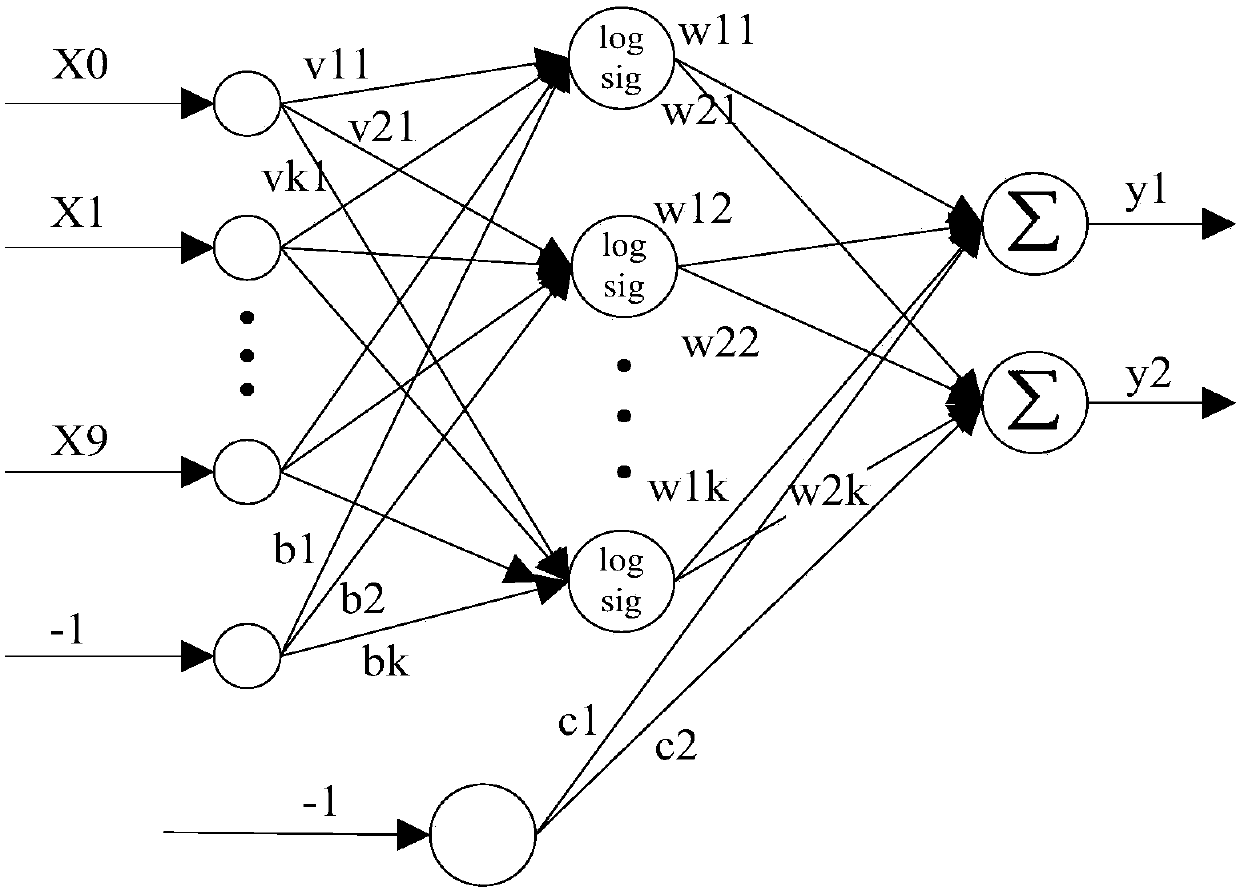

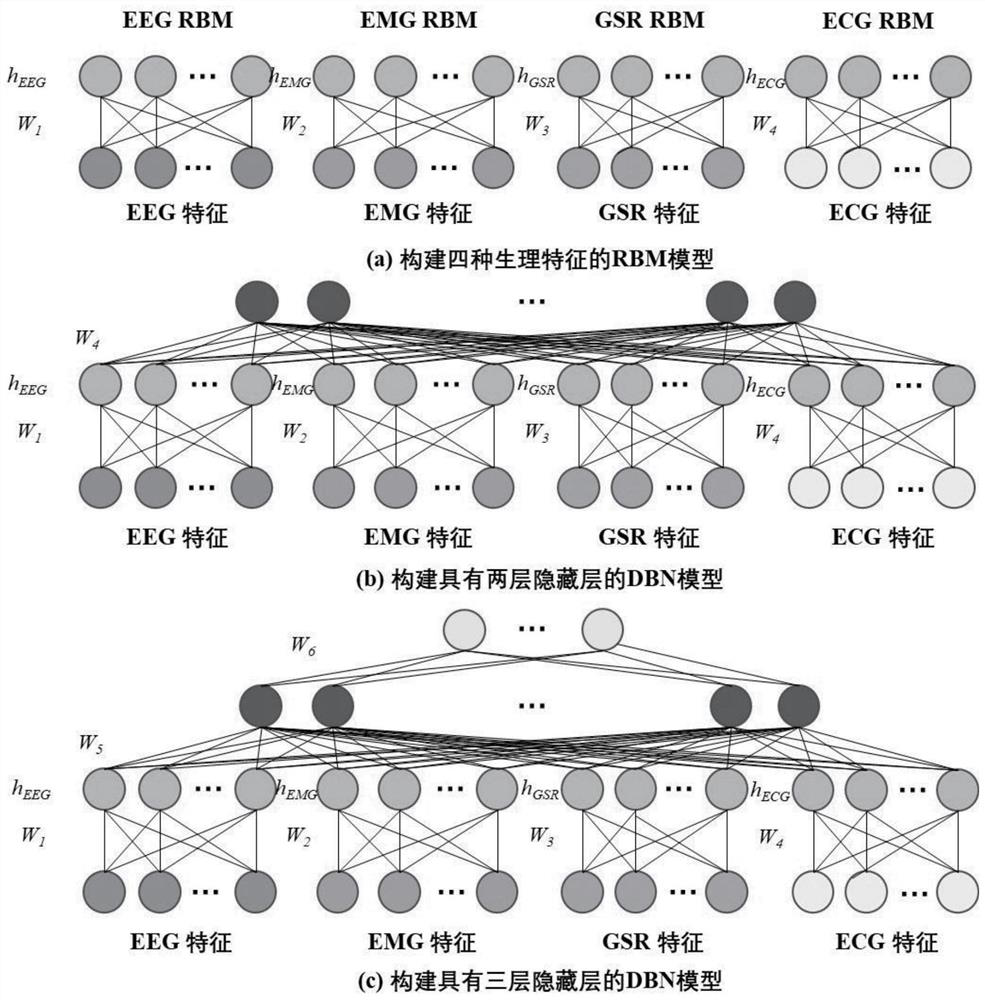

Driver fatigue and emotion evaluation method based on multi-source physiological information

InactiveCN107822623AEmphasis on comprehensivenessImprove classification effectDiagnostic signal processingSensorsEmotion assessmentFeature extraction

The invention discloses a driver fatigue and emotion evaluation method based on multi-source physiological information. The method comprises the following steps of 1, simultaneously collecting a driver's EEG, ECG, EMG and attitude signals; 2, performing pretreatment and feature extraction on the physiological signals; 3, building a fuzzy neural network evaluation model to achieve driver fatigue and emotion evaluation; and 4, based on the evaluation model, using genetic algorithms for continuously learning the driver's evaluation index, extracting rules and methods of driver fatigue and emotionevaluation, and improving the evaluation accuracy. The method emphasizes the comprehensiveness of decision information and the advanced nature of a classification method, greatly improves the accuracy of driver fatigue and emotion evaluation, and reduces the probability of occurrence of traffic accidents.

Owner:YANSHAN UNIV

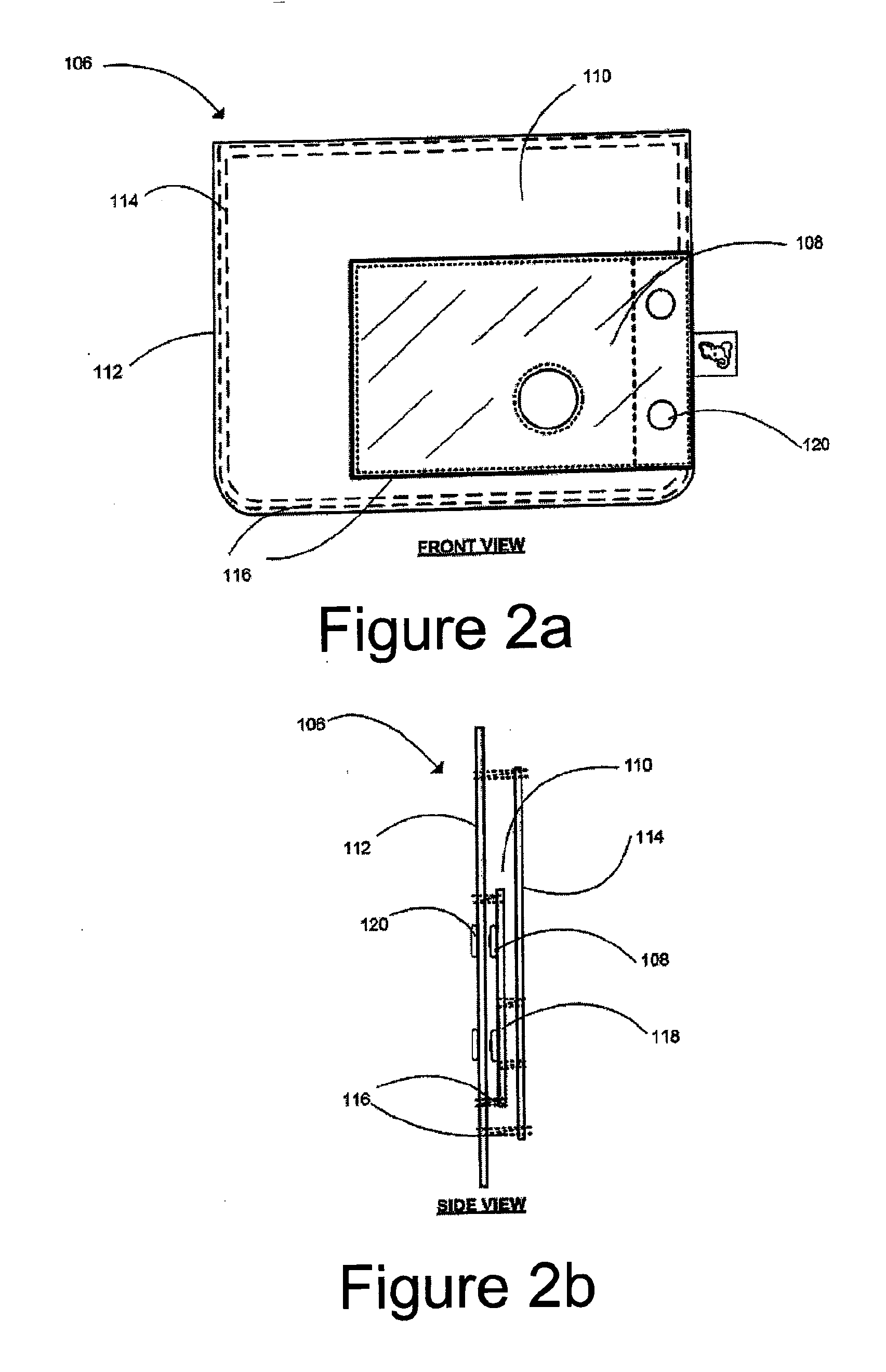

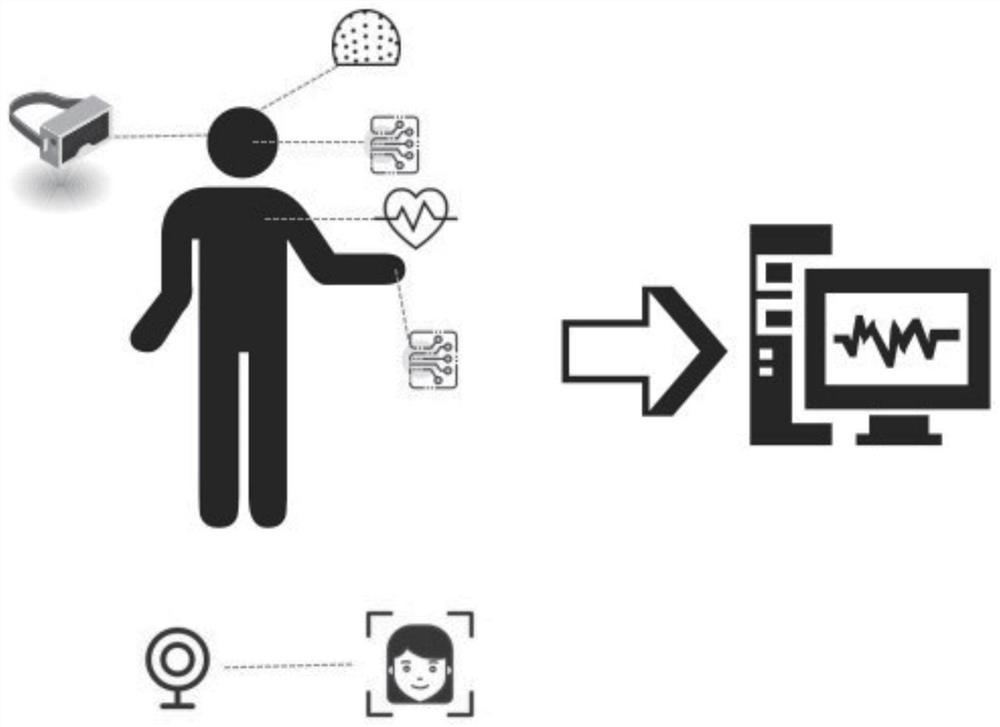

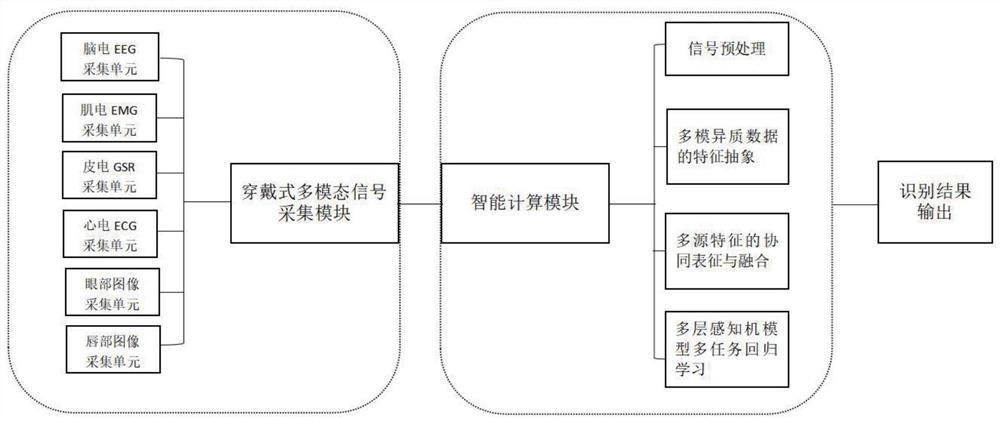

Wearable multi-mode emotional state monitoring device

PendingCN112120716ARealize multi-angle real-time monitoringEmotional state monitoringInput/output for user-computer interactionSensorsEmotion assessmentMedicine

The invention discloses a device based on wearable multi-mode emotion monitoring. The device comprises VR glasses, a wearable multi-mode signal acquisition module and an intelligent calculation module, wherein the VR glasses are used for establishing an emotion induction scene of an intelligent interactive real social scene; a wearable multi-mode emotion acquisition module acquires multi-mode physiological information of electroencephalogram, myoelectricity, electrocardio, dermatoelectricity, eye images and mouth images from the head, the faces, the chest and the wrists of a wearer; and the intelligent calculation module is used for preprocessing multi-dimensional signals, performing feature abstraction on the multi-mode heterogeneous data, performing cooperative representation and fusionon multi-source features, performing multi-task regression learning by using a multi-layer perceptron model, and finally, performing multi-dimensional emotion judgment and result output. According tothe invention, the problems of no quantitative analysis, no test equipment and the like in traditional emotion evaluation are solved, and a reliable experimental paradigm, a mechanism theory and an equipment environment are provided for evaluating and monitoring the multi-dimensional emotion.

Owner:NAT INNOVATION INST OF DEFENSE TECH PLA ACAD OF MILITARY SCI +1

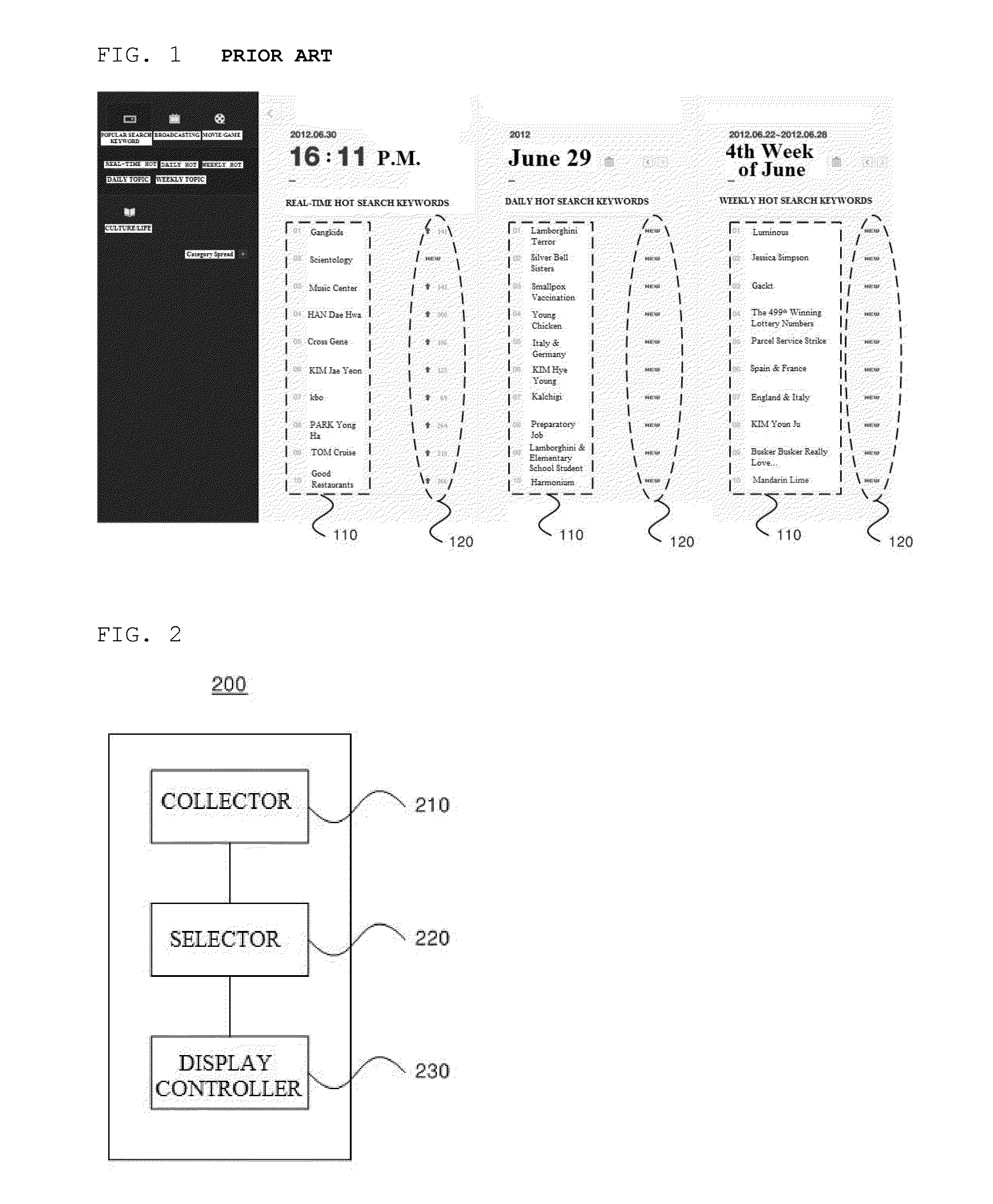

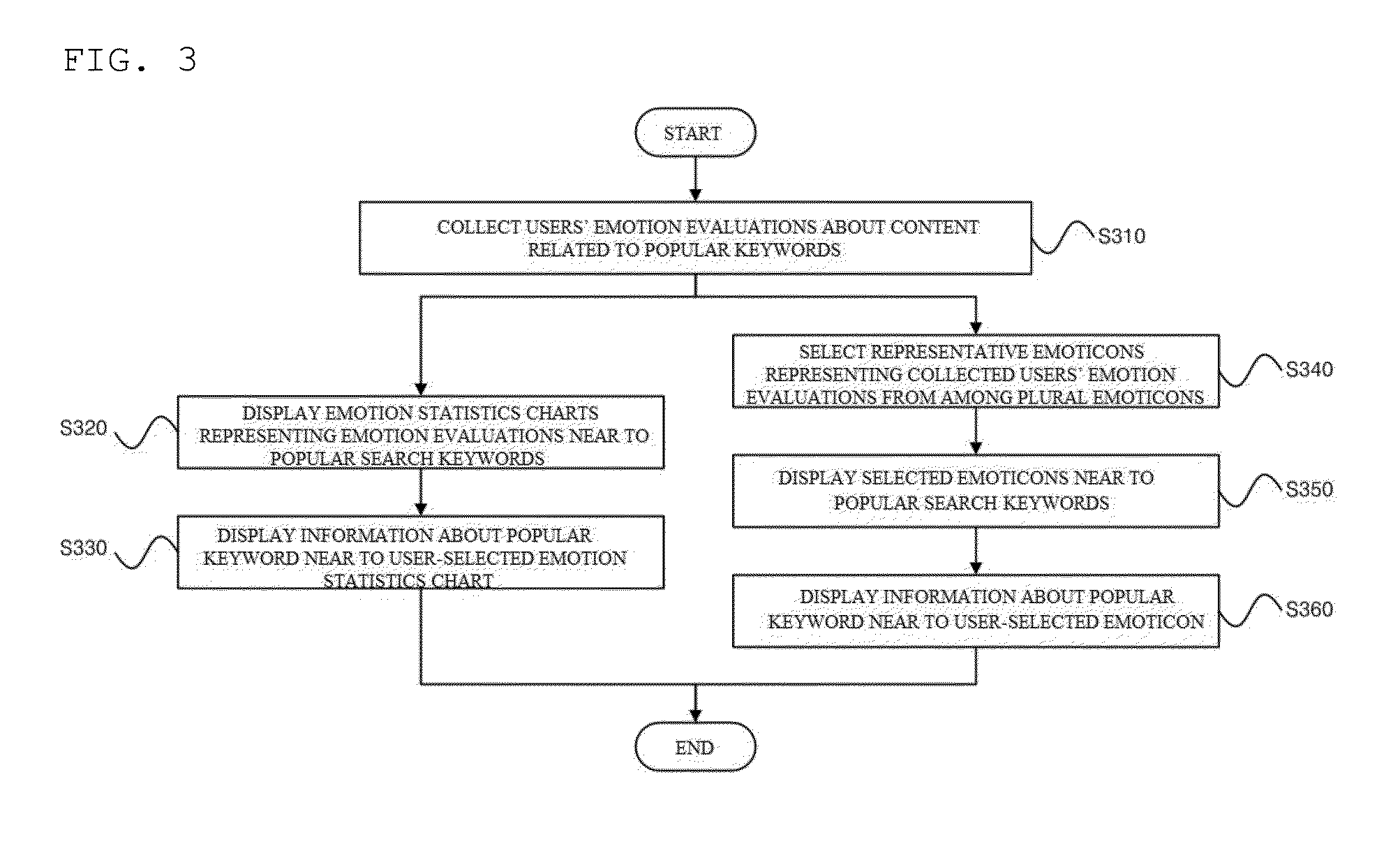

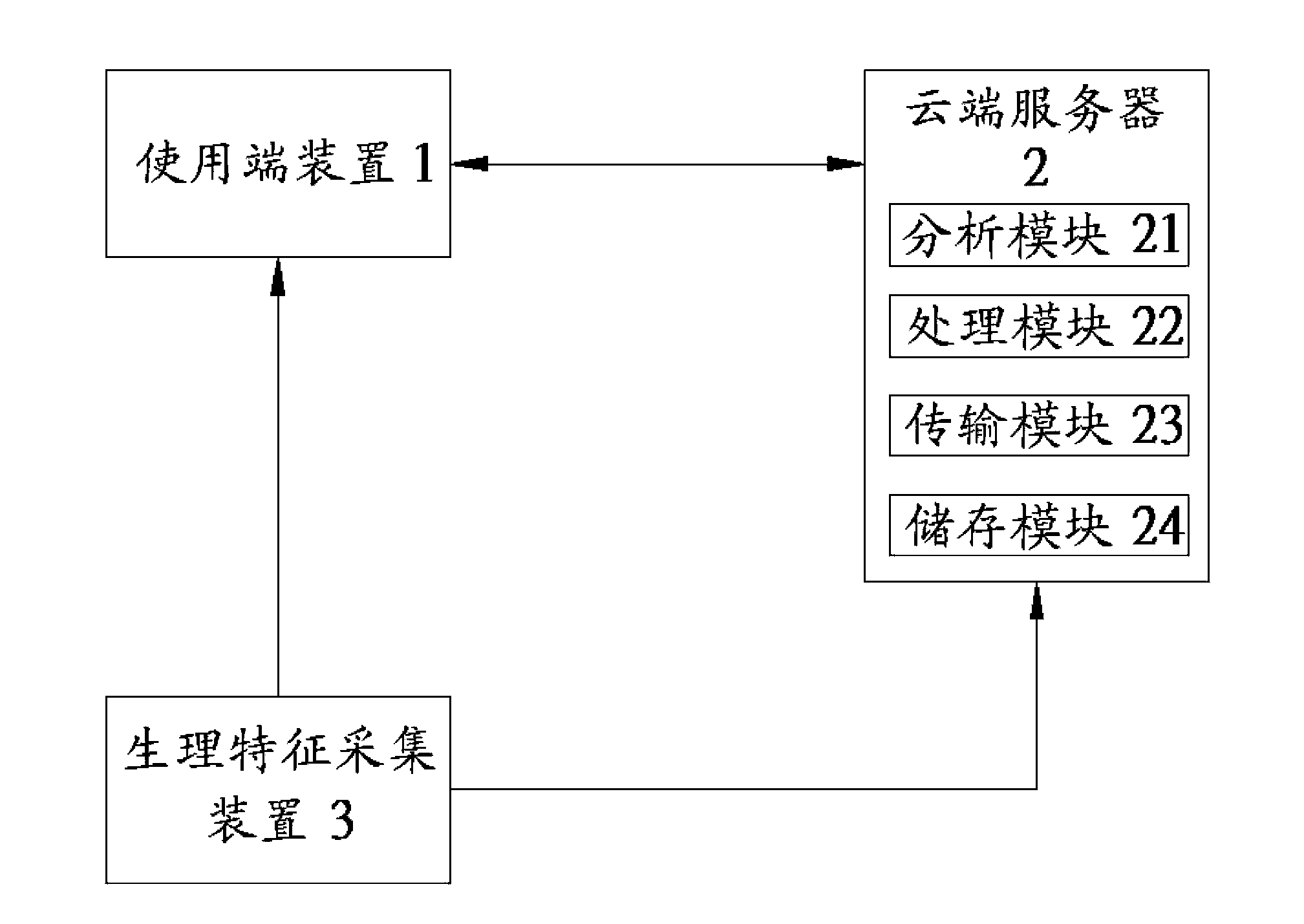

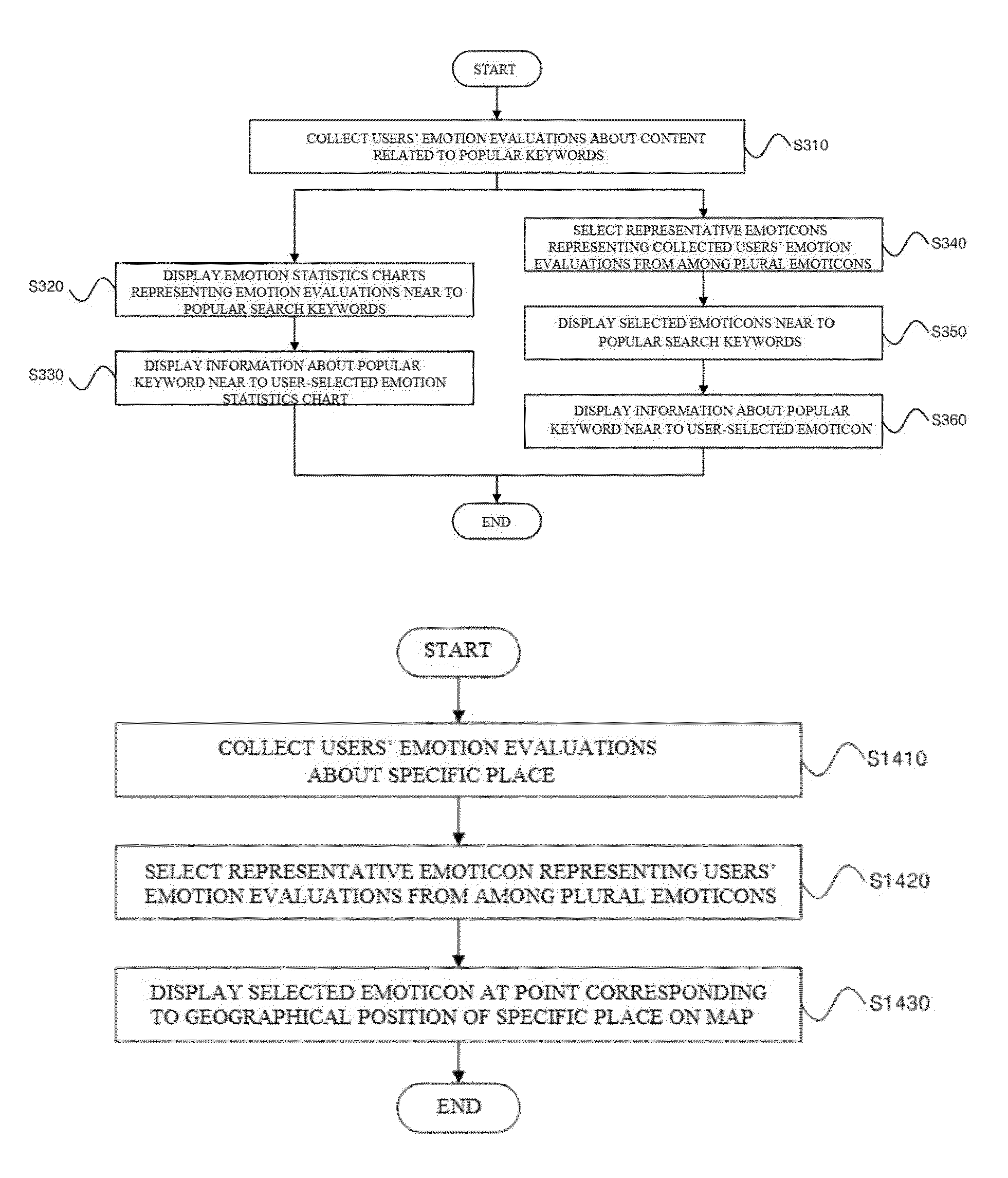

Method and apparatus for providing emotion expression service using emotion expression identifier

Disclosed are a method and apparatus for providing an emotion expression service using an emotion expression identifier. The method includes collecting emotion evaluations of users about content related to a word or a phrase, the emotion evaluations being performed by the users after the users view the content, and displaying an emotion expression identifier representing the collected emotion evaluations of the users in the vicinity of the word or the phrase. The method and apparatus enable a user to intuitively identify emotion expressions of other users (netizens) in relation to a word or phrase such on a Web page such as a portal site.

Owner:KIM JONG PHIL +1

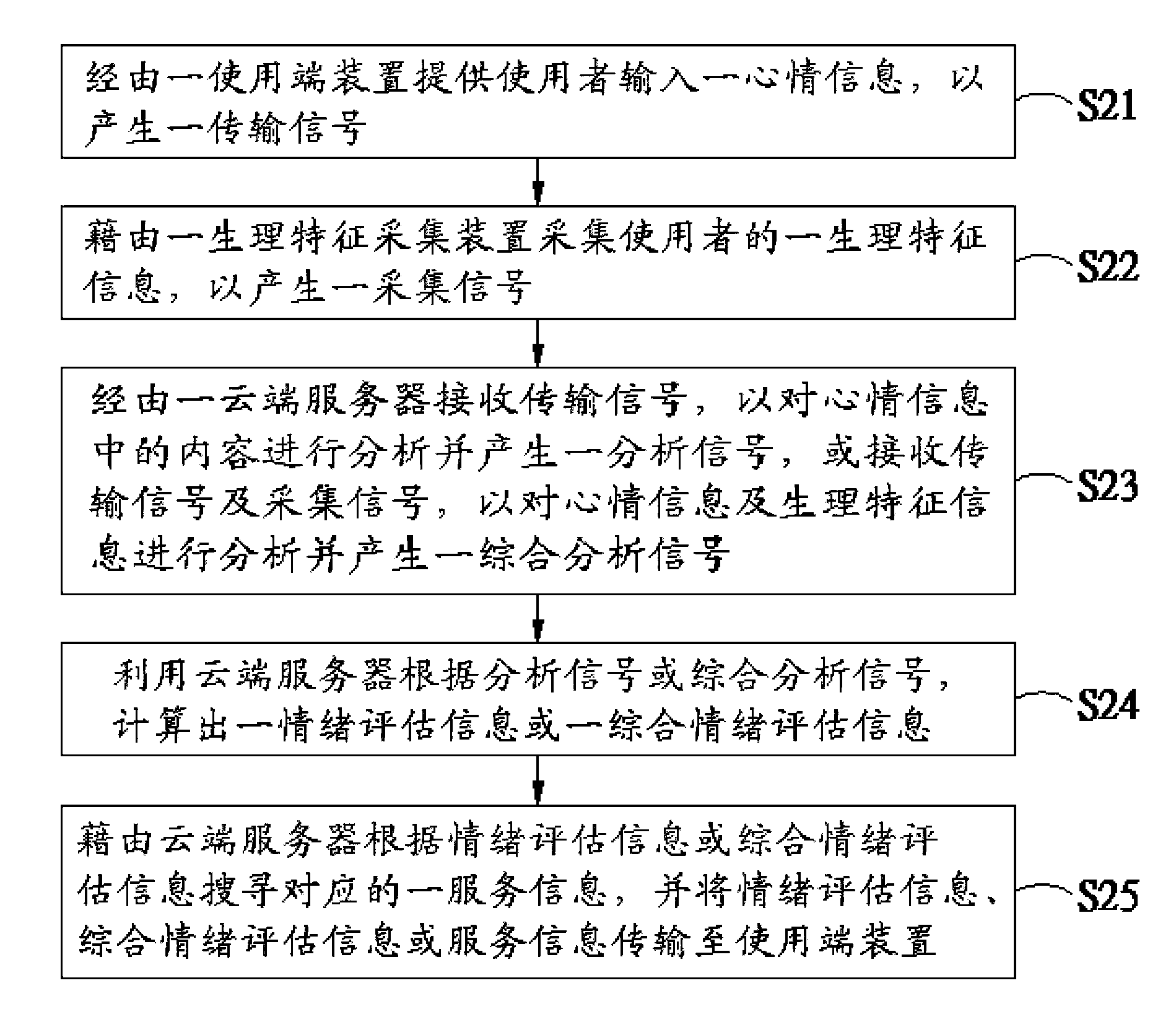

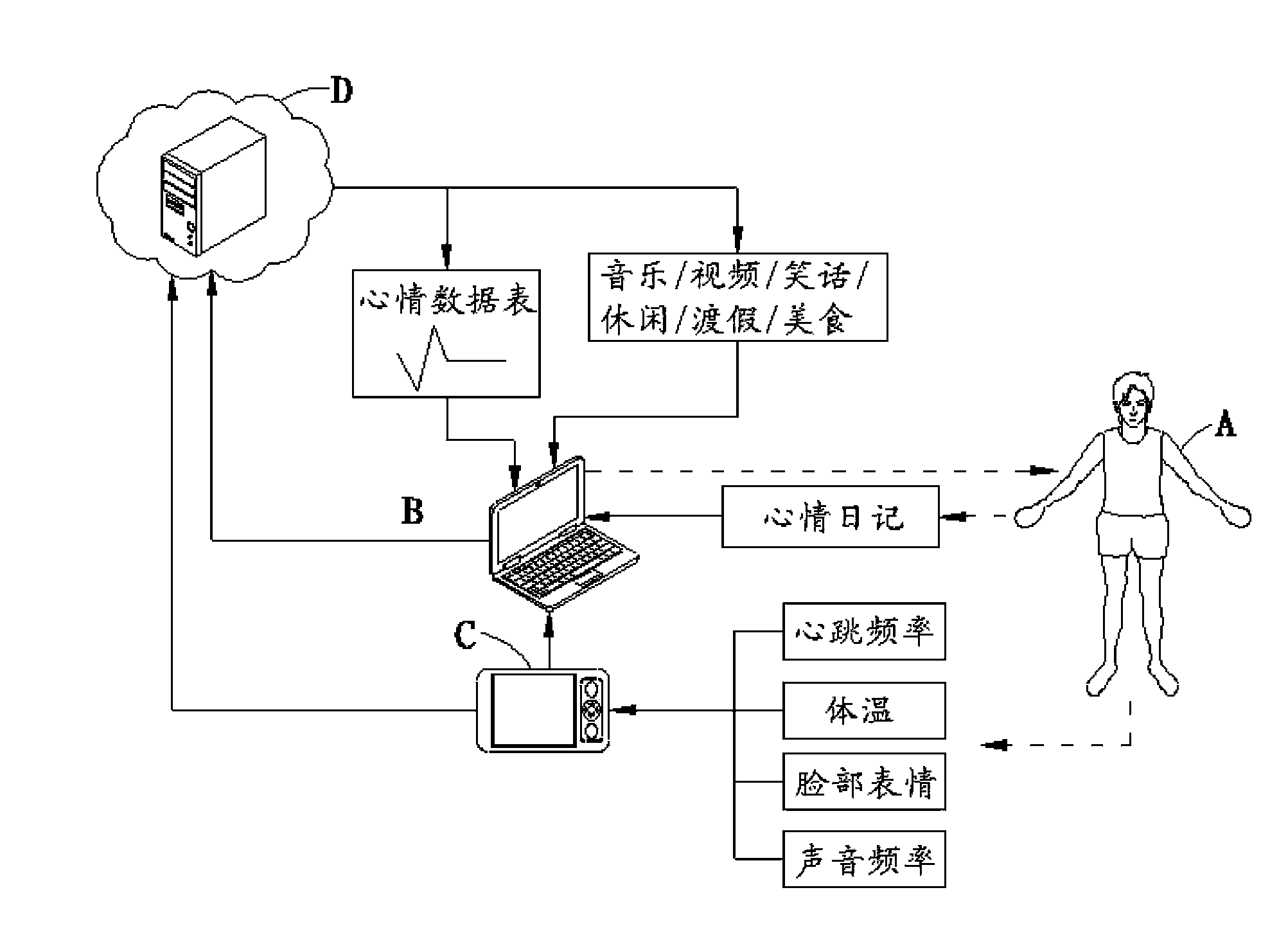

Emotion assessment service system and emotion assessment service method

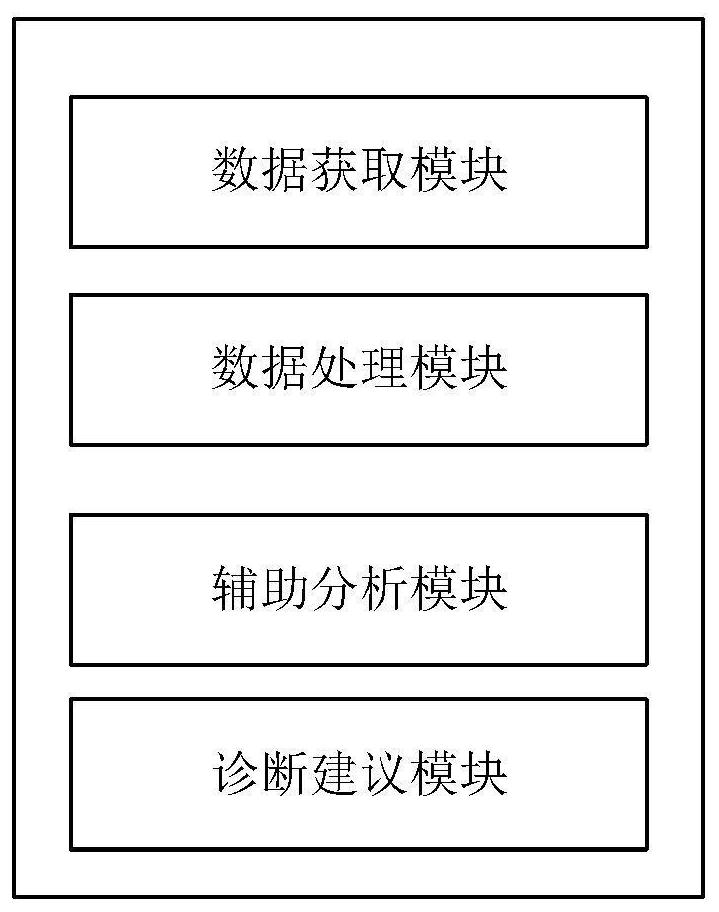

InactiveCN103565445AClear emotional stateChange bad moodPsychotechnic devicesEmotion assessmentClient-side

The invention discloses an emotion assessment service system and an emotion assessment service method. The emotion assessment service system comprises a client side device and a cloud server. The client side device is provided for a user to input a piece of mood information so as to generate a transmission signal. The cloud server is connected with the client side device, and comprises an analysis module, a processing module and a transmission module. The analysis module is used for receiving the transmission signal so as to analyze content in the mood information and generate an analysis signal. The processing module is used for receiving the analysis signal so as to work out a piece of emotion assessment information which corresponds to the mood information, and generate a processing signal. The transmission signal is used for receiving the processing signal so as to transmit the emotion assessment information to the client side device.

Owner:INVENTECSHANGHAI TECH +3

Simulation test assessment system for customer service posts

InactiveCN107368948AAffects fairness and justiceReduce labor costsResourcesTest efficiencyEmotion assessment

The invention discloses a simulation test assessment system for customer service posts. The system includes a knowledge base internally provided with types of customer service posts, a corresponding dialogue scene being preset for each type of customer service posts, each dialogue scene being provided with corresponding necessary keywords for posts; a dialogue module which launches questions and interaction to assessed personnel according to preset dialogue scenes and obtains dialogue content of the assessed personnel; a semantic emotion assessment module which assesses an emotion value of the dialogue content of the assessed personnel to obtain an emotion assessment result; and a keyword assessment module which performs keyword extraction on the dialogue content of the assessed personnel and matches the extracted dialogue keywords with the preset necessary keywords for posts to obtain a keyword assessment result. Thus automatic assessment is realized, a large amount of human cost is saved, test efficiency is greatly improved, and a circumstance that subjective tendency occurs in manual assessment and thereby affecting justice and equity of an assessment result is avoided.

Owner:XIAMEN KUAISHANGTONG TECH CORP LTD

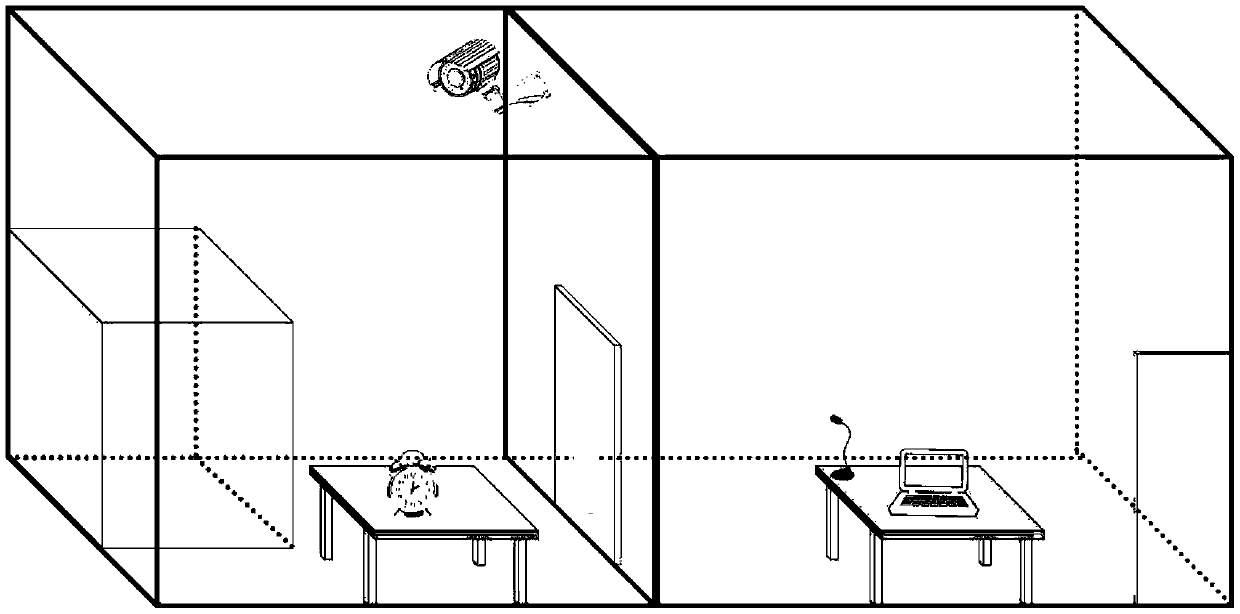

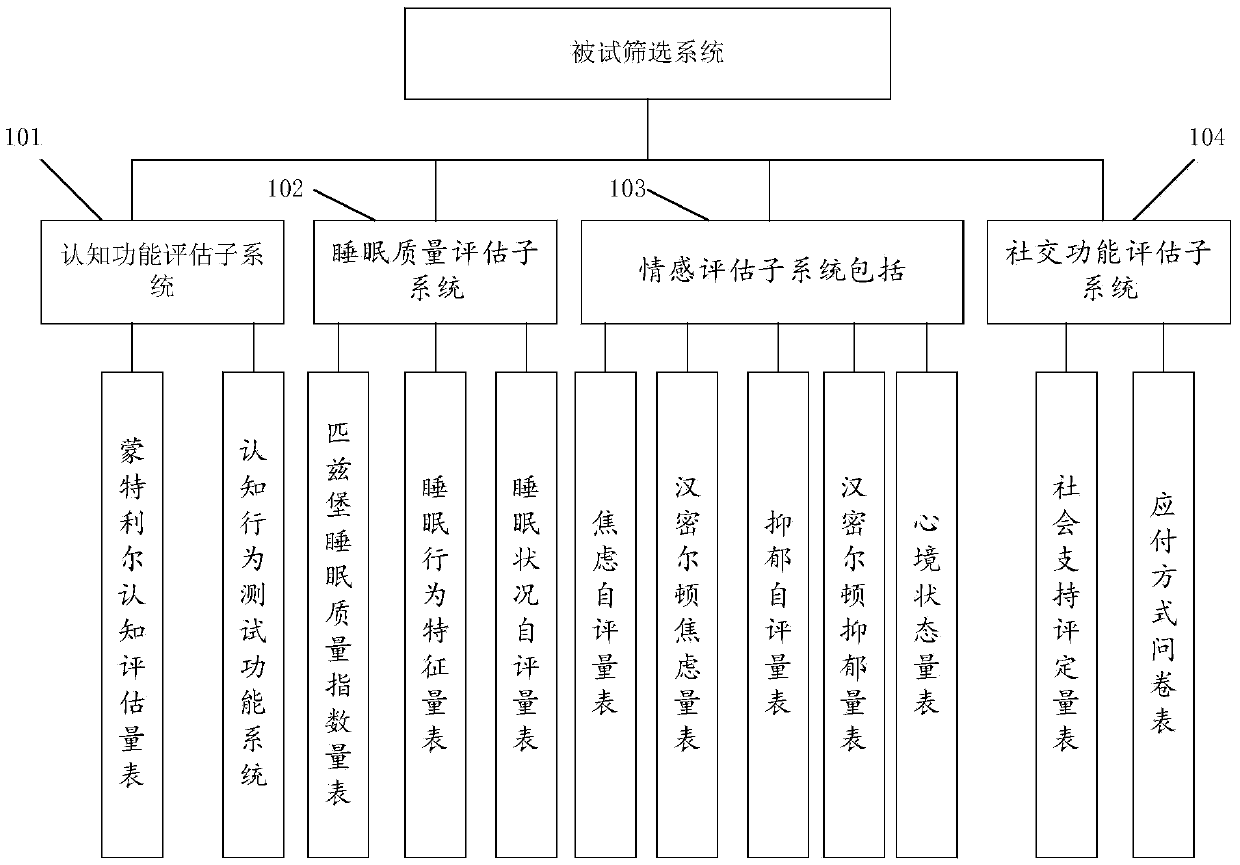

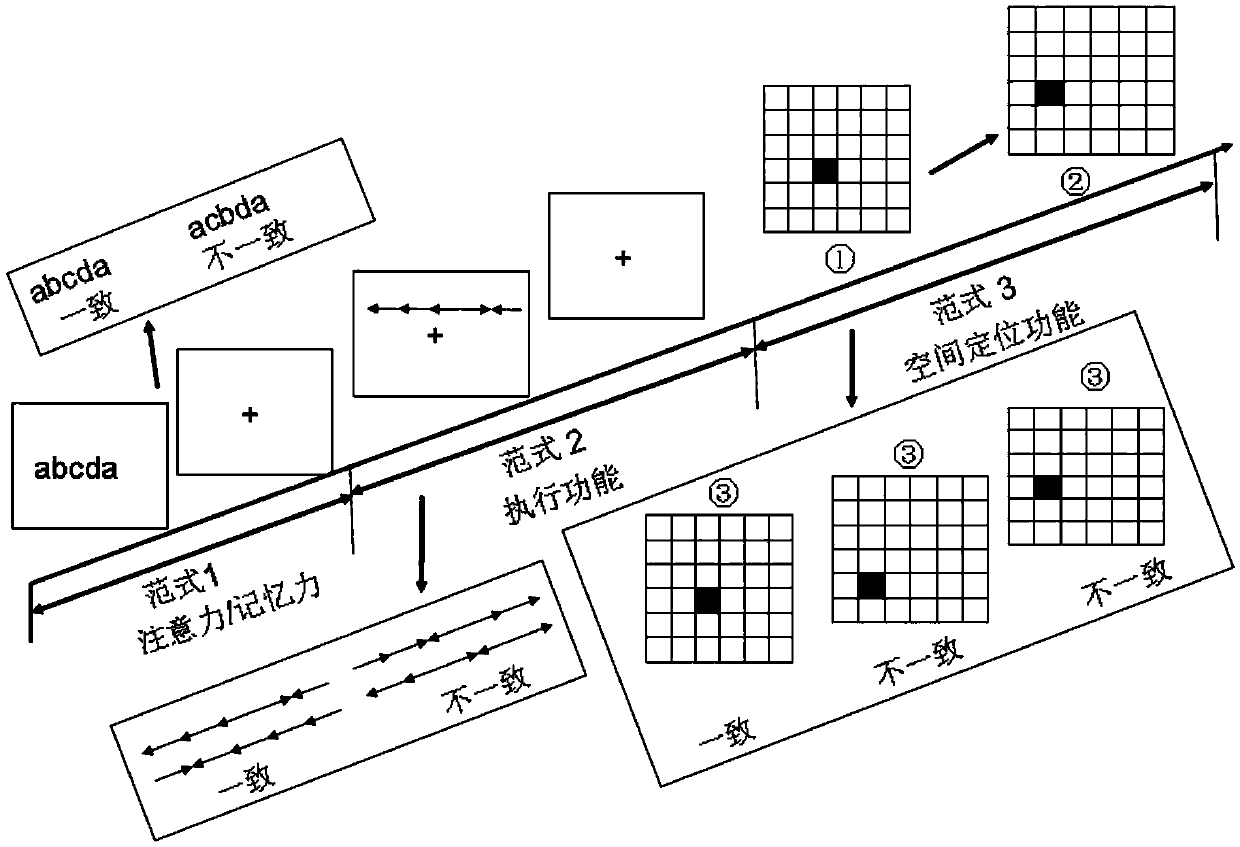

Sleep deprivation model system with simulation narrow and small spaces for pilots

InactiveCN105361856AA true reflection of sleep deprivationThe detection data is accurateSensorsPsychotechnic devicesEmotion assessmentClinical psychology

The invention provides a sleep deprivation model system with simulation narrow and small spaces for pilots. The sleep deprivation model system comprises the closed spaces and a tested screening system. The tested screening system is arranged in the closed spaces and comprises a cognitive function assessment subsystem, a sleep quality assessment subsystem, an emotion assessment subsystem, a social function assessment subsystem and a sleep detection device; the cognitive function assessment subsystem is used for assessing cognitive behavior and cognitive functions of testees; the sleep quality assessment subsystem is used for assessing sleep behavior of the testees; the emotion assessment subsystem is used for assessing emotion indexes of the testees; the social function assessment subsystem is used for assessing social parameters of the testees; the sleep detection device is used for detecting sleep parameters of the testees. According to the technical scheme, the sleep deprivation model system has the advantage of accurate detection data.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

Simulated scene-based career assessment system and method

InactiveCN108364133AObjective knowledgeUnderstand clearlyOffice automationResourcesCareer assessmentEmotion assessment

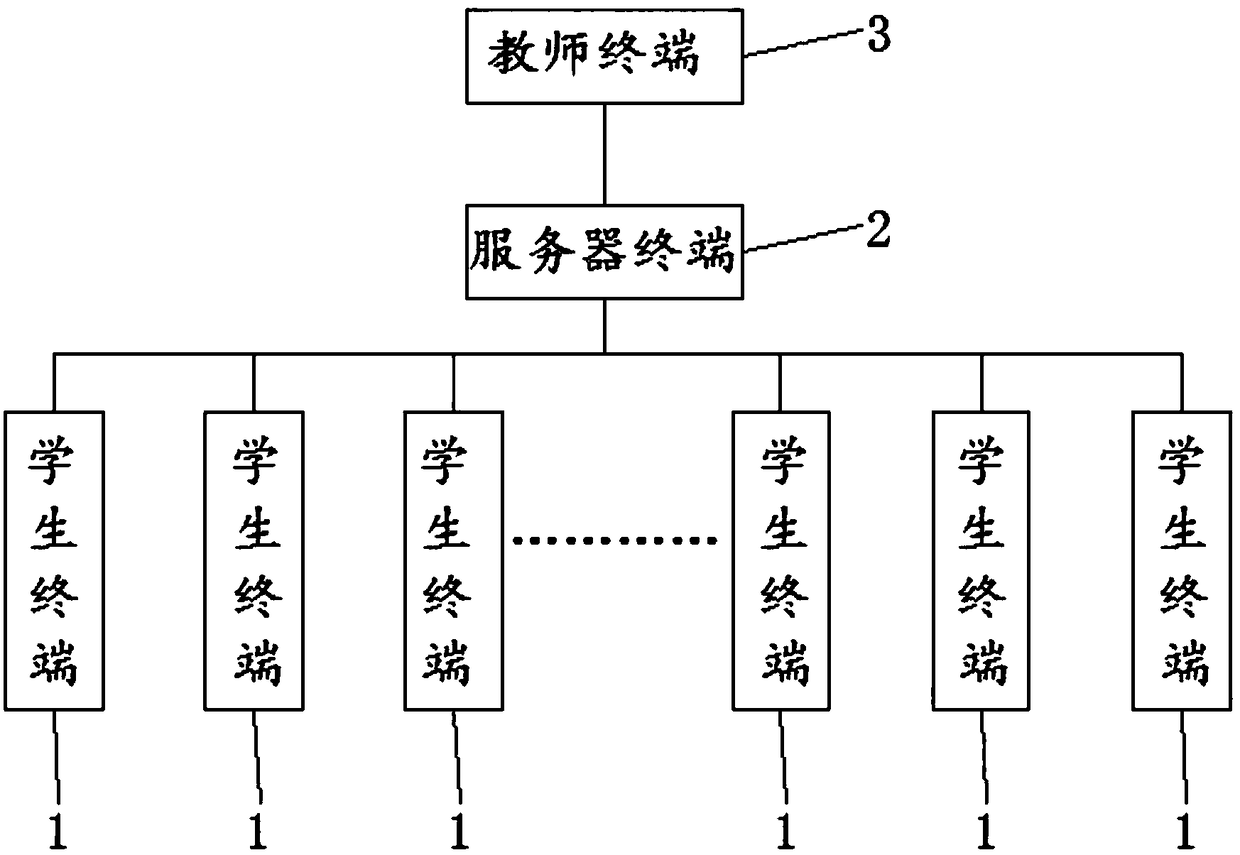

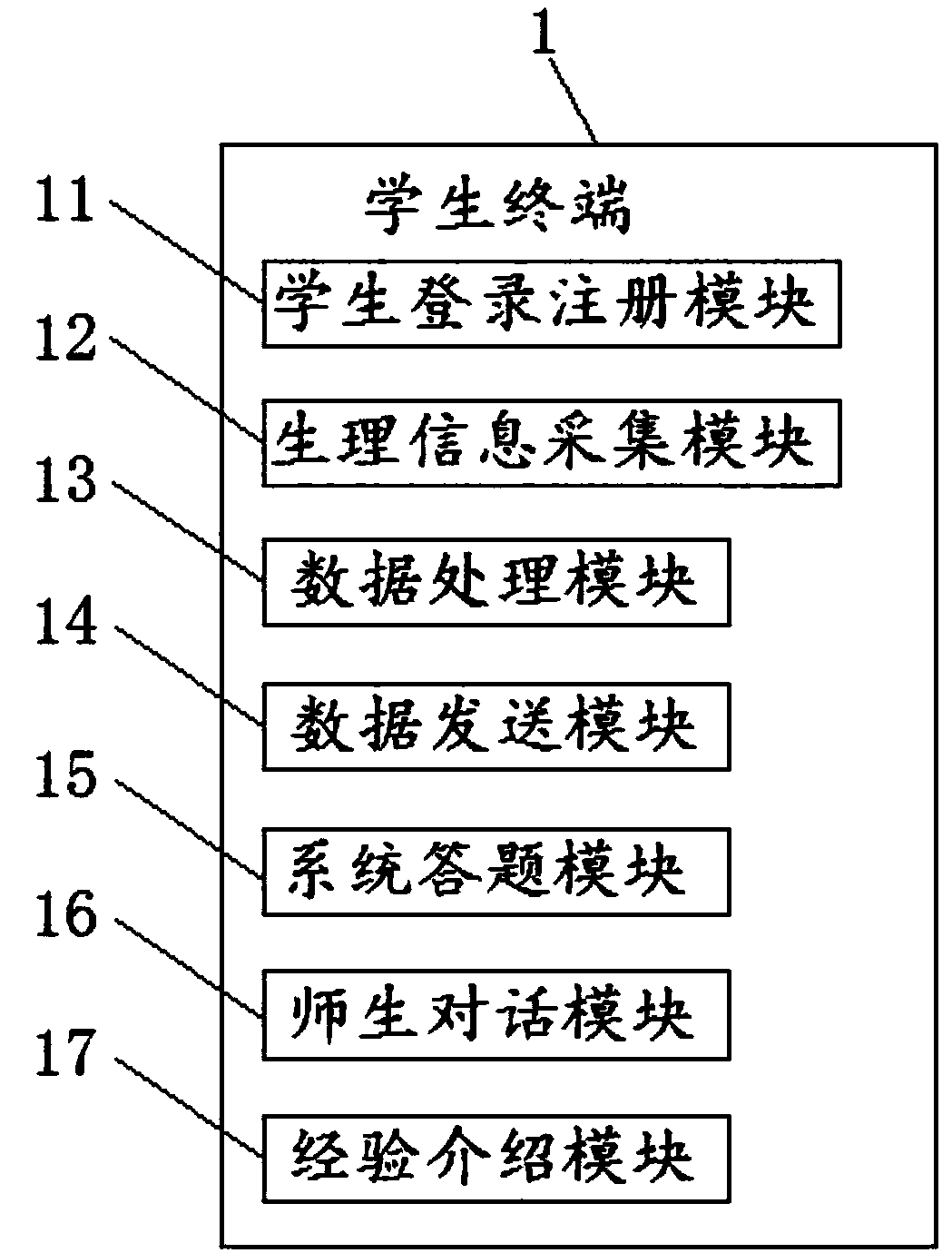

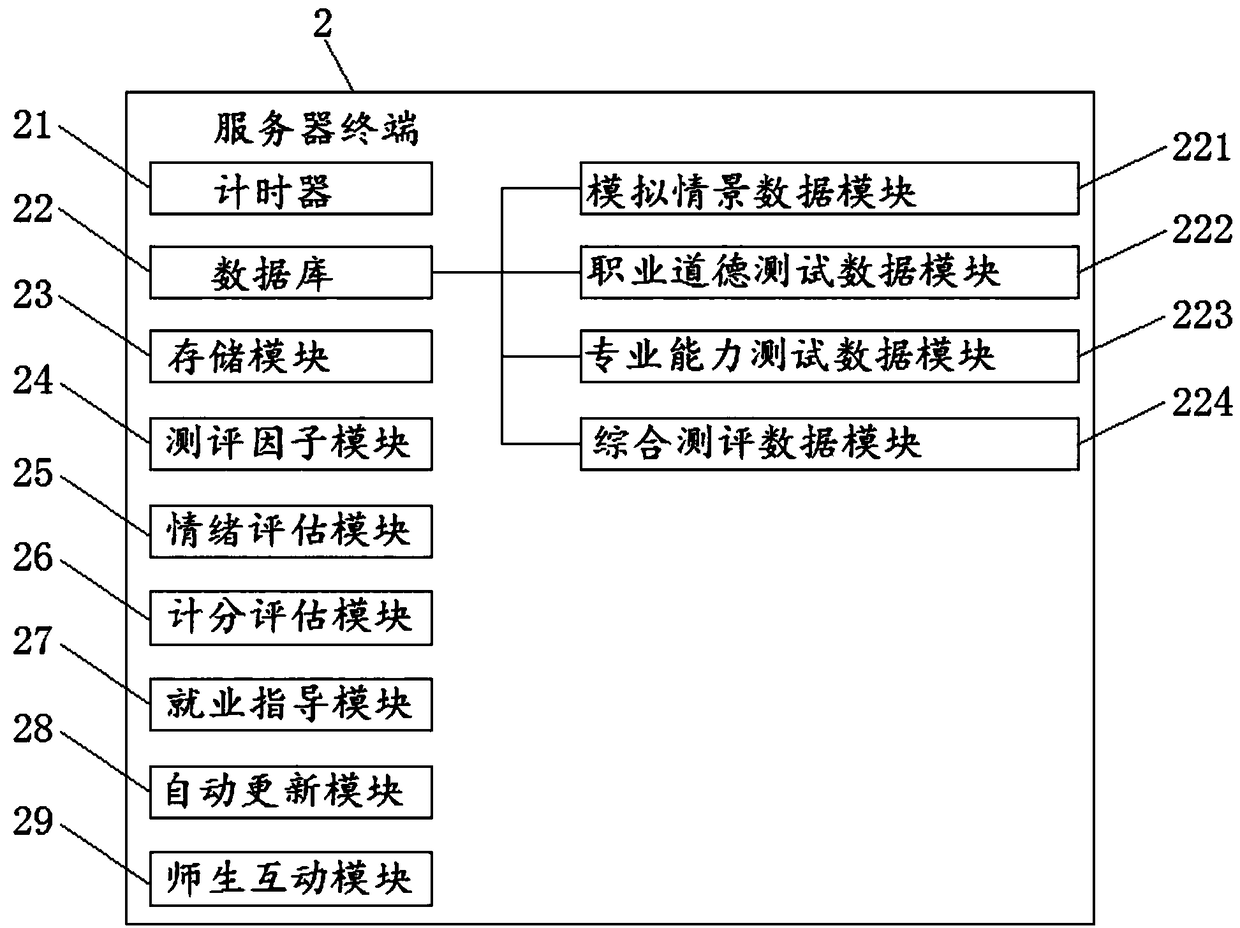

The invention provides a simulated scene-based career assessment system and method, and belongs to the technical field of employment guidance for college students. The system comprises a student terminal, a server terminal and a teacher terminal, wherein the server terminal comprises a timer, a database, a storage module, an assessment factor module, an emotion assessment module, a scoring assessment module, an employment guidance module, an automatic updating module and a teacher-student interaction module; the teacher terminal comprises a teacher login and register module, a data calling module, a questioning module and an evaluation module; and the student terminal comprises a student login and register module, a physiological information acquisition module, a data processing module, adata transmission module, a system answering module, a teacher-student conversation module and an experience introduction module. Students can carry out assessment through the system, and the system can generate evaluation reports, so that the students can carry out self-recognition more objectively and clearly, so as to find proper employment positions, improve the recruitment quality, reduce theseparation rate and reduce the double loss of employment units and employees.

Owner:SHANGQIU MEDICAL COLLEGE

Psychological career ability assessment system

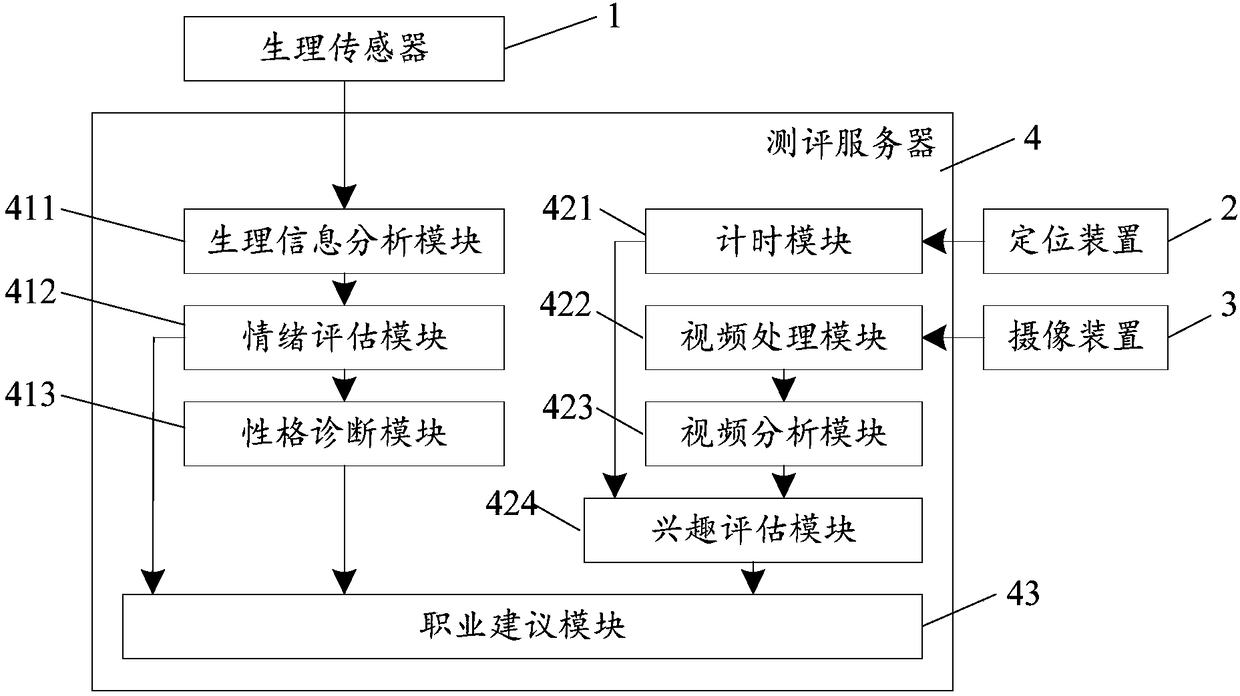

ActiveCN108171437APrecise positioningRespiratory organ evaluationSensorsEmotion assessmentInformation analysis

The invention discloses a psychological career ability assessment system, and relates to the field of computers. The system comprises a film and television projection apparatus, a physiological sensor, an exhibition room, a locating apparatus, multiple camera apparatuses and an assessment server, wherein the assessment server comprises a physiological information analysis module, an emotion assessment module, a character diagnosis module, a timing module, a video processing module, a video analysis module, an interest assessment module and a career suggestion module. According to the system, emotions and character conditions of a tester are combined with interest types and interest levels of exhibition works, and career suggestions of the tester are given psychologically, namely, suitableemployment types and career abilities (career fitness) of college students are accurately and reliably assessed from the root, so that the tester can further know himself or herself, accurately locateown work and find suitable work during graduation.

Owner:JILIN TEACHERS INST OF ENG & TECH

System and method for expressive language, developmental disorder, and emotion assessment

In one embodiment, a method for detecting autism in a natural language environment using a microphone, sound recorder, and a computer programmed with software for the specialized purpose of processing recordings captured by the microphone and sound recorder combination, the computer programmed to execute the method, includes segmenting an audio signal captured by the microphone and sound recorder combination using the computer programmed for the specialized purpose into a plurality recording segments. The method further includes determining which of the plurality of recording segments correspond to a key child. The method further includes determining which of the plurality of recording segments that correspond to the key child are classified as key child recordings. Additionally, the method includes extracting phone-based features of the key child recordings; comparing the phone-based features of the key child recordings to known phone-based features for children; and determining a likelihood of autism based on the comparing.

Owner:LENA FOUNDATION

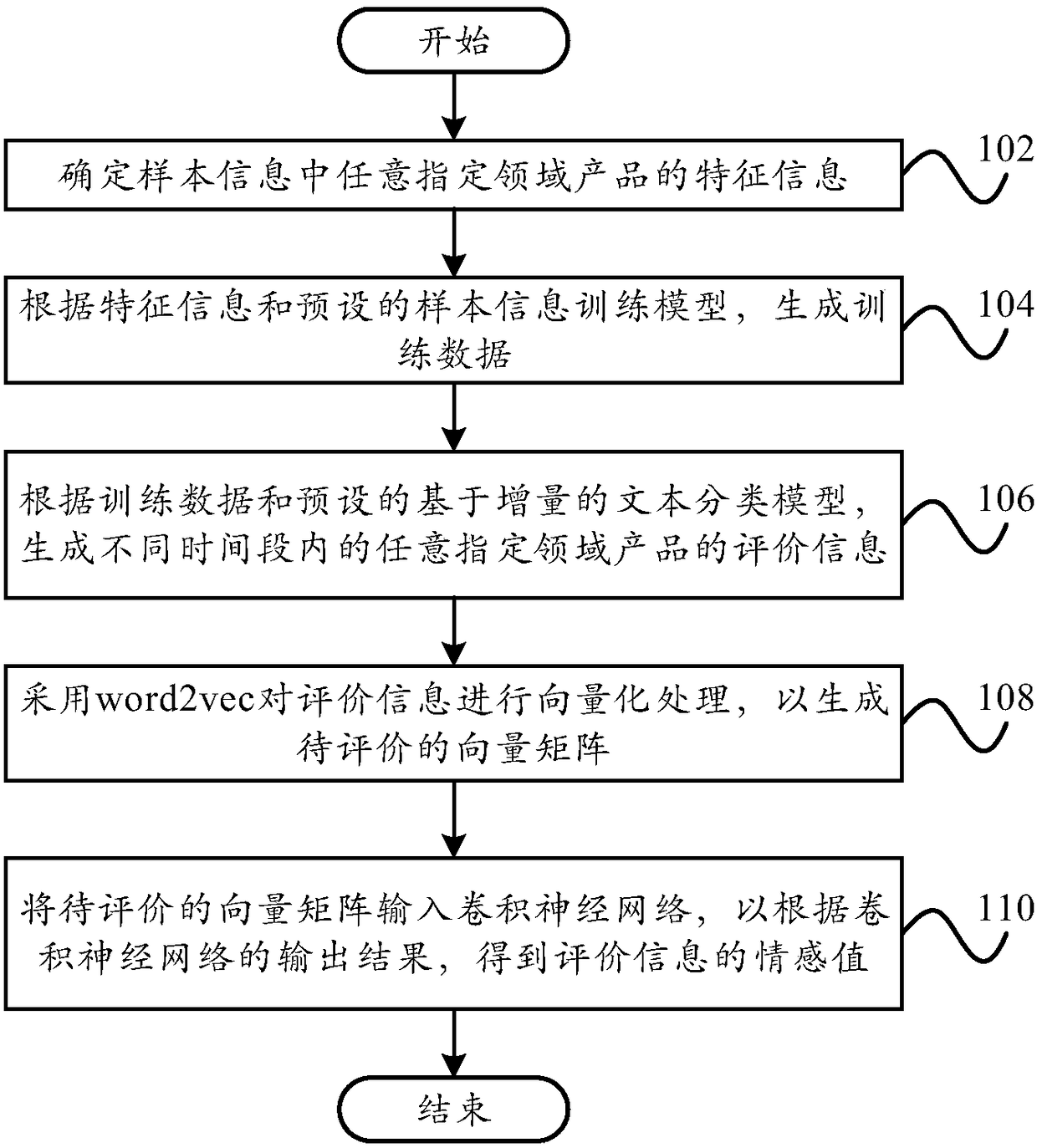

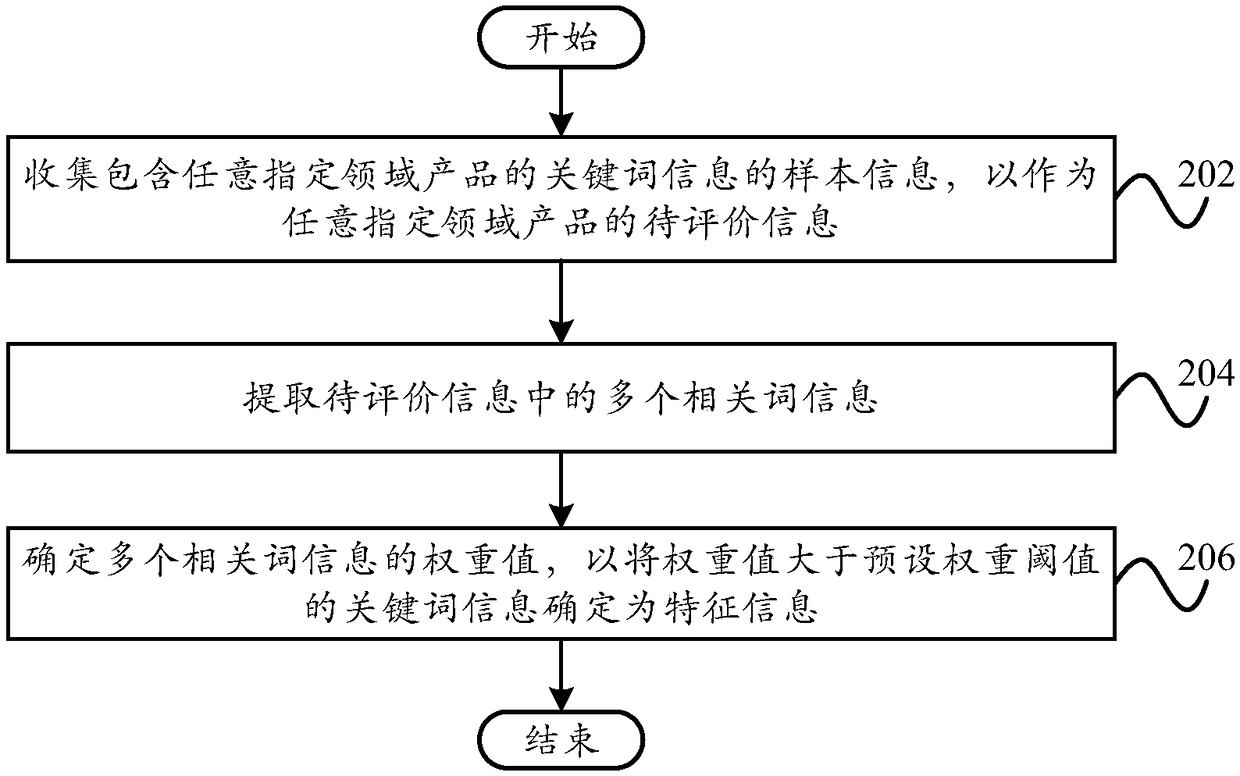

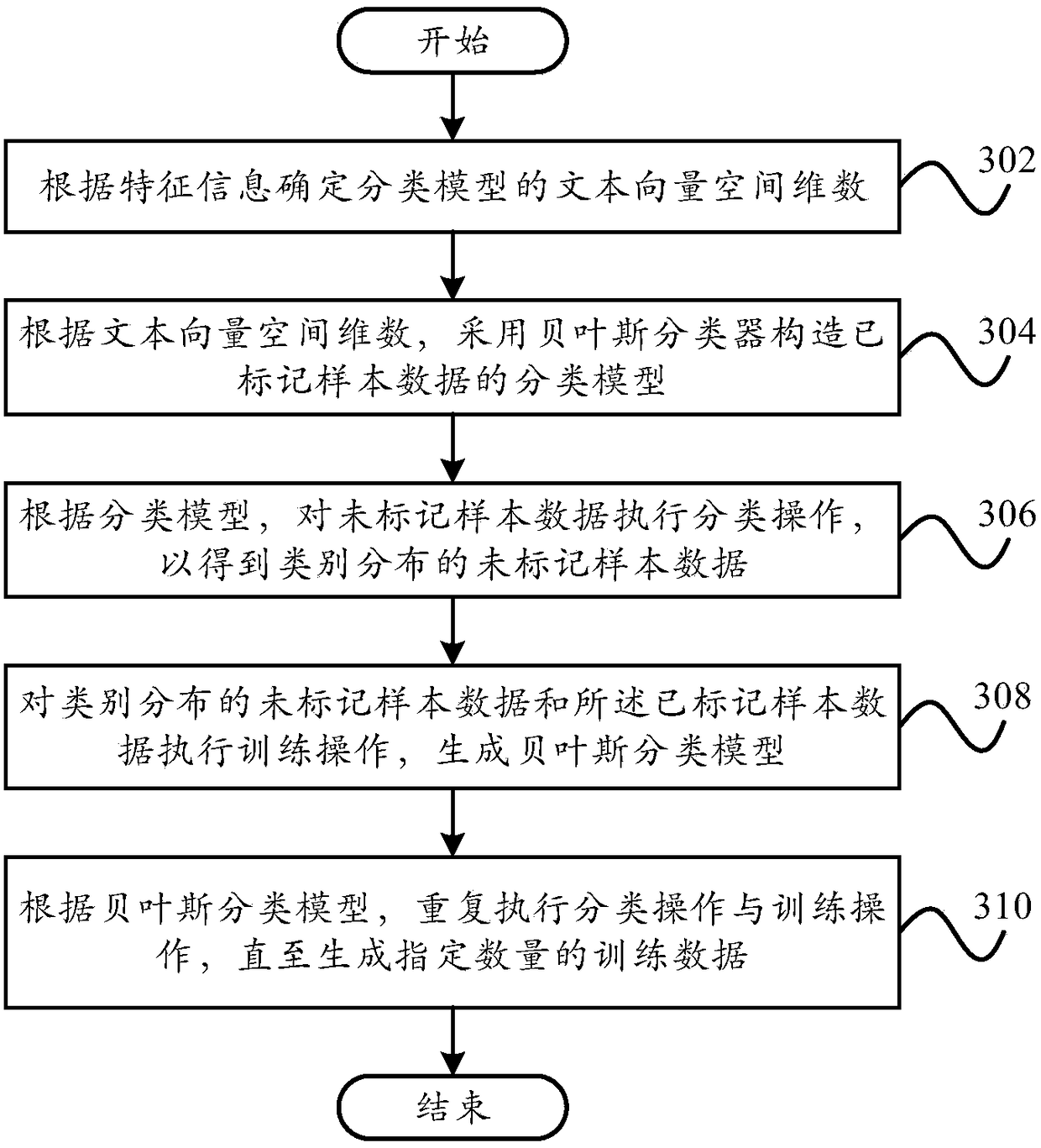

Emotion assessment method and device based on mass sample data

The invention provides an emotion assessment method and an emotion assessment device based on mass sample data. The emotion assessment method based on the mass sample data comprises the steps of determining feature information of products in any appointed field in sample information; generating training data according to the feature information and a preset sample information training model; generating assessment information of the products in any appointed field within different time slots according to the training data and a preset increment-based text classification model; using word2vec toperform vectorization treatment on assessment information to generate a vector matrix to be assessed; inputting the vector matrix to be assessed into a convolutional neural network, and thus acquiring an emotion value of the assessment information according to an output result of the convolutional neural network. According to the technical scheme of the method and device provided by the invention, the accuracy and effectiveness of the user for acquiring the emotion value of the assessment information for the products in the specific field are improved, and according to the acquired assessmentanalysis results of the different products, the user can better select the product or make the more reasonable product marketing method.

Owner:NEW FOUNDER HLDG DEV LLC +1

Method and apparatus for providing emotion expression service using emotion expression identifier

ActiveUS9401097B2Digital data processing detailsSpecial data processing applicationsEmotion assessmentWeb page

Owner:KIM JONG PHIL +1

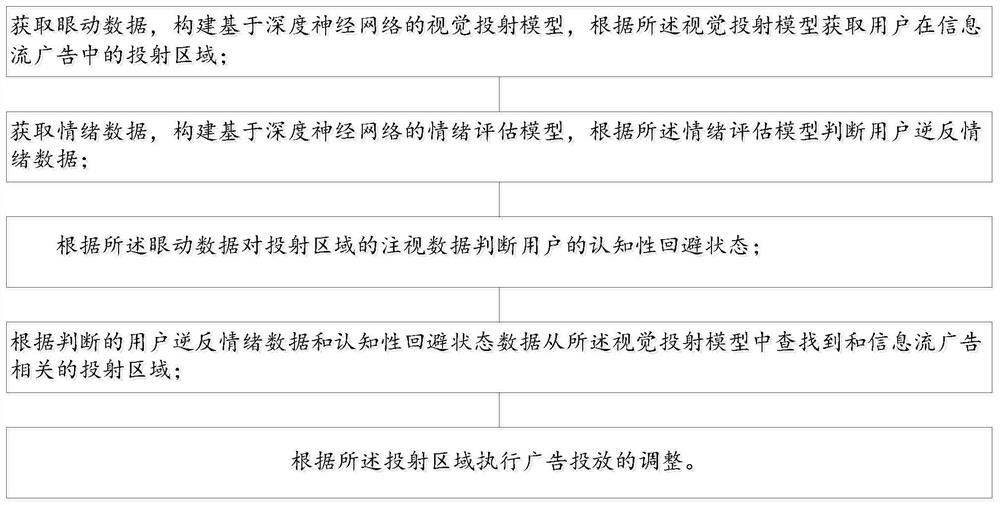

Advertisement evaluation method and system based on eye movement tracking and emotional state

PendingCN114648354AReduce negative experienceSpotting Cognitive Avoidant StatesInput/output for user-computer interactionSensorsEmotion assessmentComputer vision

The invention discloses an advertisement evaluation method and system based on eye movement tracking and an emotional state, and the method comprises the following steps: obtaining eye movement data, constructing a visual projection model based on a deep neural network, and obtaining a projection region of a user in an information flow advertisement according to the visual projection model; acquiring emotion data, constructing an emotion evaluation model based on a deep neural network, and judging user inverse emotion data according to the emotion evaluation model; searching a projection area related to the information flow advertisement from the visual projection model according to the judged user inverse emotion data; and adjusting advertisement putting according to the projection area. In combination with the eye movement data and the emotional state data, cognitive avoidance and psychological retrograde states possibly existing in the advertisement browsing process of the user can be recognized, and advertisement content putting adjustment is further performed according to the detected cognitive avoidance and psychological retrograde states.

Owner:SHANGHAI INTERNATIONAL STUDIES UNIVERSITY

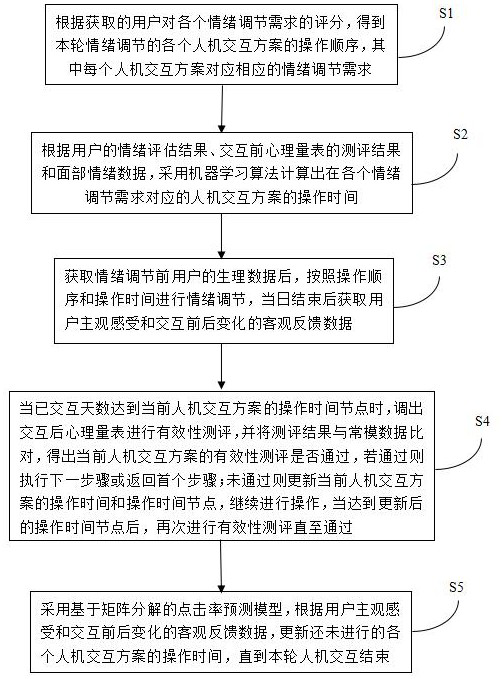

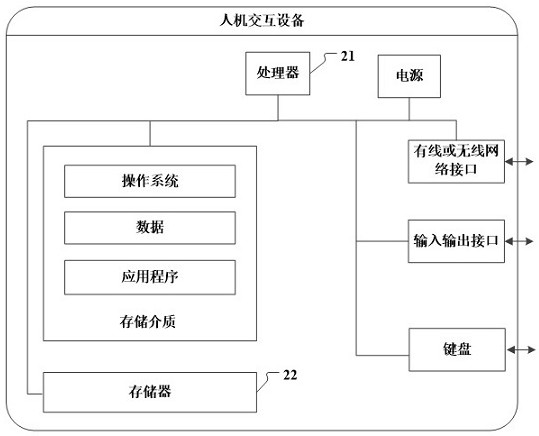

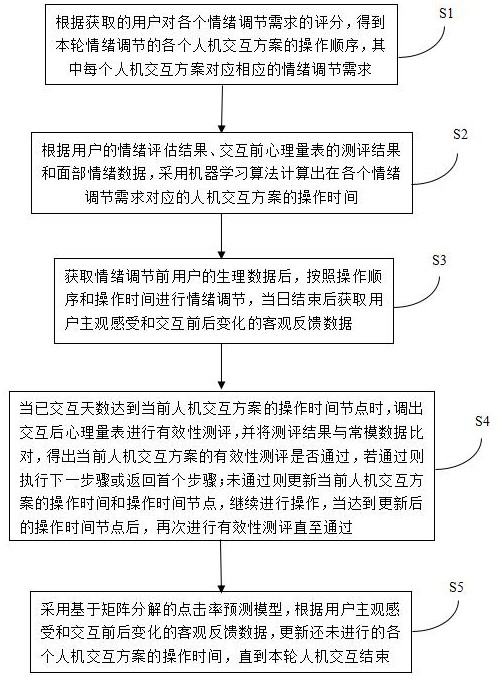

Human-computer interaction method and device for emotion regulation

ActiveCN113687744ARich and varied interactive contentImprove emotional experienceCharacter and pattern recognitionMental therapiesEvaluation resultEmotion assessment

The invention discloses a man-machine interaction method and device for emotion regulation. The method comprises the following steps: calculating operation time of a human-computer interaction scheme according to an emotion evaluation result of a user, an evaluation result of a psychological scale before interaction and facial emotion data; performing emotion regulation after acquiring physiological data of the user before interaction, and acquiring objective feedback data of subjective feelings of the user and changes before and after interaction after interaction on that day is completed; when the number of interacted days reaches the operation time node of the current man-machine interaction scheme, calling out the post-interaction psychological scale for effectiveness evaluation, comparing an evaluation result with norm data, and if the evaluation result passes, executing the next step or returning to the first step; otherwise, updating the operation time and the operation time node of the current man-machine interaction scheme, and continuing man-machine interaction until the evaluation result passes; and according to subjective and objective feedback data of the user, updating the operation time of each remaining man-machine interaction scheme until the man-machine interaction of the round is finished.

Owner:BEIJING WISPIRIT TECH CO LTD

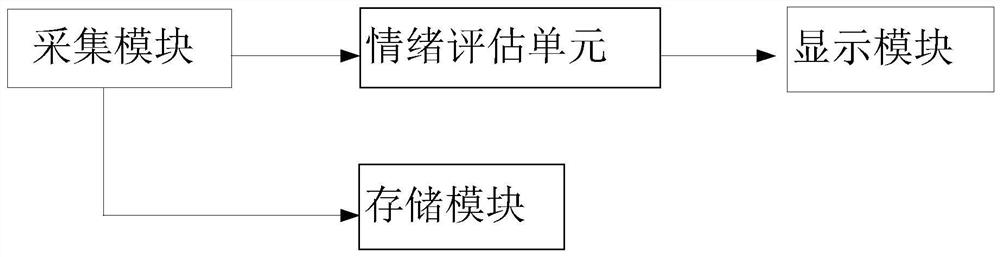

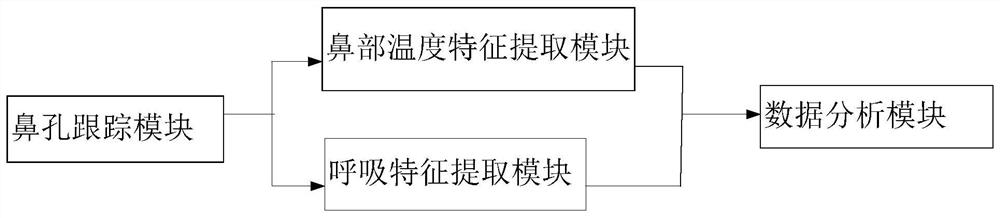

Tension evaluation system for counters

PendingCN111956243AWill not increase the psychological burdenImprove the accuracy of sentiment analysisDiagnostic signal processingRespiratory organ evaluationPhysical medicine and rehabilitationEmotion assessment

The invention discloses a tension evaluation system for counters. Thermal imaging processing and application are included. The system comprises an acquisition module, an emotion evaluation unit, a display module and a storage module; the acquisition module is hidden on a counter surface to collect facial information of a detected person; the emotion evaluation unit receives the facial information,transmitted by the acquisition module, of the detected person, analyzes breathing frequency of the detected person and judges the emotional tension degree of the detected person in combination with temperature distribution of the nose; the display module receives information, transmitted by the emotion evaluation module, of the emotional tension degree of the detected person, and displays the emotional tension degree of the detected person in the form of percentile score; and the storage module stores data of the facial information, transmitted by the acquisition module, of the detected person, and gives the emotional tension degree by the percentile score. A detection person is reminded to conduct more detailed examination or cross-examination on the detected person with tension, and a basis is provided for finding suspects with illegal intentions.

Owner:DALIAN UNIV OF TECH

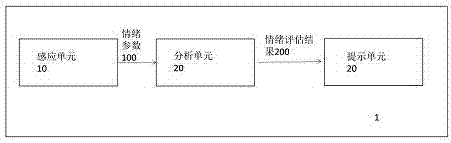

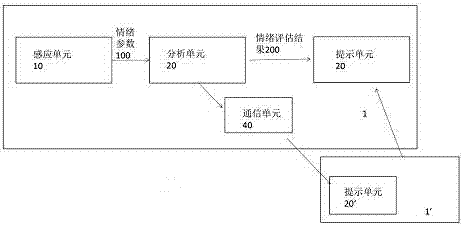

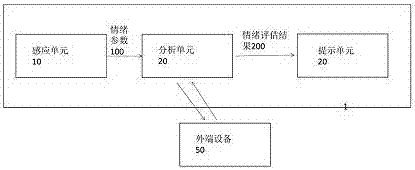

Emotion prompt equipment

PendingCN107024996AInput/output for user-computer interactionGraph readingEmotion assessmentSpeech recognition

The invention provides emotion prompt equipment which is characterized by comprising an induction unit configured to induce emotions to obtain emotion parameters, an analysis unit configured to analyze the emotion parameters to obtain an emotion assessment result and a prompt unit configured to prompt emotion assessment result. According to the emotion prompt equipment, the emotions of a user can be induced and presented in an intuitive way, so as to prompt the user.

Owner:WENZHOU YUNHANG INFOMATION TECH LTD

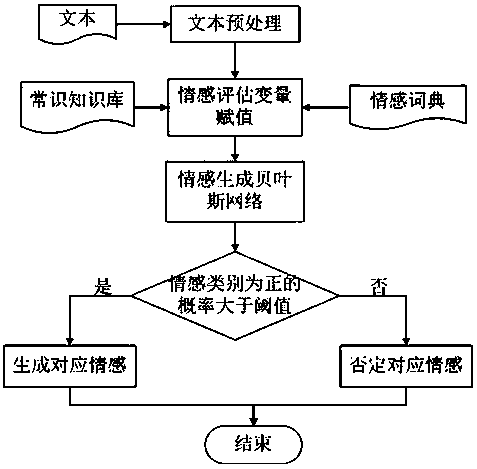

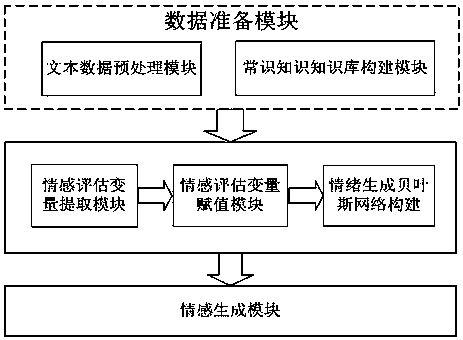

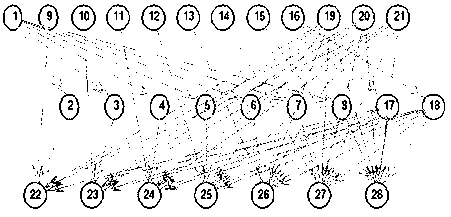

Probabilistic reasoning and emotion cognition-based text fine-grained emotion generation method

ActiveCN108549633AEfficient captureResolve incompletenessNatural language data processingSpecial data processing applicationsPattern recognitionData set

The invention relates to a probabilistic reasoning and emotion cognition-based text fine-grained emotion generation method. The method comprises the following steps of 1: preparing a text data set required by a training method; 2: processing the text data set; 3: extracting an emotion assessment variable used for constructing a Bayesian network; 4: according to characteristics of a network text, adding an expression symbol and word frequency-based emotion assessment variable; 5: constructing an emotion knowledge base; 6: constructing a commonsense knowledge base; 7: performing value assignmenton the emotion assessment variable; 8: performing emotion learning to generate a network structure of the Bayesian network; 9: performing parameter learning; and 10: finishing construction work of the emotion generation method. The problem that hidden emotions are ignored in other emotion generation methods is solved by utilizing an emotion cognition method; the emotion generation probability iscalculated by utilizing the Bayesian network; the emotion type probabilities are compared; and one or multiple emotions comprised in the text are generated.

Owner:福昕鲲鹏(北京)信息科技有限公司

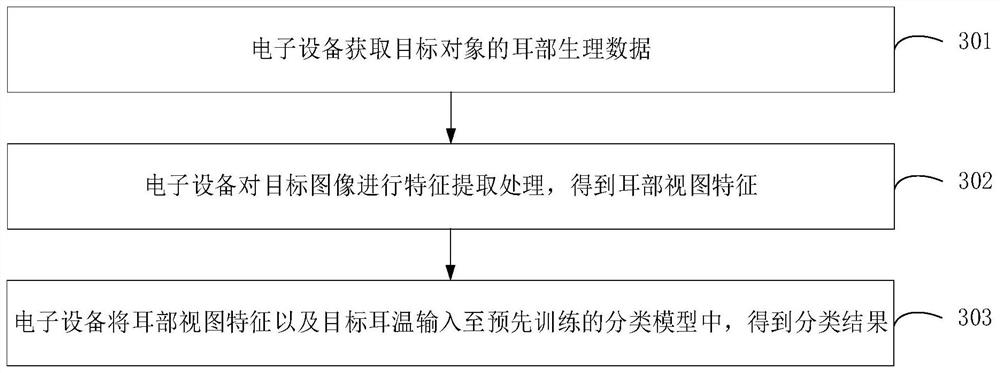

Emotion evaluation method and device, electronic equipment and computer readable storage medium

ActiveCN113017634ARealize evaluationIncrease flexibilityOtoscopesSurgeryFeature extractionEmotion assessment

The invention discloses an emotion evaluation method and device, electronic equipment and a computer readable storage medium. The method comprises the following steps: acquiring ear physiological data of a target object, wherein the ear physiological data comprises a target ear temperature and a target image of in-ear tissues; performing feature extraction processing on the target image to obtain ear view features. and inputting the ear view features and the target ear temperature into a pre-trained classification model, and obtaining a classification result, wherein the classification result is used for representing the emotion deviation of the target object. According to the technical scheme provided by the embodiment of the invention, the flexibility of emotion evaluation can be improved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

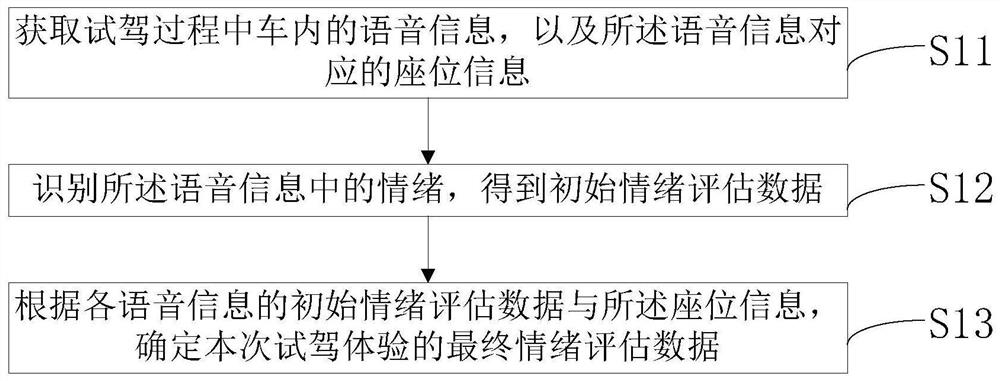

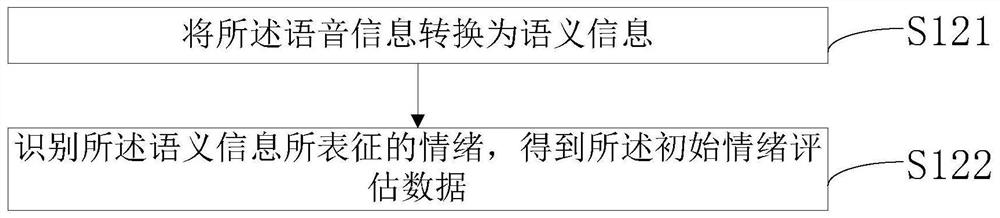

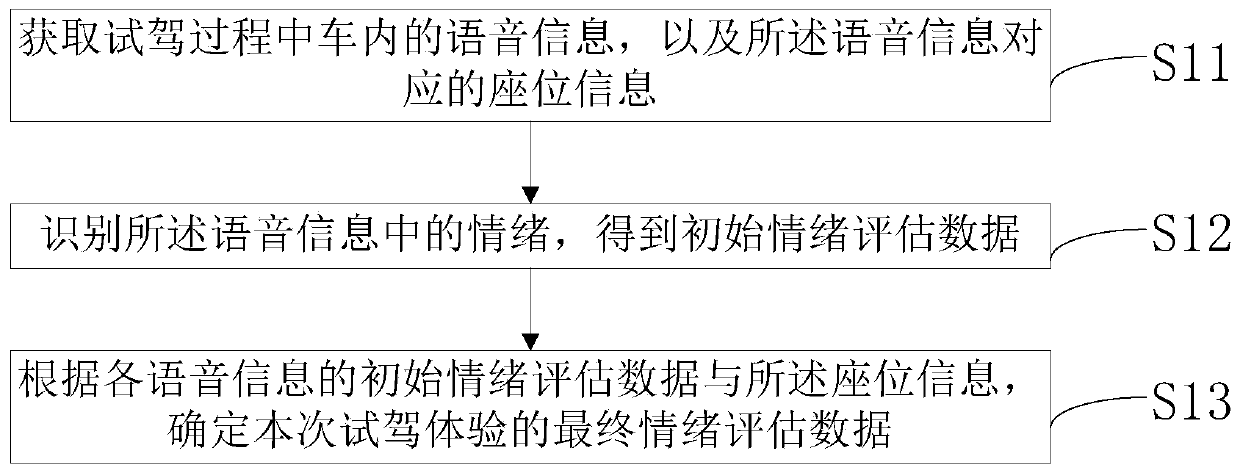

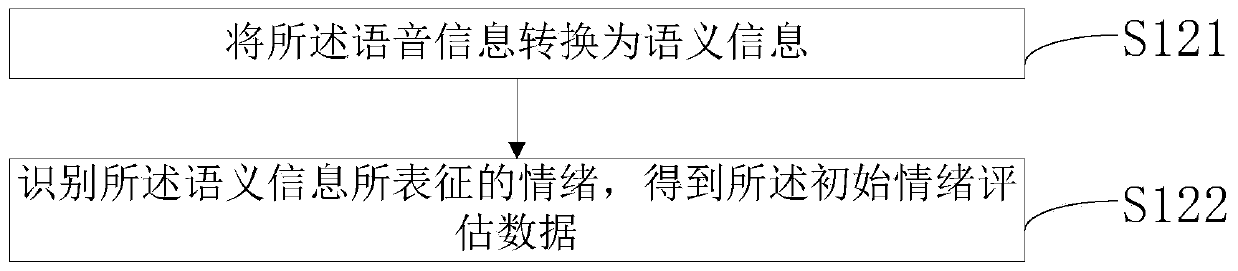

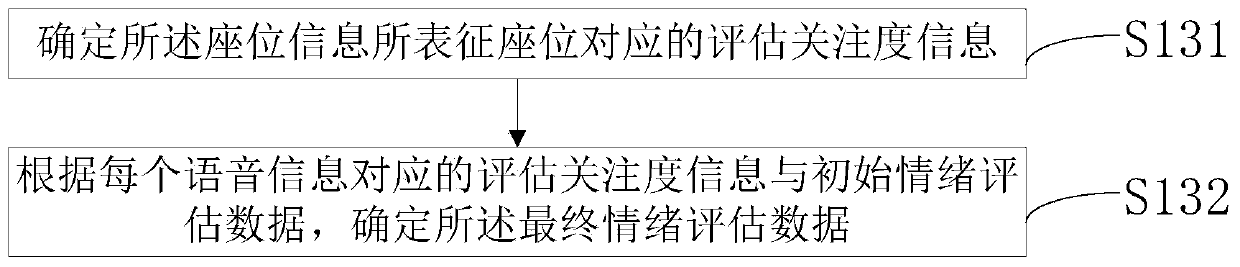

Data processing method, device, equipment and storage medium for evaluating test drive experience

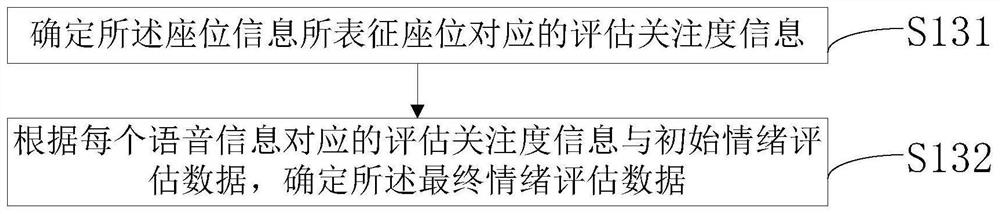

ActiveCN110797050BGuaranteed accuracyAccurately reflect the experience effectSpeech analysisEvaluation resultEmotion assessment

The present invention provides a data processing method, device, device, and storage medium for evaluating test drive experience. The method includes: acquiring voice information in the car during the test drive, and seat information corresponding to the voice information, The seat information is used to characterize the seat of the person who generated the voice information; identifying the emotion in the voice information to obtain initial emotional evaluation data; according to the initial emotional evaluation data of each voice information and the seat information, determine The final emotional assessment data for this test drive experience. In the present invention, since the accuracy of the evaluation result is not affected by people's ability to remember and understand, and is not easily affected by the ability to express, the accuracy of the evaluation can be guaranteed to a certain extent. The present invention also fully considers the specific conditions of people in different seats, so as to more accurately reflect the overall experience effect when multiple people participate in the test drive.

Owner:上海能塔智能科技有限公司

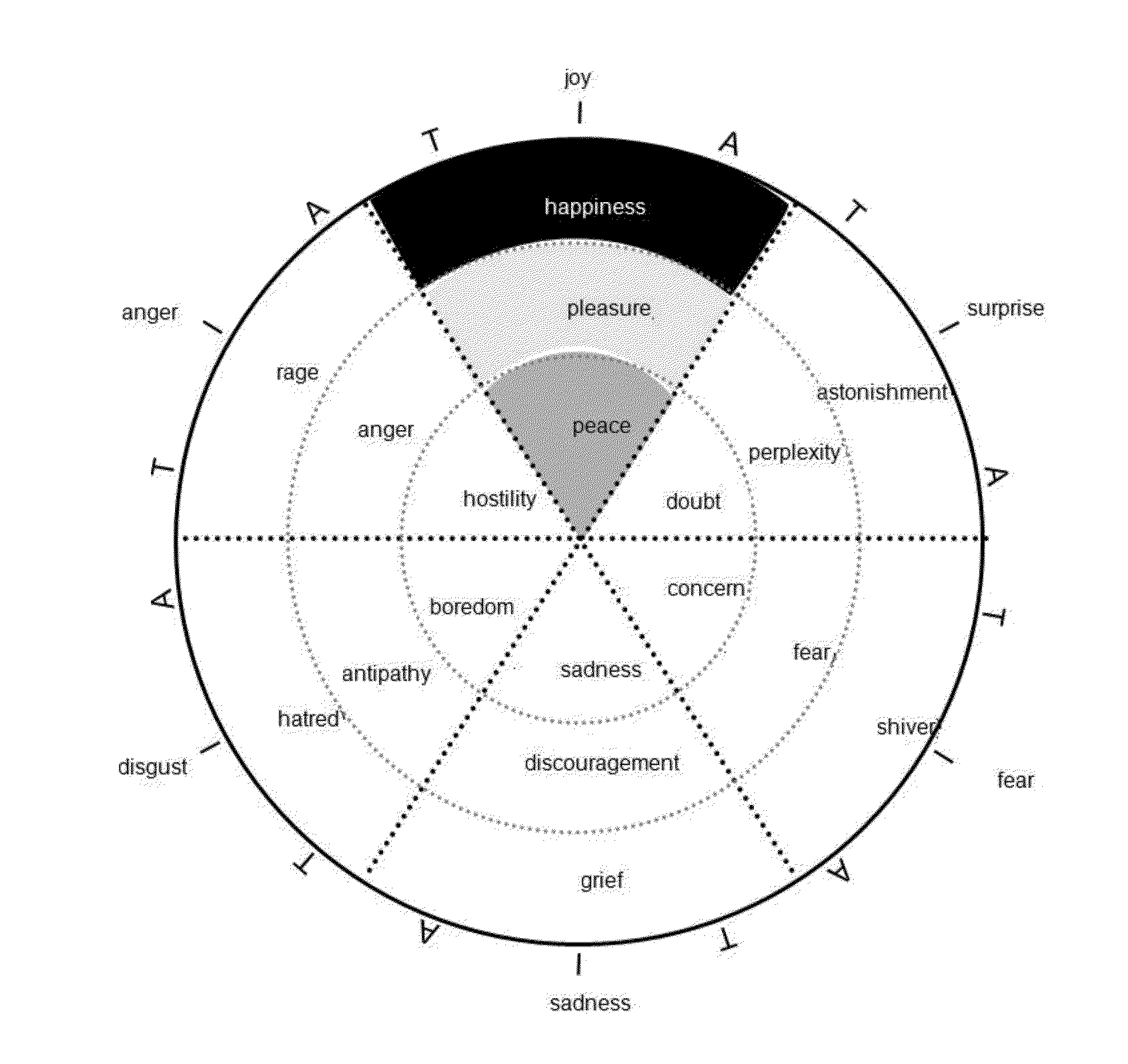

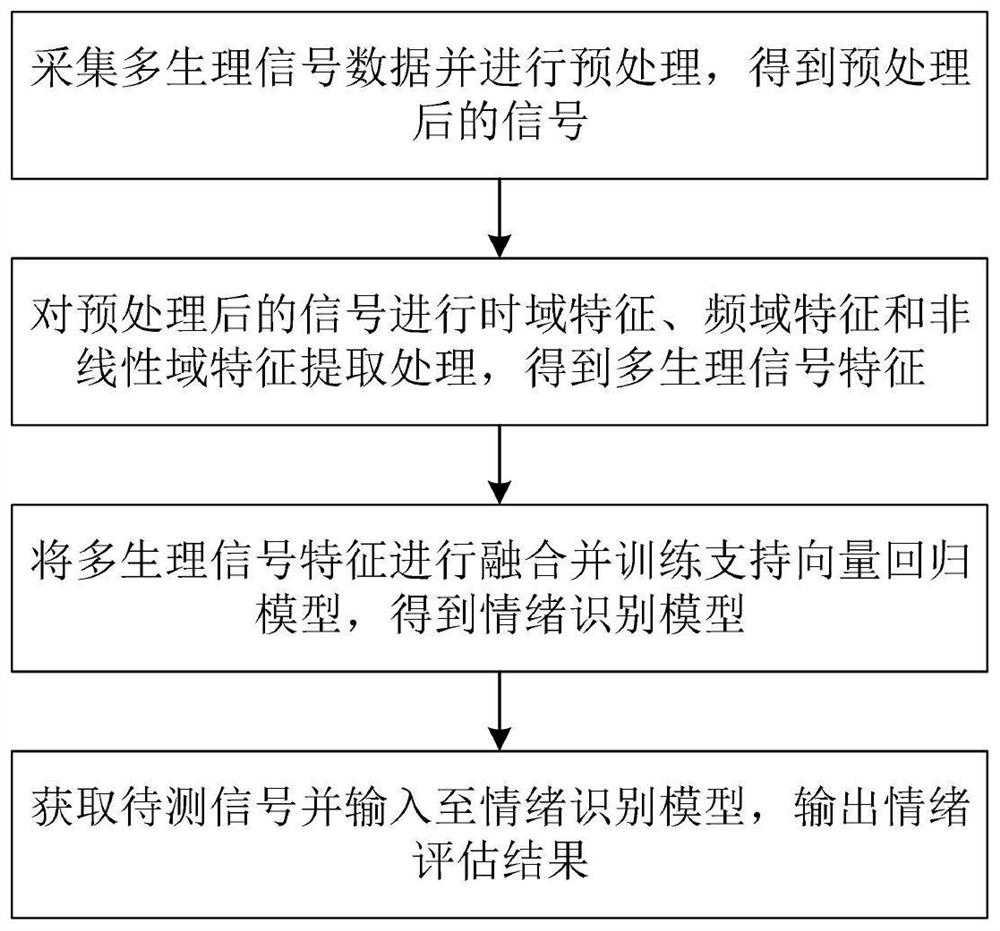

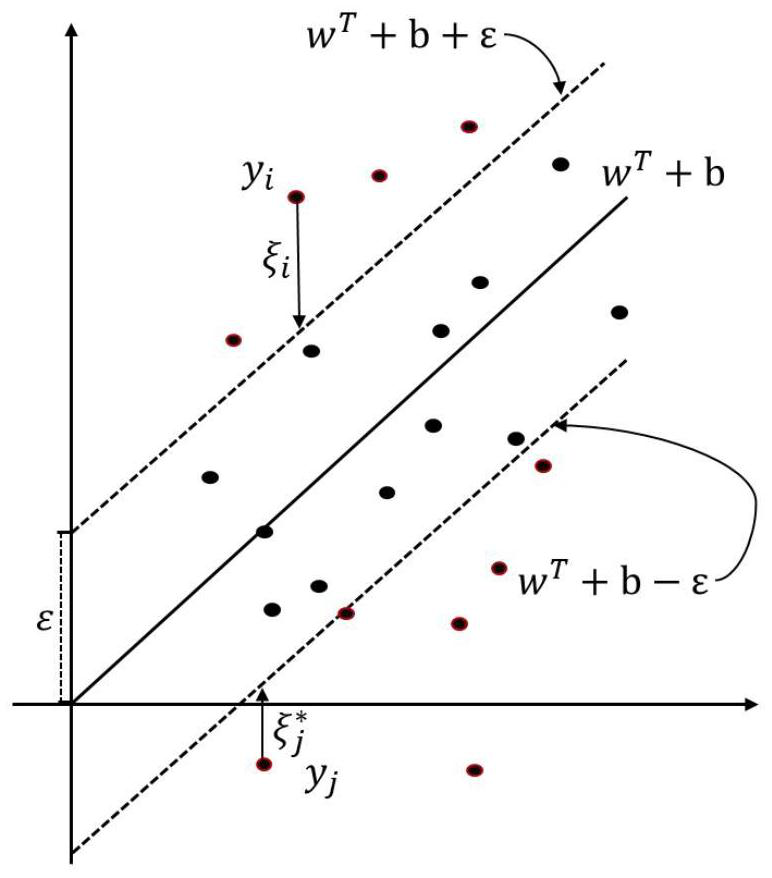

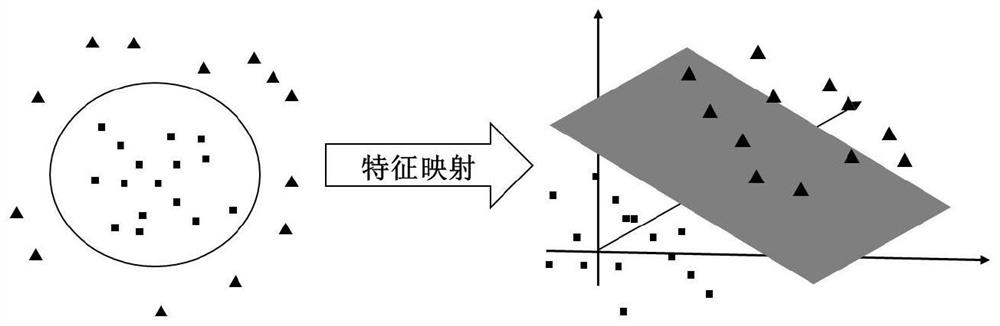

Multi-physiological signal emotion quantitative evaluation method based on two-dimensional continuous model

PendingCN114403877ASolving Nonlinear Mapping Relationship ProblemsImprove fitting abilitySensorsPsychotechnic devicesTime domainEvaluation result

The invention discloses a multi-physiological signal emotion quantitative evaluation method based on a two-dimensional continuous model, and the method comprises the steps: collecting and preprocessing multi-physiological signal data, and obtaining a preprocessed signal; performing time domain feature, frequency domain feature and nonlinear domain feature extraction processing on the preprocessed signals to obtain multiple physiological signal features; fusing the multiple physiological signal features and training a support vector regression model to obtain an emotion recognition model; and obtaining a to-be-detected signal, inputting the to-be-detected signal to the emotion recognition model, and outputting an emotion evaluation result. By using the method and the system, the emotion can be objectively and accurately analyzed by using the physiological signal, and the method and the system have certain universality. The multi-physiological-signal emotion quantitative evaluation method based on the two-dimensional continuous model can be widely applied to the field of predictive analysis.

Owner:SUN YAT SEN UNIV

Data processing method and device for evaluating test driving experience, facility and storage medium

ActiveCN110797050AGuaranteed accuracyAccurately reflect the experience effectSpeech analysisEmotion assessmentEngineering

The invention provides a data processing method and a data processing device for evaluating test driving experience, a facility and a storage medium. The method comprises the following steps: acquiring voice information in a vehicle during the test driving process, and seat information corresponding to the voice information, wherein the seat information is used for representing a seat where a person generating the voice information is located; recognizing the emotion in the voice information, thus obtaining initial emotion evaluating data; and according to the initial emotion evaluating data of the voice information and the seat information, determining final emotion evaluating data of the test driving experience. In the technical scheme, the accuracy of the evaluating result can not be influenced by the memory ability and comprehension ability of the person, and also is seldom influenced by the expression ability, and thus the evaluating accuracy can be guaranteed to a certain extent.Meanwhile, the specific circumstances of people on the different seats are also sufficiently considered, and thus the integral experience effect under the condition that multiple persons participatein test driving is more accurately reflected.

Owner:上海能塔智能科技有限公司

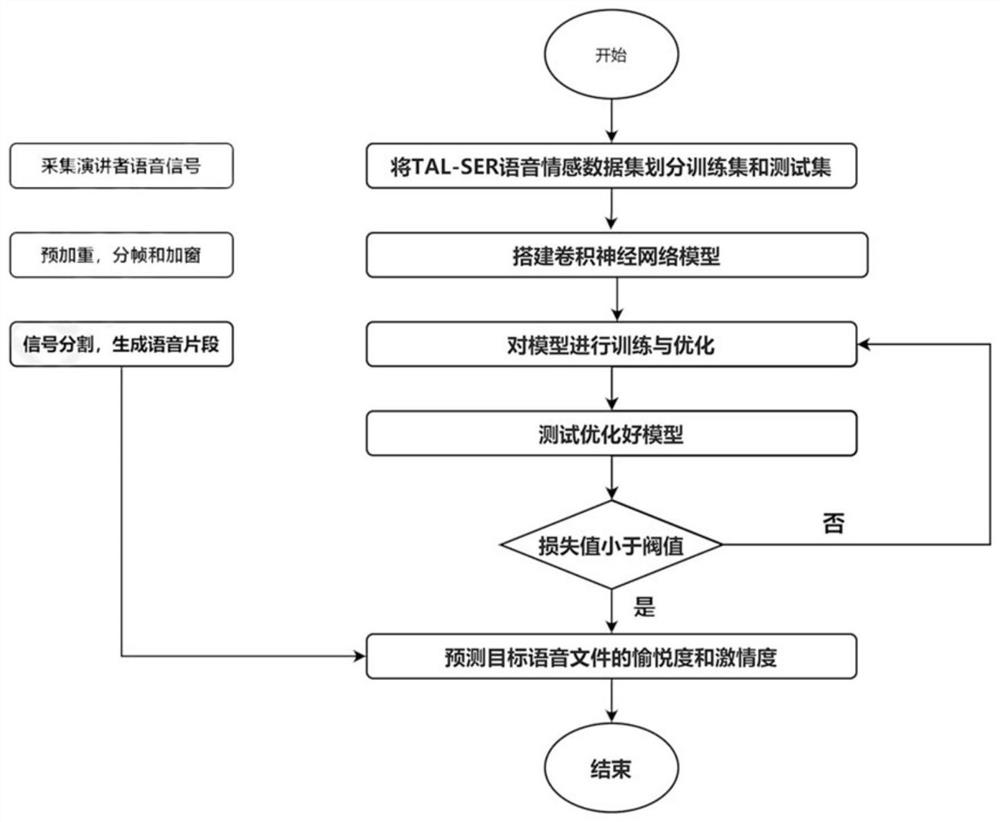

Language emotion recognition method for emotion evaluation

PendingCN114299995AEase of evaluationAssessing good language emotion recognitionSpeech recognitionEmotion assessmentIntense emotion

The invention discloses a language emotion recognition method for emotion evaluation, and belongs to the technical field of voice signal intelligent processing. The method comprises the following steps: pre-recording dialogue content to generate source audio, preprocessing the source audio, and storing to obtain an emotion database; dividing the emotion database into a training set and a test set; building a voice emotion recognition model based on the emotion database; the speech emotion recognition model predicts an emotion database through the pleasure degree and the passion degree; obtaining speech content of a speaker and preprocessing the speech content to generate a corresponding audio file; segmenting the audio file into a plurality of target audio files by taking the training set voice duration as a segmentation parameter; the target voice file is used as an input voice emotion recognition model, the emotion of a speaker is evaluated and analyzed based on the voice emotion recognition model, and the test set acts on the training set to optimize the voice emotion recognition model. The emotion change of the language of the speaker is accurately mastered, and the problem that the emotion change is neglected when only the audio is recognized is avoided.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

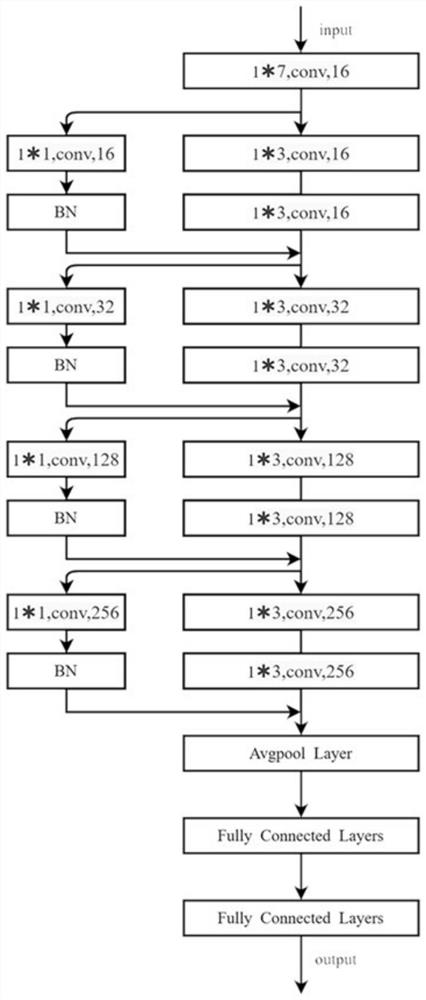

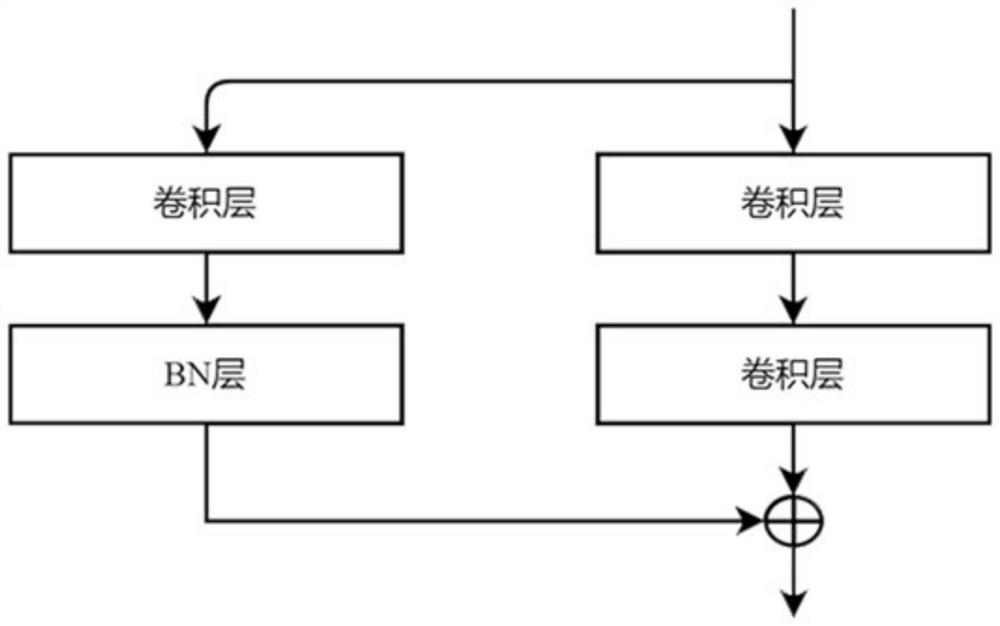

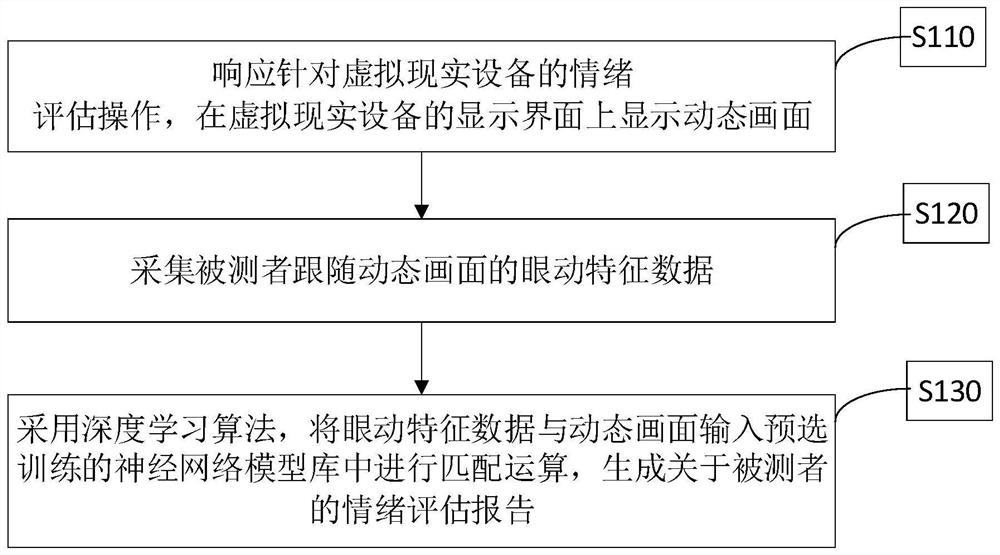

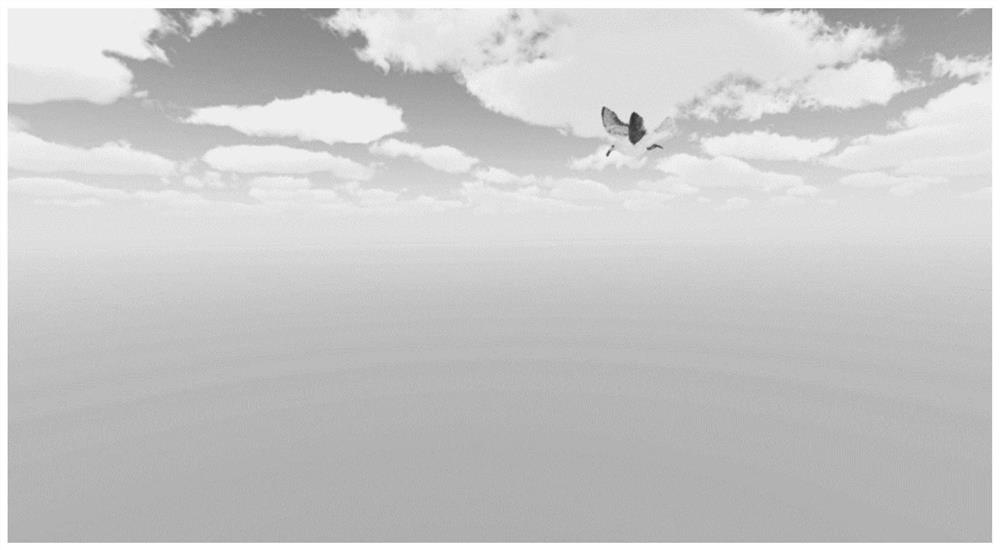

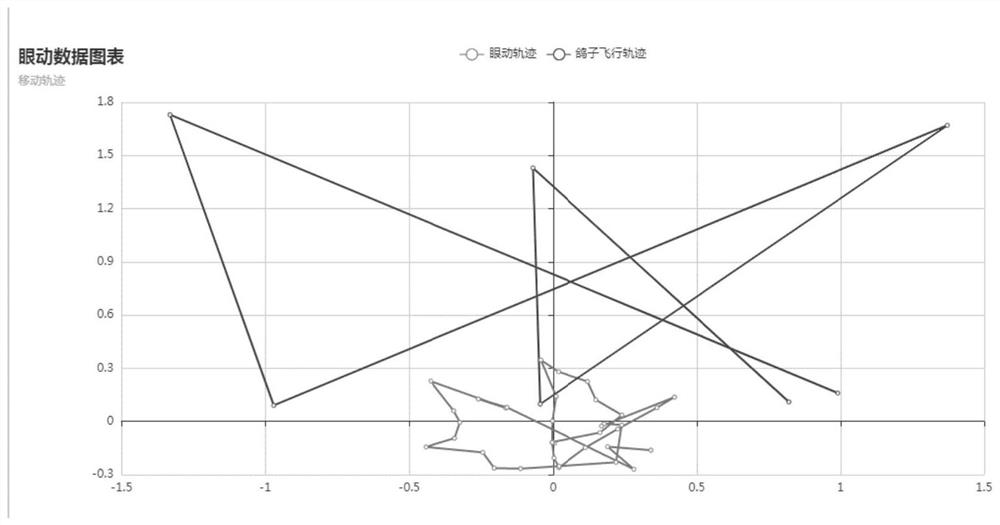

Emotion evaluation method and device based on virtual reality and eye movement information

PendingCN113827238AImprove accuracyInput/output for user-computer interactionCharacter and pattern recognitionEmotion assessmentFeature data

The invention is suitable for the technical field of medical instruments, and provides an emotion evaluation method and device based on virtual reality and eye movement information and virtual reality equipment. The method comprises the steps of responding to an emotion evaluation operation for the virtual reality equipment, and displaying a dynamic picture on a display interface of the virtual reality equipment; collecting eye movement feature data of a tested person following the dynamic picture; and inputting the eye movement feature data and the dynamic picture into a pre-selected trained neural network model library for matching operation by adopting a deep learning algorithm, and generating a corresponding emotion evaluation report. By means of the virtual reality technology and the eye movement technology, the eye movement feature data of an individual are collected in advance, eye movement tracking trajectory features are analyzed, the difference between a depressor and a non-depressor in the eye movement trajectory is explored, the depression degree of a patient is highly related to the eye movement features of the patient, therefore, emotion is evaluated by tracking the eye movement trajectory, and the accuracy of emotion recognition is effectively improved.

Owner:SUZHOU ZHONGKE ADVANCED TECH RES INST CO LTD

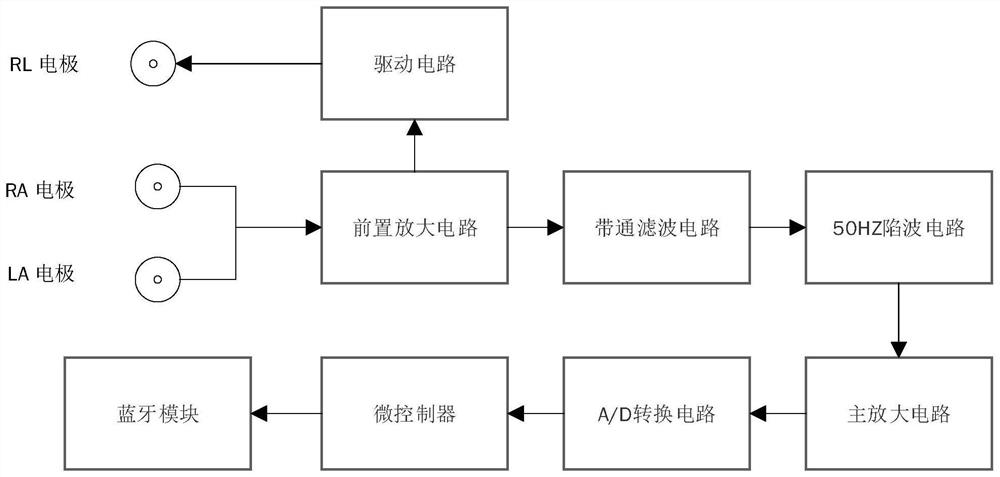

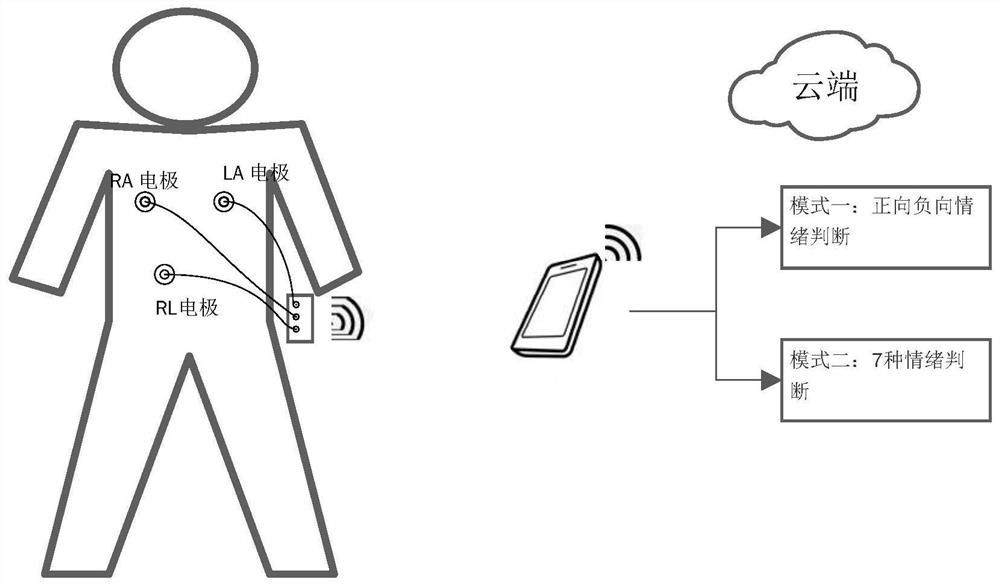

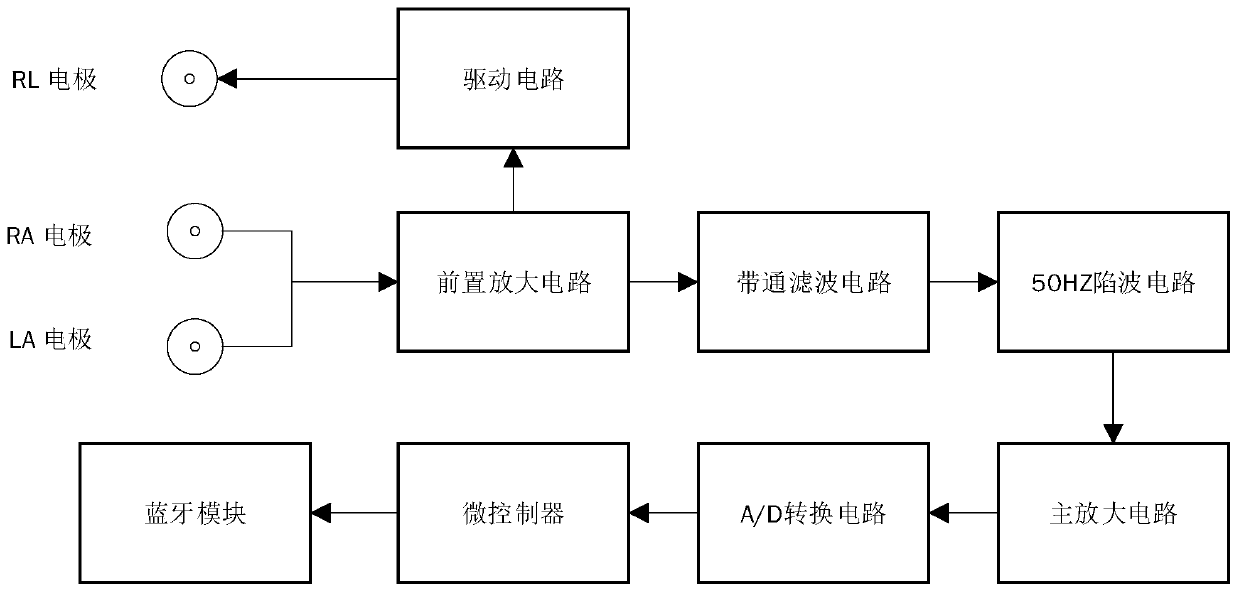

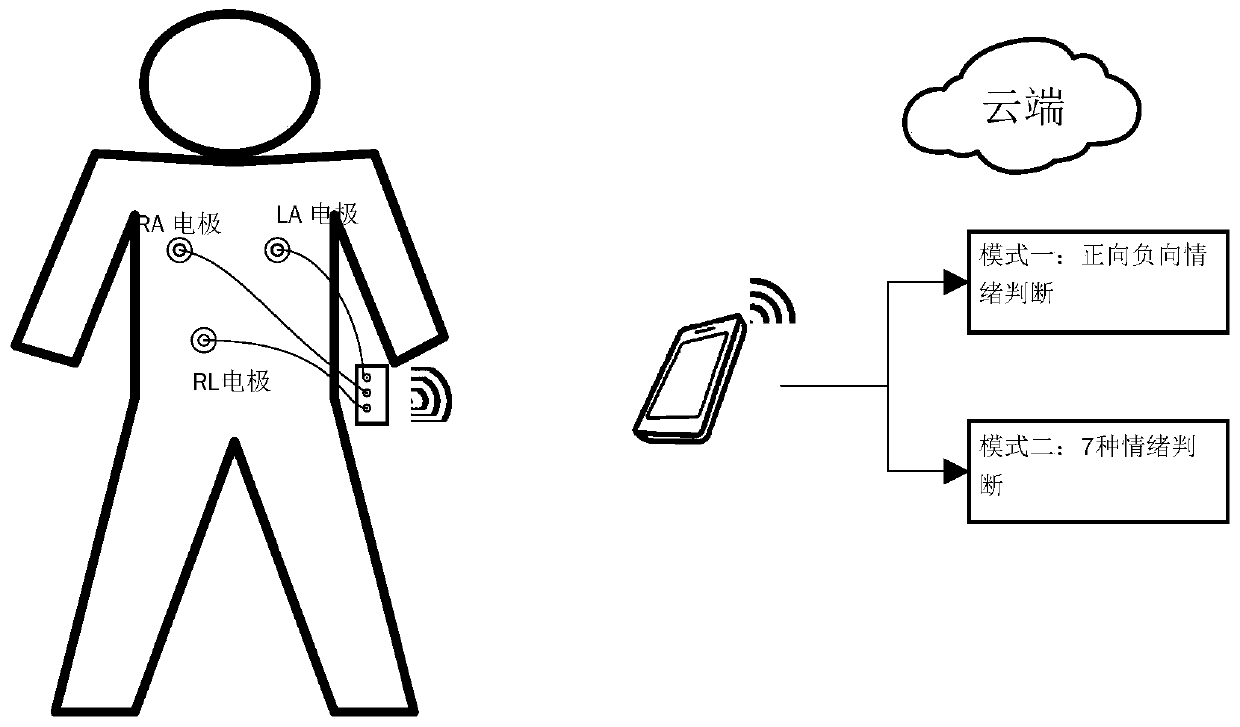

Emotion assessment method and device based on wearable ECG monitoring

ActiveCN110353704BImplement emotional assessmentSimple, convenient and accurate emotion recognitionSensorsPsychotechnic devicesEcg signalEmotion assessment

Owner:SOUTHEAST UNIV

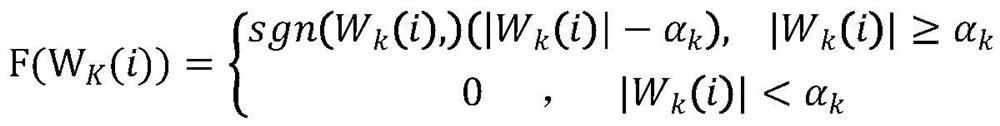

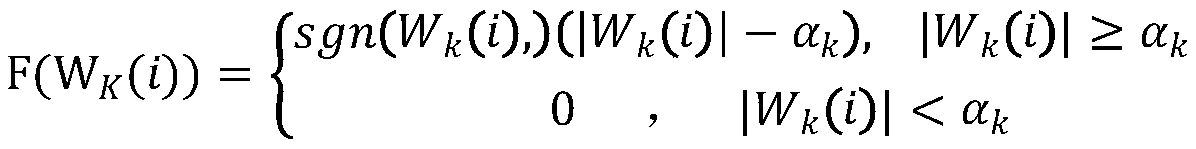

Emotion assessment method and device based on wearable electrocardio monitoring

ActiveCN110353704AImplement emotional assessmentSimple, convenient and accurate emotion recognitionSensorsPsychotechnic devicesEcg signalWavelet denoising

The invention provides an automatic emotion assessment method and device based on wearable electrocardio monitoring. The method comprises the steps that an electrocardio signal is collected by wearable equipment and transmitted to a mobile phone terminal, and the mobile phone terminal transmits the received electrocardio signal to a cloud server; the received electrocardio signal is subjected to wavelet denoising at the cloud server, the heart rate variability HRV characteristic of the electrocardio signal is extracted, data normalization is conducted, a model is established for assessing theemotion type, and a result of the emotion type is transmitted back to the mobile phone terminal by the cloud server for real-time display. According to the method and device, simple, convenient and accurate emotion identification can be conducted based on the electrocardio signal, accordingly emotion assessment is achieved, and finally two kinds of emotion types are output.

Owner:SOUTHEAST UNIV

Method and equipment for judging emotions by combining HRV signals and facial expressions

PendingCN111772648AAccurate evaluationQuick checkSensorsPsychotechnic devicesPattern recognitionEmotion assessment

The invention relates to a method and equipment for judging emotions by combining HRV signals and facial expressions. The method comprises the steps as follows: before use: classifying emotions, and establishing an HRV signal database and emotion intervals; establishing a facial expression database and emotion intervals; and in use: acquiring real-time HRV signal data and comparing the HRV signaldata to judge emotions, collecting real-time facial expression data and comparing the facial expression data to judge emotions, when the two judgments are consistent, outputting emotions, otherwise determining that the output is invalid, re-collecting HRV signal data and facial expression data until the two judgments are the same, and outputting the emotions. Emotions are output according to the combination of two indicators, the evaluation effect is more accurate, and deliberate suppression and false emotions are avoided.

Owner:南京七岩电子科技有限公司

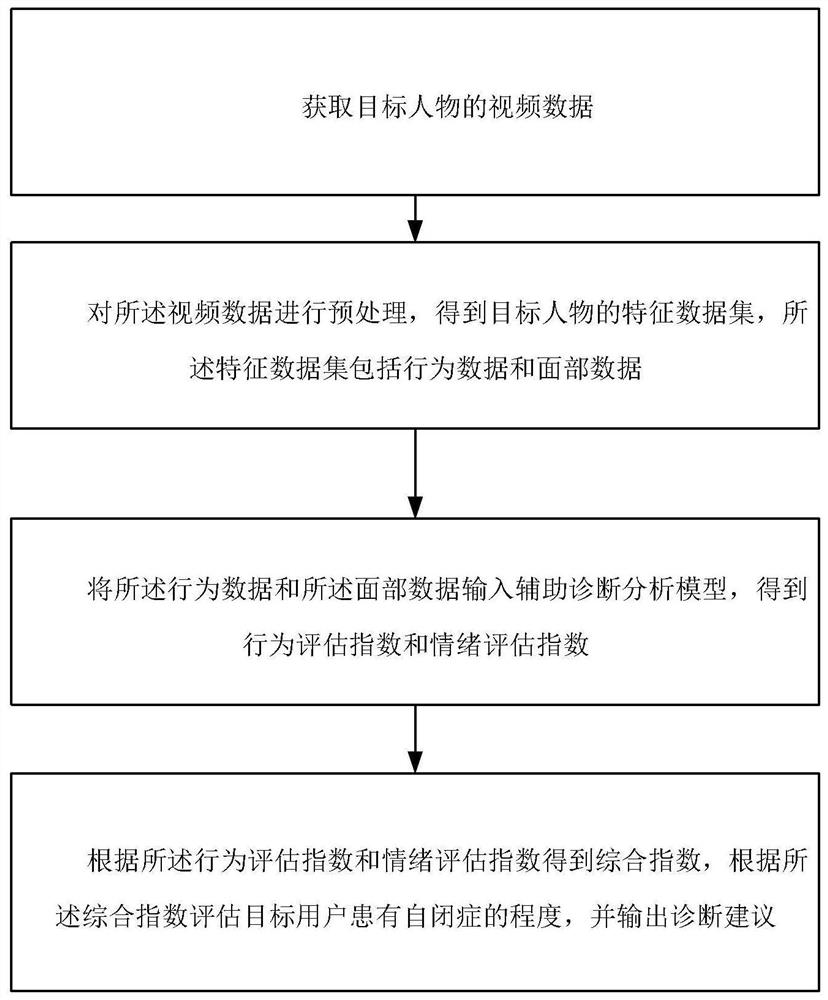

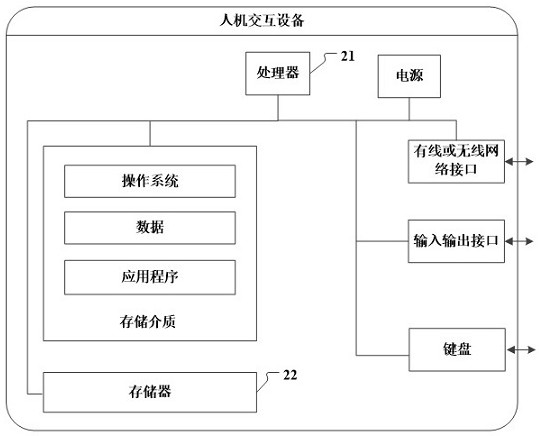

Autism auxiliary diagnosis method, system, equipment and medium

PendingCN114469091AImprove diagnostic efficiencyImprove diagnostic accuracySensorsPsychotechnic devicesInfantile autismEmotion assessment

The invention discloses an autism auxiliary diagnosis method, system and device and a medium, and relates to the technical field of autism diagnosis, and the method comprises the steps: obtaining the video data of a target person; preprocessing the video data to obtain a feature data set of the target person, the feature data set including behavior data and face data; inputting the behavior data and the face data into an auxiliary diagnosis analysis model to obtain a behavior evaluation index and an emotion evaluation index; and obtaining a comprehensive index according to the behavior evaluation index and the emotion evaluation index, evaluating the degree of infantile autism of the target user according to the comprehensive index, and outputting a diagnosis suggestion. According to the method, the behavior evaluation index, the emotion evaluation index and the comprehensive evaluation index are introduced while the behavior action and facial expression abnormity of the autism patient are considered, rapid and accurate diagnosis of the autism is realized, and the method has a wide application prospect.

Owner:杭州行熠科技有限公司

A human-computer interaction device for emotion regulation

ActiveCN113687744BRich and varied interactive contentImprove emotional experienceCharacter and pattern recognitionMental therapiesEvaluation resultEmotion assessment

The invention discloses a human-computer interaction method and equipment for emotion regulation. The method includes the following steps: calculating the operation time of the human-computer interaction scheme according to the user's emotional evaluation results, the evaluation results of the psychological scale before the interaction and the facial emotion data; After the interaction on the same day, the objective feedback data of the user's subjective feelings and changes before and after the interaction are obtained; when the number of days of interaction reaches the operating time node of the current human-computer interaction scheme, the post-interaction psychological scale is called out for effectiveness evaluation, and the evaluation results are compared with For normative data comparison, if the evaluation result is passed, execute the next step or return to the first step; otherwise, update the operation time and operation time node of the current human-computer interaction scheme, and continue human-computer interaction until the evaluation result is passed; according to the user Subjective and objective feedback data, and update the operation time of the remaining human-computer interaction schemes until the end of this round of human-computer interaction.

Owner:BEIJING WISPIRIT TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com