Emotion evaluation method and device based on virtual reality and eye movement information

A technology of virtual reality and eye movement information, applied in the field of medical devices, can solve the problem of low accuracy of emotion recognition and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

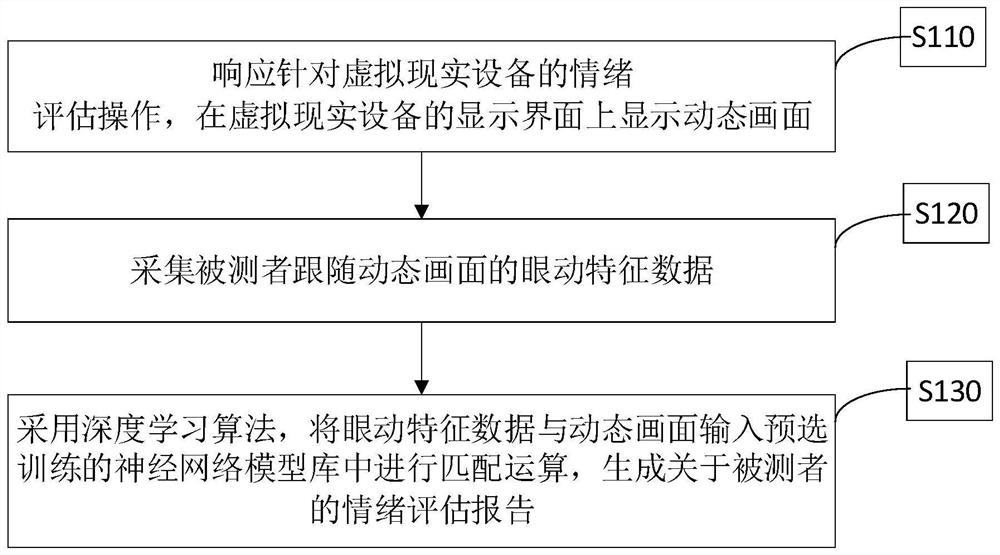

[0038] figure 1 It is an implementation flow chart of the emotion assessment method based on virtual reality and eye movement information shown in the first embodiment. The emotion assessment method based on virtual reality and eye movement information shown in Embodiment 1 is applicable to a specific virtual reality device, and the virtual reality device is equipped with a processor to collect the eye movement characteristic data of the subject to accurately realize the emotion evaluation of. For ease of description, only the parts related to the embodiments of the present invention are shown, and the details are as follows:

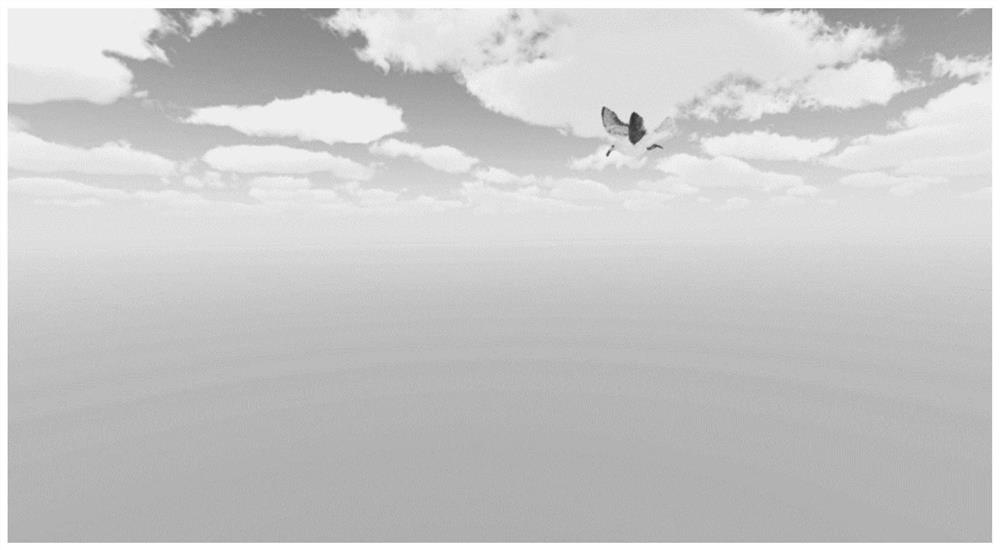

[0039] Step S110, in response to the emotion assessment operation on the virtual reality device, displaying a dynamic picture on the display interface of the virtual reality device.

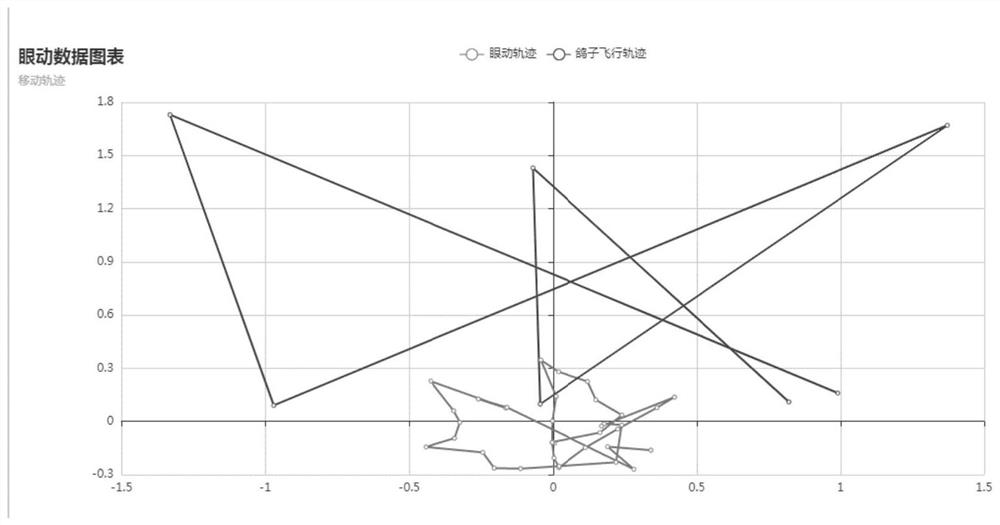

[0040] Step S120, collect eye movement characteristic data of the subject following the dynamic picture.

[0041] Step S130, using a deep learning algorithm to input th...

Embodiment 2

[0059] Such as Figure 4 As shown, Embodiment 2 of the present invention provides an emotion assessment device based on virtual reality and eye movement information, which can perform all or part of the steps of any of the above-mentioned emotion assessment methods based on virtual reality and eye movement information . The unit includes:

[0060] The dynamic picture display device 1 is used to display the dynamic picture on the display interface of the virtual reality equipment in response to the emotional evaluation operation for the virtual reality equipment;

[0061] An eye movement characteristic data acquisition device 2, used to collect eye movement characteristic data 3 of the subject following the dynamic picture;

[0062] The matching operation device 4 is used to use a deep learning algorithm to input the eye movement feature data and the dynamic picture into the pre-selected training neural network model library for matching operation, and generate an emotional e...

Embodiment 3

[0064] Embodiment 3 of the present invention provides a virtual reality device, such as virtual reality glasses, and the virtual reality device can perform all or part of the steps of any one of the aforementioned emotion assessment methods based on virtual reality and eye movement information. The system includes:

[0065] processor; and

[0066] memory communicatively coupled to the processor; wherein,

[0067] The memory stores instructions executable by the at least one processor, and the instructions are executed by the at least one processor, so that the at least one processor can execute the method described in any of the above exemplary embodiments. method, which will not be described in detail here.

[0068] In this embodiment, a storage medium is also provided, which is a computer-readable storage medium, for example, a temporary or non-transitory computer-readable storage medium including instructions. The storage medium, for example, includes a memory of instruc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com