Patents

Literature

731 results about "Emotion identification" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Emotion recognition apparatus

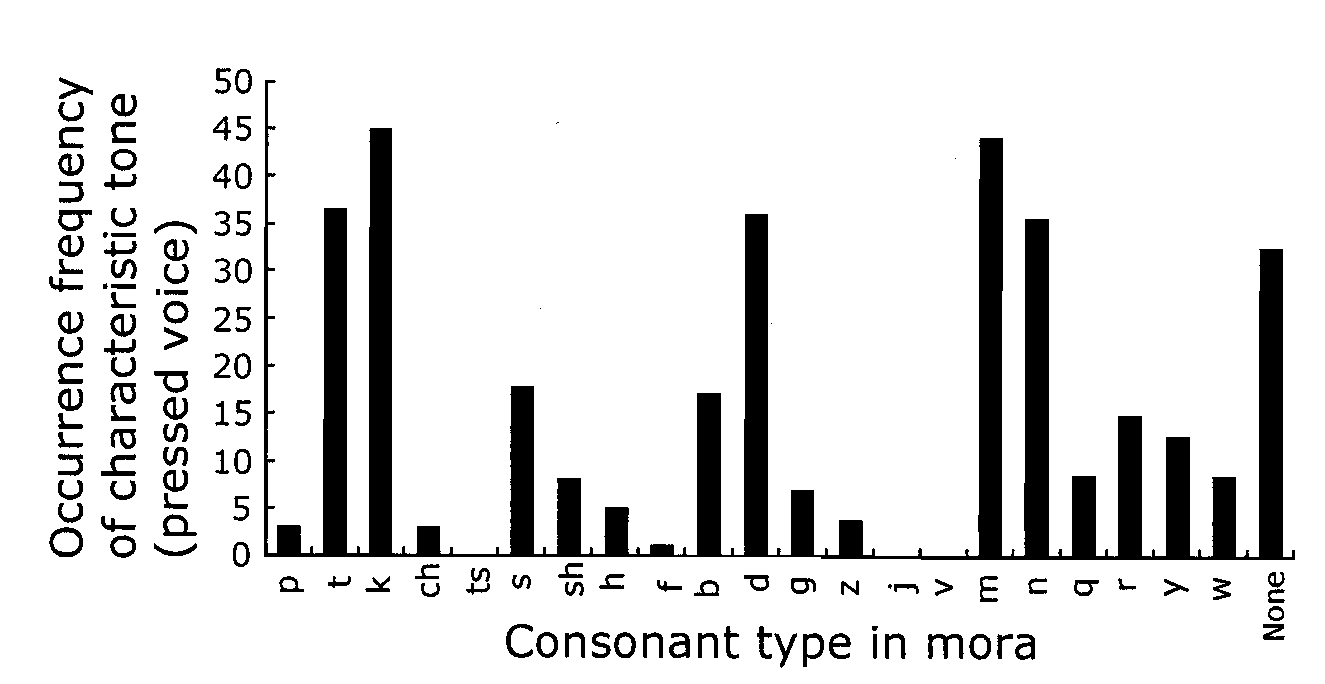

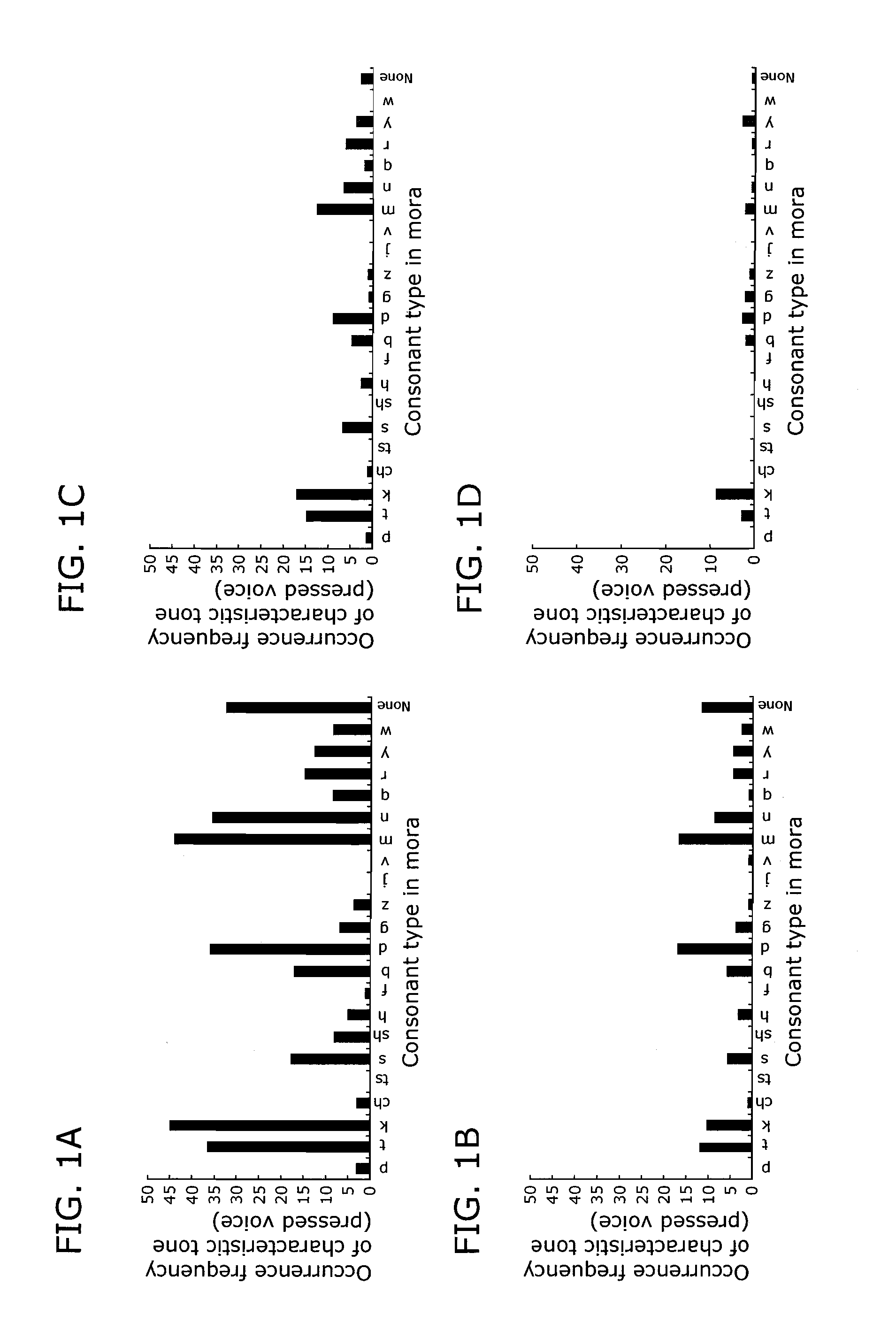

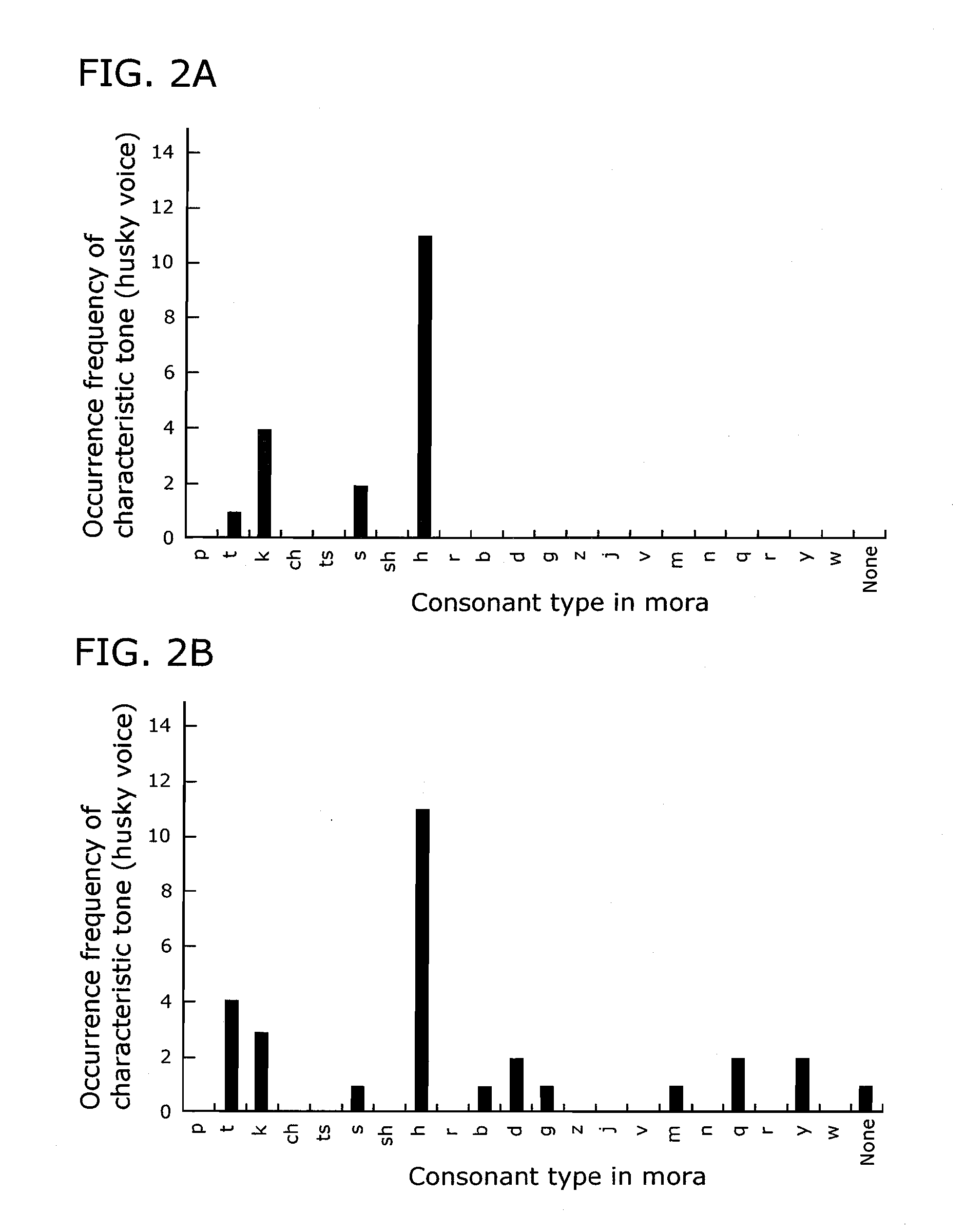

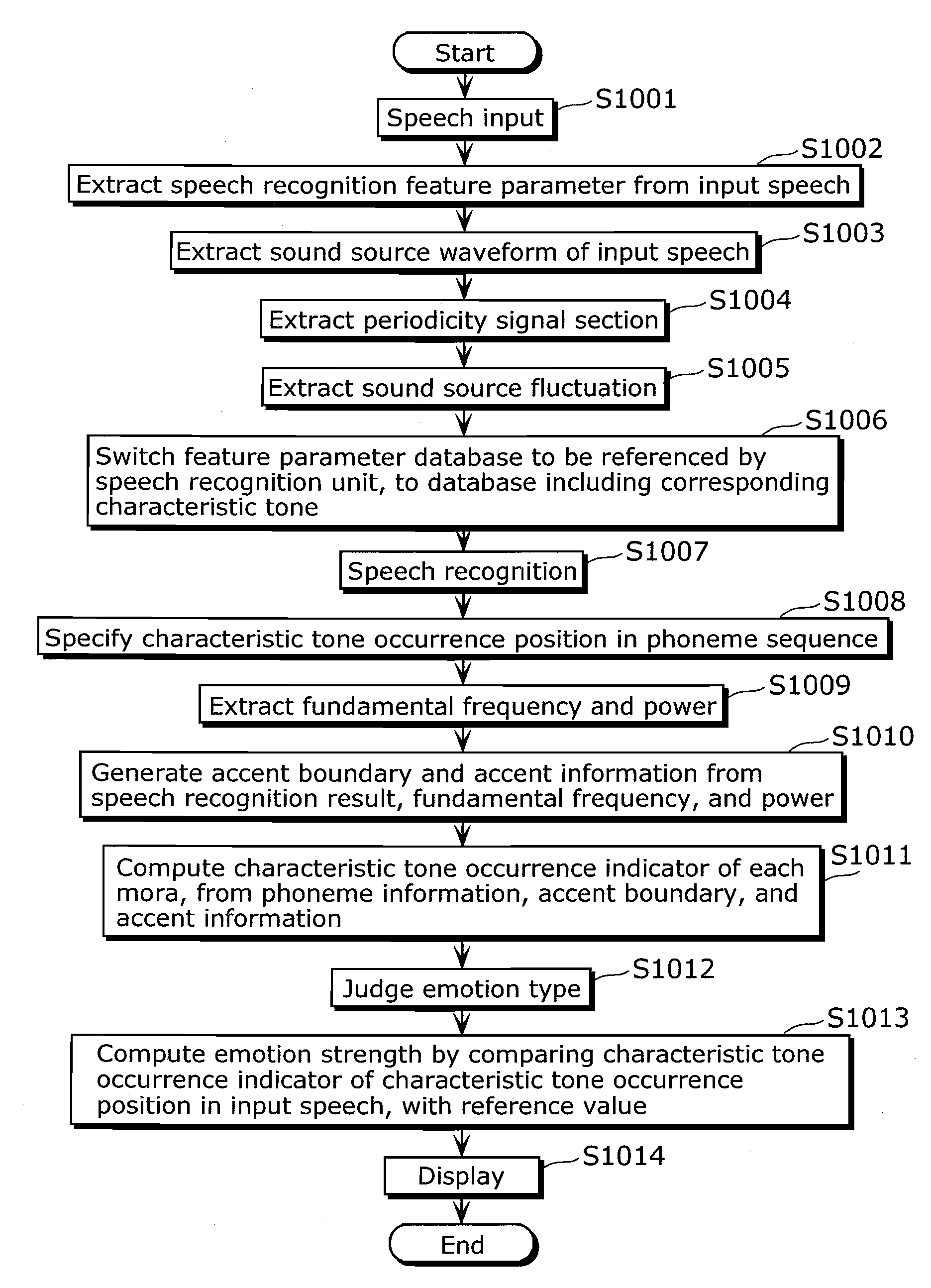

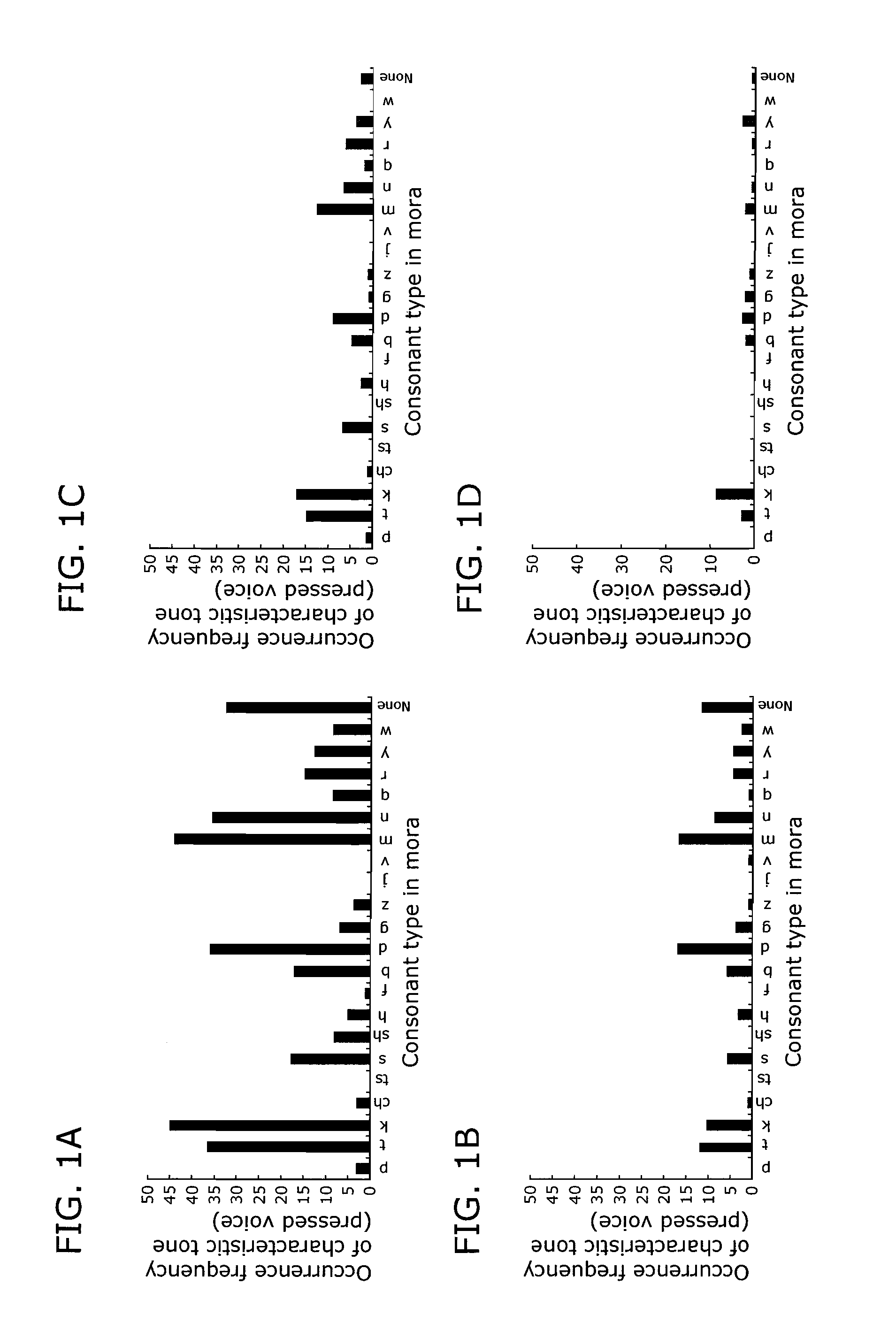

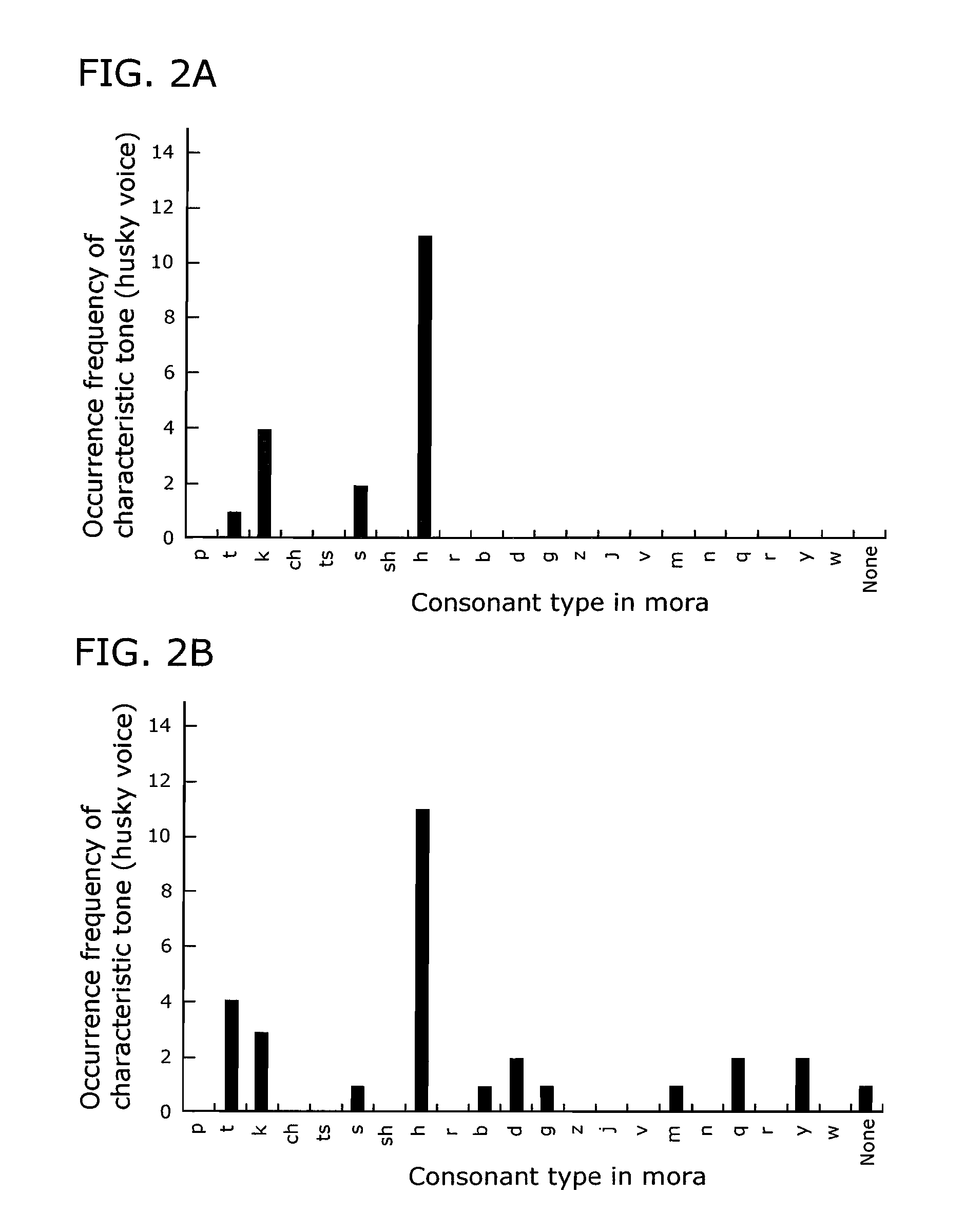

An emotion recognition apparatus is capable of performing accurate and stable speech-based emotion recognition, irrespective of individual, regional, and language differences of prosodic information. The emotion recognition apparatus is an apparatus for recognizing an emotion of a speaker from an input speech, and includes: a speech recognition unit (106) which recognizes types of phonemes included in the input speech; a characteristic tone detection unit (104) which detects a characteristic tone that relates to a specific emotion, in the input speech; a characteristic tone occurrence indicator computation unit (111) which computes a characteristic tone occurrence indicator for each of the phonemes, based on the types of the phonemes recognized by the speech recognition unit (106), the characteristic tone occurrence indicator relating to an occurrence frequency of the characteristic tone; and an emotion judgment unit (113) which judges an emotion of the speaker in a phoneme at which the characteristic tone occurs in the input speech, based on the characteristic tone occurrence indicator computed by the characteristic tone occurrence indicator computing unit (111).

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

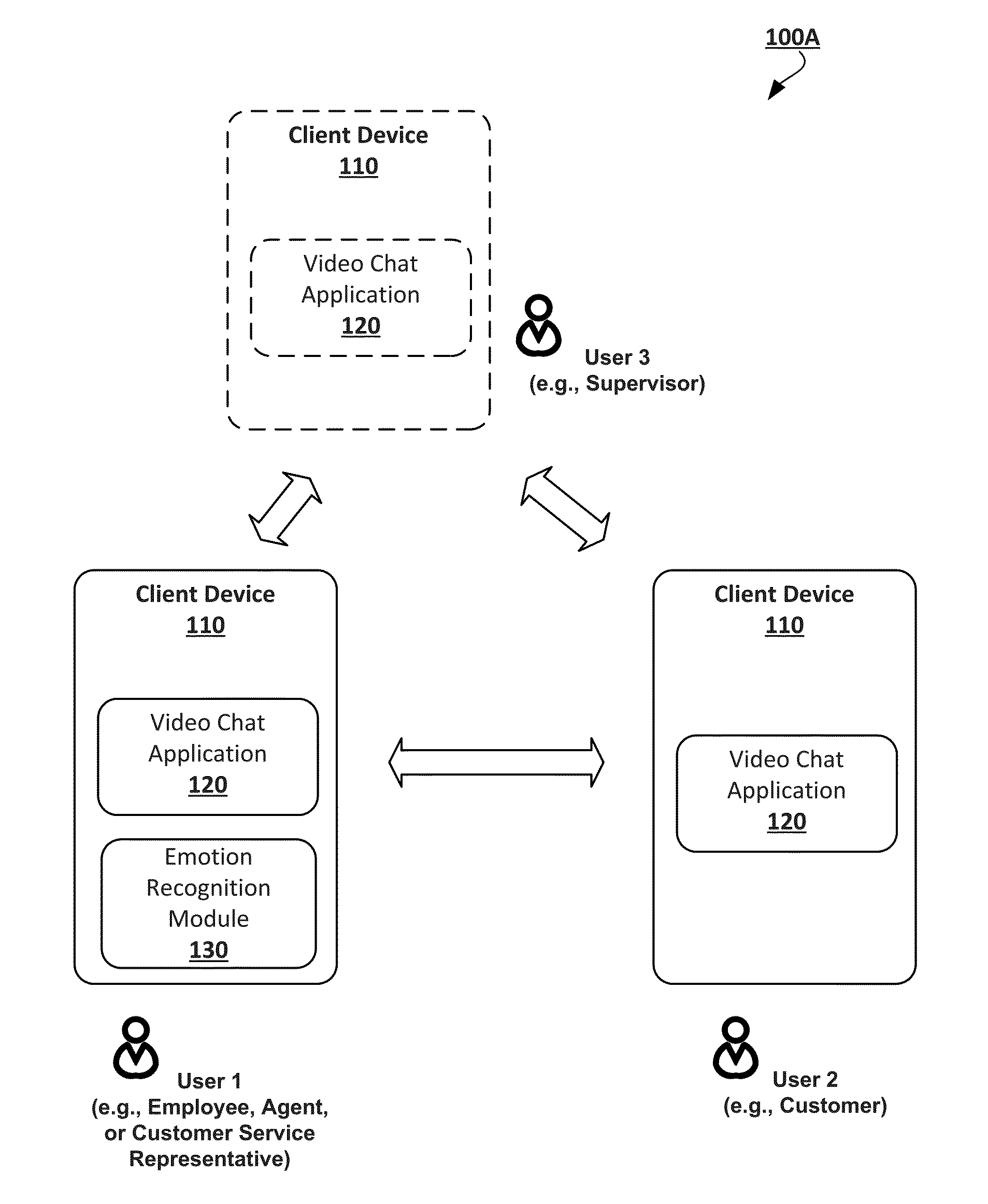

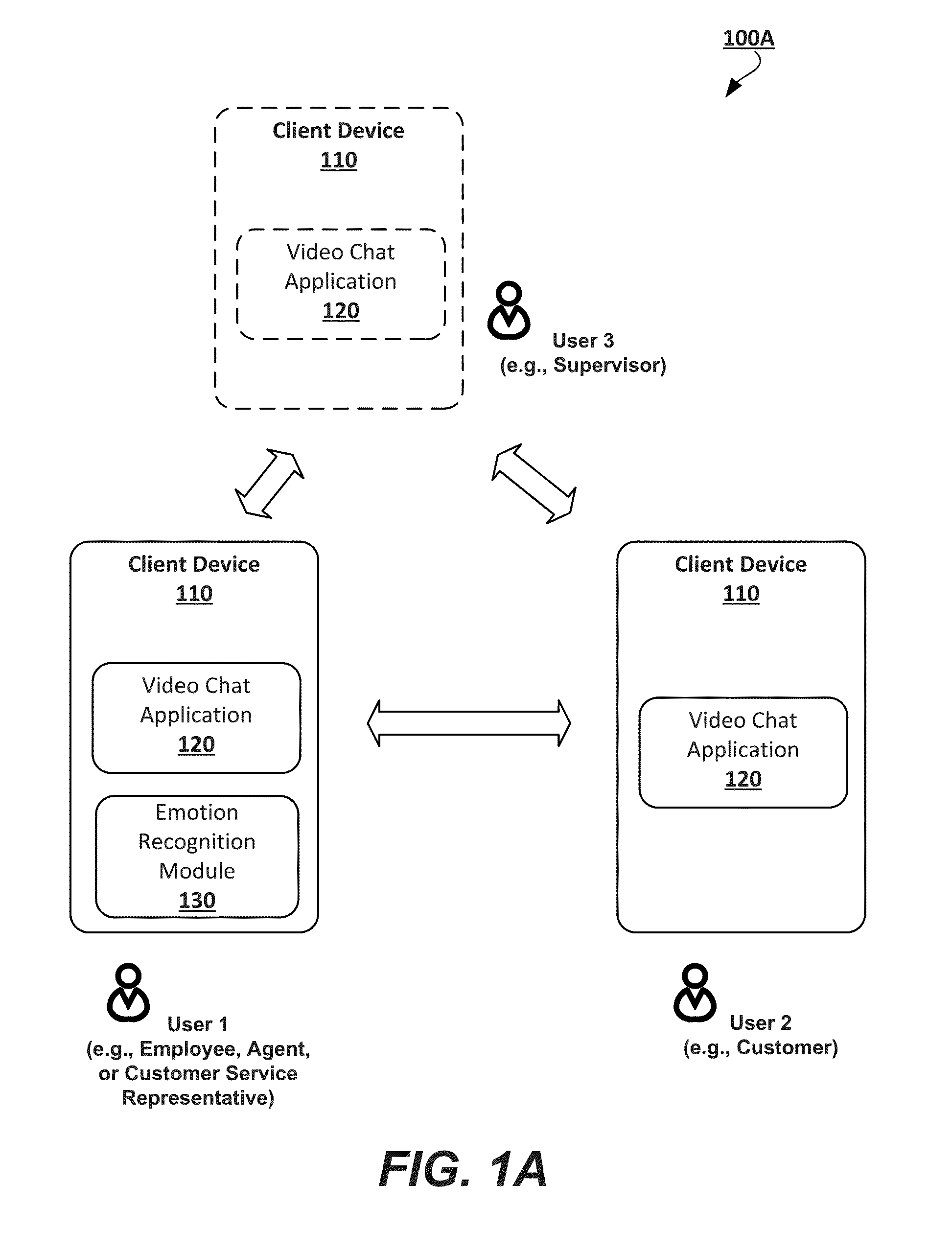

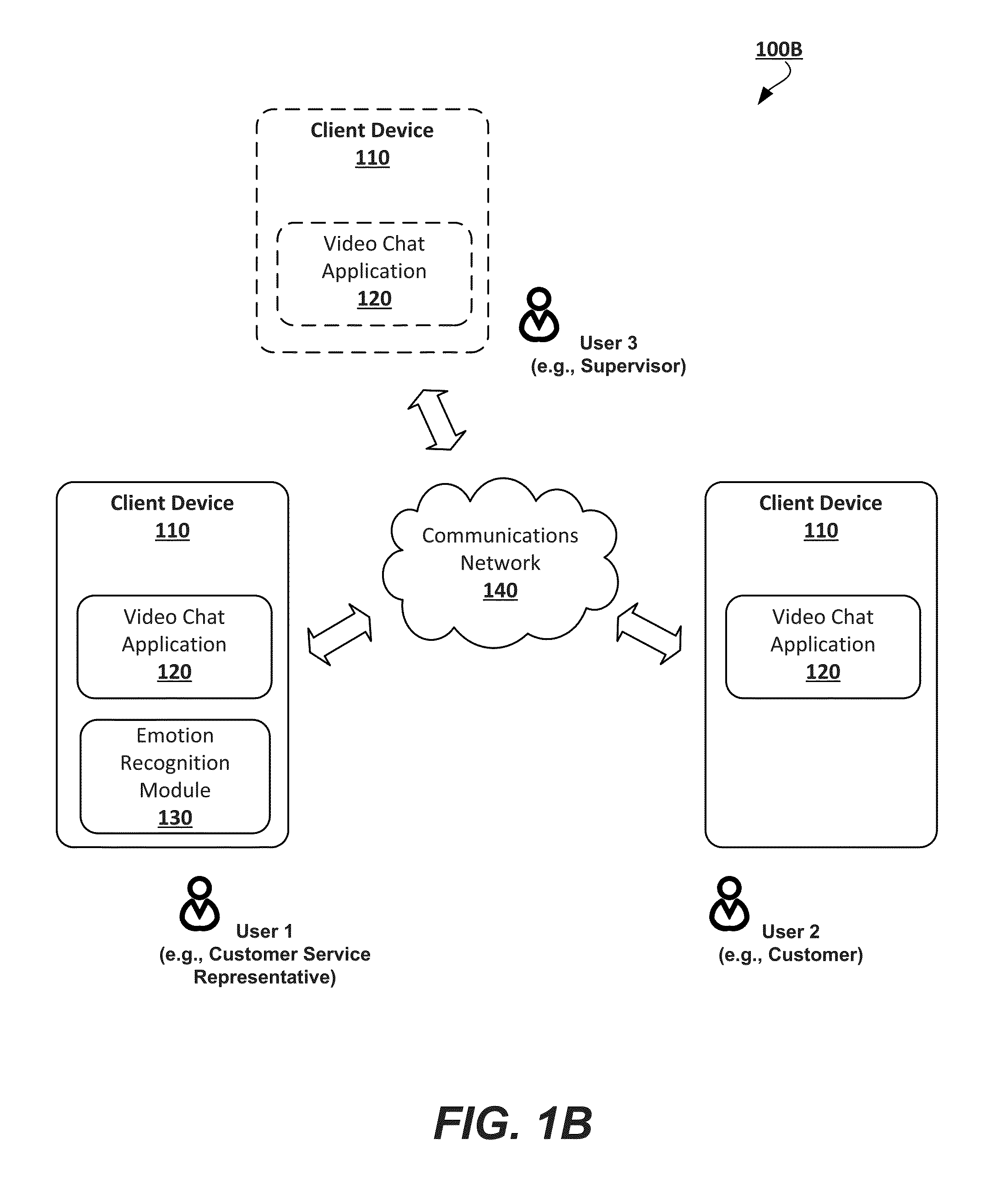

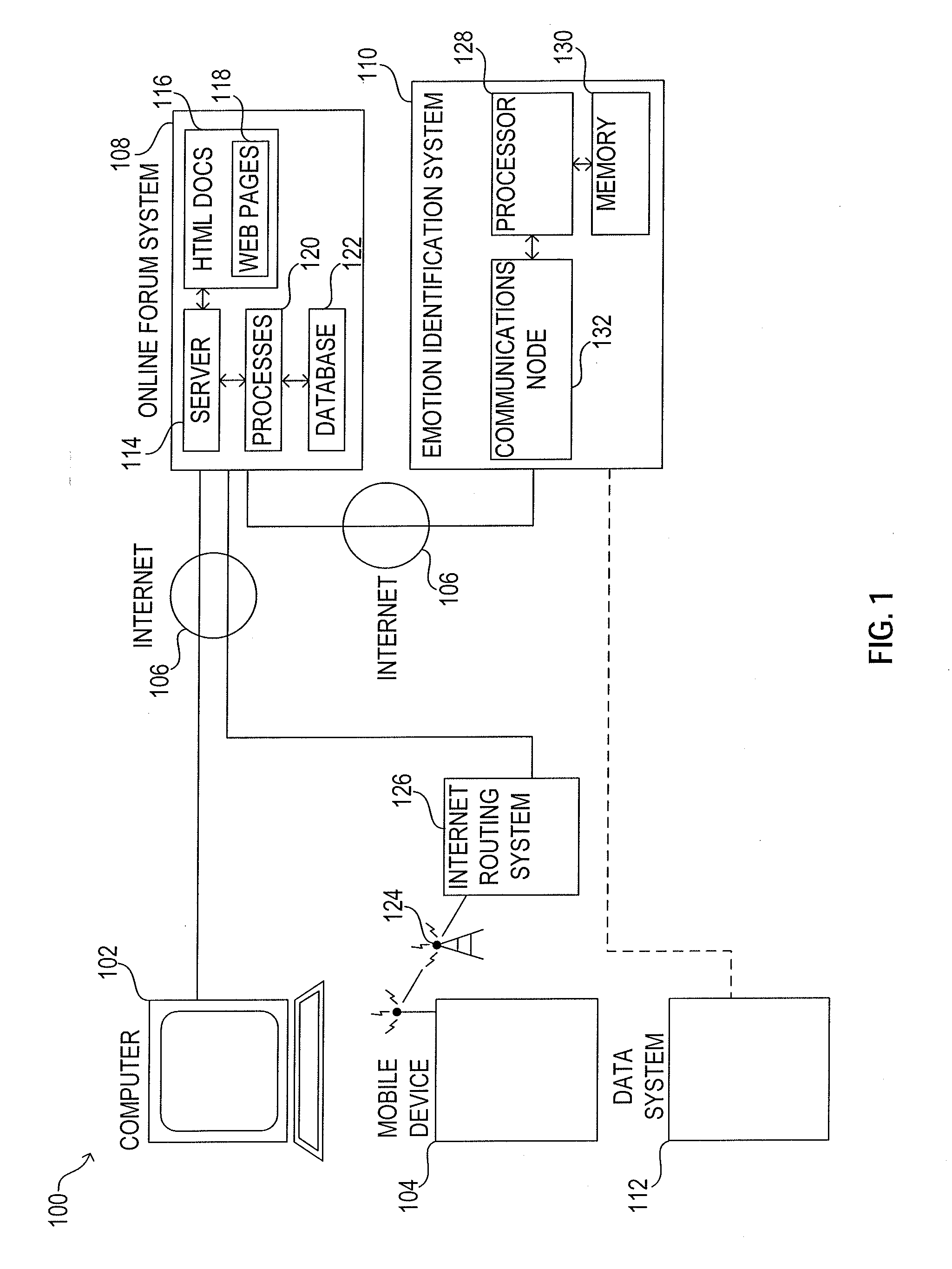

Emotion recognition in video conferencing

Methods and systems for videoconferencing include recognition of emotions related to one videoconference participant such as a customer. This ultimately enables another videoconference participant, such as a service provider or supervisor, to handle angry, annoyed, or distressed customers. One example method includes the steps of receiving a video that includes a sequence of images, detecting at least one object of interest (e.g., a face), locating feature reference points of the at least one object of interest, aligning a virtual face mesh to the at least one object of interest based on the feature reference points, finding over the sequence of images at least one deformation of the virtual face mesh that reflect face mimics, determining that the at least one deformation refers to a facial emotion selected from a plurality of reference facial emotions, and generating a communication bearing data associated with the facial emotion.

Owner:SNAP INC

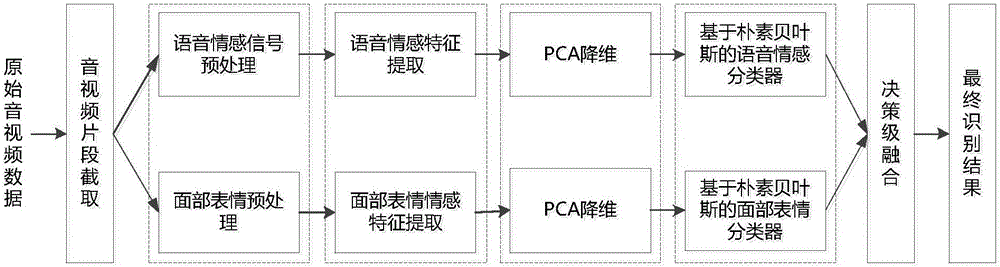

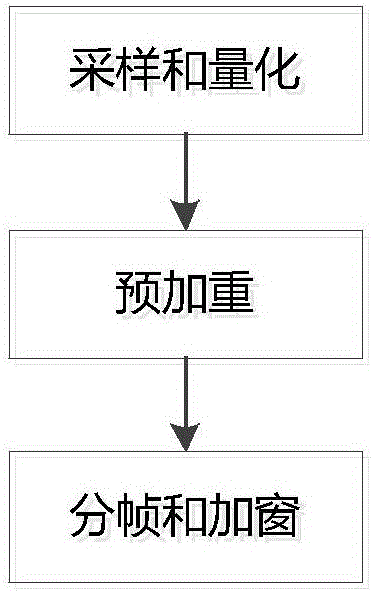

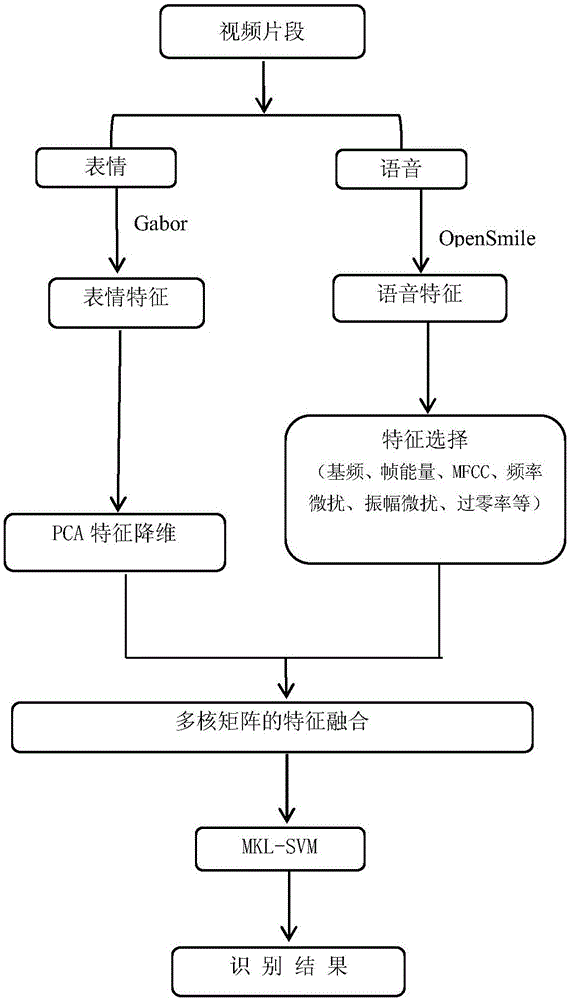

Voice-and-facial-expression-based identification method and system for dual-modal emotion fusion

ActiveCN105976809AImprove accuracyImprove reliabilitySpeech recognitionCorresponding conditionalDimensionality reduction

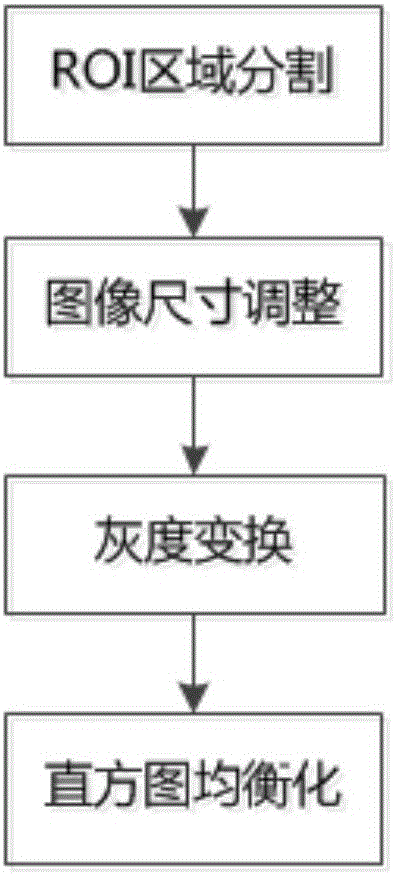

The invention relates to a voice-and-facial-expression-based identification method for dual-modal emotion fusion. The method comprises: S1, audio data and video data of a to-be-identified object are obtained; S2, a face expression image is extracted from the video data and segmentation of an eye region, a nose region, and a mouth region is carried out; S3, a facial expression feature in each regional image is extracted from images of the three regions; S4, PCA analysis and dimensionality reduction is carried out on voice emotion features and the facial expression features; and S5, naive Bayesian emotion voice classification is carried out on samples of two kinds of modes and decision fusion is carried out on a conditional probability to obtain a final emotion identification result. According to the invention, fusion of the voice emotion features and the facial expression features is carried out by using a decision fusion method, so that accurate data can be provided for corresponding conditional probability calculation carried out at the next step; and an emotion state of a detected object can be obtained precisely by using the method, so that accuracy and reliability of emotion identification can be improved.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

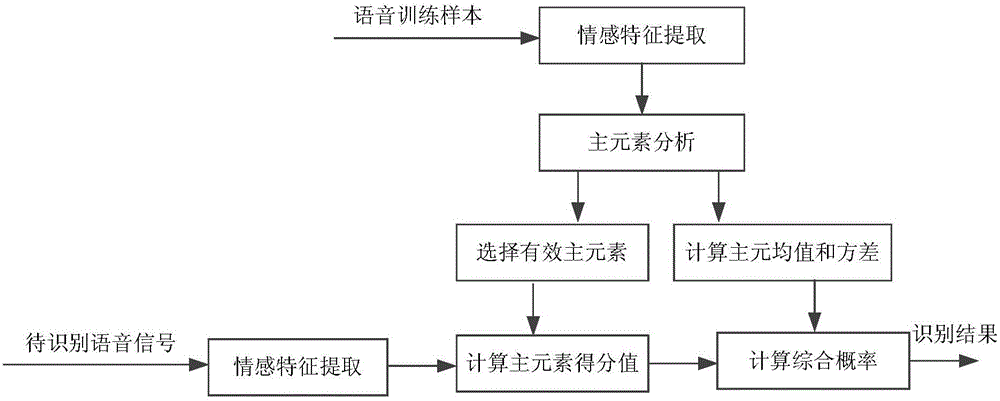

Extraction and modeling method for Chinese speech sensibility information

InactiveCN101261832AAvoid missingSolve quality problemsSpeech recognitionEmotion identificationDatabase Specification

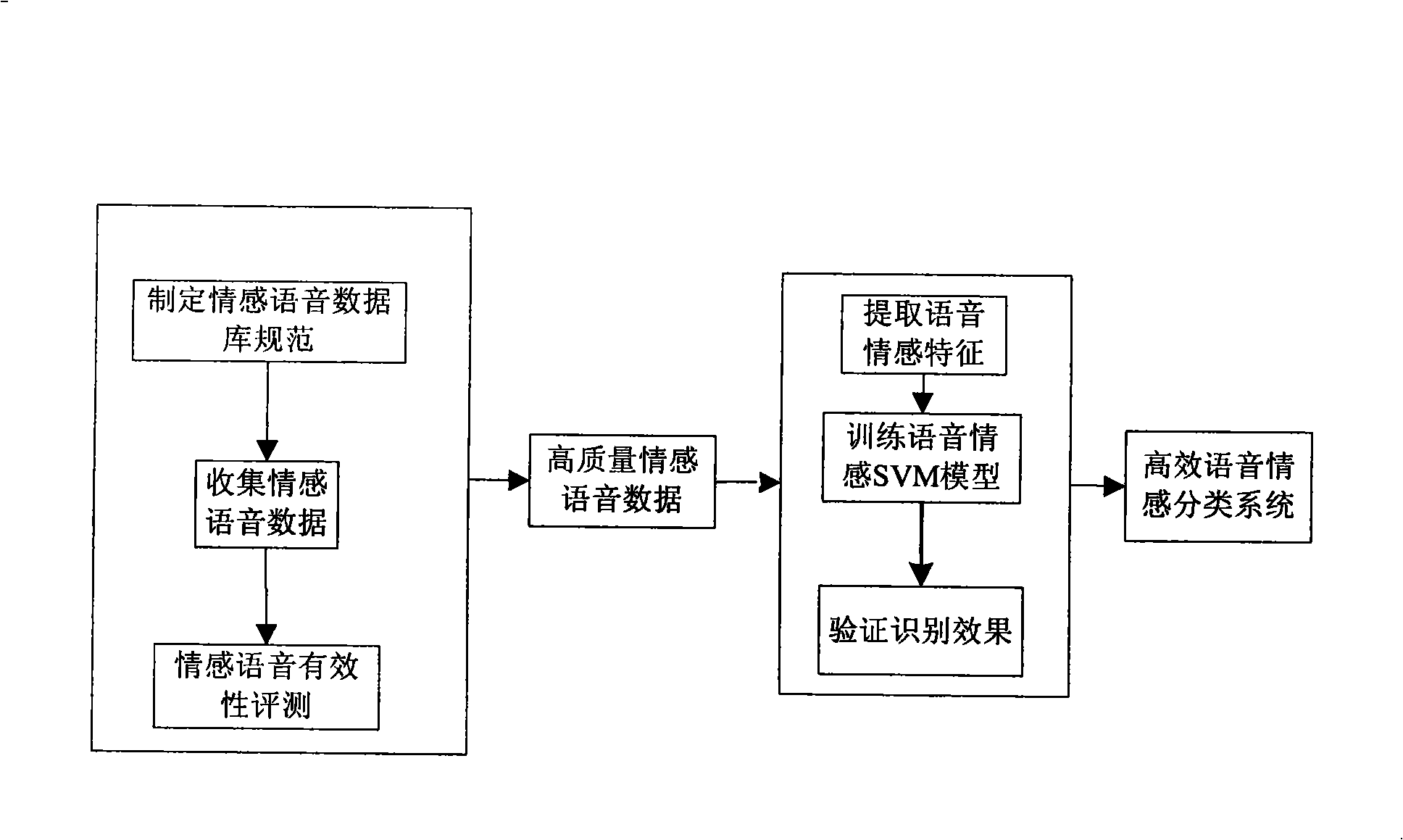

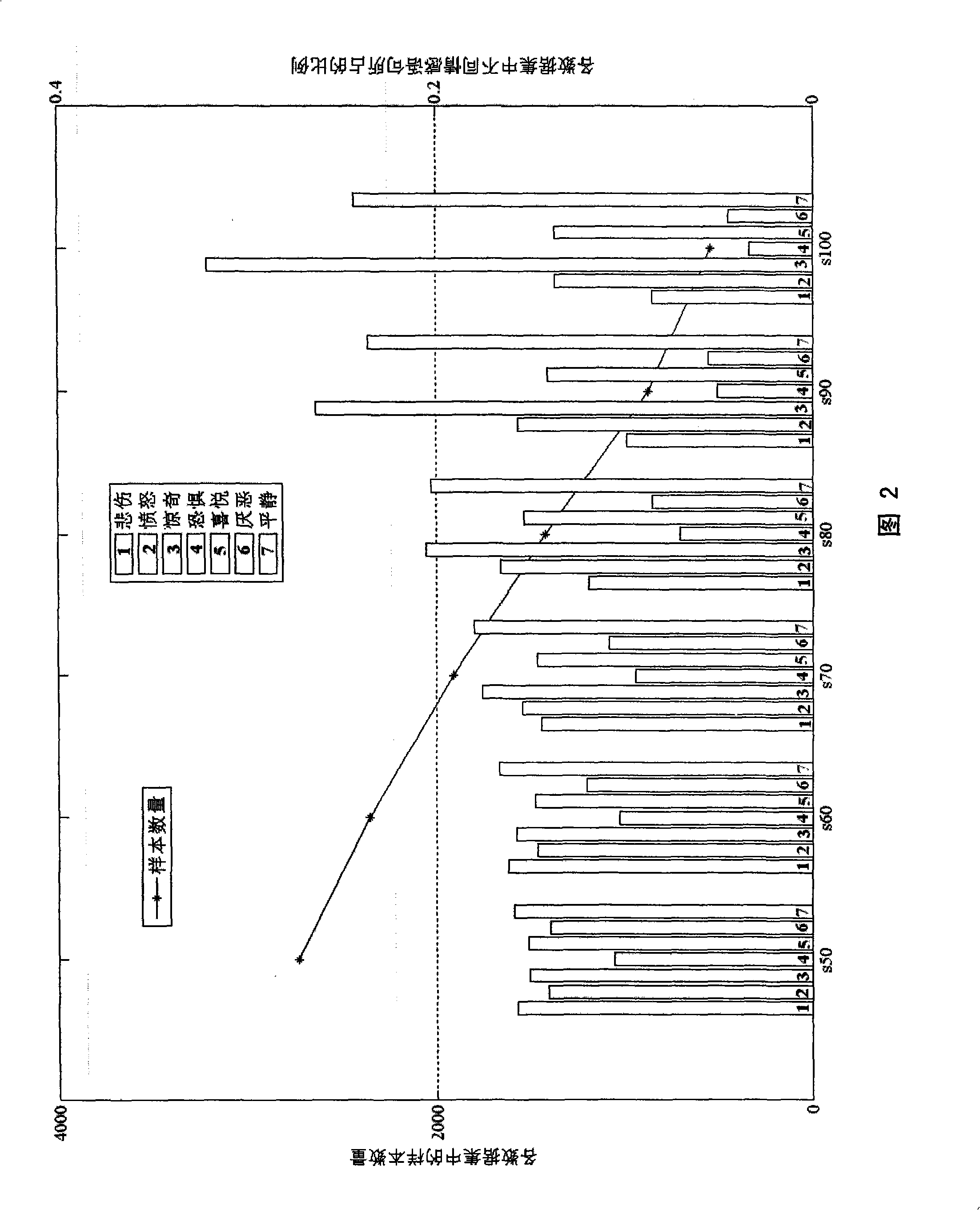

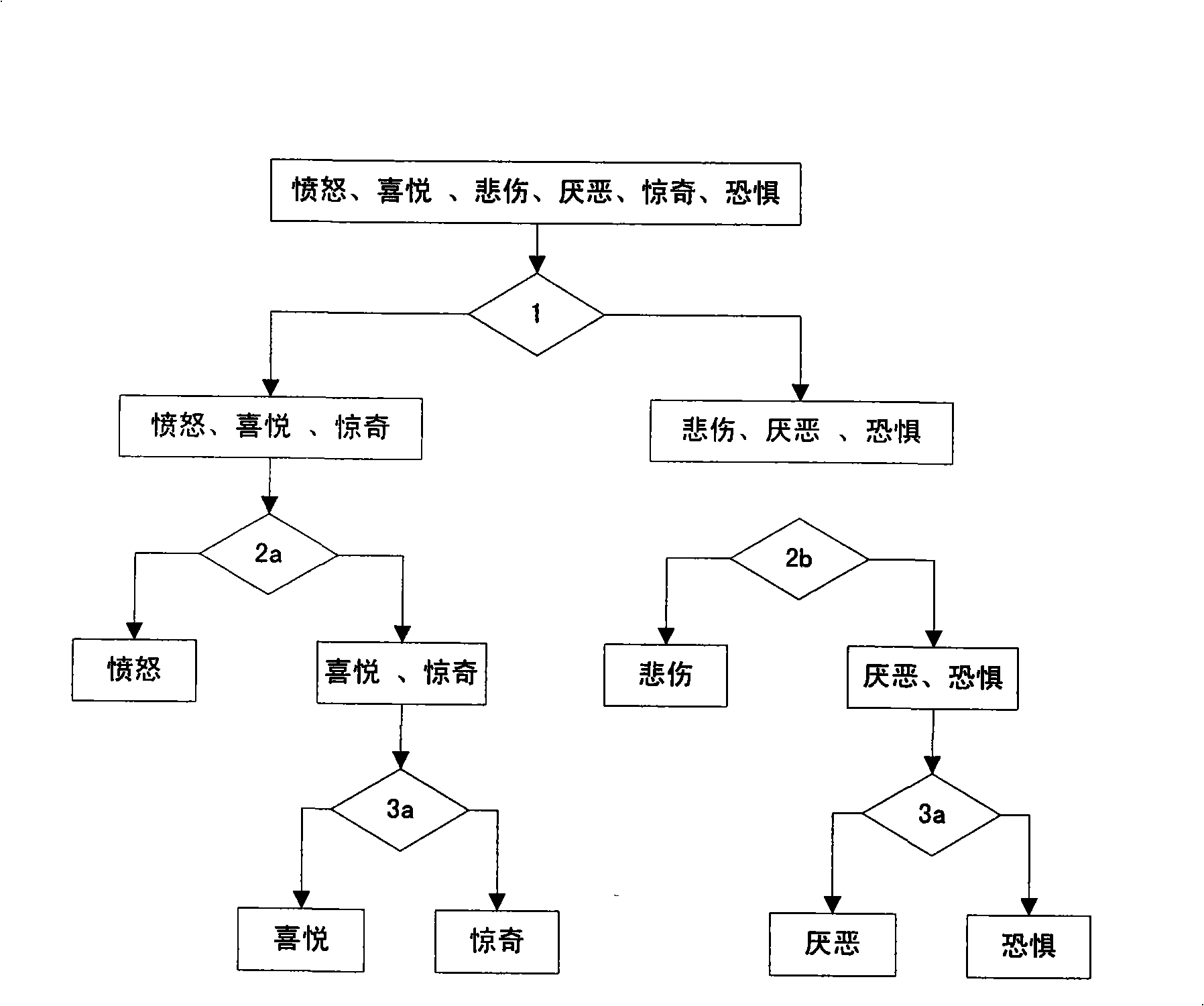

The invention provides a method for extracting and modeling the emotional information of a Chinese sound; the extracting method for the emotional information of the Chinese sound is that: formulate the specification of a emotional speech database, which includes the pronouncer specification, the recording play book design specification and the naming specification of audio files and so on; collect the emotional speech data; evaluate the validity of the emotional speech, namely, at least ten evaluators apart from a speaker carry out a subjective listen evaluation experiment on the emotional speech data. The modeling method of the emotional information of the Chinese sound is that: extract the emotional characteristics of the sound, define and distinguish the characteristic combination of each emotion type; adopt different characteristic combinations to train the SVM model of a multilevel sound emotion recognition system; verify the identification effect of the classifying models, namely, verify the classification effect of the multilevel classification models of sound emotion in a situation unrelated to the speaker by adopting a cross leave-one-out method. The method solves the problems that the domestic emotional speech databases are less in emotion type and the number of the domestic emotional speech database is very limited; at the same time, the method realizes an efficient speech emotion identification system.

Owner:BEIHANG UNIV

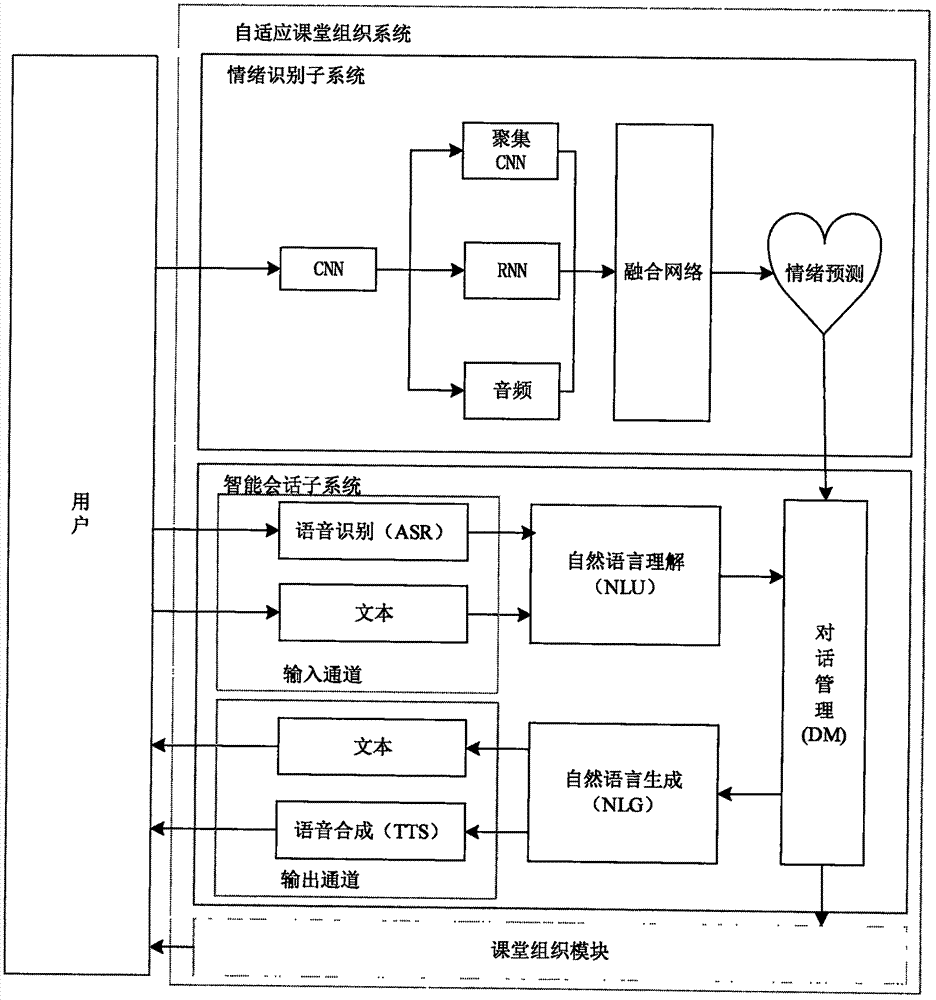

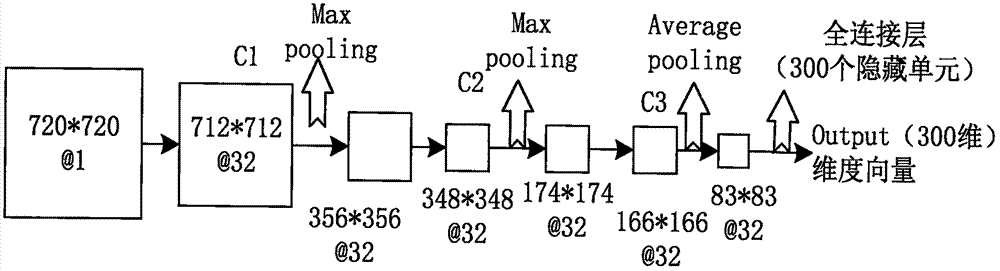

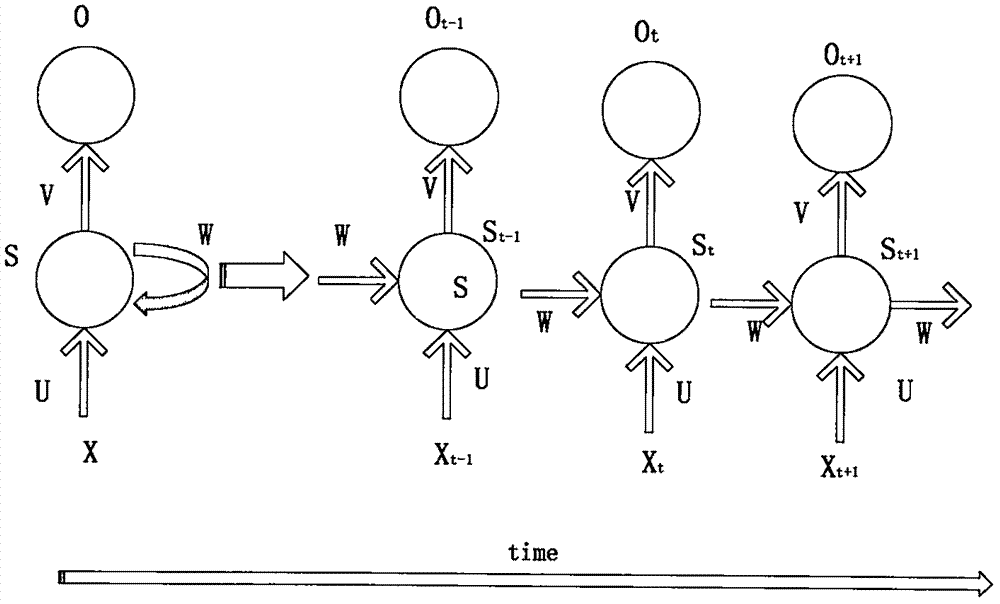

Man-machine interaction method and system for online education based on artificial intelligence

PendingCN107958433ASolve the problem of poor learning effectData processing applicationsSpeech recognitionPersonalizationOnline learning

The invention discloses a man-machine interaction method and system for online education based on artificial intelligence, and relates to the digitalized visual and acoustic technology in the field ofelectronic information. The system comprises a subsystem which can recognize the emotion of an audience and an intelligent session subsystem. Particularly, the two subsystems are combined with an online education system, thereby achieving the better presentation of the personalized teaching contents for the audience. The system starts from the improvement of the man-machine interaction vividnessof the online education. The emotion recognition subsystem judges the learning state of a user through the expression of the user when the user watches a video, and then the intelligent session subsystem carries out the machine Q&A interaction. The emotion recognition subsystem finally classifies the emotions of the audiences into seven types: angry, aversion, fear, sadness, surprise, neutrality,and happiness. The intelligent session subsystem will adjust the corresponding course content according to different emotions, and carry out the machine Q&A interaction, thereby achieving a purpose ofenabling the teacher-student interaction and feedback in the conventional class to be presented in an online mode, and enabling the online class to be more personalized.

Owner:JILIN UNIV

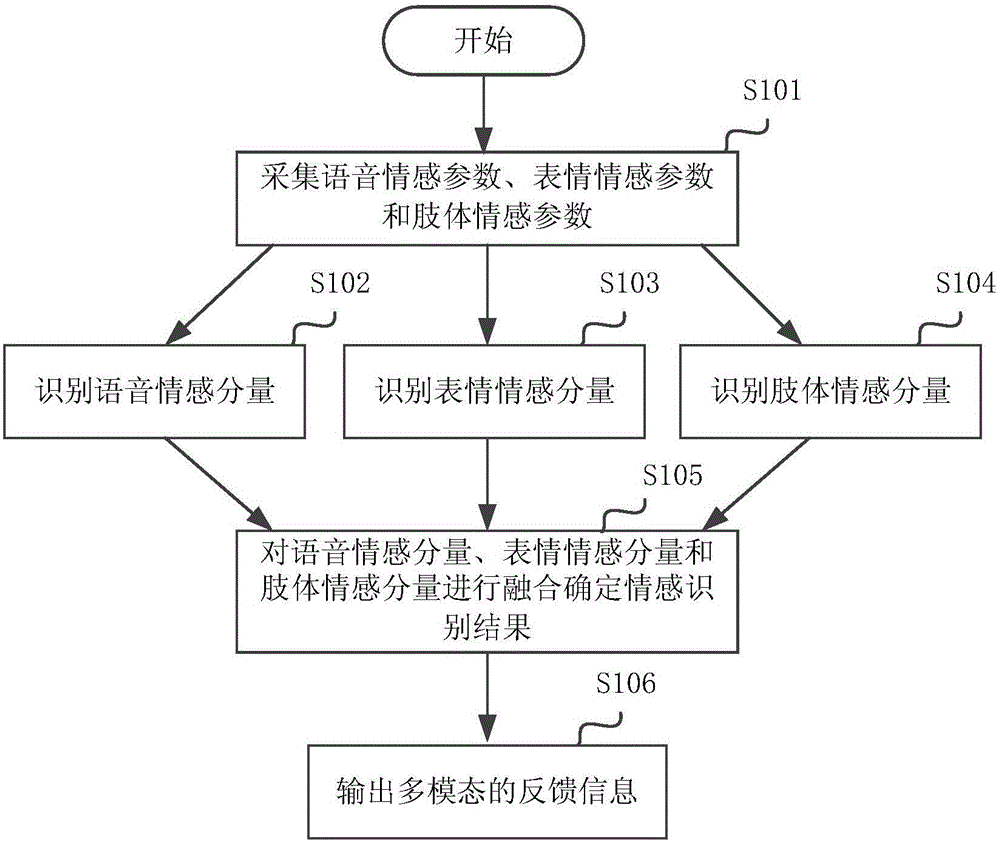

Man-machine interaction method and device based on emotion system, and man-machine interaction system

InactiveCN105739688AStrong interactionInteractive natureInput/output for user-computer interactionGraph readingPattern recognitionEmotion identification

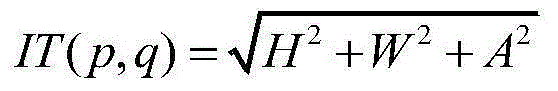

The invention discloses a man-machine interaction method and device based on an emotion system, and a man-machine interaction system. The method comprises following steps of collecting voice emotion parameters, expression emotion parameters and body emotion parameters; calculating to obtain a to-be-determined voice emotion according to the voice emotion parameters; selecting a voice emotion most proximate to the to-be-determined voice emotion from preset voice emotions as a voice emotion component; calculating to obtain a to-be-determined expression emotion according to the expression emotion parameters; selecting an expression emotion most proximate to the to-be-determined expression emotion from preset expression emotions as an expression emotion component; calculating to obtain a to-be-determined body emotion according to the body emotion parameters; selecting a body emotion most proximate to the to-be-determined body emotion from preset body emotions as a body emotion component; fusing the voice emotion component, the expression emotion component and the body emotion component, thus determining an emotion identification result; and outputting multi-mode feedback information specific to the emotion identification result. According to the method, the device and the system, the man-machine interaction process is more smooth and natural.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

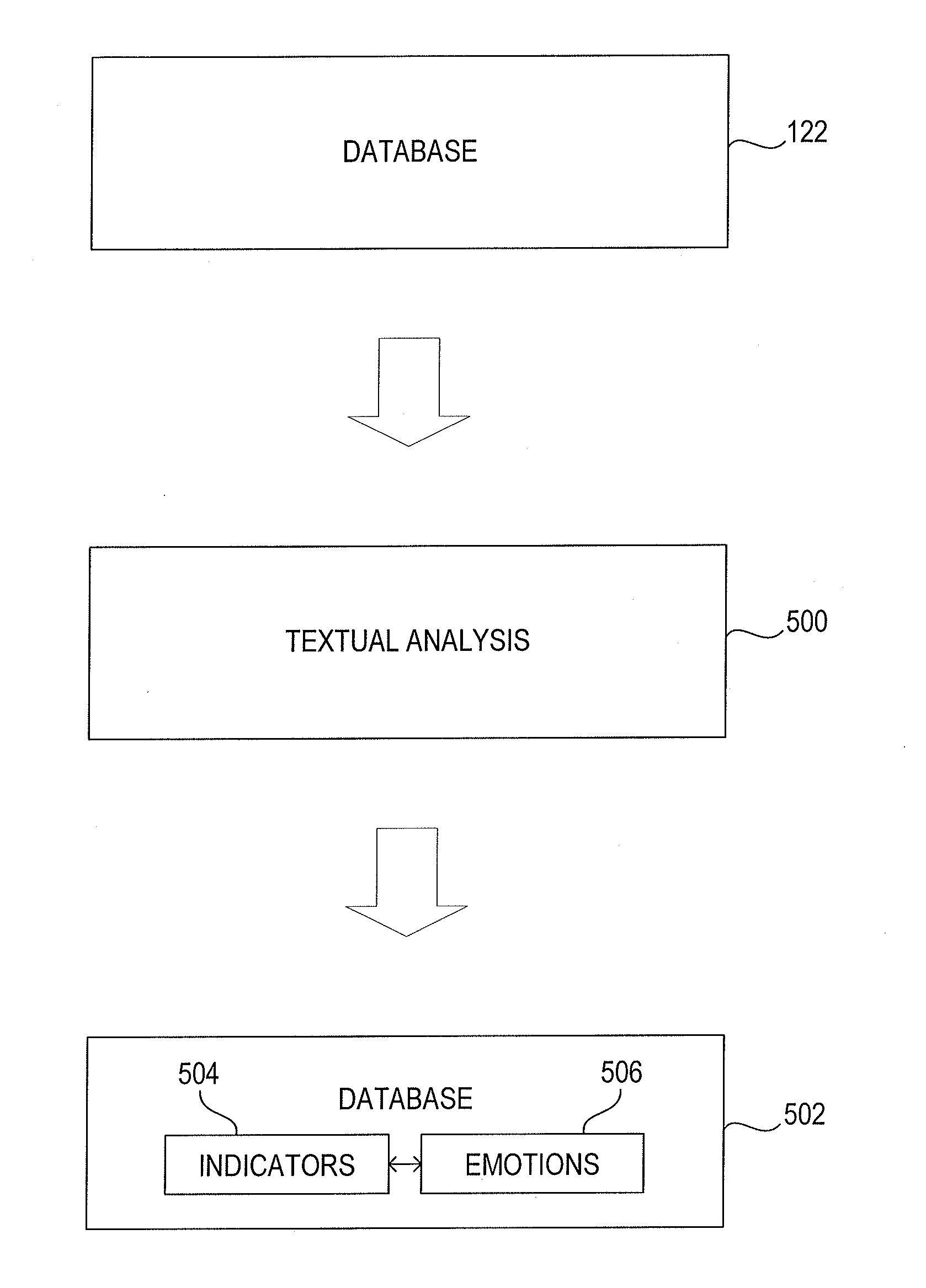

Emotion identification system and method

InactiveUS20140095150A1Natural language translationSemantic analysisGranularityEmotion identification

A system and method for identifying emotion in text that connotes authentic human expression, and training an engine that produces emotional analysis at various levels of granularity and numerical distribution across a set of emotions at each level of granularity. The method may include producing a chart of data transmissions referenced against time, comparing filtered data transmissions to a database, and selecting a database based on a demographic class of an author.

Owner:KANJOYA

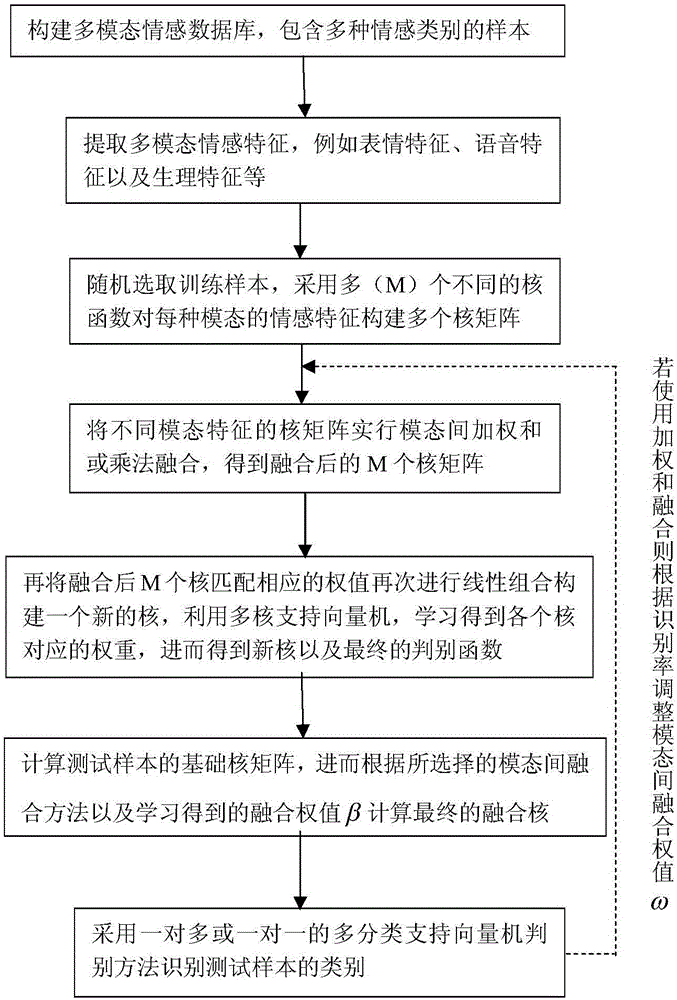

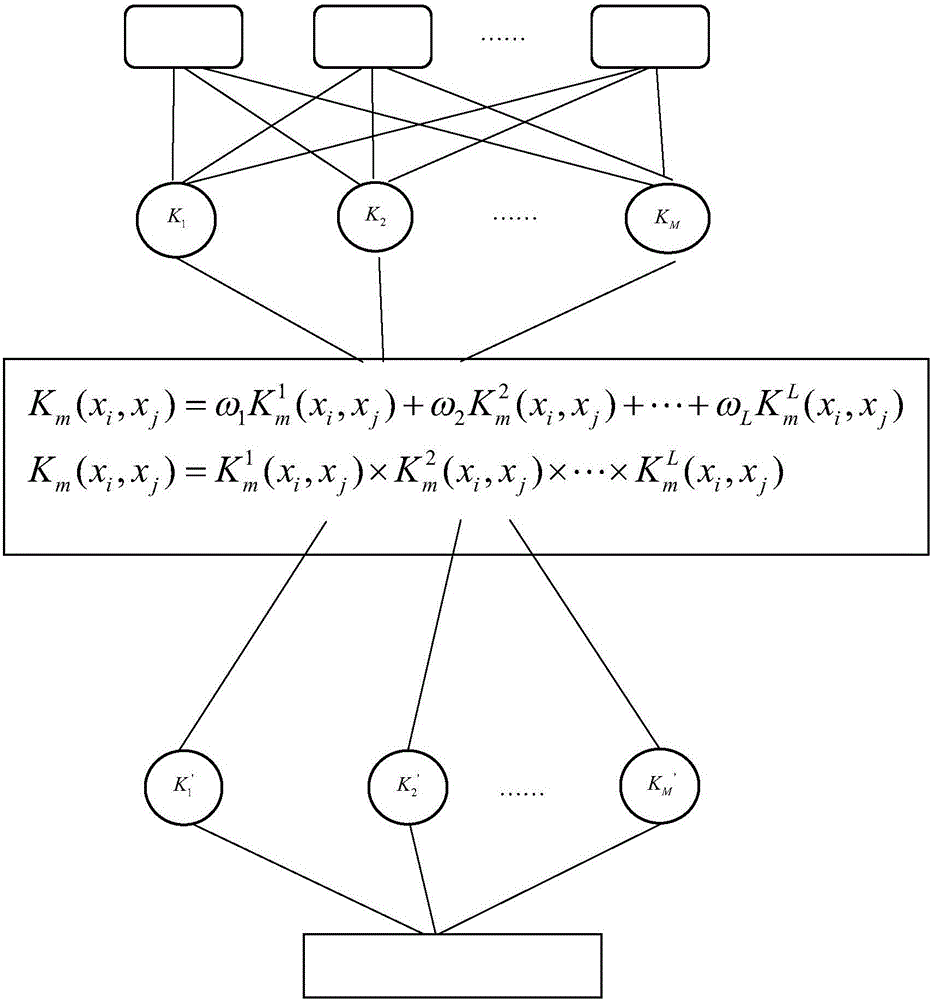

Multi-kernel-learning-based multi-mode emotion identification method

ActiveCN106250855AEasy to identifyGood effectCharacter and pattern recognitionLearning basedMatrix group

The invention discloses a multi-kernel-learning-based multi-mode emotion identification method. According to the method, extraction of emotion features like an expression feature, a voice feature and a physiological feature is carried out on sample data of each mode in a multi-mode emotion database; several different kernel matrixes are constructed for each mode respectively; the kernel matrix groups corresponding to different modes are fused to obtain a fused multi-mode emotion feature; and a multi-kernel support vector machine is used as a classifier to carry out training and identification. Therefore, basic emotions like angering, disgusting, fearing, delighting, upsetting, and surprising and the like can be identified effectively.

Owner:NANJING UNIV OF POSTS & TELECOMM

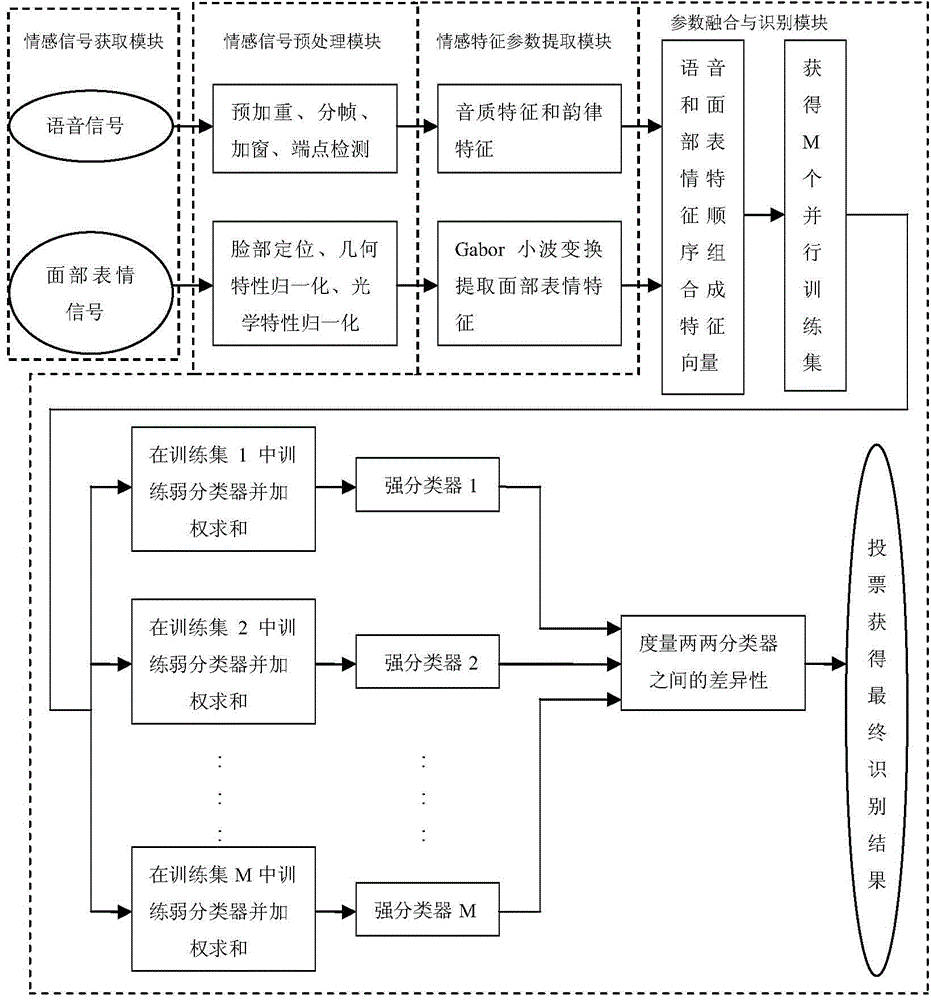

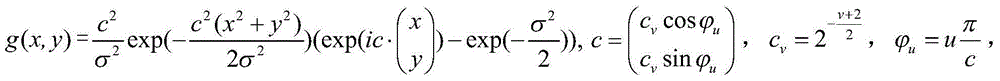

Serial-parallel combined multi-mode emotion information fusion and identification method

InactiveCN104835507AOvercome limitationsImprove accuracyCharacter and pattern recognitionSpeech recognitionFeature vectorAdaboost algorithm

The present invention discloses a serial-parallel combined multi-mode emotion information fusion and identification method belonging to the emotion identification technology field. The method mainly comprises obtaining an emotion signal; pre-processing the emotion signal; extracting an emotion characteristic parameter; and fusing and identifying the characteristic parameter. According to the present invention, firstly, the extracted voice signal and facial expression signal characteristic parameters are fused to obtain a serial characteristic vector set, then M parallel training sample sets are obtained by the sampling with putback, and sub-classifiers are obtained by the Adabost algorithm training, and then difference of every two classifiers is measured by a dual error difference selection strategy, and finally, vote is carried out by utilizing the majority vote principle, thereby obtaining a final identification result, and identifying the five human basic emotions of pleasure, anger, surprise, sadness and fear. The method completely gives play to the advantage of the decision-making level fusion and the characteristic level fusion, and enables the fusion process of the whole emotion information to be closer to the human emotion identification, thereby improving the emotion identification accuracy.

Owner:BOHAI UNIV

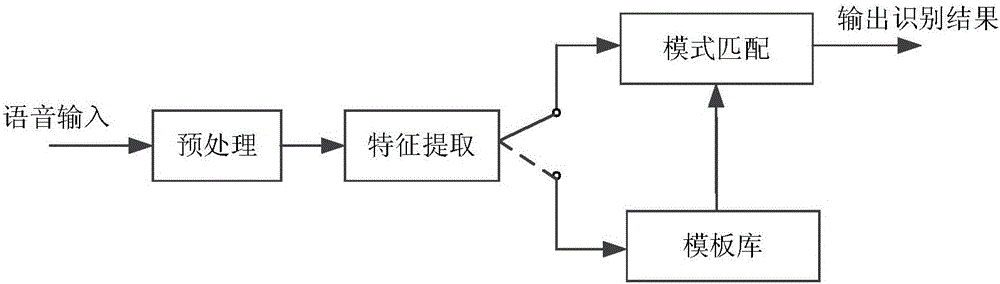

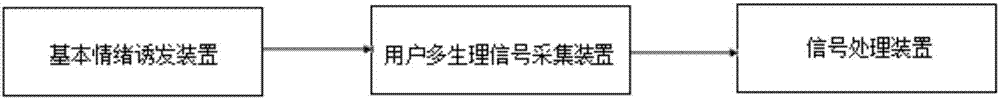

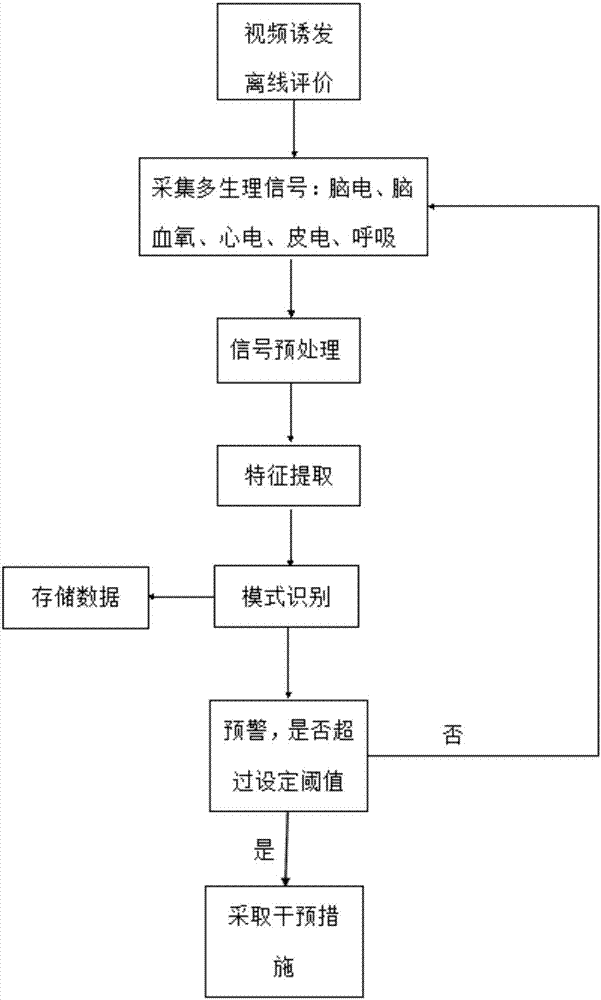

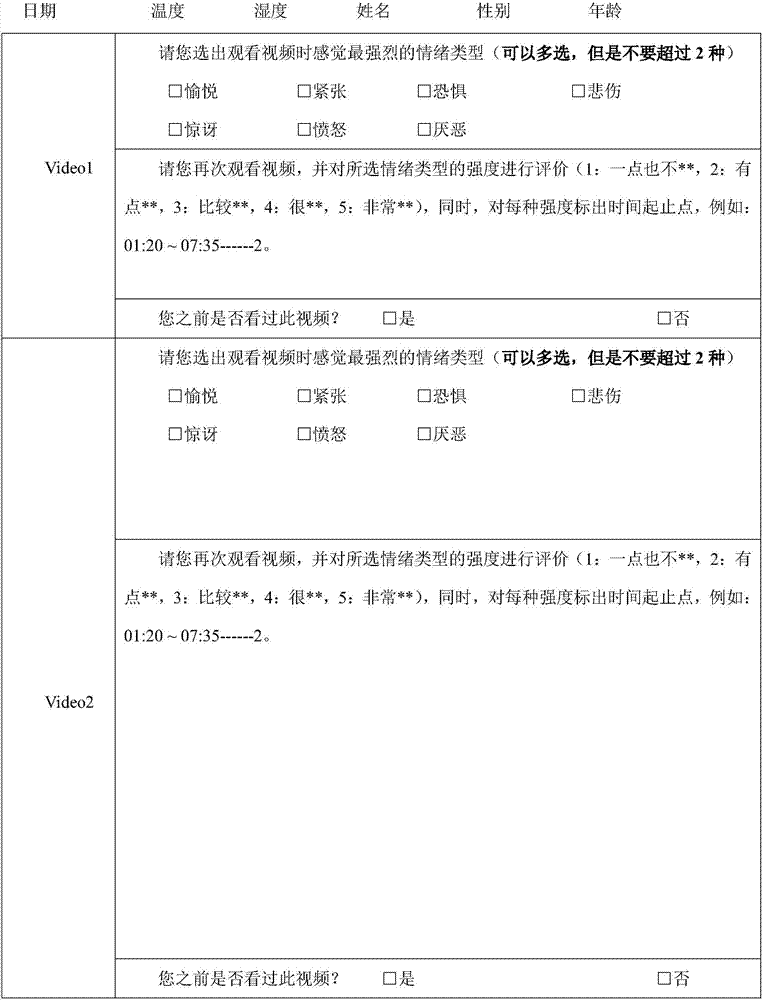

Nervous emotion intensity identification system and information processing method based on multiple physiological parameters

InactiveCN107007291AHelp selectionHelp with trainingRespiratory organ evaluationSensorsInformation processingElectricity

The invention relates to the field of emotion recognition, in particular to a nervous emotion intensity identification system and an information processing method based on multiple physiological parameters. The information processing method includes the steps of offline training and online monitoring, wherein the offline training includes the processes of inducing users' nervous emotions, collecting users' multiple physiological signals and carrying out signal processing; the signal processing includes the processes of preprocessing, feature extraction and pattern recognition; the preprocessing includes the processes of suppressing power frequency interference for EEG signals by an adaptive filter, removing the power frequency interference by a band pass filter after amplifying the ECG, respiration and skin electrical signals, and intercepting valid data by an information processing tool. The nervous emotion intensity identification system and the information processing method have the advantages of collecting central nervous signals and autonomic nerve signals that reflect the nervous system information of the human body, establishing a classification model for people or individuals by offline training to carry out the real-time recognition and detection on the intensity of the users' nervous emotions, warning for the nervous emotions of excessive intensity, storing the users' emotional physiological signals in the whole process, and detecting the intensity of the users' nervous emotions in real time.

Owner:TIANJIN UNIV

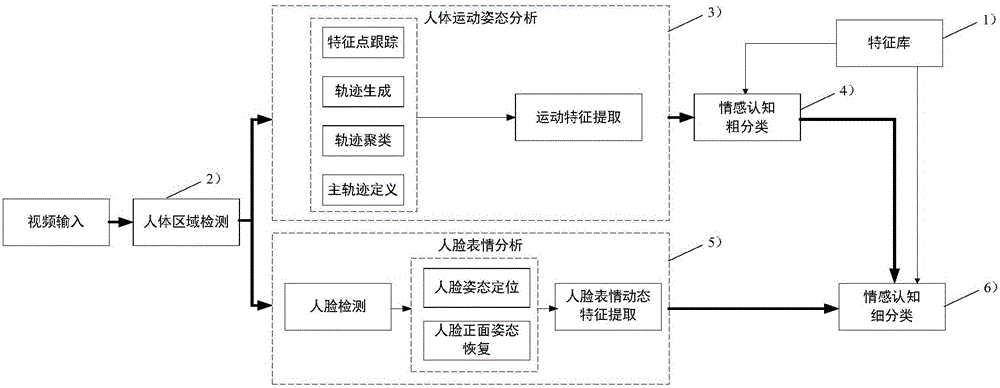

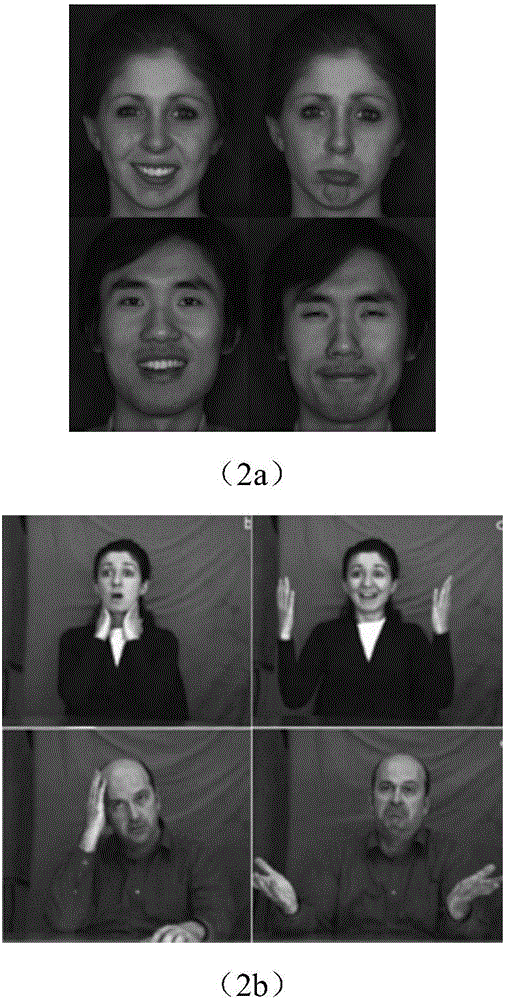

Human natural state emotion identification method based on double-mode combination of expression and behavior

ActiveCN106295568AImprove recognition accuracyImprove efficiencyAcquiring/recognising facial featuresFeature extractionCognition

The invention relates to a human natural state emotion identification method based on double-mode combination of expression and behavior. The human natural state emotion identification method comprises the following steps of S1, establishing an emotion cognition architecture in two classification modes; S2, performing human body area detection on a natural-gesture human body image of video input; S3, performing characteristic point extraction on the image in a human body trunk subarea, obtaining a characteristic point motion track according to the characteristic points in image frames at different time, acquiring a main motion track which reflects human body behavior from the characteristic point motion track by means of a clustering method, extracting a human body trunk motion characteristic from the main motion track; S4, acquiring an emotion cognition coarse classification result according to the human body trunk motion characteristic; S5, performing face expression characteristic extraction on the image of the face sub-area; and S6, outputting an emotion cognition fine-classification result which corresponds with the searched face expression characteristic. Compared with the prior art, the human natural state emotion identification method has advantages of high identification precision, wide application range, easy realization, etc.

Owner:SHANGHAI UNIVERSITY OF ELECTRIC POWER

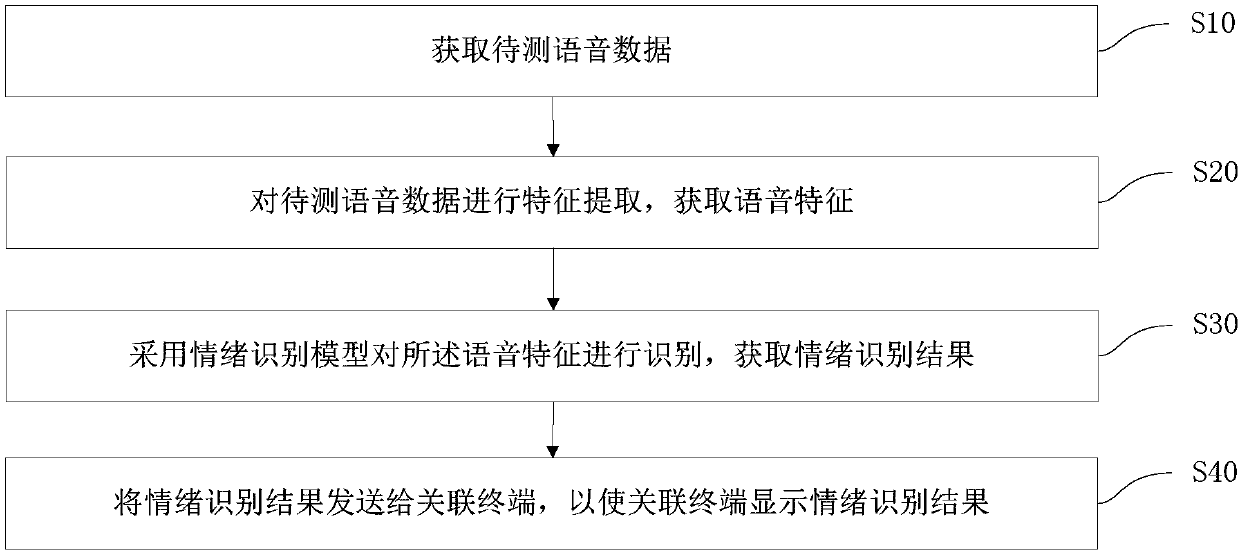

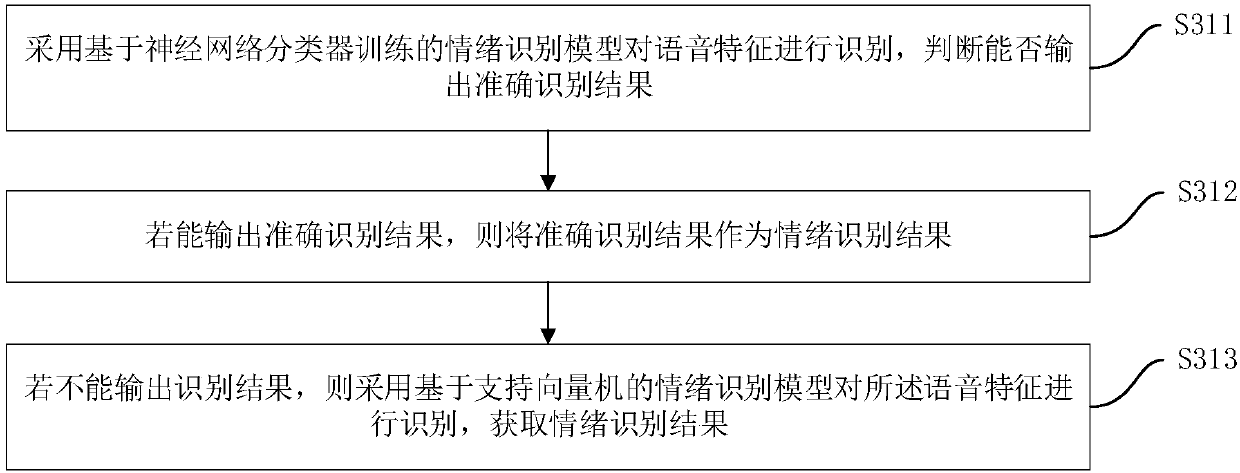

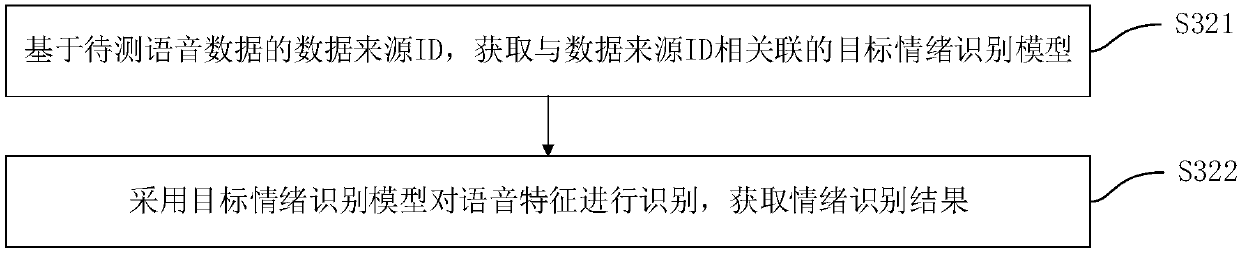

Emotion identification-based voice quality inspection method and device, apparatus and storage medium

ActiveCN107705807ATimely and comprehensive sampling inspectionRealize intelligent identificationSpeech analysisSpecial service for subscribersComputer terminalEmotion identification

The present invention discloses an emotion identification-based voice quality inspection method and device, an apparatus and a storage medium. The emotion identification-based voice quality inspectionmethod comprises the steps of obtaining the to-be-tested voice data; sending the to-be-tested voice data to a voice emotion identification platform for emotion identification; sending an emotion identification result to a correlated terminal, so that the correlated terminal displays the emotion identification result. The emotion identification-based voice quality inspection method has the advantages of being high in efficiency and low in labor cost when identifying the emotions.

Owner:PING AN TECH (SHENZHEN) CO LTD

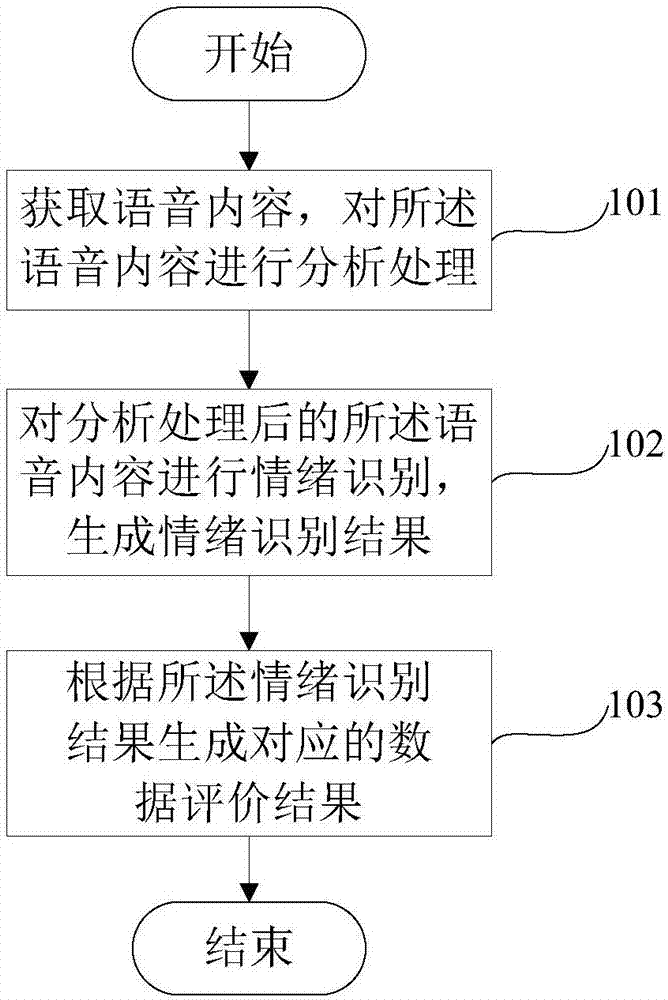

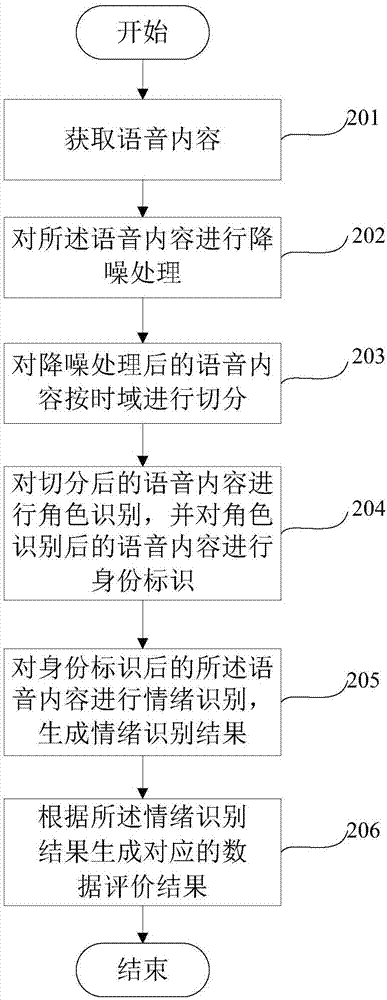

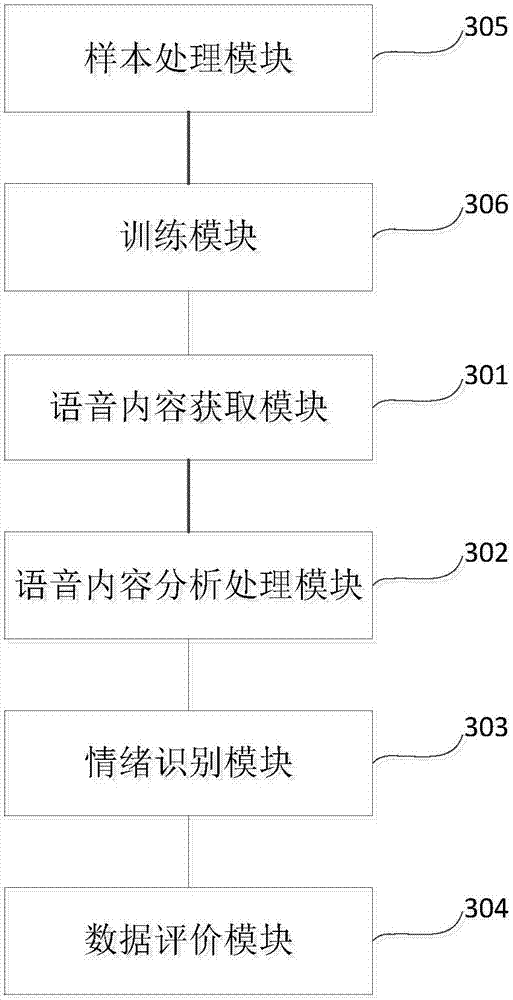

Method and device for date evaluation according to speech content

ActiveCN107452405AEmotional controlObjective evaluationSpeech analysisSpecial service for subscribersEvaluation resultEmotion identification

The embodiment of the invention provides a method and device for date evaluation according to speech content, belonging to the data processing technology field. The method comprises: obtaining speech content, and performing analysis processing of the speech content; performing emotion identification of the analyzed speech content, and generating an emotion identification result; and generating a corresponding data evaluation result according to the emotion identification result. According to the embodiment, it is realized that the service quality of customer services is evaluated from the perspective of the emotion so as to allow the actual service quality of customer services to obtain objective and real reflection.

Owner:BEIJING YIZHEN XUESI EDUCATION TECH CO LTD

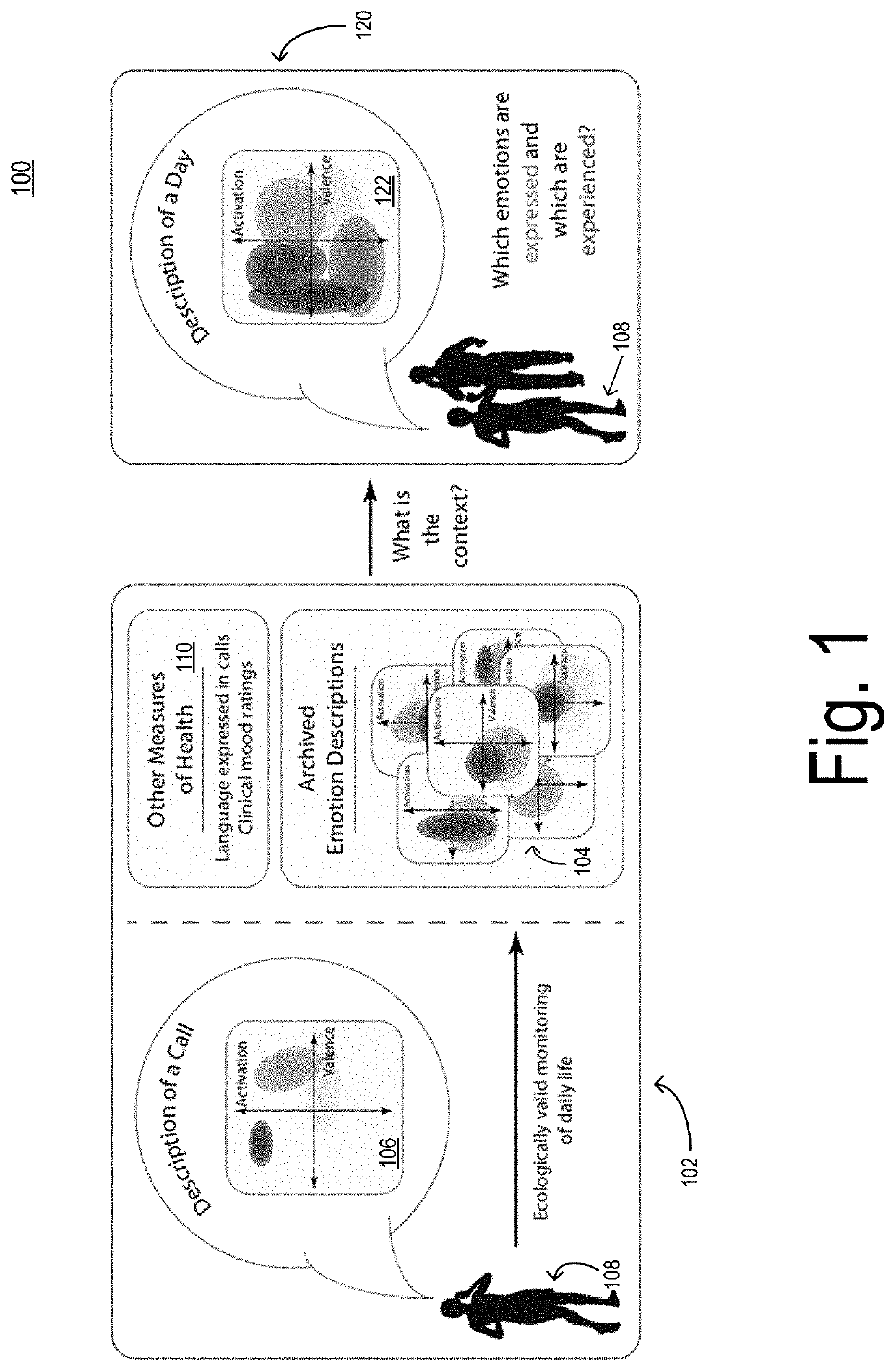

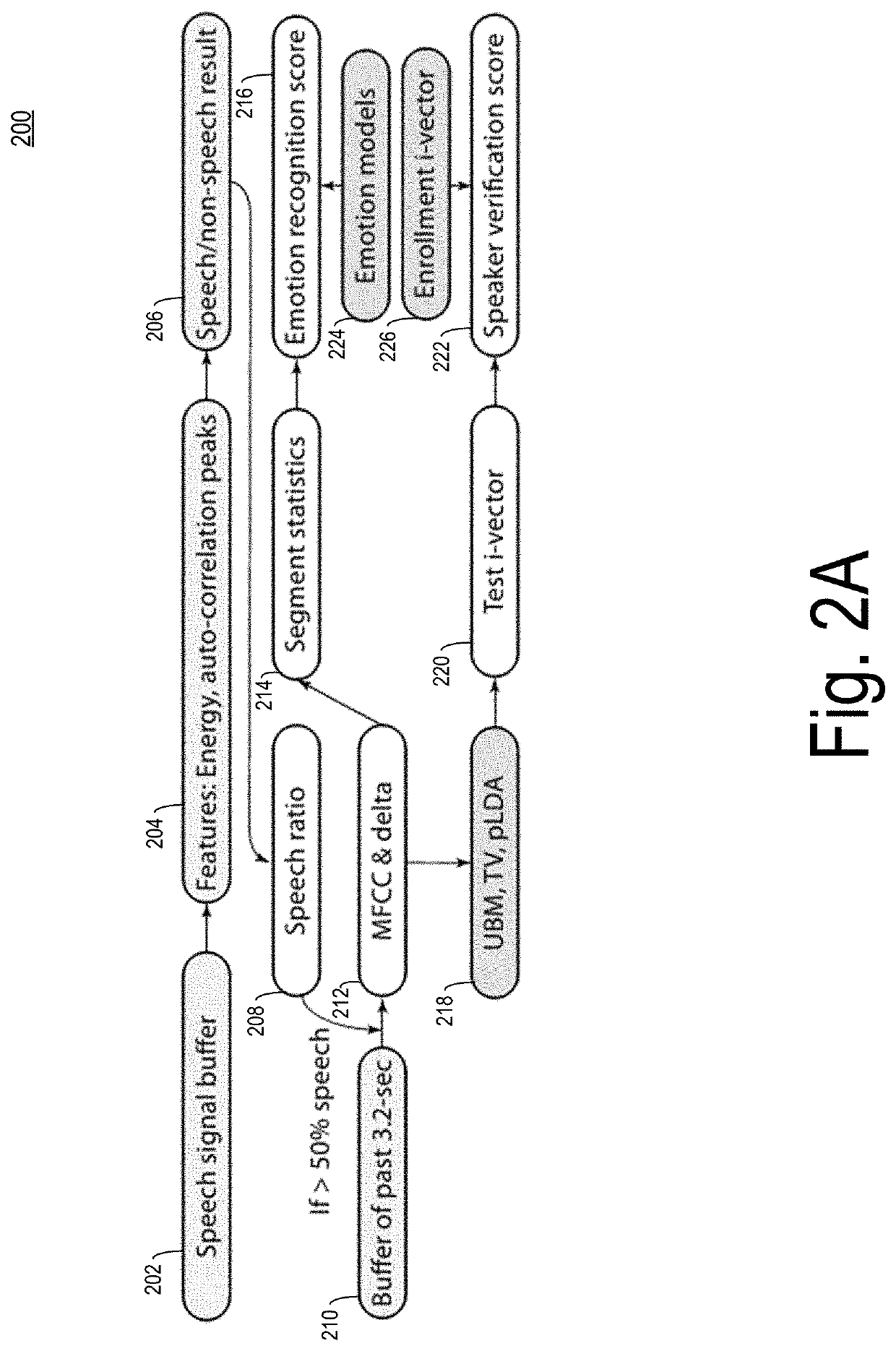

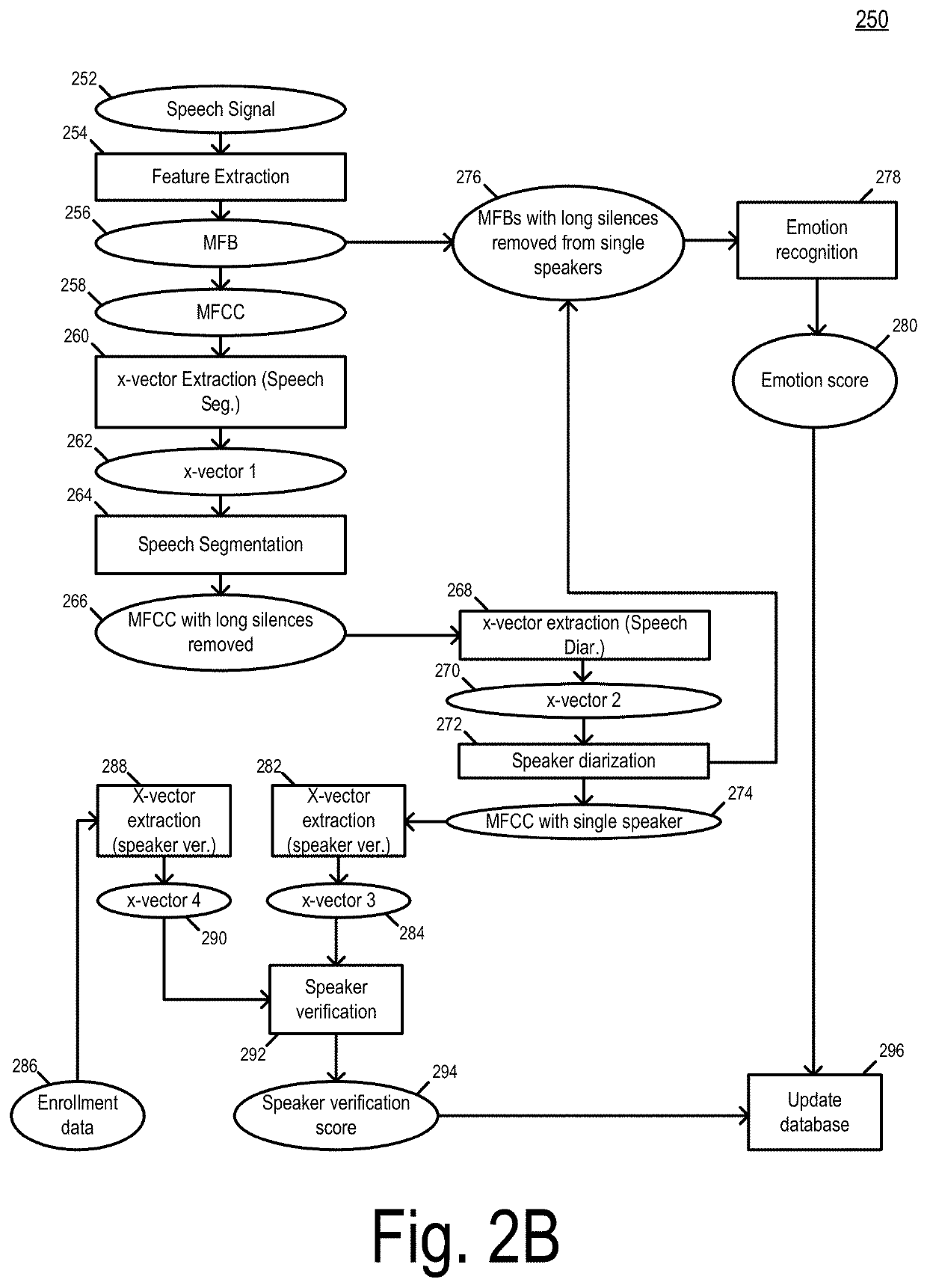

Automatic speech-based longitudinal emotion and mood recognition for mental health treatment

A method of predicting a mood state of a user may include recording an audio sample via a microphone of a mobile computing device of the user based on the occurrence of an event, extracting a set of acoustic features from the audio sample, generating one or more emotion values by analyzing the set of acoustic features using a trained machine learning model, and determining the mood state of the user, based on the one or more emotion values. In some embodiments, the audio sample may be ambient audio recorded periodically, and / or call data of the user recorded during clinical calls or personal calls.

Owner:RGT UNIV OF MICHIGAN

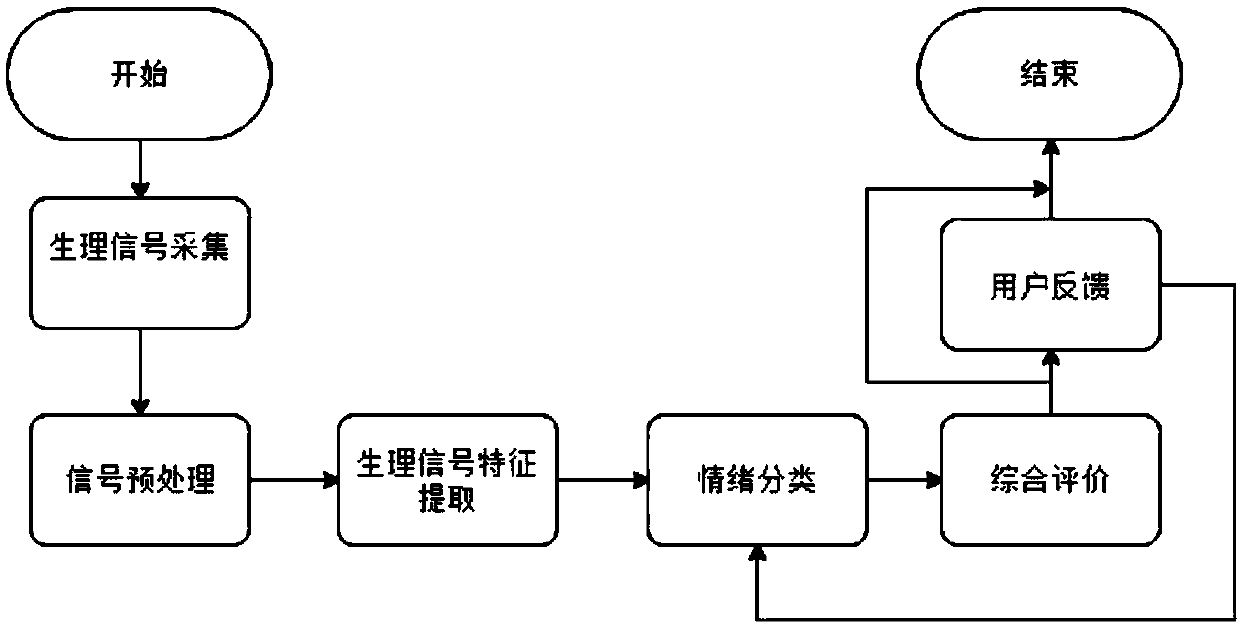

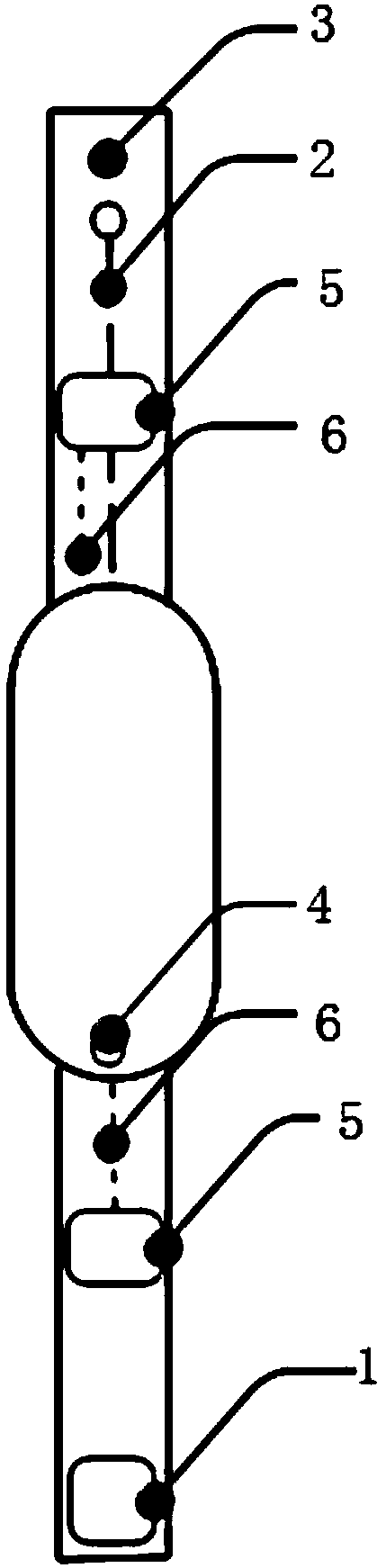

Emotion recognition system and method based on wearable bracelet

ActiveCN109620262AIncrease awarenessImprove management abilitySensorsPsychotechnic devicesElectricityFeature extraction

The invention provides an emotion recognition system and method based on a wearable bracelet. The system comprises a physiological signal acquisition module, a physiological signal preprocessing module, a physiological signal feature extraction module, an emotion classification module and a comprehensive evaluation module; the physiological signal acquisition module is used for acquiring three kinds of physiological data of electrocardiograph, heart rate and skin electricity of a wearer; the physiological signal preprocessing module is used for carrying out data segmentation and denoising on the three kinds of physiological data and then transmitting the physiological data to the physiological signal feature extraction module; the physiological signal feature extraction module is used forcarrying out feature extraction on the three kinds of physiological data; the emotion classification module is used for carrying out emotion recognition on the three kinds of physiological data and outputting three emotion states; the comprehensive evaluation module adopts a weight-based voting decision rule, voting decision is carried out on the three emotional states, a current emotional state label of the wearable bracelet wearer is determined comprehensively, and a recognition result is obtained. According to the system, the cognitive and management capability of the wearer on the emotionof the wearer is improved, and a healthier psychological state can be possessed.

Owner:SOUTH CHINA UNIV OF TECH

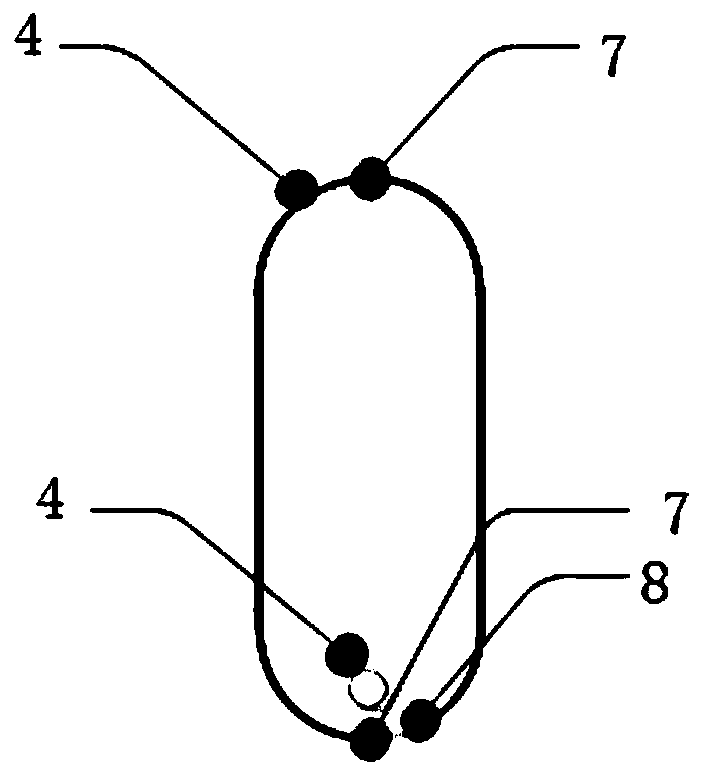

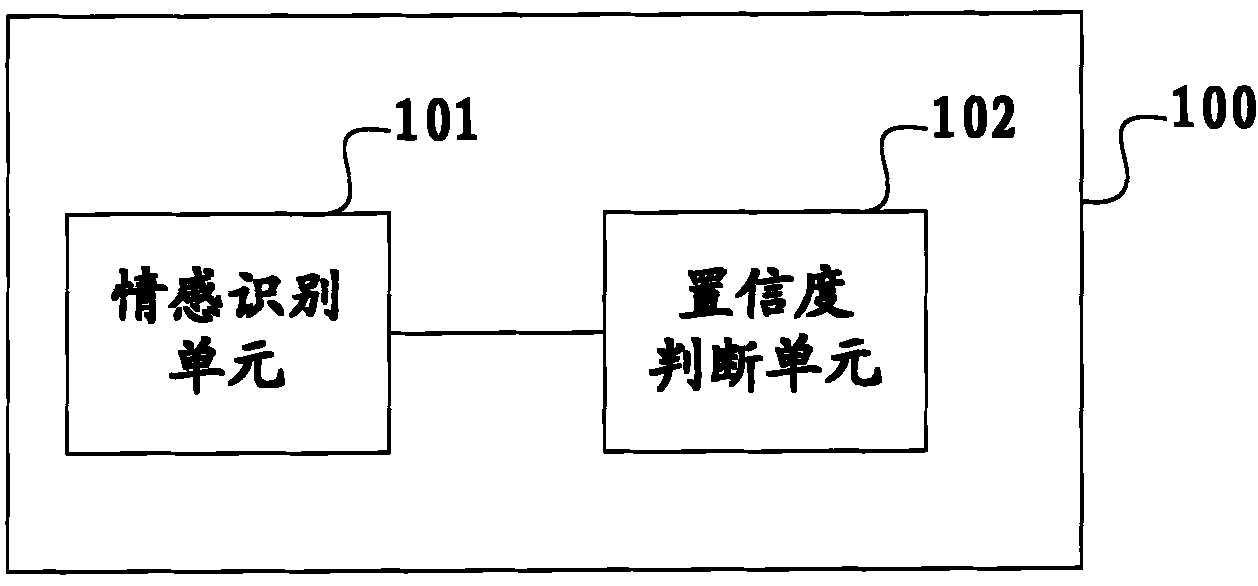

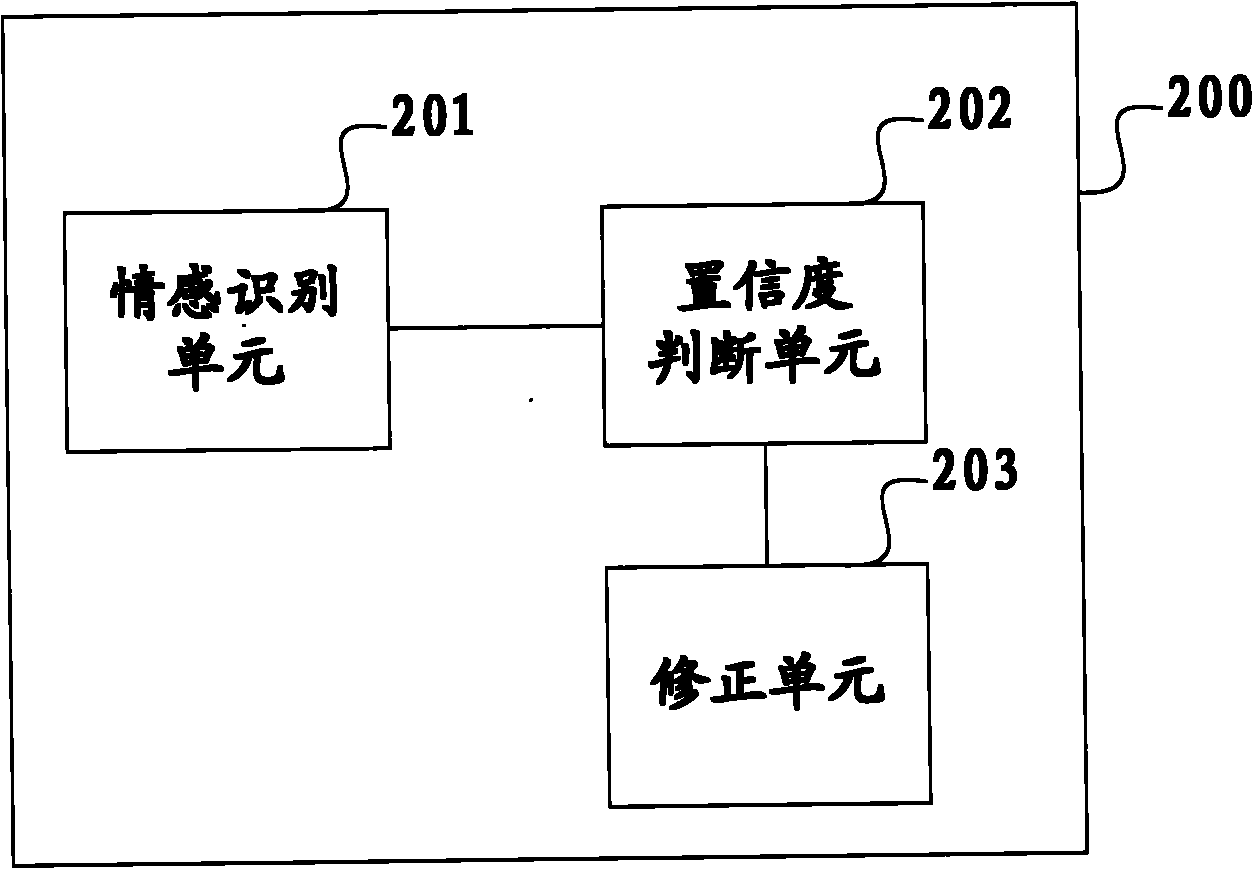

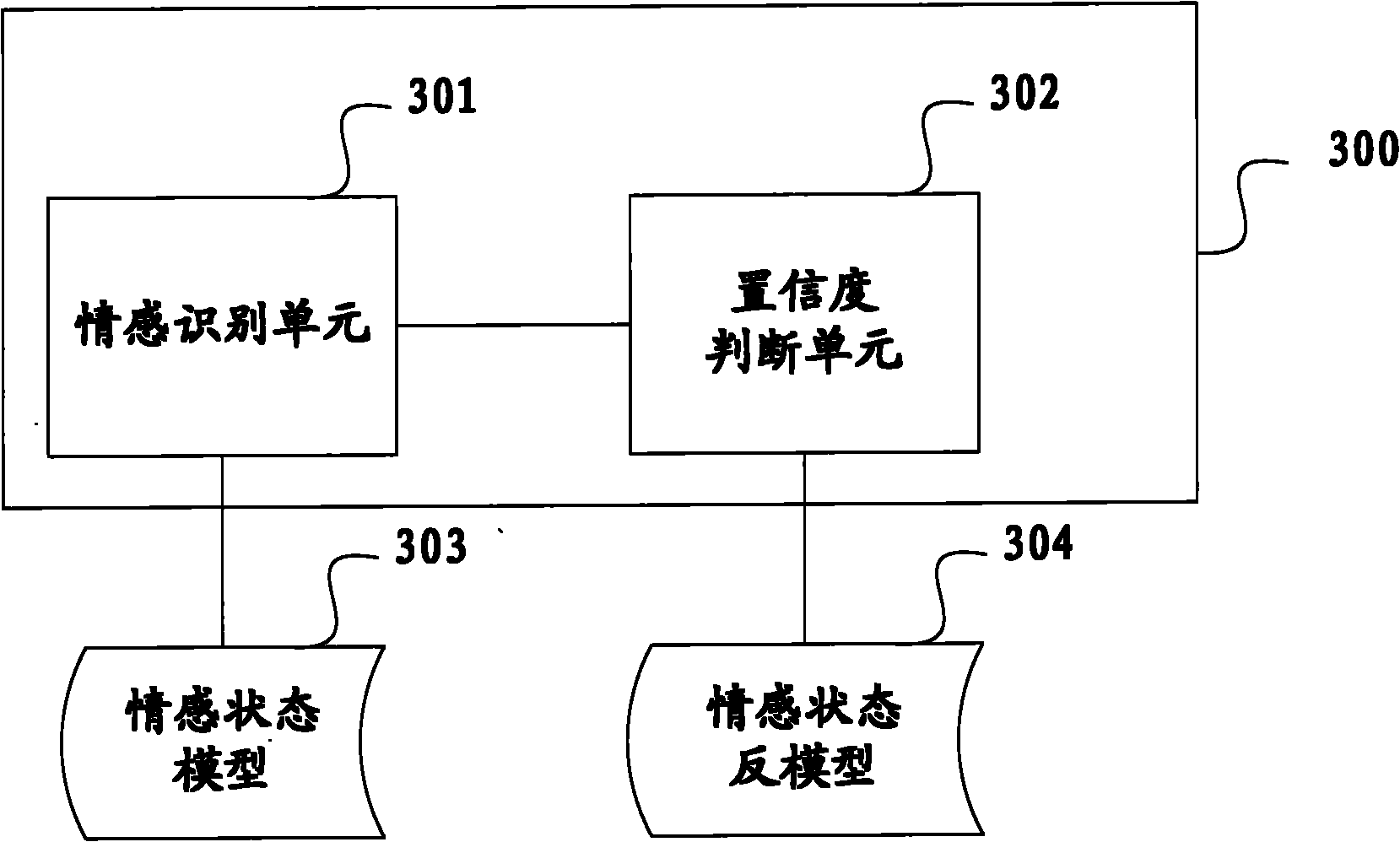

Voice emotion identification equipment and method

The invention provides voice emotion identification equipment and a voice emotion identification method. The voice emotion identification equipment comprises an emotion identification unit and a confidence judgment unit, wherein the emotion identification unit is used for identifying the current emotion state of voice of an addressor into a preliminary emotion state; and the confidence judgment unit is used for calculating the confidence of the preliminary emotion state and judging whether the preliminary emotion state is credible by using the confidence, and if so, the preliminary emotion state is determined as a final emotion state and the final emotion state is output. The confidence judgment is performed on the identification result of the voice emotion state and the final emotion state is determined according to the judgment result, so that the accuracy of the identification result of the voice emotion state can be improved.

Owner:FUJITSU LTD

Emotion recognition apparatus

An emotion recognition apparatus performs accurate and stable speech-based emotion recognition, irrespective of individual, regional, and language differences of prosodic information. The emotion recognition apparatus includes: a speech recognition unit which recognizes types of phonemes included in the input speech; a characteristic tone detection unit which detects a characteristic tone that relates to a specific emotion, in the input speech; a characteristic tone occurrence indicator computation unit which computes a characteristic tone occurrence indicator for each of the phonemes, based on the types of the phonemes recognized by the speech recognition unit, the characteristic tone occurrence indicator relating to an occurrence frequency of the characteristic tone; and an emotion judgment unit which judges an emotion of the speaker in a phoneme at which the characteristic tone occurs in the input speech, based on the characteristic tone occurrence indicator computed by the characteristic tone occurrence indicator computing unit.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

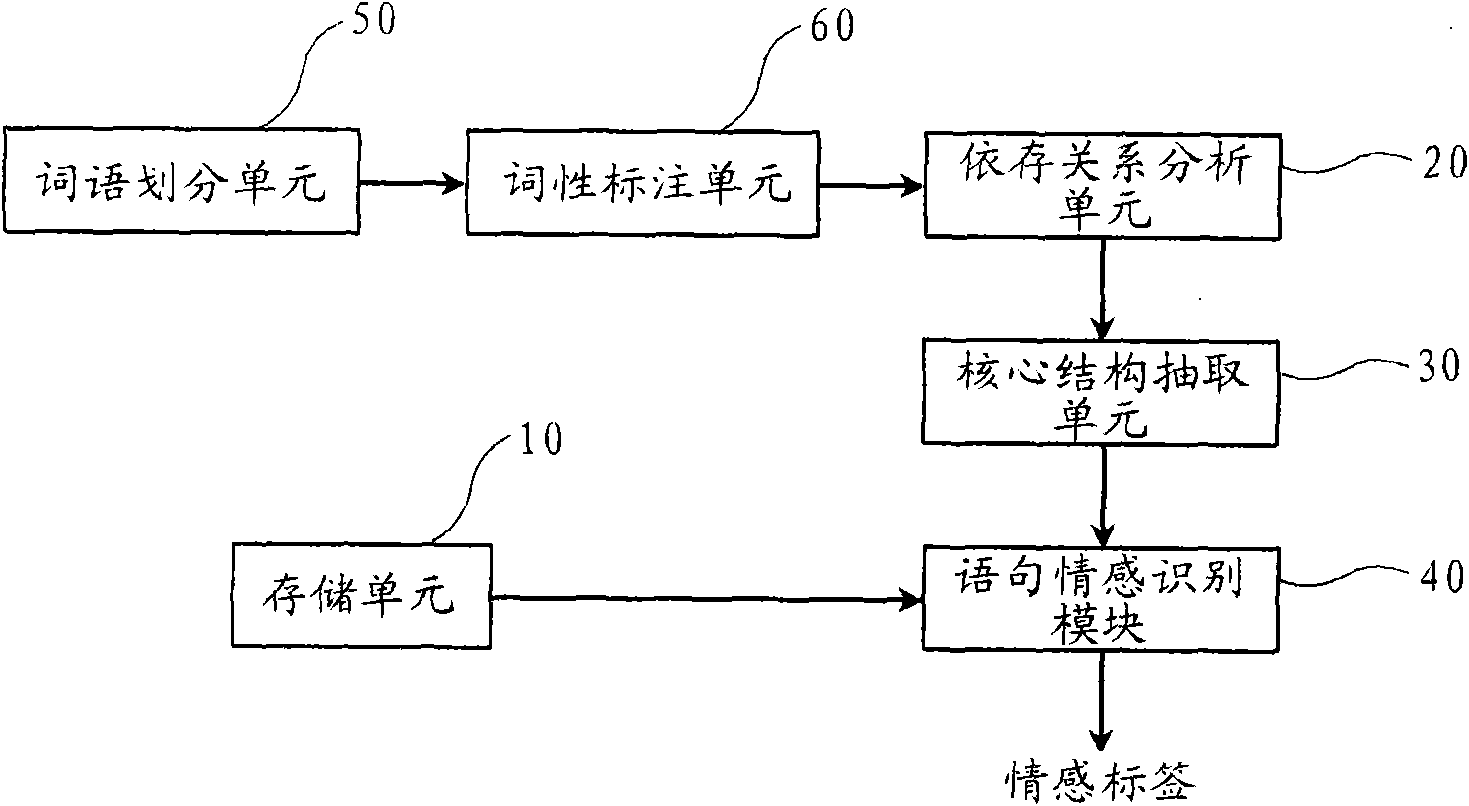

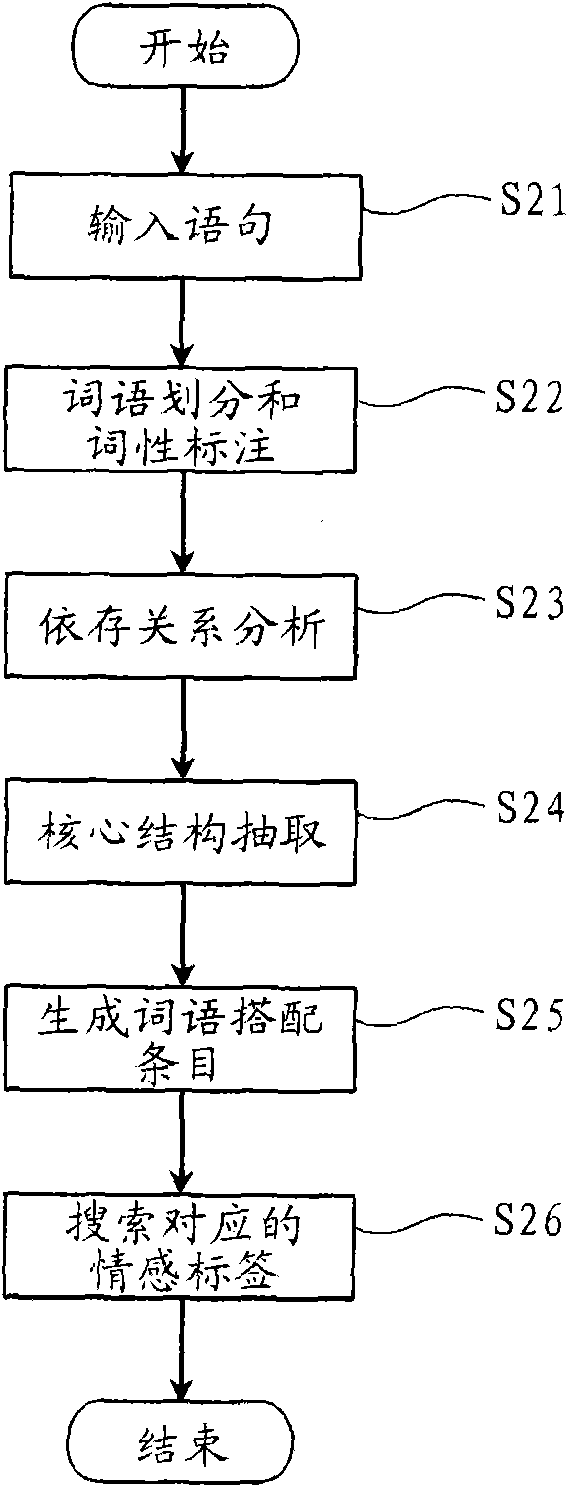

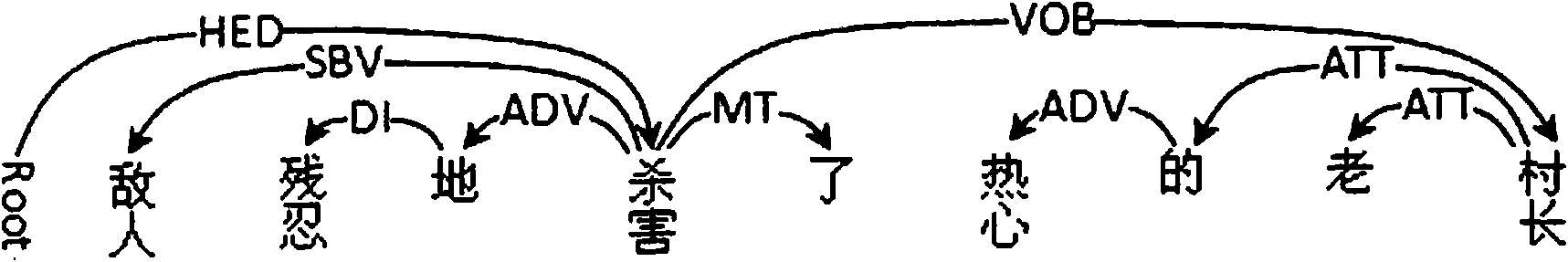

Device and method for identifying statement emotion based on dependency relation

The invention provides a device and a method for identifying statement emotion based on dependency relation. The device comprises a storage unit, a dependency relation analysis unit, a core structure extraction unit and a statement emotion identification unit. The storage unit is used for storing an emotion model library, wherein, in the emotion model library, each word collocation entry obtained from the corpus in advance is mapped to the corresponding emotion tag; the dependency relation analysis unit is used for analyzing the dependency relation of each word in an input statement based on the part-of-speech of each word in the input statement; the core structure extraction unit is used for extracting the core structure of the input statement according to the dependency relation analyzed by the dependency relation analysis unit; the statement emotion identification module is used for generating the word collocation entries of the core structure according to the core structure extracted by the core structure extraction unit and the dependency relation analyzed by the dependency relation analysis unit, and further searching the emotion tags corresponding to the generated work collocation entries from the emotion model library.

Owner:SAMSUNG ELECTRONICS CHINA R&D CENT +1

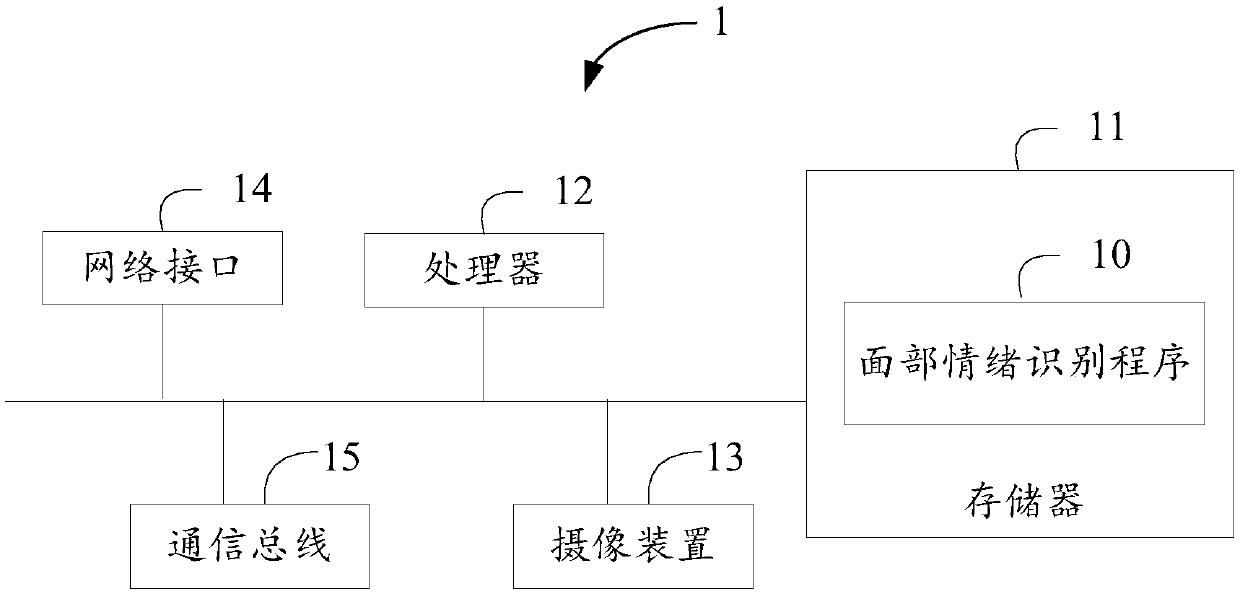

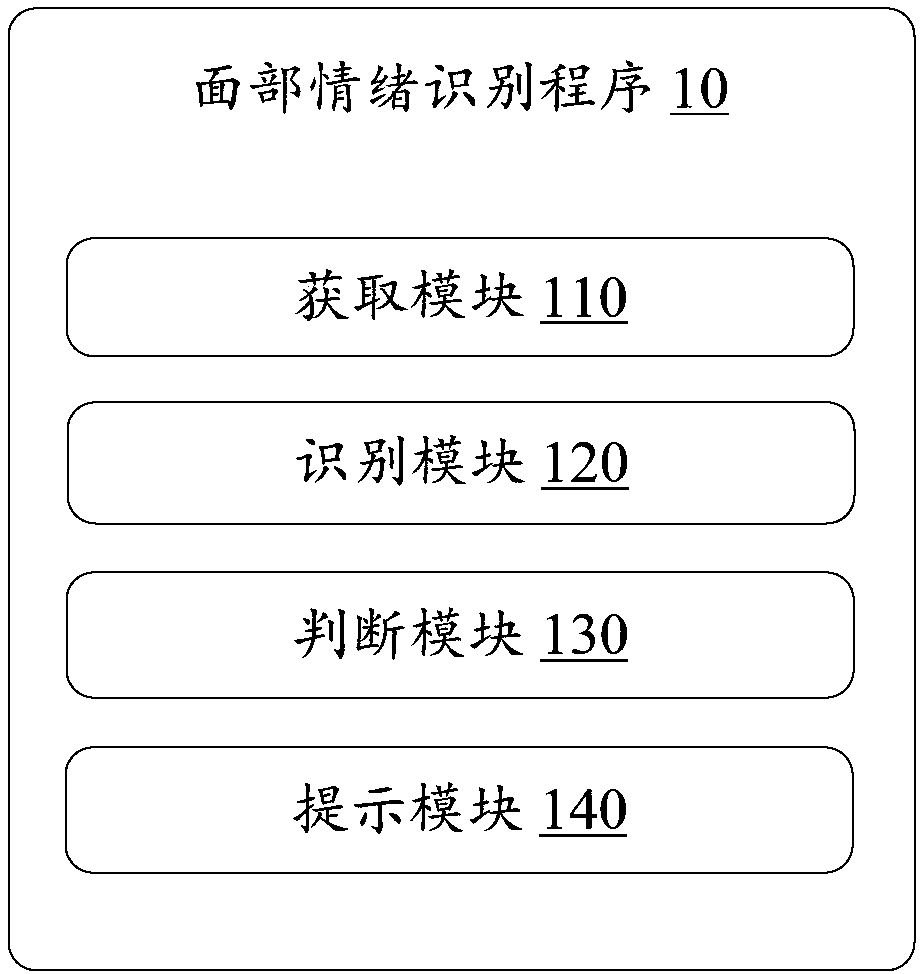

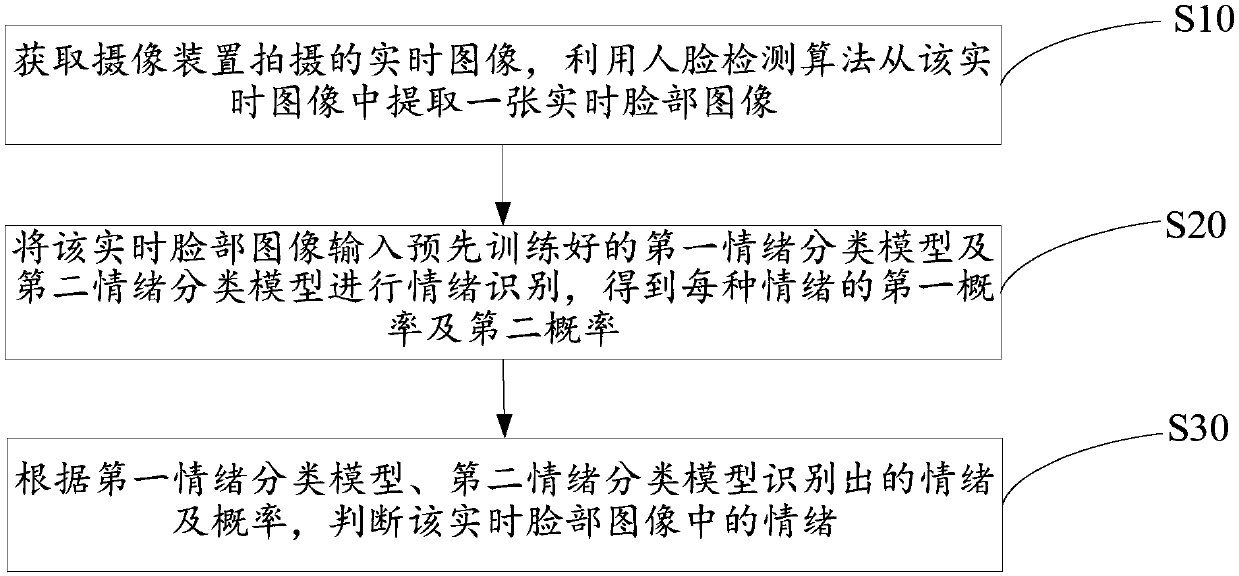

Face emotion identifying method, apparatus and storage medium

InactiveCN107633203AImprove accuracyCharacter and pattern recognitionPattern recognitionEmotion identification

The invention discloses a face emotion identifying method. The method herein includes the following steps: acquiring a real-time image that is photographed by a camera apparatus, extracting one real-time face image from the real-time image by using a face identification algorithm; inputting the real-time face image to a first emotion classification model and a second emotion classification model that are well-trained in advance so as to perform emotion identification, obtaining a first probability and a second probability of each kind of emotions; based on the emotions and probabilities that are identified by using the first emotion classification model, the second emotion classification model, determining the emotion of the real-time face image. According to the invention, in combinationwith the output results of the two models, the method herein can identify the emotions of the face in the real-time image, and increases the accuracy of identifying face emotions. The invention also discloses an electronic apparatus and a computer readable storage medium.

Owner:PING AN TECH (SHENZHEN) CO LTD

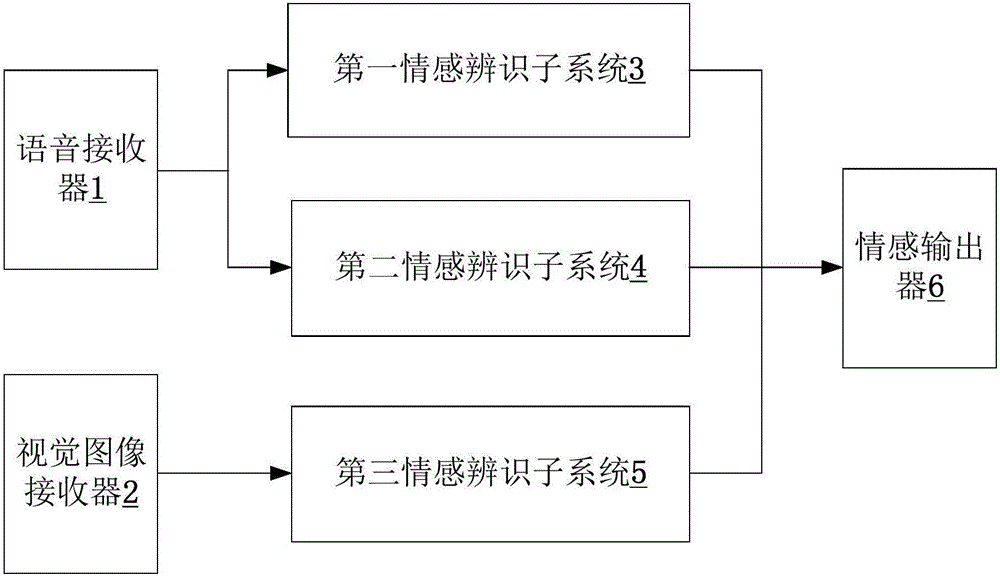

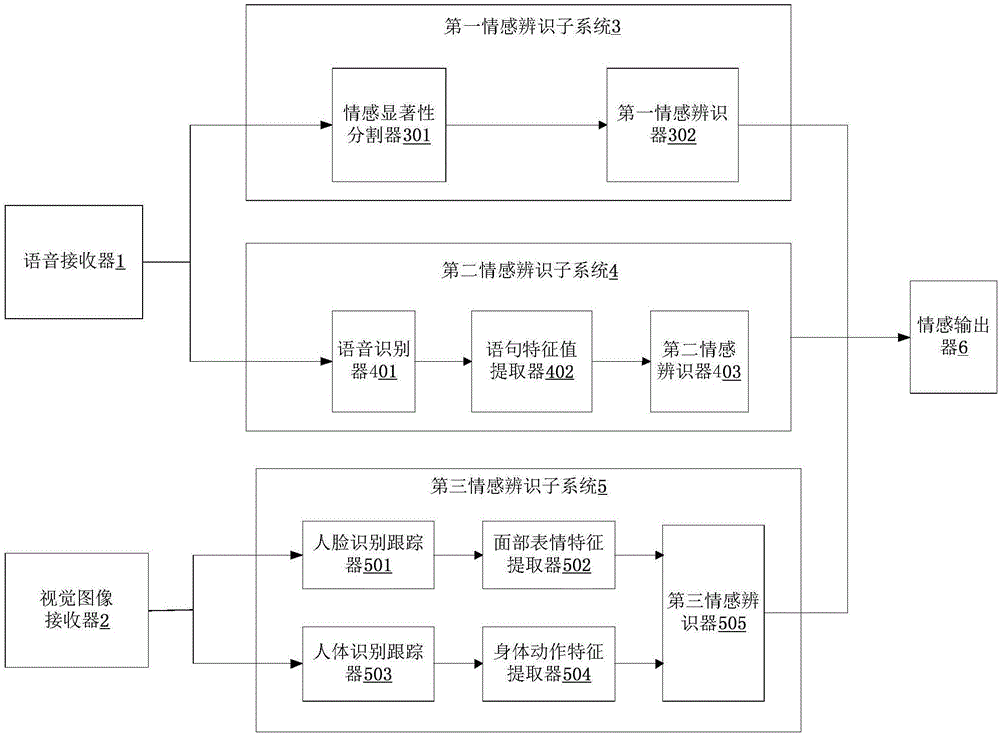

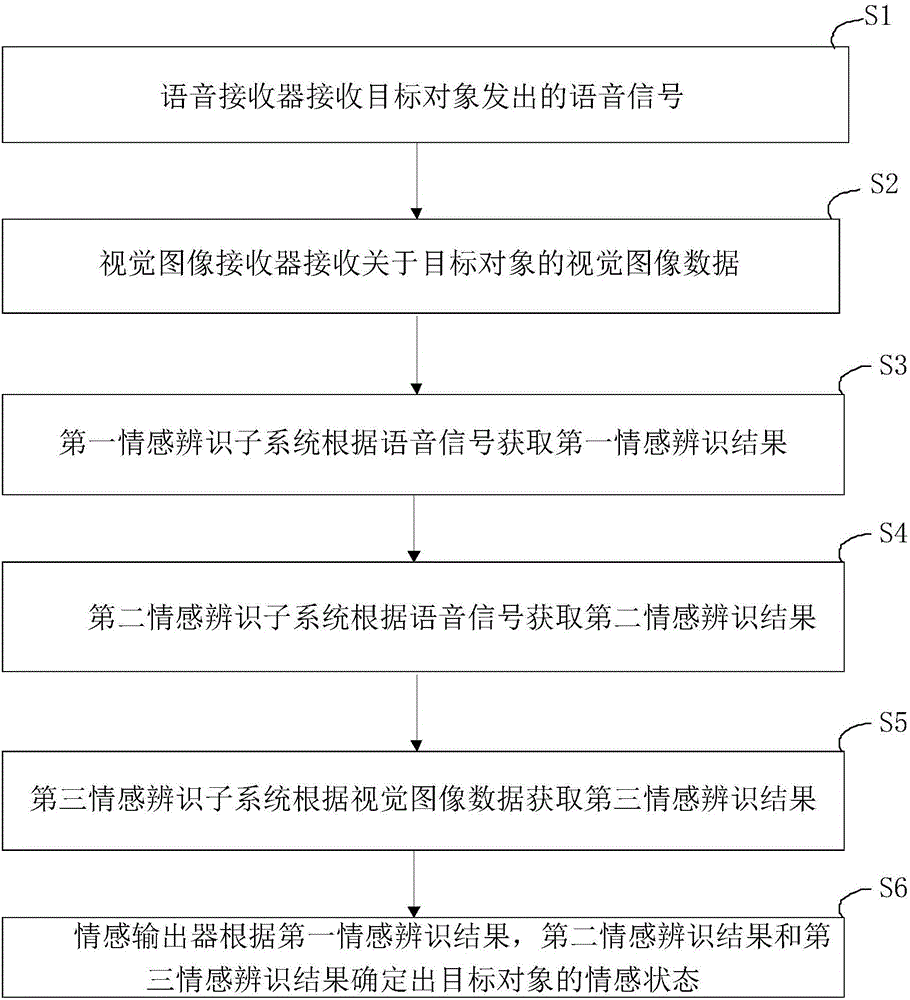

Multi-modal emotion identification system and method

ActiveCN106503646AAccurate emotion recognitionNatural language data processingSpeech recognitionPattern recognitionOutput device

The invention provides a multi-modal emotion identification system and method. The system comprises a voice receiver, a first emotion identification subsystem, a second emotion identification subsystem, a vision image receiver, a third emotion identification subsystem and an emotion output device, wherein the voice receiver is used for receiving voice signals emitted by a target object; the vision image receiver is used for receiving vision image data about the target object; the first emotion identification subsystem is used for obtaining a first emotion identification result according to the voice signals; the second emotion identification subsystem is used for obtaining a second emotion identification result according to the voice signals; the third emotion identification subsystem is used for obtaining a third emotion identification result according to the vision image data; and the emotion output device is used for determining an emission state of the target object according to the emotion identification result, the second emotion identification result and the third emotion identification result.

Owner:EMOTIBOT TECH LTD

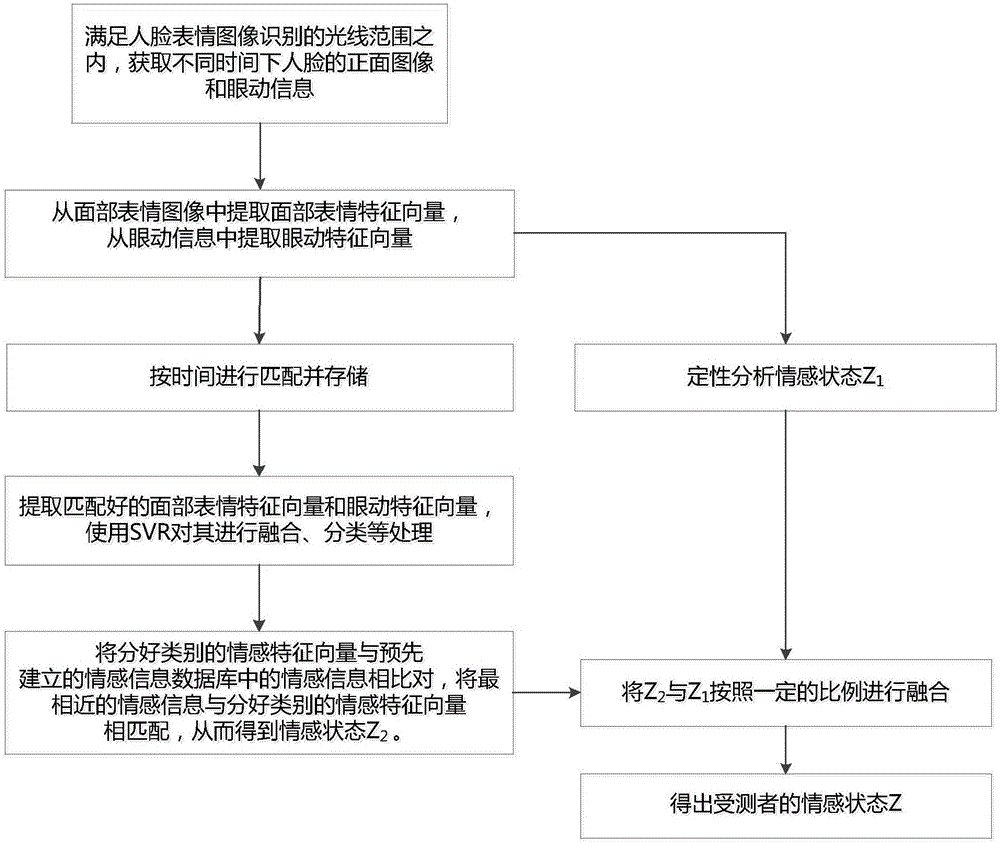

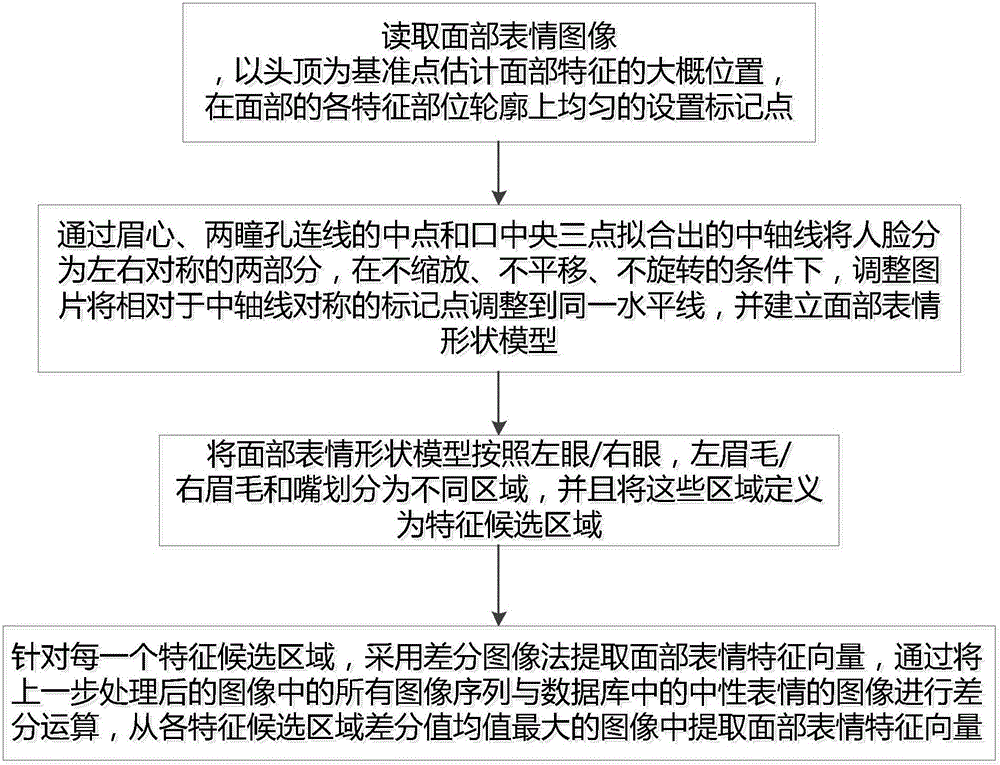

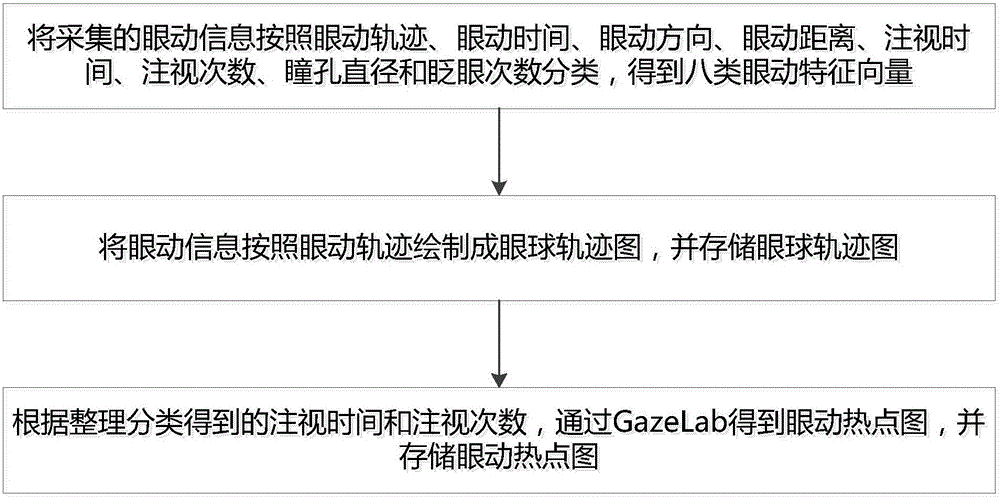

Dual-mode emotion identification method and system based on facial expression and eyeball movement

ActiveCN105868694AImprove accuracyImprove reliabilityAcquiring/recognising facial featuresFeature vectorDual mode

The present invention relates to a dual-mode emotion identification method and system based on a facial expression and an eyeball movement. The method comprises a step of performing acquisition, a step of extracting a facial expression feature vector, a step of extracting an eyeball movement feature vector, a step of performing qualitative analysis on an emotional state, a step of performing matching-by-time and storage, a step of performing fusion and classification and a step of comparing emotional information. According to the method and system provided by the present invention, facial expression information of a to-be-tested object can be dynamically and accurately extracted and analyzed, and a correlation between the facial expression and the emotion is established; rich eye movement information can be accurately and efficiently acquired by means of tracking of an eye tracker, and the emotional state of the to-be-tested object is analyzed from the angle of the eyeball movement; and the facial expression feature vector and the eyeball movement feature vector are processed by using an SVR, so that the emotional state of the to-be-tested object can be obtained more accurately, and thus accuracy and reliability of emotion identification are improved.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

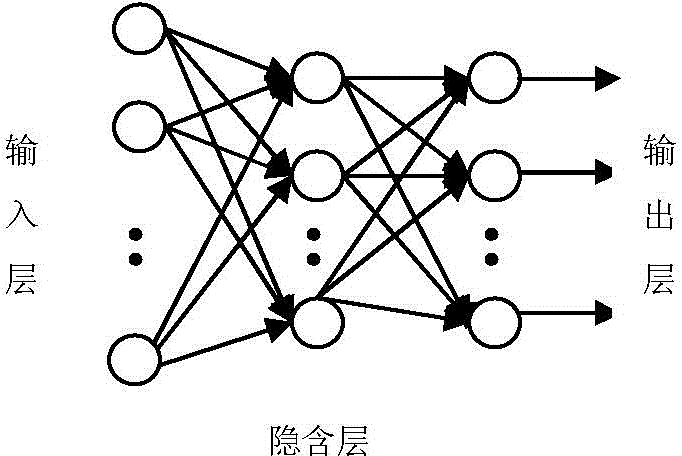

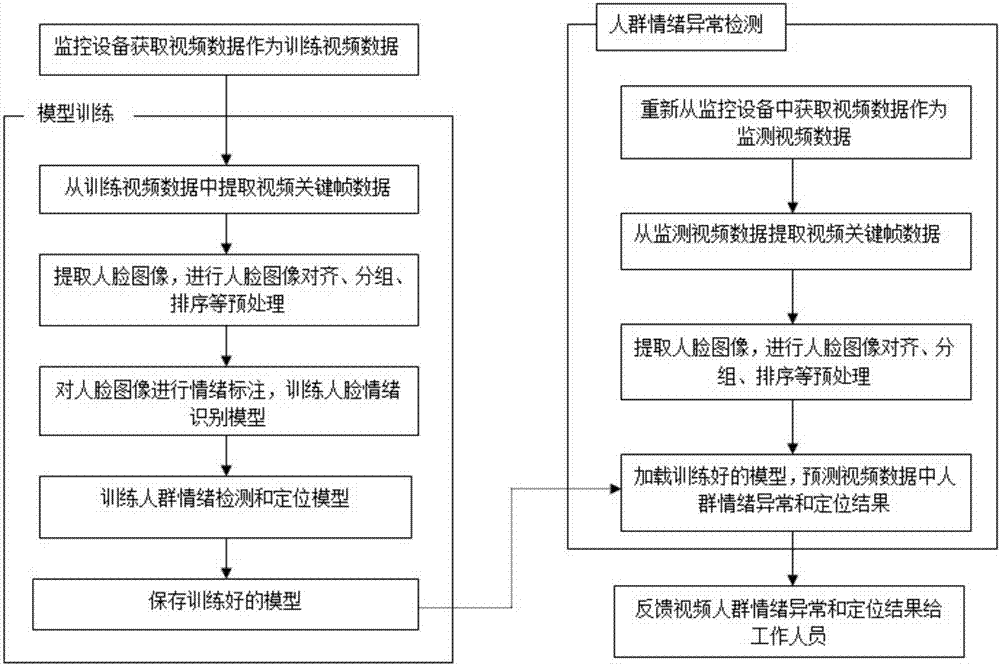

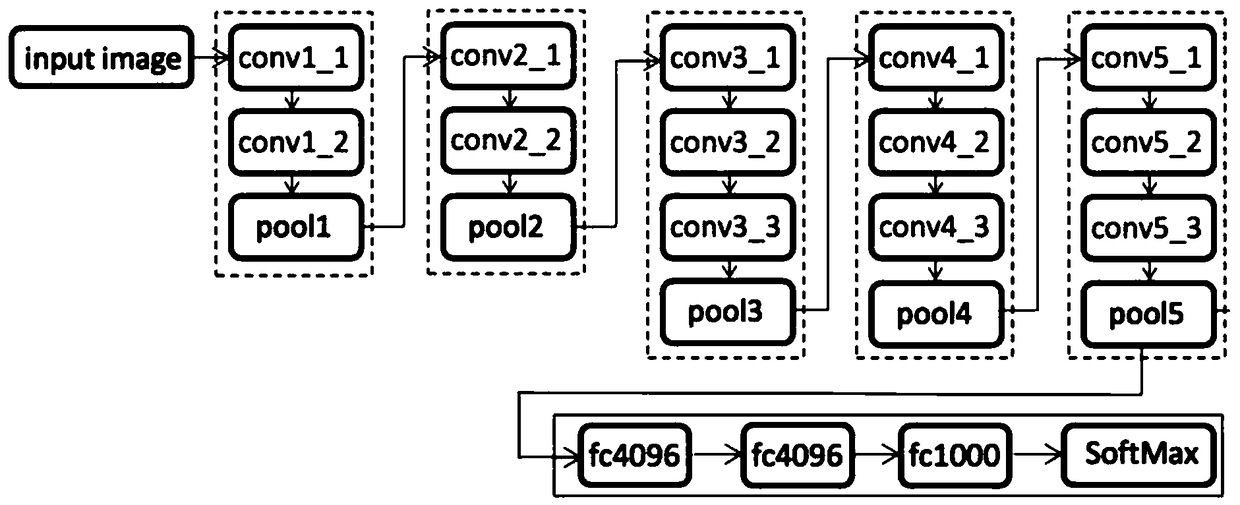

Crowd emotion anomaly detection and positioning method based on deep neural network

ActiveCN107169426AAvoid separate executionReasonable designBiological neural network modelsCharacter and pattern recognitionData ingestionAnomaly detection

The invention relates to a crowd emotion anomaly detection and positioning method based on a deep neural network. Video data is obtained through a monitoring device. Video key frame data is extracted from the video data. Data of each face image is obtained from the video key frame data. Alignment, grouping and sorting preprocessing are carried out. The data is input into a trained face emotion identification model based on a convolutional neural network. Crowd emotion anomaly detection and positioning results in monitored video data is obtained and is fed back to a worker of the monitoring device through a trained crowd emotion detection and positioning model. The method is reasonable in design; a relationship between a crowd emotion anomaly and a crowd anomaly can be obtained through the model, the detection limitation problem resulting from associating the crowd anomaly with a specific anomaly event is avoided, and moreover, the model is a hybrid deep neural network structure model, so the video crowd emotion anomaly detection and positioning efficiency is further improved.

Owner:GUANGDONG UNIV OF TECH

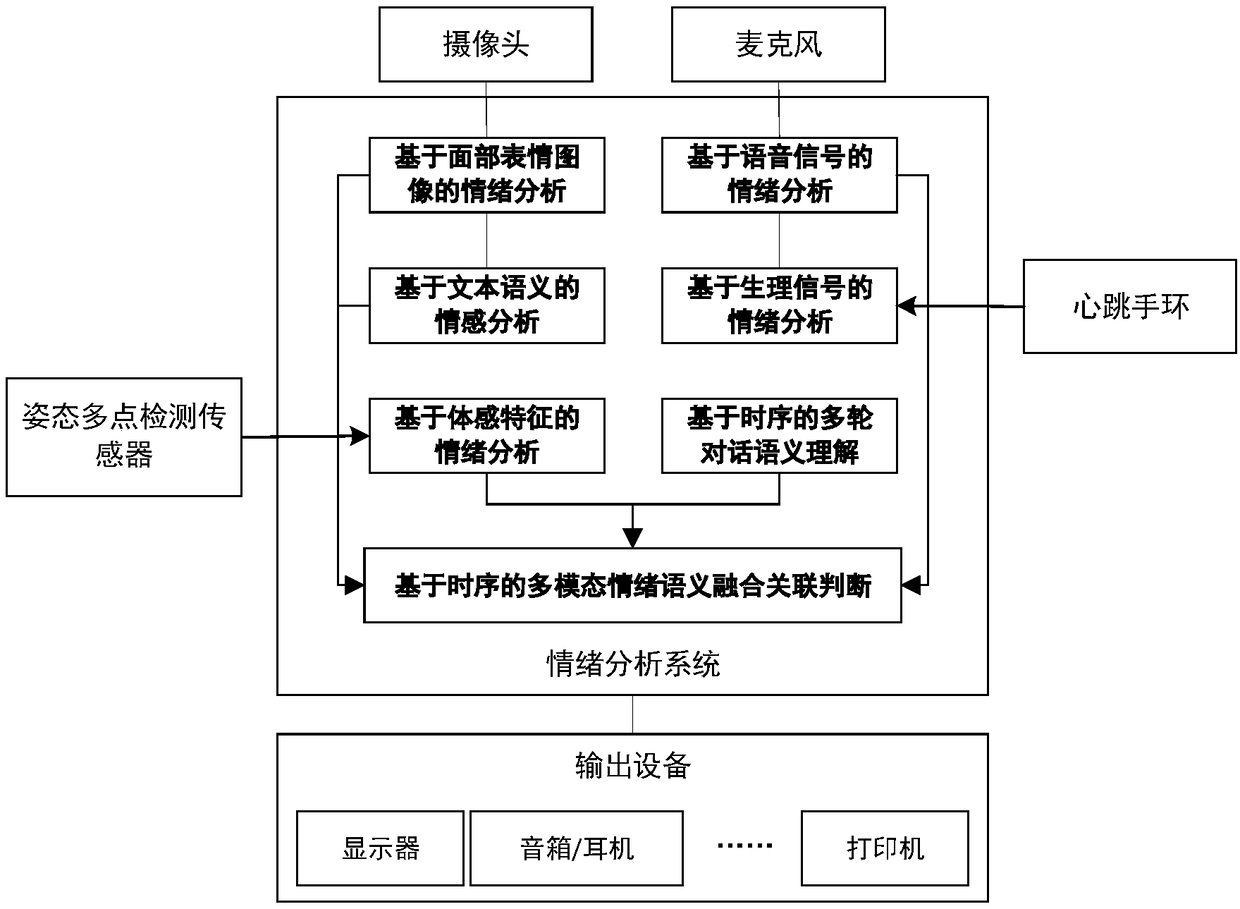

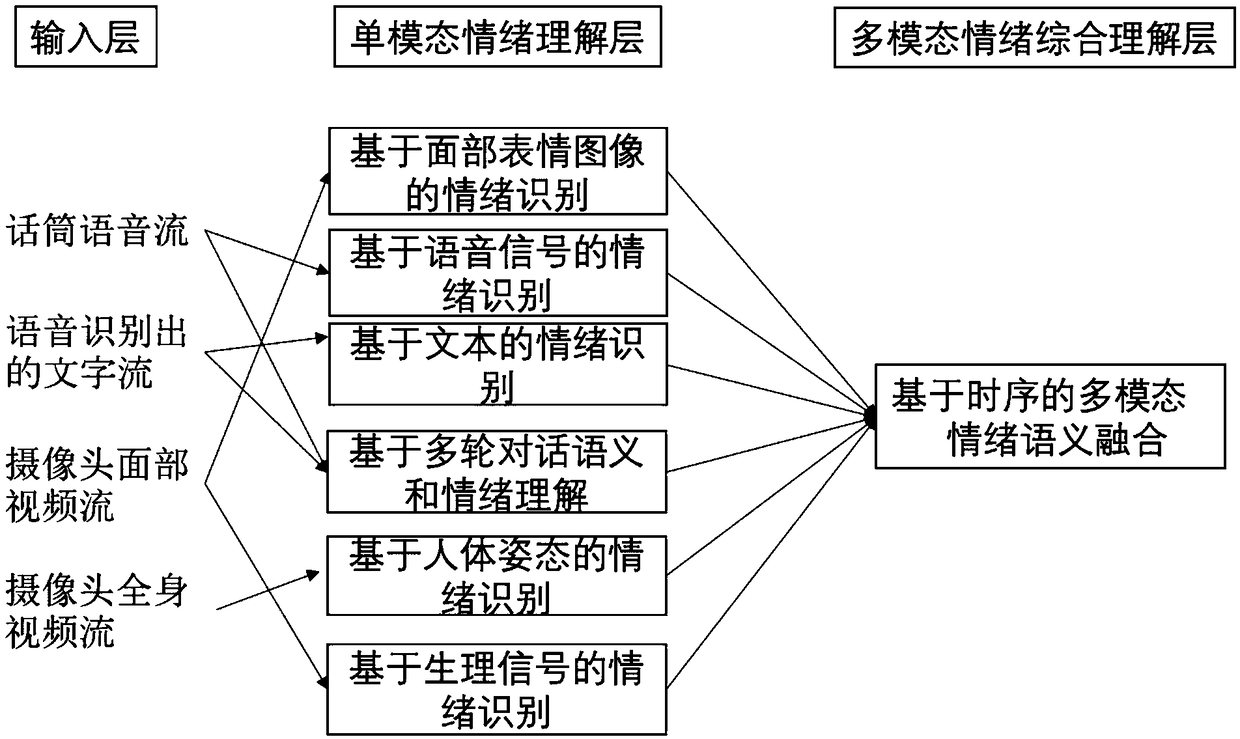

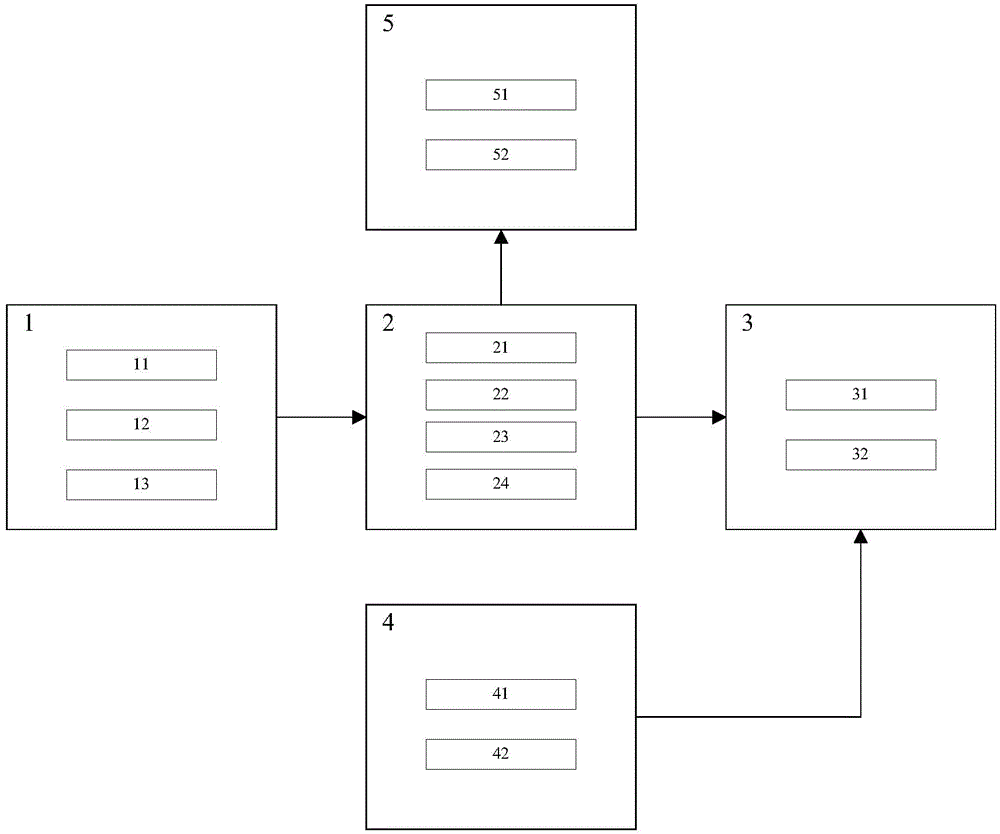

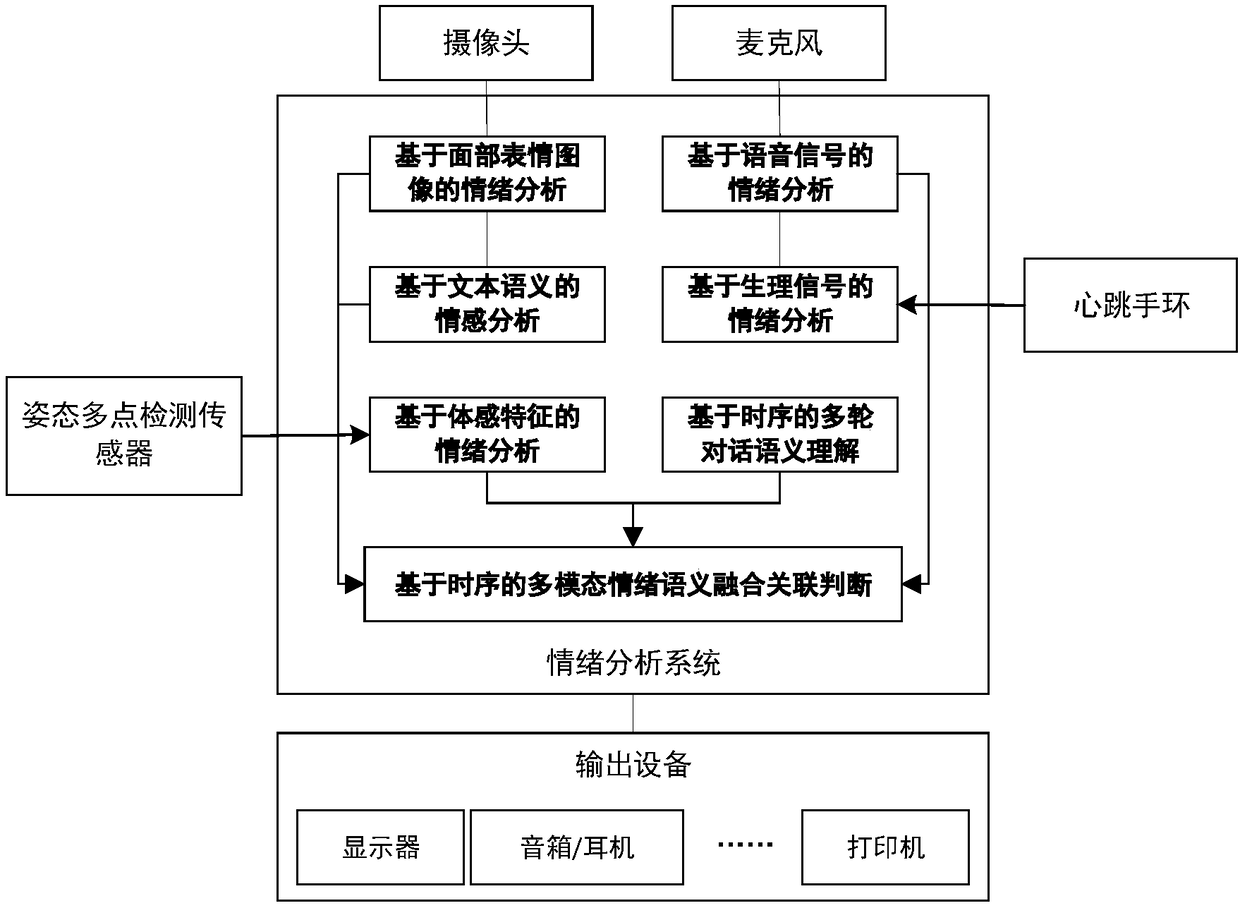

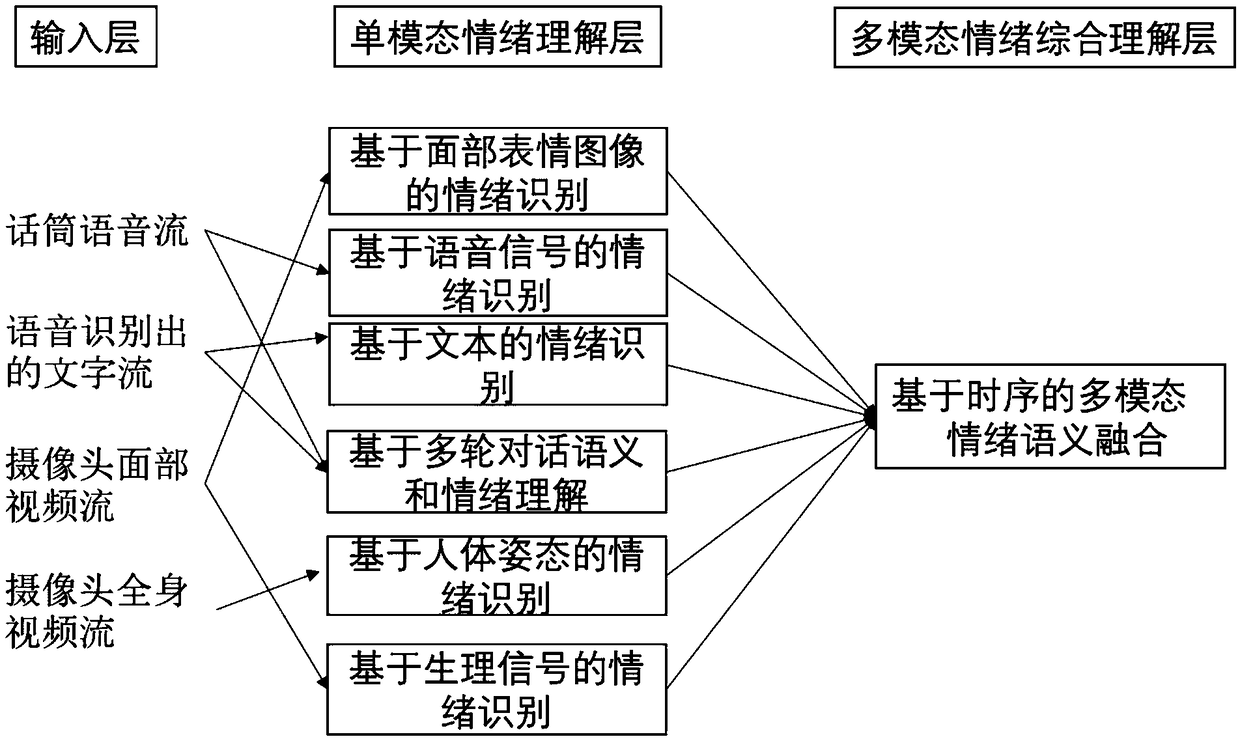

Multi-round dialogue semantic comprehension subsystem based on multi-modal emotion identification system

ActiveCN108877801ALow costImprove ease of useSpeech recognitionAcquiring/recognising facial featuresData acquisitionOutput device

The present invention discloses a multi-round dialogue semantic comprehension subsystem based on a multi-modal emotion identification system. The system comprises a data collection device and an output device. The system further comprises an emotion analysis software system which performs comprehensive analysis and inference of data obtained through the data collection device and finally outputs aresult to the output device; the emotion analysis software system comprises the multi-round dialogue semantic comprehension subsystem based on a multi-modal emotion identification system. The multi-round dialogue semantic comprehension subsystem based on a multi-modal emotion identification system gets through the emotion identification of five single modals, creatively employs a deep neural network to perform comprehensive determination of information of the single modals of the deep neural network through encoding of the neural network and deep association and comprehending so as to greatlyimprove the accuracy, and the multi-round dialogue semantic comprehension subsystem is suitable for most of general inquiry interaction application scenes.

Owner:南京云思创智信息科技有限公司

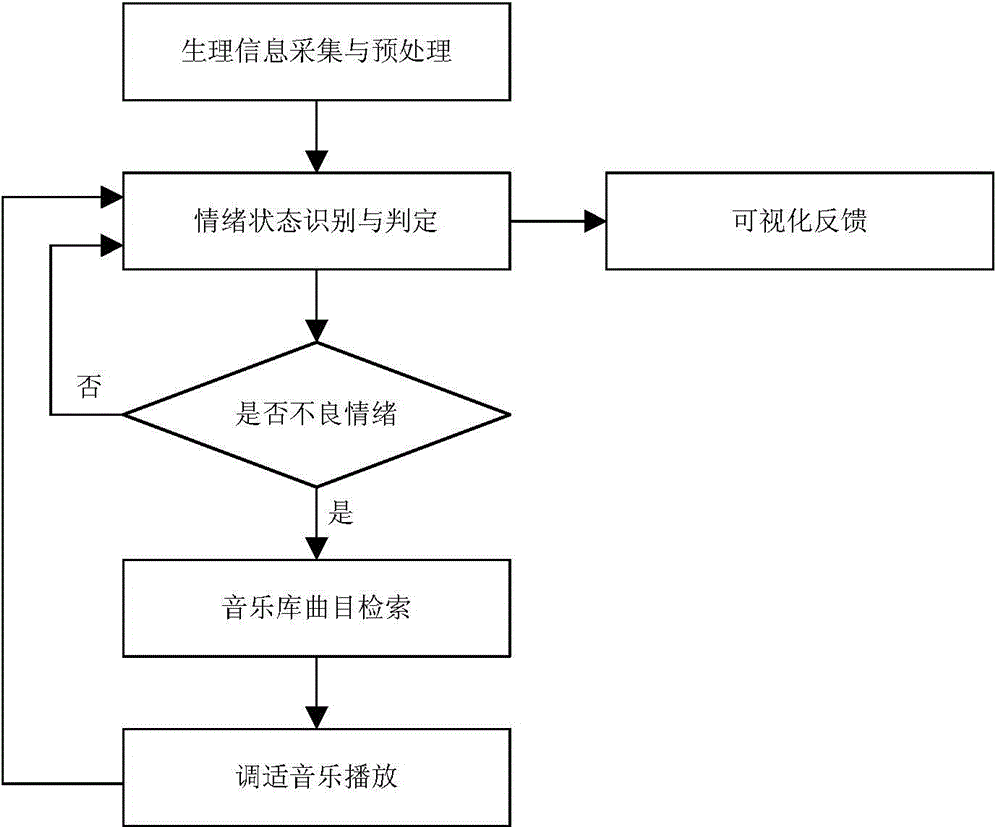

Device and method for adjusting driver emotional state through different affective characteristic music

InactiveCN104548309AAdjust emotional stateEmotional state judgment is accurateDiagnostic recording/measuringSensorsDriver/operatorCurrent driver

The invention discloses a device for adjusting driver emotional state through different affective characteristic music. The device comprises a music playing unit, a driver physiological information acquisition unit and a control unit. The driver physiological information acquisition unit is used for detecting driver heart rate, skin temperature information and skin resistance information. The control unit is used for identifying and determining current driver emotional state through an emotion identification algorithm and an emotion decision algorithm whether the emotional state is good for safe driving behaviors according to the information acquired by the driver physiological information acquisition unit. The music playing unit is used for searching and playing adapted music tracks according to the current driver emotional state. The invention further discloses a method for adjusting driver emotional state through different affective characteristic music. The driver emotional state is monitored in real time through the driver physiological information and can be adjusted by selecting corresponding affective music from a music bank automatically, and accordingly, the driving behavior is improved.

Owner:ZHEJIANG UNIV OF TECH

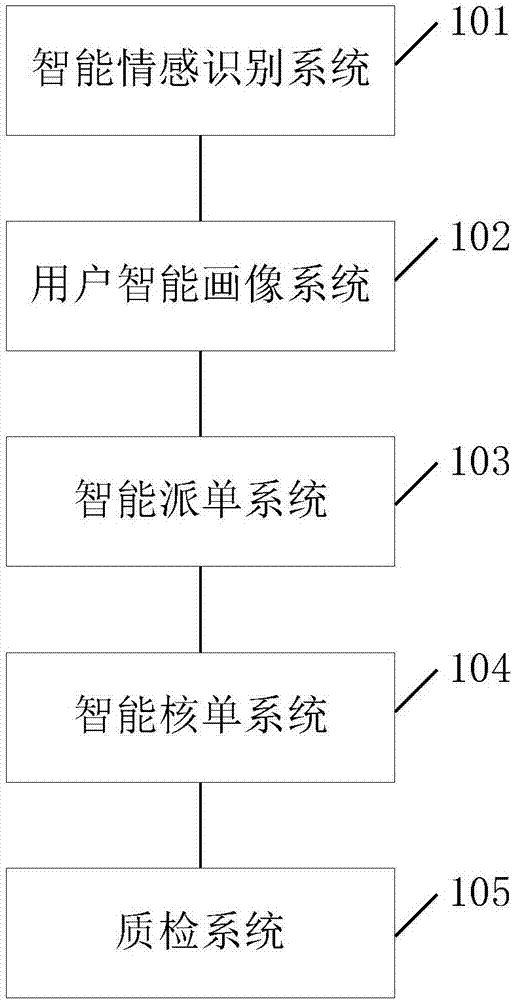

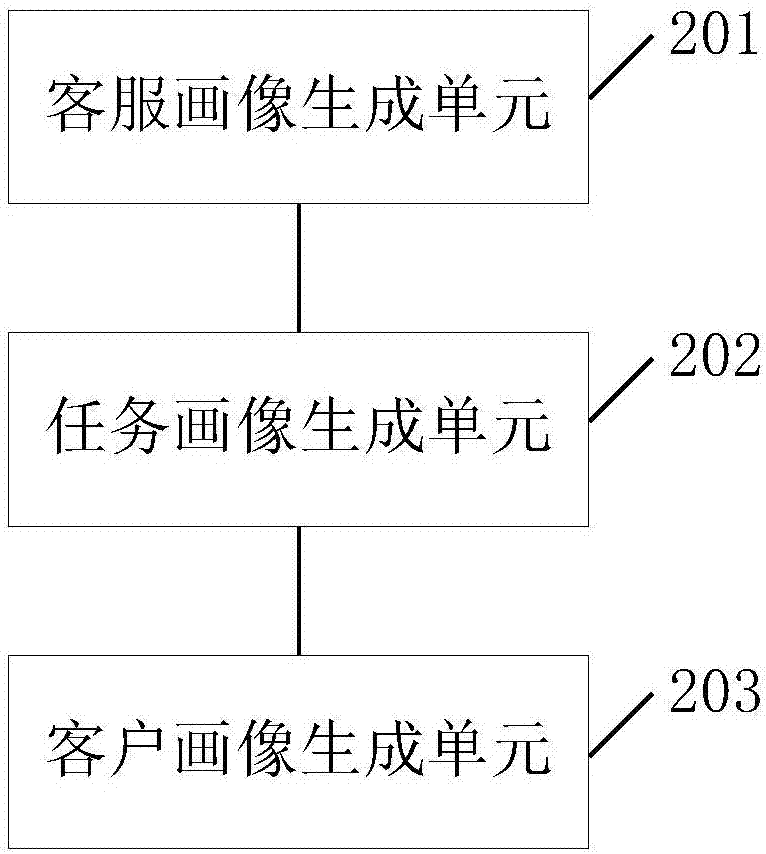

Telemarketing customer service system based on artificial intelligence and business operation mode

InactiveCN107330706ARealize precision marketingRealize intelligent dispatchBuying/selling/leasing transactionsSpeech recognitionPrecision marketingIntelligence analysis

The invention provides a telemarketing customer service system based on artificial intelligence and a business operation mode. According to records of various customer services and historical clients in a platform, a character model of every customer service and a character model of every historical client are generated based on emotion identification system. By adopting an artificial intelligence analysis technology, multidimensional analysis of every customer service, every task, and every client is carried out to generate the intelligent portrait of every customer service, the intelligent portrait of every task, and the intelligent portrait of every client are generated. The intelligent matching of the intelligent portrait of every customer service, the intelligent portrait of every task, and the intelligent portrait of every client is carried out according to a preset rule, and then a plurality of orders are generated, and accurate marketing among the customer services, the tasks, and the clients is realized, and therefore personalized service is provided for the clients. After the orders are finished, the intelligent vouching of the finished orders by adopting a speech recognition system and a preset vouching strategy, real-time quality inspection and real-time billing are realized, and finally, work load of workers is effectively reduced, vouching time is shortened, and the accuracy of the quality inspection is improved.

Owner:SHANGHAI HANGDONG TECH CO LTD

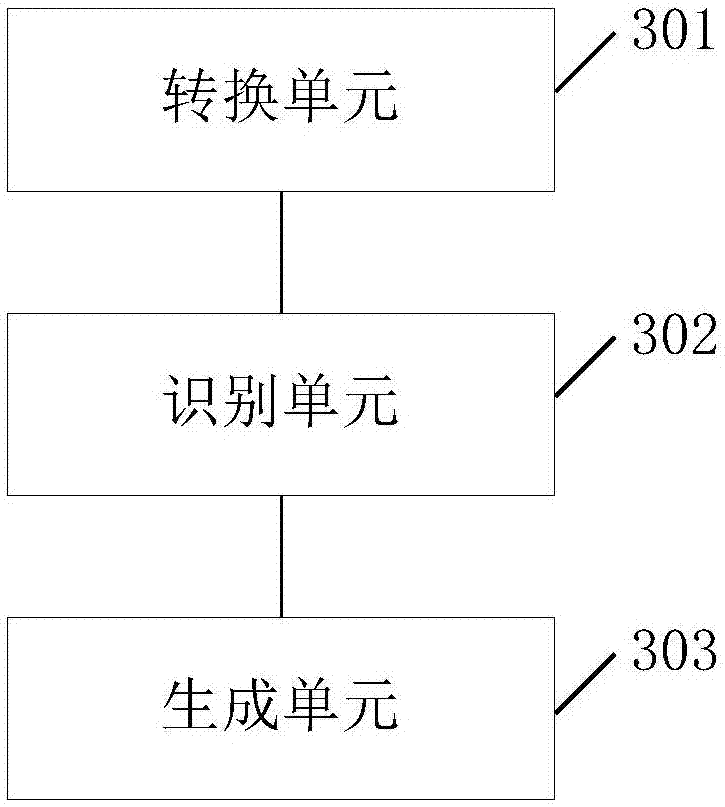

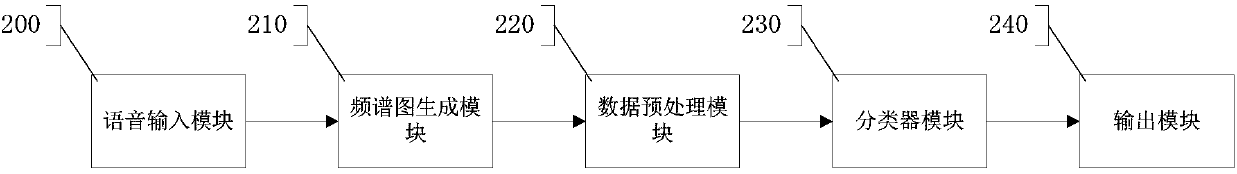

Voice signal analysis sub-system based on multi-modal emotion identification system

ActiveCN108899050ALow costImprove ease of useSpeech recognitionNeural architecturesAnalysis ReasonData acquisition

The invention discloses a voice signal analysis sub-system based on a multi-modal emotion identification system. The sub-system comprises a data acquisition device and an output device and is characterized in that the sub-system also comprises an emotion analysis software system; the emotion analysis software system carries out comprehensive analysis reasoning on data obtained by the data acquisition device and the outputs a result to the output device finally; and the emotion analysis software system comprises a voice-signal emotion identification sub-system. According to the invention, emotion identification based on five single modes is ingenuously provided; a depth neural network is creatively used for carrying out comprehensive judgment on multiple single modes after the single modesare subjected to coding of a neural network and deep association and understanding; accuracy is greatly improved; and the sub-system is suitable for most of general inquiry interactive application scenes.

Owner:南京云思创智信息科技有限公司

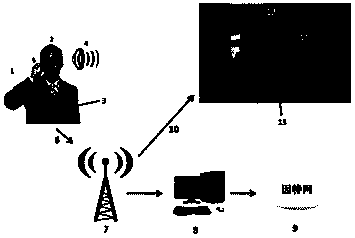

Speech emotion identification method applied to mobile terminal

InactiveCN104036776AStrong noveltyEasy to useSubstation equipmentSpeech recognitionPart of speechData acquisition

The invention provides a method for extracting speech emotion information. The method is characterized in that speech data are acquired or transmitted by a mobile phone, a computer and a recording pen by virtue of a data acquisition or communication process, and the emotions of a speaker are identified by use of speaker unrelated and speaker related methods. The speaker unrelated emotion information extraction method adopted in the method for extracting speech emotion information is composed of two parts of speech database recording and speech emotion modeling, wherein the speech database recording part is taken as the reference for training an emotion identifier and comprises at least one emotion speech database; the speech emotion modeling part is used for establishing a speech emotion model as the emotion identifier. The accuracy rate of the speaker related emotion information extraction method adopted in the method for extracting speech emotion information can reach 80%, and the speaker related emotion information extraction method is used for identifying the emotions in a speech signal by adjusting internal parameters in a statistical manner. The method is capable of identifying other complex emotions by use of a group of special parameters describing basic emotions.

Owner:毛峡 +1

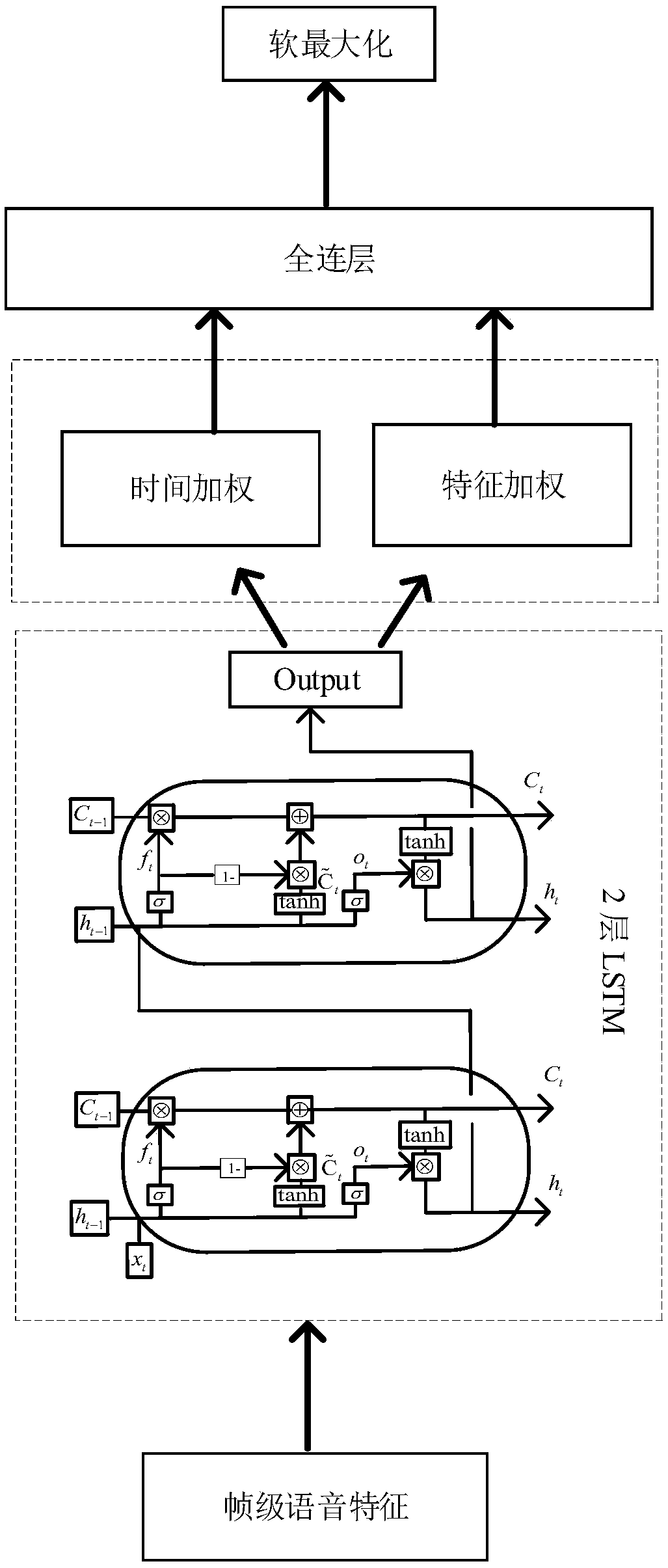

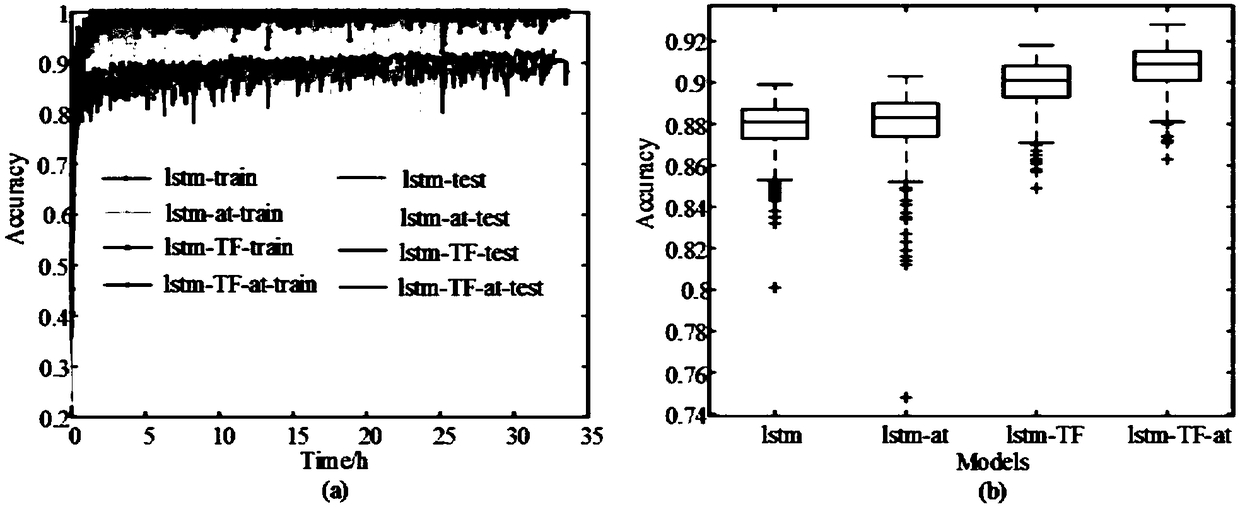

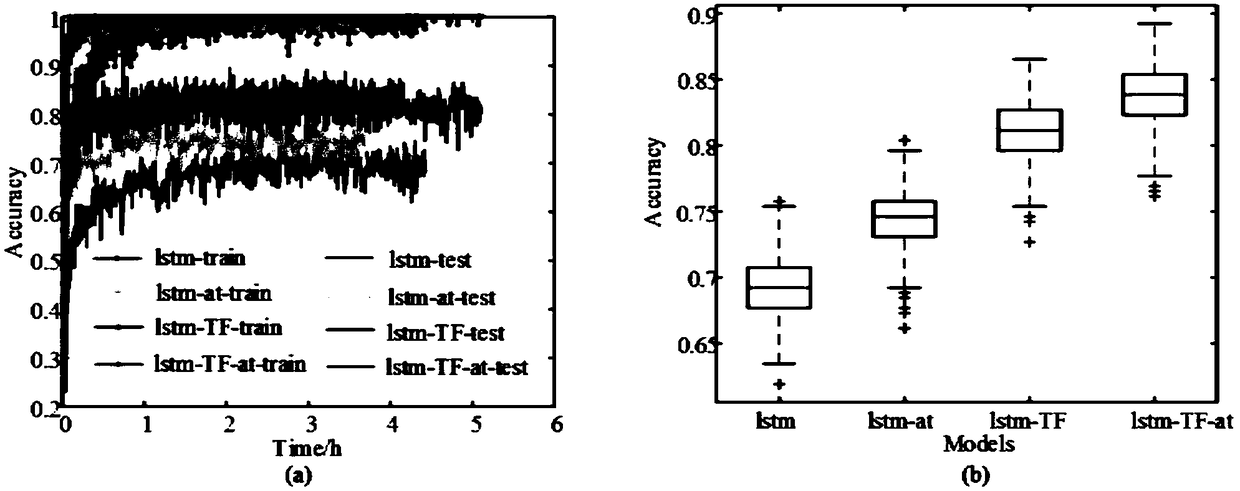

Attention mechanism based voice emotion identification method

ActiveCN109285562AImprove performanceReduce operational complexityInternal combustion piston enginesSpeech recognitionNetwork modelEmotion identification

The invention discloses an attention mechanism based voice emotion identification method. The method comprises the following steps that voice characteristics with sequential information is extracted from original voice data; an LSTM model capable of processing length variable data is established; a forgetting door calculation manner in the LSTM model is optimized via the attention mechanism; the optimized LSTM model is output, and attention in the time and characteristic dimensions is weighted; a full connection layer and a soft maximal layer are added to the LSTM model to form a complete emotion identification network model; and the emotion identification network model is trained, and an identification performance of the emotion identification network model is evaluated. The attention mechanism based voice emotion identification method can be used to improve the voice emotion identification performance, the method is ingenious and novel, and the method has good application prospects.

Owner:SOUTHEAST UNIV

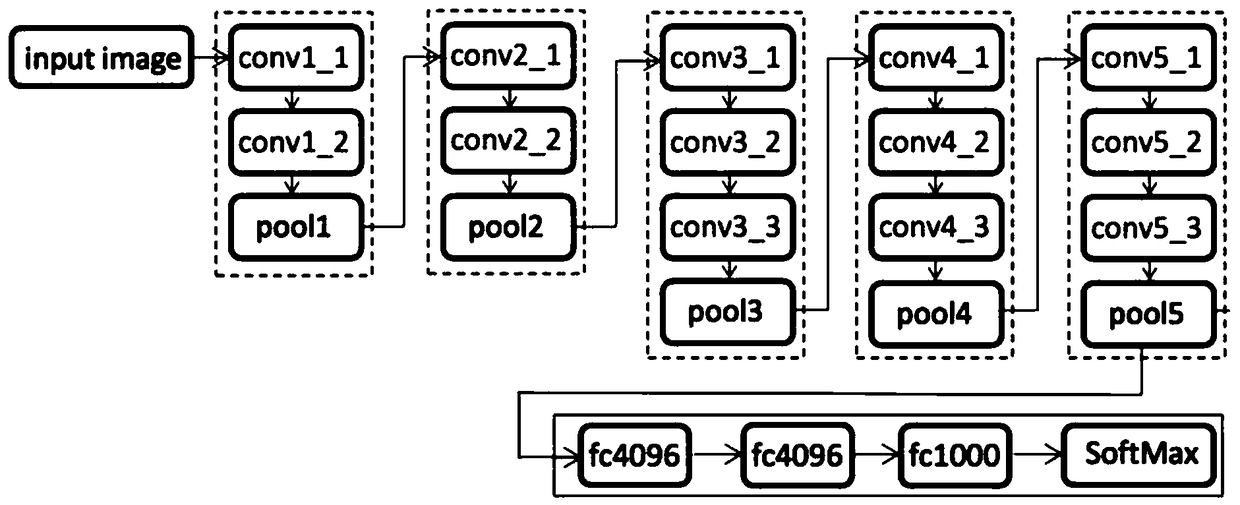

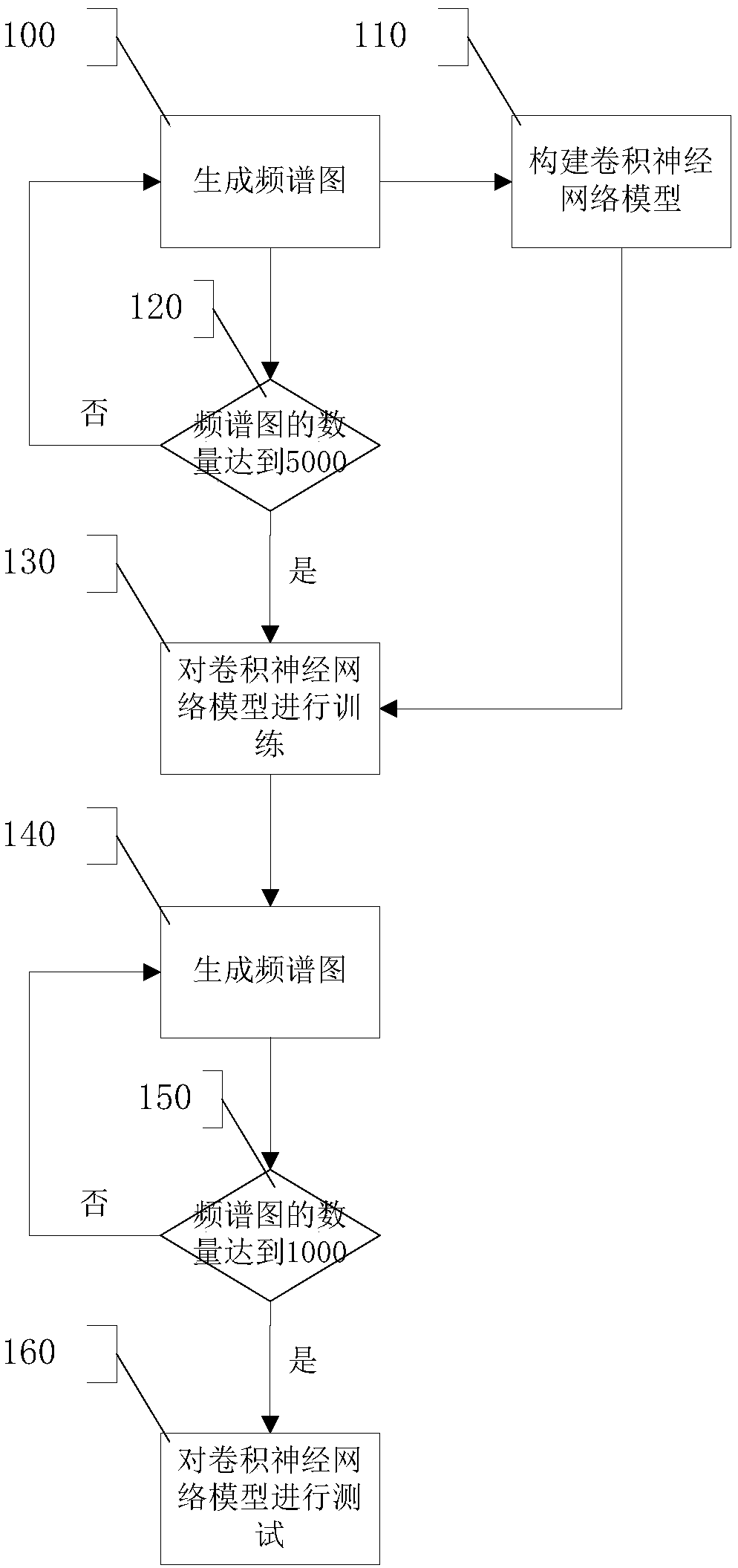

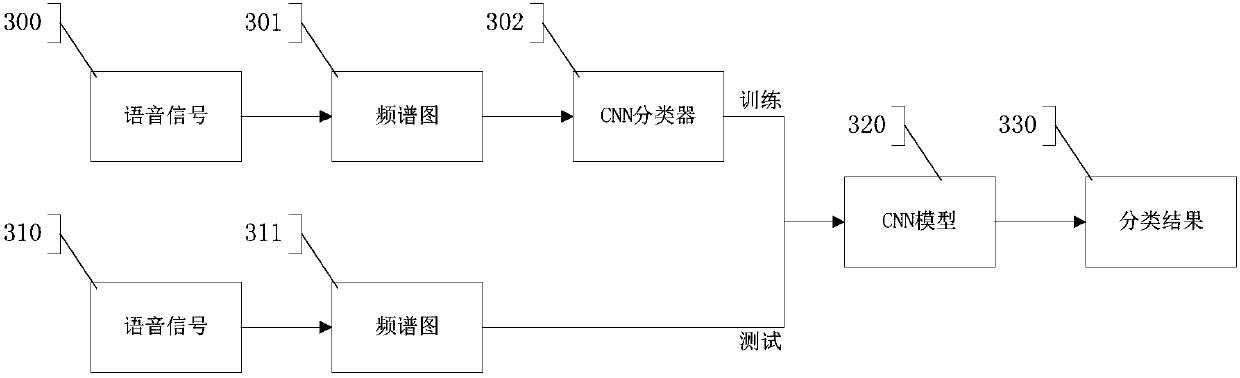

Method of using spectrograms and deep convolution neural network (CNN) to identify voice emotion

InactiveCN107705806AEasy to learnImprove speech recognition performanceSpeech analysisFrequency spectrumNetwork model

The present invention provides a method of using spectrograms and a deep convolution neural network to identify the voice emotion. The method comprises the following steps of generating the spectrograms according to the voice signals; constructing a deep convolution neural network model; using a lot of spectrograms as the input, training and optimizing the deep convolution neural network model; testing and optimizing the trained deep convolution neural network model. According to the present invention, a new voice emotion identification method is used to convert the voice signals into the images to process, and by combining the CNN, enables the identification capability to be improved effectively.

Owner:BEIJING UNION UNIVERSITY

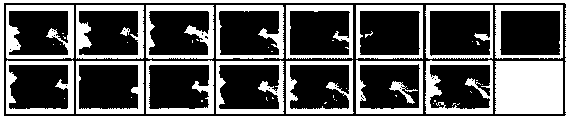

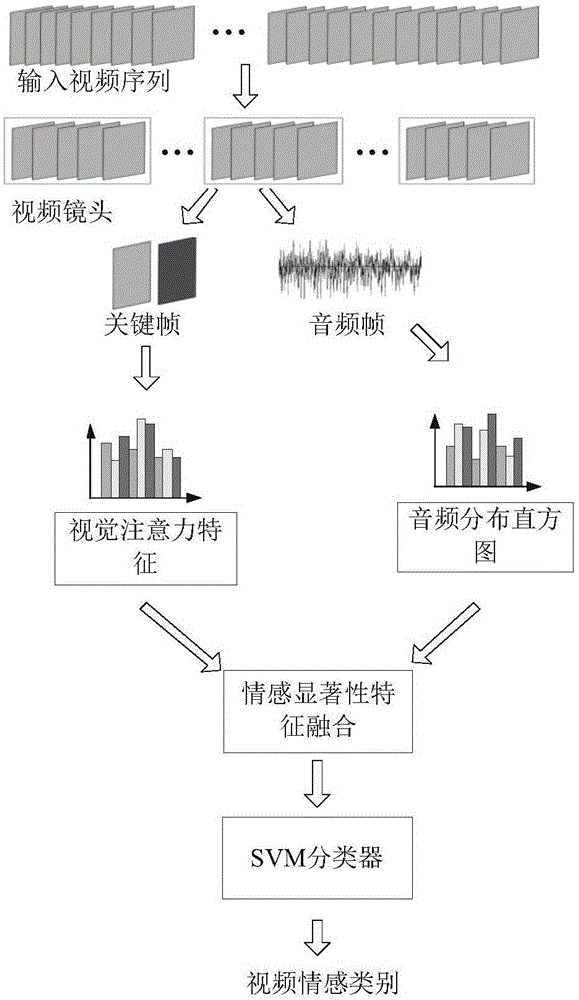

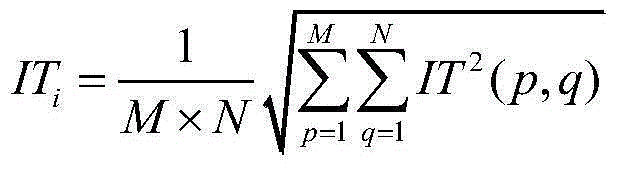

Video emotion identification method based on emotion significant feature integration

ActiveCN105138991ADiscriminatingEasy to implementCharacter and pattern recognitionIntegration algorithmVision based

The invention discloses a video emotion identification method based on emotion significant feature integration. A training video set is acquired, and video cameras are extracted from a video. An emotion key frame is selected for each video camera. The audio feature and the visual emotion feature of each video camera in the training video set are extracted. The audio feature is based on a word package model and forms an emotion distribution histogram feature. The visual emotion feature is based on a visual dictionary and forms an emotion attention feature. The emotion attention feature and the emotion distribution histogram feature are integrated from top to bottom to form a video feature with emotion significance. The video feature with emotion significance is sent into an SVM classifier for training, wherein the video feature is formed in the training video set. Parameters of a training model are acquired. The training model is used for predicting the emotion category of a tested video. An integration algorithm provided by the invention has the advantages of simple realization, mature and reliable trainer and quick prediction, and can efficiently complete a video emotion identification process.

Owner:SHANDONG INST OF BUSINESS & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com