Patents

Literature

108results about How to "Interactive nature" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

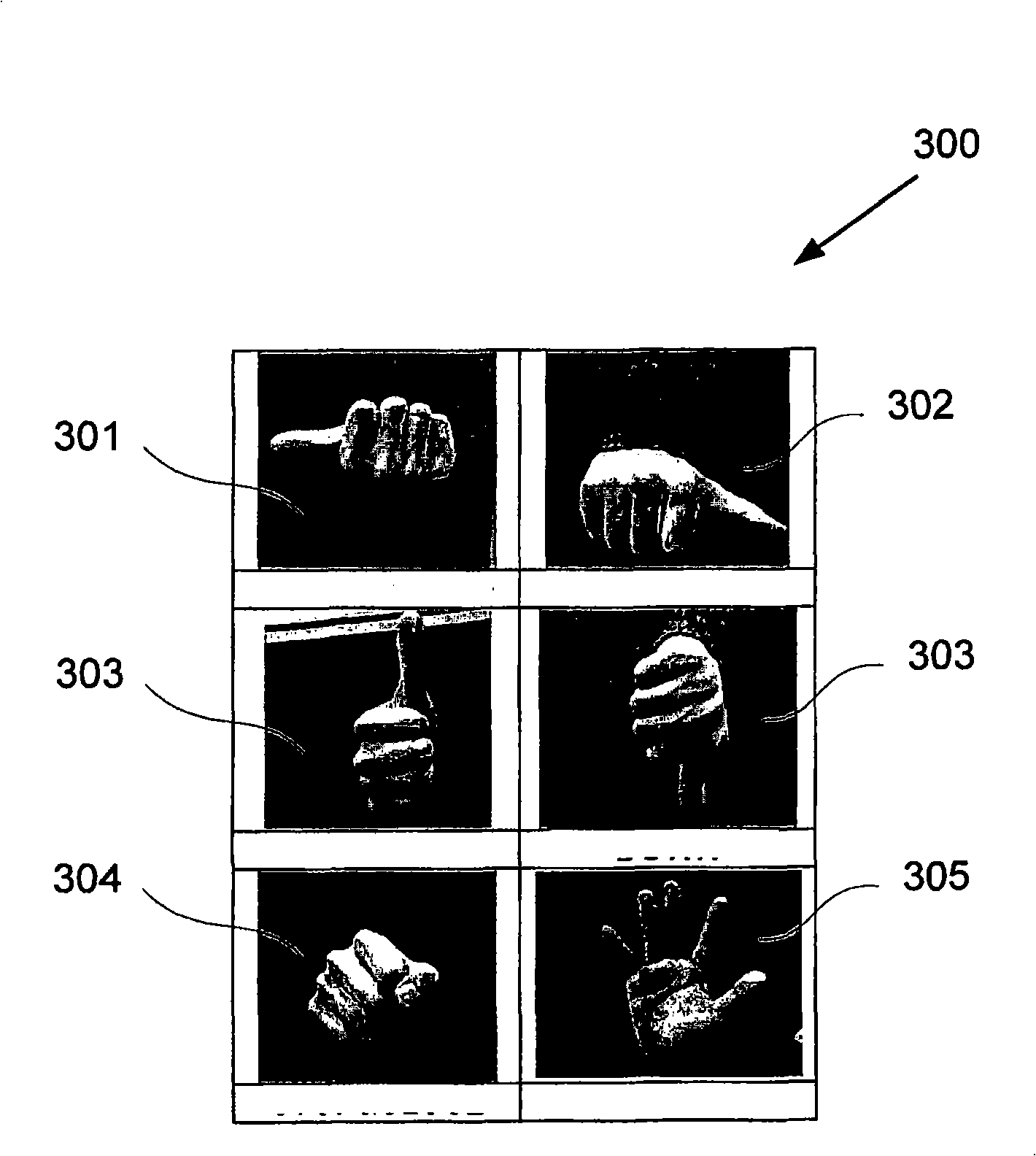

Man-machine interactive system and real-time gesture tracking processing method for same

InactiveCN102426480AReal-time hand detectionReal-time trackingInput/output for user-computer interactionCharacter and pattern recognitionVision algorithmsMan machine

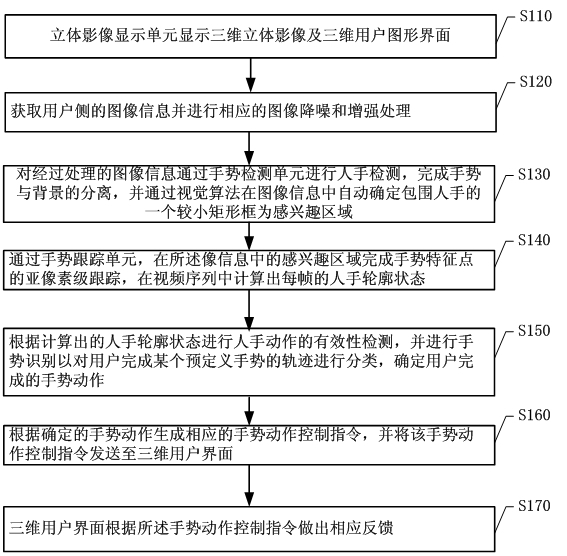

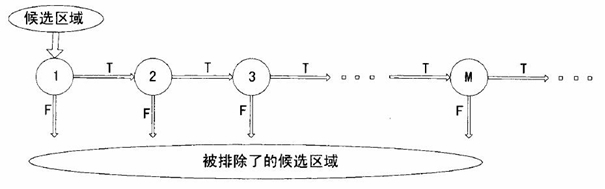

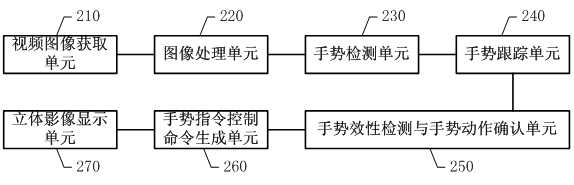

The invention discloses a man-machine interactive system and a real-time gesture tracking processing method for the same. The method comprises the following steps of: obtaining image information of user side, executing gesture detection by a gesture detection unit to finish separation of a gesture and a background, and automatically determining a smaller rectangular frame which surrounds the hand as a region of interest in the image information through a vision algorithm; calculating the hand profile state of each frame in a video sequence by a gesture tracking unit; executing validity check on hand actions according to the calculated hand profile state, and determining the gesture action finished by the user; and generating a corresponding gesture action control instruction according to the determined gesture action, and making corresponding feedbacks by a three-dimensional user interface according to the gesture action control instruction. In the system and the method, all or part of gesture actions of the user are sensed so as to finish accurate tracking on the gesture, thus, a real-time robust solution is provided for an effective gesture man-machine interface port based on a common vision sensor.

Owner:KONKA GROUP

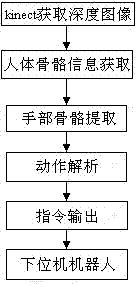

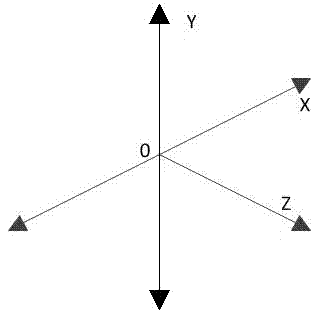

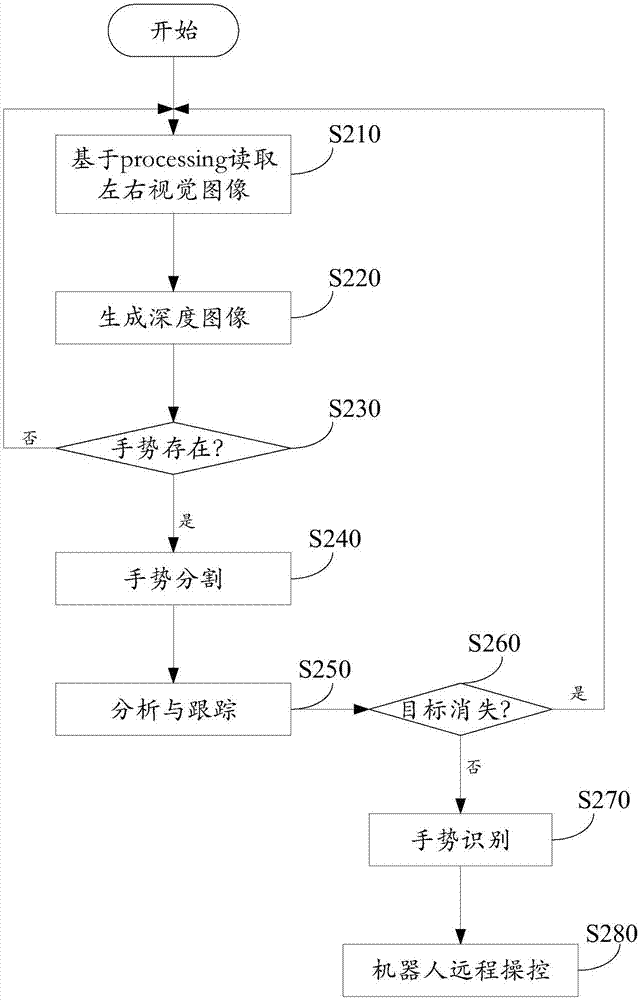

Man-computer interaction method for intelligent human skeleton tracking control robot on basis of kinect

InactiveCN103399637AInteractive natureInput/output for user-computer interactionGraph readingHuman bodyDepth of field

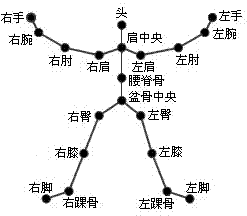

The invention provides a man-computer interaction method for an intelligent human skeleton tracking control robot on the basis of kinect. The man-computer interaction method includes the steps of detecting actions of an operator through a 3D depth sensor, obtaining data frames, converting the data frames into an image, splitting objects and background environments similar to a human body in the image, obtaining depth-of-field data, extracting human skeleton information, identifying different positions of the human body, building a 3D coordinate of joints of the human body, identifying rotation information of skeleton joints of the two hands of the human body, identifying which hand of the human body is triggered according to catching of changes of angles of the different skeleton joints, analyzing different action characteristics of the operator, using corresponding characters as control instructions which are sent to a robot of a lower computer, receiving and processing the characters through an AVR single-chip microcomputer master controller, controlling the robot of the lower computer to carry out corresponding actions, and achieving man-computer interaction of the intelligent human skeleton tracking control robot on the basis of the kinect. According to the method, restraints of traditional external equipment on man-computer interaction are eliminated, and natural man-computer interaction is achieved.

Owner:NORTHWEST NORMAL UNIVERSITY

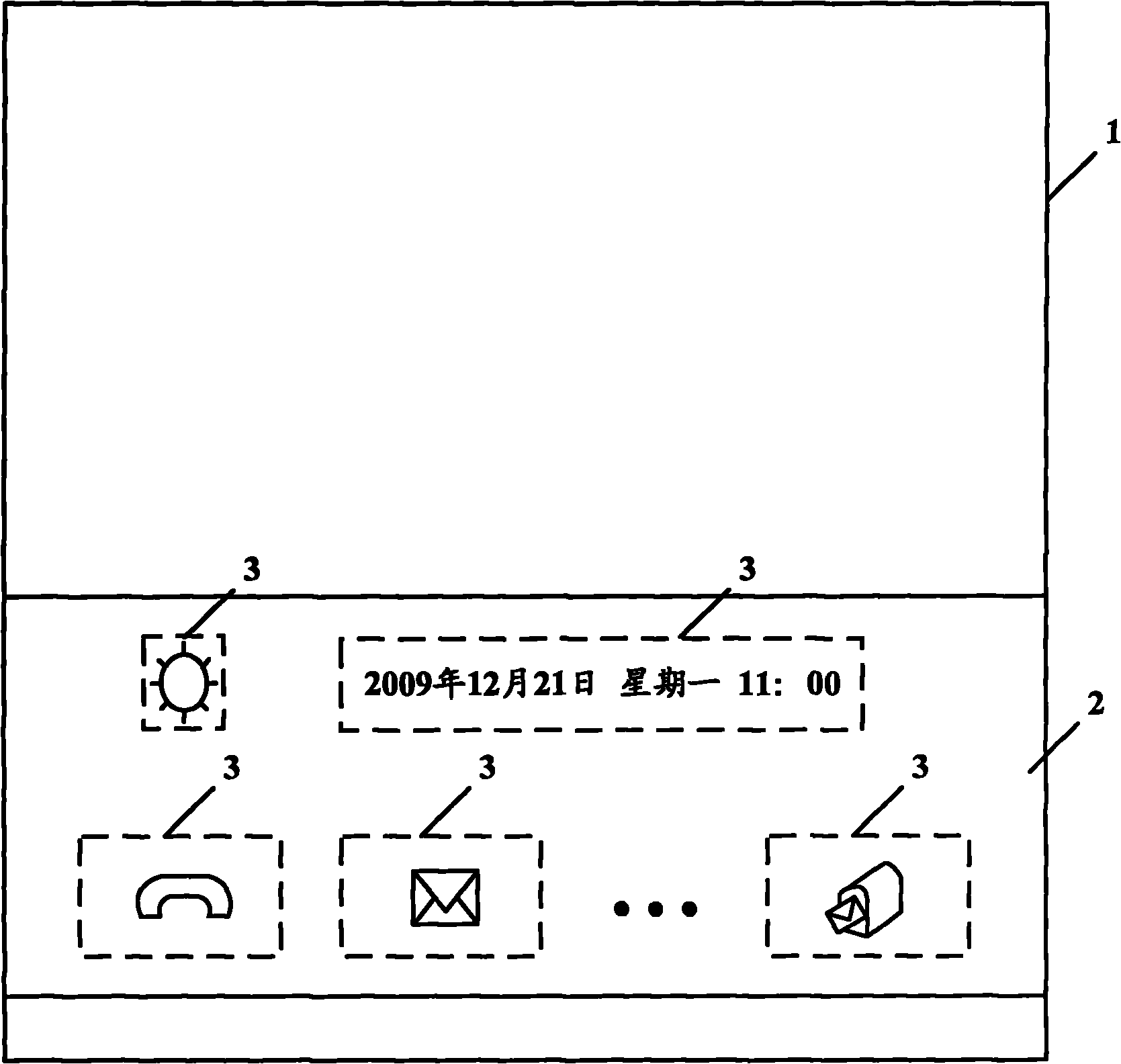

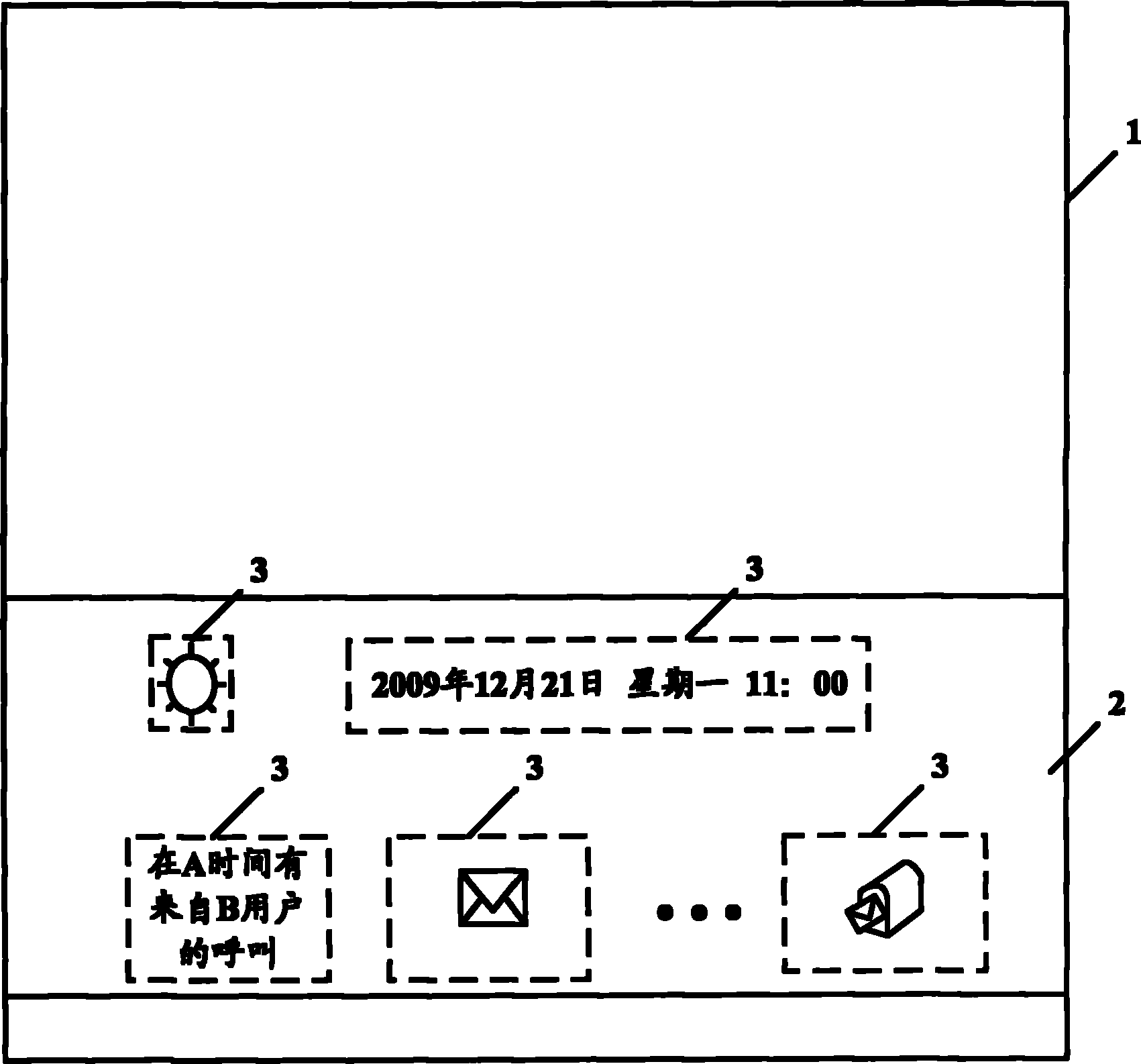

Portable electronic equipment provided with touch screen and control method thereof

ActiveCN102109945AInformativeReduce power consumptionInput/output processes for data processingTouchscreenEmbedded system

The invention provides portable electronic equipment provided with a touch screen and a control method thereof. The portable electronic equipment includes a first display processing module and an unlocking control module, wherein the display processing module is used for displaying an unlocking strip at a first area of the touch screen when the portable electronic equipment stays in the locked state; and the unlocking control module is used for releasing the locking operation after the unlocking strip is drawn by a pointer. The invention enables a user to acquire information in the locked state, and meanwhile, in term of a terminal, the terminal is not required to change states for providing information to the user, so that the power consumption of the terminal can be lowered.

Owner:LENOVO (BEIJING) LTD

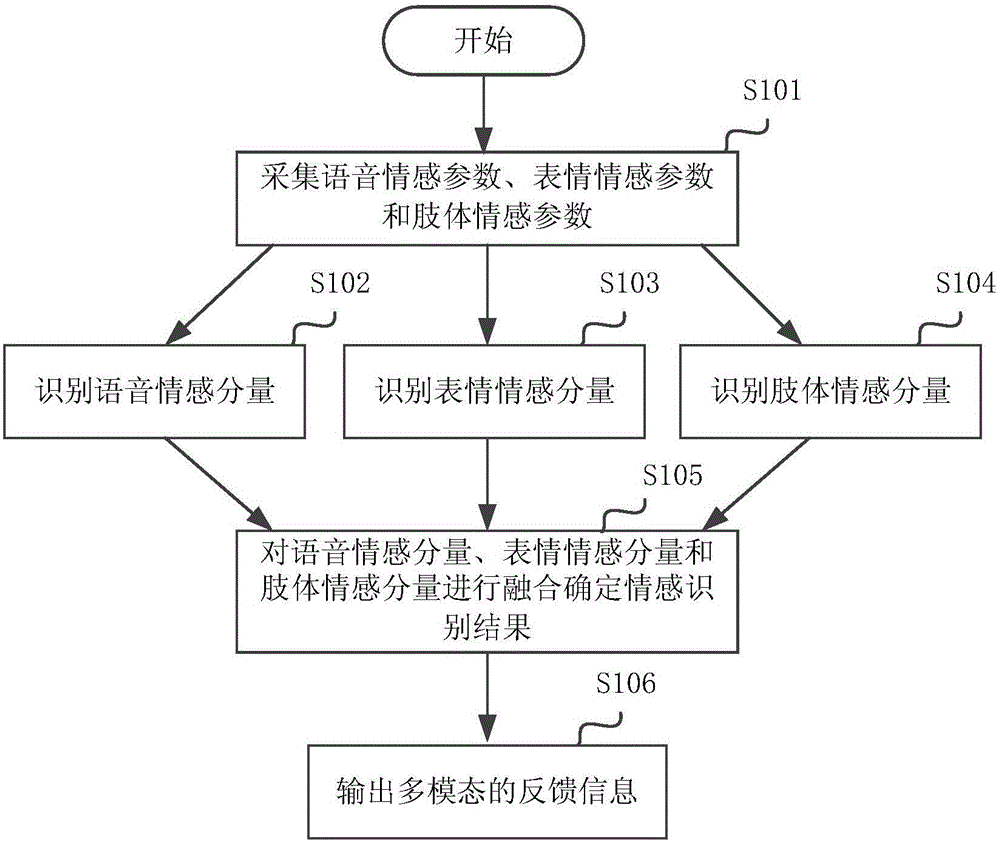

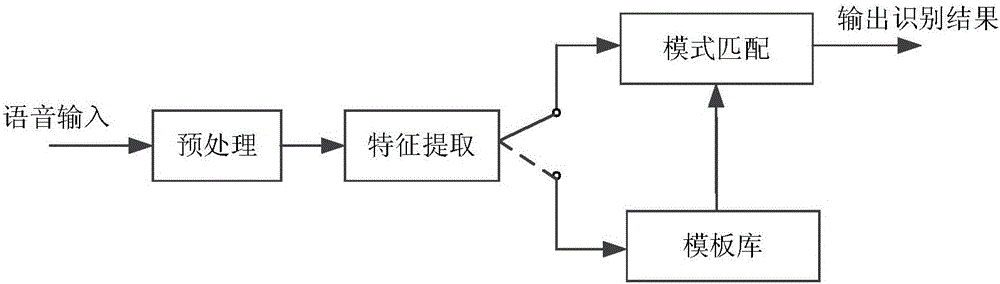

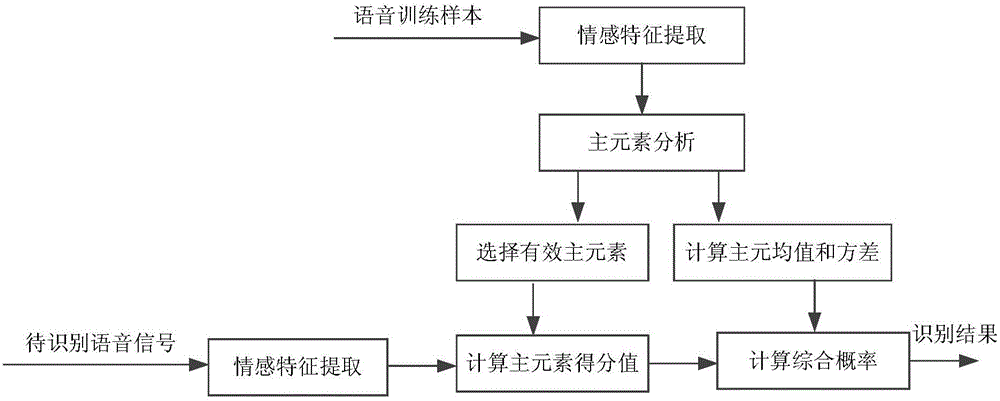

Man-machine interaction method and device based on emotion system, and man-machine interaction system

InactiveCN105739688AStrong interactionInteractive natureInput/output for user-computer interactionGraph readingPattern recognitionEmotion identification

The invention discloses a man-machine interaction method and device based on an emotion system, and a man-machine interaction system. The method comprises following steps of collecting voice emotion parameters, expression emotion parameters and body emotion parameters; calculating to obtain a to-be-determined voice emotion according to the voice emotion parameters; selecting a voice emotion most proximate to the to-be-determined voice emotion from preset voice emotions as a voice emotion component; calculating to obtain a to-be-determined expression emotion according to the expression emotion parameters; selecting an expression emotion most proximate to the to-be-determined expression emotion from preset expression emotions as an expression emotion component; calculating to obtain a to-be-determined body emotion according to the body emotion parameters; selecting a body emotion most proximate to the to-be-determined body emotion from preset body emotions as a body emotion component; fusing the voice emotion component, the expression emotion component and the body emotion component, thus determining an emotion identification result; and outputting multi-mode feedback information specific to the emotion identification result. According to the method, the device and the system, the man-machine interaction process is more smooth and natural.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

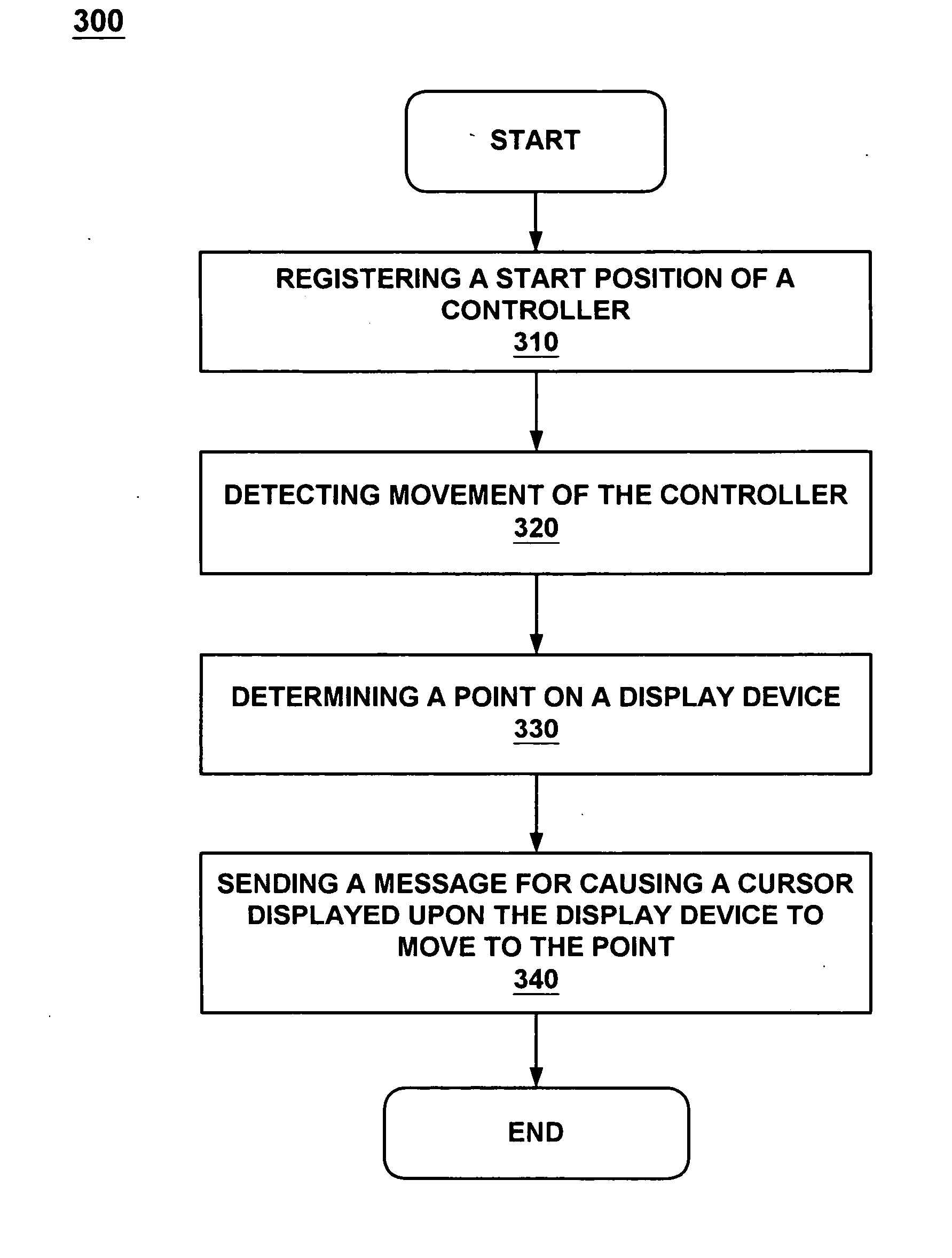

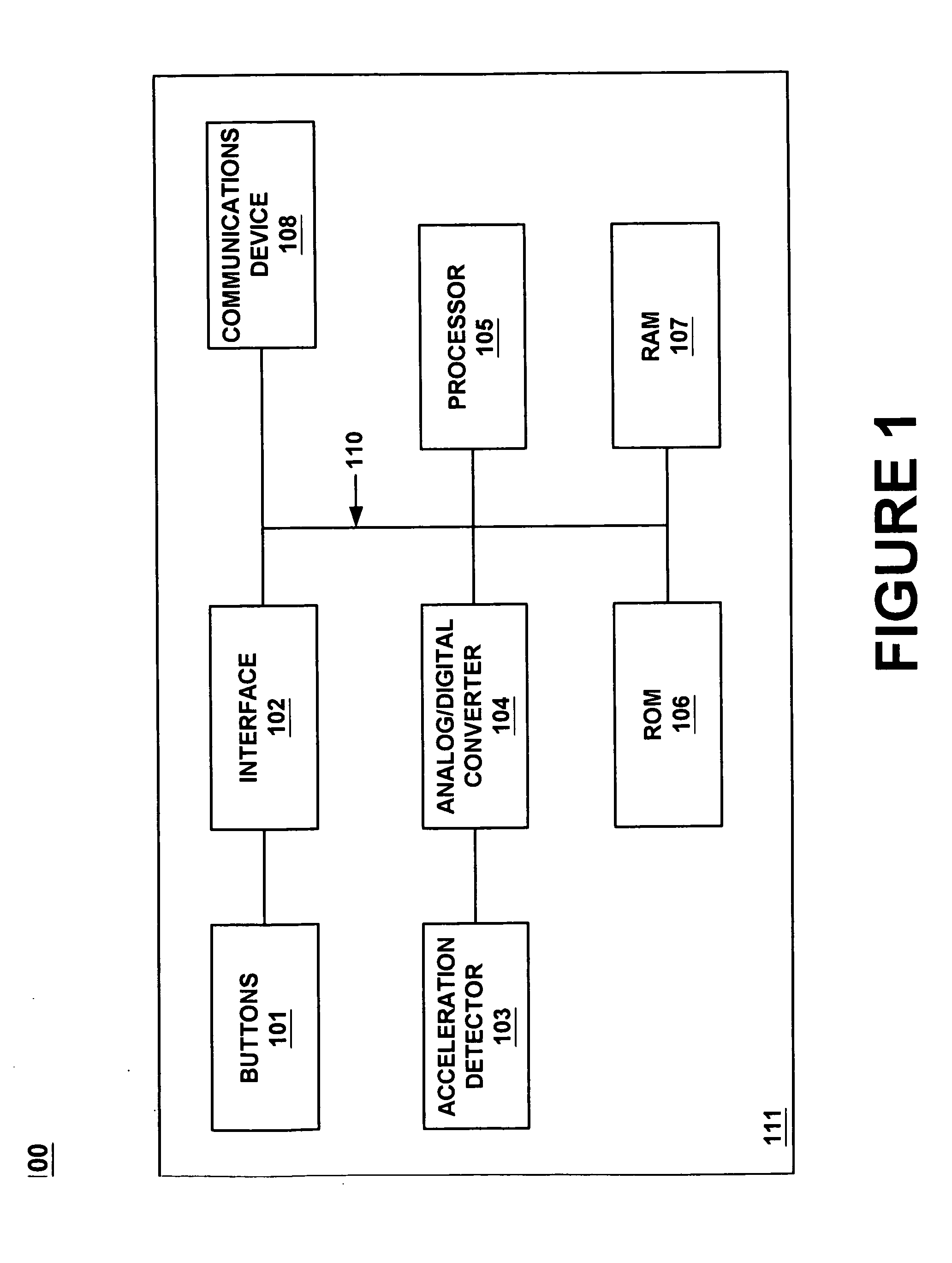

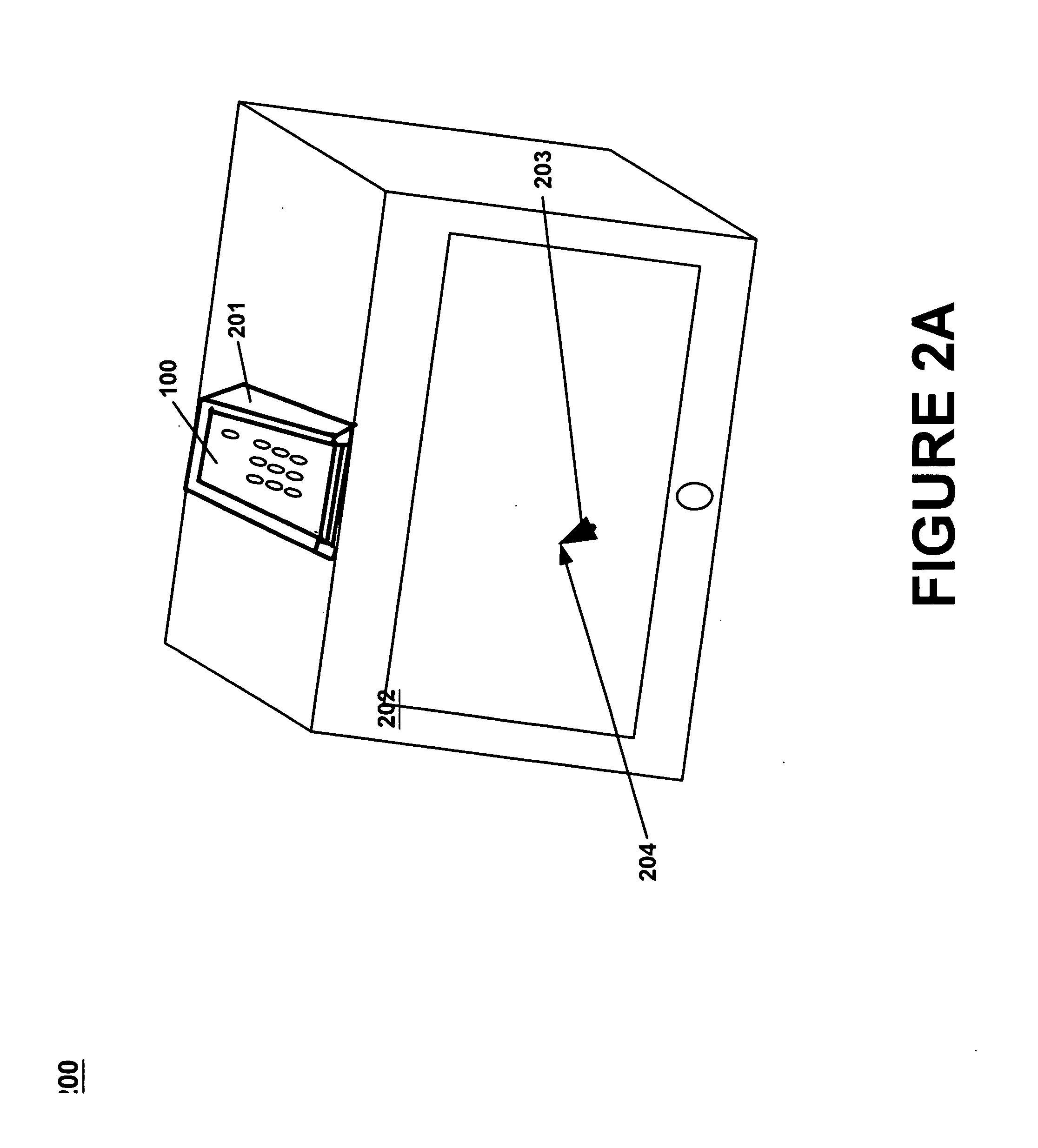

Method and system for controlling a display device

InactiveUS20060139327A1Interactive natureIndoor gamesCathode-ray tube indicatorsDisplay deviceEngineering

Embodiments of the present invention are directed to a method and system for controlling a display device. In one embodiment, a start position is registered which defines the first position and orientation of a remote controller relative to the position of the display device. When movement of the remote controller is detected, a second position and orientation of the remote controller is determined. A point on the display device is determined where a mathematical line extending from the front edge of the remote controller along its longitudinal axis intersects the front plane of the display device. Finally, a message is sent for causing a cursor displayed on the display device to be moved to the point where the mathematical line intersects the front plane of the display device.

Owner:SATURN LICENSING LLC

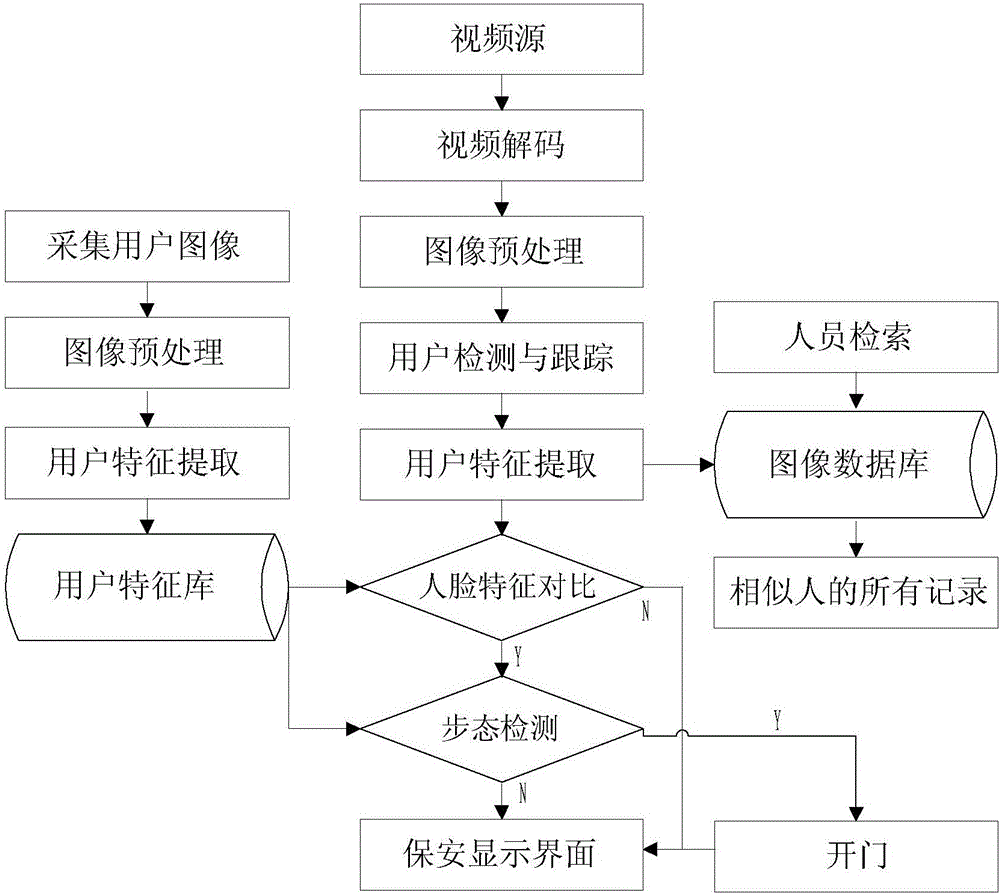

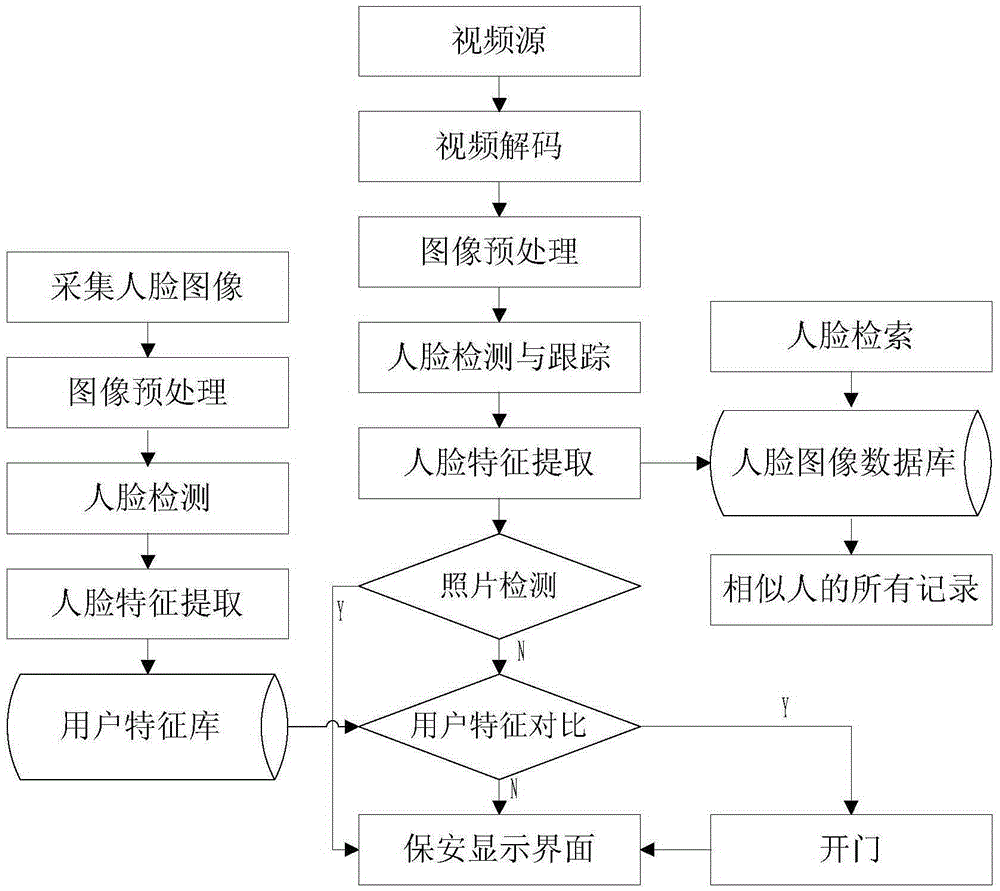

Security protection management method and system based on face features and gait features

InactiveCN105787440AInteractive natureIndividual entry/exit registersSpoof detectionContact typeGait

The invention provides a security protection management method and system based on face features and gait features. The method comprises establishing a user feature database, extracting image data of video frames, extracting user features, determining valid users and invalid users, and the like. On the basis of face identification, the authenticity and the validness of the users are further verified through gaits, and accuracy of identity identification can be provided. The system is realized based on the method and comprises a user feature database model, a video frame image data extraction module, a user feature extraction module, a valid user determining module, a valid user verification module and the like. Through non-contact type face identification and gait identification, convenience is brought to security protection management.

Owner:SHENZHEN SENSETIME TECH CO LTD

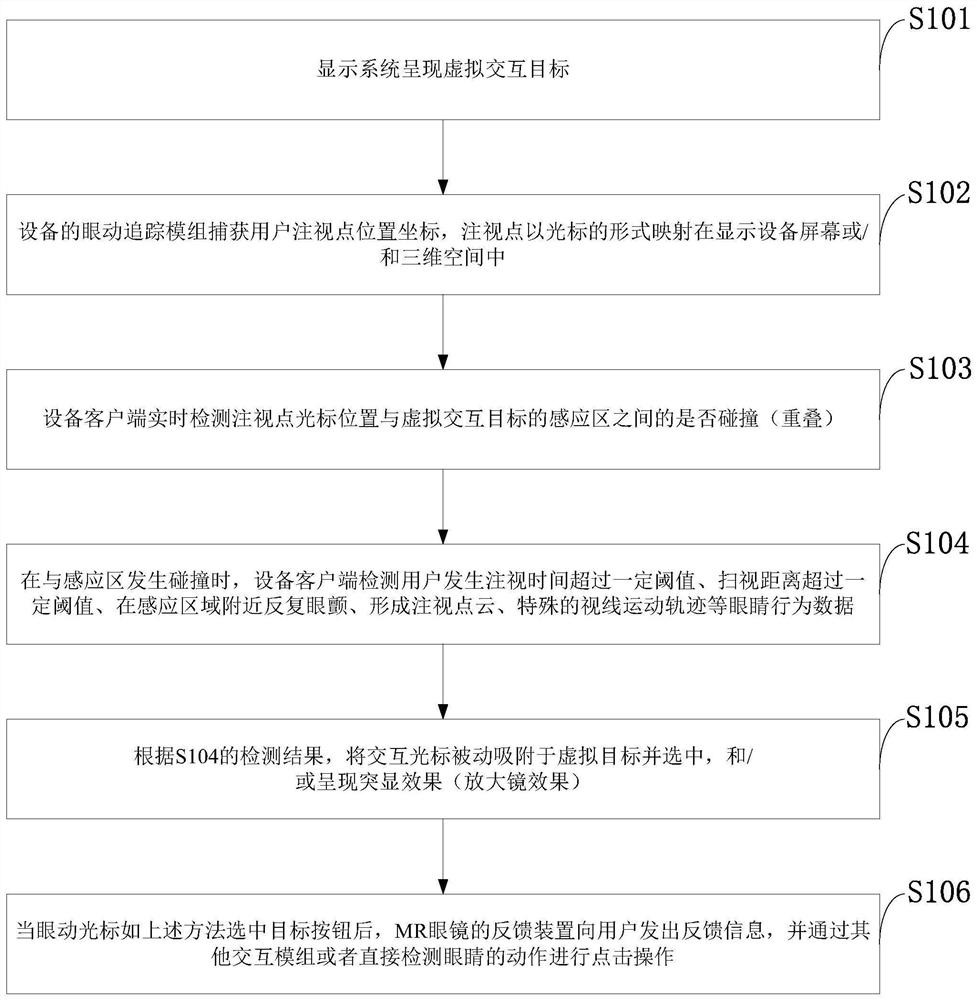

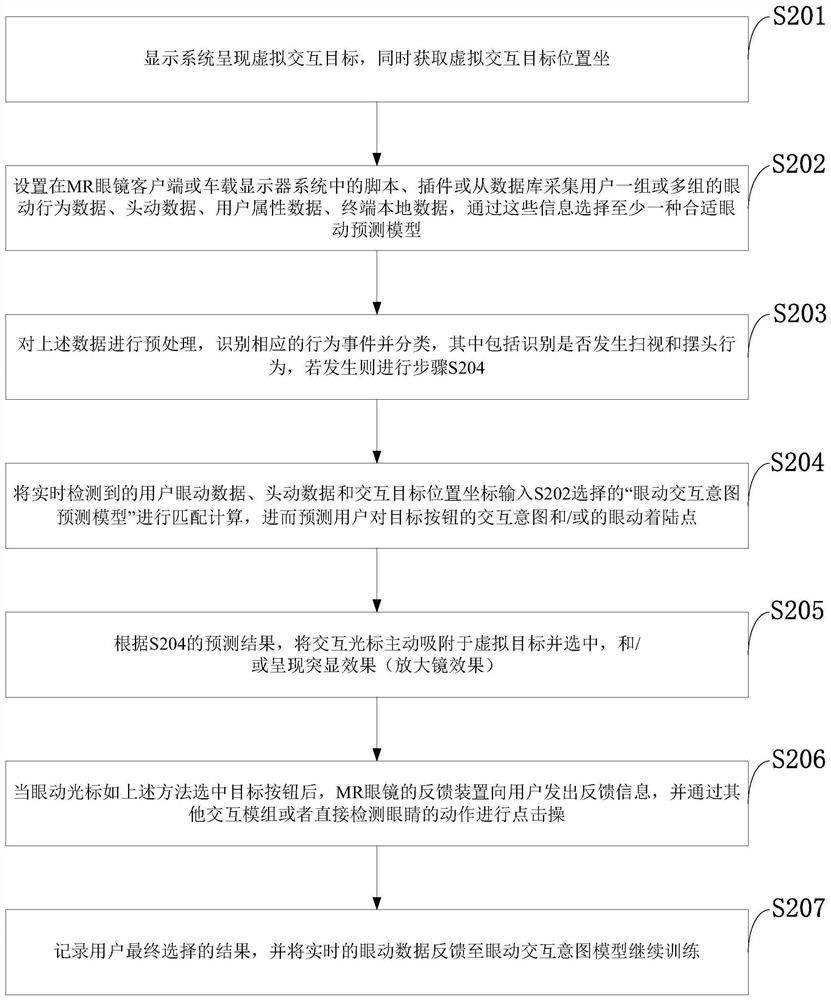

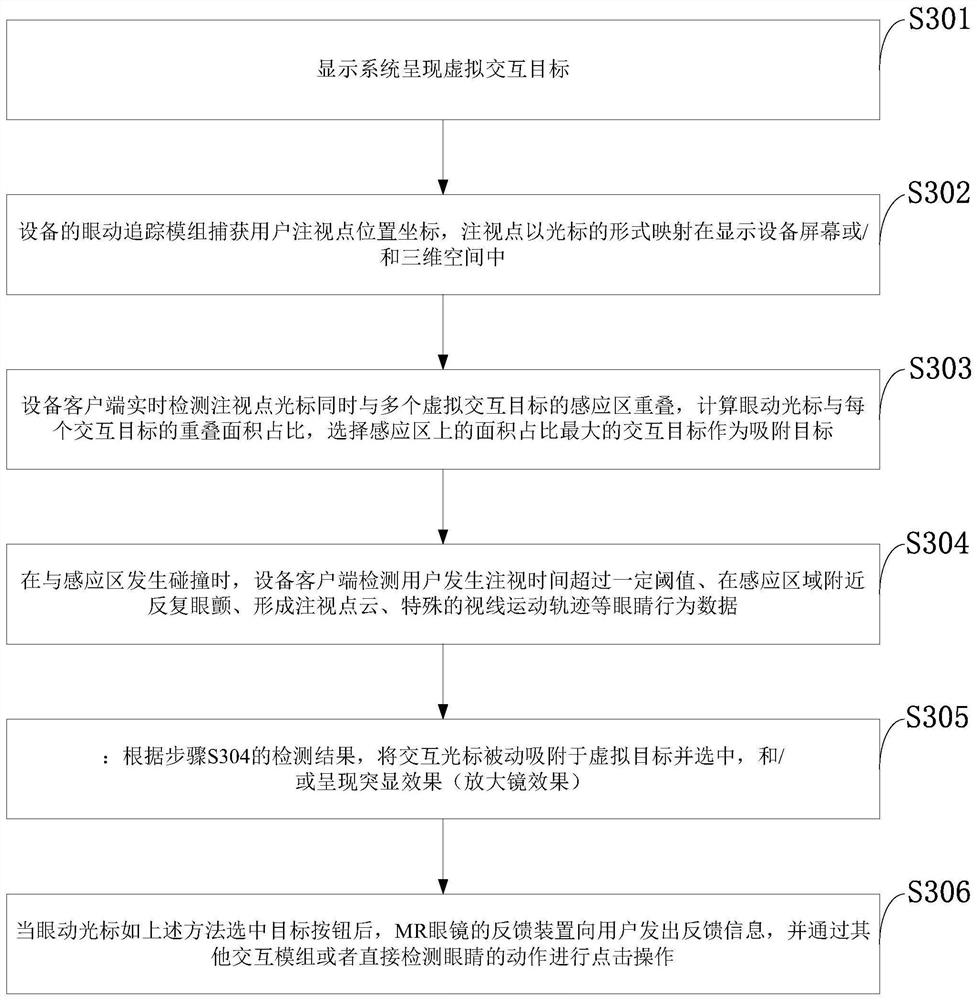

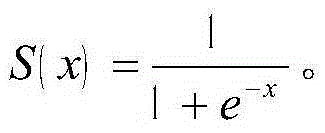

Eye movement interaction method, system and device based on eye movement tracking technology

ActiveCN111949131ASolve the problem of not being able to accurately locate the targetImprove operational efficiencyInput/output for user-computer interactionSound input/outputOptometryEye tracking on the ISS

The invention belongs to the technical field of eye movement tracking, and discloses an eye movement interaction method, system and device based on eye movement tracking technology, and the method comprises the steps: setting a passive adsorption gaze cursor or an eye movement interaction intention of a sensing area and predicting an active adsorption gaze cursor to select a target; setting corresponding sensing areas, namely effective clicking areas, for different targets, when the cursor makes contact with or covers the sensing area of a certain target, whether eye movement behaviors such aseye tremor exists or not, detecting whether the glancing distance exceeds a threshold value or not at the same time, and absorbing or highlighting then the target object. A machine learning algorithmis adopted to train the eye movement behavior data of the user, the data is filtered, processed and analyzed, an eye movement behavior rule is trained, and a subjective consciousness eye movement interaction intention model of the user is obtained. Through the method, the stability and accuracy in the eye movement interaction process are improved, and the user experience of eye movement interaction is improved.

Owner:陈涛

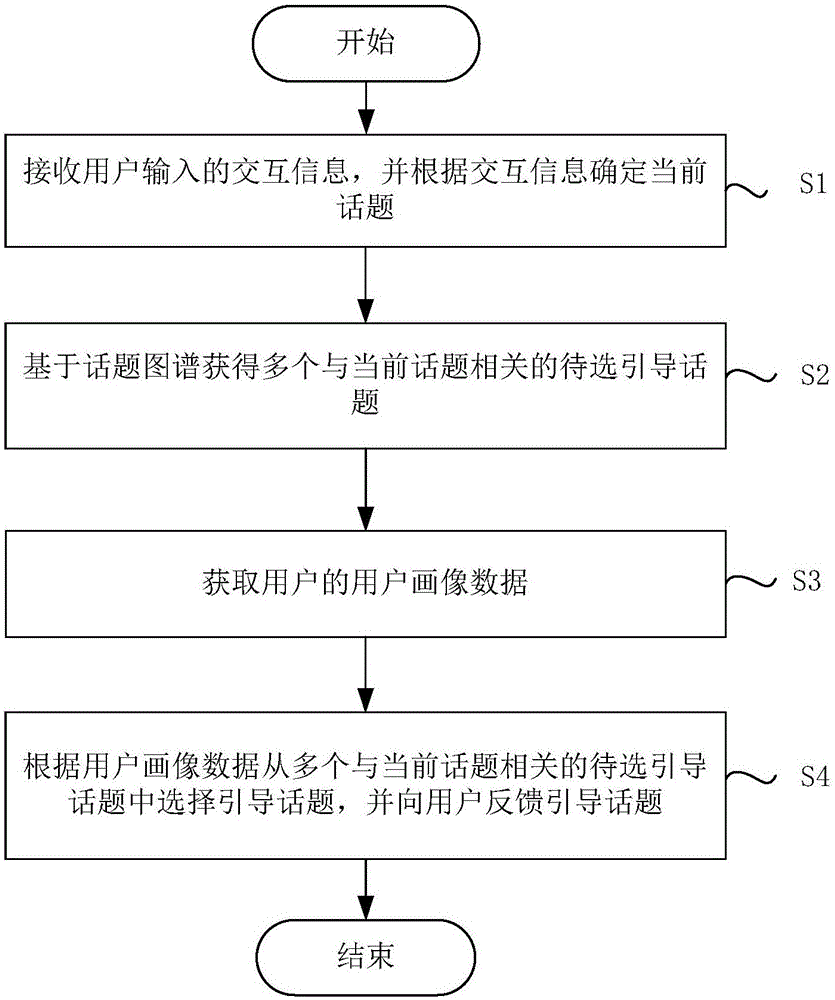

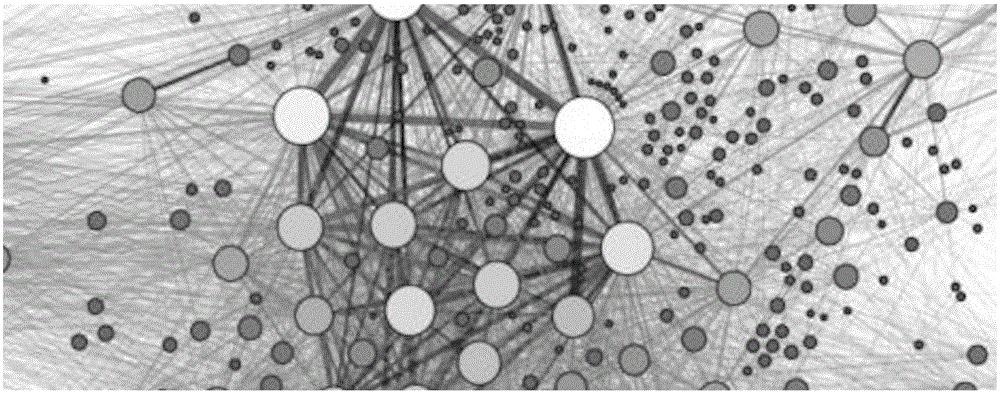

Human-computer interaction guiding method and device based on artificial intelligence

InactiveCN105138671AIncreased persistenceSmooth human-computer interactionNatural language data processingSpecial data processing applicationsSustainabilityImaging data

The invention discloses a human-computer interaction guiding method and device based on artificial intelligence. The method comprises the following steps that S1, interaction information input by a user is received, and a current topic is determined according to the interaction information; S2, multiple to-be-chosen guiding topics which are related to the current topic are obtained on the basis of a topic graph; S3, user image data of the user are obtained; S4, the guiding topic is chosen from the multiple to-be-chosen guiding topics which are related to the current topic according to the user image data and fed back to the user. According to the human-computer interaction guiding method and device based on the artificial intelligence, the current topic is determined by receiving the interaction information input by the user, the multiple to-be-chosen guiding topics which are related to the current topic are obtained on the basis of the topic graph, and then the guiding topic is chosen from the multiple to-be-chosen guiding topics which are related to the current topic by combining the user image data and fed back to the user, so that the sustainability of human-computer interaction is improved, and the human-computer interaction is more fluent and natural.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

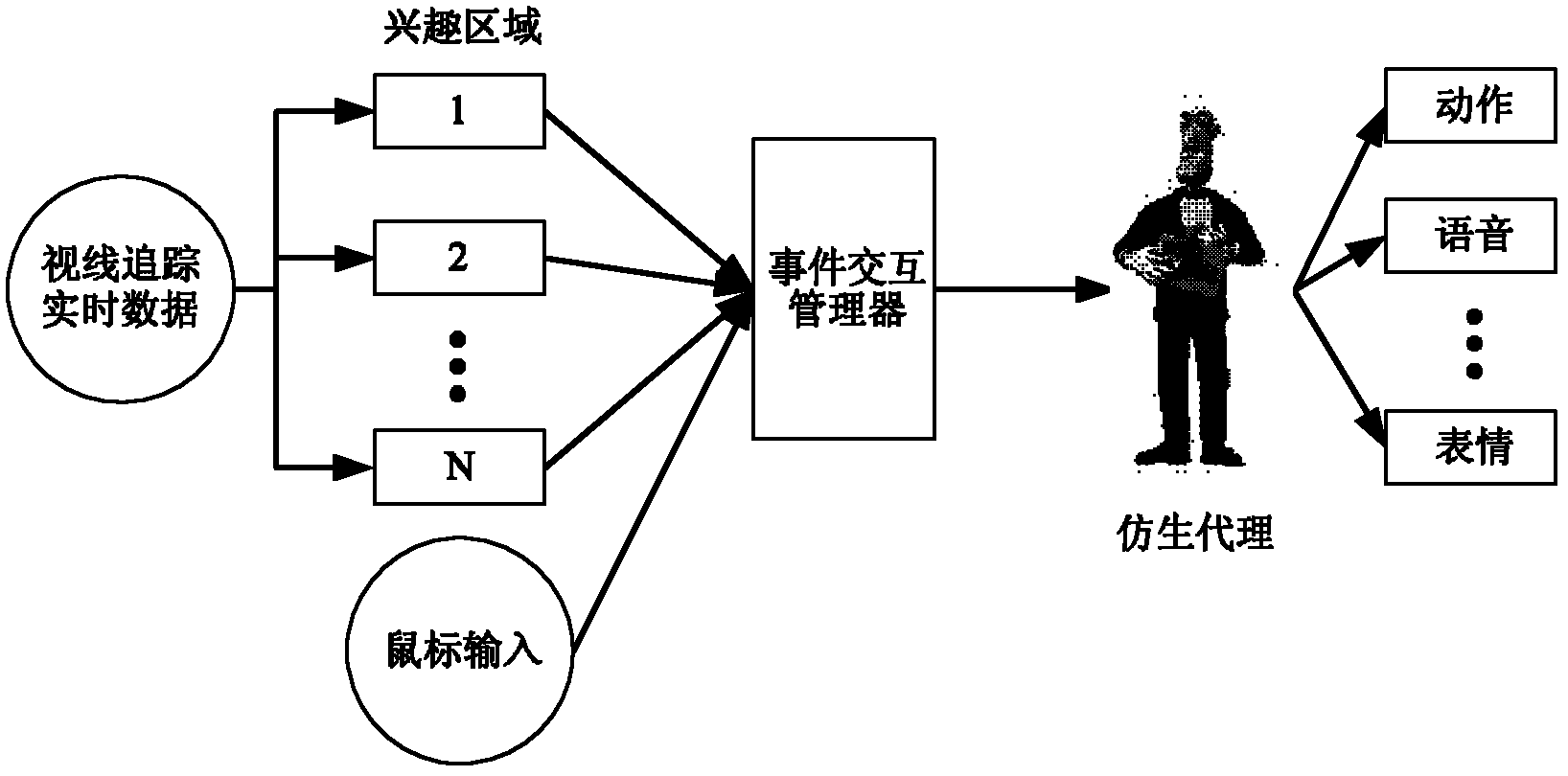

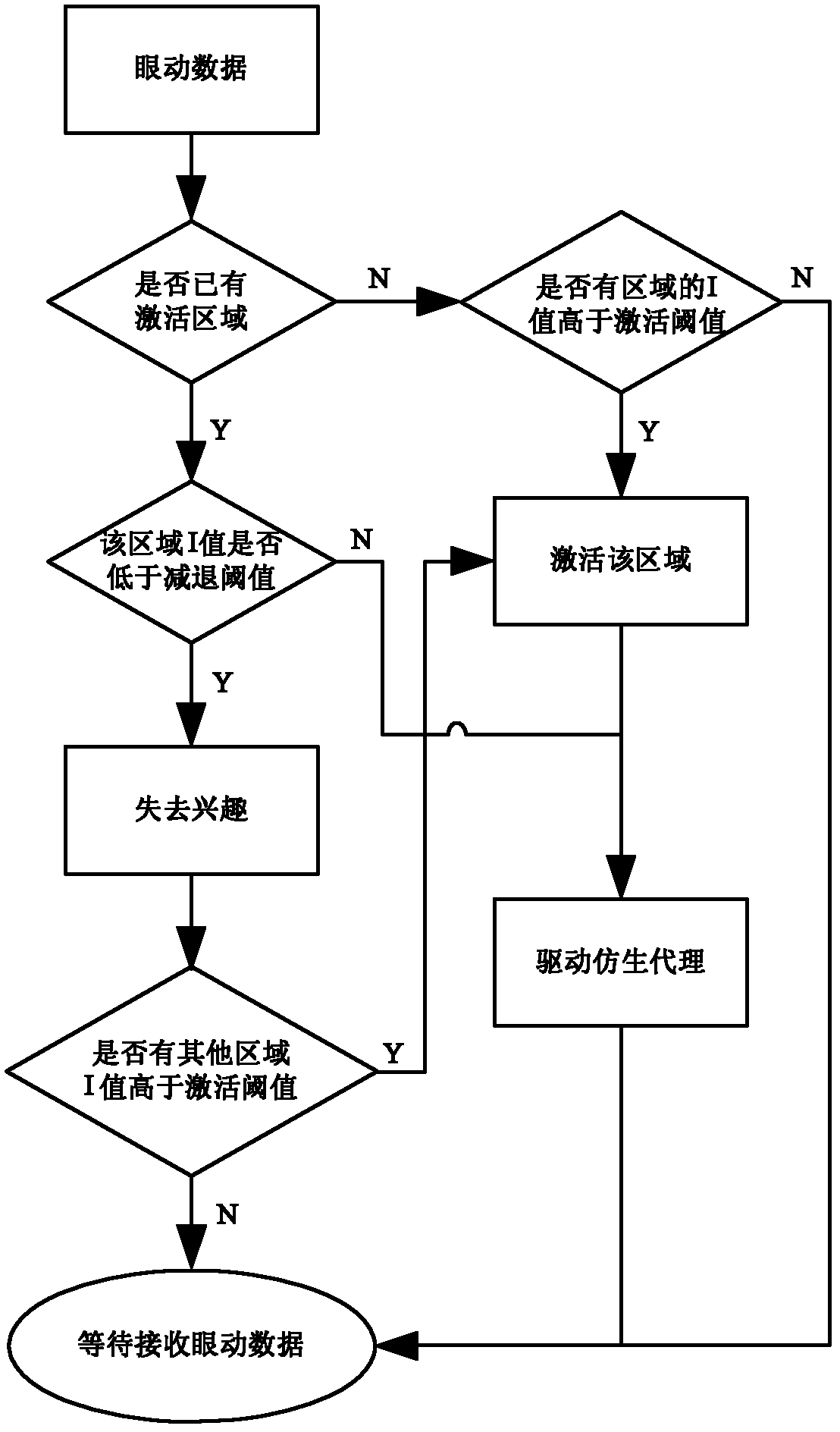

Man-machine interaction method based on analysis of interest regions by bionic agent and vision tracking

InactiveCN102221881AReal-time analysisInteractive natureInput/output for user-computer interactionGraph readingRegion analysisFocal position

The invention relates to a man-machine interaction method based on analysis of interest regions by bionic agent and vision tracking, comprising the steps: (1) a designer carries out user analysis and designs interest regions which can be possible to cause the attention of a user according to the user analysis result; (2) an event interaction manager receives and analyzes data generated by an eye tracker in real time, and calculates the focal positions of the eyeballs of the user on a screen; (3) the event interaction manager analyzes the interest regions causing the attention of the user according to the obtained focal positions of the eyeballs of the user on the screen; and (4) the event interaction manager takes the analyzed result of the interest regions causing attention of the user as a non-contact instruction to control the expressions, actions and voices of the bionic agent on the man-machine interaction method so as to carry out intelligent feedback on the user and further realize natural and harmonious man-machine interaction. A man-machine interaction system which is established according to the invention based on analysis of interest regions by bionic agent and vision tracking, comprises (1) the eye tracker; (2) a man-machine interaction interface; (3) the event interaction manager; and (4) the bionic agent.

Owner:BEIHANG UNIV

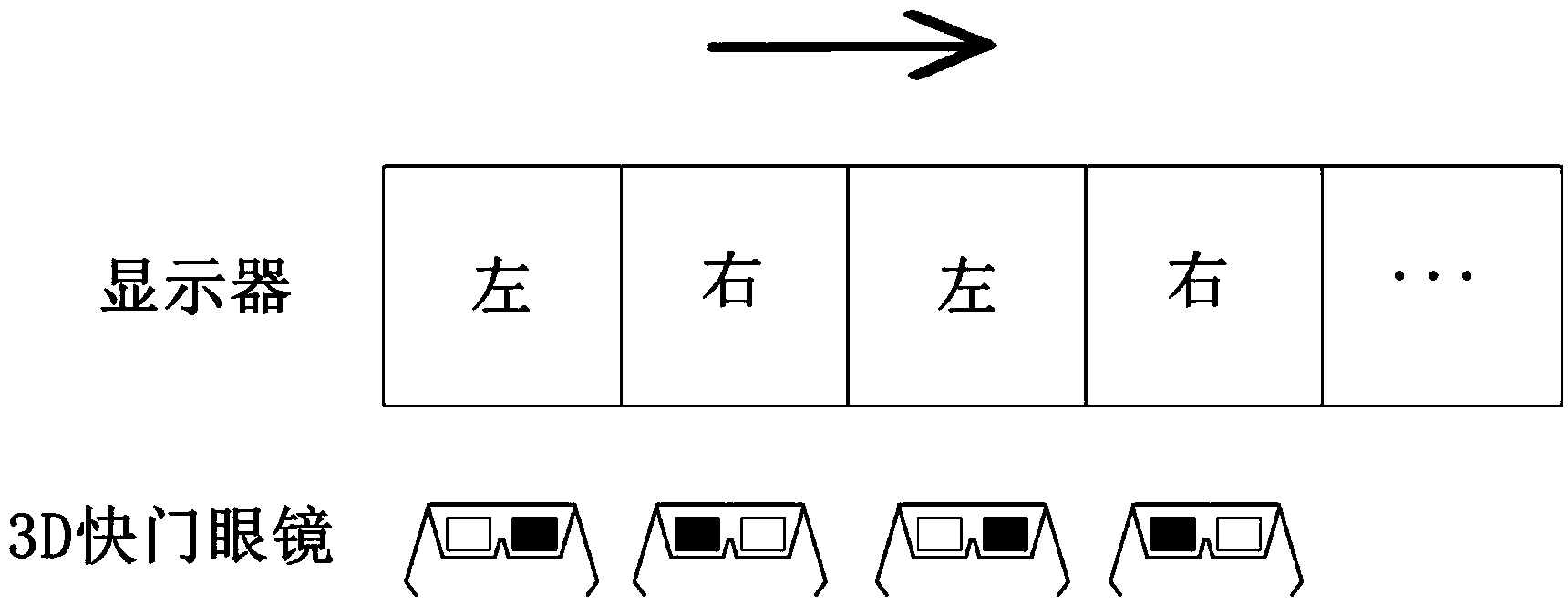

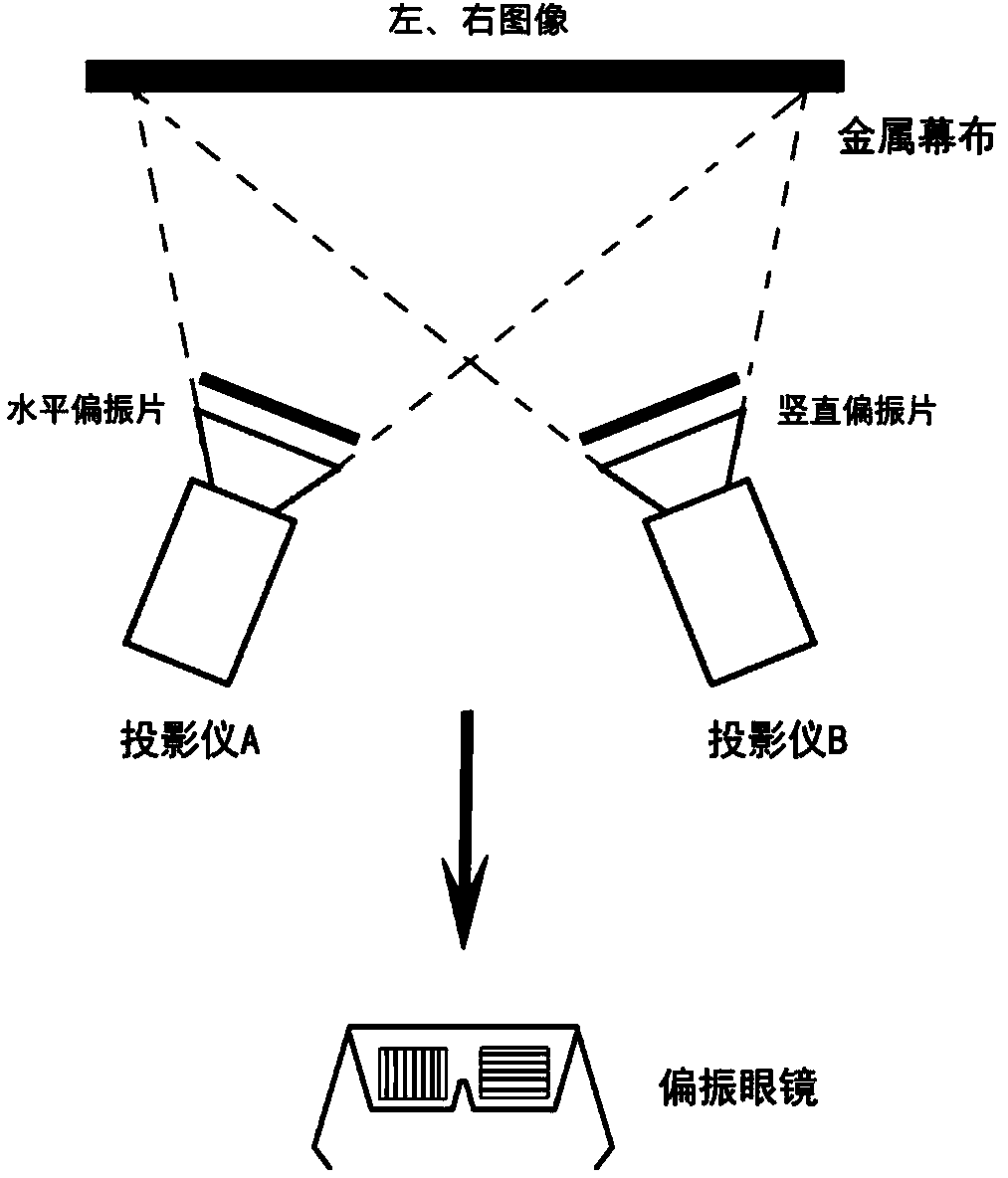

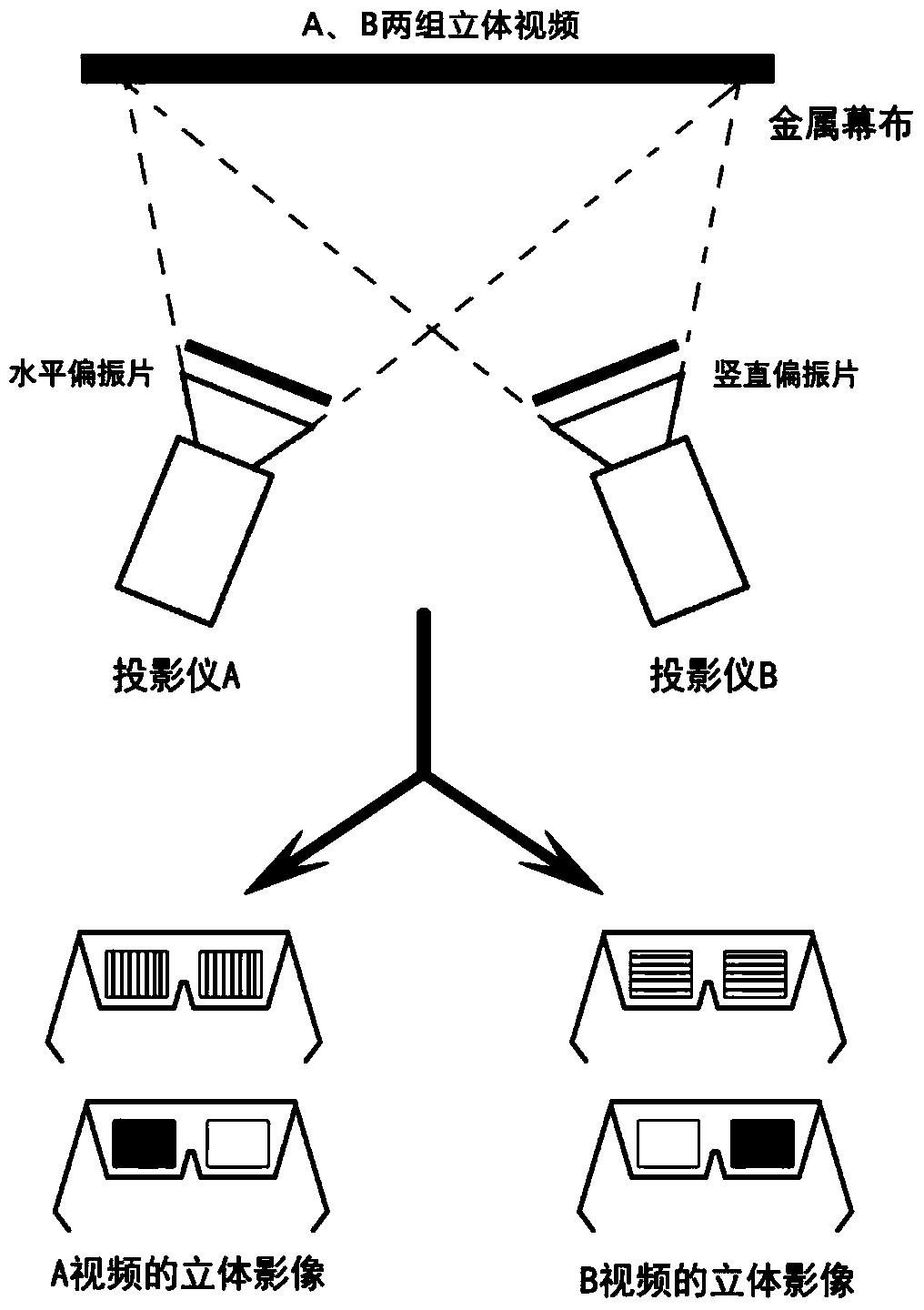

Virtual simulation system and method of antagonistic event with net based on three-dimensional multi-image display

InactiveCN104394400AExpand the scope of activitiesReal interactionVideo gamesSteroscopic systemsNetwork connectionDisplay device

The invention discloses a virtual simulation system and method of an antagonistic event with a net based on three-dimensional multi-image display. The system comprises a network connection module, a game logic module, an interaction control module, a physical engine module, a three-dimensional rendering module and a dual-image projection display module, wherein the network connection module comprises a server sub-module and a client sub-module and is used for network communication and data transmission; the game logic module is used for storing game rules, controlling the play of character animation and performing mapping by position; the interaction control module is used for controlling corresponding game characters in a virtual tennis game scene to move and shooting three-dimensional images of different sight points; the physical engine module is used for efficiently and vividly simulating the physical effects of a tennis ball, such as rebound and collision through a physical engine and enabling the game scene to be relatively real and vivid. According to the system, the three-dimensional multi-image display operation can be performed on a same display screen, and the same game scene can be rendered in real time based on different sight angles.

Owner:SHANDONG UNIV

Security management method and system based on face identification

ActiveCN105354902AInteractive natureCharacter and pattern recognitionIndividual entry/exit registersFeature extractionImaging data

The invention provides a security management method and system based on face identification. The method comprises steps: a user characteristic database is established, image data of video frames are extracted, face characteristics are detected and extracted, whether photograph cheat exists is determined and whether the user is a valid user is determined. Face identification is achieved through deep learning, and the face identification accuracy can be provided. The system is implemented based on the method and comprises a user characteristic database module, a video frame image data extraction module, a face characteristic extraction module, a photograph cheating determination module, a valid user determination module and the like. Through accurate face identification, convenience is provided for security management, photograph cheating is avoided effectively and personnel tracking is achieved.

Owner:SHENZHEN SENSETIME TECH CO LTD

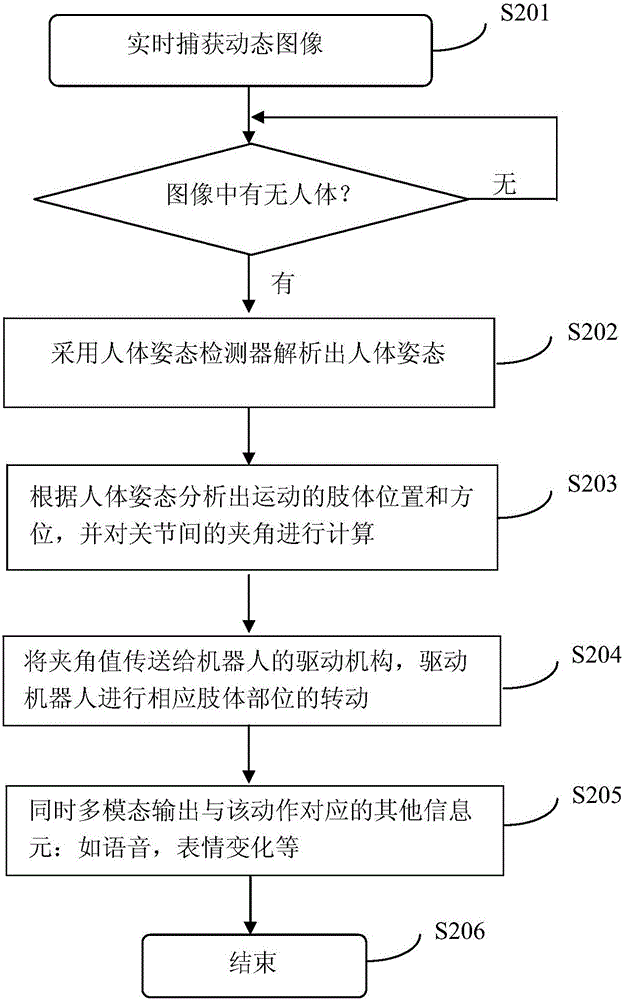

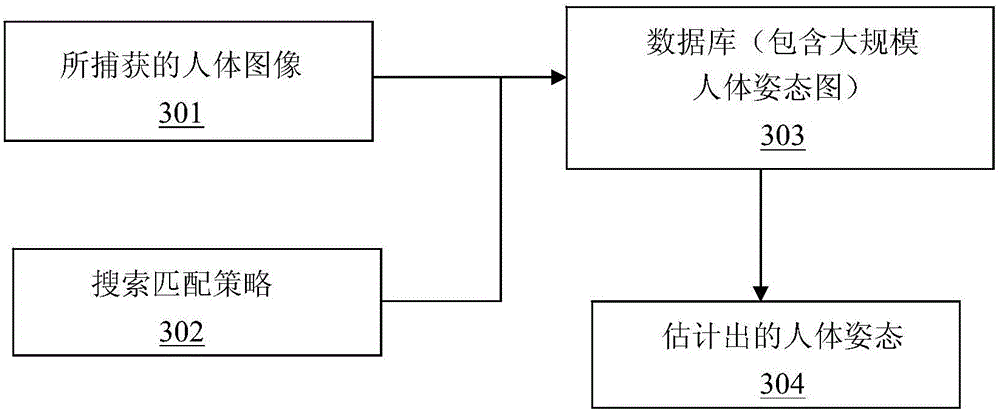

Method and system for data processing for robot action expression learning

ActiveCN105825268AInteractive natureVarious forms of communicationArtificial lifeMemory bankHuman–computer interaction

The invention provides a data processing method for robot action expression learning. The method comprises the following steps: a series of actions made by a target in a period of time is captured and recorded; information sets correlated with the series of actions are recognized and recorded synchronously, wherein the information sets are composed of information elements; the recorded actions and the correlated information sets are sorted and are stored in a memory bank of the robot according to a corresponding relationship; when the robot receives an action output instruction, an information set matched with the expressed content in the information sets stored in the memory bank is called to perform an action corresponding to the information set, and a human action expression is simulated. Action expressions are correlated with other information related to language expressions, after simulation training, the robot can perform diverse output, the communication forms are rich and more humane, and the intelligent degree is enhanced more greatly.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

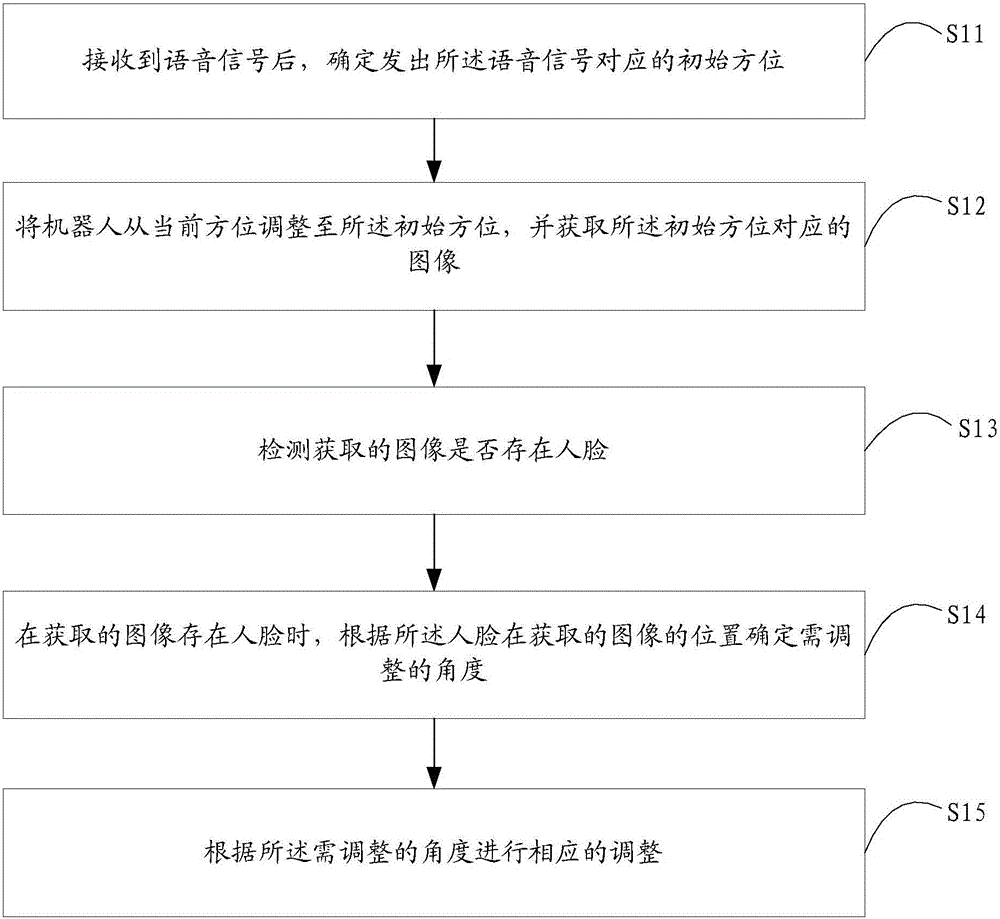

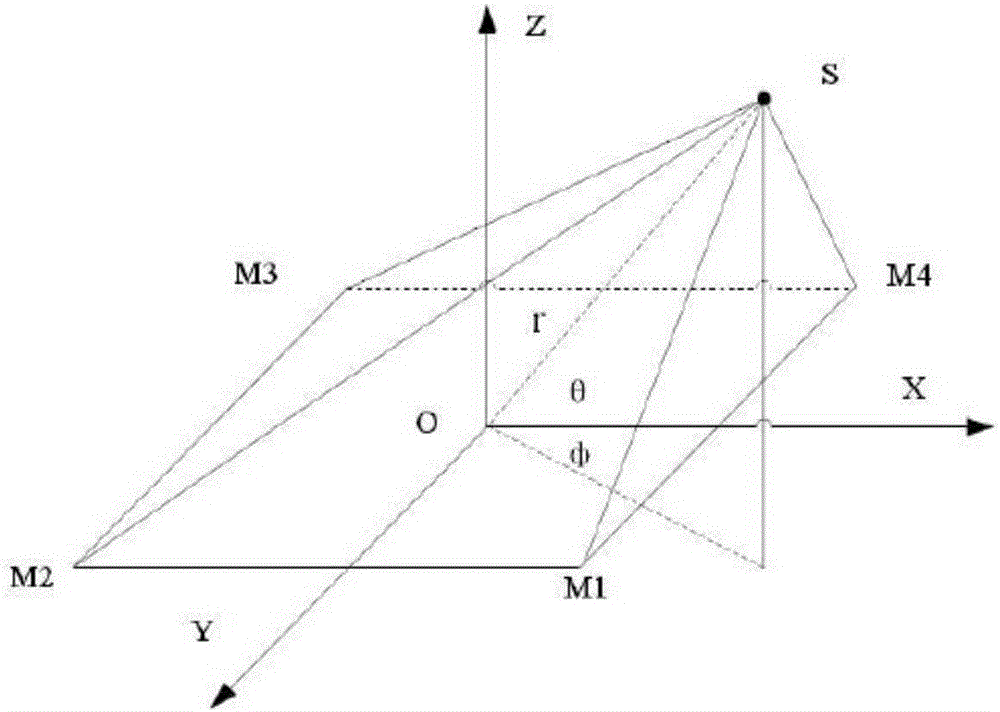

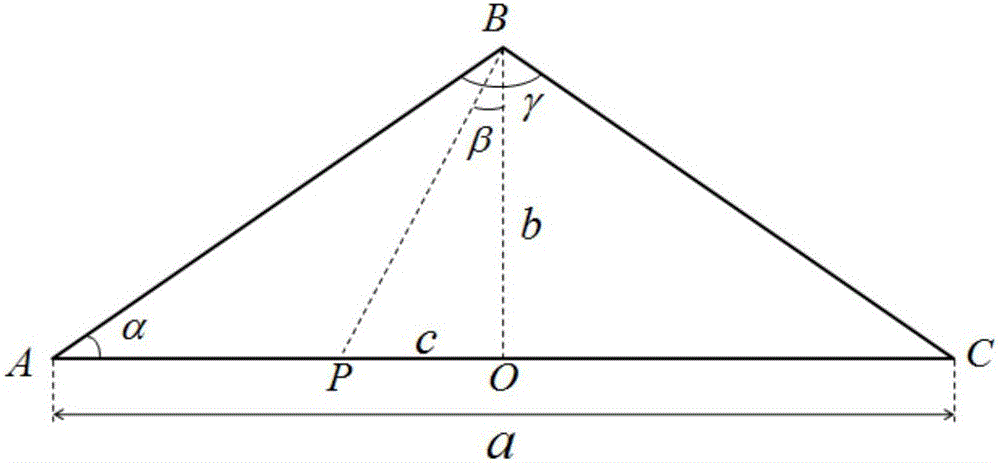

Interaction direction adjusting method and device of robot

InactiveCN106203259AImprove intelligenceRealistic interaction processImage enhancementImage analysisComputer visionHuman–robot interaction

The invention is applicable to the field of human-computer interaction, and provides an interaction direction adjusting method and device of a robot. The method comprises: after receiving a speech signal, determining a corresponding initial orientation from where the speech signal comes; adjusting the robot from the current orientation to the initial orientation, and acquiring a corresponding image of the initial orientation; detecting whether or not a human face exists in the acquired image; when a human face exists in the acquired image, determining an angle that needs to be adjusted according to the position where the human face is located in the acquired image; and performing corresponding adjustment according to the angle that needs to be adjusted. By means of the method, the positioning location and directionacquired after adjustment are more accurate.

Owner:SHENZHEN SANBOT INNOVATION INTELLIGENT CO LTD

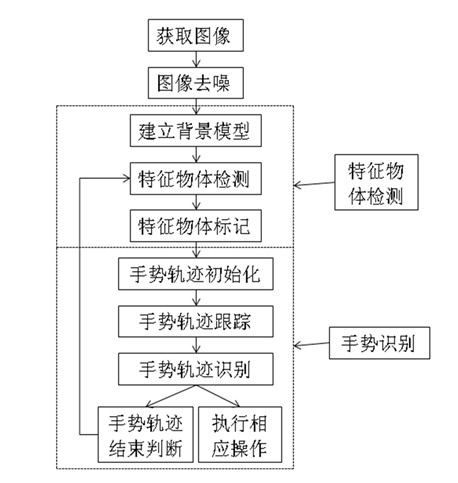

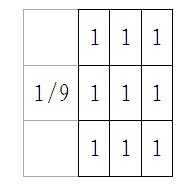

Human-computer interaction method based on machine vision

ActiveCN102096471AInteractive natureEasy and accurate extractionInput/output for user-computer interactionCharacter and pattern recognitionImage denoisingMachine instruction

The invention relates to the technical field related to machine vision, particularly to a human-computer interaction method based on machine vision, comprising the following steps of: an image obtaining step for continuously acquiring image data through an image acquisition device to update data cache; an image denoising step for reading the data from the data cache, filtering the read data and removing random noise introduced into the image; a feature object extraction step for detecting a feature object in the denoised image, and marking the detected feature object with distinct mark; and astep of gesture identification step for analyzing the motion trail of the feature object extracted by the feature object extraction step, and executing corresponding machine instructions according tothe result of analysis on the motion trail. The human-computer interaction method based on the machine vision provides a complete system solution, so that human can interact with the machine in real time, application limit of the prior touch technology is solved, and the human-machine interaction can be more natural.

Owner:阜宁县科技创业园有限公司

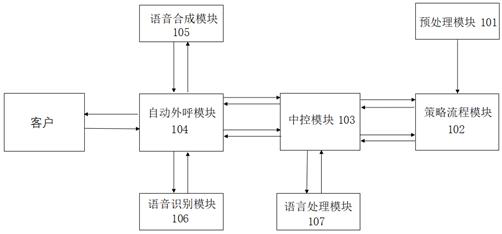

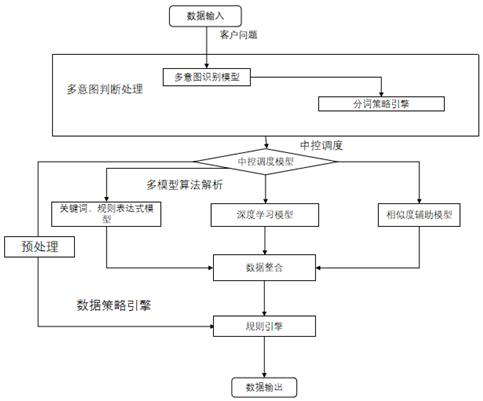

Intelligent voice interaction system and method

ActiveCN111653262AImprove accuracyImprove the level ofAutomatic exchangesSpeech recognitionComputational modelReal-time computing

The invention discloses an intelligent voice interaction system and method. The system comprises a preprocessing module, a strategy flow module, a central control module, an automatic call-out module,a voice synthesis module, a voice recognition module and a language processing module, wherein the central control module is internally provided with a central control scheduling module for scheduling the strategy flow module, the automatic call-out module and the language processing module. The method comprises the steps of 1-12. According to the method, integrated scheduling of multiple algorithms can be realized, multiple algorithm models are scheduled according to set rules for calculation, and an optimal solution is obtained by integrating calculation results, so that the limitation of blind spot calculation of a single algorithm model is solved, and a complementary effect is achieved; complex answers such as multiple questions, multiple intentions and the like are processed; and thecentral control scheduling module performs preliminary preprocessing before the text is sent to the question calculation model, decomposes questions with multiple intentions into multiple parts through the multi-intention splitting calculation model, then sends the multiple parts to the question calculation model, integrates answer results after obtaining multiple answers, and feeds back the answer results to the client.

Owner:上海荣数信息技术有限公司

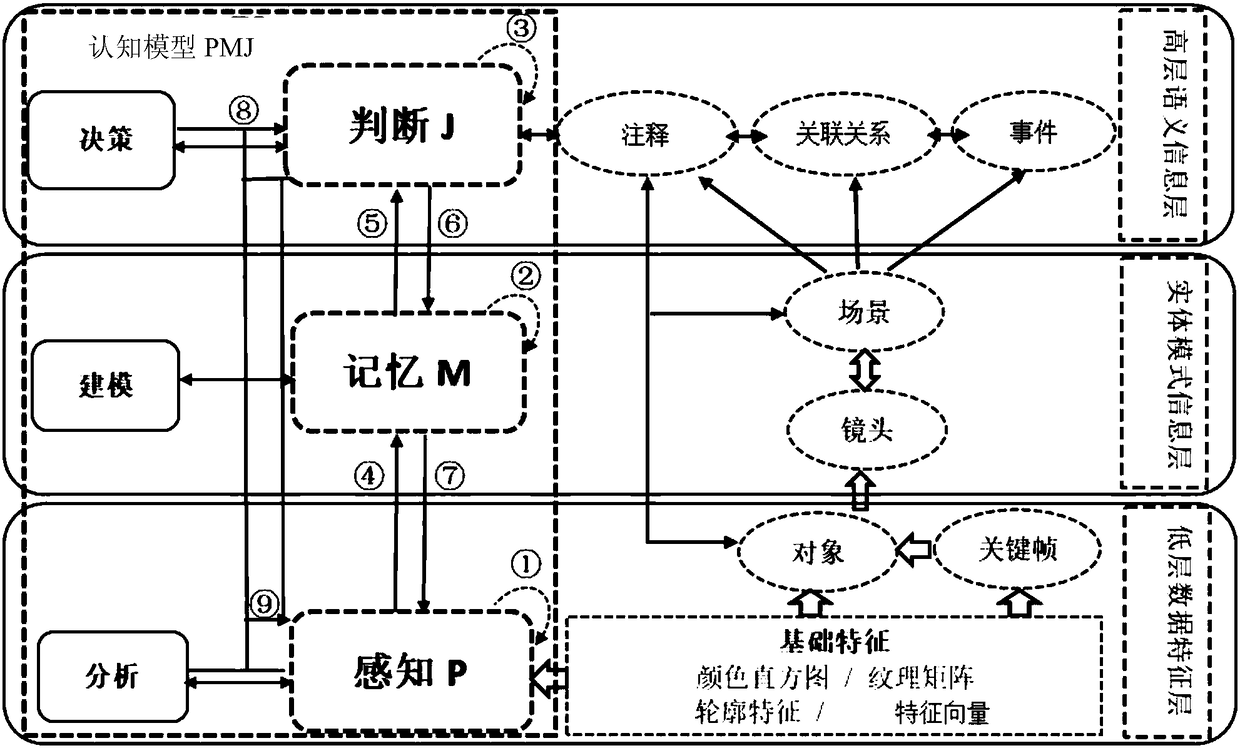

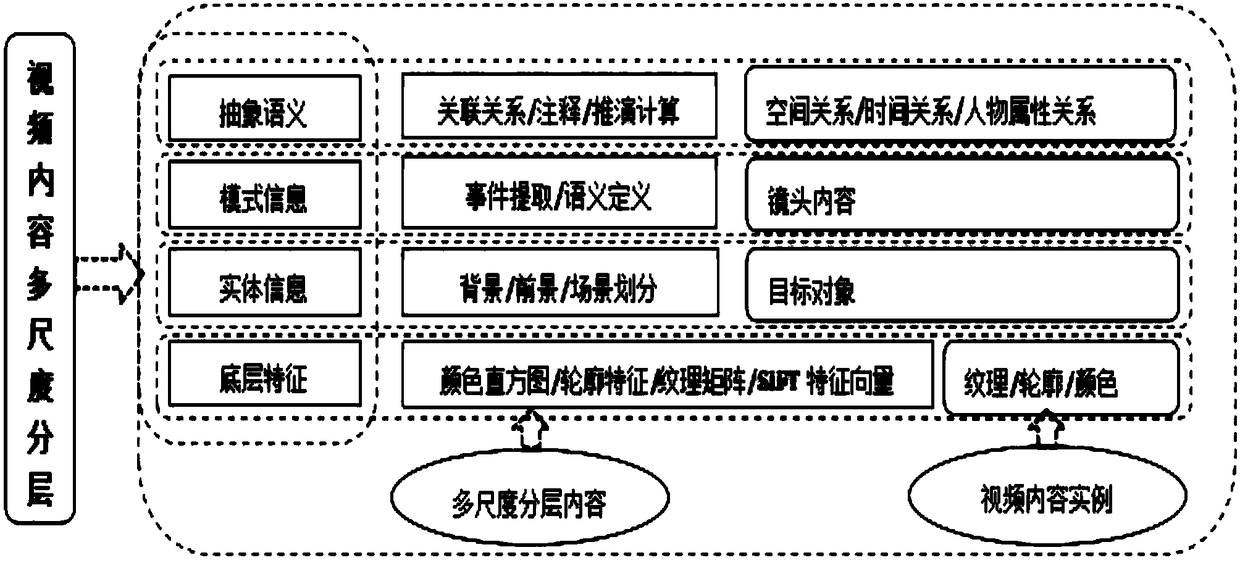

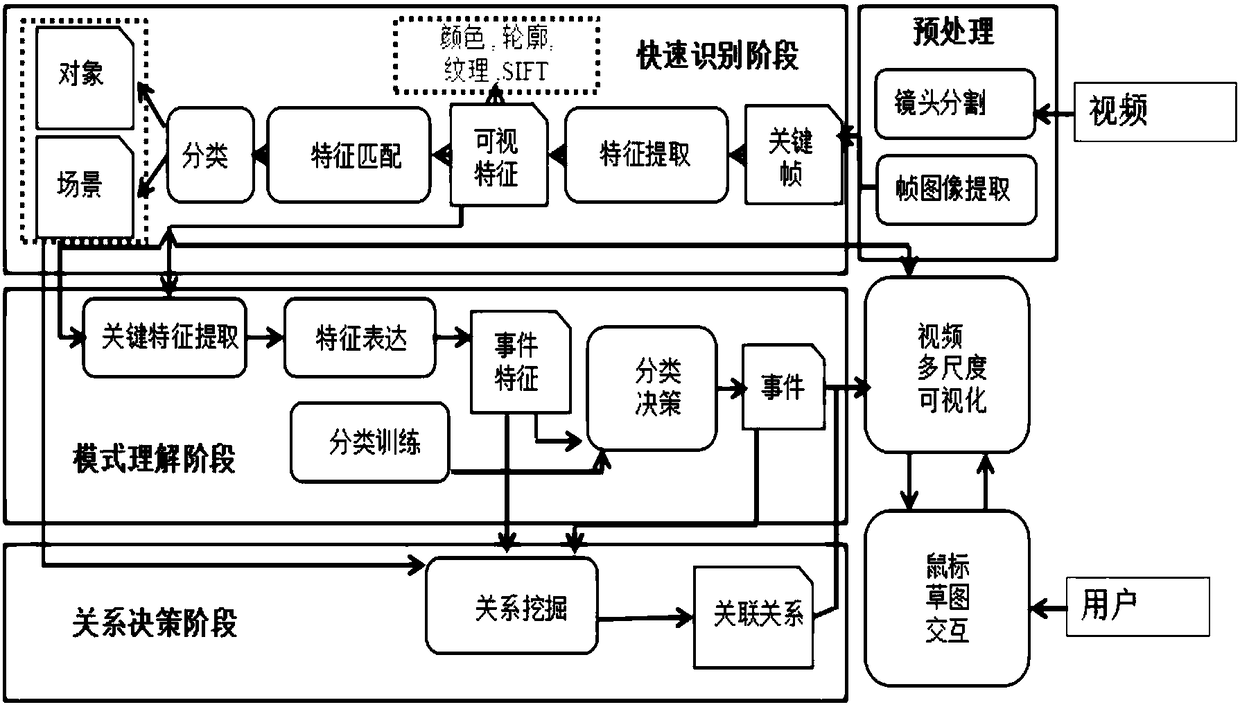

Video multi-scale visualization method and interaction method

InactiveCN108459785ADirect interactionInteractive natureImage enhancementImage analysisSemanticsInformation representation

The invention discloses a video multi-scale visualization method and a video multi-scale interaction method. The video multi-scale interaction method comprises the steps of establishing a user cognition model, which is oriented to a video content structure, of a target video; extracting a foreground object and a background scene in the target video and an image frame of the foreground object; obtaining a moving target and a corresponding trajectory thereof; calculating an appearance density of the moving target according to a time shaft-based moving target appearance amount and a correspondingtime mapping relation; extracting a key frame from processed target video data, and performing annotation on moving target information in the key frame; performing multi-scale division on a processedmoving target identification result and trajectory data of the moving target to generate a multi-scale video information representation structure; and introducing a sketch interactive gesture to an interactive interface of the multi-scale video information representation structure in combination with corresponding semantics of a mouse interactive operation based on an interactive operation mode of a user in an interactive process, and operating the target video on the interactive interface through the sketch interactive gesture.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

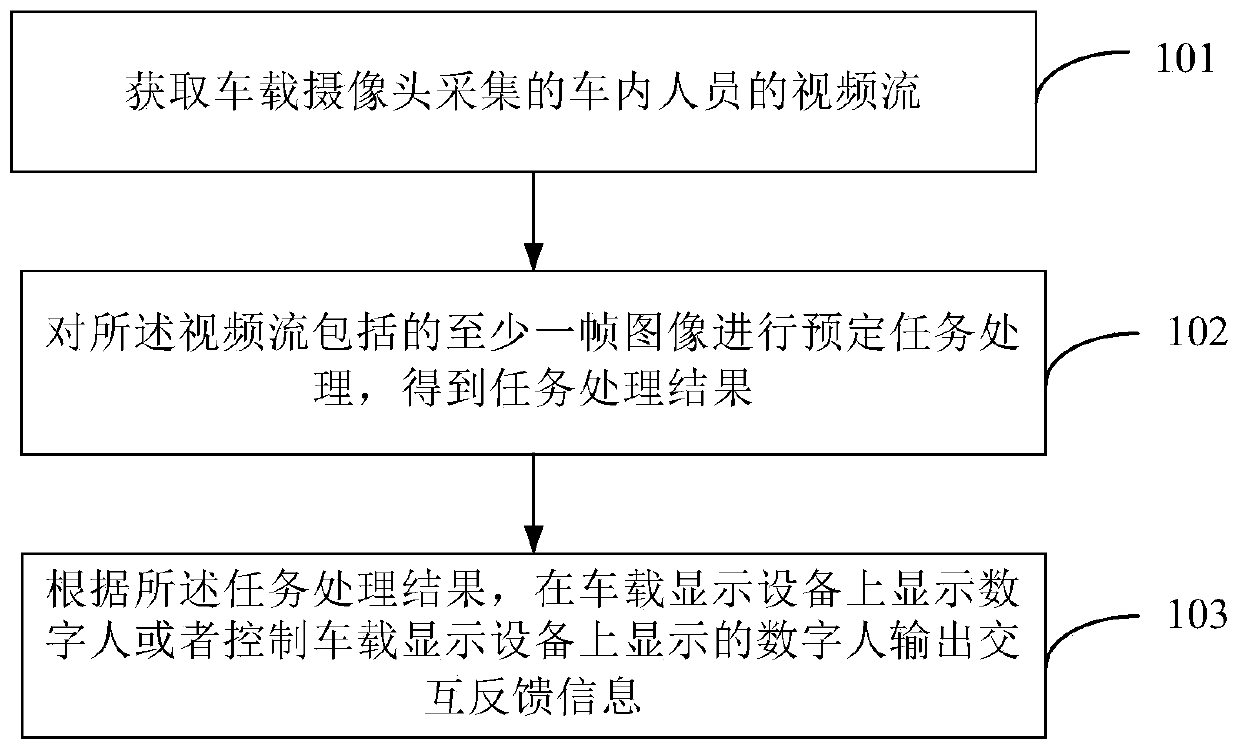

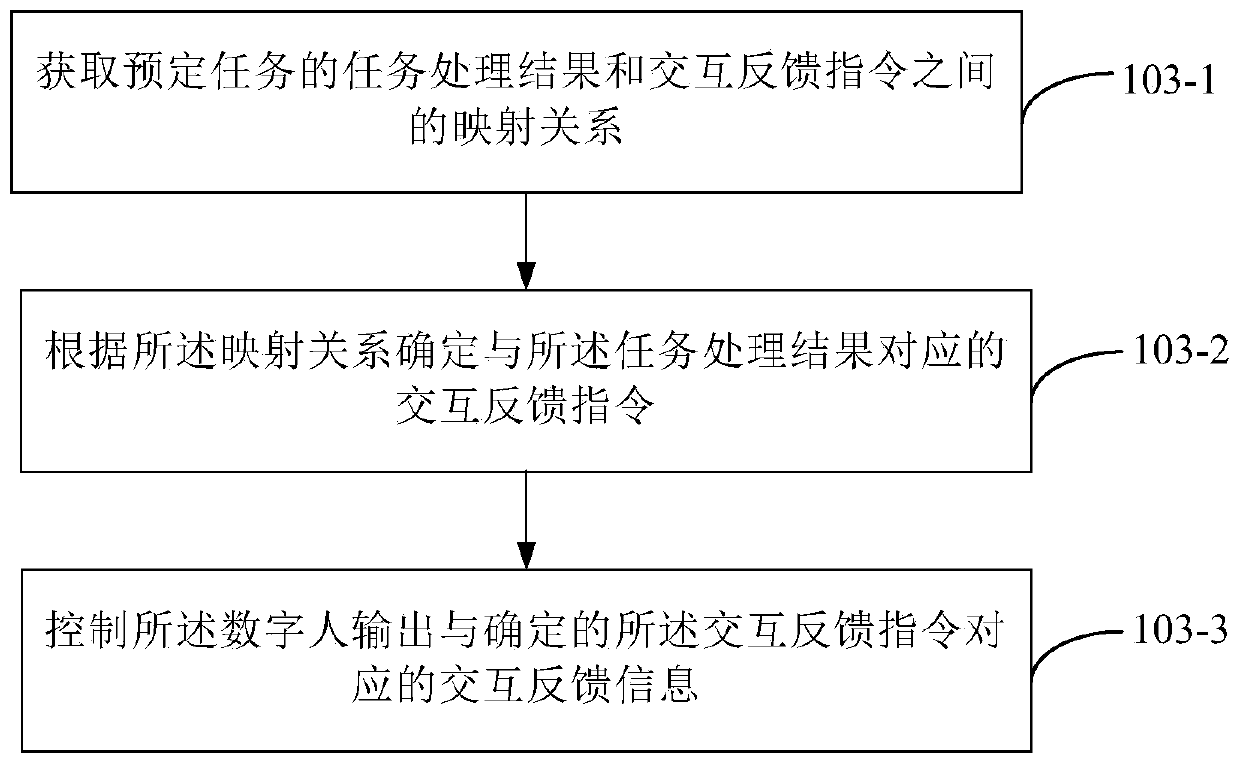

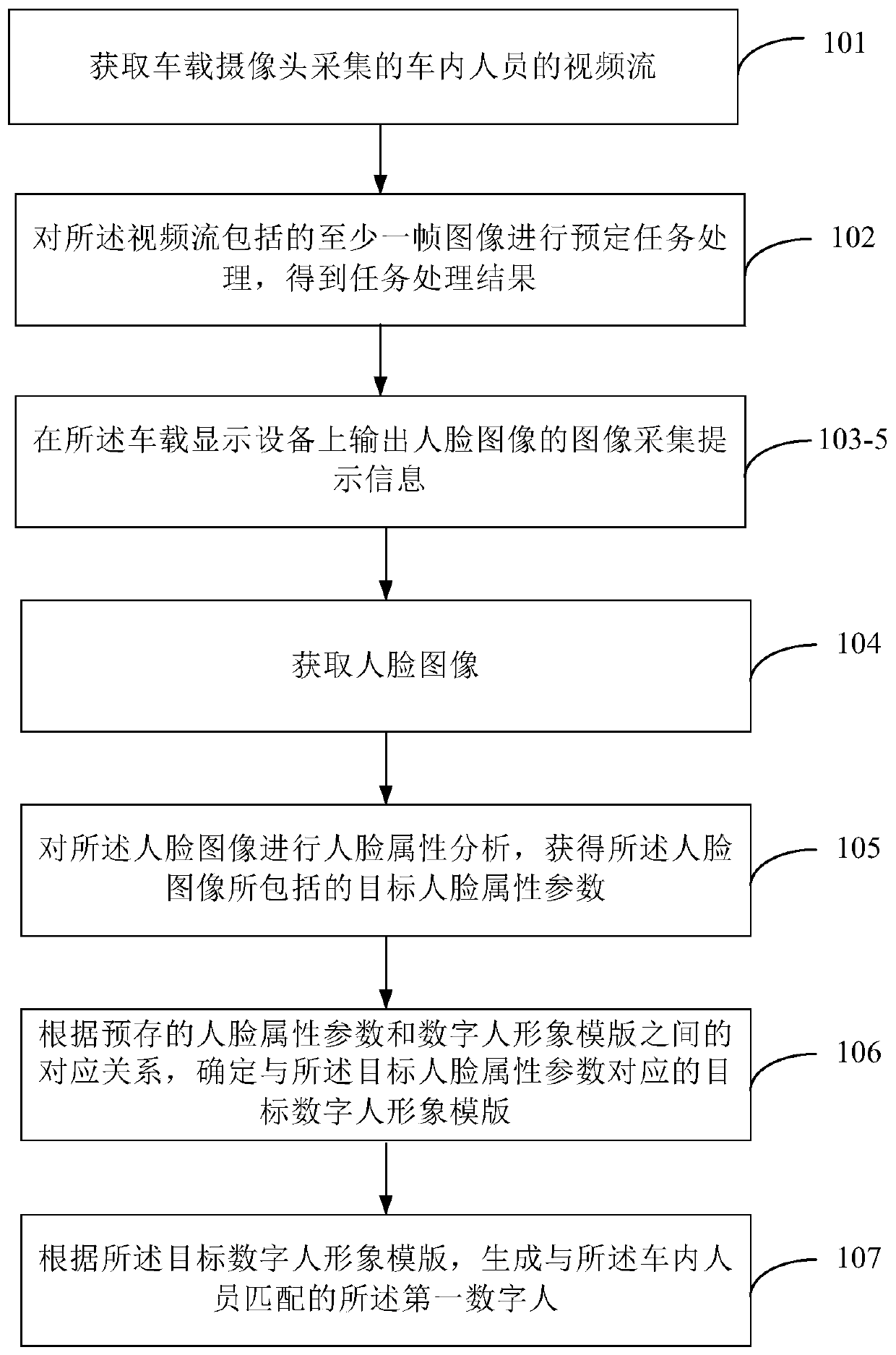

Interaction method and device based on vehicle-mounted digital human, and storage medium

PendingCN110728256AInteractive natureInteractive warmthInput/output for user-computer interactionDigital data information retrievalComputer graphics (images)In vehicle

The invention provides an interaction method and device based on a vehicle-mounted digital person, and the method comprises the steps: obtaining a video stream, collected by a vehicle-mounted camera,of a person in a vehicle; performing predetermined task processing on at least one frame of image included in the video stream to obtain a task processing result; and according to the task processingresult, displaying a digital person on the vehicle-mounted display equipment or controlling the digital person displayed on the vehicle-mounted display equipment to output interactive feedback information.

Owner:SHANGHAI SENSETIME INTELLIGENT TECH CO LTD

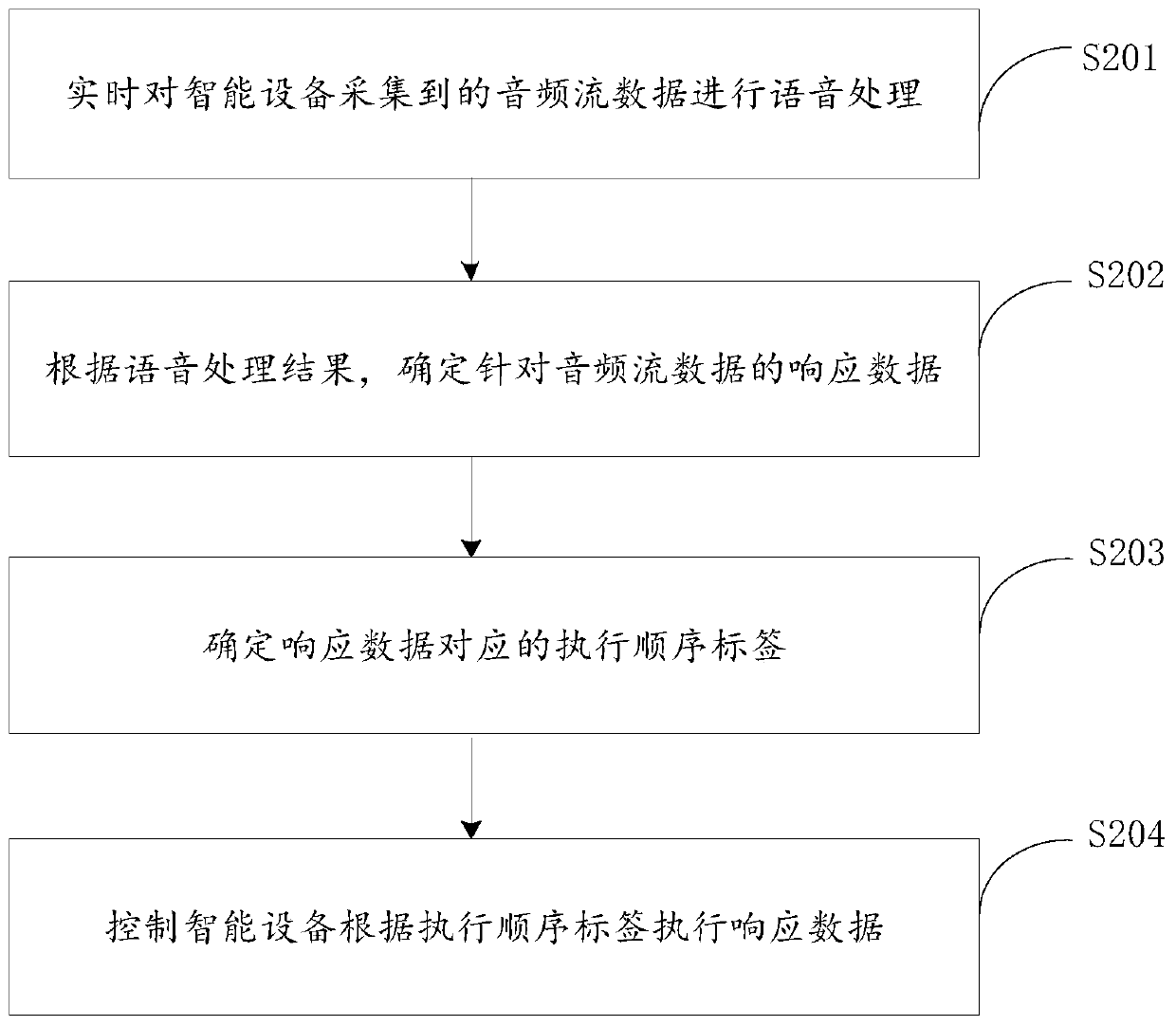

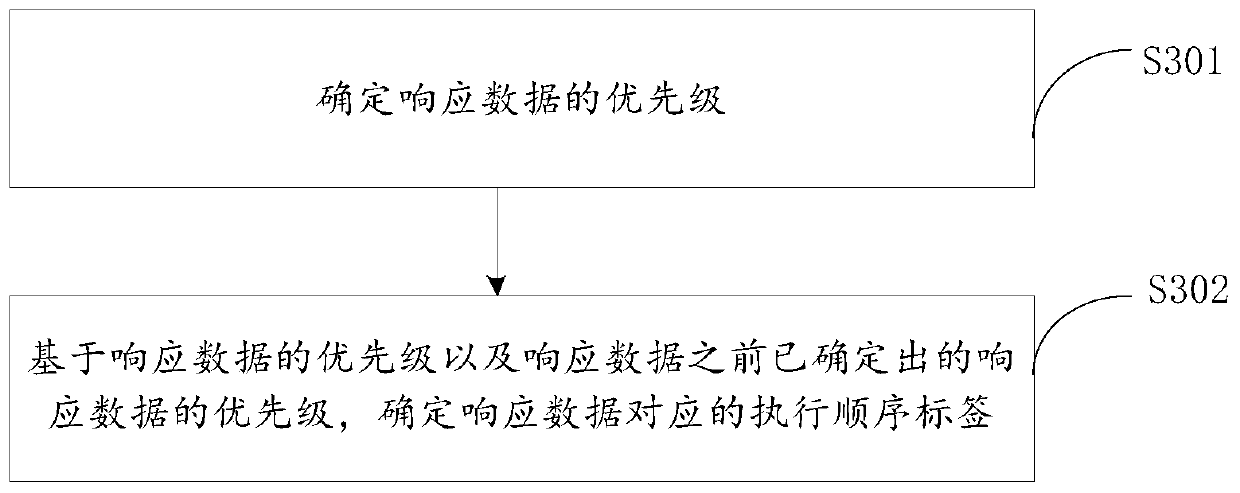

Output control method and device for man-machine conversation, electronic equipment and storage medium

InactiveCN110299152AFlexible controlInteractive natureSemantic analysisSpeech analysisStreaming dataControl manner

The invention relates to information in the technical field of artificial intelligence, and discloses an output control method and device for man-machine conversation, electronic equipment and a storage medium. The method includes the steps that real-time speech processing of audio stream data collected by intelligent equipment is conducted; based on speech processing results, response data to theaudio stream data are determined; execution sequence labels corresponding to the response data are determined; and the intelligent device is controlled to execute the response data according to the execution sequence labels. According to the technical scheme, the control way executing the response data is more flexible, thus the intelligent equipment can execute the response data in a way that isclose to human-nature interaction, and thus the man-machine interaction process is more natural.

Owner:BEIJING ORION STAR TECH CO LTD

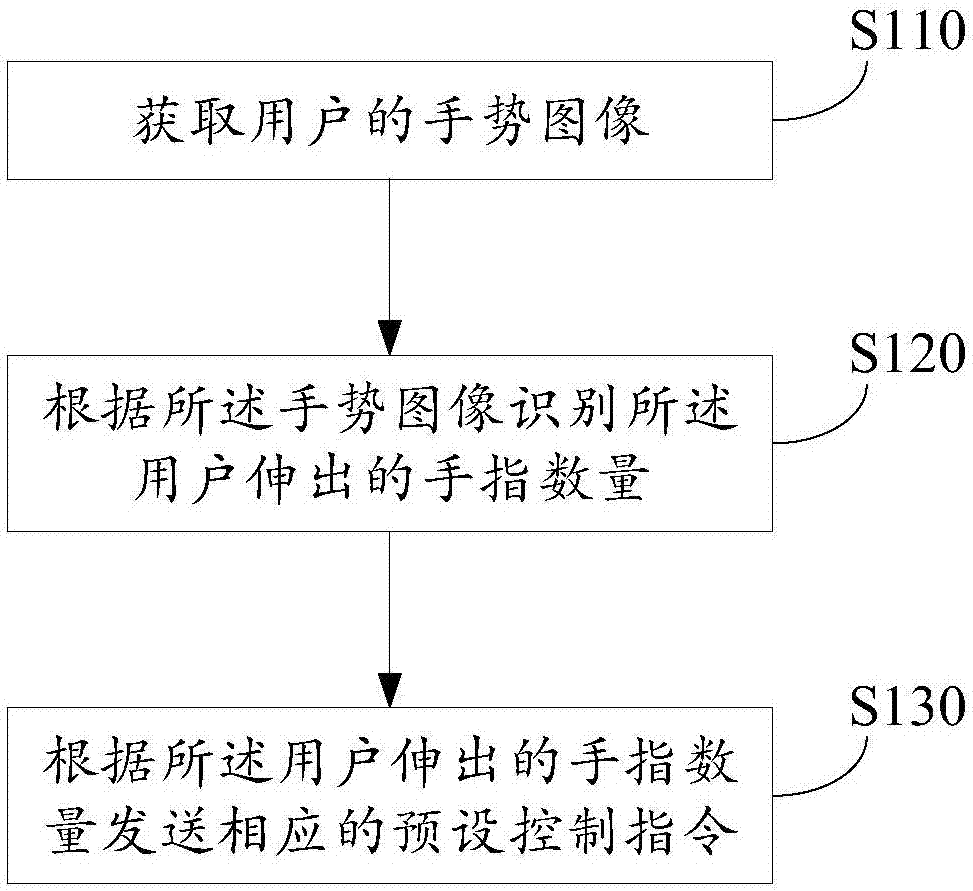

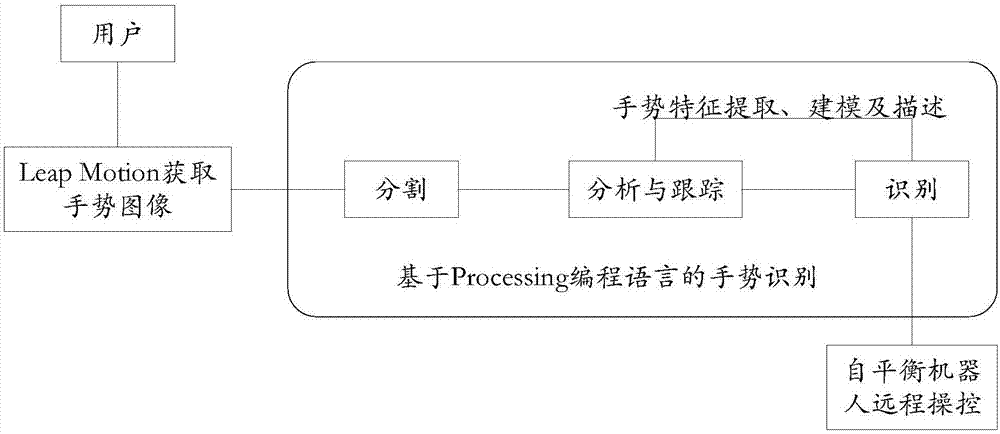

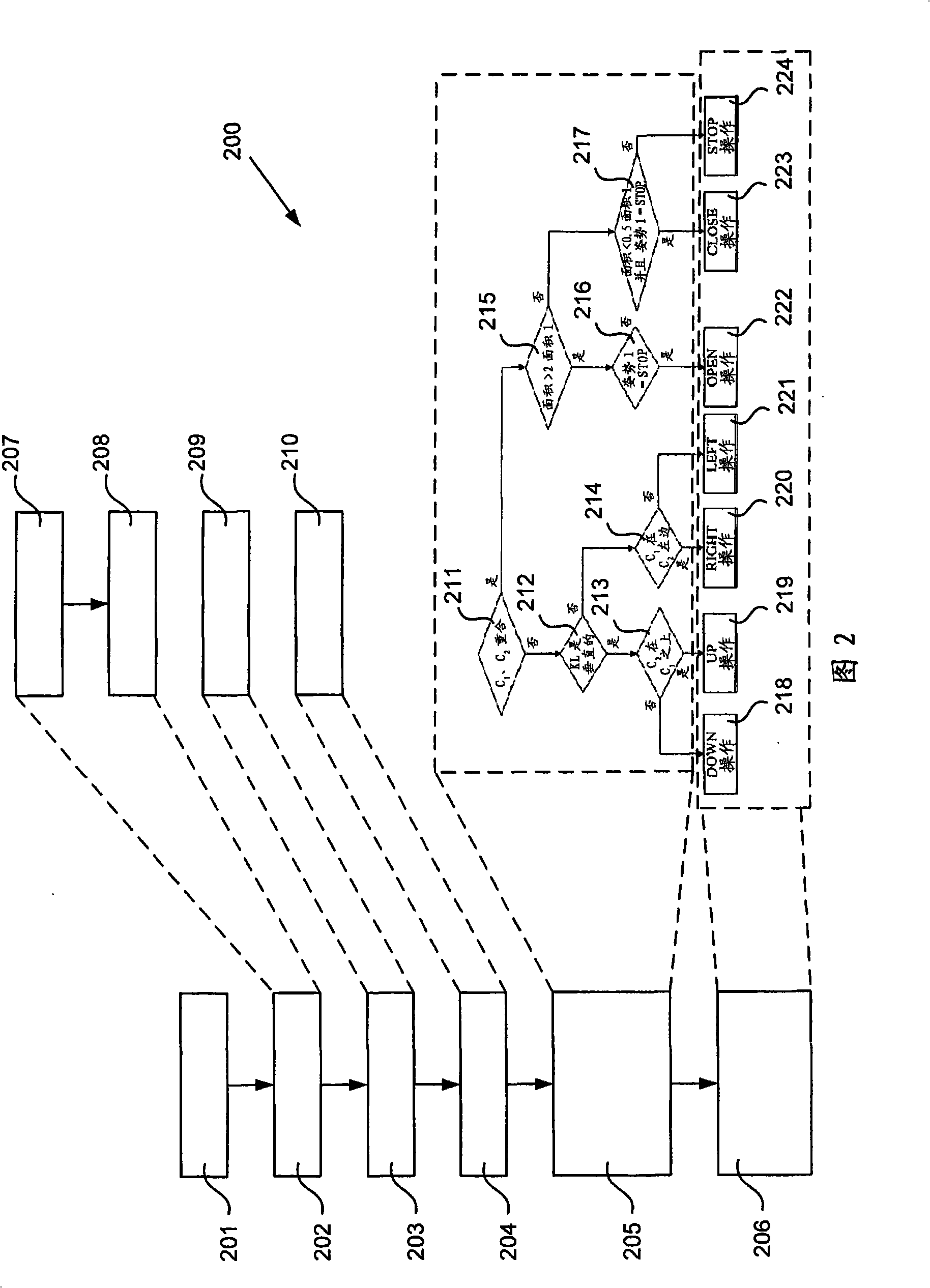

Man-machine interaction method, device and system based on gesture recognition

InactiveCN107357428AInteractive natureInput/output for user-computer interactionCharacter and pattern recognitionBody movementHuman system interaction

The invention provides a man-machine interaction method, device and system based on gesture recognition. The man-machine interaction method based on gesture recognition comprises the steps that a gesture image of a user is acquired; the number of fingers stretched by the user is recognized according to the gesture image; and a corresponding preset control instruction is sent according to the number of the fingers stretched by the user. According to the scheme, the user can control a machine more naturally and conveniently through simple body movement.

Owner:BOE TECH GRP CO LTD

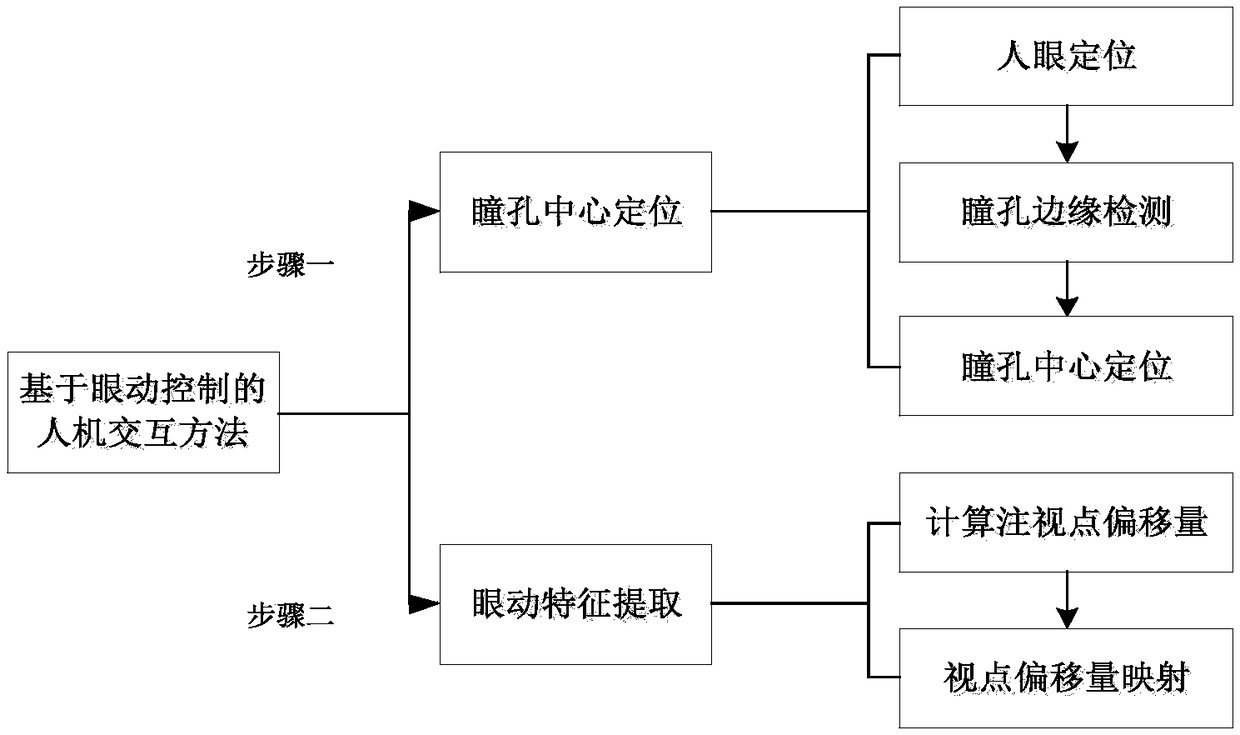

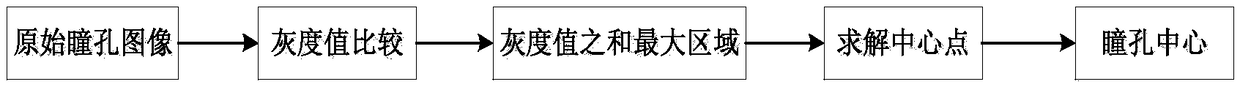

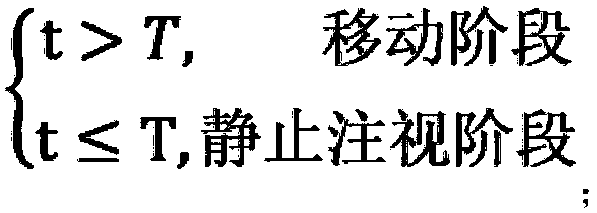

Human-computer interaction method based on eye movement control

ActiveCN108595008AReduce energy consumptionEffective interactionInput/output for user-computer interactionAcquiring/recognising eyesFixation pointVision based

The invention discloses a human-computer interaction method based on eye movement control. The method comprises the following steps of human eye pupil positioning and eye movement characteristic extraction. The step of pupil center positioning based on grey information comprises the following stages that: 1: human eye positioning; 2: pupil edge detection; 3: pupil center positioning. The step of eye movement characteristic information extraction based on visual point movement comprises the following steps that: calculating a fixation point deviation and visual point deviation mapping; on the basis of the displacement difference of two obtained adjacent frames of images, enabling eyes to move back and forth to calibrate points calibrated by coordinates on the screen, and utilizing a least square curve fitting algorithm to solve a mapping function; and after eye movement characteristic information is obtained, on the basis of the obtained eye movement control displacement and angle information, carrying out a corresponding system message response. By use of the human-computer interaction method based on the eye movement control, the energy consumption of the user can be reduced, theuser can be assisted in carrying out direct control, and efficient and natural interaction is realized.

Owner:BEIJING INST OF COMP TECH & APPL

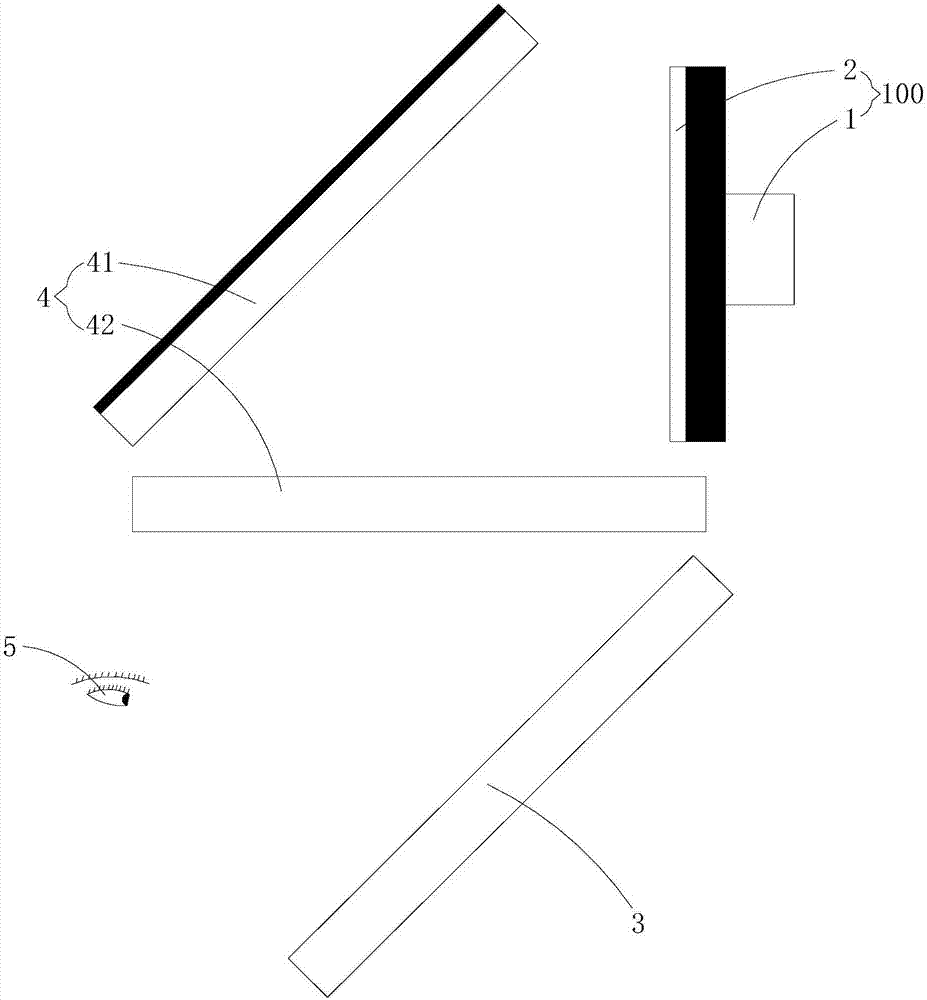

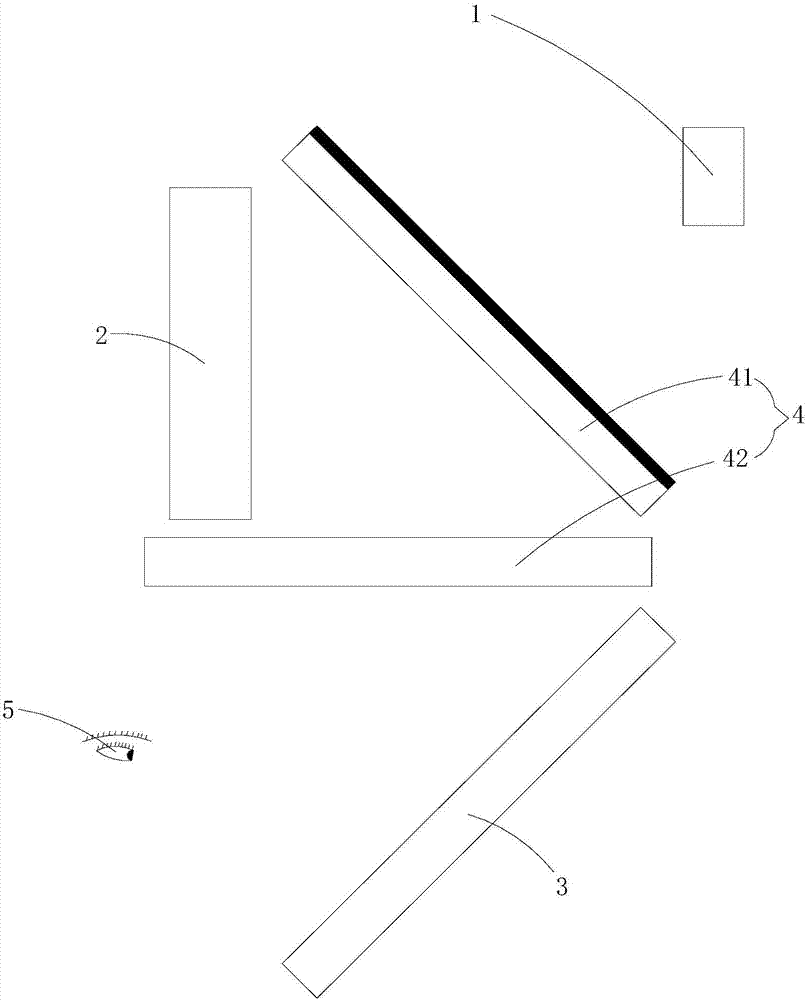

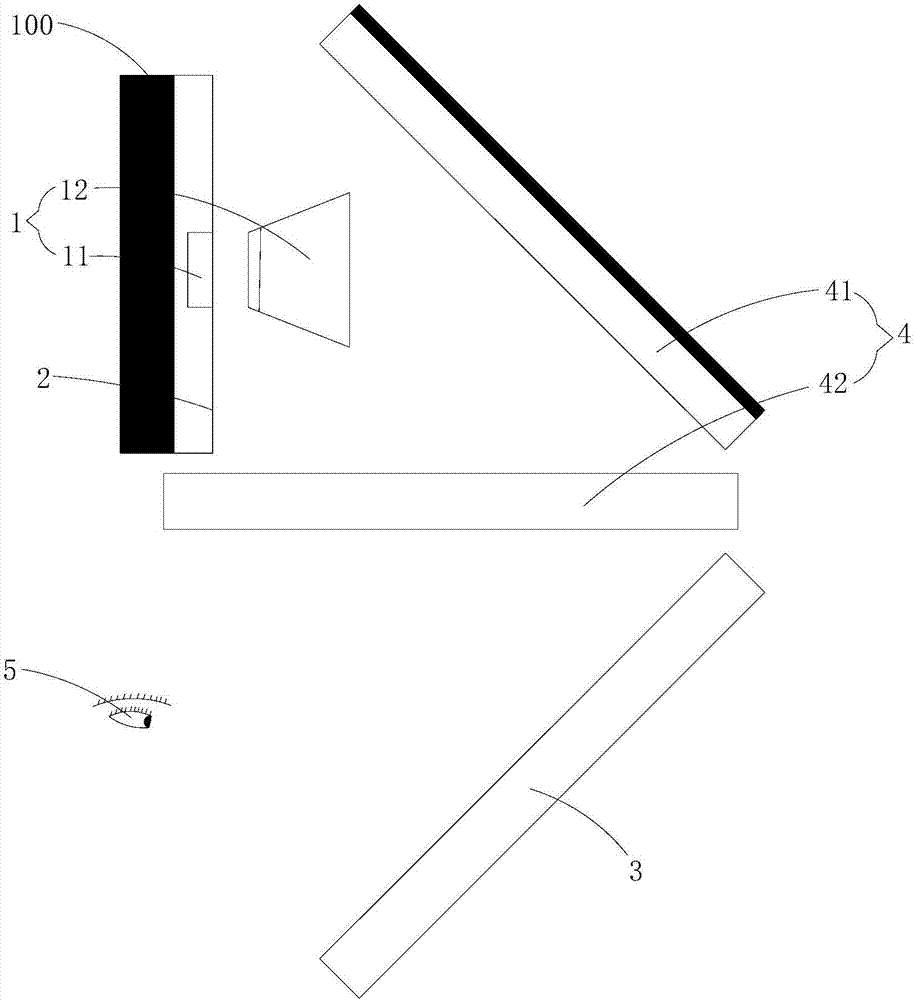

Augmented reality device

PendingCN106919262AReplace at any timeRelieve pressureInput/output for user-computer interactionGraph readingData connectionComputer graphics (images)

The invention is suitable to the AR technical field and discloses an augmented reality device. The device comprises a camera which is used for shooting external real environment; an image display screen which is used for displaying virtual features; a semi-reflective-semi-permeable mirror which is used for users to watch an integrated scene with their eyes by superimposing virtual features and external real environment and the semi-reflective-semi-permeable mirror has a single-view structure for double eyes; an optical path transition assembly which is used for projecting the virtual features displayed on the image display screen onto the semi-reflective-semi-permeable mirror; a data processor which is connected with the camera and the image display screen in a data connection mode and is used for identifying, positioning and operating image pickup information of the camera and controlling the image display screen and changing display information according to the operation results so as to realize spatial interaction between users and virtual features. The optical path of the invention is the single-view system for double eyes so that there is no need to split screen for calculation and the operation amount and energy consumption are saved; the device has strong compatibility and the spatial interaction between users and virtual features is realized and users experience is increased.

Owner:广州数娱信息科技有限公司

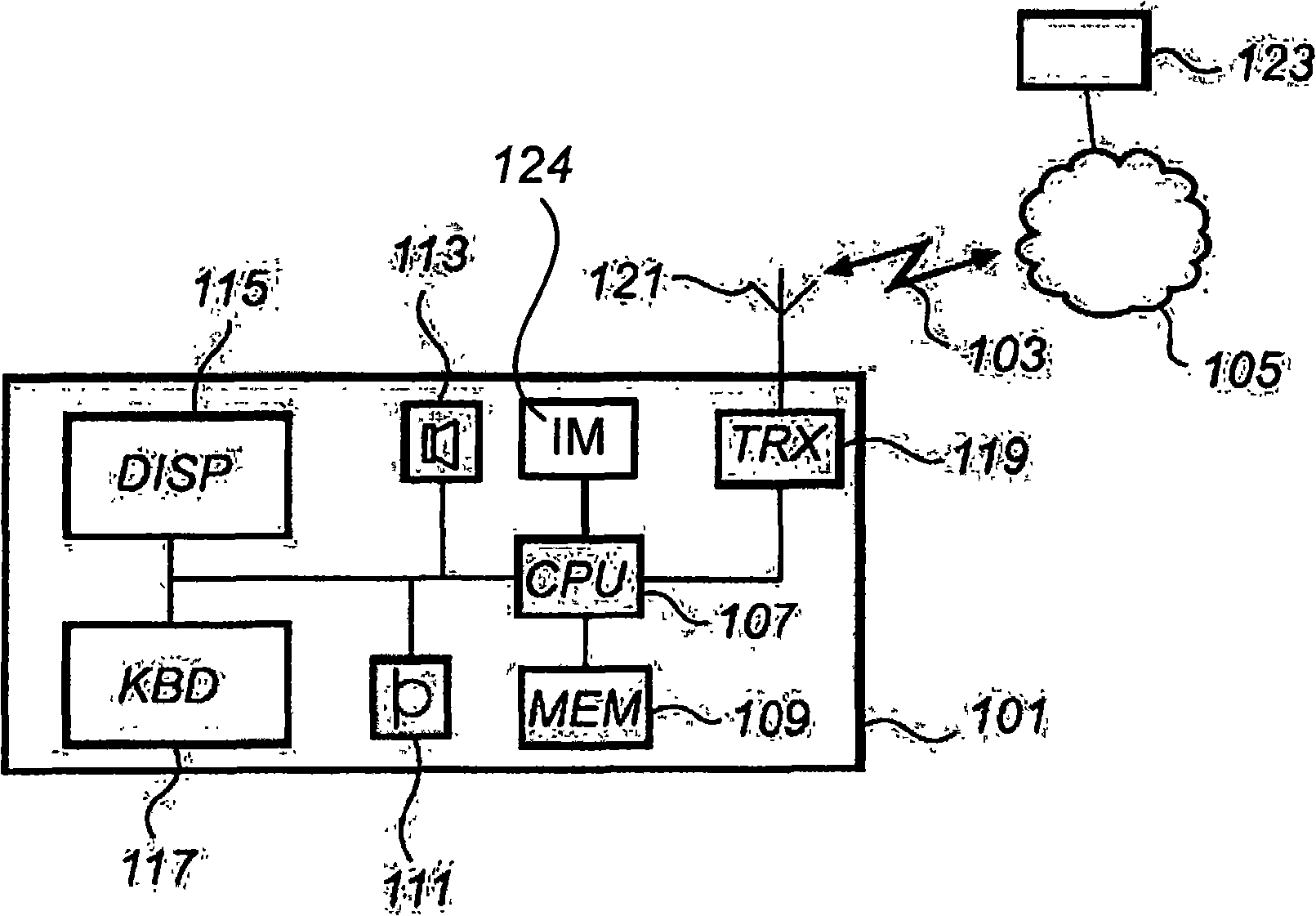

Improved user interface

ActiveCN101517515AInteractive natureInput/output for user-computer interactionCharacter and pattern recognitionComputer scienceUser interface

Owner:NOKIA TECHNOLOGLES OY

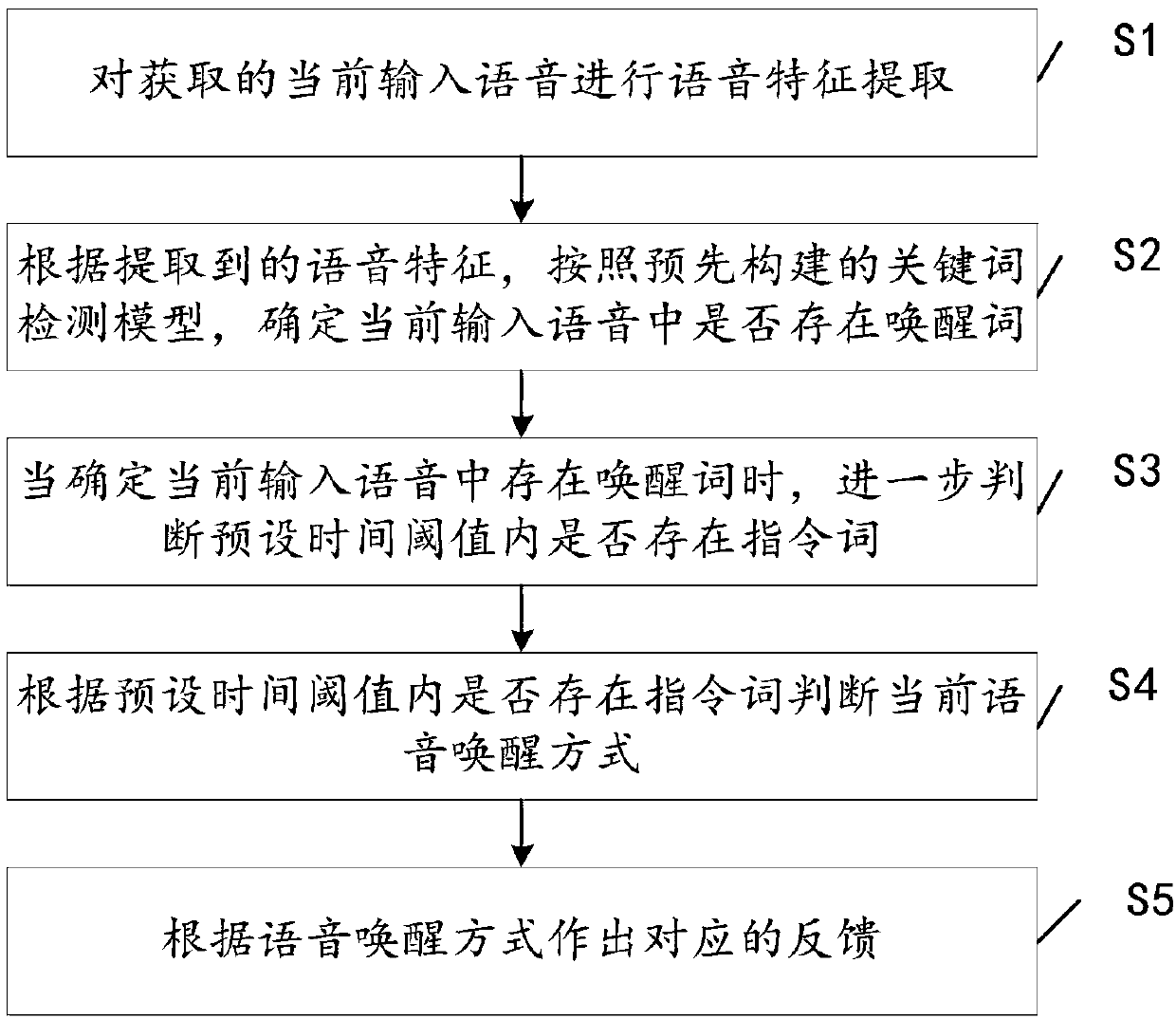

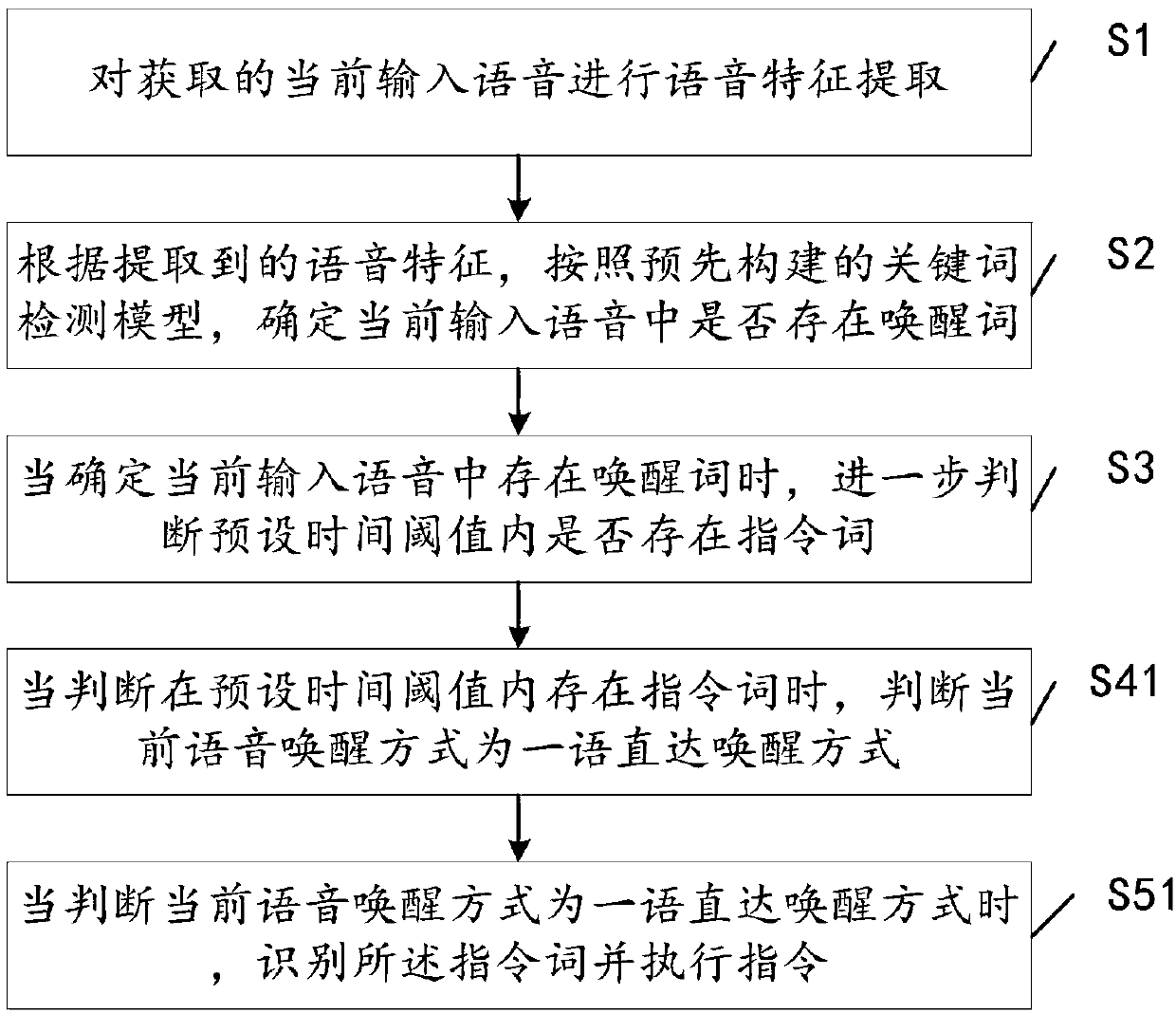

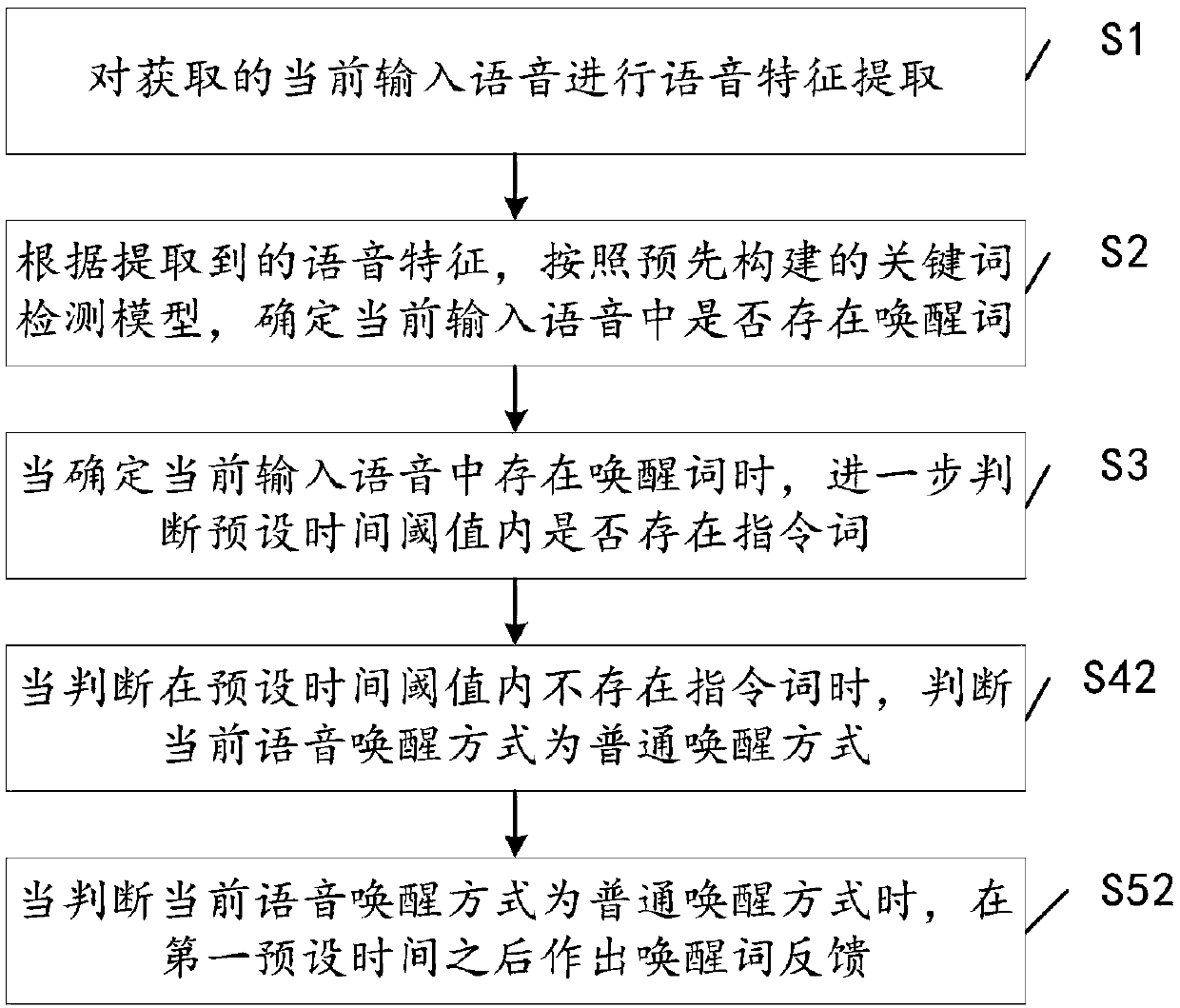

Voice wakeup method and device

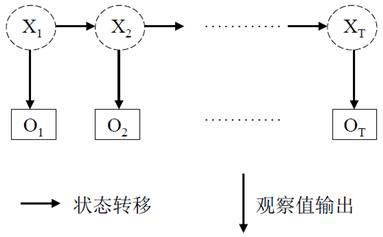

The invention belongs to the field of voice wakeup, and discloses a voice wakeup method and device. The voice wakeup method comprises the steps that obtained currently input voice is subjected to voice characteristic extraction; according to the extracted voice characteristics, whether or not wakeup words exist in the currently input voice is determined according to a keyword detection model builtin advance, wherein keywords in the keyword detection model at least comprises preset wakeup words; when it is determined that the wakeup words exit in the currently input voice, whether or not instruction words exist in a preset time threshold value is further judged; the keywords in the keyword detection module at least comprise preset instruction words; the current voice wakeup mode is judgedaccording to whether or not the instruction words exit within the preset time threshold value; corresponding feedback is given according to the voice wakeup mode, and a user interacts with a voice system more naturally.

Owner:GUANGDONG XIAOTIANCAI TECH CO LTD

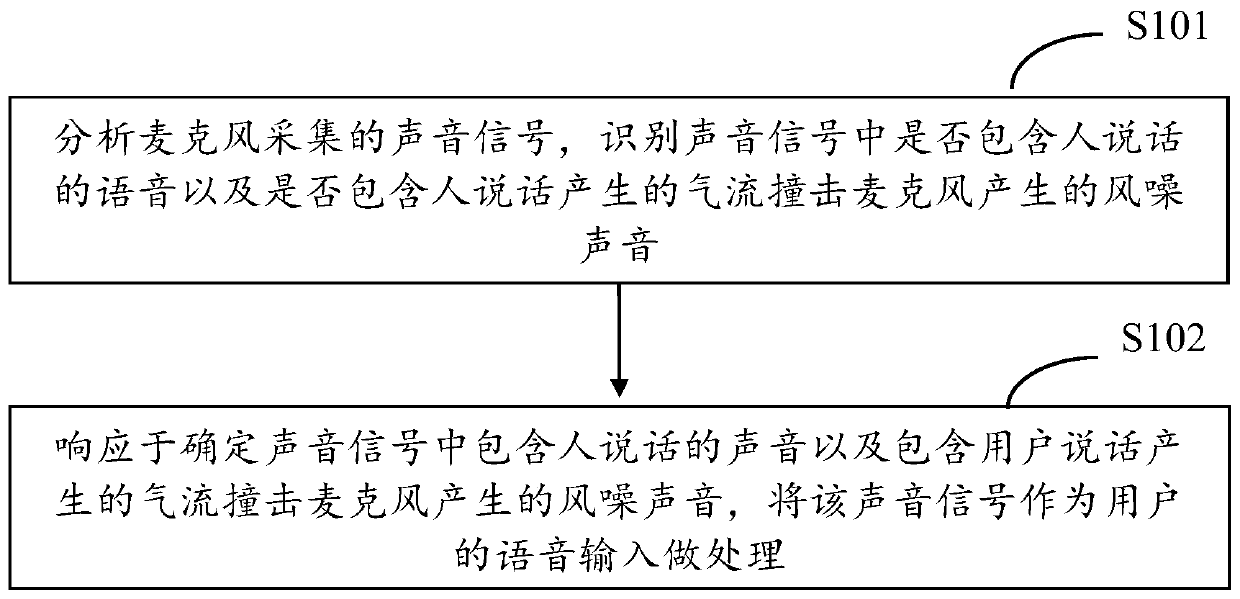

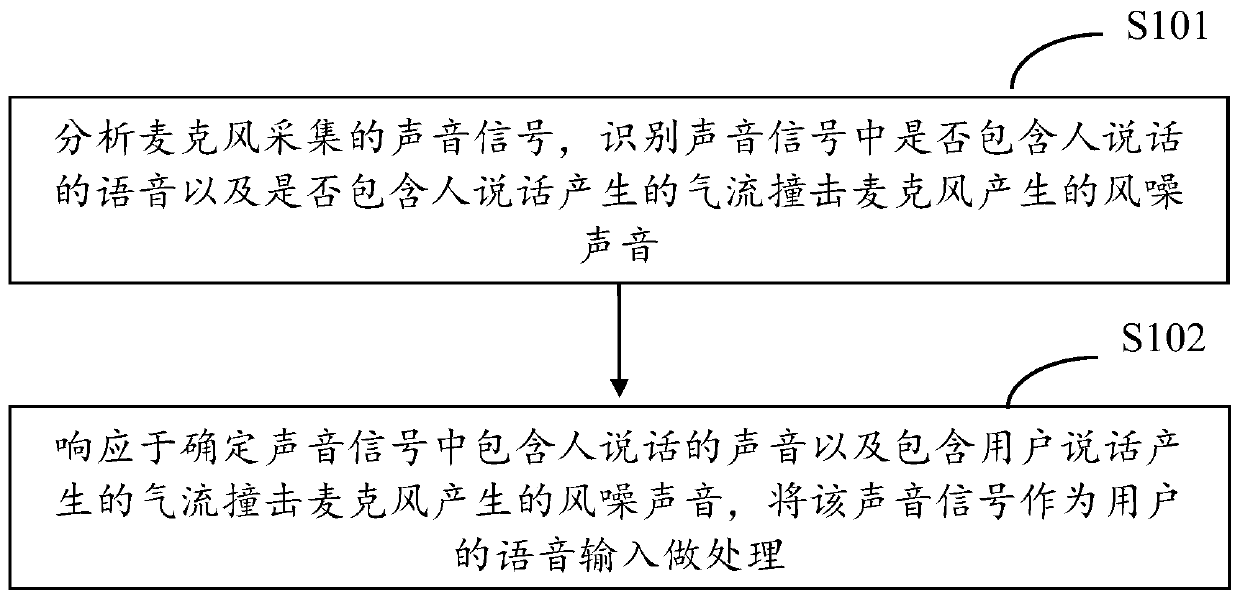

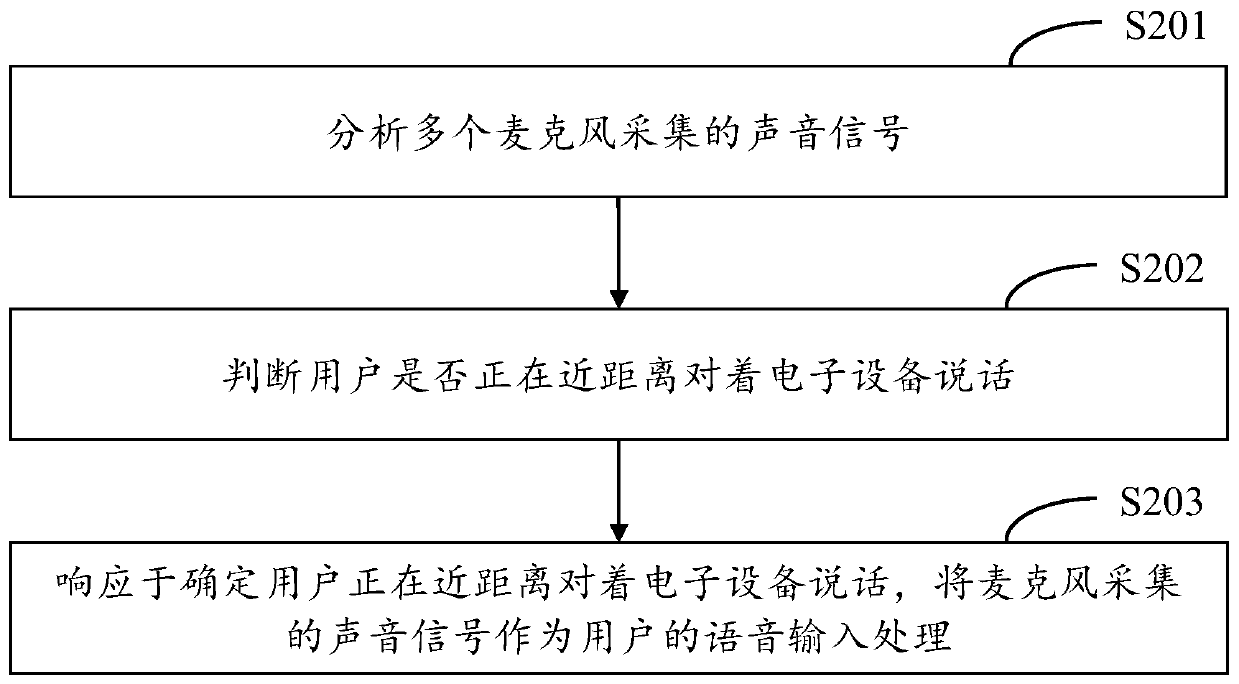

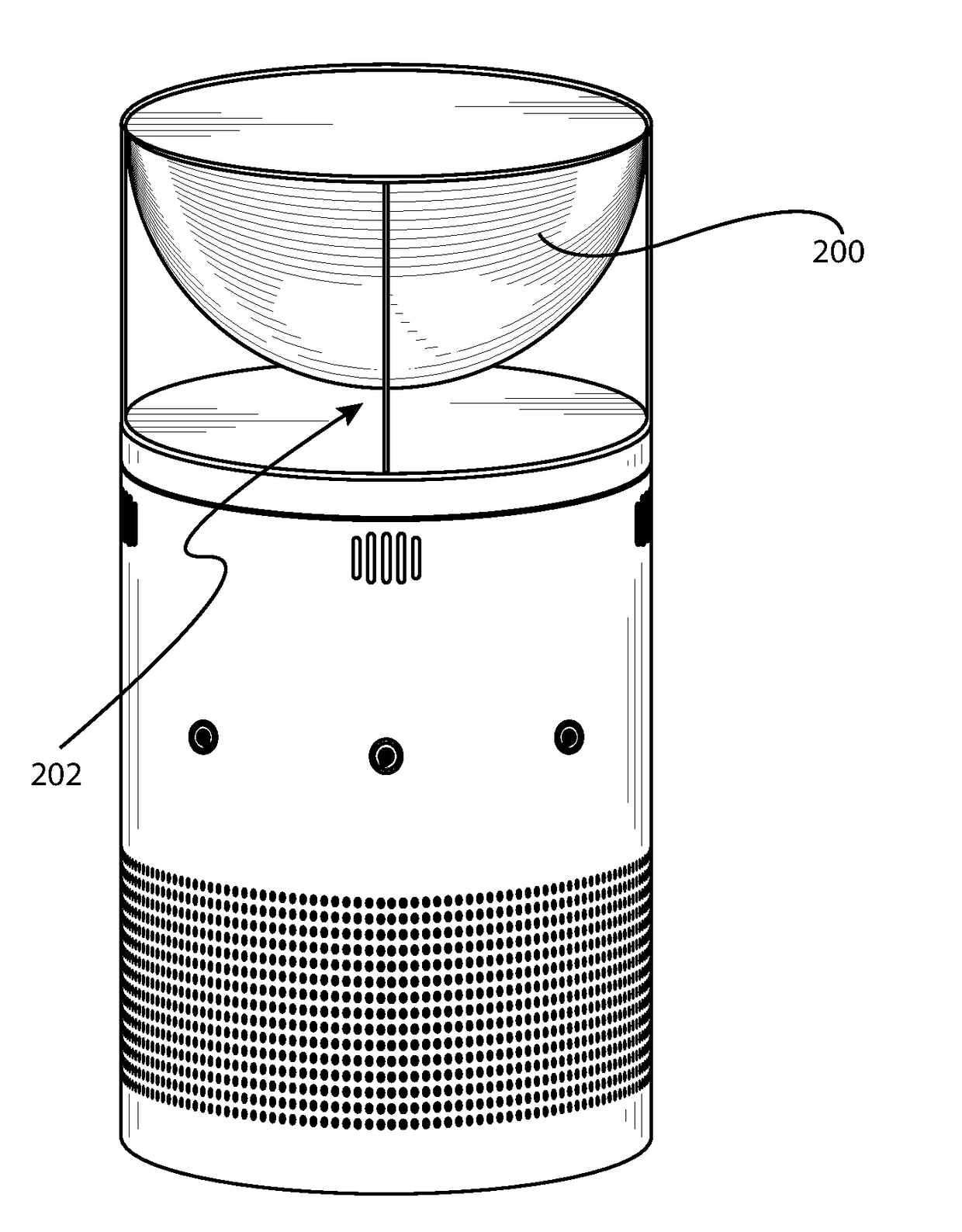

Voice interaction awakening electronic device based on microphone signal, method and medium

ActiveCN110097875AInteractive natureImprove efficiencySpeech recognitionHigh level techniquesMicrophone signalSpeech input

The invention provides an intelligent electronic device with a built-in microphone. The intelligent electronic portable device performs voice input-based interaction with a user through the followingoperations: processing a sound signal captured by the microphone to judge whether a voice signal exists in the sound signal or not; further judging whether the distance between the intelligent electronic device and the mouth of the user is less than a preset threshold value or not based on the sound signal collected by the microphone in response to the confirmation that the voice signal exists inthe sound signal; and, in response to determining that the distance between the electronic device and the mouth of the user is less than the predetermined threshold, processing the sound signal acquired by the microphone as voice input. The interaction method is suitable for voice input when the user carries the intelligent electronic device, the operation is natural and simple, the voice input steps are simplified, the interaction burden and difficulty are reduced, and the interaction is more natural.

Owner:TSINGHUA UNIV

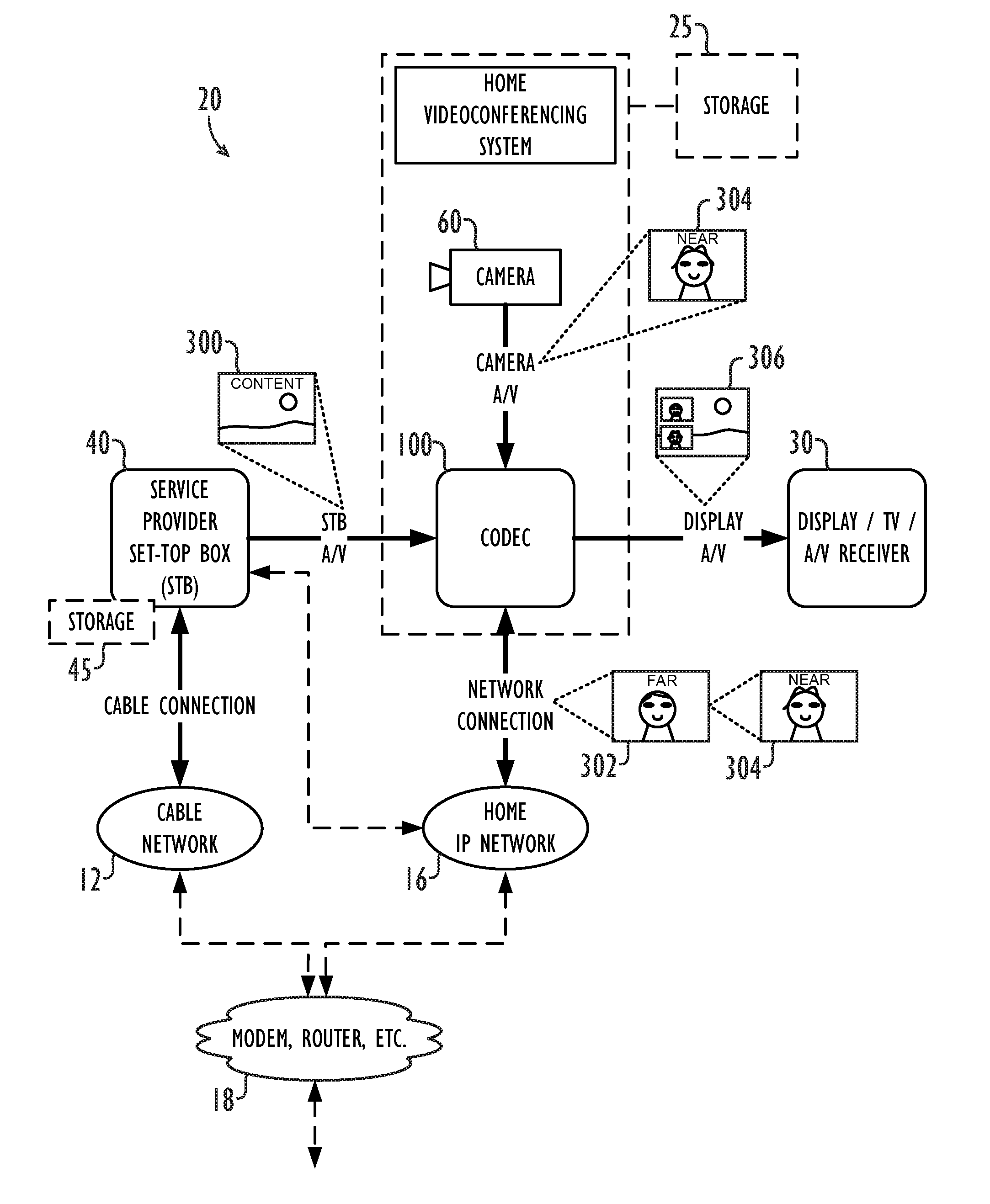

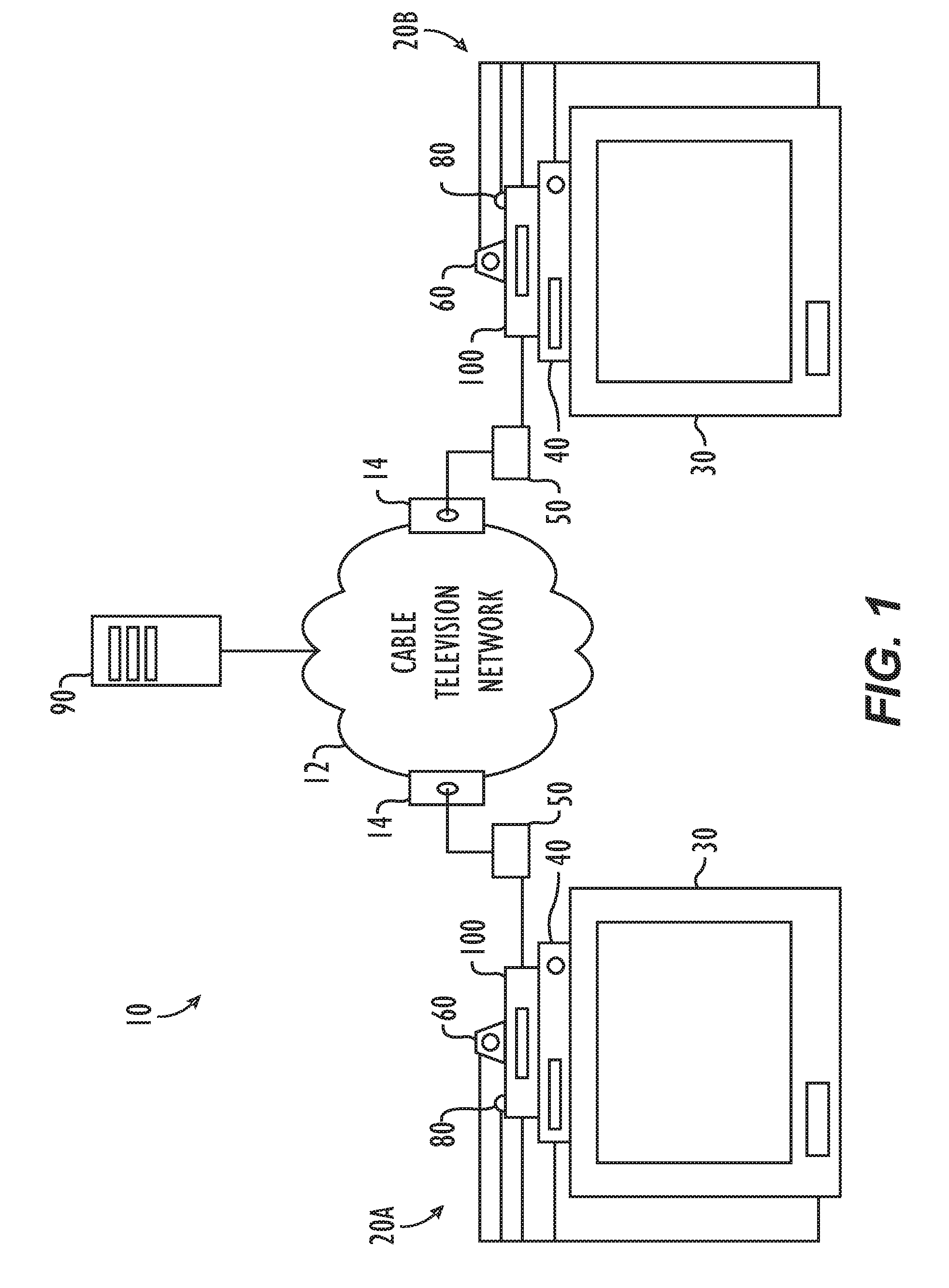

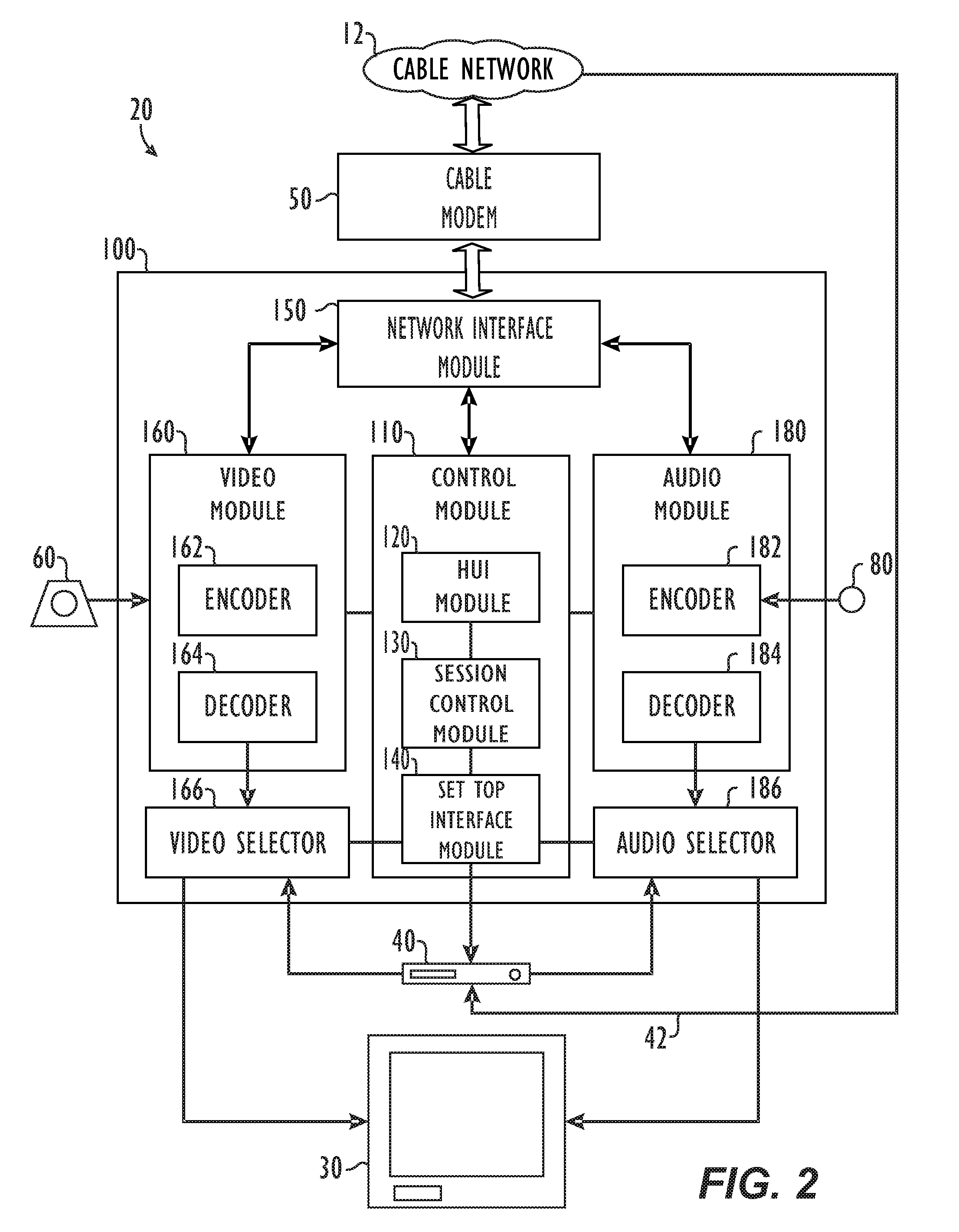

Home videoconferencing system

ActiveUS8619953B2Firmly connectedHigh-quality audio processingTelevision conference systemsTelephonic communicationModem deviceDisplay device

A home videoconferencing system interfaces with traditional set-top boxes and typical home A / V equipment. The system includes a camera, a microphone, and a codec module. The module can couple to a modem connected to a television network and can couple to a display and a set-top box. Alternatively, the module can connect to a network for exchanging videoconference data and can connect between the set-top box and the display. The set-top box can connect to the television network on its own.

Owner:HEWLETT PACKARD DEV CO LP

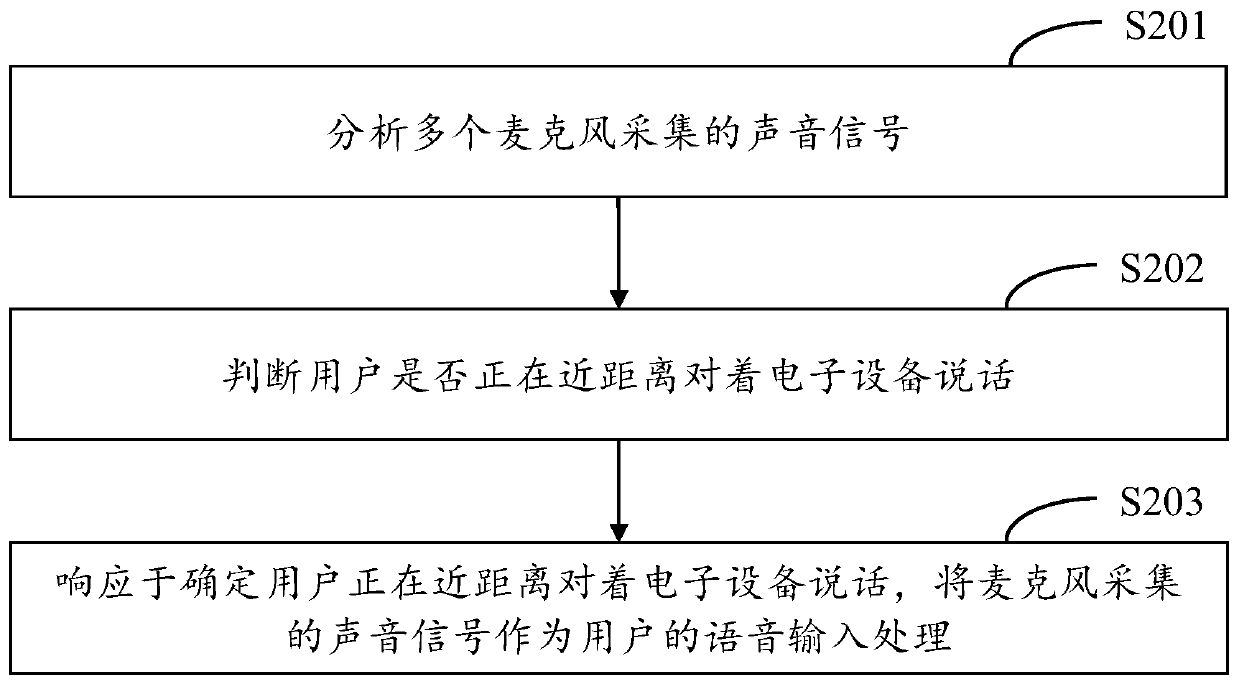

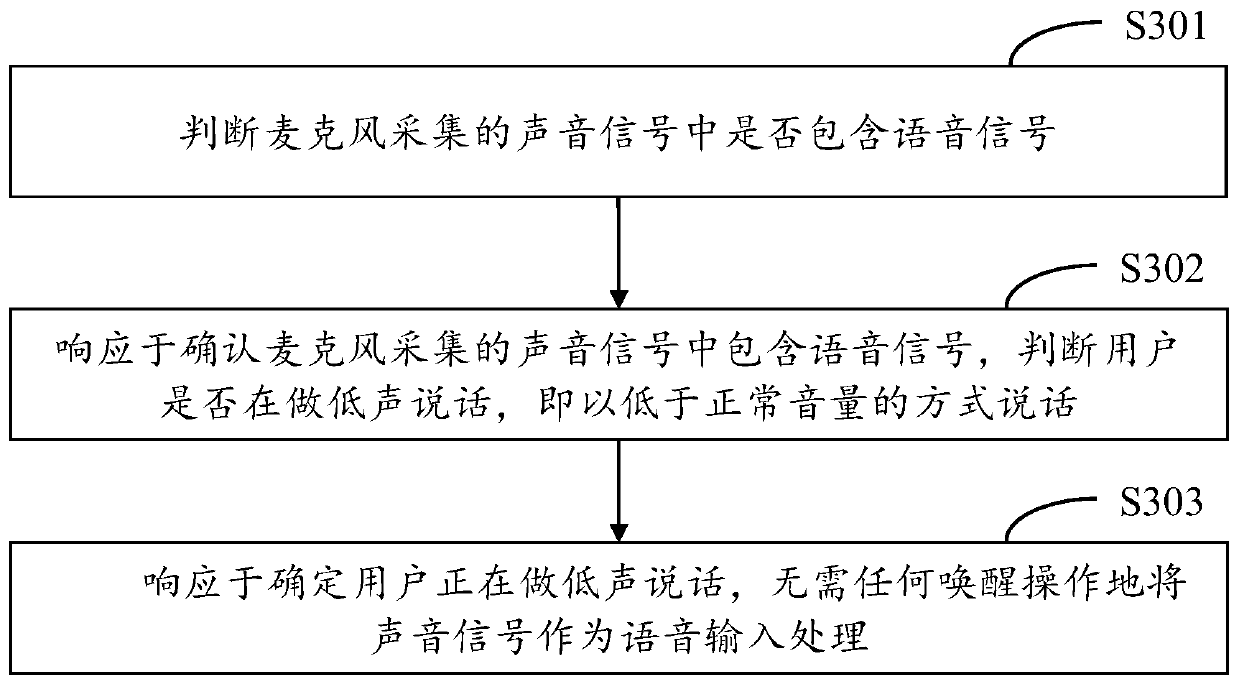

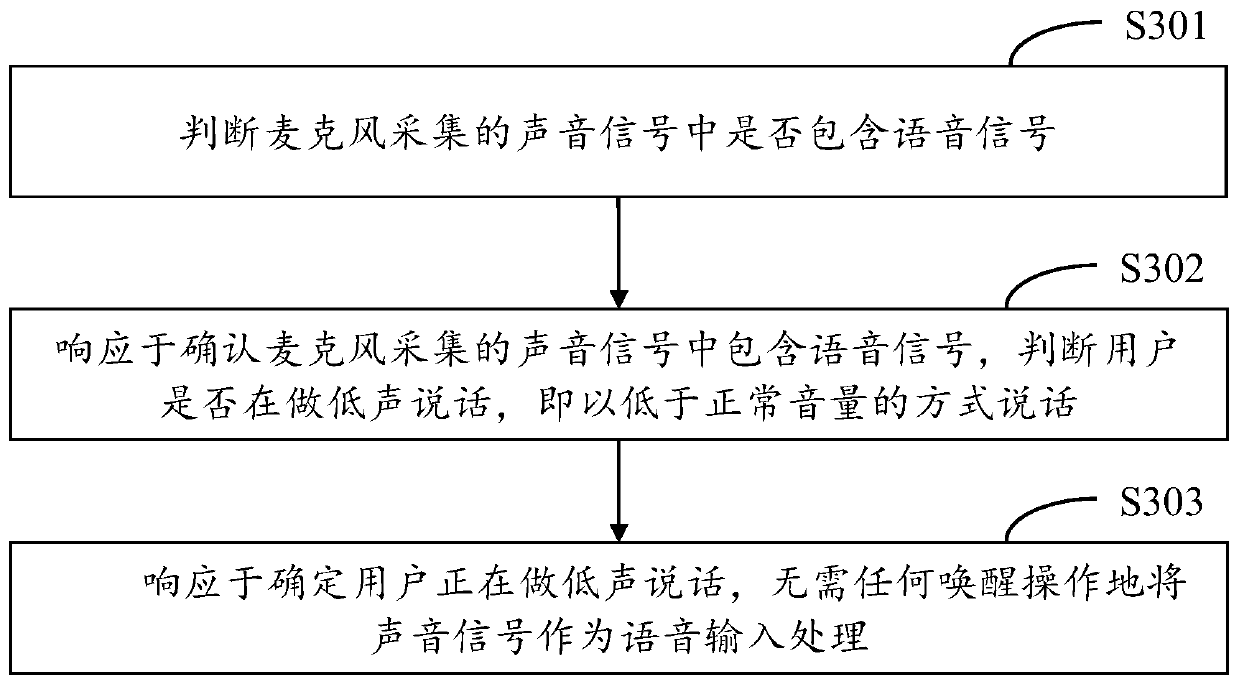

Voice interaction wake-up electronic equipment and method based on microphone signal and medium

PendingCN110111776AInteractive natureImprove efficiencySpeech recognitionMicrophone signalSpeech input

The invention provides electronic equipment provided with a microphone. The electronic equipment is provided with a storage and a central processing unit, a computer executable command is stored in the storage, and when the computer executable command is executed by the central processing unit, the execution comprises the following operation steps that whether or not a sound signal collected by the microphone comprises a voice signal is judged; in response to determination that the sound signal collected by the microphone comprises the voice signal, whether or not a user speaks in a low voiceis judged, that is, the user speaks in a voice lower than the normal volume; in response to a determination that the user speaks in the low voice, the sound signal is taken as a voice for input processing without a wake-up operation. The interaction method is suitable for voice input when the user carries intelligent electronic equipment, the operations are natural and simple, the steps of voice input are simplified, and the interaction burden and difficulty are reduced, so that integration is more natural.

Owner:TSINGHUA UNIV

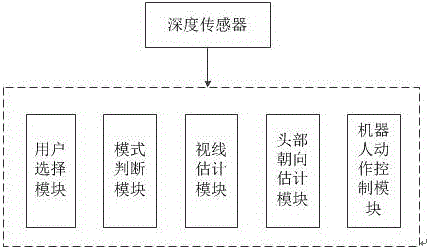

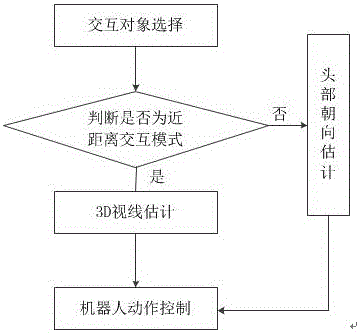

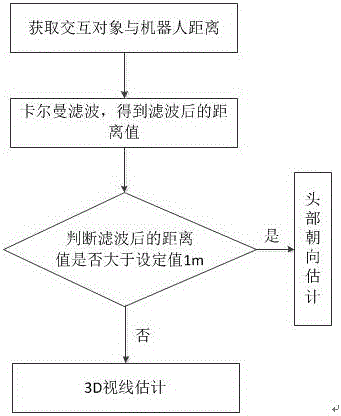

Far-near distance man-machine interactive system based on 3D sight estimation and far-near distance man-machine interactive method based on 3D sight estimation

InactiveCN105759973AImprove effectivenessImprove stabilityInput/output for user-computer interactionAcquiring/recognising eyesFar distanceMan machine

The invention discloses a far-near distance man-machine interactive system based on 3D sight estimation and a far-near distance man-machine interactive method based on 3D sight estimation. The system comprises a depth sensor, a user selection module, a mode judgment module, a sight estimation module, a head orientation estimation module and a robot action control module. The method comprises the following steps: (S1) interaction object selection; (S2) interaction mode judgment; (S3) 3D sight estimation; (S4) head orientation estimation; and (S5) robot action control. According to the system and the method, the man-machine interaction is divided into a far distance mode and a near distance mode according to the actual distance between people and a robot, and the action of the robot is controlled by virtue of the two modes, so that the validity and stability of the man-machine interaction are improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

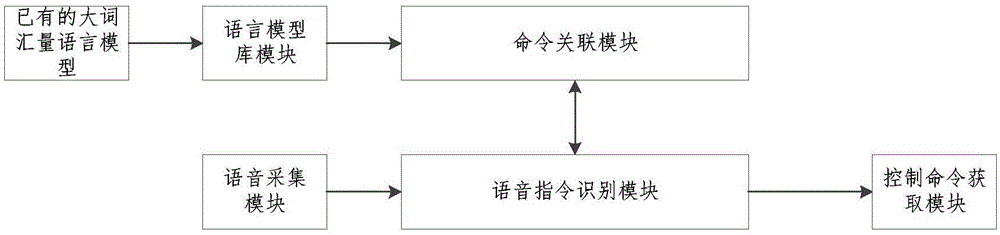

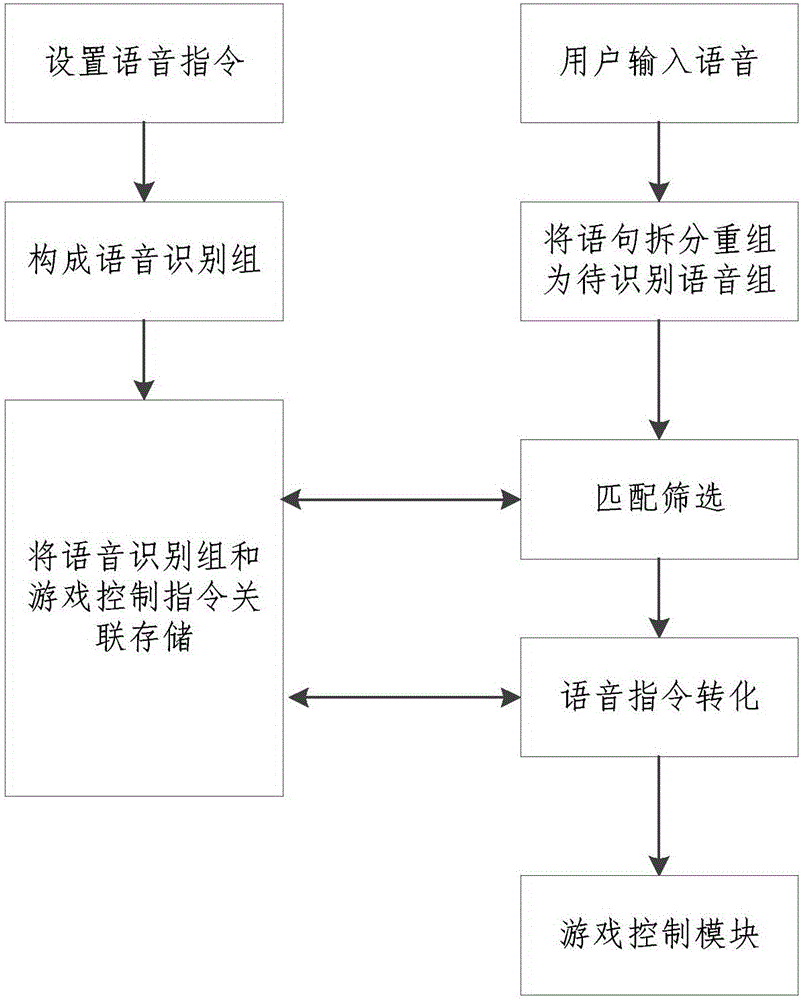

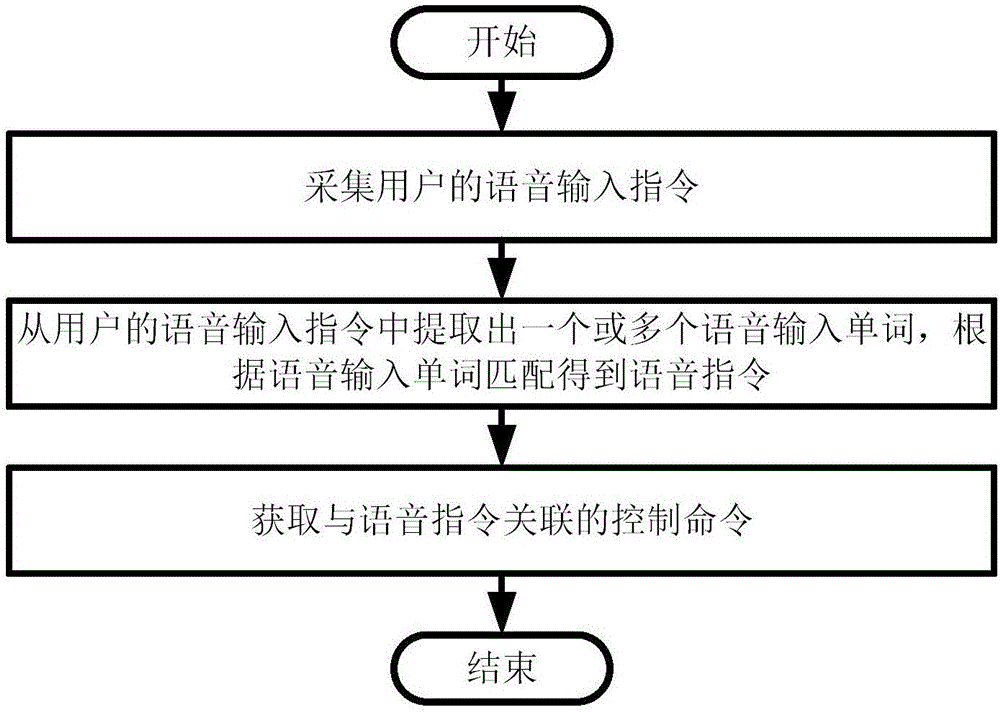

Application voice control method and system suitable for virtual reality environment

InactiveCN106512393AMake up for and circumvent the situation where command input methods are extremely limitedSmall scaleSound input/outputSpeech recognitionSpeech soundComputer science

The invention provides an application voice control method and system suitable for a virtual reality environment. The method includes: a voice acquisition step: acquiring a voice input command of a user; a voice command recognition step: extracting one or more voice input words from the voice input command of the user, and performing matching to acquire a voice command according to the voice input words; and a control command acquisition step: acquiring a control command associated with the voice command. The application voice control method and system can void the defect that a command input manner is limited due to shortage of hardware input equipment (such as a mouse and a keyboard) in a virtual reality game environment; the feedback speed of acquiring a result by the voice command is greatly improved; a user can control the input time by himself instead of real-time monitoring of input; and interference due to unmeant talk of players and outer sounds.

Owner:上海异界信息科技有限公司

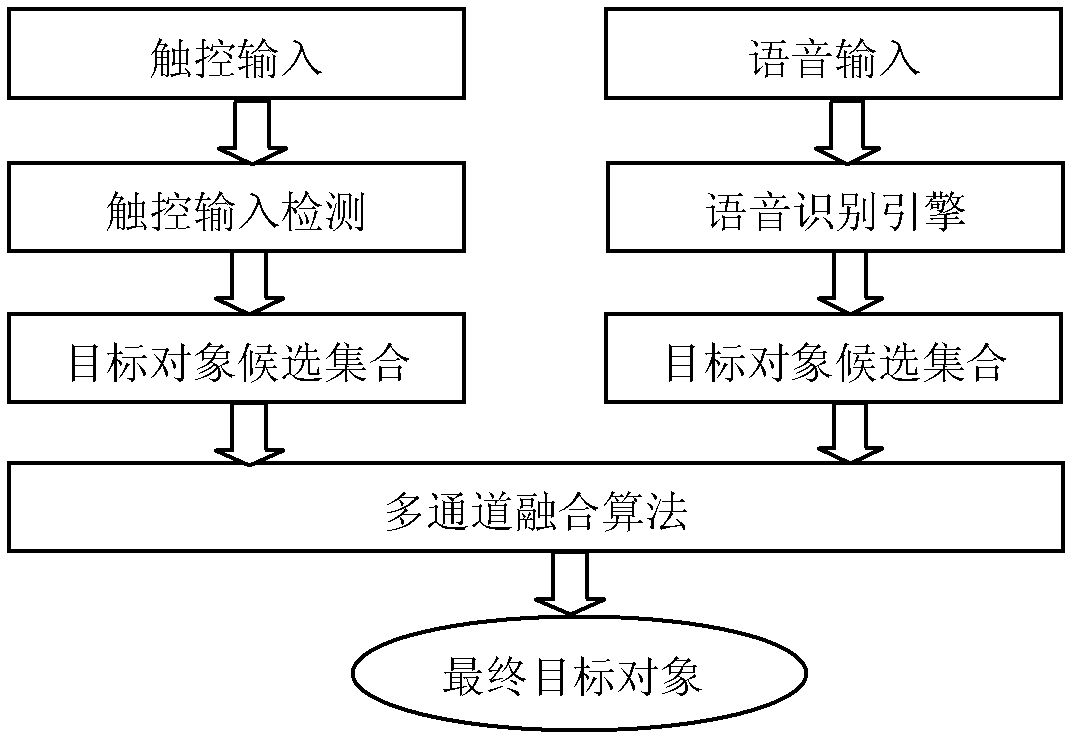

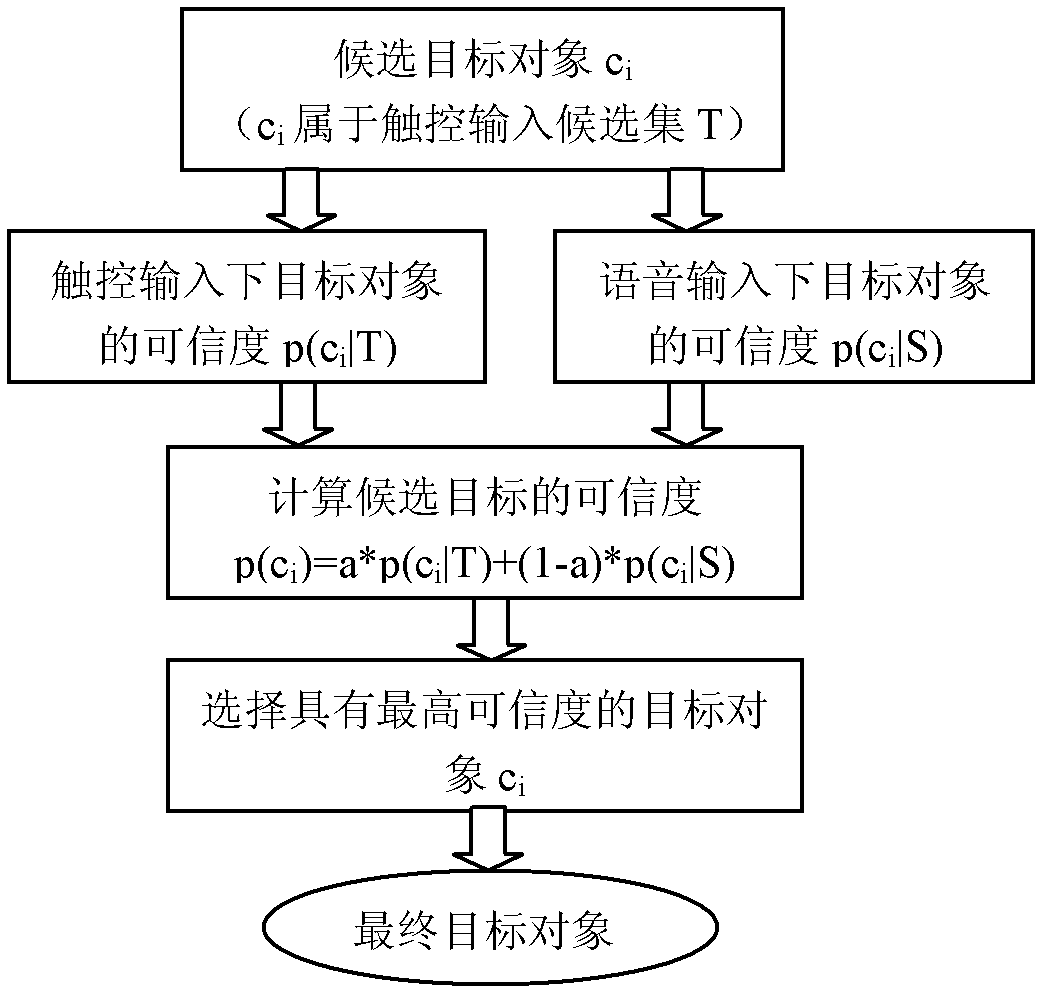

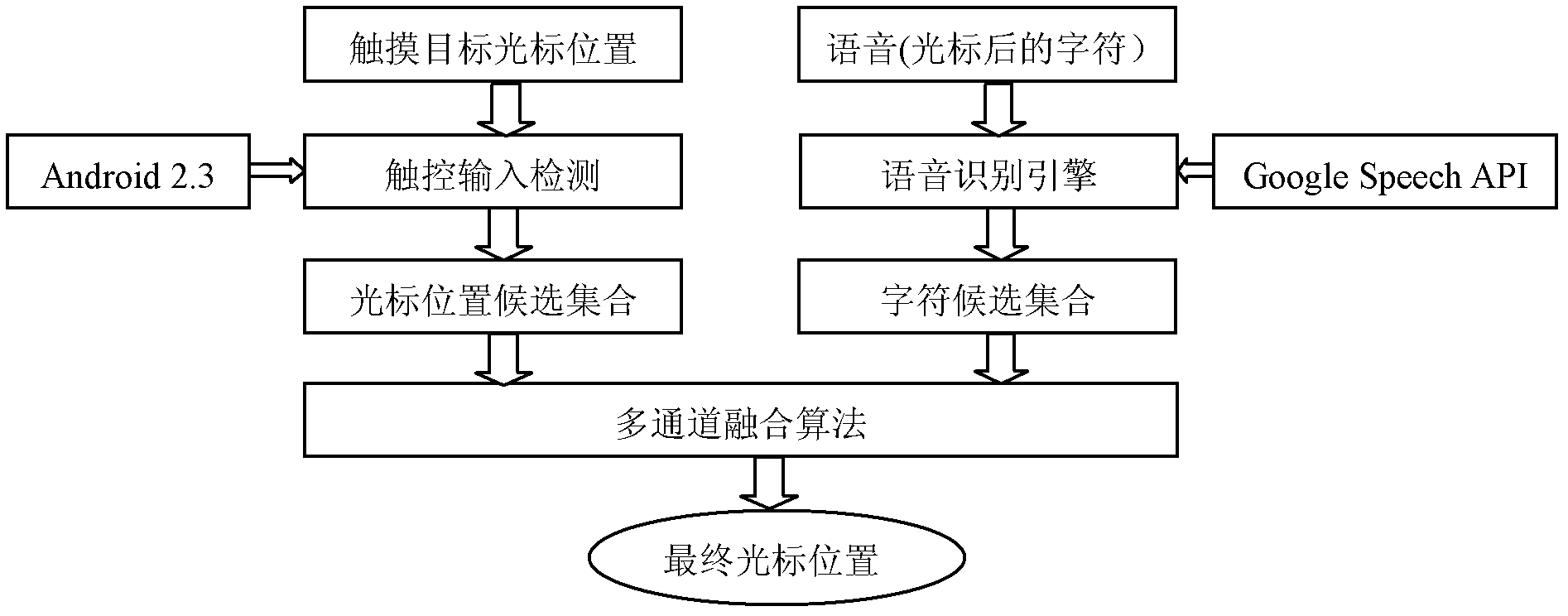

Multi-channel accurate target positioning method for touch equipment

ActiveCN102426483AImprove efficiencyImprove the efficiency of targetingInput/output for user-computer interactionGraph readingSpeech soundNatural interaction

The invention belongs to the field of human-machine interaction and particularly relates to a multi-channel accurate target positioning method for touch equipment. According to the multi-channel accurate target positioning method, touch input and voice input are used for positioning the target, wherein the touch input is used for providing a possible target object candidate set; the voice input is also used for providing a possible target object candidate set; and the possible target object candidate sets are positioned to an accurate target object by a multi-channel fusion algorithm. According to the multi-channel accurate target positioning method disclosed by the invention, two natural input channels, such as the touch input and the voice input as well as two natural interaction manners are supported; more accurate target positioning can be carried out; and the efficiency of positioning relevant tasks on the touch equipment is increased.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

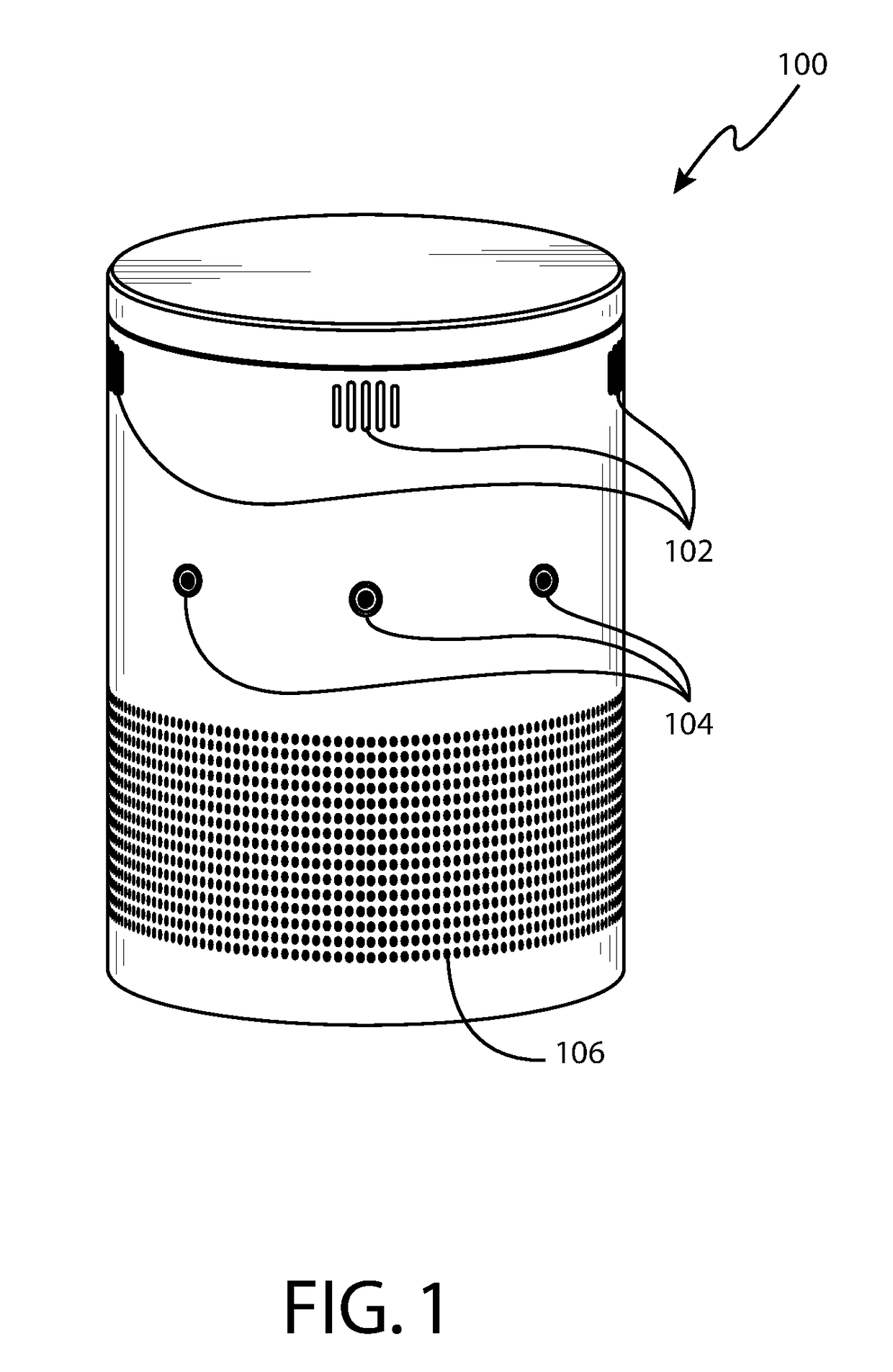

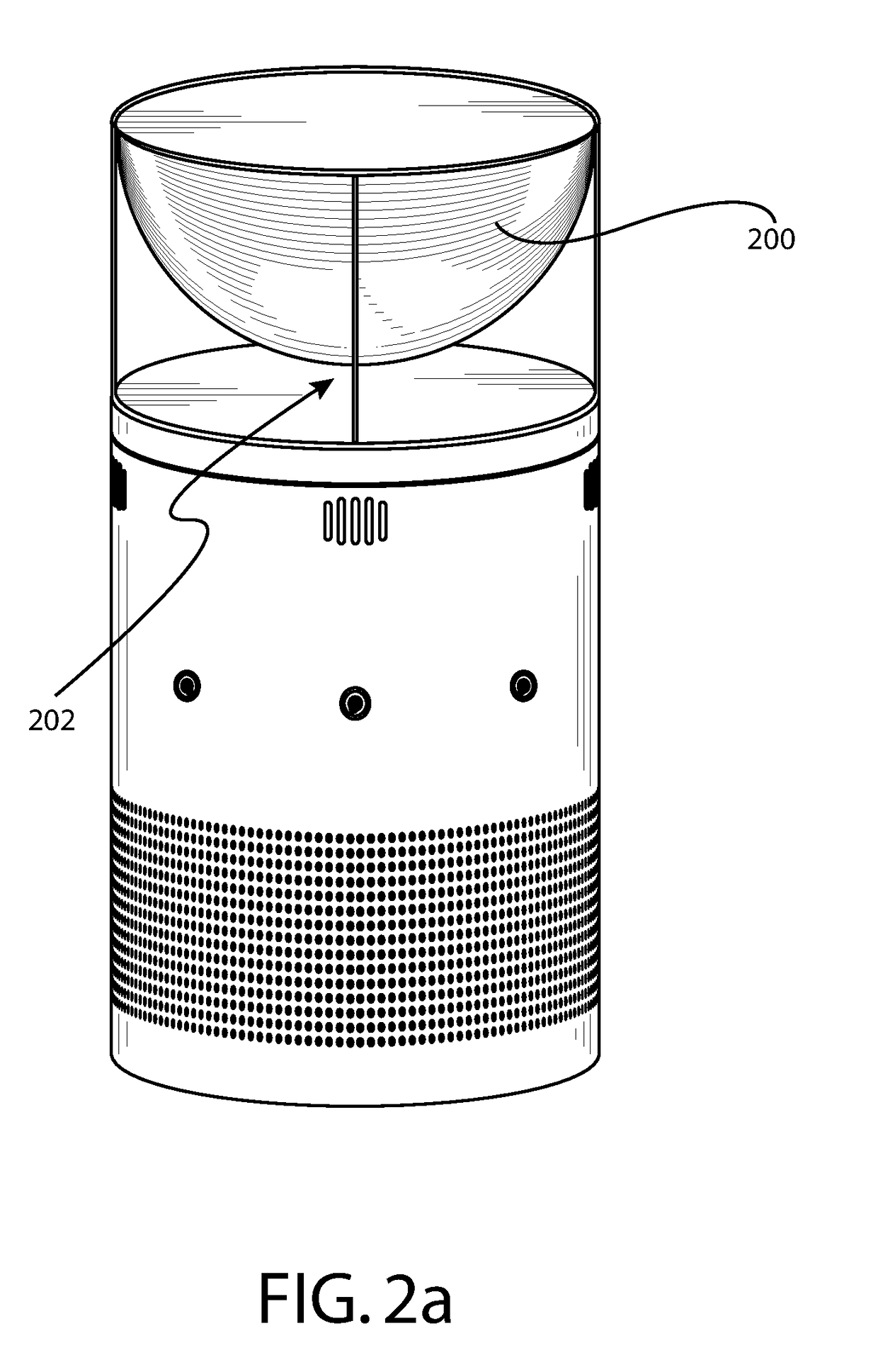

Method and apparatus for interaction with an intelligent personal assistant

Owner:ECOLINK INTELLIGENT TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com