Patents

Literature

146 results about "Natural interaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

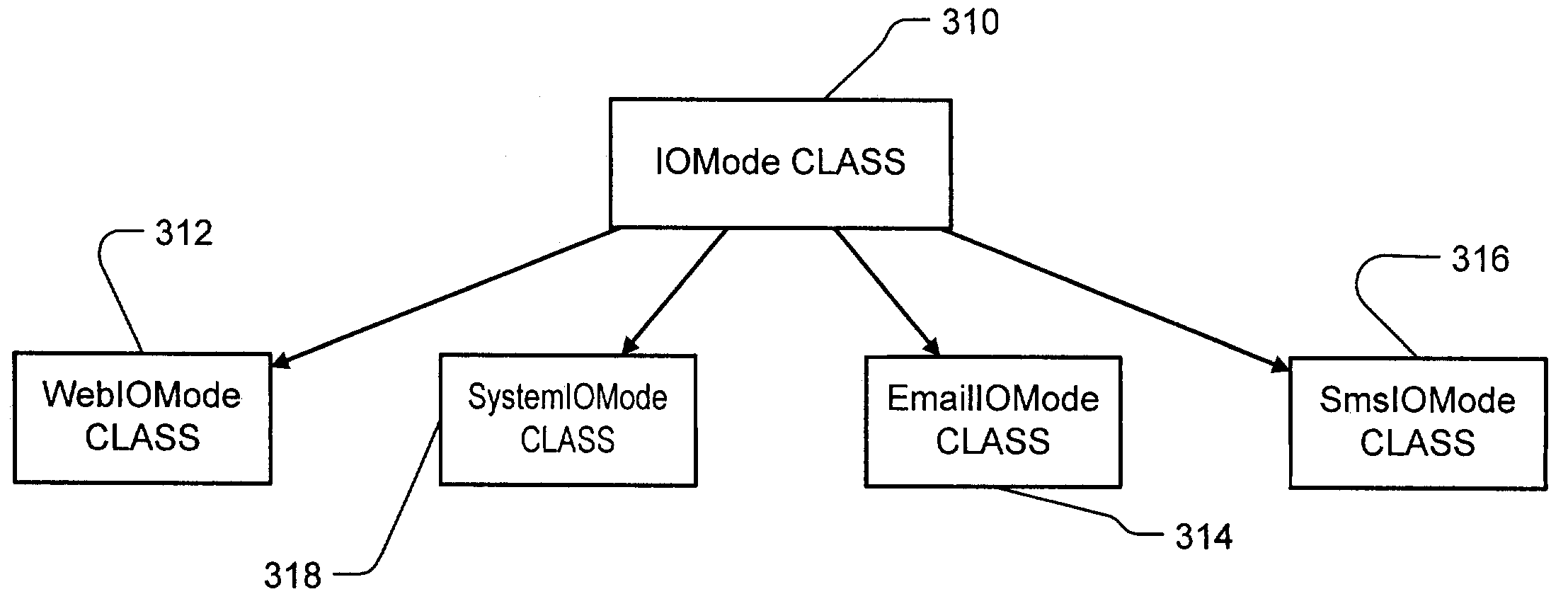

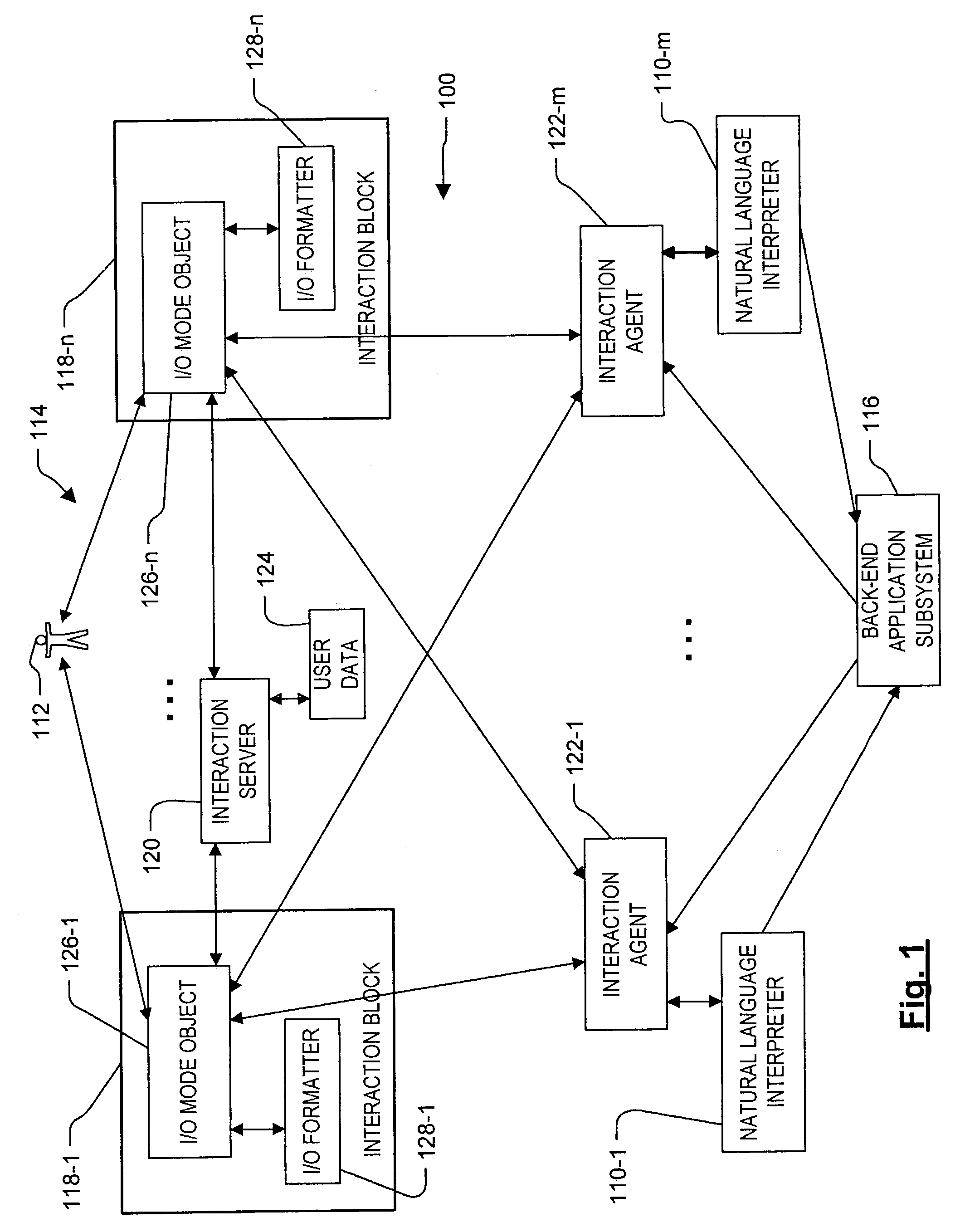

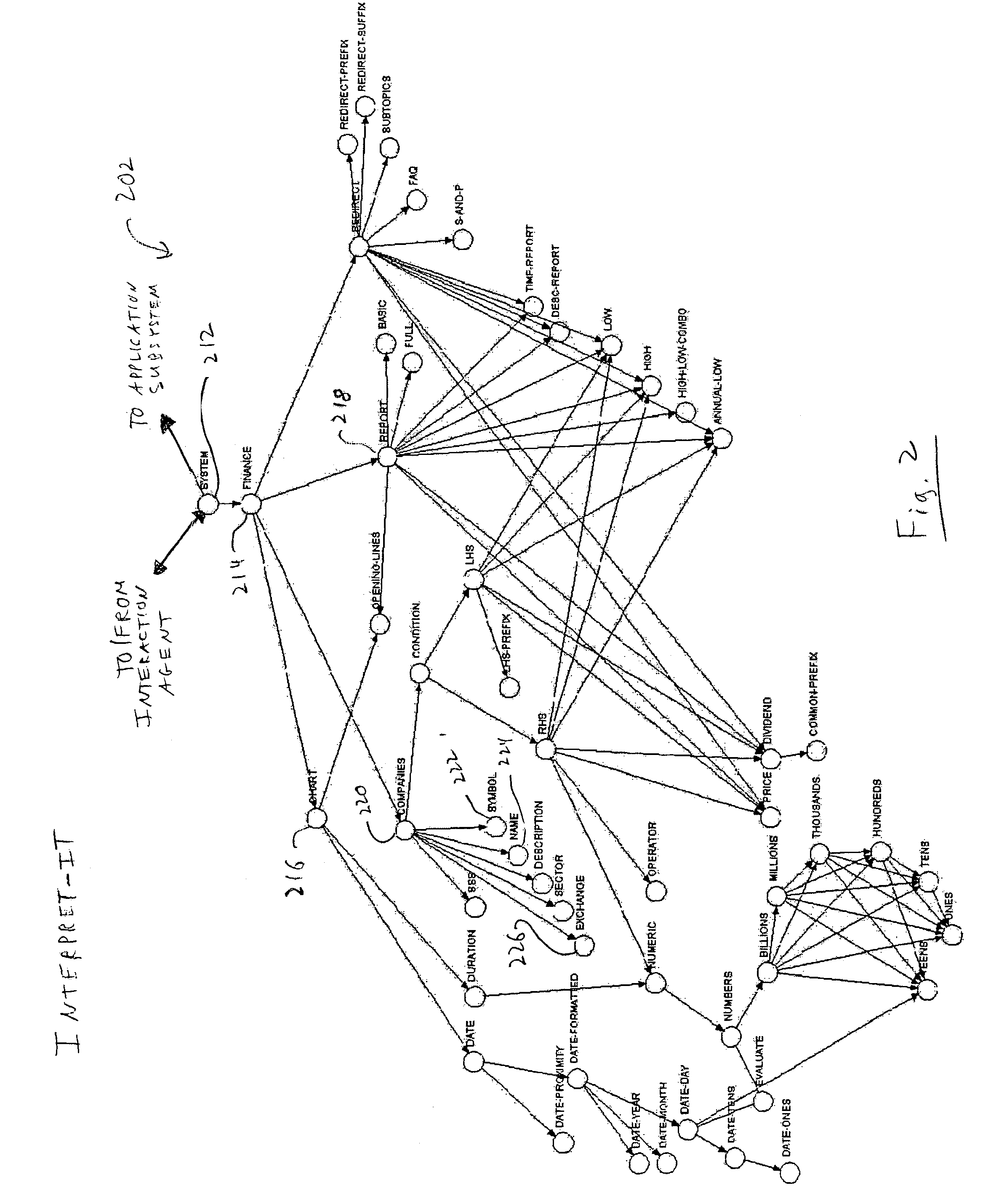

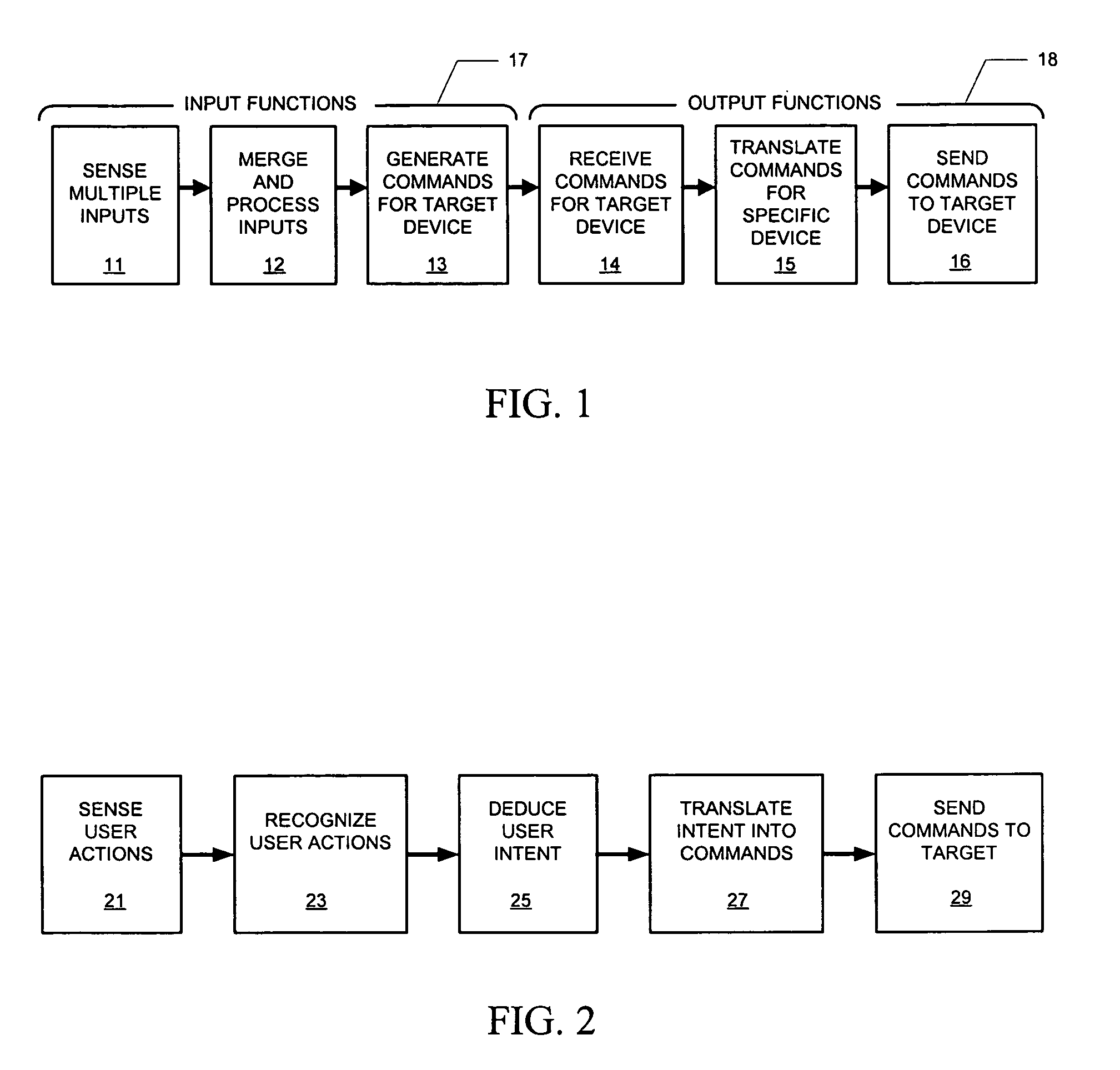

Front-end device independence for natural interaction platform

ActiveUS7302394B1Improve productivityShortening user learning curveSpeech recognitionSpecial data processing applicationsUser inputNatural interaction

Roughly described, a natural language interpretation system that provides commands to a back-end application in response to user input is modified to separate out user interaction functions into a user interaction subsystem. The user interaction subsystem can include an interaction block that is specific to each particular I / O agency, and which converts user input received from that agency into an agency-independent form for providing to the natural language interpretation system. The user interaction subsystem also can take results from the back-end application and clarification requests and other dialoguing from the natural language interpretation system, both in device-independent form, and convert them for forwarding to the particular I / O agency.

Owner:IANYWHERE SOLUTIONS

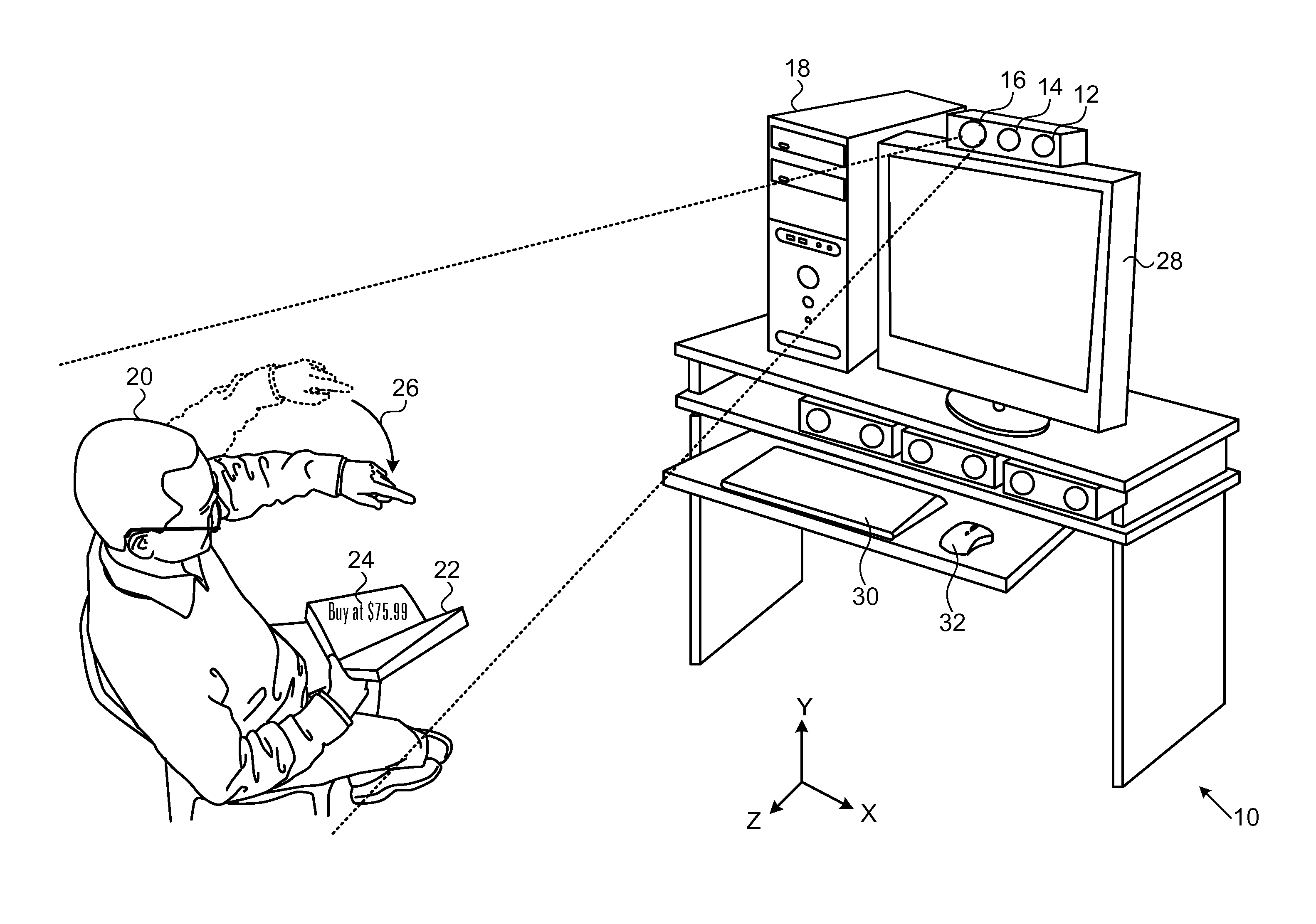

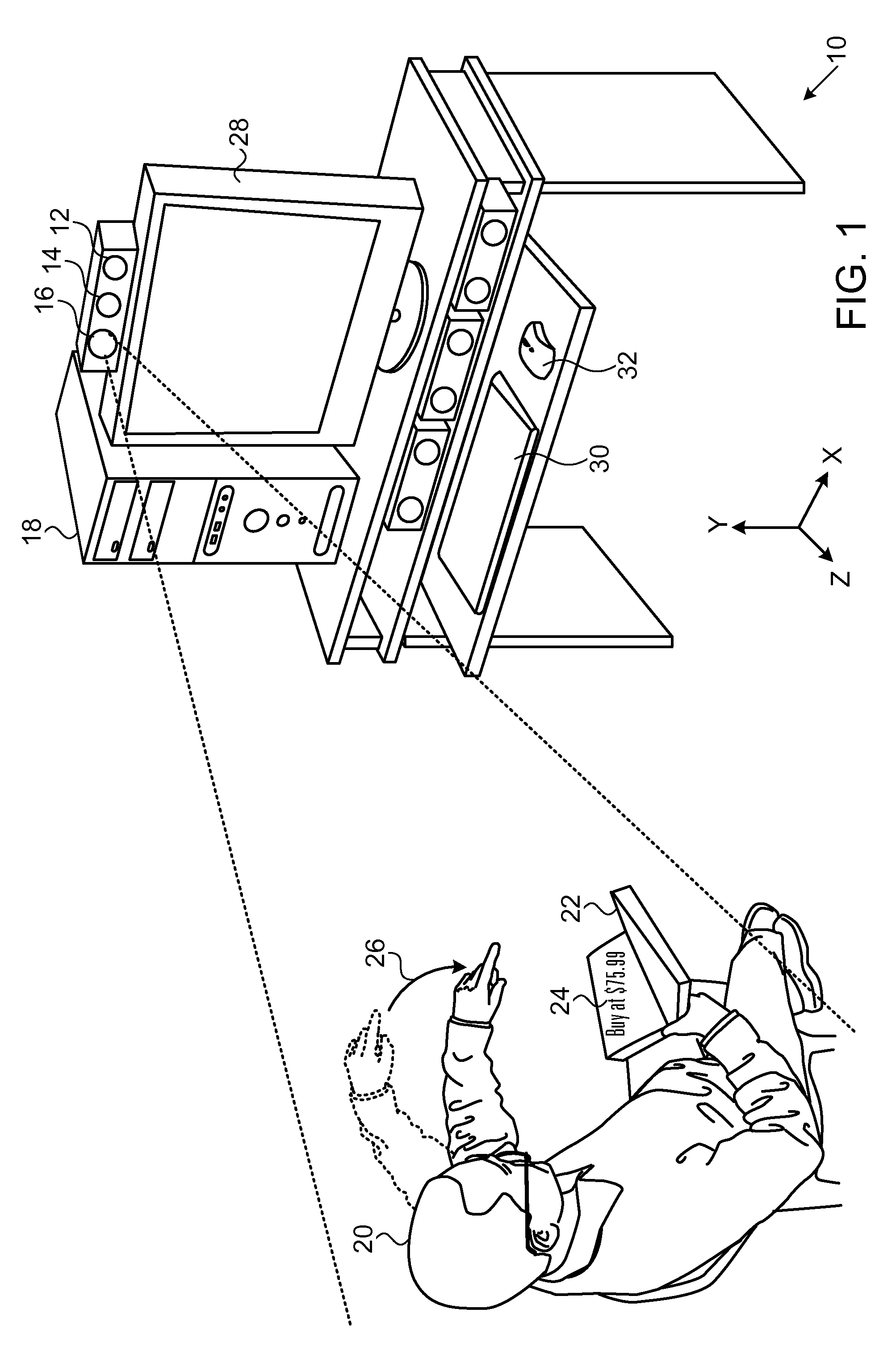

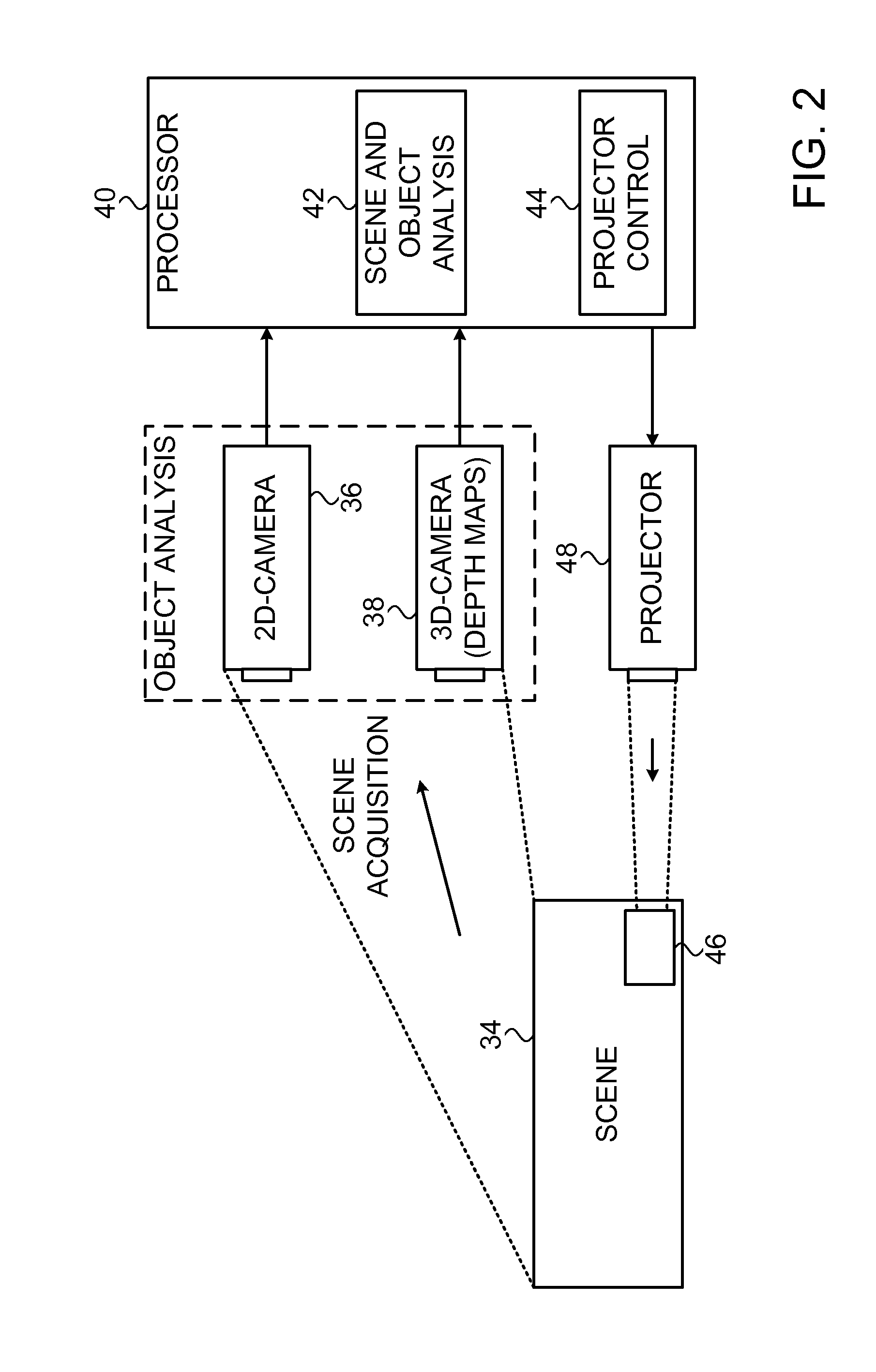

Interactive Reality Augmentation for Natural Interaction

ActiveUS20130107021A1Reduce intensityImage data processingSteroscopic systemsNatural interactionDepth map

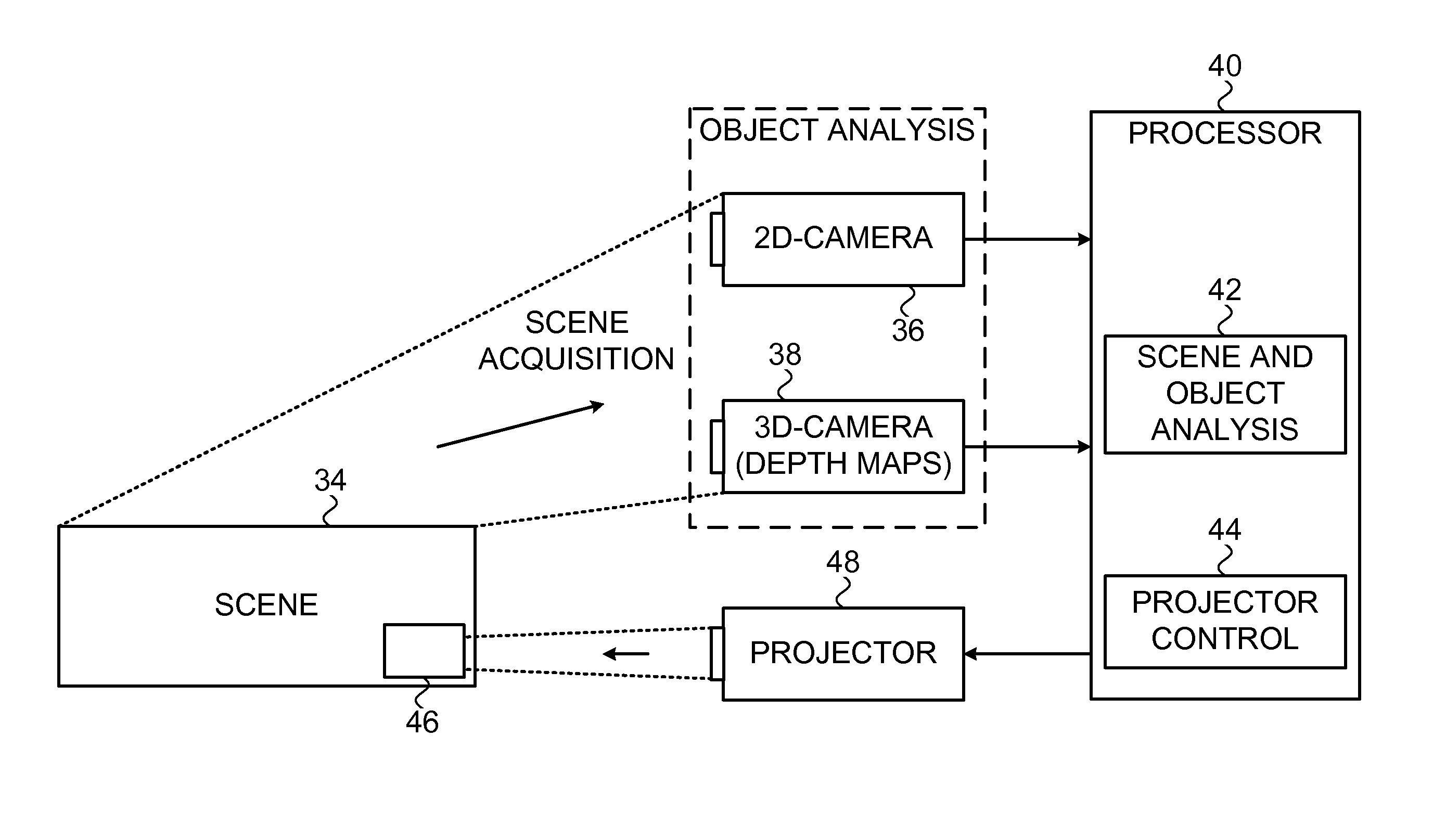

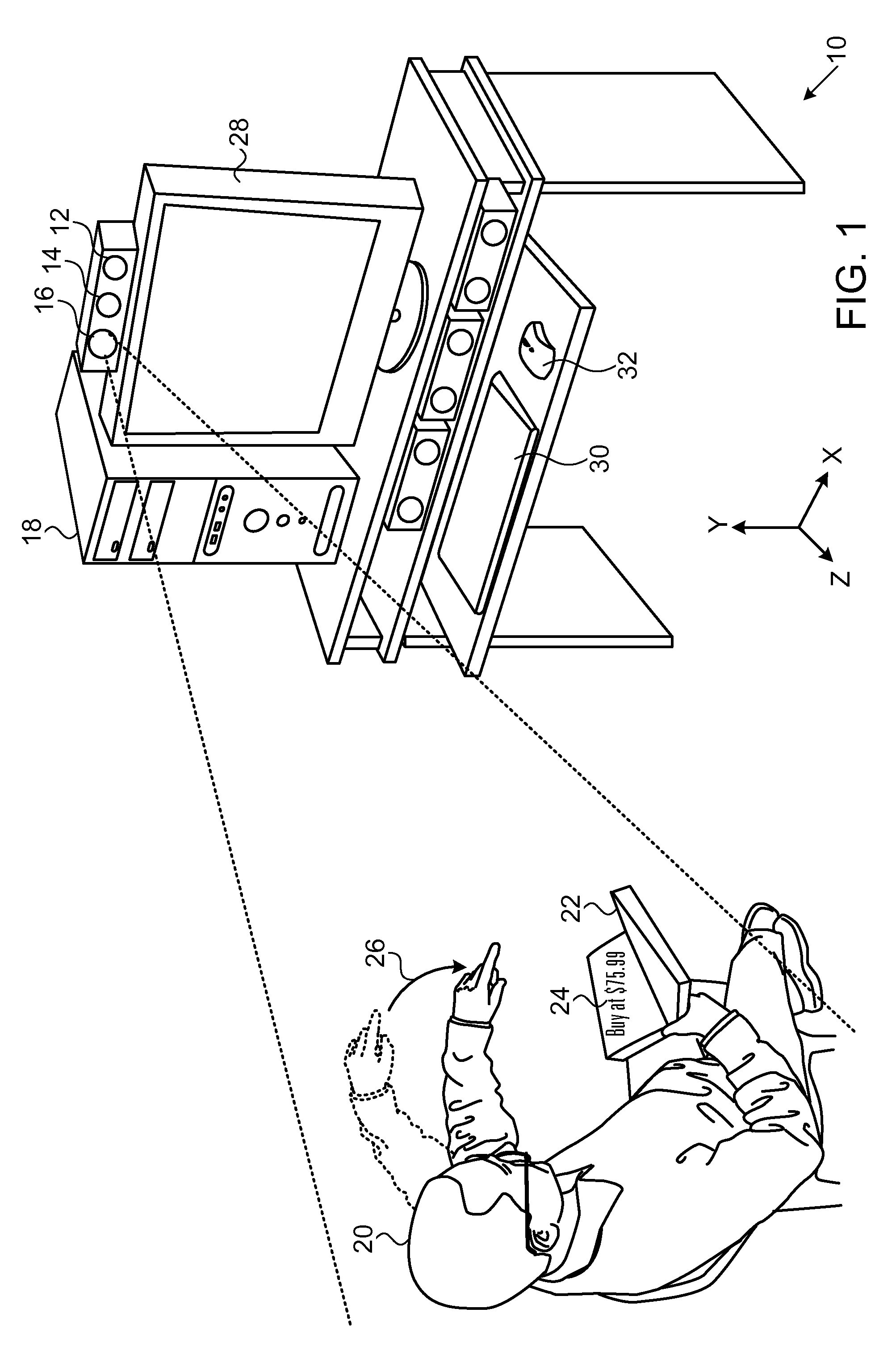

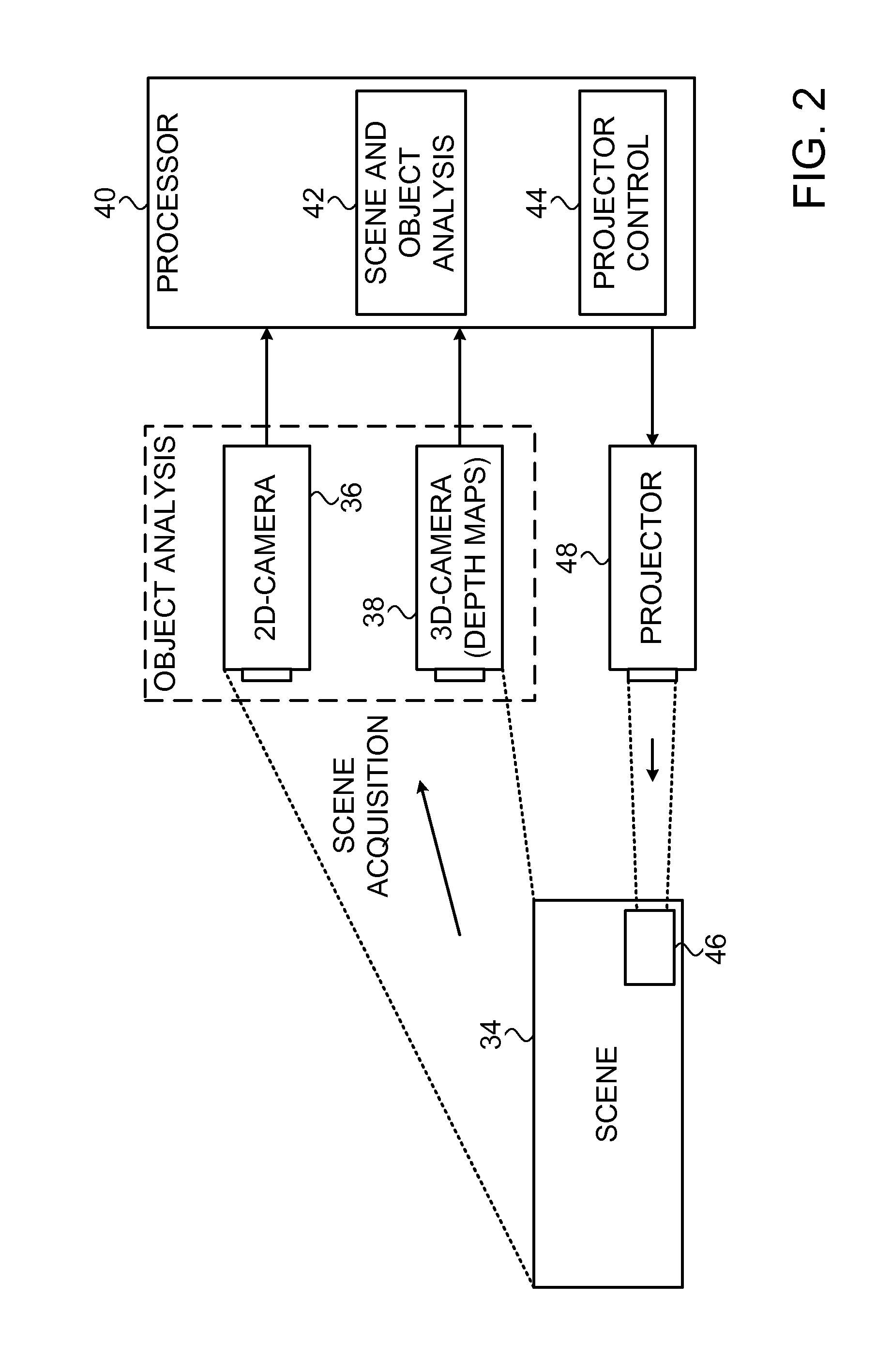

Embodiments of the invention provide apparatus and methods for interactive reality augmentation, including a 2-dimensional camera (36) and a 3-dimensional camera (38), associated depth projector and content projector (48), and a processor (40) linked to the 3-dimensional camera and the 2-dimensional camera. A depth map of the scene is produced using an output of the 3-dimensional camera, and coordinated with a 2-dimensional image captured by the 2-dimensional camera to identify a 3-dimensional object in the scene that meets predetermined criteria for projection of images thereon. The content projector projects a content image onto the 3-dimensional object responsively to instructions of the processor, which can be mediated by automatic recognition of user gestures

Owner:APPLE INC

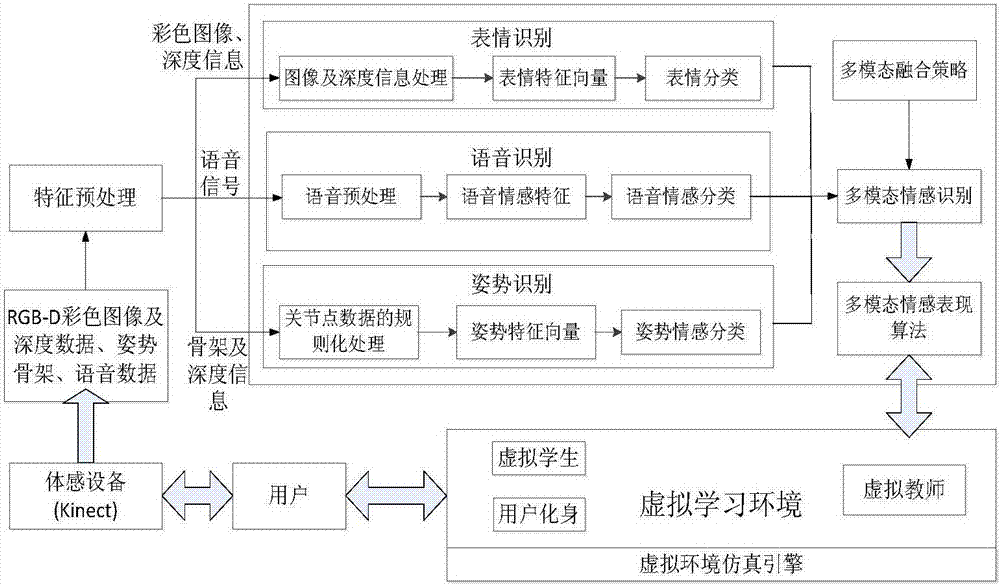

Virtual learning environment natural interaction method based on multimode emotion recognition

InactiveCN106919251AAccuracyImprove practicalityInput/output for user-computer interactionSpeech recognitionHuman bodyColor image

The invention provides a virtual learning environment natural interaction method based on multimode emotion recognition. The method comprises the steps that expression information, posture information and voice information representing the learning state of a student are acquired, and multimode emotion features based on a color image, deep information, a voice signal and skeleton information are constructed; facial detection, preprocessing and feature extraction are performed on the color image and a depth image, and a support vector machine (SVM) and an AdaBoost method are combined to perform facial expression classification; preprocessing and emotion feature extraction are performed on voice emotion information, and a hidden Markov model is utilized to recognize a voice emotion; regularization processing is performed on the skeleton information to obtain human body posture representation vectors, and a multi-class support vector machine (SVM) is used for performing posture emotion classification; and a quadrature rule fusion algorithm is constructed for recognition results of the three emotions to perform fusion on a decision-making layer, and emotion performance such as the expression, voice and posture of a virtual intelligent body is generated according to the fusion result.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

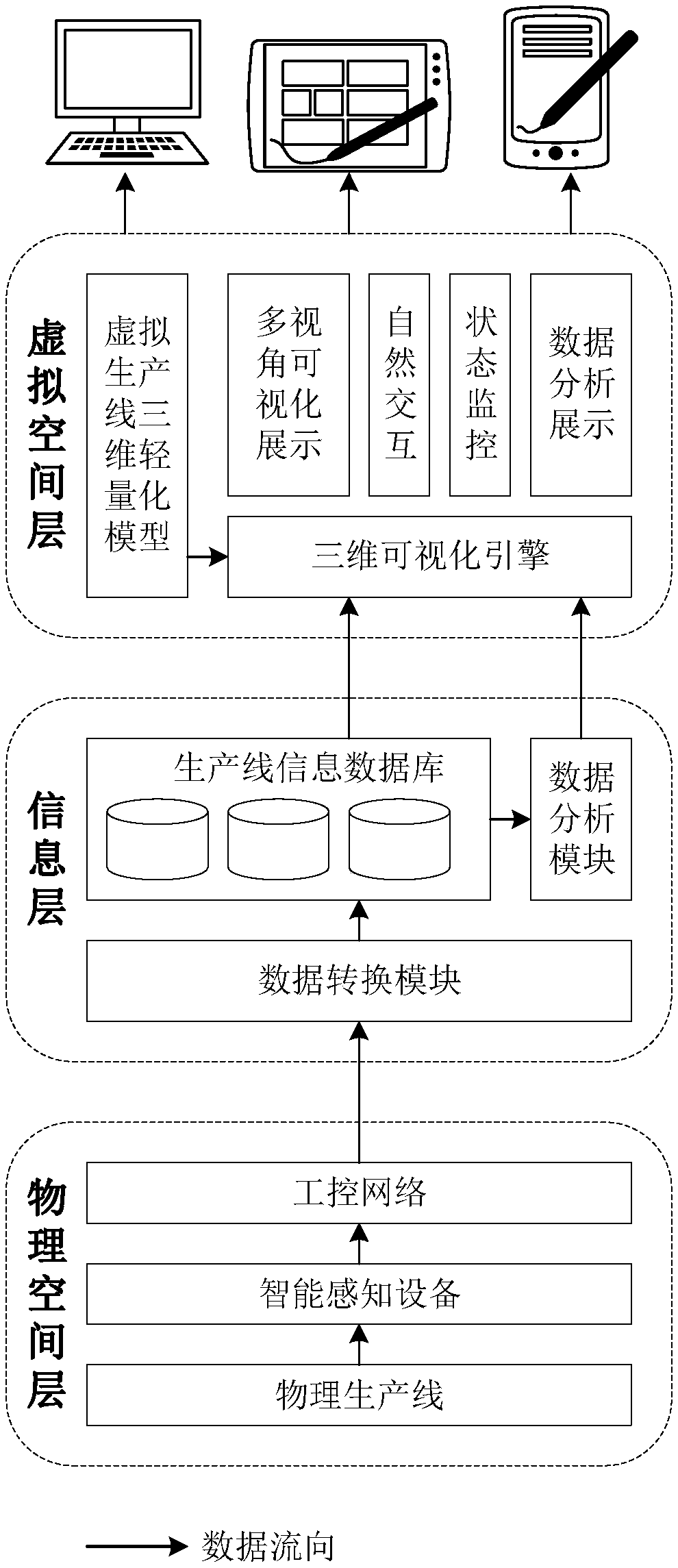

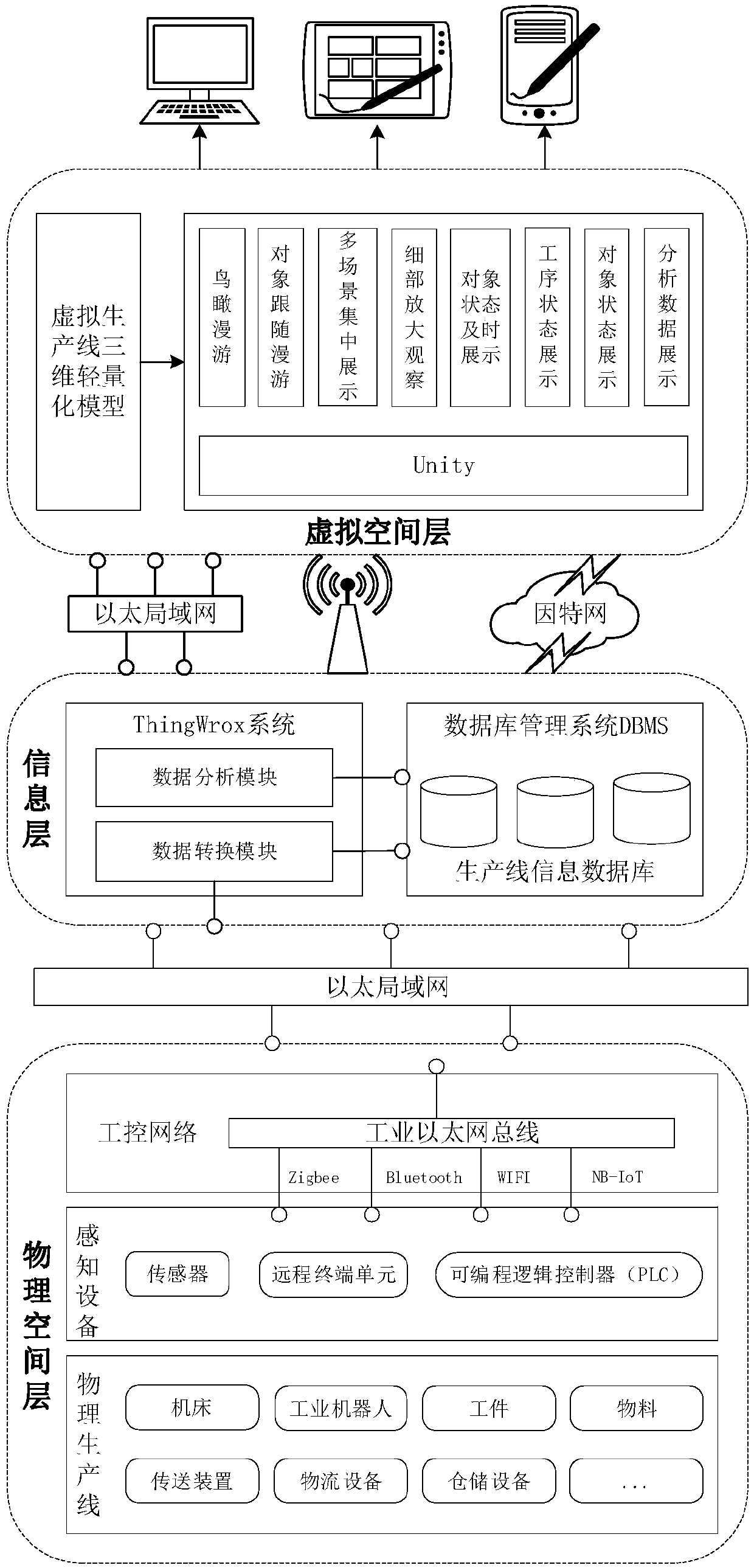

Digital twin system of an intelligent production line

InactiveCN109613895AImplement twin mirroringData processing applicationsTotal factory controlTime informationInformation layer

The invention relates to a digital twin system of an intelligent production line, which comprises a physical space layer, an information layer and a virtual space layer. The physical space layer is composed of a physical production line, intelligent sensing devices and an industrial control network. The information layer includes a data conversion module, a data analysis module and a production line information database. The virtual space layer can adapt to a variety of platforms and environments including personal computers and handheld devices, is used for generating a virtual production line consistent with the physical production line by a 3D visual engine through online real-time and offline non-real-time rendering under the driving of the production line information database of the information layer, and has the functions of multi-angle of view visual display, natural interaction, state monitoring and so on. The real-time state information of the physical production line is collected by various intelligent sensing devices, and based on the information, a three-dimensional visual engine is driven to generate a virtual production line model consistent with the physical production line through rendering, thereby realizing the twin mirror image of the virtual production line and the physical production line.

Owner:CHINA ELECTRONIC TECH GRP CORP NO 38 RES INST

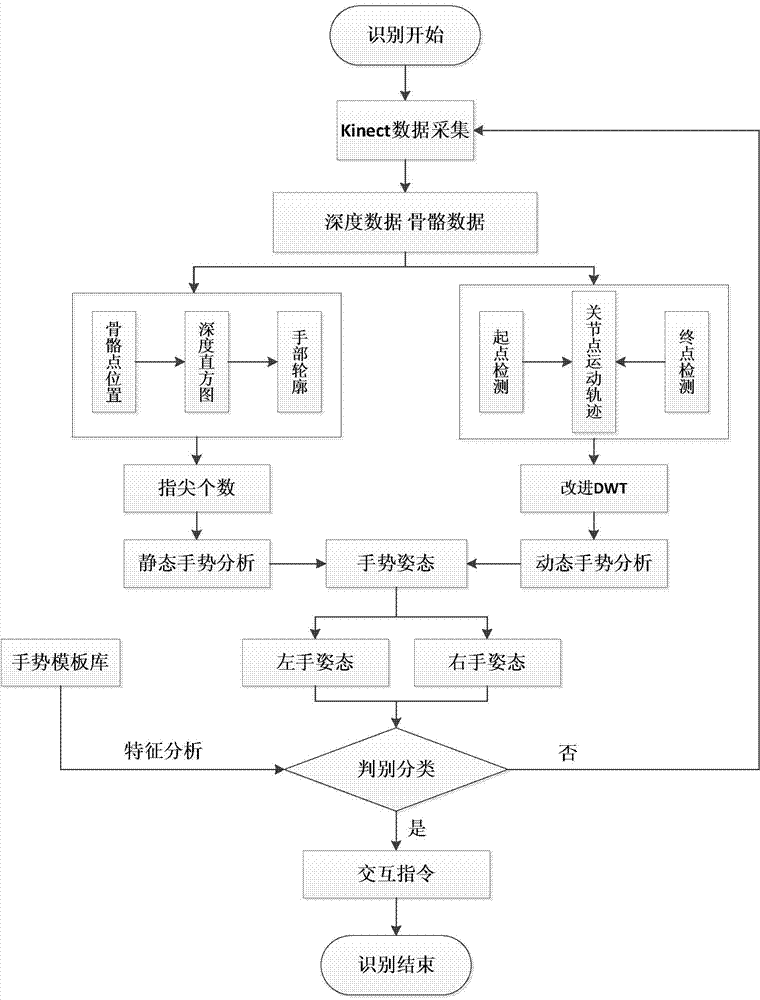

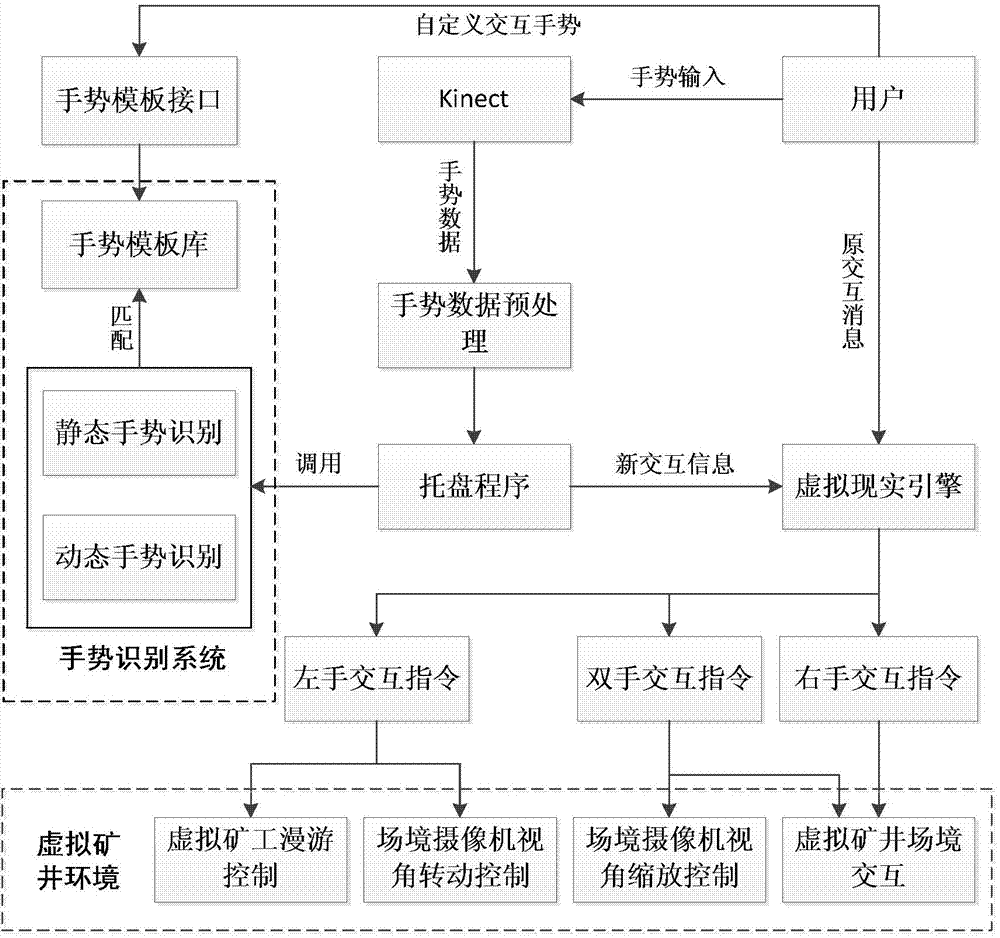

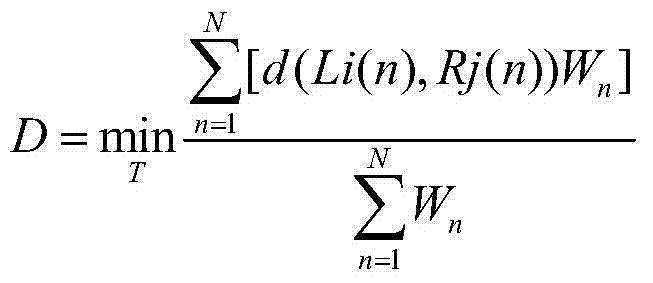

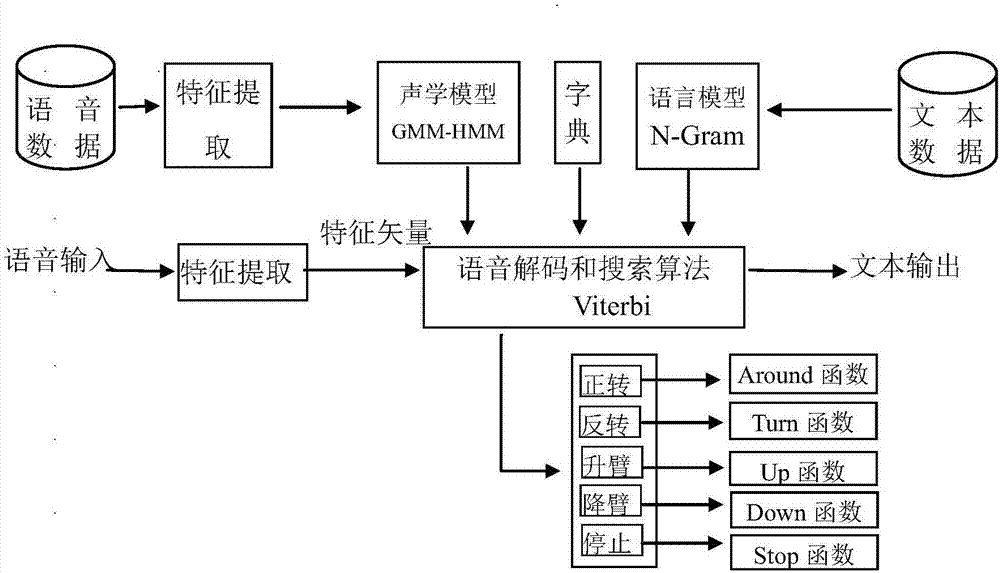

Somatosensory-based natural interaction method for virtual mine

ActiveCN104750397AImprove natural efficiencyImprove immersionCharacter and pattern recognitionInput/output processes for data processingSomatosensory systemInteraction technology

The invention discloses a somatosensory-based natural interaction method for a virtual mine. The method comprises the steps of applying a Kinect to acquire gesture signals, depth information and bone point information of a user; carrying out smoothing filtering on images, depth information and bone information of the gesture signals; dividing gesture images by using a depth histogram, applying an eight neighborhood outline tracking algorithm to find out a gesture outline, and identifying static gestures; planning feature matching identification of dynamic gestures by improving dynamic time according to the bone information; triggering corresponding Win32 instruction information by using a gesture identification result, and transmitting the information to a virtual reality engine, respectively mapping the instruction information to the primary keyboard mouse operation of a virtual mining natural interaction system, so as to realize the somatosensory interaction control of the virtual mine. According to the method provided by the invention, the natural efficiency of man-machine interaction can be improved, and the immersion and natural infection represented by the virtual mine can be improved, and the application of the virtual reality and a somatosensory interaction technology can be effectively popularized in coal mines and other fields.

Owner:重庆雅利通实业有限公司

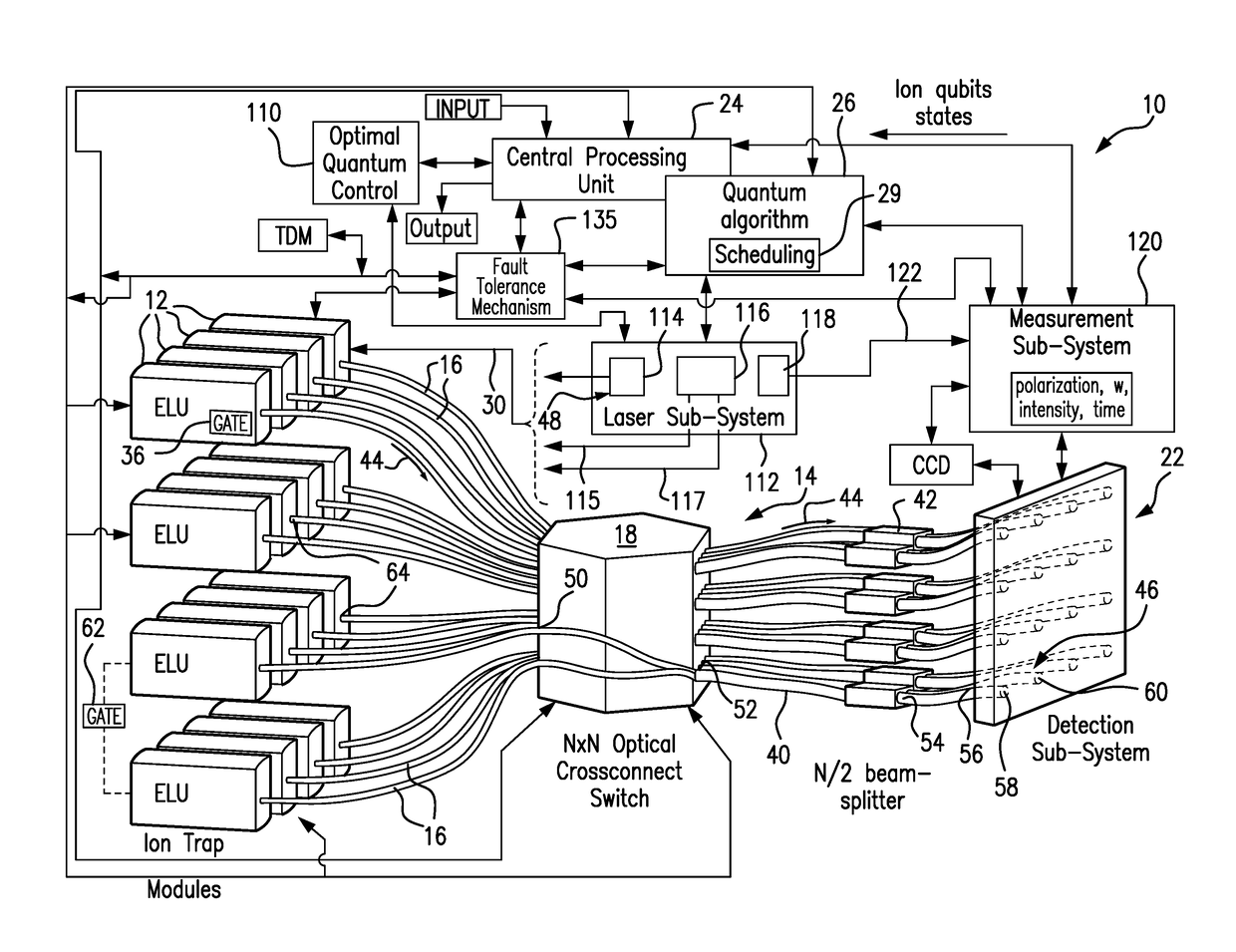

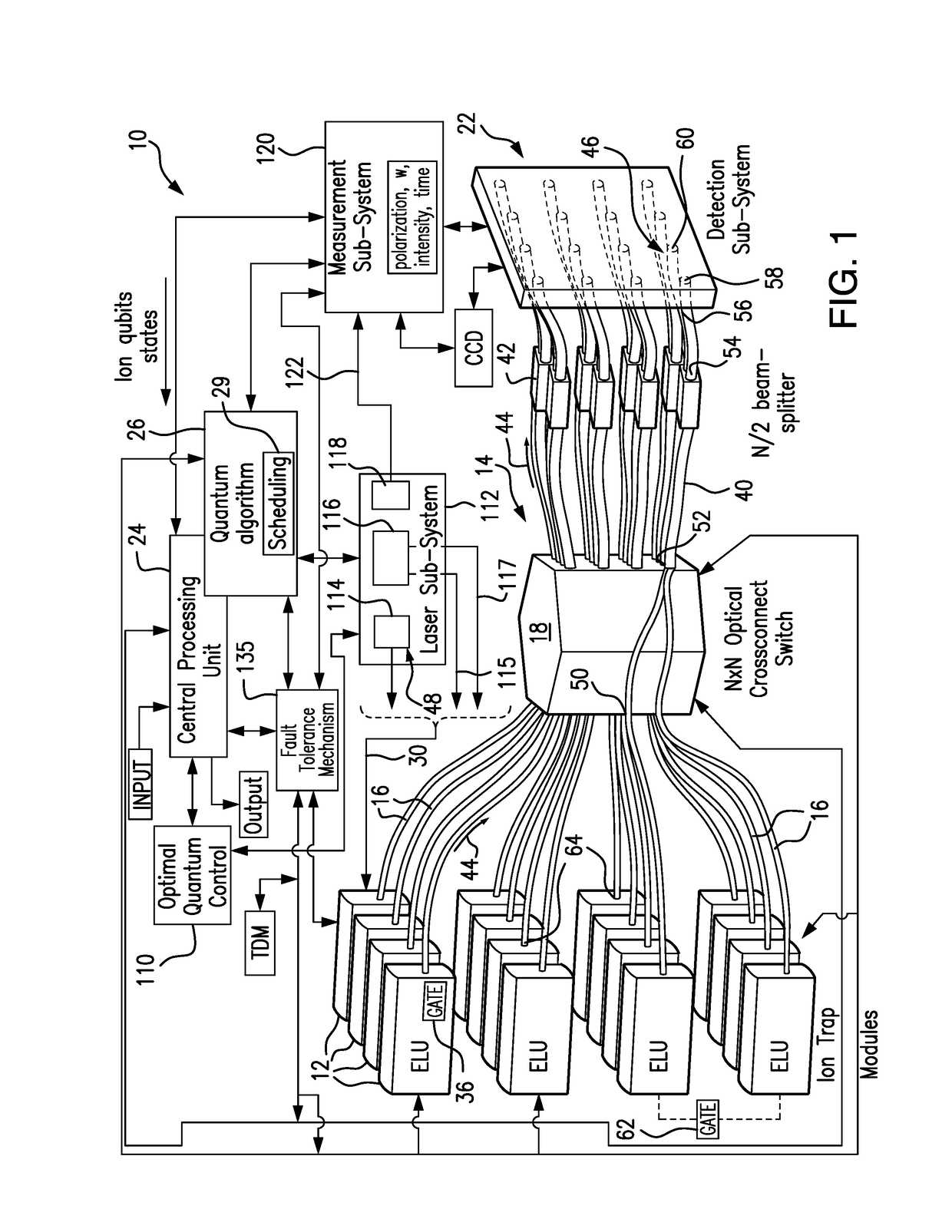

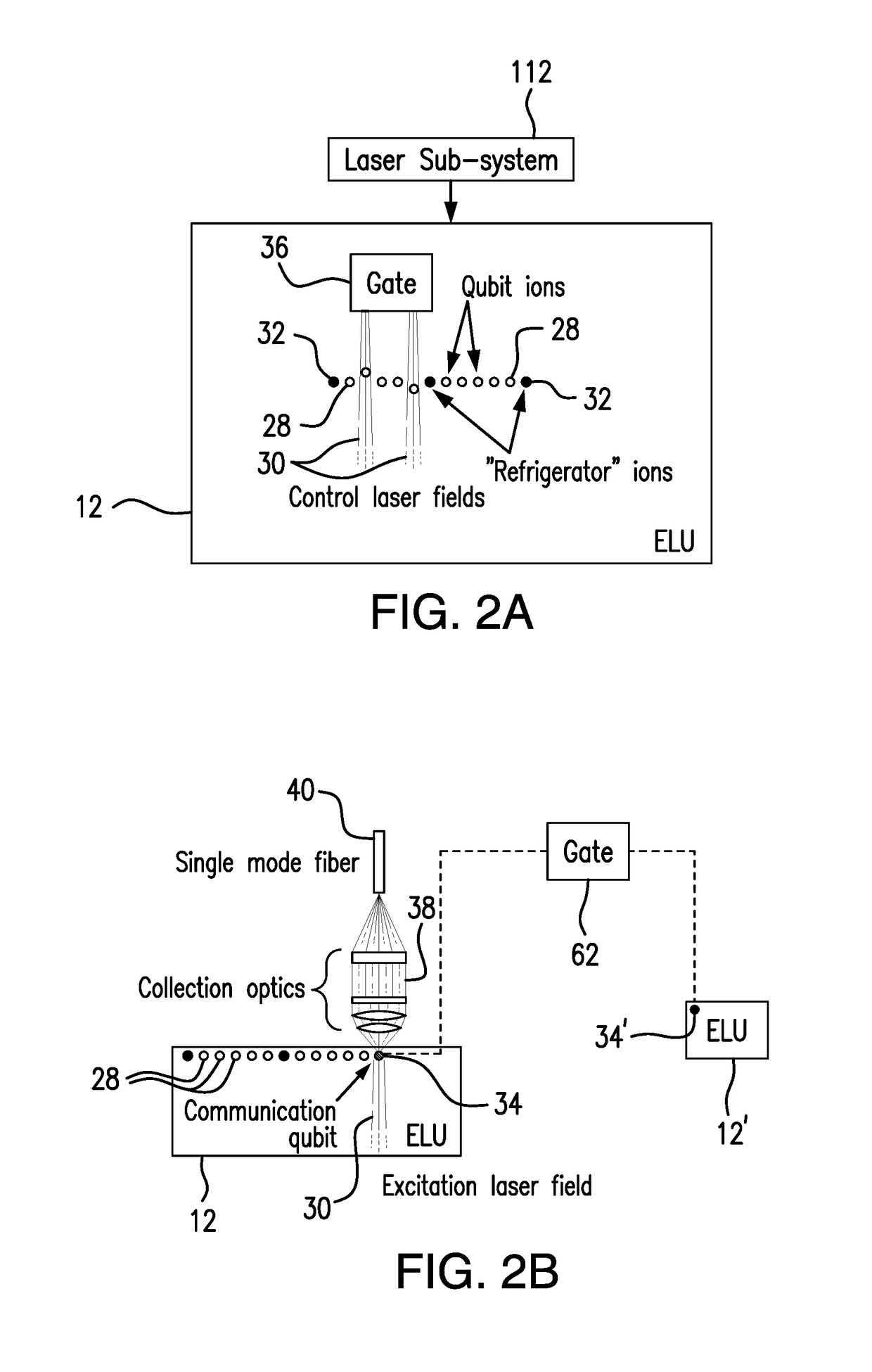

Fault-tolerant scalable modular quantum computer architecture with an enhanced control of multi-mode couplings between trapped ion qubits

ActiveUS20180114138A1Effective and reliableQuantum computersGeneral purpose stored program computerPhotonicsLarge distance

A modular quantum computer architecture is developed with a hierarchy of interactions that can scale to very large numbers of qubits. Local entangling quantum gates between qubit memories within a single modular register are accomplished using natural interactions between the qubits, and entanglement between separate modular registers is completed via a probabilistic photonic interface between qubits in different registers, even over large distances. This architecture is suitable for the implementation of complex quantum circuits utilizing the flexible connectivity provided by a reconfigurable photonic interconnect network. The subject architecture is made fault-tolerant which is a prerequisite for scalability. An optimal quantum control of multimode couplings between qubits is accomplished via individual addressing the qubits with segmented optical pulses to suppress crosstalk in each register, thus enabling high-fidelity gates that can be scaled to larger qubit registers for quantum computation and simulation.

Owner:DUKE UNIV +2

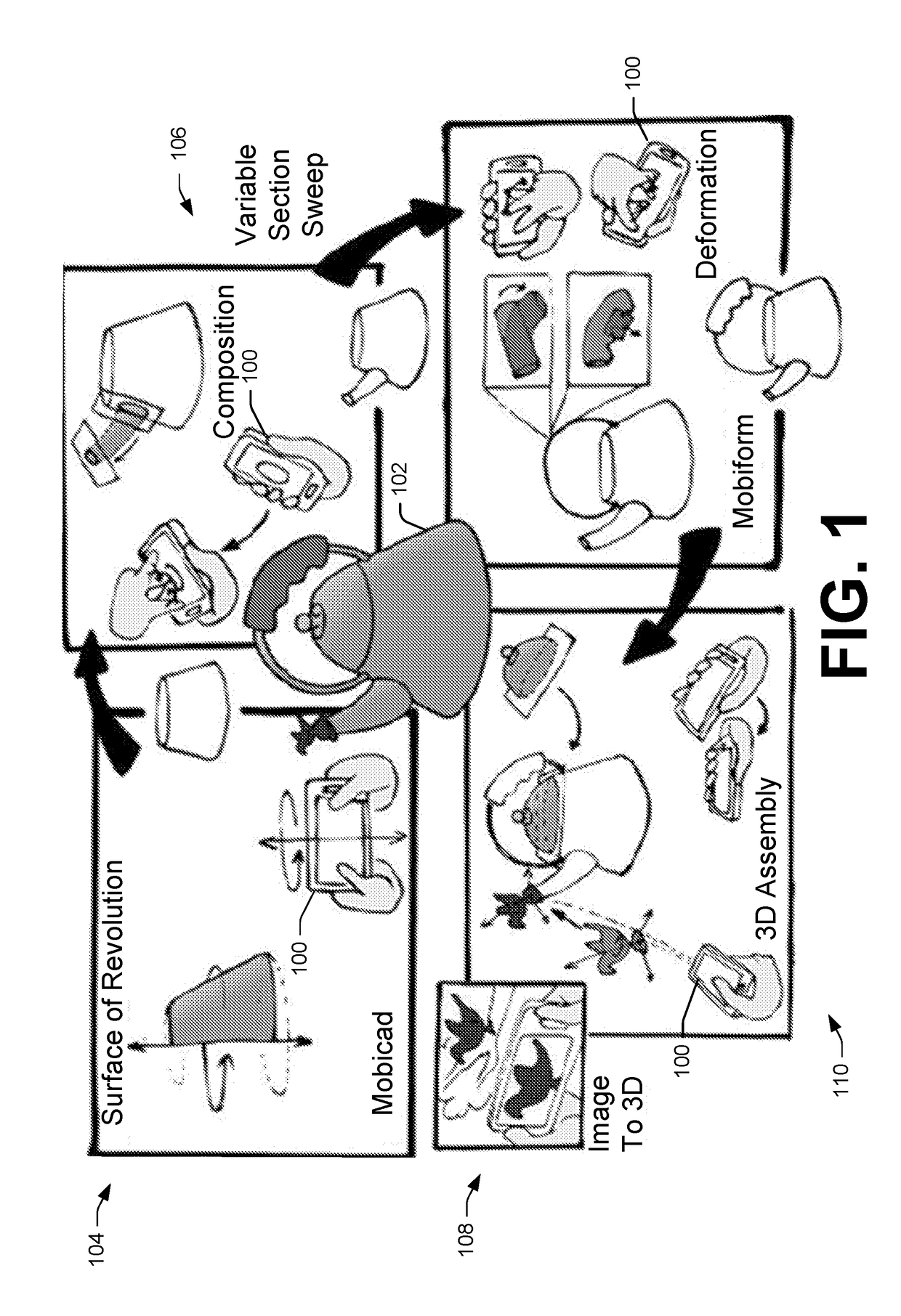

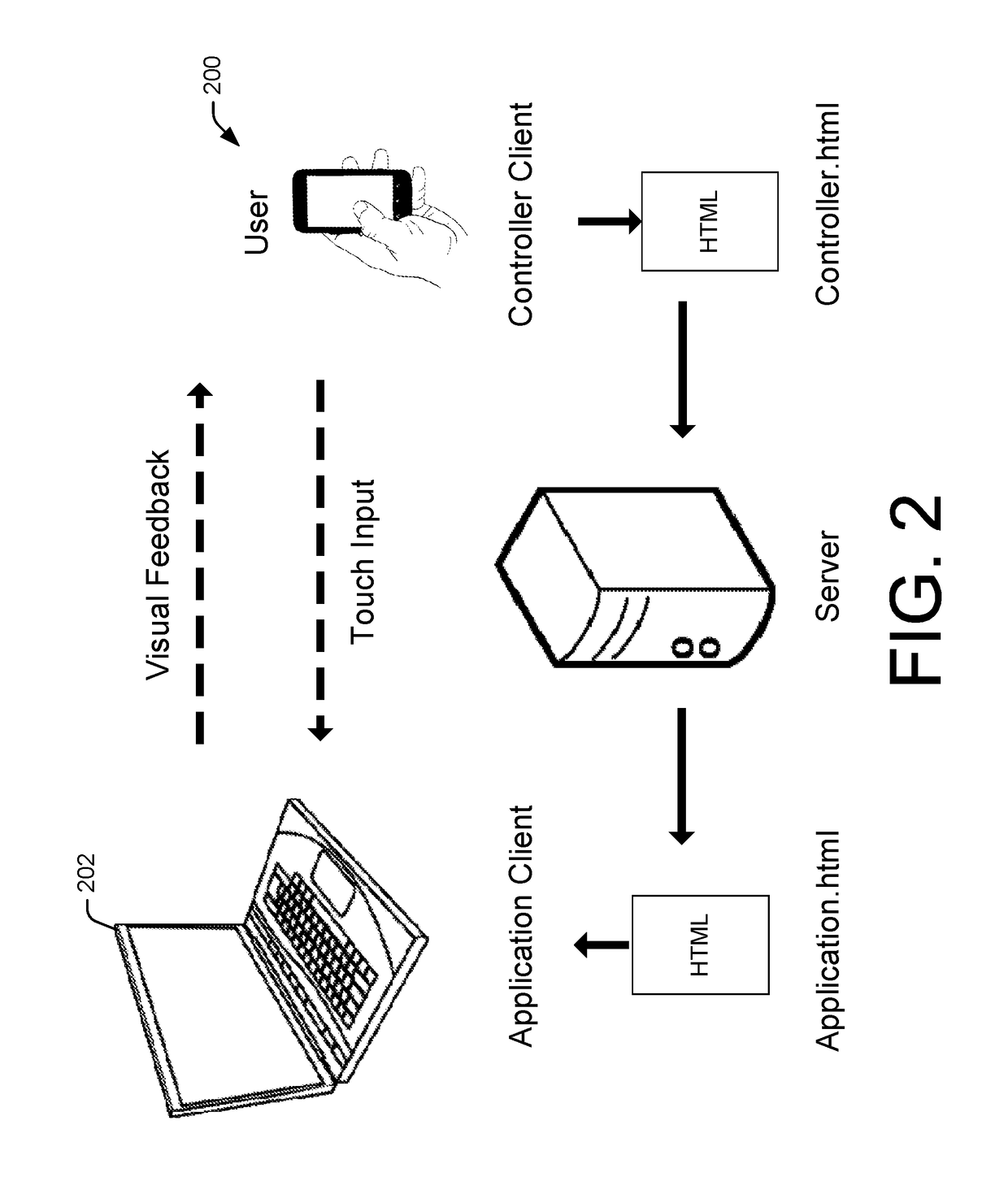

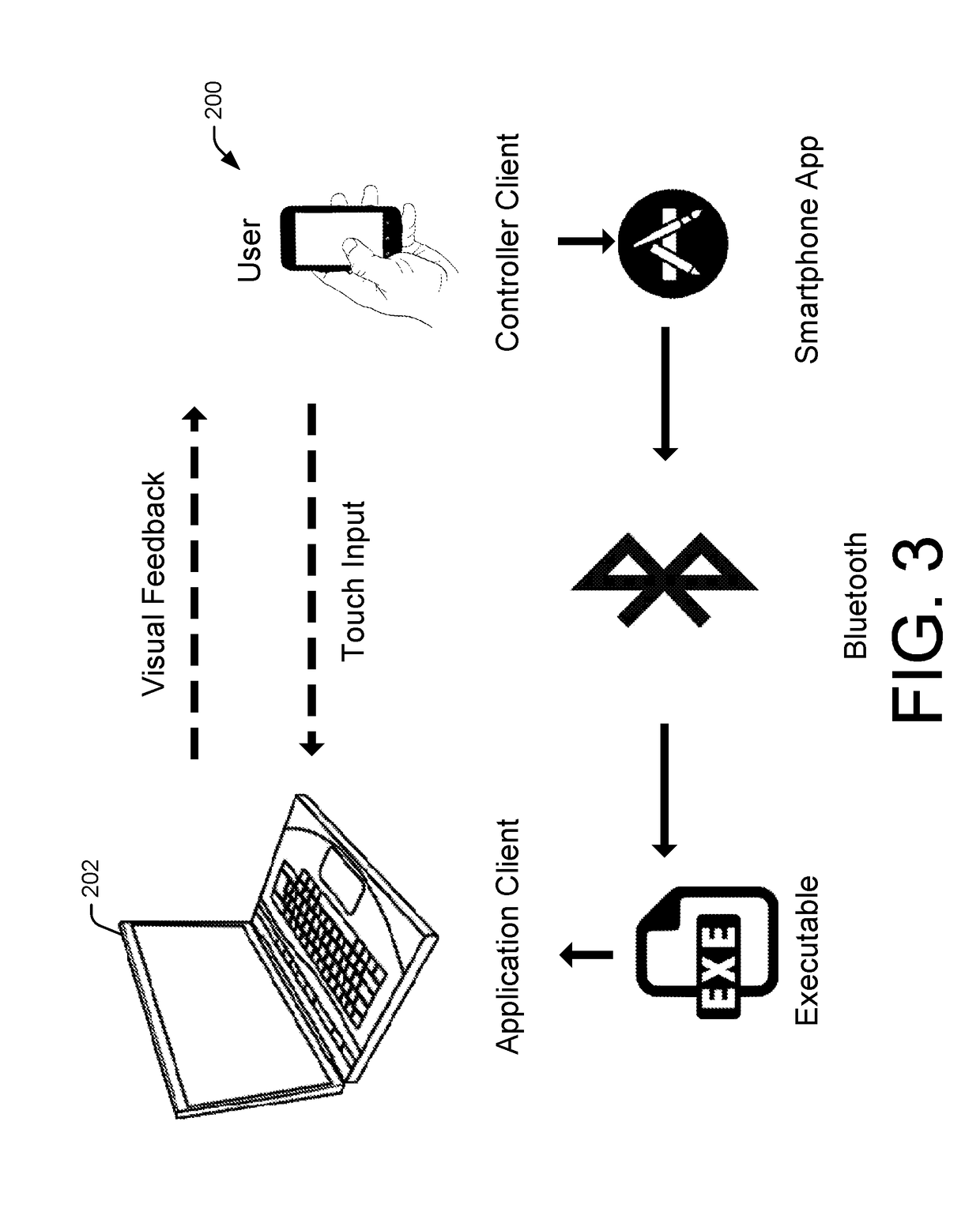

Manipulating 3D virtual objects using hand-held controllers

ActiveUS20190034076A1Image data processingInput/output processes for data processing3d shapesAccelerometer

Some examples provides a set of frameworks, process and methods aimed at enabling the expression and exploration of free-form and parametric 3D shape designs enabled through natural interactions with a hand-held mobile device acting as a controller for 3D virtual objects. A reference plane in a virtual space generated by the location of the mobile device may be used to select a 3D virtual object intersected by the reference plane. Positioning of the mobile device may also be used to control a pointer in the virtual space. In an example, the orientation of the mobile device may be detected by an accelerometer or gyroscope. In example, the position of the mobile device may be detected by a position sensor.

Owner:PURDUE RES FOUND INC

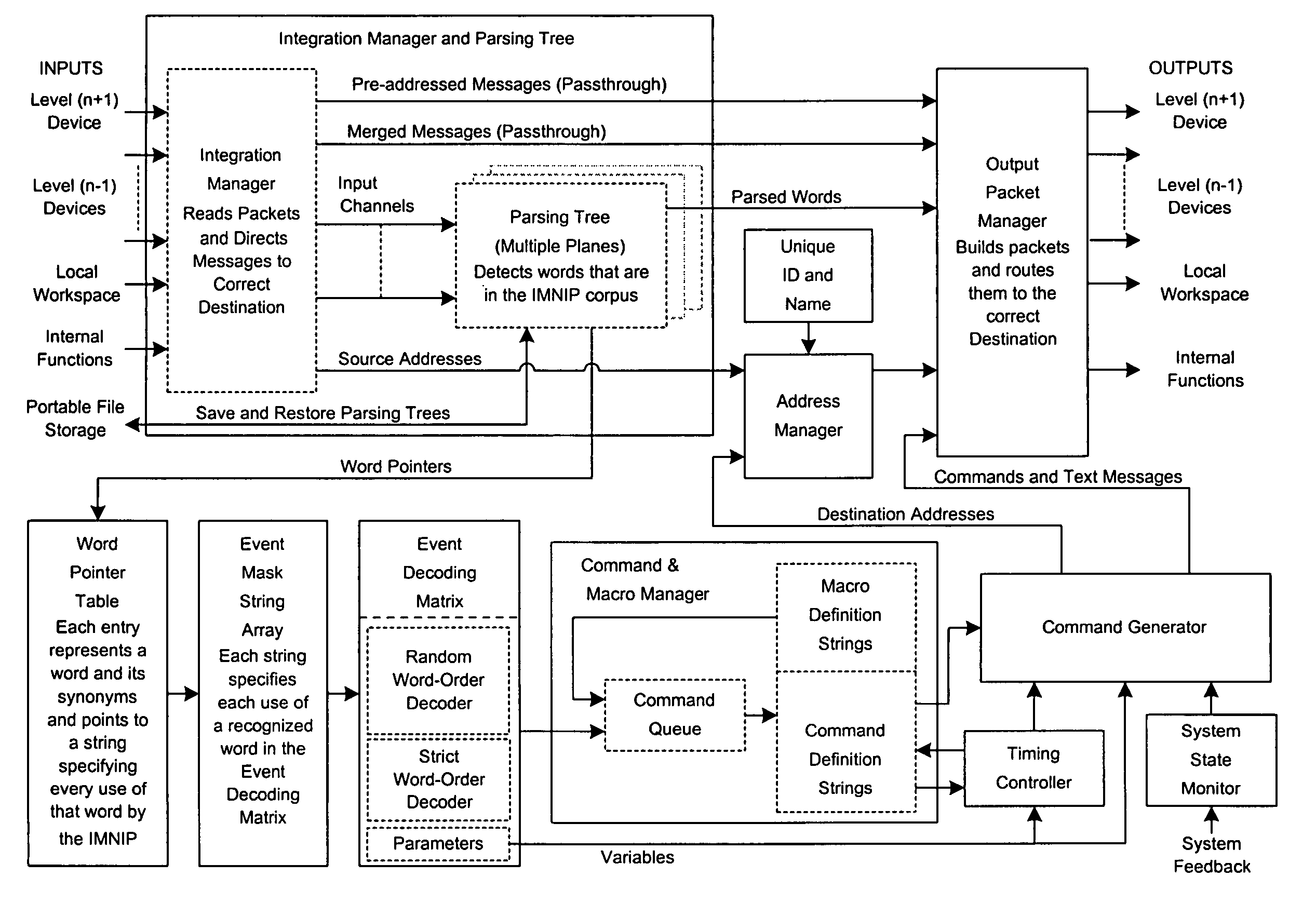

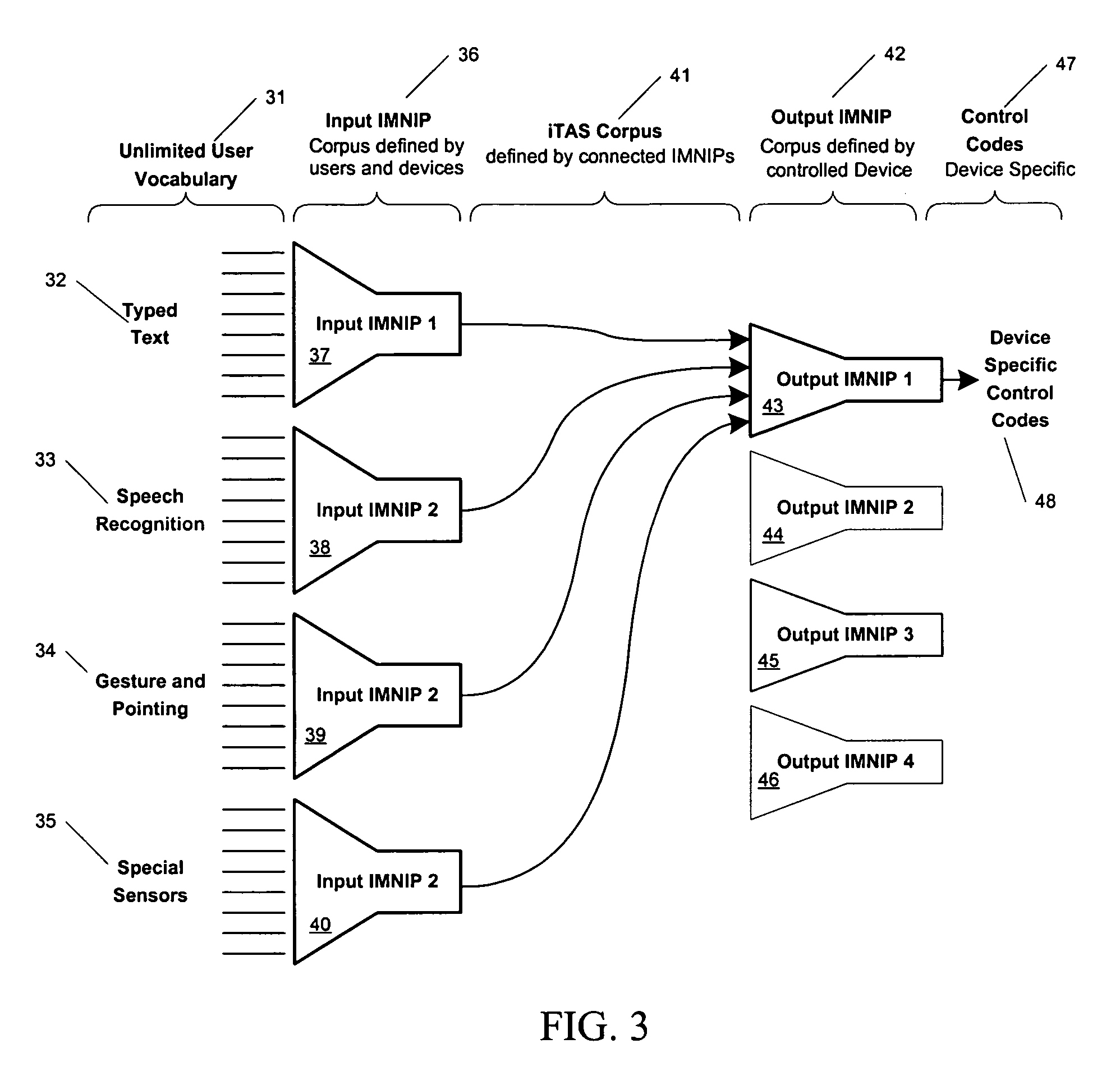

Integration manager and natural interaction processor

InactiveUS7480619B1Quick controlDevices with voice recognitionSubstation equipmentMedicineEngineering

Natural interaction command decoder implemented on small embedded microprocessors. Strings of text from multiple input sources are merged to create commands. Words that are meaningful within the current system context are linked to all dependent output events while words that have no meaning within the current system context are automatically discarded.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

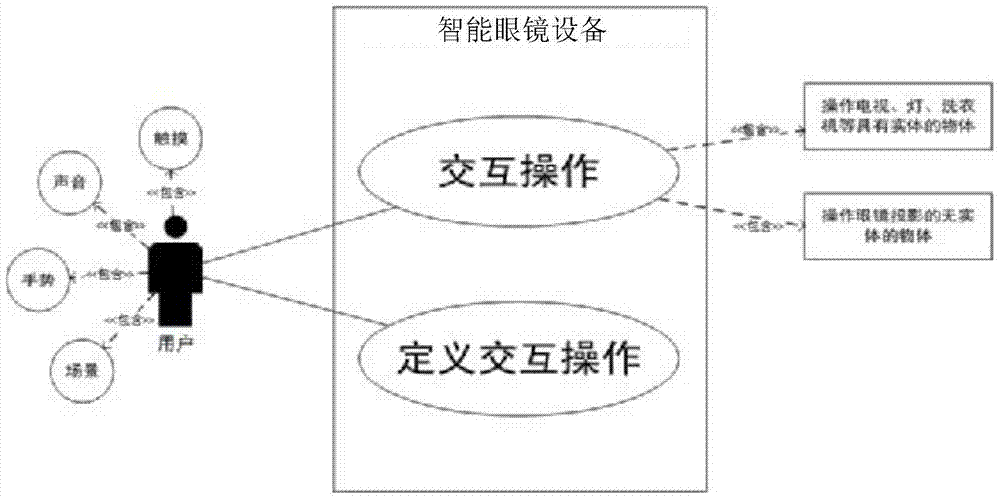

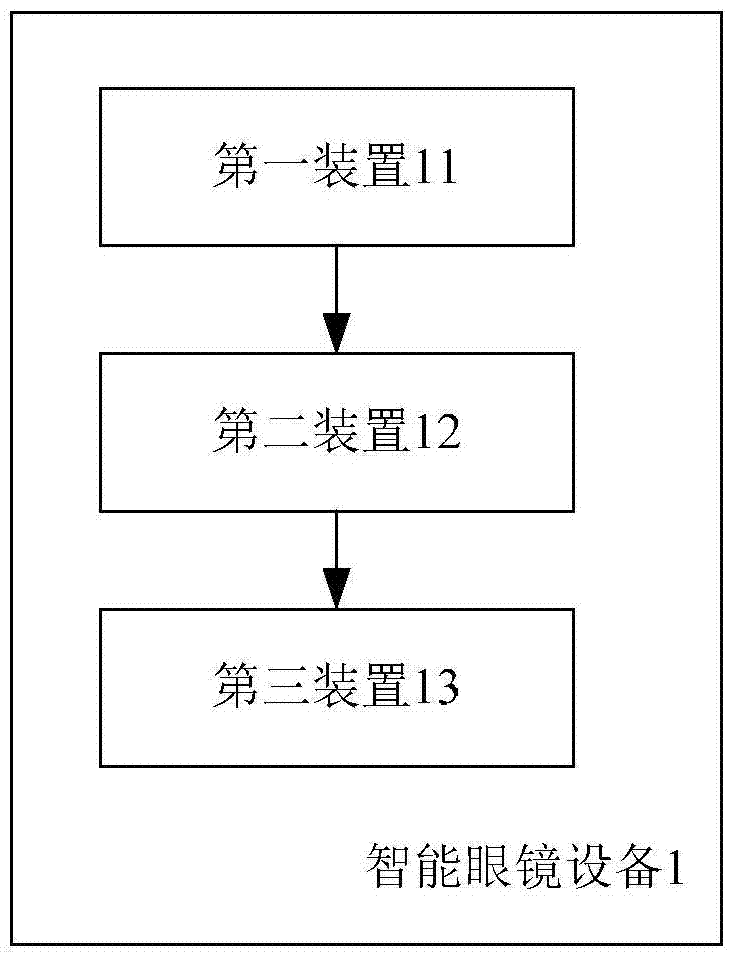

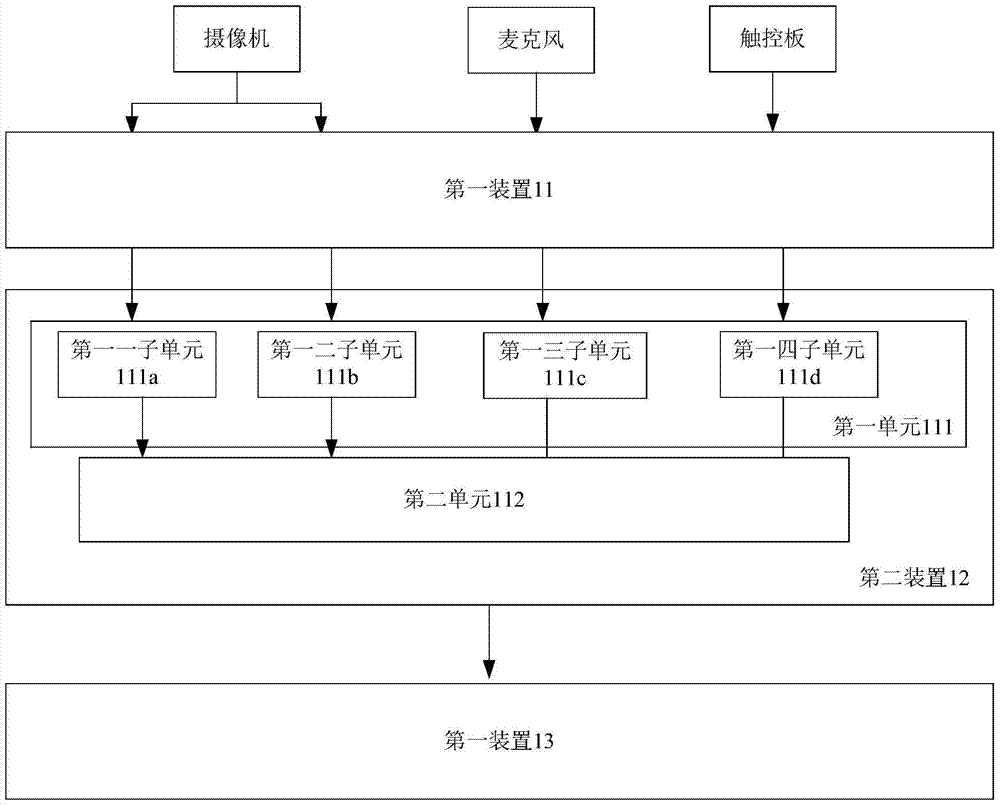

Multimodal input-based interactive method and device

ActiveCN106997236AImprove interactive experienceIncrease flexibilityInput/output for user-computer interactionCharacter and pattern recognitionObject basedComputer module

The invention aims to provide an intelligent glasses device and method used for performing interaction based on multimodal input and capable of enabling the interaction to be closer to natural interaction of users. The method comprises the steps of obtaining multiple pieces of input information from at least one of multiple input modules; performing comprehensive logic analysis on the input information to generate an operation command, wherein the operation command has operation elements, and the operation elements at least include an operation object, an operation action and an operation parameter; and executing corresponding operation on the operation object based on the operation command. According to the intelligent glasses device and method, the input information of multiple channels is obtained through the input modules and is subjected to the comprehensive logic analysis, the operation object, the operation action and the operation element of the operation action are determined to generate the operation command, and the corresponding operation is executed based on the operation command, so that the information is subjected to fusion processing in real time, the interaction of the users is closer to an interactive mode of a natural language, and the interactive experience of the users is improved.

Owner:HISCENE INFORMATION TECH CO LTD

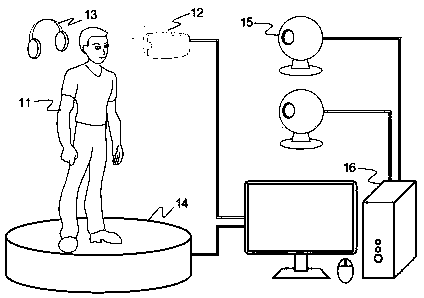

Mine virtual reality training system based on immersion type input and output equipment

InactiveCN105374251AImprove immersionImprove interactive experienceCosmonautic condition simulationsSimulatorsVirtual trainingSimulation

The invention discloses a mine virtual reality training system based on immersion type input and output equipment. With the system, a trainee can be completely immersed in the virtual scene, and carries out man-machine interaction with the virtual training scene through natural methods including gestures, walking and running, and the like, so as to complete the preset training program. The system is composed of output equipment such as a head-wearing display, an omnidirectional running machine, output equipment such as a motion capturing device, a computer, and matched training software, wherein the head-wearing display can provide complete vision immersion experiences, and the omnidirectional running machine and the motion capturing device map the movements of the trainee in the reality on a virtual object in the virtual scene, and operate the system through the natural interaction method. Compared with the traditional training method, the training system provided by the invention enables the trainee to be immersed in the virtual mine environment close to the reality at any place, and obtain the training experiences the same as those obtained in the scene, and finally, the effect comparable with the on-site training effect is obtained.

Owner:CHINA UNIV OF MINING & TECH (BEIJING)

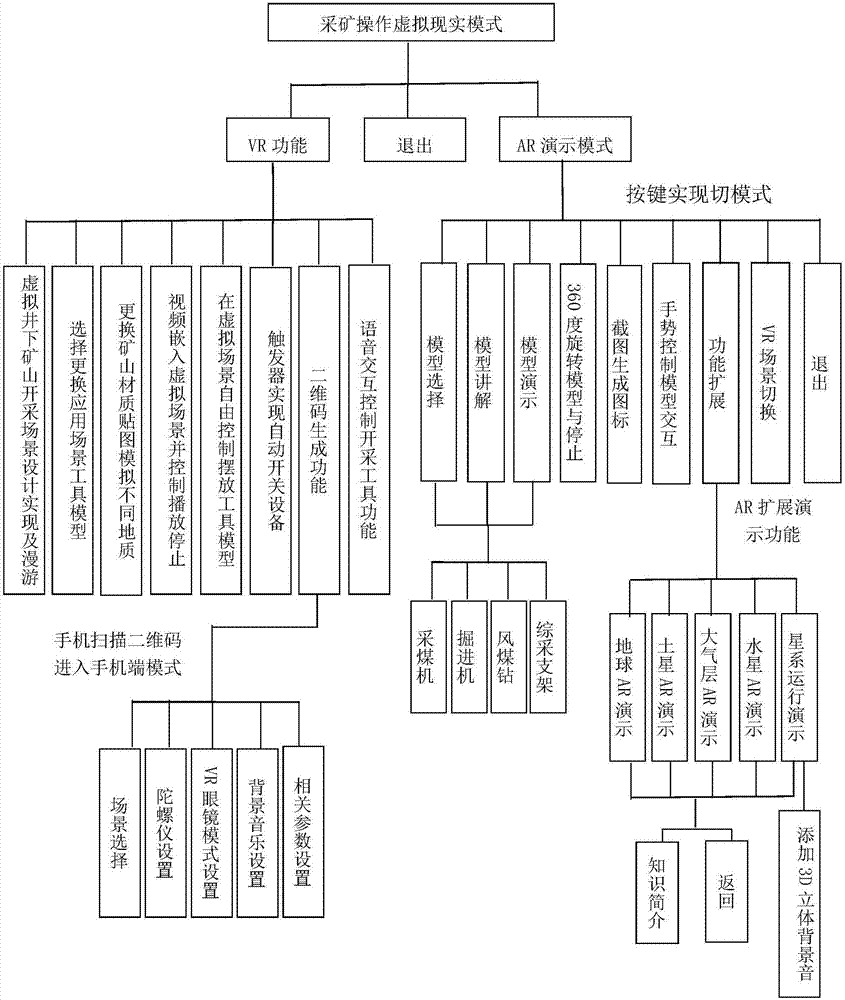

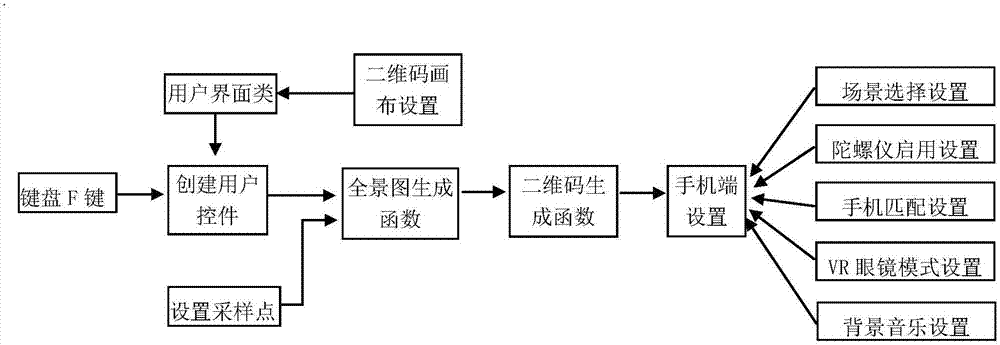

Mining operation multi-operation realization method based on virtual reality and augmented reality

ActiveCN107515674AReasonable designGood effectInput/output for user-computer interactionSpeech recognitionSkill setsComputer science

The invention discloses a mining operation multi-operation realization method based on virtual reality and augmented reality, and belongs to the technical field of the virtual reality and the augmented reality. The method comprises two patterns including the virtual reality and the augmented reality. Under a virtual reality scene, the selection and the replacement of a material and a material in the virtual reality scene can be realized, scene exploration is realized, the model can be moved and placed at will, a video is embedded, a two-dimensional code is generated, and a trigger realizes natural interaction, voice interaction and the like; under an augmented reality scene, the model can be selected, voice is played, the operation dynamics of the model can be demonstrated, and the rotation stopping, screen capture and function expansion of the model can be controlled; and under two patterns, various interaction ways of voice control, gesture control and keyboard mouse control can be realized. The method is applied to the virtual simulation application scene of the mining operation, can be used for training mine lot mining workers and students of the mining engineering specialty, reducing training capital and improving the skills of workers and provides an advanced and quick meaning for guiding production construction and science and technology studies.

Owner:SHANDONG UNIV OF SCI & TECH

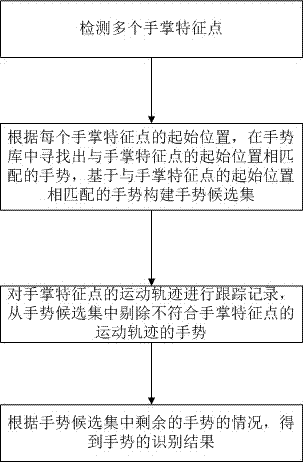

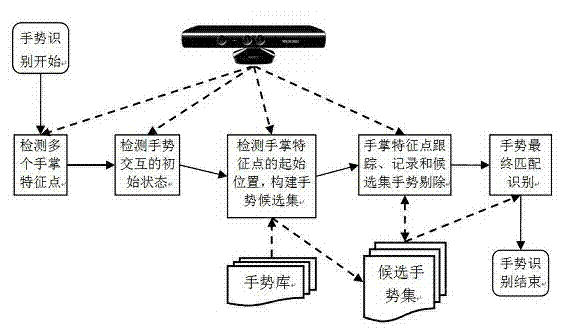

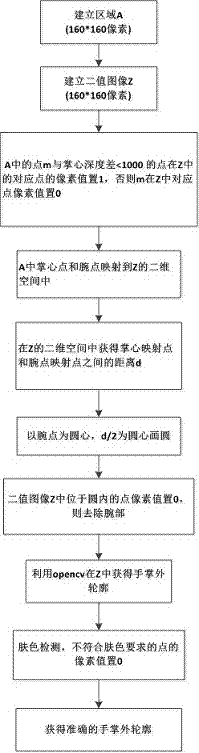

Three-dimensional gesture recognizing method based on Kinect depth image

InactiveCN103941866ARich interactionInput/output for user-computer interactionGraph readingHuman–robot interactionHuman system interaction

The invention discloses a three-dimension gesture recognizing method based on a Kinect depth image. The three-dimension gesture recognizing method is characterized by including the following steps that (1), multiple palm feature points are detected; (2), according to the starting positions of all the palm feature points, gestures matched with the starting positions of the palm feature points are found out in a gesture library, and a gesture candidate set is constructed based on the gestures matched with the starting positions of the palm feature points; (3), motion trails of the palm feature points are tracked and recorded, and the gestures which do not meet the motion trails of the palm feature points are rejected out of the gesture candidate set; (4), according to the conditions of the gestures remaining in the gesture candidate set, a gesture recognizing result is obtained. Natural interaction with a computer is achieved, and the human-computer interaction mode is enriched. The three-dimension gesture recognizing method can be widely applied to the fields of computer game control, virtual reality, digital education and the like.

Owner:HOHAI UNIV CHANGZHOU

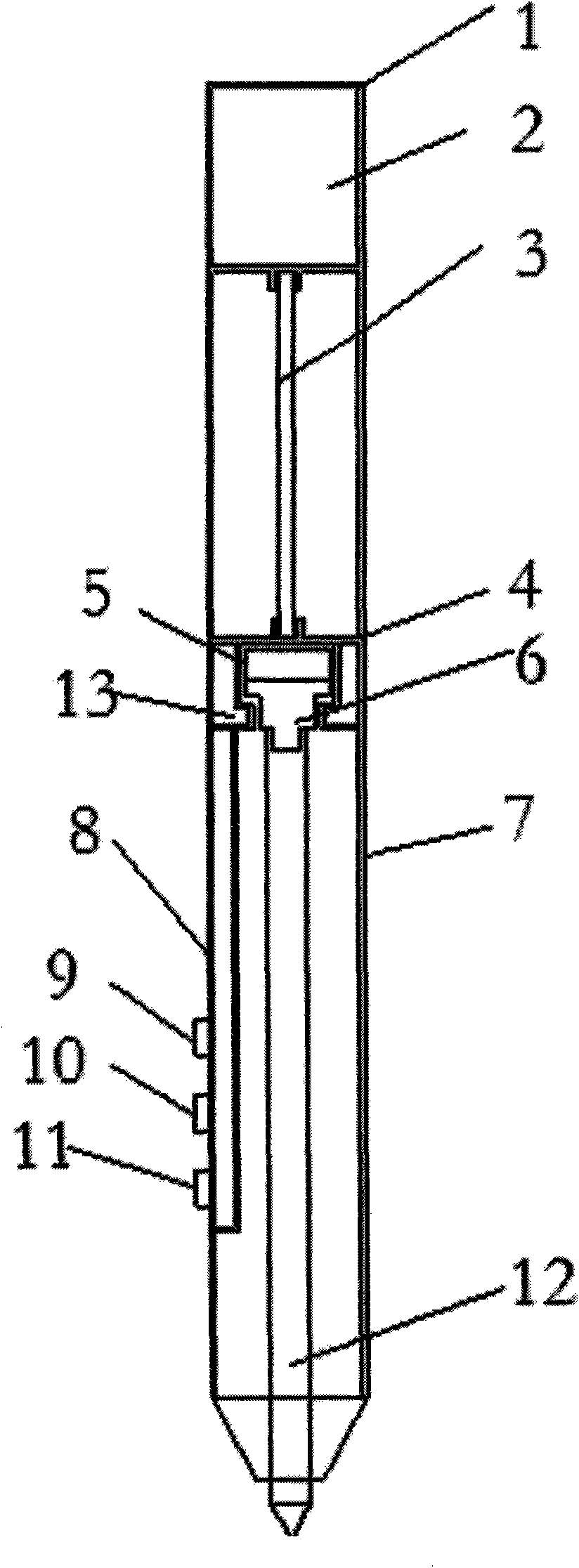

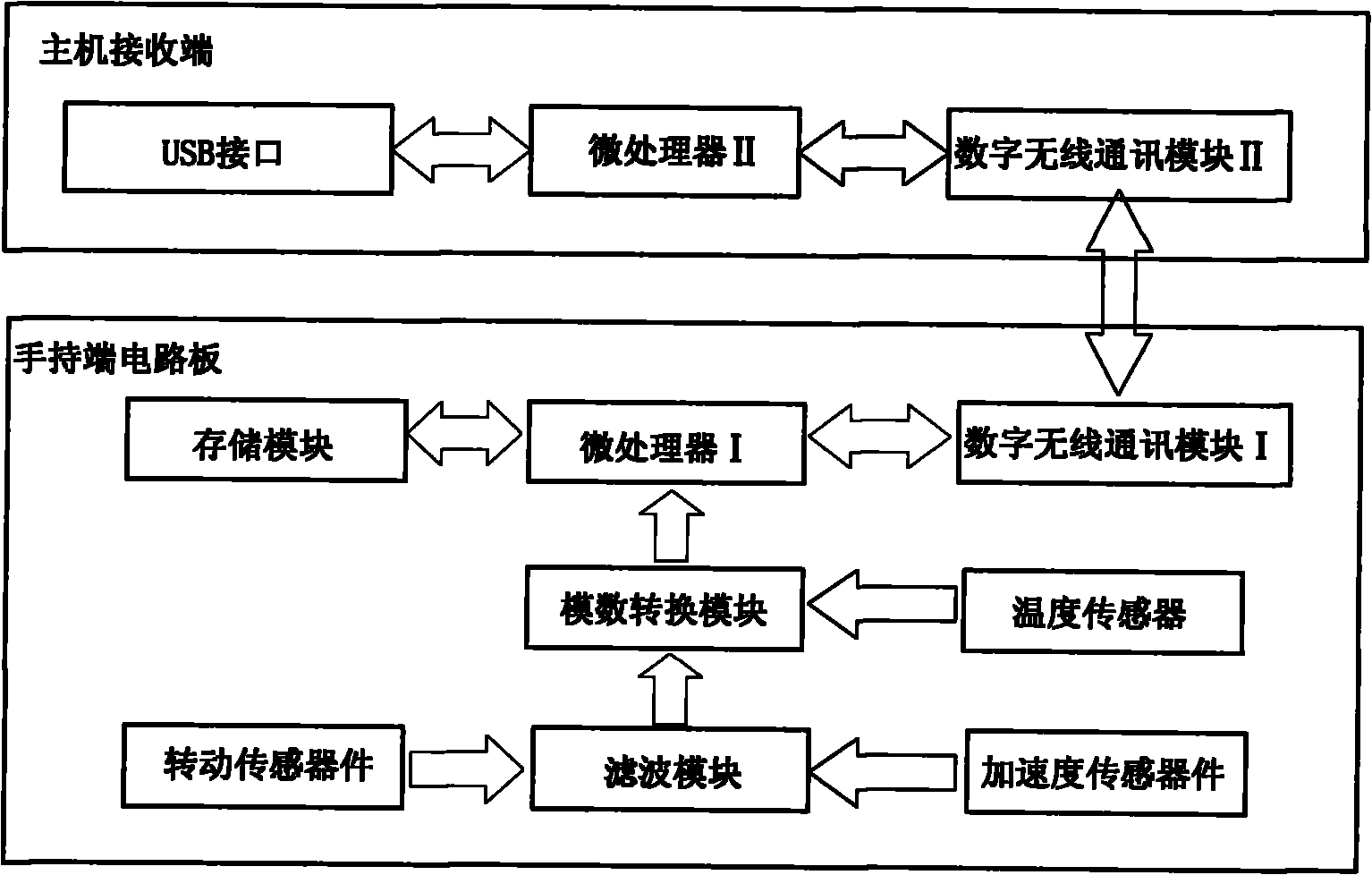

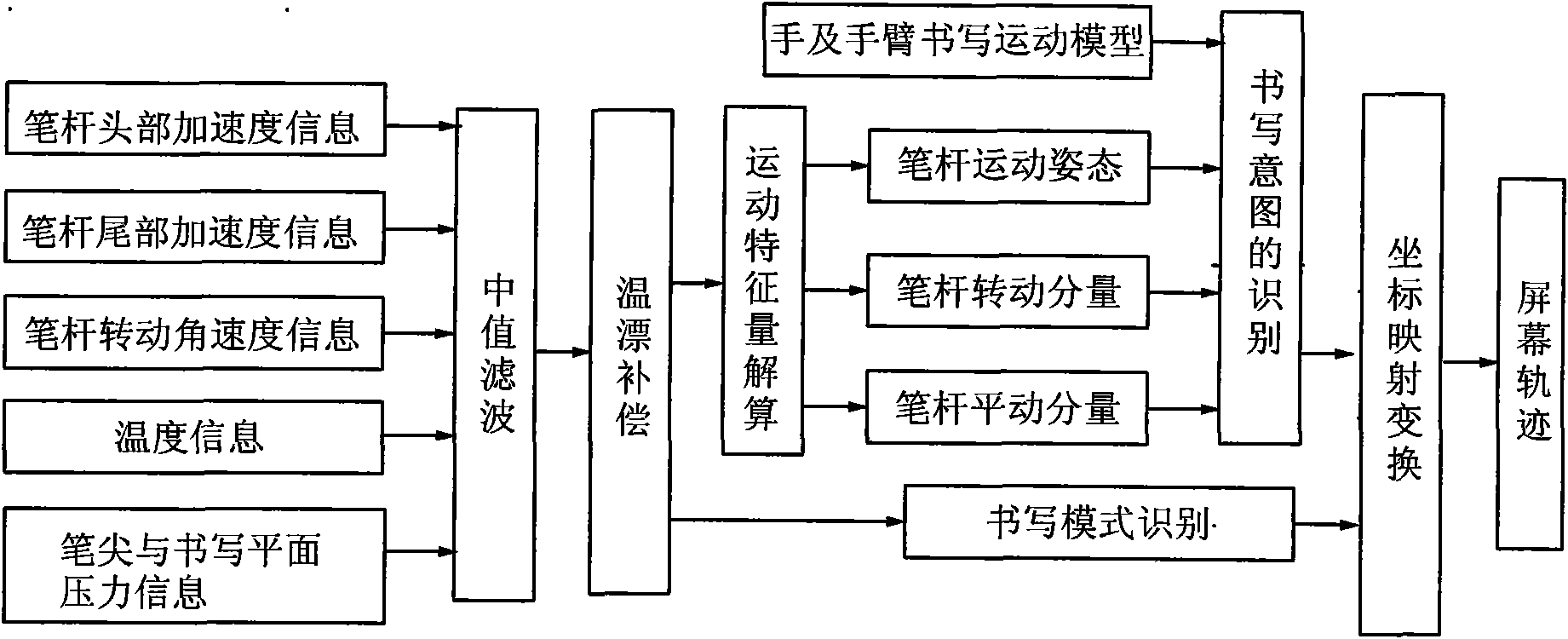

Natural interaction pen capable of automatically inputting written contents and handwriting detection method

InactiveCN101872259AEasy to detectRealize functionInput/output processes for data processingHandwritingAngular velocity

The invention discloses a natural interaction pen capable of automatically inputting written contents and a handwriting detection method. According to writing motion models of human, the interaction pen measures angular velocity information and acceleration information of the motion of a human hand and a human arm in a writing motion process by using sensors in a pen body, and output voltage signals are subjected to low-pass filtering and analogue-to-digital conversion and then are sent to a microprocessor I for calculation of writing motion characteristic quantity and recognition of writing intention and mapped into handwriting information of the written contents; the signals output by the microprocessor I are sent through a data wireless communication module I; the signals sent by the data wireless communication module I are received by a receiving end of a computer host, then processed through a microprocessor II and transmitted to the computer host through a USB interface. The natural interaction pen has the advantages of no need of handwriting panel, independent work, better accordance with the writing operation habit of people, and high portability and universality.

Owner:PLA SECOND ARTILLERY ENGINEERING UNIVERSITY

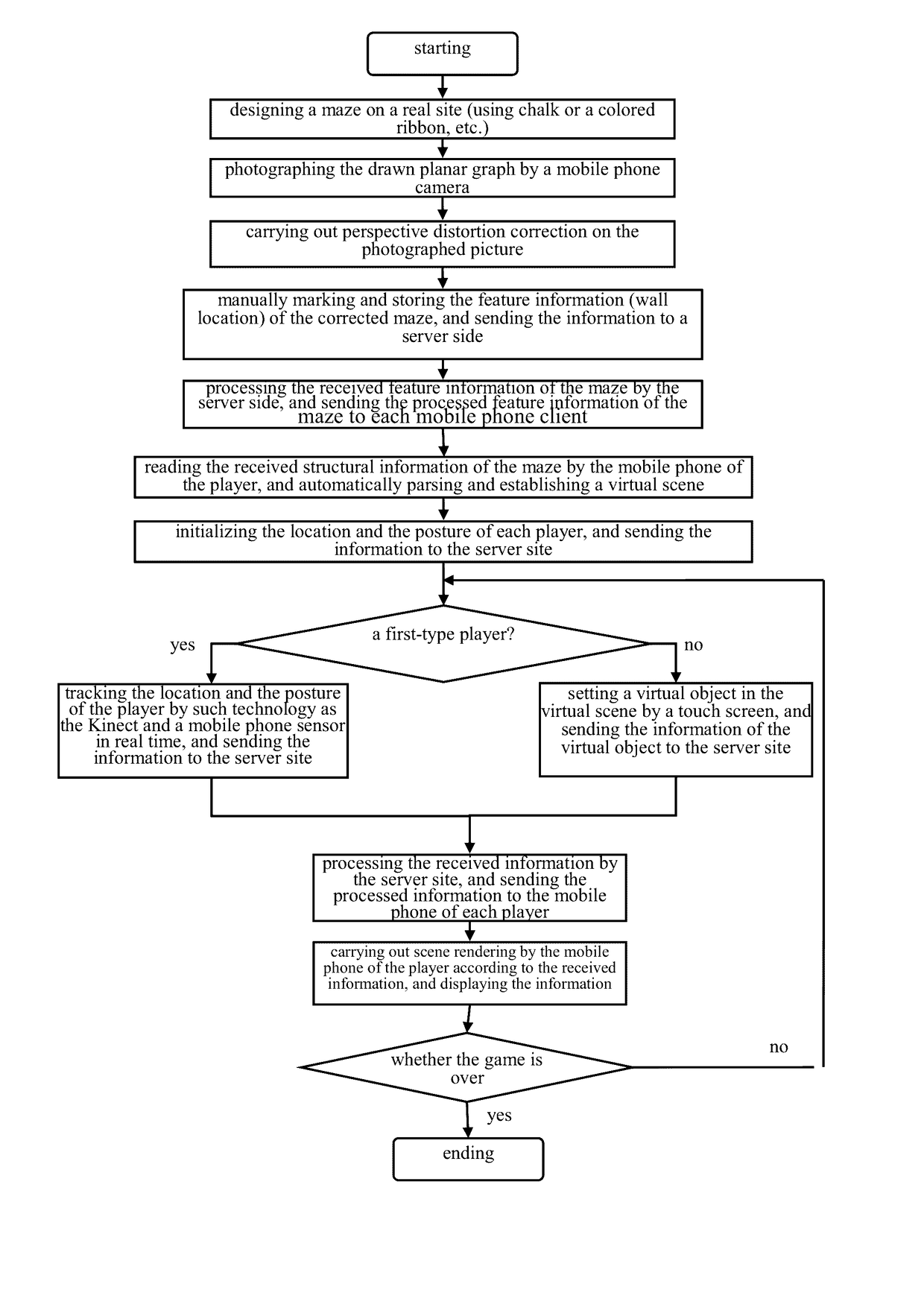

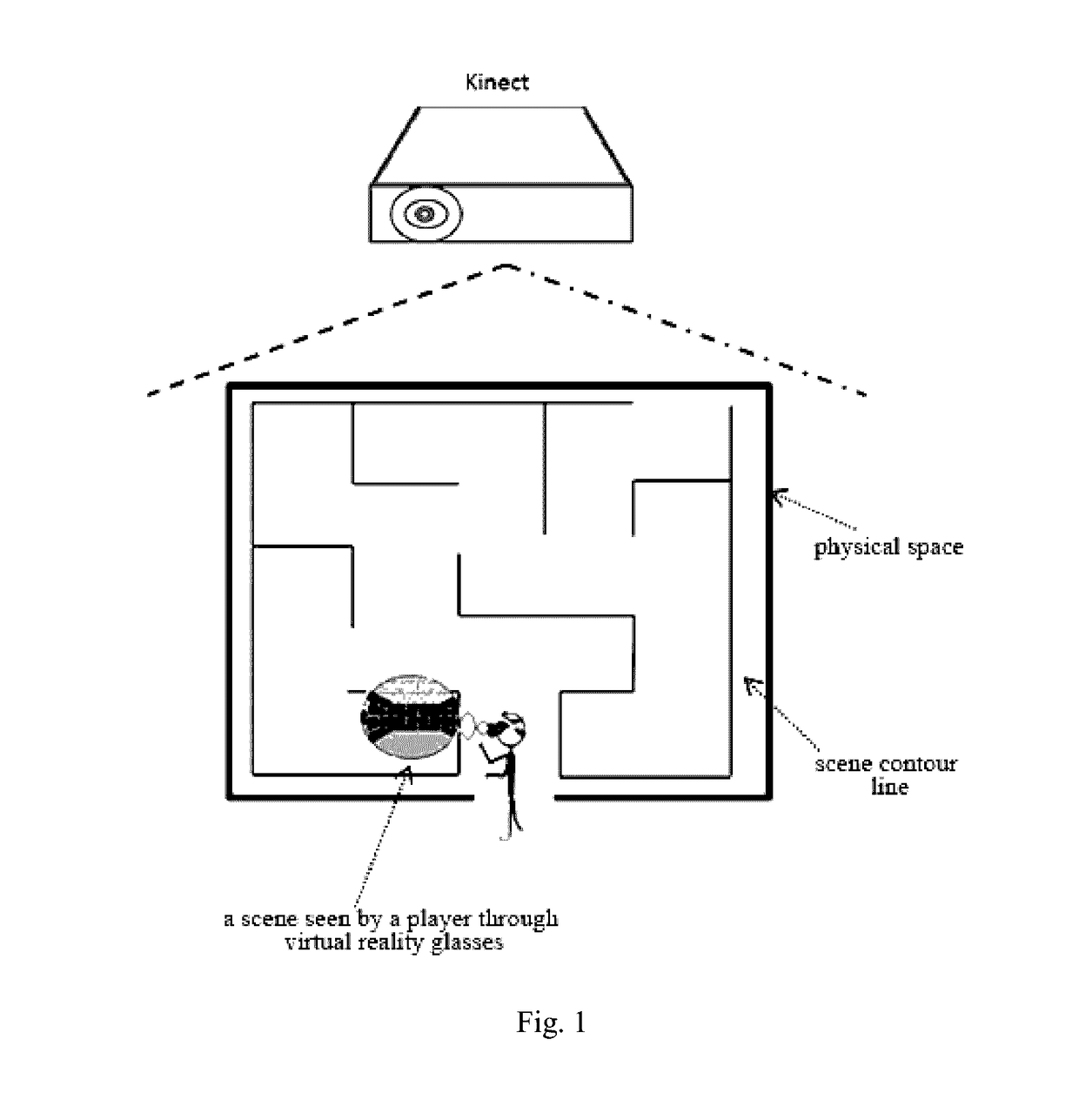

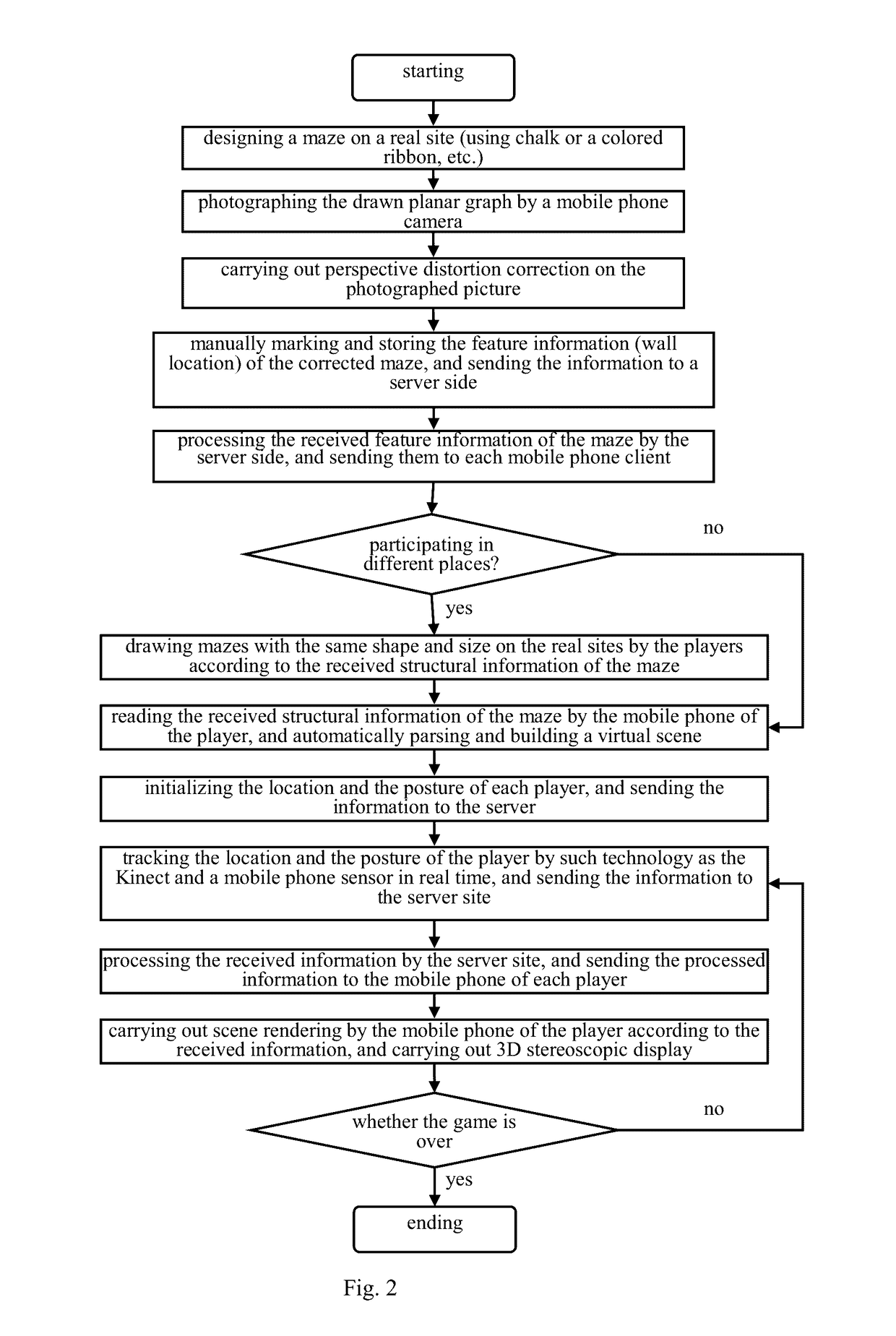

New pattern and method of virtual reality system based on mobile devices

ActiveUS20170116788A1Quick buildInput/output for user-computer interactionDrawing from basic elementsMethods of virtual realityPhysical space

A new pattern and method of a virtual reality system based on mobile devices, which may allow a player to design a virtual scene structure in a physical space and allow quick generation of corresponding 3D virtual scenes by a mobile phone; real rotation of the head of the player is captured by an acceleration sensor in the mobile phone by means of a head-mounted virtual reality device to provide the player with immersive experience; and real postures of the player are tracked and identified by a motion sensing device to realize player's mobile input control on and natural interaction with the virtual scenes. The system only needs a certain physical space and simple virtual reality device to realize the immersive experience of a user, and provides both a single-player mode and a multi-player mode, wherein the multi-player mode includes a collaborative mode and a versus mode.

Owner:SHANDONG UNIV

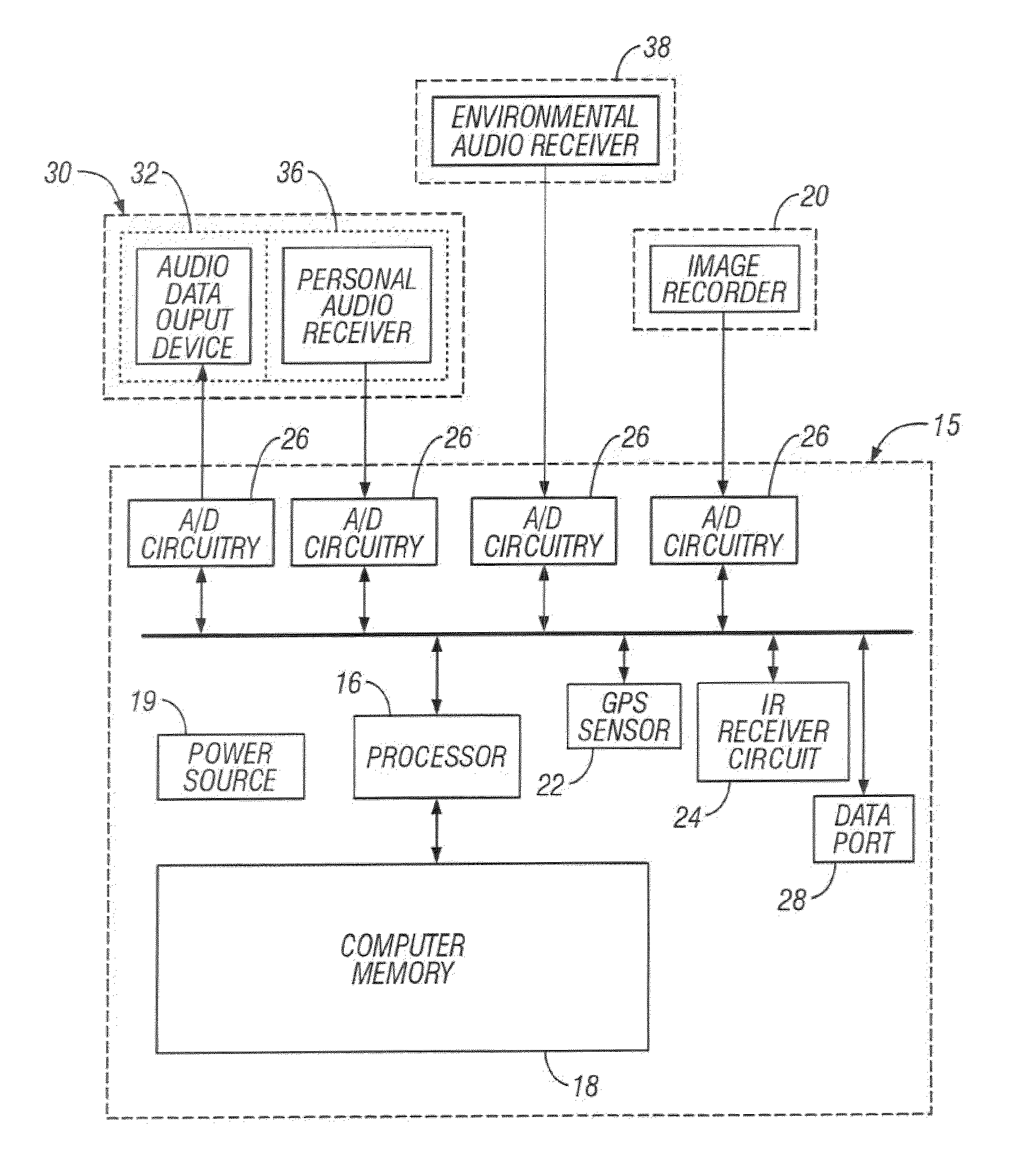

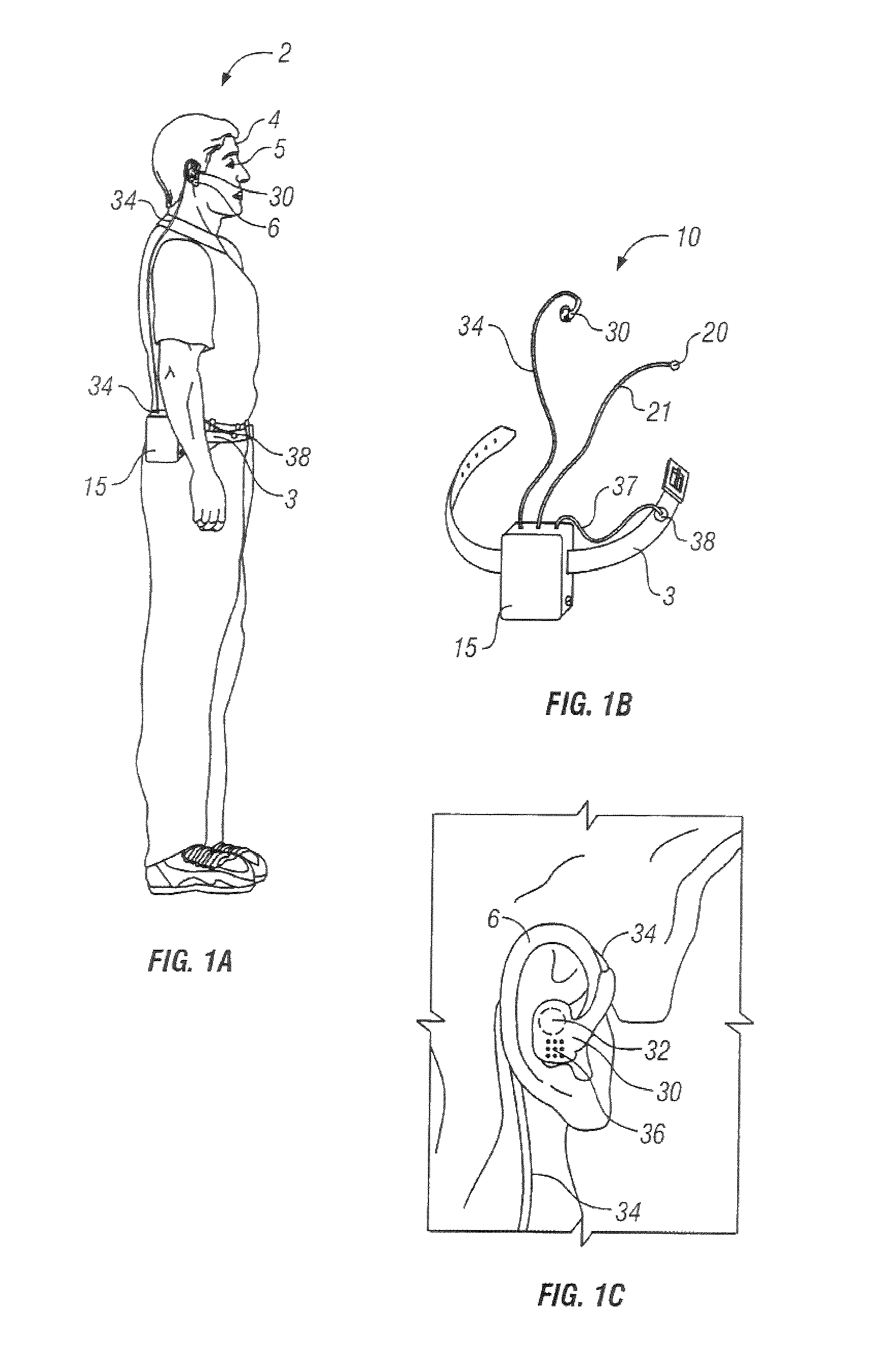

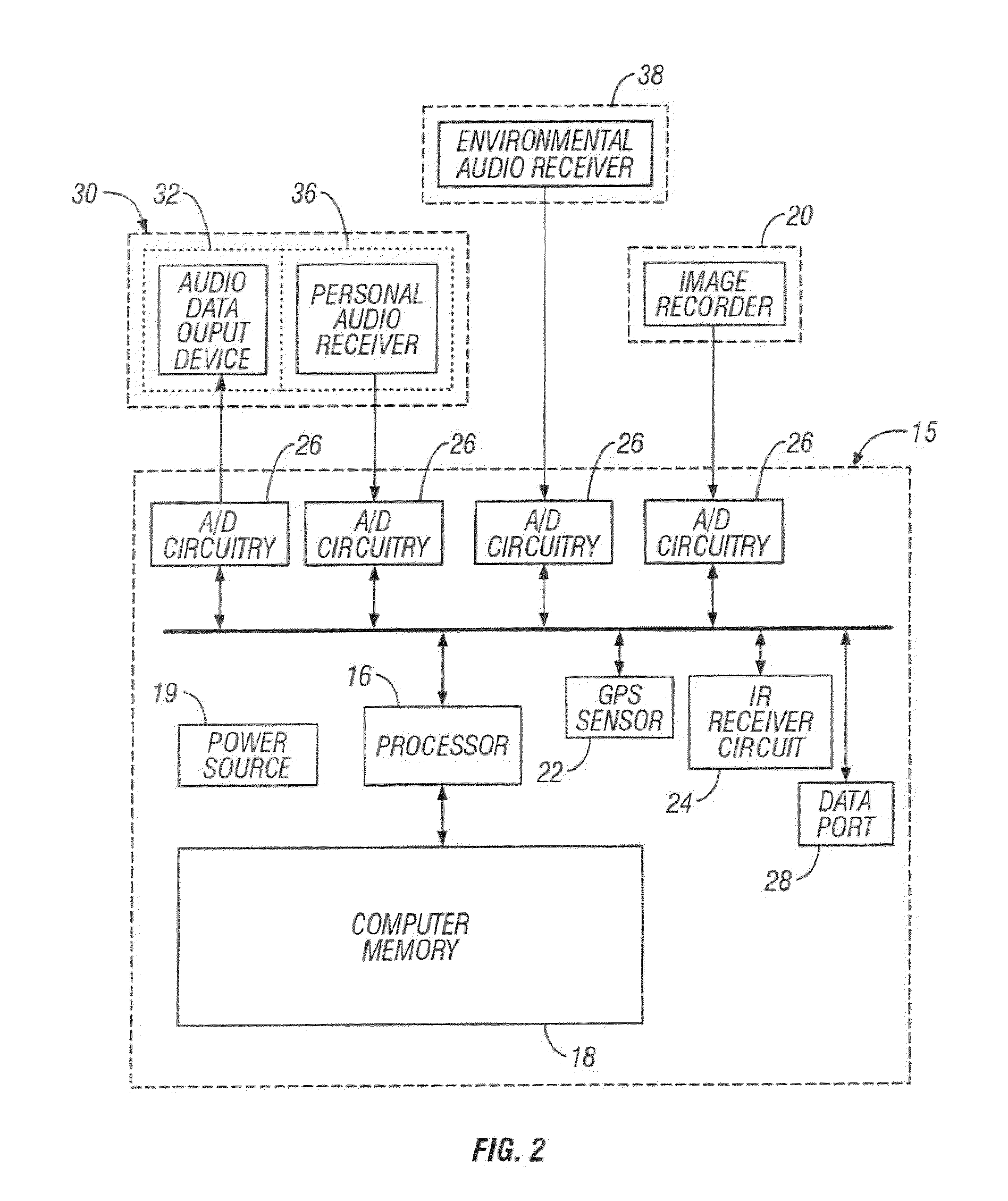

Wearable computer system and modes of operating the system

InactiveUS7966189B2More natural in appearanceFunction increaseSubstation speech amplifiersNatural language data processingOperational systemOutput device

A wearable computer system has a user interface with at least an audio-only mode of operating, and that is natural in appearance and facilitates natural interactions with the system and the user's surroundings. The wearable computer system may retrieve information from the user's voice or surroundings using a passive user interface. The audio-only user interface for the wearable computer system may include two audio receivers and a single output device, such as a speaker, that provides audio data directly to the user. The two audio receivers may be miniature microphones that collaborate to input audio signals from the user's surroundings while also accurately inputting voice commands from the user. Additionally, the user may enter natural voice commands to the wearable computer system in a manner that blends in with the natural phrases and terminology spoken by the user.

Owner:ACCENTURE GLOBAL SERVICES LTD

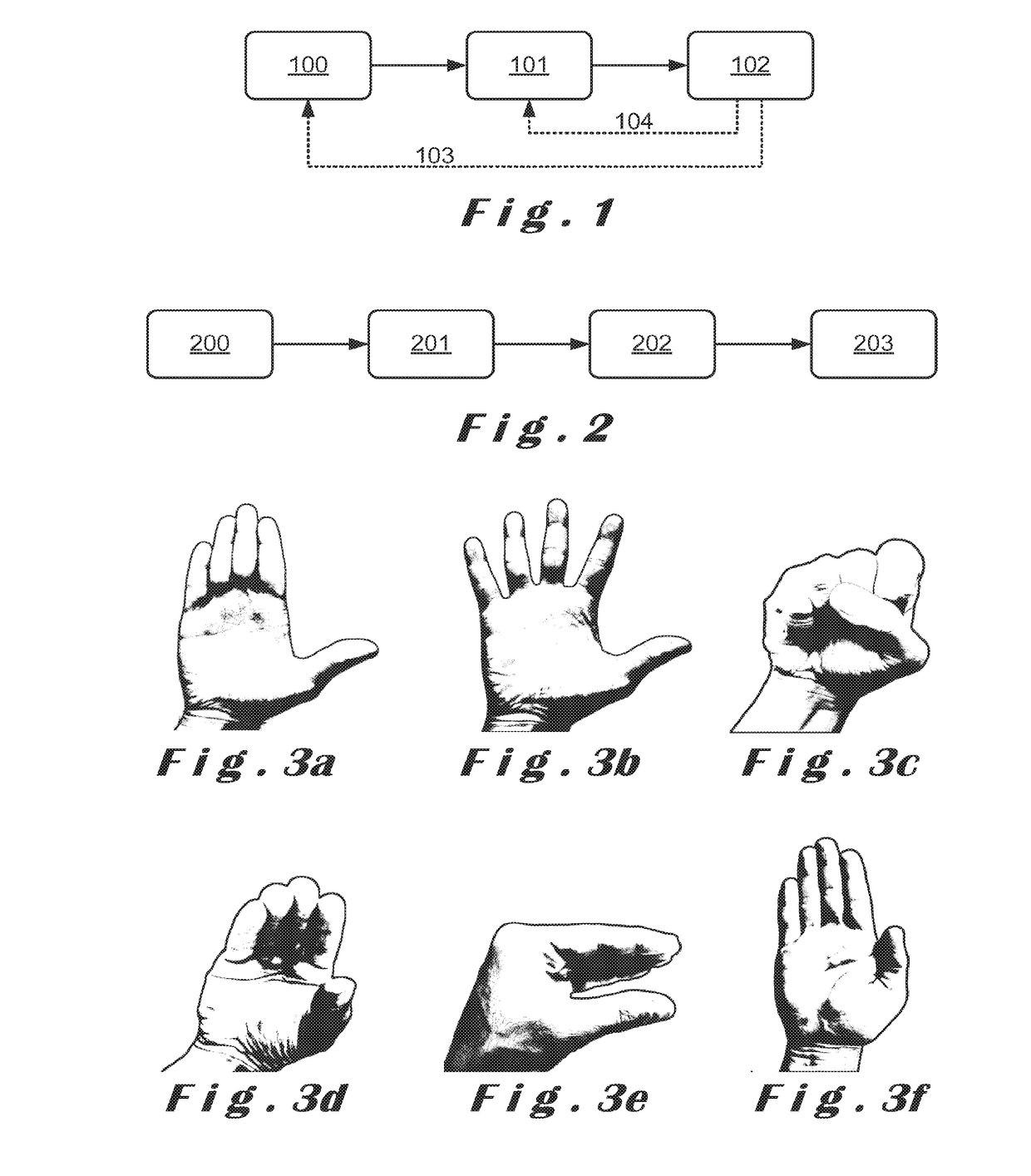

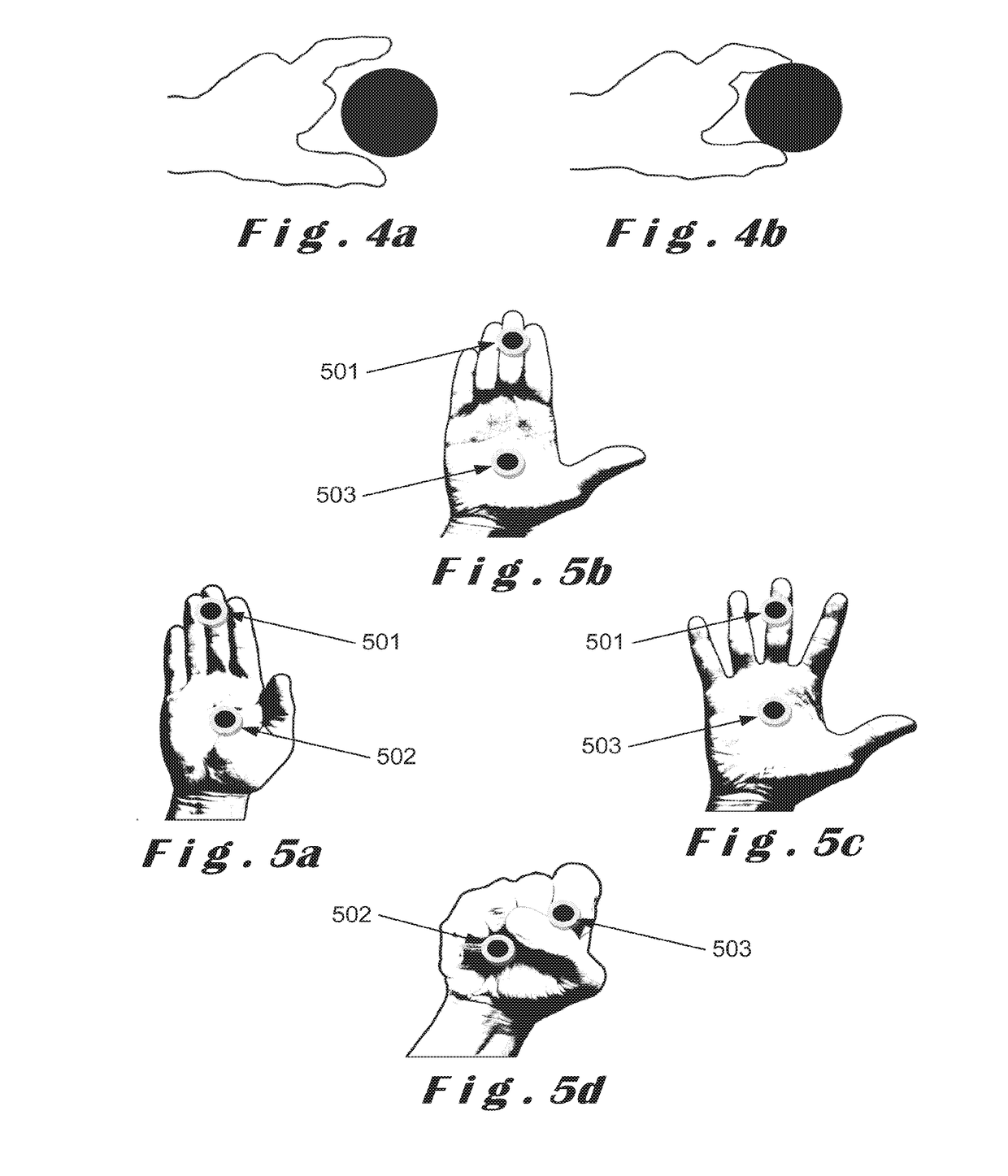

Human-to-Computer Natural Three-Dimensional Hand Gesture Based Navigation Method

ActiveUS20160124513A1Improve robustnessInput/output for user-computer interactionCharacter and pattern recognitionGraphicsGraphical user interface

Described herein is a method for enabling human-to-computer three-dimensional hand gesture-based natural interactions. From depth images provided by a range finding imaging system, the method enables efficient and robust detection of a particular sequence of natural gestures including a beginning (start), and an ending (stop) of a predetermined type of natural gestures for delimiting the period during which a control (interaction) gesture is operating in an environment wherein a user is freely moving his hands. The invention is more particularly, although not exclusively, concerned by detection without any false positives nor delay, of intentionally performed natural gesture subsequent to a starting finger tip or hand tip based natural gesture so as to provide efficient and robust navigation, zooming and scrolling interactions within a graphical user interface up until the ending finger tip or hand tip based natural gesture is detected.

Owner:SONY DEPTHSENSING SOLUTIONS SA NV

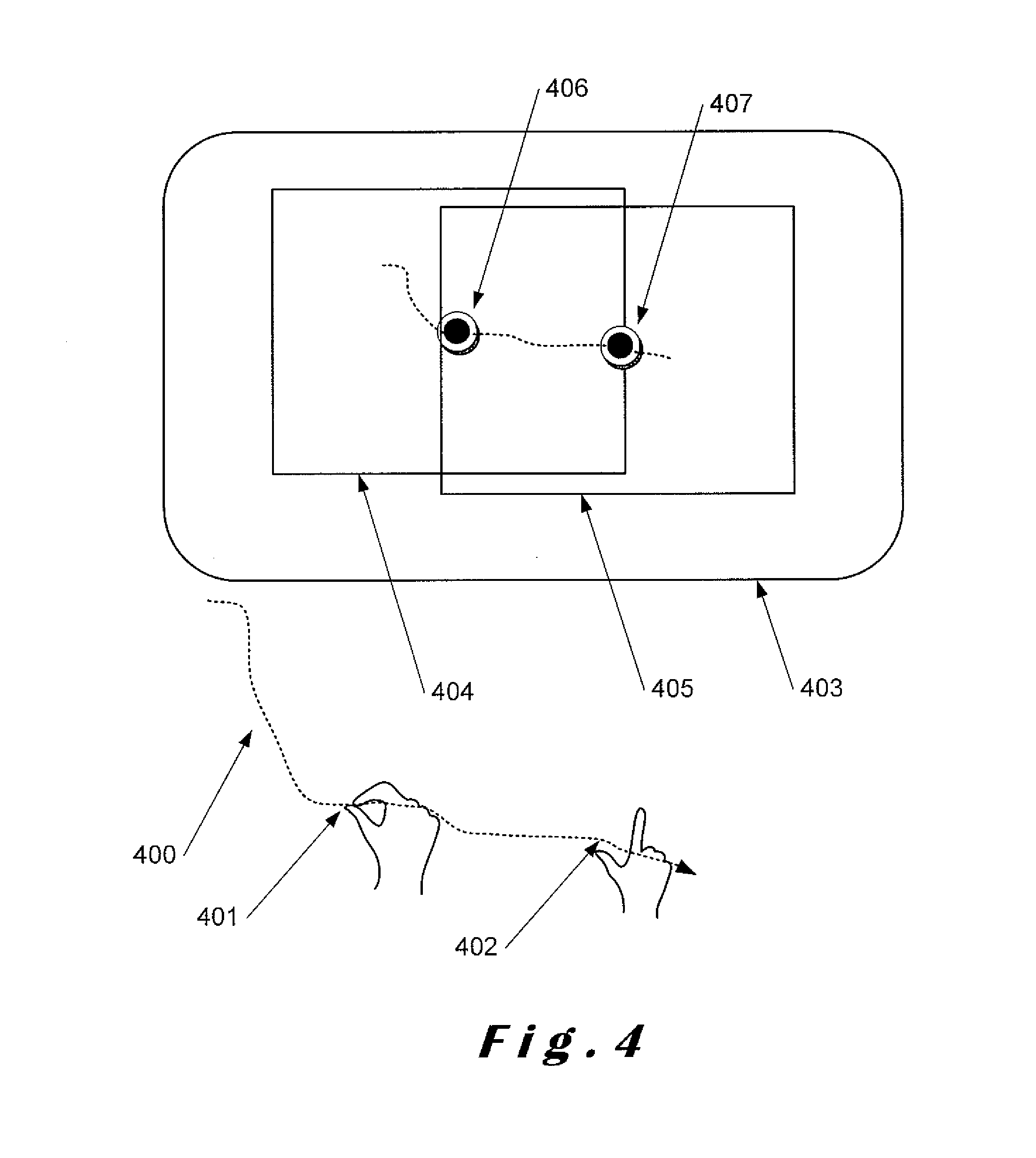

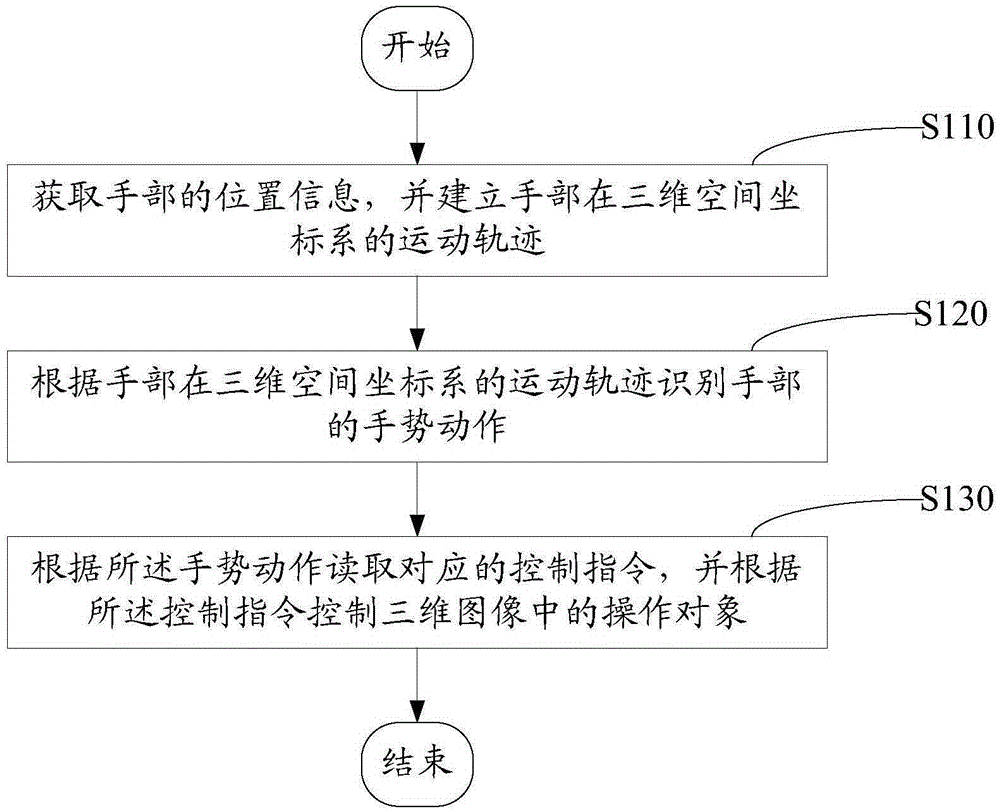

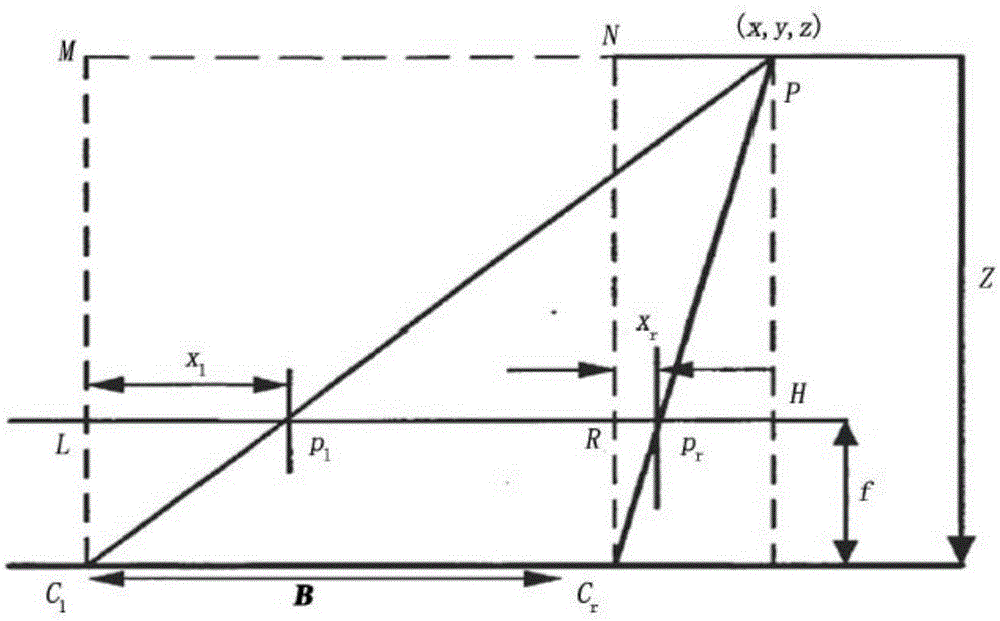

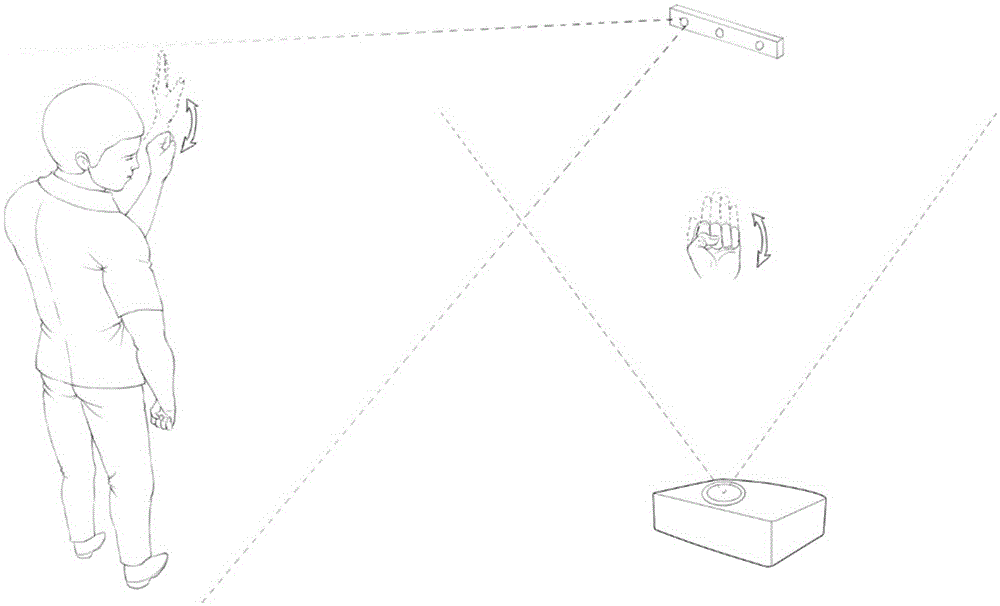

Gesture manipulation method and system based on three-dimensional display

ActiveCN105353873ARealize natural interactionInput/output for user-computer interactionCharacter and pattern recognitionThree-dimensional spaceNatural interaction

The present invention relates to a gesture manipulation method based on three-dimensional display. The method comprises: acquiring position information of a hand; establishing a motion trajectory of the hand in a coordinate system of three-dimensional space; recognizing a gesture motion of the hand according to the motion trajectory; reading a corresponding control instruction according to the gesture motion; and controlling an operation object in a three-dimensional image according to the control instruction. By recognizing a gesture motion of an user, an operational object is controlled to execute a corresponding operation according to the gesture motion. Therefore, human-computer natural interaction can be realized with no need of being contacted with a display screen. Furthermore, a gesture manipulation system and apparatus based on the three-dimensional display are further provided.

Owner:SHENZHEN ORBBEC CO LTD

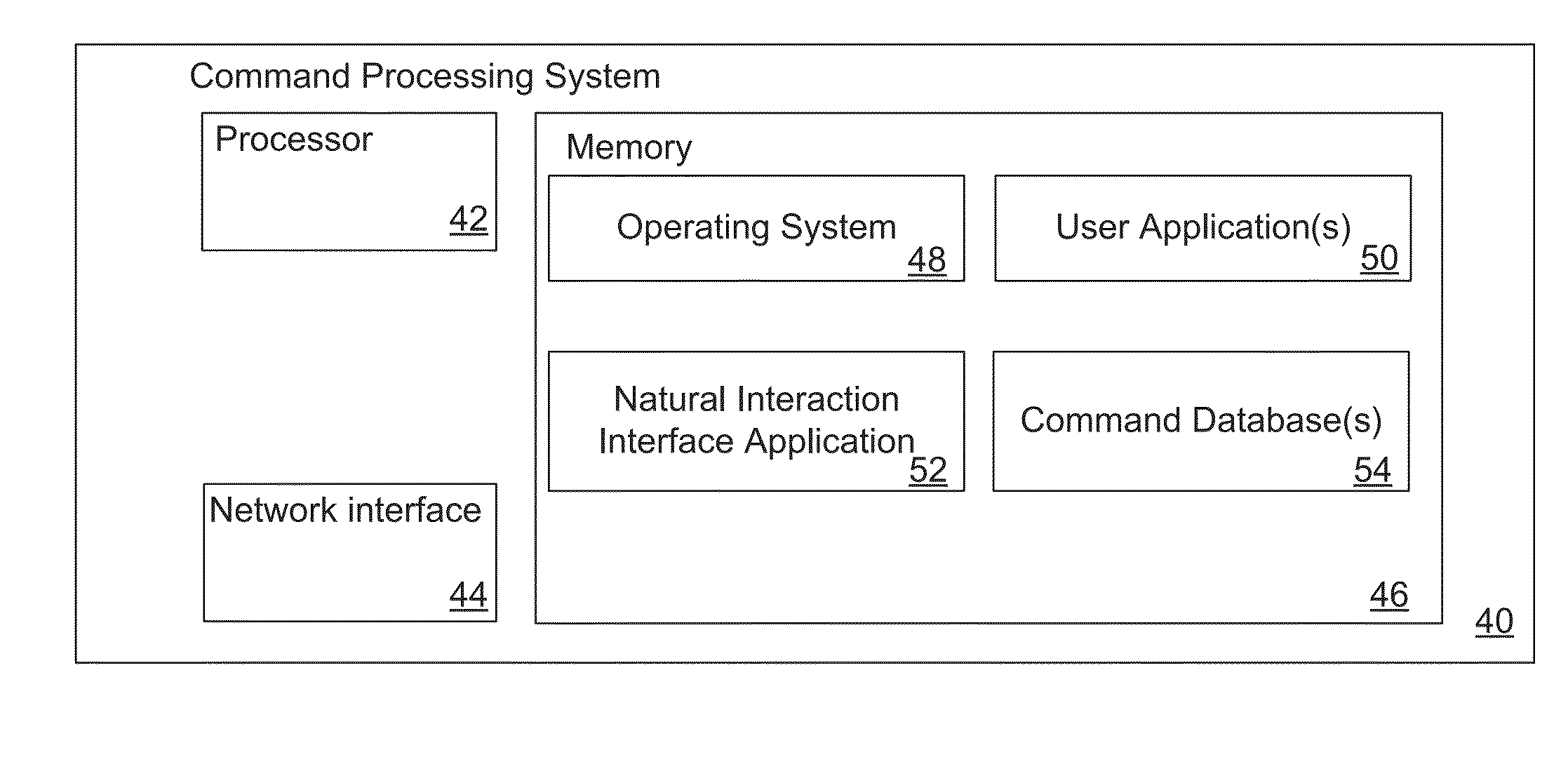

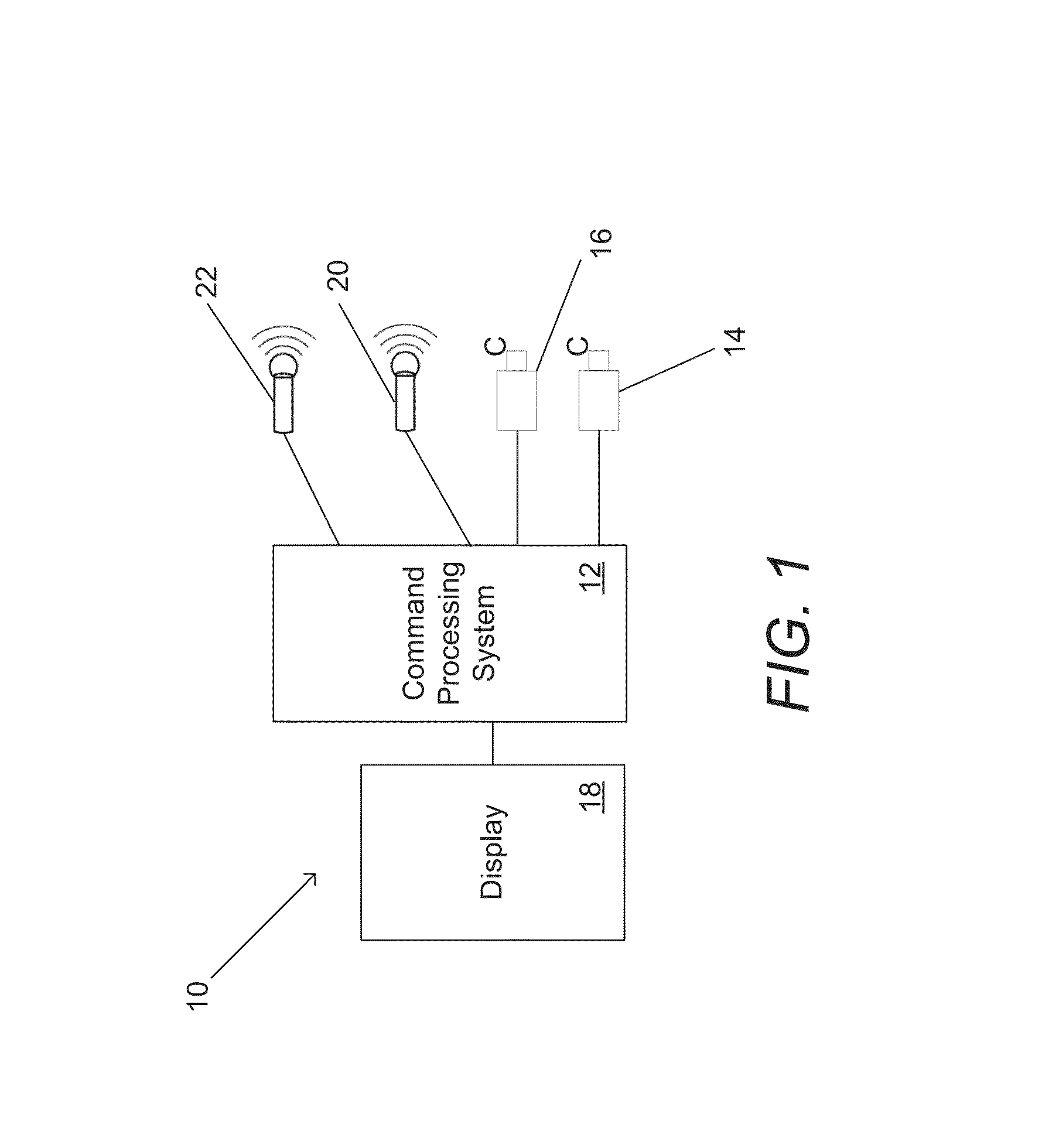

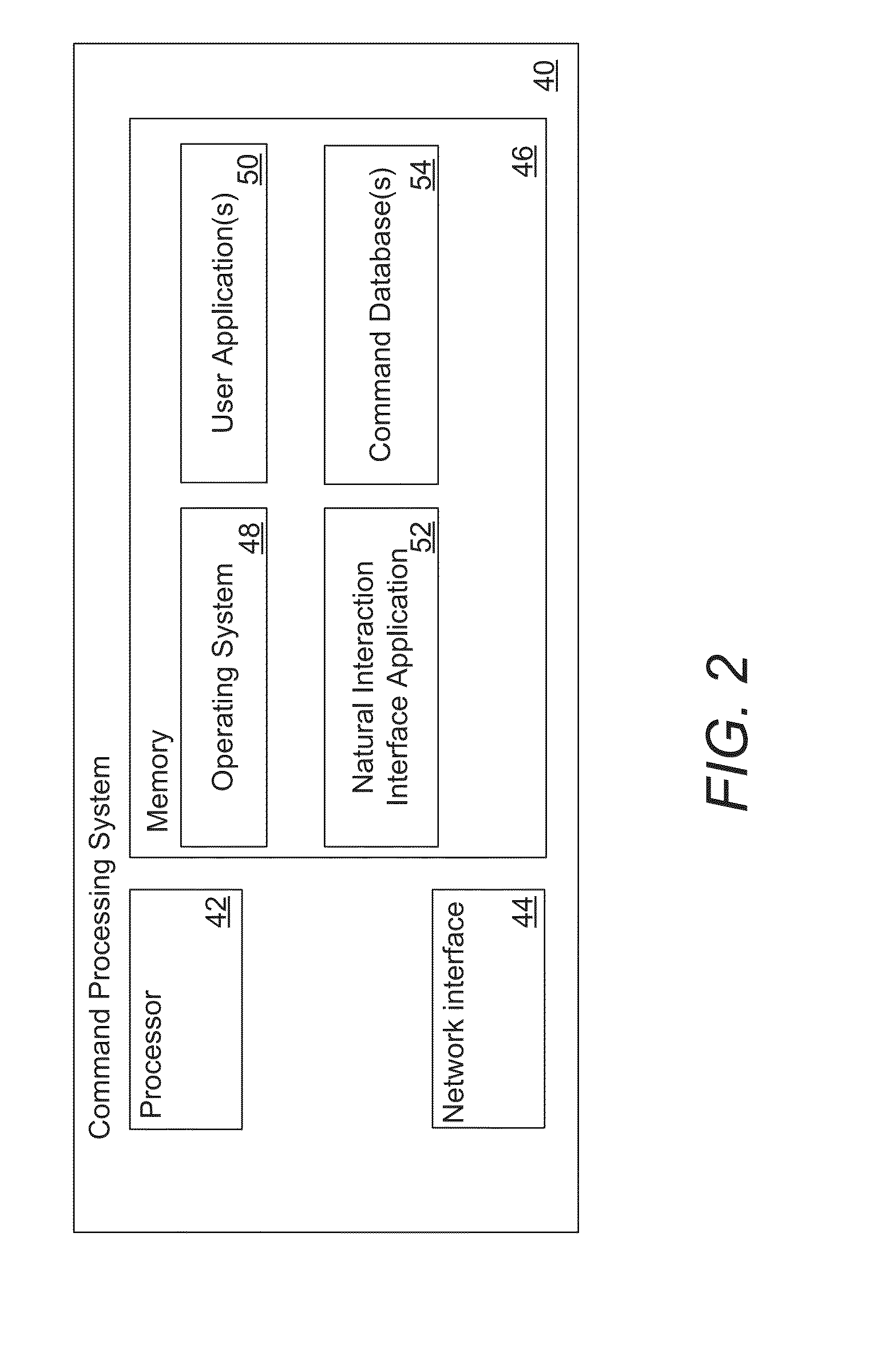

Systems and methods for natural interaction with operating systems and application graphical user interfaces using gestural and vocal input

InactiveUS20140173440A1Input/output for user-computer interactionSound input/outputGraphicsOperational system

Systems and methods for natural interaction with graphical user interfaces using gestural and vocal input in accordance with embodiments of the invention are disclosed. In one embodiment, a method for interpreting a command sequence that includes a gesture and a voice cue to issue an application command includes receiving image data, receiving an audio signal, selecting an application command from a command dictionary based upon a gesture identified using the image data, a voice cue identified using the audio signal, and metadata describing combinations of a gesture and a voice cue that form a command sequence corresponding to an application command, retrieving a list of processes running on an operating system, selecting at least one process based upon the selected application command and the metadata, where the metadata also includes information identifying at least one process targeted by the application command, and issuing an application command to the selected process.

Owner:AQUIFI

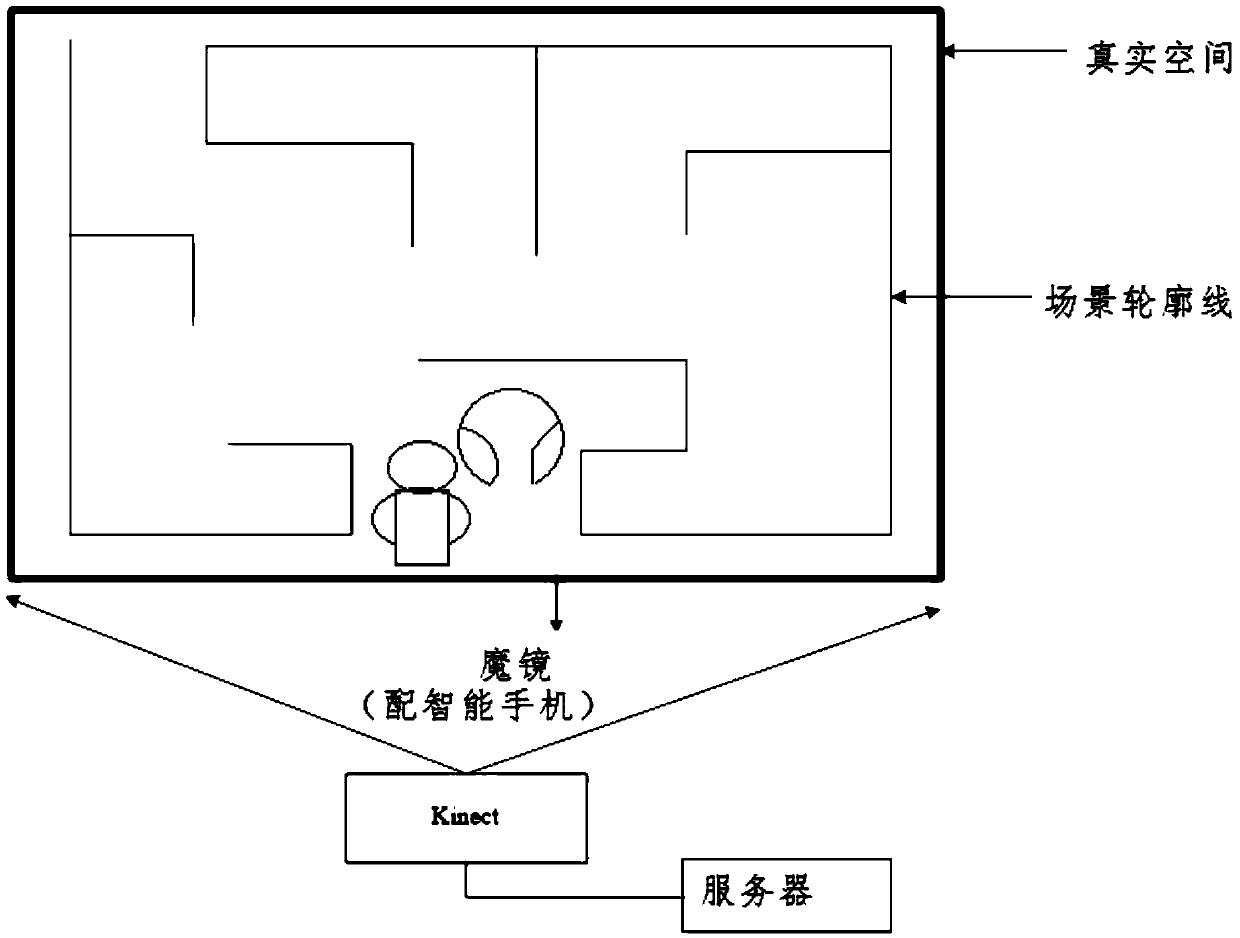

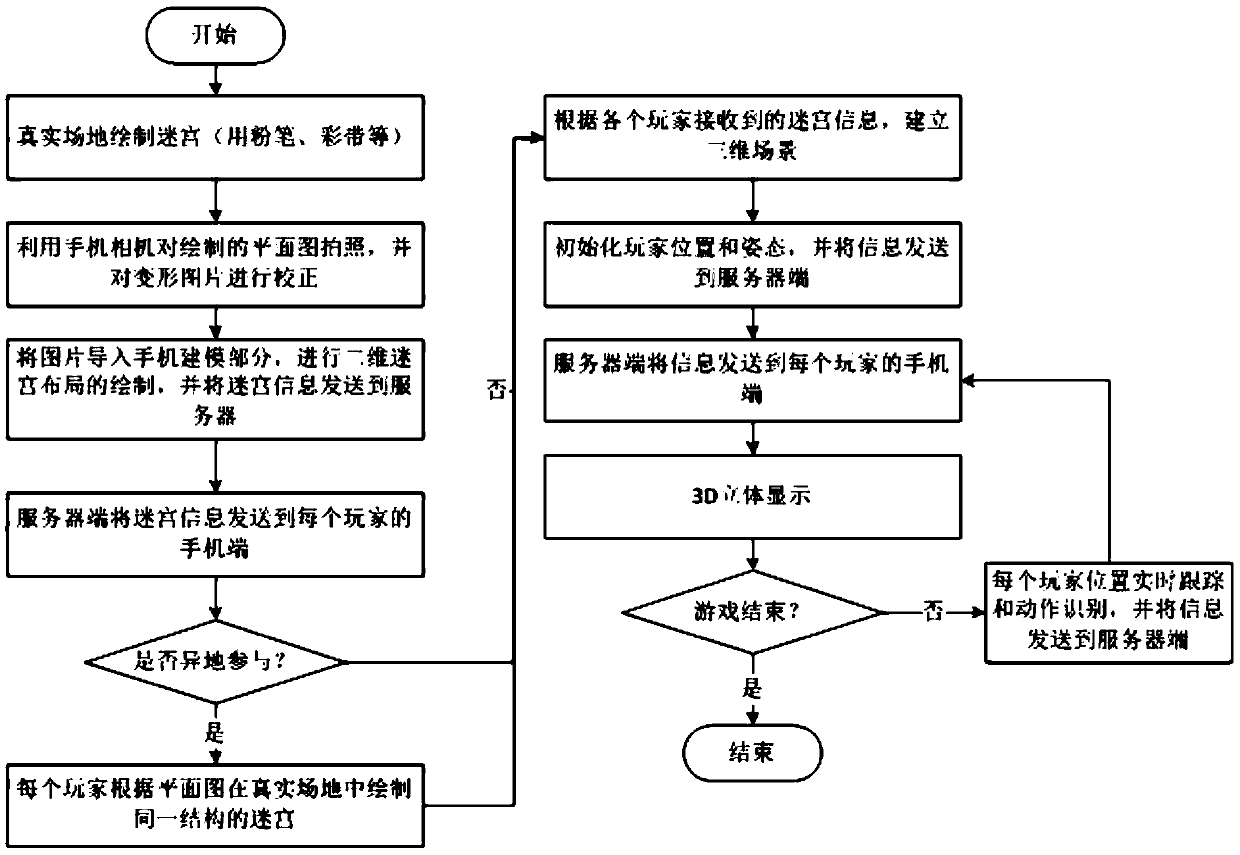

Hybrid implementation game system based on pervasive computing, and method thereof

ActiveCN105373224AEasy to useEasy to operateInput/output for user-computer interactionVideo gamesPhysical spaceMixed reality

The invention discloses a hybrid implementation game system based on pervasive computing, and a method thereof. The system allows a player to design maze layout anytime and anywhere in any free space and draw a maze planar graph through a drawing tool; a corresponding three-dimensional virtual scene is built quickly through photographing by a mobile phone; by a virtual reality glasses, the player can enjoy immersive experience through real rotation of the head of the player; and by means of tracking and identifying real posture of the player through a motion sensing device, mobile input control and natural interaction to the virtual scene by the player is realized. The system has no need of special physical space and expensive virtual reality devices, and users are not required to be trained and can play games anytime and anywhere.

Owner:SHANDONG UNIV

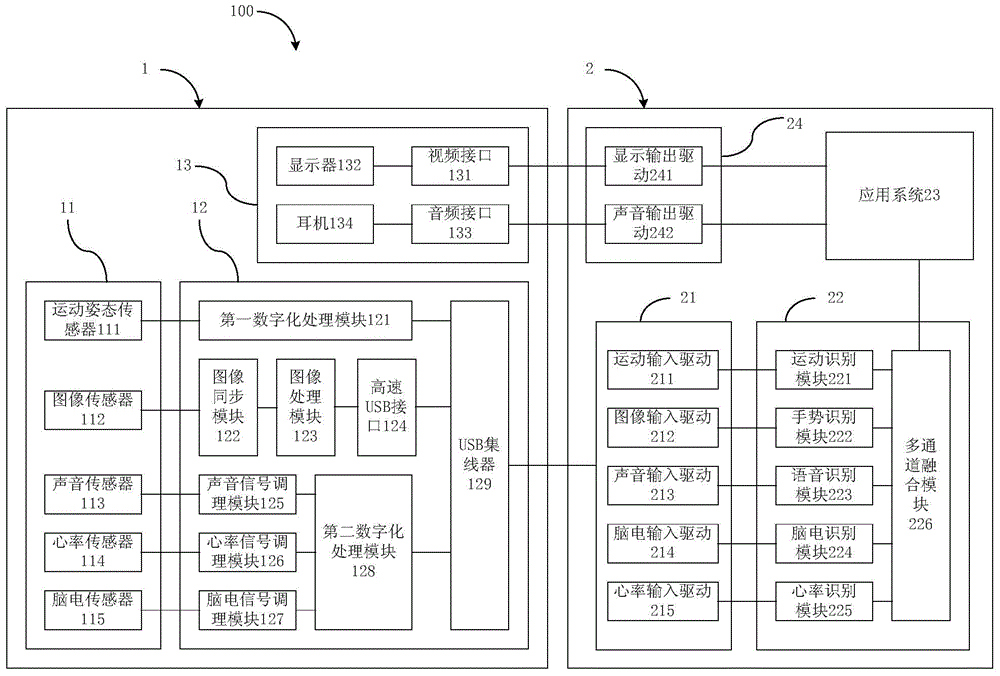

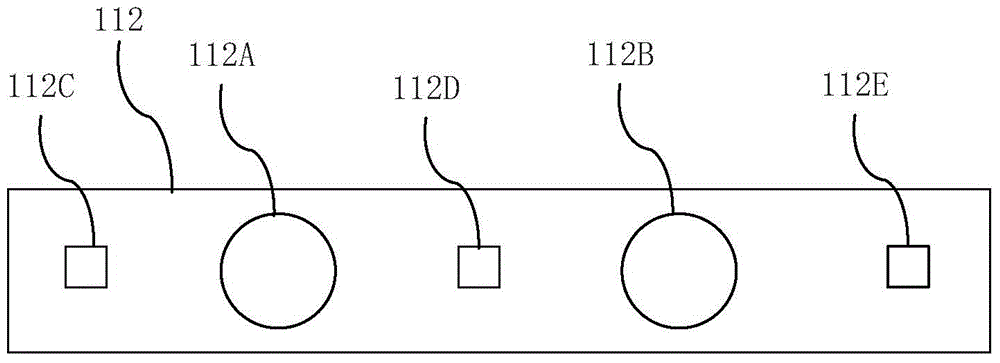

Head-wearable type multi-channel interaction system and multi-channel interaction method

InactiveCN104615243ARealize health careAchieving real-time fusionInput/output for user-computer interactionGraph readingHead movementsInteraction systems

The invention discloses a head-wearable type multi-channel interaction system and a multi-channel interaction method. The head-wearable type multi-channel interaction system comprises a head-wearable type interaction device and a data processing system. The head-wearable type interaction device comprises a sensor assembly, a signal processing device, an output device and a body. The data processing system comprises an input drive module, a multi-channel processing unit, an application system and an output drive module. The head-wearable type multi-channel interaction system and the multi-channel interaction method have the advantages that real-time fusion of multiple natural interaction modes of gestures, voice, head movement postures, heart rates, brain electricity and the like, immersive three-dimensional (3D) display and voice are integrated, the interaction experience effect of virtual reality and augmented reality is improved, and care to the health of a wearer can be achieved according to the physiological perception.

Owner:INLIFE HANDNET CO LTD

Interactive reality augmentation for natural interaction

ActiveUS9158375B2Reduce intensityInput/output for user-computer interactionImage data processingNatural interactionDepth map

Embodiments of the invention provide apparatus and methods for interactive reality augmentation, including a 2-dimensional camera (36) and a 3-dimensional camera (38), associated depth projector and content projector (48), and a processor (40) linked to the 3-dimensional camera and the 2-dimensional camera. A depth map of the scene is produced using an output of the 3-dimensional camera, and coordinated with a 2-dimensional image captured by the 2-dimensional camera to identify a 3-dimensional object in the scene that meets predetermined criteria for projection of images thereon. The content projector projects a content image onto the 3-dimensional object responsively to instructions of the processor, which can be mediated by automatic recognition of user gestures.

Owner:APPLE INC

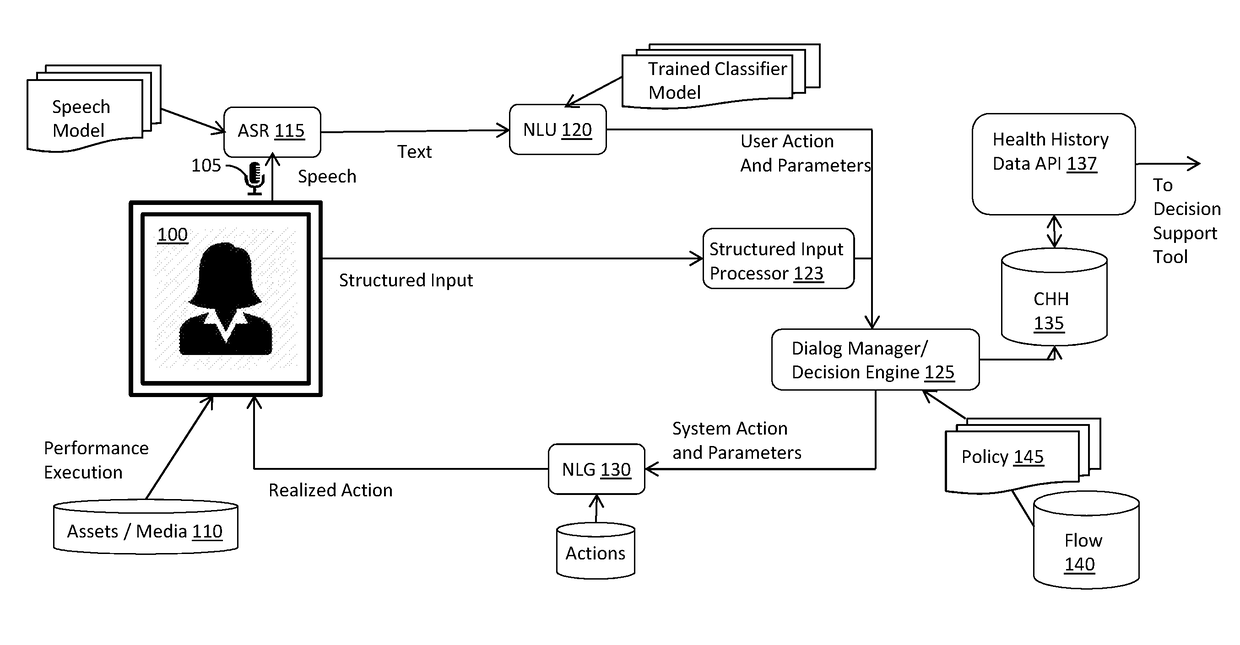

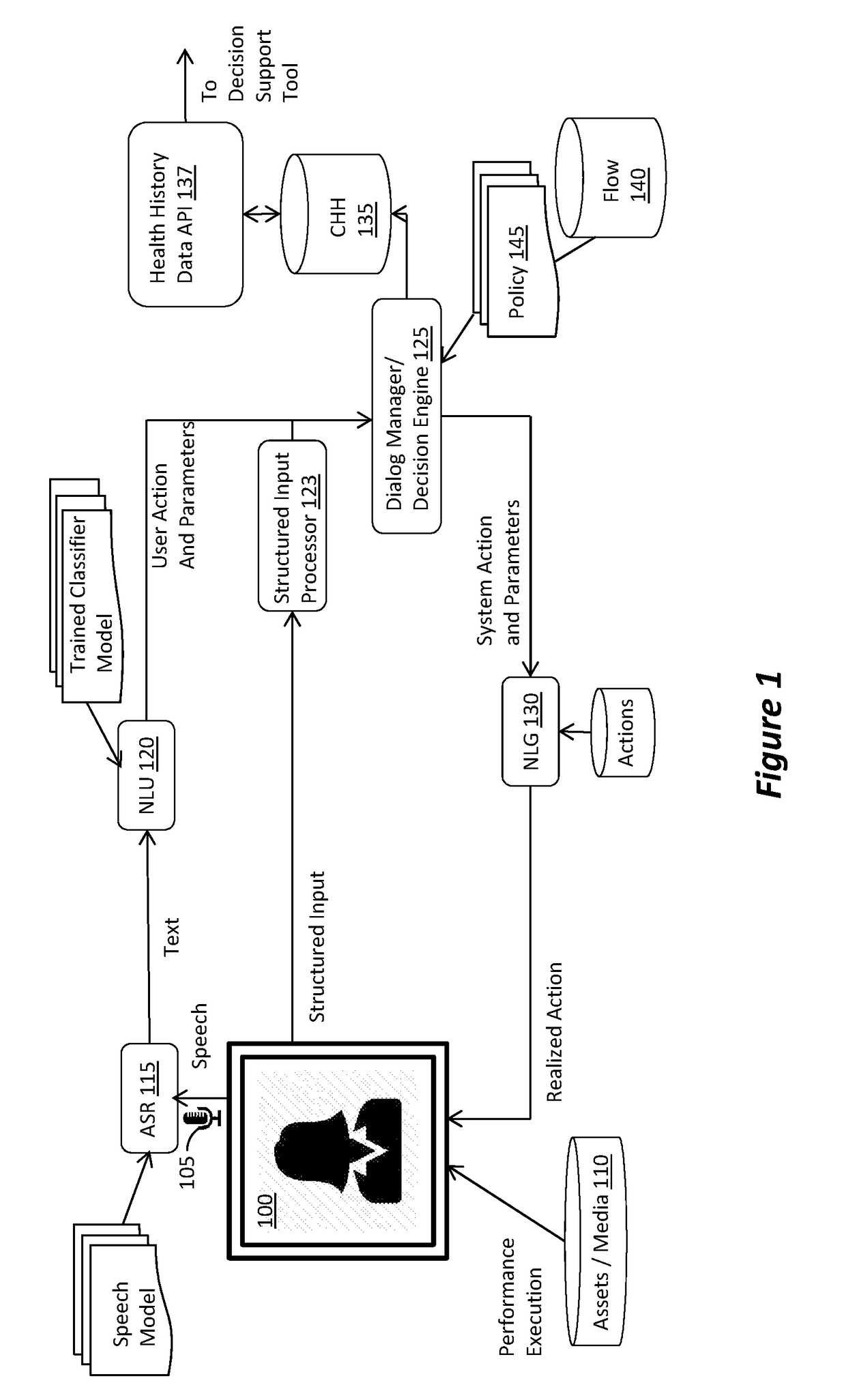

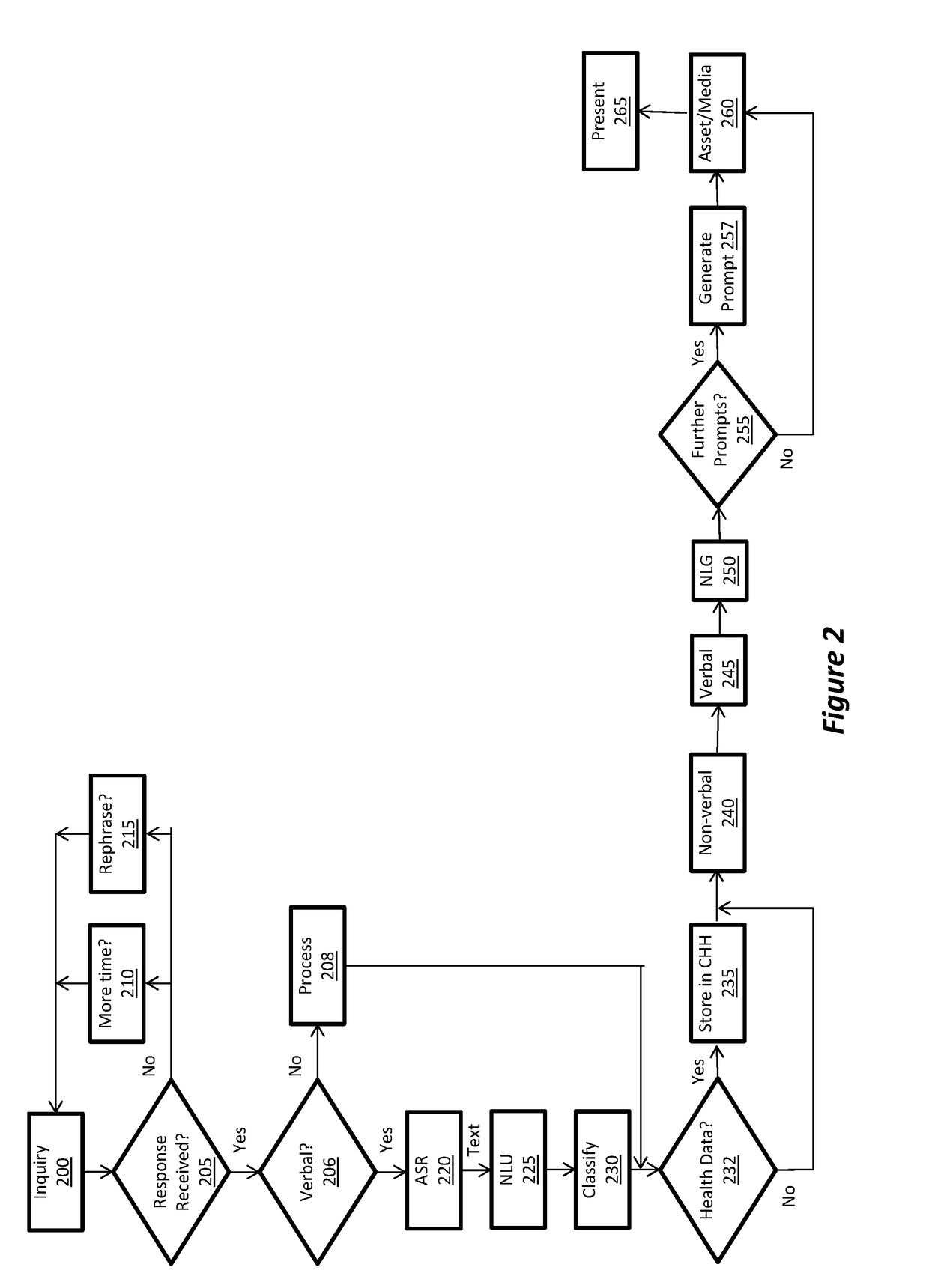

Method and system for patients data collection and analysis

ActiveUS20170300648A1Increase genetic variant interpretationImprove medical diagnosisMedical communicationHealth-index calculationAnimationInformation Harvesting

A conversational and embodied Virtual Assistant (VA) with Decision Support (DS) capabilities that can simulate and improve upon information gathering sessions between clinicians, researchers, and patients. The system incorporates a conversational and embodied VA and a DS and deploys natural interaction enabled by natural language processing, automatic speech recognition, and an animation framework capable of rendering character animation performances through generated verbal and nonverbal behaviors, all supplemented by on-screen prompts.

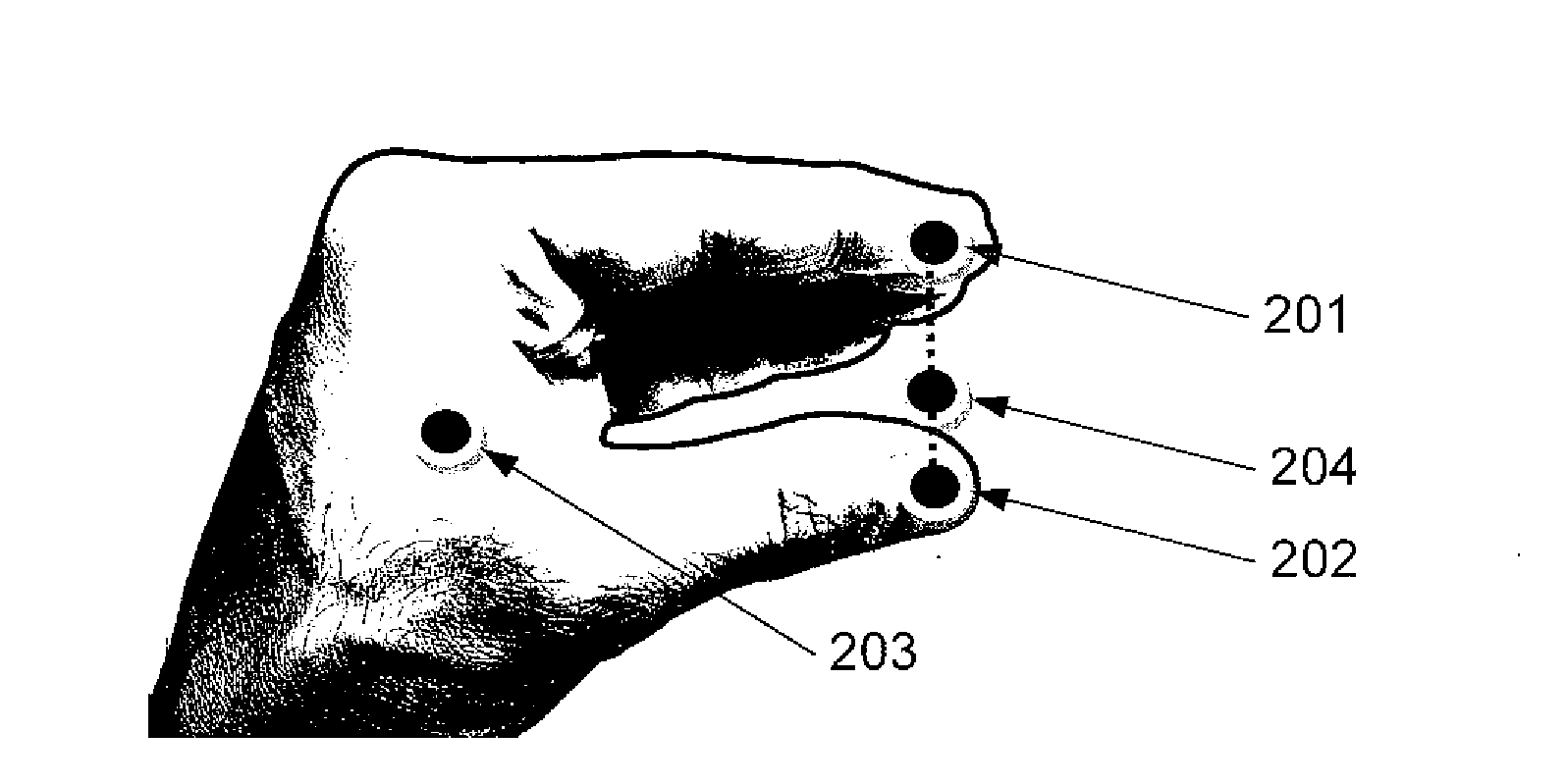

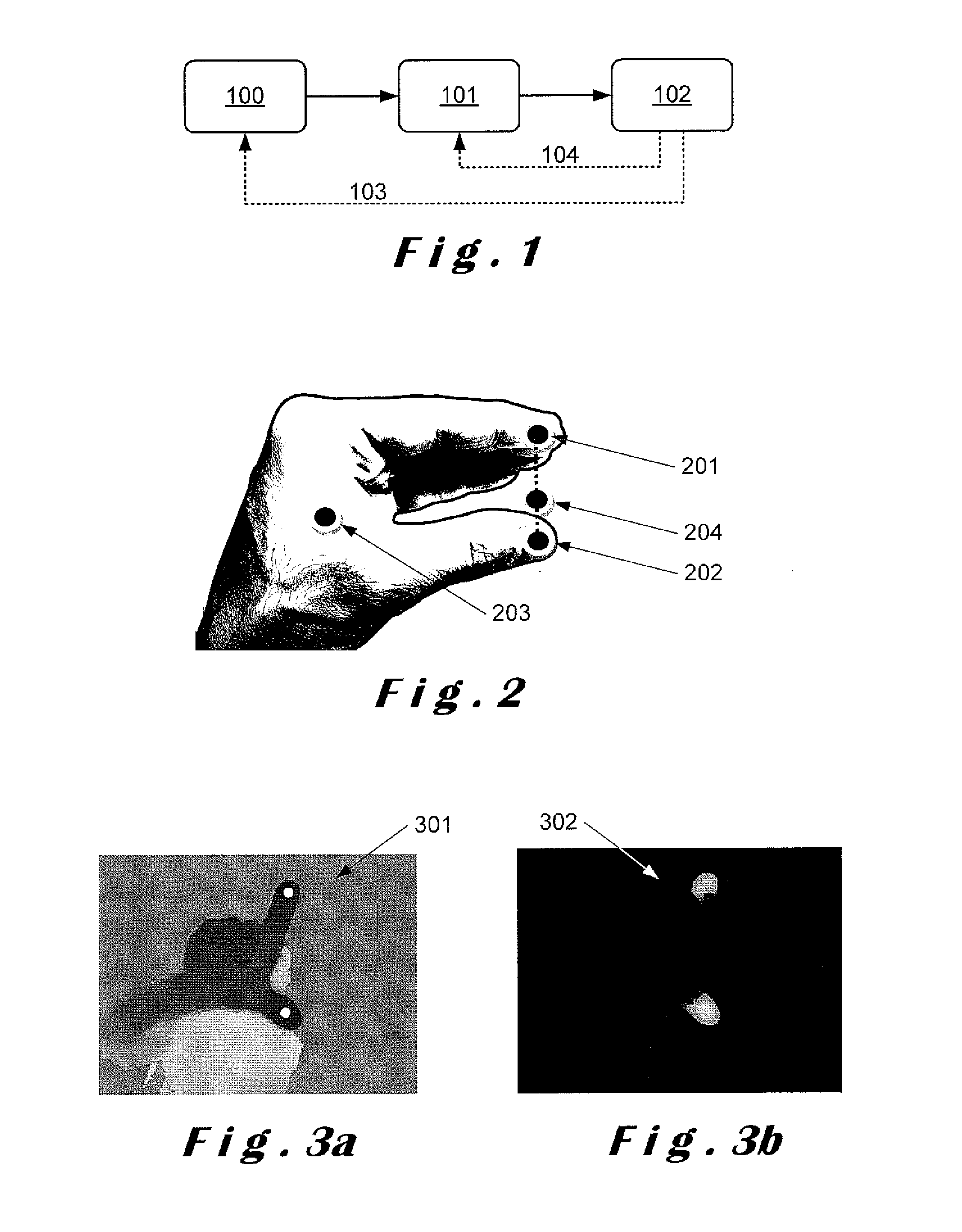

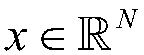

Method and system for human-to-computer gesture based simultaneous interactions using singular points of interest on a hand

ActiveUS20170097687A1Robust efficient determinationInput/output for user-computer interactionCharacter and pattern recognitionComputer visionBiological activation

Described herein is a method for enabling human-to-computer three-dimensional hand gesture-based natural interactions from depth images provided by a range finding imaging system. The method enables recognition of simultaneous gestures from detection, tracking and analysis of singular points of interests on a single hand of a user and provides contextual feedback information to the user. The singular points of interest of the hand: include hand tip(s), fingertip(s), palm centre and centre of mass of the hand, and are used for defining at least one representation of a pointer. The point(s) of interest is / are tracked over time and are analysed to enable the determination of sequential and / or simultaneous “pointing” and “activation” gestures performed by a single hand.

Owner:SONY DEPTHSENSING SOLUTIONS SA NV

Robot perception and understanding method based on man-machine collaboration

ActiveCN107150347AQuick understandingImprove online awarenessManipulatorMan machinePattern perception

The invention provides a robot perception and understanding method based on man-machine collaboration. The method allows an operator to help robot to perceive and understand the environment. The method comprises the steps of firstly, audio visual mode perception natural interaction, secondly, target describing and understanding and thirdly task teaching and learning. Intelligence of the human is used, the most natural and efficient interaction manner of the human is used for man-machine communication and assistance, and the robot perception and understanding flexibility, intelligence and adaptation are improved.

Owner:GUANGZHOU LONGEST SCI & TECH

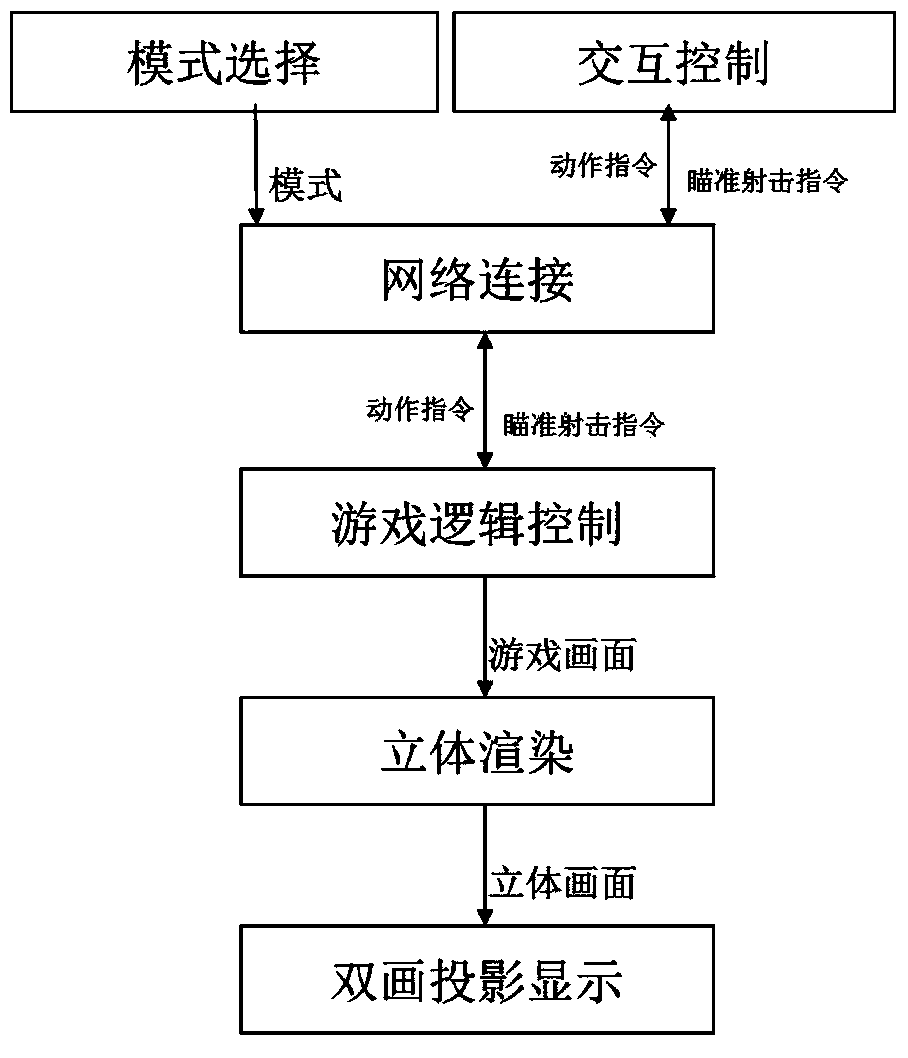

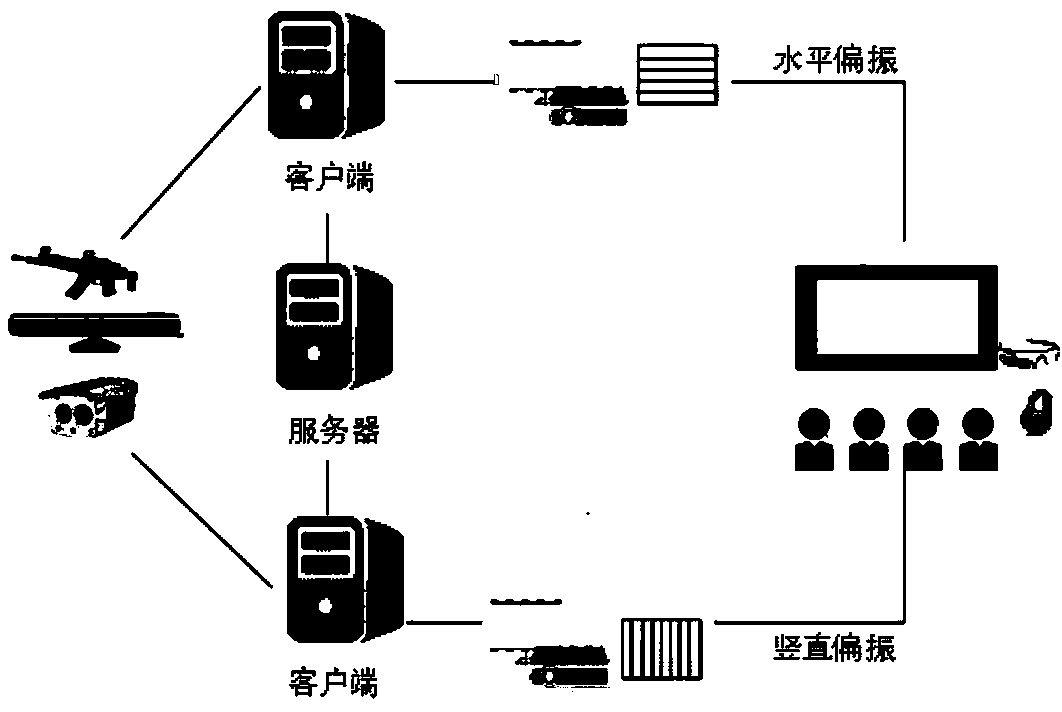

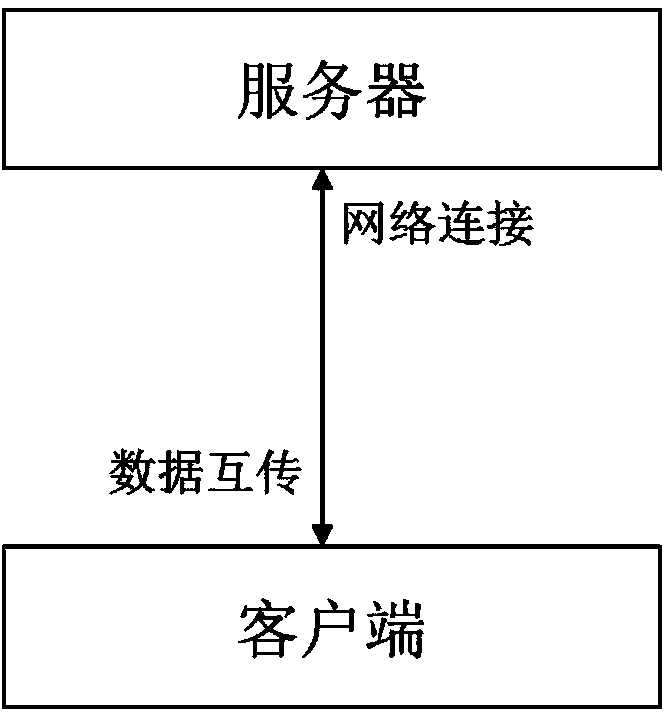

Multi-picture display-based virtual shooting cinema system and method

InactiveCN104258566AReal game experienceNatural gaming experienceVideo gamesSteroscopic systemsNetwork connectionProjection screen

The invention discloses a multi-picture display-based virtual shooting cinema system and method. The multi-picture display-based virtual shooting cinema system is simple, convenient and easy to operate, strong in immersion and the like; through such natural interaction modes as the body posture in the natural life and shooting game experience, a user plays the shooting type game in a mode of interacting with the story through the body posture and an imitation gun, the player acts a virtual leading role for controlling the game development, the user does not need additional learning and training, and the user can interact with the story through walking towards the front, walking towards the back, shooting and the like. The system romances three-dimensional game pictures in real time; the system supports double cooperative engagement through network connection; one large projection screen can provide two independent and complete game pictures for two users by means of a light splitting type double-picture principle, and accordingly the system can bring stronger and more natural immersion type experiments to the users, and the entertainment, interest and reality sense of the game in a theme cinema are reinforced.

Owner:SHANDONG UNIV

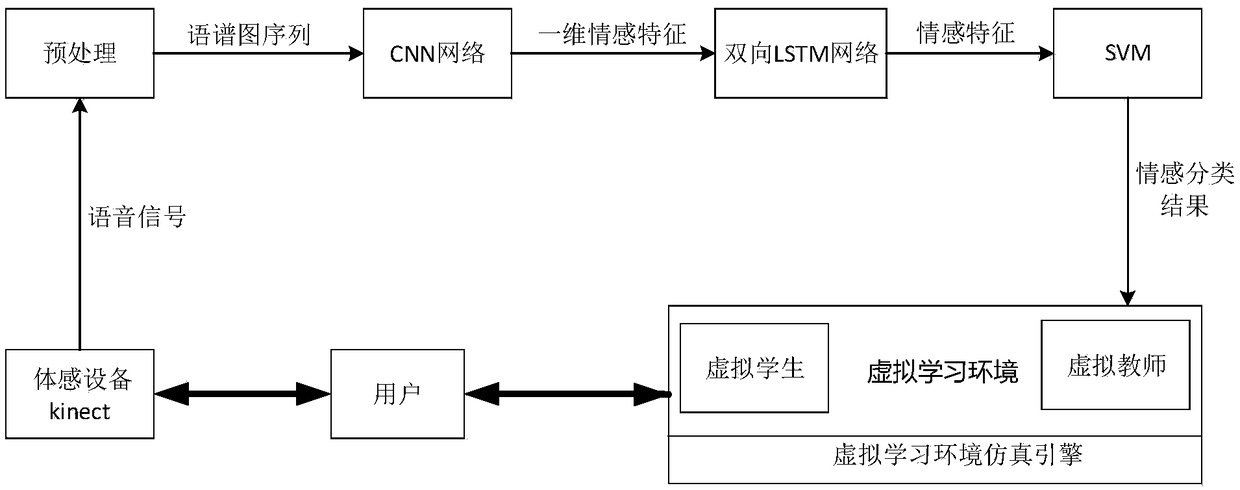

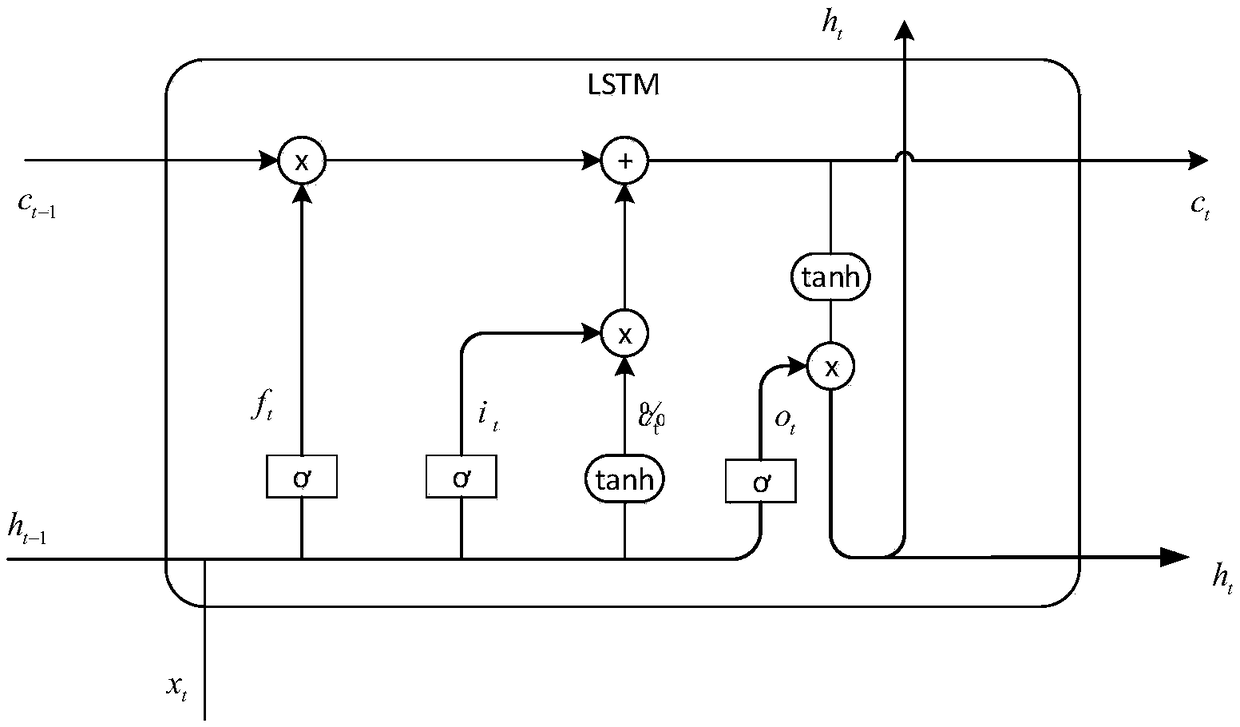

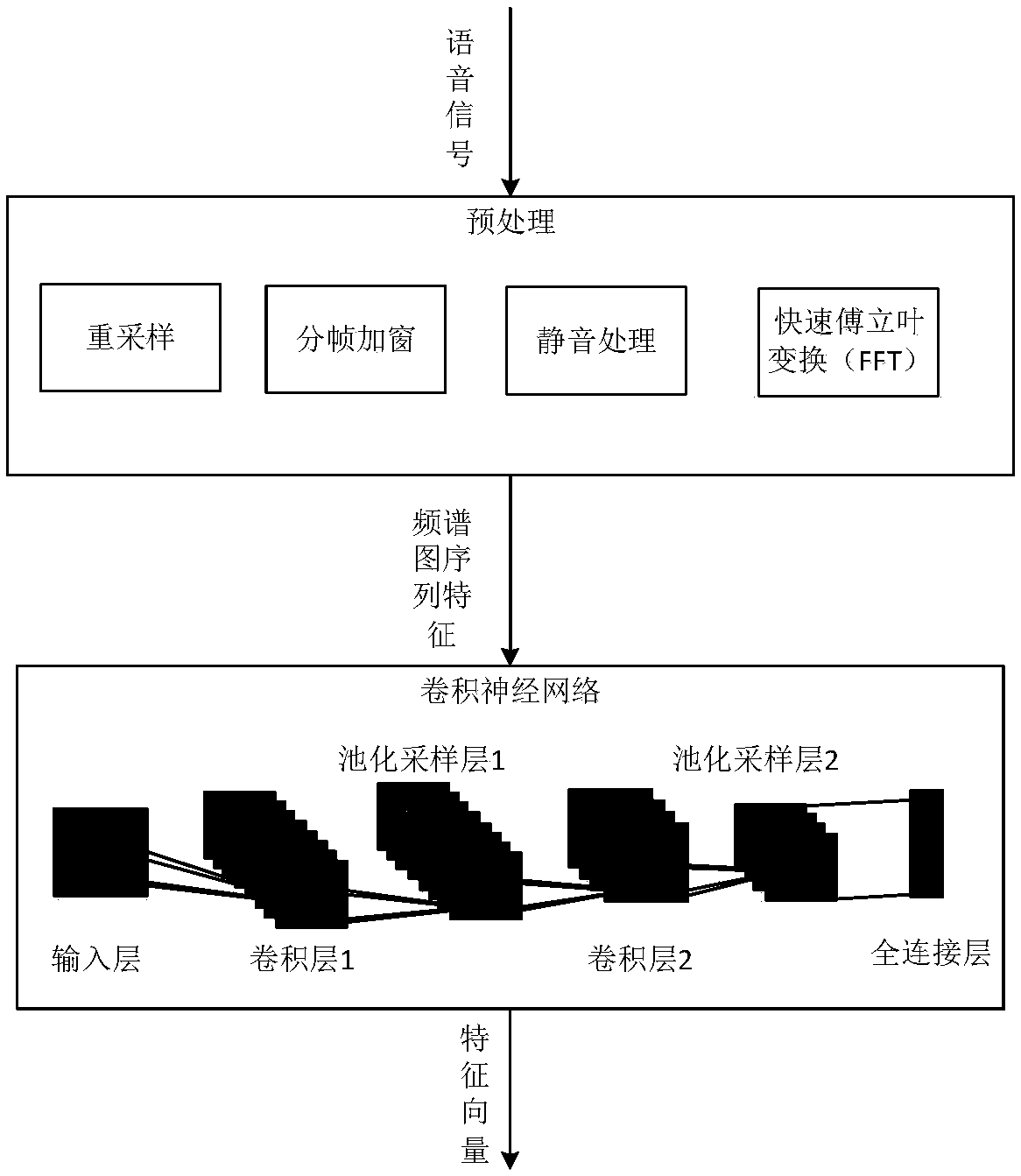

A natural interaction method of virtual learning environment based on speech emotion recognition

InactiveCN109146066AReduce false positive rateEnhanced Representational CapabilitiesSpeech analysisNeural architecturesFrequency spectrumNerve network

The invention relates to a natural interactive method of a virtual learning environment based on speech emotion recognition, belonging to the field of depth learning. The method comprises the following steps: 1, collecting speech signals of students and users through kinect, resampling, adding windows by frames, and mute processing to obtain short-time single frame signals; 2, carrying out fast Fourier transform on that signal to obtain the frequency domain data, obtaining the pow spectrum thereof, and adopting a Mel filter bank to obtain a Mel spectrum diagram; 3, inputting the features of the Mel spectrum map into a convolution neural network, performing convolution operation and pooling operation, and inputting the matrix vectors of the last desample layer to the whole connecting layerto form a vector output feature; 4, compressing and inputting the output characteristic into a bi-directional long-short time memory neural network; 5, inputting the output features into a support vector machine to classify and output a classification result; 6, feeding back the classification result to the virtual learning system for virtual learning environment interaction. The invention driveslearners to adjust the learning state and enhances the practicability of the virtual learning environment.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

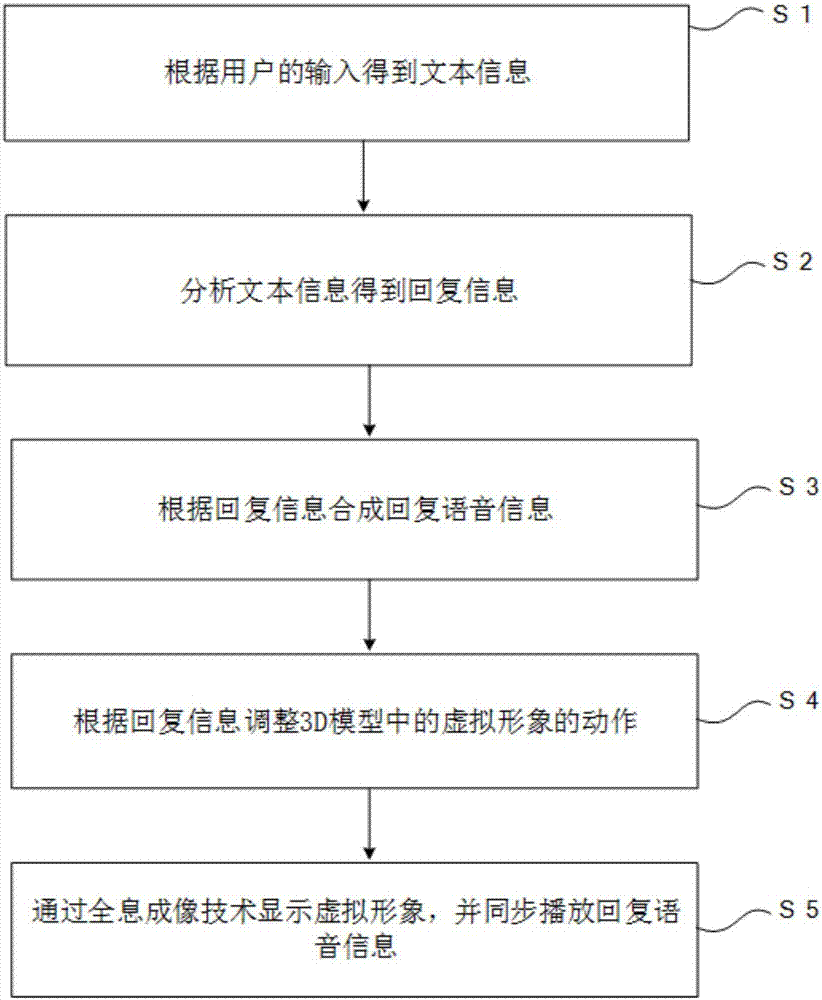

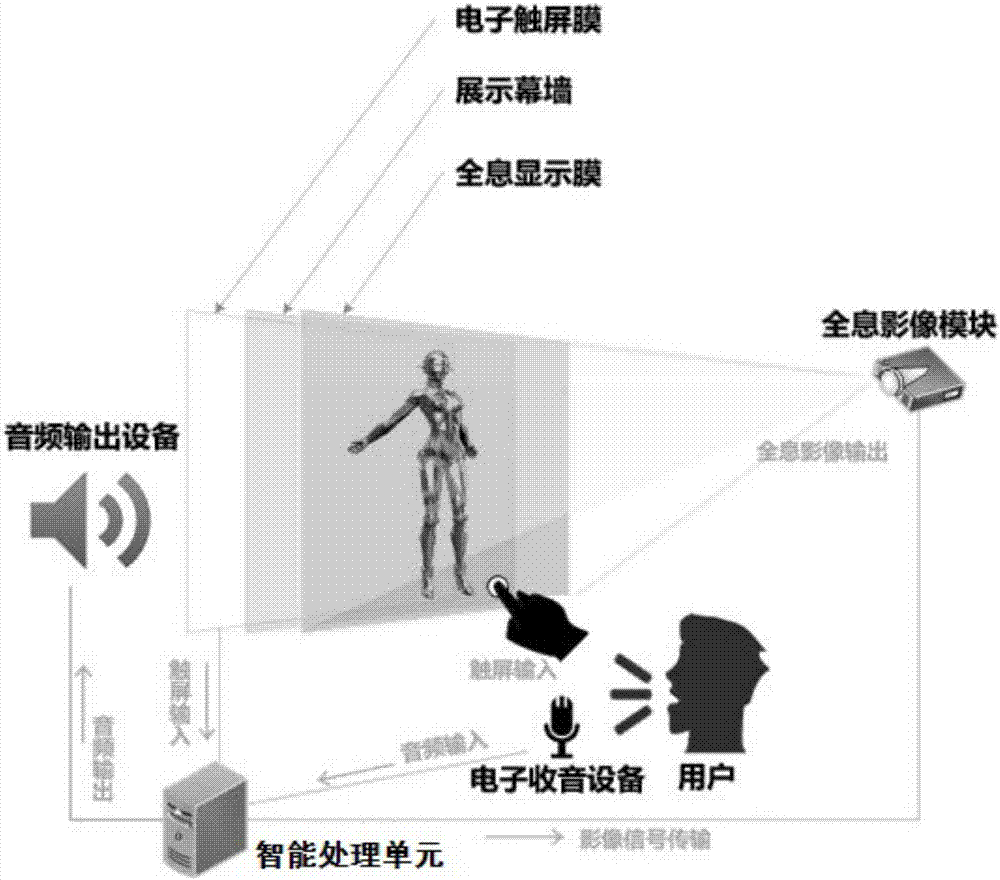

Artificial intelligence interaction method and artificial intelligence interaction system

InactiveCN107247750AInteractive natureRich interactive informationSpeech recognitionTransmissionInteraction systemsArtificial general intelligence

The invention relates to the technical field of artificial intelligence, in particular to an artificial intelligence interaction method and an artificial intelligence interaction system. The artificial intelligence interaction method includes acquiring text information according to input of users; analyzing the text information to obtain reply information; synthesizing reply voice information according to the reply information; adjusting actions of virtual images in 3D (three-dimensional) models according to the reply information; displaying the virtual images by holographic imaging technologies and synchronously playing the reply voice information. The artificial intelligence interaction method and the artificial intelligence interaction system in an embodiment of the invention have the advantages that feedback in multiple dimensions such as vision and hearing can be provided for users, accordingly, natural interaction can be carried out, and interaction information is abundant.

Owner:深圳千尘计算机技术有限公司

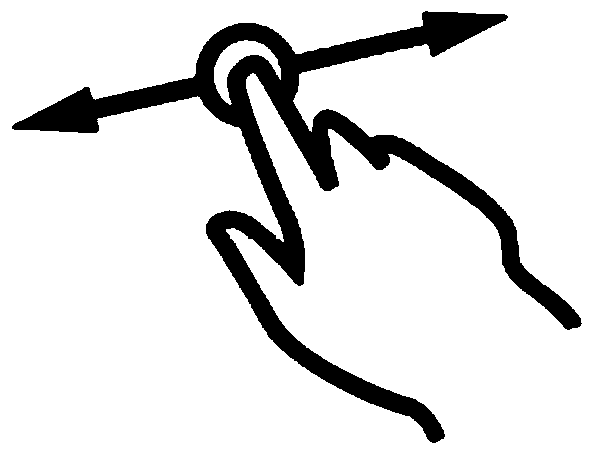

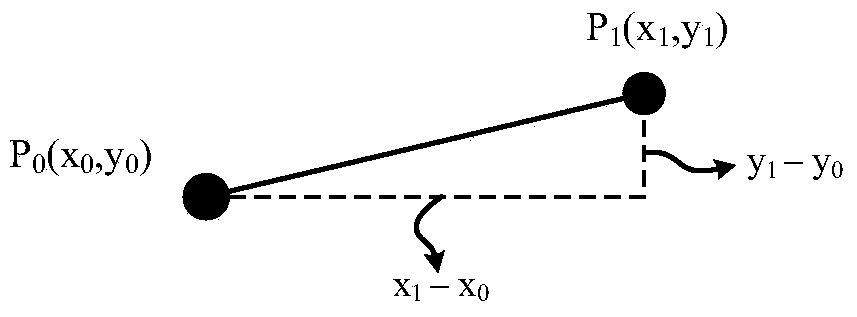

Three-dimensional model gesture touch browsing interaction method based on mobile terminal

InactiveCN103942053AImprove portabilityImplement natural interaction patternsSpecific program execution arrangementsInput/output processes for data processingGraphicsComputer terminal

The invention provides a three-dimensional model gesture touch browsing interaction method based on a mobile terminal. The method comprises fourteen steps. According to the three-dimensional model gesture touch browsing interaction method based on the mobile terminal, application three-dimensional figure programmatic interface OpenGL ES (OpenGL for Embedded Systems) is used for rendering a light three-dimensional model on the mobile terminal (lightweight and rendering methods are not discussed in the invention.), a novel human-computer interaction mode is provided through a gesture identification method, the defects of a traditional desktop side three-dimensional model browsing mode are effectively overcome, portability is improved, and the method is applicable to more application occasions; a natural interaction mode between fingers and the three-dimensional model is achieved, and is more flexible, more visual and more efficient compared with an interaction mode based on devices such as a mouse and a keyboard.

Owner:BEIHANG UNIV

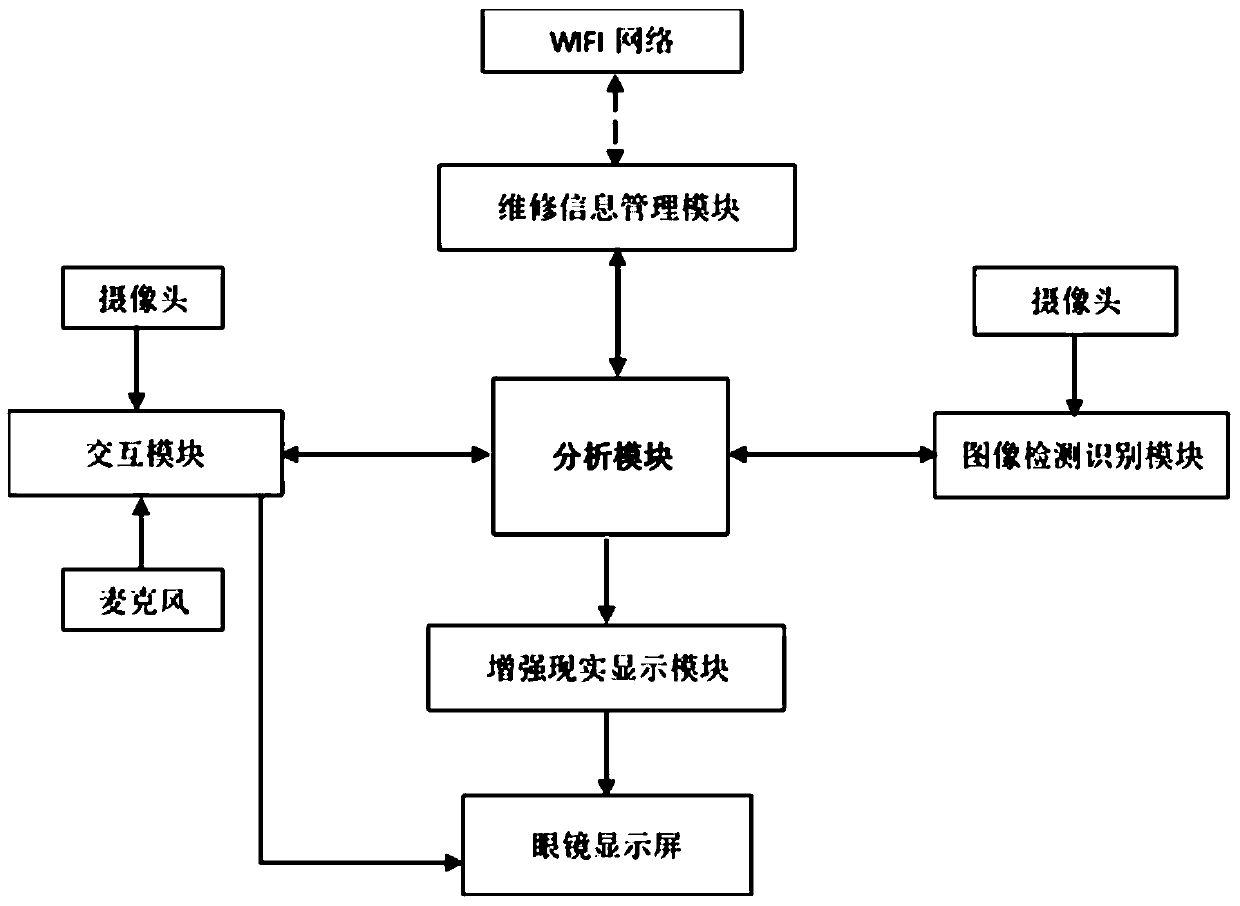

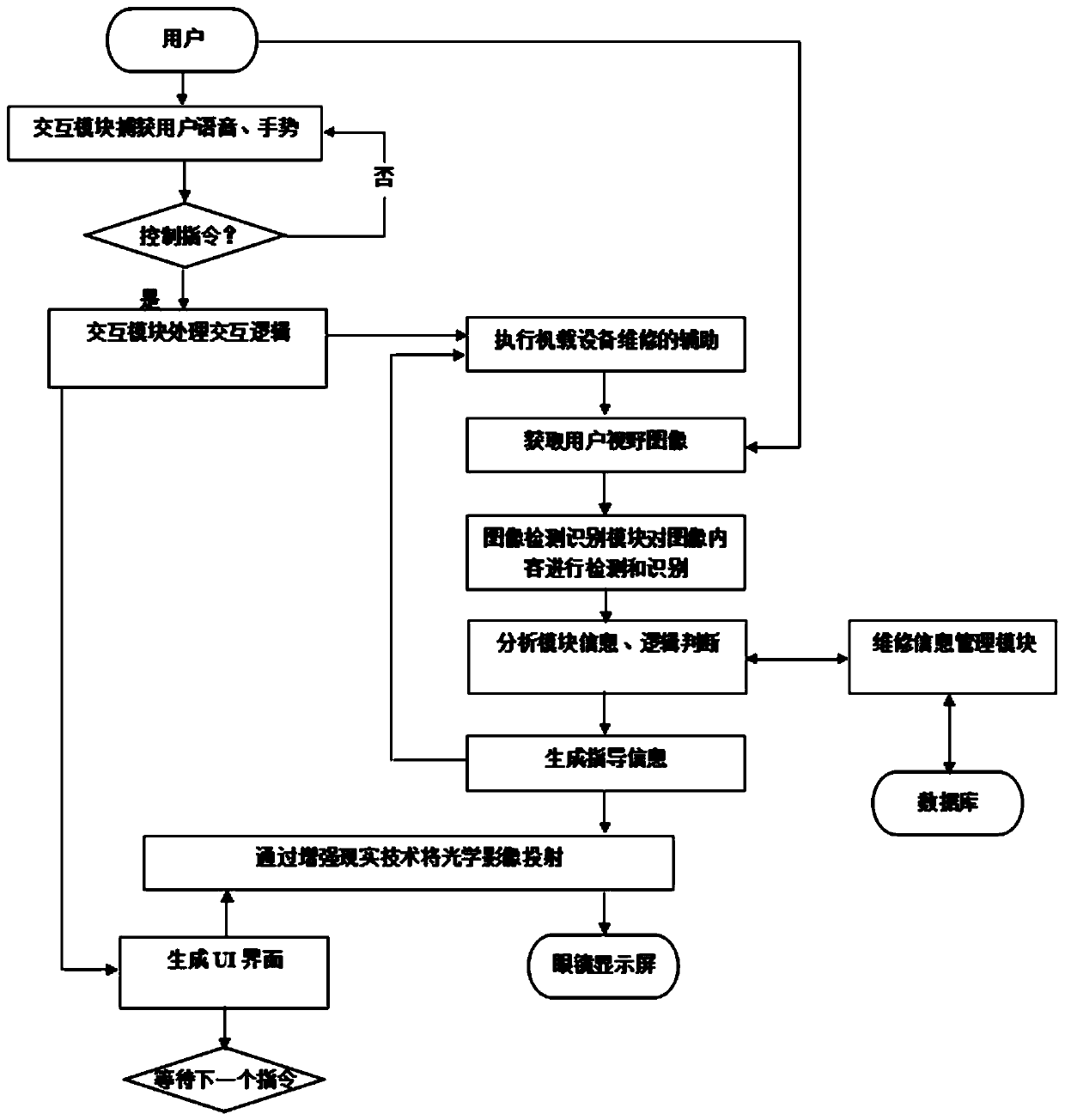

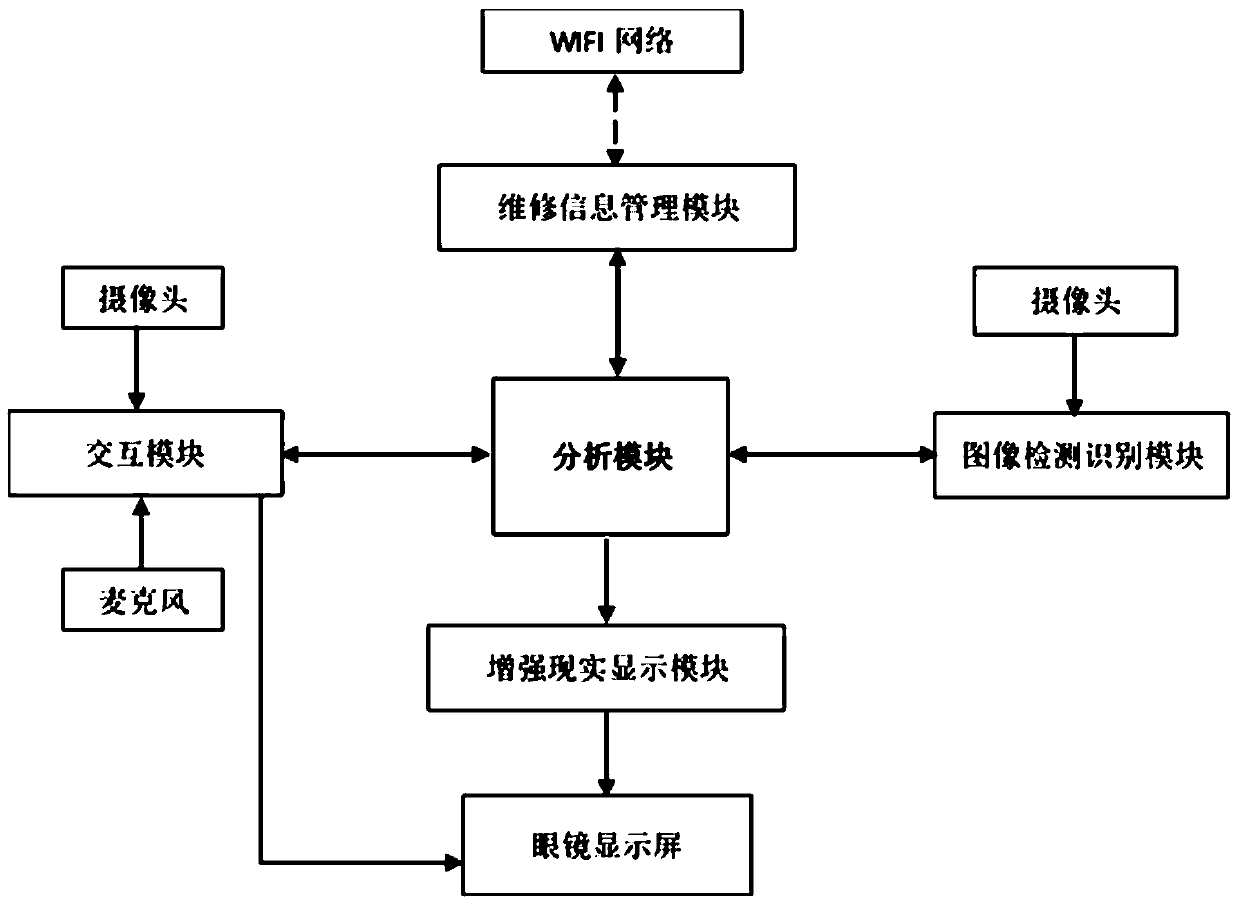

An intelligent maintenance auxiliary system and method for airborne equipment

InactiveCN109919331AReduce maintenance difficultyReduce human errorInput/output for user-computer interactionImage analysis3d imageDatabase server

The invention discloses an intelligent maintenance auxiliary system for airborne equipment. The intelligent maintenance auxiliary system comprises an interaction module, an image detection and recognition module, a maintenance information management module, an analysis module and an augmented reality display module, The interaction module is used for obtaining input of a user, projection of a UI and interaction logic processing; The image detection and recognition module is used for detecting characters and specific patterns on the picture and recognizing content information; The analysis module is used for carrying out logic judgment and generating guided characters or 3D images; The maintenance information management module is used for sending the search request to the cloud database server; And the augmented reality display module is used for positioning a target position in a real environment in the field of view of the user and superposing the guidance information to the positionthrough an augmented reality technology. An augmented reality technology and intelligent maintenance assistance are organically combined, maintenance personnel are helped and guided to maintain airborne equipment in a natural interaction mode, and therefore operability and learnability of maintenance operation are improved.

Owner:SOUTH CHINA UNIV OF TECH

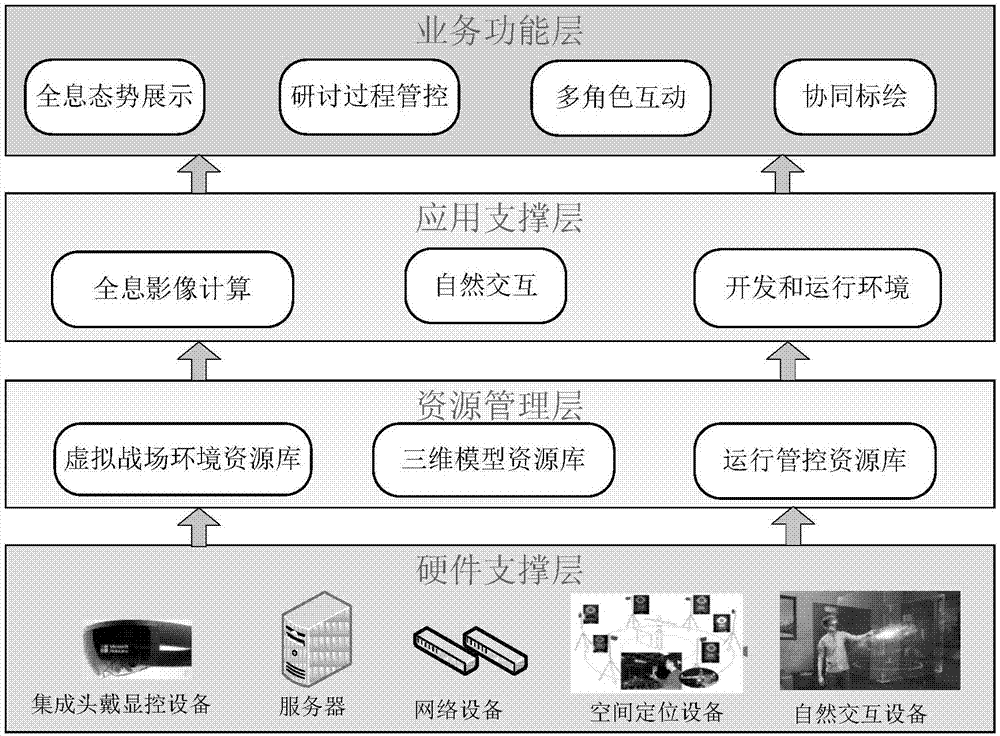

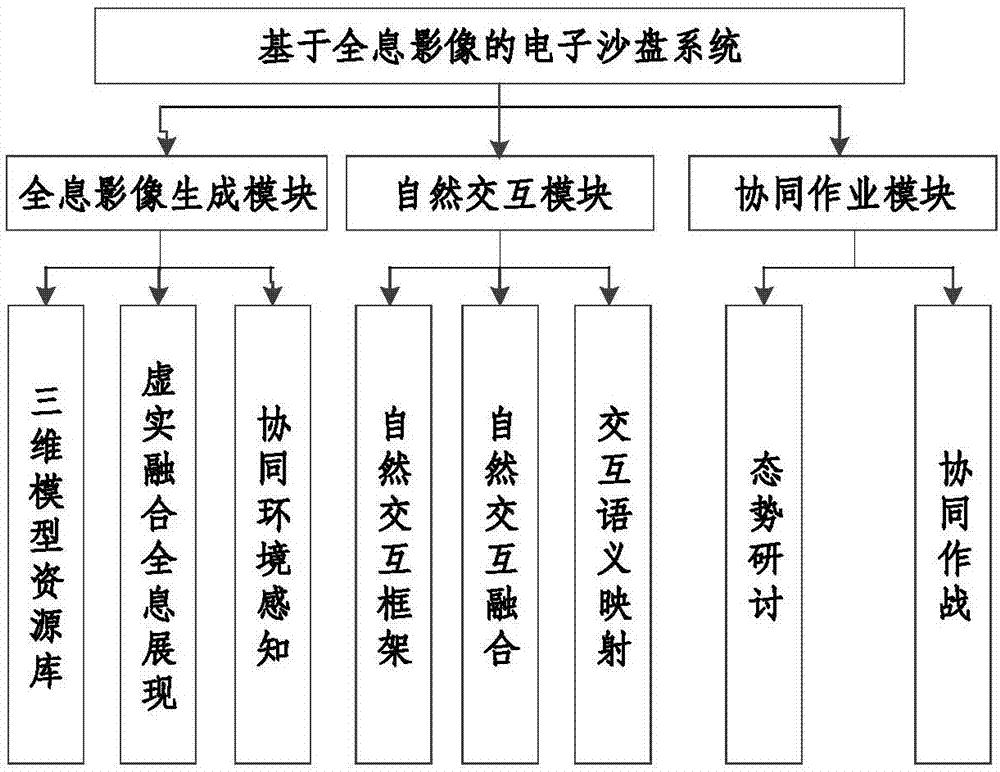

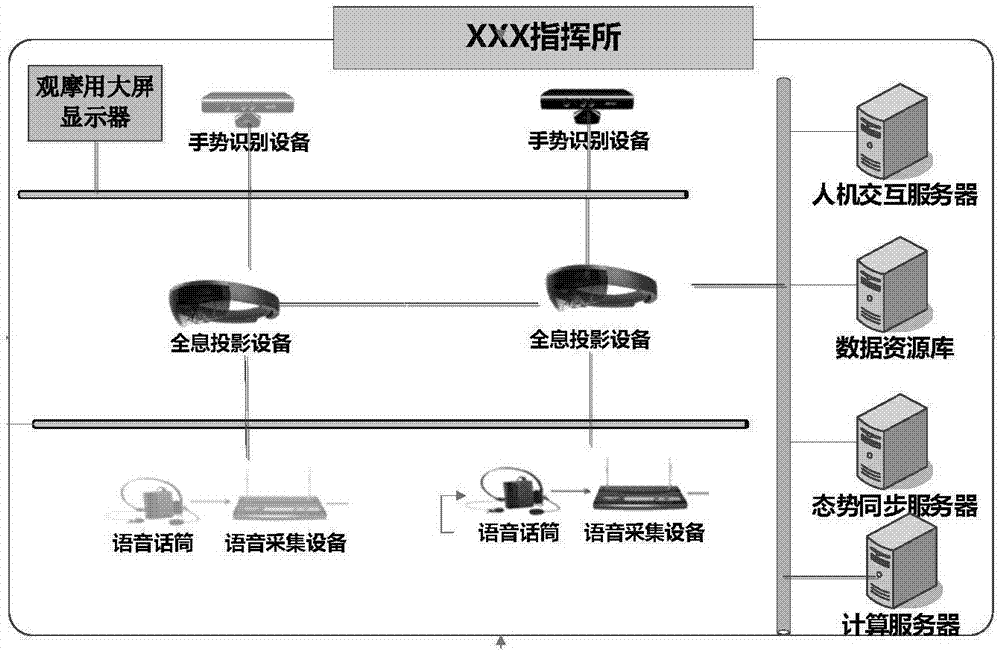

HoloLens-based command post cooperative work electronic sand table system

ActiveCN107479705ARealize holographic three-dimensional realistic displayRealize coordinated commandInput/output for user-computer interactionEducational modelsSystems managementData access

The invention discloses a HoloLens-based command post cooperative work electronic sand table system. The system comprises a hardware supporting layer, a resource management layer, an application supporting layer and a service function layer; the hardware supporting layer collects interactive information of a commander through hardware in the system and transmits the interactive information to the resource management layer; the resource management layer manages resource data in the system and provides system management, resource control and data access interfaces for the application supporting layer; the application supporting layer achieves holographic image calculation and provides application development and operation environment supporting for the service function layer; the service function layer achieves holographic situation display, multi-role fight person interaction, natural interaction, cooperative plotting, discussion process control and holographic image generation. The system supports whole scene holographic display and military science element holographic display of an electronic sand table, the commander performs situation plotting operation in the cooperative work environment of the holographic electronic sand table, and role interaction between commanders inside the command post is supported; environment and situation are collected for performing role interaction with fight persons.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com