Patents

Literature

912 results about "Interaction function" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Interaction() function computes a factor which represents the interaction of the given factors. The result of interaction is always unordered.

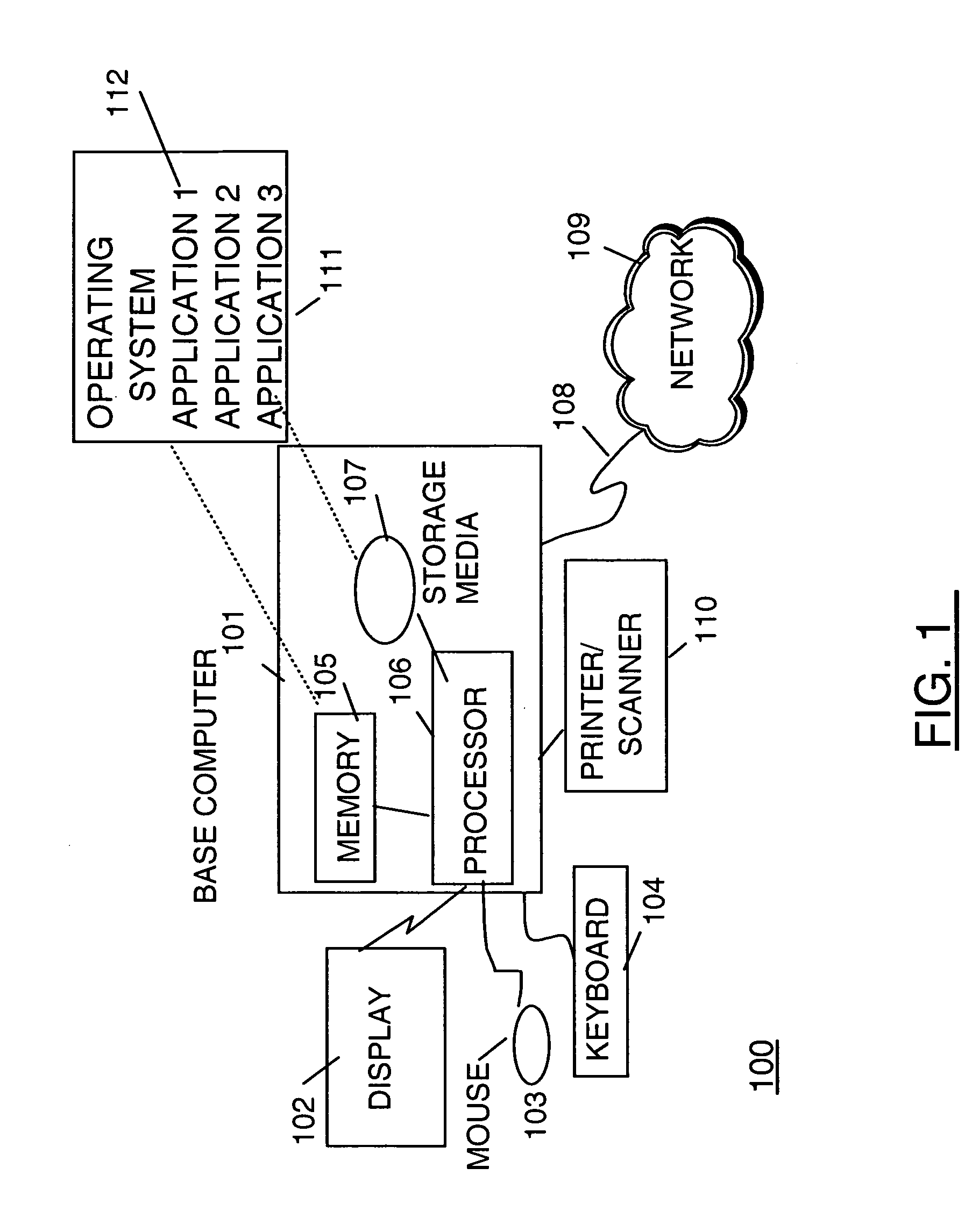

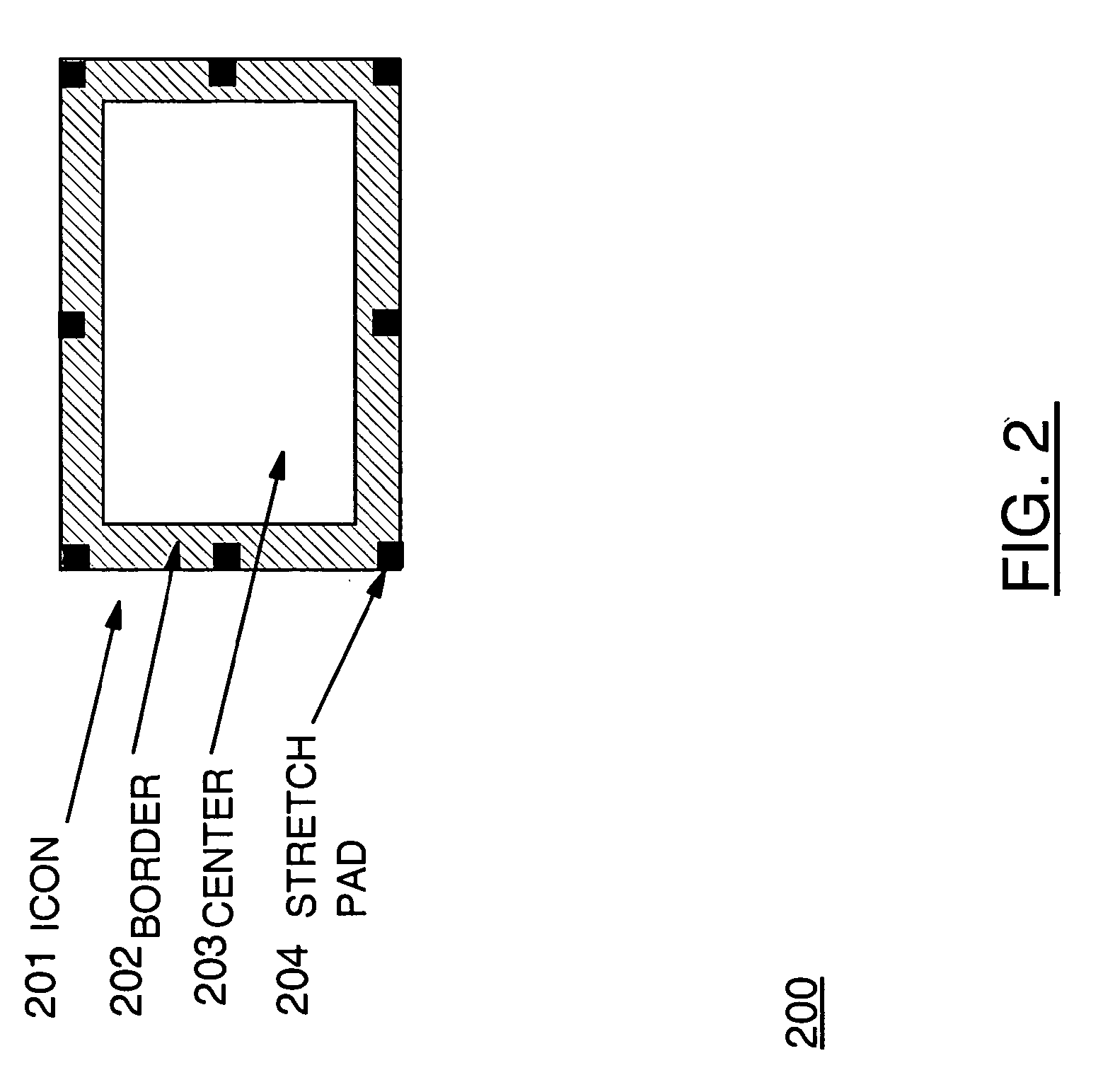

Front-end device independence for natural interaction platform

ActiveUS7302394B1Improve productivityShortening user learning curveSpeech recognitionSpecial data processing applicationsUser inputNatural interaction

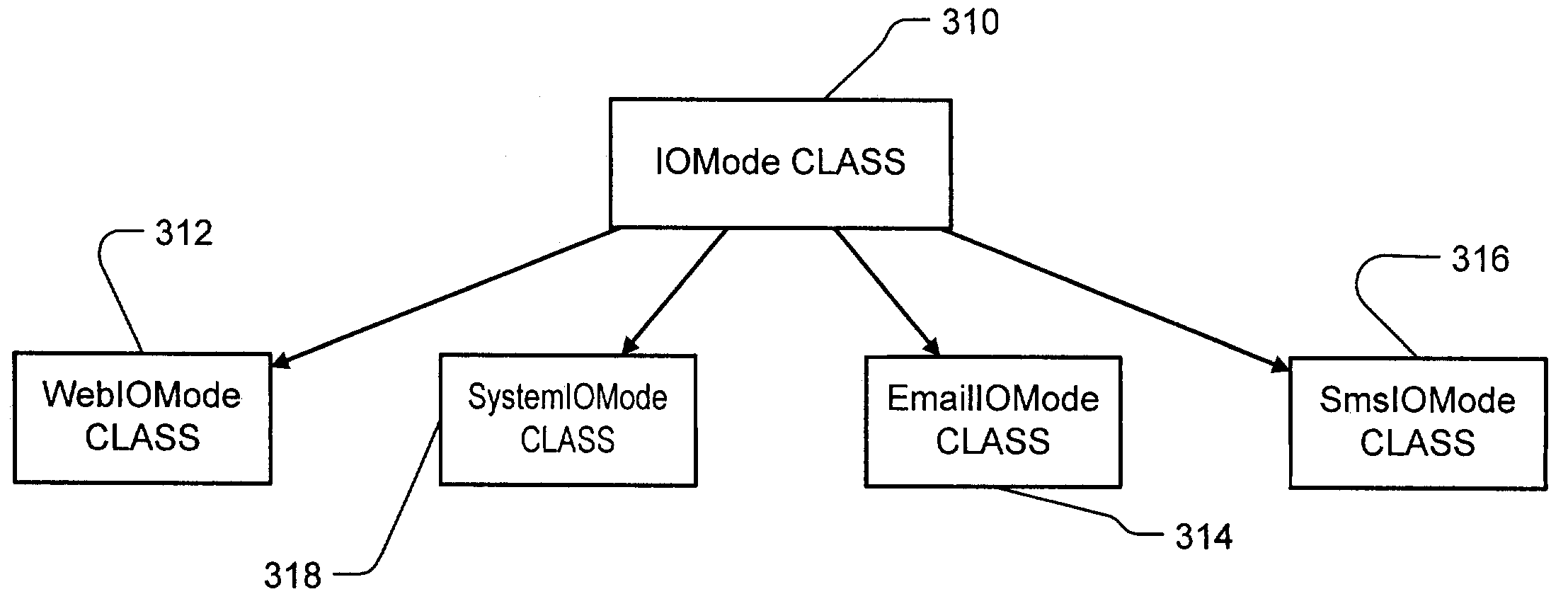

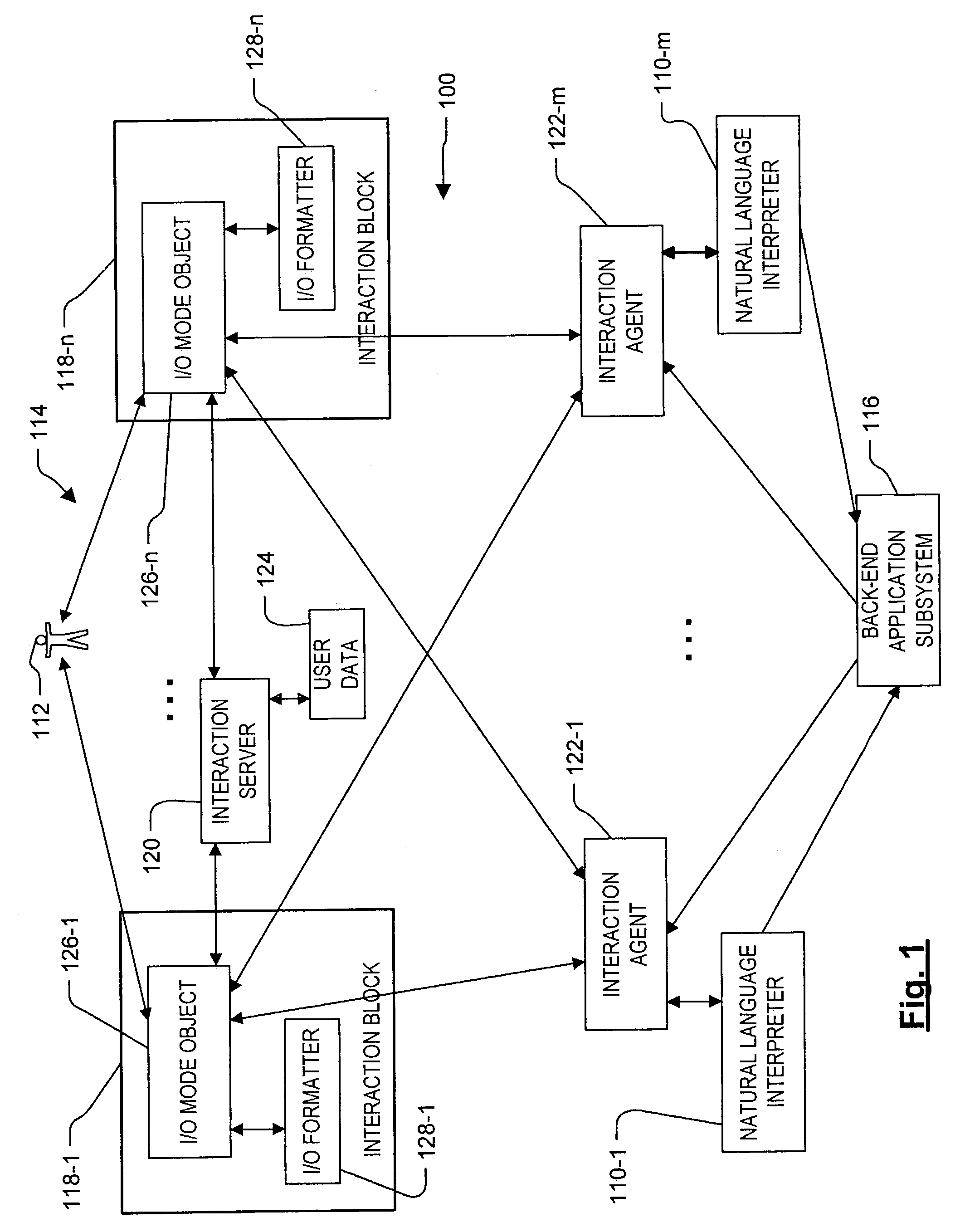

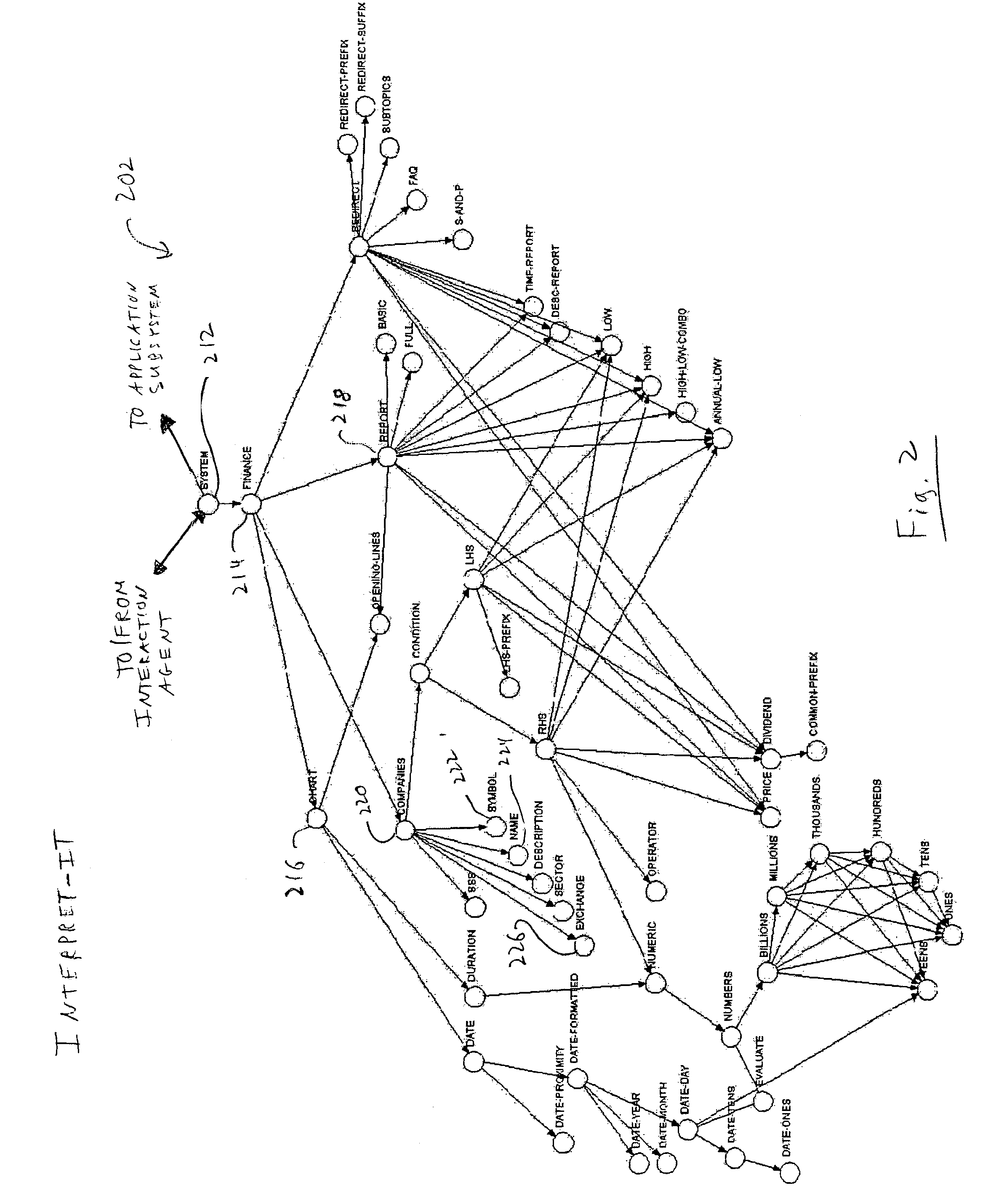

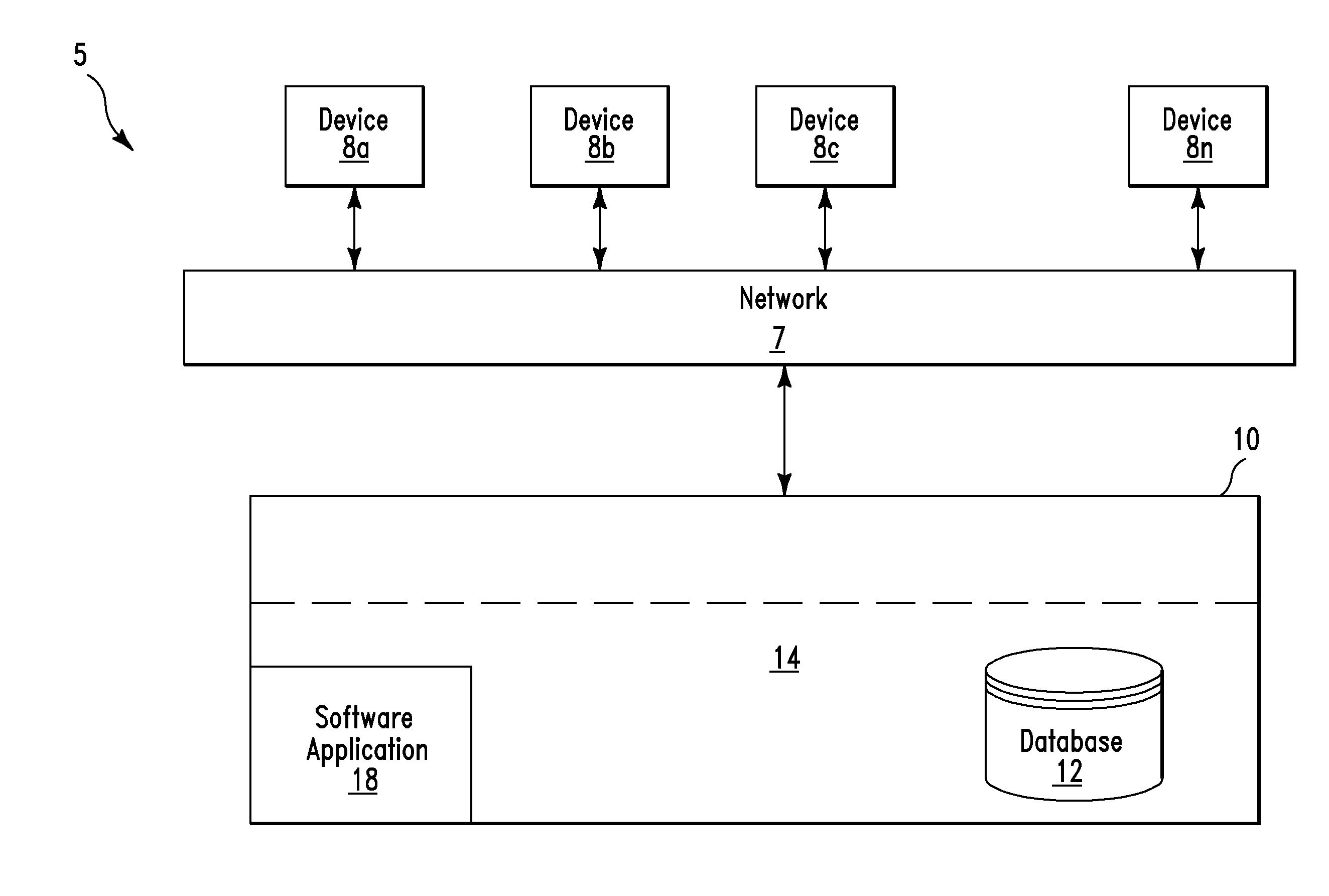

Roughly described, a natural language interpretation system that provides commands to a back-end application in response to user input is modified to separate out user interaction functions into a user interaction subsystem. The user interaction subsystem can include an interaction block that is specific to each particular I / O agency, and which converts user input received from that agency into an agency-independent form for providing to the natural language interpretation system. The user interaction subsystem also can take results from the back-end application and clarification requests and other dialoguing from the natural language interpretation system, both in device-independent form, and convert them for forwarding to the particular I / O agency.

Owner:IANYWHERE SOLUTIONS

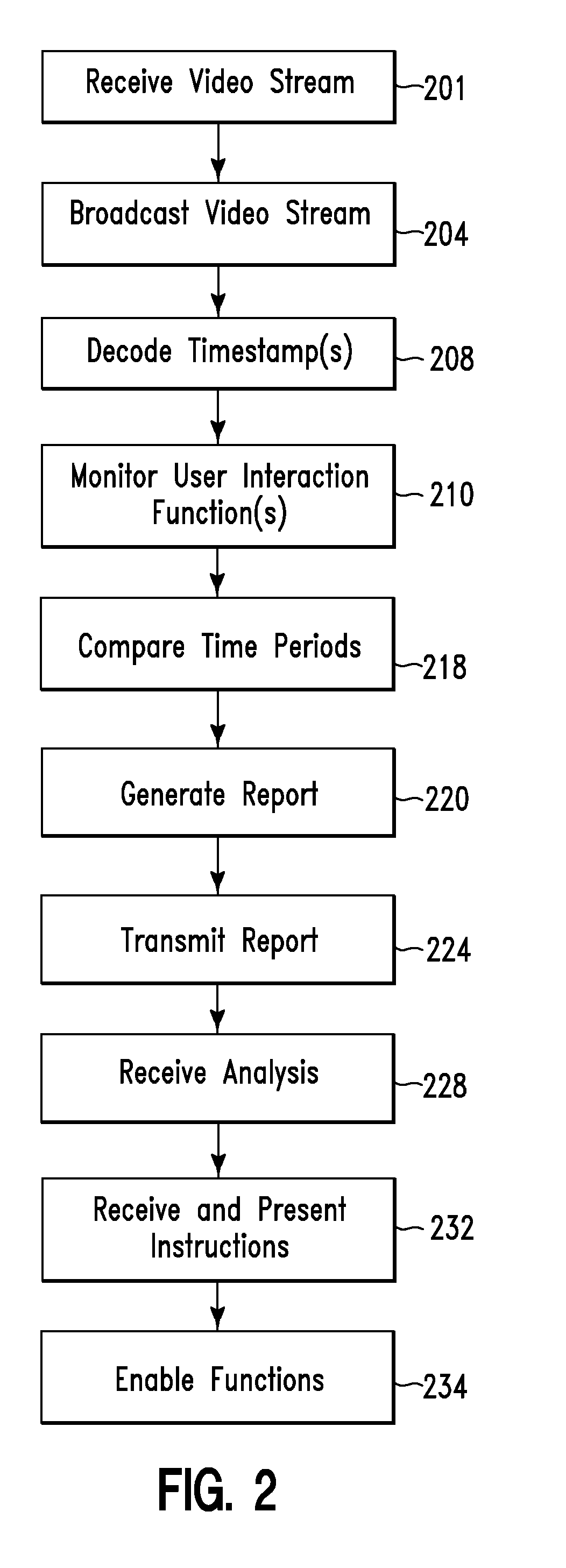

Video stream measurement method and system

InactiveUS20110131596A1Broadcast transmission systemsAnalogue secracy/subscription systemsSystem monitorComputing systems

A video stream measurement method and system. The method includes receiving by a computing system, a video stream comprising plurality of timestamps located at specified intervals of the video stream. The computing system broadcasts the video stream for a user and decodes a first time stamp broadcasted during a first time period. The computing system monitors a user interaction function performed by the user and associated with the video stream. The computing system generates and transmits a report comprising a description associated with the user interaction function. The computing system receives an analysis associated with the report.

Owner:IBM CORP

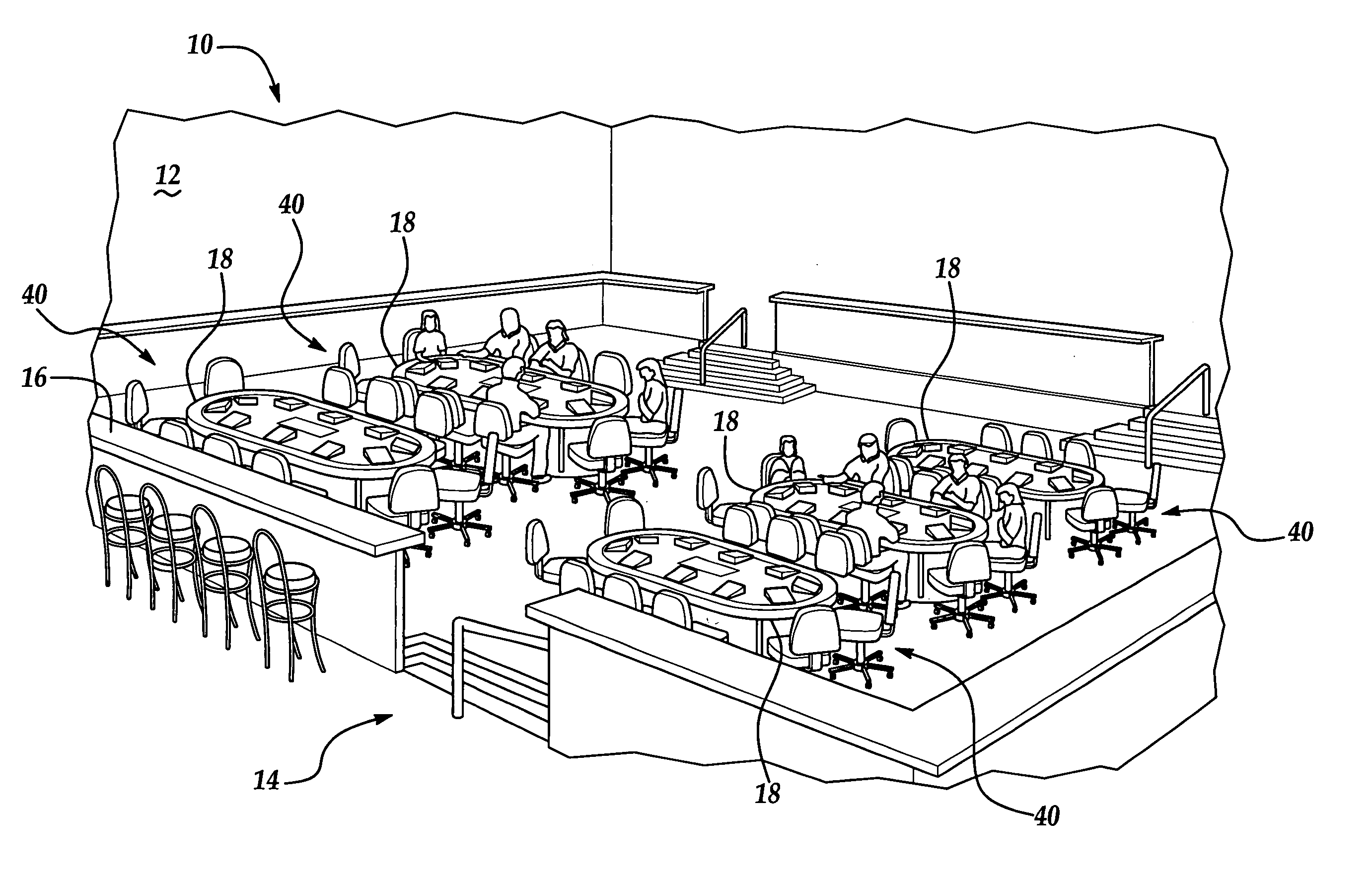

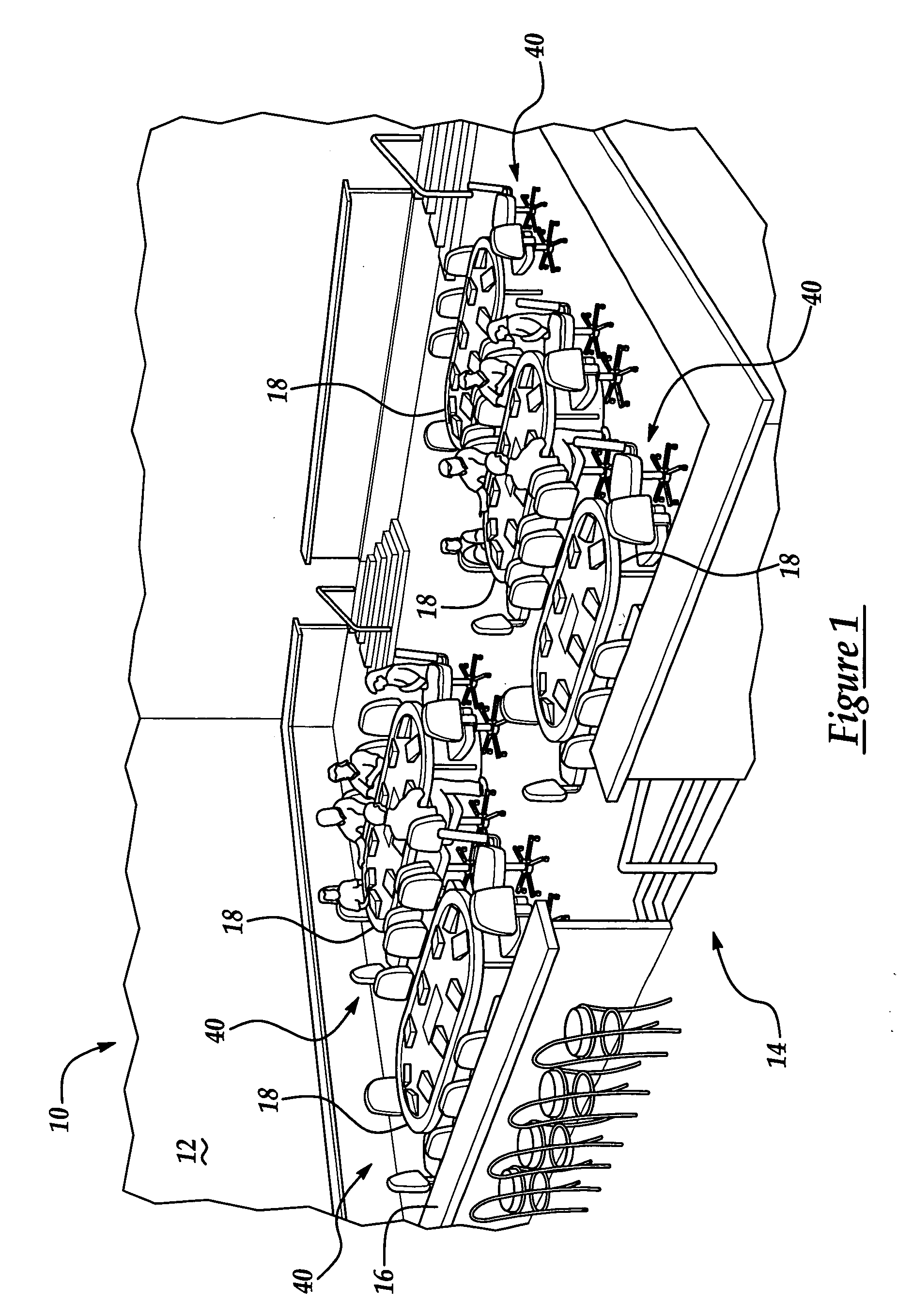

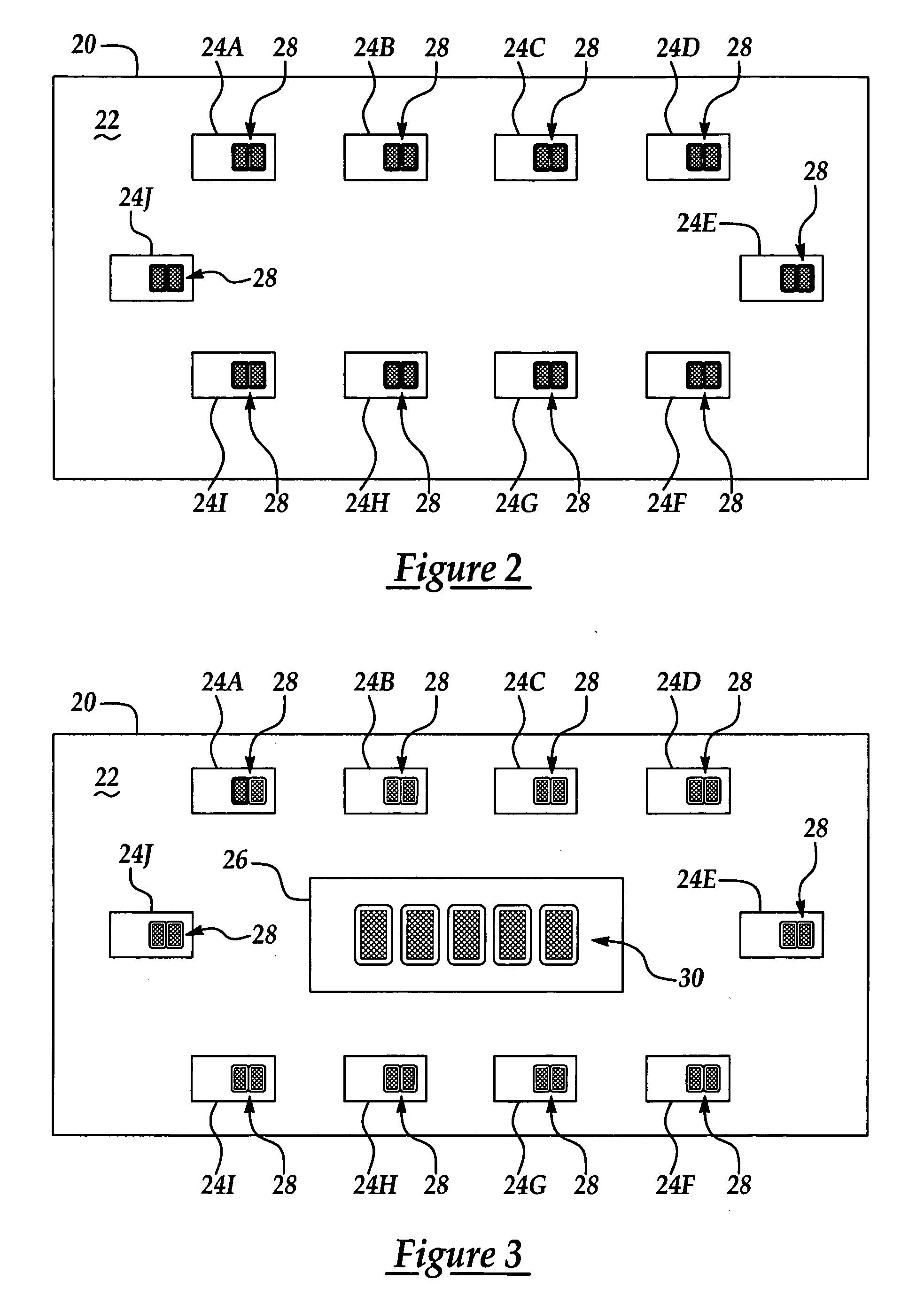

Electronic player interaction area with player customer interaction features

InactiveUS20060058085A1Apparatus for meter-controlled dispensingResourcesEngineeringInteraction function

A system and method for providing an electronic poker game o includes a table having a table top with a central display area and a plurality of electronic player interaction areas (EPIAs) located around the table top. Each EPIA is designed to provide a player interface for interaction with one of the players. A dealer button and a button indicating a turn of the player in a next round are defined in each EPIA. A game computer is coupled to the plurality of EPIAs for administering the electronic poker game and for indicating a rake amount on the EPIA and the central display area, determining a winner from among the players; awarding a pot to the winner, and the like.

Owner:POKERTEK

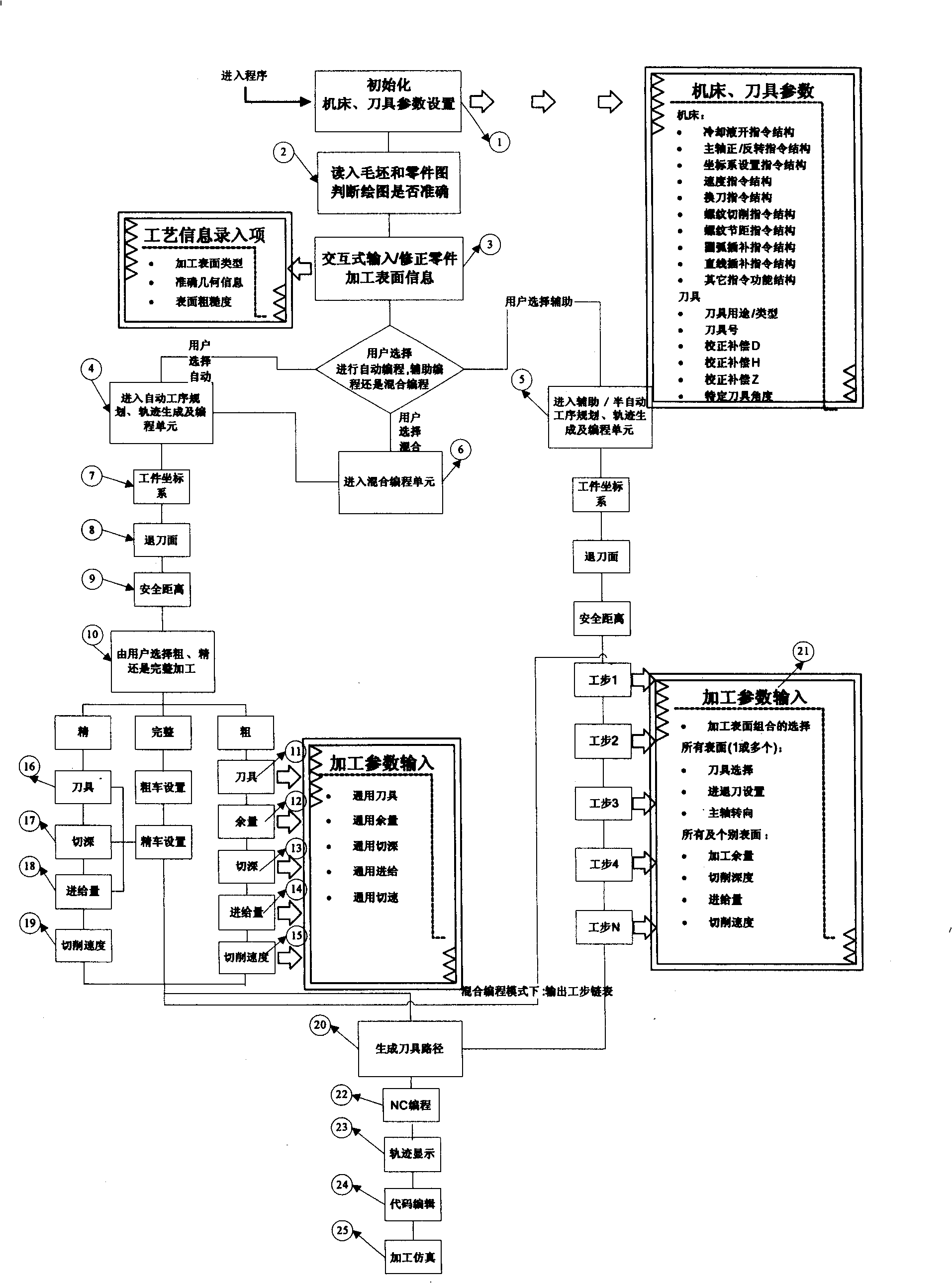

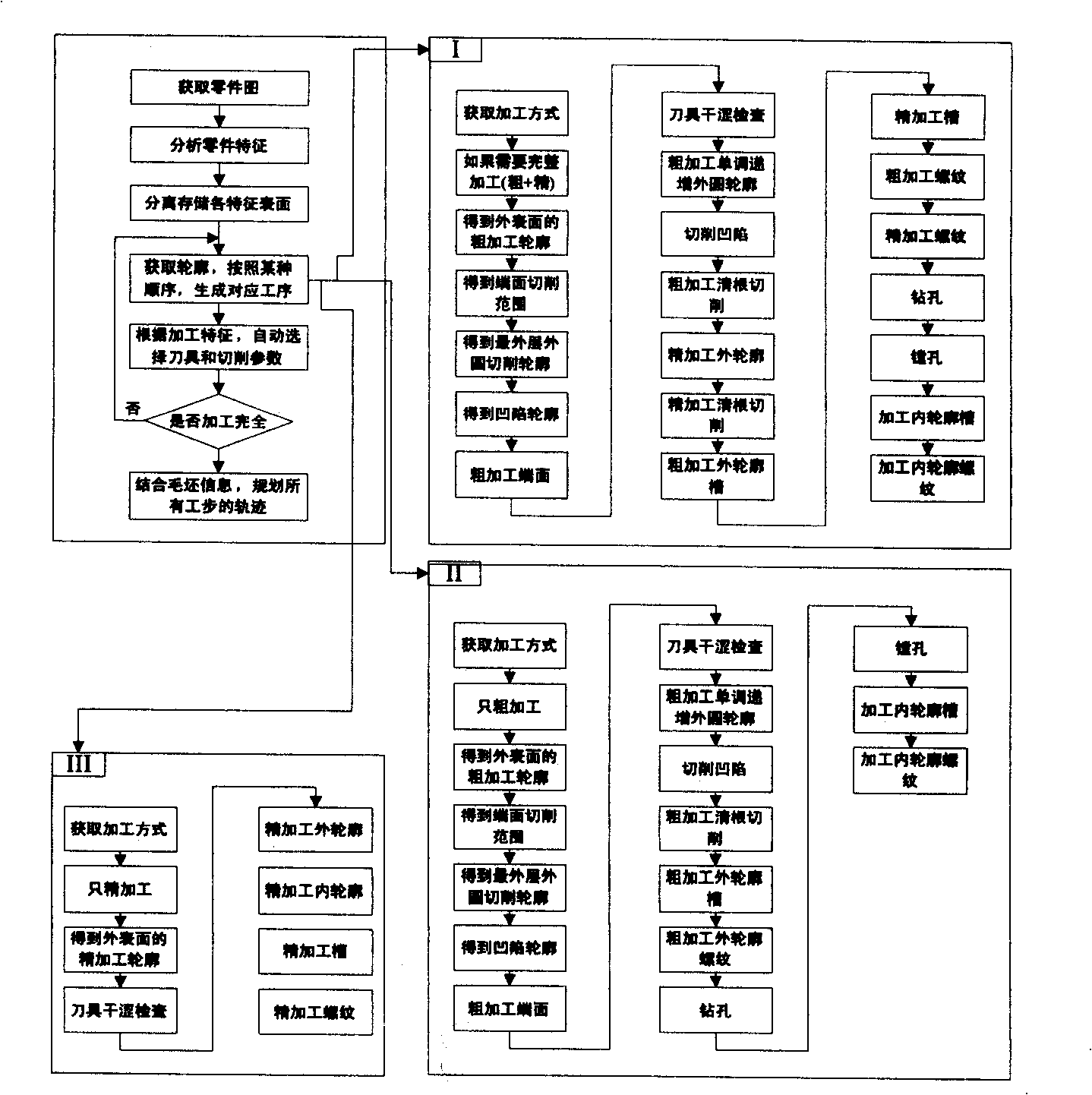

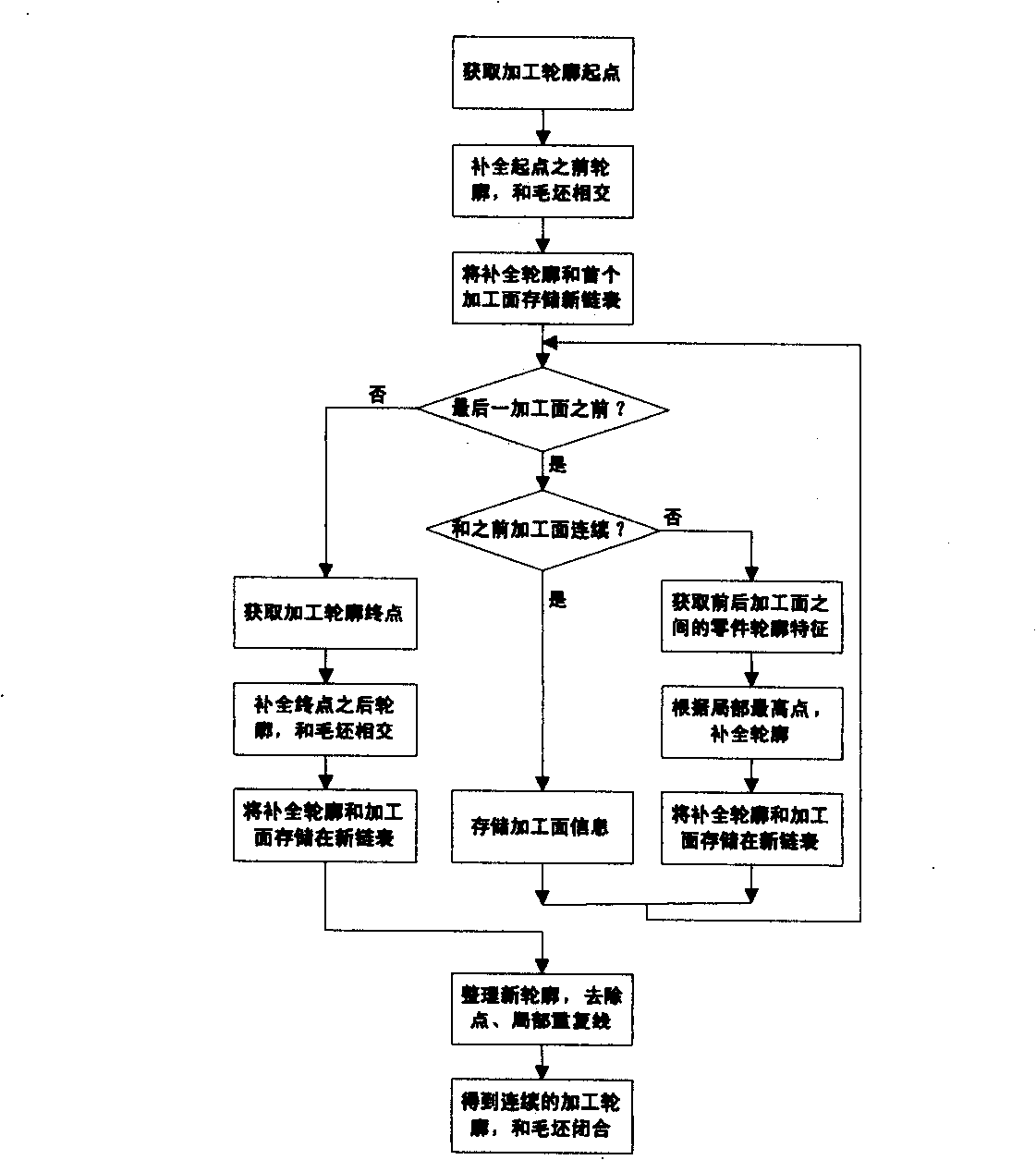

Imaging interactive numerical control turning automatic programming method and system

The invention provides an automatic graphical interaction-typed numerical control (NC) turning programming method and system used for improving programming efficiency and NC code quality, prompting quick product process realization (RPPR) and integrated product process development (IPPD). The technical proposal of the invention is that the automatic graphical interaction-typed numerical control turning programming method is characterized in that the method comprises the steps of: reading in blank and part drawing, removing redundant information, and judging whether the graphics are exact; leading the graphics to have real-time interaction function and be capable of correcting the information of the part processing surface, including processing surface type, exact geometrical information and surface roughness; determining whether to execute the corresponding system function modules by carrying out automatic programming or auxiliary programming or mixed programming according to the selection of the user. The automatic graphical interaction-typed numerical control turning control system is characterized in that the automatic graphical interaction-typed NC turning control system comprises a CAD data reading-in module which is respectively connected with an automatic programming module, an auxiliary programming module and a mixed programming module which are respectively connected with an automatic track layout module which is connected with an NC code generation module.

Owner:TSINGHUA UNIV

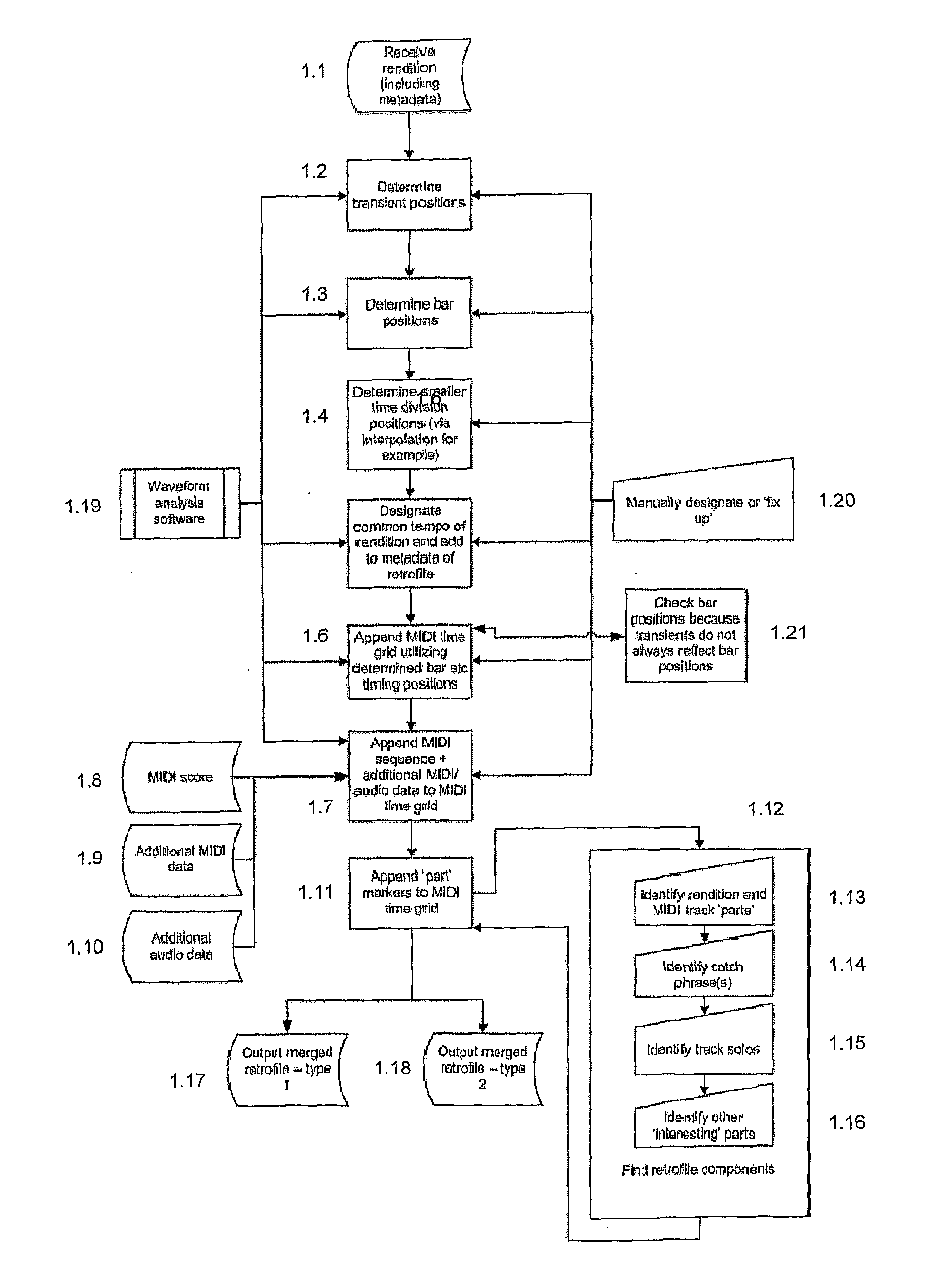

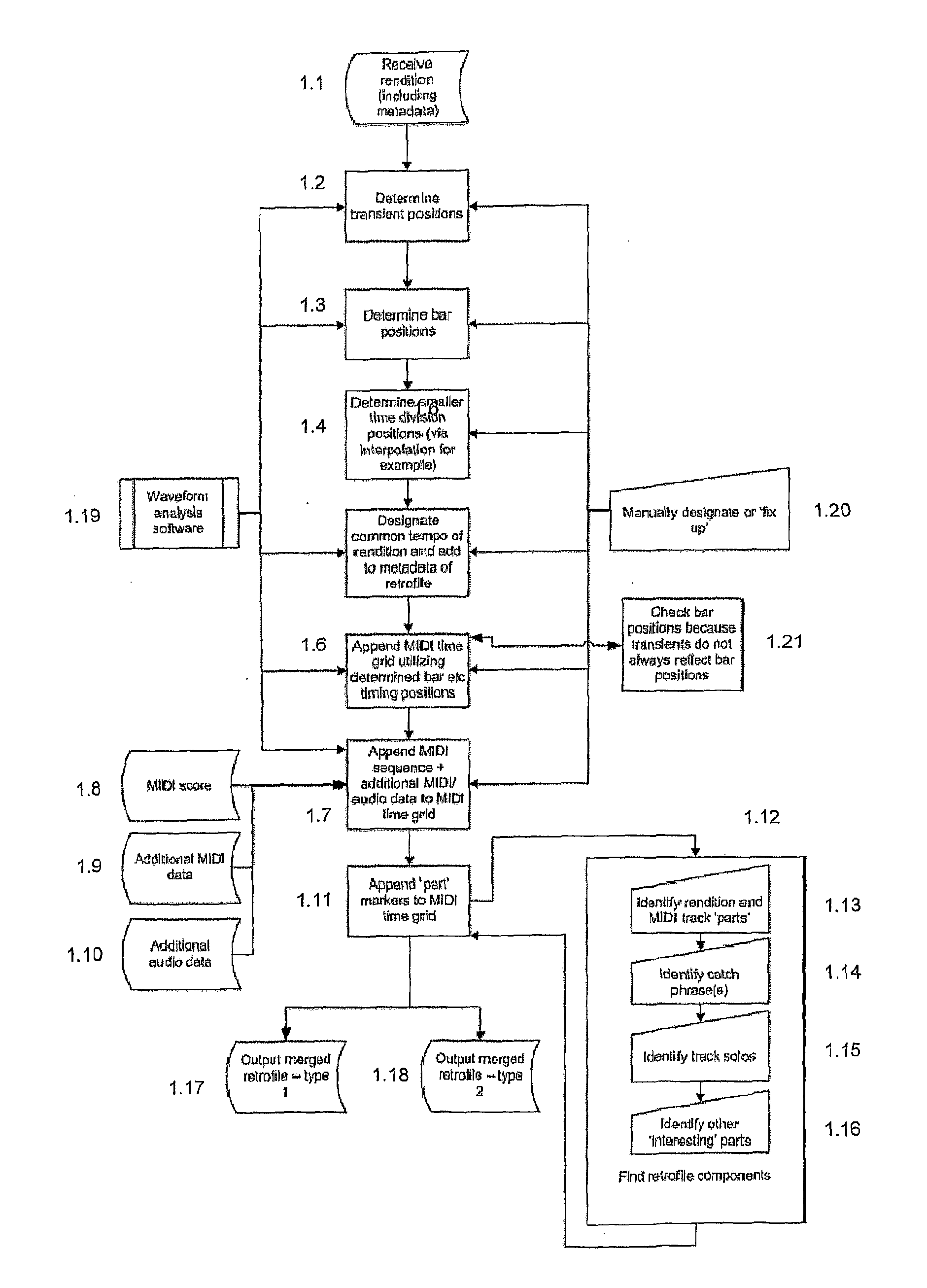

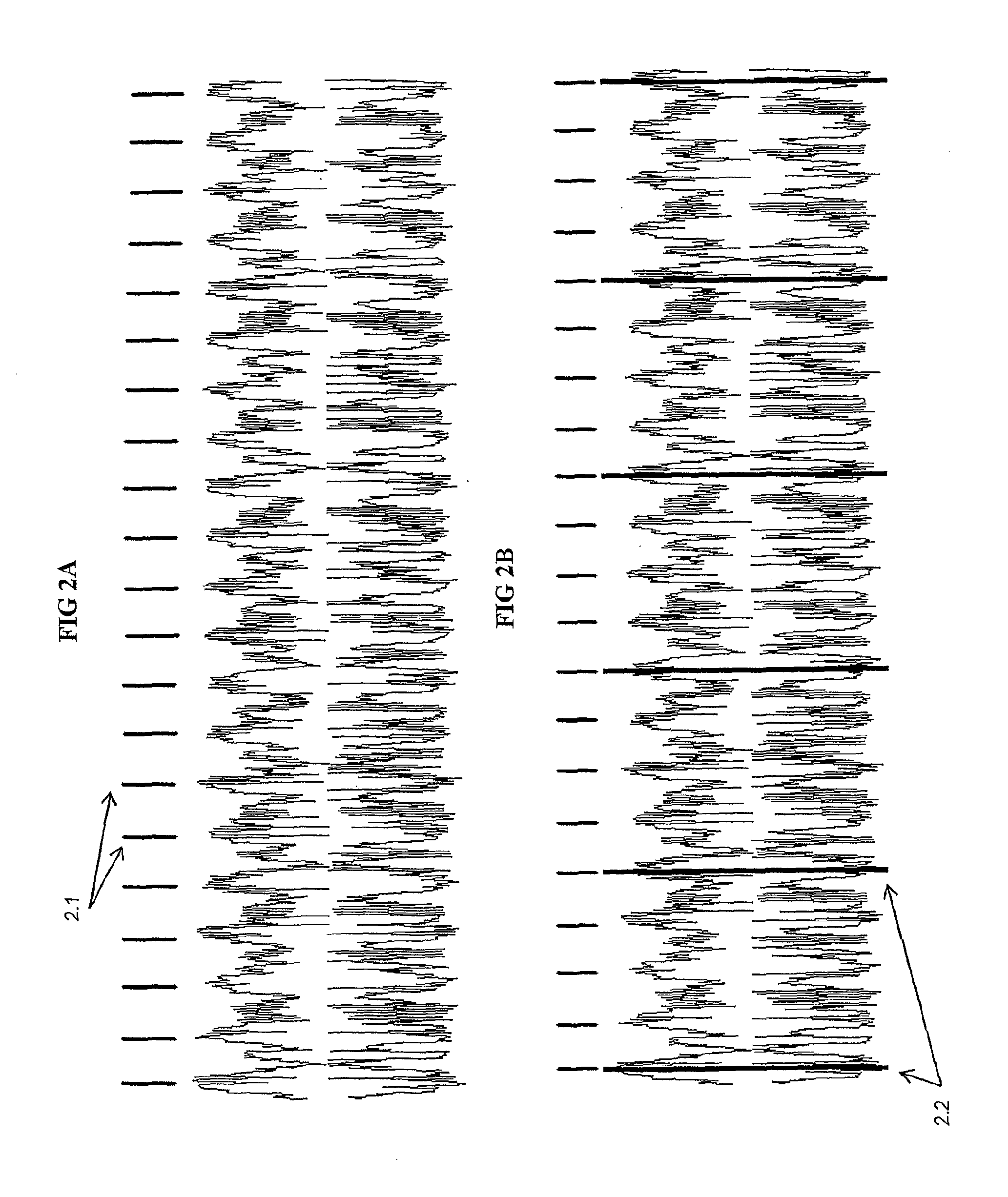

File creation process, file format and file playback apparatus enabling advanced audio interaction and collaboration capabilities

A file creation process, file format and playback device are provided that enables an interactive and if desired collaborative music playback experience for the user(s) by combining or retro-fitting an ‘original song’ with a MIDI time grid, the MIDI score of the song and other data in a synchronized fashion. The invention enables a music interaction platform that requires a small amount of time to learn and very little skill, knowledge or talent to use and is designed to bring ‘mixing music’ to the average person. The premiere capability that the file format provides, is the capability for any two bars, multiples of bars or pre-designated ‘parts’ from any two songs to be mixed in both tempo and bar by bar synchronization in a non-linear drag and drop fashion (and therefore almost instantaneously). The file format provides many further interaction capabilities however such a remixing MIDI tracks from the original song back in with the song. In the preferable embodiment the playback means is a software application on a handheld portable device which utilizes a multitouch-screen user interface, such as an iPhone. A single user can musically interact with the device and associated ‘original songs’ etc or interactively collaborate with other users in like fashion, either whilst in the same room or over the Internet. The advanced inter-active functionality the file format enables in combination with the unique features of the iPhone (as a playback device), such as the multitouch-screen and accelerometer, enable furthered intuitive and enhanced music interaction capabilities. The object of the invention is to make music interaction (mixing for example) a regular activity for the average person.

Owner:ODWYER SEAN PATRICK

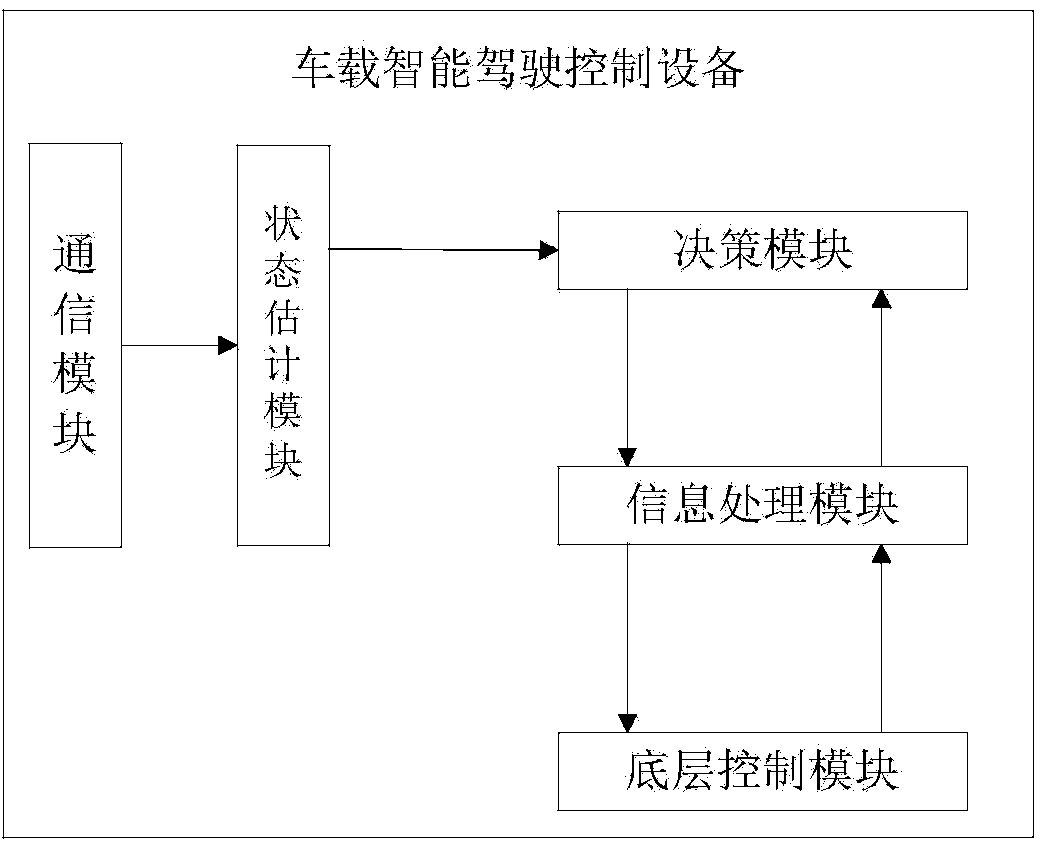

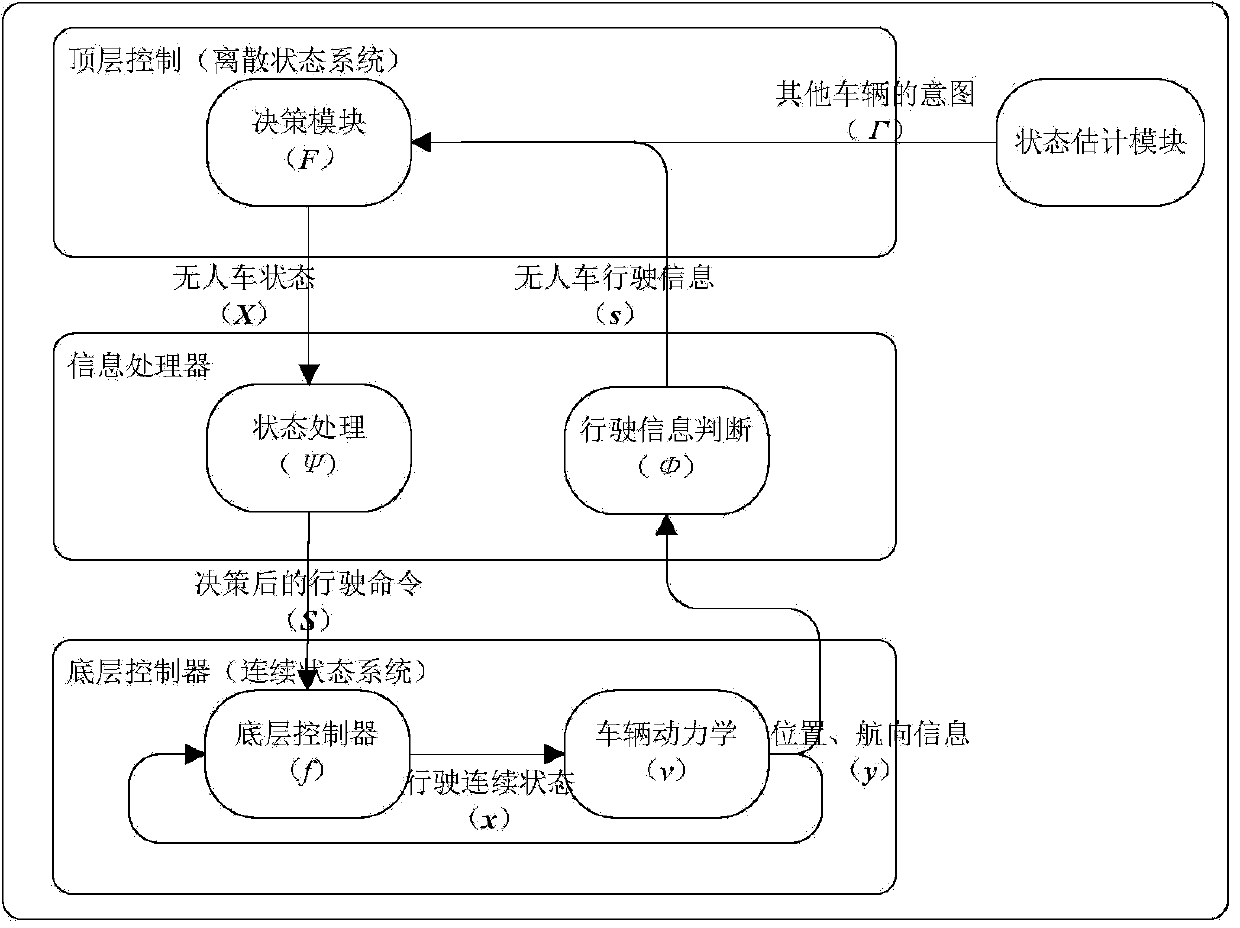

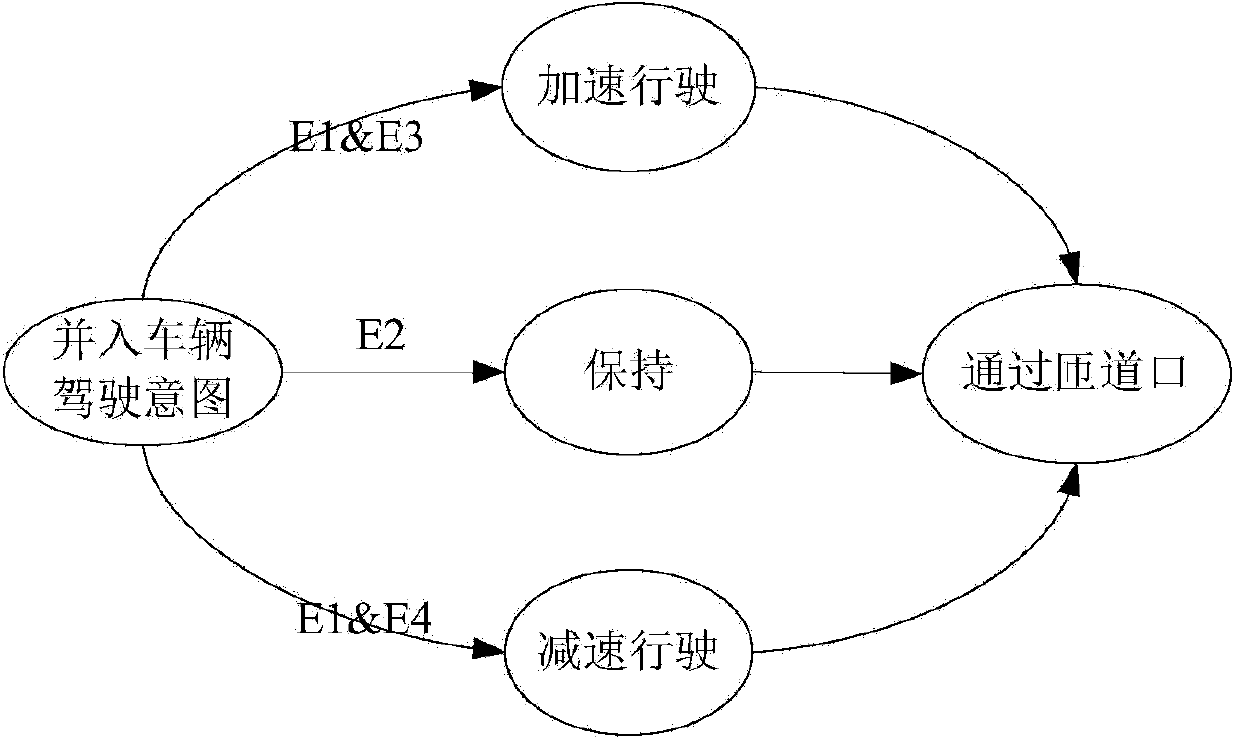

Pilotless automobile control system with social behavior interaction function

The invention relates to a pilotless automobile control system and method with the social behavior interaction function. The pilotless automobile control system analyzes the driving intention of other vehicles, and has control over the traveling state of the automobile with the pilotless automobile control system according to the driving intention of other vehicles. According to the pilotless automobile control system and method with the social behavior interaction function, social behavior interaction can be carried out according to the driving intention of other vehicles, and therefore traveling safety of the pilotless automobile is improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

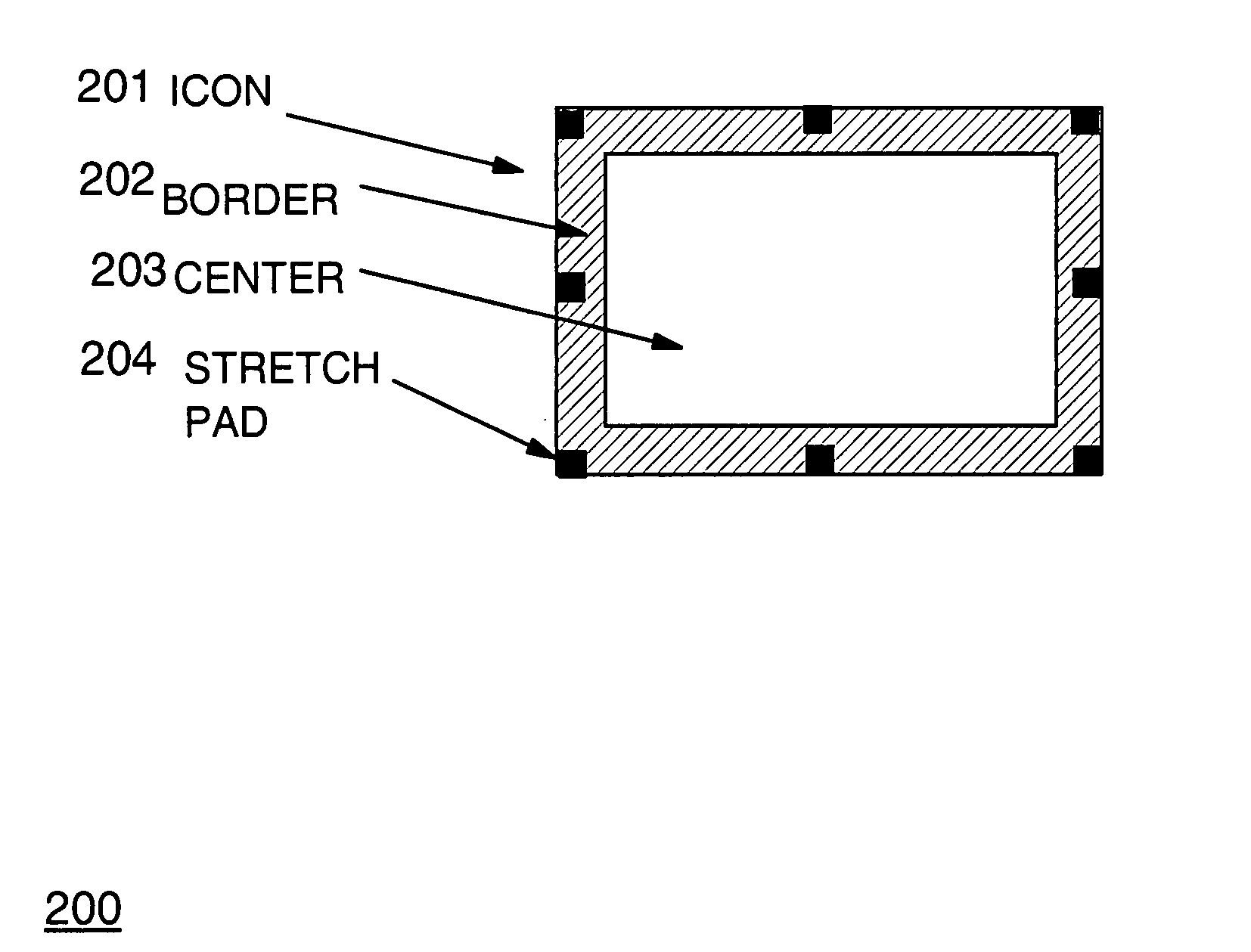

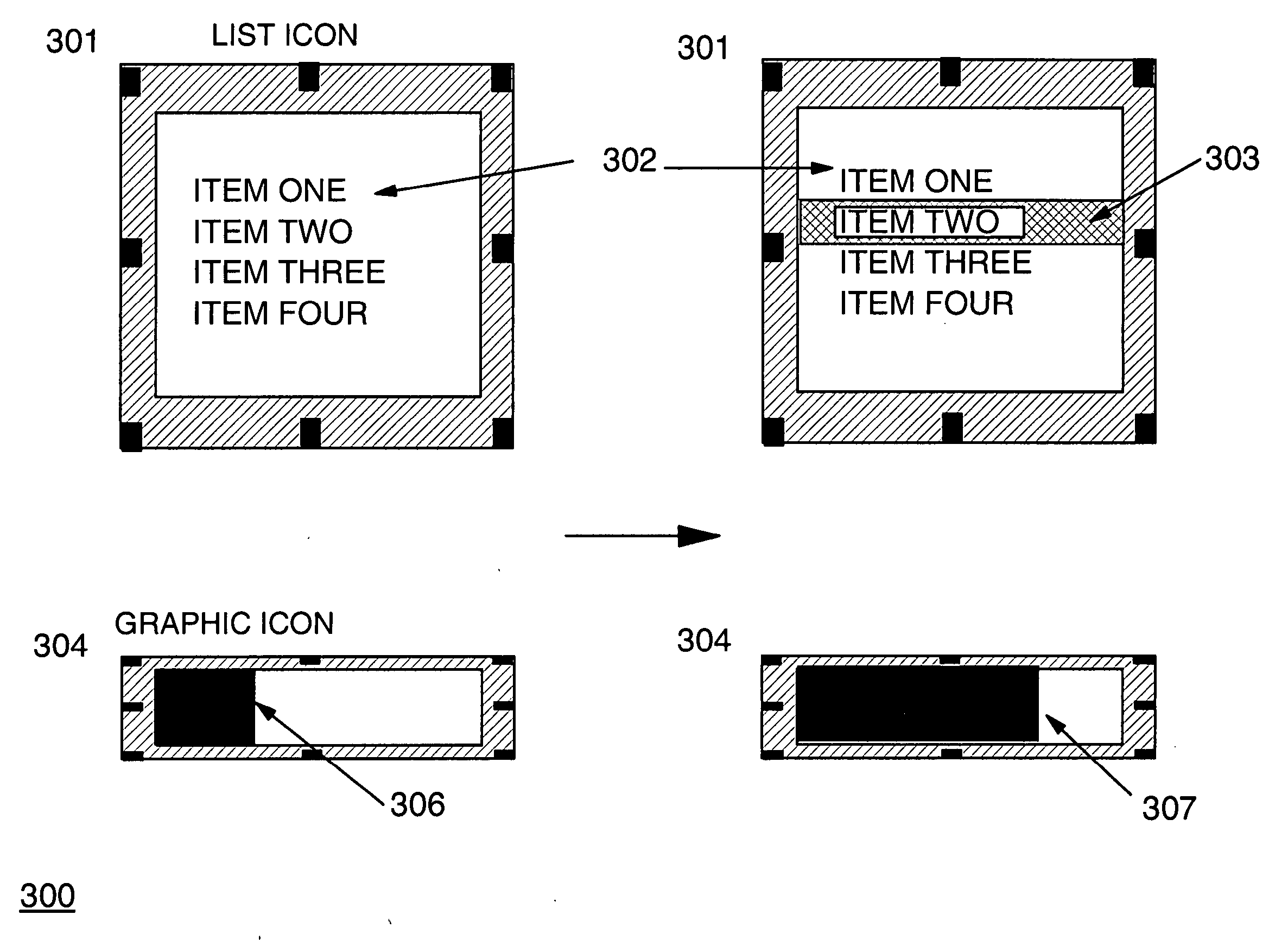

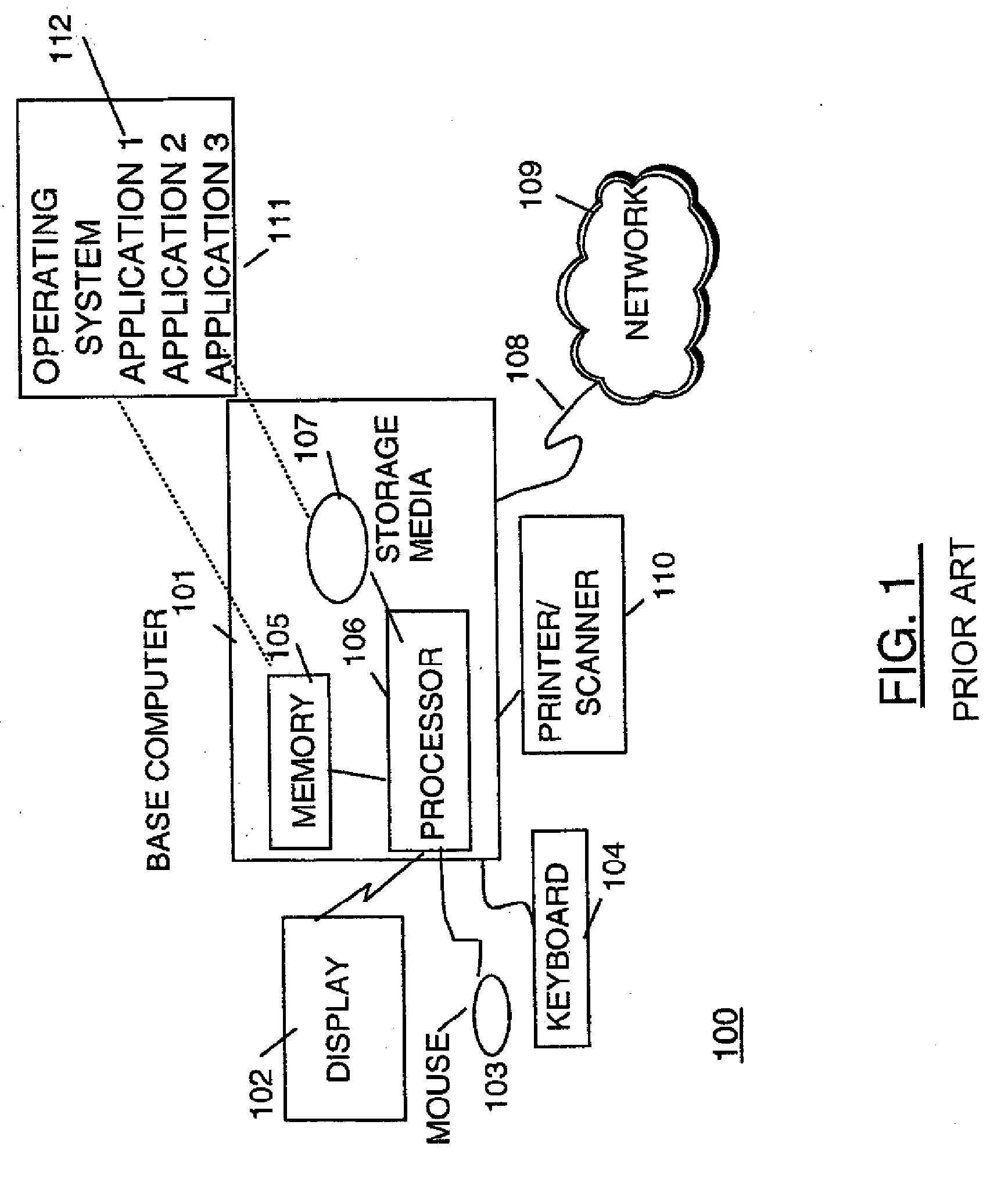

Modeless interaction with GUI widget applications

InactiveUS20050172239A1Program controlSpecial data processing applicationsGraphicsHuman–computer interaction

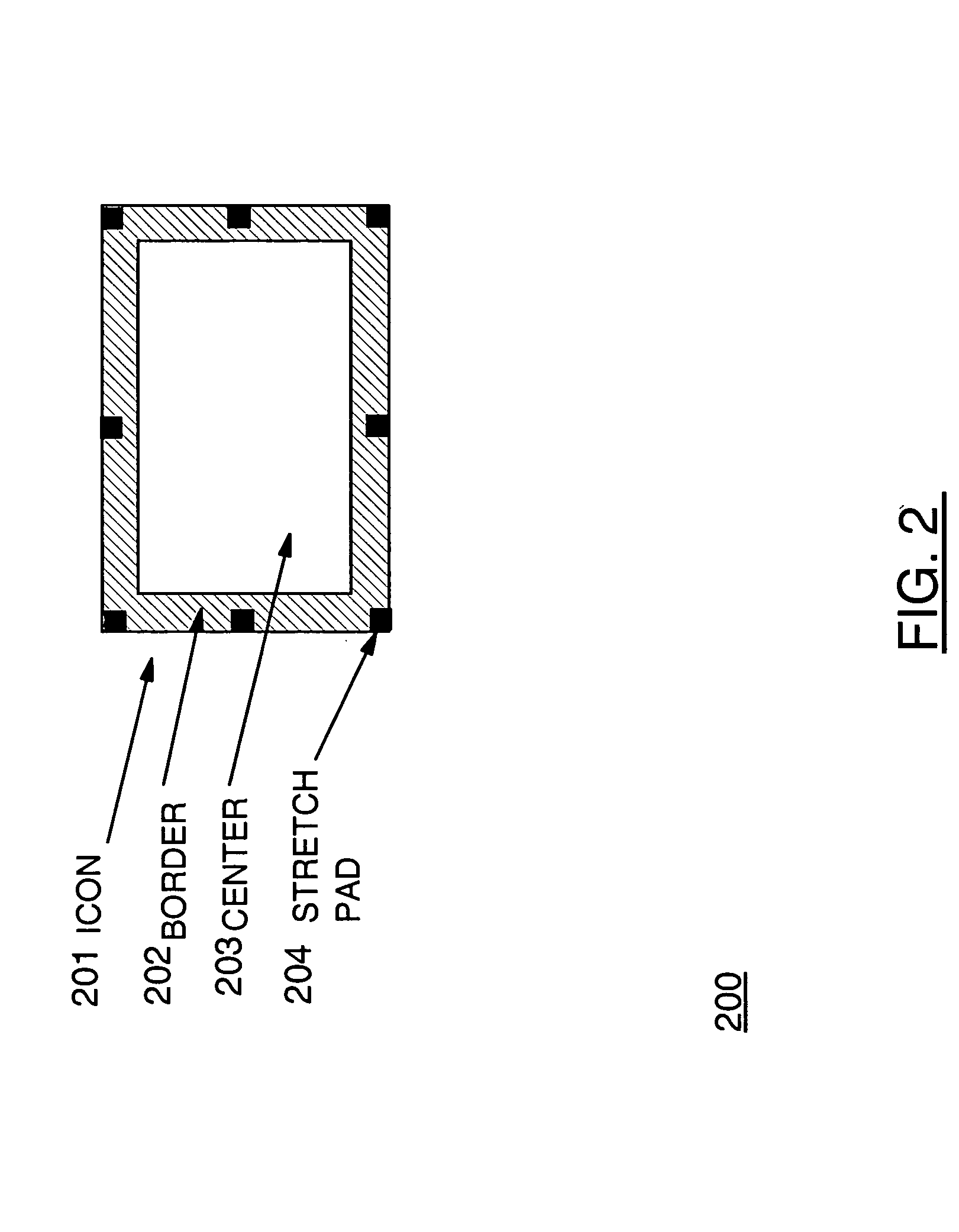

A user displayed interactive GUI widget (icon) provides two or more regions. A first region provides widget manipulation function for editing the widget, editing including moving or sizing functions. A second region provides widget interaction function for user interaction with the widget including interaction lists or interaction graphics. The regions may comprise one or more icon border regions and one or more icon internal regions.

Owner:IBM CORP

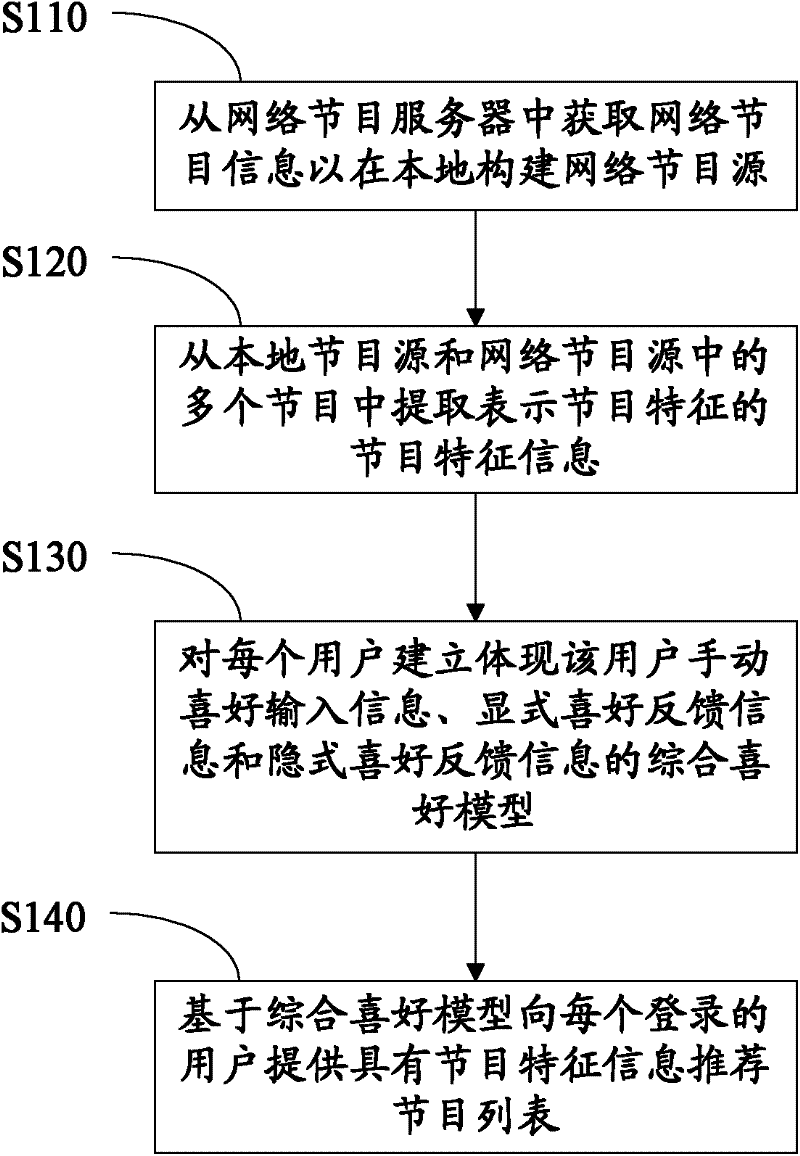

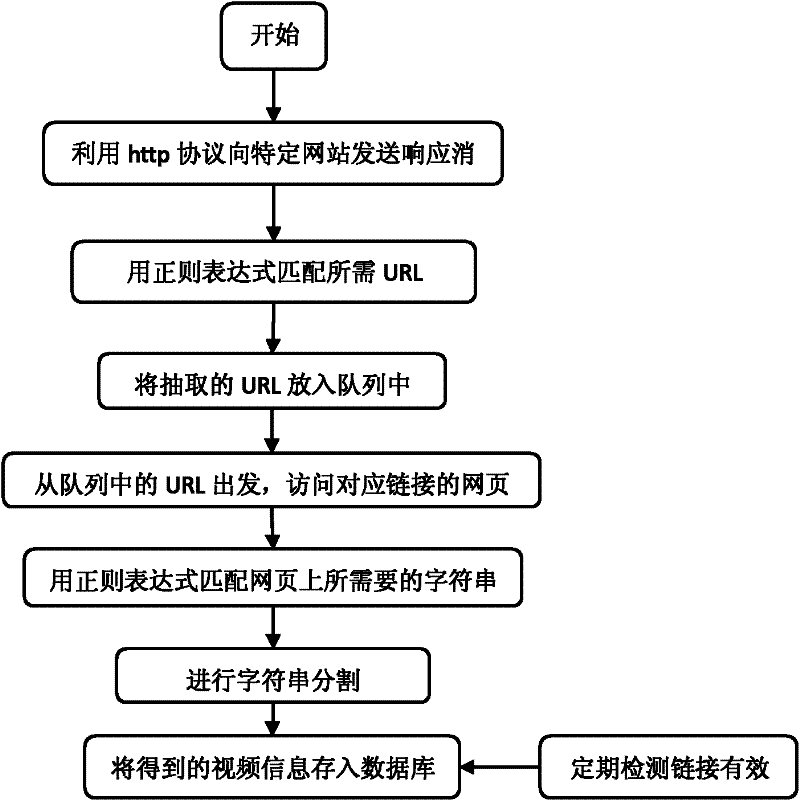

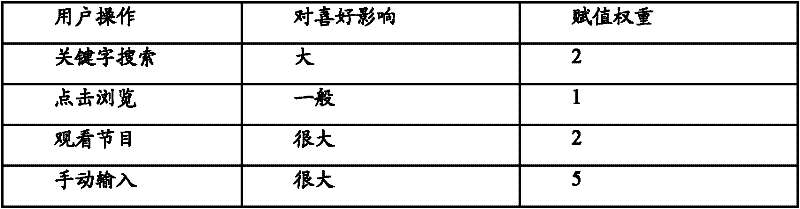

Network program aggregation and recommendation system and network program aggregation and recommendation method

InactiveCN102523511AImprove fullyEasy to identifySelective content distributionSoftware engineeringInteraction function

The invention discloses a network program aggregation and recommendation system and a network program aggregation and recommendation method. The network program aggregation and recommendation method comprises the following steps of: constructing a network program source based on network program information in a network program server; extracting program characteristic information showing characteristics of programs from a plurality of programs from a local program source and the network program source; establishing a comprehensive preference model capable of displaying manual preference input information, explicit preference feedback information and implicit preference information of a user for the user; and showing a recommended program list with the program characteristic information to the user based on the comprehensive preference model. According to the method and the system, the program source can be expanded, the user can conveniently indentify the programs in which the user is interested, the favorite programs can be preferentially presented, the information selection is more humanized, and the intelligentization and an interaction function are further improved.

Owner:COMMUNICATION UNIVERSITY OF CHINA

Modeless interaction with GUI widget applications

A user displayed interactive GUI widget (icon) provides two or more regions. A first region provides widget manipulation function for editing the widget, editing including moving or sizing functions. A second region provides widget interaction function for user interaction with the widget including interaction lists or interaction graphics. The regions may comprise one or more icon border regions and one or more icon internal regions.

Owner:INT BUSINESS MASCH CORP

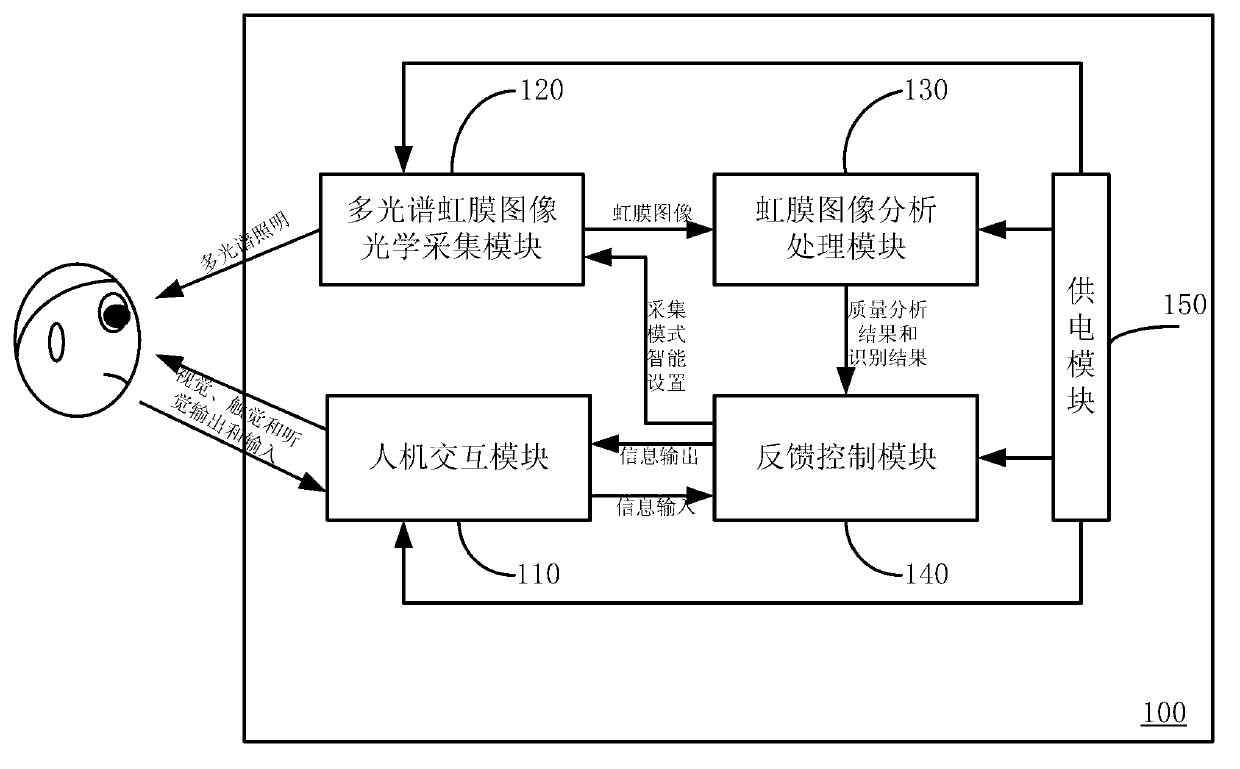

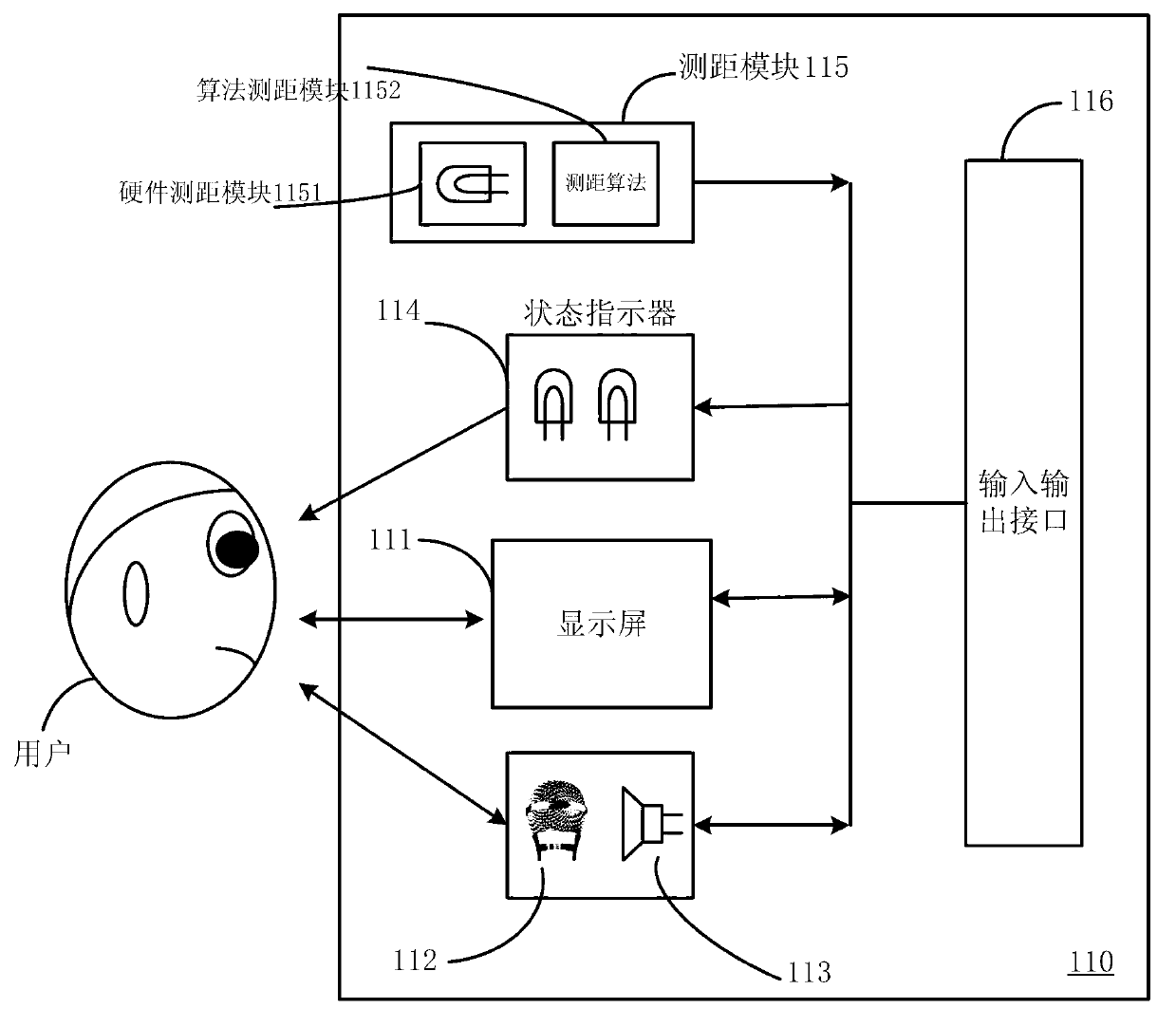

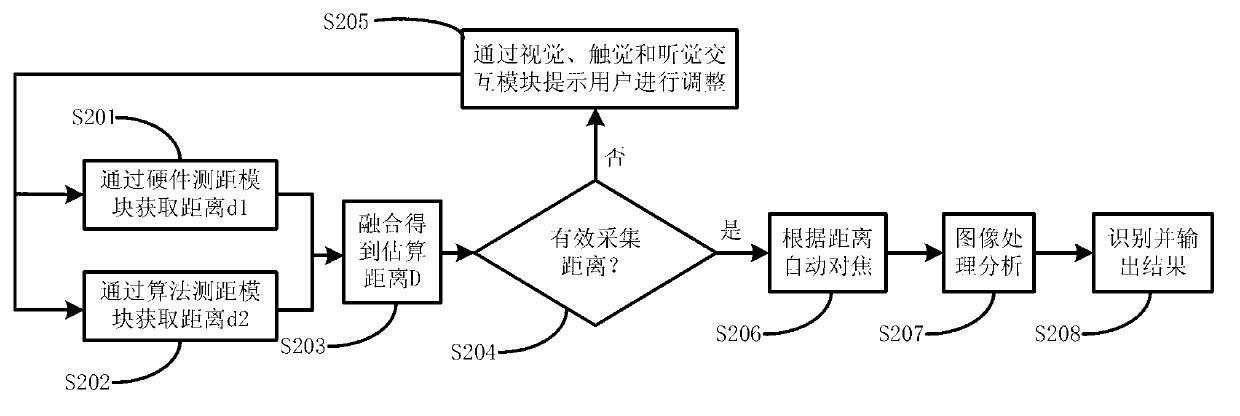

Mobile terminal iris recognition device with human-computer interaction mechanism and method

ActiveCN103106401AMiniaturizationMobile improvementsInput/output for user-computer interactionAcquiring/recognising eyesComputer moduleMiniaturization

The invention provides a mobile terminal iris recognition device with a human-computer interaction mechanism. The device comprises a human-computer interaction module, a multi-spectral iris image optical gathering module, an iris image analysis and processing module, a feedback control module and a power supply module, wherein the human-computer interaction module is used for ensuring that a user configures the mobile terminal iris recognition device in the process of iris image gathering and processing and being combined with the device to realize a human-computer interaction function; the multi-spectral iris image optical gathering module is used for gathering an iris image of the user; the feedback control module is used for feeding back processed results of the iris image analysis and processing module to the multi-spectral iris image optical gathering module, accordingly adjusting imaging parameters of the multi-spectral iris image optical gathering module, and feeding back processed results from the iris image analysis and processing module to the feedback control module of the human-computer interaction module. Accordingly to the mobile terminal iris recognition device with the human-computer interaction mechanism and a method, miniaturization, mobility, usability and the like of the iris recognition device can be improved.

Owner:BEIJING IRISKING

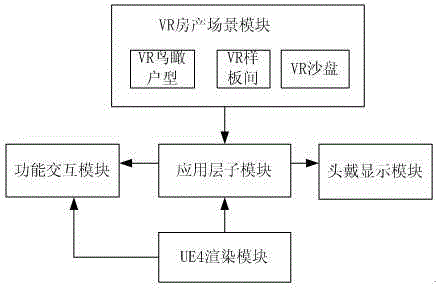

VR real estate display and interaction system based on UE4 engine

InactiveCN106251185AReduce construction costsImprove practicalityCustomer communicationsInteraction systemsComputer module

The invention discloses a VR real estate display and interaction system based on a UE4 engine. The system comprises a VR real estate scene module used for visually displaying a VR sand table, a VR bird's-eye house type and a VR sample room for enabling the user experience and the user activity; a function interaction module used for realizing user interaction functions, wherein the user interaction functions are composed of shifting roles, marking a scale, picking an object, switching on / off a lamp, opening / closing a door and switching on / off a television; a UE4 rendering module used for constructing the VR real estate scene module and realizing the functions of the function interaction module; a head-mounted display module applied to VR equipment for users to eventually experience and perceive a virtual world; and an application layer submodule used for calling the VR eal estate scene module, the function interaction module, the UE4 rendering module and the head-mounted display module. Based on the above system, the defect of the high construction cost of a real estate developer in the prior art can be overcome. Meanwhile, a novel marketing mode is provided. Moreover, the practicability and the interest of user experience are enhanced.

Owner:四川见山科技有限责任公司

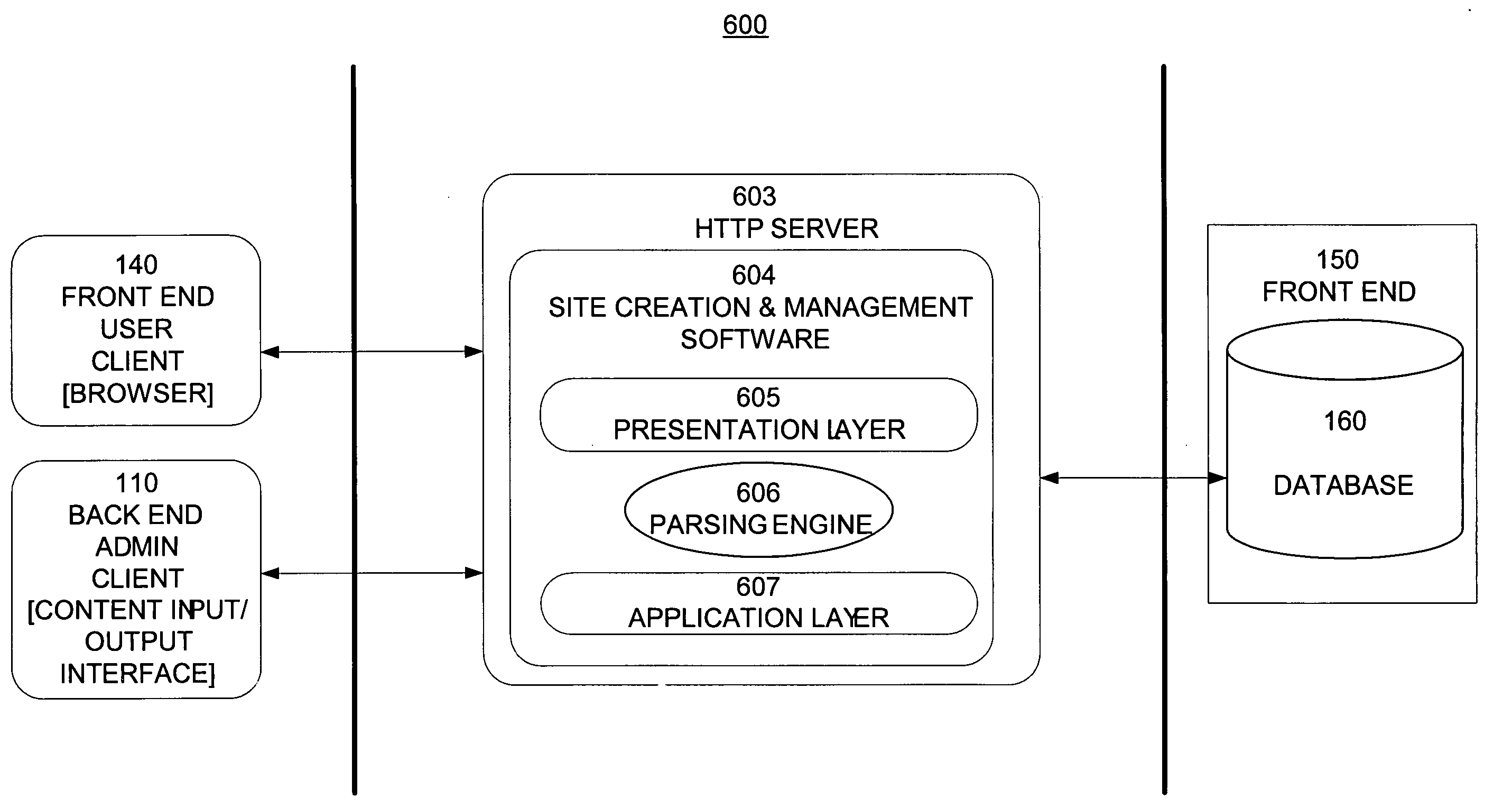

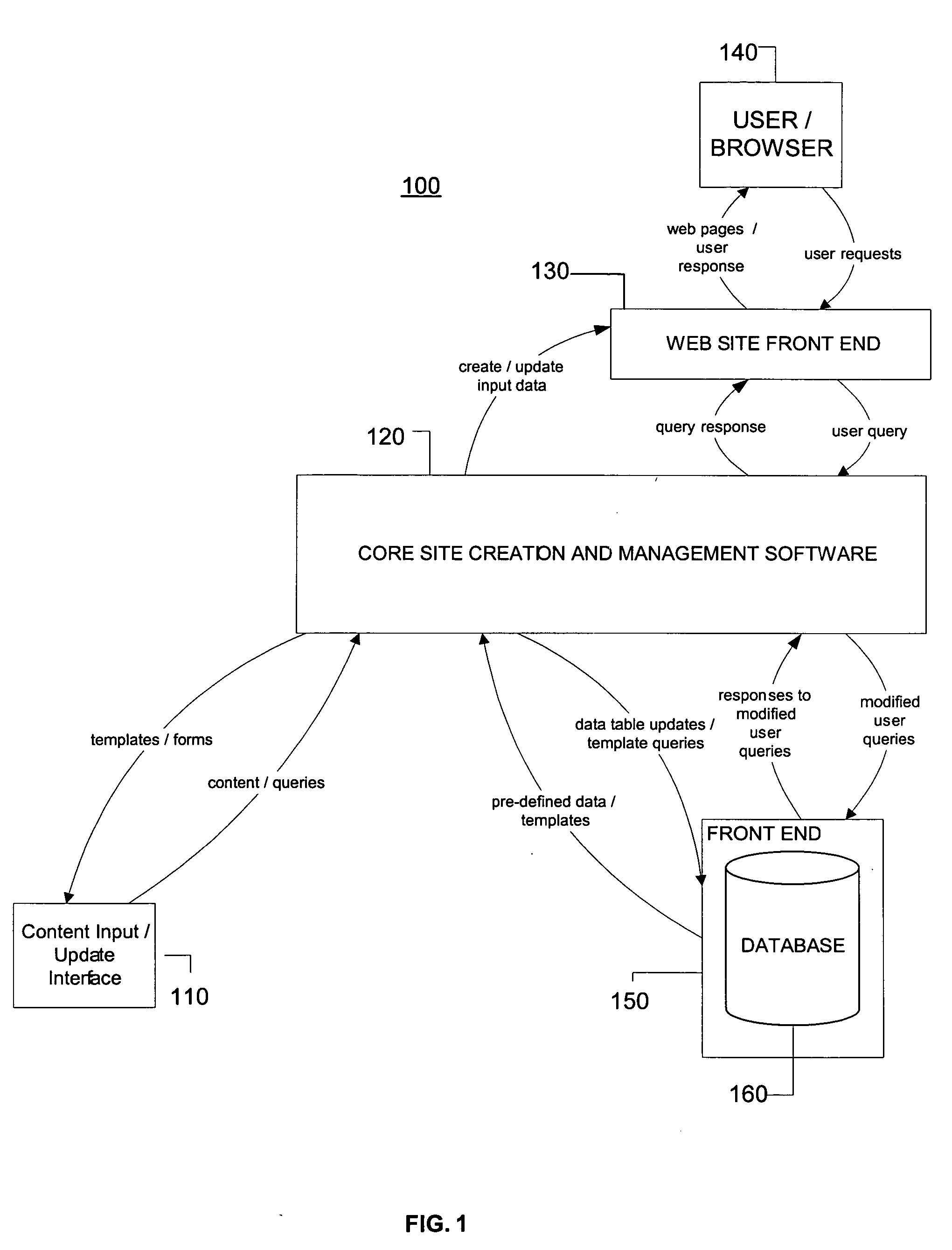

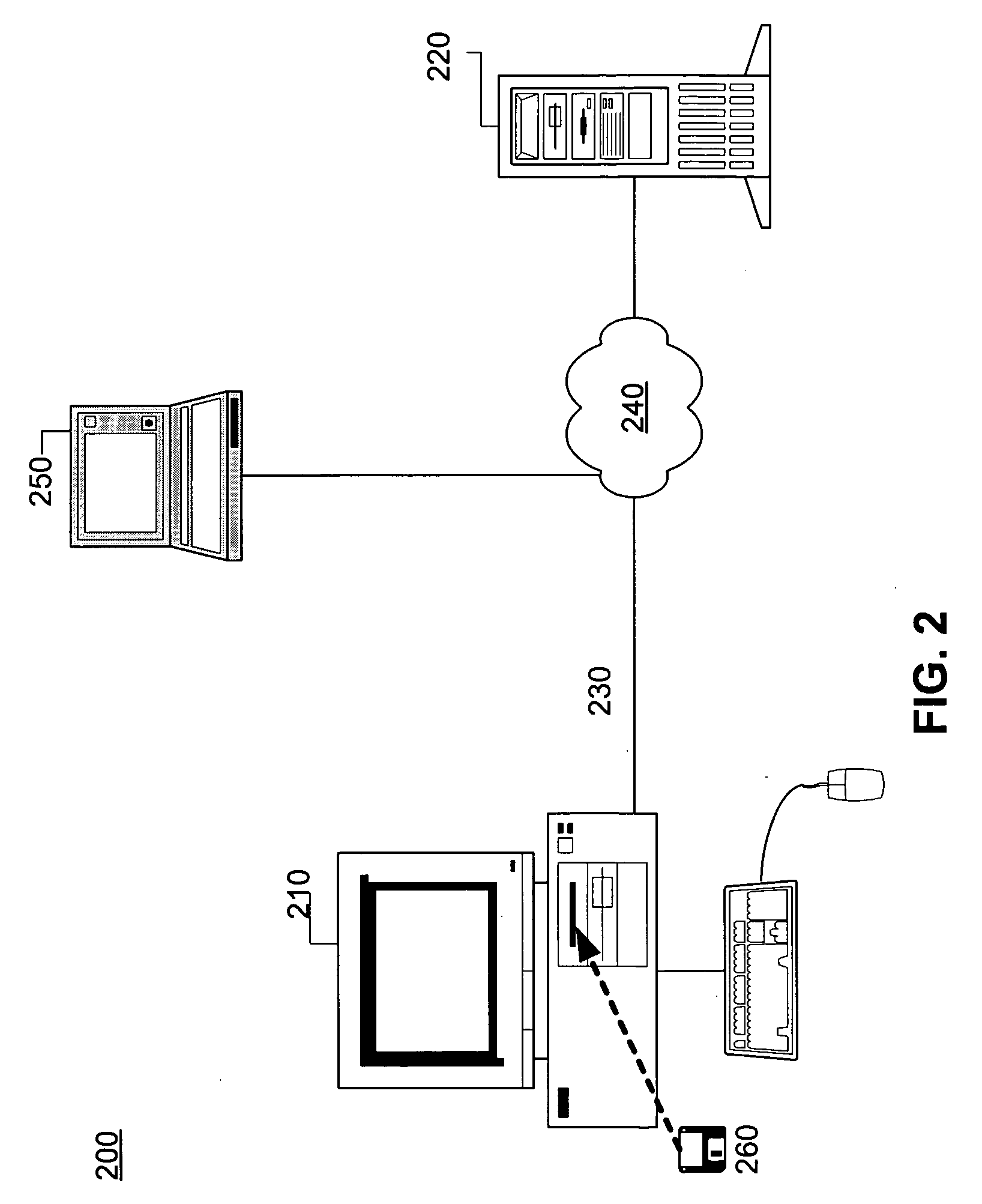

Creation and management of common interest community web sites

InactiveUS20050273702A1Website content managementSpecial data processing applicationsWeb siteContent creation

Systems and methods consistent with the present invention integrate content creation, content management, content display and user interaction functions for web sites. In some site management methods consistent with the present invention content may be submitted and is processed to associate the submitted content with pre-existing related content accessible through the web site. In some embodiments consistent with the present invention submissions may be prioritized for display at prominent locations on the web site.

Owner:ARTS COUNCIL SILICON VALLEY

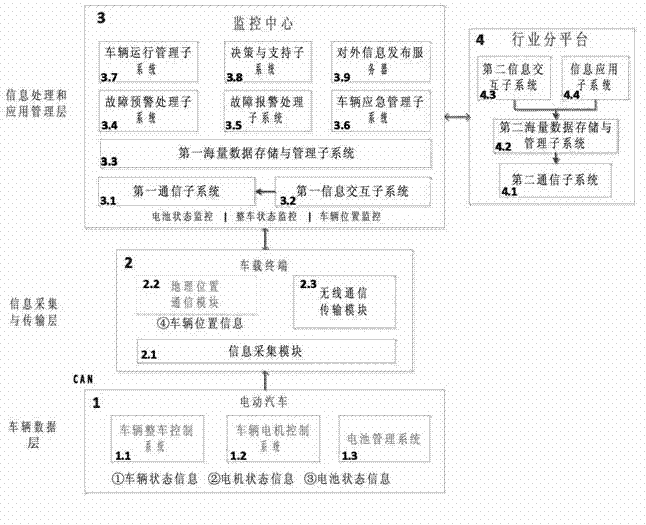

Method and system for remotely monitoring electric vehicle

The invention provides a method and a system for remotely monitoring an electric vehicle so as to solve the problems that the safety use of batteries can be ensured, the service life of the batteries can be improved and the cost of the batteries can be reduced. The method comprises the steps of comprehensively monitoring the state of a complete vehicle, a battery state (particularly a single battery state) and vehicle position information in real time during vehicle operating. According to the system, the information interaction function between different monitoring platforms can be realized and the information fusion and processing of mass data can be finished; the remote services such as fault diagnosis, fault early warning, emergency management and the like can be provided for the electric vehicle by the system; and meanwhile, the scheduling and the navigation service for electric energy supply of the electric vehicle, the energy peak scheduling information service and the vehicle route scheduling informationization service can be further provided by the system.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

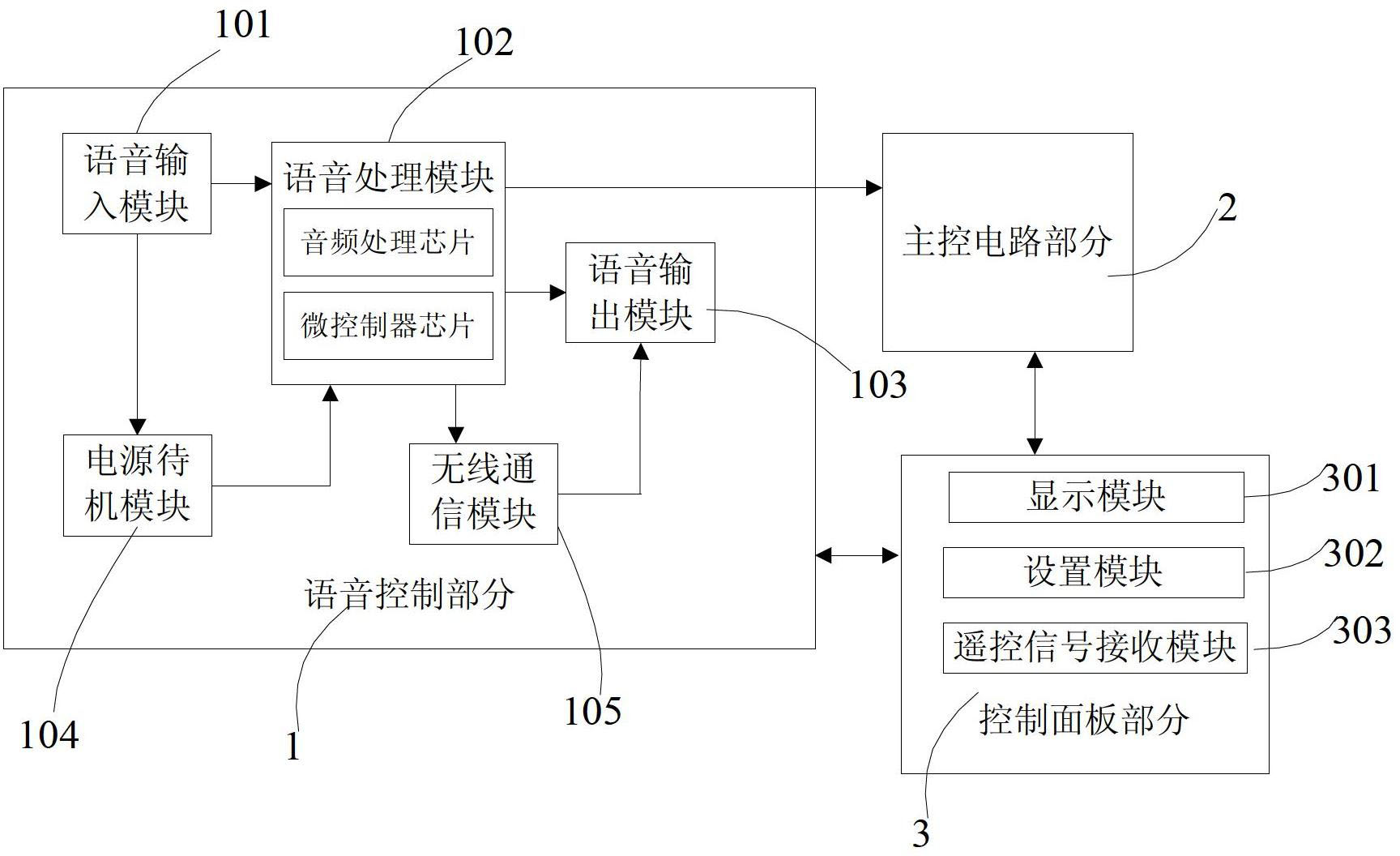

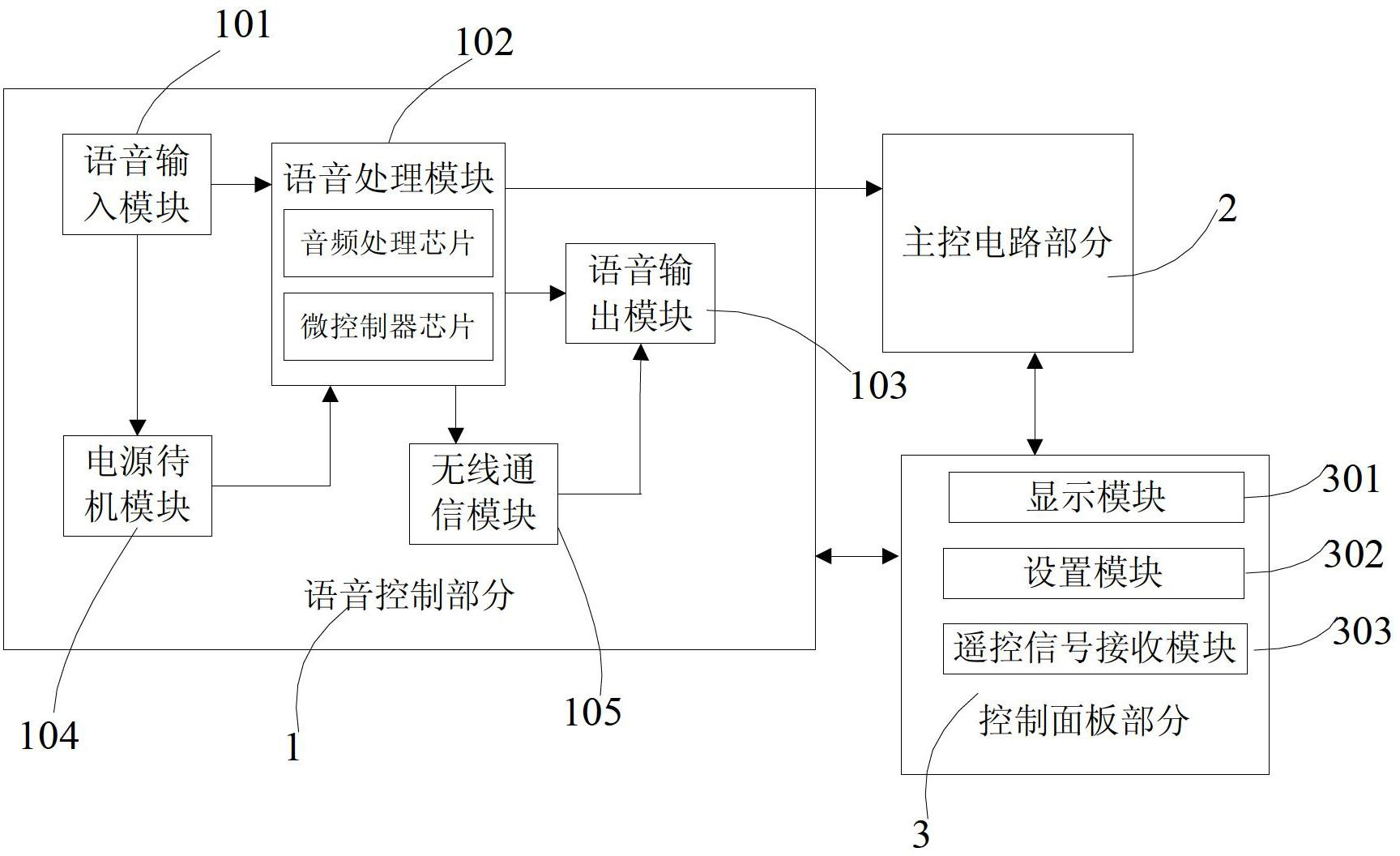

Voice-controlled air conditioner with voice interaction function

ActiveCN102692068AImprove experienceEasy to controlSpace heating and ventilation safety systemsLighting and heating apparatusPersonalizationEngineering

The invention discloses a voice-controlled air conditioner with a voice interaction function. The air conditioner is controlled directly by voice command information given out by a user, and the user is confirmed by the voice command information fed back in the voice control process of the air conditioner, thus realizing voice interaction between the user and the air conditioner. With the technical scheme, the air conditioner can be controlled completely without a remote controller, thus being convenient to operate, and simultaneously having flexibility in voice interaction mode, being capable of meeting personalized requirements of different users, and improving the experience of users.

Owner:HAIER GRP CORP +1

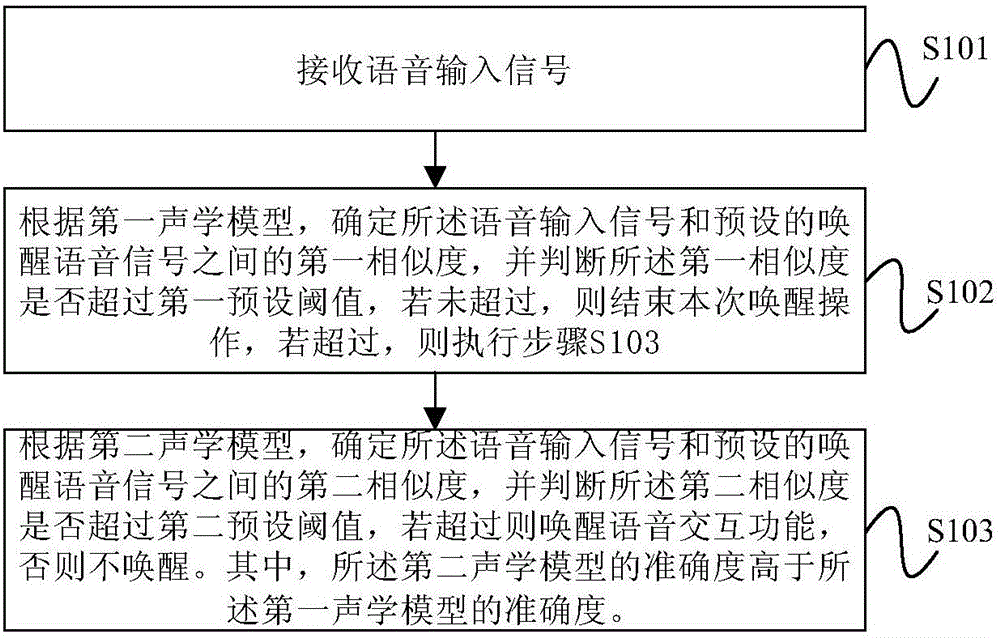

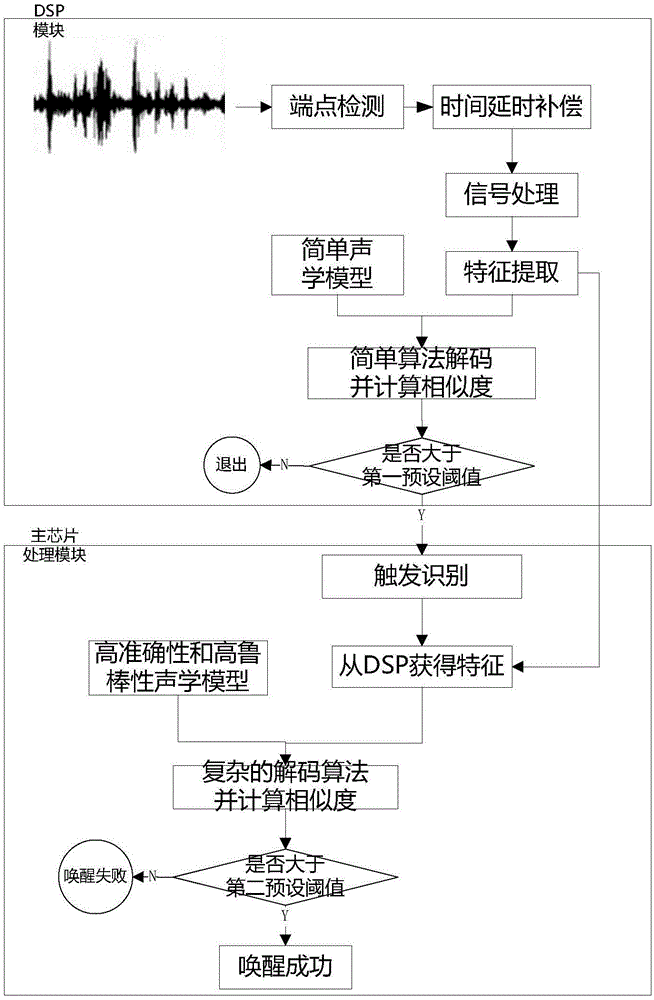

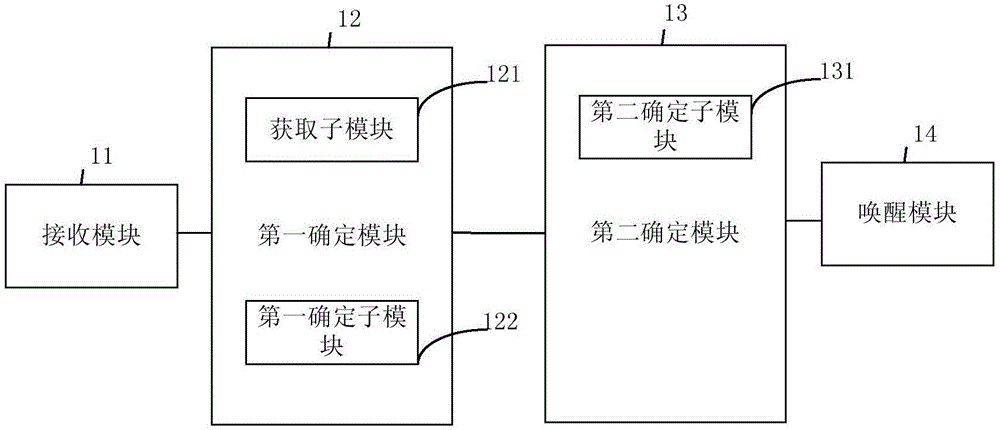

Voice wakeup method and voice interaction device

The present invention provides a voice wake-up method and a voice interaction device. The method includes the following steps that: voice input signals are received; the first similarity of the voice input signals and preset wake-up voice signals is determined according to a first acoustic model, and whether the first similarity exceeds a first preset threshold value is judged; and if the first similarity exceeds the first preset threshold value, second similarity between the speech input signals and the preset wake-up voice signals is determined according to a second acoustic model, and whether the second similarity exceeds a second preset threshold value is judged, if the second similarity exceeds the second preset threshold value, a voice interaction function is awaken, wherein the accuracy of the second acoustic model is higher than the accuracy of the first acoustic model. The voice wake-up method and the voice interaction device provided by the embodiment of the invention have the advantages of low power consumption and low wrong wake-up rate.

Owner:HISENSE

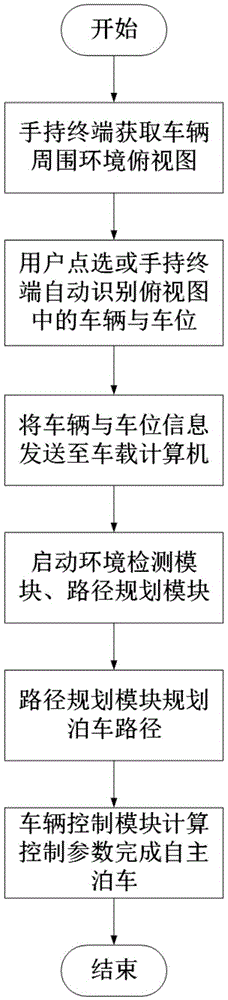

Intelligent man-car interaction parking method based on hand-held terminal

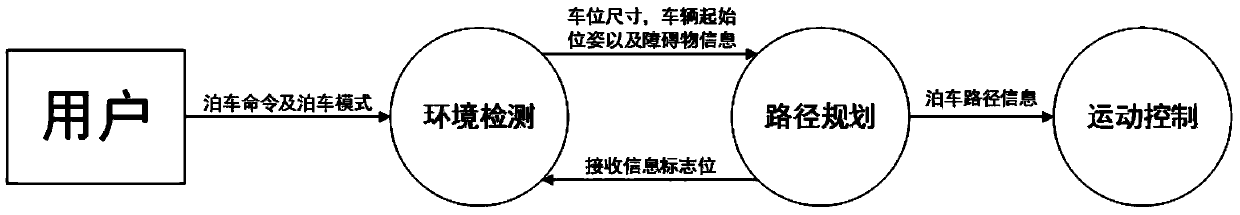

ActiveCN105539430ARealize human-vehicle interaction functionGood self parkingExternal condition input parametersHand heldInteraction function

The invention discloses an intelligent man-car interaction parking method based on a hand-held terminal. According to the intelligent man-car interaction parking method, the man-car interaction function is achieved through a hand-held terminal clicking mode or a hand-held terminal automatic identification mode, and a car can acquire the relative position relation of a parking stall specified by a user and the car to achieve the man-car interaction function. According to the intelligent man-car interaction parking method, a parking path is reasonably planned, a plurality of alternative parking positions are selected, and a plurality of driving routes are planned; accordingly, autonomous parking can be better achieved. In addition, an intelligent parking system enables the user to visually learn the surrounding environment information of the car through the mode that the car and the parking stall on the vertical view, acquired by the hand-held terminal, of the surrounding environment of the car are clicked by the user or automatically identified, , and the car is made to acquire the positions of the car and the parking stall in the view through the mode of clicking or hand-held terminal automatic identification.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

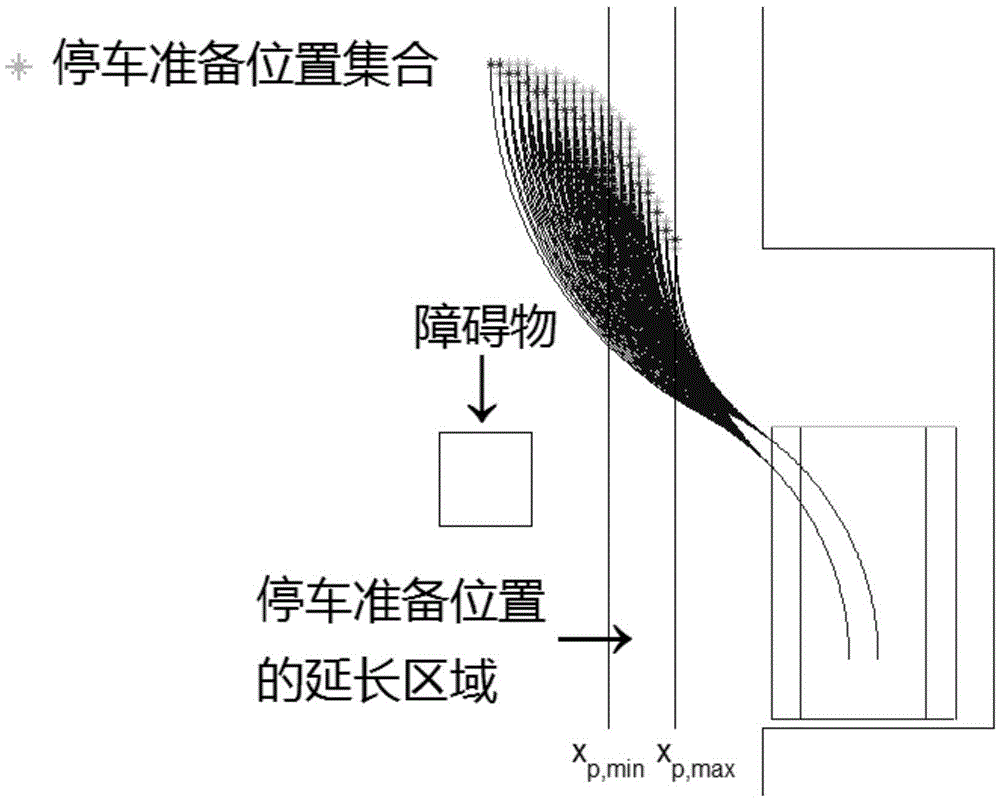

Intelligent parking system with man-vehicle interaction function

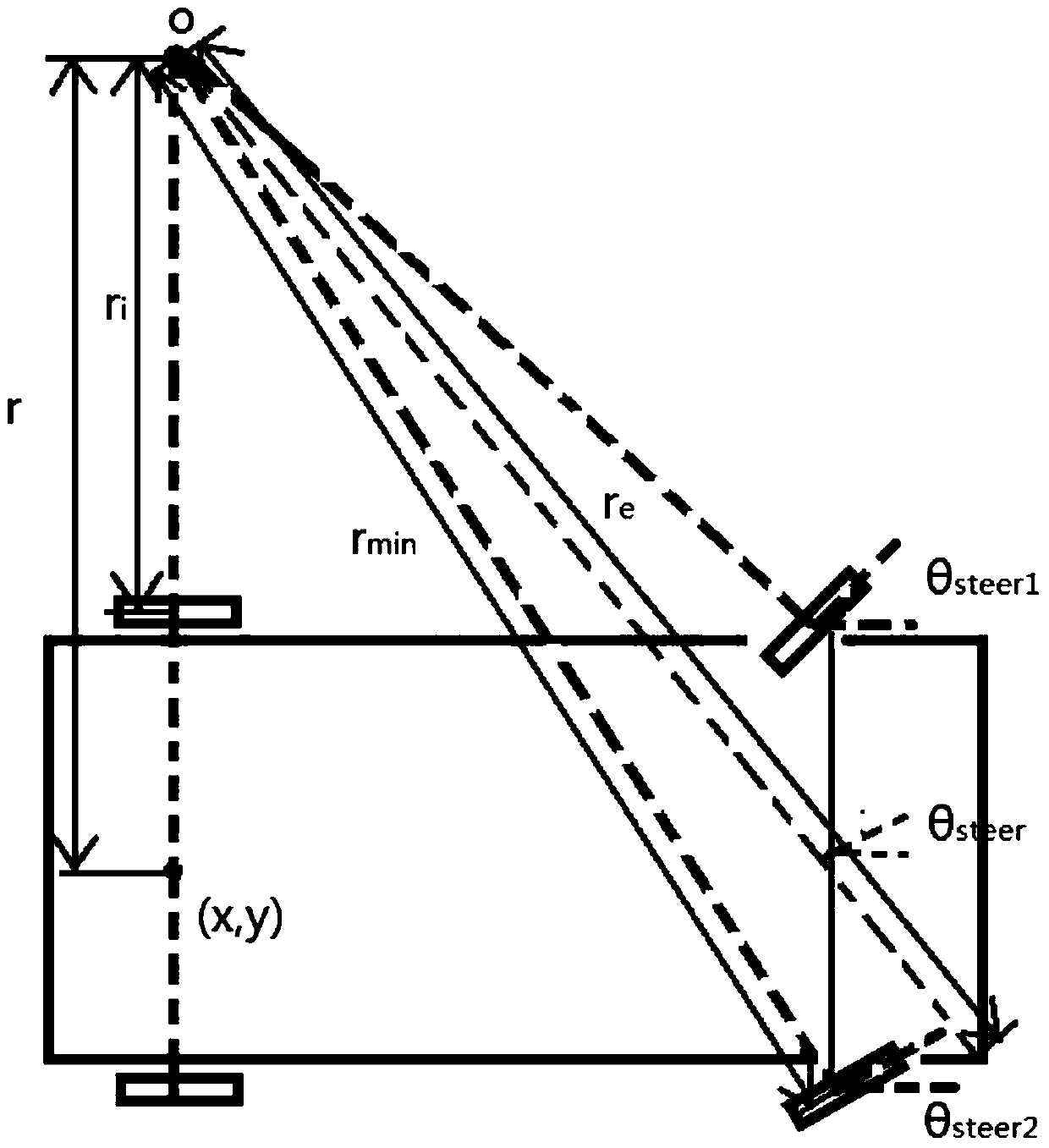

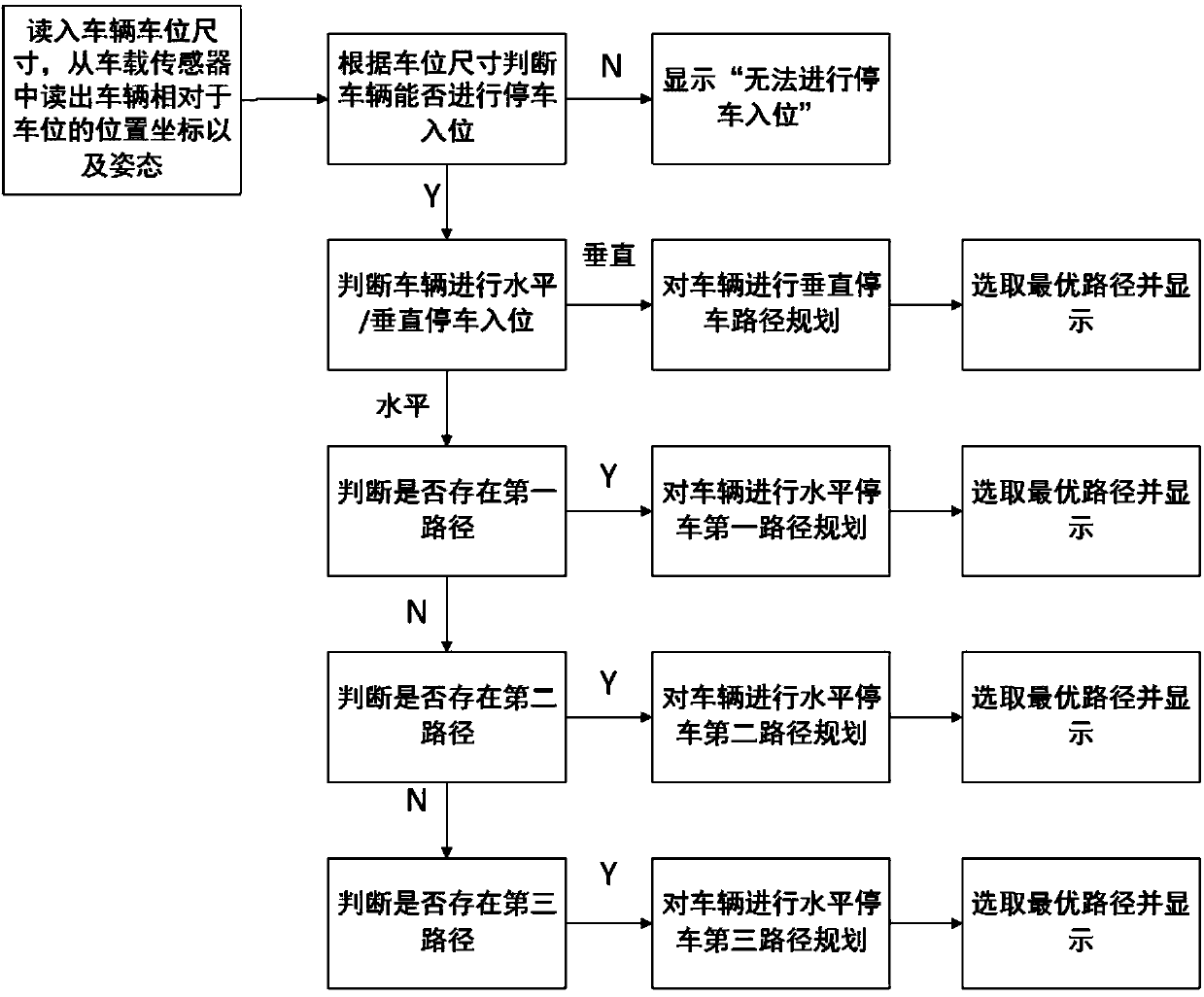

ActiveCN104627175AIntelligent judgment of vertical/horizontal parkingSteering partsSteering wheelComputer module

The invention discloses an intelligent parking system with the man-vehicle interaction function. The system comprises an environment detection module, a path programming module and a motion control module. The environment detection module detects the vehicle size and the stall size and transmits the vehicle size and the stall size to the path programming module. The path programming module includes a determining stage, an initialization stage, a free programming stage, a final programming stage and an optimum determining stage. The path programming module programs a plurality of parking paths according to the stall size, and then selects the optimal parking path according to the optimum determining rule. The motion control module calculates vehicle control parameters according to the optimal parking path to finish automatic parking. In various parking environments, the parking system can generate the parking paths, and program the path for the rotating process of wheels, so that a vehicle does not need to be stopped in the steering wheel rotating process, and the continuity of the advancing process of the vehicle is ensured.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

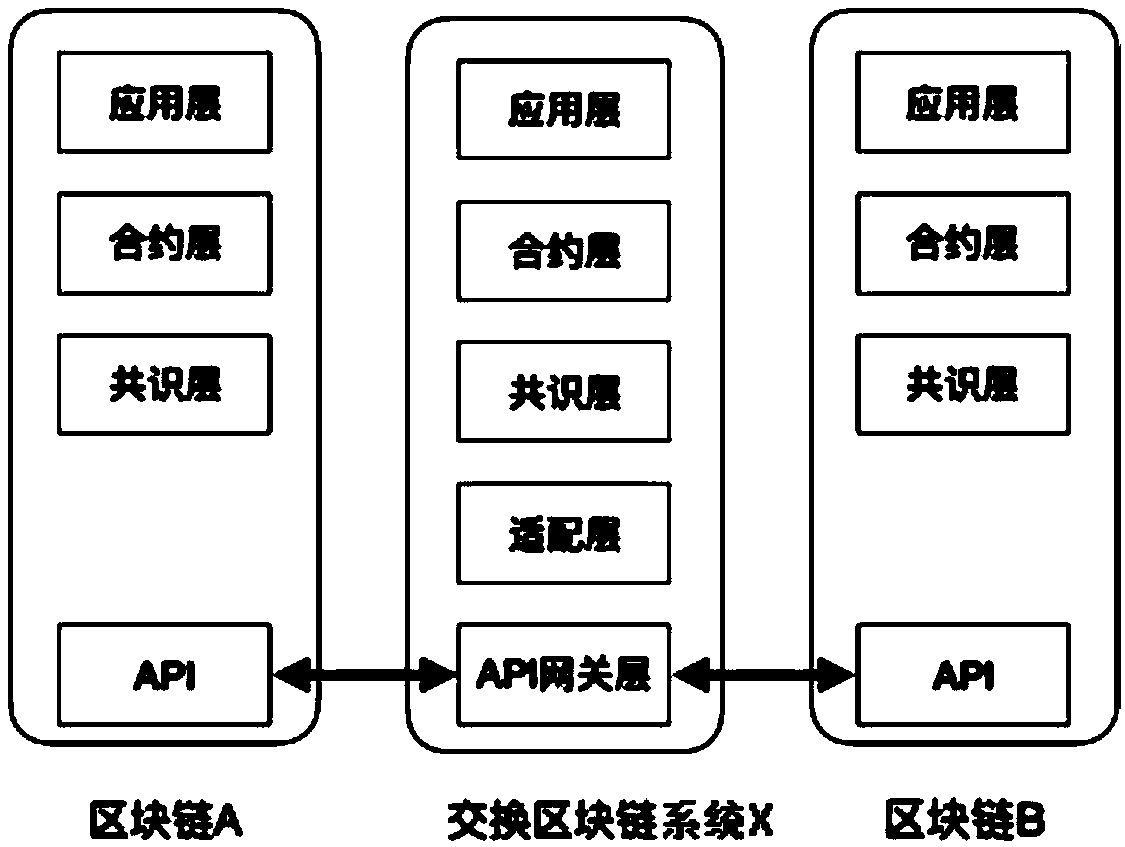

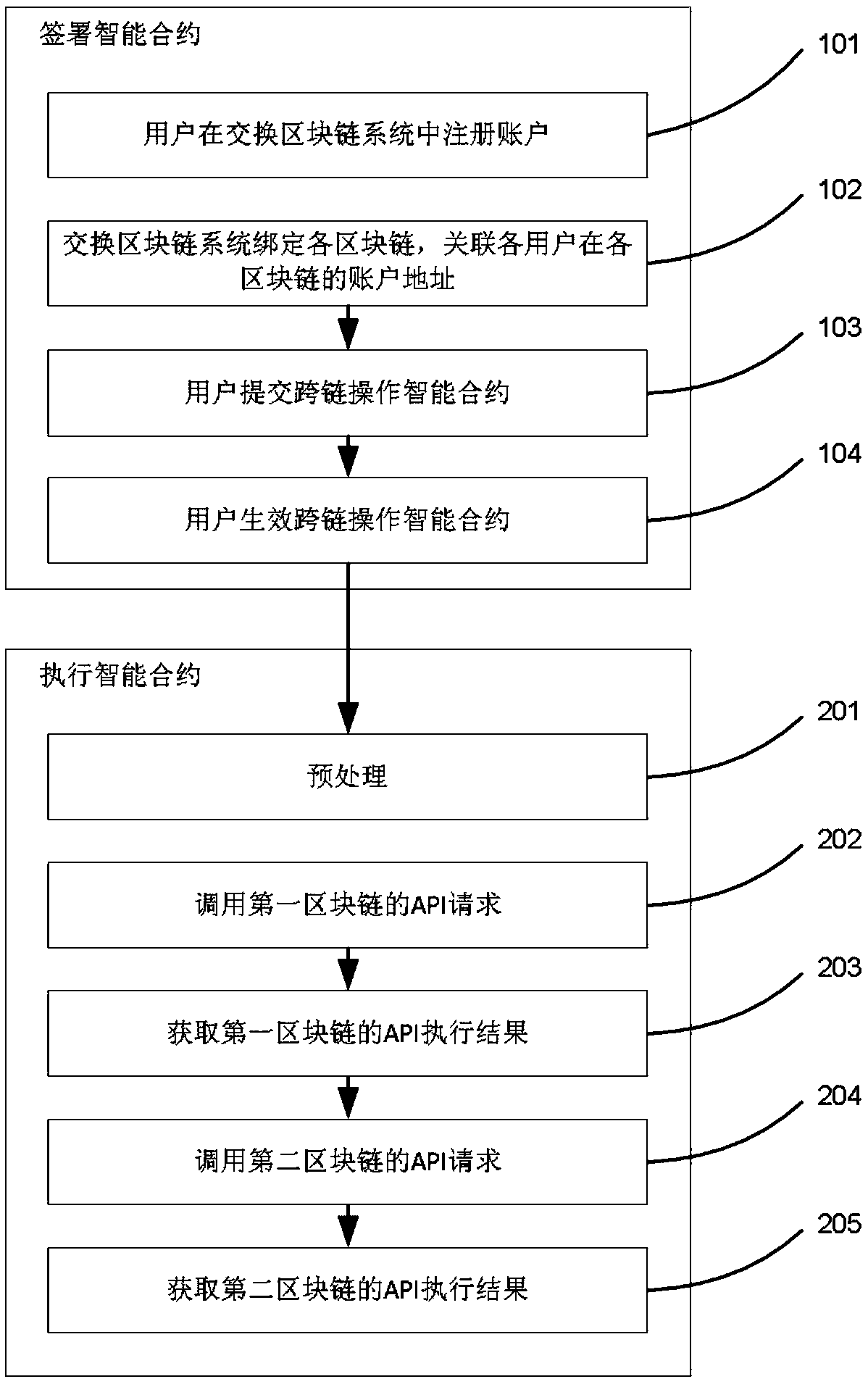

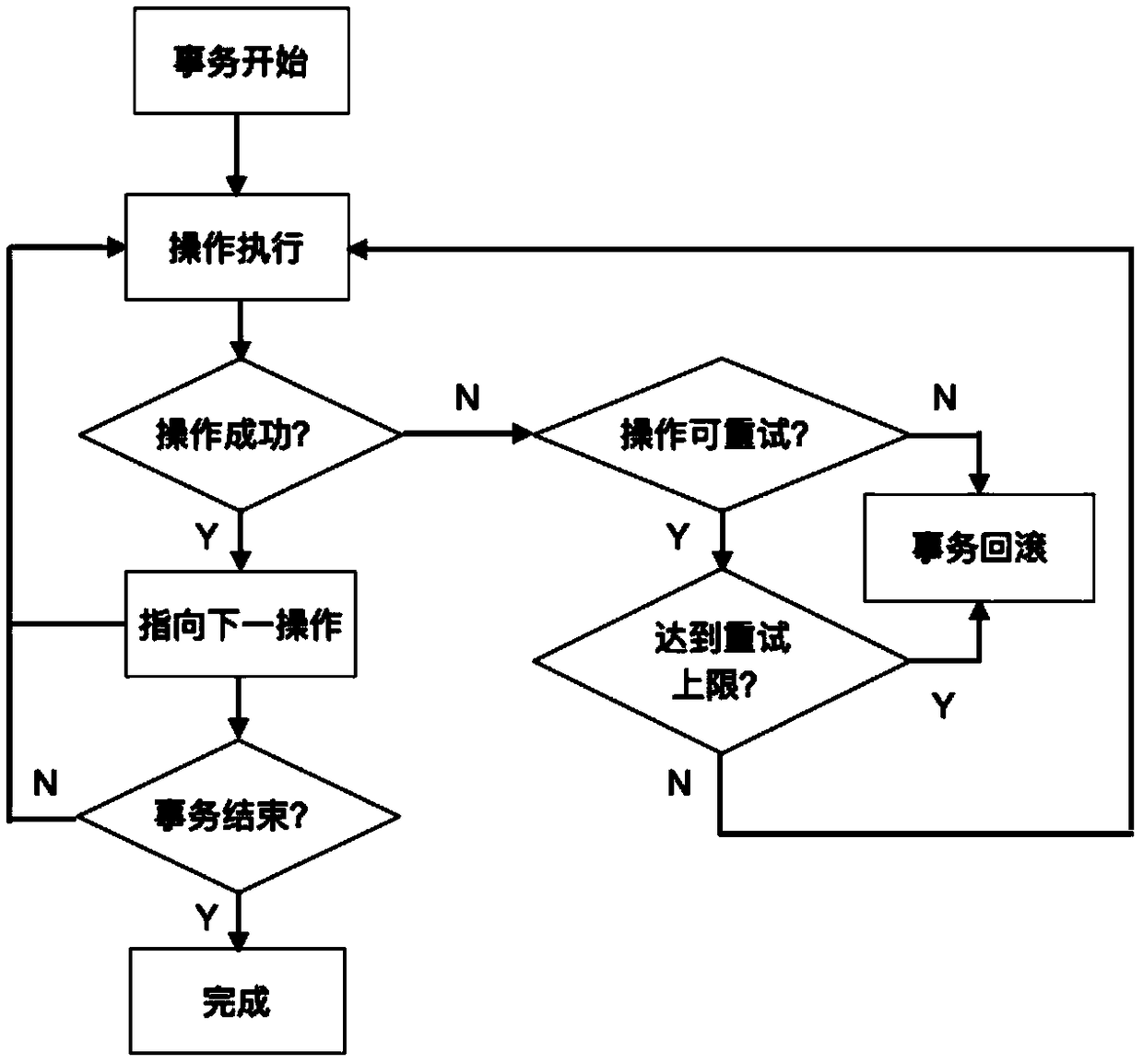

Exchange block chain system and corresponding universal block chain interoperation method and network

The invention discloses an exchange block chain system and a corresponding universal block chain interoperation method and network and belongs to the technical field of a block chain. The exchange block chain system comprises an application layer module, a contract layer module, a consensus layer module and an adaptation layer module. The universal block chain interoperation method comprises the steps of deploying a cross-chain operation smart contract; and performing the cross-chain operation smart contract. The universal block chain interoperation network comprises the exchange block chain system and block chains for interoperation. According to the exchange block chain system and the corresponding universal block chain interoperation method and network provided by the invention, variouslimitations of the existing cross-chain system in aspects such as target chain number, interaction function, homogeneity and heterogeneity, and centralization are solved. A cross-chain intercommunication scheme for a comprehensive system is provided.

Owner:浙江华信区块链科技服务有限公司

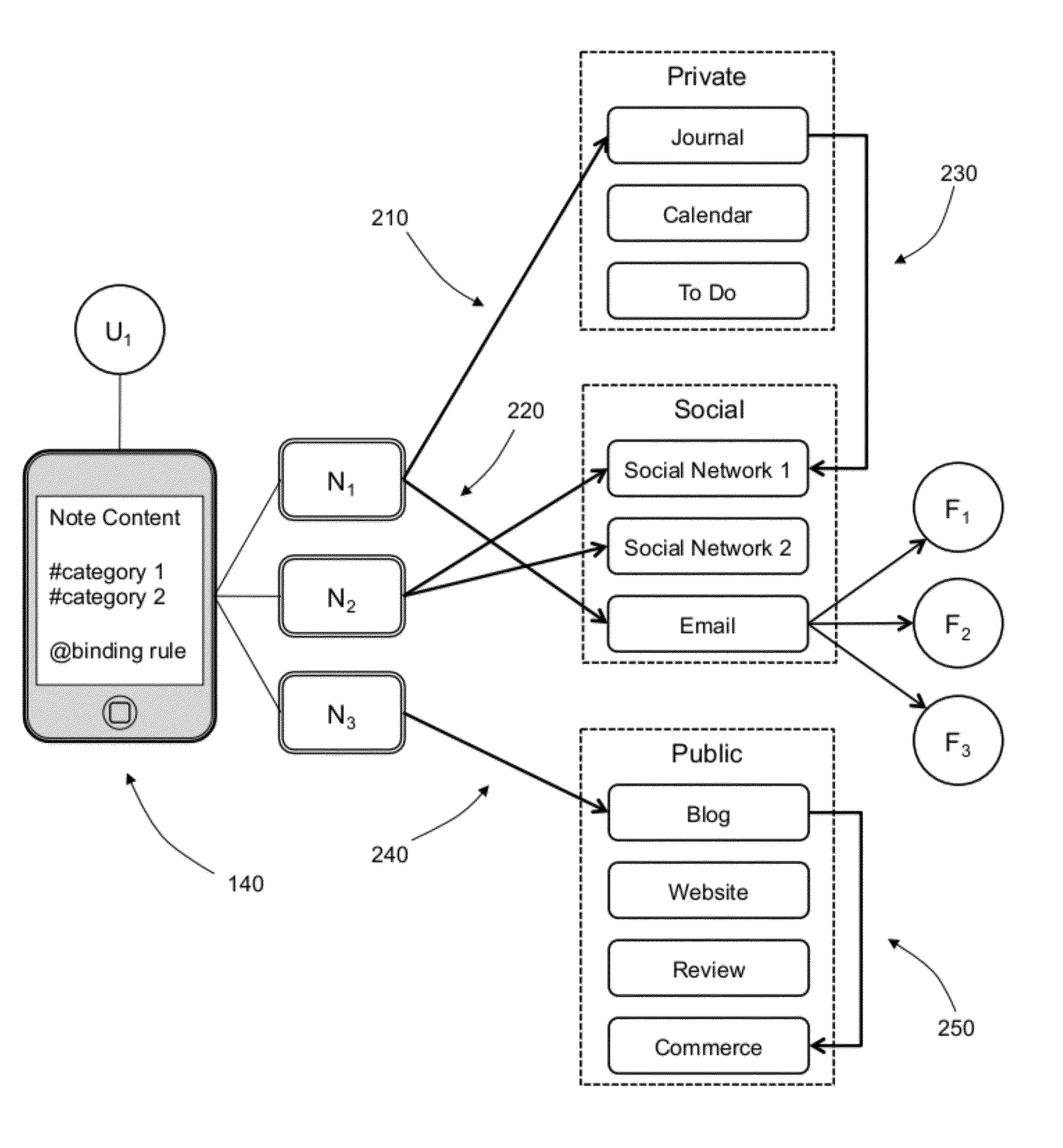

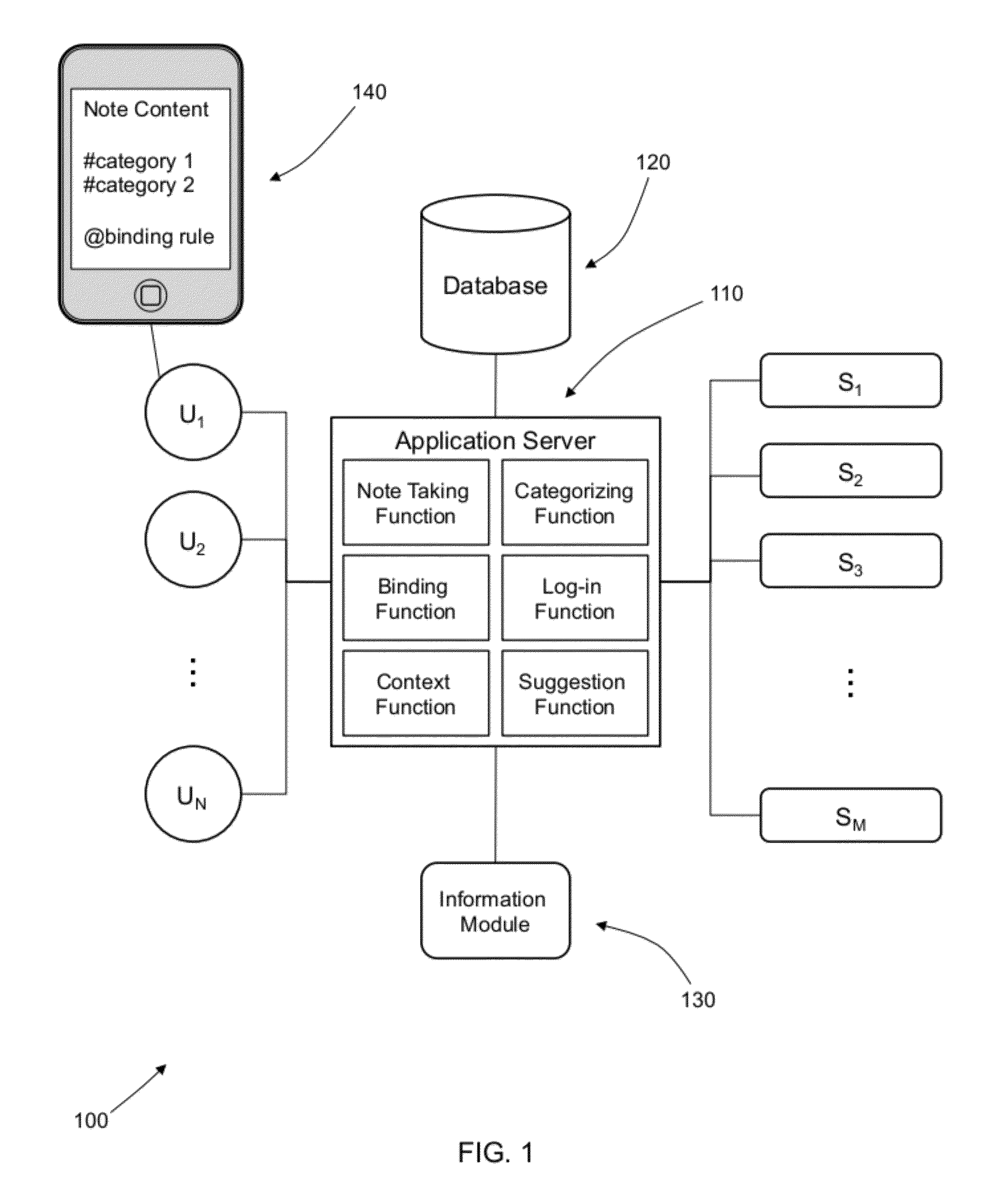

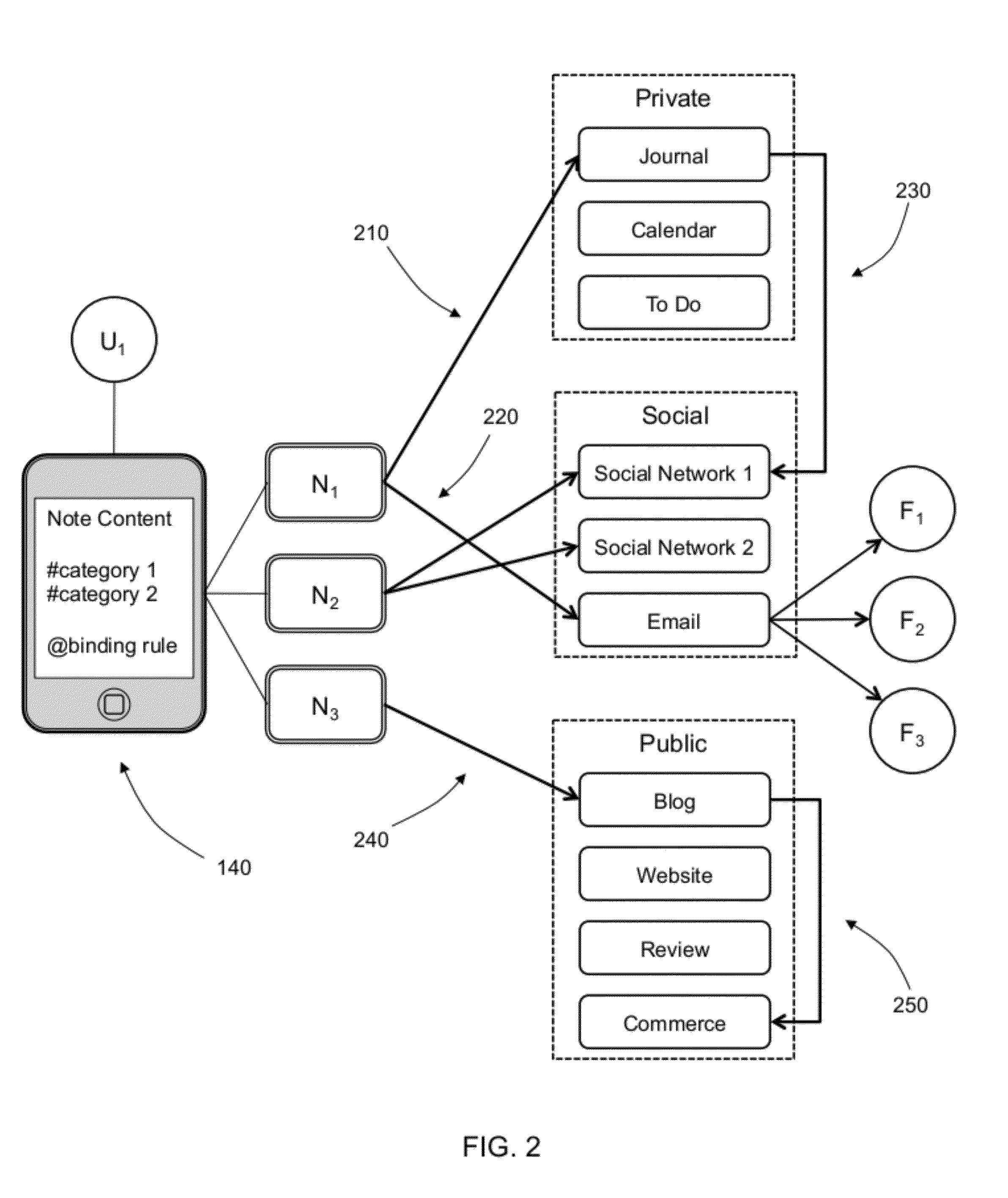

Mobile Content Capture and Discovery System based on Augmented User Identity

ActiveUS20120110458A1Input/output for user-computer interactionDatabase distribution/replicationThe InternetMobile content

A system and a method is provided for collecting information and discovery of the information based on an augmented user identity. A note creation function allows a user of a computer device to create notes. A note interaction function allows the user to interact with notes and / or Cloud information available via the Internet. An annotiation function annotates content to the notes, which could be context traits and / or user identity traits. User identity traits are augmented through information obtained from the creation process and / or the interaction process with notes or Cloud information. Using a discovery function, notes can be discovered for a user from a note database / store using aspects of the context traits and / or the augmented user identity traits.

Owner:APPLE INC

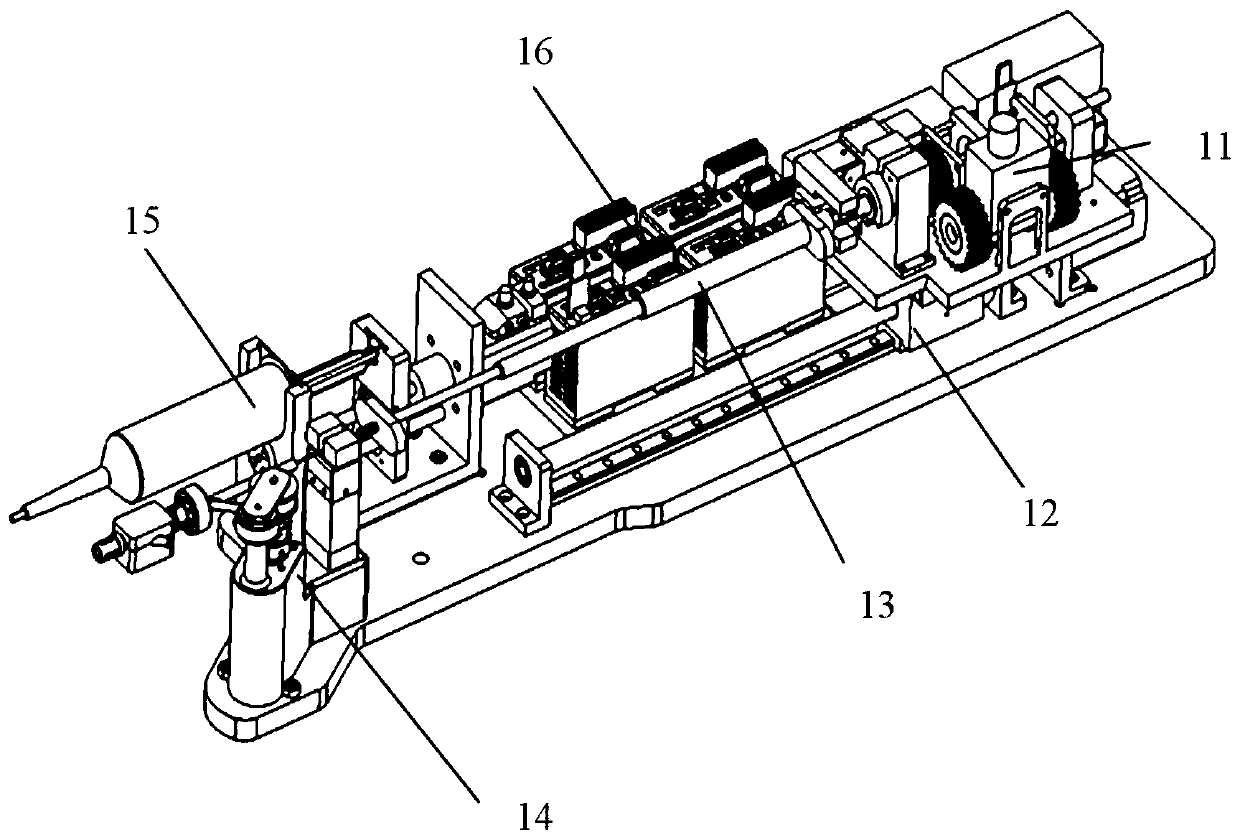

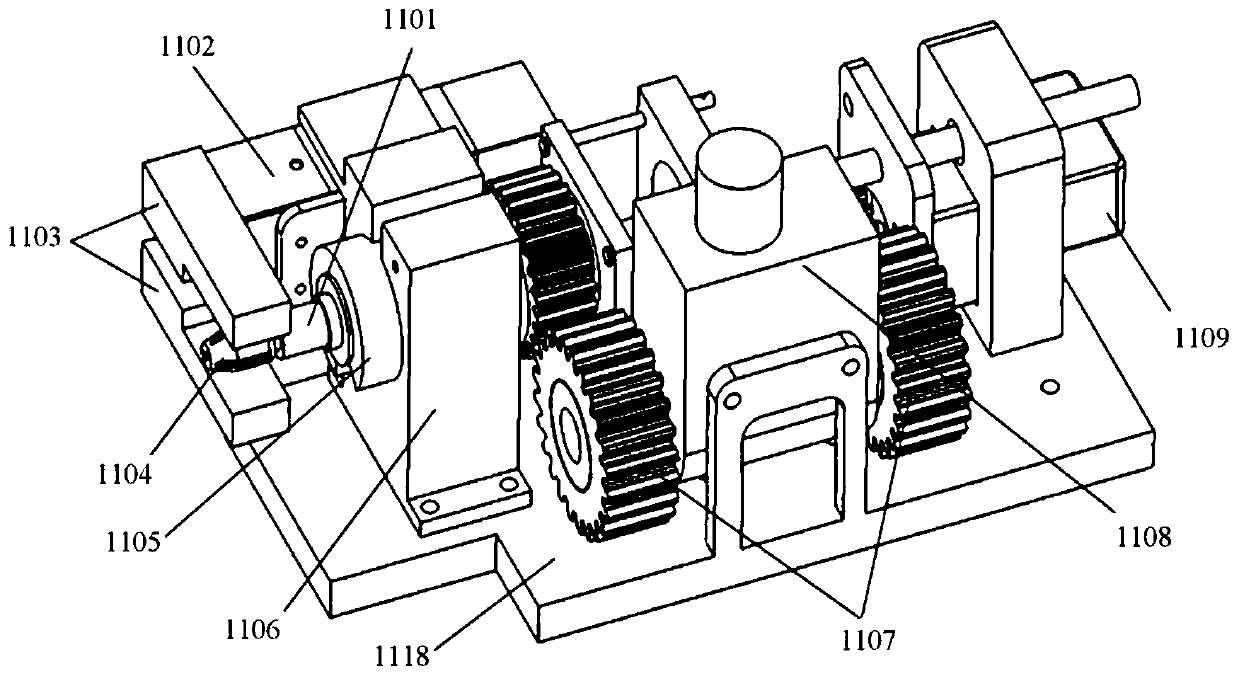

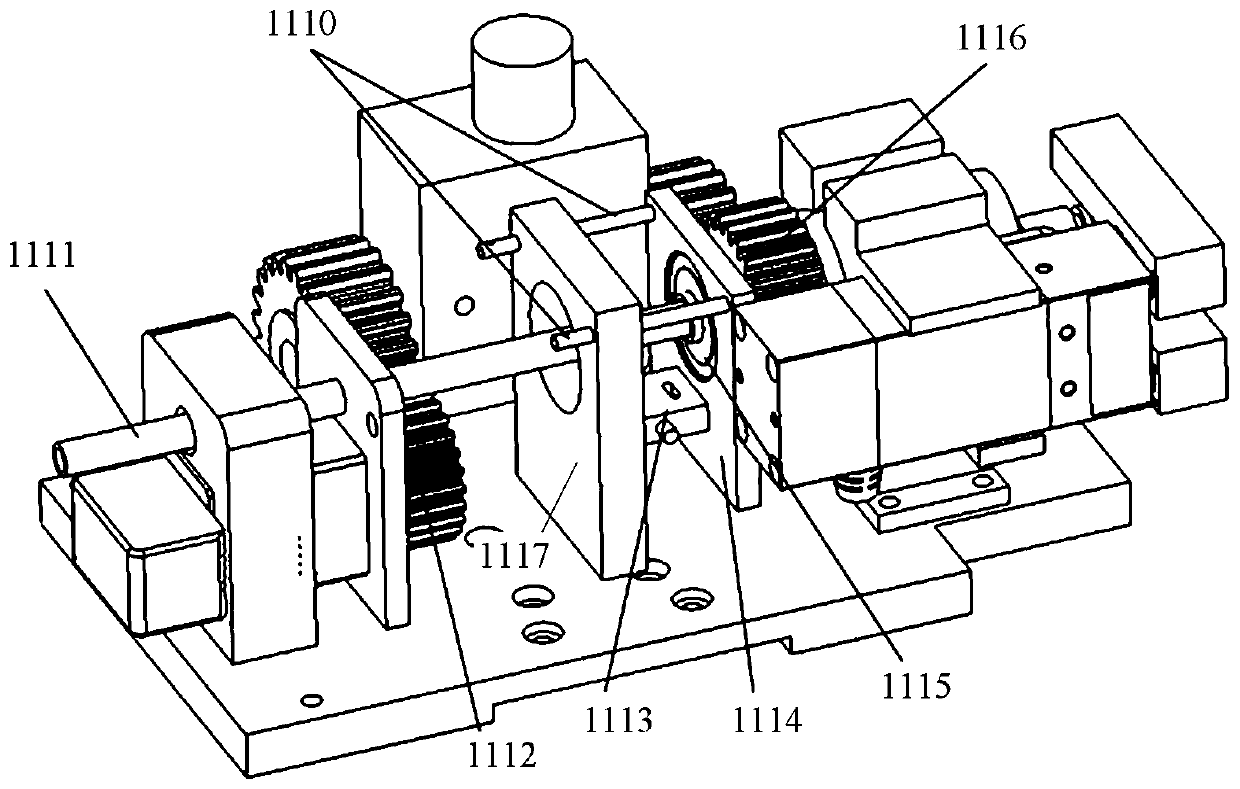

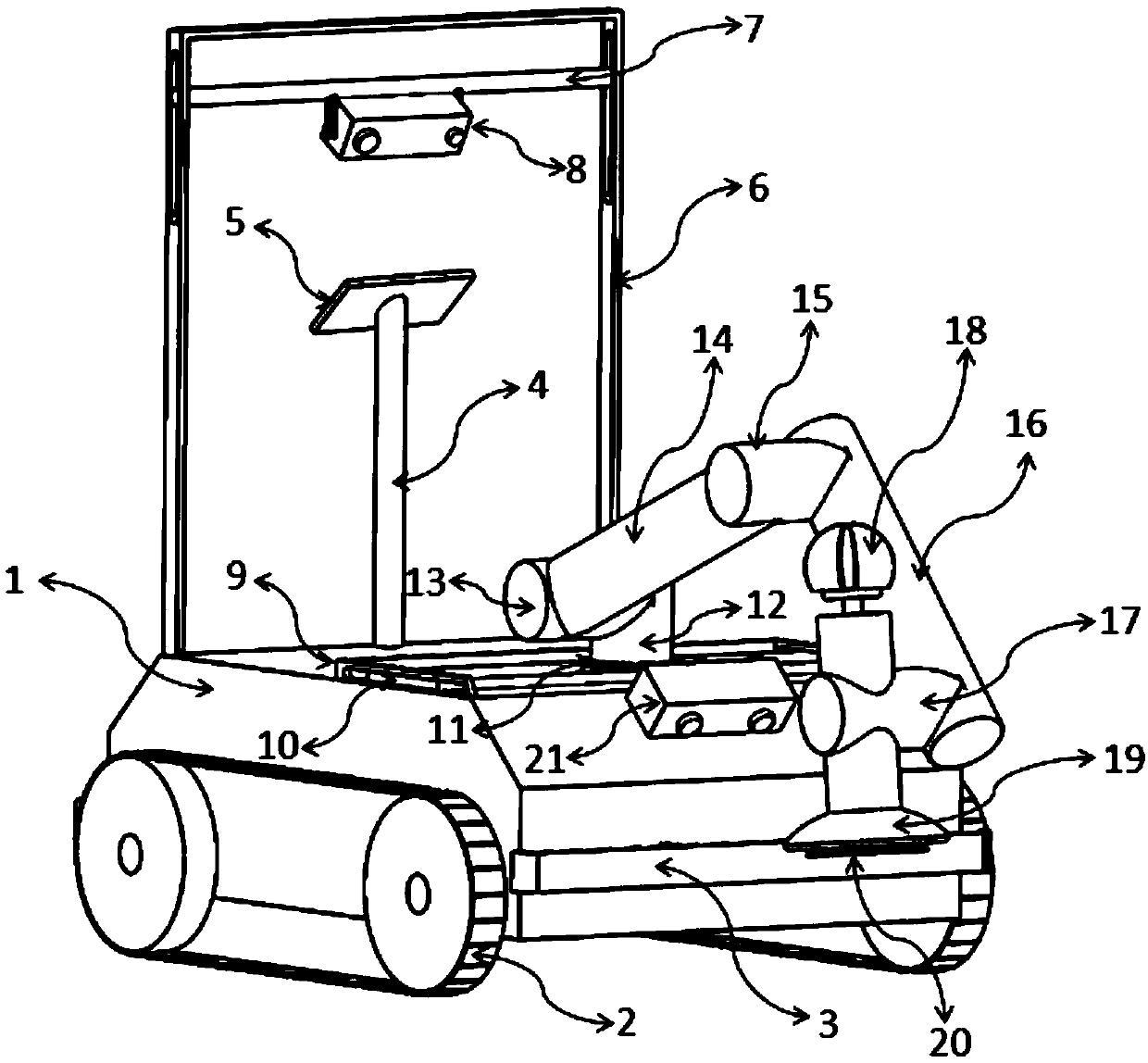

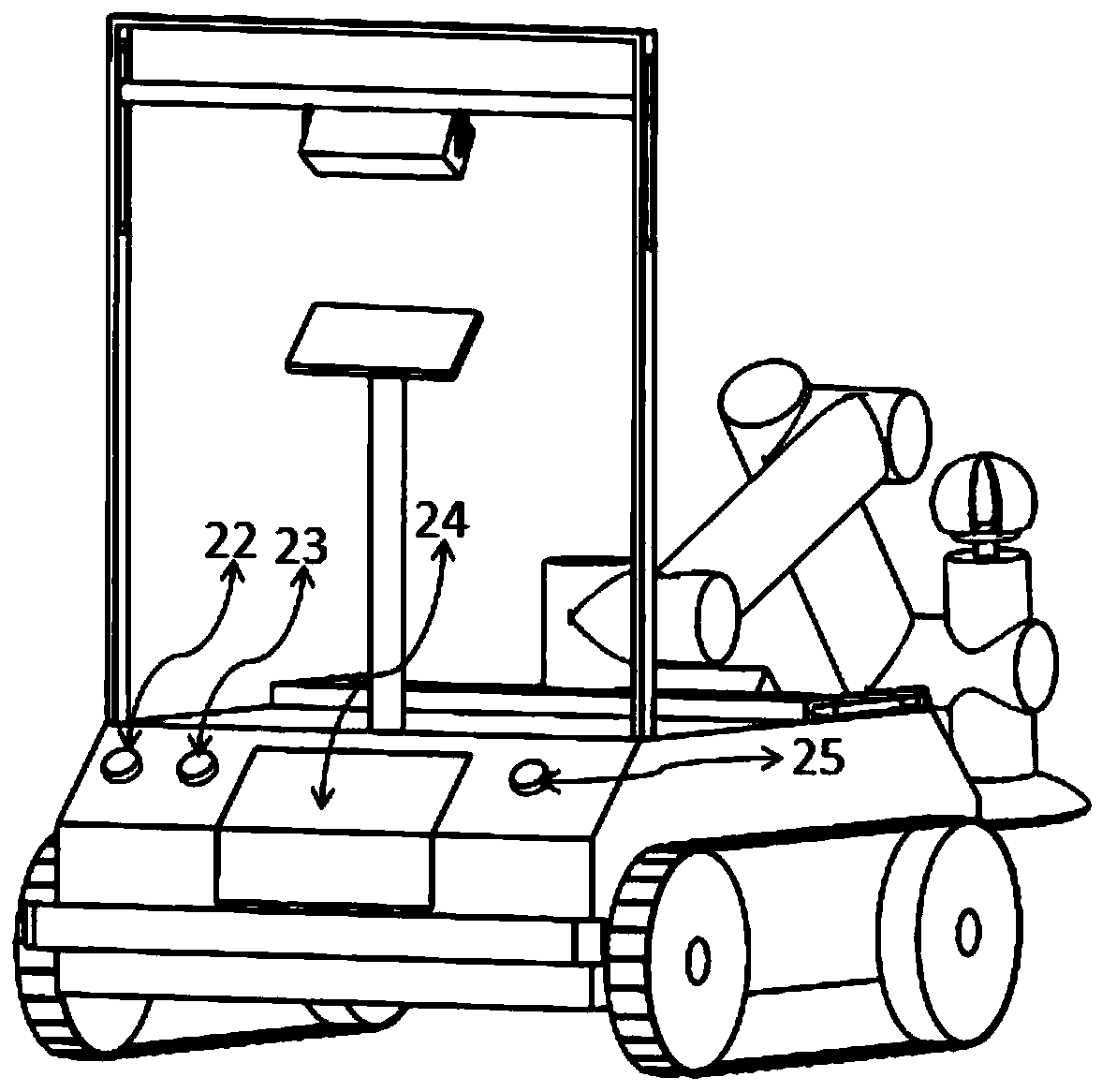

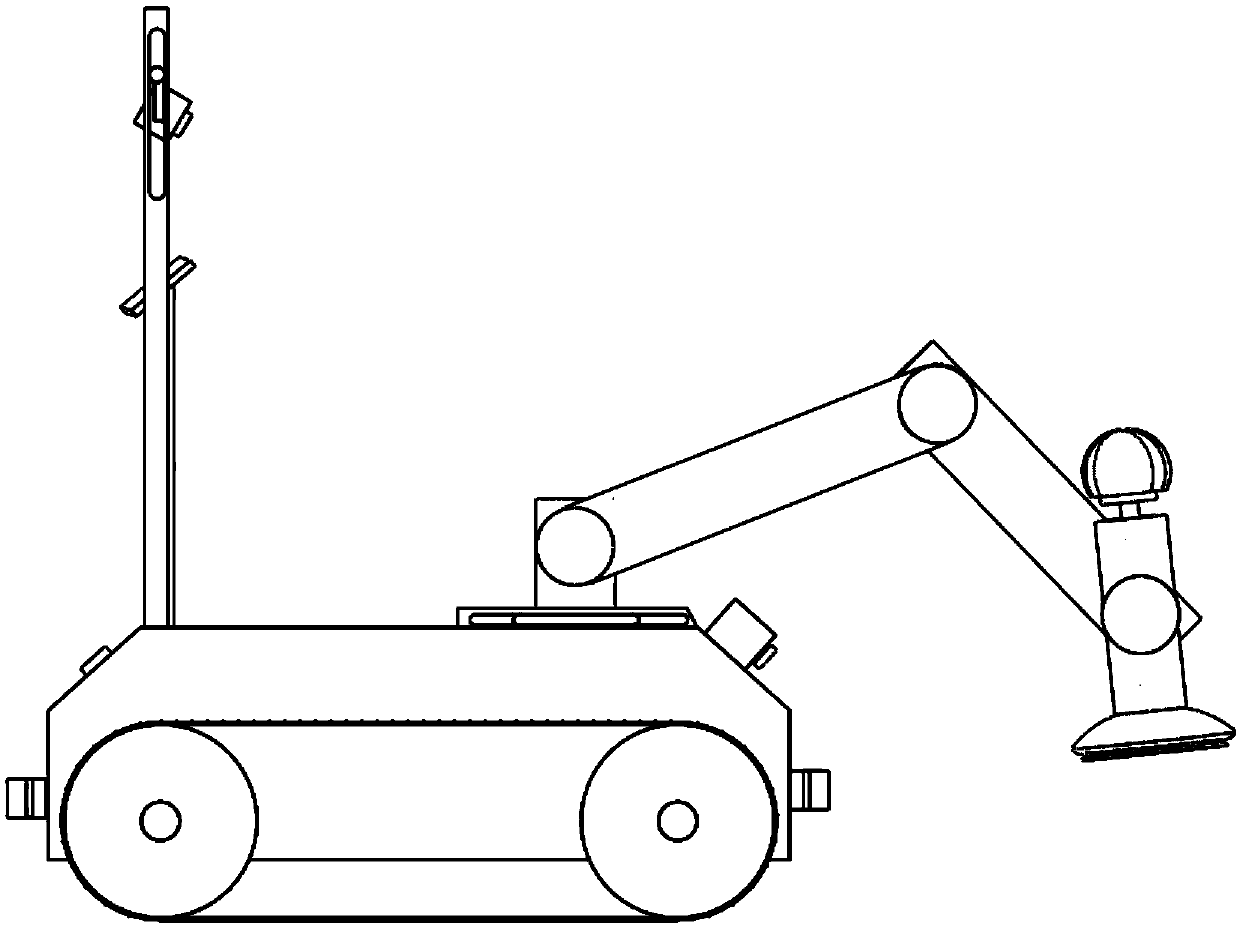

Vascular interventional surgery robot and device

ActiveCN110200700AImprove securityPlay a monitoring roleMedical devicesCatheterEngineeringAxial force

The invention provides a vascular interventional surgery robot and a vascular interventional device. The suffered axial force and torque of a guide wire can be truly measured by a guide wire rotationand force feedback integrated module of the robot, and a hardware basis is laid for developing a human-computer interaction function. The robot is additionally provided with a detachable outer shell to meet the needs of surgical disinfection and ensure clinical safety and hygiene requirements. At the same time, the vascular interventional device is additionally provided with a four-degree-of-freedom mechanical arm structure on the basis of the vascular interventional robot, the height, position and stable support of the guide wire propulsion module are adjusted according to operation requirements, and wheels are arranged below a mechanical arm to facilitate the overall transportation and movement of the vascular interventional surgery robot. In addition, in the vascular interventional surgery robot and device, the size of the propulsion mechanism module is reduced as much as possible, the production materials are saved and the occupied space is saved.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

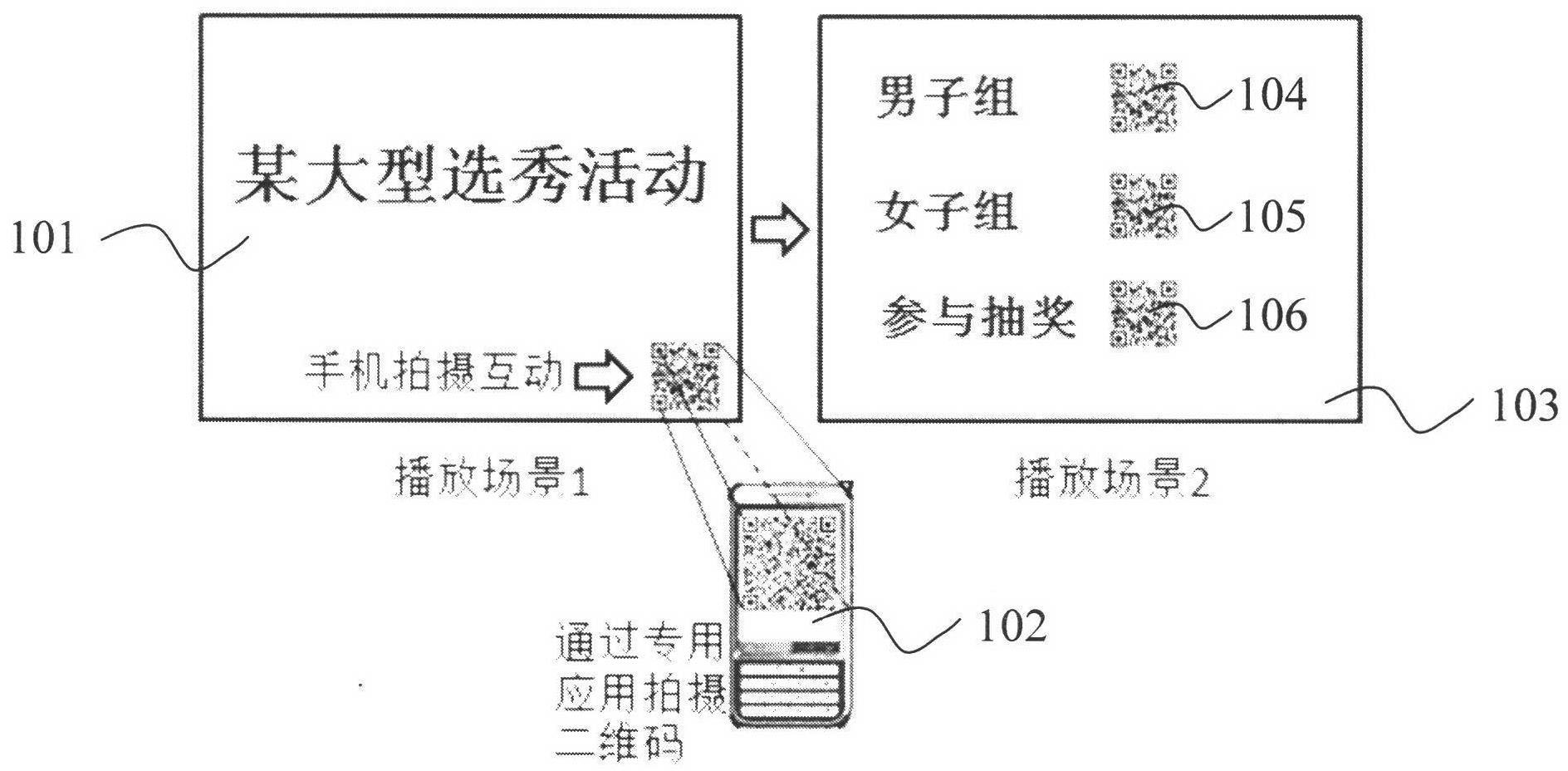

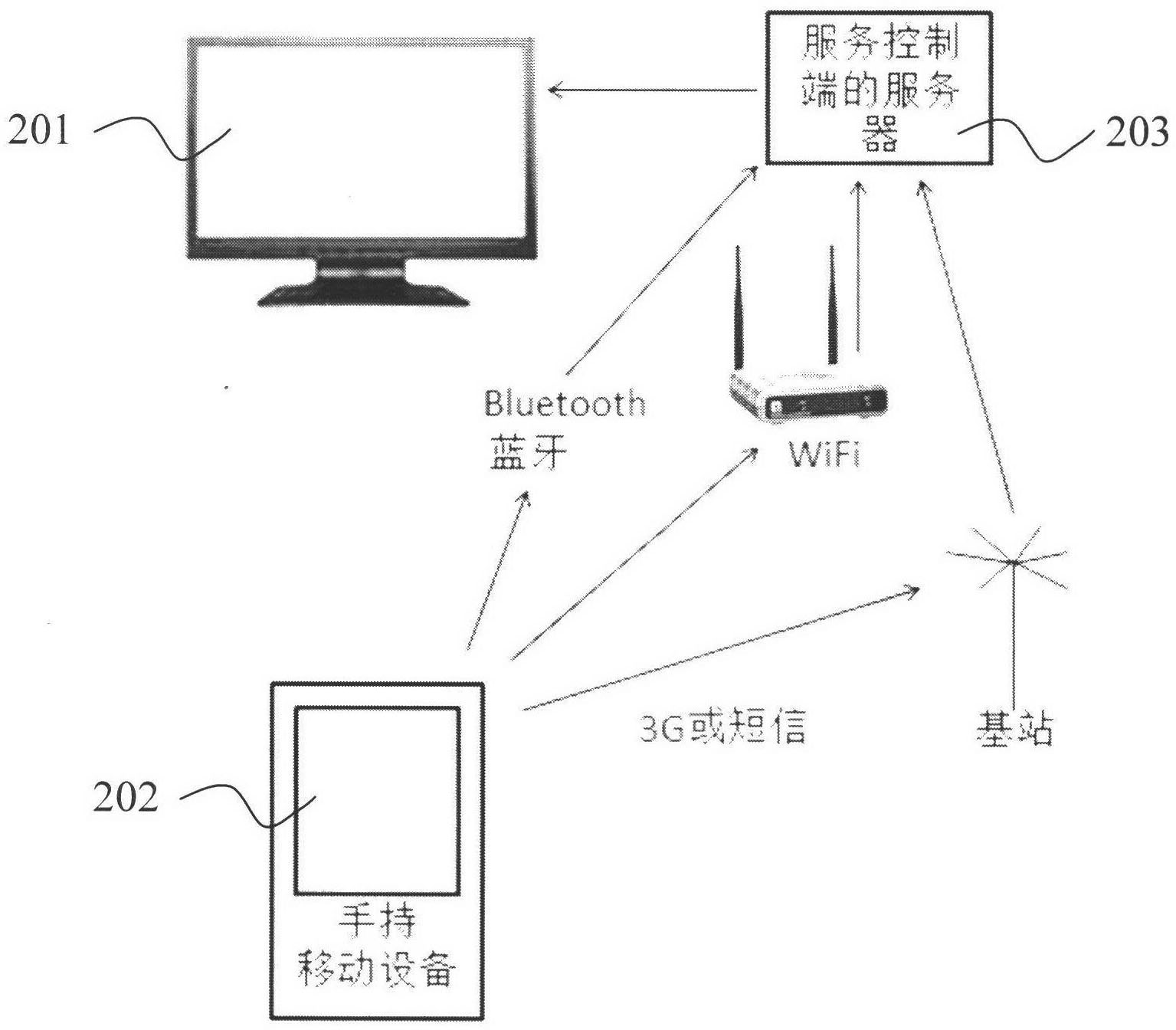

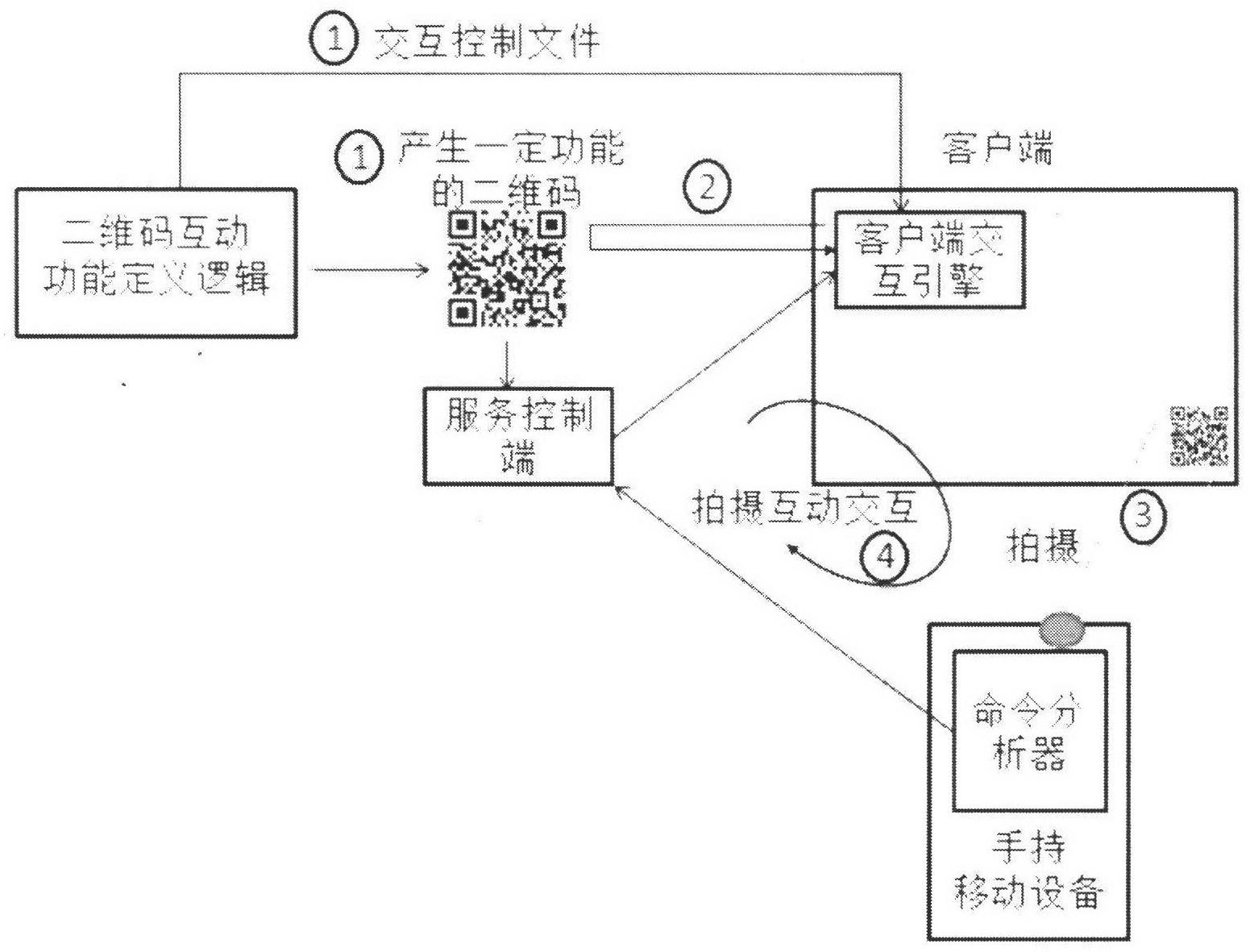

Medium interaction method based on two-dimensional codes and medium interaction system

ActiveCN102624697AWith interactive functionSensing record carriersTransmissionInteraction systemsInteraction control

An embodiment of the invention provides a medium interaction method based on two-dimensional codes and a medium interaction system. The method includes adding a two-dimensional code carrying a command in scenario content played on a screen of a client; shooting the two-dimensional code appearing in a scenario played on the screen by handheld mobile equipment; analyzing the command carried by the two-dimensional code by the handheld mobile equipment, and then transmitting the command to a service control terminal; transmitting the command to the client after the service control terminal receives the command; and controlling and modifying the scenario content displayed or played on the client according to the content of an interaction control file by an interaction control module embedded into the service control terminal or the client end. The medium interaction method and the medium interaction system have the advantages that a user interacts with a one-way push screen containing the two-dimensional code by the aid of application embedded into the mobile equipment such as a mobile phone and the like, and a frequently used non-contact LED screen can realize an interaction function.

Owner:孟智平

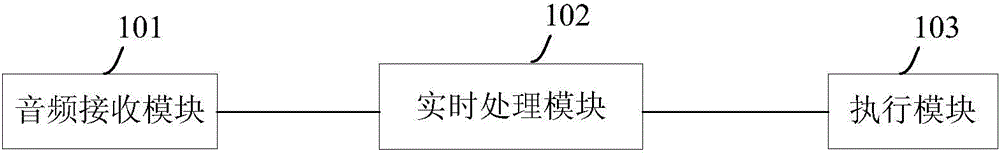

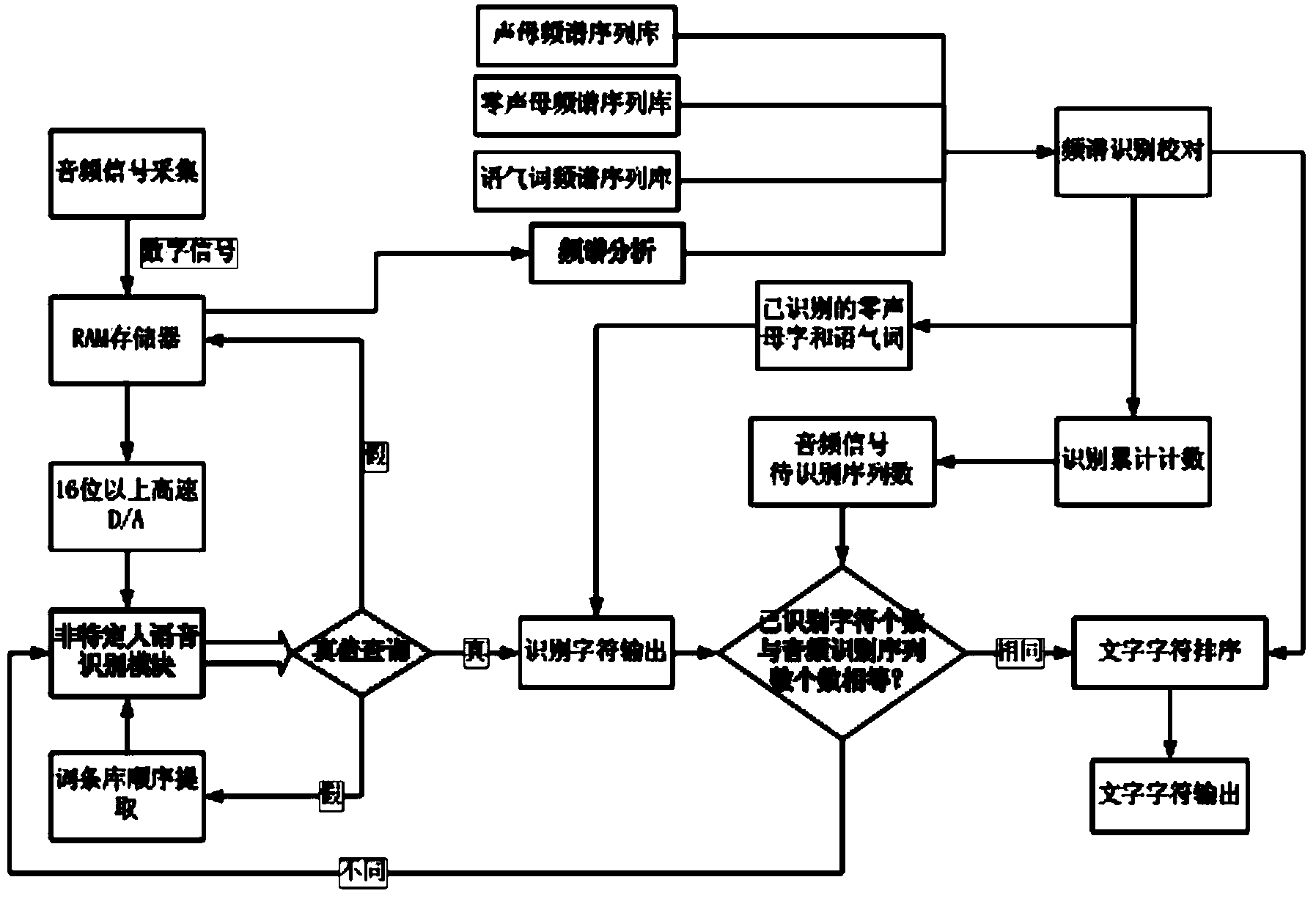

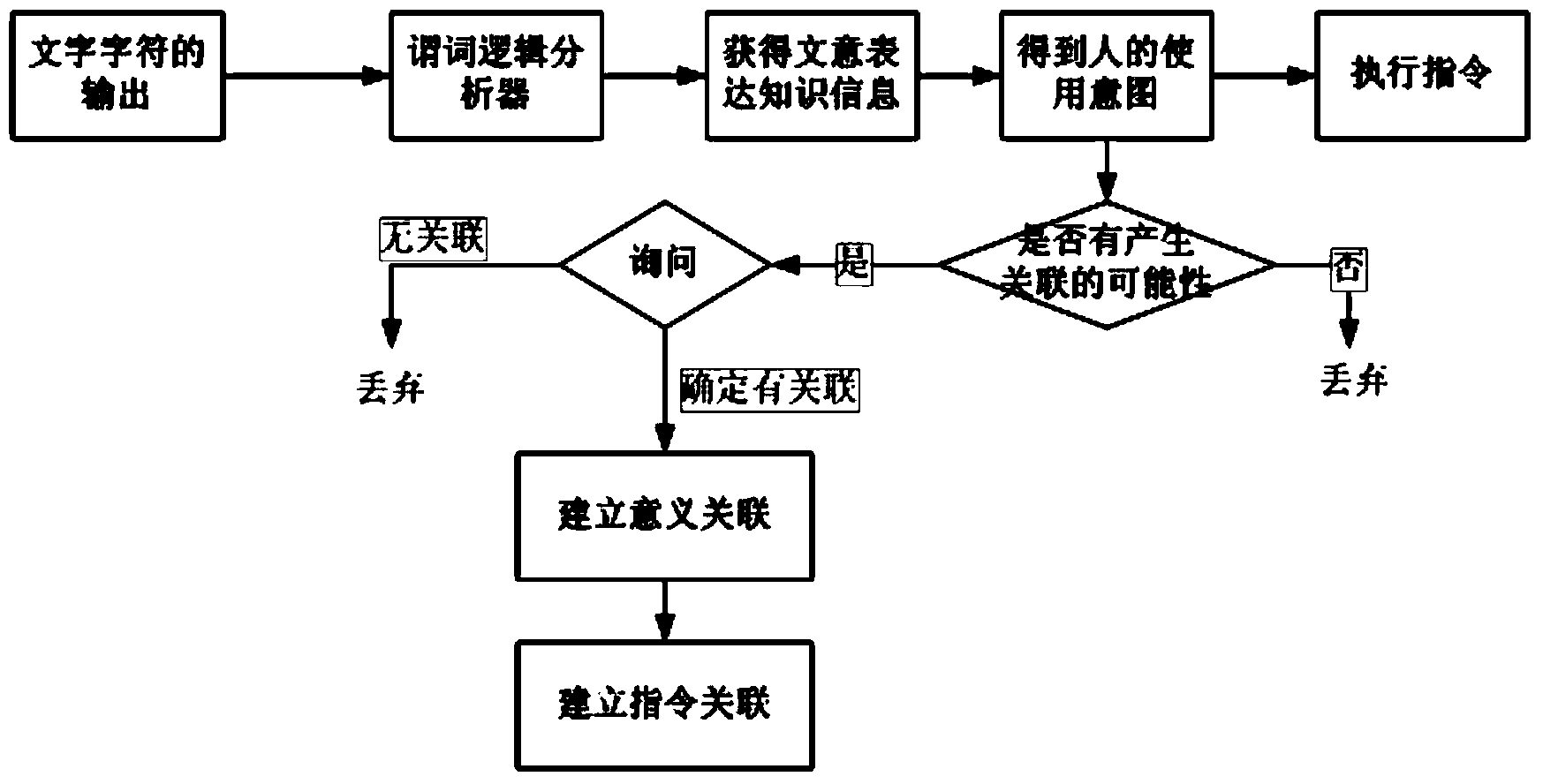

Intelligent interaction system and method

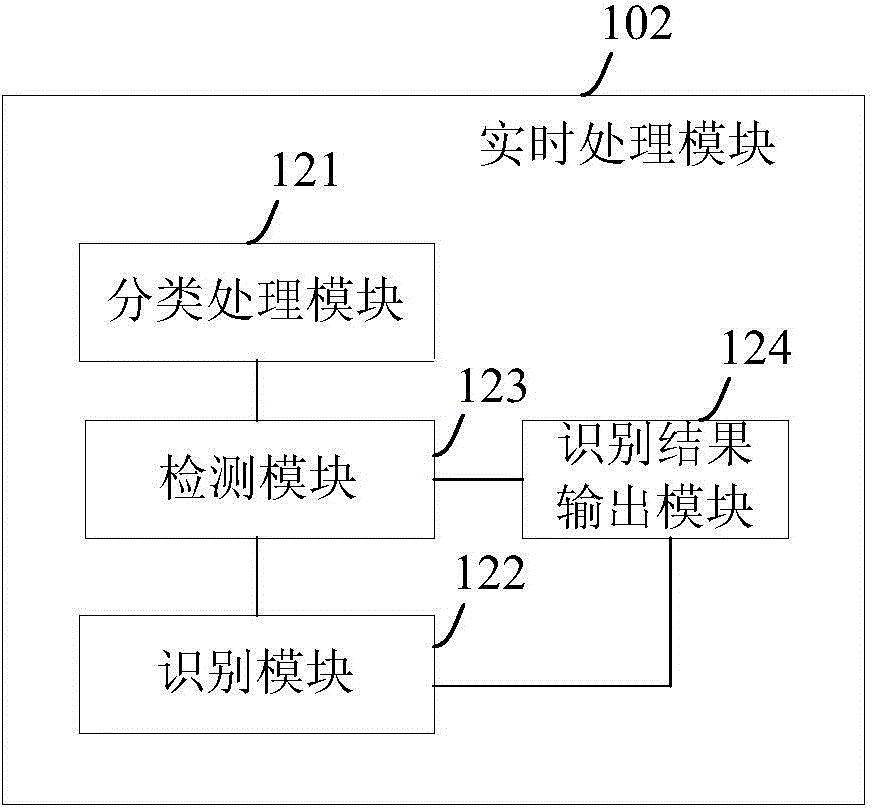

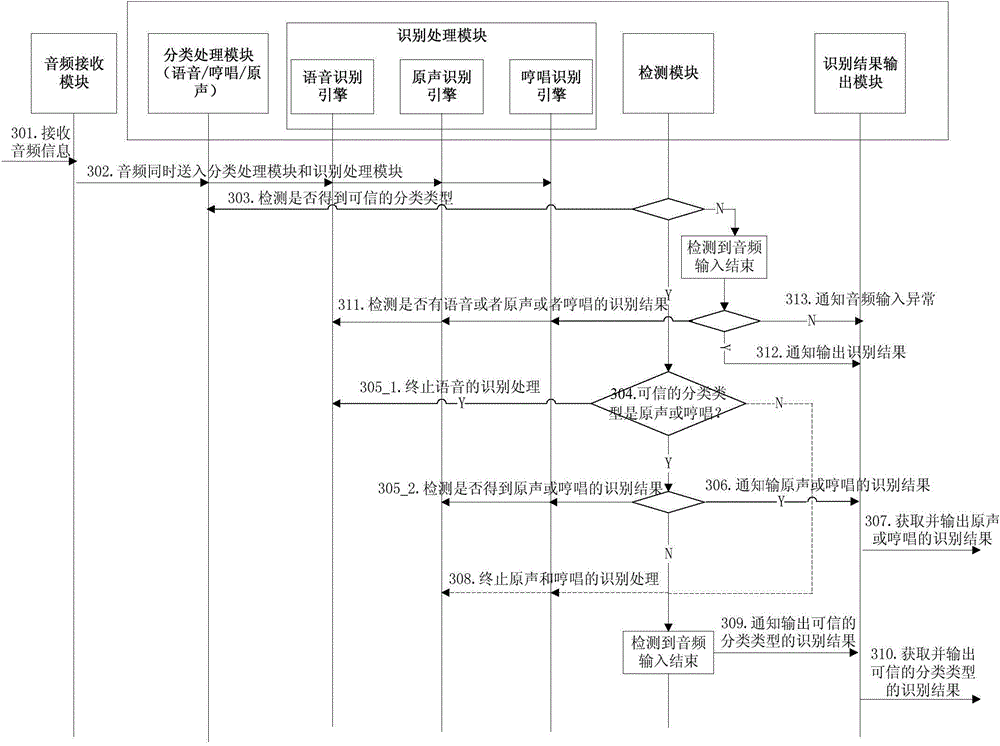

ActiveCN104867492AImprove experienceImprove interaction efficiencySpeech recognitionSpecial data processing applicationsInteraction systemsUser input

The invention relates to an intelligent interaction system and method. The system includes an audio receiving module, a real-time processing module and an execution module, wherein the audio receiving module is used for receiving audio information inputted by a user, the real-time processing module is used for performing parallel online real-time processing on the audio information, and the execution module is used for executing corresponding operation according to identification results transmitted by the real-time processing module. The parallel online real-time processing includes the following steps that: classification processing and identification processing corresponding to different types are performed on the audio information; if credible classification types are obtained before the ending of audio input, identification processing on classification types except the credible classification types is terminated; identification results corresponding to the credible classification types can be obtained and are transmitted to the execution module. With the intelligent interaction system and method of the invention adopted, the user can use audio identification and voice interaction functions easily and quickly, and user experience can be enhanced.

Owner:科大讯飞(北京)有限公司

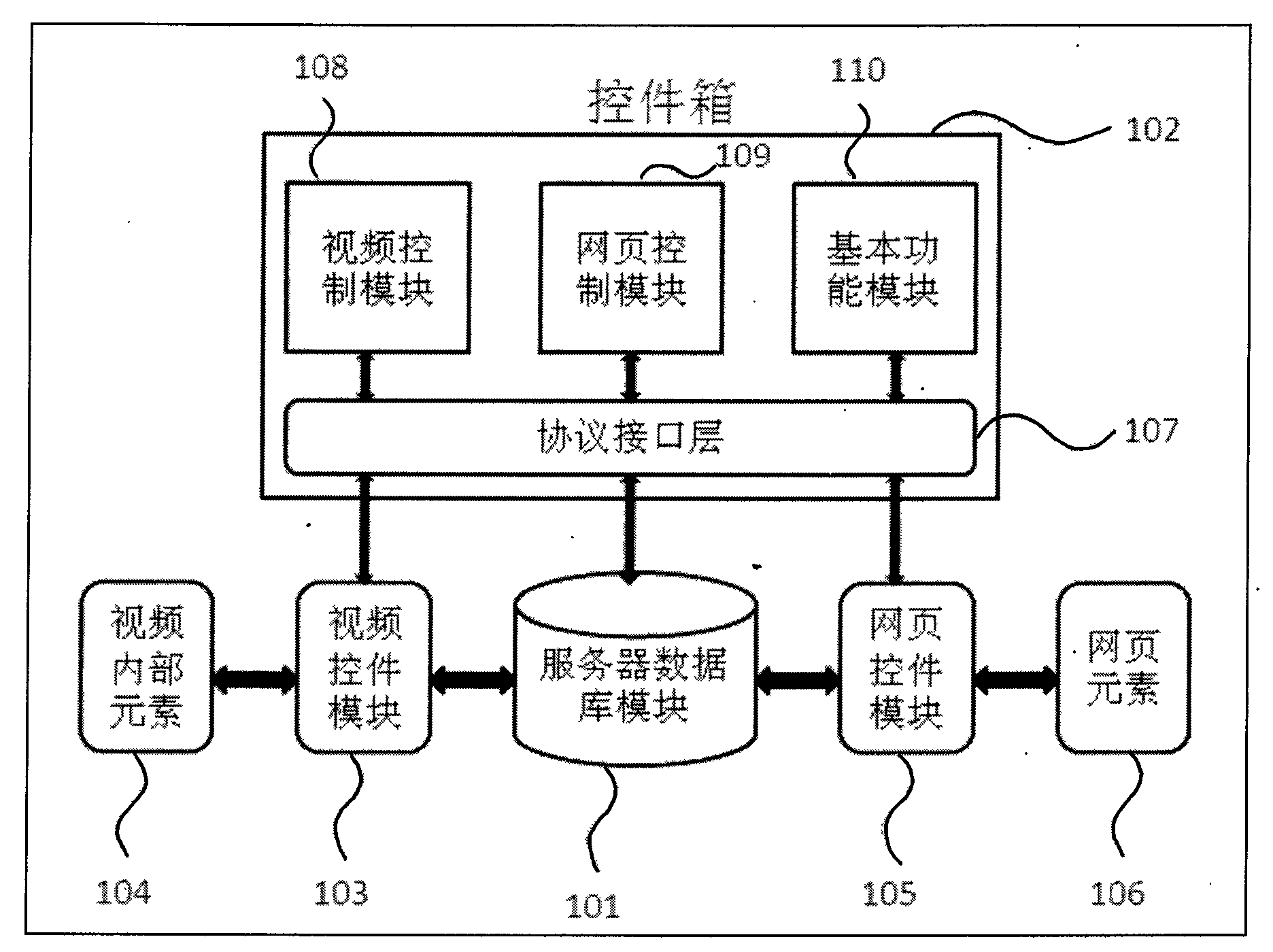

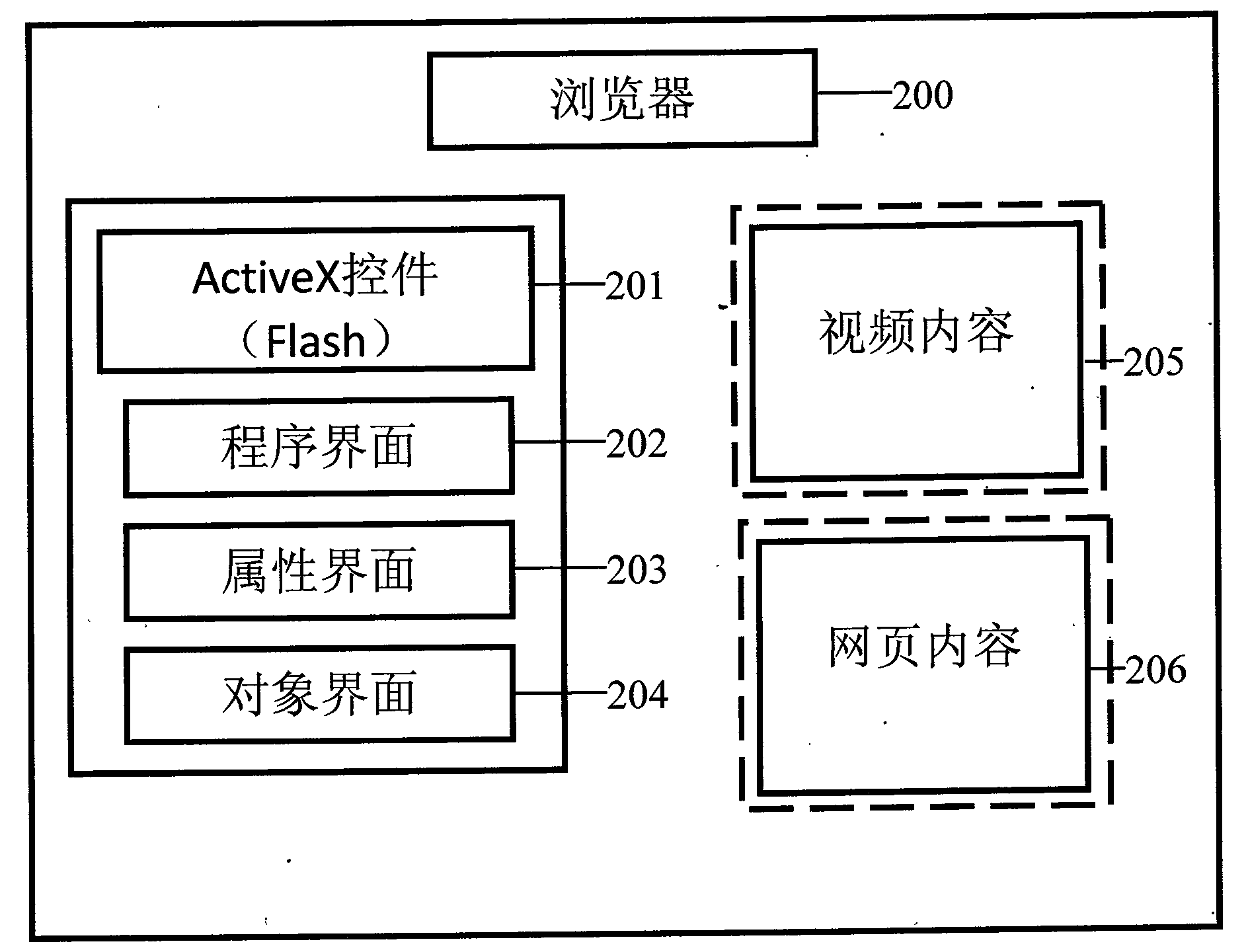

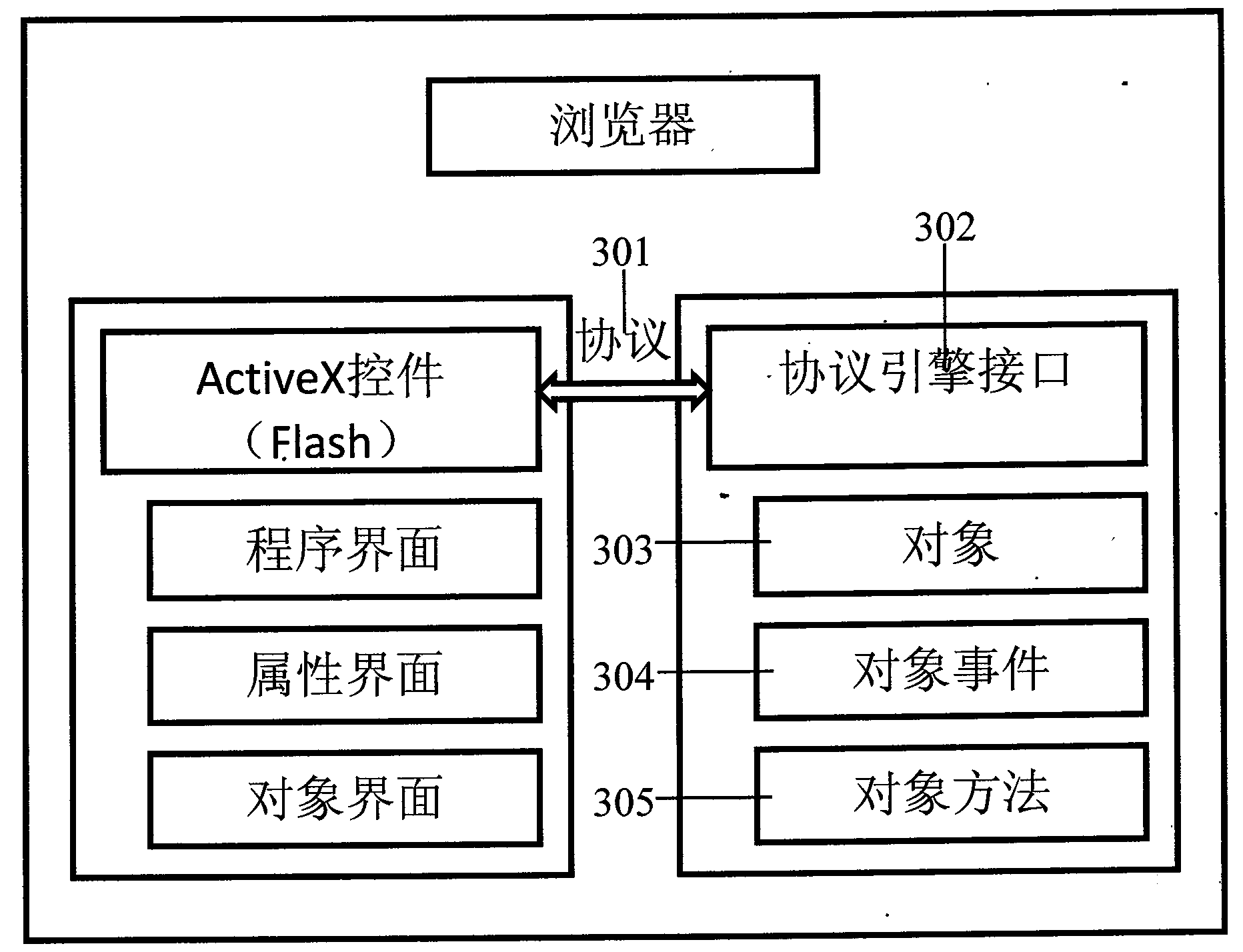

Method and system for interaction of video elements and web page elements in web pages

InactiveCN101630329ASimple structureRealize Personalized Video EditingSpecial data processing applicationsSpecific program execution arrangementsWeb browserResource description

The invention discloses a method for the interaction of video elements and web page elements in web pages. The method comprises the following steps: video inside elements and web page elements in web pages are established by a video control module and a web page control module in a component control box, an interaction relationship between the video inside elements and the web page elements is constructed, the video inside elements, the web page elements and the interaction relationship between the elements are stored as a resource description file, and the resource description file is transmitted to a server database; a web browser loads and analyzes the resource description file by accessing the server database, videos are played in the web pages, all the video inside elements and all the web page elements are constructed, and the interaction relationship between the elements is shown. The invention also discloses a system for the interaction of the video elements and the web page elements in the web pages. The invention has the advantages that the videos and the web pages can be edited much easily by the method and the system which are provided by the invention, and an interaction function between the video inside elements and the web page elements can be realized.

Owner:孟智平

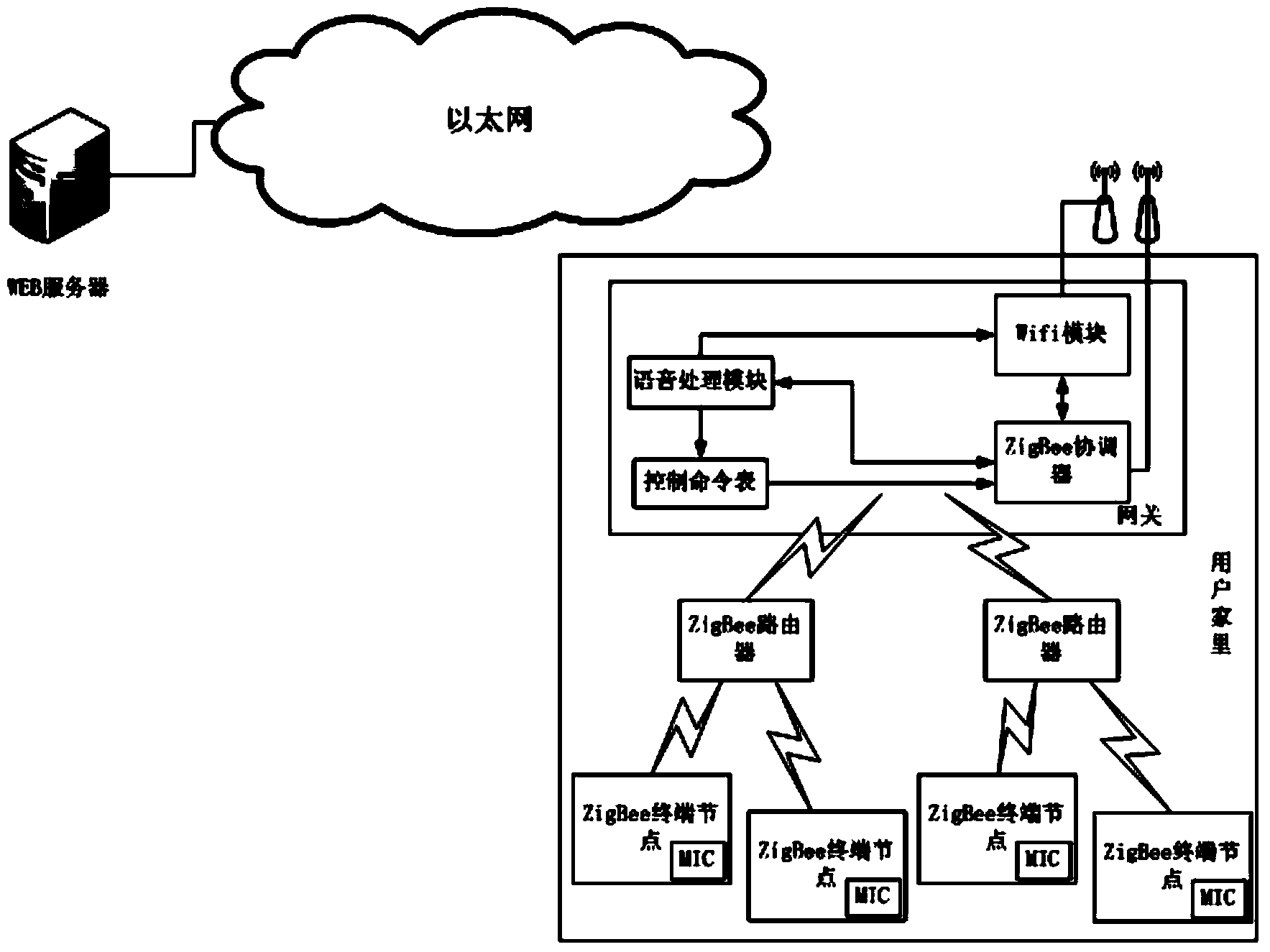

Voice interaction smart home system and voice interaction method

InactiveCN103745722ALow costFast operationSpeech recognitionProgramme total factory controlComputer terminalWorkload

The invention relates to the technical field of smart home, in particular to a smart home system capable of realizing the voice interaction function and a voice interaction method. The smart home system comprises a hardware terminal and a server terminal, wherein the hardware terminal consists of a gateway, a ZigBee router and ZigBee terminal nodes; a plurality of appliance equipment or sensors can be connected to the ZigBee terminal nodes; all the ZigBee terminal nodes have Mic voice input modules for acquiring voice analog information of users and converting the voice analog information to digital information; the digital information is transmitted to a ZigBee coordinator through the ZigBee router; the gateway consists of the ZigBee coordinator, a Wifi module, a voice processing module and a control command list module. Compared with the prior art, the smart home system has the following advantages: the operating rate of voice recognition can be prominently improved under the premise that the hardware cost is slightly incresed; the smart home system has the self-learning function, so that the default programming workload of various voice service environments at the early stage is saved; the accuracy of voice recognition is improved by adopting the spectral analysis method of a voice recognition module.

Owner:SHANGHAI GOLD SOFTWARE DEV

User self-help real-time traffic condition sharing method

InactiveCN101916509AReal-time graspAccurate graspRoad vehicles traffic controlTransmissionComputer terminalTraffic conditions

The invention belongs to the field of traffic management and particularly relates to a user self-help real-time traffic condition sharing method. In the method, a plurality of terminals and a platform are comprised, wherein the terminals are installed on vehicles of different users; and the platform is an information interactive center of all the terminals. A user can transmit a road condition query request to the platform through the terminal; and the platform can be used for sorting and analyzing the feedback of other terminal users in relevant areas and feeding the feedback back to the user. The sharing can be realized among users. By using the interaction function between the terminals and the platform and between the terminals, the invention can realize the real-time and accurate grasp of the users on the road condition information and the share among the terminal users on the road condition information.

Owner:BEIJING CARSMART TECH

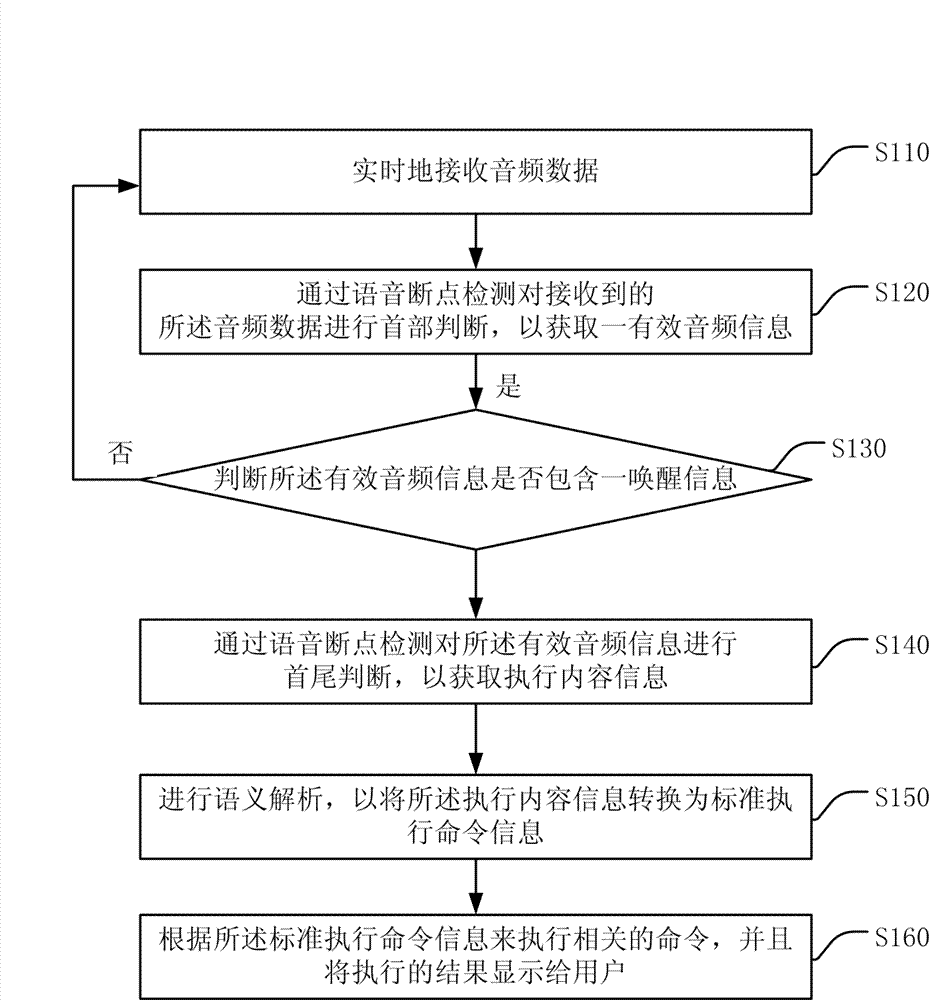

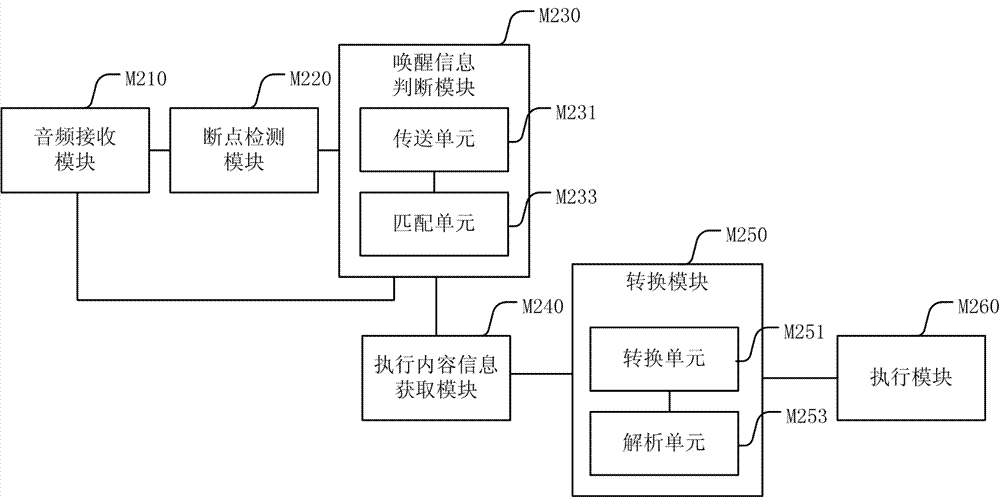

Voice control method and device thereof

The invention discloses a voice control method and a device thereof. The control method comprises the steps of: (1) receiving audio data in real time; (b) performing head part judgment on the received audio data through voice breakpoint detection to obtain valid audio information; (c) judging whether the valid audio information includes awakening information, and if the awakening information being included, further executing step (d), otherwise executing step (a); (d) performing head part and tail part judgment on the valid audio information through voice breakpoint detection to obtain executive content information; (e) performing semantic parsing to convert the executive content information to standard executive command information; and (f) executing a related command according to the standard executive command information and displaying an executive result to a user. The voice control method and the device thereof provide a novel intelligent voice interaction environment, and enable the user to use a voice interaction function efficiently and conveniently.

Owner:何永

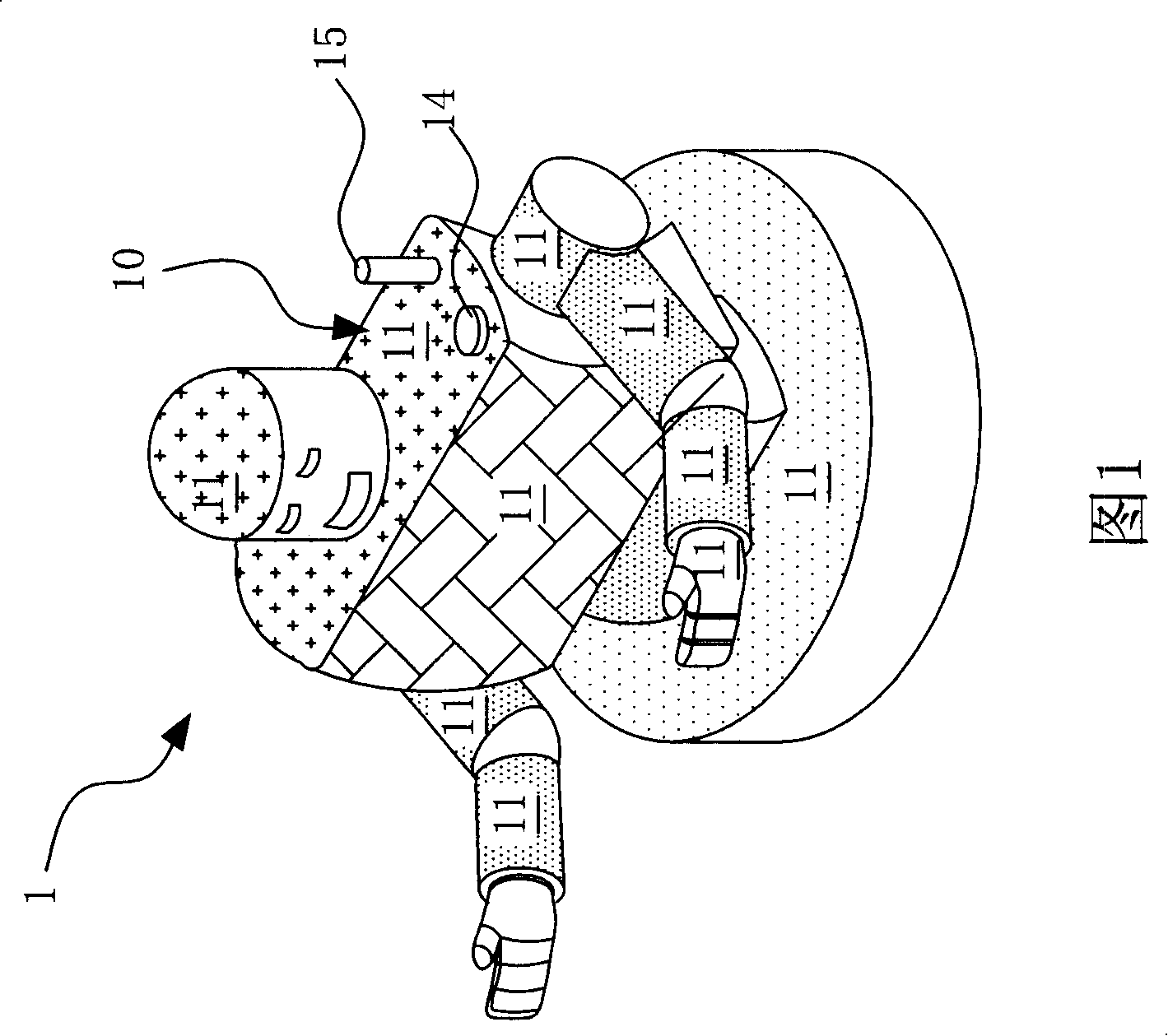

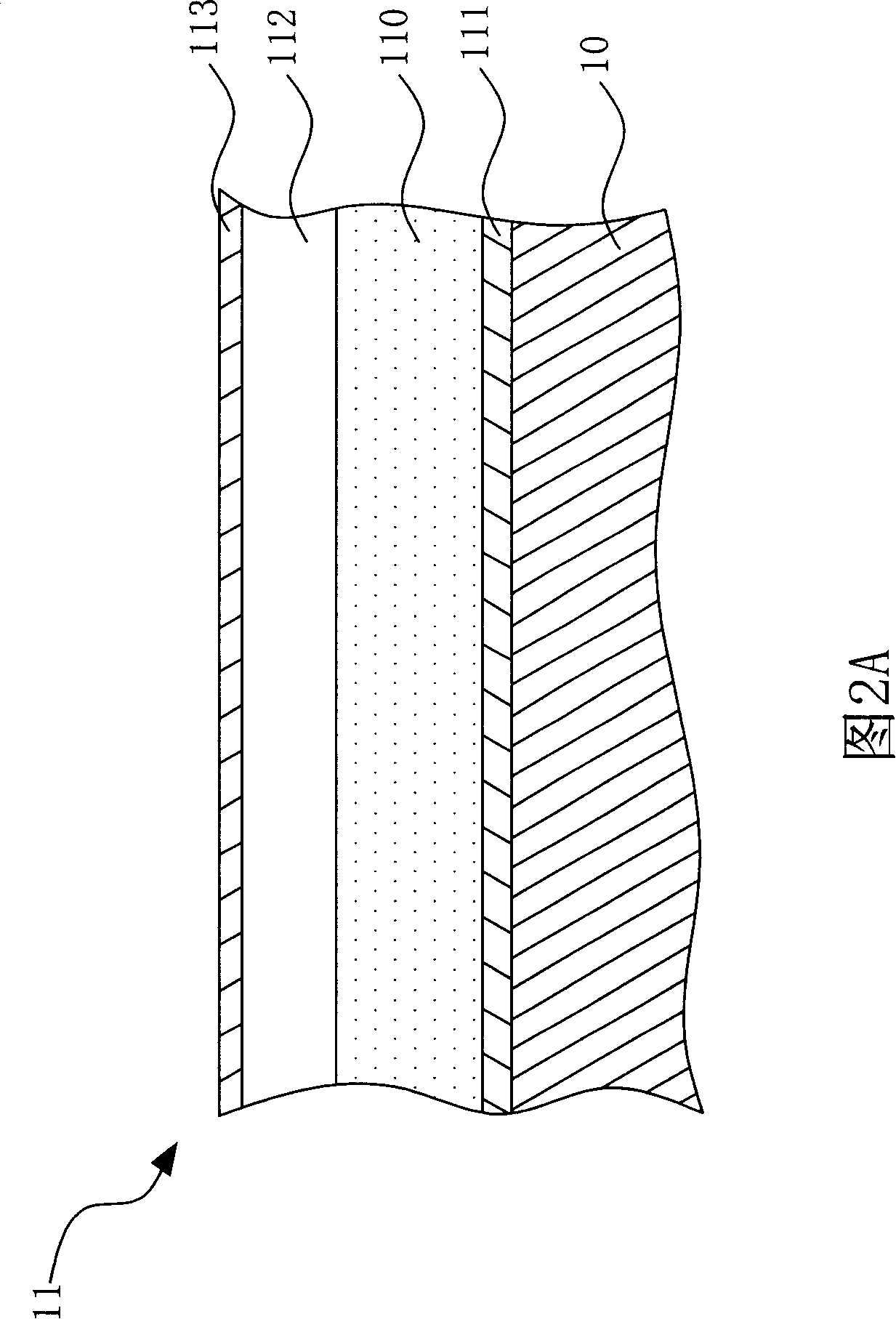

Movable device with surface display information and interaction function

InactiveCN101206522AInput/output for user-computer interactionSelf-moving toy figuresSurface displayComputer graphics (images)

The invention provides a mobile device with surface displaying information and an interactive function which attaches the skin unit to the surface of a moving-object which is provided with the ability of motion expressing. Thus the crust becomes a skin which can display information and the colors, patterns and text information can be changed under the control of software. The flexible display device can also be provided with a layer of a touch sensing unit to sense the input interactive signals. Besides, the moving-object can integrate an environmental state sensing device or information identification device, and the environmental state recognition, text information recognition or image video recognition can be judged as input signals by the wire and wireless technologies, and thus the aims of integrating multiple input signals and output expression signals can de achieved and the interactivity of the moving-object is improved.

Owner:IND TECH RES INST

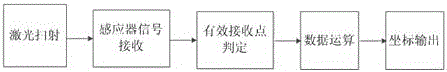

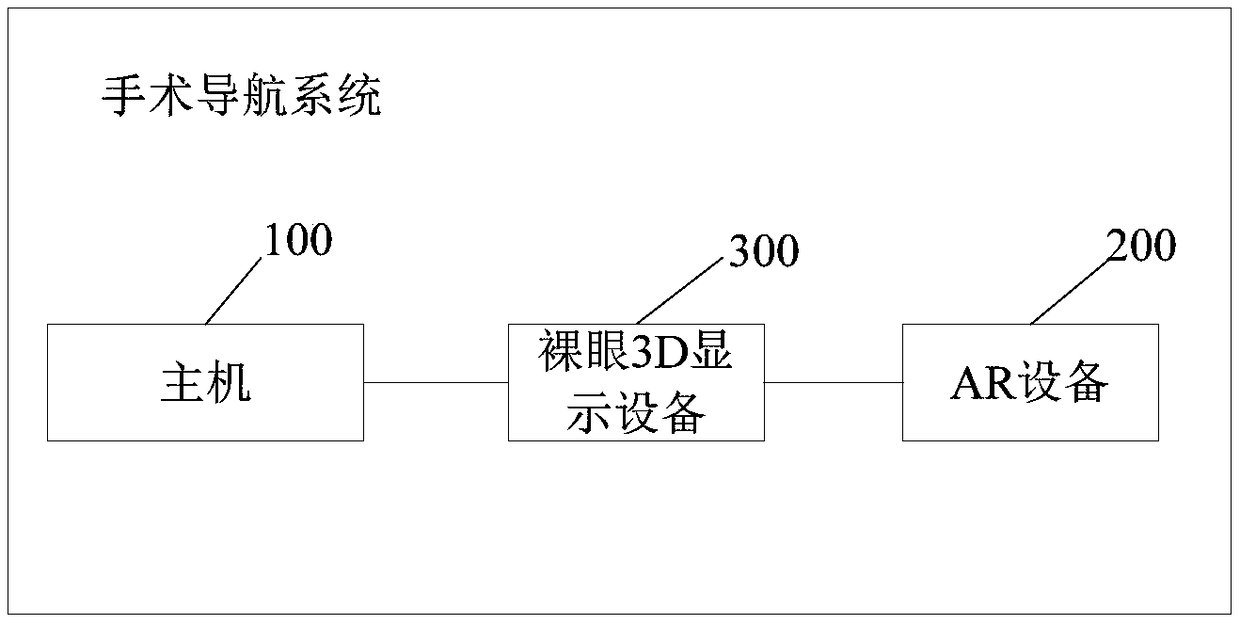

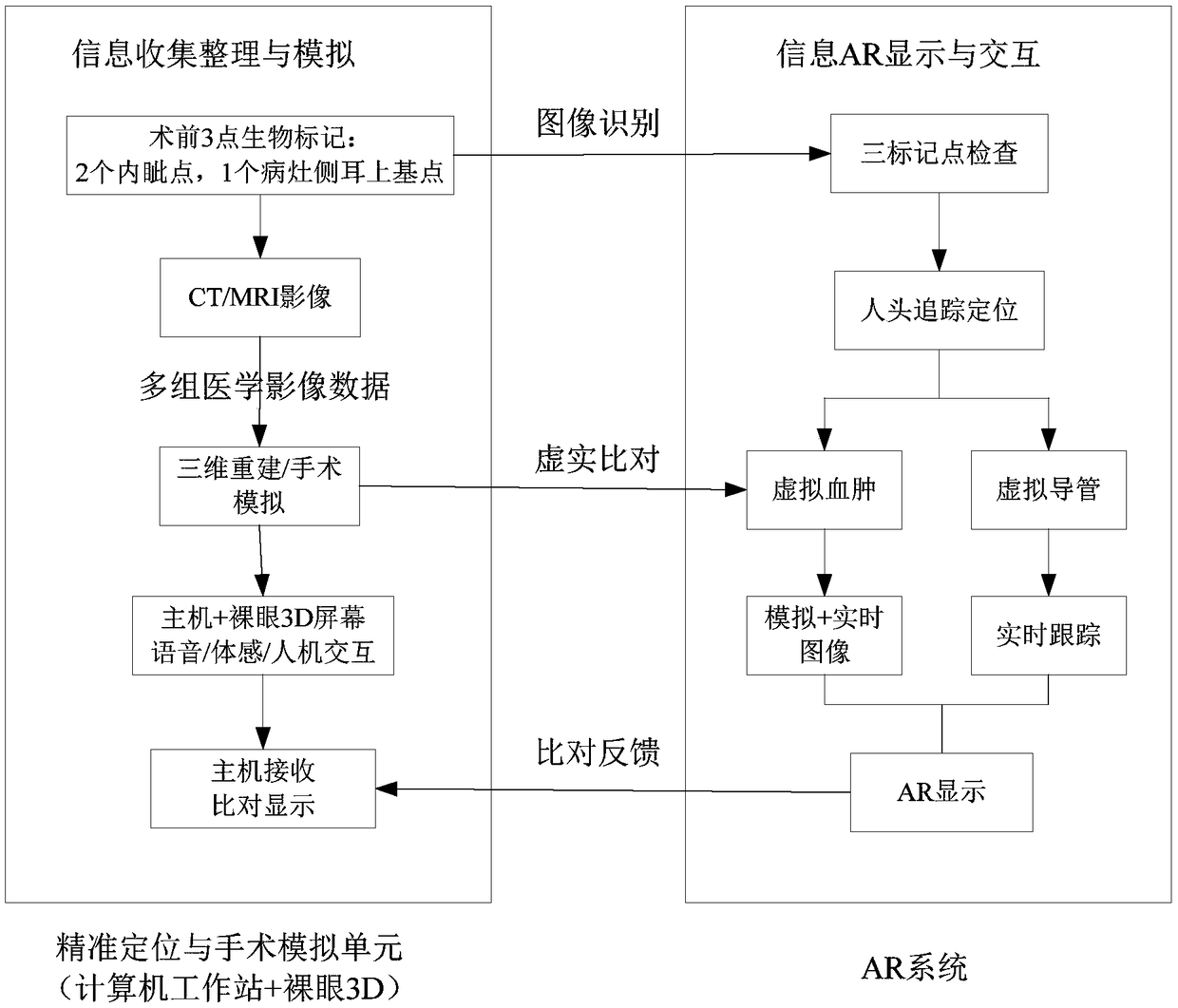

Navigation system for intracerebral hemorrhage puncture surgery based on medical image model reconstruction and localization

InactiveCN109223121AEasy to observeEffectively Assist Minimally Invasive Oriented Puncture Operation of Cerebral HemorrhageSurgical needlesSurgical navigation systemsModel reconstructionDisplay device

A navigation system for intracerebral hemorrhage puncture surgery based on medical image model reconstruction and localization is disclosed in the embodiment of the invention, Positioned navigation system for intracerebral hemorrhage puncture surgery, including hosts, AR device and naked-eye 3D display device, The host computer receives the medical CT / MRI data of patients with intracerebral hemorrhage, reconstructs the model of multi-group patients with intracerebral hemorrhage, processes the model butt joint, obtains the three-dimensional virtual model of patients' skull and hematoma, and displays the three-dimensional virtual model on the naked eye 3D display device. The host computer receives the medical CT / MRI data of patients with intracerebral hemorrhage. According to the 3D virtualmodel and the angle depth information, the host computer simulates the operation navigation and connection to import the AR device. AR devices are used for feature point detection, spatial registration between virtual hematoma and real hematoma, real-time catheter tracking, holographic display of virtual hematoma and virtual catheter, voice and somatosensory interaction with physicians. The embodiment of the invention can reduce medical cost and provide human-computer interaction function.

Owner:广州狄卡视觉科技有限公司

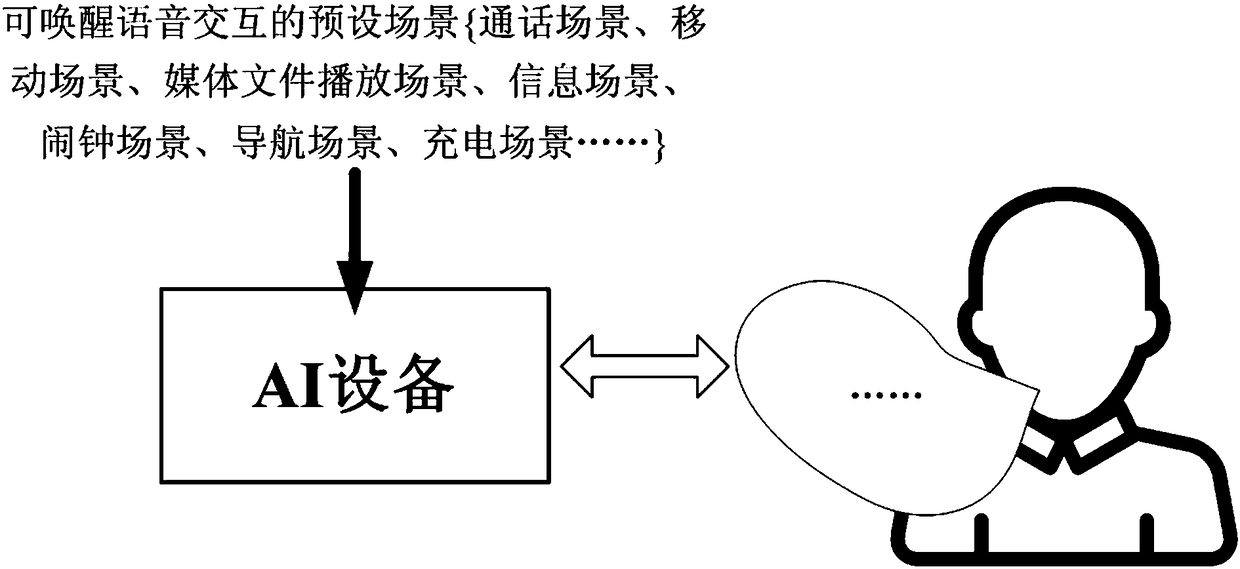

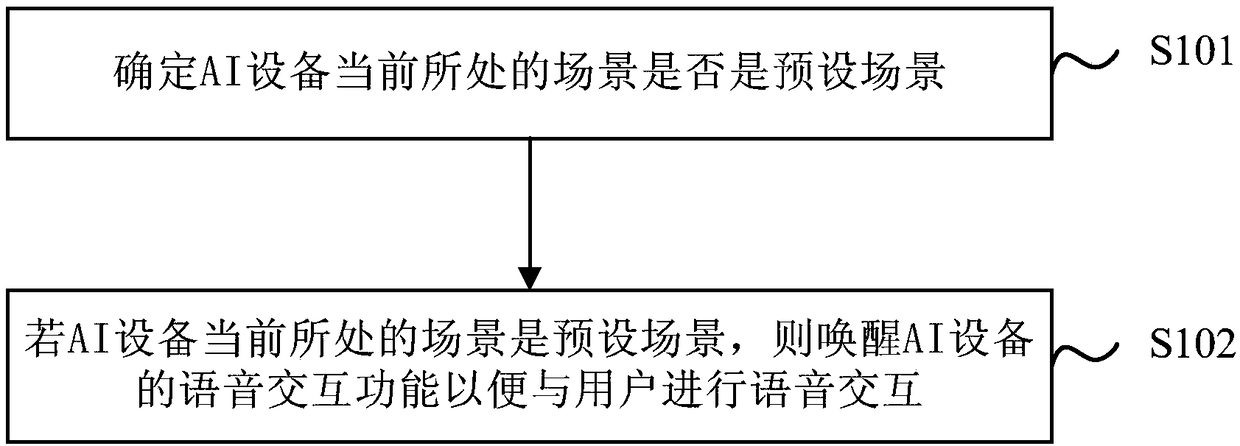

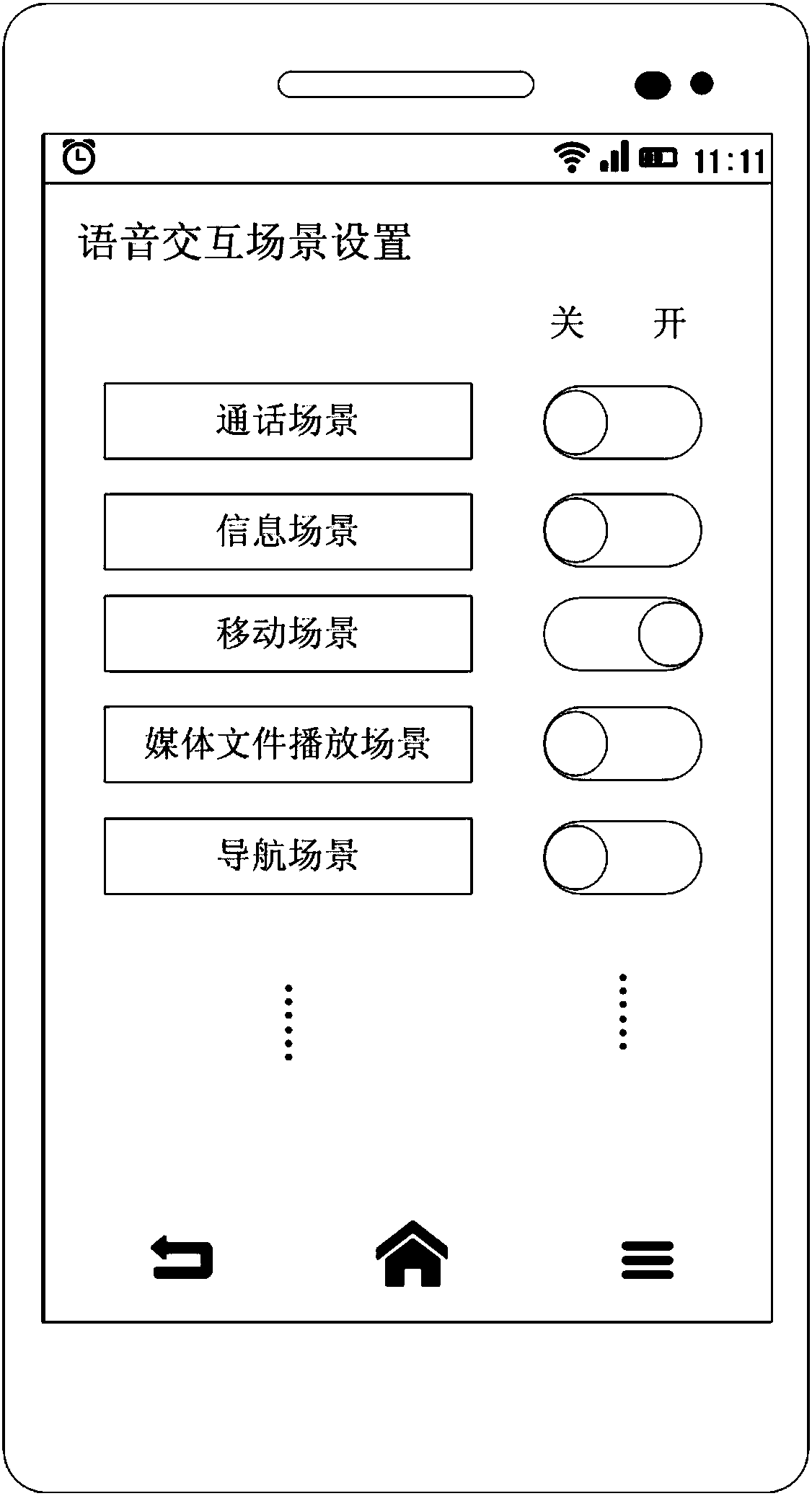

Voice interaction method and device, equipment and storage medium

InactiveCN108337362ASimplify the use processReduce learning costsSubstation equipmentSpeech recognitionSpeech soundInteraction function

The invention provides a voice interaction method and device, equipment and a storage medium. The voice interaction method is applied to AI equipment to determine whether the scene where the AI equipment locates is a preset scene, and to wake up a voice interaction function of the AI device to enable voice interaction between the AI equipment and a user if the scene where the AI equipment locatesis the preset scene. As the voice interaction process is triggered directly through the scene, the process of waking up via physical wakeup or a wakeup word is omitted, the use process of the voice interaction is simplified, the learning cost of voice interaction is reduced, and the user experience is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Intelligent weeding robot system and control method based on depth vision

ActiveCN109511356APlay a protective effectReduced risk of collisionProgramme-controlled manipulatorSoil-working equipmentsRobotic systemsDrive wheel

The invention discloses an intelligent weeding robot system based on depth vision, and belongs to the technical field of intelligent robots. The system includes an industrial personal computer, a power module, a dolly body, a crawler wheel, a hydraulic claw and weeding equipment. The crawler wheel driven by a driving wheel drive device is installed on the dolly body. The hydraulic claw and the weeding equipment are installed on the dolly body through a weeding mechanical arm device used for adjusting the postures of the hydraulic claw and the weeding equipment. The driving wheel drive device,the hydraulic claw and the weeding equipment are all powered by the power module and controlled by the industrial personal computer. The system further includes a depth camera module for measuring therelative position relationship of weeds, crops and the dolly body. The system has the advantages that the interaction function of two mechanical arms can be achieved by the interactive work of a single mechanical arm, and meanwhile a precise weeding method is adopted and can effectively reduce the secondary damage to crops and greatly promote the application and development of intelligent weedingrobots on the premise of ensuring the weeding efficiency and accuracy.

Owner:ANHUI AGRICULTURAL UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com