Patents

Literature

2521 results about "Information fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

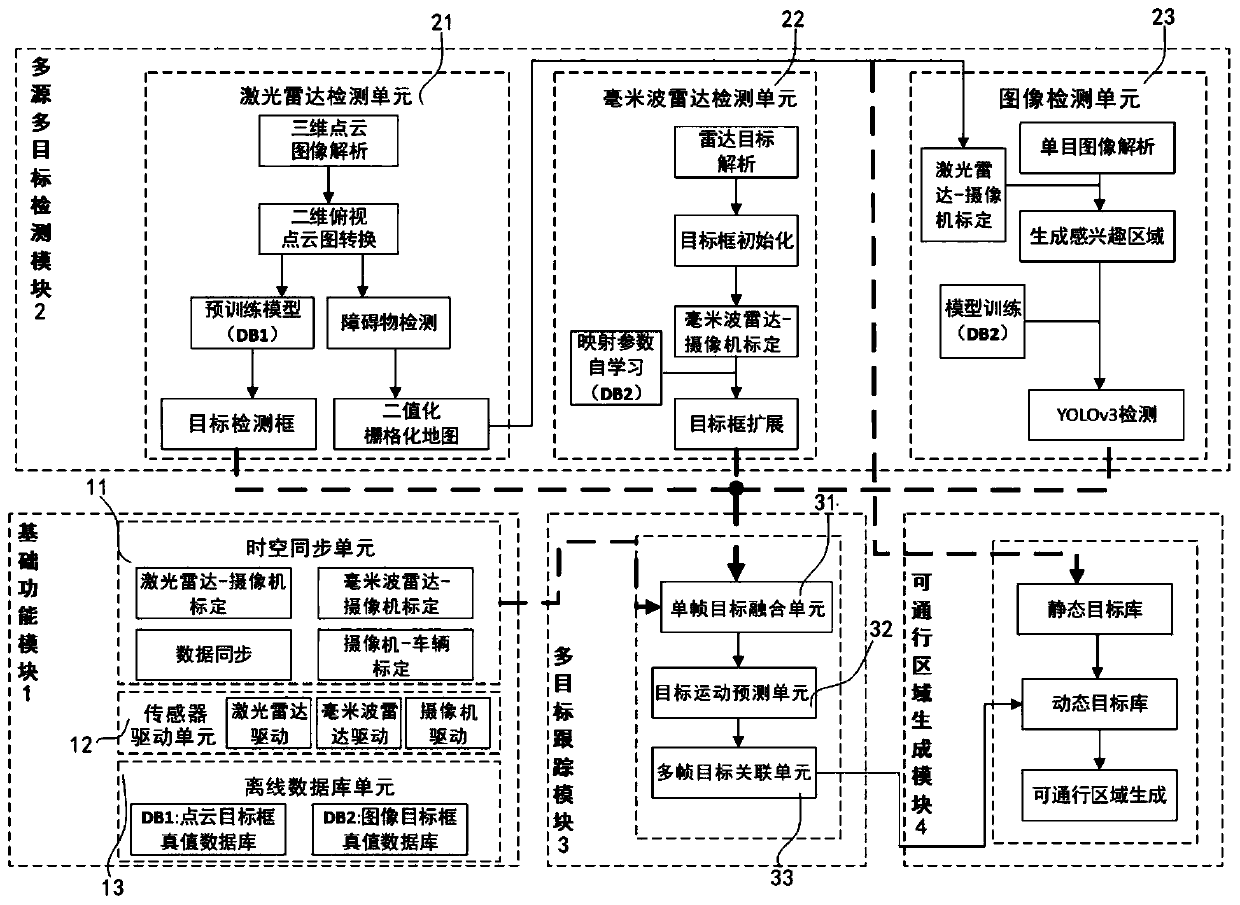

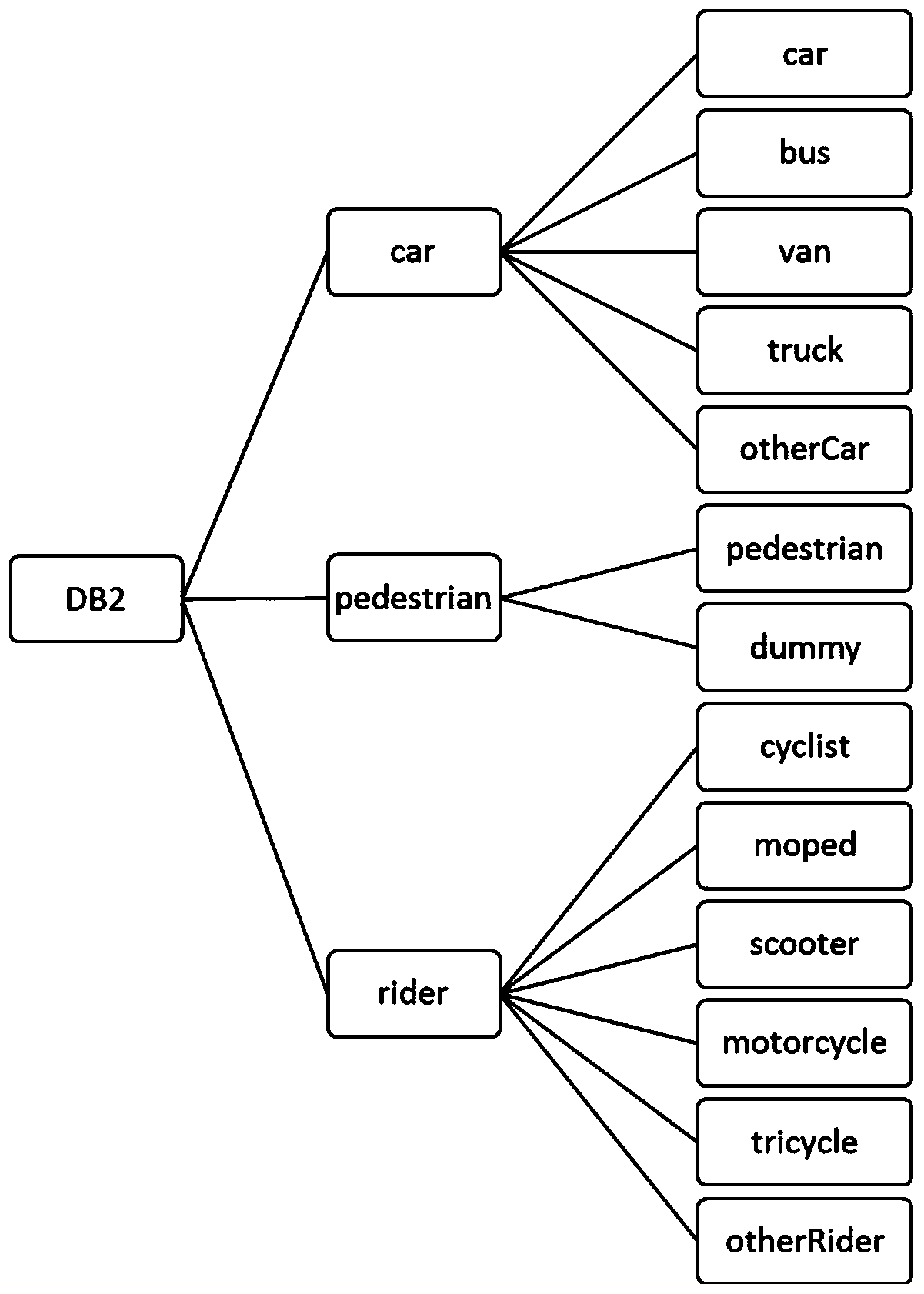

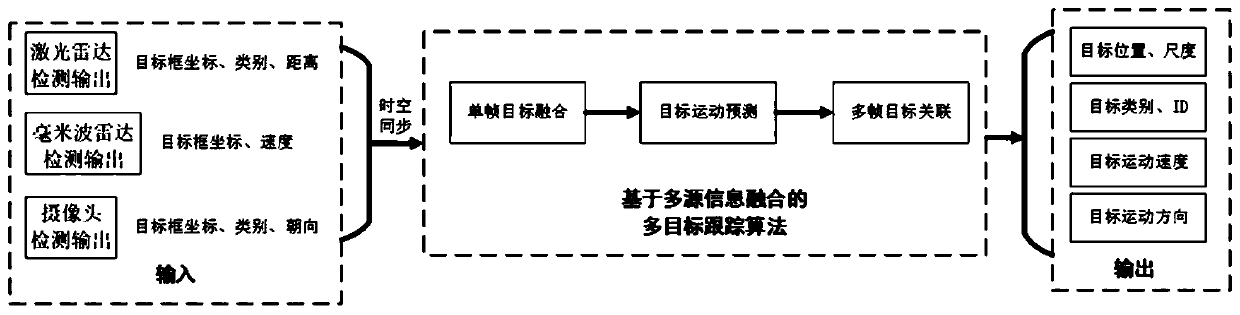

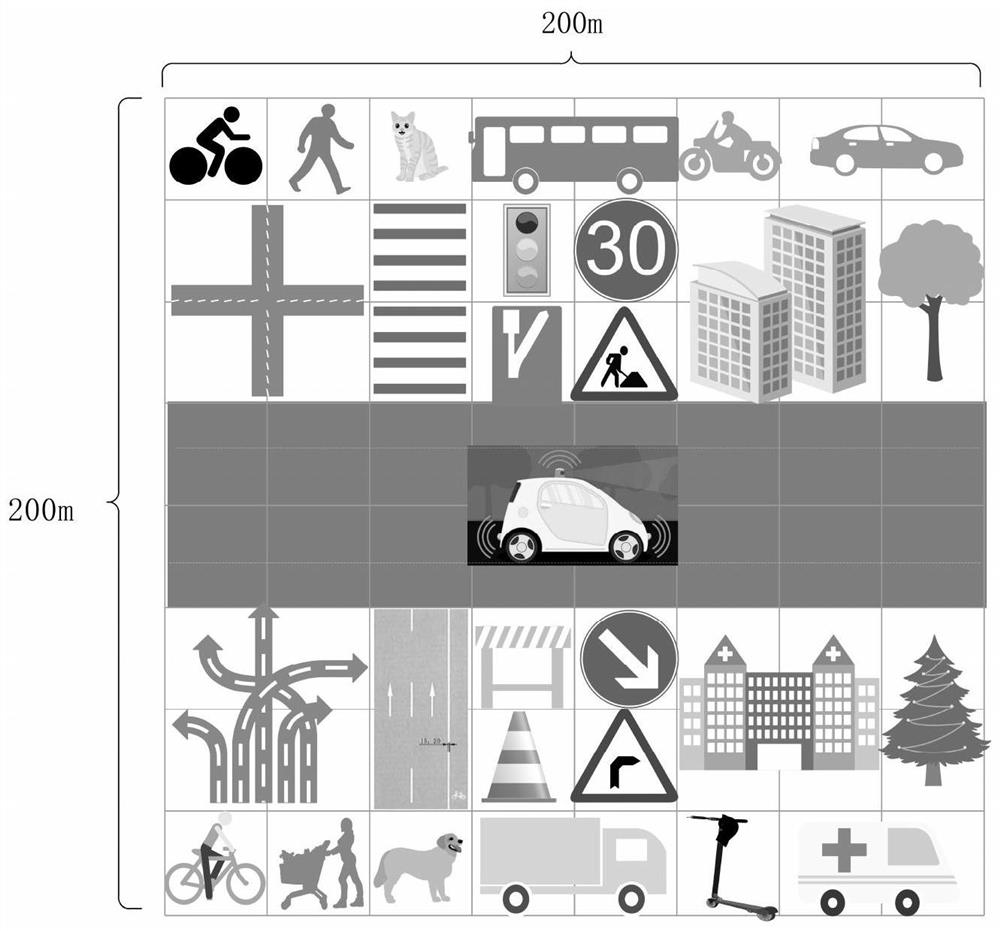

An intelligent vehicle passable area detection method based on multi-source information fusion

The invention discloses an intelligent vehicle passable area detection method based on multi-source information fusion, and the method comprises the steps: S100, collecting obstacle target informationaround a vehicle detected by a vehicle-mounted sensor, and outputting a static obstacle target library; S200, receiving obstacle target information around the vehicle, carrying out time-space synchronization on the obstacle target information detected by the vehicle-mounted sensor, carrying out single-frame target fusion on all detected obstacle information around the vehicle, carrying out continuous inter-frame multi-target tracking by utilizing motion prediction and multi-frame target association, and outputting a dynamic obstacle target library; And S300, receiving the static obstacle target library and the dynamic obstacle target library output in the step S200, and updating the dynamic obstacle target library according to the information of the static obstacle target library to formreal-time obstacle target information and generate a passable area. The position, scale, category and motion information of the obstacle around the vehicle and the binarization rasterized map can be accurately obtained in the vehicle driving process, the motion track of multiple targets is tracked, and the intelligent vehicle passable area including the binarization rasterized map and dynamic obstacle information real-time updating is formed.

Owner:TSINGHUA UNIV

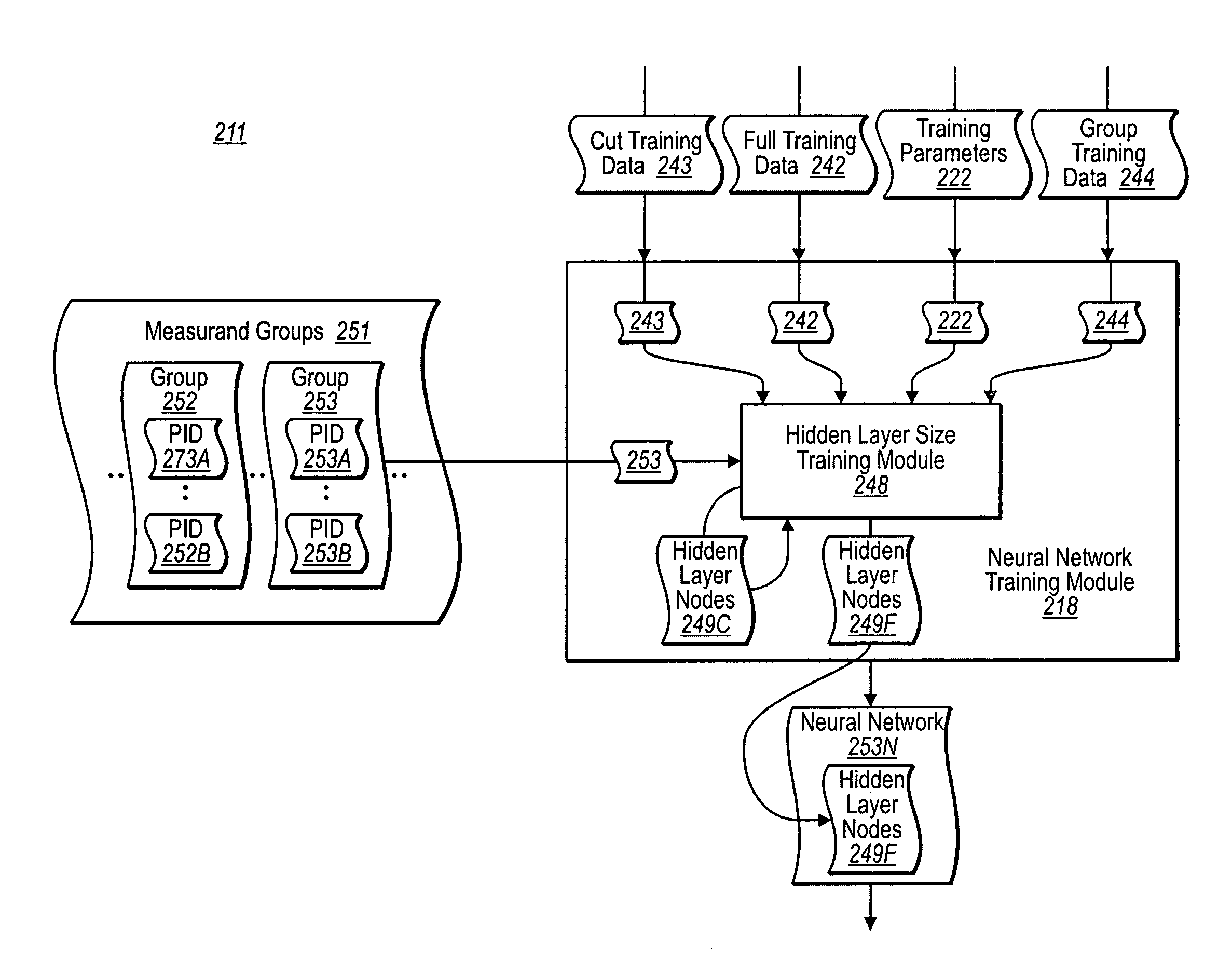

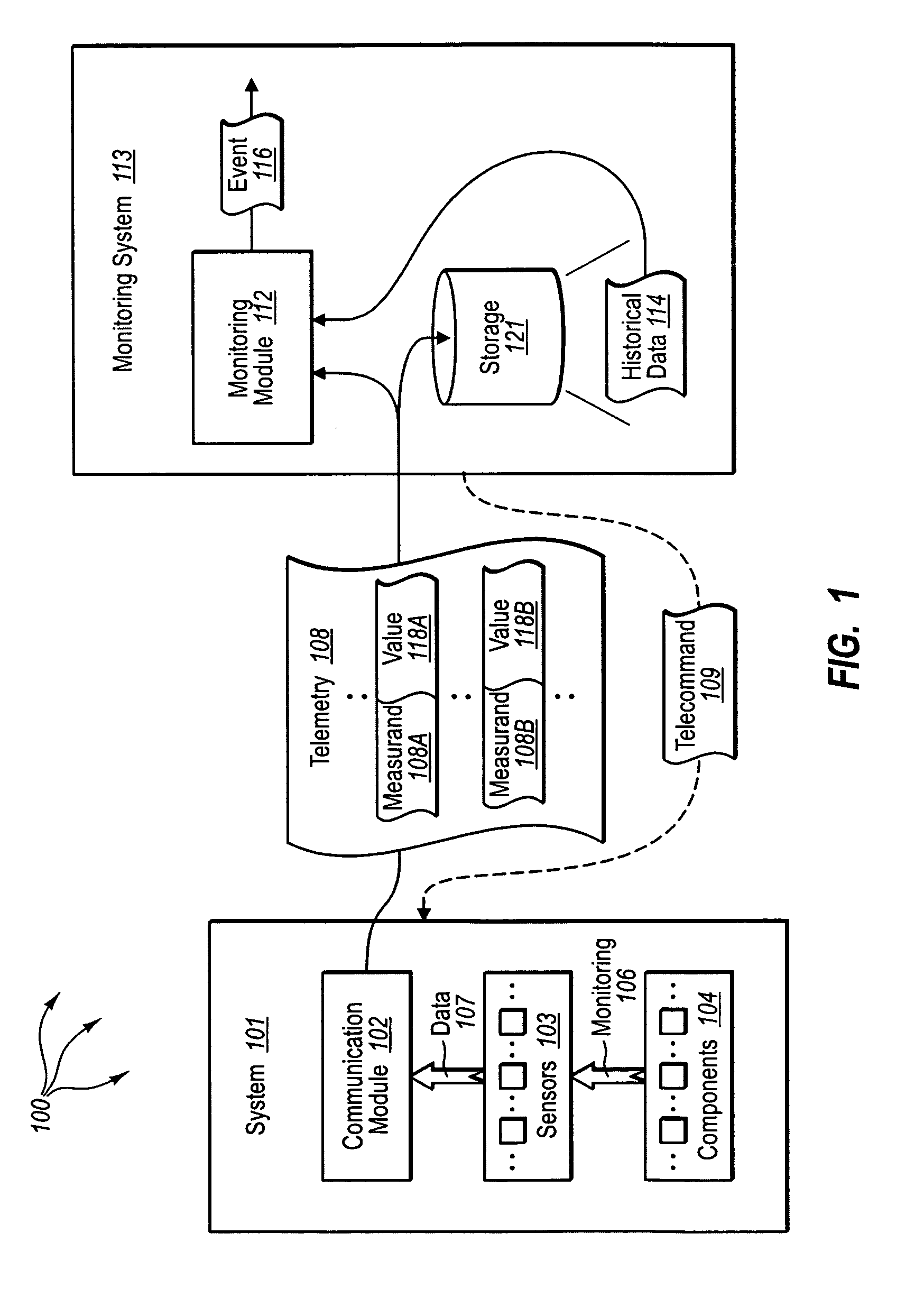

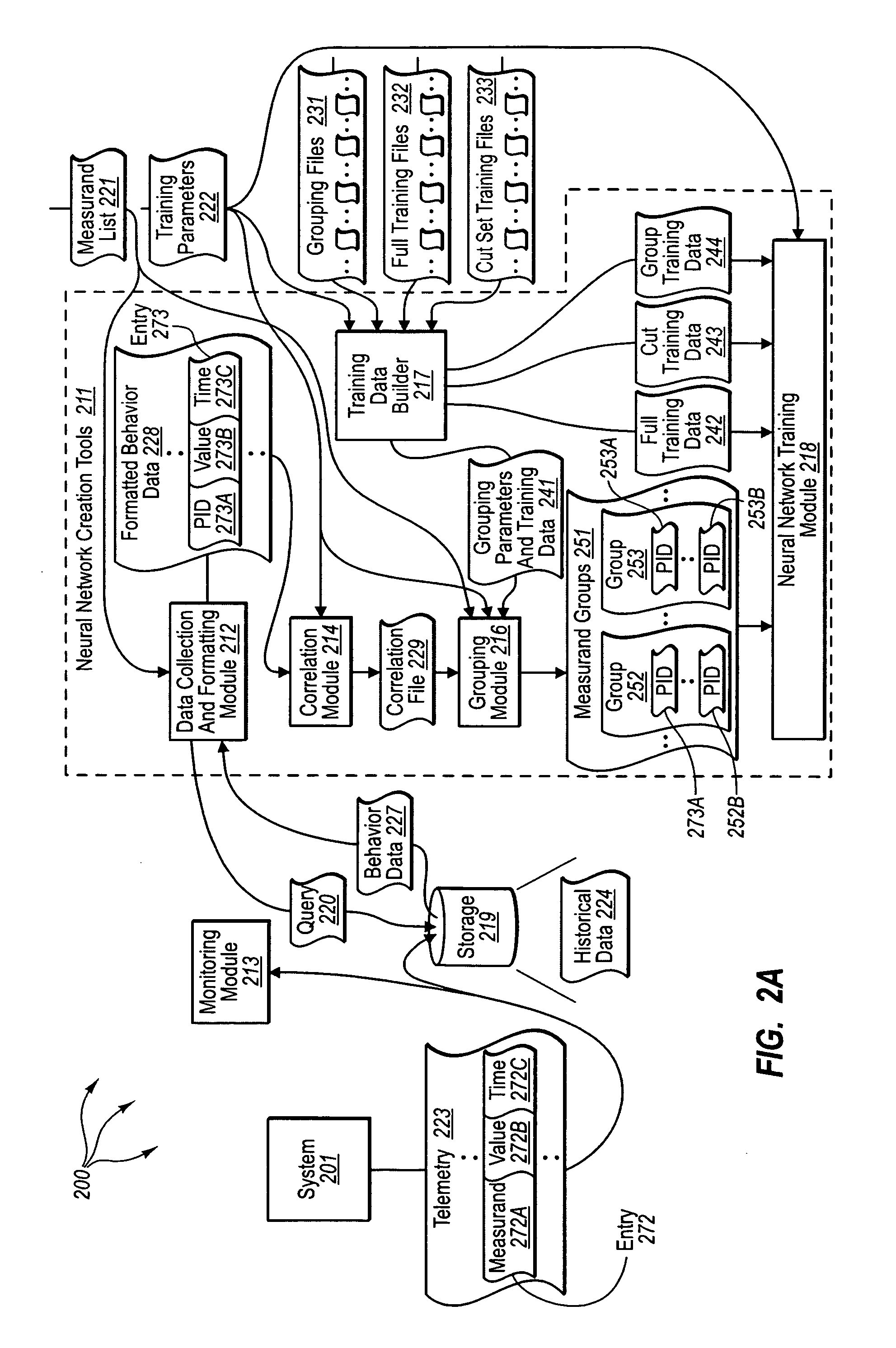

Detecting, classifying, and tracking abnormal data in a data stream

ActiveUS8306931B1Efficiently determineEfficiently determinedDigital computer detailsDigital dataNeural net architectureSelf adaptive

The present invention extends to methods, systems, and computer program products for detecting, classifying, and tracking abnormal data in a data stream. Embodiments include an integrated set of algorithms that enable an analyst to detect, characterize, and track abnormalities in real-time data streams based upon historical data labeled as predominantly normal or abnormal. Embodiments of the invention can detect, identify relevant historical contextual similarity, and fuse unexpected and unknown abnormal signatures with other possibly related sensor and source information. The number, size, and connections of the neural networks all automatically adapted to the data. Further, adaption appropriately and automatically integrates unknown and known abnormal signature training within one neural network architecture solution automatically. Algorithms and neural networks architecture are data driven, resulting more affordable processing. Expert knowledge can be incorporated to enhance the process, but sufficient performance is achievable without any system domain or neural networks expertise.

Owner:DATA FUSION & NEURAL NETWORKS

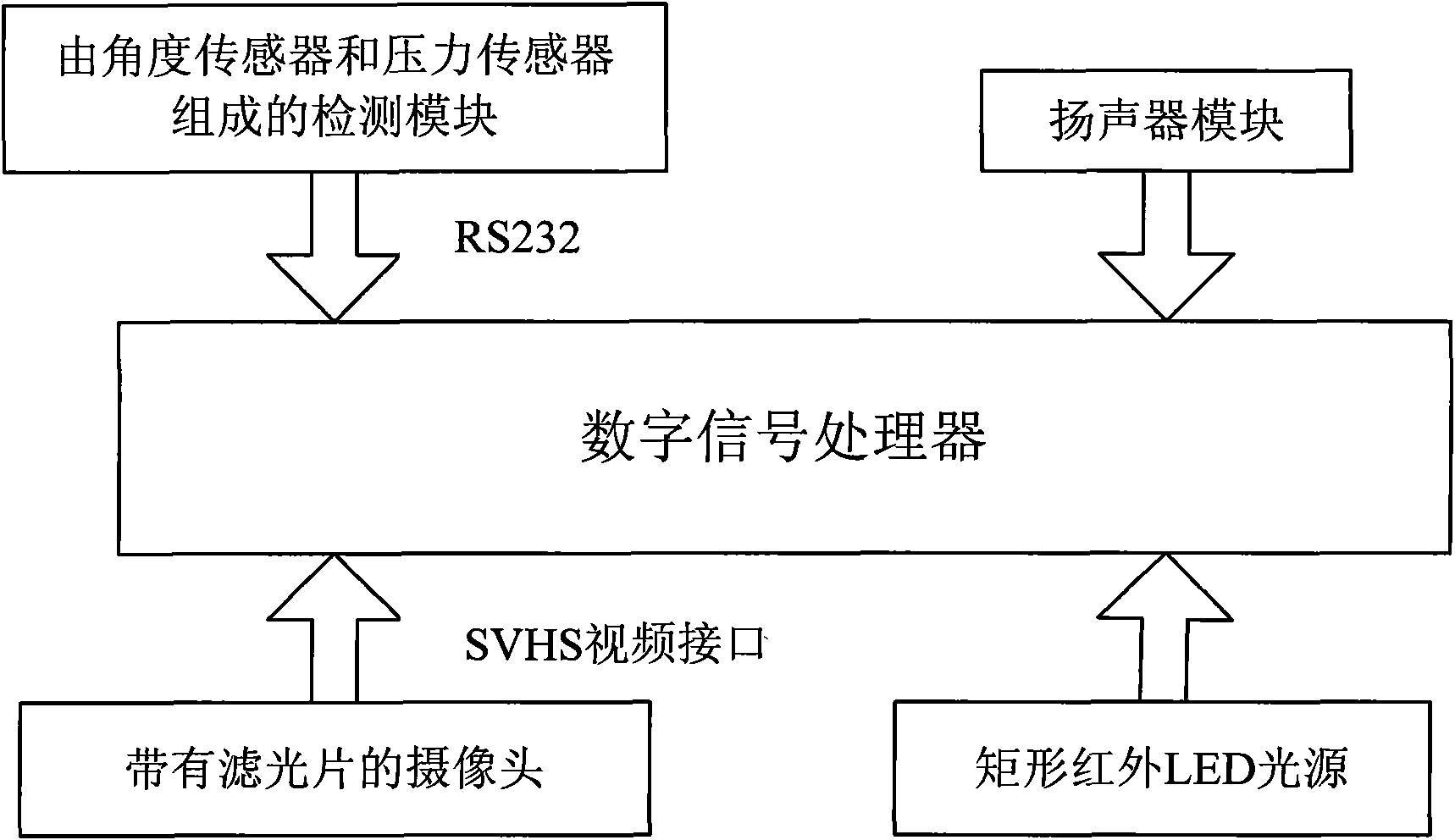

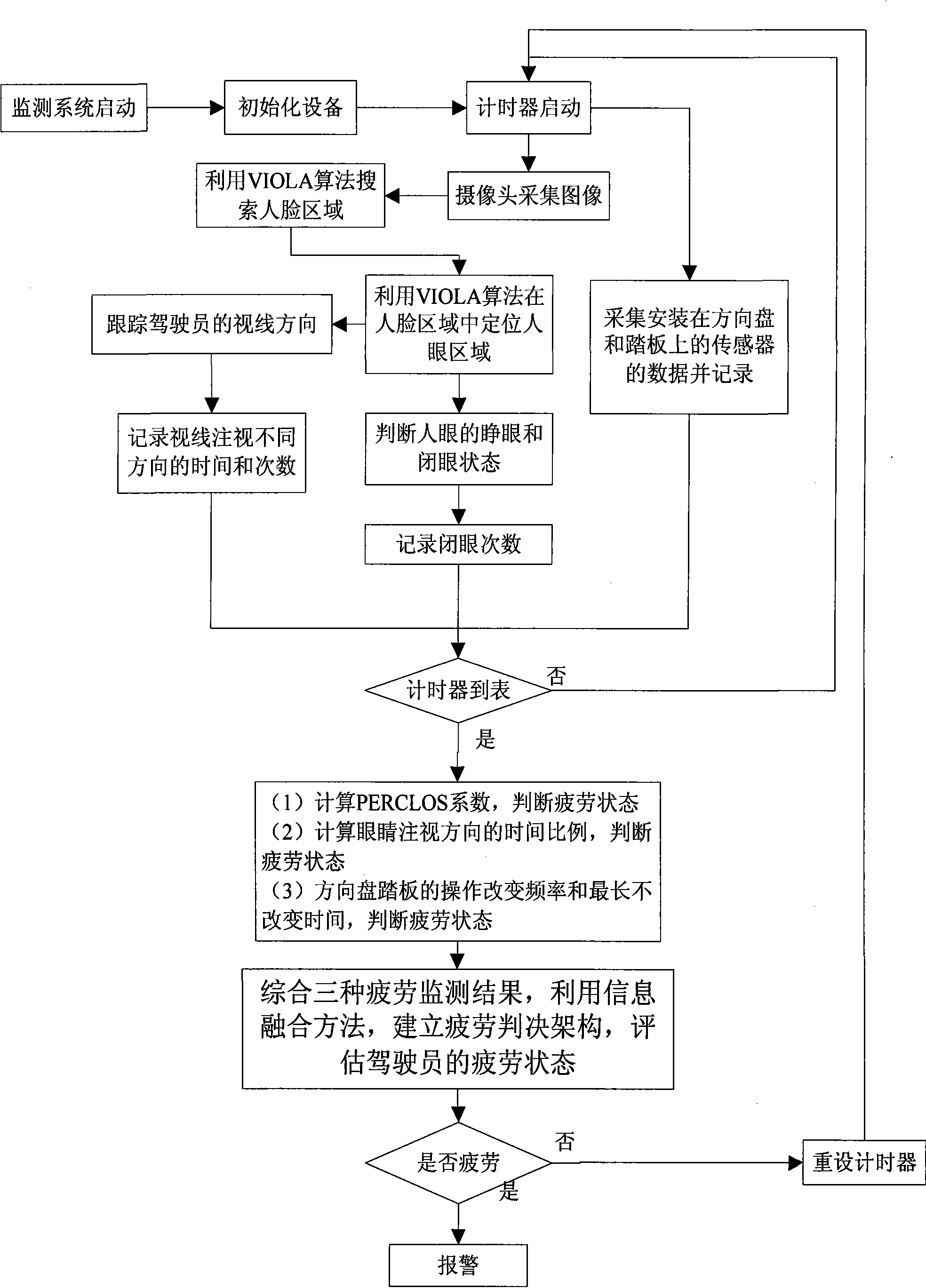

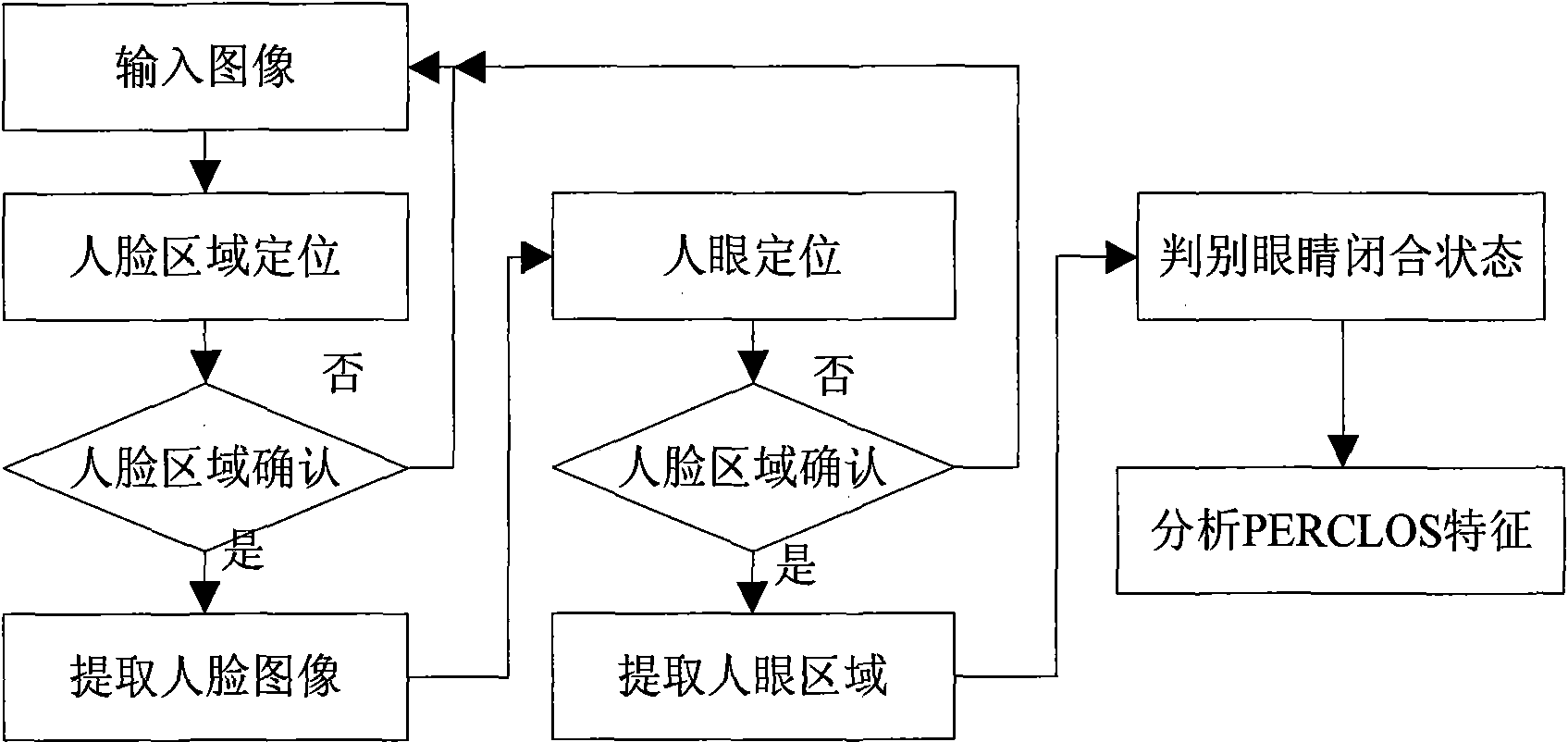

Driver fatigue monitoring device based on multivariate information fusion and monitoring method thereof

InactiveCN101540090AImprove computing powerMeet the requirementsCharacter and pattern recognitionAlarmsDriver/operatorInformation integration

The invention discloses a driver fatigue monitoring device based on multivariate information fusion and a monitoring method thereof. The device comprises a digital signal processor, a camera head with a filter, a rectangular ultrared LED light source module mounted on a car windshield, a speaker module and a sensor module comprising an angular sensor and a pressure sensor, wherein the camera head, the rectangular ultrared LED light source module, the speaker module and the sensor module are respectively connected with the digital signal processor. The monitoring method comprises four procedures, namely eye characteristic identification, view line track, driving activity monitoring and fatigue characteristic judgment which merges the fatigue judgment results of the eye characteristic identification, the view line track and the driving activity monitoring to accurately judge the fatigue states of a driver. The invention utilizes an information merging technology to further develop and improve a fatigue driving detection technology, can objectively, rapidly and accurately judge the fatigue state of the driver in real time and avoids traffic accidents caused by fatigue driving.

Owner:SOUTH CHINA UNIV OF TECH

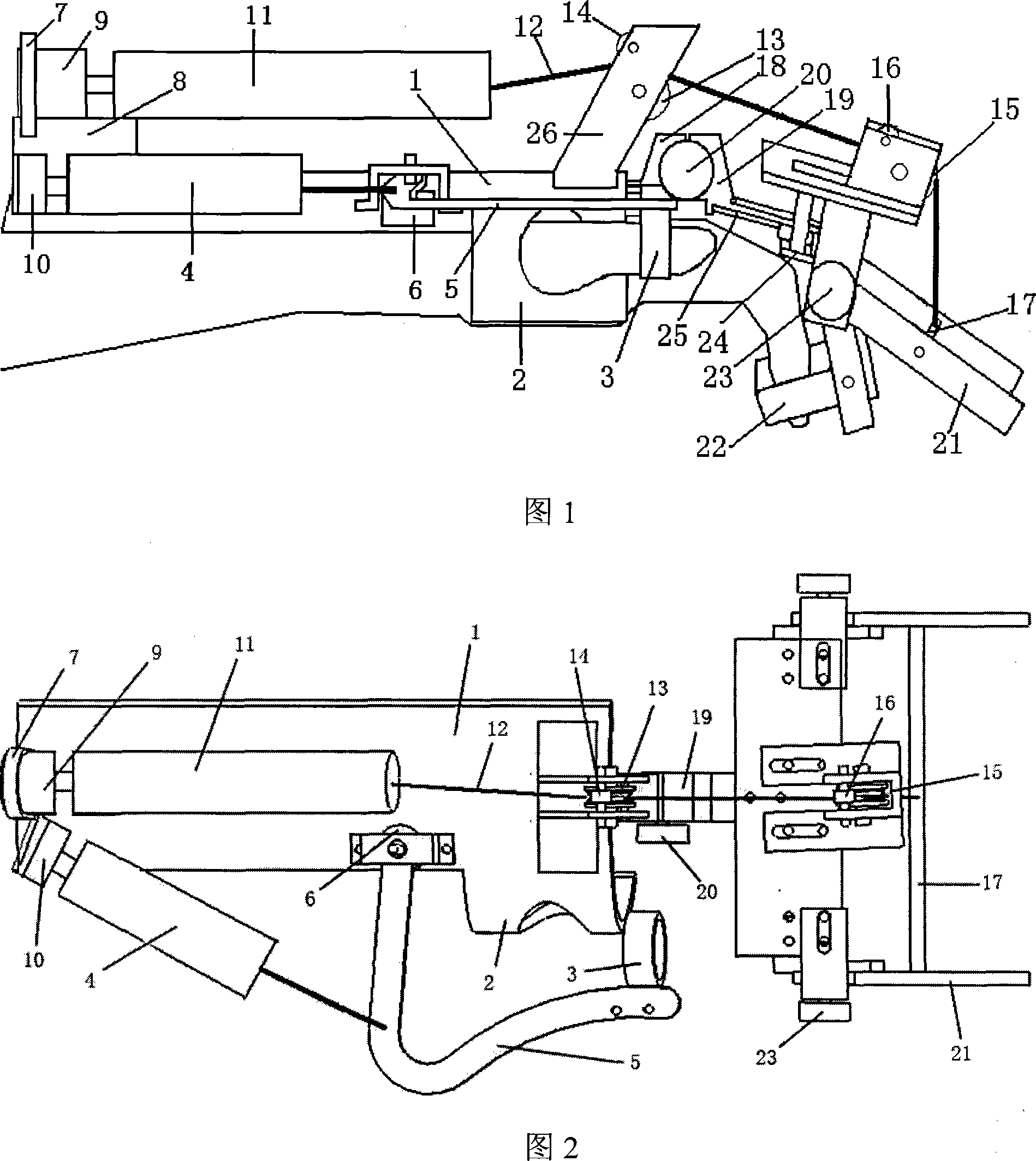

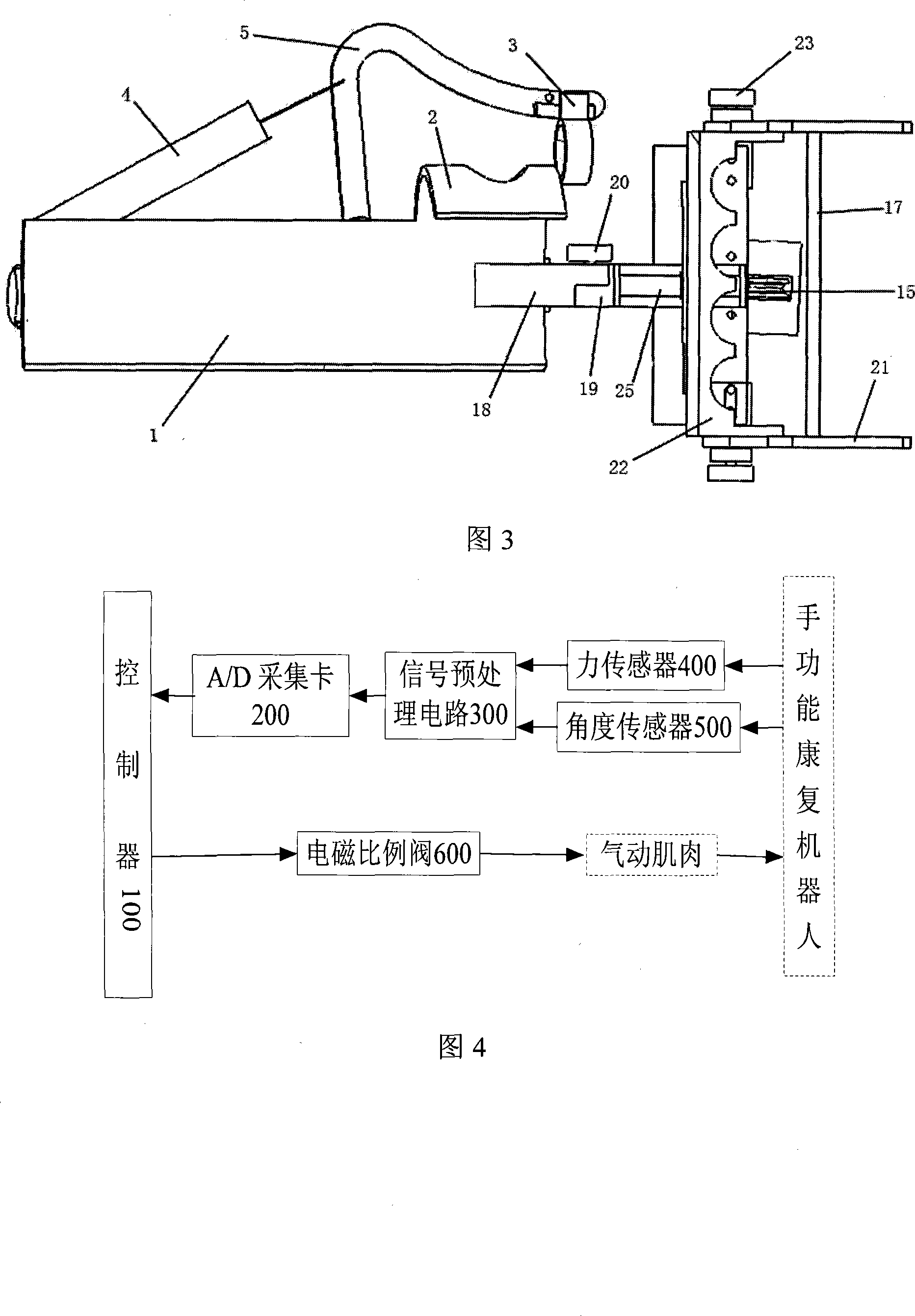

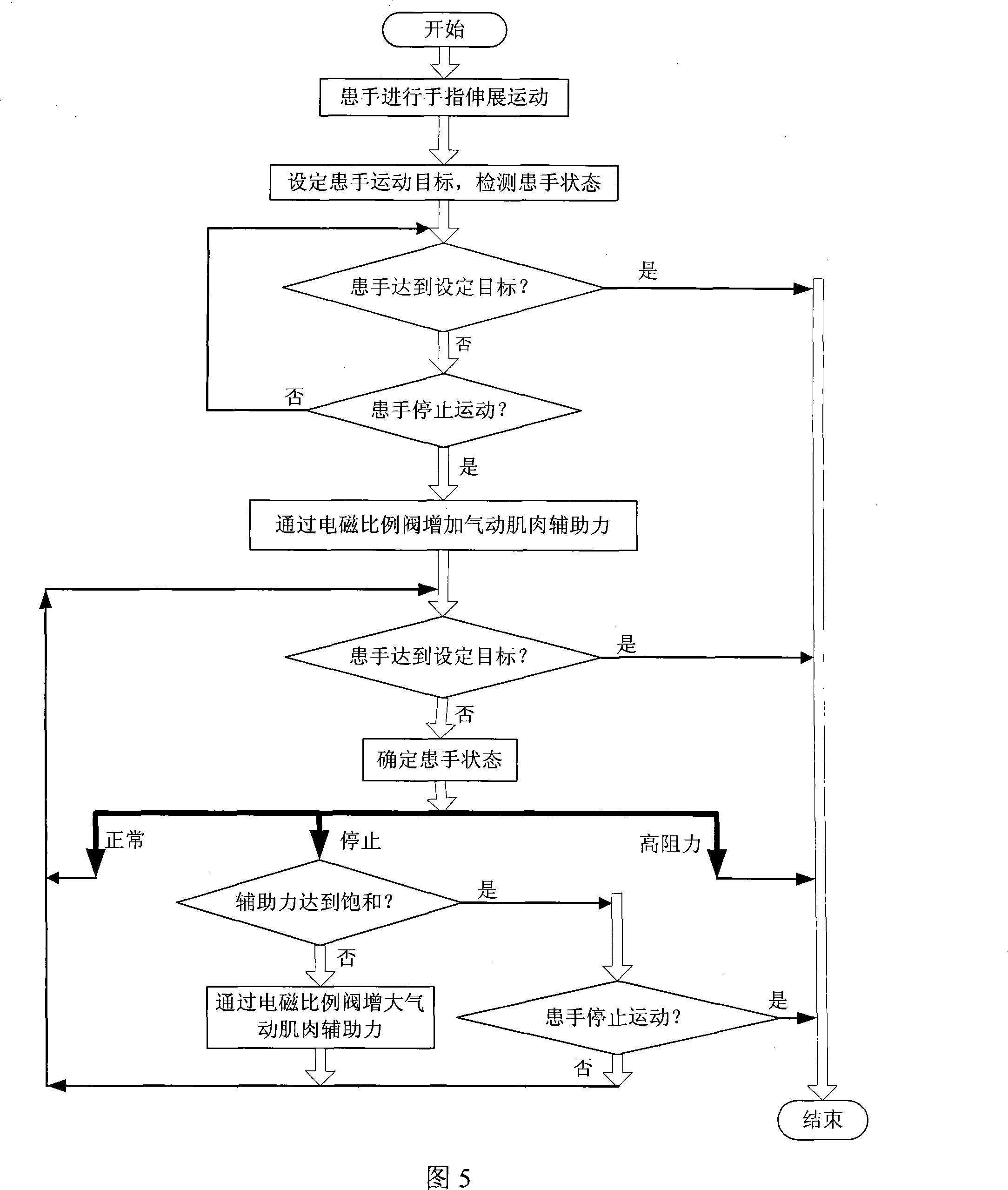

Apparel type robot for healing hand function and control system thereof

InactiveCN101181176AMechanical structure matchingEffective rehabilitationGymnastic exercisingChiropractic devicesCommunity orData information

The invention discloses a wearable hand function rehabilitation robot, which is mainly used for assisting the repeated movement function rehabilitation training of the patient with hand movement function disorder which is caused by stroke, brain trauma, spinal cord injury and peripheral nerve injury in communities or families. The robot system extracts the active movement will of the patient by detecting the multi-channel surface myoelectric signals of the affected hand and obtains the state of the affected limb by combining the data which is measured by an angle and force sensor to carry out the rehabilitation training of the affected hand by pneumatic muscle contraction assistance by using the intelligent control algorithm on the basis. The rehabilitation robot has multiple degrees of freedom, which can assist the affected hand to carry out multi-joint complex movement and inosculate the multi-sensor data information fusion during the rehabilitation process to be further used for the evaluation of rehabilitation effect, and the activity and the training interest of the patient can be improved by using the rehabilitation treatment virtual environment on a computer. The invention has the advantages of simple structure, flexible movement, safety and reliability, which can not only realize the rehabilitation training of the movement function of the affected hand, but can also be in line with the physiologic structure characteristics of human hands. The invention is more comfortable to wear.

Owner:HUAZHONG UNIV OF SCI & TECH

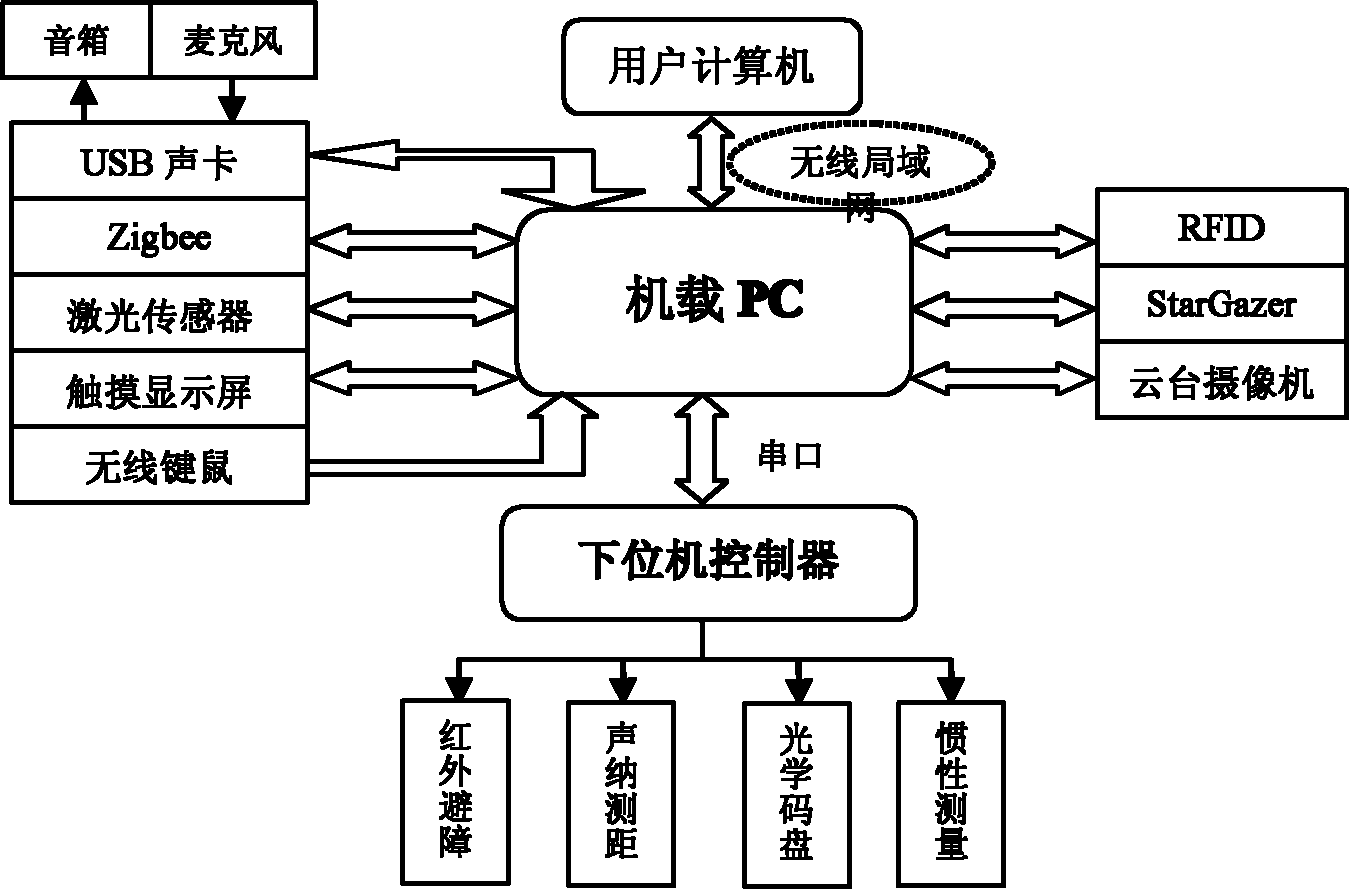

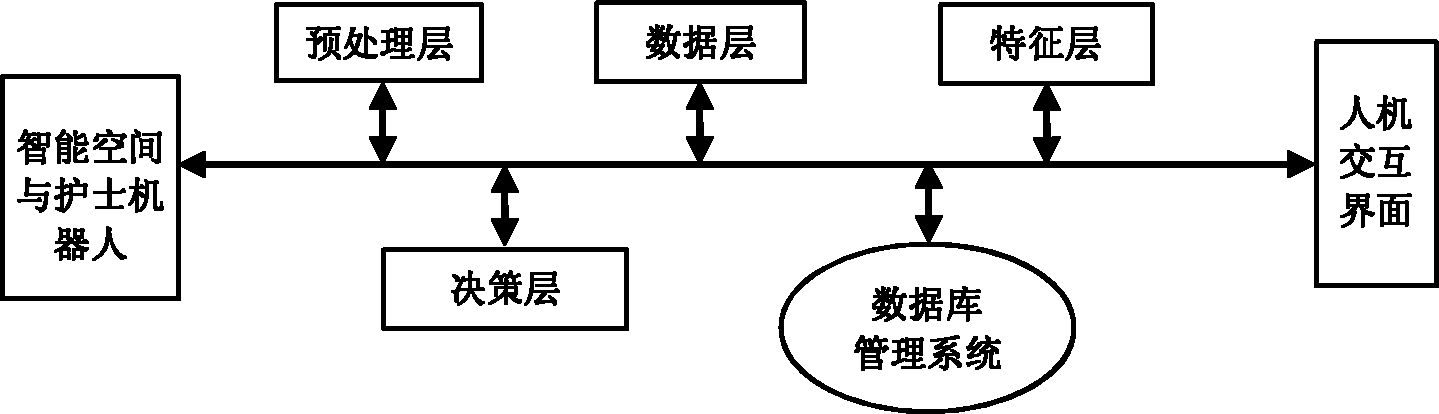

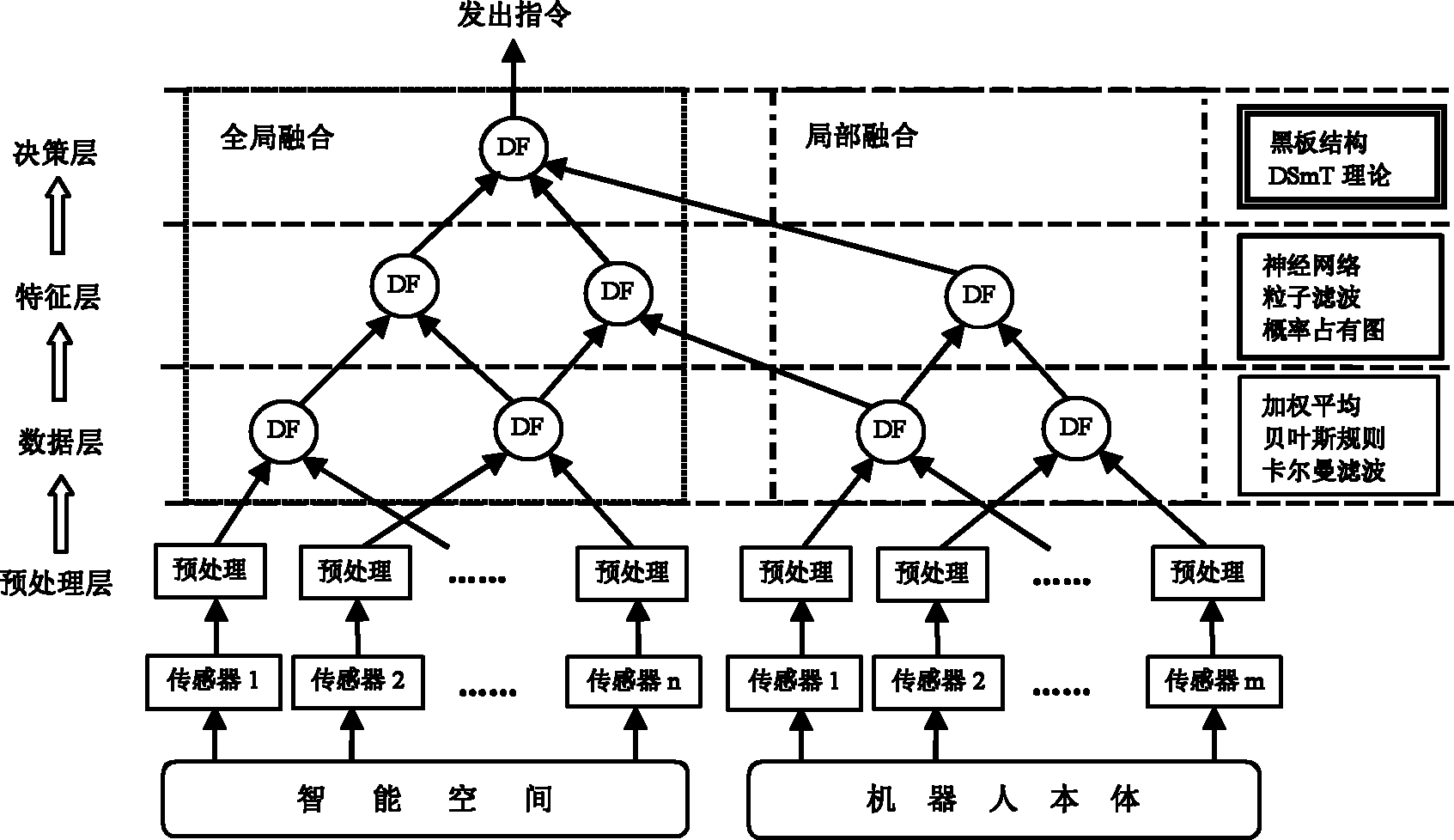

Intelligent space and nurse robot multi-sensor system and information fusion method of intelligent space and nurse robot multi-sensor system

ActiveCN102156476AImprove interoperabilityAchieve sharingPosition/course control in two dimensionsAlgorithmReusability

The invention discloses an intelligent space and nurse robot multi-sensor system, establishes a manual sign expression model of the typical QRCode and RFID (radio frequency identification devices) based technique in intelligent space, builds a multi-mode information expression system based on improved JDL (joint directors of laboratories) model and a multi-mode information acquiring model based on a distribution type data fusion tree, and designs a corresponding fusion algorithm by aiming at the characteristics of each layer. The model shields the isomerism of the device and the complexity of environment. By considering the dynamics, isomerism and hierarchy of intelligent space information and organically combining local fusion with overall fusion, the fusion efficiency is increased, the fusion precision is ensured, the recognition of intelligent space to human and environment is improved, the system is conveniently integrated, and the expandability and the reusability of the system are improved.

Owner:SHANDONG UNIV

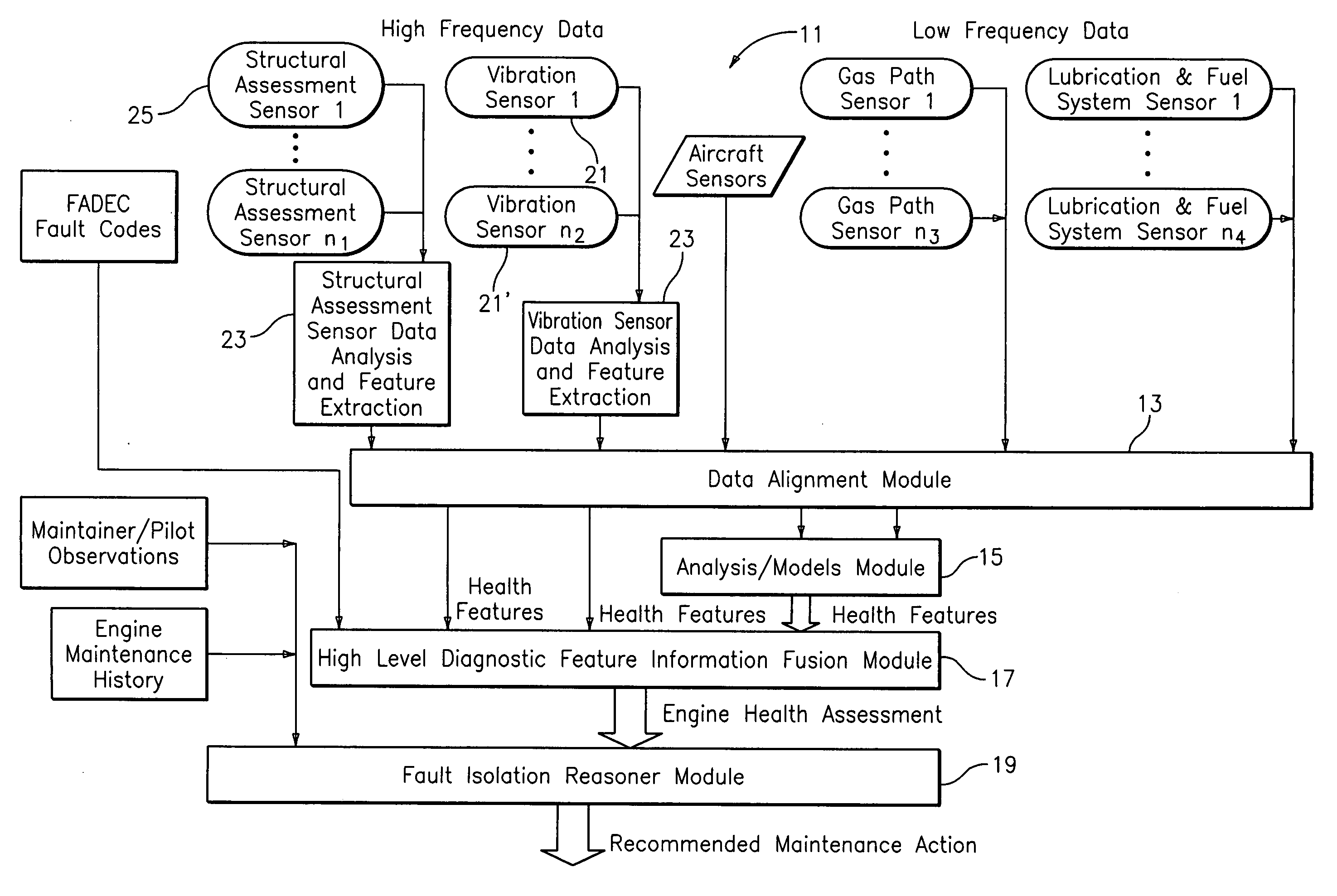

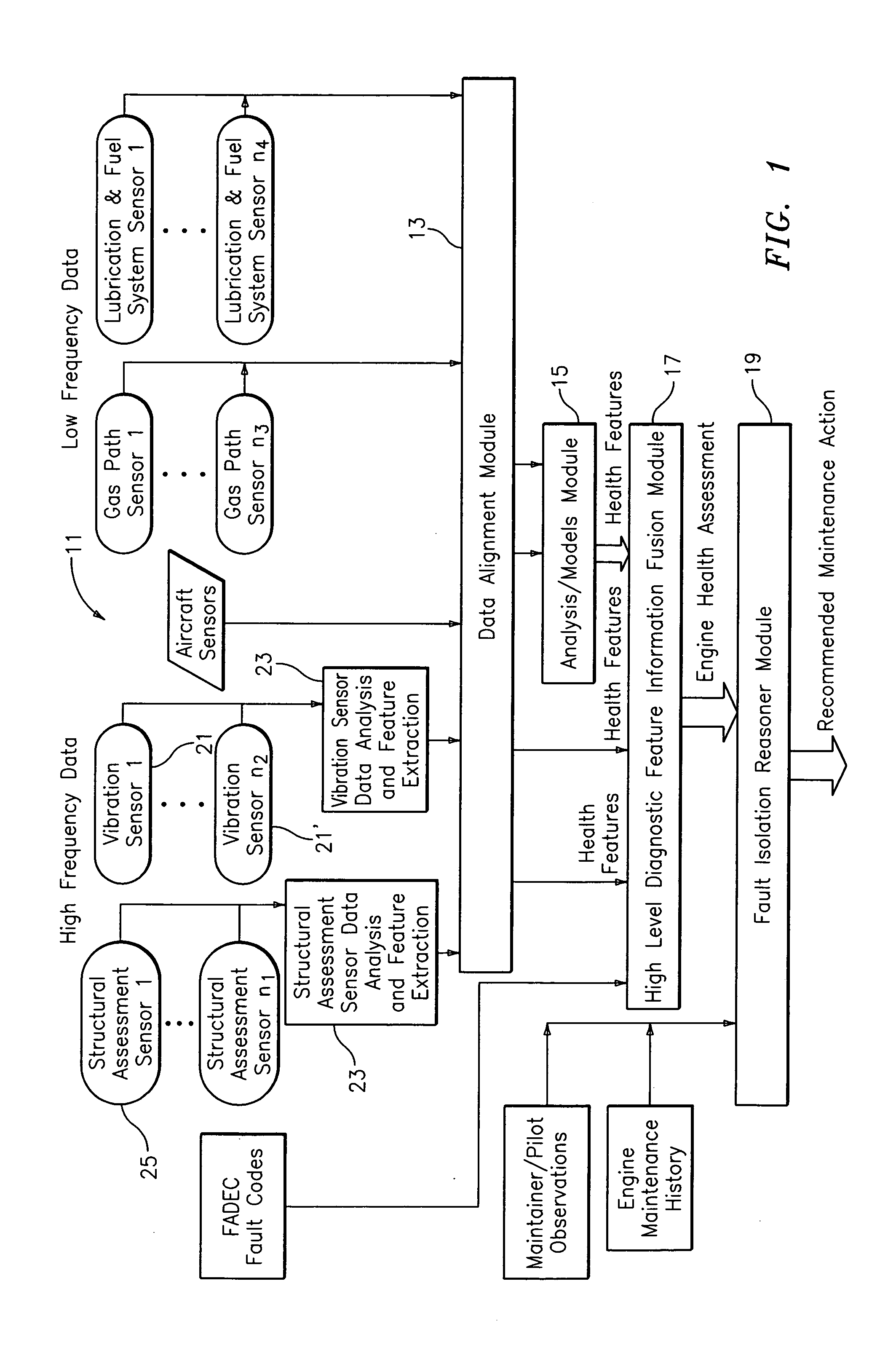

System for gas turbine health monitoring data fusion

An apparatus for assessing health of a device comprising a data alignment module for receiving a plurality of sensory outputs and outputting a synchronized data stream, an analysis module for receiving the synchronized data stream and outputting at least one device health feature, and a high level diagnostic feature information fusion module for receiving the at least one device health feature and outputting a device health assessment.

Owner:RTX CORP

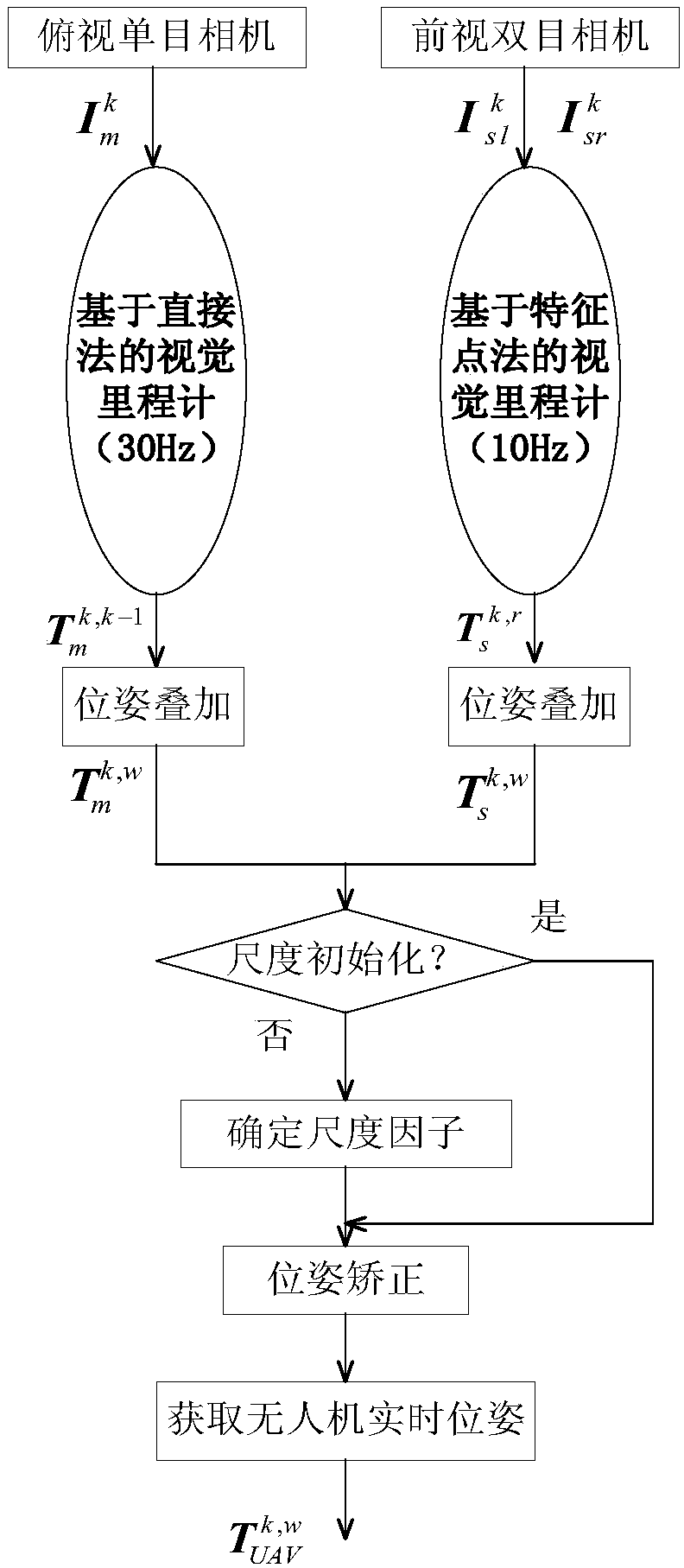

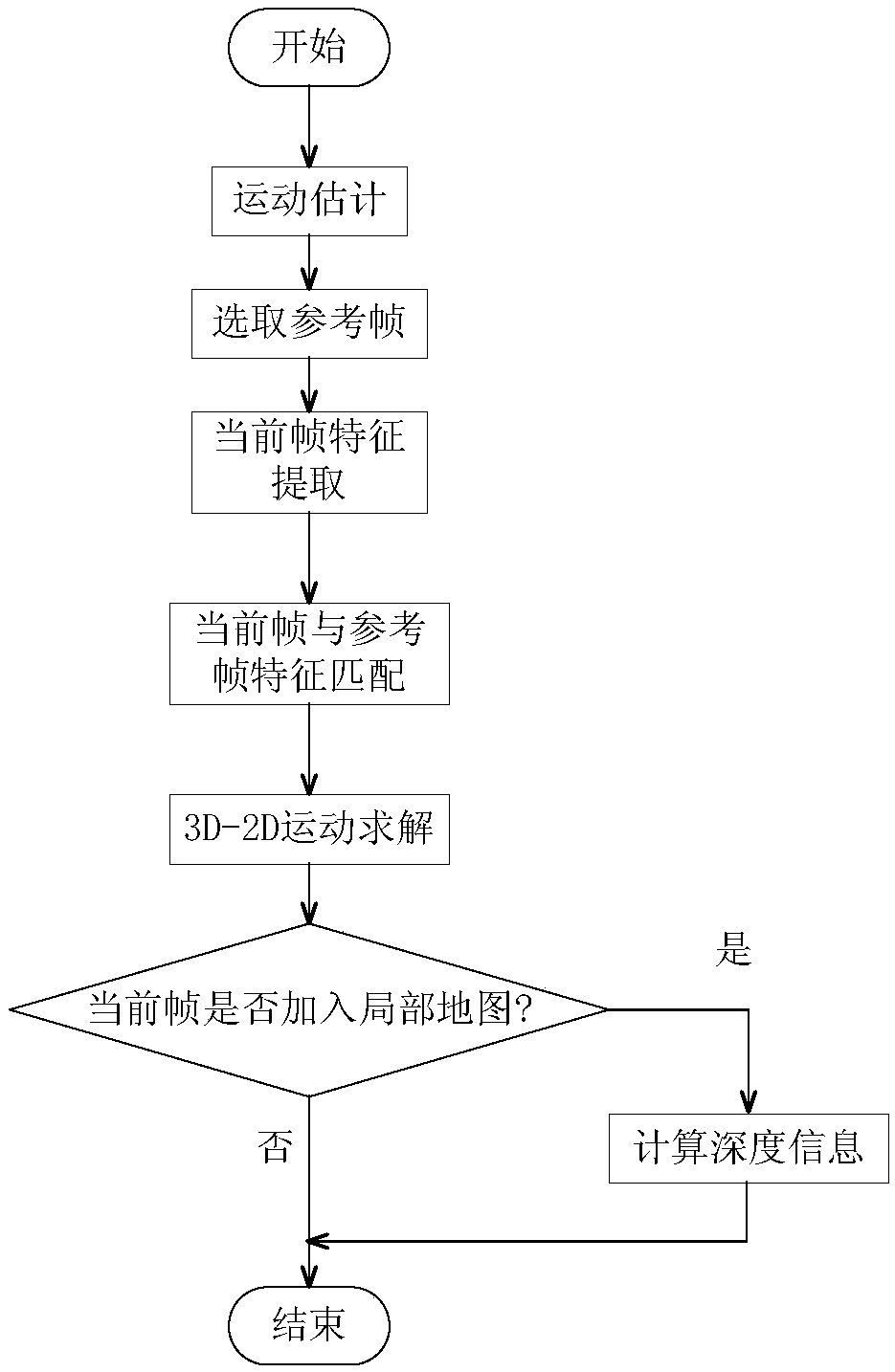

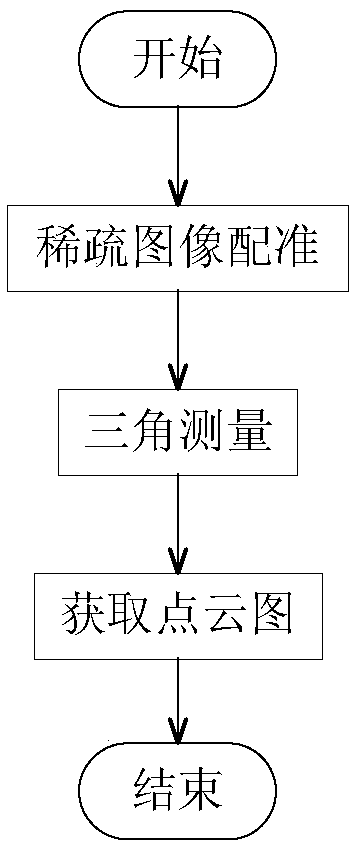

Simultaneous localization and mapping (SLAM) method for unmanned aerial vehicle based on mixed vision odometers and multi-scale map

ActiveCN109029417AReal-time accurate and reliable positioningShorten operation timeNavigational calculation instrumentsSimultaneous localization and mappingEnvironmental perception

The invention discloses a simultaneous localization and mapping (SLAM) method for an unmanned aerial vehicle based on mixed vision odometers and a multi-scale map, and belongs to the technical field of autonomous navigation of unmanned aerial vehicles. According to the SLAM method, an overlooking monocular camera, a foresight binocular camera and an airborne computer are carried on an unmanned aerial vehicle platform; the monocular camera is used for the visual odometer based on a direct method, and binocular camera is used for the visual odometer based on feature point method; the mixed visual odometers conduct information fusion on output of the two visual odometers to construct the local map for positioning, and the real-time posture of the unmanned aerial vehicle is obtained; then theposture is fed back to a flight control system to control the position of the unmanned aerial vehicle; and the airborne computer transmits the real-time posture and collected images to a ground station, the ground station plans the flight path in real time according to the constructed global map and sends waypoint information to the unmanned aerial vehicle, and thus autonomous flight of the unmanned aerial vehicle is achieved. Real-time posture estimation and environmental perception of the unmanned aerial vehicle under the non-GPS environment are achieved, and the intelligent level of the unmanned aerial vehicle is greatly increased.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

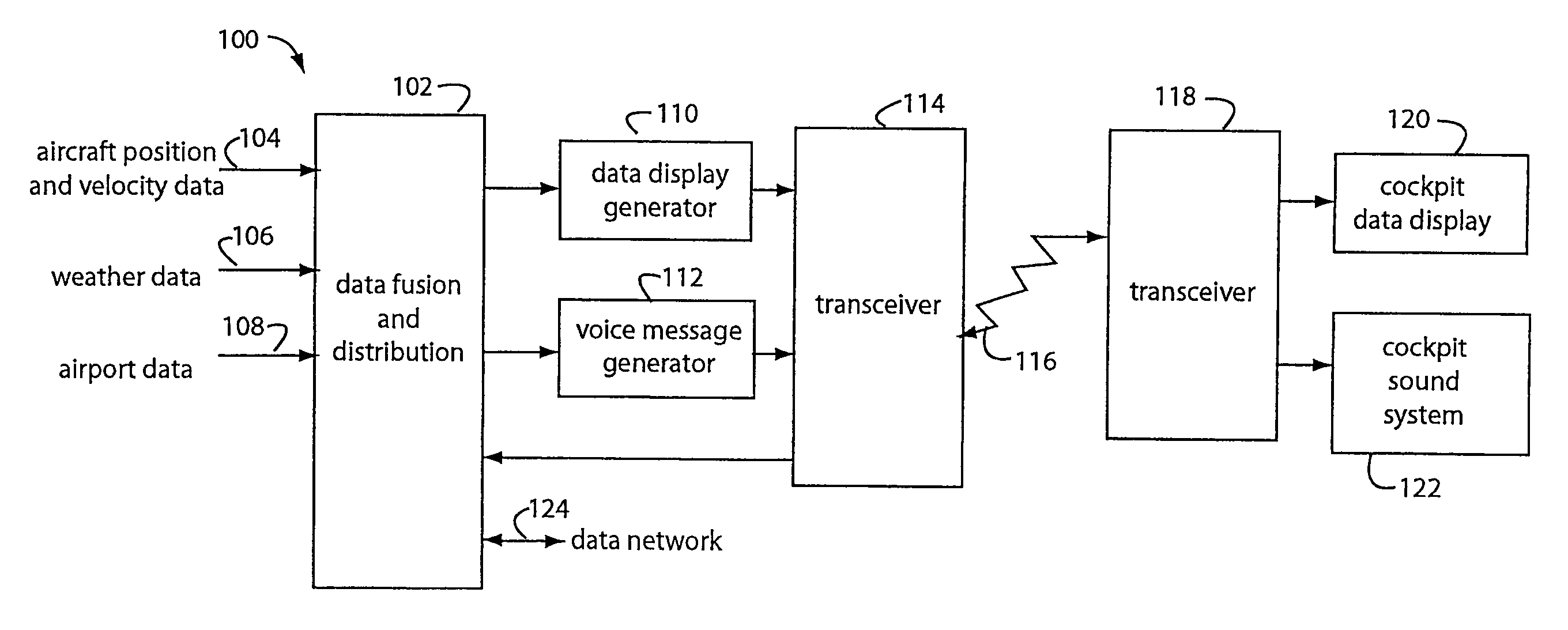

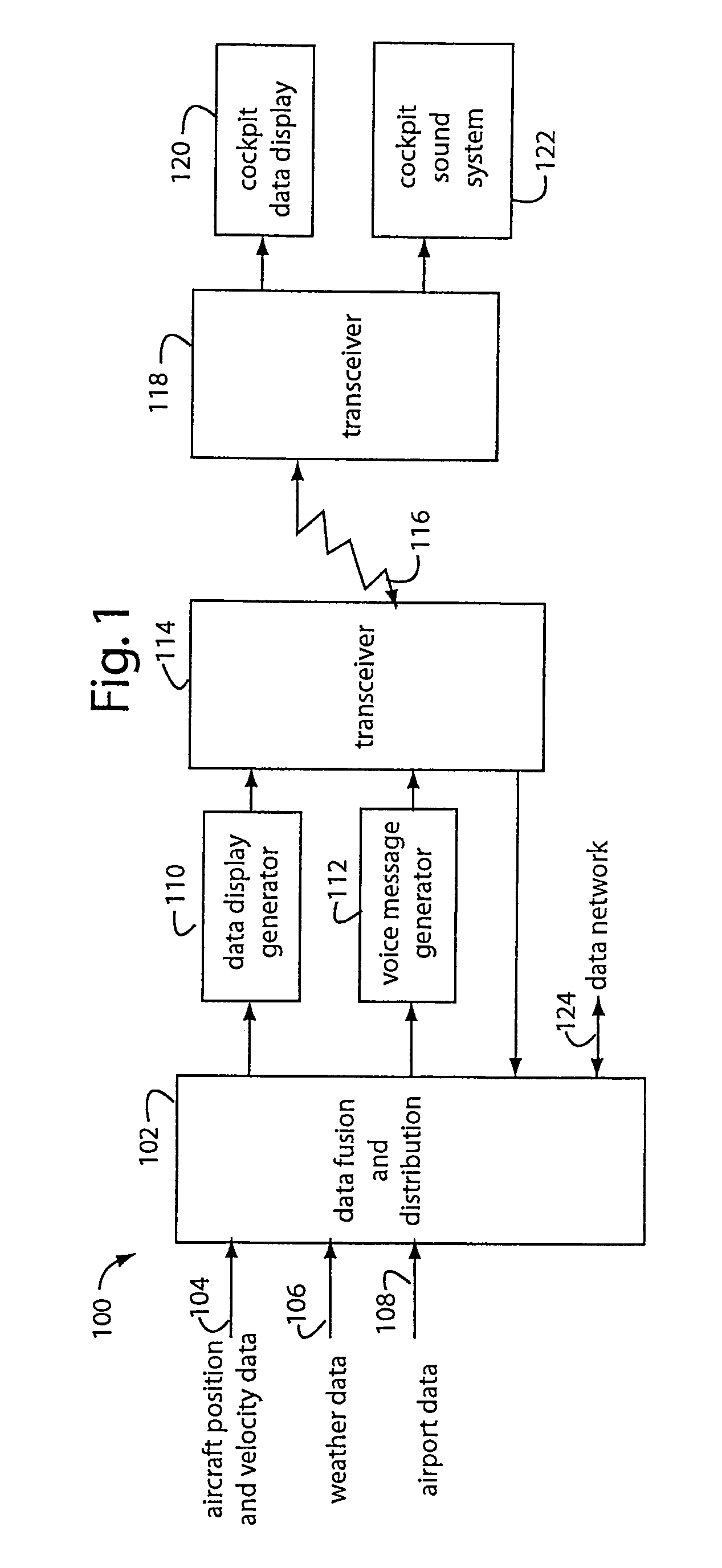

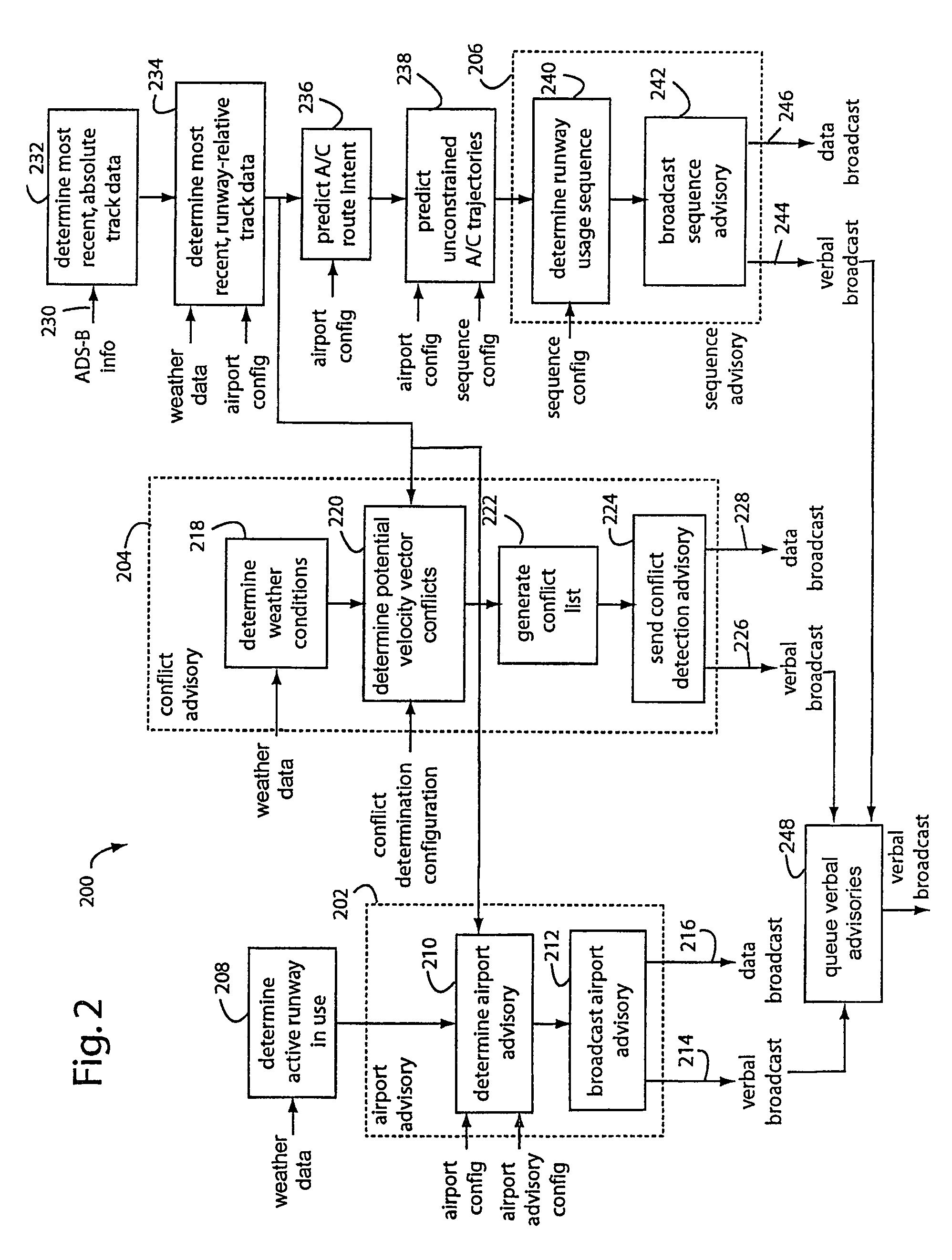

Smart airport automation system

ActiveUS7385527B1Enhances pilot situation awarenessIncrease awarenessAnalogue computers for trafficNavigation instrumentsData formatIntelligent machine

A smart airport automation system gathers and reinterprets a wide variety of aircraft and airport related data and information around unattended or non-towered airports. Data is gathered from many different types of sources, and in otherwise incompatible data formats. The smart airport automation system then decodes, assembles, fuses, and broadcasts structured information, in real-time, to aircraft pilots. The fused information is also useful to remotely located air traffic controllers who monitor non-towered airport operations. The system includes a data fusion and distribution computer that imports aircraft position and velocity, weather, and airport specific data. The data inputs are used to compute safe takeoff and landing sequences, and other airport advisory information for participating aircraft.

Owner:ARCHITECTURE TECH

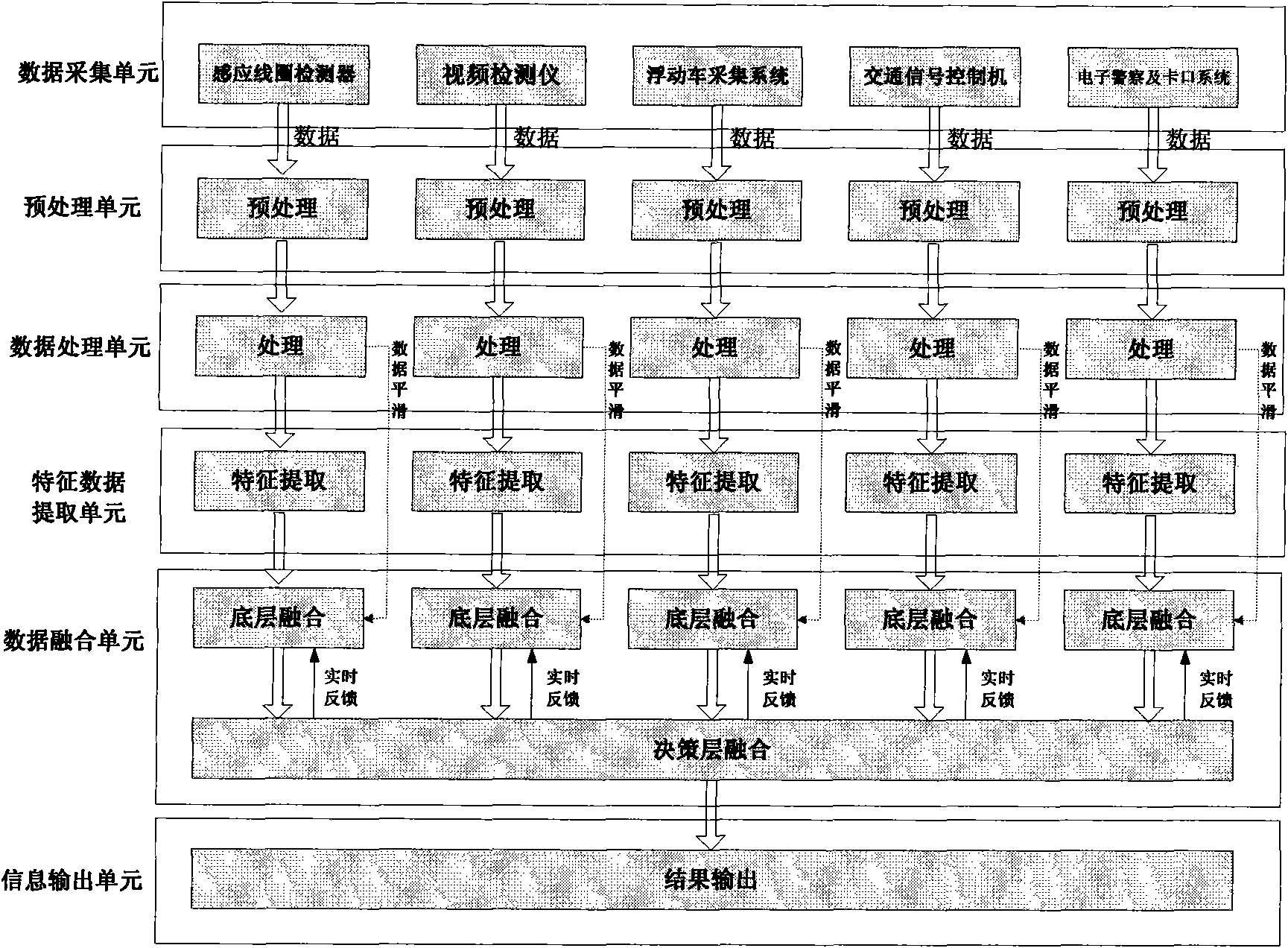

Method and device for fusion processing of multi-source traffic information

InactiveCN101571997AAccurate descriptionDetection of traffic movementInformation integrationTraffic management

The invention relates to a method for fusion processing of multi-source traffic information, which is characterized by comprising the following steps: (a) acquiring source data of a plurality of detection devices; (b) calibrating the source data; (c) performing data processing on the calibrated source data to obtain a parameter capable of singly reflecting the traffic state of a road section; (d) extracting the parameter with the required characteristic to obtain characteristic data; and (e) performing fusion processing on the characteristic data to obtain traffic state information of a road. Because different types of traffic data reflects part of the traffic state information, the method and the device fuse the multi-source traffic information so as to obtain more accurate traffic state information of the road section and an intersection for the convenience of issuing the traffic information, issuing convenient traffic information on traffic management more quickly, accurately and reasonably, and performing traffic management more quickly, accurately and reasonably.

Owner:SHANGHAI BAOKANG ELECTRONICS CONTROL ENG

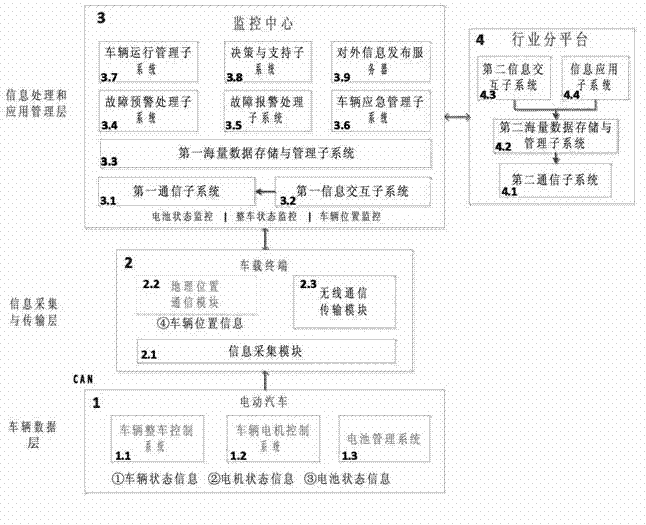

Method and system for remotely monitoring electric vehicle

The invention provides a method and a system for remotely monitoring an electric vehicle so as to solve the problems that the safety use of batteries can be ensured, the service life of the batteries can be improved and the cost of the batteries can be reduced. The method comprises the steps of comprehensively monitoring the state of a complete vehicle, a battery state (particularly a single battery state) and vehicle position information in real time during vehicle operating. According to the system, the information interaction function between different monitoring platforms can be realized and the information fusion and processing of mass data can be finished; the remote services such as fault diagnosis, fault early warning, emergency management and the like can be provided for the electric vehicle by the system; and meanwhile, the scheduling and the navigation service for electric energy supply of the electric vehicle, the energy peak scheduling information service and the vehicle route scheduling informationization service can be further provided by the system.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

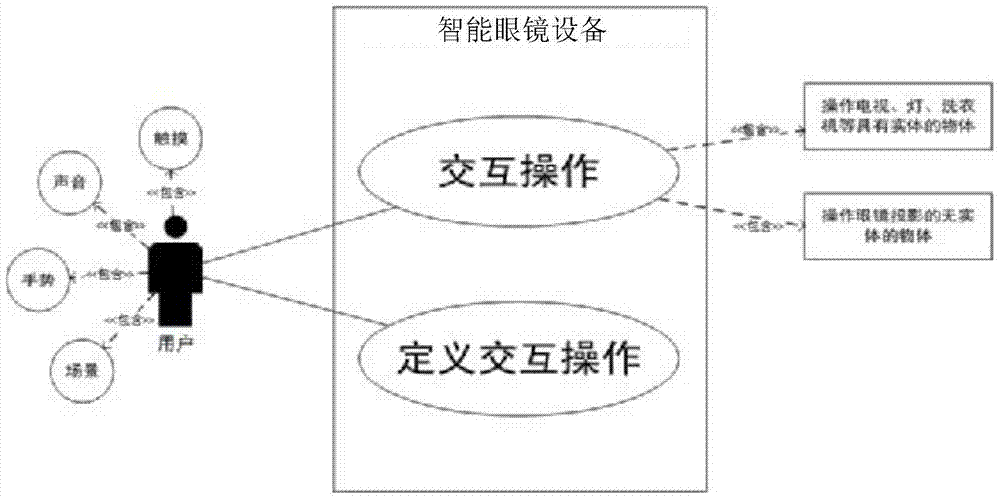

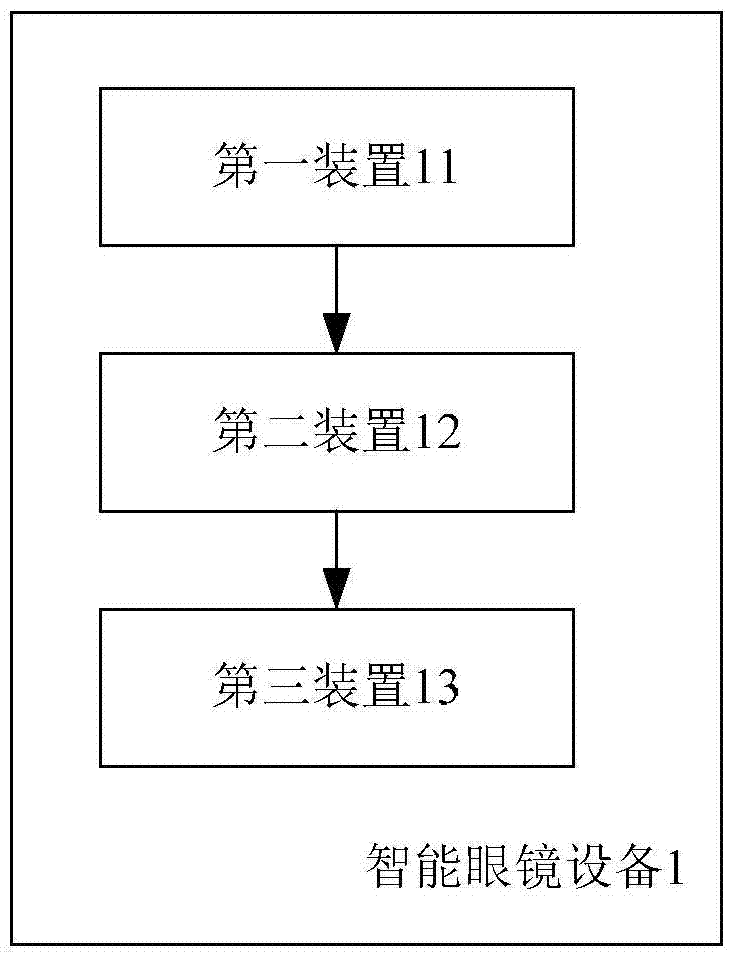

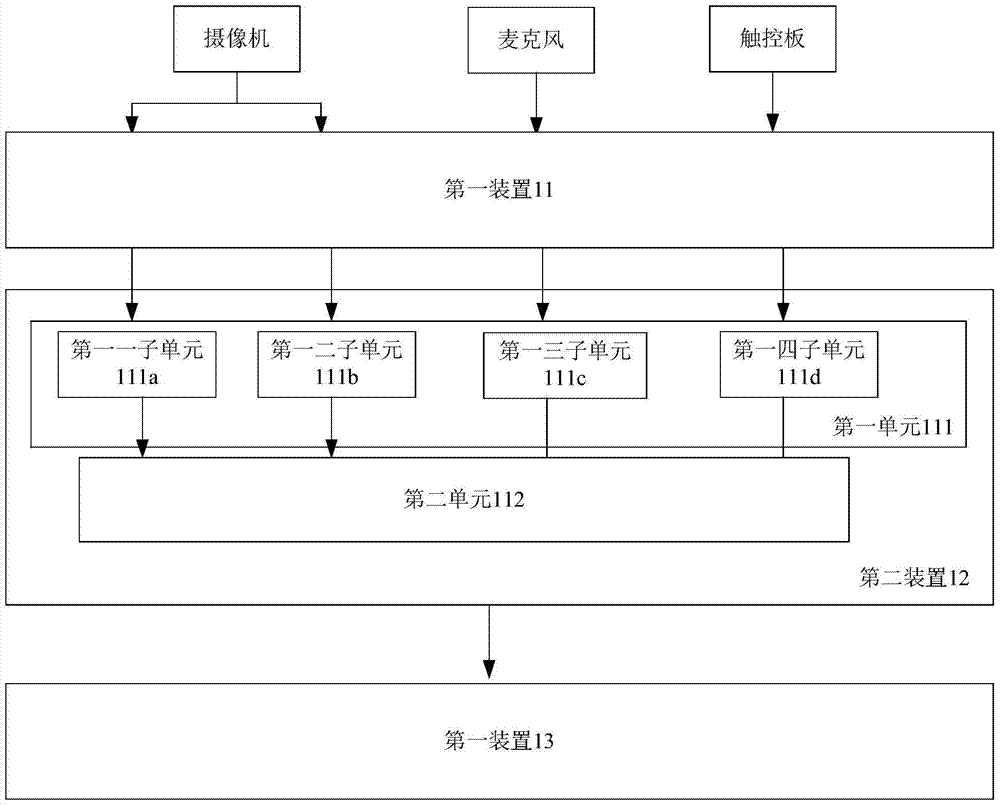

Multimodal input-based interactive method and device

ActiveCN106997236AImprove interactive experienceIncrease flexibilityInput/output for user-computer interactionCharacter and pattern recognitionObject basedComputer module

The invention aims to provide an intelligent glasses device and method used for performing interaction based on multimodal input and capable of enabling the interaction to be closer to natural interaction of users. The method comprises the steps of obtaining multiple pieces of input information from at least one of multiple input modules; performing comprehensive logic analysis on the input information to generate an operation command, wherein the operation command has operation elements, and the operation elements at least include an operation object, an operation action and an operation parameter; and executing corresponding operation on the operation object based on the operation command. According to the intelligent glasses device and method, the input information of multiple channels is obtained through the input modules and is subjected to the comprehensive logic analysis, the operation object, the operation action and the operation element of the operation action are determined to generate the operation command, and the corresponding operation is executed based on the operation command, so that the information is subjected to fusion processing in real time, the interaction of the users is closer to an interactive mode of a natural language, and the interactive experience of the users is improved.

Owner:HISCENE INFORMATION TECH CO LTD

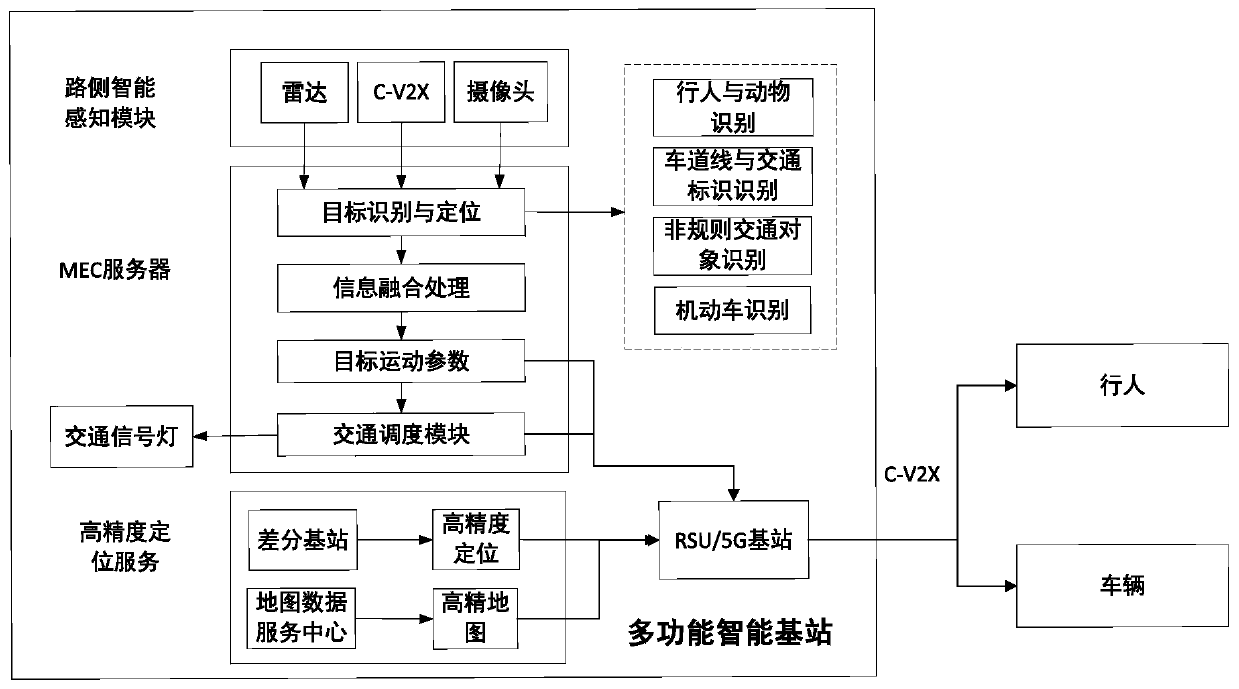

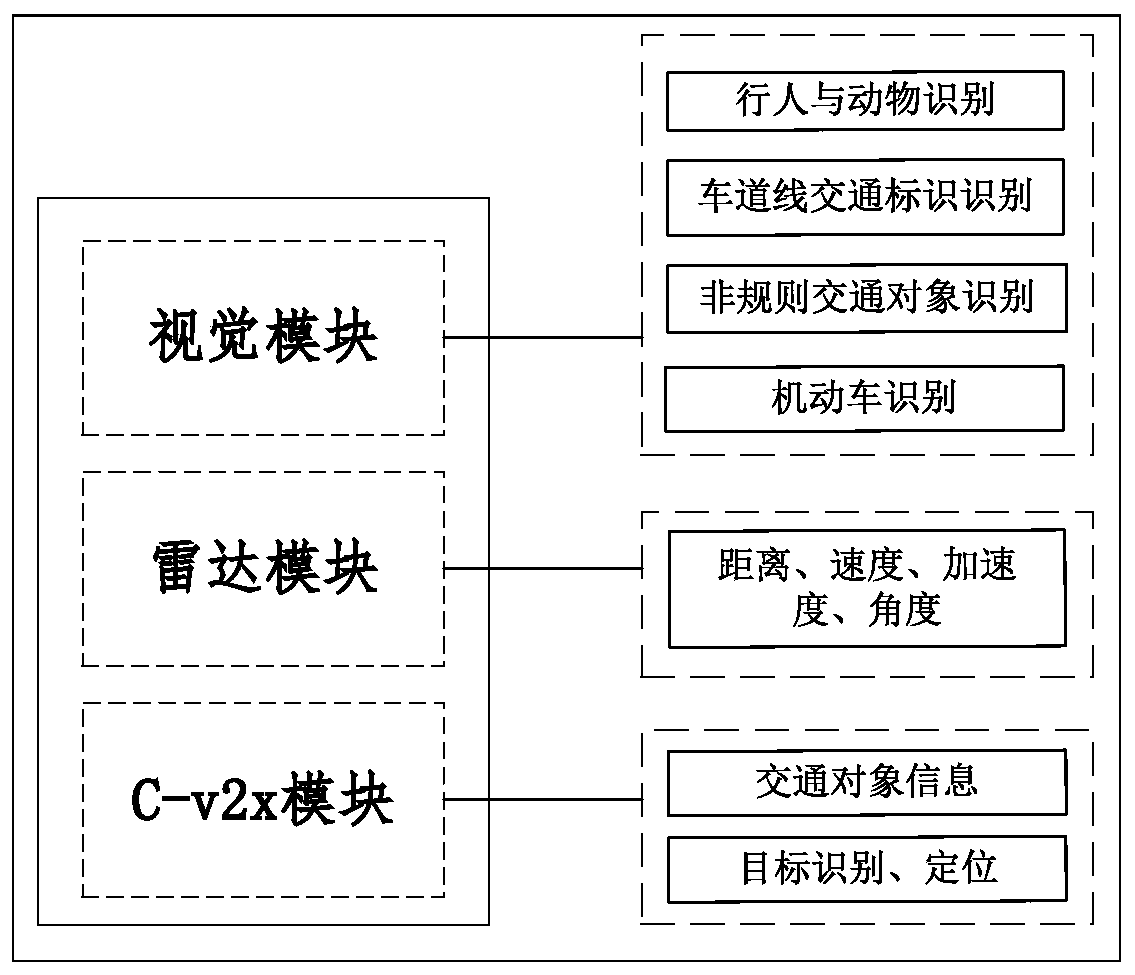

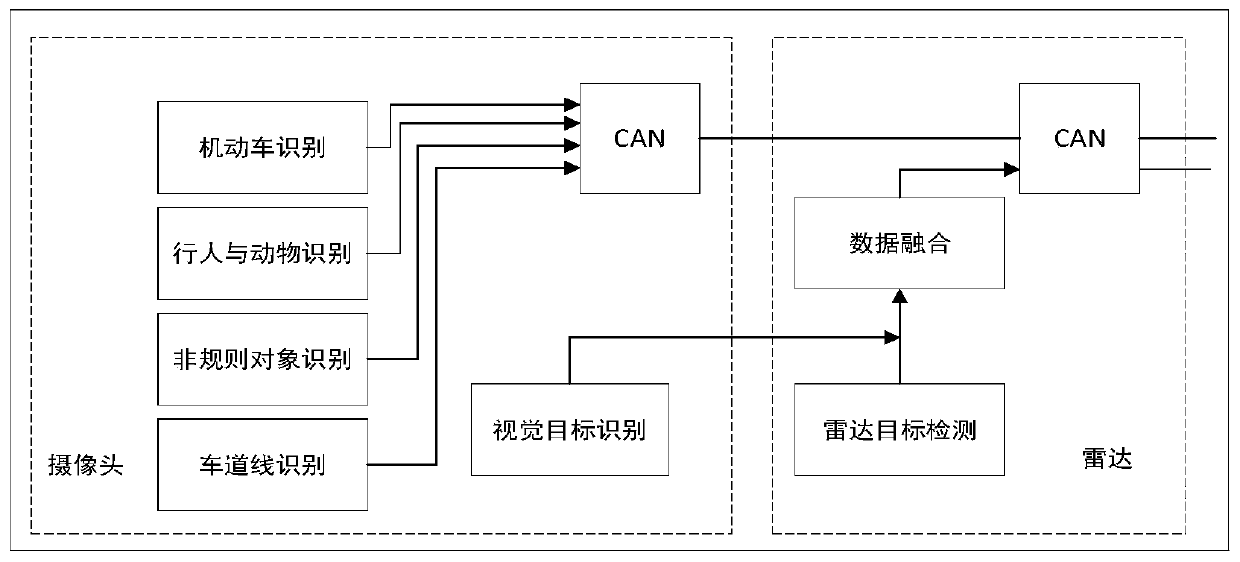

Multifunctional V2X intelligent roadside base station system

ActiveCN111554088AImprove object detection accuracyImprove accuracyControlling traffic signalsDetection of traffic movementVehicle drivingData format

The invention requests to protect a multifunctional V2X intelligent roadside base station system. The system comprises roadside sensing equipment, an MEC server, a high-precision positioning service module, a multi-source intelligent roadside sensing information fusion module and a 5G / LTE-V communication module. An intelligent roadside device integrating C-V2X communication, environmental perception and target recognition, high-precision positioning and the like is designed, and the problem that multi-device information fusion and integration are inconvenient in intelligent transportation is solved. In the system, a C-V2X intelligent road side system architecture and a target layer multi-source information fusion method are designed. Road side multi-source environment cooperative sensing is combined, real-time traffic scheduling of the intersection is realized by using a traffic scheduling module in the MEC server, and communication and high-precision positioning services are providedfor vehicle driving, and finally the target information after fusion processing is broadcasted to other vehicles or pedestrians through a C-V2X RSU (LTE-V2X / 5G V2X and the like) according to an application layer standard data format, so the driving and traffic safety is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Intelligent information fusion method of distributed multi-sensor

InactiveCN103105611AAchieve high-precision indoor positioningAcoustic wave reradiationSampling instantMultiple sensor

The invention provides an intelligent information fusion method of a distributed multi-sensor. The intelligent information fusion method includes the following steps: (1) directly collecting signals from a distributed ultrasonic wave receiver, sending the signals to a control center, filtering processing the signals through distributed Kalman filtering, (2) after distributed signal filtering processing, aligning time, space and a related track, unifying sample frequency, namely each measure system is provided with current measured data of each sampling instant, and unifying a space and time coordinate system, (3) judging errors through a track correlation method with the deviation between a future point and an observing point as error judgment criteria, and (4) matching each relative track into a novel track through a fusion method of optimal fusion estimation. By means of the intelligent information fusion method of a distributed multi-sensor, the optimal fusion of numbers of estimated values is achieved through a minimum variance principle, and high-precision indoor positioning of supersonic waves is achieved effectively.

Owner:GUANGDONG UNIV OF TECH

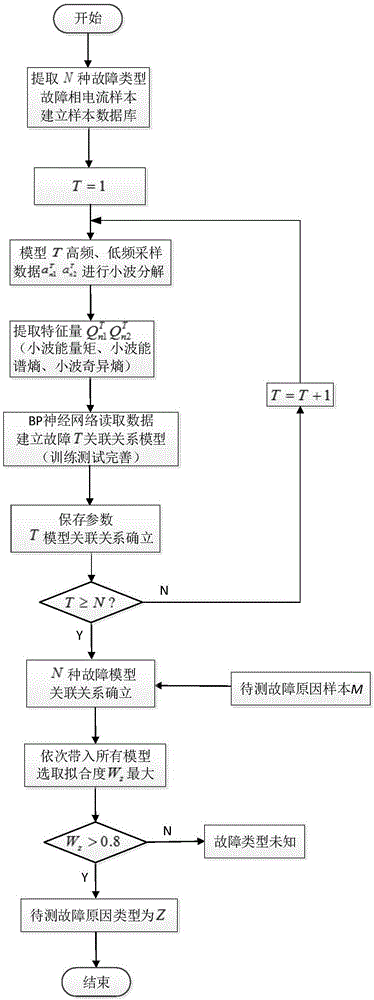

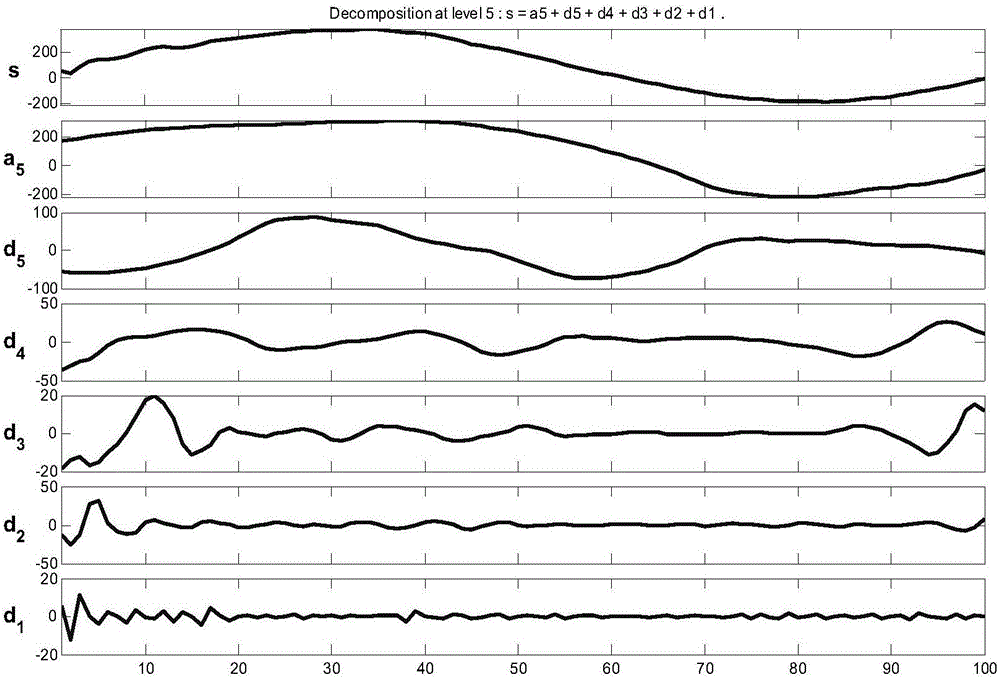

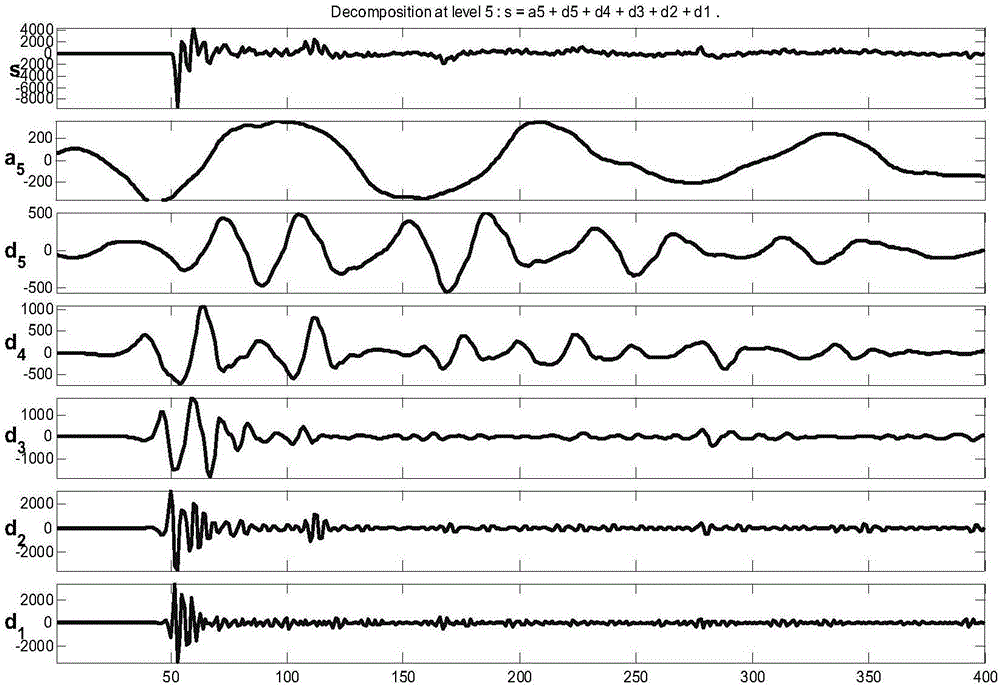

Power transmission line fault reason identification method based on high and low frequency wavelet feature association

ActiveCN106405339AAchieve the purpose of integrationEfficient analysisFault location by conductor typesPhase currentsRelational model

The invention discloses a power transmission line fault season identification method based on high and low frequency wavelet feature association, which comprises the steps of extracting fault phase current samples of N failure types, and building a sample database; performing high frequency and low frequency data sampling on a fault type T from the sample database, and respectively performing wavelet decomposition; performing feature extraction on a high frequency data wavelet coefficient and a low frequency data wavelet coefficient; S4, building an association relationship model of the fault type T; determining the association relationship model of the N fault types; S6, performing wavelet decomposition on sample data whose fault reason is to be tested, and extracting a feature vector; substituting the feature vector of the sample whose fault reason is to be tested to the association relationship models of the N fault types, and judging the fault type of the sample data whose fault reason is to be tested. According to the invention, fault phase current is analyzed and processed through combining a wavelet transform theory and an information entropy, thereby not only being capable of effectively analyzing suddenly changed signals, but also being capable of achieving a purpose of information fusion, and improving the identification accuracy.

Owner:CHINA SOUTHERN POWER GRID COMPANY +1

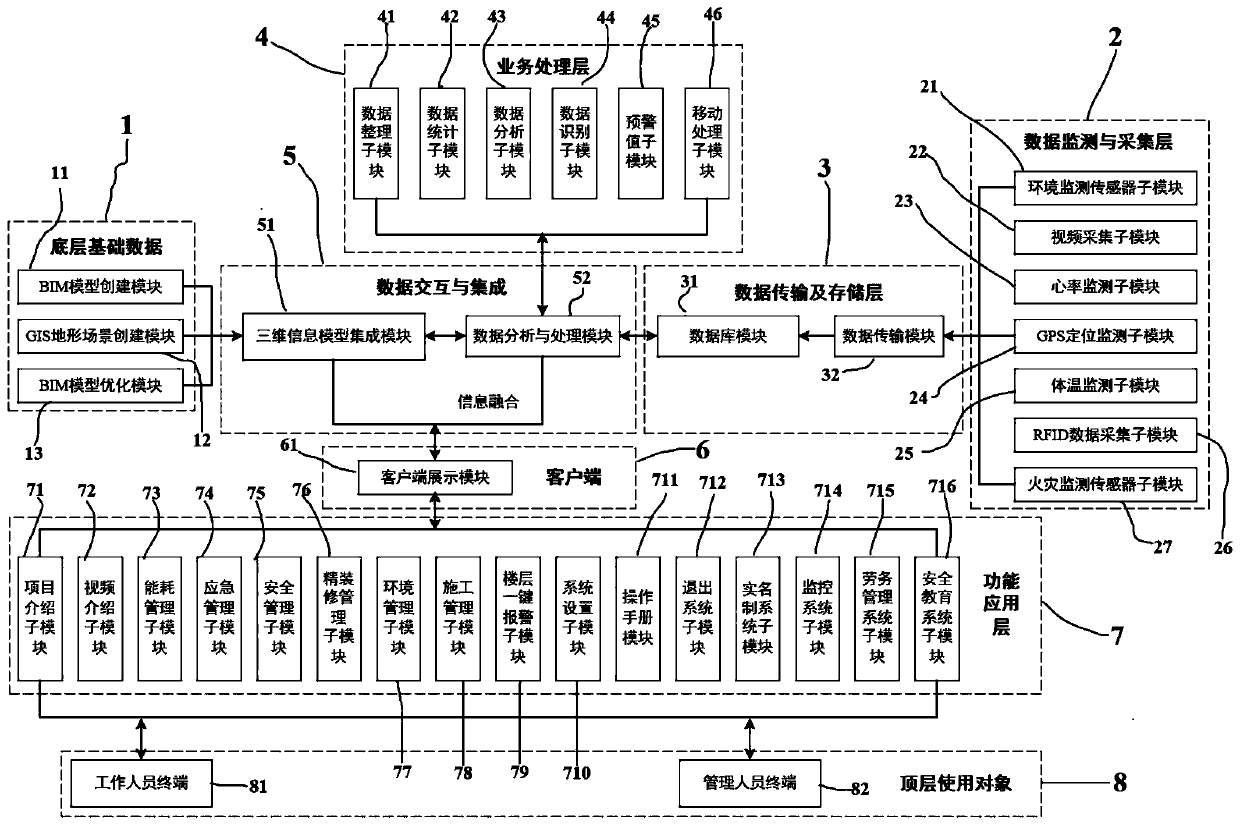

Intelligent construction site comprehensive management system based on BIM and Internet of Things

PendingCN111598734AGet goodConvenient statisticsCo-operative working arrangementsOffice automationInformatizationThe Internet

The invention discloses an intelligent construction site comprehensive management system based on BIM and the Internet of Things. The intelligent construction site comprehensive management system comprises bottom-layer basic data, a data monitoring and collecting layer, a data transmission and storage layer, a service processing layer, a data interaction and integration layer, a client, a functionapplication layer and a top-layer use object. According to the invention, core application technologies such as BIM, CE, GIS, IoT, RFID and the like can be utilized to effectively carry out refined management on personnel, equipment and a construction process of a construction site and realize visual intelligent management. The informatization level of engineering management can be improved, green construction and ecological construction are achieved, BIM and Internet of Things integrated application is achieved, information fusion of an intelligent construction site is achieved, and visual intelligent management of engineering construction is achieved.

Owner:THE SECOND CONSTR ENG CO LTD OF CHINA CONSTR THIRD ENG BUREAU

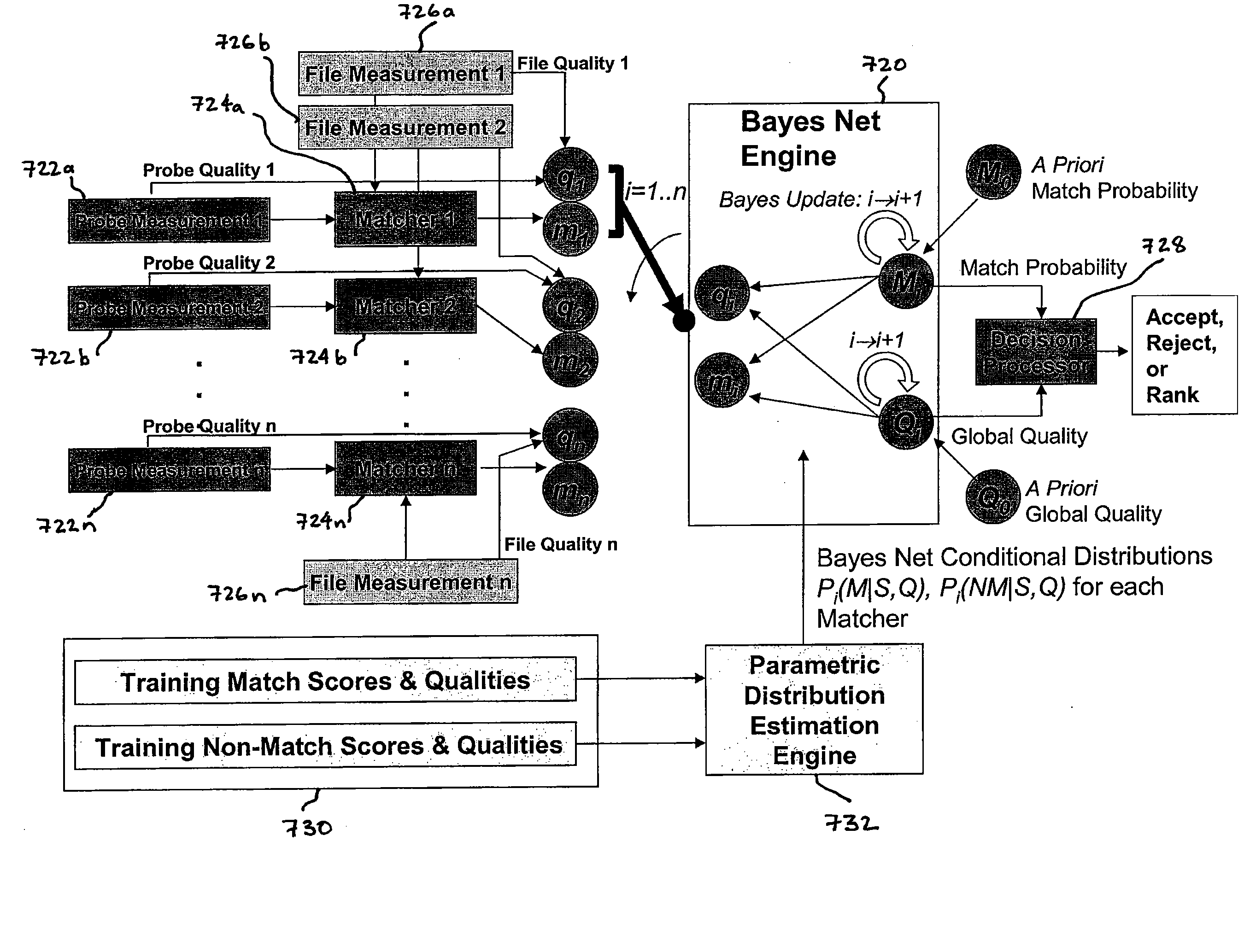

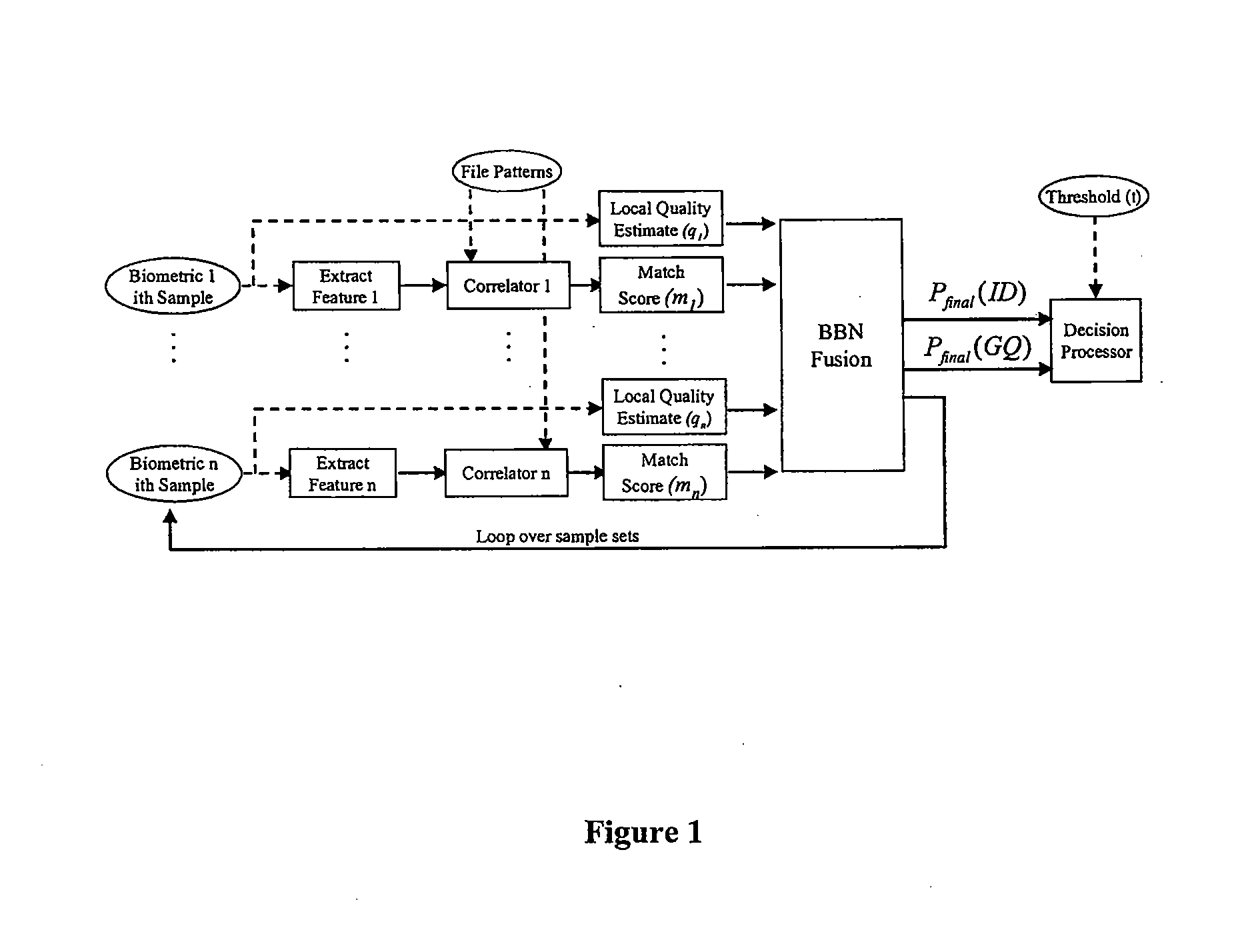

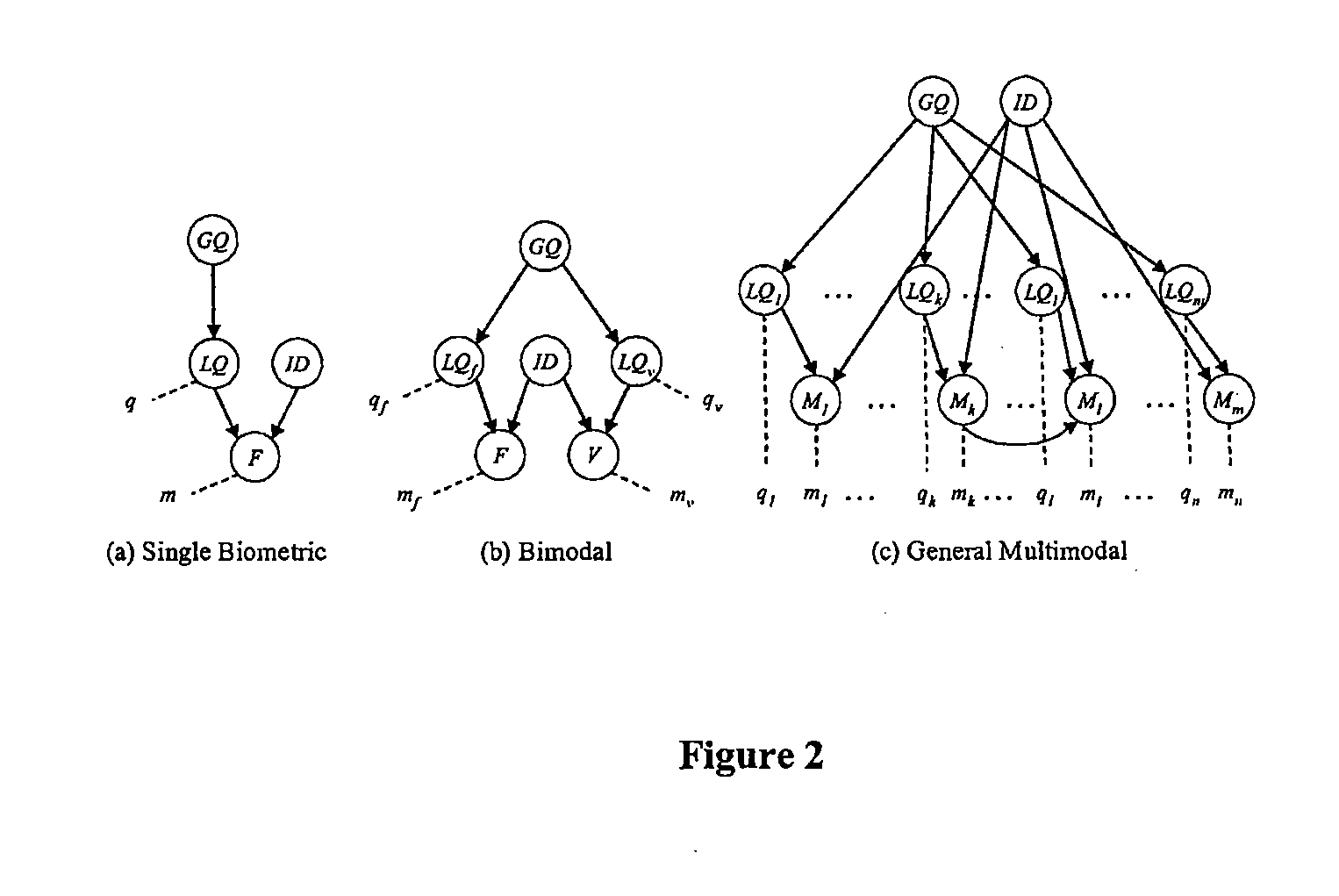

Fusing Multimodal Biometrics with Quality Estimates via a Bayesian Belief Network

ActiveUS20070172114A1Good compatibilityReduce error rateElectric signal transmission systemsImage analysisBiometric fusionFeature fusion

A Bayesian belief network-based architecture for multimodal biometric fusion is disclosed. Bayesian networks are a theoretically sound, probabilistic framework for information fusion. The architecture incorporates prior knowledge of each modality's capabilities, quality estimates for each sample, and relationships or dependencies between these variables. A global quality estimate is introduced to support decision making.

Owner:THE JOHN HOPKINS UNIV SCHOOL OF MEDICINE

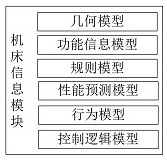

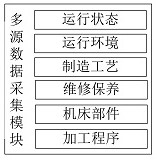

Composite machine tool digital twinning monitoring system

InactiveCN112162519AEnable proactive predictive maintenanceImprove monitoring accuracyProgramme controlComputer controlData acquisitionMonitoring system

The invention discloses a composite machine tool digital twinning monitoring system, which relates to the technical field of digital twinning. The composite machine tool digital twinning monitoring system creates a digital twinning monitoring system through using a machine tool information module, realizes multi-platform, multi-data and multi-interface communication based on an OPCUA transmissioninterface, and constructs a man-machine interaction module; a multi-field data acquisition module acquires multi-source heterogeneous data in real time by adopting different types of sensors, processes the acquired data based on an information fusion technology to form twin data, and forwards the twin data to a modeling calculation module; the modeling calculation module is used for forming a digital twinning body of a compound machine tool through driving of the twin data in combination with rules such as constraint, prediction and decision; and a personalized decision module monitors and manages entity machine tool equipment in real time by reconstructing and optimizing a machine tool monitoring twinning model in real time. According to the composite machine tool digital twinning monitoring system, the monitoring process of the operation of the compound machine tool is simplified, the monitoring precision of the machine tool system is improved, and the active predictive maintenance of the operation of the compound machine tool is realized.

Owner:GUILIN UNIV OF ELECTRONIC TECH

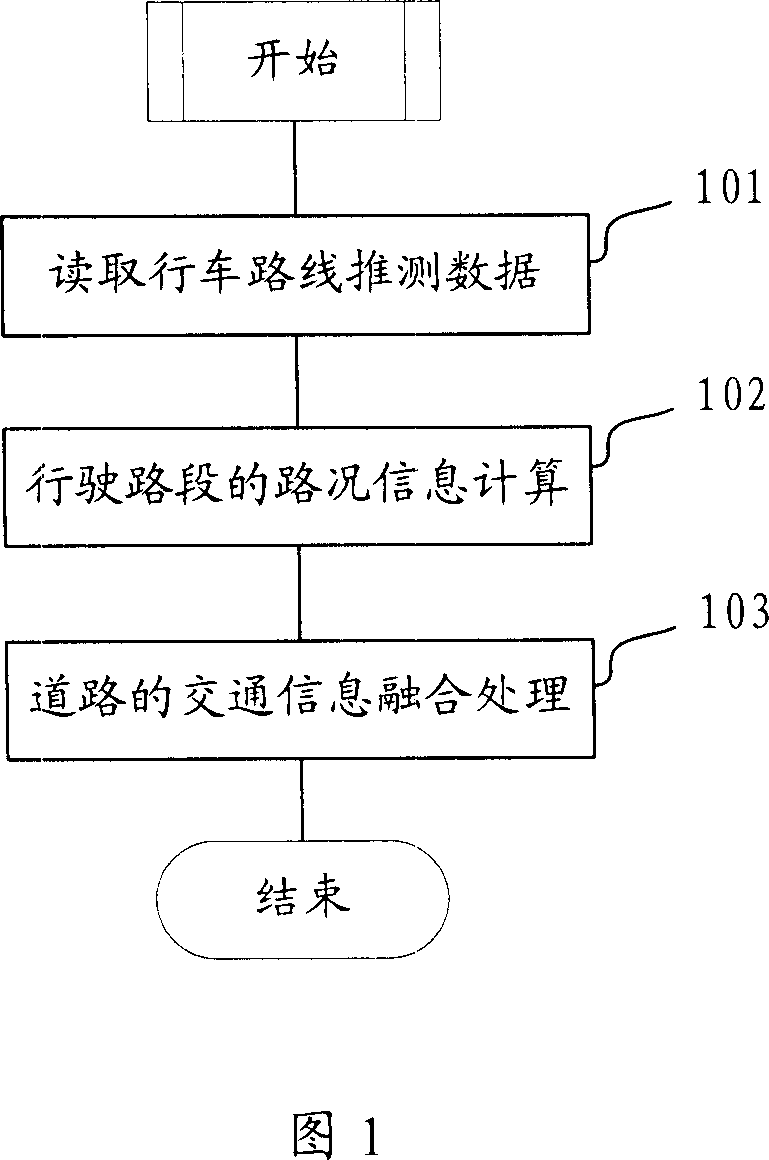

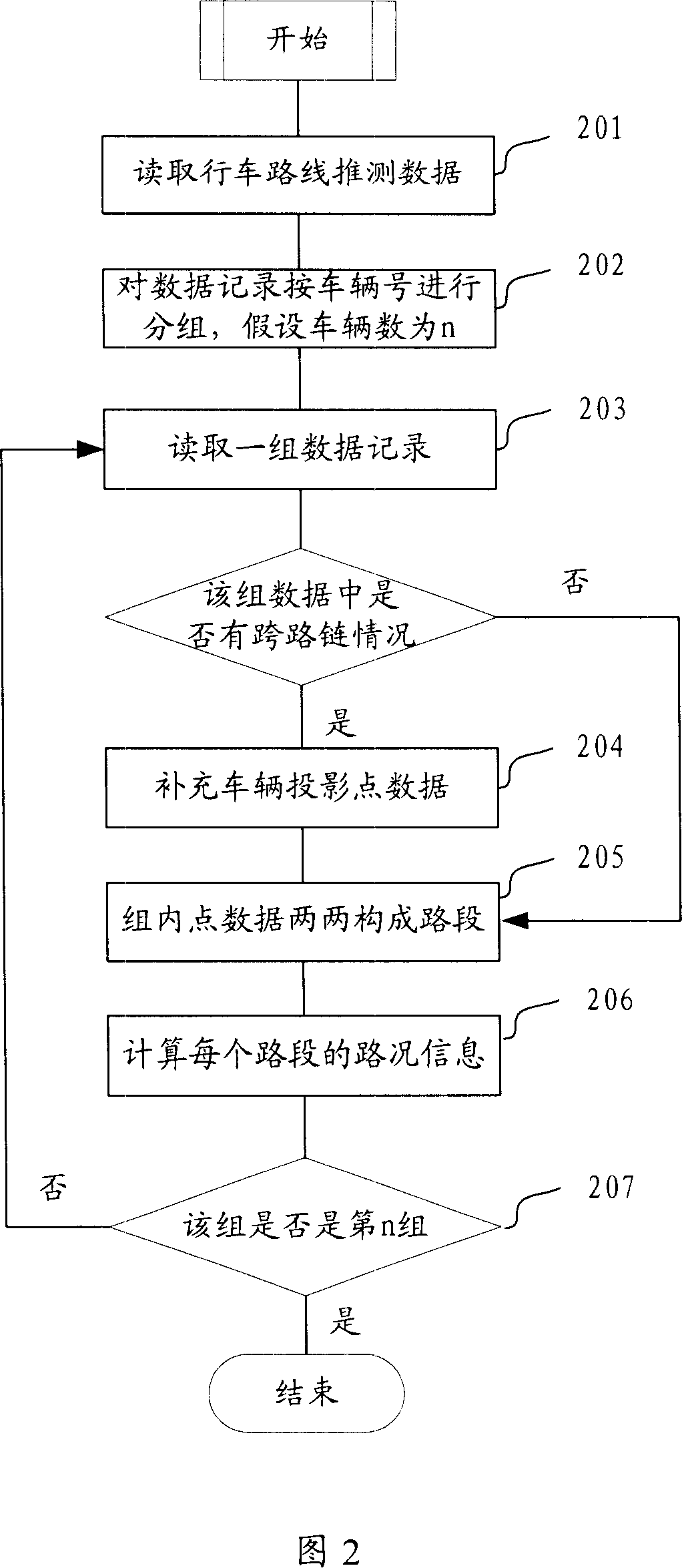

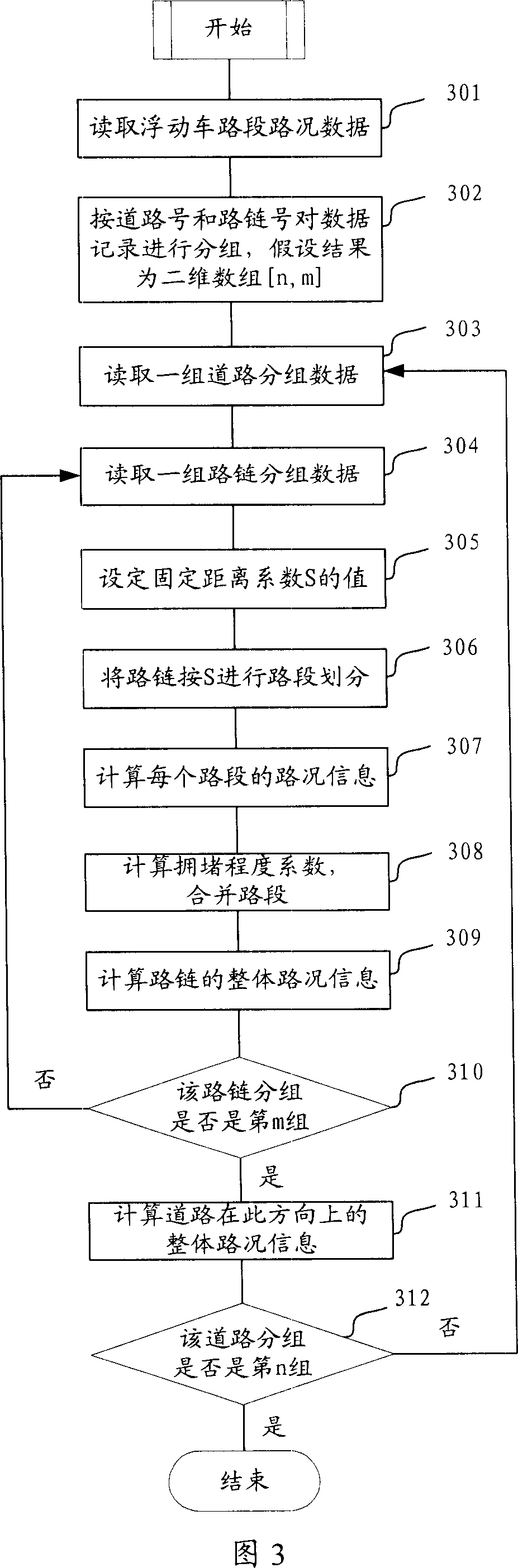

Traffic information fusion processing method and system

The invention discloses a public transport information integrating and processing method and system, to resolve the problem that floating car data only reflects the traffic information in its routing trace, but cannot calculate the entire road traffic information. The method includes: iteratively read source data from all floating cars in a cycle; for source data from each car, calculate traffic information in different time segments; divide each road link that form a trace into road segment units, and calculate the traffic information of each unit; according to the traffic information of the units, calculate the traffic information of each road link, and then calculate the synthesized traffic information of each road.

Owner:CENNAVI TECH

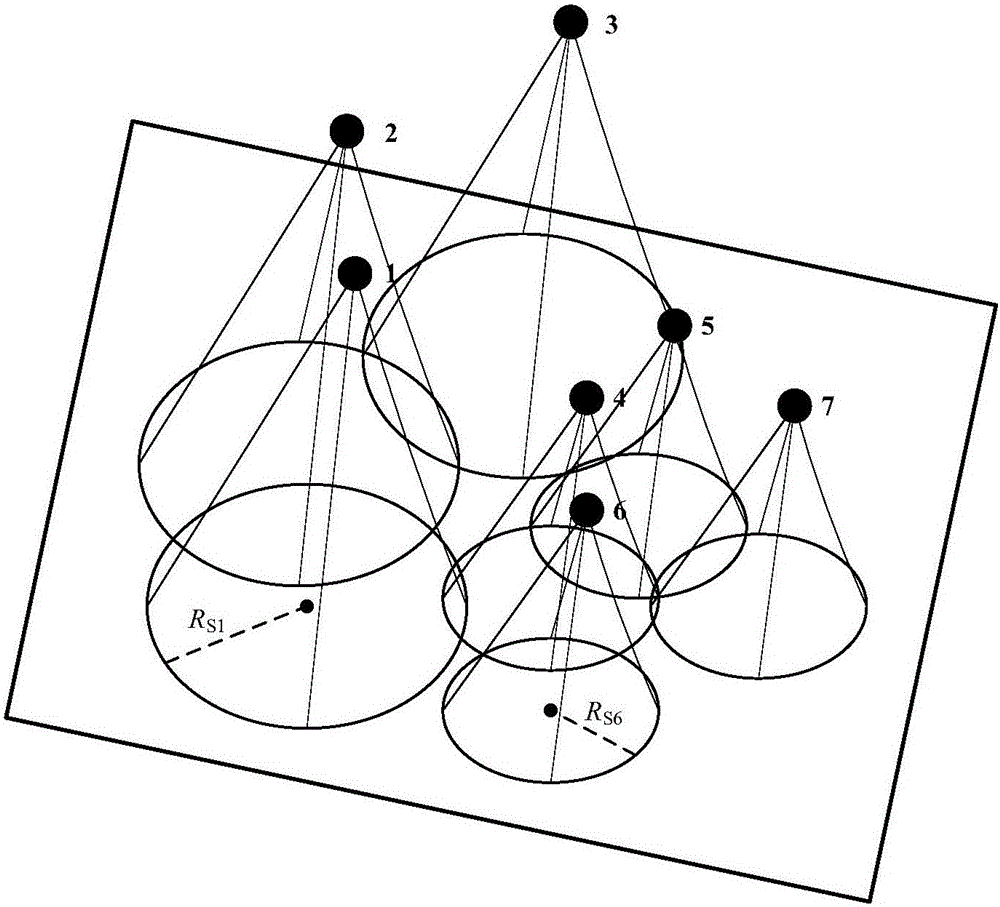

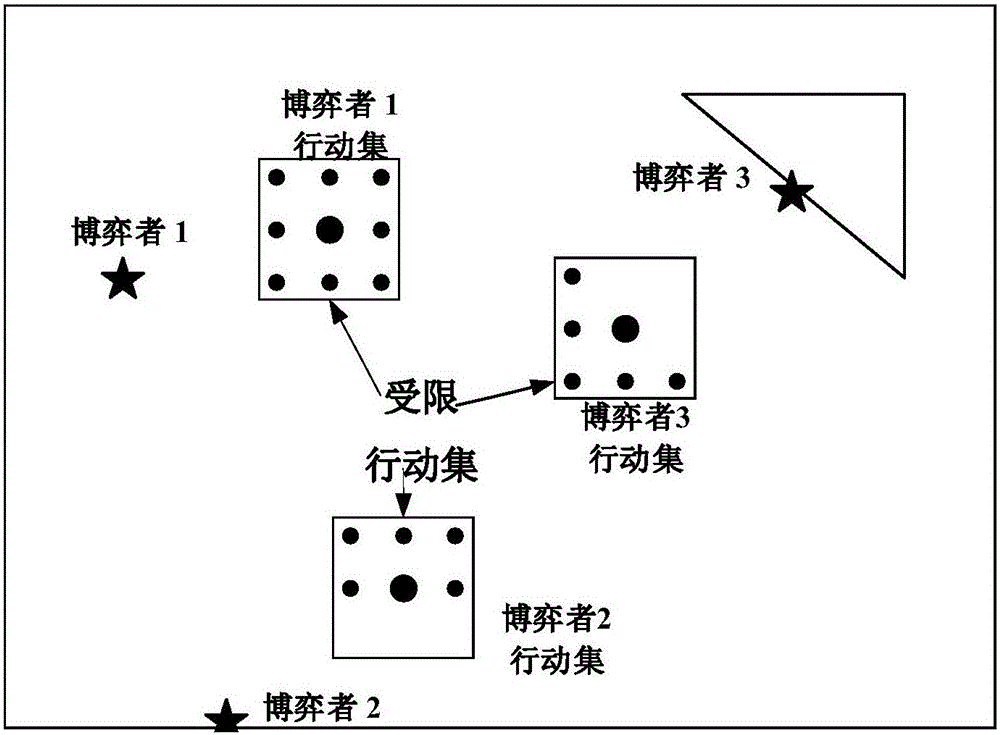

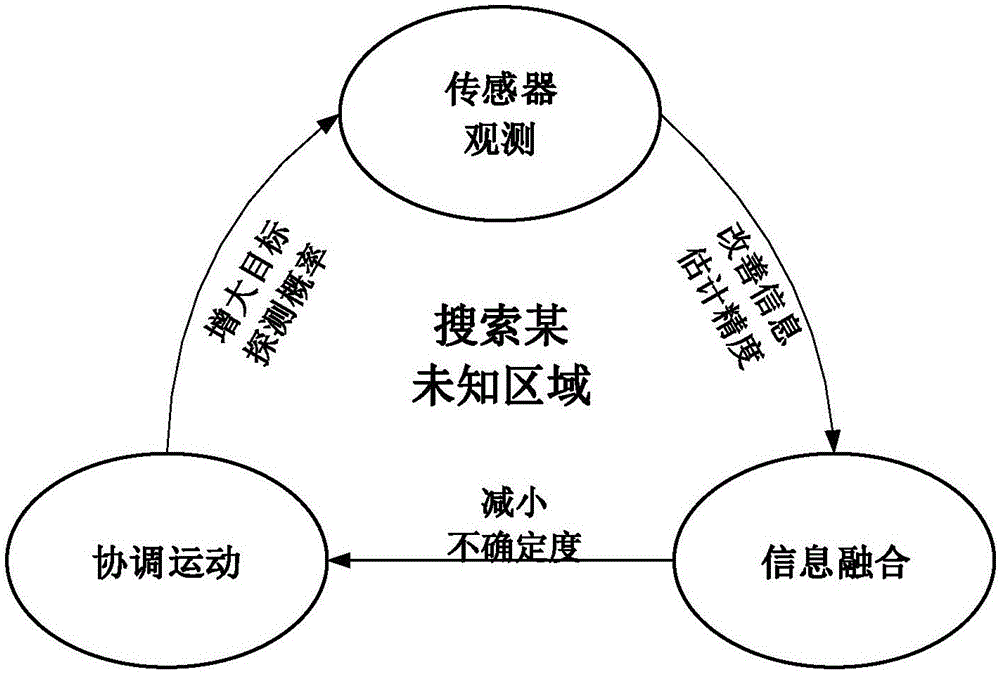

Potential game-based multi-unmanned aerial vehicle cooperative search method

ActiveCN105700555AOvercome localityAchieve consistencyTarget-seeking controlAdaptive controlSearch problemControl manner

The invention discloses a potential game-based multi-unmanned aerial vehicle cooperative search method. The method comprises steps: 1, modeling of a multi-unmanned aerial vehicle cooperative search problem is carried out; 2, potential game modeling for a multi-unmanned aerial vehicle cooperative motion and potential game solution in a double log-linear learning method are carried out; 3, a probability map is updated according to sensor detection information, information fusion is carried out on the updated probability map, and a target existence probability is acquired; and 4, uncertainty is updated according to the target existence probability, and multi-unmanned aerial vehicle cooperative search is carried out. The method of the invention can realize multi-unmanned aerial vehicle cooperative search, and comprises steps of cooperative motion based on the potential game, updating of the probability map, information fusion and the like, and due to the self distributed control mode, the calculation is simple, the robustness is strong, and external disturbance can be effectively handled.

Owner:BEIHANG UNIV

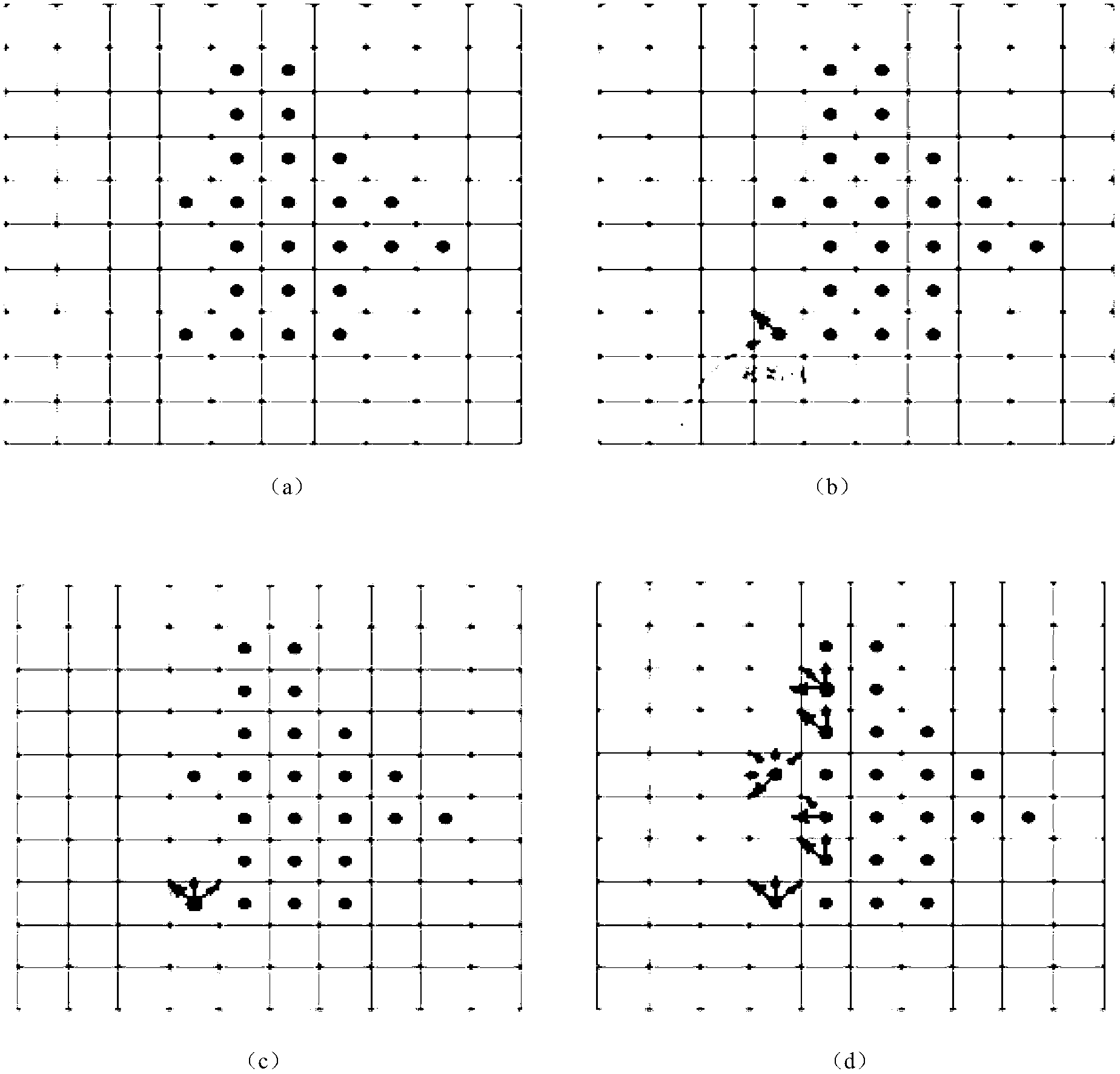

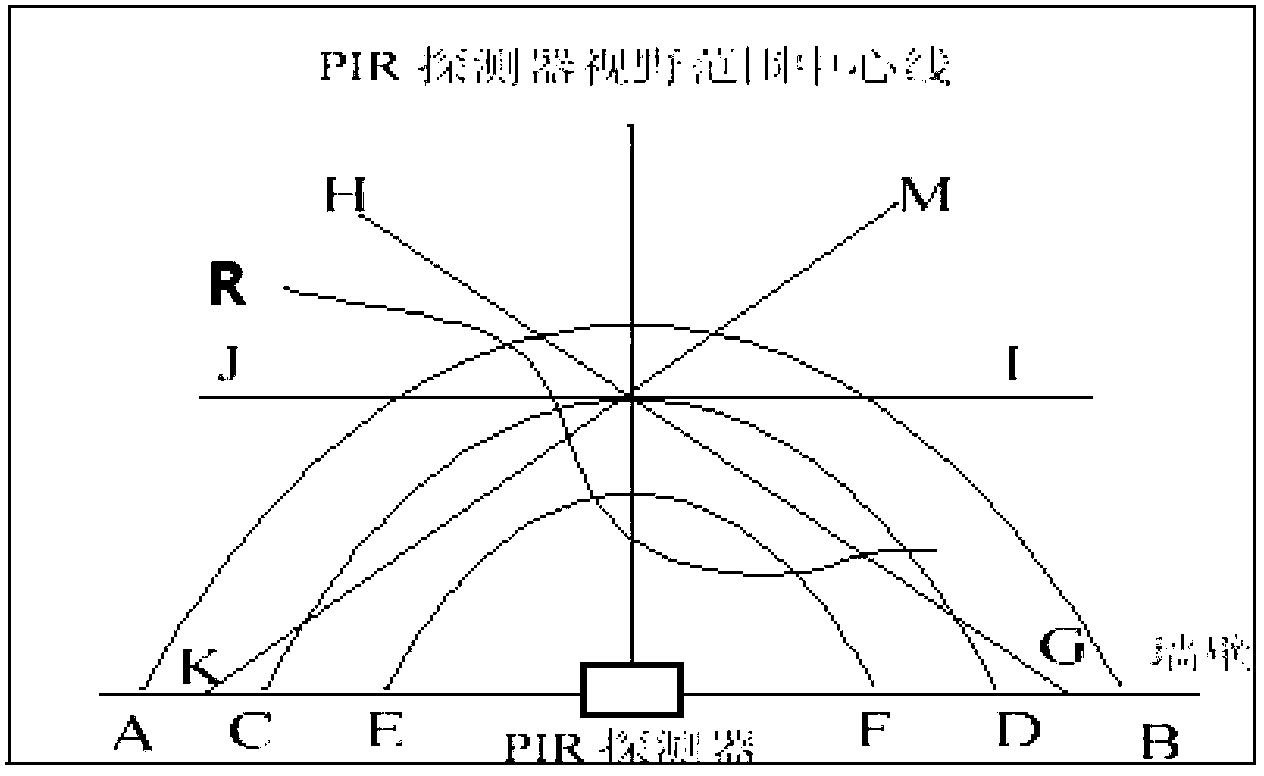

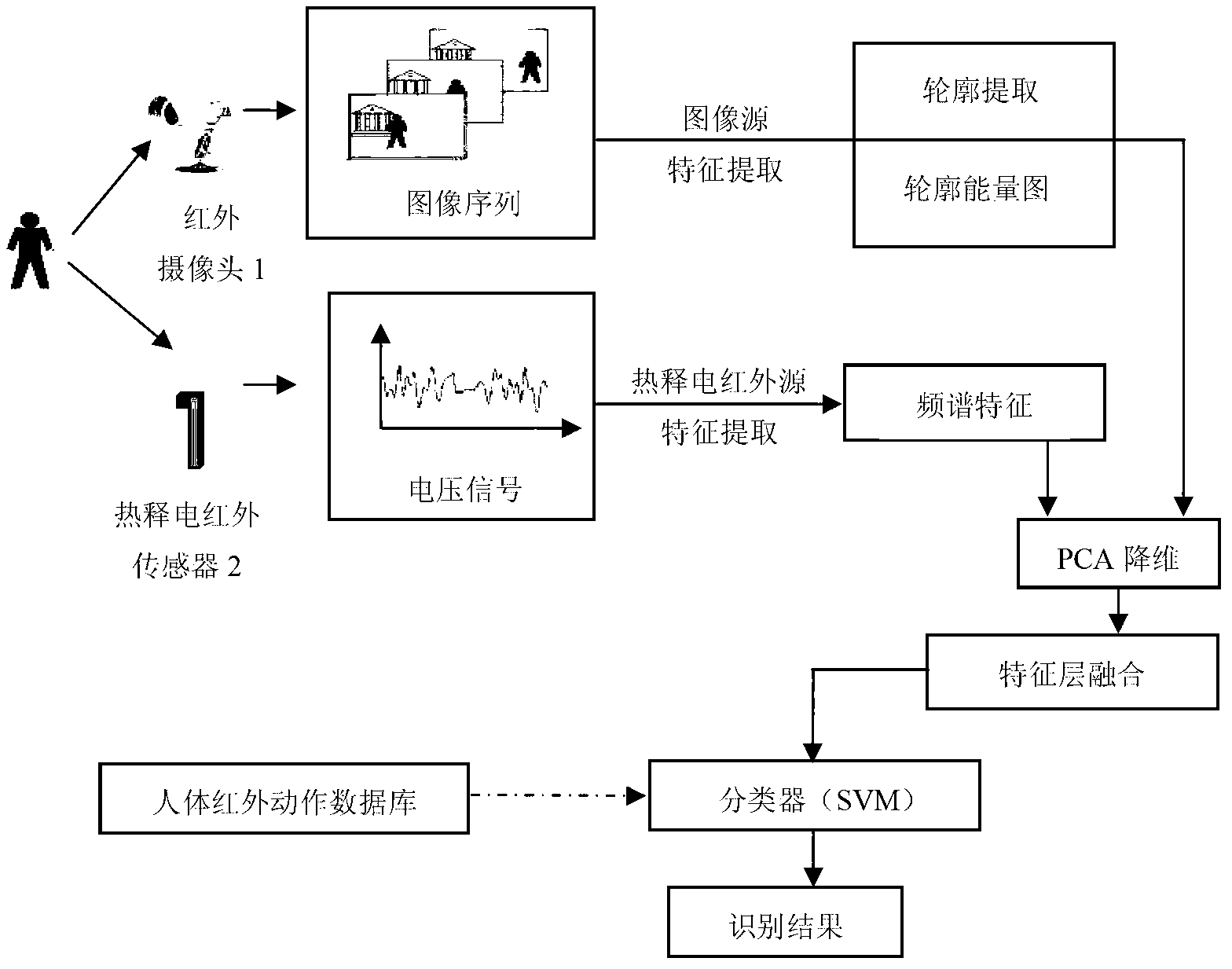

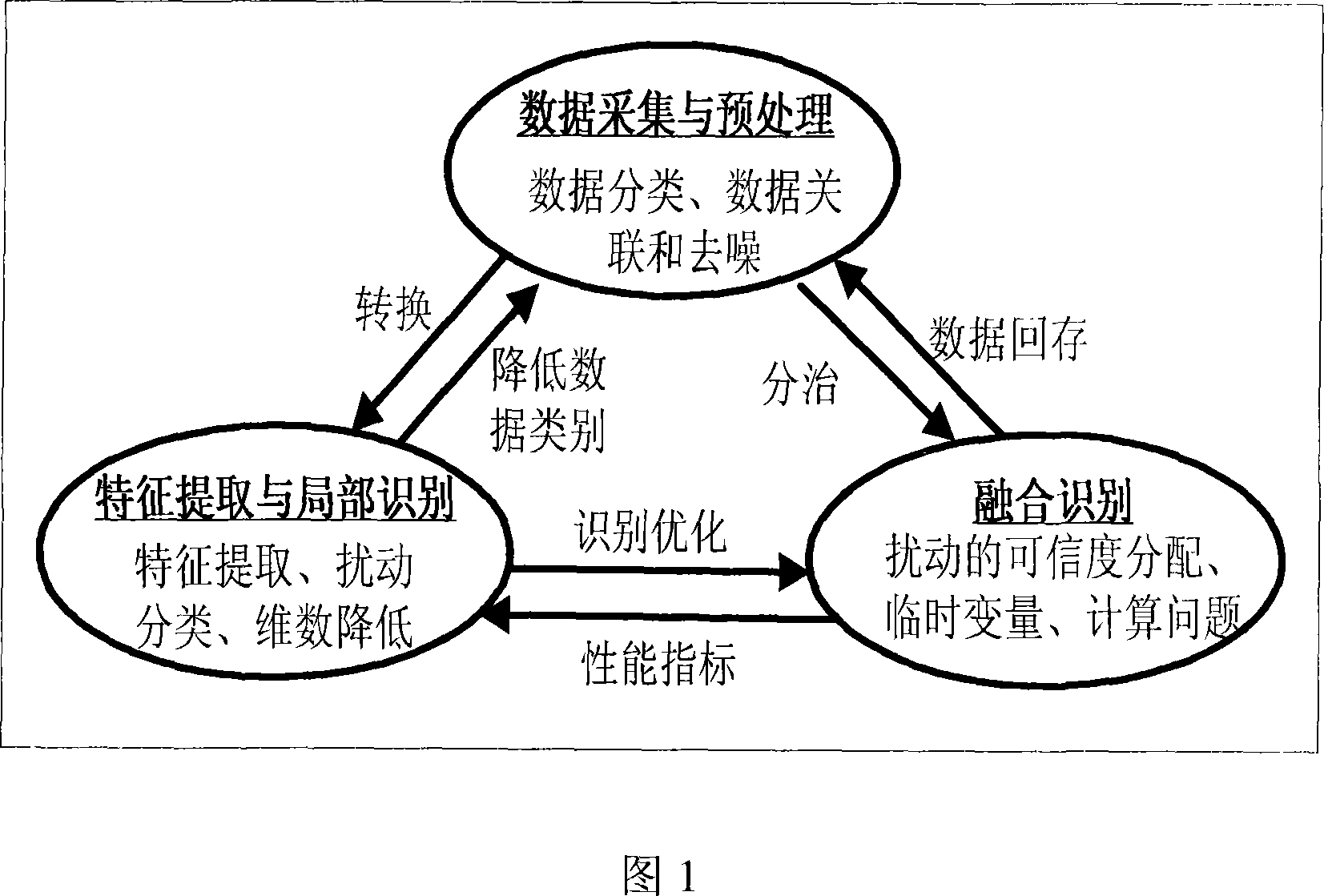

Human action recognition method based on two-channel infrared information fusion

InactiveCN102799856AGuaranteed correct recognition rateCharacter and pattern recognitionFrequency spectrumPrincipal component analysis

The invention discloses a human action recognition method based on two-channel infrared information fusion, and the method comprises the following steps of: collecting a human motion video image through an infrared camera, and collecting a human motion voltage signal through a pyroelectric infrared sensor; respectively carrying out feature extraction on the collected human motion video image and human motion voltage signal, wherein human contour energy is obtained from the human motion video image and spectrum signature is obtained from the human motion voltage signal; respectively carrying out principal component analysis on the human contour energy and the spectrum signature; fusing the principal component analysis results on a feature layer; and carrying out classification and identification on the fused features through the support vector machine method combined with corresponding data in the human infrared action data base. According to the method, multi-level human action information in the infrared image is fully utilized and the human direction information in the output signals of the pyroelectric infrared sensor is fused so as to realize classification and identification on different human actions in different directions and ensure accurate action recognition rate.

Owner:TIANJIN UNIV

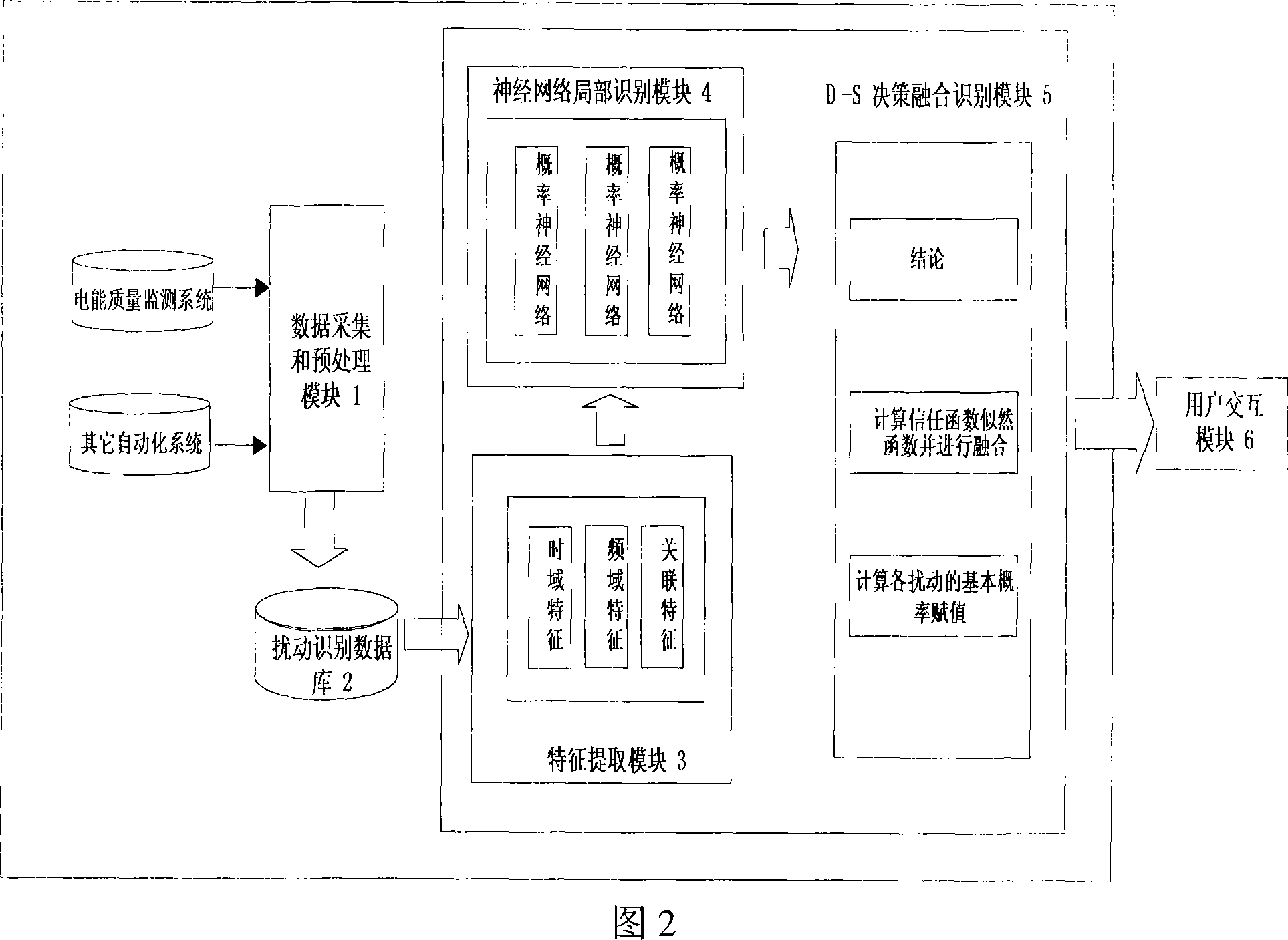

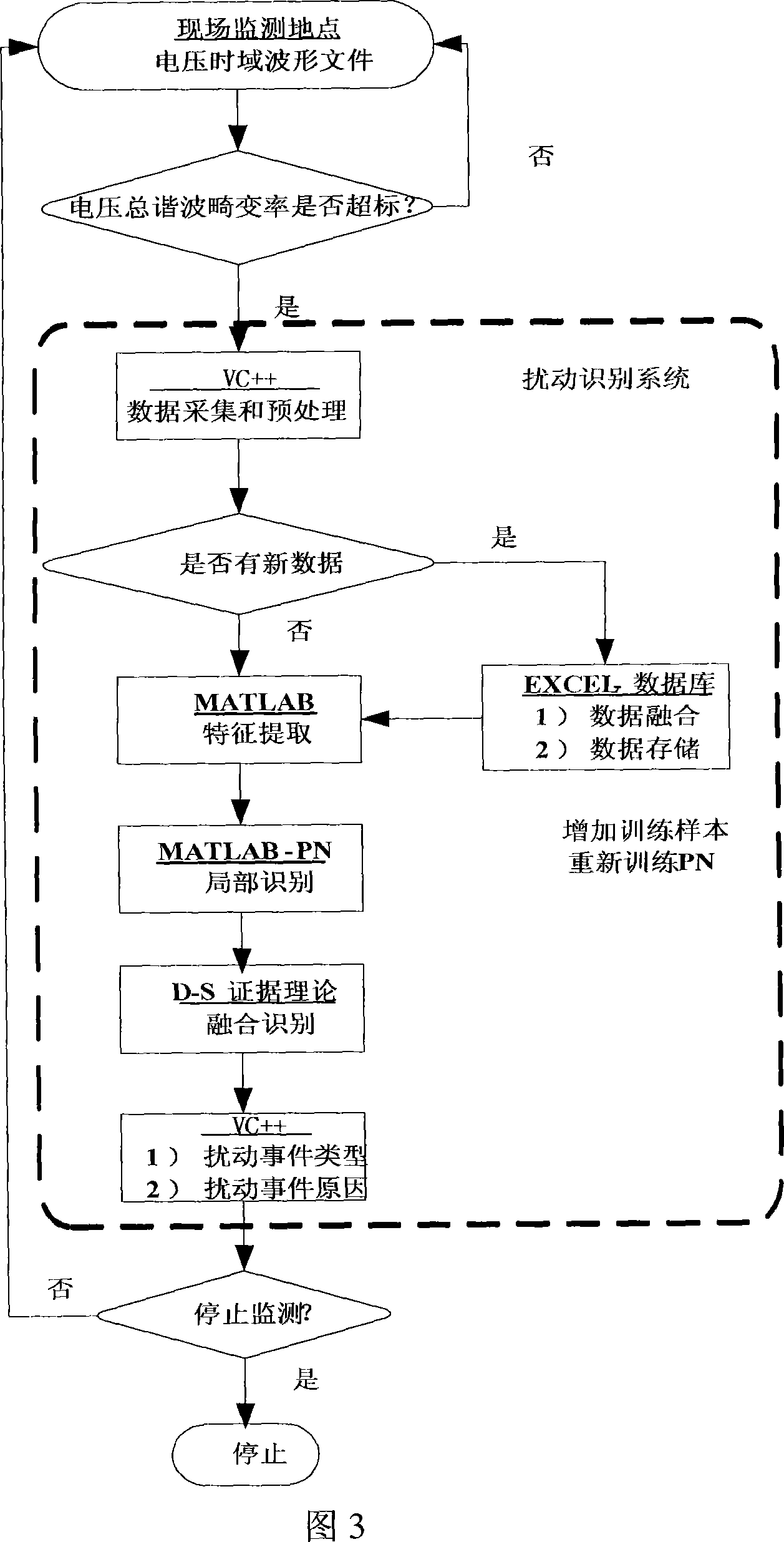

Electrical energy power quality disturbance automatic identification method and system based on information fusion

InactiveCN101034389ARealize intelligent identificationOvercome the disadvantage of strong subjectivityData processing applicationsData acquisition and loggingPower qualityNerve network

The invention is a automatic identification method and system based on the power quality disturbances of the information fusion, characterized by: collecting the transient and steady-state measurement datum associated with the power quality disturbances from the Power Quality Monitoring System and other automation systems, disposing of noise such as pretreatments; Using the method combining Fourier analysis, small wave multi-resolution decomposition and analysis of the correlation functions, distilling the from the disturbance datum, establishing the disturbance eigenvector, and as a the input characteristic vector of three probabilistic neural networks, realizing the mapping from a feature space to the disturbance space; the output of three probabilistic neural networks regarded as the evidence body of independent of each other, realizing Information Fusion by the use of D-S evidence theory, obtaining recognition results. This invention through the correct selection and extraction of disturbance eigenvectors, can input neural network parallel in classification and reflect the disturbance situation from the various aspects, thus effectively enhancing the correct identification rate of disturbance, a first step in Intelligent Recognition of the power quality disturbances.

Owner:ELECTRIC POWER RES INST STATE GRID JIANGXI ELECTRIC POWER CO

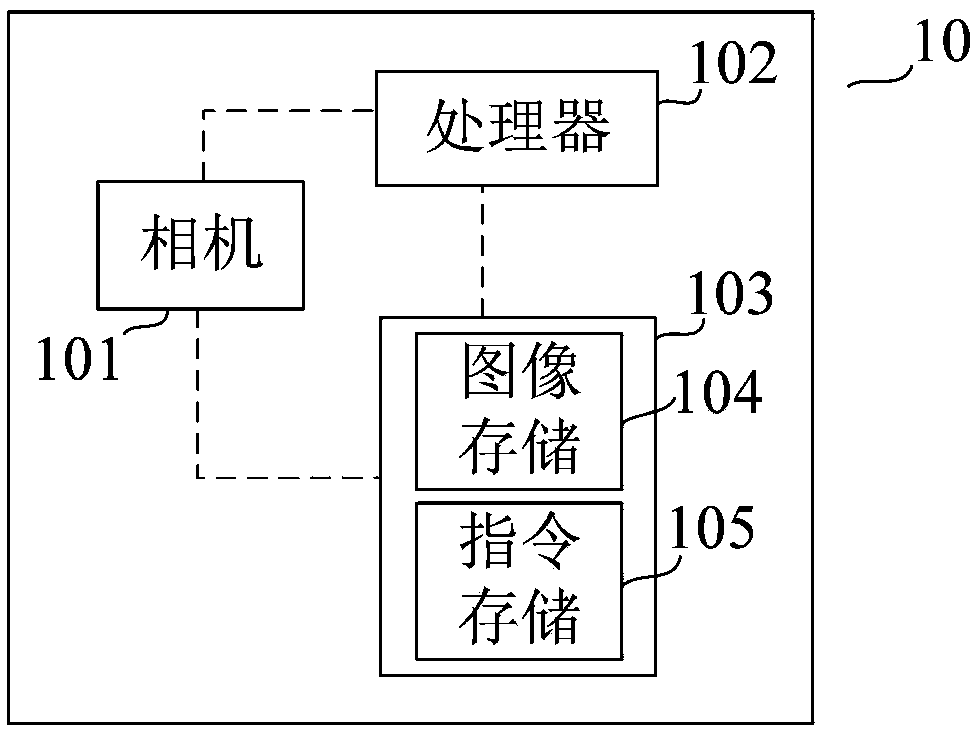

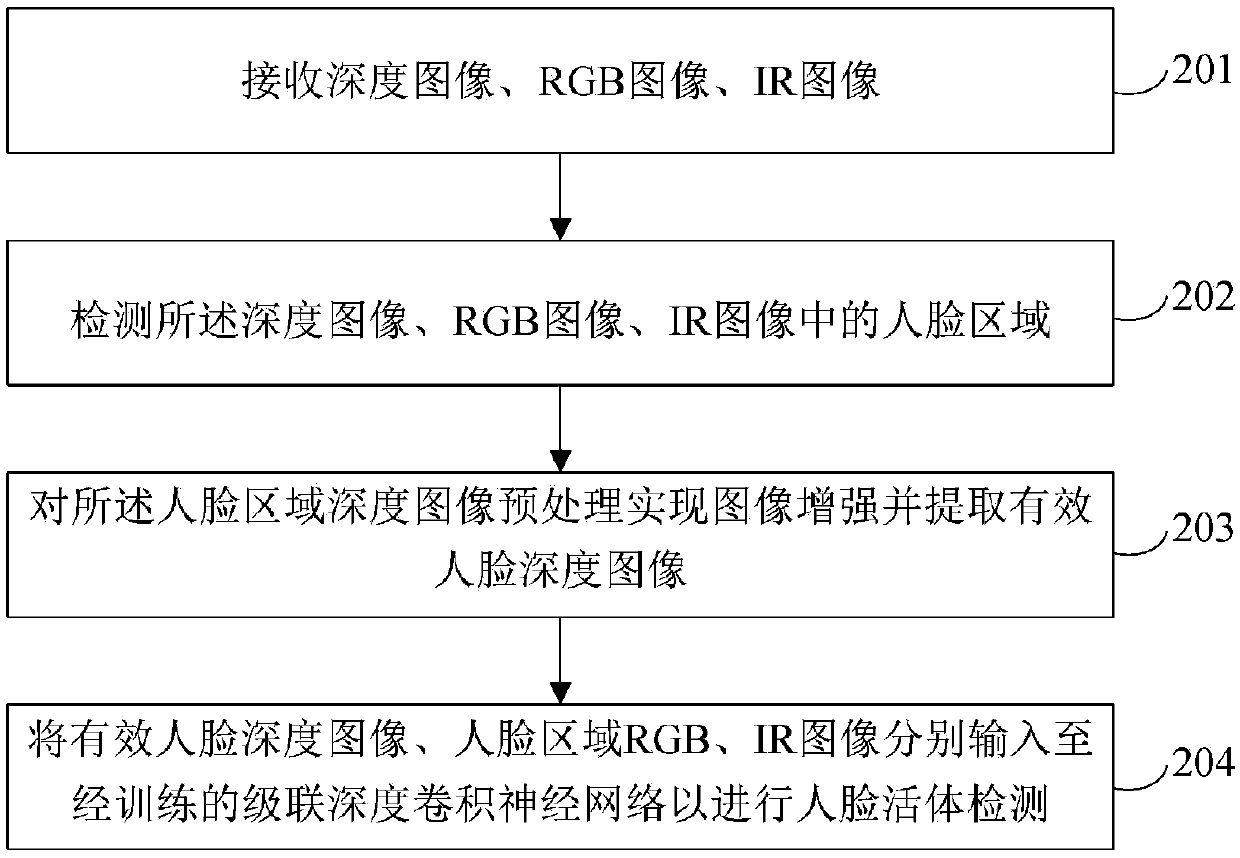

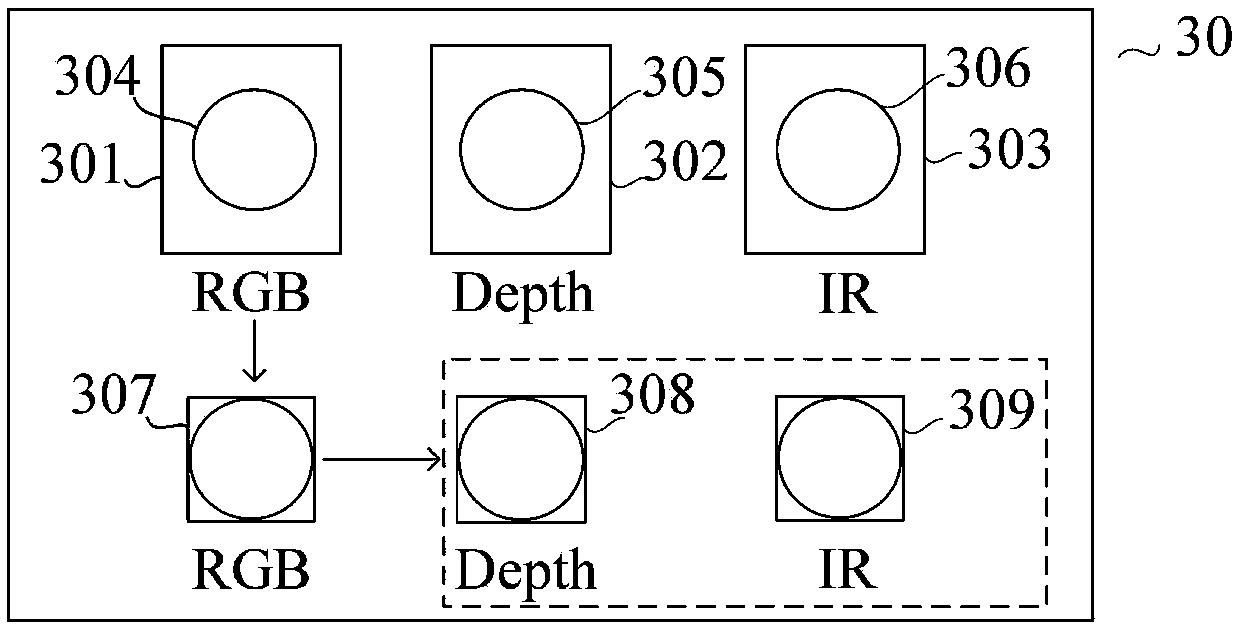

Human face living body detection method and equipment

ActiveCN109684924ASolve the problem that the three-dimensional information of the face cannot be recovered wellImprove accuracyImage enhancementImage analysisColor imageLiving body

The invention relates to a human face living body detection method and device, and the method comprises the following steps: S1, receiving a depth image, a color image, and an infrared image which comprise a human face region, and carrying out the registration; S2, detecting human face areas in the depth image, the color image and the infrared image; S3, preprocessing the face region depth image to realize image enhancement and extract an effective face depth image; And S4, respectively inputting the effective face depth image, the color image of the face region and the infrared image into thetrained cascade depth convolutional neural network, and carrying out accurate face living body detection. The device comprises a computer program for realizing the method. Through multi-source information fusion and the cascade deep convolutional neural network, the problem that a traditional monocular color camera cannot well recover face three-dimensional information is solved, and the accuracyof face recognition is improved.

Owner:SHENZHEN ORBBEC CO LTD

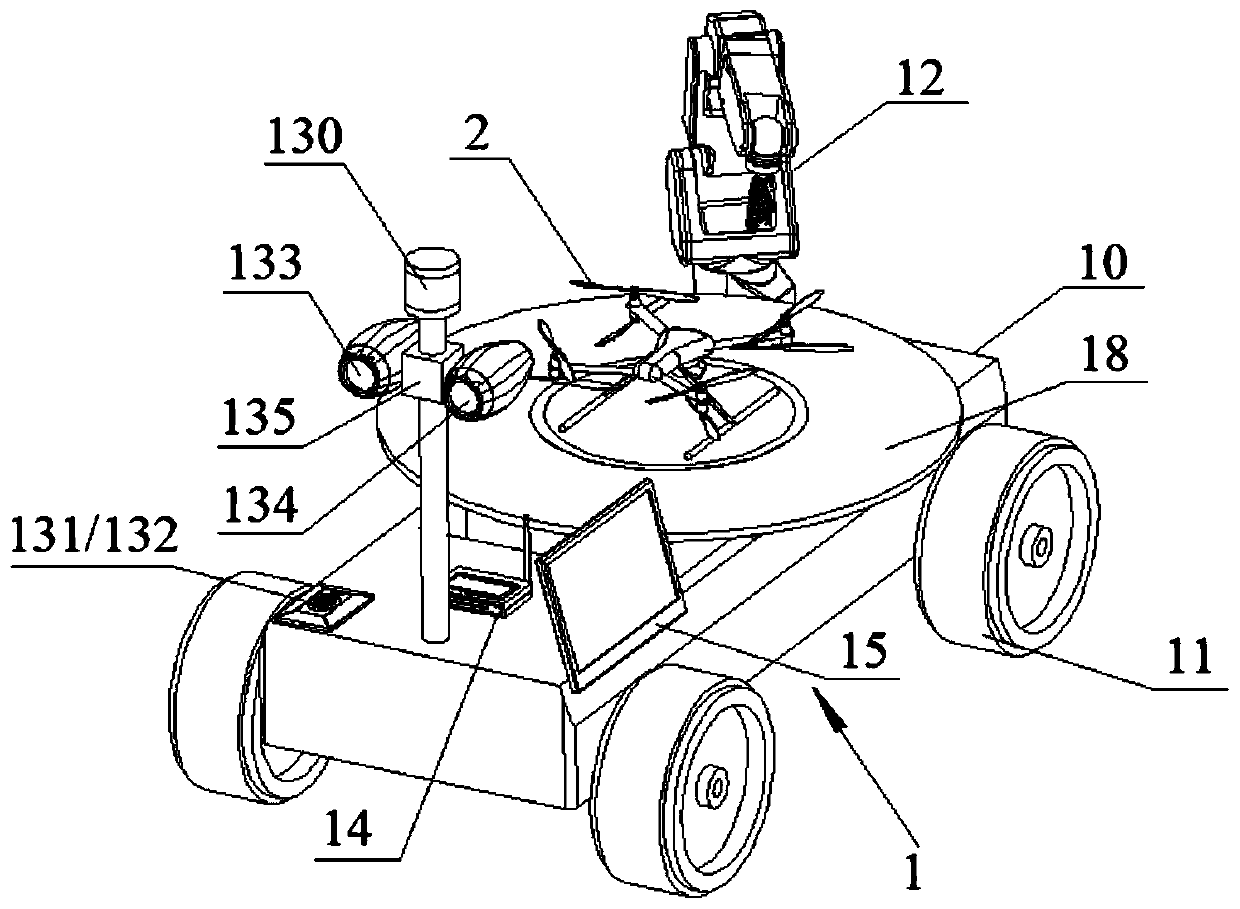

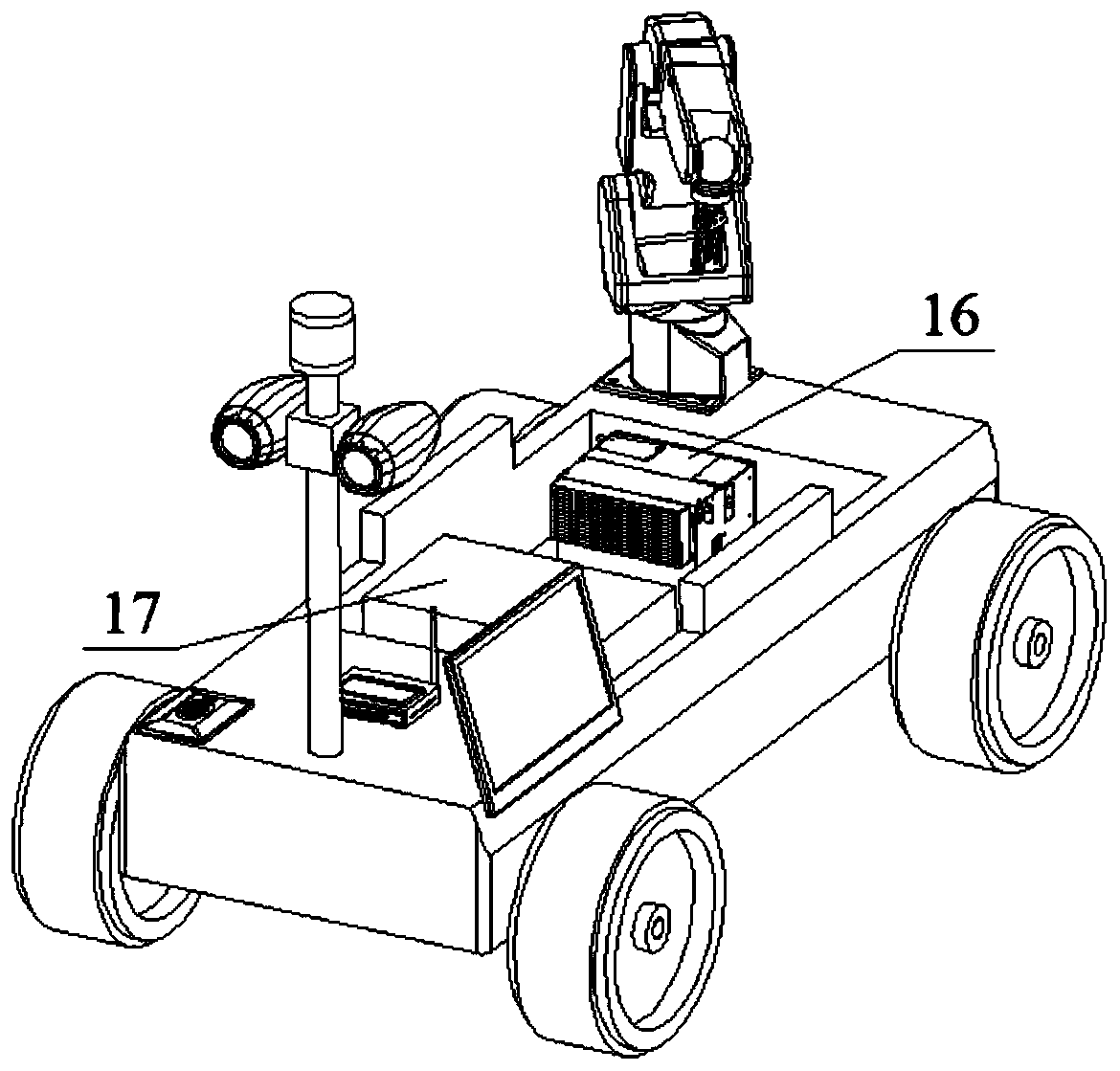

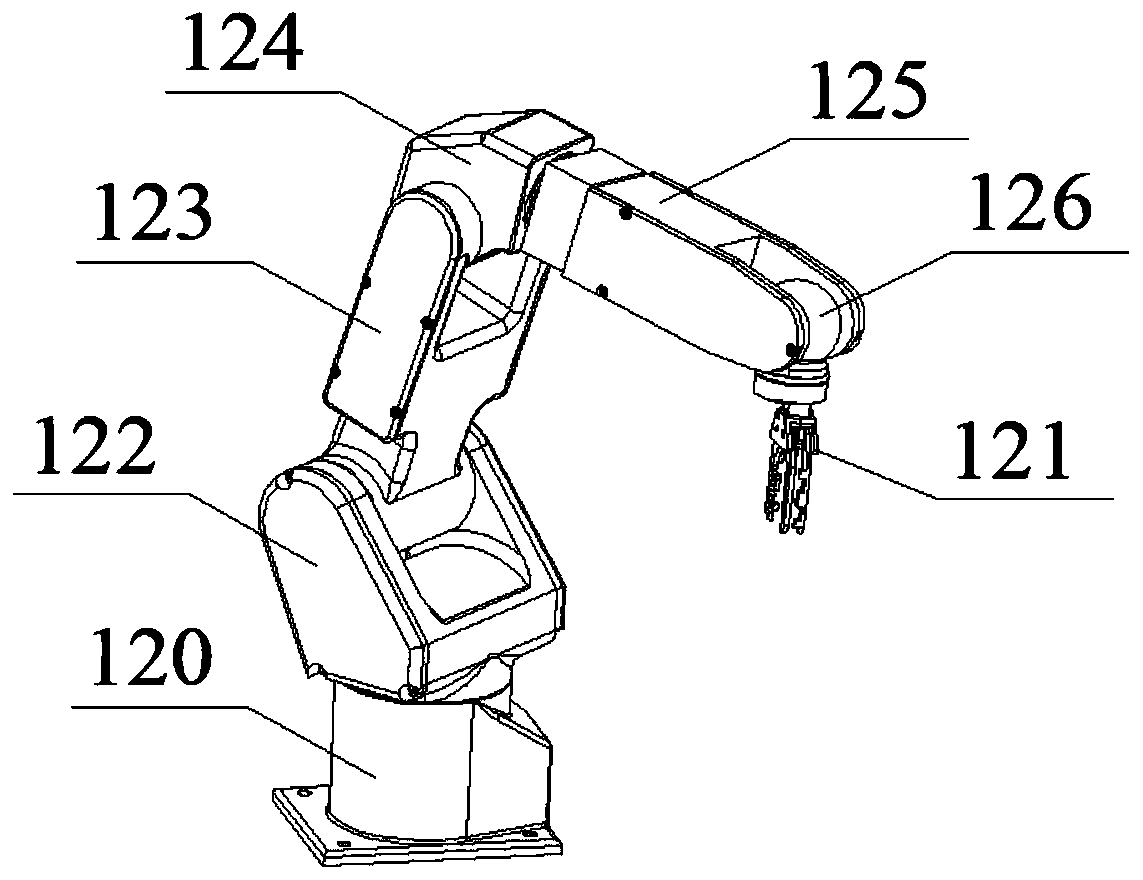

Air-ground cooperative intelligent inspection robot and inspection method

PendingCN111300372AGuaranteed all-roundReduce security operating costsManipulatorThe InternetControl engineering

The invention relates to an air-ground cooperative intelligent inspection robot. The robot comprises a robot platform and an unmanned aerial vehicle, wherein the robot platform comprises a vehicle body, wheels, a driving assembly, a mechanical arm, an environment sensing assembly, a communicator, a robot controller and a power supply assembly, and the unmanned aerial vehicle is in communication connection with a base station through the communicator. The inspection method comprises the following steps that air-ground cooperative multi-robot positioning and mapping is carried out, specifically,sensing positioning calculation is carried out, map creation is carried out, and multi-information fusion positioning is carried out; and air-ground cooperative tracking and control is carried out, specifically, unmanned aerial vehicle flight control design is carried out, robot platform trajectory tracking control is carried out, and unmanned aerial vehicle self-help landing control is carried out. The robot can be ensured to execute given navigation and inspection tasks in an omnibearing and all-weather mode, the technologies of the Internet of Things, artificial intelligence and the like are applied, environment sensing, dynamic decision making, behavior control and alarm devices are integrated, the robot has the capabilities of autonomous sensing, walking, protection, interactive communication and the like, basic, repeated and dangerous security work can be completed, and the security operation cost is reduced.

Owner:TONGJI ARTIFICIAL INTELLIGENCE RES INST SUZHOU CO LTD

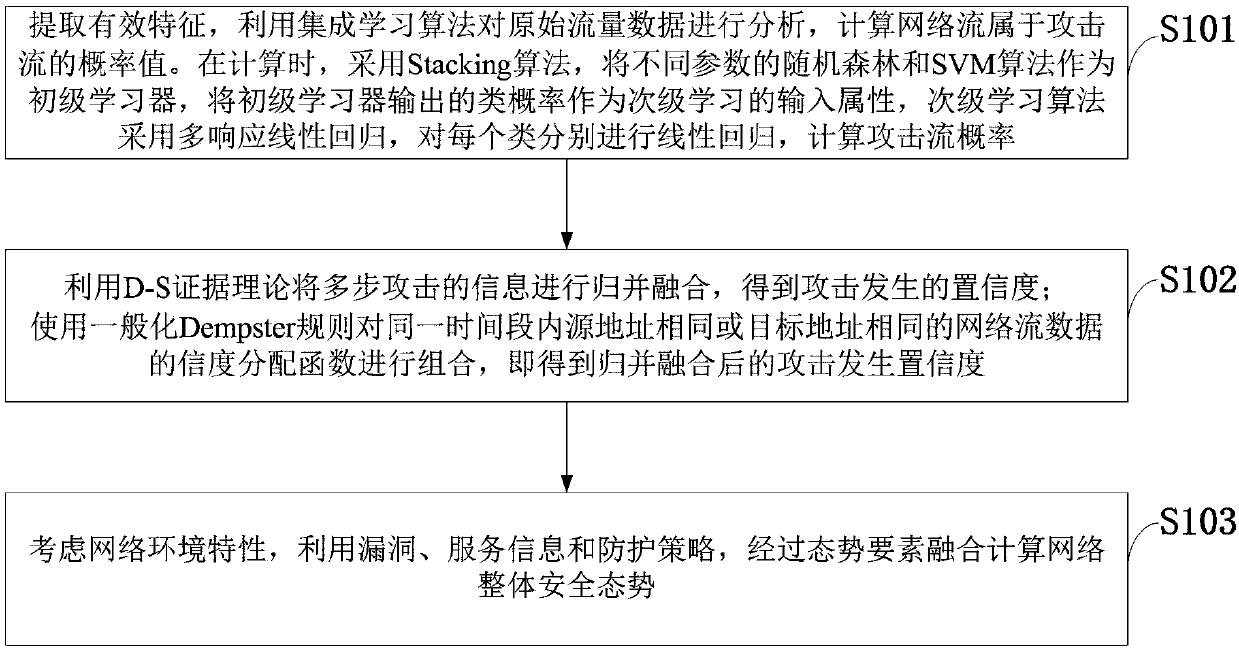

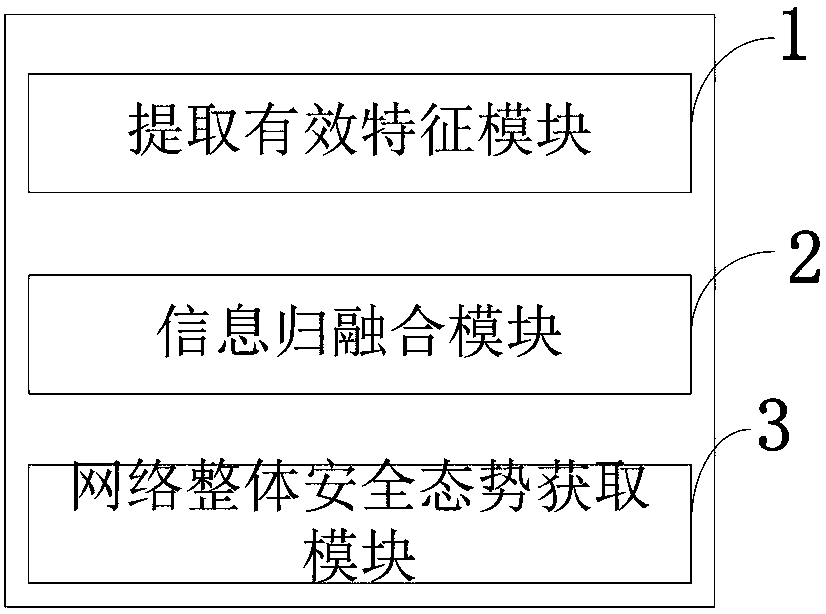

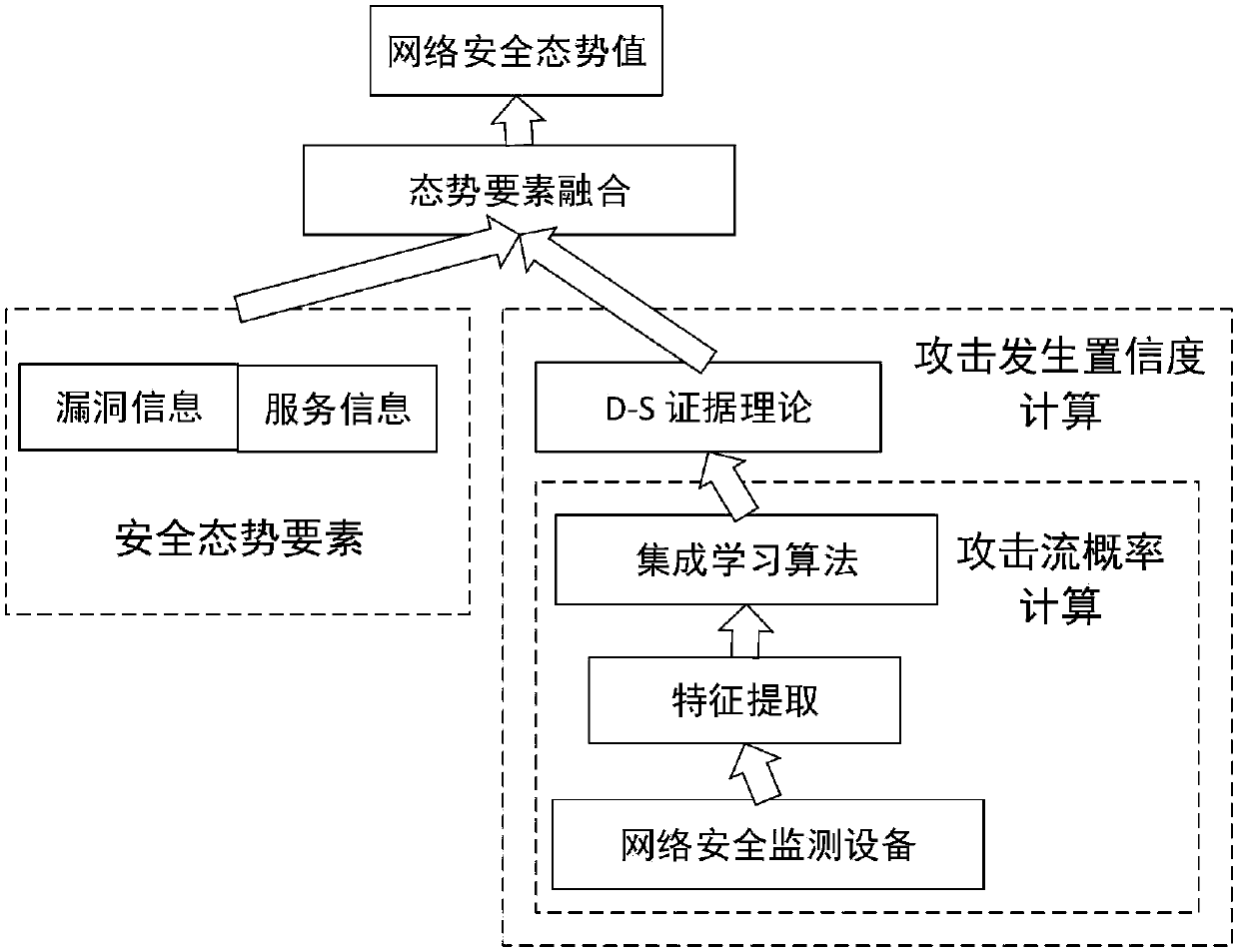

Attack occurrence confidence-based network security situation assessment method and system

InactiveCN108306894AAccurately reflect the security situationTimely responseData switching networksStream dataNetwork attack

The invention belongs to technical fields characterized by protocols and discloses an attack occurrence confidence-based network security situation assessment method and system. According to the attack occurrence confidence-based network security situation assessment method and system, a machine learning technology is adopted to analyze network stream data and calculate a probability that networkstreams belong to attack streams; a D-S evidence theory is used to fuse the information of multi-step attacks to obtain the confidence of attack occurrence; and a network security situation is calculated by means of situational factor integration on the basis of security vulnerability information, network service information and host protection strategies; and therefore, the accuracy of assessmentis effectively improved. Since the confidence information of detection equipment is added to the assessment system, the influence of false negatives and false positives can be effectively reduced. Anensemble learning method is adopted, so that the accuracy of confidence calculation can be improved. A network attack is regarded as a dynamic process, and merging processing is performed on the information of the multi-step attacks. Information fusion technology is adopted, so that network environment characteristics such as vulnerabilities, service information and protection strategies are comprehensively considered.

Owner:XIDIAN UNIV

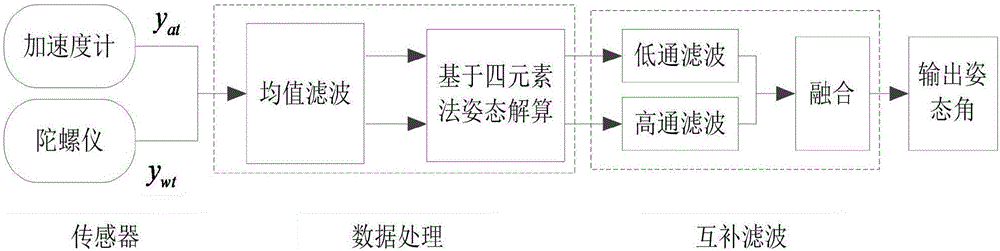

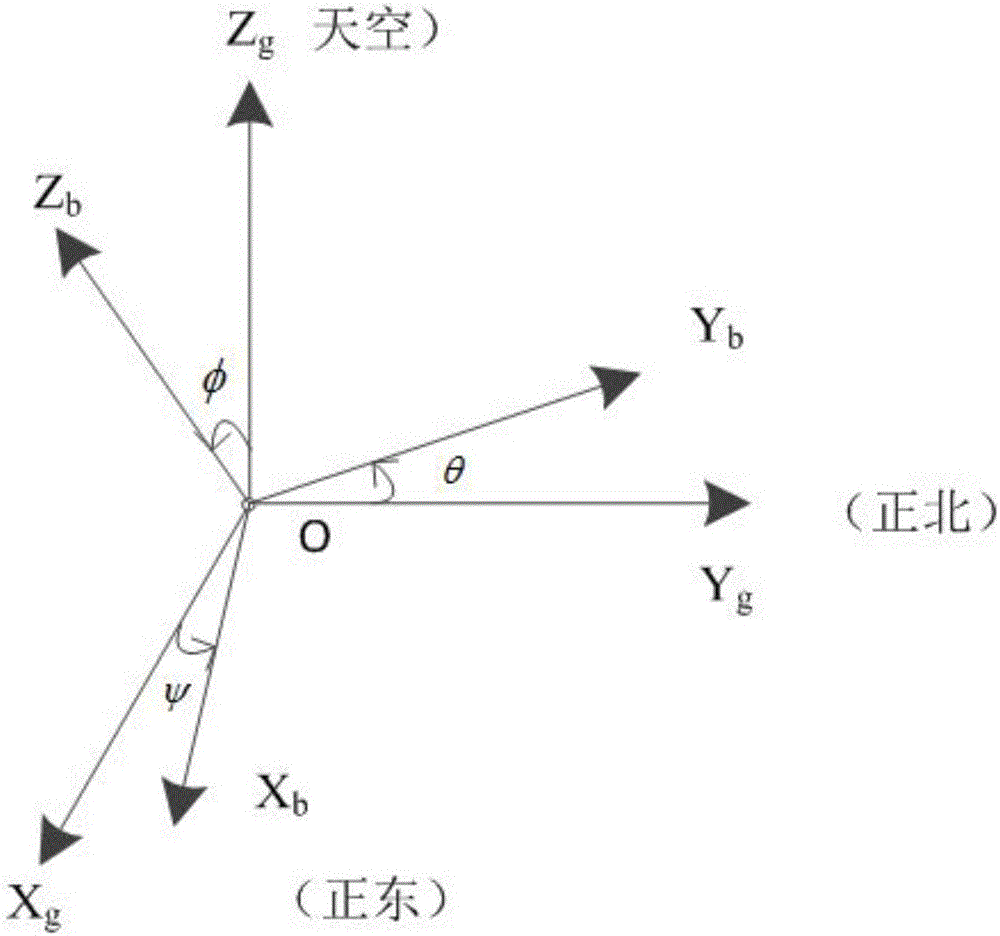

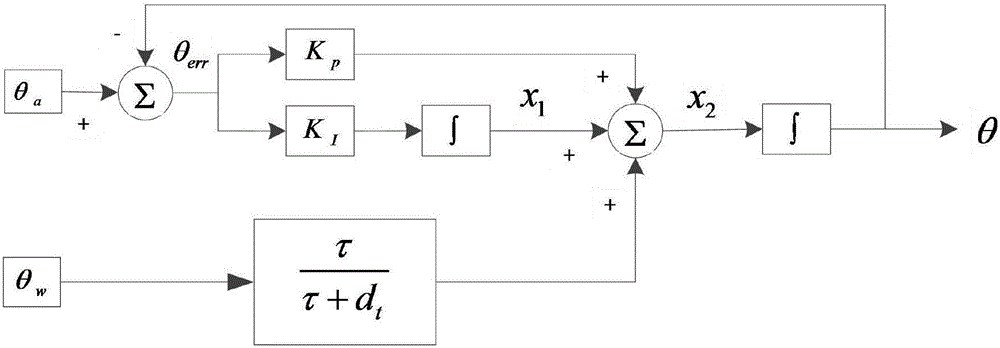

Filtering method for IMU multi sensor data fusion

InactiveCN106482734AHigh measurement accuracyImprove dynamic performanceNavigation by speed/acceleration measurementsMathematical modelComplementary filter

The present invention provides a filtering method for IMU multi sensor data fusion, firstly data is collected, aircraft attitude data can be acquired by an IMU inertial measurement unit comprising a gyroscope, an accelerometer and other multiple sensors, and a sensor output information mathematical model expression formula can be established; output data processing is performed, and the output data processing mainly includes removal of interference information and noises by use of slide average filter and resolution of the rotation angle of each sensor based on a four element method; a high-pass filter and a low-pass filter are combined, PI control parameters are added into a low-pass filtering part, proportionality coefficient variable Kp and integral coefficient variable KI are introduced for design of an error correction negative feedback of a second order complementary filter so as to realize the data fusion of each sensor, and finally attitude angles are outputted. By introduction and setting of the PI control parameters, a negative-feedback-containing second order complementary filter algorithm is designed to fuse the information of each sensor to improve the measurement accuracy and dynamic performance of the system.

Owner:湖南优象科技有限公司

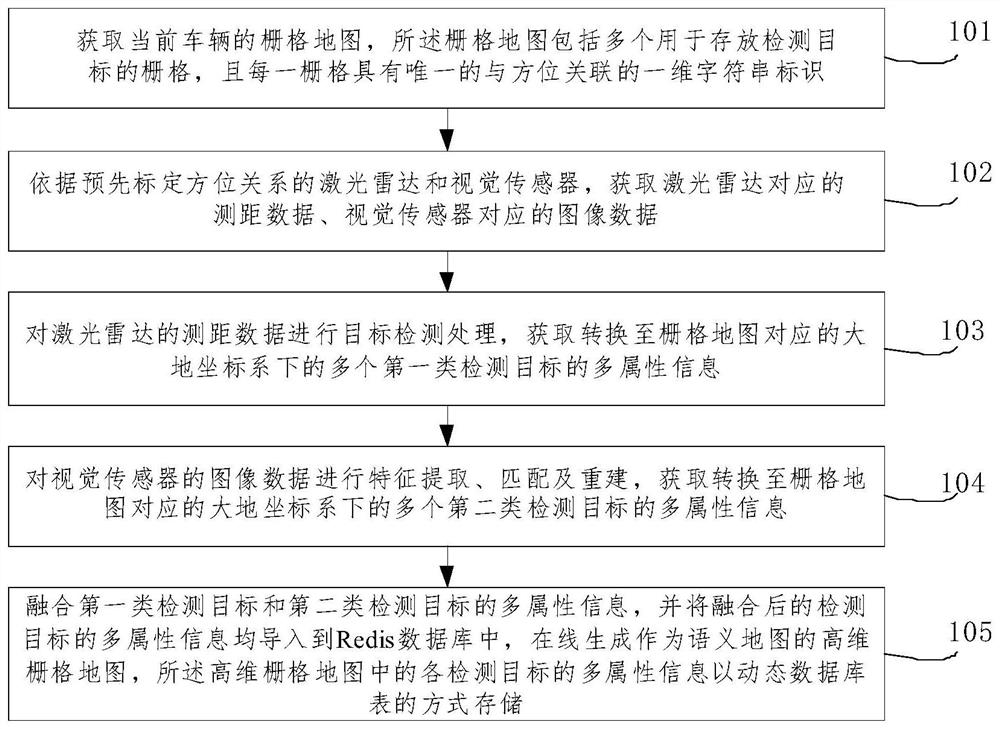

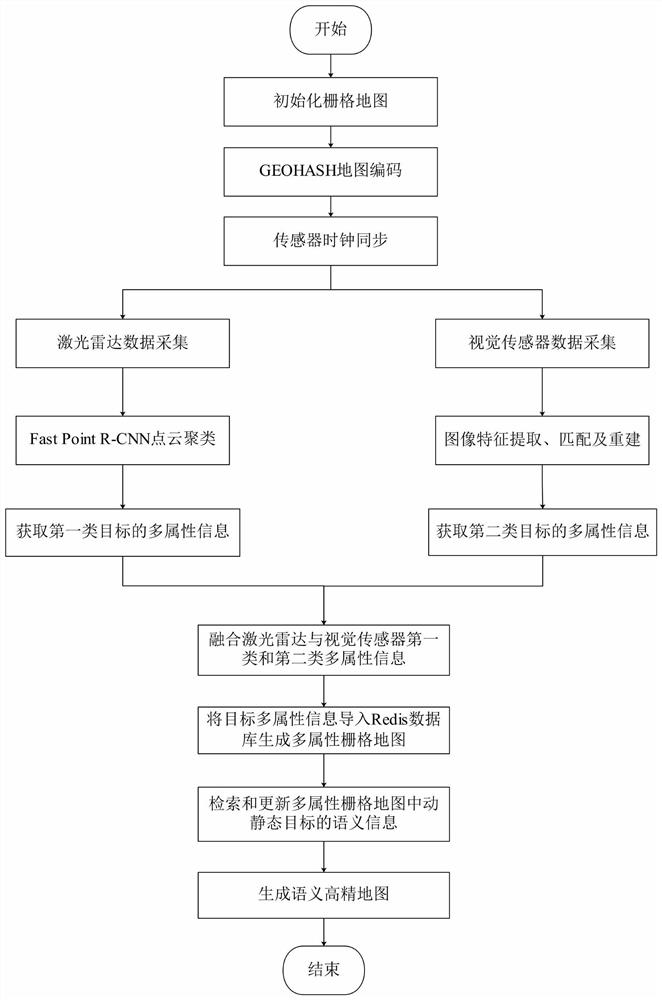

Method for constructing semantic map on line by utilizing fusion of laser radar and visual sensor

PendingCN111928862AEasy to driveImprove reuse efficiencyInstruments for road network navigationElectromagnetic wave reradiationEngineeringVision sensor

The invention relates to a method for constructing a semantic map on line by utilizing fusion of a laser radar and a visual sensor. The method comprises the following steps: acquiring an initialized grid map of a current vehicle, and acquiring distance measurement data corresponding to the laser radar and image data corresponding to the visual sensor; performing target detection processing on theranging data of the laser radar to obtain multi-attribute information of a plurality of first-class detection targets; performing feature extraction and matching on the image data of the visual sensorto obtain multi-attribute information of a plurality of second-class detection targets; fusing the multi-attribute information of the first type of detection targets and the second type of detectiontargets, importing the fused multi-attribute information of the detection targets into a Redis database, generating a high-dimensional grid map serving as a semantic map, and storing the multi-attribute information of each detection target in the high-dimensional grid map in a dynamic database table mode. According to the method, the multi-dimensional semantic information of the dynamic and staticenvironments around the vehicle can be represented online in real time.

Owner:廊坊和易生活网络科技股份有限公司

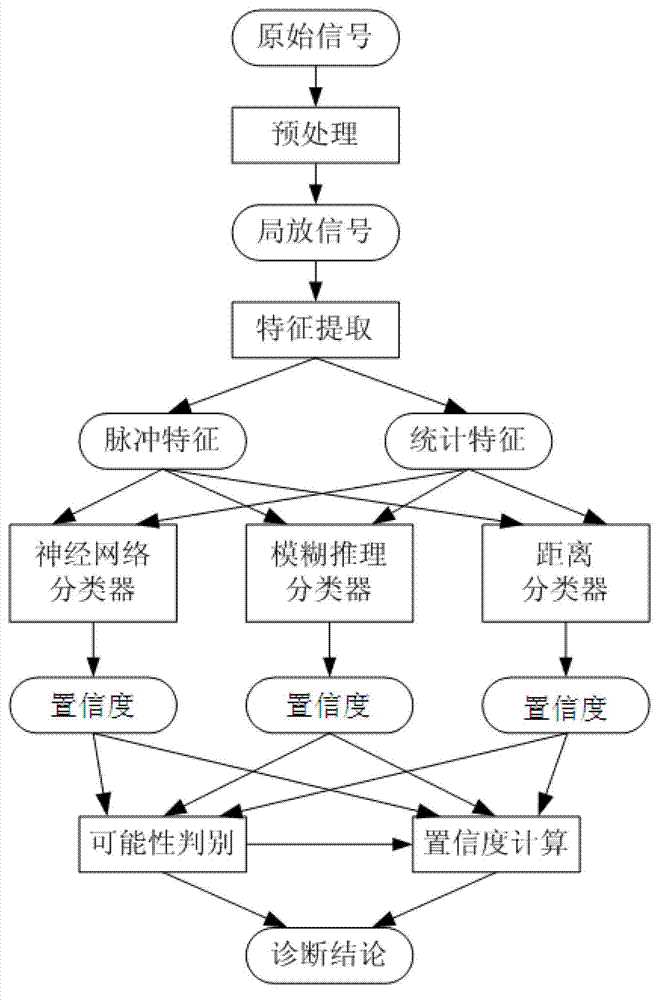

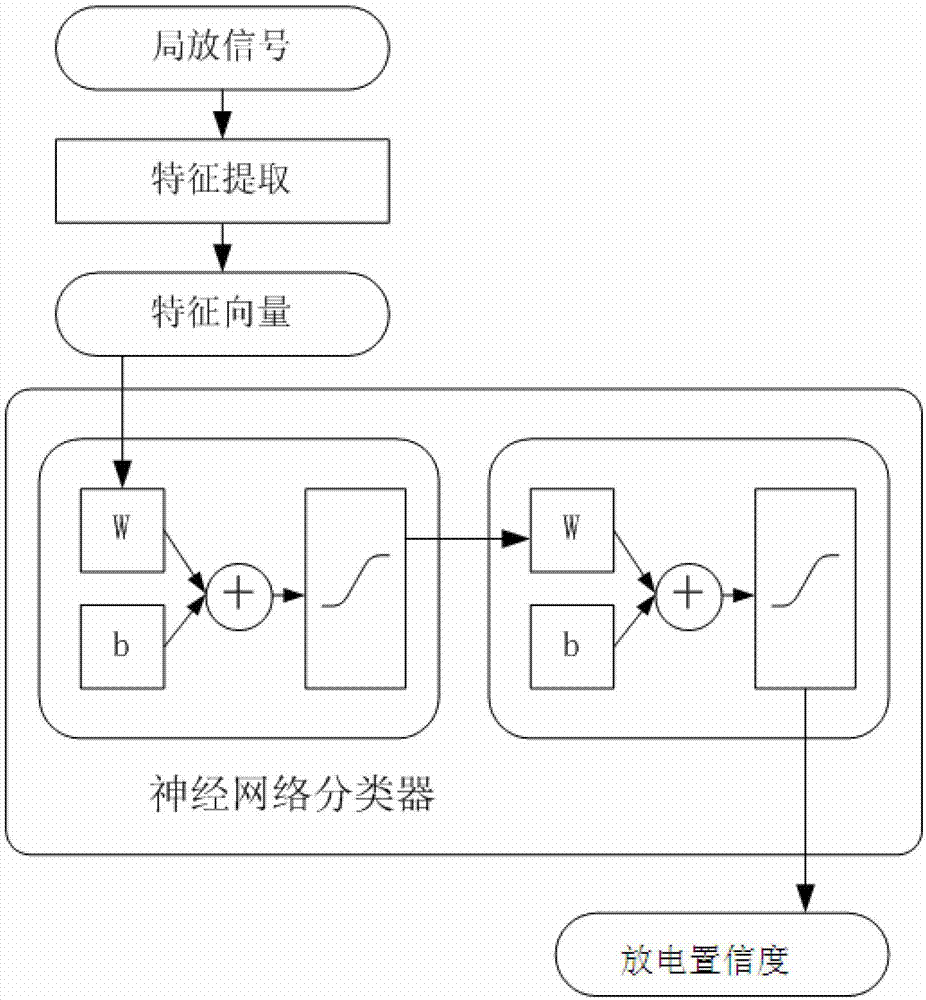

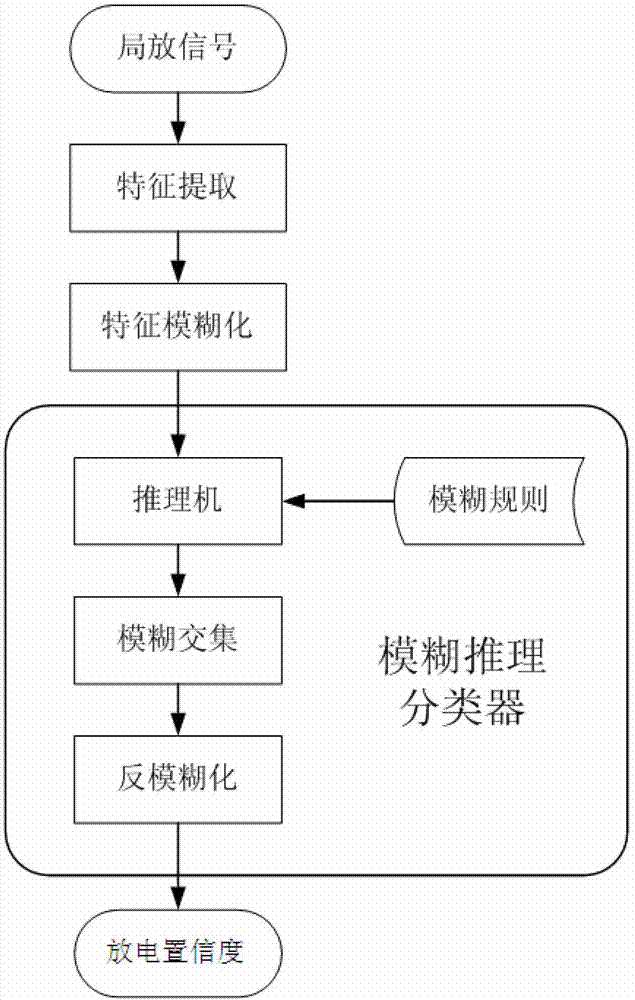

Multi-classifier information fusion partial discharge diagnostic method

ActiveCN103323749ALow resolution accuracyTesting dielectric strengthElectric power equipmentCorona discharge

A multi-classifier information fusion partial discharge diagnostic method comprises the steps of (1) signal acquisition, namely partial discharge signals of electrical equipment are acquired through a sensor, (2) signal preprocessing, (3) discharge feature extraction, namely extraction of pulse features and statistic features, (4) classifier recognition, namely extracted feature parameters are used as input vectors, and a neural network classifier, a fuzzy reasoning classifier and a distance discriminant classifier respectively give out confidence coefficient results of five discharge types of corona discharge, suspension electrode discharge, free particle discharge, air gap discharge and creeping discharge, (5) possibility judgment and (6) eventual confidence coefficient calculation. According to the multi-classifier information fusion partial discharge diagnostic method, the discharge types can be pointed out, the specific confidence coefficients can be given out, so that a result is more accurate and comprehensive. The multi-classifier information fusion partial discharge diagnostic method can be further popularized and applied to the fields of fault diagnosis, mode recognition and the like, and has broad market prospects and application values.

Owner:SHANGHAI JIAO TONG UNIV

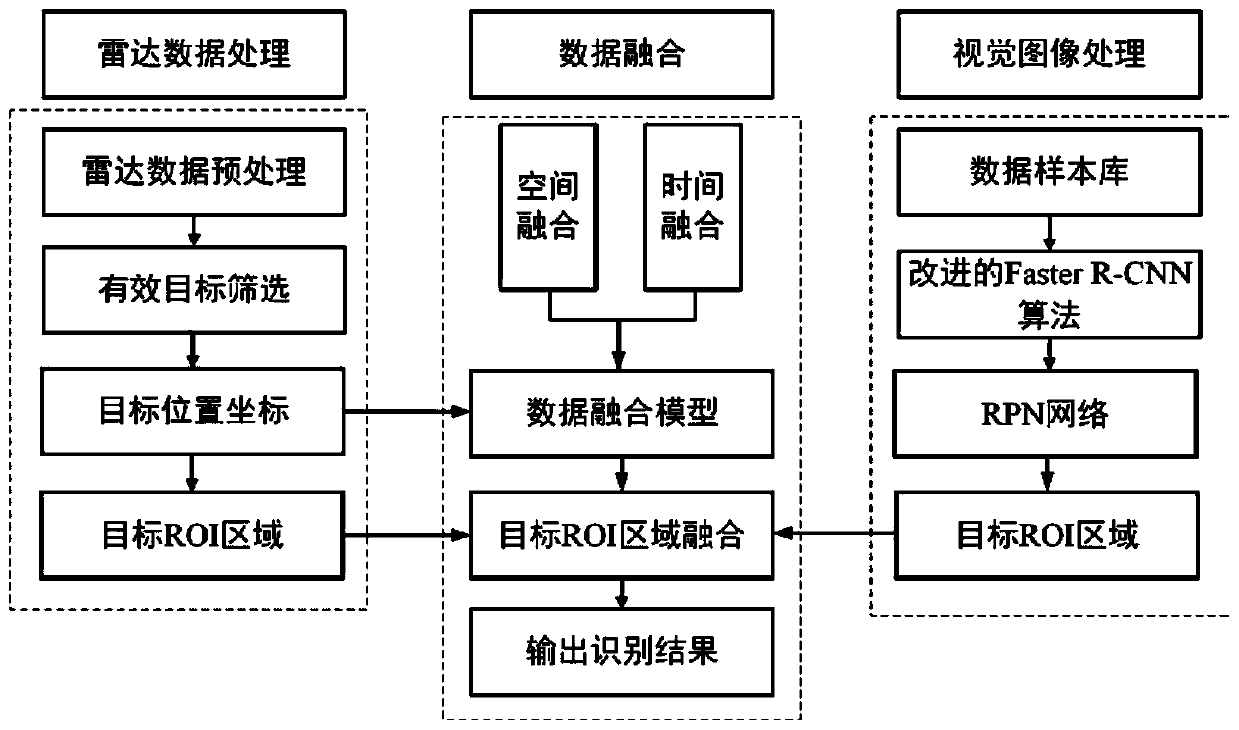

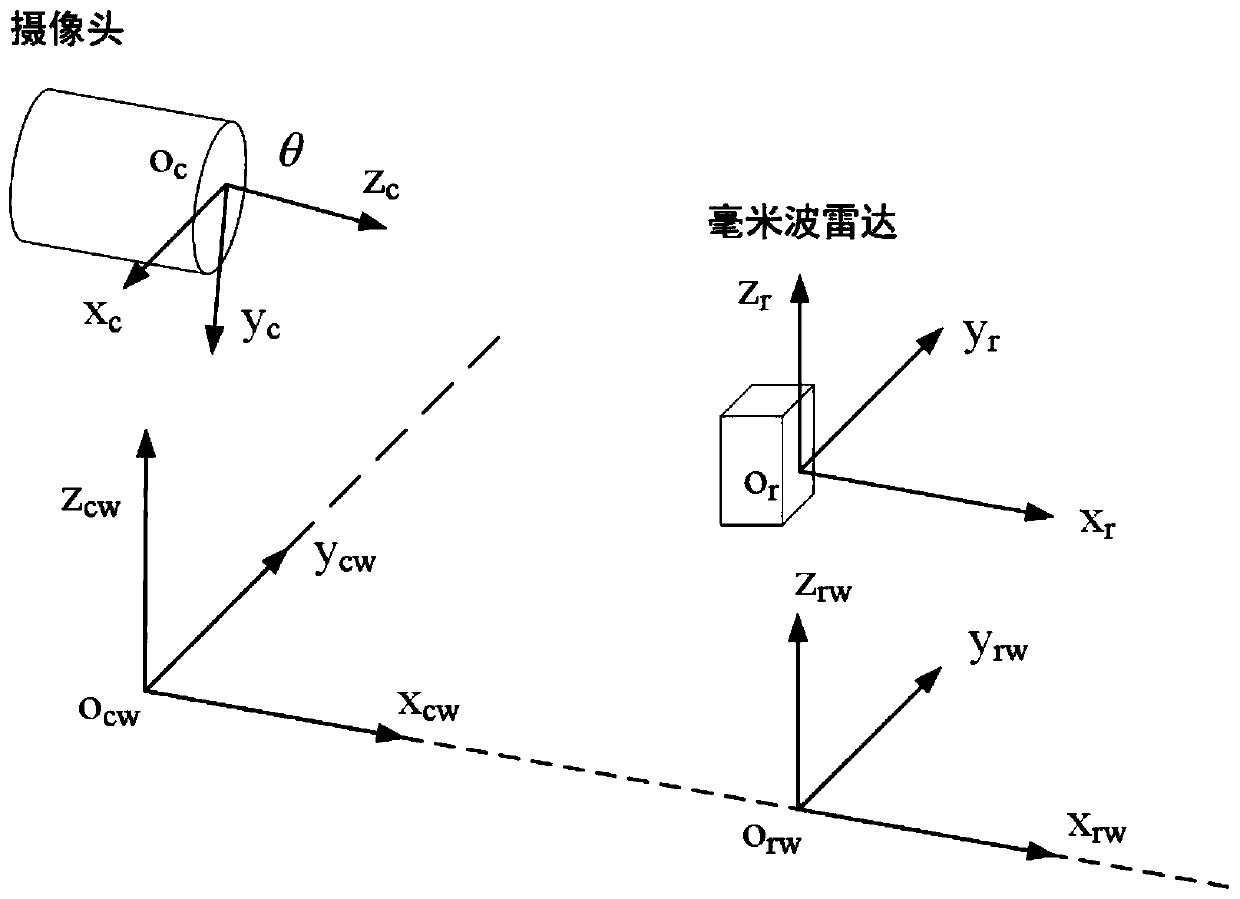

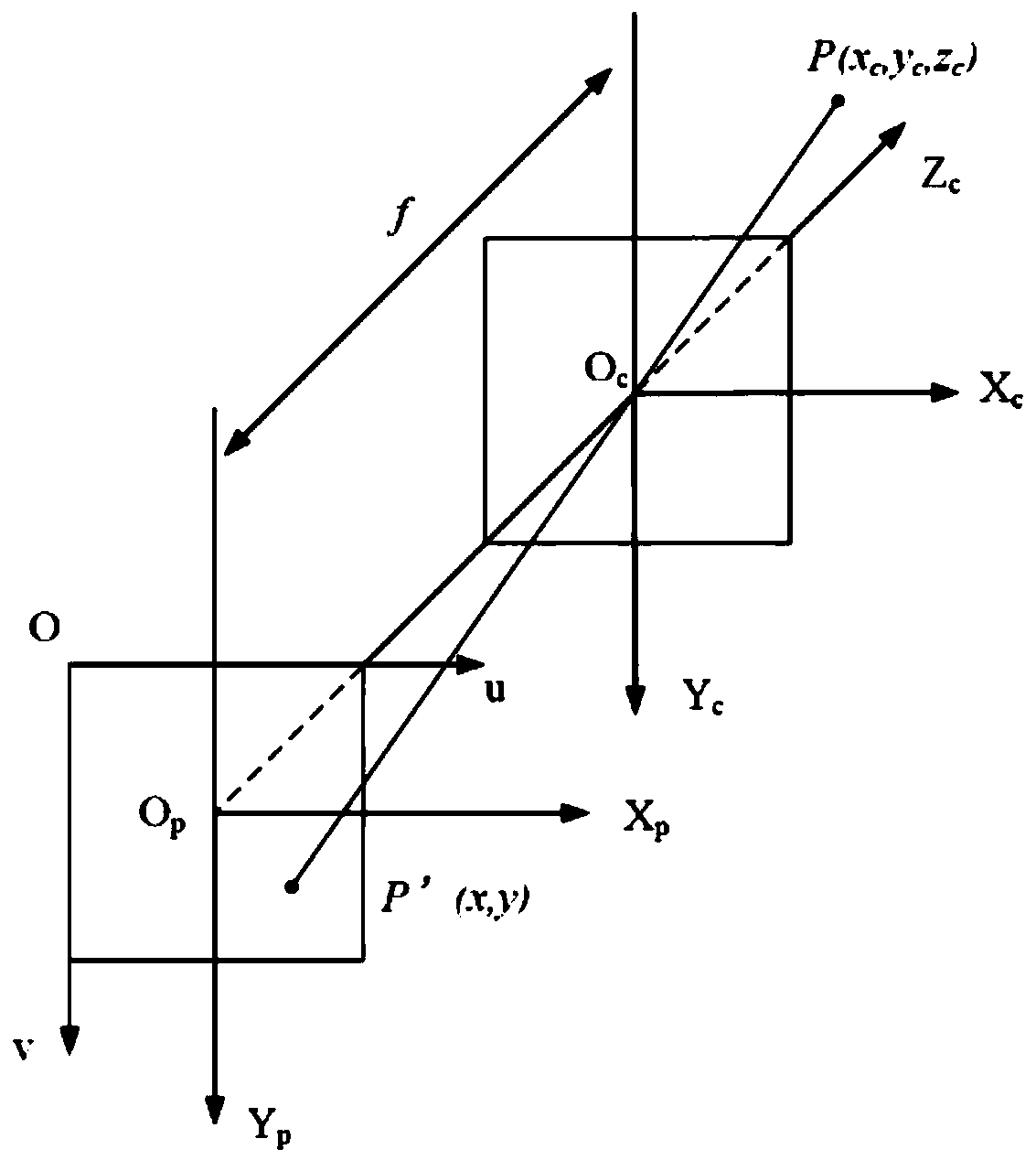

Data fusion dynamic vehicle detection method based on millimeter wave radar and machine vision

ActiveCN111368706AReduce detection impactImprove recognition accuracyCharacter and pattern recognitionRadio wave reradiation/reflectionEngineeringRadar detection

The invention discloses a data fusion dynamic vehicle detection method based on millimeter-wave radar and machine vision. A millimeter-wave radar data processing module, a vision image processing module and a data fusion processing module are included. Firstly, through sensor joint calibration, a projection matrix of a millimeter wave radar and a visual sensor is obtained, and a conversion relation between a radar coordinate system and an image coordinate system is established; preprocessing the acquired millimeter-wave radar data, performing effective target screening, projecting a radar detection target to a visual image through a conversion relationship, and obtaining a target region of interest according to the position of the projection target; performing target information fusion according to the overlapping condition of the target region of interest obtained from the image processing algorithm and the target region of interest detected by the millimeter-wave radar; and finally,verifying whether there is a vehicle in the fused region of interest based on an image processing algorithm. According to the invention, the front vehicle can be effectively detected, and the system has good environmental adaptability and stability.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

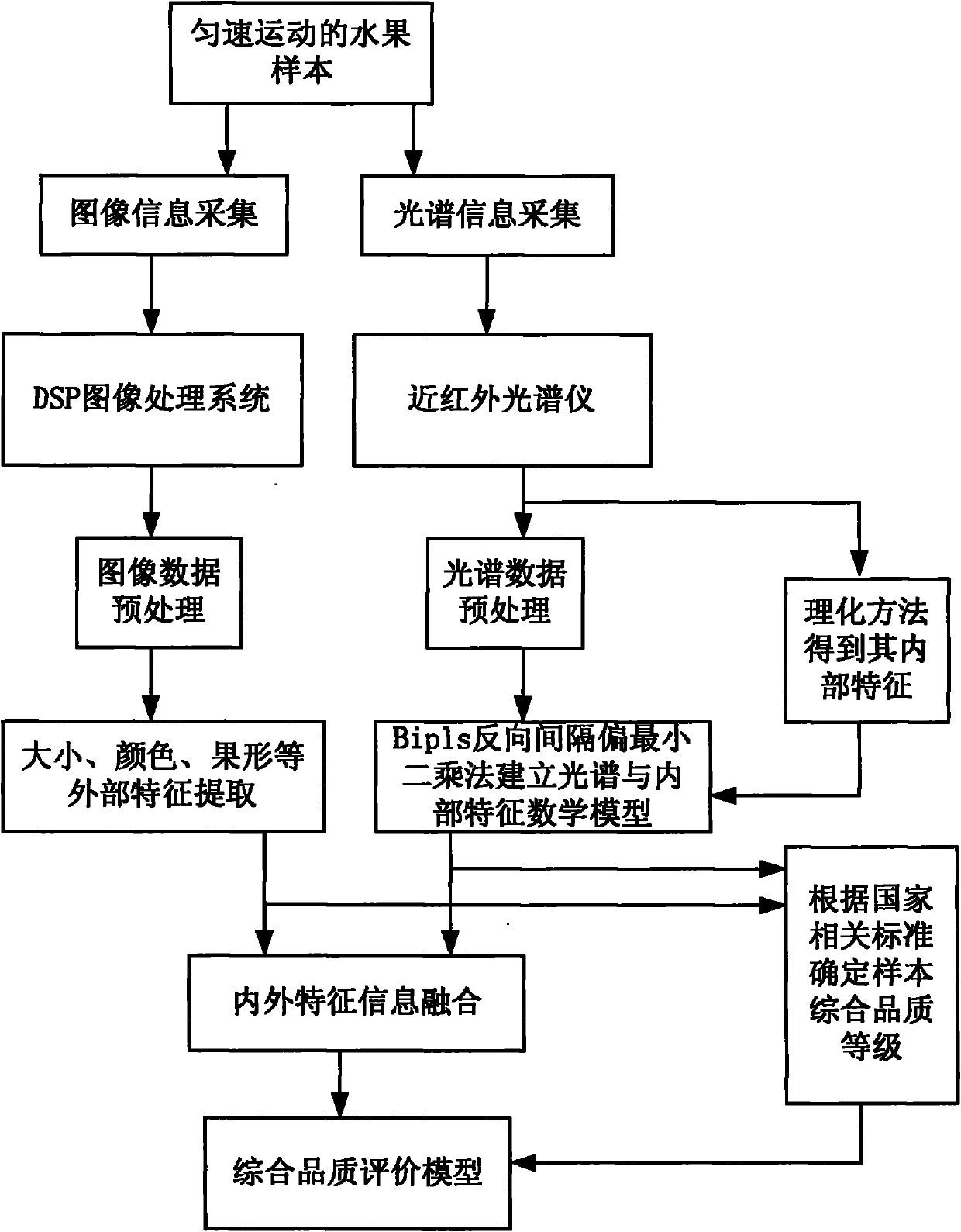

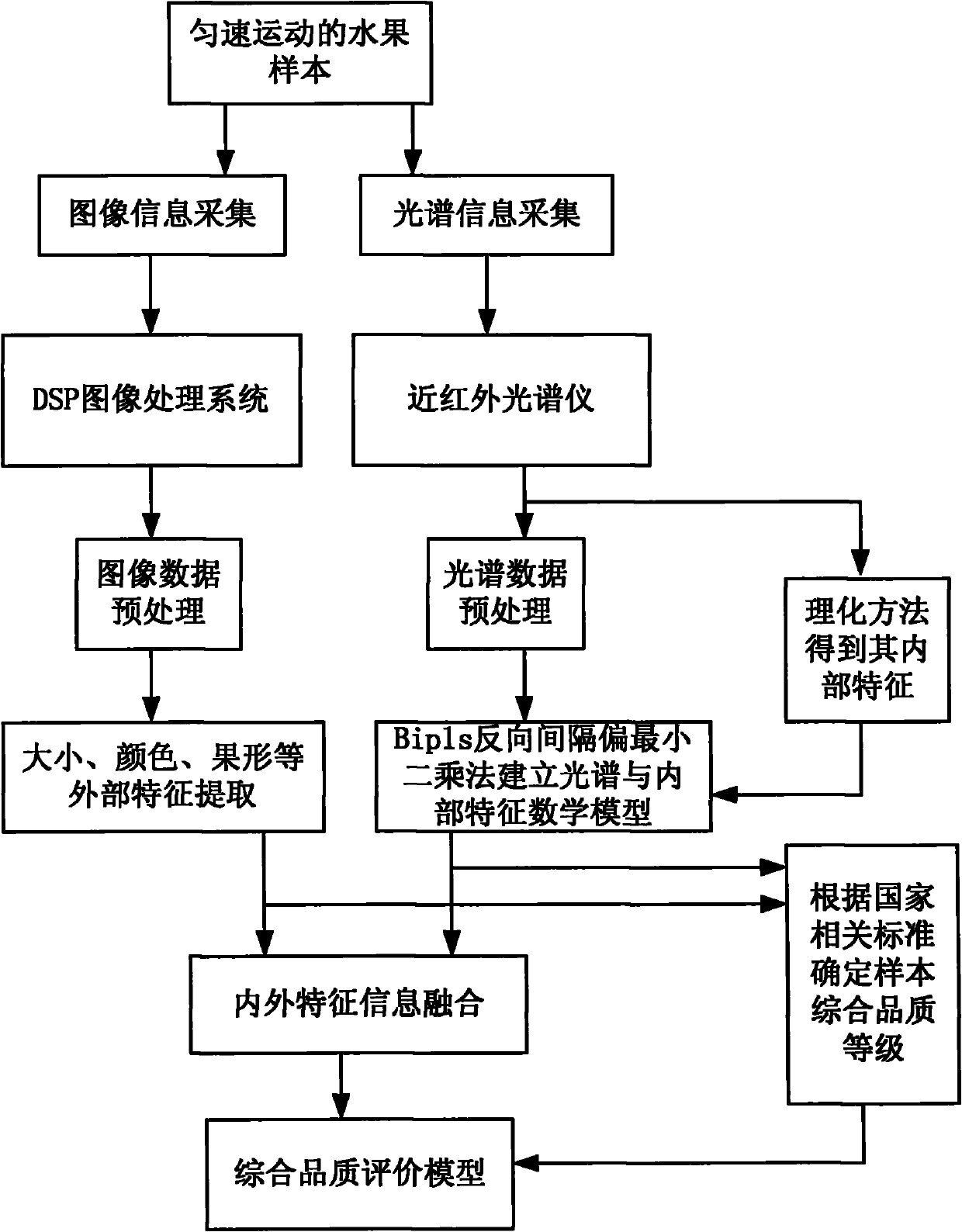

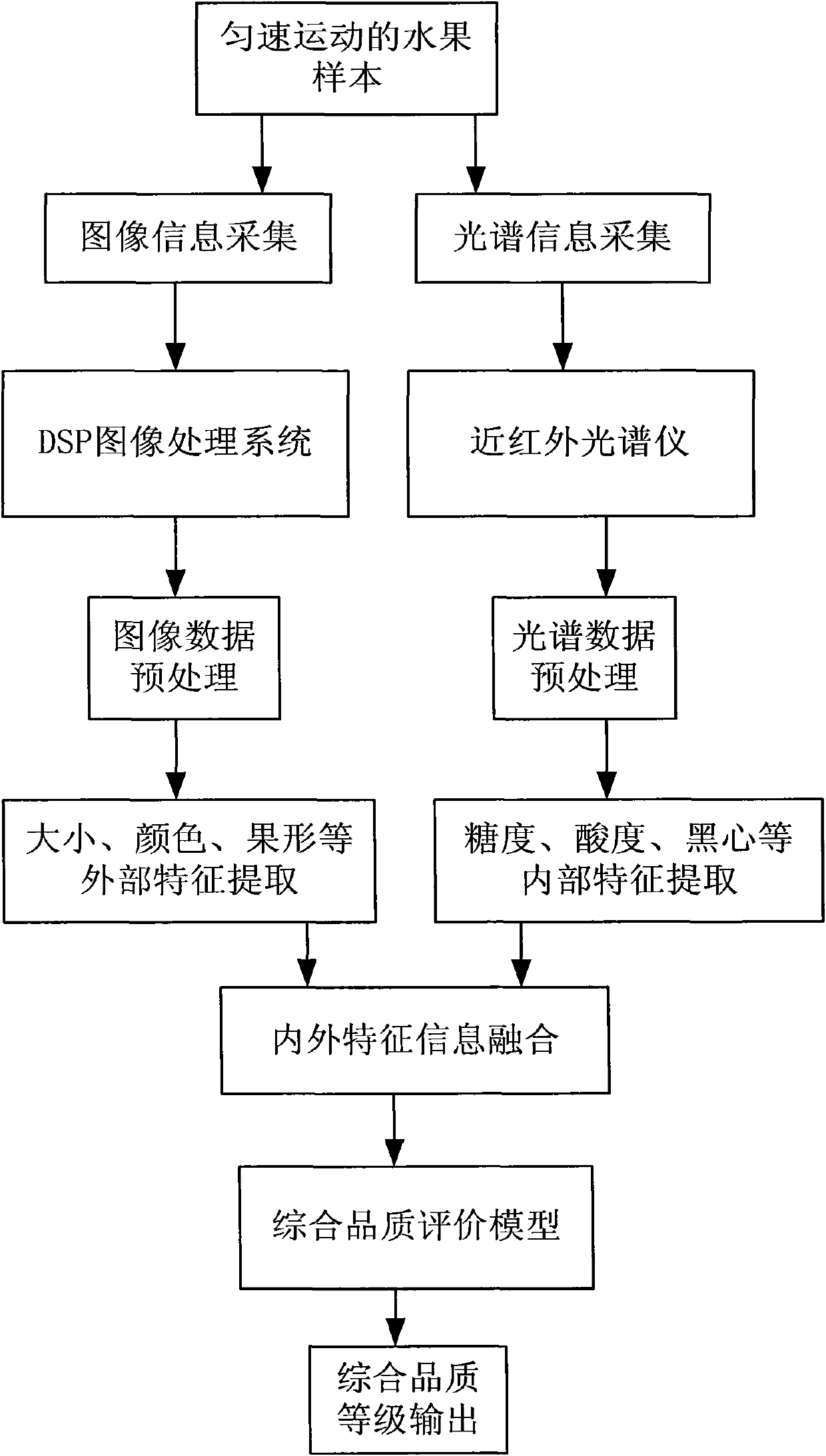

Online nondestructive testing (NDT) method and device for comprehensive internal/external qualities of fruits

InactiveCN101949686AImprove real-time performanceColor measuring devicesUsing optical meansImaging processingMathematical model

The invention discloses an online nondestructive testing (NDT) method and an online nondestructive testing (NDT) device for comprehensive internal / external qualities of fruits. The online NDT method of the invention comprises the following steps: a comprehensive quality evaluation model for the fruits is established firstly by a detection device consisting of a conveying system, a machine vision system, an Near Infrared Spectrum (NIR) system and a grading system, and the fruits performs online uniform motion through the conveying system; the machine vision system acquires the image information of the fruits and extracts the external characteristics of the fruits; the NIR system acquires the spectral information of the fruits; the grading system analyses the spectral information by a pre-established mathematical model, and extracts the internal characteristics of the fruits; and the grading system fuses the internal characteristics and the external characteristics of the fruits by a pre-established information fusion model so as to obtain the comprehensive quality level of the fruits. The method and the device of the invention can detect the internal characteristics and the external characteristics of the fruits simultaneously; a DSP high-speed image processing system is used to handle complex image information, which greatly improves the real-time characteristic of the system; and an information fusion technology is used to carry out online real-time detection on the comprehensive qualities of the fruits.

Owner:扬州福尔喜果蔬汁机械有限公司

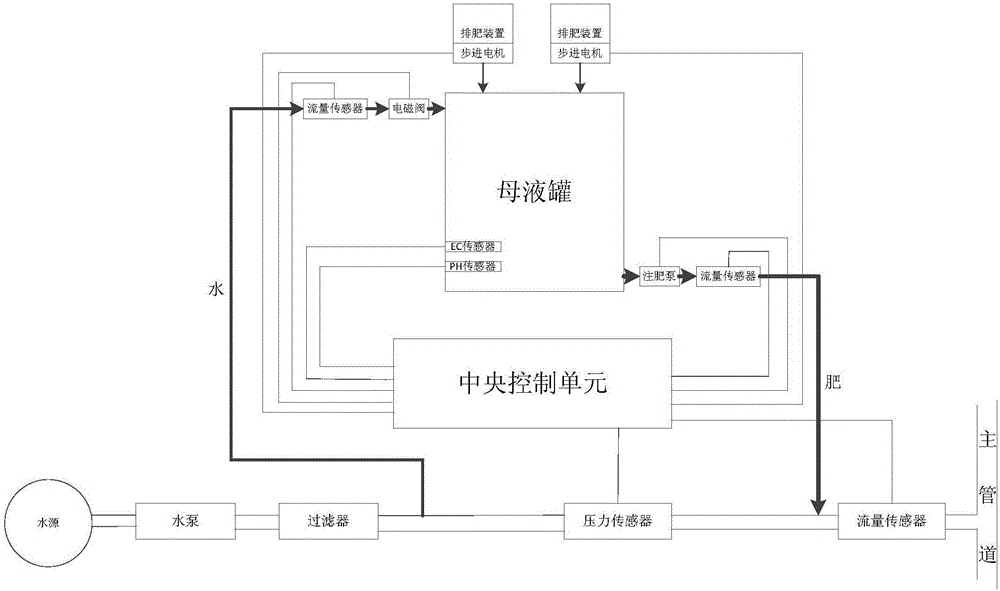

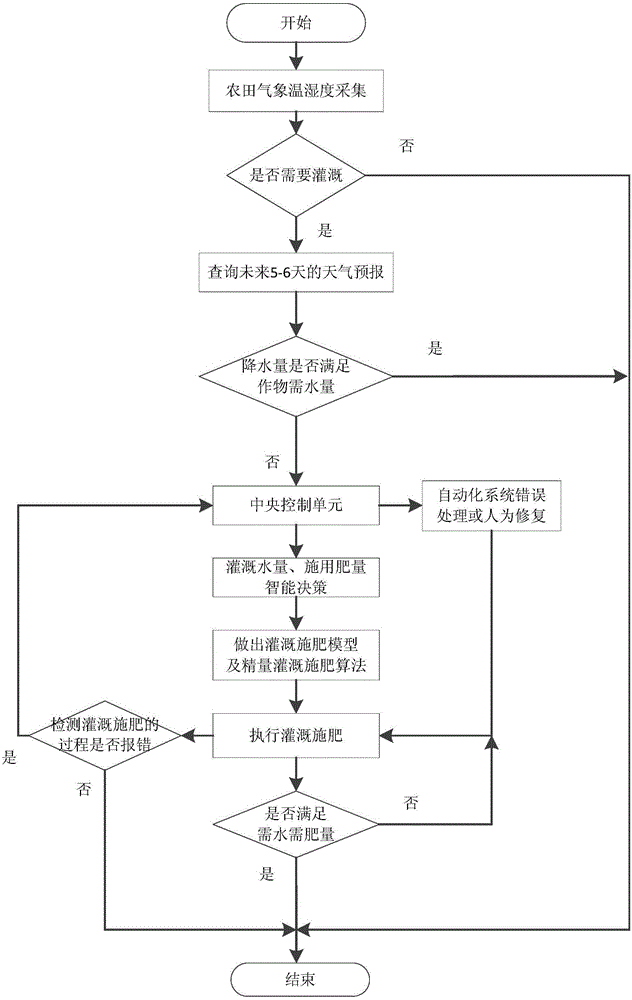

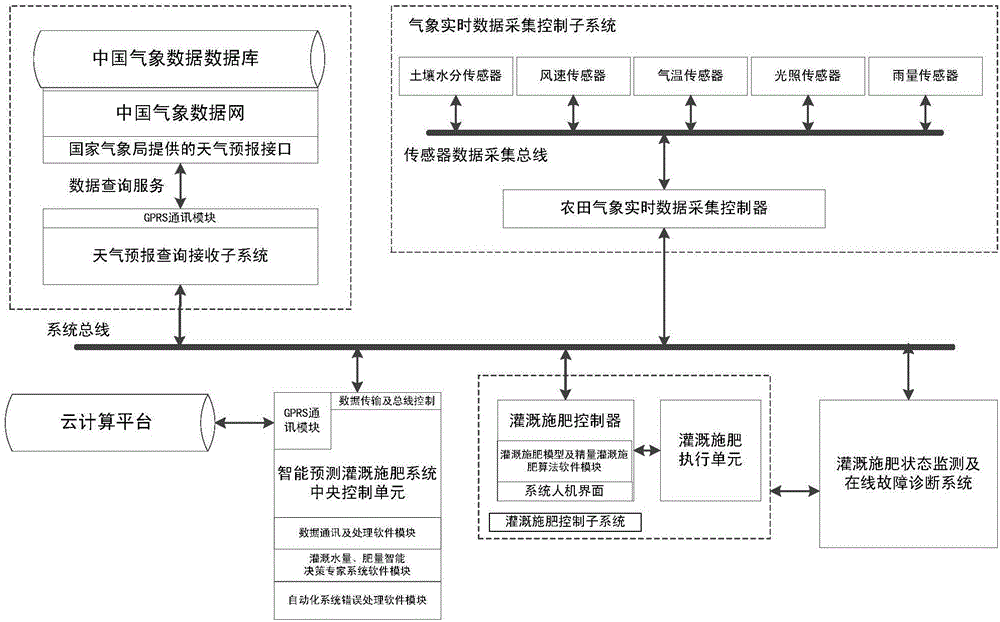

System and method for integrally and intelligently controlling water and fertilizer in field based on multi-source information fusion

ActiveCN106707767ASimple structureHigh degree of automationAdaptive controlAutomatic controlData acquisition

The invention relates to a system and method for integrally and intelligently controlling water and fertilizer in a field based on multi-source information fusion. The system comprises a weather forecast inquiry receiving subsystem, a weather real-time data collecting control subsystem, a cloud computing platform, a central control unit, an irrigation and fertilization control subsystem, an irrigation and fertilization state monitoring system and an online fault detection system. The system provided by the invention is an automatic control system integrated with the functions of weather forecast inquiry, crop cloud computing platform inquiry, farmland weather real-time collection, solid fertilizer rapid solution, mother solution real-time monitoring regulation, irrigation and fertilization state monitoring, online fault detection, irrigation and fertilization and remote intelligent control. According to the invention, the factors, such as, weather forecast, cloud computing platform, weather real-time collection and growth vigor of the crops in the growth process can be comprehensively considered, corresponding irrigation and fertilization decisions can be made, and thus precise irrigation and precise fertilization can be accurately realized; and the growth vigor of the crops can be described in real time, and the irrigation and fertilization can be performed according to the growth vigor of the crops.

Owner:SHANDONG AGRICULTURAL UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com