Man-machine interaction method and device based on emotion system, and man-machine interaction system

A human-computer interaction and emotional technology, applied in the field of intelligent services, can solve the problem of not being able to simultaneously recognize the emotional characteristics of voice, expression and body movements input by the user, so as to achieve smooth human-computer interaction process, improve the success rate, and increase the amount of data Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

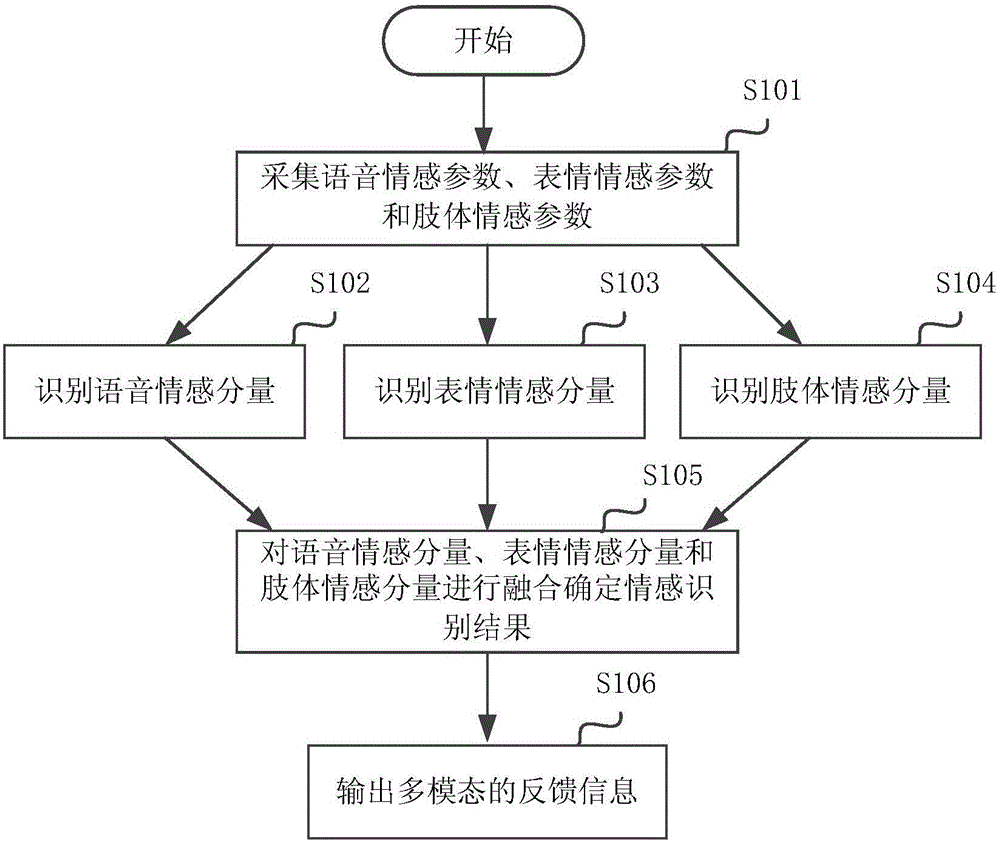

[0058] This embodiment provides a human-computer interaction method, the steps of which are as follows figure 1 shown. The following combination figure 1 The emotion recognition method of this embodiment will be described in detail.

[0059] Firstly, in step S101, speech emotion parameters, expression emotion parameters and body emotion parameters are collected.

[0060] Subsequently, step S102 is performed to calculate and obtain the undetermined voice emotion according to the voice emotion parameter, and select the one closest to the undetermined voice emotion from the preset voice emotion as the voice emotion component; execute step S103 to calculate and obtain the undetermined voice emotion according to the expression emotion parameter. Emotional emotion, select the one closest to the undetermined facial emotion from the preset emotional emotions as the emotional emotion component of the facial expression; execute step S104, calculate and obtain the undetermined physical e...

Embodiment 2

[0109] This embodiment provides a human-computer interaction device 600, the structure of which is as follows Image 6 shown. The device includes a parameter acquisition unit 610 , a speech emotion recognition unit 620 , an expression emotion recognition unit 630 , a body emotion recognition unit 640 , a fusion unit 650 and a feedback unit 660 .

[0110] Among them, the parameter collection unit 610 is used to collect speech emotion parameters, expression emotion parameters and body emotion parameters.

[0111] The speech emotion recognition unit 620 is configured to calculate and obtain the pending speech emotion according to the speech emotion parameter, and select the one closest to the pending speech emotion from the preset speech emotions as the speech emotion component.

[0112] The expression emotion recognition unit 630 is used to calculate and obtain the undetermined expression emotion according to the expression emotion parameters, and select the one closest to the ...

Embodiment 3

[0117] This embodiment provides a human-computer interaction system. like Figure 7 As shown, the system includes a speech sensing device 710 , a visual sensing device 720 , a human-computer interaction device 600 , an output drive device 730 , an expression unit 740 , a speech unit 750 and an action unit 760 .

[0118] Wherein, the voice sensing device 710 is an audio sensor such as a microphone, which is used to collect voice information and input it to the human-computer interaction device 600 . The visual sensing device 720 is such as a camera, which is used to collect expression information and body information and input them to the human-computer interaction device 600 .

[0119] The structure of the human-computer interaction device 600 is as described in the second embodiment, and will not be repeated here. The output driving device 730 drives the expression unit 740 , the speech unit 750 and / or the action unit 760 to perform actions according to the multimodal feedb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com