Patents

Literature

548 results about "Feature transformation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

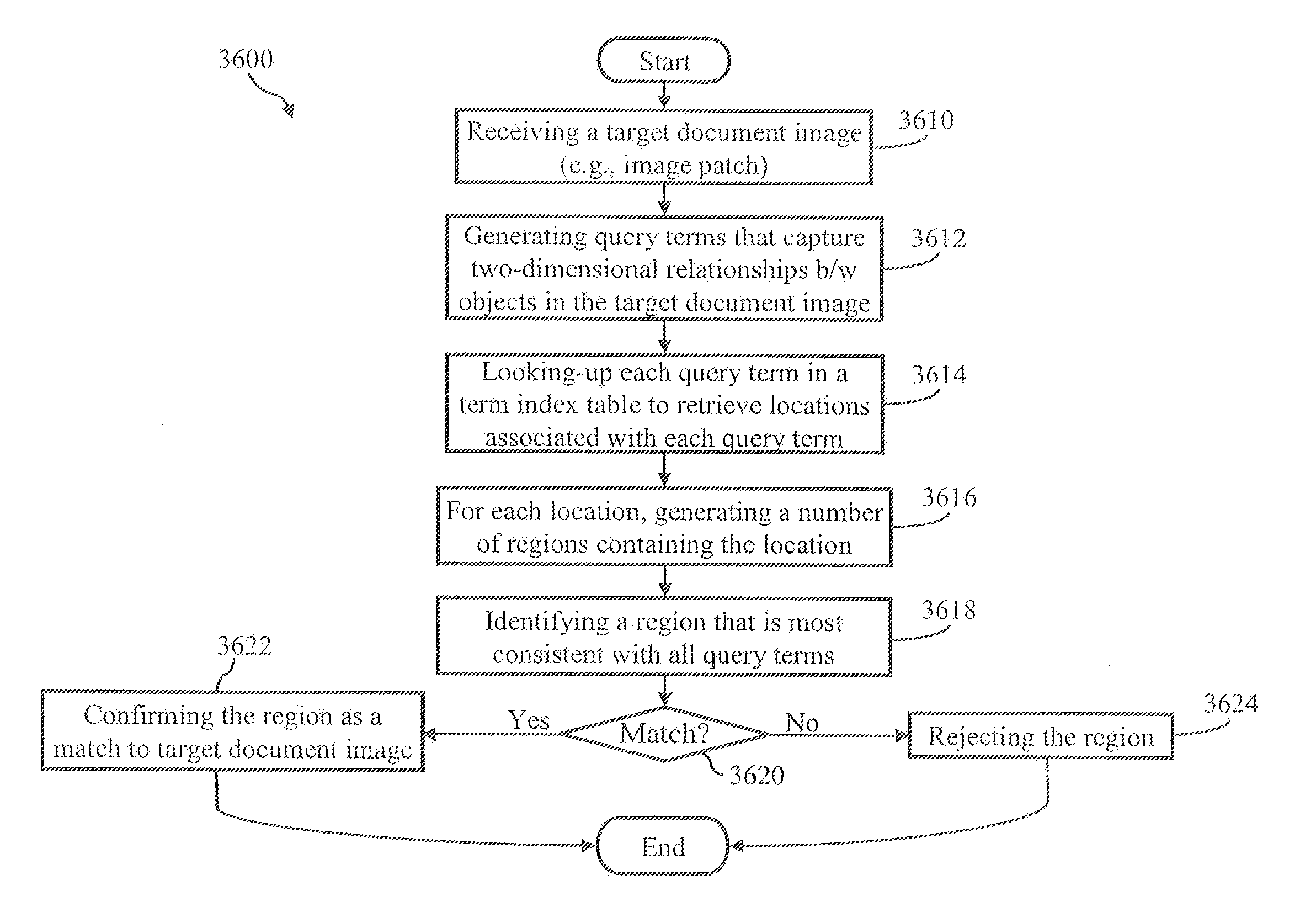

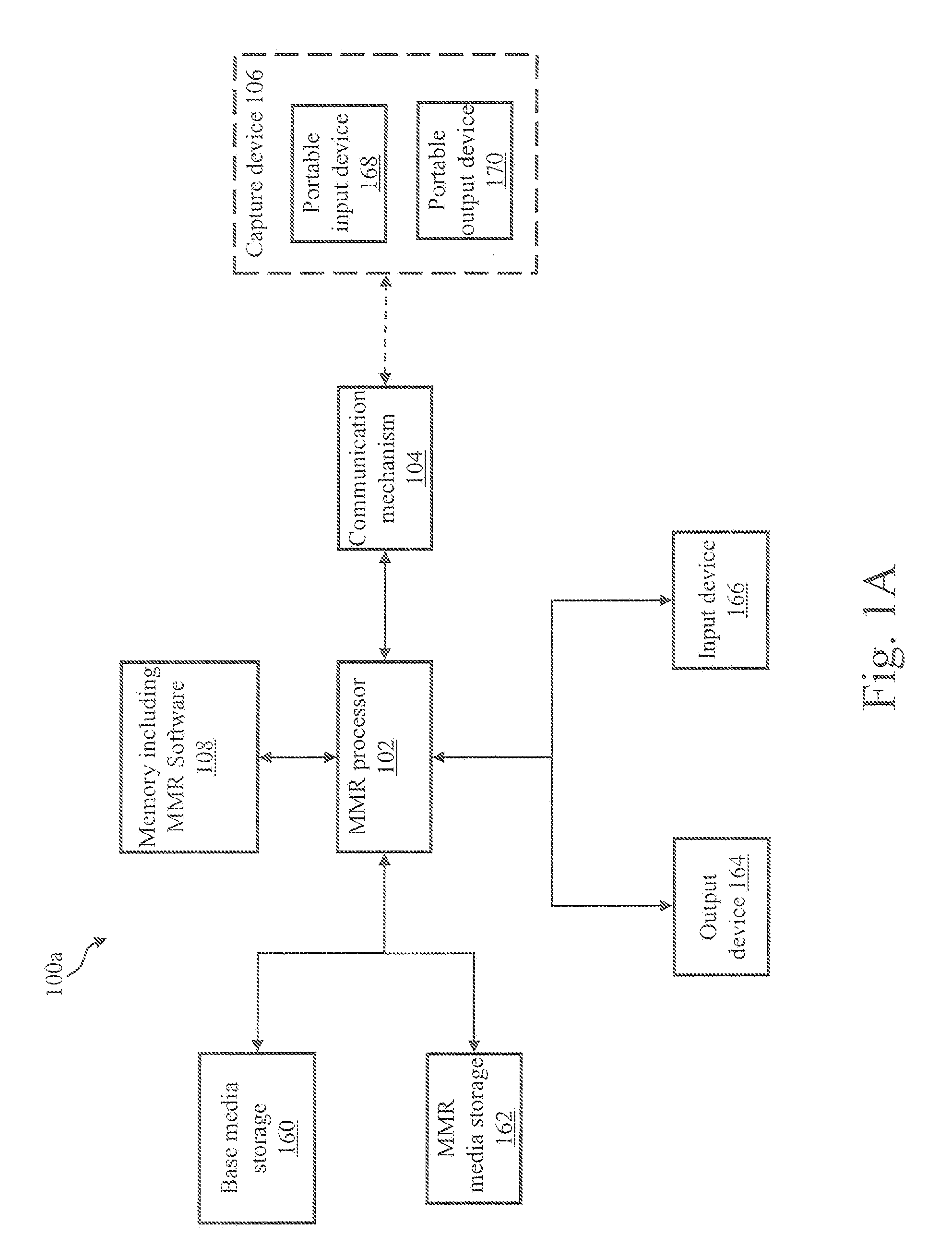

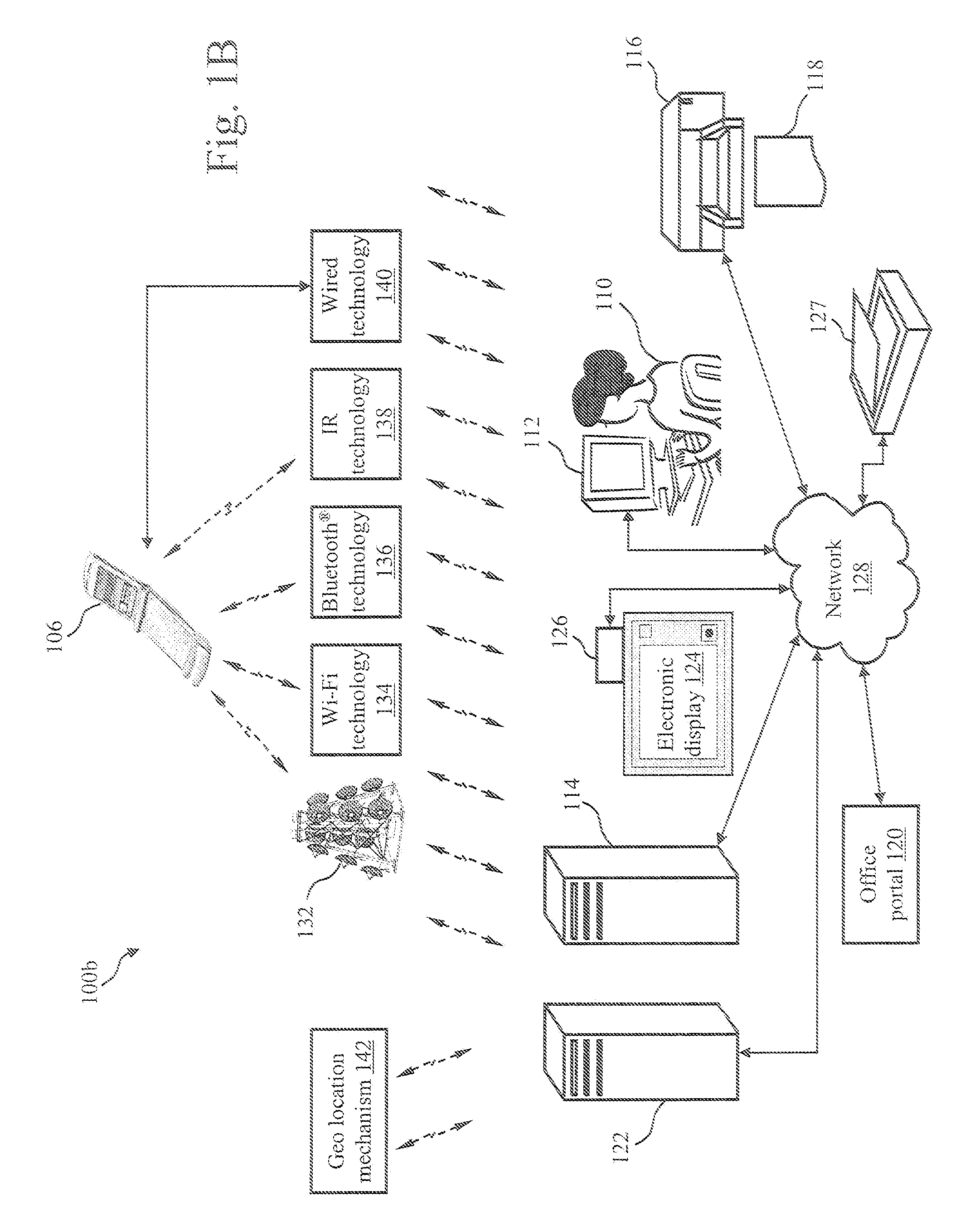

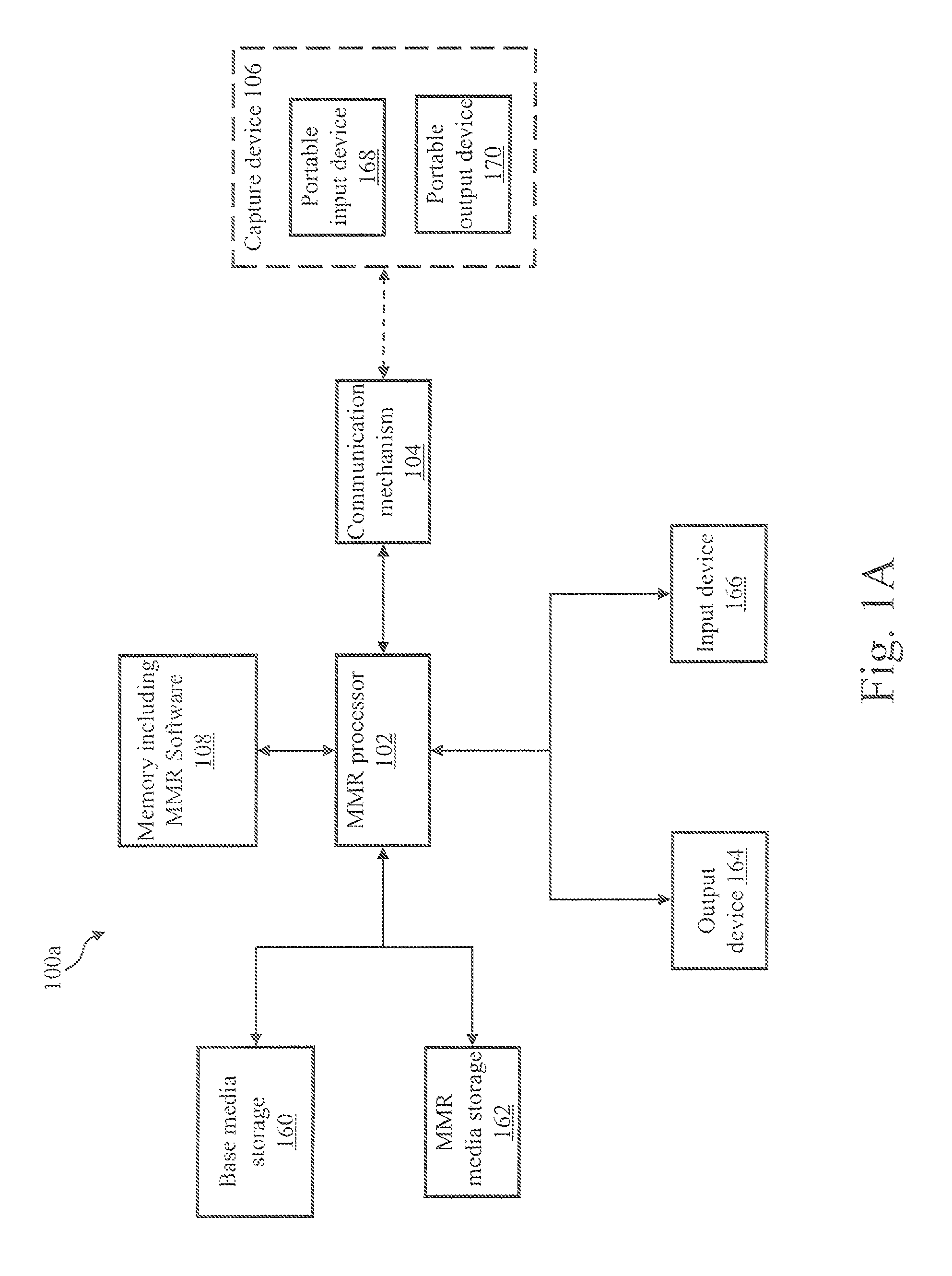

Data organization and access for mixed media document system

ActiveUS20070047819A1Digital data processing detailsCharacter recognitionDigital contentDocumentation

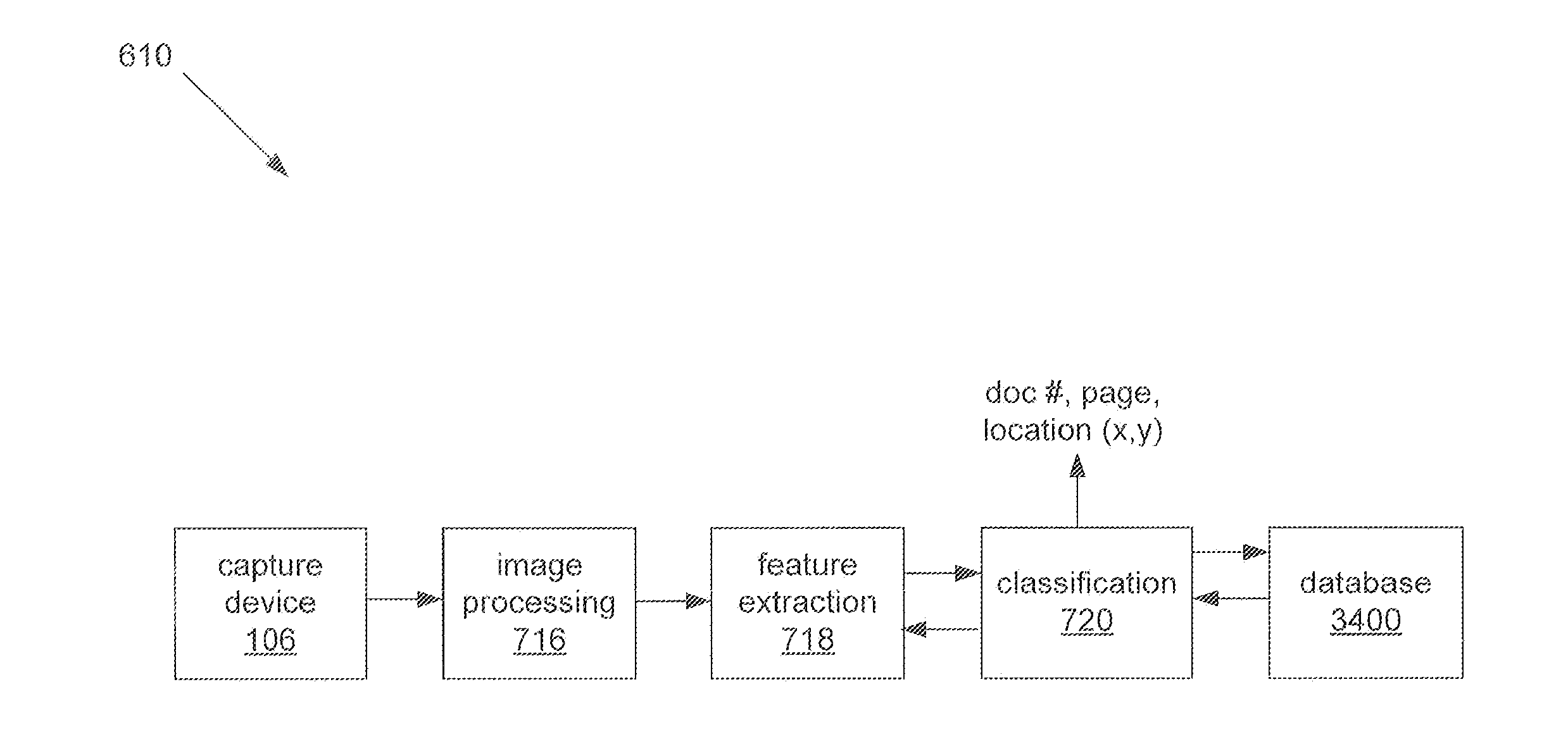

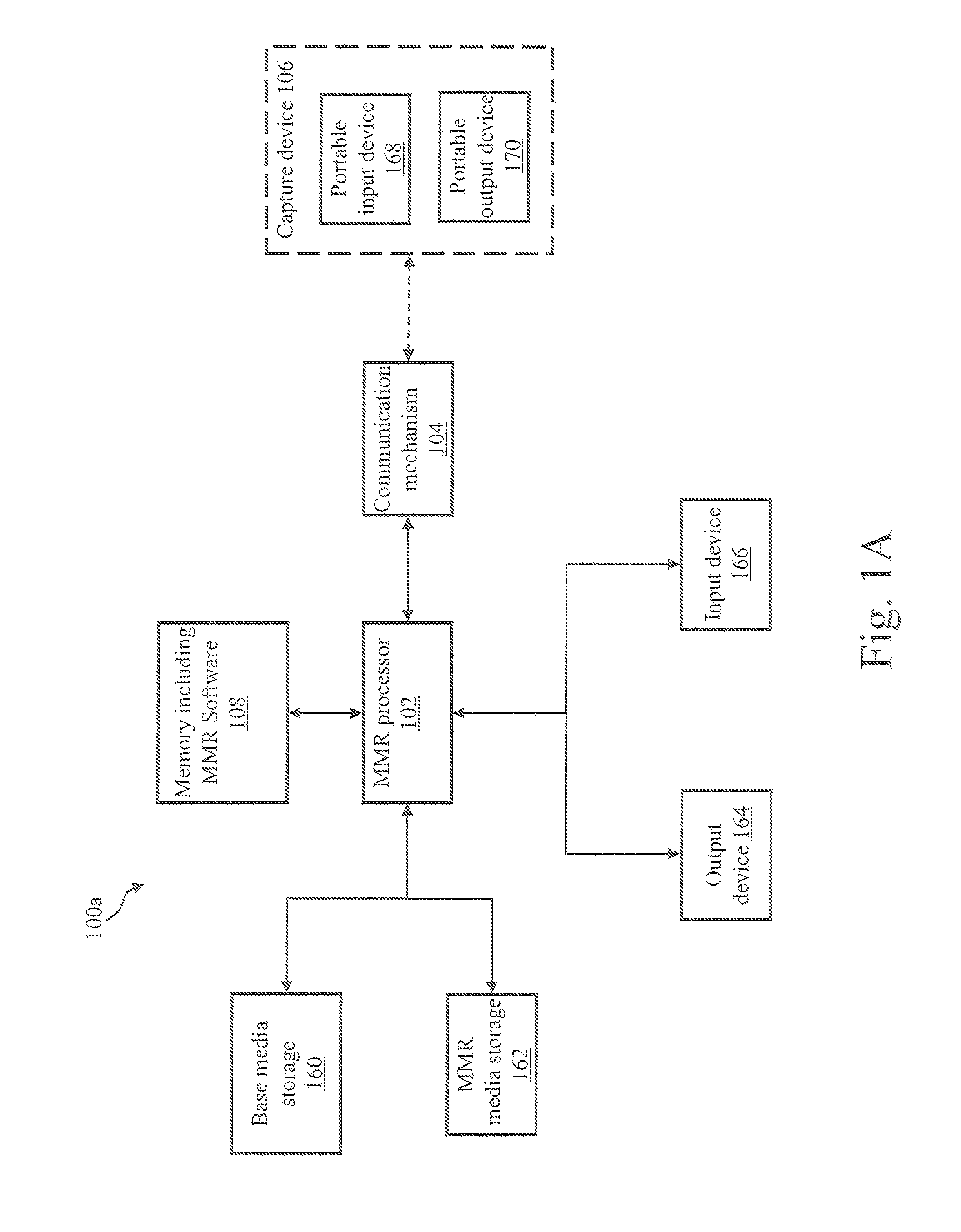

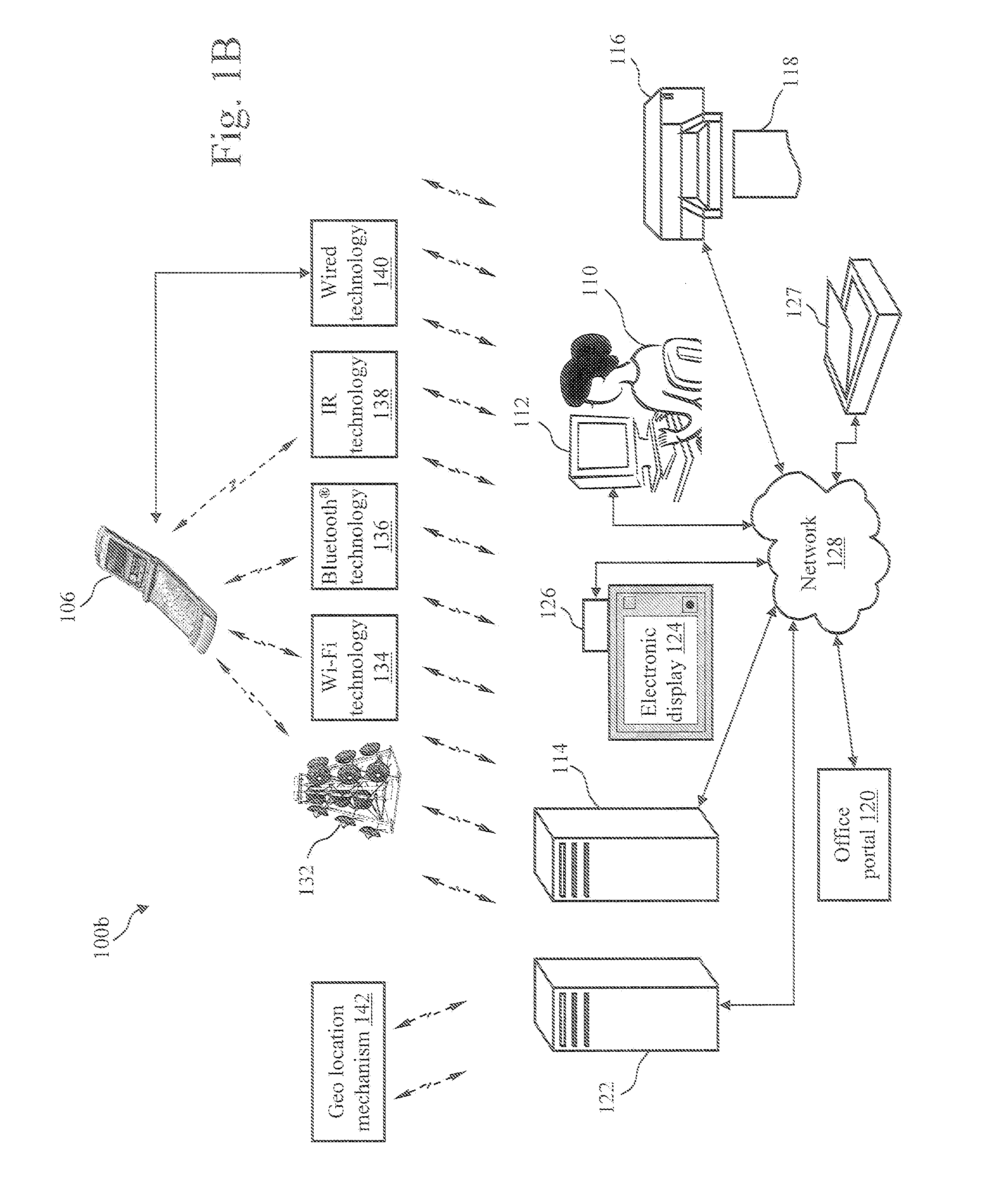

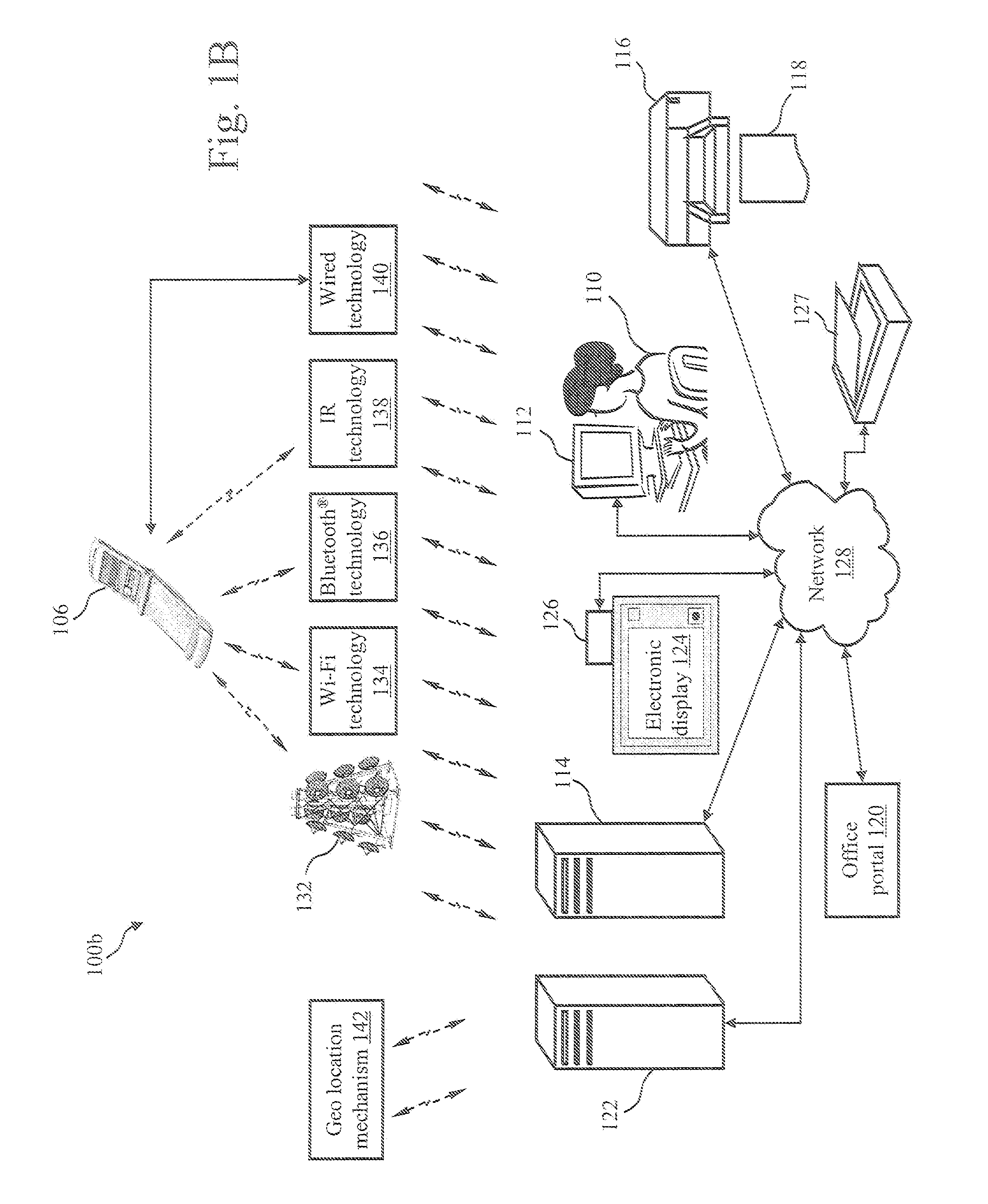

A Mixed Media Reality (MMR) system and associated techniques are disclosed. The MMR system provides mechanisms for forming a mixed media document that includes media of at least two types (e.g., printed paper as a first medium and digital content and / or web link as a second medium). In one particular embodiment, the MMR system includes a content-based retrieval database configured with an index table to represent two-dimensional geometric relationships between objects extracted from a printed document in a way that allows look-up using a text-based index. A ranked set of document, page and location hypotheses can be computed given data from the index table. The techniques effectively transform features detected in an image patch into textual terms (or other searchable features) that represent both the features themselves and the geometric relationship between them. A storage facility can be used to store additional characteristics about each document image patch.

Owner:RICOH KK

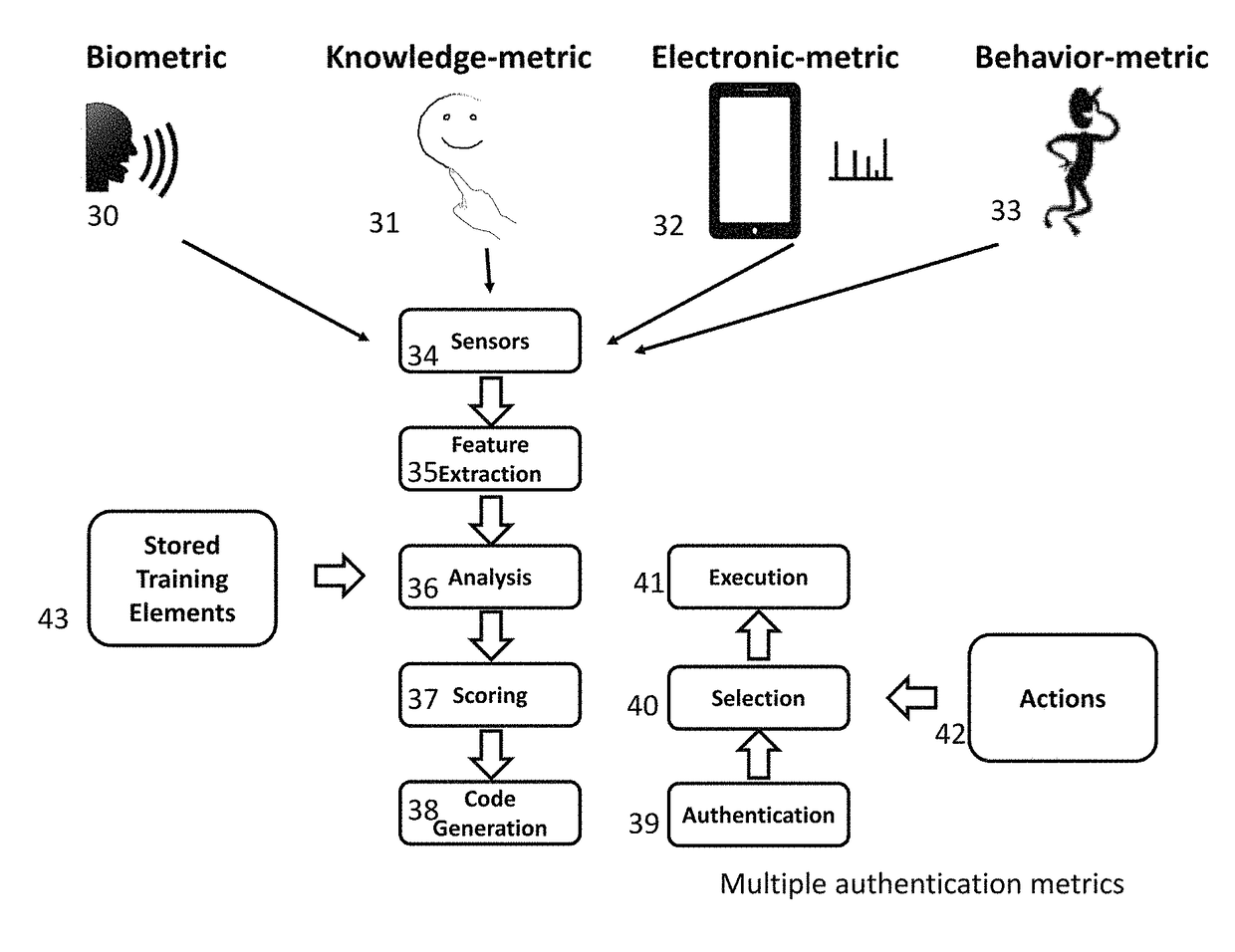

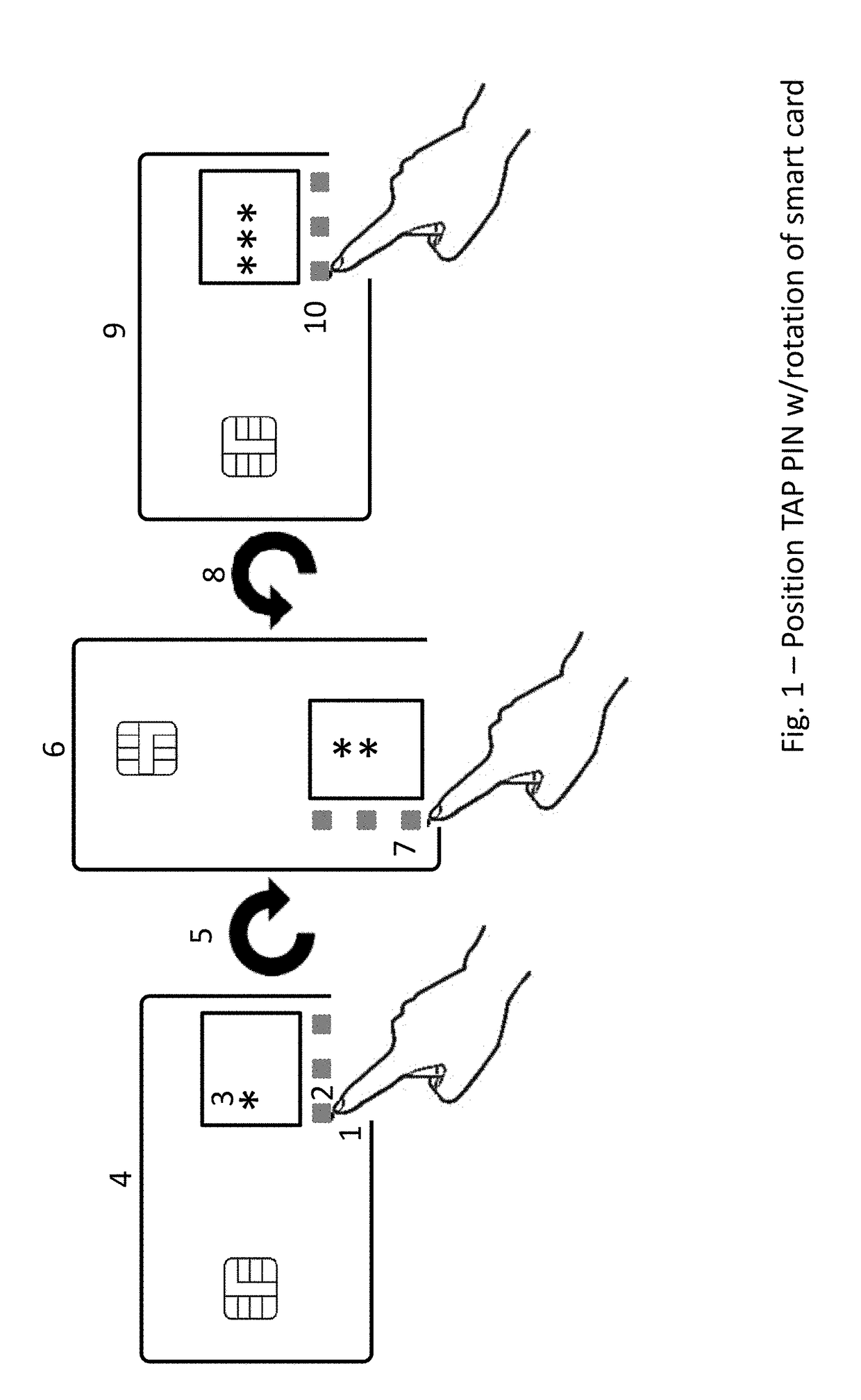

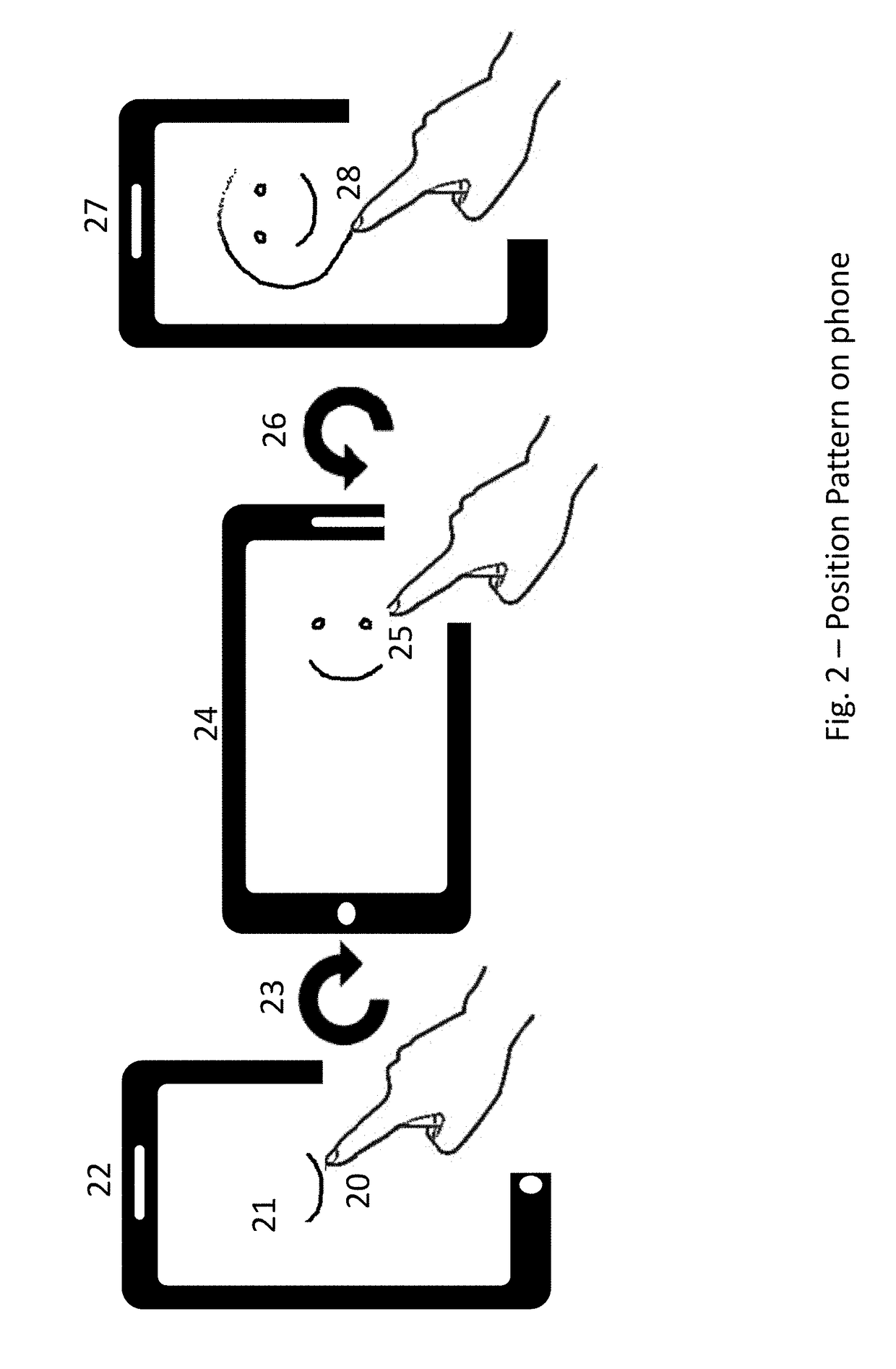

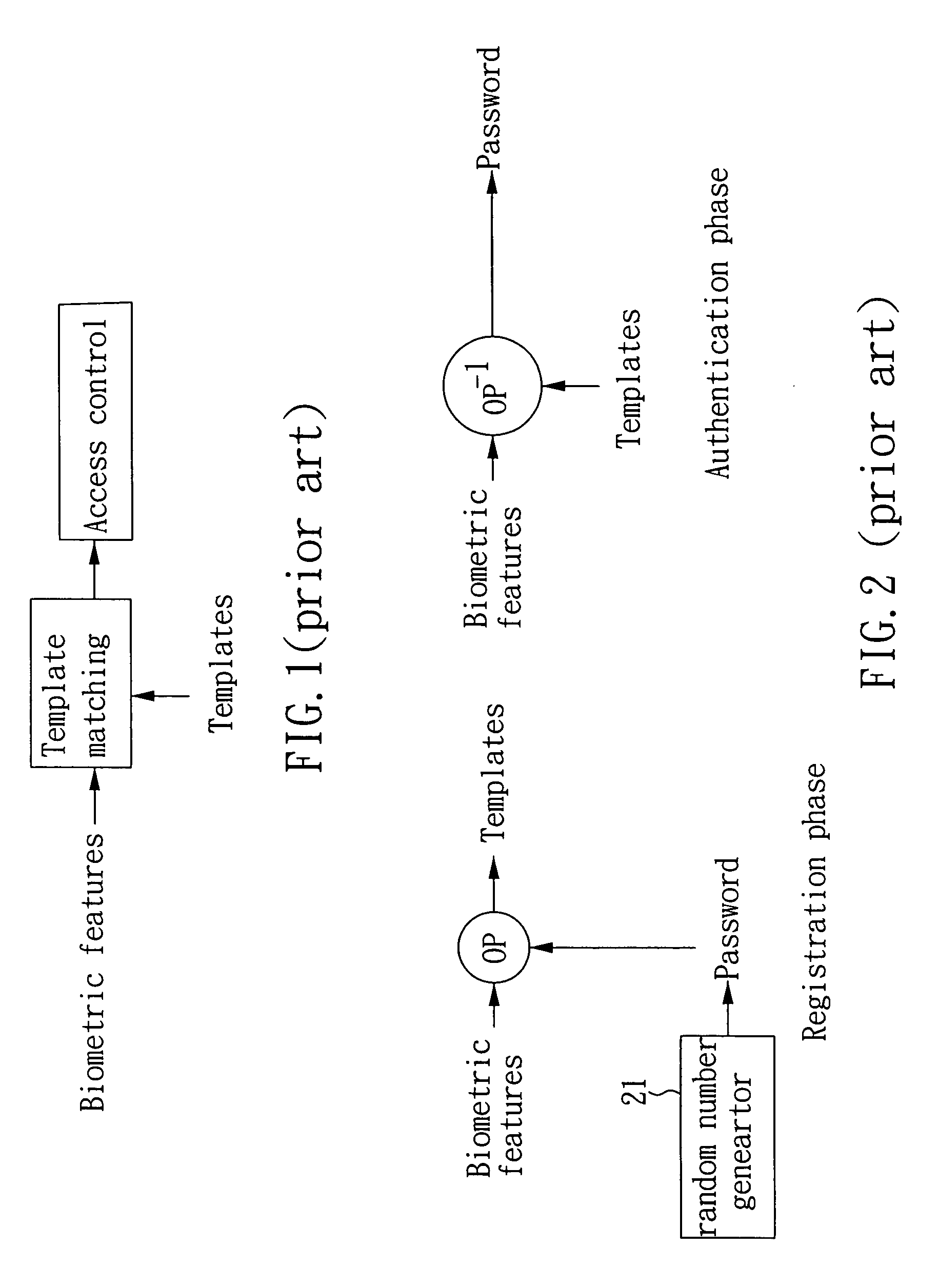

Biometric, Behavioral-Metric, Knowledge-Metric, and Electronic-Metric Directed Authentication and Transaction Method and System

InactiveUS20180012227A1Cryptography processingProtocol authorisationPaymentTheoretical computer science

A system to authenticate an entity and / or select details relative to an action or a financial account using biometric, behavior-metric, electronic-metric and / or knowledge-metric inputs. These inputs may comprise gestures, facial expressions, body movements, voice prints, sound excerpts, etc. Features are extracted from the inputs and each feature converted to a risk score, which is then translated to a representative value, such as a letter or a number, i.e., a code or PIN that represents the input. For user authentication, the code is compared with a data base of legitimate / authenticated codes. In some embodiments a user selects specific information elements, such as an account or a payment amount using one or more of a biometric, a behavior-metric, an electronic-metric and / or a knowledge-metric input.

Owner:GARMIN INT

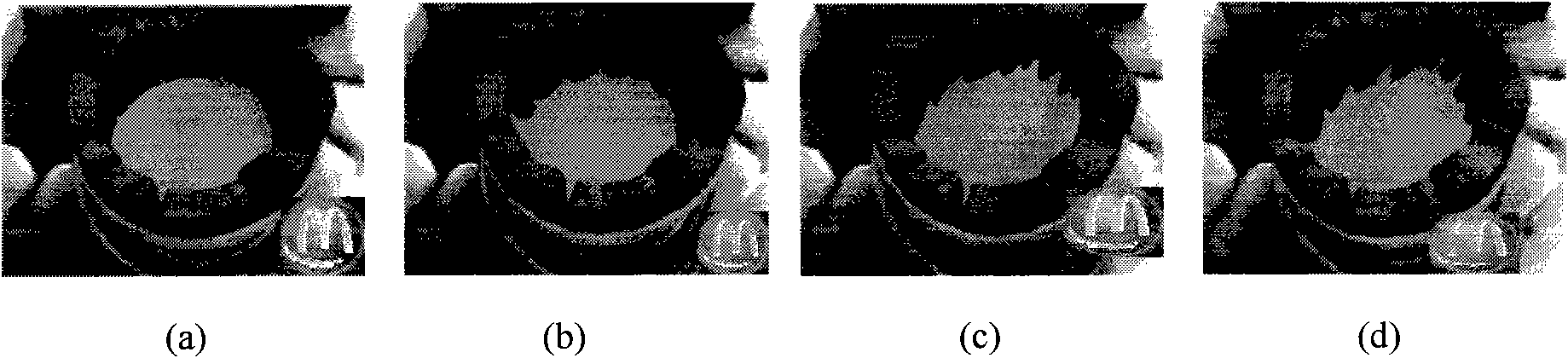

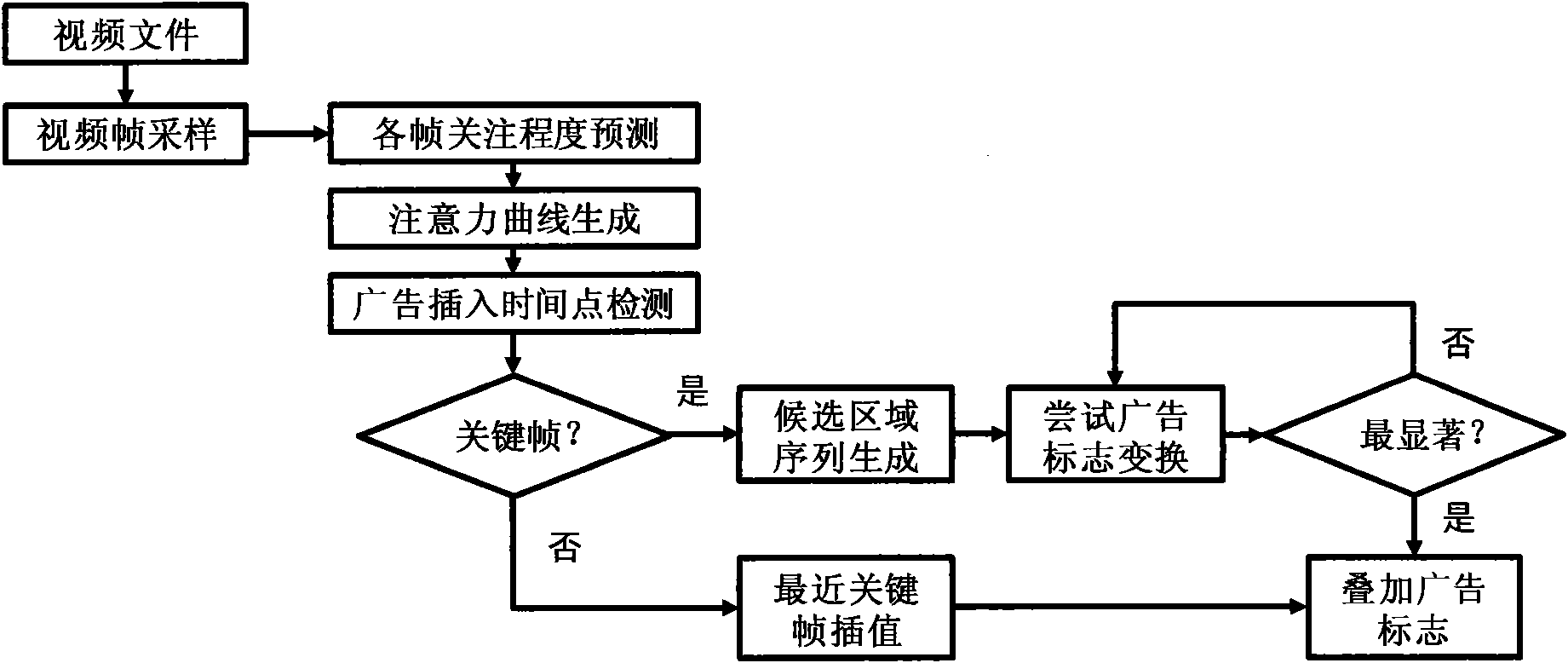

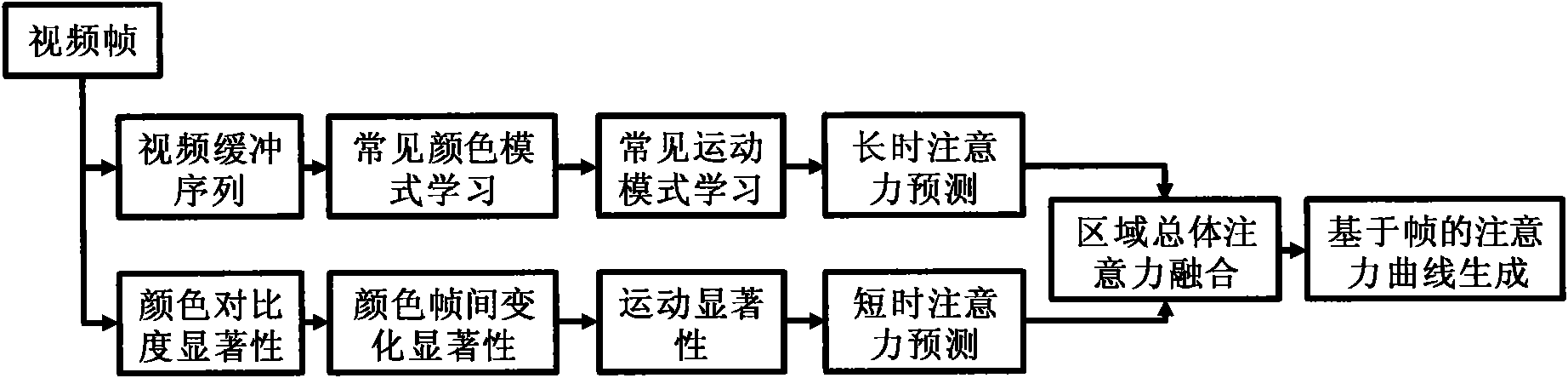

Method and system for inserting and transforming advertisement sign based on visual attention module

InactiveCN101621636AGood advertising effectAttract attentionTelevision system detailsColor television detailsVision basedProper time

The invention discloses a method and a system for inserting and transforming an advertisement sign based on a visual attention module. The method comprises the following steps: firstly, predicting interest areas in various areas of each frame of video and the attention degree on each frame of a user on the basis of the constructed visual attention model; secondly, determining a time point for inserting an advertisement according to a curve of the attention degree on each frame of the user, evaluating the fitness degree of inserting the advertisement in the various areas on the basis of predicted attention distribution, further acquiring a sequence of candidate areas for inserting the advertisement, and inserting the advertisement in an area with little influence on video content; and finally, inserting the advertisement sign into proper time point and position according to the predicted attention distribution, and performing multiple feature transformation on the advertisement sign to make the advertisement sign attract the attention of users or audience repeatedly. The method and the system can effectively perform automatic insertion and transformation of the advertisement sign, and make the inserted advertisement sign attract the attention of people repeatedly in the condition of not influencing normal watching.

Owner:PEKING UNIV

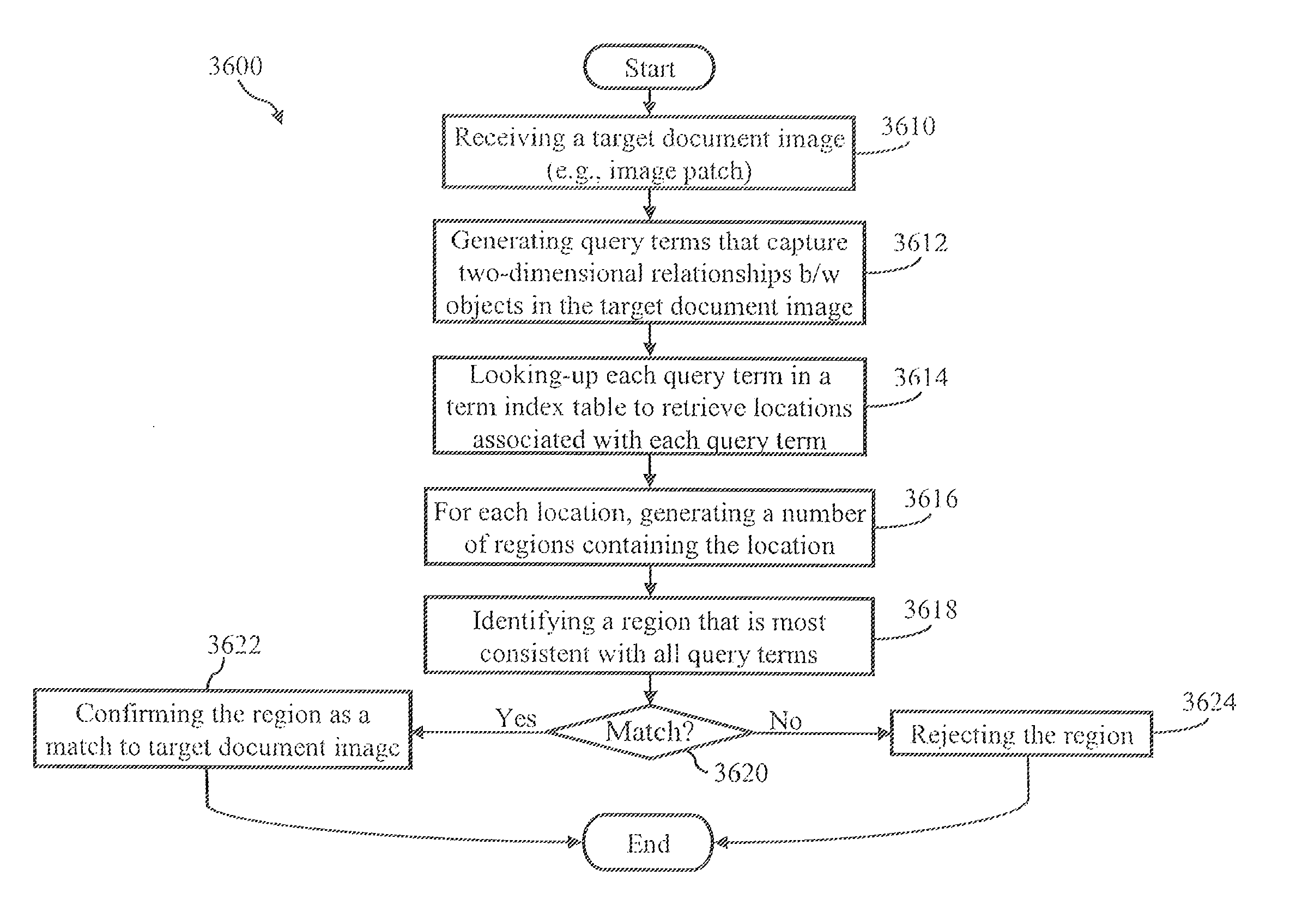

Database for mixed media document system

ActiveUS20070050411A1Multimedia data indexingDigital data processing detailsDigital contentFile system

A Mixed Media Reality (MMR) system and associated techniques are disclosed. The MMR system provides mechanisms for forming a mixed media document that includes media of at least two types (e.g., printed paper as a first medium and digital content and / or web link as a second medium). In one particular embodiment, the MMR system includes a content-based retrieval database configured with an index table to represent two-dimensional geometric relationships between objects extracted from a printed document in a way that allows look-up using a text-based index. A ranked set of document, page and location hypotheses can be computed given data from the index table. The techniques effectively transform features detected in an image patch into textual terms (or other searchable features) that represent both the features themselves and the geometric relationship between them. A storage facility can be used to store additional characteristics about each document image patch.

Owner:RICOH KK

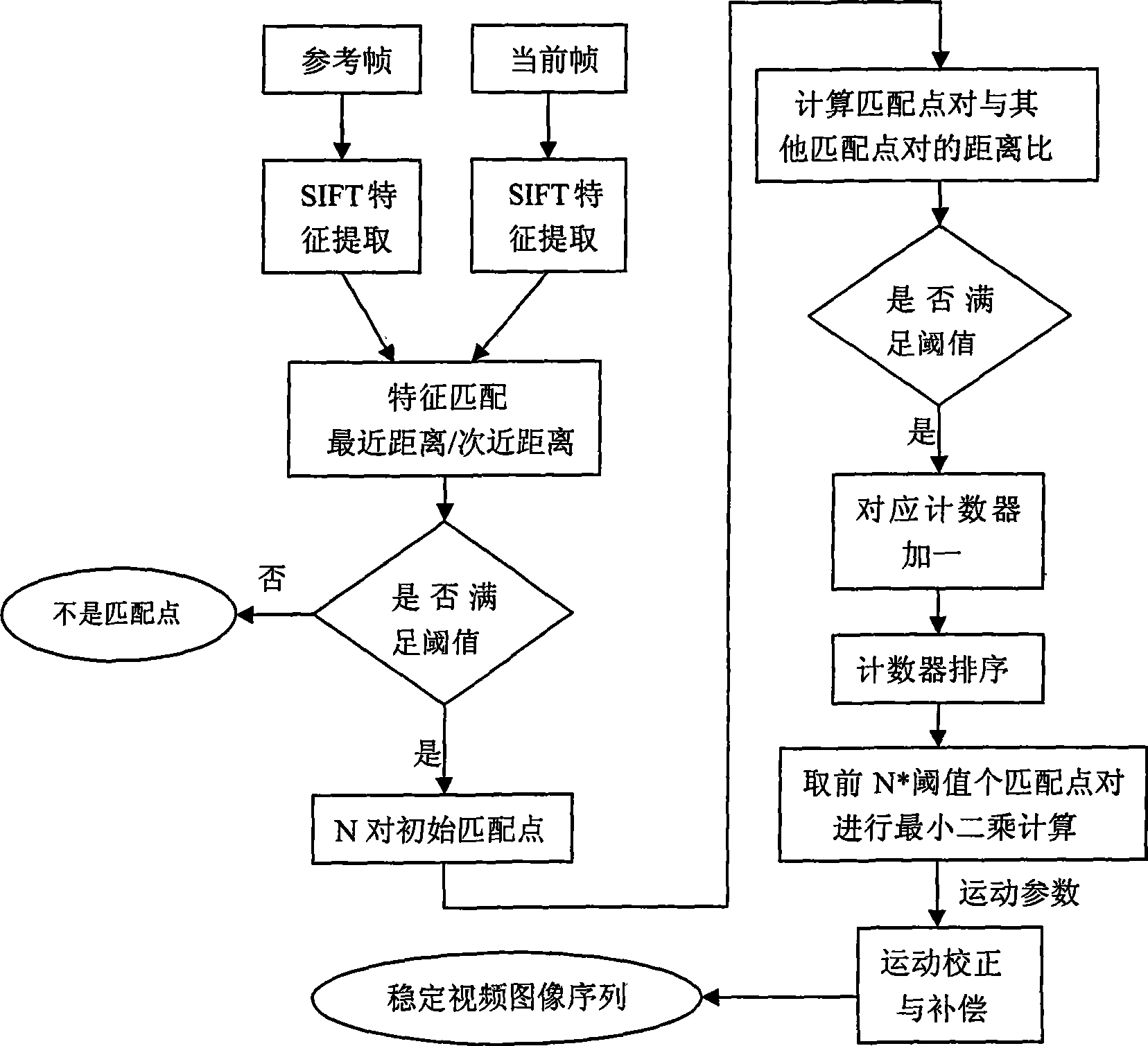

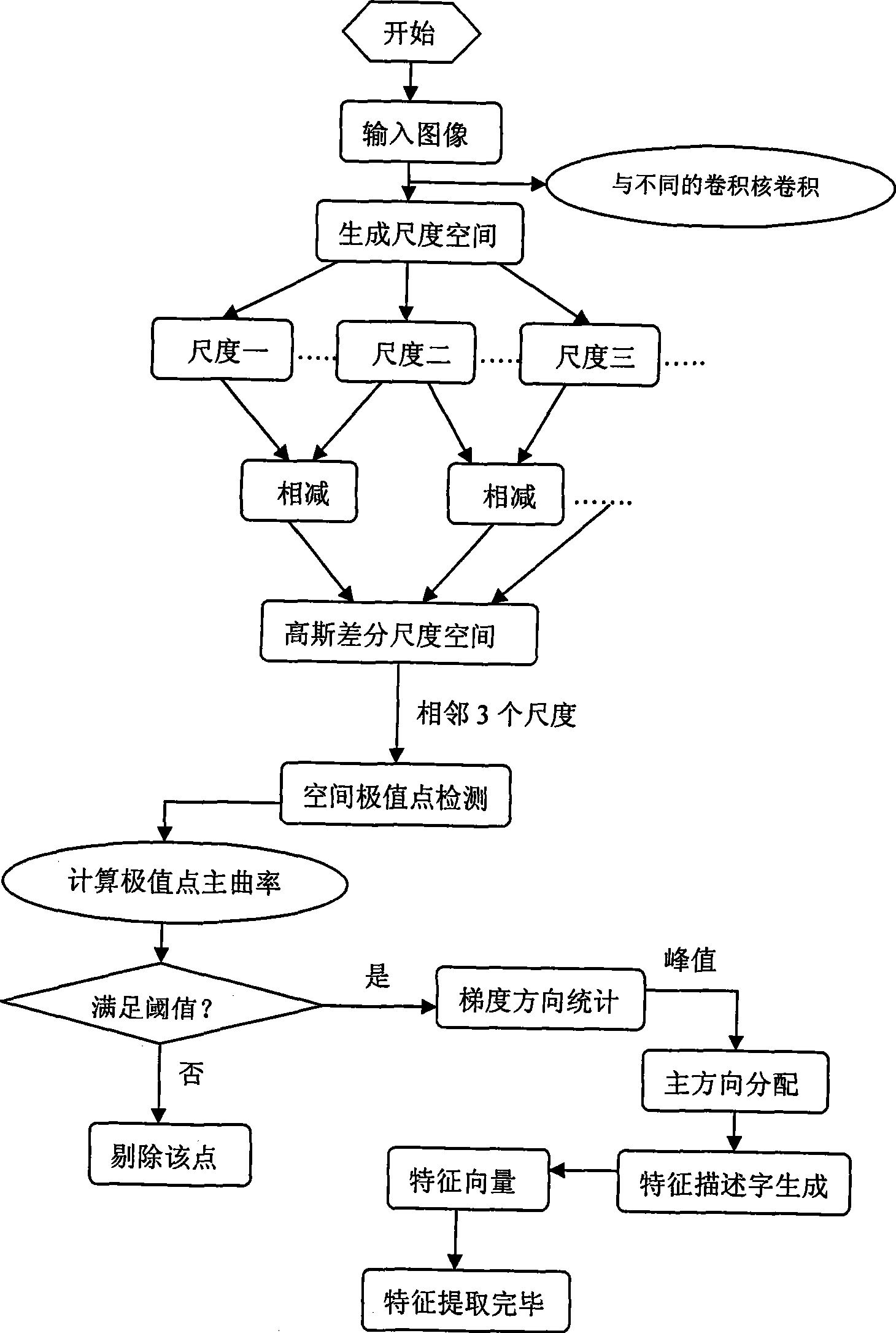

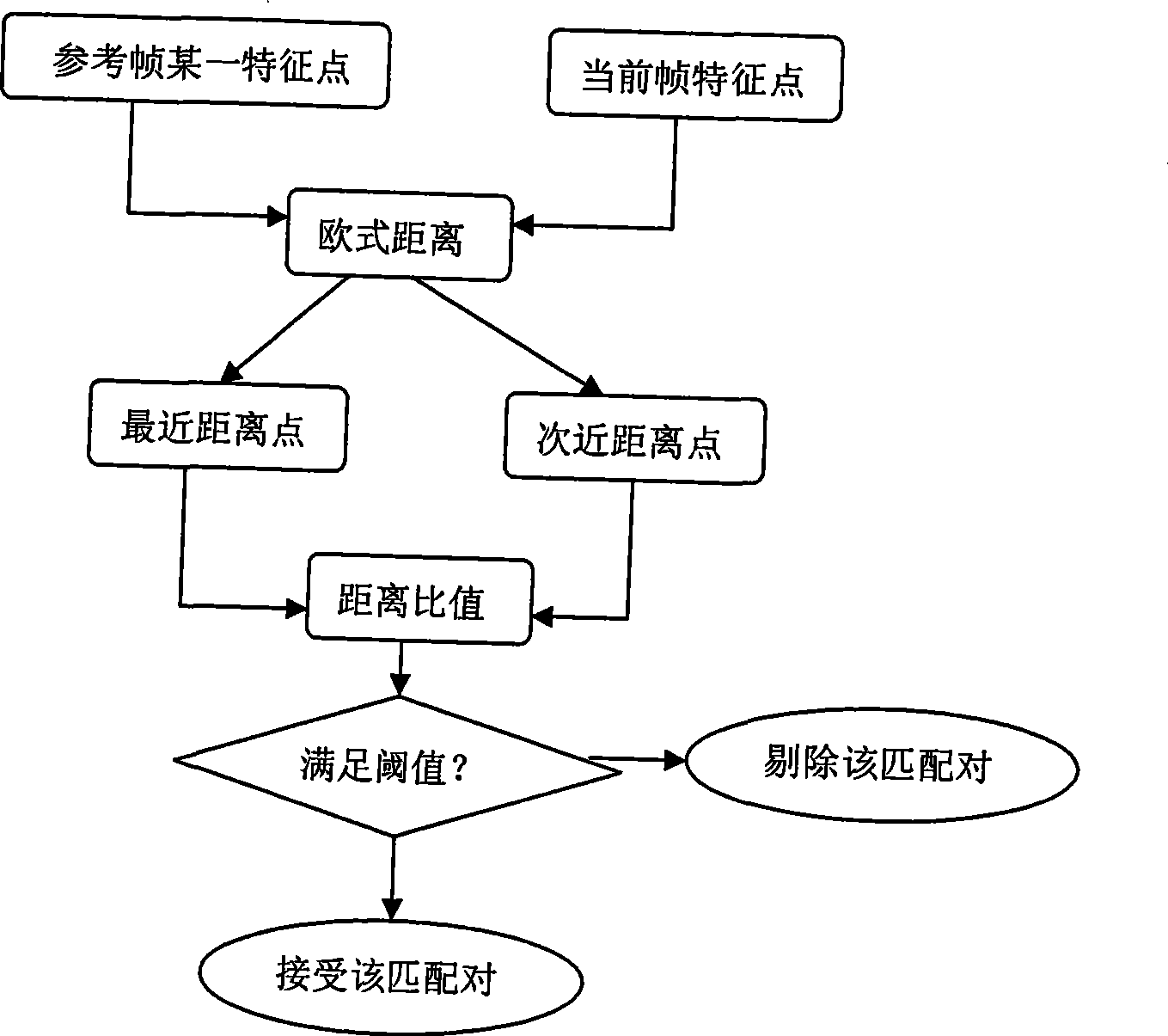

Video image stabilizing method for space based platform hovering

InactiveCN101383899AEfficient removalStabilize video outputTelevision system detailsCharacter and pattern recognitionVideo sequenceModel parameters

A method for stabilizing an image during suspension of a video on an air-based platform comprises the steps as follows: first, selecting a frame image in a video series as a reference frame; extracting characteristic points of a reference image and a current image of the video series by using such a characteristic extraction method as invariant scale and feature transformation; preliminarily matching the characteristics by taking a euclidean space distance as a characteristic match evidence so as to form characteristic match point pairs; further selecting the characteristic match points according to invariability of relative positions of characteristic points of an image background and removing wrong matched characteristic match point pairs and the characteristic match point pairs positioned on a movement target; performing least square calculation by using the characteristic match point pairs in a six-parameter affine transformation module so as to obtain module parameters; and performing correction compensation to the current image so as to obtain stable video series output with fixed visual field. During the process, the invention also provides an idea of changing a new reference frame with an interval of a certain number of frames, thereby reducing errors and improving the stability accuracy; and the invention can be applied to traffic monitoring, target track and other fields and has wide market prospect and application value.

Owner:BEIHANG UNIV

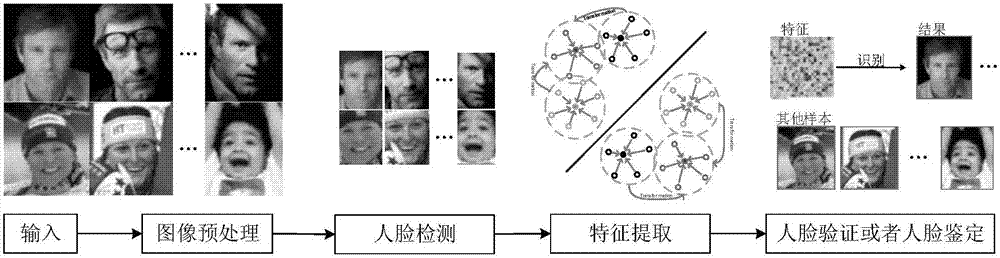

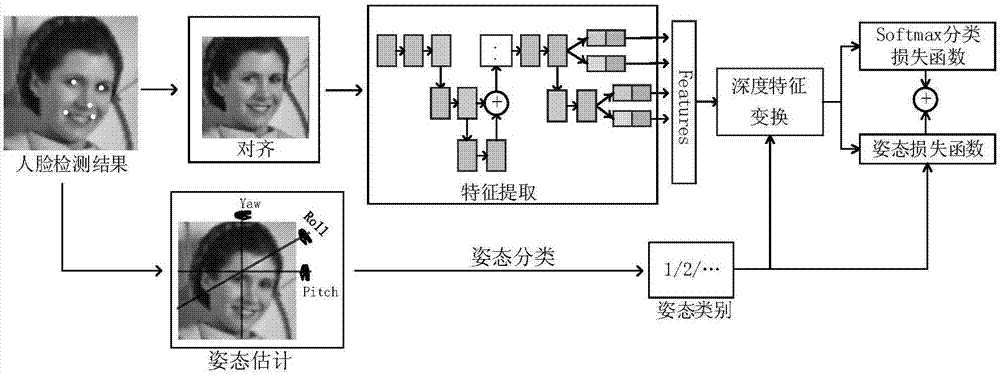

Face recognition method based on deep transformation learning in unconstrained scene

ActiveCN107506717AEnhancing Feature Transformation LearningImprove robustnessCharacter and pattern recognitionNeural learning methodsCrucial pointCharacteristic space

The invention discloses a face recognition method based on deep transformation learning in an unconstrained scene. The method comprises the following steps: obtaining a face image and detecting face key points; carrying out transformation on the face image through face alignment, and in the alignment process, minimizing the distance between the detected key points and predefined key points; carrying out face attitude estimation and carrying out classification on the attitude estimation results; separating multiple sample face attitudes into different classes; carrying out attitude transformation, and converting non-front face features into front face features and calculating attitude transformation loss; and updating network parameters through a deep transformation learning method until meeting threshold requirements, and then, quitting. The method proposes feature transformation in a neural network and transform features of different attitudes into a shared linear feature space; by calculating attitude loss and learning attitude center and attitude transformation, simple class change is obtained; and the method can enhance feature transformation learning and improve robustness and differentiable deep function.

Owner:唐晖

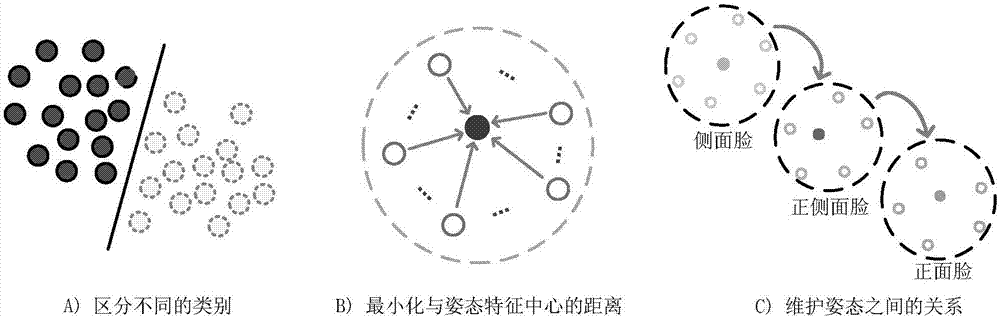

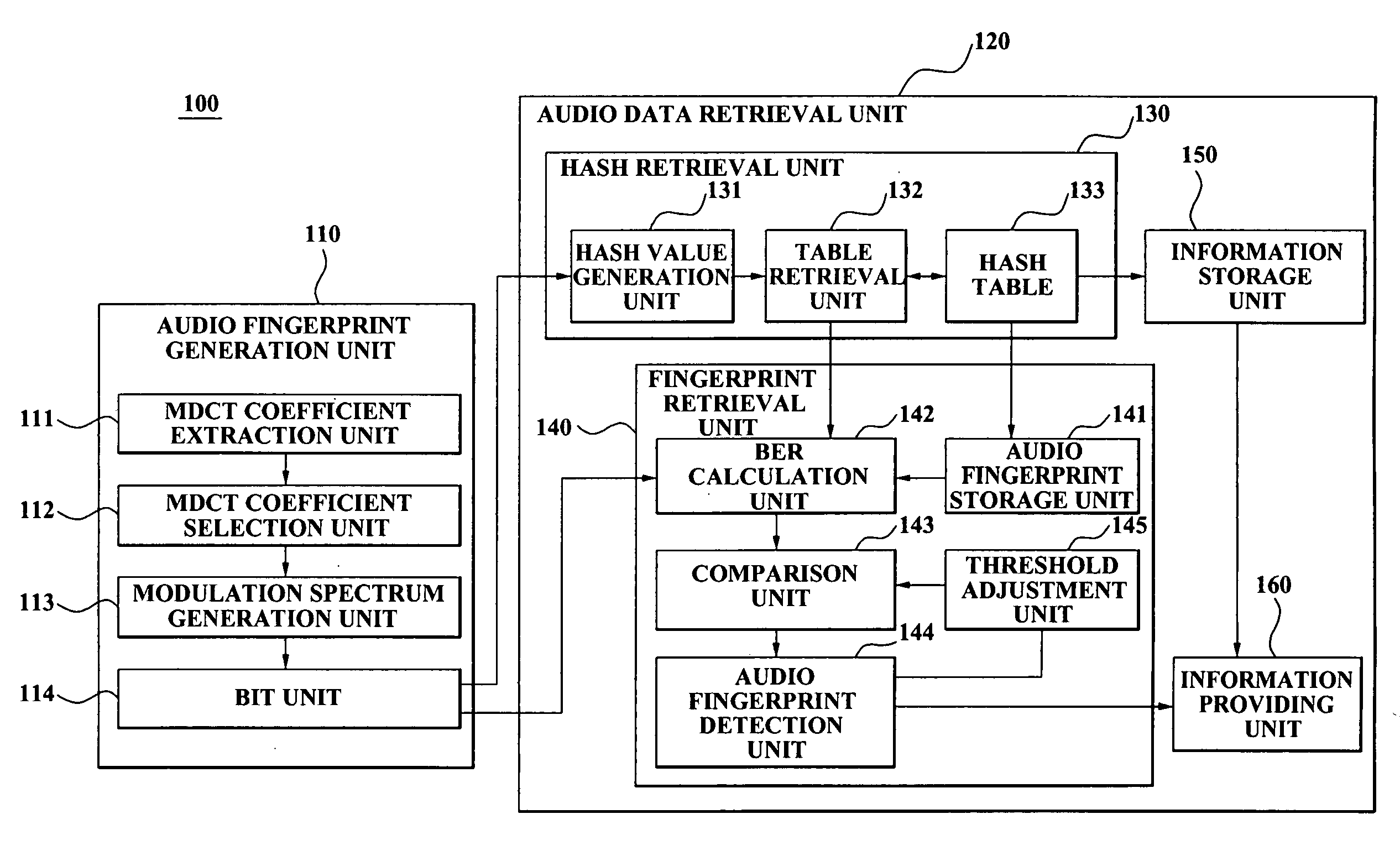

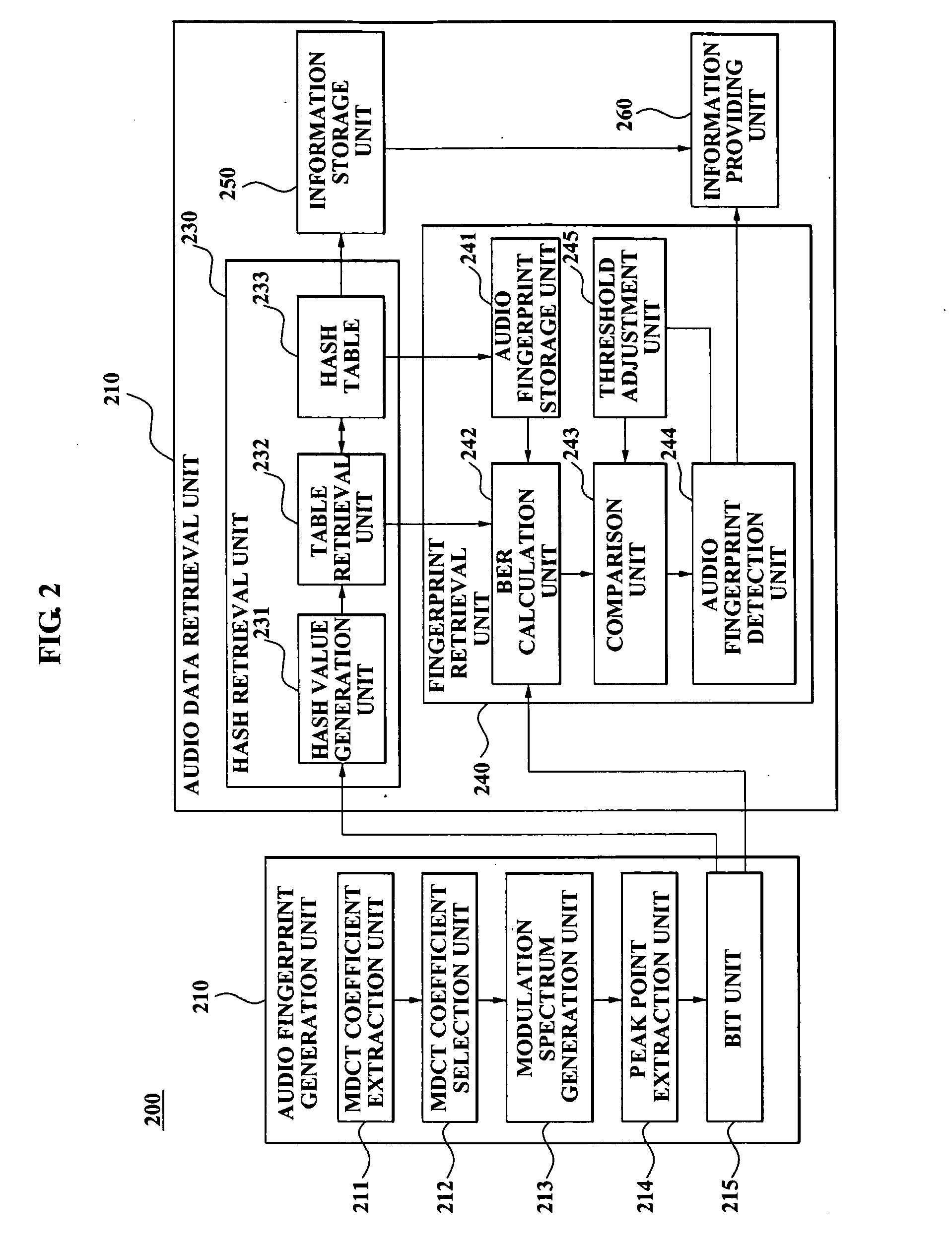

Method, medium, and system for music retrieval using modulation spectrum

ActiveUS20070192087A1Quick searchNoise robustDigital data information retrievalSpeech analysisFrequency spectrumModulation spectrum

An audio information retrieval method, medium, and system that can rapidly retrieve audio information, even in noisy environments, by extracting a modulation spectrum that is robust against noise, converting features of the extracted modulation spectrum into hash bits, and using a hash table. The audio information retrieval method may include extracting a modulation spectrum from audio data of a compressed domain, converting the extracted modulation spectrum into fingerprint bits, arranging the fingerprint bits in a form of a hash table, converting a received query into an address by a hash function corresponding to the query, and retrieving the audio information by referring to the hash table.

Owner:SAMSUNG ELECTRONICS CO LTD

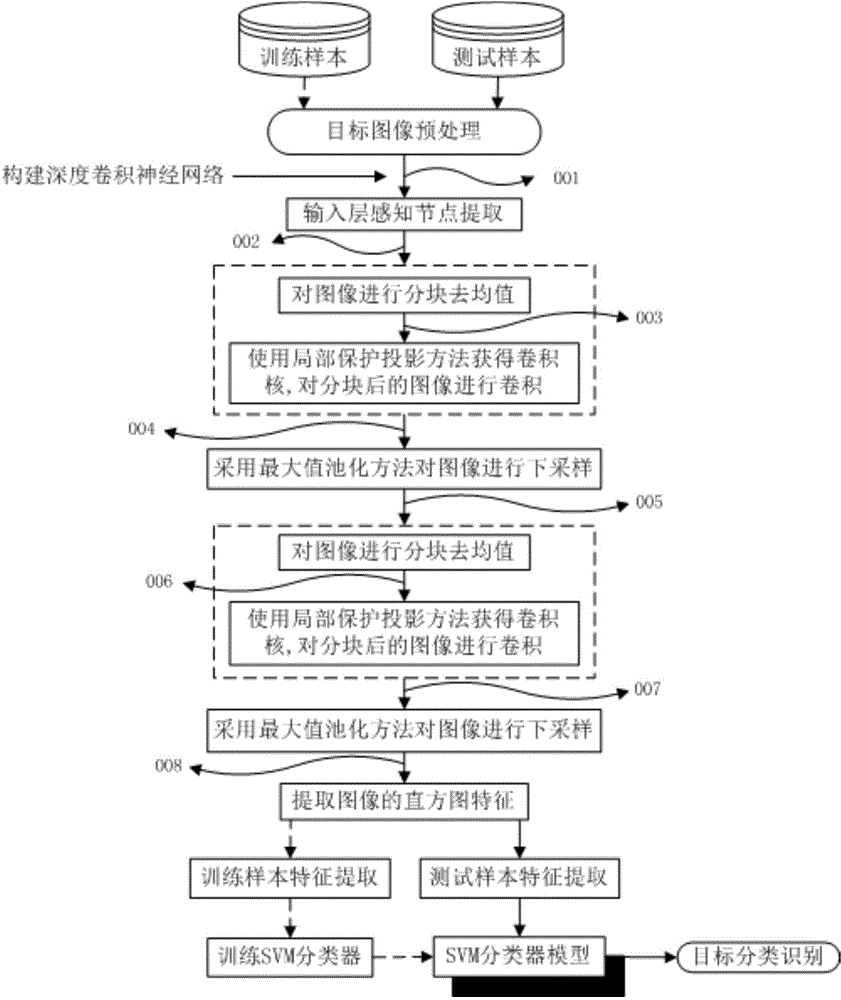

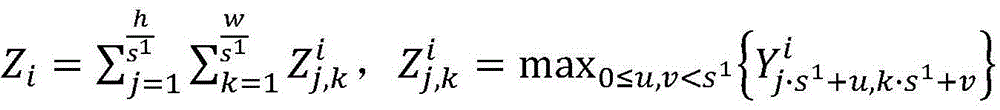

Multi-camera system target matching method based on deep-convolution neural network

InactiveCN104616032APrecisely preserve salient featuresPreserve distinctive featuresCharacter and pattern recognitionSupport vector machineSupport vector machine svm classifier

Disclosed is a multi-camera system target matching method based on a deep-convolution neural network. The multi-camera system target matching method based on the deep-convolution neural network comprises initializing multiple convolution kernels on the basis of a local protective projection method, performing downsampling on images through a maximum value pooling method, and through layer-by-layer feature transformation, extracting histogram features higher in robustness and representativeness; performing classification and identification through a multi-category support vector machine (SVM) classifier; when a target enters one camera field of view from another camera field of view, performing feature extraction on the target and marking a corresponding target tag. The multi-camera system target matching method based on the deep-convolution neural network achieves accurate identification of the target in a multi-camera cooperative monitoring area and can be used for target handoff, tracking and the like.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

2D Image Analyzer

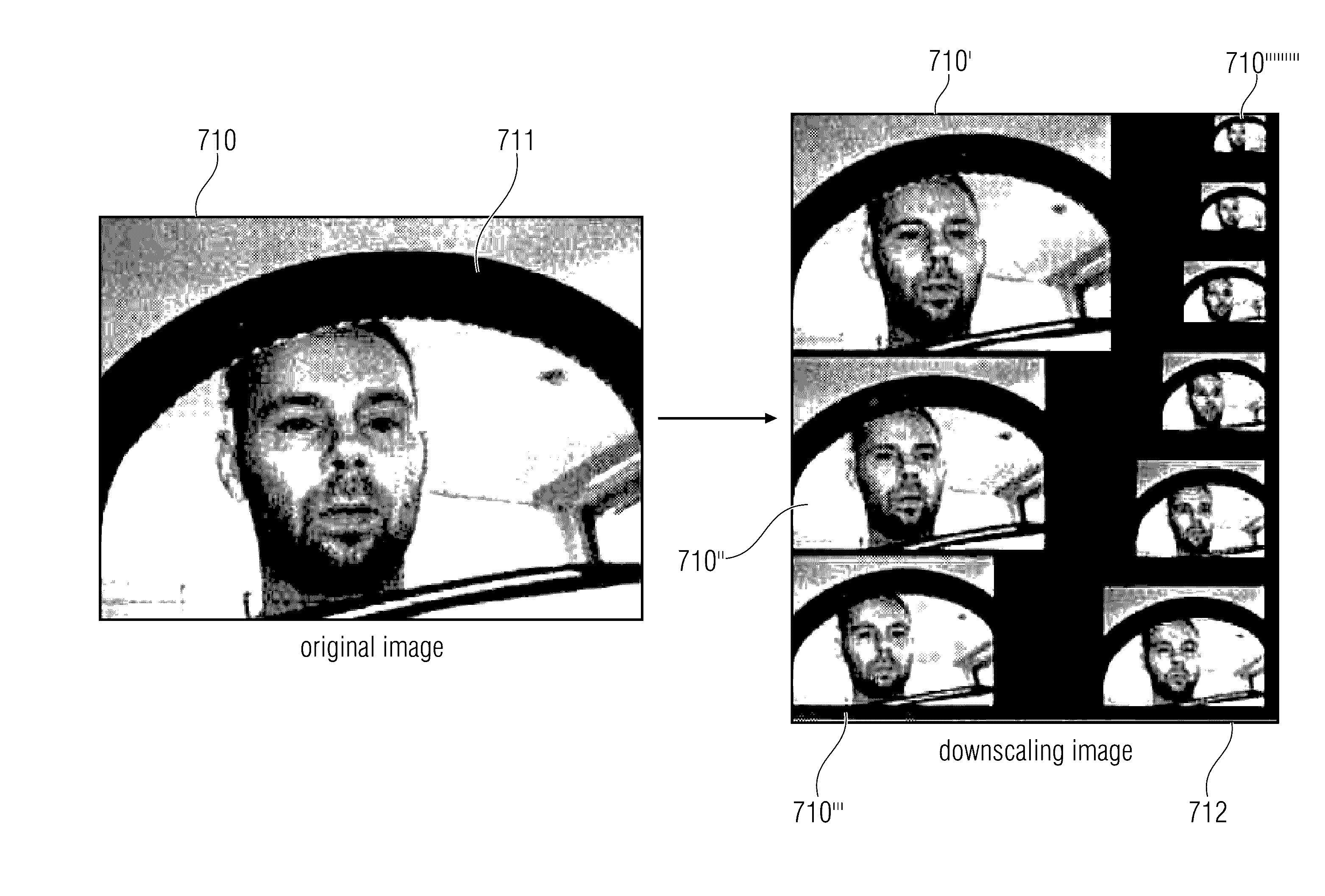

ActiveUS20170032214A1Collected considerably more efficientEfficient collectionImage enhancementImage analysisViewfinderFeature transformation

A 2D image analyzer includes an image scaler, an image generator and a pattern finder. The image scaler is configured to scale an image according to a scaling factor. The image generator is configured to produce an overview image including a plurality of copies of the received and scaled image, wherein every copy is scaled about a different scaling factor. Thereby, the respective position can be calculable by an algorithm, which considers a gap between the scaled images in the overview image, a gap of the scaled image towards one or more borders of the overview image and / or other predefined conditions. The pattern finder is configured to perform a feature transformation and classification of the overview image in order to output a position at which an accordance of the searched pattern and the predetermined pattern is maximal. A post-processing unit for smoothening and correcting the position of local maxima in the classified overview image may also be provided.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

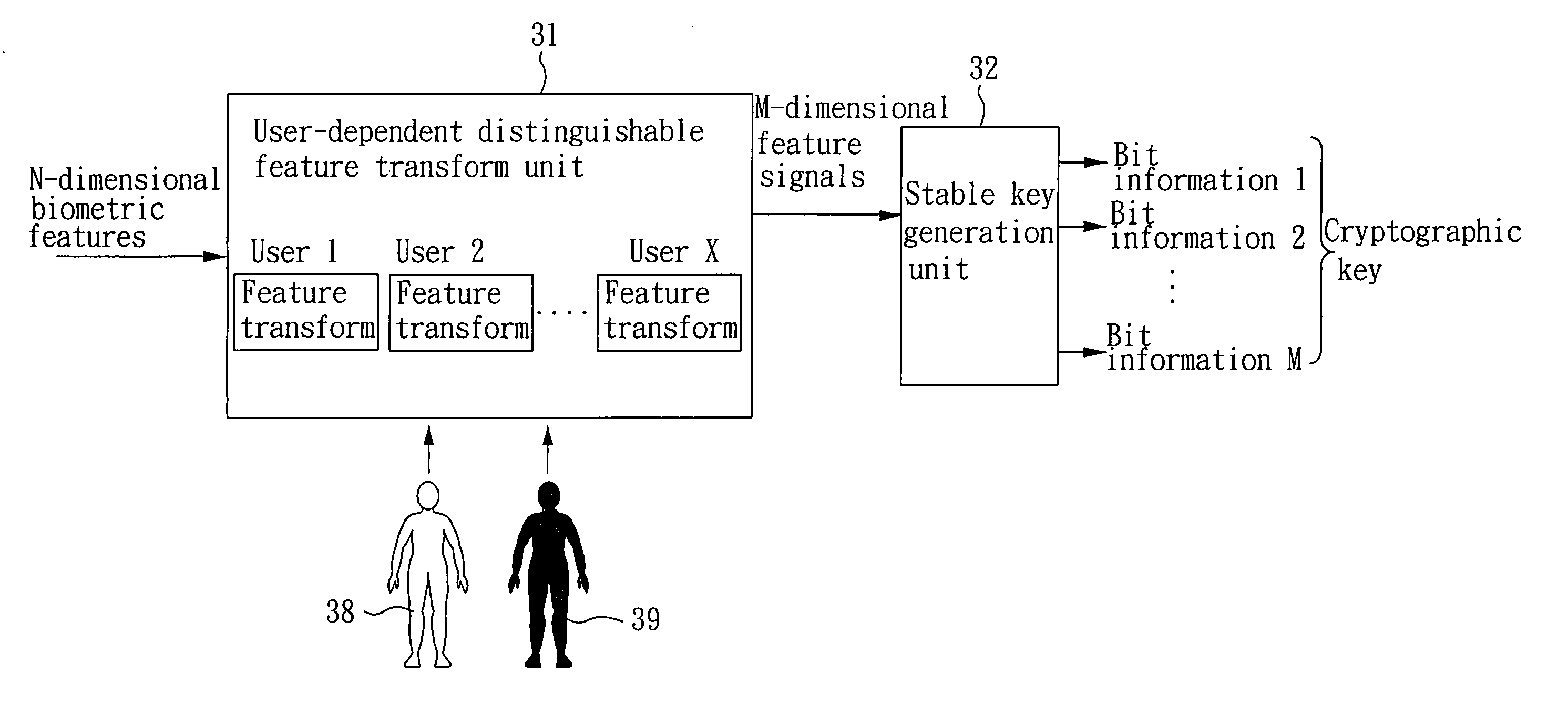

Biometrics-based cryptographic key generation system and method

ActiveUS20060083372A1Increasing length of keyExpand the key spaceComputer security arrangementsSpeech recognitionCryptographic key generationCharacteristic space

The present invention provides a biometrics-based cryptographic key generation system and method. A user-dependent distinguishable feature transform unit provides a feature transformation for each authentic user, which receives N-dimensional biometric features and performs a feature transformation to produce M-dimensional feature signals, such that the transformed feature signals of the authentic user are compact in the transformed feature space while those of other users presumed as imposters are either diverse or far away from those of the authentic user. A stable key generation unit receives the transformed feature signals to produce a cryptographic key based on bit information respectively provided by the M-dimensional feature signals, wherein the length of the bit information provided by the feature signal of each dimension is proportional to the degree of distinguishability in the dimension.

Owner:A10 NETWORKS

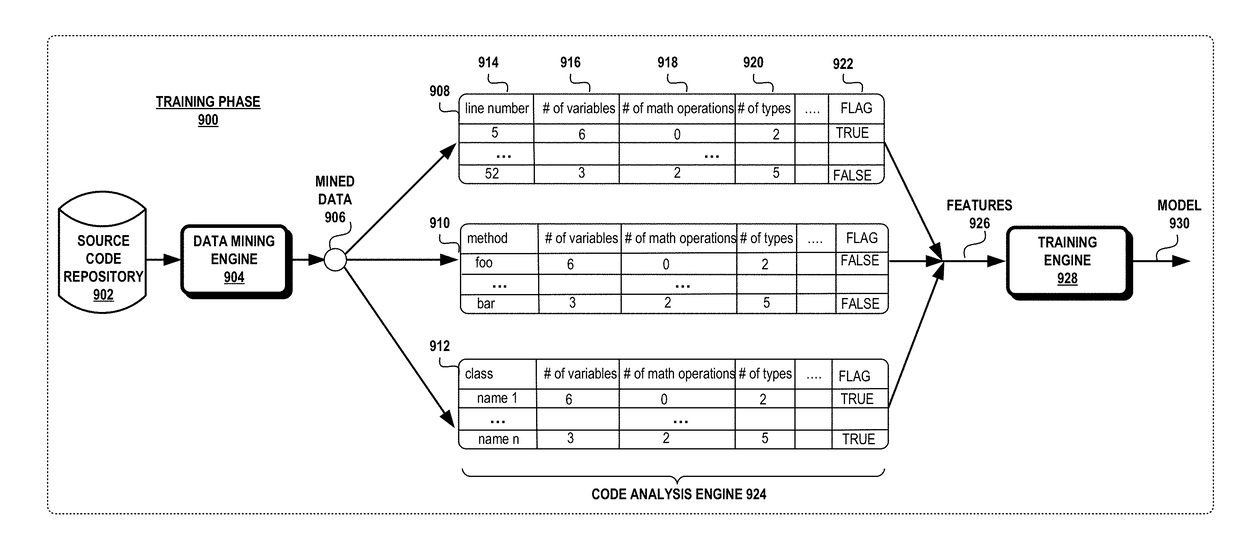

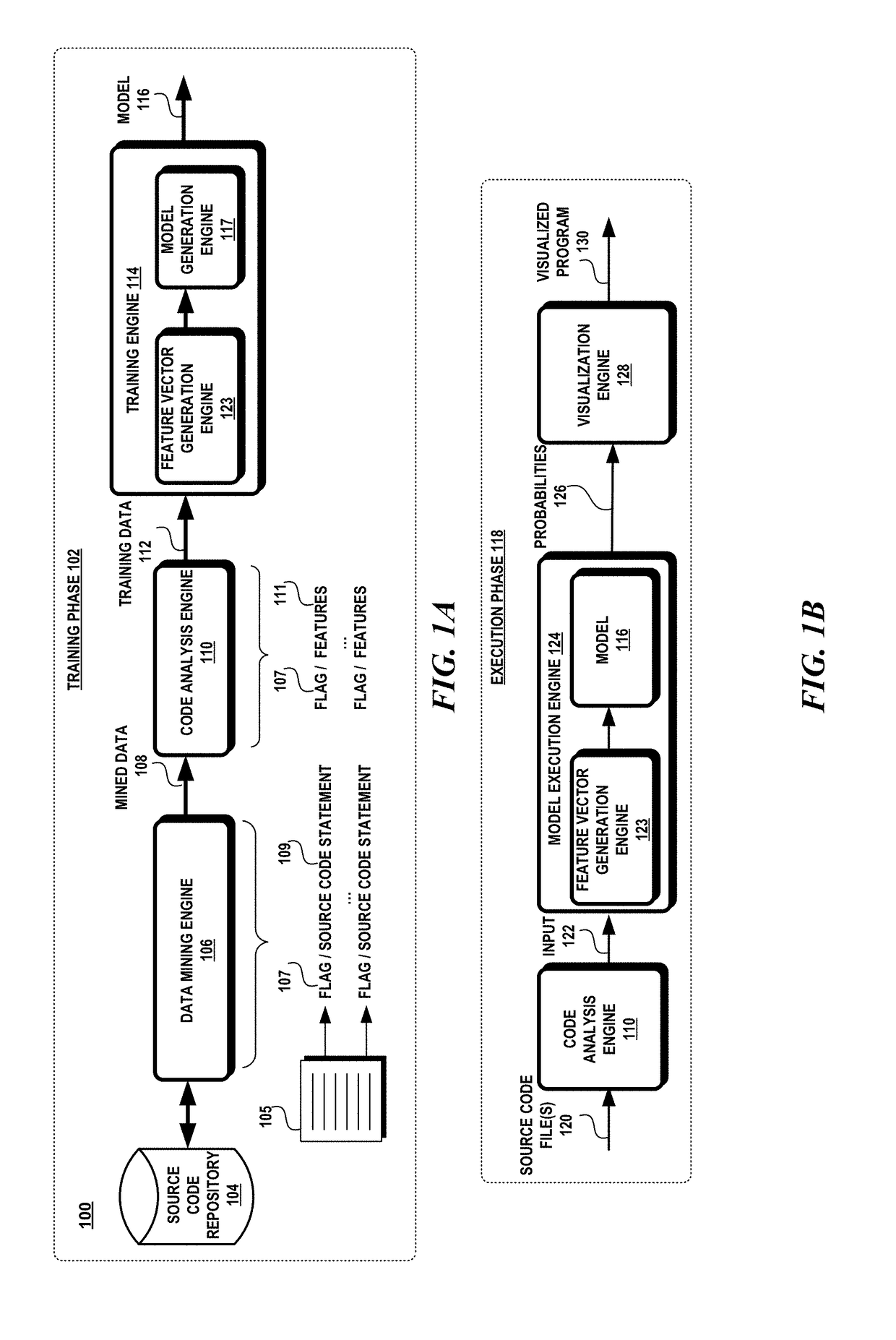

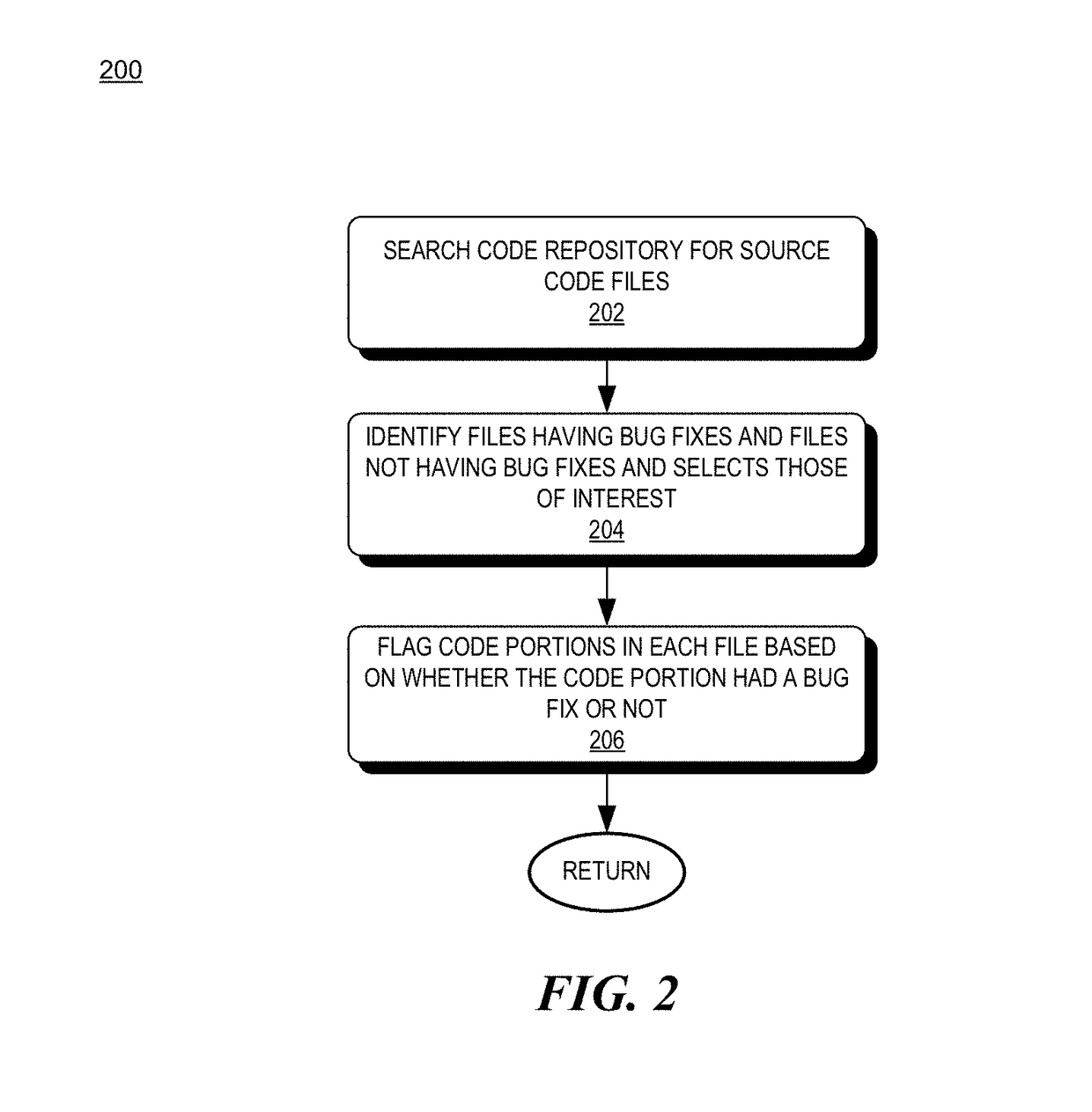

Source code bug prediction

InactiveUS20180150742A1Well formedReliability/availability analysisSoftware testing/debuggingFeature vectorSoftware bug

A probabilistic machine learning model is generated to identify potential bugs in a source code file. Source code files with and without bugs are analyzed to find features indicative of a pattern of the context of a software bug, wherein the context is based on a syntactic structure of the source code. The features may be extracted from a line of source code, a method, a class and / or any combination thereof. The features are then converted into a binary representation of feature vectors that train a machine learning model to predict the likelihood of a software bug in a source code file.

Owner:MICROSOFT TECH LICENSING LLC

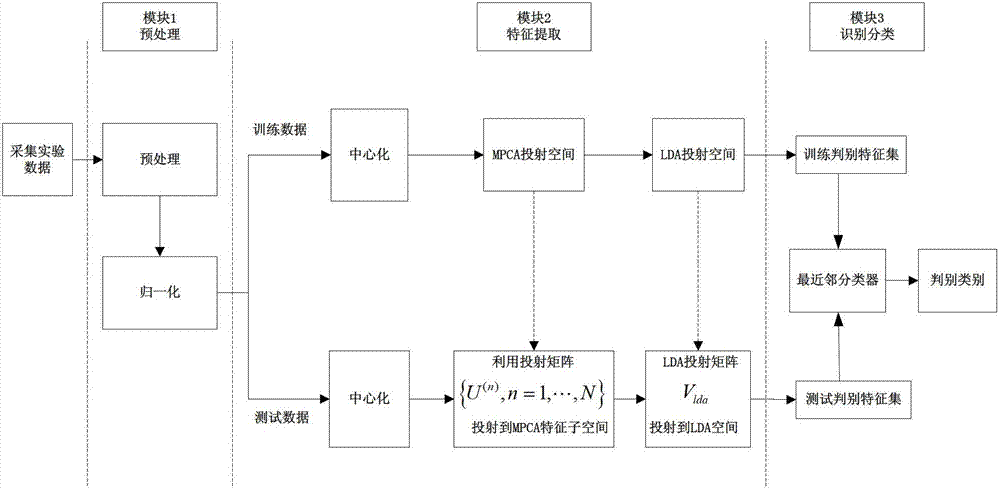

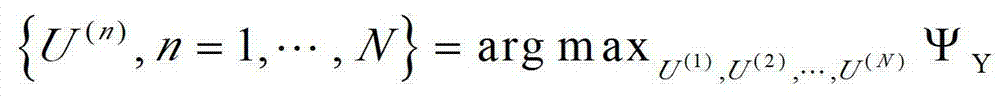

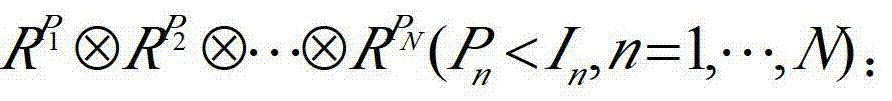

Brain cognitive state judgment method based on polyteny principal component analysis

InactiveCN103116764AGood recognition and classificationCharacter and pattern recognitionHat matrixDecomposition

The invention discloses a brain cognitive state judgment method based on polyteny principal component analysis (PCA). The method includes the following steps of firstly, inputting sample sets, and processing input data; secondly, calculating characteristic decomposition of training sample sets, determining an optimal feature transformation transformational matrix, and projecting training samples into tensor characteristic subspace to obtain feature tensor sets of the training sets; thirdly, vectorizing lower dimension feature tensor data which are subjected to dimensionality reduction as input of linear discriminant analysis (LDA), determining an LDA optimal projection matrix, and projecting the vectorized lower dimension feature tensor data into LDA feature subspace for further extracting discriminant feature vectors of the training sets; and fourthly, classifying features, subjecting the discriminant feature vectors obtained by projection of training images and test images to feature matching, and further classifying the features . According to the brain cognitive state judgment method, PCA is utilized to directly perform dimensionality reduction and feature extraction to multi-level tensor data, the defect that structures and correlation of original image data are destroyed and redundancy and structures in the original images can not be completely maintained due to the fact that traditional PCA simply performs dimensionality reduction is overcome, and space structure information of functional magnetic resonance image (fMRI) imaging data is kept.

Owner:XIDIAN UNIV

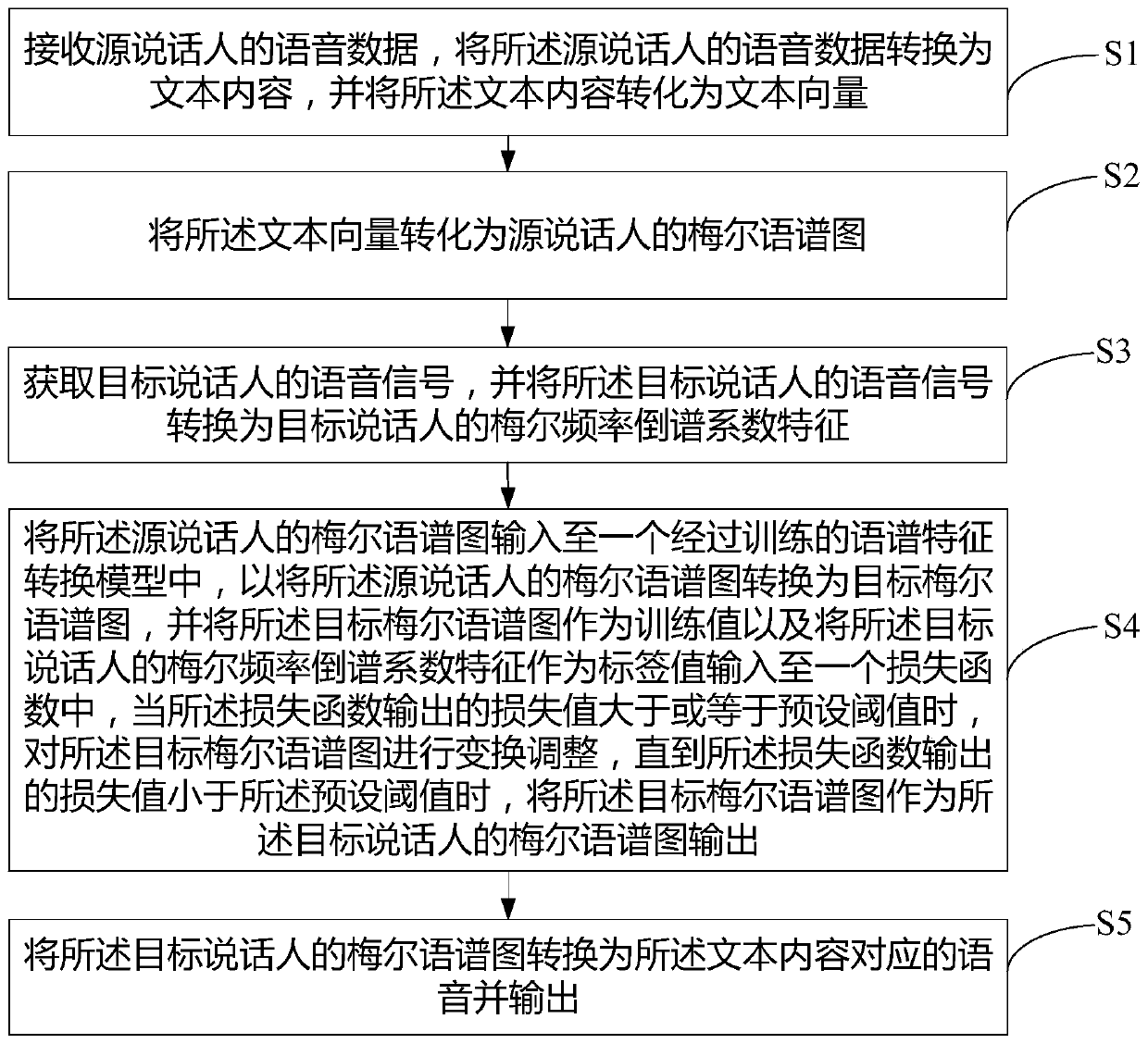

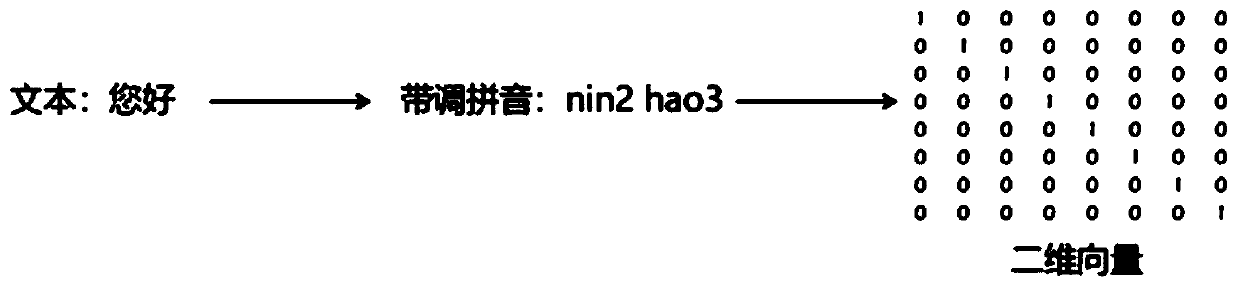

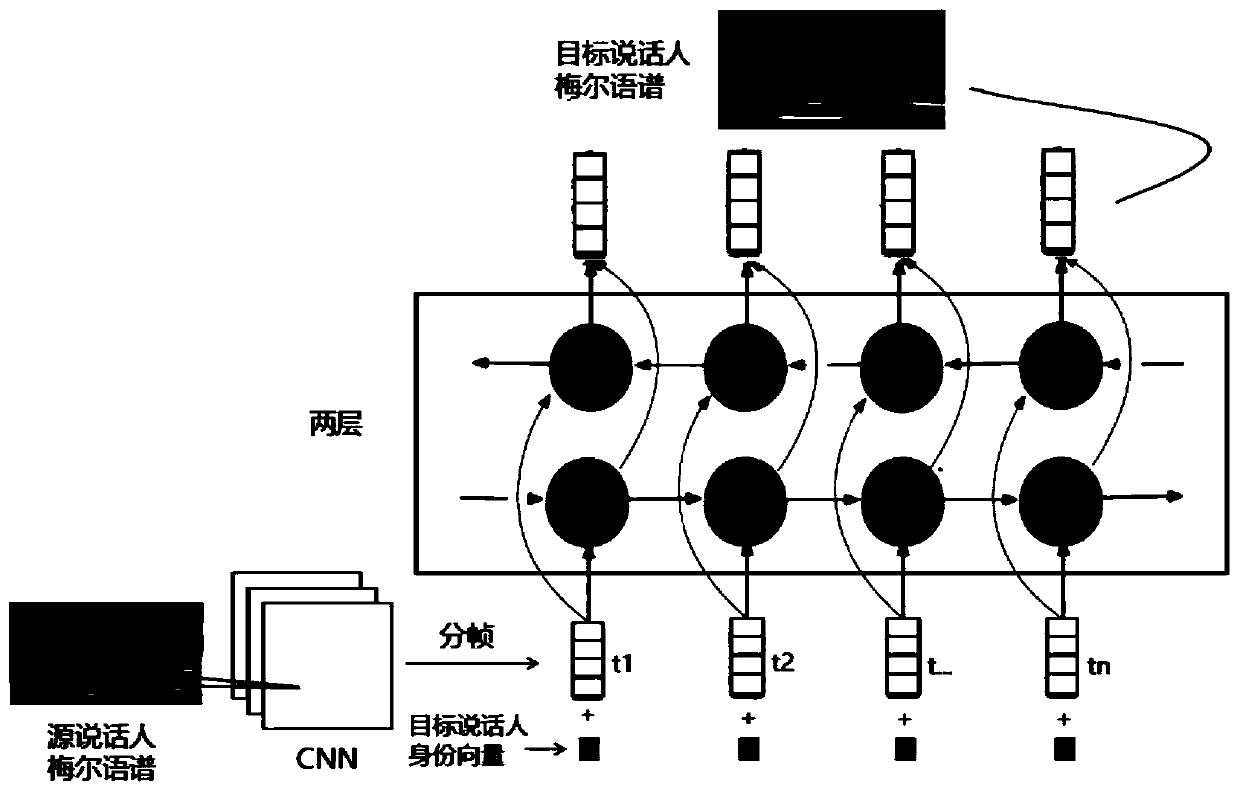

Speech synthesis method and device and computer readable storage medium

PendingCN110136690ARealize voice conversionSpeech recognitionSpeech synthesisMel-frequency cepstrumSpeech synthesis

The invention relates to the technical field of artificial intelligence, and discloses a speech synthesis method. The method comprises the steps that speech data of a source speaker is converted intotext content, and the text content is converted into a text vector; the text vector is converted into a Mel spectrogram of the source speaker; a speech signal of a target speaker is obtained, the speech signal of the target speaker is converted into Mel frequency cepstrum coefficient characteristics of the target speaker; the Mel spectrogram of the source speaker and the Mel frequency cepstrum coefficient characteristics of the target speaker are input into a trained spectral feature transformation model, and a Mel spectrogram of the target speaker is obtained; the Mel spectrogram of the target speaker is converted into speech corresponding of the text content, and the speech is output. The invention further provides a speech synthesis device and a computer readable storage medium. Accordingly, tone shift of the speech synthesis system can be achieved.

Owner:PING AN TECH (SHENZHEN) CO LTD

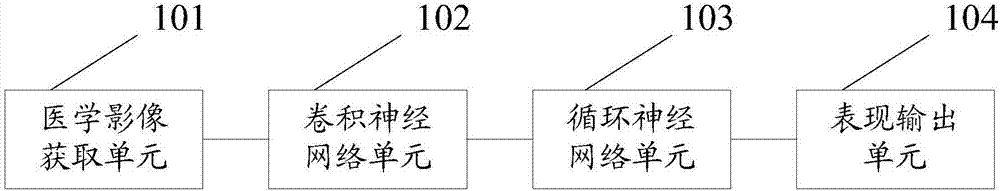

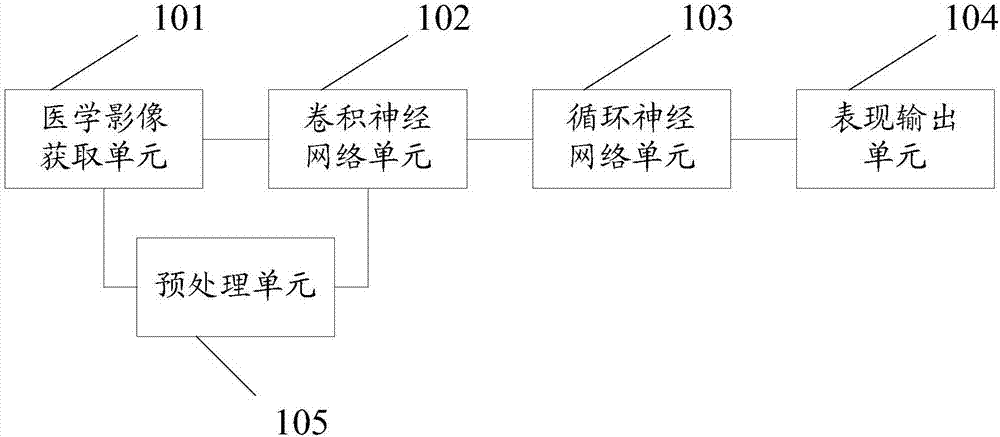

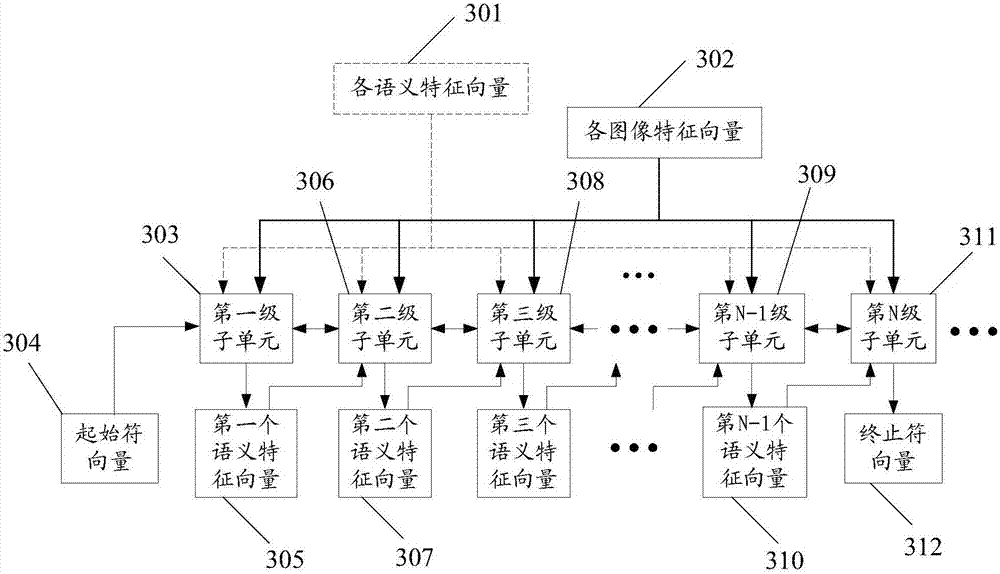

Medical image expression generating system, training method and expression generating method thereof

InactiveCN107145910AEasy diagnosisImprove reading efficiencyReconstruction from projectionMedical data miningFeature vectorFeature transformation

The invention discloses a medical image expression generating system, a training method and an expression generating method thereof. After a medical image acquisition unit acquires a two-dimensional medical image, a convolutional neural network unit extracts the image characteristic of the medical image and converts the image characteristic to an image characteristic vector, and then outputs the image characteristic vector to a pre-established first vector space. A circulating neural network unit determines a semantic characteristic vector which corresponds with the image characteristic vector according to a correspondence between the image characteristic vector included in the pre-established first vector space and a semantic characteristic vector included in a second vector space, and furthermore performs outputting. An expression output unit converts the semantic characteristic vector which matches the image characteristic vector to a corresponding natural language and performs outputting. Therefore the expression generating system realizes simple reading and analyzing to the medical image and furthermore has advantages of improving image reading efficiency, improving image reading quality and greatly reducing misdiagnosing probability.

Owner:BOE TECH GRP CO LTD

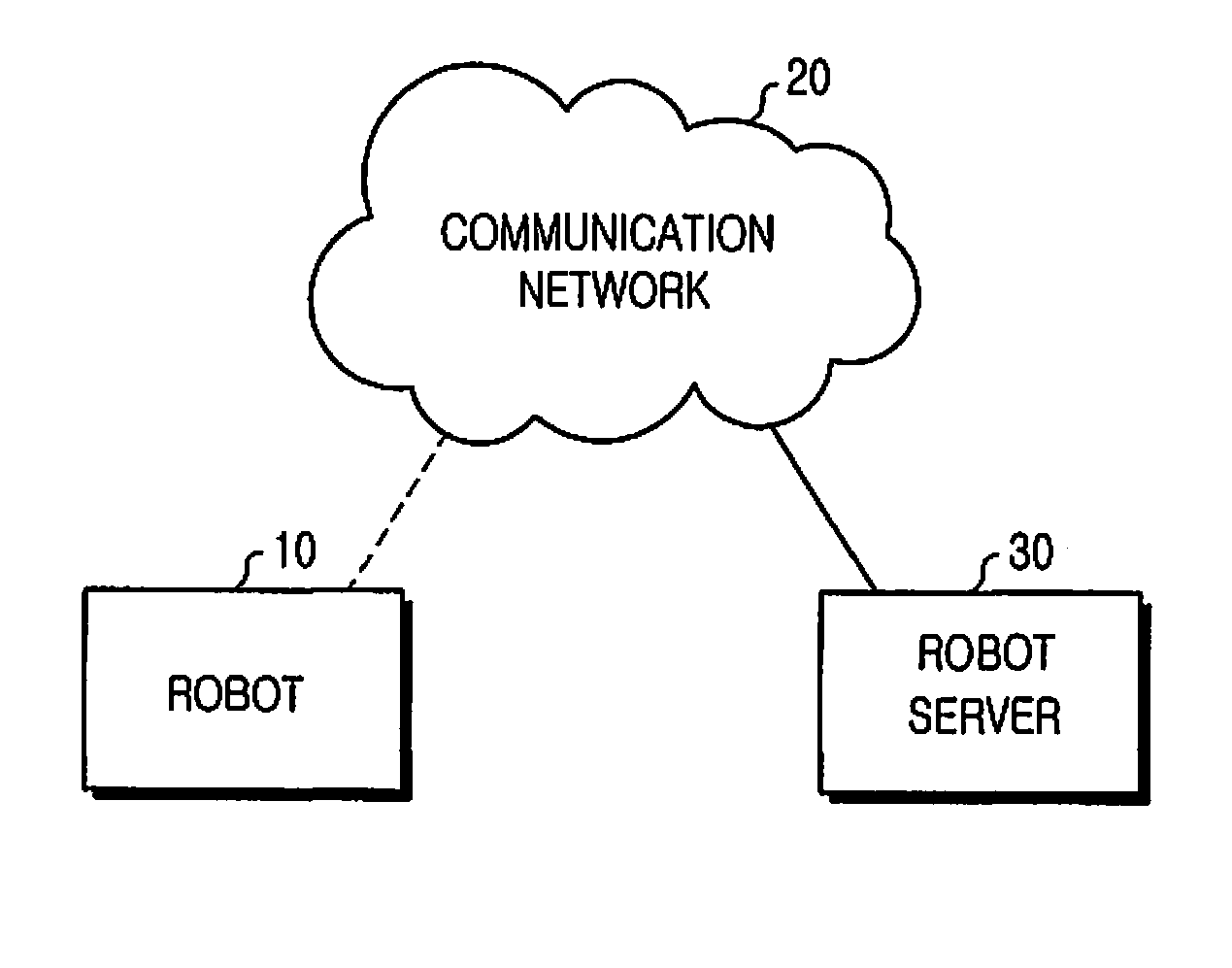

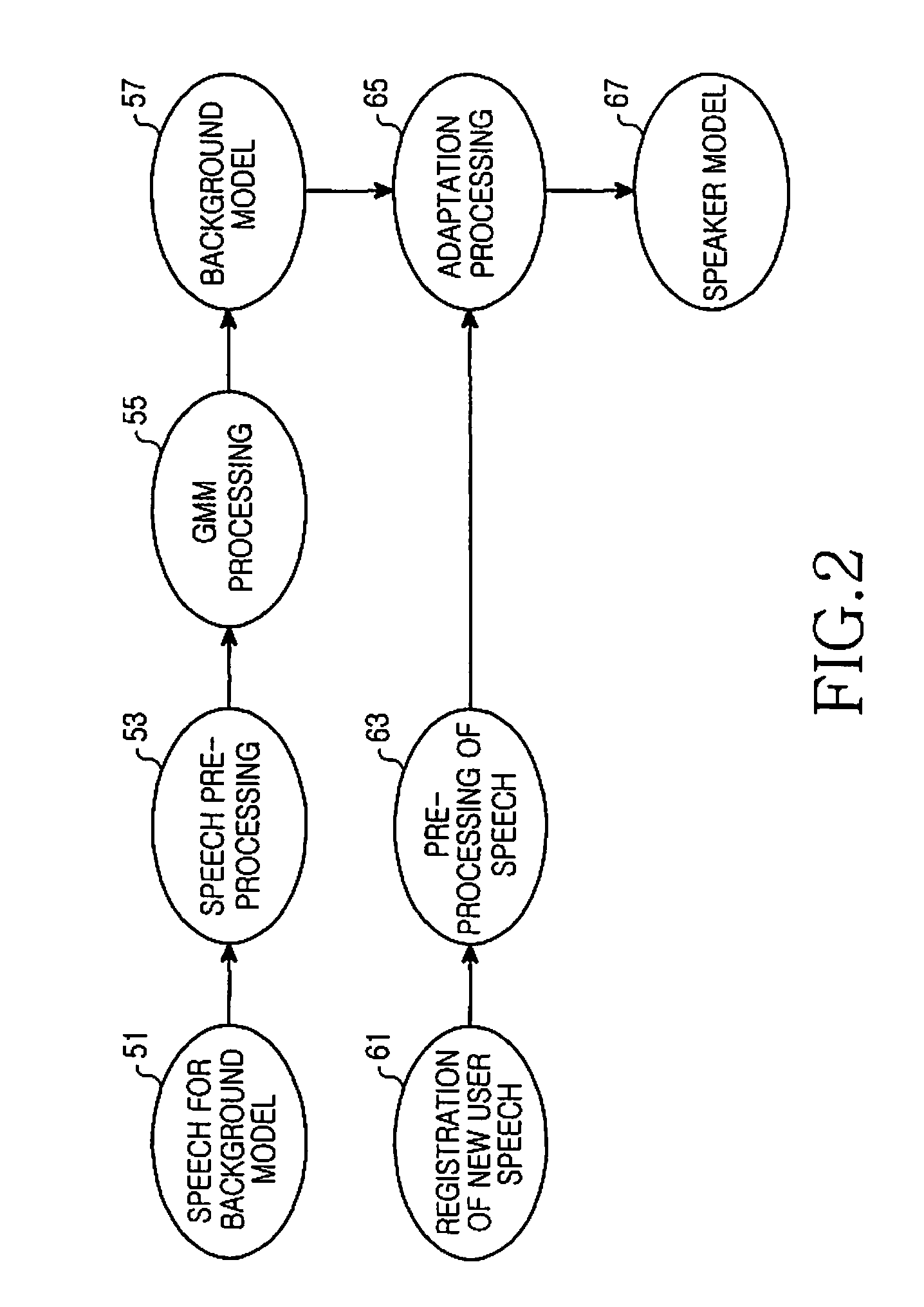

Method and apparatus for speech speaker recognition

InactiveUS20080249774A1Accurate speaker identificationAccurate identificationSpeech recognitionFeature vectorPrincipal component analysis

Disclosed is a method for speech speaker recognition of a speech speaker recognition apparatus, the method including detecting effective speech data from input speech; extracting an acoustic feature from the speech data; generating an acoustic feature transformation matrix from the speech data according to each of Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA), mixing each of the acoustic feature transformation matrixes to construct a hybrid acoustic feature transformation matrix, and multiplying the matrix representing the acoustic feature with the hybrid acoustic feature transformation matrix to generate a final feature vector; and generating a speaker model from the final feature vector, comparing a pre-stored universal speaker model with the generated speaker model to identify the speaker, and verifying the identified speaker.

Owner:SAMSUNG ELECTRONICS CO LTD

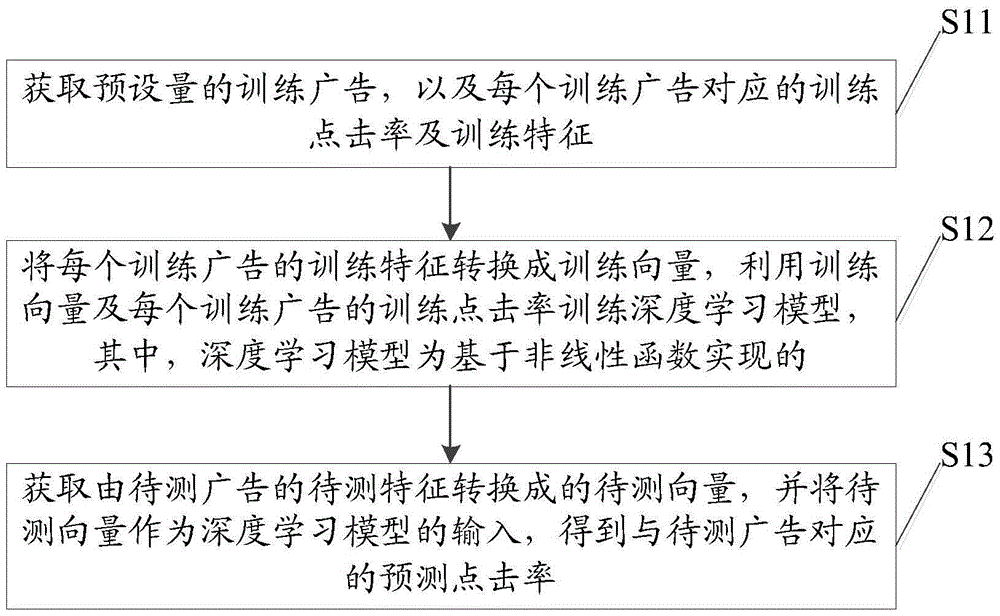

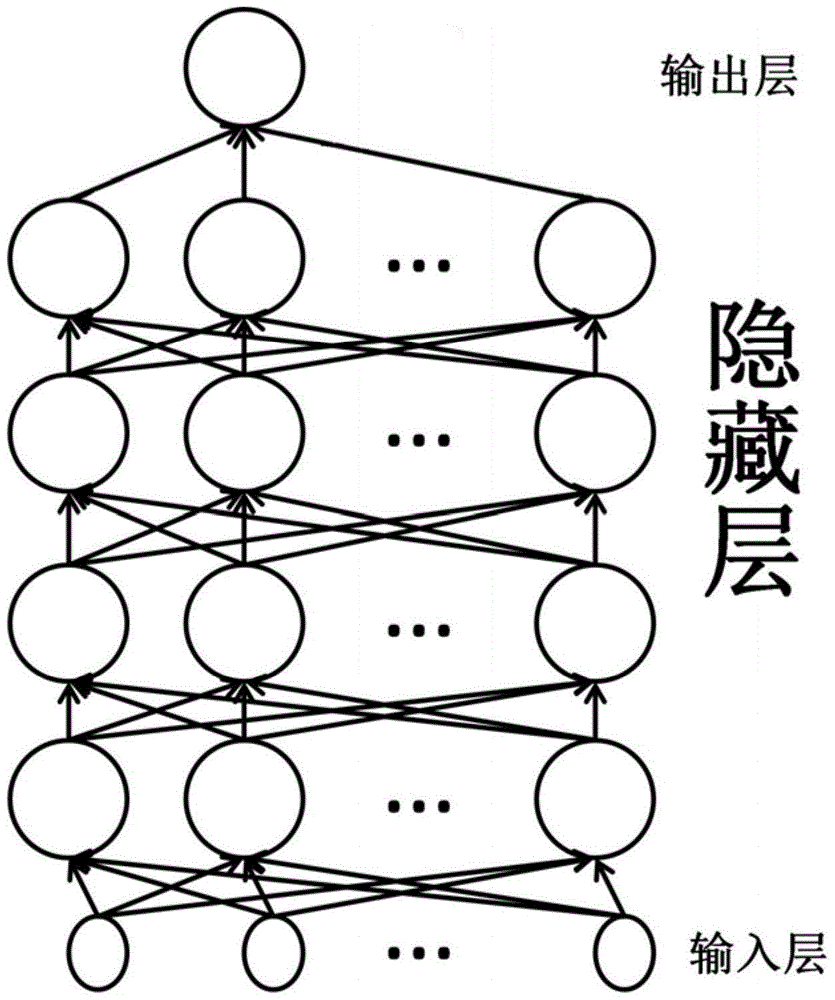

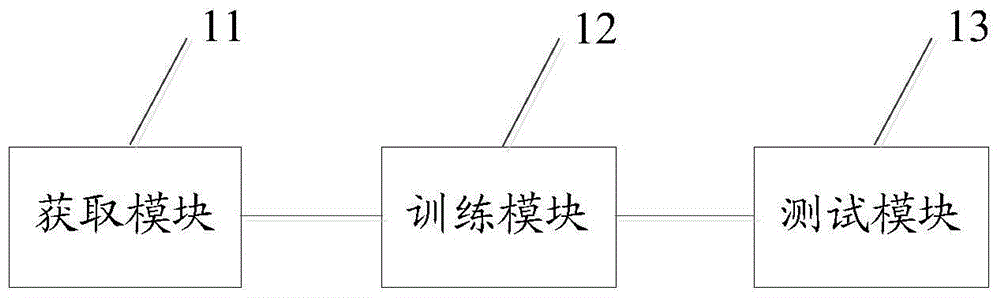

Deep learning-based advertisement click-through rate prediction method and device

The invention discloses a deep learning-based advertisement click-through rate prediction method and device. The method includes the following steps that: a preset number of training advertisements as well as training click-through rates and training characteristics of each training advertisement are acquired; the training characteristics of each training advertisement are converted into training vectors, a deep learning model is trained by using the training vectors and the training click-through rates of each training advertisement, wherein the deep learning model is realized based on a nonlinear function; and a vector to be tested converted from characteristics to be tested of an advertisement to be tested is obtained, and the vector to be tested is adopted as the input of the deep learning model, and a predictive click-through rate corresponding to the advertisement to be tested is obtained. According to the deep learning model in the method of the invention, nonlinear relationships between the characteristics are fully considered, and thus, after the vector to be tested is inputted into the deep learning model, the deep learning model can efficiently and accurately output the predictive click-through rate corresponding to the vector to be tested based on the nonlinear function.

Owner:SHANGHAI TRUELAND INFORMATION & TECH CO LTD

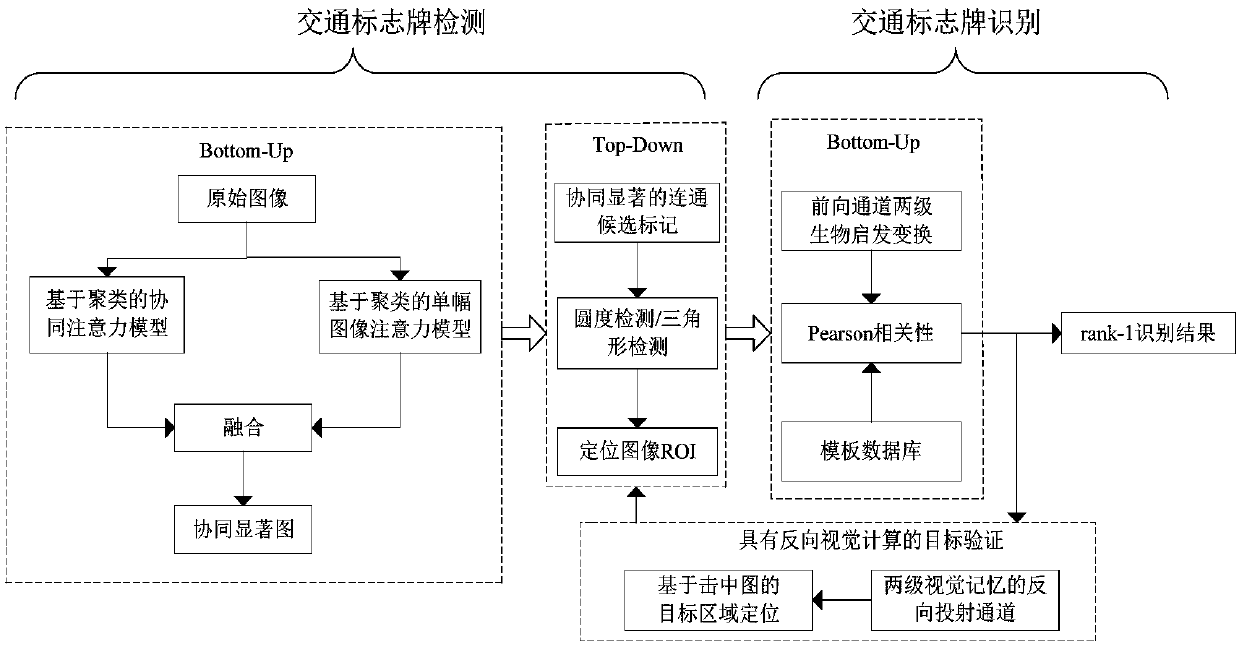

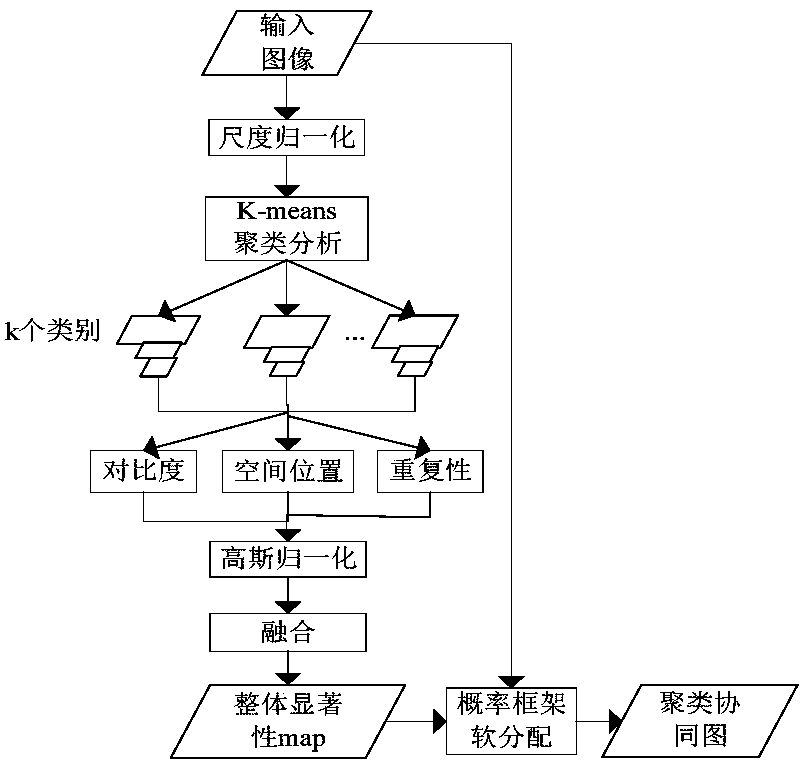

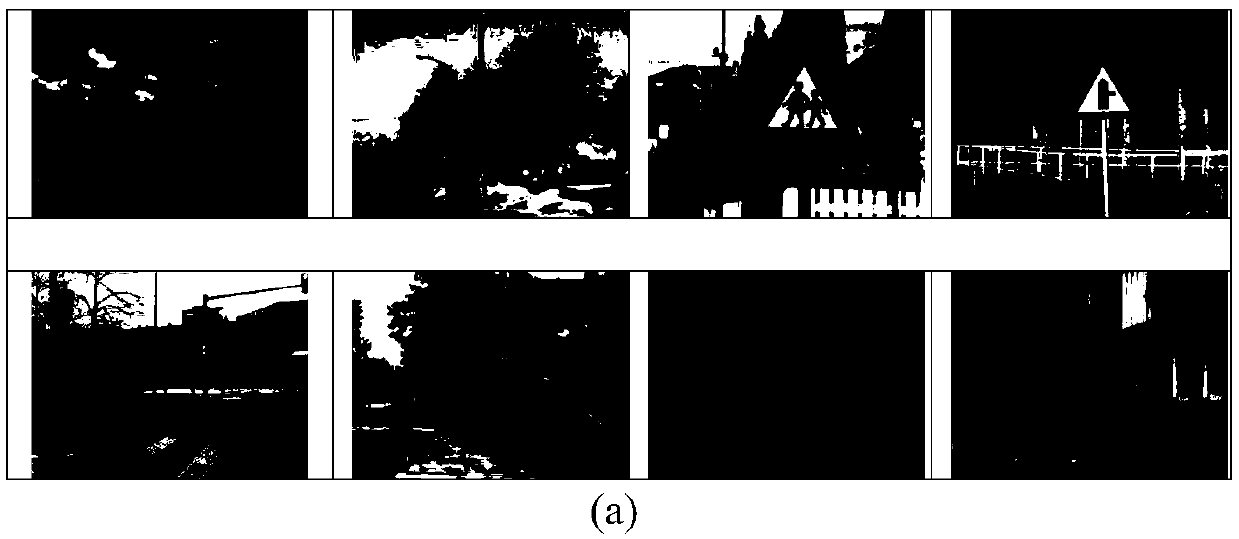

Traffic sign detection and identification method based on collaborative bionic vision in complex city scene

InactiveCN107909059AVerify validityAvoid interferenceImage analysisCharacter and pattern recognitionSaliency mapTraffic sign detection

The invention discloses a traffic sign detection and identification method based on collaborative bionic vision in a complex city scene, which includes the following steps: A, obtaining multiple to-be-detected images in a continuous scene; B, obtaining a cluster collaboration map of the to-be-detected image set; C, obtaining an attention saliency map of each to-be-detected image; D, obtaining a collaboration saliency map corresponding to each to-be-detected image; E, locating a sign ROI (Region of Interest); F, carrying out two-level biologically inspired transformation on the sign ROI by using a forward channel; and H, using a feature transformation map and traffic sign template images pre-stored in a database to carry out Pearson correlation calculation to complete the identification ofthe to-be-detected images. In the method, visual processing of a target by the human brain is simulated, and bottom-up visual processing and top-down visual processing processes are integrated. The collaborative nature of global images is considered, so that image location is accurate, and the robustness of identification is strong.

Owner:CENT SOUTH UNIV

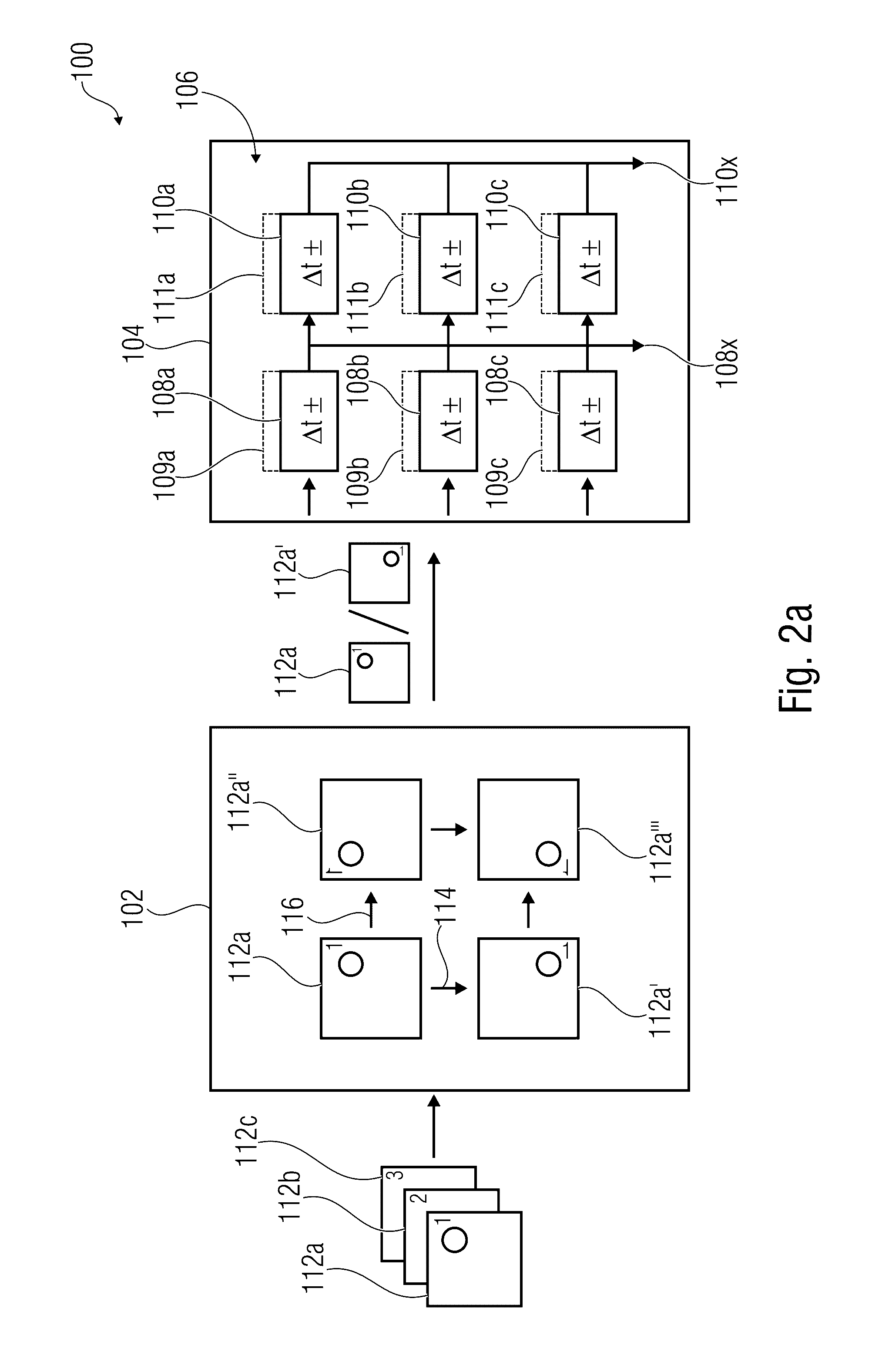

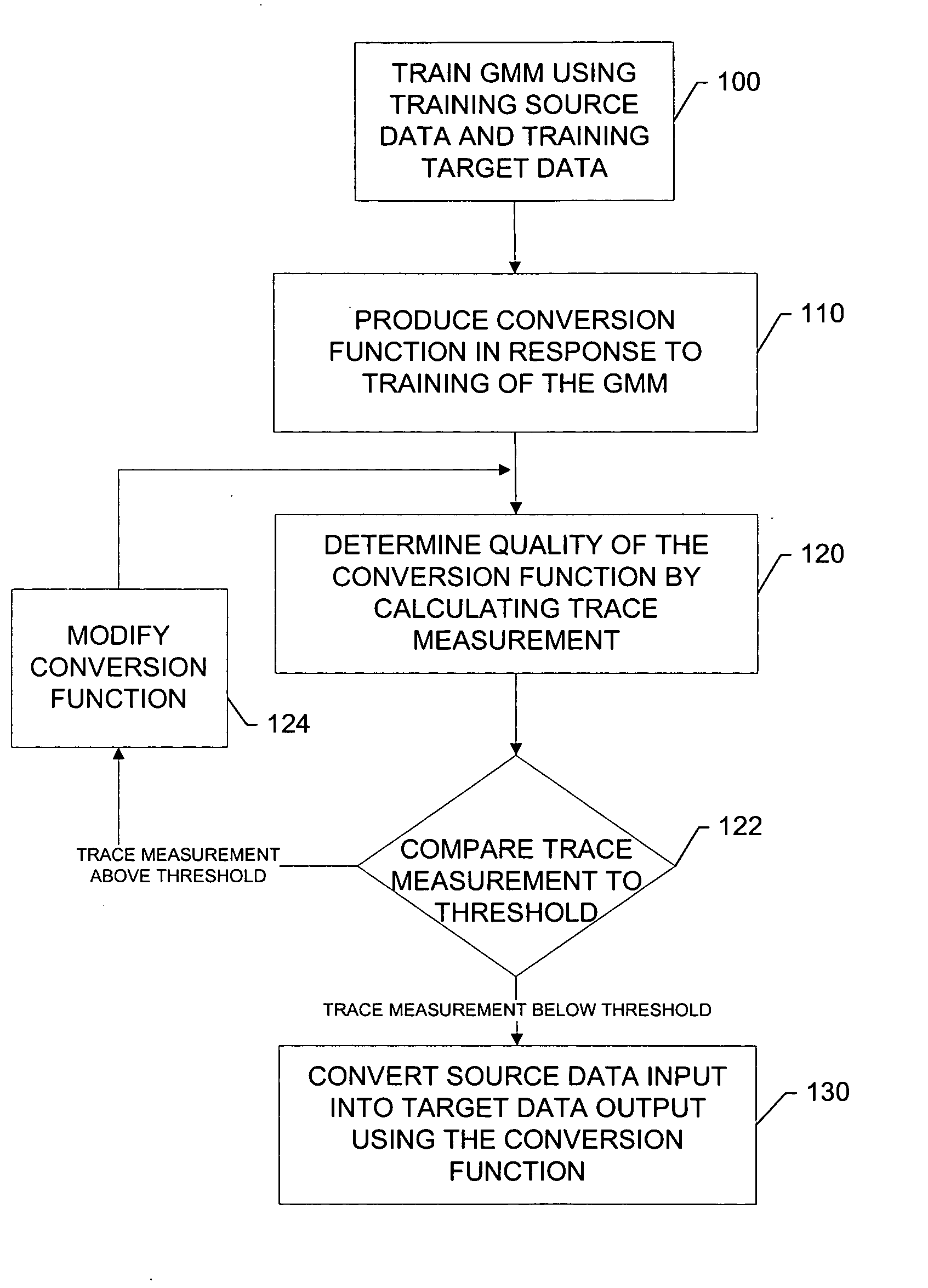

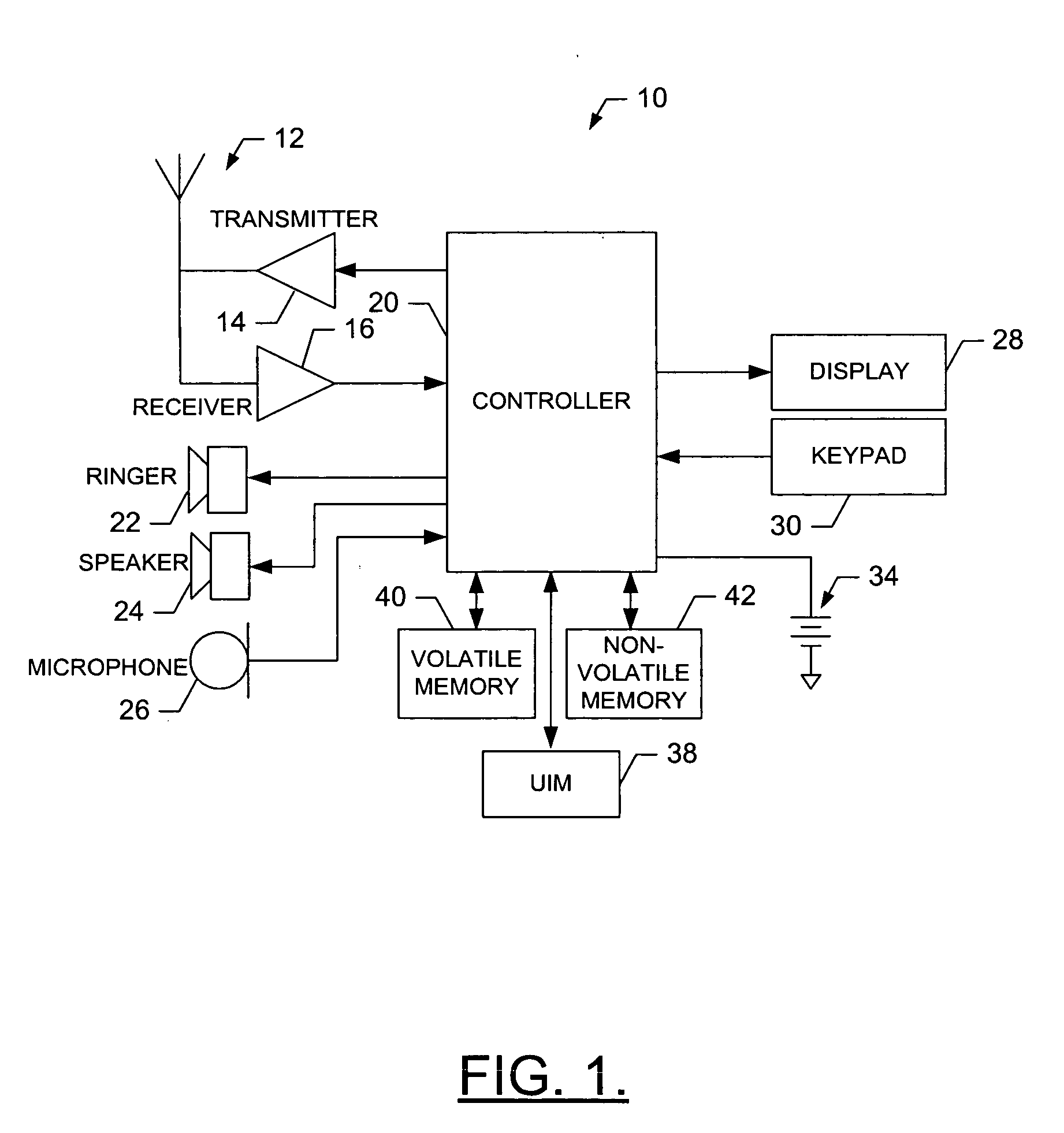

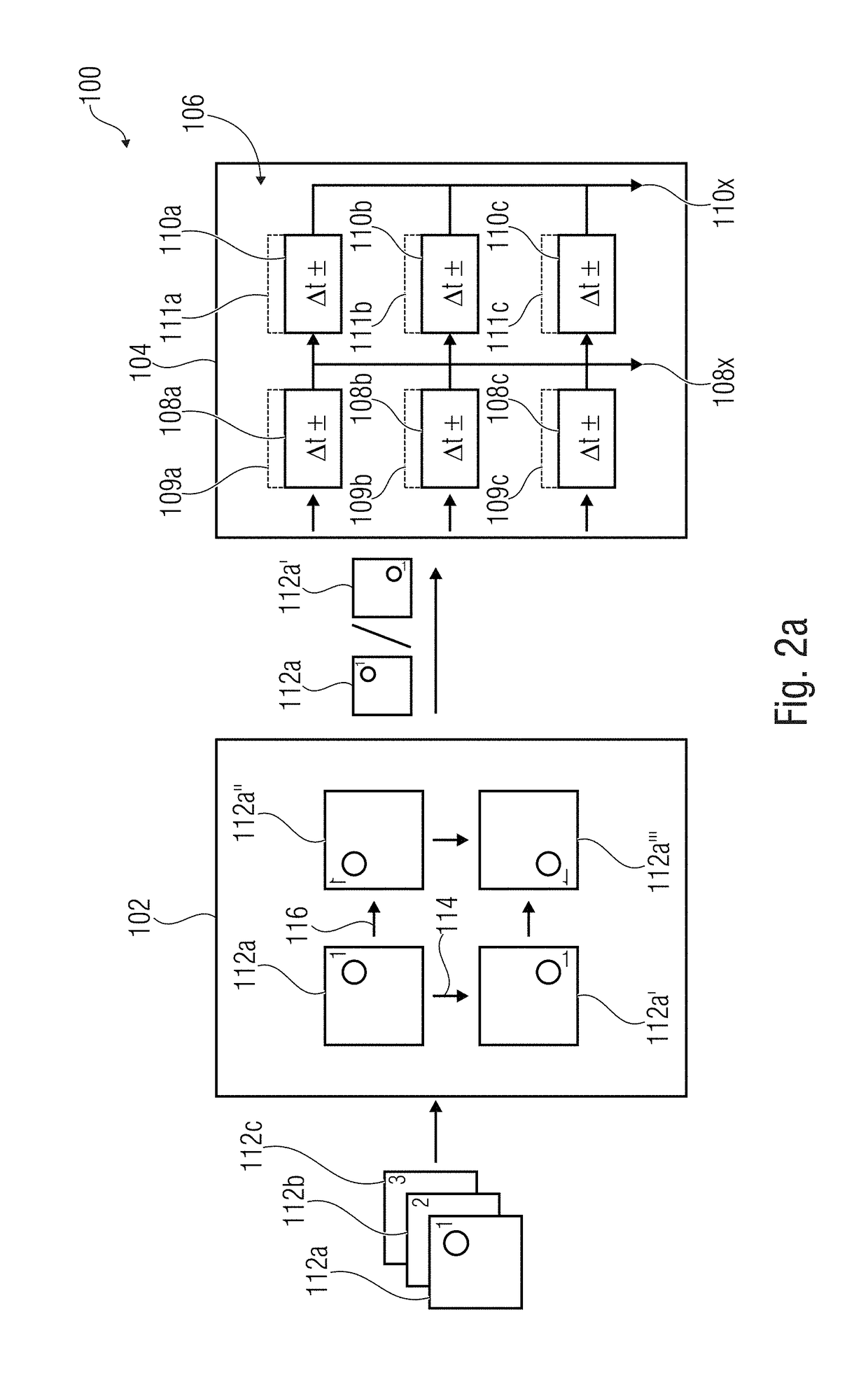

Method, apparatus, mobile terminal and computer program product for providing efficient evaluation of feature transformation

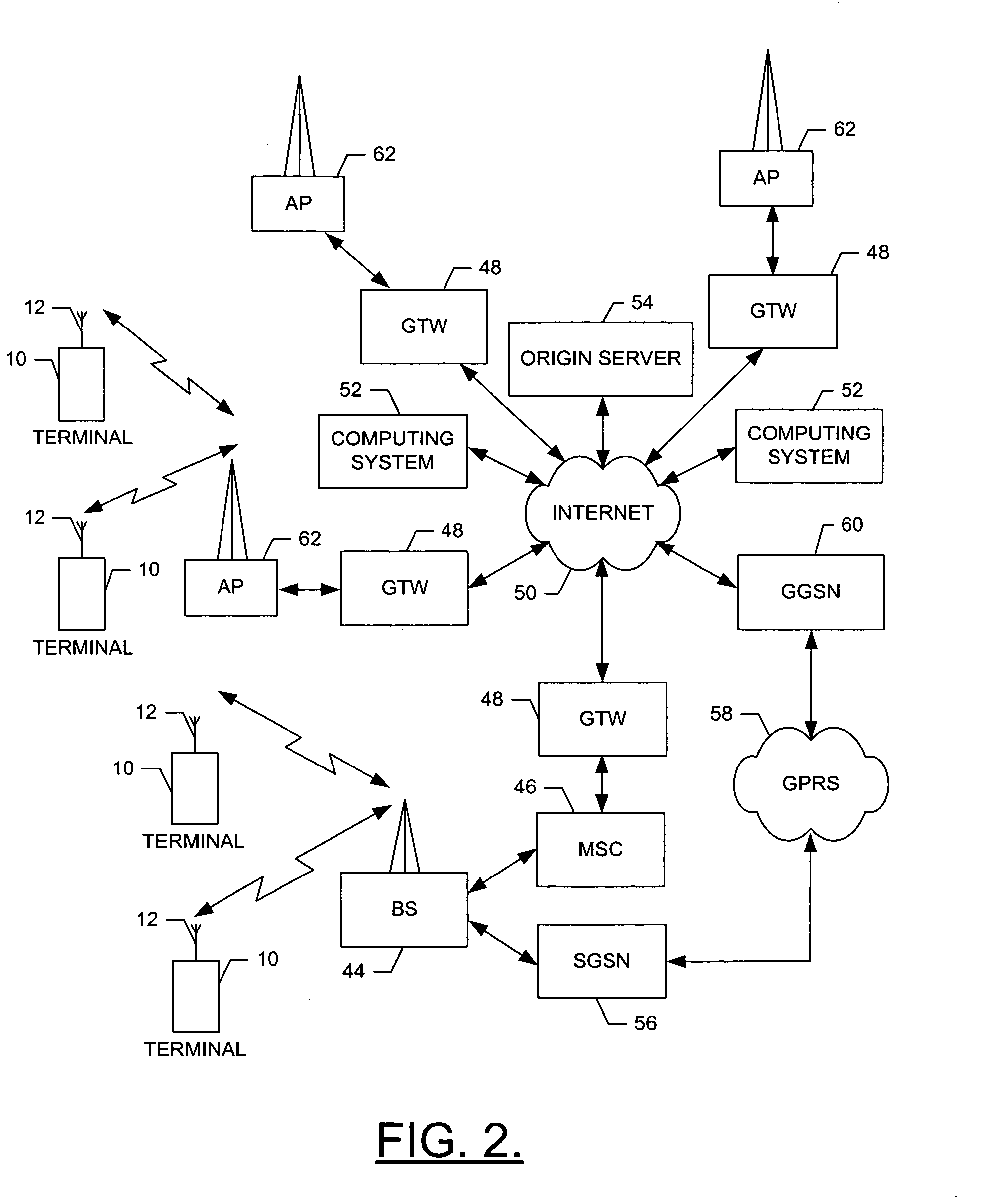

ActiveUS20070239634A1Testing or verification data may be reduced or eliminatedReduce resource consumptionDigital computer detailsBiological neural network modelsFeature transformationModel transformation

An apparatus for providing efficient evaluation of feature transformation includes a training module and a transformation module. The training module is configured to train a Gaussian mixture model (GMM) using training source data and training target data. The transformation module is in communication with the training module. The transformation module is configured to produce a conversion function in response to the training of the GMM. The training module is further configured to determine a quality of the conversion function prior to use of the conversion function by calculating a trace measurement of the GMM.

Owner:HMD GLOBAL

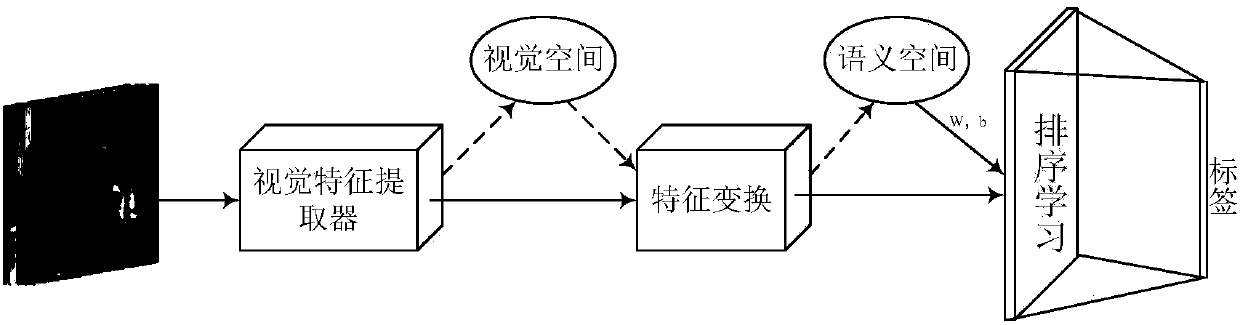

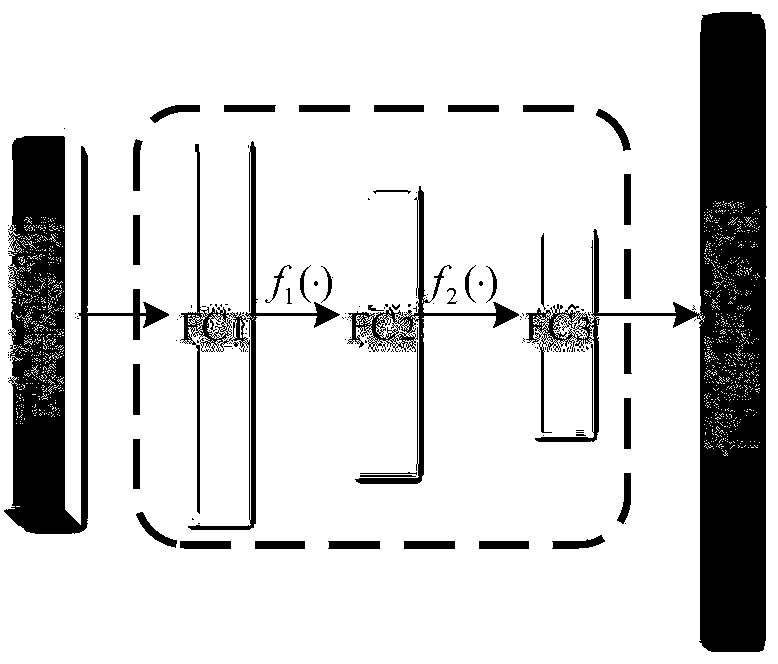

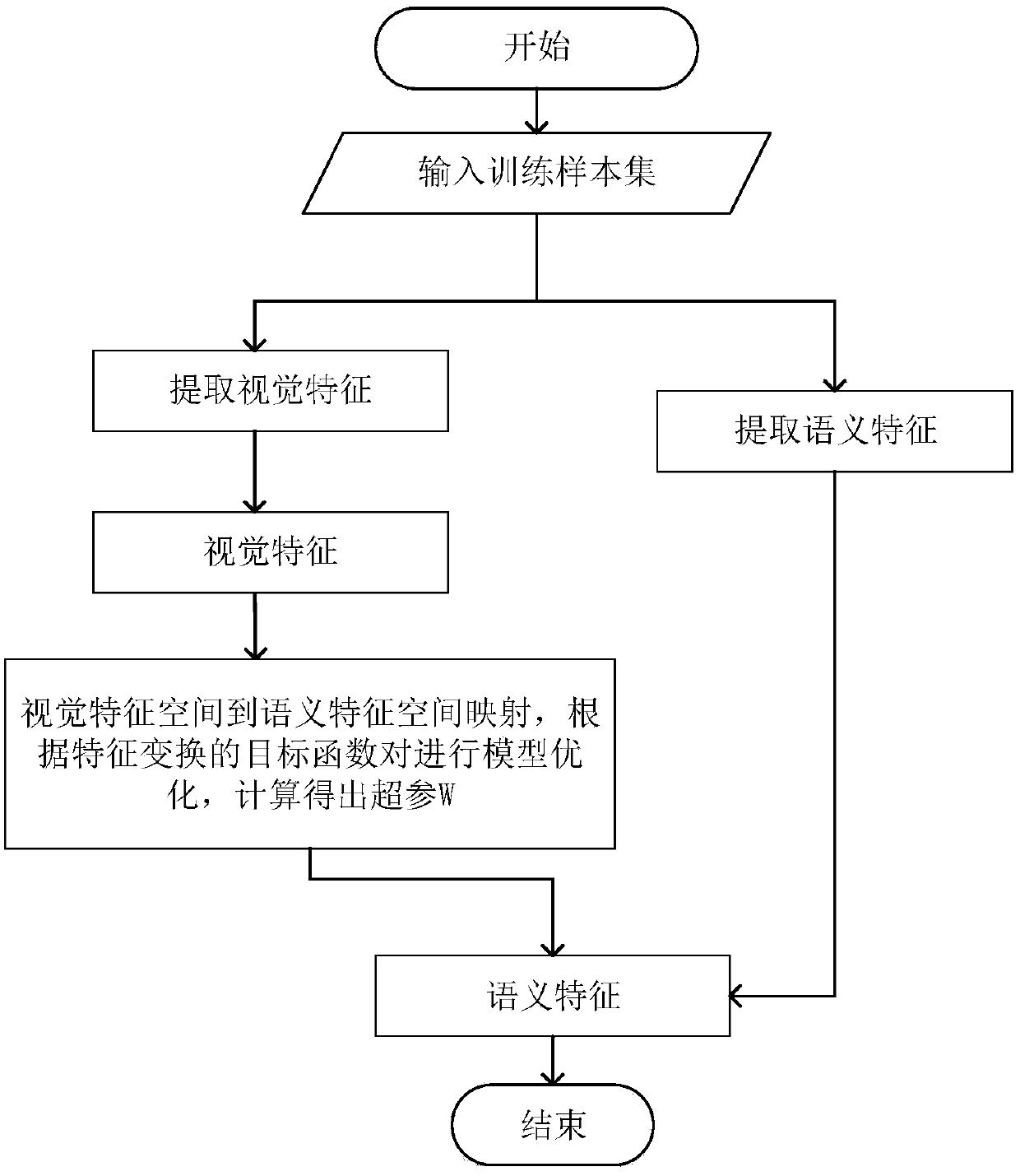

Ranking-learning-based multi-label zero sample classification method

InactiveCN107766873ASolve the problem of multi-label labelingTake full advantage of semantic associationsCharacter and pattern recognitionNeural learning methodsLearning basedData set

The invention relates to a multi-label image classification technology oriented to the field of multimedia content understanding and analysis so as to construct a new classification model and realizea correlation-level-based multi-label classification algorithm. A ranking-learning-based multi-label zero sample classification method provided by the invention comprises the following steps: at a feature extraction stage, carrying out feature description of different modes by using an existing feature extractor to obtain a training data set; at a multi-mode feature transformation stage, giving atraining sample set pair including an original image and a corresponding label between which determined marking information is provided so as to train the model; and at a classification marking stage,giving an original image of a testing sample and a possible label to carry out testing. At the classification marking stage, the correspondence relationship between the original image and the label is not determined. The ranking-learning-based multi-label zero sample classification method is mainly applied to the multi-label image classification occasions.

Owner:TIANJIN UNIV

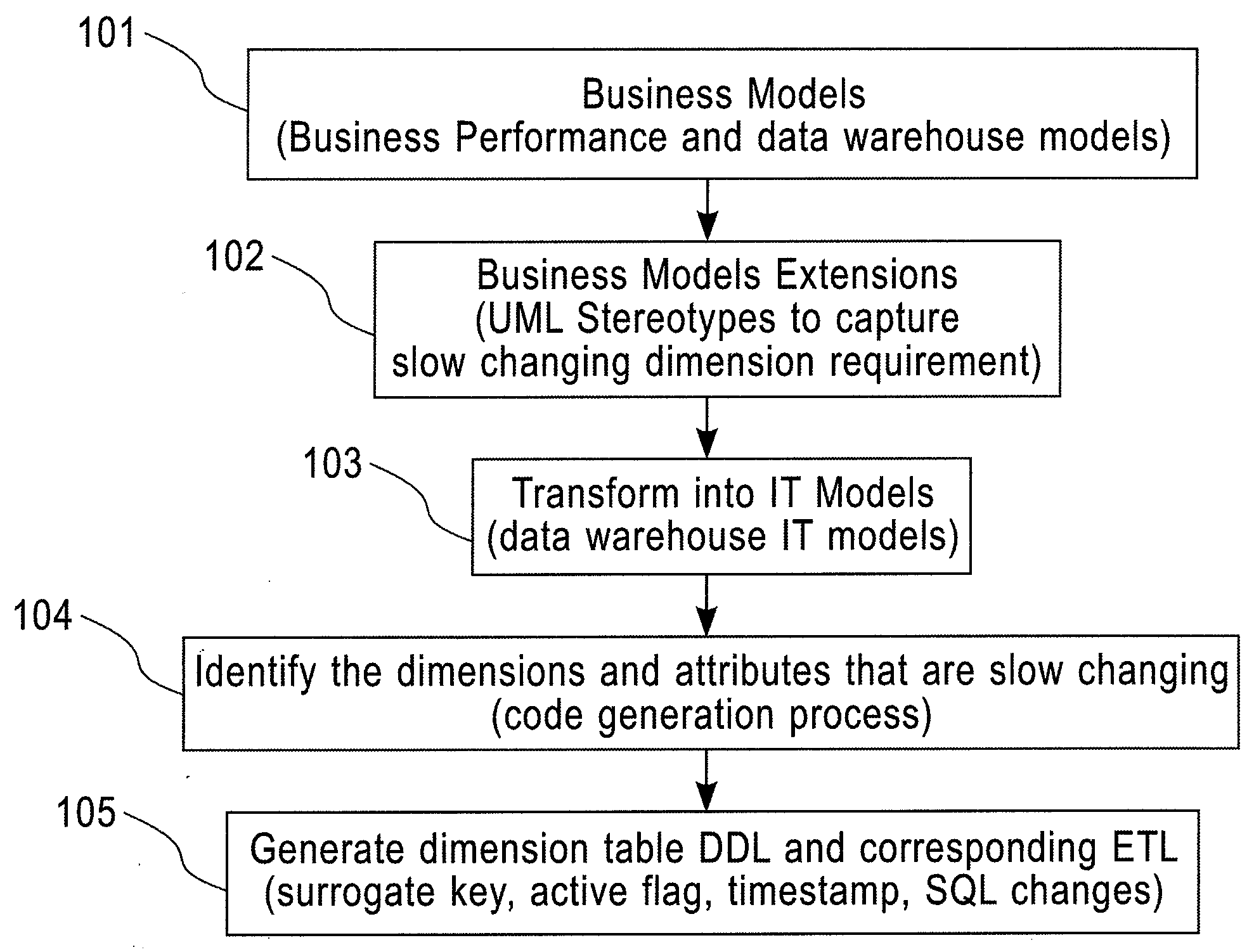

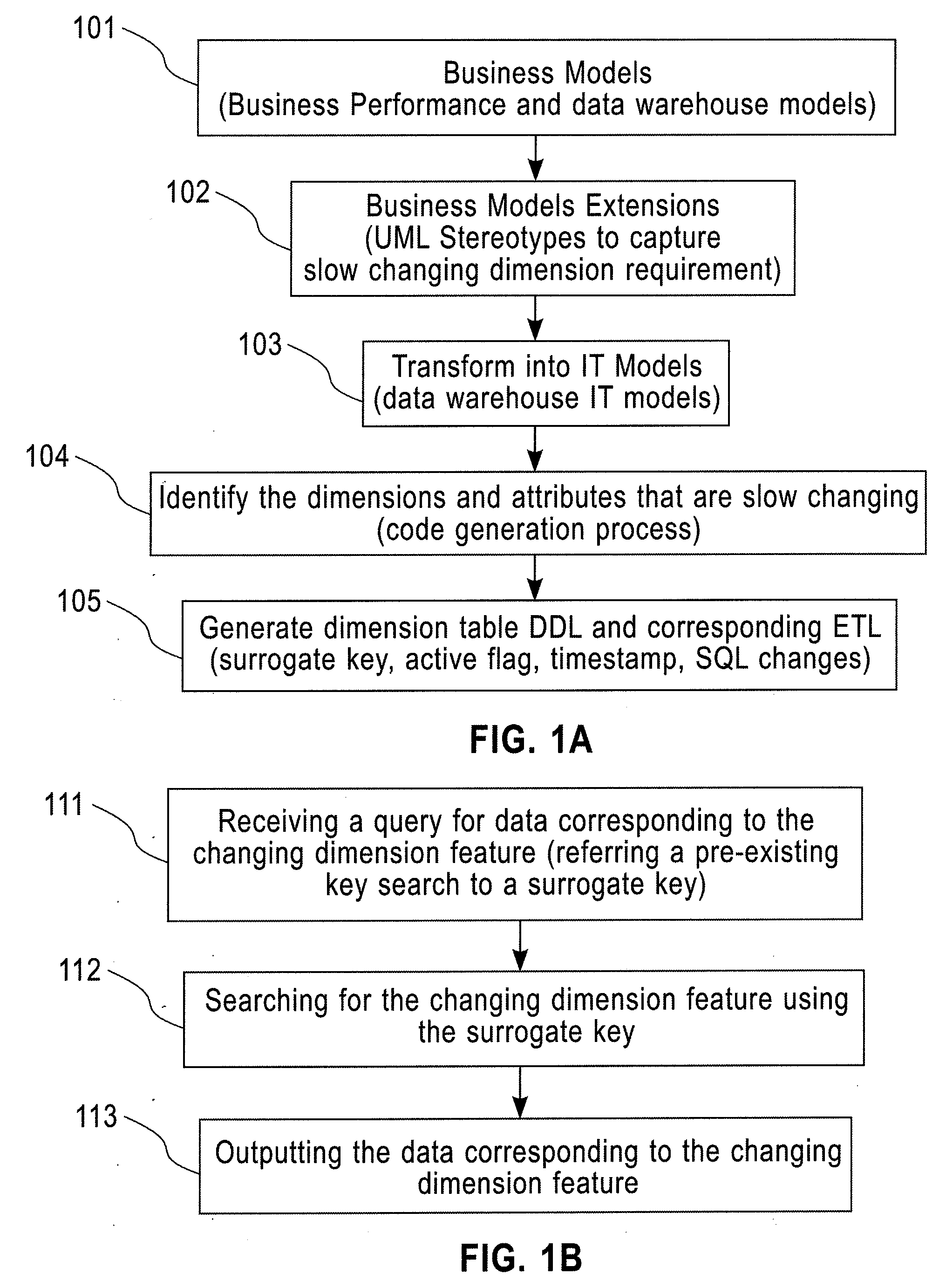

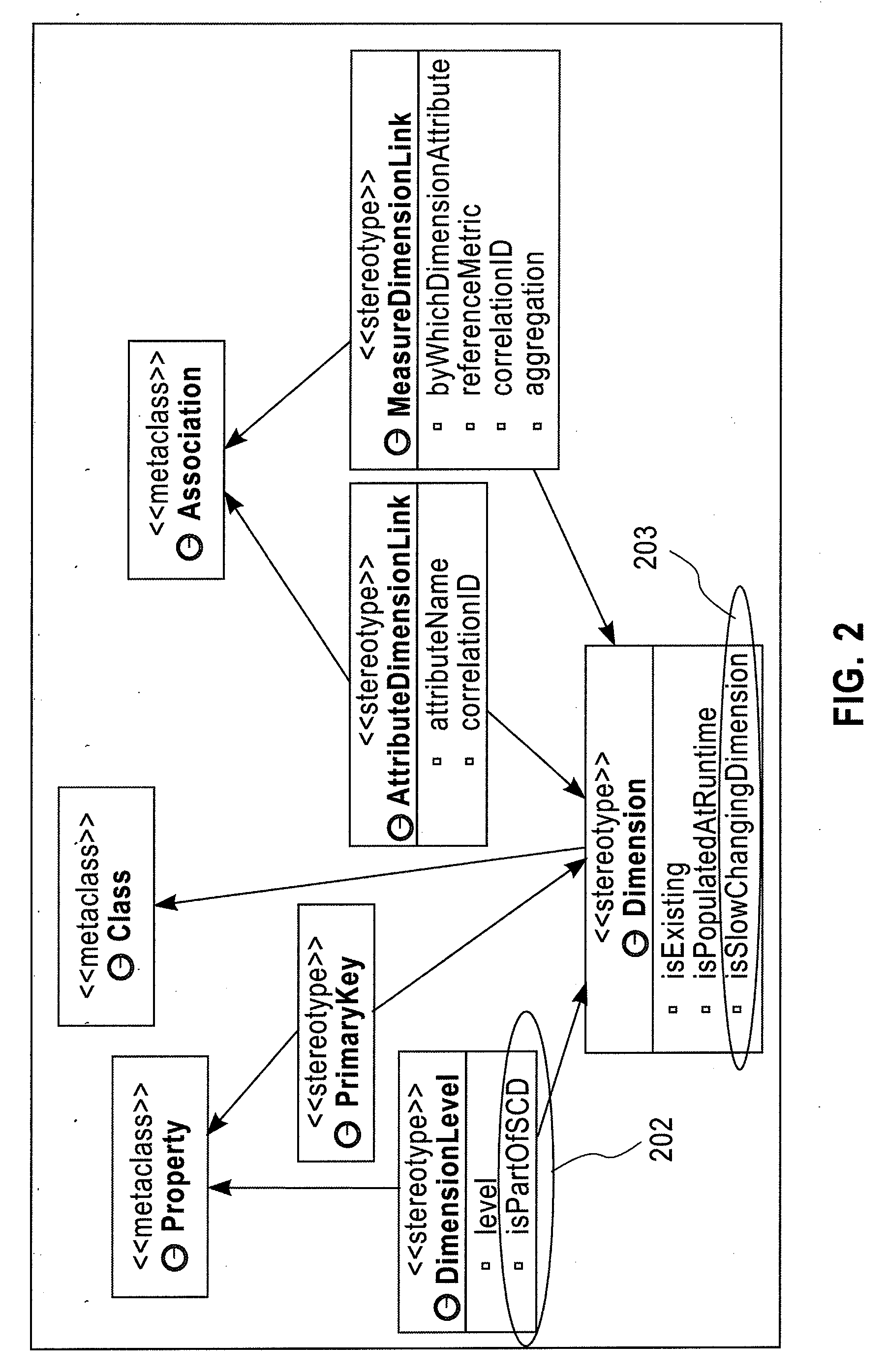

System and Method for Modeling Slow Changing Dimension and Auto Management Using Model Driven Business Performance Management

InactiveUS20090006146A1Visual data miningStructured data browsingData warehouseBusiness performance management

A system for generating a model for tracking a changing dimension feature of data in a business model includes a memory for storing the business model and a computer readable code for modeling the changes in the changing dimension feature and a processor for executing the computer readable code to perform method steps including capturing the changing dimension feature of the business model, transforming the changing dimension feature into a data warehouse model corresponding the business model, identifying changing dimensions and attributes in the changing dimension feature according to the data warehouse model, and generating a run-time deployable component for tracking the changing dimension feature based on the identified changing dimensions and attributes.

Owner:IBM CORP

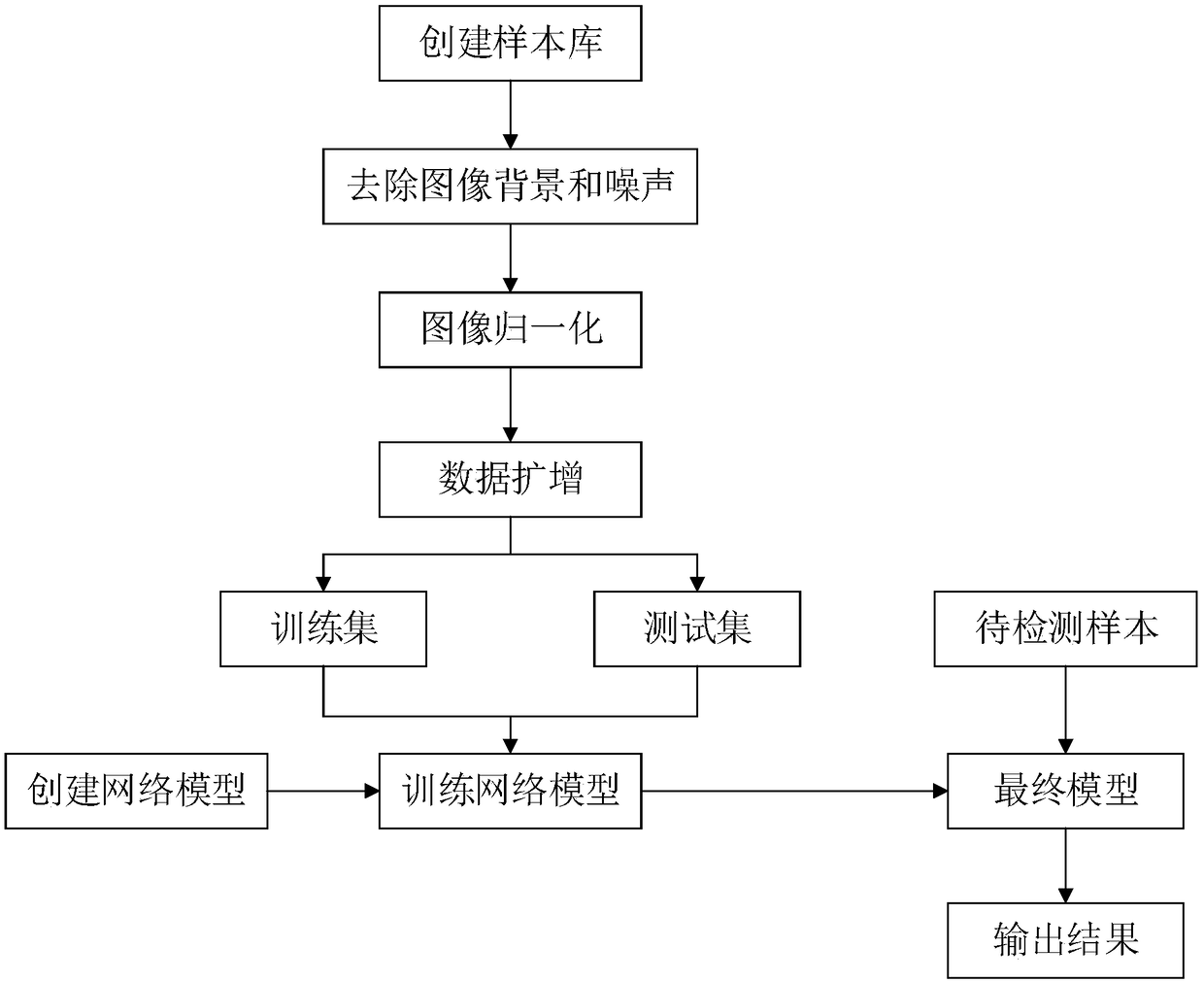

Diabetic retinopathy grade classification method based on deep learning

InactiveCN108960257AGet rid of dependencyStrong generalizationRecognition of medical/anatomical patternsDiabetes retinopathyClassification methods

The invention provides a diabetic retinopathy grade classification method based on deep learning. The diabetic retinopathy grade classification method comprises the steps of: constructing a sample library; removing backgrounds and noise of ophthalmoscope photographs in the sample library; normalizing the images of different brightness and different intensity to the same range by adopting a local mean value subtracting method; adopting random stretching and rotating methods for different samples for data augmentation, and constructing a training set and a test set; training an initial deep learning network model by establishing an input portion architecture, a multi-branch feature transformation portion architecture and an output portion architecture separately; and inputting samples to betested into the trained initial deep learning network model for diabetic retinopathy grade classification. Compared with the traditional processing method, the diabetic retinopathy grade classification method gets rid of the dependence on prior knowledge, and has good generalization ability; and by adopting the designed multiple grades, a small-sized convolution kernel can be used for extracting very tiny lesion features, thereby making the classification results more reliable.

Owner:NORTHEASTERN UNIV

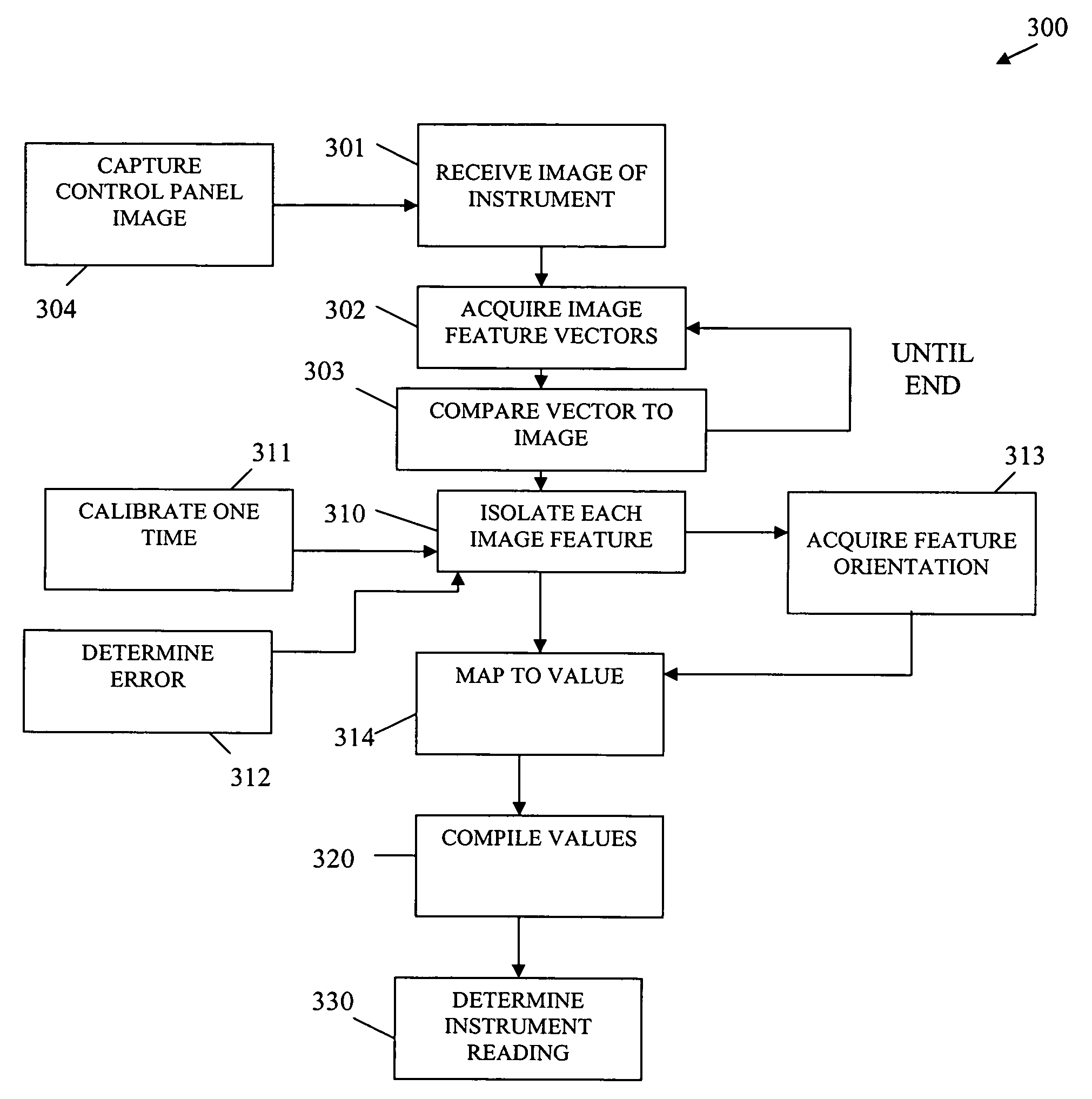

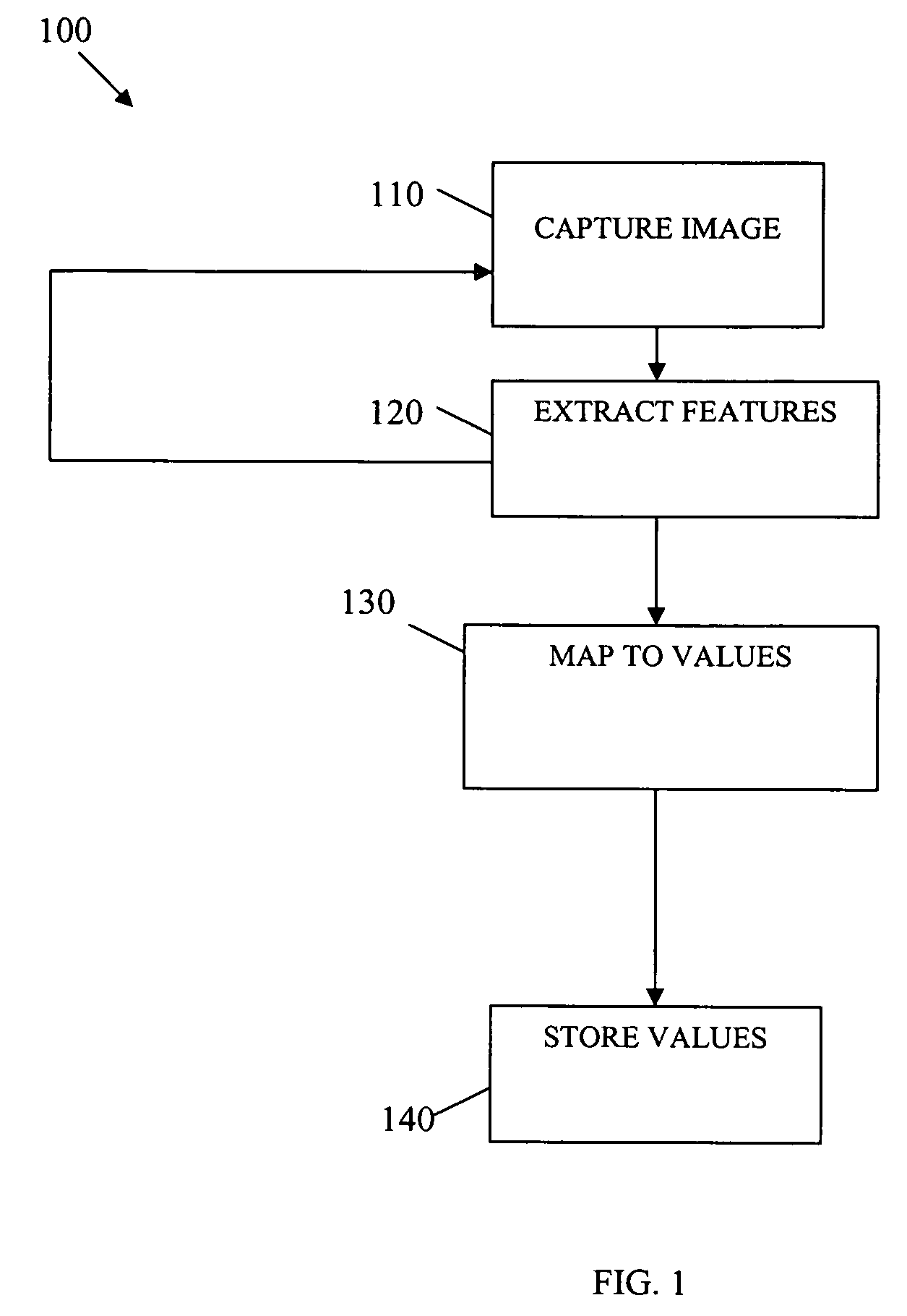

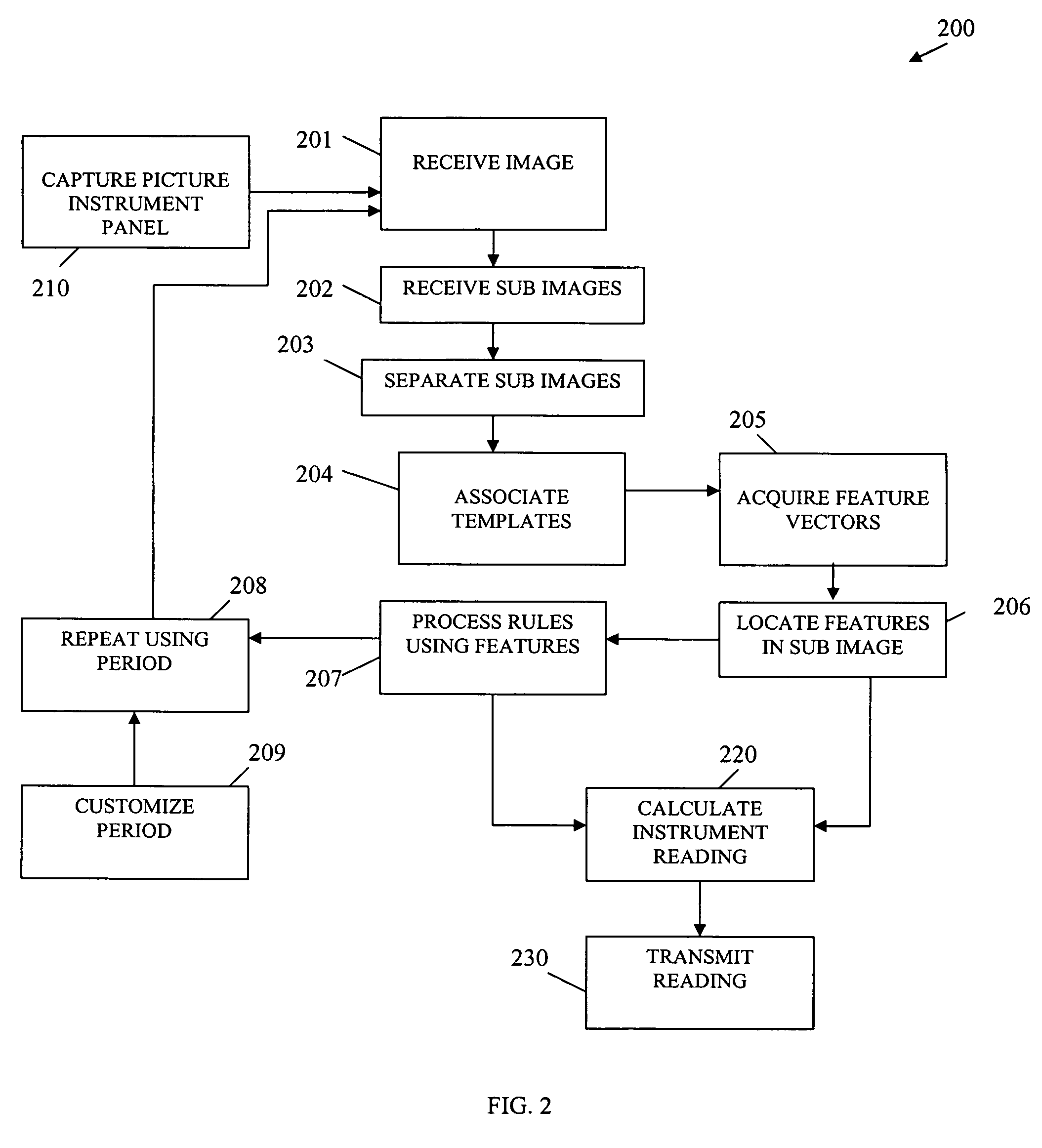

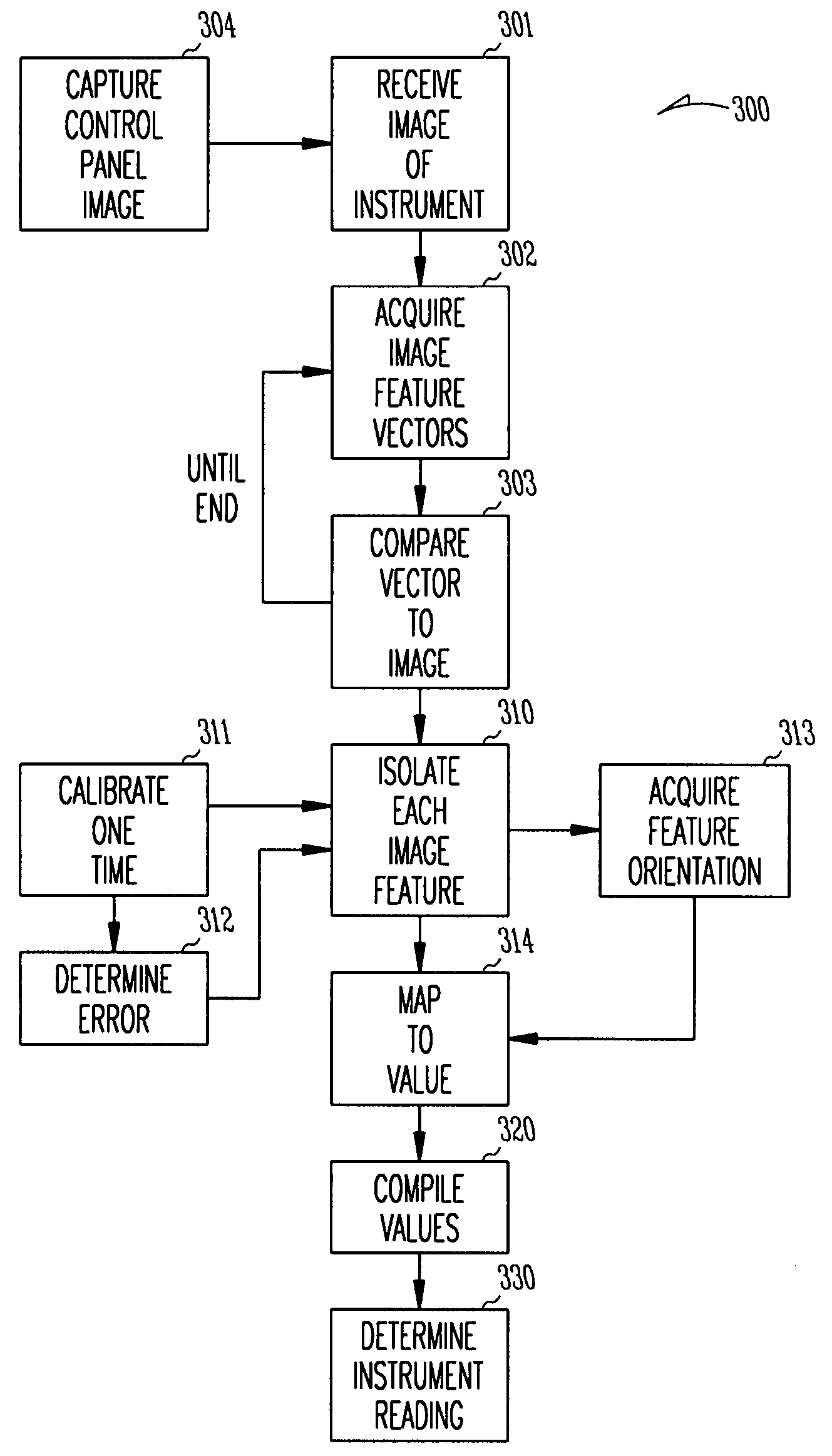

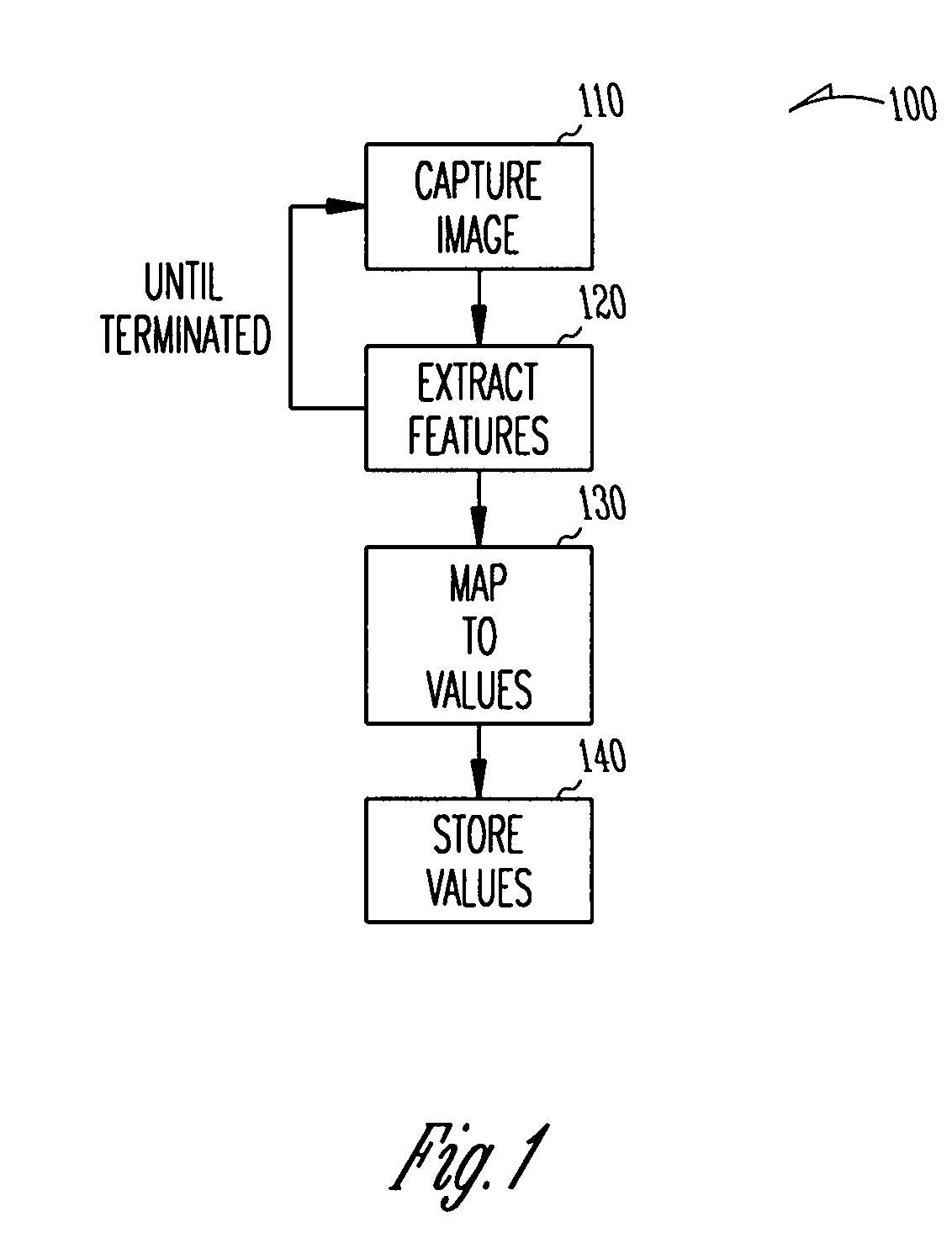

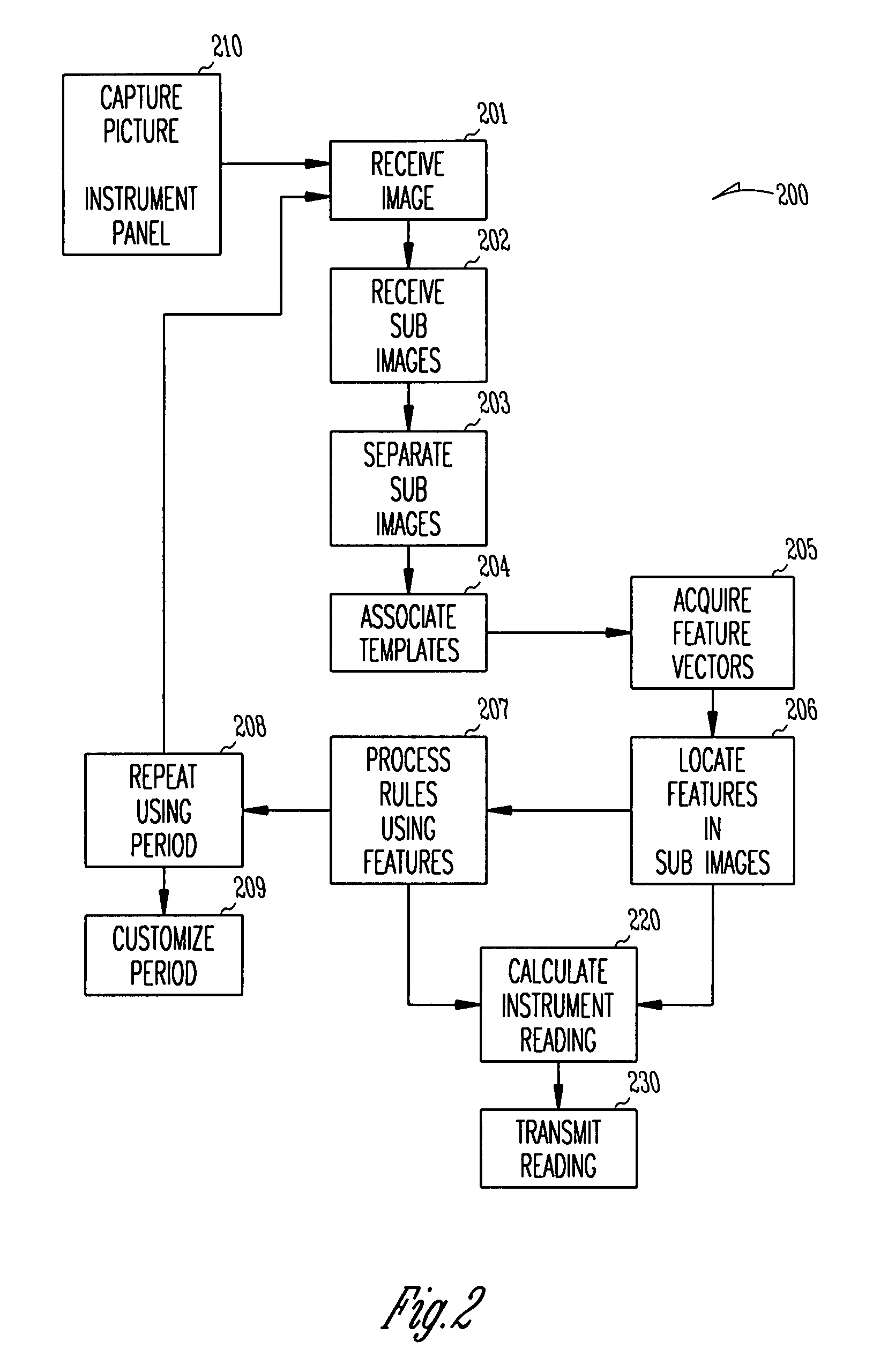

Methods, functional Data, and Systems for image feature translation

Owner:HONEYWELL INC

Methods, functional data, and systems for image feature translation

Owner:HONEYWELL INT INC

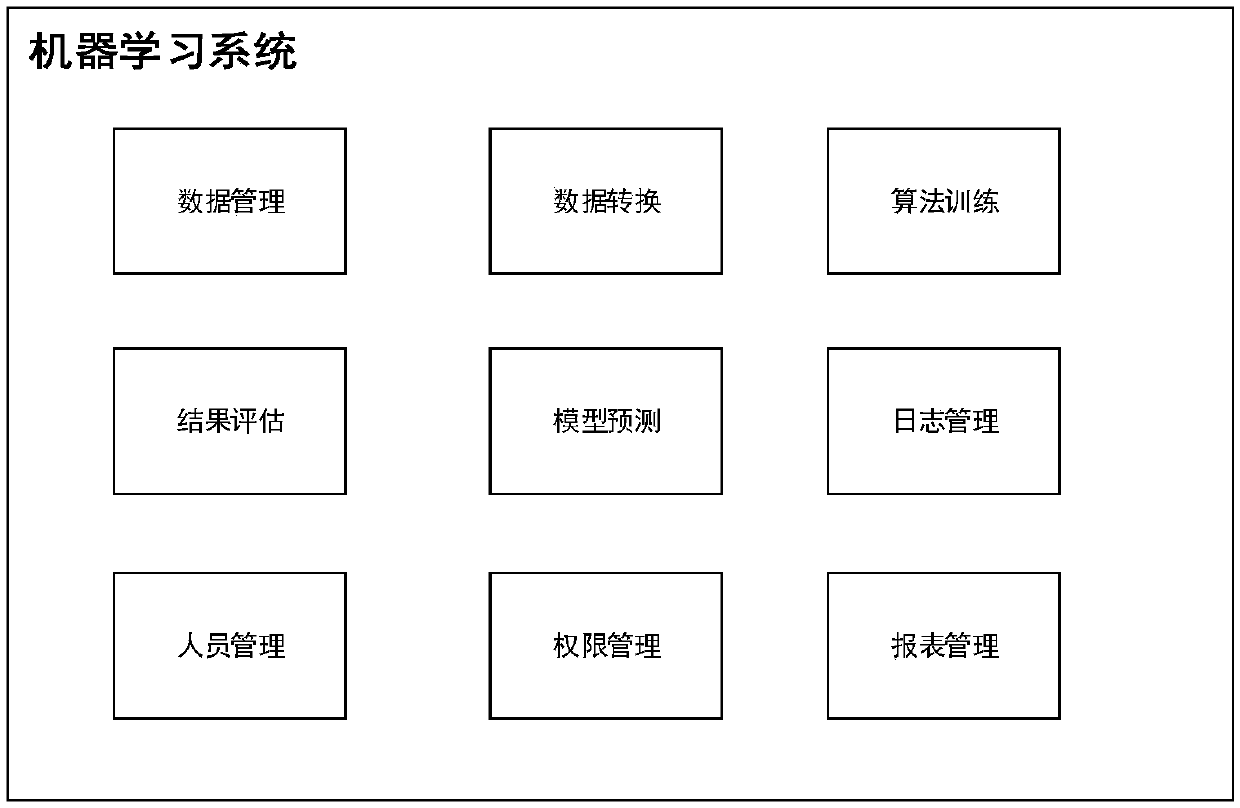

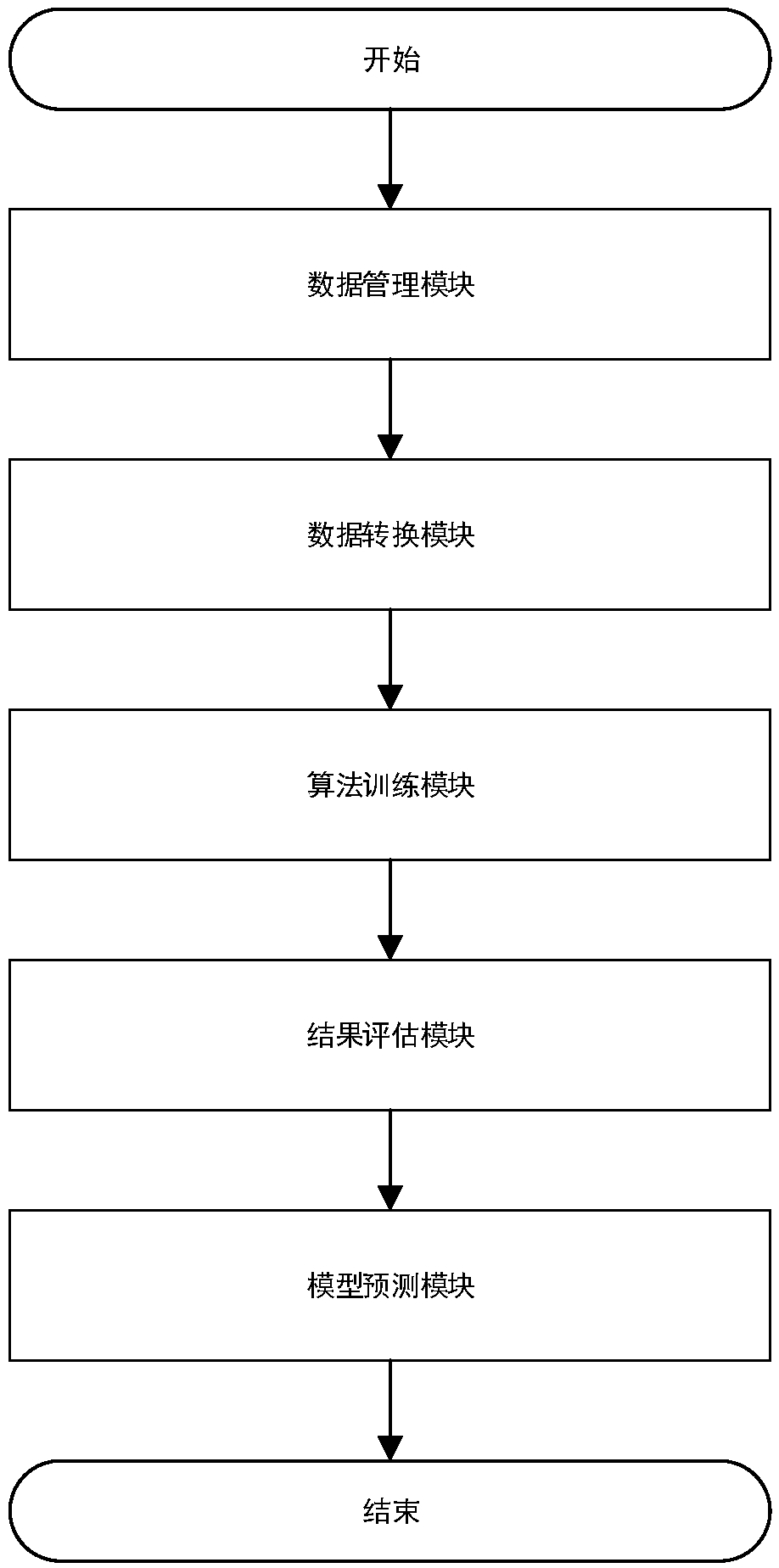

Machine learning-based system and learning method

InactiveCN107844836AReduce complex mathematics knowledge requirementsAuxiliary productivityForecastingCharacter and pattern recognitionBatch processingStudy methods

The invention discloses a machine learning-based system and a learning method. The system includes a data management module, a data conversion module, an algorithm training module, a result evaluationmodule and a model prediction module. According to the learning method, the data management module automatically determines the data type of a data source uploaded by a user and preprocesses the data; the user labels the data; the data conversion module substitutes the preprocessed data into a feature conversion algorithm set to obtain feature-converted data; the algorithm training module automatically substitutes the feature-converted data into a classification, clustering, or regression algorithm for batch training so as to obtain a batch of classification, clustering, or regression models;the result evaluation module evaluates the standard indicators of the above models so as to obtain an optimal model; and the model prediction module predicts the latest data source with the optimal model. The system can automatically perform batched data processing and algorithm training, manual intervention in an intermediate process is not required, and therefore, the threshold of learning is decreased, and efficiency can be improved.

Owner:SUNYARD SYST ENG CO LTD

Database for mixed media document system

ActiveUS9405751B2Multimedia data indexingSpecial data processing applicationsHypothesisDocumentation procedure

A Mixed Media Reality (MMR) system and associated techniques are disclosed. The MMR system provides mechanisms for forming a mixed media document that includes media of at least two types (e.g., printed paper as a first medium and digital content and / or web link as a second medium). In one particular embodiment, the MMR system includes a content-based retrieval database configured with an index table to represent two-dimensional geometric relationships between objects extracted from a printed document in a way that allows look-up using a text-based index. A ranked set of document, page and location hypotheses can be computed given data from the index table. The techniques effectively transform features detected in an image patch into textual terms (or other searchable features) that represent both the features themselves and the geometric relationship between them. A storage facility can be used to store additional characteristics about each document image patch.

Owner:RICOH KK

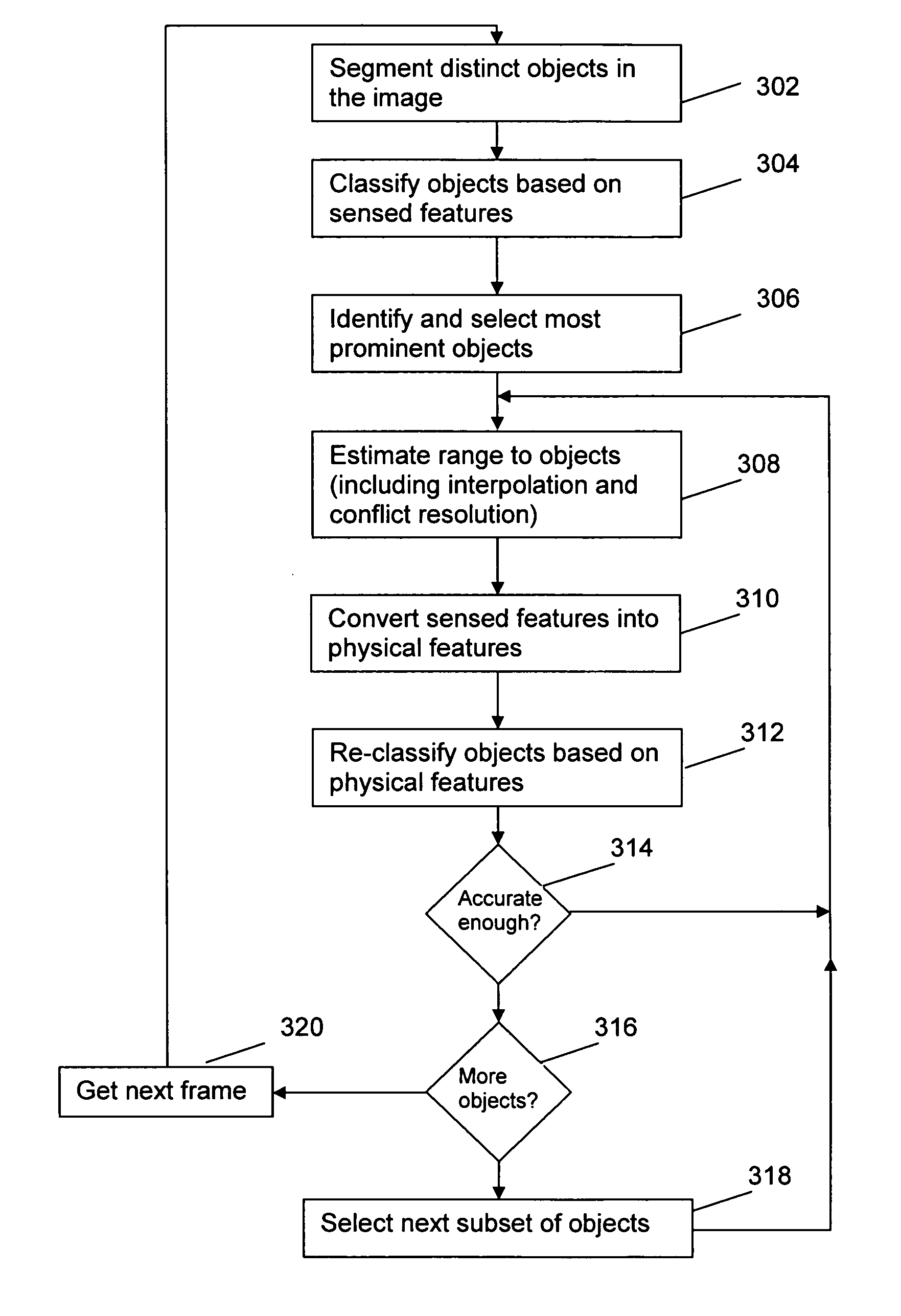

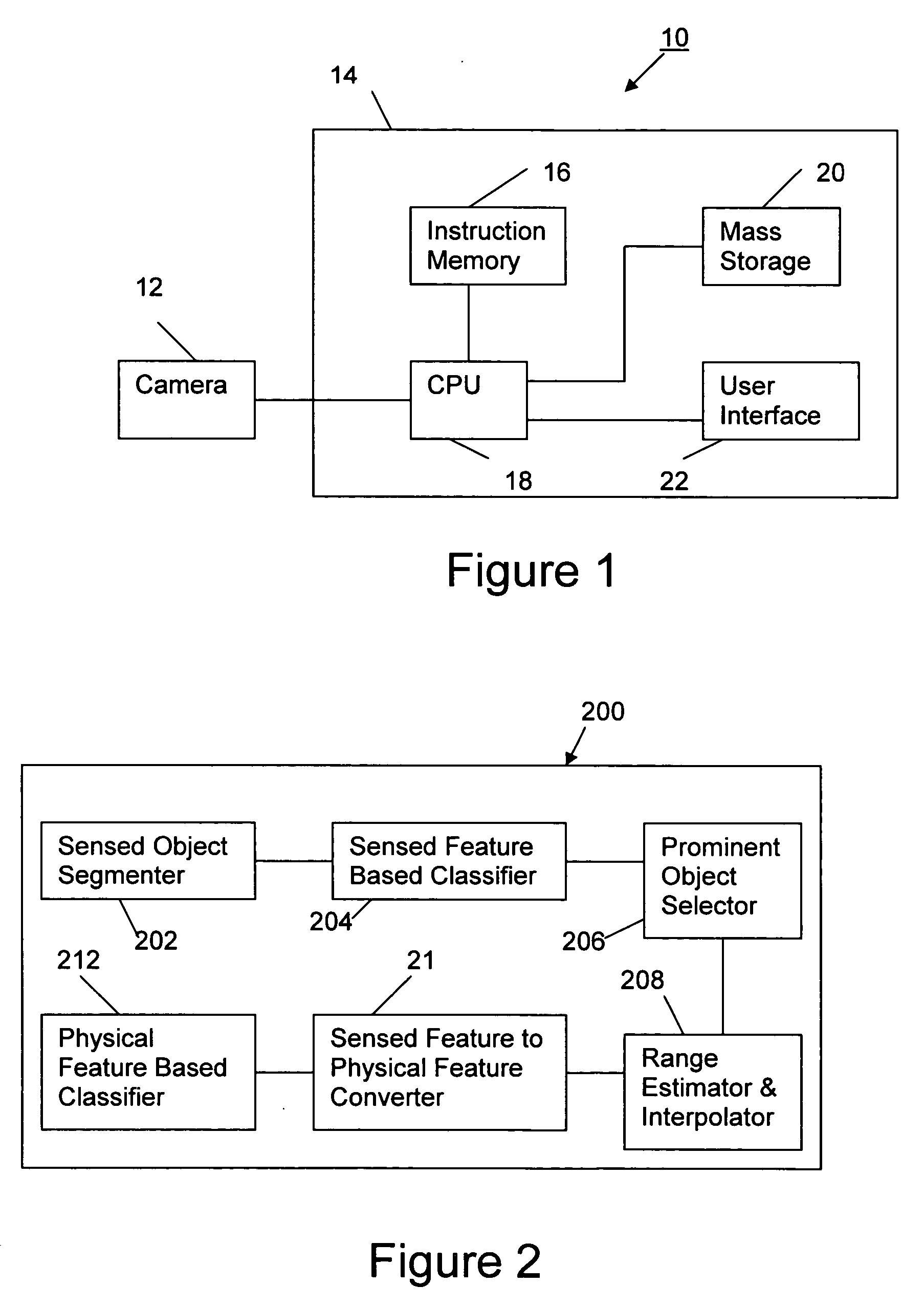

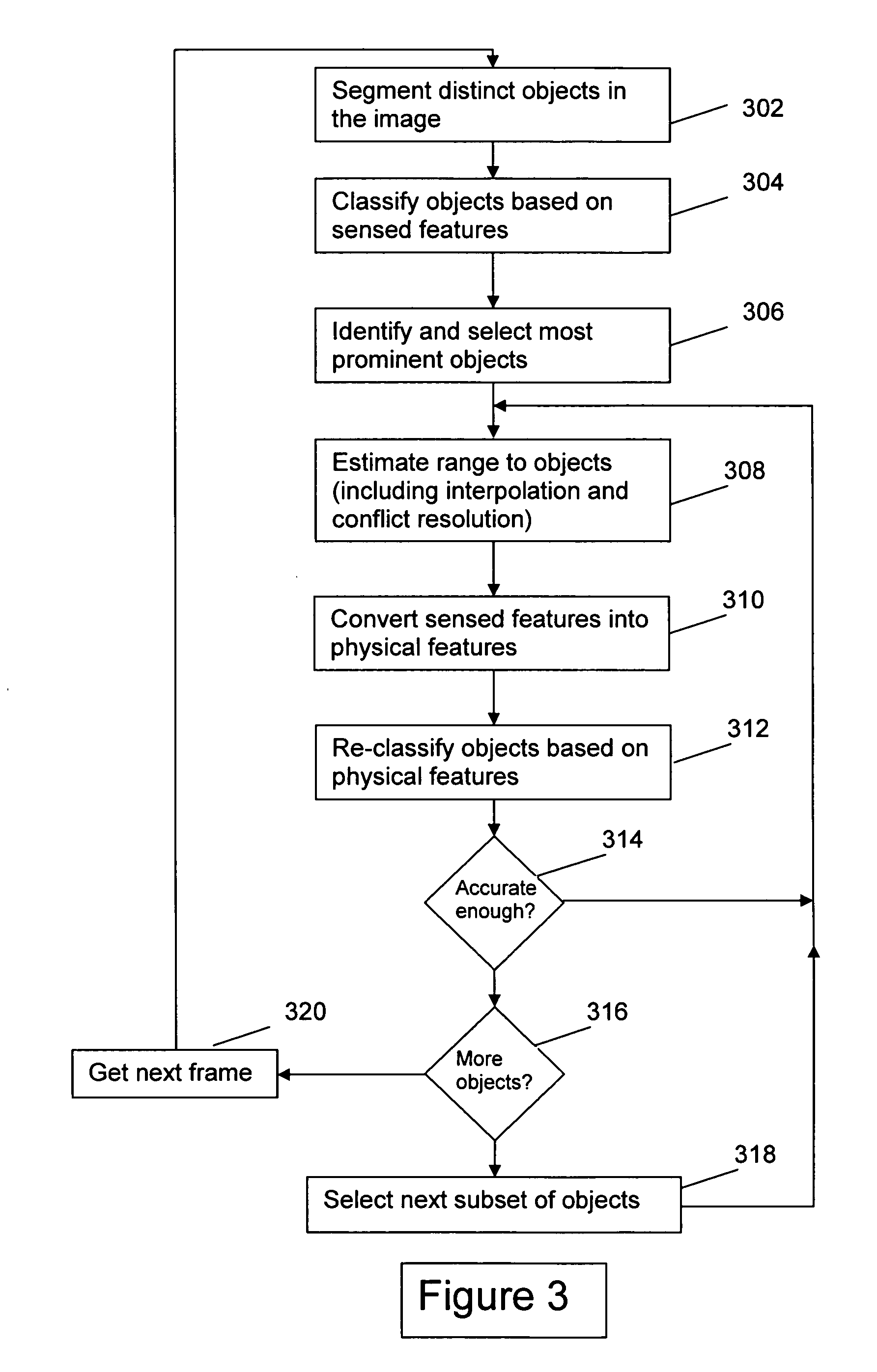

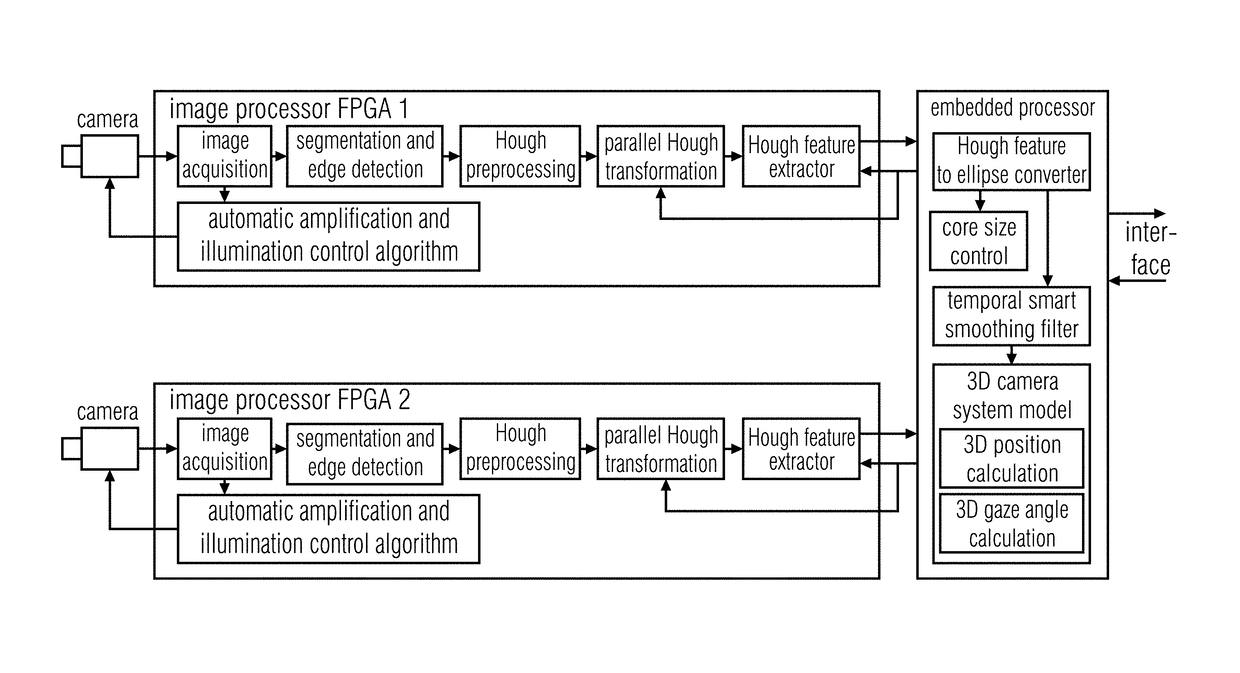

Method and apparatus for identifying physical features in video

ActiveUS20070122040A1Improve accuracyImprove reliabilityCharacter and pattern recognitionComputer scienceFeature transformation

An image is processed by a sensed-feature-based classifier to generate a list of objects assigned to classes. The most prominent objects (those objects whose classification is most likely reliable) are selected for range estimation and interpolation. Based on the range estimation and interpolation, the sensed features are converted to physical features for each object. Next, that subset of objects is then run through a physical-feature-based classifier that re-classifies the objects. Next, the objects and their range estimates are re-run through the processes of range estimation and interpolation, sensed-feature-to-physical-feature conversion, and physical-feature-based classification iteratively to continuously increase the reliability of the classification as well as the range estimation. The iterations are halted when the reliability reaches a predetermined confidence threshold. In a preferred embodiment, a next subset of objects having the next highest prominence in the same image is selected and the entire iterative process is repeated. This set of iterations will include evaluation of both of the first and second subsets of objects. The process can be repeated until all objects have been classified.

Owner:HONEYWELL INT INC

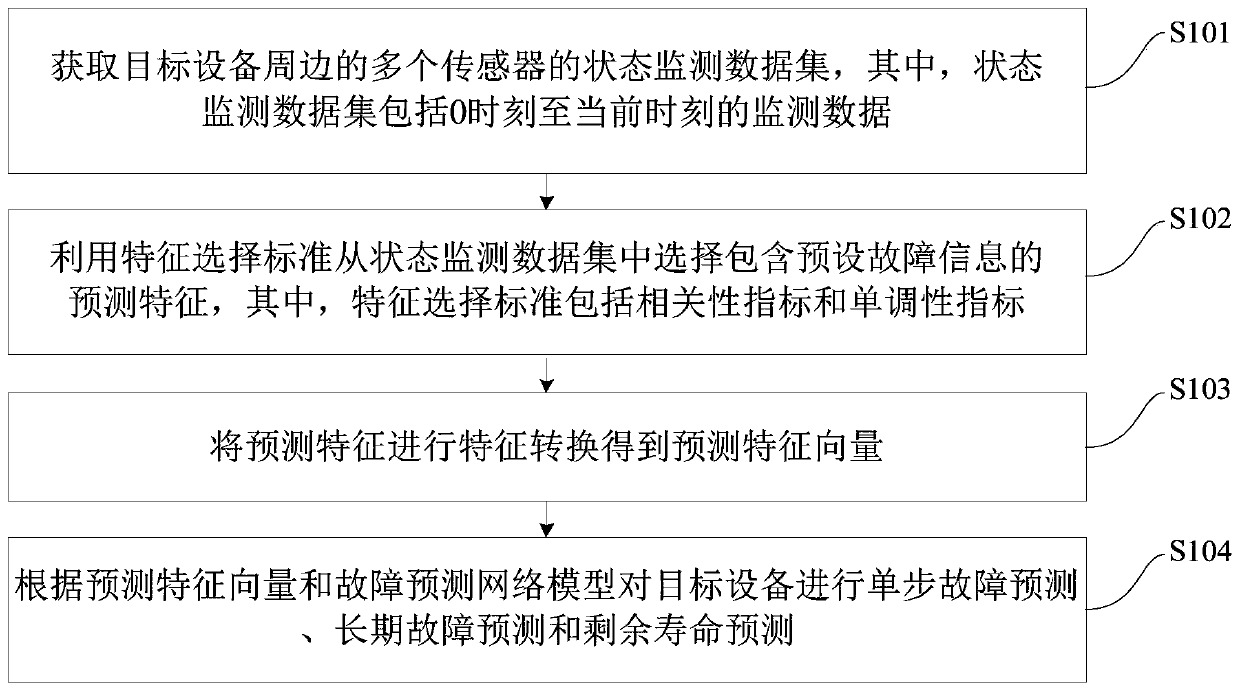

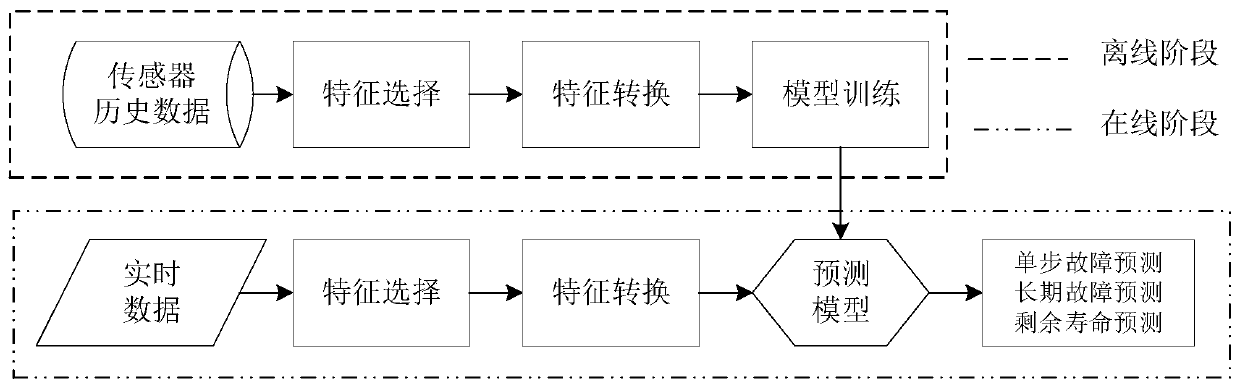

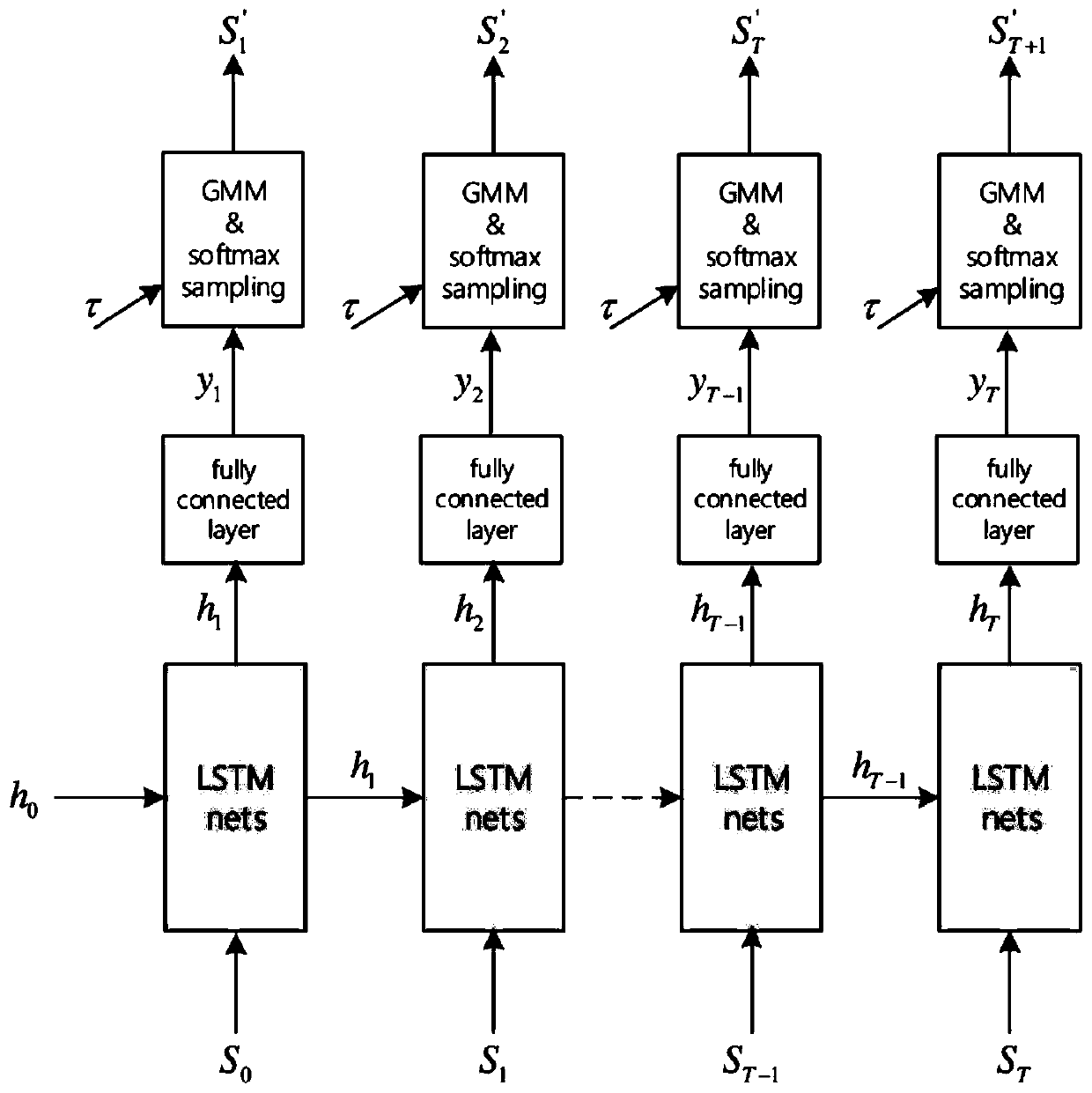

Fault prediction method and device for industrial equipment based on LSTM circulating neural network

ActiveCN109814527AAchieving long-term forecastsAddressing Insufficient Prediction AccuracyElectric testing/monitoringNeural architecturesData setConfidence interval

The invention discloses a fault prediction method and device for industrial equipment based on an LSTM circulating neural network, wherein the method comprises the following steps of: acquiring a state monitoring data set of a plurality of sensors at the periphery of target equipment, wherein the state monitoring data set comprises monitoring data from 0 moment to a current moment; selecting a prediction characteristics containing preset fault information from the state monitoring data set by utilizing a characteristic selection standard, wherein the characteristic selection standard comprisesa correlation index and a monotonicity index; performing characteristic conversion on the prediction characteristics to obtain a prediction characteristic vector; and performing single-step fault prediction, long-term fault prediction and residual life prediction on the target equipment according to the prediction characteristic vector and a fault prediction network model. The method can effectively avoid insufficient prediction precision caused by unreasonable preset fault threshold, can give a confidence interval under the occasion of single-step performance prediction, and can achieve long-term prediction of performance and residual service life of the equipment.

Owner:TSINGHUA UNIV

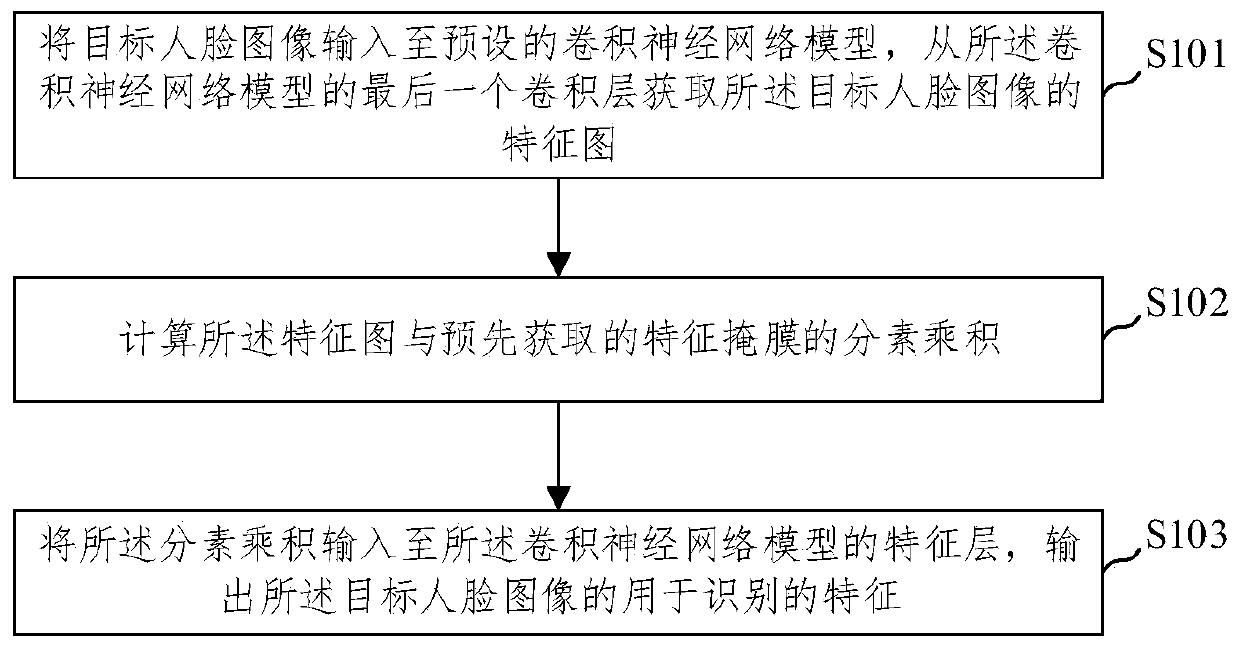

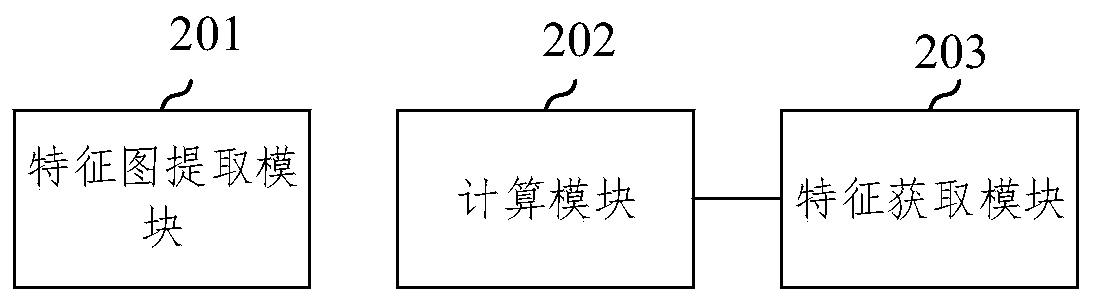

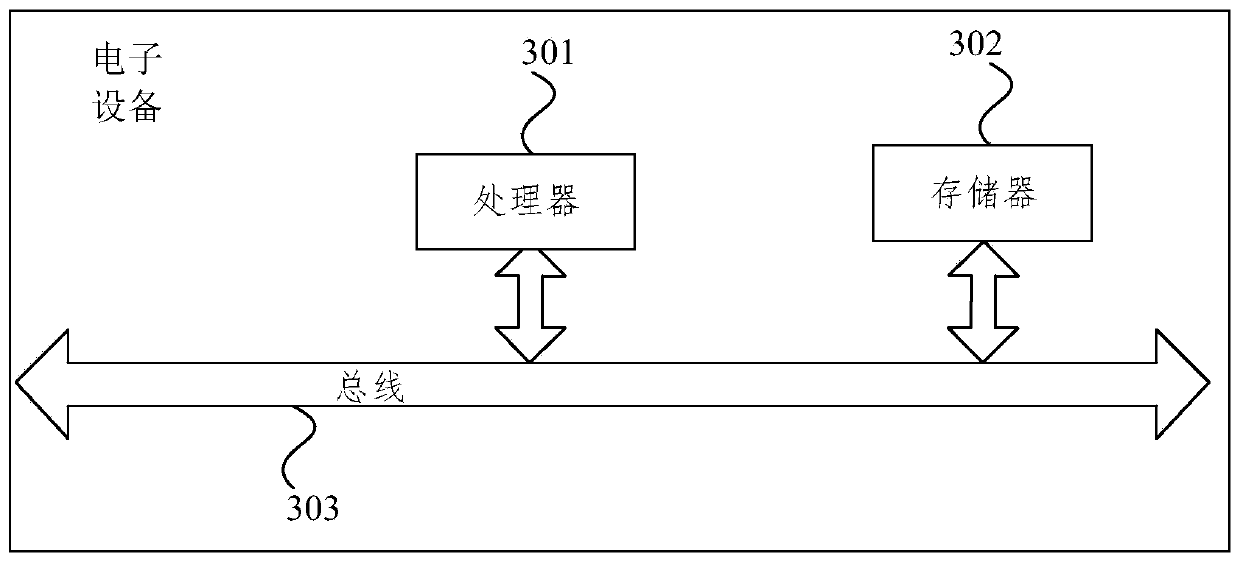

A shielded face recognition method and device based on feature transformation

ActiveCN109840477AImprove recognition accuracySimple structureCharacter and pattern recognitionNeural architecturesCharacteristic responseNetwork structure

The embodiment of the invention provides a shielded face recognition method and device based on feature transformation, and the method comprises the steps: inputting a target face image into a presetconvolutional neural network model, and obtaining a feature map of the target face image from the last convolutional layer of the convolutional neural network model; Calculating a sub-pixel product ofthe feature map and a pre-obtained feature mask; And inputting the sub-pixel product into a feature layer of the convolutional neural network model, and outputting a feature for identification of thetarget face image. The embodiment of the invention provides a shielded face recognition method and device based on feature transformation. A feature transformation mode of adding a feature mask is adopted for an image of a shielded face, feature responses of common areas prone to being shielded of the face are abandoned, engineering implementation is easier, the calculation time is shorter, the network structure is simpler, and the recognition precision of the shielded face is improved.

Owner:苏州飞搜科技有限公司

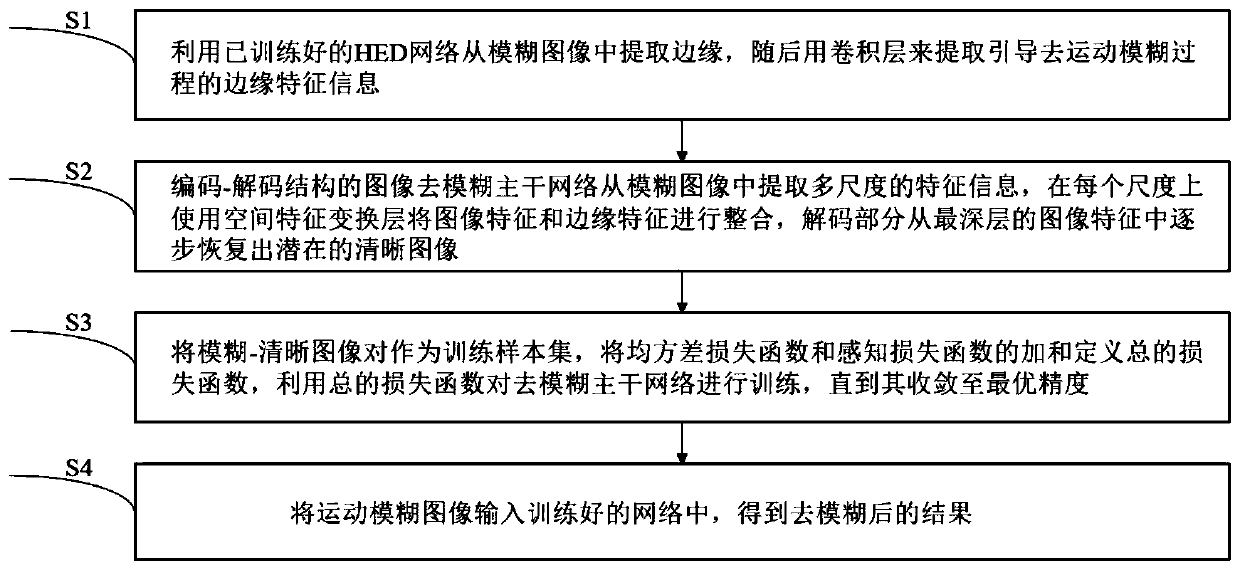

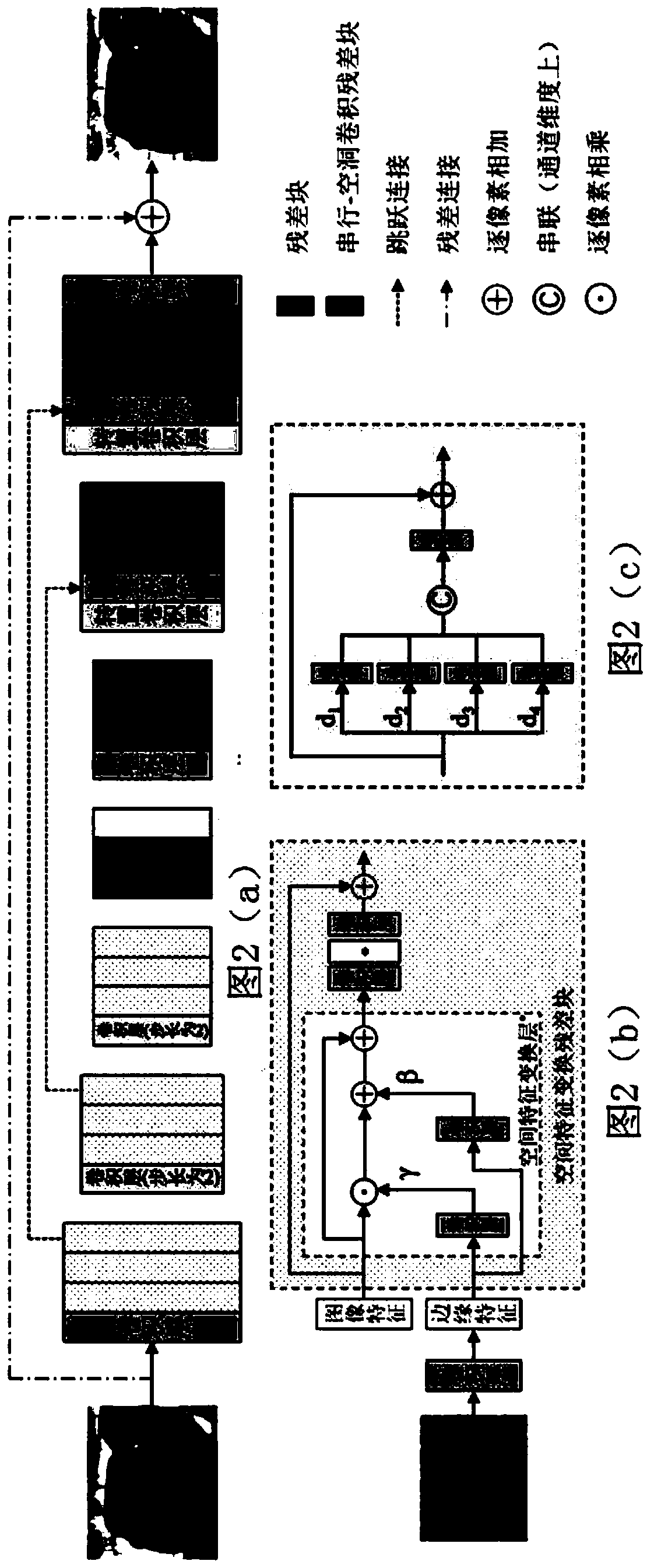

Edge-based deep learning image motion blur removing method

ActiveCN111028177AImprove generalization abilitySimple structureImage enhancementImage analysisDeblurringImaging Feature

The invention relates to an image restoration technology, in particular to an edge-based deep learning image motion blur removing method, which comprises the following steps of: extracting an edge from a blur image by using a trained HED network, and then extracting edge feature information for guiding a motion blur removing process by using a convolution layer; extracting multi-scale feature information from the blurred image by a deblurring backbone network, integrating image features and edge features on each scale by using a spatial feature transformation layer, and a decoding part gradually recovering a potential clear image from the deepest image features; taking the blurred-clear image pair as a training sample set, defining a total loss function by the sum of a mean square error loss function and a perception loss function, and training the deblurred backbone network by using the total loss function until the deblurred backbone network is converged to the optimal precision; andinputting the motion blurred image into the trained deblurred backbone network to obtain a deblurred result. According to the method, effective integration of image features and edge features is realized, and the deblurring effect is remarkable.

Owner:WUHAN UNIV

2D image analyzer

ActiveUS10074031B2Collected considerably more efficientEfficient collectionImage enhancementImage analysisViewfinderFeature transformation

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com