Patents

Literature

77 results about "Batch training" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Batch Training. Running algorithms which require the full data set for each update can be expensive when the data is large. In order to scale inferences, we can do batch training. This trains the model using only a subsample of data at a time.

Supervised snore source identifying method

ActiveCN106821337AFew weight parametersThe recognition result is accurateDiagnostic recording/measuringSensorsBatch trainingFrequency spectrum

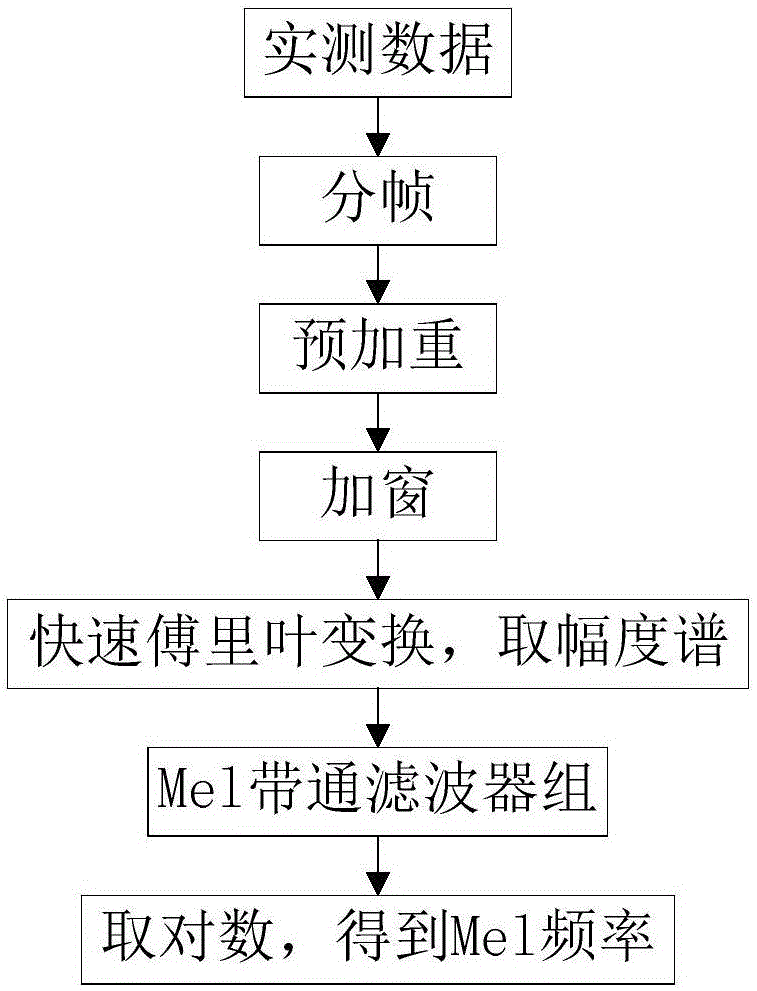

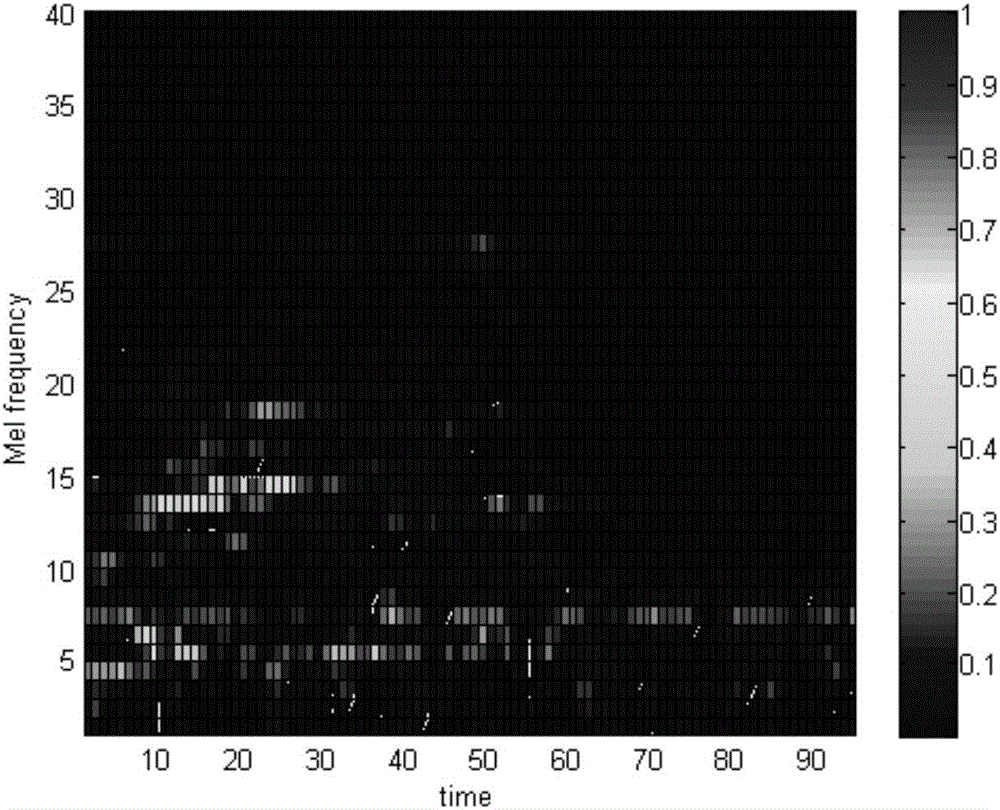

The invention discloses a supervised snore source identifying method. The method includes data pretreatment, training and identification; the main steps include, firstly, performing Mel frequency conversion on actual measured snore data, and acquiring a data sample; secondly, arranging a structure of a convolution neutral network, quantity of output feature drawings of a convolution layer and convolution core size, pooling size, weight vector updating studying rate, batch training sample number, and training iterations; thirdly, using a snore time frequency spectrogram of a training set as the convolution neutral network to input; performing network initialization on the arranged network structure; completing the training process through forward process, direction bias transmission, weight value updating and offset until the appointed iterations; at last, delivering the testing set to the trained network model and acquiring the identifying result. The supervised snore source identifying method can effectively identify the snore source, and is exact in identifying result and good in performance.

Owner:NANJING UNIV OF SCI & TECH

Machine learning-based system and learning method

InactiveCN107844836AReduce complex mathematics knowledge requirementsAuxiliary productivityForecastingCharacter and pattern recognitionBatch processingStudy methods

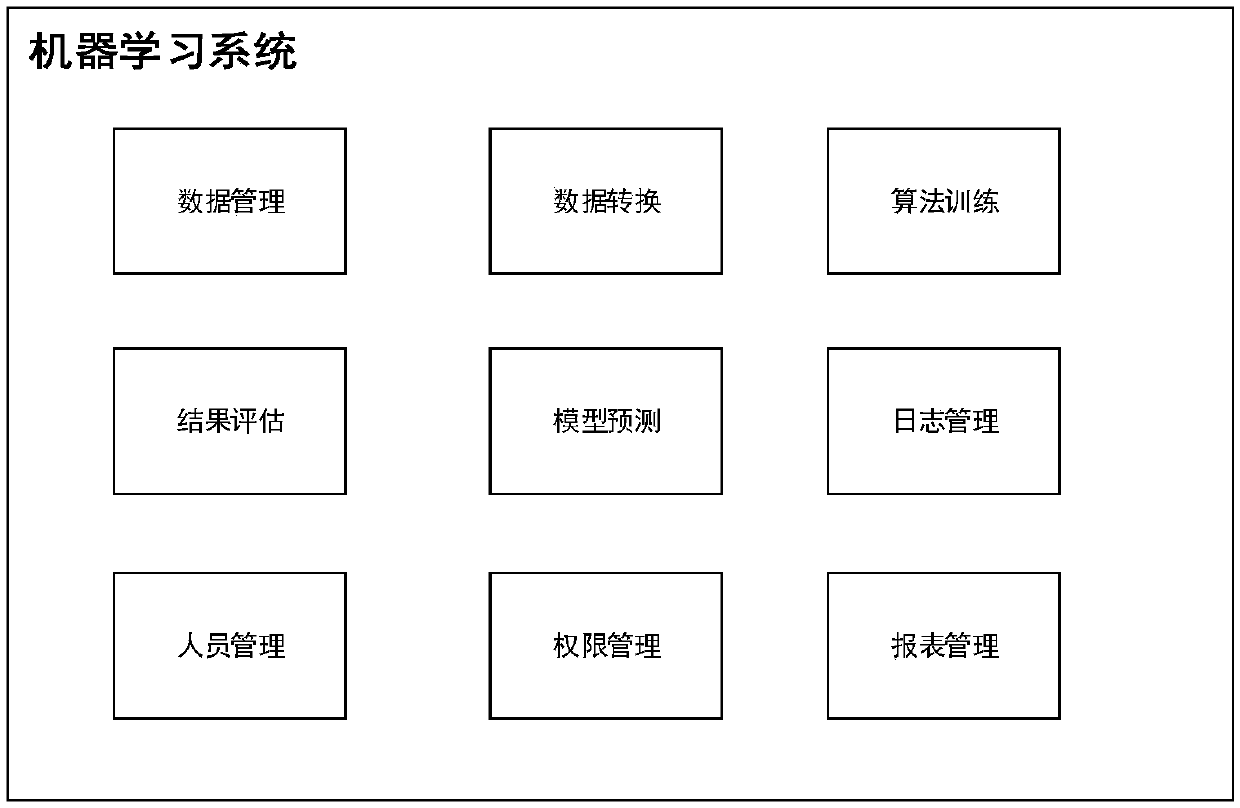

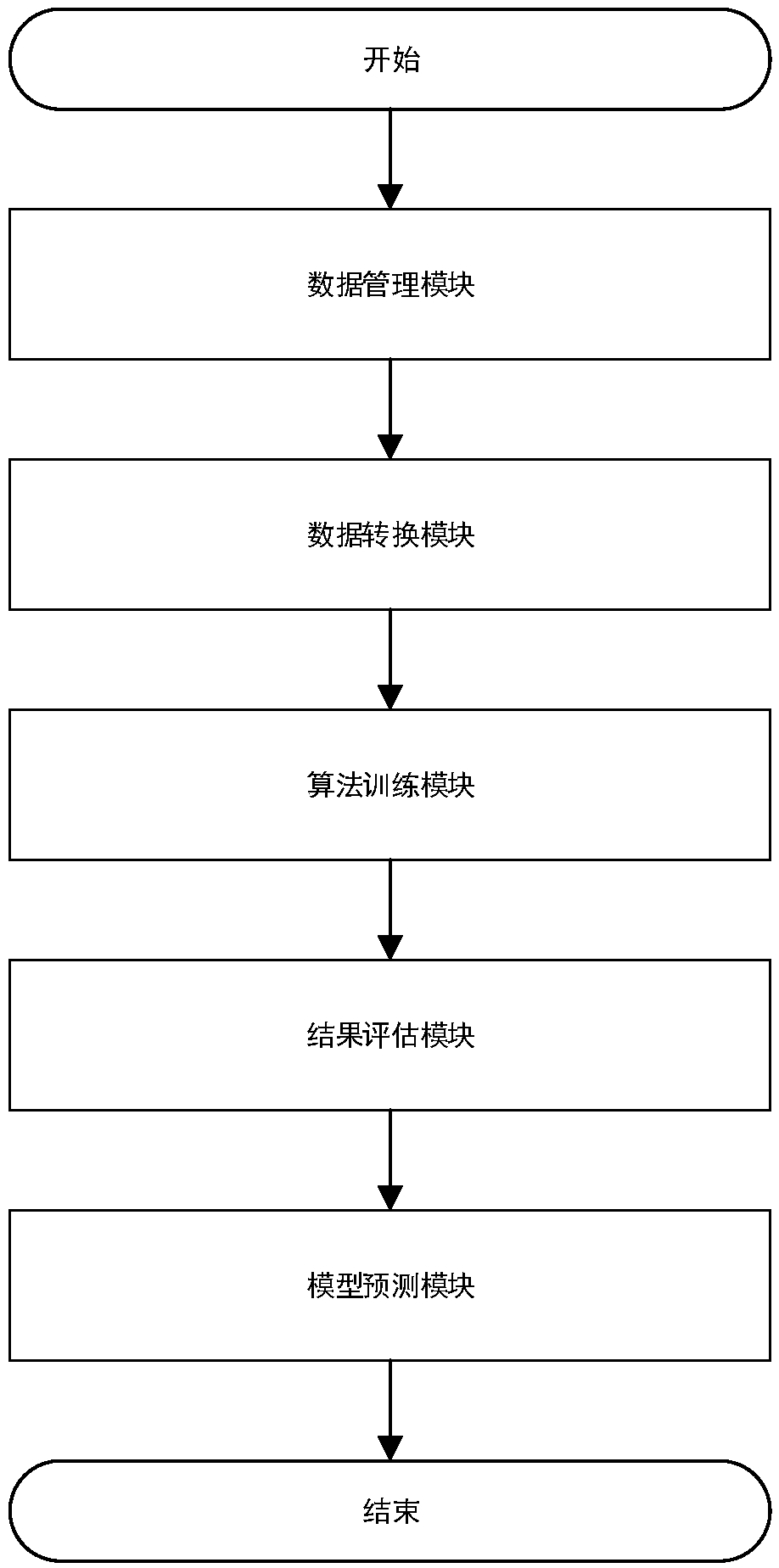

The invention discloses a machine learning-based system and a learning method. The system includes a data management module, a data conversion module, an algorithm training module, a result evaluationmodule and a model prediction module. According to the learning method, the data management module automatically determines the data type of a data source uploaded by a user and preprocesses the data; the user labels the data; the data conversion module substitutes the preprocessed data into a feature conversion algorithm set to obtain feature-converted data; the algorithm training module automatically substitutes the feature-converted data into a classification, clustering, or regression algorithm for batch training so as to obtain a batch of classification, clustering, or regression models;the result evaluation module evaluates the standard indicators of the above models so as to obtain an optimal model; and the model prediction module predicts the latest data source with the optimal model. The system can automatically perform batched data processing and algorithm training, manual intervention in an intermediate process is not required, and therefore, the threshold of learning is decreased, and efficiency can be improved.

Owner:SUNYARD SYST ENG CO LTD

A multi-fault diagnosis method for complex equipment based on DNN

ActiveCN109034368AEfficient identificationEffectively identify the root cause of multiple faultsNeural architecturesNeural learning methodsBatch trainingData set

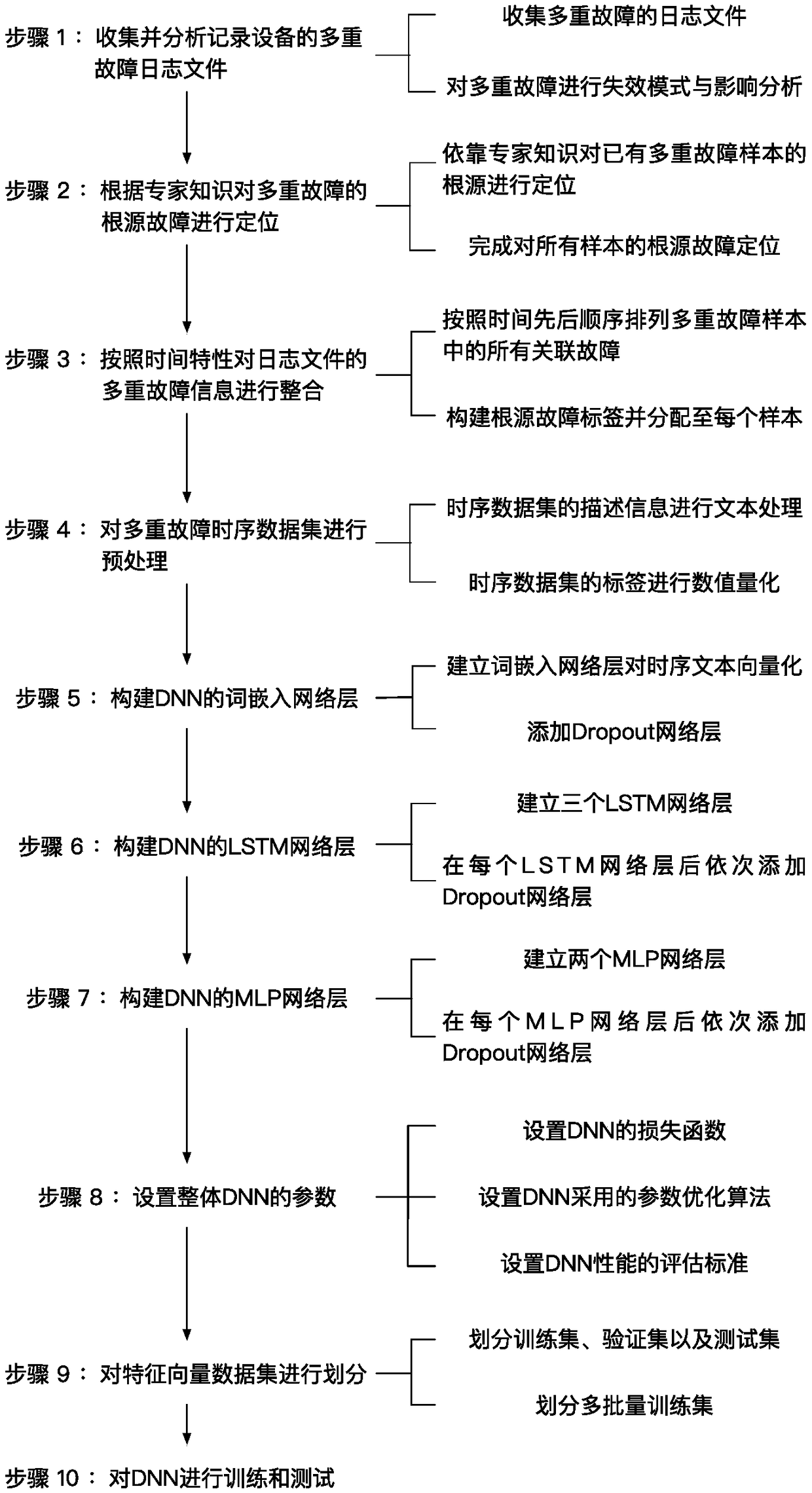

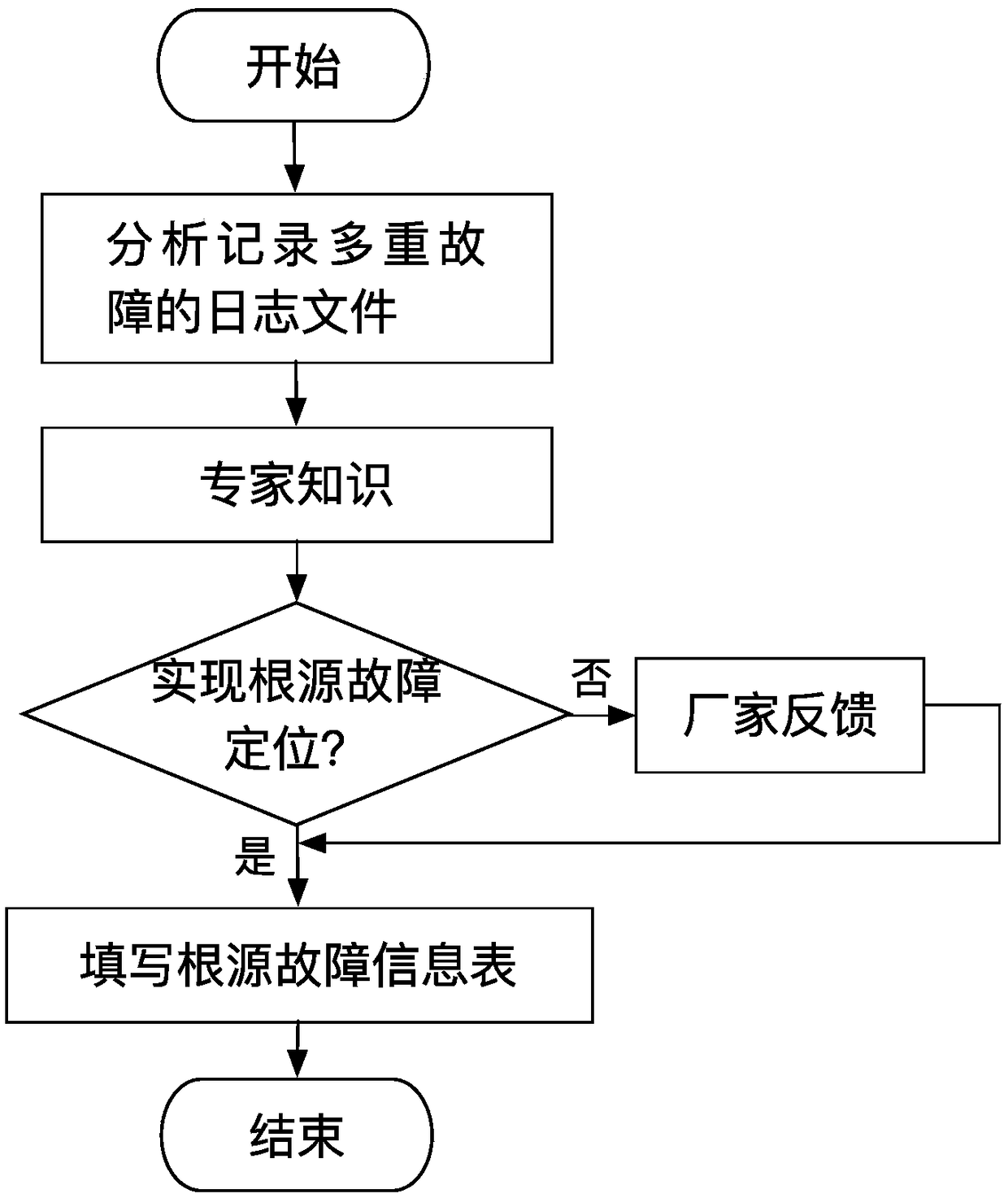

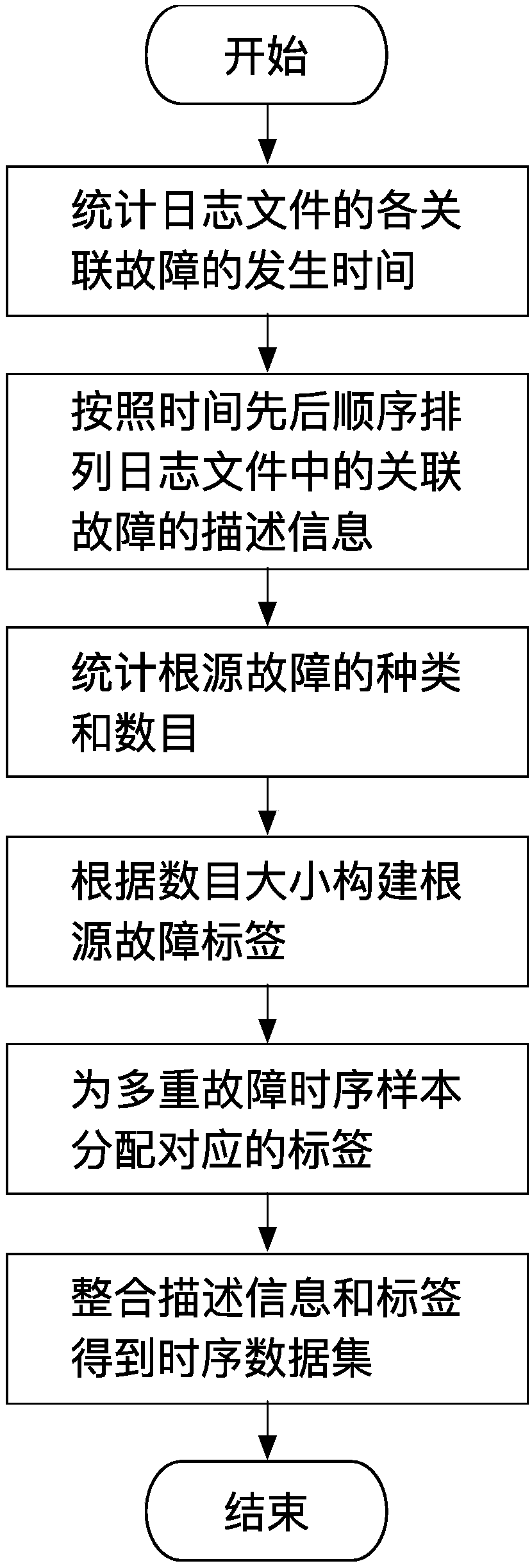

The invention provides a multiple fault diagnosis method of complex equipment based on DNN, which comprises the following steps: 1, collecting multiple fault logs of the equipment, and counting and summarizing fault information; 2. relying on expert knowledge to locate the root cause of multiple faults; 3, integrating the log information according to the time characteristic to obtain a time sequence data set; 4, preprocessing the time series data set; 5, numerically quantizing the data set; using word embedding as the first layer network of DNN; adding Dropout after the network layer; 6, establishing an LSTM network layer; 7, establishing an MLP network layer; 8, setting the learning parameters of DNN; 9, dividing the data set; 10,learning and testing DNN by using partitioned datasets. Theinvention processes the multiple fault logs to obtain the sequential data set, and establishes a DNN model including a word embedded network layer, an LSTM network layer and an MLP network layer; After the data sets are partitioned, the DNN is learned by batch training data sets and verification data sets, and the accuracy of DNN in identifying root causes of failure is evaluated by test data sets.

Owner:BEIHANG UNIV

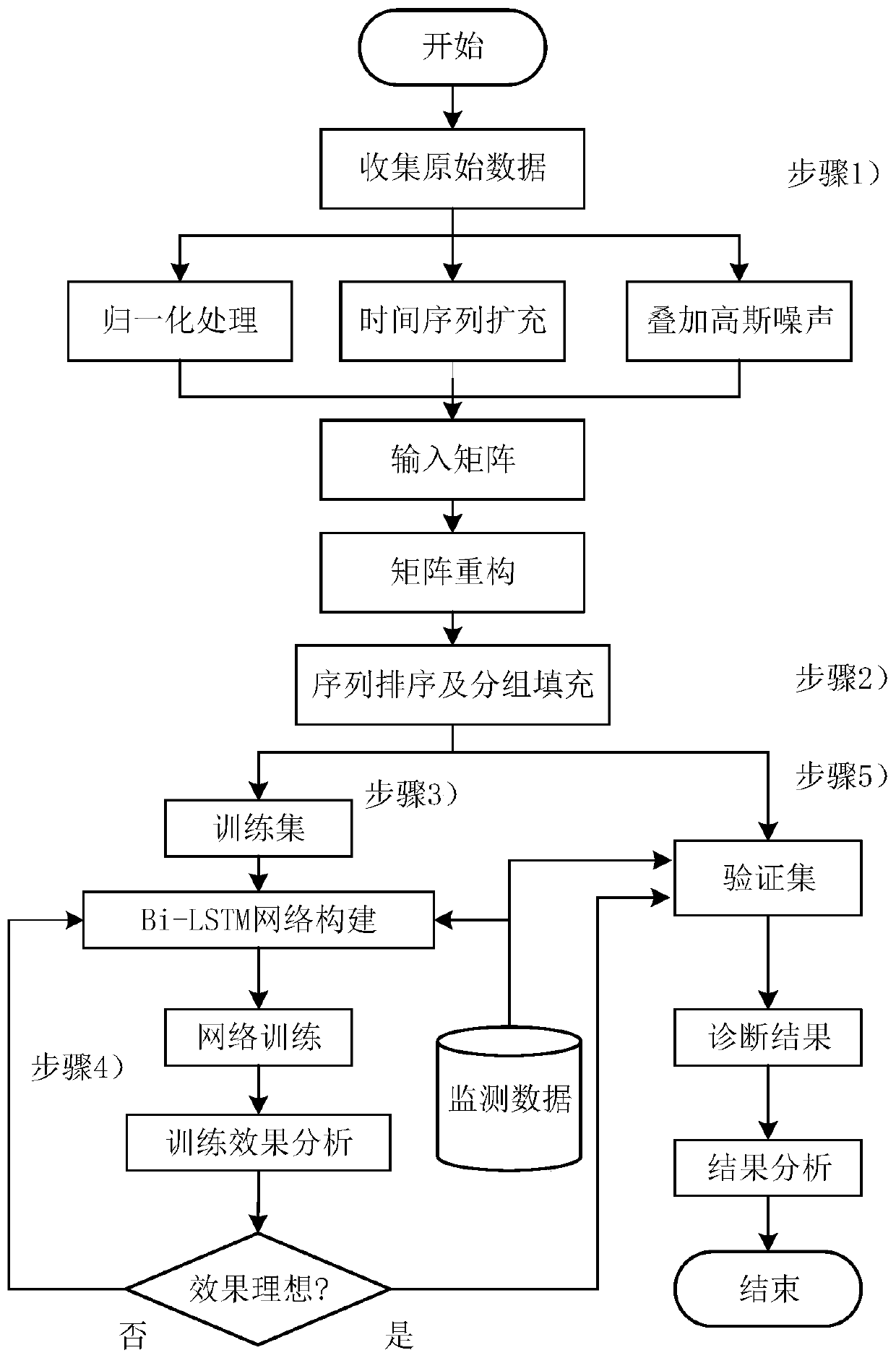

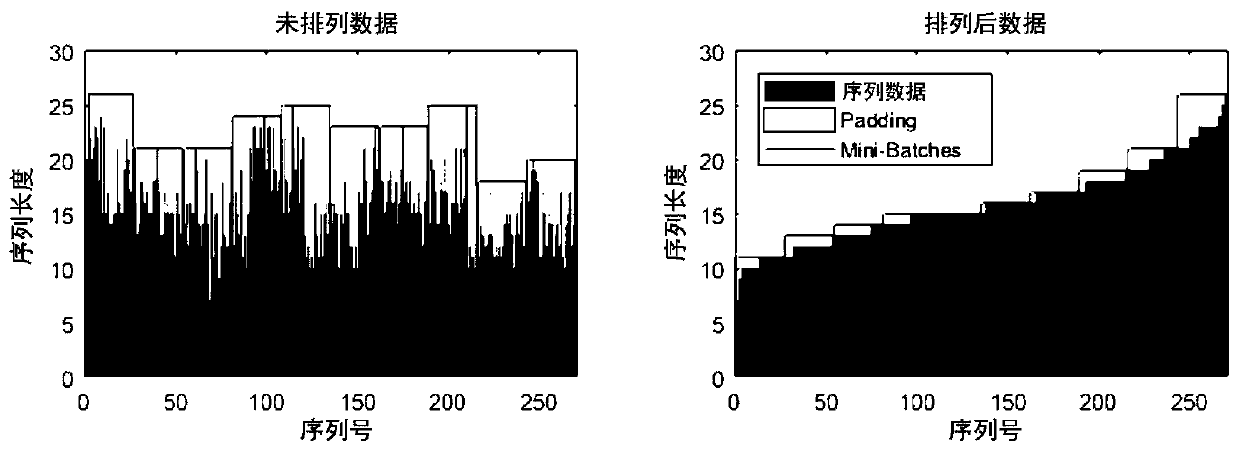

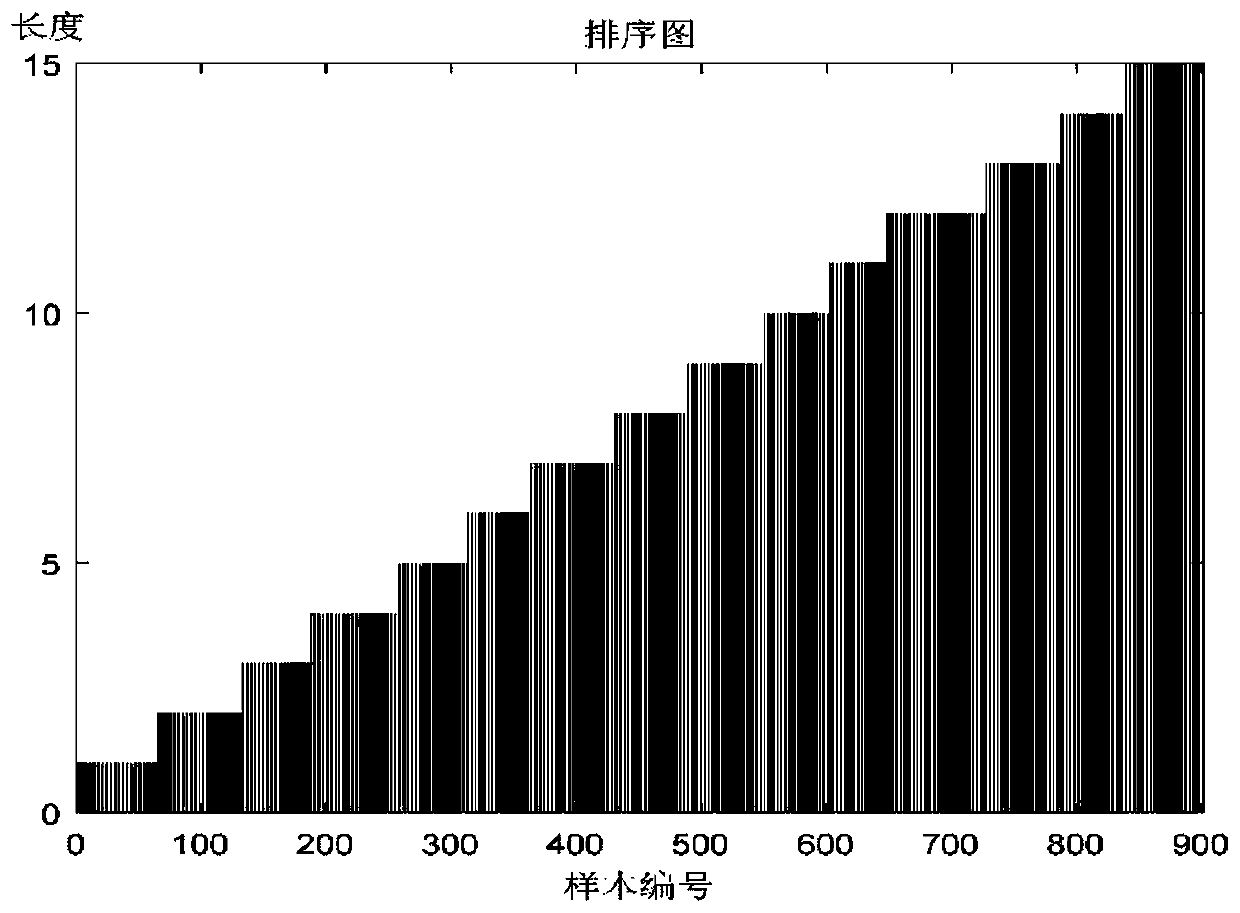

Transformer fault diagnosis method based on Bi-LSTM and analysis of dissolved gas in oil

ActiveCN110501585AImprove accuracyImprove portabilityElectrical testingNeural architecturesFrame basedAlgorithm

The invention discloses a transformer fault diagnosis method based on Bi-LSTM and analysis of dissolved gas in oil. The method comprises: collecting fault DGA monitoring data of each substation, carrying out normalization, sequence expansion, noise superimposing and the like on the data, and extracting fault feature information based on a non-coding ratio method; carrying out length ranking on a DGA sequence, carrying out grouping and filling, and classifying groups into a training set and a verification set; constructing a deep learning frame based on Bi-LSTM, inputting data, and carrying outtraining; and then carrying out diagnosis and network updating by combining actual test data to obtain a fault diagnosis model with the high diagnosis accuracy and portability. According to the invention, the influence of the noise and error on the diagnosis during the DGA data monitoring process is reduced effectively; and the Bi-LSTM-based transformer fault diagnosis model is constructed by considering the complex correlation between different sequences. With introduction of links of sequence sorting, grouping, filling and the like, a problem of different sampling lengths of different transformers in the actual engineering is solved by using the batch training strategy.

Owner:WUHAN UNIV

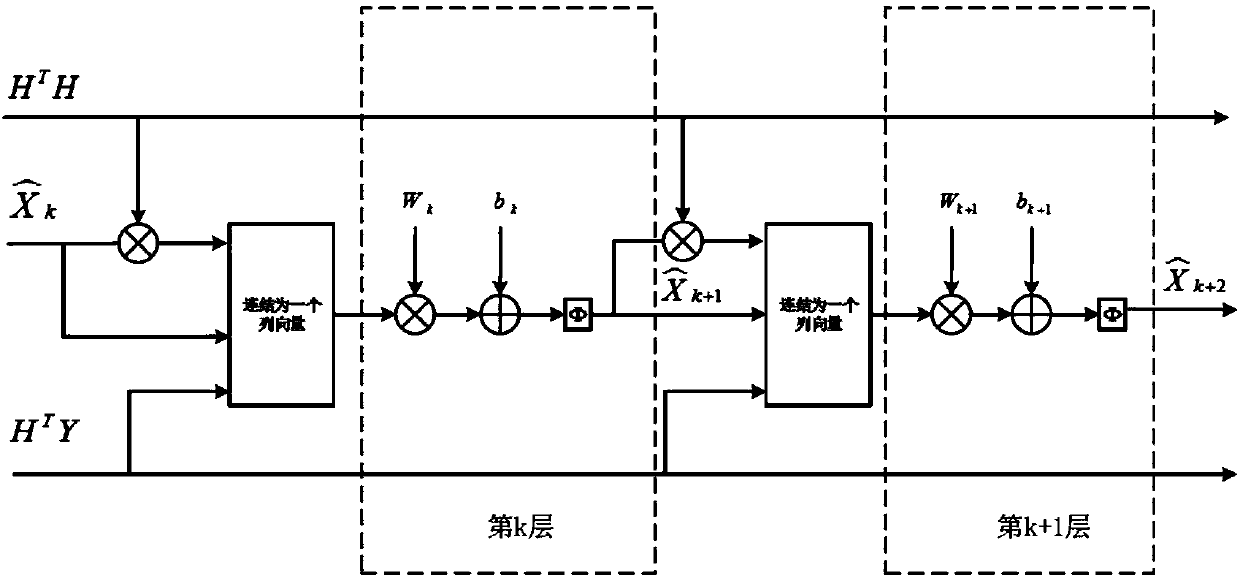

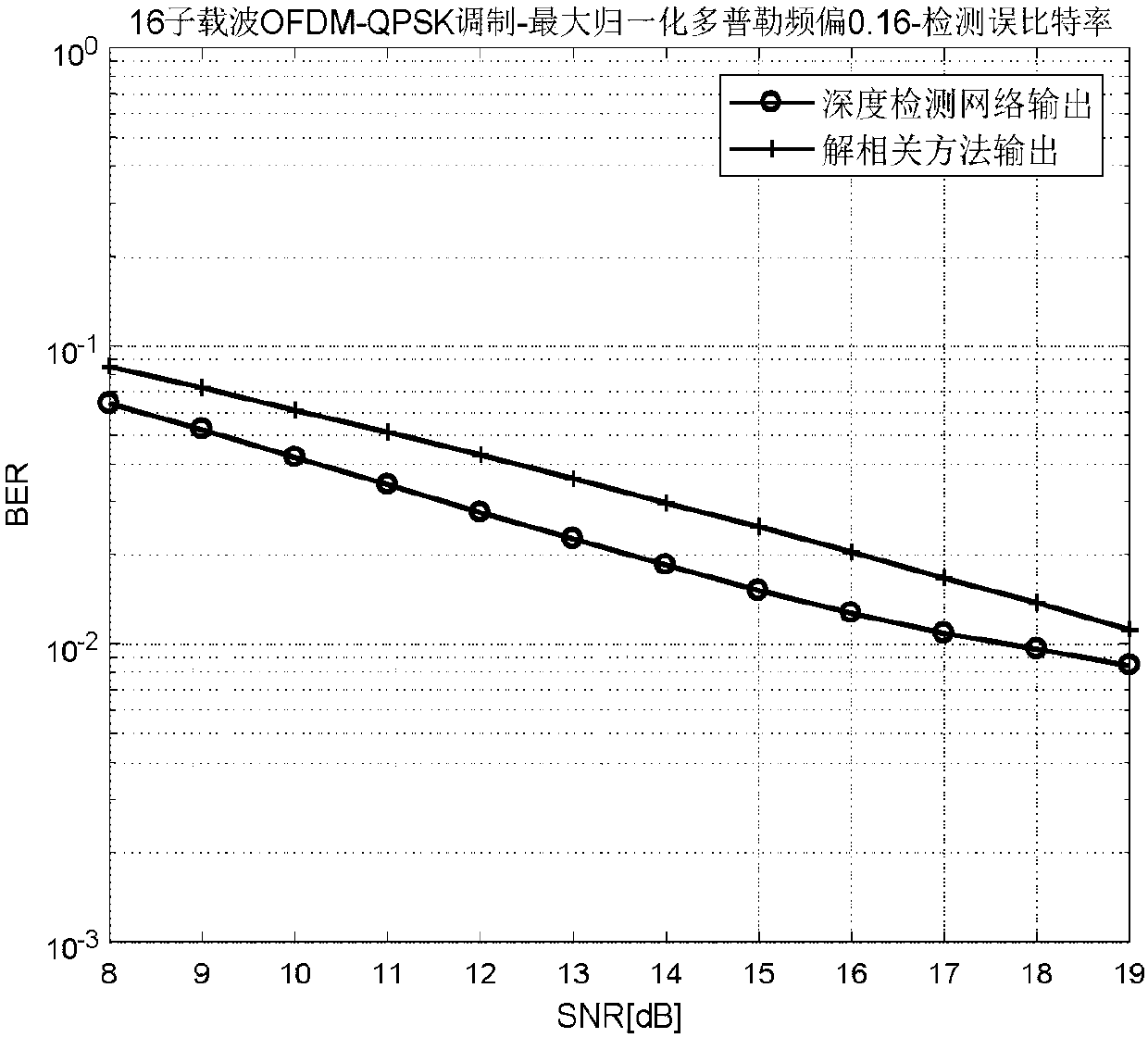

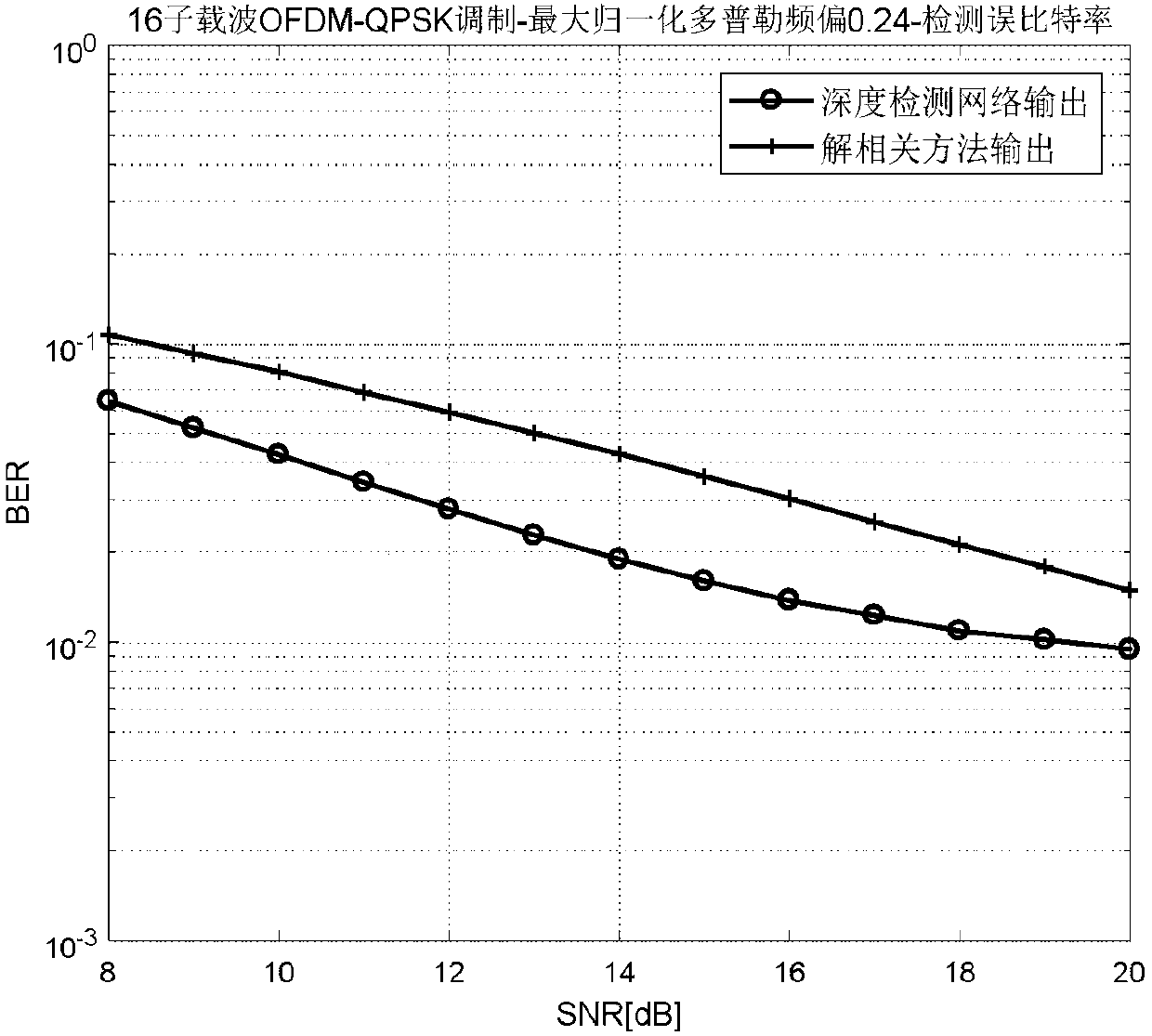

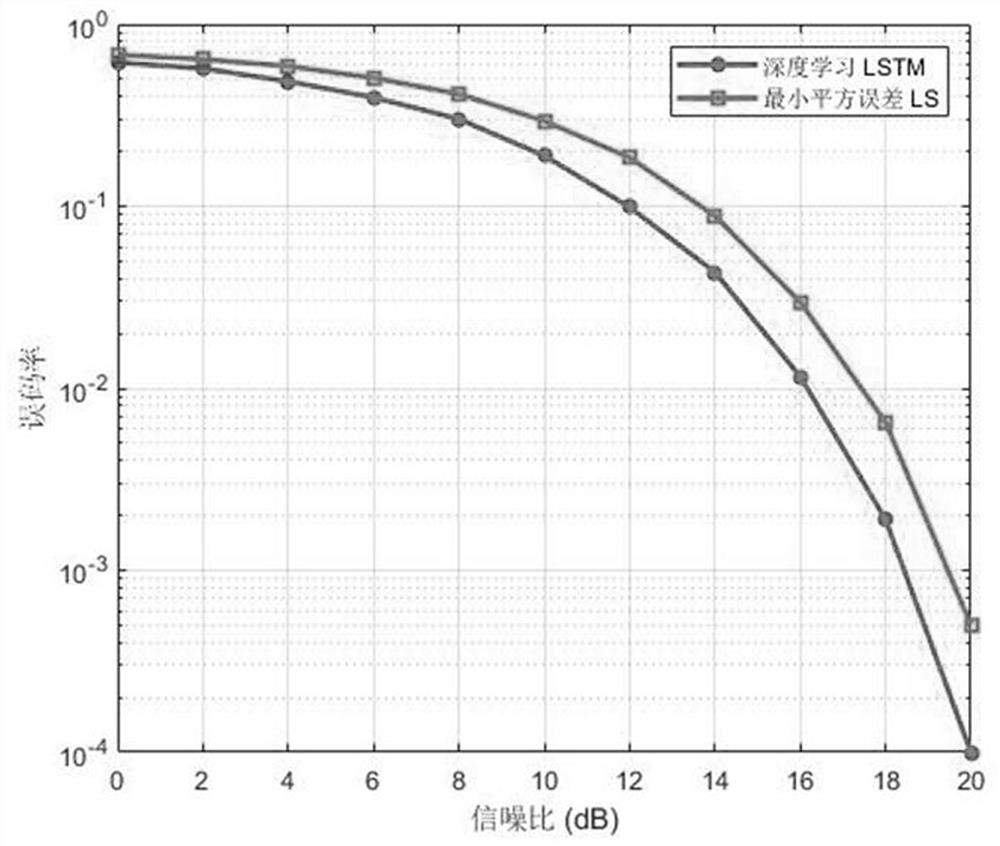

Inter-carrier interference resistant OFDM detection method based on deep learning

ActiveCN108540419ASolve demodulation problemsImprove performancePhase-modulated carrier systemsMulti-frequency code systemsPhase noiseCarrier signal

The invention discloses an inter-carrier interference resistant OFDM detection method based on deep learning. The method can be applied to a high-speed mobile OFDM communication system and an OFDM system with relatively large millimetric wave band carrier phase noise and can effectively resist against the inter-carrier interference brought by Doppler frequency offset and the phase noise. Accordingto the inter-carrier interference resistant OFDM detection method disclosed by the invention, a deep network structure is designed for an approximation ML detector by using a deep expansion mode on the basis of a projection gradient descent method, the training algorithm is the Adam algorithm, a small batch training mode is adopted, each batch contains multiple input and output OFDM symbols andcorresponding channel matrixes H, that is, each batch reflects the changes of the channels within a period of time. Different types of channel information are retrieved at first during the training, and then deep learning is performed by using the channel information to converge a loss function to a small value. An OFDM signal is demodulated by using a trained deep detection network to effectivelyimprove the performance of the OFDM system that is affected by the inter-carrier interference generated by greater Doppler frequency offset or phase noise.

Owner:SOUTHEAST UNIV

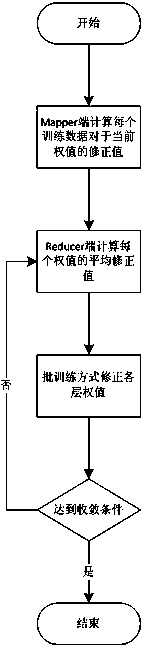

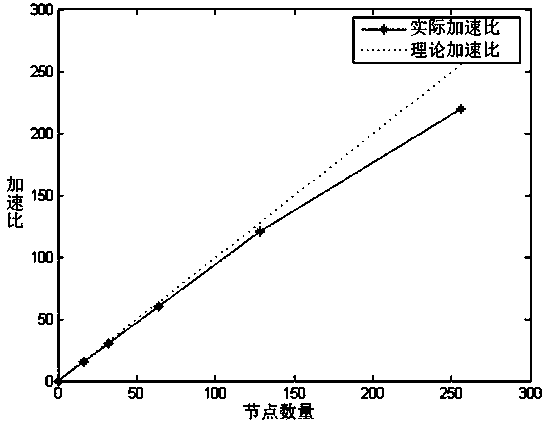

BP neural-network classification method based on Hadoop

InactiveCN103544528AIncrease training speedGood effectNeural learning methodsBatch trainingNetwork model

The invention discloses a BP neural-network classification method based on a Hadoop. The BP neural-network classification method based on the Hadoop comprises the following steps that data are preprocessed, Map tasks are started at Mapper ends of all nodes on a Hadoop platform, a training datum is obtained at each Mapper end and the training data serve as weight calculation modification values of the current network and the modification valves are transmitted to Reducer ends; Reduce tasks are started on the Reducer ends of all nodes on the Hadoop platform, all modification values of one weight are obtained by each Reducer end and the average value of the modification values is calculated out and serves as the output; the manner of batch training is adopted and the weight values of all layers are modified; the steps are repeated until the error reaches the preset precision or the frequency of study is larger than the preset maximum frequency and a BP neural-network model is obtained. Otherwise, iteration continues. Parallel computing can be achieved according to the BP neural-network classification method based on the Hadoop.

Owner:NANJING UNIV +1

A method of link prediction for complex networks

ActiveCN109214599AOvercoming the disadvantages of uniform processing networksEasy to handleForecastingSystems biologyNODALBatch training

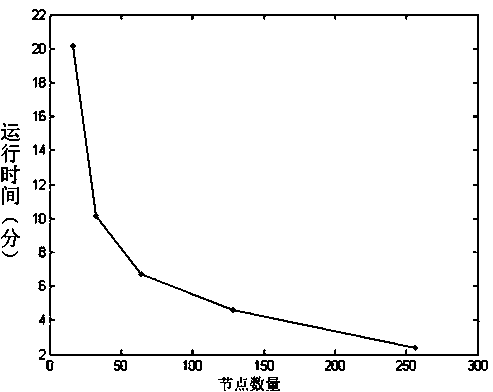

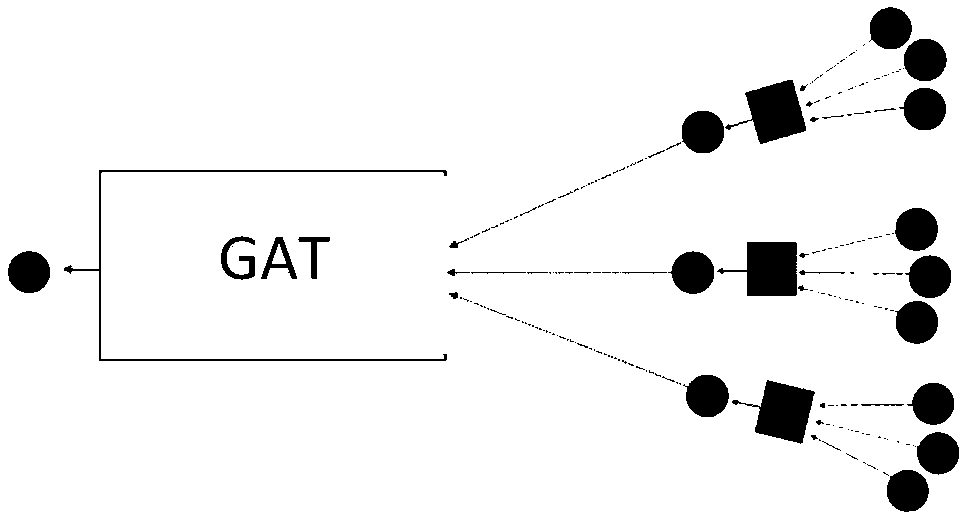

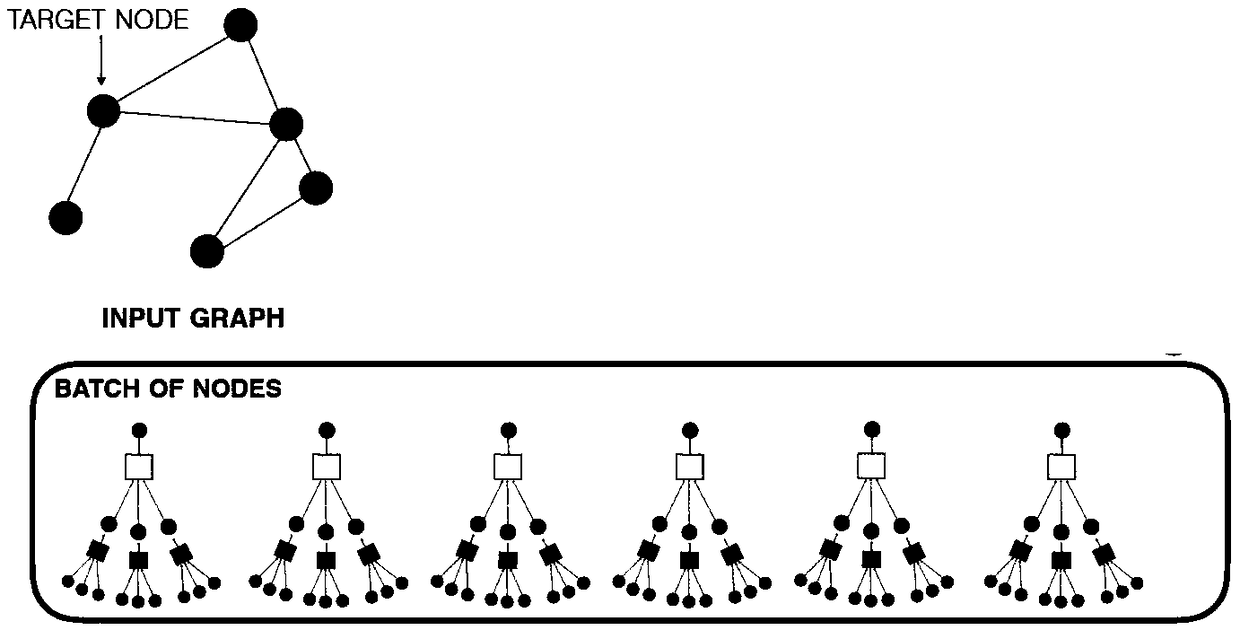

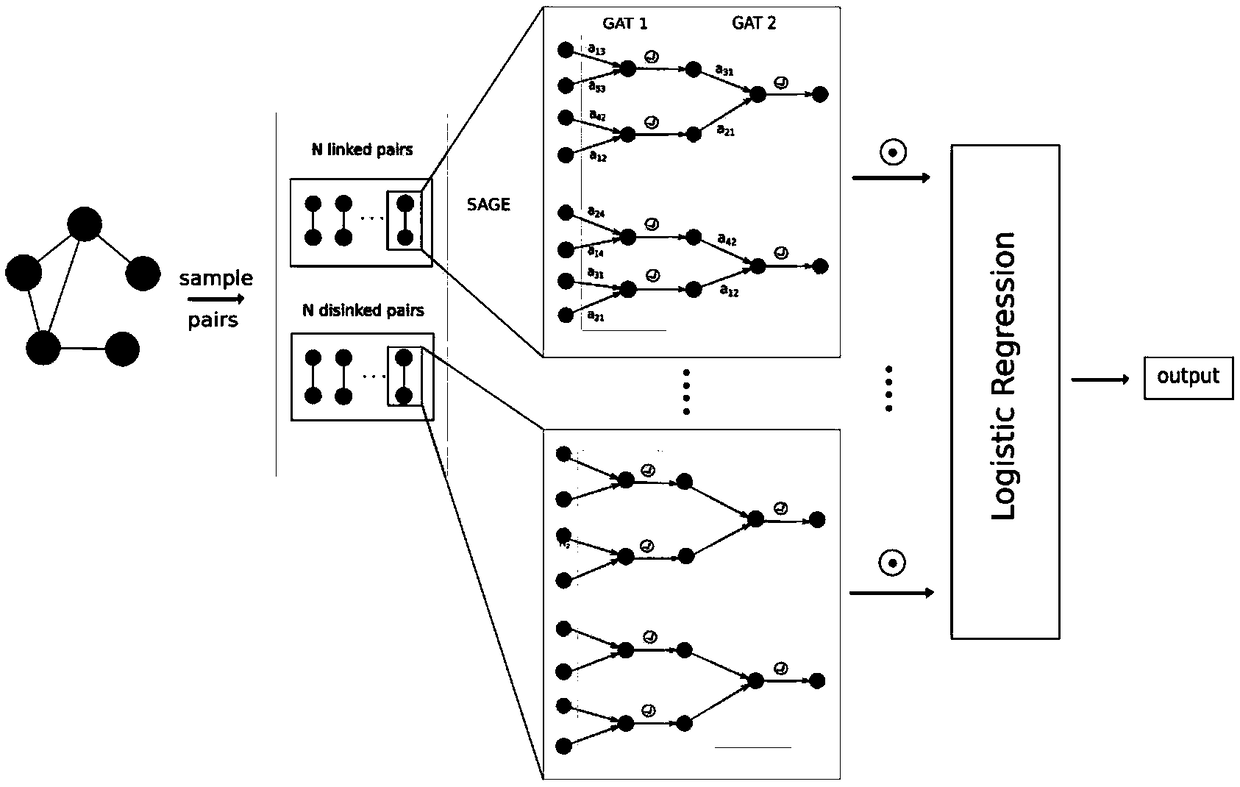

The invention provides a method for link prediction of a complex network, an end-to-end link prediction model based on a graph attention network (GAT), and a batch training method of the model. The key of this model is to learn the attention distribution of network nodes to neighbors. The steps of model training and model prediction include: (1) inputting the topological structure of unweighted undirected homogeneous network; 2, sampling all nodes according to the topological structure of the training set, so as to batch the network; 3, inputting the batched training set into the model to train the model parameters; Step 4, inputting the point pair to be predicted, and the model outputs the probability of connecting edges between the point pairs. The model of the invention has the characteristics of end-to-end. The batch training method makes the model suitable for large-scale complex networks.

Owner:BEIJING NORMAL UNIVERSITY +1

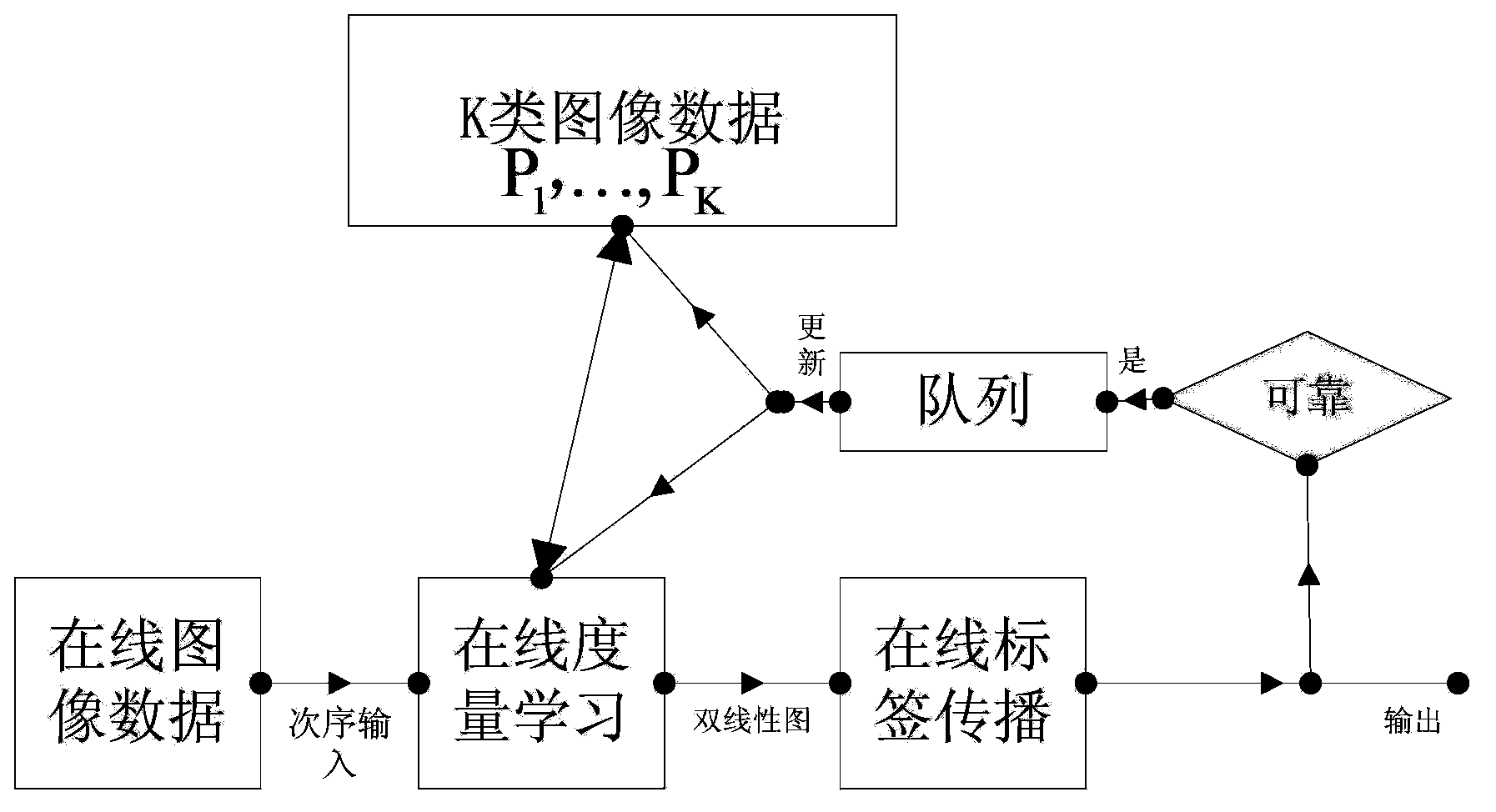

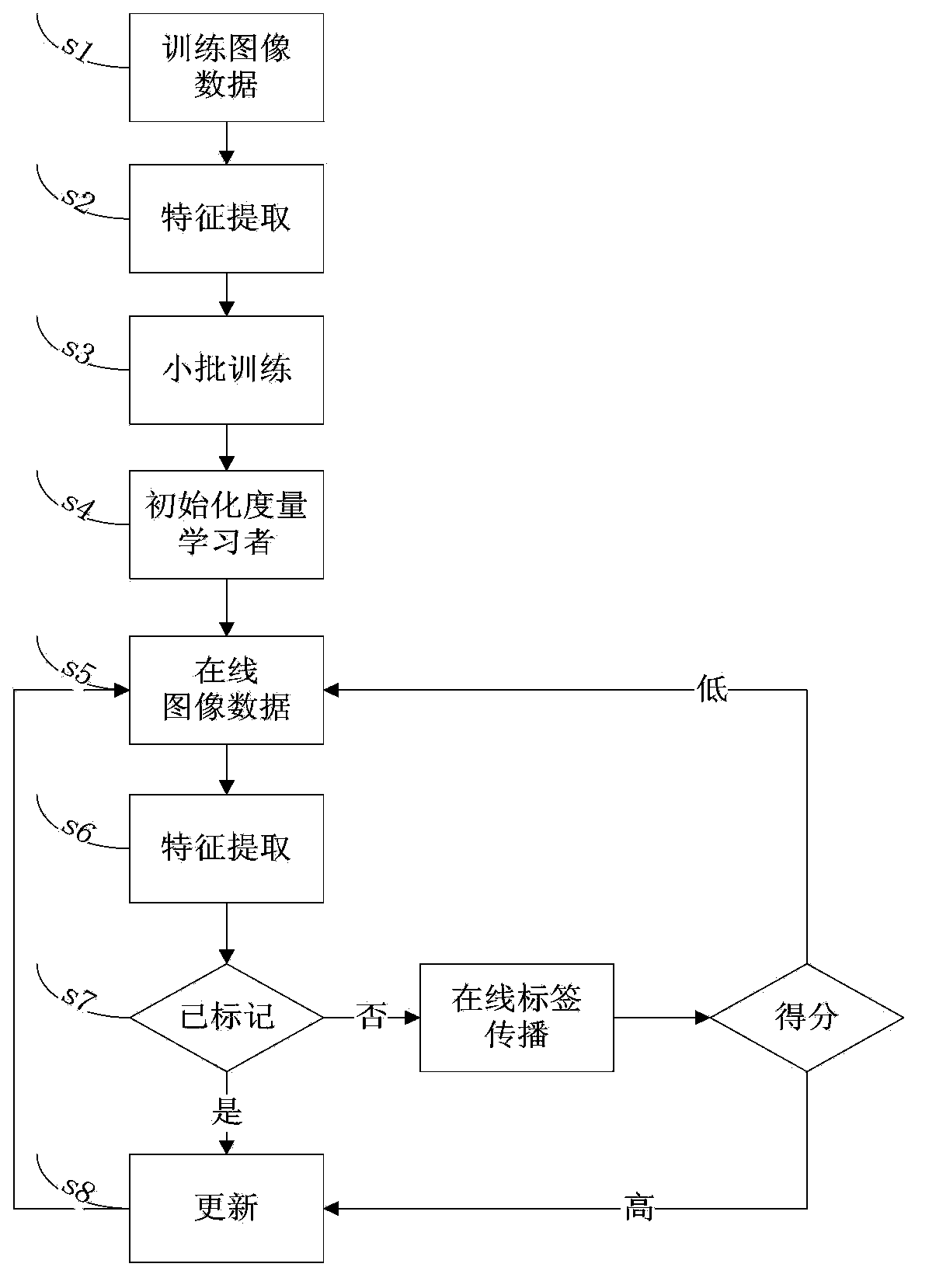

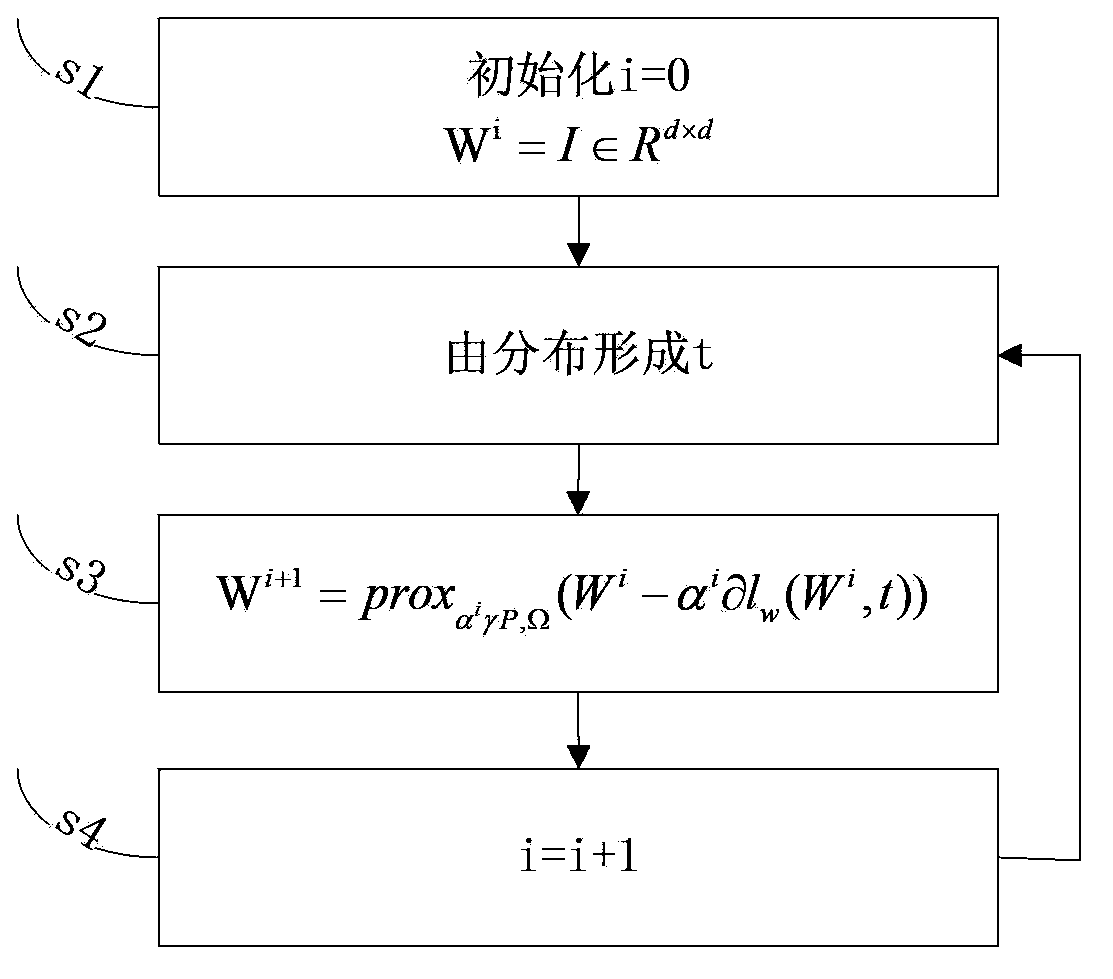

Low-rank constraint online self-supervised learning scene classification method

InactiveCN103793713AGuaranteed accuracyAchieve self-renewalCharacter and pattern recognitionFeature vectorBatch training

The invention relates to a low-rank constraint online self-supervised learning scene classification method. The method comprises the following steps: performing training and feature extraction on off-line image data; carrying out small-batch training to obtain an initial metric learner; inputting online data images sequentially and extracting image features; judging whether each image feature has a label; if the image feature has the label, updating the metric learner; if the image feature has no label, measuring the similarity between the image feature and each training sample, and utilizing a generated bidirectional linear graph to transmit the label; judging feature vector similarity scores of the sample; if the scores are high, updating the metric learner; and otherwise, inputting online data images. According to the scene classification method, self-updating can be realized gradually and useful information obtained from marked samples and unmarked samples can be combined; and the framework of a unified on-line self-updating model is utilized to process online scene classification, so that the on-line automatic scene classification can be achieved, the accuracy of classification is ensured, and work efficiency is improved.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

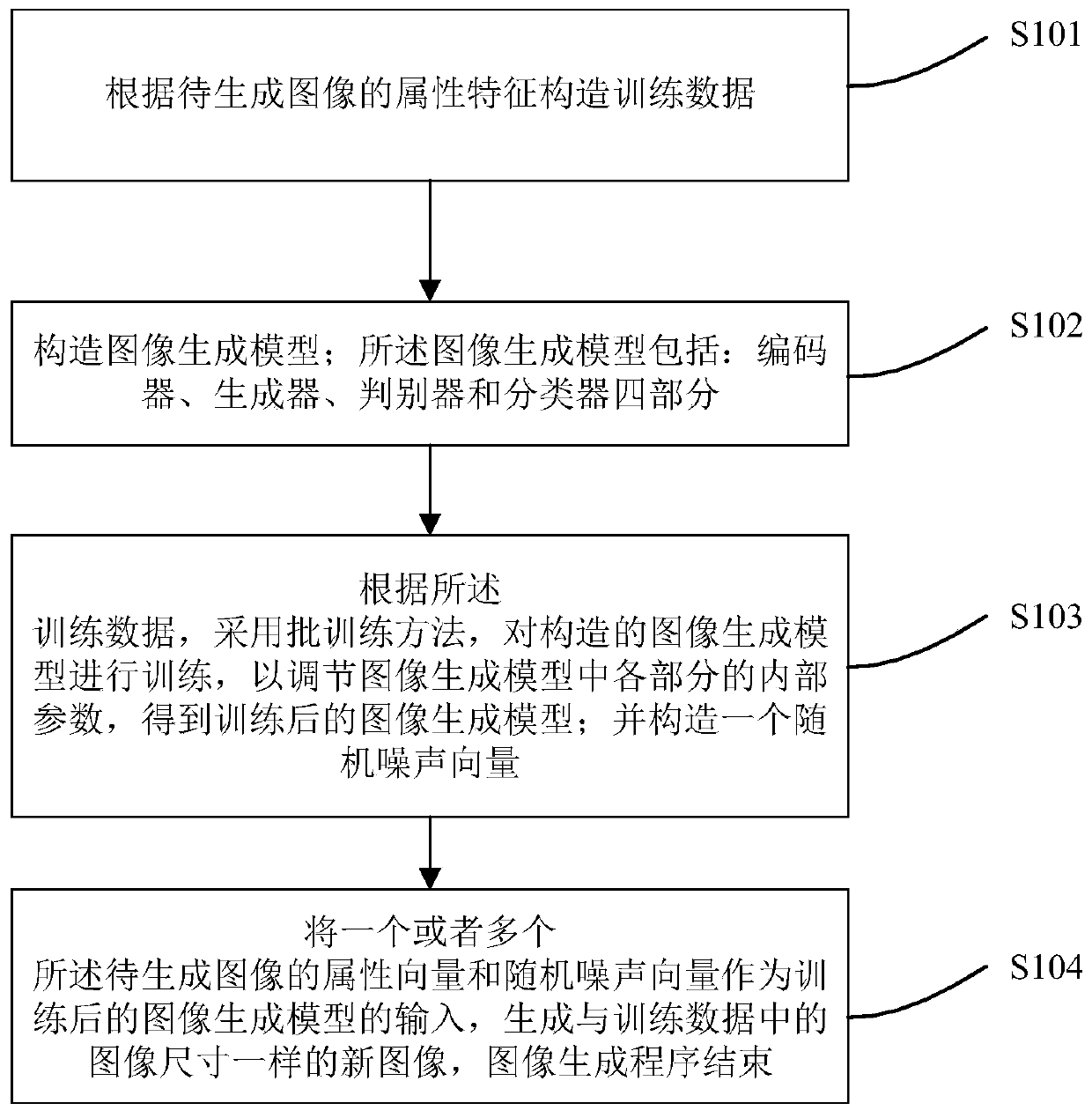

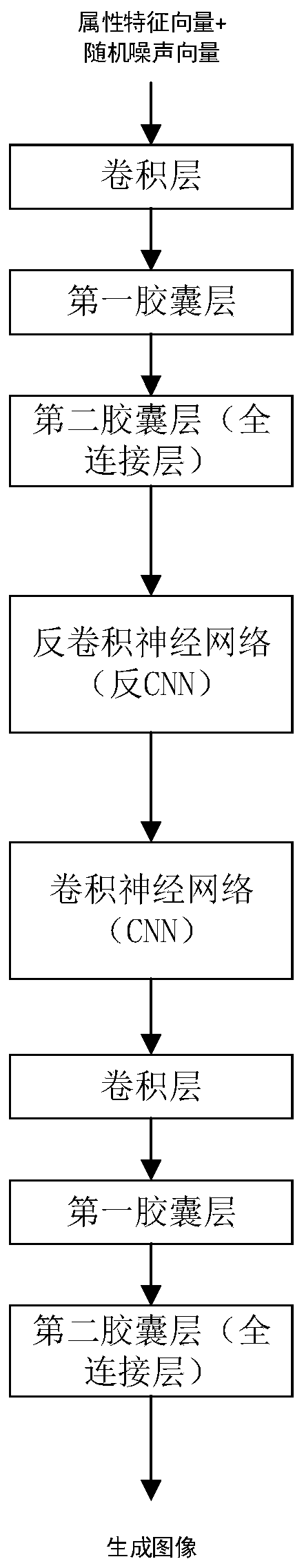

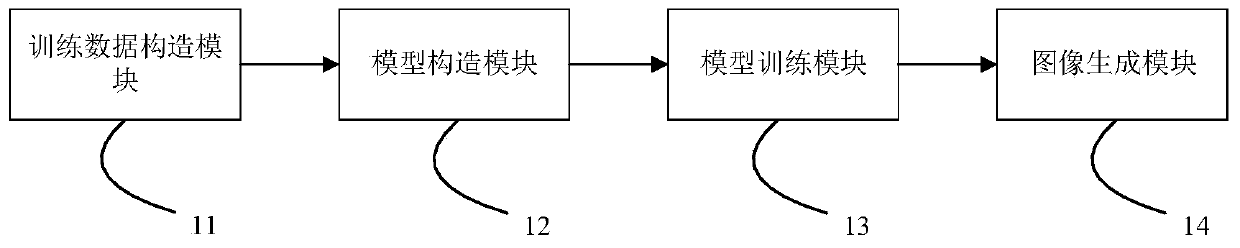

Image generation method and system based on a capsule network

InactiveCN109871888AFully generatedGenerate diverse and authenticCharacter and pattern recognitionNeural architecturesBatch trainingReal image

The invention provides an image generation method and system based on a capsule network. The method comprises the following steps: firstly, constructing training data according to attribute characteristics of an image to be generated; Constructing an image generation model; Training the constructed image generation model by adopting a batch training method according to the training data to obtaina trained image generation model, and constructing a random noise vector; And finally, taking the attribute vector and the random noise vector of the to-be-generated image as the input of the trainedimage generation model, and generating a new image with the same size as the image in the training data. The method has the beneficial effects that the capsule network is added into the image generation model to serve as the encoder network, so that the faster convergence of the model training process is facilitated; And on the other hand, compared with the pooling process of the convolutional neural network, the dynamic routing algorithm of the capsule network has stronger generalization robustness on characteristics, and more diversified and real images can be generated.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

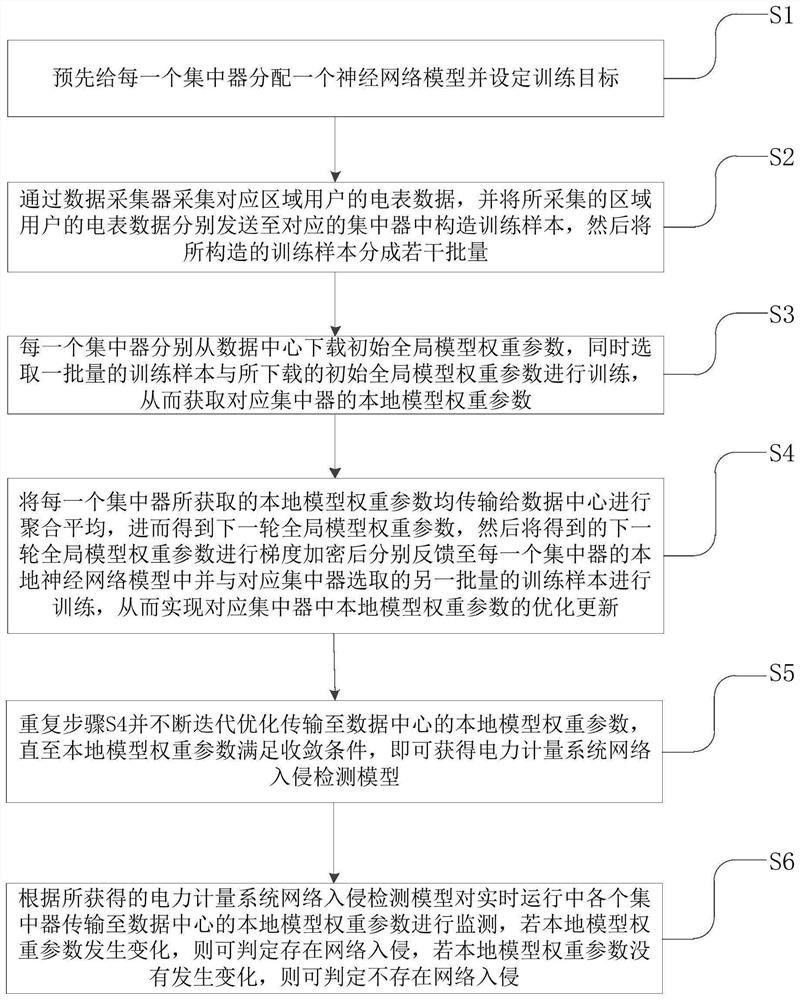

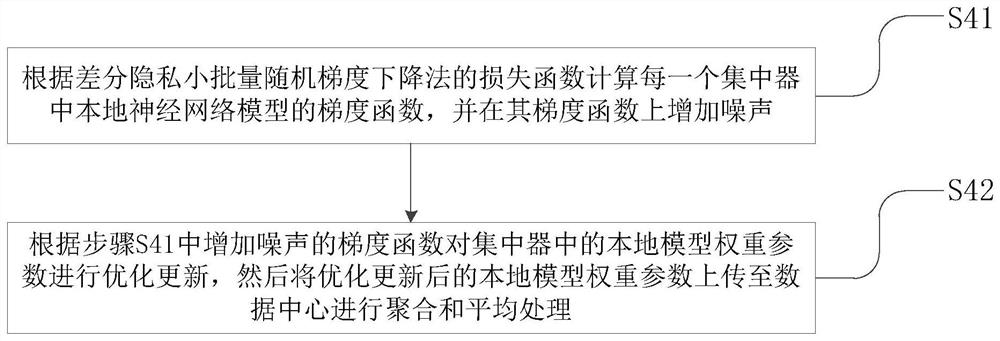

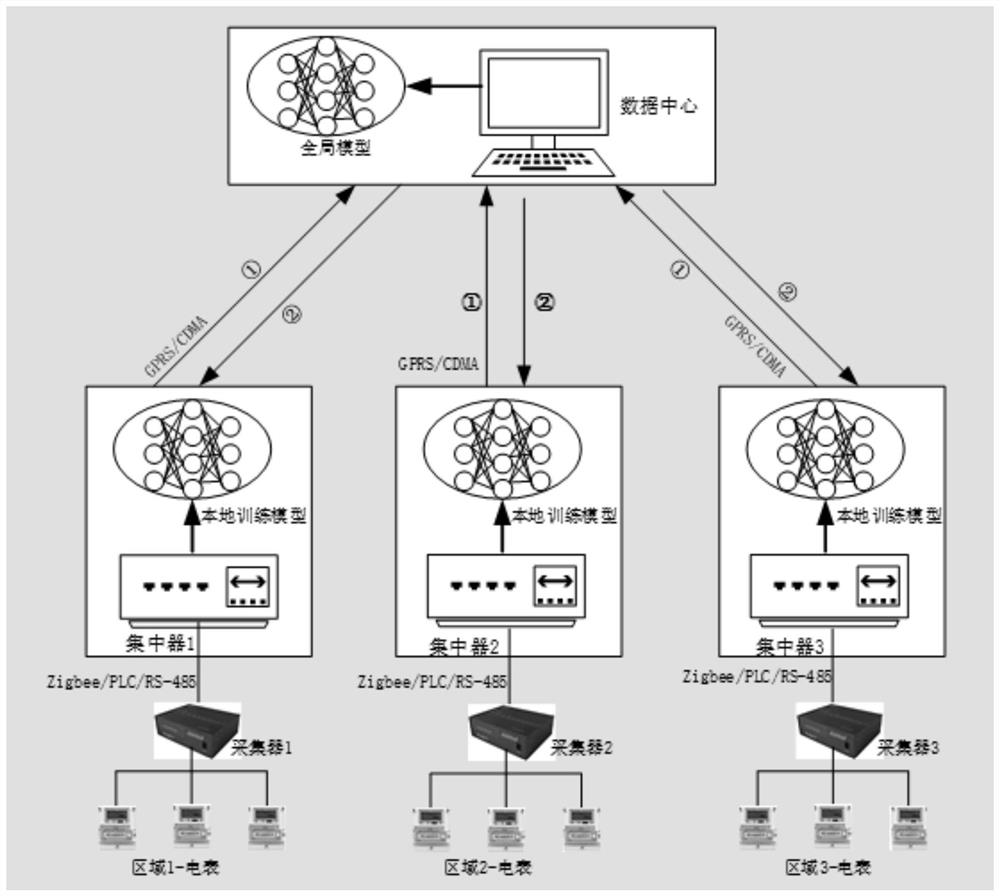

Power measurement system network intrusion detection method based on federated learning framework

PendingCN112800461APrivacy protectionReduce communication costsData processing applicationsDigital data protectionBatch trainingData center

The invention specifically discloses an electric power metering system network intrusion detection method based on a federated learning framework. The method comprises the following steps: S1, enabling a concentrator to obtain a local model and setting a target; s2, acquiring user electric meter data and sending the user electric meter data to a concentrator to construct a plurality of batches of training samples; s3, enabling each concentrator to download a global model weight parameter from the data center and carrying out training on the global model weight parameter and the selected batch of training samples to obtain a corresponding local model weight parameter; s4, transmitting the local model weight parameters to a data center for aggregation averaging to obtain a next round of global model parameters, feeding back the obtained next round of global model weight parameters to the concentrator, and optimizing respective local model weight parameters; s5, repeating the step S4 to obtain a detection model; and S6, judging whether network intrusion exists in the real-time operation system or not according to the obtained detection model. According to the method, the privacy of the user can be protected, the detection rate can be ensured, and the communication time and computing resources are reduced.

Owner:SHENZHEN POWER SUPPLY BUREAU +1

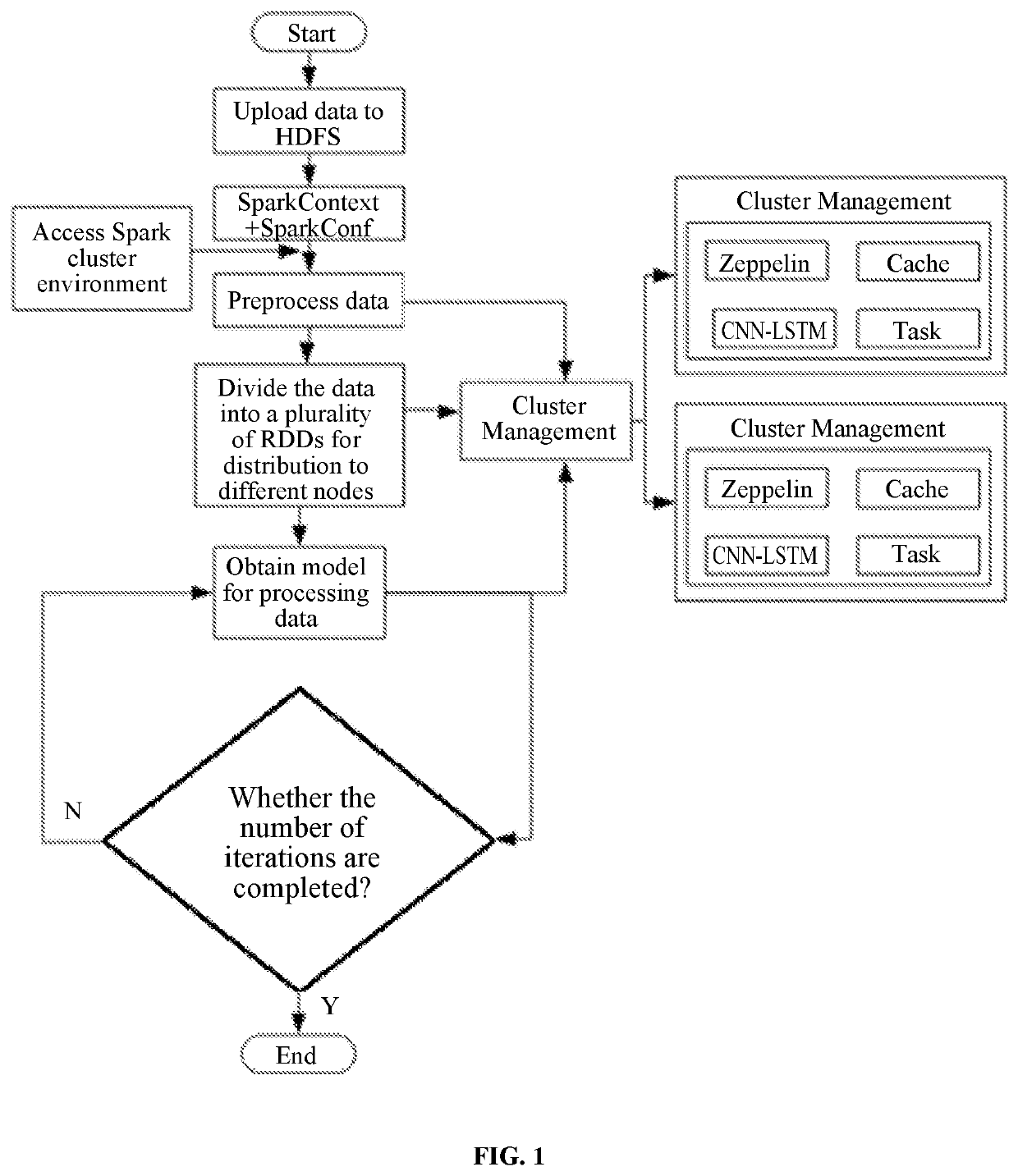

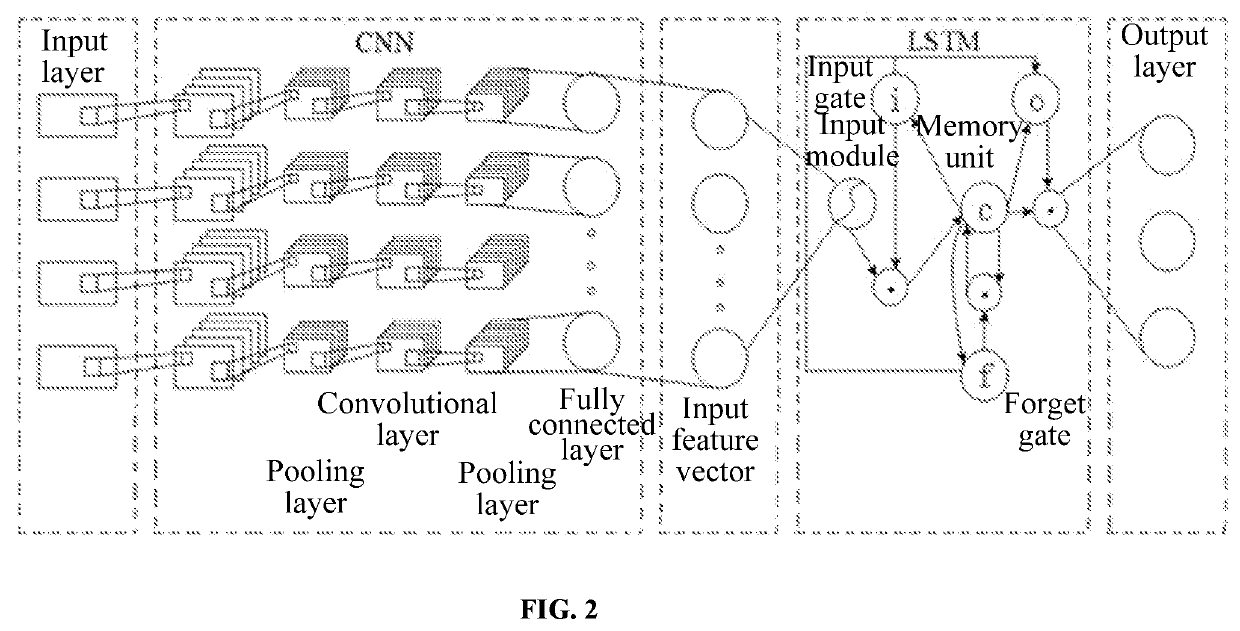

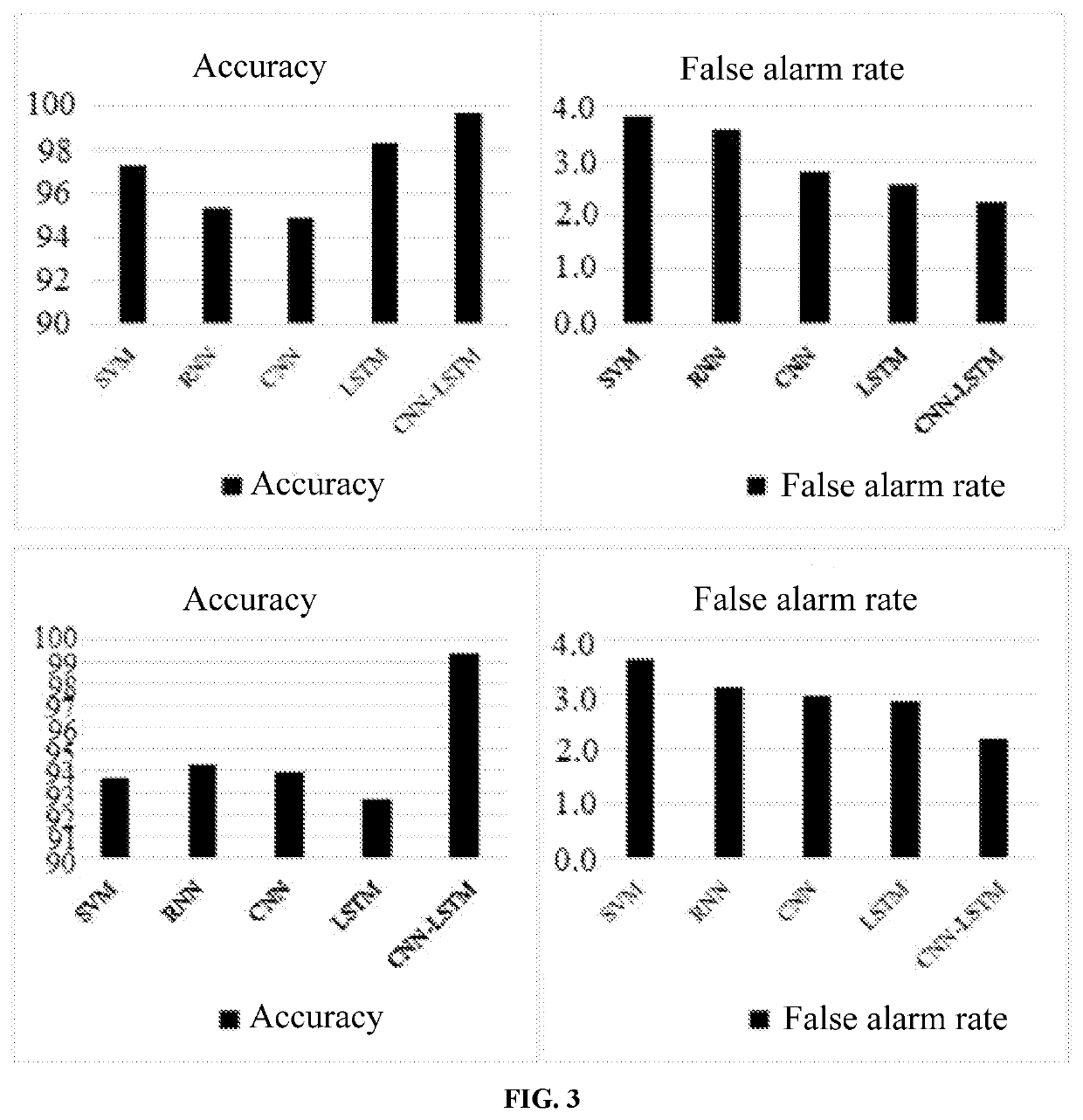

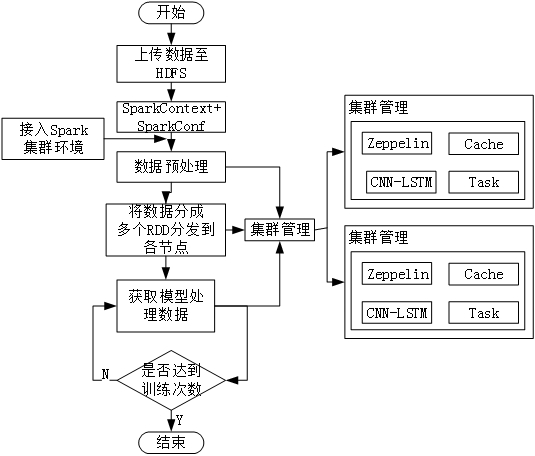

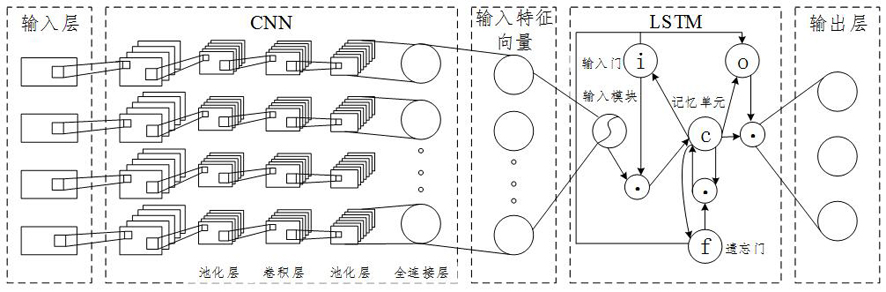

Intrusion detection method and system for internet of vehicles based on spark and deep learning

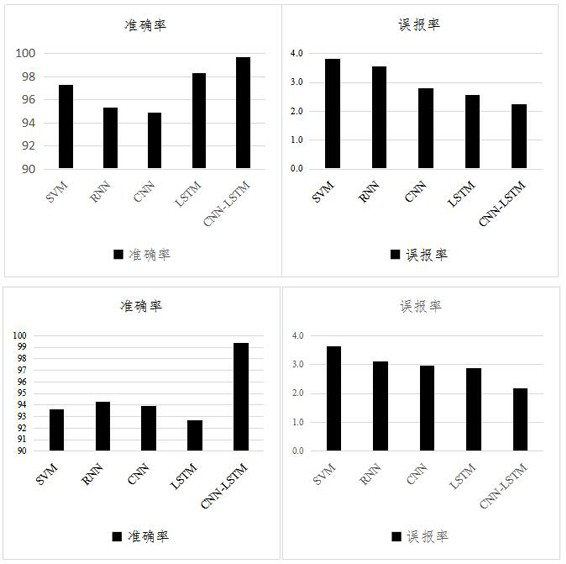

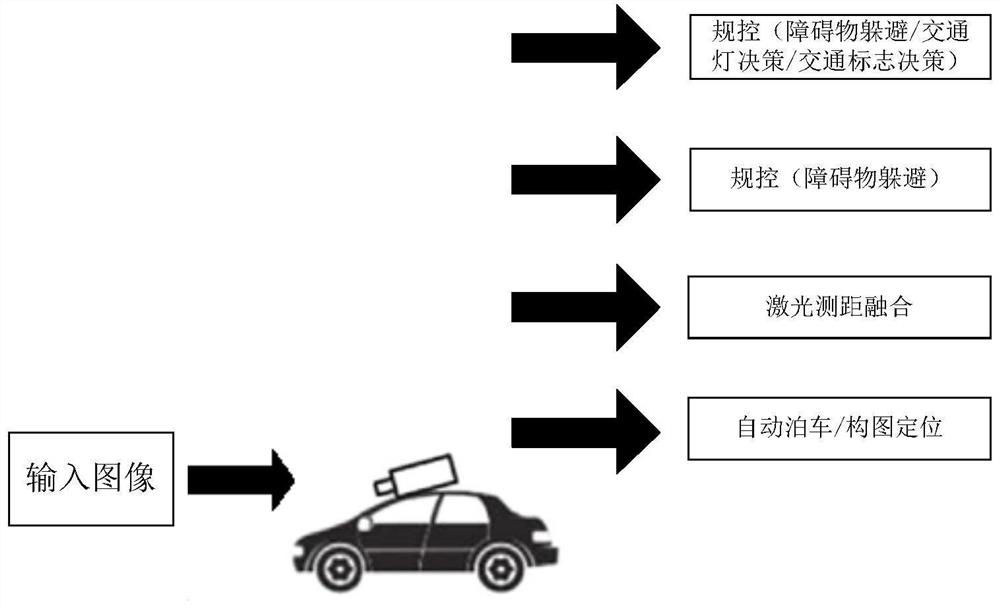

ActiveUS20220217170A1Improve accuracySimple calculationParticular environment based servicesVehicle wireless communication serviceBatch trainingData set

An intrusion detection method and system for Internet of Vehicles based on Spark and combined deep learning are provided. The method includes the following steps: S1: setting up Spark distributed cluster; S2: initializing the Spark distributed cluster, constructing a convolutional neural network (CNN) and long short-term memory (LSTM) combined deep learning algorithm model, initializing parameters, and uploading collected data to a Hadoop distributed file system (HDFS); S3: reading the data from the HDFS for processing, and inputting the data to the CNN-LSTM combined deep learning algorithm model, for recognizing the data; and S4: dividing the data into multiple resilient distributed datasets (RDDs) for batch training with a preset number of iterations.

Owner:NANJING UNIV OF SCI & TECH

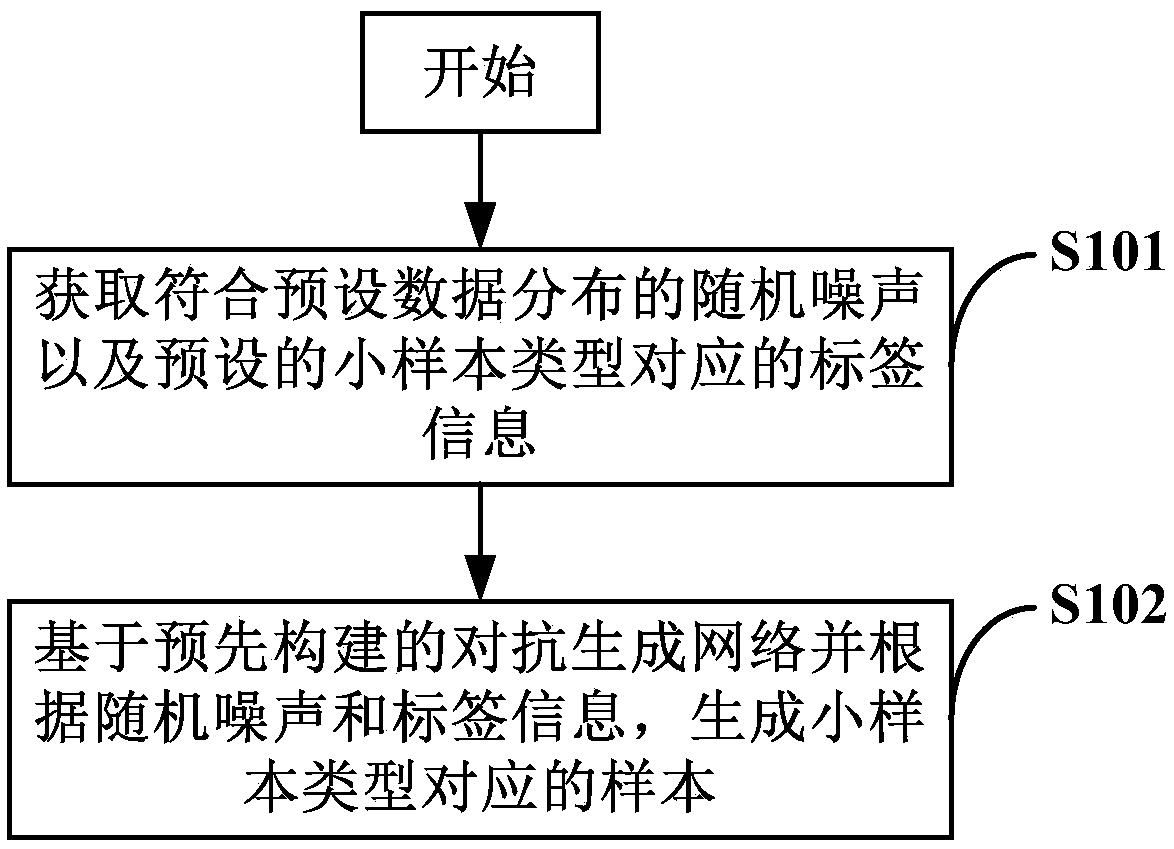

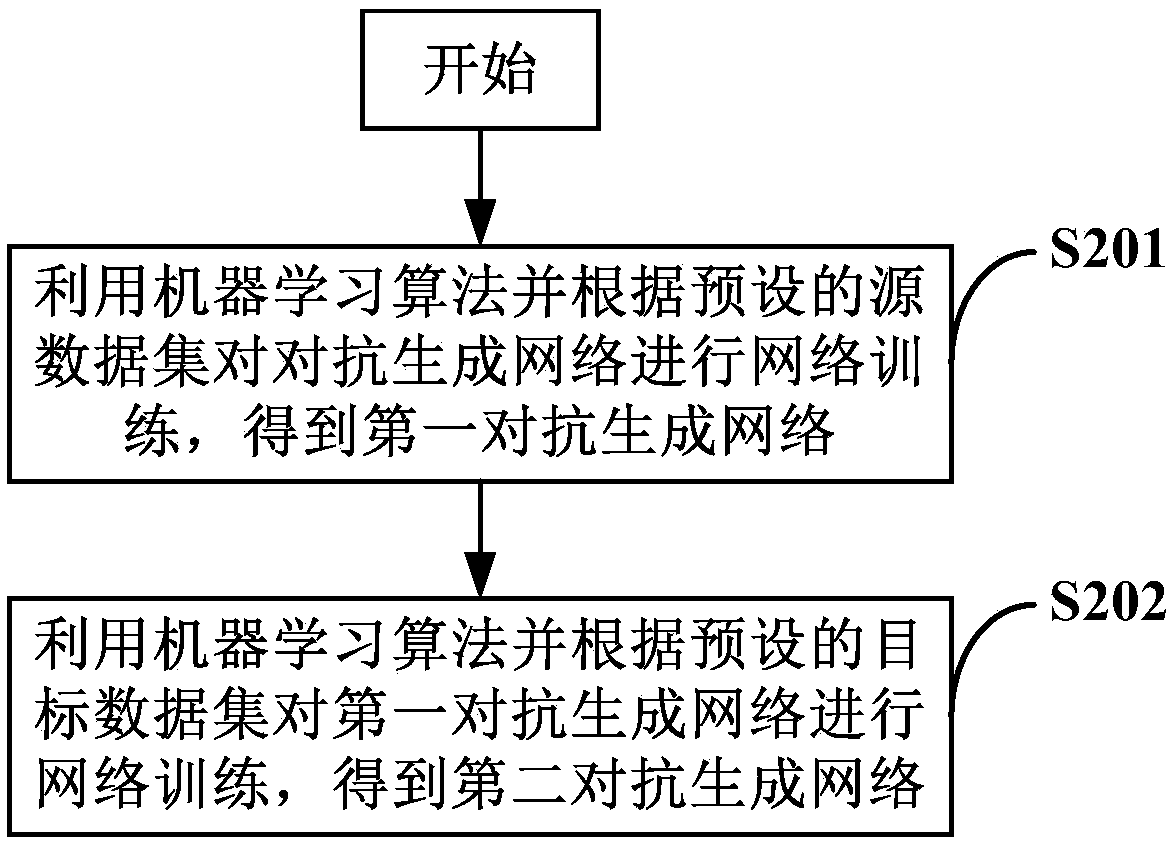

Small sample generation method and device based on generative adversarial network

The invention relates to the technical field of deep learning, in particular to a small sample generation method and device based on a generative adversarial network, aiming at solving the technical problem of how to generate sample data by using the generative adversarial network under the condition of a small amount of sample data. For this purpose, the small sample generation method based on the generative adversarial network provided by the present invention can generate samples corresponding to small sample types based on the generative adversarial network and according to random noise and label information. In this process, the invention adopts the methods of migration learning and batch training to train the generative adversarial network, so that the generative adversarial networkcan be effectively migrated and applied to the generative adversarial network sample generation task of a small number of samples.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT +1

Deep learning intrusion detection method and system based on Spark Internet of Vehicles combination

ActiveCN111970309AImprove detection accuracySimple calculationParticular environment based servicesVehicle wireless communication serviceBatch trainingThe Internet

The invention discloses a deep learning intrusion detection method and system based on Spark Internet of Vehicles combination. The method comprises the following steps: S1, establishing a Spark distributed cluster; S2, initializing the Spark distributed cluster, constructing a CNN-LSTM combined deep learning algorithm model, initializing parameters, and uploading the collected data to an HDFS; S3,reading the data from the HDFS for processing, inputting the data into a CNN-LSTM combined deep learning algorithm model, and recognizing the data; and S4, dividing the data into a plurality of RDDsfor batch training, and performing iteration for a preset number of times. The method provides a rapid and accurate detection method for intrusion detection of the Internet of Vehicles, ensures that the Internet of Vehicles can accurately and rapidly complete an intrusion detection task in a short time under the conditions that the computing power is limited, the application environment is complexand a large number of node networks exist, and provides a safe and reliable communication environment.

Owner:NANJING UNIV OF SCI & TECH

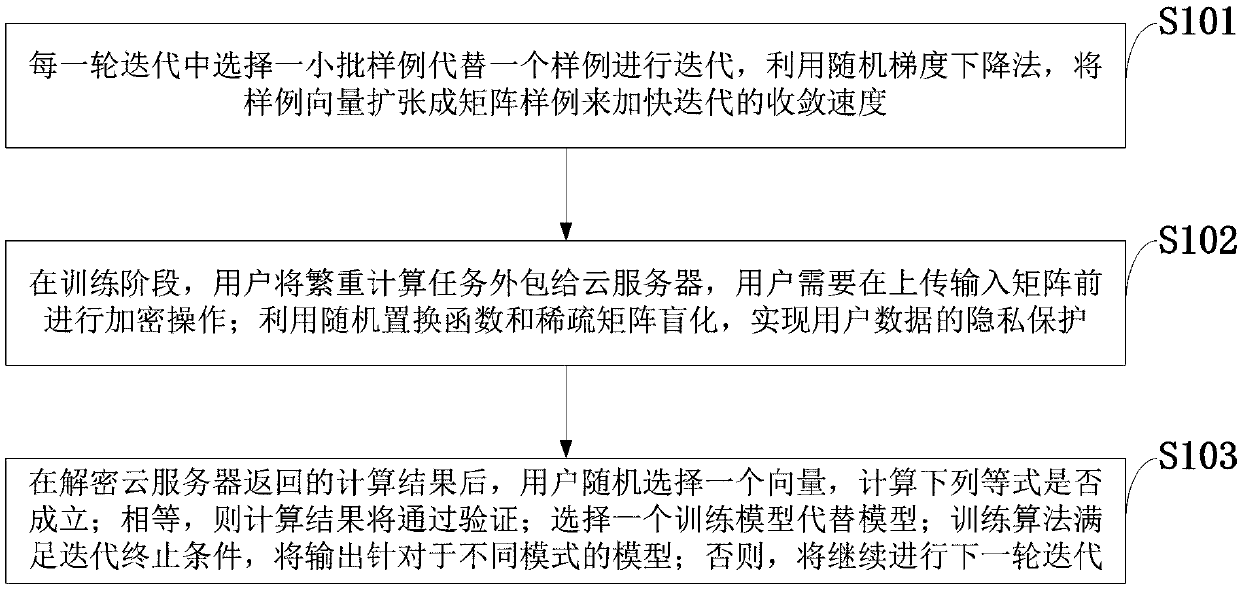

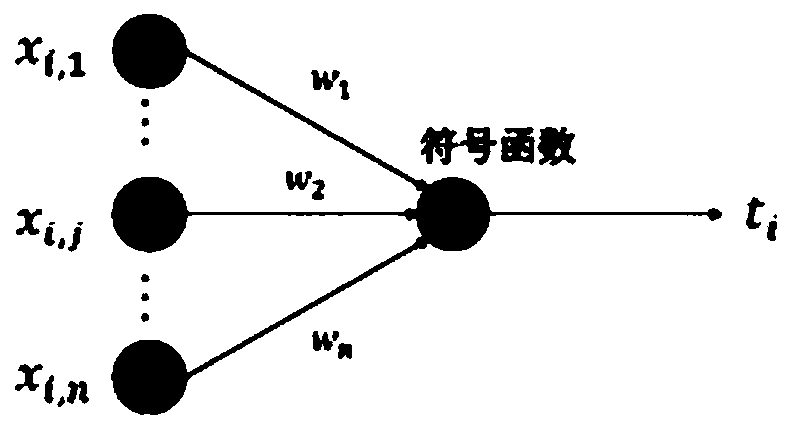

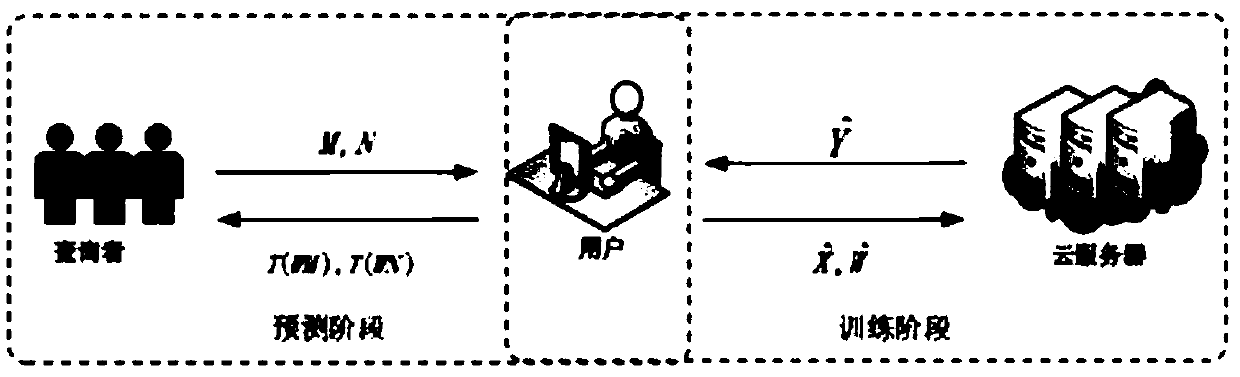

Verifiable privacy protection single-layer perceptron batch training method

ActiveCN108647525ARealize privacy protectionImprove protectionDigital data protectionNeural architecturesPrivacy protectionMachine learning

The invention belongs to the technical field of applying an electronic device to carry out identification and discloses a verifiable privacy protection single-layer perceptron batch training method and a mode identification system. On the basis of the same group of training samples, the training can be carried out for different modes, thereby obtaining a plurality of different training models. Ineach round of iteration, a small batch of samples instead of a sample are selected for iteration. Through utilization of a stochastic gradient descent method, a sample vector is expanded into a matrixsample for acceleration of a convergence rate of the iteration. In a training phase, a user outsources heavy computing tasks to a cloud server. The user needs to carry out encryption operation beforeuploading an input matrix. Through utilization of a stochastic permutation function and a sparse matrix blind technology, the privacy protection of user data is realized. According to the method, a verification mechanism is taken into consideration in a single-layer perceptron training scheme for the first time, the cloud server returns a wrong computing result, and the user can check the wrong computing result with a probability of 100%.

Owner:XIDIAN UNIV

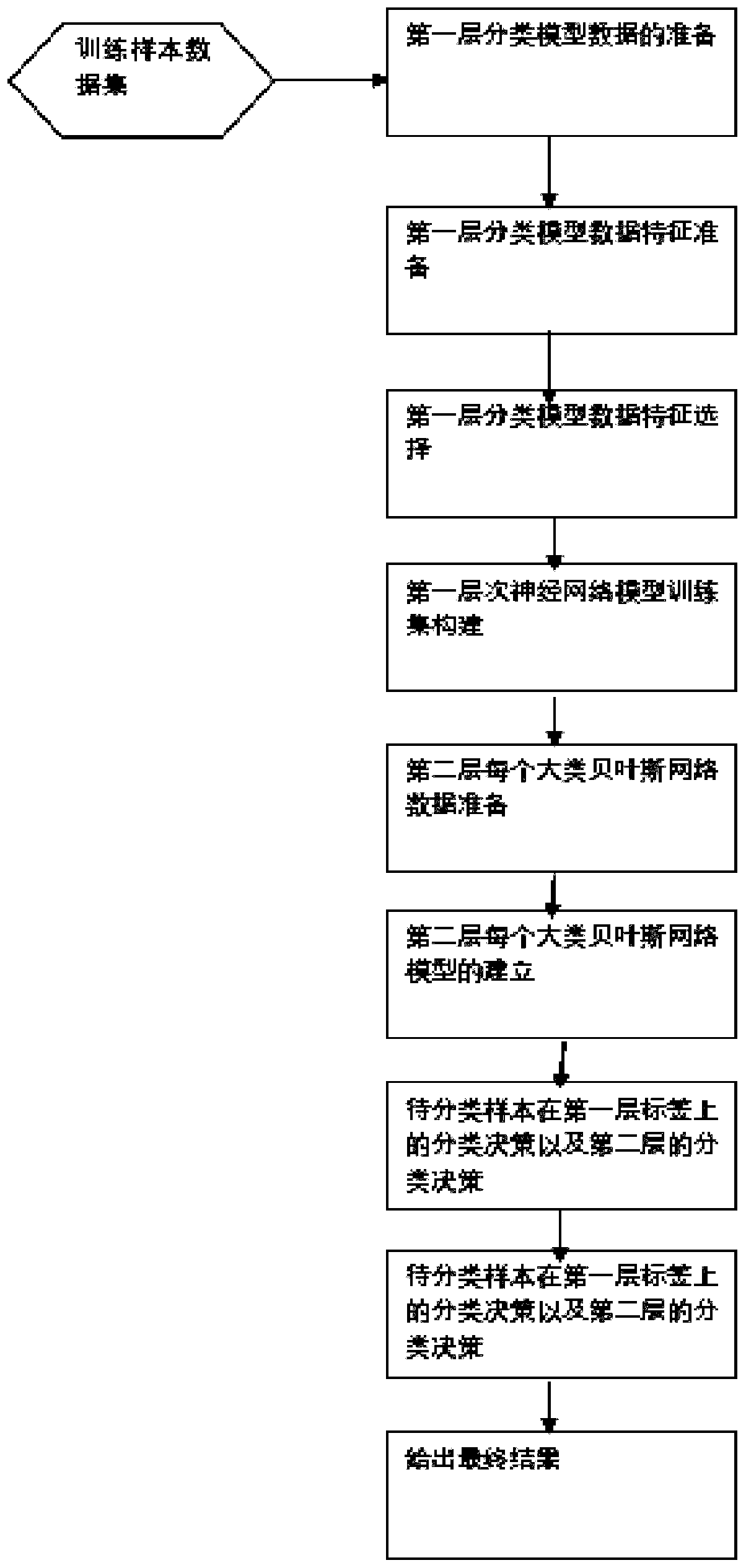

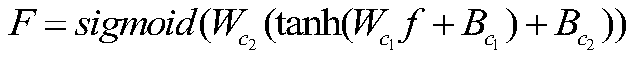

Multi-level progressive classification method and system based on neural network and Bayesian model

PendingCN109784387AImprove accuracyFast trainingCharacter and pattern recognitionFeature vectorBatch training

The invention provides a multi-level progressive classification method and system based on a neural network and a Bayesian model, and the method comprises the steps: carrying out the data preprocessing of the neural network, and preparing a feature vector and a prediction result of a neural network training model for a first model; training a neural network: training a neural network model on theprepared data, and constructing a large-class hierarchical classifier model; training a Bayesian model, and establishing a Bayesian network model of the category under each large category; and predicting the samples to be classified. According to the method, advantages and disadvantages of different models are fully utilized, batch training is carried out on mass data according to requirements oflevels, labels of samples to be classified are determined through a series of models, and corresponding solutions are provided for training and prediction of multiple models.

Owner:TIANJIN NANKAI UNIV GENERAL DATA TECH

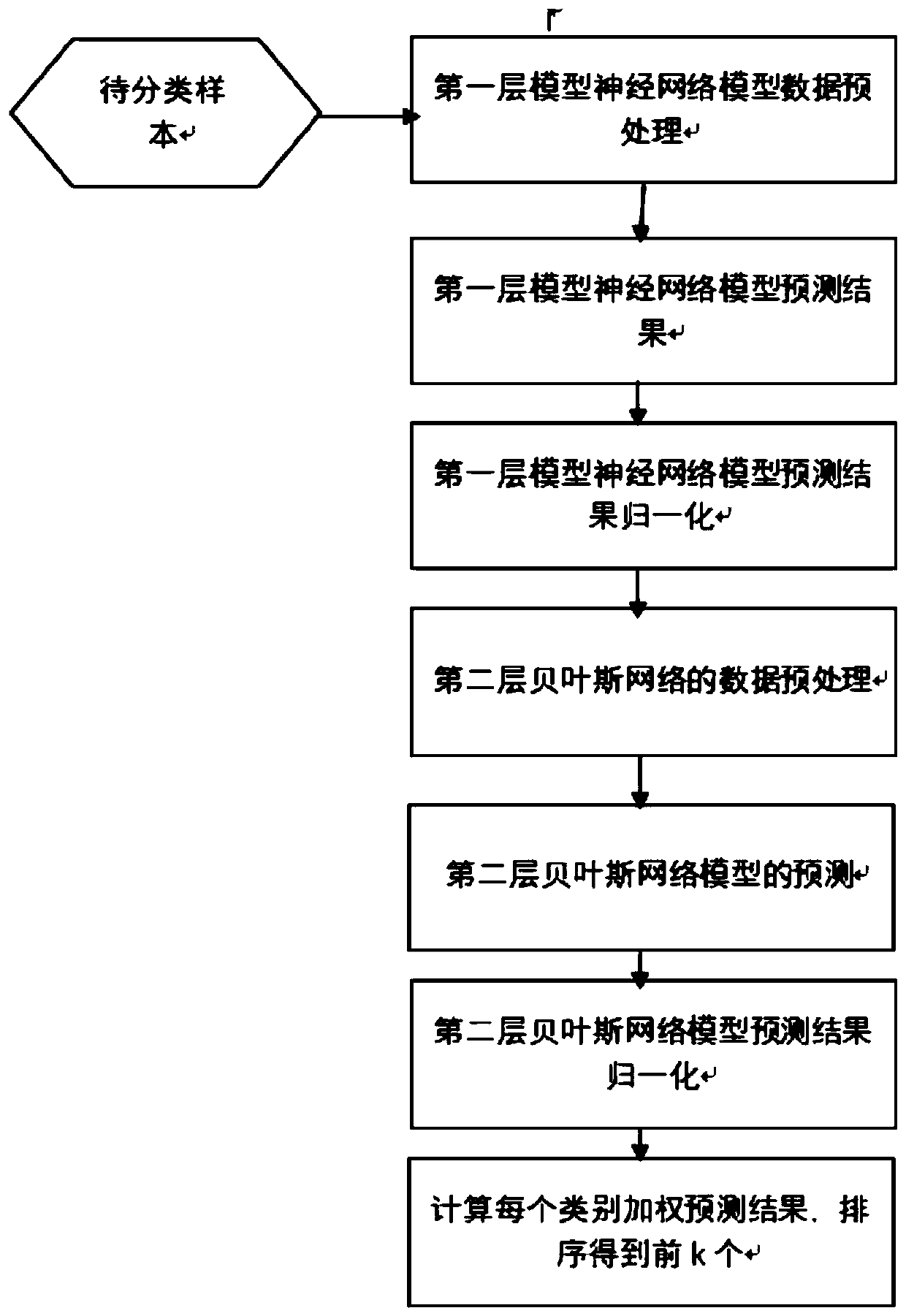

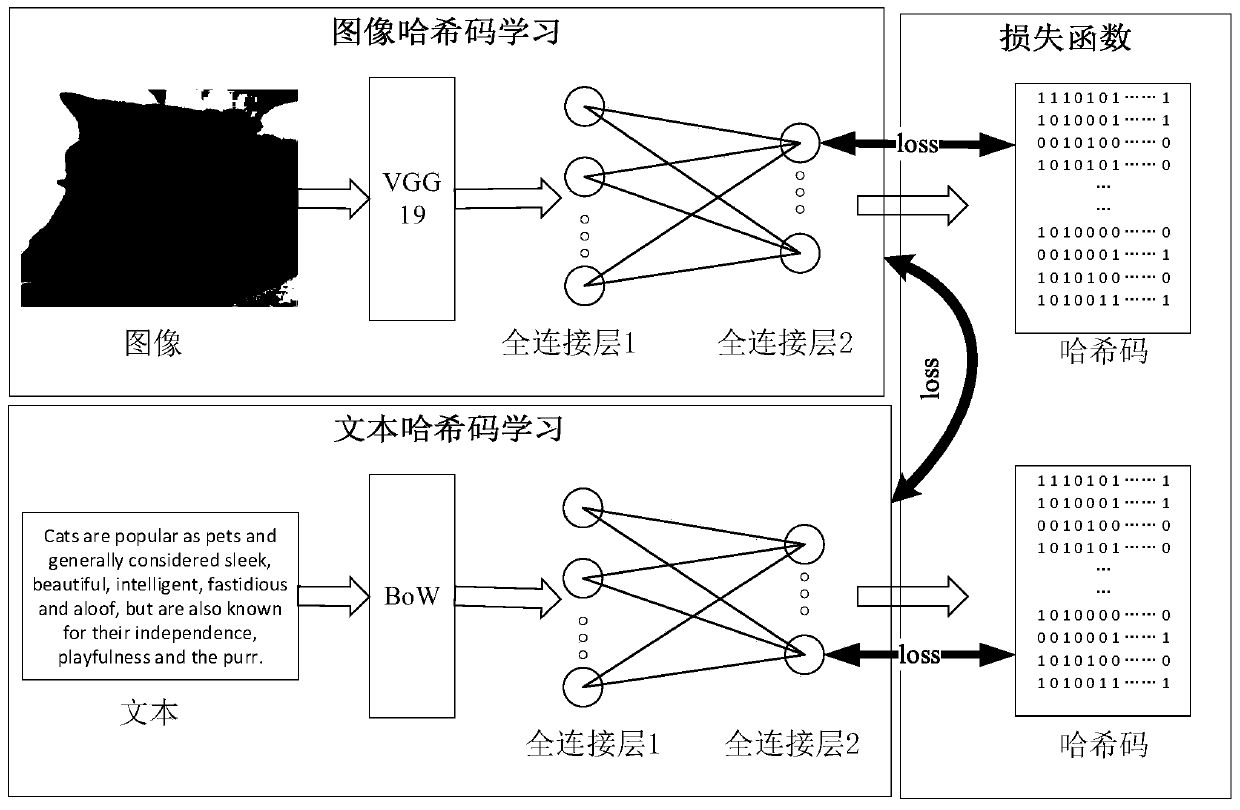

Image-text cross-modal hash retrieval method based on large-batch training

ActiveCN111209415AHigh precisionIncrease training speedDigital data information retrievalEnergy efficient computingPattern recognitionBatch training

The invention relates to an image-text cross-modal hash retrieval method based on large-batch training, and belongs to the field of cross-modal retrieval, and is used for solving the problems that theexisting deep learning-based cross-modal hash retrieval method, particularly a triple-based deep cross-modal hash method, is long in small-batch training time, limited in obtained sample number and not good enough in gradient, so that the retrieval performance is influenced. The method comprises the following steps: preprocessing an image and text data; carrying out hash code mapping; establishing a target loss function L; inputting the triple data training model in a large-batch manner; and performing cross-modal hash retrieval by using the trained model. According to the scheme provided bythe invention, the triple data is input in a large-batch manner for training, so that the time of each round of training is shortened; because more training samples exist when the parameters are updated each time, a better gradient can be obtained, orthogonal regularization is used for the weight, the gradient can be kept during gradient transmission, model training is more stable, and the retrieval accuracy is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Flower recognition method based on convolutional neural network (CNN) with ReLU activation function

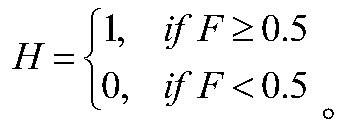

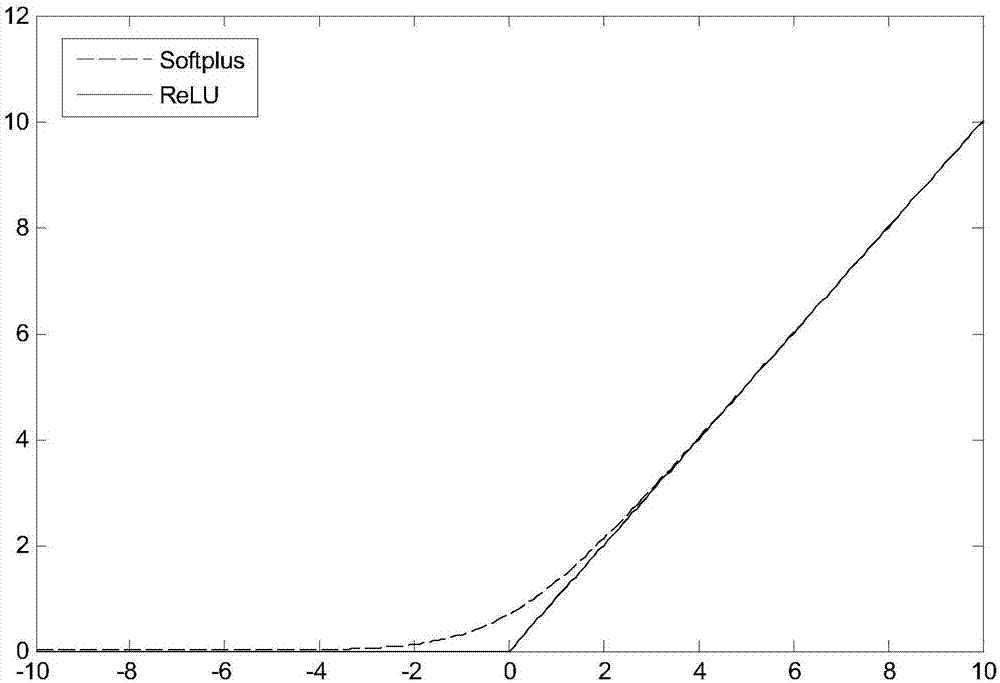

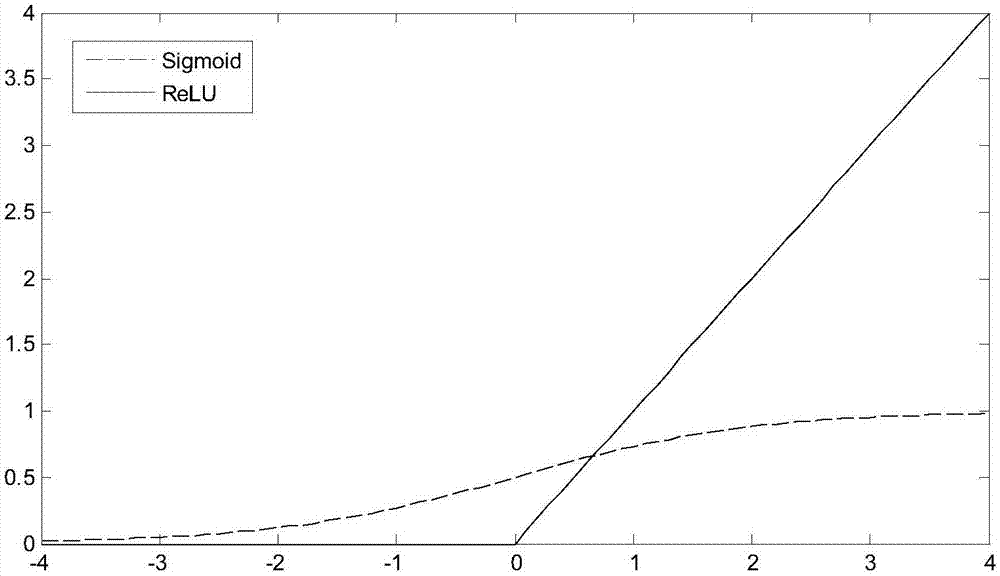

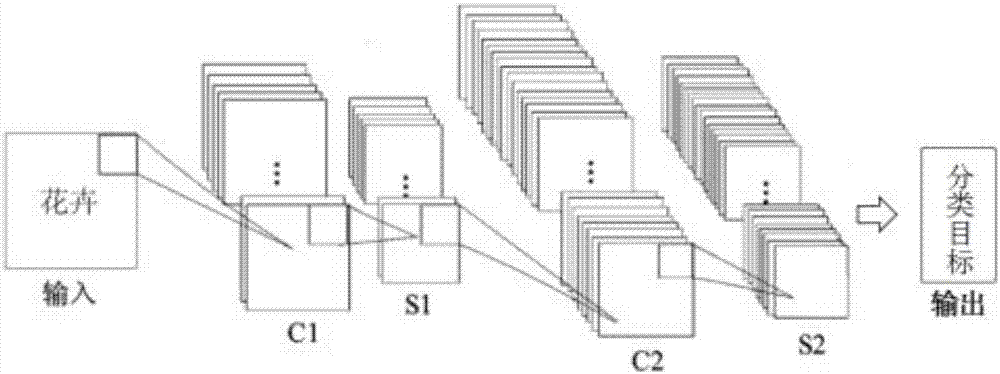

InactiveCN107516128AReduce the number of parametersIncrease training speedCharacter and pattern recognitionNeural architecturesBatch trainingActivation function

The invention discloses a flower recognition method based on a convolutional neural network (CNN) with a ReLU activation function, which belongs to the technical field of image recognition. The method comprises steps: basic parameters of the CNN are set; the weight and the bias term are initialized, and convolutional down sampling layers are designed layer by layer; a random sequence is generated, 50 samples are selected at each time for batch training, a forward process, error conduction and a gradient calculation process are completed, and a gradient sum is updated to a weight model for next weight updating; and a well-set training function and an updating function are called for training, and the accuracy of a sample is tested. Flower recognition can be carries out effectively at a high speed under influences of lighting, rotation and occlusion conditions.

Owner:NANJING UNIV OF POSTS & TELECOMM

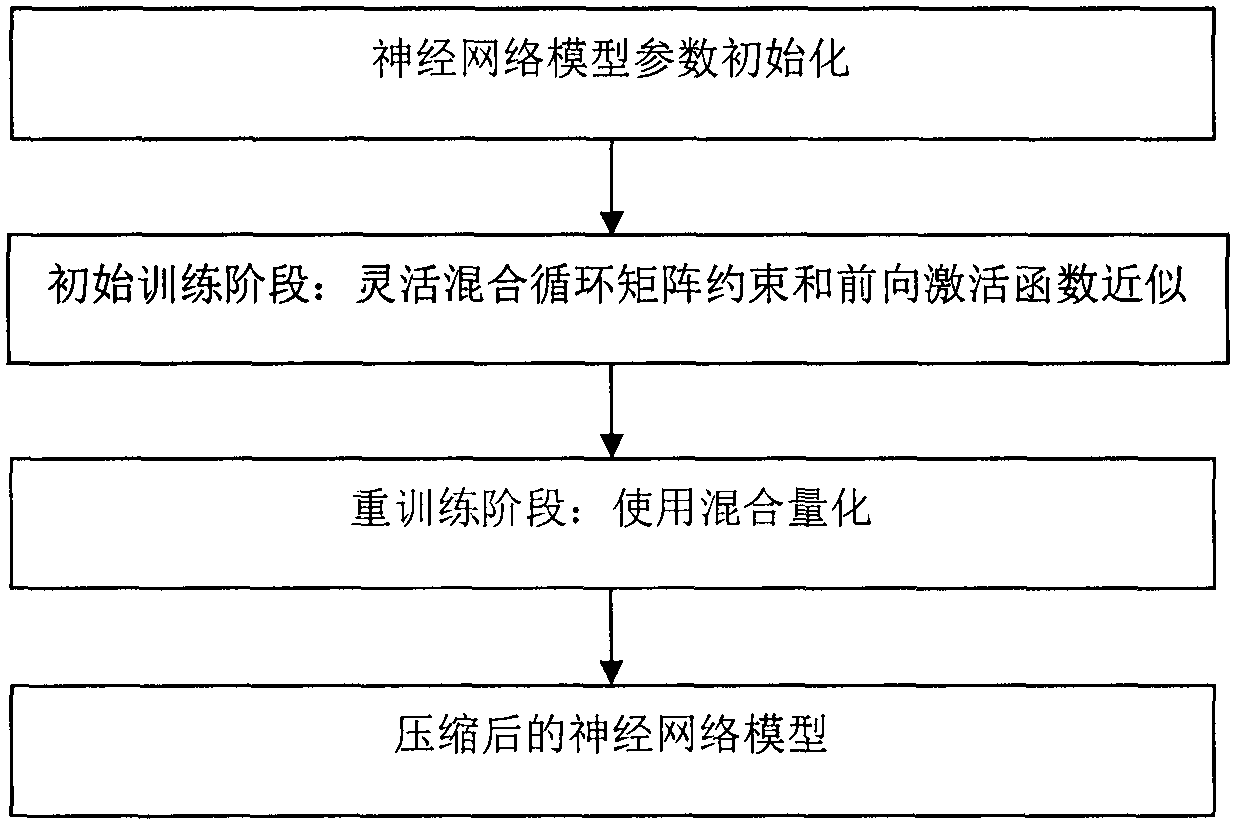

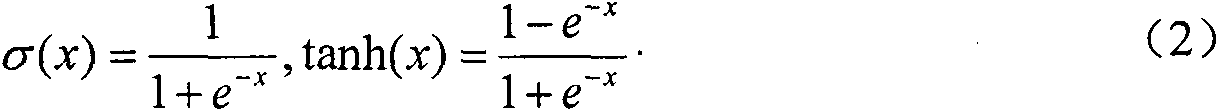

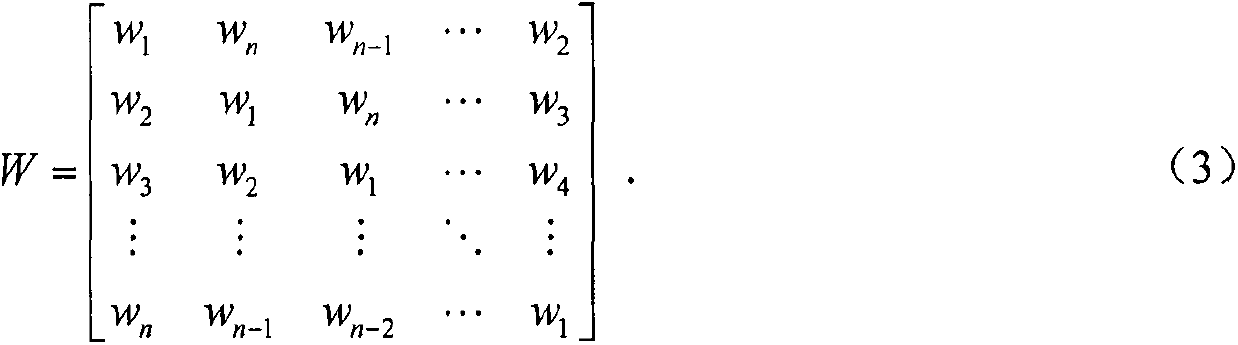

Multi-mechanism mixed recurrent neural network model compression method

The invention discloses a multi-mechanism mixed recurrent neural network model compression method. The multi-mechanism mixed recurrent neural network model compression method comprises A, carrying outcirculant matrix restriction: restricting a part of parameter matrixes in the recurrent neural network into circulant matrixes, and updating a backward gradient propagation algorithm to enable the network to carry out batch training of the circulant matrixes, B, carrying out forward activation function approximation: replacing a non-linear activation function with a hardware-friendly linear function during the forward operation process, and keeping the backward gradient updating process unchanged; C, carrying out hybrid quantization: employing different quantification mechanisms for differentparameters according to the error tolerance difference between different parameters in the recurrent neural network; and D, employing a secondary training mechanism: dividing network model training into two phases including initial training and repeated training. Each phase places particular emphasis on a different model compression method, interaction between different model compression methodsis well avoided, and precision loss brought by the model compression method is reduced to the maximum extent. According to the invention, a plurality of model compression mechanisms are employed to compress the recurrent neural network model, model parameters can be greatly reduced, and the multi-mechanism mixed recurrent neural network model compression method is suitable for a memory-limited andlow-delay embedded system needing to use the recurrent neural network, and has good innovativeness and a good application prospect.

Owner:南京风兴科技有限公司

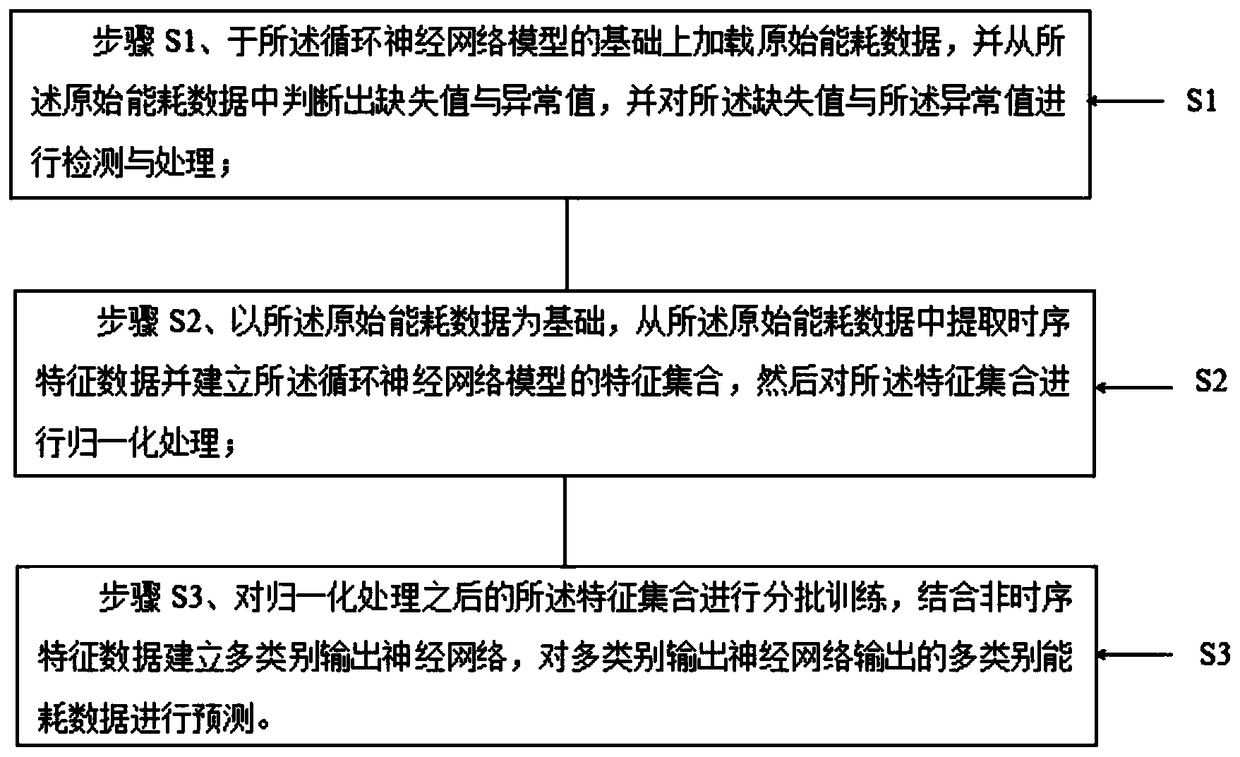

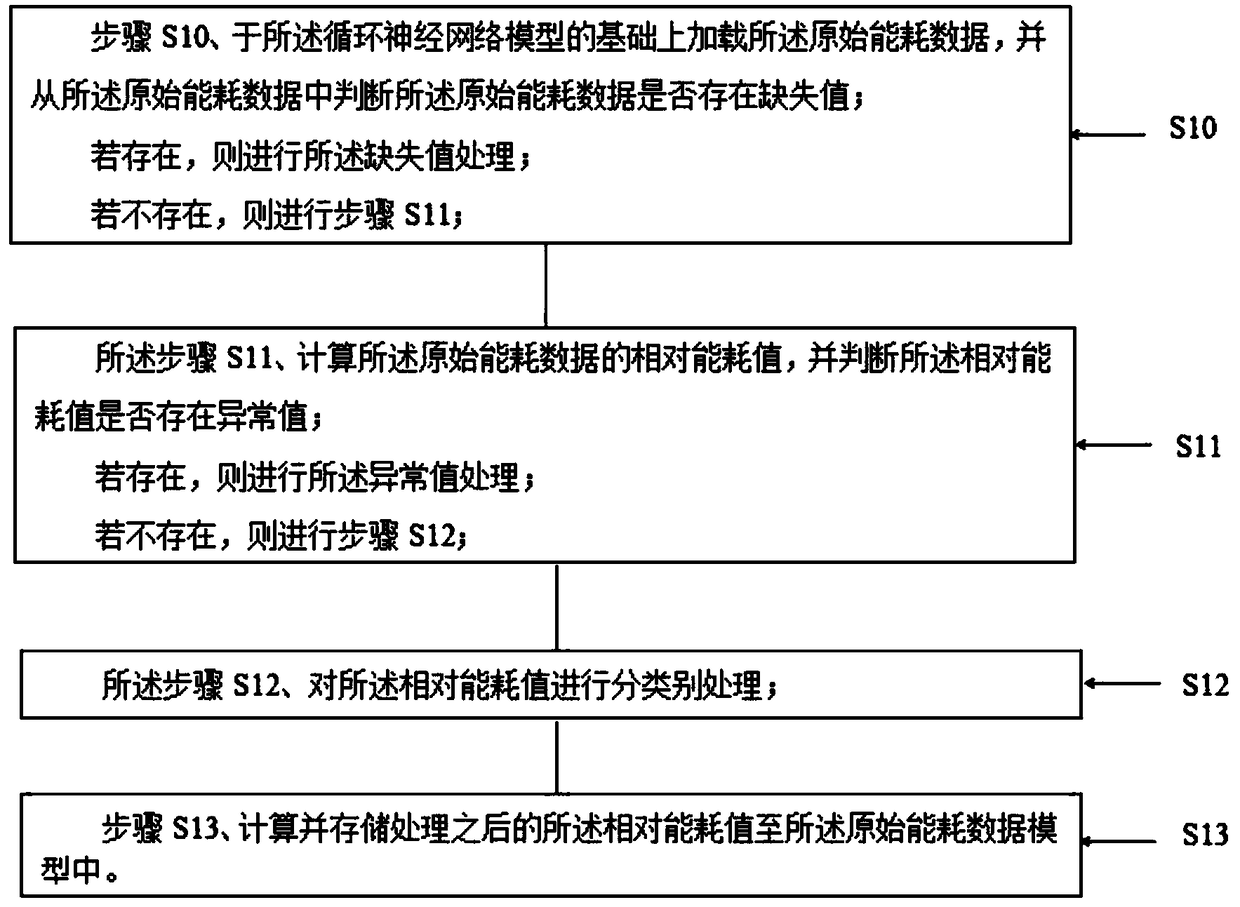

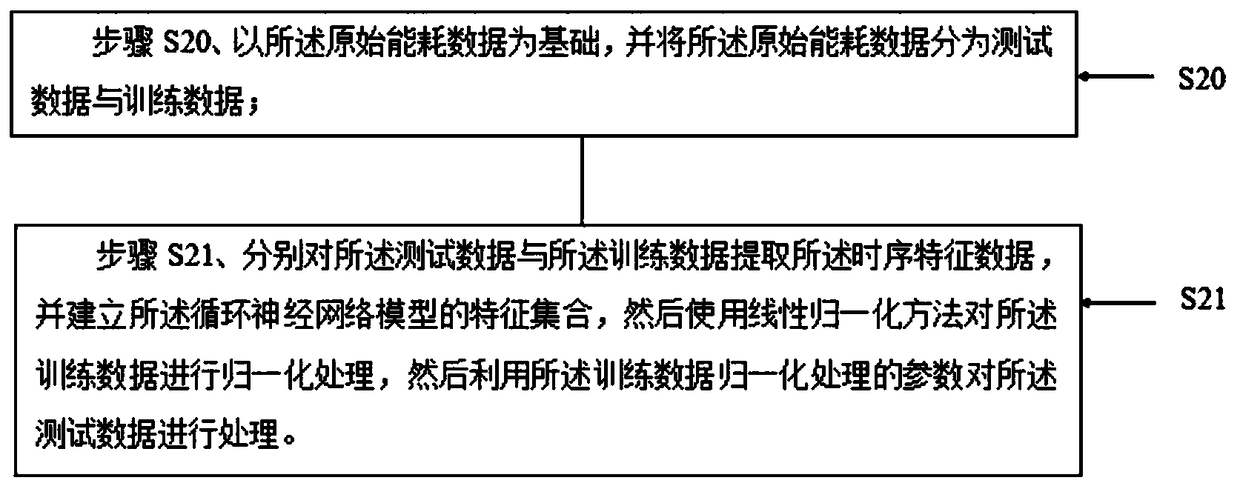

A multi-class energy consumption forecasting method based on circulating neural network

The invention discloses a multi-class energy consumption prediction method based on a circulating neural network. A circulating neural network model is formed by pre-training, and the method comprisesthe following steps: step S1, loading original energy consumption data on the basis of the circulating neural network model, judging missing value and abnormal value from the original energy consumption data, and detecting and processing the missing value and abnormal value; step S2, extracting the time series characteristic data from the original energy consumption data on the basi of the original energy consumption data, establishing a characteristic set of the circulating neural network model, and normalizing the characteristic set; step S3, batch training being carried out on the featureset after the normalization processing, a multi-class output neural network being established by combining the non-time series feature data, and the multi-class energy consumption data output by the multi-class output neural network being predicted. The utility model has the advantages that a multi-class output neural network is established by using the time series characteristic data of the original energy consumption data and the non-time series characteristic data to predict the energy consumption.

Owner:鲁班软件股份有限公司 +1

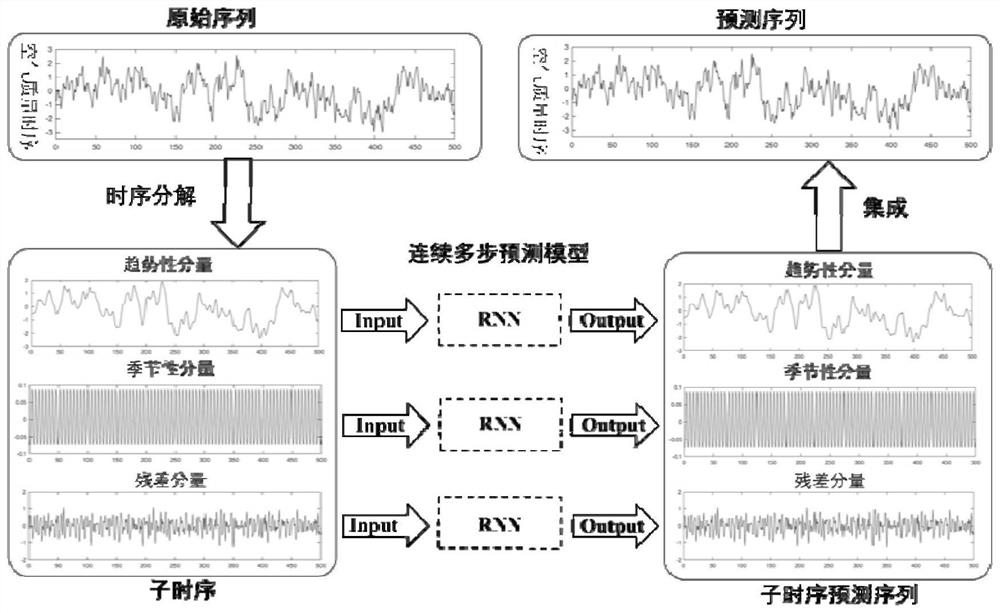

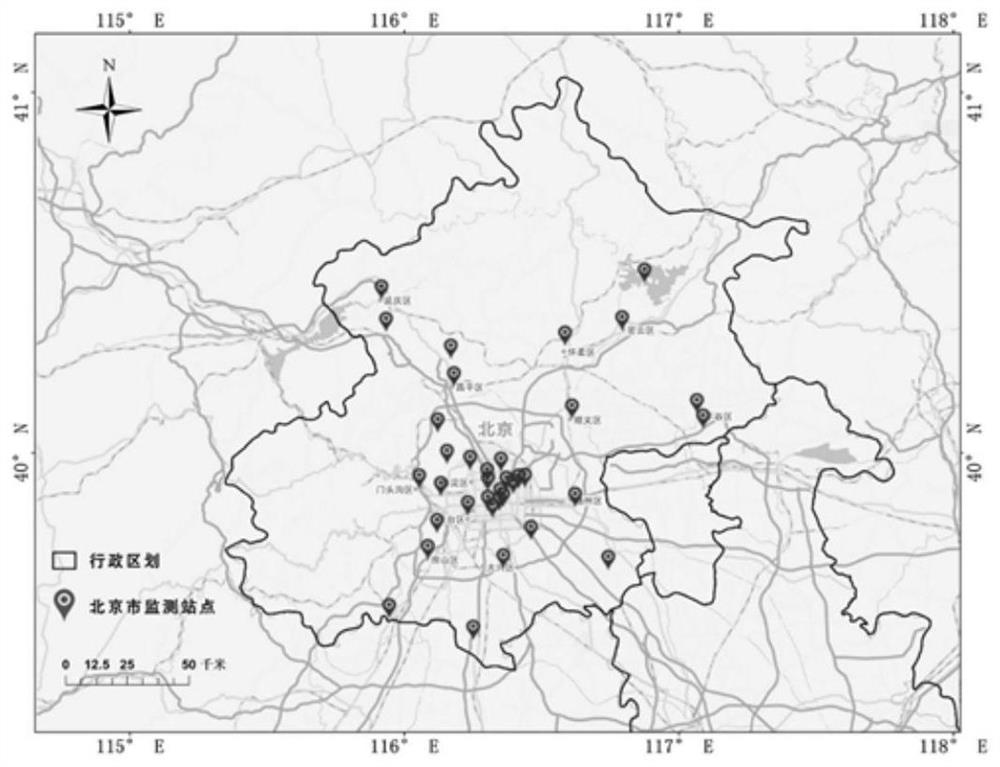

Air quality prediction method based on seasonal recurrent neural network

The invention provides an air quality prediction method based on a seasonal recurrent neural network. The method comprises the following steps: recording long-time-sequence air quality monitoring station data; preprocessing monitoring station data, analyzing seasonal and periodic change characteristics of air quality long-time sequence monitoring data, and determining seasonal change frequency of the air quality long-time sequence monitoring data; carrying out time sequence decomposition according to seasonal and periodic time sequence change characteristics of the air quality monitoring parameters, and generating a trend component, a seasonal component and a residual component; constructing a large batch of training samples, preliminarily establishing a three-channel recurrent neural network, and obtaining an optimal three-channel recurrent network model through training; predicting the future value of each component according to the historical value by using the optimal three-channel cyclic network model to obtain a trend component predicted value, a seasonal component predicted value and a residual component predicted value; and integrating the prediction results of the components to form a final air quality prediction value sequence.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

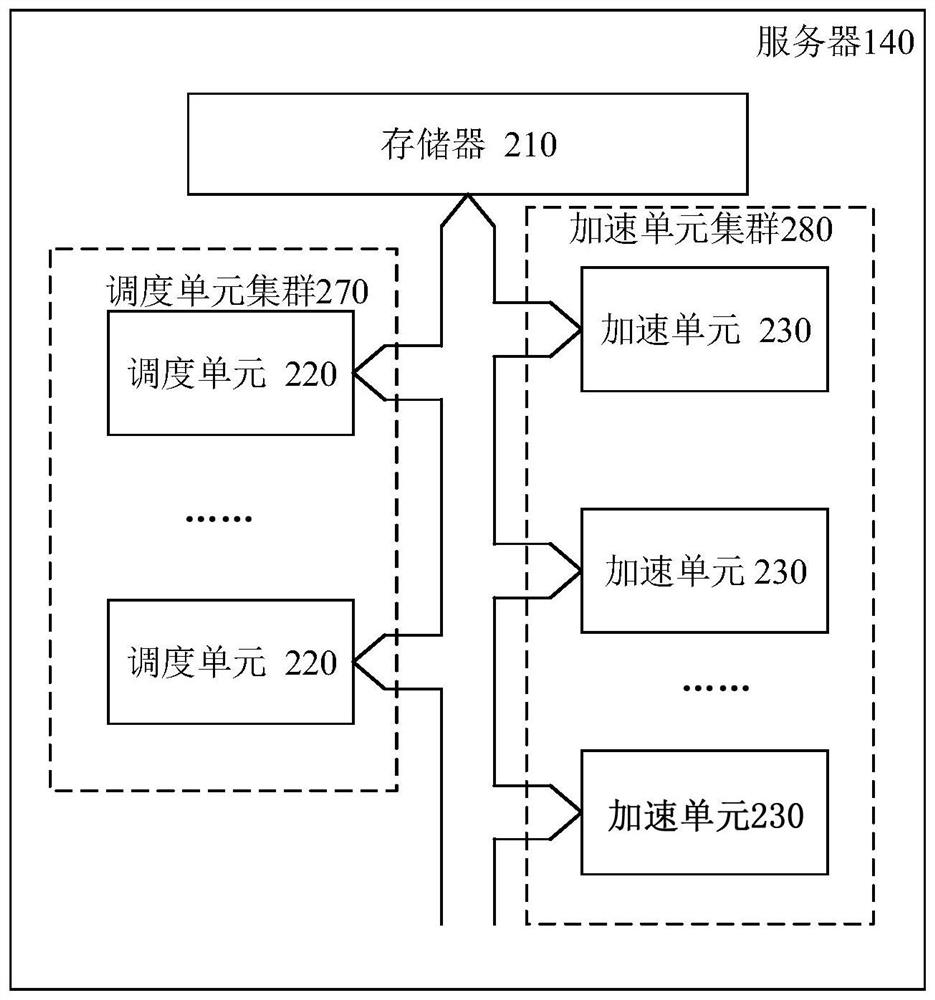

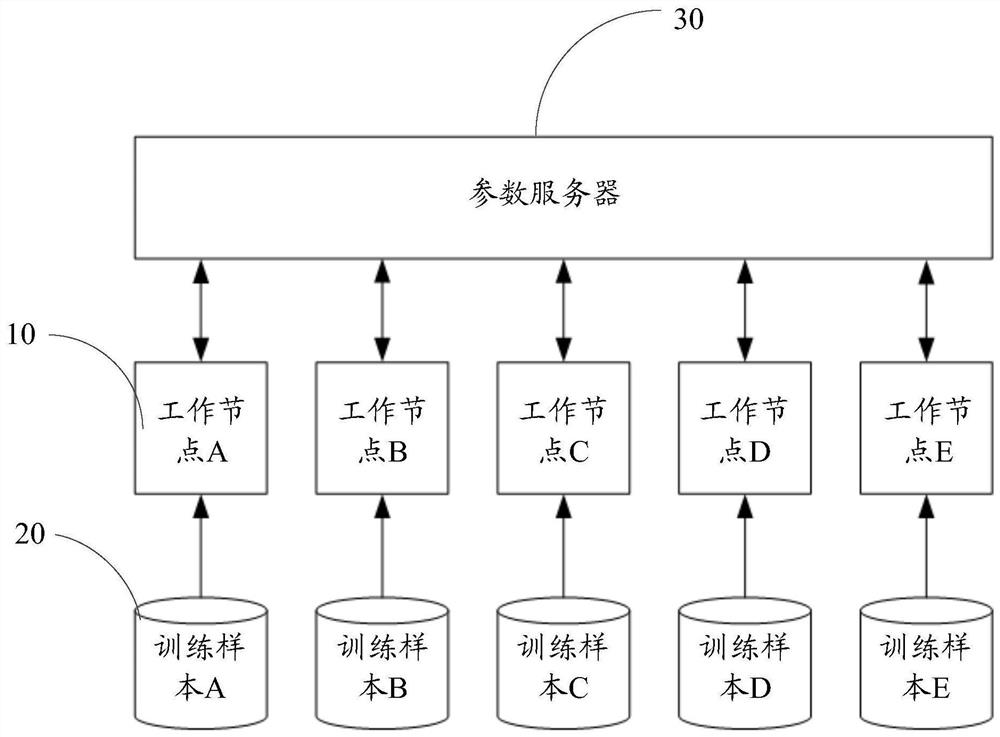

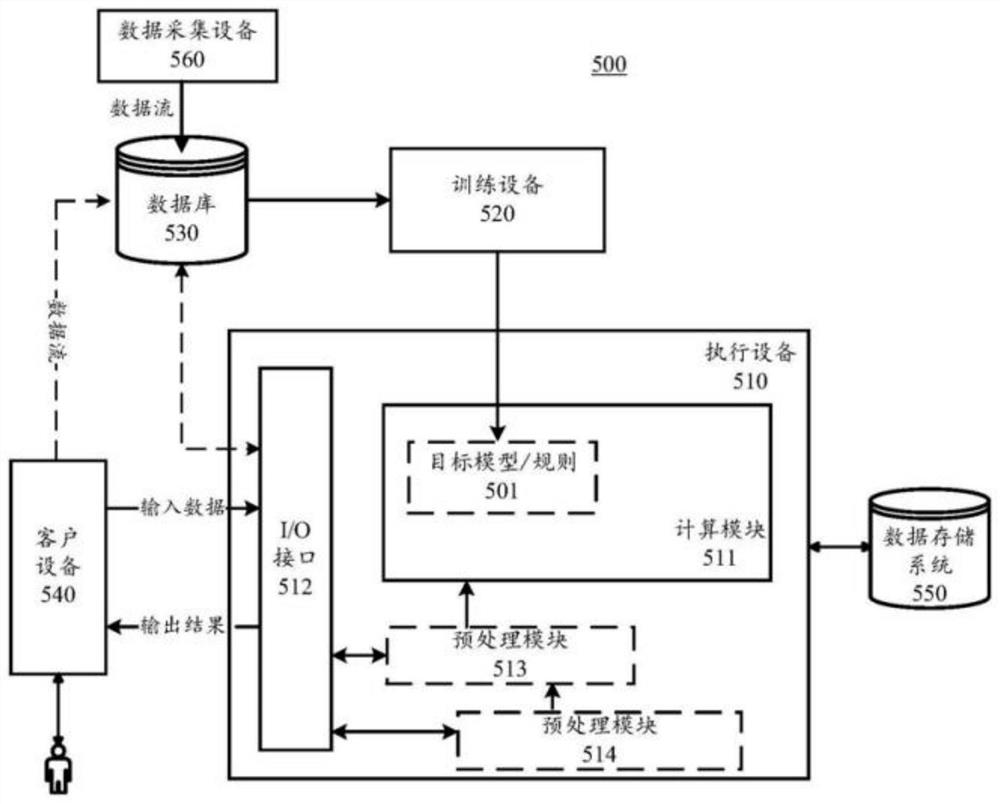

Distributed training method and device for deep learning model, and computing equipment

PendingCN113642734AImprove training efficiencyReduce communication frequencyMachine learningBatch trainingData set

The invention discloses a distributed training method and device for a deep learning model and computing equipment. The method comprises the following steps: in each training step, acquiring a predetermined number of training data from a training data set as batch training data; calculating a gradient of a model parameter of the deep learning model on the batch training data, and taking the gradient as a local gradient; calculating an accumulated value of the local gradients of the preset number of training steps as an accumulated gradient; communicating with other computing nodes, and exchanging accumulated gradients of each other; and calculating a gradient average value of the accumulated gradients of all the computing nodes, and updating the model parameters based on the gradient average value.

Owner:ALIBABA GRP HLDG LTD

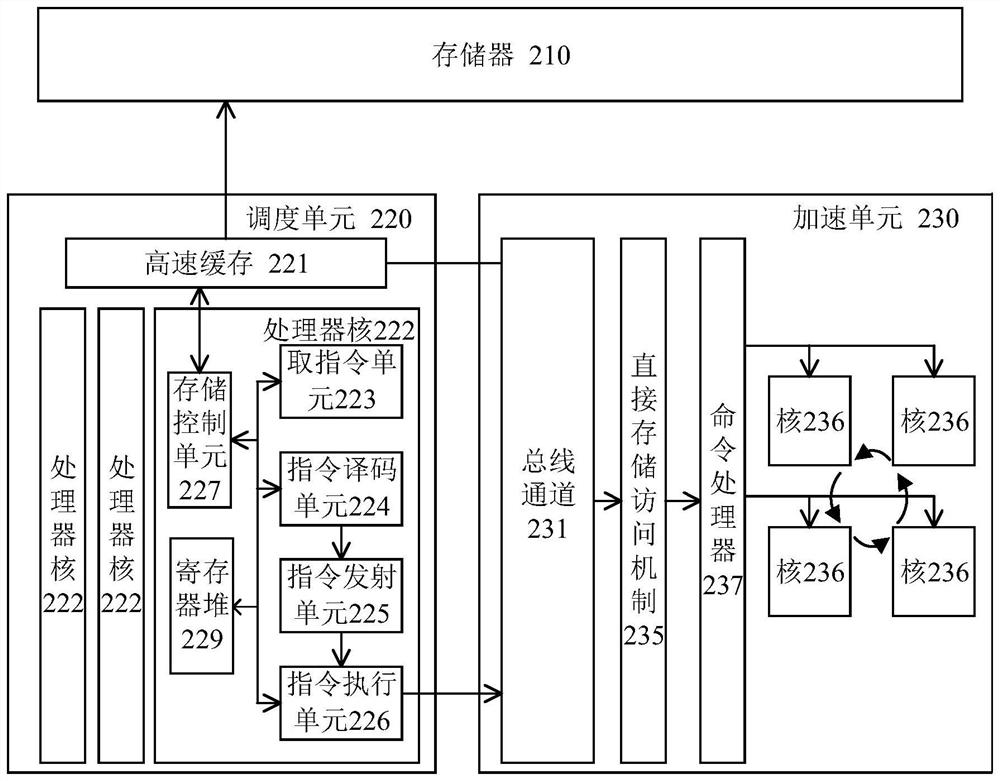

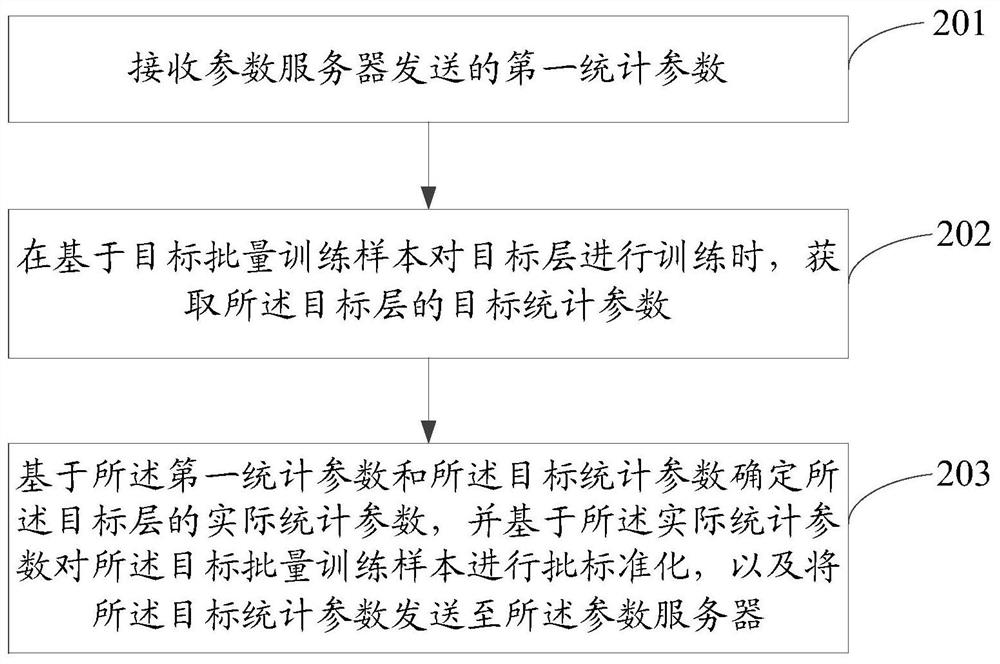

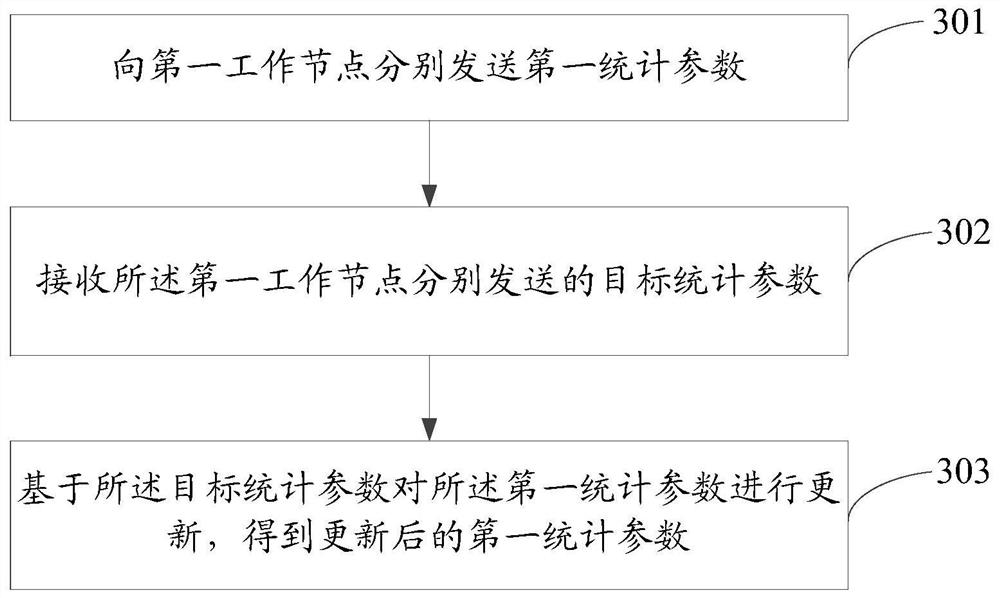

Deep learning model training method, working node and parameter server

PendingCN112016699AImprove training efficiencyShorten the timeMachine learningBatch trainingEngineering

The embodiment of the invention provides a deep learning model training method, a working node and a parameter server. The deep learning model training method applied to the working node comprises thesteps that a first statistical parameter sent by a parameter server is received, and the first statistical parameter is determined by the parameter server according to historical training data of a target layer of a target model; when the target layer is trained based on target batch training samples, target statistical parameters of the target layer are obtained, and the target statistical parameters are statistical parameters of the target batch training samples; and an actual statistical parameter of the target layer is determined based on the first statistical parameter and the target statistical parameter, batch standardization is performed on the target batch training samples based on the actual statistical parameter, and the target statistical parameter is sent to the parameter server. According to the embodiment of the invention, the training efficiency of the deep learning model can be improved.

Owner:LYNXI TECH CO LTD

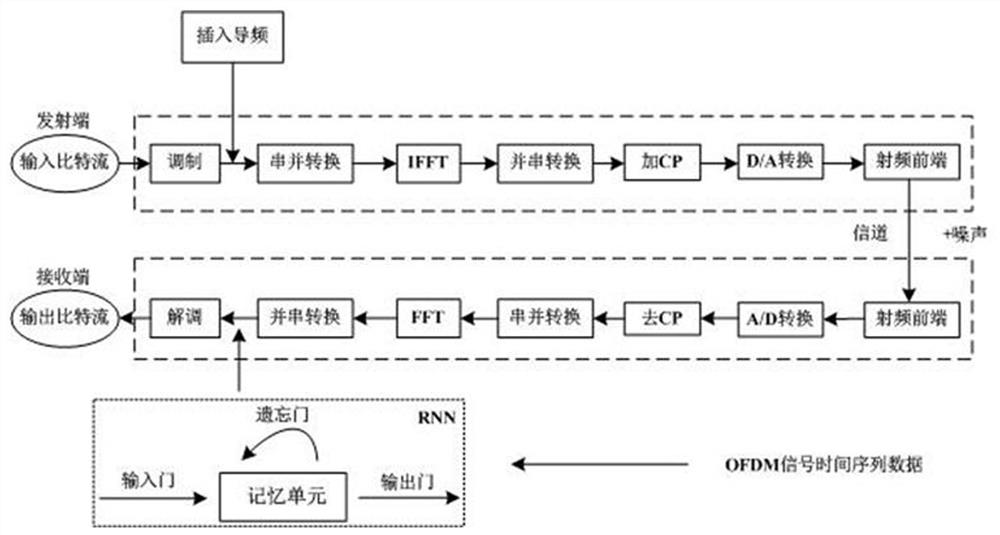

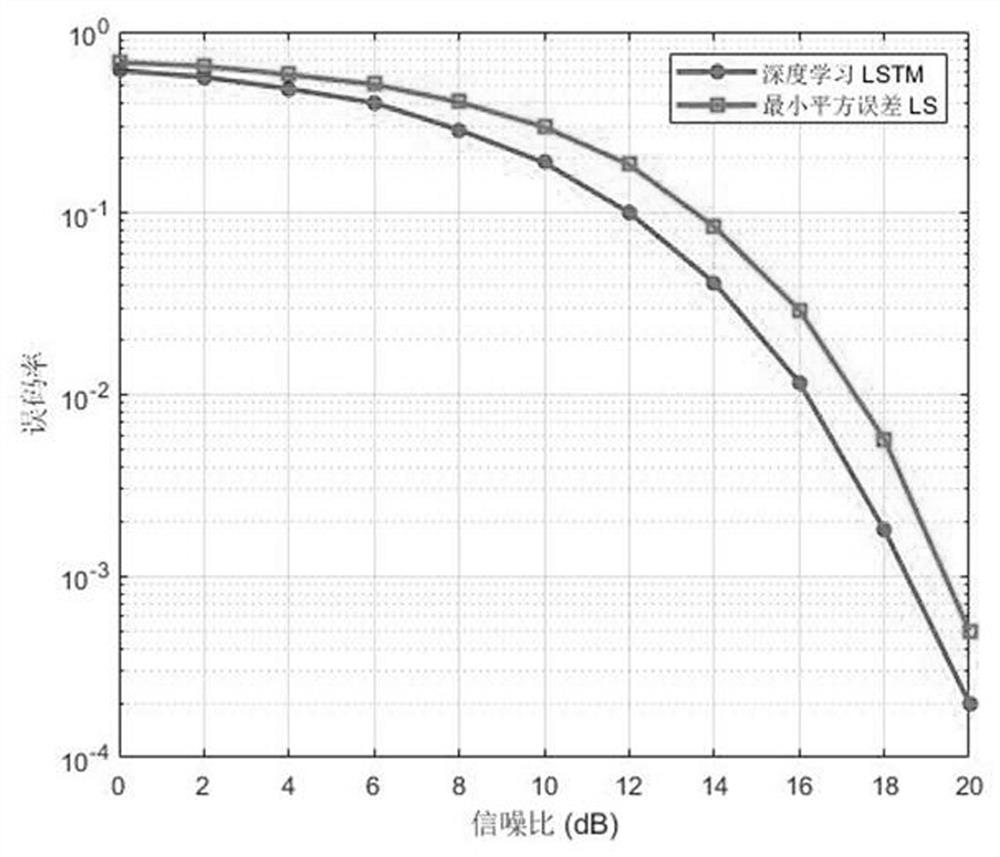

OFDM signal detection method based on RNN neural network

ActiveCN111865863ASolve signal reception problemsImprove Spectrum Detection PerformanceMulti-frequency code systemsNeural architecturesBatch trainingFrequency spectrum

The invention discloses an OFDM signal detection method based on an RNN neural network, which can be applied to a cognitive radio spectrum sensing technology of wireless mobile communication, and effectively improves the recovery capability of an OFDM system in the aspect of receiver signal demodulation, thereby improving the signal detection performance of cognitive radio spectrum sensing. On thebasis of analyzing an OFDM system, firstly, an OFDM system framework is built, and a time sequence data set for model learning is generated by utilizing the OFDM system framework; then, the time sequence signal data set is learned through an LSTM model of the RNN neural network; an Adam training algorithm is adopted to quickly optimize parameters and a small-batch training mode, so that end-to-end spectrum signal detection is realized, the problem of signal receiving of an OFDM system with NBI and ICI is effectively solved, and the spectrum detection performance of the system is improved.

Owner:SHANDONG UNIV

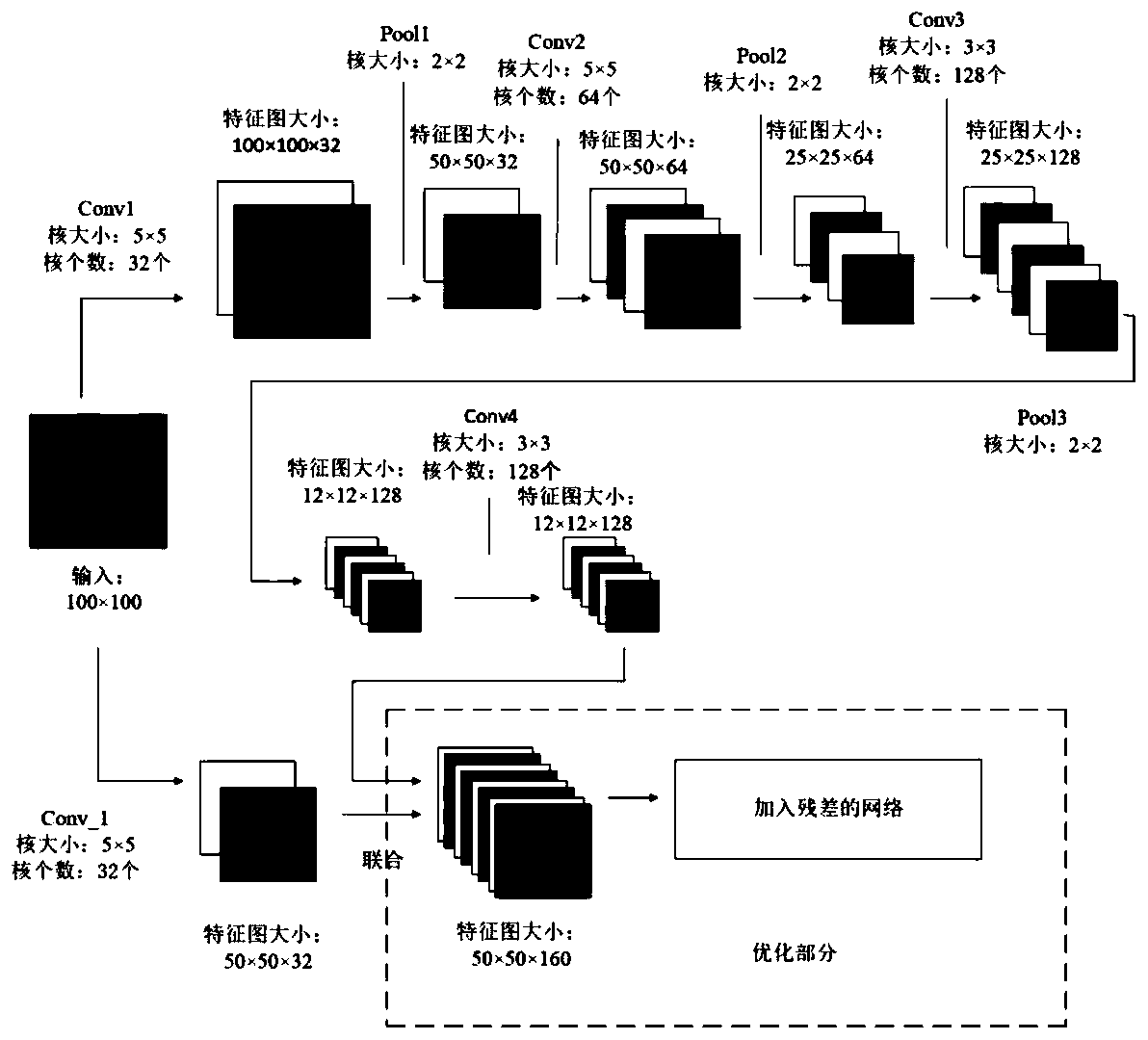

Convolutional neural network (CNN) based rain measurement identification of marine radar

ActiveCN110568441AGood convergence and stable stateNeural architecturesNeural learning methodsBatch trainingNetwork model

The invention provides a CNN based rain measurement identification of a marine radar. The method comprises a model building process, a model optimization process and a model training process. The method is based on a classical LeNet-5 CNN model. A multi-level residual CNN model is established, a training set is established by using samples of light rain, medium rain and heavy rain, and input intothe multi-level residual CNN to train the network model, the loss rate is calculated by using a cross entropy loss function, the minimum loss is obtained rapidly, and achieving a very good convergencestable state by using a batch training mode.

Owner:DALIAN MARITIME UNIVERSITY

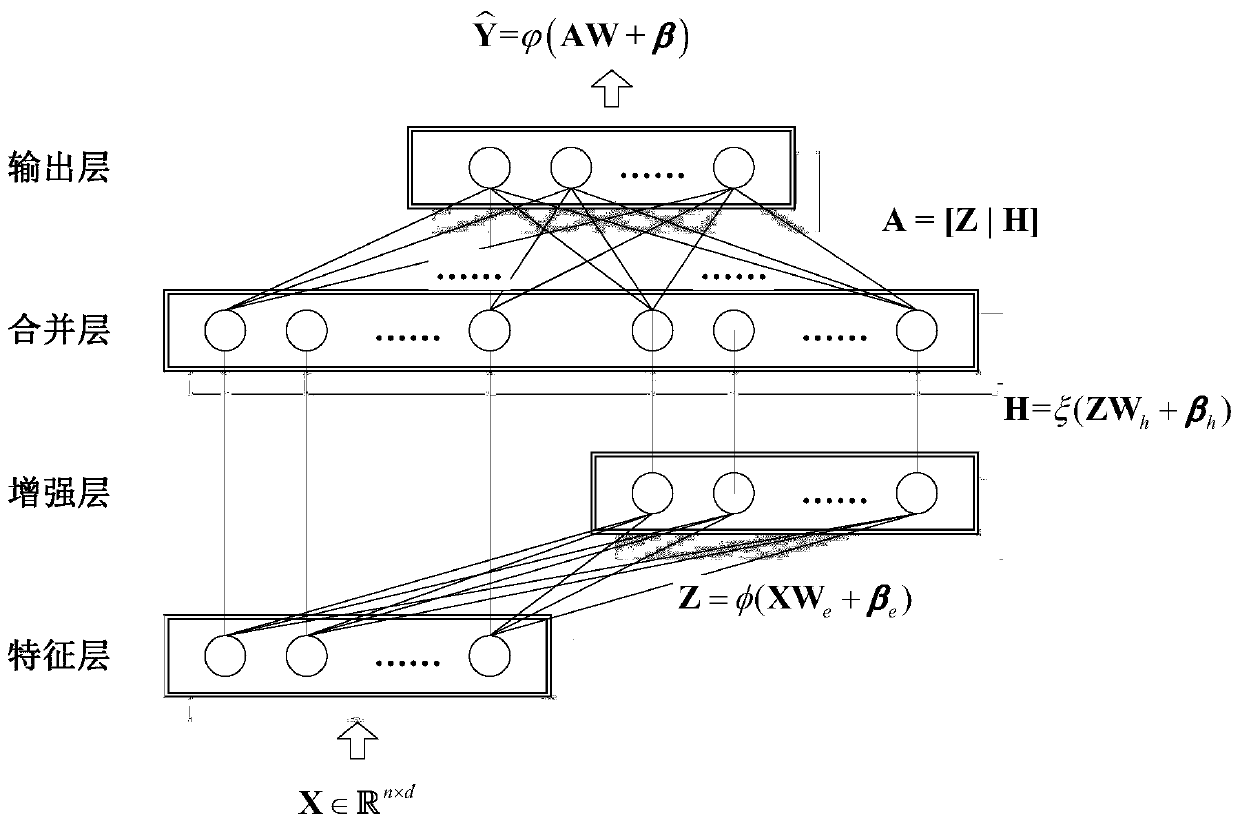

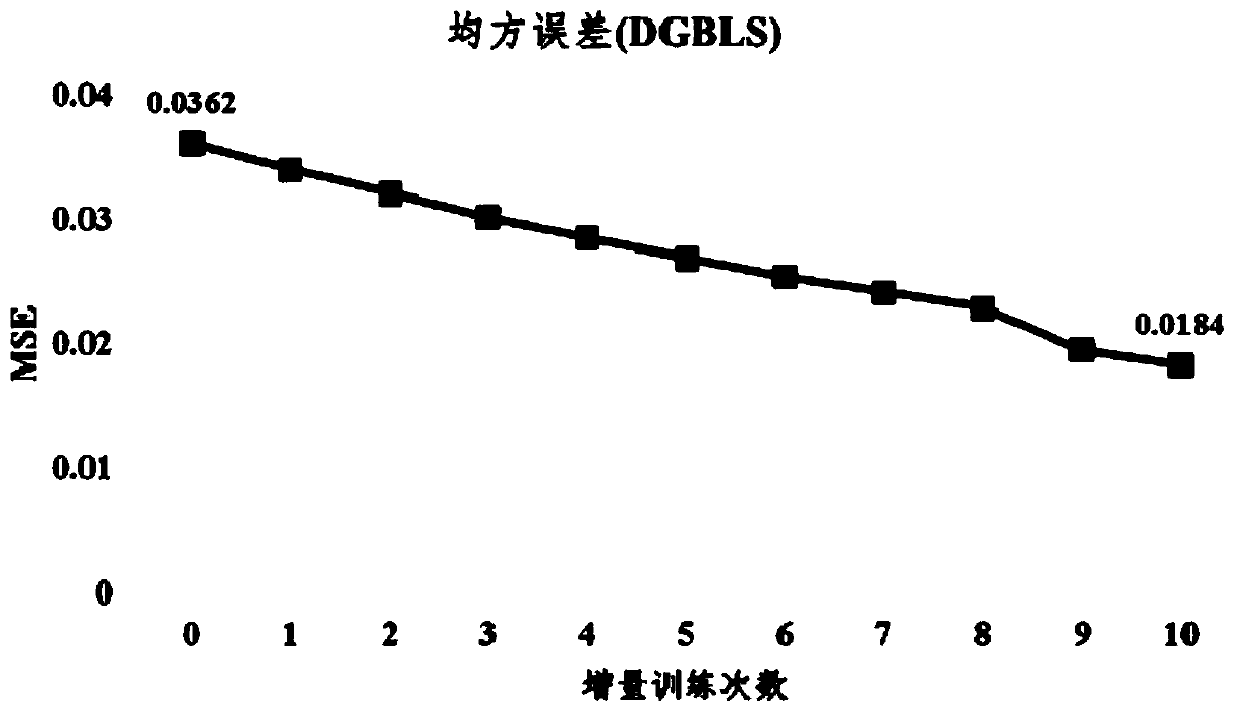

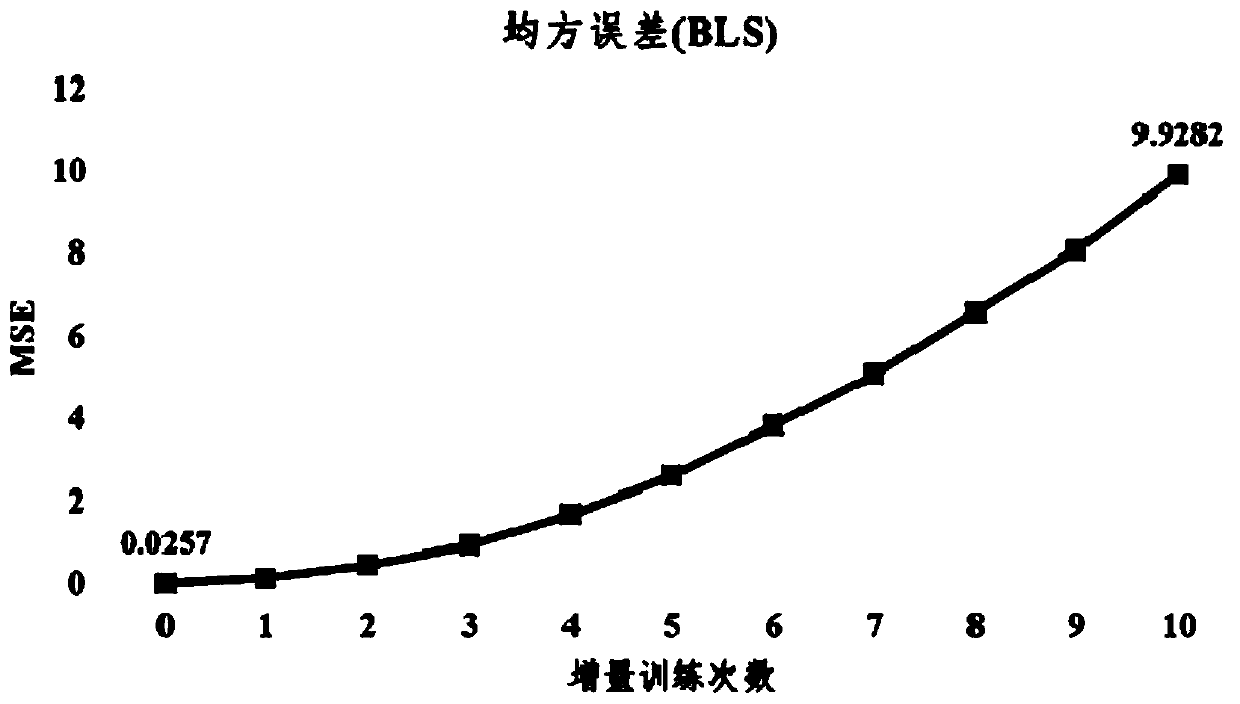

Implementation method of gradient descent width learning system

The invention provides an implementation method of a gradient descent width learning system. The gradient descent width learning system is composed of three dense layers, namely a feature layer, an enhancement layer and an output layer, and a merging layer. The feature layer maps the input data into mapping features by using random mapping to form feature nodes; the enhancement layer enhances themapping features output by the feature layer to form enhancement nodes; the merging layer merges the outputs of the feature nodes and the enhancement nodes and inputs the merged outputs into the output layer as a whole; and the output layer maps the output of the merging layer into the final output of the network, a small batch of training samples are continuously input into the gradient descent width learning system during training, and the gradient descent width learning system adopts a gradient descent method to update the weight of the network, so that the loss of a mean square error MSE loss function is gradually reduced. When the gradient descent width learning system implemented by the method continuously performs small-batch training sample training, the regression performance during batch training can be obviously improved, and the method can be applied to regression tasks.

Owner:CHONGQING UNIV

Model training method and related equipment

PendingCN113191241ASave storage spaceAchieving resistance to catastrophic forgettingSemantic analysisScene recognitionBatch trainingEngineering

The embodiment of the invention provides a model training method which is applied to the field of artificial intelligence, and the method comprises the steps: obtaining a first neural network model and M batches of batch training samples, M being a positive integer greater than 1; then determining a target incremental training method according to sample distribution characteristics between batches of batch training samples in the M batches of batch training samples, wherein the sample distribution characteristics are related to the degree of catastrophic forgetting generated by the model when incremental training is carried out based on the batches of batch training samples; and using the target incremental training method for realizing catastrophic forgetting resistance when incremental training is performed on the model; and according to the M batches of batch training samples, performing self-supervised training on the first neural network model through a target incremental training method to obtain a second neural network model. According to the method, on the premise that the training time is shortened and the data storage space is saved, the balance between efficiency and performance is realized.

Owner:HUAWEI TECH CO LTD

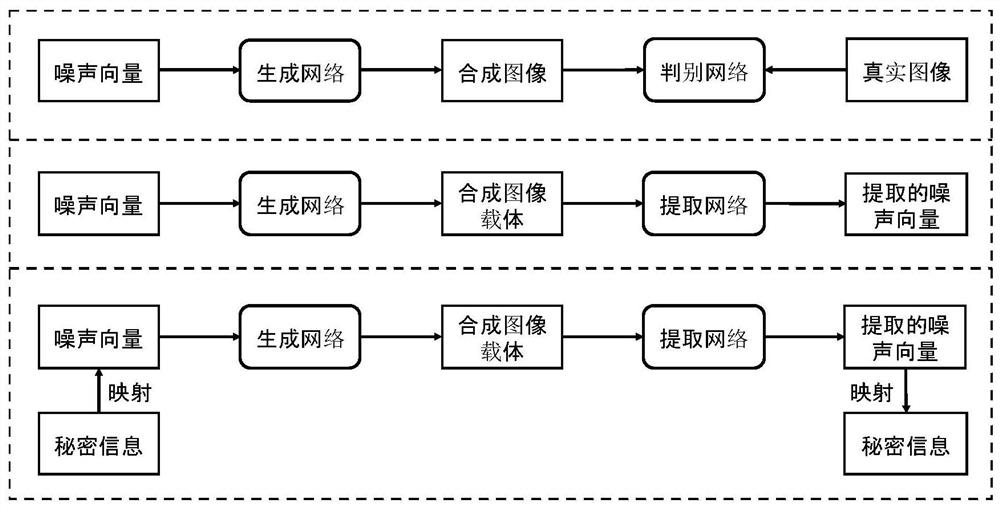

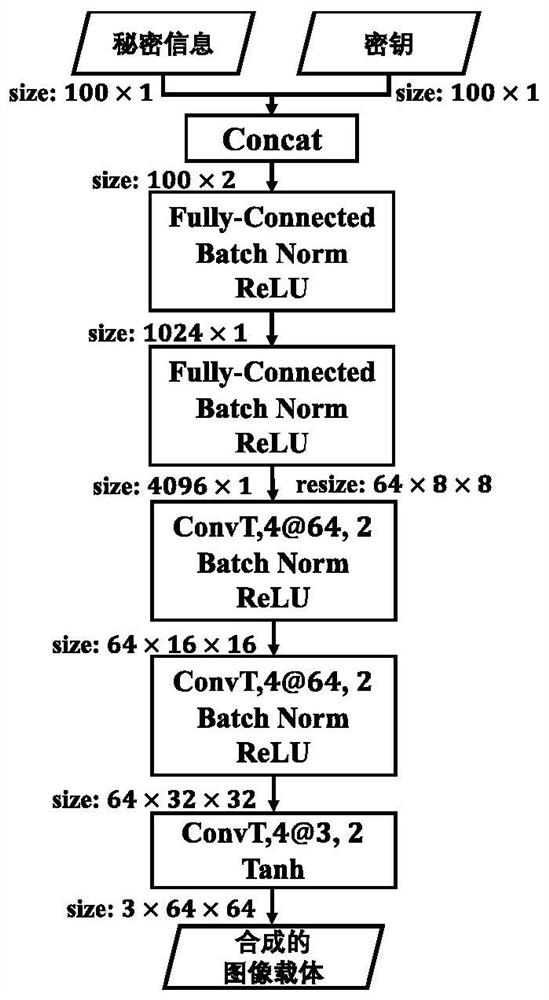

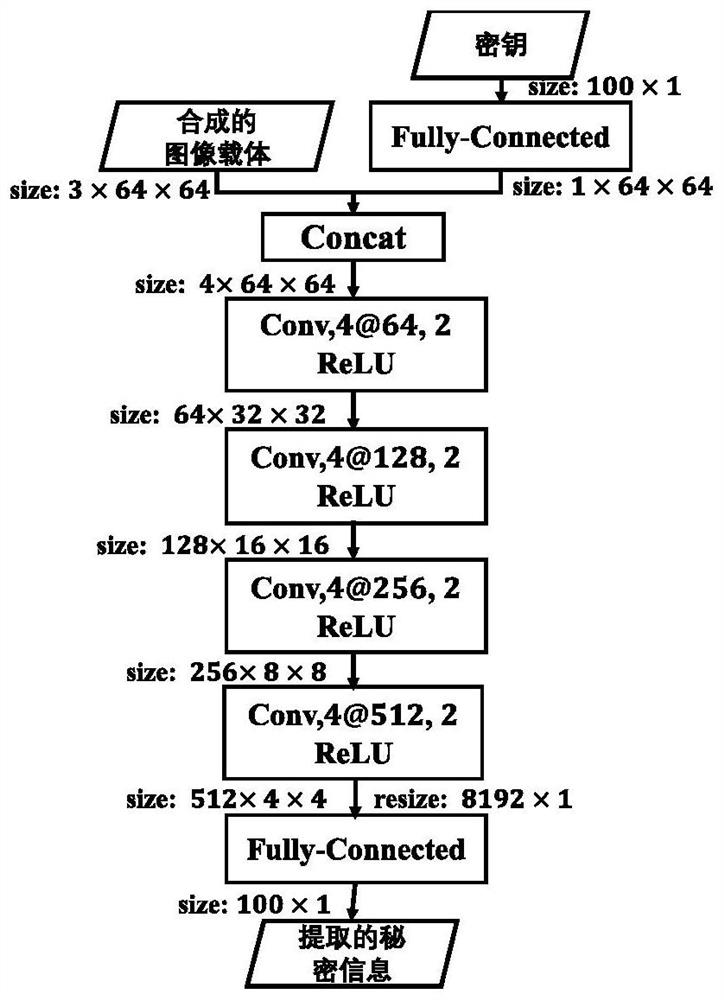

GAN-based carrier image synthesis steganography method

PendingCN112115490AWon't crackImprove securityDigital data protectionNeural architecturesPattern recognitionBatch training

The invention relates to a GAN-based carrier image synthesis steganography method, which comprises the steps of cutting each image in a data set into images with the same size, and forming a real image data set; constructing a generation network G, a discrimination network D, an evidence obtaining network F and an extraction network E, initializing parameters in the generation network G, the discrimination network D and the extraction network E, and setting the parameters in the evidence obtaining network F as preset values; training the initialized generation network G, discrimination networkD and extraction network E by using a batch training mode to obtain a trained generation network G, discrimination network D and extraction network E; inputting secret information to be embedded anda preset secret key into the trained generation network G to obtain a synthetic carrier image; and inputting the synthesized carrier image and the preset secret key into the trained extraction networkE, and extracting secret information. According to the method, the security of the algorithm is enhanced, and the naturalness of the synthesized carrier image is improved.

Owner:NINGBO UNIV

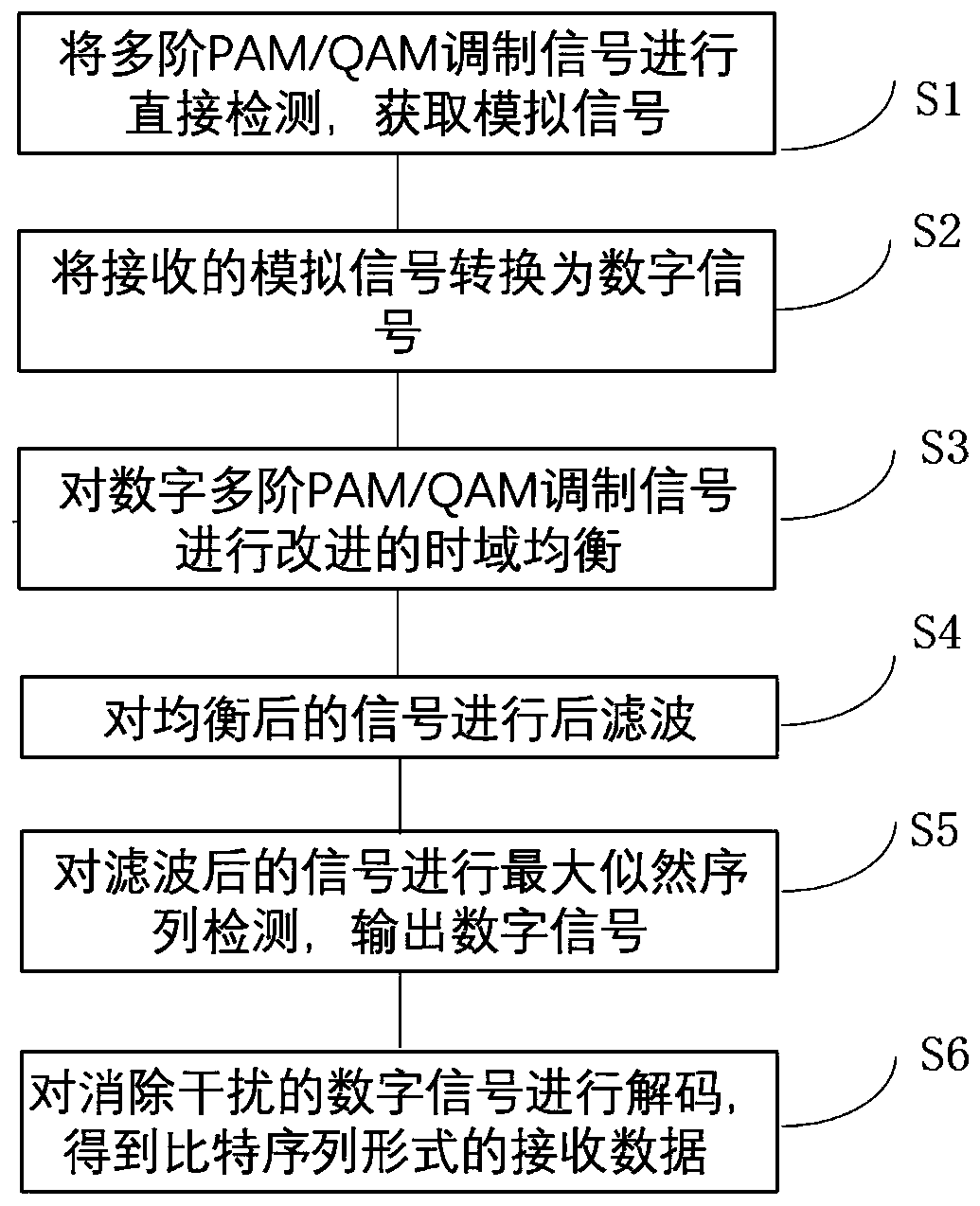

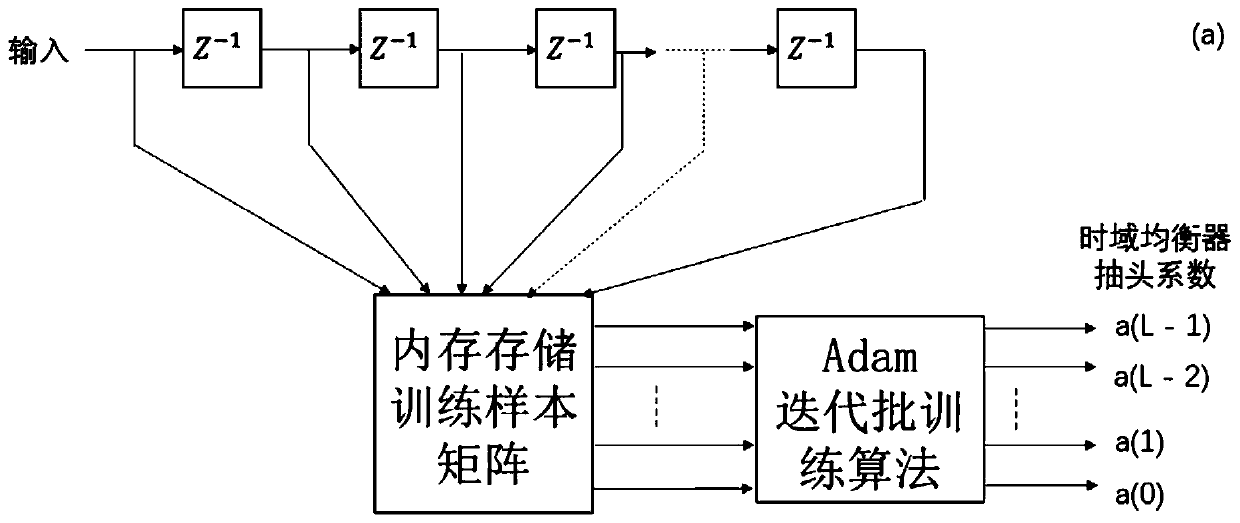

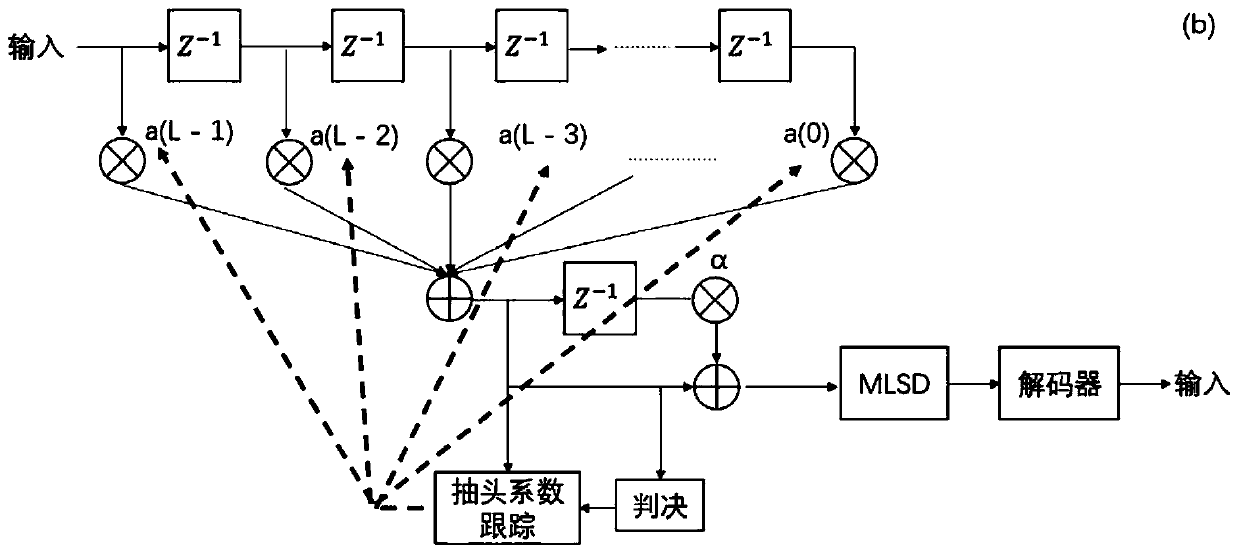

Data receiving method and receiving system based on adaptive moment estimation

ActiveCN109981502AEnhanced in-band noiseImprove performanceError preventionEqualisersAlgorithmCombined method

The invention discloses a data receiving method based on adaptive moment estimation, which comprises the following steps of: eliminating intersymbol interference through a combined method consisting of a time domain feedforward equalizer, a rear filter and maximum likelihood sequence detection improved on the basis of the adaptive moment estimation method; the process specifically comprises the steps that a training sample matrix is constructed through a small number of training sequences, iterative batch training based on a self-adaptive moment estimation algorithm is conducted on time domainequalizer tap coefficients through the matrix, ideal equalizer tap coefficients are obtained, post-filtering and maximum likelihood detection are conducted on equalized signals, and signals with interference eliminated are obtained; an efficient adaptive moment estimation iteration batch equalization training method is adopted to replace a traditional time domain feedforward equalizer one-by-onesample training method, the interference elimination performance is high, and meanwhile the proportion of effective loads is increased.

Owner:JINAN UNIVERSITY

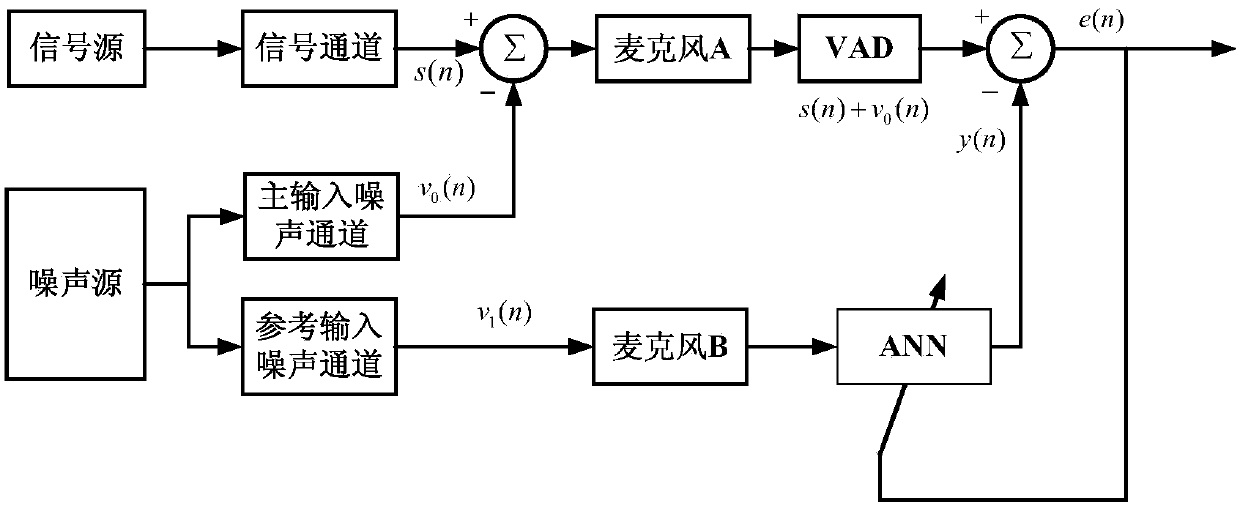

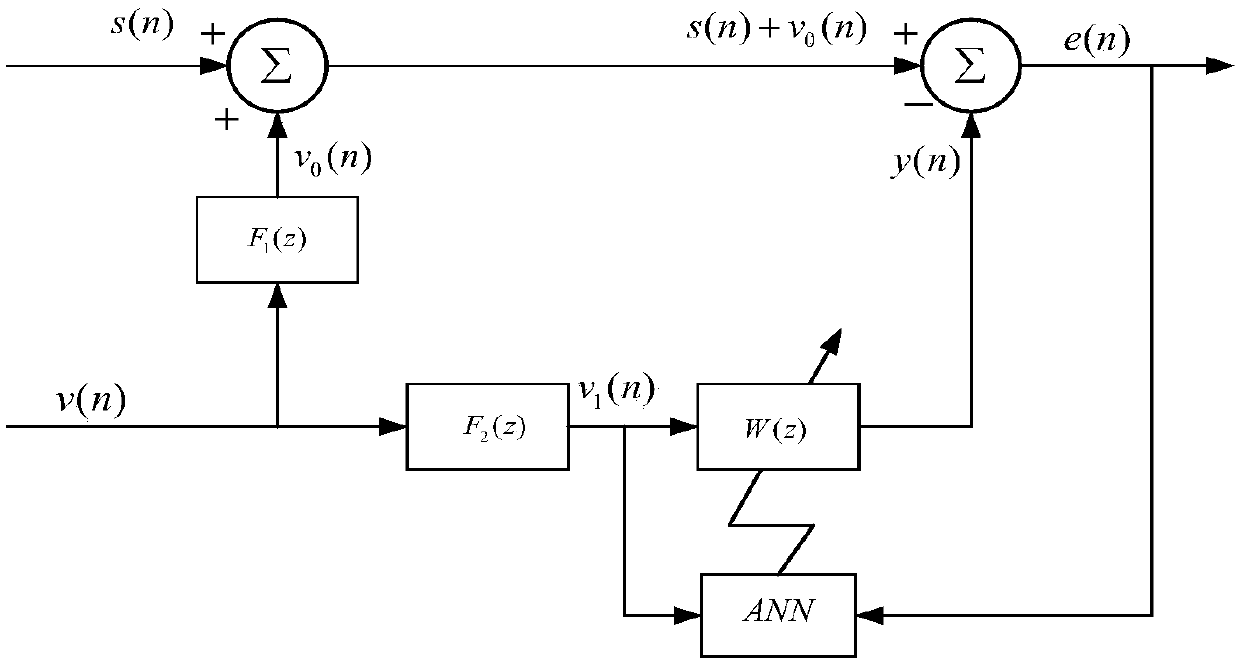

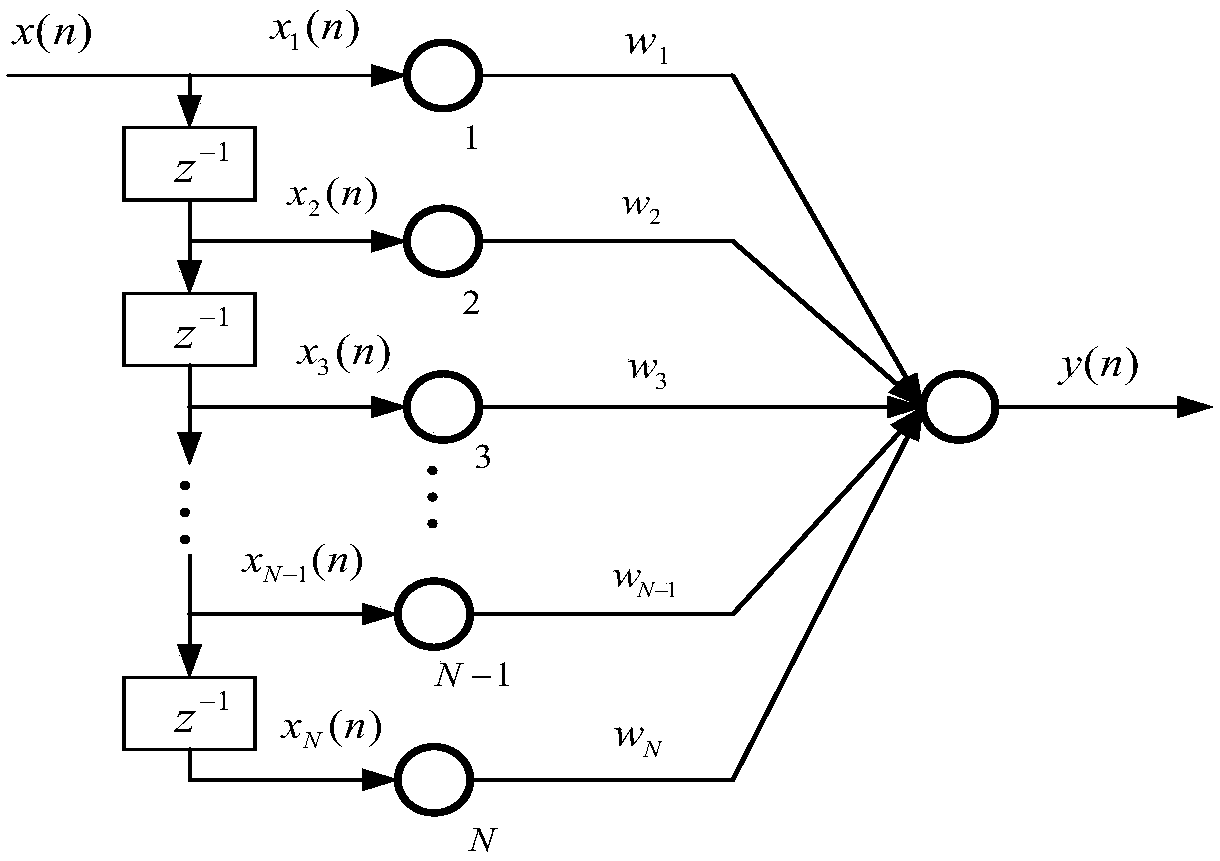

Noise elimination method based on VAD and ANN

The invention discloses a noise elimination method based on VAD and ANN, and the method comprises the steps: firstly employing the VAD technology for dividing voice with noise into a voice frame and anoise frame; secondly carrying out the different noise reduction of the voice frame and the noise frame through ANN; thirdly employing a sequential training of the reference noise corresponding to the voice frame, and employing batch training for the reference noise corresponding to the noise frame. According to the invention, the voice with the noise is divided into the voice frame and the noiseframe through the VAD technology. Through the quick convergence and high convergence precision of the batch training of the neural networks, the method achieves the processing of the noise frame. Onethe one hand, the method enables adaptive filtering to be convergent in one piece of noise frame data; on the other hand, the method also improves the convergence precision.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

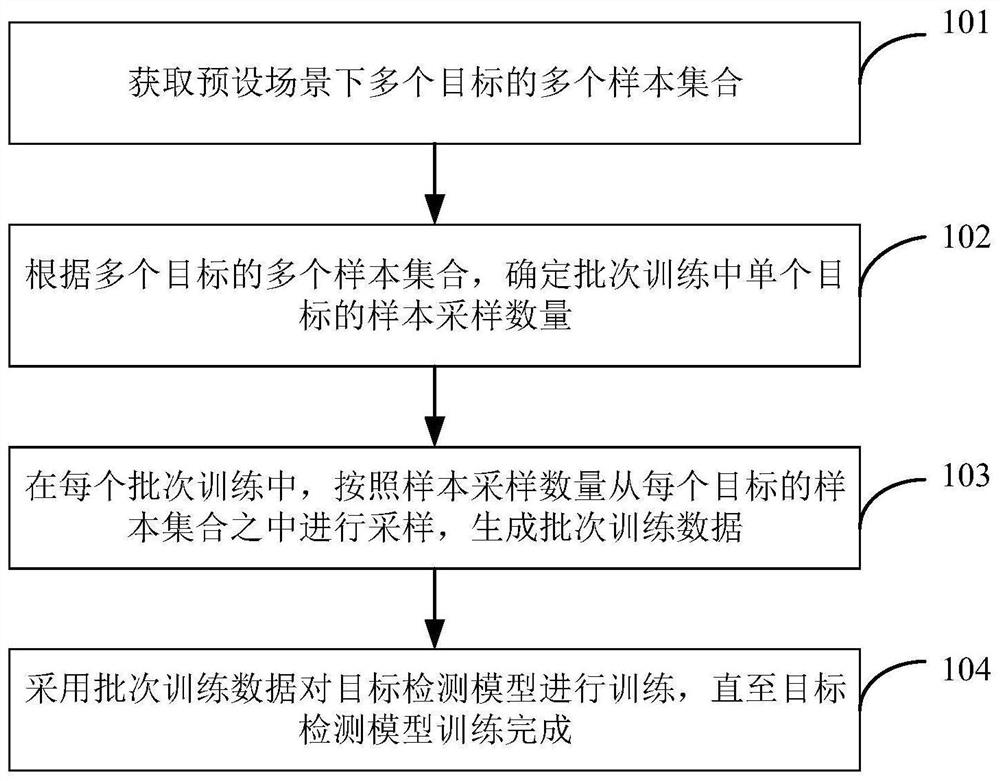

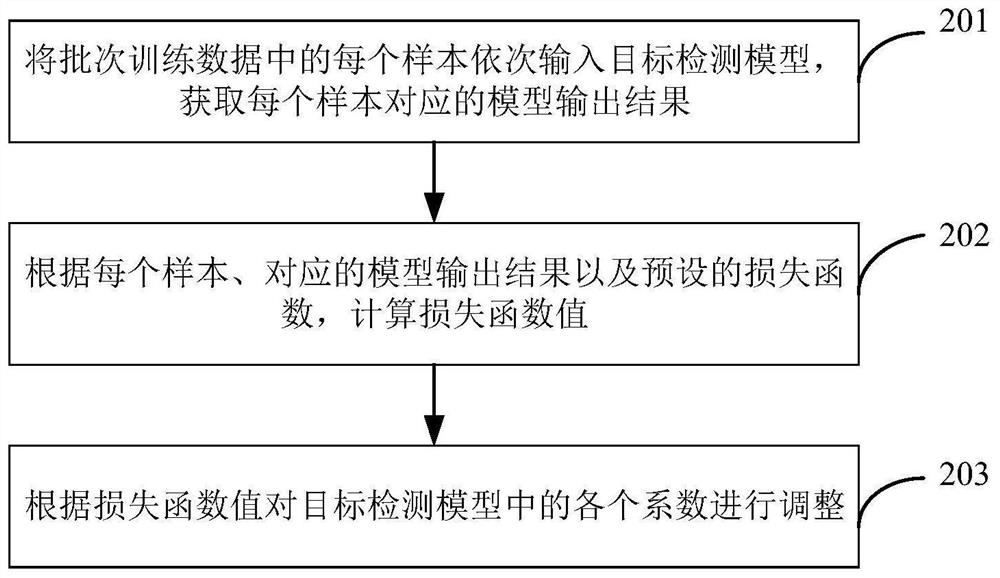

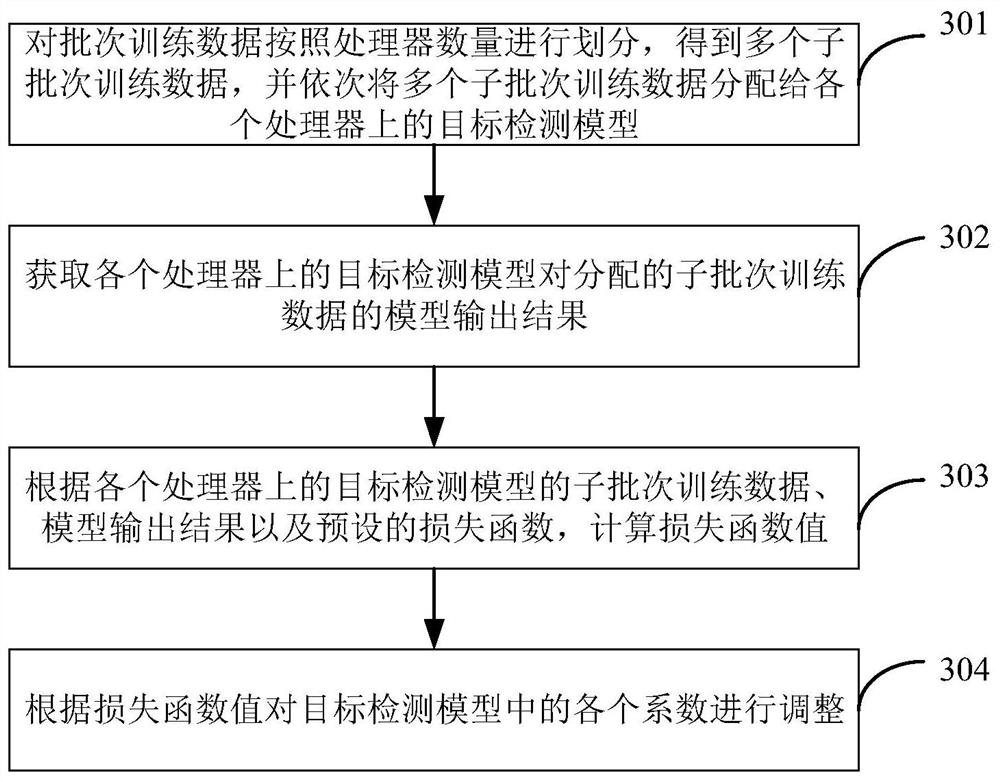

Target detection model training method and device, electronic equipment and storage medium

The invention discloses a target detection model training method and device, electronic equipment and a storage medium, and relates to the technical field of deep learning and computer vision. The specific implementation scheme is as follows: firstly, acquiring a plurality of sample sets of a plurality of targets in a preset scene; determining the sample sampling number of a single target in batchtraining according to the plurality of sample sets of the plurality of targets; then, in each batch of training, performing sampling from the sample set of each target according to the sample sampling number, and forming batch training data; and training the target detection model by using the batch training data until the training of the target detection model is completed. According to the method, when the training data is sampled, each target is sampled according to the sample sampling number, and the training data with the same number or approximately the same number can be obtained for training, so that the detection efficiency and the detection accuracy of the trained target detection model on each target are ensured.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com