Patents

Literature

351 results about "Model compression" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

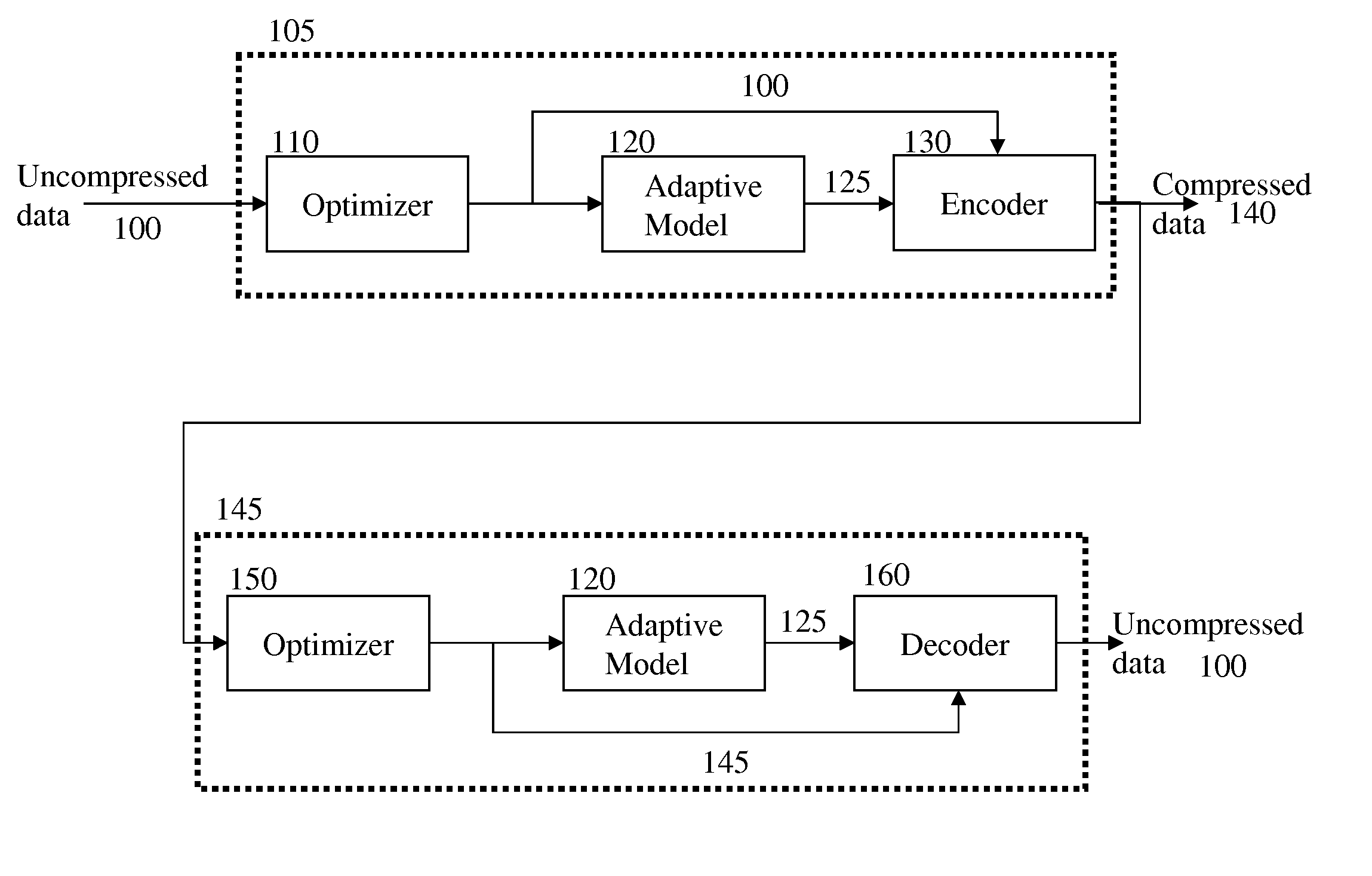

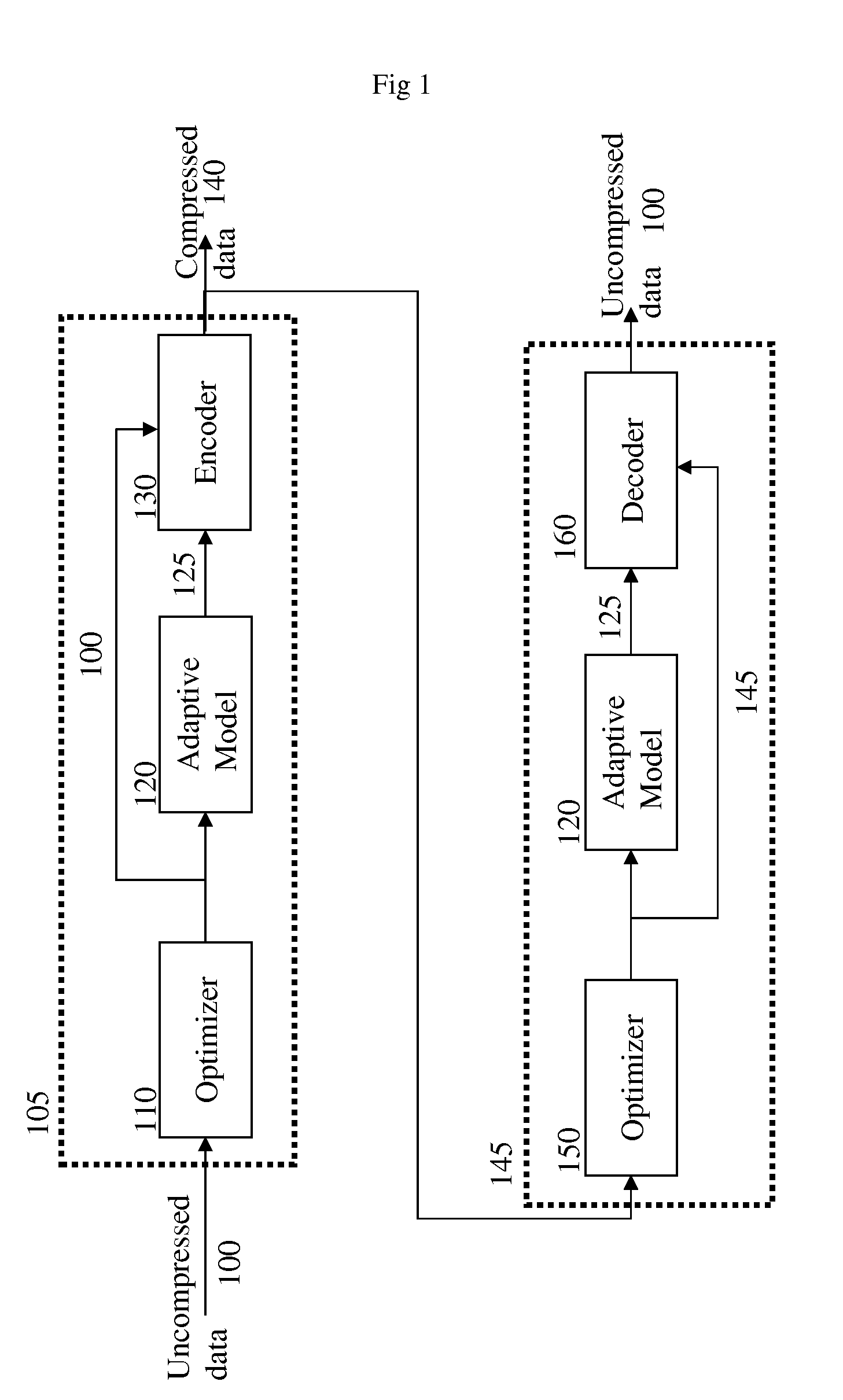

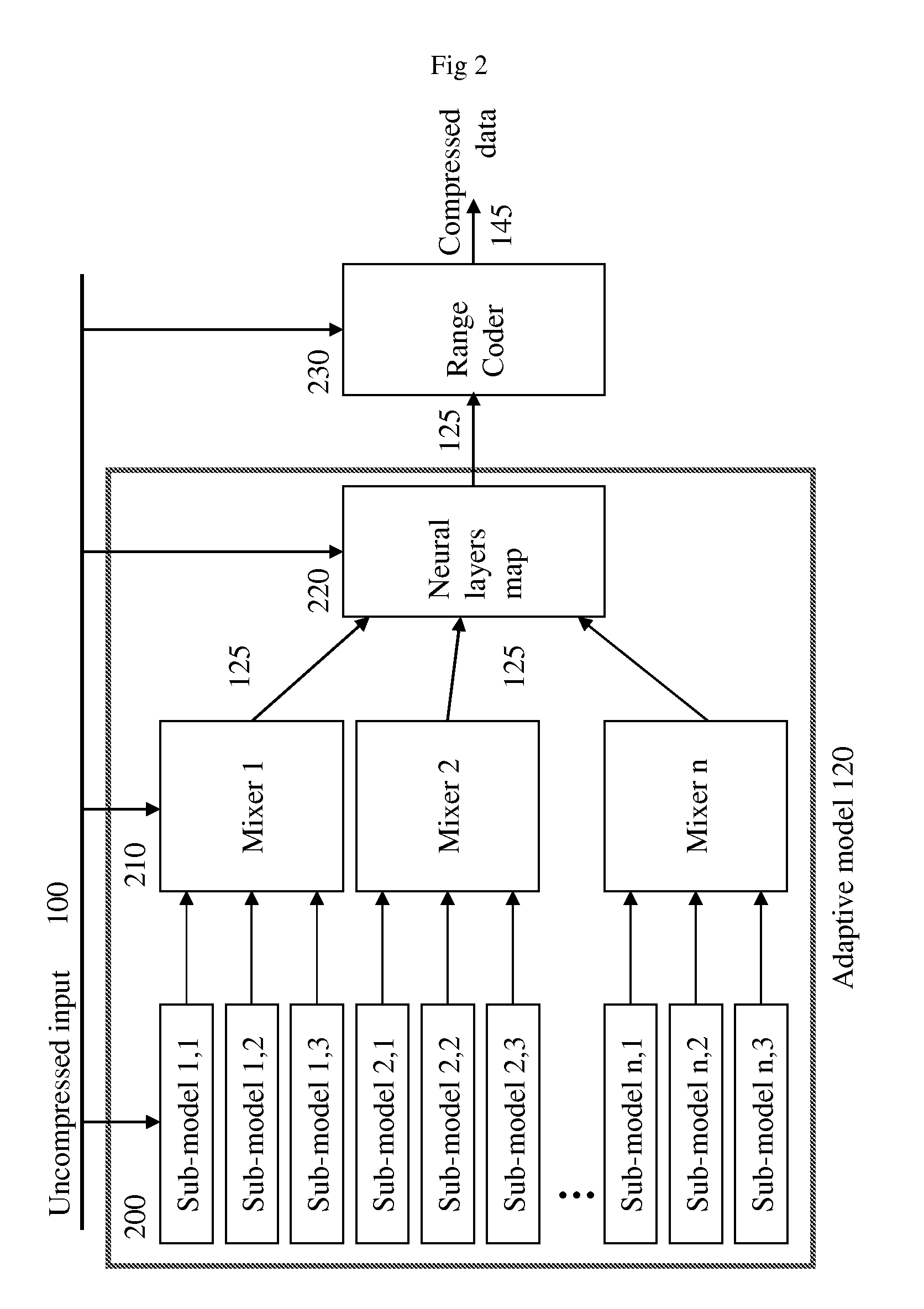

Lossless Data Compression Using Adaptive Context Modeling

The present invention is a system and method for lossless compression of data. The invention consists of a neural network data compression comprised of N levels of neural network using a weighted average of N pattern-level predictors. This new concept uses context mixing algorithms combined with network learning algorithm models. The invention replaces the PPM predictor, which matches the context of the last few characters to previous occurrences in the input, with an N-layer neural network trained by back propagation to assign pattern probabilities when given the context as input. The N-layer network described below, learns and predicts in a single pass, and compresses a similar quantity of patterns according to their adaptive context models generated in real-time. The context flexibility of the present invention ensures that the described system and method is suited for compressing any type of data, including inputs of combinations of different data types.

Owner:INFIMA

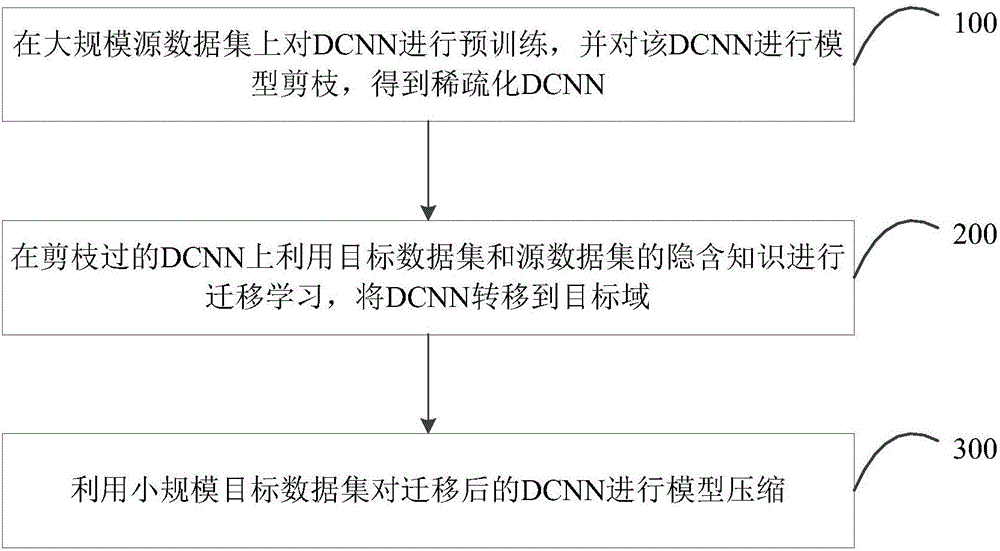

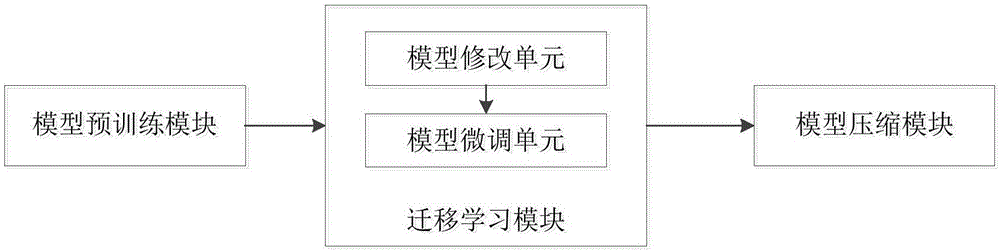

Deep convolution neural network training method and device

InactiveCN106355248AImprove predictive performanceImprove transfer learning capabilitiesPhysical realisationNeural learning methodsData setAlgorithm

The present invention relates to the field of deep learning techniques, in particular to a deep convolution neural network training method and a device. The deep convolution neural network training method and the device comprise the steps of a, pretraining the DCNN on a large scale data set, and pruning the DCNN; b, performing the migration learning on the pruned DCNN; c, performing the model compression and the pruning on the migrated DCNN with the small-scale target data set, In the process of migrating learning of large-scale source data set to small-scale target data set, the model compression and the pruning are performed on the DCNN by the migration learning method and the advantages of model compression technology, so as to improve the migration learning ability to reduce the risk of overfitting and the deployment difficulty on the small-scale target data set and improve the prediction ability of the model on the target data set.

Owner:SHENZHEN INST OF ADVANCED TECH

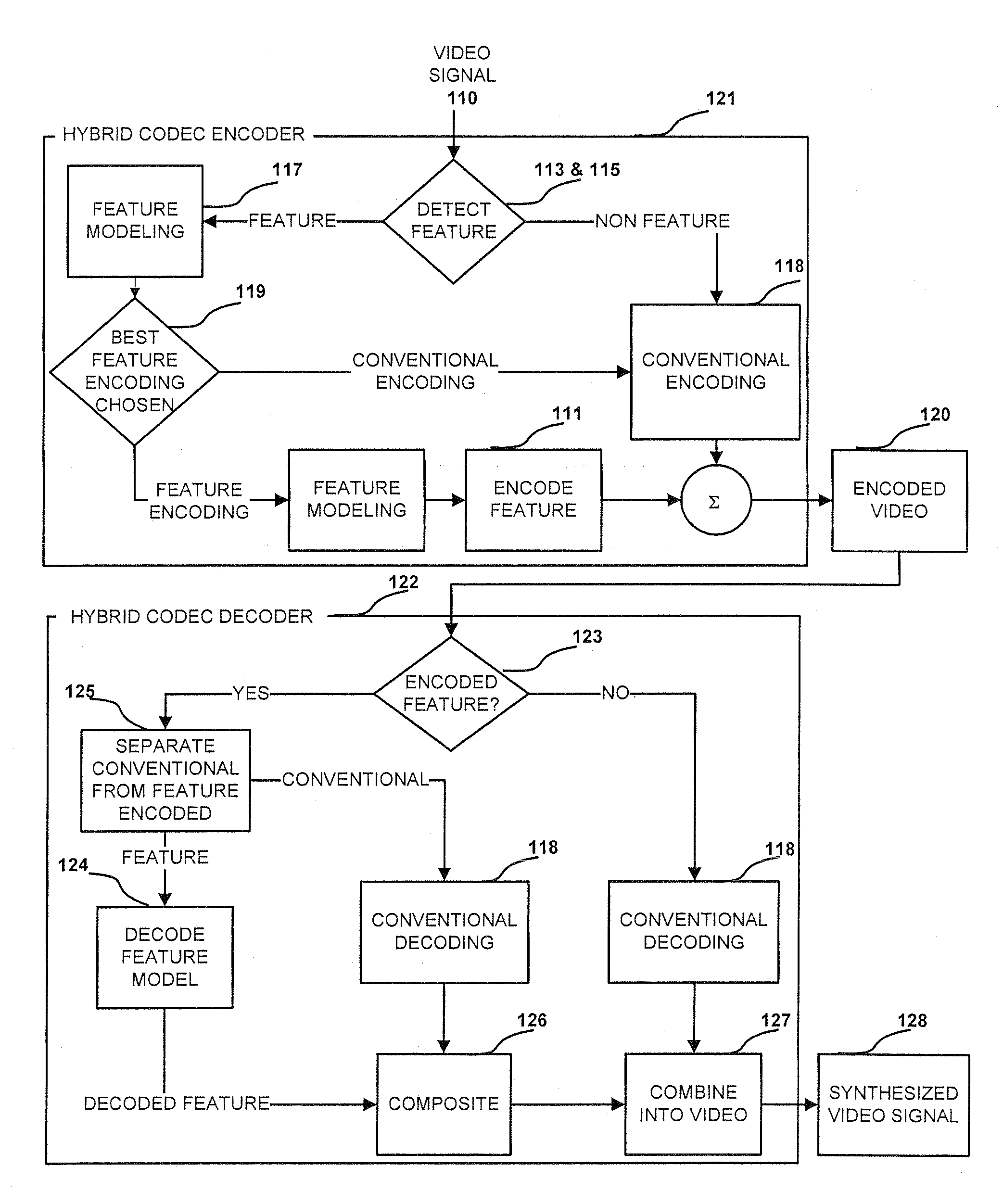

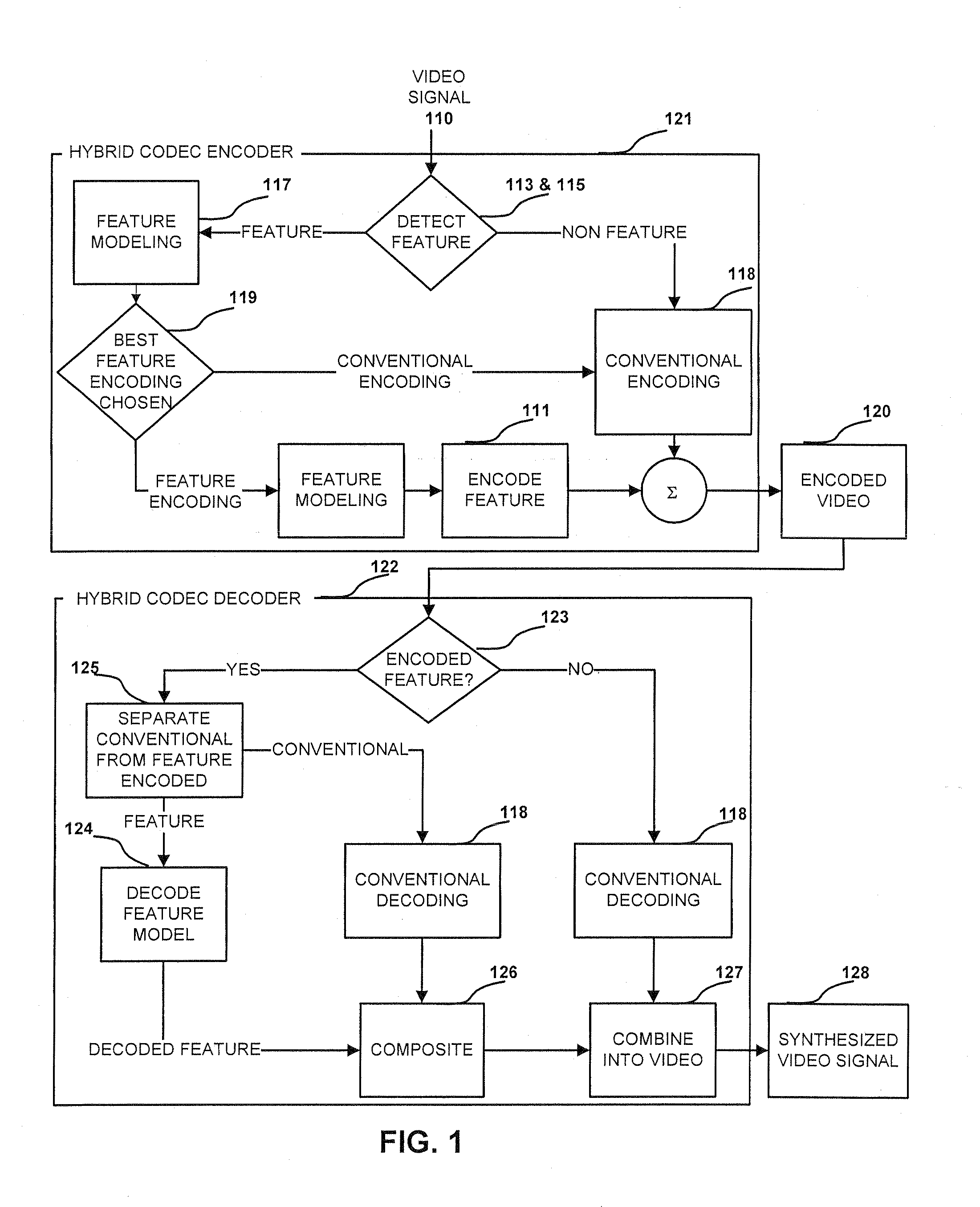

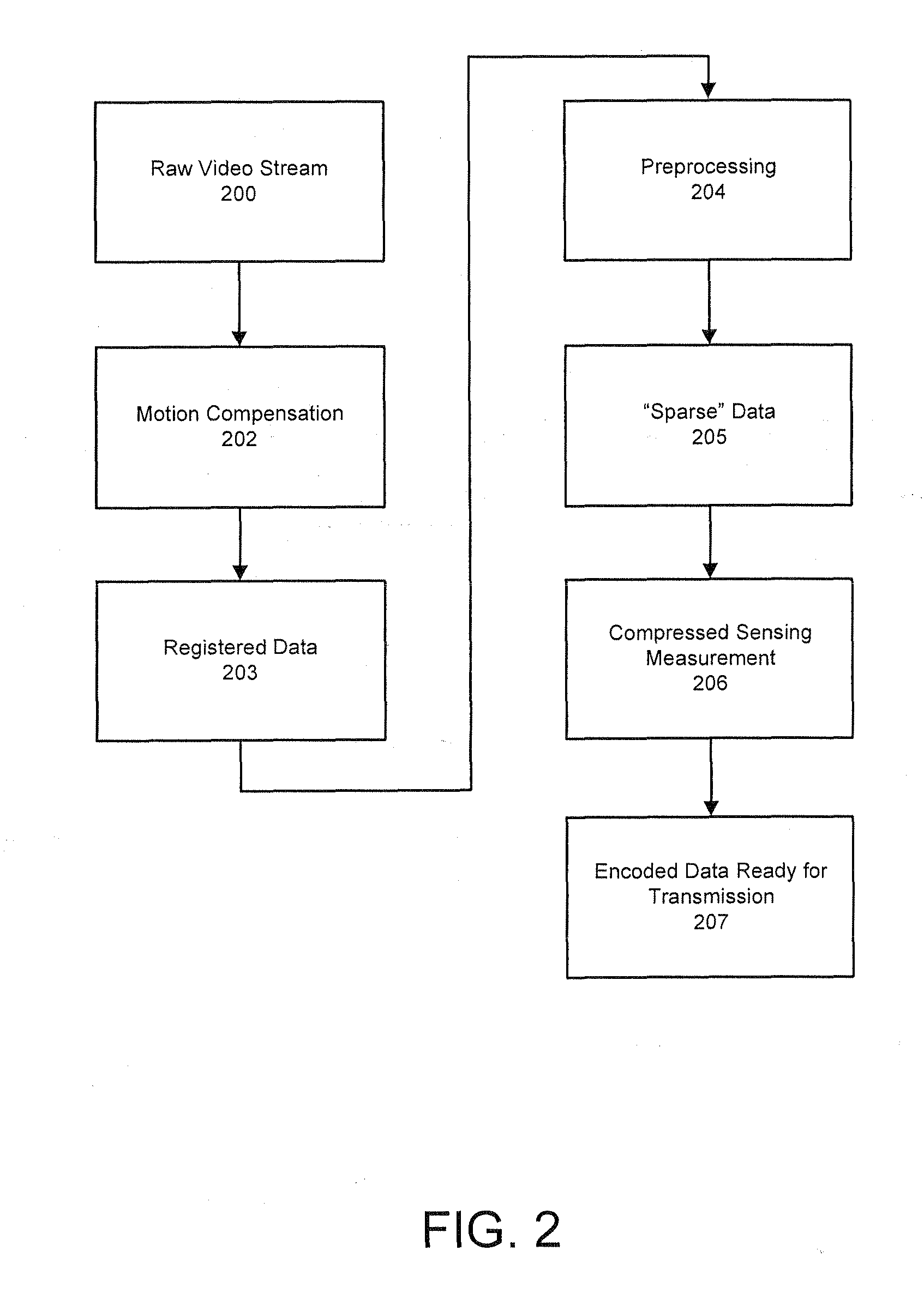

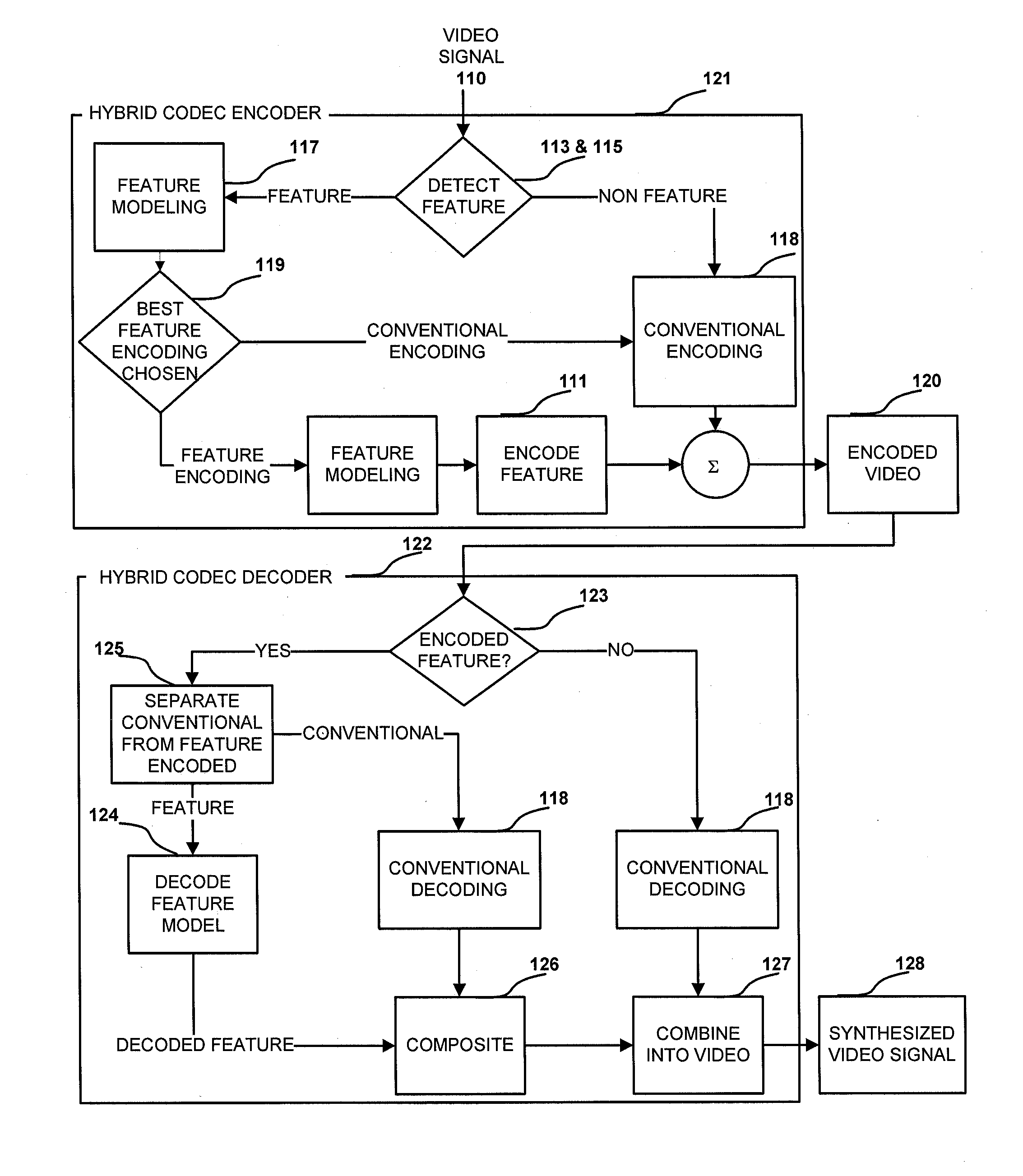

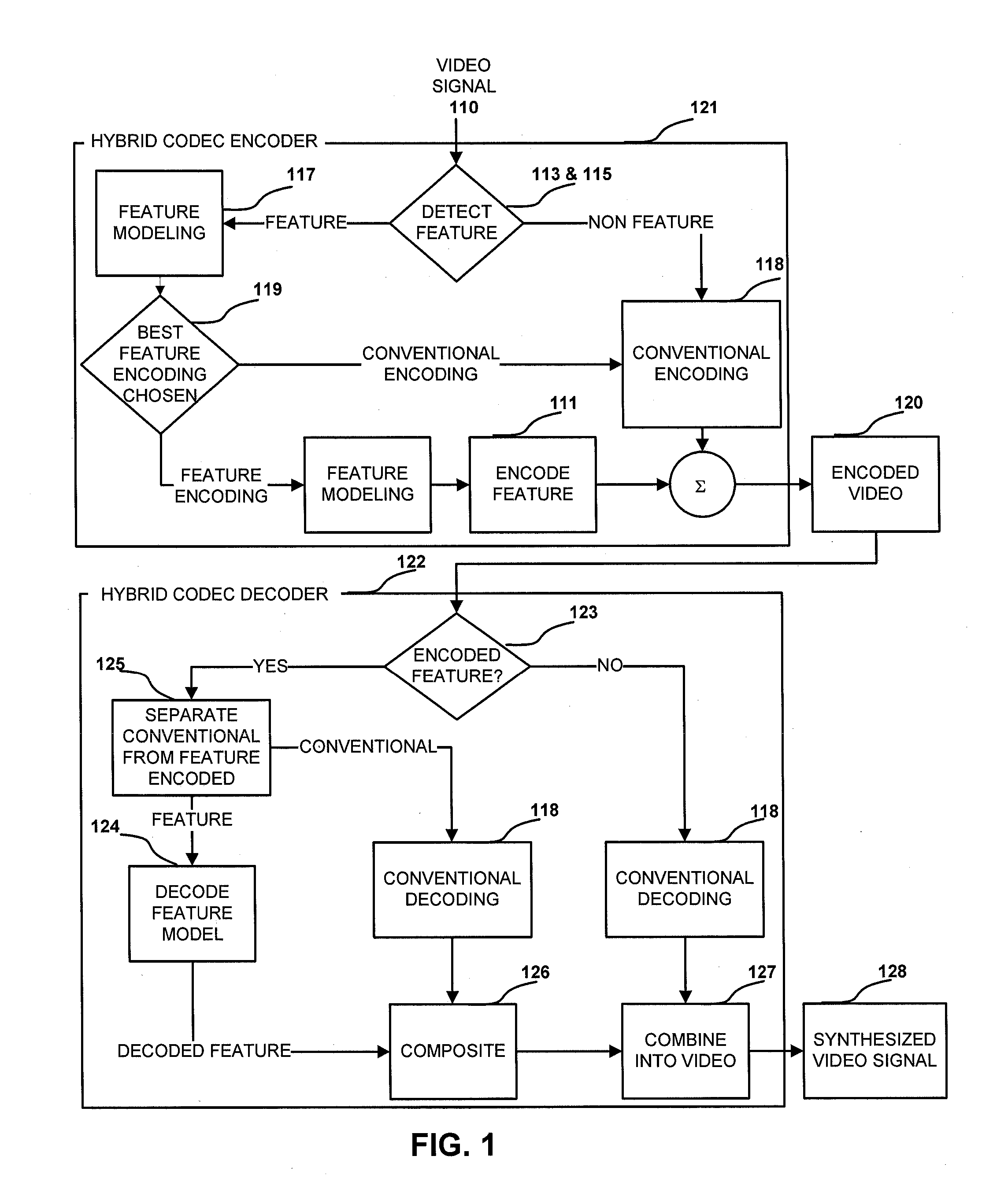

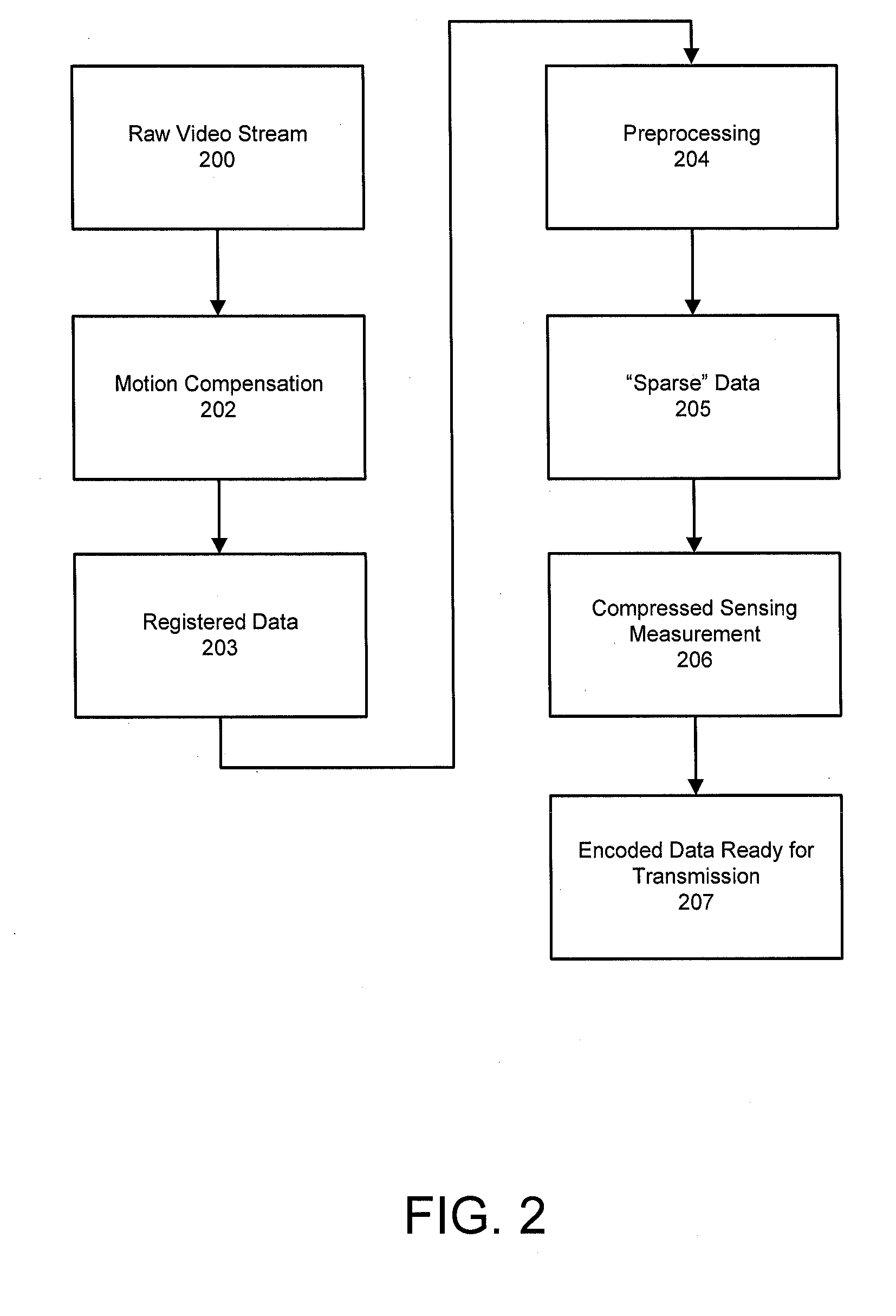

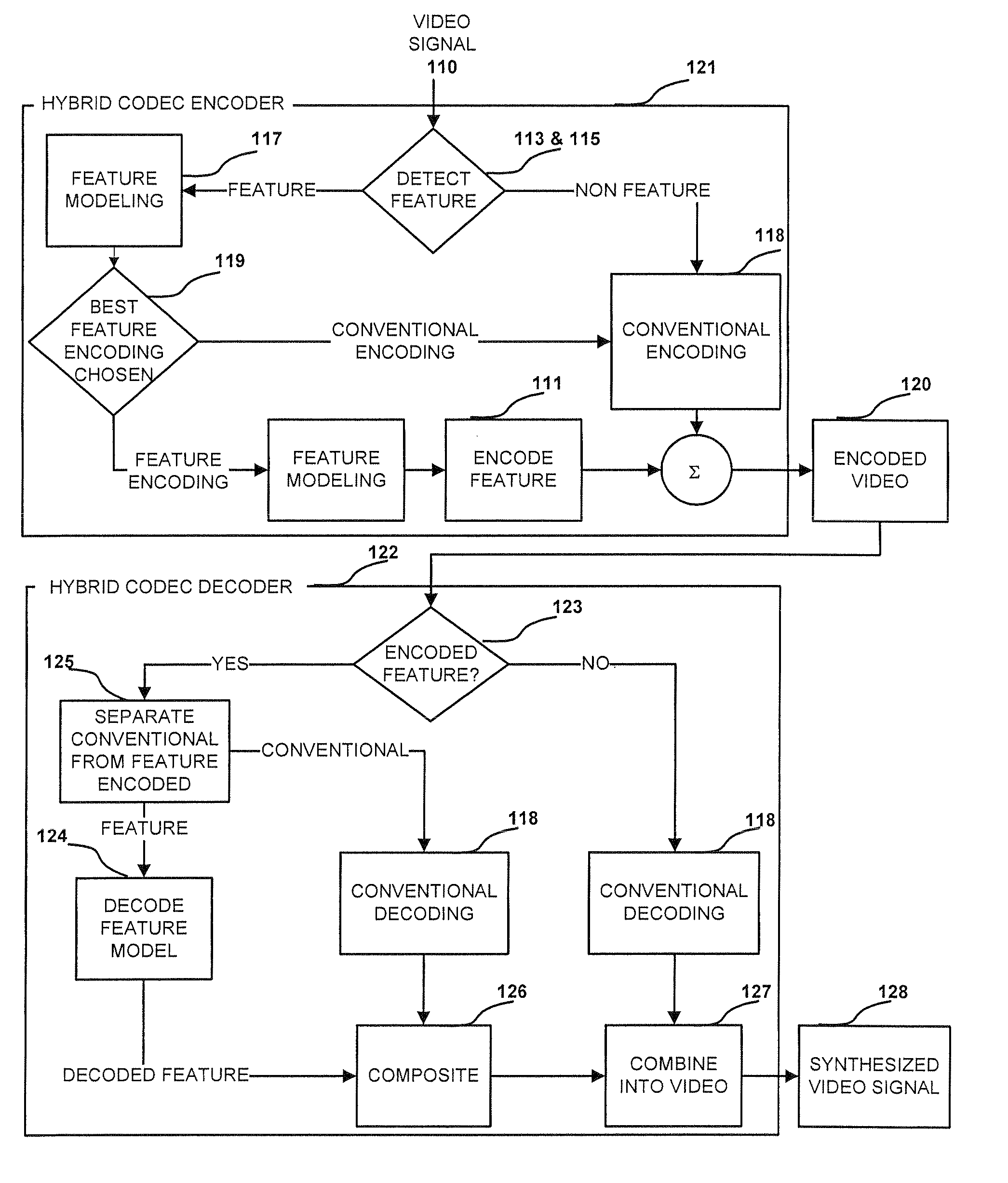

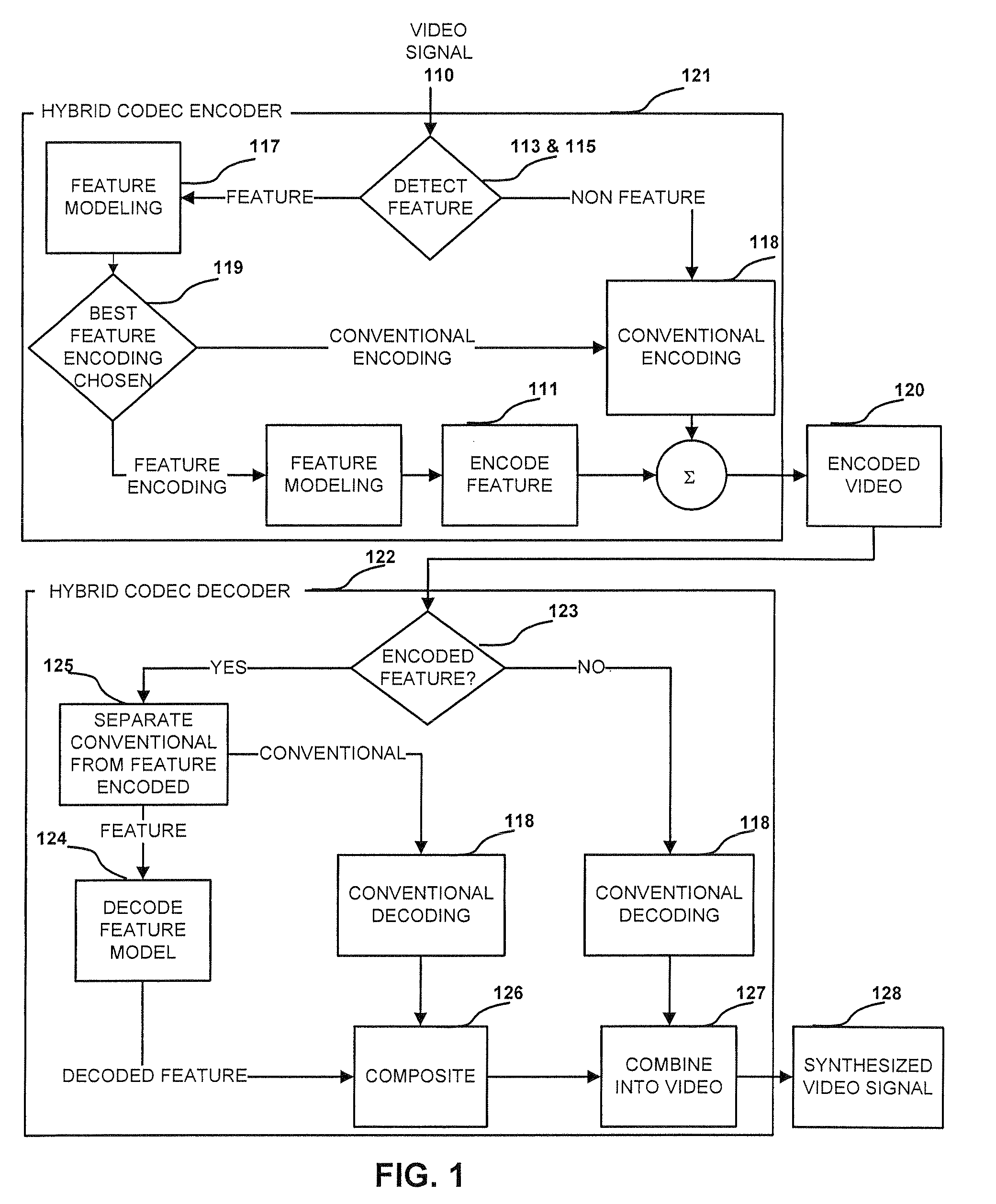

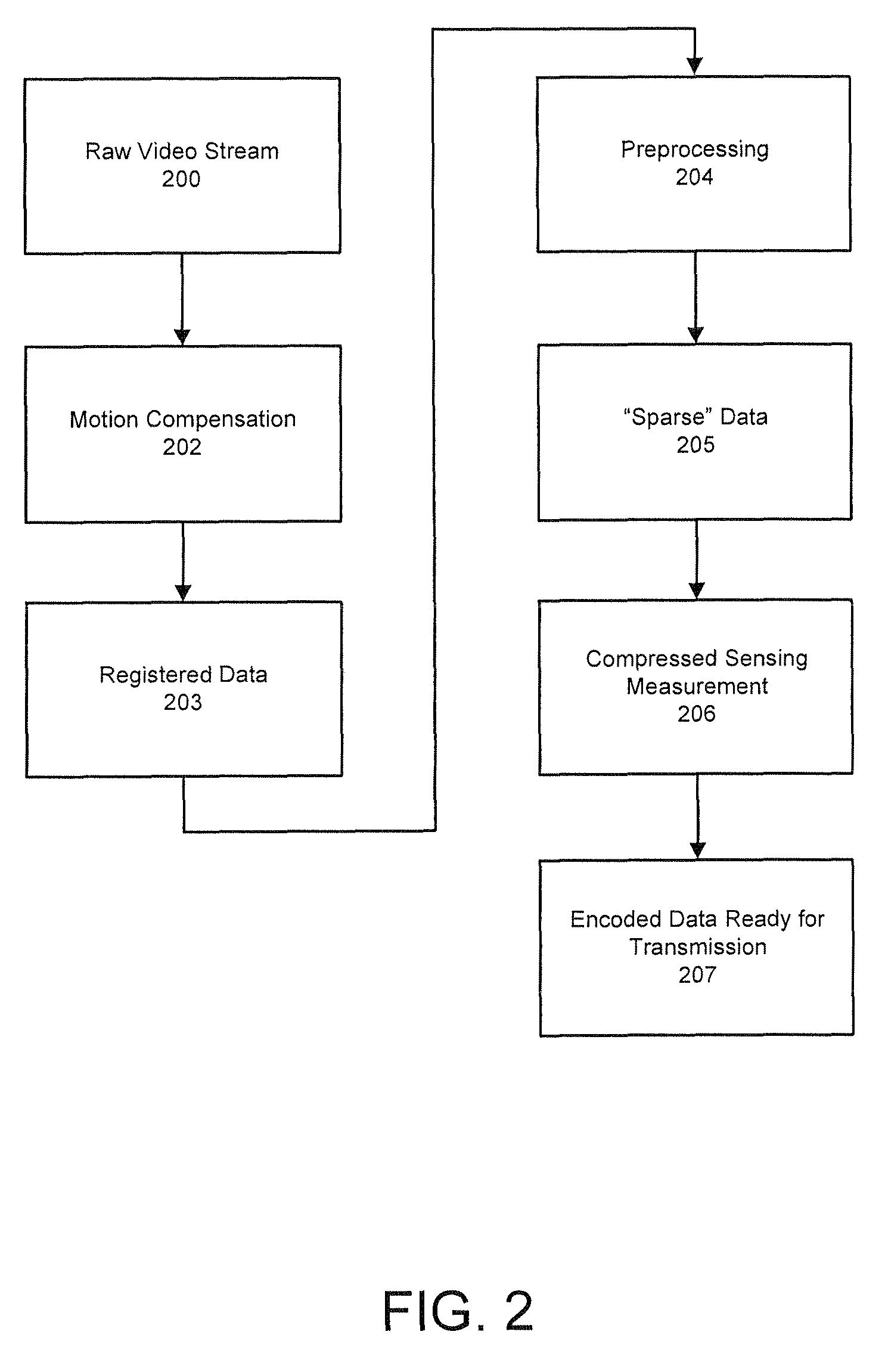

Feature-Based Video Compression

ActiveUS20110182352A1Increase the number ofReduce areaImage analysisPicture reproducers using cathode ray tubesFeature setFeature based

Systems and methods of processing video data are provided. Video data having a series of video frames is received and processed. One or more instances of a candidate feature are detected in the video frames. The previously decoded video frames are processed to identify potential matches of the candidate feature. When a substantial amount of portions of previously decoded video frames include instances of the candidate feature, the instances of the candidate feature are aggregated into a set. The candidate feature set is used to create a feature-based model. The feature-based model includes a model of deformation variation and a model of appearance variation of instances of the candidate feature. The feature-based model compression efficiency is compared with the conventional video compression efficiency.

Owner:EUCLID DISCOVERIES LLC

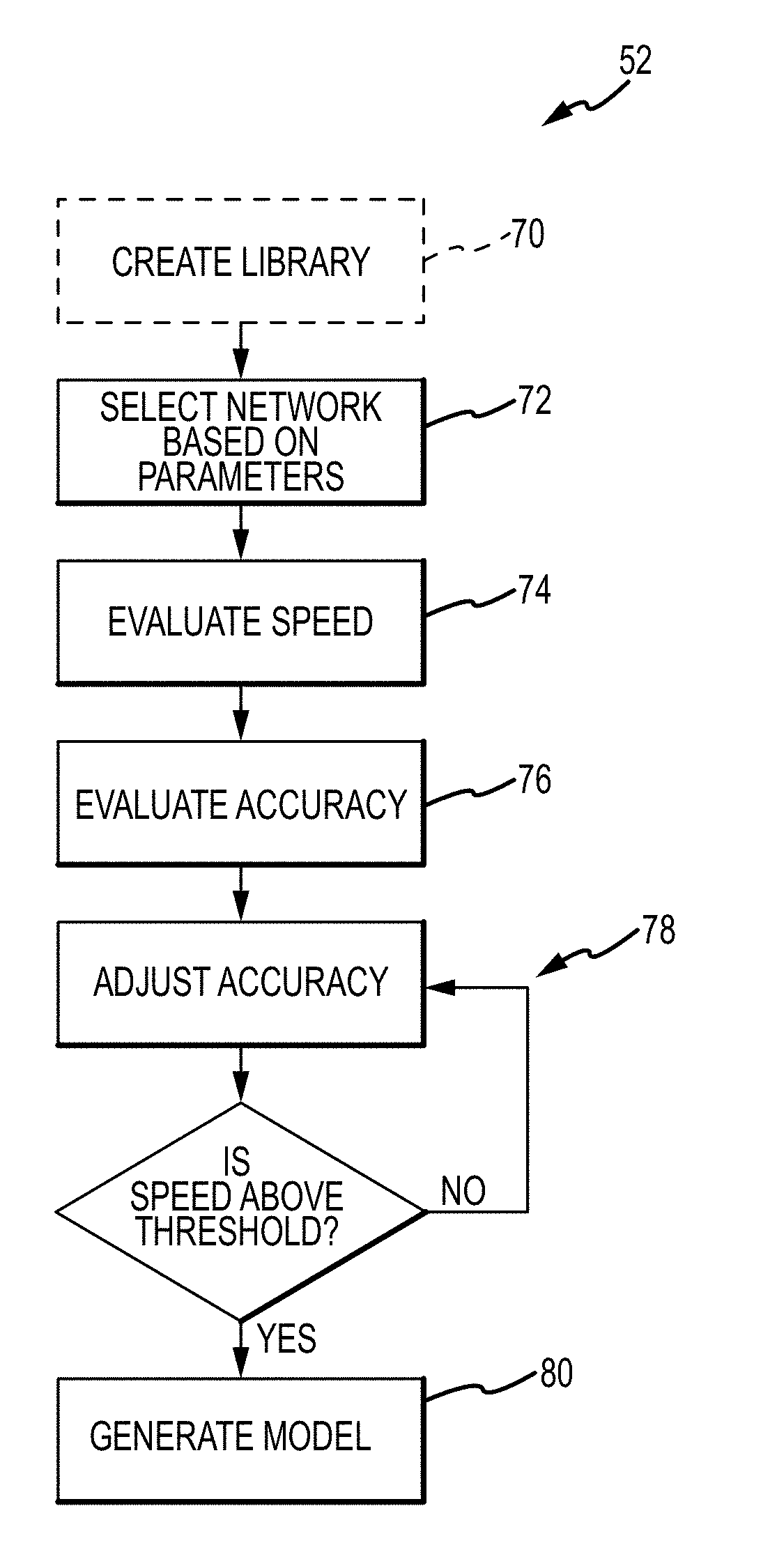

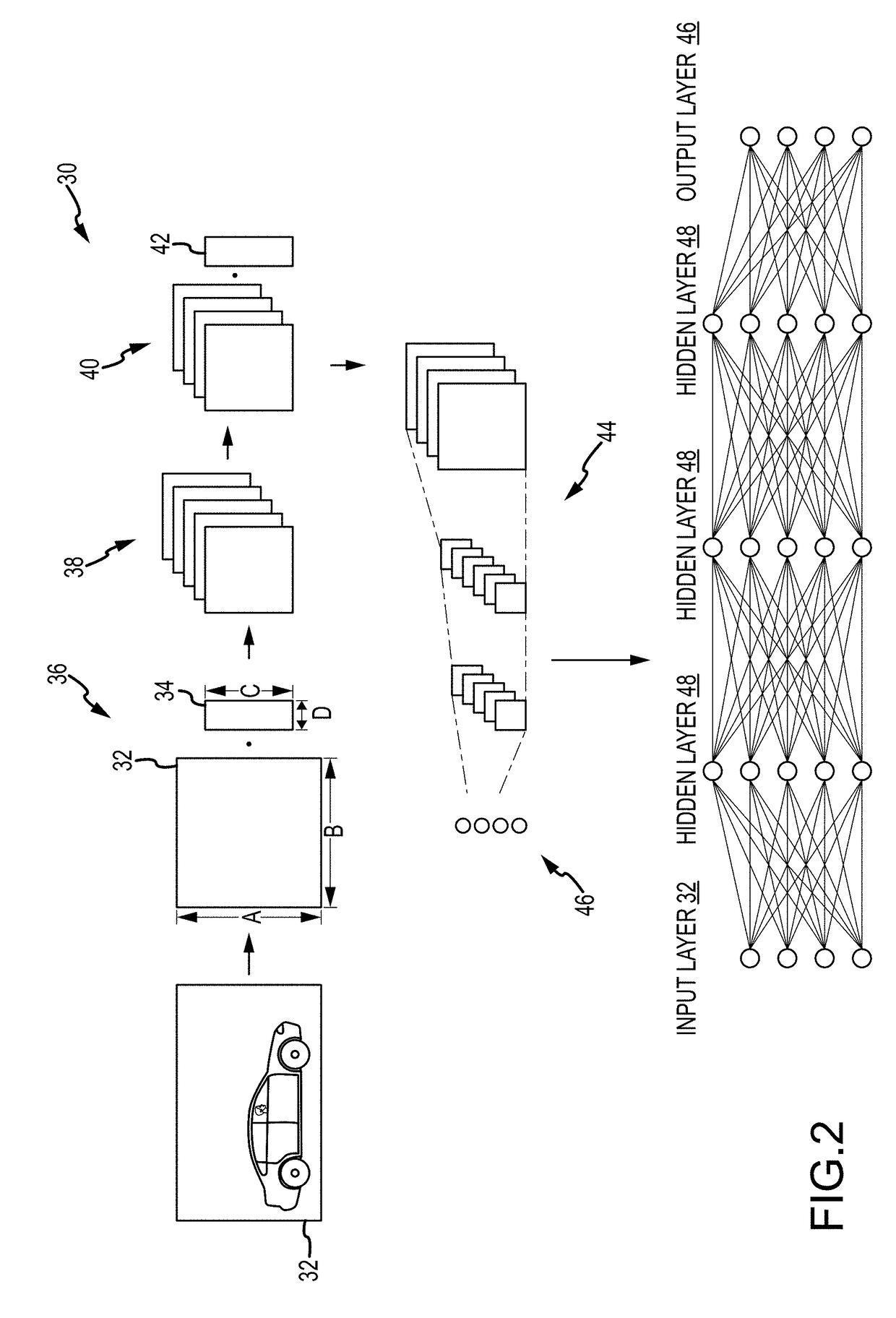

System and method for model compression of neural networks for use in embedded platforms

Embodiments of the present disclosure include a non-transitory computer-readable medium with computer-executable instructions stored thereon executed by one or more processors to perform a method to select and implement a neural network for an embedded system. The method includes selecting a neural network from a library of neural networks based on one or more parameters of the embedded system, the one or more parameters constraining the selection of the neural network. The method also includes training the neural network using a dataset. The method further includes compressing the neural network for implementation on the embedded system, wherein compressing the neural network comprises adjusting at least one float of the neural network.

Owner:BOSSA NOVA ROBOTICS IP +1

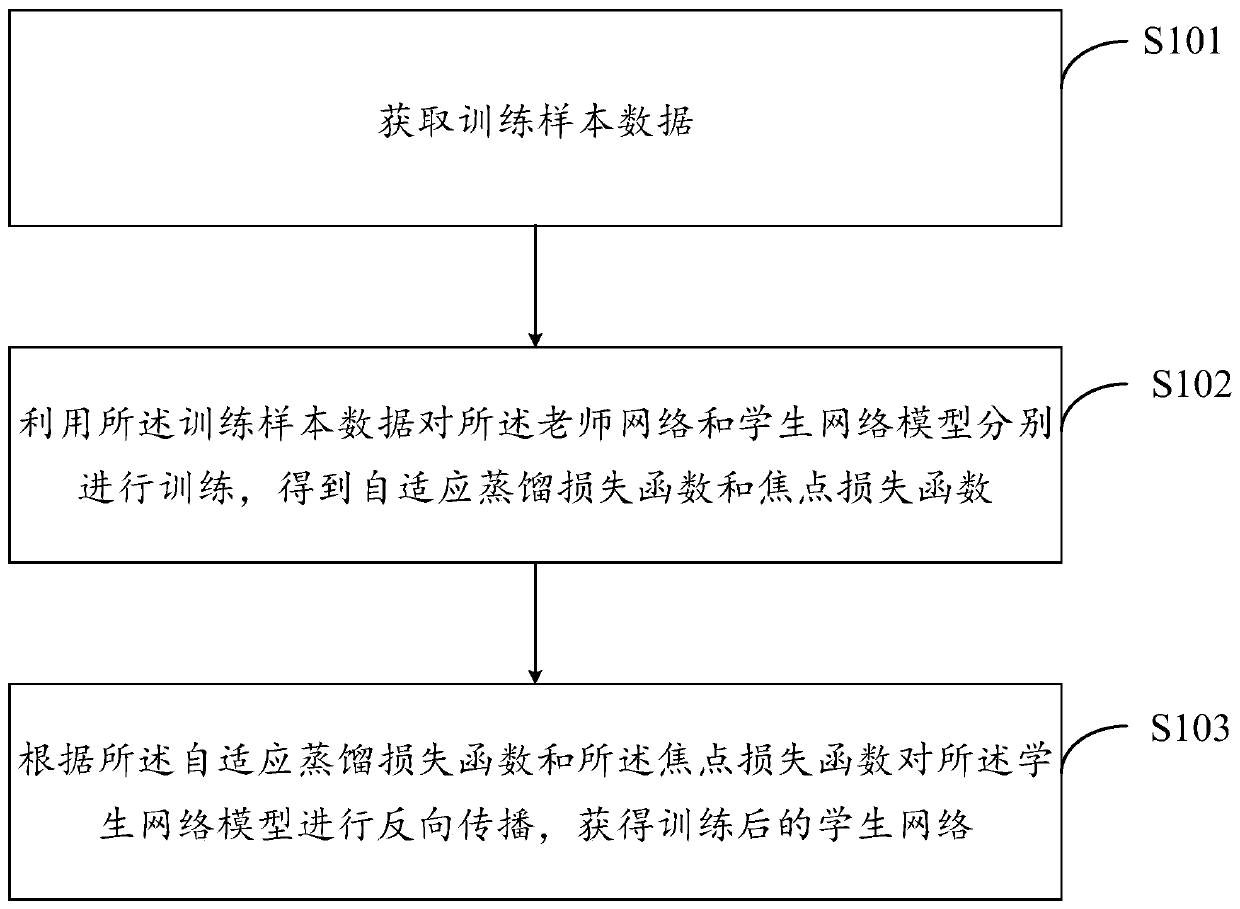

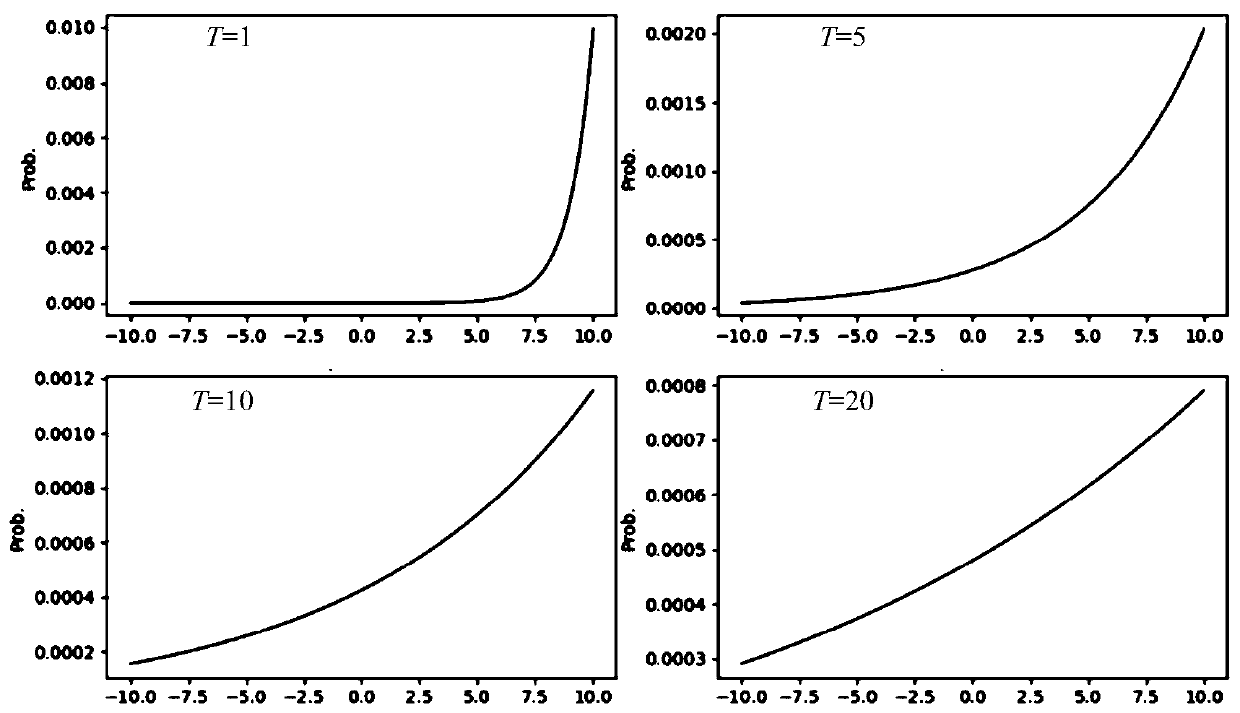

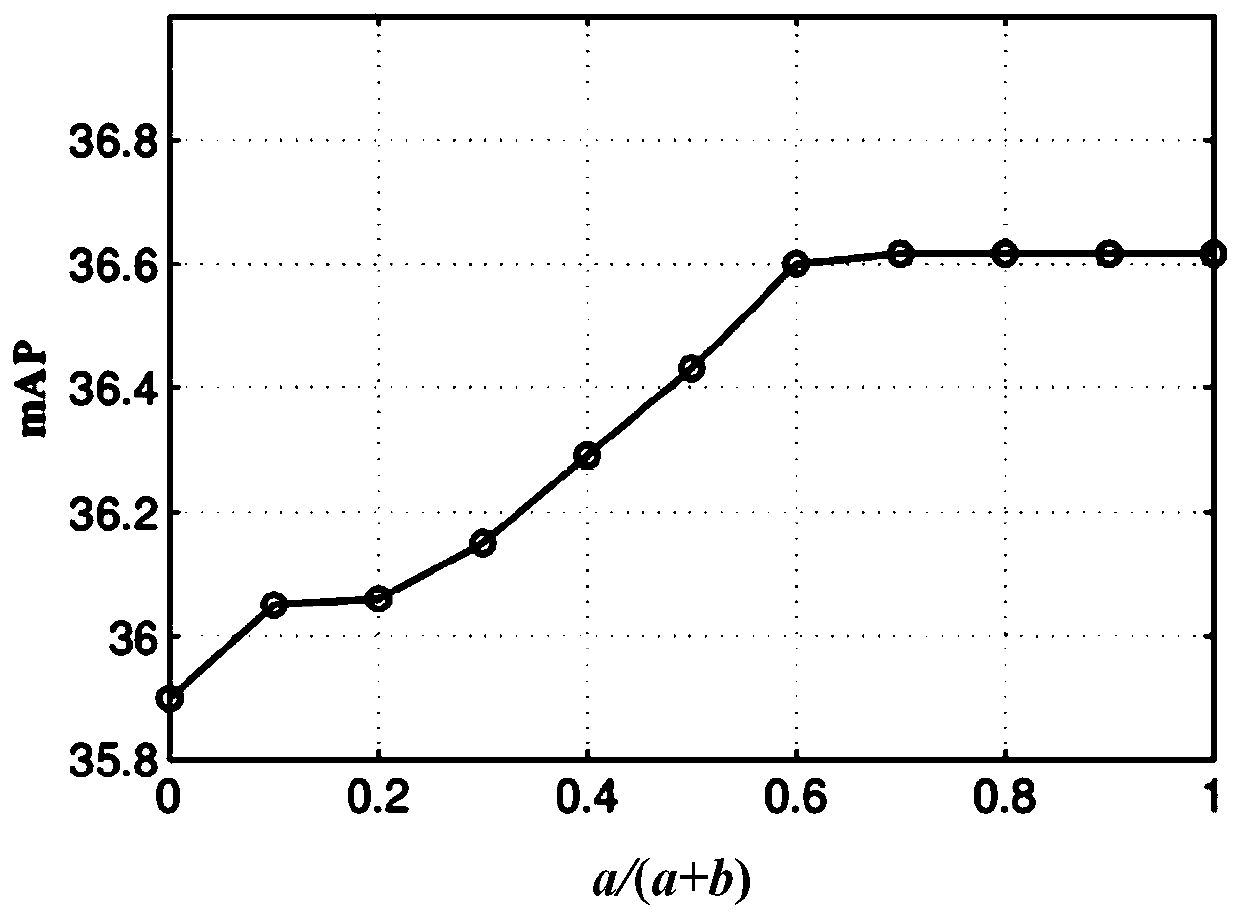

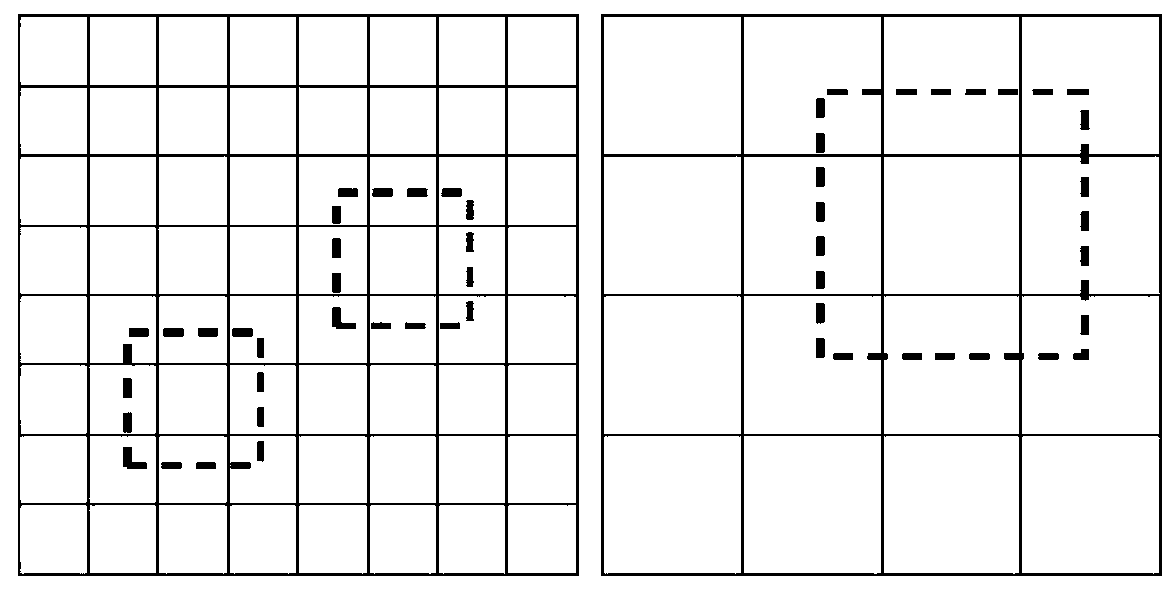

Model compression method and device, electronic equipment and computer storage medium

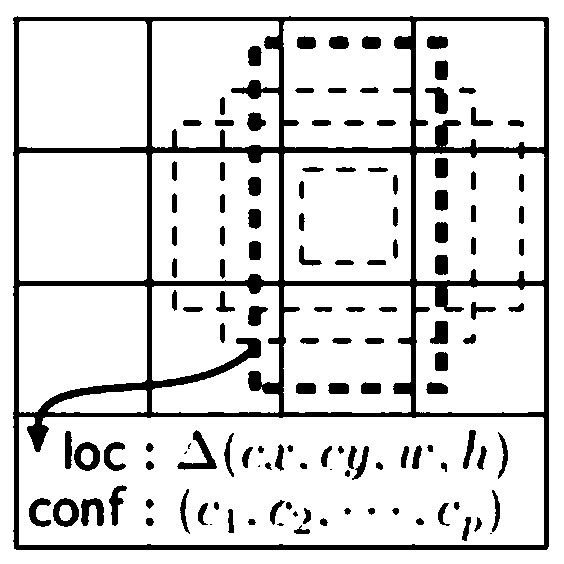

InactiveCN109711544AImprove object detection performanceNeural learning methodsDistillationNetwork model

The invention provides a model compression method and device, electronic equipment and a computer storage medium. The method comprises the steps that training sample data are acquired, and the training sample data comprise label sample data; Respectively training the teacher network model and the student network model by using the training sample data to obtain an adaptive distillation loss function and a focus loss function; and performing back propagation on the student network model according to the adaptive distillation loss function and the focus loss function to obtain a trained studentnetwork.

Owner:BEIJING SENSETIME TECH DEV CO LTD

Unmanned aerial vehicle detection method based on deep learning

ActiveCN109753903AEasy to controlEasy to detectCharacter and pattern recognitionVehicle detectionRemote sensing

The invention discloses an unmanned aerial vehicle detection method based on deep learning. The method comprises the steps of collecting data; data enhancement; constructing a deep convolutional neural network model; performing model training; perform ing model deployment; and applying the model. A cascaded convolutional neural network model is used, and a correction module is added, so that the detection performance of the model can be further improved only by carrying out a small amount of human intervention in the detection process. Through model compression, the model can run on the embedded equipment in real time. And various performance parameters of the unmanned aerial vehicle can be given by combining a database technology. The method has the advantages that the type of the unmanned aerial vehicle can be judged. A correction module is also added, so that the detection performance of the model can be further improved only by carrying out a small amount of human intervention in the detection process. The database technology is combined, various performance parameters of the unmanned aerial vehicle can be given, and control over the unmanned aerial vehicle is facilitated.

Owner:北航(四川)西部国际创新港科技有限公司

Feature-Based Video Compression

InactiveUS20120155536A1Facilitates identification and segmentationReduce in quantityImage analysisPicture reproducers using cathode ray tubesFeature setFeature based

Owner:EUCLID DISCOVERIES LLC

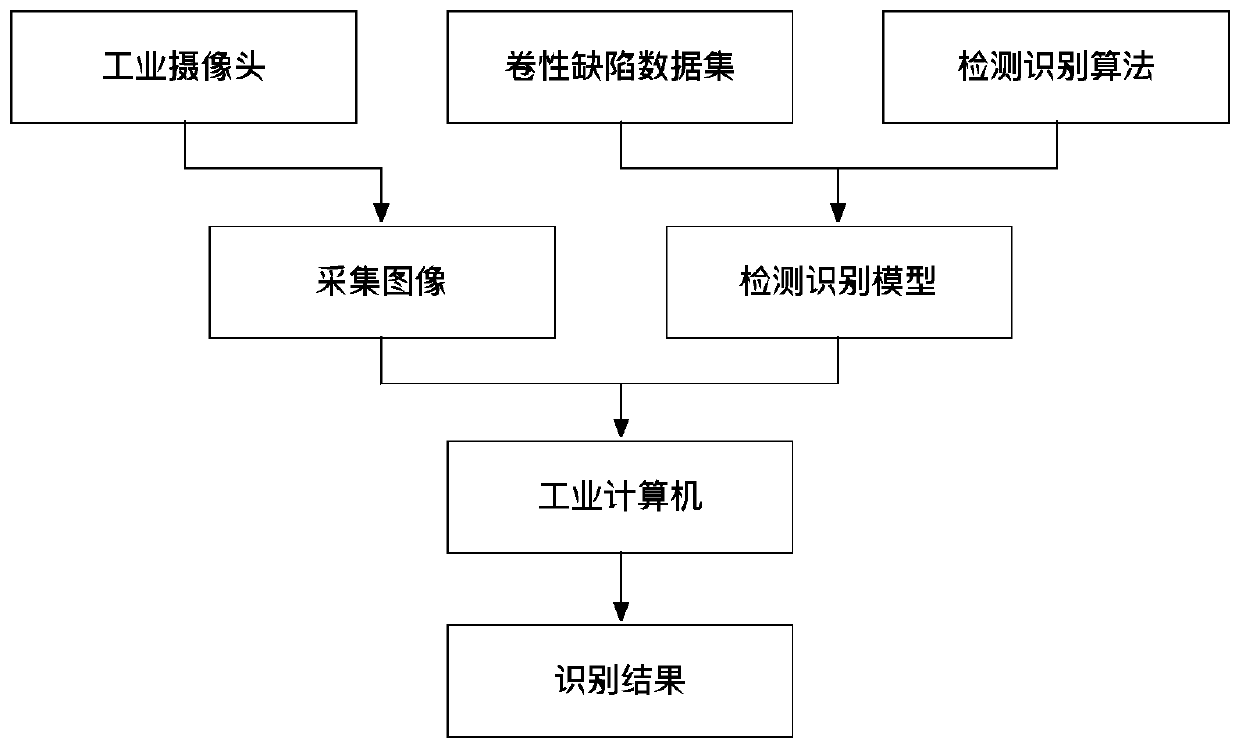

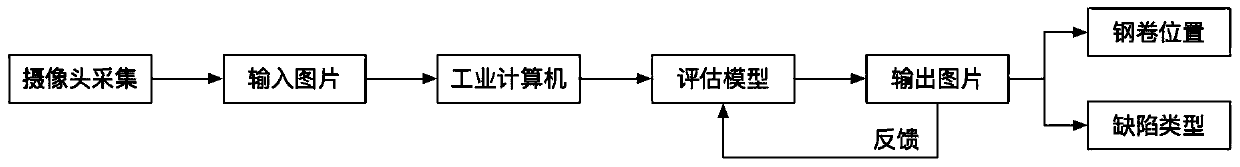

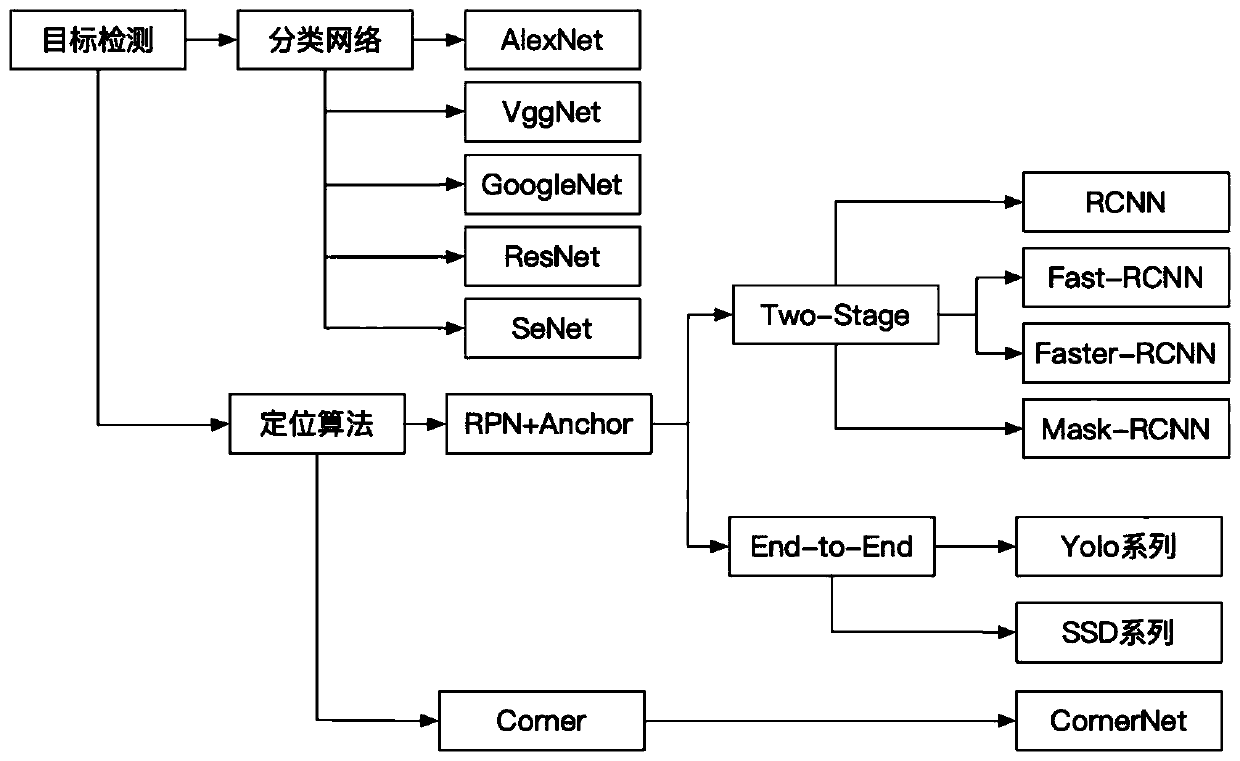

Steel coil shape defect detection and recognition method based on target detection

InactiveCN110197170AImprove automation performanceLow costData processing applicationsCharacter and pattern recognitionData setMaterials science

The invention belongs to the field of steel coil rolling, and relates to a steel coil shape defect detection and recognition method based on target detection. A large number of steel coil shape pictures are obtained from the site to construct a coil shape defect data set, and the task of detecting and identifying the coil shape defects of the steel coil is completed in the traditional steel production industry by utilizing the currently advanced Faster-RCNN target detection algorithm with high identification precision and high detection speed, and meanwhile, the Faster-RCNN is subjected to model compression by pruning, so that the model can meet the industrial embedded requirements. According to the method, a modern intelligent detection technology is utilized, and the method is applied toindustrial production detection of the steel coil.

Owner:UNIV OF SCI & TECH BEIJING

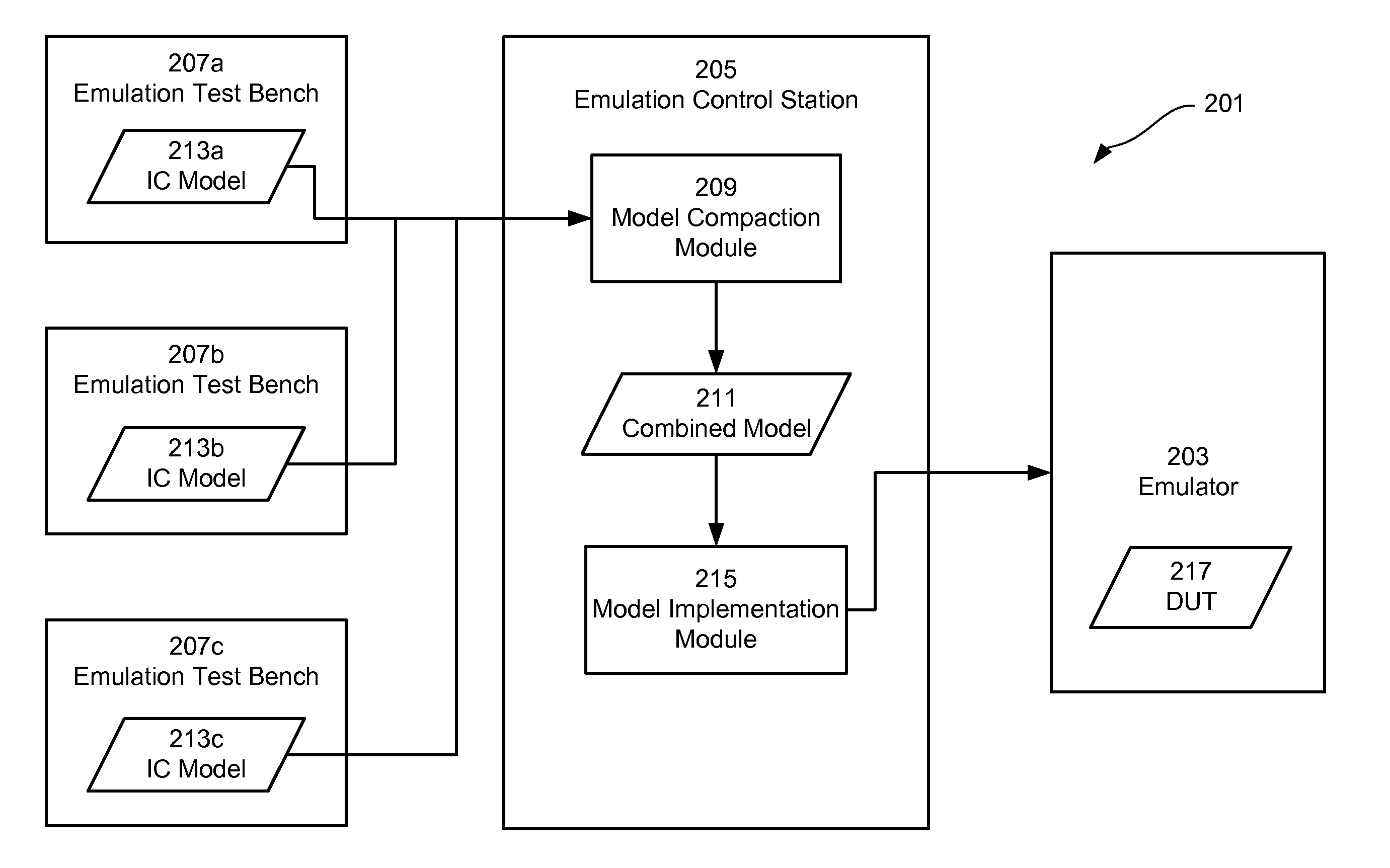

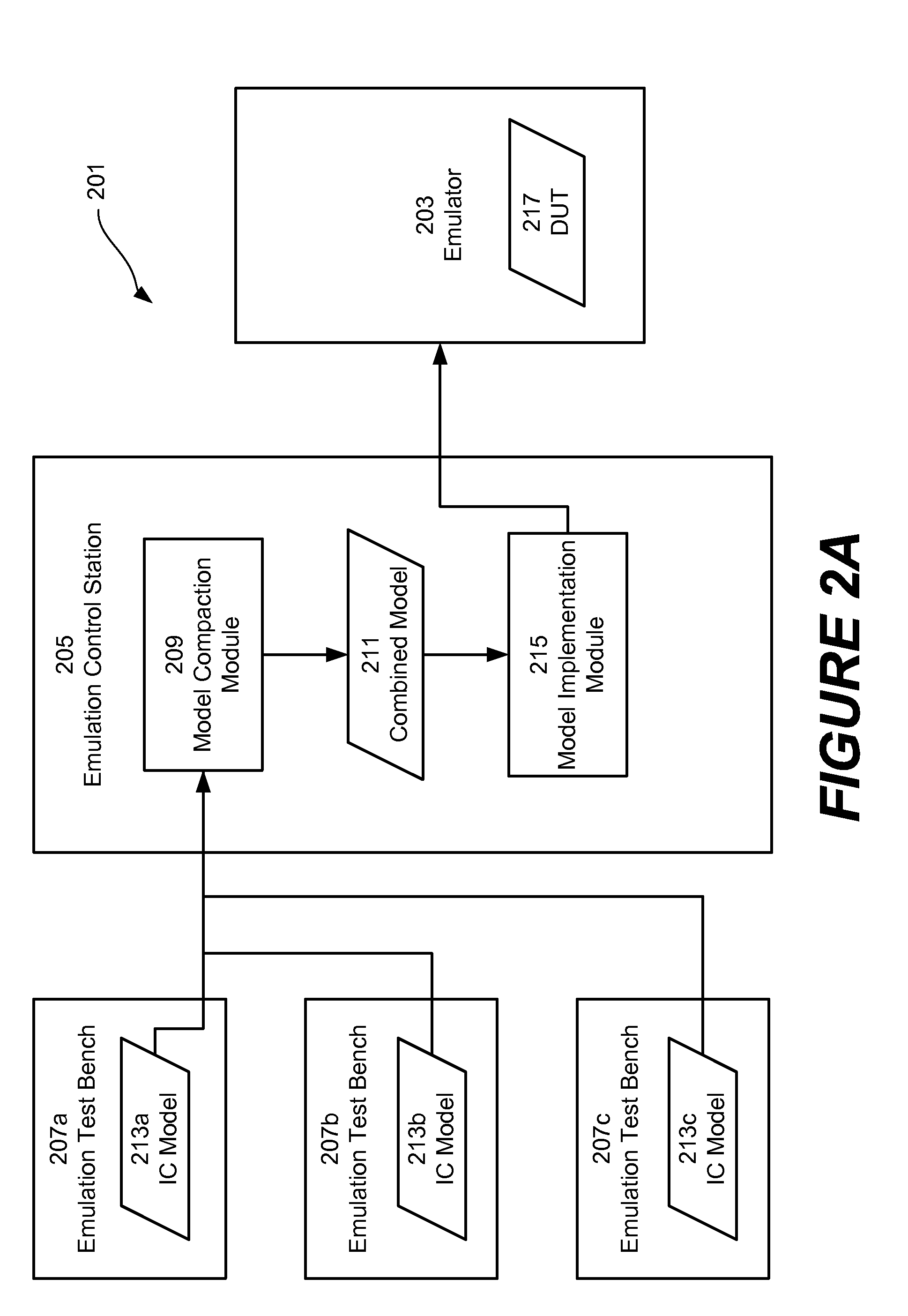

Partitionless Multi User Support For Hardware Assisted Verification

InactiveUS20140052430A1High simulationCAD circuit designSpecial data processing applicationsParallel computingComputer module

Embodiments of the disclosed technology are directed toward facilitating the concurrent emulation of multiple electronic designs in a single emulator without partition restrictions. In certain exemplary embodiments, an emulation environment comprising an emulator and an emulation control station is provided. The emulation control station includes a model compaction module that is configured to combine multiple design models into a combined model. In some implementations, the design models are merged to form the combined model, where each design model is represented as a virtual design with the combined model. Subsequently, the emulator can be configured to implement the combined model. Furthermore, an emulation clock control component is provided that allows for portions of the emulated combined model to be “stalled” during emulation without affecting other portions.

Owner:MENTOR GRAPHICS CORP

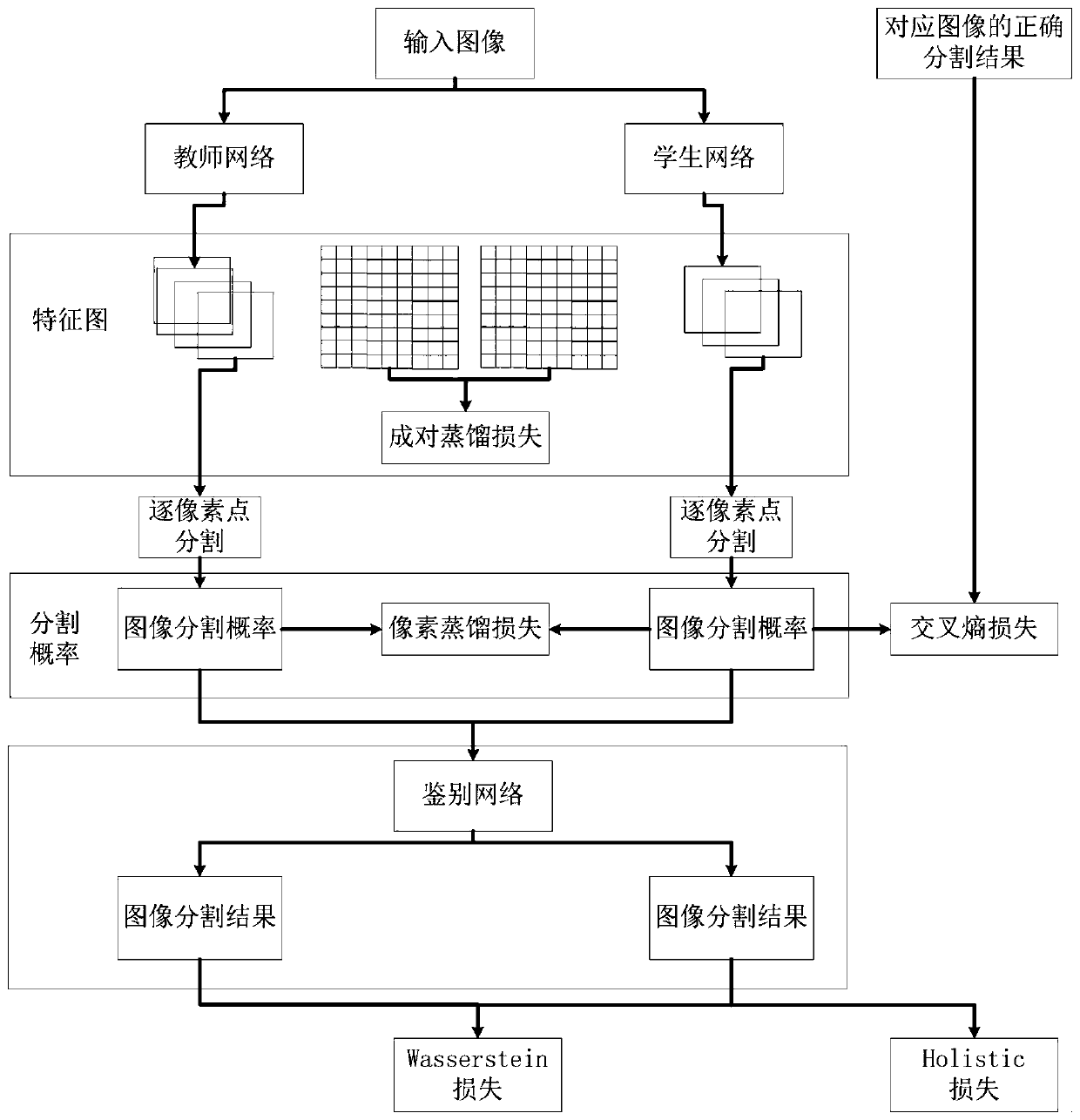

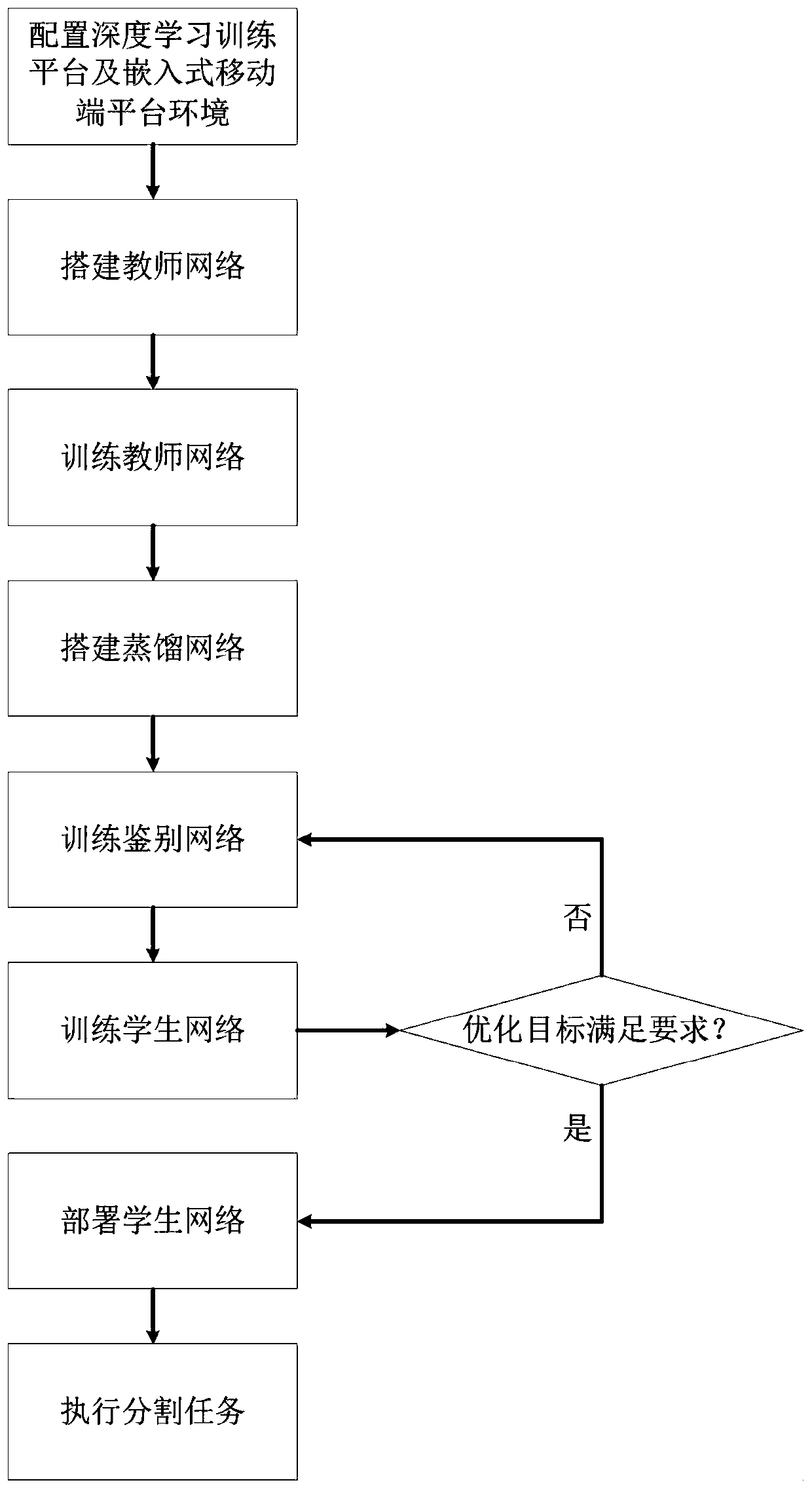

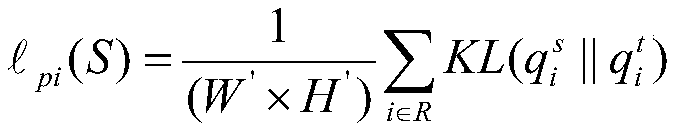

Deep learning semantic segmentation model compression method for embedded mobile terminal

InactiveCN110059740AReduce the amount of parametersSpeed up forward speculationCharacter and pattern recognitionNeural architecturesComputer scienceModel compression

The invention discloses a deep learning semantic segmentation model compression method for an embedded mobile terminal. According to the invention, teacher network parameter weight obtained by training is fixed. Identification network and student network are continuously trained and learned. Distillation (paired distillation, pixel distillation and integral distillation) is performed on three different levels. An overall optimization target (cross entropy loss, pixel distillation loss, paired distillation loss and overall distillation loss) is continuously optimized. Finally, the number of parameters of the student network obtained through distillation is greatly reduced and the forward calculation time of the network is greatly reduced under the condition that the student network obtainedthrough distillation meets extremely small reduction of IEU (Interoperability Union). The problem that the embedded mobile terminal cannot carry a large deep learning network under the condition thatGPU capacity and power supply are limited is solved. The task calculation time is greatly shortened and the embedded mobile terminal platform carries a complex deep network model.

Owner:HANGZHOU DIANZI UNIV

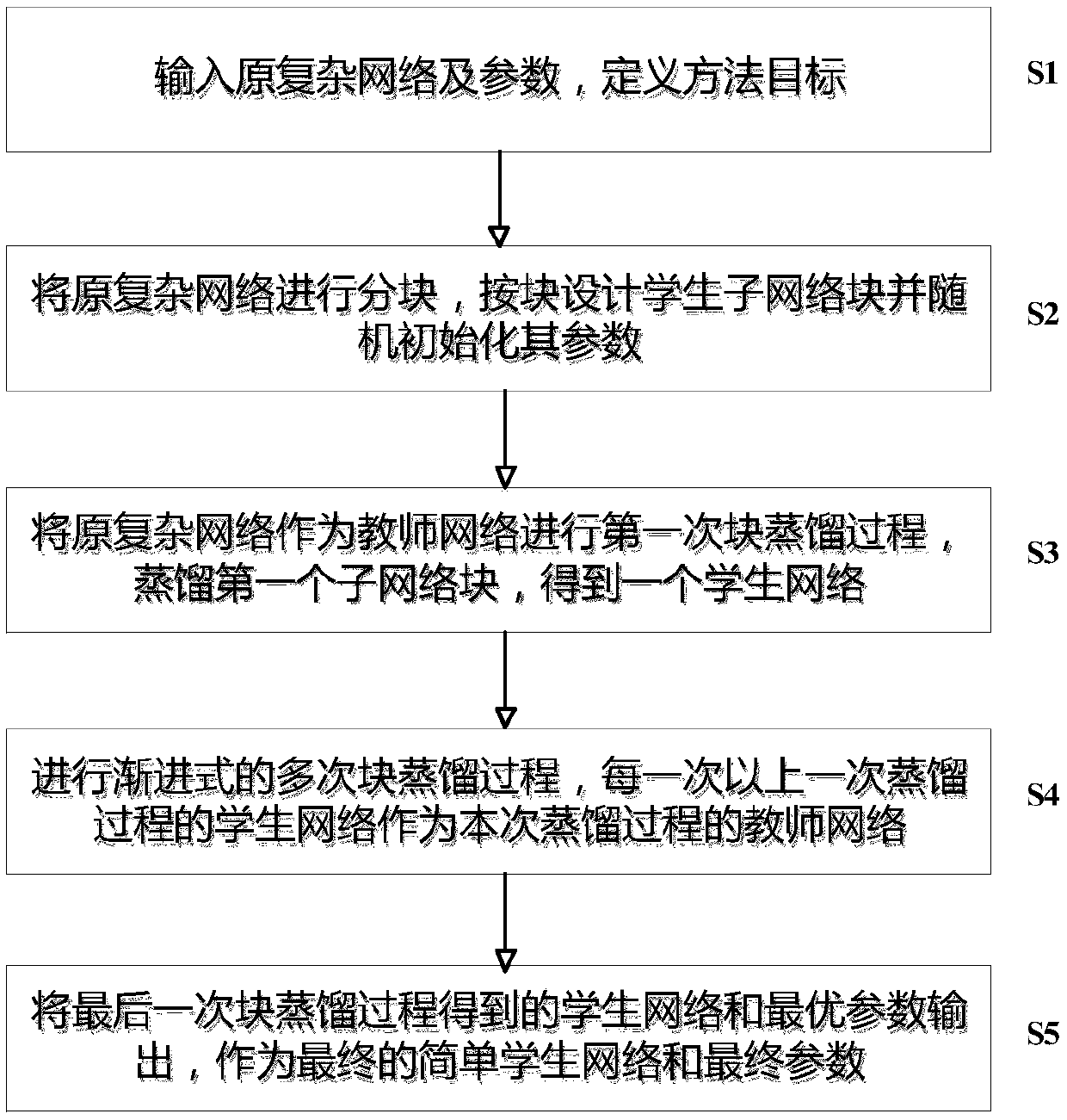

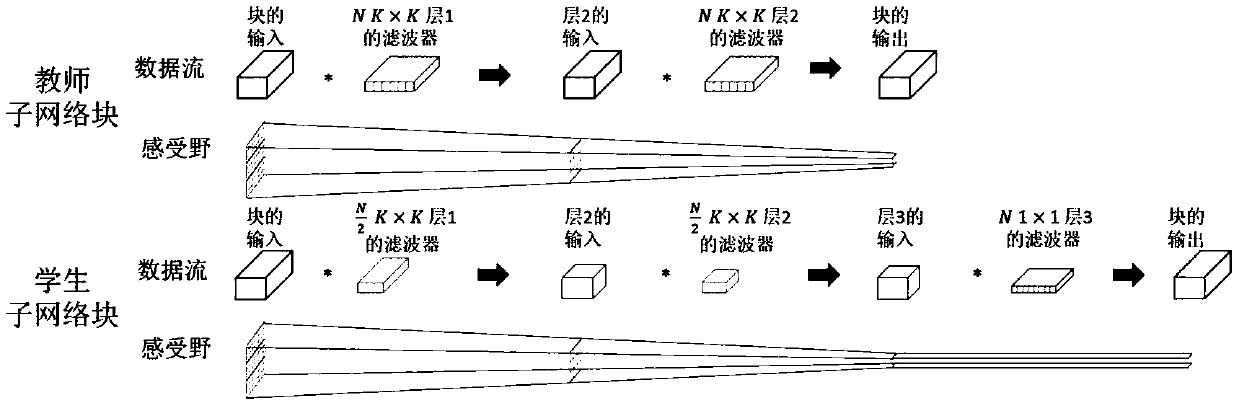

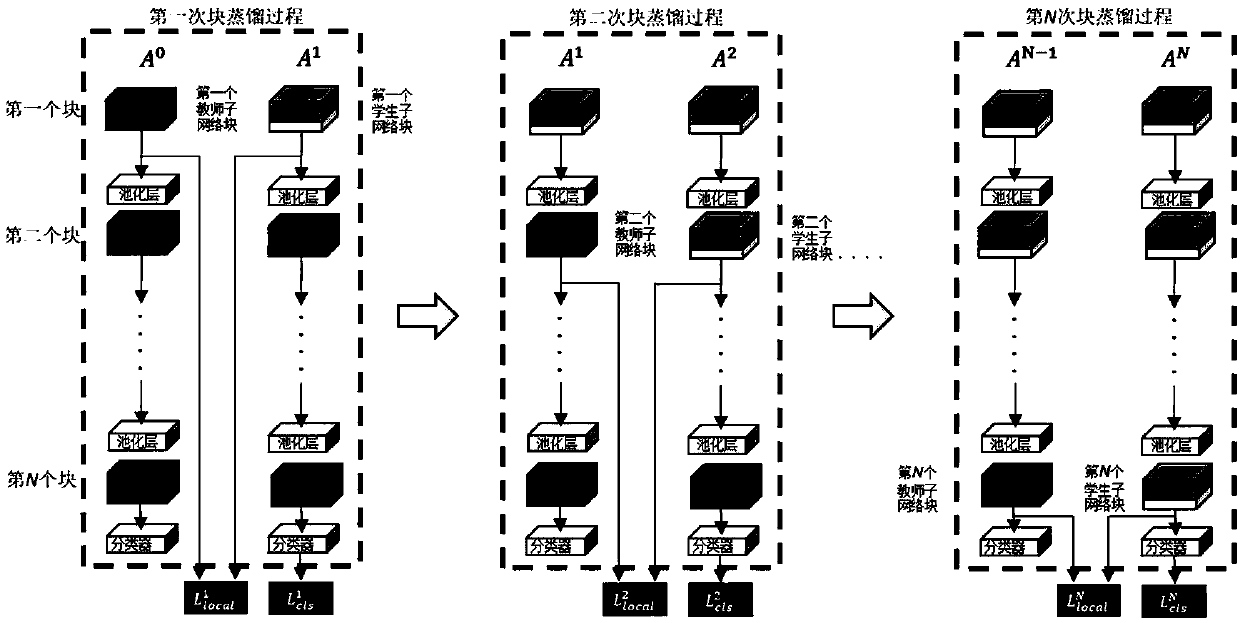

Progressive block knowledge distillation method for neural network acceleration

InactiveCN108921294AEfficient compressionEffective expansionNeural learning methodsNerve networkHardware architecture

The invention discloses a progressive block knowledge distillation method for neural network acceleration. The method specifically comprises the following steps of: inputting an original complex network and related parameters; dividing the original complex network into a plurality of sub-network blocks, designing student sub-network blocks and randomly initializing the parameters; taking the inputoriginal complex network as a teacher network of the first block distillation process and obtaining a student network after the block distillation process is completed, wherein the first student sub-network block has the optimum parameters; taking the student network obtained in the last block distillation process as a teacher network of the next block distillation process so as to obtain a nextstudent network, wherein the student sub-network blocks, the block distillation of which is finished, have the optimum parameters; and obtaining a final simple student network and optimum parameters after all the sub-network block distillation processes are completed. The method is capable of achieving the effect of accelerating model compression on common hardware architecture, is simple to realize, and is an effective, practical and simple deep network model compression acceleration algorithm.

Owner:ZHEJIANG UNIV

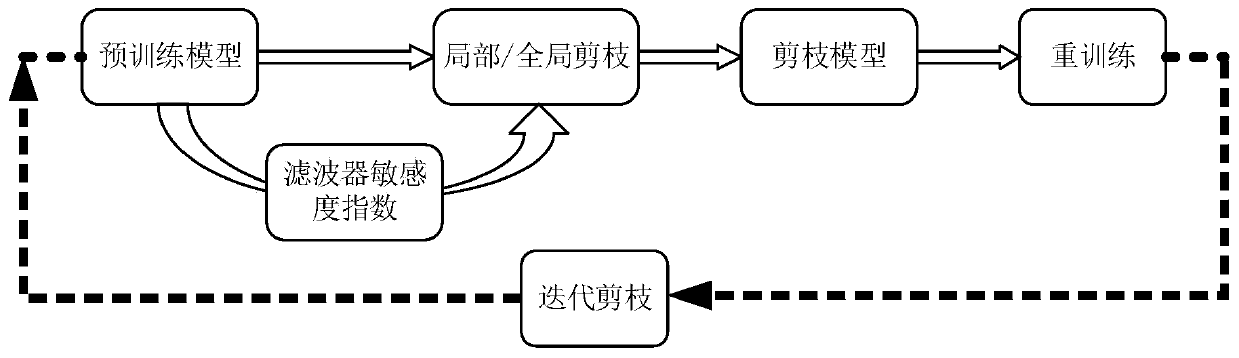

Structured network model compression acceleration method based on multistage pruning

ActiveCN110619385AImprove operational efficiencyReduce occupancyNeural architecturesNeural learning methodsVideo memoryRate curve

The invention discloses a structured network model compression acceleration method based on multistage pruning, and belongs to the technical field of model compression acceleration. The method comprises the following steps: obtaining a pre-training model, and training to obtain an initial complete network model; measuring the sensitivity of the convolution layers, and obtaining a sensitivity-pruning rate curve of each convolution layer through controlling variables; carrying out single-layer pruning from low to high according to a sensitivity sequence, and finely tuning and re-training a network model; selecting a sample as a verification set, and measuring the information entropy of the filter output feature map; performing iterative flexible pruning according to the size sequence of theoutput entropy, and finely tuning and re-training the network model; and hard pruning: carrying out retraining on the network model to recover the network performance, and obtaining and storing a lightweight model. According to the method, the large-scale convolutional neural network can be compressed on the premise of maintaining the original network performance, the local memory occupation of the network can be reduced, the floating point operation and the video memory occupation during operation are reduced, and the lightweight of the network is realized.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

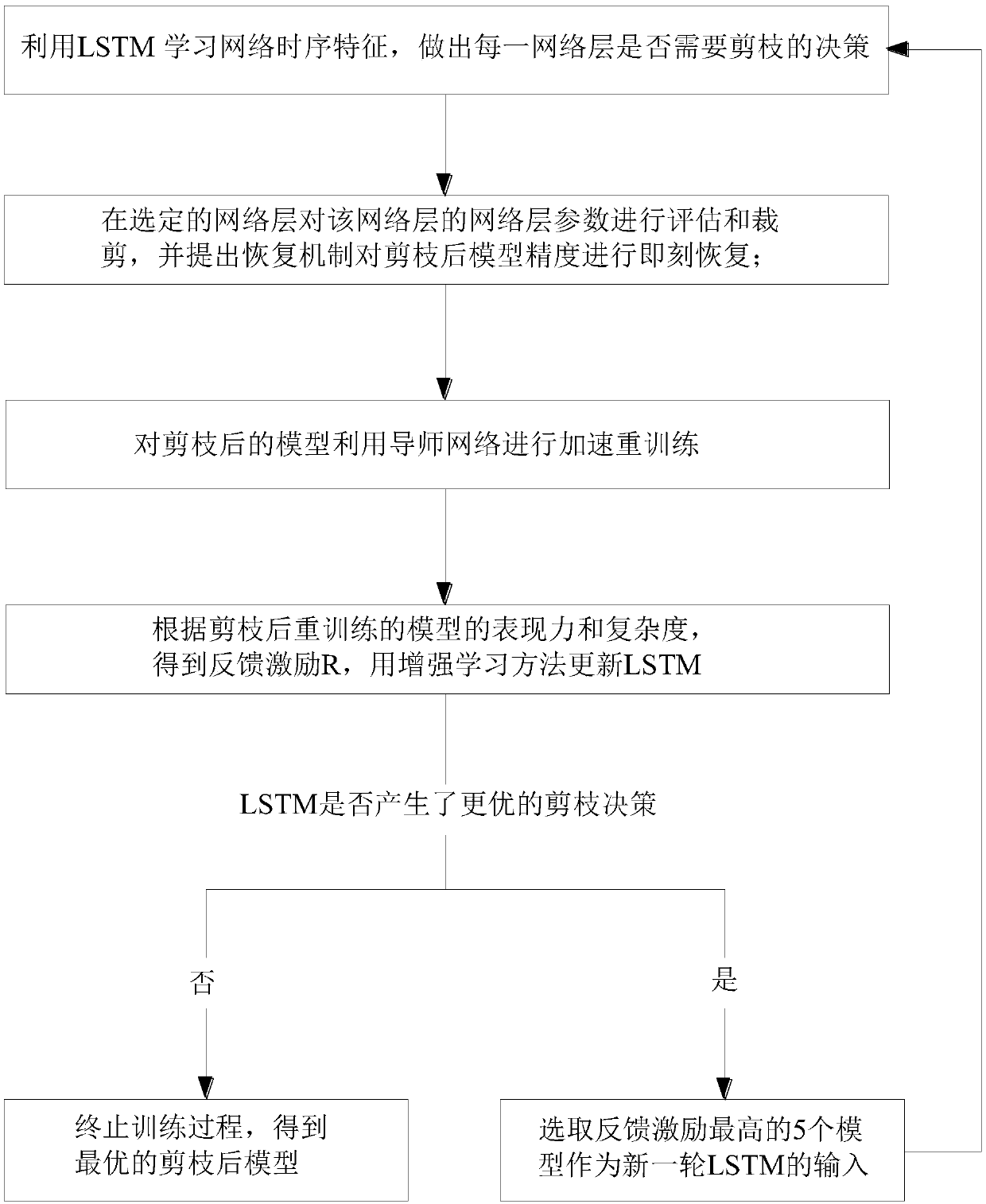

Model compression method based on pruning sequence active learning

InactiveCN109657780AGuaranteed accuracyMulti-angle assessmentNeural architecturesNeural learning methodsData setAlgorithm

The invention provides a model compression method based on pruning sequence active learning. an end-to-end pruning framework based on sequential active learning is provided; The method has the advantages that the importance of all layers of the network can be actively learned, the pruning priority is generated, a reasonable pruning decision is made, the problem that an existing simple sequential pruning method is unreasonable is solved, pruning is preferentially carried out on the network layer with the minimum influence, pruning is carried out step by step from simplification to difficulty, and the model precision loss in the pruning process is minimized; And meanwhile, the final loss of the model is taken as a guide, and the importance of the convolution kernel is evaluated in a multi-angle, efficient, flexible and rapid manner, so that the compression correctness and effectiveness of the whole-process model are ensured, and technical support is provided for subsequent transplantation of a large model to portable equipment. Experimental results show that the model compression method based on pruning sequence active learning provided by the invention is leading under the conditions of multiple data sets and multiple model structures, can greatly compress the model volume under the condition of ensuring the model precision, and has a very strong practical application prospect.

Owner:TSINGHUA UNIV

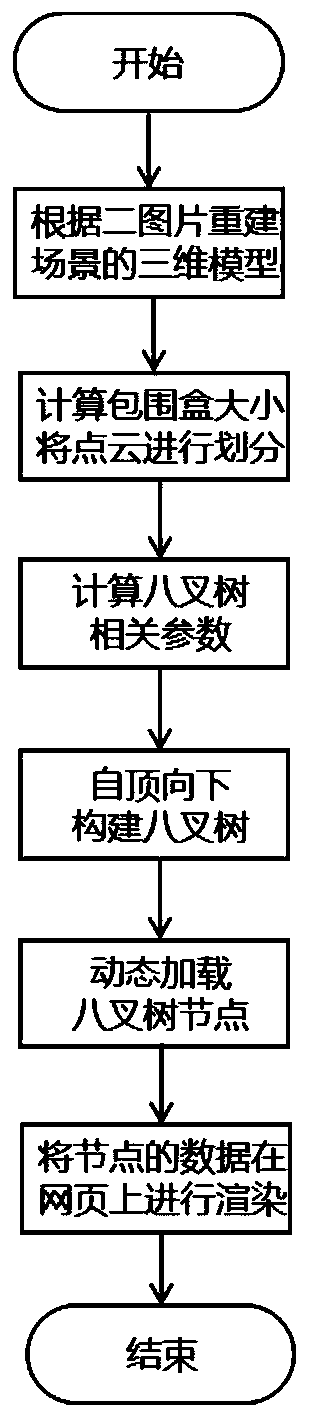

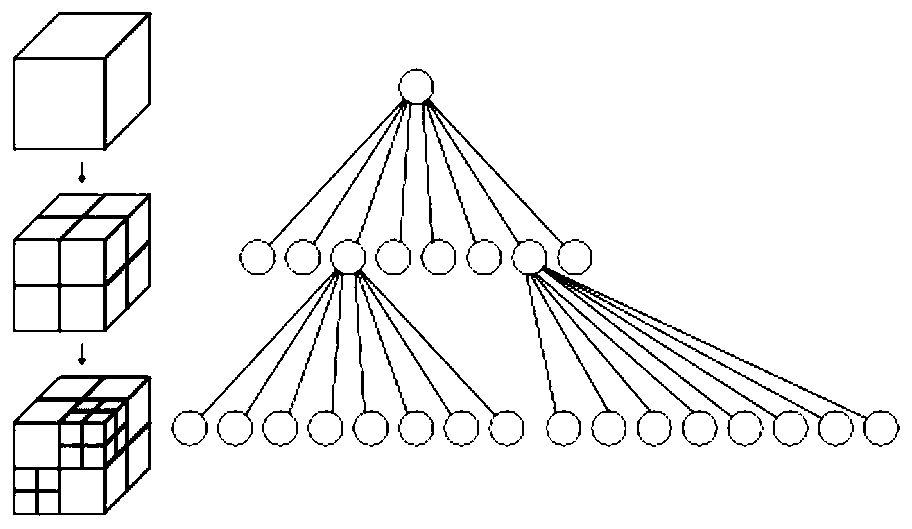

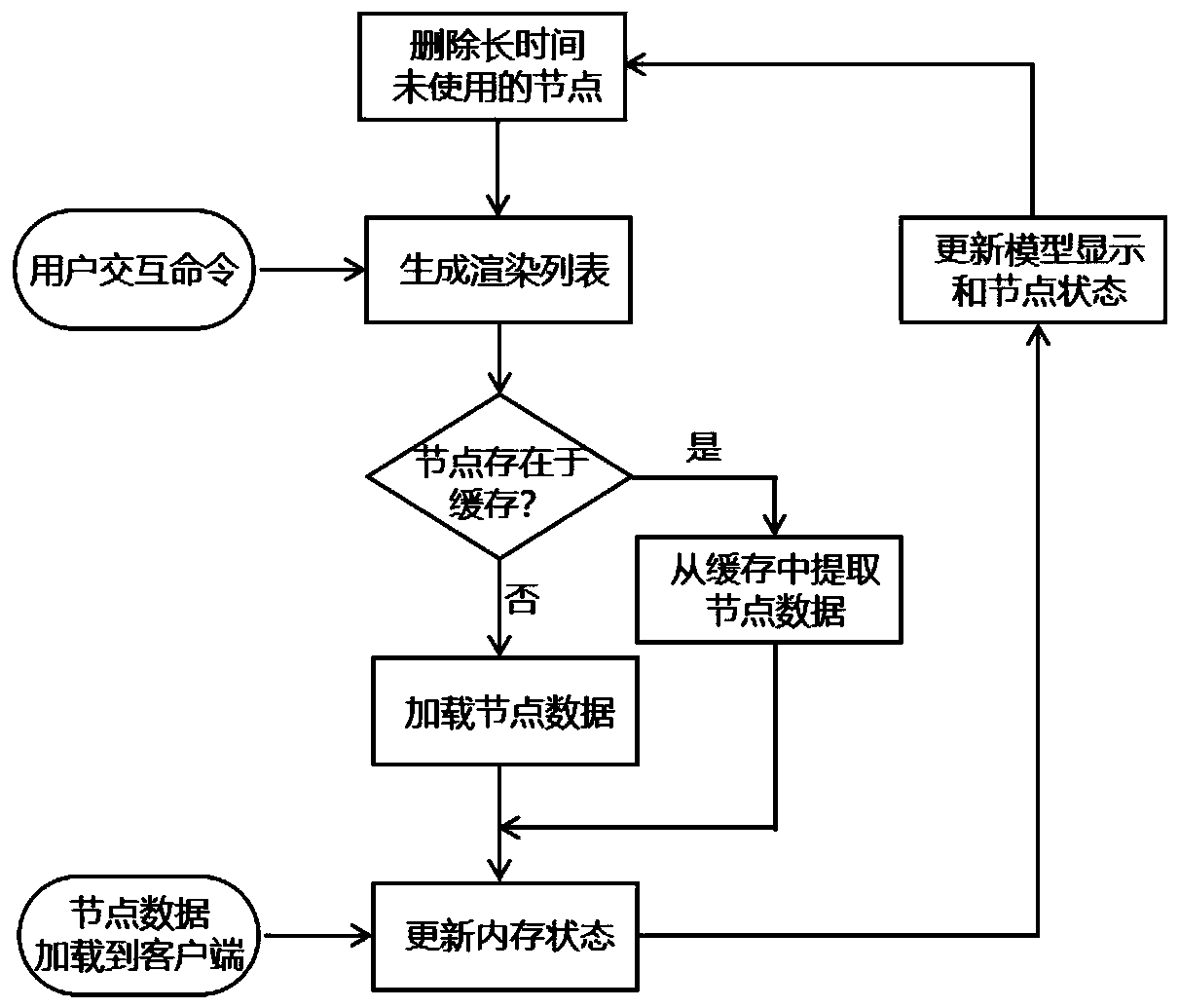

Large-scale three-dimensional scene webpage display method based on model compression and asynchronous loading

ActiveCN110070613AImprove loading speedImprove fluency3D-image rendering3D modellingPoint cloudComputer graphics (images)

The invention provides a large three-dimensional scene webpage display method based on model compression and asynchronous loading, and relates to the technical field of computer graphics. The method comprises the following steps of 1, obtaining a two-dimensional picture of a scene to be reconstructed, and generating a three-dimensional model of the scene to be reconstructed by utilizing a three-dimensional reconstruction technology; 2, dividing a point cloud space in the model into small cubes according to a three-dimensional model, and forming structured data by using an octree; 3, calculating the node size and depth of the octree model; 4, constructing an octree model; 5, compressing the octree model requested by the user at the server side, and transmitting the compressed octree model to a browser for rendering display through a network; and 6, dynamically loading the nodes of the octree model on the webpage according to the hierarchical detail technology, and rendering the nodes atthe same time to finally obtain the three-dimensional scene graph of the scene to be reconstructed. According to the method, the real-time performance of model rendering in webpage model display is ensured, and the waiting time of a user is greatly shortened.

Owner:NORTHEASTERN UNIV

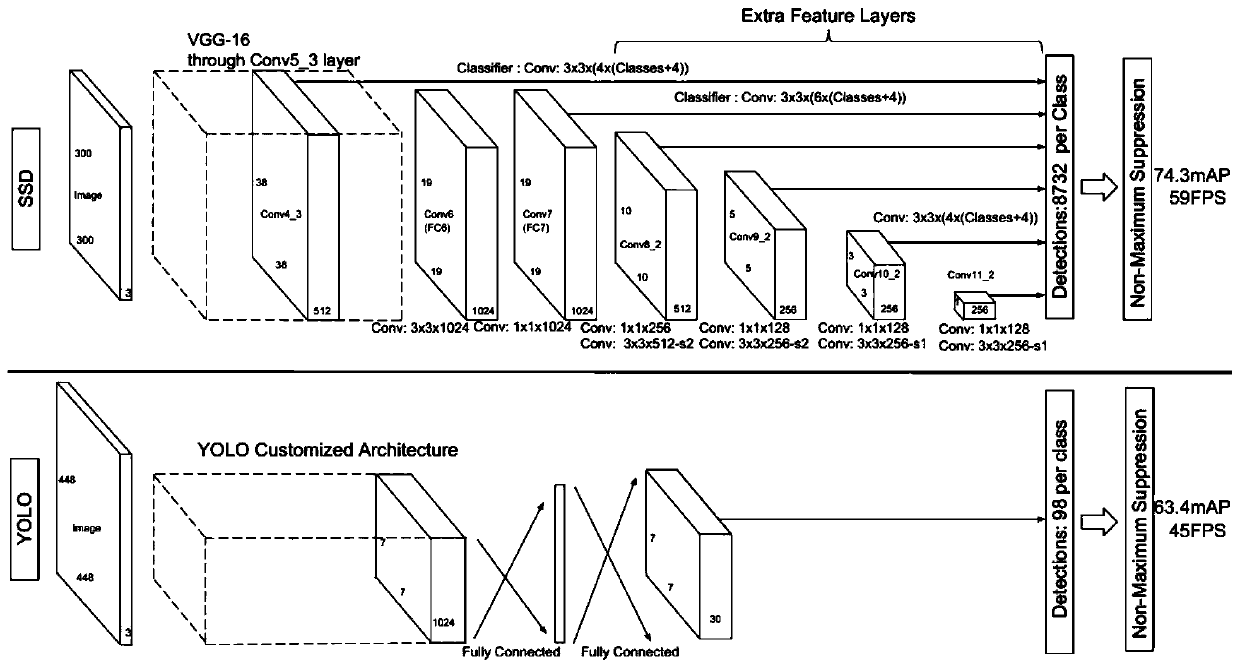

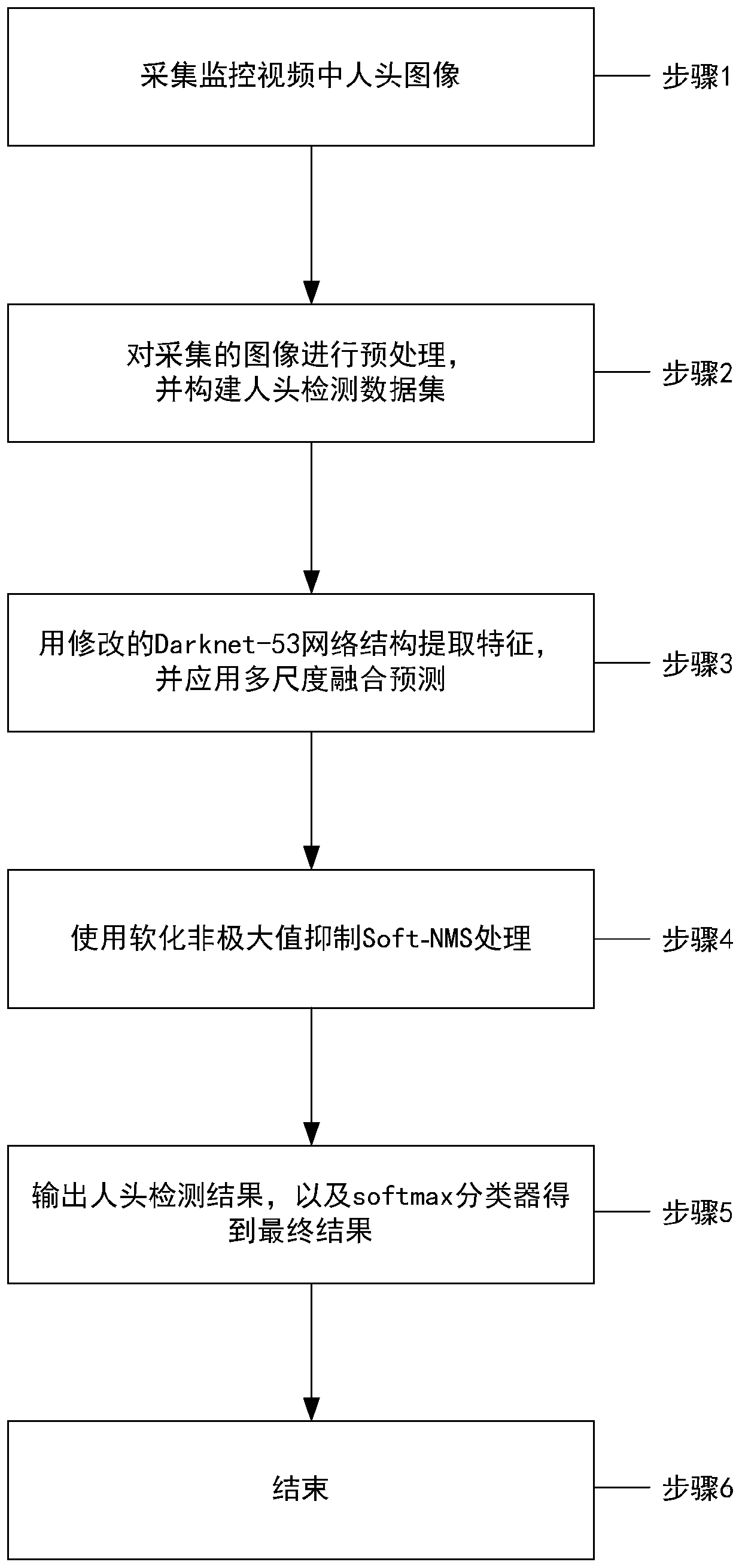

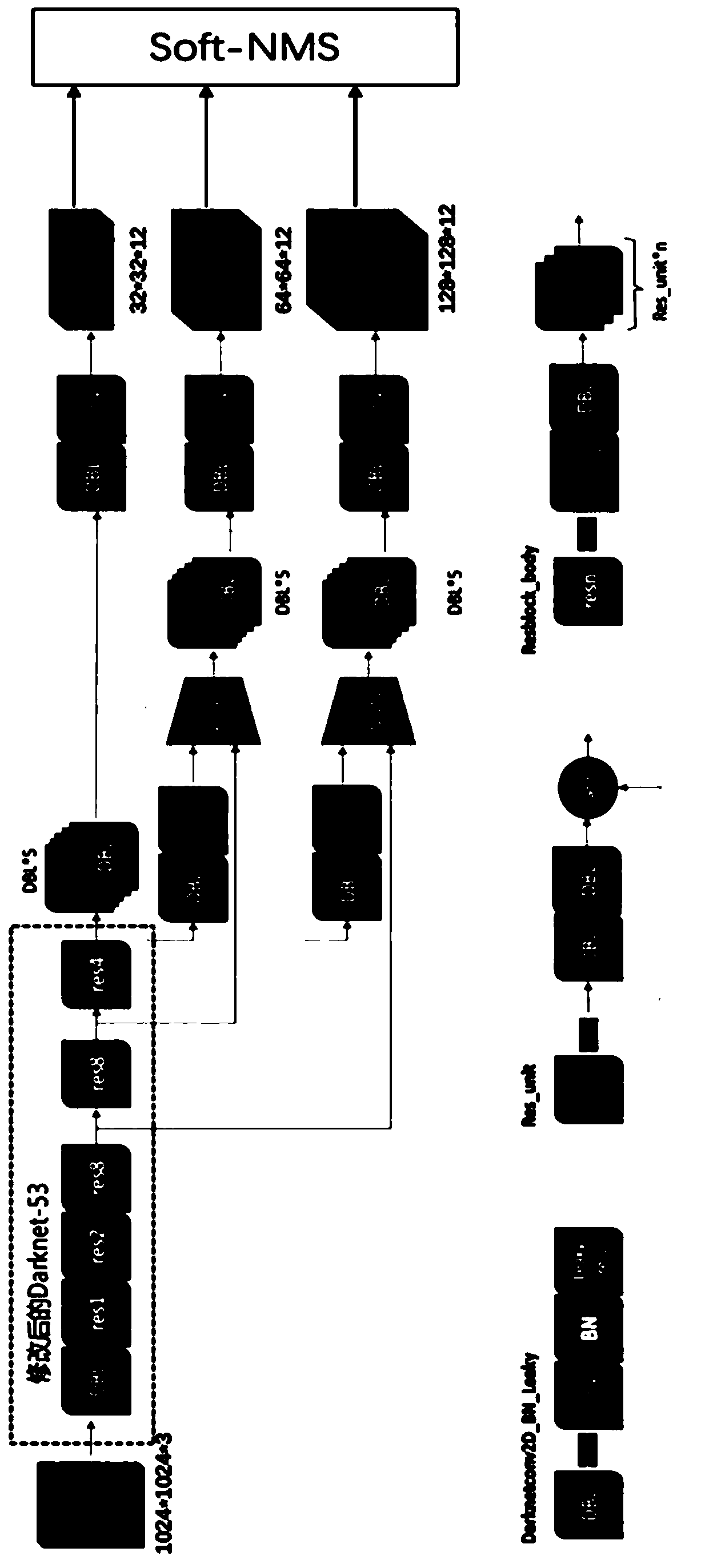

Chef cap and mask wearing detection method based on deep learning

PendingCN111062429ASmall sizeHigh degree of generalizationBiometric pattern recognitionNeural architecturesData setEngineering

The invention provides a chef hat and mask wearing detection method based on deep learning. The chef hat and mask wearing detection method comprises the steps: collecting head images in a kitchen scene; preprocessing the human head image, and constructing a human head detection data set; putting the training set into a convolution feature device to extract features related to the chef cap and themask, generating a predicted human head bounding box by generating the Anchor box number through a K-means clustering method, and taking the intersection ratio of a candidate box and a real box as anevaluation standard; inputting the training set data into a YOLOv3 network for repeated training to obtain a weight value and an offset value of a convolutional layer, and outputting a loss function value of the training set data; and compressing the trained model to meet real-time detection conditions, detecting the model, detecting the head image according to the trained model, and respectivelyoutputting results including prediction bounding boxes and categories of the chef cap and the mask.

Owner:SHANGHAI DIANZE INTELLIGENT TECH CO LTD

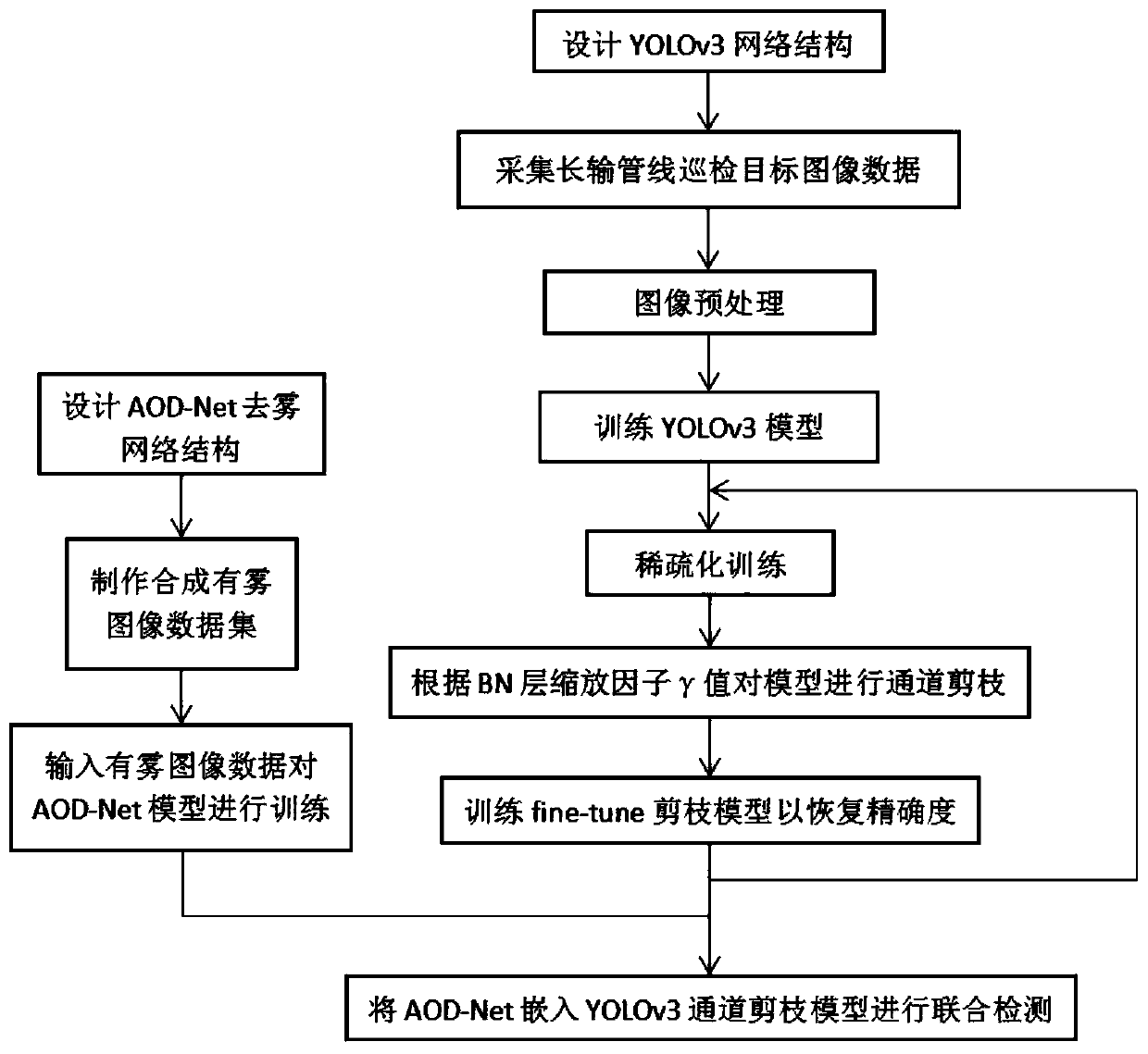

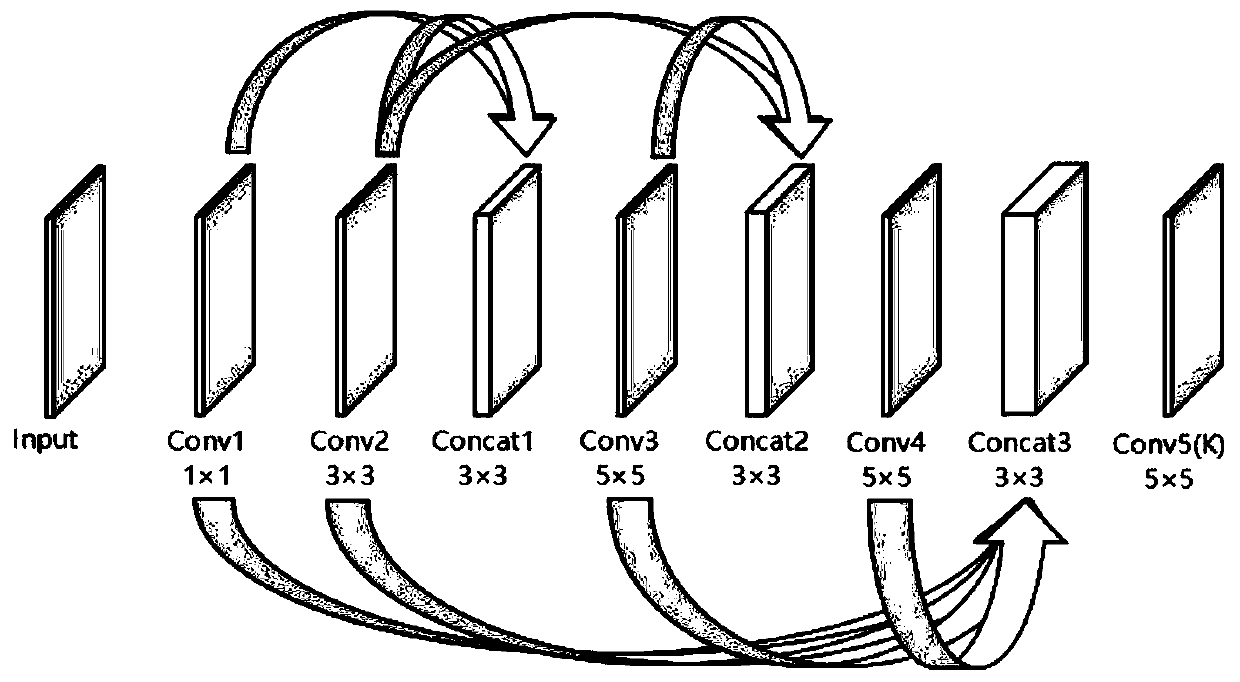

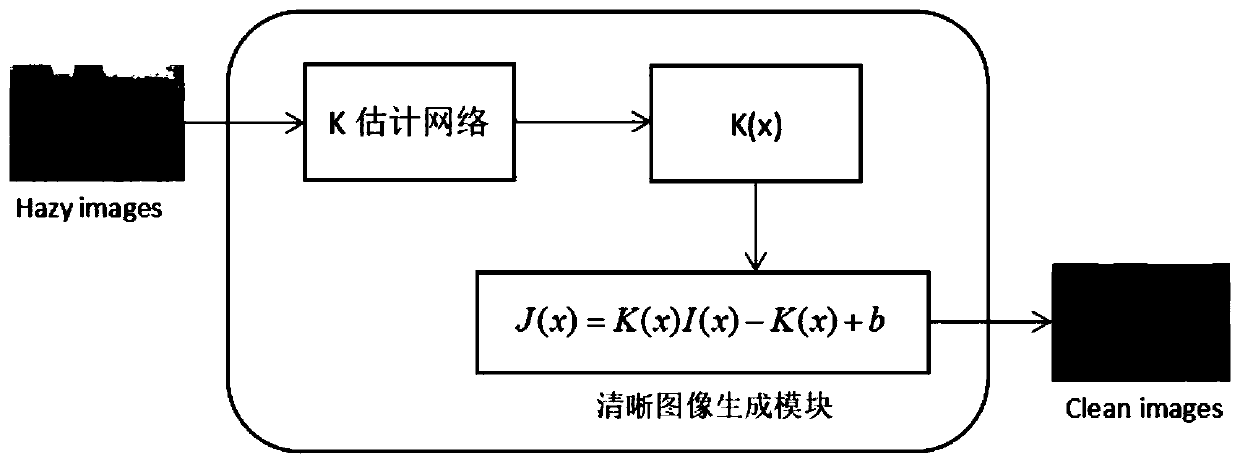

Long-distance pipeline inspection method based on YOLOv3 pruning network and deep learning defogging model

ActiveCN111461291AImproving the accuracy of subsequent target detection tasksImprove efficiencyImage enhancementInternal combustion piston enginesImaging processingAlgorithm

The invention belongs to an image processing technology based on deep learning, and particularly relates to a long-distance pipeline inspection method based on a YOLOv3 pruning network and a deep learning defogging model. The method comprises the following steps: 1, constructing and training an AOD-Net defogging network model; 2, designing a YOLOv3 backbone network and a loss function; 3, performing image data acquisition and training on the target area in an unmanned aerial vehicle inspection mode; 4, performing compression and accelerated calculation on the YOLOv3 model through a scaling factor gamma pruning method based on a BN layer; 5, deploying the AOD-Net and YOLOv3 joint model to an embedded module of the unmanned aerial vehicle for target task detection; and 6, returning the inspection task detection result of the long-distance pipeline of the unmanned aerial vehicle to the background system in real time. The system is used for being deployed on an unmanned aerial vehicle embedded module to perform long-distance pipeline inspection work, and the labor cost is greatly reduced while high detection precision, good real-time performance and high efficiency are guaranteed.

Owner:XIAN UNIV OF SCI & TECH

Feature-based hybrid video codec comparing compression efficiency of encodings

ActiveUS8942283B2Increase the number ofReduce areaImage analysisPicture reproducers using cathode ray tubesFeature setFeature based

Systems and methods of processing video data are provided. Video data having a series of video frames is received and processed. One or more instances of a candidate feature are detected in the video frames. The previously decoded video frames are processed to identify potential matches of the candidate feature. When a substantial amount of portions of previously decoded video frames include instances of the candidate feature, the instances of the candidate feature are aggregated into a set. The candidate feature set is used to create a feature-based model. The feature-based model includes a model of deformation variation and a model of appearance variation of instances of the candidate feature. The feature-based model compression efficiency is compared with the conventional video compression efficiency.

Owner:EUCLID DISCOVERIES LLC

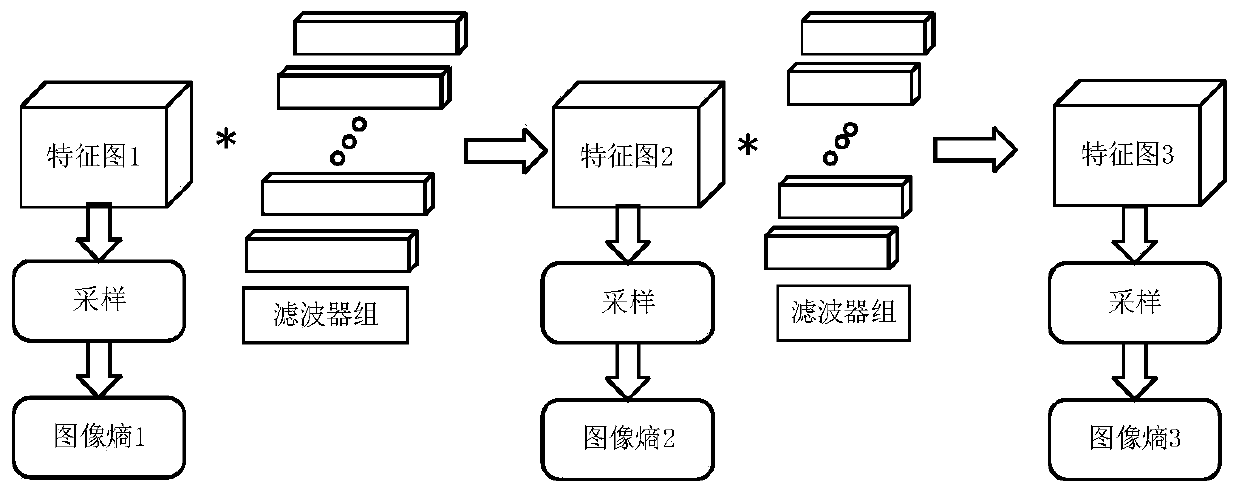

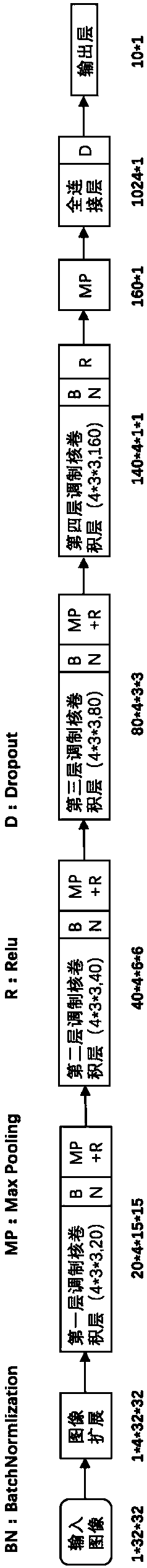

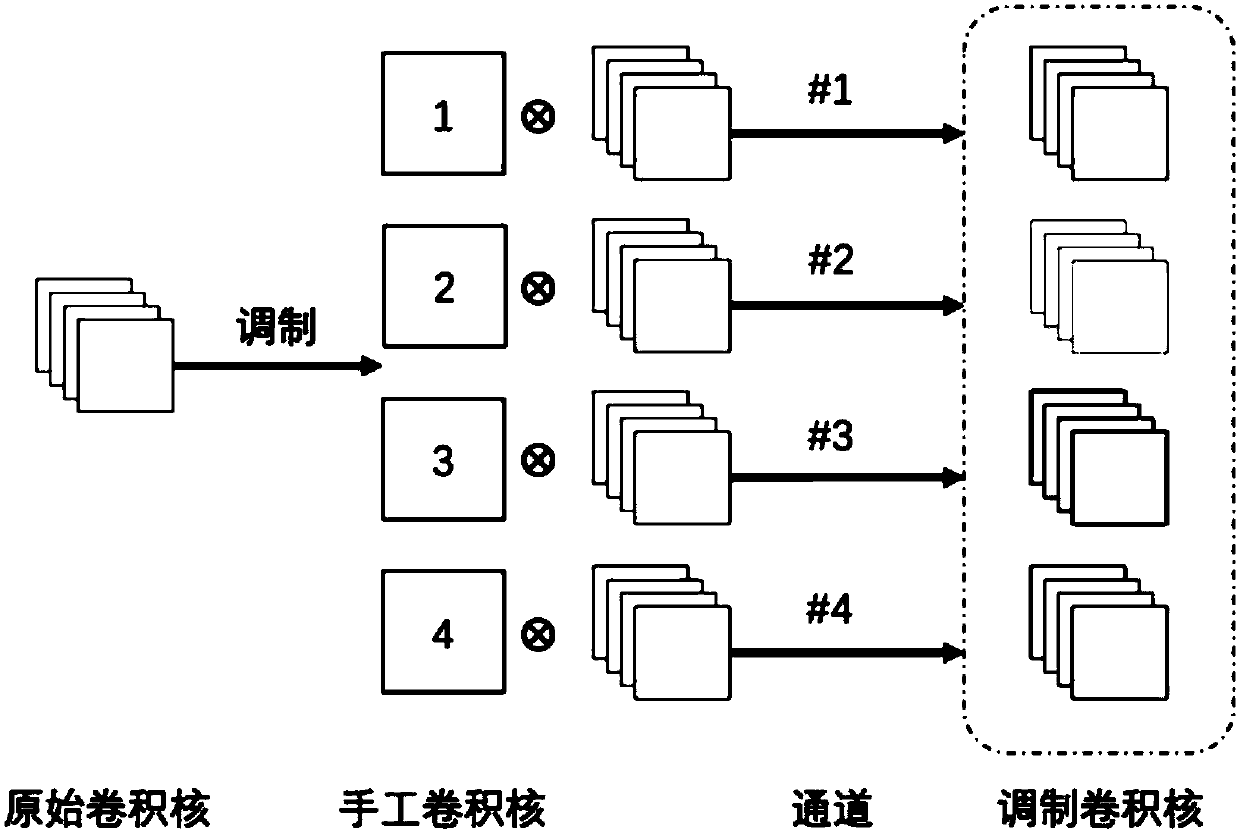

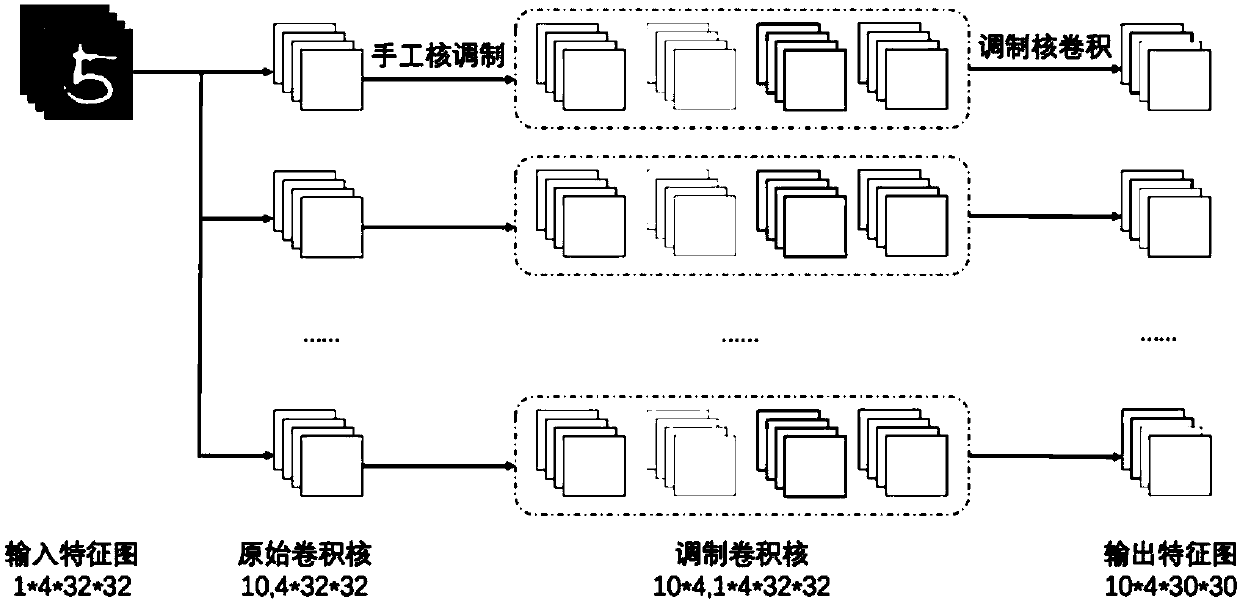

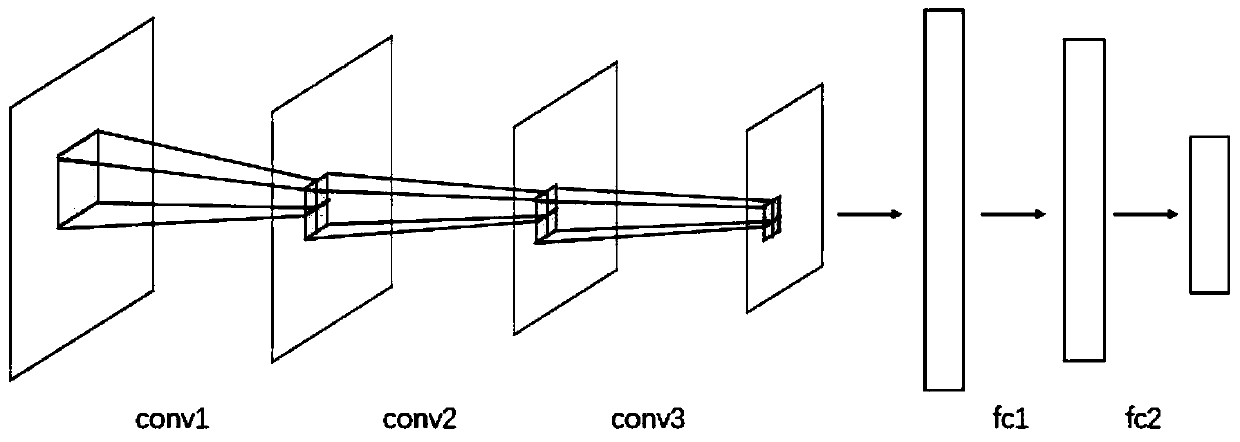

Convolutional neural network construction method

ActiveCN107633296AReduce performanceFeature optimization or enhancementNeural architecturesNeural learning methodsNetwork structureConvolution theorem

The present invention discloses a convolutional neural network construction method, and belongs to the neural network technology field. According to the present invention, when the convolutional neural network transfers forward, on each original convolution kernel, and by manually adjusting the dot product of a kernel and the original convolution kernel, the original convolution kernel is modulated to obtain the modulated convolution kernel, and the modulated convolution kernel is used to substitute for the original convolution kernel to transfer the neural network forward, thereby realizing afeature enhancement effect. According to the method of the present invention, the neural network is optimized greatly, so that the total number of the kernels that the network must learn is reduced.In addition, by modulating to generate the sub-convolution kernels to arrange the kernels of the redundant learning in an original network structure, a model compression purpose also can be achieved.

Owner:NO 54 INST OF CHINA ELECTRONICS SCI & TECH GRP

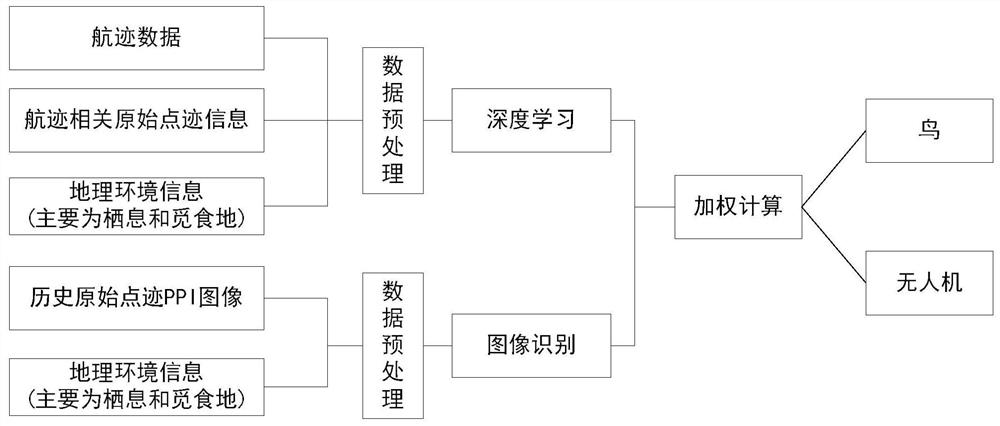

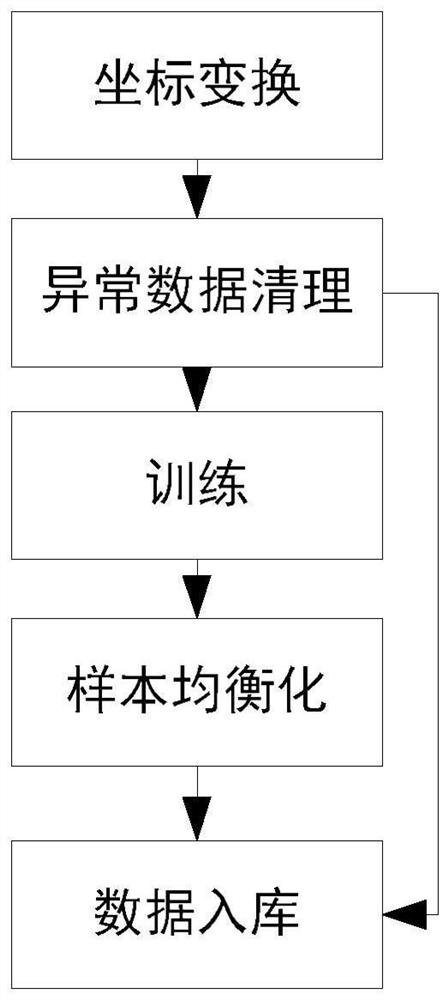

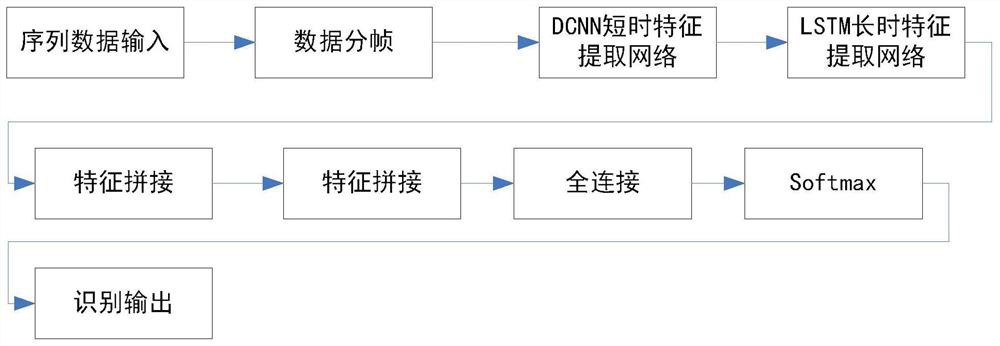

Classification and identification method for low, slow small targets

PendingCN112434643AImprove classification recognition abilityAccurate classificationCharacter and pattern recognitionNeural architecturesNetwork modelTerm memory

A disclosed classification and identification method for low, slow and small targets is accurate in identification and short in identification time. The method is realized through the following technical scheme: different target track data is taken as a training sample of a data preprocessing module based on a PPI image formed by an original trace point; the data preprocessing module performs dataprediction and preprocessing on an obtained track-related plot data rule, generates a training set, constructs a deep learning network model and a network optimization module which sequentially adopta deep convolutional network DCNN and a long short-term memory network LSTM, extracts two groups of features from framing data, performs splicing to obtain joint features, and performs deep learning;image recognition target track data is input into a weighting calculation module in real time, model compression and acceleration are conducted on a trained network model structure, model compressionacceleration is achieved through weighting calculation and model pruning, depth features needed by accurate classification are achieved, and accurate recognition of classification and recognition ofsmall birds and unmanned aerial vehicles is completed.

Owner:LINGBAYI ELECTRONICS GRP

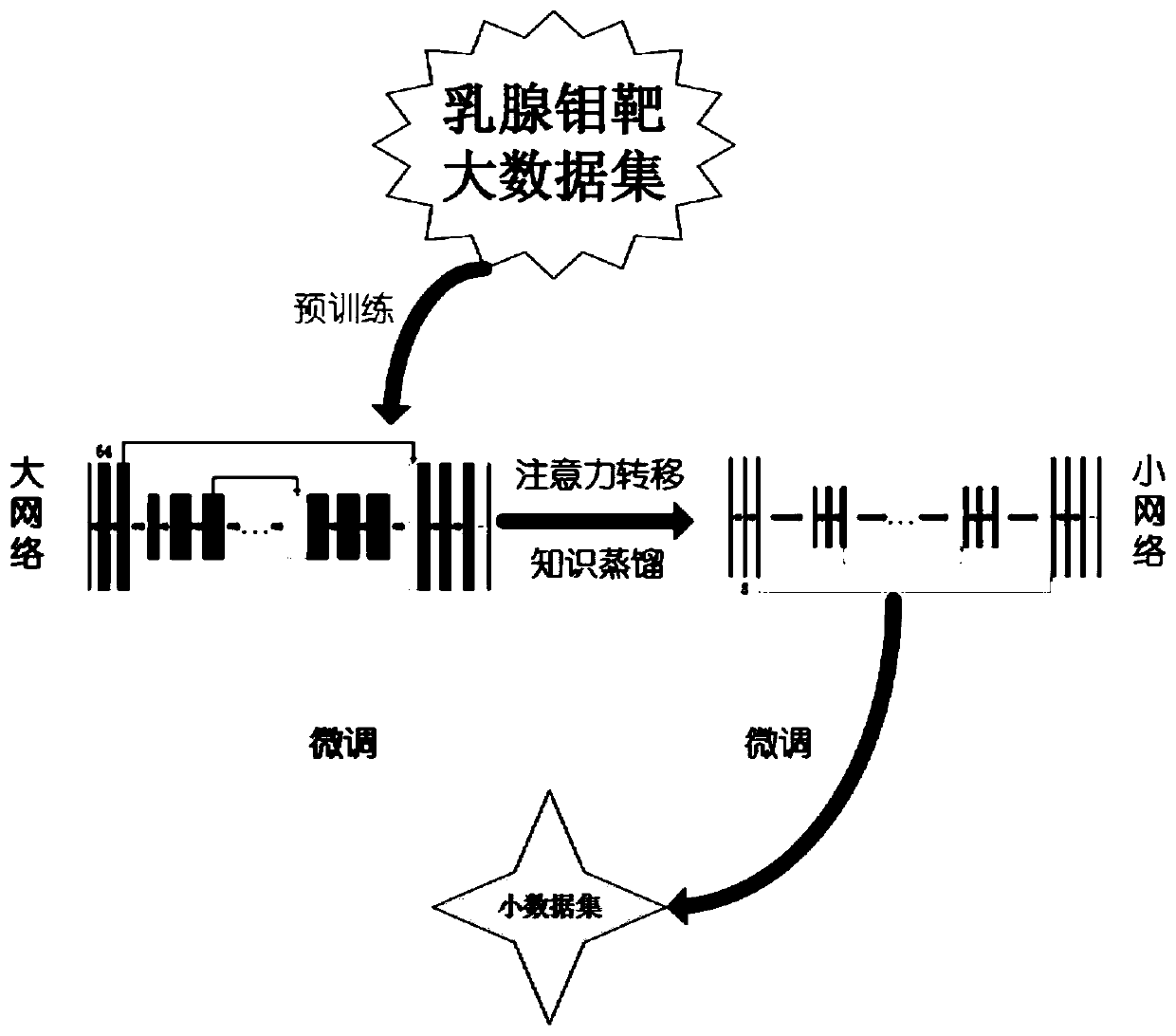

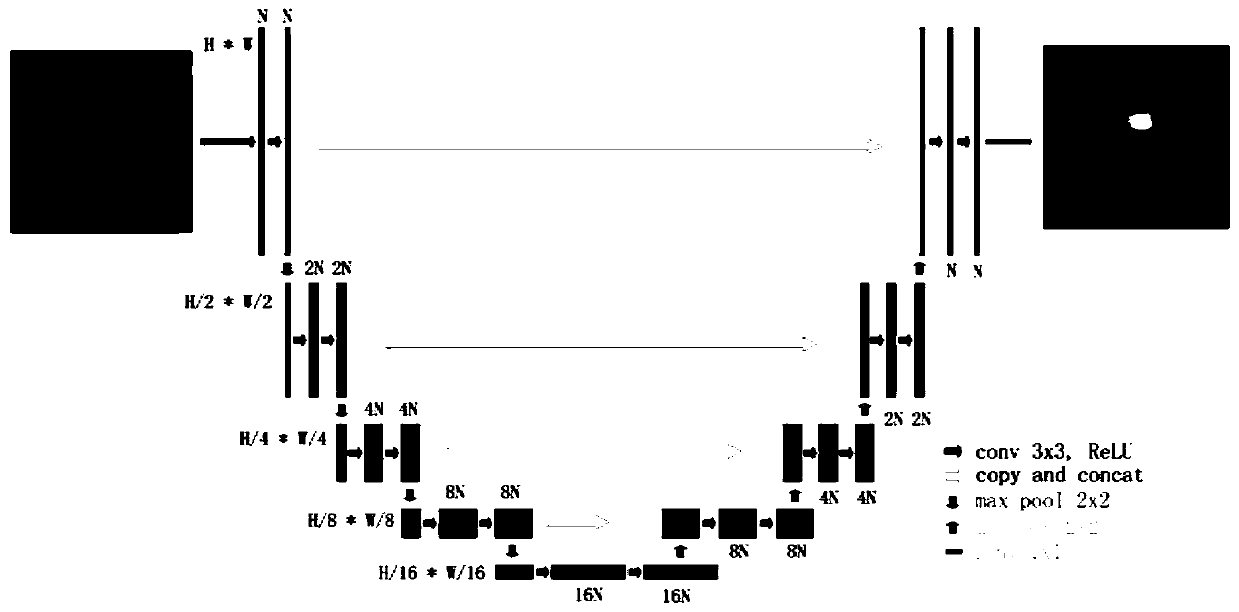

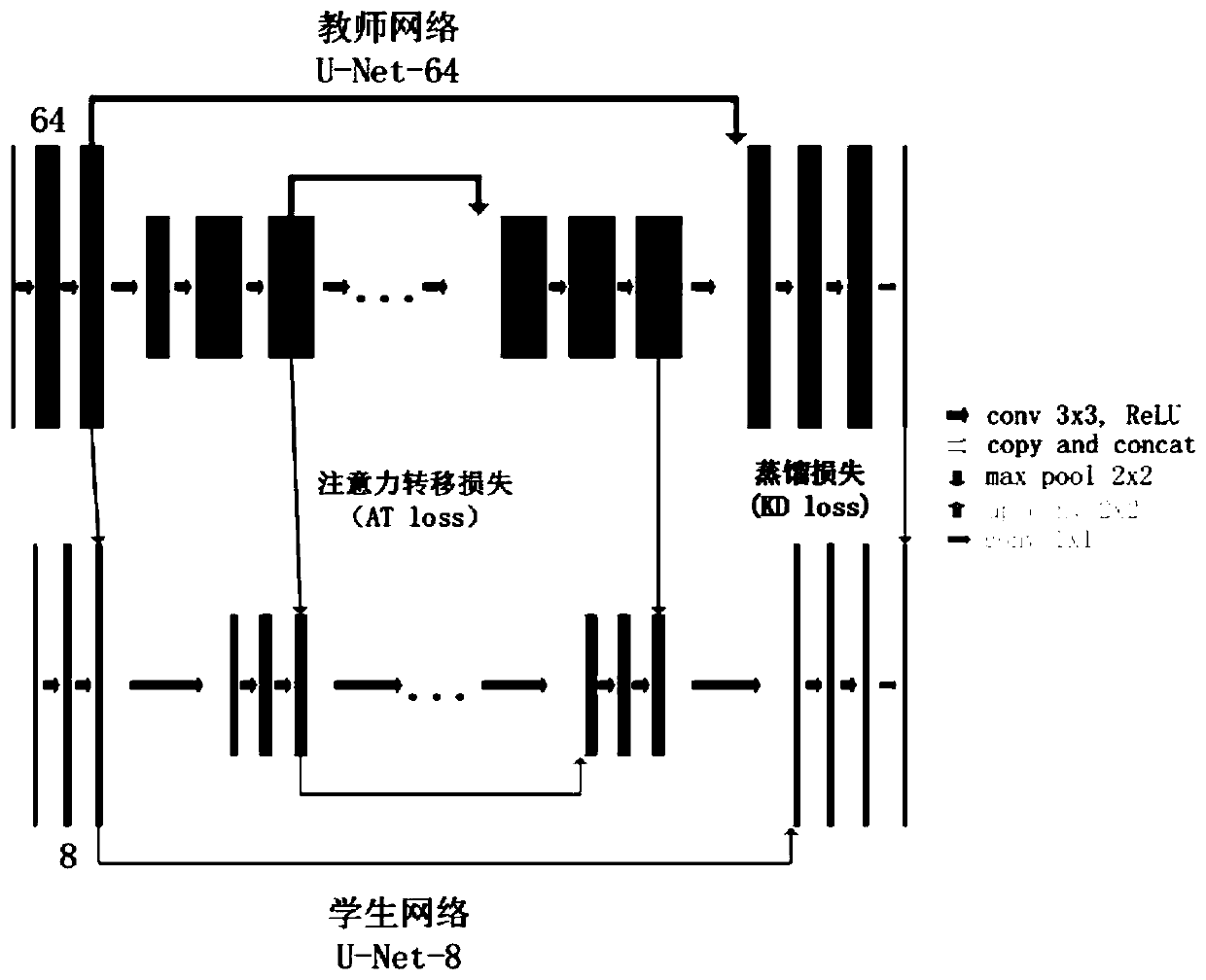

Convolutional neural network automatic segmentation method and system for mammary molybdenum target data set

InactiveCN110059717AHigh fine-tuning precisionFast testImage enhancementImage analysisReduced modelModel parameters

The invention discloses a convolutional neural network automatic segmentation method and system for a mammary molybdenum target data set, which can obviously reduce model parameters and improve the practicability while ensuring the precision of a deep learning model on a mammary molybdenum target small data set. The method comprises the following steps: pre-training a convolutional neural big network on a mammary molybdenum target big data set; performing model compression on the trained convolutional neural large network by adopting attention transfer and knowledge distillation methods to obtain a convolutional neural small network; and carrying out fine tuning on the convolutional neural small network on the mammary molybdenum target small data set.

Owner:SHANDONG UNIV

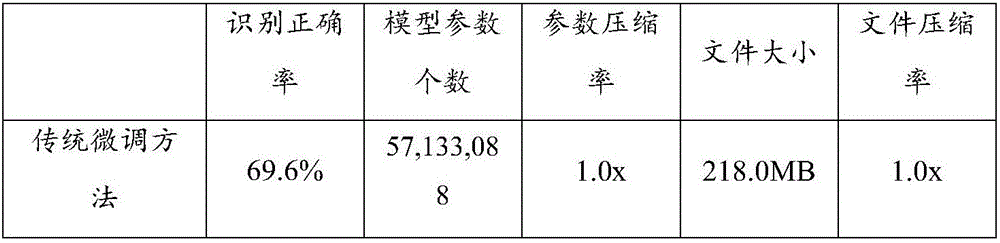

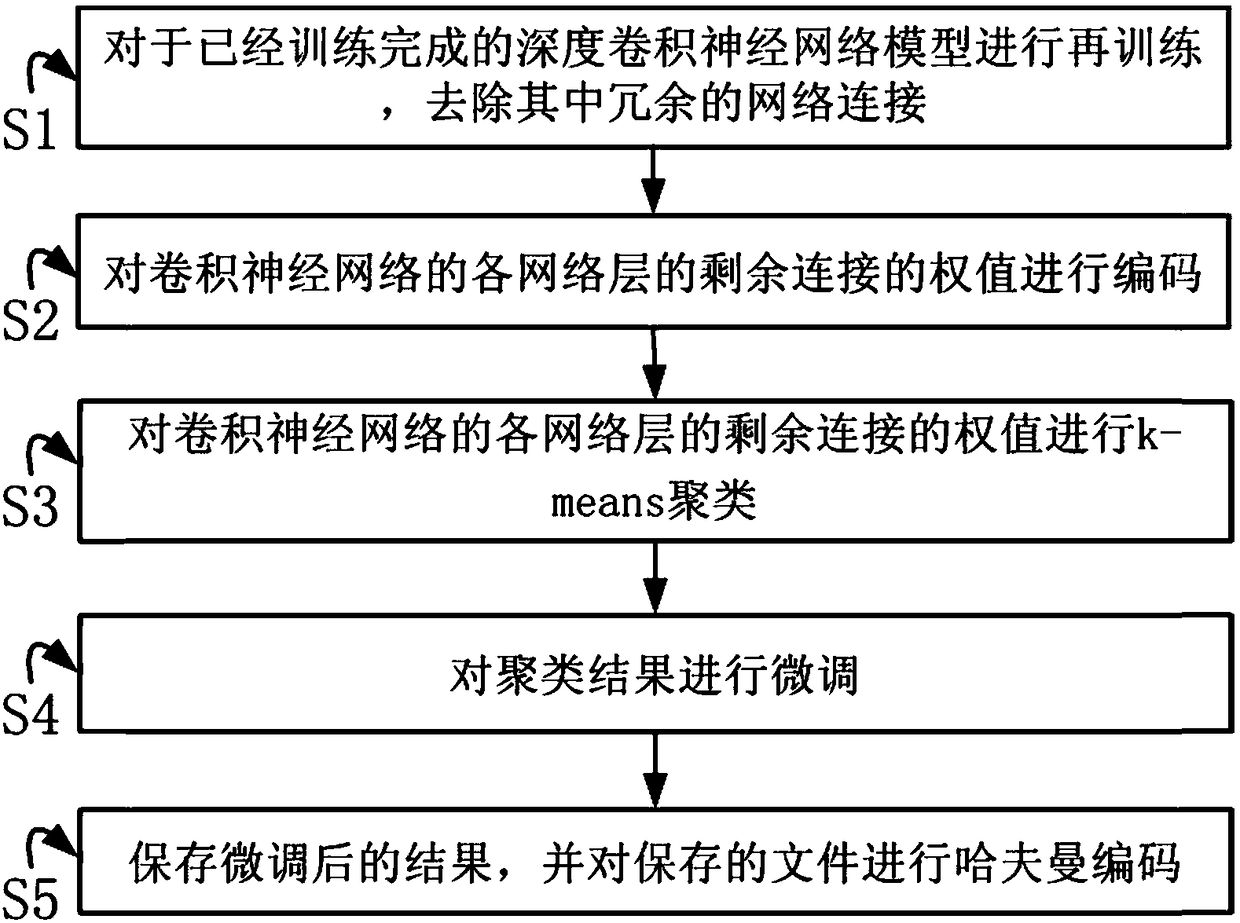

Method for deep convolutional neural network model compression

InactiveCN108322221AStable removalIncrease the compression factorCode conversionCharacter and pattern recognitionNetwork connectionData mining

The invention discloses a method for deep convolutional neural network model compression. The method comprises the steps that a trained deep convolutional neural network model is retrained to remove redundant network connections; weights of remaining connections of various network layers of a convolutional neural network are coded; the weights of the remaining connections of the various network layers of the convolutional neural network are subjected to k-means clustering; clustering results are subjected to fine tuning; and results after fine tuning are saved, and a saved file is subjected toHuffman coding. According to the method, by setting a dynamic threshold, the connections in the network can be gently removed to enable the network to be recovered from the unfavorable condition thatthe connections are removed, and therefore the effect that the compression multiples is high under the condition of the same accuracy rate loss can be achieved; and in the coding process of the remaining connections, the bit number needed for representing an index value can be decreased by means of the used improved CSR coding method, therefore, the size of the compressed file can be decreased, and the compression ratio is increased.

Owner:SOUTH CHINA UNIV OF TECH +1

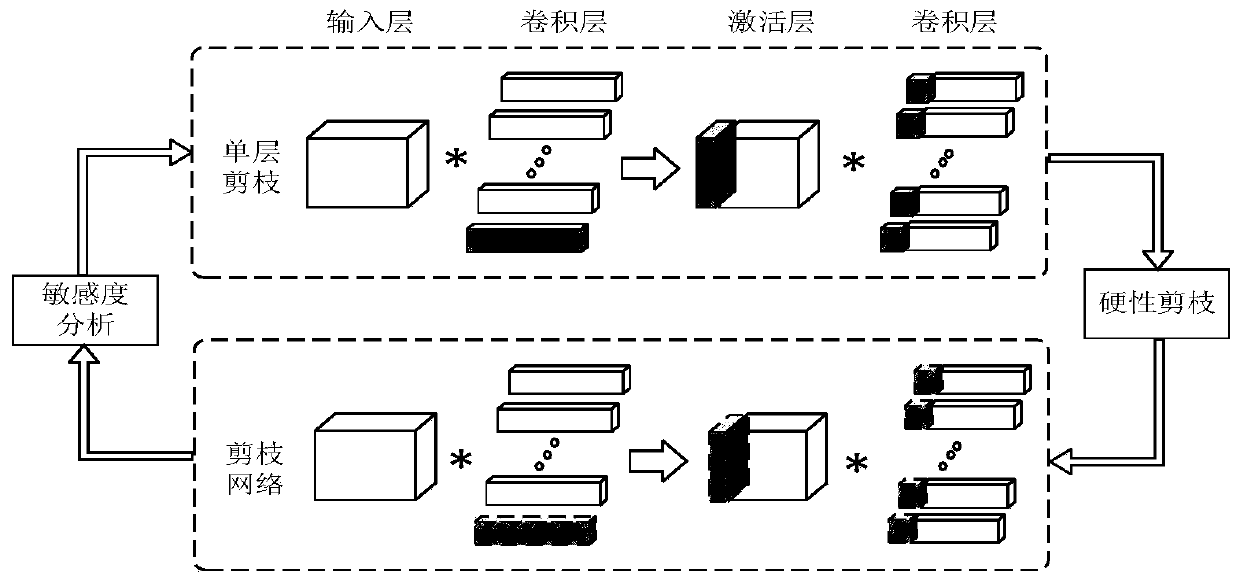

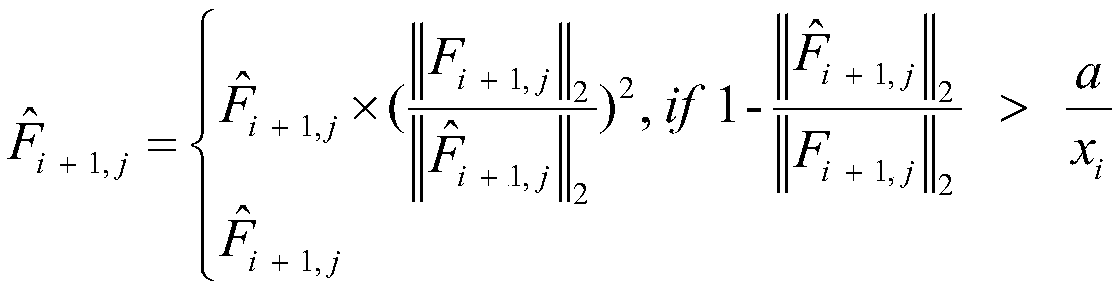

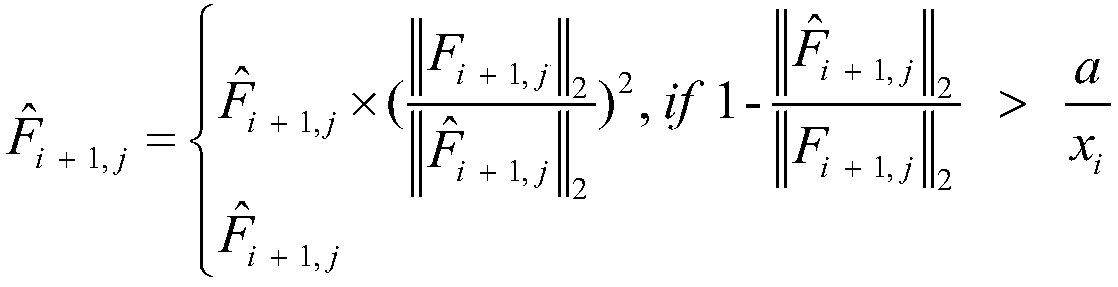

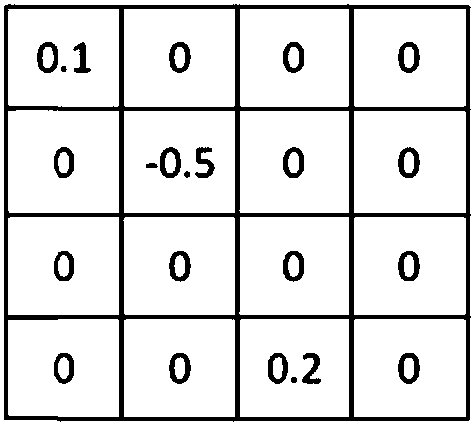

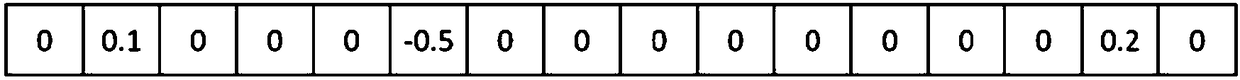

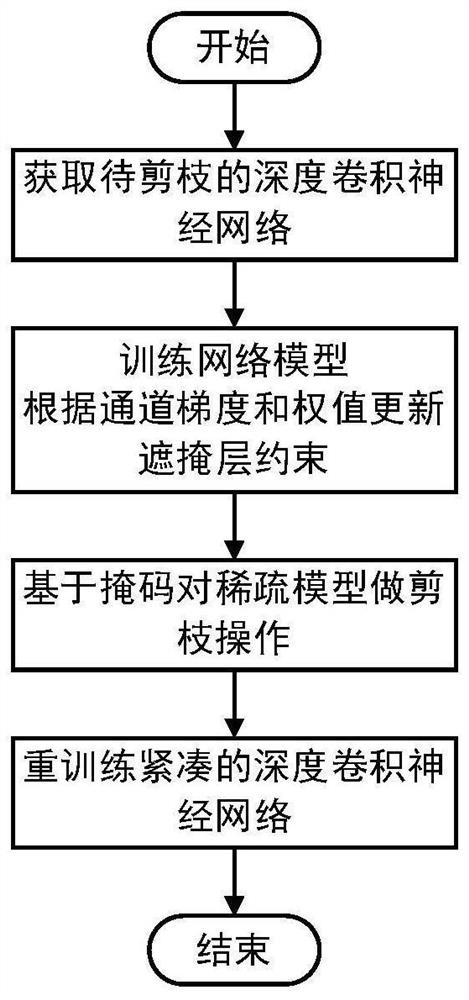

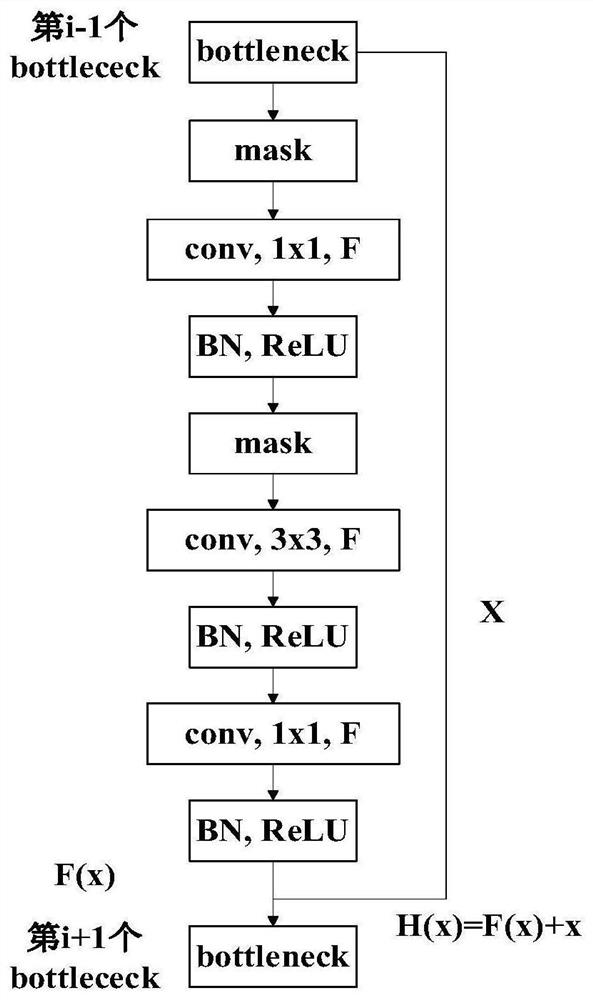

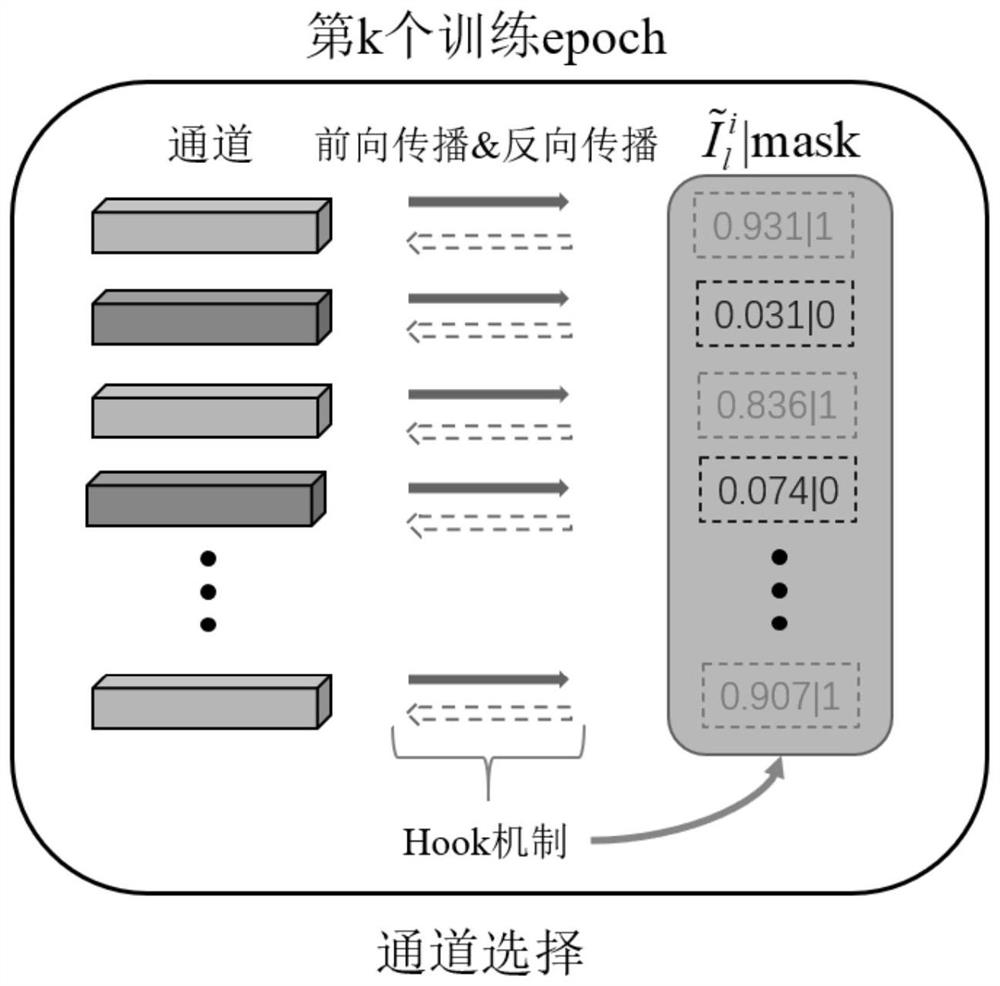

Flexible deep learning network model compression method based on channel gradient pruning

PendingCN112396179ATrim controllableImprove predictabilityNeural architecturesNeural learning methodsData setAlgorithm

The invention discloses a flexible deep learning network model compression method based on channel gradient pruning, and the method comprises the steps: 1, adding a masking layer constraint to an original network, and obtaining a to-be-pruned deep convolutional neural network model; wherein the absolute value of the product of the channel gradient and the weight serves as an importance standard toupdate the masking layer constraint of the channel to obtain a mask and a sparse model, 3, carrying out pruning operation on the sparse model based on the mask, and 4, retraining a compact deep convolutional neural network model. The invention further provides an application effect of the flexible deep learning network model compression method based on channel gradient pruning on an actual objectrecognition APP, the recognition speed of the model to the object after pruning is greatly improved, and the problem that the deep neural network model cannot be applied to the actual object recognition APP due to high storage space occupation and high memory occupation, high computing resources are occupied and cannot be deployed to embedded devices, smart phones and other devices are solved, and the application range of the deep neural network is expanded.

Owner:ZHEJIANG UNIV OF TECH

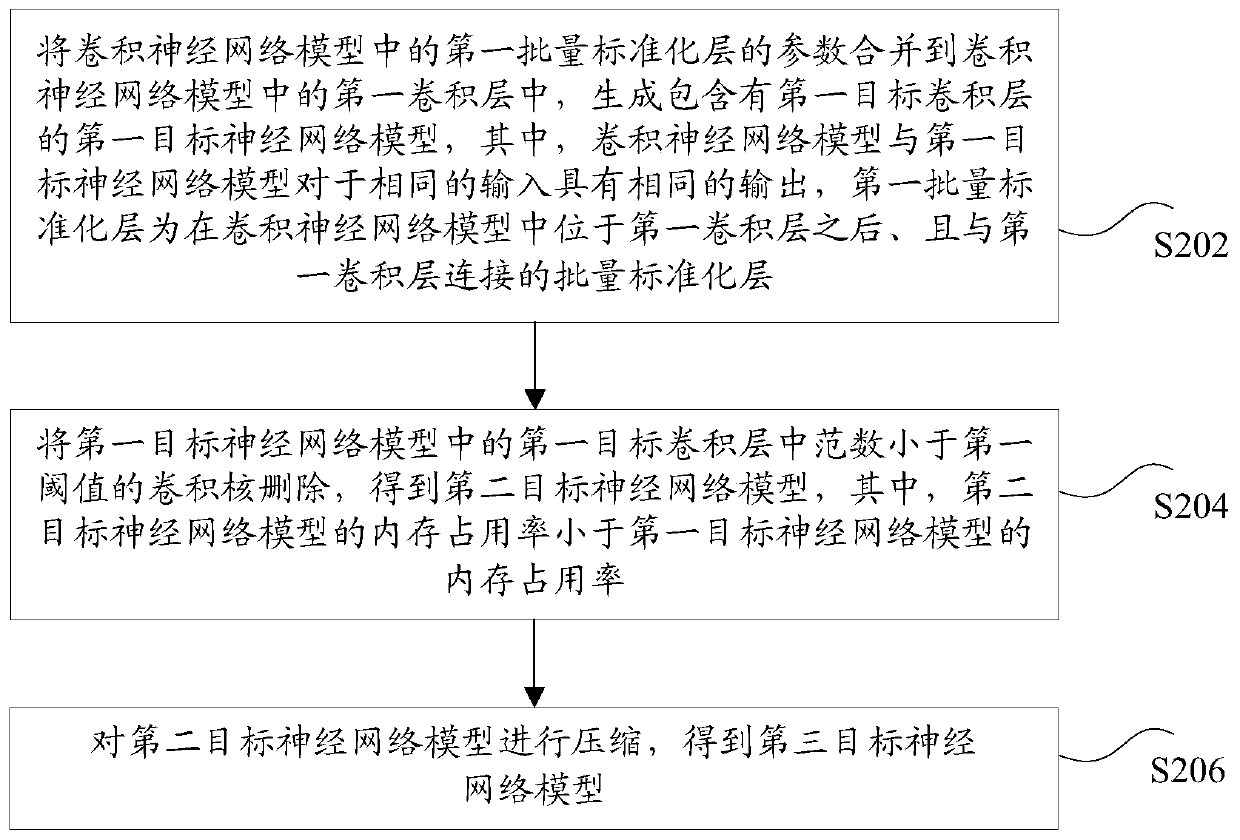

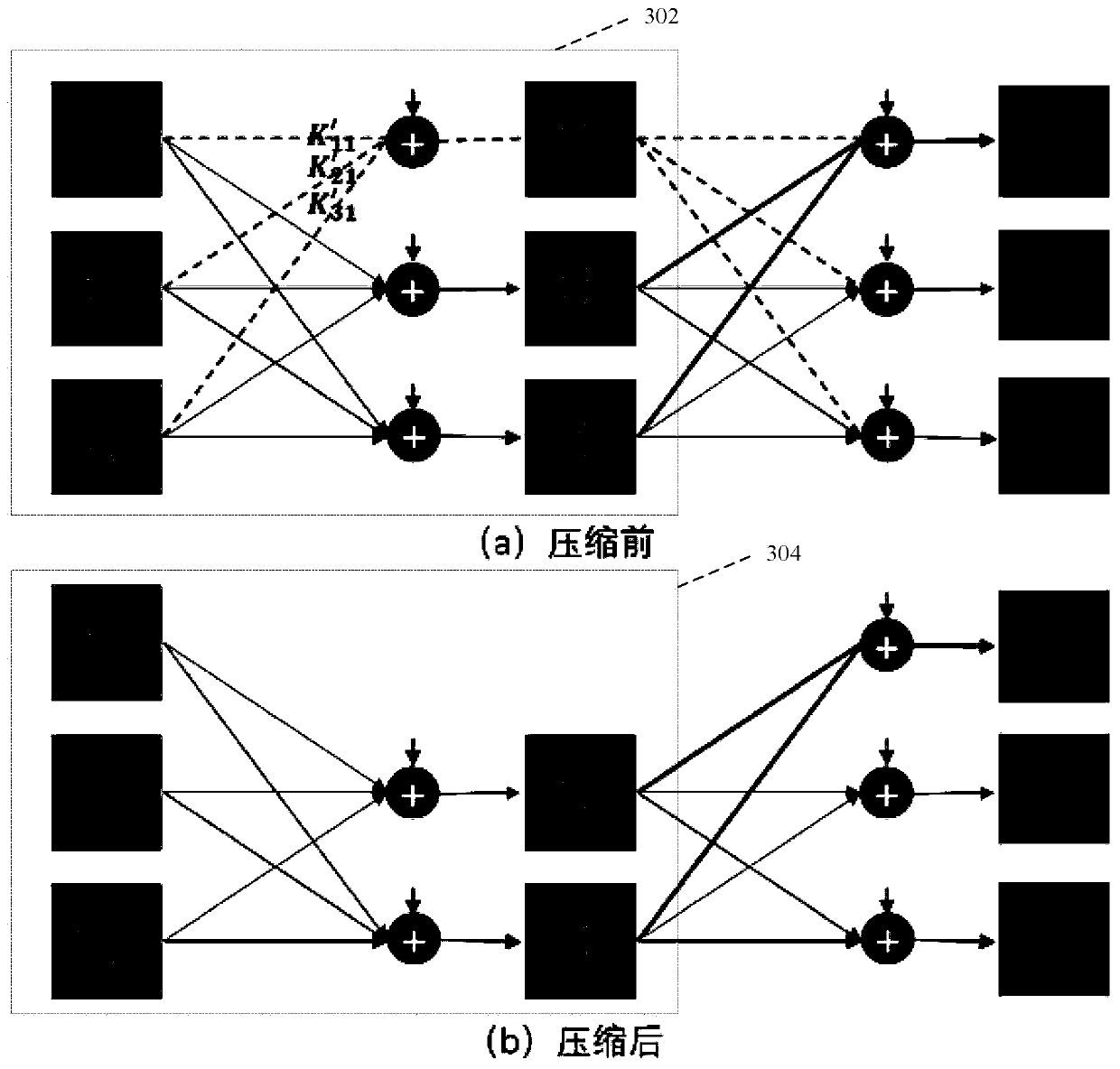

Convolutional neural network model compression method and device, storage medium and electronic device

ActiveCN110033083AReduce memory usageImprove efficiencyNeural architecturesEnergy efficient computingNerve networkNetwork model

The invention discloses a convolutional neural network model compression method and device, a storage medium and an electronic device. The method comprises the following steps of merging the parameters of a first batch of standardized layers in a convolutional neural network model into a first convolutional layer in the convolutional neural network model to generate a first target neural network model comprising a first target convolutional layer, the convolutional neural network model and the first target neural network model having the same output for the same input; deleting a convolution kernel with a norm smaller than a first threshold value in a first target convolution layer in the first target neural network model to obtain a second target neural network model; and compressing thesecond target neural network model to obtain a third target neural network model, so that the technical problems of low use efficiency and poor flexibility of a neural network model in the prior art are solved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

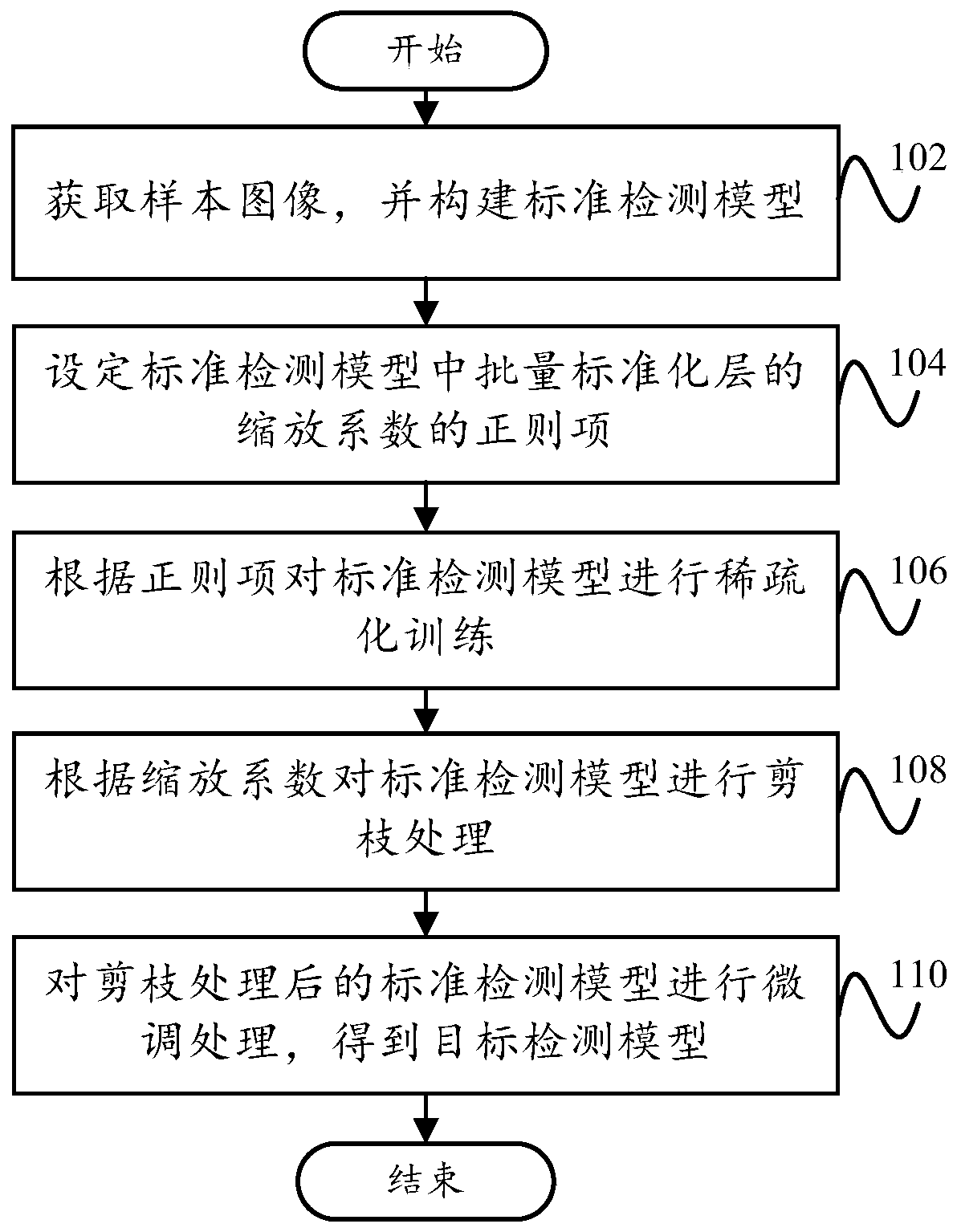

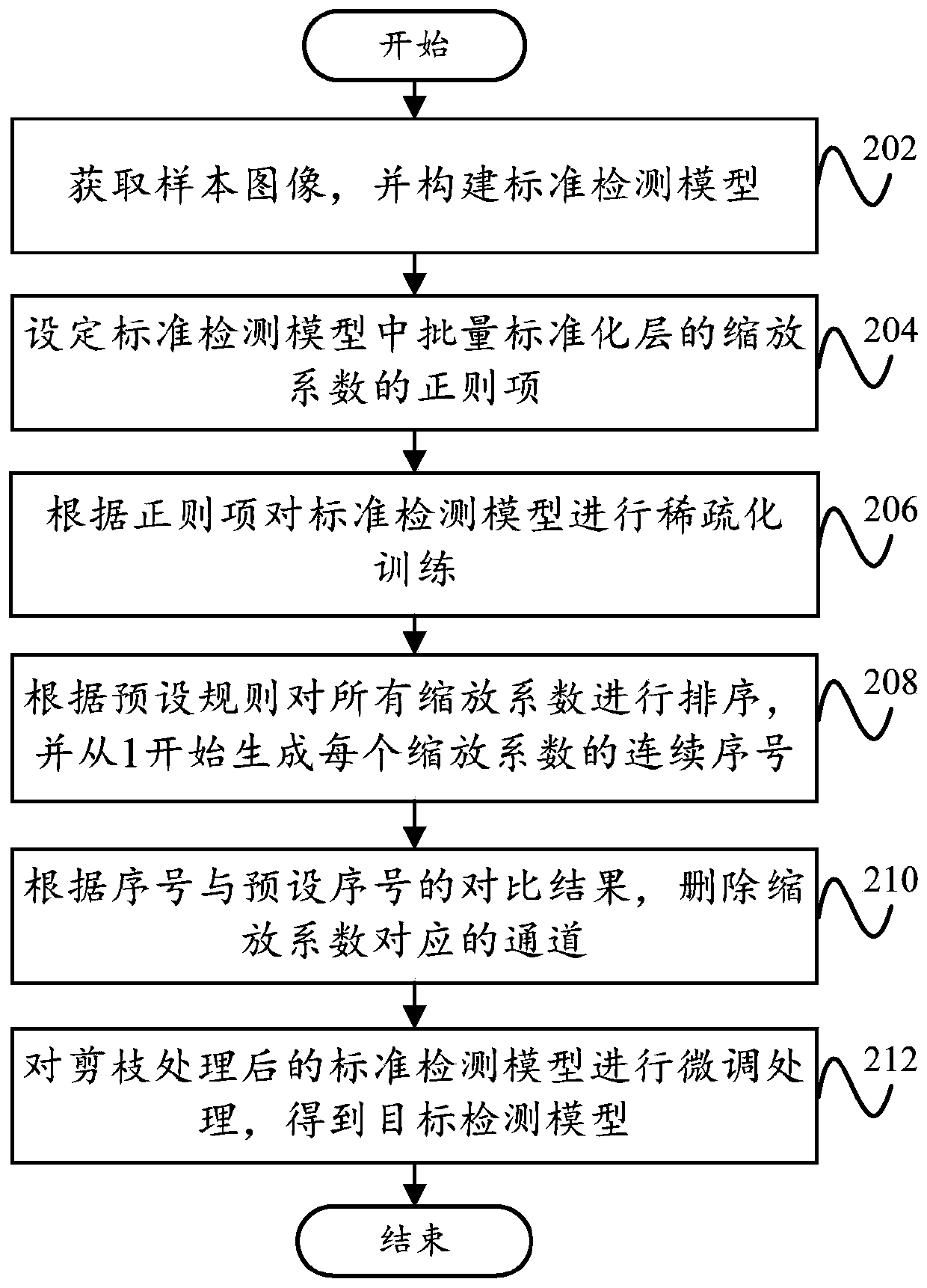

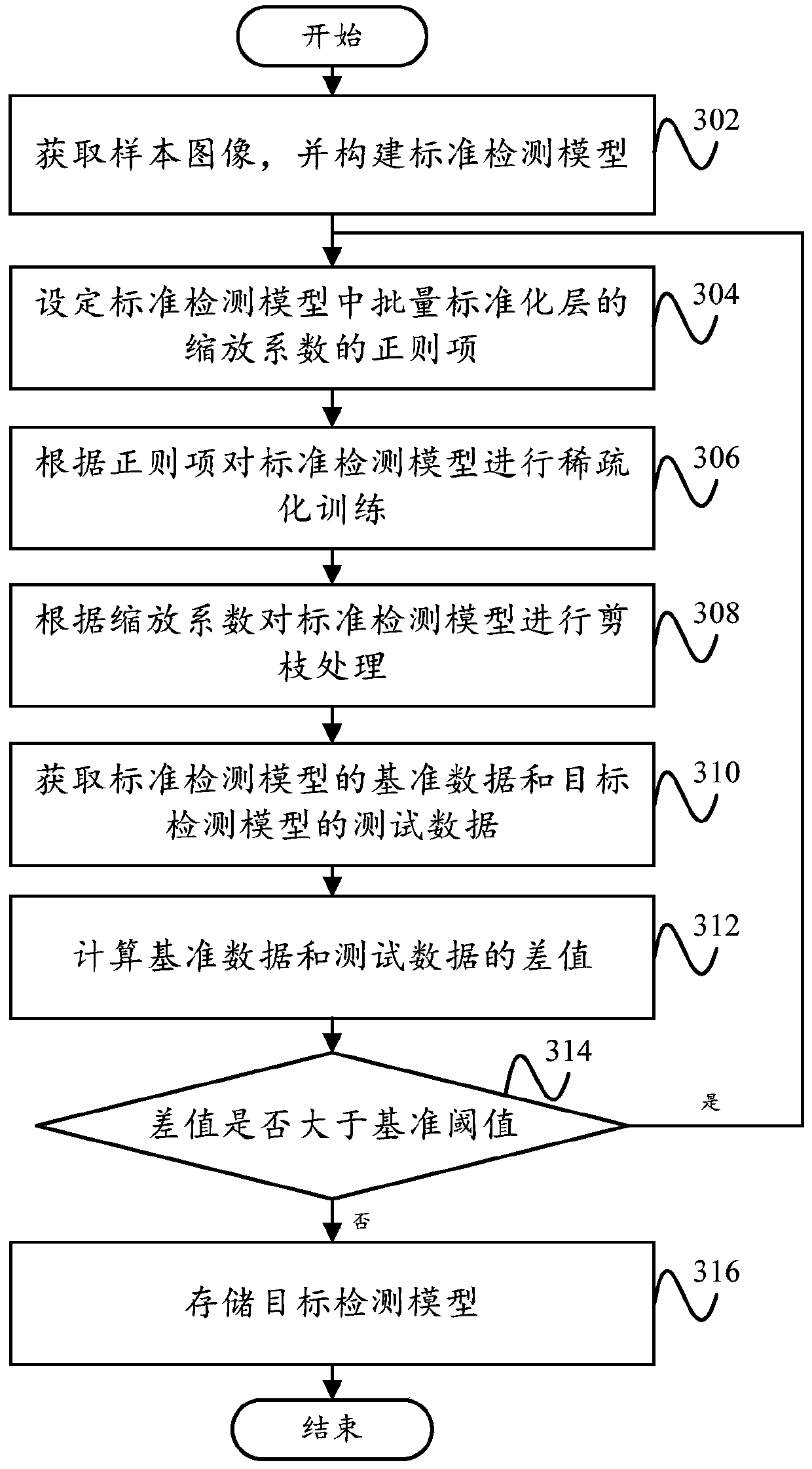

Model compression method and device, target detection equipment and storage medium

PendingCN111325342AGuaranteed detection accuracyHigh input-output ratioNeural architecturesNeural learning methodsAlgorithmSample image

The invention provides a model compression method and device, target detection equipment and a storage medium. The compression method comprises the following steps: acquiring a sample image, and constructing a standard detection model; setting a regular term of a scaling coefficient of a batch standardization layer in the standard detection model; performing sparse training on the standard detection model according to the regular term; pruning the standard detection model according to the scaling coefficient; and performing fine adjustment processing on the pruned standard detection model to obtain a target detection model. According to the compression method, the detection precision of the target detection model can be guaranteed, the detection speed of the target detection model is greatly increased, the input-output ratio of actual engineering is greatly increased, the compression method can be directly operated on a mature framework, support of a special algorithm library is not needed, and the universality is improved.

Owner:ZICT TECH CO LTD

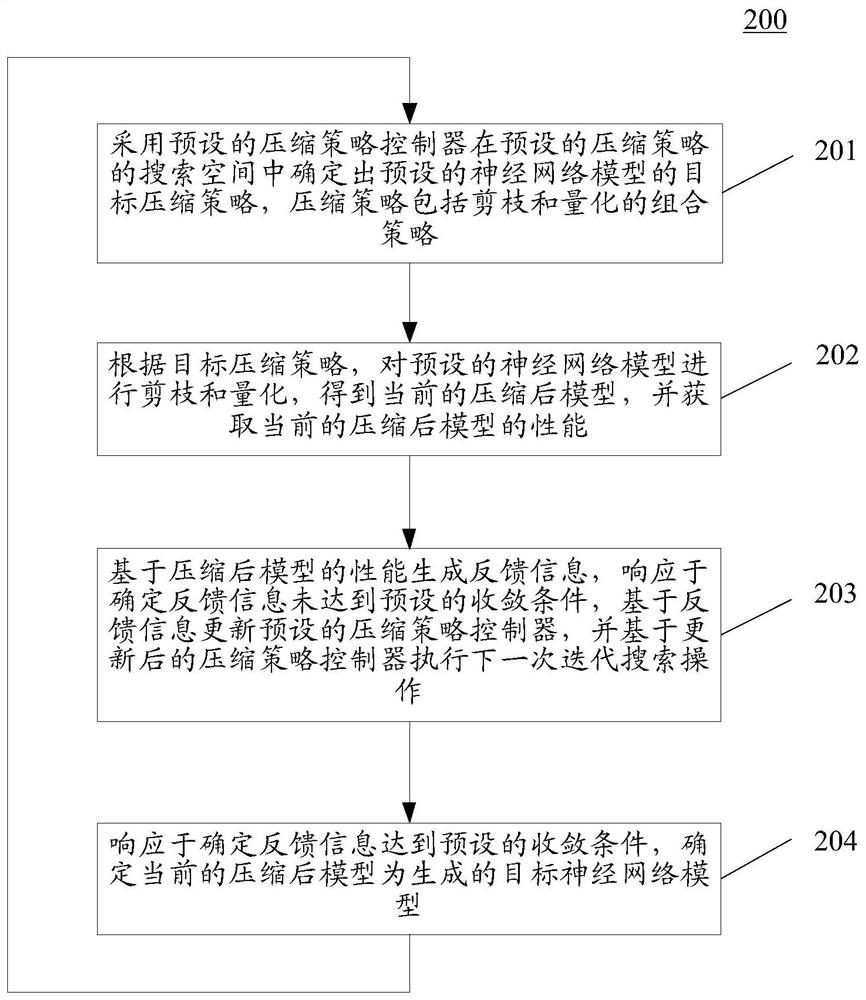

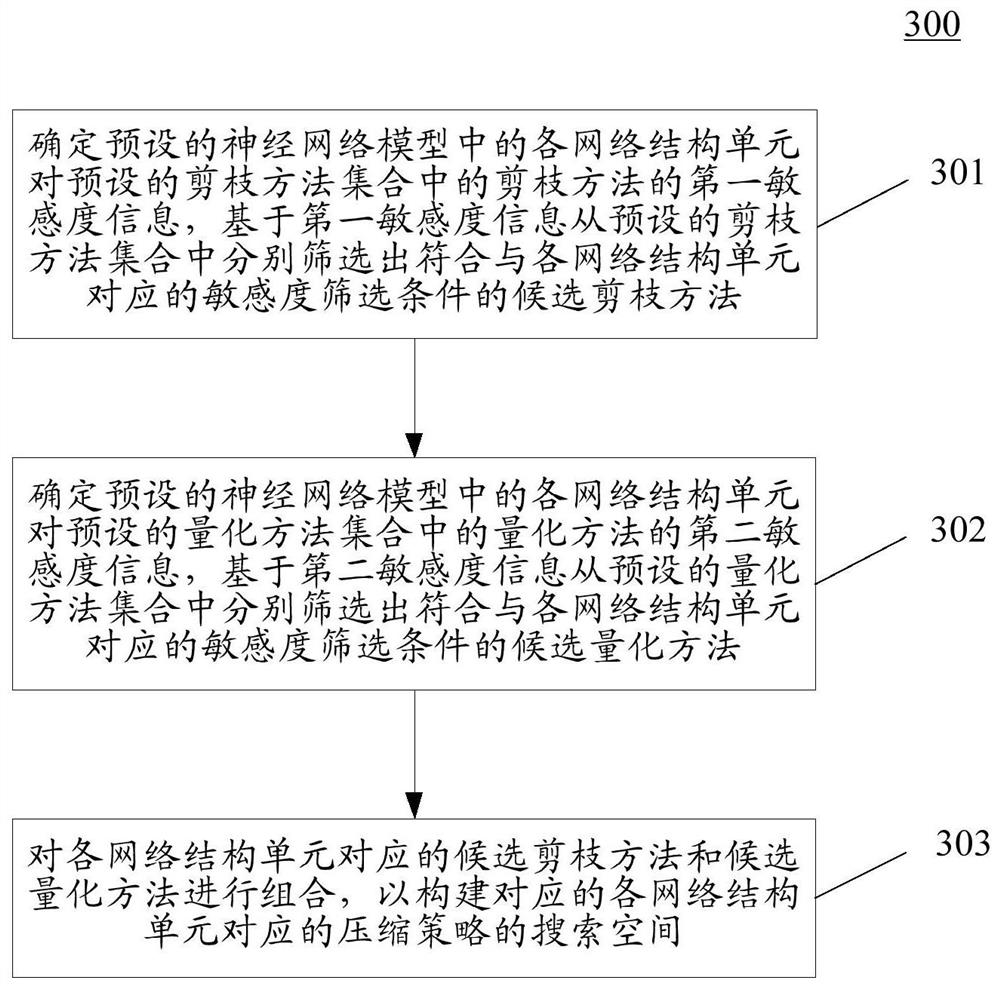

Method and device for generating neural network model, electronic equipment and storage medium

ActiveCN111667054AGuaranteed accuracyEasy to understandNeural architecturesEnergy efficient computingImaging processingIterative search

The embodiment of the invention discloses a method and device for generating a neural network model, electronic equipment and a storage medium, and relates to the technical field of artificial intelligence, deep learning and image processing. According to the specific implementation scheme, the method comprises the steps: executing a plurality of iterative search operations, wherein the iterativesearch operation comprises the following steps of: determining a target compression strategy of a preset neural network model in a search space of a preset compression strategy by adopting a preset compression strategy controller, wherein the compression strategy comprises a pruning and quantifying combined strategy; according to the target compression strategy, pruning and quantifying a preset neural network model to obtain a current compressed model and obtain the performance of the current compressed model; and generating feedback information based on the performance of the compressed model, and determining the current compressed model as a generated target neural network model in response to determining that the feedback information reaches a preset convergence condition. According tothe method, the optimal model compression strategy can be searched.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

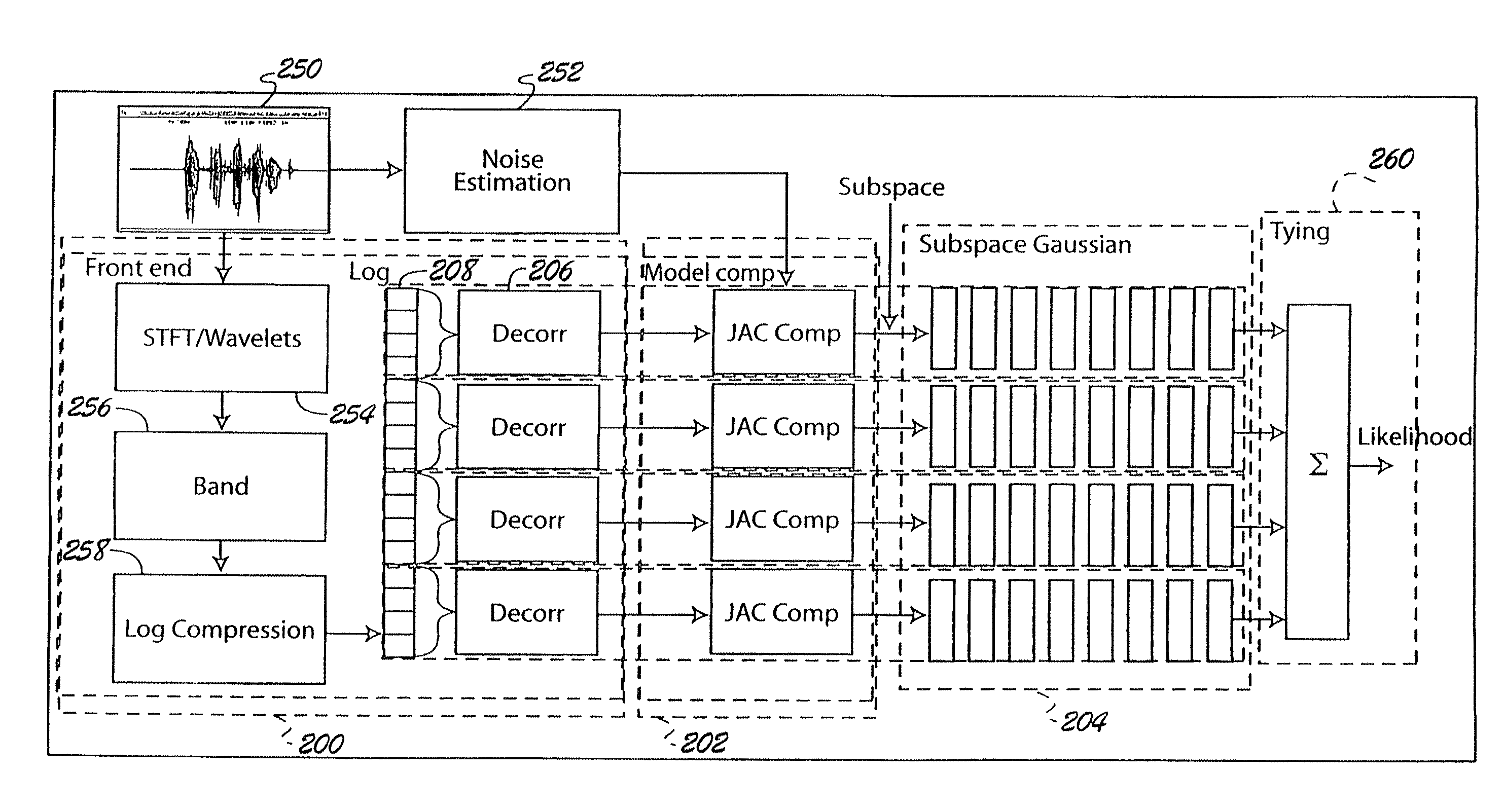

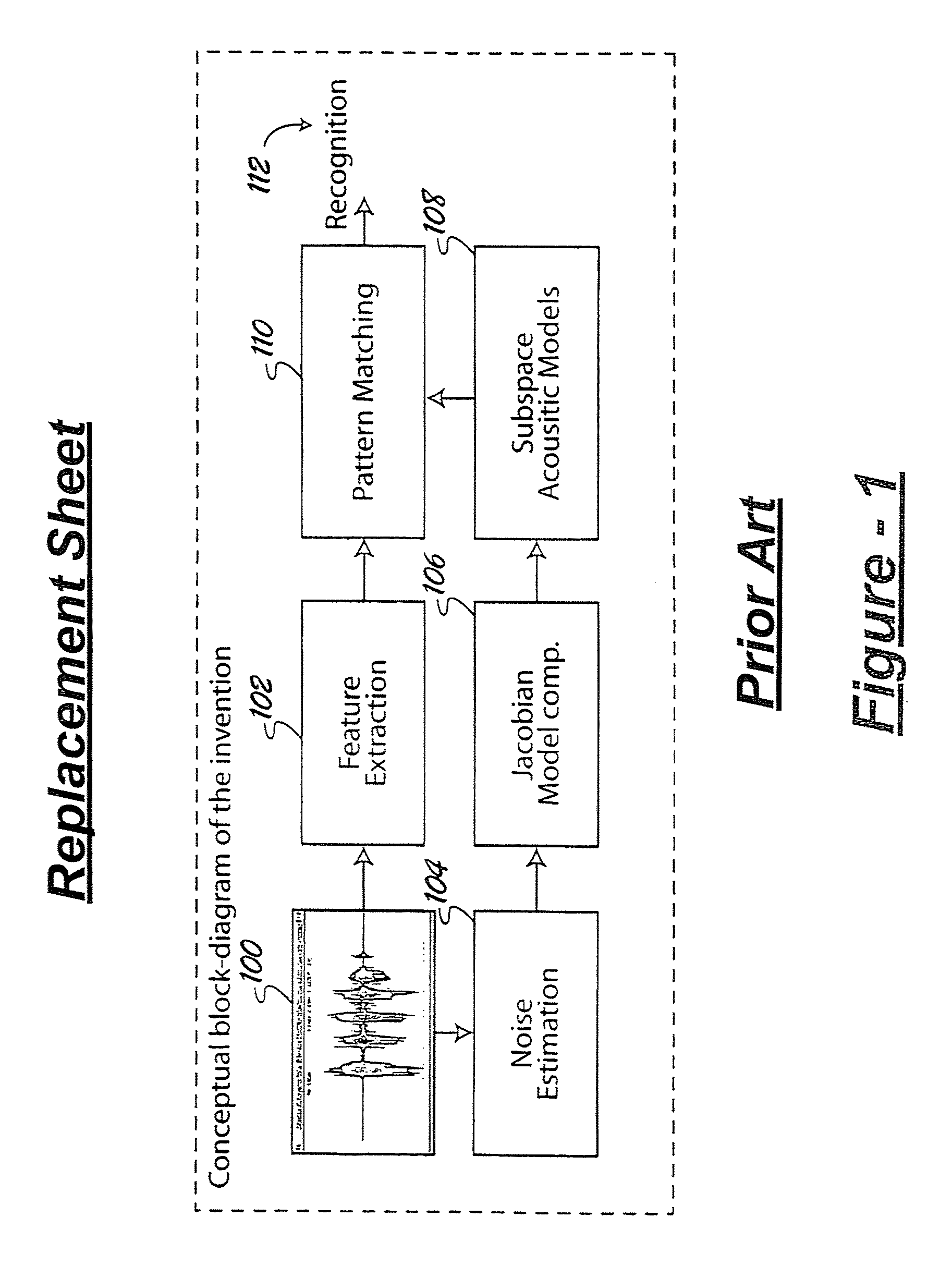

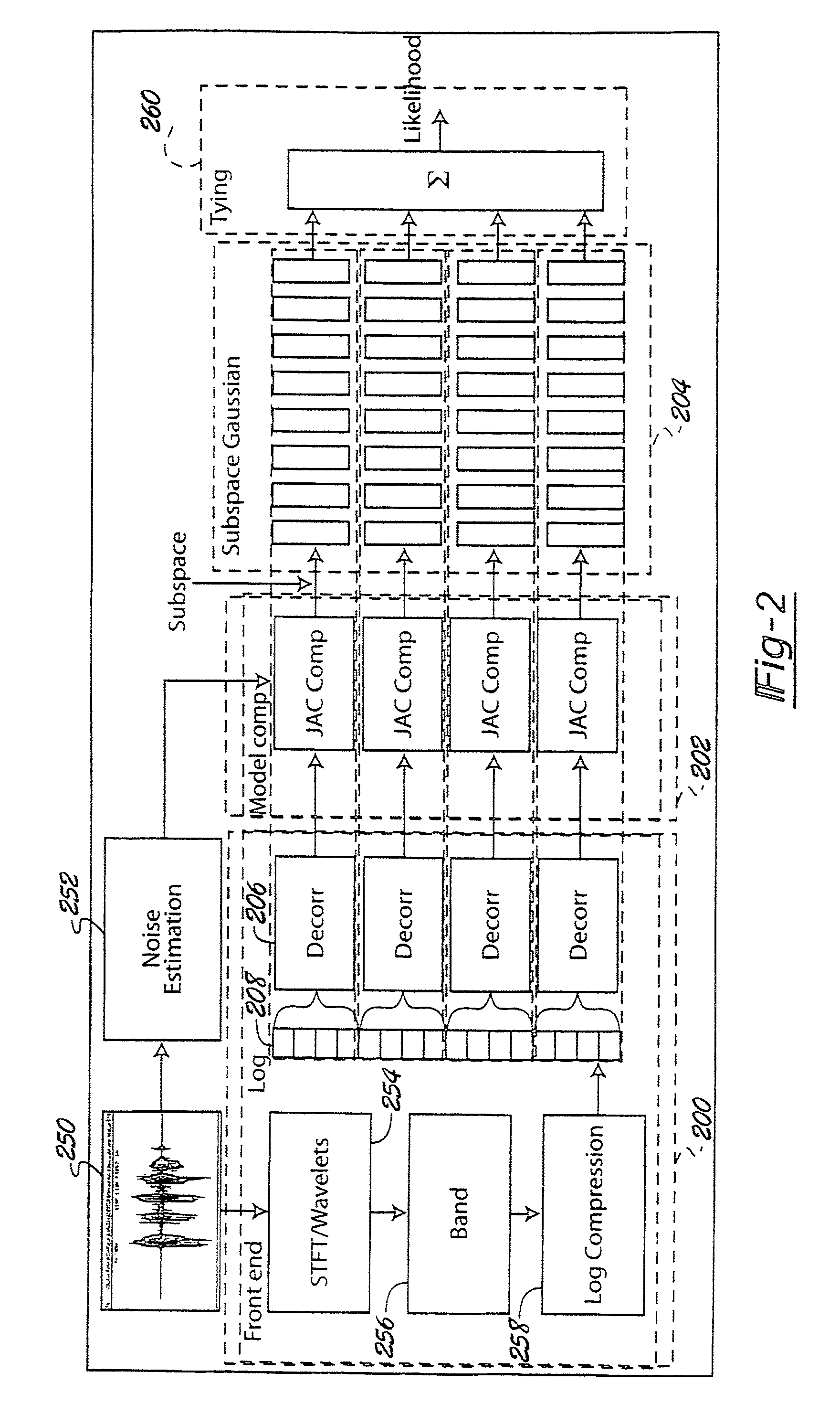

Block-diagonal covariance joint subspace tying and model compensation for noise robust automatic speech recognition

InactiveUS7729909B2Reduce size and computational complexityImprove accuracySpeech recognitionCovarianceAutomatic speech

Owner:SOVEREIGN PEAK VENTURES LLC

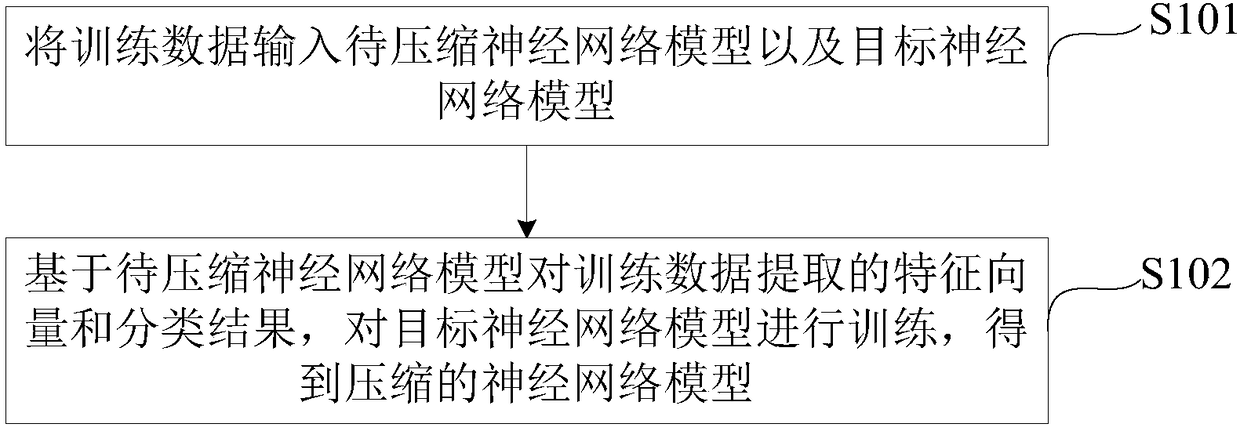

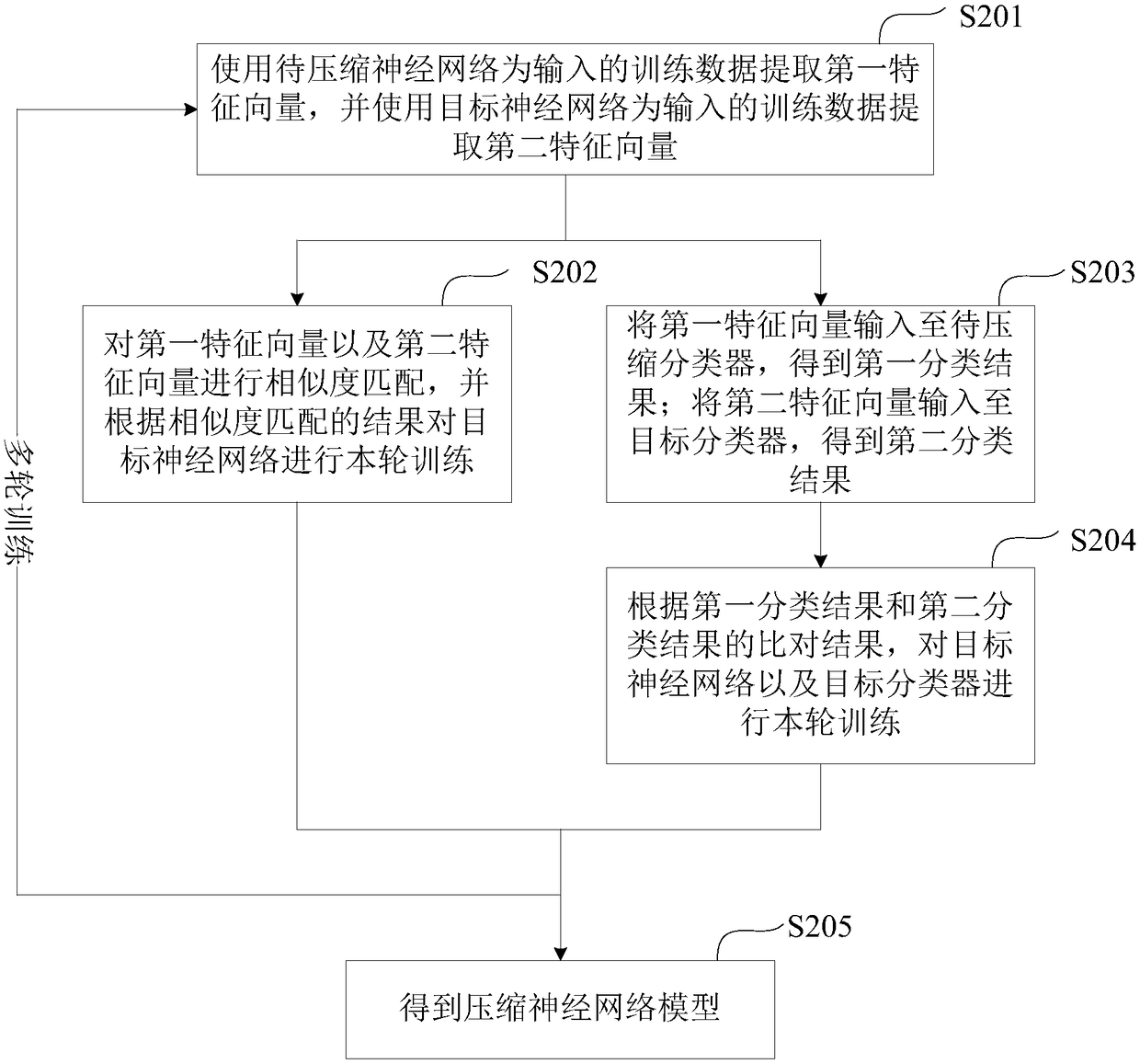

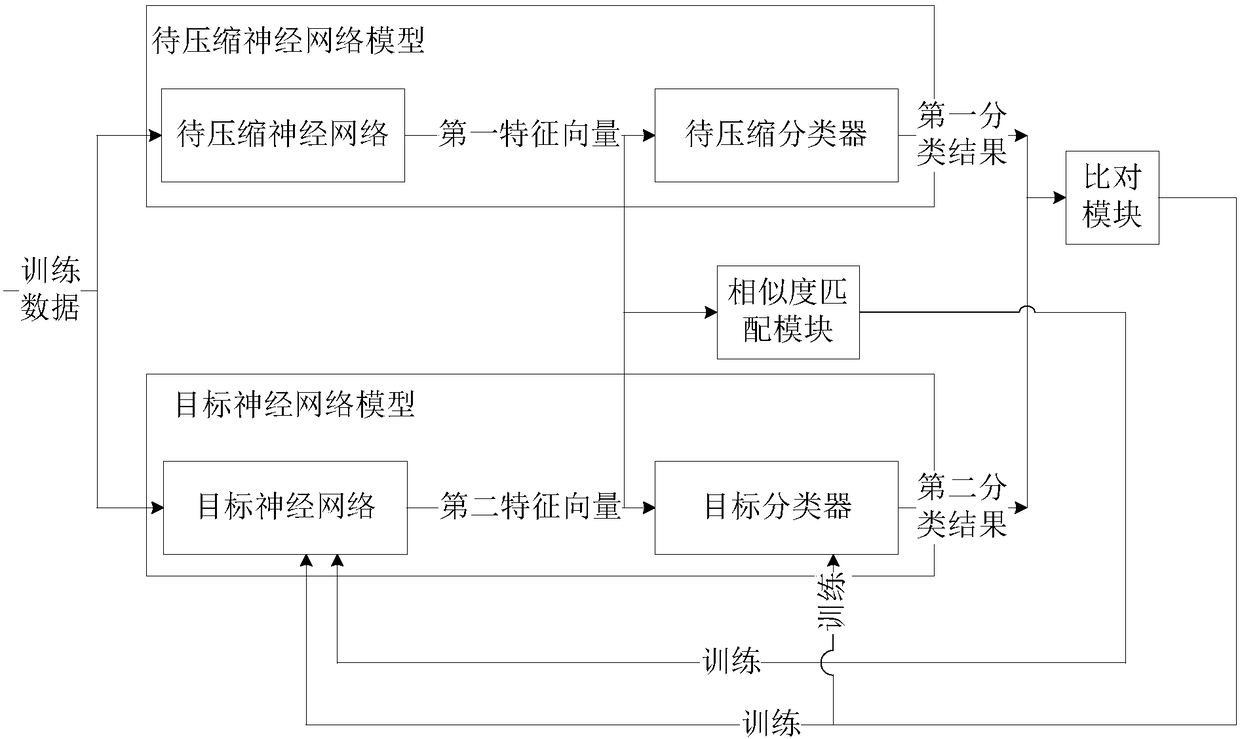

Neural network model compression method and apparatus

ActiveCN108510083AWithout loss of precisionGuaranteed accuracyNeural learning methodsFeature vectorNerve network

The invention provides a neural network model compression method and apparatus. The method comprises the steps of inputting training data to a to-be-compressed neural network model and a target neuralnetwork model; and based on an eigenvector and a classification result extracted for the training data by the to-be-compressed neural network model, training the target neural network model to obtaina compressed neural network model, wherein a parameter quantity of the target neural network model is smaller than that of the to-be-compressed neural network model. Based on the eigenvector and theclassification result extracted for the training data by the to-be-compressed neural network model, the target neural network model is guided to be trained, and classification results for the same training data by the finally obtained compressed neural network model and the to-be-compressed neural network model are same, so that the precision loss is not caused in the model compression process, the model size can be compressed on the premise of ensuring the precision, and double demands on the precision and the model size can be met.

Owner:GUOXIN YOUE DATA CO LTD

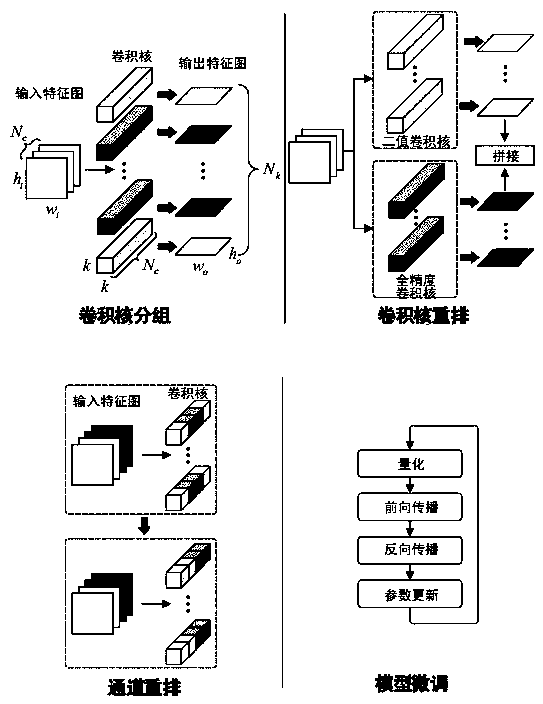

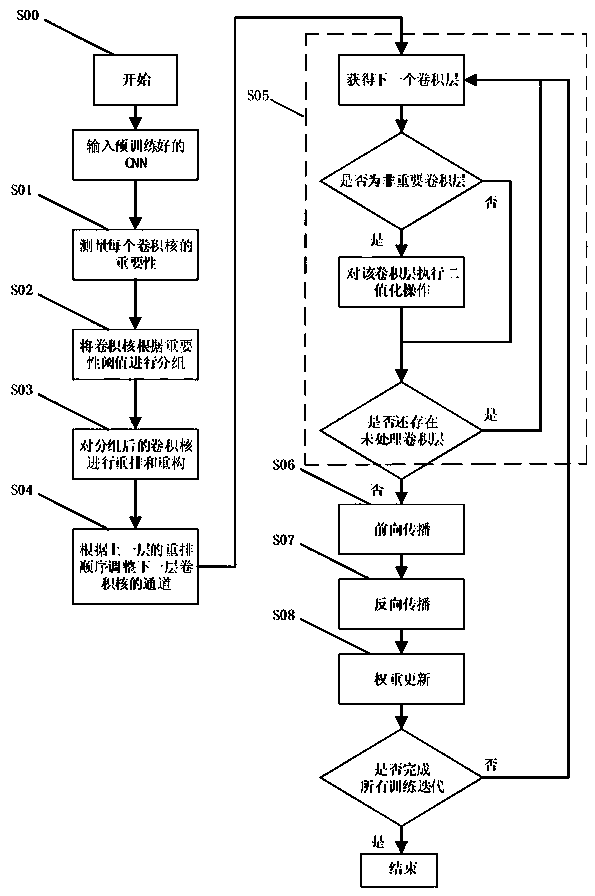

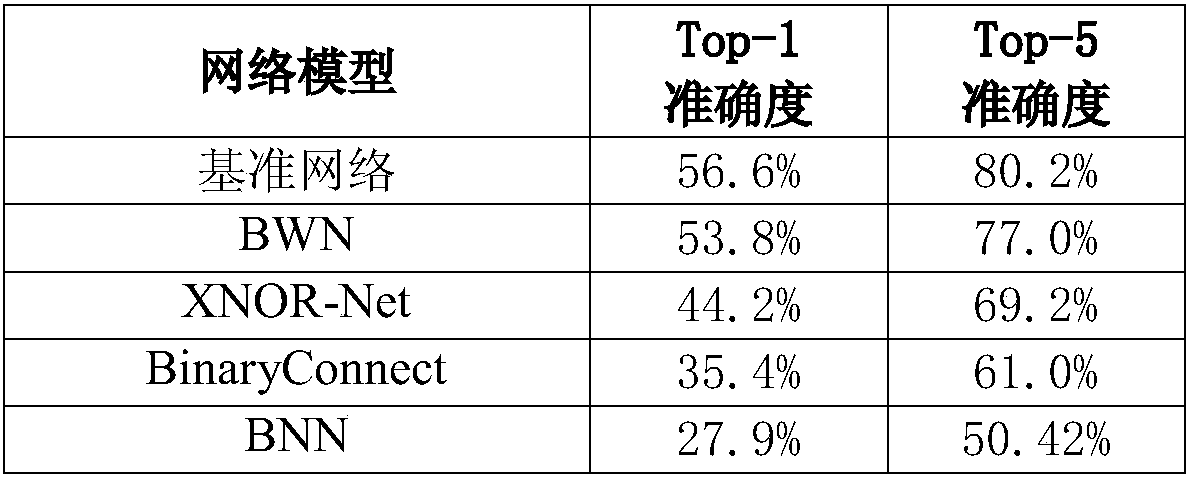

A partial binary convolution method suitable for embedded devices

The invention discloses a partial binary convolution method suitable for embedded equipment, belonging to a model compression method of depth learning. The method comprises the following steps: 1. foreach convolution layer of a given CNN, measuring the importance of each convolution core according to the statistics of each output characteristic map; the convolution kernels of each layer being divided into two groups. 2, rearrangeing that two groups of convolution core on the storage space so that the storage positions of the same group of convolution cores are adjacent to each other, recording the rearrange order to generate a new convolution layer; 3, changing the channel order of the convolution nucleus of the next convolution layer according to the rearrangement order of the step 2; Step 4, according to the CNN processed in the above steps, fine-tuning training being carried out, binary quantization being carried out on the convolution layer divided into non-important layers, and the accuracy of the whole network being gradually recovered through the iterative operation of quantization and training. The invention ensures the accuracy of the given CNN on the large data set, andreduces the calculation and storage overhead of the convolution neural network on the embedded equipment.

Owner:CHONGQING UNIV

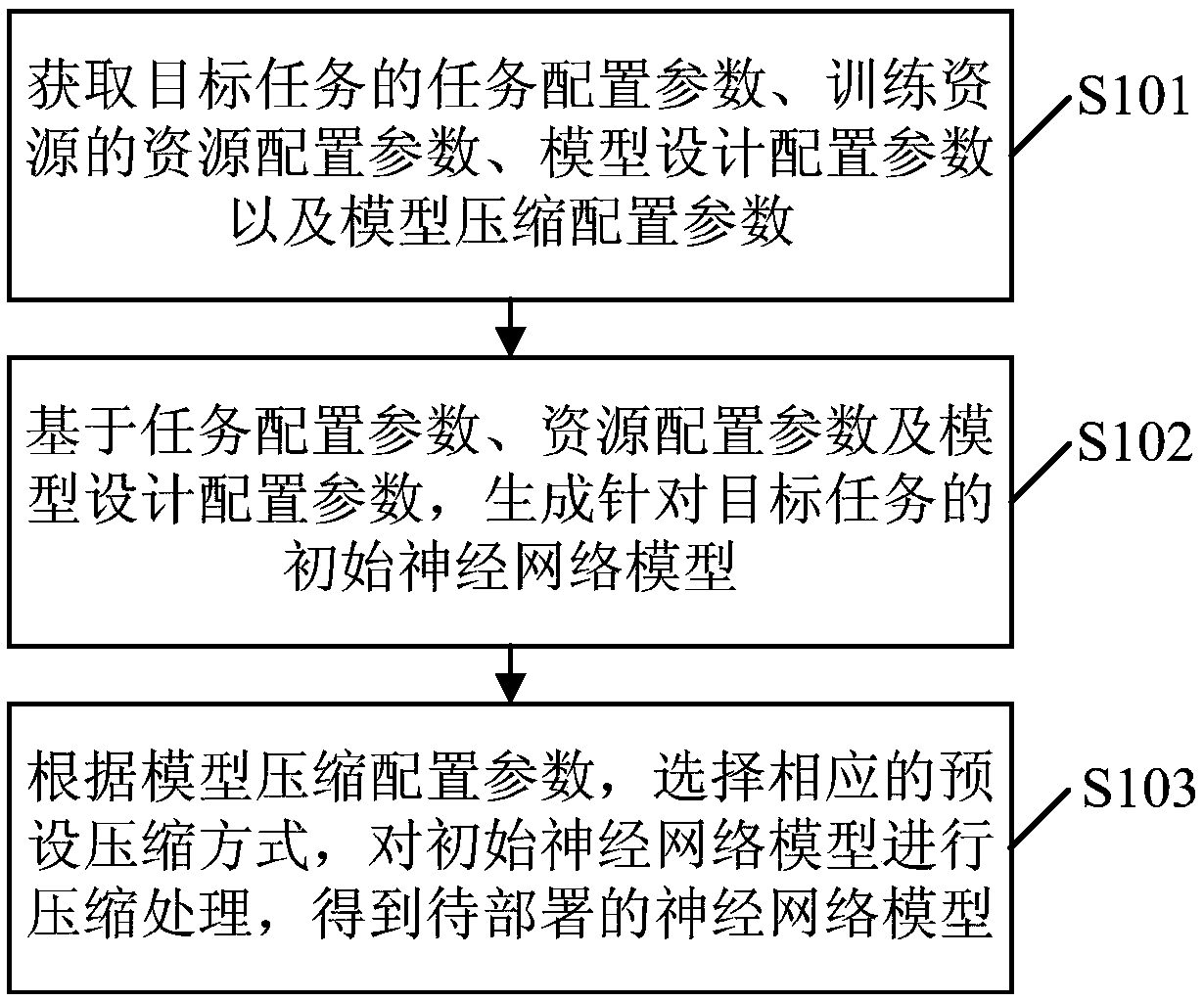

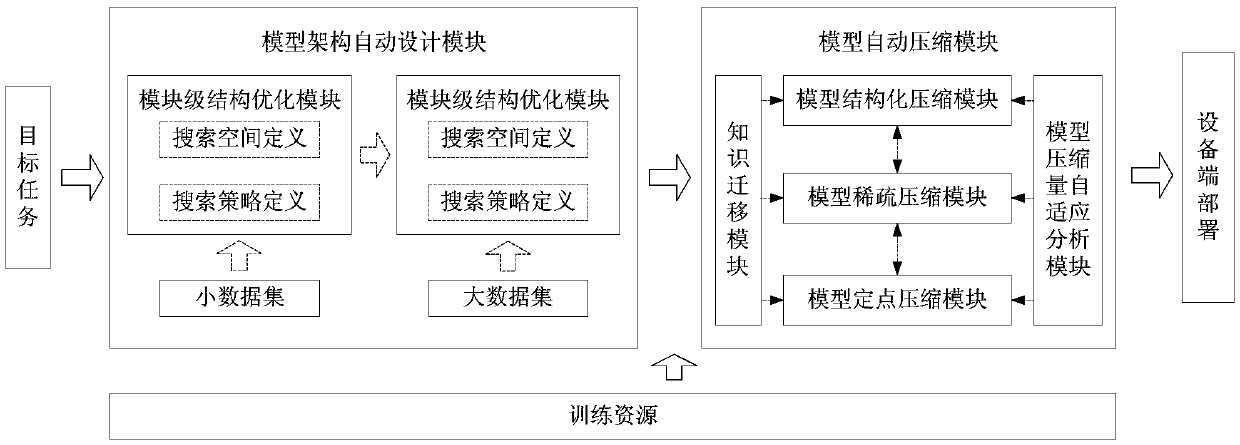

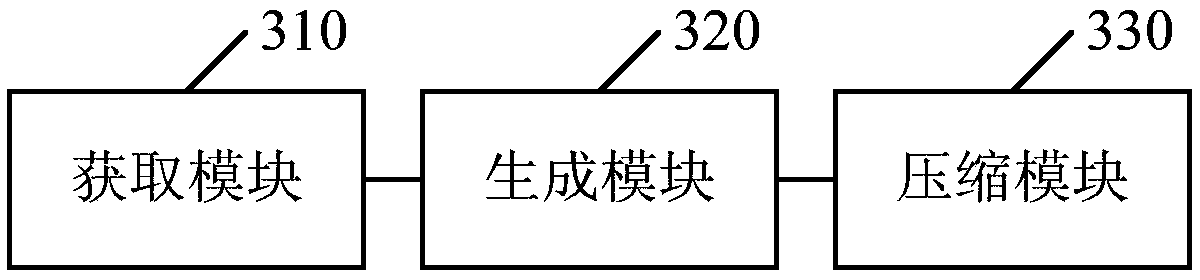

Neural network model determination method and device

ActiveCN111144561AReduce development costsNo human intervention requiredEnergy efficient computingNeural learning methodsAlgorithmSimulation

The embodiment of the invention provides a neural network model determination method and device, and the method comprises the steps of obtaining a task configuration parameter of a target task, a resource configuration parameter of a training resource, a model design configuration parameter, and a model compression configuration parameter; generating an initial neural network model for the targettask based on the task configuration parameter, the resource configuration parameter and the model design configuration parameter; and according to the model compression configuration parameters, selecting a corresponding preset compression mode, and performing compression processing on the initial neural network model to obtain a to-be-deployed neural network model. Through the scheme, the development efficiency in the neural network model determination process can be improved.

Owner:HANGZHOU HIKVISION DIGITAL TECH

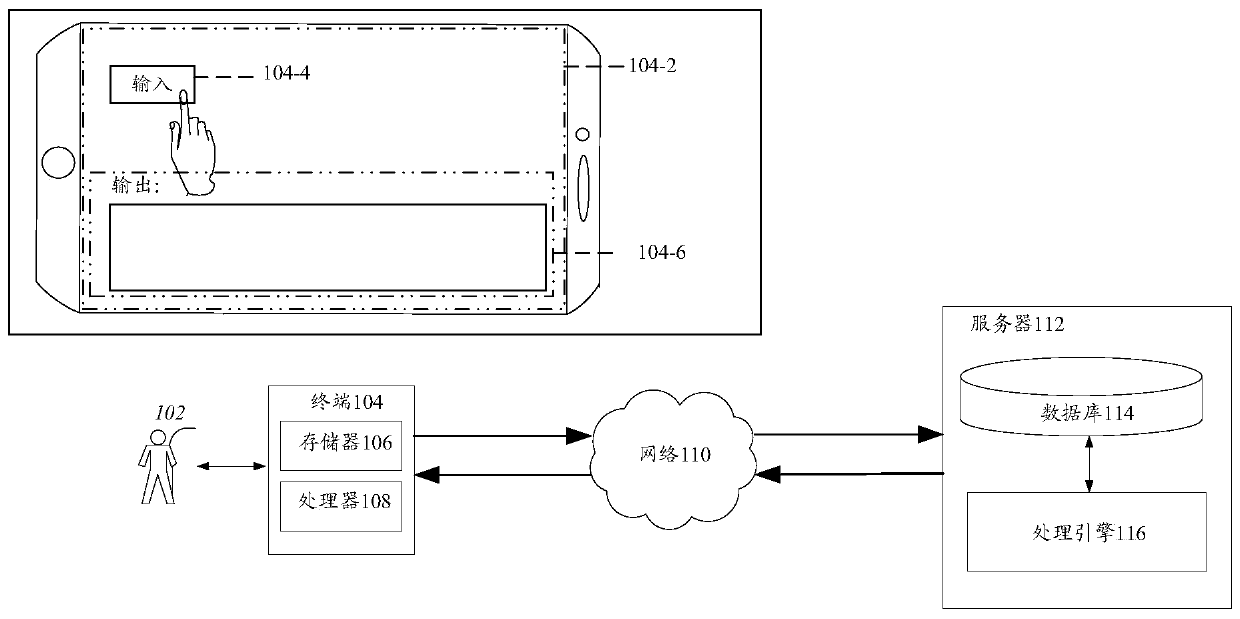

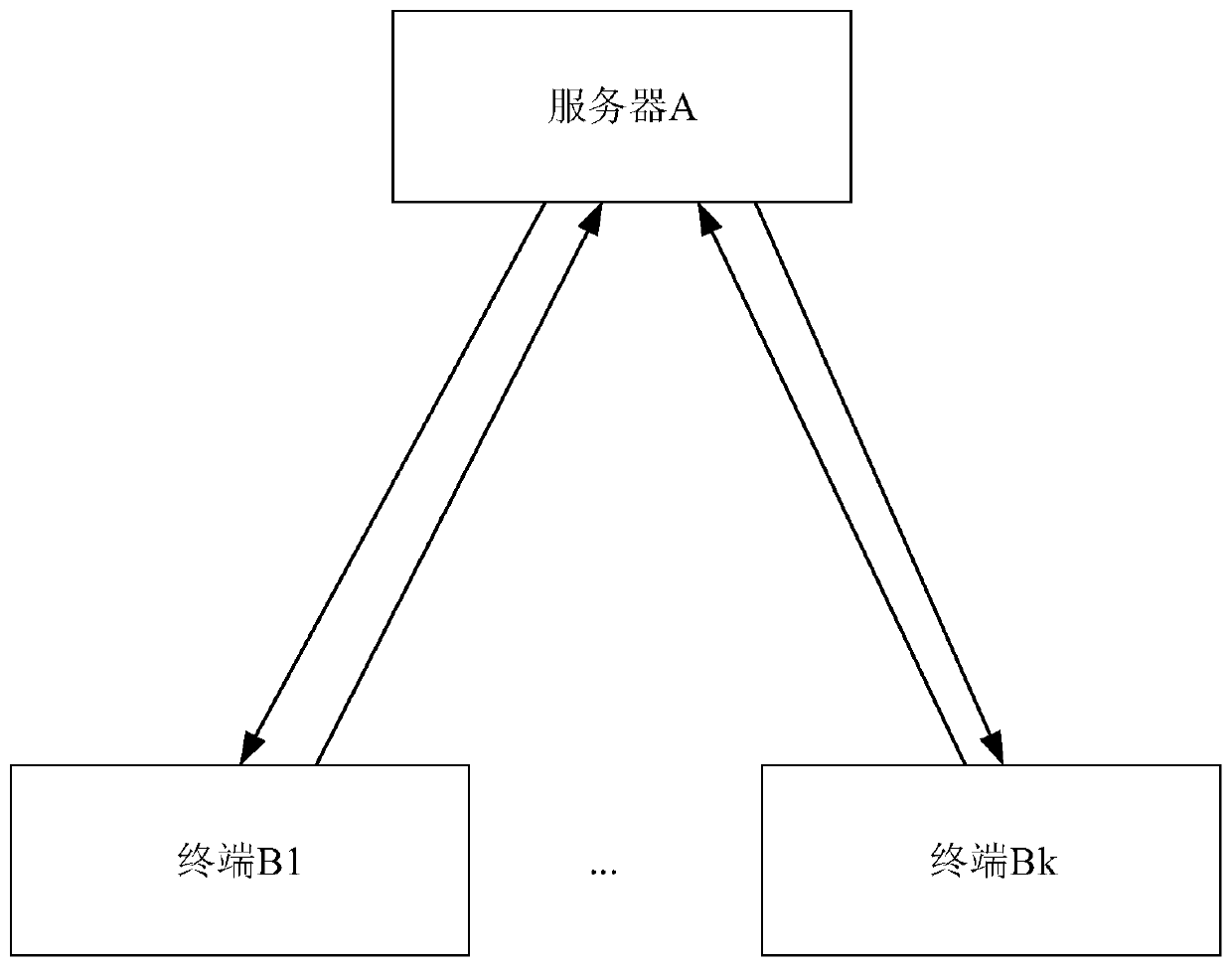

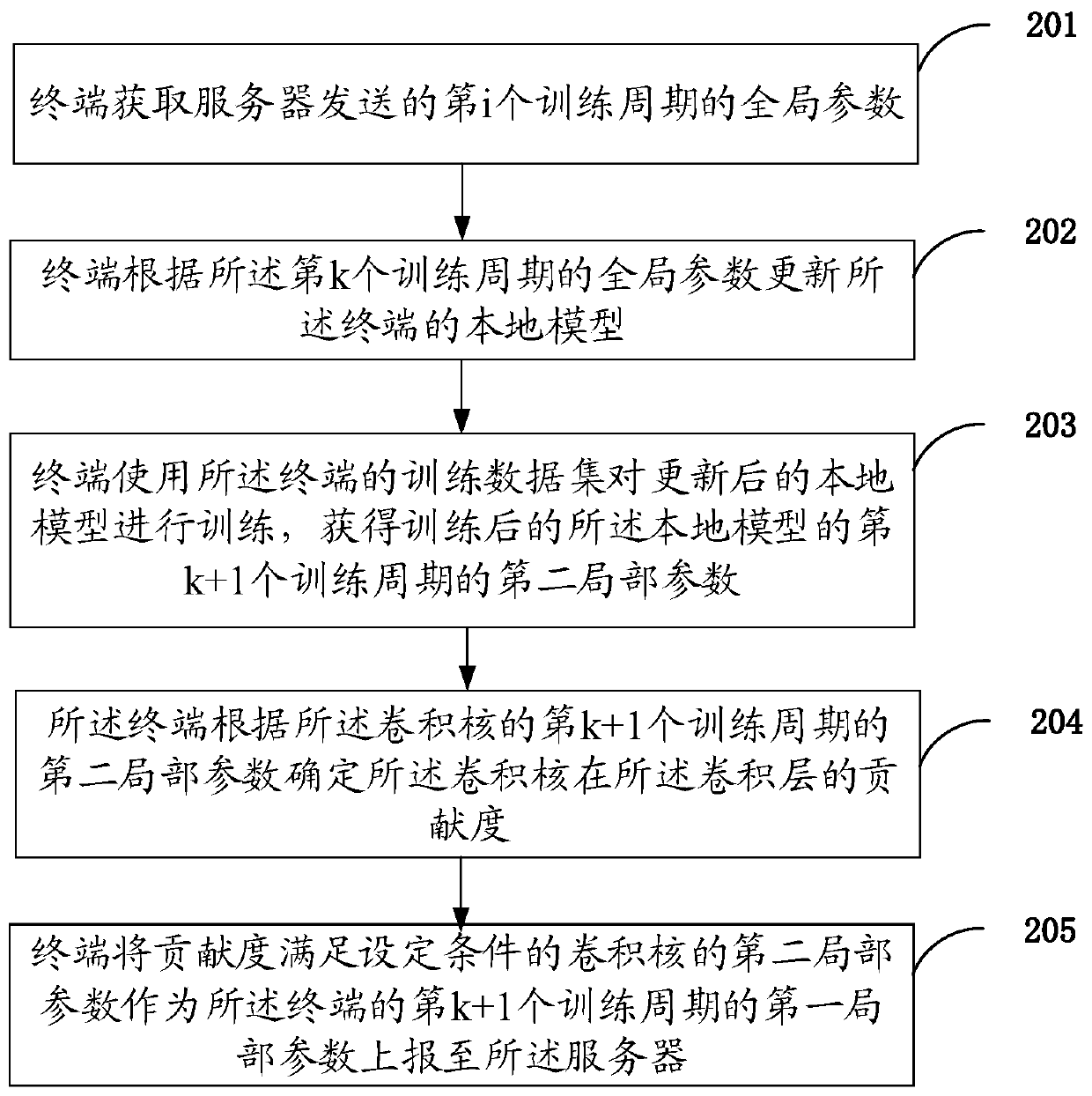

Model compression method and device

ActiveCN110309847AGuaranteed calculation accuracyImprove compression performanceCharacter and pattern recognitionNeural architecturesTraining periodData set

The embodiment of the invention discloses a model compression method and device. The method comprises the steps that a terminal obtains a global parameter of a kth training period sent by a server; the terminal updates a local model of the terminal according to the global parameters of the kth training period; the terminal uses the training data set of the terminal to train the updated local modelto obtain a second local parameter of the (k + 1) th training period of the trained local model; for a convolution kernel of at least one convolution layer of a local model of the terminal, the terminal determines a contribution degree of the convolution kernel in the convolution layer according to a second local parameter of a (k + 1) th training period of the convolution kernel; and the terminal reports the second local parameter of the convolution kernel with the contribution degree meeting the set condition to the server as the first local parameter of the (k + 1) th training period of the terminal.

Owner:WEBANK (CHINA)

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com