Model compression method based on pruning sequence active learning

A technology of active learning and compression method, applied in the field of neural network models, it can solve the problems of not considering the influence of the convolution layer, the pruning strategy is too simple, and the overall model accuracy is reduced, so as to minimize the loss of model accuracy and achieve strong practical application. Foreground, the effect of compressing the model volume

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The model compression method provided by the present invention will be described in detail below by taking pruning only for the convolutional layer as an example.

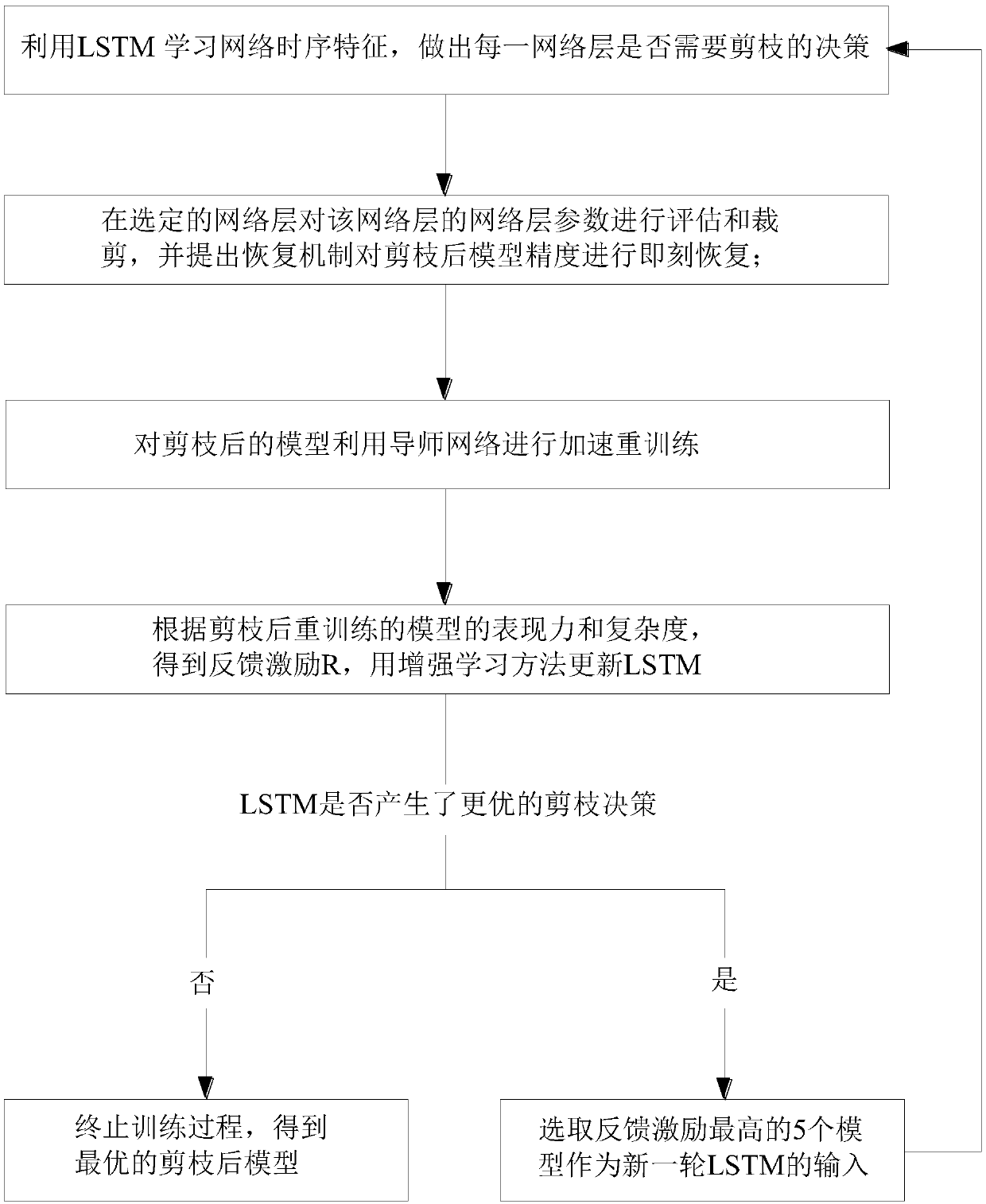

[0034] A model compression method based on active learning of pruning order, such as figure 1 shown, including:

[0035] S1. Use LSTM (Long Short-Term Memory) to learn the timing characteristics of the network, and make a decision whether each convolutional layer needs to be pruned;

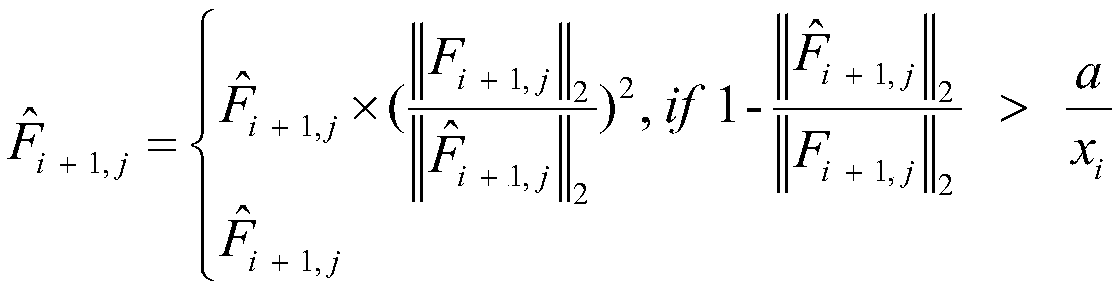

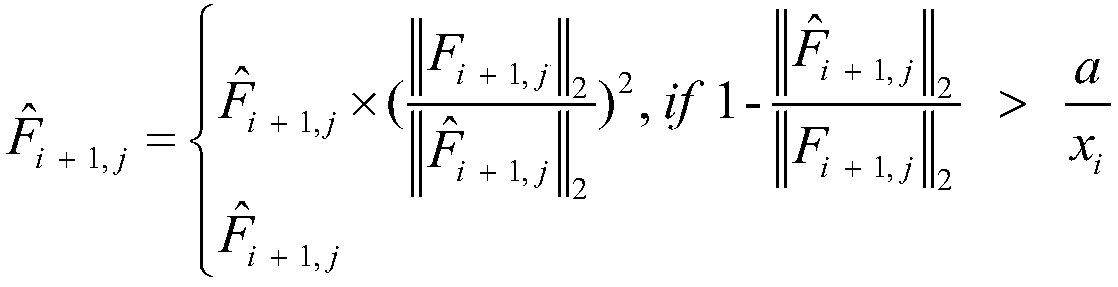

[0036] S2. Evaluate and cut the convolution kernel in the selected convolution layer. The convolution kernel evaluation method takes into account the correlation between the two convolution layers before and after, and uses a non-data-driven method to quickly evaluate the importance of the convolution kernel, and A recovery mechanism is proposed to restore the model accuracy immediately after pruning;

[0037]S3. Use the tutor network to accelerate retraining of the pruned model;

[0038] S4. Calculate the expressive power a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com