Patents

Literature

112 results about "Convolution theorem" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

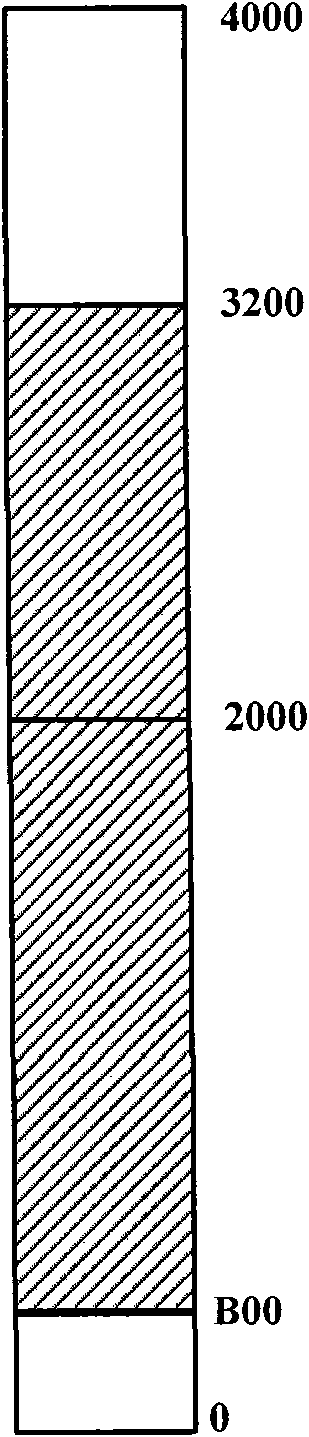

Application Year

Inventor

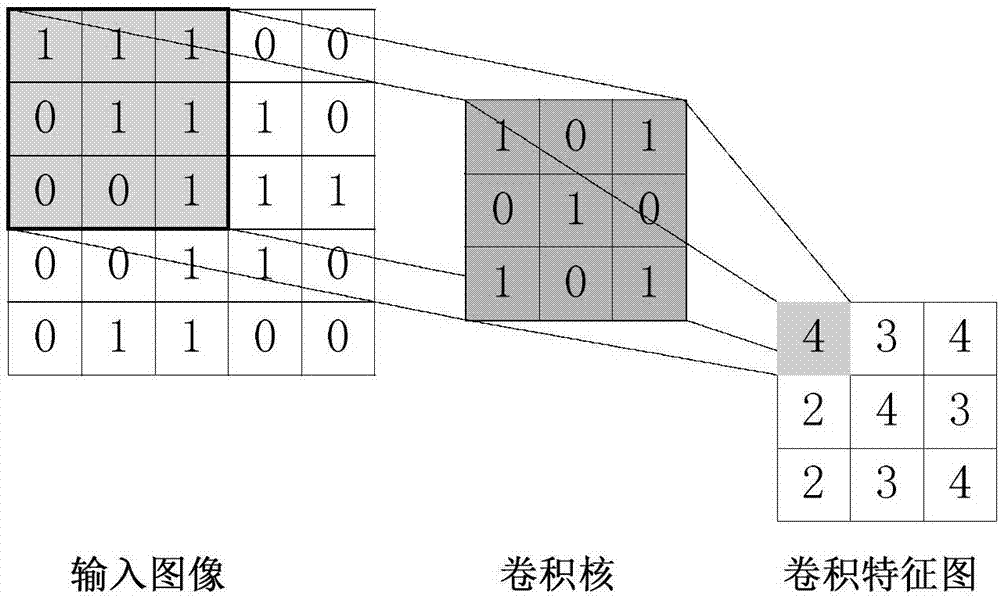

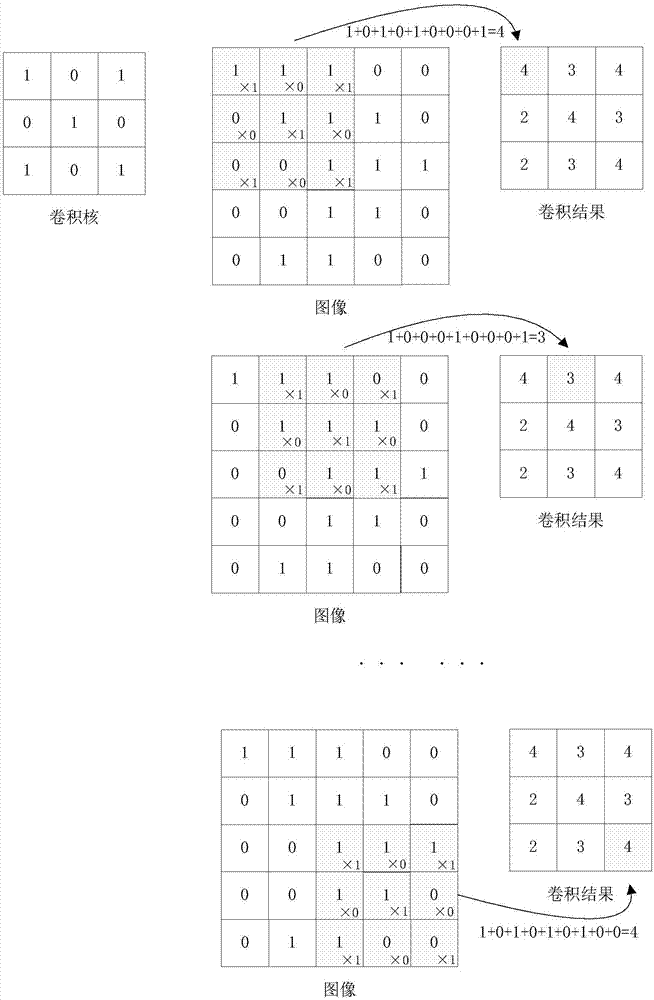

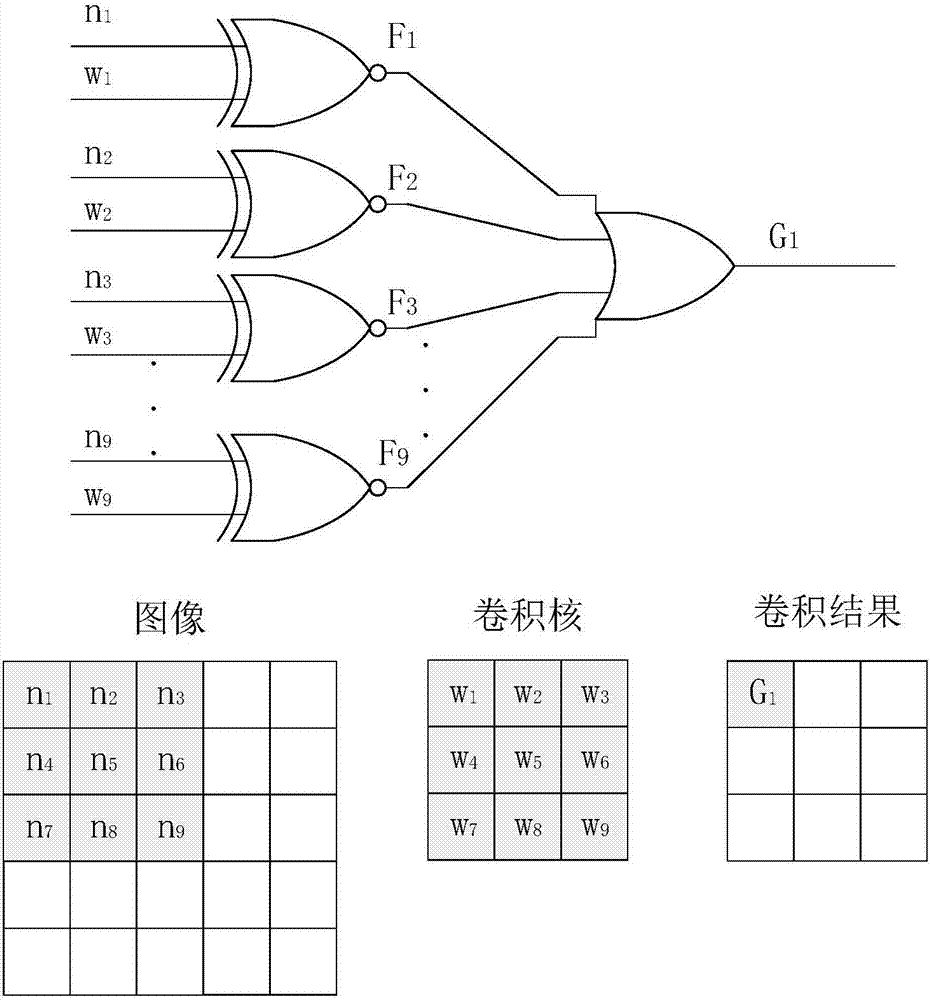

In mathematics, the convolution theorem states that under suitable conditions the Fourier transform of a convolution of two signals is the pointwise product of their Fourier transforms. In other words, convolution in one domain (e.g., time domain) equals point-wise multiplication in the other domain (e.g., frequency domain). Versions of the convolution theorem are true for various Fourier-related transforms.

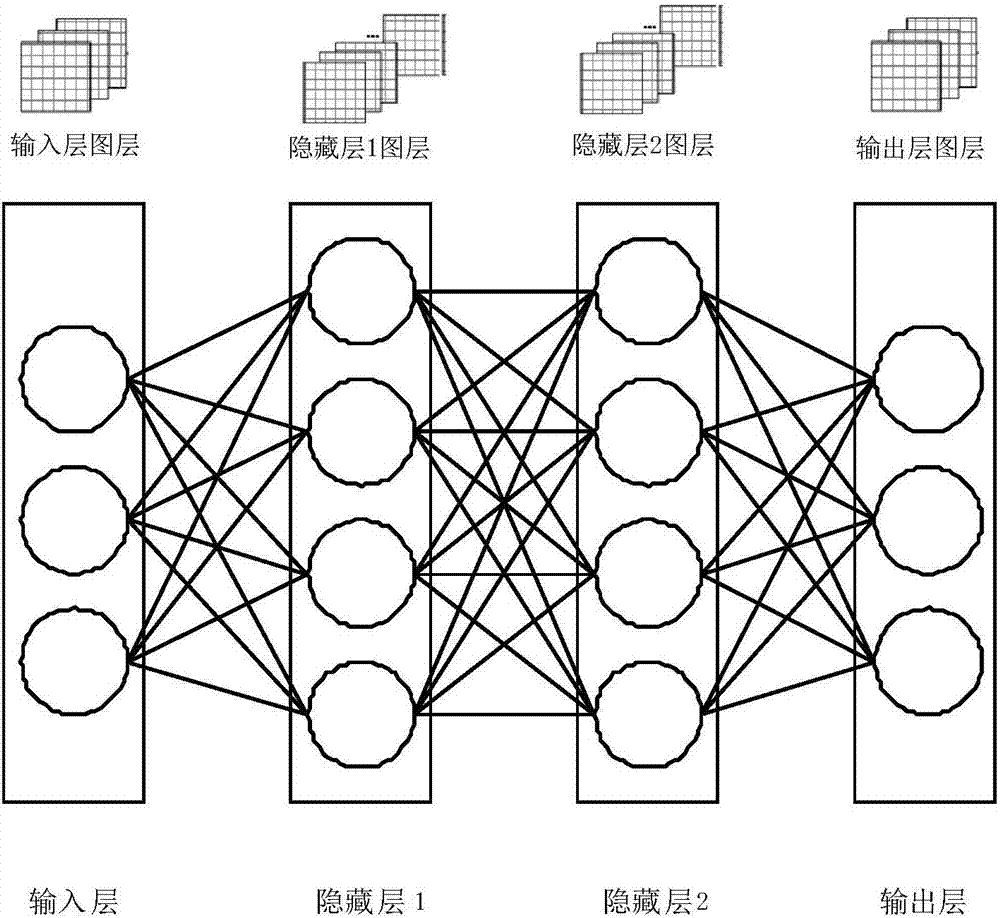

Small target rapid detection method based on deep convolution neural network

InactiveCN106599827AIncrease the number ofReduce computational complexityBiological neural network modelsScene recognitionNerve networkComputation complexity

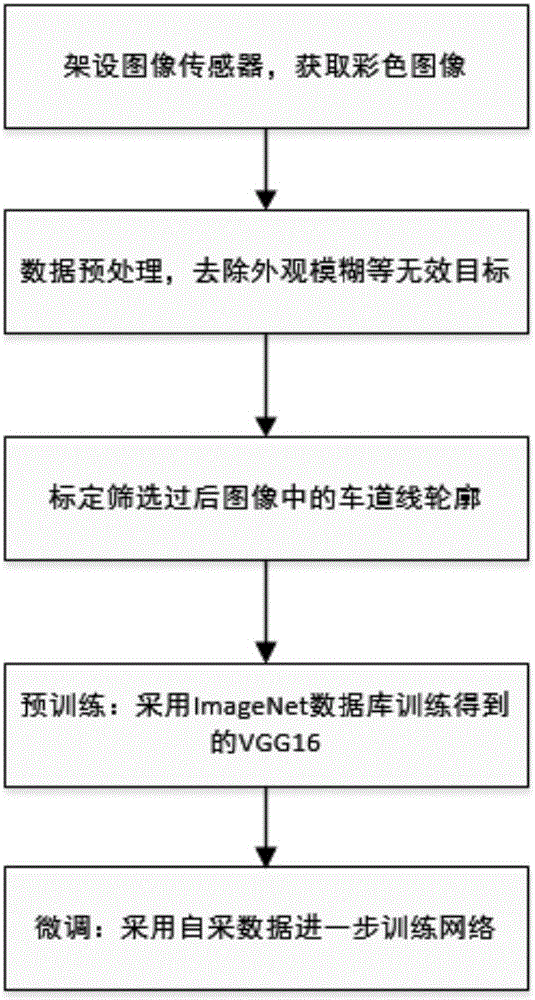

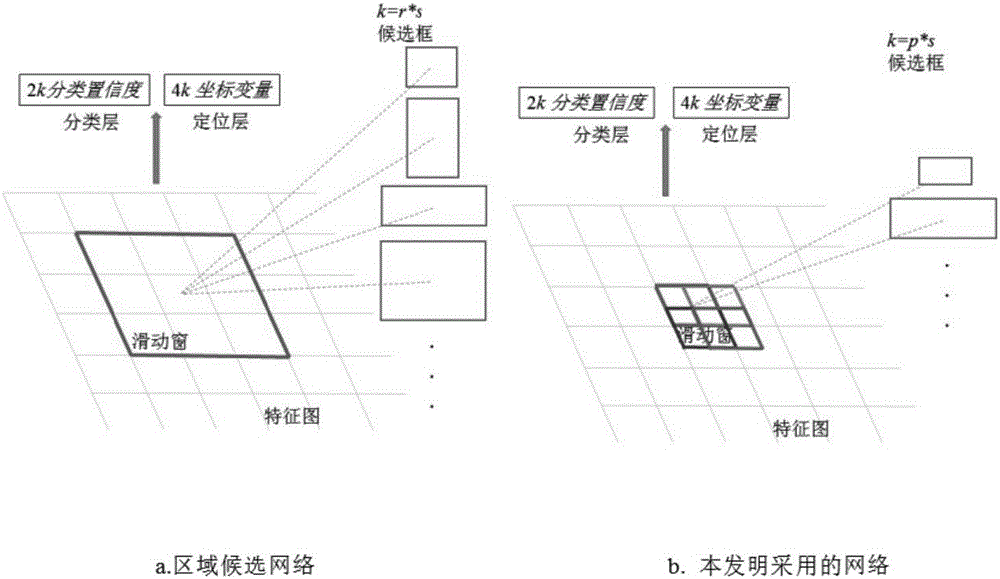

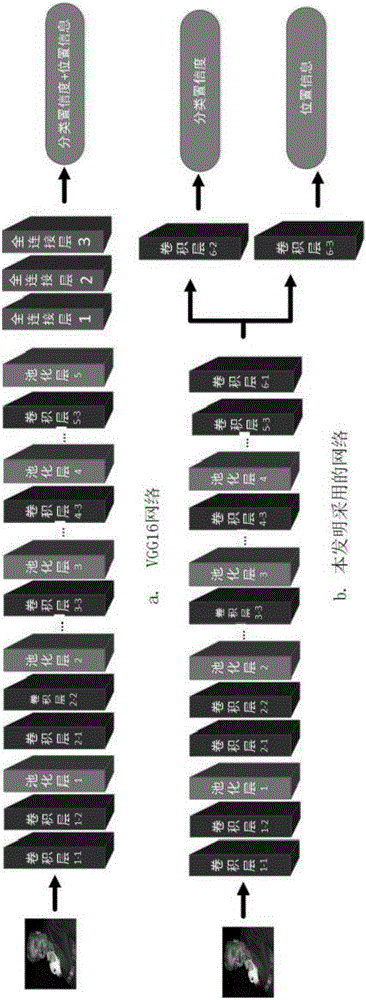

The invention discloses a small target rapid detection method based on a deep convolution neural network. The deep convolution neural network is improved by the following steps: selecting the sliding windows on the convolution feature map of the last shared convolution layer of a VGG16 network as candidate boxes, wherein the sliding windows adopted are half-pixel precision sliding window; deleting a fifth pooling layer, and retaining other convolution layers and pooling layers; adding a convolution layer with a 3*3 convolution kernel; and using two convolution layers with 1*1 convolution kernels to replace all full-connection layers in the network to get the network adopted in the invention, training the network using collected data to get a small target classification model, and using the model to detect small targets. By using the method, the computational complexity is reduced, and the detection rate of small targets is improved.

Owner:ZHEJIANG GONGSHANG UNIVERSITY +1

Image target recognition method based on optimized convolution architecture

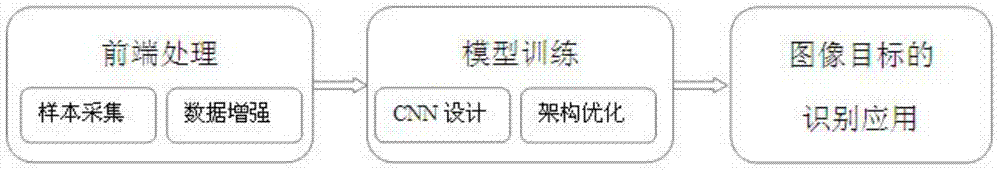

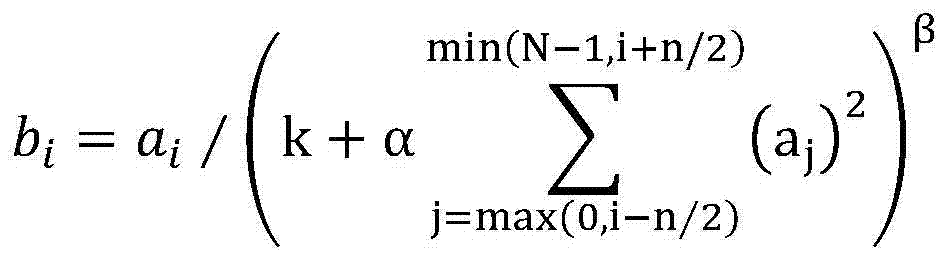

InactiveCN104517122AExpand the training sample setReduce overfittingCharacter and pattern recognitionNeural learning methodsPattern recognitionActivation function

The invention discloses an image target recognition method based on optimized convolution architecture. The image target recognition method includes collecting and enhancing an input image to form a sample; training the sample on the basis of the optimized convolution architecture; performing classified recognition on an image target by using the convolution architecture after training, wherein optimization of convolution architecture includes ReLU activation function; locally responding to normalization; overlapping and merging a convolution area; adopting neuron connection Drop-out technology; performing heuristic learning. Compared with the prior art, the image target recognition method has the advantages that tape label samples can be expanded, and the image target recognition method is supportive of classification of many objects and acquiring of high training convergence speed and high image target recognition rate and has higher robustness.

Owner:ZHEJIANG UNIV

Natural scene text identification method based on convolution attention network

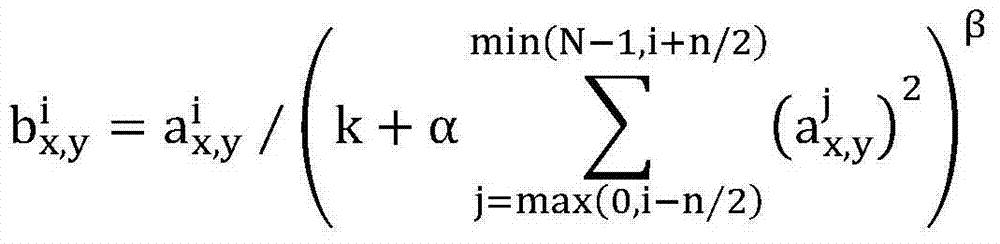

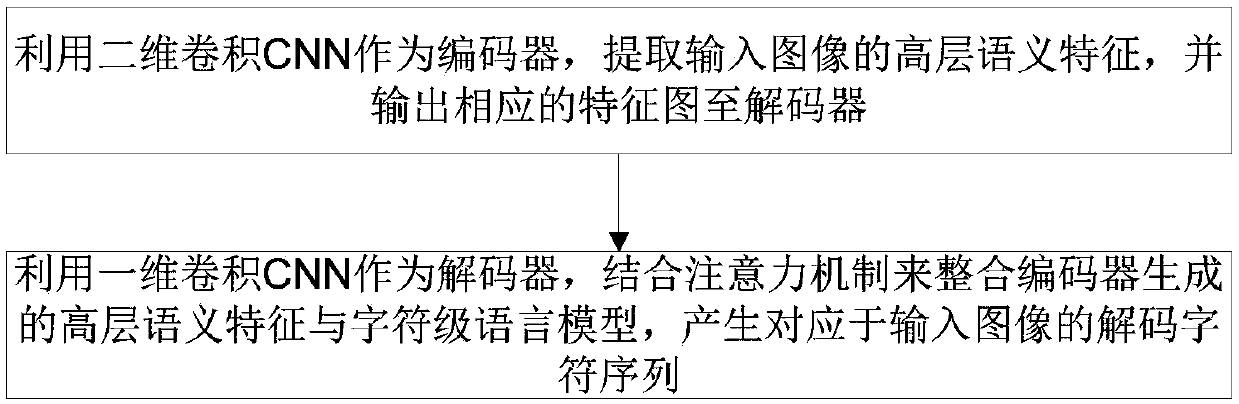

ActiveCN108615036AEasy to parallelizeReduce complexityNeural architecturesNeural learning methodsPattern recognitionConvolution theorem

The invention discloses a natural scene text identification method based on a convolution attention network; the method comprises the following steps: using a two-dimensional convolution CNN as an encoder, extracting High-level semantic features of an input image, and outputting a corresponding feature graph to a decoder; using a one-dimensional convolution CNN as the decoder, combining an attention mechanism to integrate the High-level semantic feature formed by the encoder with a character level language model, thus forming a decode character sequence corresponding to the input image. For asequence with the length of n, the method uses the CNN modeling character sequence with a convolution kernel as s, and only O (n / s) times of operation is needed to obtain the long term dependence expression, thus greatly reducing the algorithm complexity; in addition, because of convolution operation characteristics, the CNN can be better paralleled when compared with a RNN, thus performing advantages of GPU resources; more importantly, a deep model can be obtained via a convolution layer stacking mode, thus improving abstractness expression of a higher level, and improving the model accuracy.

Owner:UNIV OF SCI & TECH OF CHINA

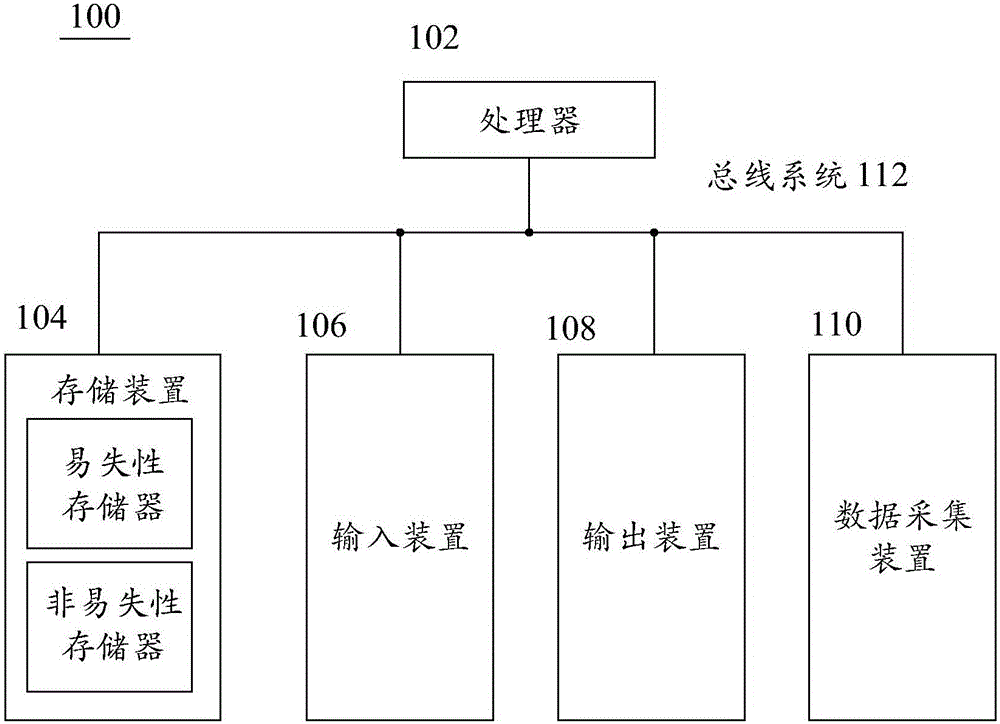

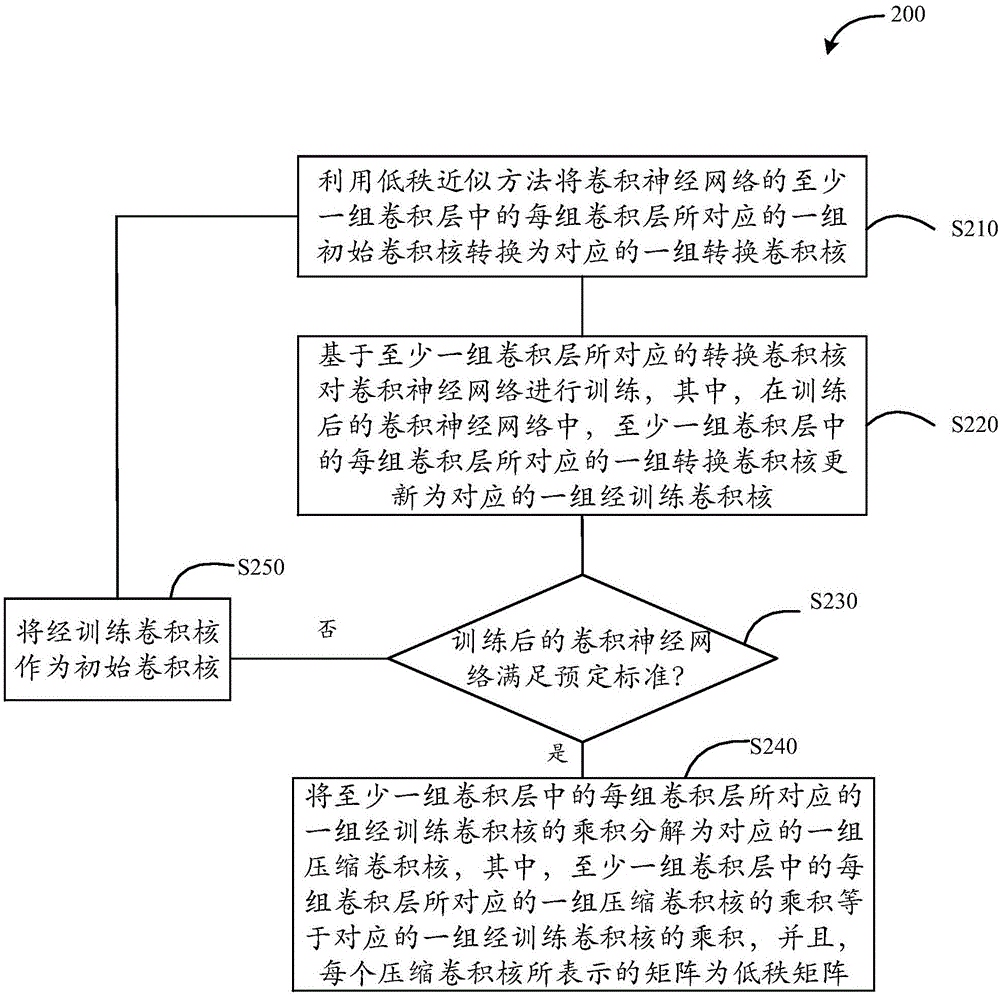

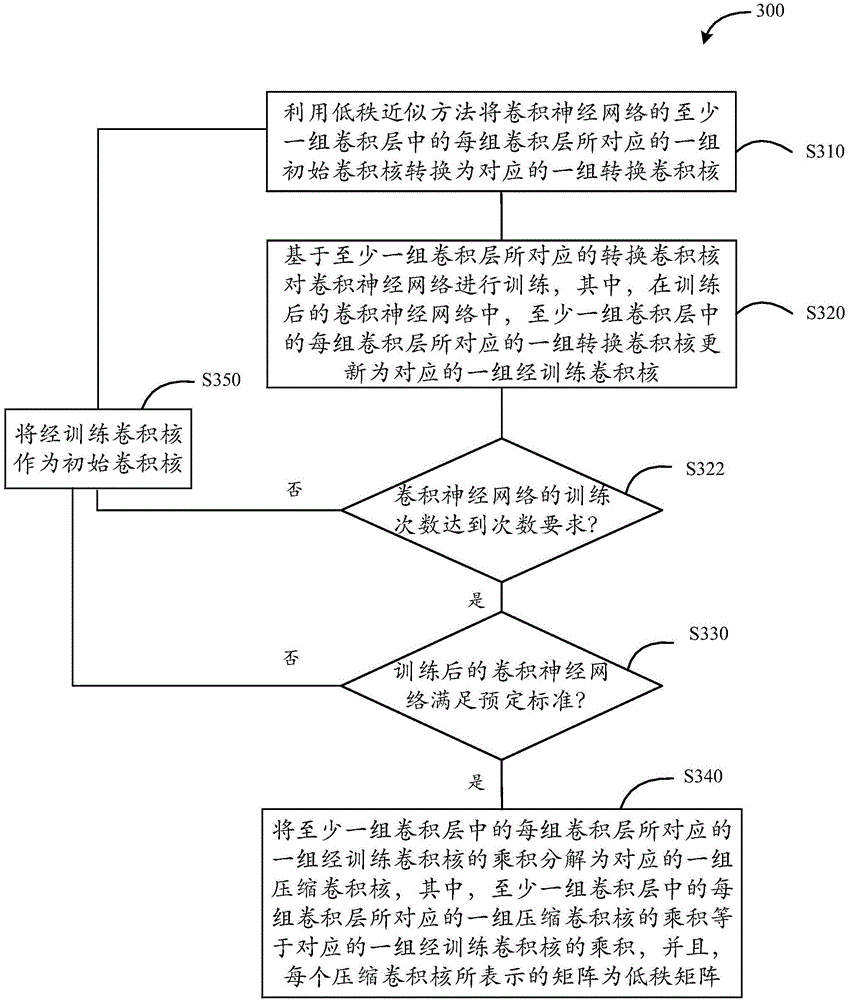

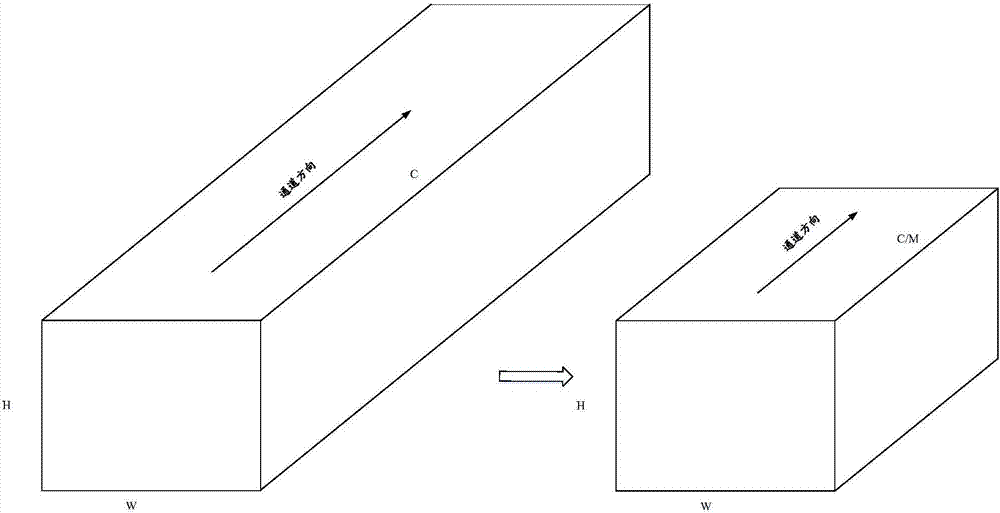

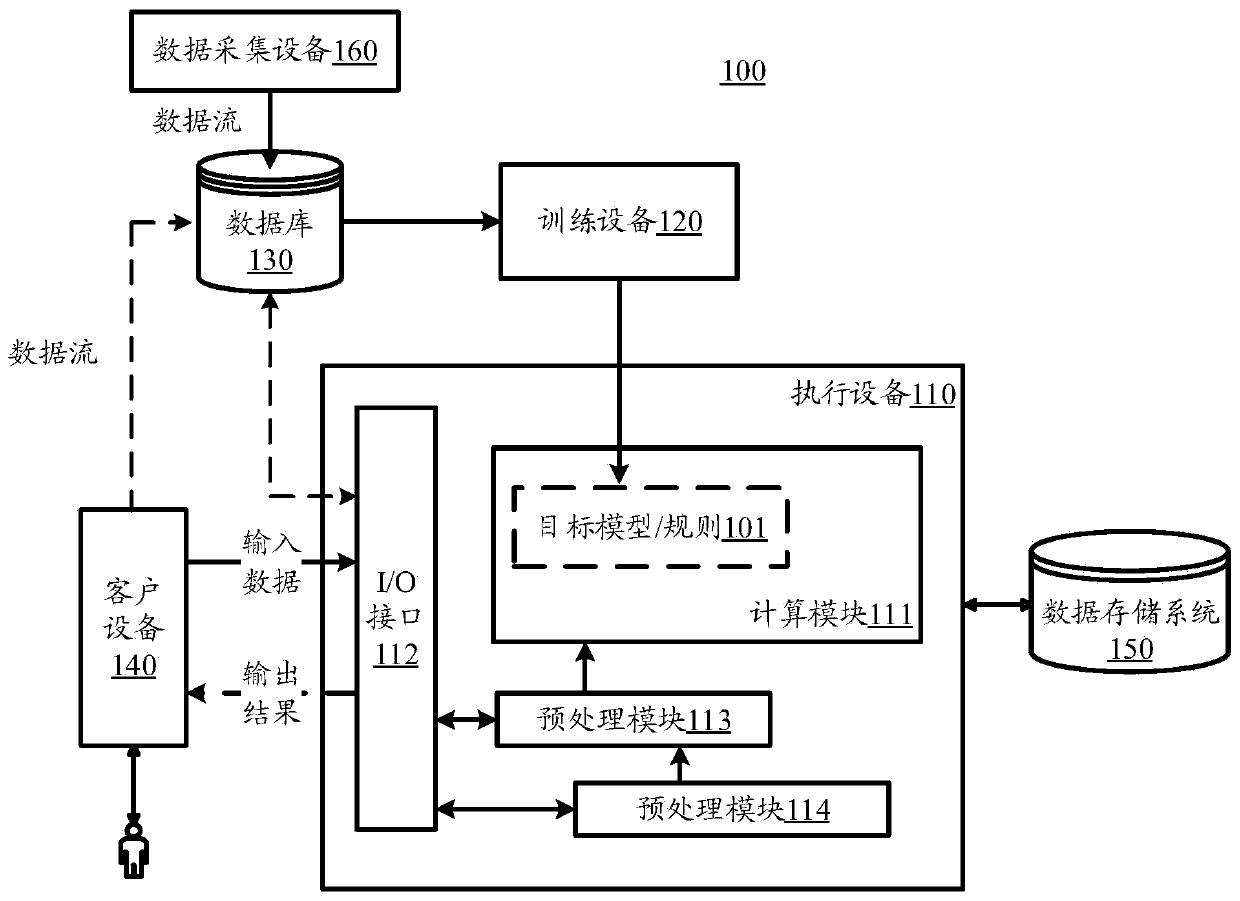

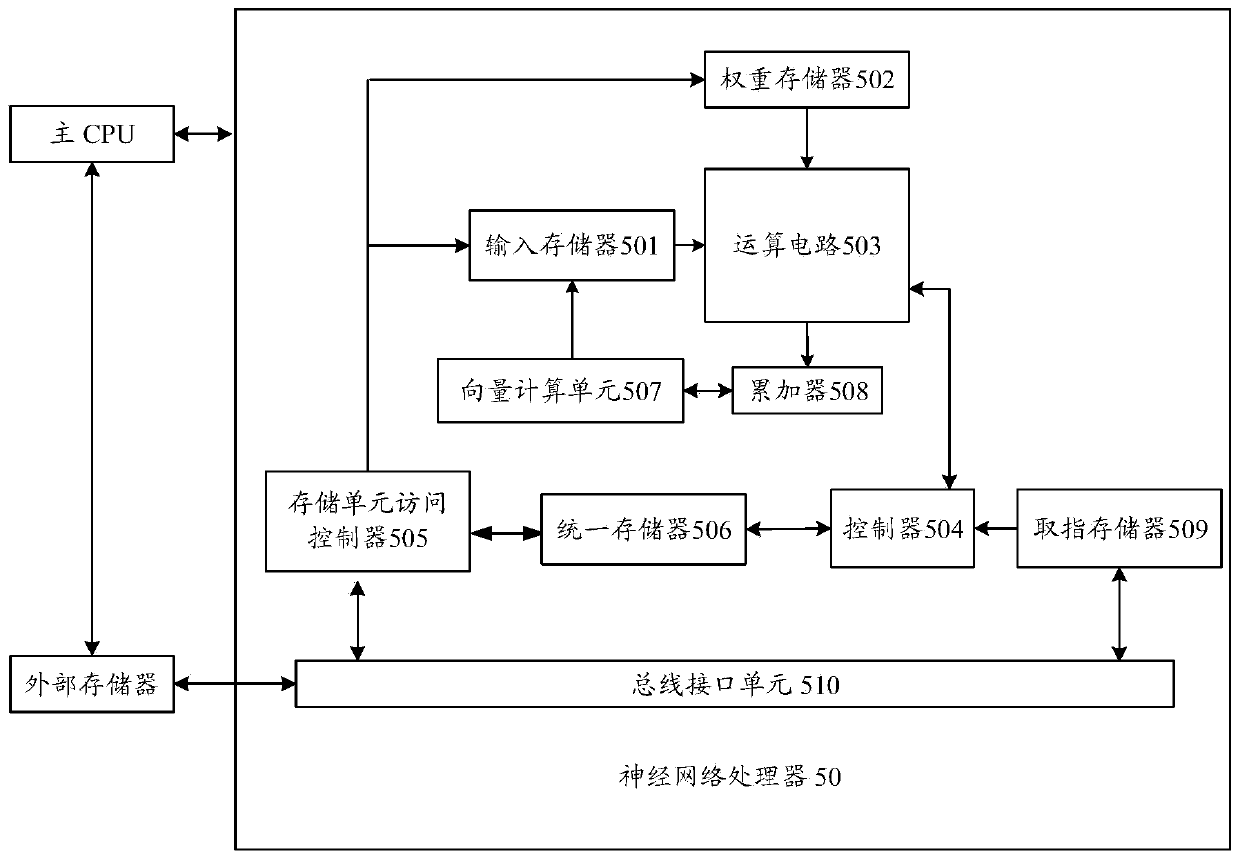

Neural network training method, neural network training device, data processing method and data processing device

InactiveCN106326985AImprove processing efficiencySmall amount of calculationNeural architecturesNeural learning methodsNerve networkAlgorithm

The invention provides a neural network training method, a neural network training device, a data processing method and a data processing device. The neural network training method comprises the following steps: S210, transforming a set of initial convolution kernels corresponding to each of at least one set of convolution layers of a convolutional neural network into a corresponding set of transformed convolution kernels by use of a low-rank approximation method; S220, training the convolutional neural network based on the transformed convolution kernels corresponding to the at least one set of convolution layers; S230, judging whether the trained convolutional neural network meets a predetermined standard, going to S240 if the trained convolutional neural network meets the predetermined standard, or going to S250; S240, decomposing the product of the set of trained convolution kernels corresponding to each of the at least one set of convolution layers into a corresponding set of compressed convolution kernels; and S250, taking the set of trained convolution kernels corresponding to each of the at least one set of convolution layers as a set of initial convolution kernels corresponding to the set of convolution layers, and returning to S210. Through the methods and the devices, the amount of computation can be saved.

Owner:BEIJING KUANGSHI TECH +1

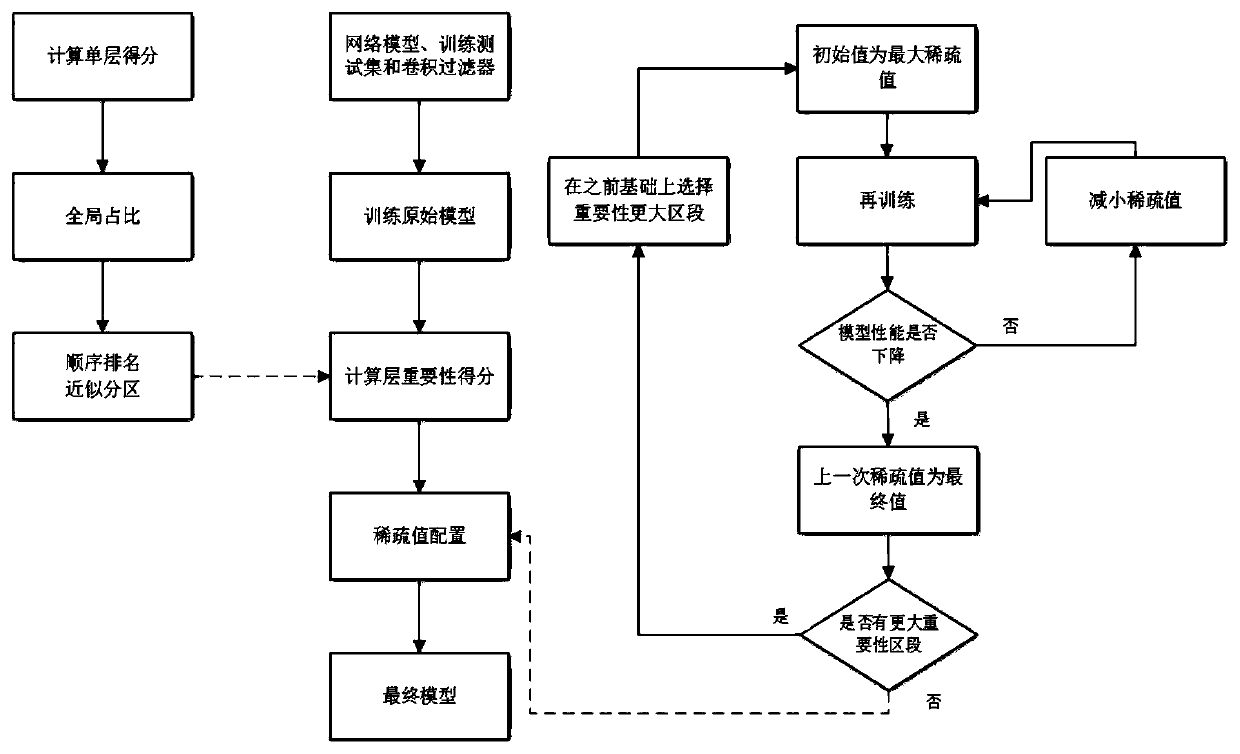

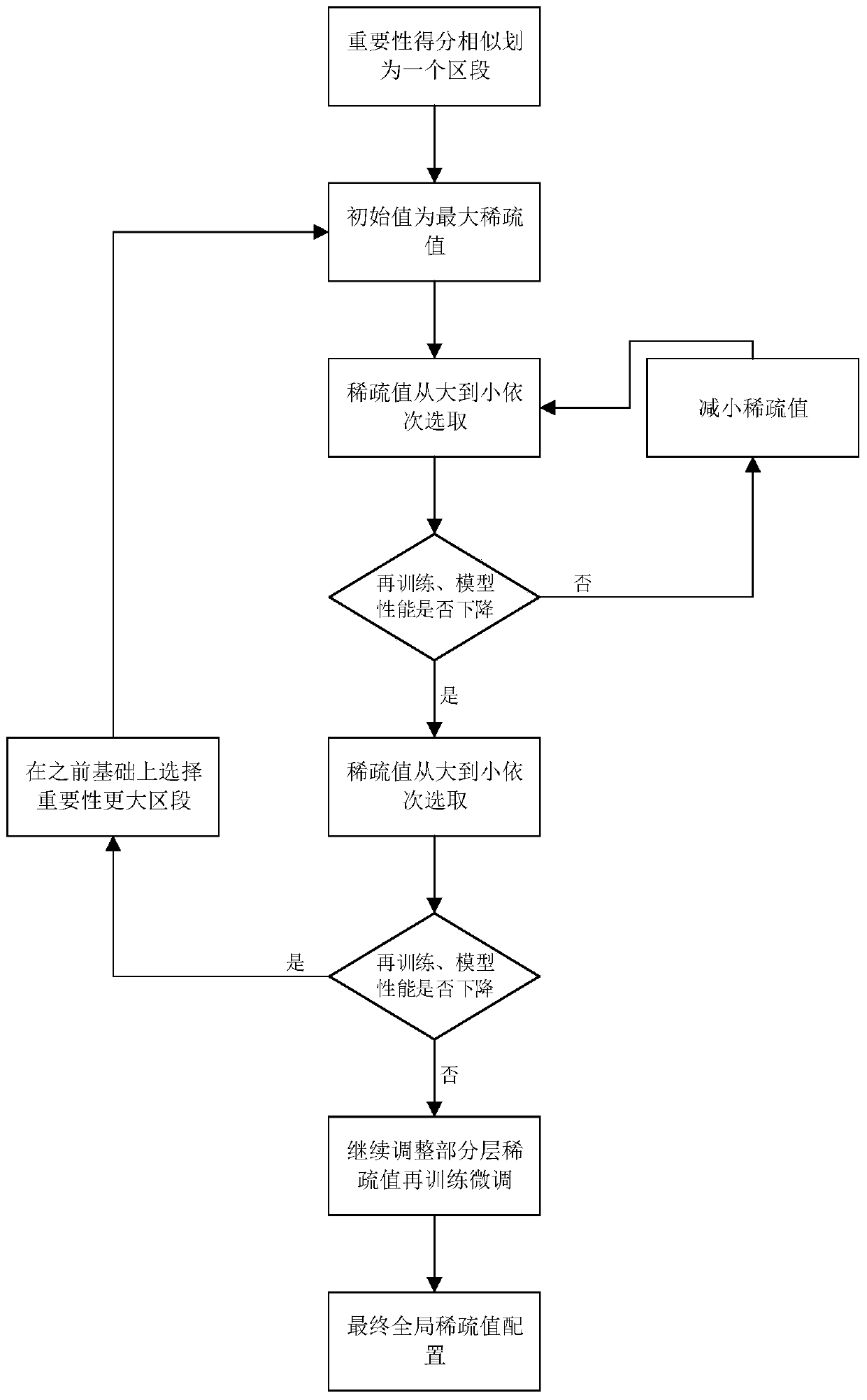

A neural network structured pruning compression optimization method for a convolutional layer

InactiveCN109886397APotential for huge computational optimizationFast operationNeural architecturesNerve networkConvolution filter

The invention discloses a neural network structured pruning compression optimization method for convolutional layers, and the method comprises the steps: (1), carrying out the sparse value distribution of each convolutional layer: (1.1) training an original model, obtaining the weight parameter of each convolutional layer capable of being pruned, and carrying out the calculation, and obtaining theimportance score of each convolutional layer; (1.2) according to the sequence of importance scores from small to large, carrying out average scale segmentation by referring to the maximum value and the minimum value, carrying out sparse value configuration from small to large on the convolution layers of all the sections in sequence, and through model training adjustment, obtaining sparse valueconfiguration of all the convolution layers capable of being pruned; (2) structured pruning: selecting a convolution filter according to the sparse value determined in the step (1.2), and carrying outstructured pruning training; Wherein only one convolution filter is used for each convolution layer. According to the optimization method provided by the invention, the deep neural network can be more conveniently operated on a resource-limited platform, so that the parameter storage space can be saved, and the model operation can be accelerated.

Owner:XI AN JIAOTONG UNIV

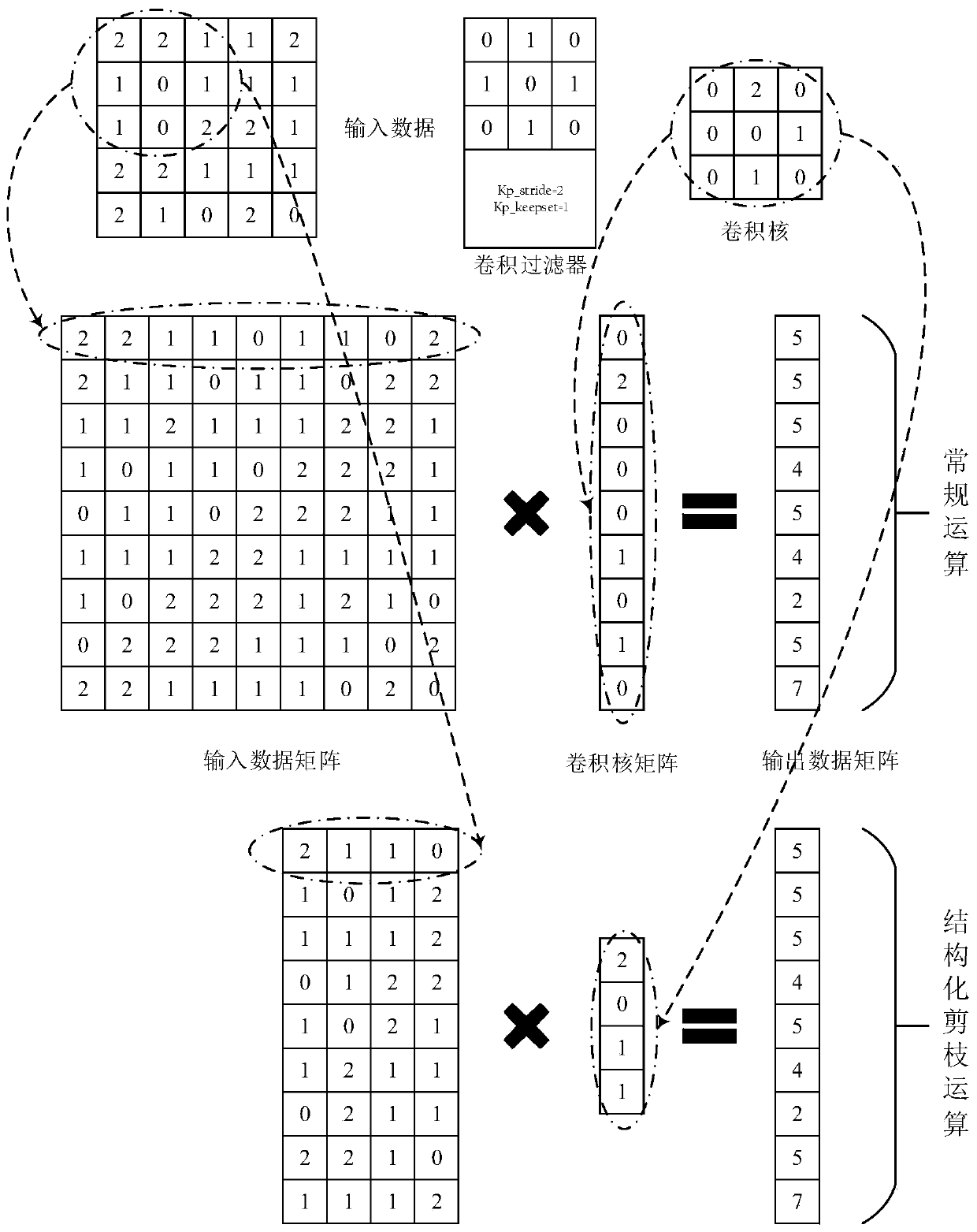

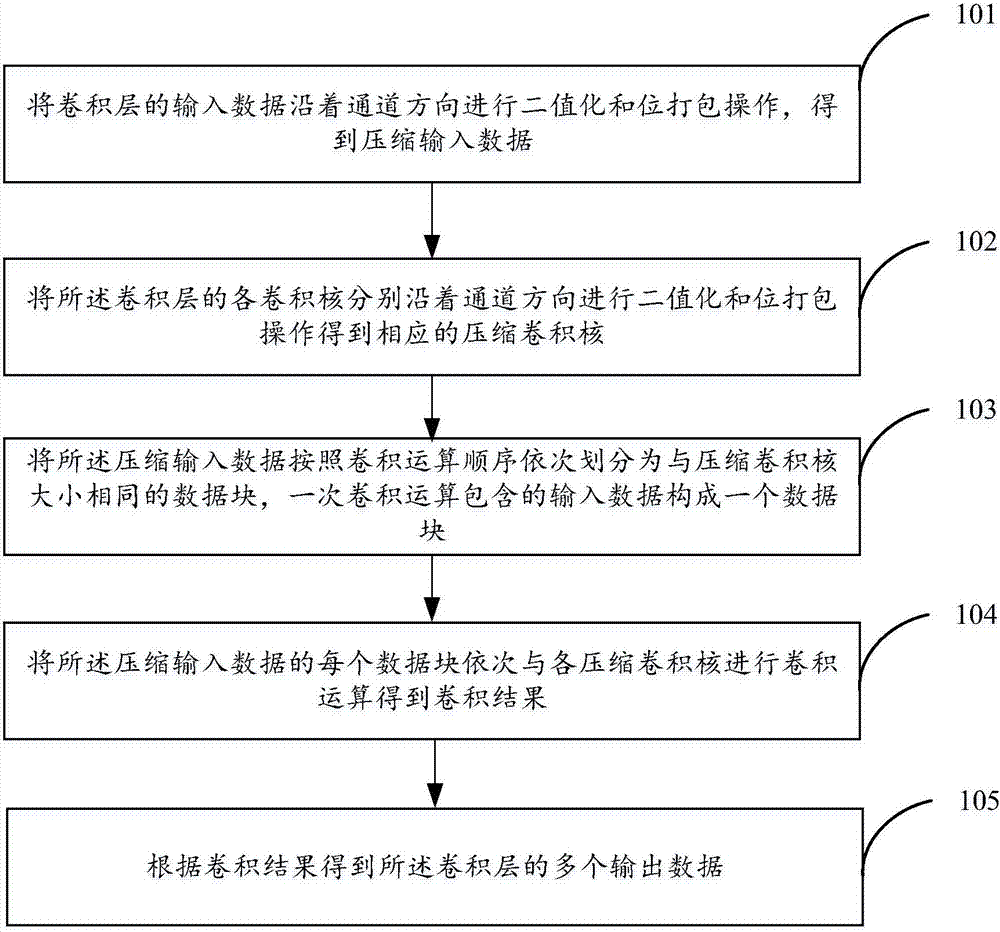

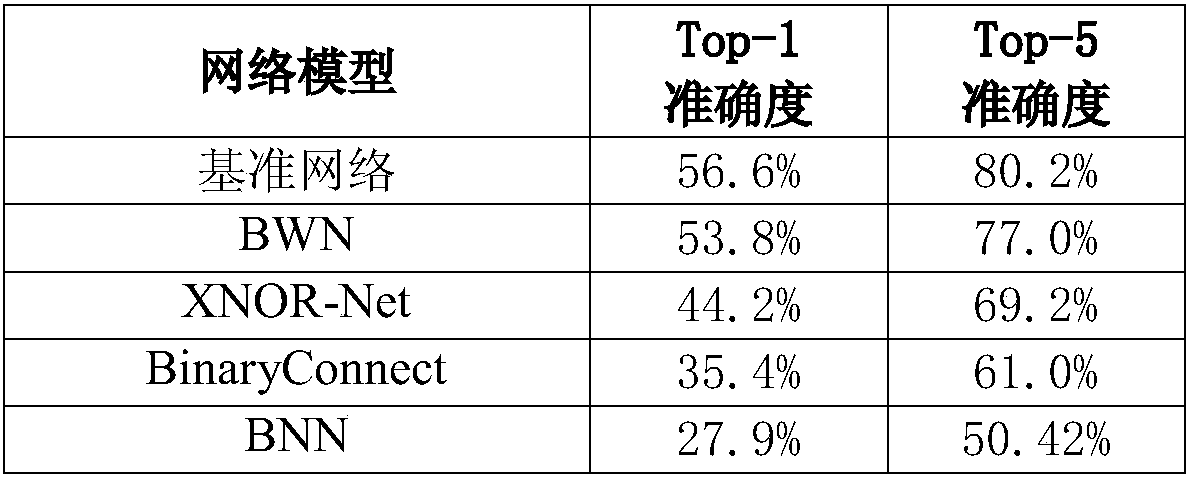

Neural network optimizing method and device

ActiveCN107145939ABitwise operations are good atHeavy computationKernel methodsCode conversionNerve networkAlgorithm

The invention discloses a neural network optimizing method and a device to solve the problems in the prior art of neural network technology that the calculation speed is low, and that the timeliness is poor. The method comprises: performing binarization to the convolution layer inputted data along the channel direction and packaging the bits to obtain the zipped data; performing binarization to the convolution kernels of the various convolution layers and packaging the bits to obtain the corresponding zipped convolution kernels; dividing the zipped input data into data blocks of the same sizes as the convolution kernels according to the calculation order in a succession manner with the input data contained in one convolution calculation forming a data block; performing convolution calculations to each data block of the zipped input data successively with the zipped convolution kernels to obtain the convolution result; and according to the convolution result, obtaining a plurality of output data for the convolution layers. The technical schemes of the invention are able to make the neural network calculation more speedy and more timely.

Owner:BEIJING TUSEN ZHITU TECH CO LTD

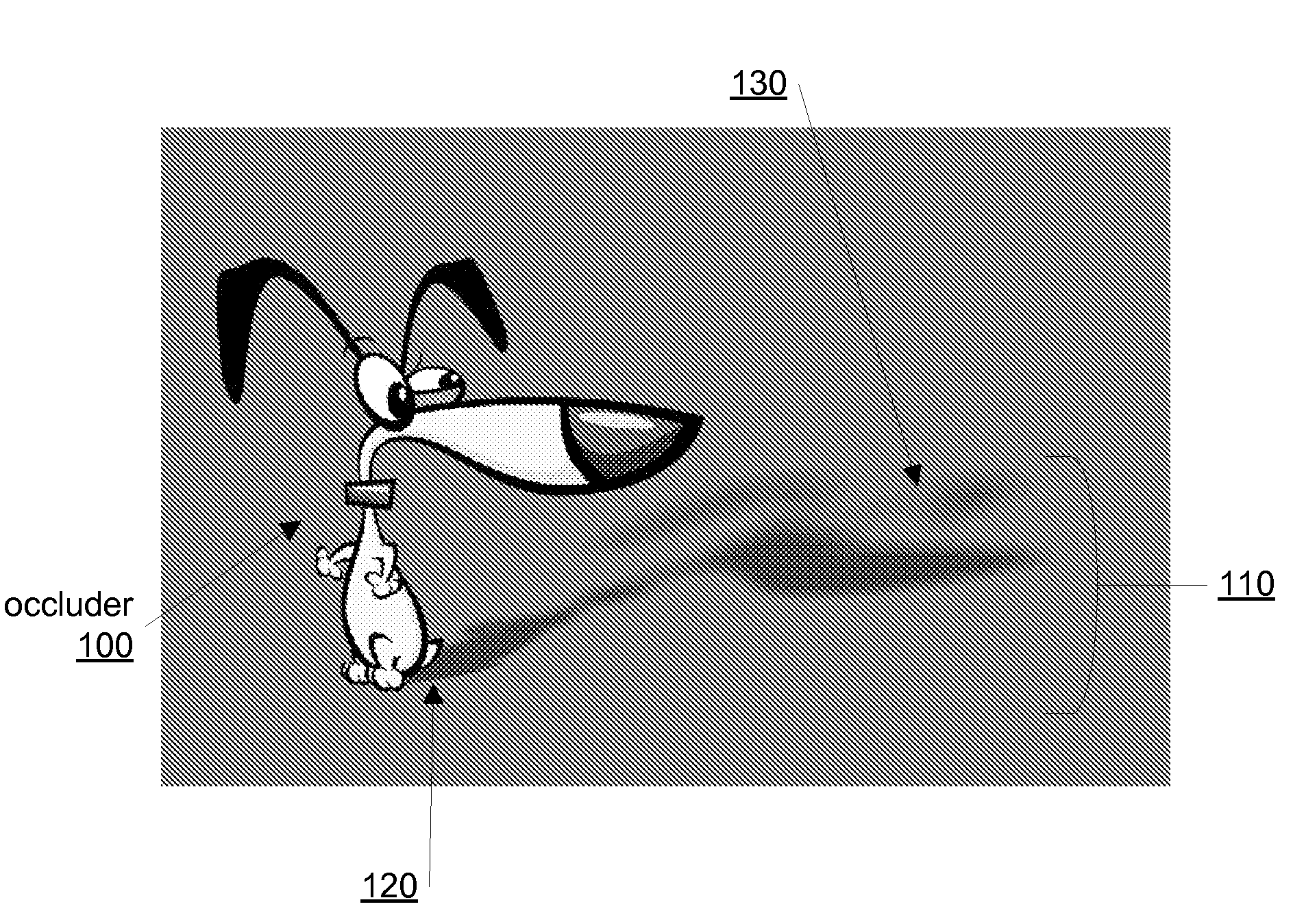

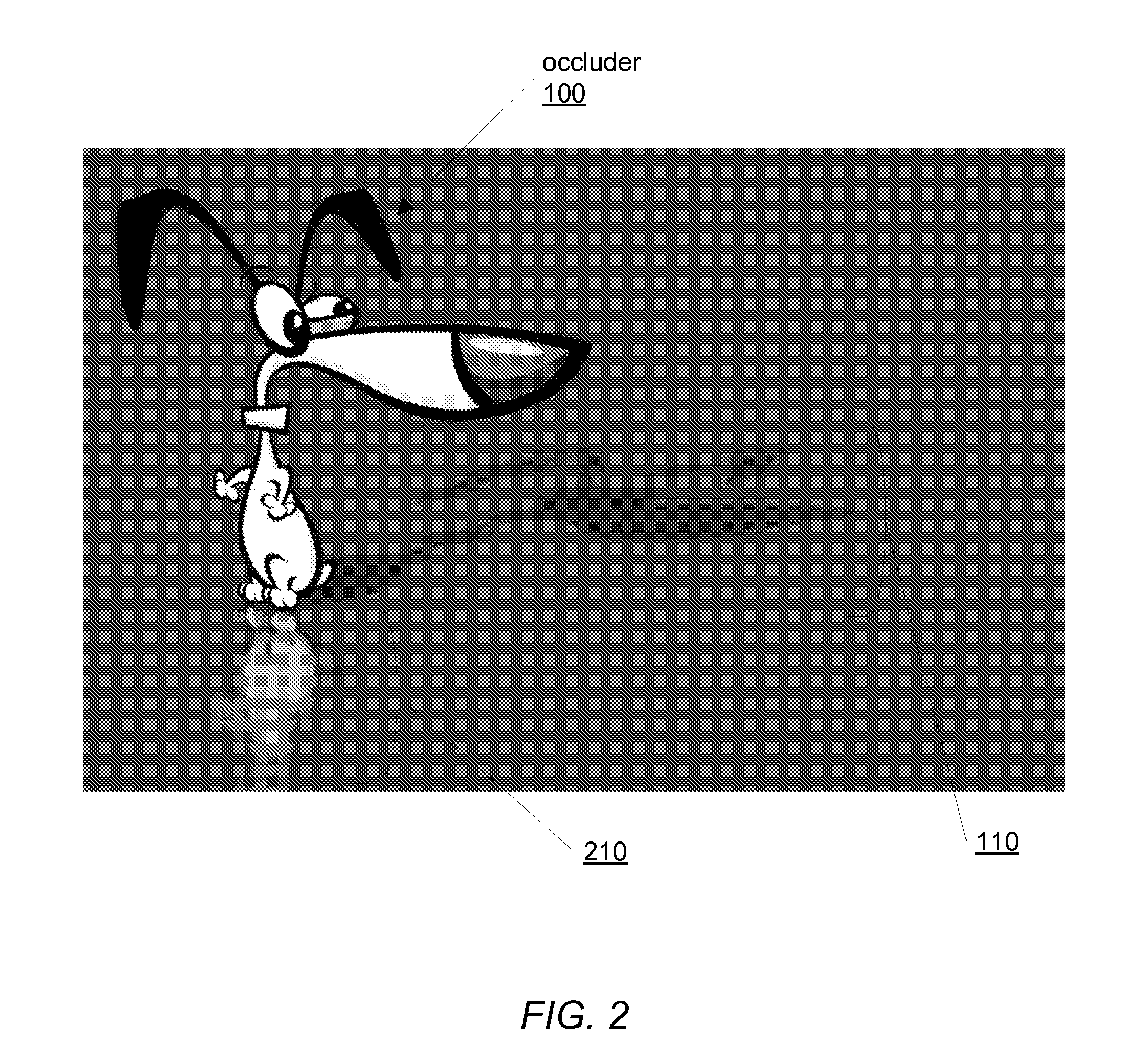

Spatially-Varying Convolutions for Rendering Soft Shadow Effects

ActiveUS20090033661A1Enhance interestImprove usabilityCathode-ray tube indicators3D-image renderingConvolution filterLinear filter

Soft shadows may include areas that are less clear (more blurry) than other regions. For instance, an area of shadow that is closer to the shadow caster may be clearer than a region that is farther from the shadow caster. When generating a soft shadow, the total amount of light reaching each point on the shadow receiving surface is calculated according to a spatially-varying convolution kernel of the occluder's transparency information. Ray-tracing, traditionally used to determine a spatially varying convolution, can be very CPU intensive. Instead of using ray-tracing, data structures, such as MIP-maps and summed-area tables, or separable linear filters may be used to compute the spatially-varying convolution. For example, a two-dimensional convolution may be computed as two spatially-varying, separable, linear convolution filters—one computing a horizontal component and the other a vertical component of the final 2D convolution.

Owner:ADOBE SYST INC

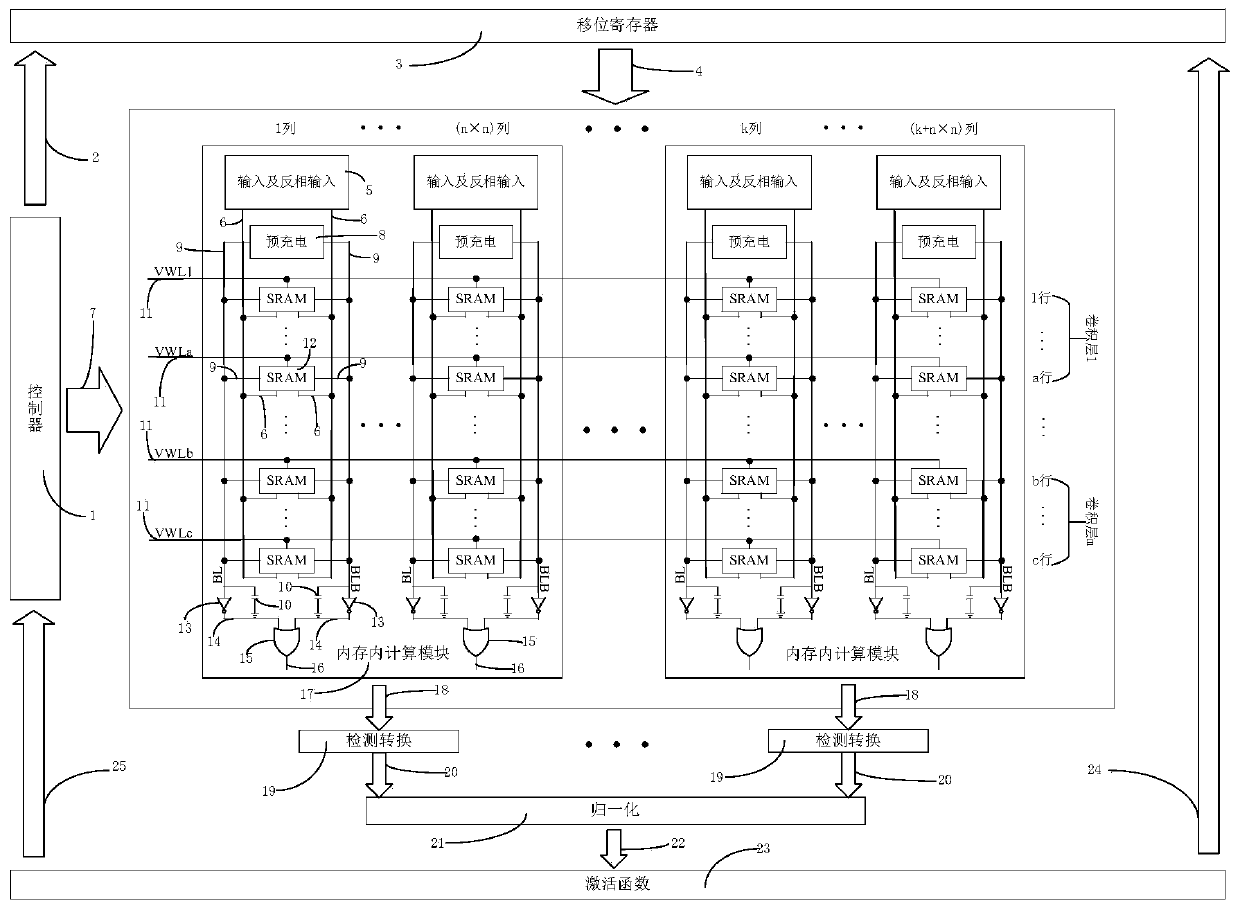

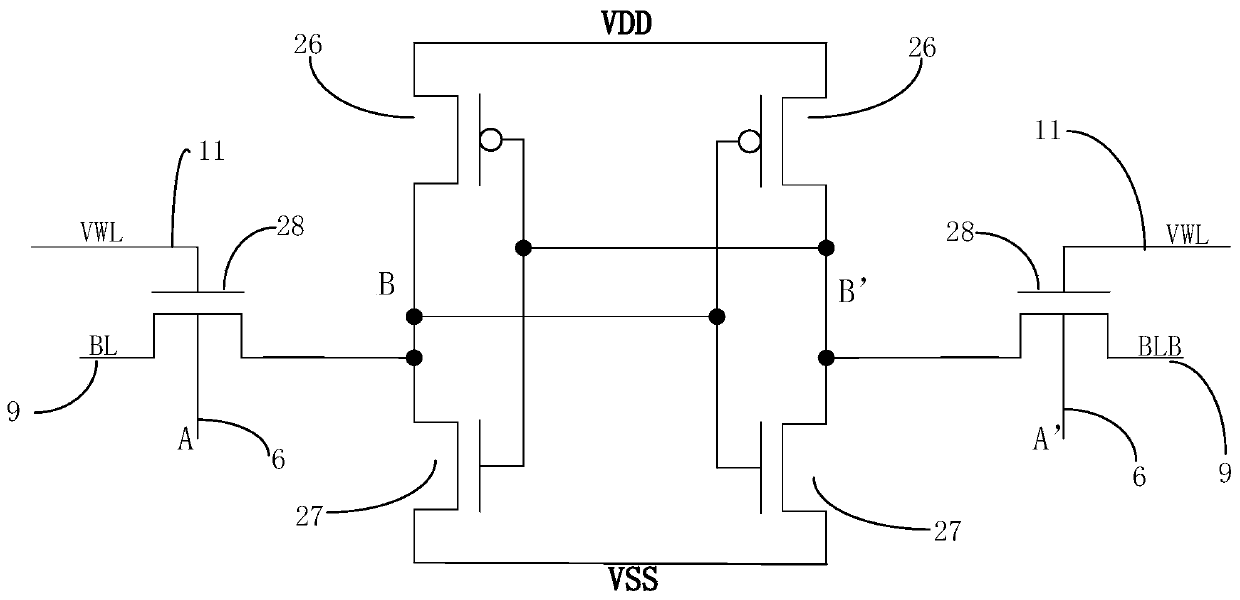

FD-SOI process-based calculation accelerator in binary convolutional neural network memory

ActiveCN109784483AImprove convolution processing speedSave storage spaceNeural architecturesEnergy efficient computingMOSFETAlgorithm

The invention belongs to the technical field of neural networks, and relates to an FD-SOI process-based calculation accelerator in binary convolutional neural network memory. The accelerator utilizesthe back gate voltage of the FD-SOI-MOSFET to adjust its threshold voltage to achieve XOR processing of the data. The convolution kernel parameters of the convolutional neural network are "one-dimensional" processed and stored in the memory, and the convolution process of the convolution kernel to the neural network is realized by XOR operation of the convolution kernel by the FD-SOI-MOSFET. Underthe premise of in-memory calculation, compared with the traditional convolution process, the XOR operation is completed by using the XOR operation while maintaining high precision, which greatly improves the convolution processing speed of the neural network and saves the neural network. Parameter storage space, data transfer, and reduced computing power.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

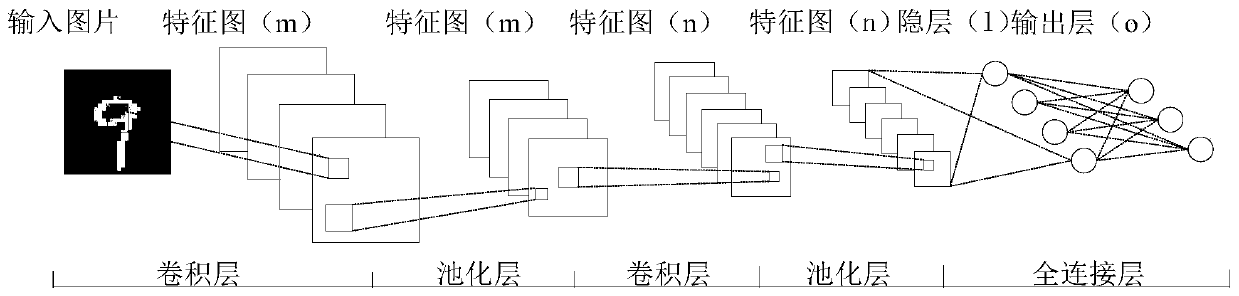

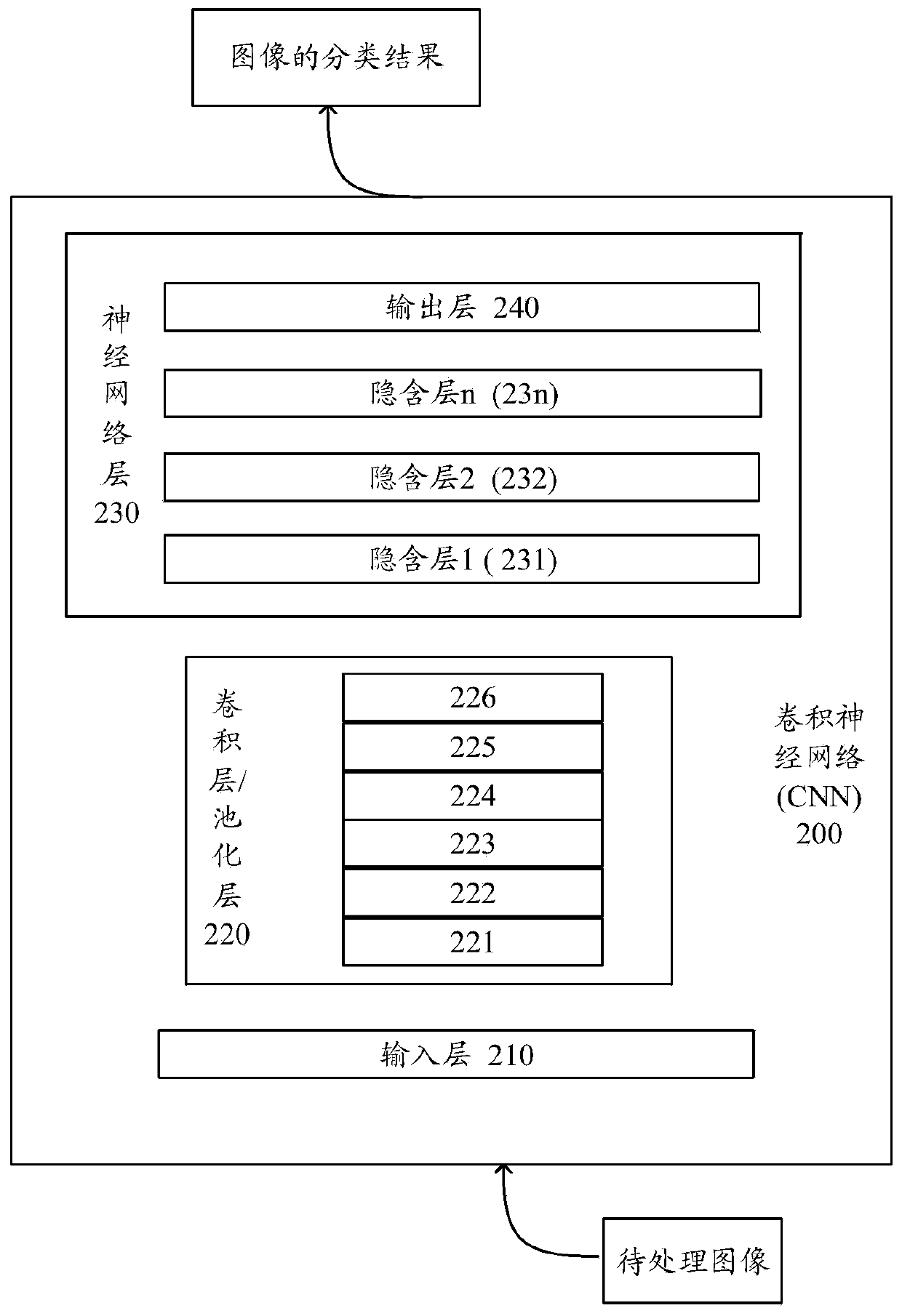

Image classification method and device, data processing method and device

ActiveCN110188795AReduce storage overheadSave storage spaceCharacter and pattern recognitionNeural architecturesClassification methodsComputer vision

The invention provides an image classification method and device, and relates to the field of artificial intelligence, in particular to the field of computing vision. The image classification method comprises the following steps: obtaining a convolution kernel parameter of a reference convolution kernel of a neural network and a mask tensor of the neural network, and performing Hadamard operationon the reference convolution kernel of the neural network and the mask tensor corresponding to the reference convolution kernel to obtain a plurality of sub-convolution kernels; and performing convolution processing on the to-be-processed image according to the plurality of sub-convolution kernels, and classifying the to-be-processed image according to the convolution feature map finally obtainedby convolution to obtain a classification result of the to-be-processed image. Due to the fact that the mask tensor occupies a smaller storage space relative to the convolution kernel, some devices with limited storage resources can deploy a neural network comprising the reference convolution kernel and the mask tensor, and then image classification is achieved.

Owner:HUAWEI TECH CO LTD

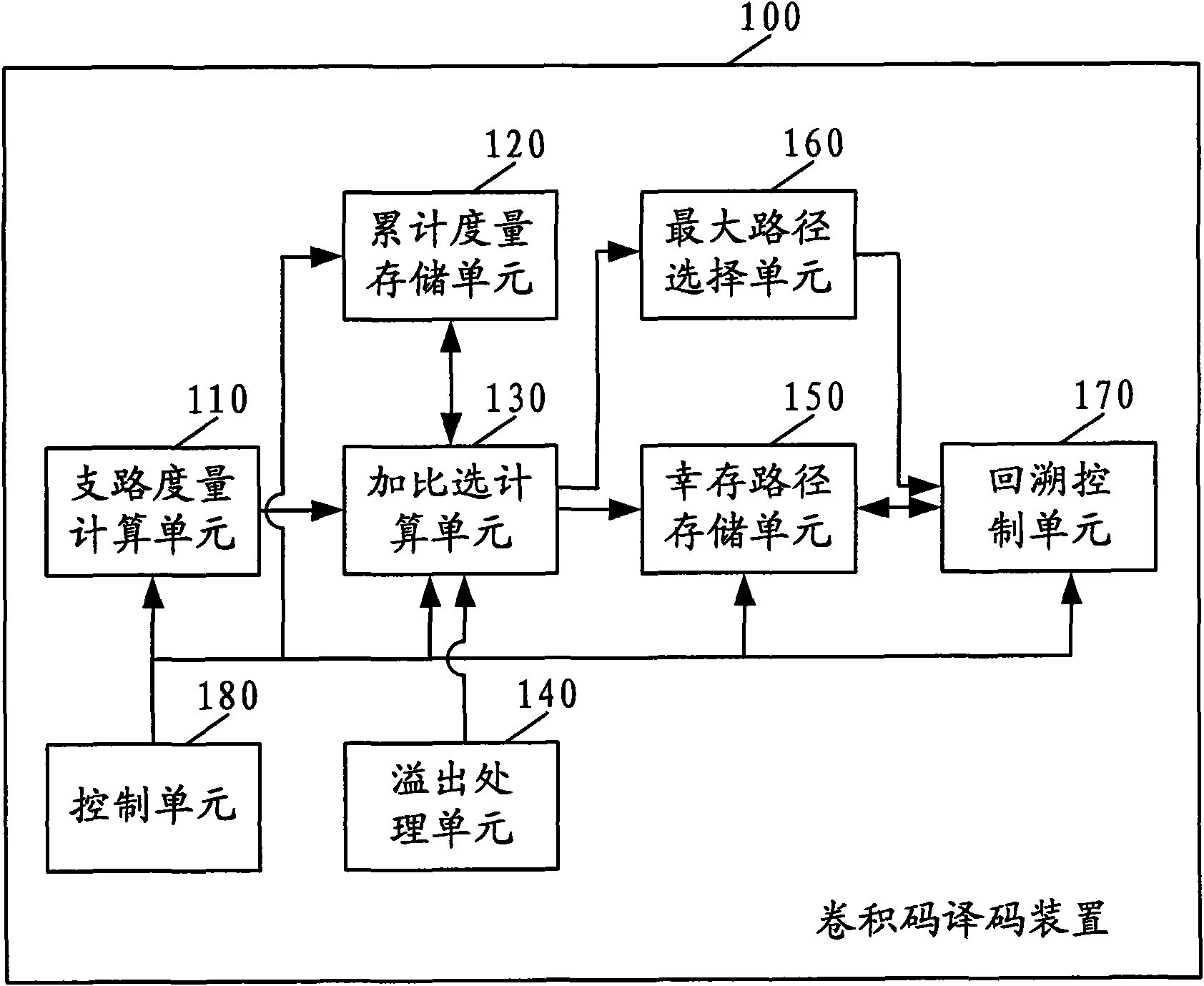

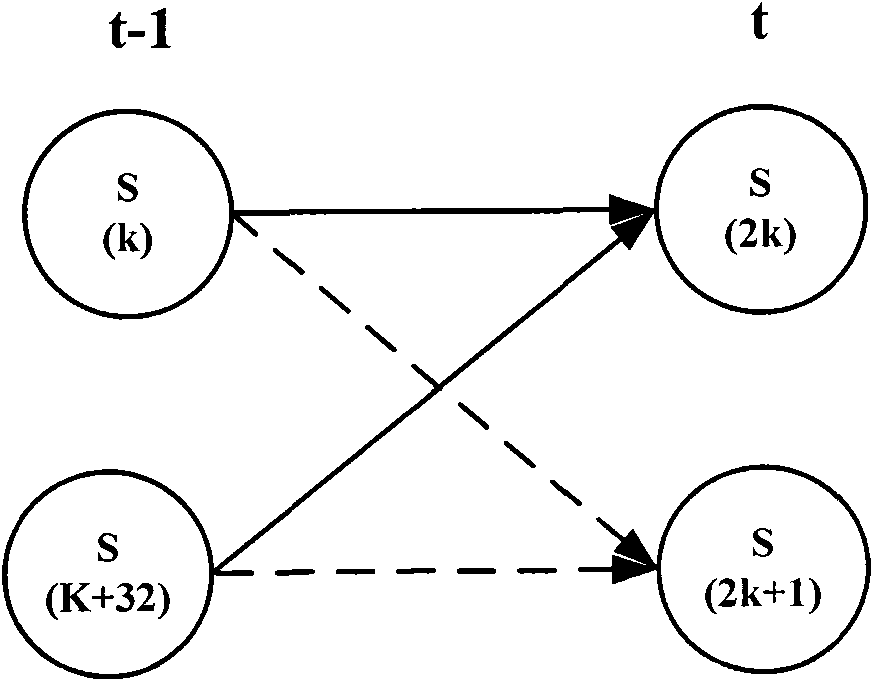

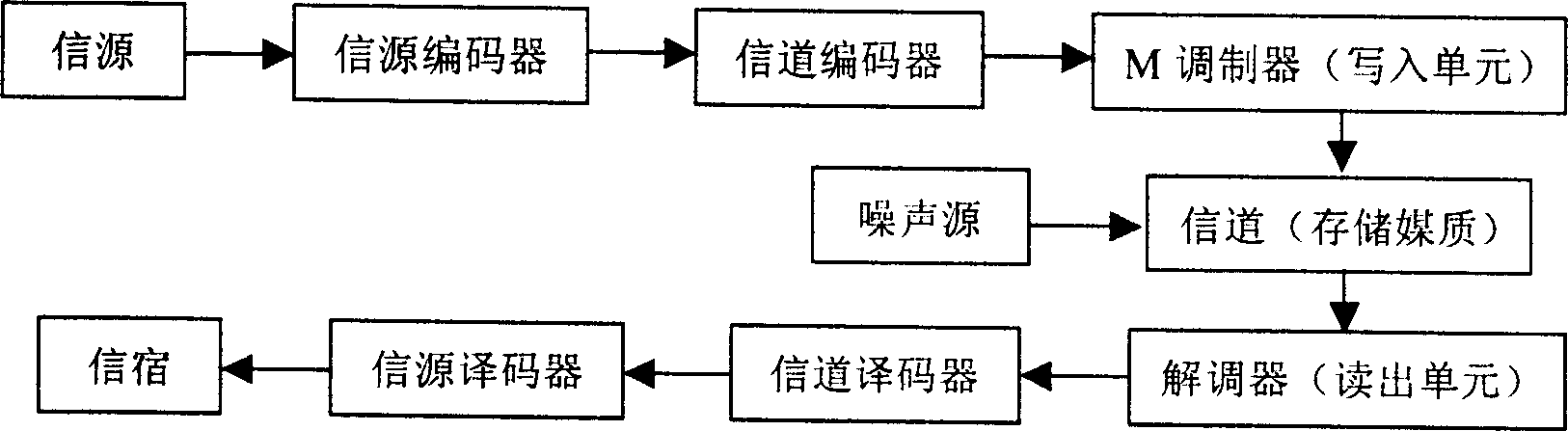

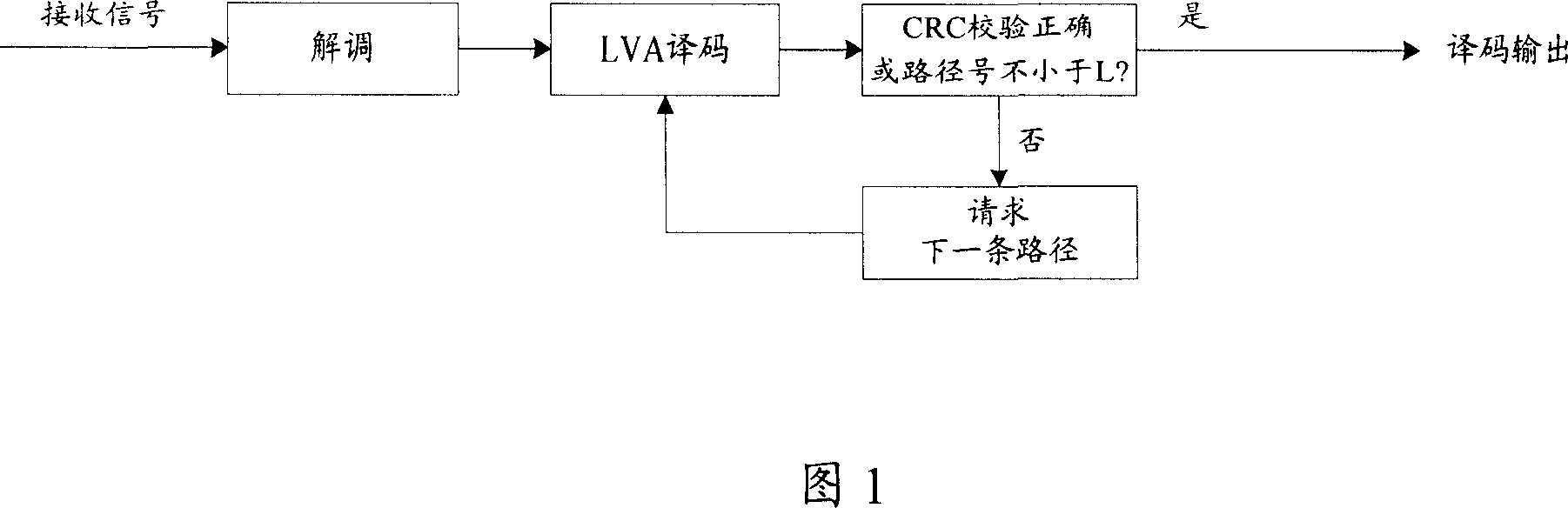

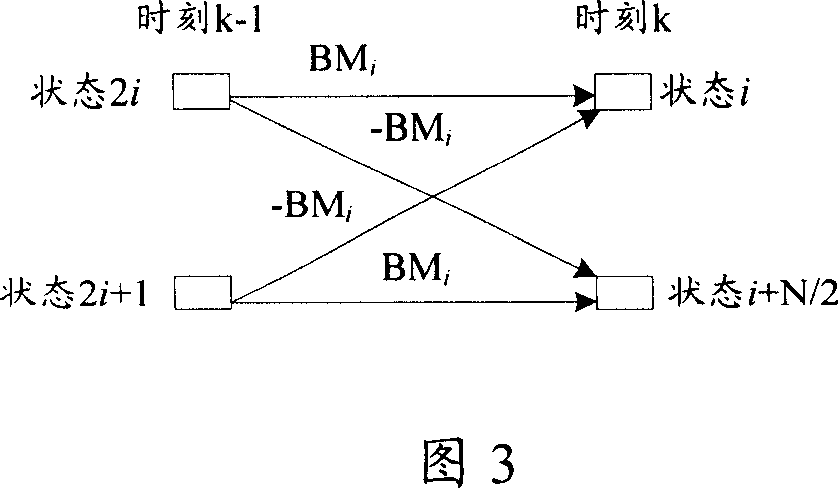

Method and device for decoding convolution code

ActiveCN101997553AHigh speedImprove performanceError correction/detection using convolutional codesConvolutional codeConvolution theorem

The invention discloses a method and device for decoding a convolution code. The device comprises a branch measure calculating unit, an accumulated measure storage unit, an add-compare-select calculating unit, an overflow processing unit, a survival path storage unit and a backtracking control unit, wherein the branch measure calculating unit is used for calculating a branch measure value; the accumulated measure storage unit is used for storing an accumulated survival path measure value; the add-compare-select calculating unit is used for carrying out adding-comparison-selection operation; the overflow processing unit is used for generating a subtraction enabling signal according to the state change of the highest order of the accumulated measure value in the accumulating process and controlling the add-compare-select calculating unit to carry out subtraction operation on the accumulated survival path measure value; the survival path storage unit is used for storing a survival path; and the backtracking control unit is used for backtracking the survival path and outputting a decoding result. The method and the device can solve the overflow problem of the survival path measure value in the accumulating process.

Owner:SANECHIPS TECH CO LTD

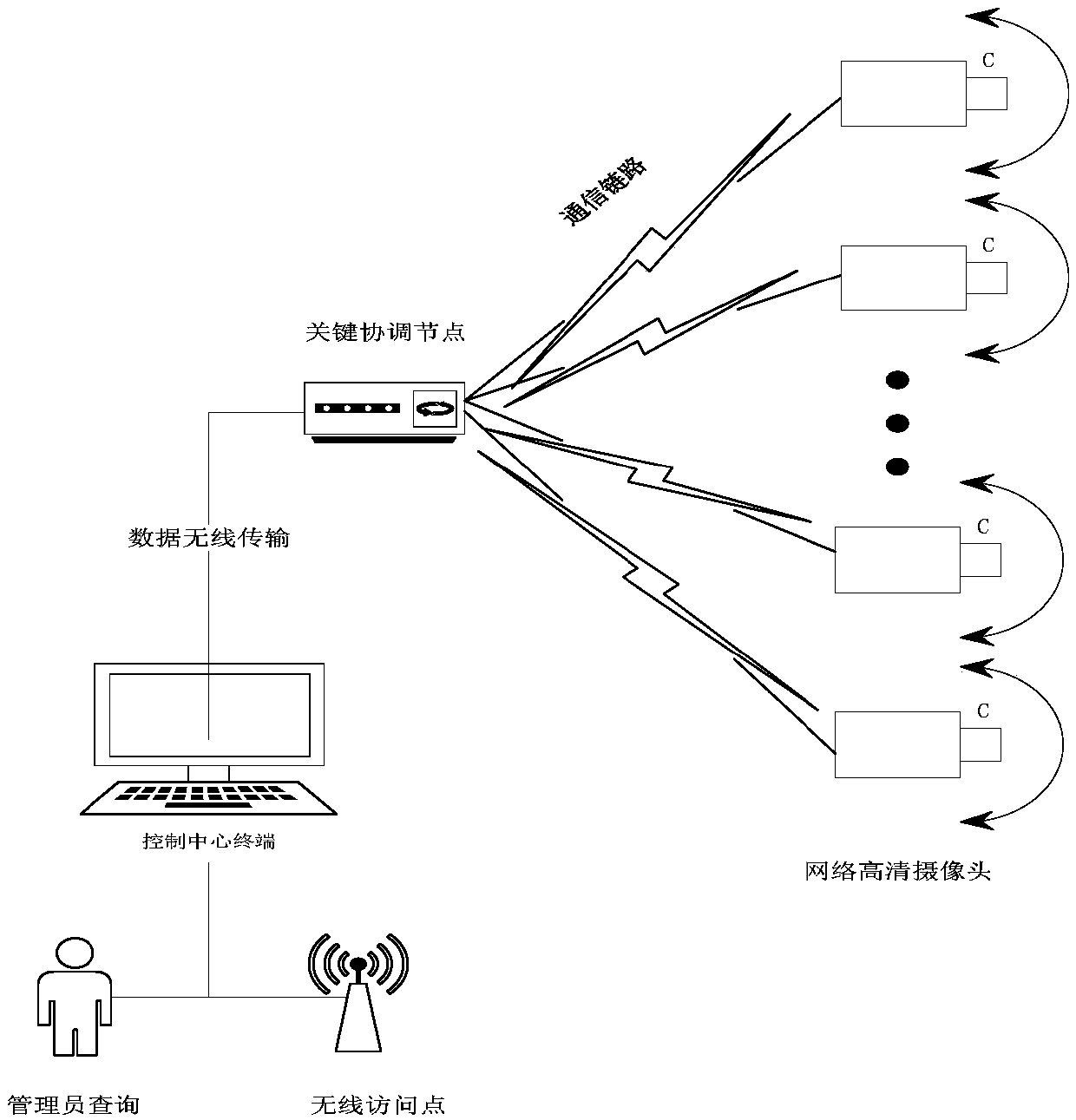

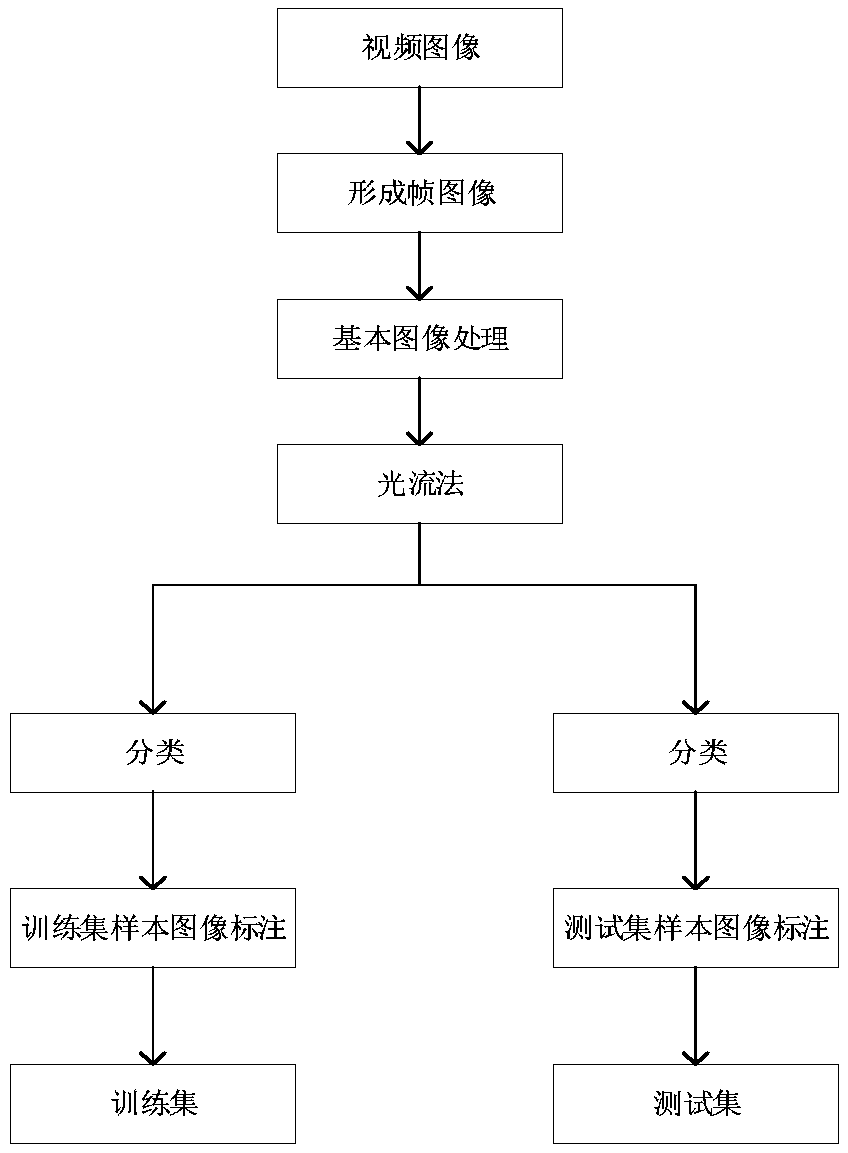

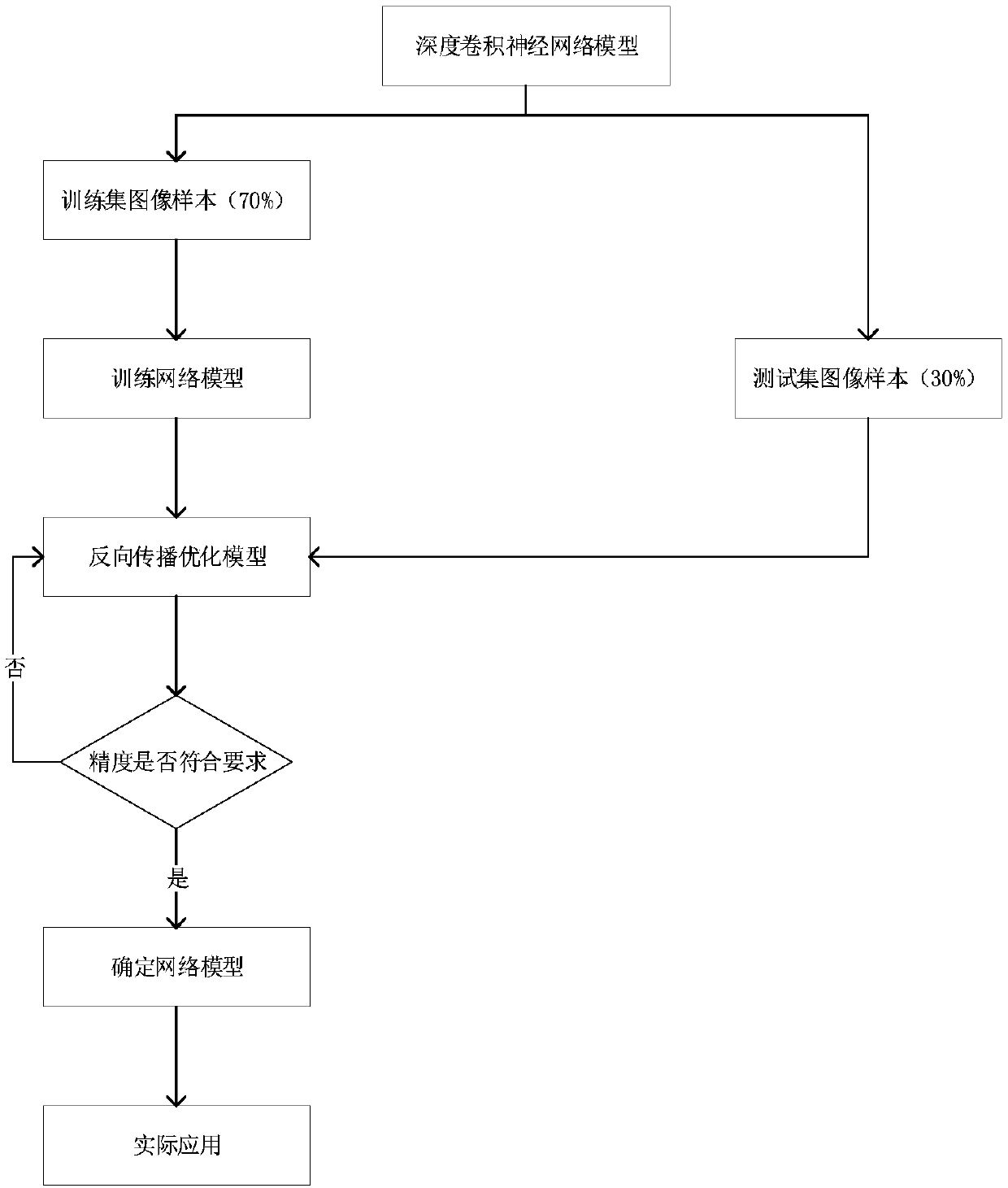

Forest fire detection method based on deep convolutional model with convolution kernels of multiple sizes

InactiveCN108921039AEfficient collectionImprove accuracyForest fire alarmsCharacter and pattern recognitionOptical flowConvolution theorem

The invention discloses a forest fire detection method based on a deep convolutional model with convolution kernels of multiple sizes, and relates to the technical field of deep learning video recognition. The method comprises the steps of collecting video image information; wirelessly transmitting data; forming an optical flow field; building a convolutional neural network model in deep learning;predicting a result; and the like. By training the deep convolutional neural network model with the convolution kernels of different sizes, an existing algorithm is improved; and in combination withexisting hardware conditions, the forest fire judgment speed is increased, the prediction precision of the whole model is improved, and the problems that an existing forest fire is not timely discovered and the economic loss is relatively serious can be effectively solved; and therefore, the method has a certain practical value.

Owner:南京启德电子科技有限公司

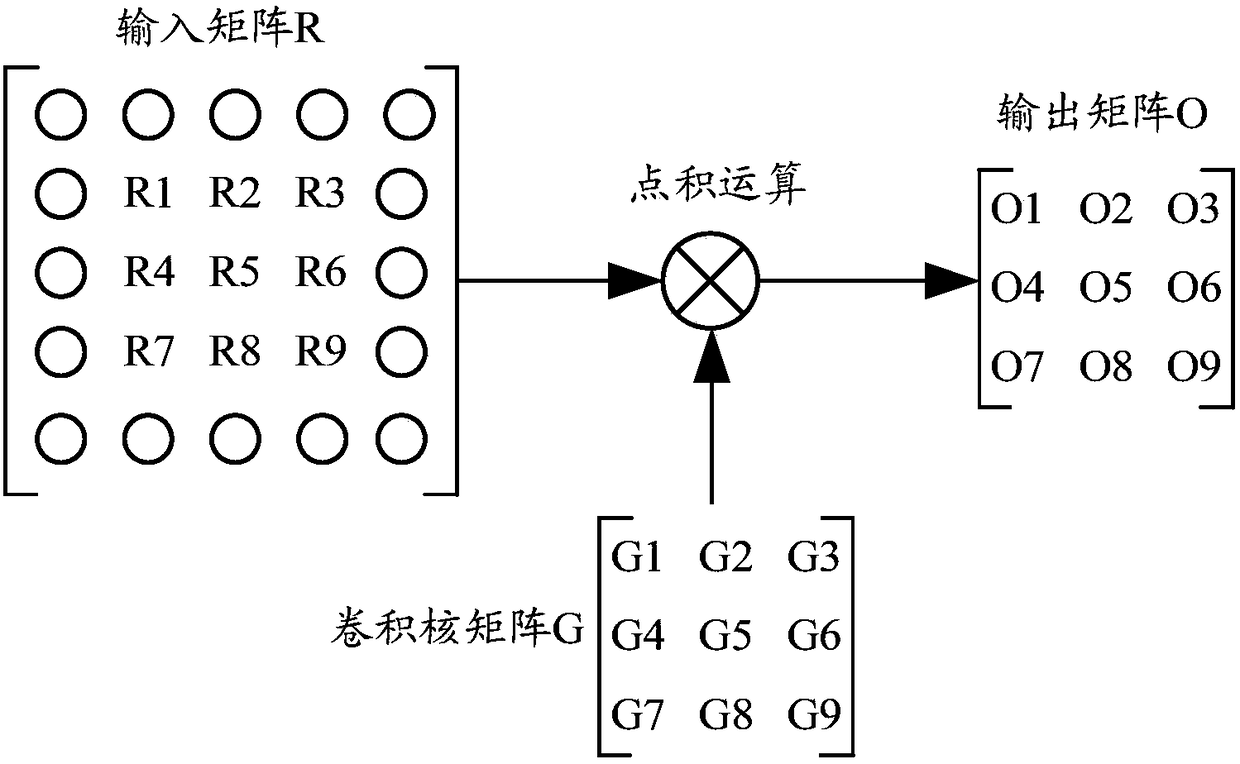

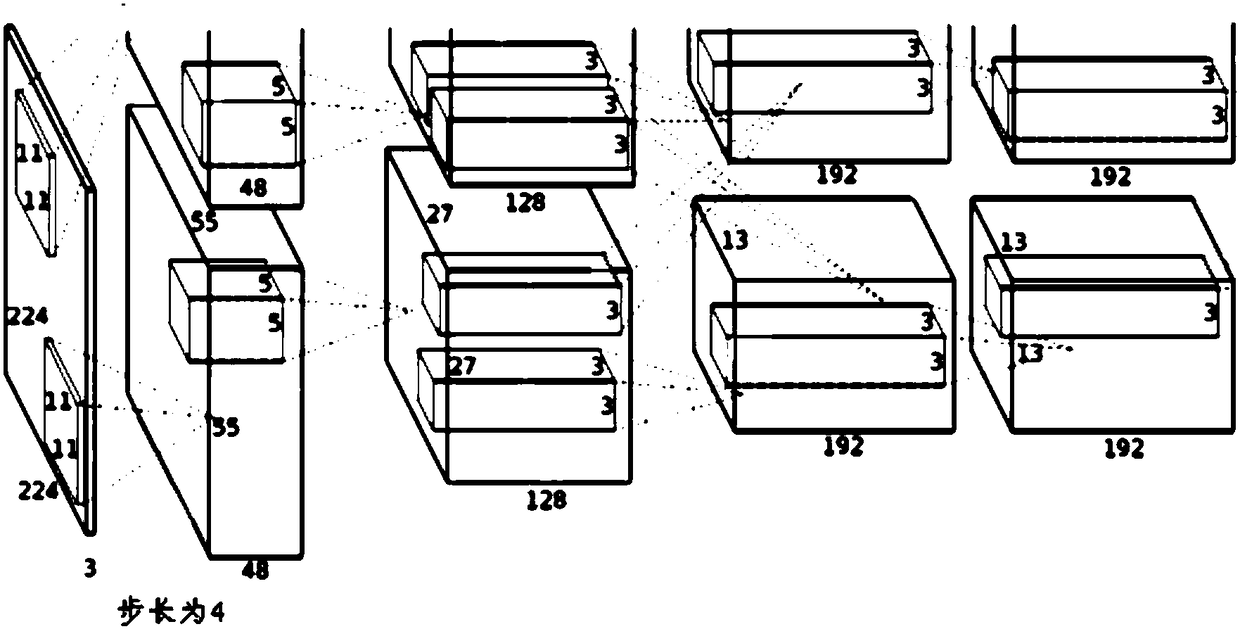

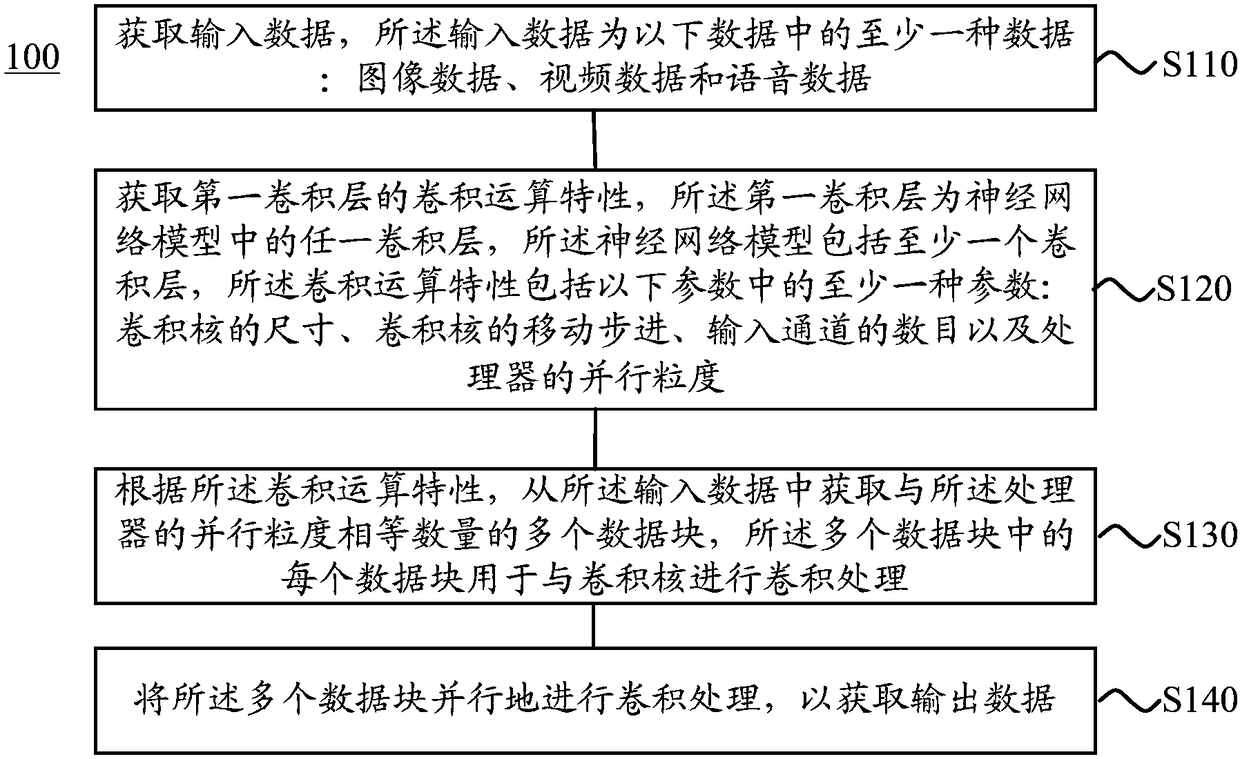

Method, device and system for data processing

The embodiment of the invention discloses a method, device and system for data processing. The method comprises the steps of obtaining input data; obtaining convolution operation characteristics of afirst convolution layer, wherein the first convolution layer is any convolution layer in a neural network model, the neural network model comprises at least one convolution layer, and the convolutionoperation characteristics comprise at least one of the following parameters: the size of a convolution kernel, the moving step of the convolution kernel, the number of input channels and the parallelgranularity of a processor; obtaining a plurality of data blocks with the number being the same as the parallel granularity of the processor from the input data according to the convolution operationcharacteristics, wherein each of the plurality of data blocks is used for performing convolution processing with the convolution kernel; and performing convolution processing on the plurality of datablocks in parallel so as to obtain output data. The method, device and system disclosed by the embodiment of the invention can adaptively select an optimal parallel operation according to the convolution operation characteristics of different convolution layers, so that the parallelism of the convolution operation is improved.

Owner:HUAWEI TECH CO LTD

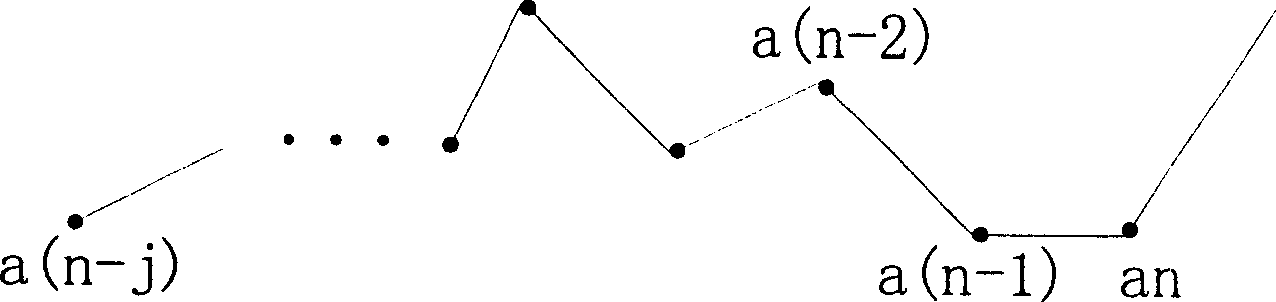

Encoding method and encoder for tailing convolution codes

InactiveCN1855732AOvercome the disadvantages of relatively high complexity and difficulty in implementationReduce computational complexityError correction/detection using convolutional codesDecoding methodsComputation complexity

The invention comprises the following steps: with the accumulating metrics of the initial window, an incorrect initial path is merged into a correct path, and a state of optimal node at the end of initial window is used as a decoding initial state of the tail-biting convolution code; in term of said decoding initial state and a delay window, through whole decoding trace-back, a Viterbi decoding is made for the tail-biting convolution code.

Owner:ZTE CORP

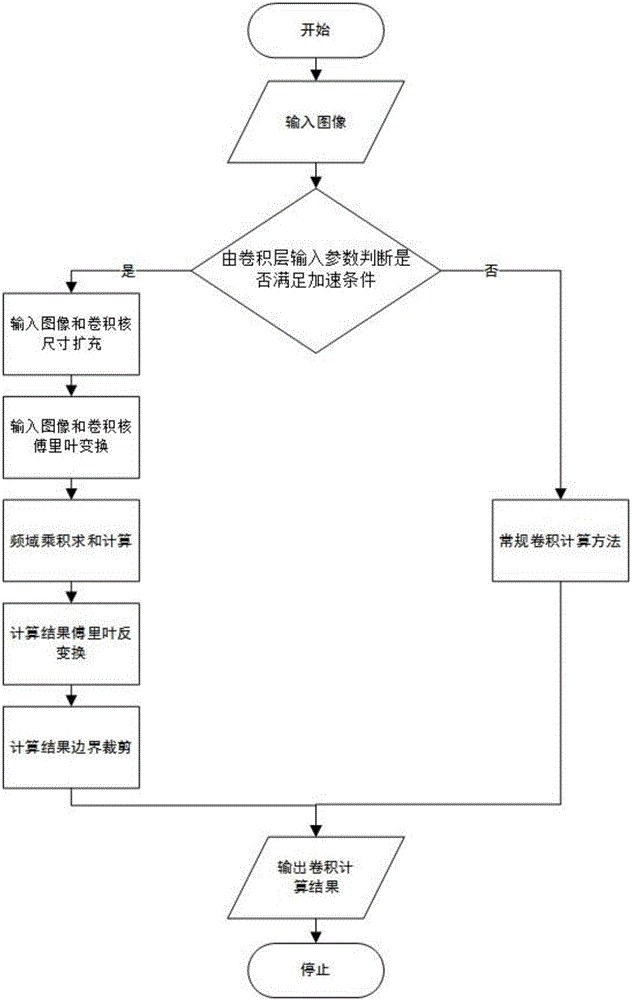

Convolution theorem based face verification accelerating method

ActiveCN106709441ANo loss of precisionWill not affect accuracyCharacter and pattern recognitionConvolution theoremFrequency domain

The invention relates to a convolution theorem based face verification accelerating method, and belongs to the field of face verification in computer vision. For a face verification system adopting a CNN (Convolutional Neural Network) technology, a convolution theorem method is adopted to replace the conventional convolution computation method to perform convolution computation on a convolution layer meeting an acceleration condition on the basis of using a GPU parallel computation platform. The convolution theorem shows that convolution in the space domain is equivalent to product in the frequency domain. Through transforming time-consuming convolution computation into product computation in the frequency domain, the computation amount can be significantly reduced, and the computation speed of a CNN is accelerated. In allusion to problems of great computational burden and slow operation speed of the face verification system, the method enables the operation speed of the face verification system to be obviously improved, and the processing capacity for mass data can be improved.

Owner:深圳市小枫科技有限公司

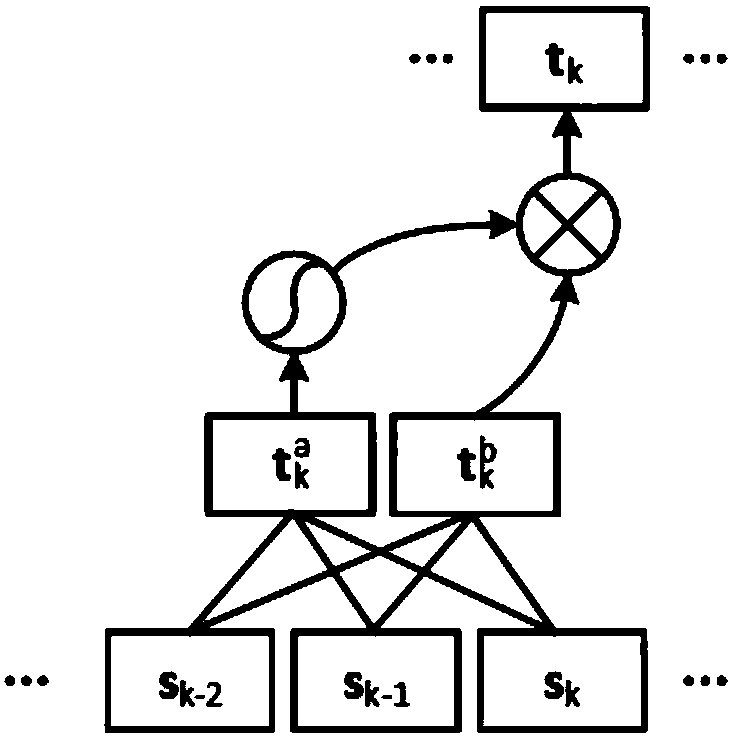

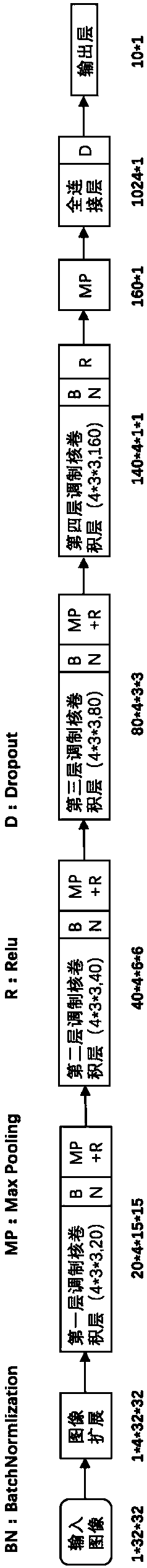

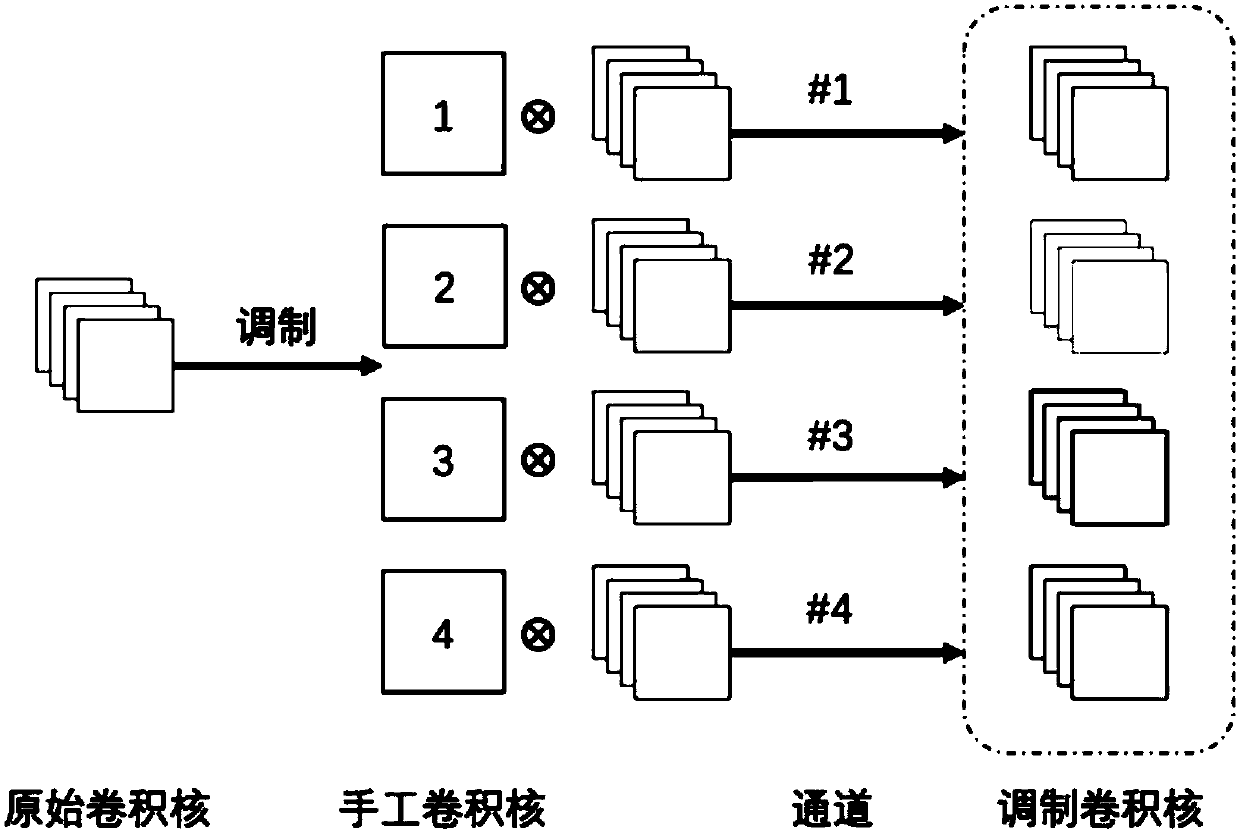

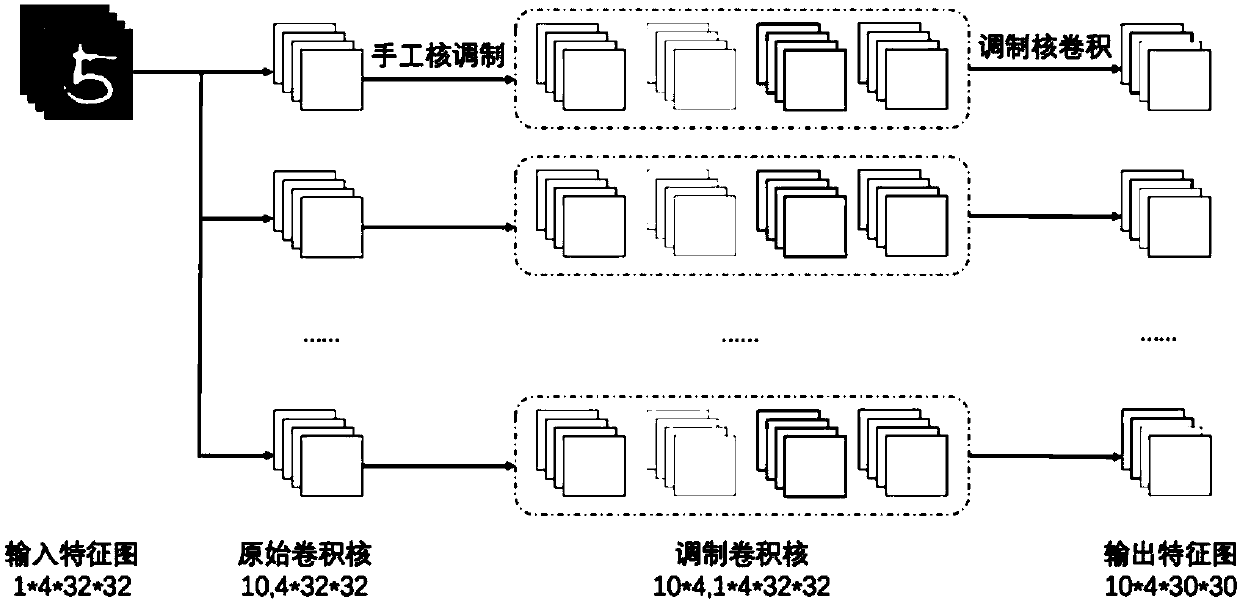

Convolutional neural network construction method

ActiveCN107633296AReduce performanceFeature optimization or enhancementNeural architecturesNeural learning methodsNetwork structureConvolution theorem

The present invention discloses a convolutional neural network construction method, and belongs to the neural network technology field. According to the present invention, when the convolutional neural network transfers forward, on each original convolution kernel, and by manually adjusting the dot product of a kernel and the original convolution kernel, the original convolution kernel is modulated to obtain the modulated convolution kernel, and the modulated convolution kernel is used to substitute for the original convolution kernel to transfer the neural network forward, thereby realizing afeature enhancement effect. According to the method of the present invention, the neural network is optimized greatly, so that the total number of the kernels that the network must learn is reduced.In addition, by modulating to generate the sub-convolution kernels to arrange the kernels of the redundant learning in an original network structure, a model compression purpose also can be achieved.

Owner:NO 54 INST OF CHINA ELECTRONICS SCI & TECH GRP

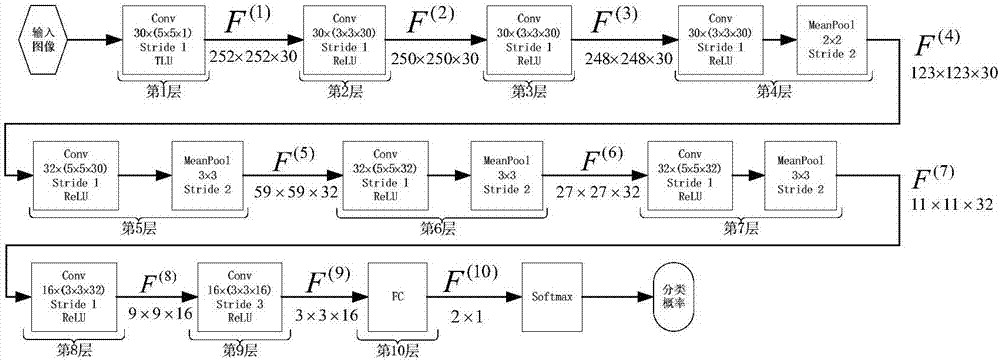

Convolutional neural network-based digital image steganalysis method

InactiveCN107330845AImprove performanceImprove accuracyImage data processing detailsNeural architecturesSteganalysisActivation function

The present invention designs a convolutional neural network-based digital image steganalysis method. The convolutional neural network-based digital image steganalysis method comprises the following steps of S1 constructing a convolutional neural network formed by connecting multiple convolutional layers in series; S2 for the first convolutional layer, adopting a high-pass filter to initialize a convolution kernel, and then adopting a truncation linear unit activation function as the activation function of the convolutional layer; S3 inputting a digital image in the convolutional neural network, and using the convolutional neural network to output a result of the digital image whether or not after the steganography.

Owner:SUN YAT SEN UNIV

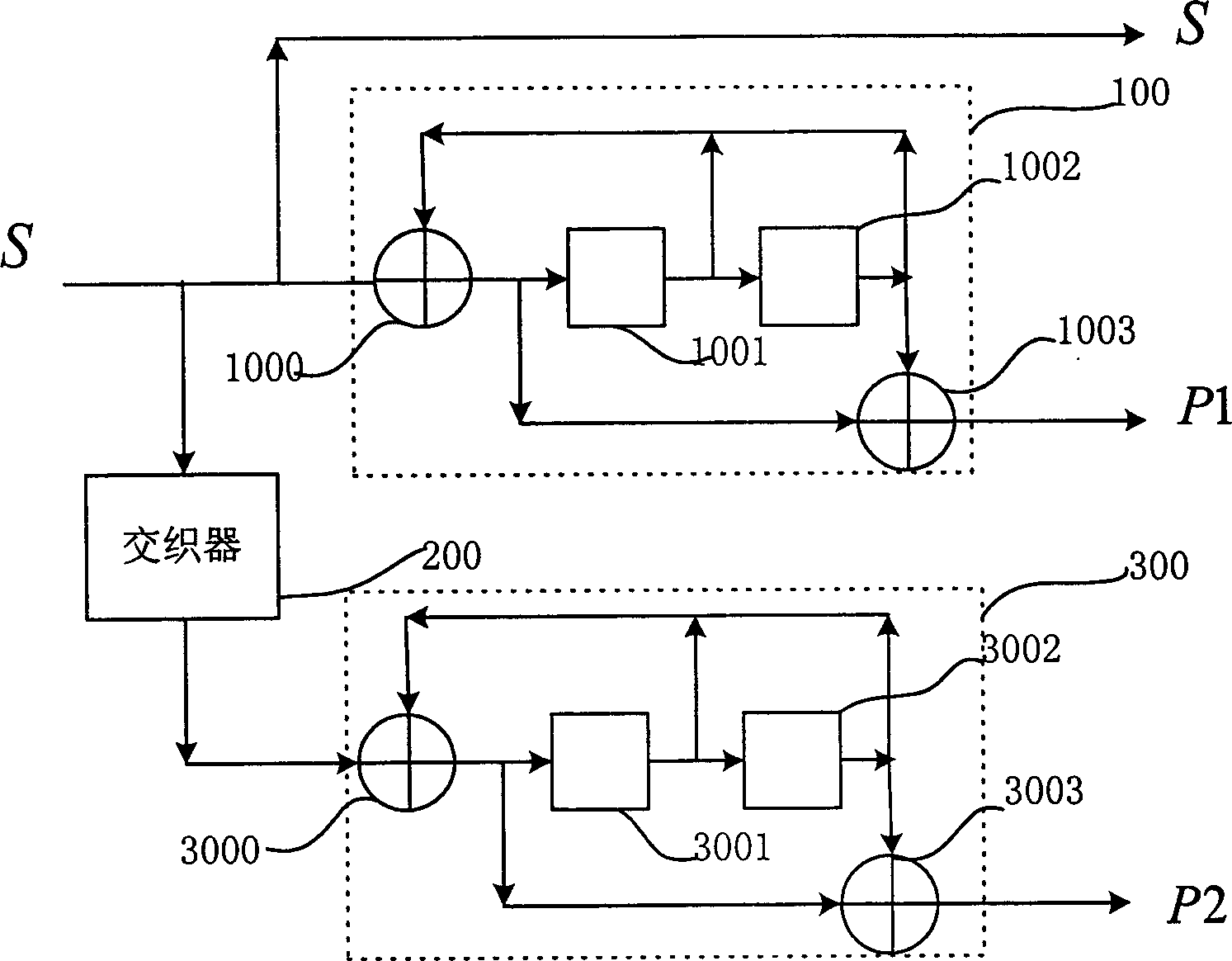

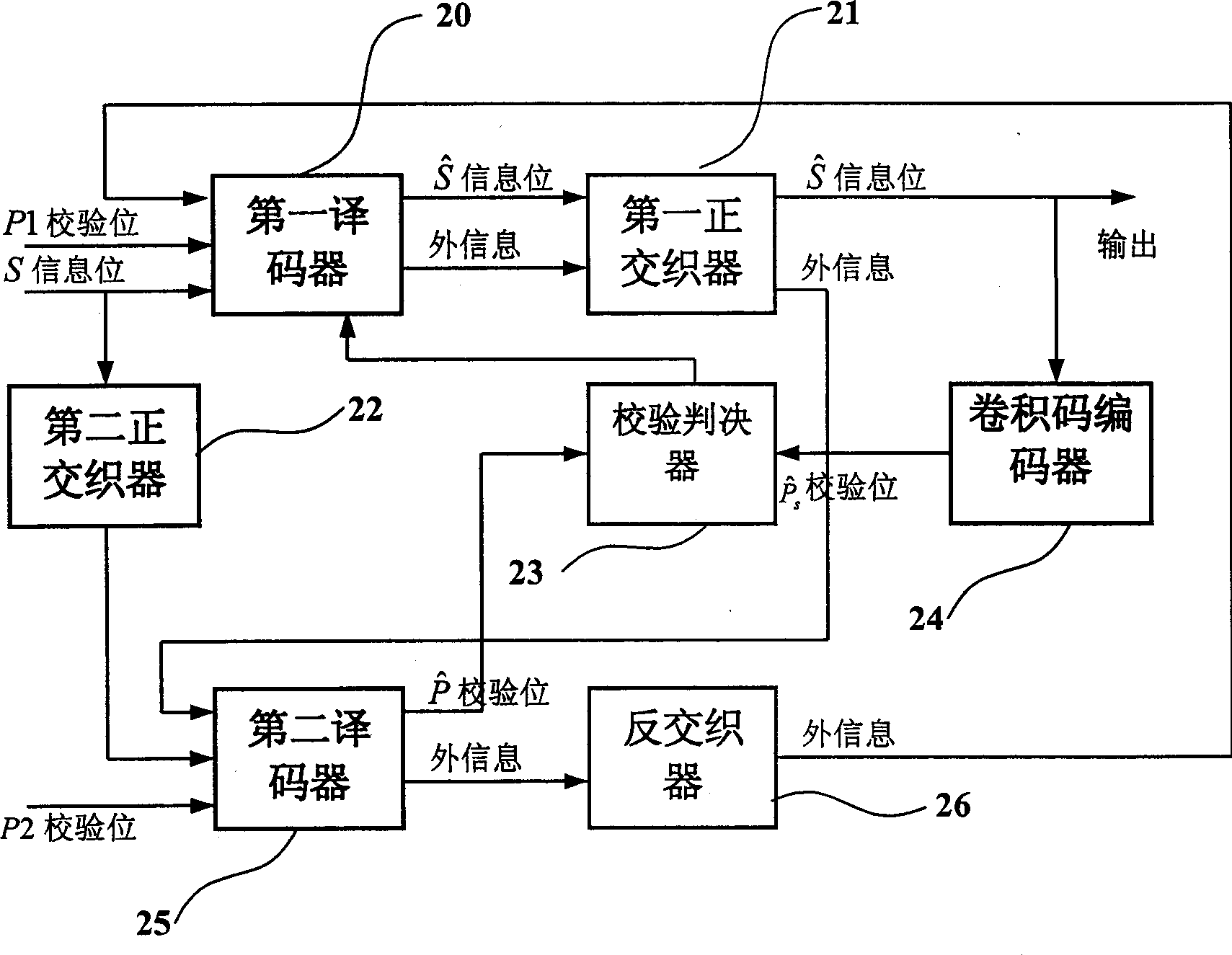

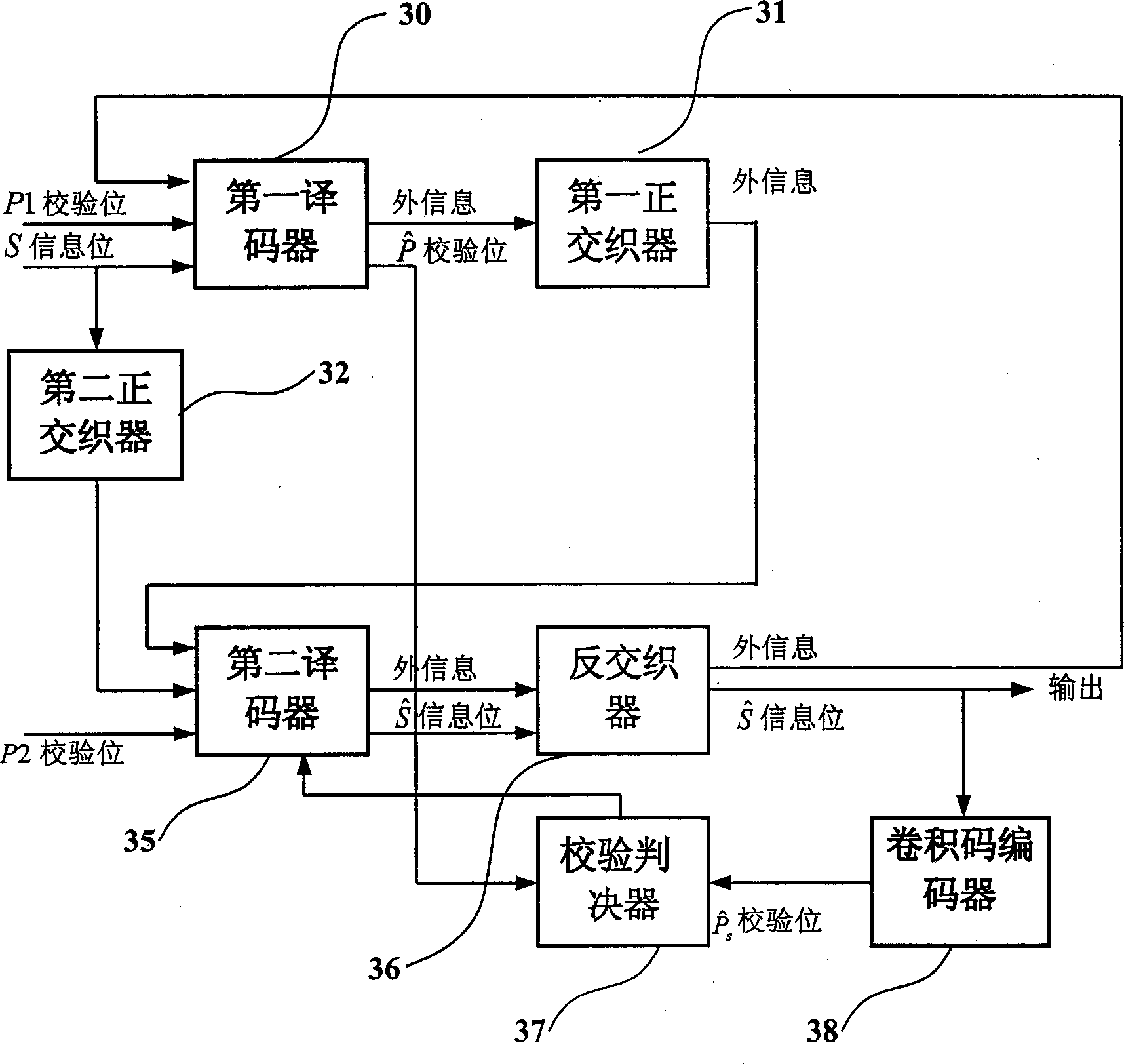

Interative encode method and system

InactiveCN1780152AReduce the number of iterationsReduce latencyError correction/detection by combining multiple code structuresTheoretical computer scienceConvolutional code

An iterative decode system and method for high-speed decode of Turbo code features that in iterative decoding procedure, the hard decision values for information bits and check bits are output, if they can meet the constrain relation of convolution code is checked, and the iteration is stopped if they can.

Owner:PANASONIC CORP

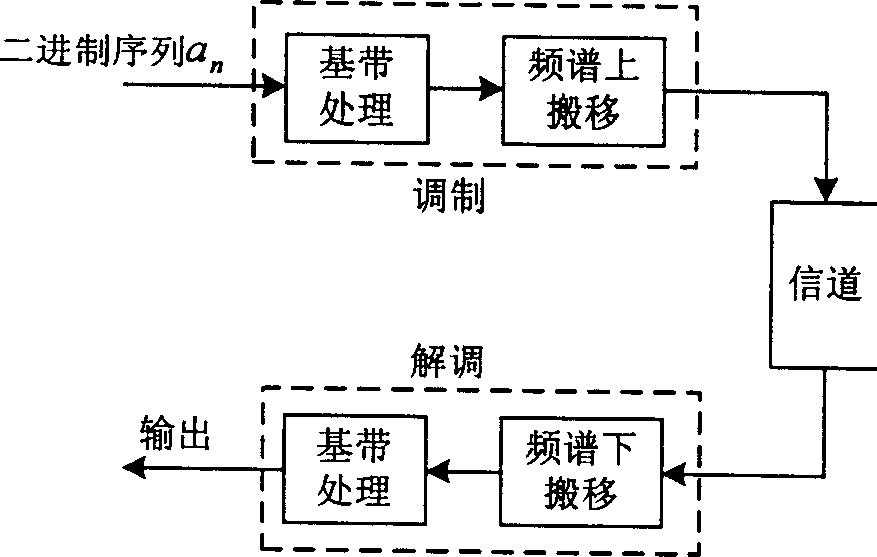

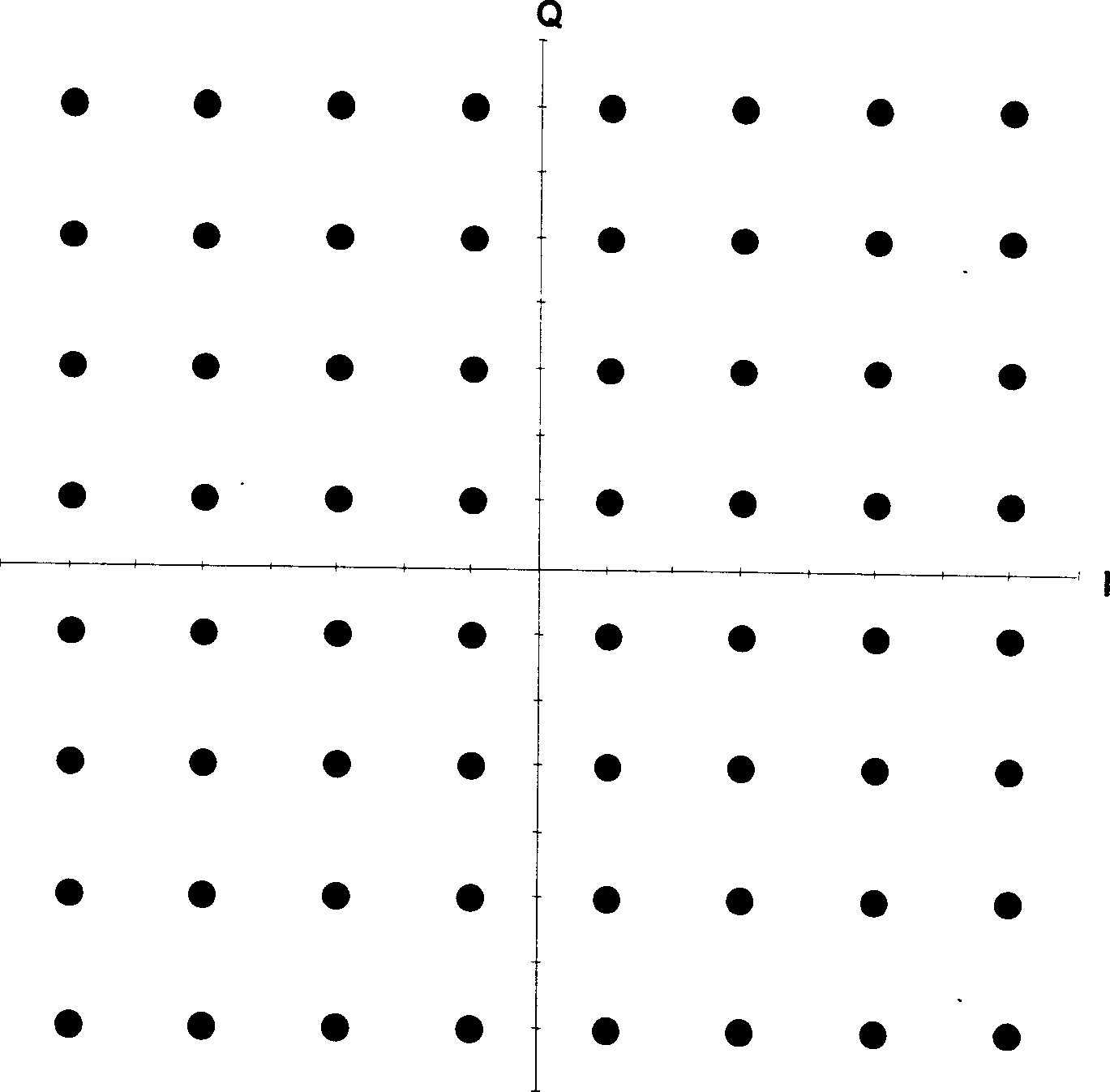

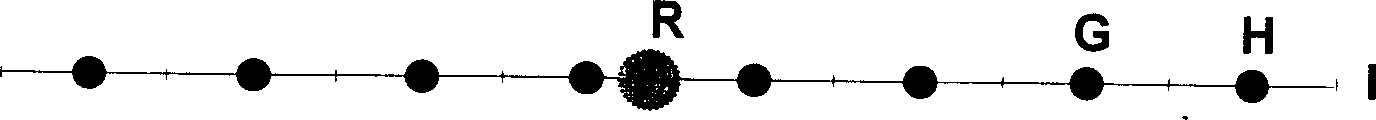

Minimum Eustachian distance maximized constellation mapping cascade error-correction coding method

InactiveCN1430353AImprove error correction performanceError correction/detection using convolutional codesOrthogonal multiplexTheoretical computer scienceConvolutional code

A constellation mapping cascaded error-correcting encode method with maximizing the minimal Euclidean distance includes encoding the input digital signals by systen convolution code, random bit interleaving processing to data, and performing mQAM modulation to data. The sign constellation mapping relative conforms with the maximization of minimal Euclidean distance between correlative codes. The sign constellation mapping code as the internal code of cascaded error-correcting code and said system conveolution code constitute a cascaded system convolution code. It has high error-correcting performance with less iteractive operations.

Owner:TSINGHUA UNIV

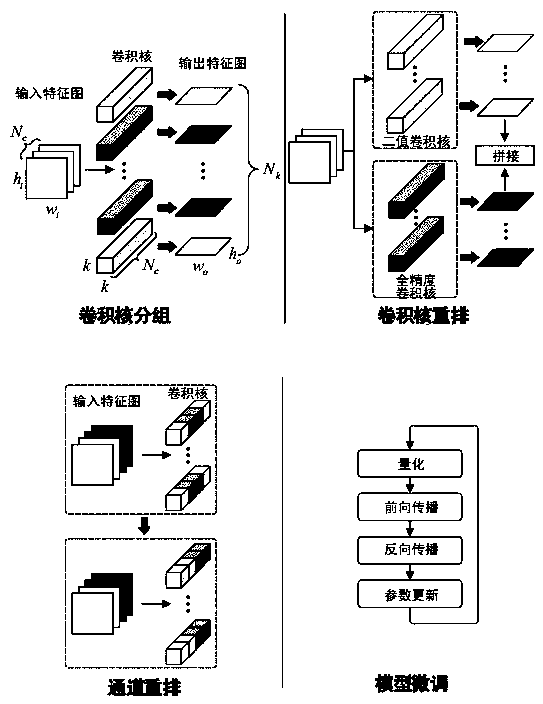

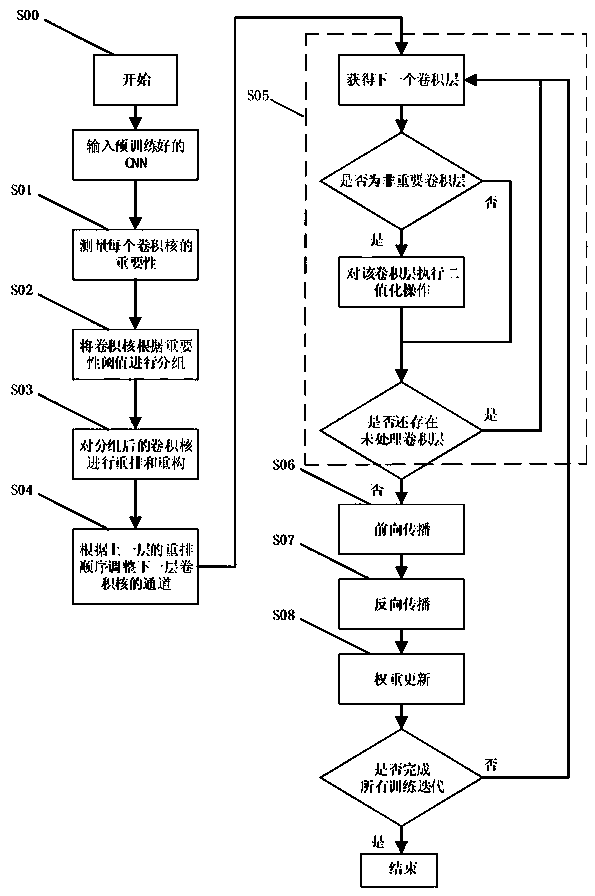

A partial binary convolution method suitable for embedded devices

The invention discloses a partial binary convolution method suitable for embedded equipment, belonging to a model compression method of depth learning. The method comprises the following steps: 1. foreach convolution layer of a given CNN, measuring the importance of each convolution core according to the statistics of each output characteristic map; the convolution kernels of each layer being divided into two groups. 2, rearrangeing that two groups of convolution core on the storage space so that the storage positions of the same group of convolution cores are adjacent to each other, recording the rearrange order to generate a new convolution layer; 3, changing the channel order of the convolution nucleus of the next convolution layer according to the rearrangement order of the step 2; Step 4, according to the CNN processed in the above steps, fine-tuning training being carried out, binary quantization being carried out on the convolution layer divided into non-important layers, and the accuracy of the whole network being gradually recovered through the iterative operation of quantization and training. The invention ensures the accuracy of the given CNN on the large data set, andreduces the calculation and storage overhead of the convolution neural network on the embedded equipment.

Owner:CHONGQING UNIV

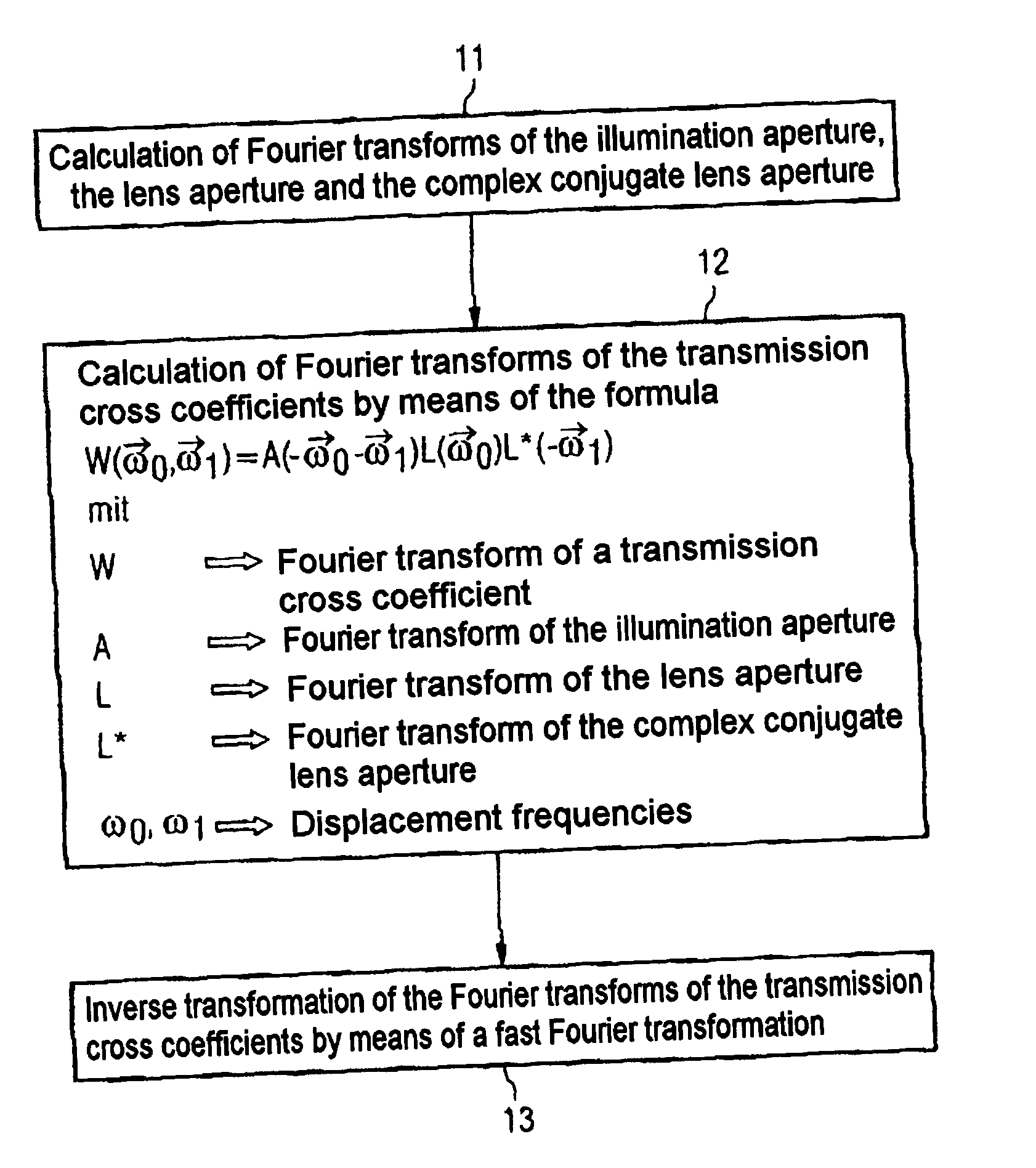

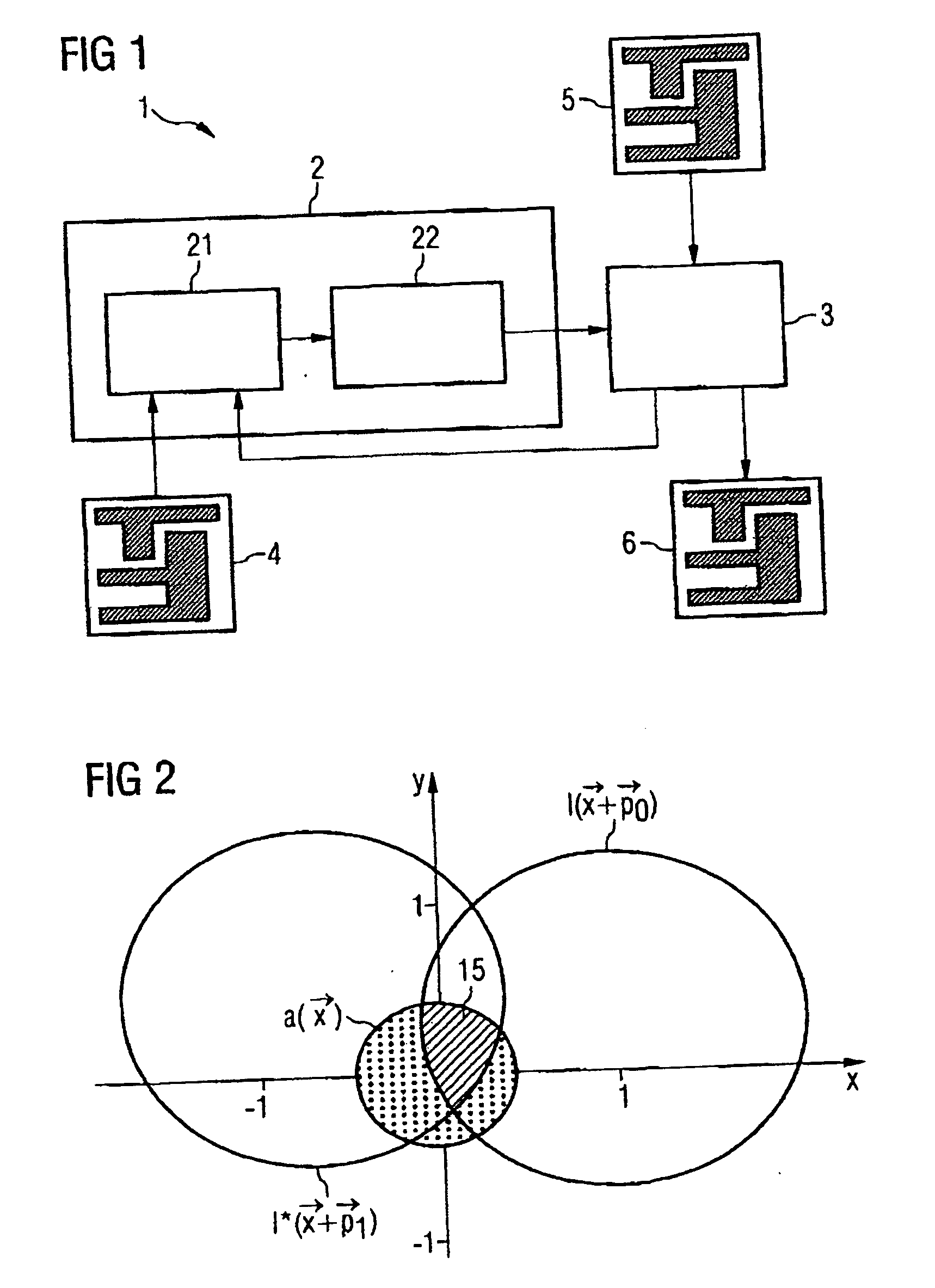

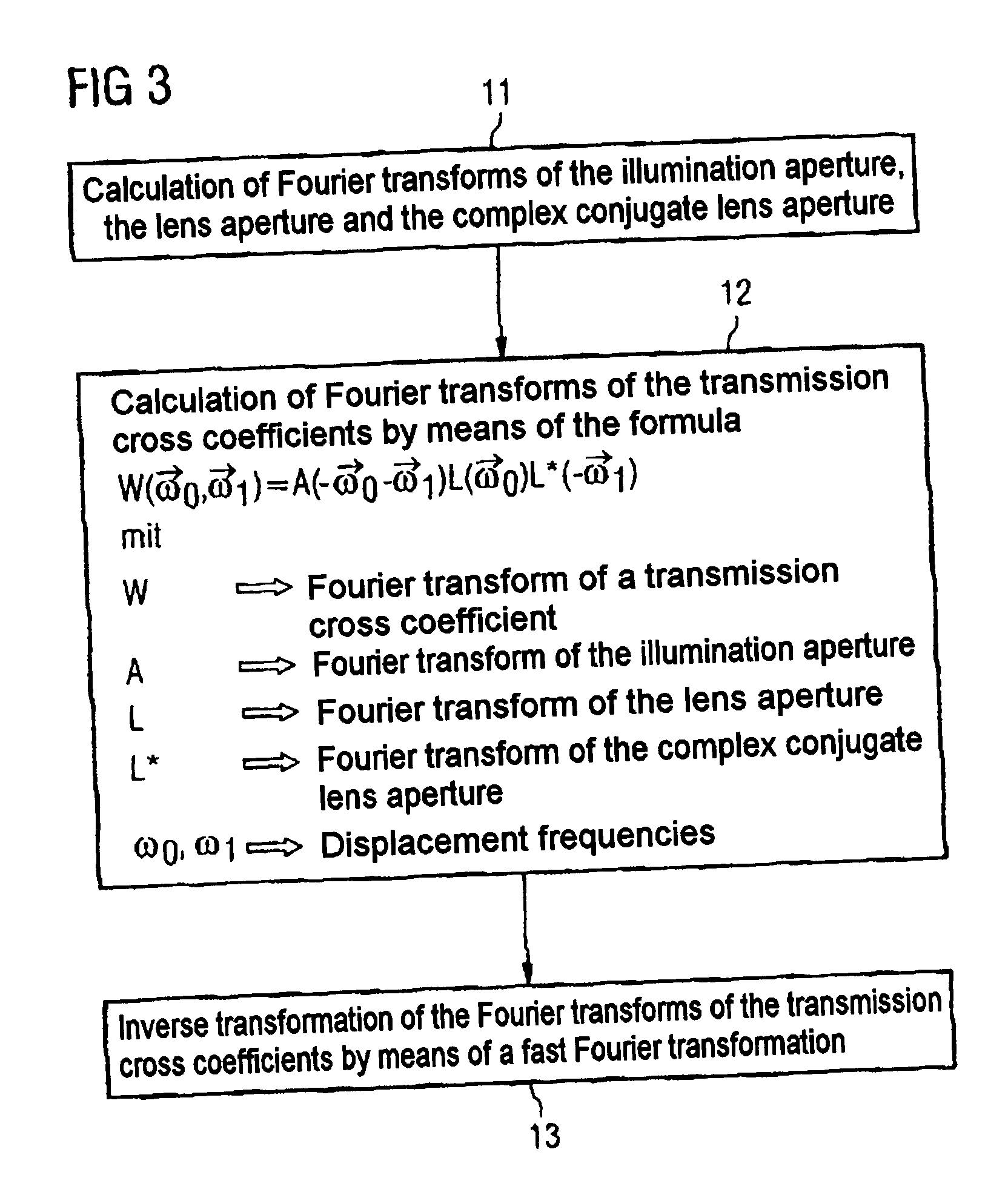

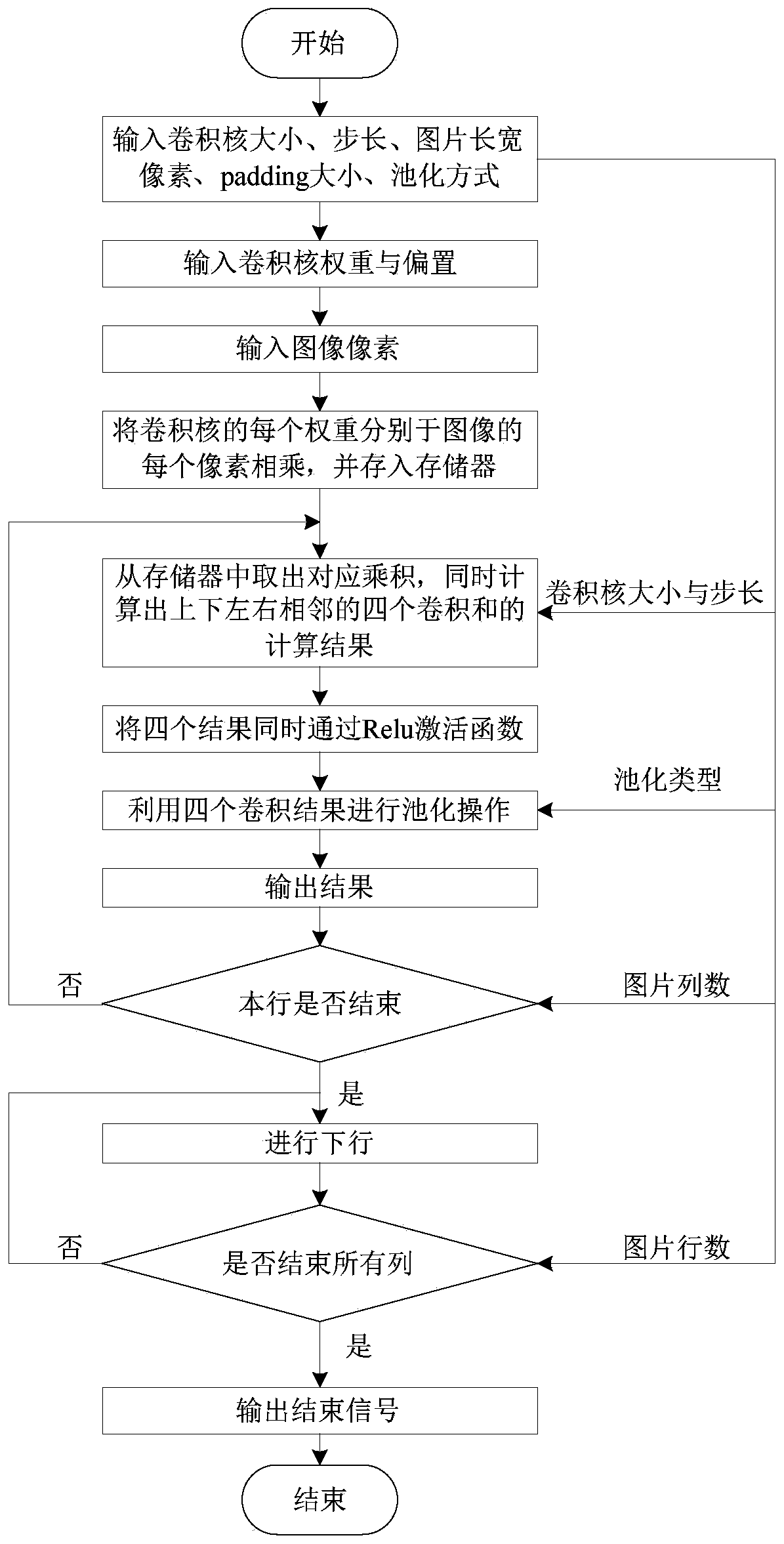

Method for determining a matrix of transmission cross coefficients in an optical proximity correction of mask layouts

InactiveUS20060009957A1Fast and efficient establishmentProvide quicklyPhotomechanical apparatusDigital computer detailsFast Fourier transformGrating

The present invention relates to a method for determining a matrix of transmission cross coefficients w for an optical modeling in an optical proximity correction of mask layouts. In a first step, there is calculation of Fourier transforms of an illumination aperture, a lens aperture and a complex conjugate lens aperture, which are present in the form of image matrices with a predetermined raster. A second step involves calculating Fourier transforms for the transmission cross coefficients w from the Fourier transforms by means of a convolution theorem in order to obtain the matrix of the Fourier transforms of the transmission cross coefficients w. A further step involves inverse-transforming the Fourier transforms of the transmission cross coefficients w by means of a fast Fourier transformation in order to obtain the matrix of the transmission cross coefficients w for the optical modeling in the optical proximity correction of mass layouts.

Owner:POLARIS INNOVATIONS

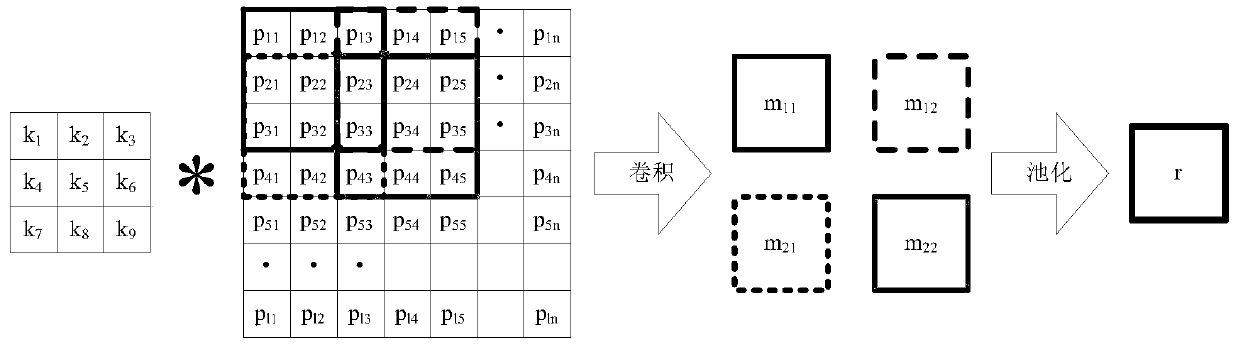

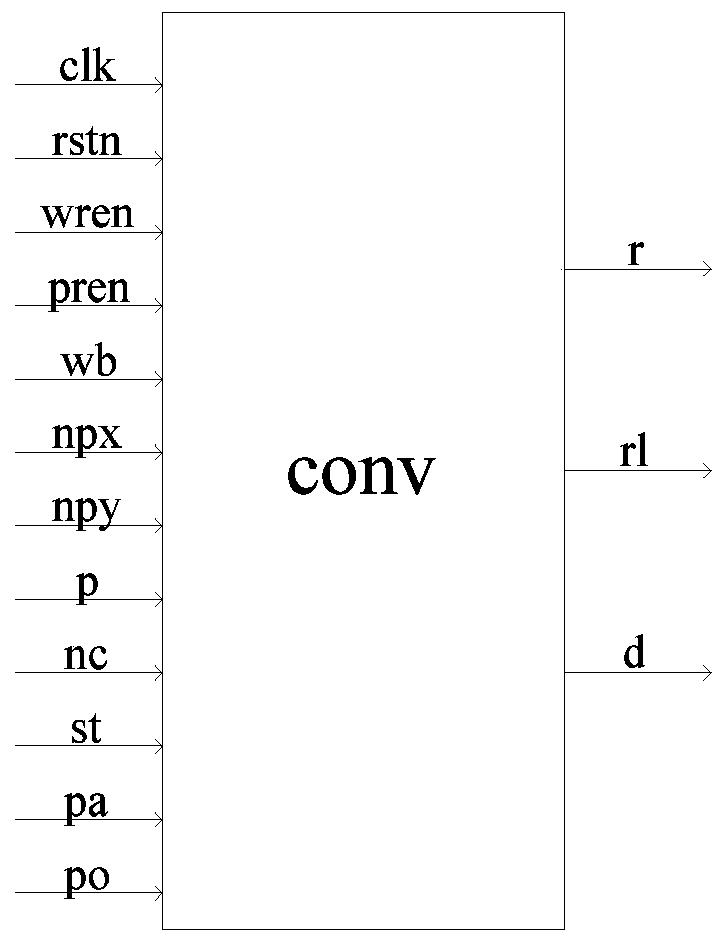

Universal convolution-pooling synchronous processing convolution kernel system

ActiveCN109978161ASize boostSpeed up design methodsNeural architecturesPhysical realisationAlgorithmConvolution theorem

The invention discloses a universal convolution-pooling synchronous processing convolution kernel system, and belongs to the technical field of convolution neural network acceleration in machine learning. An existing machine learning method is realized by adopting software, so that the problems of limited computing power, higher cost and the like exist. According to the present invnetino, the machine learning is realized by adopting the hardware design, the purpose of accelerating the convolutional neural network is realized in a convolutional-pooling synchronous processing manner, and the machine learning can be realized quickly and efficiently with low power consumption on the premise that the accuracy is not changed. According to an existing convolutional neural network, a convolution kernel is generally of a fixed size and cannot adapt to various design requirements, but the convolution kernel of the invention can change the parameters such as the size and the step length of the convolution kernel and can adapt to the design requirements under various conditions.

Owner:JILIN UNIV

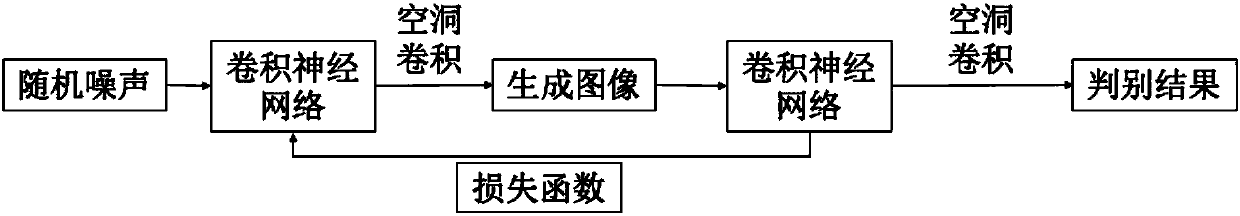

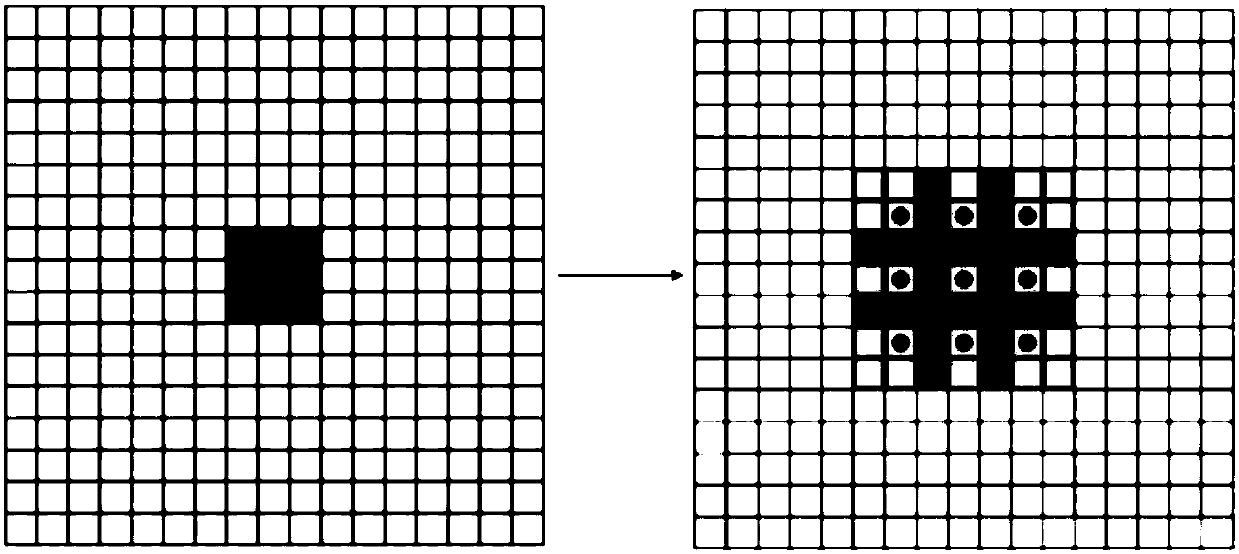

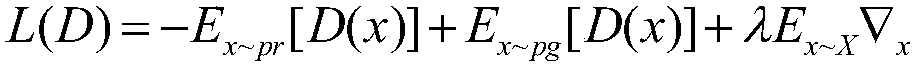

Dilated-convolution method based on deep convolutional adversarial network model

InactiveCN107871142AFast network trainingStable Network TrainingCharacter and pattern recognitionNeural architecturesGenerative adversarial networkConvolution theorem

The invention discloses a dilated-convolution method based on a deep convolutional adversarial network model, and belongs to the fields of deep learning and neural networks. The dilated-convolution method includes the following steps: S1, constructing an original generative adversarial network (GAN) model; S2, constructing deep convolutional neural networks to use the same as a generator and a discriminator; S3, initializing random noises, and inputting the same into the generator; S4, utilizing dilated convolution to carry out convolution operations on images in the neural network; and S5, inputting a loss function value, which is obtained by the dilated-convolution operations, into the generator for subsequent training. According to the dilated-convolution method based on the deep convolutional adversarial network model constructed in the invention, a convolution manner of the discriminator and the generator after receiving a picture is changed, the discriminator and the generator are enabled to learn features of the image in a larger range, and thus robustness of the entire network training model can be improved.

Owner:SOUTH CHINA UNIV OF TECH

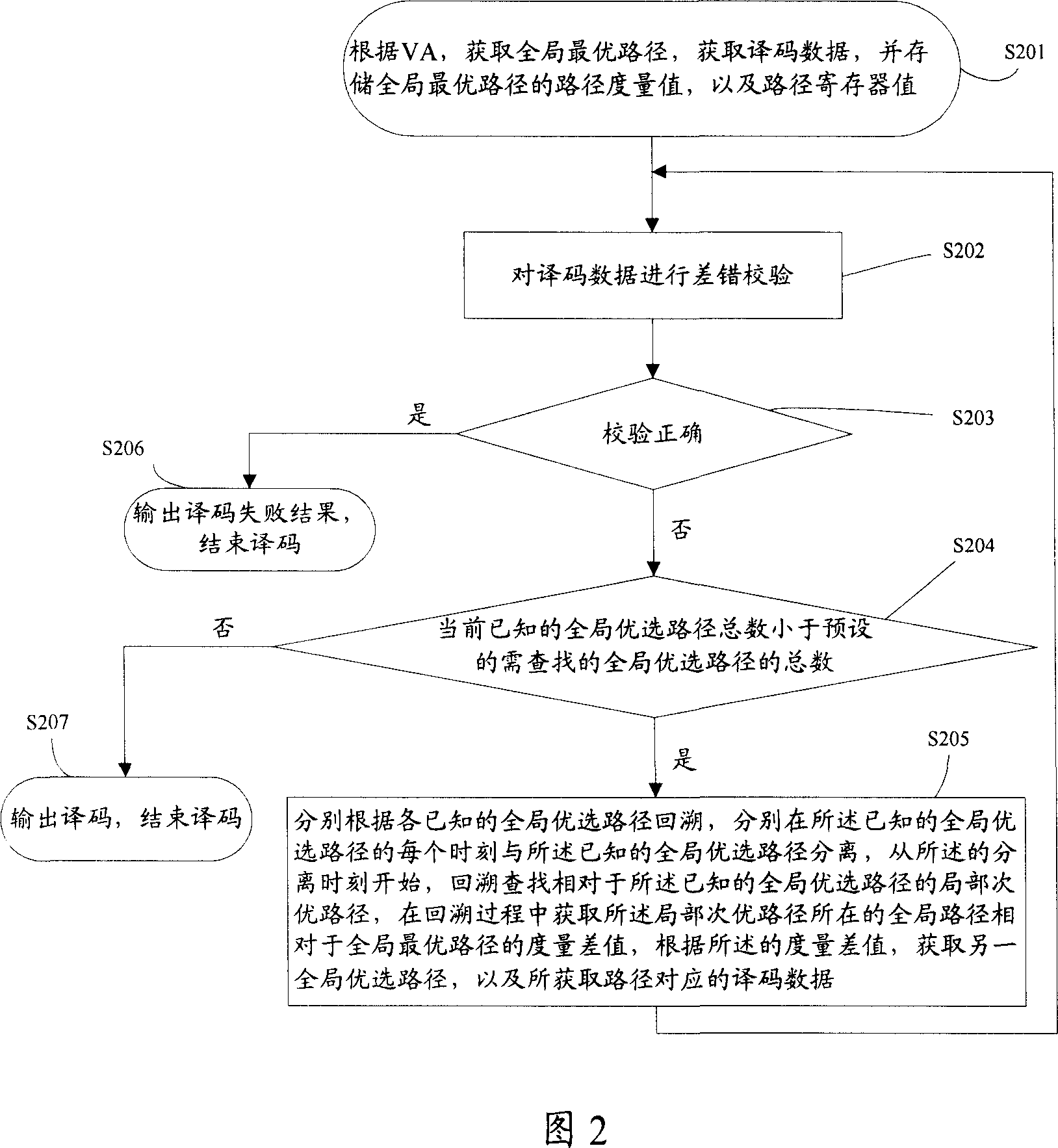

A coding method of convolution code

ActiveCN1968024ASimple calculationSave storage spaceError correction/detection using convolutional codesOther decoding techniquesTheoretical computer scienceConvolutional code

The invention relates to a convolutional code decode method, which comprises: based on Viterbi algorithm, obtaining globe optimized path, obtaining decode data; checking the decode data; if the code is right, outing code, ending; or else, searching for other globe optimized paths and decode data, as based on known globe optimized paths, separating from selected path at each time of each globe optimized path, searching for local sub-optimized path, obtaining the difference between relative globe optimized path and optimized path, obtaining one globe optimized path, obtaining relative decode data; checking the decode data, if the check is wrong, searching for other globe optimized paths.

Owner:HUAWEI TECH CO LTD

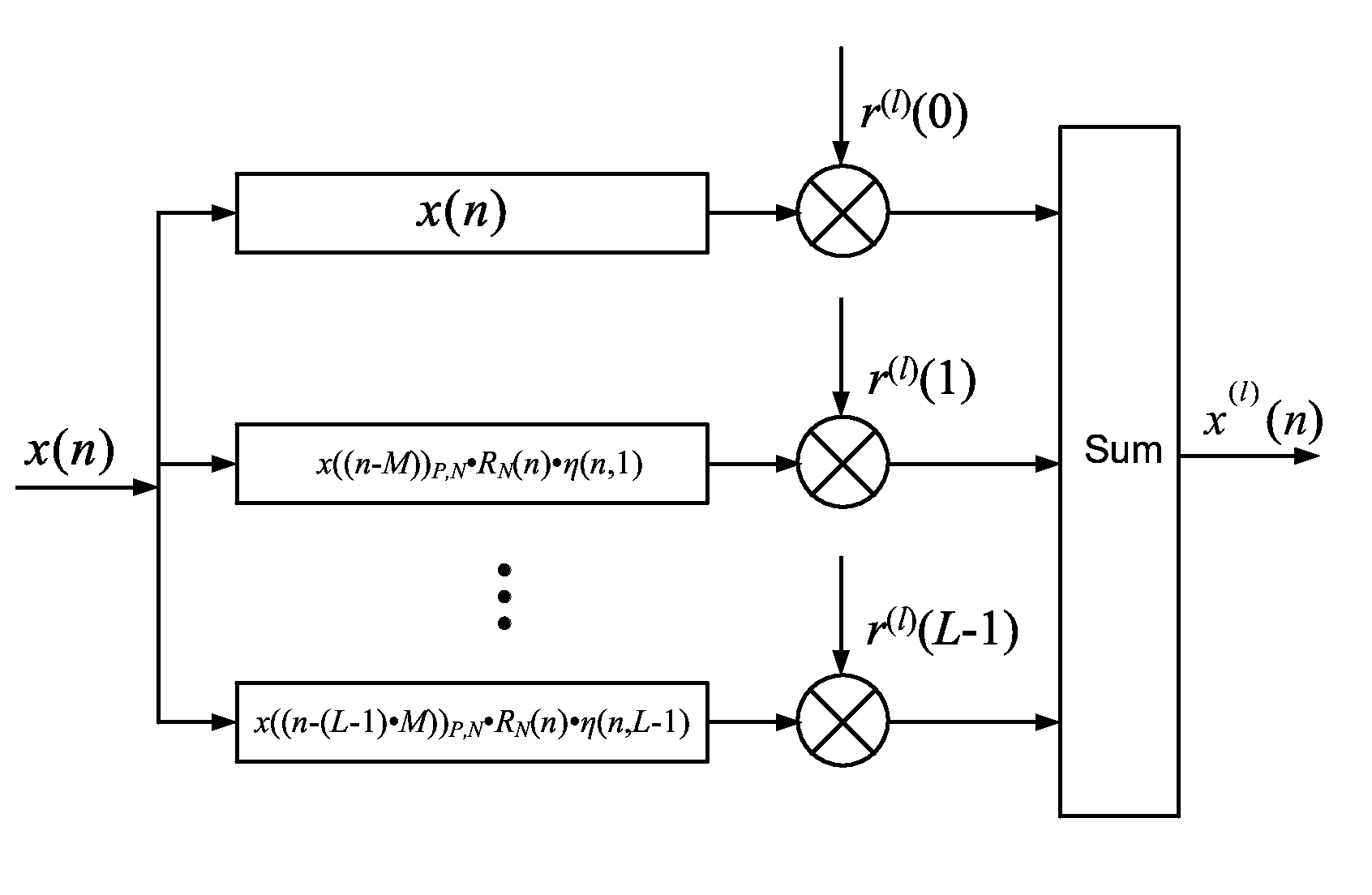

Low complexity PAPR suppression method in FRFT-OFDM system

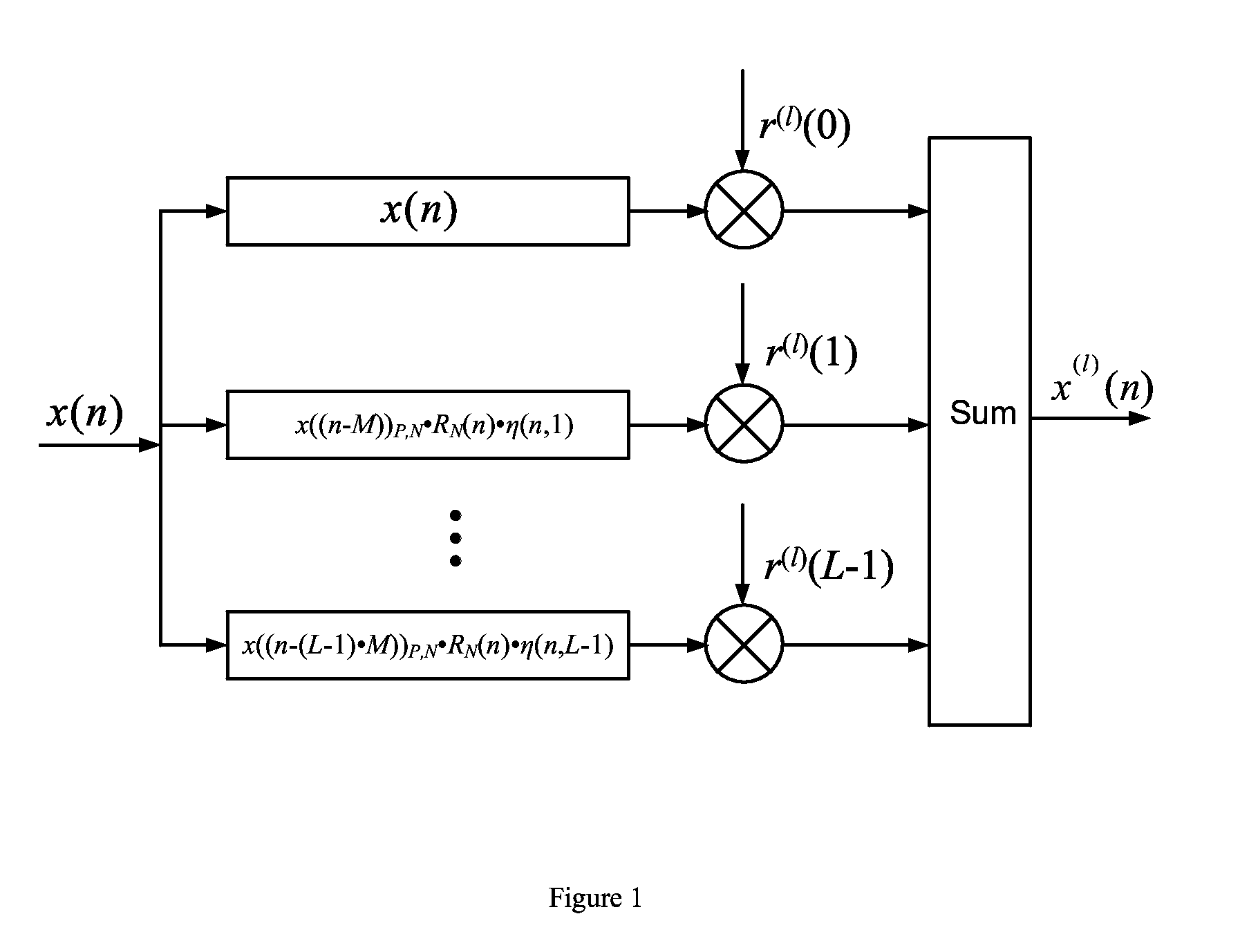

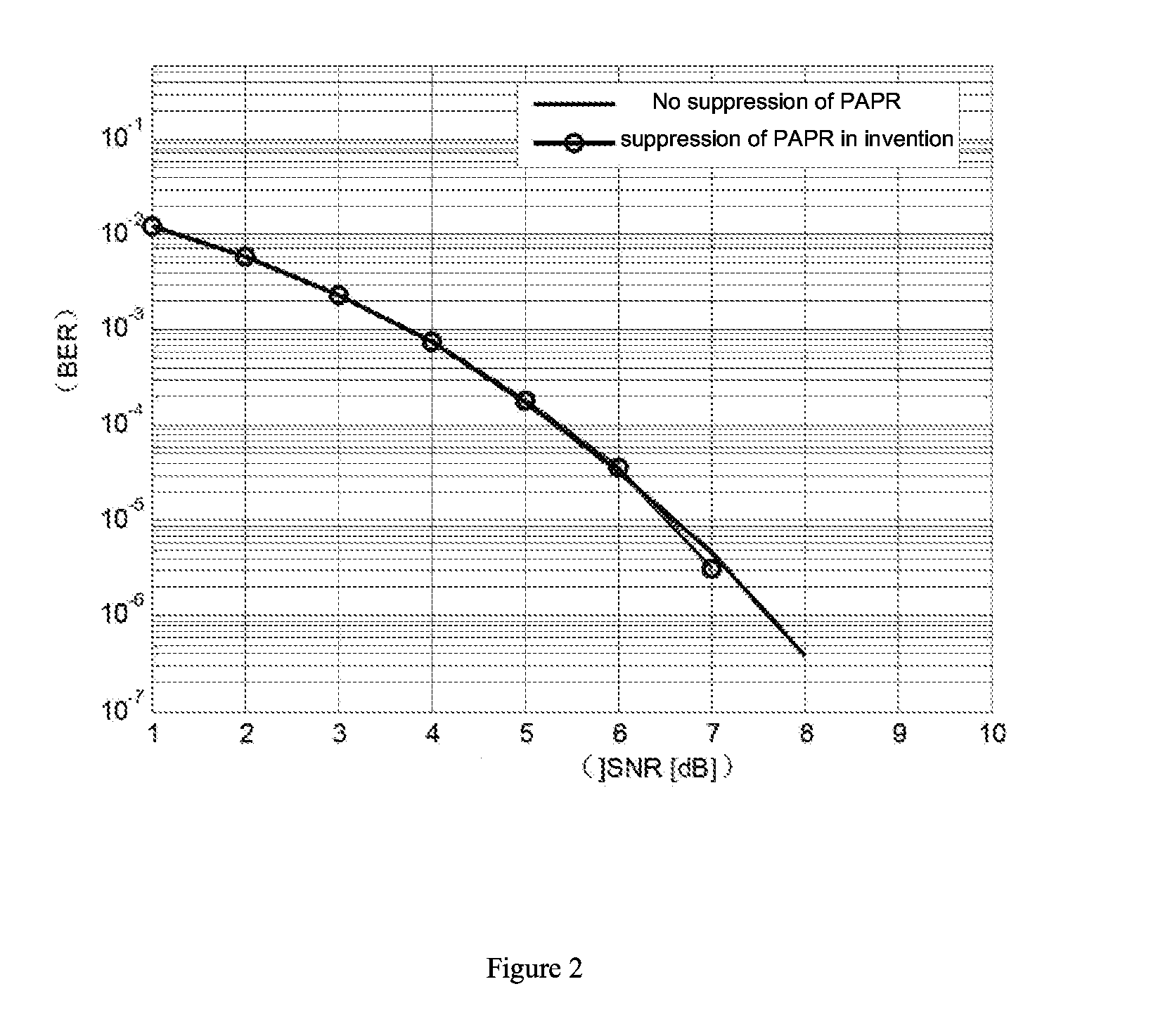

InactiveUS20160043888A1Reduce complexityHigh PAPRSecret communicationMulti-frequency code systemsComputation complexityComputer science

The invention relates to a method that low complexity suppression of PAPR in FRFT-OFDM system, which belongs to the field of broadband wireless digital communications technology and can be used to reduce the PAPR in FRFT-OFDM system. The method is based on fractional random phase sequence and fractional circular convolution theorem, which can effectively reduce the PAPR of system. The method of the invention has the advantages of simple system implementation and low computational complexity. In this method, the PAPR of the system can be effectively reduced while keeping the system reliability. When the number of candidate signals is the same, the PAPR performance of the proposed method was found to be almost the same as that of SLM and better than that of PTS. More importantly, the proposed method has lower computational complexity than that of SLM and PTS.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

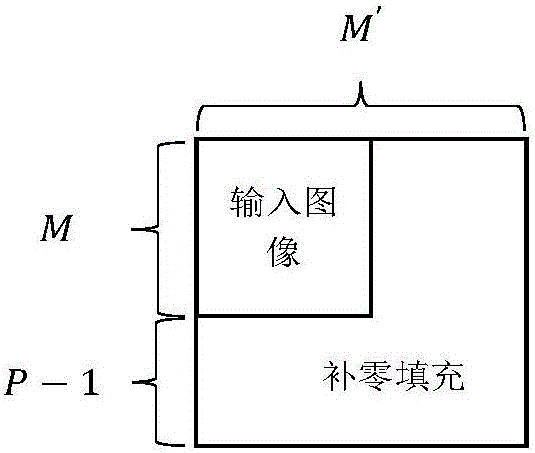

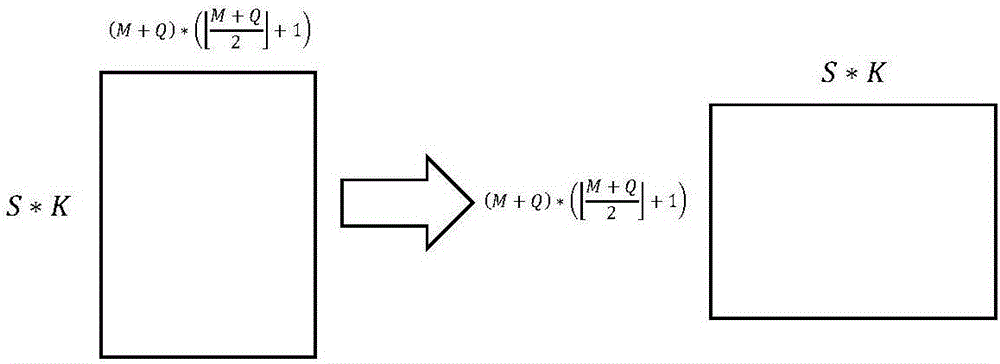

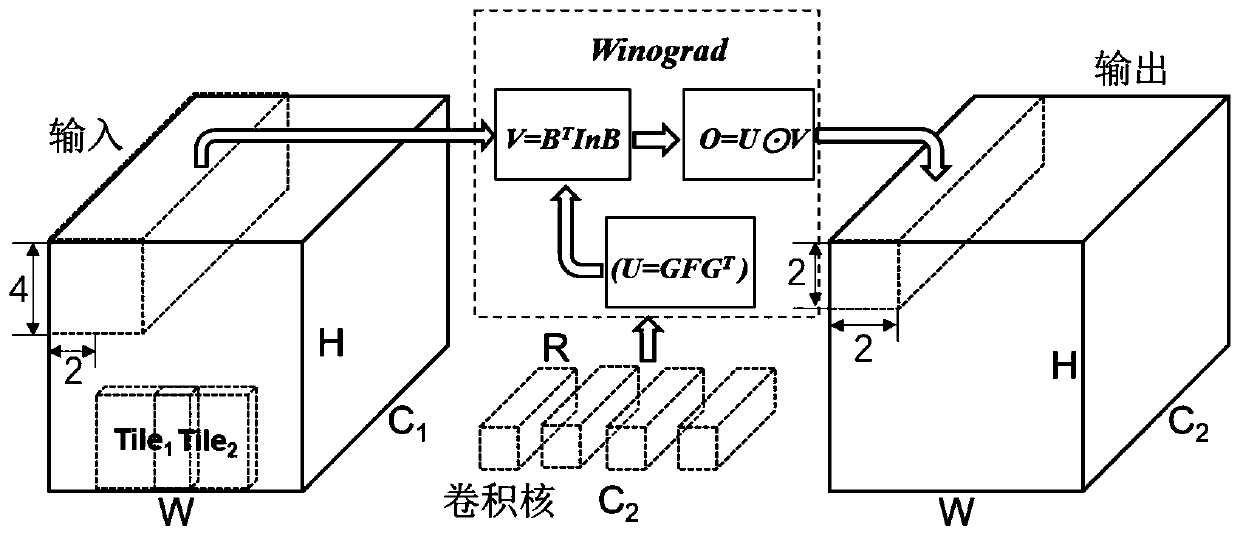

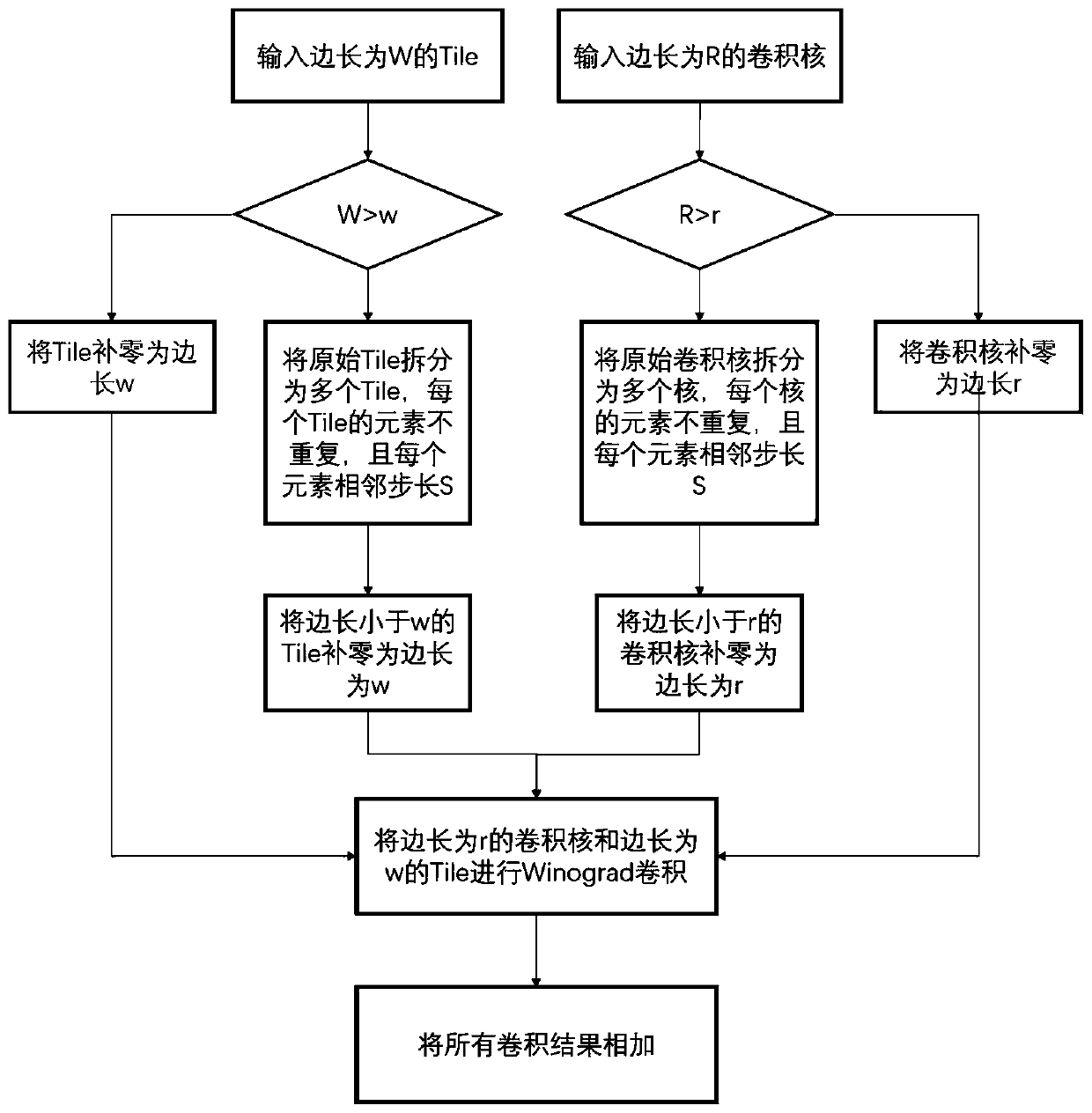

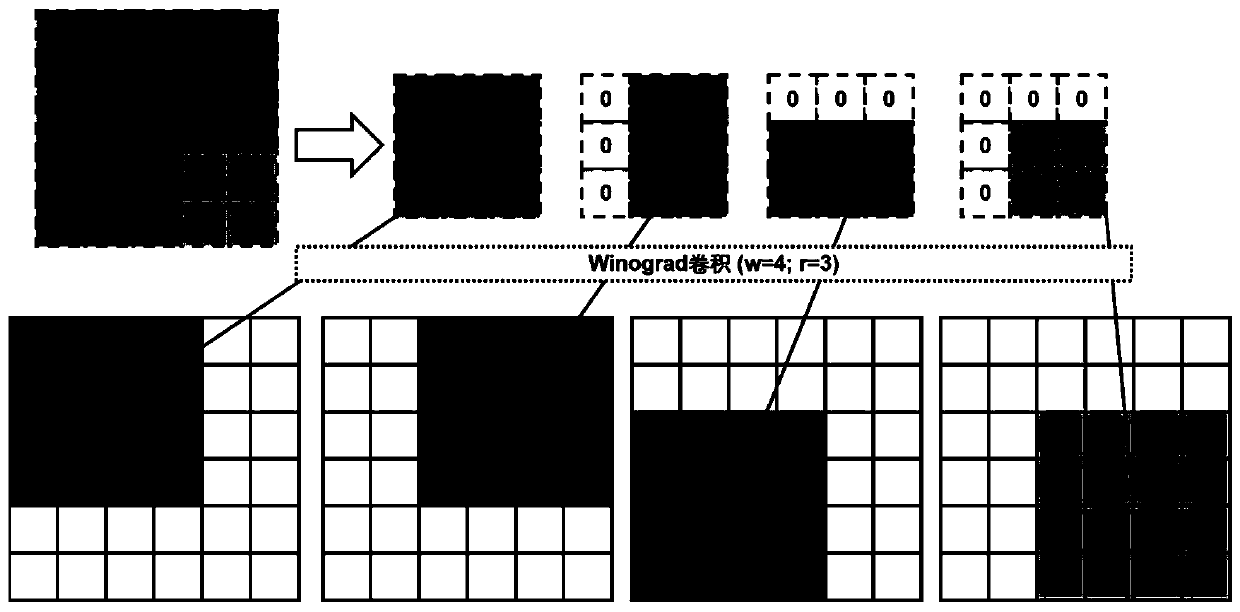

Winograd convolution splitting method for convolutional neural network accelerator

ActiveCN110533164AImprove performanceIncrease power consumptionNeural architecturesEnergy efficient computingTheoretical computer scienceConvolution theorem

The invention discloses a Winograd convolution splitting method for a convolutional neural network accelerator. The method comprises the following steps: 1) reading an input and a convolution kernel of any size from a cache of the convolutional neural network accelerator; 2) judging whether convolution splitting is carried out or not according to the convolution kernel size and the input size, andif convolution splitting needs to be carried out, carrying out the next step; 3) splitting the convolution kernel according to the size and the step length of the convolution kernel, and splitting the input according to the size and the step length of the input; 4) combining and zero-filling the split elements according to the size of the convolution kernel, and combining and zero-filling the split elements according to the input size; 5) performing Winograd convolution on each pair of split input and convolution kernels; 6) accumulating the Winograd convolution results of each input and convolution kernel, and 7) storing the accumulation results in a cache of the convolutional neural network accelerator, so that the convolutional neural network accelerator can support convolution of various different shapes by adopting one Winograd acceleration unit.

Owner:XI AN JIAOTONG UNIV

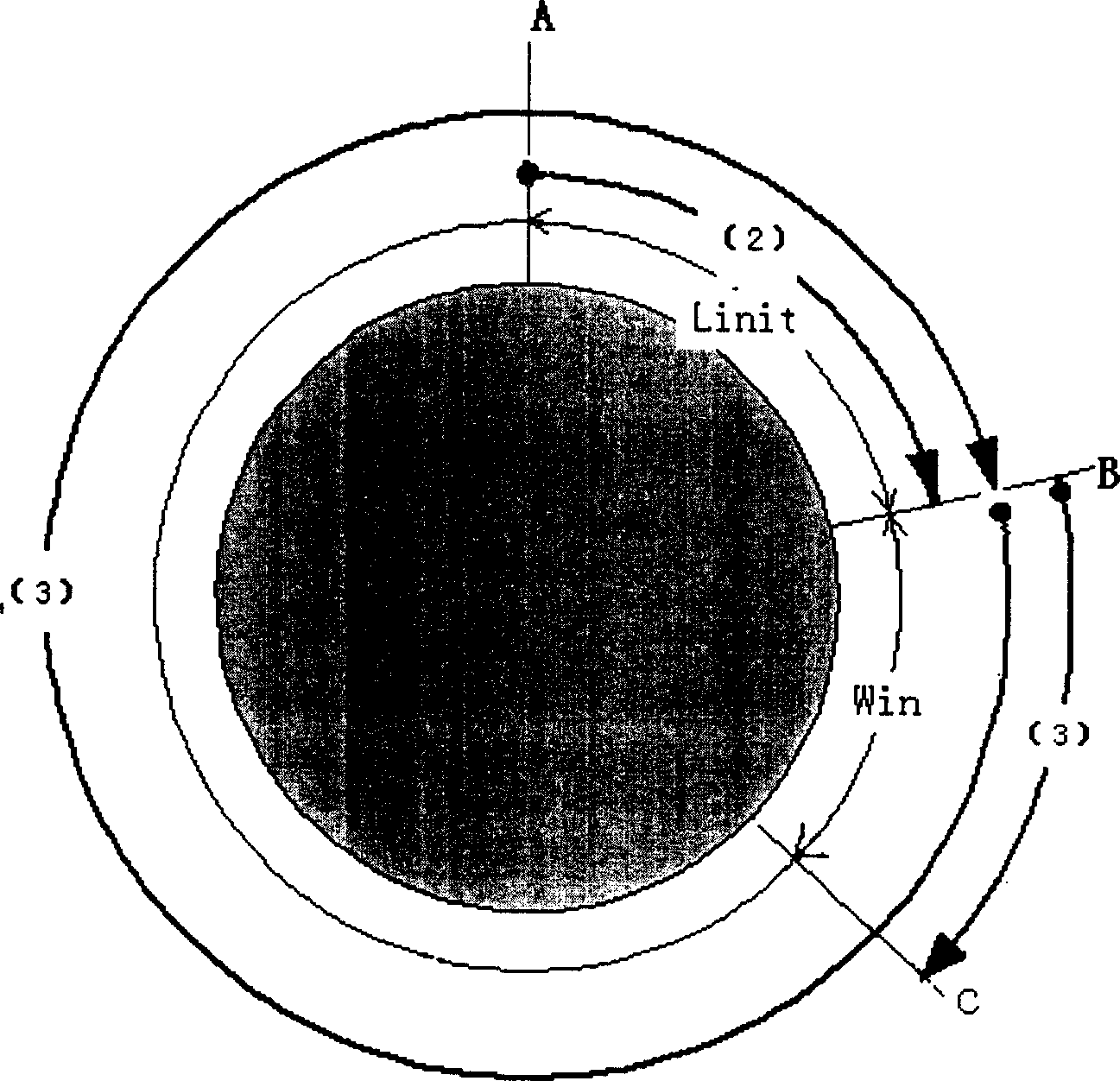

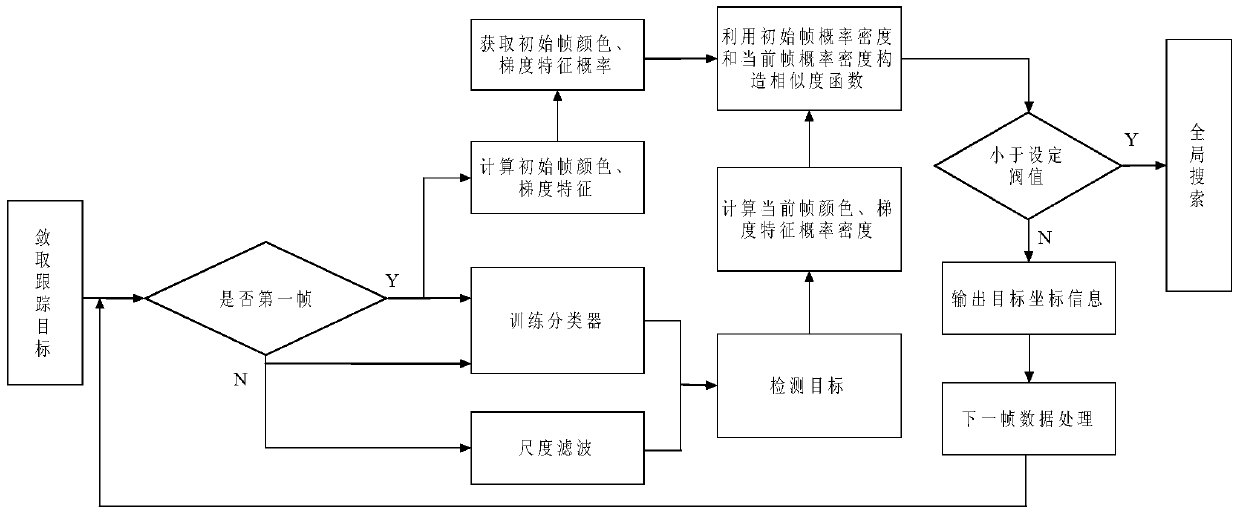

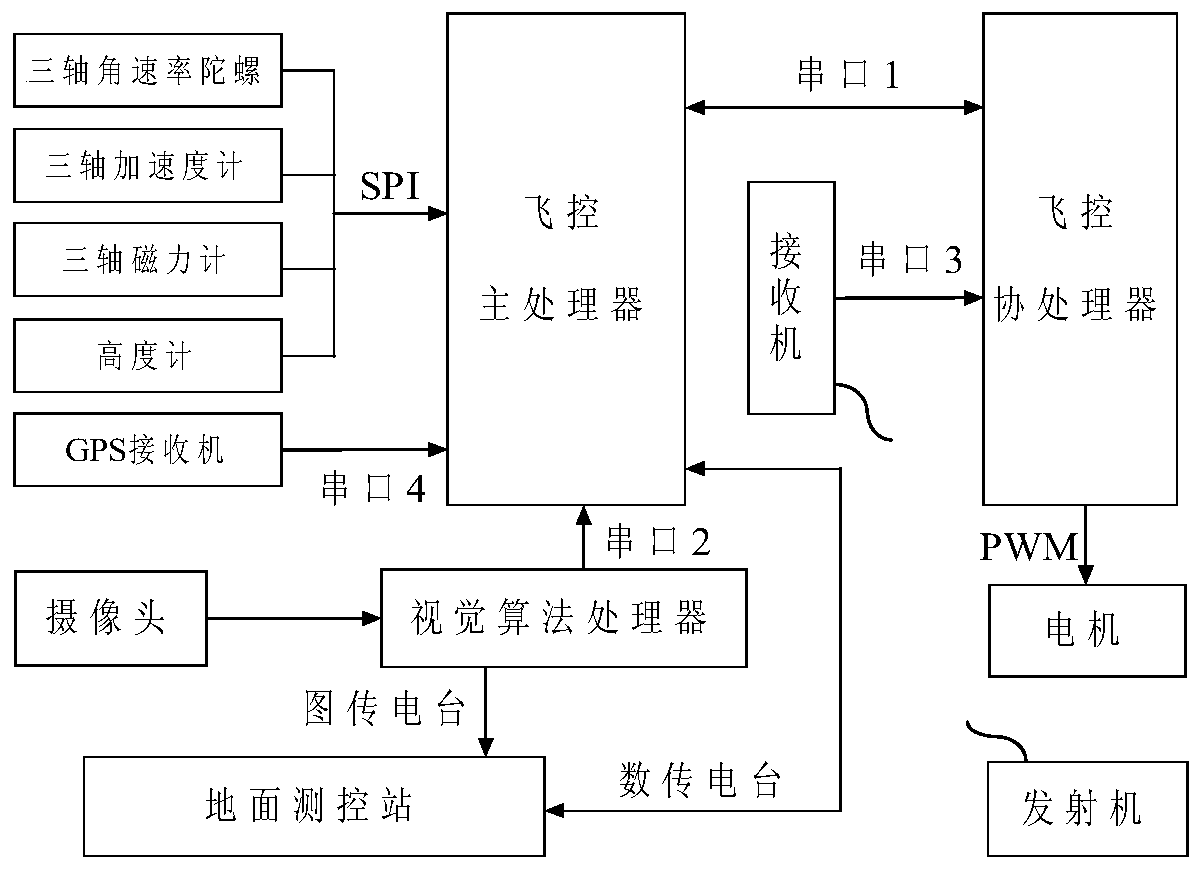

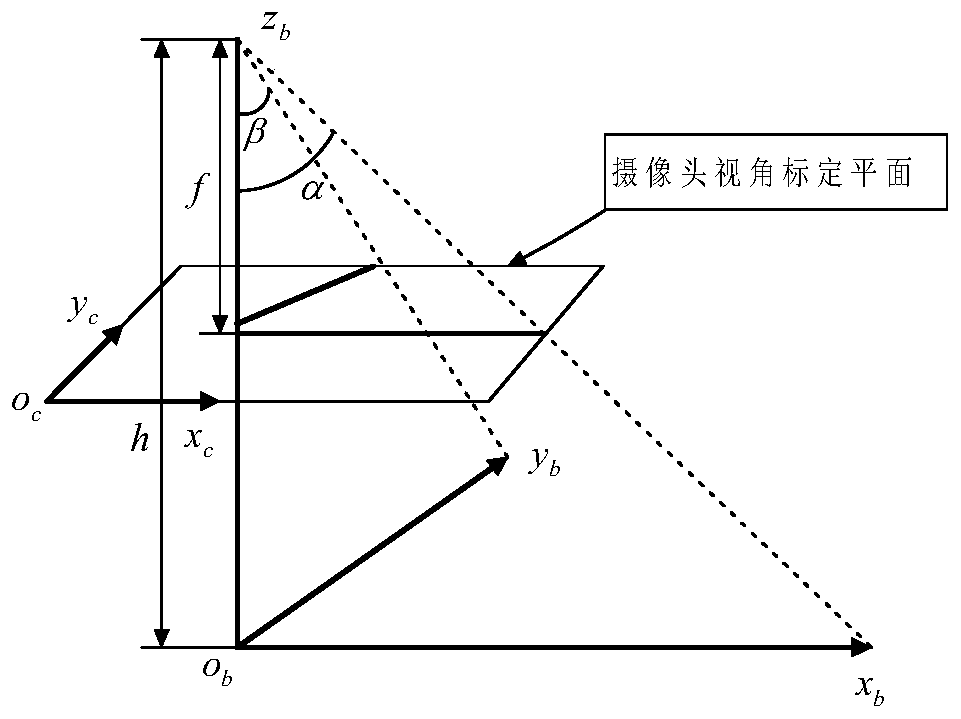

An unmanned aerial vehicle visual target tracking method based on scale adaptive kernel correlation filtering

ActiveCN109816698AOptimal Tracking Scale Fixed ProblemHigh precisionImage analysisInternal combustion piston enginesPattern recognitionCorrelation filter

The invention discloses an unmanned aerial vehicle visual target tracking method based on scale self-adaptive kernel correlation filtering, which comprises the following steps of selecting a trackingtarget, calculating to obtain the color and gradient initial probability density of a first frame of the tracking target, and training a classifier and detecting the central position of the target byusing the kernel correlation filtering algorithm for the first frame of data; establishing a one-dimensional kernel correlation filter from the second frame to detect the change of the target scale, and calculating kernel correlation filtering by using a convolution theorem; constructing a similarity function by utilizing the current target feature and the initial feature, if the similarity is smaller than a set threshold value, considering that the target identification is inaccurate or the target is lost, entering global search, otherwise, representing that the target is identified and tracked, and obtaining target position information; and sending the position information of the tracking target to an unmanned aerial vehicle flight control system in real time to control the position of the unmanned aerial vehicle. According to the method, the problem of fixed tracking scale of a kernel correlation filtering algorithm is optimized, and the tracking precision of target characteristicsis effectively improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

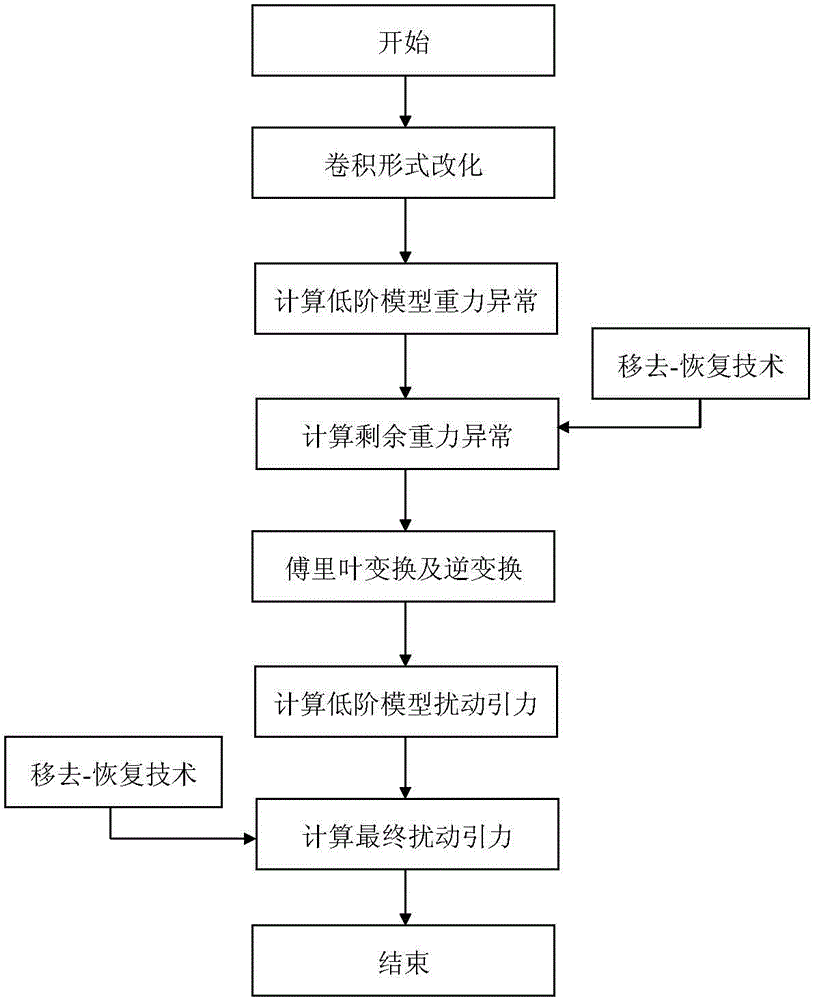

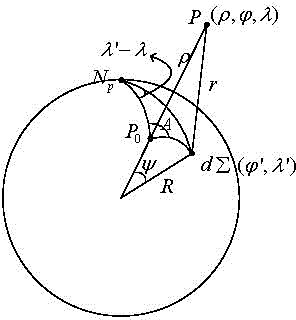

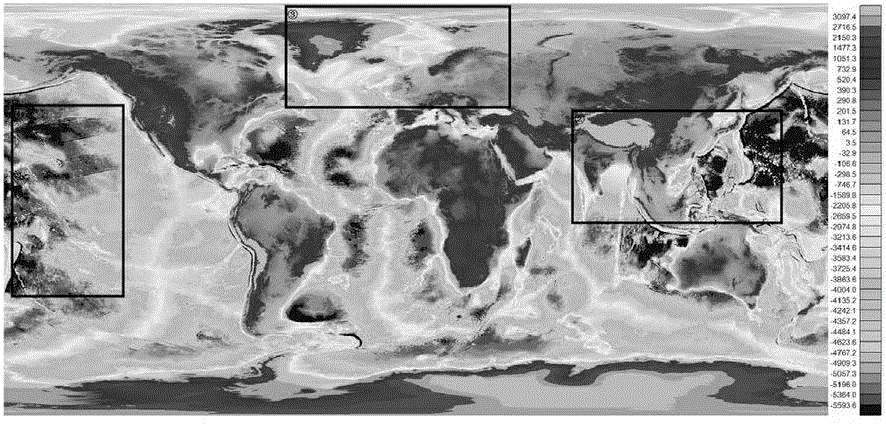

Spatial layering disturbance gravitational field grid model rapid construction method

InactiveCN104834320ARapid positioningQuick controlTarget-seeking controlMassive gravityGravity anomaly

The present invention relates to a spatial layering disturbance gravitational field grid model rapid construction method which can effectively solve the problem of obtaining the disturbance gravitation of earth's any height level rapidly and at a high precision. The method comprises the following steps of modifying a conventional integration formula into a convolution form to calculate rapidly by utilizing a convolution theorem; calculating the low-order model gravity anomaly and the residual gravity anomaly to obtain the residual gravity anomaly; carrying out the Fourier transform and the inverse transformation thereof to obtain a residual disturbance gravitation value; calculating a low-order model disturbance gravitation and a final disturbance gravitation to obtain the final disturbance gravitation; comparing a disturbance gravitation precision with a calculation efficiency, at the same time, comparing the efficiency difference with a conventional method. The method of the present invention is easy to operate and apply, can effectively solve the problem of obtaining the disturbance gravitation of earth's any height level rapidly and at the high precision, facilitates the rapid and real-time positioning and control of various spacecrafts when the spacecrafts move, guarantees the safety of the spacecrafts, and possesses a very strong use value.

Owner:THE PLA INFORMATION ENG UNIV

Binary convolutional device and corresponding binary convolutional neural network processor

ActiveCN107203808AReduce bit widthImprove computing efficiencyPhysical realisationParallel computingConvolution theorem

The present invention provides a binary convolutional device and a corresponding binary convolutional neural network processor. The binary convolutional device comprises: an XNOR gate taking elements in an employed convolution kernel and corresponding elements in data to be subjected to convolution as input, wherein the elements in the employed convolution kernel and the corresponding elements in the data to be subjected to convolution are both binary forms; and an accumulation device taking the output of the XNOR gate as input and configured to perform accumulation of the output of the XNOR gate to output a binary convolutional result. According to the technical scheme, the bit wide of data for calculation is reduced in the operation process so as to reach the effect of improving operation efficiency and reduce storage capacity and energy consumption.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

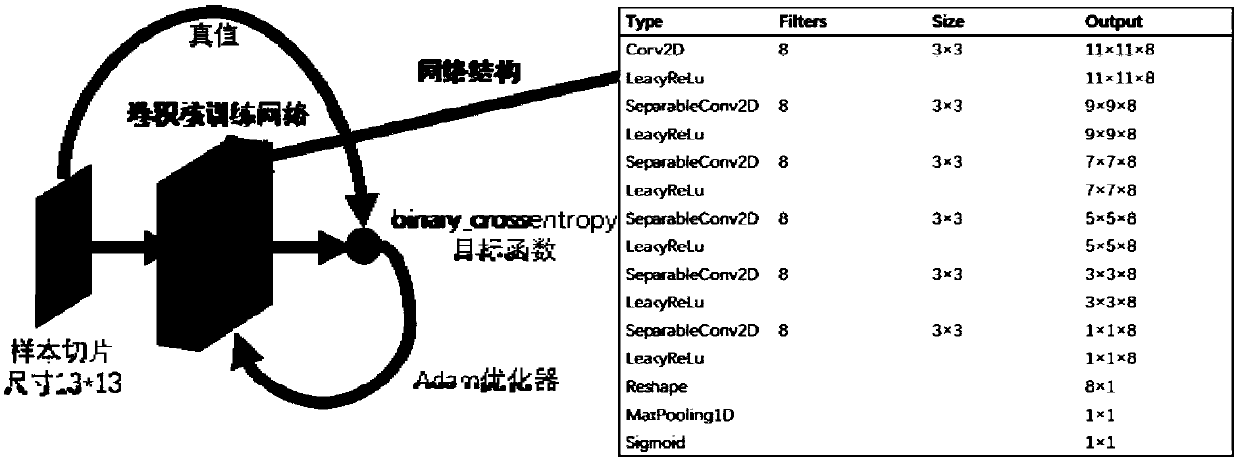

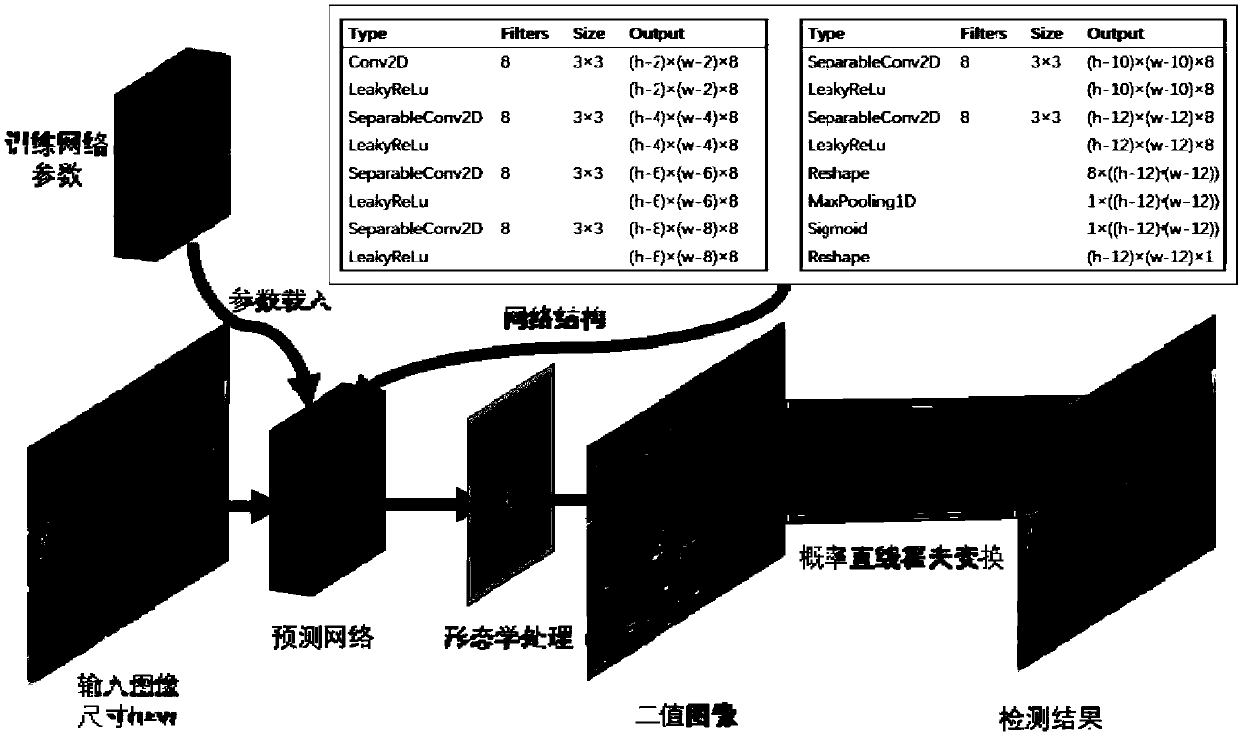

Training method and detection method of electric wire based on depth separable convolution neural network

ActiveCN109543595AReduce false positivesFast predictionCharacter and pattern recognitionNeural architecturesHough transformNerve network

The training method and detection method of electric wire based on depth separable convolution neural network comprises that steps of: constructing a neural network using the deeply separable convolution; trainingconvolution kernels with uniformly distributed mini-slices, wherein the trained convolution kernel is used for feature extraction of infrared gray-scale image; binarizing the image according to the threshold value, removing the small area region, and connecting the linear region by the probabilistic hough transform. The invention trains a convolution kernel capable of extracting infrared wire features by a machine learning method, and can effectively extract wire features from infrared gray-scale images. Combined with morphological processing and linear Hough transform, wire detection can be carried out in real time.

Owner:SHANGHAI JIAO TONG UNIV

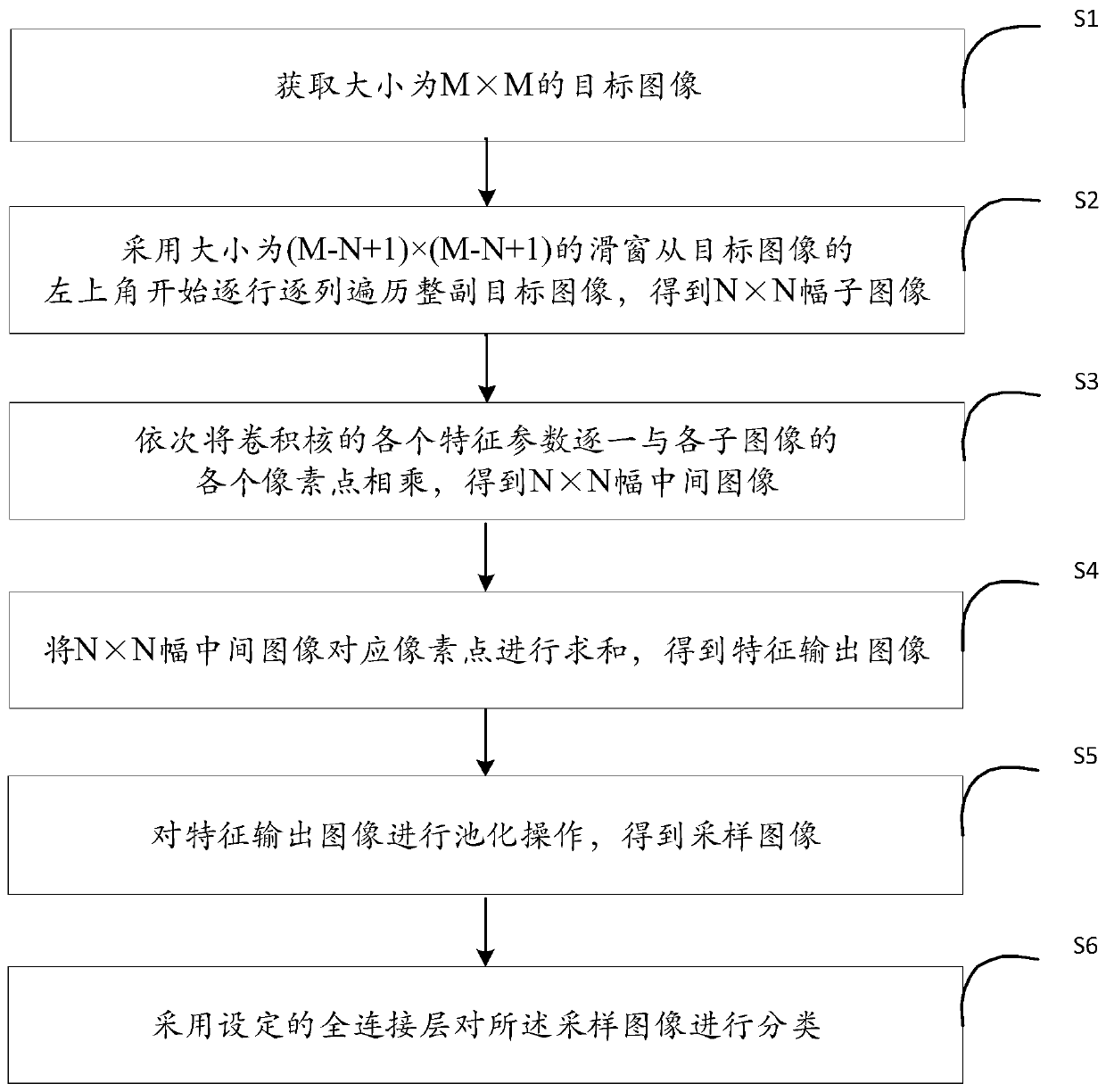

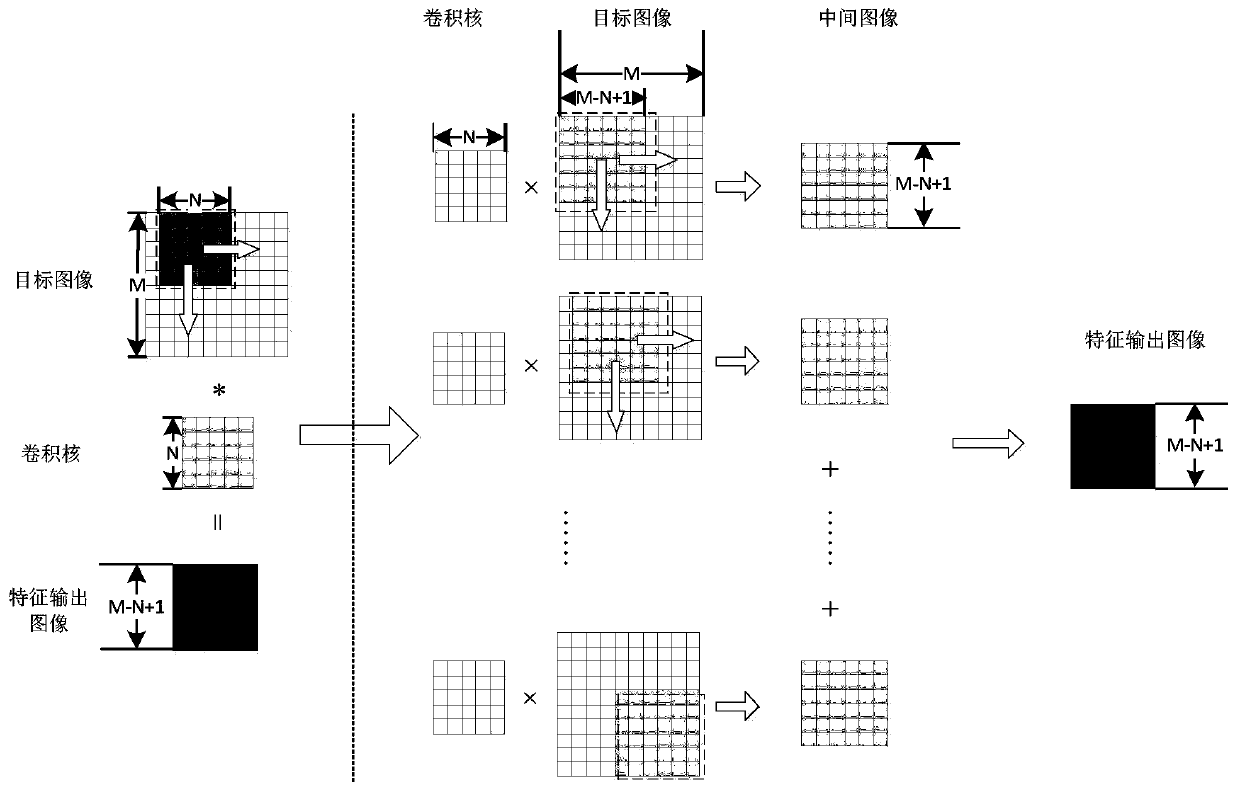

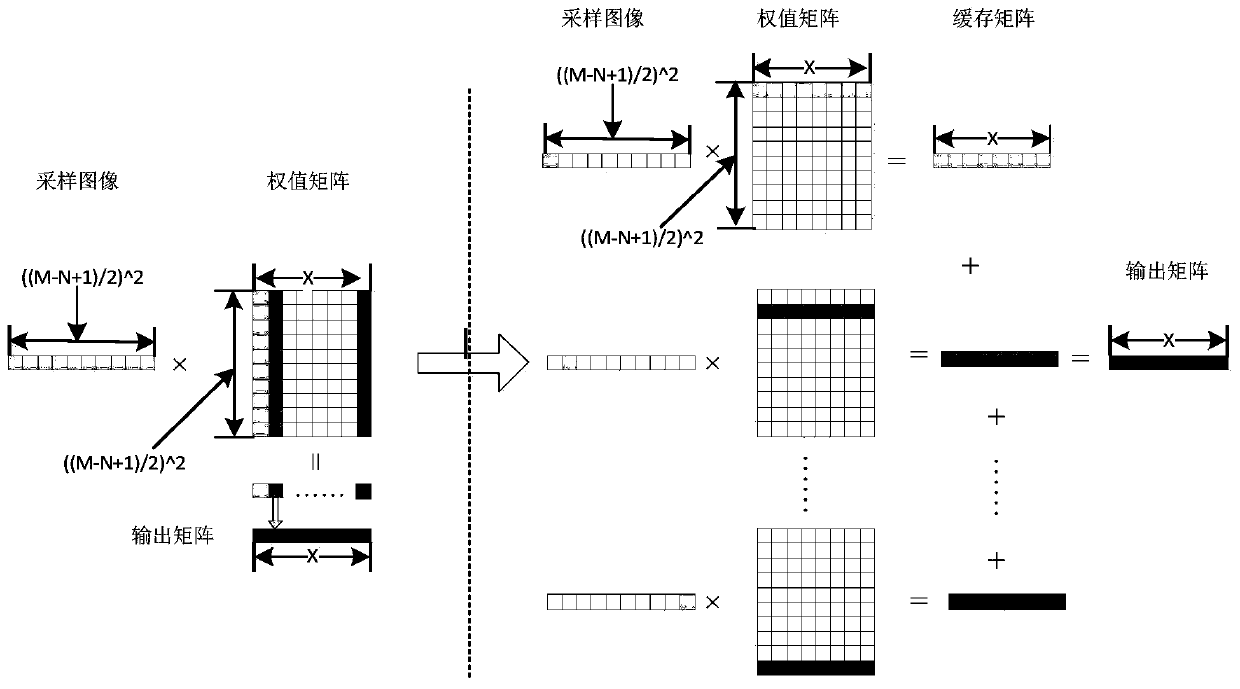

A target classification method based on a convolutional neural network

ActiveCN109784372AReduce the number of jumpsImprove processing efficiencyCharacter and pattern recognitionNeural architecturesIntermediate imageSlide window

The invention provides a target classification method based on a convolutional neural network. the target image is directly traversed without adopting a convolution kernel; instead, a sliding window with the same size as the output feature image is adopted to traverse the whole target image row by row and column by column; pixel points corresponding to the target image are extracted to serve as sub-images; correspondingly each characteristic parameter of the convolution kernel is multiplied by each sub-image to obtain an intermediate image; and finally, the sum value of the intermediate imagesis taken as an output feature image. Under the premise that a convolution result which is the same as that of an existing convolution implementation mode is obtained, convolution operation is dividedinto multiplication and addition operation of a single point, the number of times of address hopping when a microprocessor reads data in the convolution implementation process can be reduced to the maximum extent, and then the hardware processing efficiency is greatly improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com