Forest fire detection method based on deep convolutional model with convolution kernels of multiple sizes

A deep convolution and forest fire technology, applied in the field of deep convolutional neural network models, can solve the problems of slow detection speed and poor processing effect of forest fire, and achieve the effect of improving the problem of local minimum, low cost and high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The following describes specific embodiments of the present invention in conjunction with the accompanying drawings.

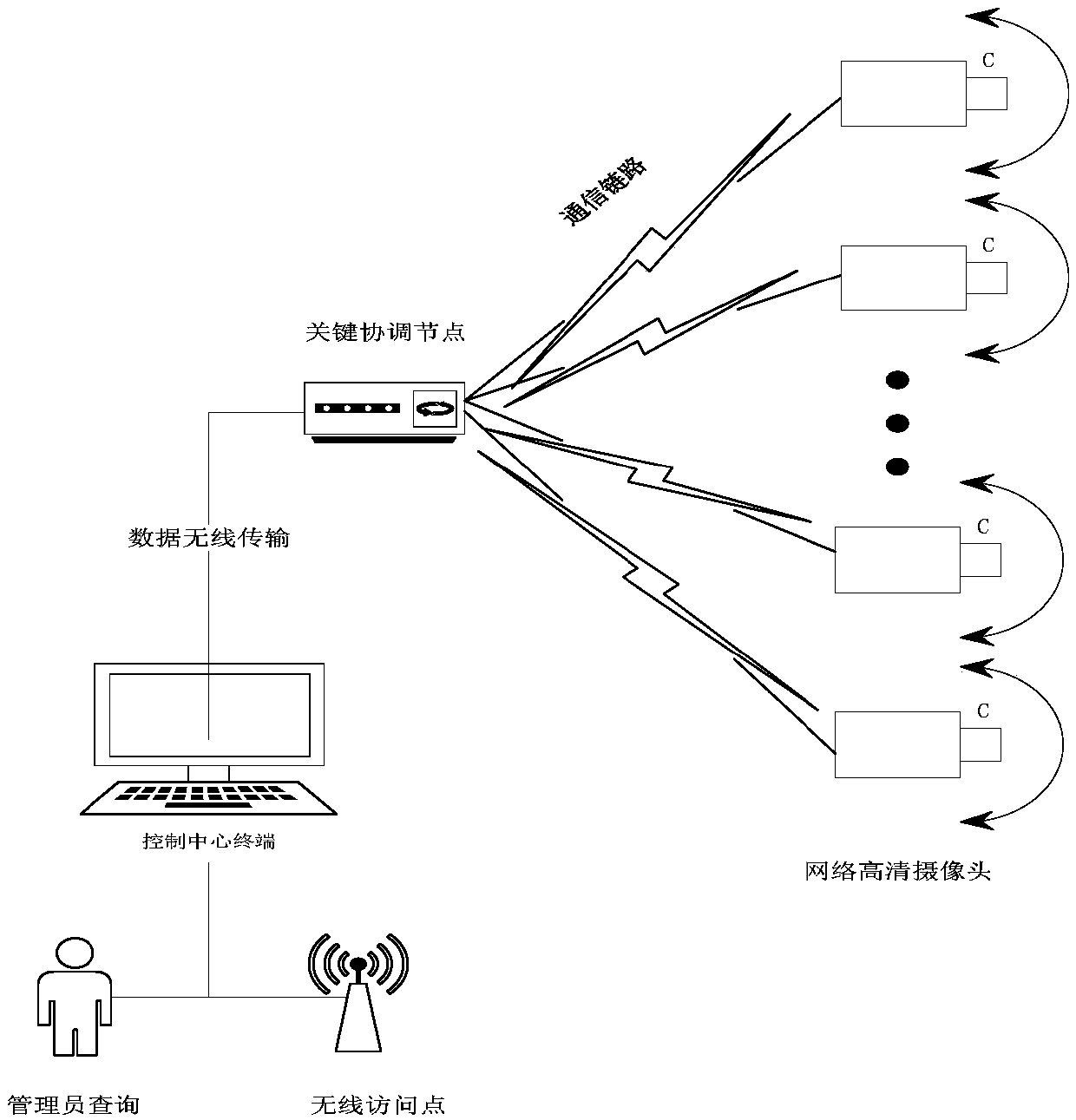

[0020] figure 1 It is a schematic diagram of the system structure of the forest fire detection method based on the deep convolution model of the multi-size convolution kernel, which mainly includes two parts: the video surveillance network and the forest fire detection model. The former is used to capture real-time conditions in the forest and transmit the video to the control center; the latter judges whether a fire has occurred and the trend of the fire based on the collected data.

[0021] The monitoring network is mainly realized by a network high-definition camera installed at a suitable location in the forest. The content captured by the camera needs to cover the entire forest, mainly shooting dynamic substances or objects in the forest, such as smoke and flames, etc., and saving the collected video, transmitted to the control center. The video ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com