A neural network structured pruning compression optimization method for a convolutional layer

A network structure and optimization method technology, applied in the direction of biological neural network model, neural architecture, etc., can solve the problems of not being able to use large training data sets smoothly, not being able to save computing and storage resources, and consuming large computing resources to achieve large Computing acceleration potential, convenient operation, and storage-saving effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

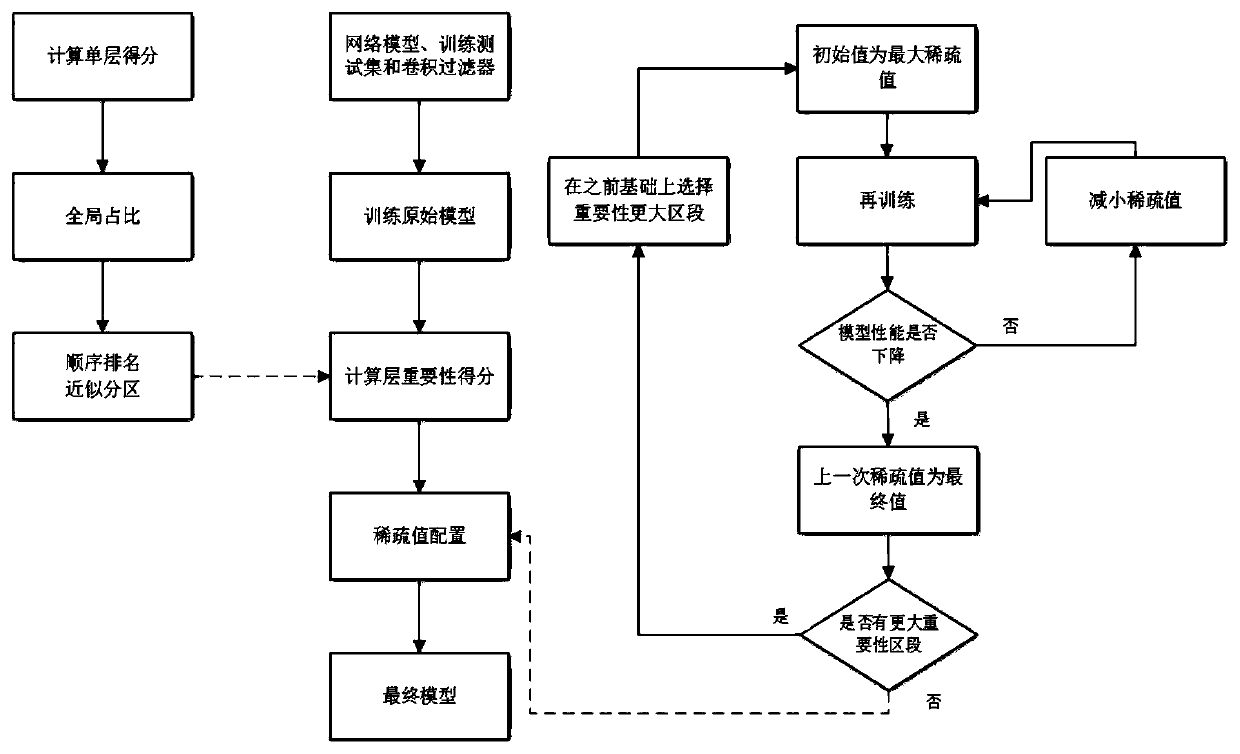

[0034] See figure 1 , figure 1 Shown is the schematic diagram of the entire structured pruning compression optimization principle. In an embodiment of the present invention, a structured pruning compression optimization method for a deep neural network convolutional layer, specific steps include: sparse value distribution of each convolutional layer and structured pruning.

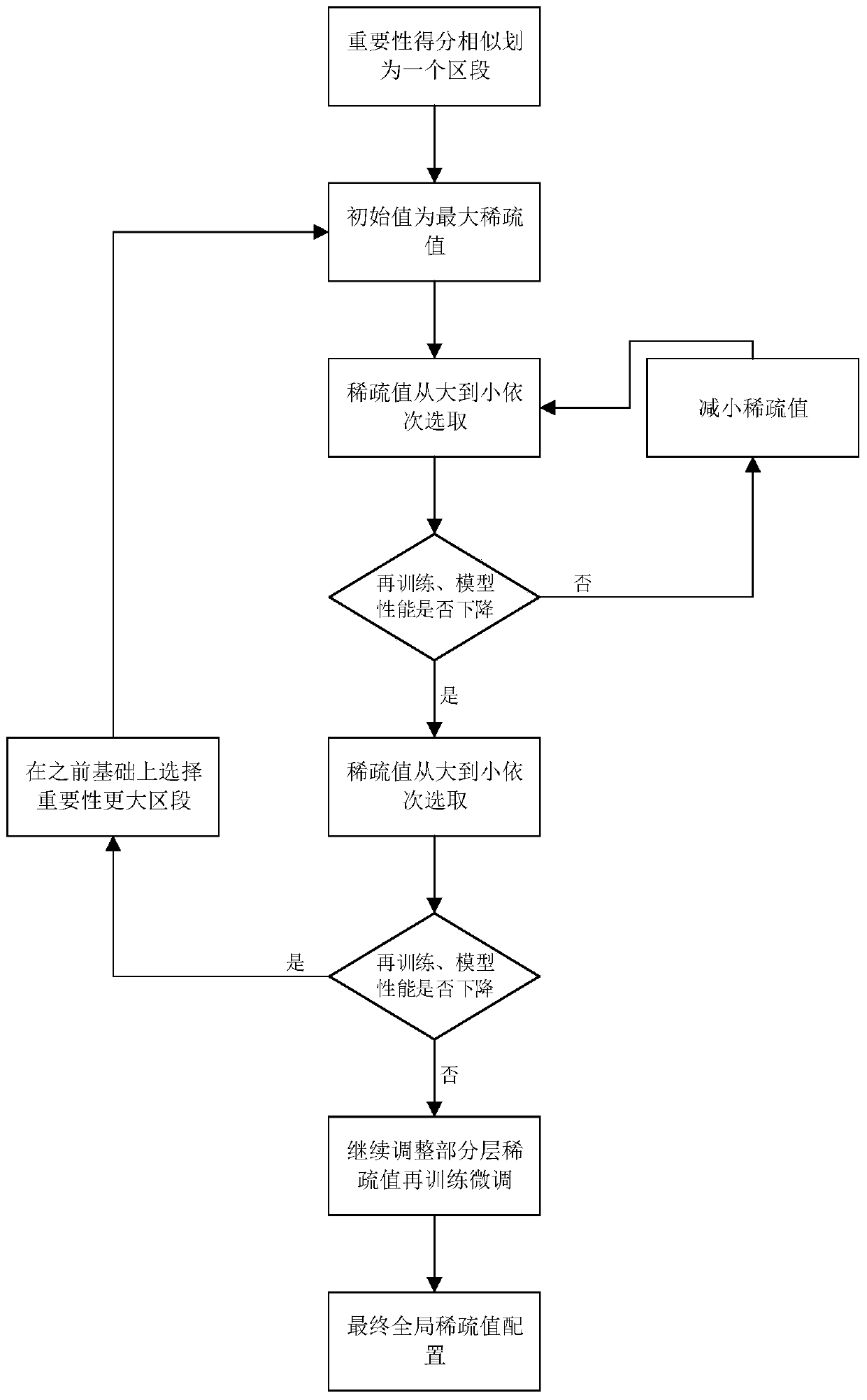

[0035] (1) The sparse value distribution steps of each convolutional layer are as follows: First, the original model is trained to obtain the parameter data of each prunable convolutional layer, and the single-layer importance score is calculated. Score M for the importance of each layer l Sum up to get M, calculate the overall importance of each layer Follow D from small to large l Perform sequential ranking, according to D l The maximum and minimum values are divided into eq...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com