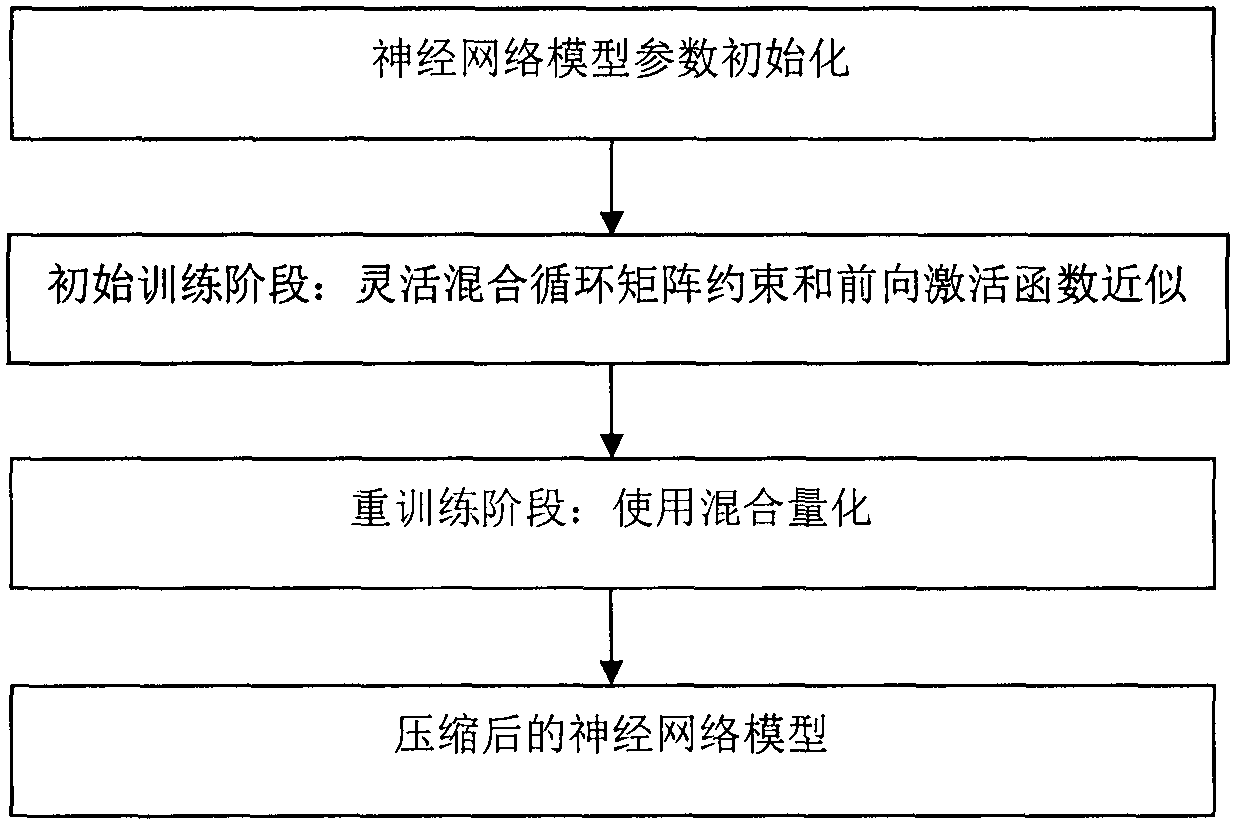

Multi-mechanism mixed recurrent neural network model compression method

A recursive neural network and model technology, which is applied in the field of recurrent neural network model compression, can solve the problem that the recurrent neural network cannot adapt to the storage resources and computing power of embedded systems, and achieve the effect of wide application prospects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] Embodiments of the present invention are described in detail below. Since the recurrent neural network includes many variants, this embodiment will take the most basic recurrent neural network as an example, which is intended to explain the present invention, but should not be construed as a limitation to the present invention. The implementation process of the rest of the recurrent neural network variants is basically the same as this embodiment.

[0023] A typical formulation of a recurrent neural network with m input nodes and n output nodes is defined as:

[0024] h t =f(Wx t +Uh t-1 +b), (1)

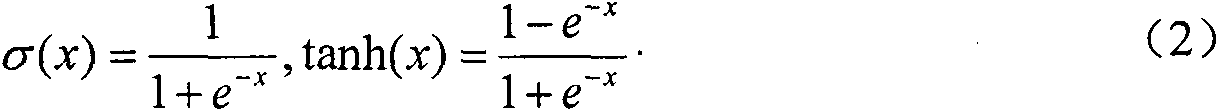

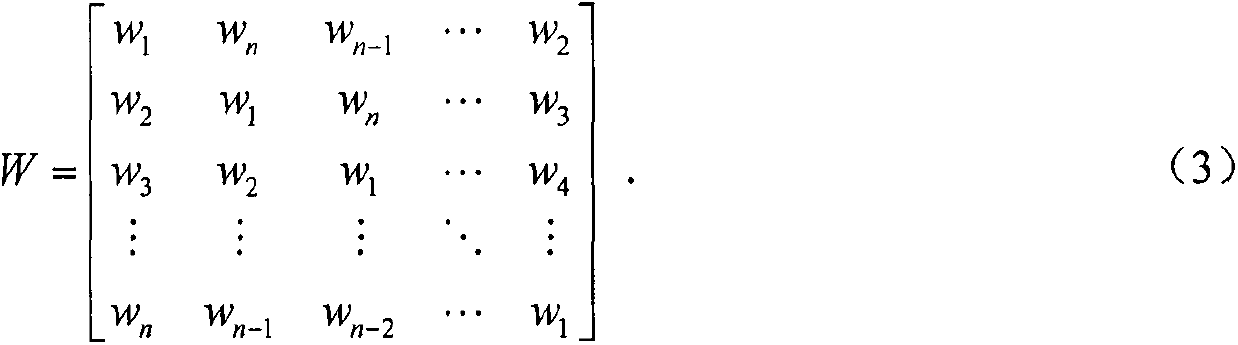

[0025] Among them, h t ∈ R n×1 is the hidden state of the recurrent neural network at time t; x t ∈ R m×1 is the input vector at time t; W∈R n×m , U∈R n×n , b∈R n×1 is the model parameter of the recurrent neural network, b is the bias item, W and U are the parameter matrix; f is a nonlinear function, and the common ones are σ and tanh:

[0026]

[0027] Such as...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com