Patents

Literature

137 results about "Circulant matrix" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

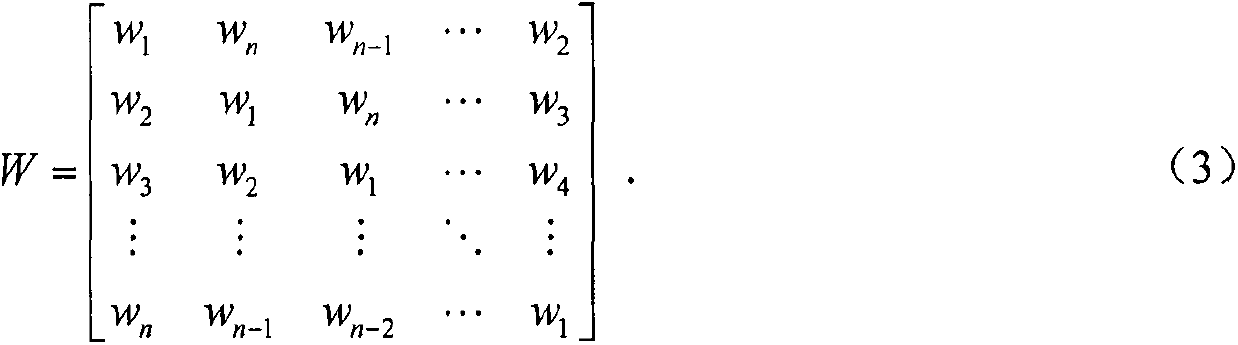

In linear algebra, a circulant matrix is a special kind of Toeplitz matrix where each row vector is rotated one element to the right relative to the preceding row vector. In numerical analysis, circulant matrices are important because they are diagonalized by a discrete Fourier transform, and hence linear equations that contain them may be quickly solved using a fast Fourier transform. They can be interpreted analytically as the integral kernel of a convolution operator on the cyclic group Cₙ and hence frequently appear in formal descriptions of spatially invariant linear operations.

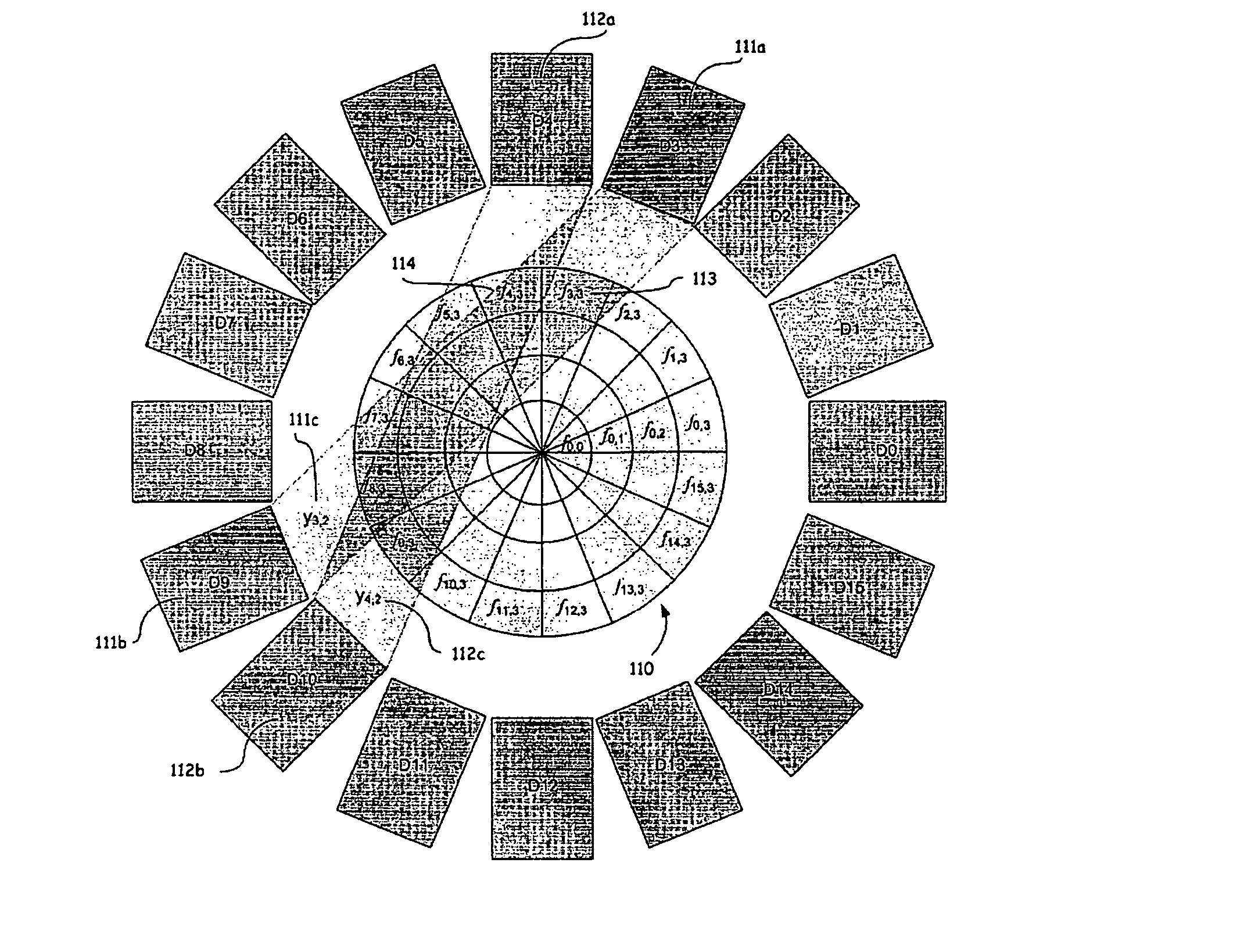

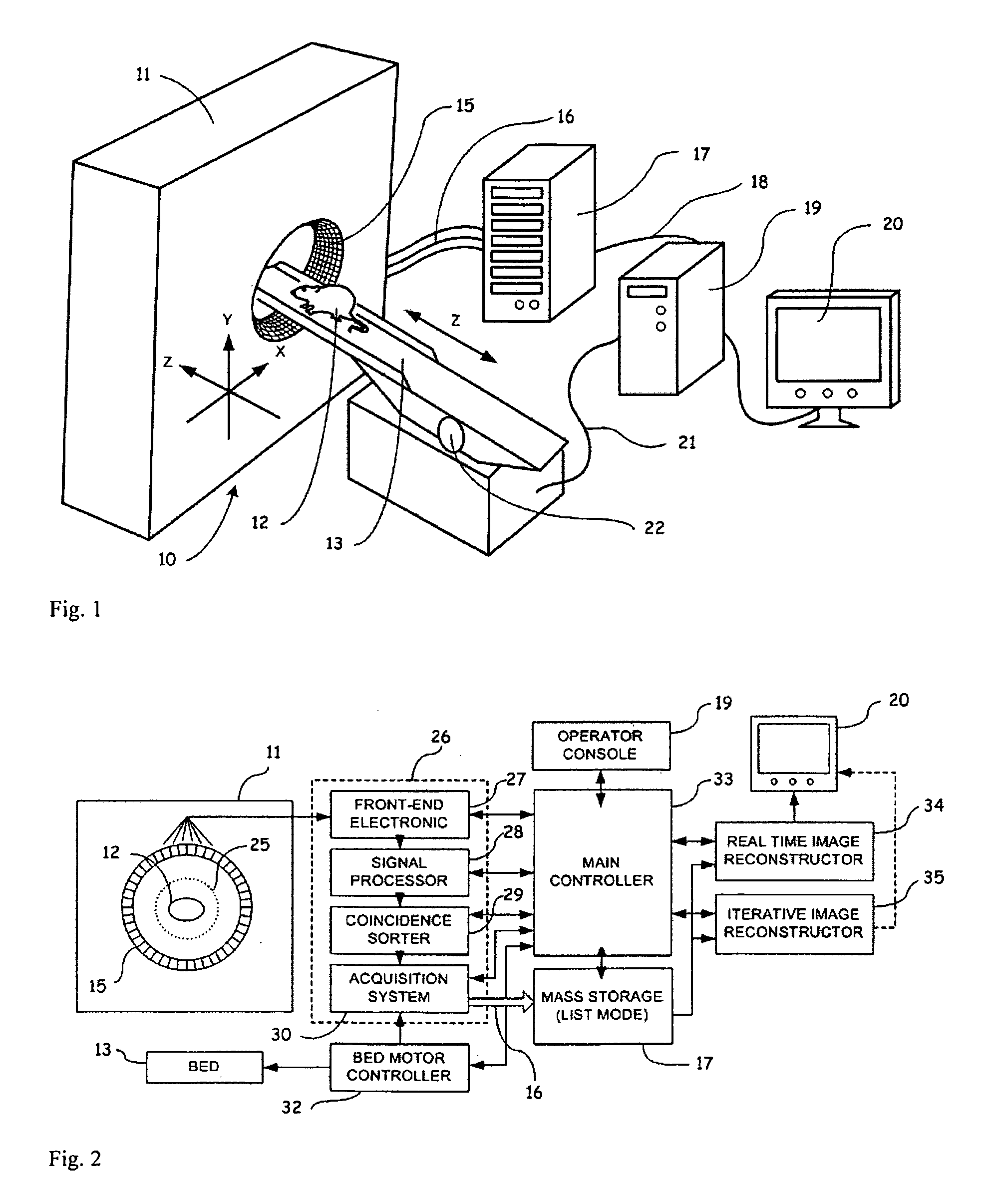

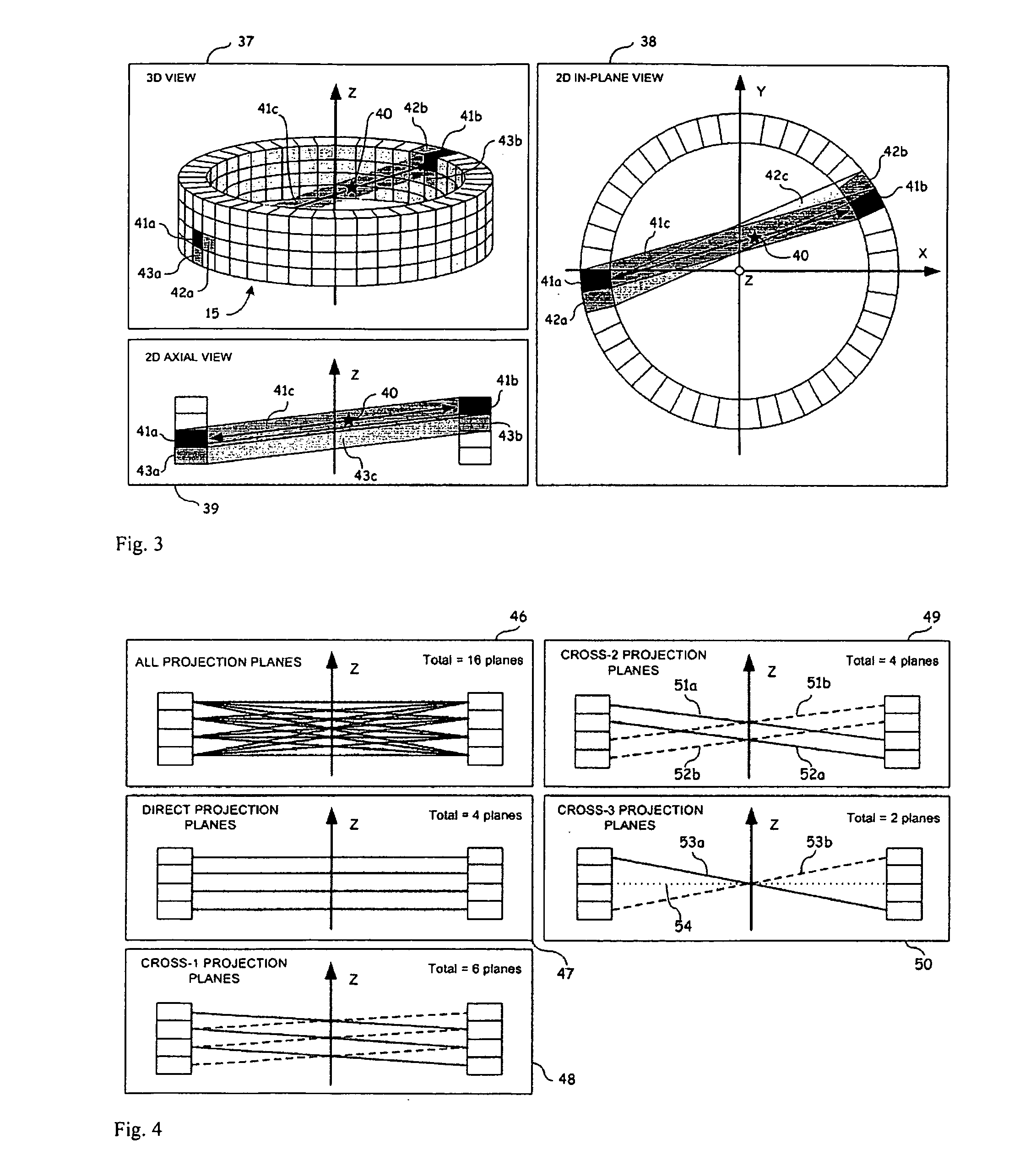

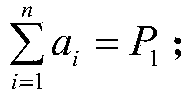

Image Reconstruction Methods Based on Block Circulant System Matrices

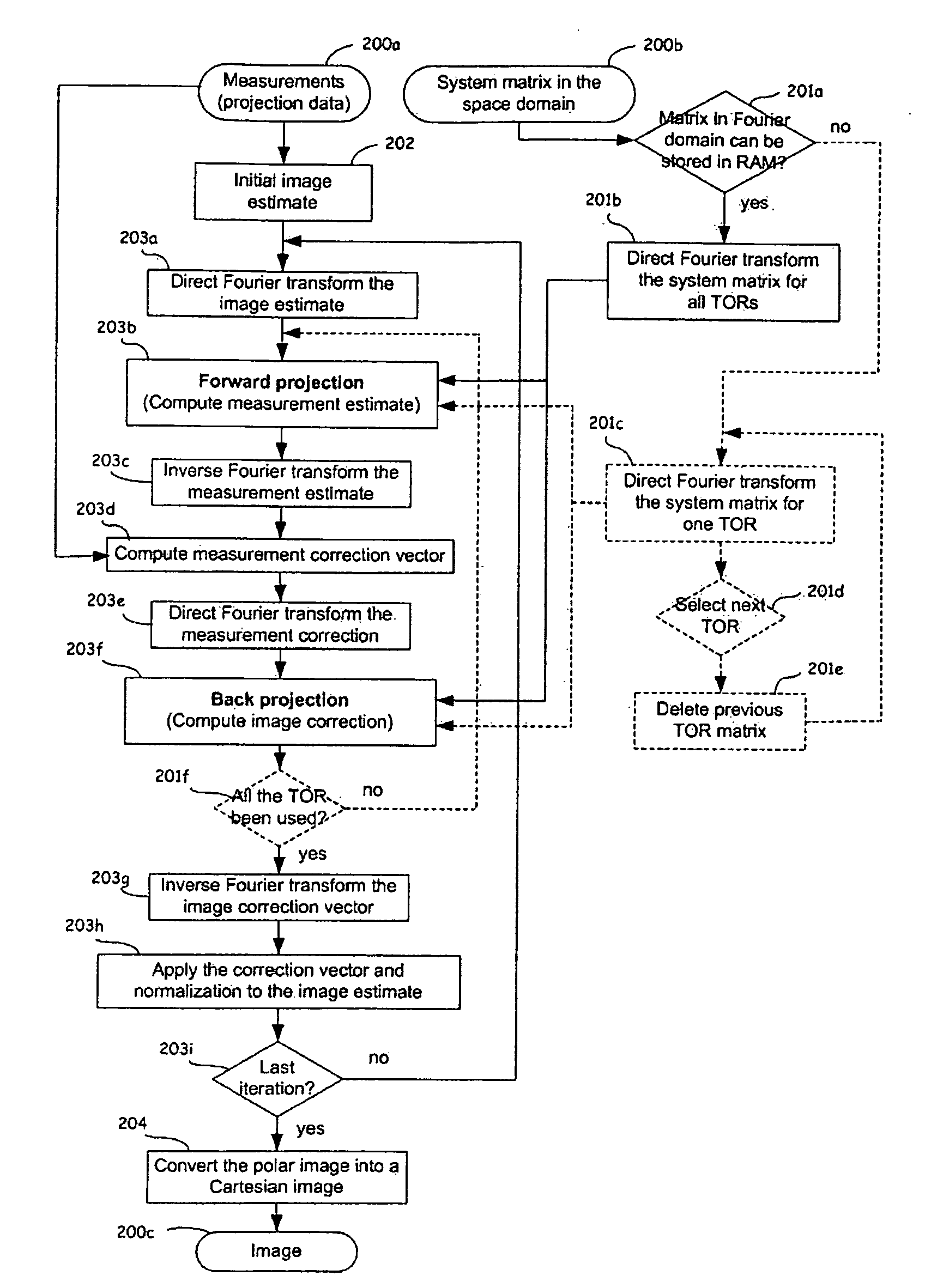

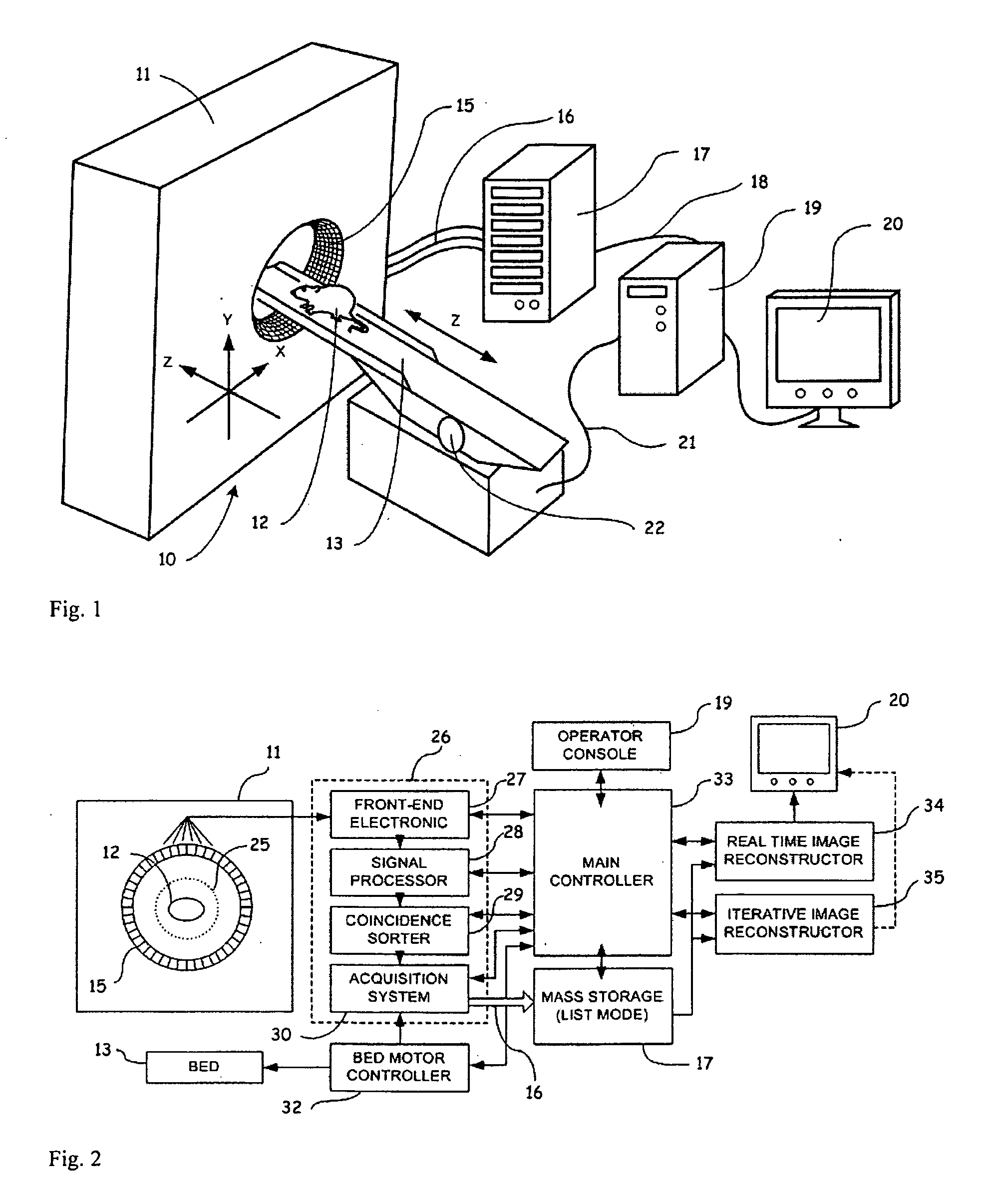

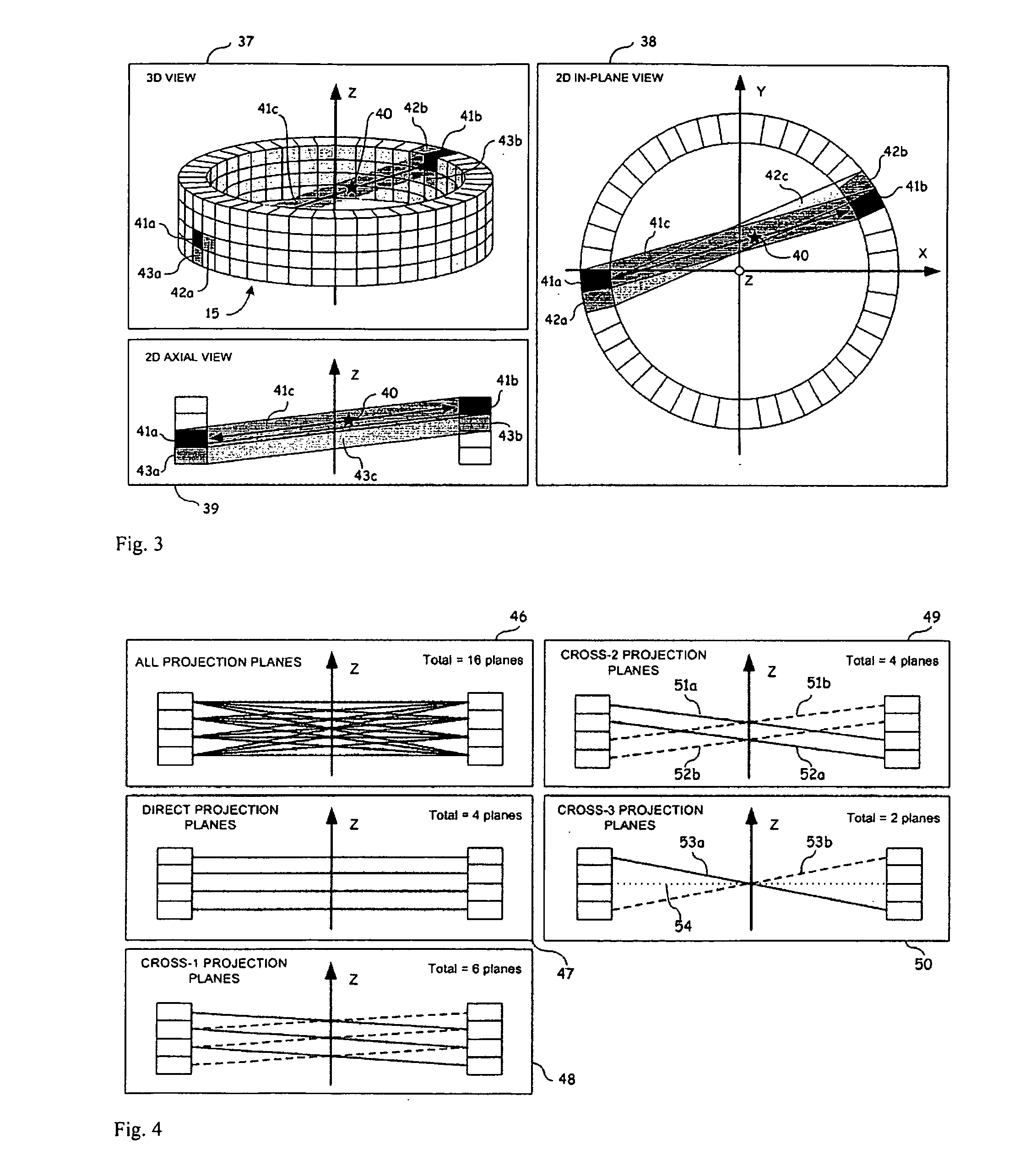

InactiveUS20090123048A1Minimized in sizeFast computerReconstruction from projectionMaterial analysis using wave/particle radiationIn planeLines of response

An iterative image reconstruction method used with an imaging system that generates projection data, the method comprises: collecting the projection data; choosing a polar or cylindrical image definition comprising a polar or cylindrical grid representation and a number of basis functions positioned according to the polar or cylindrical grid so that the number of basis functions at different radius positions of the polar or cylindrical image grid is a factor of a number of in-plane symmetries between lines of response along which the projection data are measured by the imaging system; obtaining a system probability matrix that relates each of the projection data to each basis function of the polar or cylindrical image definition; restructuring the system probability matrix into a block circulant matrix and converting the system probability matrix in the Fourier domain; storing the projection data into a measurement data vector; providing an initial polar or cylindrical image estimate; for each iteration; recalculating the polar or cylindrical image estimate according to an iterative solver based on forward and back projection operations with the system probability matrix in the Fourier domain; and converting the polar or cylindrical image estimate into a Cartesian image representation to thereby obtain a reconstructed image.

Owner:SOCPRA SCI SANTE & HUMAINES S E C

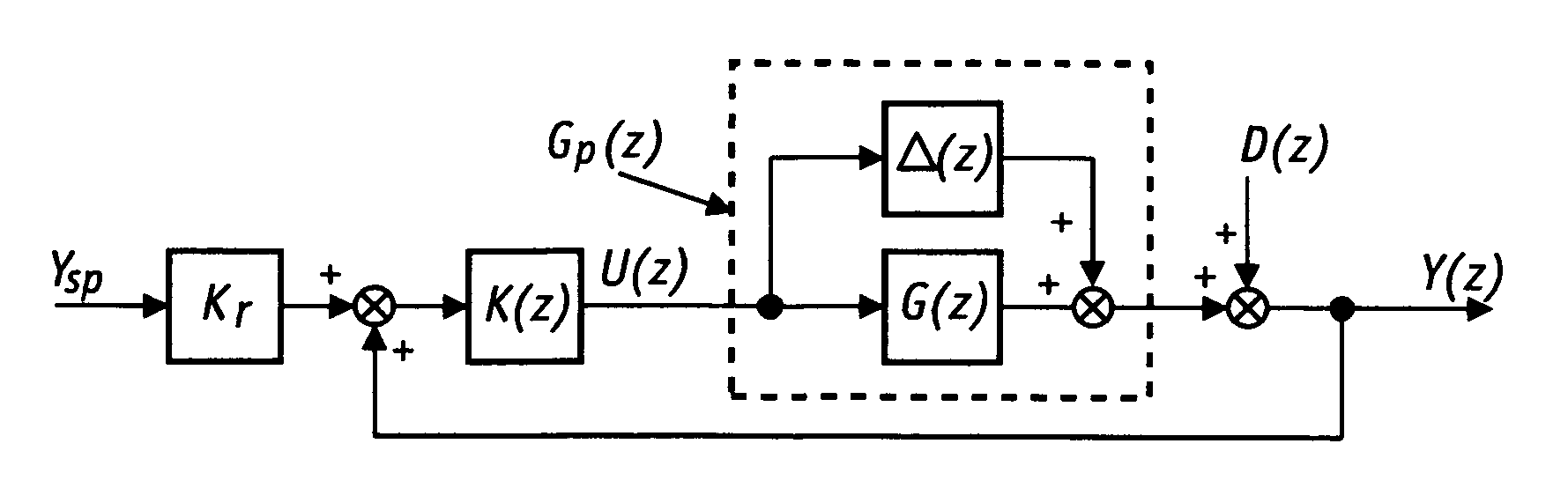

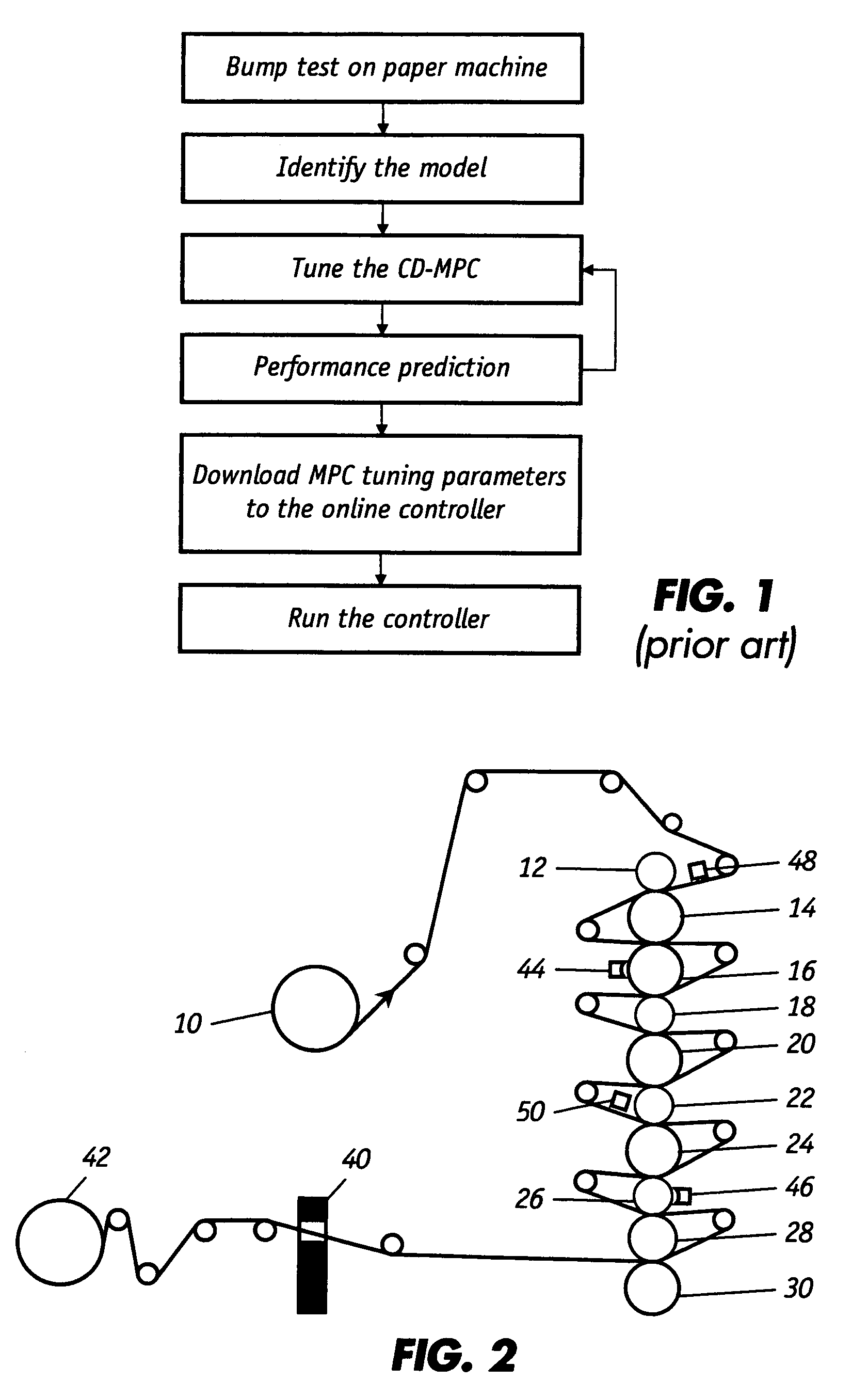

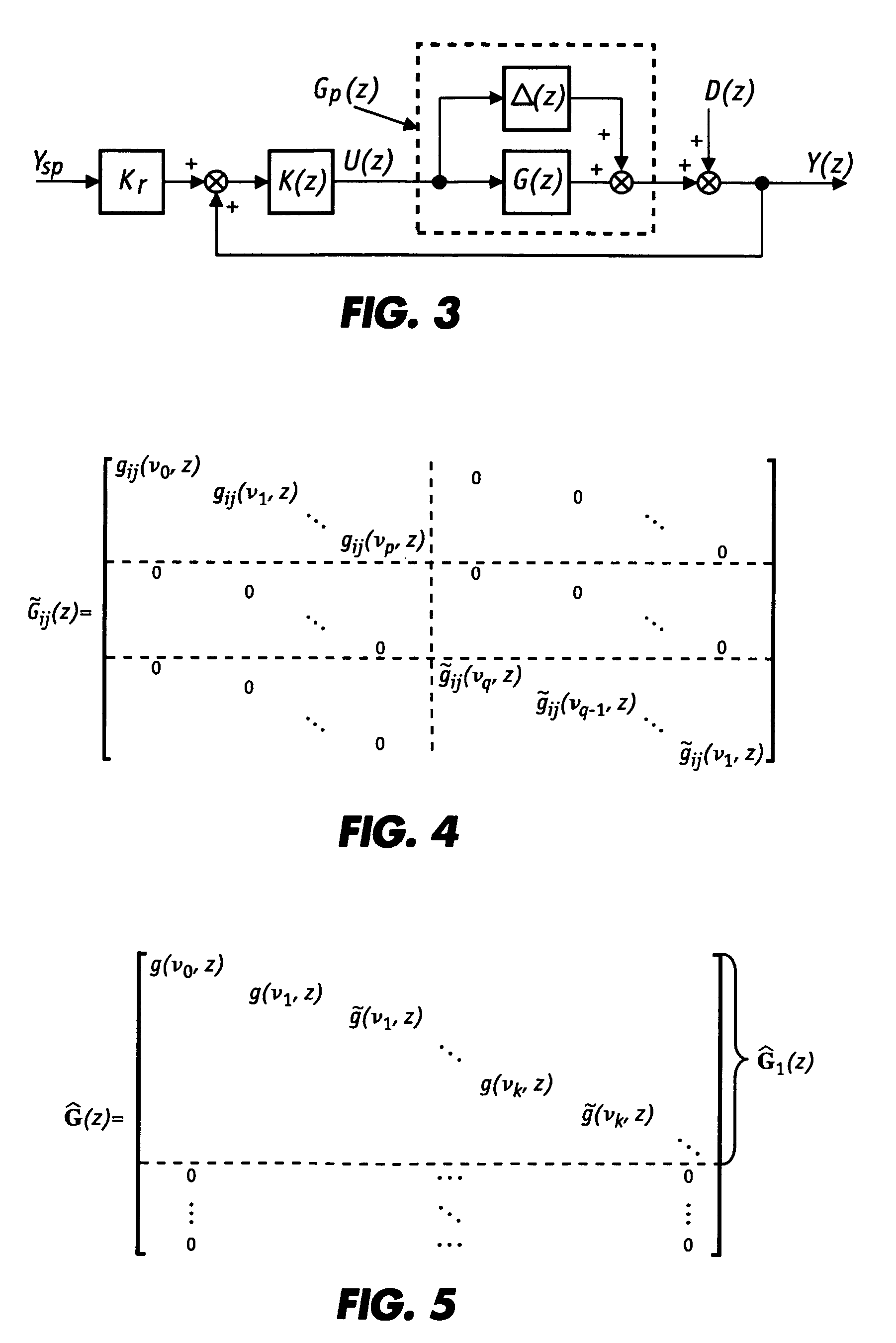

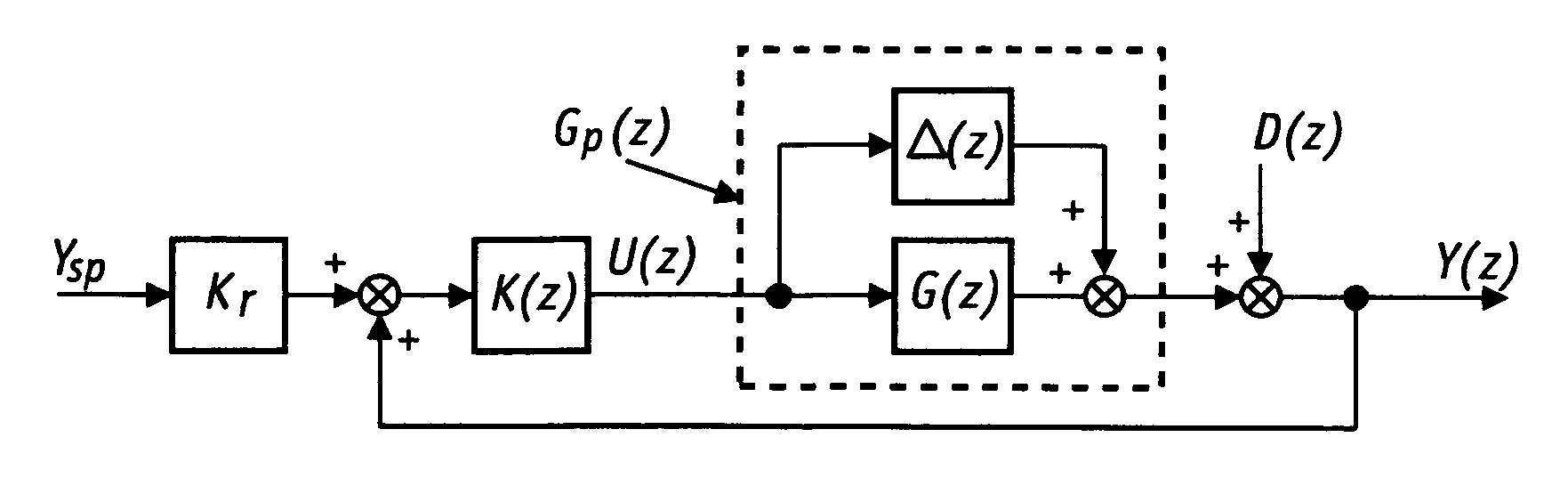

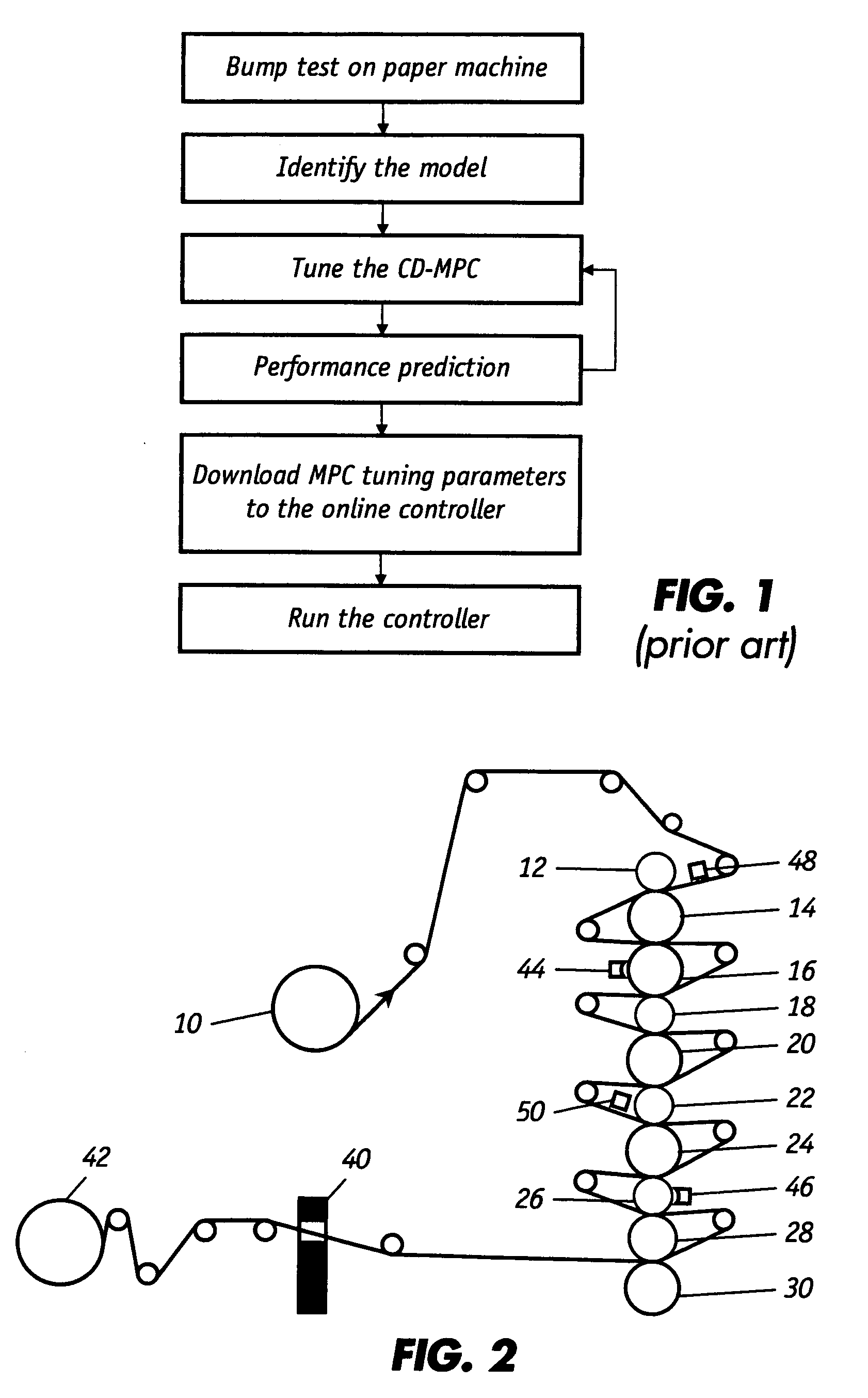

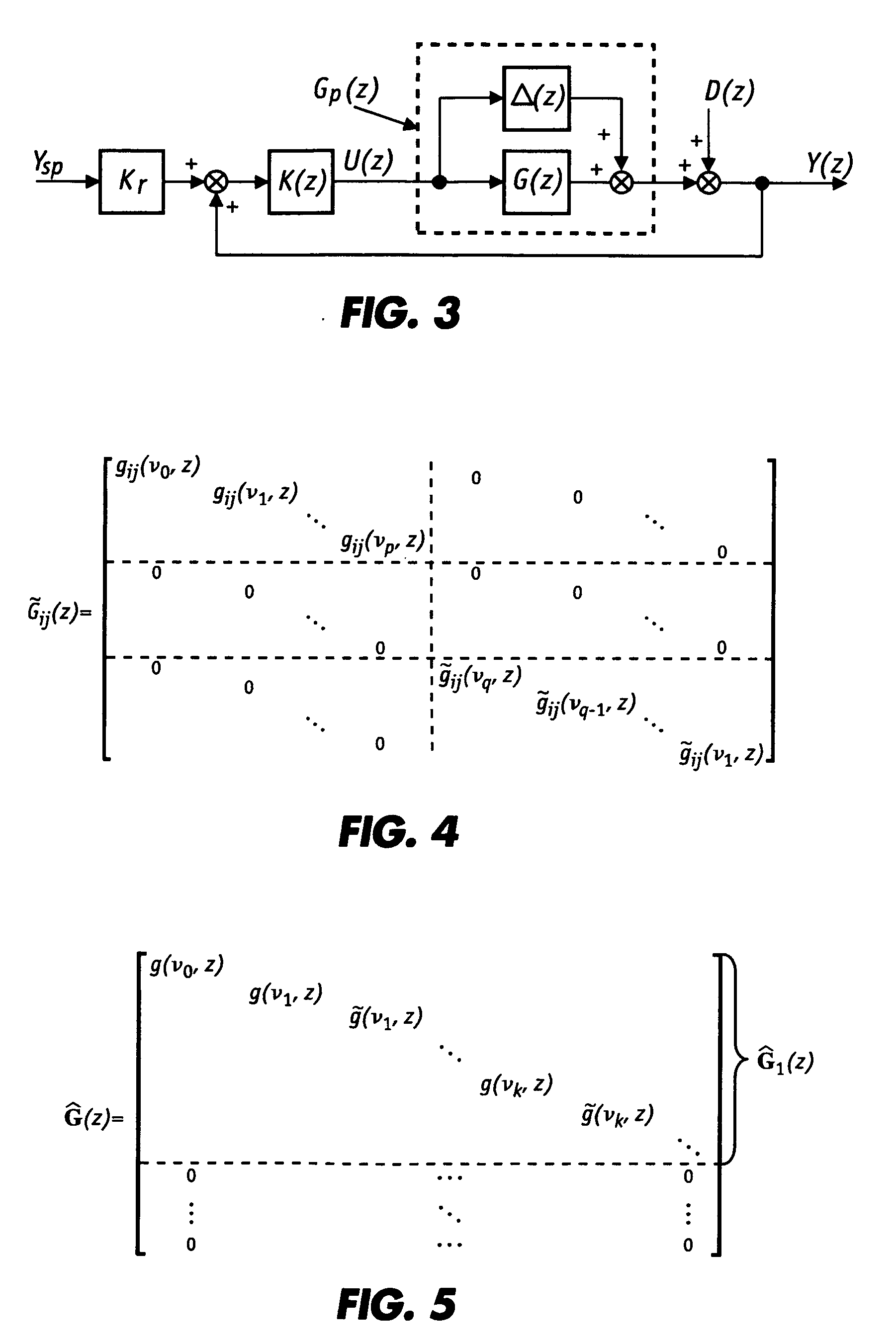

Automated tuning of large-scale multivariable model predictive controllers for spatially-distributed processes

ActiveUS7650195B2Analogue computers for chemical processesDigital computer detailsPredictive controllerClosed loop

An automated tuning method of a large-scale multivariable model predictive controller for multiple array papermaking machine cross-directional (CD) processes can significantly improve the performance of the controller over traditional controllers. Paper machine CD processes are large-scale spatially-distributed dynamical systems. Due to these systems' (almost) spatially invariant nature, the closed-loop transfer functions are approximated by transfer matrices with rectangular circulant matrix blocks, whose input and output singular vectors are the Fourier components of dimension equivalent to either number of actuators or number of measurements. This approximation enables the model predictive controller for these systems to be tuned by a numerical search over optimization weights in order to shape the closed-loop transfer functions in the two-dimensional frequency domain for performance and robustness. A novel scaling method is used for scaling the inputs and outputs of the multivariable system in the spatial frequency domain.

Owner:HONEYWELL ASCA INC

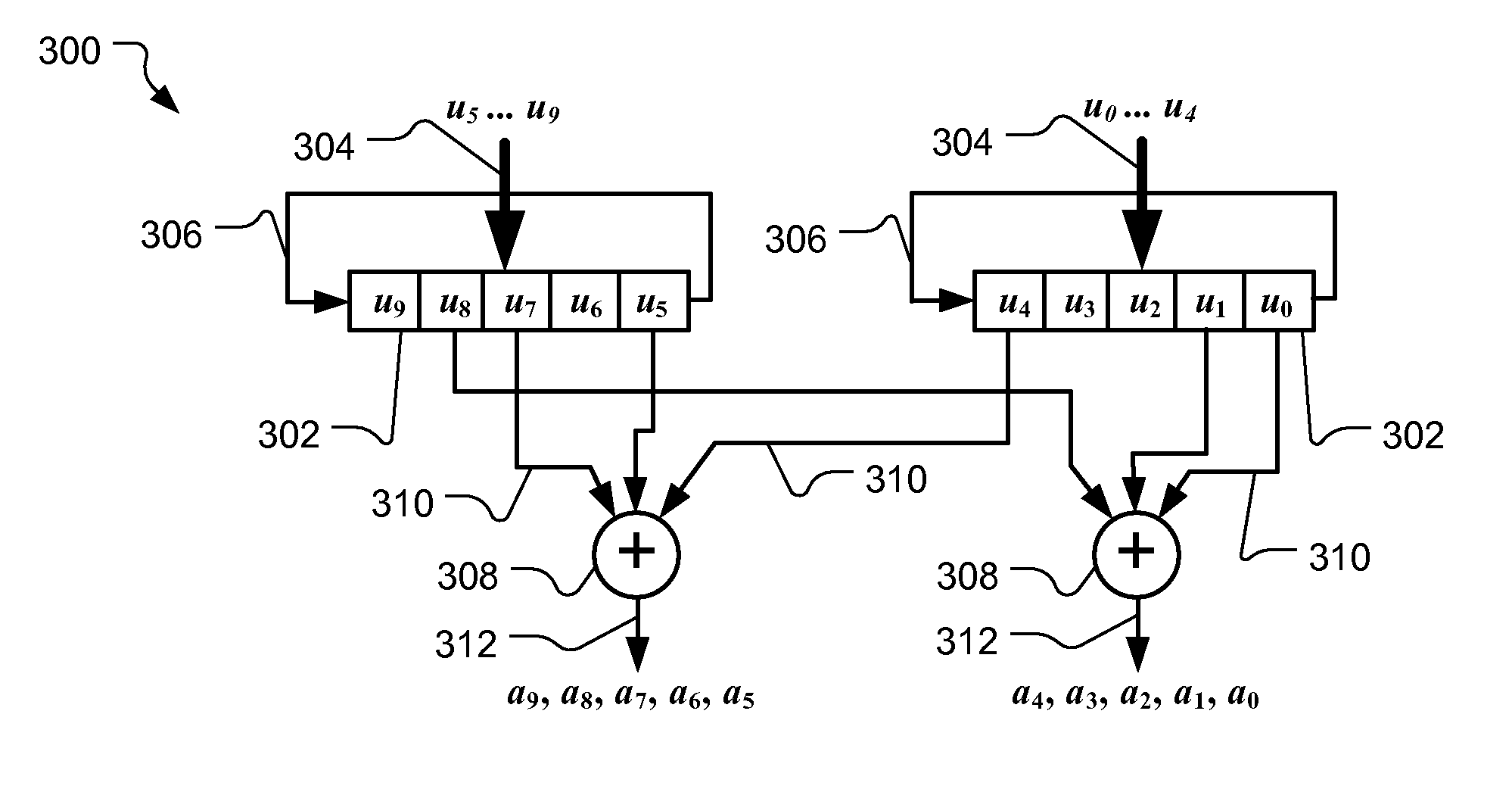

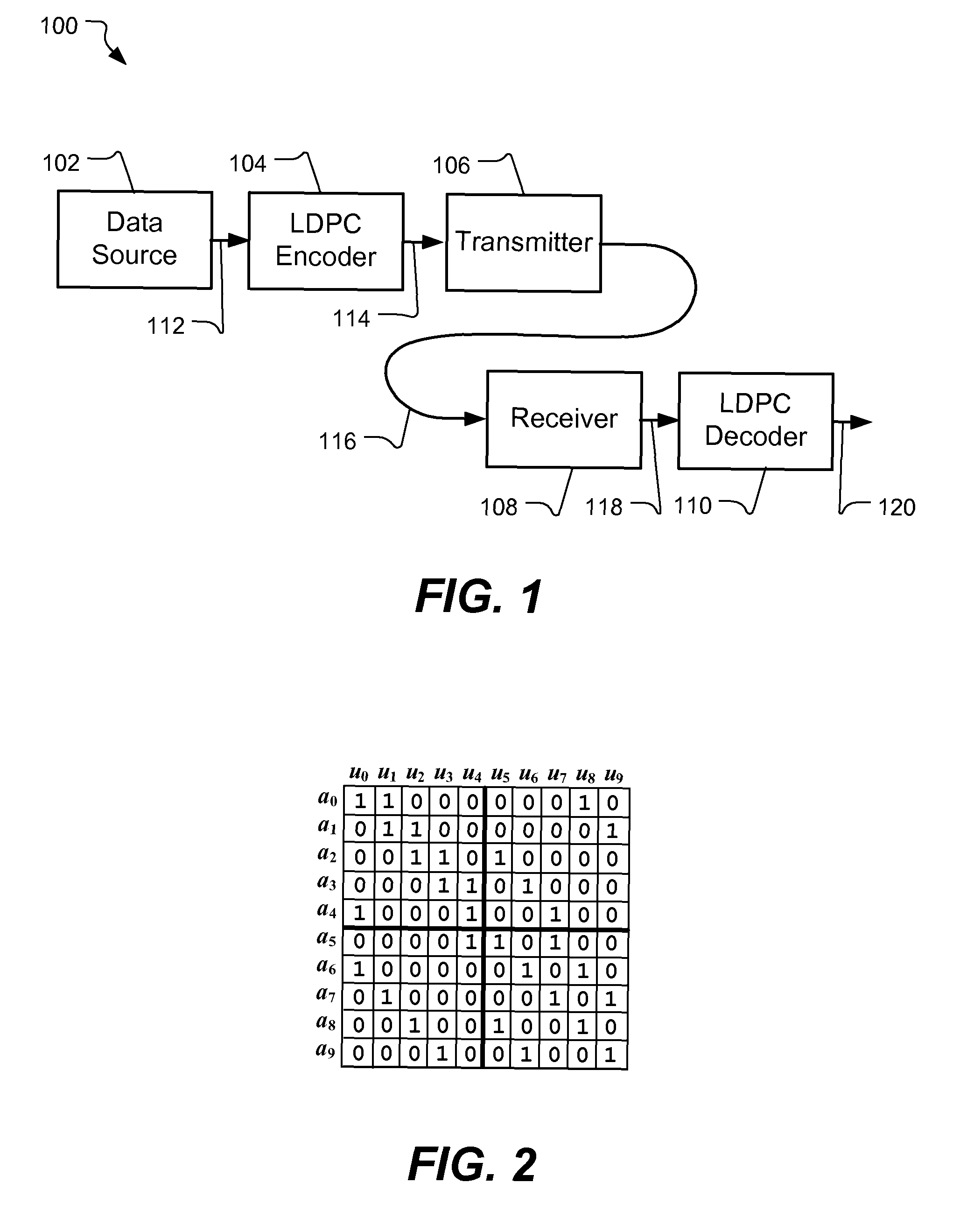

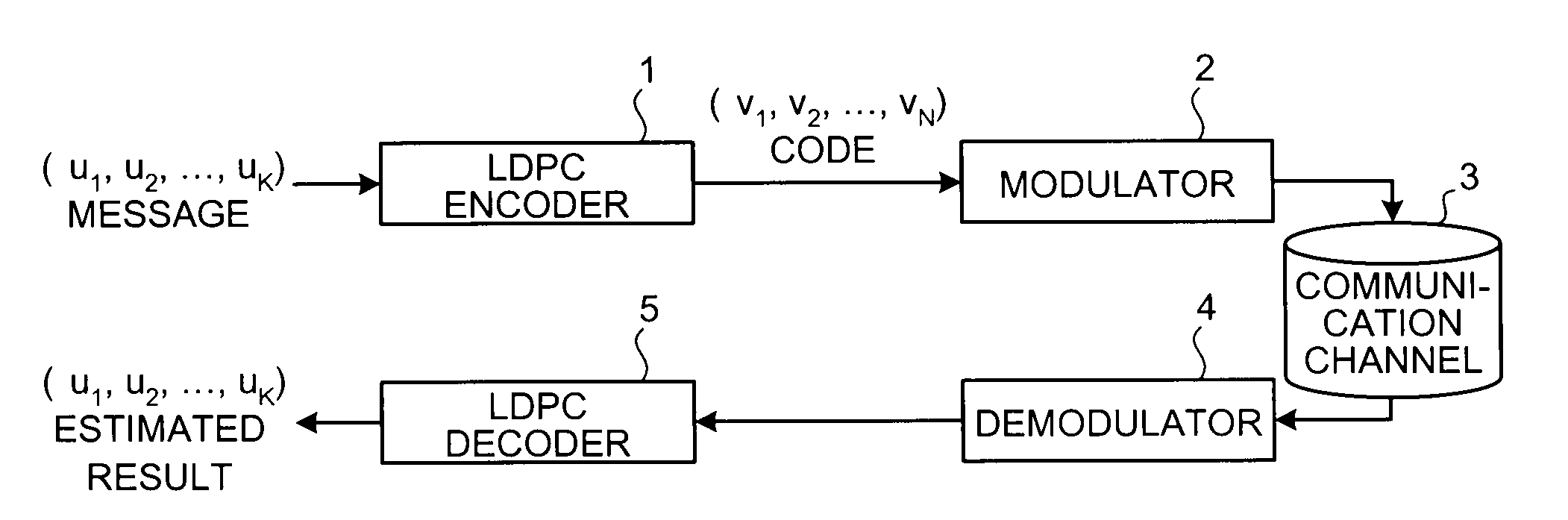

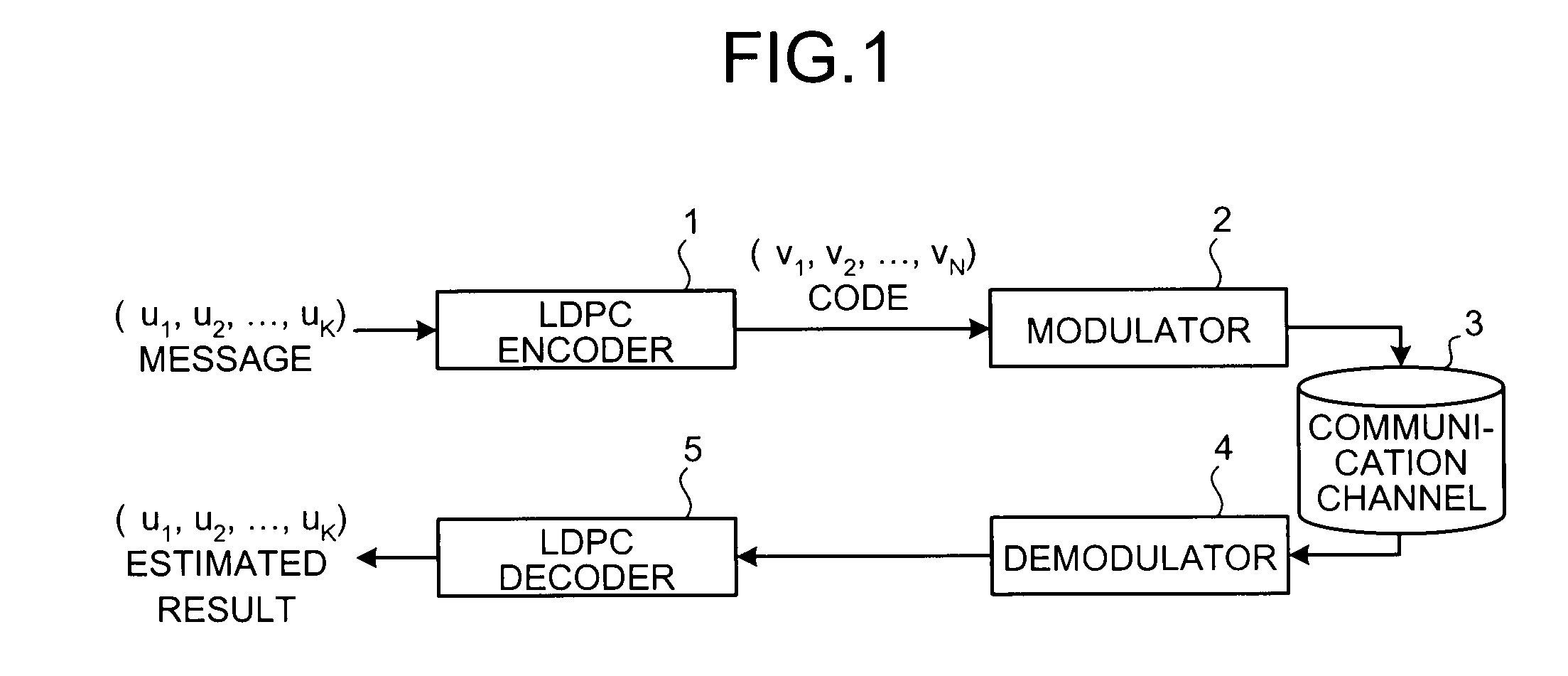

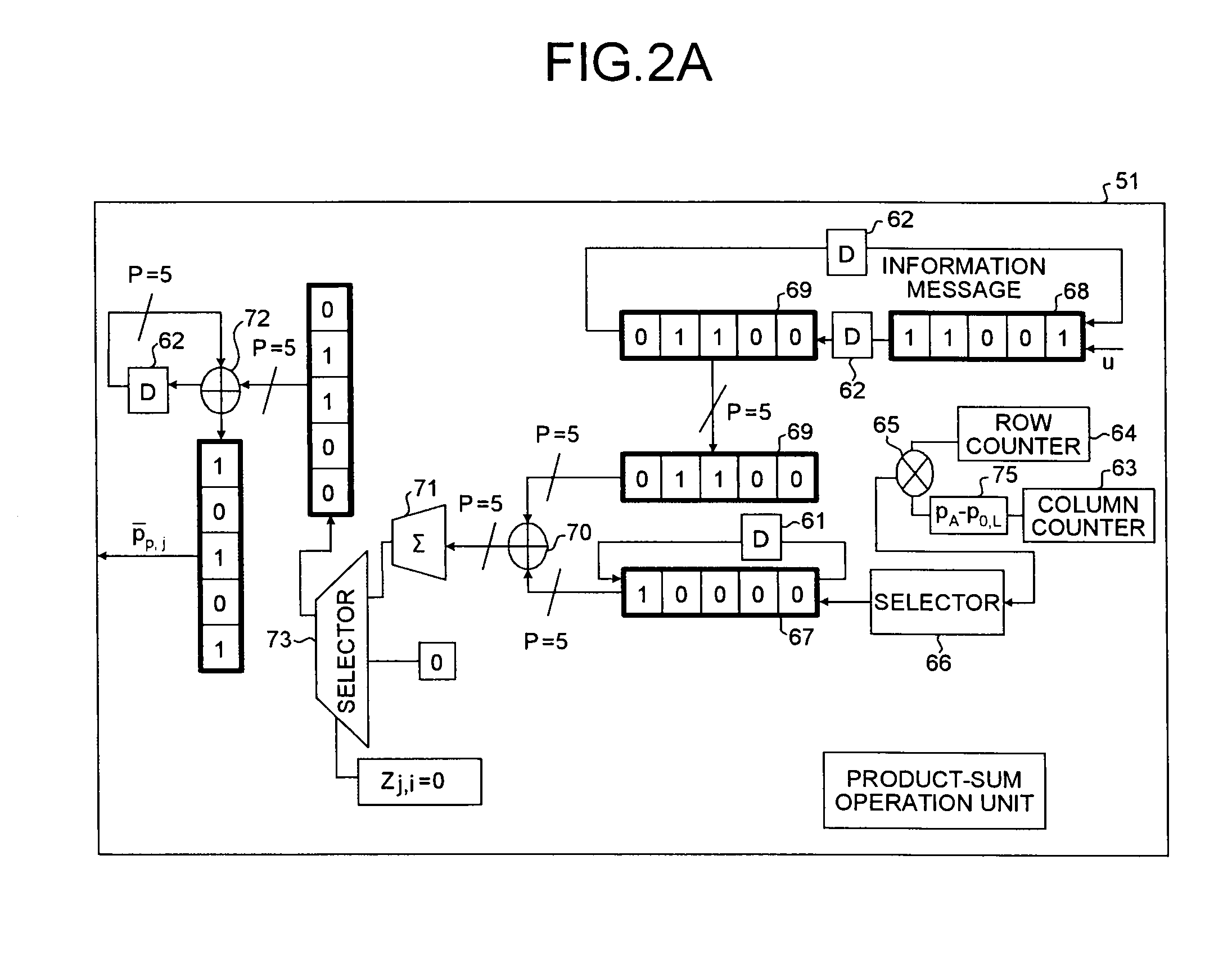

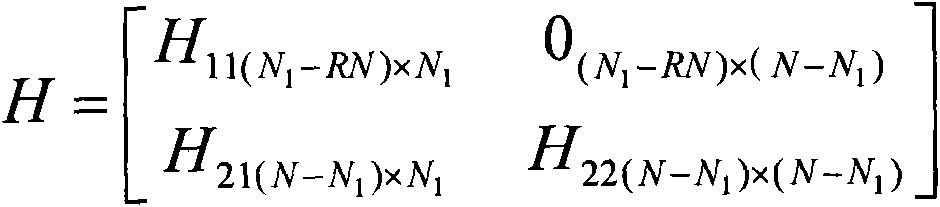

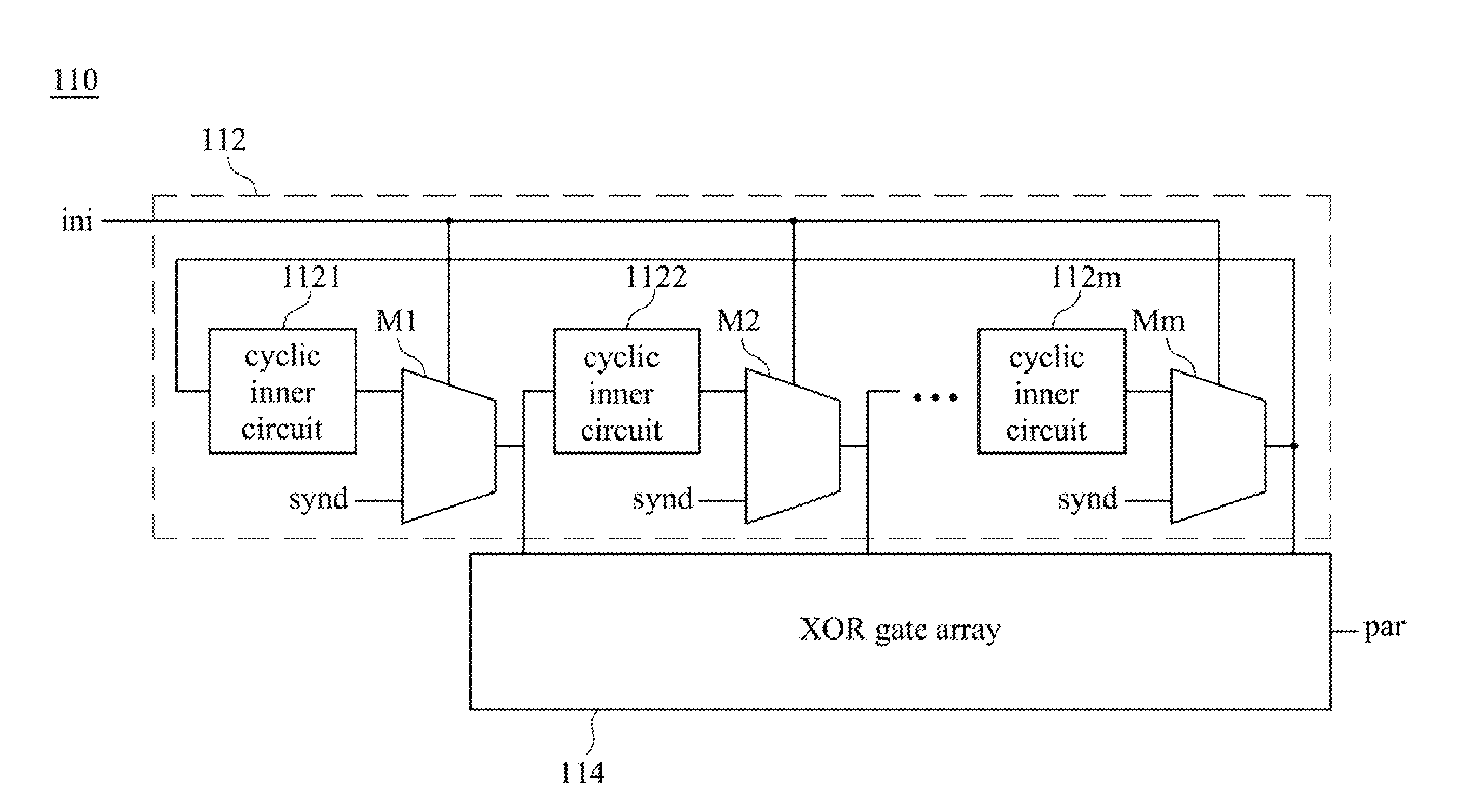

Encoder for low-density parity check codes

Encoding of a low-density parity check code uses a block-circulant encoding matrix built from circulant matrices. Encoding can include partitioning data into a plurality of data segments. The data segments are each circularly rotated. A plurality of XOR summations are formed for each rotation of the data segments to produce output symbols. The XOR summations use data from the data segments defined by the circulant matrices. Output symbols are produced in parallel for each rotation of the data segments.

Owner:L3 TECH INC

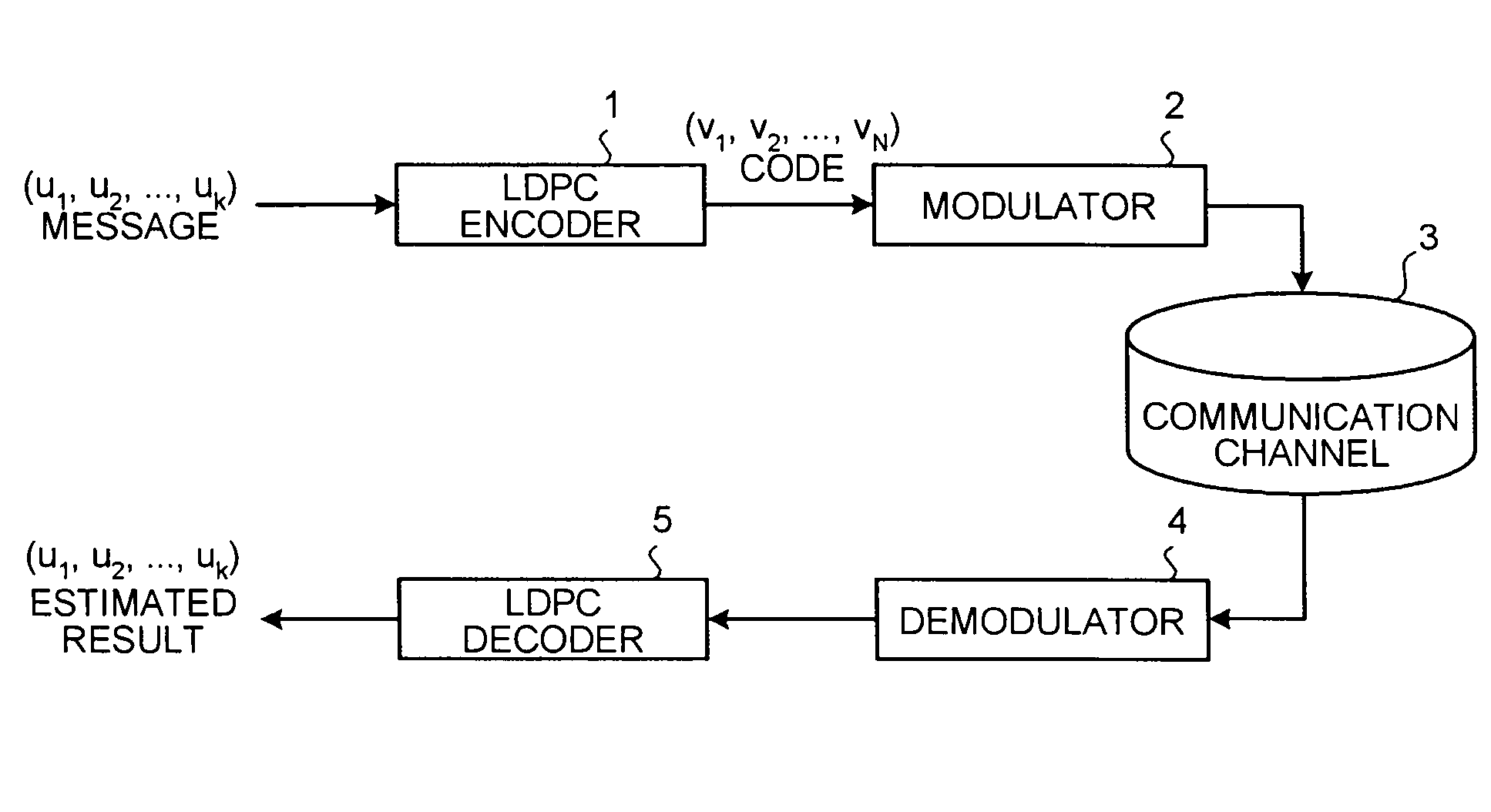

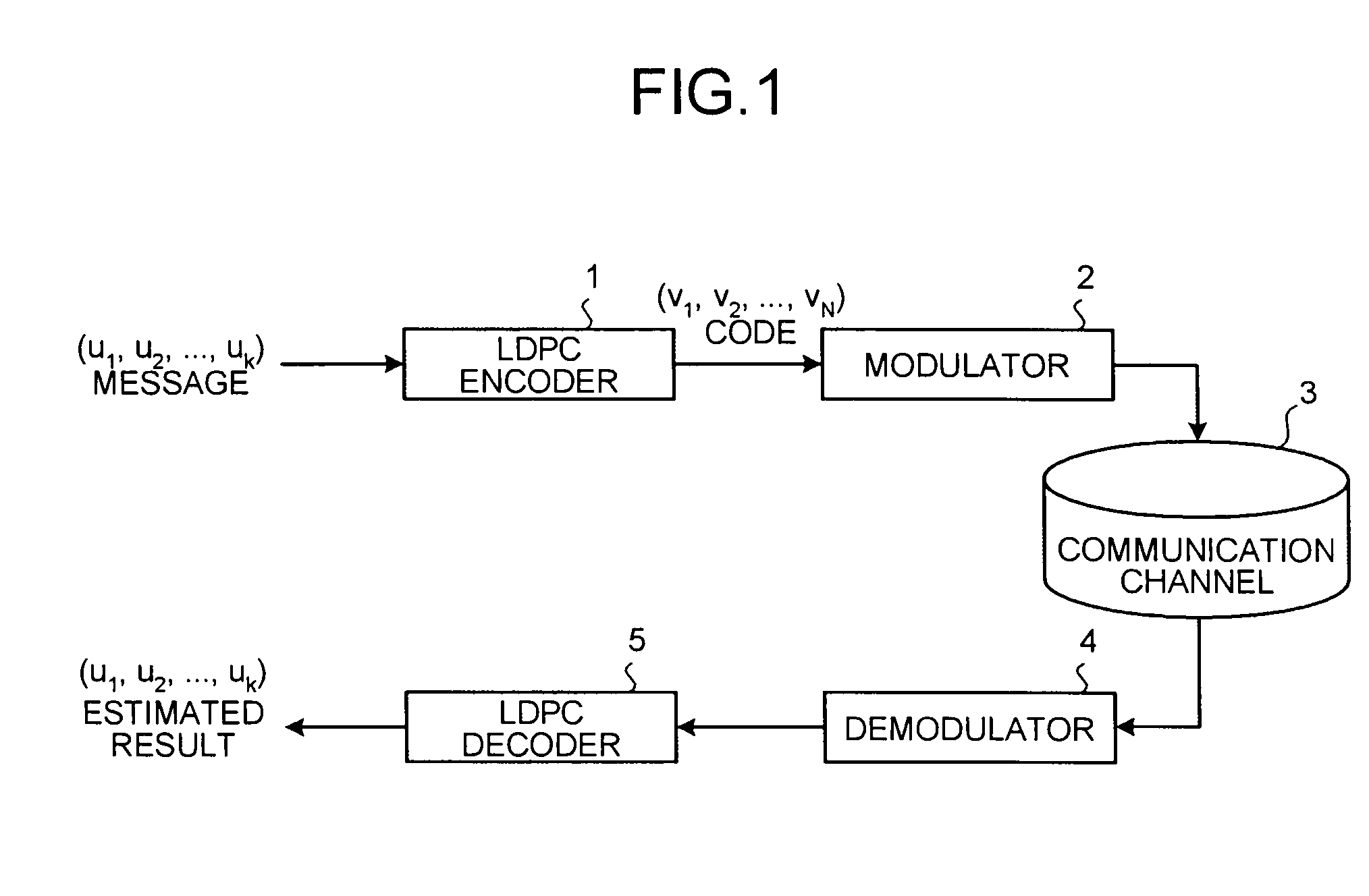

Simplified LDPC encoding for digital communications

ActiveUS20060036926A1Simple methodError correction/detection using LDPC codesCode conversionTheoretical computer scienceParity-check matrix

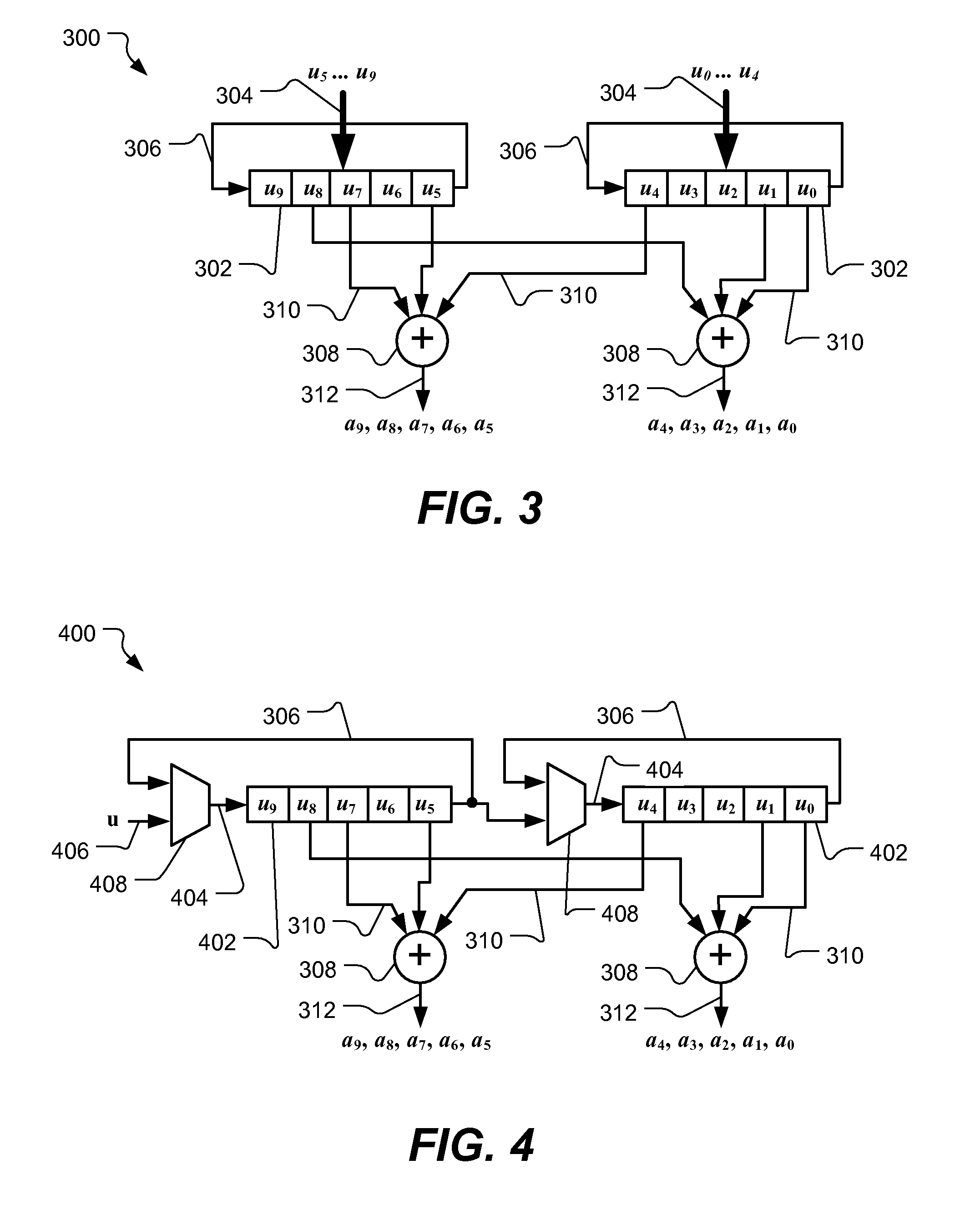

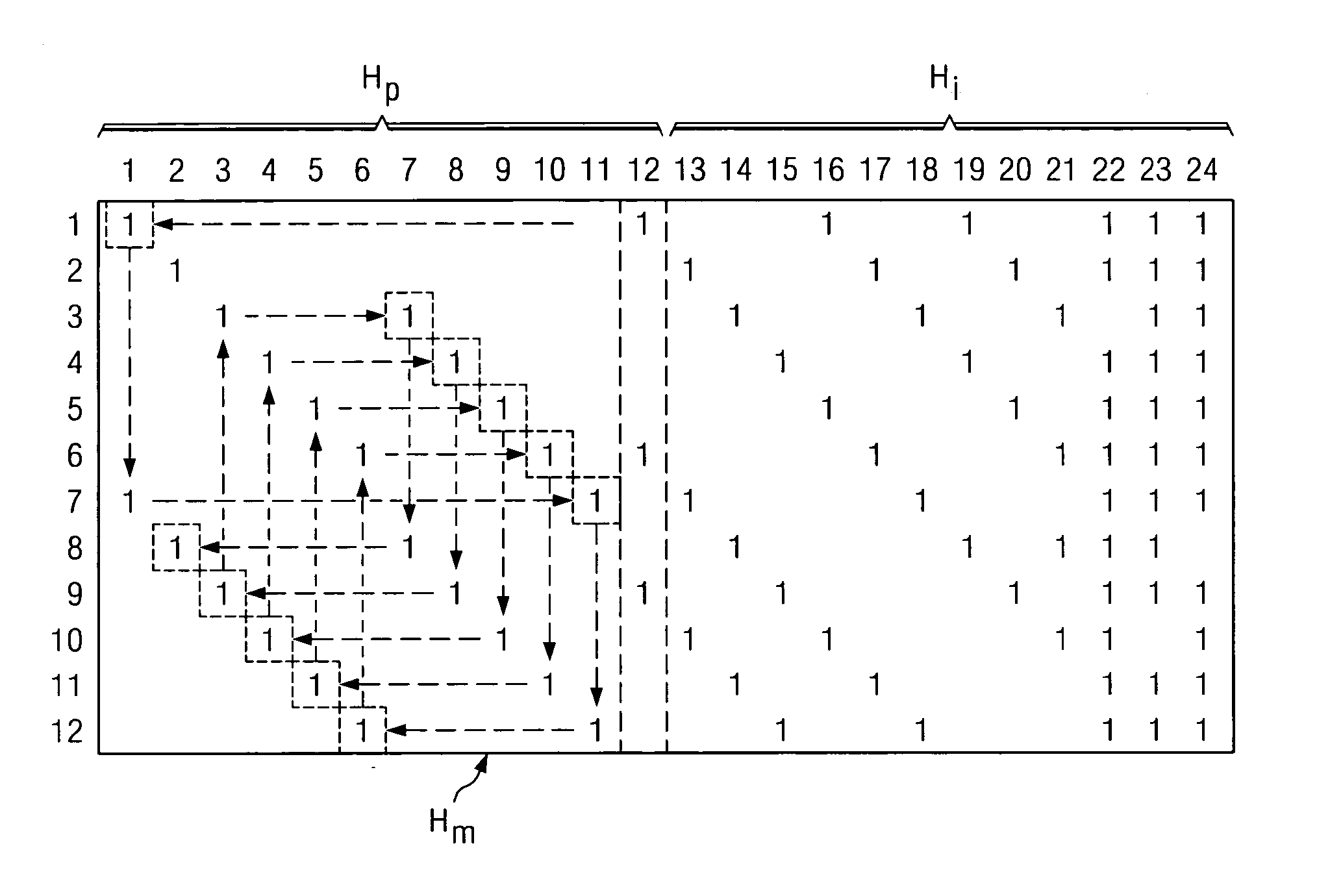

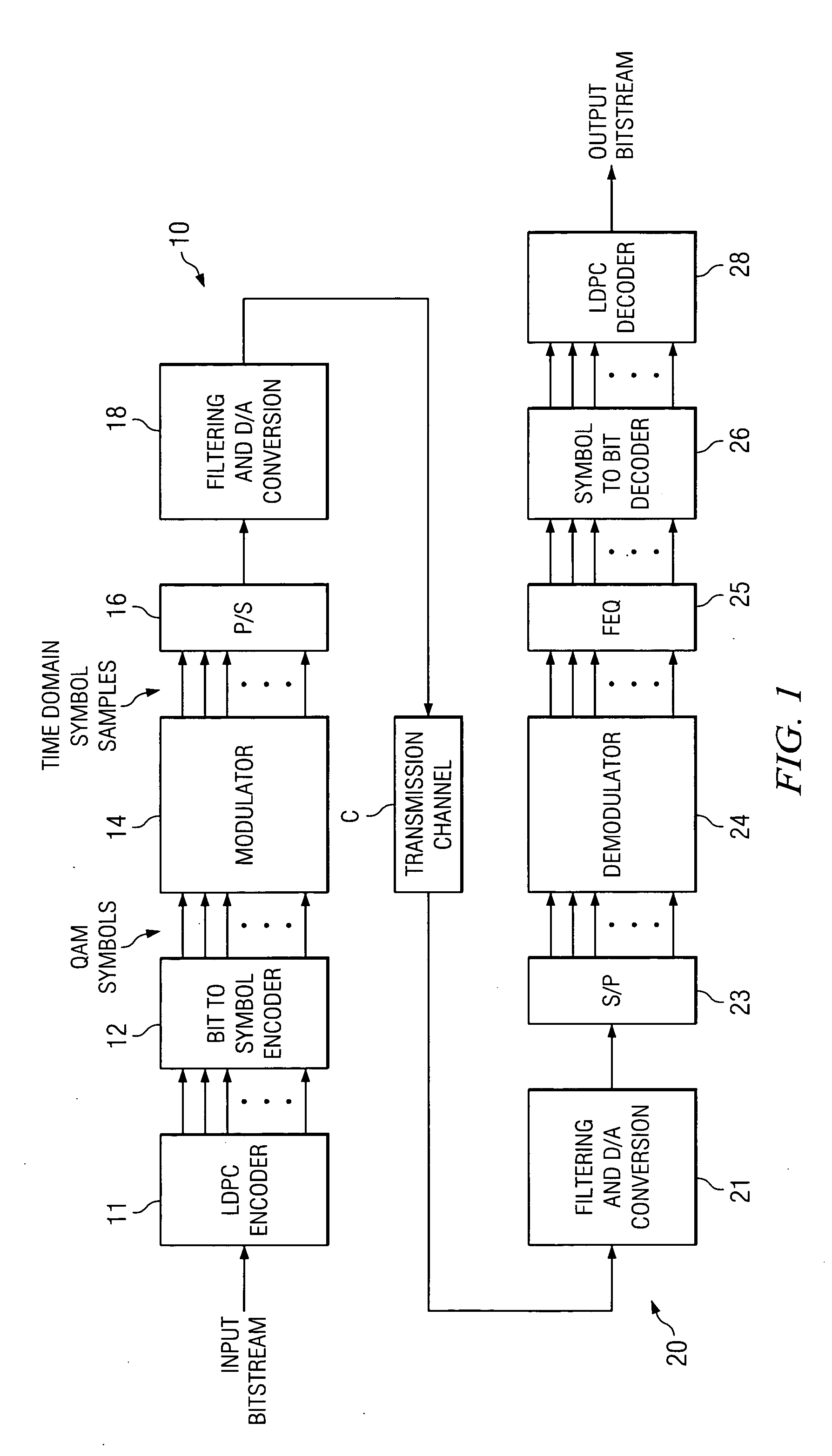

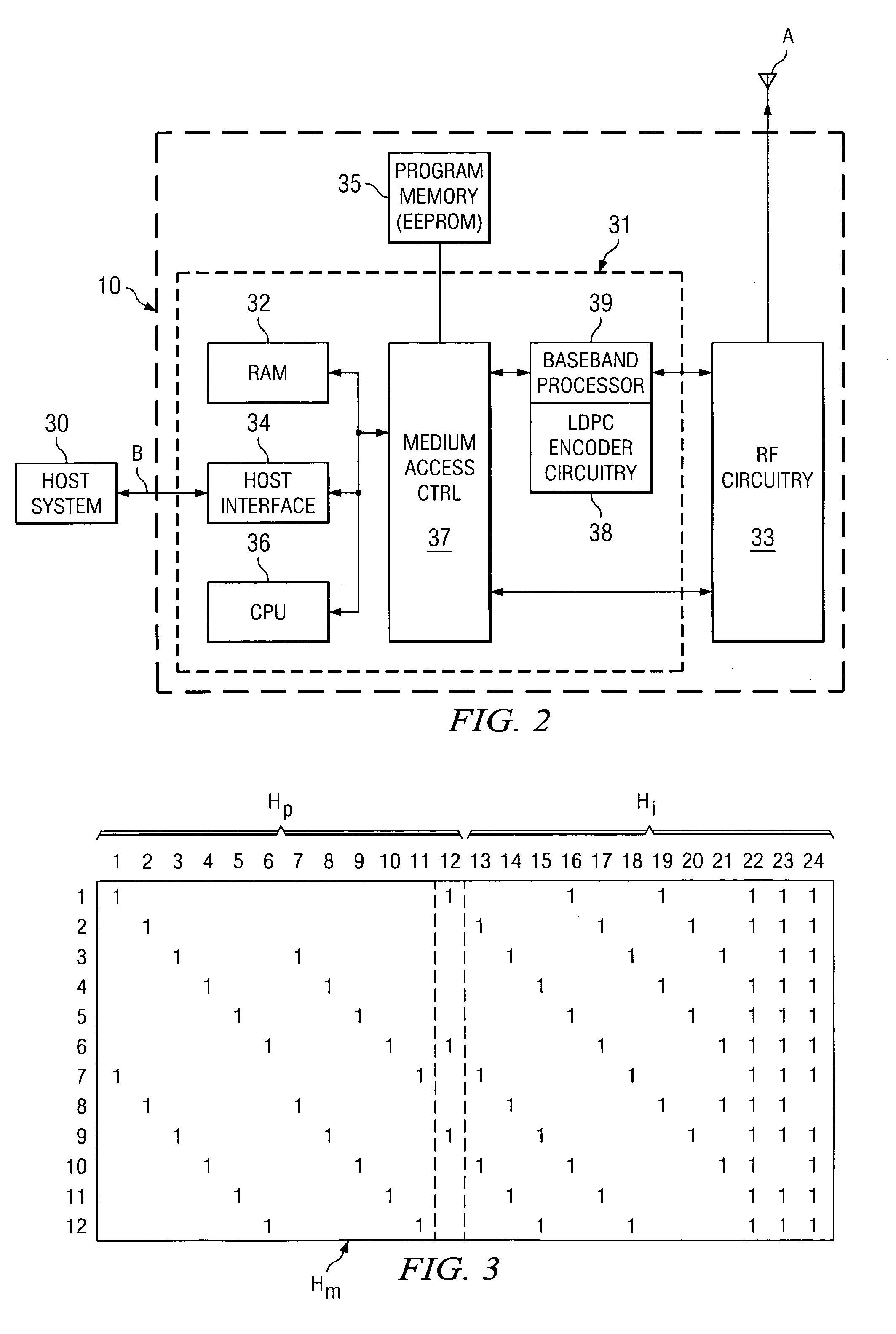

Encoder circuitry for applying a low-density parity check (LDPC) code to information words is disclosed. The encoder circuitry takes advantage of a macro matrix arrangement of the LDPC parity check matrix in which the parity portion of the parity check matrix is arranged as a macro matrix in which all block columns but one define a recursion path. The parity check matrix is factored so that the last block column of the parity portion includes an invertible cyclic matrix as its entry in a selected block row, with all other parity portion columns in that selected block row being zero-valued, thus permitting solution of the parity bits for that block column from the information portion of the parity check matrix and the information word to be encoded. Solution of the other parity bits can then be readily performed, from the original (non-factored) parity portion of the parity check matrix, following the recursion path.

Owner:TEXAS INSTR INC

Fast computation of multi-input-multi-output decision feedback equalizer coefficients

InactiveUS7113540B2Lower requirementRapid productionMultiple-port networksDelay line applicationsMulti inputOptimal decision

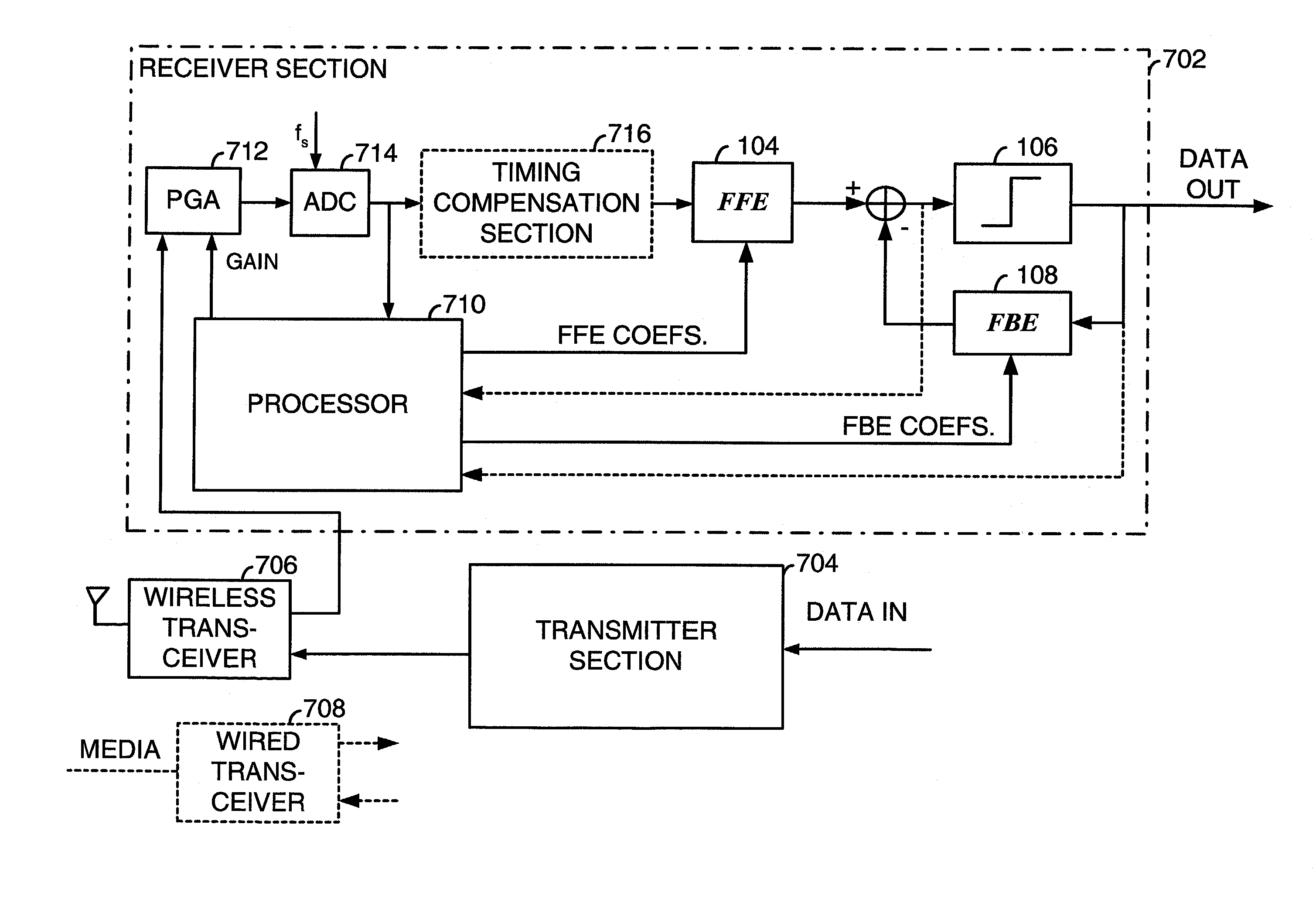

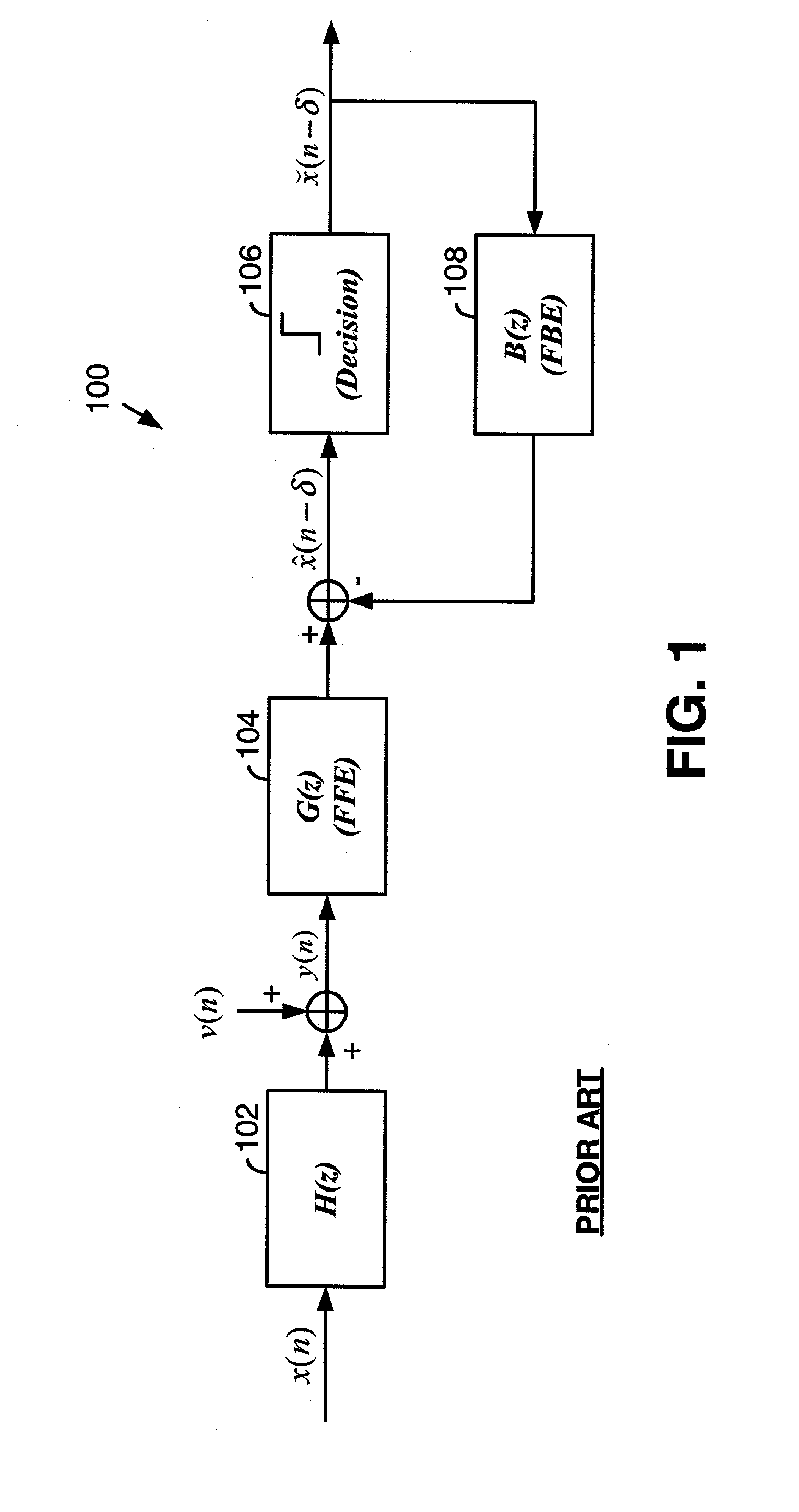

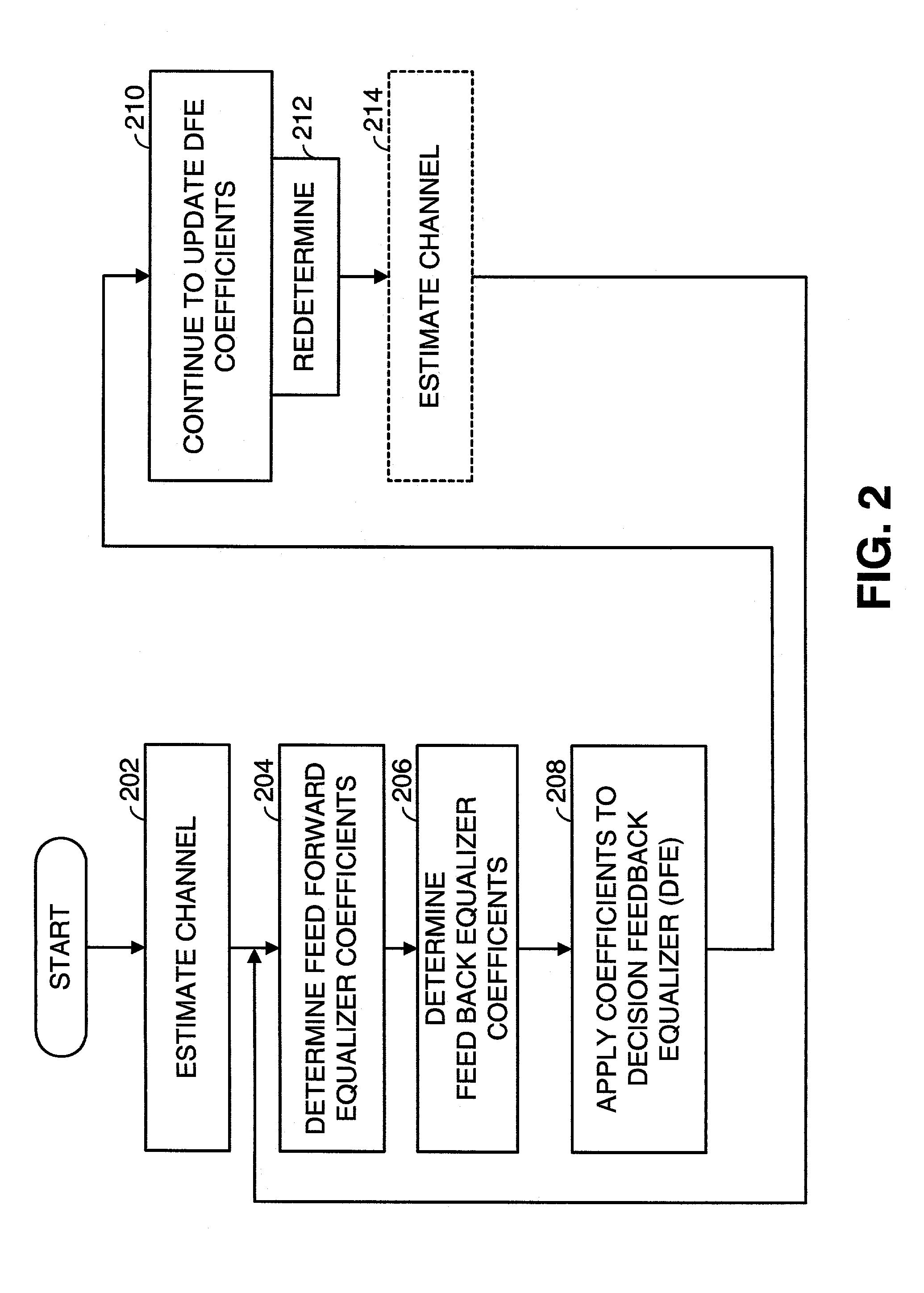

Multi-Input-Multi-Output (MIMO) Optimal Decision Feedback Equalizer (DFE) coefficients are determined from a channel estimate h by casting the MIMO DFE coefficient problem as a standard recursive least squares (RLS) problem and solving the RLS problem. In one embodiment, a fast recursive method, e.g., fast transversal filter (FTF) technique, then used to compute the Kalman gain of the RLS problem, which is then directly used to compute MIMO Feed Forward Equalizer (FFE) coefficients gopt. The complexity of a conventional FTF algorithm is reduced to one third of its original complexity by choosing the length of a MIMO Feed Back Equalizer (FBE) coefficients bopt (of the DFE) to force the FTF algorithm to use a lower triangular matrix. The MIMO FBE coefficients bop are computed by convolving the MIMO FFE coefficients gopt with the channel impulse response h. In performing this operation, a convolution matrix that characterizes the channel impulse response h extended to a bigger circulant matrix. With the extended circulant matrix structure, the convolution of the MIMO FFE coefficients gopt with the channel impulse response h may be performed easily performed in the frequency domain.

Owner:AVAGO TECH INT SALES PTE LTD

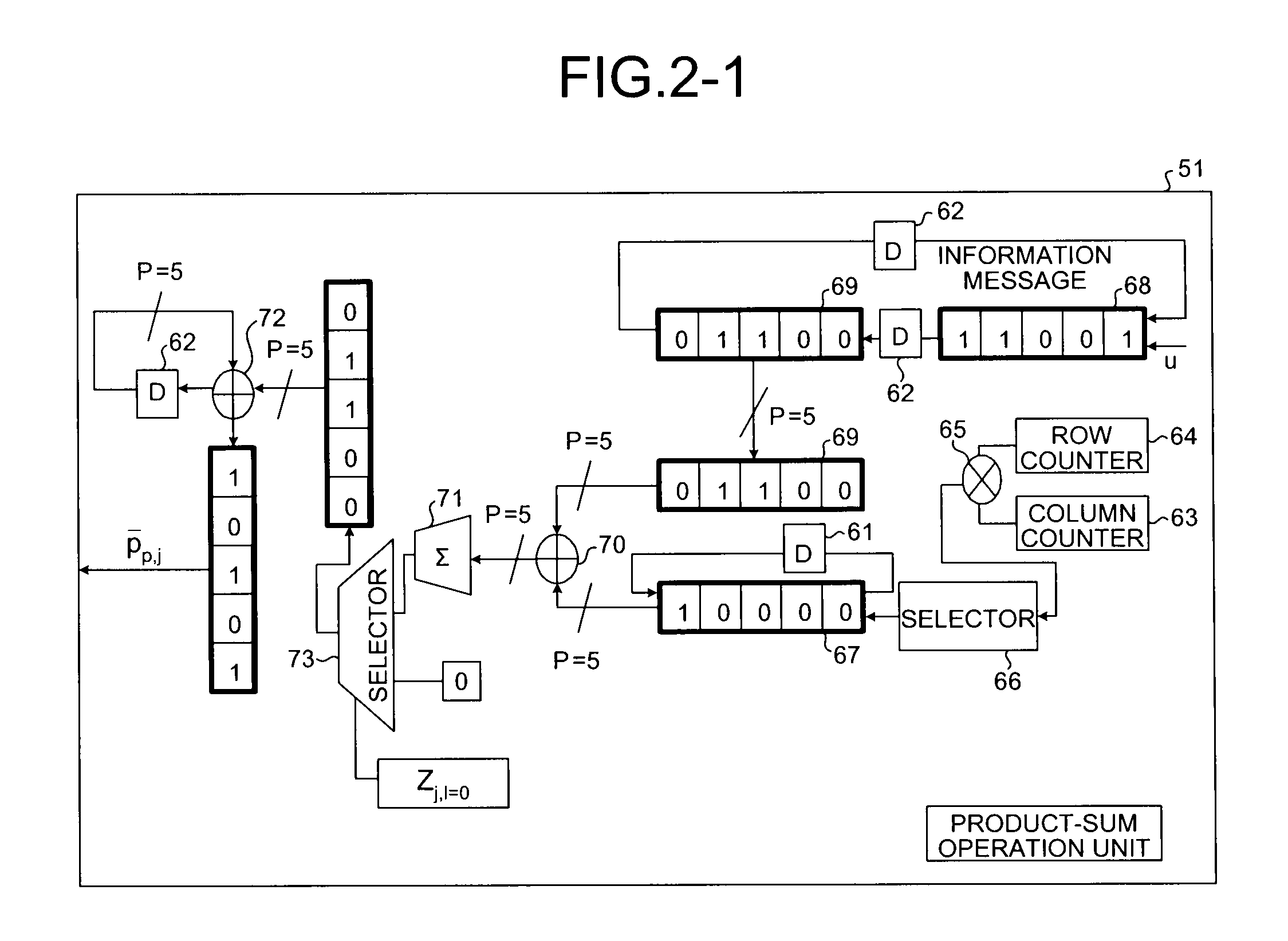

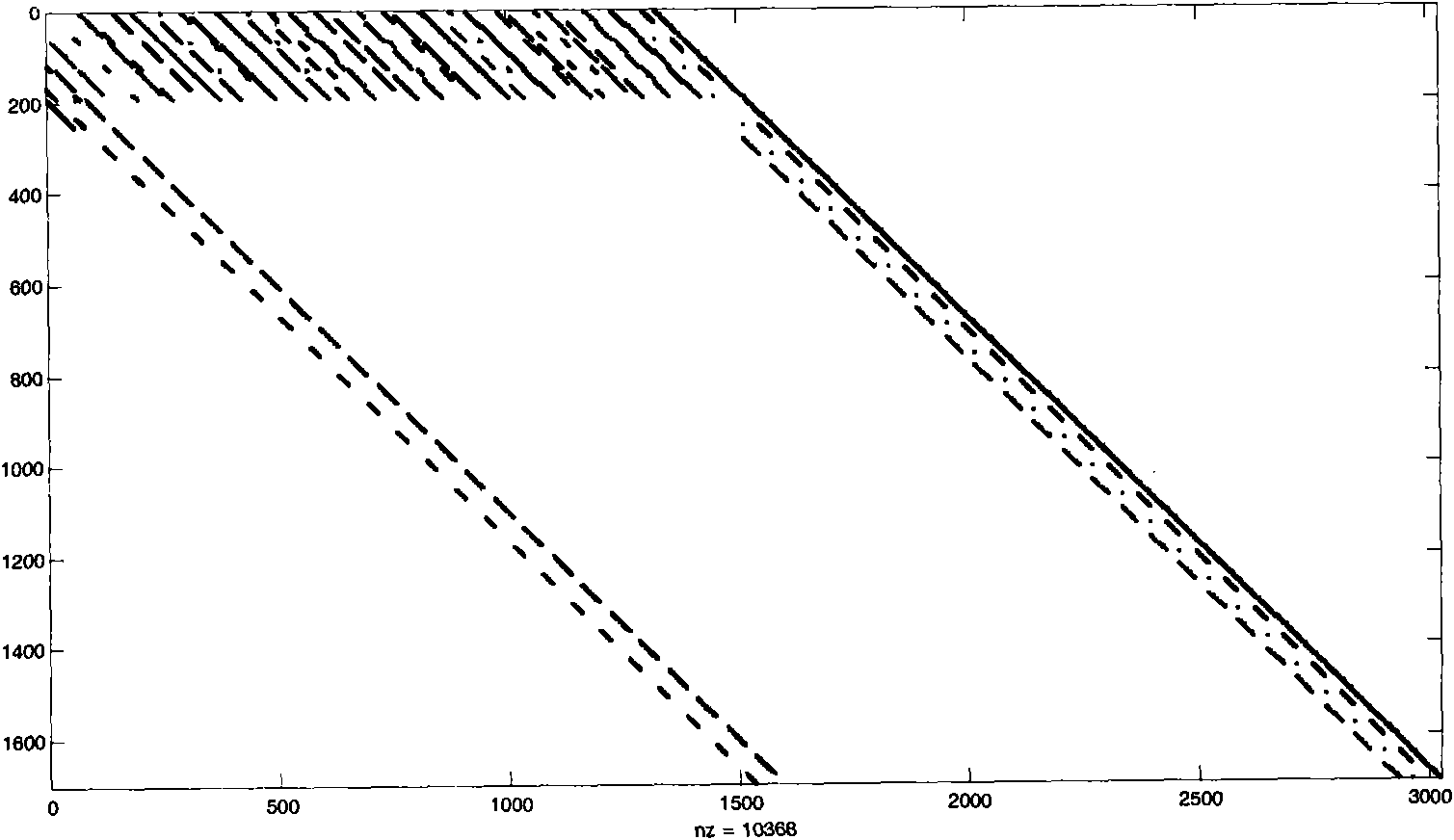

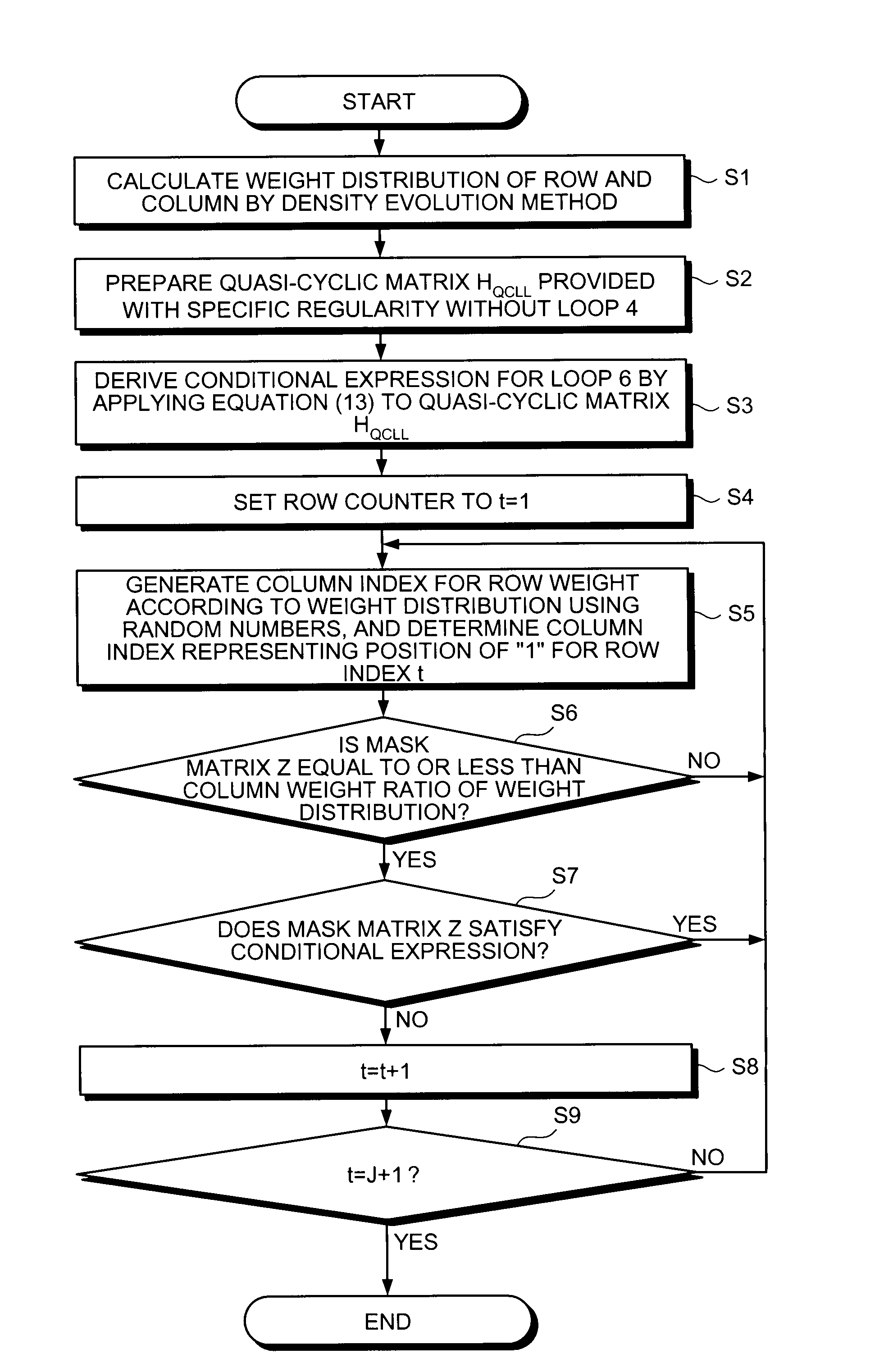

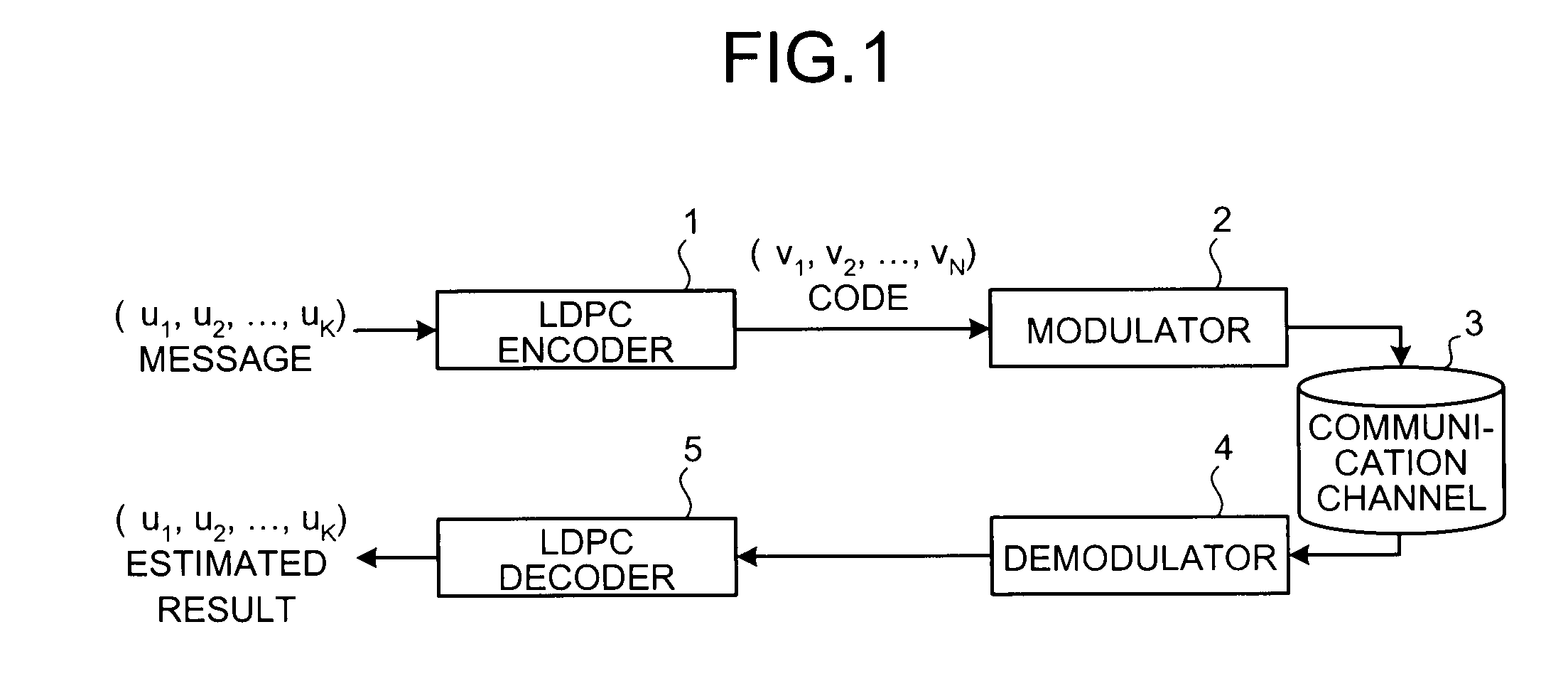

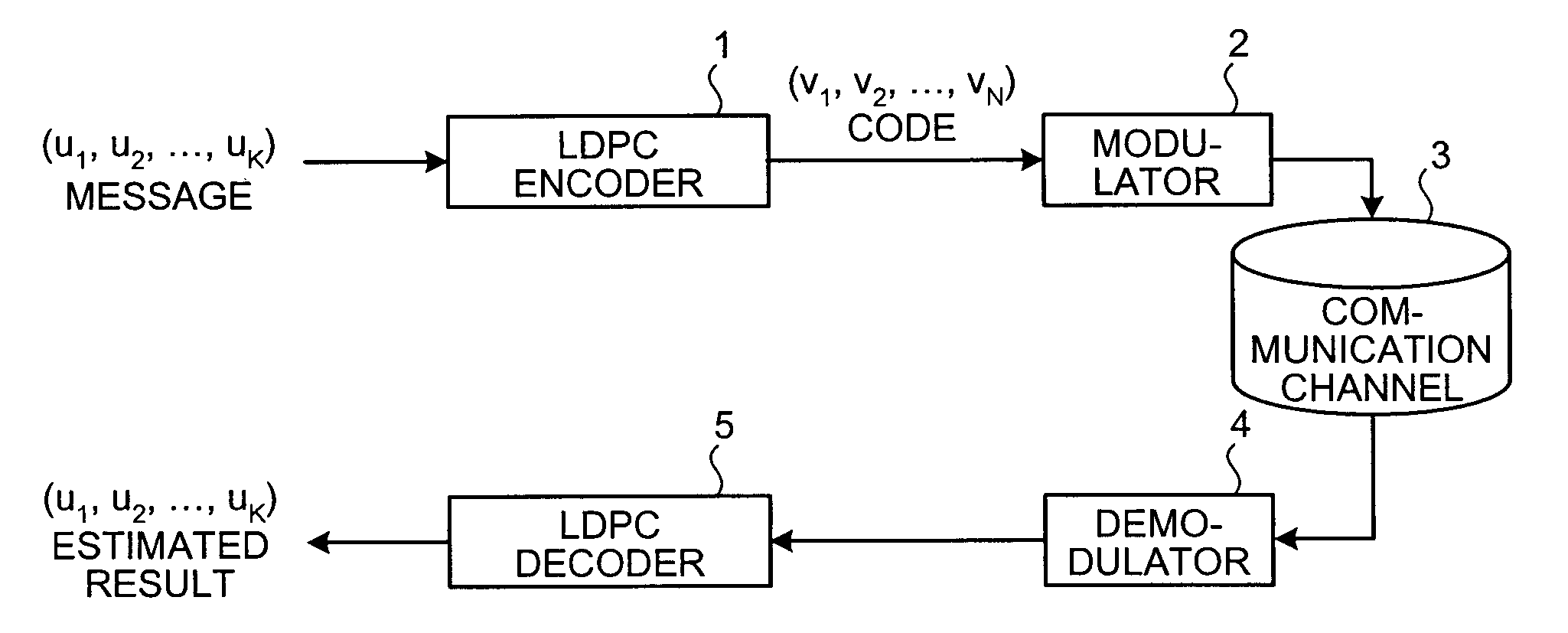

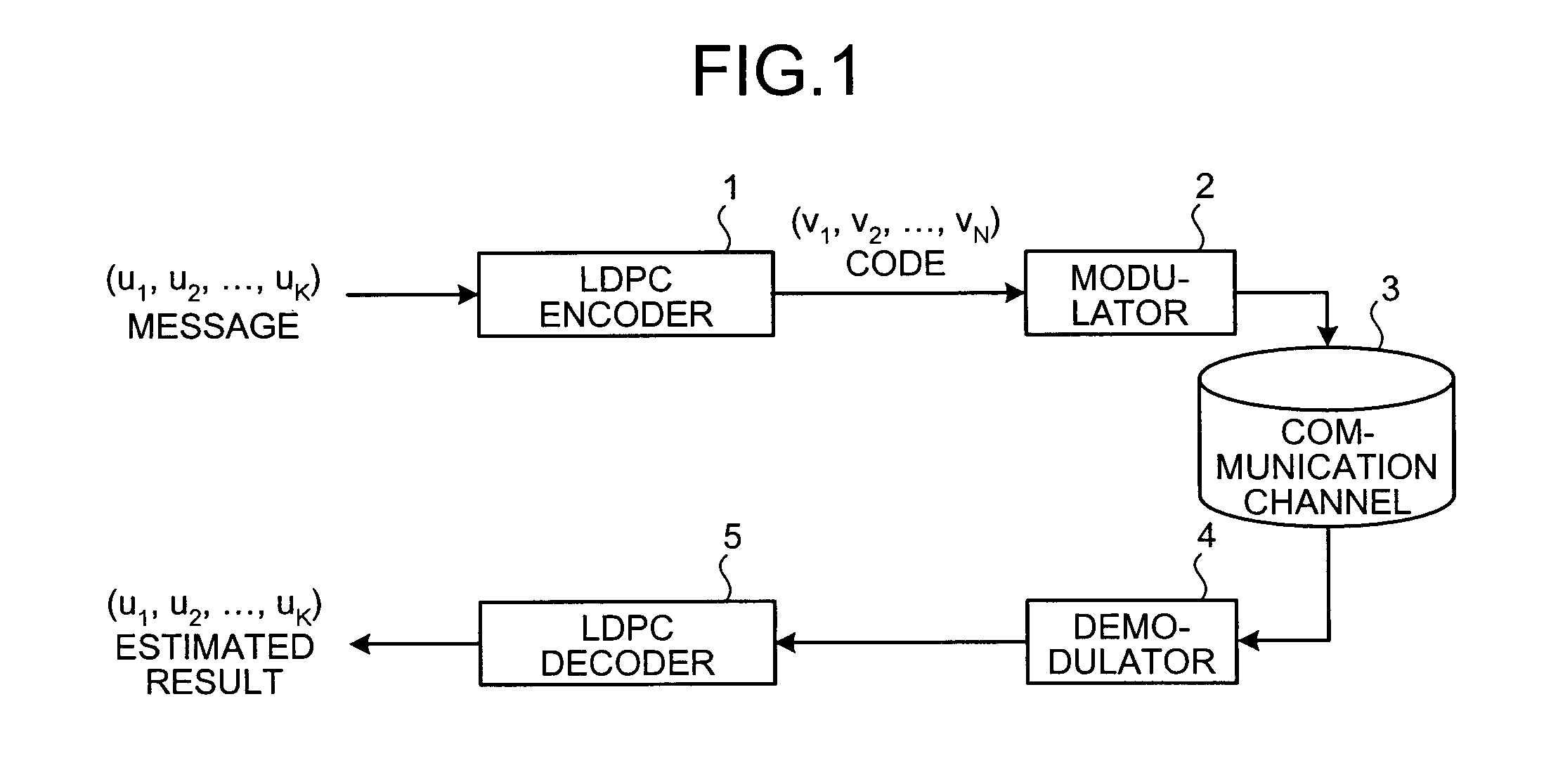

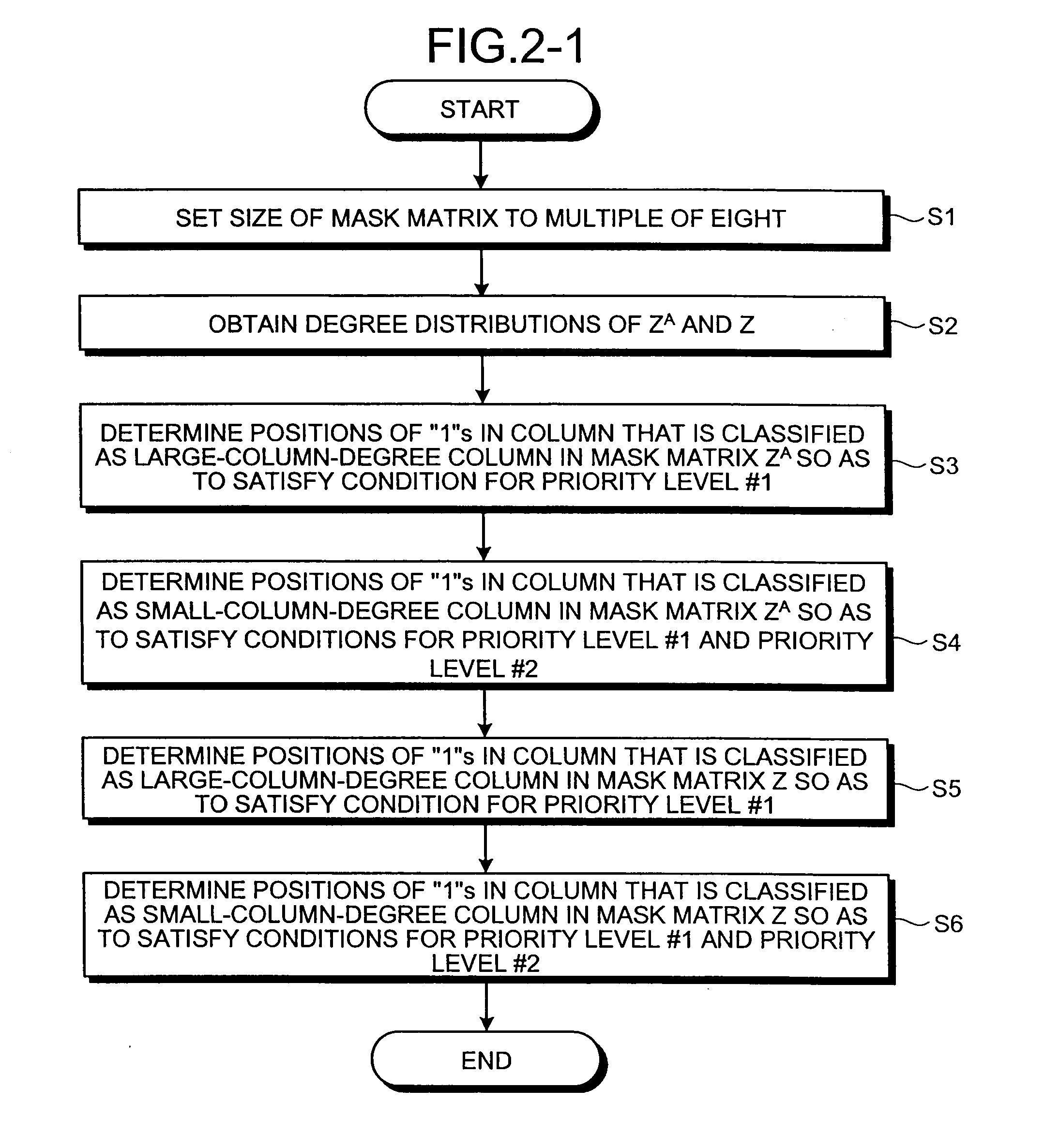

Test matrix generating method, encoding method, decoding method, communication apparatus, communication system, encoder and decoder

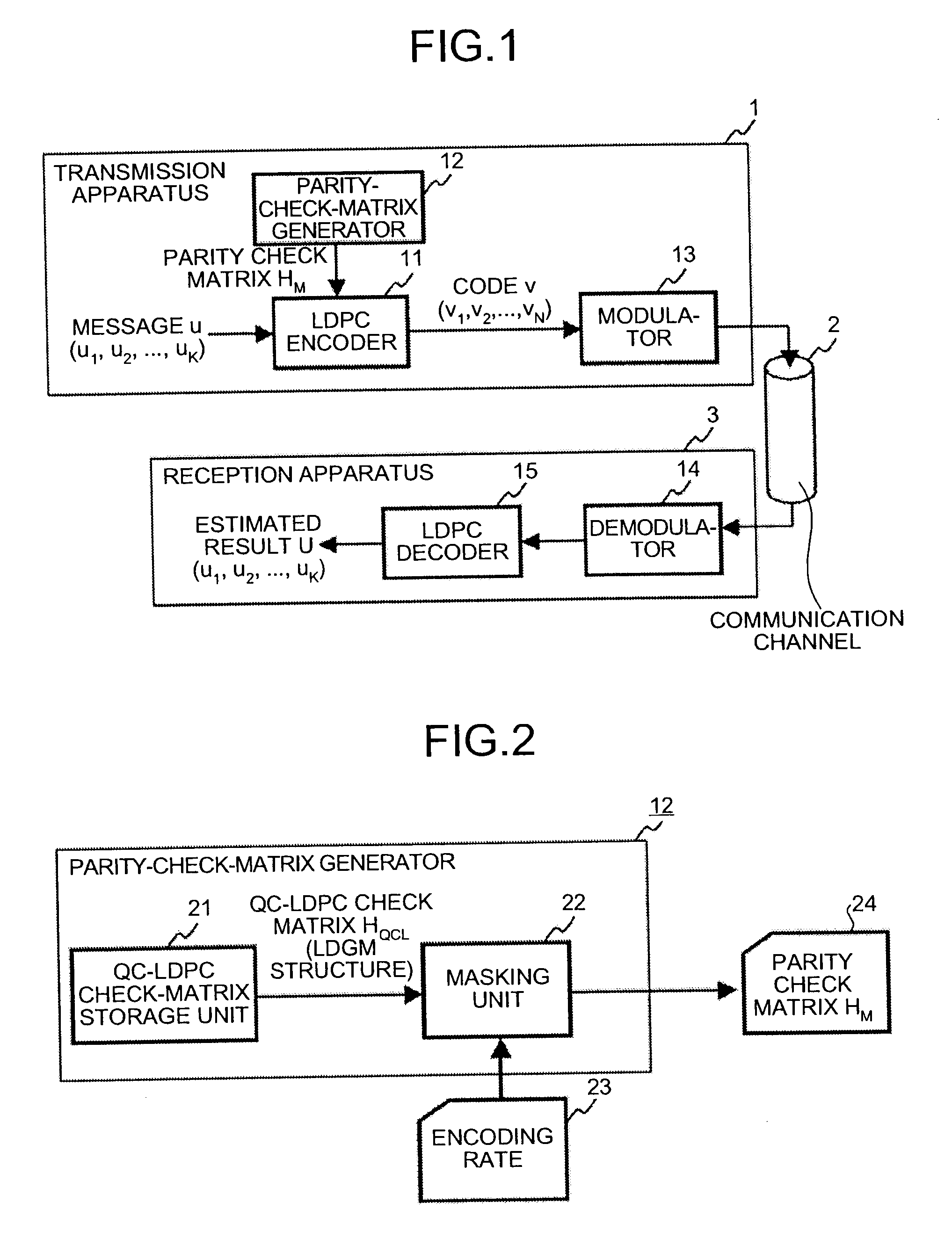

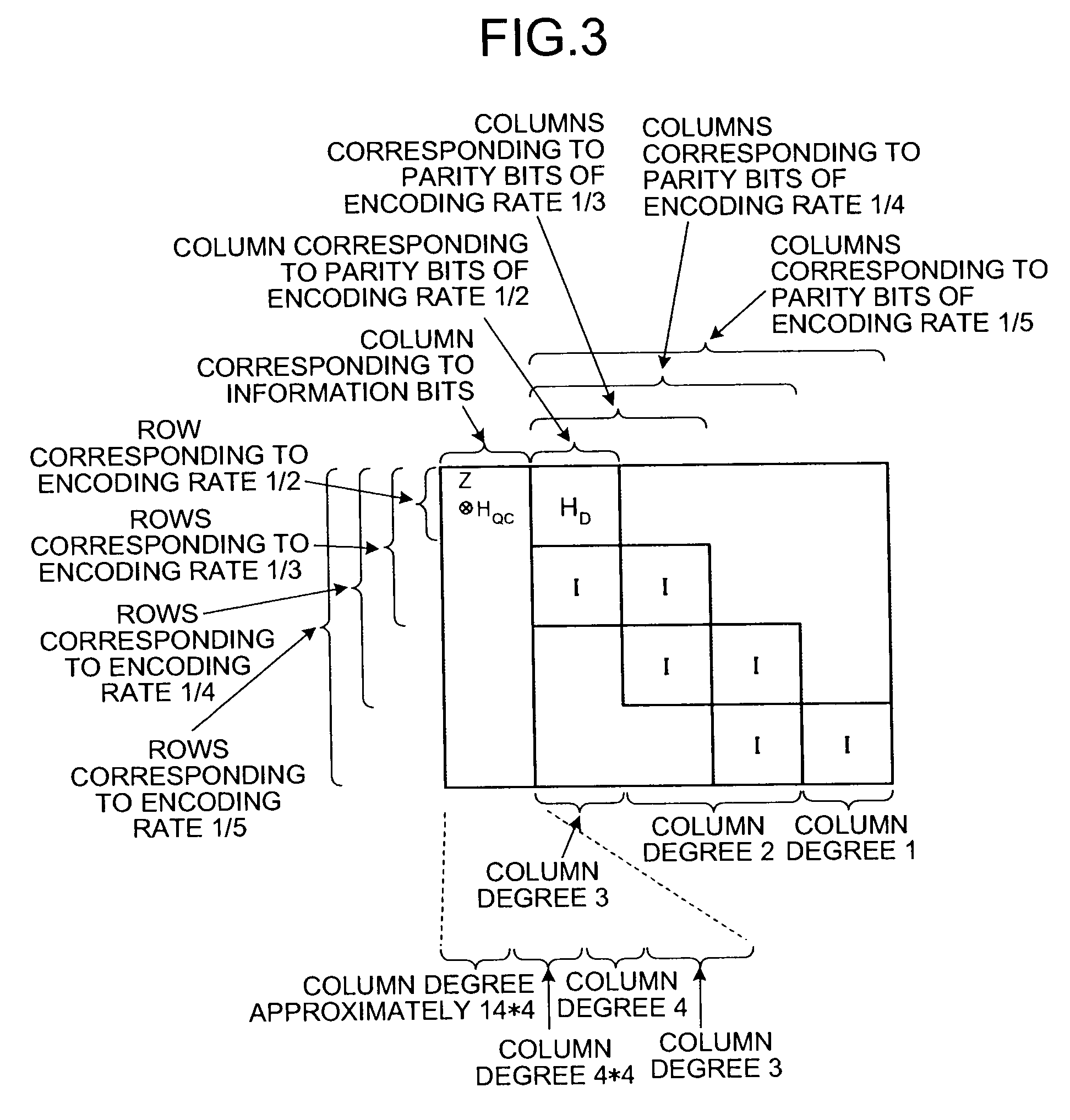

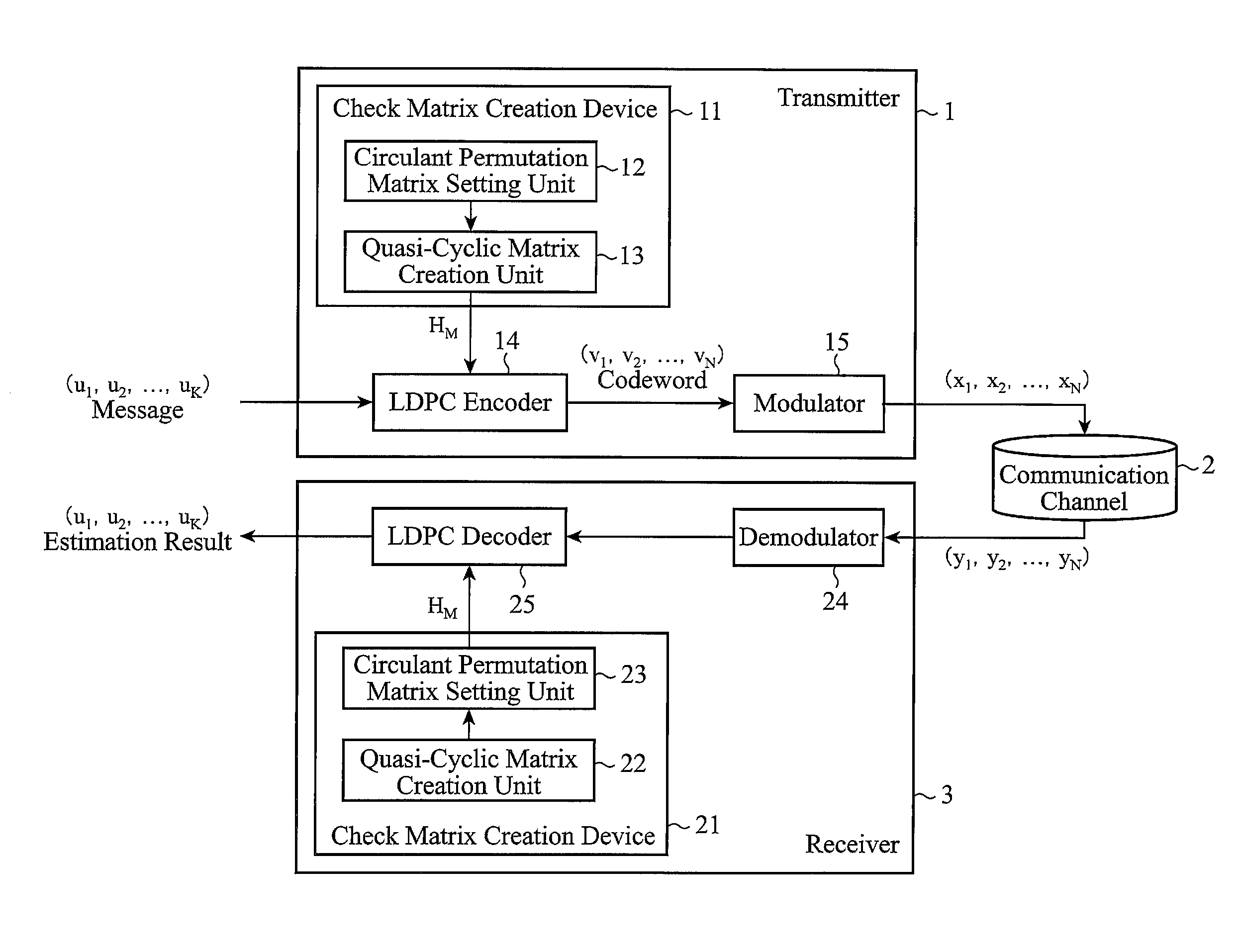

ActiveUS20090265600A1Promote generationReduce circuit sizeError preventionError correction/detection using multiple parity bitsDecoding methodsCommunications system

A regular quasi-cyclic matrix is prepared, a conditional expression for assuring a predetermined minimum loop in a parity check matrix is derived, and a mask matrix for converting a specific cyclic permutation matrix into a zero-matrix based on the conditional expression and a predetermined weight distribution is generated. The specific cyclic permutation matrix is converted into the zero-matrix to generate an irregular masking quasi-cyclic matrix. An irregular parity check matrix in which the masking quasi-cyclic matrix and a matrix in which the cyclic permutation matrices are arranged in a staircase manner are arranged in a predetermined location.

Owner:RAKUTEN GRP INC

Automated tuning of large-scale multivariable model predictive controllers for spatially-distributed processes

ActiveUS20070100476A1Digital computer detailsAnalogue computers for chemical processesClosed loopPredictive controller

An automated tuning method of a large-scale multivariable model predictive controller for multiple array papermaking machine cross-directional (CD) processes can significantly improve the performance of the controller over traditional controllers. Paper machine CD processes are large-scale spatially-distributed dynamical systems. Due to these systems' (almost) spatially invariant nature, the closed-loop transfer functions are approximated by transfer matrices with rectangular circulant matrix blocks, whose input and output singular vectors are the Fourier components of dimension equivalent to either number of actuators or number of measurements. This approximation enables the model predictive controller for these systems to be tuned by a numerical search over optimization weights in order to shape the closed-loop transfer functions in the two-dimensional frequency domain for performance and robustness. A novel scaling method is used for scaling the inputs and outputs of the multivariable system in the spatial frequency domain.

Owner:HONEYWELL ASCA INC

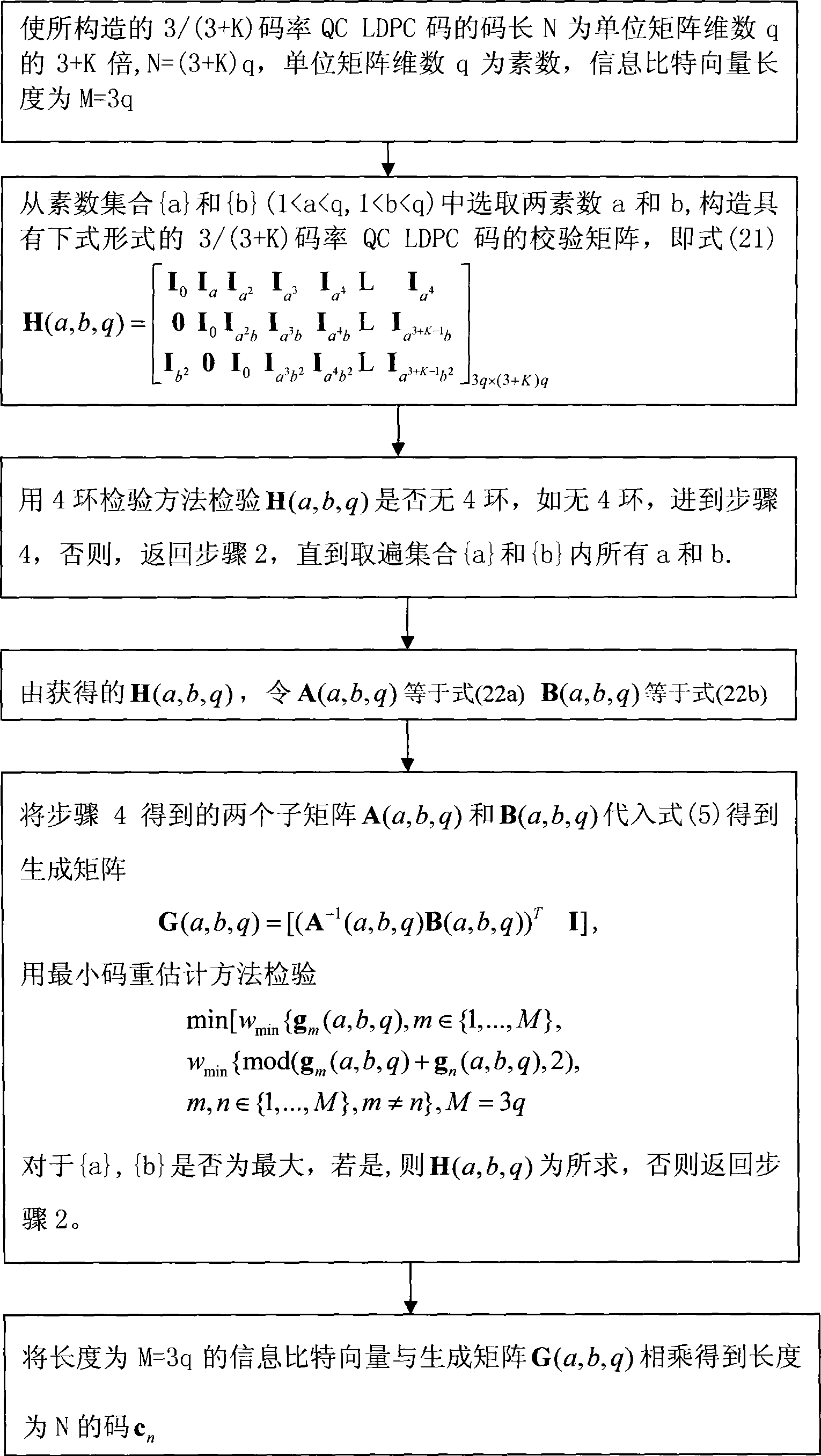

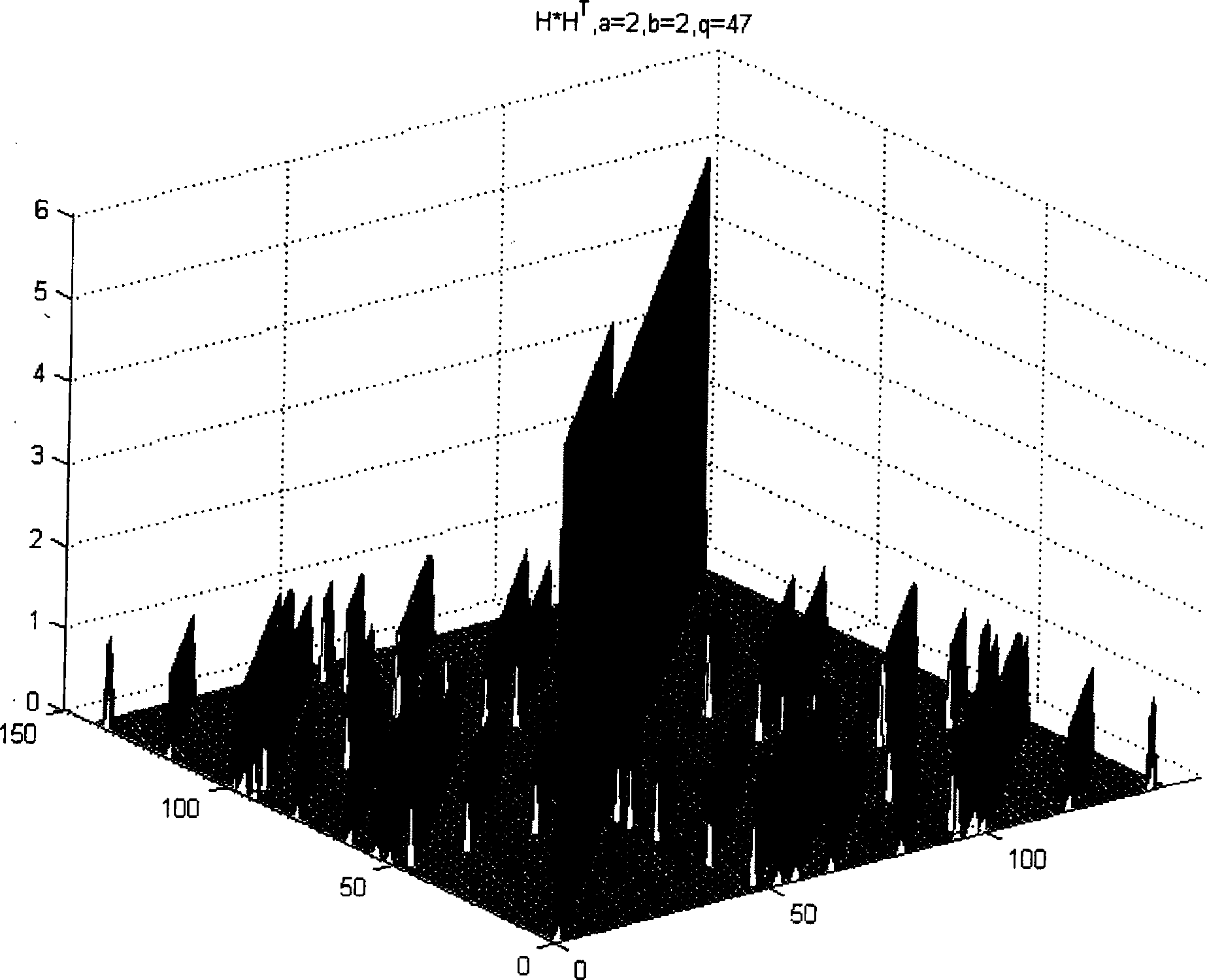

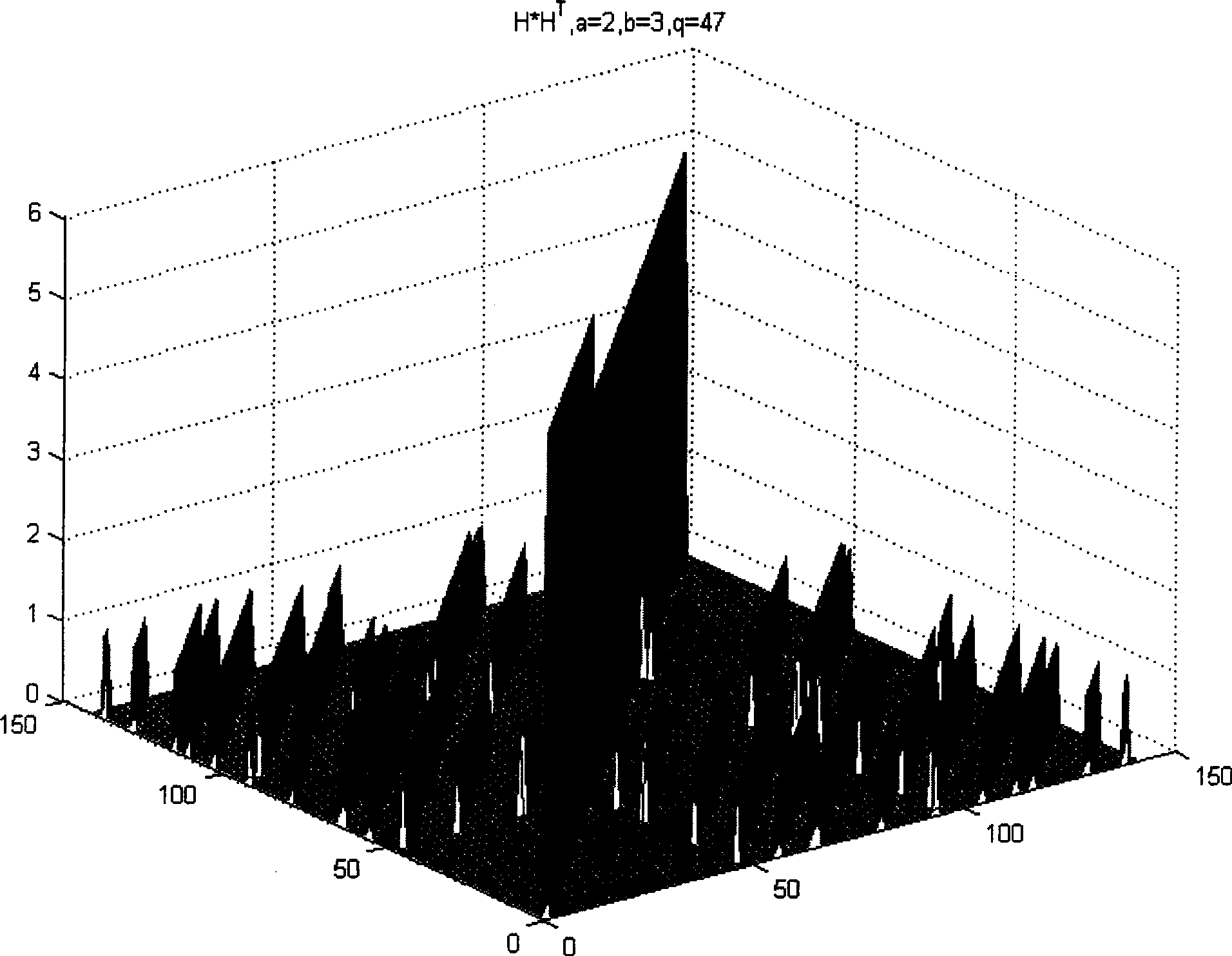

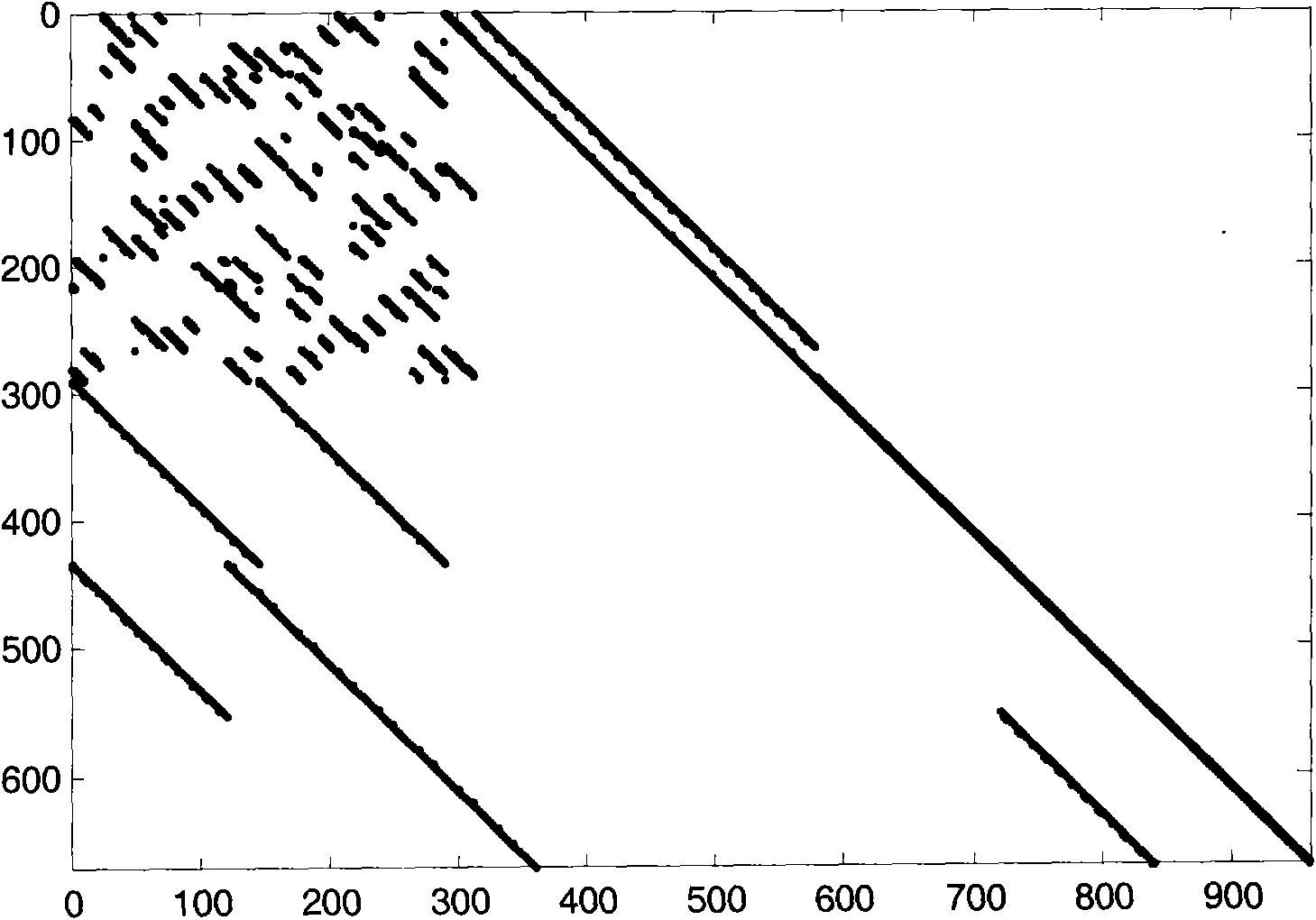

LDPC constructing method with short ring or low duplicate code

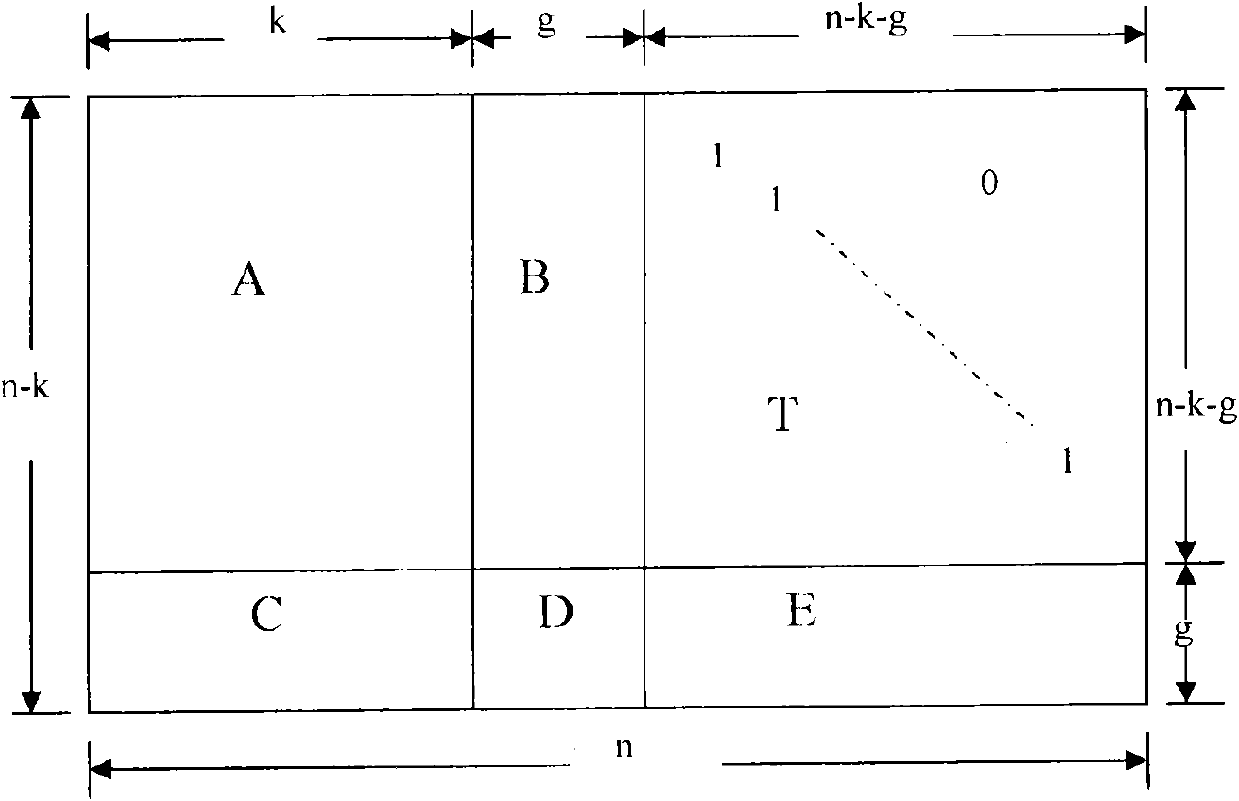

InactiveCN101488761ASolve the problem of low-code repeated code wordsCode majorError correction/detection using multiple parity bitsLinear codingShort loop

The invention discloses an algebraic construction method of LDPC (Low Density Parity Check) code based on cyclic matrixes; design parameters of cyclic matrixes are adjusted by short-loop check and minimum code weight check, which are nonnegative prime numbers a and b meeting two constraint conditions, and dimension q of an identity matrix, wherein the magnitude of dimension q of a shift identity matrix and whether the error rate characteristics of the designed LDPC code are influenced by prime numbers. The invention solves the problems of short-loop and low code coincident code word appearing in the existing QC LDPC code design. The method can check the existence of low code coincident code word in the designed code, thereby checking the existence of 4-loop. An irregular quasi-cyclic LDPC code structure disclosed by the invention divides the check matrix H into two submatrixes A and B, the nonsingular structure of the submatrix A is disclosed, and a matrix is generated by the two submatrixes A and B. Direct linear coding is carried out by generating the matrix. The embodiment validates the efficiency and good bit rate performance of the method disclosed by the invention.

Owner:BEIJING JIAOTONG UNIV

Check matrix generating method, encoding method, decoding method, communication device, encoder, and decoder

InactiveUS20090063930A1Data representation error detection/correctionCode conversionDecoding methodsParity-check matrix

A regular quasi-cyclic matrix is generated with cyclic permutation matrices and specific regularity given to the cyclic permutation matrices. A mask matrix for making the regular quasi-cyclic matrix into an irregular quasi-cyclic matrix is generated. An irregular masked quasi-cyclic matrix is generated by converting a specific cyclic permutation matrix in the regular quasi-cyclic matrix into a zero-matrix using a mask matrix supporting a specific encoding rate. An irregular parity check matrix with an LDGM structure is generated with a masked quasi-cyclic matrix and a matrix in which the cyclic permutation matrices are arranged in a staircase manner.

Owner:MITSUBISHI ELECTRIC CORP

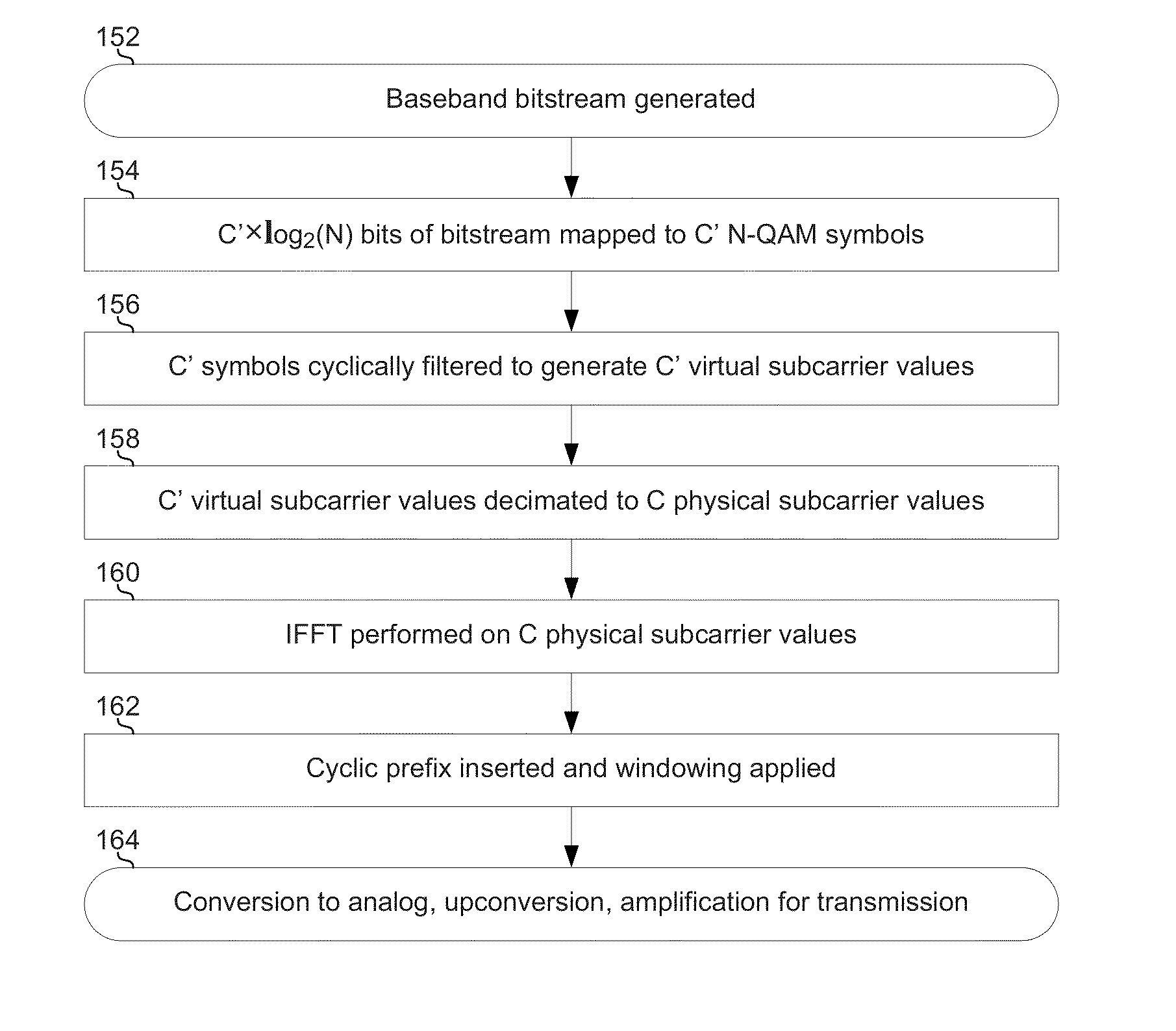

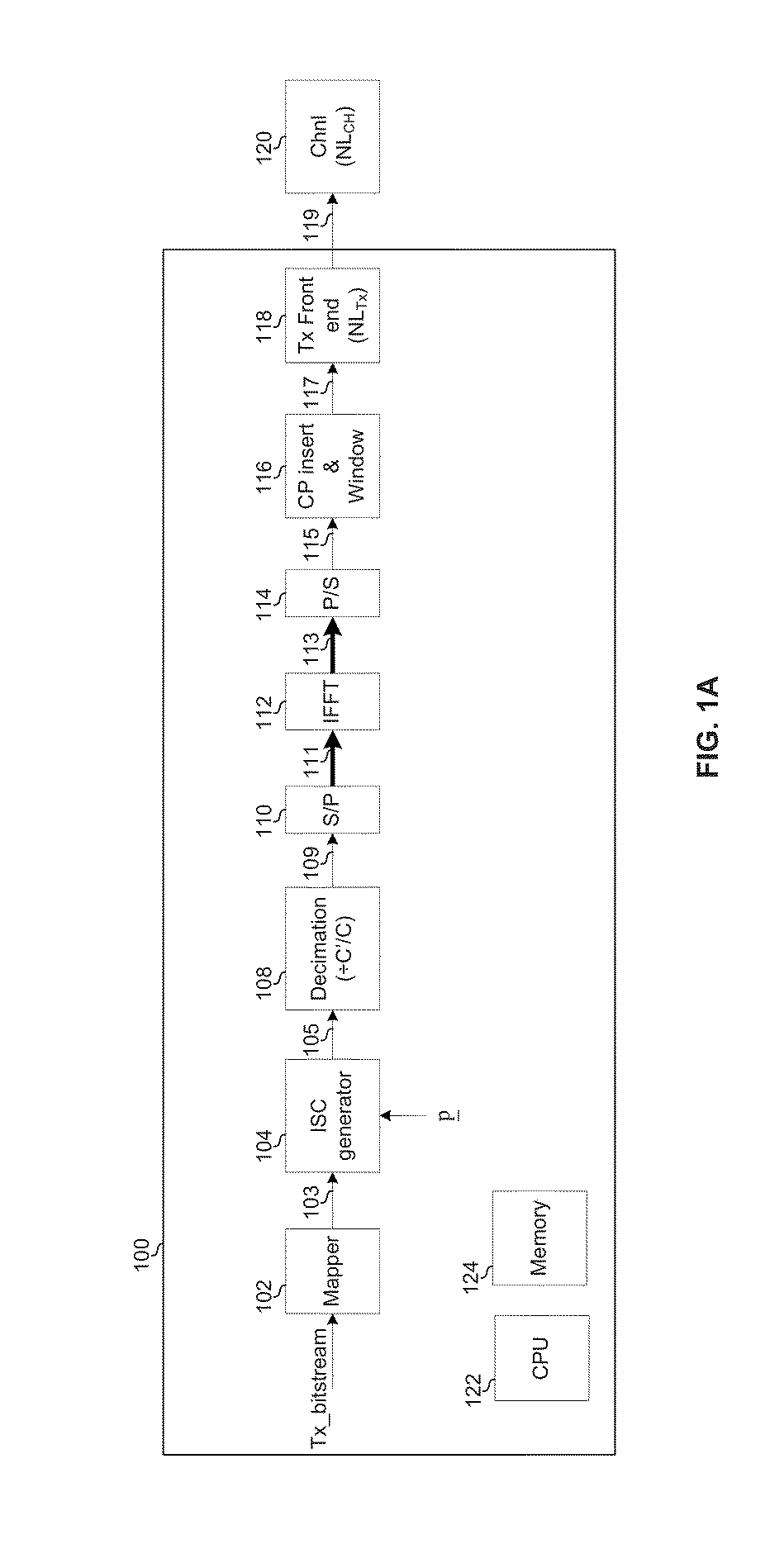

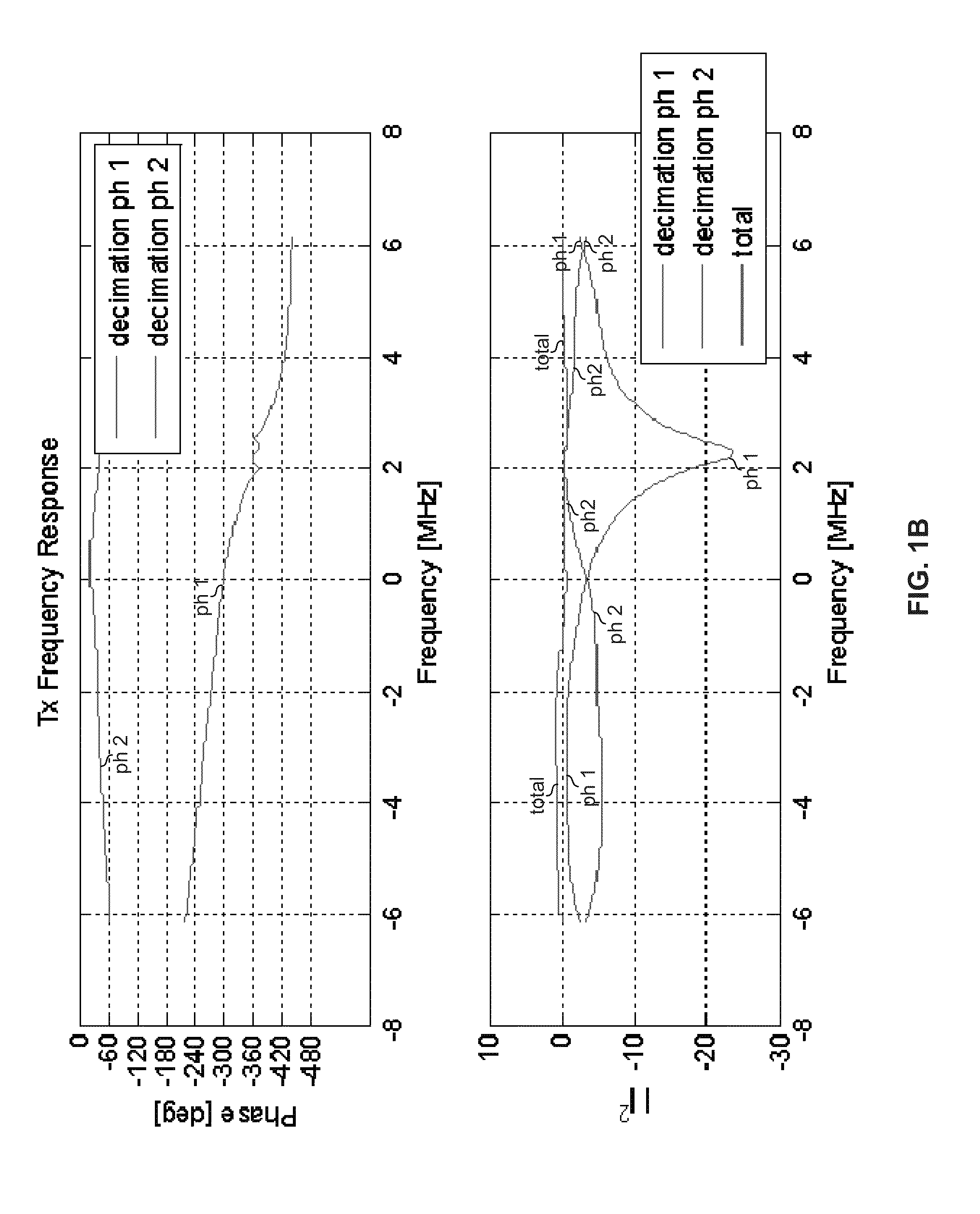

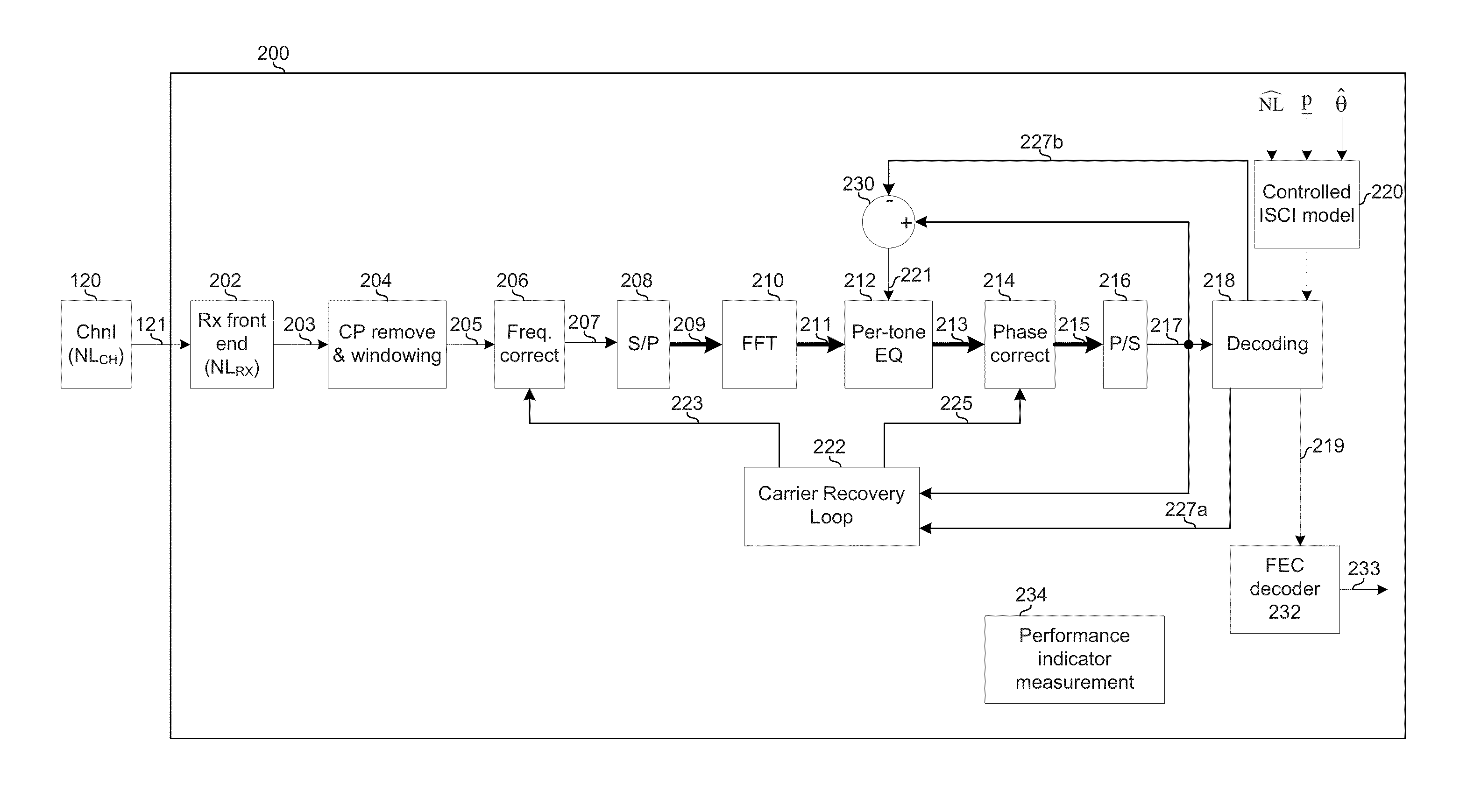

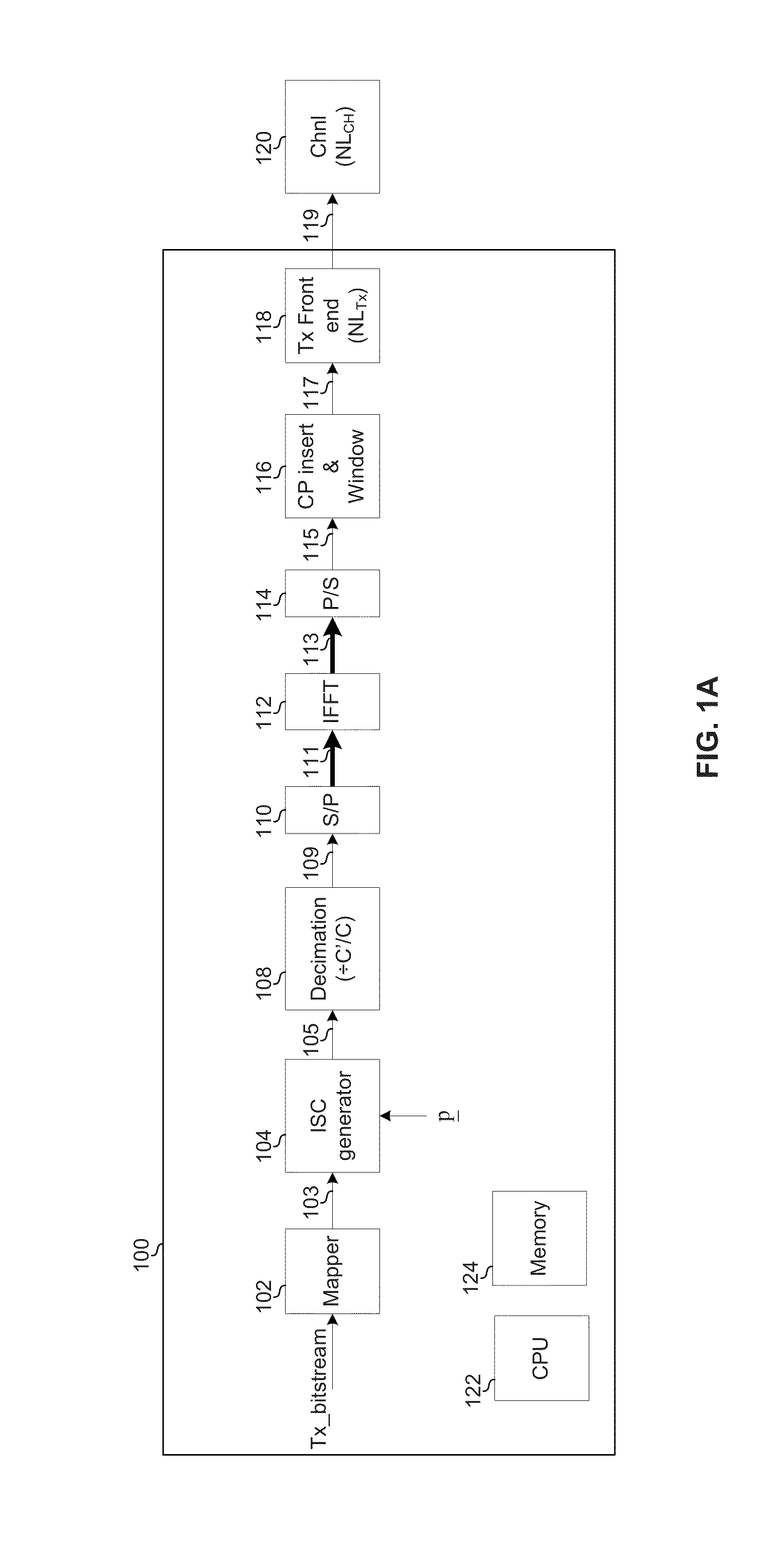

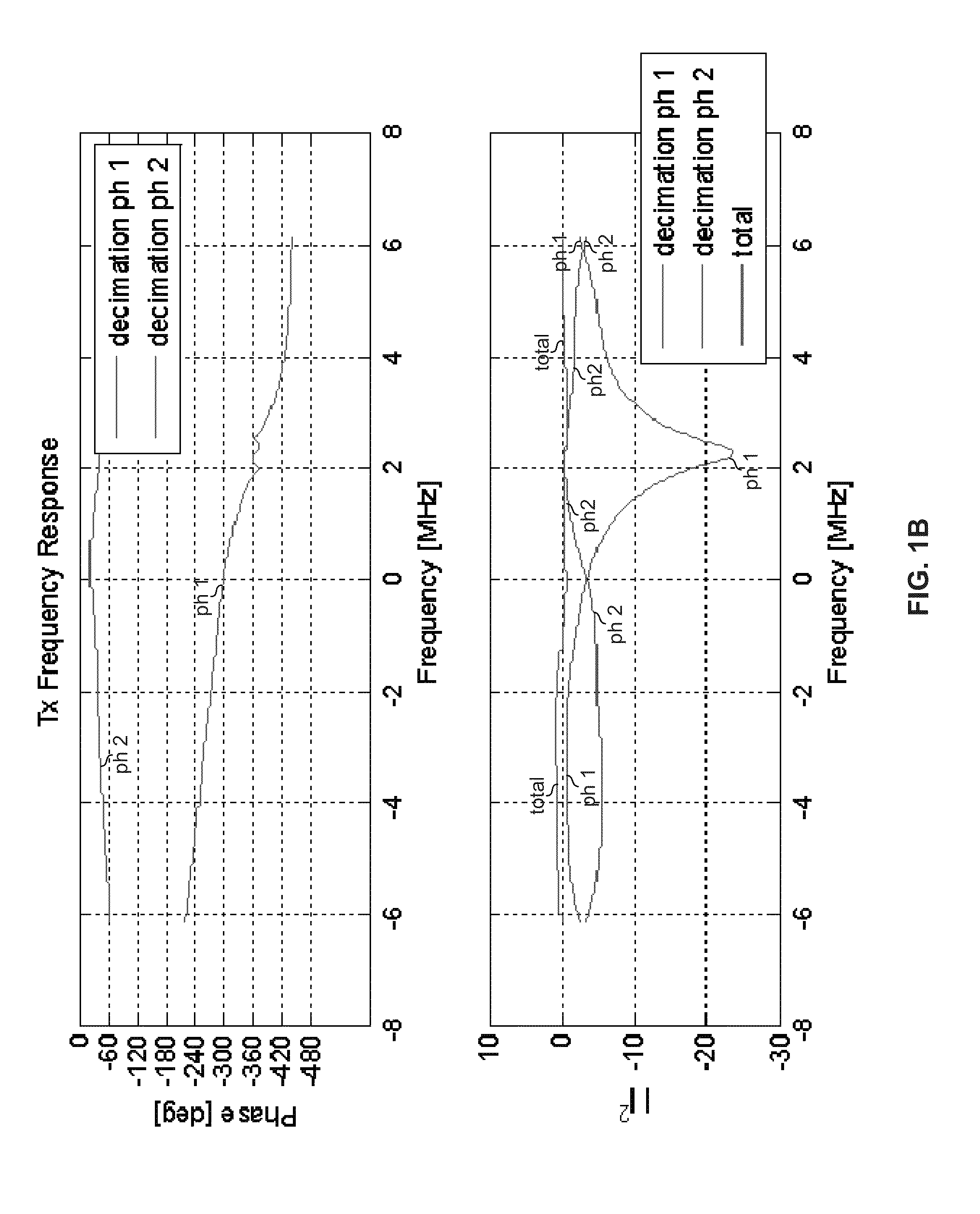

Highly-spectrally-efficient transmission using orthogonal frequency division multiplexing

A transmitter may map, using a selected modulation constellation, each of C′ bit sequences to a respective one of C′ symbols, where C′ is a number greater than one. The transmitter may process the C′ symbols to generate C′ inter-carrier correlated virtual subcarrier values. The transmitter may decimate the C′ virtual subcarrier values down to C physical subcarrier values, C being a number less than C′. The transmitter may transmit the C physical subcarrier values on C orthogonal frequency division multiplexed (OFDM) subcarriers. The modulation constellation may be an N-QAM constellation, where N is an integer. The processing may comprise filtering the C′ symbols using an array of C′ filter tap coefficients. The filtering may comprise cyclic filtering. The filtering may comprise multiplication by a circulant matrix populated with the C′ filter tap coefficients.

Owner:AVAGO TECH INT SALES PTE LTD

Image reconstruction methods based on block circulant system matrices

InactiveUS7983465B2Minimized in sizeFast computerReconstruction from projectionMaterial analysis using wave/particle radiationLines of responseIn plane

An iterative image reconstruction method used with an imaging system that generates projection data, the method comprises: collecting the projection data; choosing a polar or cylindrical image definition comprising a polar or cylindrical grid representation and a number of basis functions positioned according to the polar or cylindrical grid so that the number of basis functions at different radius positions of the polar or cylindrical image grid is a factor of a number of in-plane symmetries between lines of response along which the projection data are measured by the imaging system; obtaining a system probability matrix that relates each of the projection data to each basis function of the polar or cylindrical image definition; restructuring the system probability matrix into a block circulant matrix and converting the system probability matrix in the Fourier domain; storing the projection data into a measurement data vector; providing an initial polar or cylindrical image estimate; for each iteration; recalculating the polar or cylindrical image estimate according to an iterative solver based on forward and back projection operations with the system probability matrix in the Fourier domain; and converting the polar or cylindrical image estimate into a Cartesian image representation to thereby obtain a reconstructed image.

Owner:SOCPRA SCI SANTE & HUMAINES S E C

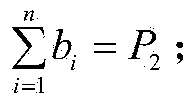

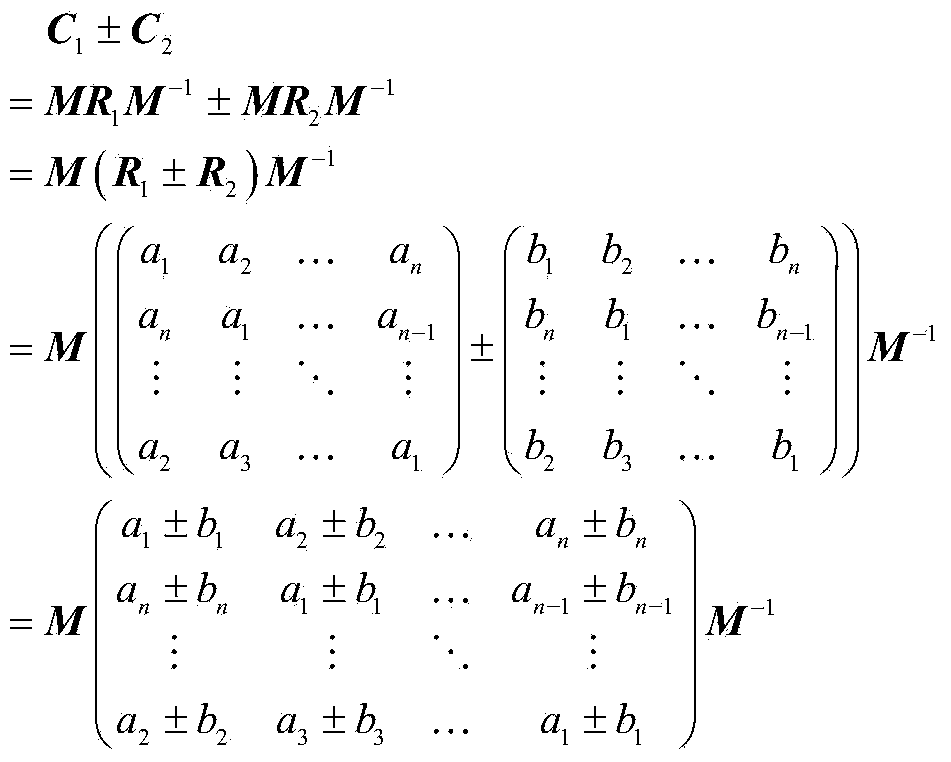

Circulant matrix transformation based and ciphertext computation supportive encryption method

The invention discloses a circulant matrix transformation based and ciphertext computation supportive encryption method. The method includes the steps: firstly, encrypting original data by means of converting the original data into vectors, then converting the vectors into a circulant matrix and encrypting through a key matrix to obtain an encrypted outsourcing matrix; secondly, encrypting computational parameters by means of converting the computational parameters into vectors, then converting the vectors into a circulant matrix and encrypting through a key matrix to obtain an encrypted computational parameter matrix; thirdly, performing arithmetic operation of an encrypting matrix to obtain an encrypted operation result; fourthly, decrypting the encrypted operation result through the key matrix to obtain a circulant matrix, and then selecting one line / column of the circulant matrix to be added to obtain a plaintext of the operation result. The method is particularly suitable for outsourcing storage and computation of confidential data in a cloud computing environment, and can be used for protecting the confidential data of persons or enterprises; the method is supportive to four-rule hybrid operation of infinite addition, subtraction, multiplication and division of encrypted numerical data.

Owner:XI AN JIAOTONG UNIV

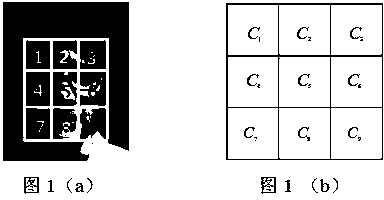

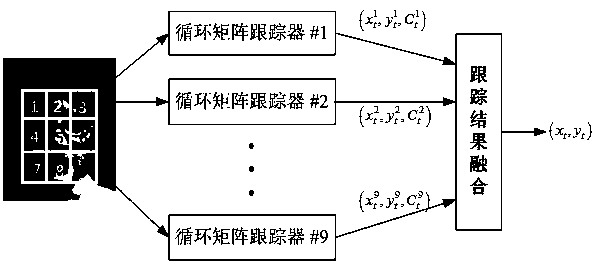

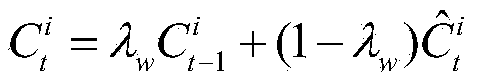

Cyclic matrix video tracking method with partition

ActiveCN103886325AImprove robustnessRobustCharacter and pattern recognitionPattern recognitionVideo processing

The invention discloses a cyclic matrix video tracking method with partition. The method comprises a step of dividing a target into a plurality of sub targets with the same size and carrying out cosine window adding preprocessing on each sub target, a step of carrying out cyclic matrix tracking on each sub target respectively to obtain the tracking result of each sub target, and calculating the confidence level of the result, and a step of combining sub target tracking results and confidence levels to obtain the real position of the target. According to the method, the idea of partition is used, the target is subjected to partition processing, each sub target is subjected to cyclic matrix tracking respectively, the spatial distribution information of the target and a background is fully utilized, and the robustness of a video tracking algorithm is raised. According to the method, a complex scene video can be subjected to robust tracking, and the conditions of target scale change, serious blocking, target rapid change, 3D rotation and the like are effectively processed. In addition, the tracking speed is fast, the video processing speed can reach dozens of frames per second, and a real-time requirement is completely satisfied.

Owner:ZHEJIANG UNIV

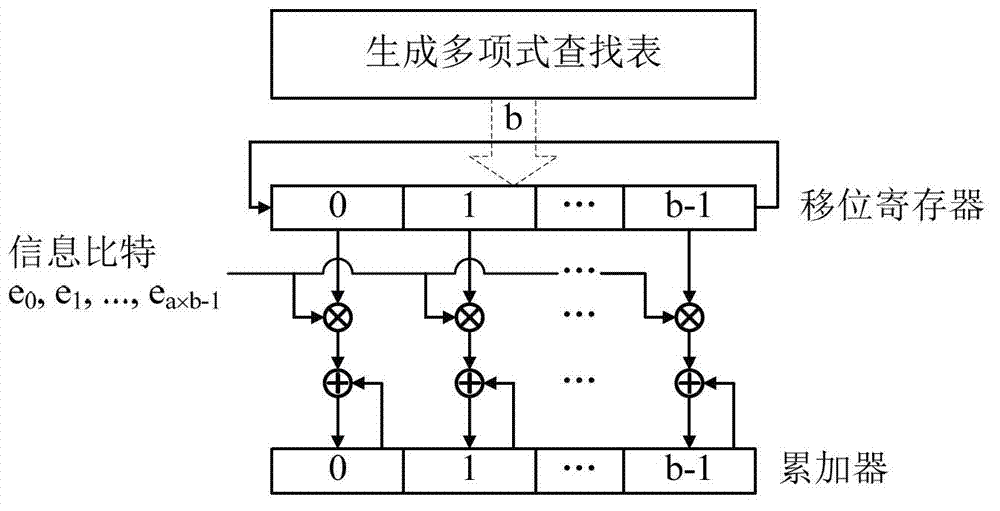

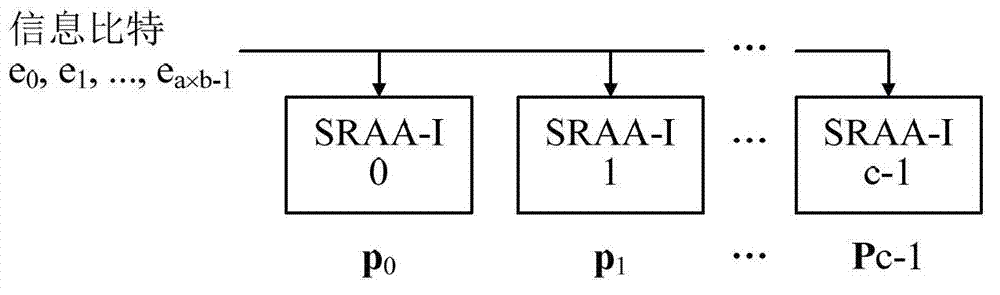

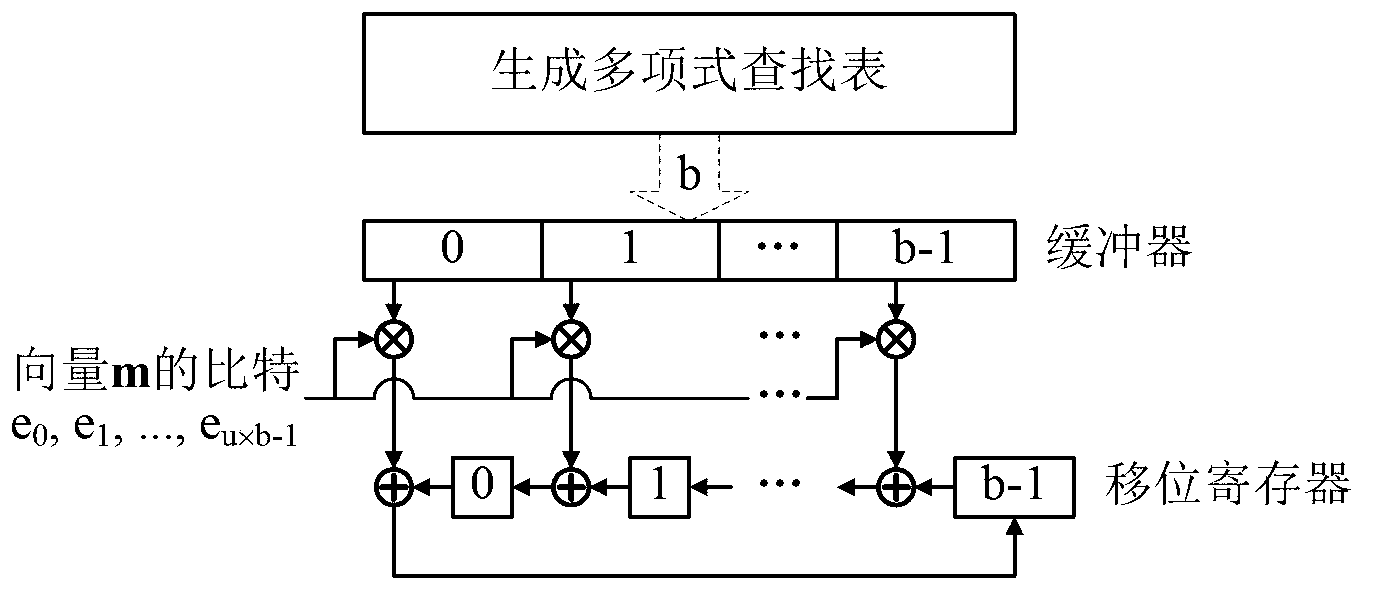

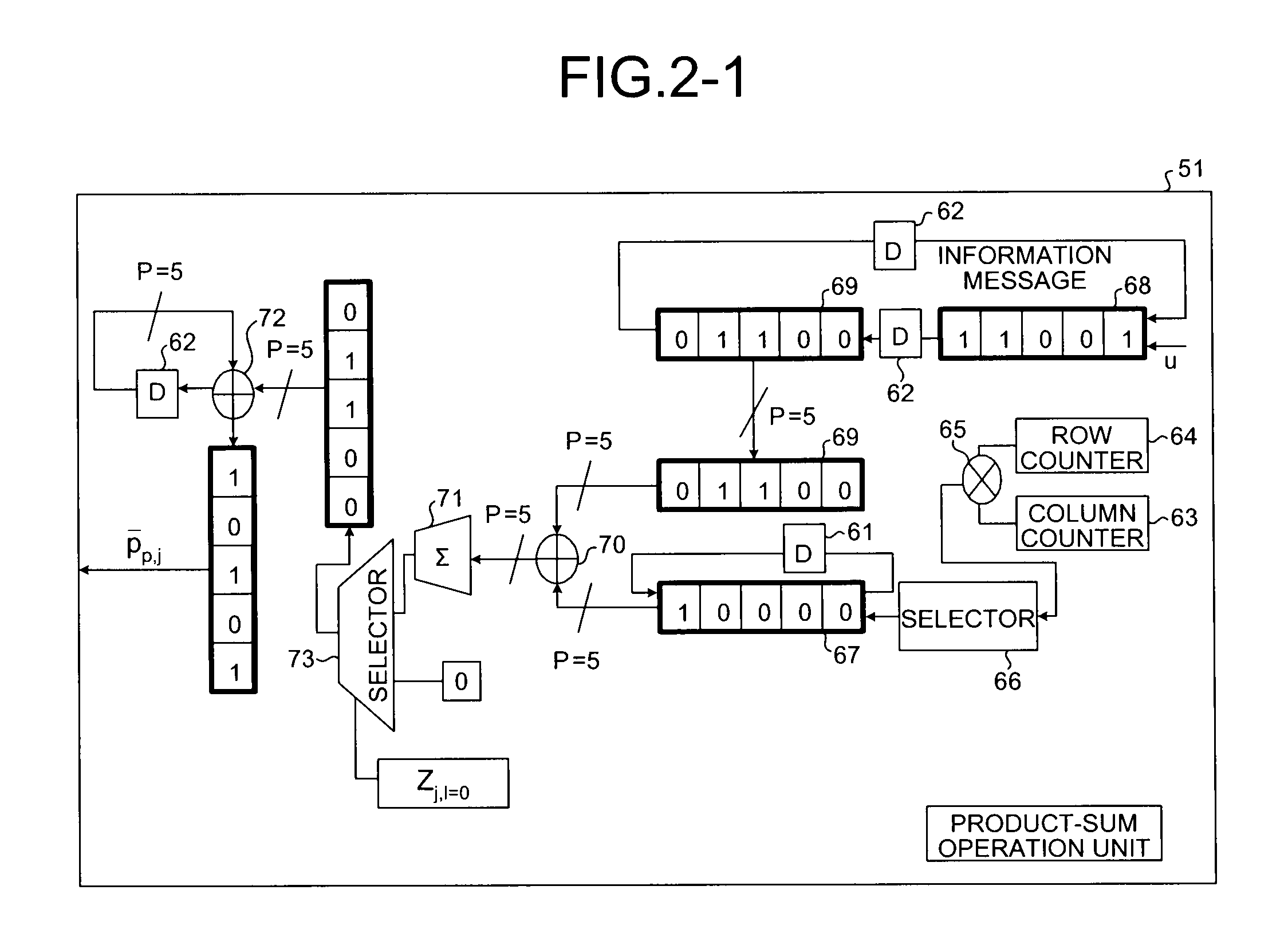

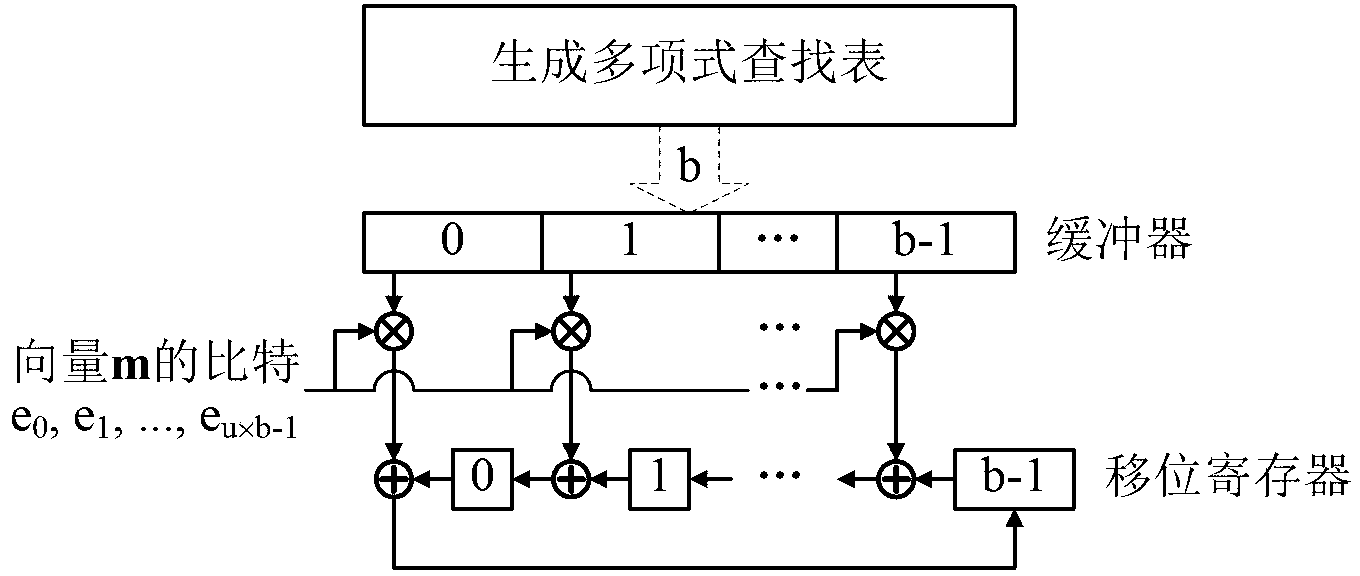

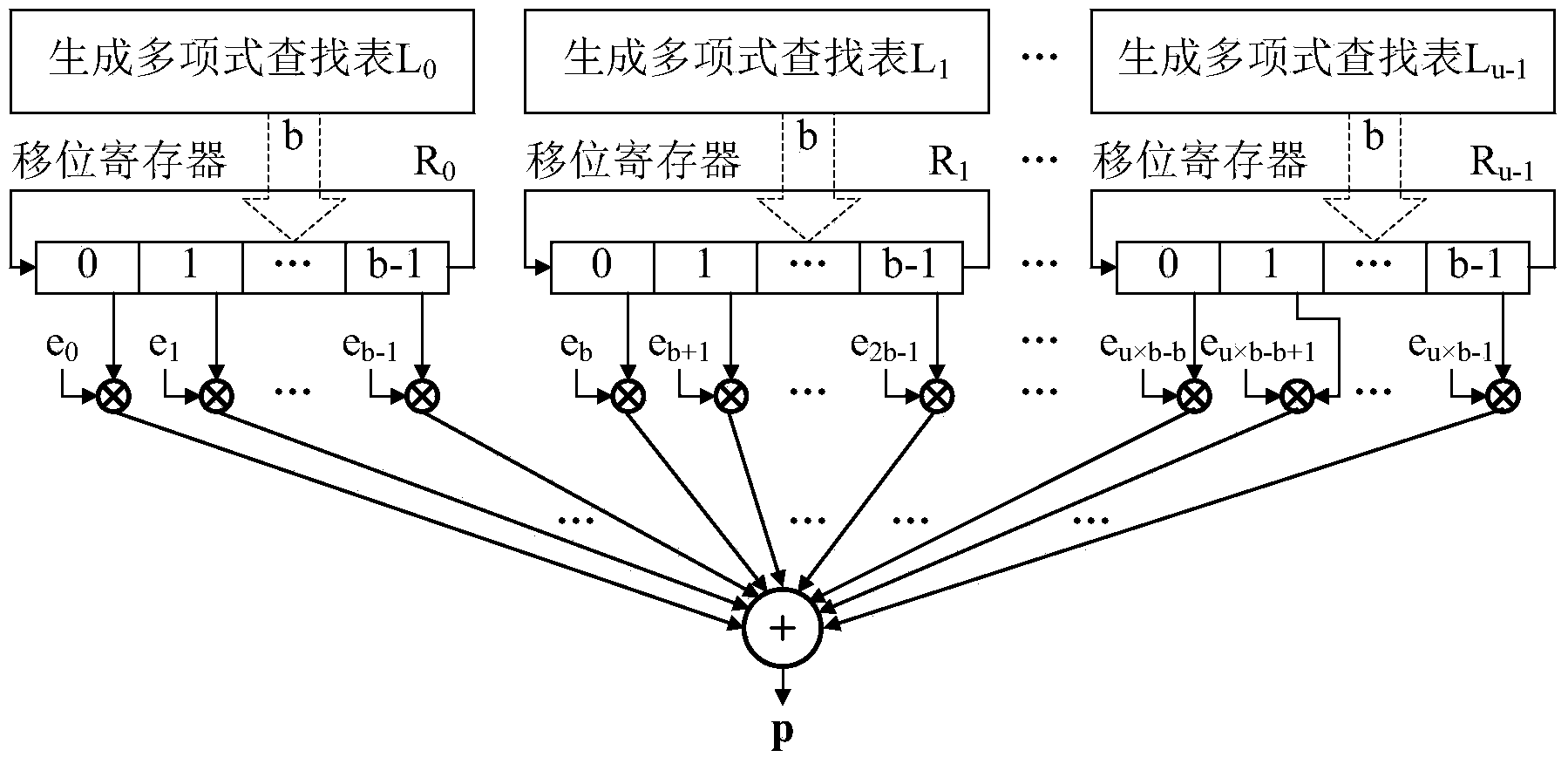

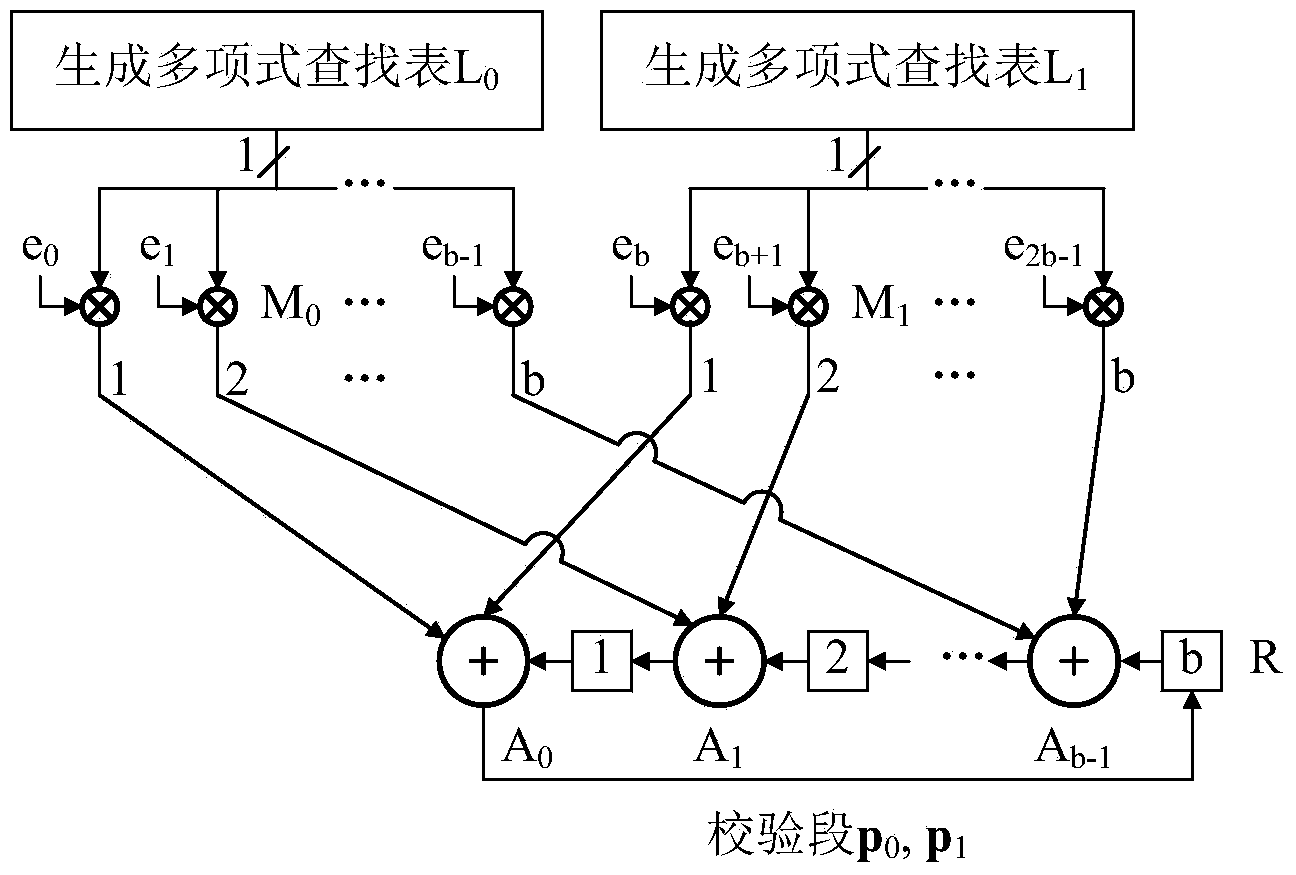

Quasi-cyclic LDPC serial encoder based on ring shift left

InactiveCN103248372ASimple structureReduce power consumptionError correction/detection using multiple parity bitsCode conversionShift registerBinary multiplier

The invention provides a quasi-cyclic LDPC serial encoder based on ring shift left, which includes c generator polynomial lookup tables of all cyclic matrix generator polynomials in a prestored generated matrix, c b-bit binary multipliers for performing scalar multiplication to information bit and generator polynomials, c b-bit binary adders for performing modulo-2 adding to product and content of shifting registers, and c b-bit shifting registers for storing the sum of ring shift left for 1 bit, and finally, the checking data is stored in the c shifting registers. The serial encoder provided by the invention has the advantages of few registers, simple structure, low power consumption, low cost and the like.

Owner:RONGCHENG DINGTONG ELECTRONICS INFORMATION SCI & TECH CO LTD

Highly-Spectrally-Efficient Transmission Using Orthogonal Frequency Division Multiplexing

A transmitter may map, using a selected modulation constellation, each of C′ bit sequences to a respective one of C′ symbols, where C′ is a number greater than one. The transmitter may process the C′ symbols to generate C′ inter-carrier correlated virtual subcarrier values. The transmitter may decimate the C′ virtual subcarrier values down to C physical subcarrier values, C being a number less than C′. The transmitter may transmit the C physical subcarrier values on C orthogonal frequency division multiplexed (OFDM) subcarriers. The modulation constellation may be an N-QAM constellation, where N is an integer. The processing may comprise filtering the C′ symbols using an array of C′ filter tap coefficients. The filtering may comprise cyclic filtering. The filtering may comprise multiplication by a circulant matrix populated with the C′ filter tap coefficients.

Owner:AVAGO TECH INT SALES PTE LTD

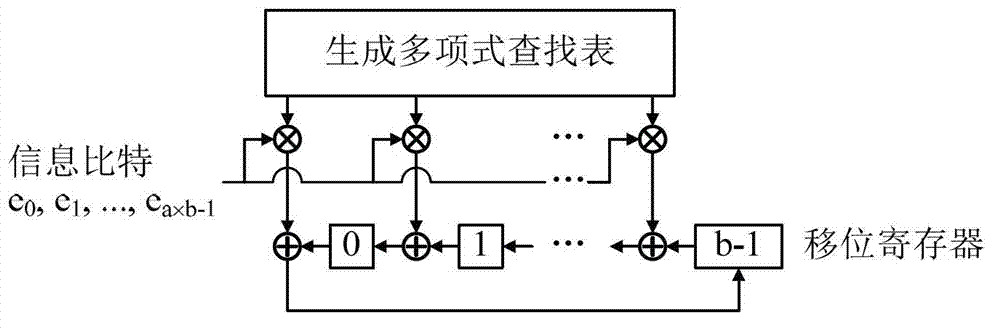

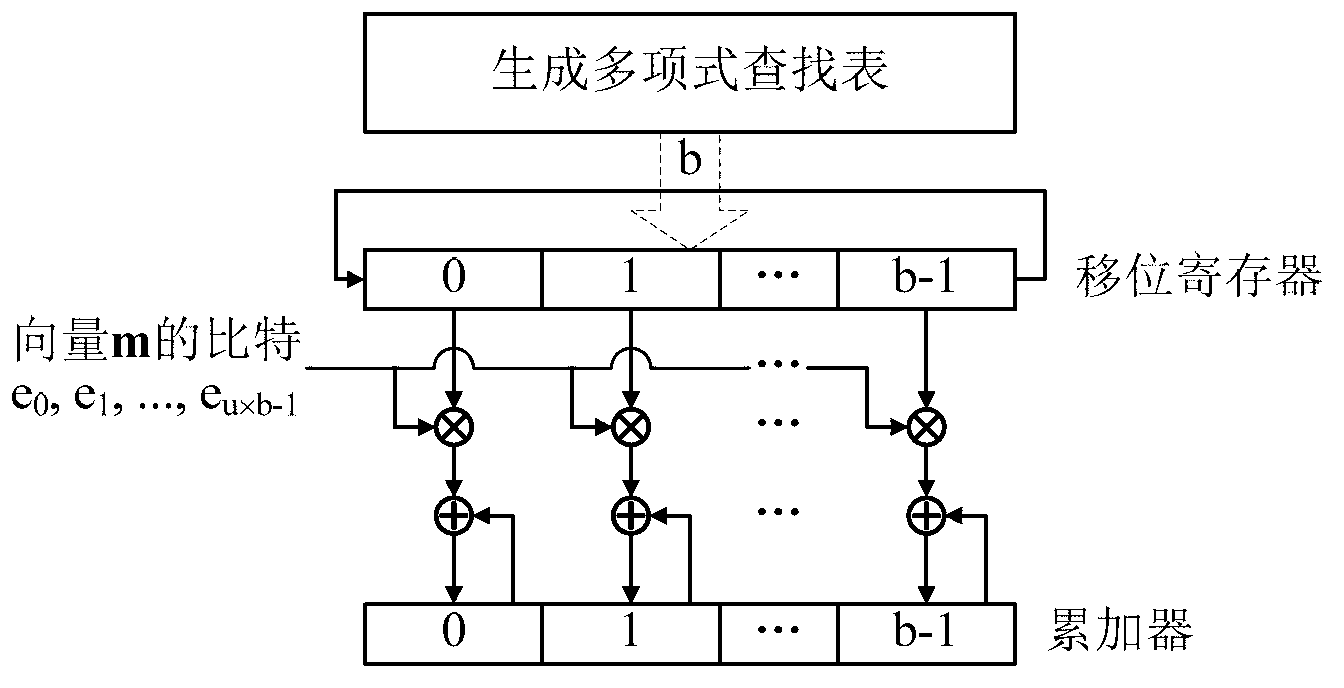

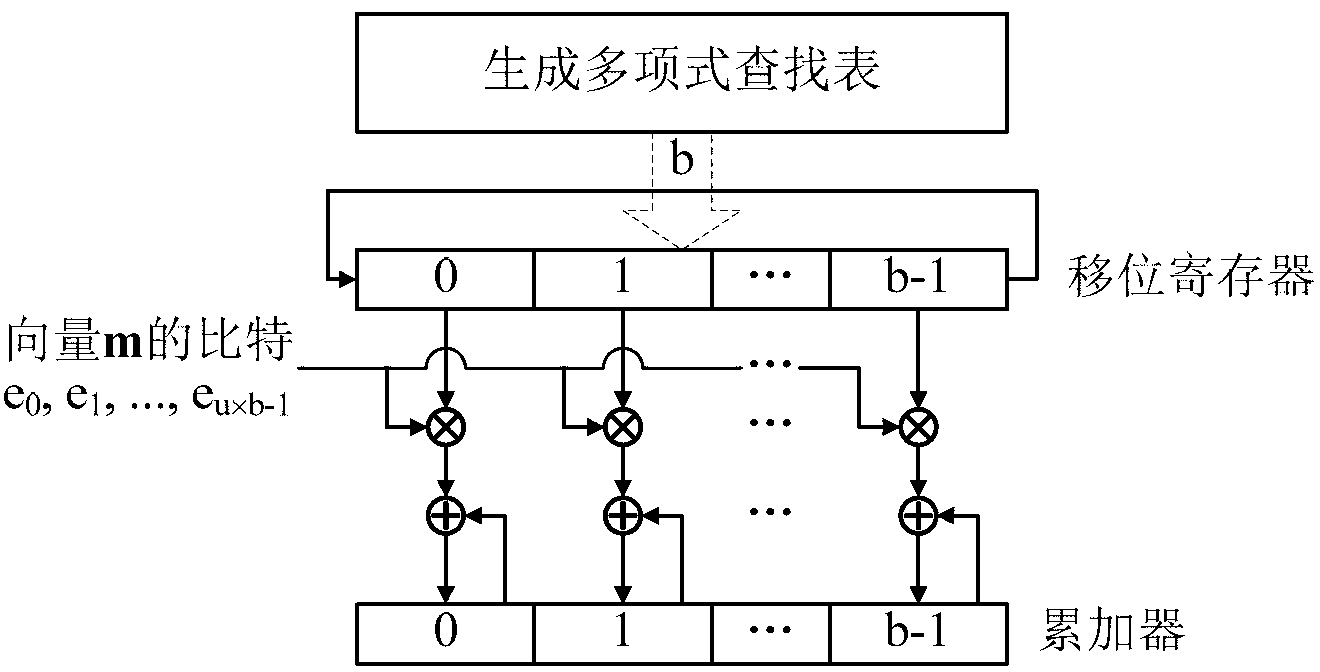

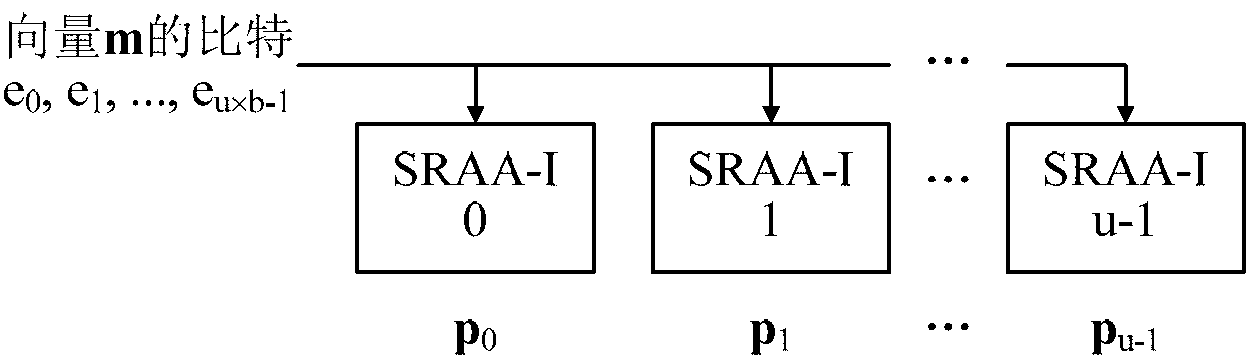

Quasi-cyclic matrix serial multiplier based on rotate left

InactiveCN103268217ASimple structureReduce power consumptionDigital data processing detailsShift registerBinary multiplier

The invention provides a quasi-cyclic matrix serial multiplier based on rotate left. The quasi-cyclic matrix serial multiplier is used for implementing multiplication of a vector m and a quasi-cyclic matrix F in QC-LDPC (quasi-cyclic low-density parity-check) approximate lower triangular encodings. The multiplier comprises u generated polynomial lookup tables, u b-bit binary multipliers, u b-bit binary summators and u b-bit shift registers. The generated polynomial lookup tables are used for pre-storing quasi-cyclic matrix generated polynomial in the matrix F. The b-bit binary multipliers are used for performing scalar multiplication of the vector m data bit and the generated polynomial. The b-bit binary summators are used for performing modulo-2 adding of products and shift register contents. The b-bit shift registers are used for storing sums of 1-bit movements subjected to rotate left. The quasi-cyclic matrix serial multiplier has the advantages of small number of registers, simple structure, low power consumption and cost and the like.

Owner:RONGCHENG DINGTONG ELECTRONICS INFORMATION SCI & TECH CO LTD

LDPC code constructing method based on coding cooperative communication

InactiveCN101800618AReduce complexityImprove performanceError prevention/detection by using return channelError correction/detection using multiple parity bitsRound complexityPartition of unity

The invention discloses an LDPC code constructing method based on coding cooperative communication; the method comprises the following steps: 1. choose a basis matrix W (1) based on finite field multiplicative inverse; the multiplicative inverse of a certain element in the basis matrix is multiplied with each row of the basis matrix, so as to generate a multiplicative identity in the basis matrix; 3.the basis matrix is expanded, so as to obtain an expansion matrix containing diagonal line; 4. the submatrix above the diagonal line is substituted by zero matrix, so as to obtain a quasi-cyclic matrix which is provided with a lower triangular type and has a nested structure; the invention can flexibly construct the quasi-cyclic matrix with the nested structure, which is suitable for CC proposal, while reducing the code construction complexity, the complexity of a coder of a trunk node and a destination node is reduced; in addition, the code in the invention does not have short link characteristic, and the performance of the code is promoted obviously compared with the traditional decode and forward, DF proposal.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Check matrix generating method

InactiveUS20100229066A1Reduce circuit sizeCode conversionSingle error correctionParity-check matrixTheoretical computer science

An irregular parity check matrix is generated which has an LDGM structure in which a masking quasi-cyclic matrix and a matrix in which cyclic permutation matrices are arranged in a staircase manner are arranged in a predetermined location. A mask matrix capable of supporting a single encoding rate for making the regular quasi-cyclic matrix into an irregular quasi-cyclic matrix is generated. The irregular parity check matrix is masked using a generated mask matrix, and a parity check matrix is generated combining a masked irregular parity check matrix with a lower triangular matrix formed in a staircase manner to satisfy a single encoding rate.

Owner:MITSUBISHI ELECTRIC CORP

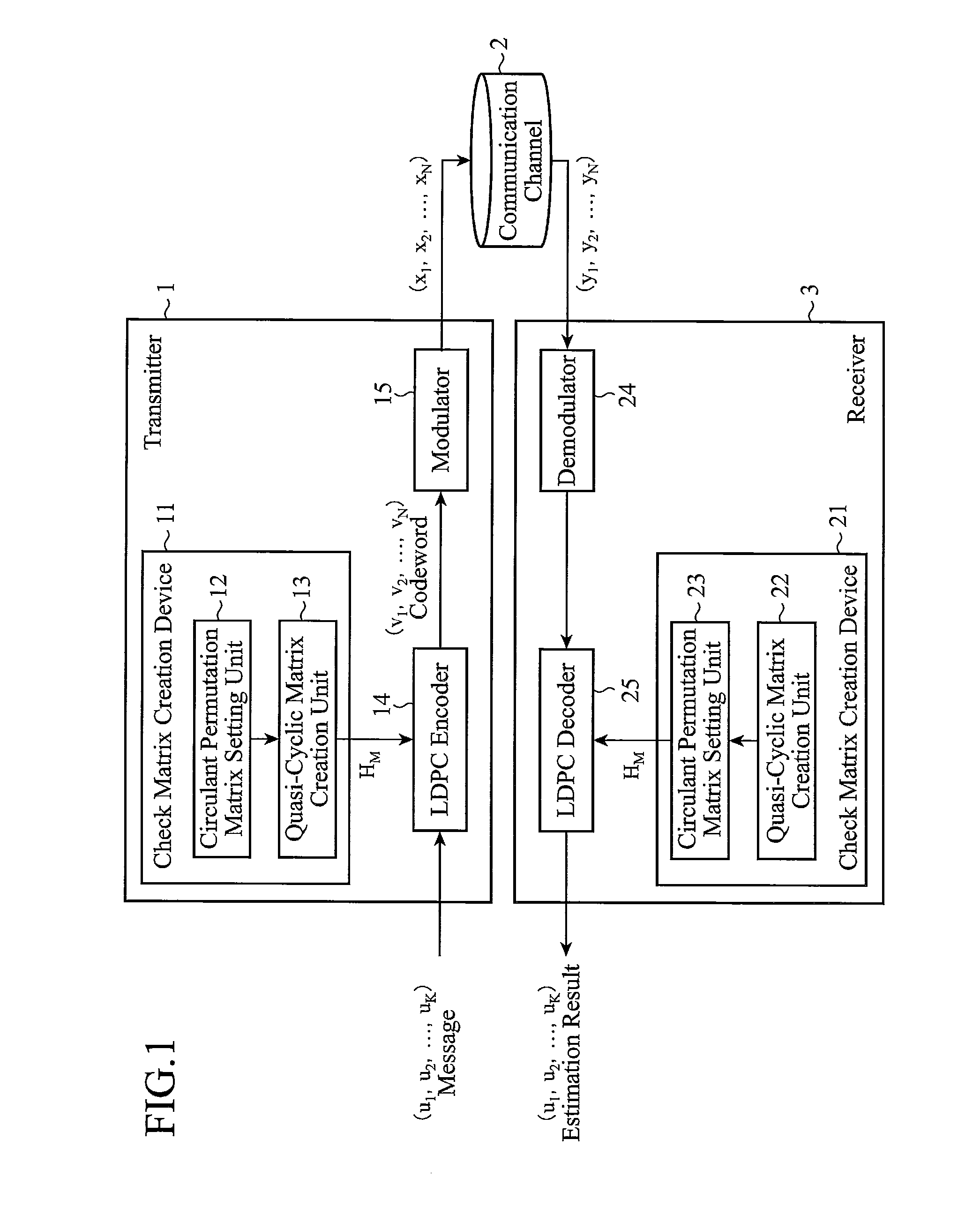

Check matrix creation device, check matrix creation method, check matrix creation program, transmitter, receiver, and communication system

InactiveUS20110154151A1Improve performanceError correction/detection using multiple parity bitsCode conversionCommunications systemEngineering

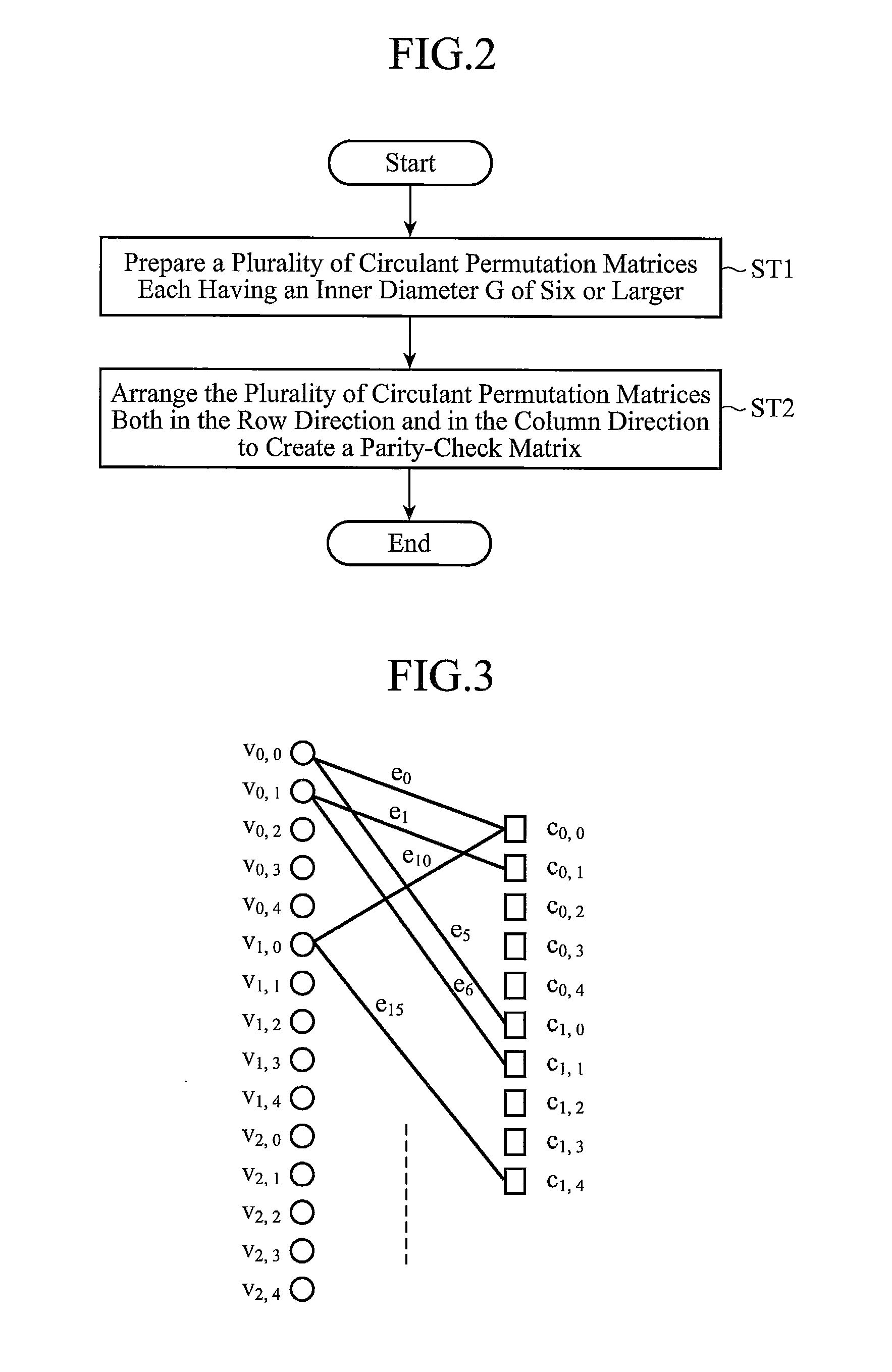

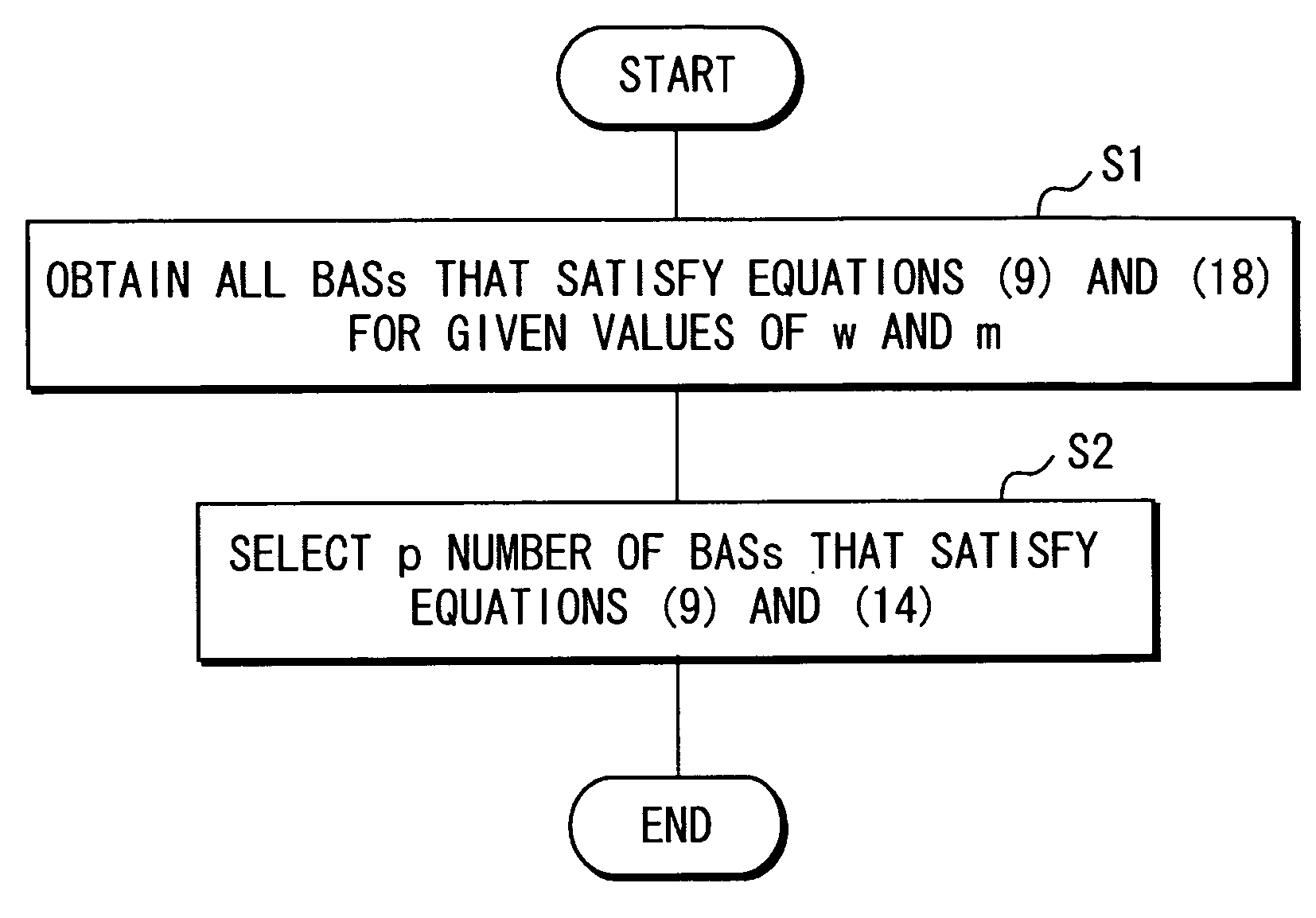

A check matrix creation device includes a circulant permutation matrix setting unit 12 for preparing a plurality of circulant permutation matrices each having an inner diameter of six or larger, and a quasi-cyclic matrix creation unit 13 for arranging the plurality of circulant permutation matrices prepared by the circulant permutation matrix setting unit 12 both in a row direction and in a column direction to create a quasi-cyclic matrix.

Owner:MITSUBISHI ELECTRIC CORP

Encoding method to QC code

ActiveUS20070094582A1Reduce circuit sizeImprove decoding performanceError detection/correctionCode conversionTheoretical computer scienceParity-check matrix

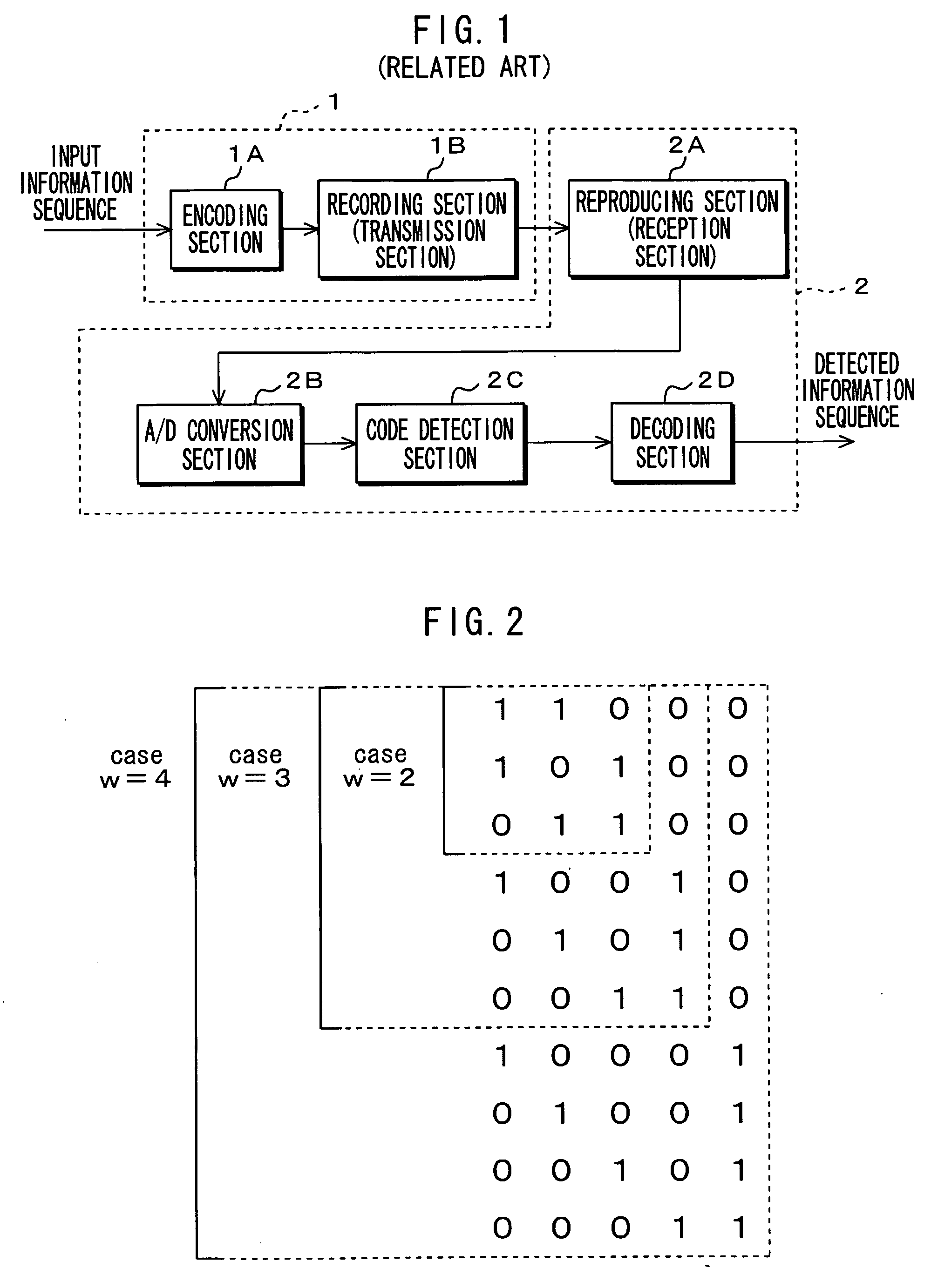

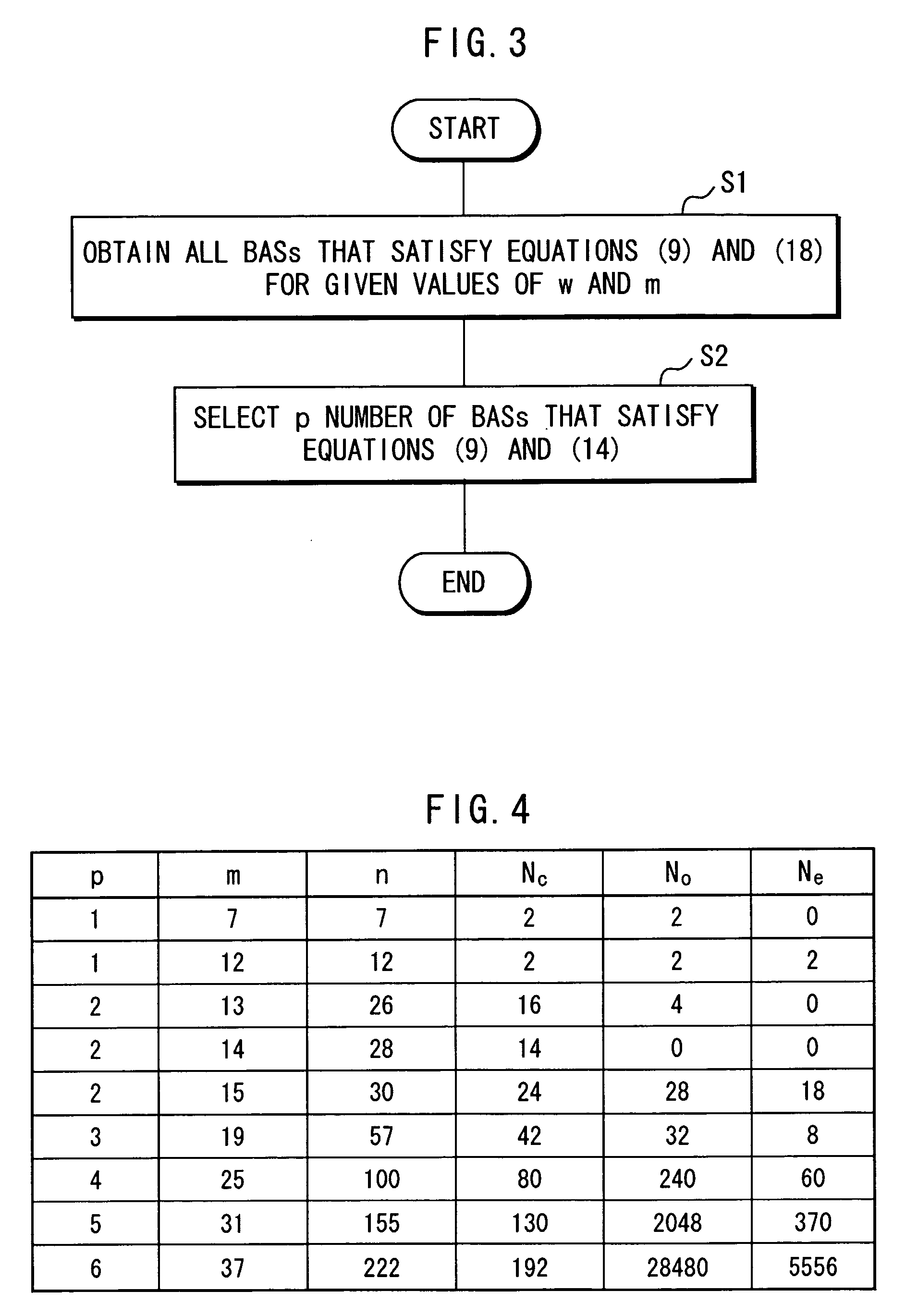

In an encoding method to a self-orthogonal QC code whose parity check matrix is expressed by at least one circulant matrix, a code sequence is generated which satisfies a check matrix. The check matrix is designed so that a column weight w of each circulant matrix is three or larger and a minimum hamming distance of the code is w+2 or larger.

Owner:SONY SEMICON SOLUTIONS CORP

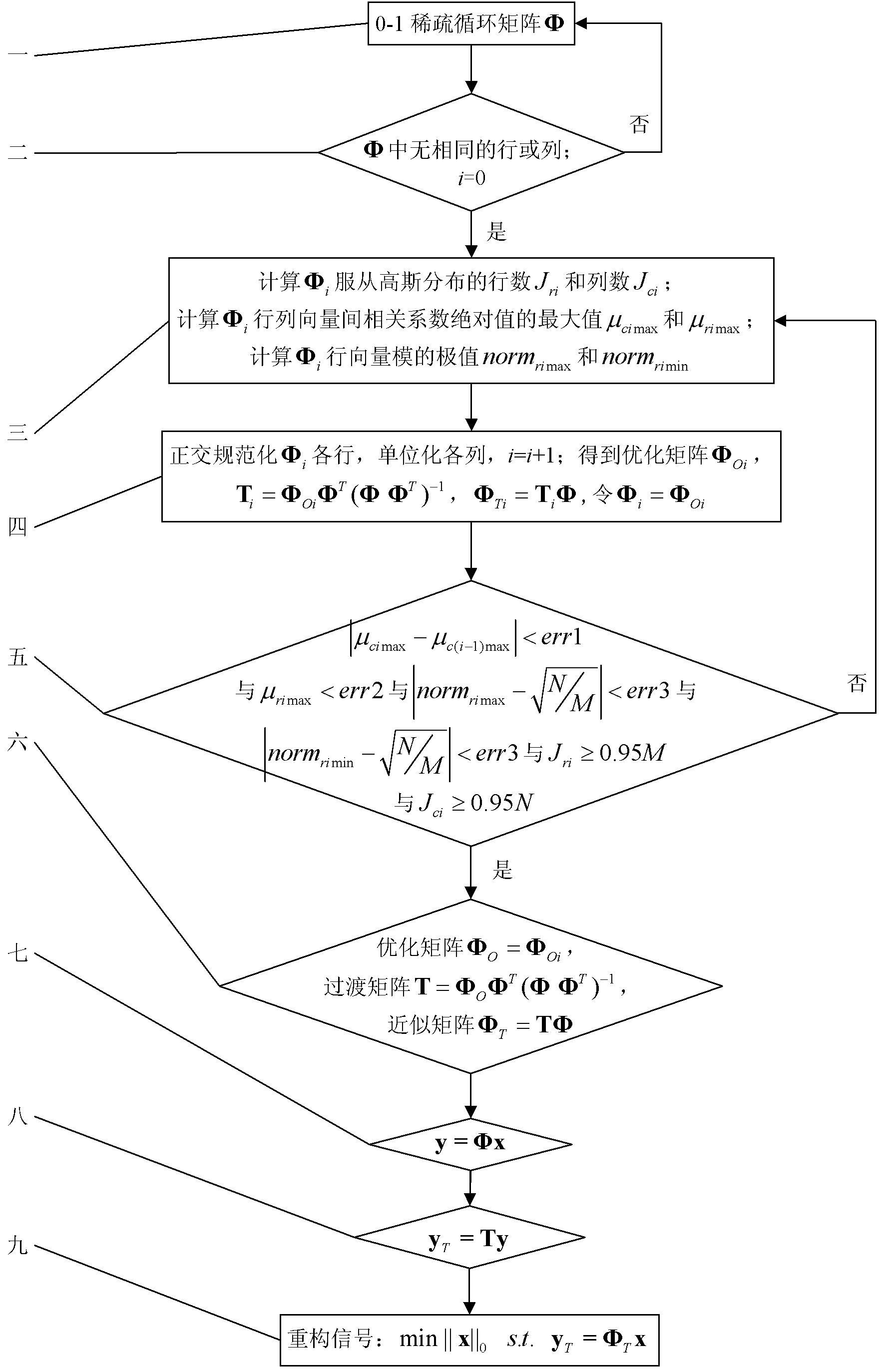

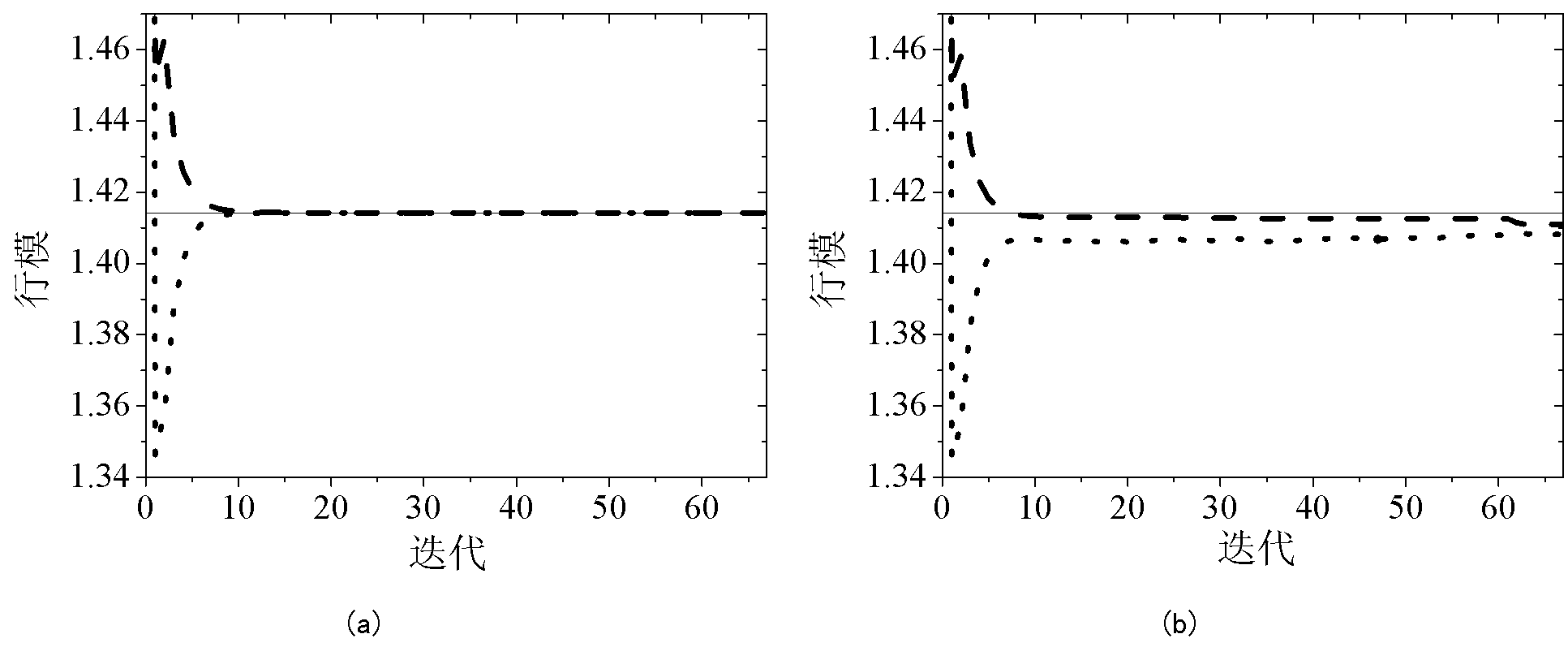

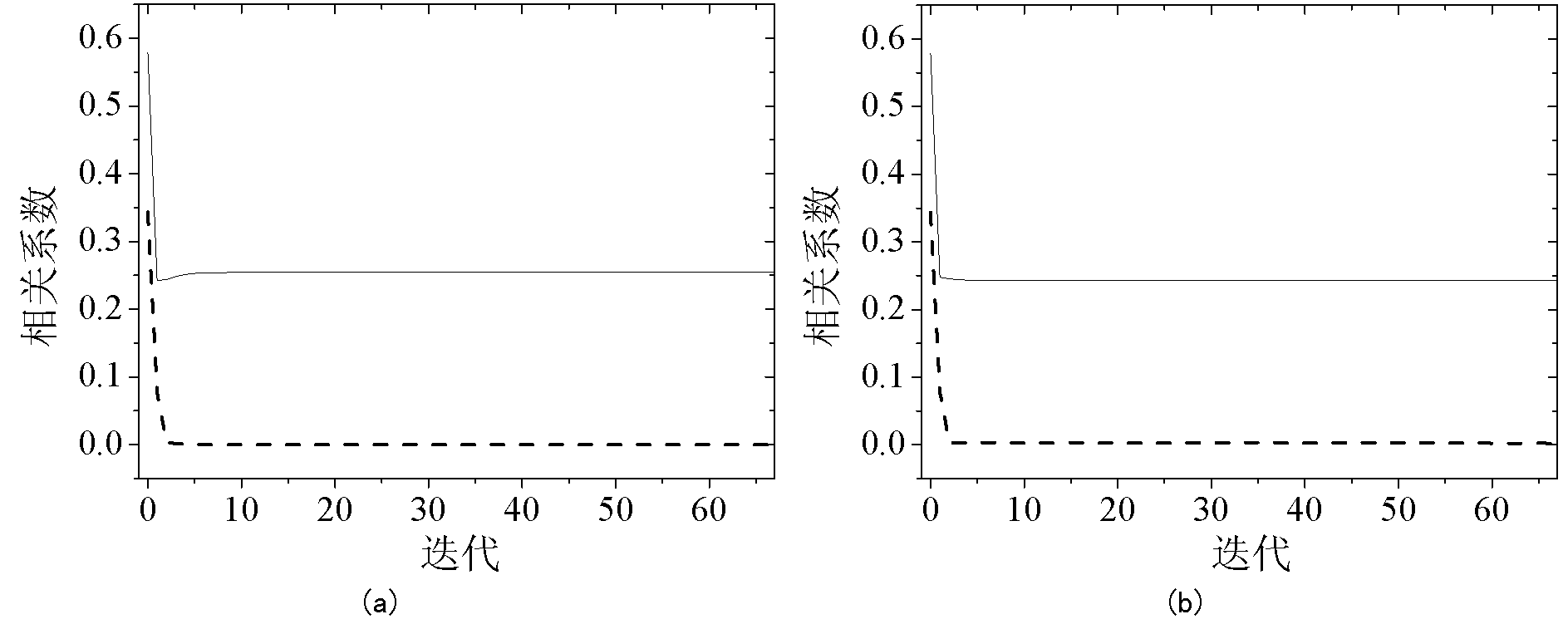

Approximation optimization and signal acquisition reconstruction method for 0-1 sparse cyclic matrix

ActiveCN102801428AUniversalSimplify hardware designCode conversionComplex mathematical operationsReconstruction methodTransition matrices

The invention discloses an approximation optimization and signal acquisition reconstruction method for a 0-1 sparse cyclic matrix, belongs to the technical field for the design and optimization of measurement matrix in compressive sensing, and provides a posteriori optimizing method which is easy to implement by hardware and can ensure signal reconstruction effect, wherein the 0-1 sparse cyclic matrix is adopted in the measurement stage, and a Gaussian matrix is adopted in the reconstruction stage. The method comprises the following steps: orthonormalizing the row vector and unitizing the column vector of the measurement matrix obtained by the i-1th iteration by the ith iteration; and optimizing the 0-1 sparse cyclic matrix by taking the maximum value of the absolute value of the correlated coefficient between each row and column vector, the convergence stability of each row vector module and the row and column number of each row and column subjected to Gaussian distribution as the criteria. The posteriori optimization of the measured data and measured matrix is completed by solving a transition matrix and an approximate matrix. The method establishes the foundation for the compressive sensing to be practical from the theoretical study.

Owner:GUANGXI UNIVERSITY OF TECHNOLOGY

Test matrix generating method, encoding method, decoding method, communication apparatus, communication system, encoder and decoder

ActiveUS8103935B2Promote generationReduce circuit sizeError preventionError detection/correctionDecoding methodsCommunications system

A regular quasi-cyclic matrix is prepared, a conditional expression for assuring a predetermined minimum loop in a parity check matrix is derived, and a mask matrix for converting a specific cyclic permutation matrix into a zero-matrix based on the conditional expression and a predetermined weight distribution is generated. The specific cyclic permutation matrix is converted into the zero-matrix to generate an irregular masking quasi-cyclic matrix. An irregular parity check matrix in which the masking quasi-cyclic matrix and a matrix in which the cyclic permutation matrices are arranged in a staircase manner are arranged in a predetermined location.

Owner:RAKUTEN GRP INC

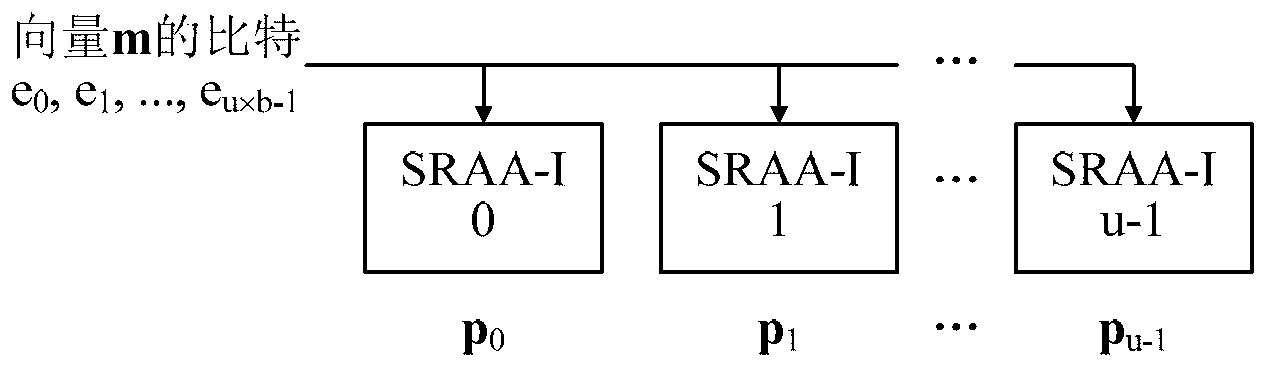

Rotate left-based quasi-cyclic (QC) matrix serial multiplier in deep space communication

InactiveCN103236850ASimple structureReduce power consumptionError correction/detection using multiple parity bitsShift registerBinary multiplier

The invention provides a rotate left-based quasi-cyclic (QC) matrix serial multiplier in deep space communication, which is used for realizing the multiplication of a vector m and a quasi-cyclic matrix F in triangle coding under standard multi-code class QC-LDPC approximate in the deep space communication of consultative committee for space data systems (CCSDS). The multiplier comprises 4 generating polynomial lookup tables which prestore cyclic matrix generating polynomials of all code class matrixes F, 4 2048-bit binary multipliers which perform scalar multiplication on the data bit of the vector m and the generating polynomials, 4 2048-bit binary adders which perform modulo 2 addition on products and contents of shift registers, and 4 2048-bit shift registers which store the sums which are rotated left for one bit. The quasi-cyclic matrix serial multiplier provided by the invention is compatible with all code classes, and has the advantages of few registers, simple structure, low power consumption, low cost and the like.

Owner:RONGCHENG DINGTONG ELECTRONICS INFORMATION SCI & TECH CO LTD

Check-matrix generating method, encoding method, communication apparatus, communication system, and encoder

InactiveUS20100058140A1Wide rangeCode conversionSingle error correctionCommunications systemAlgorithm

A regular quasi-cyclic matrix is generated in which specific regularity is given to cyclic permutation matrices. A mask matrix capable of supporting a plurality of encoding rates is generated. A specific cyclic permutation matrix in the regular quasi-cyclic matrix is converted into a zero-matrix using a mask matrix corresponding to a specific encoding rate to generate an irregular masking quasi-cyclic matrix. An irregular parity check matrix with an LDGM structure is generated in which the masking quasi-cyclic matrix and a matrix in which the cyclic permutation matrices are arranged in a staircase manner are arranged in a predetermined location.

Owner:MITSUBISHI ELECTRIC CORP

ROL quasi-cyclic matrix multiplier for full parallel input in WPAN

InactiveCN103902509ASimple structureReduce registerComputation using non-contact making devicesComplex mathematical operationsShift registerBinary multiplier

The invention provides an ROL quasi-cyclic matrix multiplier for full parallel input in a WPAN. The multiplier is used for multiplication of a vector m and a quasi-cyclic matrix F in standard QC-LDPC approximate triangle coding of the WPAN. The multiplier comprises two generating polynomial lookup tables for pre-storing all cyclic matrix cyclic generating polynomials in the matrix F, two 21-bit binary multipliers for performing scalar multiplication on the vector section and generating polynomial bits of the m, 21 three-bit binary adders for performing modulo-2 adding on content of a product sum shifting register and a 21-bit shifting register for storing the sum subjected to one-bit ROL. The multiplier for full parallel input is suitable for QC-LDPC codes in the WPAN standard and has the advantages of having fewer registers, being small in power consumption, low in cost, high in working frequency and large in throughput capacity, and the like.

Owner:RONGCHENG DINGTONG ELECTRONICS INFORMATION SCI & TECH CO LTD

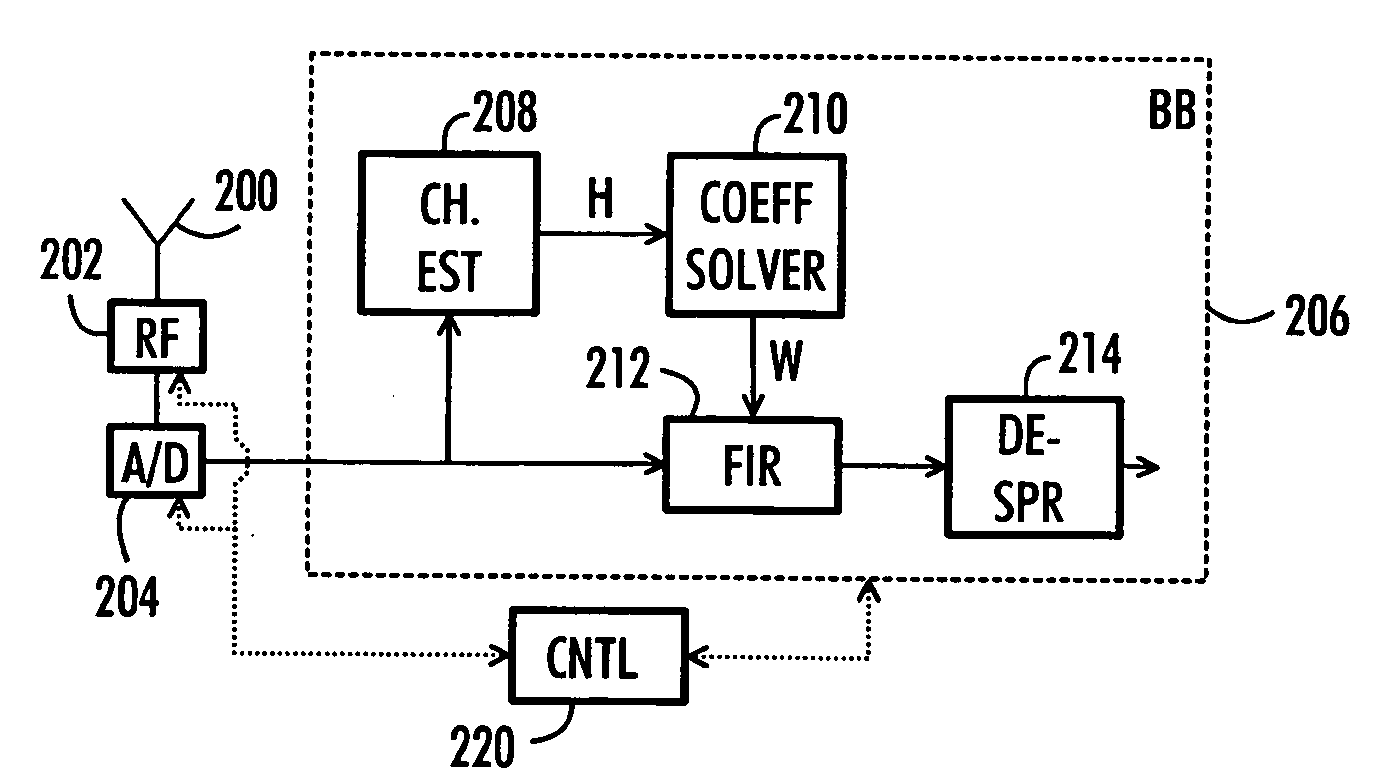

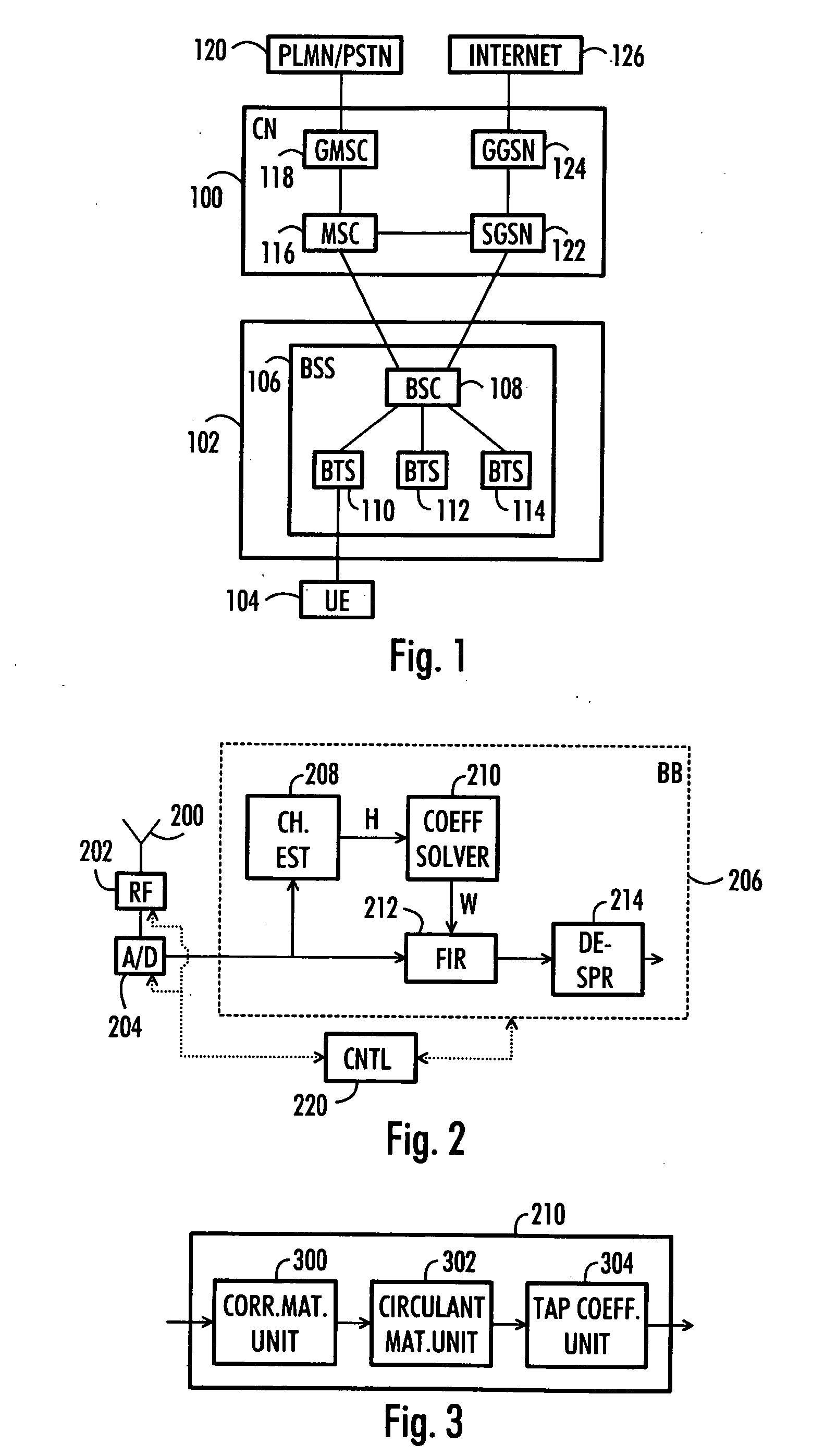

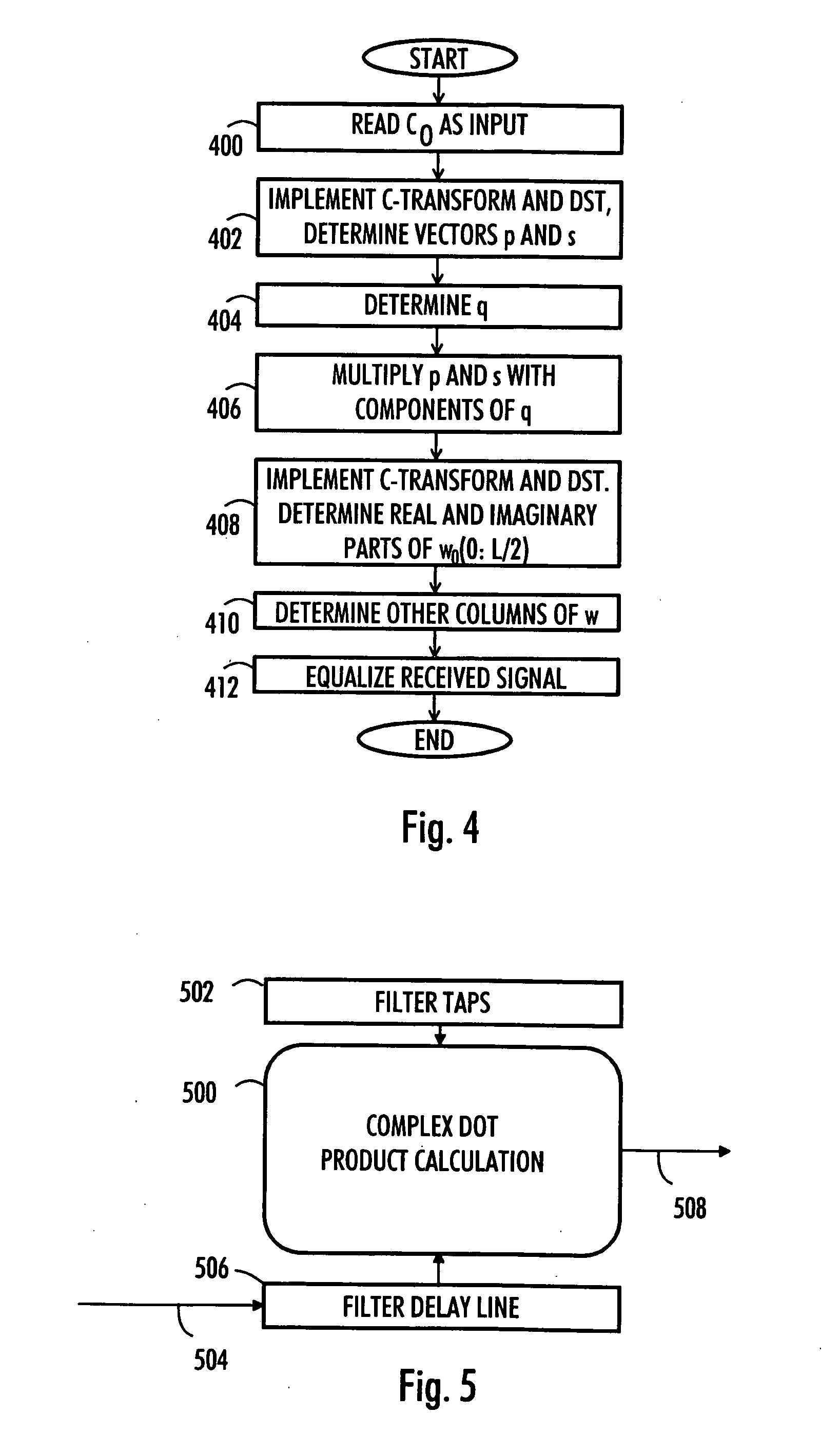

Signal processing method, receiver and equalizing method in receiver

InactiveUS20070253514A1Reduce in quantityReduced implementation timeMultiple-port networksDelay line applicationsEqualizationCirculant matrix

A signal processing method, receiver and equalizing method are provided. The receiver comprises an estimator estimating a channel coefficient matrix from a received signal, a first calculation unit determining a channel correlation matrix based on the channel coefficient matrix a converter converting the channel correlation matrix into a circulant matrix. A second calculation unit determines equalization filter coefficients by applying a first transform to the real parts of a first subset of the terms in the first column of the circulant matrix and by applying a second transform to the imaginary parts of a second subset of the terms in the first column of the circulant matrix. An equalizer equalizes the received signal by using the determined equalization filter coefficients.

Owner:WSOU INVESTMENTS LLC

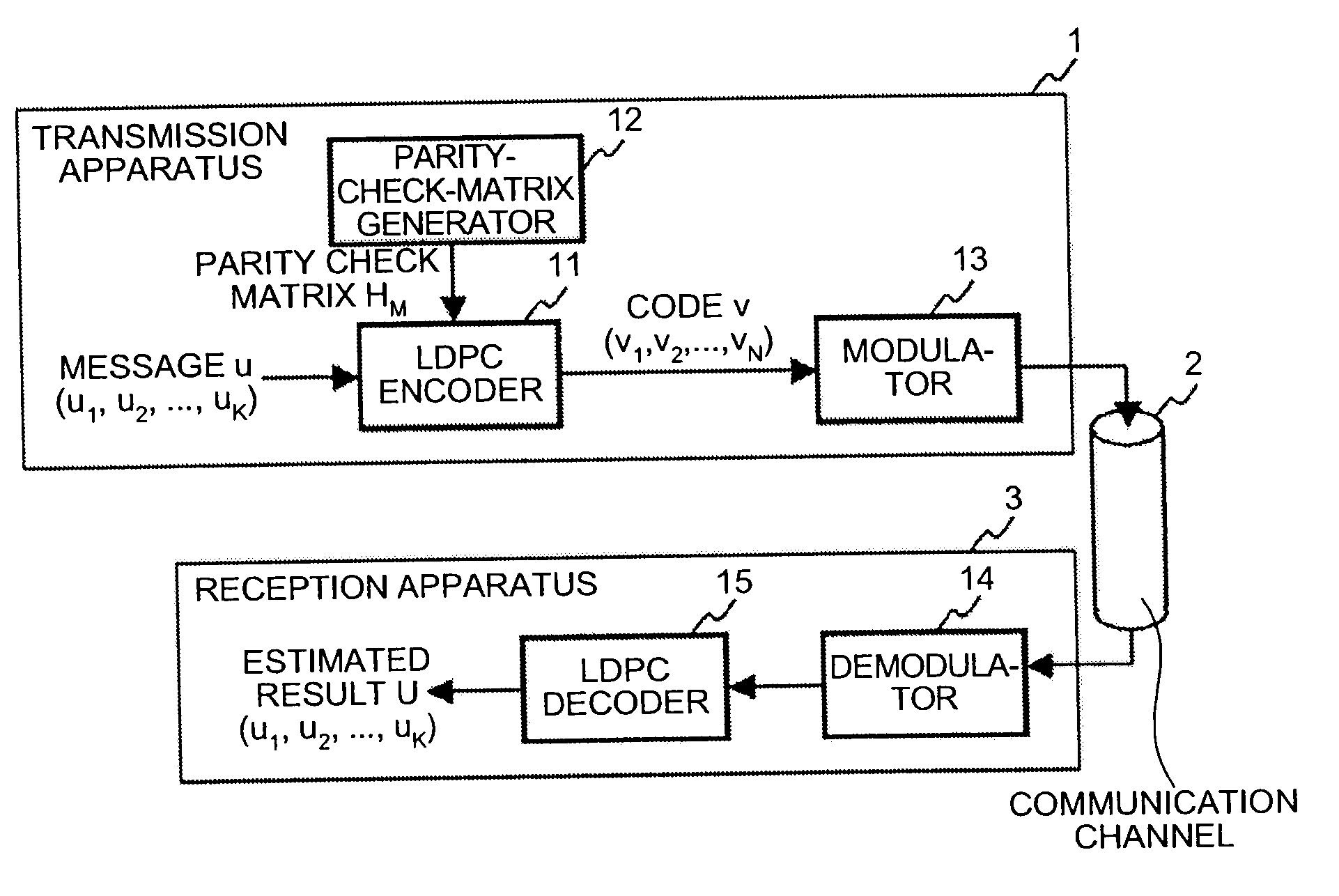

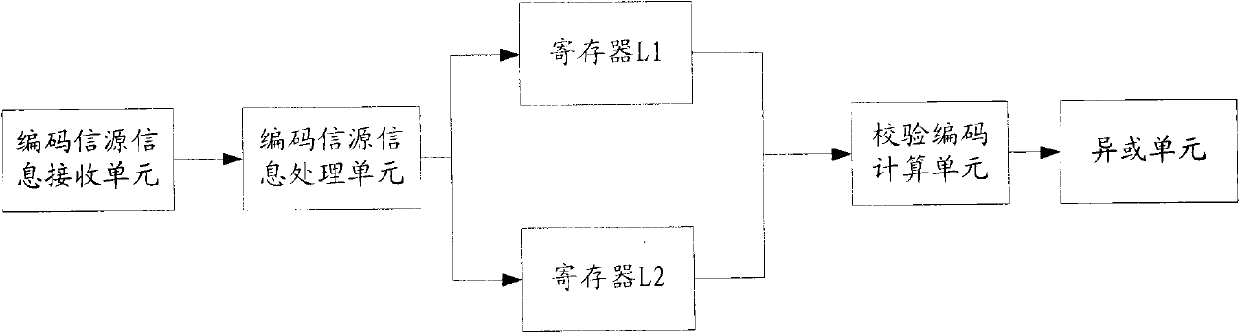

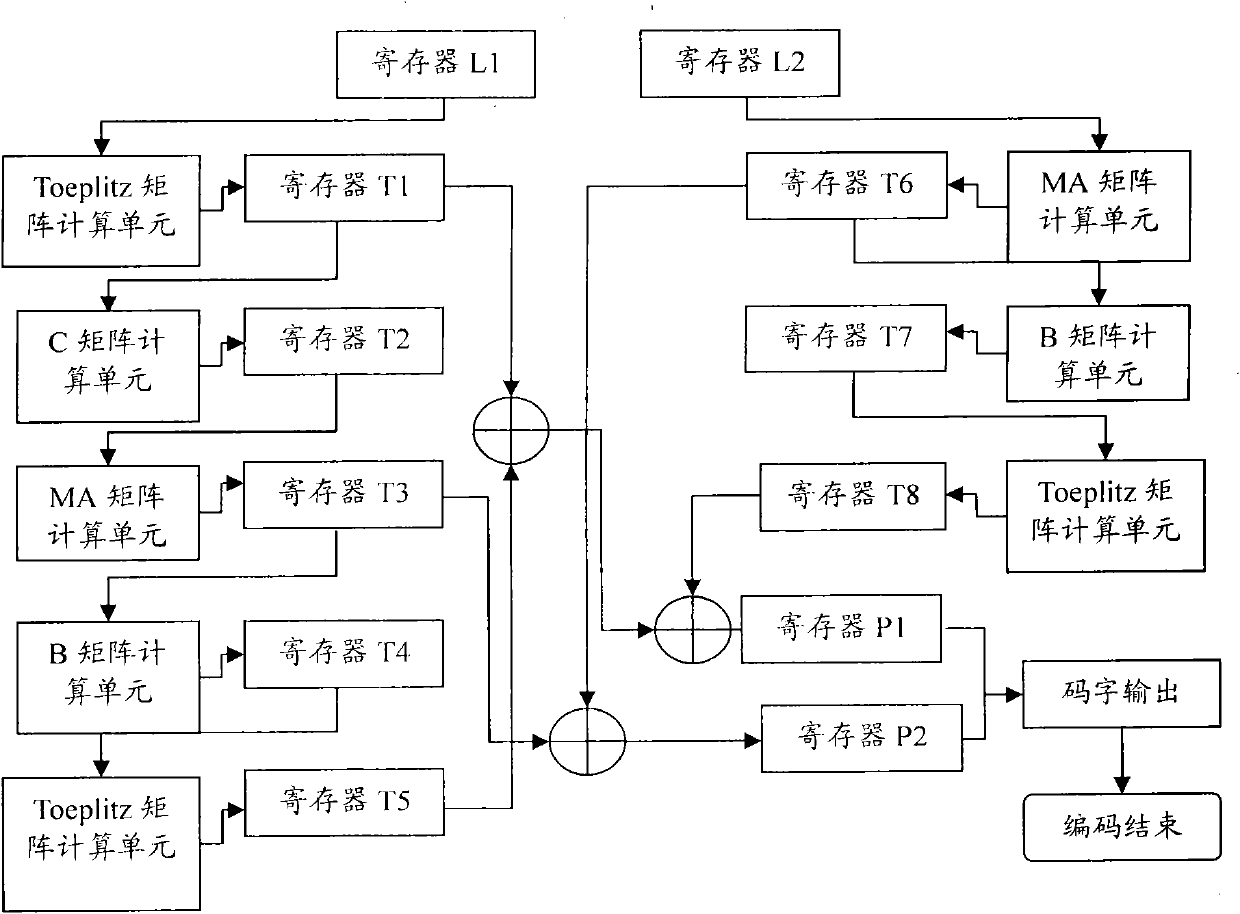

Device and method for encoding LDPC code

ActiveCN101640543ASave resourcesEasy to codeError correction/detection using multiple parity bitsProduction lineProcessor register

The invention is applicable in the technical field of encoding, and provides a method and a device for encoding an LDPC code. The encoding device comprises a storage unit which is used for storing encoding information source information, a check encoding calculation unit which is used for calculating the encoding information source information of a register according the a check matrix with a Toeplitz structure and an exclusive OR unit which is used for outputting a calculated result after exclusive OR, wherein the check code unit is provided with the check matrix with the Toeplitz structure.The device and the method partition the check matrix, and encode the received information source information according to partitioned check matrix, wherein the partitioned check matrix is provided with the Toeplitz structure. In the embodiment of the invention, values of converse diagonal lines of the matrix are completely the same, contribute to adopt operation in a production line mode, and have excellent regularity; and a sparse quasi-cyclic matrix structure brings great convenience to encoding and saves hardware resources.

Owner:SHENZHEN COSHIP ELECTRONICS CO LTD

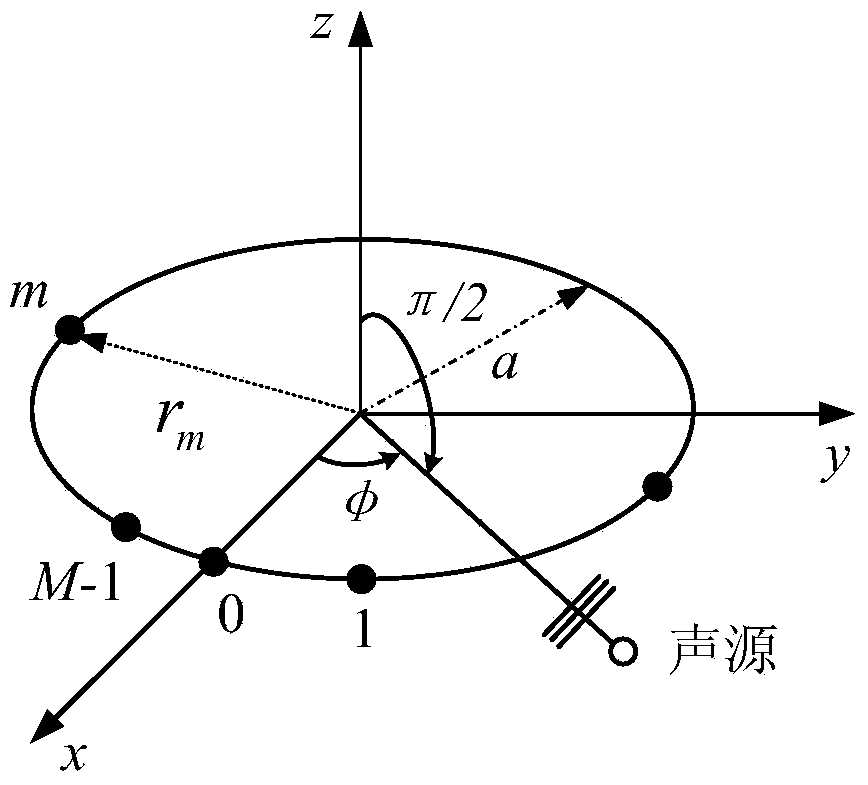

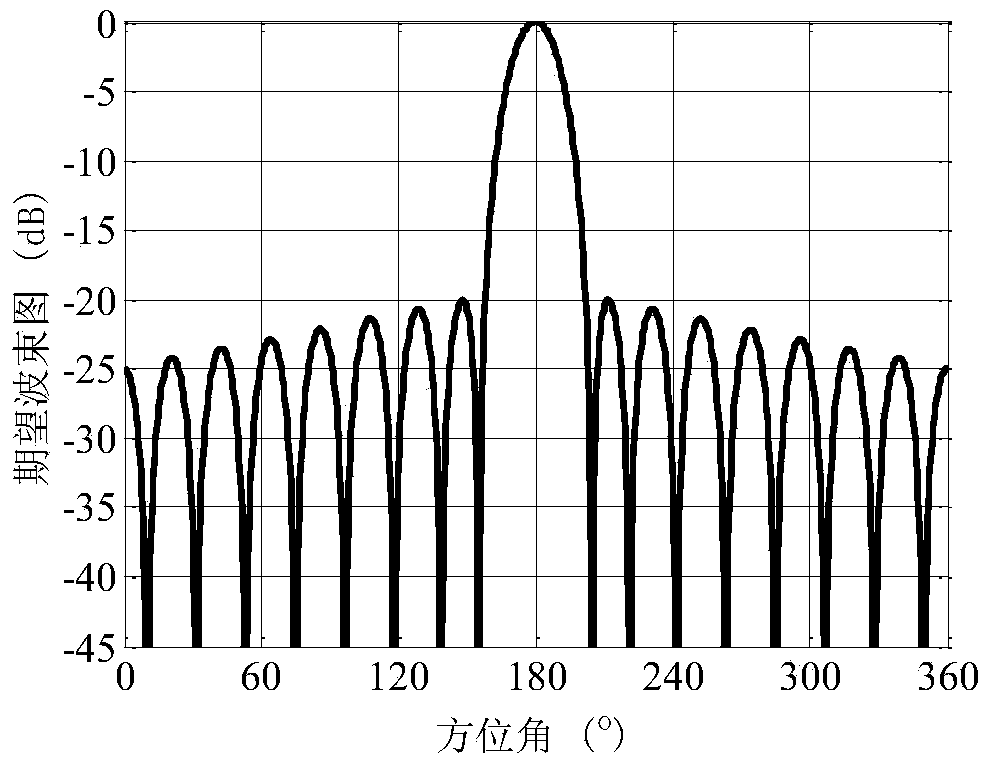

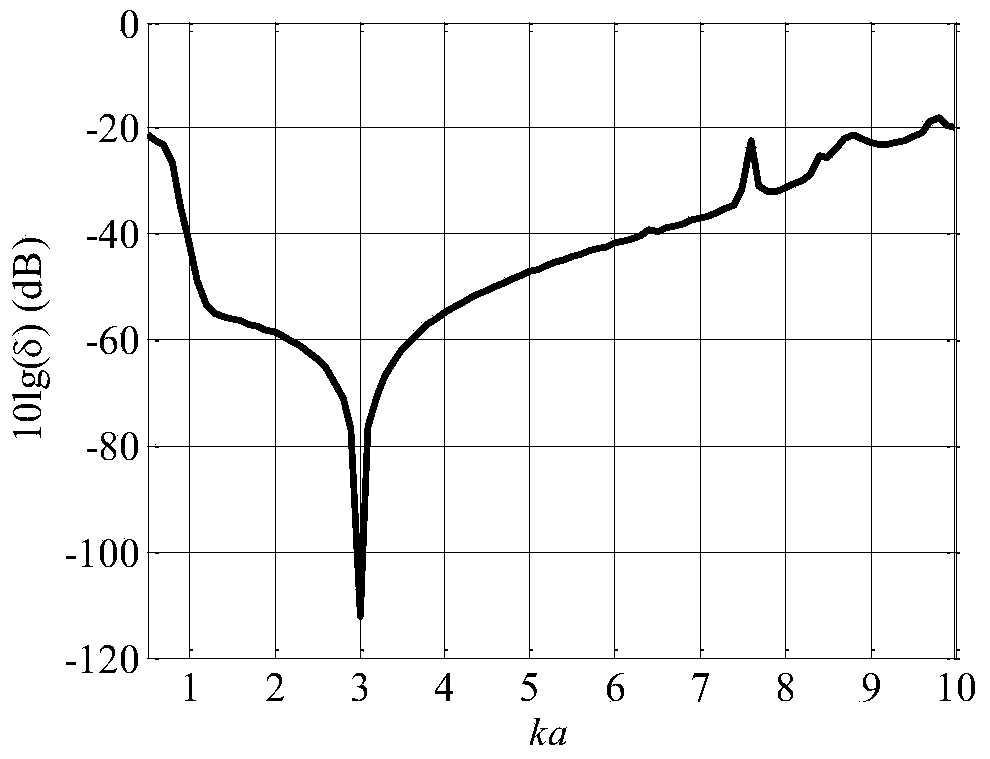

Method for designing circular array constant beamwidth beam former

ActiveCN103903609ASimple methodSmall square errorSound producing devicesClosed expressionBeam pattern

The invention relates to a method for designing a circular array constant beamwidth beam former. The method for designing the circular array constant beamwidth beam former is simple and precise and is provided according to an even circular array. The characteristic of a circulant matrix is utilized in the method, an exact solution to a circular array minimum square error beam pattern synthesis problem is provided, and a finally-composited beam and a minimum square error are simply represented as the sum of subcomponent superposition. When the beam is expected to be transformed into a proper form, a closed expression of a weight vector of the constant beamwidth beam former can be obtained. The method overcomes the defects of the complex and imprecise operation in the prior art, an optimal solution is precisely represented as the sum of subcomponent composited, and the closed expression exists.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Double qc-LDPC code

ActiveUS20140298132A1Improve regularityCode conversionCoding detailsParity-check matrixTheoretical computer science

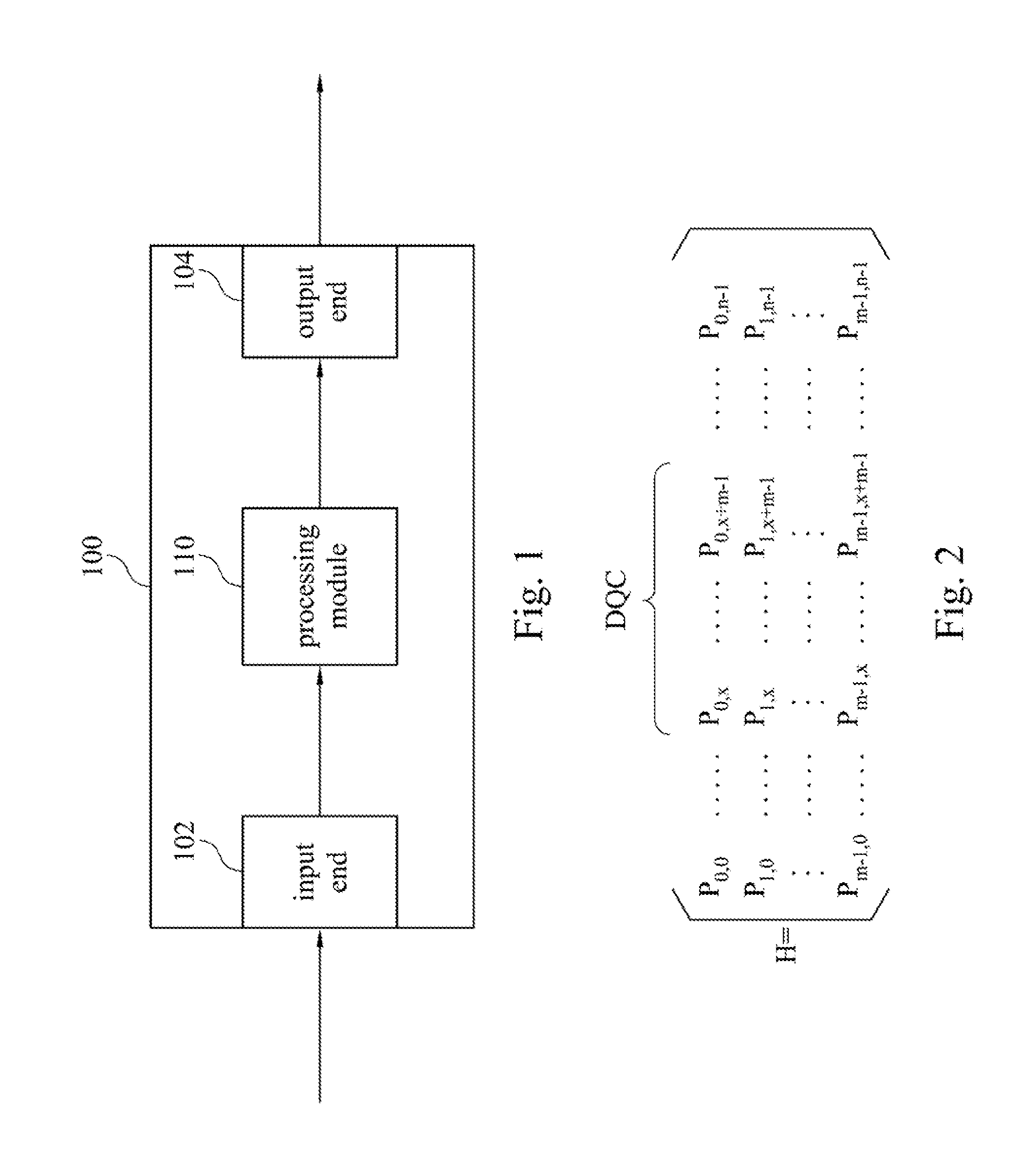

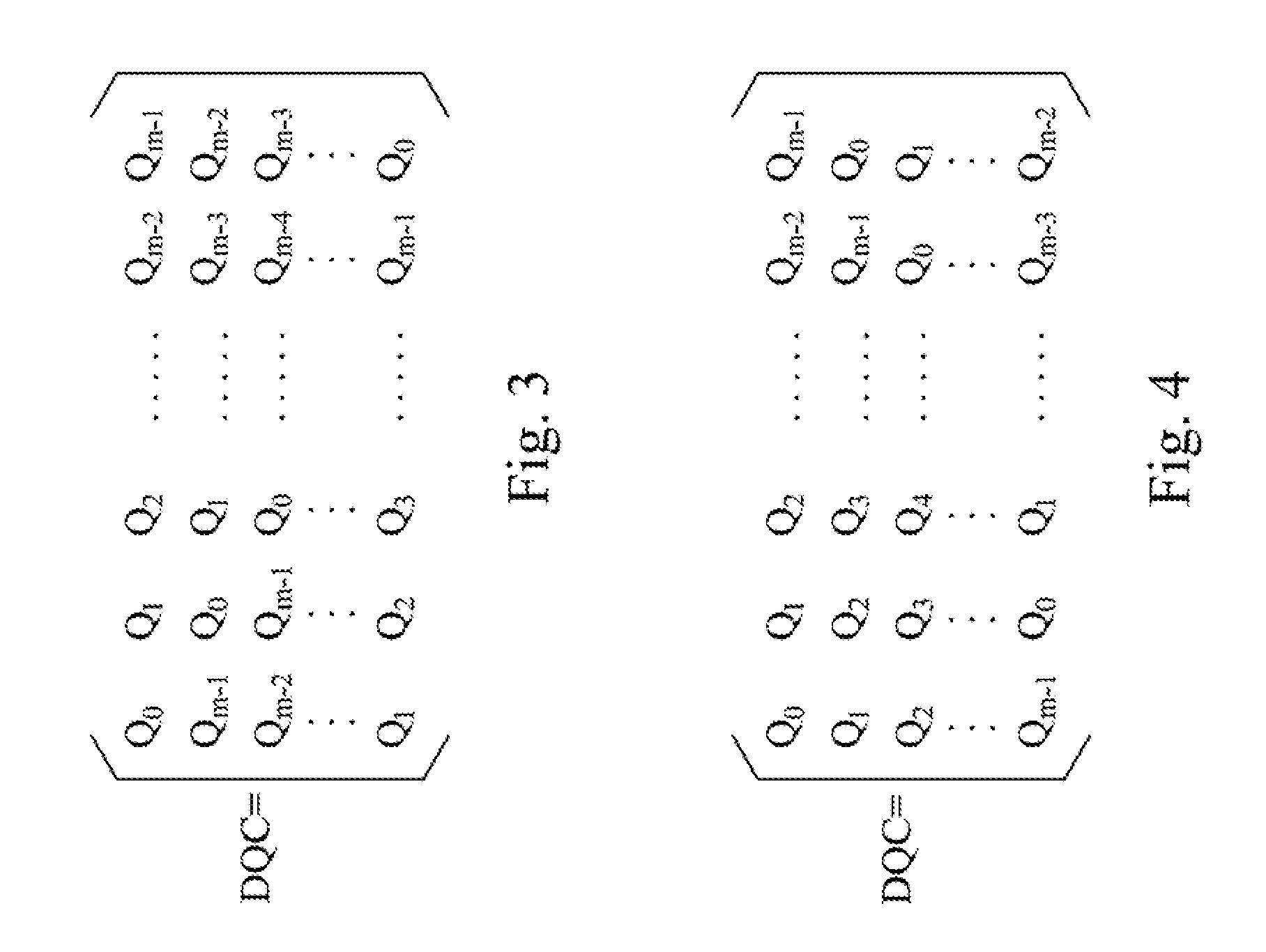

A double quasi-cyclic low density parity check (DQC-LDPC) code and a corresponding processor are disclosed herein. The parity-check matrix of DQC-LDPC codes has regularity with its corresponding processor including an input end, an output end and a processing module. The parity-check matrix includes a double quasi-cyclic matrix. The double quasi-cyclic matrix includes a plurality of sub-matrices. The sub-matrices are arranged in an array. Each sub-matrix includes a plurality of entries, and each sub-matrix is a circulant matrix having the entries circular shifted row-by-row. The double quasi-cyclic matrix is a circulant matrix having the sub-matrices circular shifted row-by-row. The processing module is configured to process an input signal and output an output signal correspond to the parity-check matrix of a low density parity check code (LDPC).

Owner:NATIONAL TSING HUA UNIVERSITY

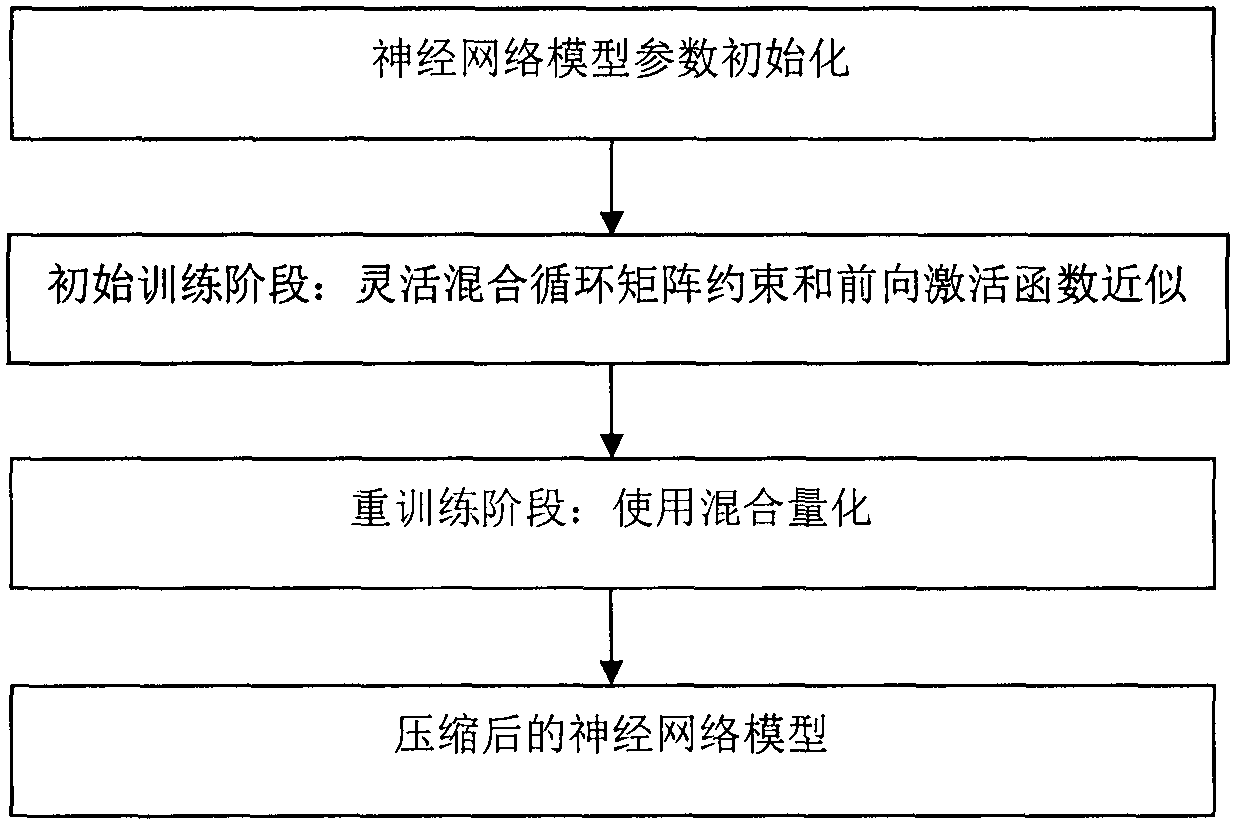

Multi-mechanism mixed recurrent neural network model compression method

The invention discloses a multi-mechanism mixed recurrent neural network model compression method. The multi-mechanism mixed recurrent neural network model compression method comprises A, carrying outcirculant matrix restriction: restricting a part of parameter matrixes in the recurrent neural network into circulant matrixes, and updating a backward gradient propagation algorithm to enable the network to carry out batch training of the circulant matrixes, B, carrying out forward activation function approximation: replacing a non-linear activation function with a hardware-friendly linear function during the forward operation process, and keeping the backward gradient updating process unchanged; C, carrying out hybrid quantization: employing different quantification mechanisms for differentparameters according to the error tolerance difference between different parameters in the recurrent neural network; and D, employing a secondary training mechanism: dividing network model training into two phases including initial training and repeated training. Each phase places particular emphasis on a different model compression method, interaction between different model compression methodsis well avoided, and precision loss brought by the model compression method is reduced to the maximum extent. According to the invention, a plurality of model compression mechanisms are employed to compress the recurrent neural network model, model parameters can be greatly reduced, and the multi-mechanism mixed recurrent neural network model compression method is suitable for a memory-limited andlow-delay embedded system needing to use the recurrent neural network, and has good innovativeness and a good application prospect.

Owner:南京风兴科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com