Patents

Literature

629results about How to "The recognition result is accurate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

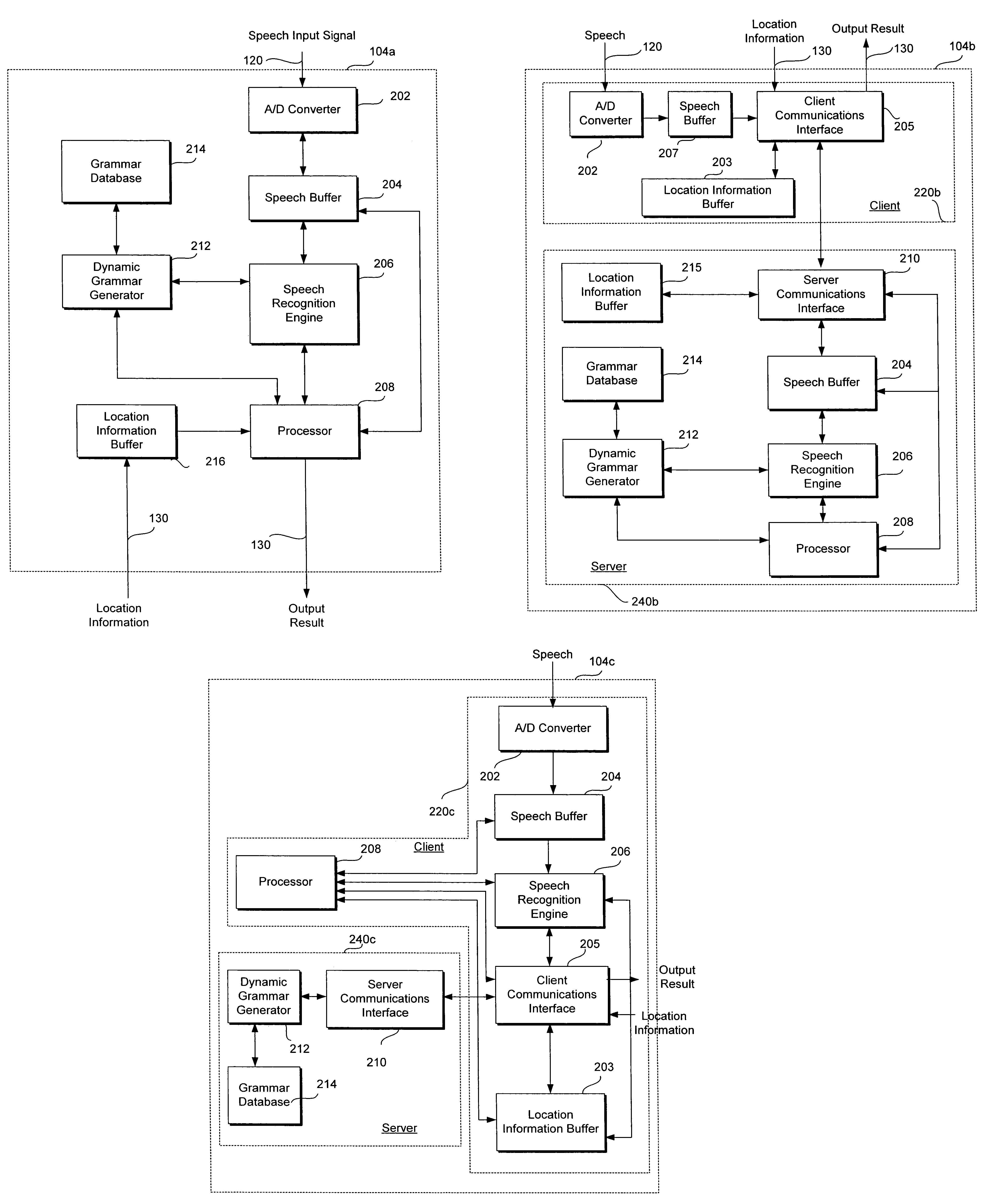

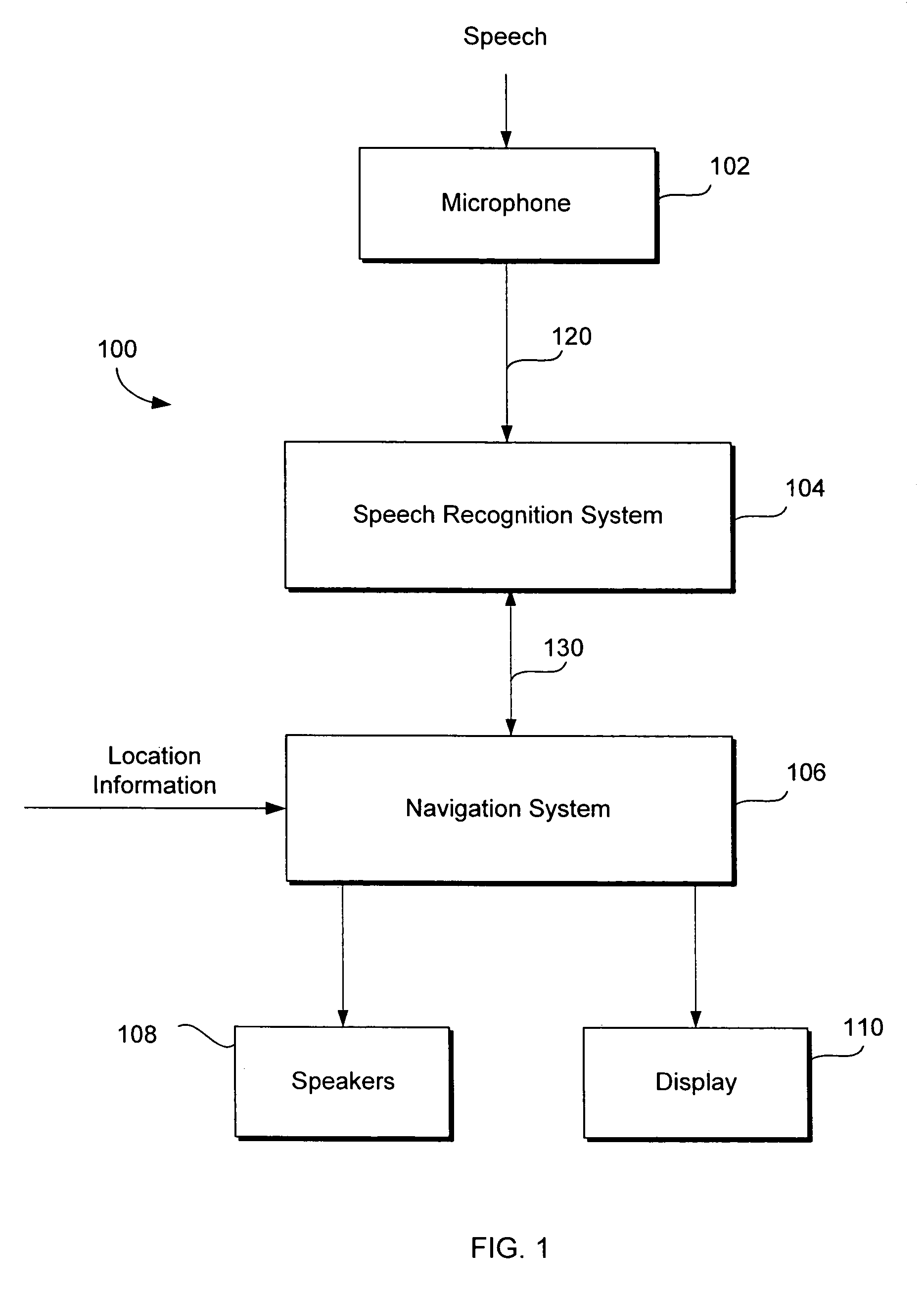

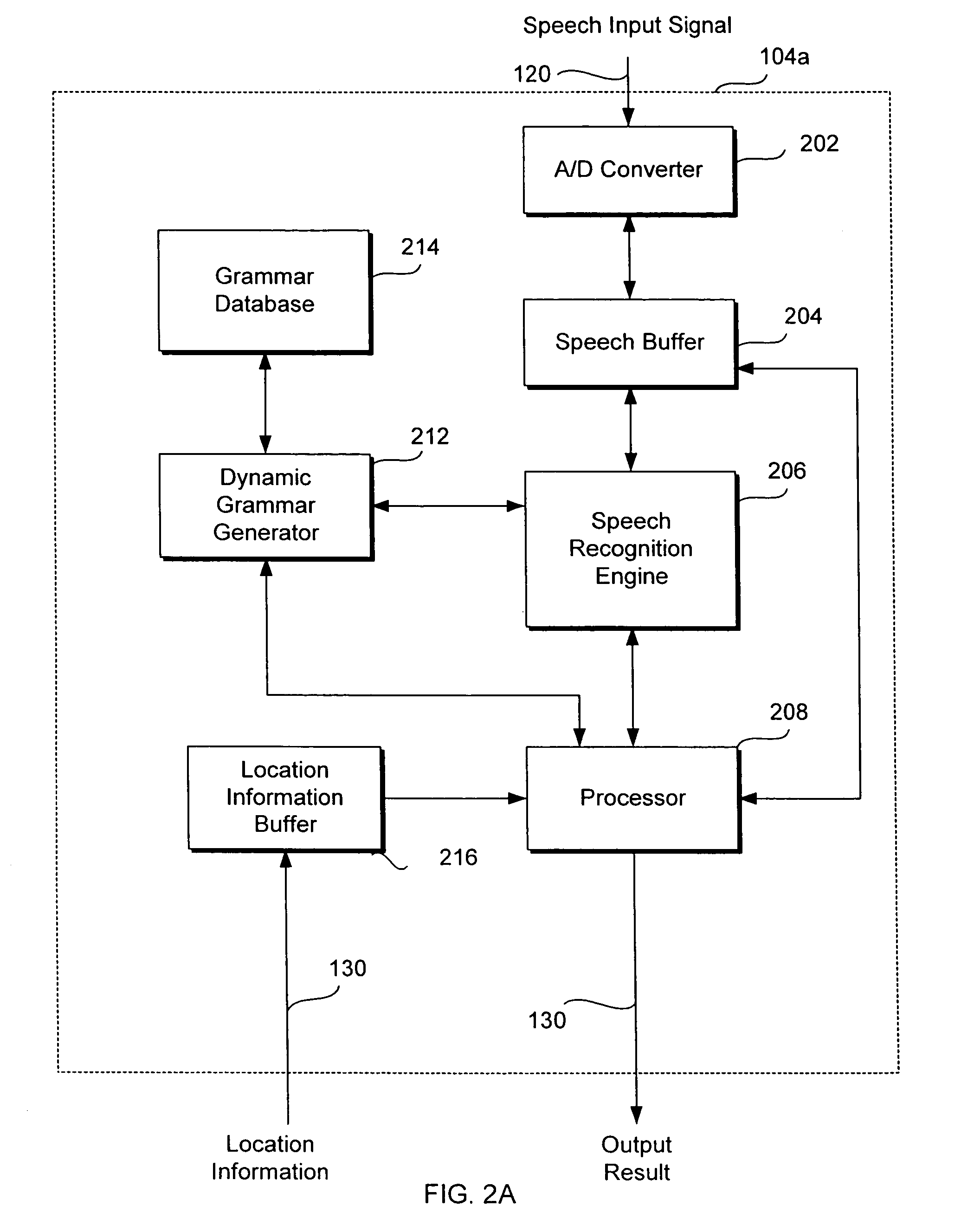

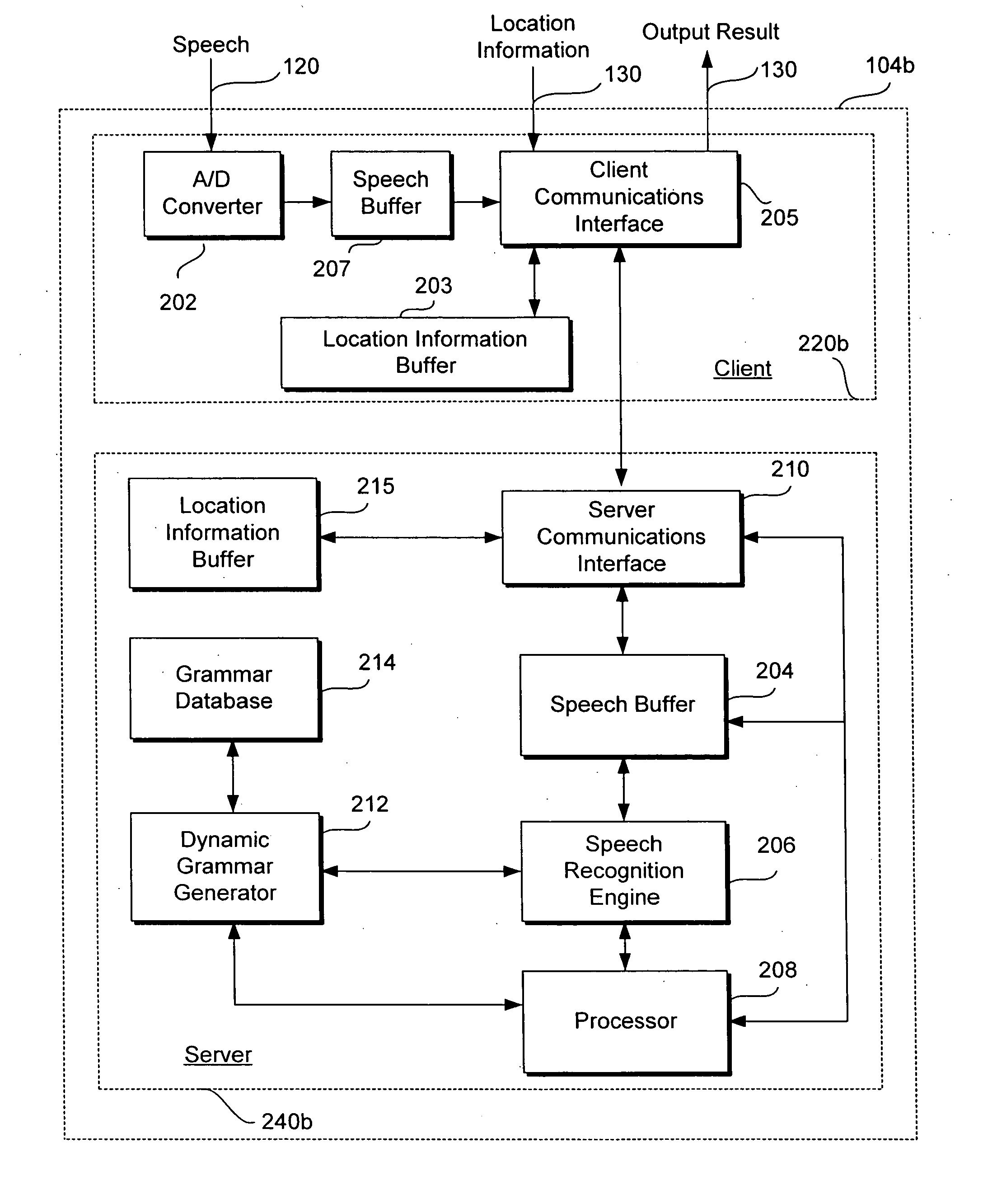

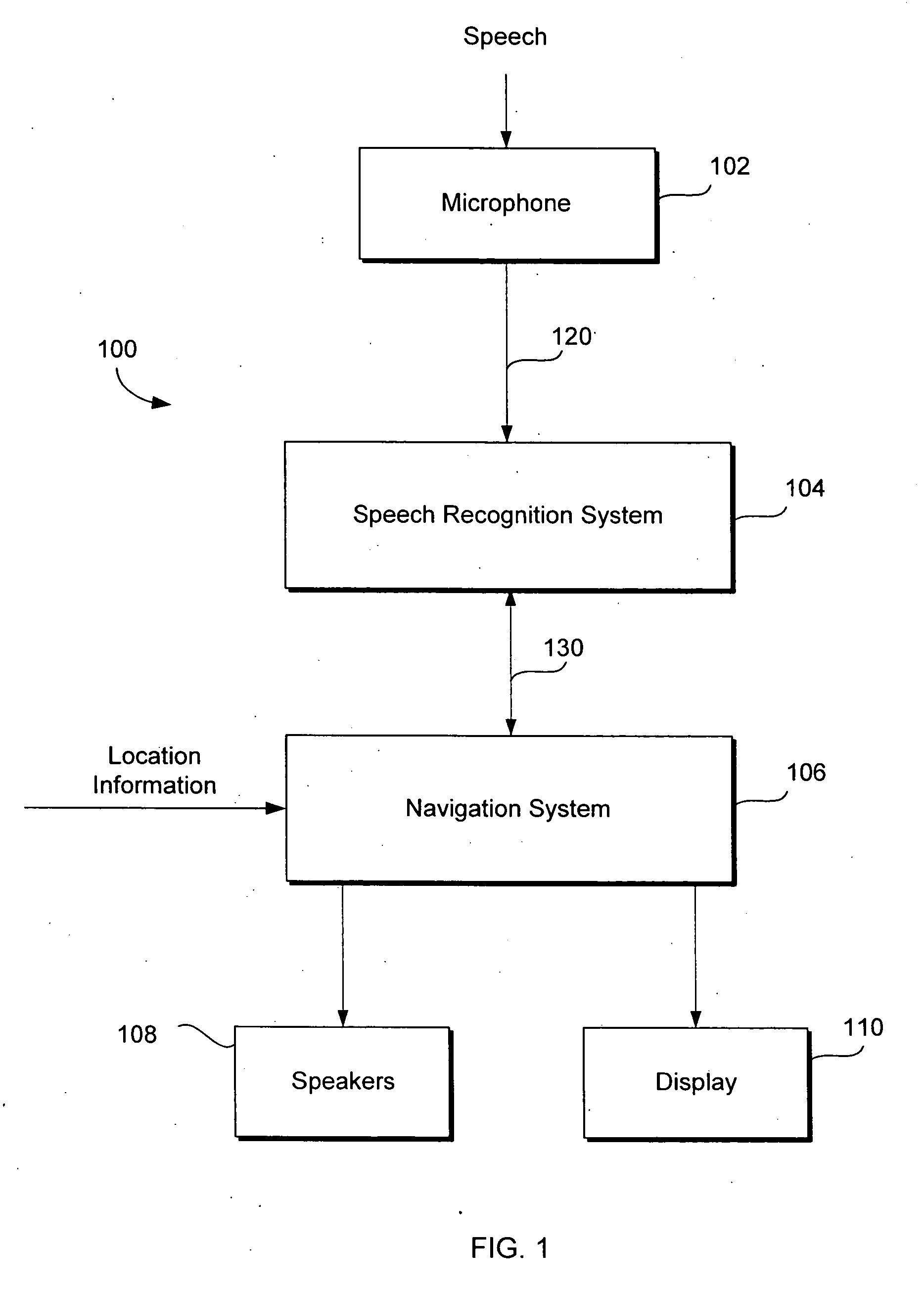

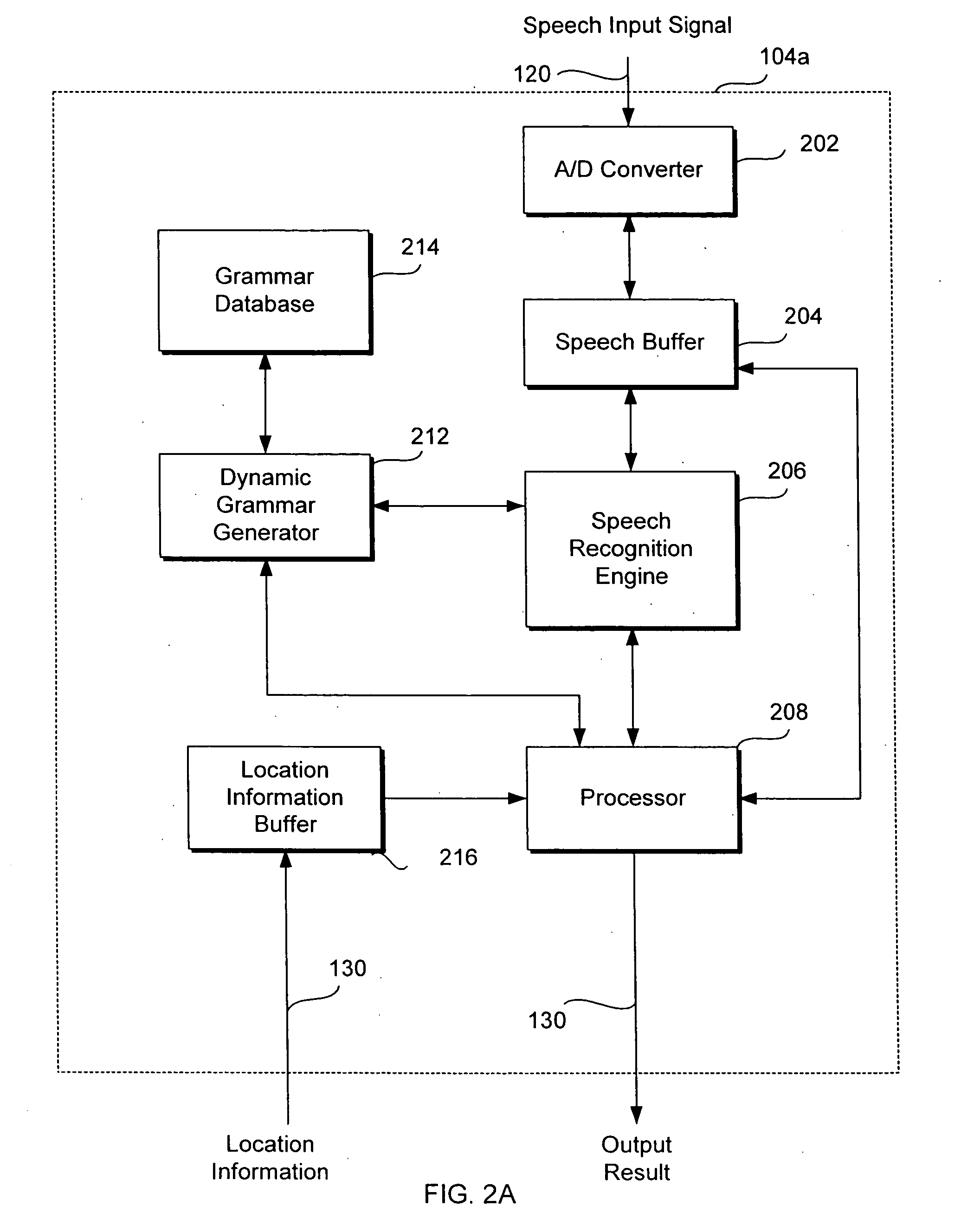

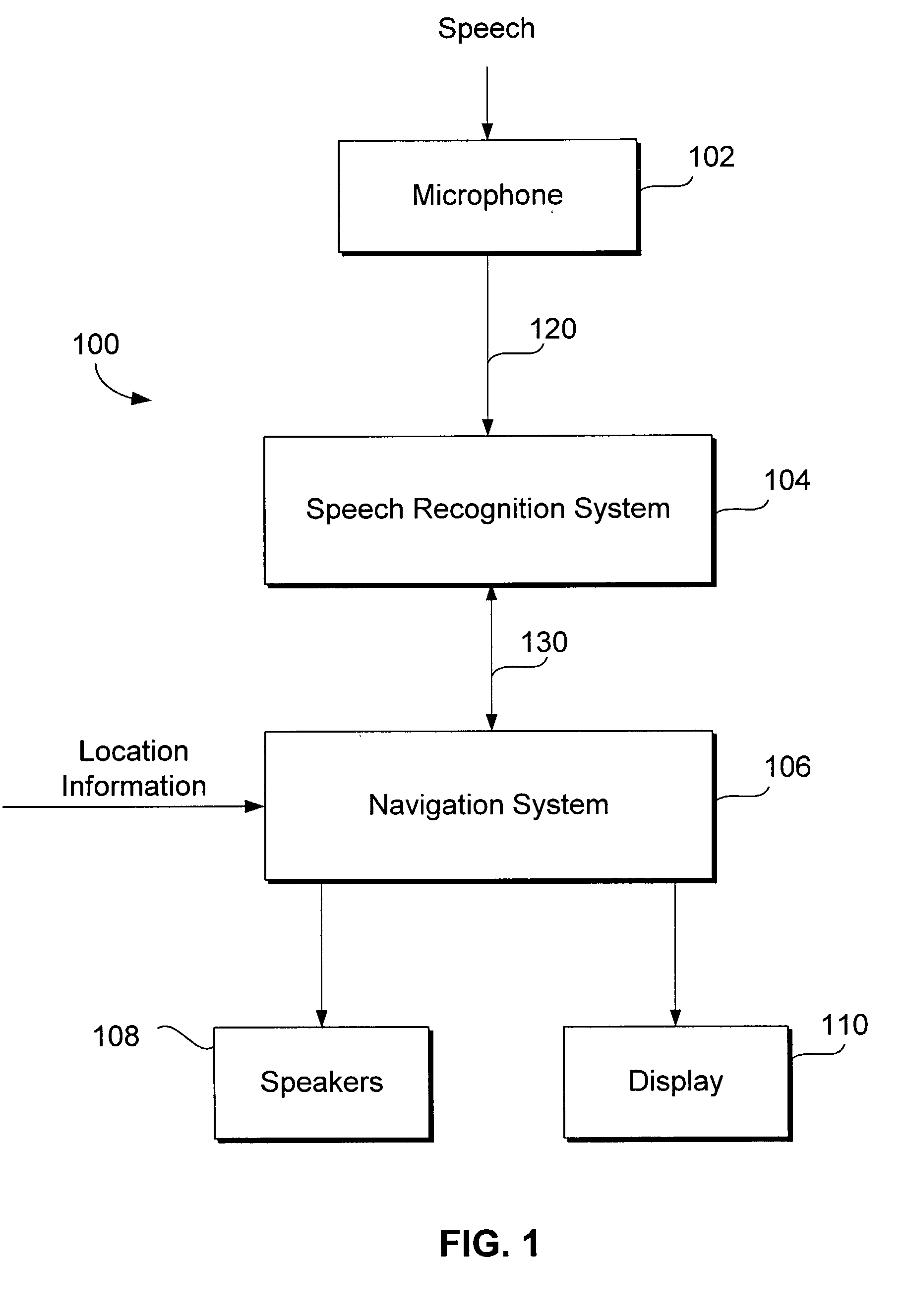

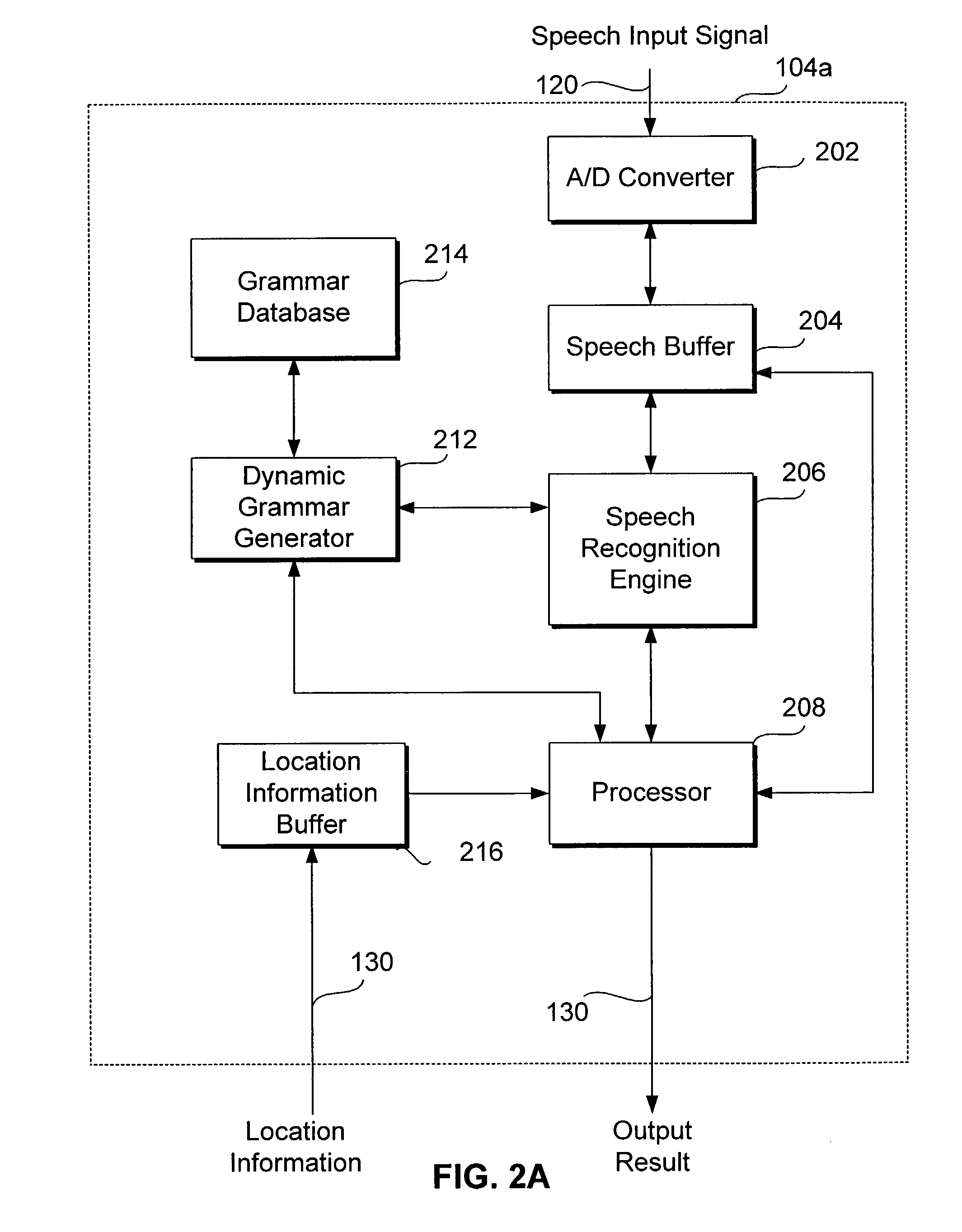

Method and system for speech recognition using grammar weighted based upon location information

InactiveUS7328155B2Overcome inaccurate recognitionThe recognition result is accurateSpeech recognitionSpeech identificationNavigation system

A speech recognition method and system for use in a vehicle navigation system utilize grammar weighted based upon geographical information regarding the locations corresponding to the tokens in the grammars and / or the location of the vehicle for which the vehicle navigation system is used, in order to enhance the performance of speech recognition. The geographical information includes the distances between the vehicle location and the locations corresponding to the tokens, as well as the size, population, and popularity of the locations corresponding to the tokens.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

Method and system for speech recognition using grammar weighted based upon location information

InactiveUS20050080632A1Overcome inaccurate recognitionThe recognition result is accurateSpeech recognitionNavigation systemSpeech sound

A speech recognition method and system for use in a vehicle navigation system utilize grammar weighted based upon geographical information regarding the locations corresponding to the tokens in the grammars and / or the location of the vehicle for which the vehicle navigation system is used, in order to enhance the performance of speech recognition. The geographical information includes the distances between the vehicle location and the locations corresponding to the tokens, as well as the size, population, and popularity of the locations corresponding to the tokens.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

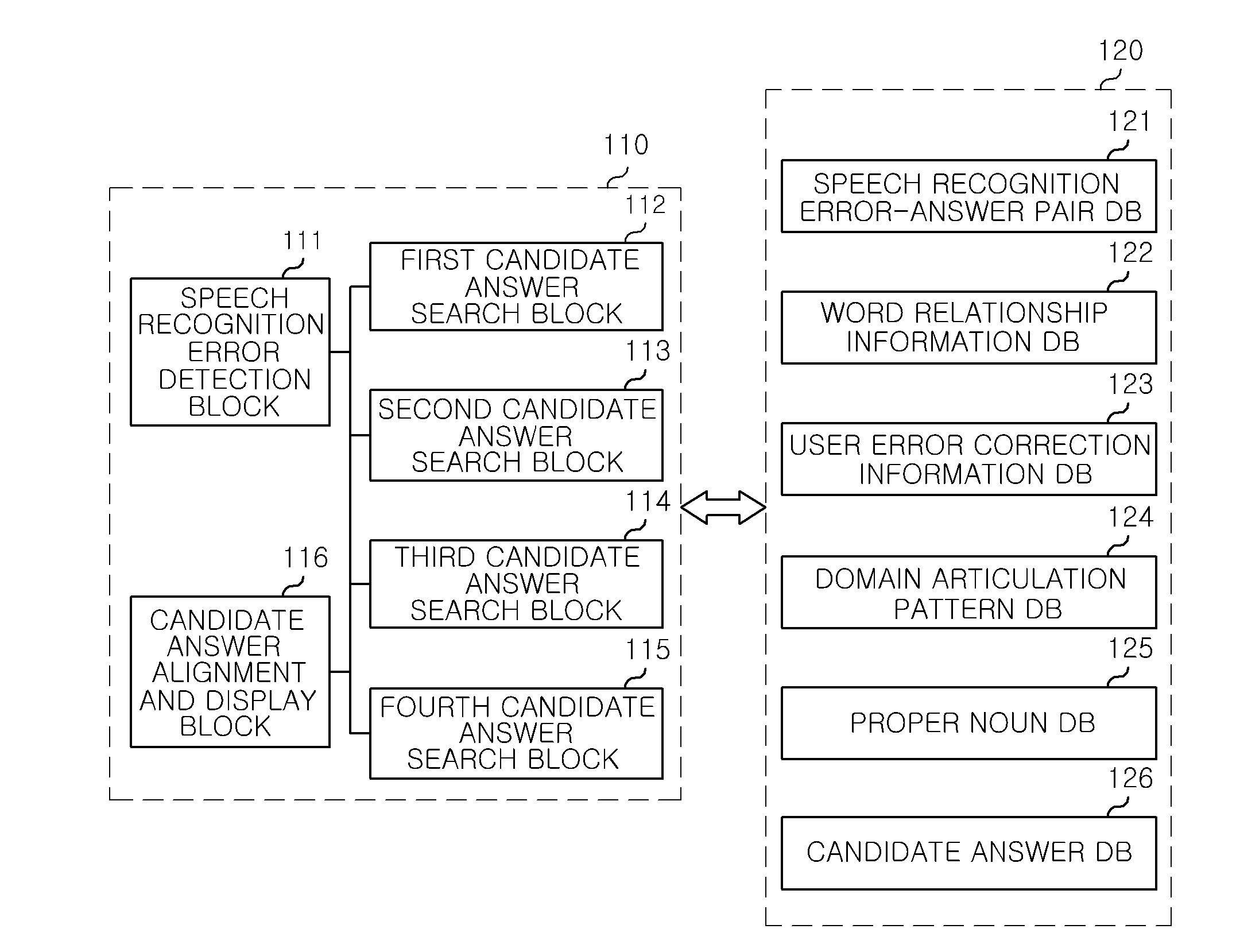

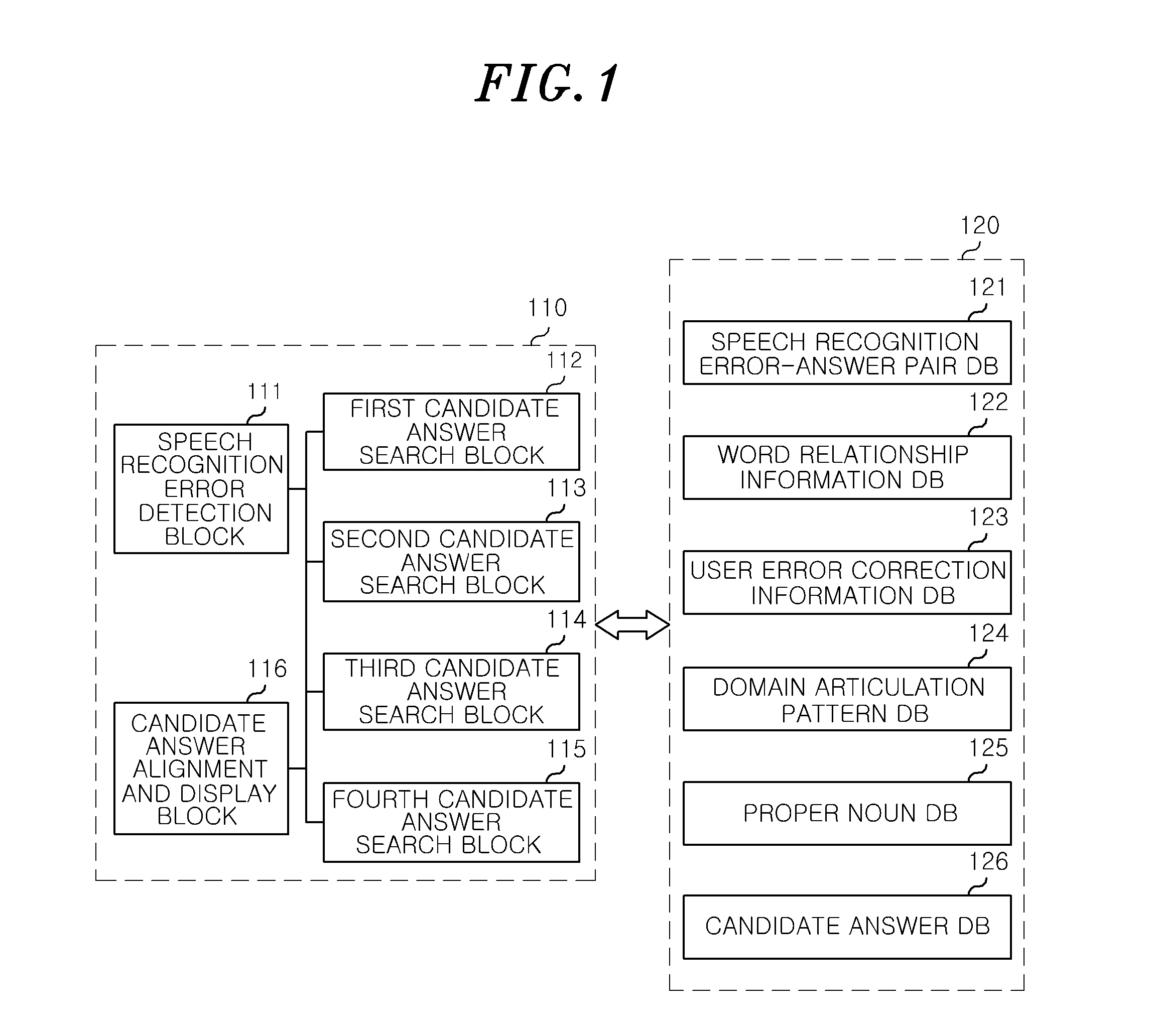

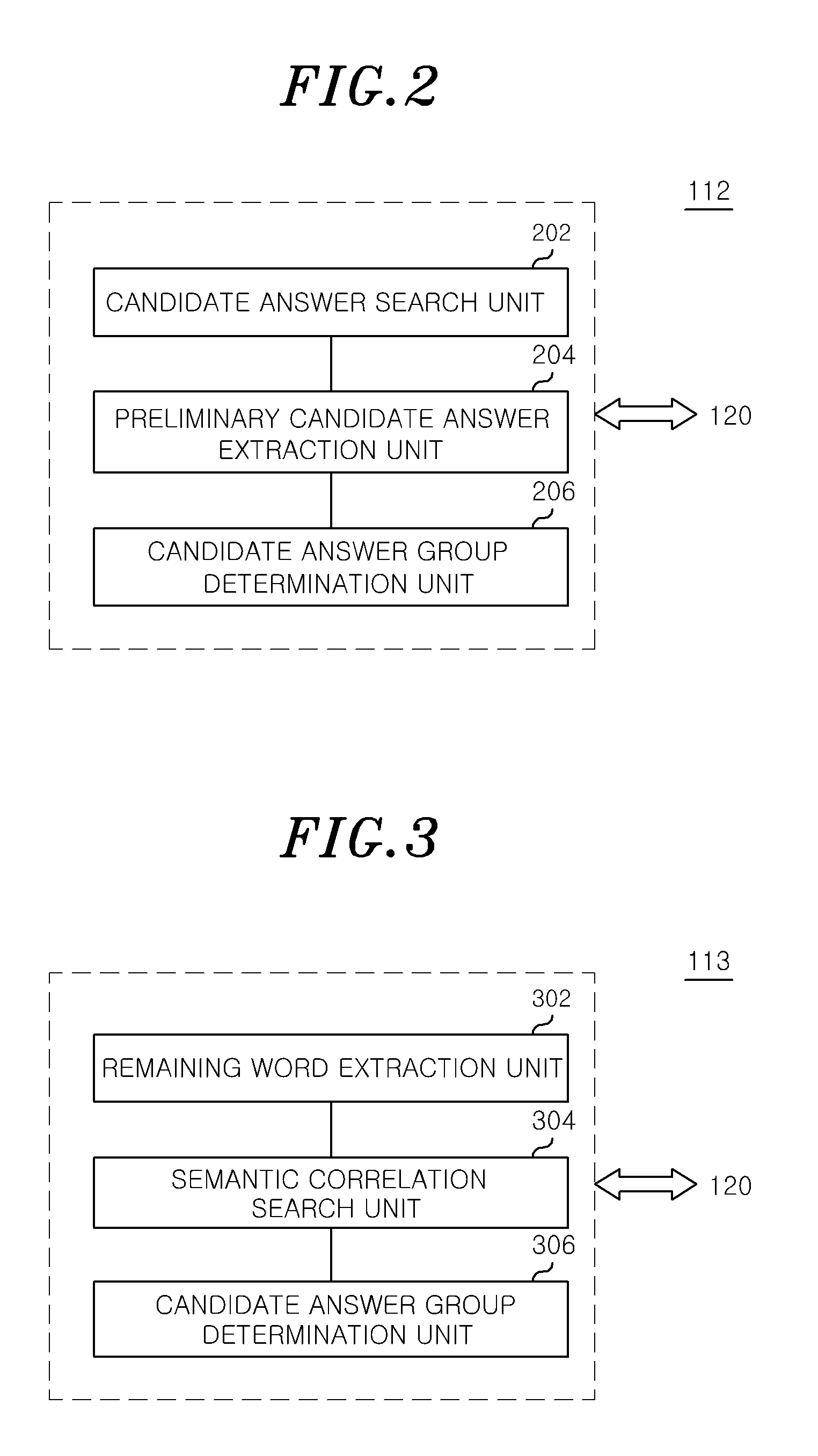

Method and apparatus for correcting error in speech recognition system

InactiveUS20140195226A1Easy to handleImprove user convenienceMetal sawing devicesSpeech recognitionSpeech identificationA domain

A method of correcting errors in a speech recognition system includes a process of searching a speech recognition error-answer pair DB based on a sound model for a first candidate answer group for a speech recognition error, a process of searching a word relationship information DB for a second candidate answer group for the speech recognition error, a process of searching a user error correction information DB for a third candidate answer group for the speech recognition error, a process of searching a domain articulation pattern DB and a proper noun DB for a fourth candidate answer group for the speech recognition error, and a process of aligning candidate answers within each of the retrieved candidate answer groups and displaying the aligned candidate answers.

Owner:ELECTRONICS & TELECOMM RES INST

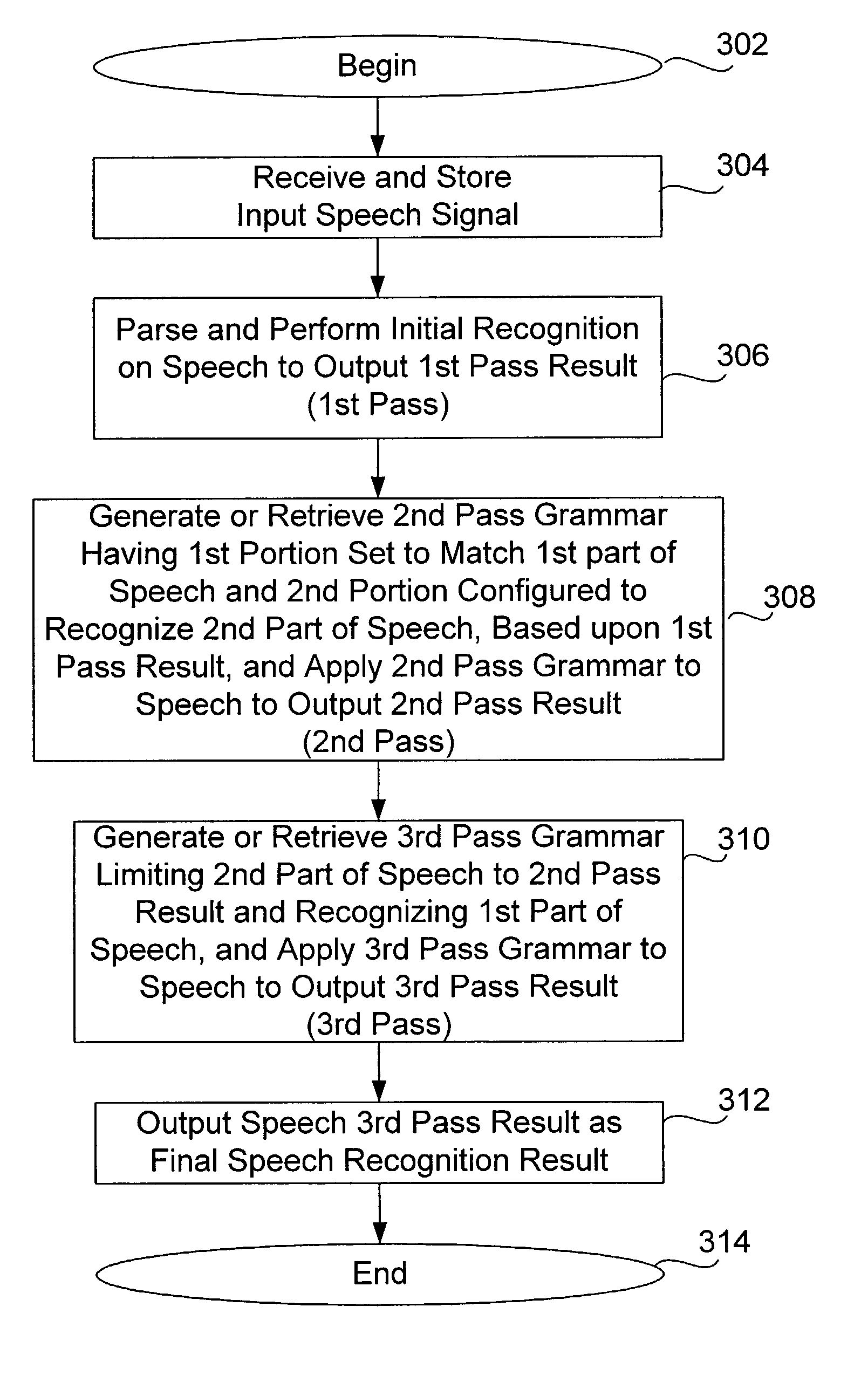

Multiple pass speech recognition method and system

InactiveUS7184957B2Overcome inaccurate recognitionThe recognition result is accurateSpeech recognitionSpeech identificationMultiple pass

A multiple pass speech recognition method includes a first pass and a second pass. The first pass recognizes an input speech signal to generate a first pass result. The second pass generates a first grammar having a portion set to match a first part of the input speech signal, based upon the context of the first pass result, and generate a second pass result. The method may further include a third pass grammar limiting the second part of the input speech signal to the second pass result. The third pass grammar includes a model corresponding to the first part of the input speech signal and varying within the second pass result. The third pass compares the first part of the input speech signal to the model while limiting the second part of the input speech signal to the second pass result.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

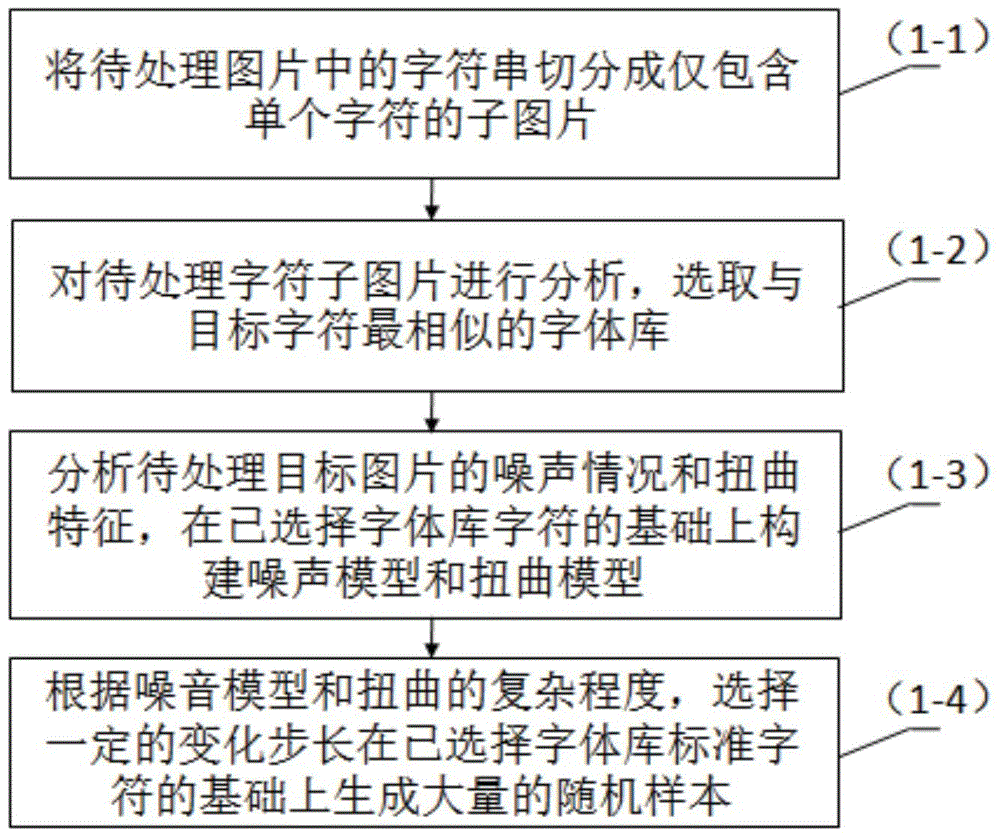

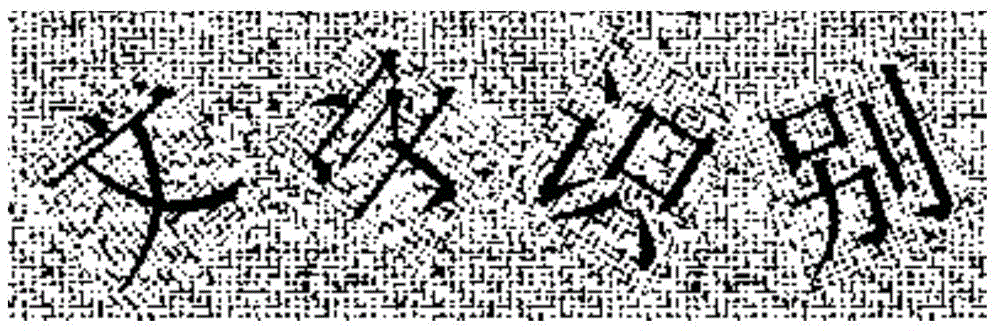

Complex character recognition method based on deep learning

ActiveCN104966097AThe recognition result is accurateMeet the need for identificationNeural learning methodsCharacter recognitionNoise reductionOptical character recognition

The invention relates to the field of image recognition, and especially relates to a complex character recognition method based on deep learning. Through the analysis of character complexity, a training sample, which contains a to-be-recognized image noise model and a distortion characteristic model, generated by a random sample generator is employed for the training of a deep neural network. The training sample comprises complex noise and distortion, and can meet the demands of the recognition of various types of complex characters. A few of manually annotated first training sample sets and a large amount of randomly generated second training sample sets are mixed and then inputted to the deep neural network, thereby solving a problem that a large number of manually annotated training samples are needed for character recognition through the deep neural network. Moreover, the most advanced deep neural network is employed for automatic learning under the condition that the noise and distortion of a to-be-recognized image are retained, thereby avoiding information loss caused by noise reduction in a conventional OCR method, and improving the recognition accuracy.

Owner:成都数联铭品科技有限公司

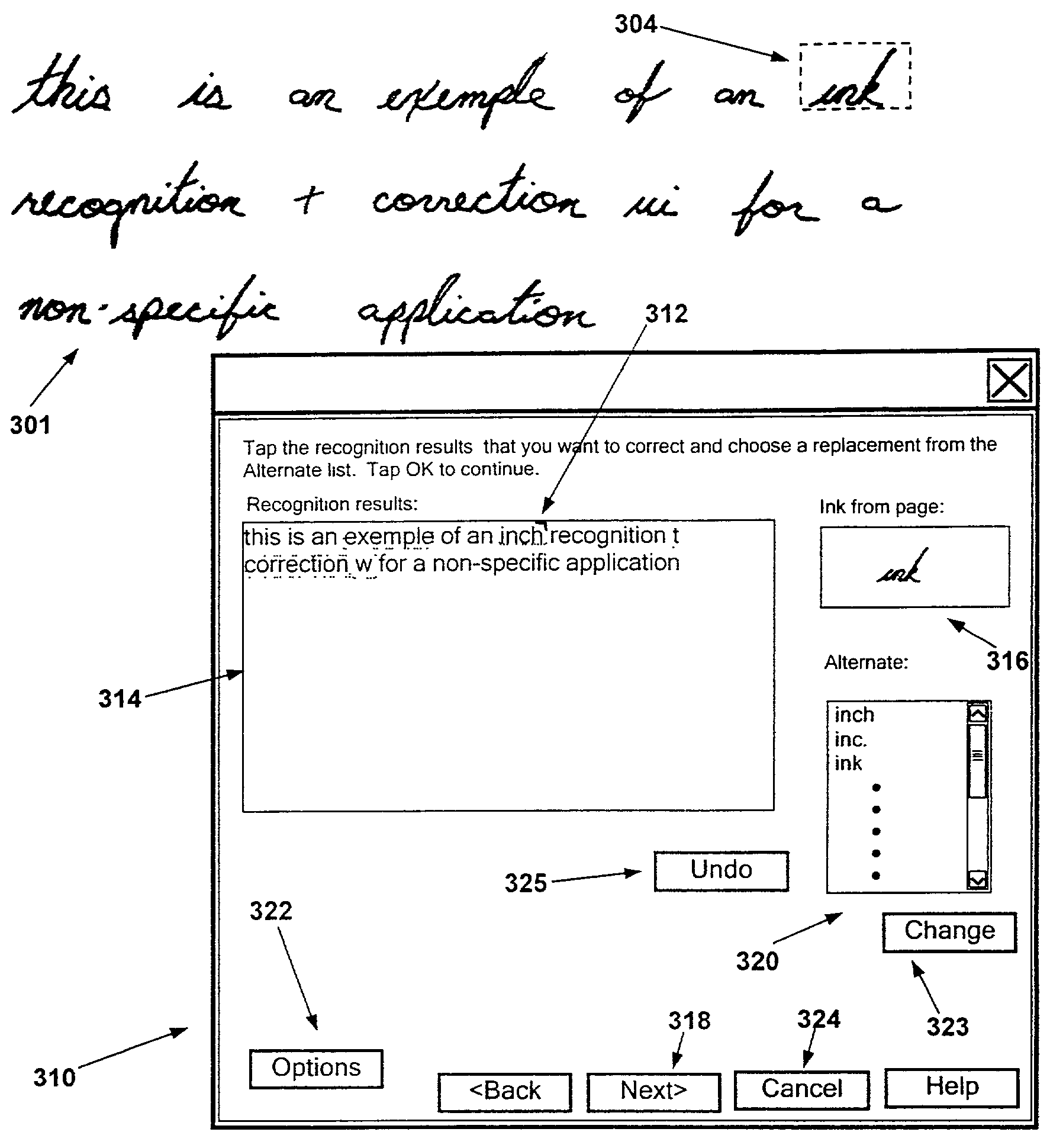

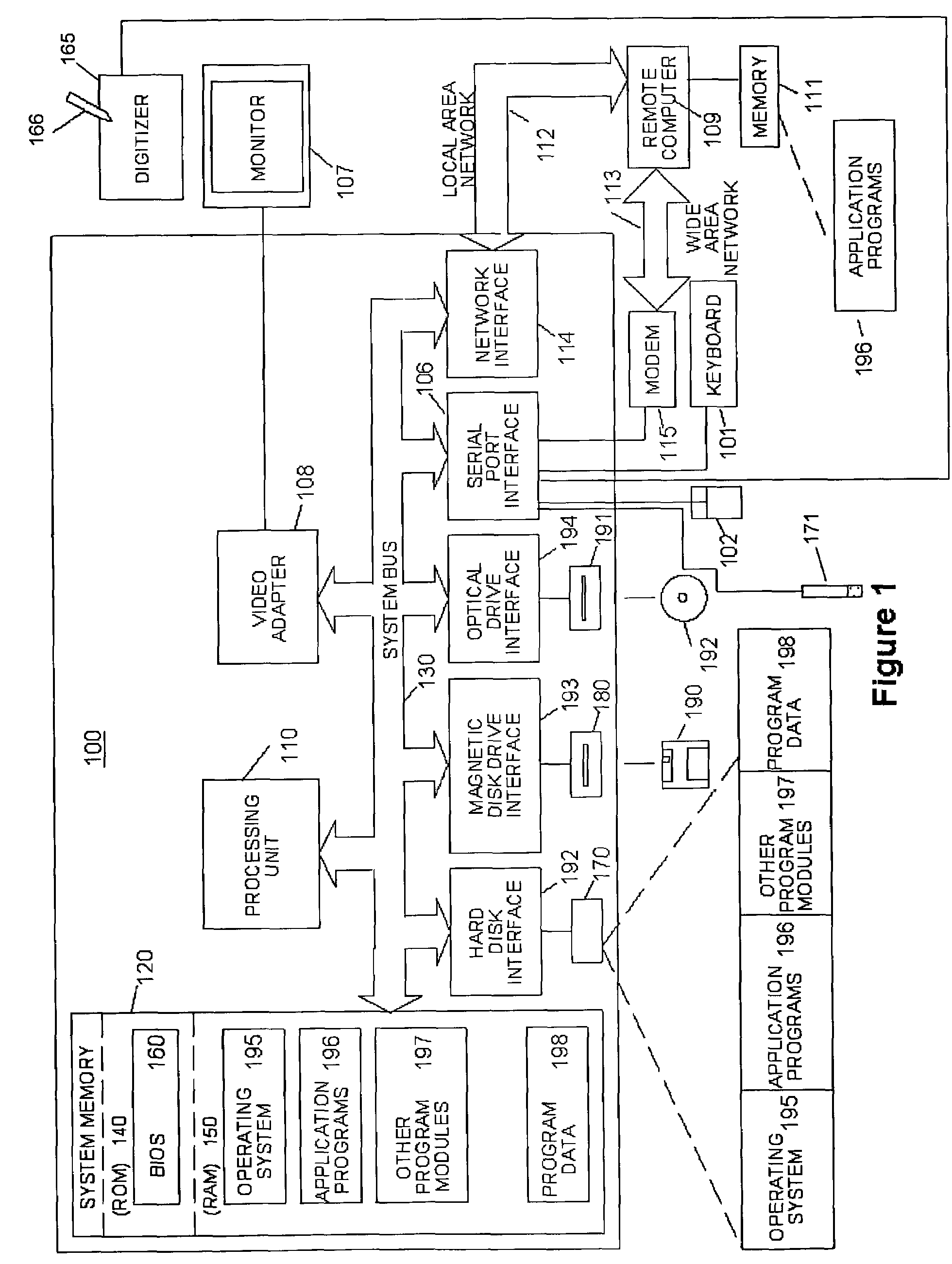

Correcting recognition results associated with user input

InactiveUS7137076B2Improve recognition accuracyThe recognition result is accurateCharacter and pattern recognitionCathode-ray tube indicatorsPattern recognitionUser input

Recognition results associated with handwritten electronic ink, voice recognition results or other forms of user input can be corrected by designating at least a portion of a visual display. Recognition results corresponding to the designated portion, and optionally, additional recognition results to provide context, are displayed. Portions of the displayed recognition results are then selected, and alternate recognition results made available. Alternate recognition results can be chosen, and the selected recognition results modified based upon the chosen alternate. The invention may include different user interfaces.

Owner:MICROSOFT TECH LICENSING LLC

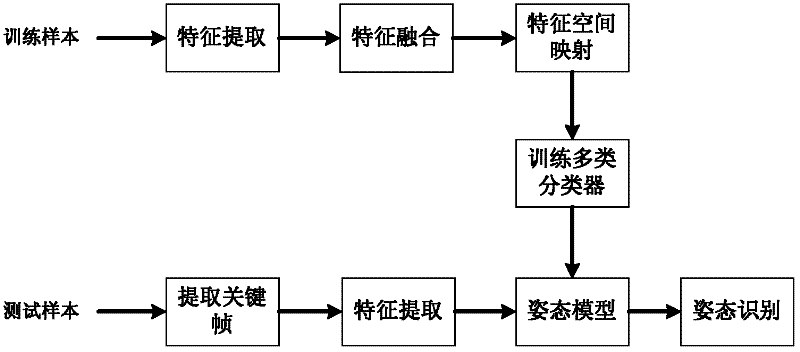

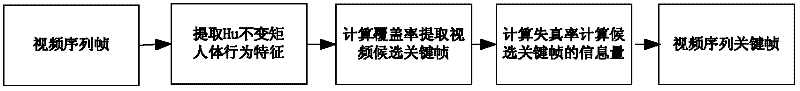

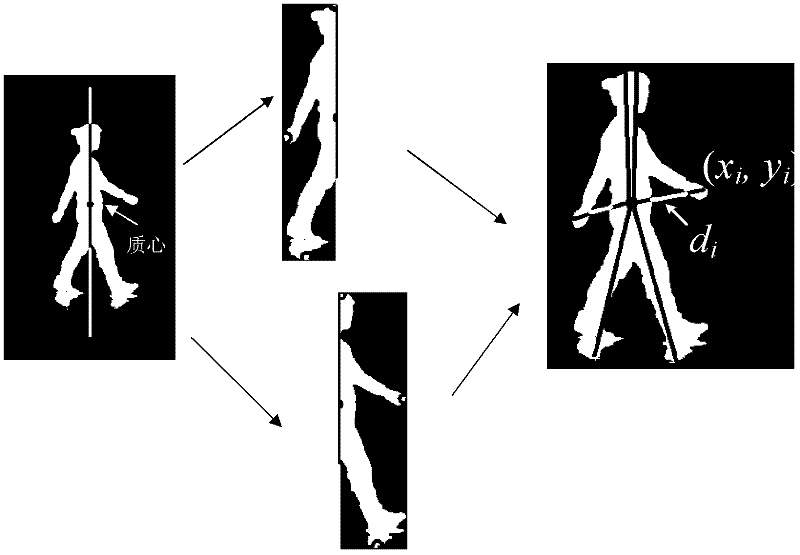

Human body posture identification method based on multi-characteristic fusion of key frame

ActiveCN102682302ADescribe wellEfficient analysisImage analysisCharacter and pattern recognitionHuman bodyFeature vector

The invention provides a human body posture identification method based on multi-characteristic fusion of a key frame, comprising the following steps of: (1) extracting Hu invariant moment characteristics from a video image; calculating a covering rate of an image sequence; extracting the highest covering percentage of the covering rate as a candidate key frame; then calculating a distortion rate of the candidate key frame and extracting the minimum distortion percentage as the key frame; (2) carrying out extraction of a foreground image on the key frame to obtain the foreground image of a moving human body; (3) extracting characteristic information of the key frame, wherein the characteristic information comprises a six-planet model, a six-planet angle and eccentricity; obtaining a multi-characteristic fused image characteristic vector; and (4) utilizing a one-to-one trained classification model, wherein the classification model is a posture classifier based on an SVM (Secure Virtual Machine); and identifying a posture. The human body posture identification method has the advantages of simplified calculation, good stability and good robustness.

Owner:ZHEJIANG UNIV OF TECH

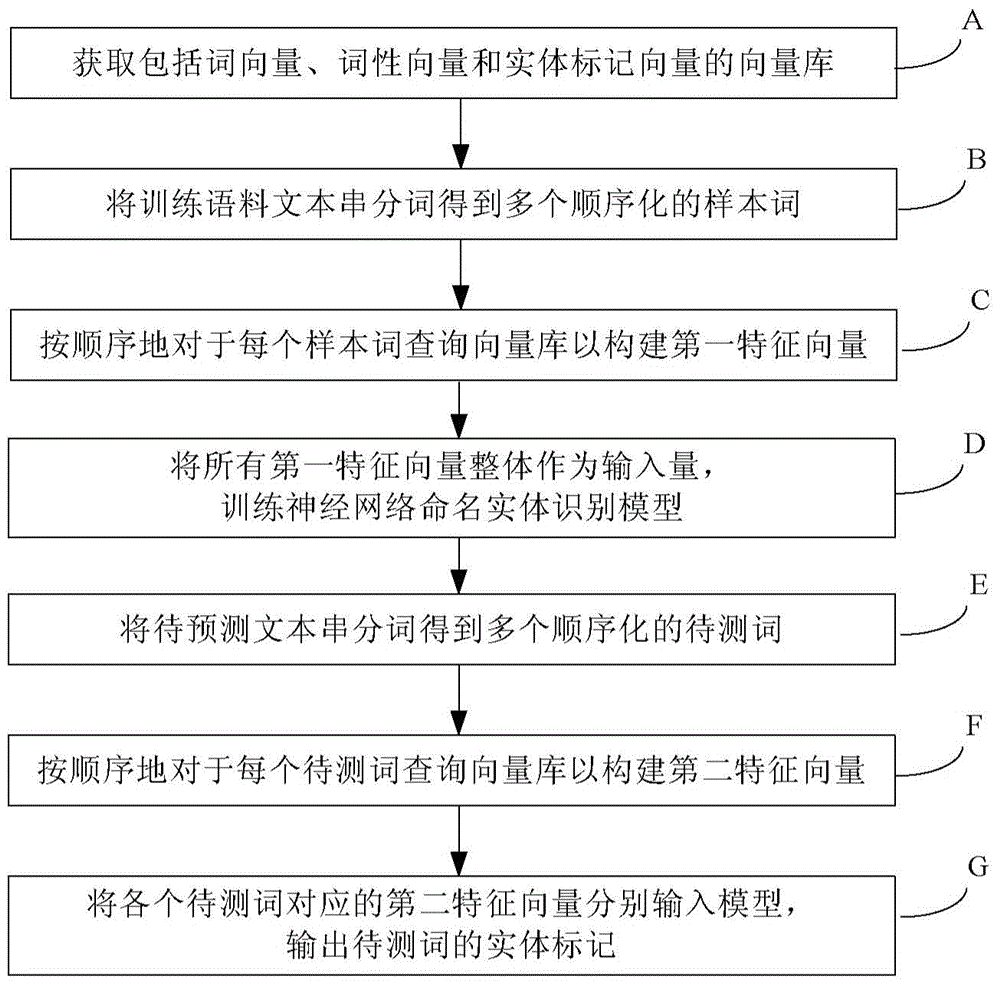

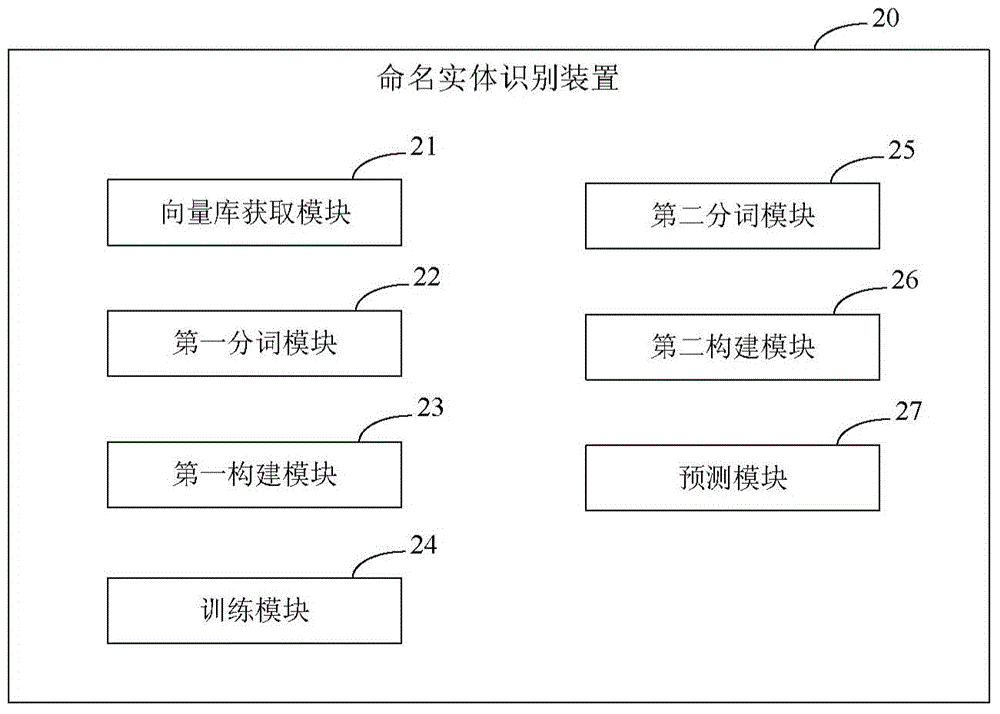

Named entity identification method and device

ActiveCN104899304AComprehensive information consideredThe recognition result is accurateSemantic analysisSpecial data processing applicationsIdentification deviceAlgorithm

The invention provides a named entity identification method and a named entity identification device capable of accurately identifying a named entity, in particular to a named entity in the field of E-business. The method comprises: acquiring a vector library; carrying out word segmentation on a training corpus text string to obtain a plurality of sample words; inquiring the vector library of each sample word sequentially to obtain a first feature vector which comprises a word vector and a word class vector corresponding to the same word as well as an entity marking vector corresponding to the last word of the sample word; taking all the first feature vectors integrally as an input quantity, and training a named entity identification model of a neutral network; carrying out word segmentation on a to-be-predicted text string to obtain a plurality of to-be-tested words; inquiring the vector library of each sample word sequentially to obtain a second feature vector which comprises a word vector and a word class vector corresponding to the same word as well as an entity marking vector corresponding to the last word of the sample word; respectively inputting the second feature vectors corresponding to all the to-be-tested words into the model, and outputting entity identifiers of the to-be-tested words.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

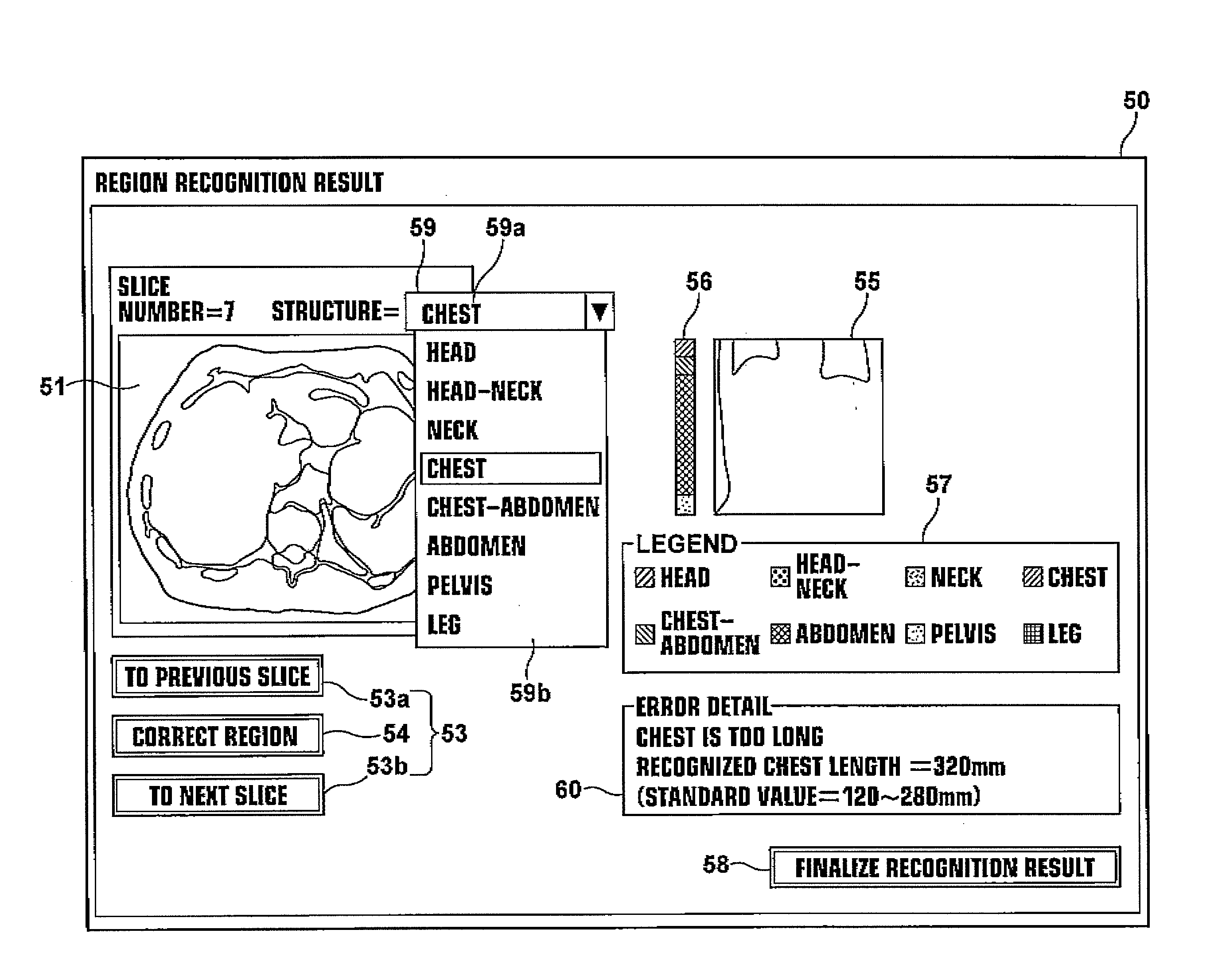

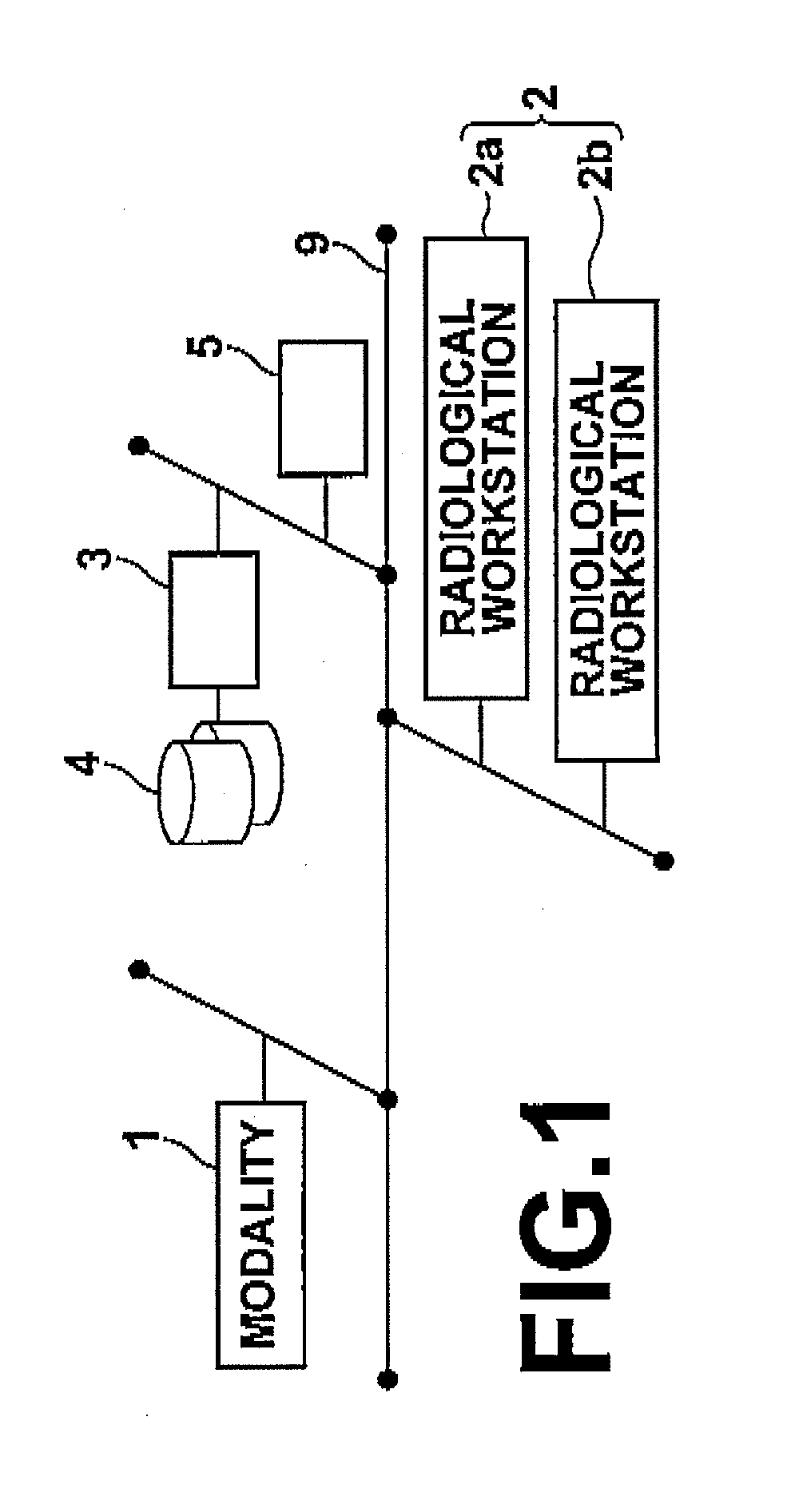

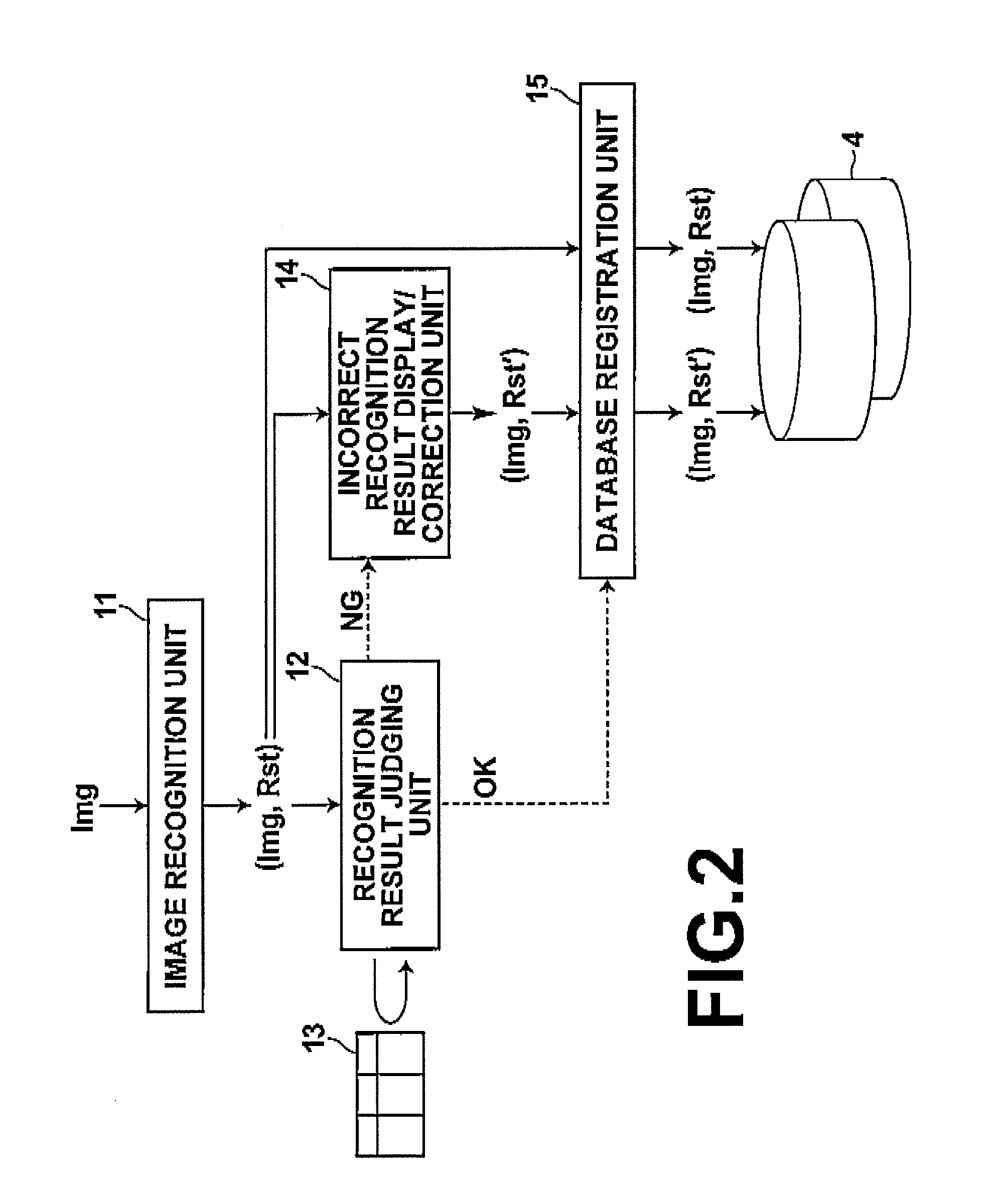

Method, apparatus, and program for judging image recognition results, and computer readable medium having the program stored therein

ActiveUS20080260226A1The recognition result is accurateEasy to understandCharacter and pattern recognitionPattern recognitionAnatomical measurement

To obtain more accurate image recognition results while alleviating the burden on the user to check the image recognition results. An image recognition unit recognizes a predetermined structure in an image representing a subject, then a recognition result judging unit measures the predetermined structure on the image recognized by the image recognition unit to obtain a predetermined anatomical measurement value of the predetermined structure, automatically judges whether or not the anatomical measurement value falls within a predetermined standard range, and, if it is outside of the range, judges the image recognition result to be incorrect.

Owner:FUJIFILM CORP

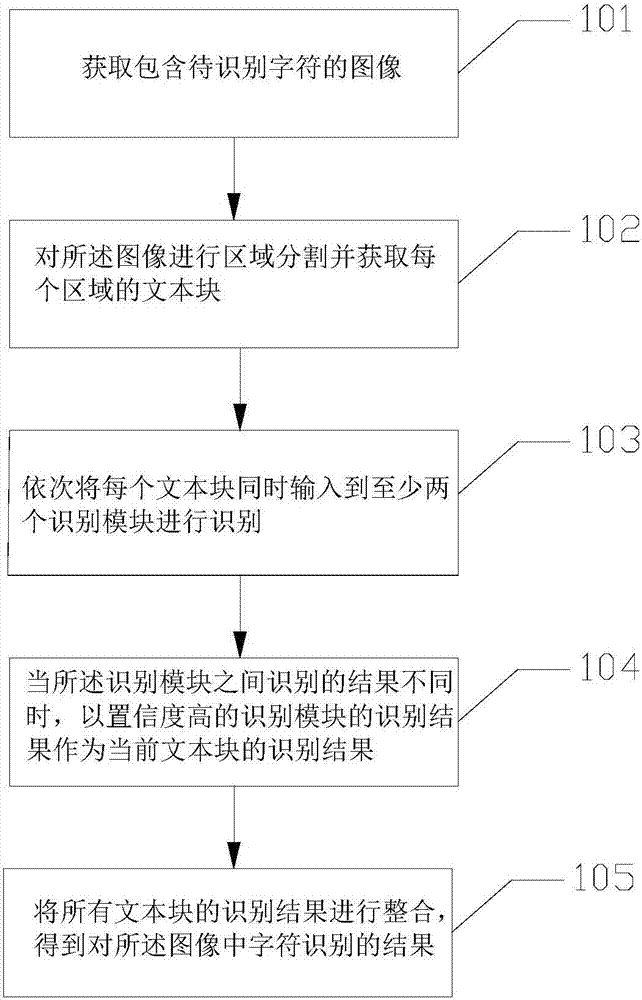

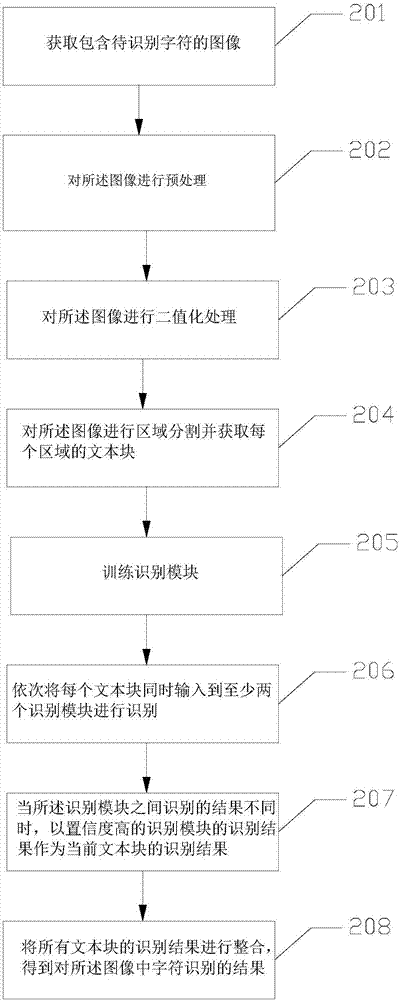

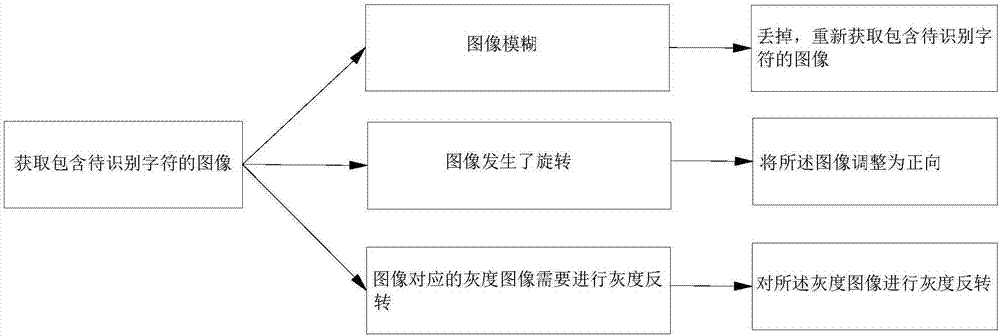

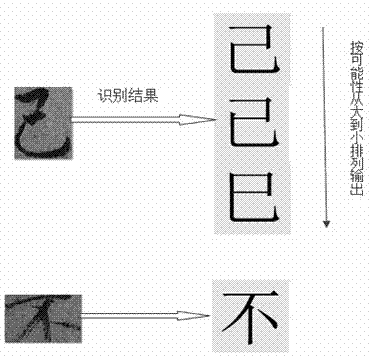

Character recognition method and character recognition device

InactiveCN107403130AAccurate identificationThe recognition result is accurateCharacter recognitionConfidence measuresCharacter recognition

The application provides a character recognition method and a character recognition device. The character recognition method includes: acquiring an image containing to-be-recognized characters; carrying out area segmentation on the image and acquiring a text block of each area; sequentially inputting each text block into at least two recognition modules at the same time for recognition, wherein each of the recognition modules has a respective confidence degree, and the confidence degrees of the recognition modules are different; taking a recognition result of the recognition module with the high confidence degree as a recognition result of the current text block when recognition results of the recognition modules are different; and integrating recognition results of all the text blocks to obtain a result of recognition for the characters in the image.

Owner:BEIJING YUANLI WEILAI SCI & TECH CO LTD

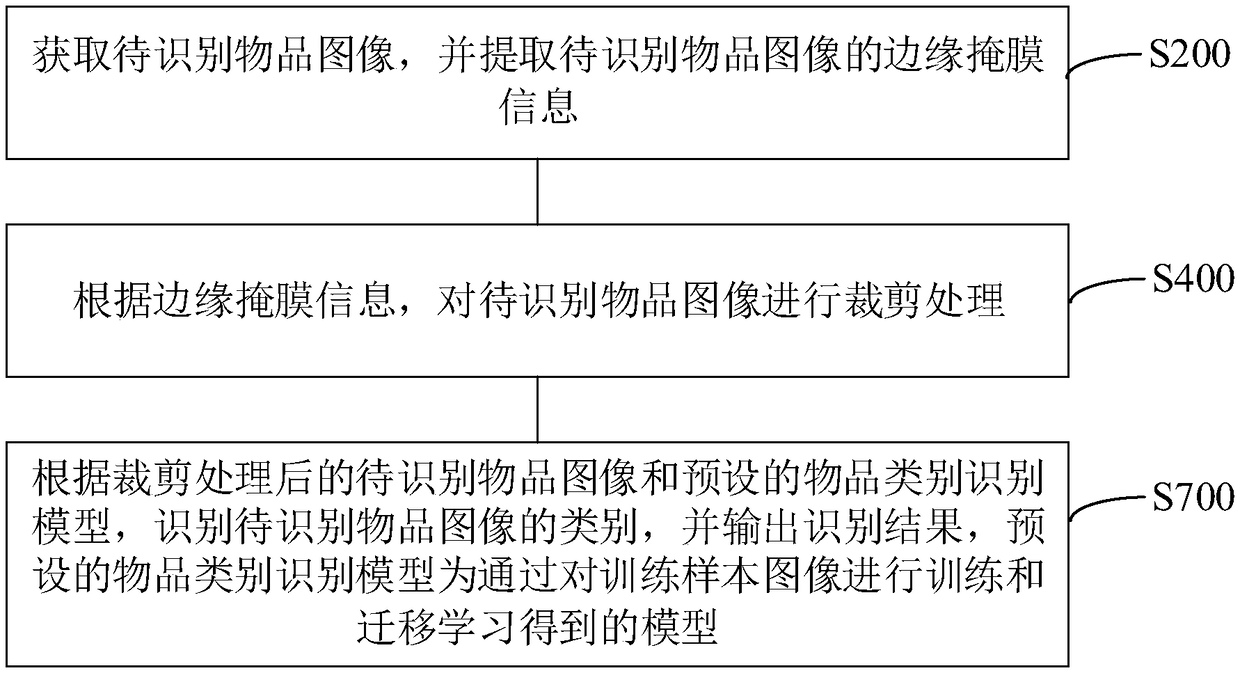

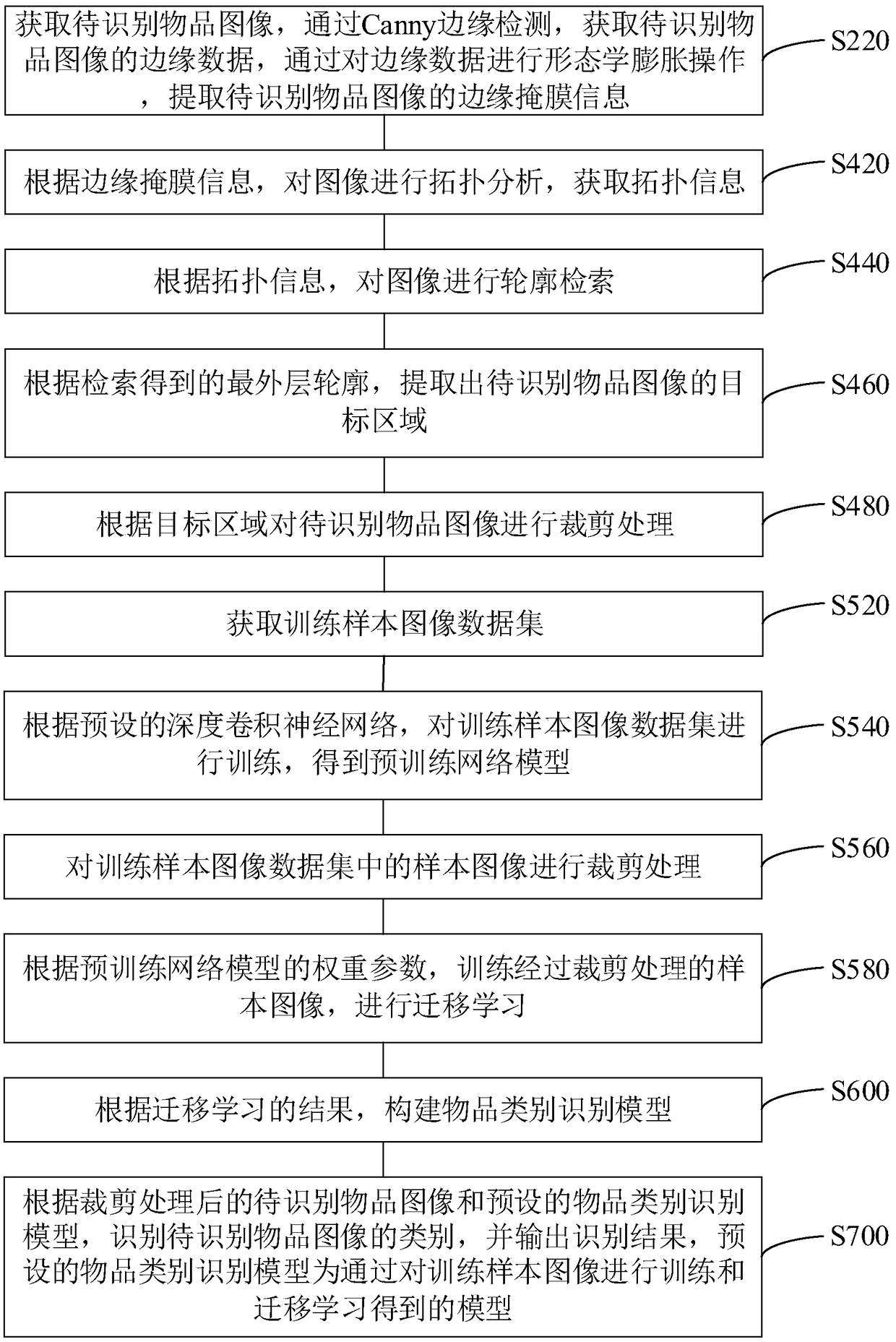

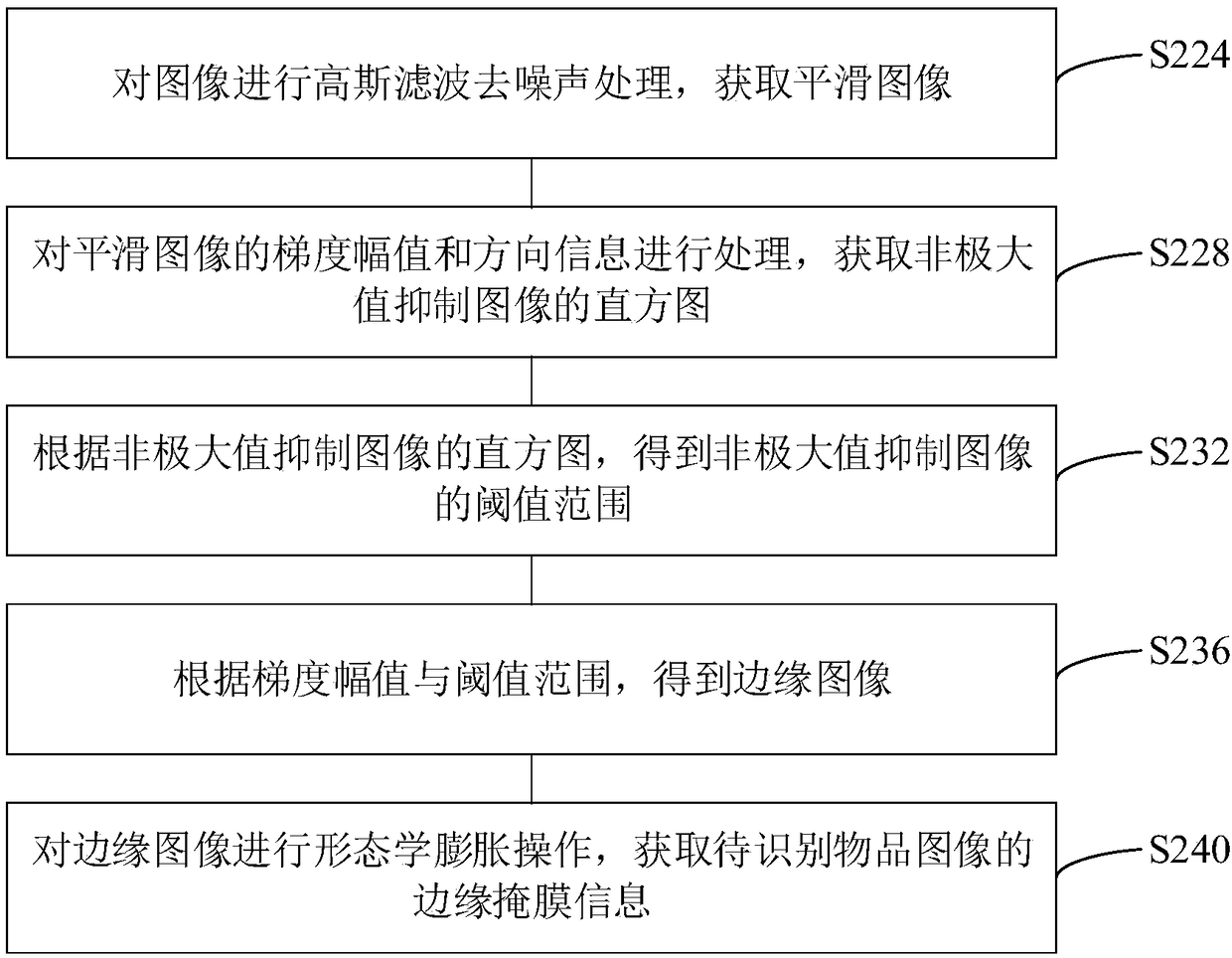

Article category recognition method and device, computer equipment and storage medium

InactiveCN108647588AReduce distractionsThe recognition result is accurateCharacter and pattern recognitionNeural architecturesCategory recognitionSample image

The invention relates to an article category recognition method and device, computer equipment and a storage medium. The method comprises the steps that a to-be-recognized article image is acquired, and edge mask information of the to-be-recognized article image is extracted; according to the edge mask information, clipping processing is performed on the to-be-recognized article image; and according to the to-be-recognized article image obtained after clipping processing and a preset article category recognition model, the category of the to-be-recognized article image is recognized, and a recognition result is output, wherein the preset article category recognition model is a model obtained by performing training and migration learning on training sample images. Through the article category recognition model obtained through pre-training and migration learning, the control requirement on article accuracy is improved; and by use of the article category recognition model to recognize the to-be-recognized article image, the article recognition result can be accurately acquired.

Owner:广州绿怡信息科技有限公司

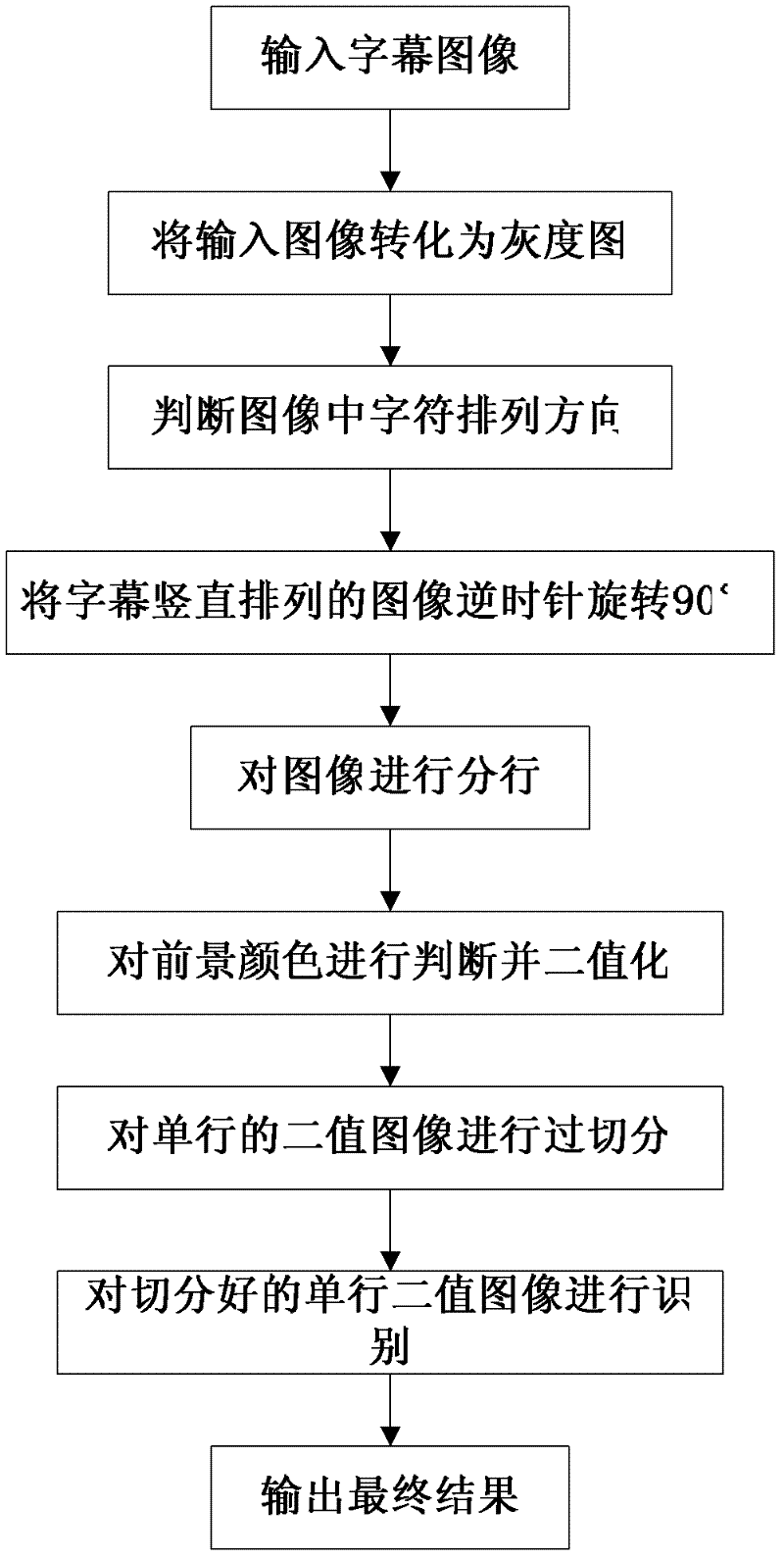

Video caption text extraction and identification method

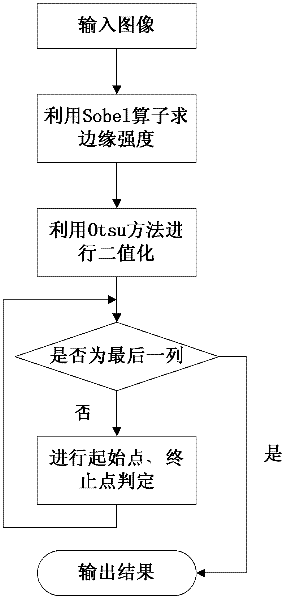

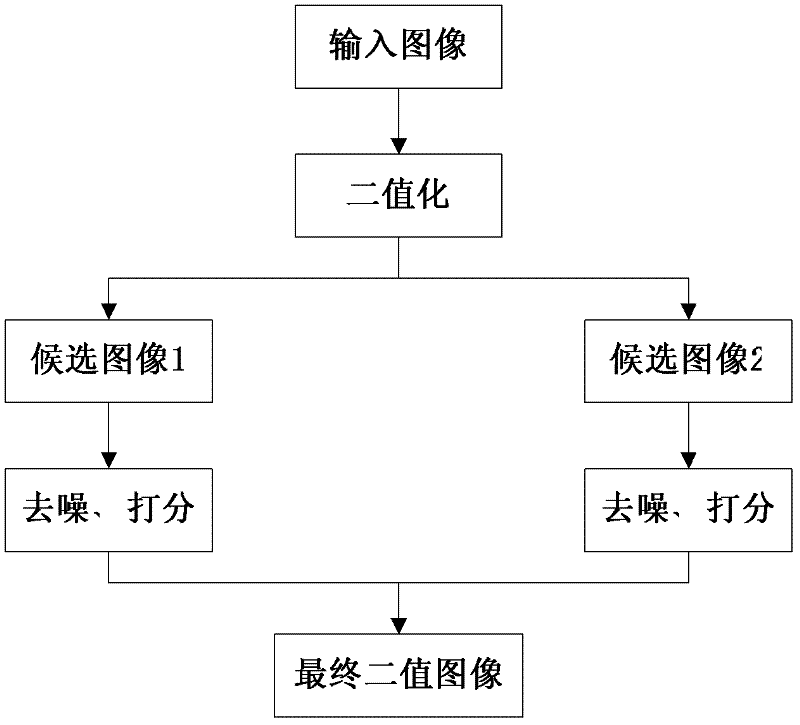

ActiveCN102332096AAvoid Segmentation ErrorsEffective segmentationCharacter and pattern recognitionPattern recognitionHorizontal and vertical

The invention discloses a video caption text extraction and identification method which comprises the following steps of: inputting an image of a caption area in a video; converting the input image into a grayscale; judging the arrangement direction of characters in the caption area; counterclockwise rotating the caption area in which vertical arrangement is adopted 90 degrees to obtain a horizontal caption area; lining the caption area to obtain single-line caption images; automatically judging foreground colors of the single-line caption images to obtain binary single-line caption images; over-segmenting the binary single-line caption images to obtain character segment sequences; and performing text line identification on the over-segmented binary single-line caption images. By utilizing the method, horizontal and vertical video caption text lines can be effectively segmented, the foreground colors of the characters can be accurately judged, noises can be filtered, and accurate character segmentation and identification results can be obtained; and the method can be applicable to a plurality of purposes such as video and image content editing, indexing, retrieving and the like.

Owner:北京中科阅深科技有限公司

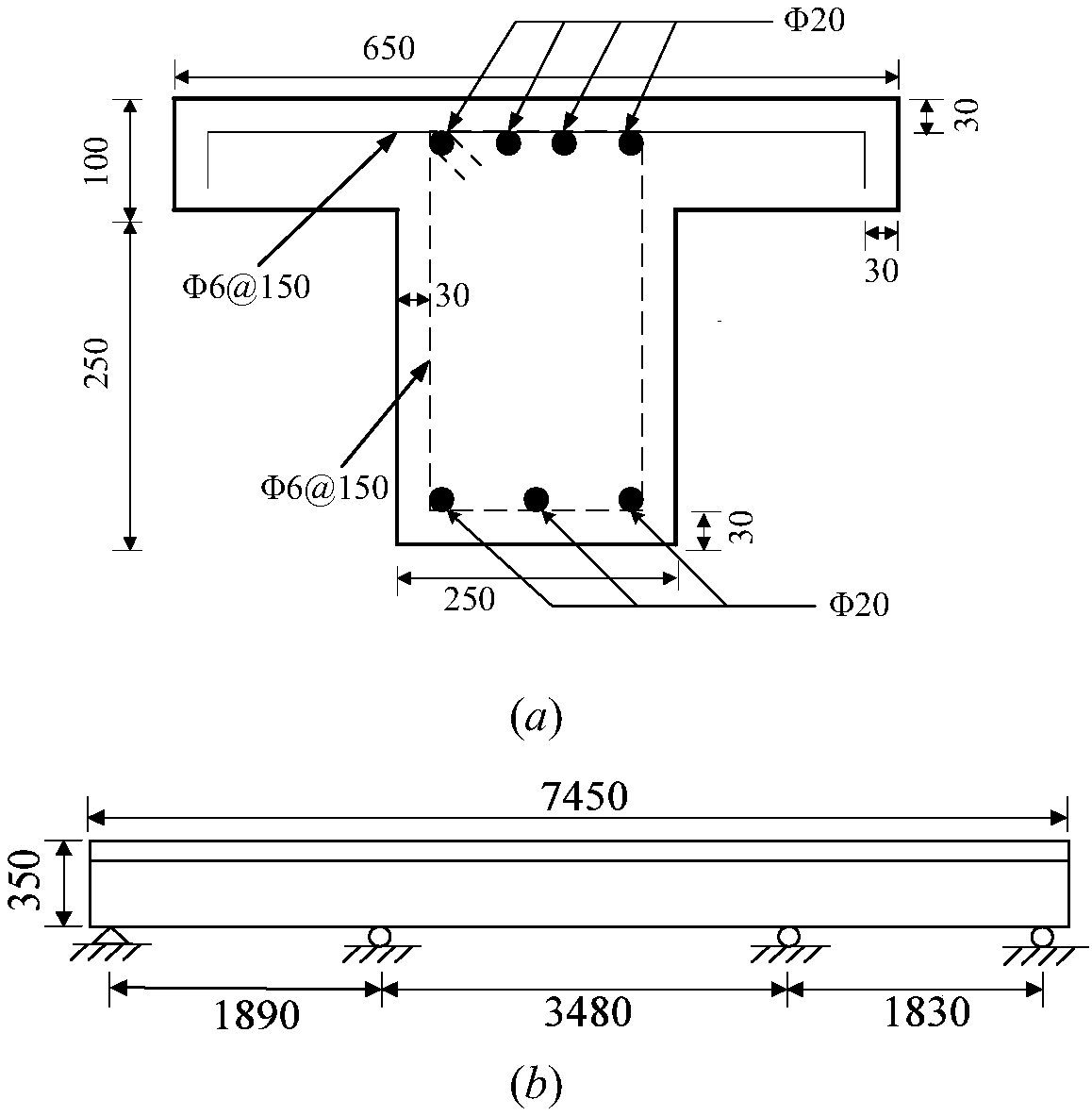

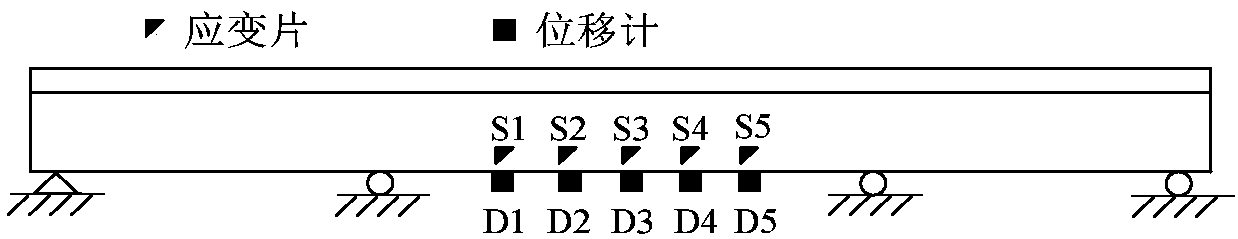

Optimized bridge damage identification method based on neural network

InactiveCN104200004AStructural damage identification error is smallIndicate damageBiological neural network modelsCharacter and pattern recognitionData miningArtificial intelligence

The invention discloses an optimized bridge damage identification method based on a neural network. The optimized bridge damage identification method based on the neural network comprises the steps of S1, constructing sample data; S2, determining network topology; S3, conducting training and testing; S4, identifying damage, wherein real-time strain data of a bridge are input into a trained BP neural network, so that damage identification of the bridge is achieved, the real-time strain data of the bridge are obtained through sensors which are arranged optimally, the minimum number Ymin of unidentifiable models serves as an object function, and the arrangement positions of the sensors corresponding to Ymin is the optimal sensor arrangement. By the adoption of the optimized bridge damage identification method based on the neural network, various possible damage conditions of a structure can be identified to the maximum extent through the minimum number of sensors, and an identification result is high in precision and tends to be stable.

Owner:NORTHEASTERN UNIV

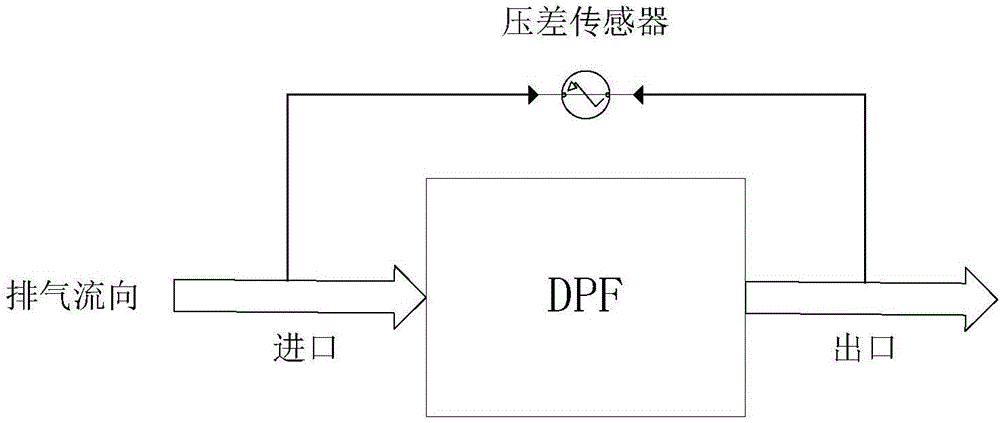

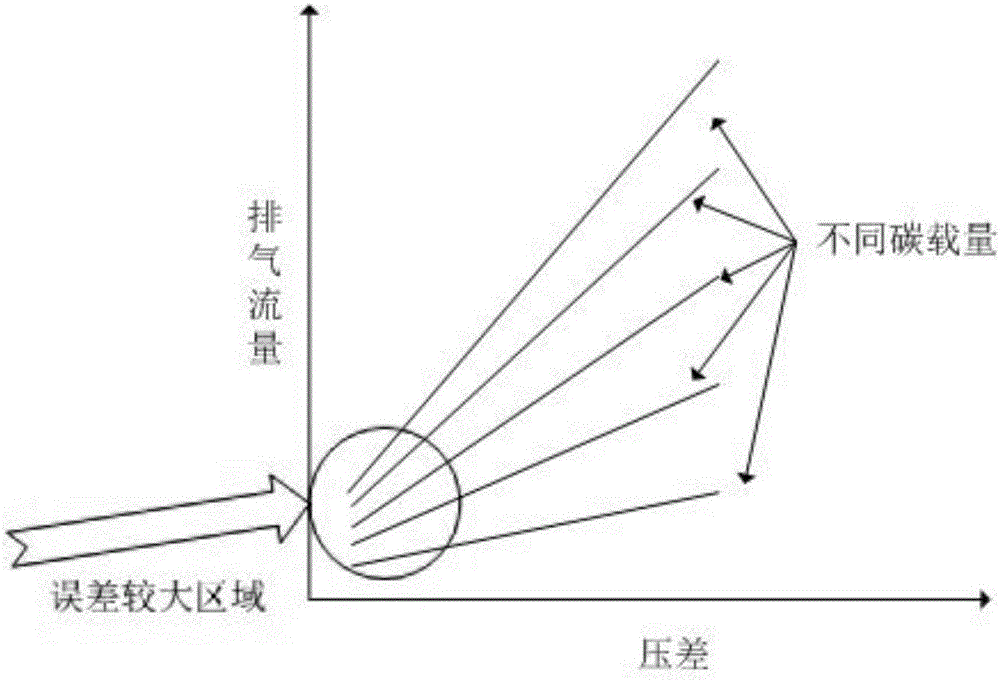

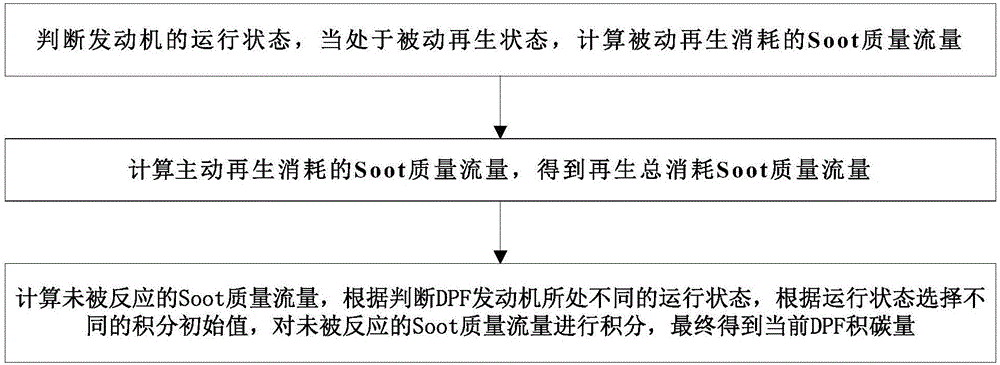

Carbon deposit amount calculation method of diesel engine particulate filter

ActiveCN106481419AReduce economyReduce riskInternal combustion piston enginesExhaust treatment electric controlCarbon depositSoot

The invention discloses a carbon deposit amount calculation method of a diesel engine particulate filter. The method comprises following steps: the running state of an engine is judged, and when the engine is in the passive regeneration state, the Soot mass flow consumed by passive regeneration is calculated; the Soot mass flow consumed by initiative regeneration is calculated, and the Soot mass flow totally consumed by regeneration is obtained; the non-reaction Soot mass flow is calculated, according to judgment of the different running states of a DPF engine and the running state, the different integral initial values are selected, the non-reaction Soot mass flow is subject to integral operation, and the current DPF carbon deposit amount is obtained; the work state of an aftertreatment system is represented through exhaust substance components and exhaust physical parameters, and therefore the DPF carbon deposit amount is identified, and the identification result is more precise.

Owner:清华大学苏州汽车研究院(吴江) +1

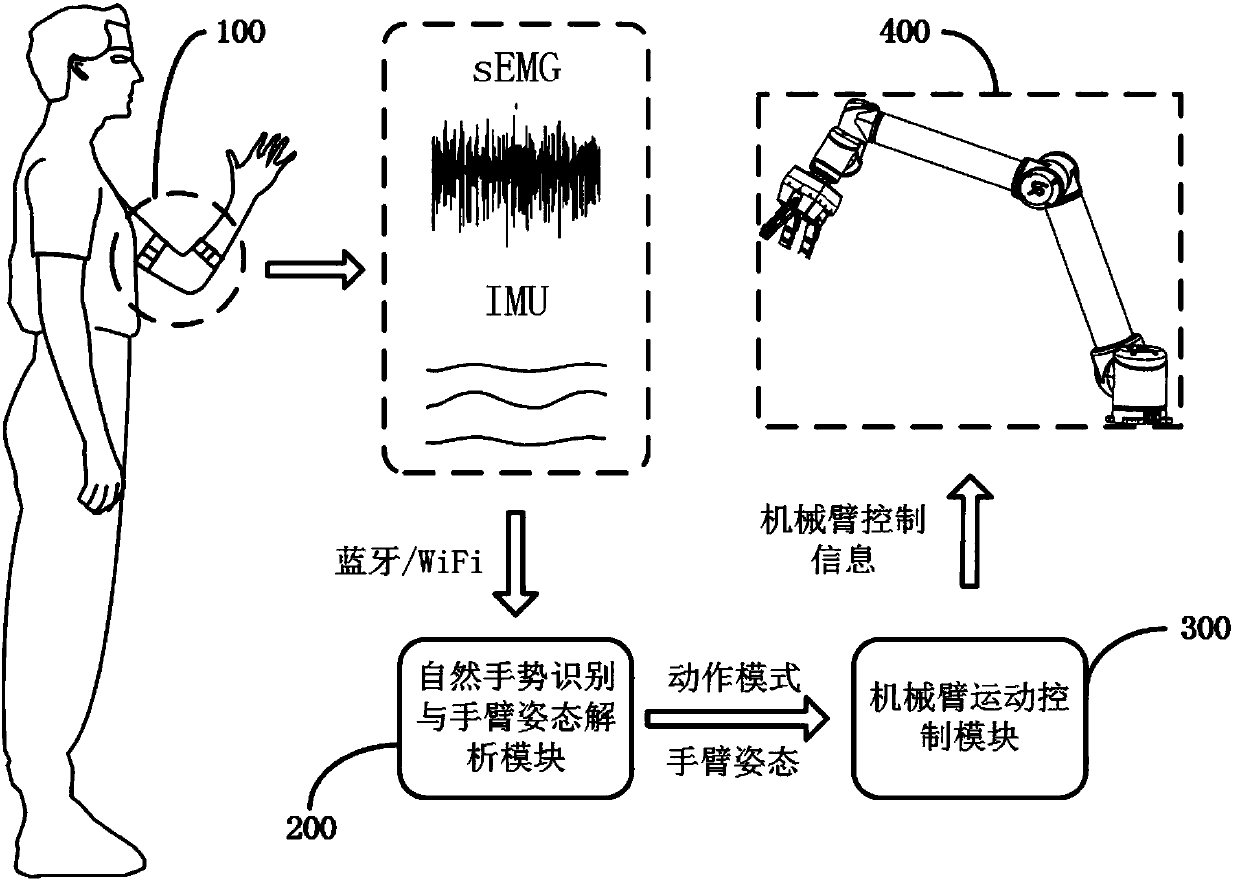

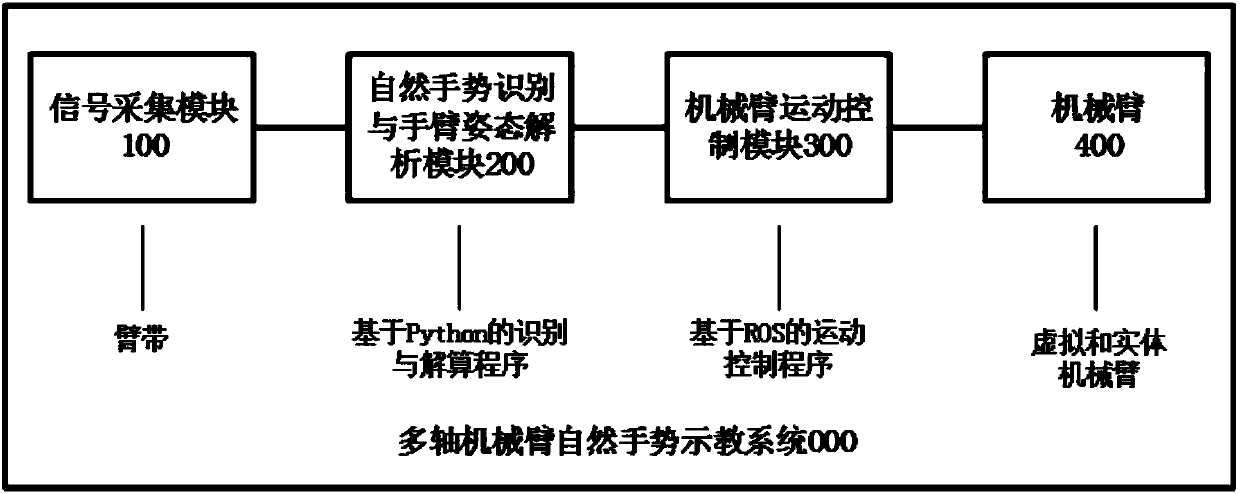

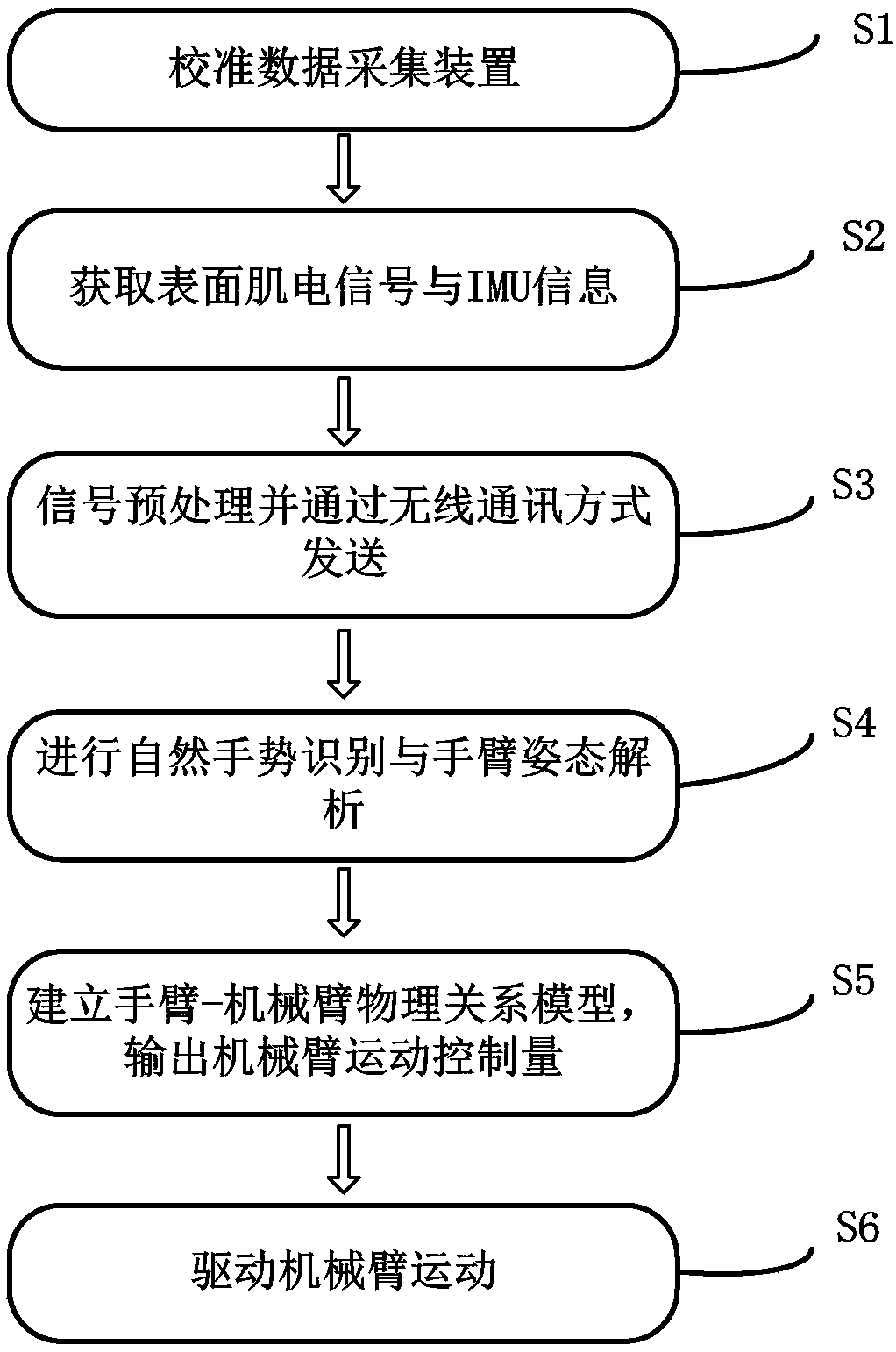

Physical gesture movement control system and method for multi-shaft mechanical arm

InactiveCN107553499AAccurate identificationControl natureProgramme-controlled manipulatorArm surfaceHuman body

The invention relates to a movement control system and method for a multi-shaft mechanical arm, the movement of the mechanical arm can be controlled by users through a natural gesture and an arm motion. According to the movement control system and method for the multi-shaft mechanical arm, the physical gesture is recognized and the arm posture is calculated and solved through detection of myoelectric signals and IMU motion information on the surface of the human arm; the mechanical arm is controlled to present the arm motion of the human body through establishment of an arm-mechanical arm physical relationship model; and the grabbing operation of a tail end actuator of the mechanical arm is controlled by the natural gesture. The movement control system and method for the multi-shaft mechanical arm can be widely used for the field of remote operations of the mechanical arm, the teaching and the like; and the intelligent control over a robot is realized, and the aims of human-computer interaction and intelligent interaction are achieved.

Owner:SHANGHAI JIAO TONG UNIV +1

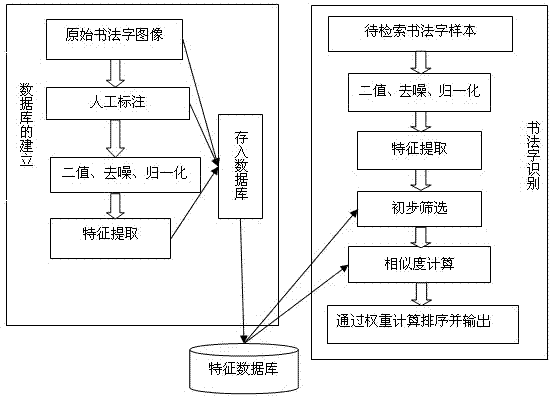

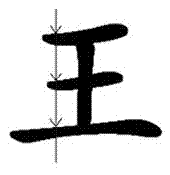

Calligraphy character identifying method

InactiveCN103093240ASmall amount of calculationThe recognition result is accurateCharacter and pattern recognitionChinese charactersFeature data

The invention discloses a calligraphy character identifying method. A signal calligraphy character image is collected and Chinese character semanteme is manually annotated, wherein the Chinese character semanteme corresponds to the signal calligraphy character image. The calligraphy character feature information of the signal calligraphy character image is extracted and stored in a feature data bank after binaryzation processing, denoising processing and normalization processing are conducted to signal calligraphy character image. The feature information comprises four boundary point positions of the calligraphy character of the signal calligraphy character image and average stroke passing numbers, projecting valves and outline points in a horizontal direction and a vertical direction of the calligraphy character. Then, to-be-identified signal calligraphy character image is processed. The feature information of the to-be-identified signal calligraphy character is extracted and shape match is compared after preliminary screening. The calligraphy character is screened in the feature data bank, wherein the shape of the calligraphy character is similar to the shape of the to-be-identified calligraphy character. Finally, weight calculation is conducted to same semanteme calligraphy character image and the same semanteme calligraphy character image is merged. Identifying results are given. The calligraphy character identifying method has the advantages of being small in calculated amount, capable of giving an accuracy identifying result in a short time and capable of having no specific requirements for the to-be-identified calligraphy character image offered by users.

Owner:ZHEJIANG UNIV

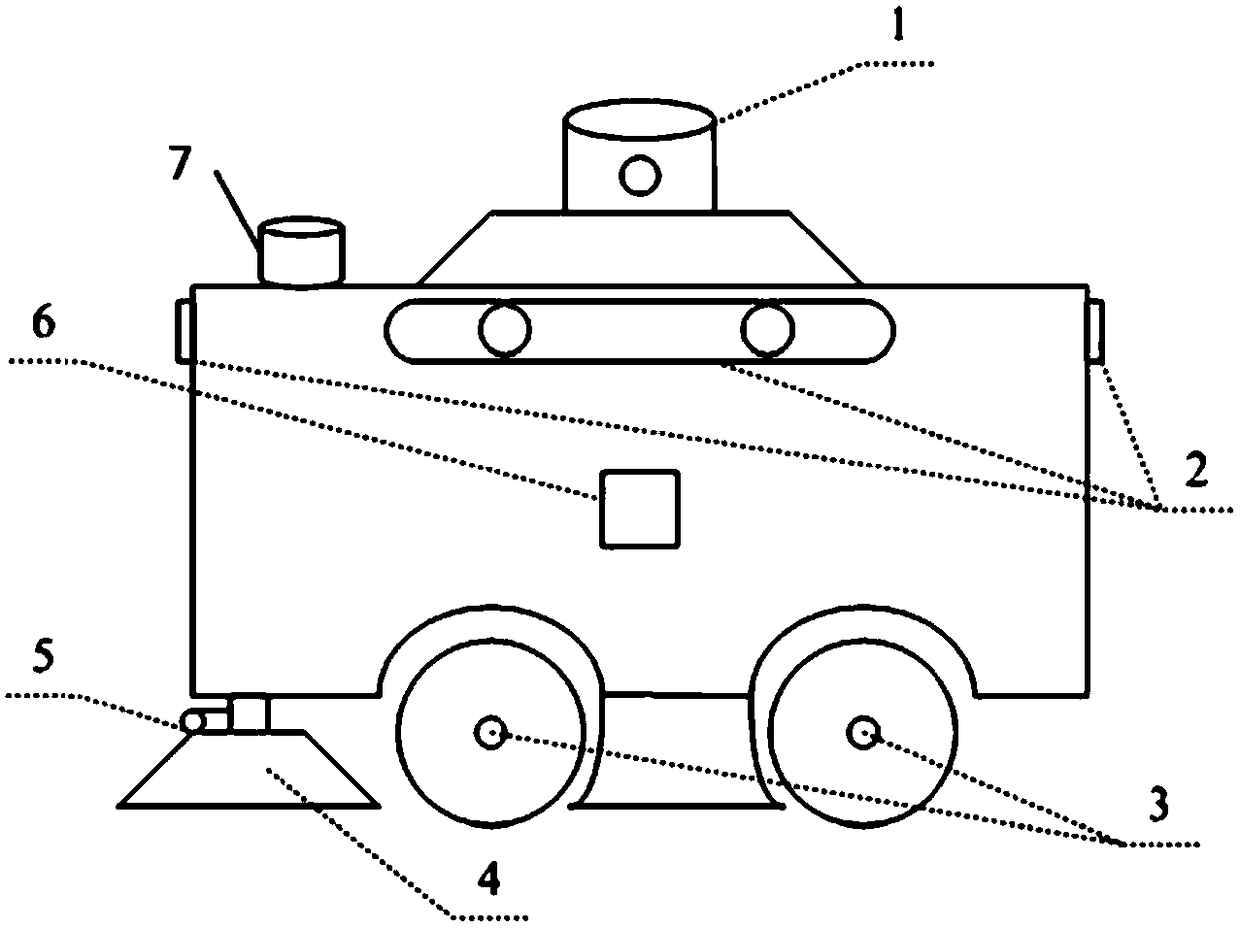

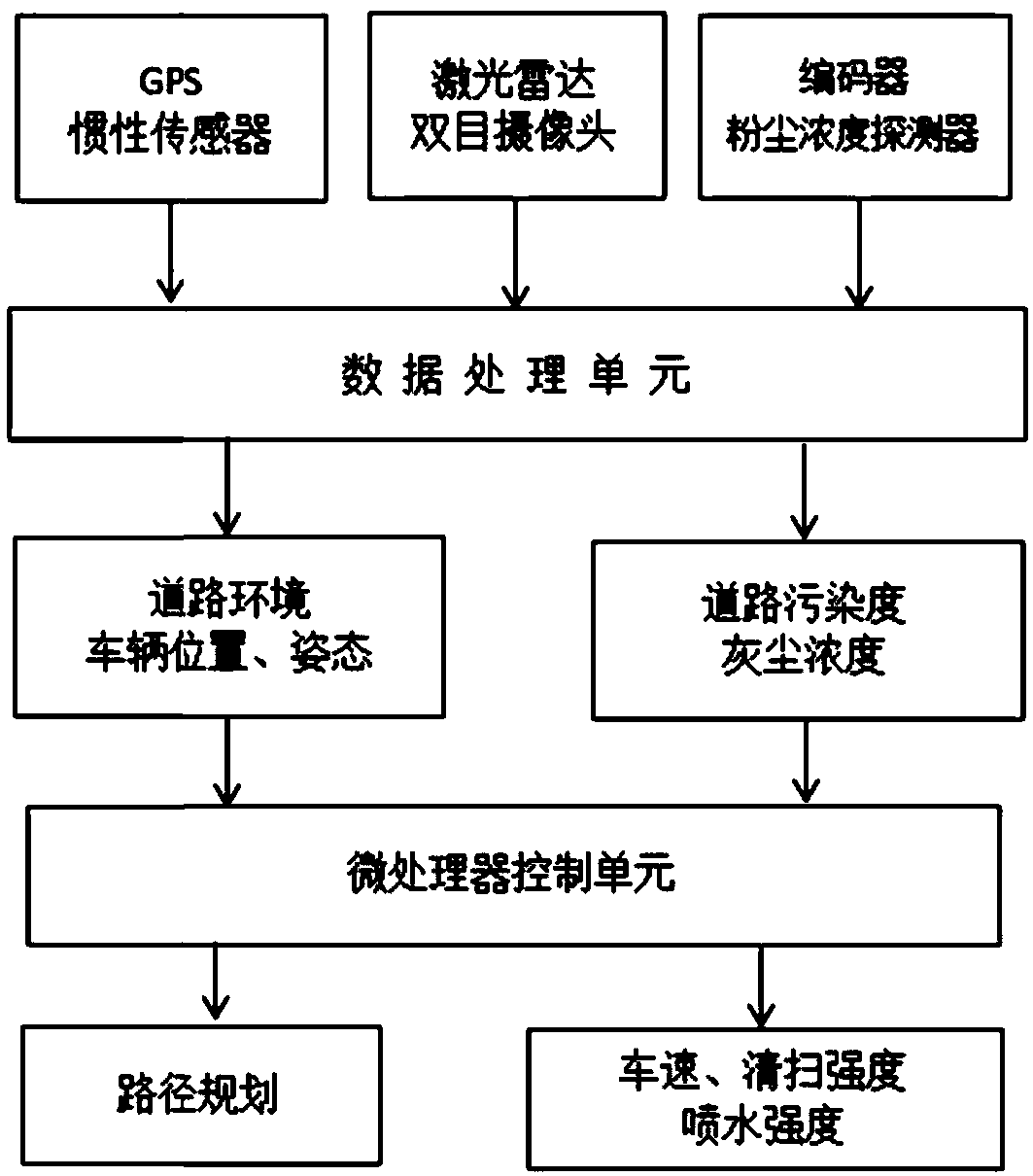

Intelligent road sweeper and a road pollutant identification method and a control method thereof

ActiveCN109024417AGood safety and reliabilityImprove cleaning qualityRoad cleaningPollutantNetwork model

The invention discloses an intelligent road sweeper and a road pollutant identification method and a control method thereof. The road sweeper comprises a road sweeper body, a laser radar, a camera, anencoder, a dust concentration detector, an inertial sensor, a GPS system and a processing controller. A method for identify road pollutants include acquiring RGB images of clean road surface and roadfacilities to be cleaned, convert that acquired RGB images into HSV space, and obtaining a BP neural network model capable of identifying the clean road surface and road facilities; The real-time image information of the road to be cleaned is input to the BP neural network model to identify the pollutants. The method for identifying pollutants such as garbage in the present invention utilizes theHSV feature of a road image, which has a larger information amount of the gray value of the image than that of a single image, and has a better effect for identifying pollutants such as garbage.

Owner:CHANGAN UNIV

Objectionable image distinguishing method integrating skin color, face and sensitive position detection

InactiveCN103366160AAvoid multi-scale searchesSolve the detection speed is slowCharacter and pattern recognitionFace detectionHuman body

The invention relates to an objectionable image distinguishing method integrating skin color, face and sensitive position detection. The method comprises the following steps that a skin color model is firstly built, the face detection is carried out, the constituted feature vector of skin color and face features is extracted, a SVM (support vector machine) algorithm is utilized for training, and a SVM classifier is obtained; then, by aiming at the female breast in the local key position of the human body, SIFT (scale-invariant feature transform) features are extracted, an Adaboost algorithm is utilized for training, and an Adaboost classifier is obtained; next, by aiming at the female private parts in the local key position of the human body, the trunk region of the human body is determined, haar-like features are utilized as a template for carrying out searching and matching in the trunk region of the human body; and finally, the SVM classifier, the Adaboost classifier and the template matching method are adopted for carrying out image detection, a C4.5 decision-making tree method is utilized for integrating detection results, a decision-making tree model is built, the decision-making tree model is adopted for recognizing objectionable images, and the final distinguishing results are given. The objectionable image distinguishing method has the advantages that the detection accuracy is improved, and meanwhile, the execution speed is ensured.

Owner:XI AN JIAOTONG UNIV

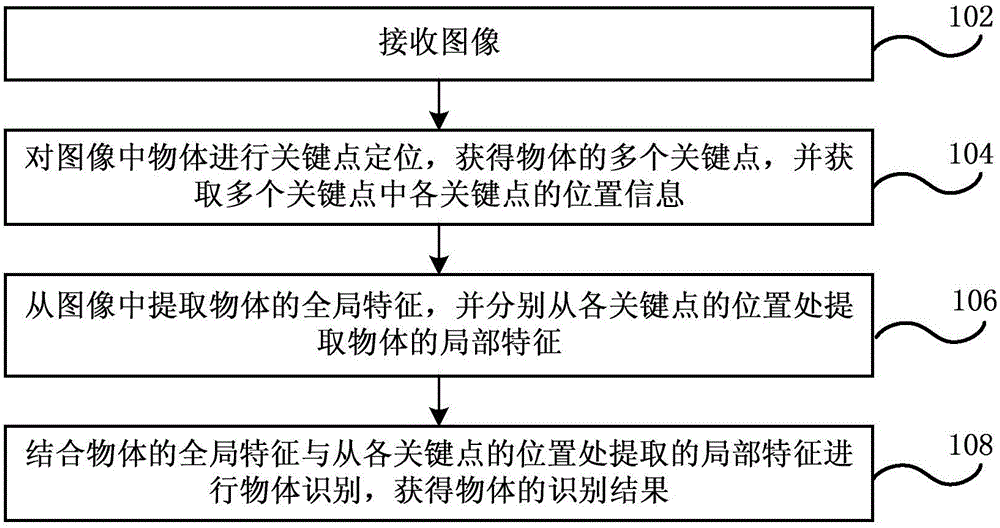

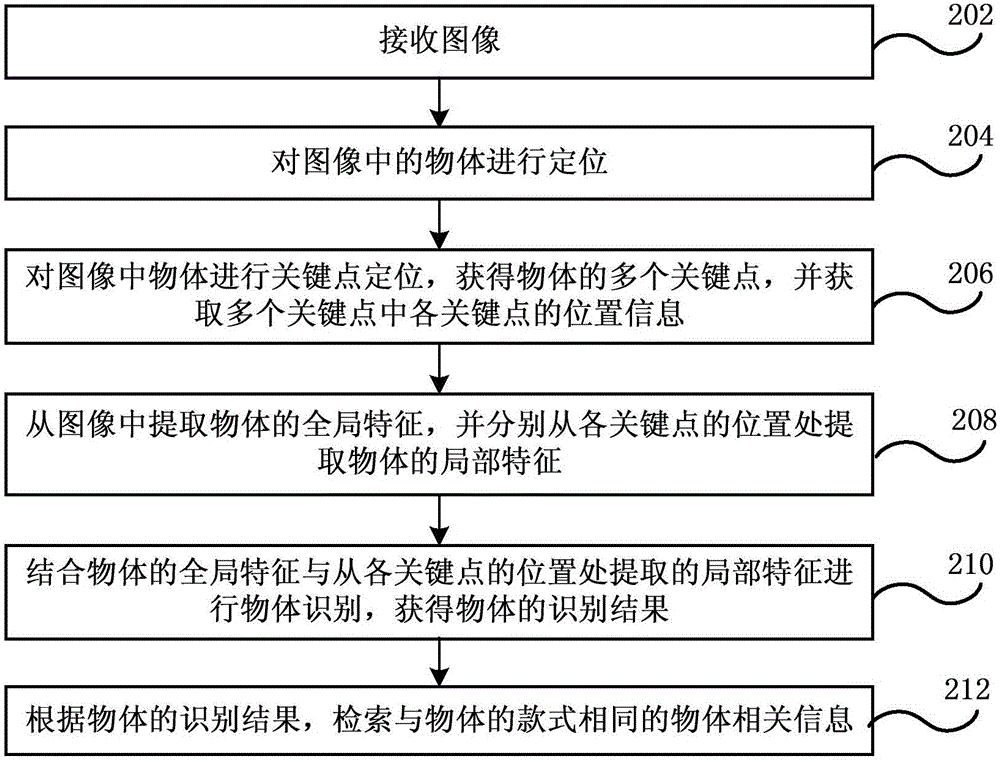

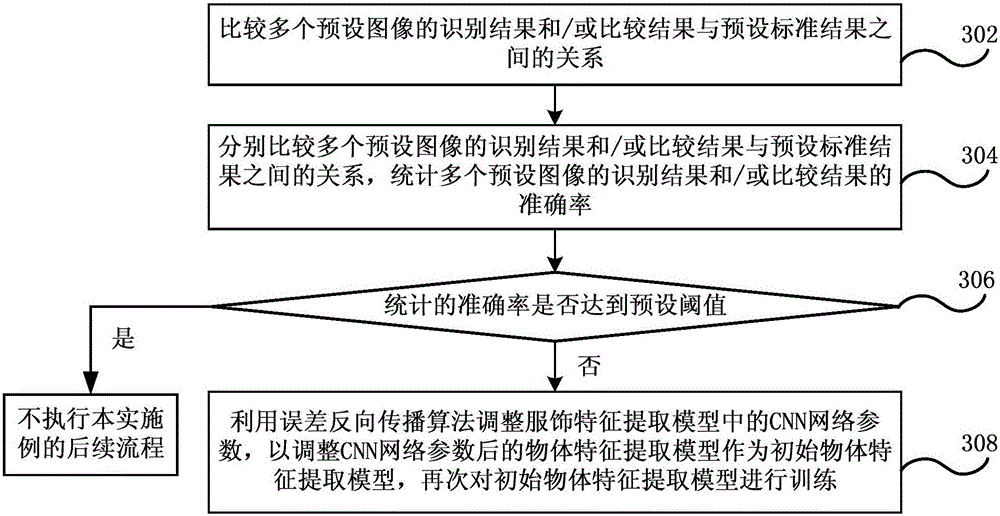

Object recognition method and device, data processing device and terminal equipment

ActiveCN106126579AAccurate identificationThe recognition result is accurateSpecial data processing applicationsVisibilityTerminal equipment

The embodiment of the invention discloses an object recognition method and device, a data processing device and terminal equipment. The method comprises the following steps: receiving an image; performing key point localization on an object in the image to obtain a plurality of key points of the object, and acquiring position information of each of the plurality of key points, wherein the position information includes position coordinates and visibility states; extracting a global feature of the object from the image, and extracting local features of the object from the positions of the key points respectively; and performing object recognition according to the global feature of the object and the local features extracted from the positions of the key points to obtain a recognition result of the object. Through adoption of the object recognition method and device, the object recognition effect can be improved.

Owner:BEIJING SENSETIME TECH DEV CO LTD

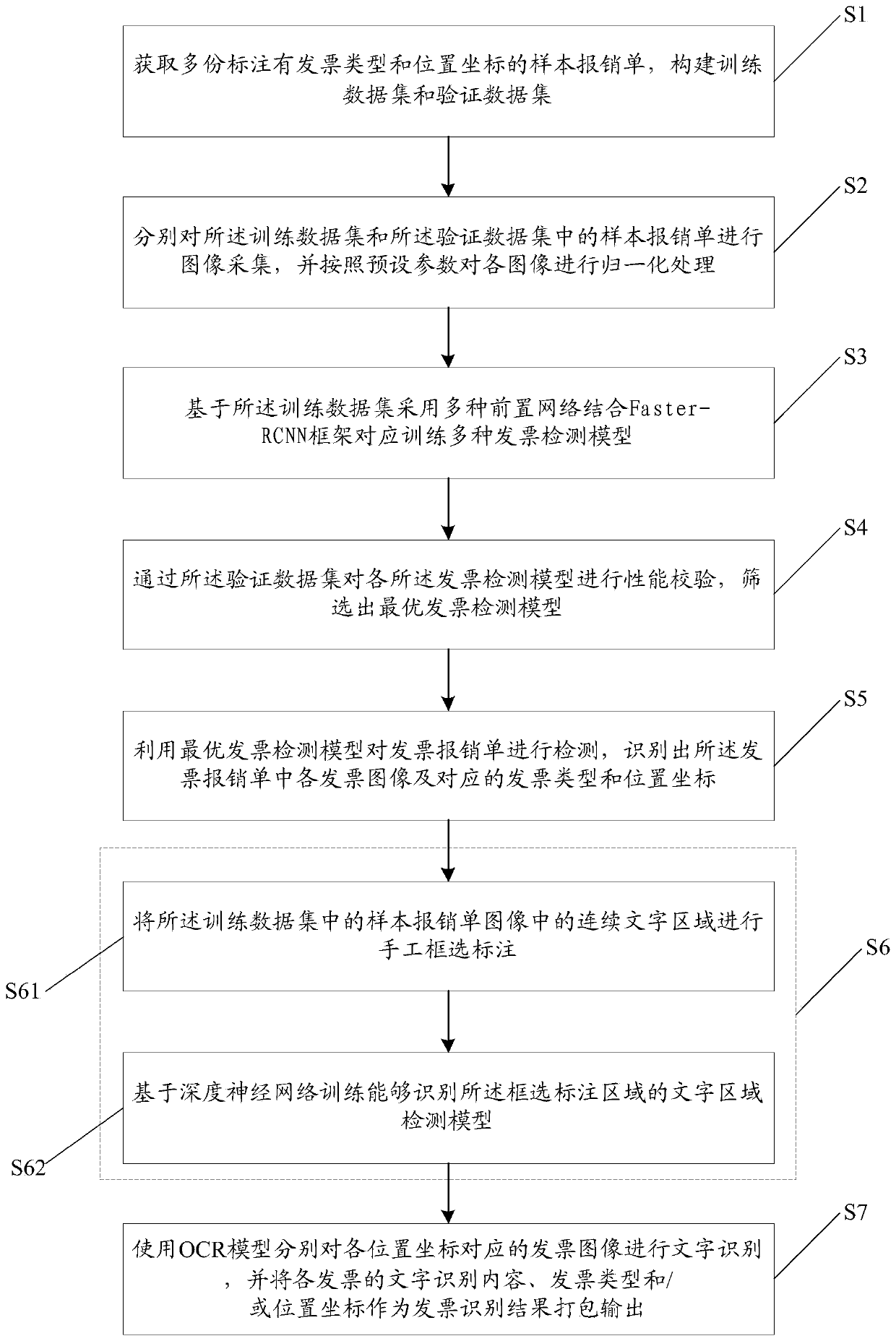

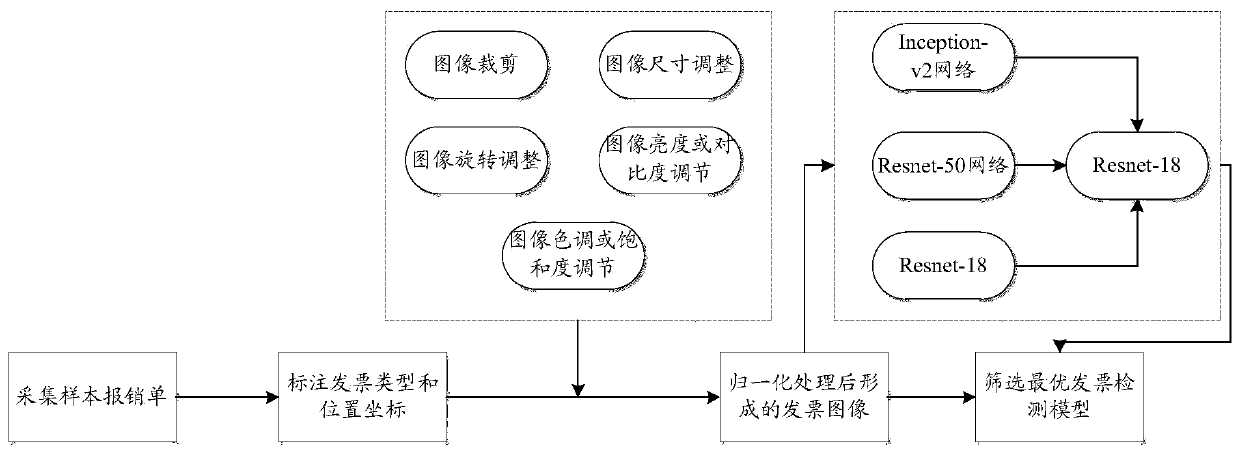

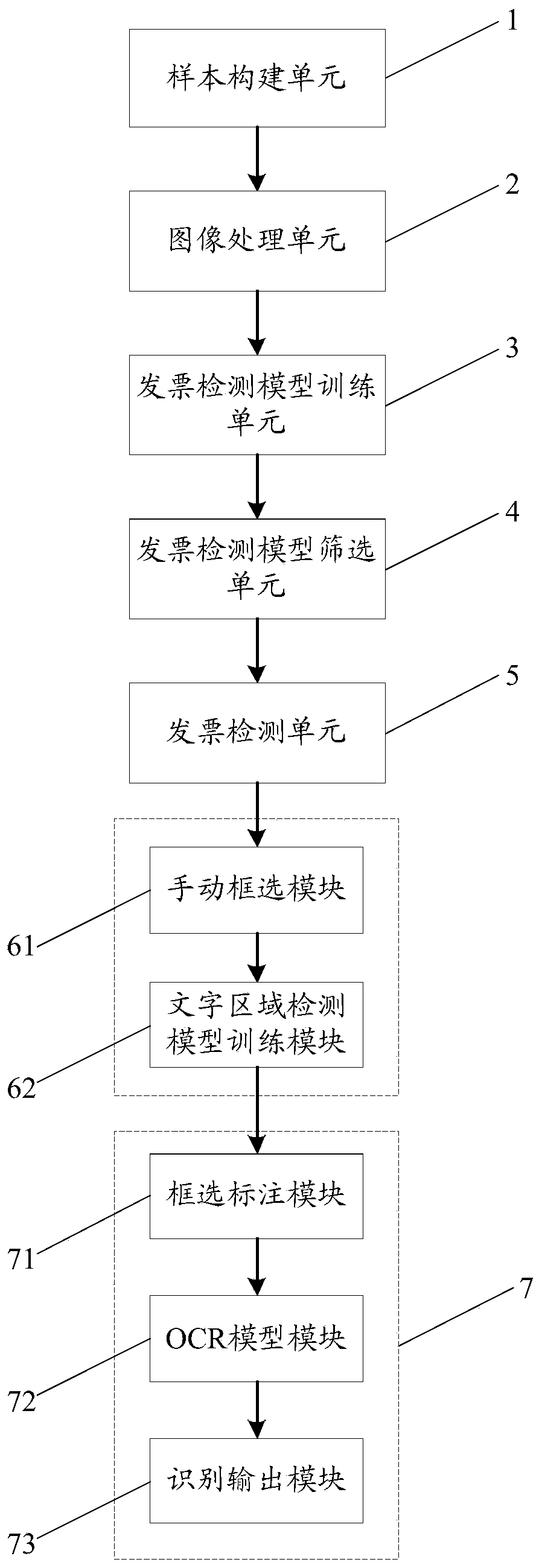

Invoice recognition method and system based on deep learning

InactiveCN109977957AEfficient and accurate identificationGuaranteed validityPaper-money testing devicesNeural architecturesInvoice typeData set

The invention discloses an invoice recognition method and system based on deep learning, relates to the technical field of invoice recognition, and solves the technical problem of inaccurate invoice OCR data acquisition caused by various invoice types and nonstandard invoice pasting in the prior art. The method comprises the following steps: obtaining a plurality of sample reimbursement lists marked with invoice types and position coordinates, and constructing a training data set and a verification data set; based on training data set, combining multiple front networks with a Faster-RCNN framework correspondingly trains a plurality of invoice detection models; carrying out performance verification on each invoice detection model through the verification data set, and screening out an optimal invoice detection model; detecting the invoice reimbursement list by utilizing the optimal invoice detection model, and identifying each invoice image in the invoice reimbursement list and corresponding invoice types and position coordinates; and carrying out character recognition on the invoice images corresponding to the position coordinates by using an OCR model, and packaging and outputtingthe character recognition content, the invoice type and / or the position coordinates of each invoice as invoice recognition results.

Owner:SUNING COM CO LTD

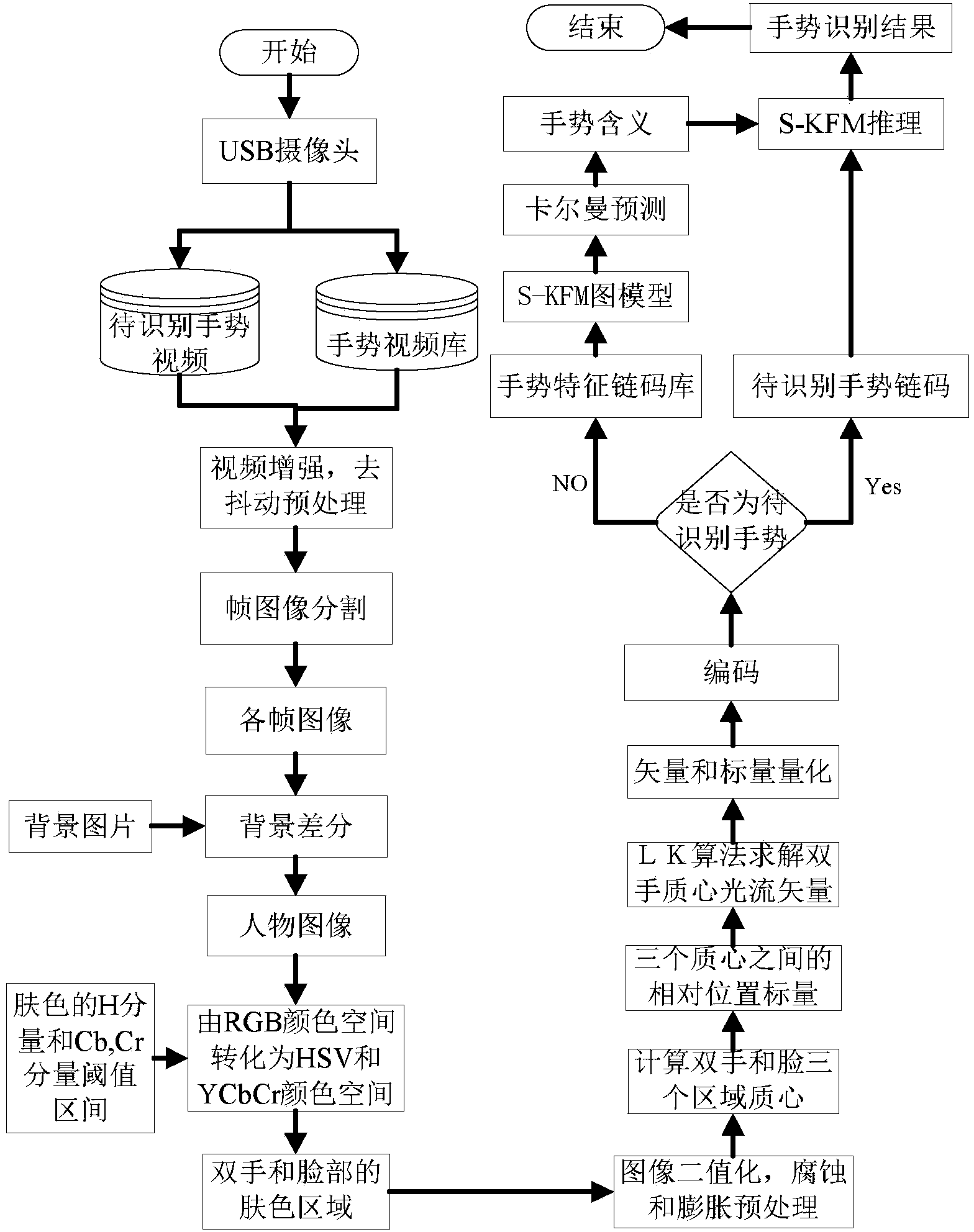

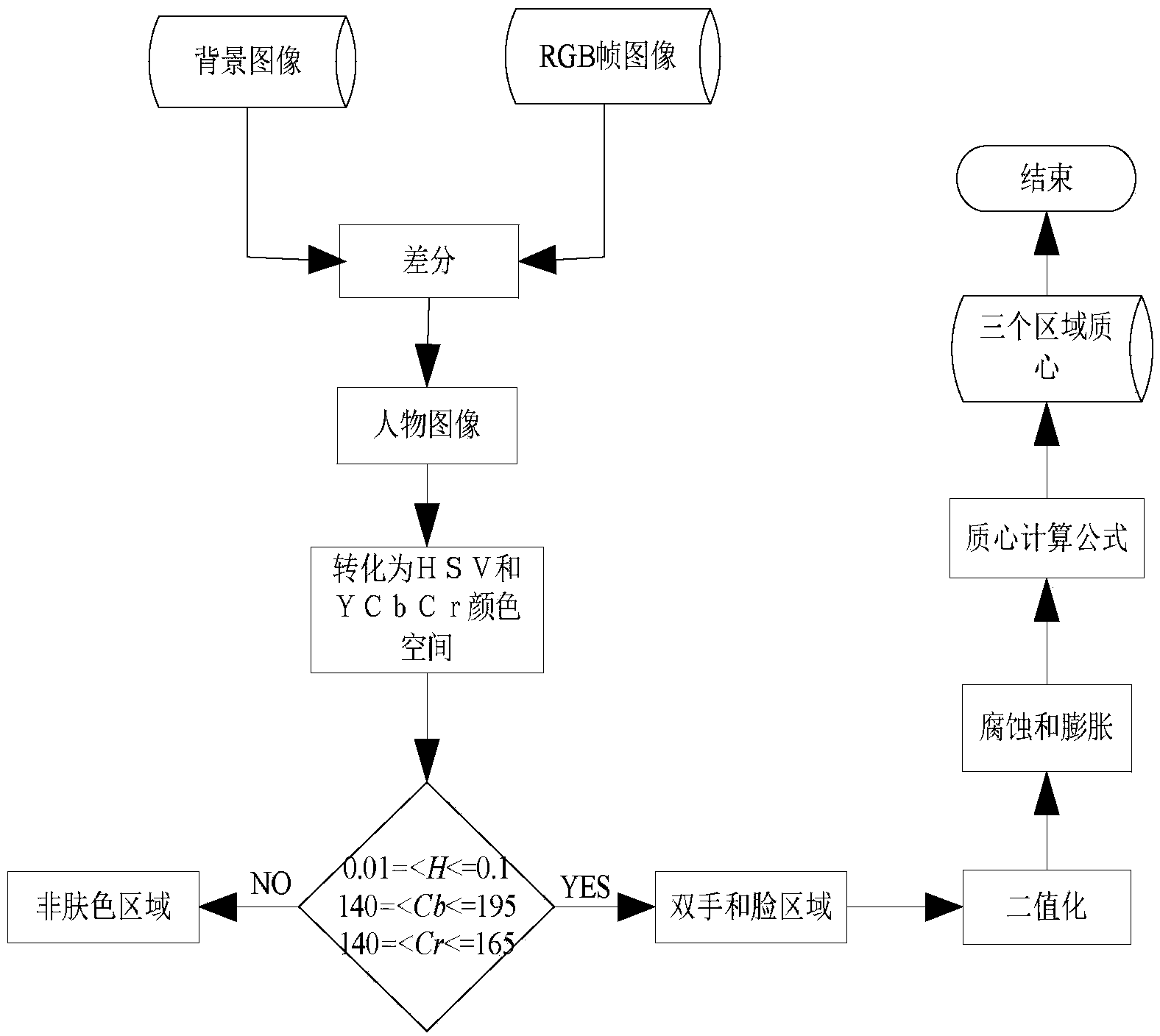

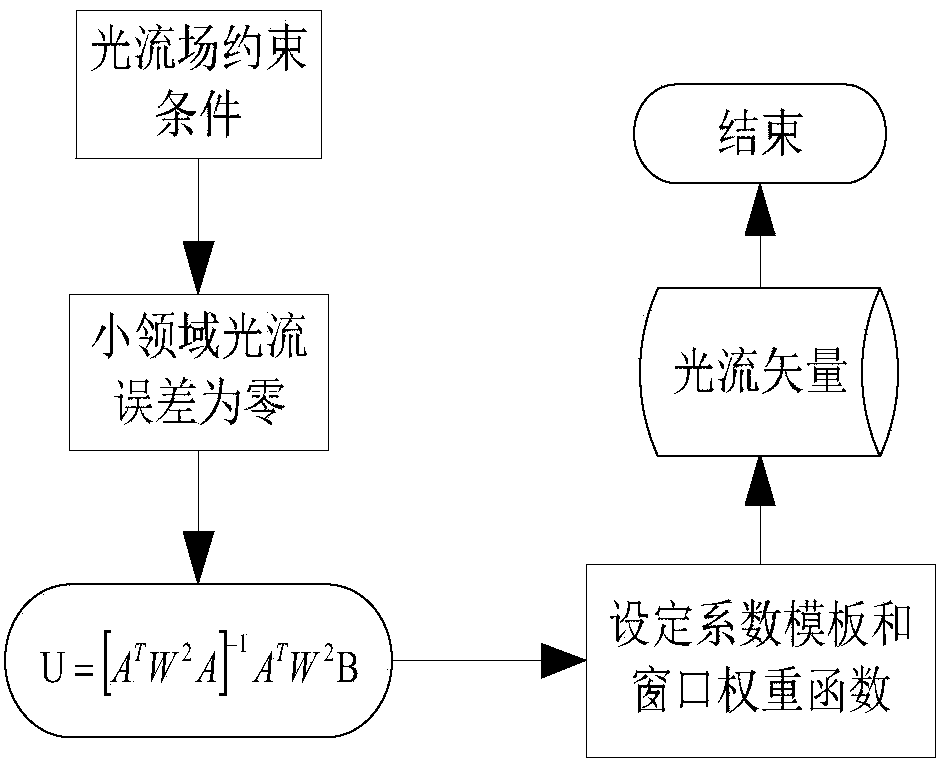

Hand gesture recognition method based on switching Kalman filtering model

InactiveCN104050488AThe recognition result is accurateReduce recognition errorsImage enhancementCharacter and pattern recognitionPattern recognitionSkin color

The invention discloses a hand gesture recognition method based on a switching Kalman filtering model. The hand gesture recognition method based on a switching Kalman filtering model comprises the steps that a hand gesture video database is established, and the hand gesture video database is pre-processed; image backgrounds of video frames are removed, and two hand regions and a face region are separated out based on a skin color model; morphological operation is conducted on the three areas, mass centers are calculated respectively, and the position vectors of the face and the two hands and the position vector between the two hands are obtained; an optical flow field is calculated, and the optical flow vectors of the mass centers of the two hands are obtained; a coding rule is defined, the two optical flow vectors and the three position vectors of each frame of image are coded, so that a hand gesture characteristic chain code library is obtained; an S-KFM graph model is established, wherein a characteristic chain code sequence serves as an observation signal of the S-KFM graph model, and a hand gesture posture meaning sequence serves as an output signal of the S-KFM graph model; optimal parameters are obtained by conducting learning with the characteristic chain code library as a training sample of the S-KFM; relevant steps are executed again for a hand gesture video to be recognized, so that a corresponding characteristic chain code is obtained, reasoning is conducted with the corresponding characteristic chain code serving as input of the S-KFM, and finally a hand gesture recognition result is obtained.

Owner:XIAN TECHNOLOGICAL UNIV

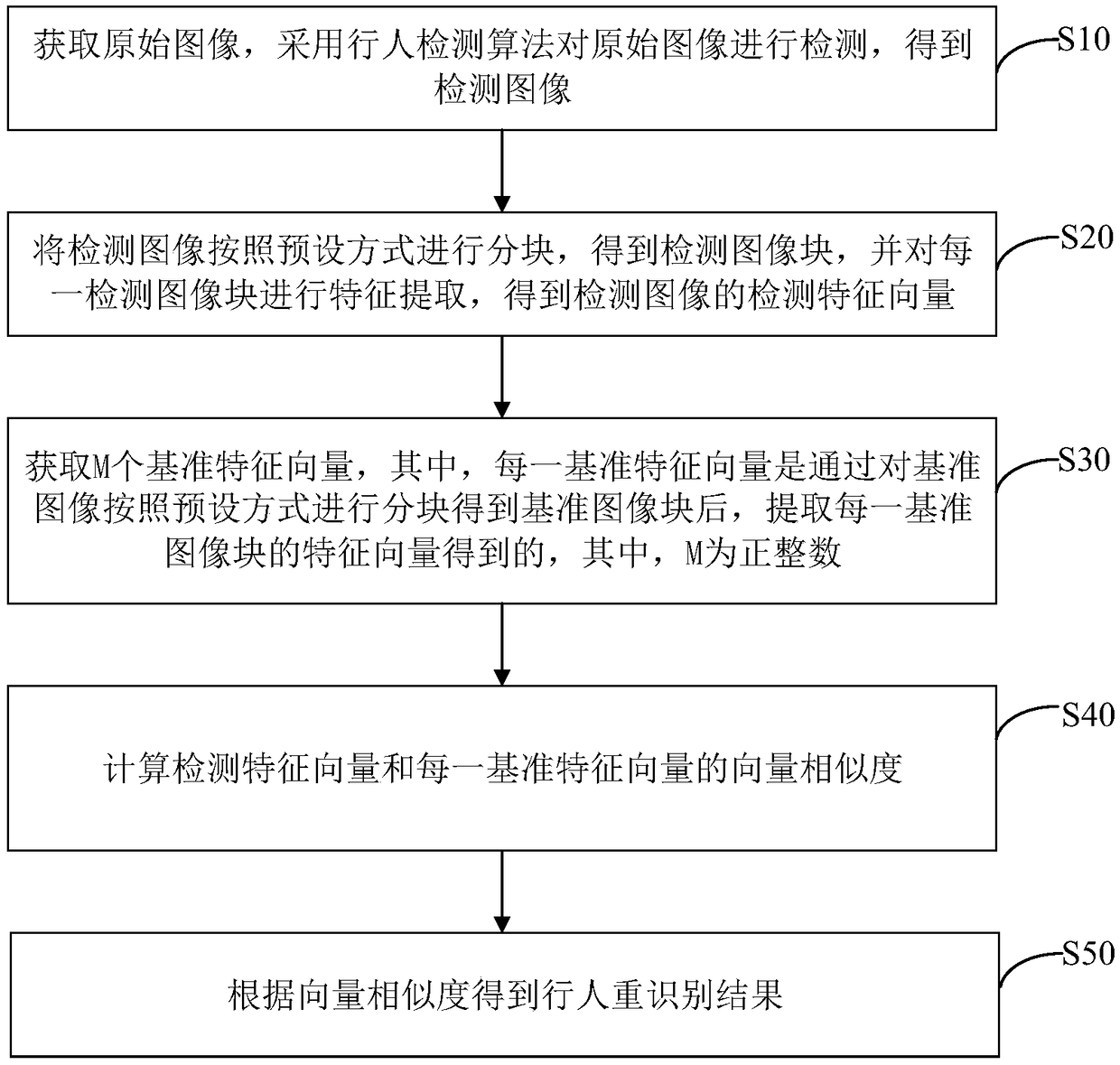

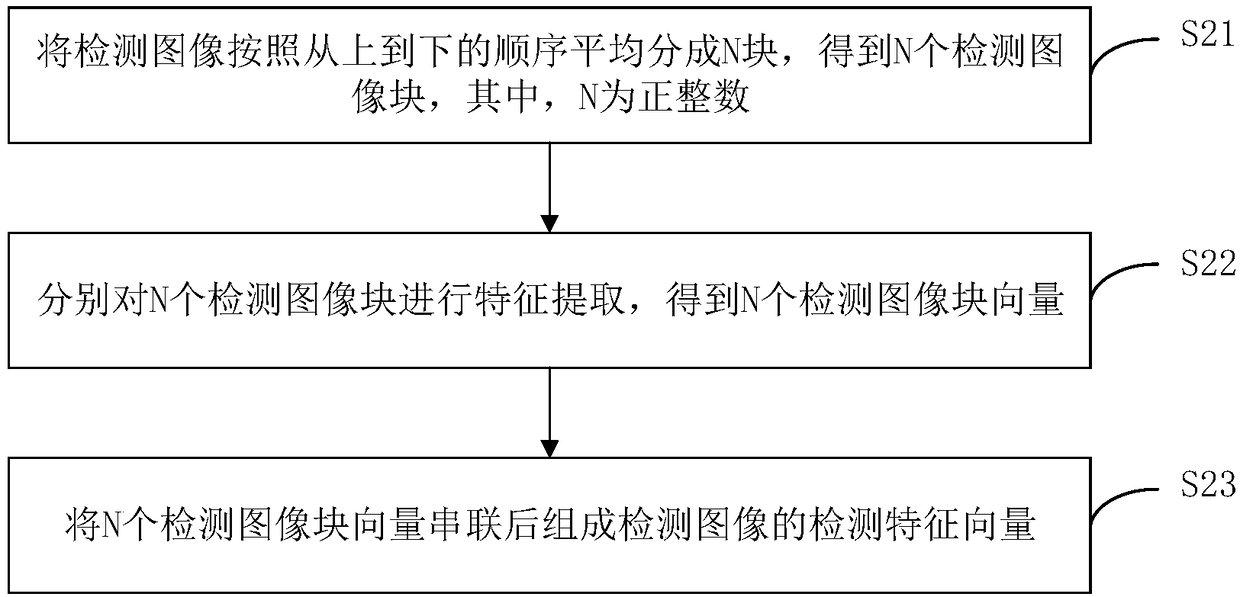

Pedestrian re-identification method, device, computer device and storage medium

PendingCN109271870AImprove accuracySmall amount of calculationCharacter and pattern recognitionInternal combustion piston enginesFeature vectorFeature extraction

The invention discloses a pedestrian re-identification method, a device, a terminal device and a storage medium. The method comprises the following steps: obtaining an original image; detecting the original image by adopting a pedestrian detection algorithm to obtain a detection image; dividing the detection image into blocks according to a preset mode to obtain detection image blocks, and extracting features of each detection image block to obtain detection feature vectors of the detection image; obtaining M reference feature vectors, wherein, each reference feature vector is obtained by extracting the feature vectors of each reference image block after the reference image block is obtained by partitioning the reference image in a preset manner, wherein M is a positive integer; calculating vector similarity between the detected feature vector and each reference feature vector; obtaining pedestrian re-recognition results according to vector similarity. The pedestrian re-recognition method combines the advantages of local and global features of pedestrian images, and improves the accuracy of pedestrian re-recognition.

Owner:PING AN TECH (SHENZHEN) CO LTD

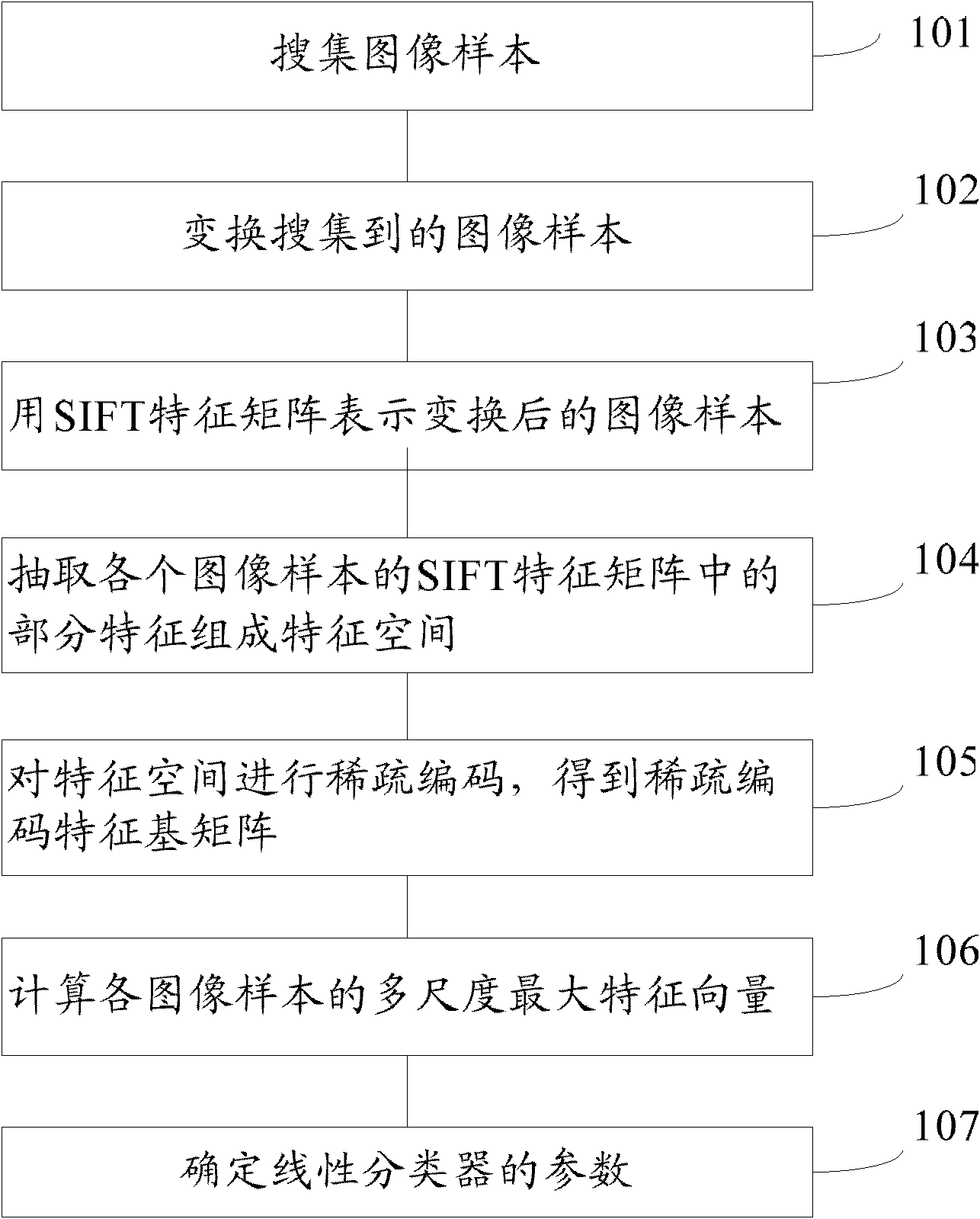

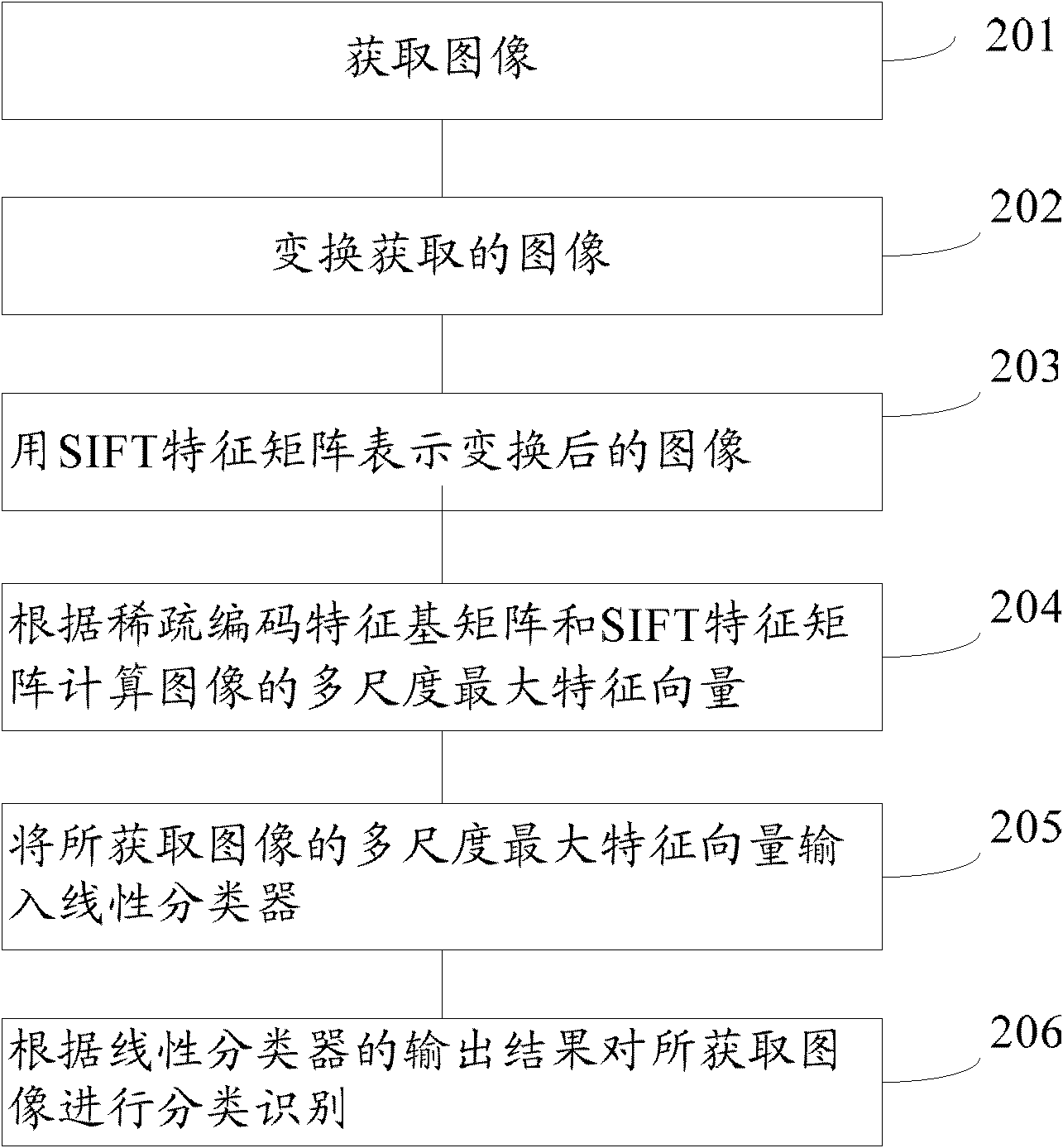

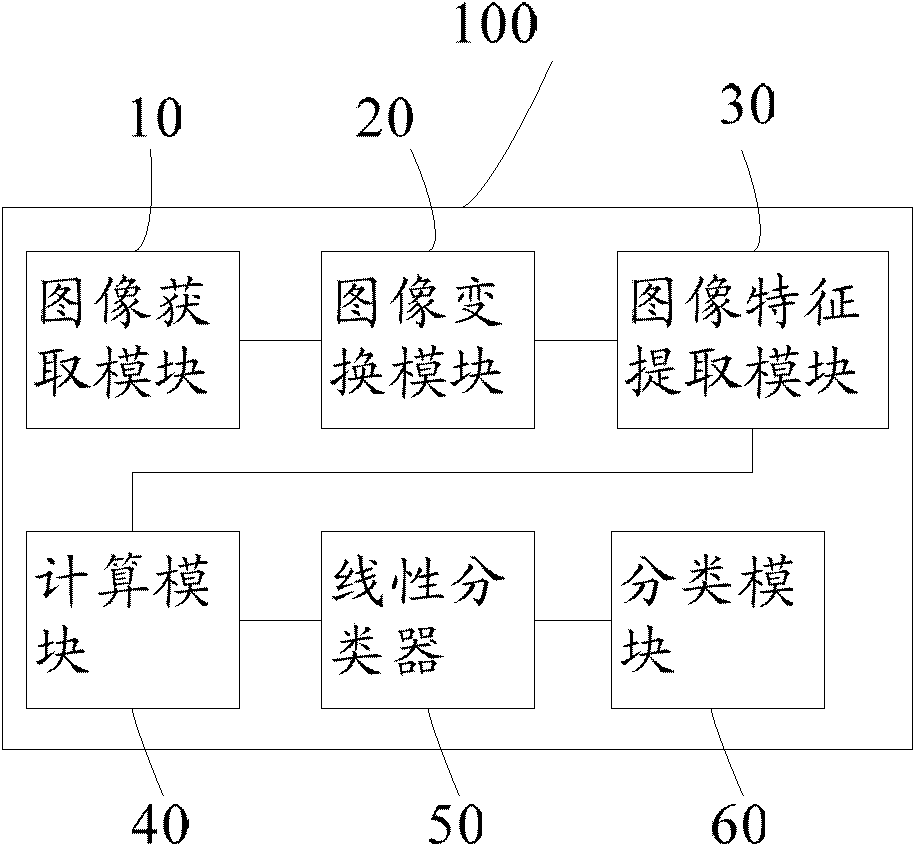

Training method for vehicle identification model, and vehicle identification method and apparatus

ActiveCN102651075AQuick identificationImprove accuracyCharacter and pattern recognitionFeature vectorLinear classifier

The invention provides a training method for a vehicle identification model, comprising the following steps of: collecting image samples, transforming the collected image samples, representing a transformed image sample by a feature matrix with constant size but transformable features, extracting partial features in the feature matrix with constant size but transformable features of each image sample to compose a feature space, performing sparse coding on the feature space to obtain a sparse coding feature basis matrix, computing the maximum multi-scale feature vector of each image sample, and determining the parameter of a linear classifier. By the training method for the vehicle identification model, the complexity, occupied memory space and computing time of an algorithm can be reduced, so that the vehicle identification is implemented fast; and meanwhile, the vehicle identification precision is improved. The invention further provides a vehicle identification method and apparatus based on the training method for the vehicle identification model.

Owner:甘肃煜城智慧停车科技有限责任公司

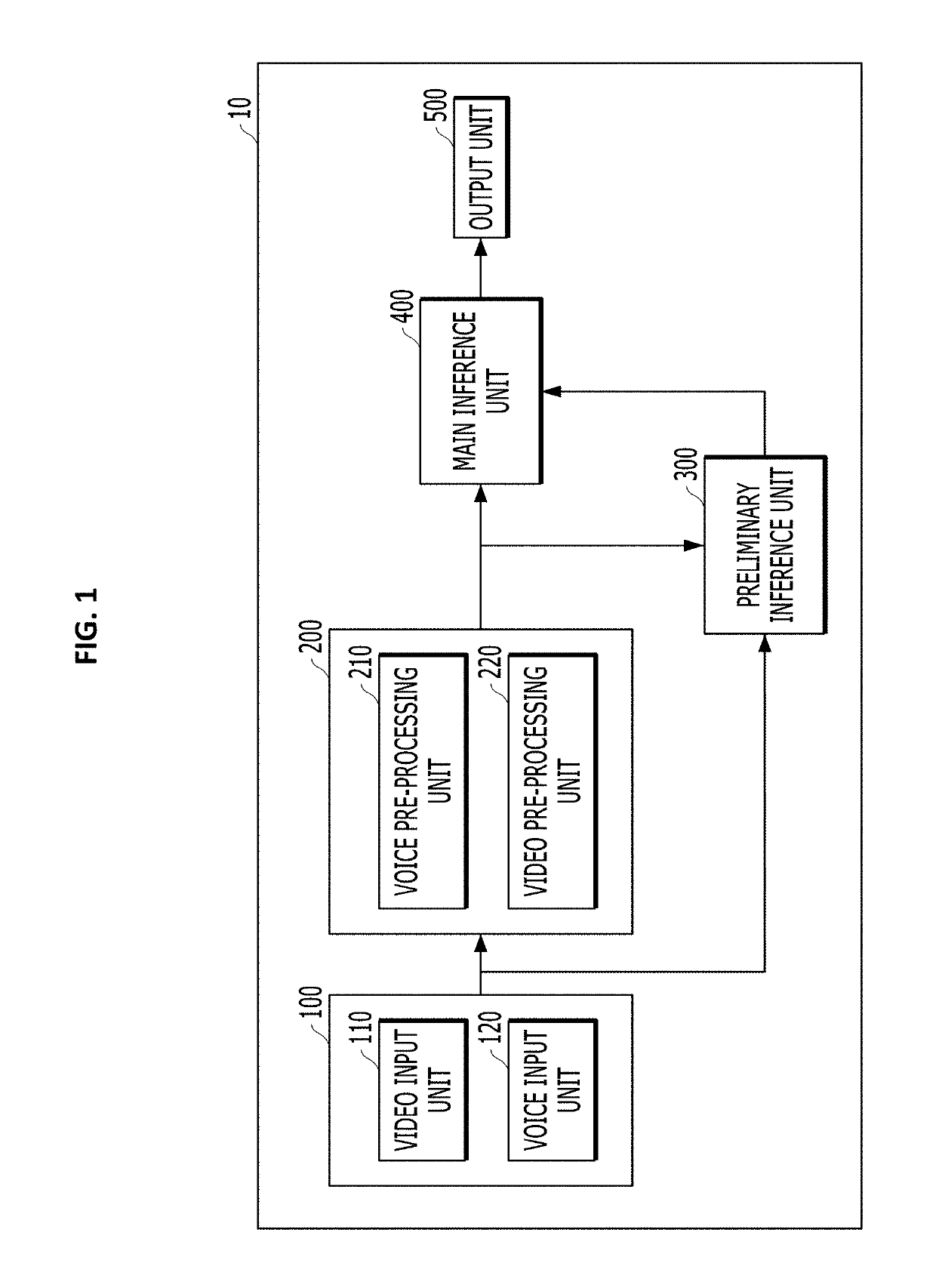

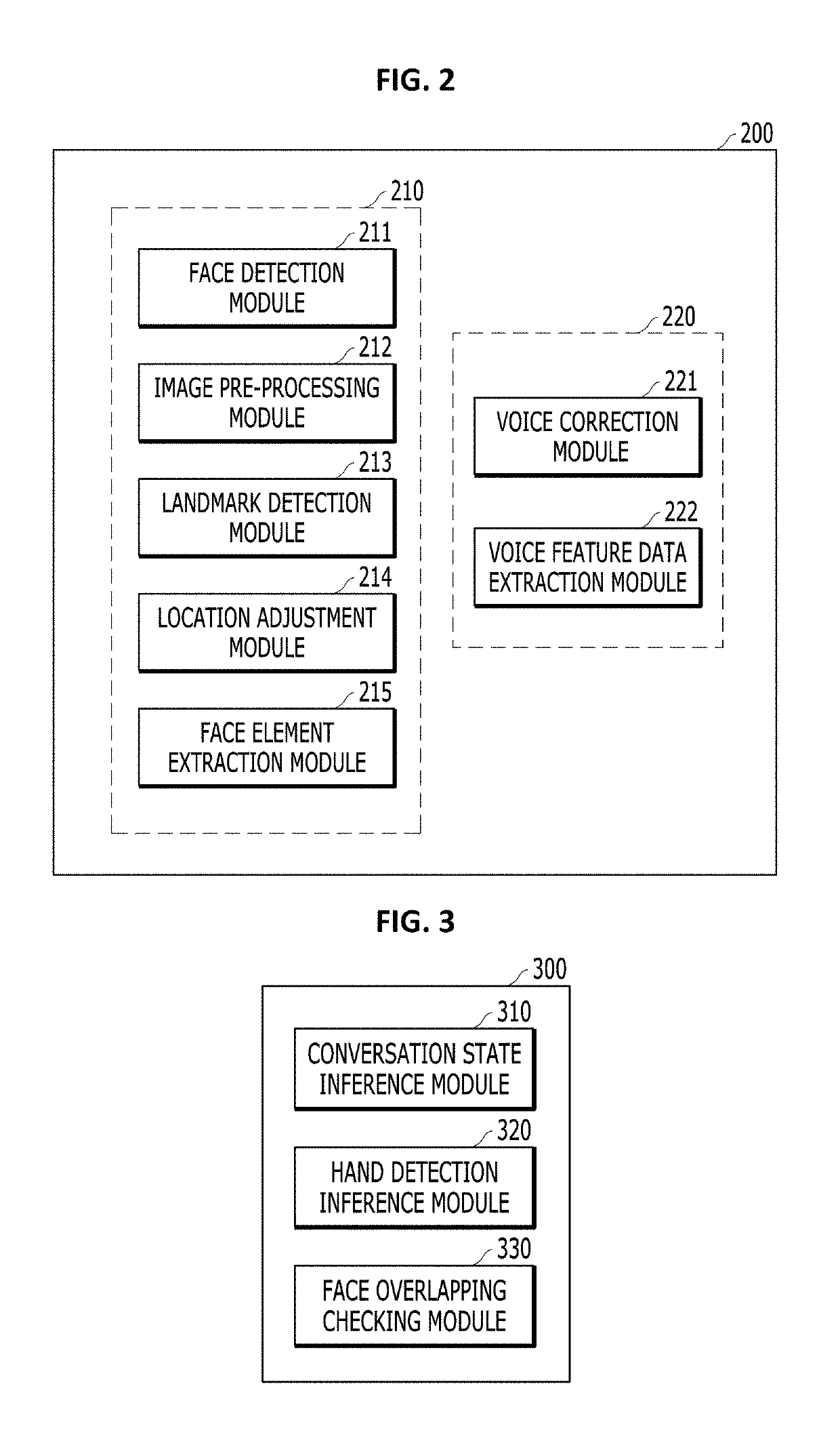

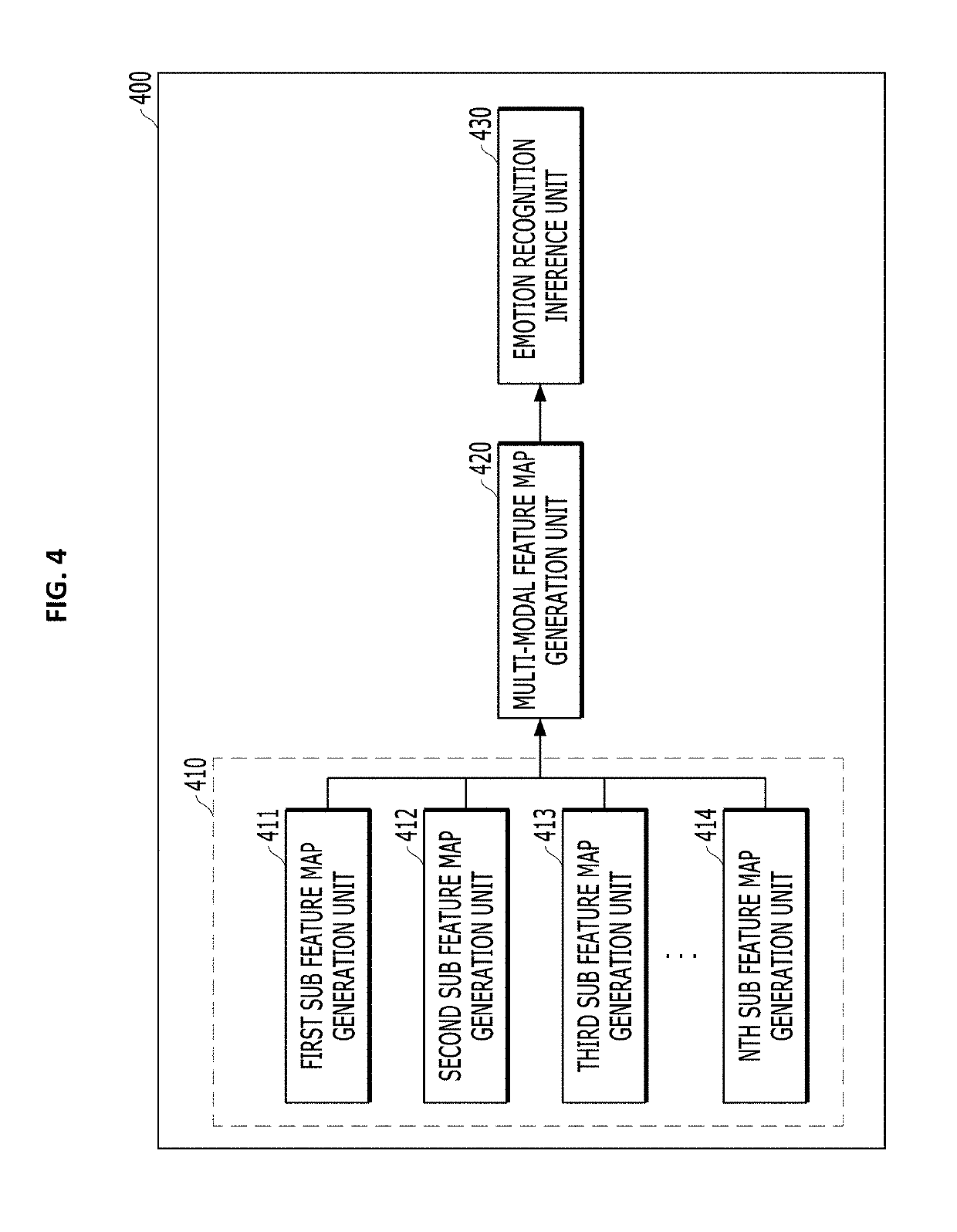

Multi-modal emotion recognition device, method, and storage medium using artificial intelligence

ActiveUS20190163965A1Accurate confirmationError minimizationSpeech analysisAcquiring/recognising facial featuresData pre-processingVoice data

A multi-modal emotion recognition system is disclosed. The system includes a data input unit for receiving video data and voice data of a user, a data pre-processing unit including a voice pre-processing unit for generating voice feature data from the voice data and a video pre-processing unit for generating one or more face feature data from the video data, a preliminary inference unit for generating situation determination data as to whether or not the user's situation changes according to a temporal sequence based on the video data. The system further comprises a main inference unit for generating at least one sub feature map based on the voice feature data or the face feature data, and inferring the user's emotion state based on the sub feature map and the situation determination data.

Owner:GENESIS LAB

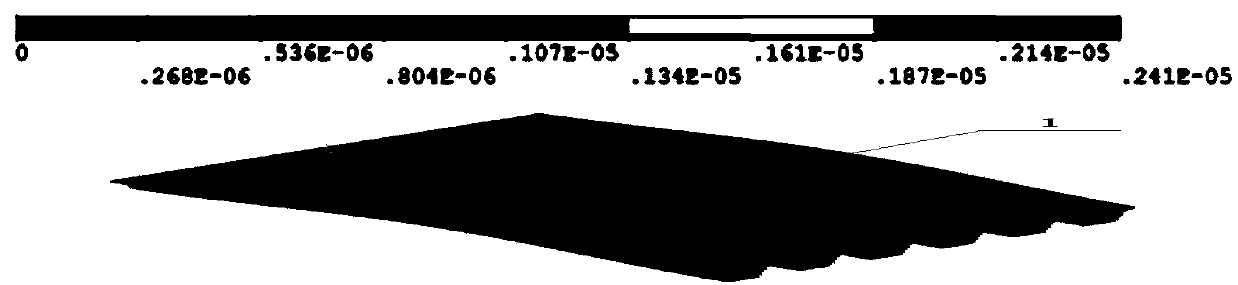

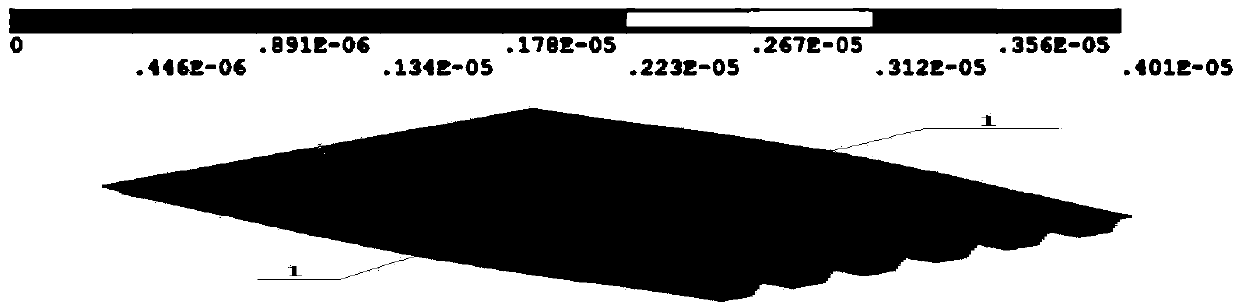

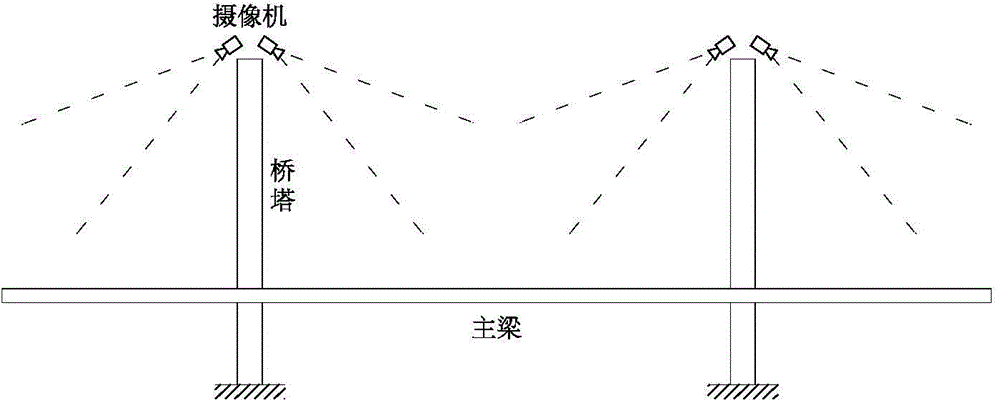

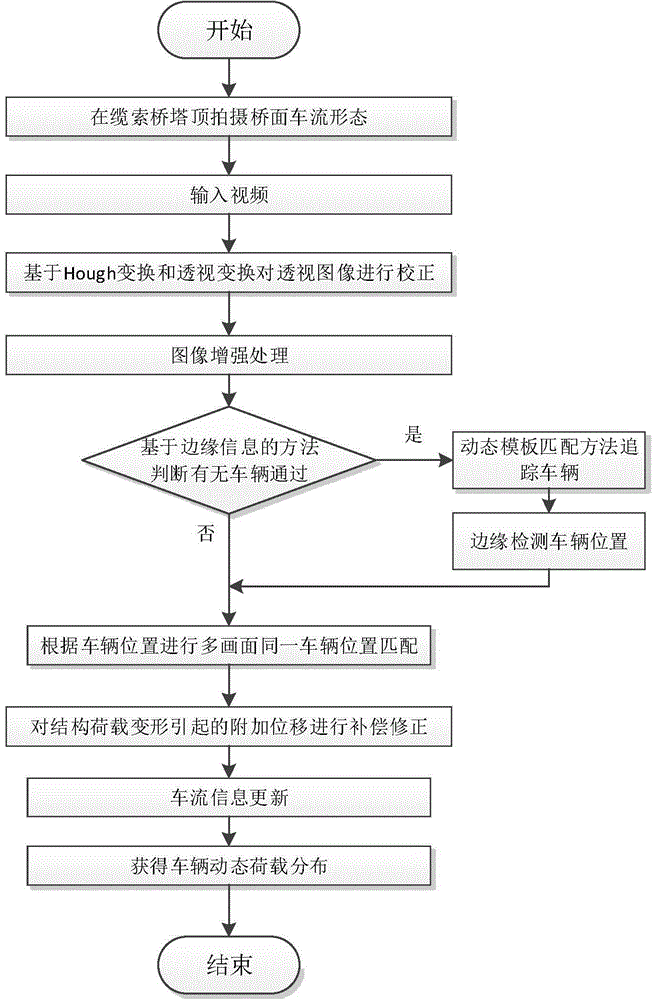

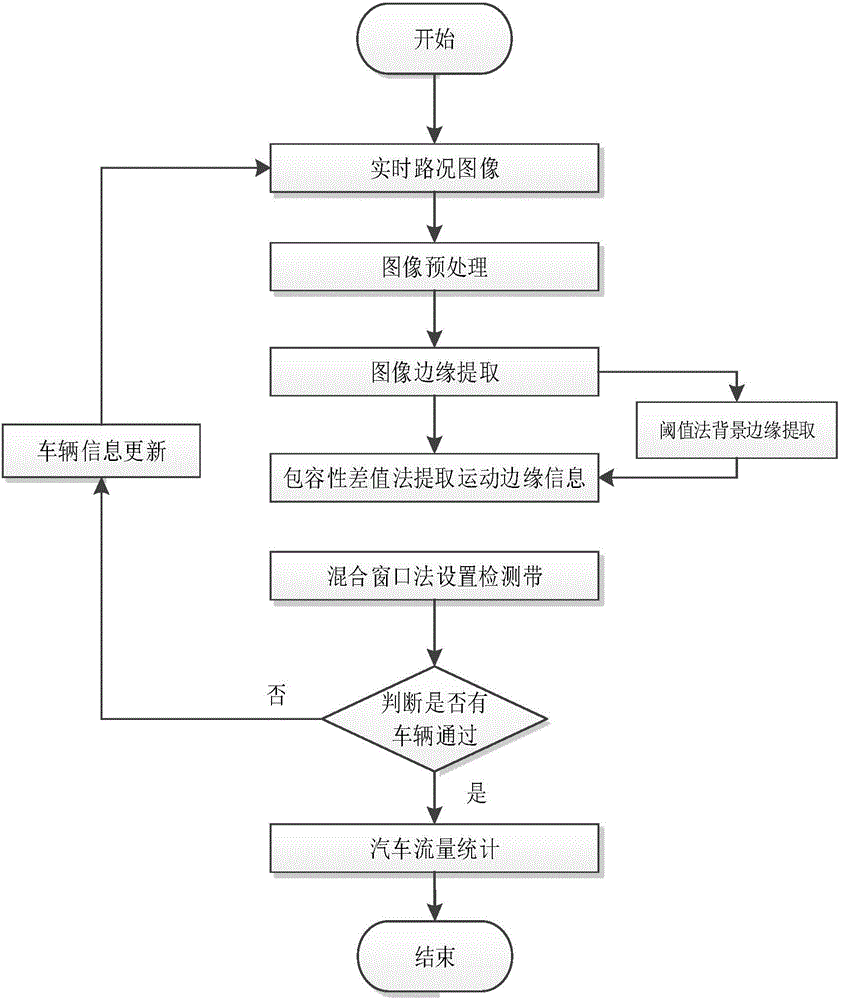

Cable rope bearing bridge deck vehicle load distribution real-time detection method

InactiveCN104599249AQuality improvementSolve occlusionImage enhancementImage analysisReal time trackingEngineering

The invention discloses a cable rope bearing bridge deck vehicle load distribution real-time detection method. The cable rope bearing bridge deck vehicle load distribution real-time detection method comprises the steps of shooting a bridge deck image; conducting perspective correction processing and enhancement processing on the image so as to obtain a bridge deck image subjected to image enhancement and adopting an edge information based detection method to judge a bridge deck vehicle; tracking a vehicle image and correcting a deformed structure of the vehicle in the acquired image; using the bridge deck as an absolute coordinate system, accurately drawing a running track of an automobile tire in each video picture section on the bridge deck, splicing tracks of the same vehicle in different video pictures according to a same track principle to obtain a running track of each vehicle on the bridge deck and achieving vehicle load real-time tracking. The cable rope bearing bridge deck vehicle load distribution real-time detection method is wide in application range, low in cost, capable of obtaining a detection result in real time and small in environmental influence. By means of a perspective correction and image enhancement technology, high-quality earlier-stage images can be obtained. The vehicle blocking problem caused by image acquisition in an oblique state can be well solved by means of a dynamic template matching technology, and the method has a wide application range.

Owner:CHONGQING UNIV

Bridge influence line identification method and system

ActiveCN107588915AHigh precisionSuppresses unphysical fluctuationsSustainable transportationElasticity measurementMobile vehicleInfluence line

The invention discloses a bridge influence line identification method and system. The method comprises the following steps: (1) a mathematic model for influence line identification is built; (2) influence line identification is performed based on a B spline curve. According to the method, actual measurement information of moving vehicles and bridge response caused by the moving vehicles is used, regularization theories and a B spline curve construction method are adopted, a problem that influence line identification is excessively sensitive to errors such as measurement noise and the like canbe effectively alleviated; the method is high in identification precision and great in potential for subsequent projection application; compared with a conventional method, the method can be used forinfluence line identification directly based on actually measured dynamic bridge response data, the method is simple and fast in operation, the method can be used for real time monitoring of key indexes of a bridge and can help effectively suppress influence line fluctuation which is not in line with physical meaning, and influence line identification precision can be improved.

Owner:XIAMEN UNIV

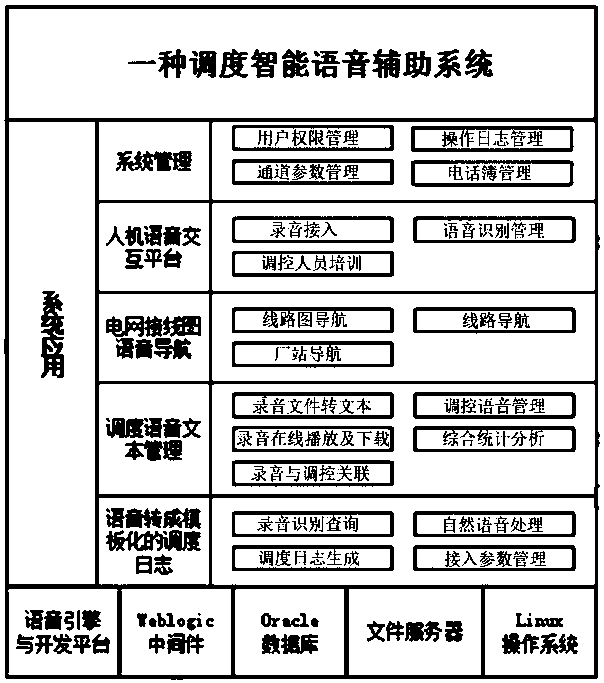

Intelligent voice auxiliary system for dispatching

InactiveCN111489748AAccess speed optimizationEasy to identifyCircuit arrangementsSpeech recognitionWiring diagramEngineering

The invention discloses an intelligent voice auxiliary system for dispatching. The system comprises a system management module, a man-machine voice interaction platform module, a power grid wiring diagram navigation module, a voice-to-templated dispatching log module and a dispatching voice text management module. The intelligent voice auxiliary system for dispatching has the beneficial effects that the advanced voice recognition technology is fully utilized, the functions of dispatching information recording, voice navigation and the like are achieved, and the intelligent level of dispatchingtelephone voice and power grid dispatching management is improved; the system constructs a dispatching man-machine voice interaction platform, realizes automatic recognition of dispatching voice, automatically analyzes the dispatching voice into text characters, and provides a bottom layer basis for other functions of dispatching resource navigation and the like of voice recognition.

Owner:GUANGXI POWER GRID CORP

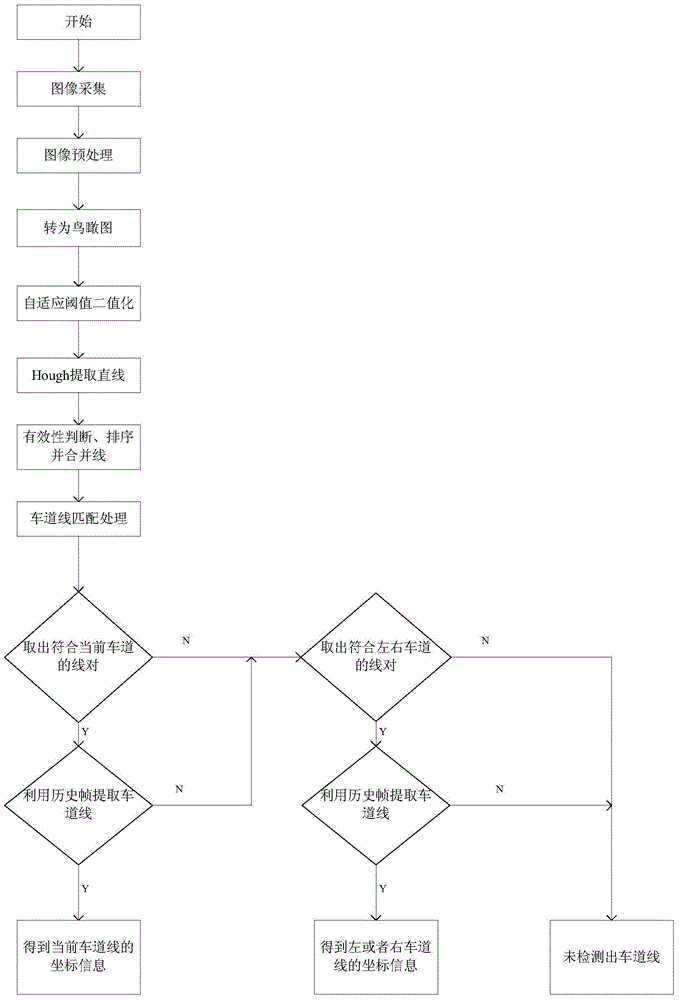

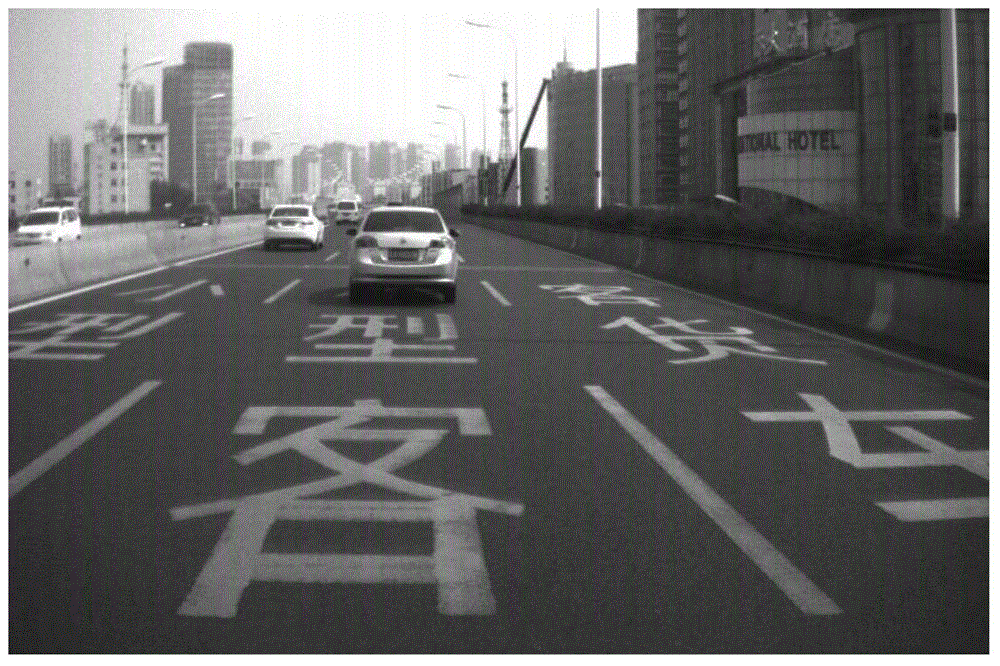

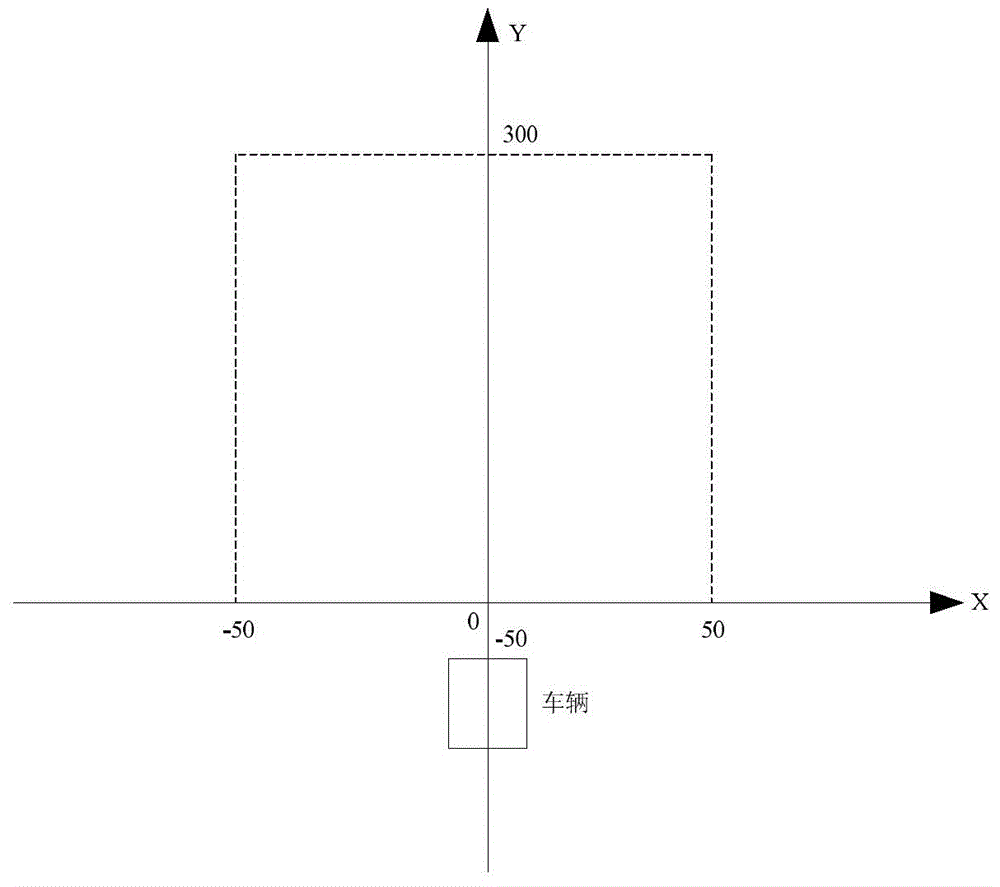

Lane line historical frame recognition method

ActiveCN104700072ASmall sizeEfficient use ofCharacter and pattern recognitionHough transformComputer graphics (images)

The invention discloses a lane line historical frame recognition method. The method comprises performing Gaussian filter preprocessing on acquired road images and performing adverse projection on the images to be converted into airscapes; performing binarization processing on the airscapes through an adaptive threshold binarization method and performing Hough transformation on the airscapes to extract lines; determining which lines in Hough transformation results are lane lines based on the line angle LX.theta, the line distance LX.rho, the line ticket number LX.V and the line initial point distance LX.S of each line LX, wherein X =1, 2, ......N and the space and positions of the lane lines obtained in the previous period. By the aid of the method, interferences of complex road conditions of characters, multiple interference lines, shadow shielding, lane line damage, stain coverage and the like are removed effectively, and the recognition rate and the stability of lane lines are improved greatly; the method can be widely applied to car safety auxiliary driving systems to assist drivers for the maintaining of traveling of cars in the lanes in the monotonous driving environment.

Owner:HEFEI INSTITUTES OF PHYSICAL SCIENCE - CHINESE ACAD OF SCI

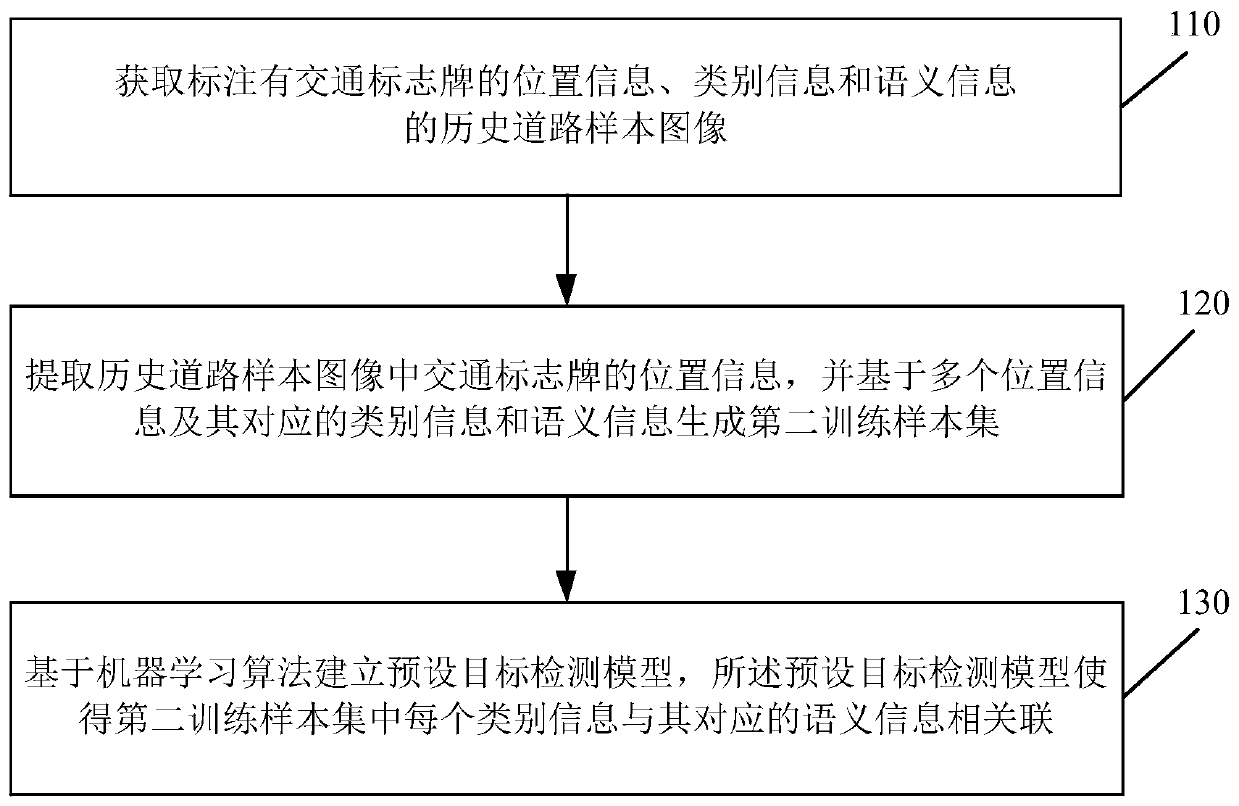

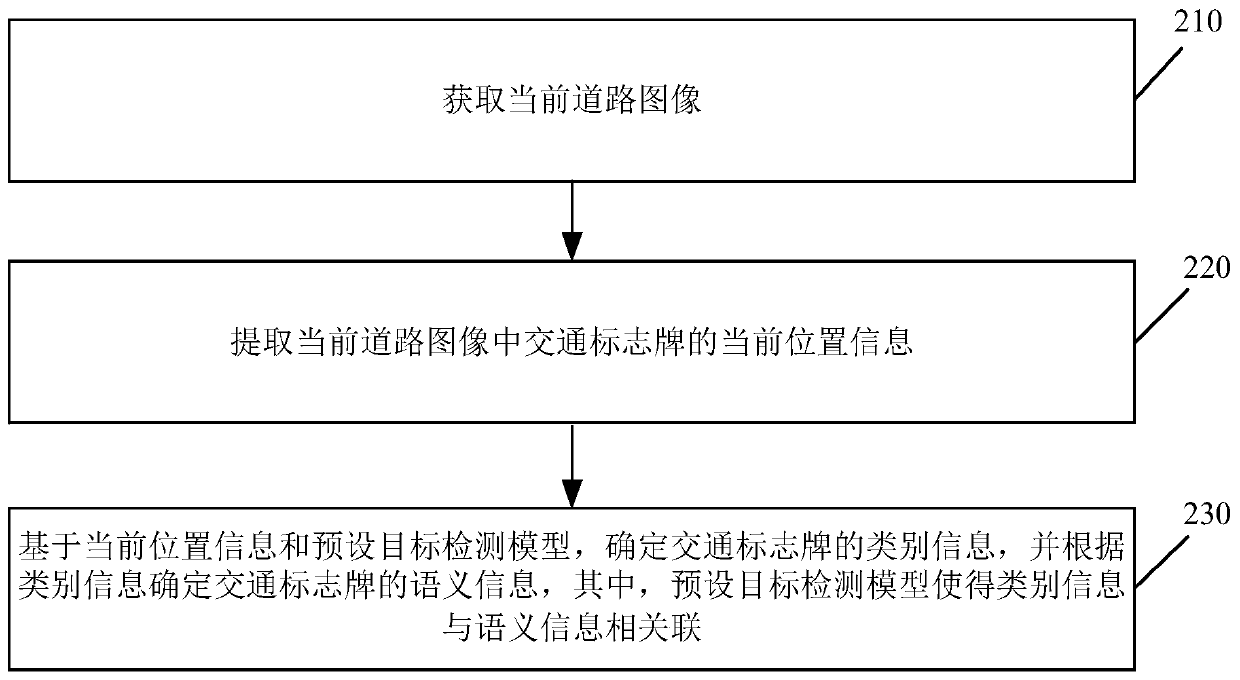

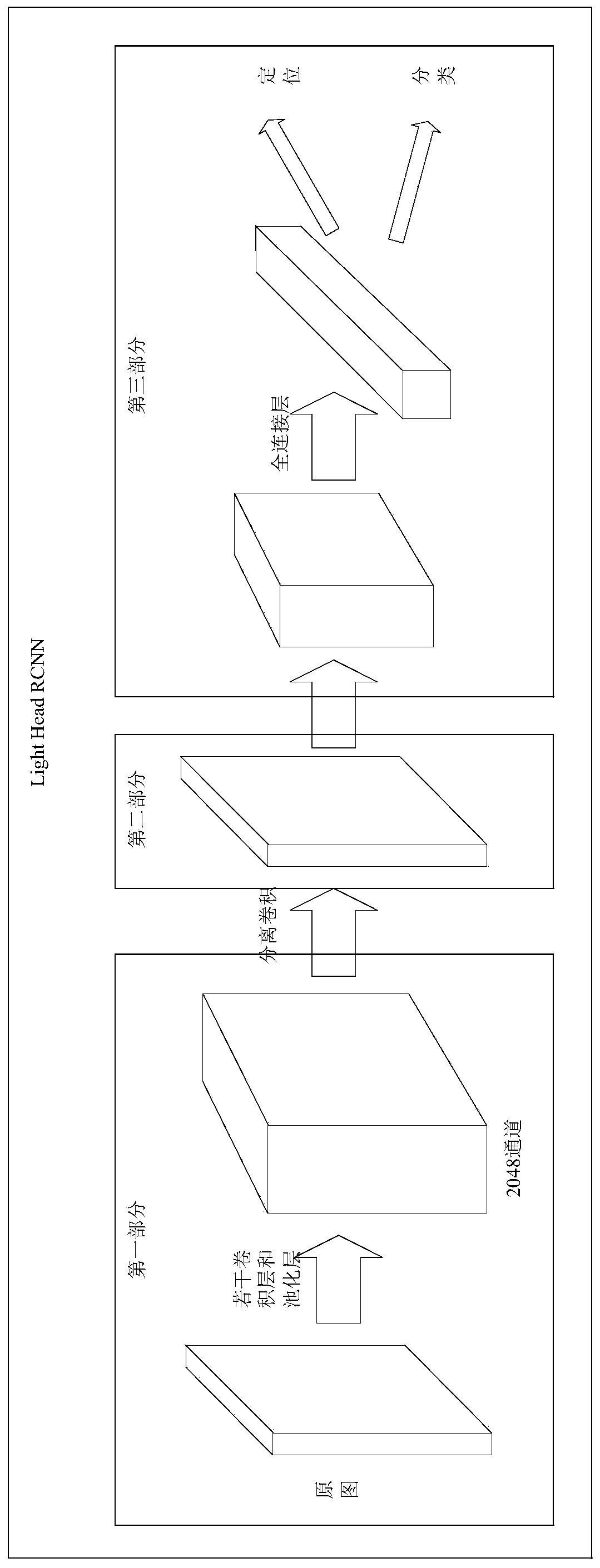

Traffic sign recognition method and device and training method and device of neural network model

InactiveCN111488770AThe recognition result is accurateImprove recognition accuracyCharacter and pattern recognitionNeural architecturesTraffic sign recognitionFeature extraction

The embodiment of the invention discloses a traffic sign recognition method and device, and a training method and device of a neural network model. The traffic sign recognition method comprises the steps: acquiring position information and category information of a current sub-image of a traffic sign board area in a current road image, wherein the position information and the category informationare obtained by conducting feature extraction on a to-be-recognized traffic sign board image in the current road image through a preset target detection model; according to the position information and the category information, performing feature extraction on the current sub-image by using a convolutional neural network (CNN) to obtain a feature sequence of the current sub-image; and obtaining target semantic information corresponding to the current sub-image according to the feature sequence and a preset convolutional recurrent neural network (CRNN) model, whereinthe CRNN model enables the feature sequence of the image to be associated with the corresponding semantic information. By adopting the technical scheme, the identification precision of the traffic sign is improved.

Owner:MOMENTA SUZHOU TECH CO LTD

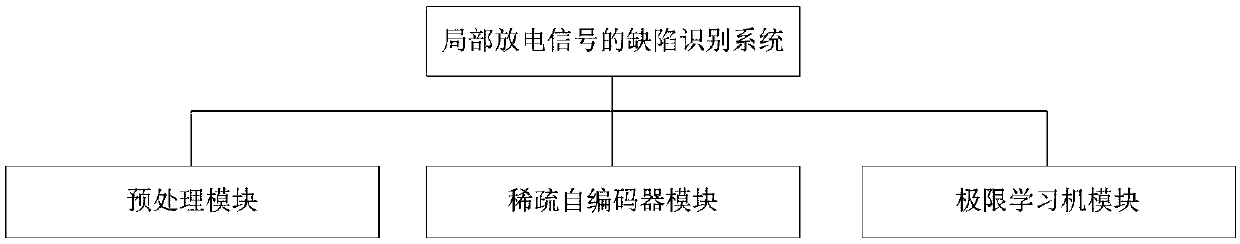

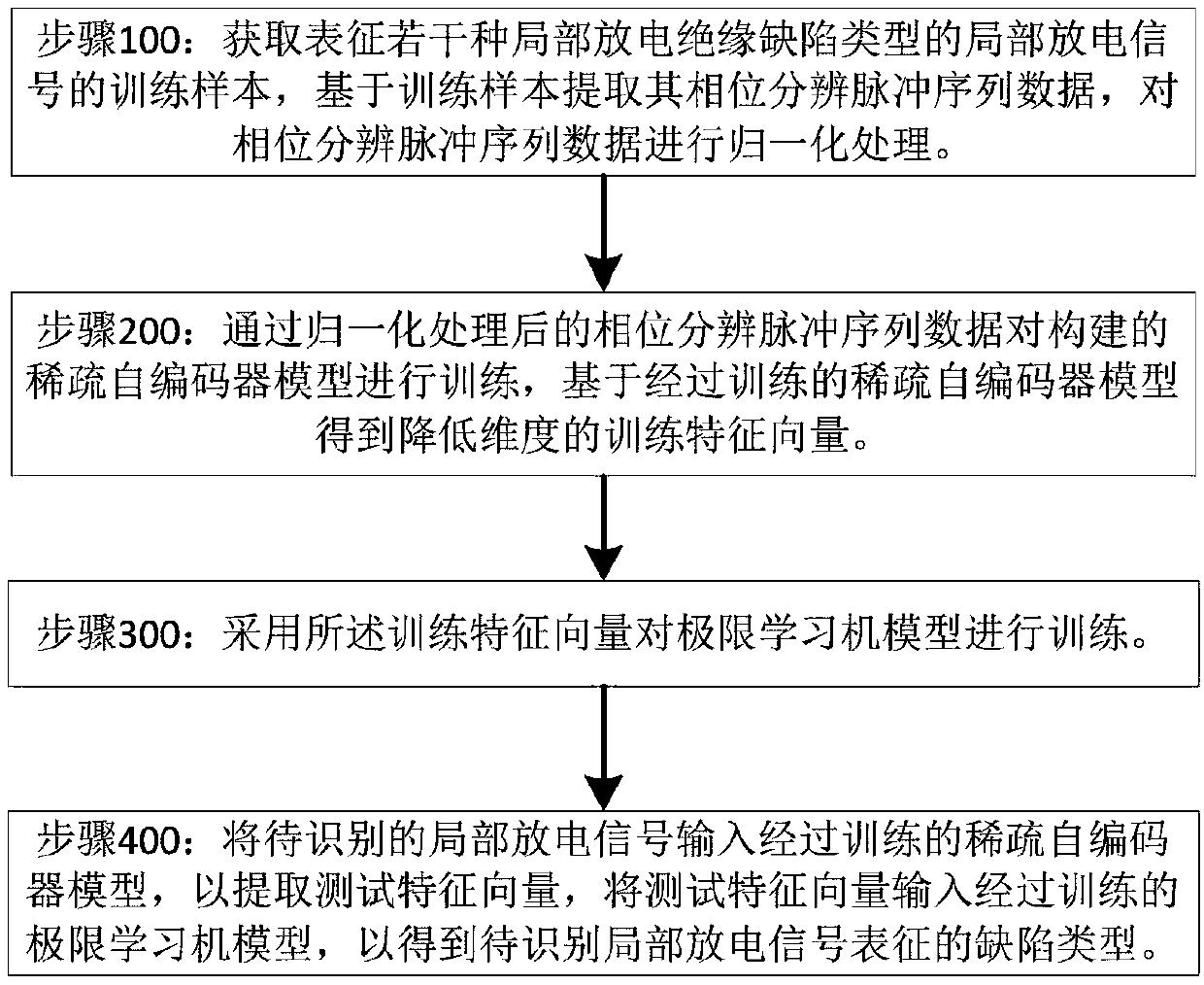

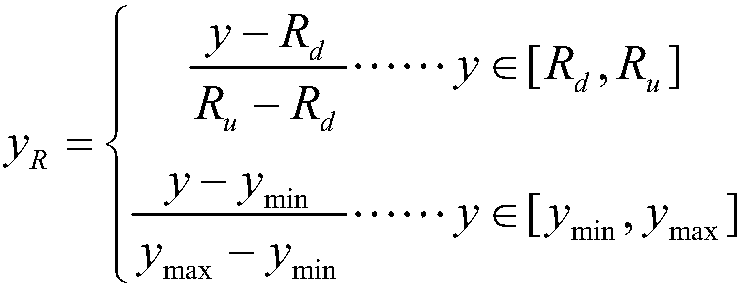

A defect recognition method and a system of a partial discharge signal

InactiveCN109102012ATimely identification of defectsThe recognition result is accurateTesting dielectric strengthCharacter and pattern recognitionFeature vectorLearning machine

The invention discloses a defect identification method of partial discharge signal, which comprises the following steps: (1) acquiring training samples of partial discharge signals representing several partial discharge insulation defect types, extracting phase-resolved pulse sequence data based on the training samples, and normalizing the phase-resolved pulse sequence data; (2) the sparse self-encoder model being trained by the normalized phase-resolved pulse sequence data, and the dimension-reduced training eigenvectors being obtained based on the trained sparse self-encoder model; 3) training the extreme learning machine model by using the training eigenvector; (4) the PD signal to be identified being input into the trained sparse self-encoder model to extract the test feature vector, and the test feature vector being input into the trained limit learning machine model to obtain the defect types represented by the PD signal to be identified. The invention also discloses a defect identification system of a partial discharge signal.

Owner:SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com