Patents

Literature

425 results about "Category recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

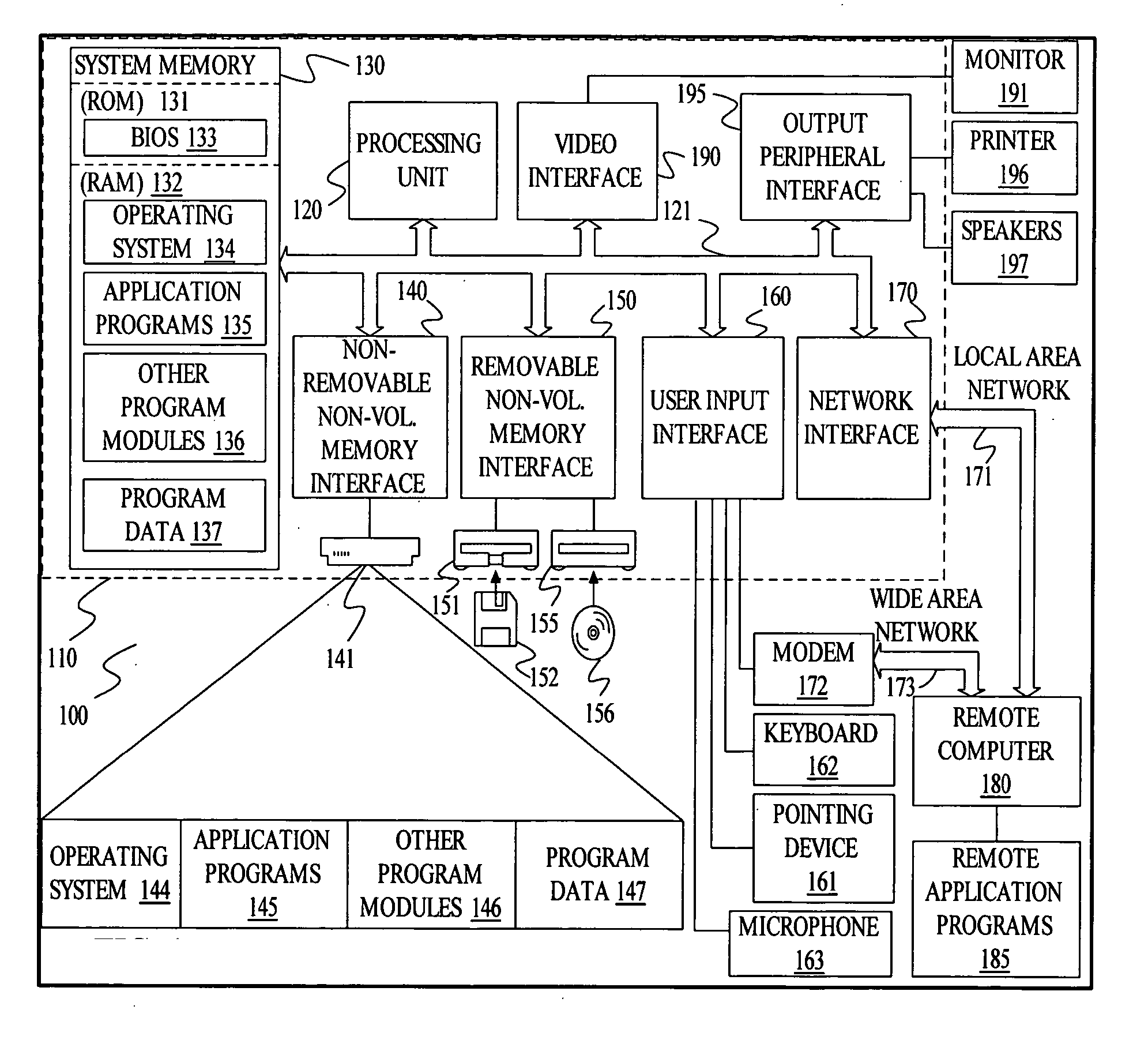

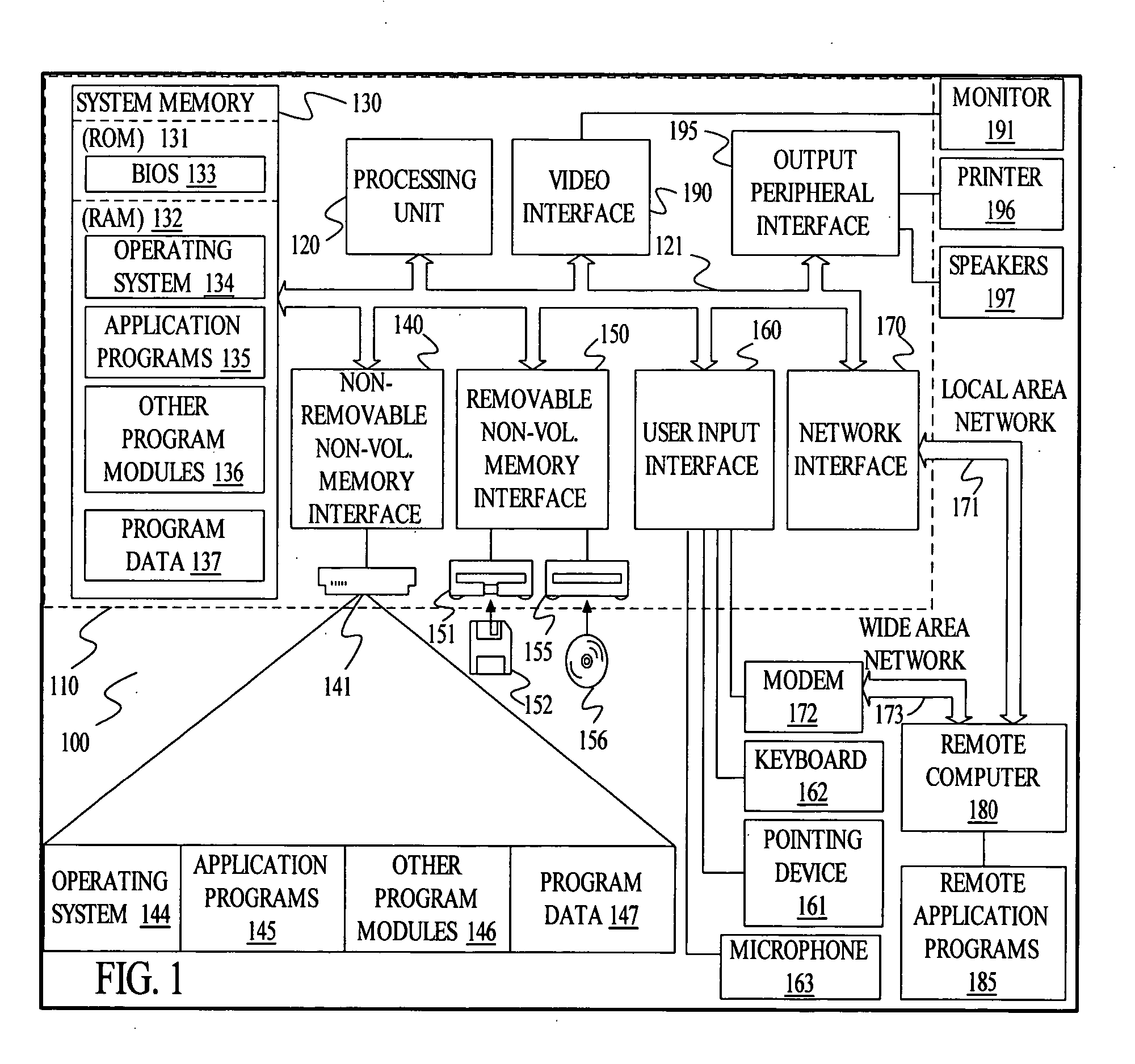

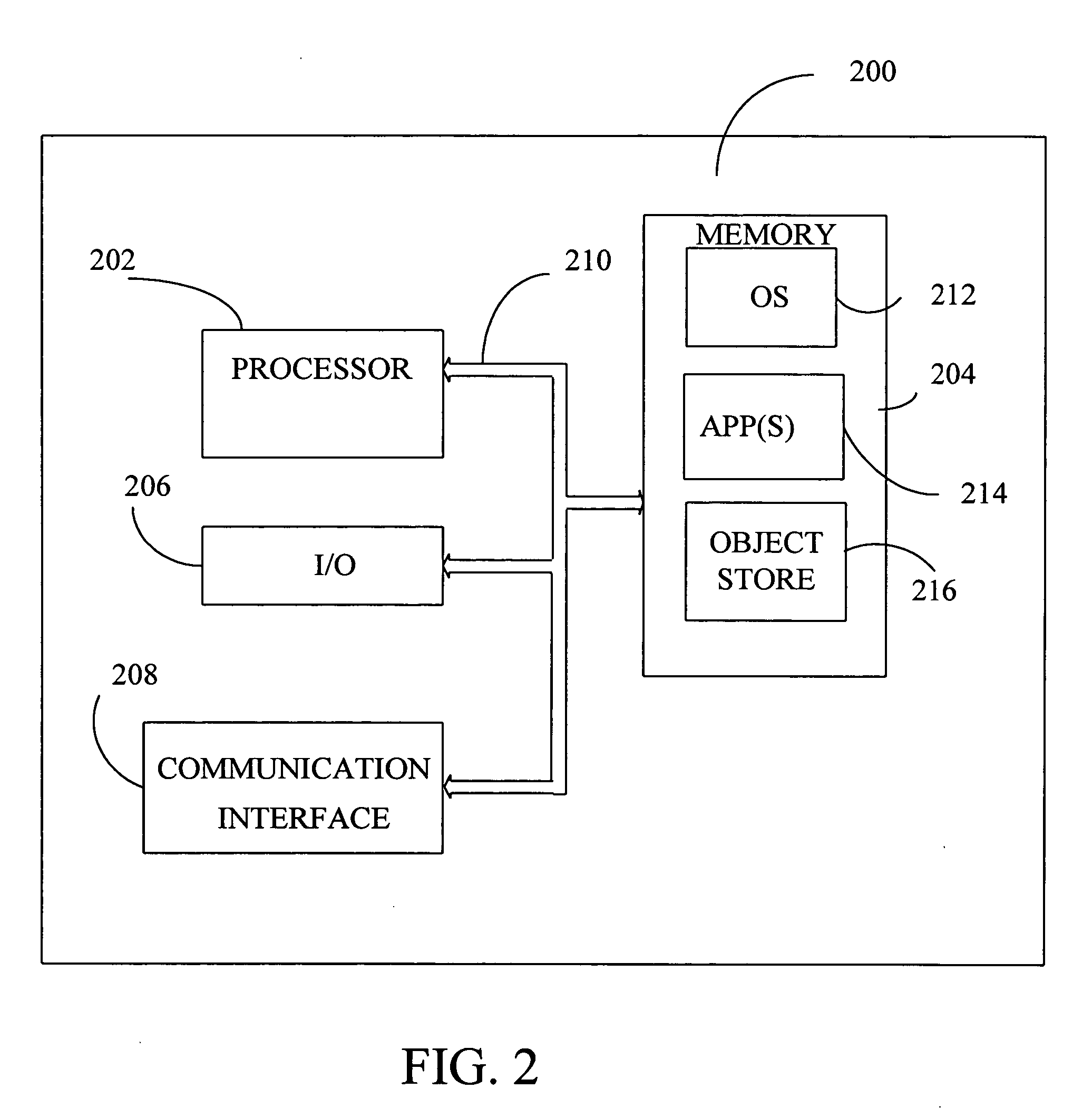

Method and system for gesture category recognition and training using a feature vector

InactiveUS6249606B1Image analysisCathode-ray tube indicatorsCategory recognitionApplication software

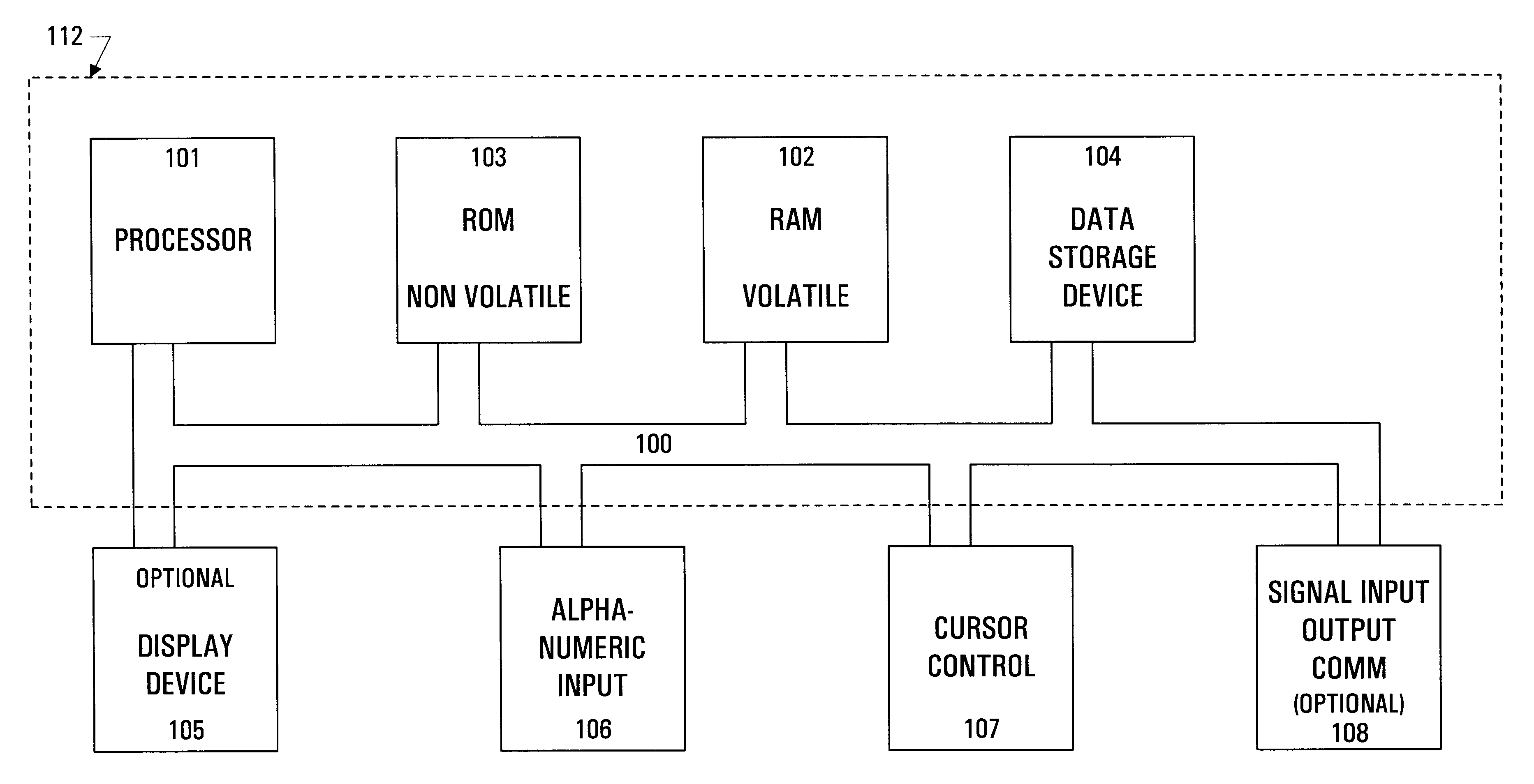

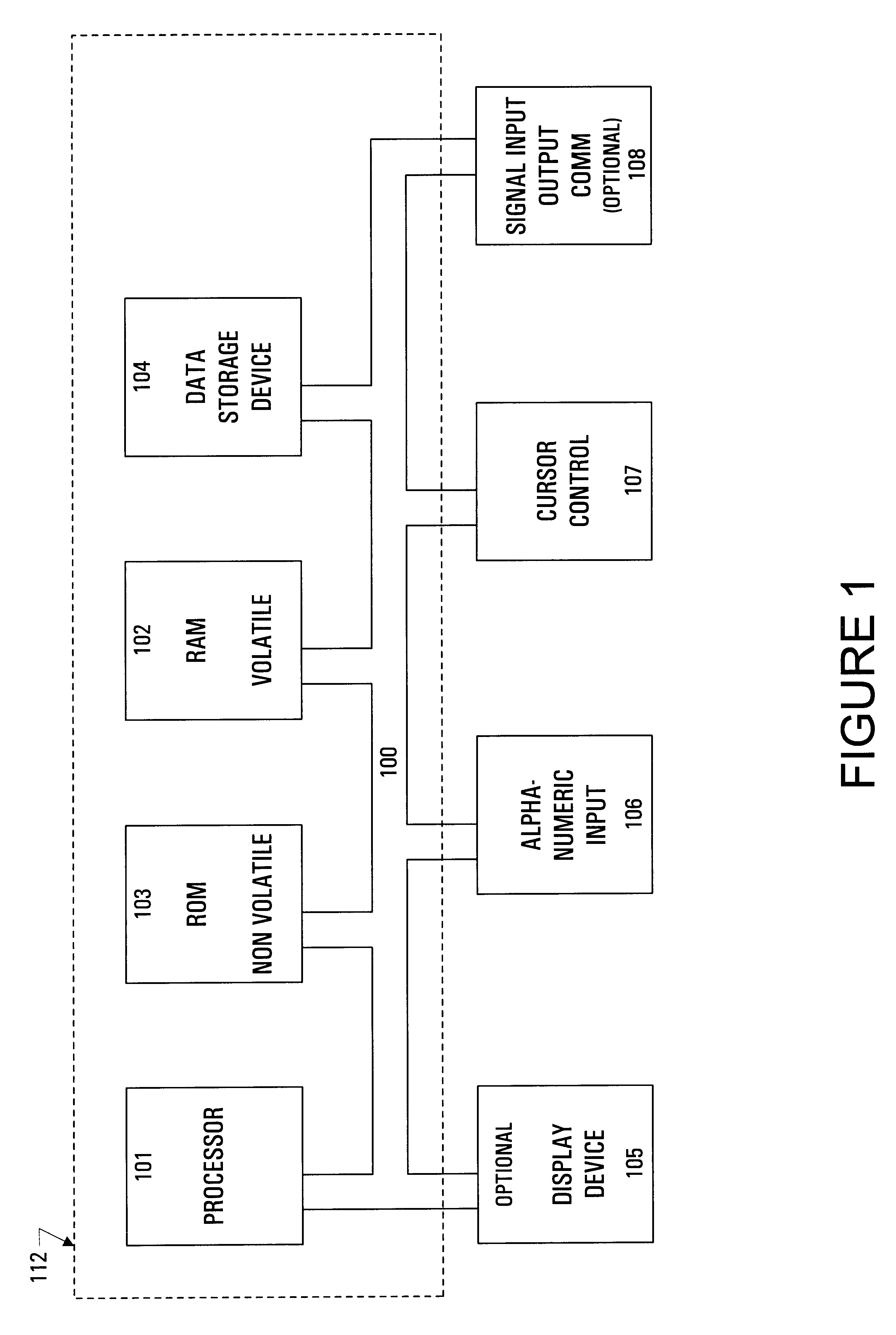

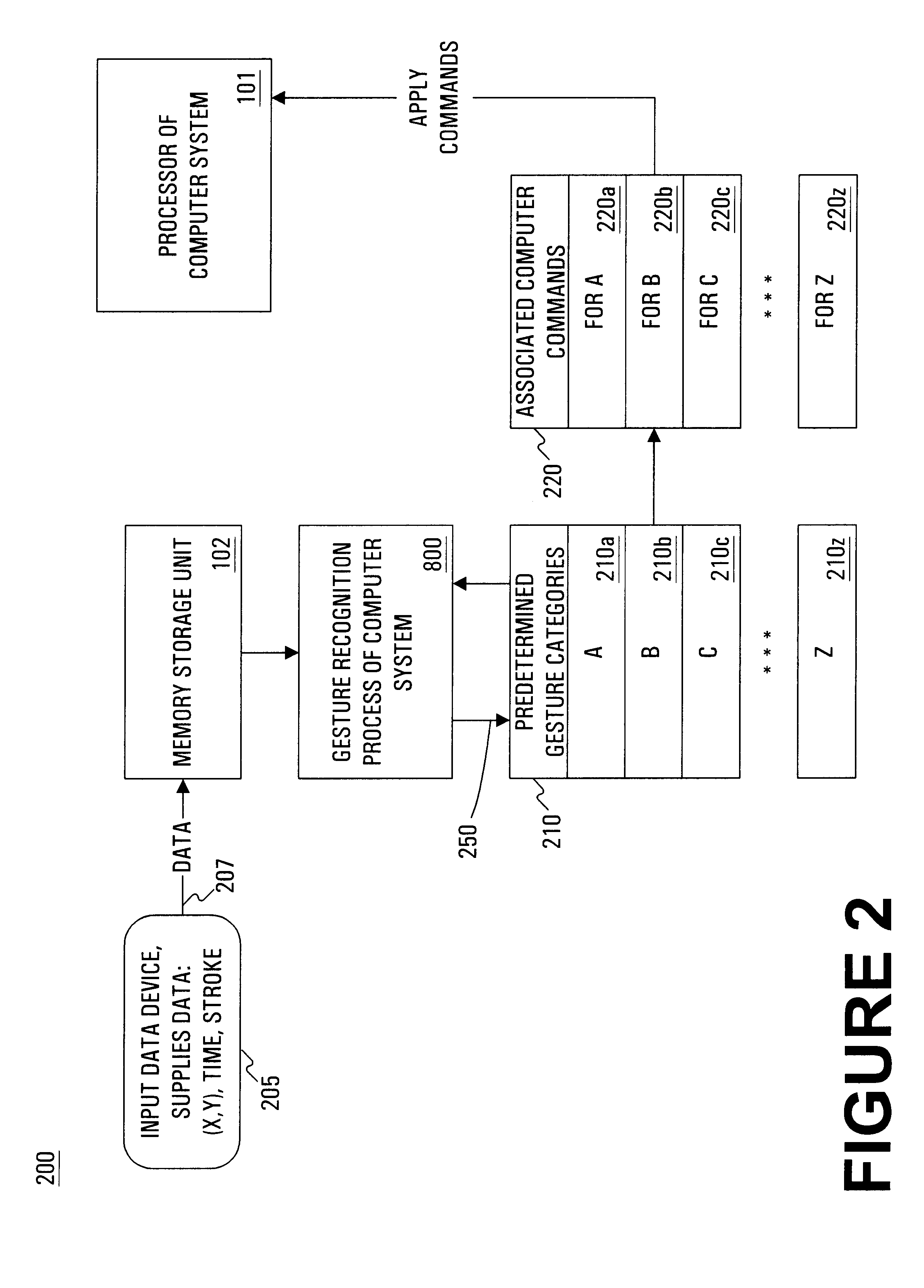

A computer implemented method and system for gesture category recognition and training. Generally, a gesture is a hand or body initiated movement of a cursor directing device to outline a particular pattern in particular directions done in particular periods of time. The present invention allows a computer system to accept input data, originating from a user, in the form gesture data that are made using the cursor directing device. In one embodiment, a mouse device is used, but the present invention is equally well suited for use with other cursor directing devices (e.g., a track ball, a finger pad, an electronic stylus, etc.). In one embodiment, gesture data is accepted by pressing a key on the keyboard and then moving the mouse (with mouse button pressed) to trace out the gesture. Mouse position information and time stamps are recorded. The present invention then determines a multi-dimensional feature vector based on the gesture data. The feature vector is then passed through a gesture category recognition engine that, in one implementation, uses a radial basis function neural network to associate the feature vector to a pre-existing gesture category. Once identified, a set of user commands that are associated with the gesture category are applied to the computer system. The user commands can originate from an automatic process that extracts commands that are associated with the menu items of a particular application program. The present invention also allows user training so that user-defined gestures, and the computer commands associated therewith, can be programmed into the computer system.

Owner:ASSOCIATIVE COMPUTING +1

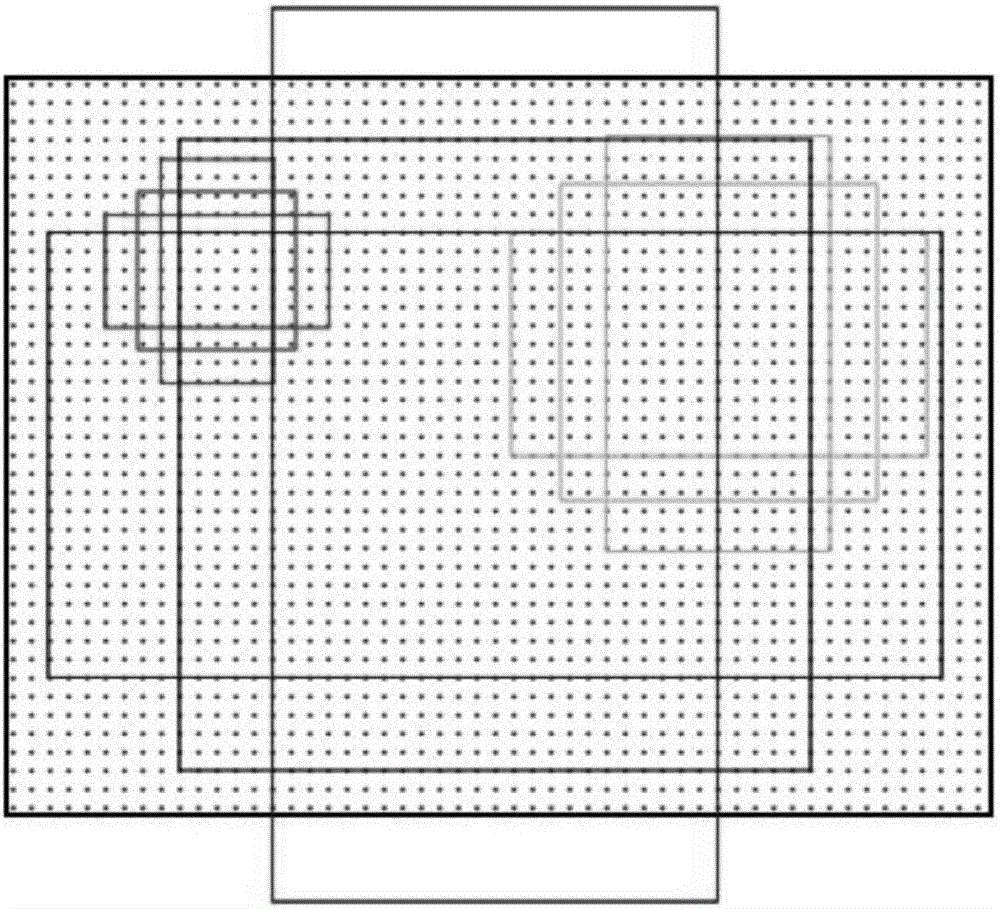

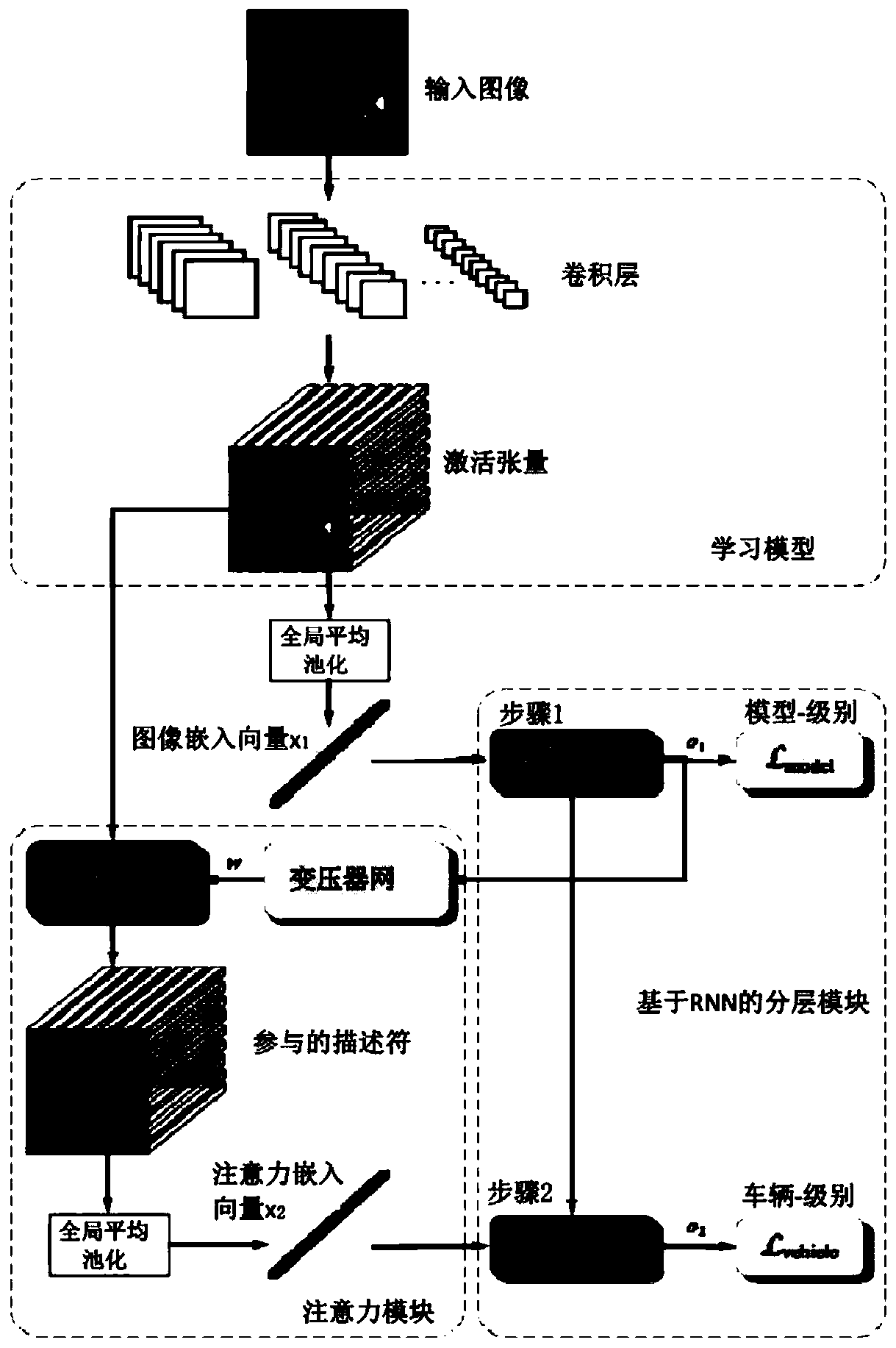

Vehicle type recognition method based on rapid R-CNN deep neural network

ActiveCN106250812AScalableQuick Subclass IdentificationCharacter and pattern recognitionNeural learning methodsCategory recognitionNerve network

The invention discloses a vehicle type recognition method based on a rapid R-CNN deep neural network, which mainly comprises unsupervised deep learning, a multilayer CNN (Convolutional Neural Network), a regional advice network, network sharing and a softmax classifier. The vehicle type recognition method realizes a framework for implementing end-to-end vehicle detection and recognition by using one rapid R-CNN network in a real sense, and is capable of carrying out quick vehicle sub-category recognition with high accuracy and robustness under the environment of being applicable to the shape diversity, the illumination variation diversity, the background diversity and the like of vehicle targets.

Owner:汤一平

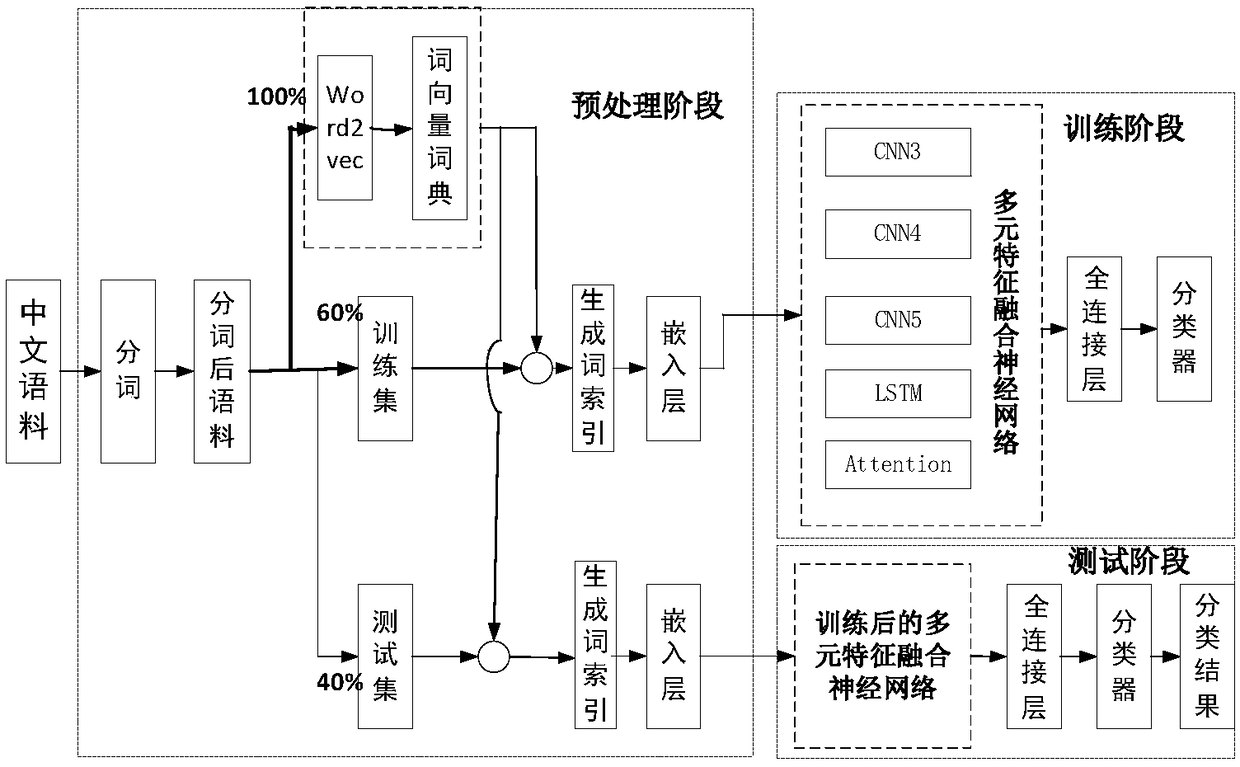

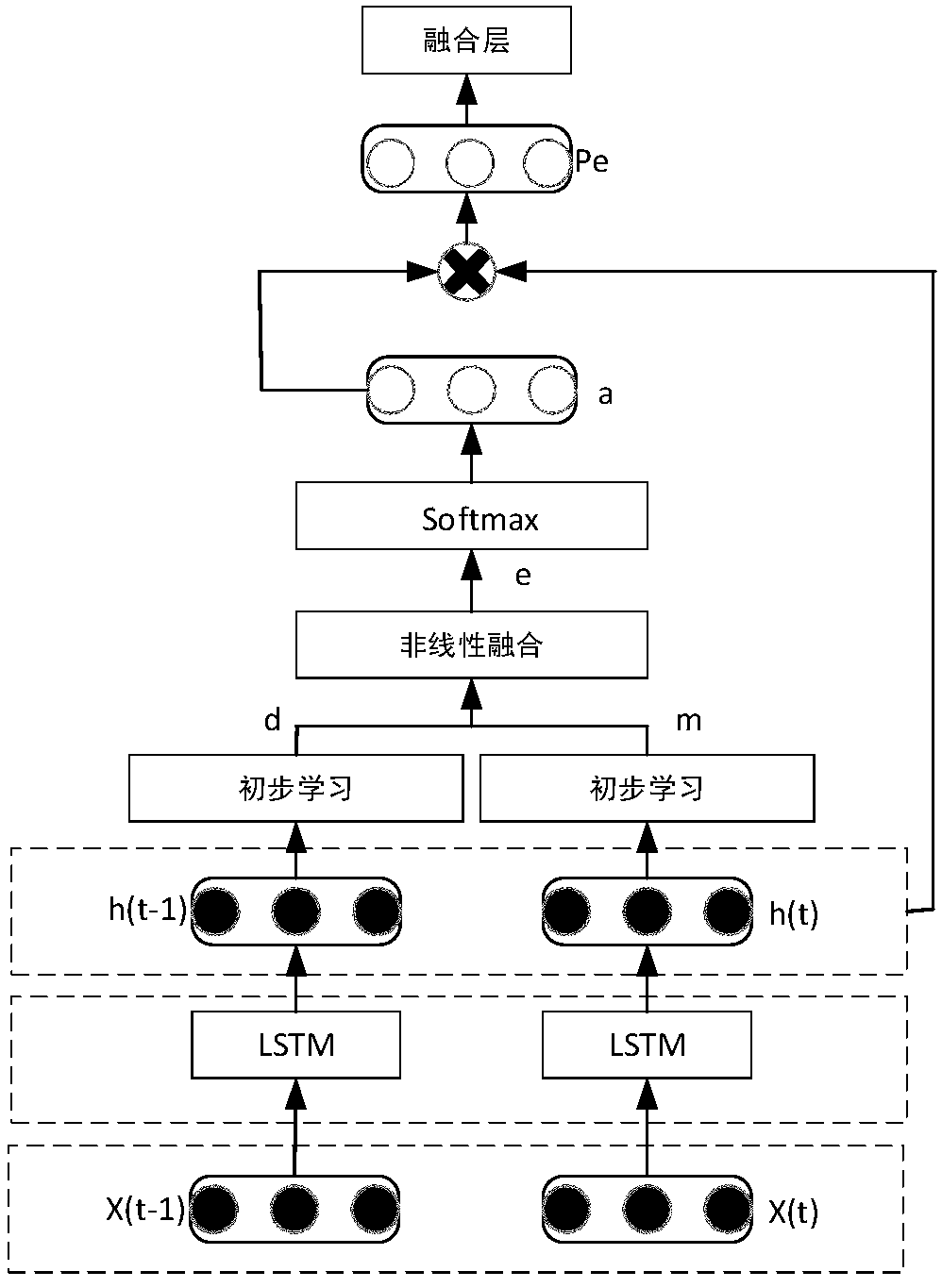

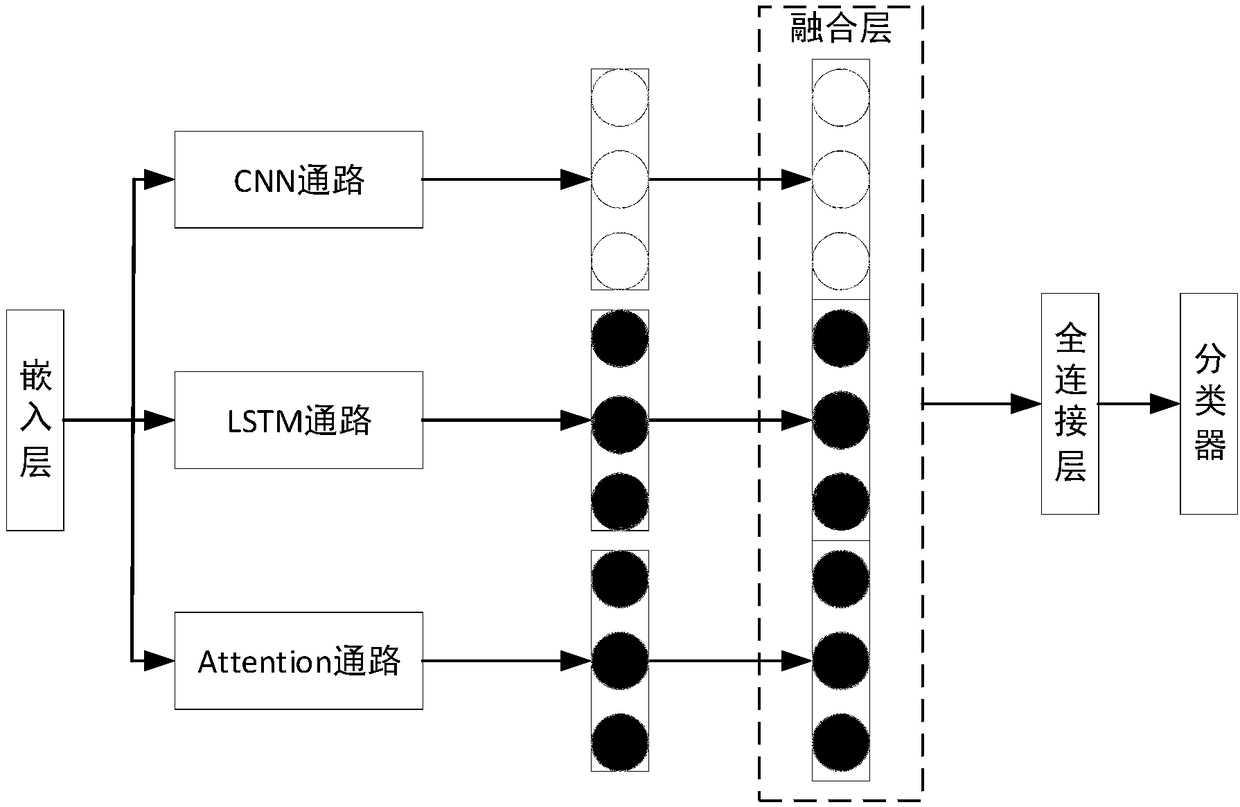

Multi-feature-fusion Chines-text classification method based on Attention neural network

ActiveCN108460089AExcavate fully and comprehensivelyImprove classification accuracyCharacter and pattern recognitionNeural architecturesCategory recognitionGranularity

A solution of the invention discloses a multi-feature-fusion Chines-text classification method based on Attention neural network, and belongs to the field of natural language processing. In order to further improve accuracy of Chinese-text classification, the method fully exploits features of text data under three different sizes of convolution kernel granularity through fusing three CNN paths; interconnections among the text data are manifested through fusing an LSTM path; and in particular, relatively important data features are enabled to play a greater role in a Chinese-text class recognition process through merging a provided Attention algorithm model, and thus recognition ability of a model on Chinese text classes is improved. Experiment results show that compared with a CNN model, an LSTM structure model and a combined model of the two parts under the same experiment conditions, the model provided by the invention is significantly improved in Chinese-text classification accuracy, and can be better applied to the Chinese-text classification field with high requirements on the classification accuracy.

Owner:HAINAN NORMAL UNIV

Speech recognition using categories and speech prefixing

InactiveUS20050203740A1Easy to identifyEasily direct textSpeech recognitionCategory recognitionSpeech sound

Speech recognition utilizing categories and prefixes is disclosed. Categories identify types of recognition and allow different grammars and prefixes for each category. Categories can be directed to specific applications and / or program modules. Uttering a prefix allows users to easily direct text to specific grammars for enhanced recognition, and also to direct the recognized text to the appropriate application / module.

Owner:MICROSOFT TECH LICENSING LLC

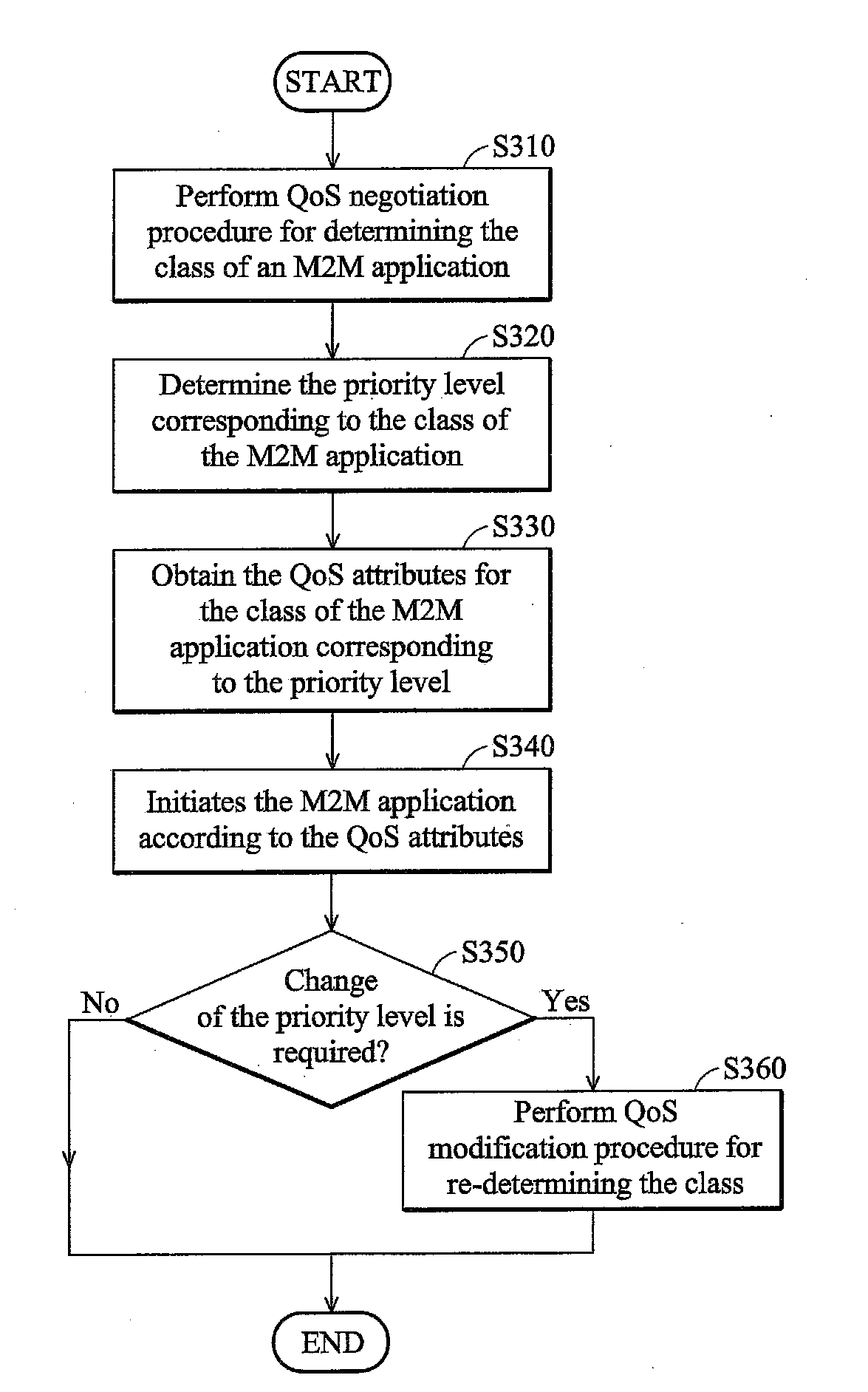

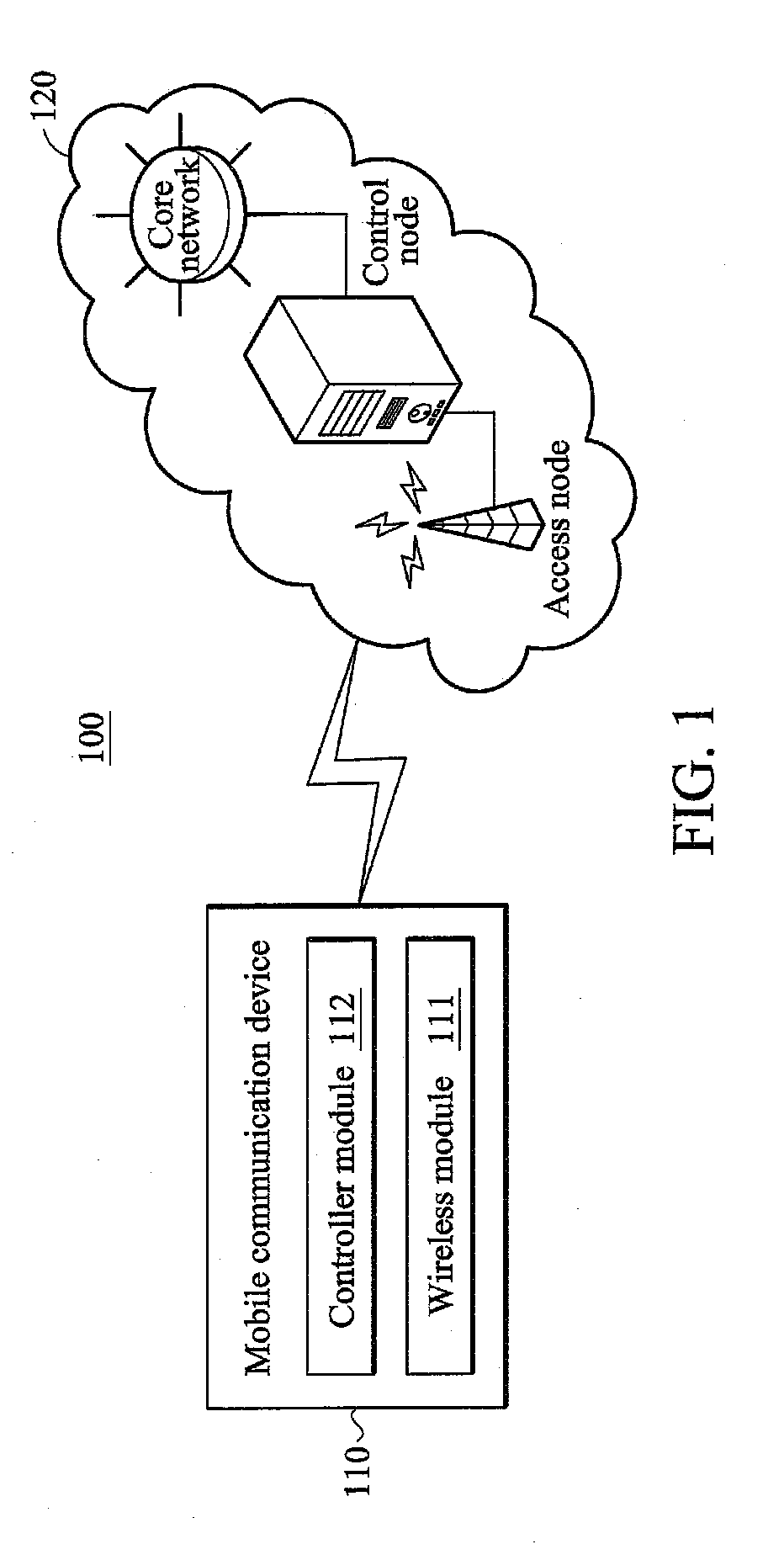

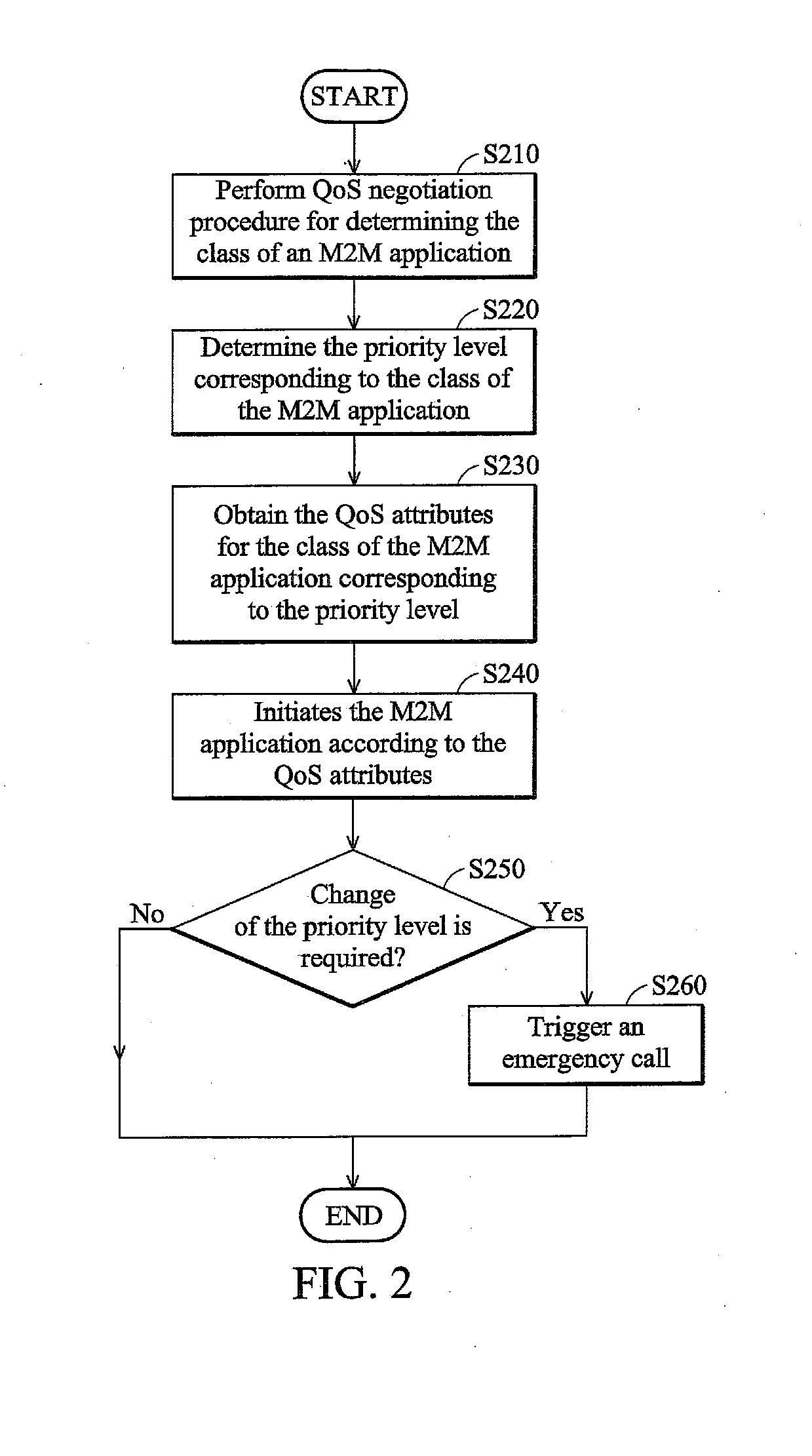

Class identification methods for machine-to-machine (M2M) applications, and apparatuses and systems using the same

ActiveUS20120117140A1Network traffic/resource managementMultiple digital computer combinationsCategory recognitionWireless transmission

A mobile communication device for application-based class identification is provided with a wireless module and a controller module. The wireless module performs wireless transmissions and receptions to and from a service network. The controller module determines a class of a Machine-to-Machine (M2M) application, and determines a priority level corresponding to the class of the M2M application. Also, the controller module initiates the M2M application via the wireless module according to at least one M2M parameter corresponding to the priority level.

Owner:APPLE INC

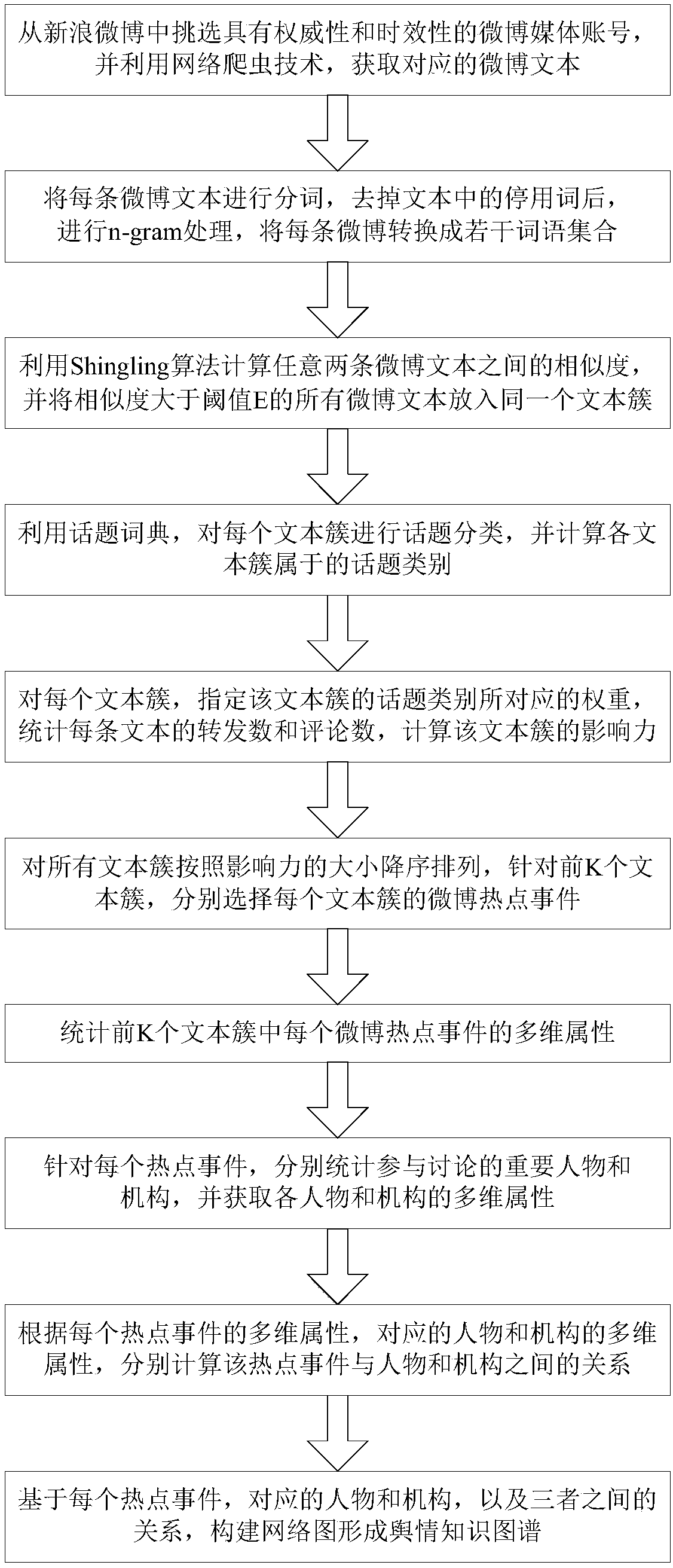

Method for constructing public opinion knowledge map based on hot events

ActiveCN107633044AEasy to handleRealize all-round analysisData processing applicationsSpecial data processing applicationsCategory recognitionDirectional analysis

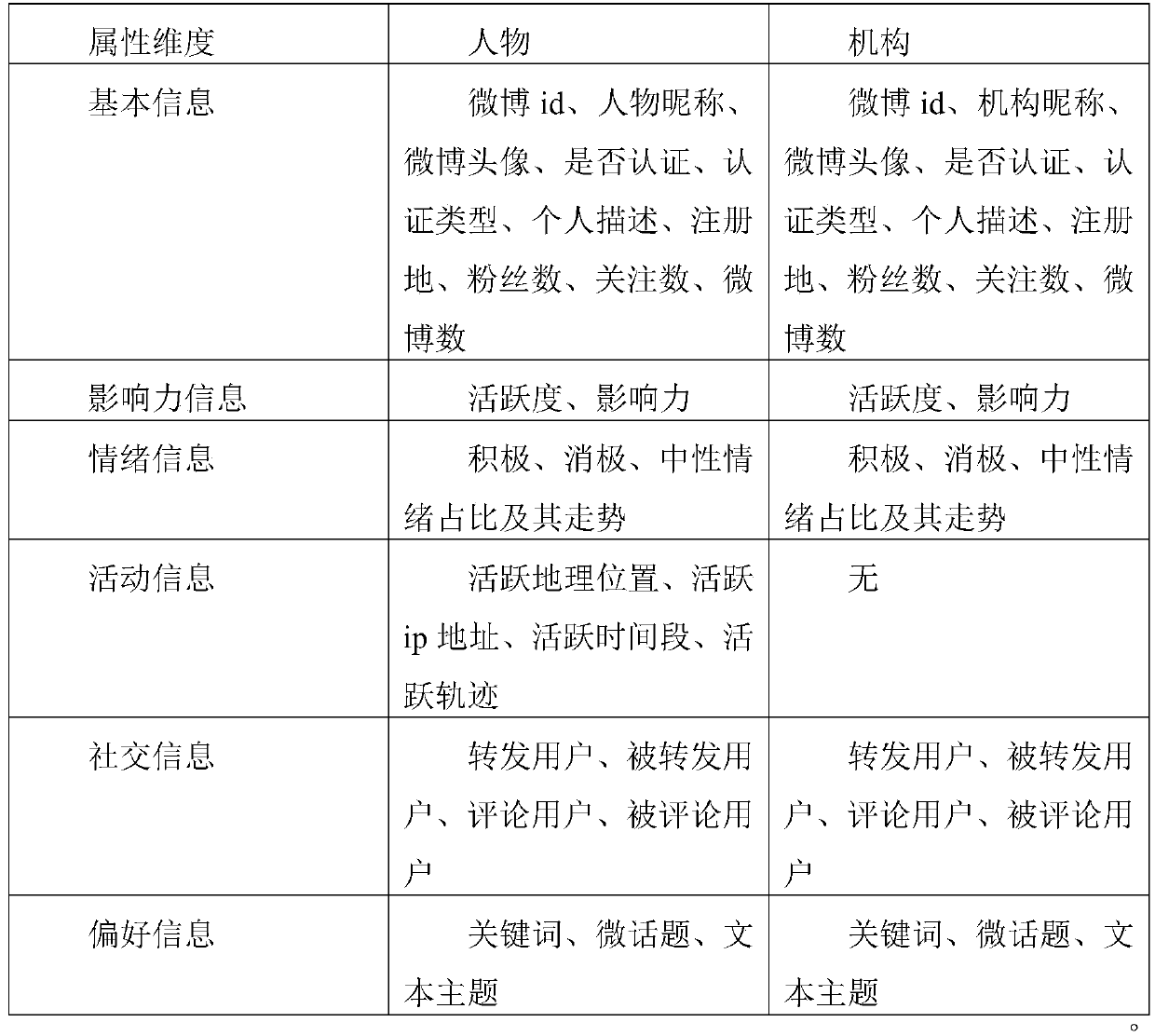

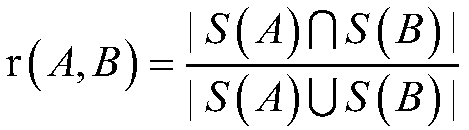

The present invention discloses a method for constructing a public opinion knowledge map based on hot events, and belongs to the field of natural language processing. The method comprises: obtaining microblogging texts in real time, processing each microblogging text, constructing text clusters, calculating a topic category to which each text cluster belongs, identifying hot events in each clusterby category, and collecting statistics of multi-dimensional attributes of each hot event; identifying key people and organizations involved in the discussion of the hot events and obtaining the multi-dimensional attributes of the key people and organizations; and constructing a multi-dimensional attribute system and a relationship type among events, people and organizations, taking the relationship among the events, people and organizations as association, and constructing a public opinion knowledge map. According to the method disclosed by the present invention, the hot events, people and organizations can be described from multiple dimensions, and all-directional analysis of hot events, people and organizations can be implemented; and according to the actual needs, the weight of different topic categories can be set, and construction of the public opinion knowledge map of different topics can be realized.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT

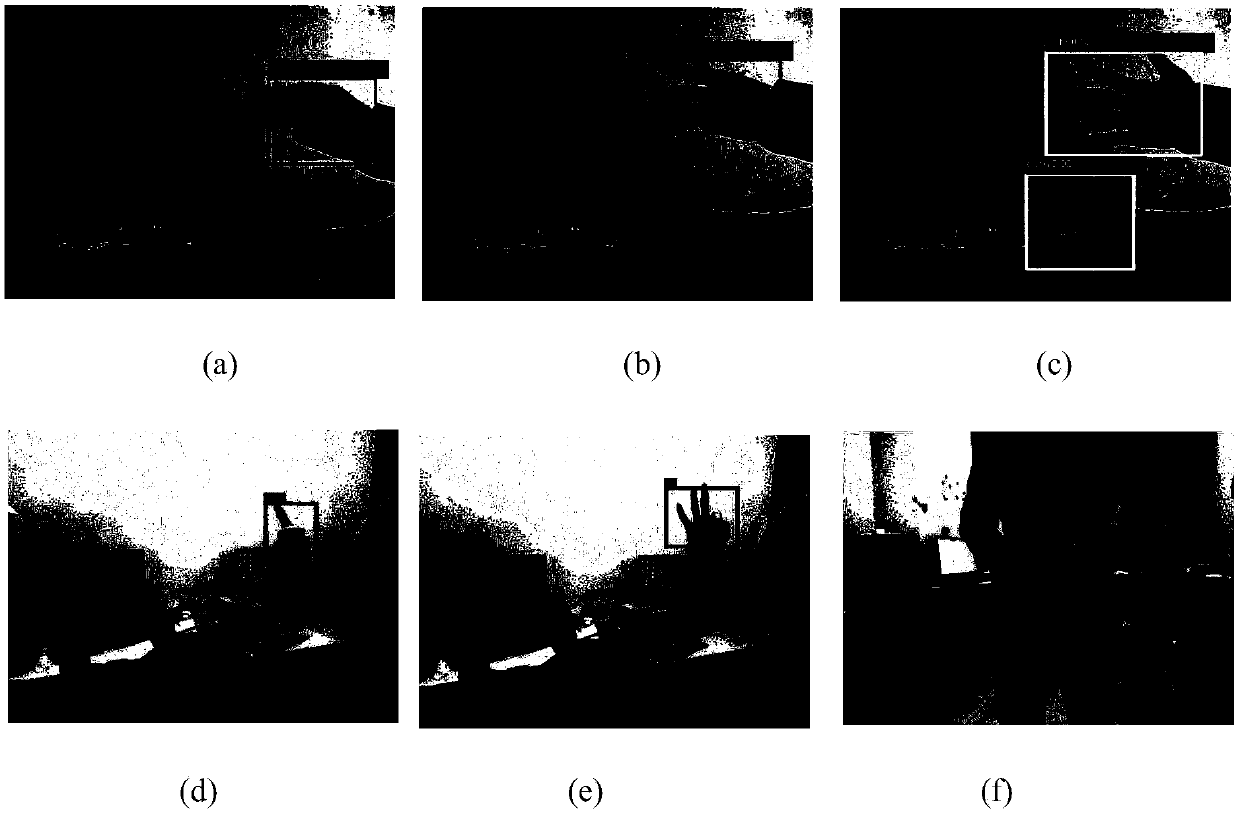

Computer vision-based dynamic gesture recognition method

ActiveCN107808143AEasy extractionSimple stepsCharacter and pattern recognitionNeural architecturesCategory recognitionState of art

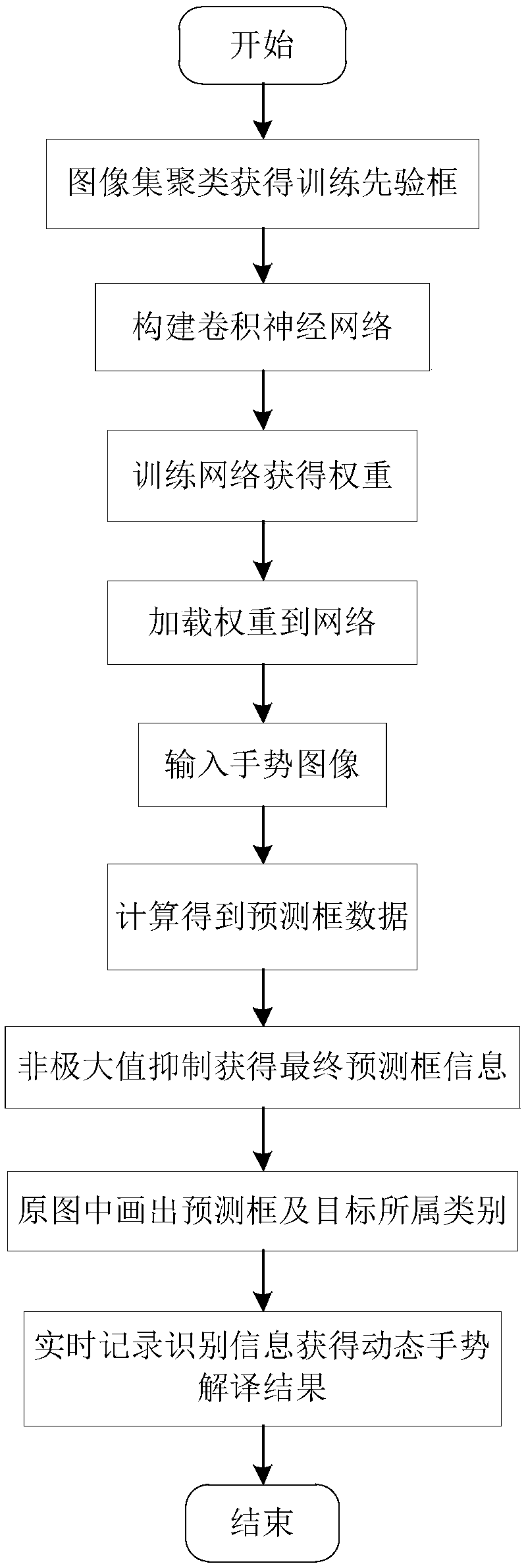

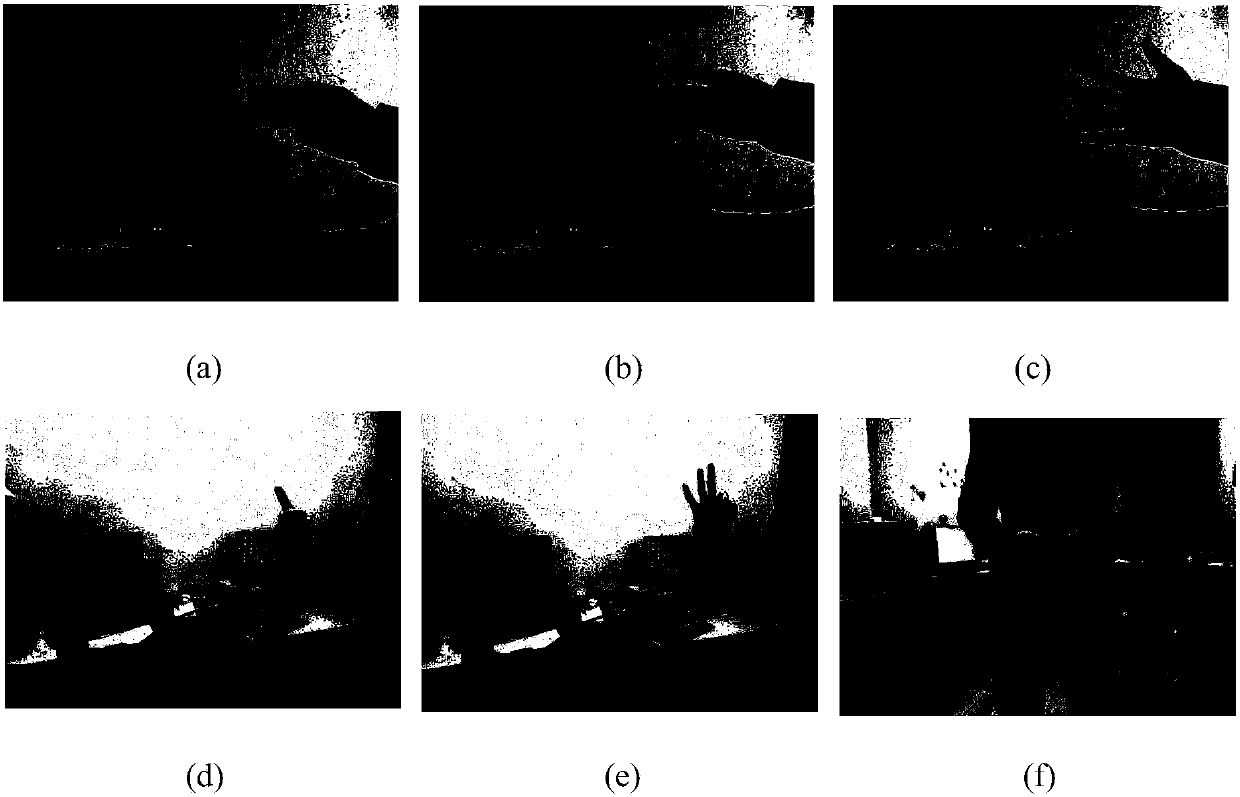

The invention discloses a computer vision-based dynamic gesture recognition method, and aims at solving the gesture recognition problems under complicated backgrounds. The method is realized through the following steps of: acquiring a gesture data set and carrying out artificial labelling; clustering a labelled image set real frame to obtain a trained prior frame; constructing an end-to-end convolutional neural network which is capable of predicting a target position, a size and a category at the same time; training the network to obtain a weight; loading the weight to the network; inputting agesture image to carrying out recognition; processing an obtained position coordinate and category information via a non-maximum suppression method so as to obtain a final recognition result image; and recording recognition information in real time to obtain a dynamic gesture interpretation result. According to the method, the defect that hand detection and category recognition in gesture recognition are carried out in different steps in the prior art is overcome, the gesture recognition process is greatly simplified, the recognition correctness and speed are improved, the recognition systemrobustness is strengthened, and a dynamic gesture interpretation function is realized.

Owner:XIDIAN UNIV

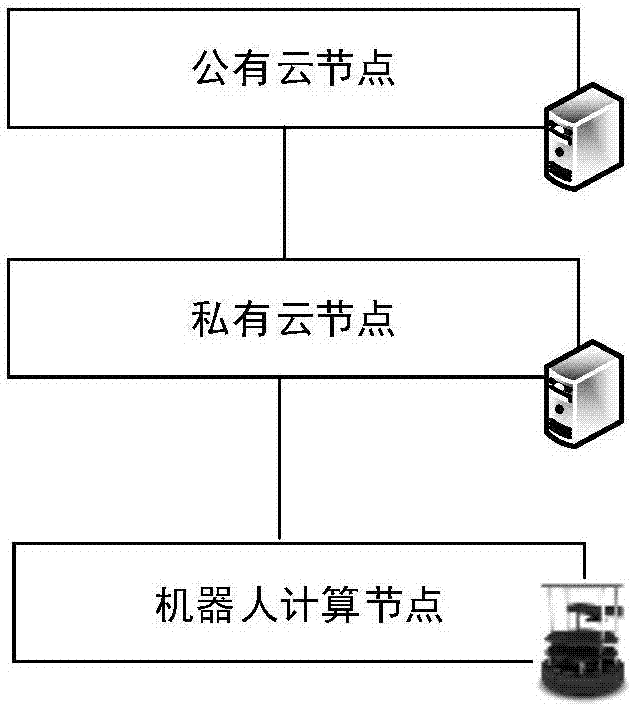

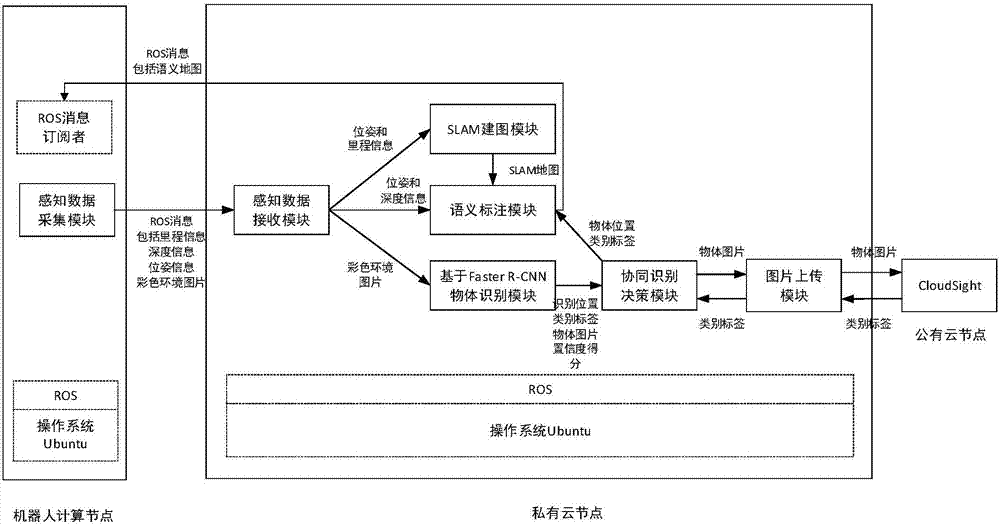

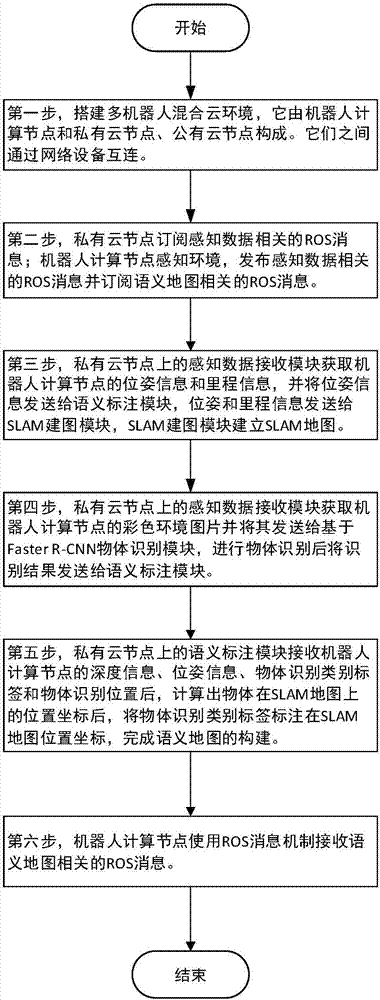

Semantic map construction method based on cloud robot mixed cloud architecture

ActiveCN107066507AReduce computing loadImprove accuracyCharacter and pattern recognitionGeographical information databasesComputer scienceSemantic map

The invention discloses a semantic map construction method based on cloud robot mixed cloud architecture, and aims to achieve a proper balance for improving object identification accuracy and shortening identification time. The technical scheme of the method is that mixed cloud consisting of a robot, a private cloud node and a public cloud node is constructed, wherein the private cloud node obtains an environment picture shot by the robot and milemeter and position data on the basis of an ROS (Read-Only-Storage) message mechanism, and SLAM (Simultaneous Location and Mapping) is used for drawing an environmental geometric map in real time on the basis of the milemeter and position data. The private cloud node carries out object identification on the basis of an environment picture, and an object which may be wrongly identified is uploaded to the public cloud node to be identified. The private cloud node maps an object category identification tag returned from the public cloud node and an SLAM map, and the corresponding position of the object category identification tag on a map finishes the construction of a semantic map. When the method is adopted, the local calculation load of the robot can be lightened, request response time is minimized, and object identification accuracy is improved.

Owner:NAT UNIV OF DEFENSE TECH

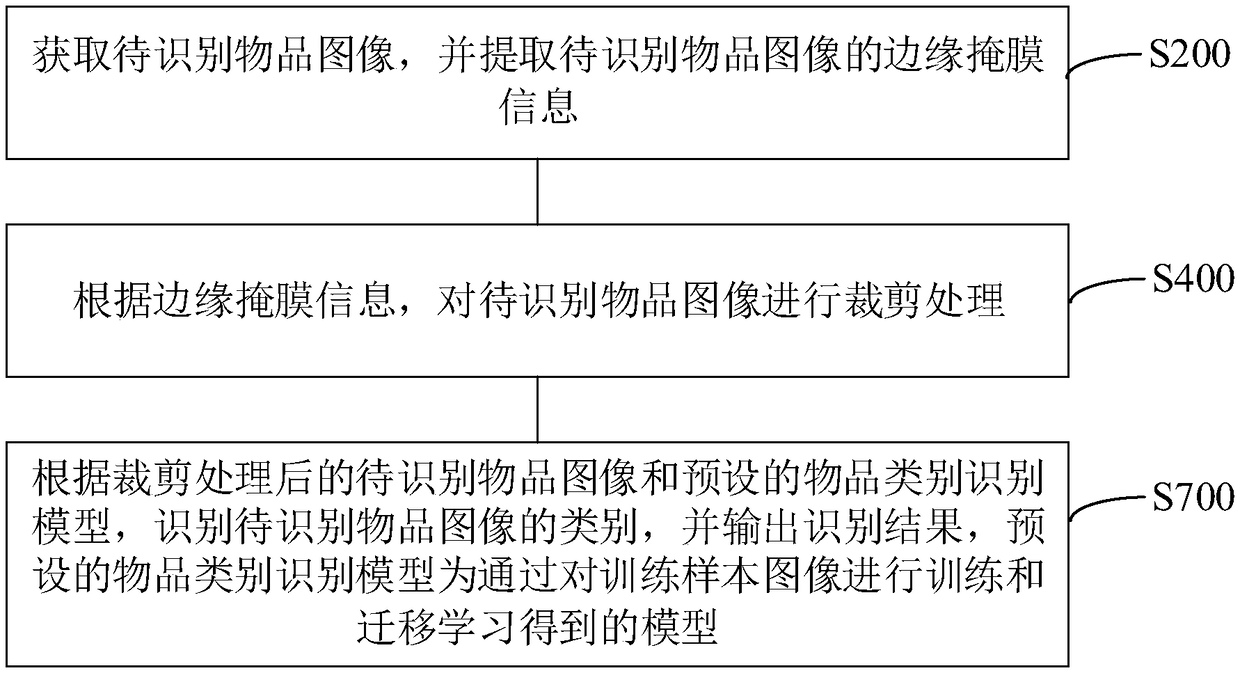

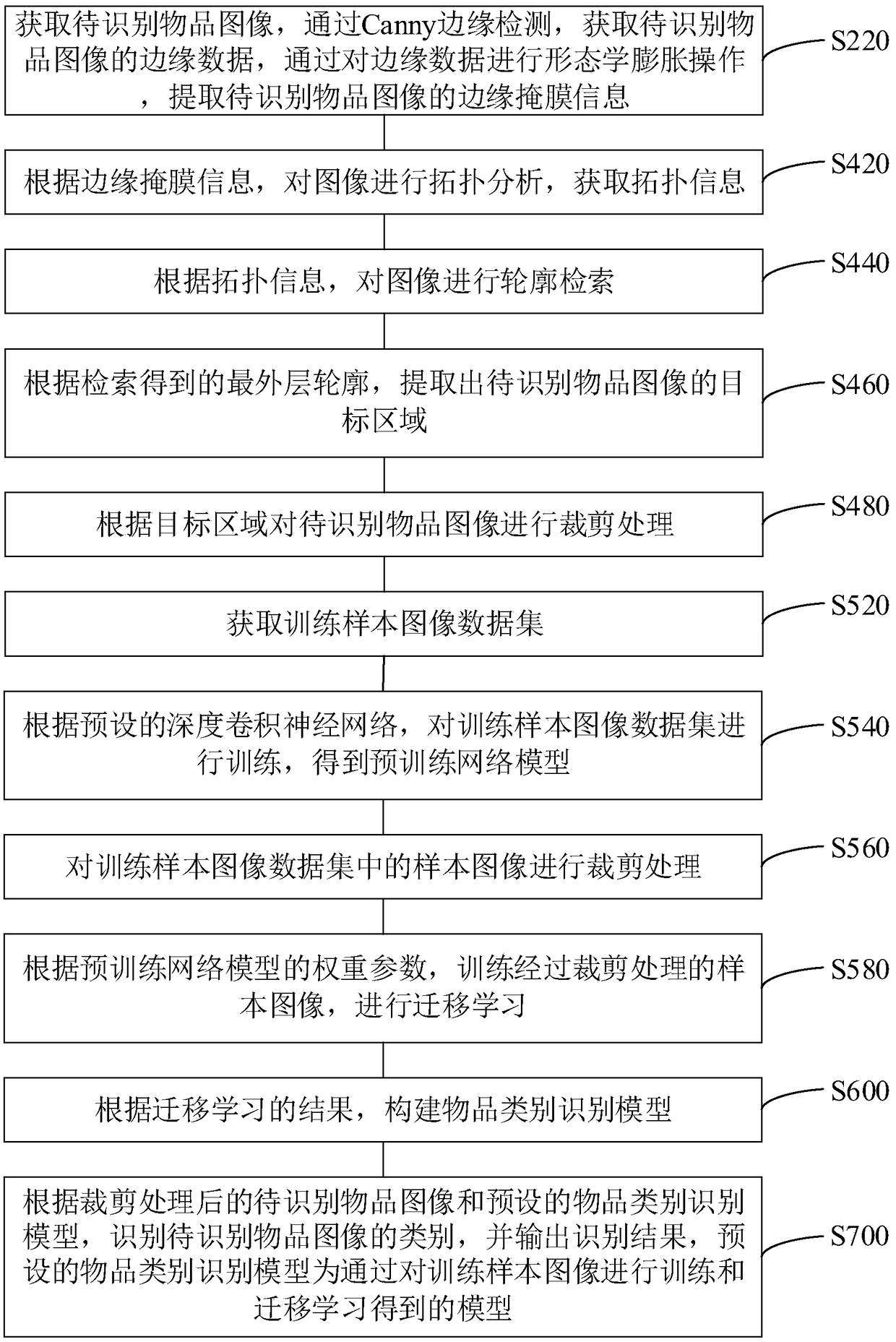

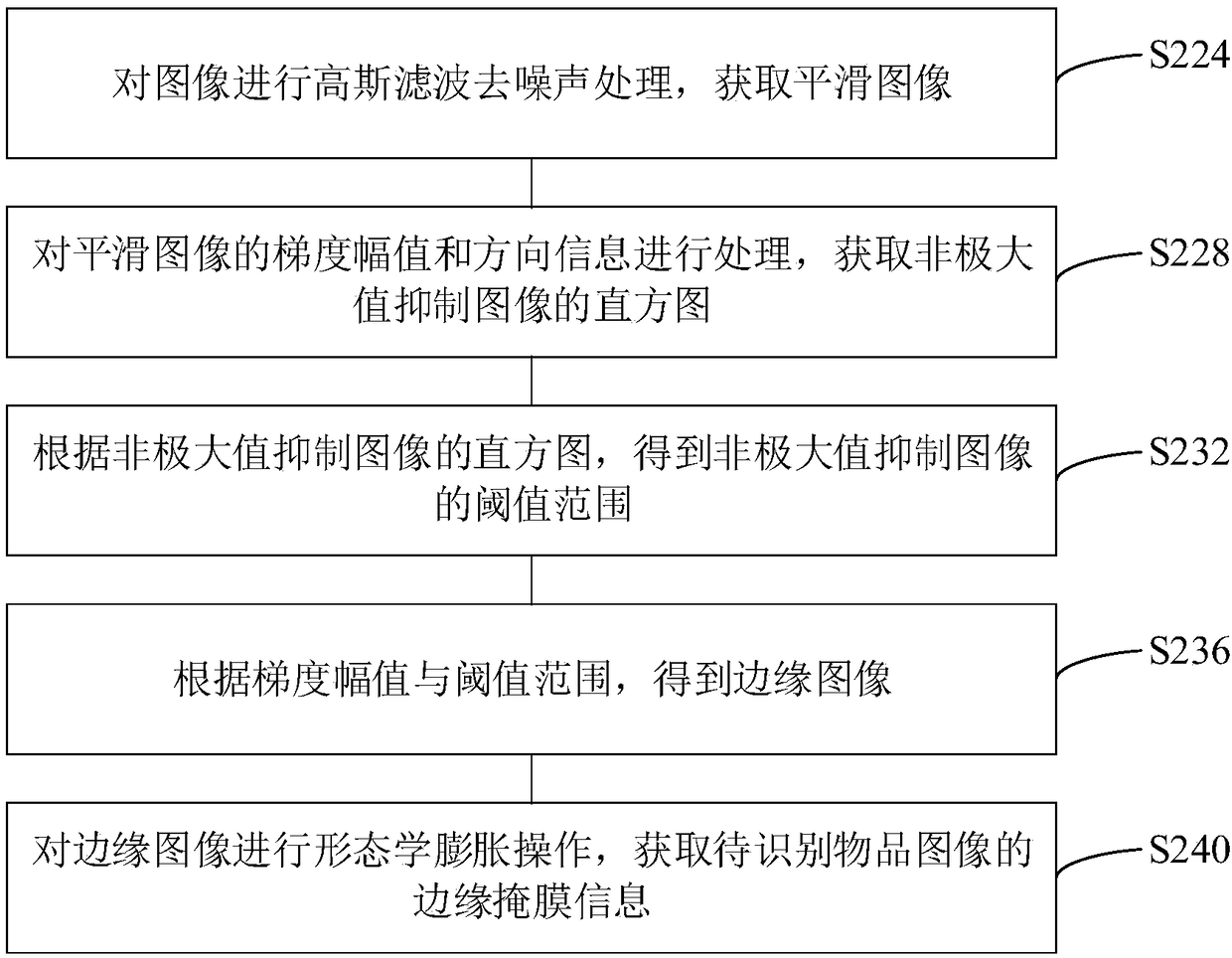

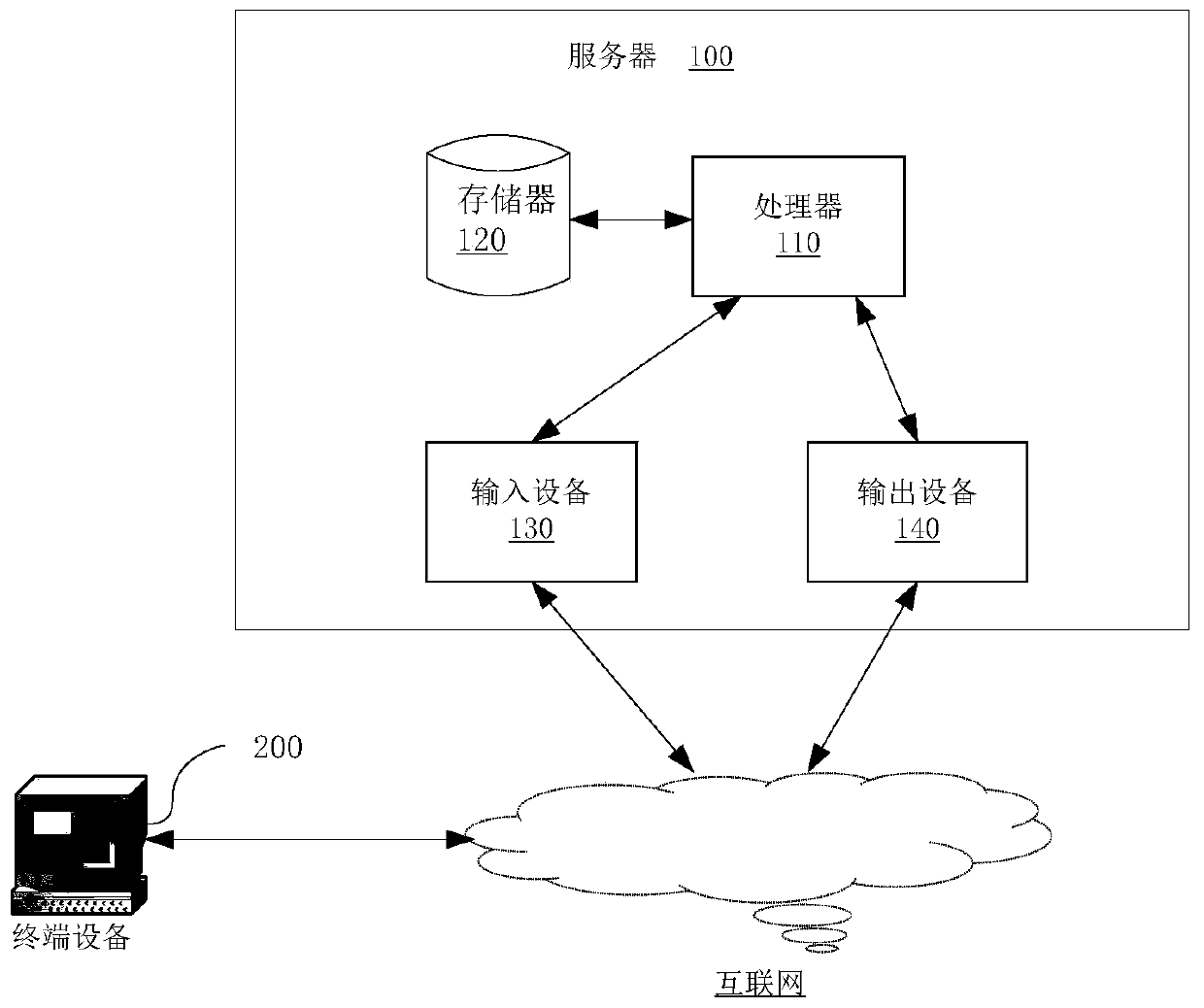

Article category recognition method and device, computer equipment and storage medium

InactiveCN108647588AReduce distractionsThe recognition result is accurateCharacter and pattern recognitionNeural architecturesCategory recognitionSample image

The invention relates to an article category recognition method and device, computer equipment and a storage medium. The method comprises the steps that a to-be-recognized article image is acquired, and edge mask information of the to-be-recognized article image is extracted; according to the edge mask information, clipping processing is performed on the to-be-recognized article image; and according to the to-be-recognized article image obtained after clipping processing and a preset article category recognition model, the category of the to-be-recognized article image is recognized, and a recognition result is output, wherein the preset article category recognition model is a model obtained by performing training and migration learning on training sample images. Through the article category recognition model obtained through pre-training and migration learning, the control requirement on article accuracy is improved; and by use of the article category recognition model to recognize the to-be-recognized article image, the article recognition result can be accurately acquired.

Owner:广州绿怡信息科技有限公司

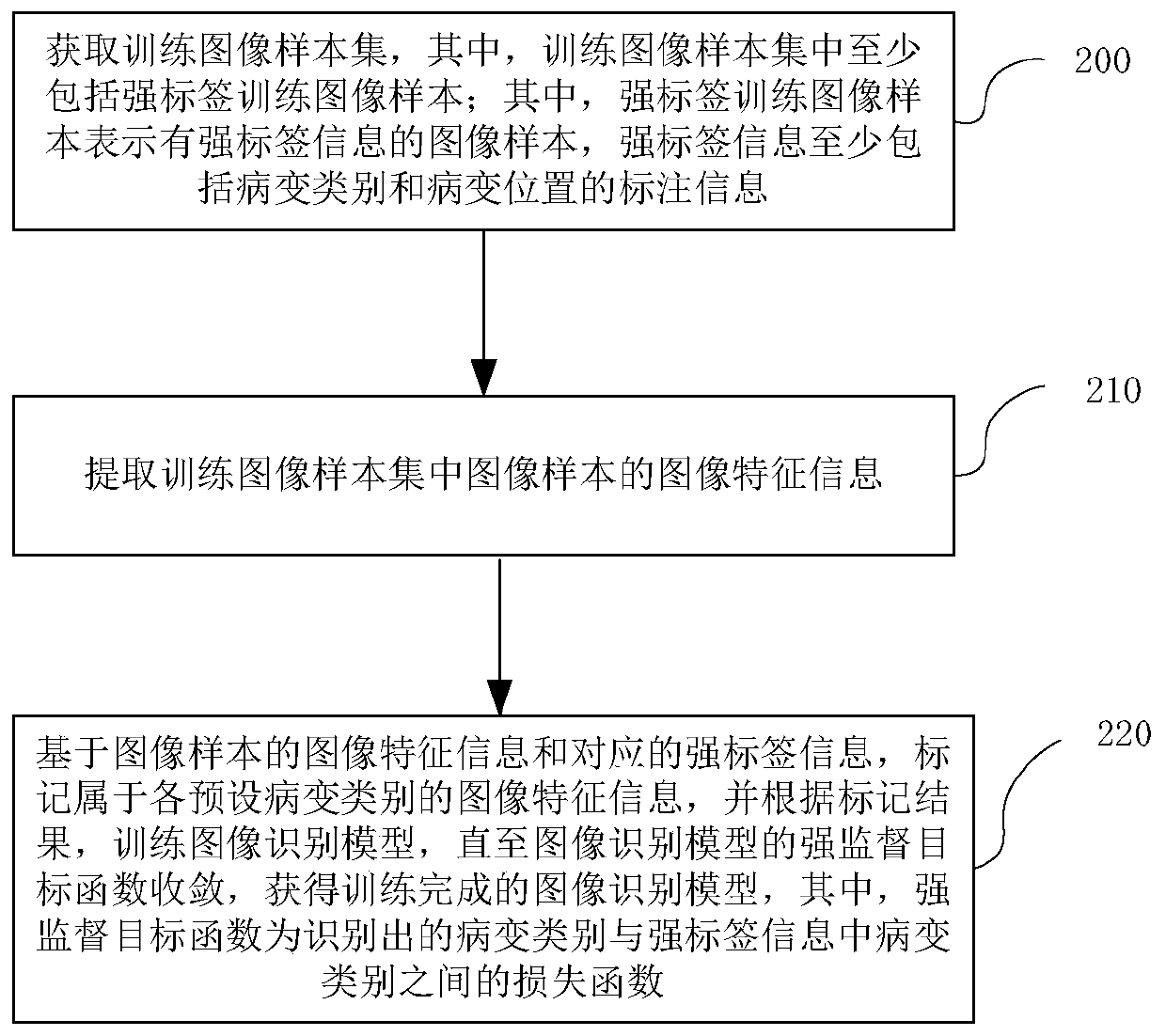

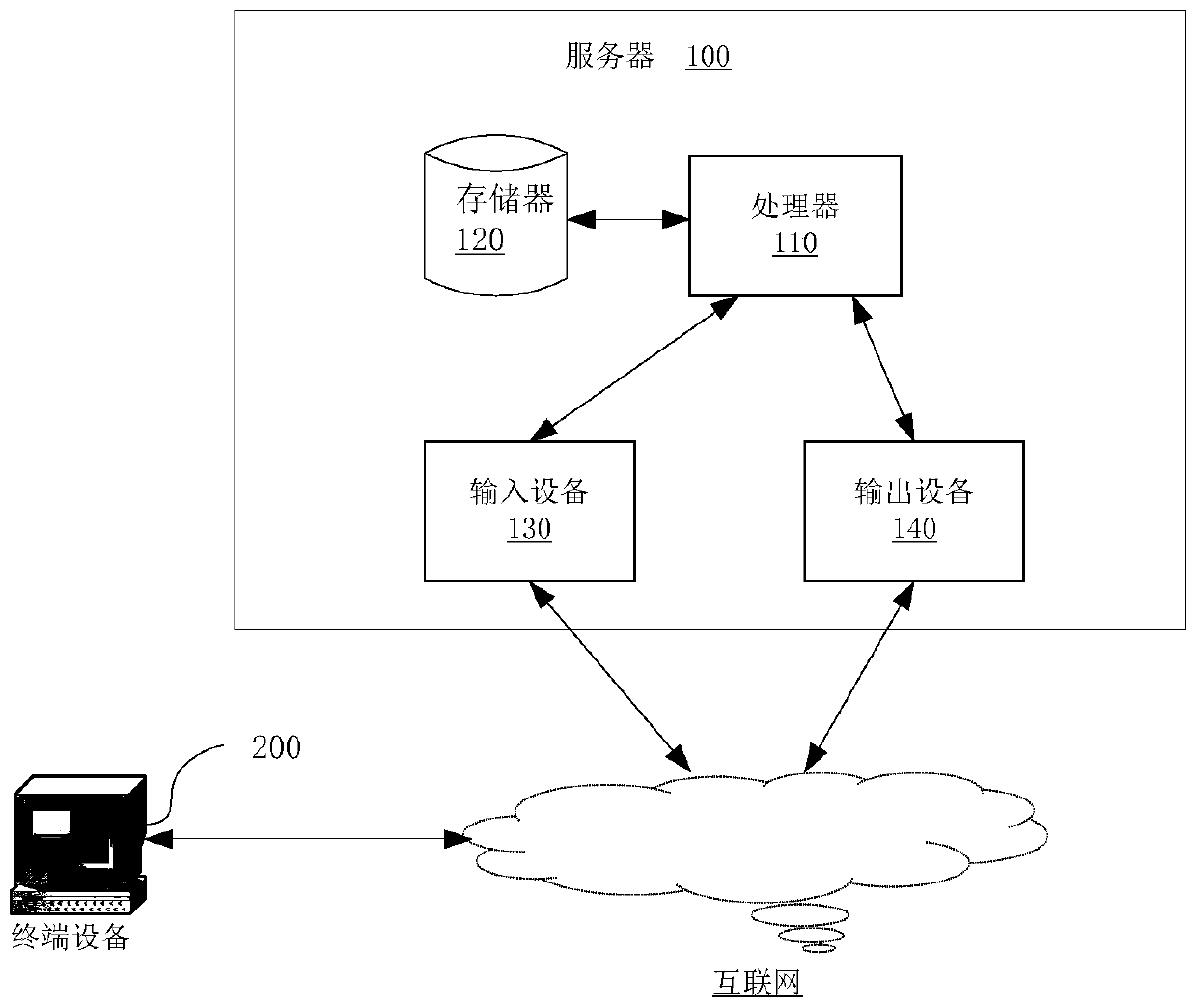

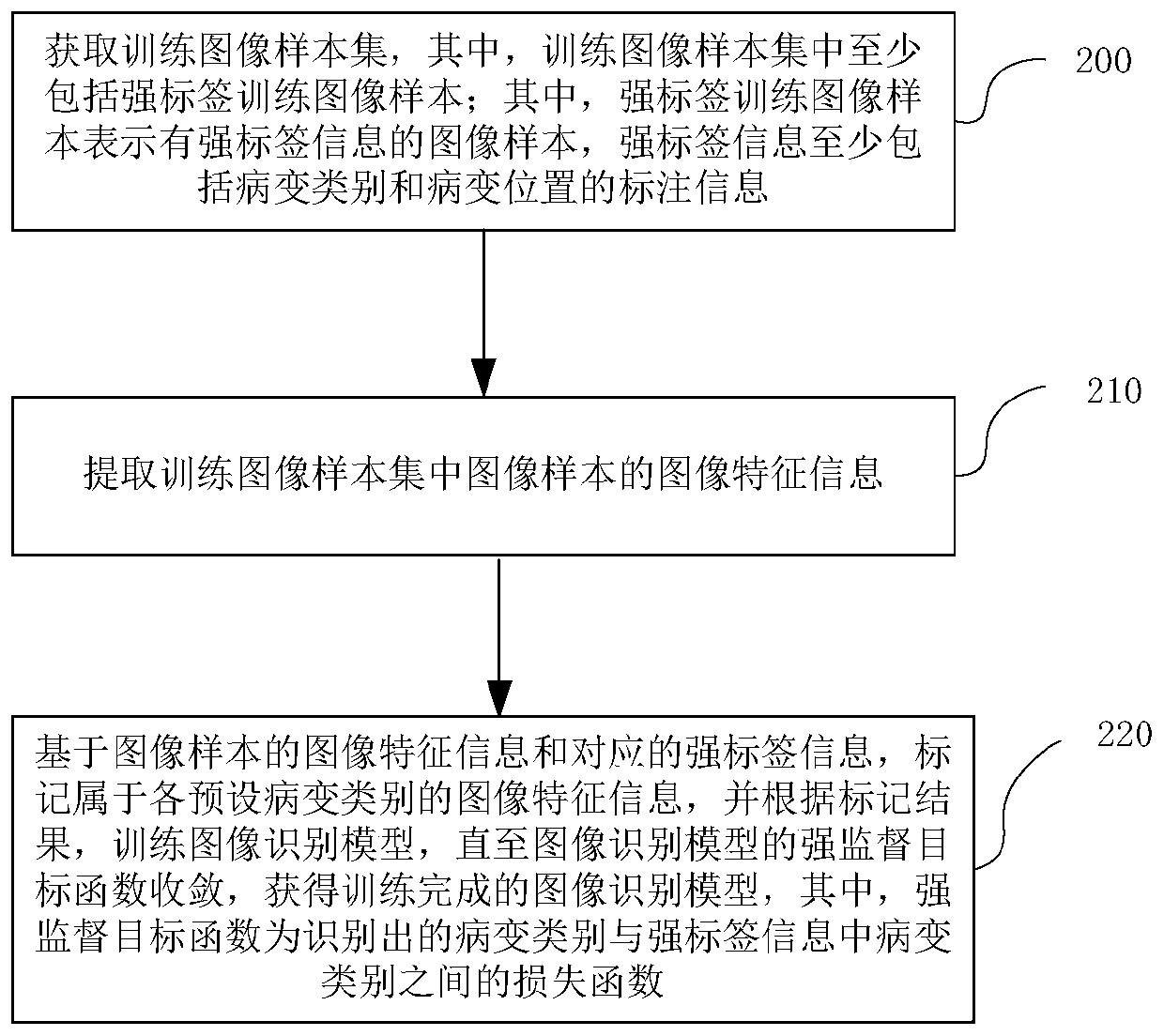

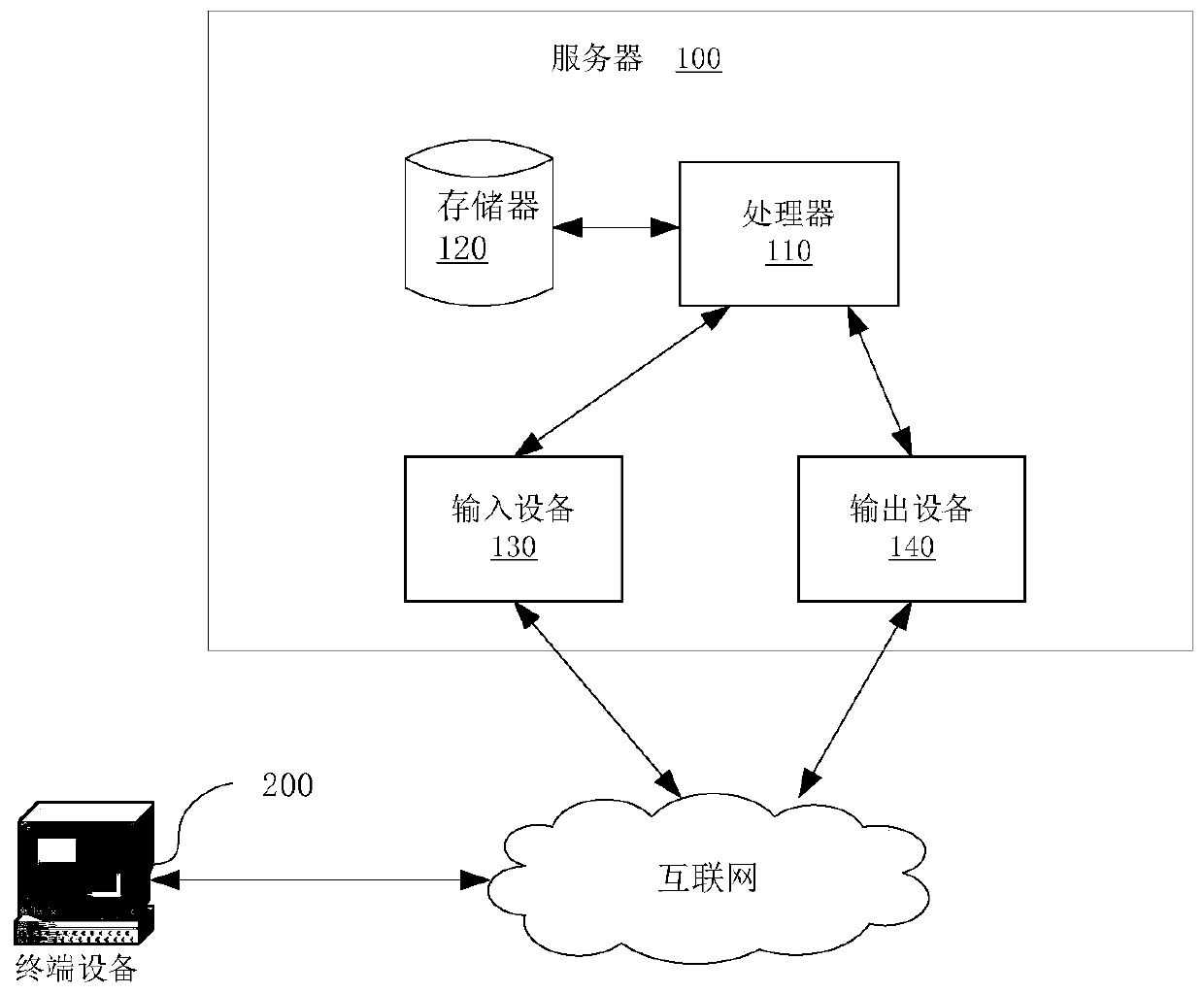

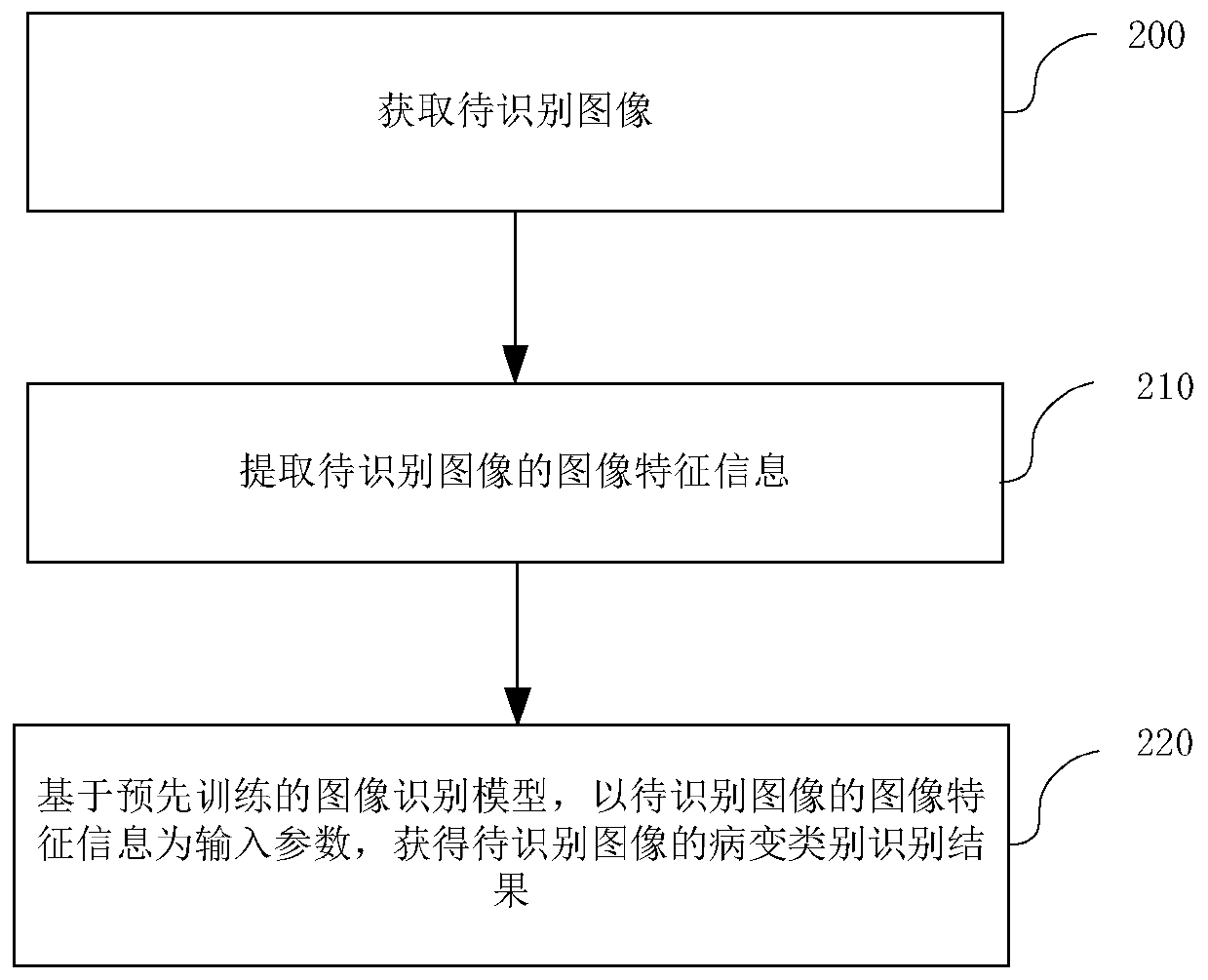

Image recognition model training and image recognition method, device and system

ActiveCN110009623AAccurate distinctionAccurate predictionImage enhancementMedical data miningCategory recognitionImaging Feature

The invention relates to the technical field of computers, in particular to an image recognition model training and image recognition method, device and system, and the method comprises the steps: obtaining a training image sample set which at least comprises strong label training image samples, wherein the strong label training image sample represents an image sample with strong label information, and the strong label information at least comprises the lesion category and the labeling information of the lesion position; extracting image feature information of image samples in the training image sample set; based on the image feature information and the corresponding strong label information, marking the image feature information belonging to each preset lesion category, and training an image recognition model according to the marking result until the strong supervision objective function converges to obtain a trained image recognition model, thereby obtaining a lesion category recognition result of the to-be-recognized image based on the image recognition model. The image feature information of a certain lesion category can be positioned more accurately according to the lesion position, noise is reduced, and reliability and accuracy are improved.

Owner:腾讯医疗健康(深圳)有限公司

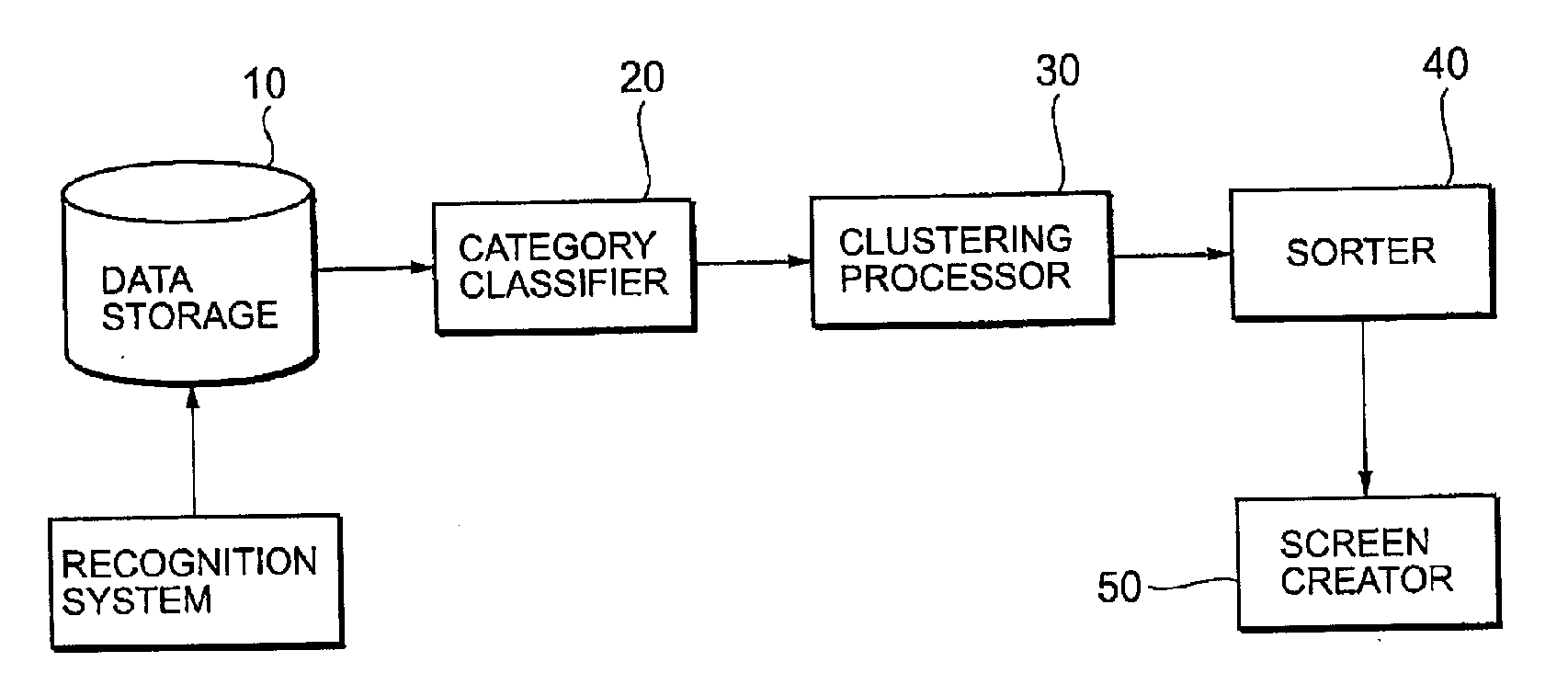

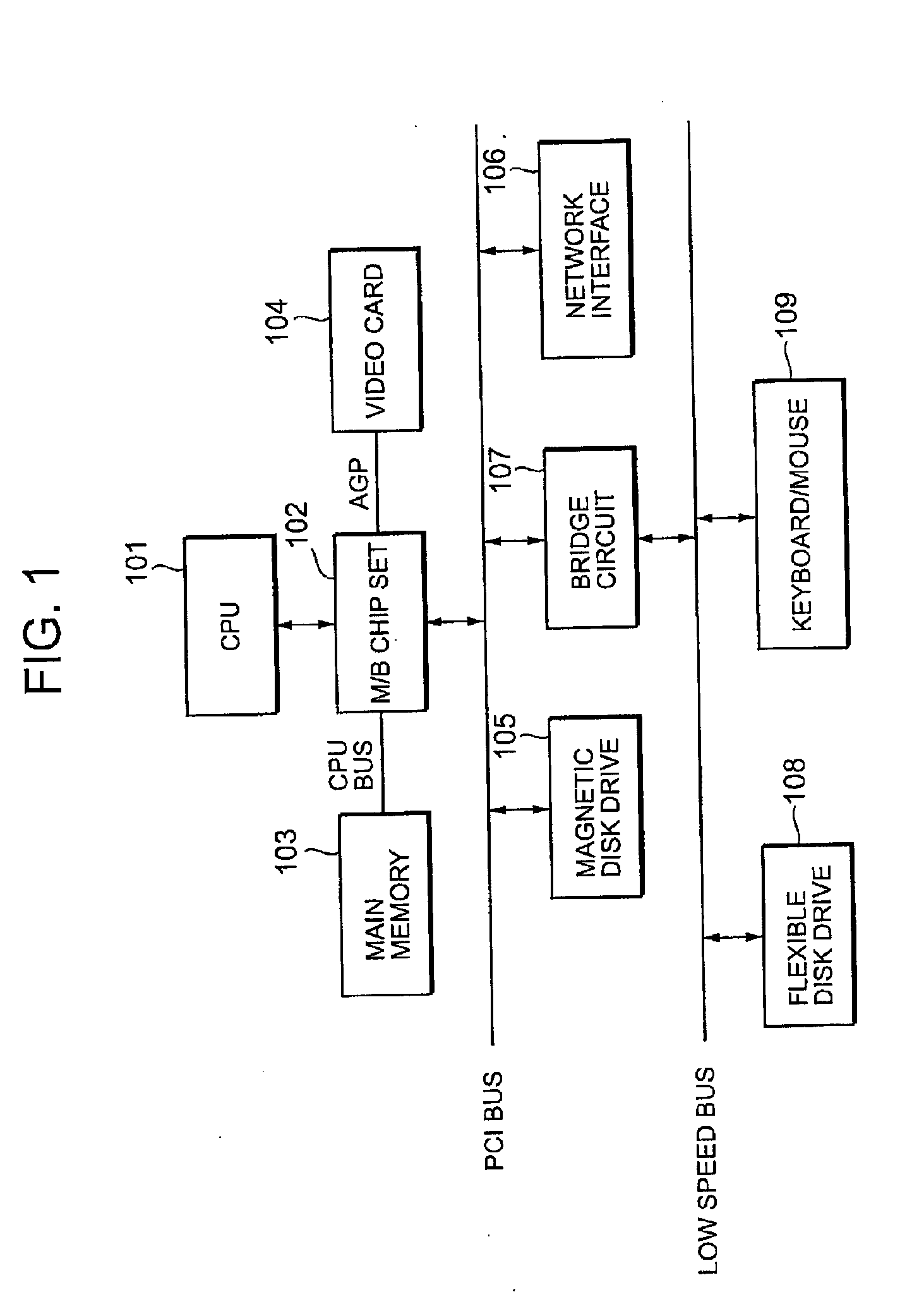

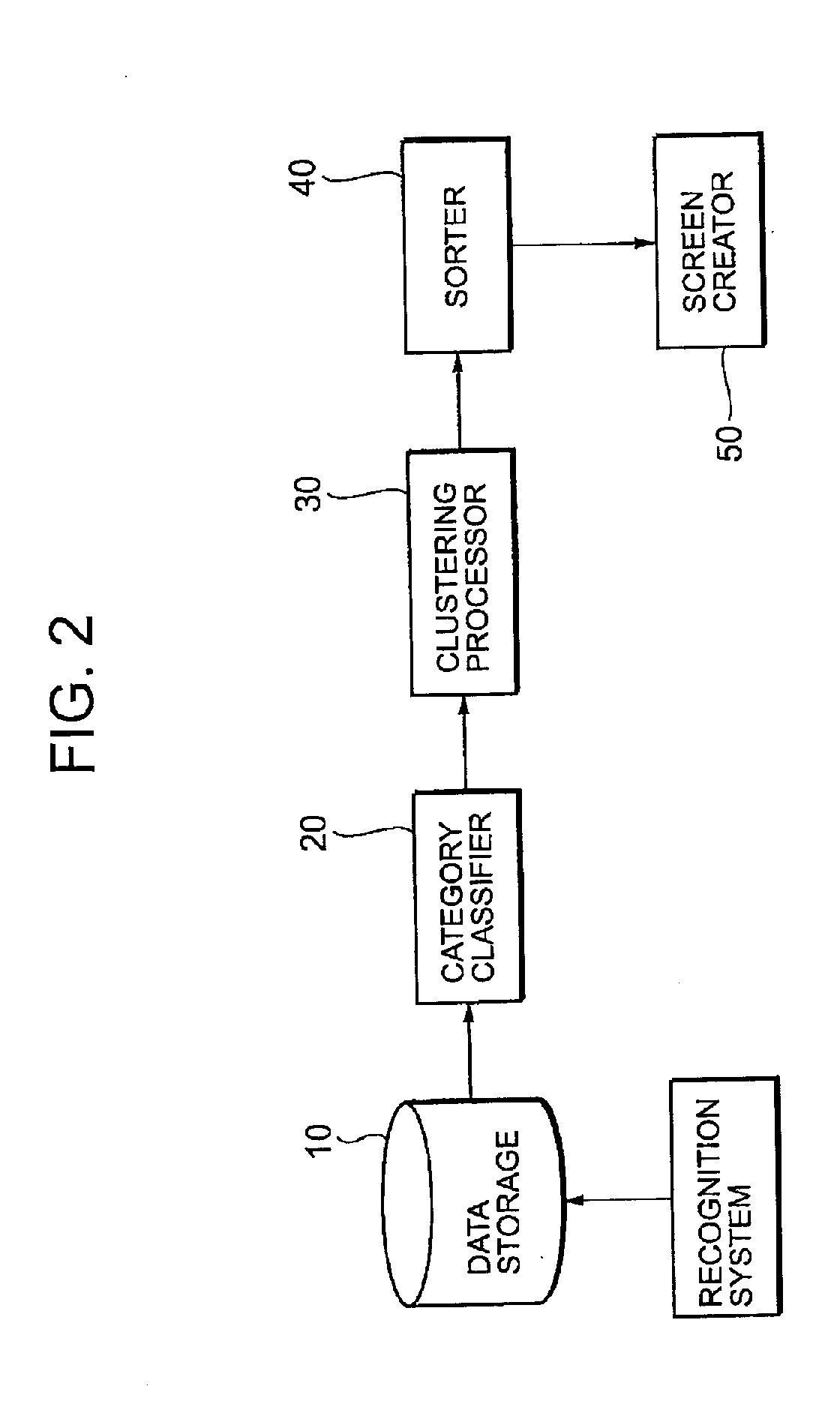

Device for Outputting Character Recognition Results, Character Recognition Device, and Method and Program Therefor

InactiveUS20050232495A1Efficiently review and correctReduce workloadCharacter recognitionCategory recognitionCluster based

An output mechanism of a character recognition device includes a category classifier for classifying image data of characters to be recognized for each category recognized in character recognition processing, a clustering processor for determining feature values related to shapes of characters included in the image data in each category classified by the category classifier, and for classifying the image data into one or more clusters based on the feature values, and a screen creator for creating a confirmation screen for displaying the image data for each cluster classified by the clustering processor.

Owner:IBM CORP

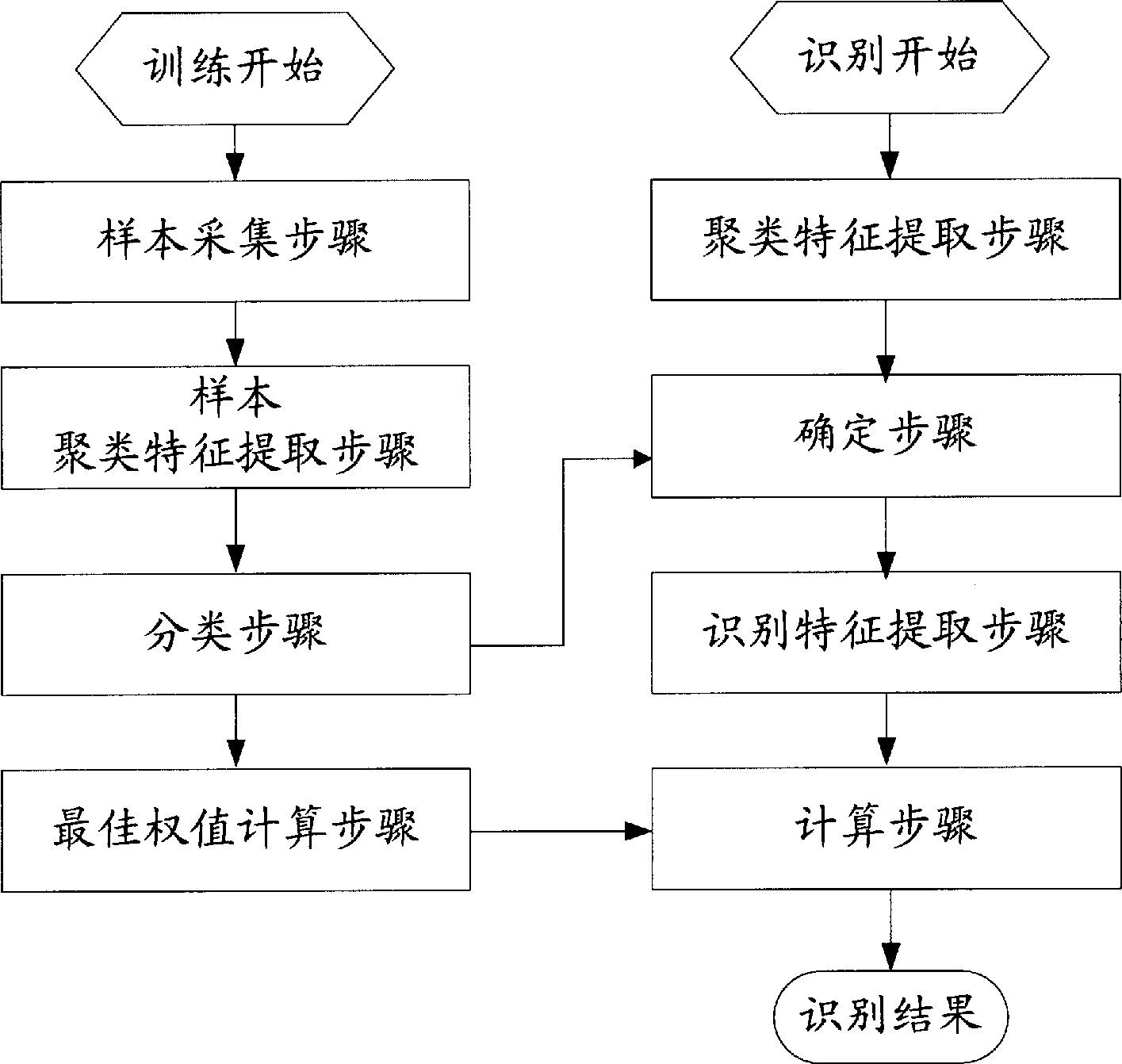

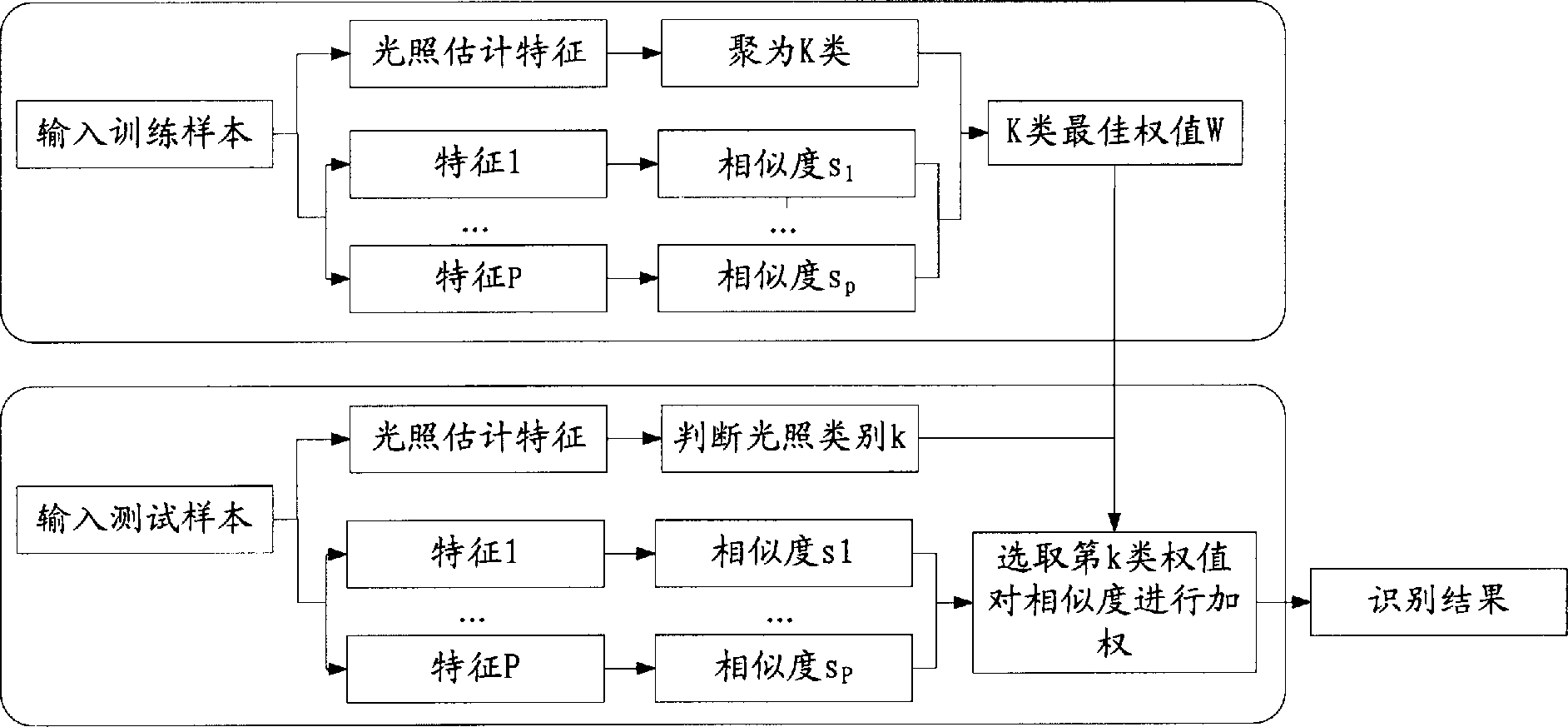

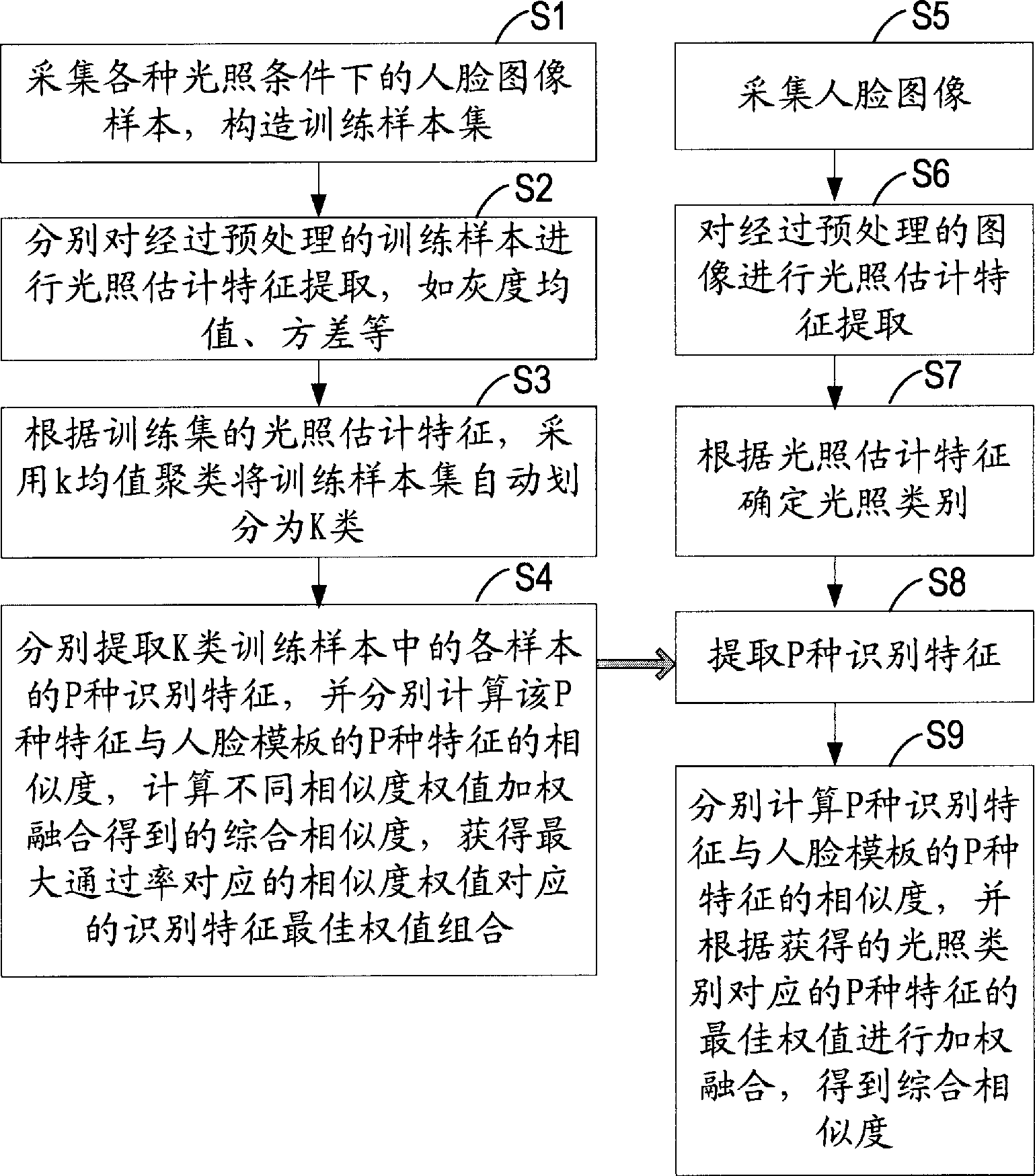

Face recognition method and device

ActiveCN103136504AGood multi-feature fusion performanceCharacter and pattern recognitionFeature extractionImage matching

The invention provides a face recognition method and a device. The face recognition method includes a clustering feature extraction step, a determining step, a recognition feature extraction step and a calculation step, wherein the clustering feature extraction step is used for carrying out the clustering feature extraction for a preprocessed face image; the determining step is used for determining a clustering feature category trained and acquired in advance, and the clustering feature category is matched with the face image according to the clustering features extracted from the face image; the recognition feature extraction step is used for extracting the P kinds of the recognition features on the preprocessed face image, wherein the P is a natural number which is greater than one; a calculation step is used for respectively calculating the P kinds of recognition features and the similarity of the corresponding features of the P kinds of recognition features in a face template registered in advance, and determining a best weight combination of the P kinds of the recognition features in the weight fusion according to the determined clustering feature category in the determining step in order to acquire the comprehensive similarity of the face image and the face template. The face recognition method can effectively improve face recognition performance.

Owner:HANVON CORP

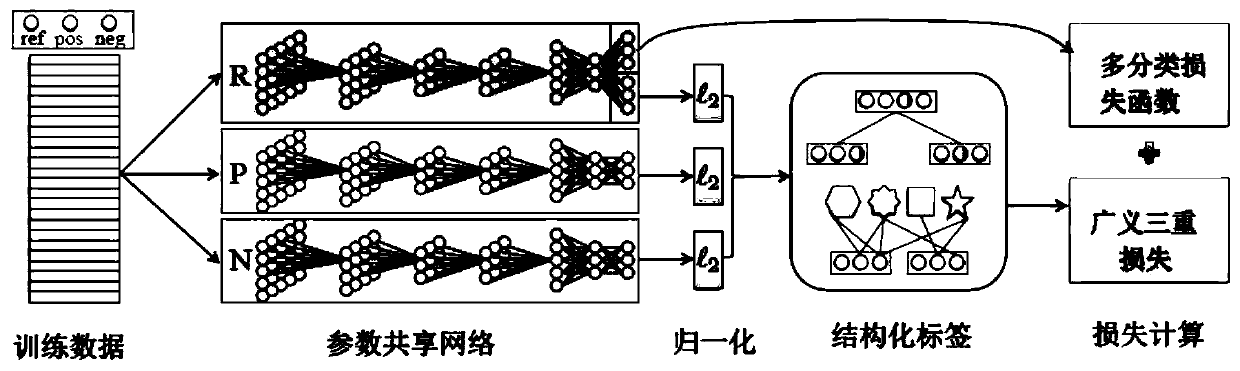

Mixed-granularity object recognition model training and recognition method and device and storage medium

PendingCN110458233AIncrease the gapImprove accuracyBiometric pattern recognitionEnergy efficient computingCategory recognitionGranularity

The invention relates to the technical field of the Internet, and discloses a mixed-granularity object recognition model training and recognition method and device and a storage medium. The mixed-granularity object recognition model training method comprises: acquiring sample images, determining category labels of the sample images, and the category labels comprising fine-granularity categories and coarse-granularity categories; performing image category recognition training on an initial deep learning model based on the sample image and the category label of the sample image to obtain a pre-training model; and adjusting the fine-grained branch classification module of the pre-training model by taking the feature difference between the enlarged fine-grained categories as a target to obtaina mixed-grained object recognition model. According to the invention, coarse-grained category identification and fine-grained category identification can be carried out in the same network structure,and the accuracy of fine-grained category identification is improved.

Owner:TENCENT CLOUD COMPUTING BEIJING CO LTD

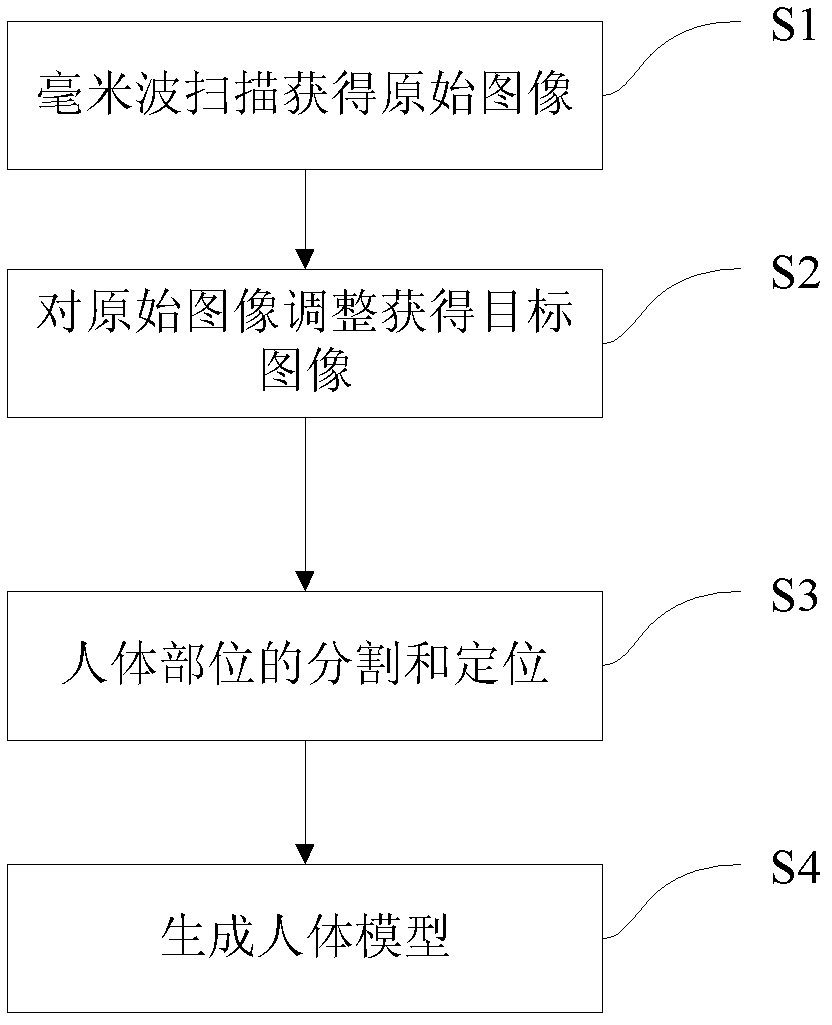

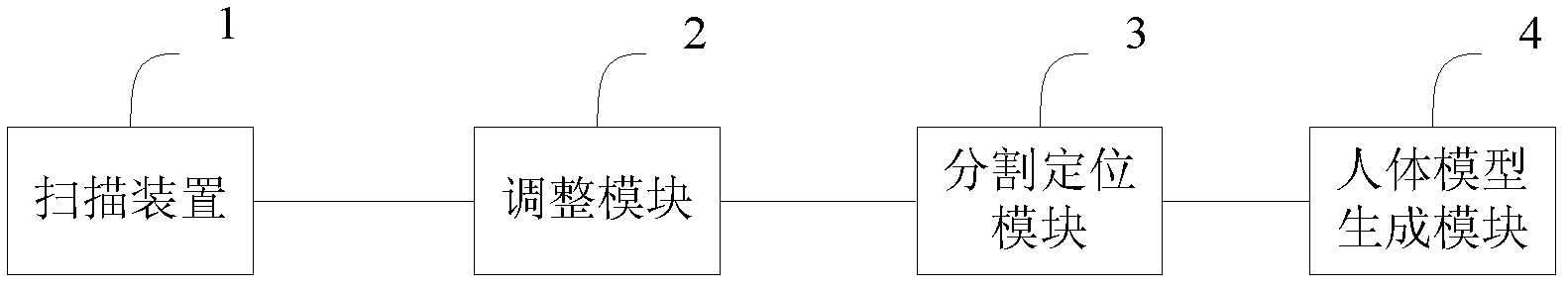

Automatic detection and identification apparatus of concealed object

ActiveCN102629315AAchieving identifiabilityAchieving processing powerCharacter and pattern recognitionCategory recognitionComputer module

The invention discloses an automatic detection and identification apparatus of a concealed object. The apparatus comprises a scanning device, an adjusting module, a segmentation positioning module, a bar and rod combination model generation module, a non-human-body object preliminary detection module, a non-human-body object distribution module and a category recognition module. By using the automatic detection and identification apparatus of the concealed object, the detection and the identification of the concealed object are turned to be automatic from manual, which reduce a usage requirement of personnel, decrease a personal error and shorten detection interpretation time.

Owner:BEIJING INST OF RADIO METROLOGY & MEASUREMENT

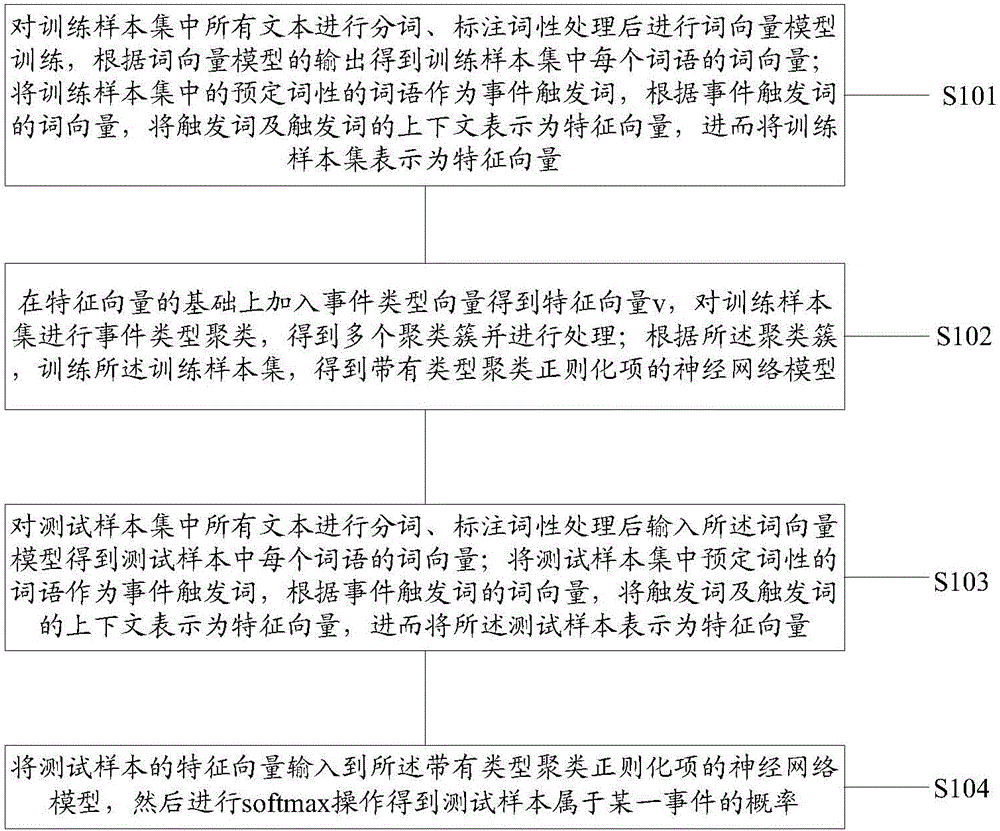

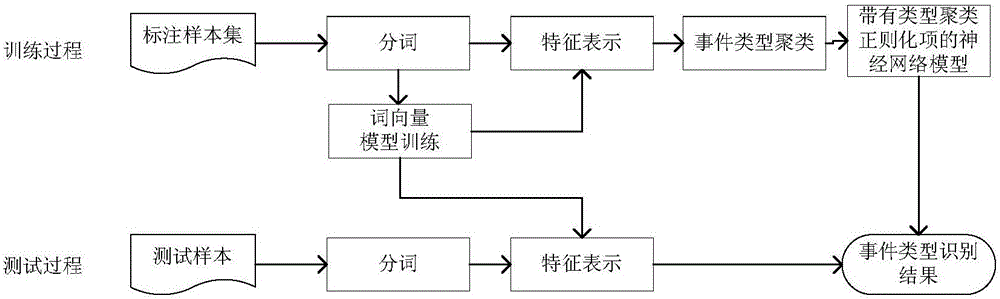

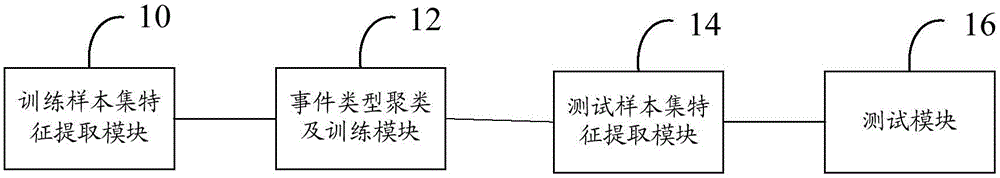

Event type recognition method and device

ActiveCN106095928AEasy to shareSolve the problems caused by data imbalanceNatural language data processingSpecial data processing applicationsAnnotationTest sample

The invention discloses an event type recognition method and device. The method comprises the following steps that word segmentation is performed on all texts in a training set, a word vector space model is trained after word class treatment is extracted, text characteristics are extracted, and texts are shown as characteristic vectors; even type clustering is performed on the training set, and a neutral network model with type cluster regularization items is trained; test samples are also analyzed, word class treatment is extracted, and the trained word vector model is trained for obtaining character representations; by means of the neutral network model with the type cluster normalization items, event type recognition is performed. By means of the technical scheme, problems brought by annotation data imbalance are relieved by means of type sharing information in the same group.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT

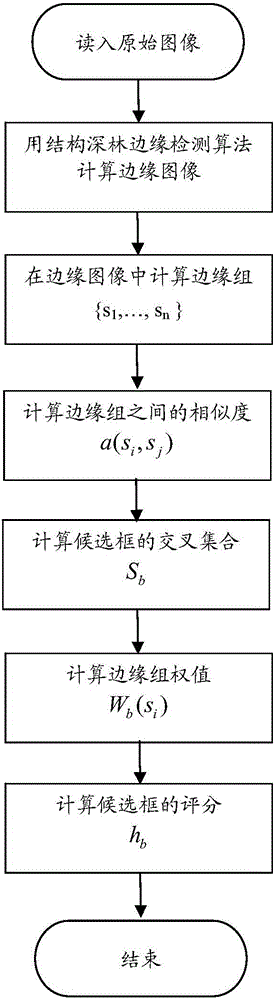

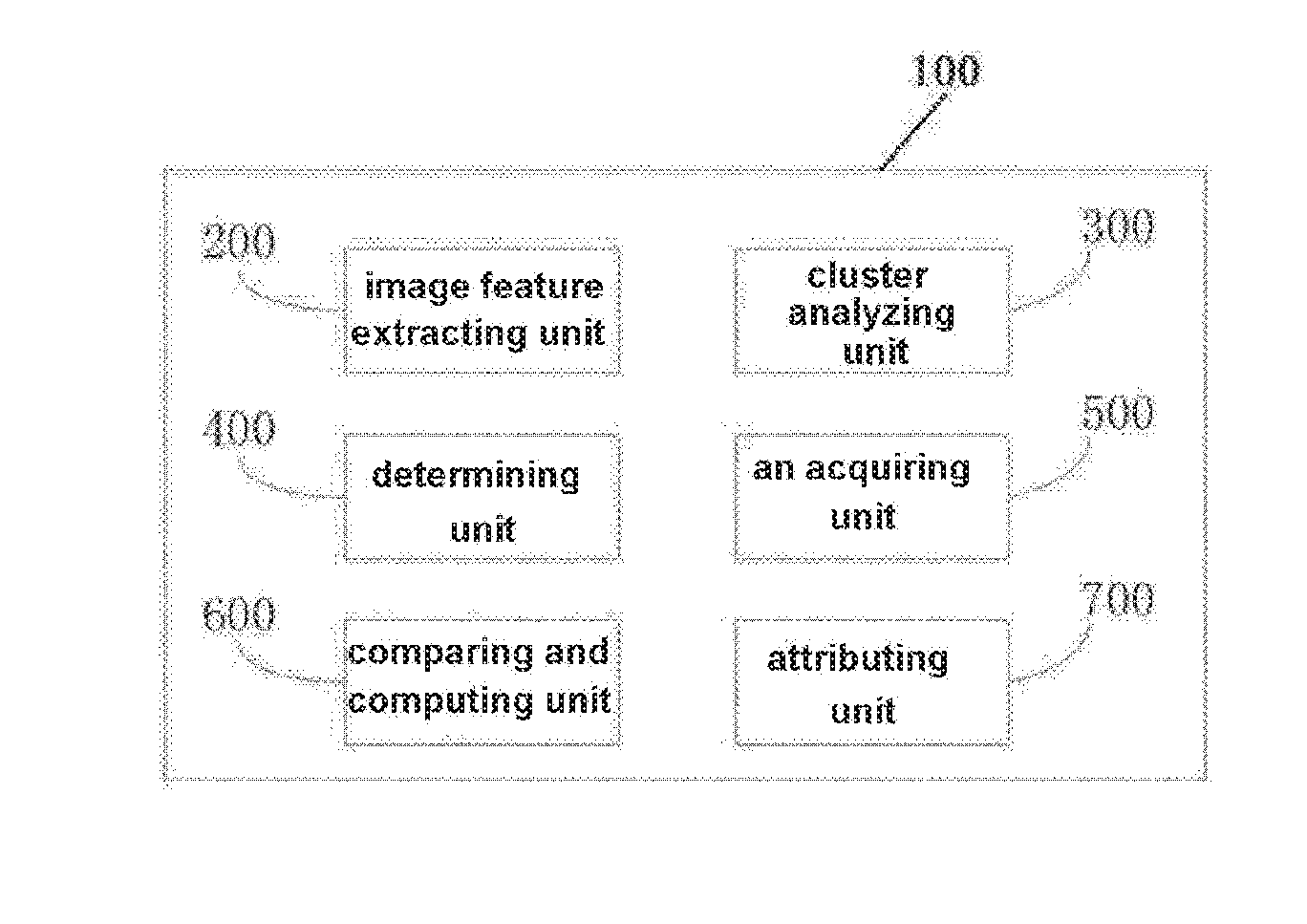

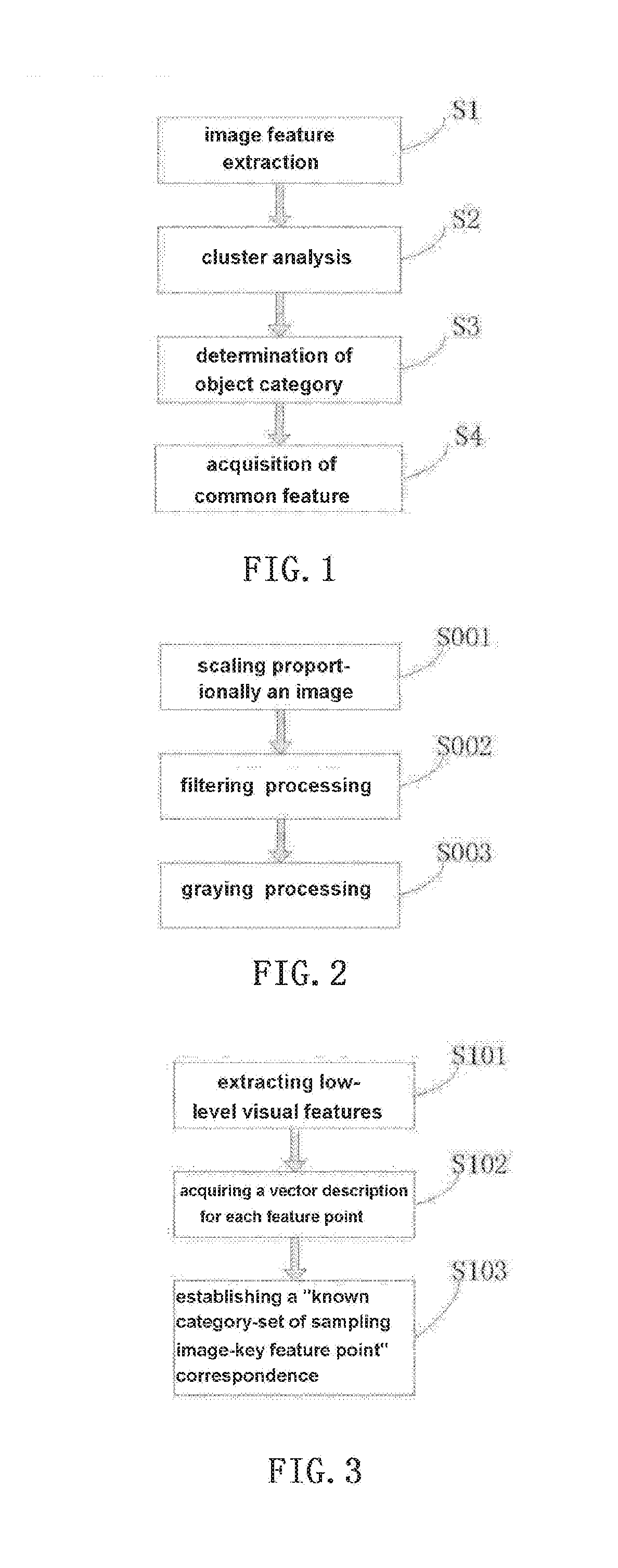

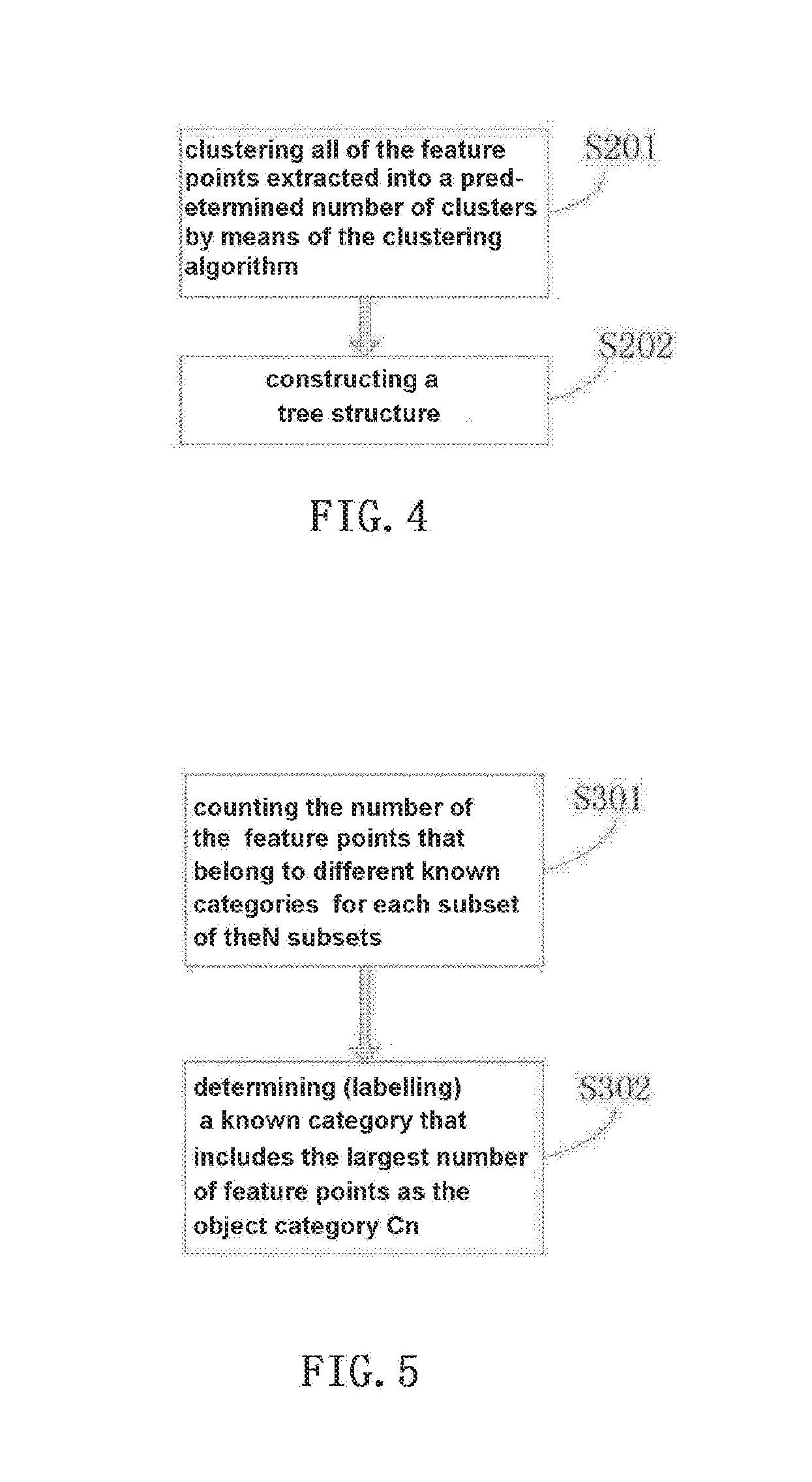

Image object category recognition method and device

ActiveUS20160267359A1Improve accuracyIncrease speedImage enhancementImage analysisCategory recognitionFeature extraction

The present invention relates to an image object category recognition method and device. The recognition method comprises an off-line autonomous learning process of a computer, which mainly comprises the following steps: image feature extracting, cluster analyzing and acquisition of an average image of object categories. In addition, the method of the present invention also comprises an on-line automatic category recognition process. The present invention can significantly reduce the amount of computation, reduce computation errors and improve the recognition accuracy significantly in the recognition process.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

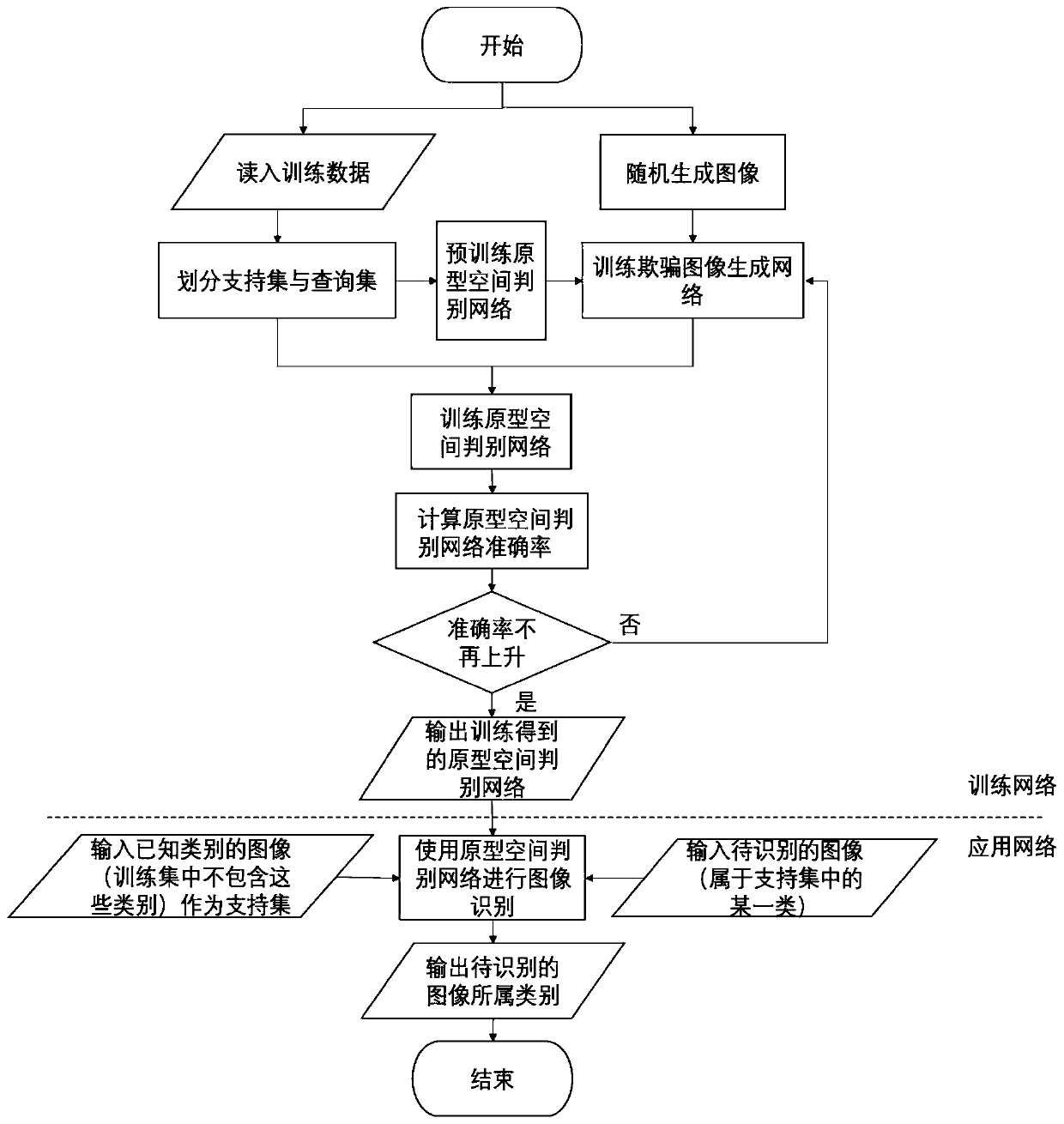

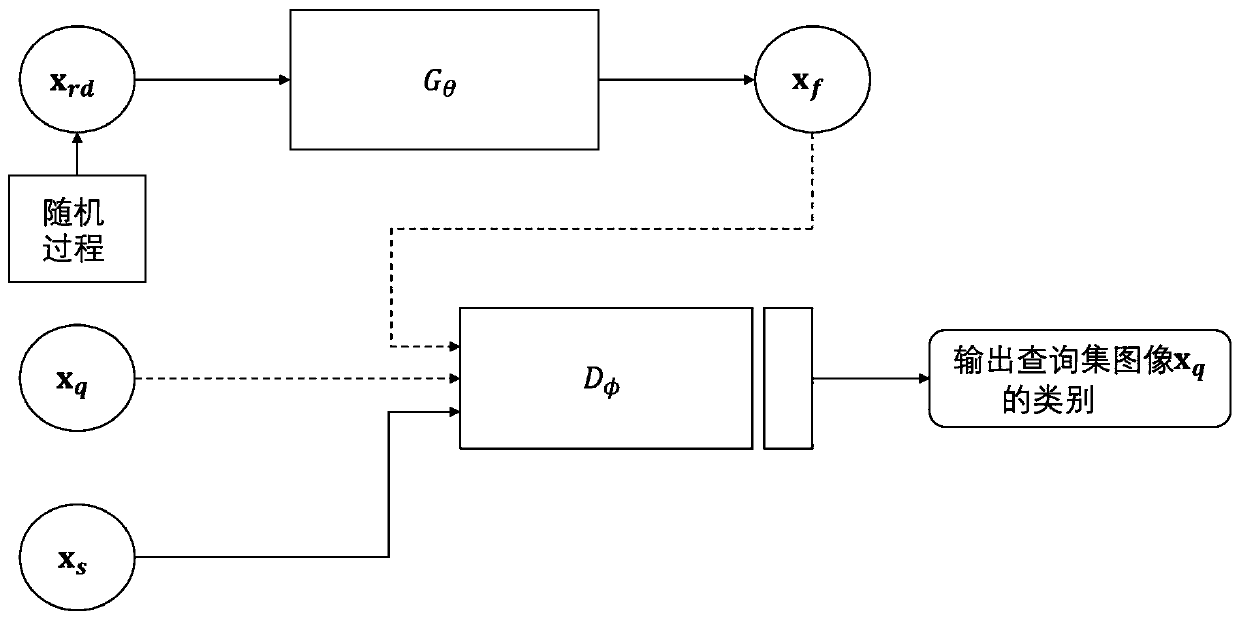

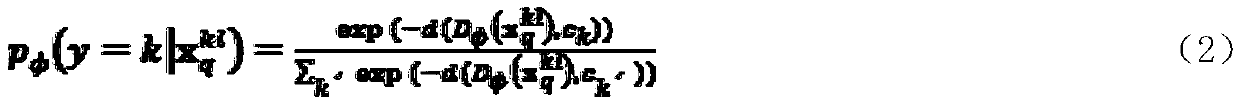

Small sample image recognition method based on deep learning

The invention relates to a small sample image recognition method based on deep learning. The method comprises the following steps of 1, dividing a training set; 2, generating a noise image; 3, pre-training a prototype space discrimination network; 4, training a deception image generation network; 5, training a prototype space discrimination network; 6, repeating the step 4 and the step 5 for crossiteration training until preset iteration times are reached or the accuracy is not improved any more; 7, performing image category recognition. According to the method, on the premise that a trainedmodel is not changed, by means of a few labeled samples of each class, through generalization of the rare classes, new classes which are not seen in the training process are recognized, extra trainingis not needed, and the image recognition accuracy is high.

Owner:JILIN UNIV

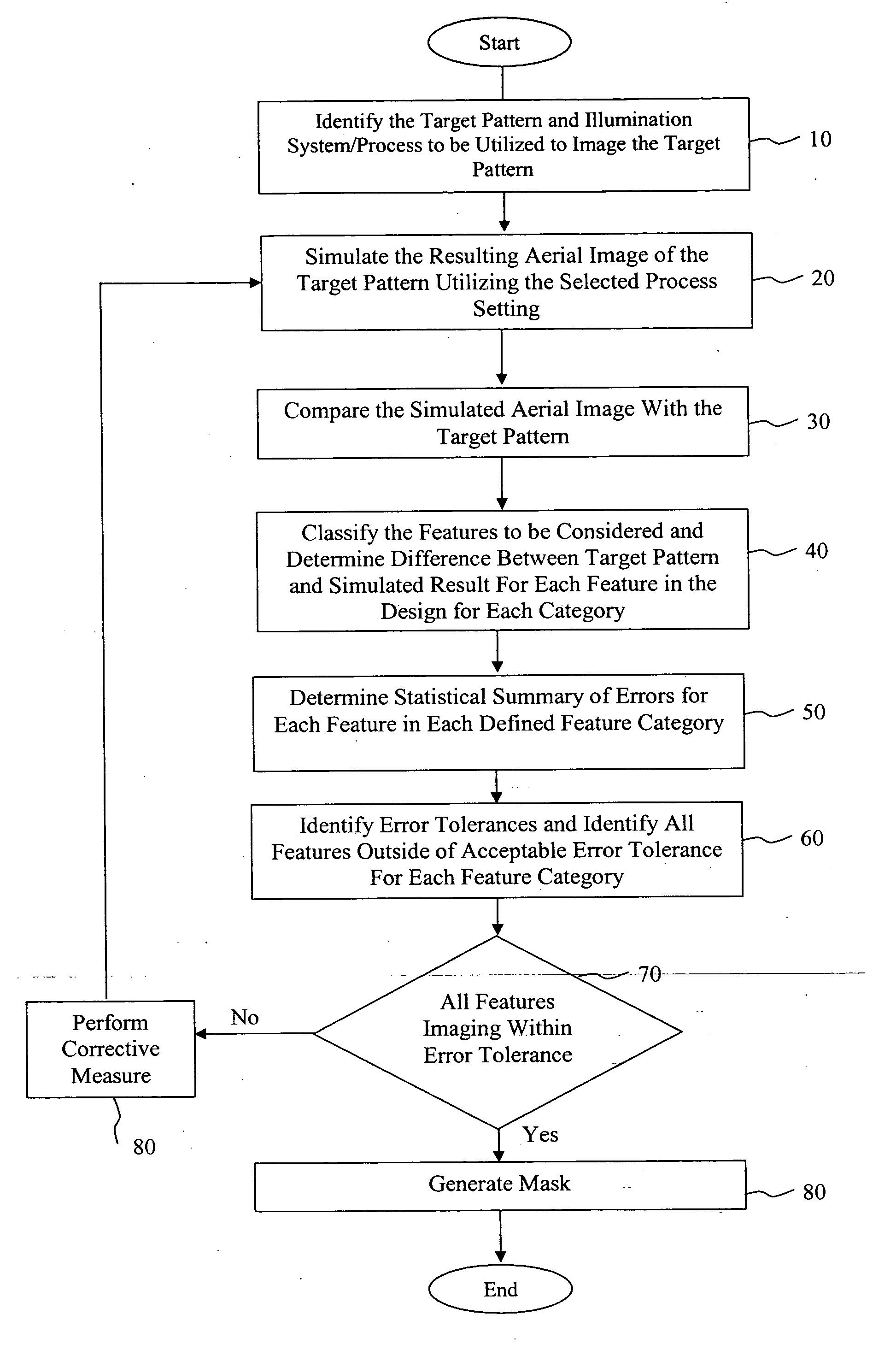

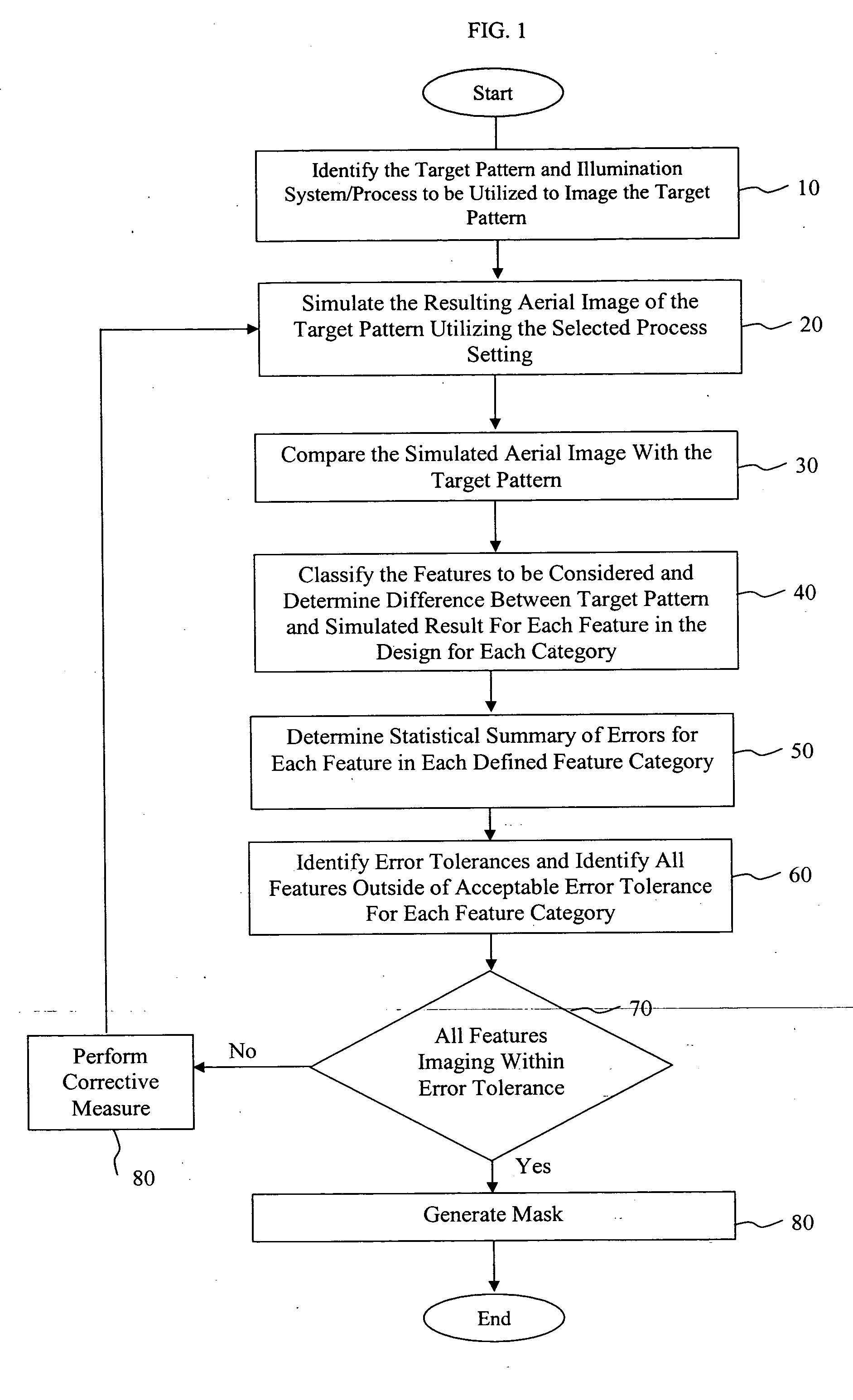

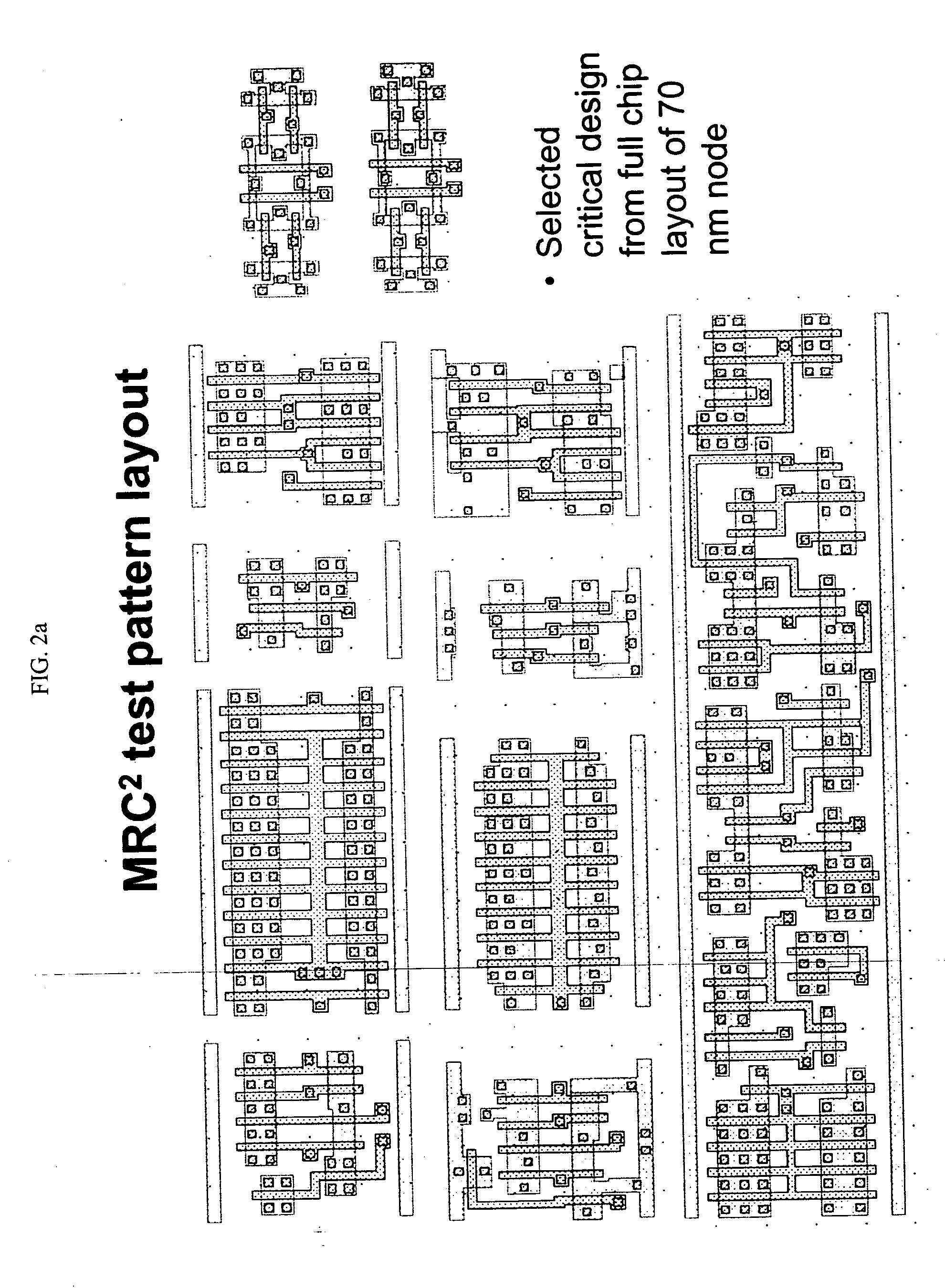

Method for performing full-chip manufacturing reliability checking and correction

ActiveUS20060080633A1Suitable for useMinimize timePhotomechanical apparatusCharacter and pattern recognitionPattern recognitionProcess patterns

A method of generating a mask for use in an imaging process pattern. The method includes the steps of: (a) obtaining a desired target pattern having a plurality of features to be imaged on a substrate; (b) simulating a wafer image utilizing the target pattern and process parameters associated with a defined process; (c) defining at least one feature category; (d) identifying features in the target pattern that correspond to the at least one feature category, and recording an error value for each feature identified as corresponding to the at least one feature category; and (e) generating a statistical summary which indicates the error value for each feature identified as corresponding to the at least one feature category.

Owner:ASML NETHERLANDS BV

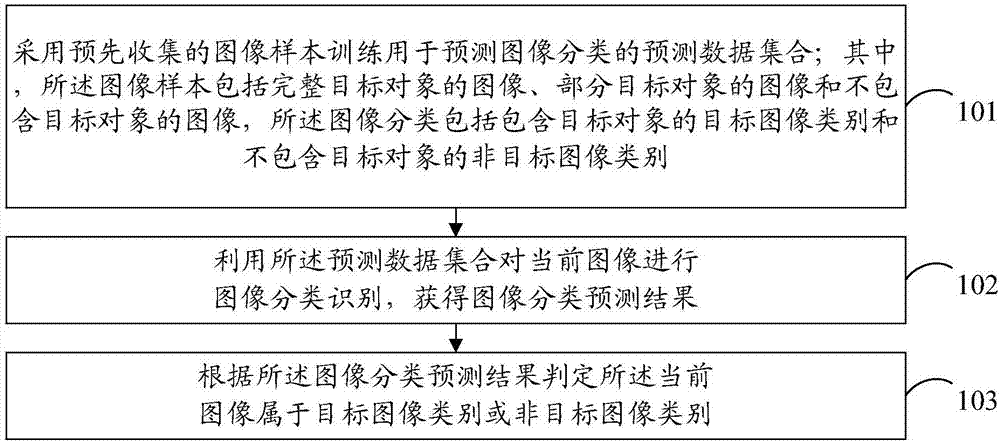

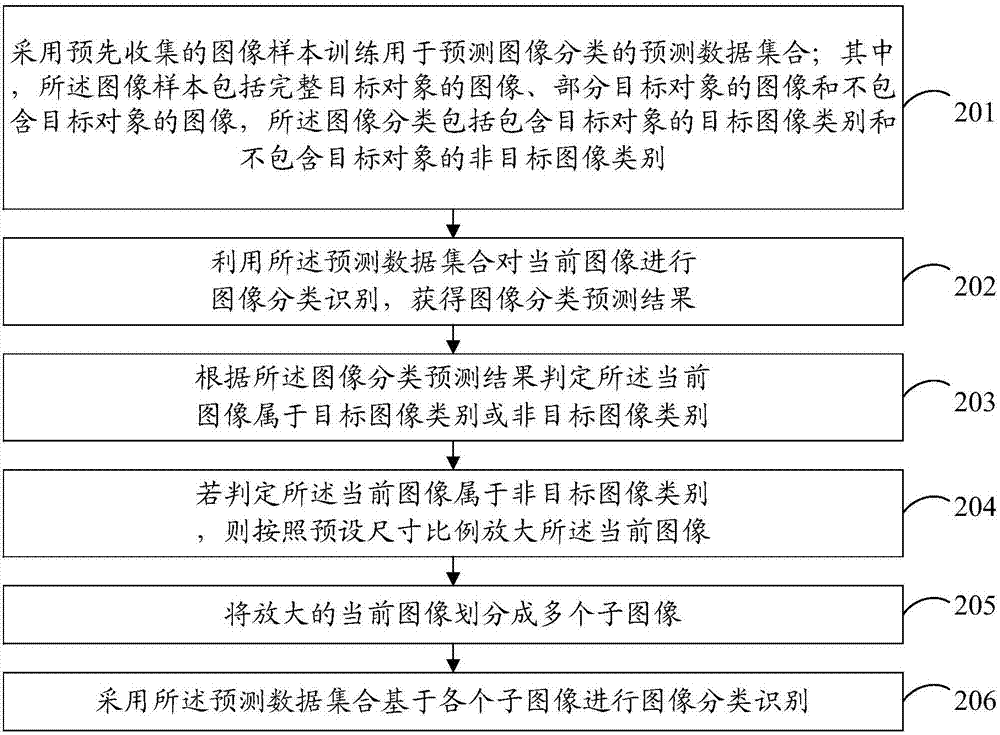

Image recognition method and apparatus

InactiveCN107292229AResolve irregularitiesResolve identifiabilityBiometric pattern recognitionCategory recognitionNon targeted

The present invention provides an image recognition method and apparatus. The method comprises the following steps that: pre-collected image samples are adopted to train a prediction image set for predicting image categories, wherein the image samples include the images of a complete target object, the images of a part of the target object and images not containing the target object, and the image categories include a target image category containing the target object and a non-target image category not containing the target object; the prediction image set is utilized to perform image category recognition on a current image so as to obtain an image category prediction result; and whether the current image belongs to the target image category or the non-target image category is judged according to the image category prediction result. With the image recognition method and apparatus provided by the embodiment of the present invention adopted, the accuracy of image recognition can be improved.

Owner:BEIJING SANKUAI ONLINE TECH CO LTD

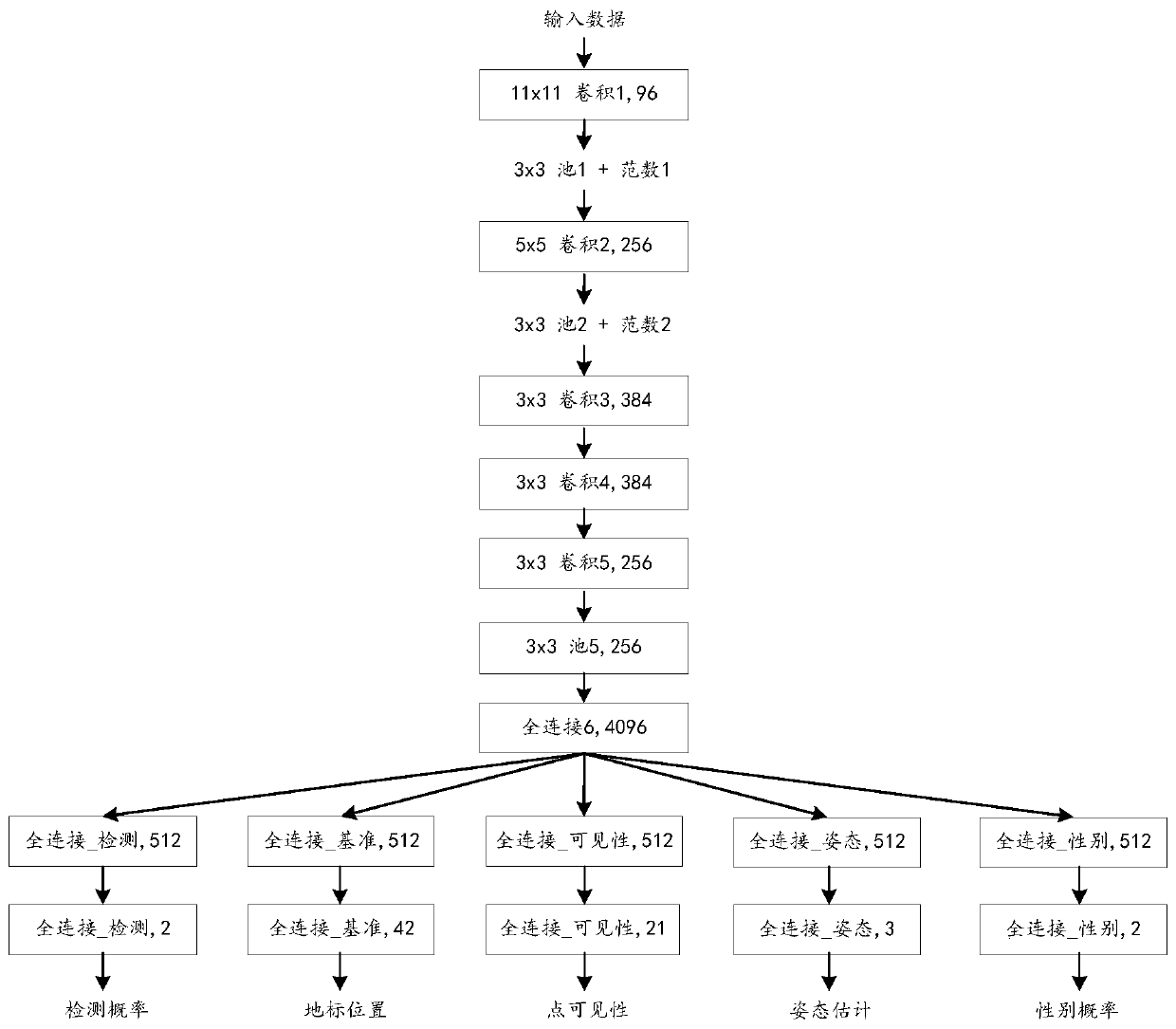

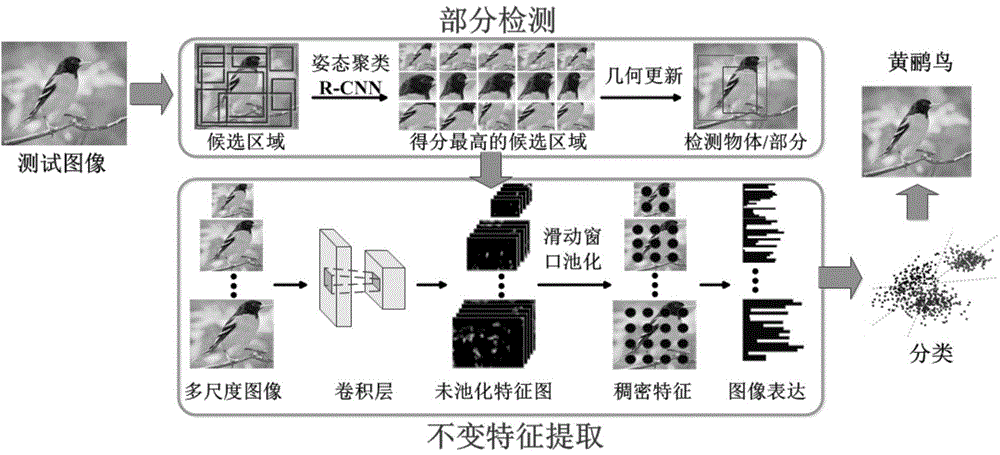

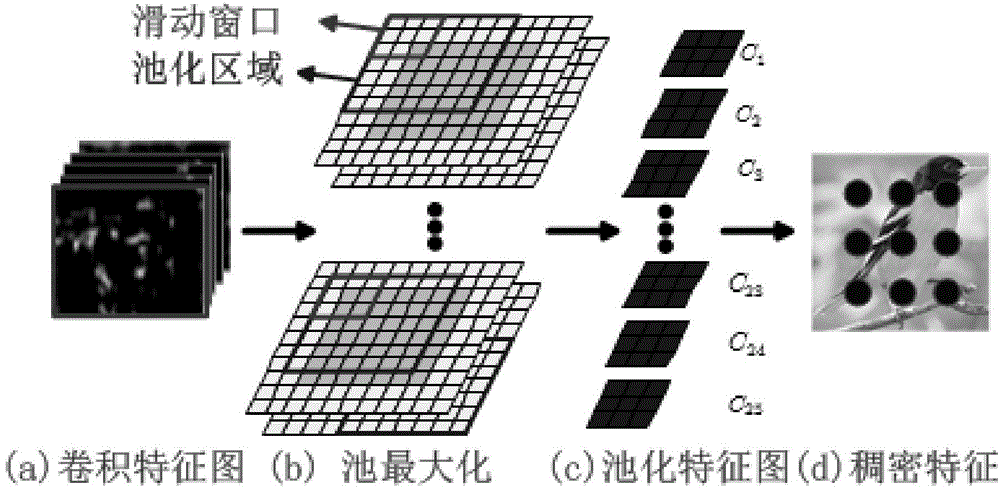

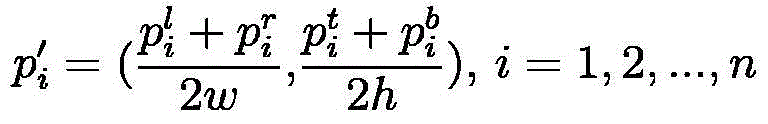

Fine granularity classification recognition method and object part location and feature extraction method thereof

ActiveCN104573744AEasy to identifyAccurate Part Positioning AccuracyCharacter and pattern recognitionFeature extractionGranularity

The invention provides a fine granularity classification recognition method and an object part location and feature extraction method thereof. The fine granularity classification recognition method and the object part location and feature extraction method thereof well achieve object part location and feature expression in fine granularity classification recognition. For object part location, a series of part detectors trained by supervised learning are utilized, the methods just detect the part with small deformation in consideration of the posture change and deformation influence of targets to be located, different detectors are trained for the same object part by adopting the posture clustering method, and therefore the posture change of objects is taken into account. For feature expression of the objects or parts, features are extracted at multiple dimensions and multiple positions according to the methods and then fused to be used for final object expression, and therefore the features have certain dimension and translation invariance. According to the methods, object part location and feature expression have certain complementarity at the same time, and therefore the accuracy of fine granularity classification recognition can be effectively improved.

Owner:SHANGHAI JIAO TONG UNIV

Digestive tract endoscope image recognition model training and recognition method, device and system

ActiveCN110473192AAccurate distinctionAccurate predictionImage enhancementMedical data miningCategory recognitionImaging Feature

The invention relates to the technical field of computers, and mainly relates to a computer vision technology in artificial intelligence, particularly relates to a digestive tract endoscope image recognition model training and recognition method, device and system. The methos comprises steps of obtaining a training digestive tract endoscope image sample set, wherein the training digestive tract endoscope image sample set at least comprises a strong label digestive tract endoscope training image sample; based on the image feature information and the corresponding strong label information, marking the image feature information belonging to each preset lesion category; training the digestive tract endoscope image recognition model according to the marking result until the strong supervision target function converges, and obtaining the trained digestive tract endoscope image recognition model, thereby obtaining a lesion category recognition result of the to-be-recognized digestive tract endoscope image based on the digestive tract endoscope image recognition model. Image feature information of a certain lesion category can be more accurately positioned according to the lesion position,noise is reduced, and reliability and accuracy are improved.

Owner:TENCENT HEALTHCARE (SHENZHEN) CO LTD

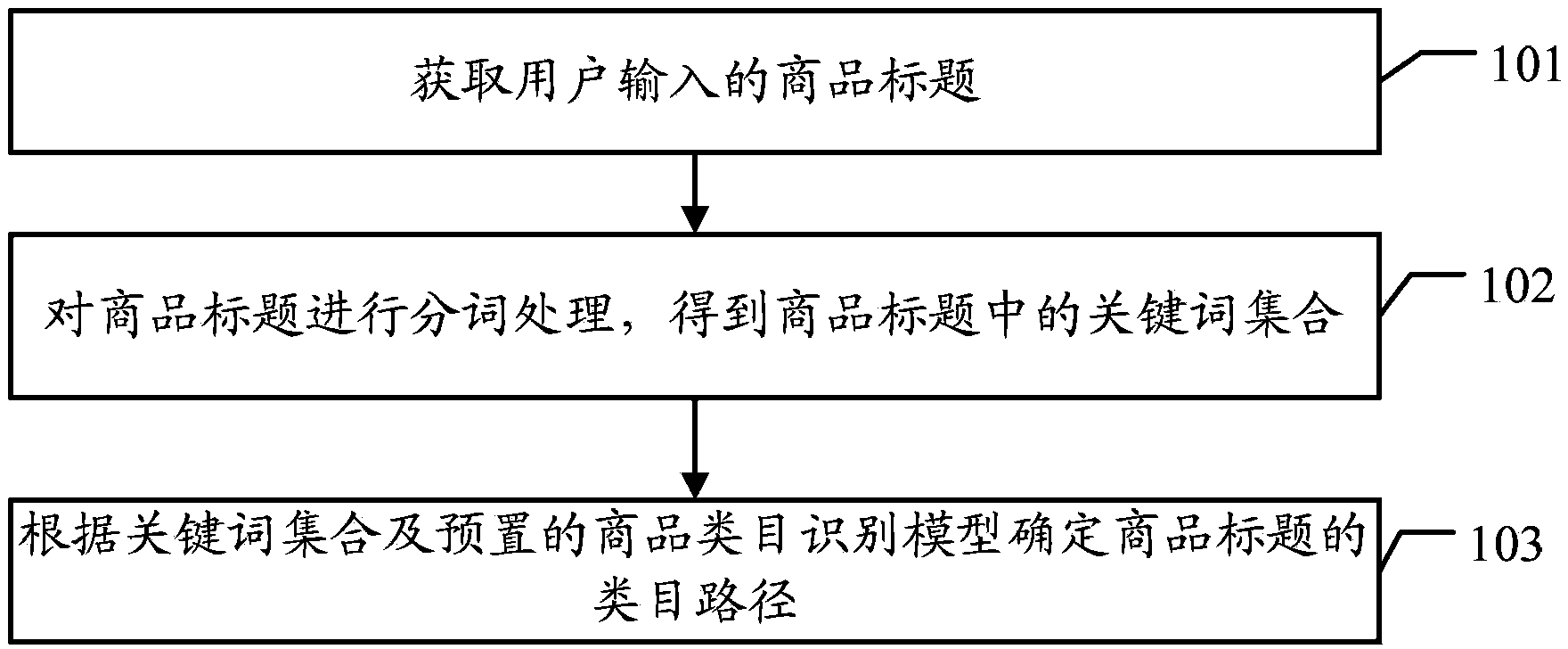

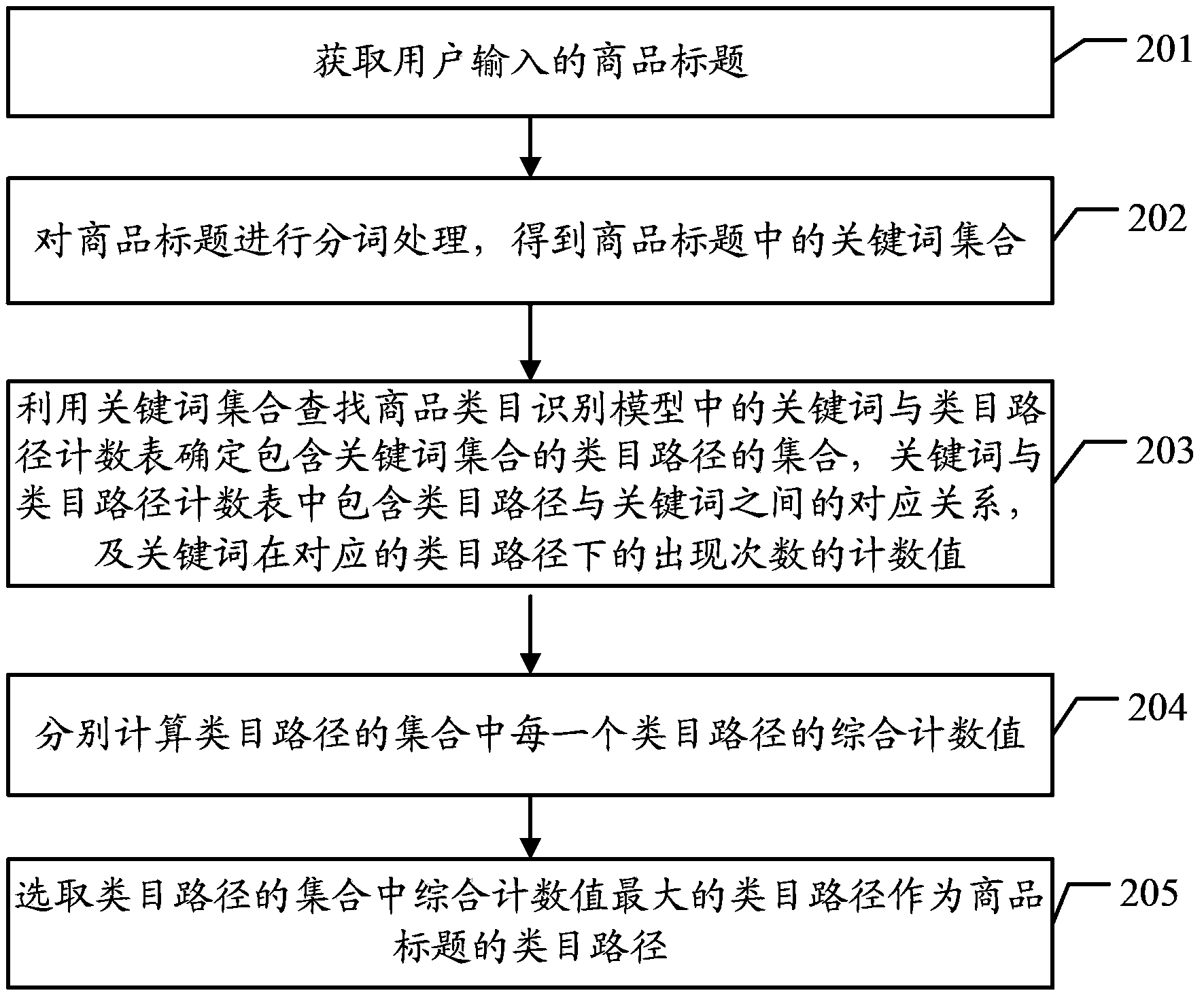

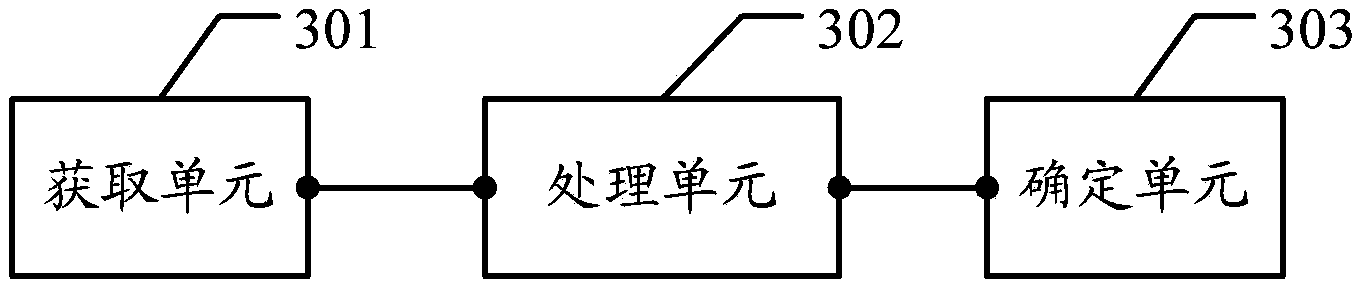

Category path recognition method and system

ActiveCN103902545AAvoid error conditionsRealize identificationWeb data indexingCommerceCategory recognitionUser input

Solutions of category path recognition are described. A commodity title input by a user is obtained. Word segmentation is performed on the commodity title to obtain a keyword set comprising keywords comprised in the commodity title. A category path of the commodity title is determined according to the keyword set and a preconfigured commodity category recognition model, wherein the commodity category recognition model includes correspondences between a plurality of keywords and a plurality of category paths and a counting value of the number of occurrences of each of the plurality of keywords under each corresponding category path.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD

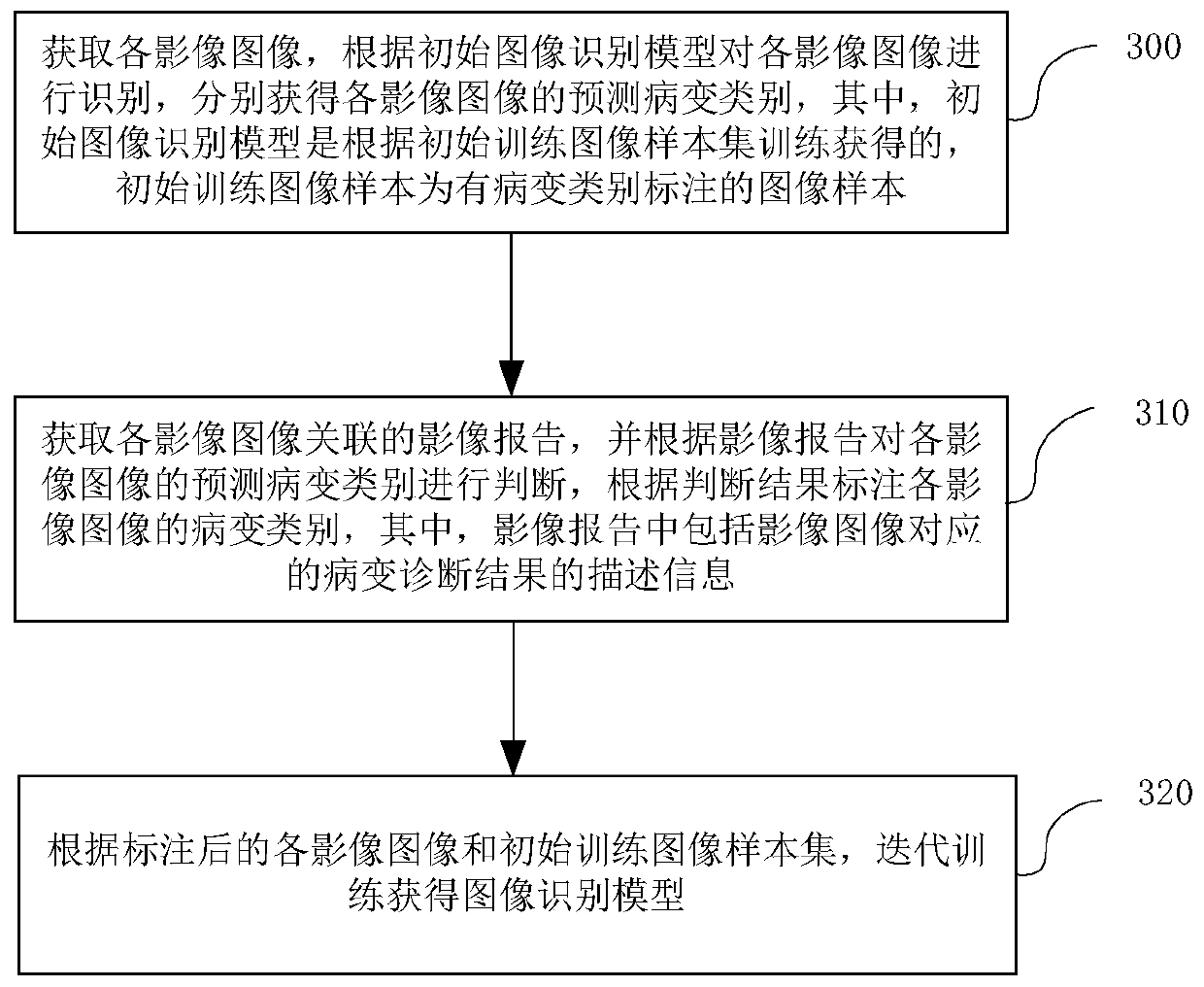

Image recognition model training method, device and system, and image recognition method, device and system

ActiveCN110909780AImprove accuracyLow costRecognition of medical/anatomical patternsCategory recognitionNuclear medicine

The invention relates to the technical field of computers. The invention particularly relates to an image recognition model training method, device and system, and an image recognition method, deviceand system. Each image is recognized according to the initial image recognition model, predicted lesion categories of the image images are respectively obtained, and the predicted lesion category is judged according to an image report associated with each image; the lesion category of each image is marked according to the judgment result; according to the marked image images and an initial training image sample set, iterative training is performed to obtain an image recognition model; and then the lesion category of the to-be-recognized image can be recognized based on the trained image recognition model, and the lesion category recognition result of the to-be-recognized image can be determined, so that iterative training is carried out by utilizing the image report, additional labeling cost does not need to be increased, the iterative rate is improved, and the recognition accuracy can be improved along with continuous iterative updating.

Owner:TENCENT TECH (SHENZHEN) CO LTD

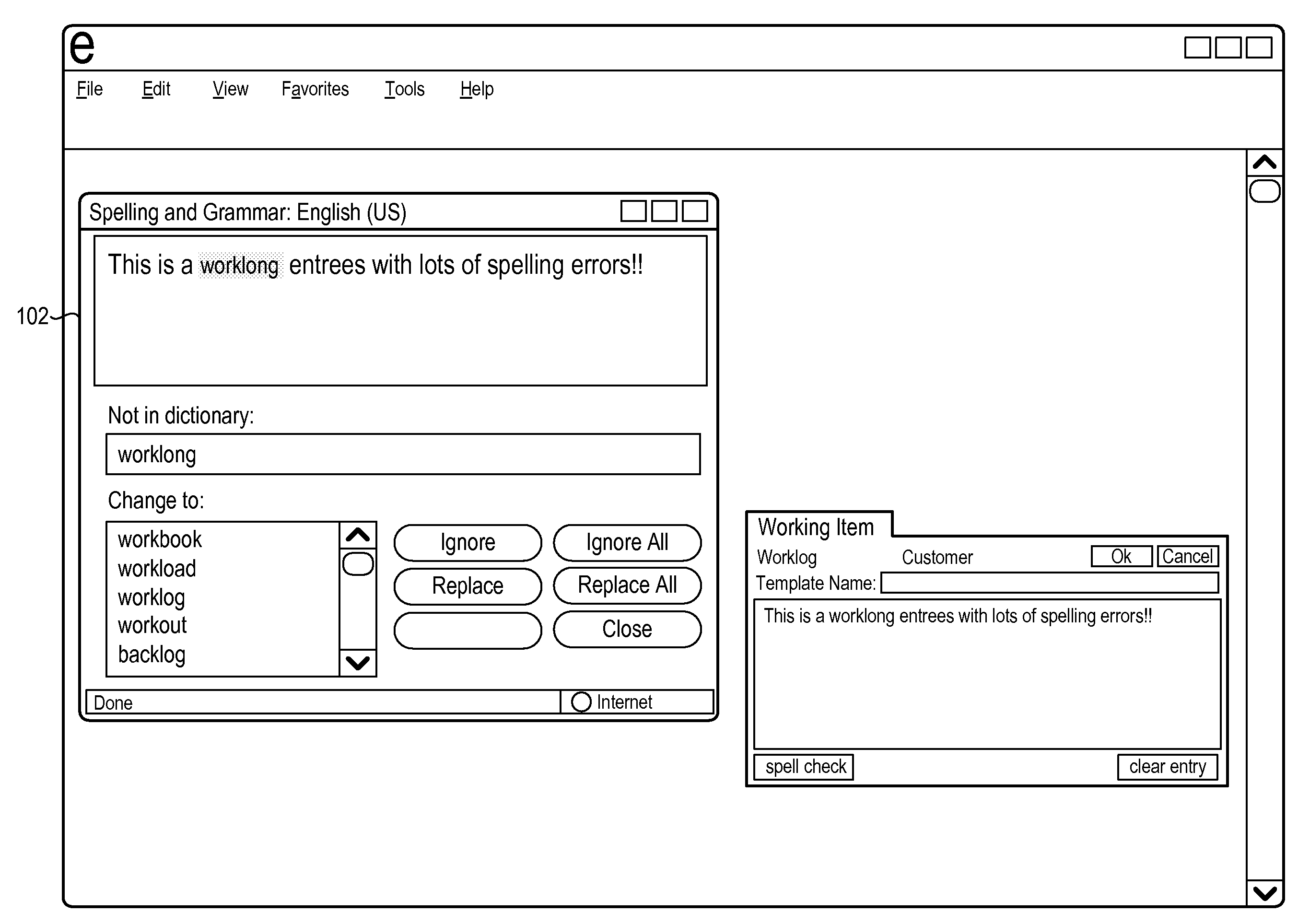

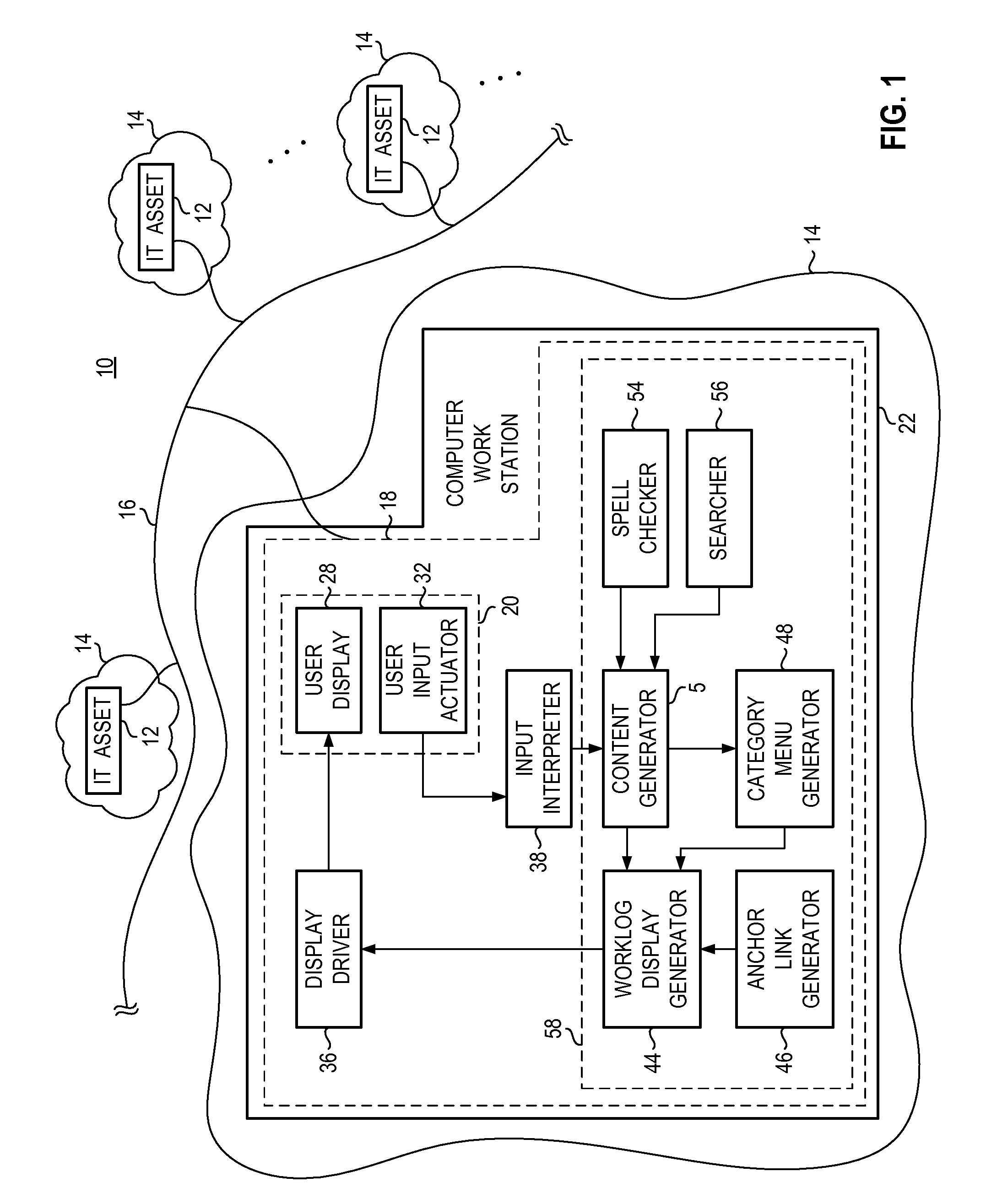

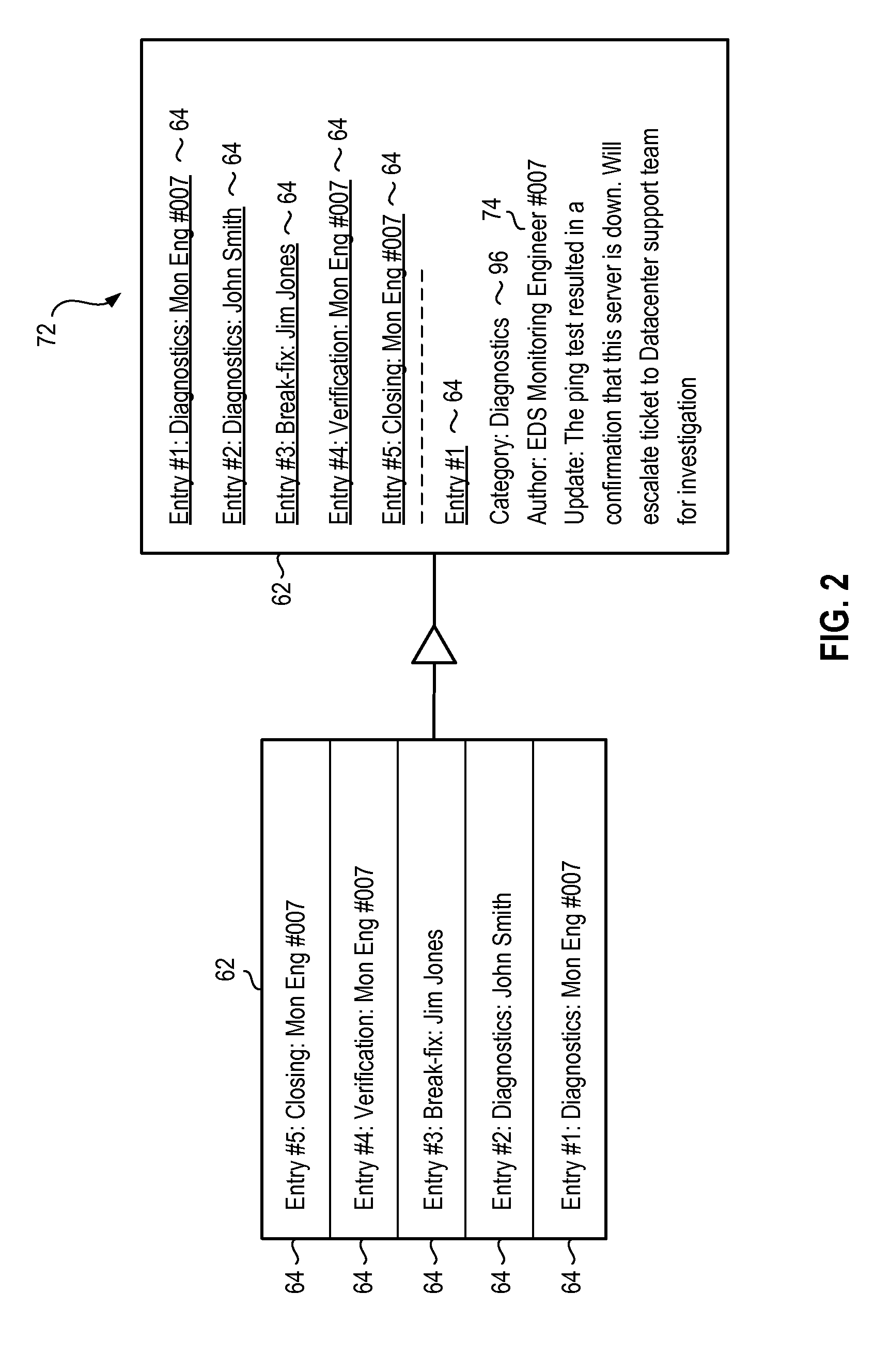

Apparatus, and associated method, for generating an information technology incident report

ActiveUS20090055720A1Precise positioningImprove readabilityInput/output for user-computer interactionNatural language data processingCategory recognitionIncident report

Apparatus, and an associated method, for generating a trouble ticket related to an IT incident. When an IT incident occurs, a worklog is formed by a reporter that enters information associated with the incident. Successive inputs, made by appropriate personnel, are made to update the status of the incident. A table-of-contents is formed, associated with the collection of entries of information. And, each entry of information is categorized, to identify the entry by an associated category.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

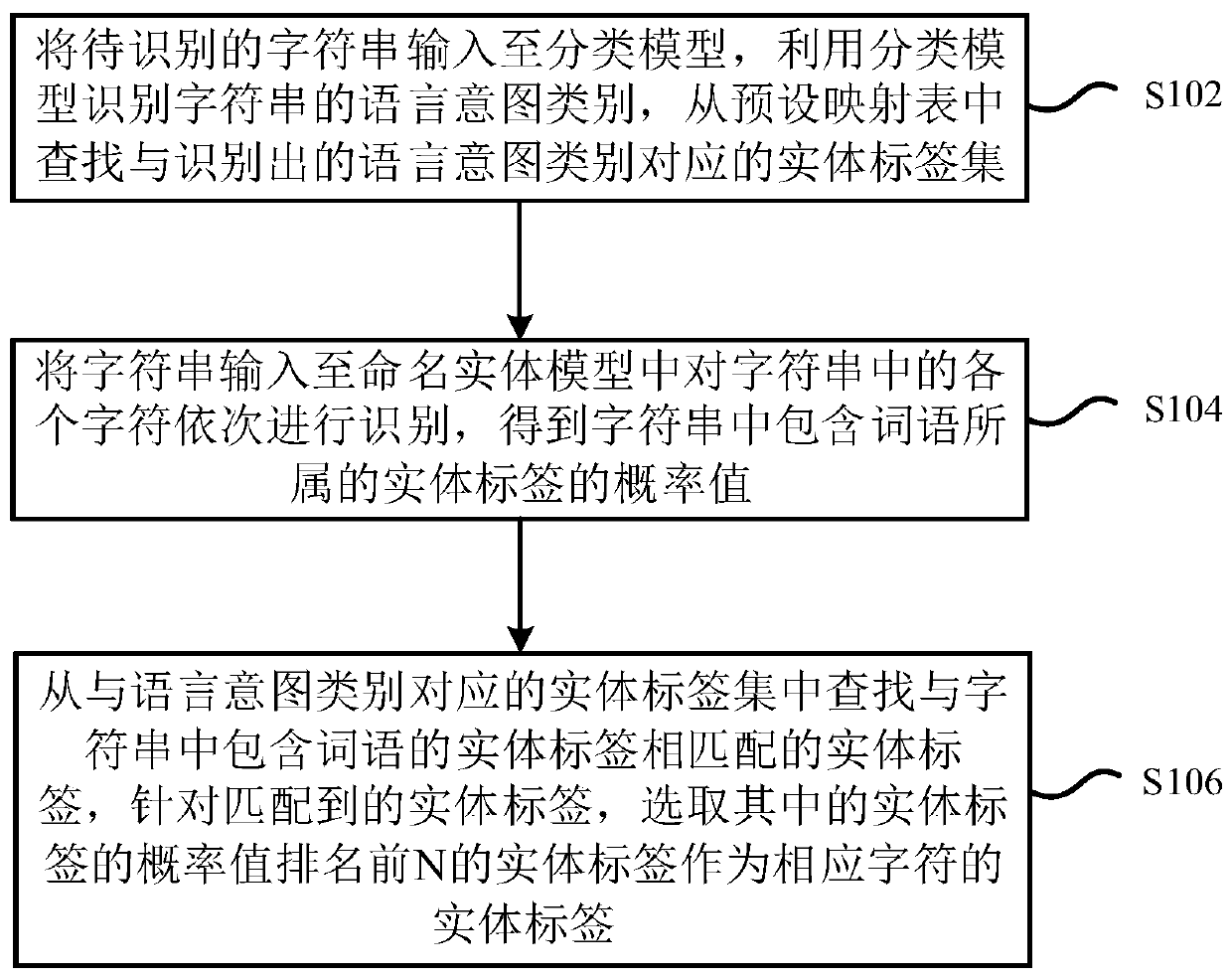

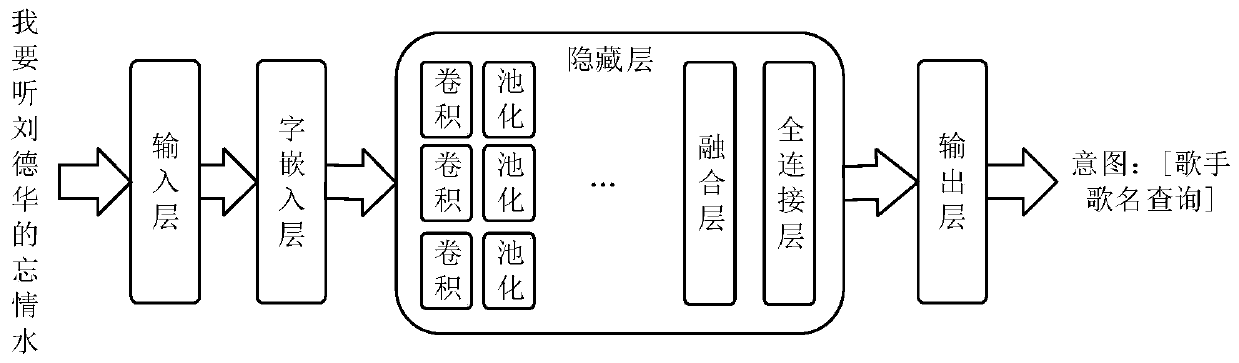

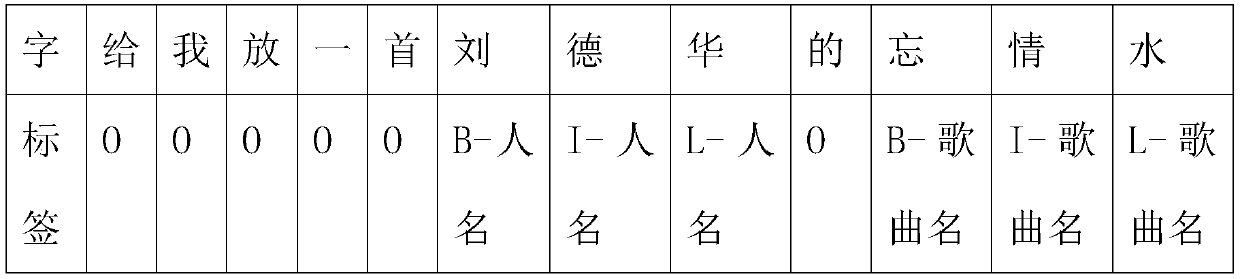

Named entity identification method based on neural network and computer storage medium

ActiveCN110516247AImprove accuracyReduce false recognition rateNeural architecturesSpecial data processing applicationsCategory recognitionNamed-entity recognition

The invention provides a named entity recognition method based on a neural network and a computer storage medium, and the method comprises the steps: inputting a to-be-recognized character string intoa classification model, recognizing the language intention category of the character string through the classification model, and searching an entity label set corresponding to the recognized language intention category from a preset mapping table; inputting the character string into a named entity model to sequentially identify each character in the character string to obtain probability valuesof a plurality of entity tags to which the words belong in the character string; searching an entity label matched with the entity label of the word contained in the character string from an entity label set corresponding to the language intention category, and aiming at the matched entity label, selecting the entity label of which the probability value ranks top N in the matched entity label setas the entity label of the corresponding character. The incorrect entity tags in the named entity model recognition result are filtered through the language intention category recognition result of the classification model, so that the error recognition rate of the named entity model is reduced.

Owner:ECARX (HUBEI) TECHCO LTD

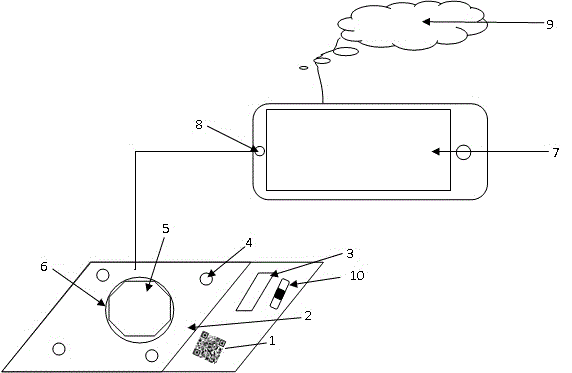

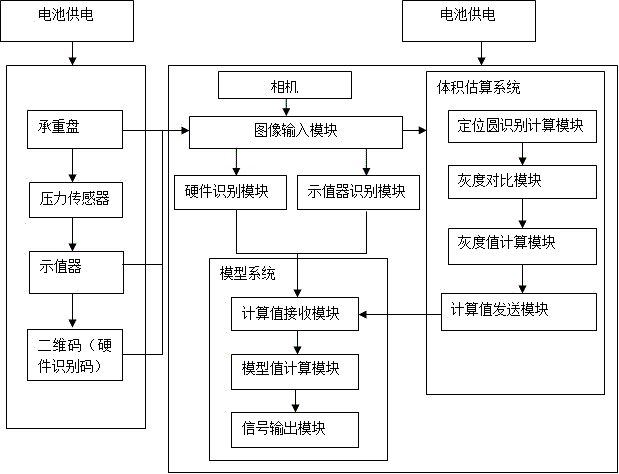

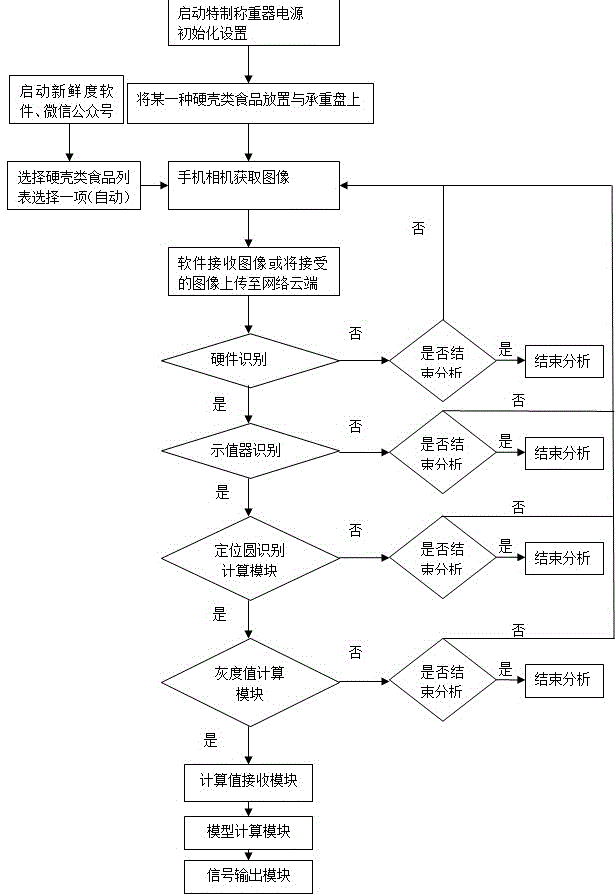

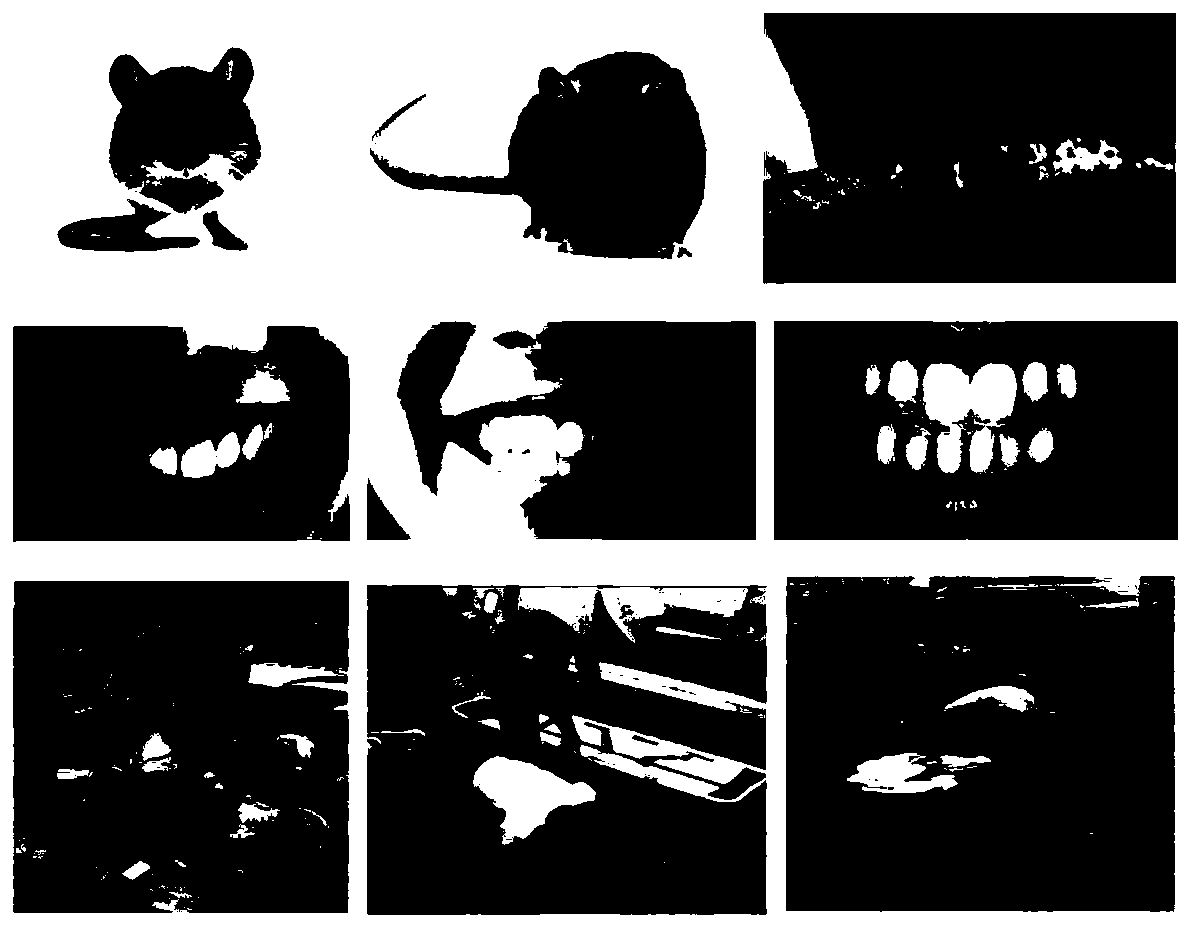

Shell food freshness determination system based on density model and method of system

InactiveCN105547915AFreshness real-time detectionSpecific gravity measurementCategory recognitionIlluminance

The invention relates to the food field, in particular to a shell food freshness determination system based on a density model and a method of the system. A freshness weigher contains a two-dimensional code for software recognition, a groove for fixing food, a gradienter, a weighing disc for calculating the mass of the shell food and a positioning circle capable of estimating the size of the shell food. Freshness software (an APP, a wechat number, a microblog number and a cloude program) acquires an image through a phone camera under a normal daylight lamp (the illuminance is 100-160 Lux) and performs category recognition, mass recognition and size estimation of the shell food according to the acquired image. An automatic substituting and judging model has the advantage that intelligentilization, feedback real-time transformation and model control parameters can be updated in real time on the networking condition and is mainly used for real-time detection on the freshness of part of the shell food in daily life. Through resolving of the judging model set, a series of judging values and judging conclusions of the shell food freshness are obtained without needing to measure all specific indexes, and information is transmitted in real time. Judging results are judged through an indication interval with a certain confidence degree.

Owner:BEIJING WONDER TECH CO LTD

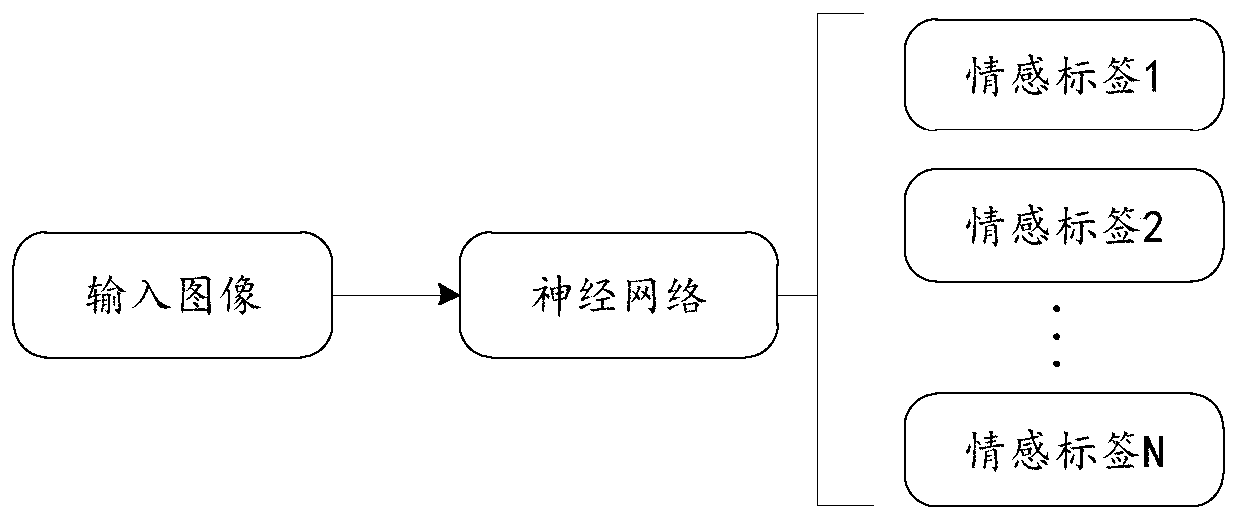

Image emotion recognition method and device, equipment and storage medium

PendingCN110852360AEasy to learnImprove the effect of emotion recognitionCharacter and pattern recognitionNeural architecturesCategory recognitionMedicine

The invention relates to the technical field of computers, in particular to an image emotion recognition method and device, equipment and a storage medium. According to the invention, semantic information and emotion information of an image are decoupled; a corresponding emotion recognition sub-model is constructed for each semantic category; when the method is applied, the semantic category of animage is identified by using the semantic identification sub-model; a target semantic category is determined according to a semantic category identification result; and then a target emotion recognition sub-model corresponding to the target semantic category is determined according to the corresponding relationship between the semantic category and the emotion recognition sub-model, and emotion recognition is performed by using the target emotion recognition sub-model to obtain an emotion recognition result of the image, so that the image emotion recognition precision can be greatly improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

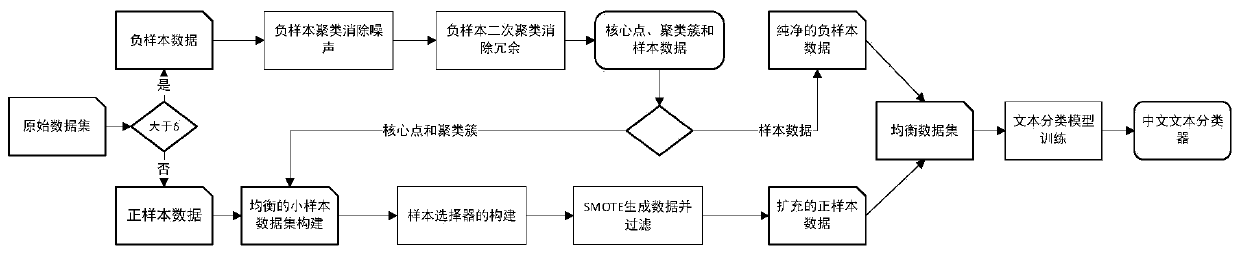

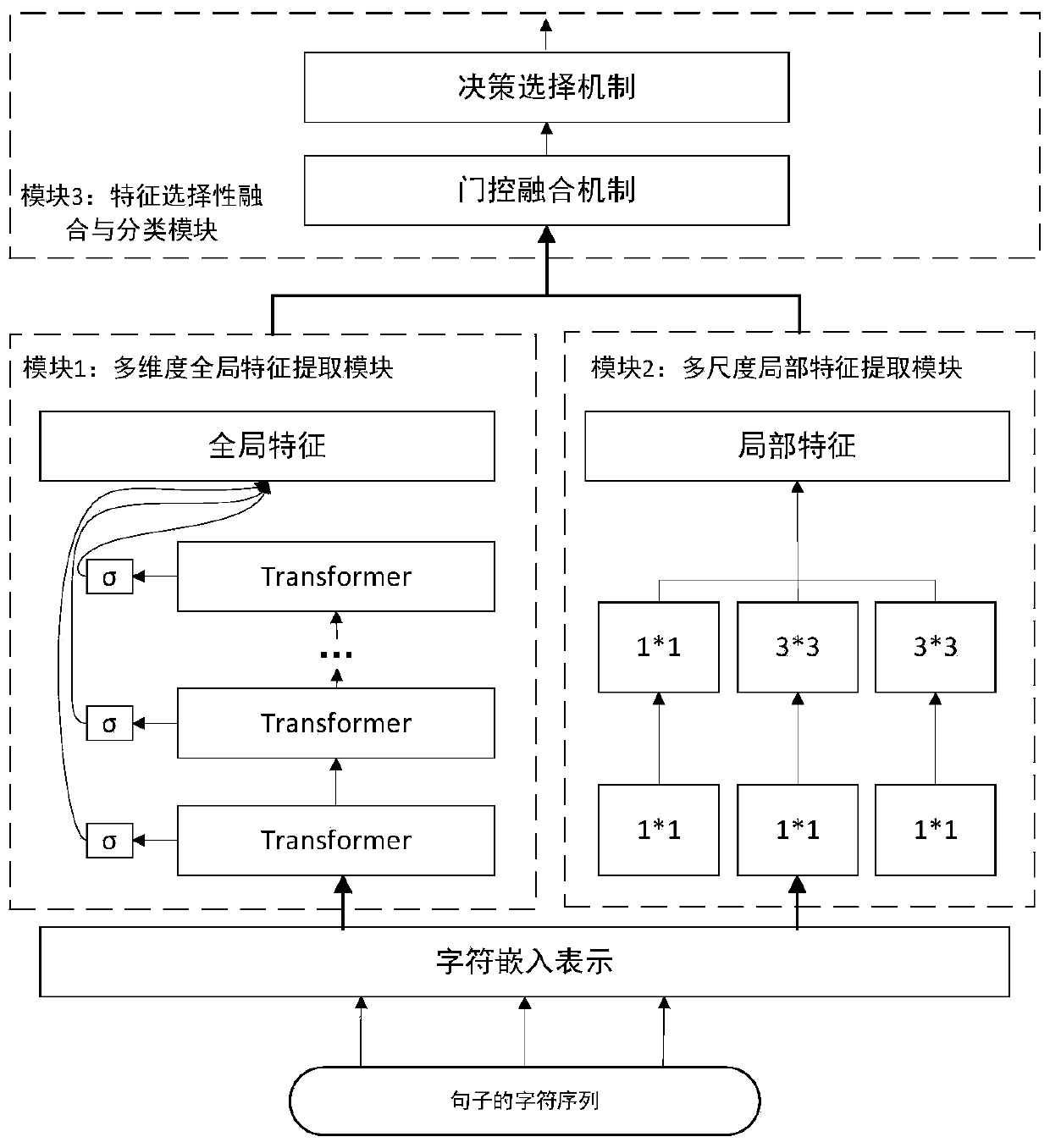

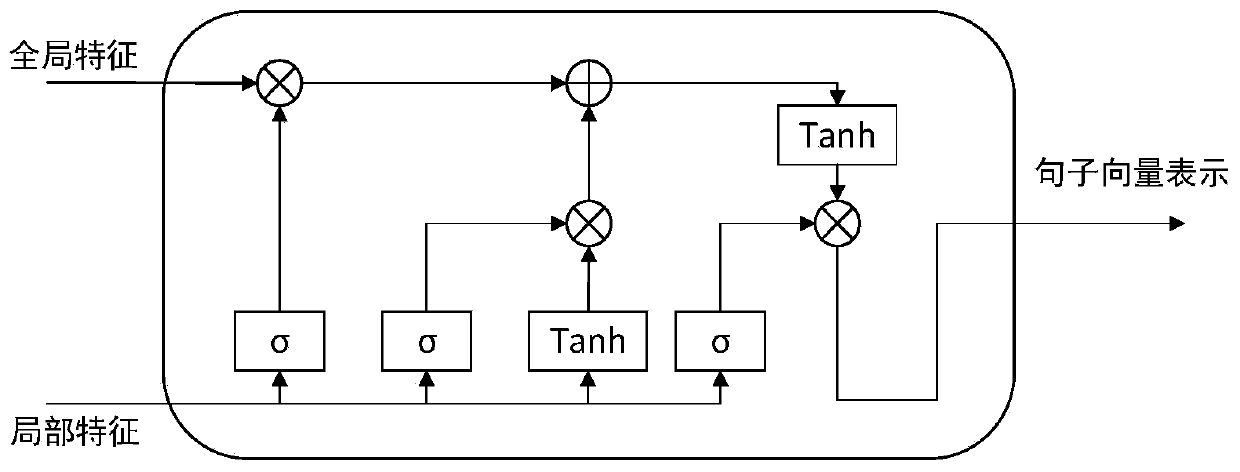

Chinese text category recognition system and method for unbalanced data sampling

PendingCN111581385AOvercoming classification performance degradationSolve the problem of impurityNatural language data processingNeural architecturesCategory recognitionData set

The invention discloses a Chinese text category recognition system and method for unbalanced data sampling. The method comprises the following steps: firstly employing a text encoder to encode a Chinese text, and forming sentence vector representation; secondly, redundant and noise data are removed by adopting a multi-time DBSCAN clustering algorithm for negative sample data, and a deep learning sample selector is constructed for positive sample data to filter samples and select high-quality sample data to supplement positive samples while the samples are randomly generated; and finally, decoding the selected sample data through a text decoder to form text data, and forming a balanced data set together with the processed negative sample data so as to be applied to a text classification model. According to the method, a mixed sampling method is adopted to process data, particularly redundant data processing is performed on negative samples, a deep learning sample selector is constructedfor positive samples to filter generated samples, a high-quality sample training classifier is selected, and the text classification performance is improved.

Owner:XI AN JIAOTONG UNIV

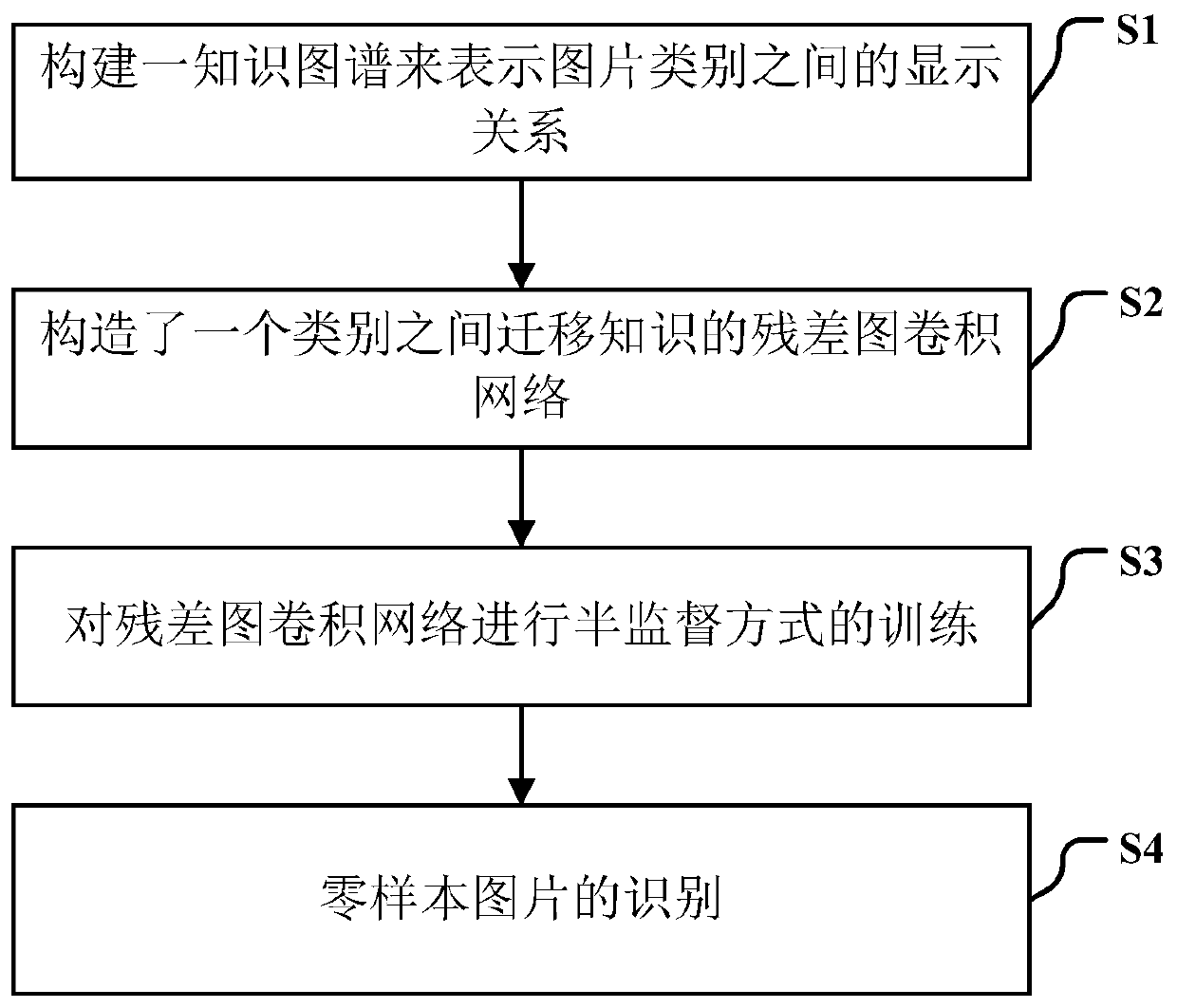

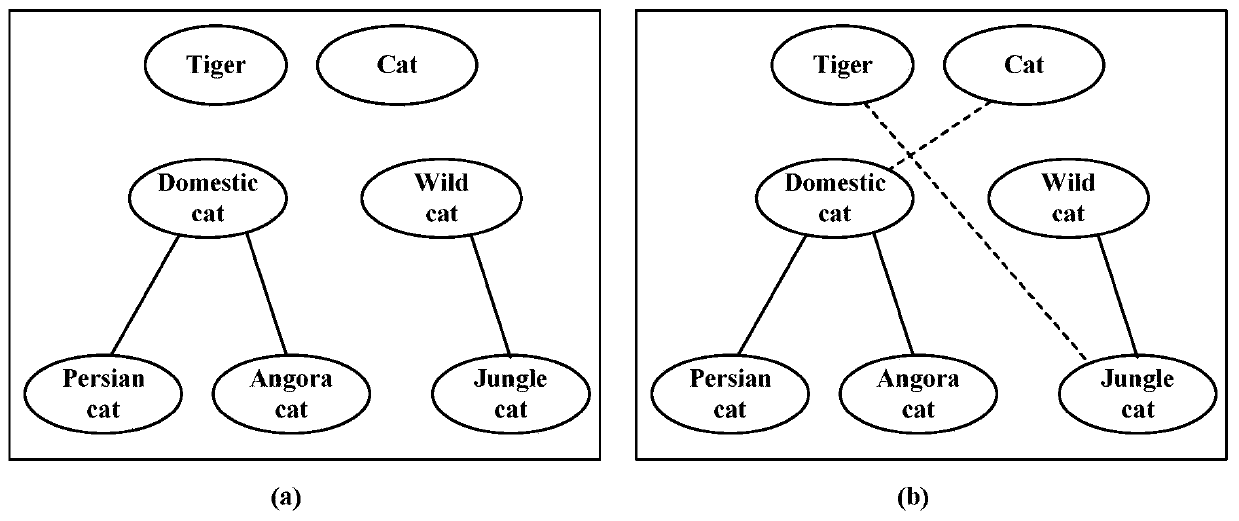

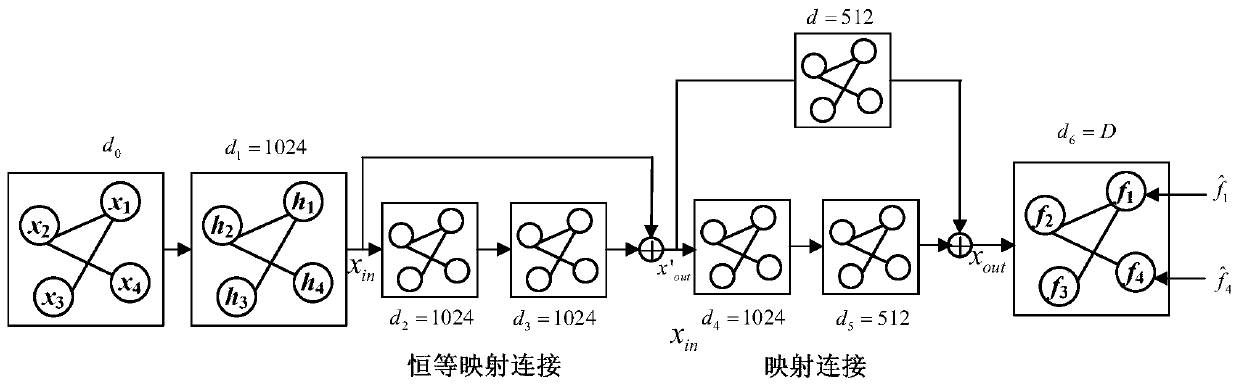

Class identification method for zero sample picture

ActiveCN110222771AAvoid blurAvoid uncertaintyCharacter and pattern recognitionNeural architecturesCategory recognitionAlgorithm

The invention discloses a class identification method for a zero sample picture. The method includes constructing a knowledge graph according to the knowledge of the human to represent explicit relationships among the categories; according to the method and the device, the problems of implicit relation learning and fuzzy and uncertain relation between categories in a semantic space are avoided, meanwhile, a residual image convolutional network is constructed and trained for migrating knowledge between the categories, and category recognition is carried out by adopting maximum inner product values, so that the category recognition accuracy of the zero sample picture is improved.

Owner:成都澳海川科技有限公司

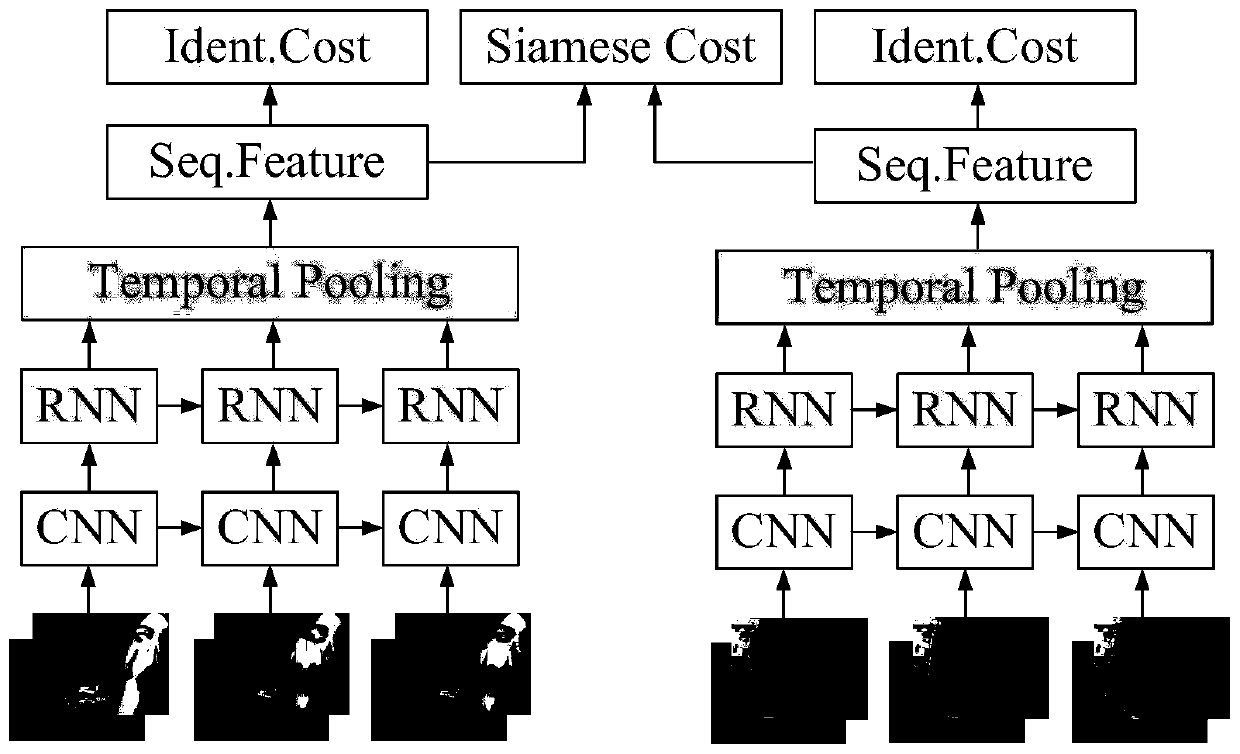

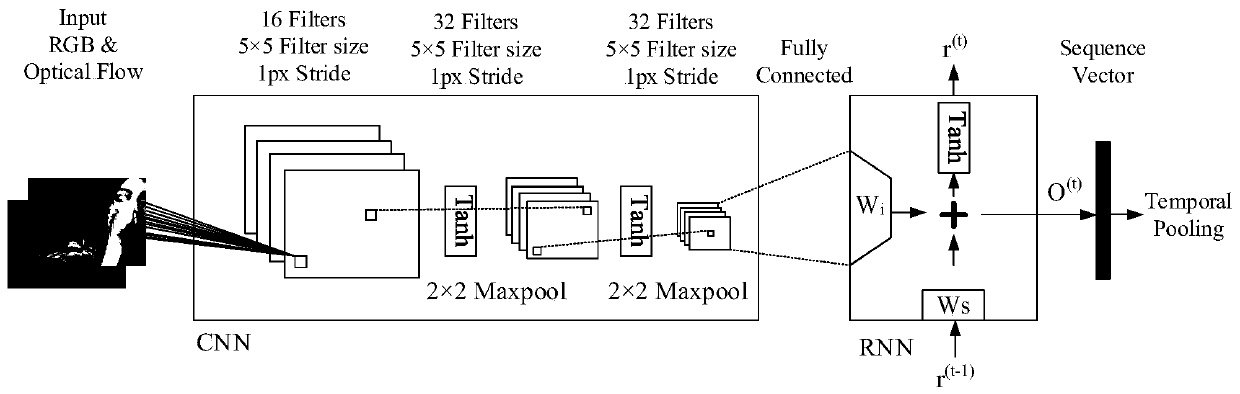

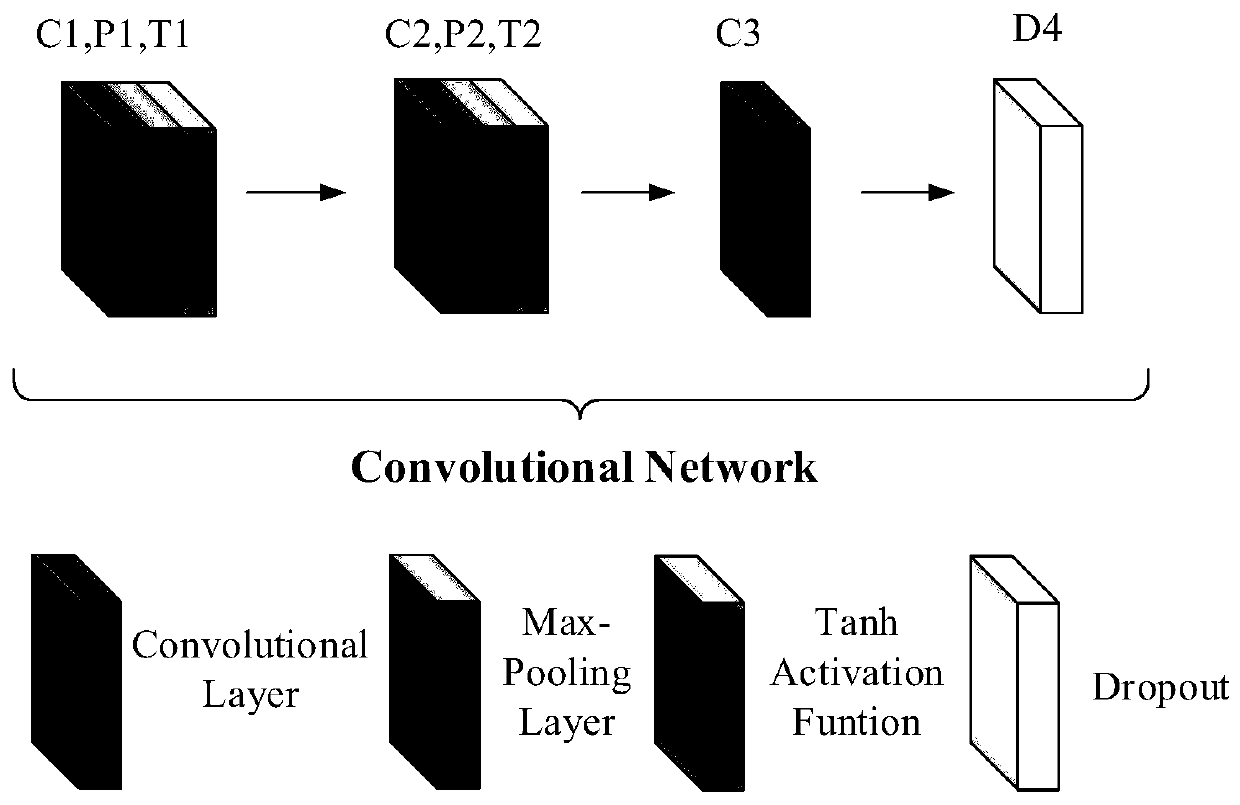

Human body action recognition method based on cyclic convolutional neural network

ActiveCN110503053AHigh precisionEasy to captureCharacter and pattern recognitionNeural architecturesHuman bodyCategory recognition

The invention discloses a human body action recognition method based on a cyclic convolutional neural network, belongs to the field of image classification, pattern recognition and machine learning, and solves the problems of low human body action recognition precision and the like caused by changes inside action categories and between the categories or video composed of continuous frames. The method comprises: constructing a data set, namely randomly selecting sequence pairs with the same length from a public data set, and each frame in each sequence comprising an RGB image and an optical flow image; constructing a twin network, wherein each network in the twin network sequentially comprises a CNN layer, an RNN layer and a Temporal Pooling layer; constructing an 'identification-verification' joint loss function; training a constructed deep convolutional neural network and an 'identification-verification' joint loss function based on the data set; and based on the to-be-recognized human body action sequence pair, sequentially passing through the trained deep convolutional neural network and the trained 'identification-verification' joint loss function to obtain an action category recognition result of the sequence pair. The method is used for human body action recognition in the image.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com