Computer vision-based dynamic gesture recognition method

A computer vision and dynamic gesture technology, applied in the field of image processing, can solve the problems of high background color requirements, cumbersome recognition steps, time-consuming and other problems, and achieve the effect of fast recognition speed, simple steps, and overcoming single requirements.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

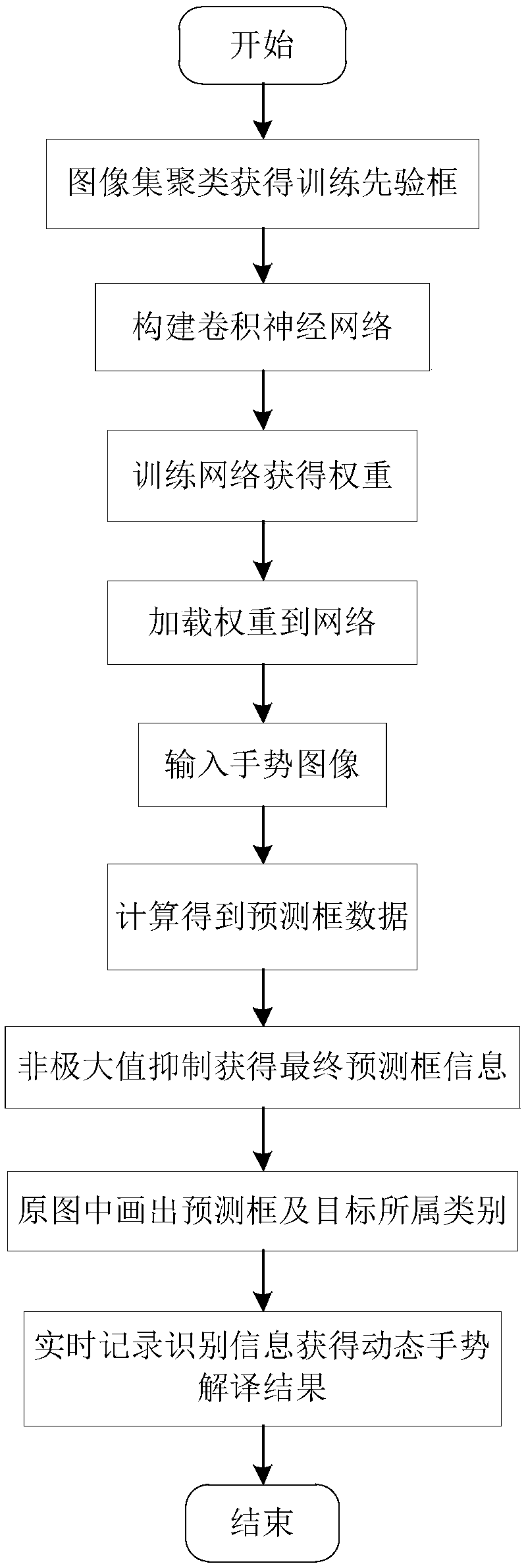

[0035] As a natural and intuitive way of communication, gestures have a good application prospect: using prescribed gestures to control smart devices in virtual reality; used as sign language interpreters to solve the communication problems of deaf-mute people; unmanned driving automatically recognizes traffic police Gestures etc. At present, the traditional method is generally adopted for vision-based gesture recognition technology, that is, to segment gestures first, and then classify gestures. This method requires high quality photos and is difficult to handle gestures in complex backgrounds. Therefore, the development of gesture recognition applications is limited. The present invention has carried out research and innovation aiming at the above-mentioned status quo, and proposes a dynamic gesture recognition method based on computer vision, see figure 1 , including the following steps:

[0036] (1) Collect gesture images: Divide the collected gesture images into a train...

Embodiment 2

[0054] The dynamic gesture recognition method based on computer vision is the same as embodiment 1, and the real data frame clustering of manual labeling in the step (2) of the present invention specifically includes the following steps:

[0055] (2a) Read the artificially labeled real frame data of the training set and test set samples;

[0056] (2b) Set the number of clustering centers, use the k-means clustering algorithm, and perform clustering according to the loss measure d(box,centroid) of the following formula to obtain the prior box:

[0057] d(box,centroid)=1-IOU(box,centroid)

[0058] Among them, centroid represents the randomly selected cluster center frame, box represents other real frames except the center frame, and IOU(box, centroid) represents the similarity between other frames and the center frame, that is, the ratio of the overlapping area of the two frames, Calculated by dividing the intersection of the center box and the other boxes by the union.

[0...

Embodiment 3

[0061] The dynamic gesture recognition method based on computer vision is the same as embodiment 1-2, and the construction convolutional neural network in step (3) of the present invention includes the following steps:

[0062] (3a) Based on the GoogLeNet convolutional neural network, use simple 1*1 and 3*3 convolution kernels to construct a convolutional neural network containing G convolutional layers and 5 pooling layers. In this example, G is taken as 25.

[0063] (3b) Train the constructed convolutional network according to the loss function of the following formula:

[0064]

[0065] Among them, the first item of the loss function is the coordinate loss of the center point of the predicted target frame, where λ coord is the coordinate loss coefficient, 1≤λ coord ≤5, take it as 3 in this example, this is to ensure that the location information of the predicted gesture is accurate; S 2 Indicates the number of grids the picture is divided into, and B indicates the num...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com