Patents

Literature

301 results about "Natural user interface" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a natural user interface, or NUI, or natural interface is a user interface that is effectively invisible, and remains invisible as the user continuously learns increasingly complex interactions. The word natural is used because most computer interfaces use artificial control devices whose operation has to be learned.

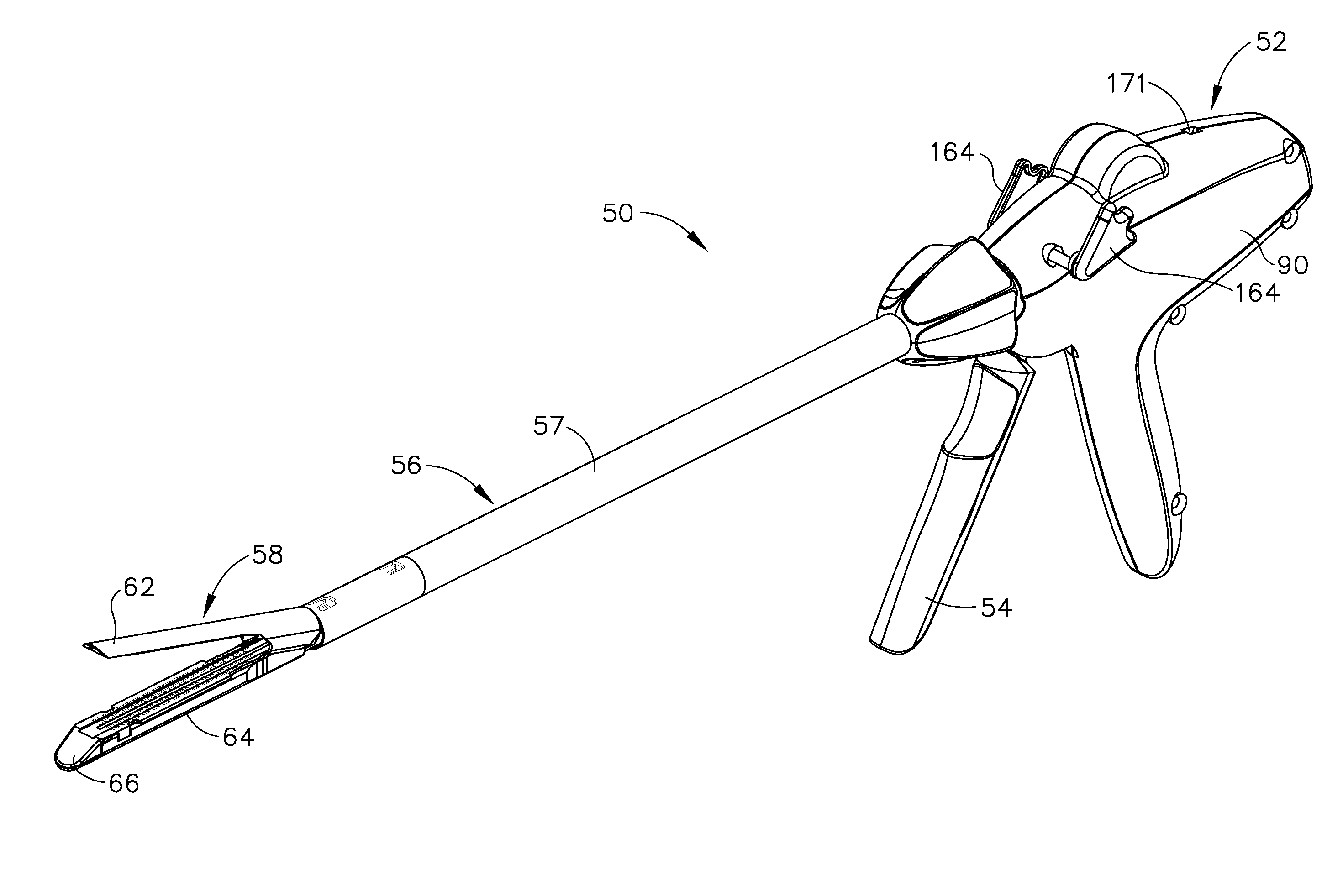

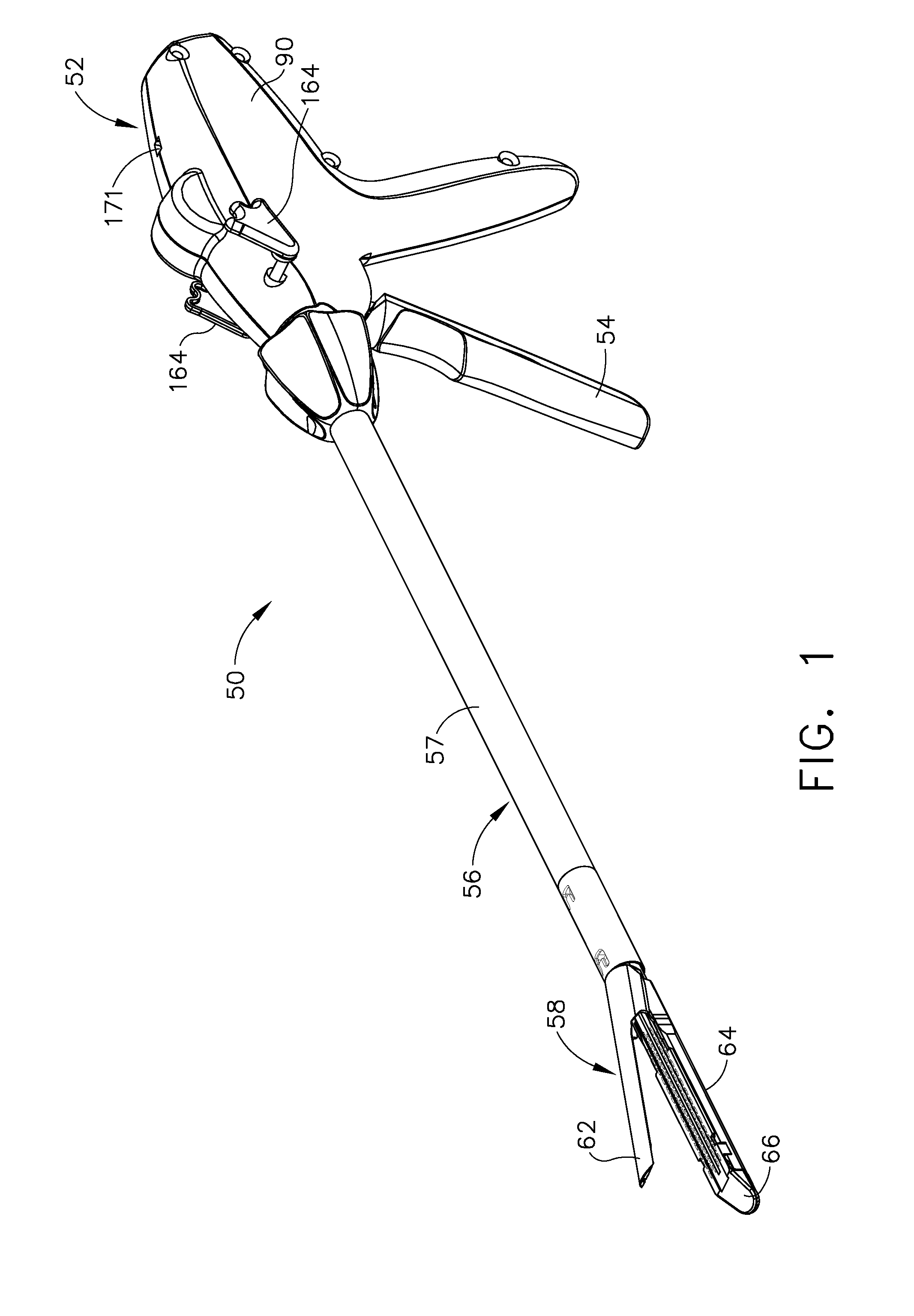

Surgical instrument having a multiple rate directional switching mechanism

A surgical instrument having a remotely controllable user interface, and a firing drive configured to generate a rotary firing motion upon a first actuation of the remotely controllable user interface and a rotary refraction motion upon an other actuation of remotely controllable user interface. The instrument is such that when the remotely controllable user interface operates a first drive member, the first actuation advances a cutting member a first distance, wherein, when the remotely controllable user interface operates a second drive member, the other actuation retracts the cutting member a second distance, and wherein the second distance is greater than the first distance.

Owner:CILAG GMBH INT

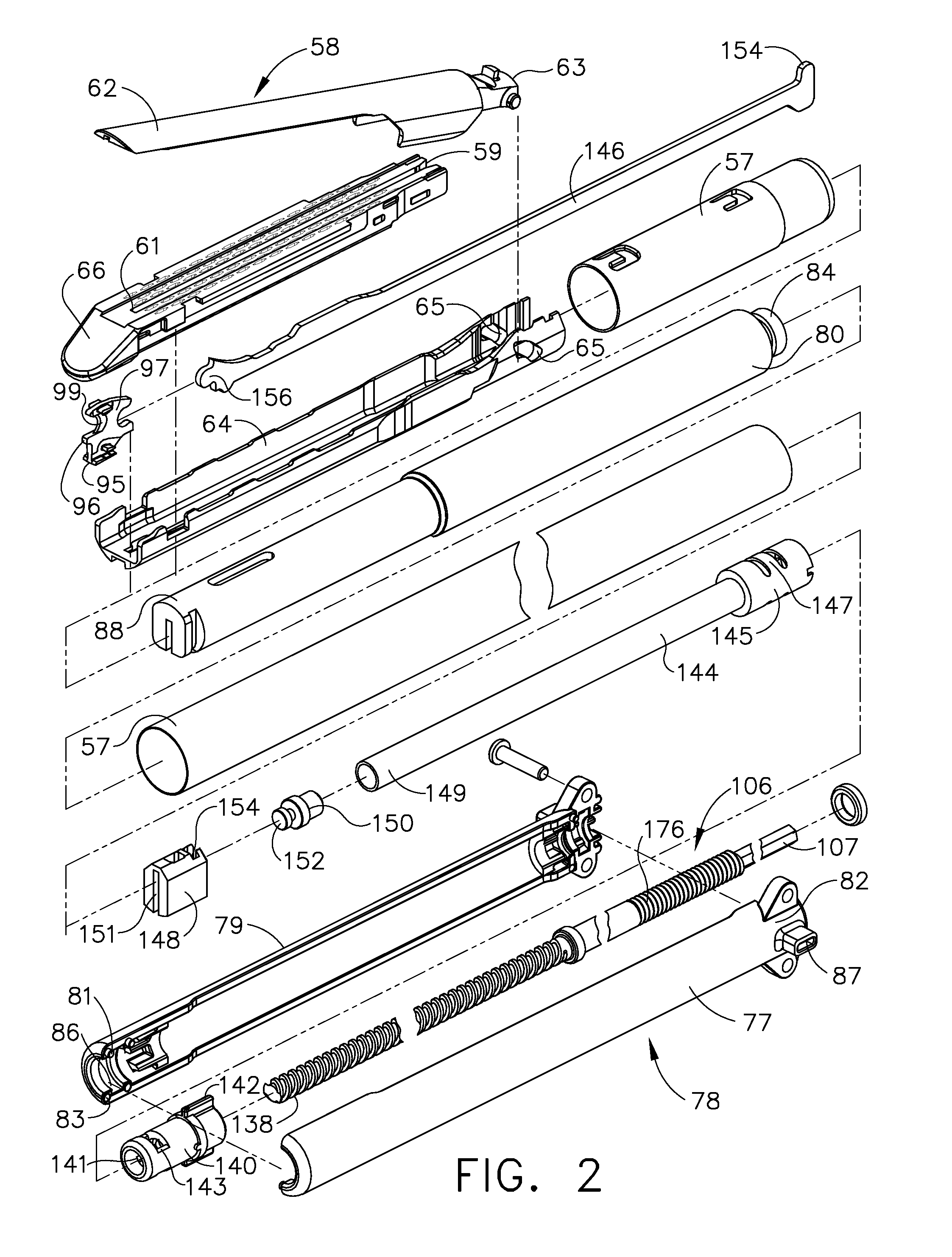

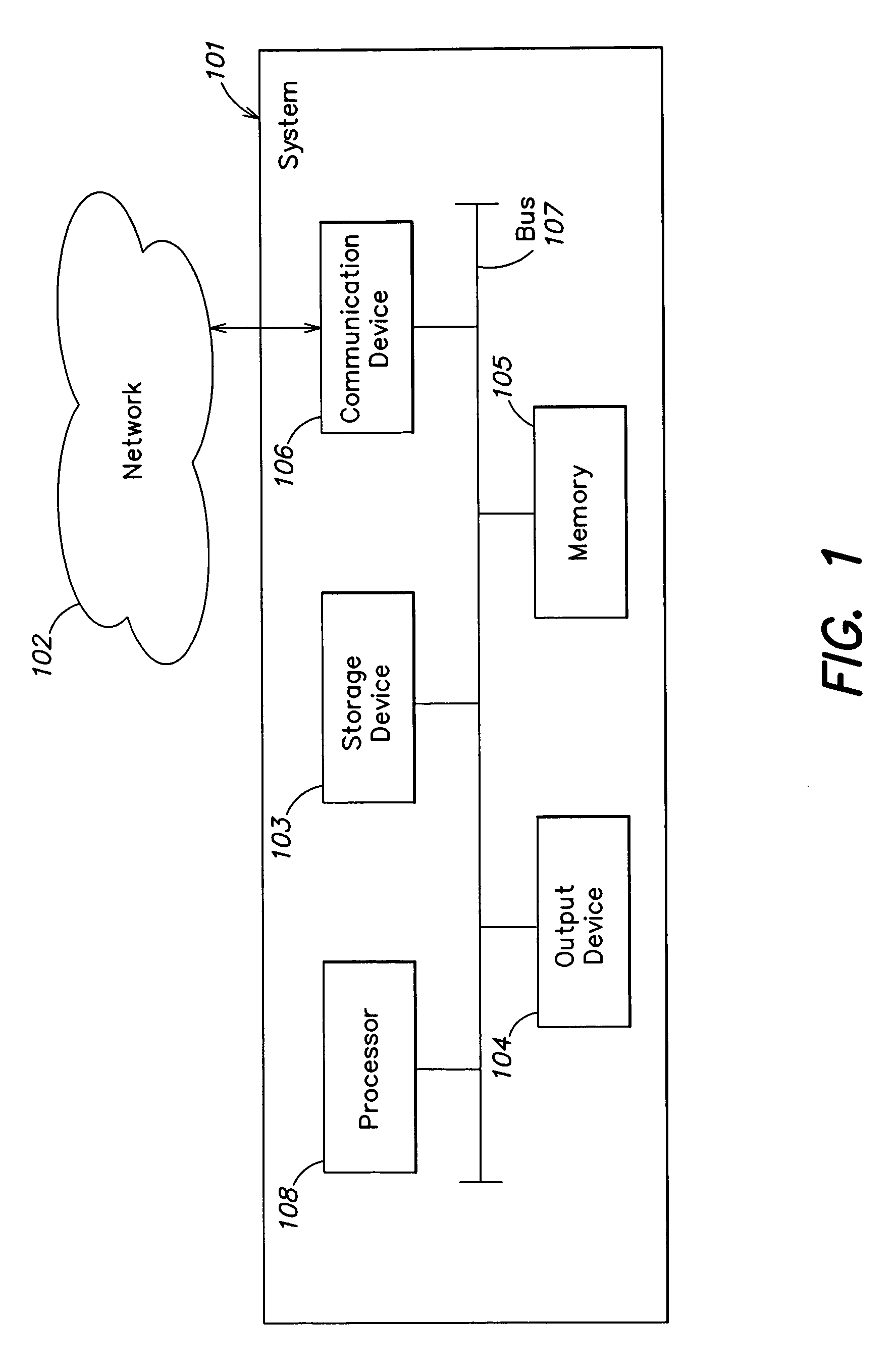

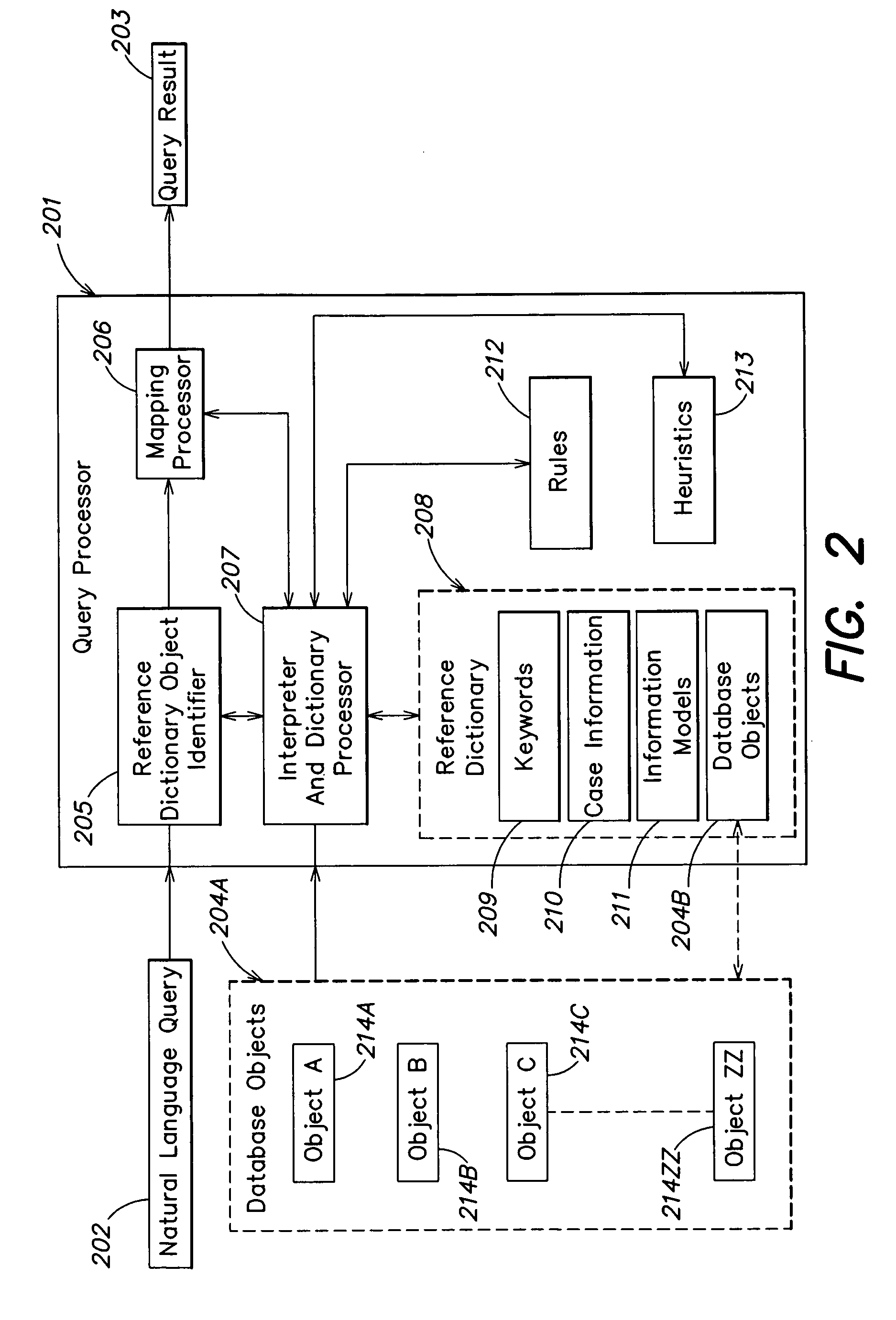

Natural language interface using constrained intermediate dictionary of results

InactiveUS7177798B2Not assureSpeech recognitionSpecial data processing applicationsR languageInformation type

A method for processing a natural language input provided by a user includes: providing a natural language query input to the user; performing, based on the input, a search of one or more language-based databases; providing, through a user interface, a result of the search to the user; identifying, for the one or more language-based databases, a finite number of database objects; and determining a plurality of combinations of the finite number of database objects. The one or more language-based databases include at least one metadata database including at least one of a group of information types including case information, keywords, information models, and database values.

Owner:RENESSELAER POLYTECHNIC INST

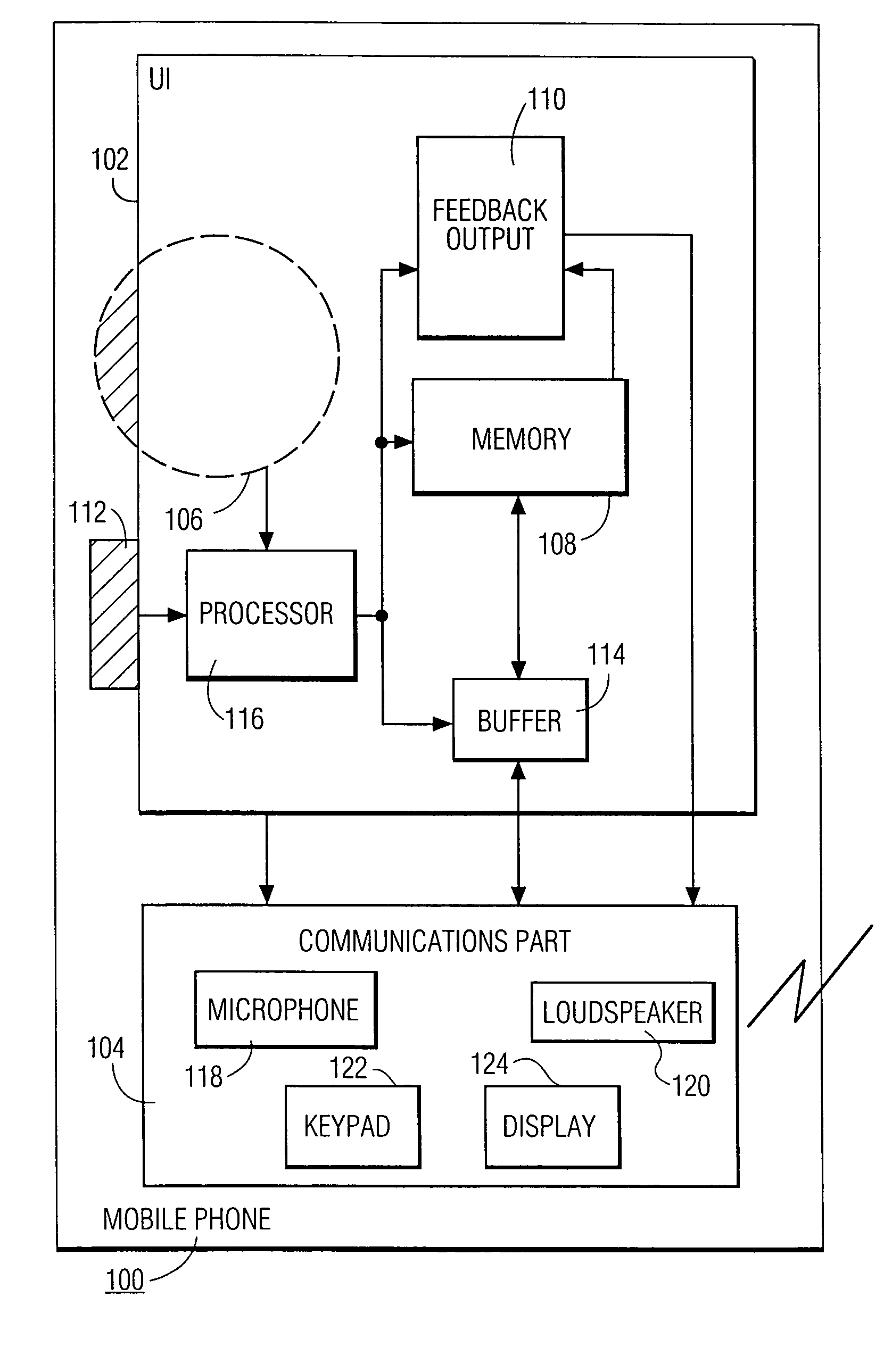

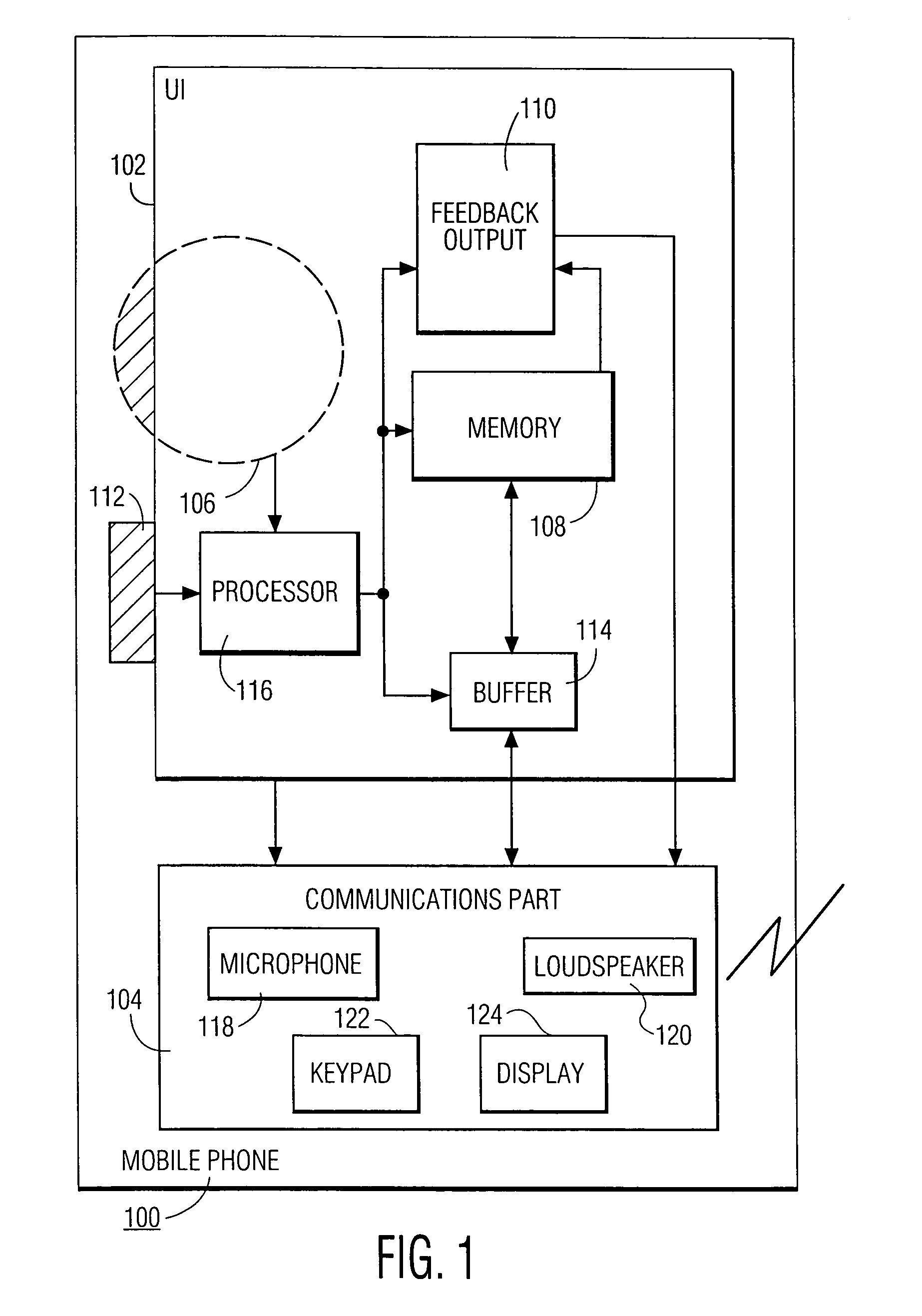

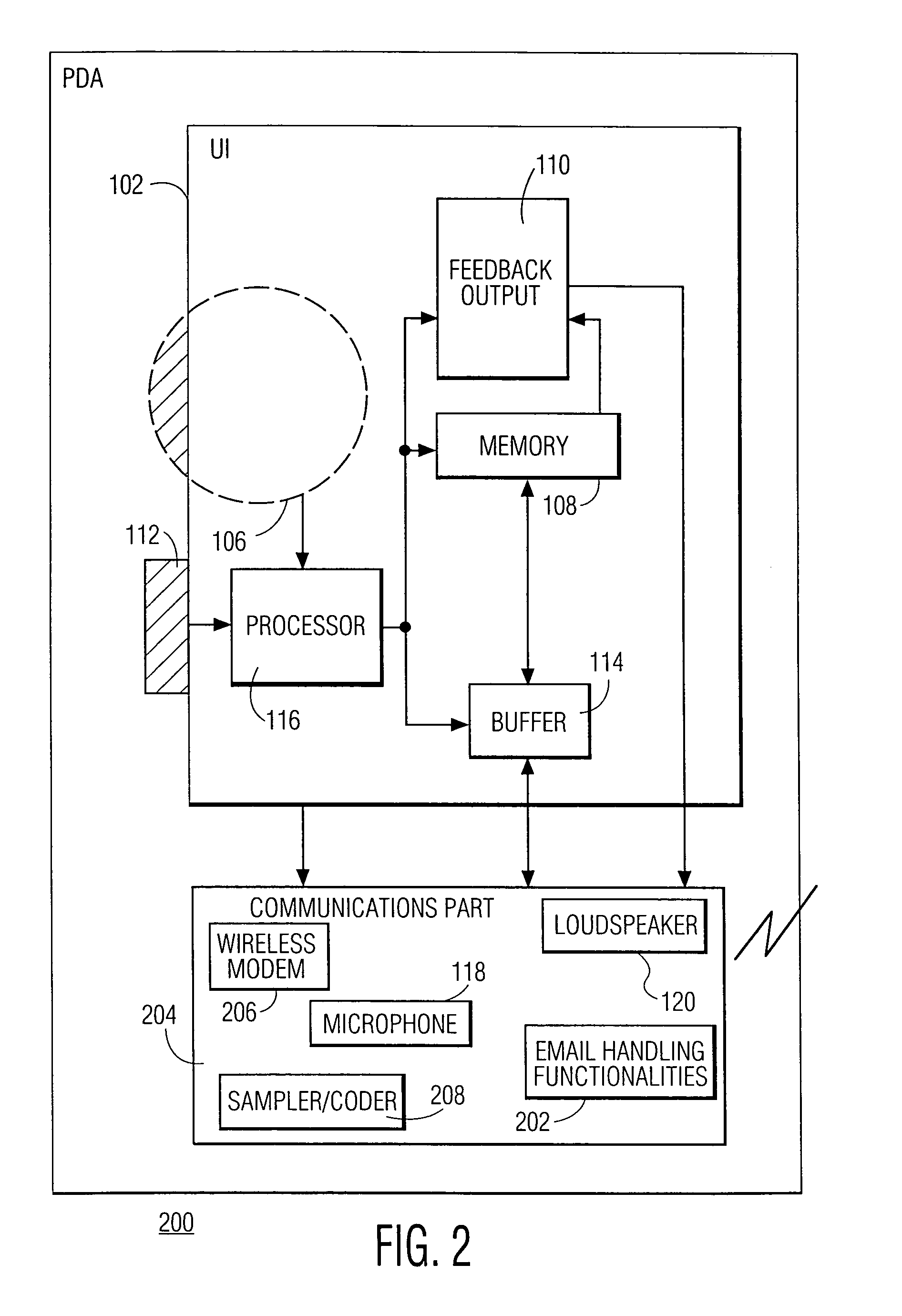

Hand-ear user interface for hand-held device

InactiveUS6978127B1Small sizeInput/output for user-computer interactionInterconnection arrangementsInformation processingPersonalization

A hand-held information processing device, such as a mobile phone, has a thumb wheel that lets the user scan a circular array of options. Each respective one of the options is represented by a respective audio output that gets played out when the wheel is turned a notch up or down. This enables the user to select an option with one hand and without having to look at the device. It also allows for a form factor smaller than that of a conventional mobile phones since a keypad is not needed for entering digits to make a call from a personalized directory.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

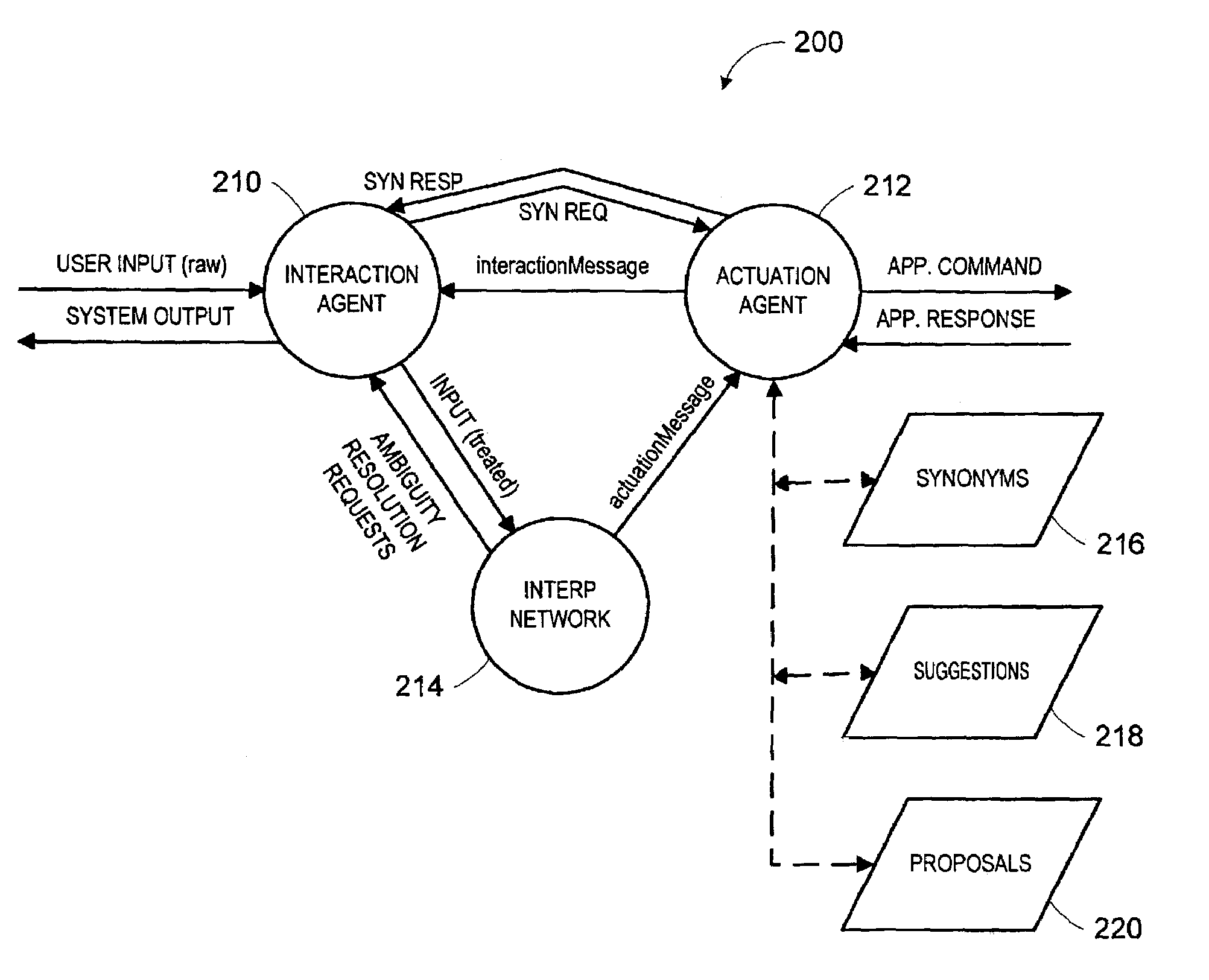

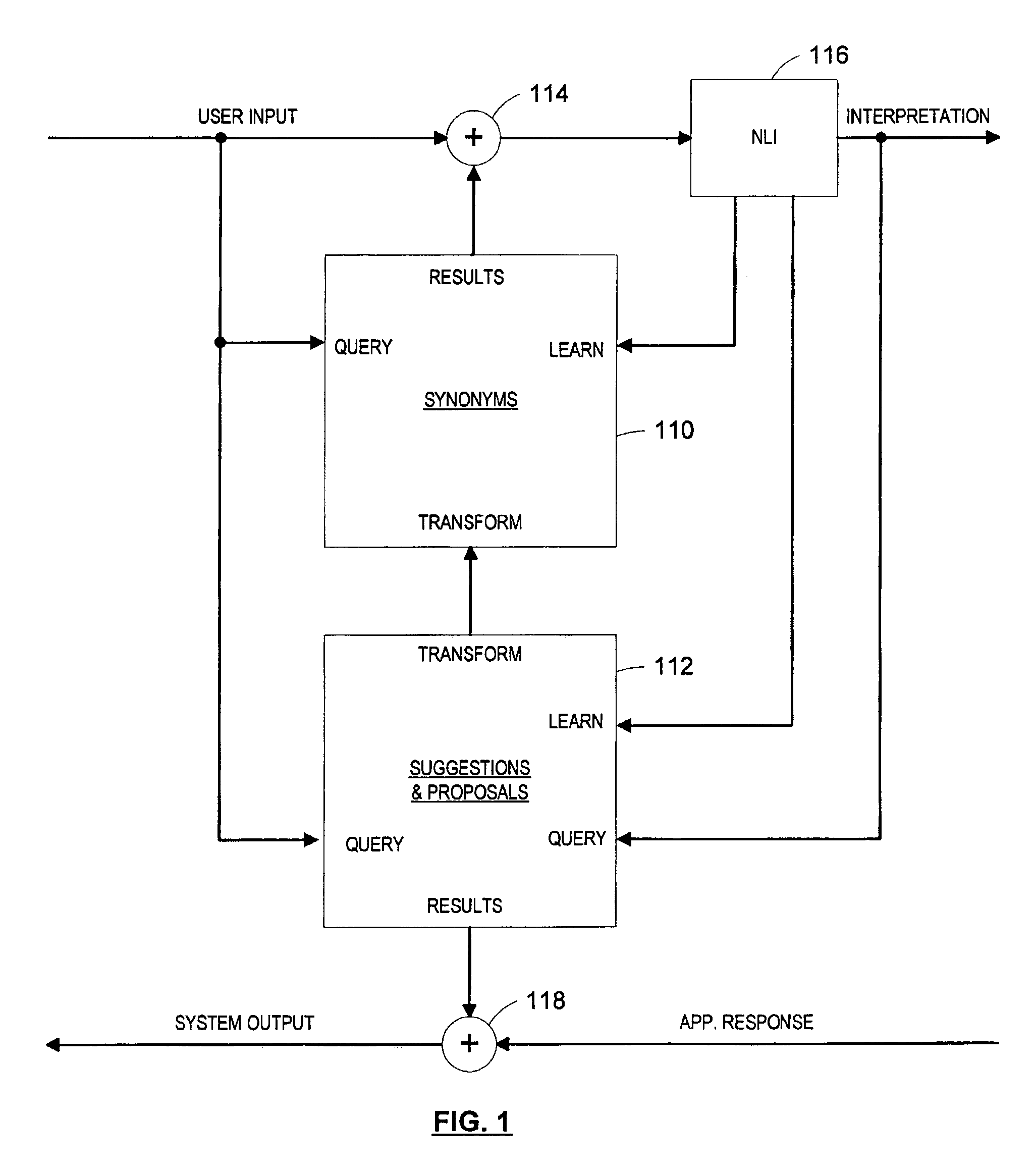

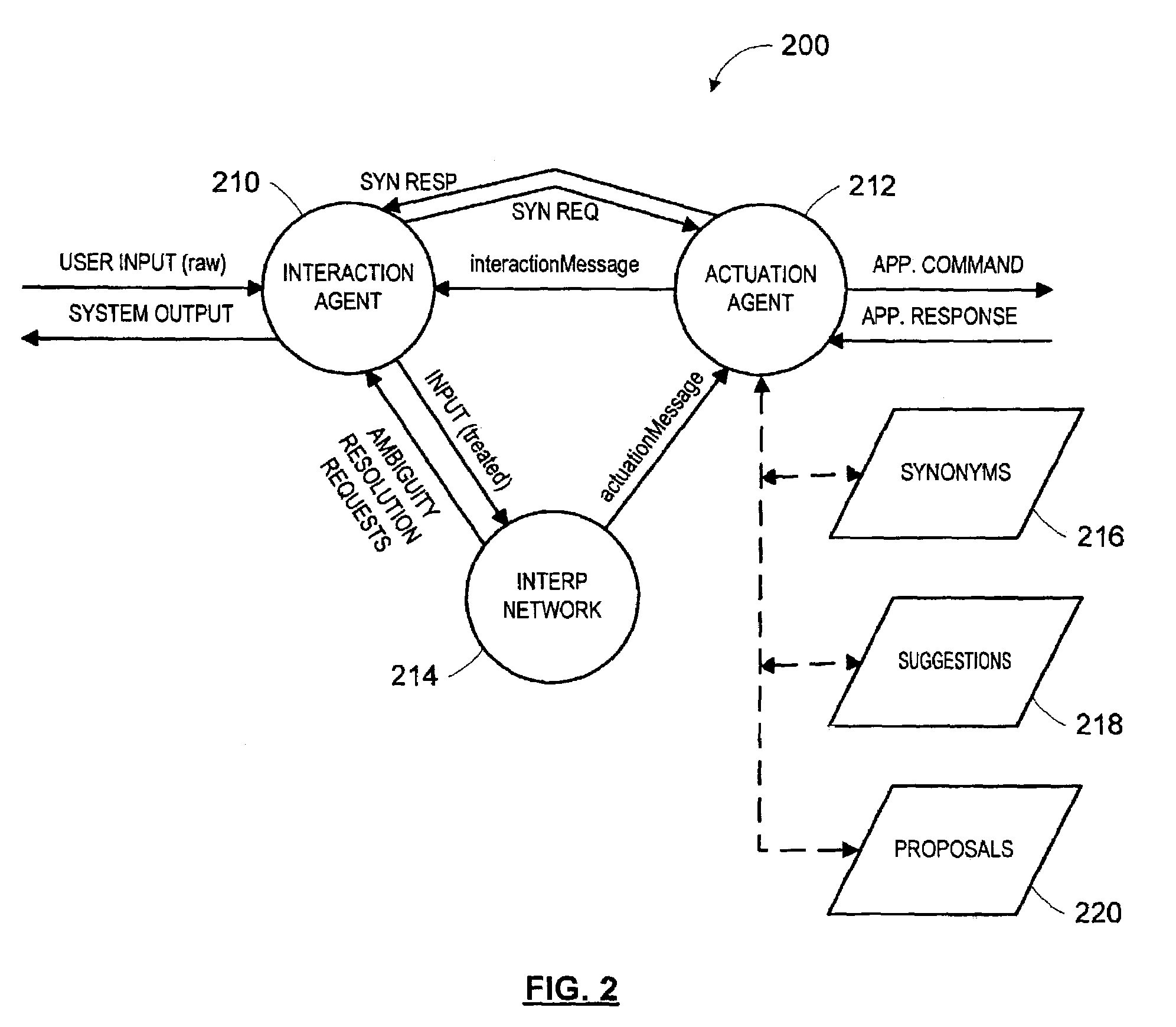

Synonyms mechanism for natural language systems

Roughly described, a natural language interface to a back-end application incorporates synonyms automatically added to user input to enhance the natural language interpretation. Synonyms can be learned from user input and written into a synonyms database. Their selection can be based on tokens identified in user input. Natural language interpretation can be performed by agents arranged in a network, which parse the user input in a distributed manner. In an embodiment, a particular agent of the natural language interpreter receives a first message that includes the user input, returns a message claiming at least a portion of the user input, and subsequently receives a second message delegating actuation of at least that portion to the particular agent.

Owner:IANYWHERE SOLUTIONS

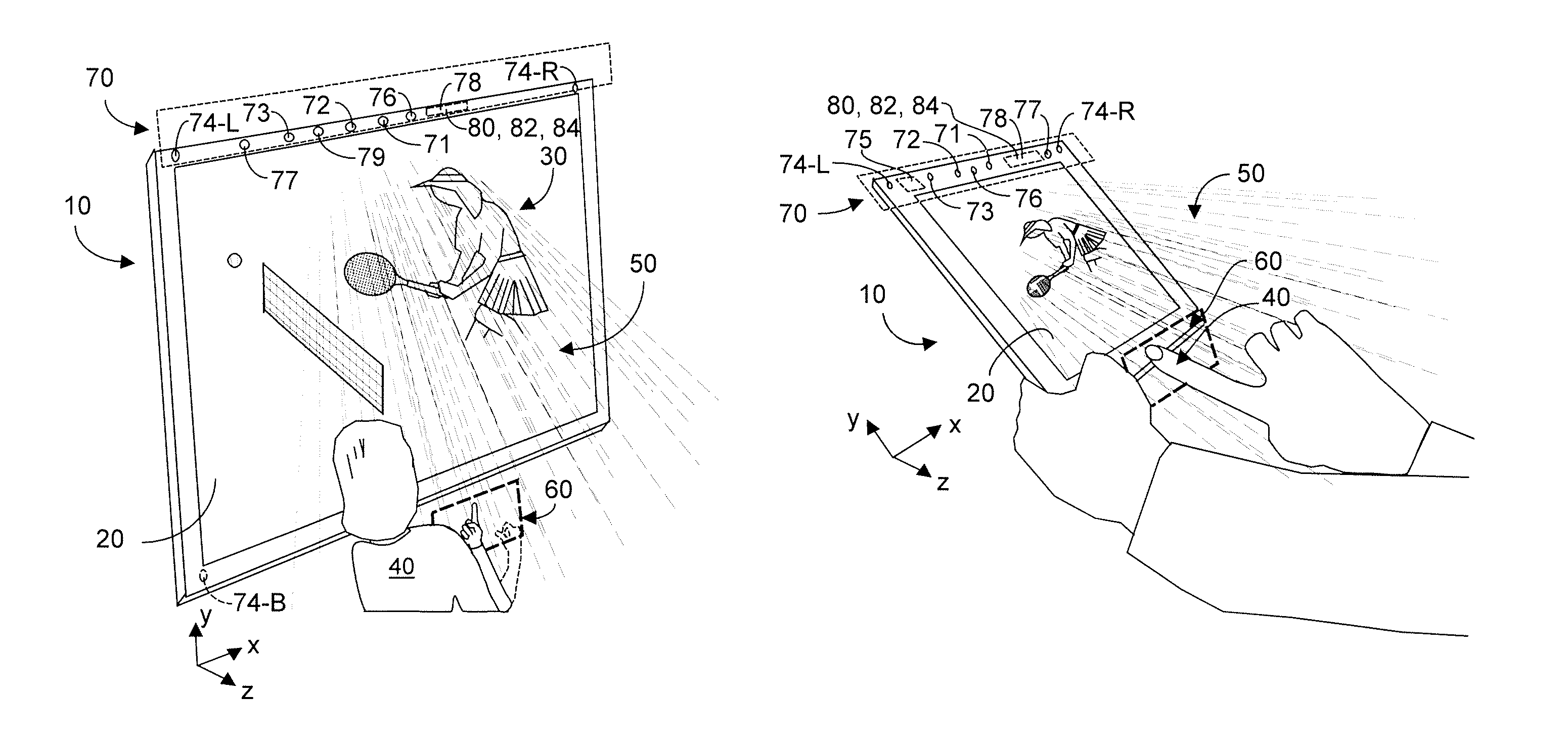

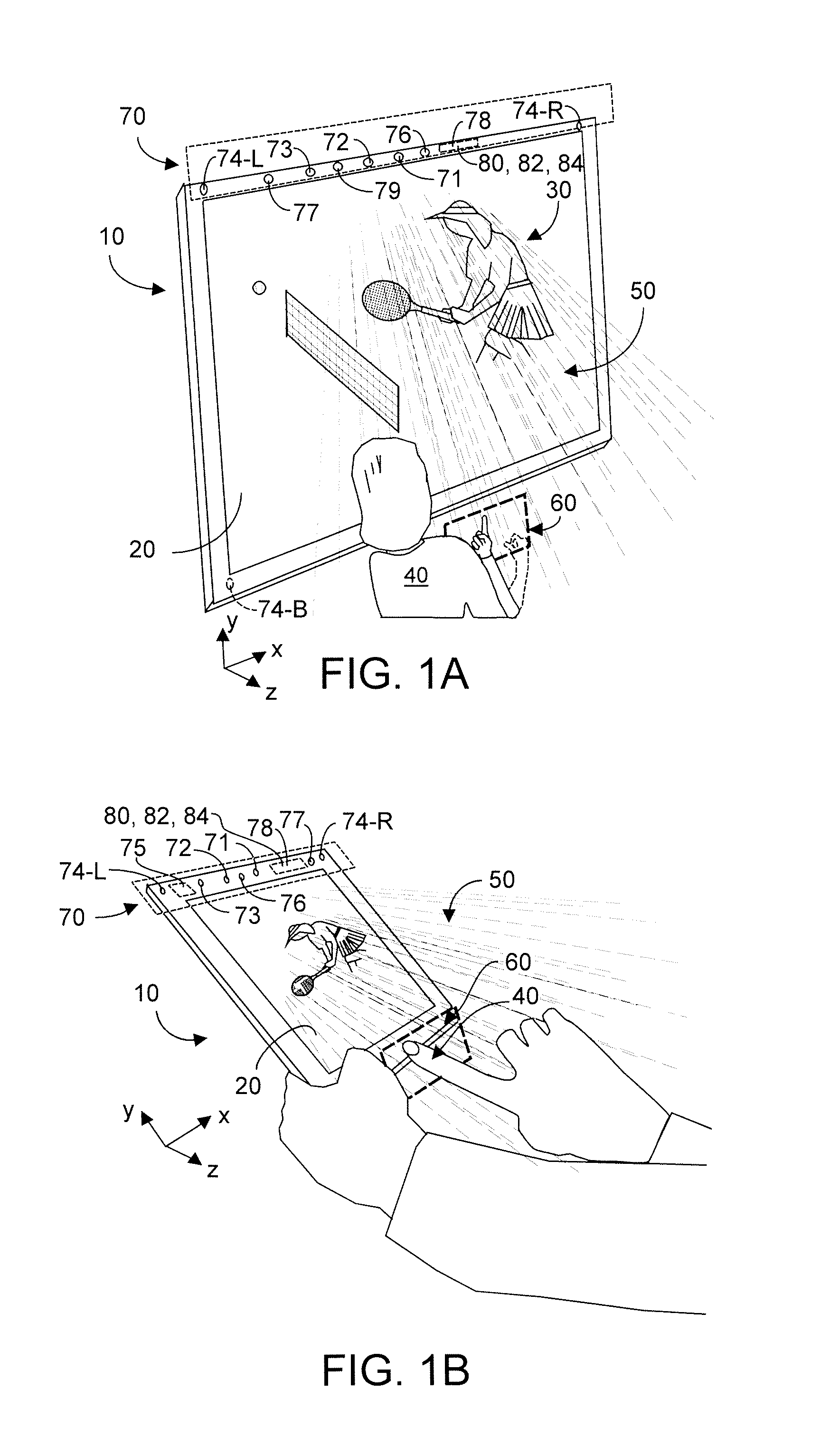

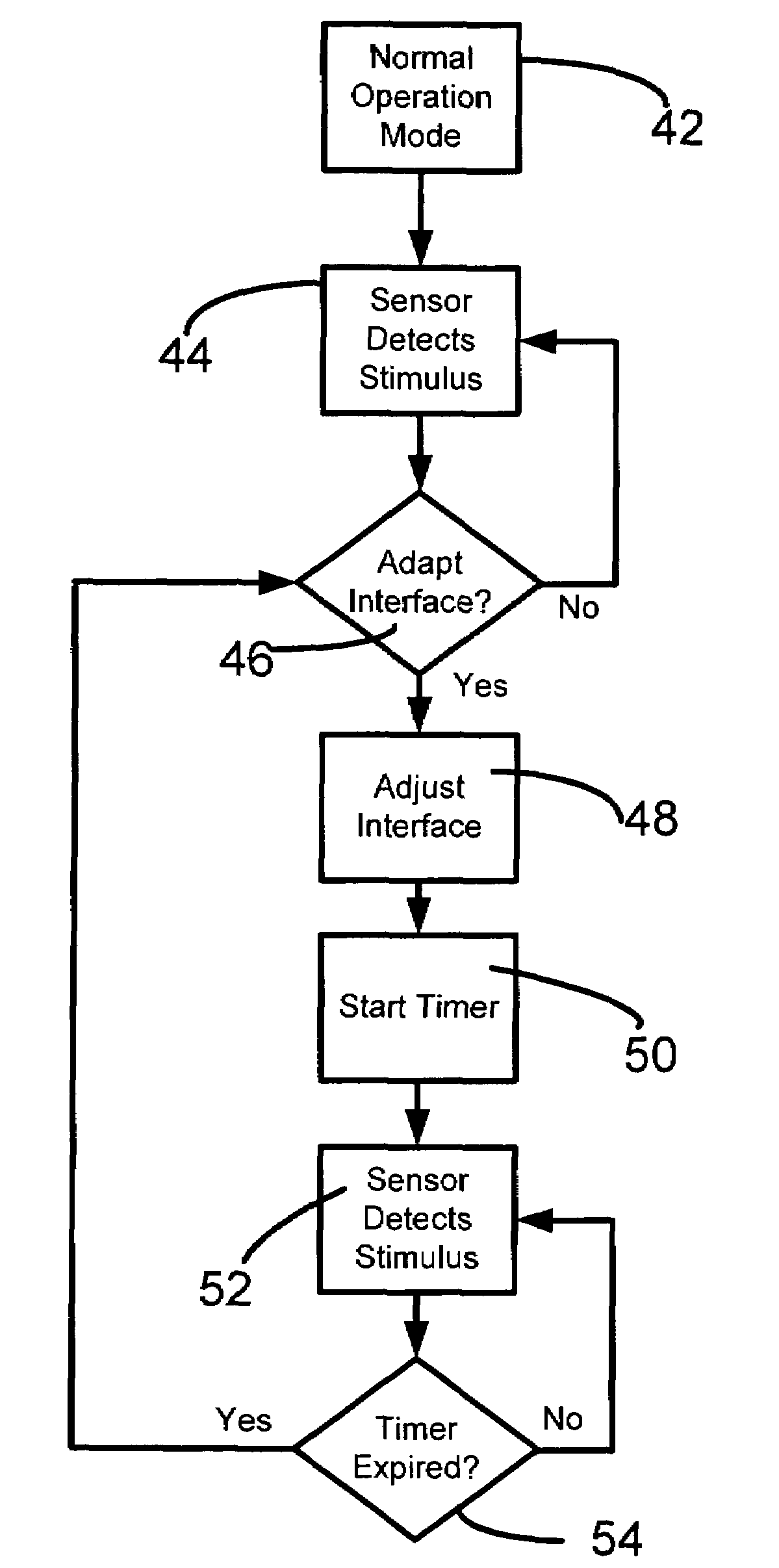

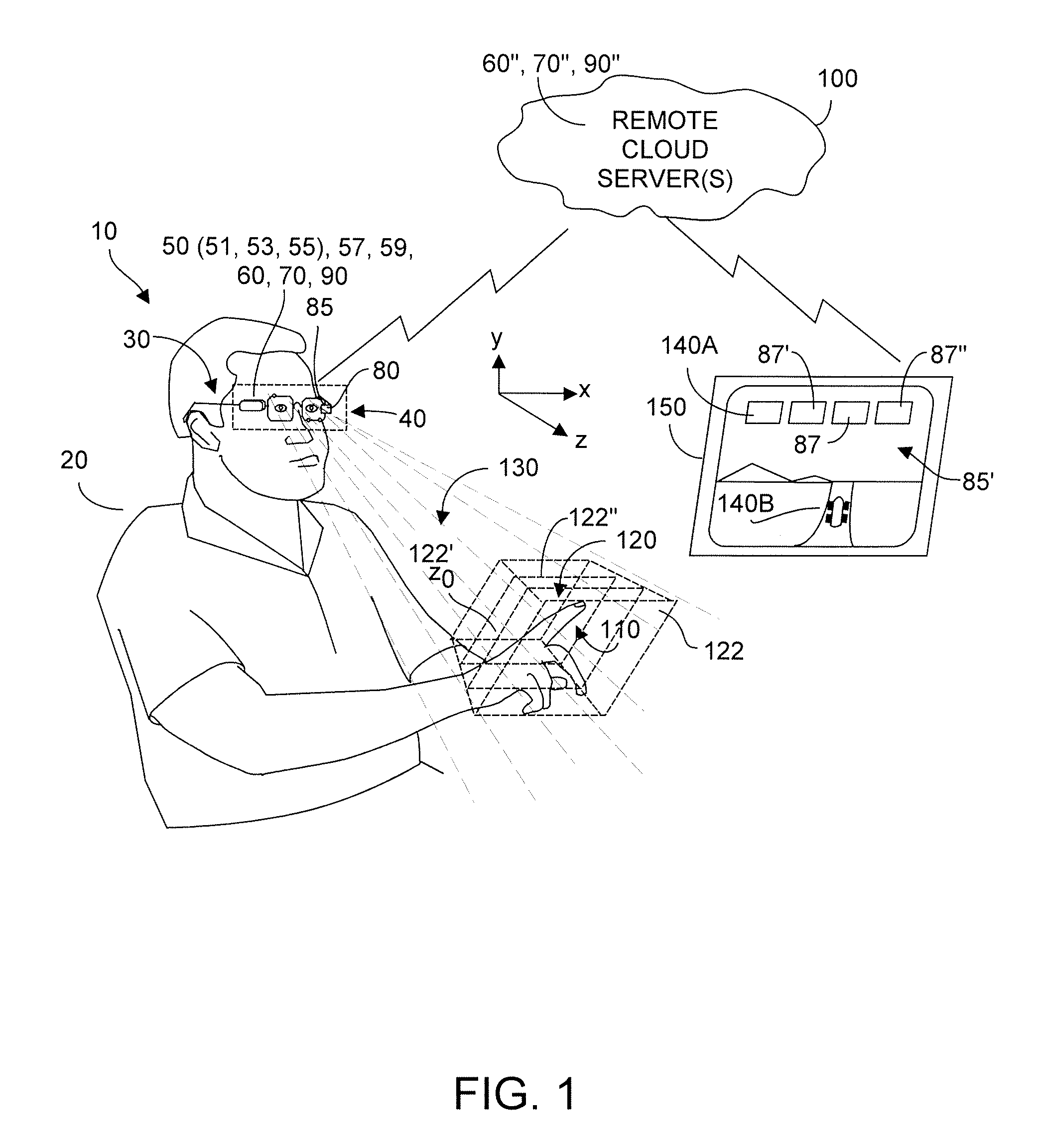

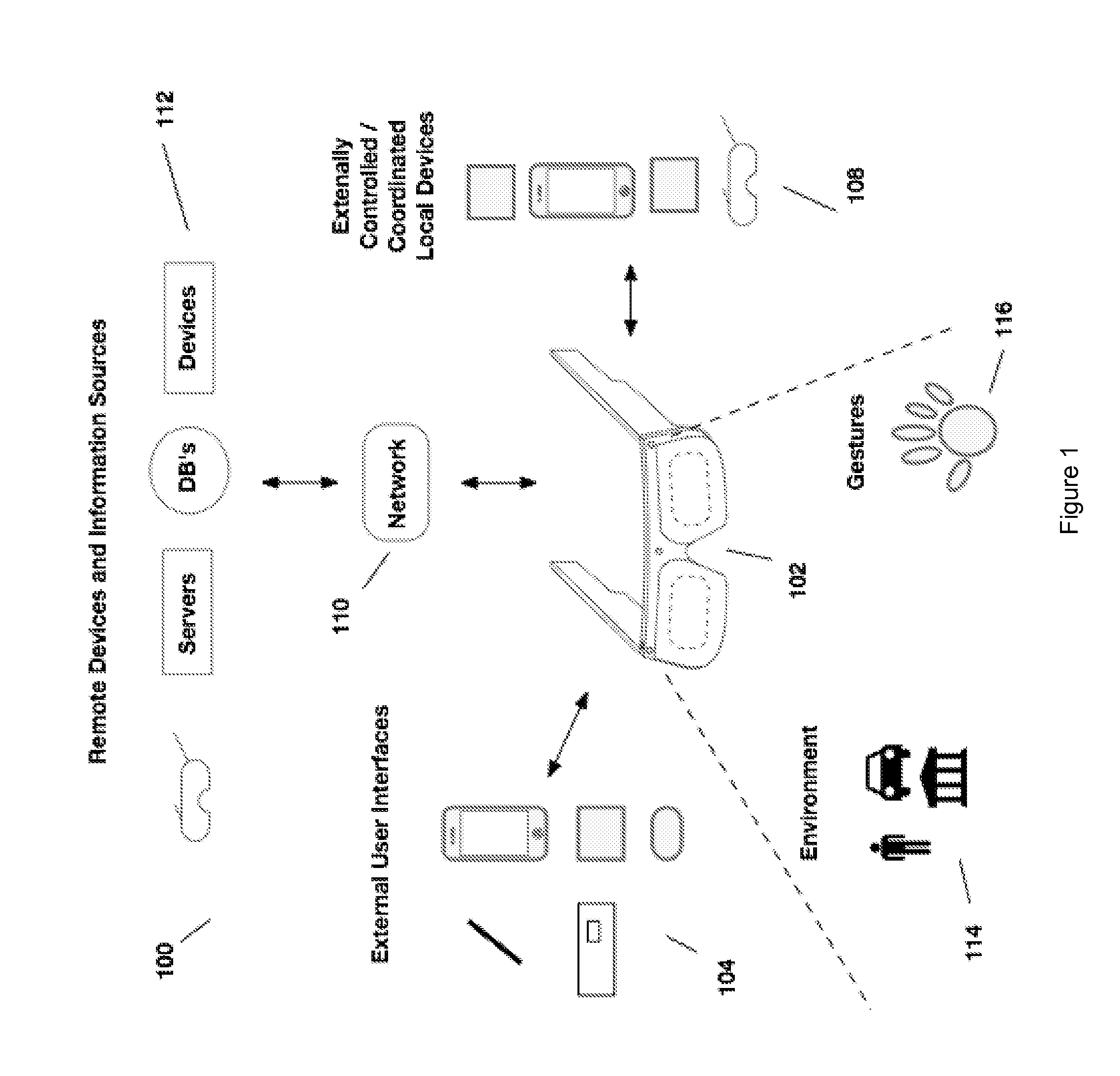

Method and system enabling natural user interface gestures with an electronic system

ActiveUS8854433B1Speed up the processReduce power consumptionInput/output for user-computer interactionClosed circuit television systemsElectronic systemsHand movements

An electronic device coupleable to a display screen includes a camera system that acquires optical data of a user comfortably gesturing in a user-customizable interaction zone having a z0 plane, while controlling operation of the device. Subtle gestures include hand movements commenced in a dynamically resizable and relocatable interaction zone. Preferably (x,y,z) locations in the interaction zone are mapped to two-dimensional display screen locations. Detected user hand movements can signal the device that an interaction is occurring in gesture mode. Device response includes presenting GUI on the display screen, creating user feedback including haptic feedback. User three-dimensional interaction can manipulate displayed virtual objects, including releasing such objects. User hand gesture trajectory clues enable the device to anticipate probable user intent and to appropriately update display screen renderings.

Owner:KAYA DYNAMICS LLC

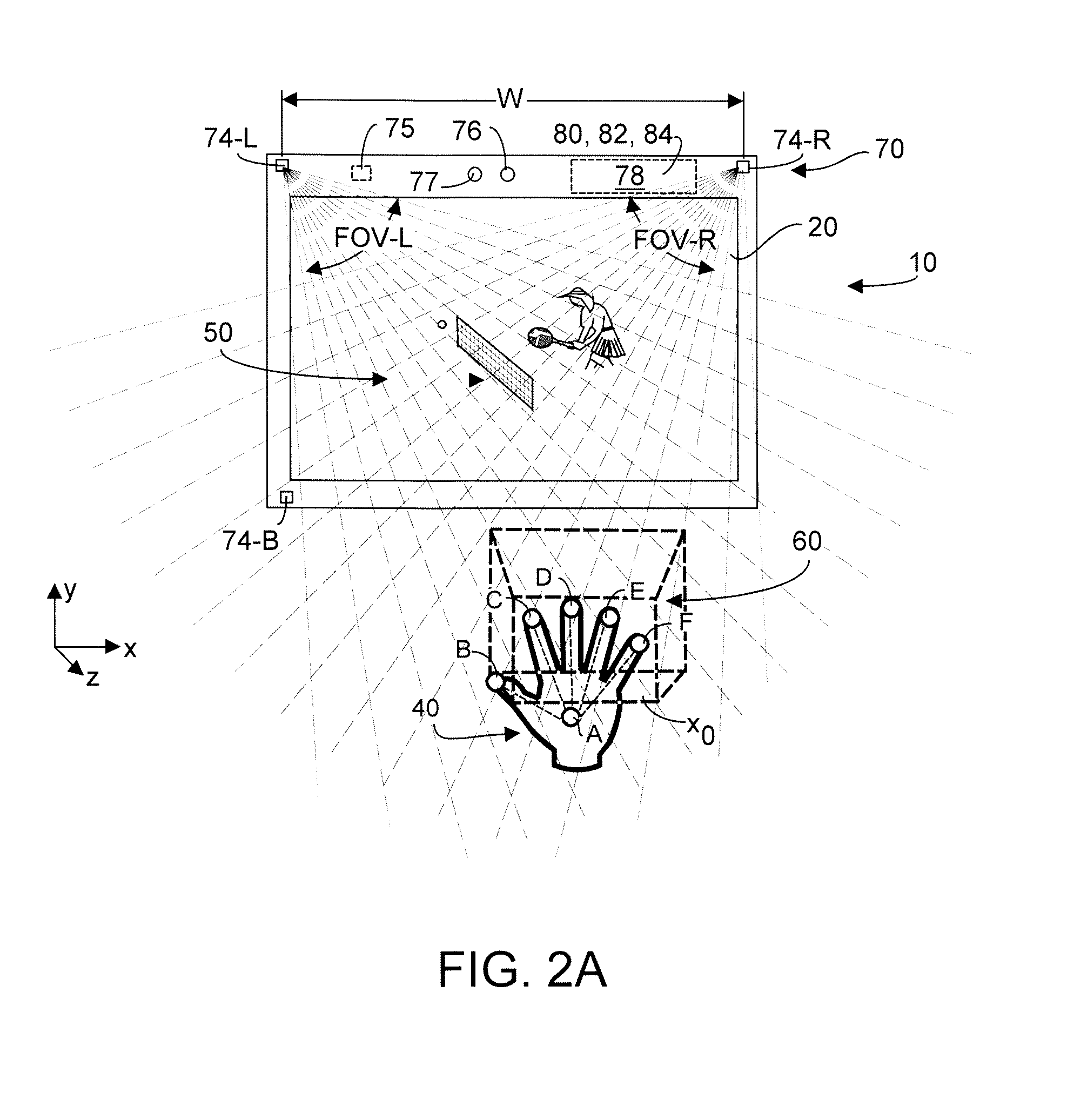

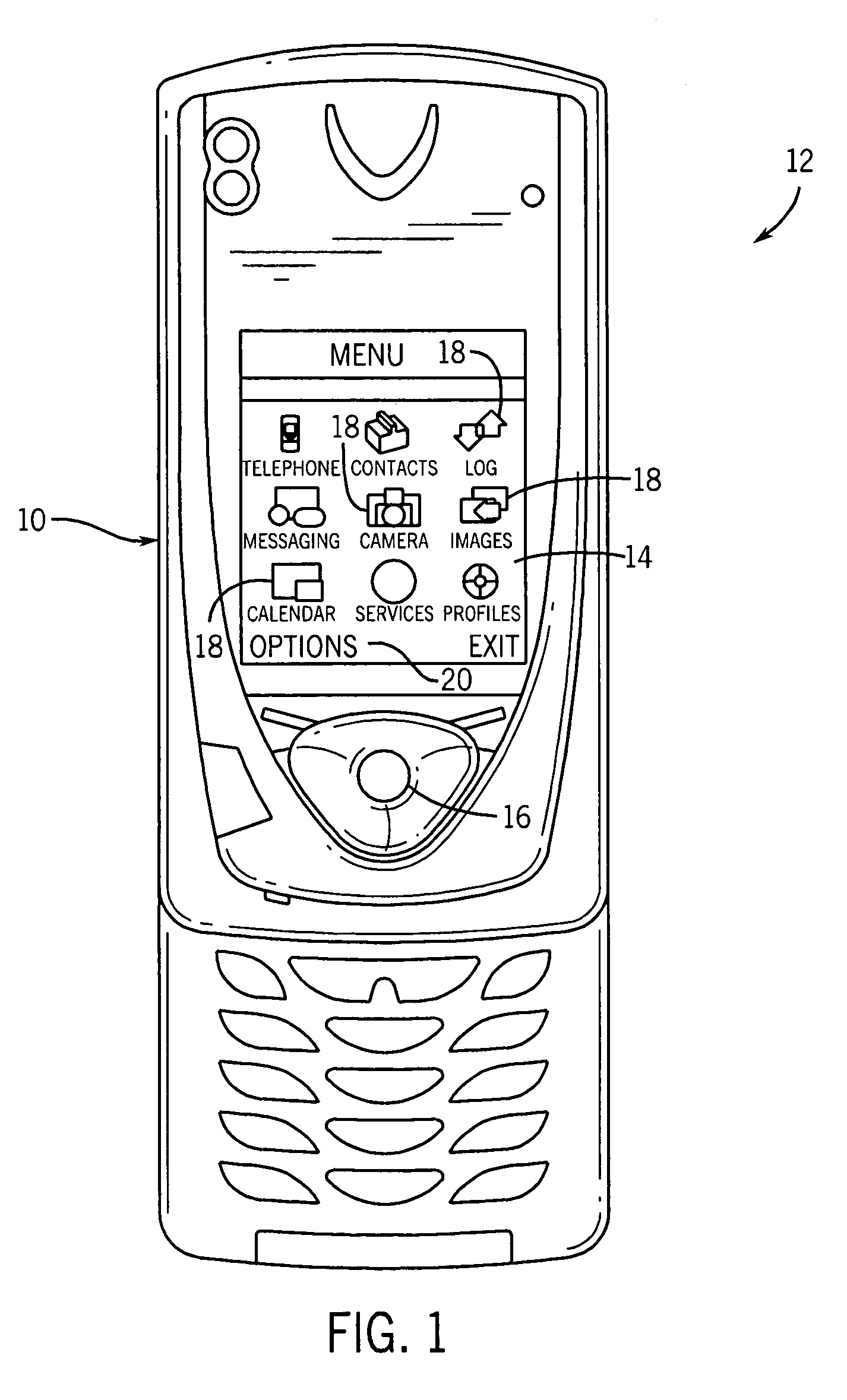

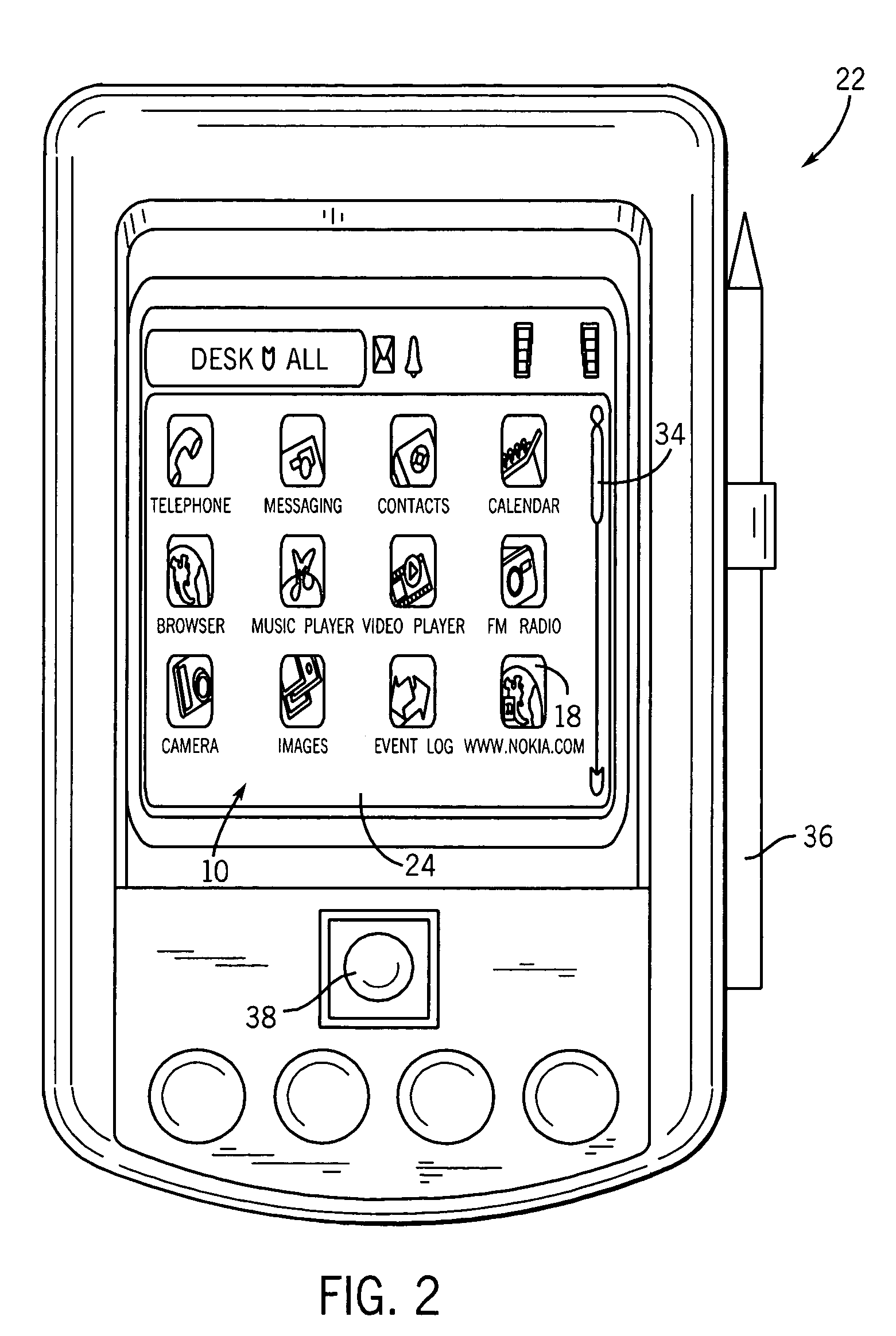

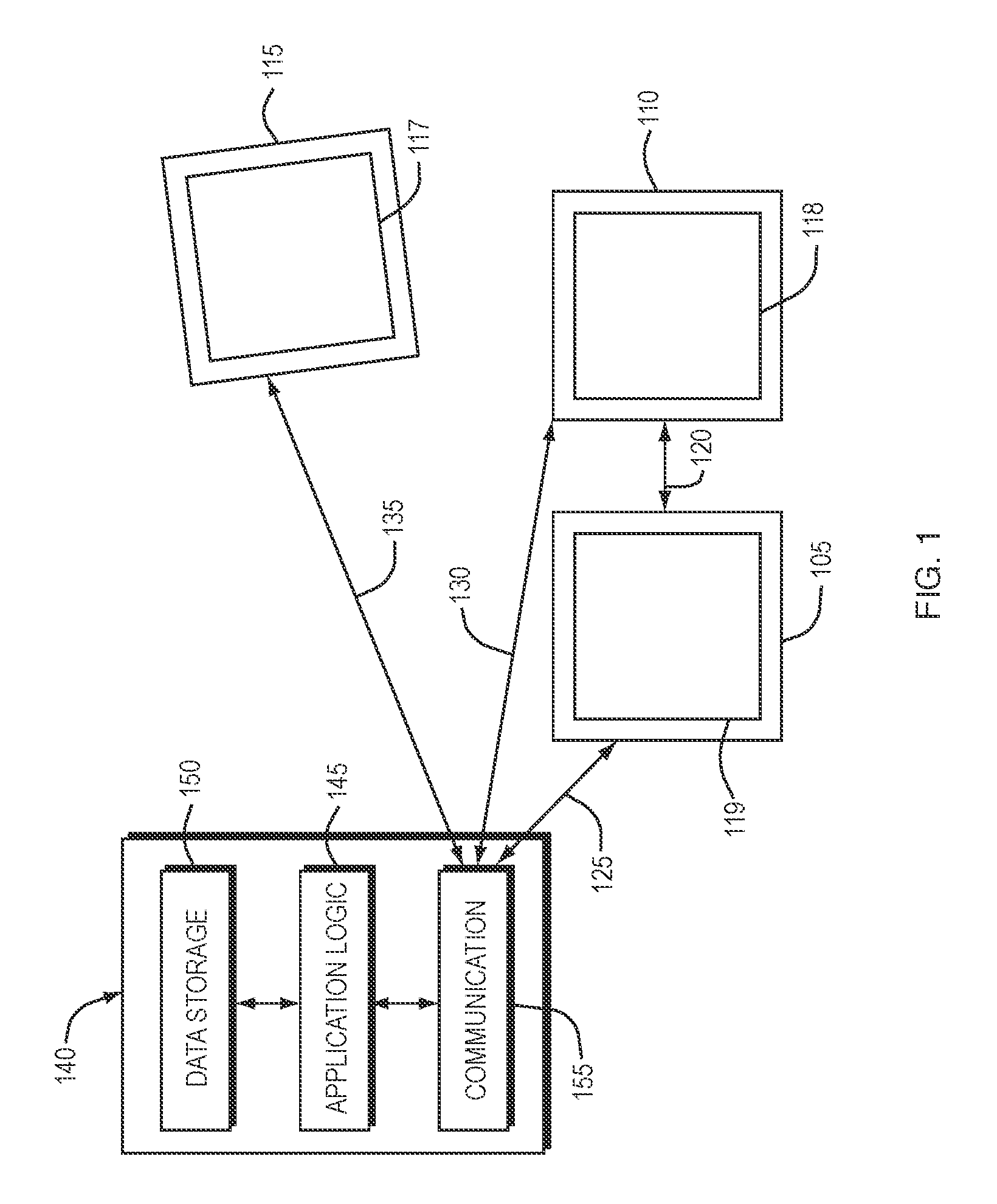

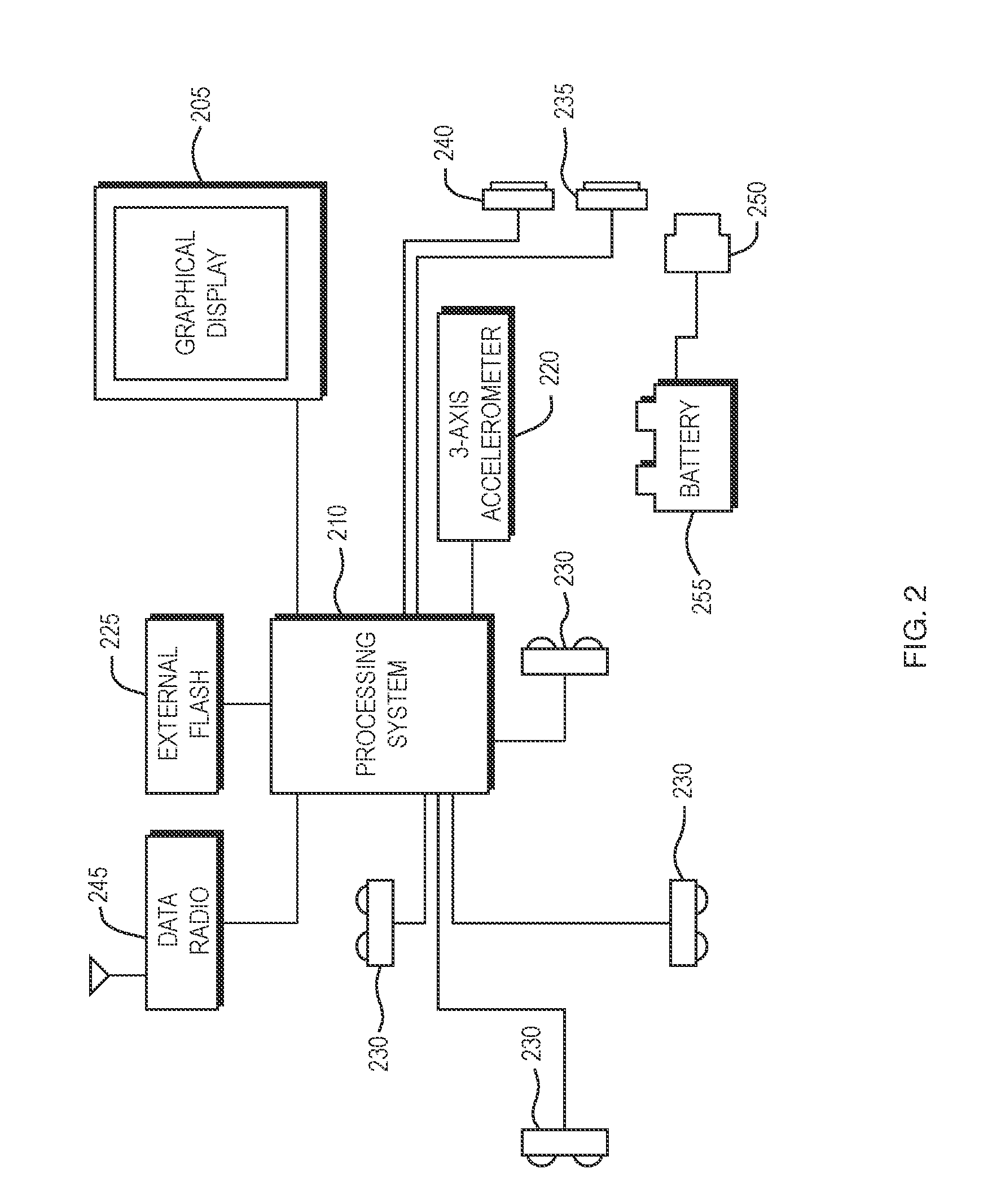

Adaptive user interface input device

ActiveUS7401300B2Digital data processing detailsDevices with sensorAdaptive user interfaceUser input

The present invention relates to an adaptable user interface. The adaptable user interface provides a more error free input function as well as greater ease of use when being used during certain events such as while moving. A user interface in accordance with the principles of the present invention comprises a user input, at least one sensor, and a display unit functionally in communication with the at least one sensor and adapted to change its user interface input mode. The user interface is capable of adapting its user interface input in response to a stimulus sensed by the sensor.

Owner:NOKIA TECHNOLOGLES OY

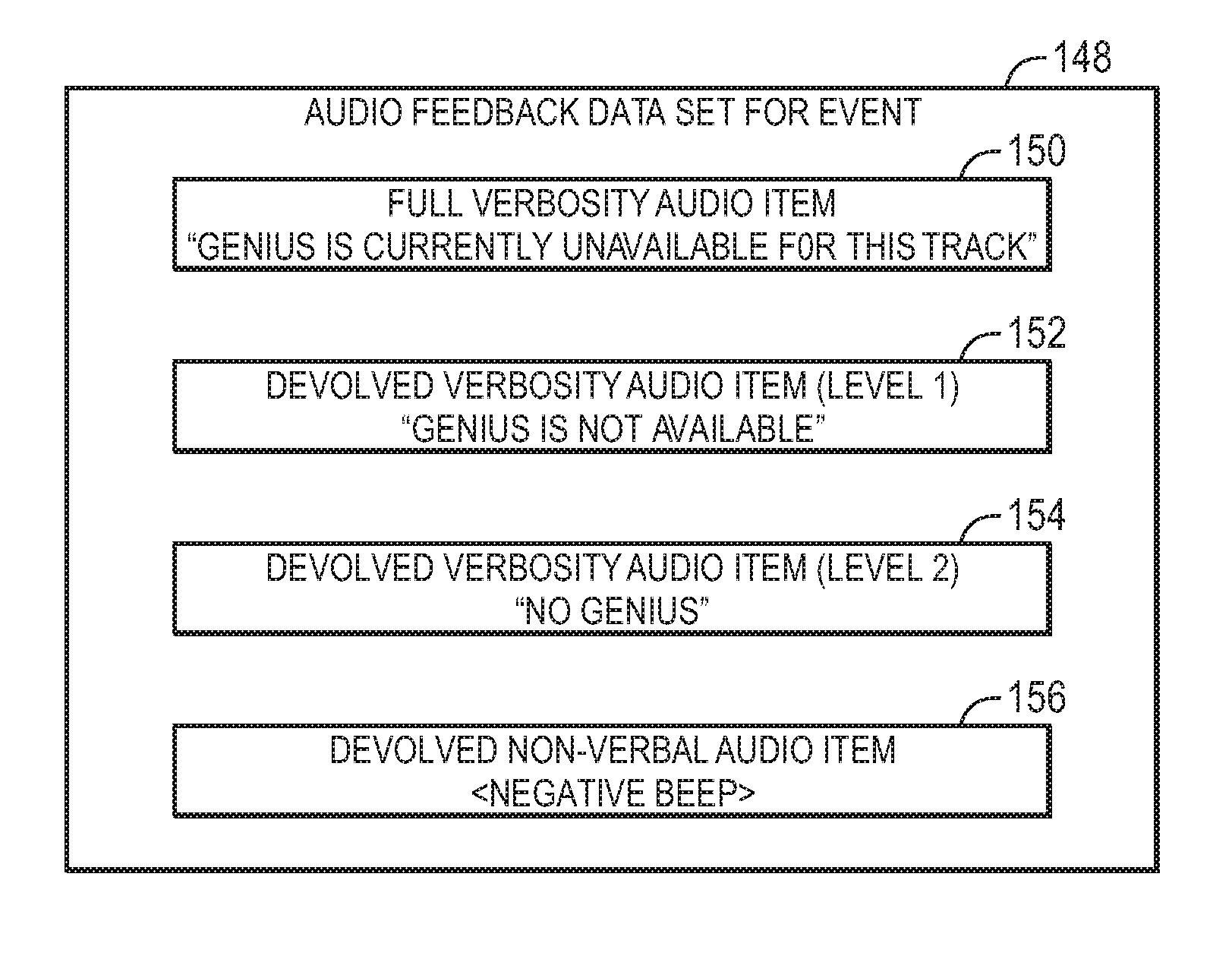

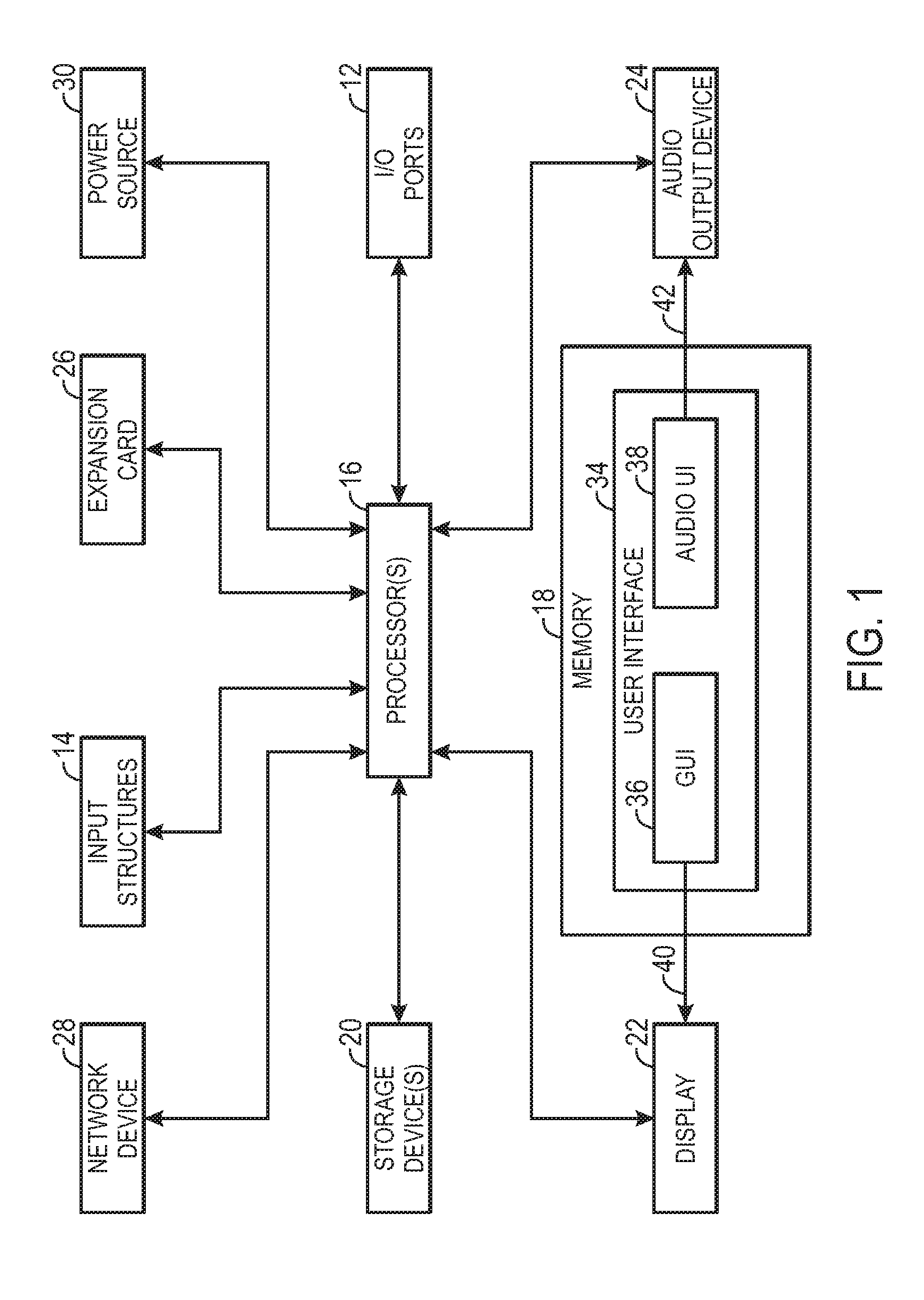

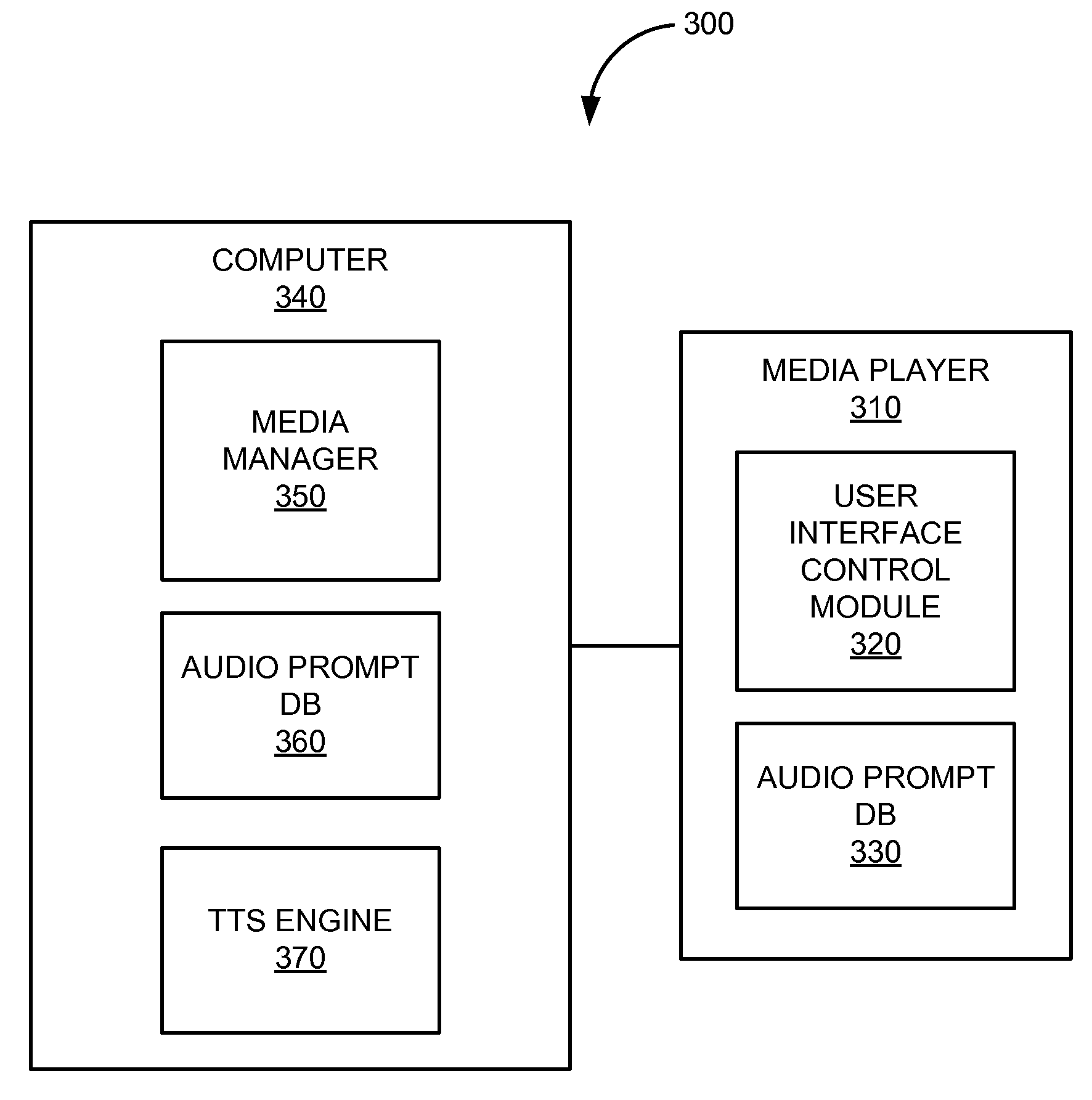

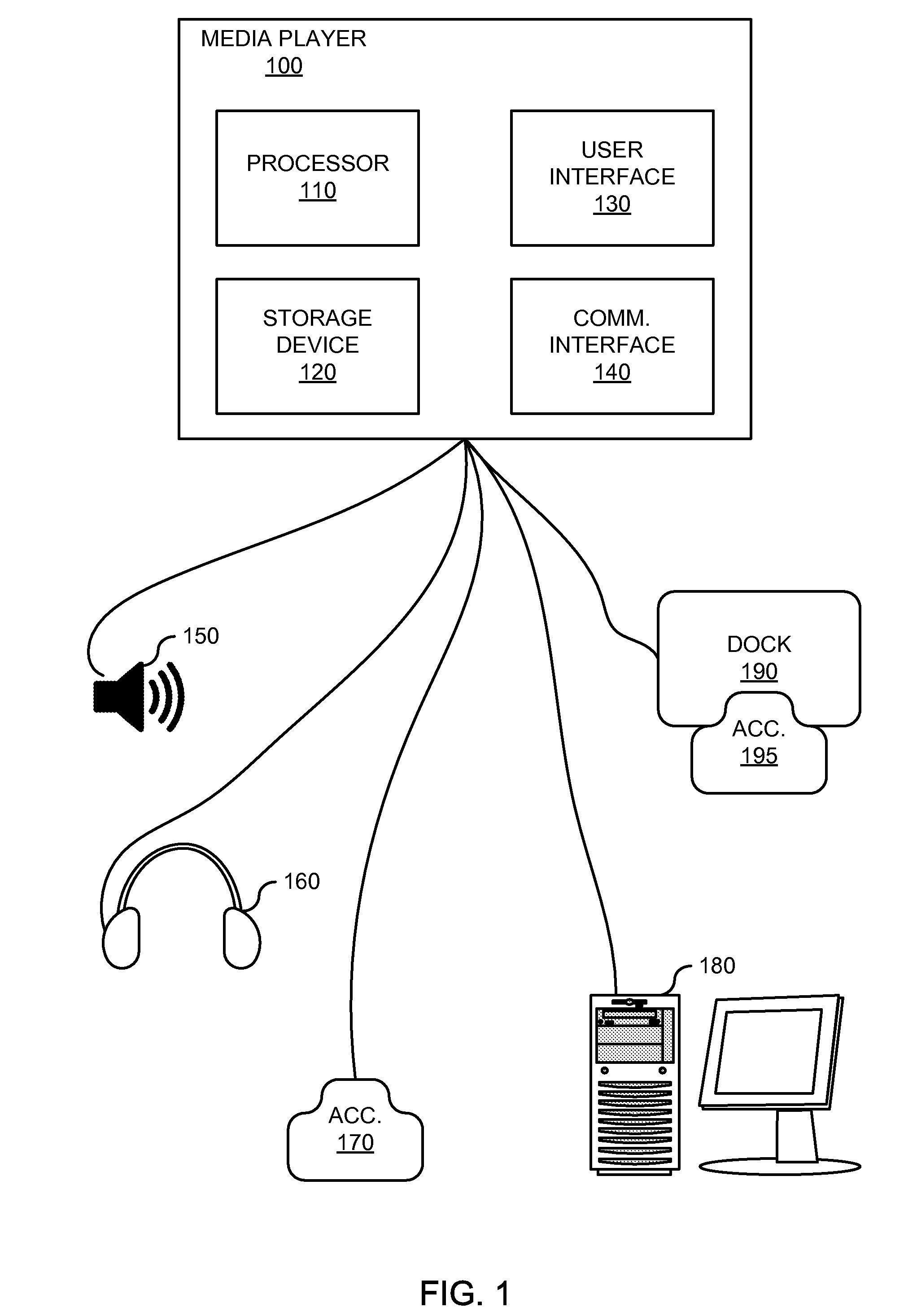

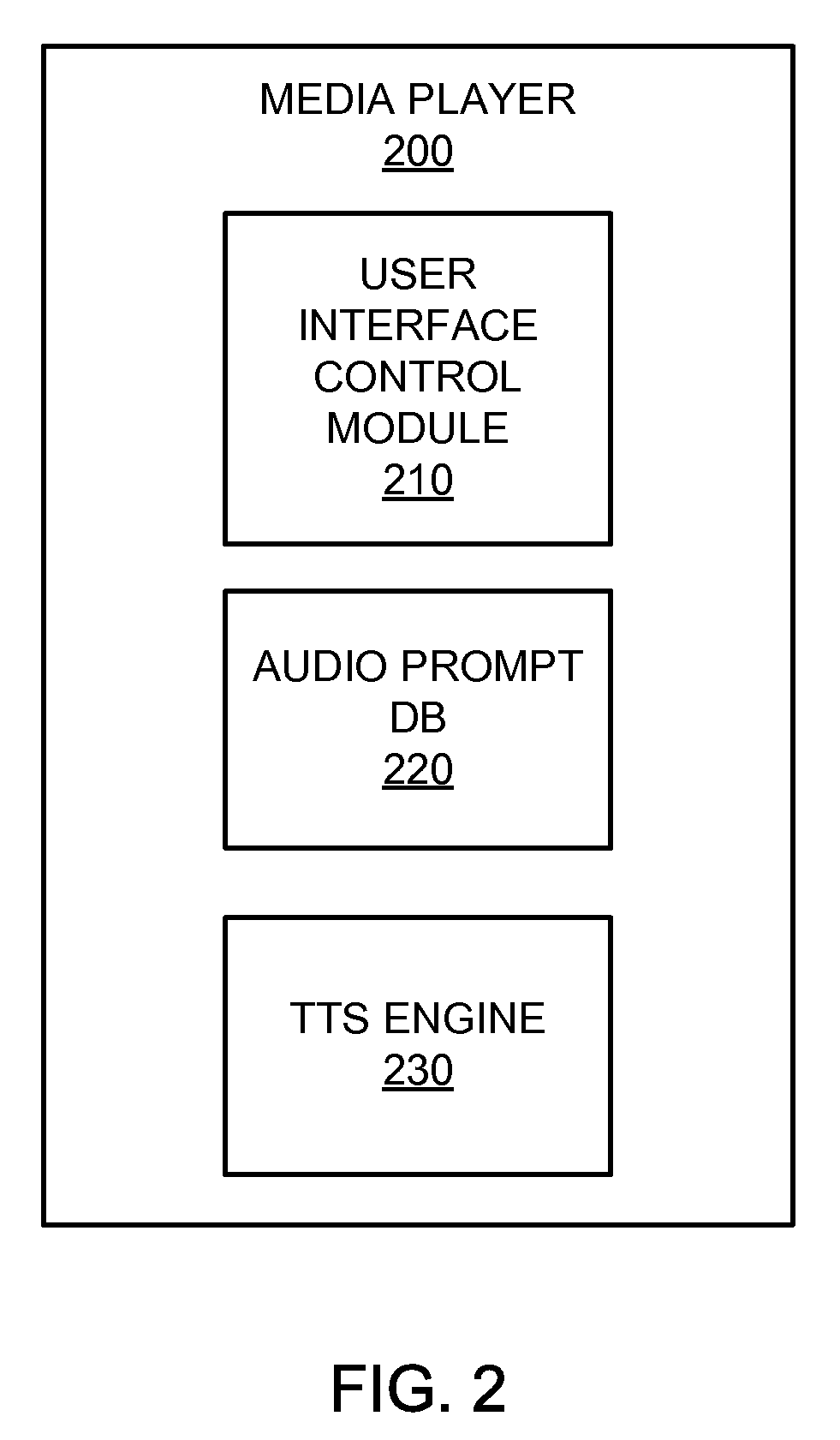

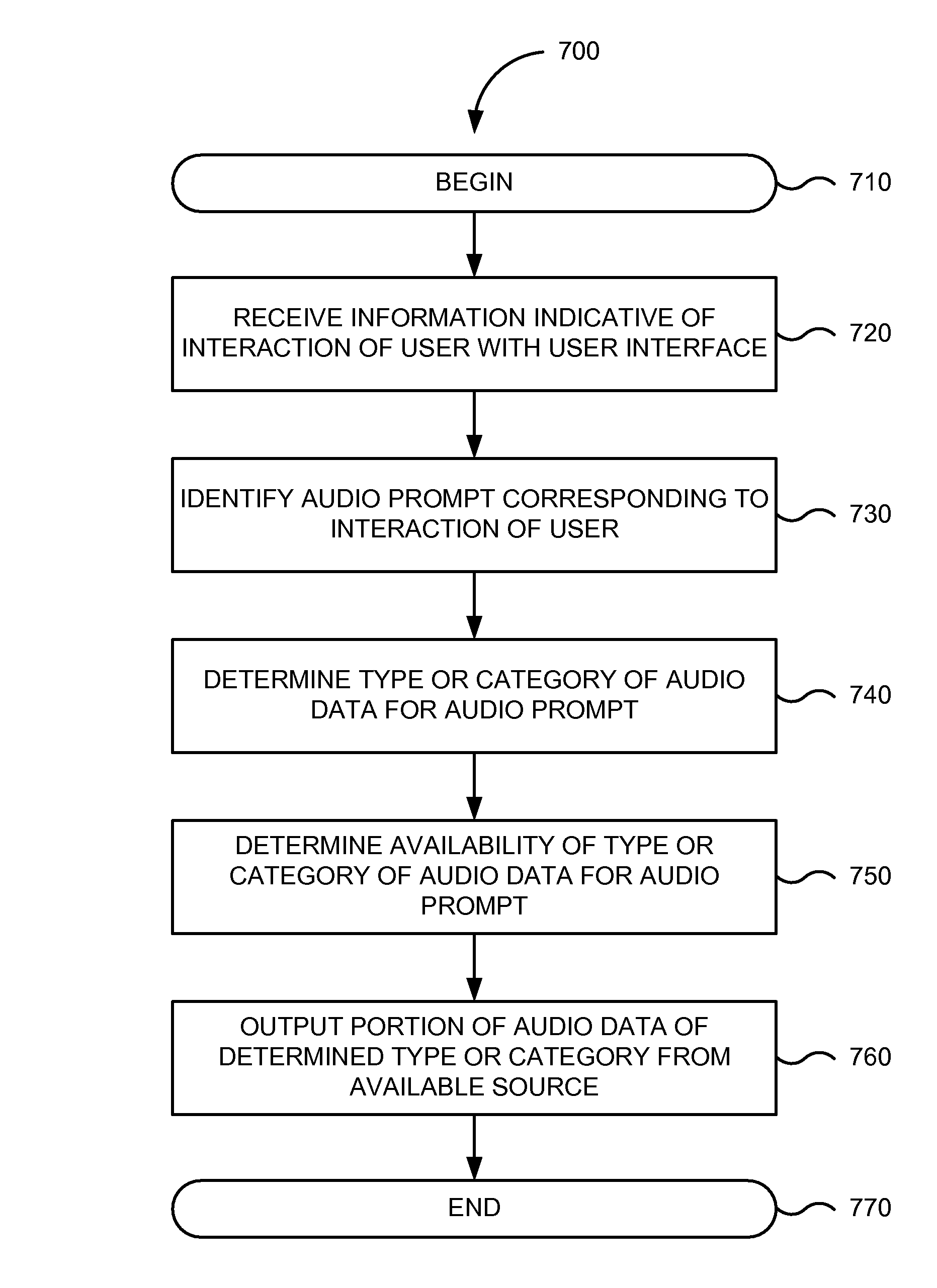

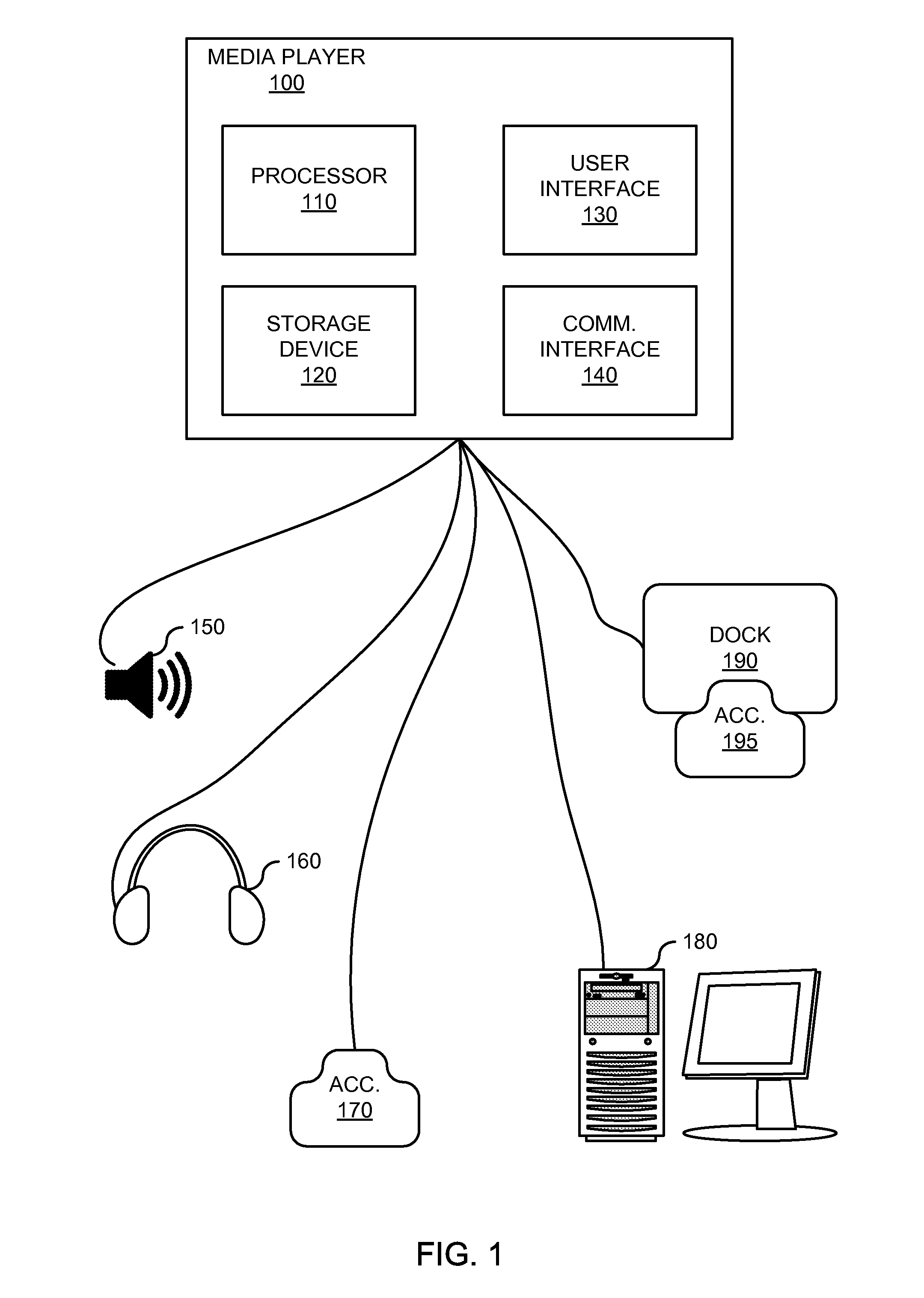

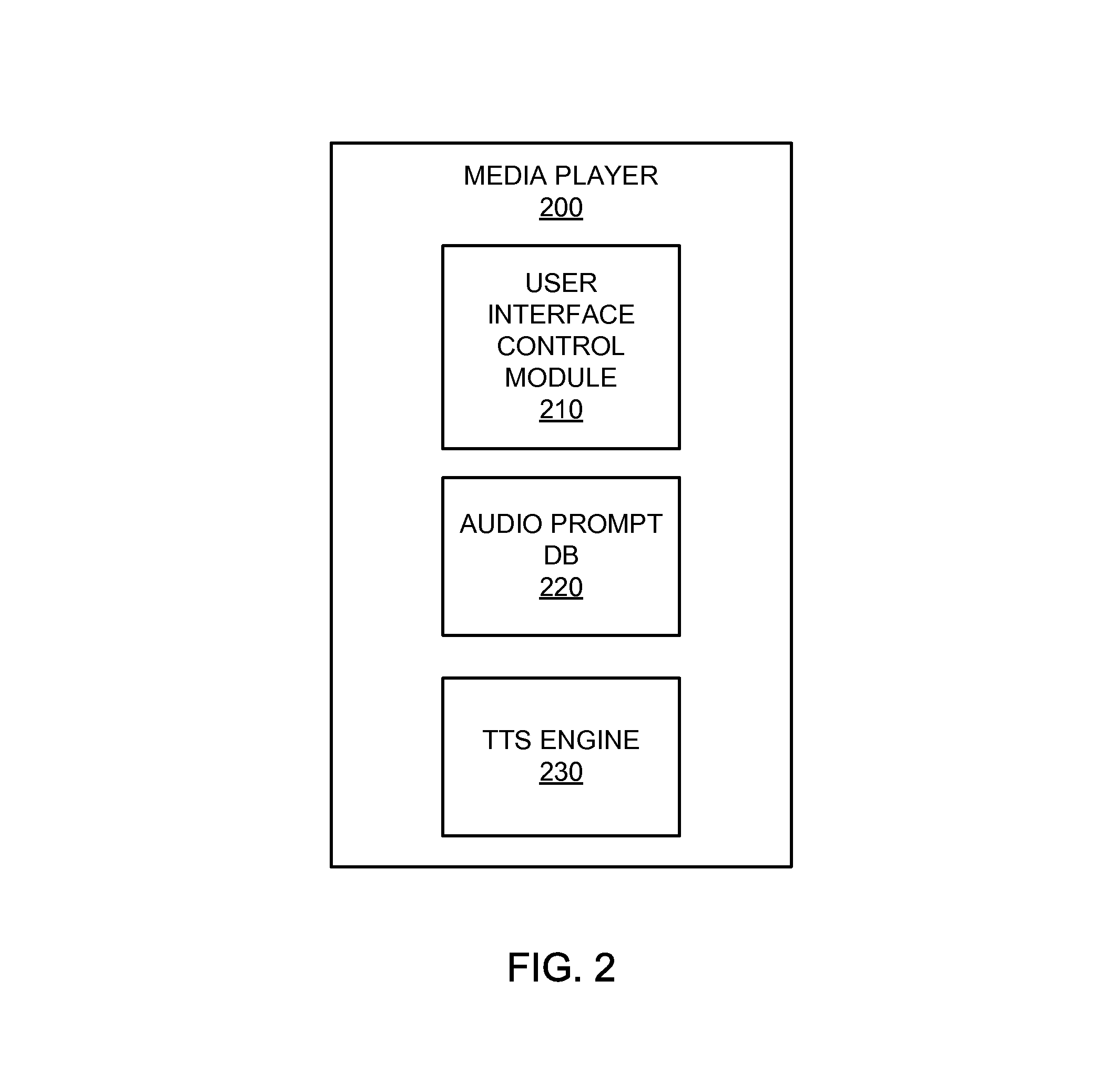

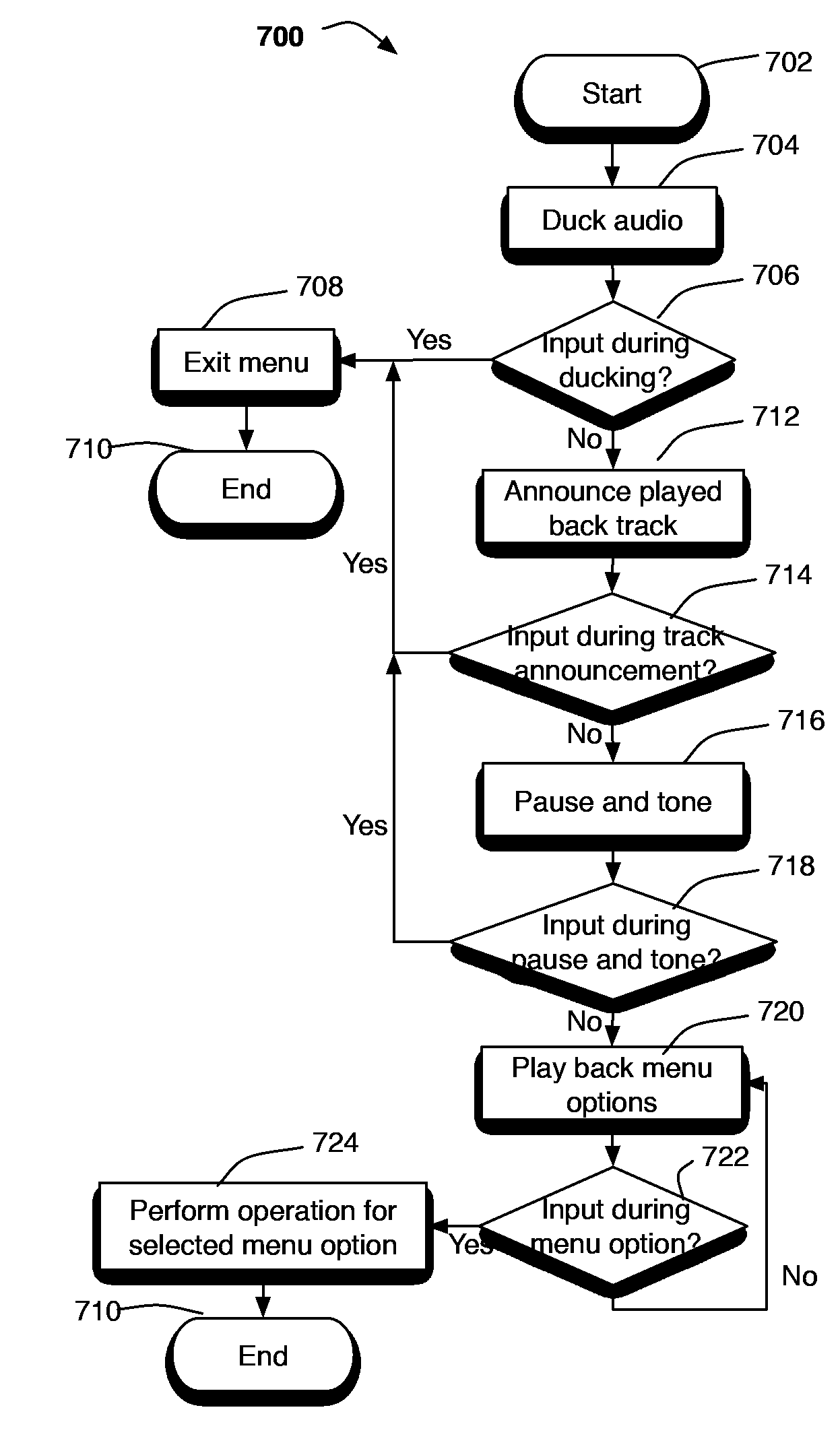

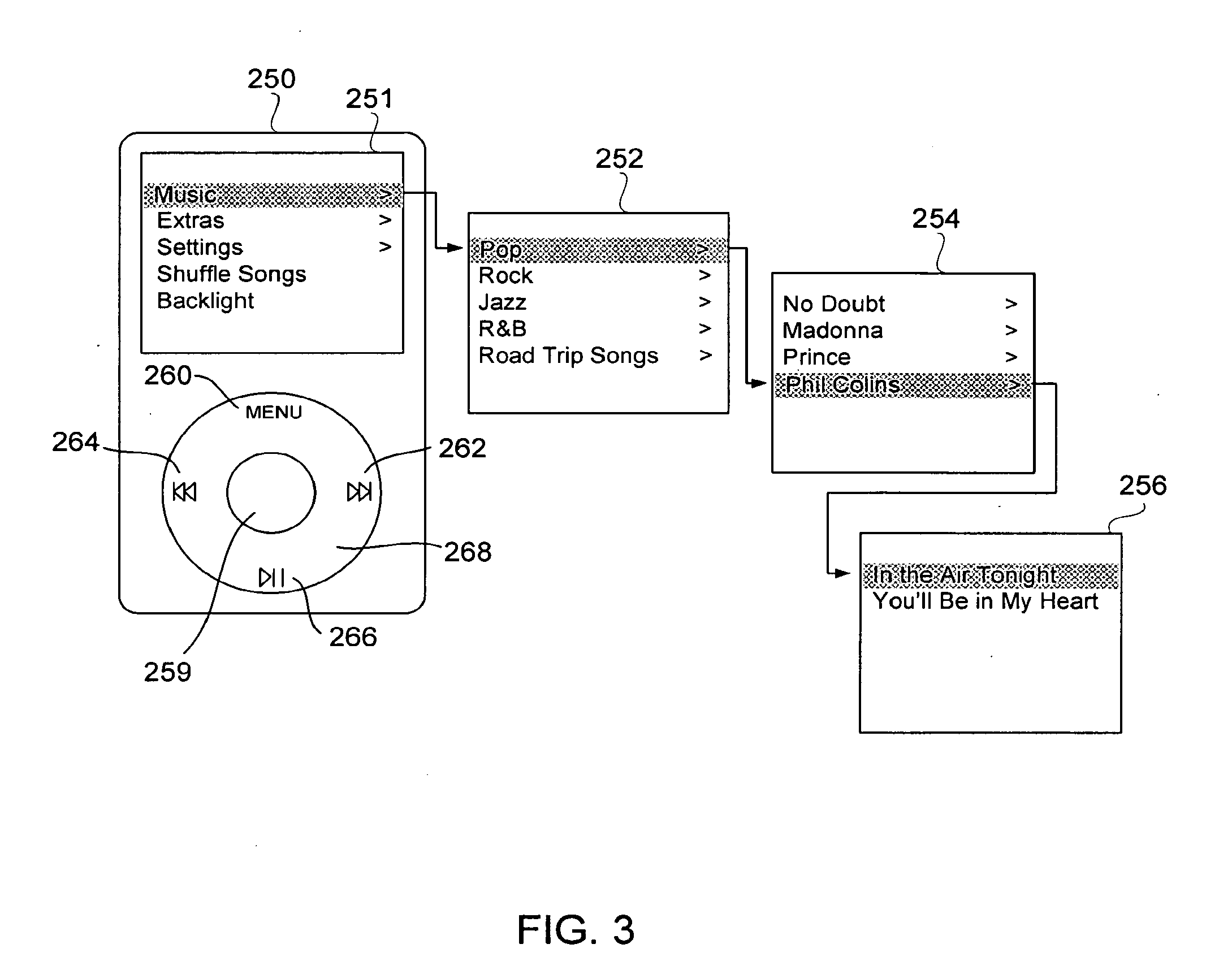

Adaptive audio feedback system and method

ActiveUS8381107B2Avoid overwhelming a user with repetitive and highly verbose informationReduce verbositySound input/outputSpeech recognitionNatural user interfaceSelf adaptive

Various techniques for adaptively varying audio feedback data on an electronic device are provided. In one embodiment, an audio user interface implementing certain aspects of the present disclosure may devolve or evolve the verbosity of audio feedback in response to user interface events based at least partially upon the verbosity level of audio feedback provided during previous occurrences of the user interface event. In another embodiment, an audio user interface may be configured to vary the verbosity of audio feedback associated with a navigable list of items based at least partially upon the speed at which a user navigates the list. In a further embodiment, an audio user interface may be configured to vary audio feedback verbosity based upon the contextual importance of a user interface event. Electronic devices implementing the present techniques provide an improved user experience with regard to audio user interfaces.

Owner:APPLE INC

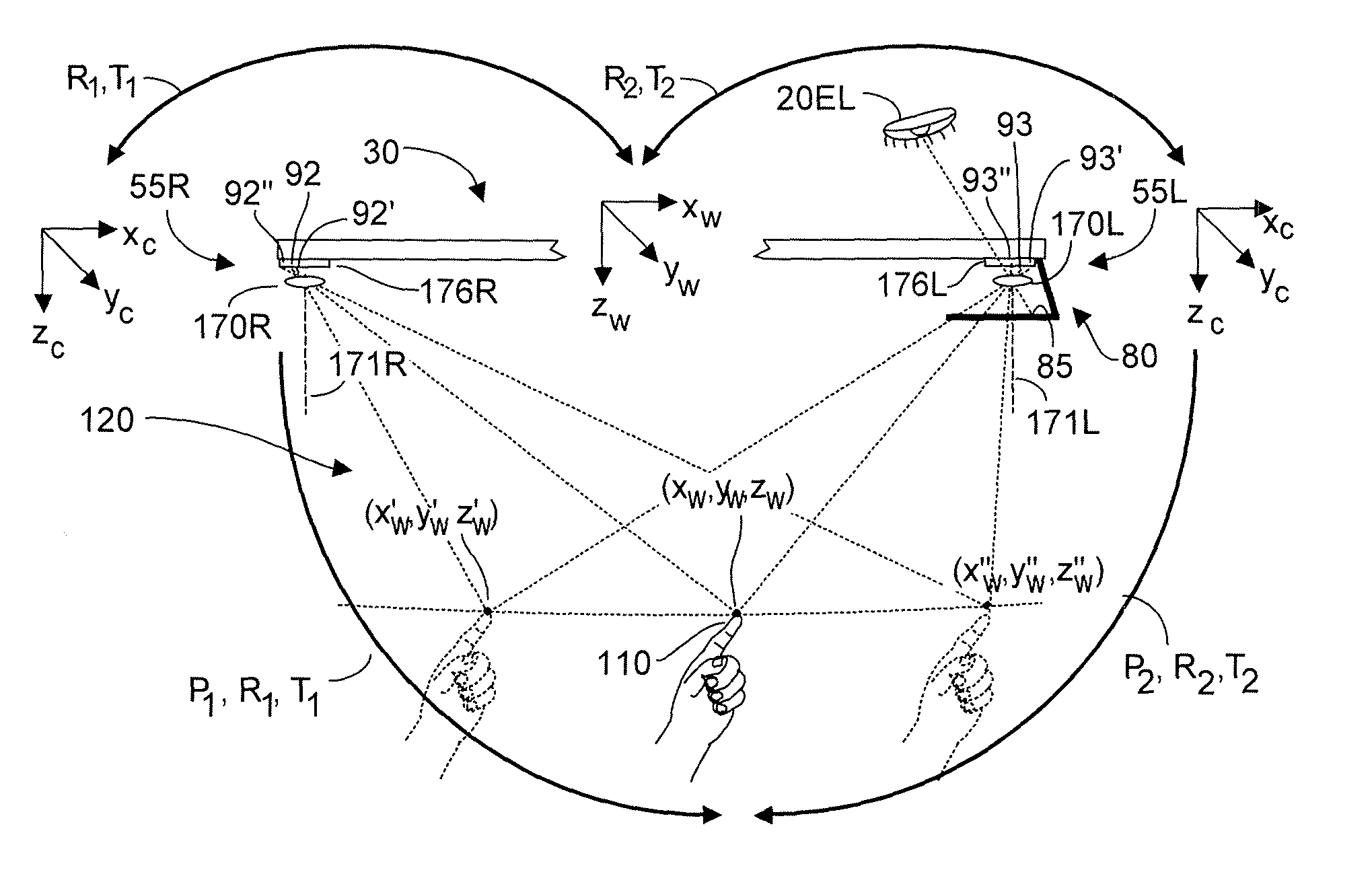

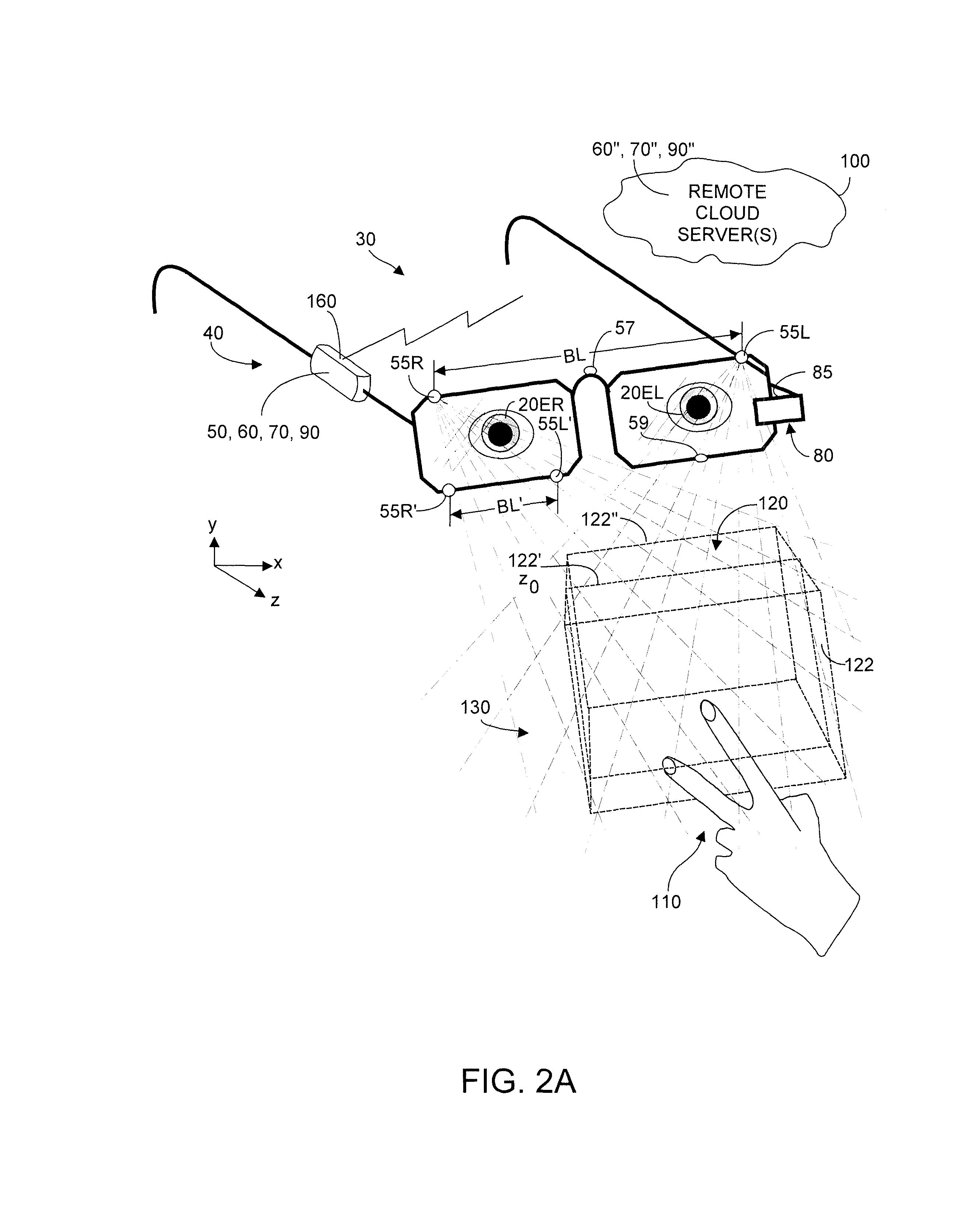

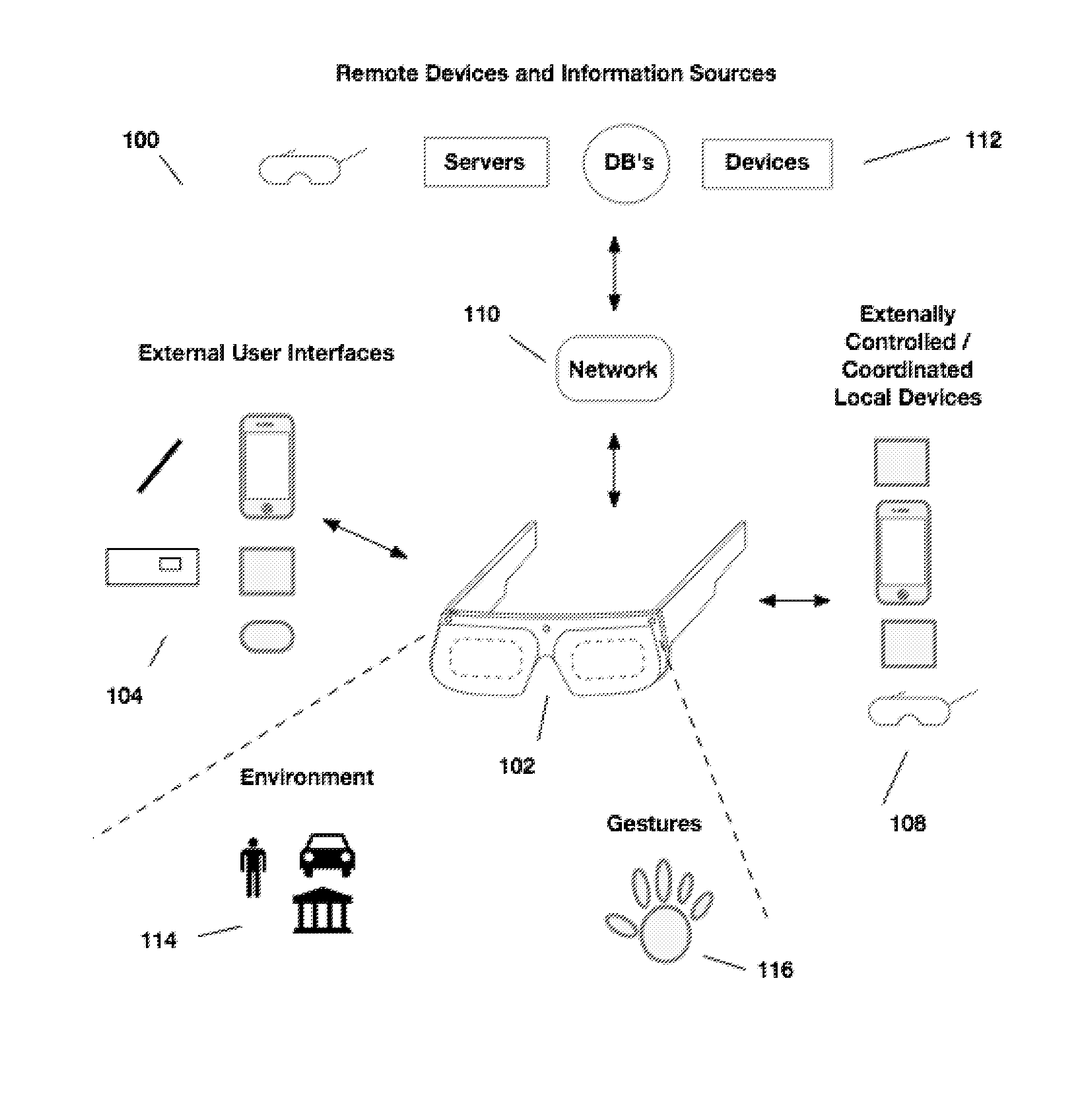

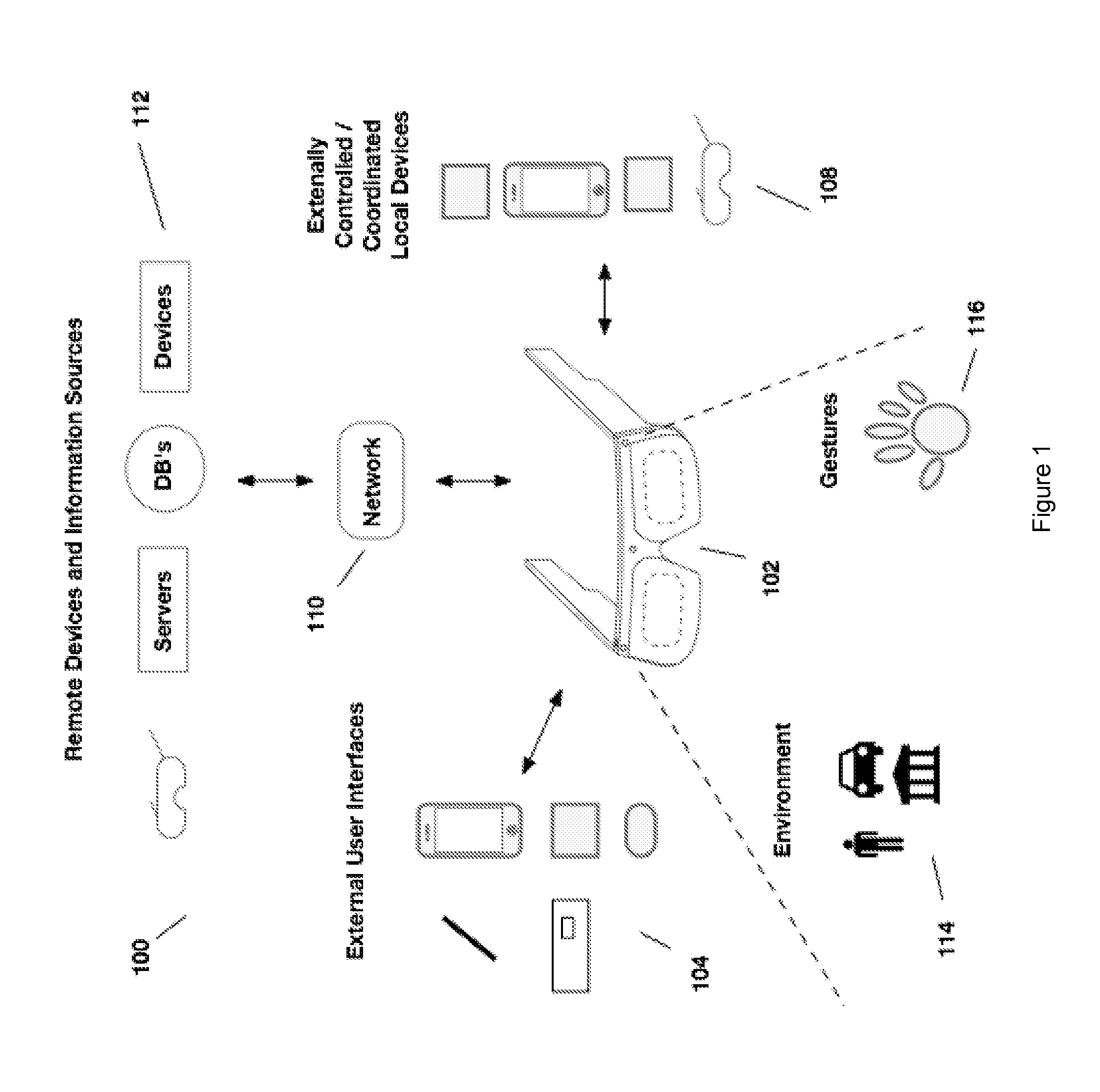

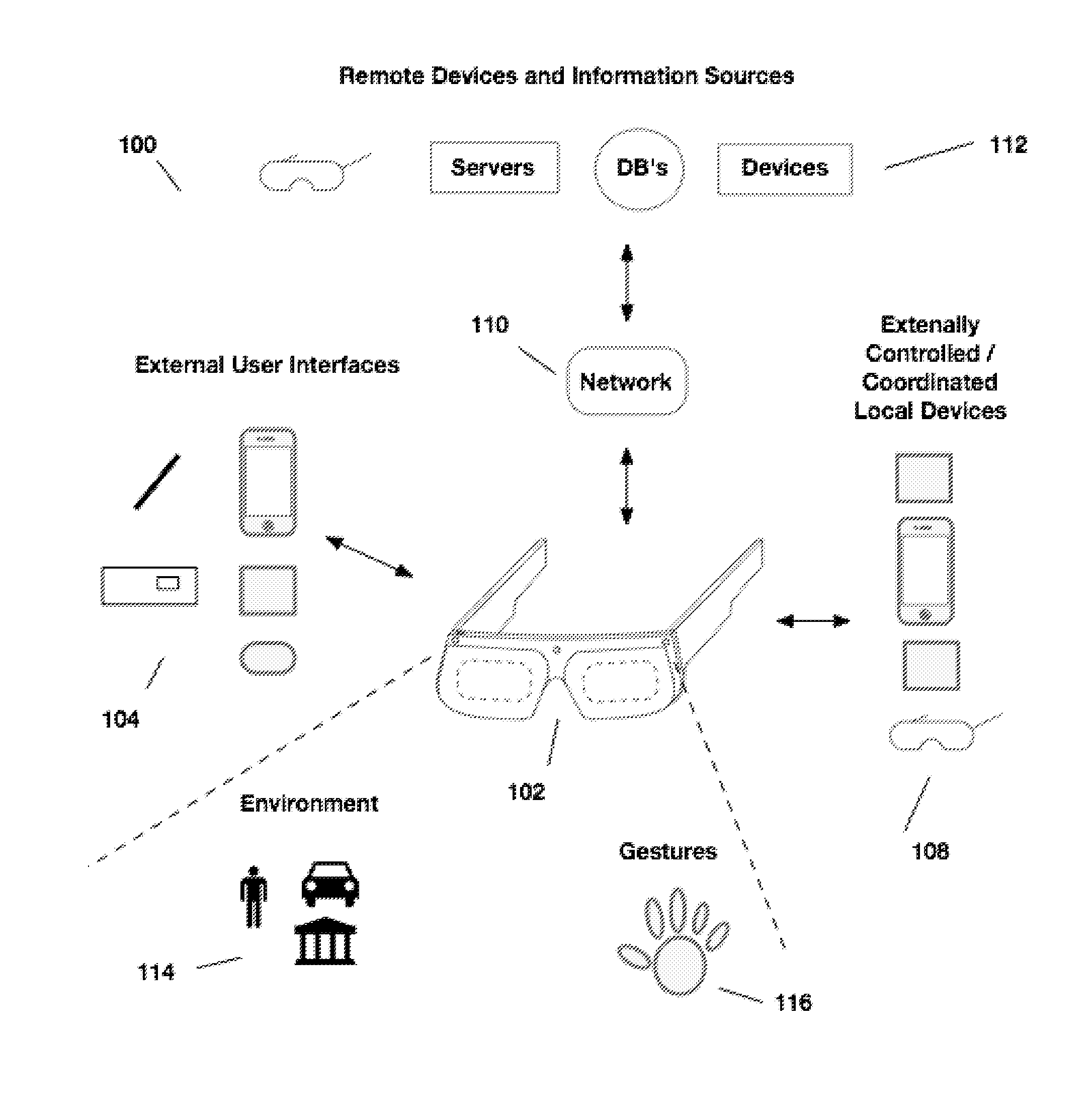

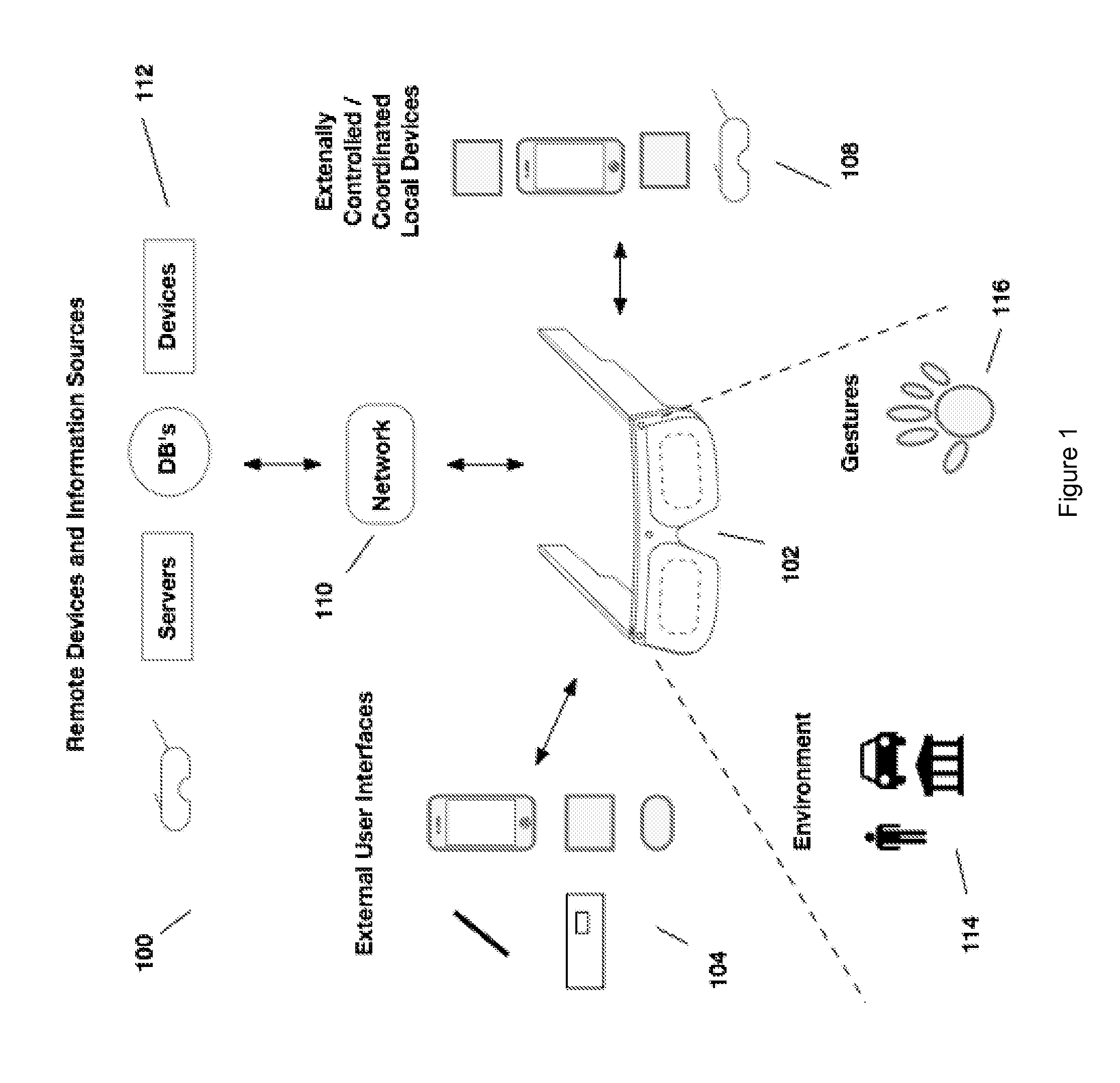

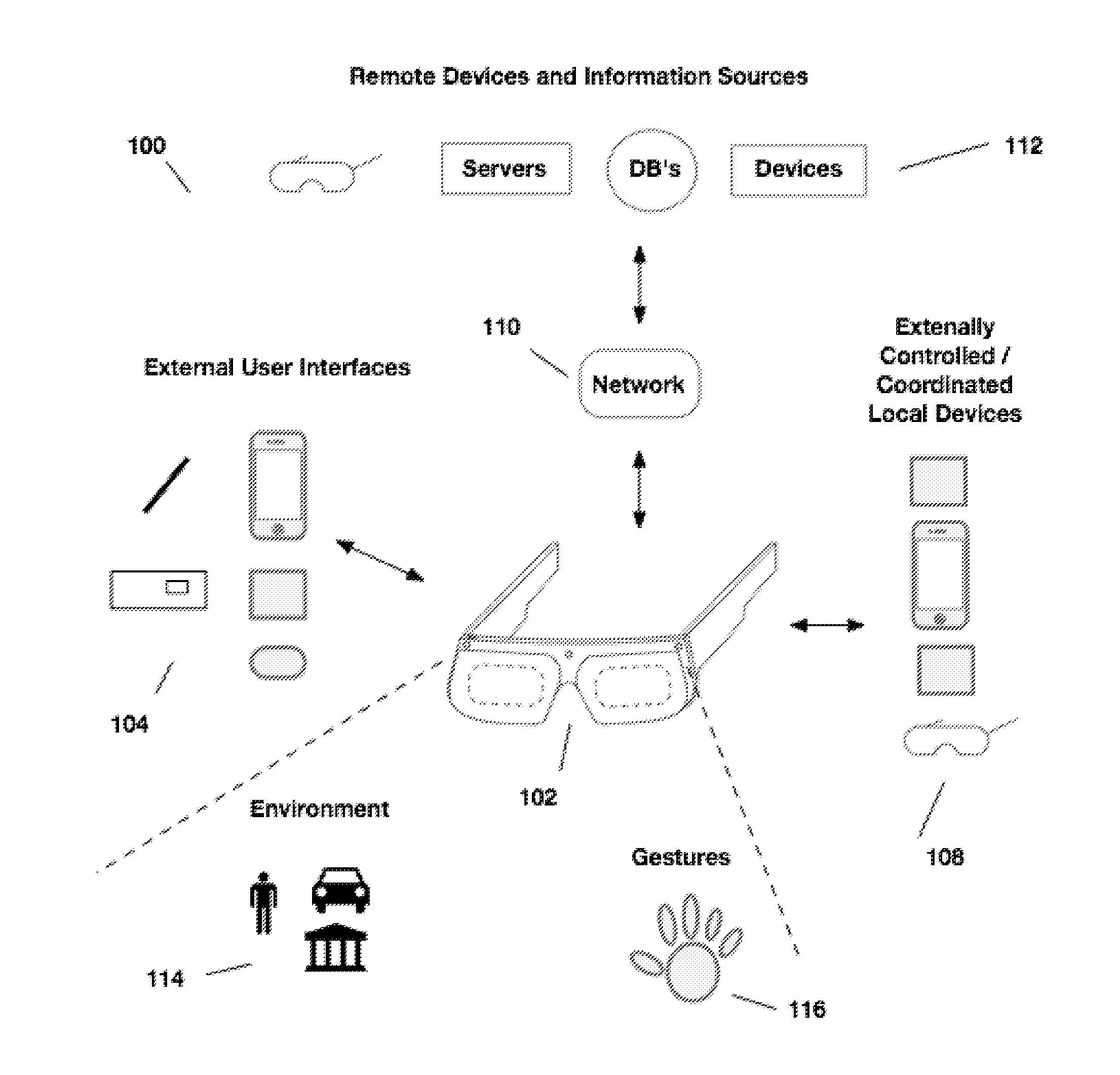

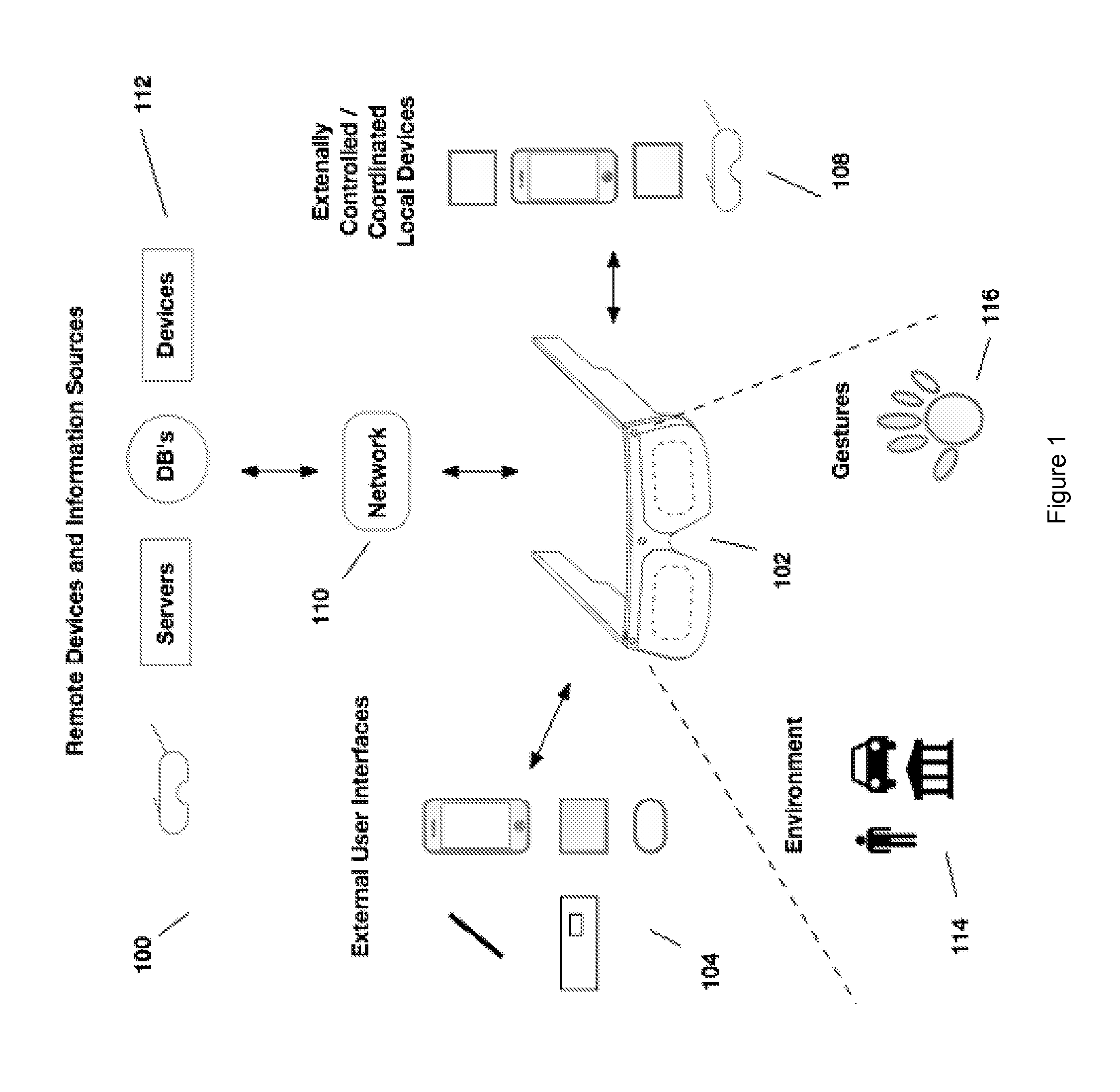

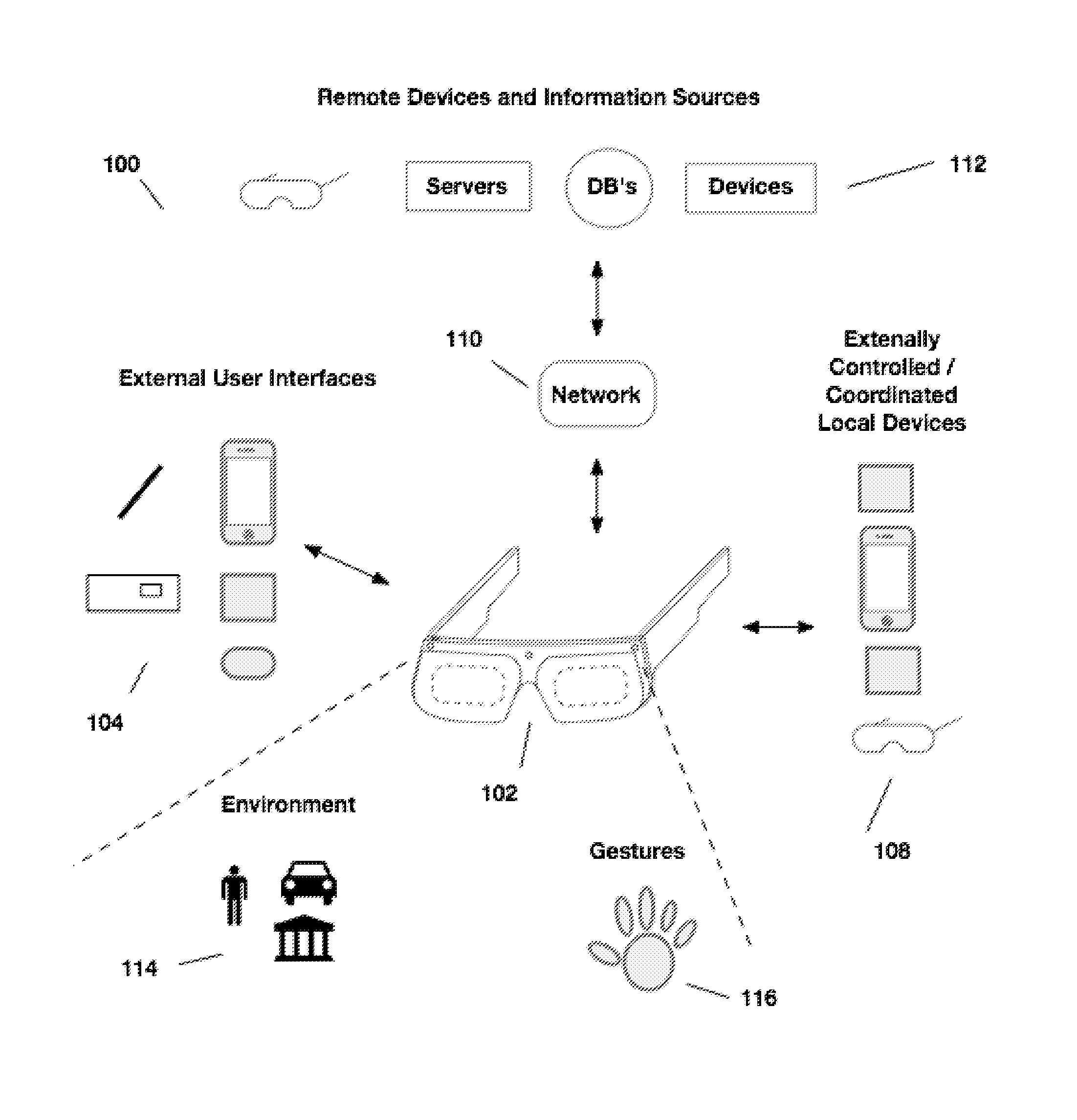

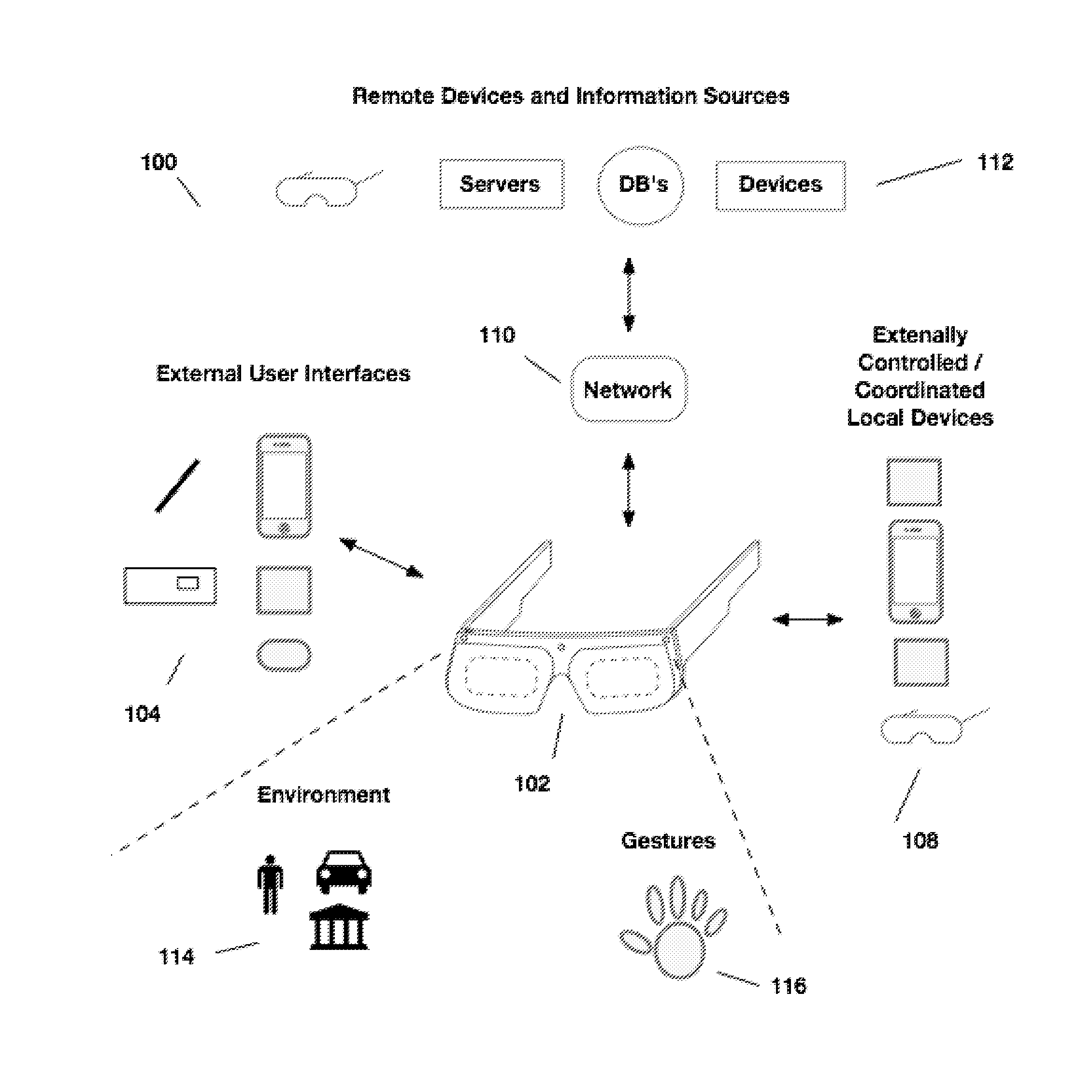

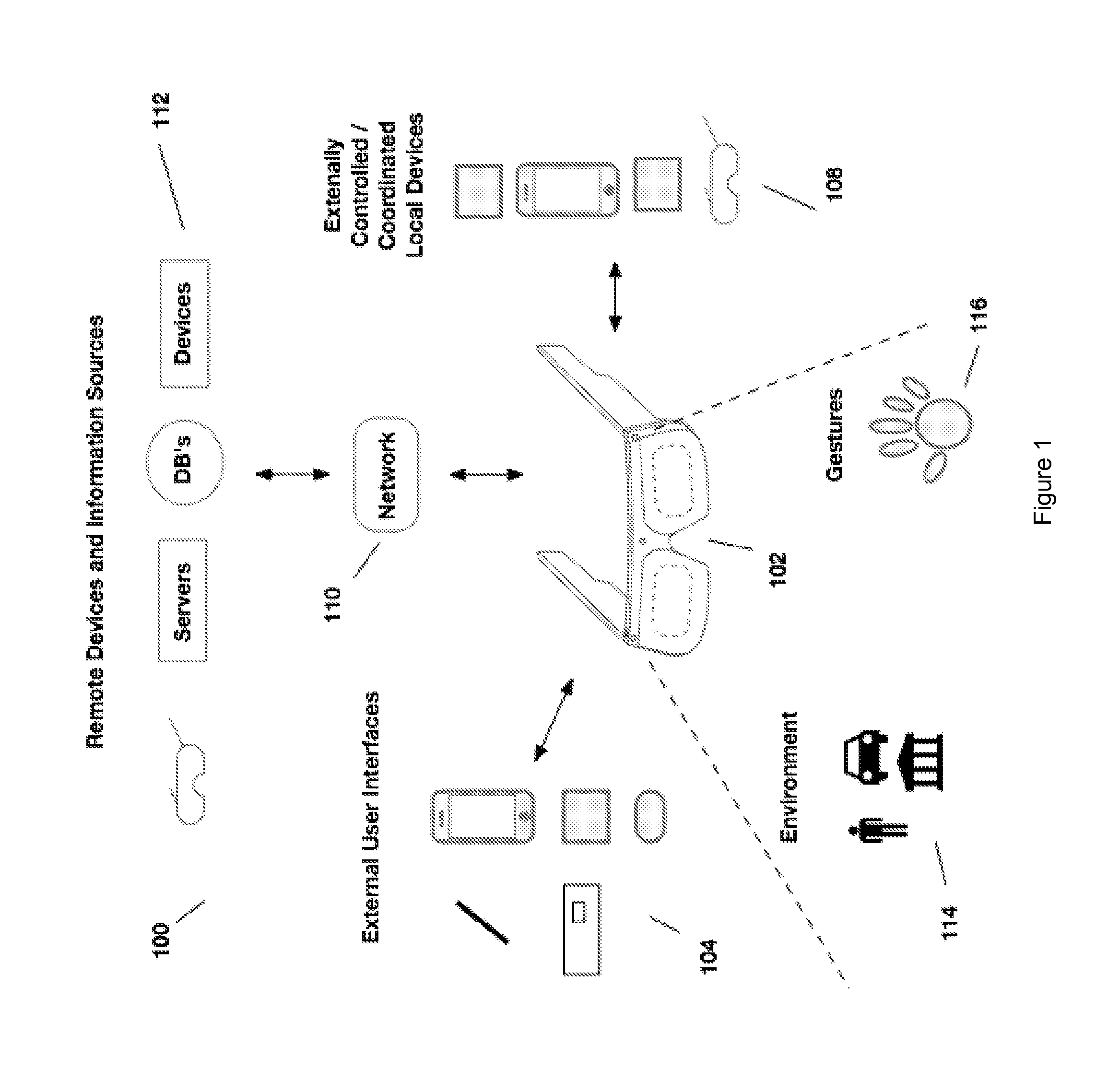

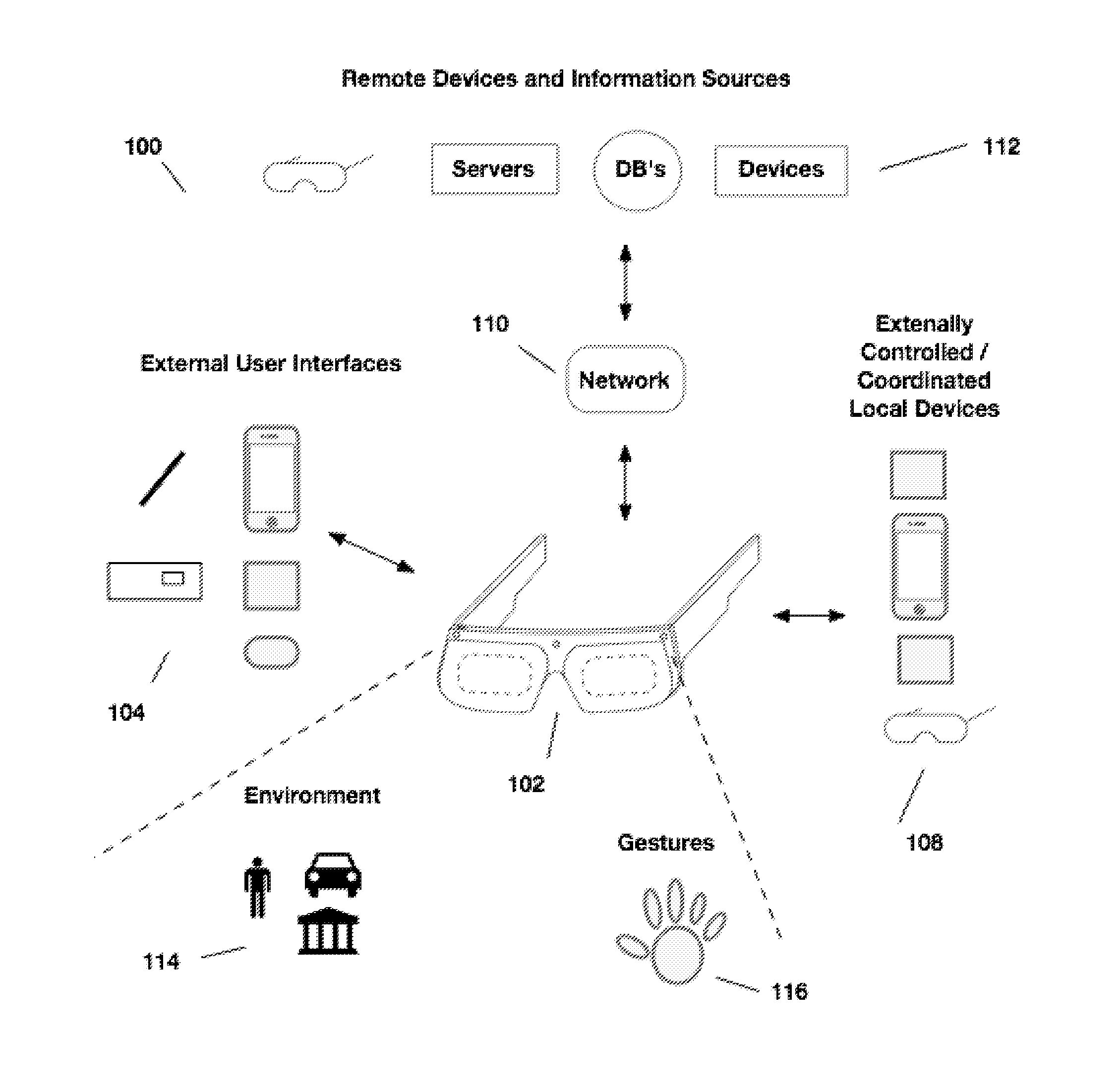

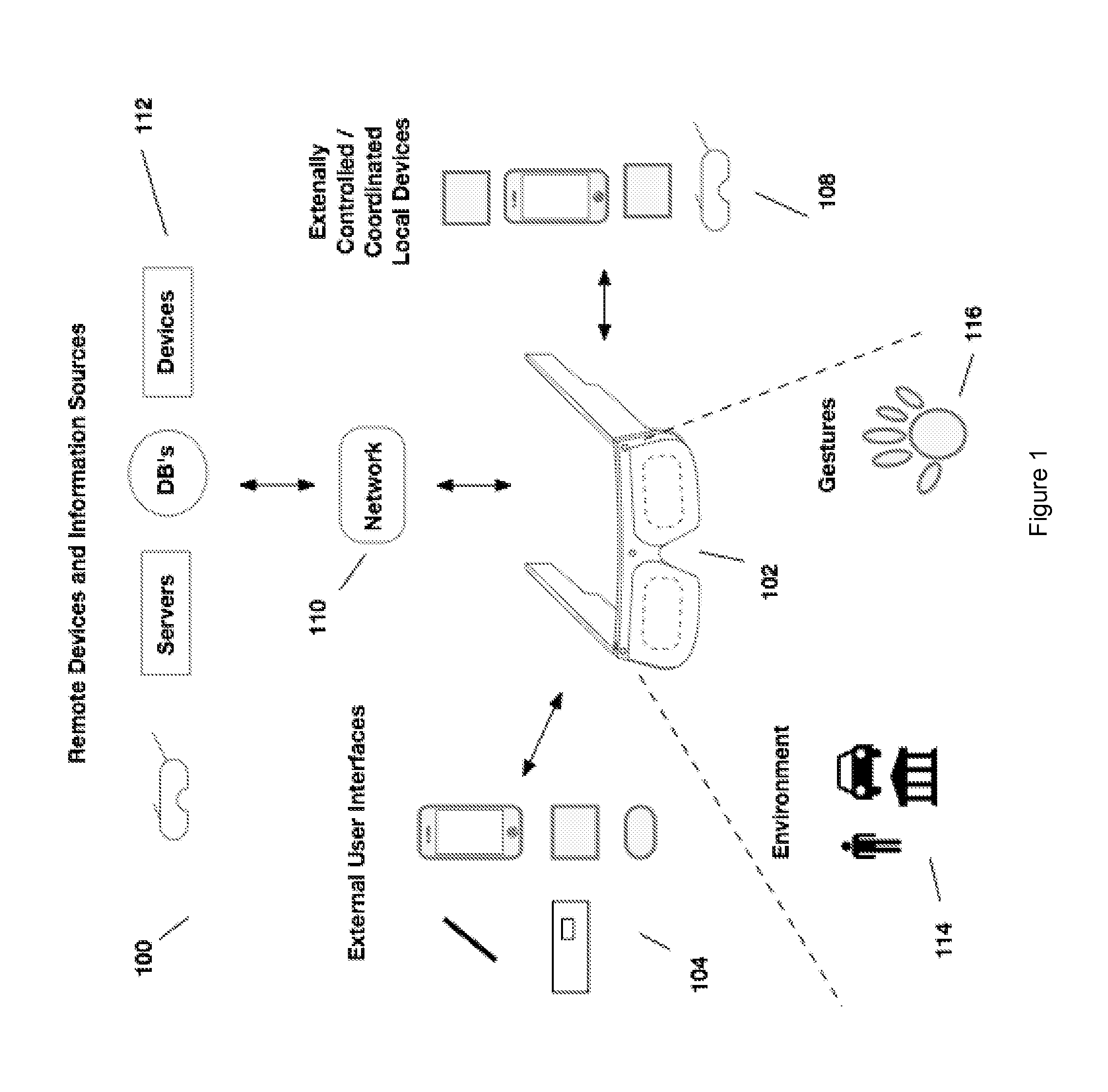

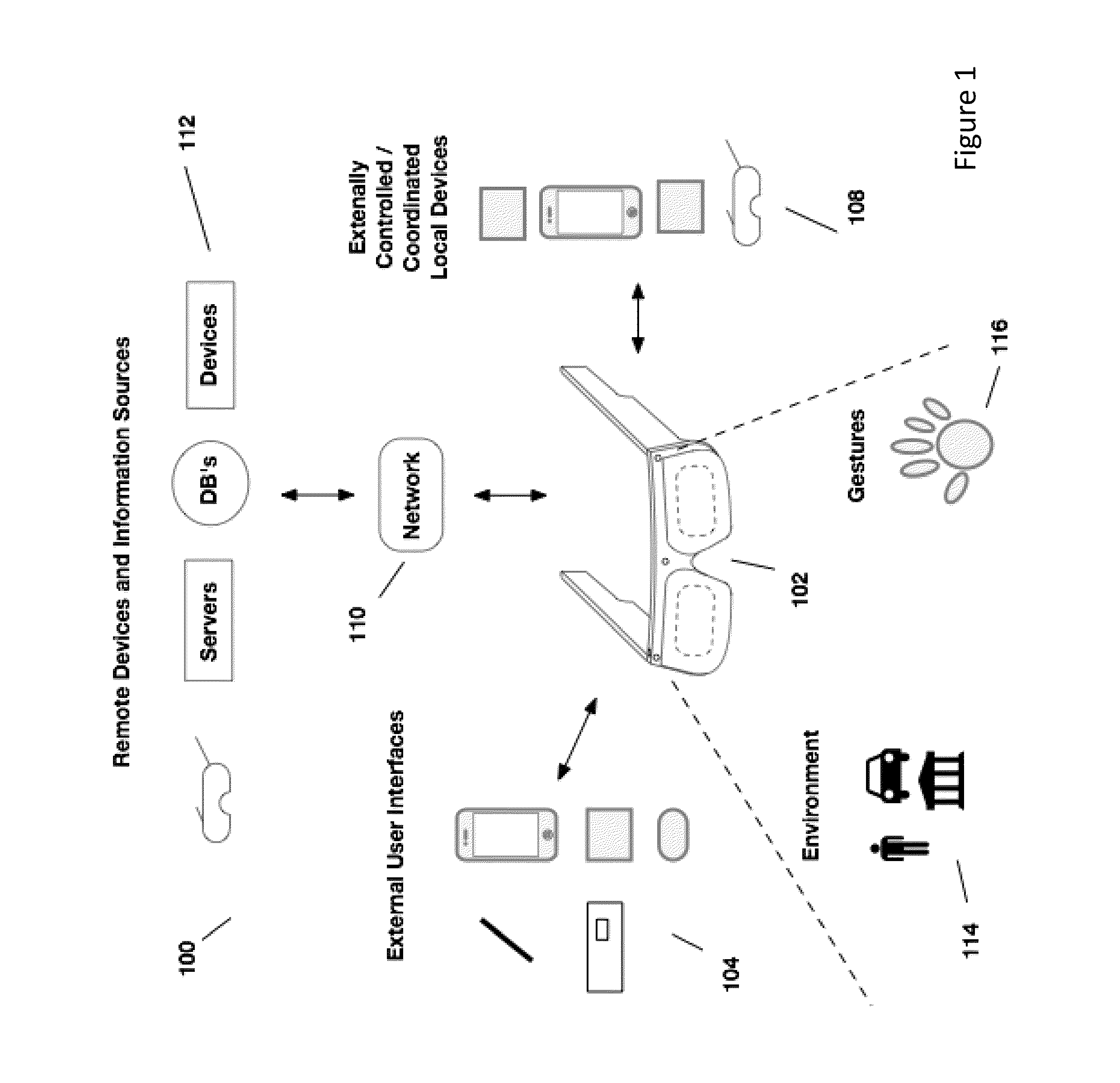

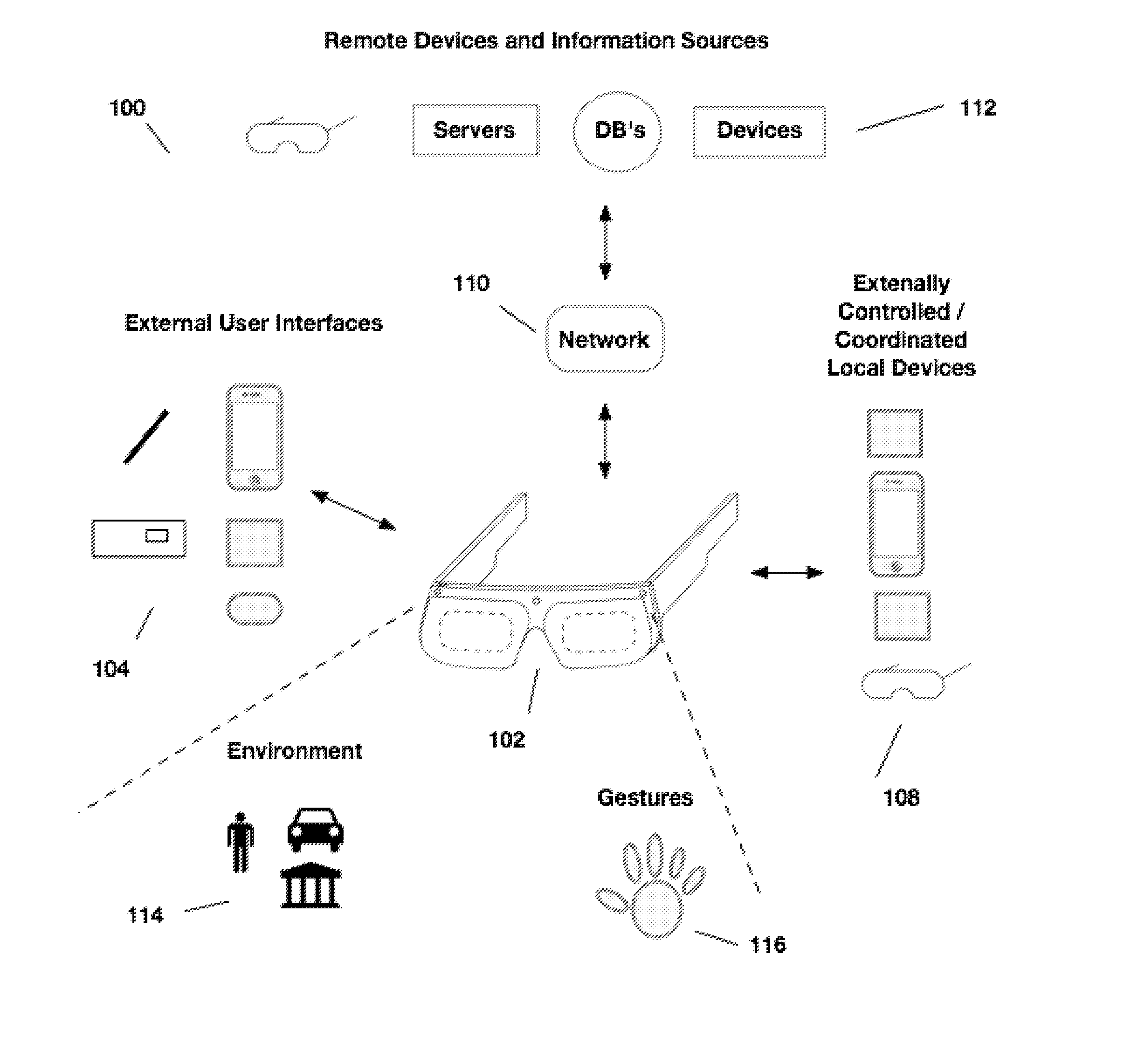

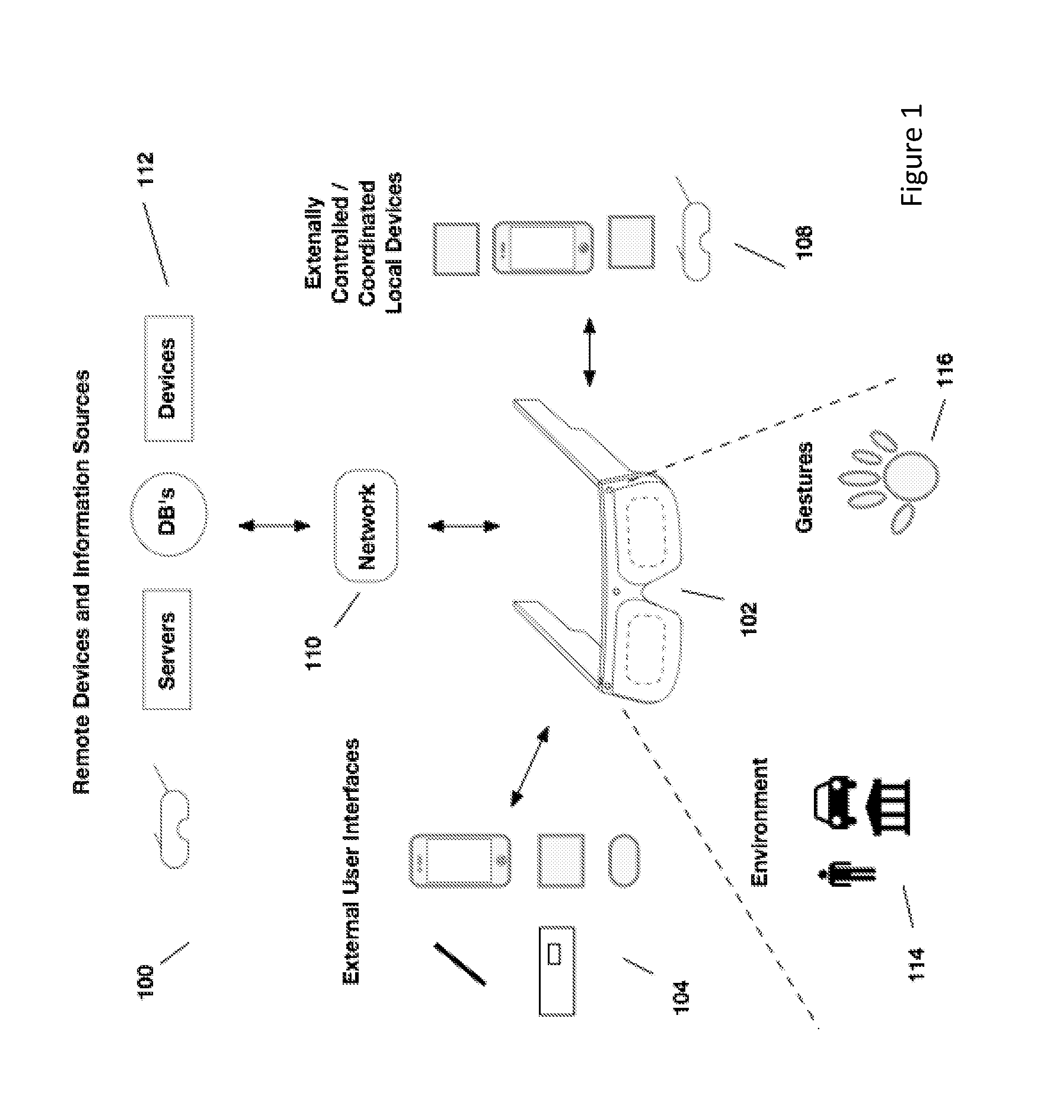

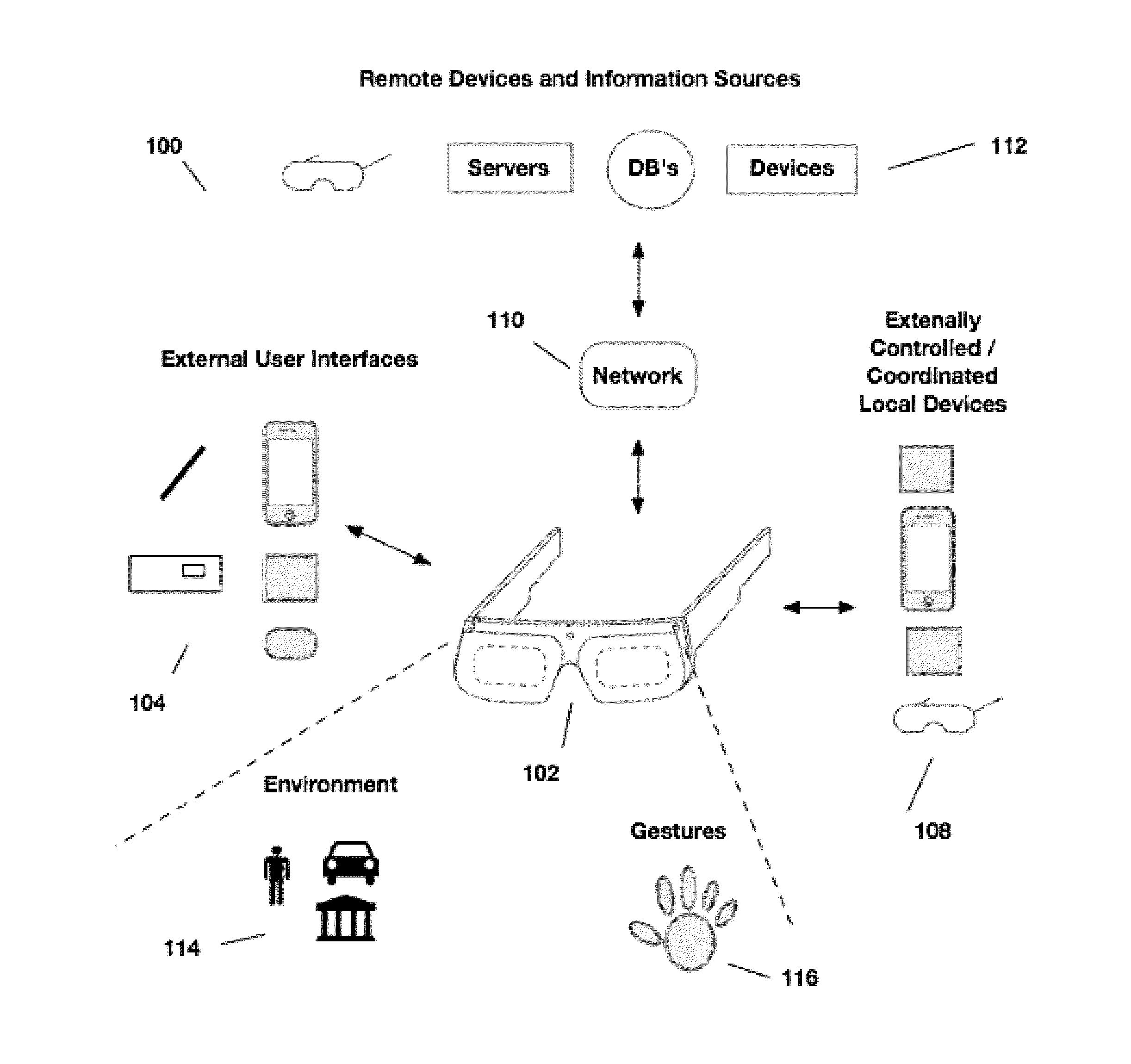

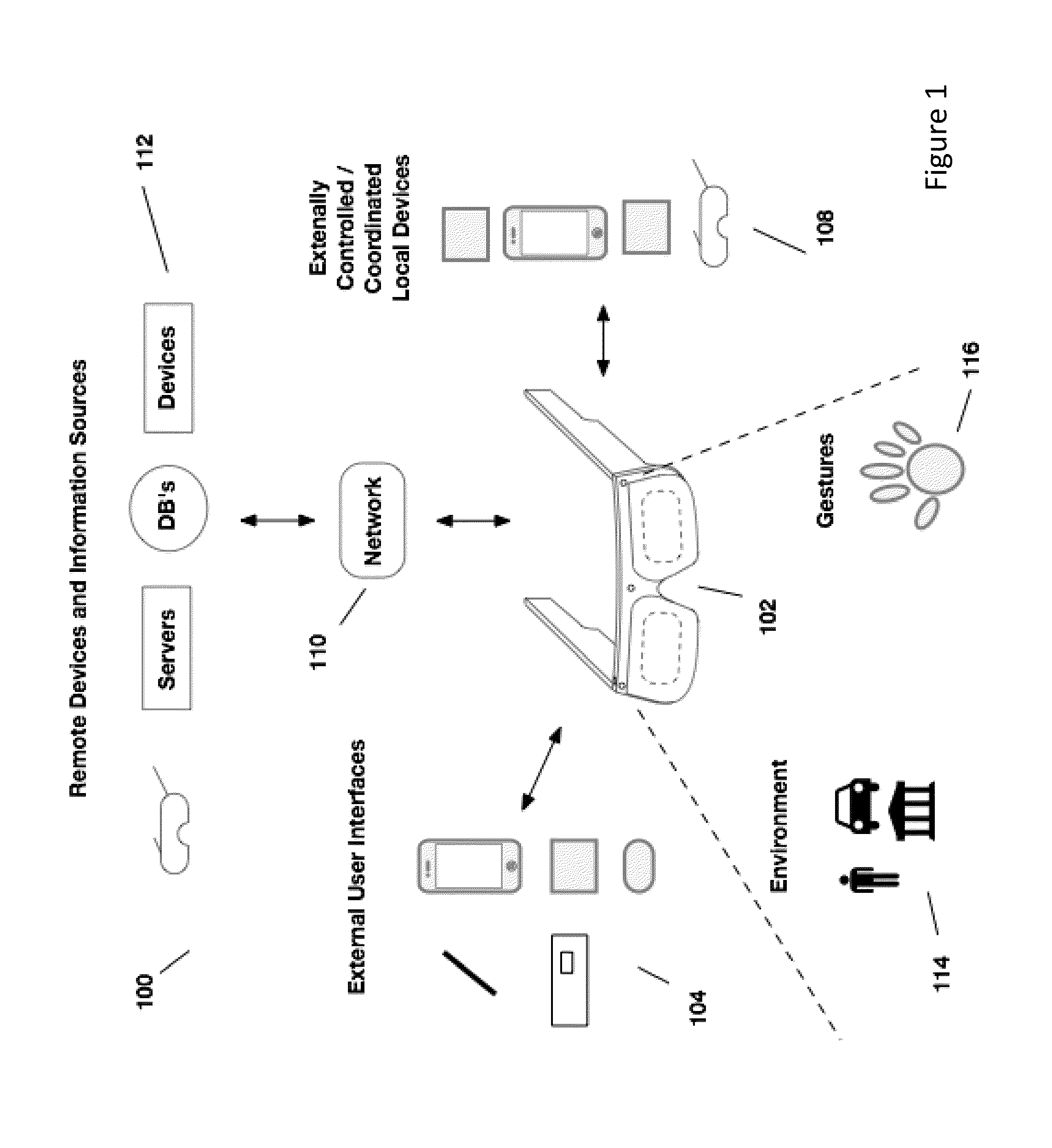

Method and system enabling natural user interface gestures with user wearable glasses

ActiveUS8836768B1Reduce power consumptionInput/output for user-computer interactionCathode-ray tube indicatorsUses eyeglassesEyewear

User wearable eye glasses include a pair of two-dimensional cameras that optically acquire information for user gestures made with an unadorned user object in an interaction zone responsive to viewing displayed imagery, with which the user can interact. Glasses systems intelligently signal process and map acquired optical information to rapidly ascertain a sparse (x,y,z) set of locations adequate to identify user gestures. The displayed imagery can be created by glasses systems and presented with a virtual on-glasses display, or can be created and / or viewed off-glasses. In some embodiments the user can see local views directly, but augmented with imagery showing internet provided tags identifying and / or providing information as to viewed objects. On-glasses systems can communicate wirelessly with cloud servers and with off-glasses systems that the user can carry in a pocket or purse.

Owner:KAYA DYNAMICS LLC

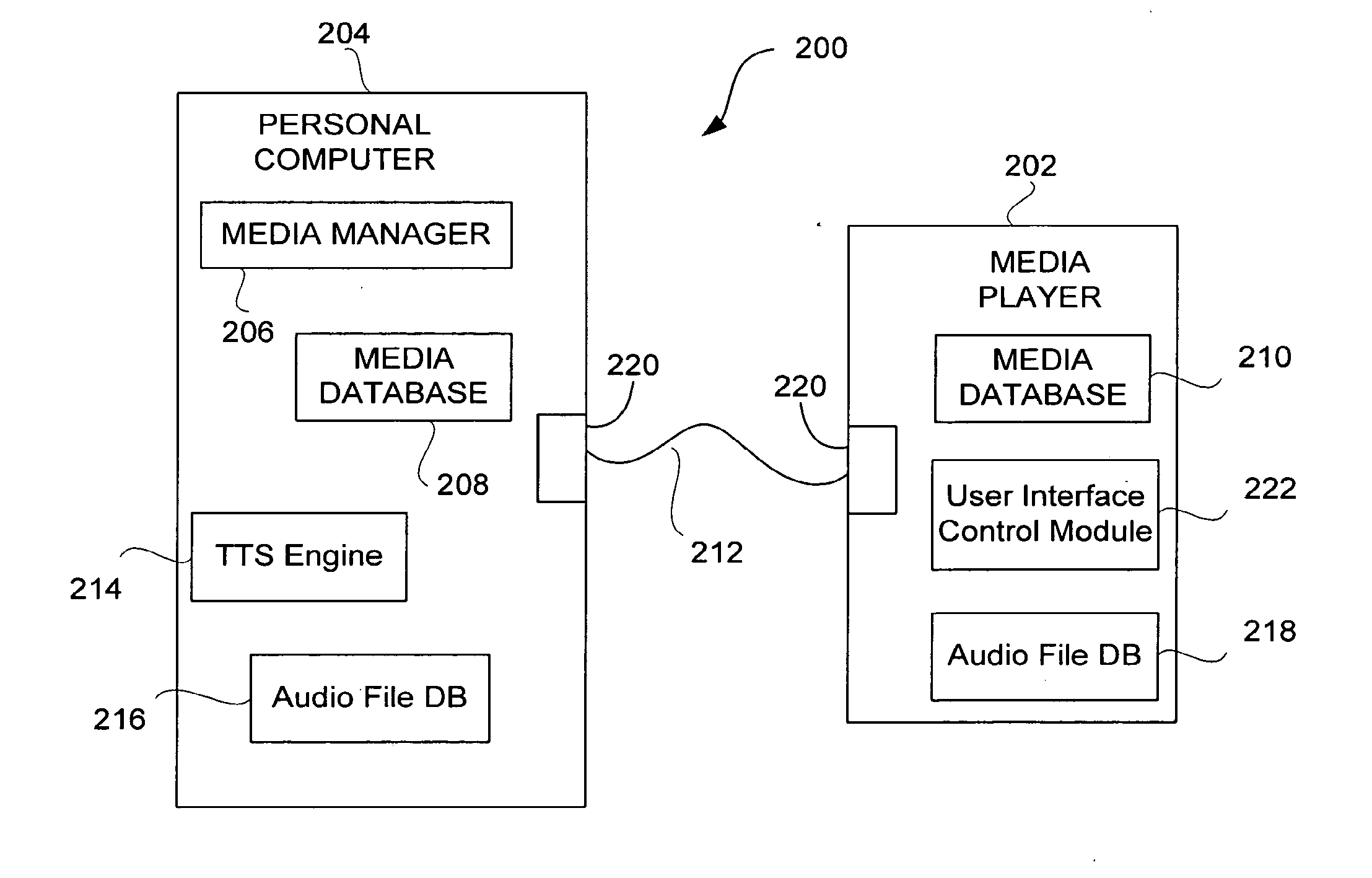

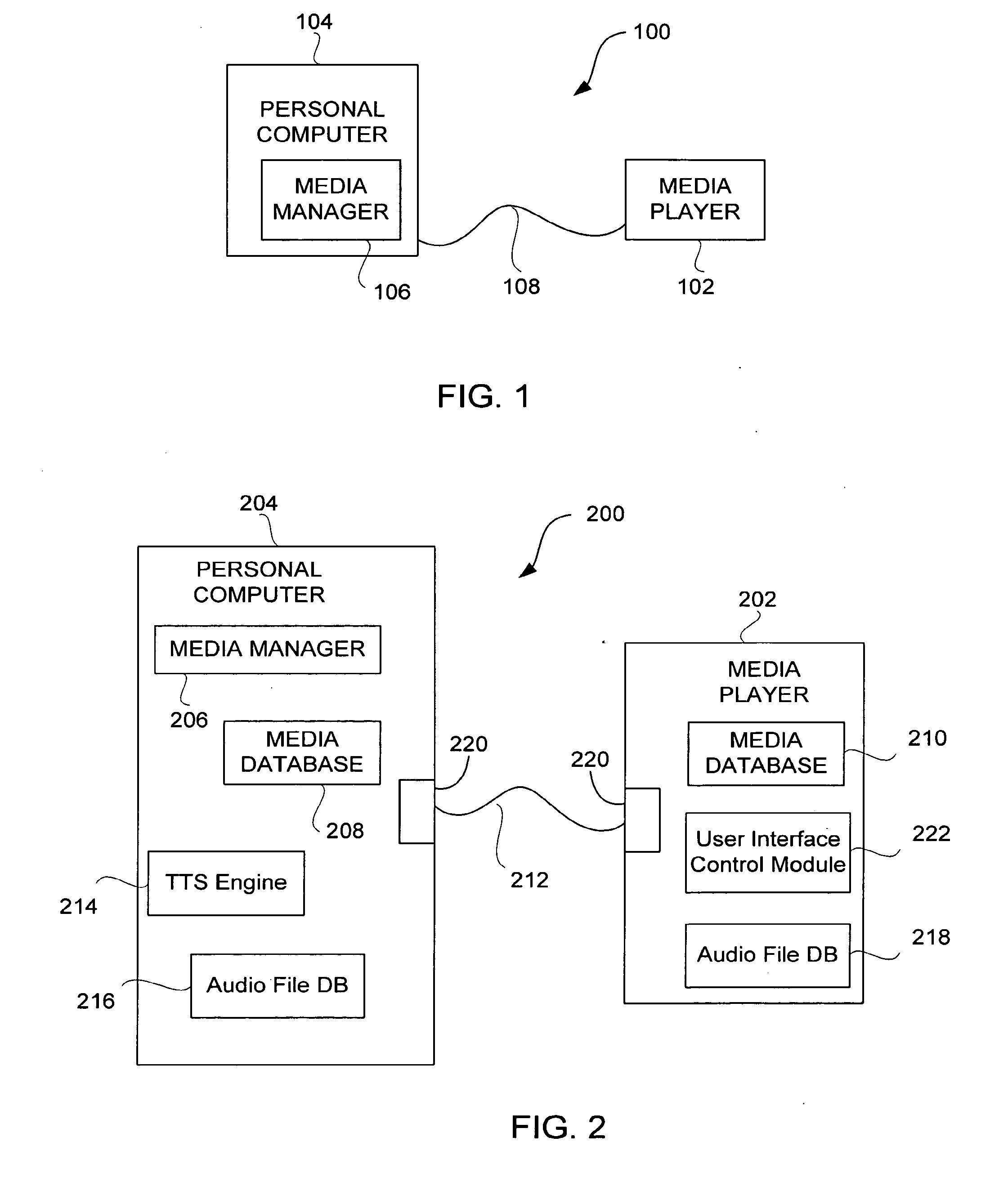

Audio user interface

InactiveUS20100064218A1User may experienceImprove experienceNavigation instrumentsSubstation equipmentNatural user interfaceHuman–computer interaction

An audio user interface that provides audio prompts that help a user interact with a user interface of an electronic device is disclosed. The audio prompts can provide audio indicators that allow a user to focus his or her visual attention upon other tasks such as driving an automobile, exercising, or crossing a street, yet still enable the user to interact with the user interface. An intelligent path can provide access to different types of audio prompts from a variety of different sources. The different types of audio prompts may be presented based on availability of a particular type of audio prompt. As examples, the audio prompts may include pre-recorded voice audio, such as celebrity voices or cartoon characters, obtained from a dedicate voice server. Absent availability of pre-recorded or synthesized audio data, non-voice audio prompts may be provided.

Owner:APPLE INC

Audio user interface

InactiveUS8898568B2User may experienceImprove experienceInstruments for road network navigationGain controlNatural user interfaceHuman–computer interaction

An audio user interface that provides audio prompts that help a user interact with a user interface of an electronic device is disclosed. The audio prompts can provide audio indicators that allow a user to focus his or her visual attention upon other tasks such as driving an automobile, exercising, or crossing a street, yet still enable the user to interact with the user interface. An intelligent path can provide access to different types of audio prompts from a variety of different sources. The different types of audio prompts may be presented based on availability of a particular type of audio prompt. As examples, the audio prompts may include pre-recorded voice audio, such as celebrity voices or cartoon characters, obtained from a dedicate voice server. Absent availability of pre-recorded or synthesized audio data, non-voice audio prompts may be provided.

Owner:APPLE INC

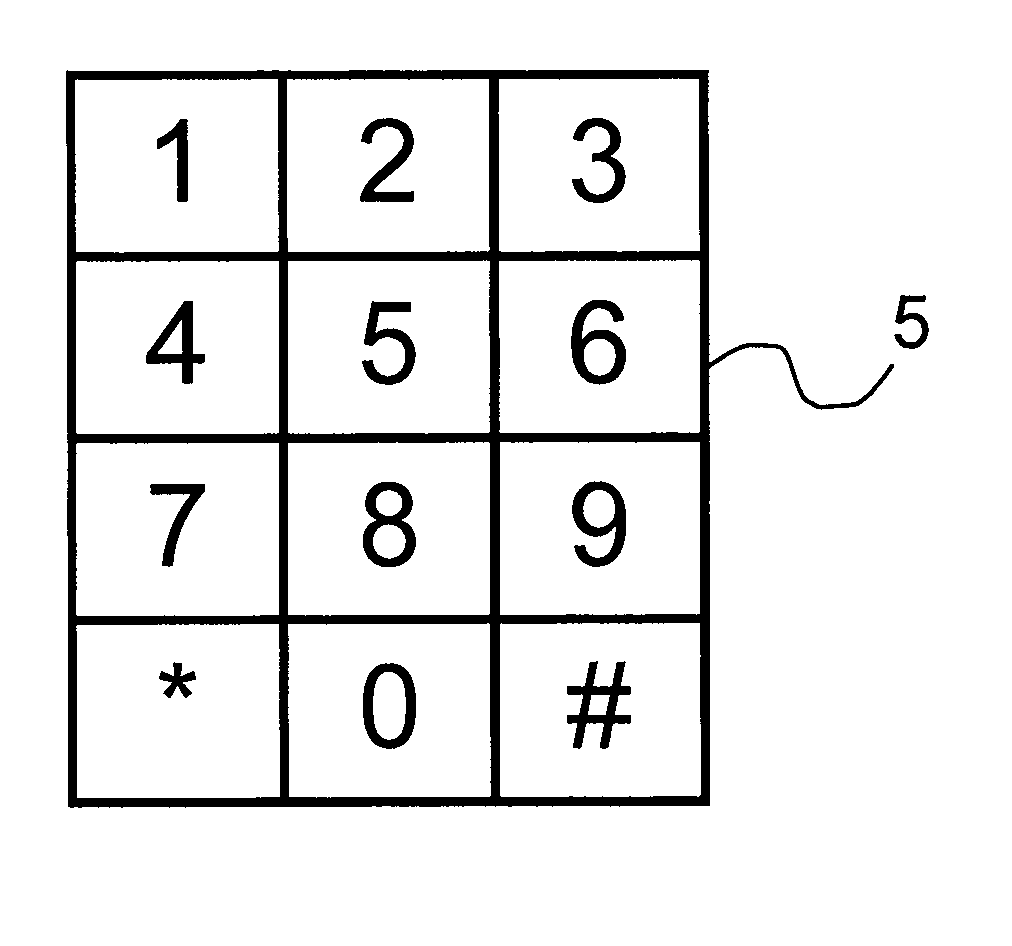

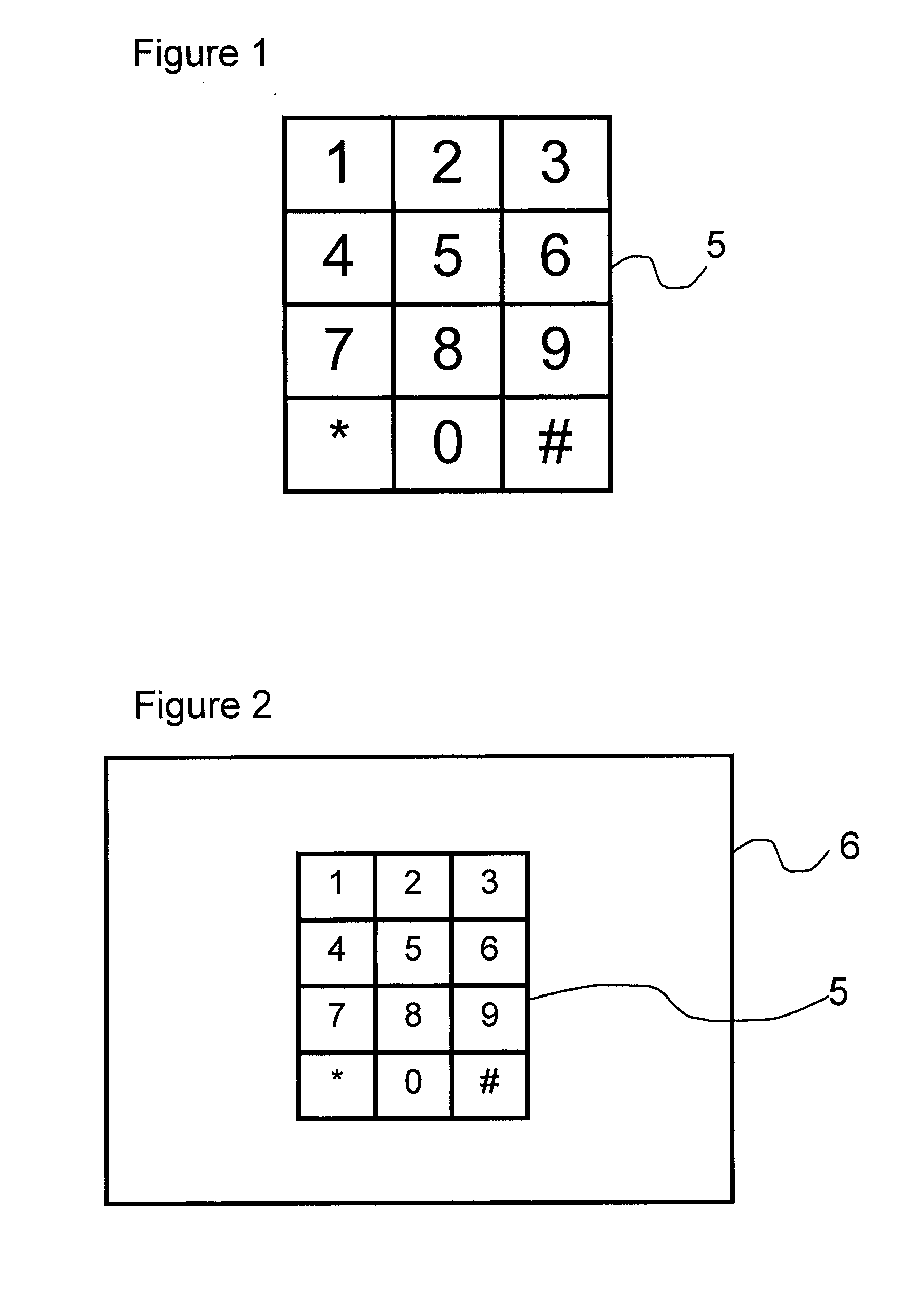

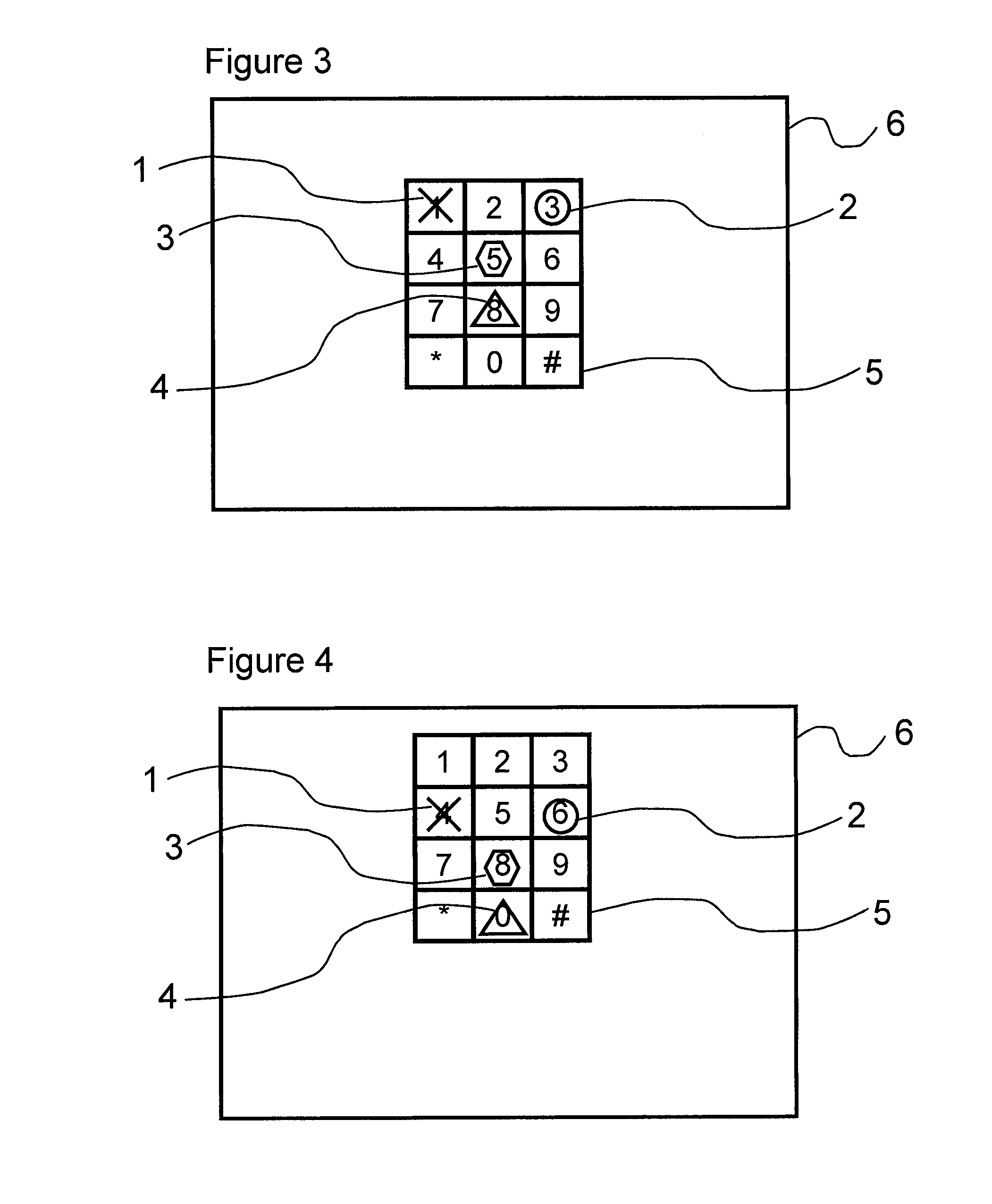

Private data entry

InactiveUS20110006996A1Easy to useImprove security levelInput/output for user-computer interactionAcutation objectsGraphicsGraphical user interface

A device and method for creating a private entry display on a touchscreen is provided, wherein the touchscreen includes a plurality of touch cells corresponding to spatial locations on the touchscreen. A graphical user interface is generated for display on the touchscreen for a predefined operation, the graphical user interface including a plurality of input zones. A characteristic of the graphical user interface as displayed on the touchscreen for the predefined operation is alter in order to change the touch cells associated with the graphical user interface. The altered user interface then is displayed on the touchscreen.

Owner:SHARP KK

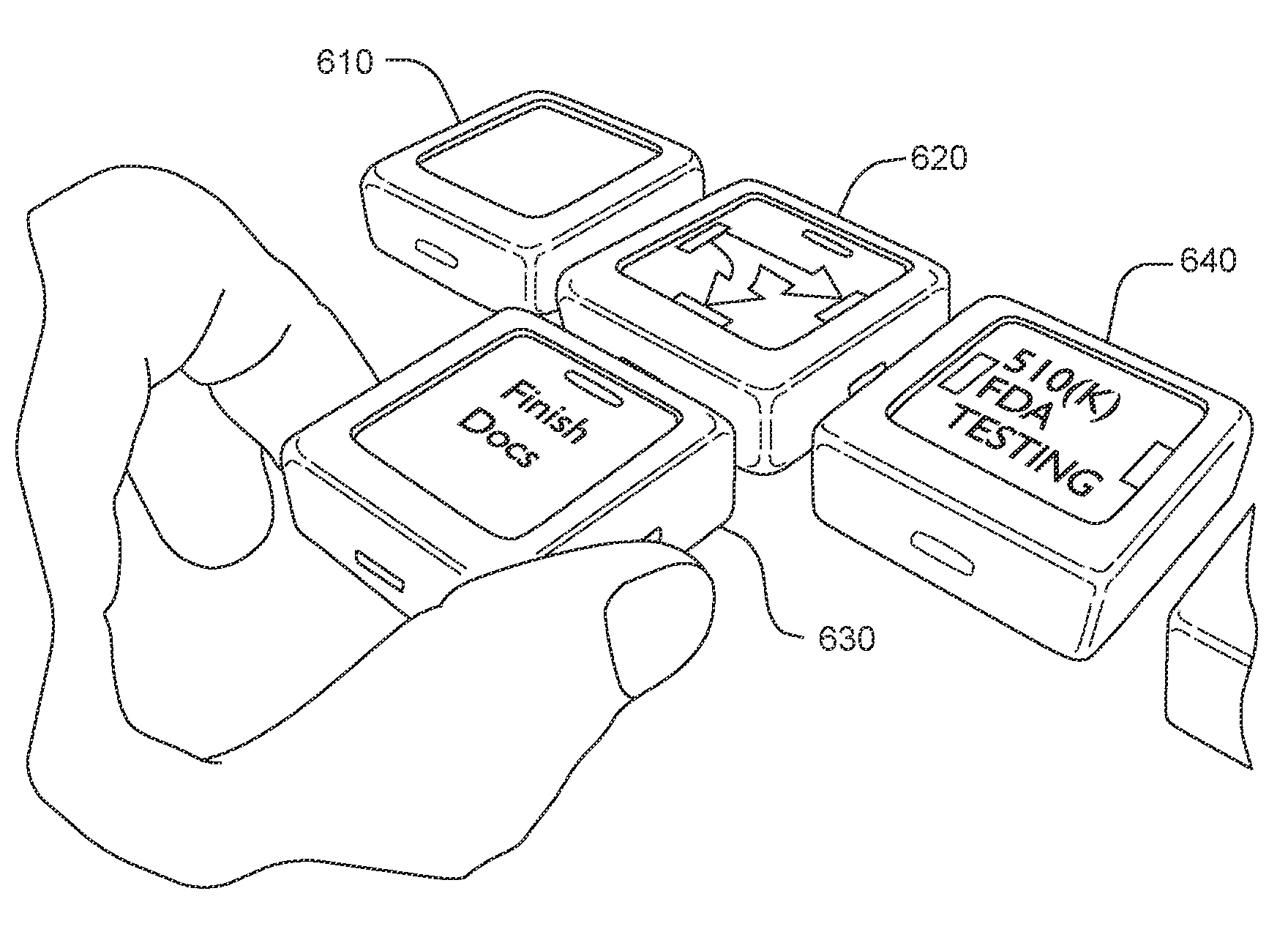

Sensor-based distributed tangible user interface

InactiveUS20090273560A1Improve user interactionCathode-ray tube indicatorsInput/output processes for data processingInteractive softwareDigital content

A distributed tangible user interface comprises compact, self-powered, tangible user interface manipulative devices having sensing, display, and wireless communication capabilities, along with one or more associated digital content or other interactive software management applications. The manipulative devices display visual representations of digital content or program controls and can be physically manipulated as a group by a user for interaction with the digital information or software application. A controller on each manipulative device receives and processes data from a movement sensor, initiating behavior on the manipulative and / or forwarding the results to a management application that uses the information to manage the digital content, software application, and / or the manipulative devices. The manipulative devices may also detect the proximity and identity of other manipulative devices, responding to and / or forwarding that information to the management application, and may have feedback devices for presenting responsive information to the user.

Owner:MASSACHUSETTS INST OF TECH

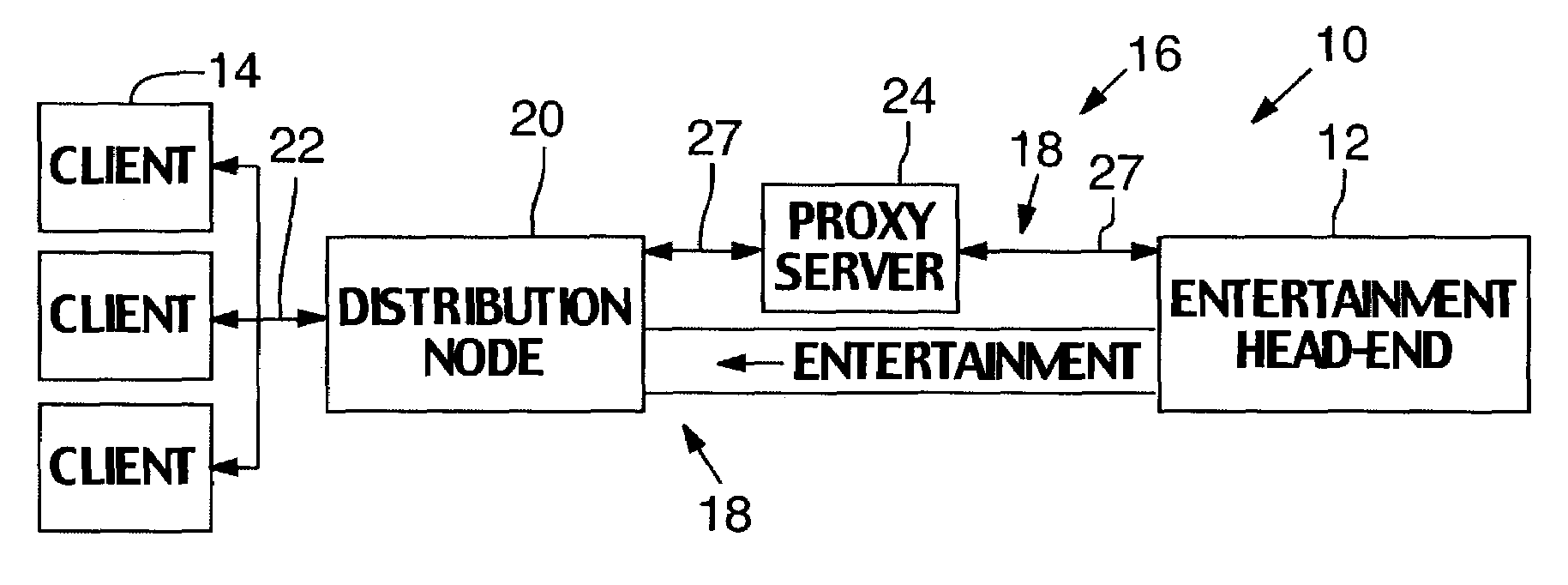

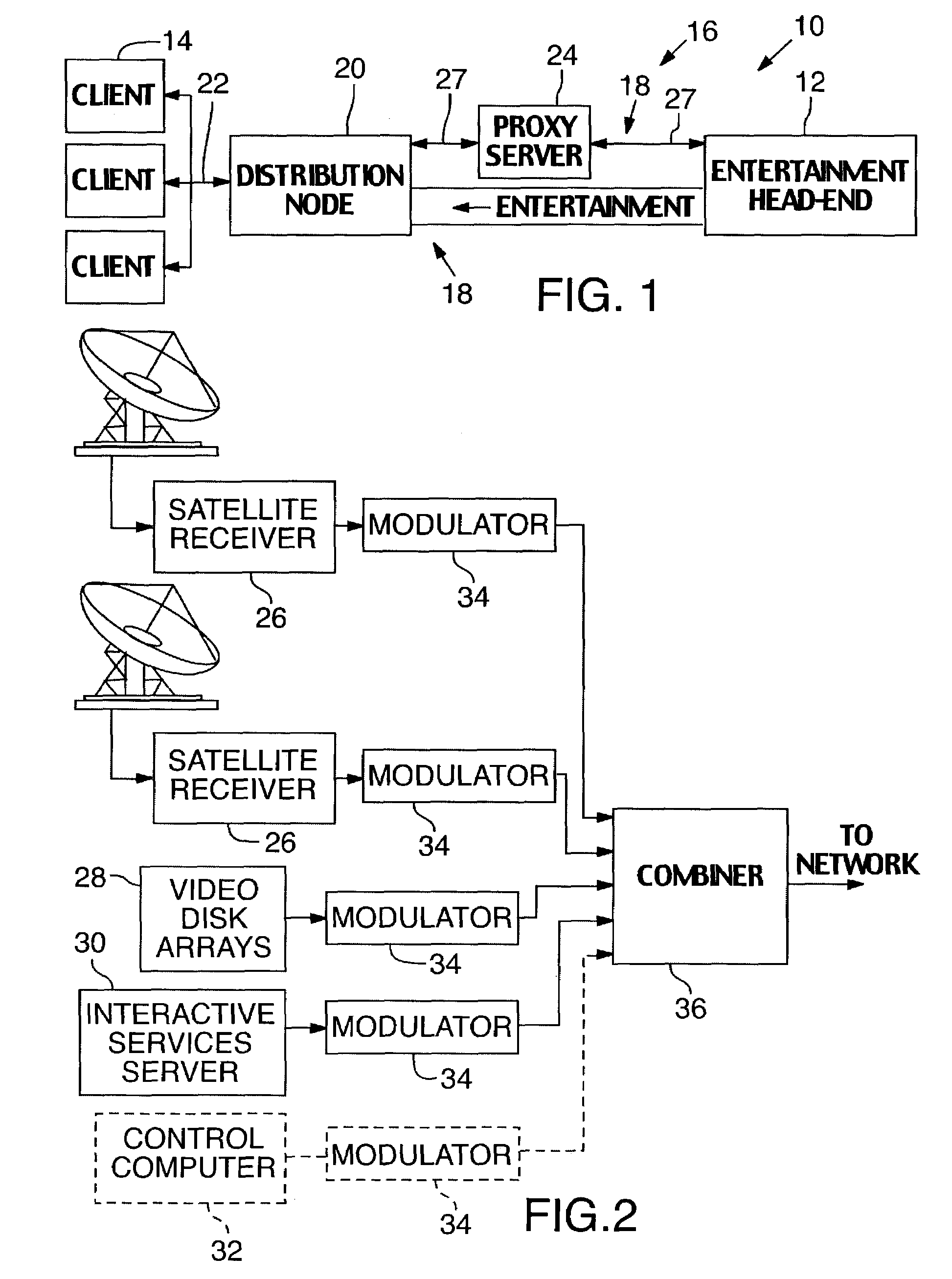

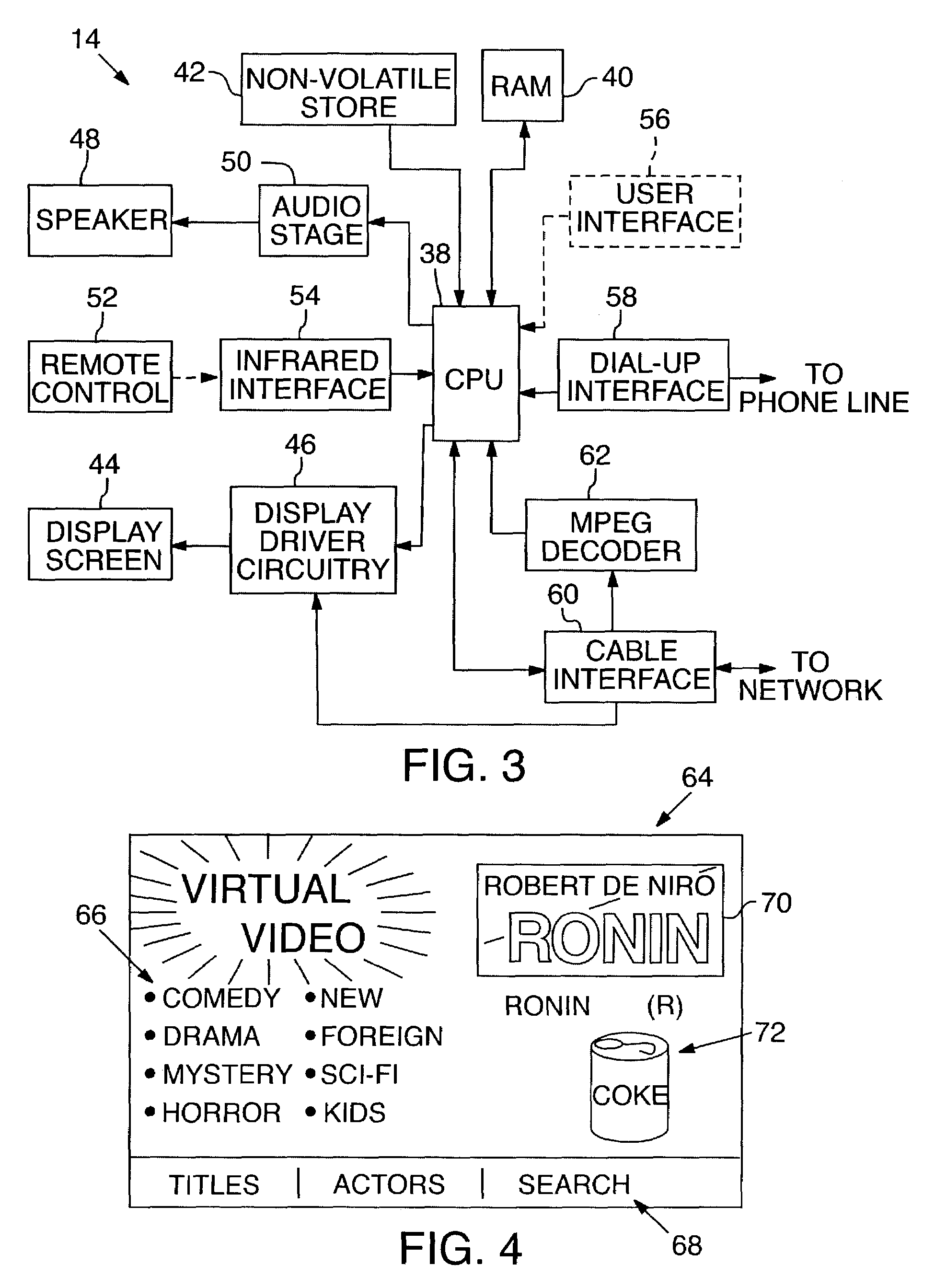

Interactive video programming methods

InactiveUS7392532B2Television system detailsDigital computer detailsInteractive videoNatural user interface

An entertainment head-end provides broadcast programming, video-on-demand services, and HTML-based interactive programming through a distribution network to client terminals in subscribers' homes. A number of different features are provided, including novel user interfaces, enhanced video-on-demand controls, a variety of interactive services (personalized news, jukebox, games, celebrity chat), and techniques that combine to provide user experiences evocative of conventional television.

Owner:MICROSOFT TECH LICENSING LLC

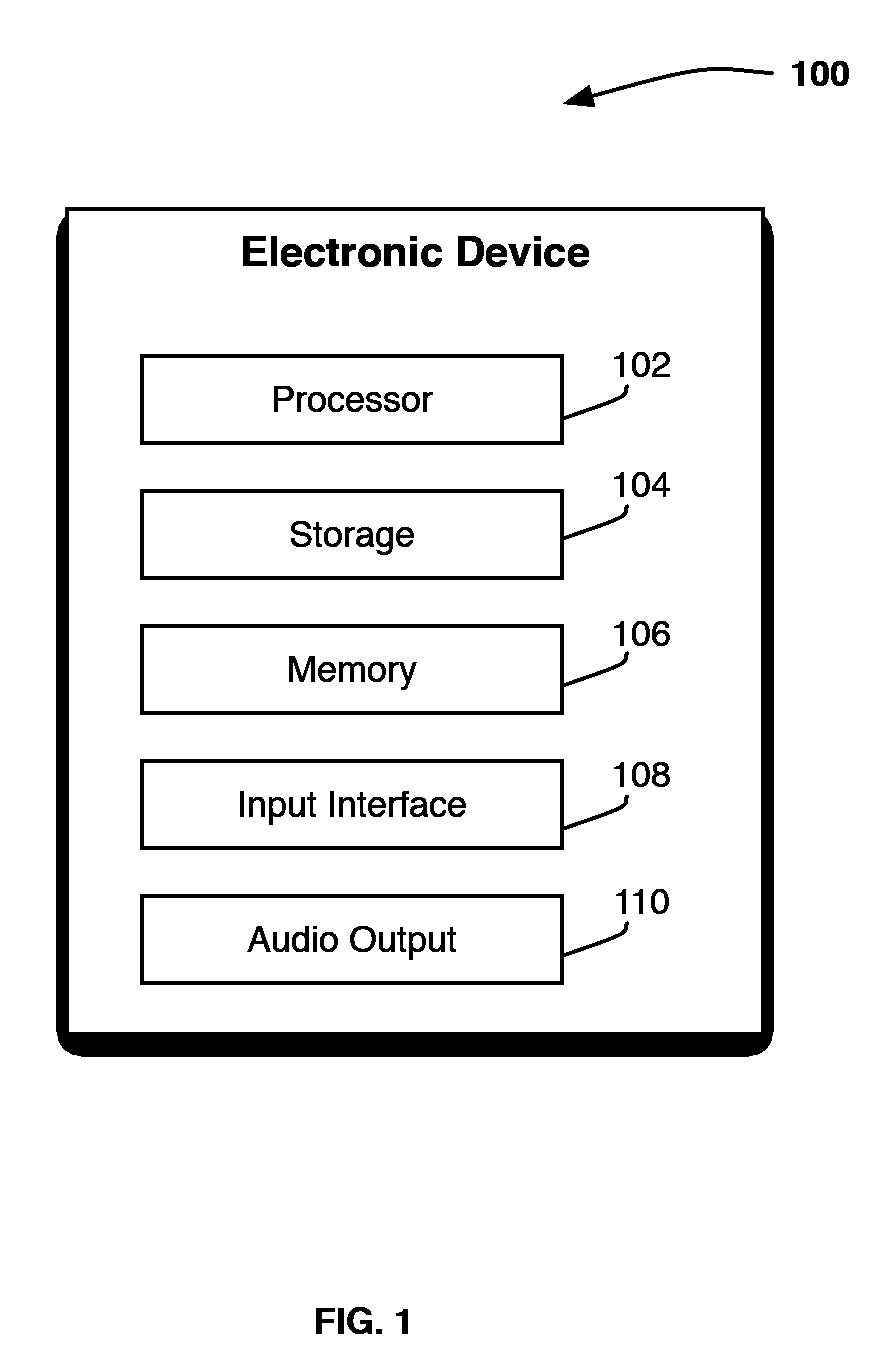

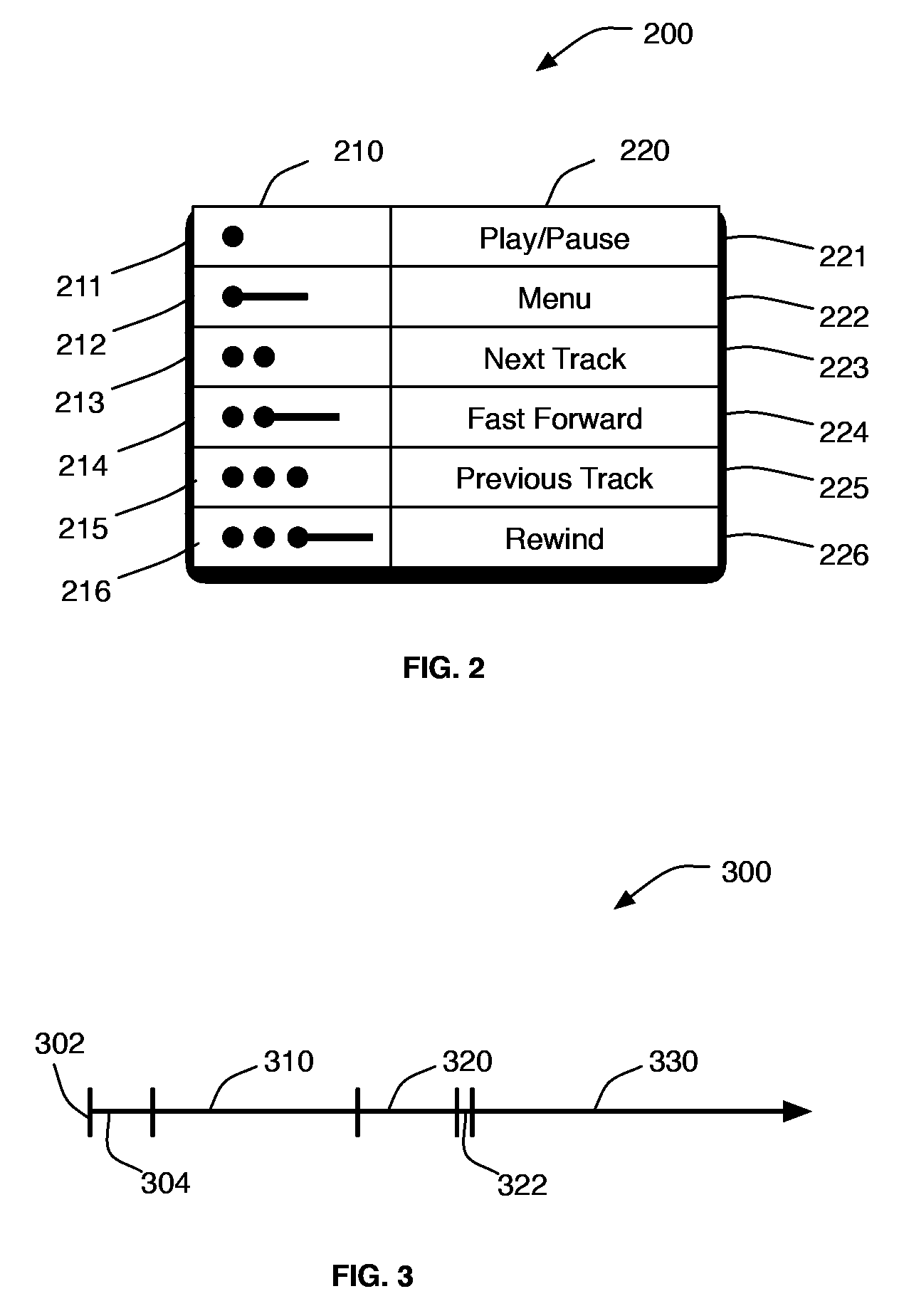

Audio user interface for displayless electronic device

This invention is directed to an audio menu provided in an electronic device having no display. The electronic device can further include an input interface having only a single sensing element (e.g., a single button) for controlling audio playback of the device and for accessing and controlling the device audio menu. In response to a particular input detected by the single sensing element, the electronic device can enable an audio menu mode and play back audio clips associated with different menu options. The user can provide selection instructions using the single sensing element during the playback of an audio clip to select the menu option associated with the played back audio clip. In some embodiments, the audio menu can be multi-dimensional (e.g., the device plays back audio clips for sub-options in response to a selection of a menu option). Suitable menu options can include, for example, groupings of audio (e.g., playlists), options to toggle (e.g., a shuffle option), or options associated with particular metadata tags associated with audio available to the device.

Owner:APPLE INC

Audio user interface for computing devices

ActiveUS20070180383A1Easy to carryCarrier indicating arrangementsSound input/outputNatural user interfaceHuman–computer interaction

An audio user interface that generates audio prompts that help a user interact with a user interface of a computing device is disclosed. The audio prompts can provide audio indicators that allow a user to focus his or her visual attention upon other tasks such as driving an automobile, exercising, or crossing a street, yet still enable the user to interact with the user interface. As examples, the audio prompts provided can audiblize the spoken version of a user interface selection, such as a selected function or a selected (e.g., highlighted) menu item of a display menu. The computing device can be, for example, a media player such as an MP3 player, a mobile phone, or a personal digital assistant.

Owner:APPLE INC

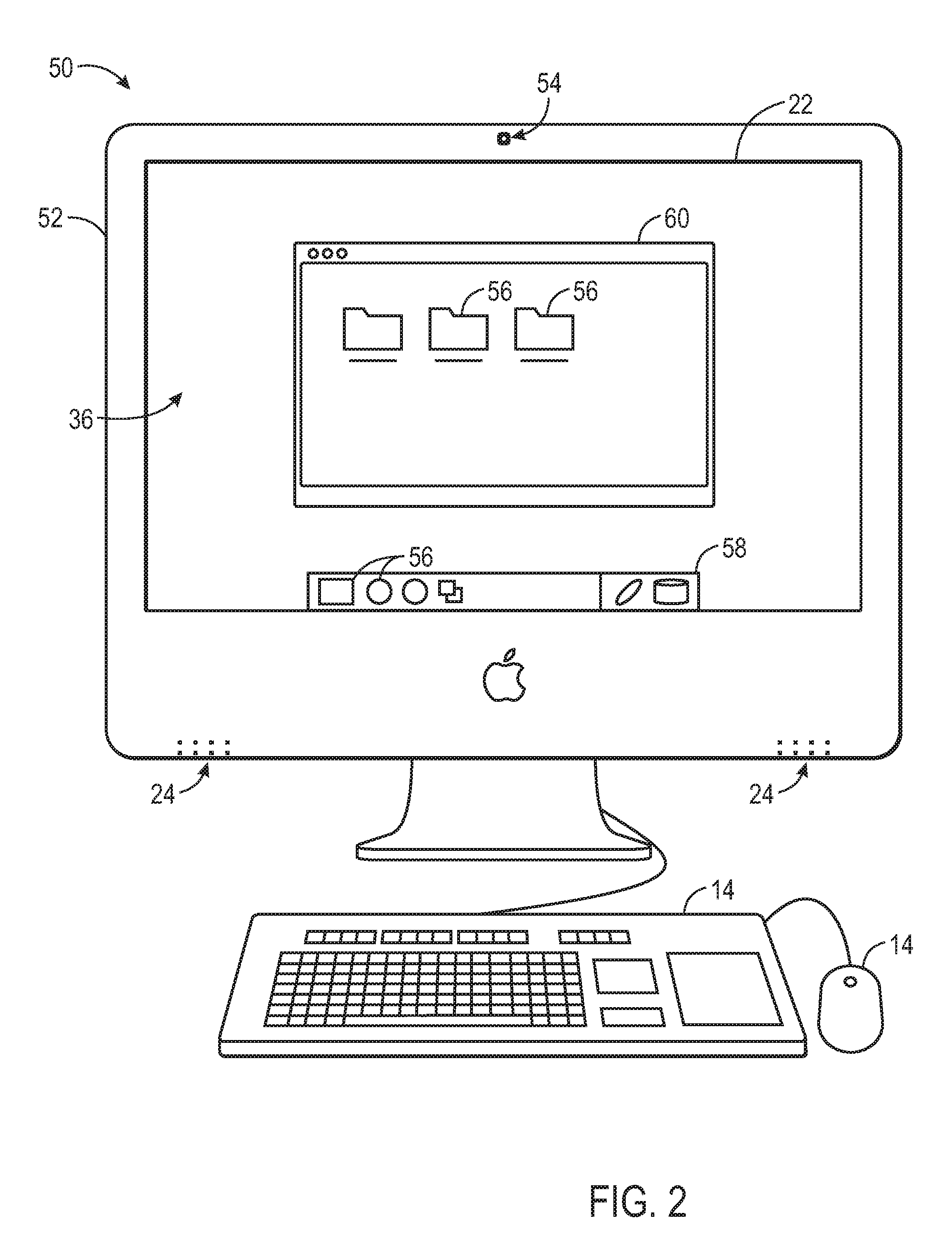

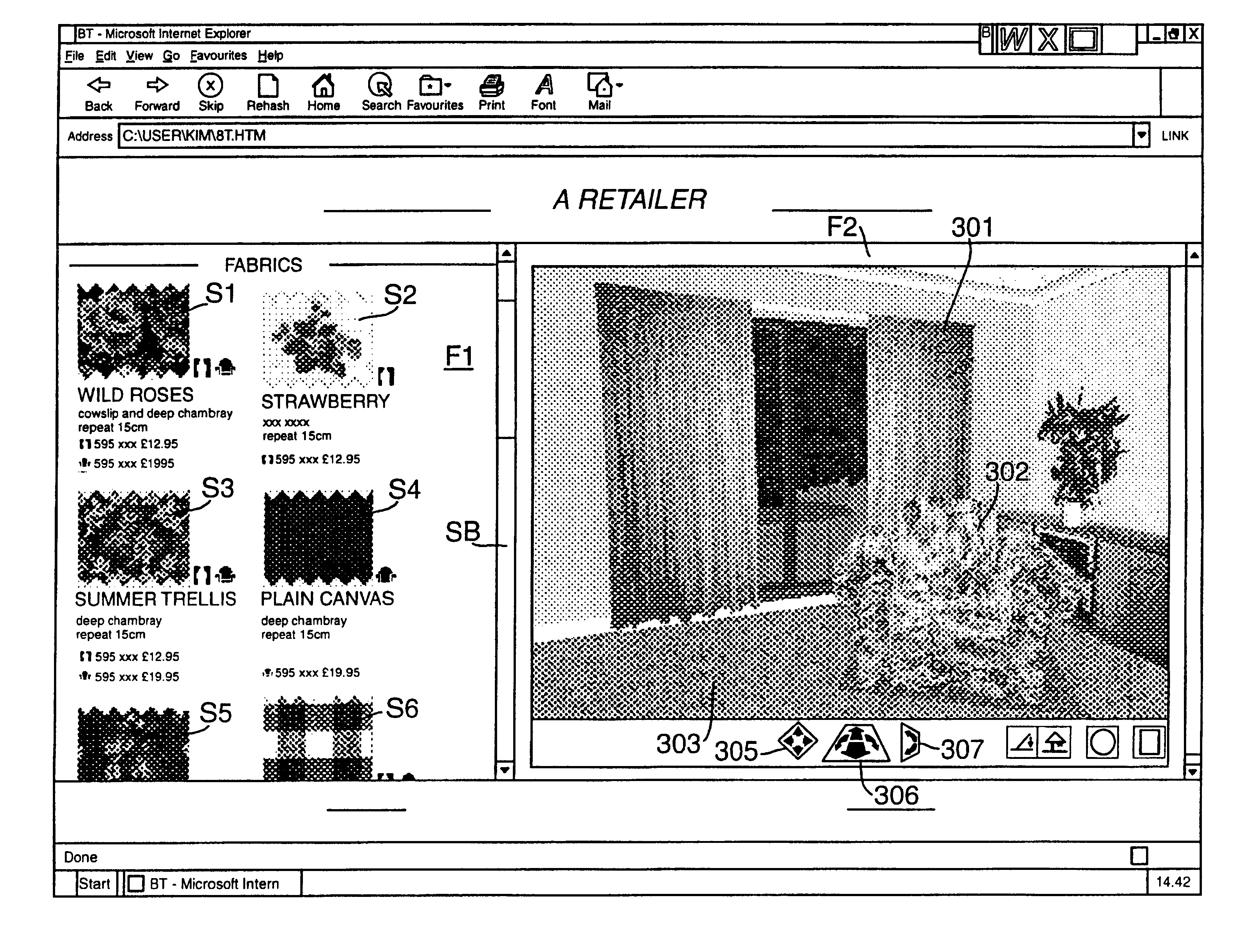

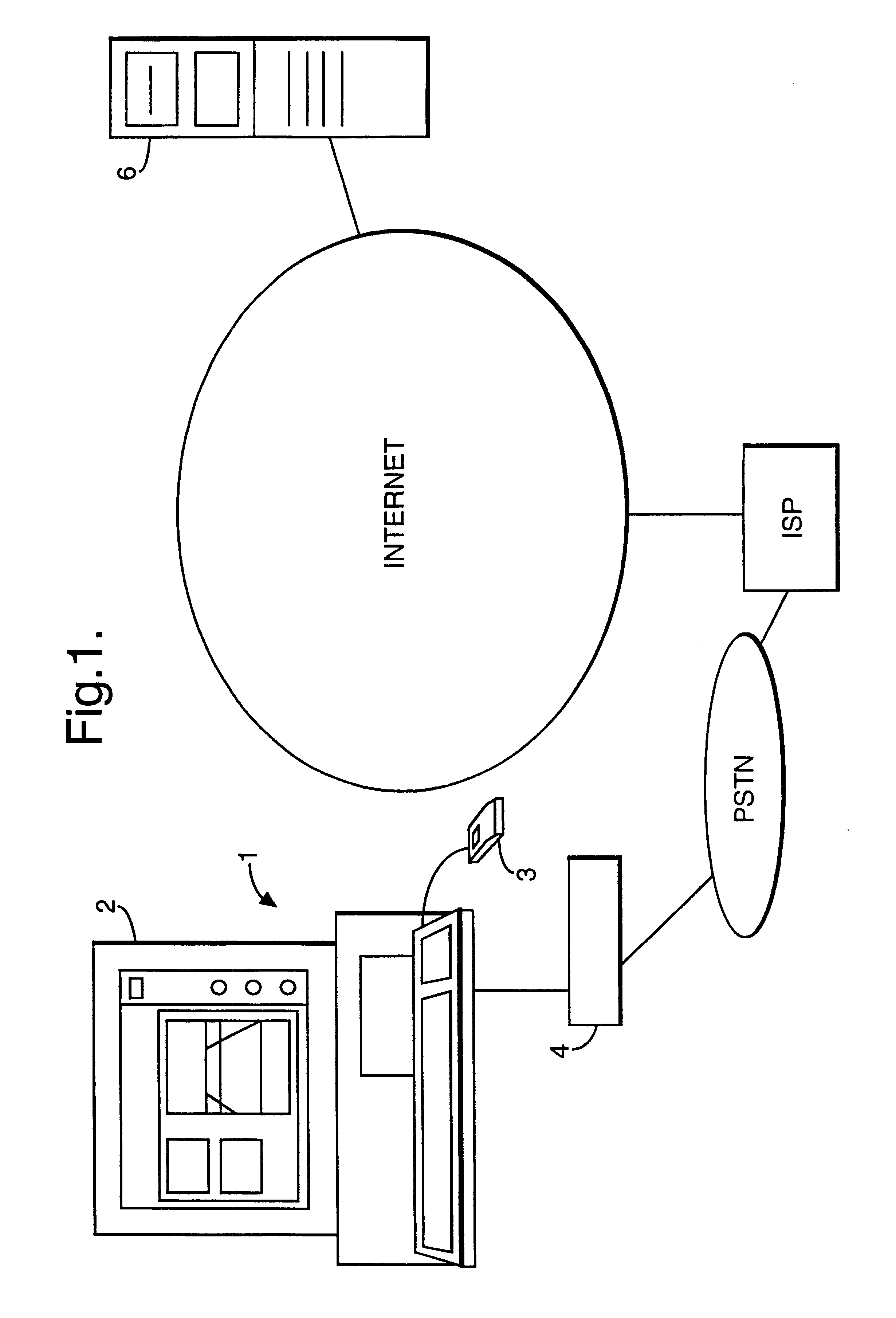

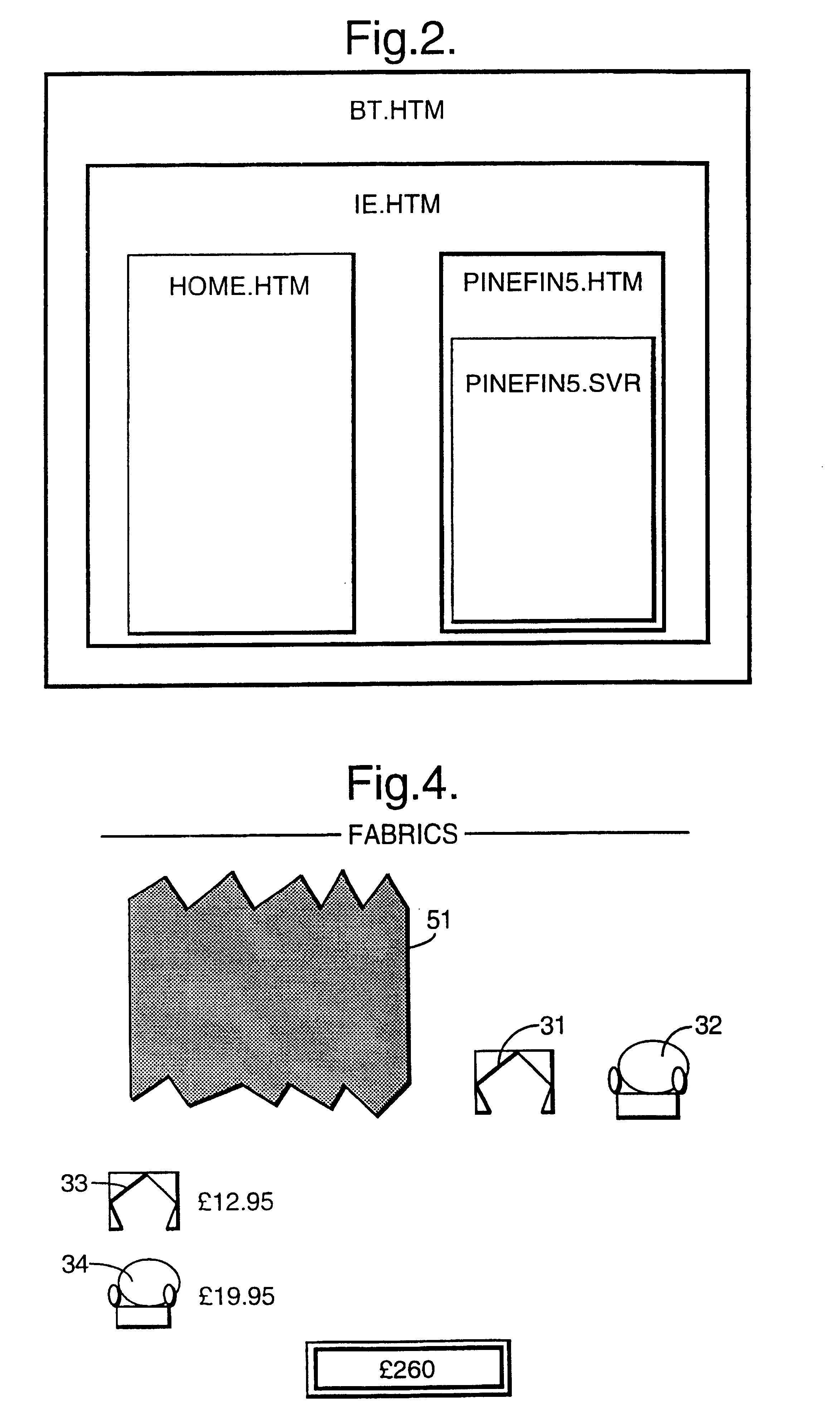

Display terminal user interface with ability to select remotely stored surface finish for mapping onto displayed 3-D surface

InactiveUS6331858B2Realistic assessmentCathode-ray tube indicatorsTwo-way working systemsViewpointsZoom

A user interface on a display terminal, such as a personal computer, includes a 3D display region which shows a scene incorporating a number of objects, such as items of furniture. A surface finish selector is also displayed and is used to select a surface finish from a number of alternatives. In the case of items of furniture, these finishes may correspond to different fabrics for upholstery. A surface texture data for a selected finish is automatically downloaded from a remote source and mapped onto the object in the 3D scene. In a preferred implementation, the surface finish selector is a frame of a web page and generates control data which is passed to another frame containing the 3D scene together with movement controls for changing the viewpoint in the scene.

Owner:BRITISH TELECOMM PLC

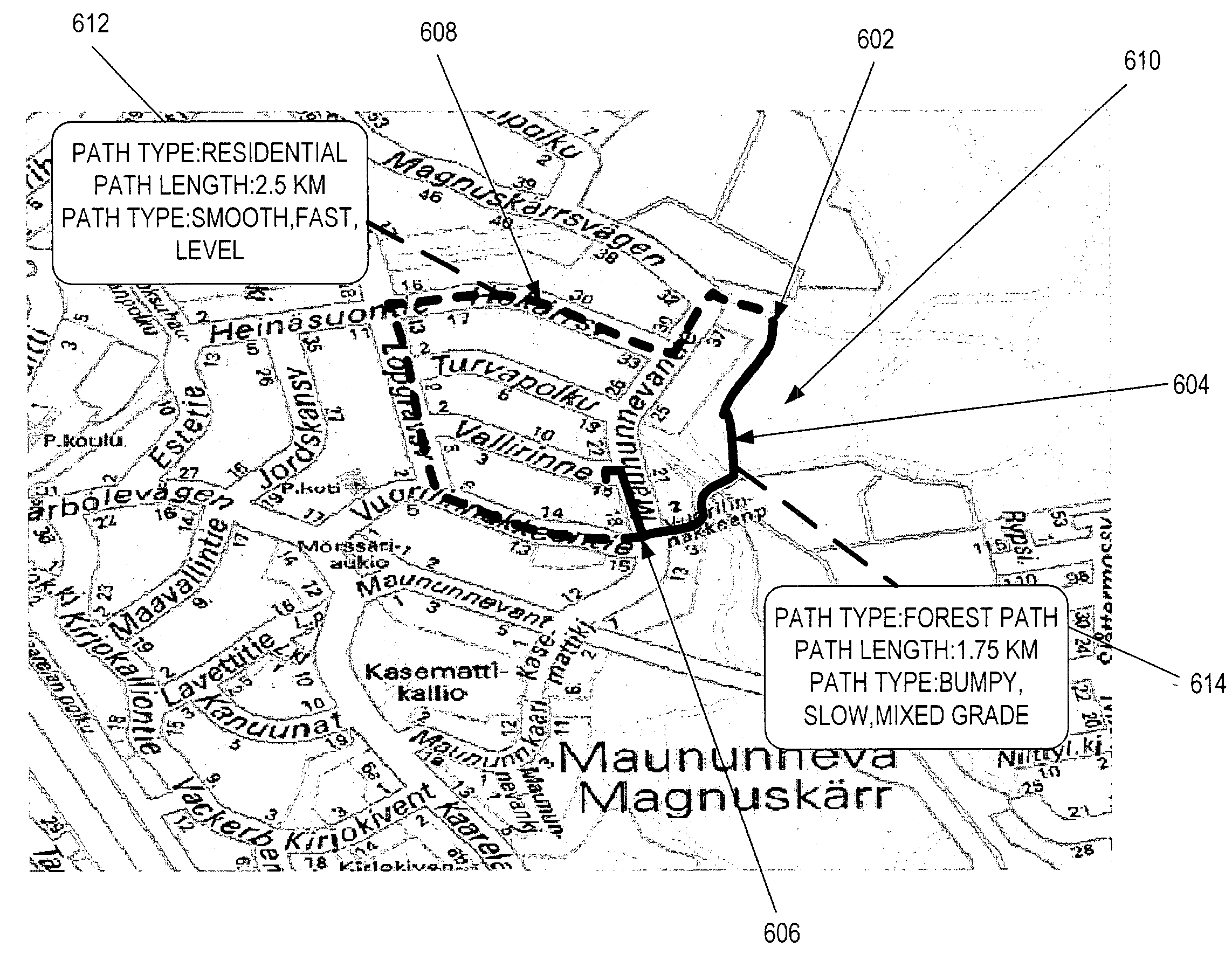

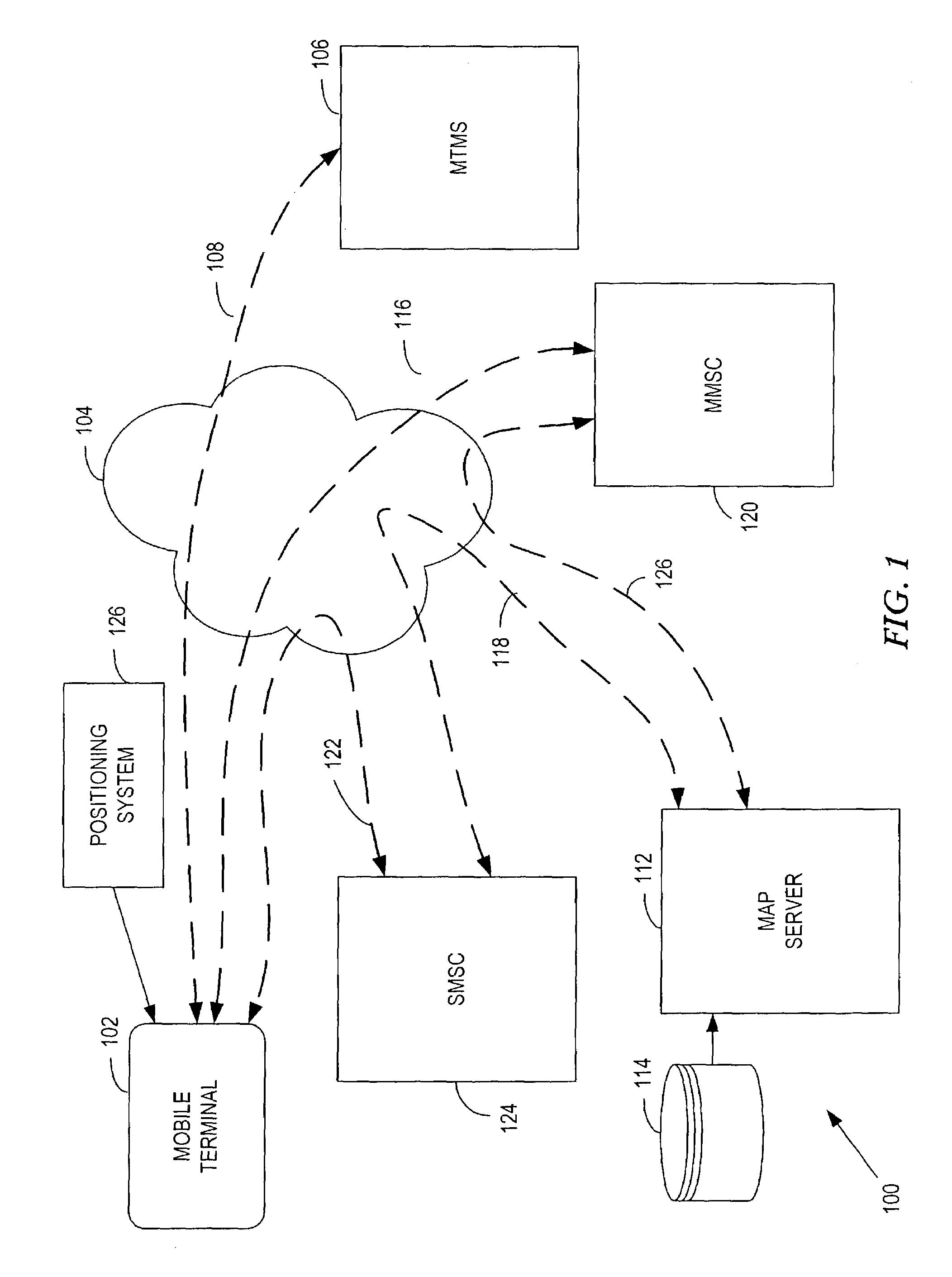

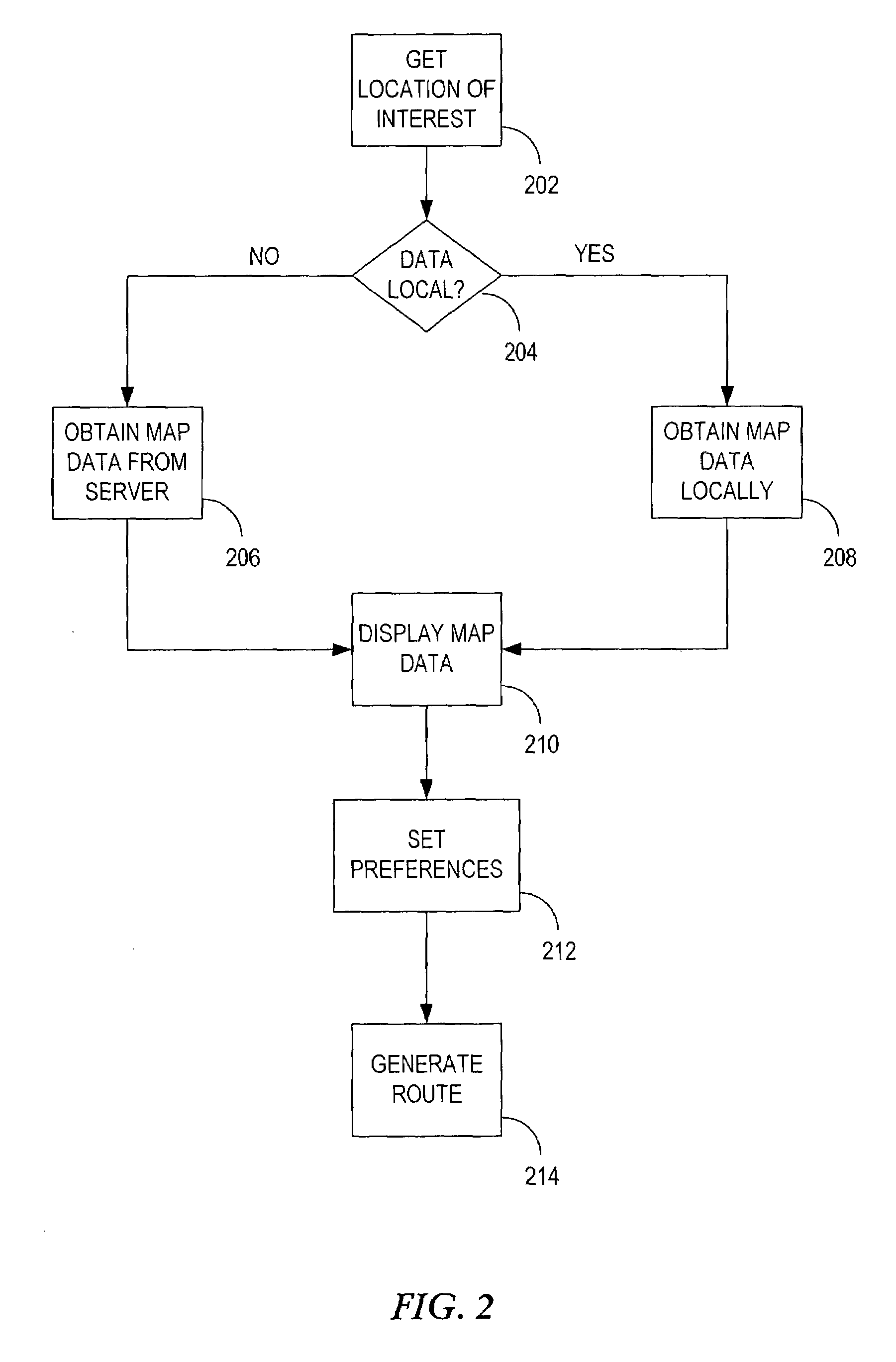

System and method for an intelligent multi-modal user interface for route drawing

InactiveUS7216034B2Facilitate route drawingFacilitate the route drawingInstruments for road network navigationRoad vehicles traffic controlGraphicsDisplay device

A system and method for providing an interactive, multi-modal interface used to generate a route drawing. A mobile terminal display initially displays map data indicative of a user's region of interest. Through a series of audible, visual, and tactile excitations, the user interacts with the route drawing system to generate a desired route to be superimposed upon the user's region of interest. User preferences are used by the system to intelligently aid the user in selection of each segment of the route to be drawn. An analysis of each segment, as well as an analysis of the final route, is performed so that the attributes of the route, along with the graphical representation of the route itself, may be stored either locally within the mobile terminal or remotely within a map server.

Owner:NOKIA TECHNOLOGLES OY

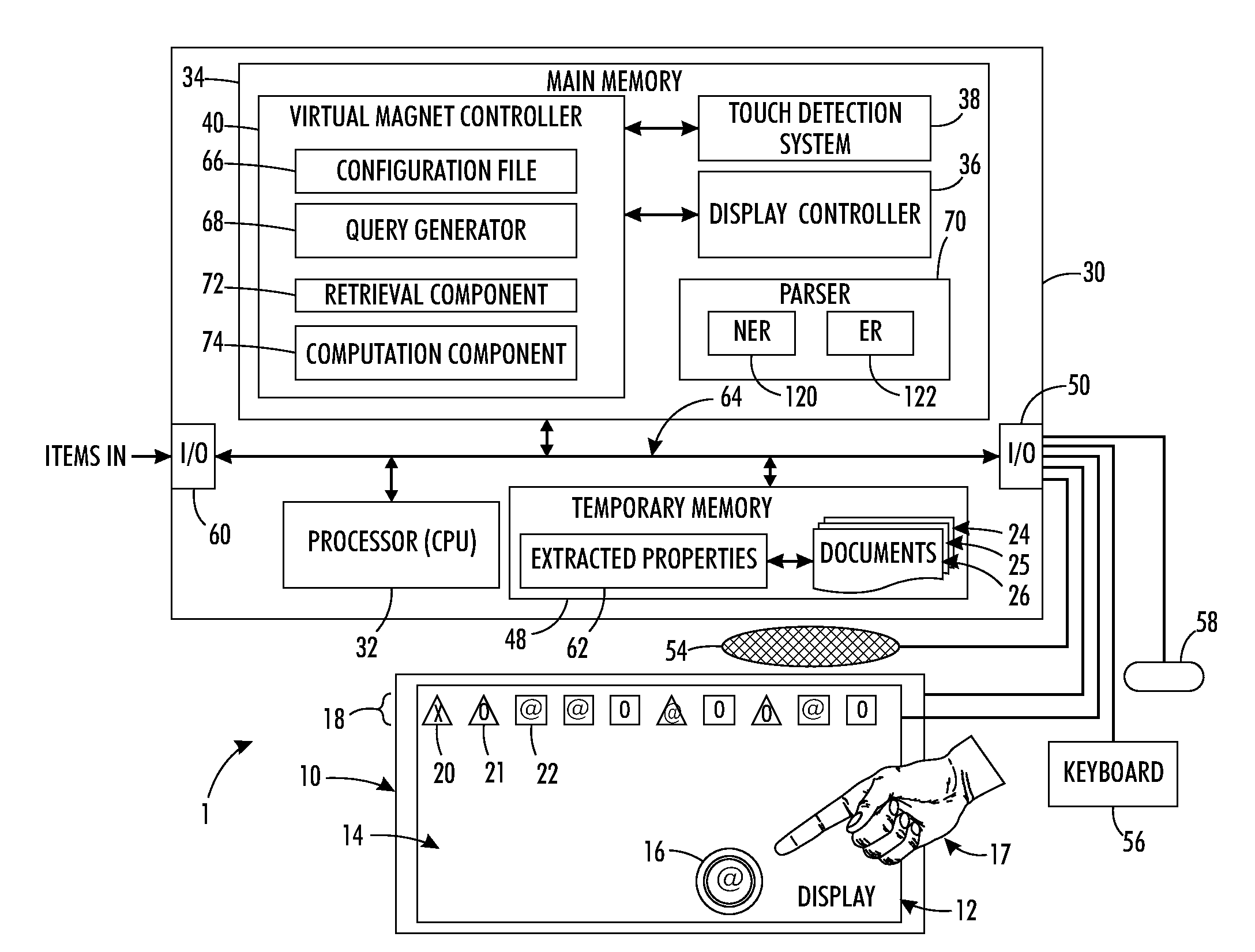

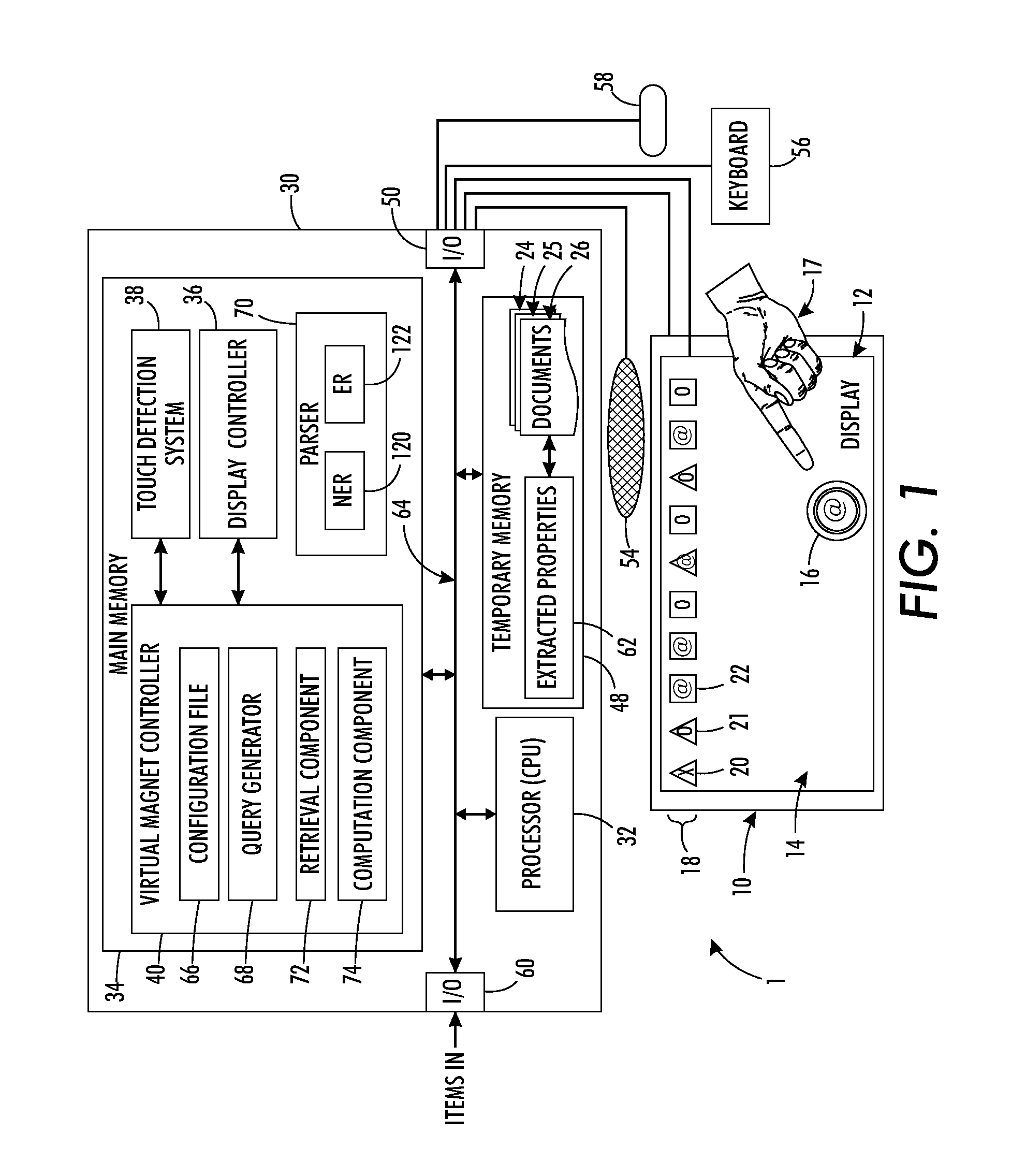

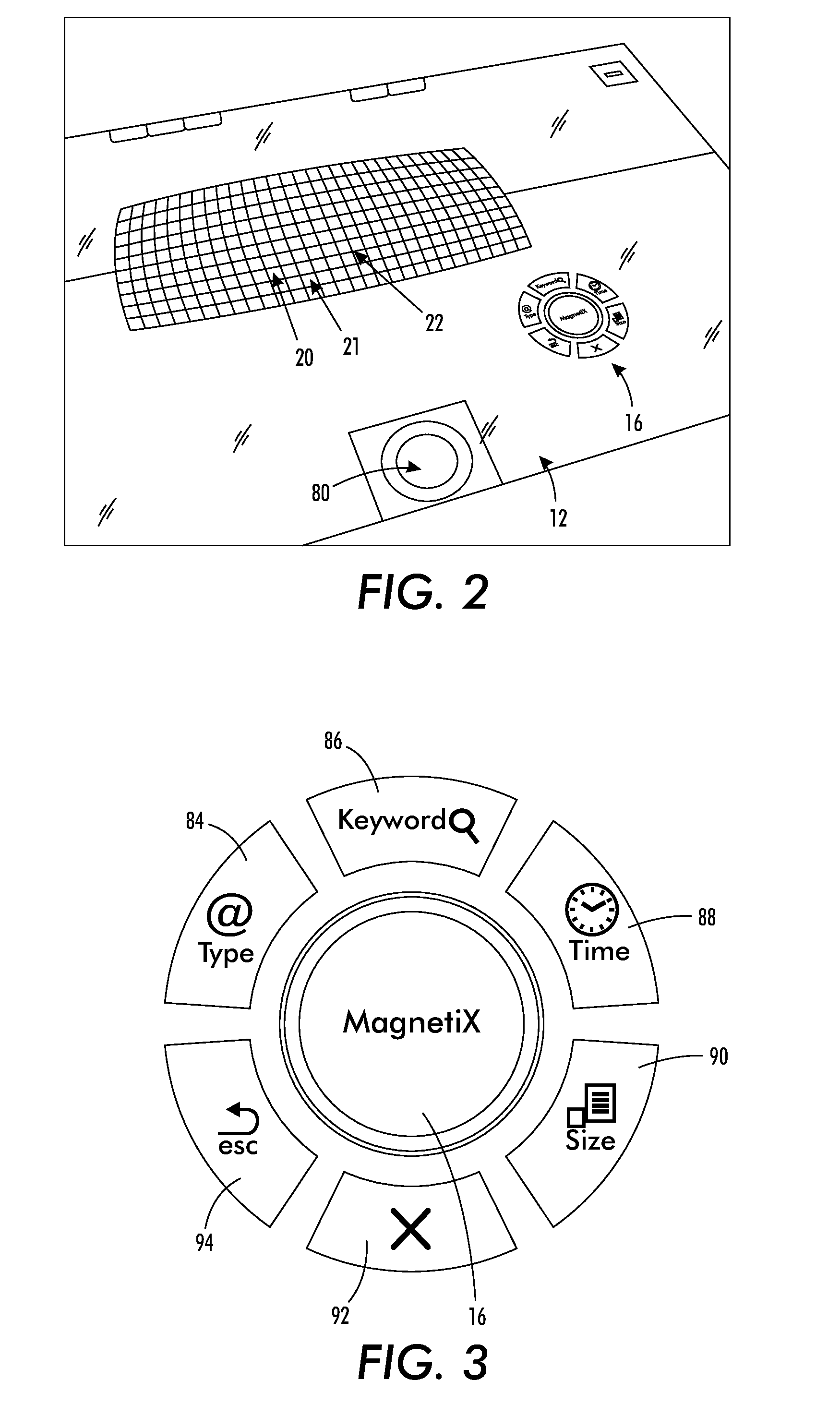

Query generation from displayed text documents using virtual magnets

InactiveUS20120216114A1Input/output for user-computer interactionText processingGraphicsTouch Perception

A system and method are provided for dynamically generating a query using touch gestures. A virtual magnet is movable on a display device of a tactile user interface in response to touch. A user selects one of a set of text documents for review, which is displayed on the display. The system is configured for recognizing a highlighting gesture on the tactile user interface over the displayed document as a selection of a text fragment from the document text. The virtual magnet is populated with a query which is based on the text fragment selected with the highlighting gesture. The populated magnet is able to cause a subset of displayed graphic objects to exhibit a response to the magnet as a function of the query and the text content of the respective documents which the objects represent and / or to cause responsive instances in a text document to be displayed.

Owner:XEROX CORP

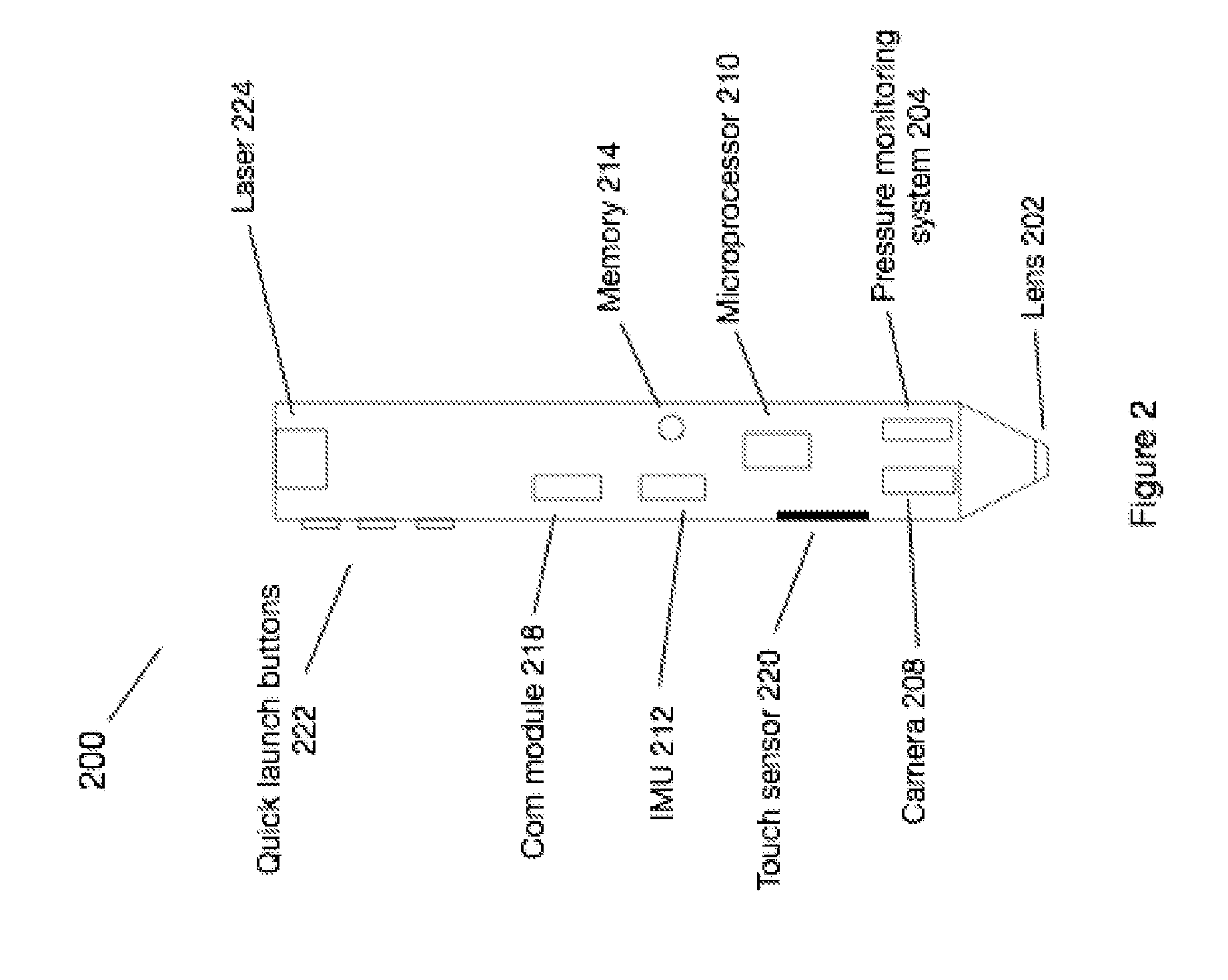

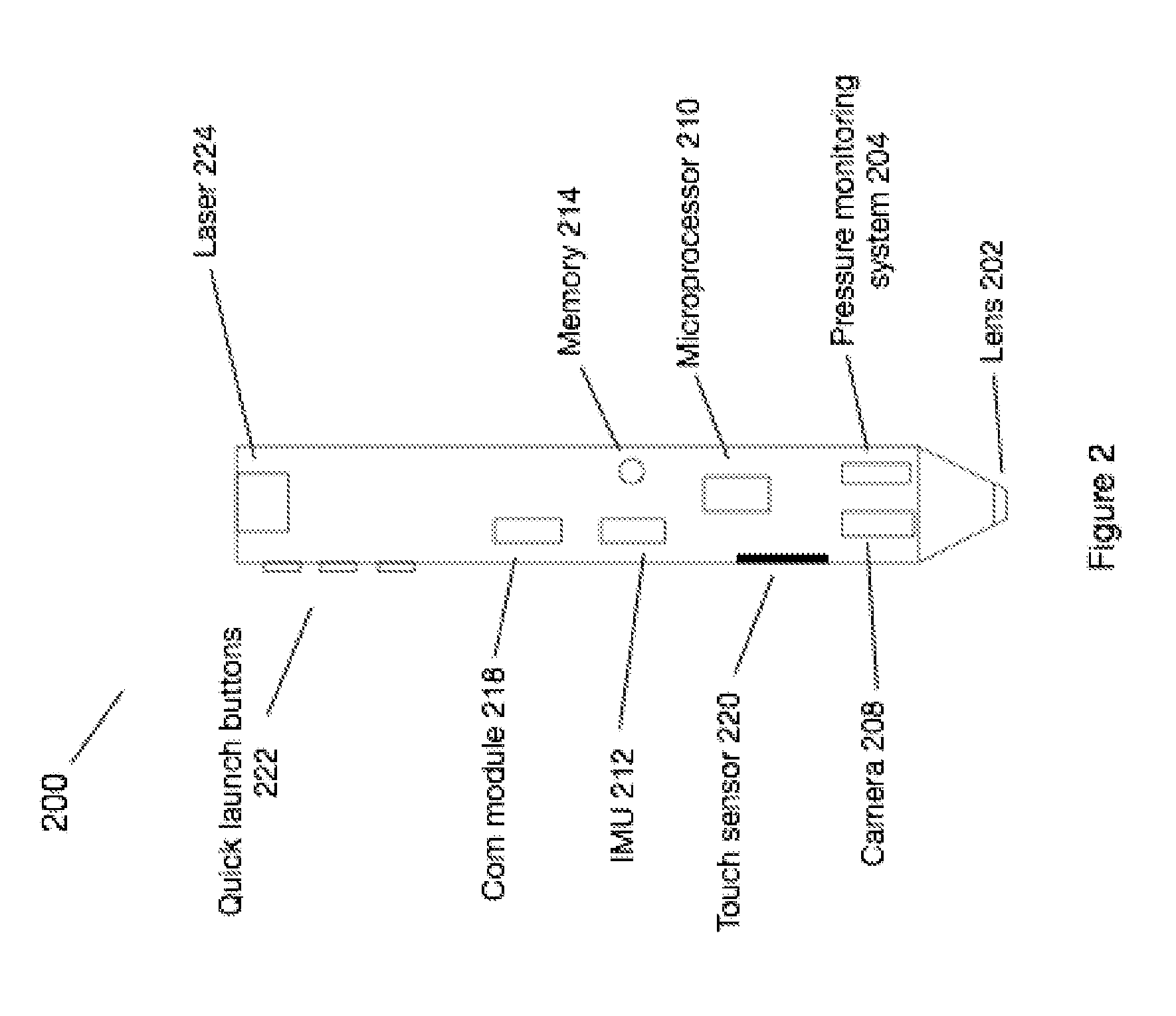

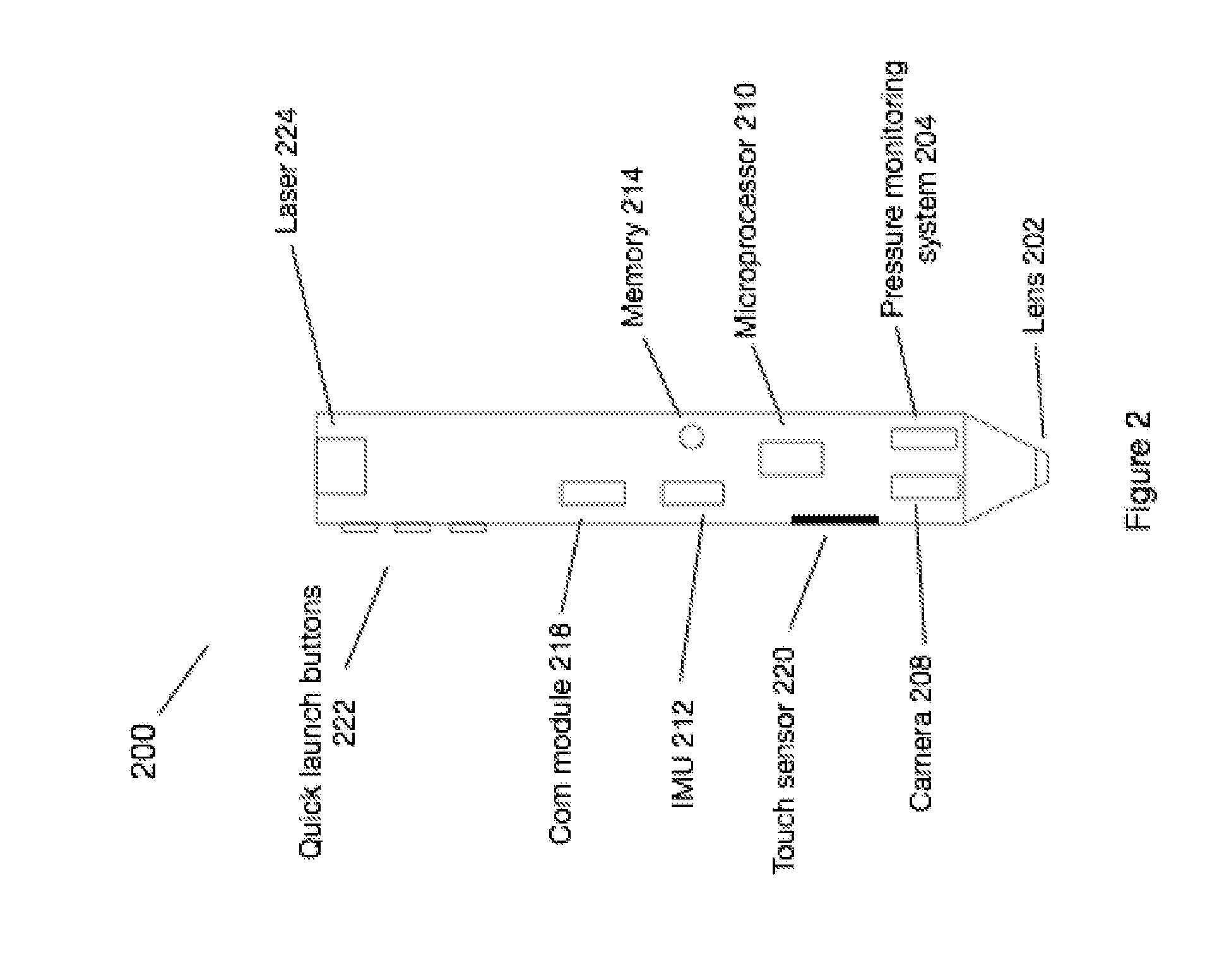

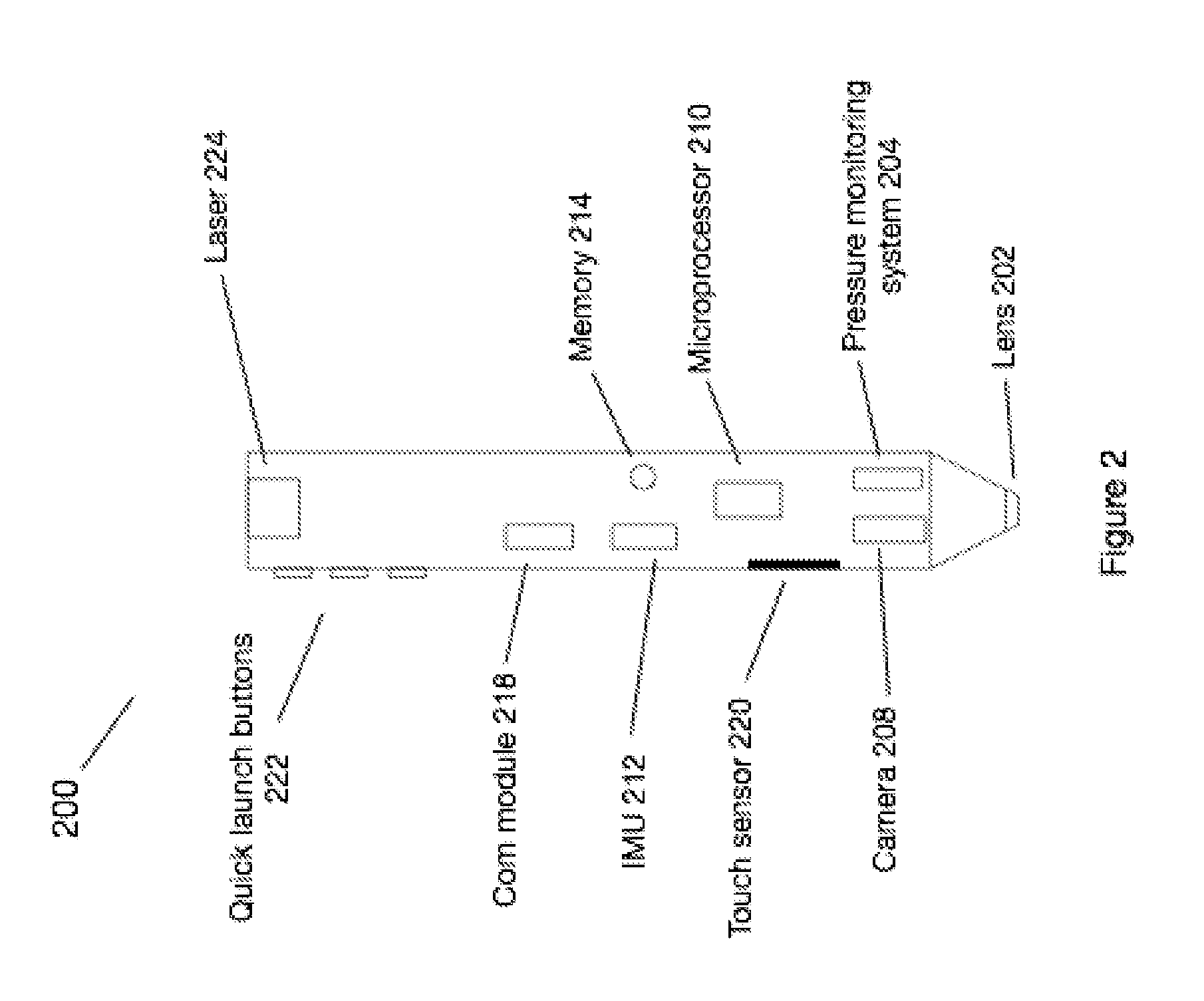

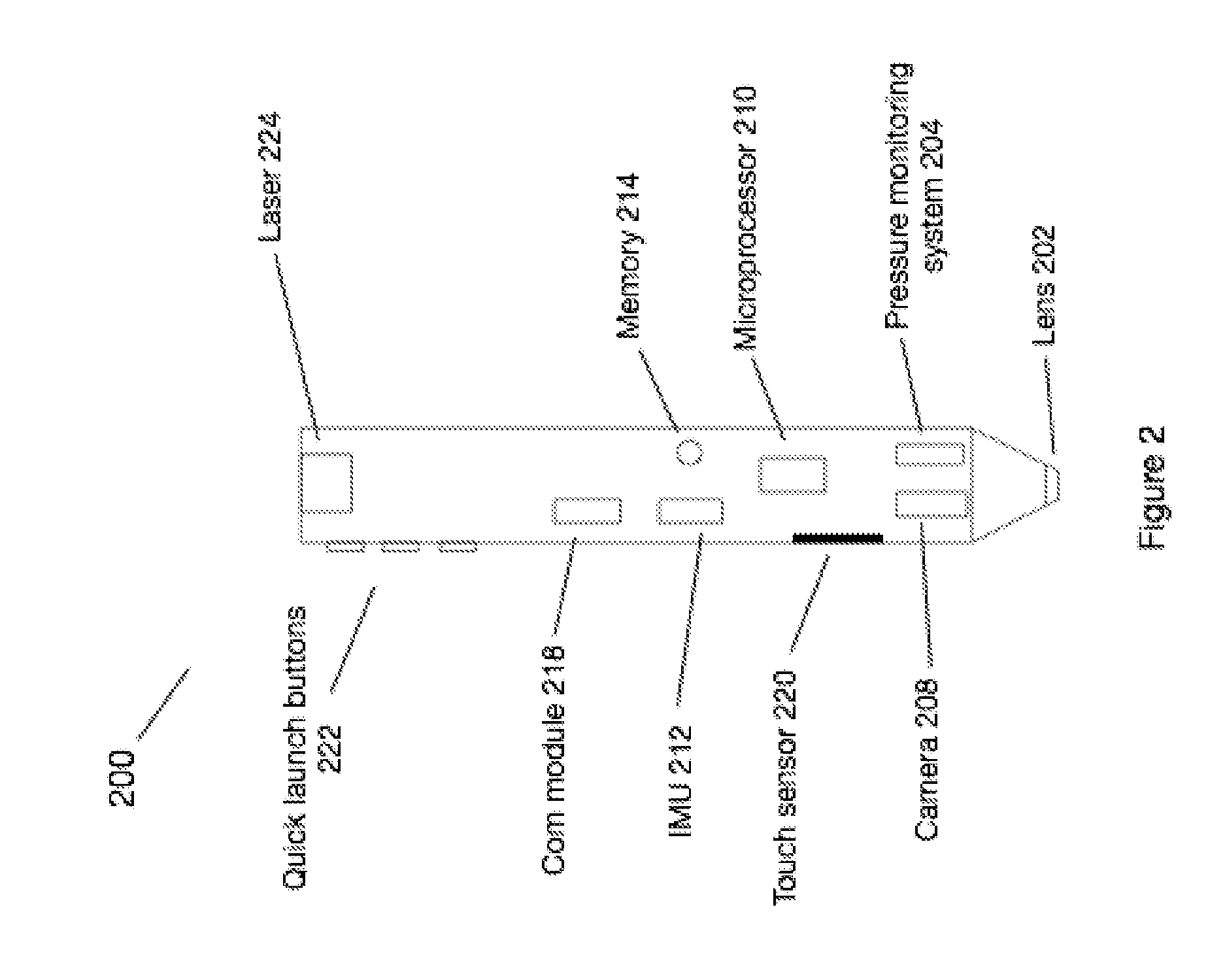

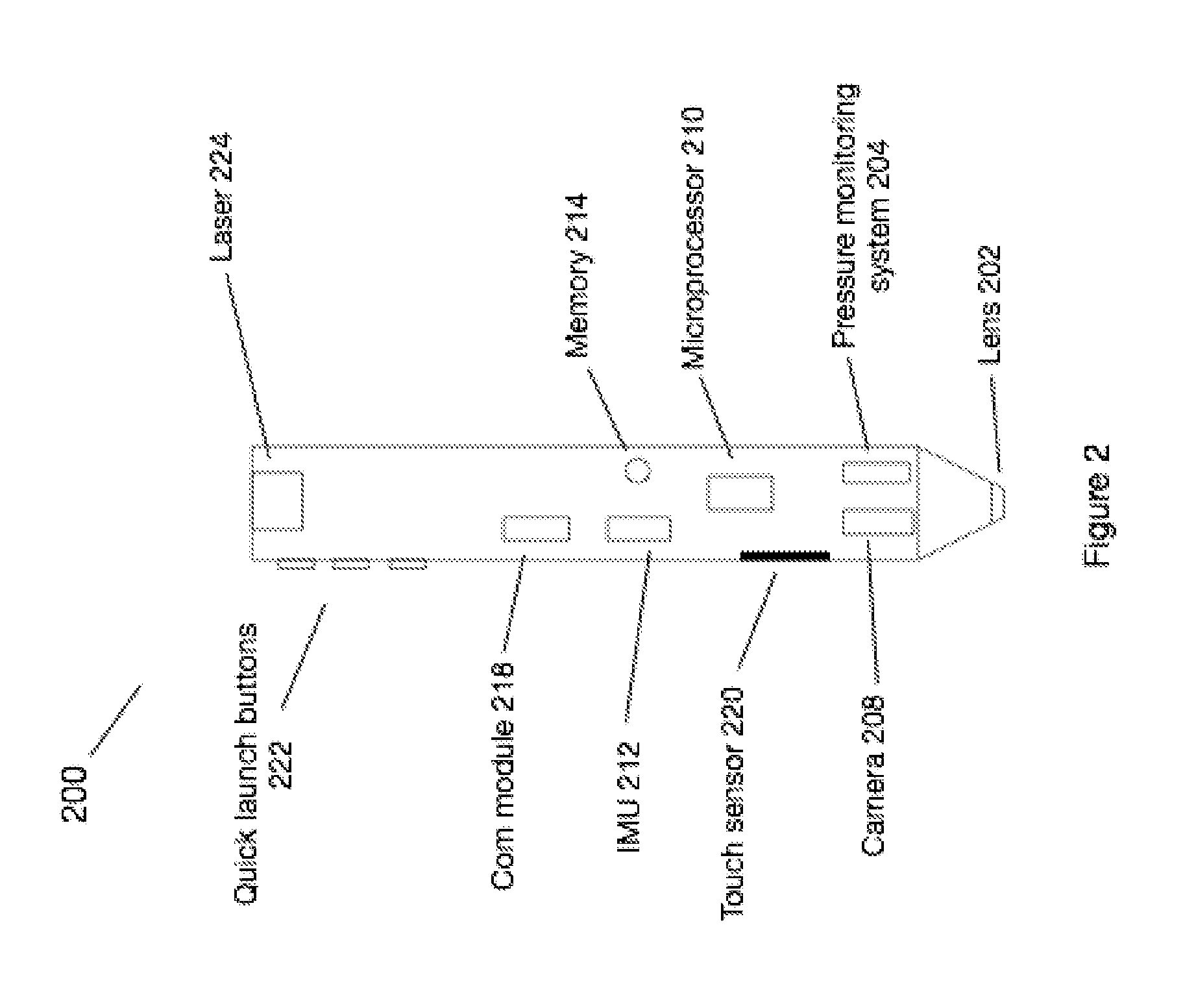

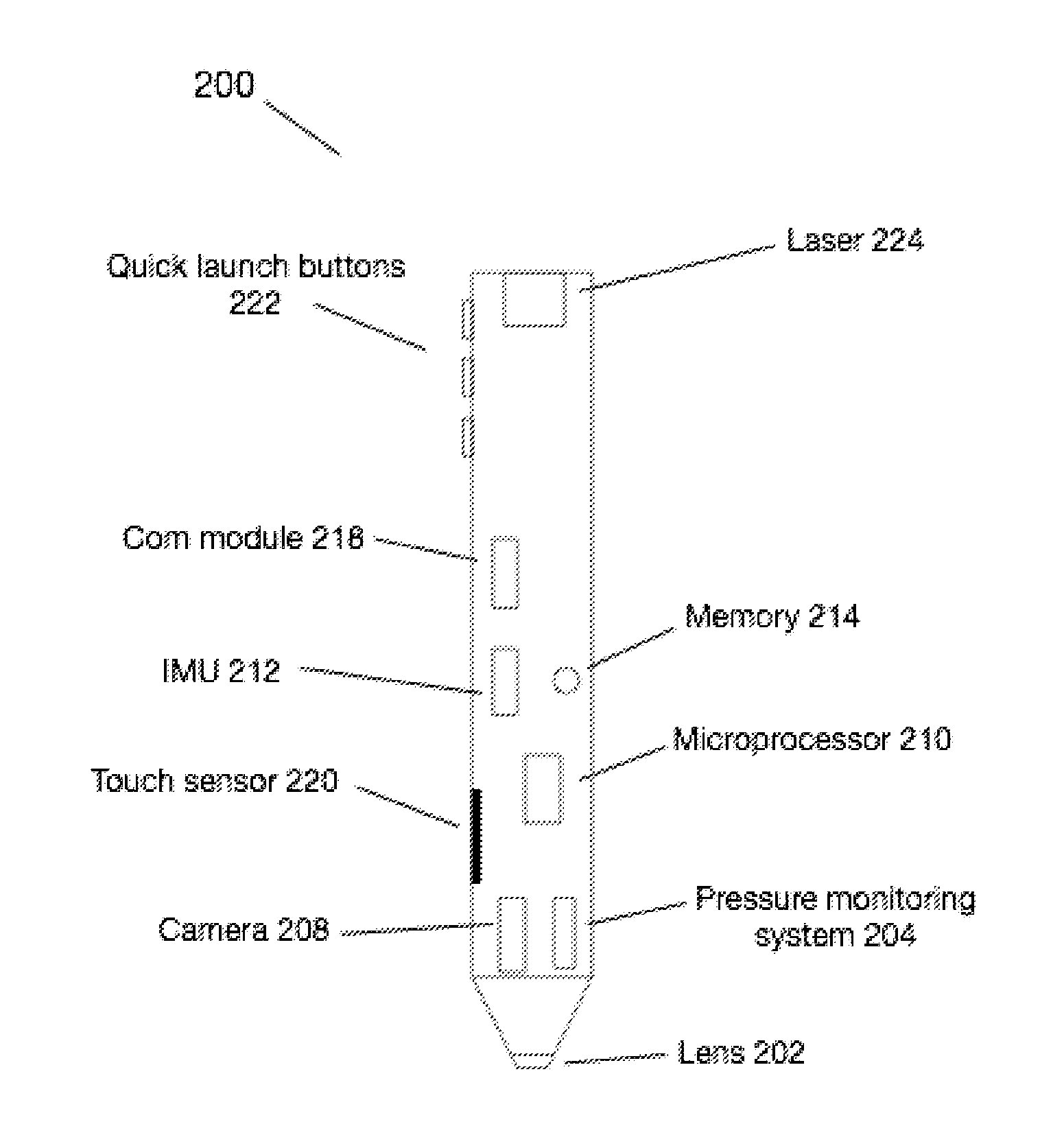

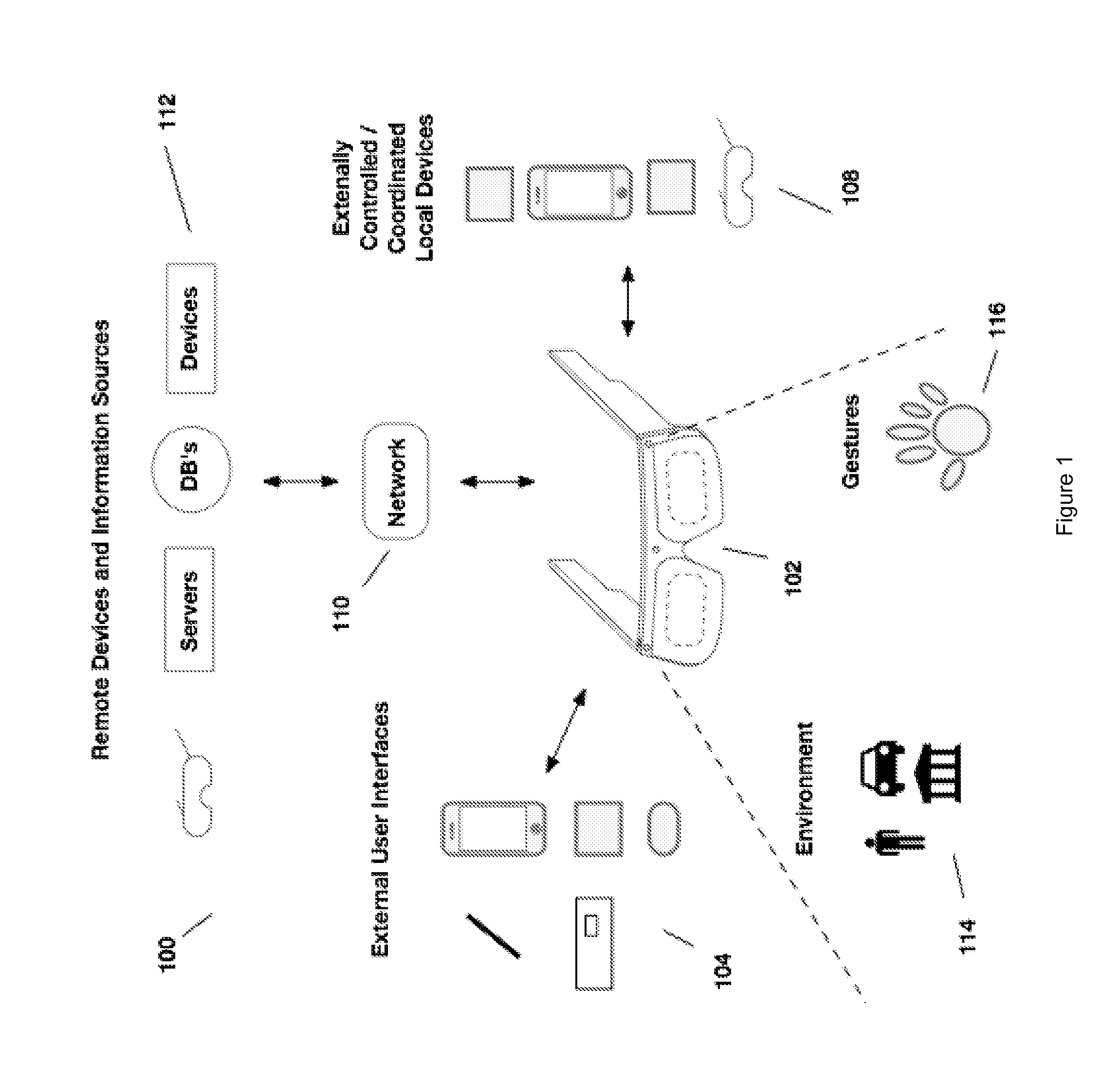

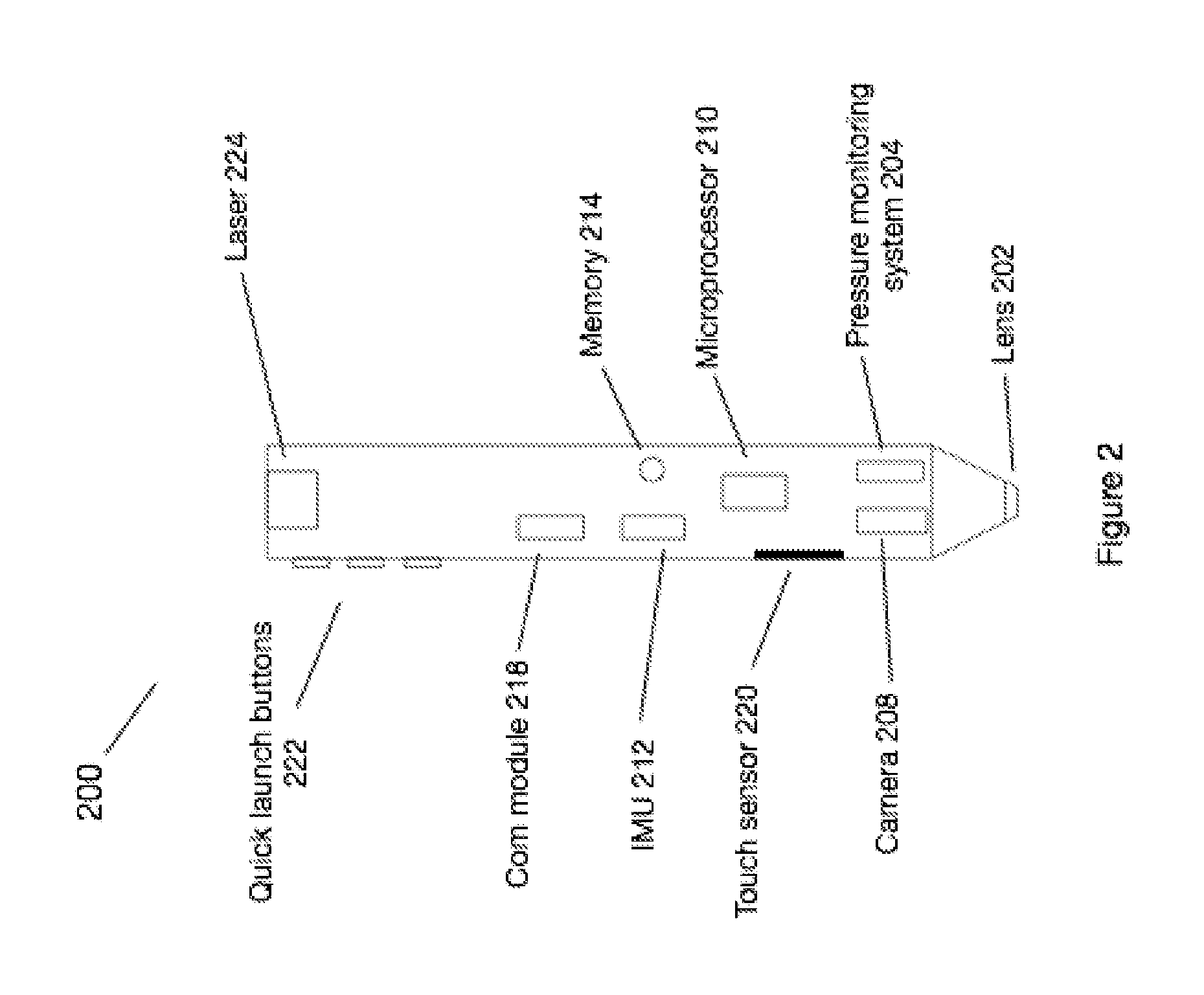

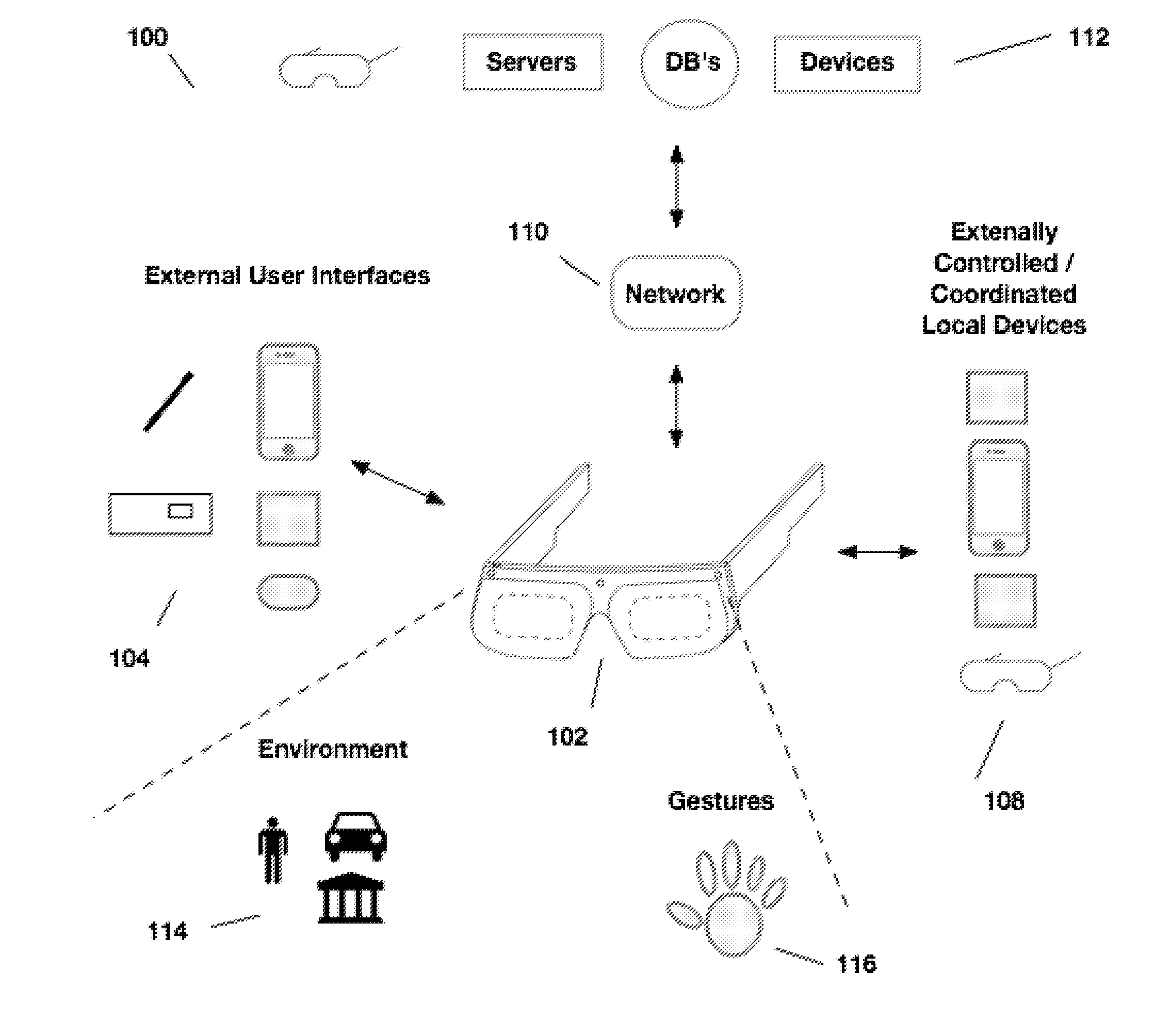

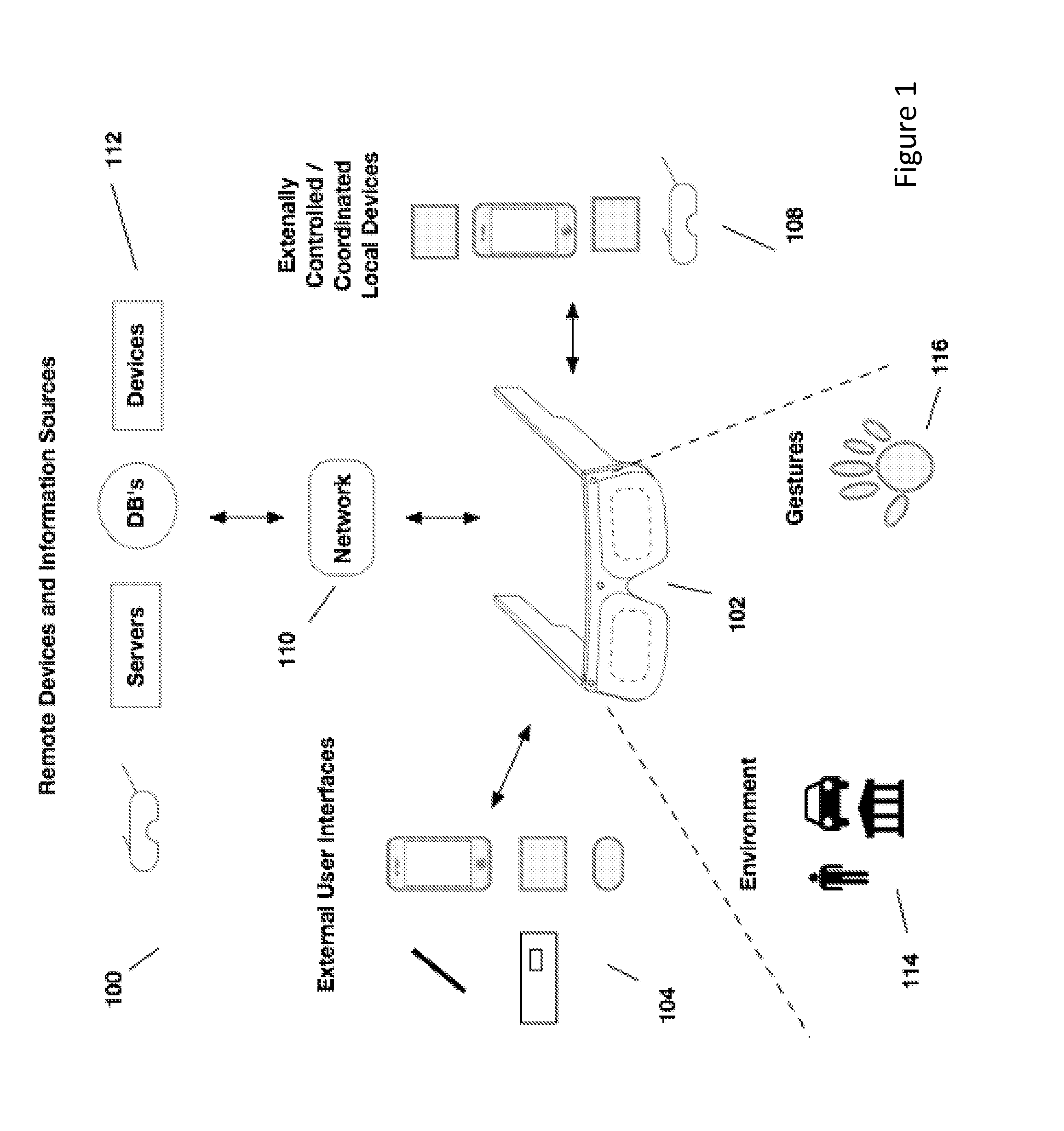

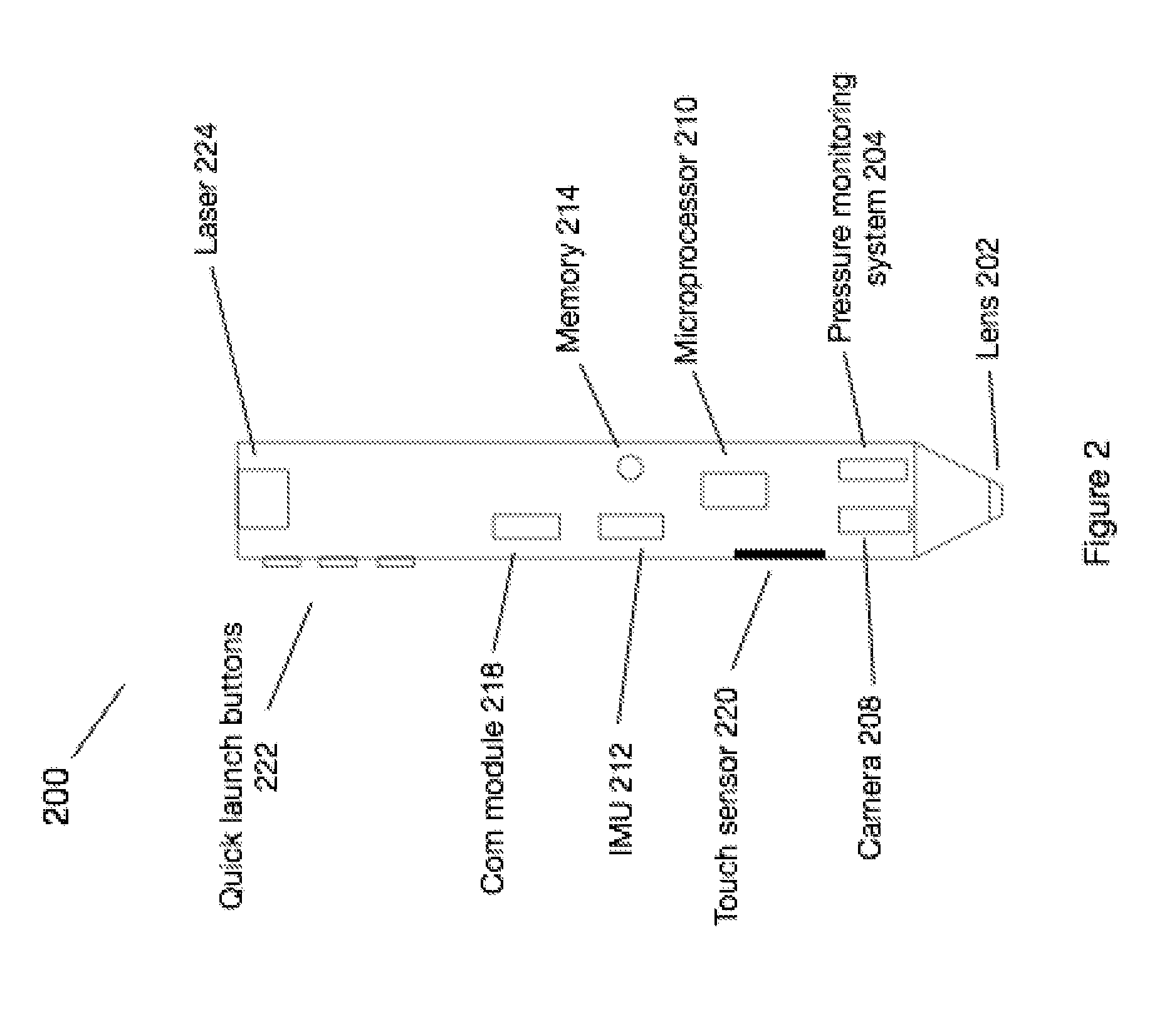

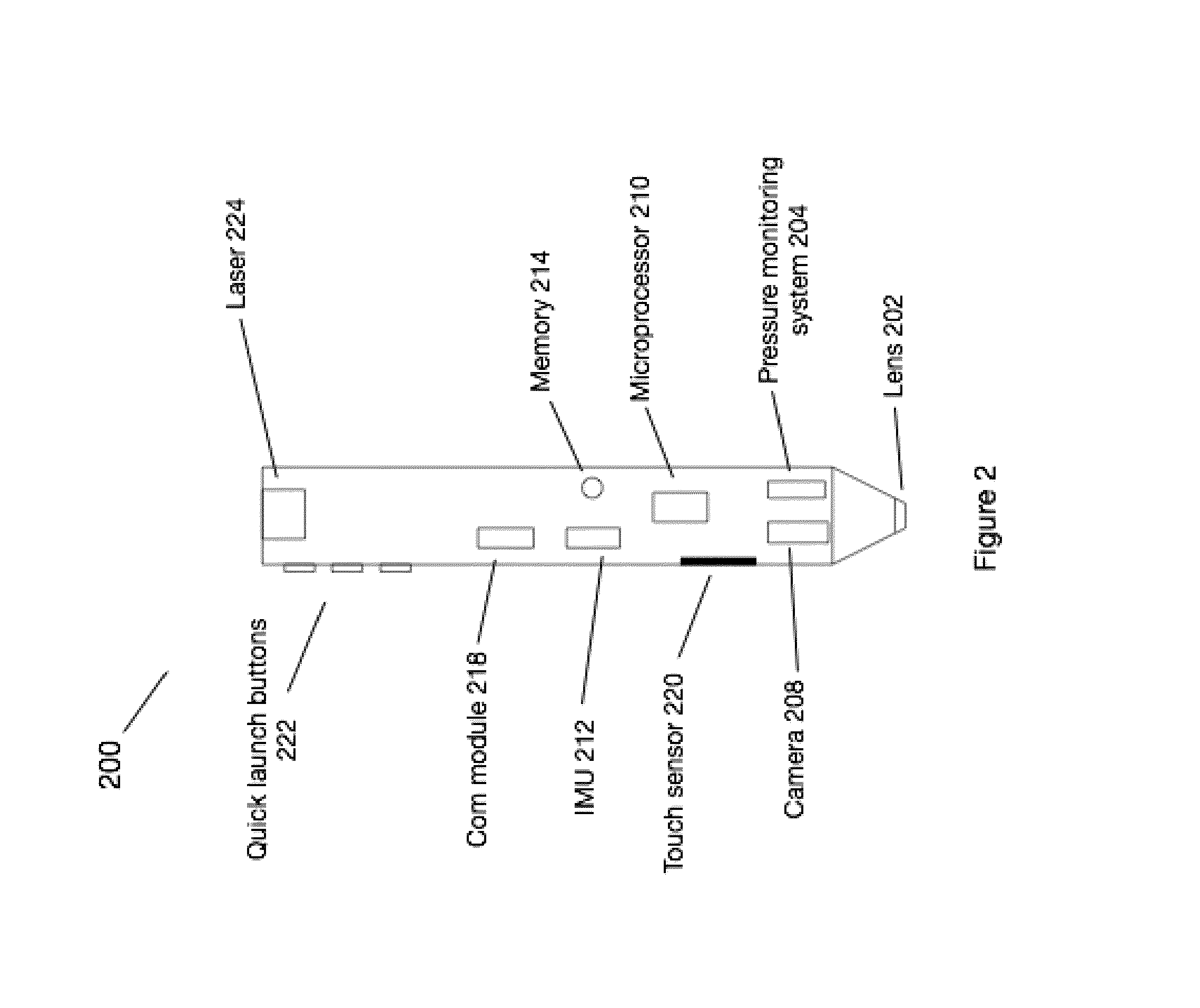

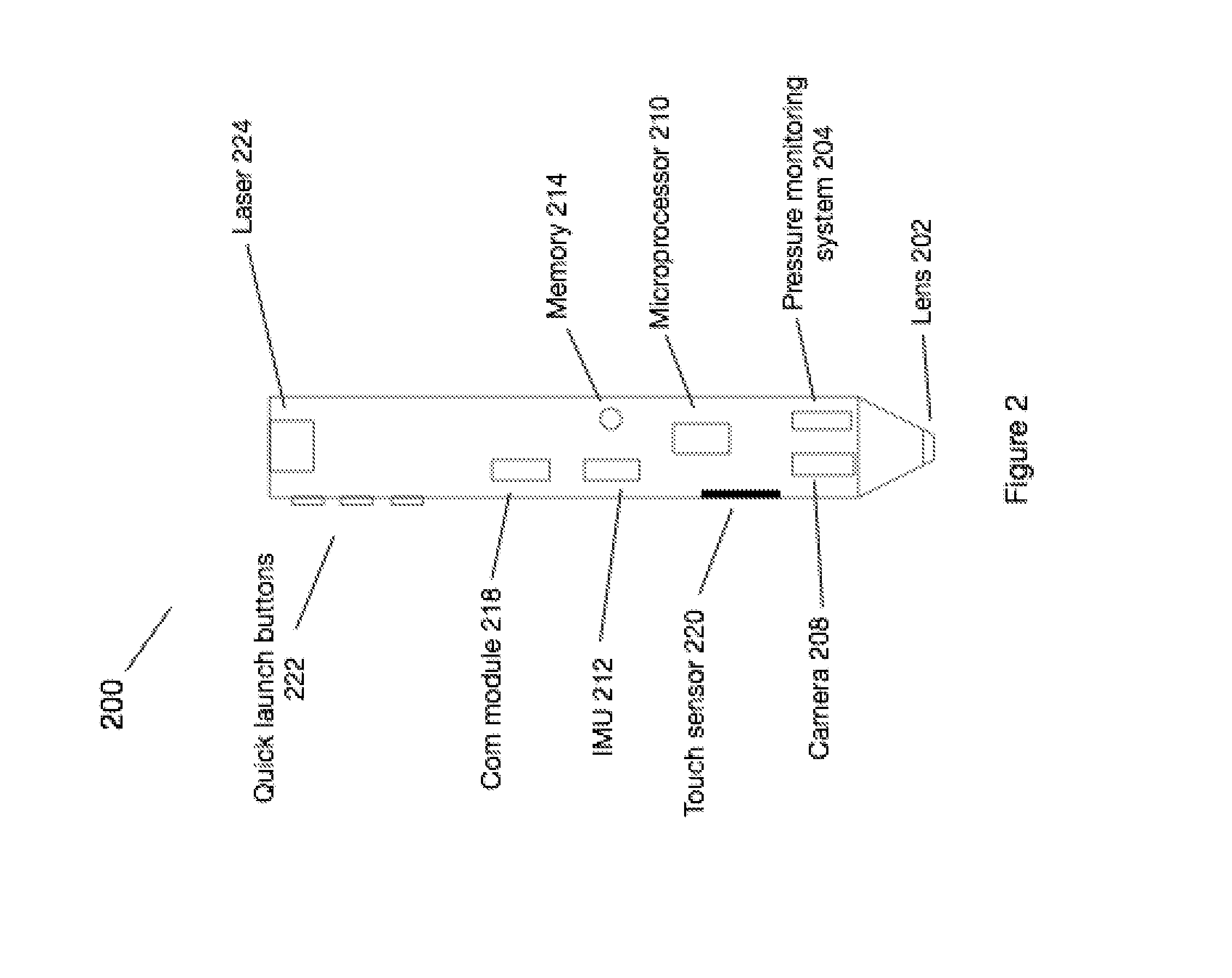

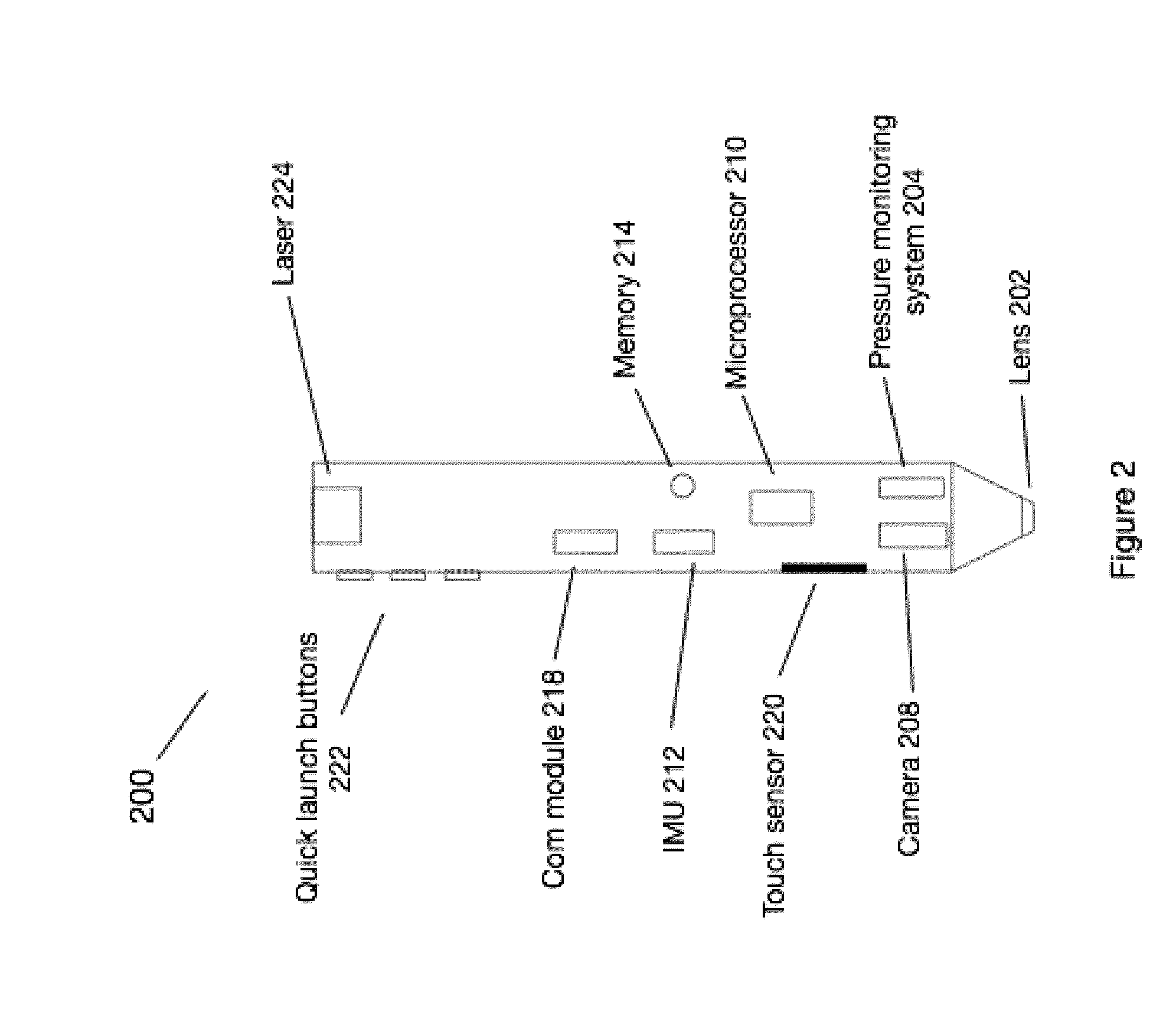

External user interface for head worn computing

InactiveUS20150205401A1Input/output for user-computer interactionElectronic time-piece structural detailsHand heldNatural user interface

Aspects of the present invention relate to external user interfaces used in connection with head worn computers (HWC). Embodiments relate to an external user interface that has a physical form intended to be hand held. The hand held user interface may be in the form similar to that of a writing instrument, such as a pen. In embodiments, the hand held user interface includes technologies relating to writing surface tip pressure monitoring, lens configurations setting a predetermined imaging distance, user interface software mode selection, quick software application launching, and other interface technologies.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

InactiveUS20150205378A1Input/output for user-computer interactionElectronic time-piece structural detailsCombined useHand held

Aspects of the present disclosure relate to external user interfaces used in connection with head worn computers (HWC). Embodiments relate to an external user interface that has a physical form intended to be hand held. The hand held user interface may be in the form similar to that of a writing instrument, such as a pen. In embodiments, the hand held user interface includes technologies relating to writing surface tip pressure monitoring, lens configurations setting a predetermined imaging distance, user interface software mode selection, quick software application launching, and other interface technologies.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

InactiveUS20150205384A1Electronic time-piece structural detailsCathode-ray tube indicatorsHand heldNatural user interface

Aspects of the present disclosure relate to external user interfaces used in connection with head worn computers (HWC). Embodiments relate to an external user interface that has a physical form intended to be hand held. The hand held user interface may be in the form similar to that of a writing instrument, such as a pen. In embodiments, the hand held user interface includes technologies relating to writing surface tip pressure monitoring, lens configurations setting a predetermined imaging distance, user interface software mode selection, quick software application launching, and other interface technologies.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

InactiveUS20150205387A1Electronic time-piece structural detailsCathode-ray tube indicatorsCombined useHand held

Aspects of the present disclosure relate to external user interfaces used in connection with head worn computers (HWC). Embodiments relate to an external user interface that has a physical form intended to be hand held. The hand held user interface may be in a form similar to that of a writing instrument, such as a pen. In embodiments, the hand held user interface includes technologies relating to writing surface tip pressure monitoring, lens configurations setting a predetermined imaging distance, user interface software mode selection, quick software application launching, and other interface technologies.

Owner:OSTERHOUT GROUP INC

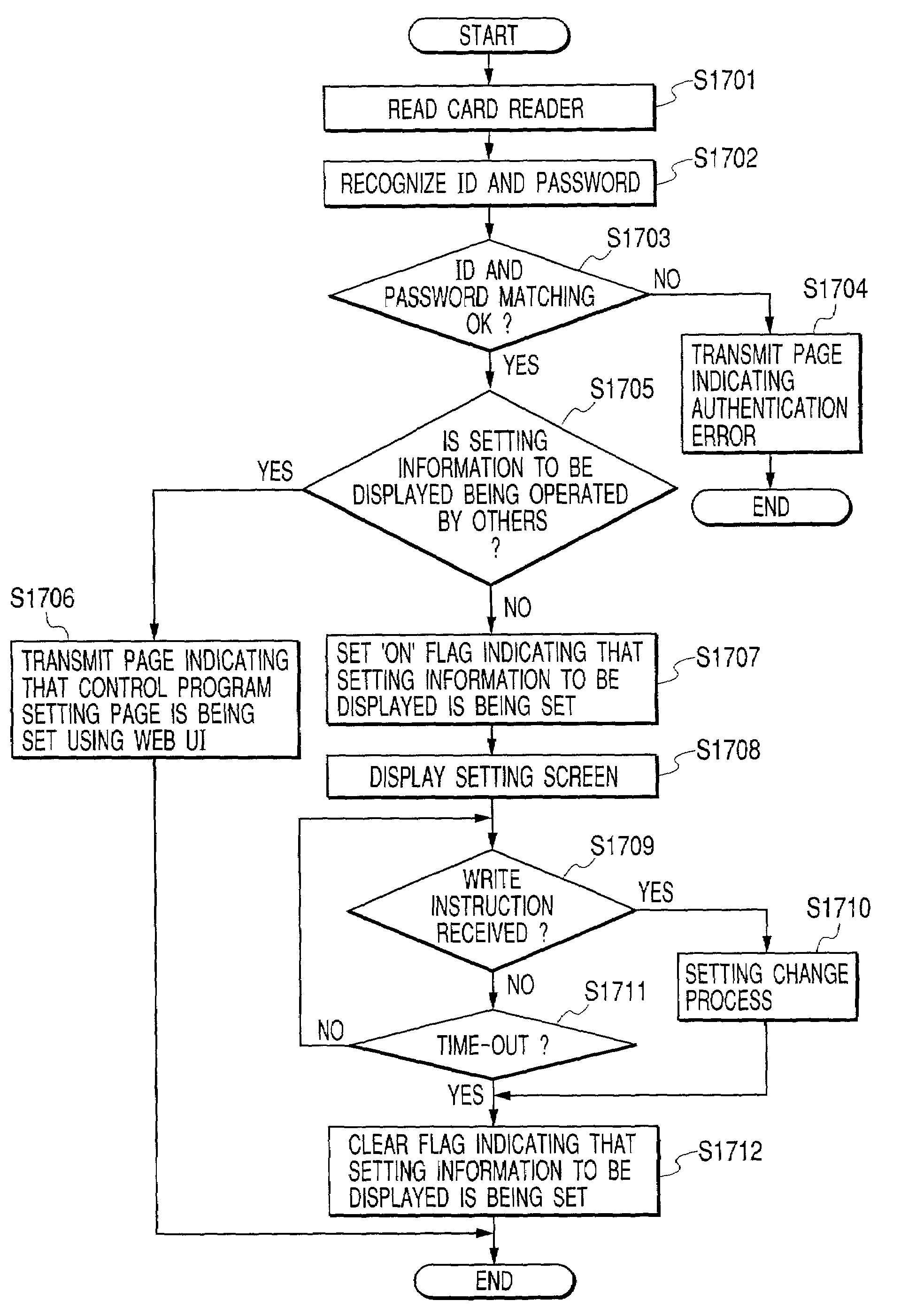

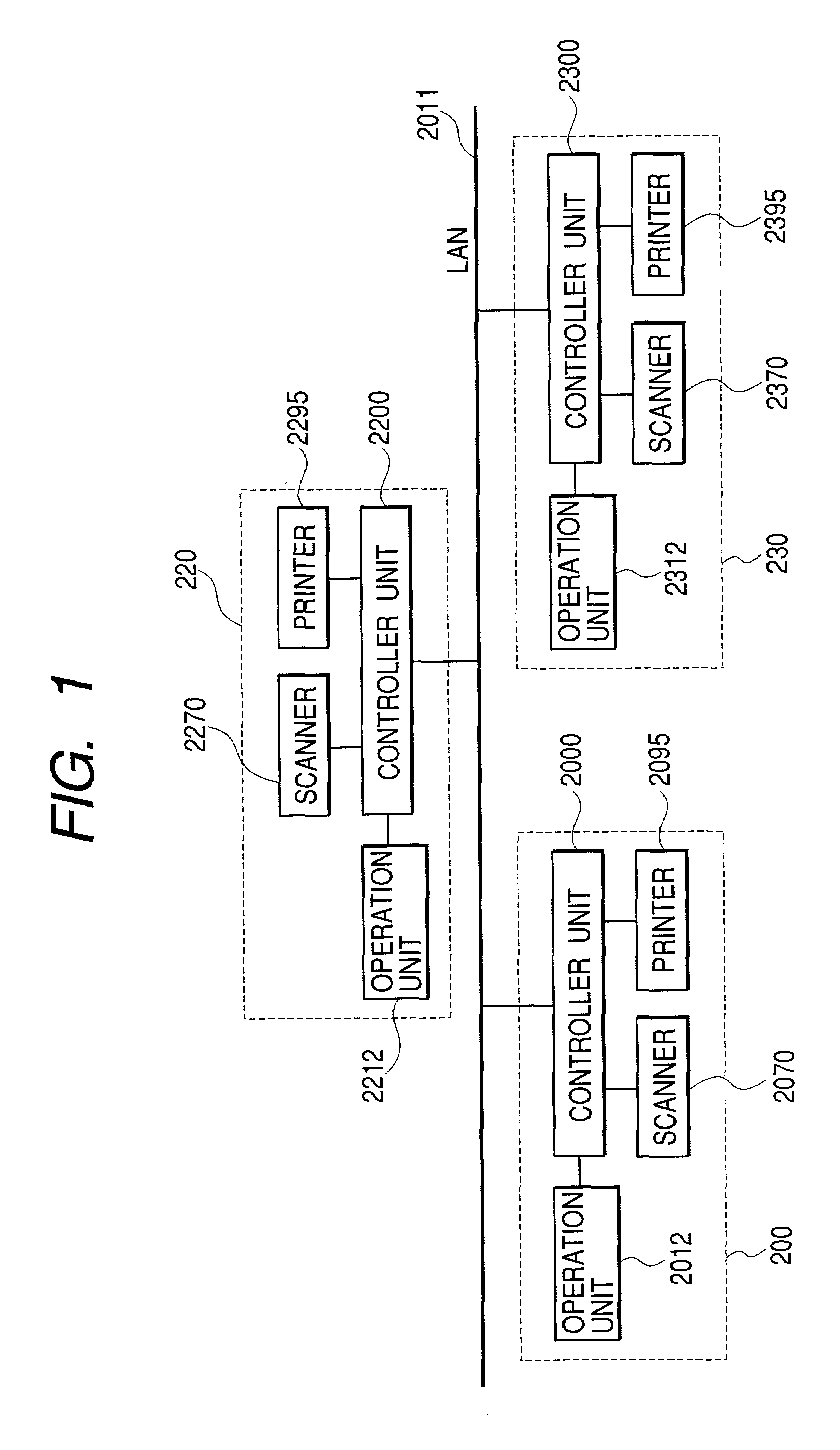

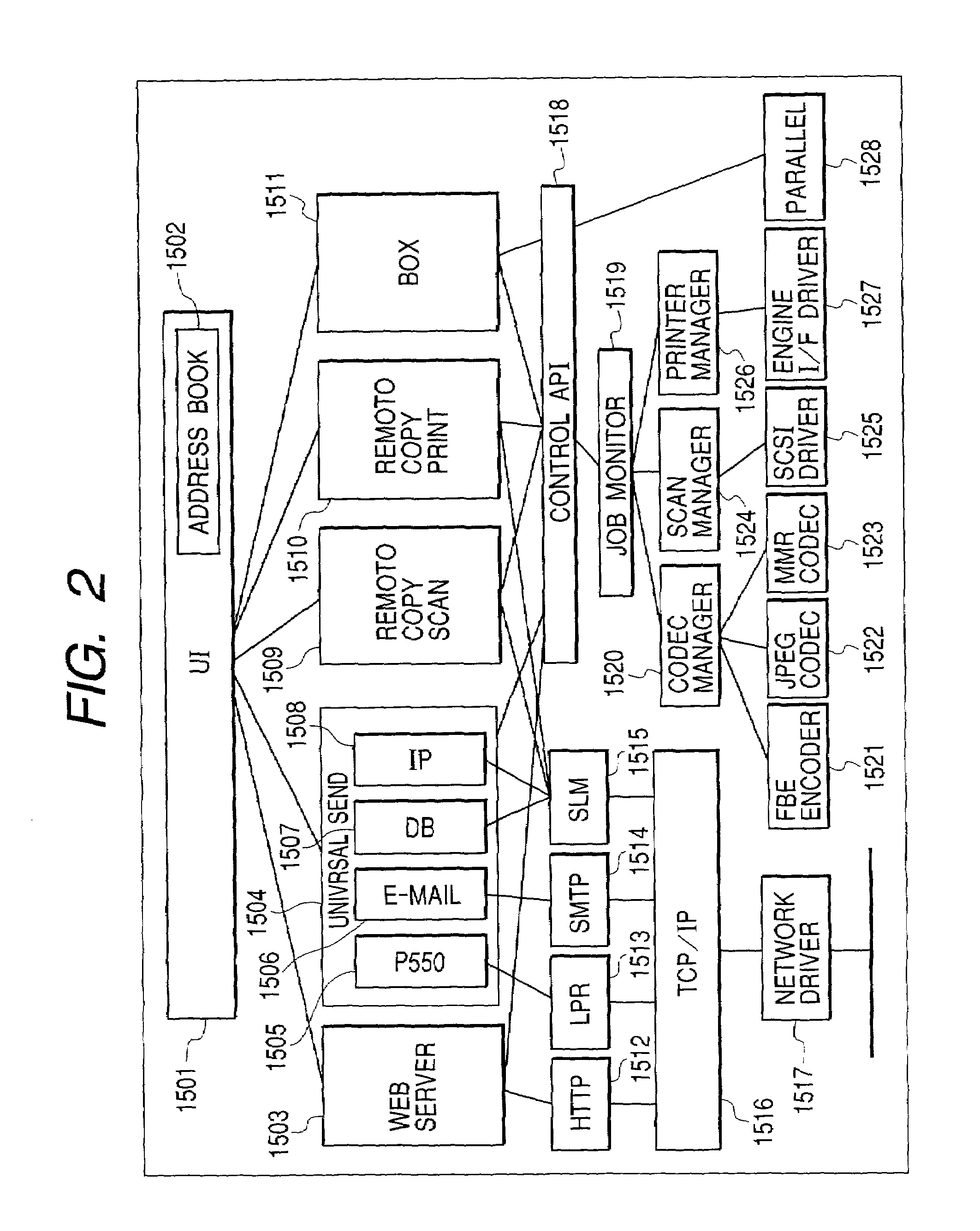

Image processing device, information processing method and computer-readable storage medium storing a control program for performing an operation based on whether a function is being set or requested to be set

InactiveUS7327478B2Avoid inconsistenciesDigital data authenticationVisual presentationInformation processingImaging processing

For example, an image processing device which can be operated from both remote user interface such as a Web browser and local interface by an operation panel or a card reader, and a computer system communicating with the device are embodied. A system of integrating authenticating processes by each of the remote and local user interfaces can be provided. In this case, an adjustment is made not to cause inconsistency by the conflict between the operation from the remote user interface and the operation from the local user interface so as to practically utilize each user interface.

Owner:CANON KK

External user interface for head worn computing

ActiveUS20150205373A1Electronic time-piece structural detailsCathode-ray tube indicatorsHand heldNatural user interface

Aspects of the present disclosure relate to external user interfaces used in connection with head worn computers (HWC). Embodiments relate to an external user interface that has a physical form intended to be hand held. The hand held user interface may be in the form similar to that of a writing instrument, such as a pen. In embodiments, the hand held user interface includes technologies relating to writing surface tip pressure monitoring, lens configurations setting a predetermined imaging distance, user interface software mode selection, quick software application launching, and other interface technologies.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

InactiveUS20150205402A1Input/output for user-computer interactionElectronic time-piece structural detailsHand heldCombined use

Aspects of the present disclosure relate to external user interfaces used in connection with head worn computers (HWC). Embodiments relate to an external user interface that has a physical form intended to be hand held. The hand held user interface may be in the form similar to that of a writing instrument, such as a pen. In embodiments, the hand held user interface includes technologies relating to writing surface tip pressure monitoring, lens configurations setting a predetermined imaging distance, user interface software mode selection, quick software application launching, and other interface technologies.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

ActiveUS20150205385A1Electronic time-piece structural detailsCathode-ray tube indicatorsHand heldNatural user interface

Aspects of the present invention relate to external user interfaces used in connection with head worn computers (HWC). Embodiments relate to an external user interface that has a physical form intended to be hand held. The hand held user interface may be in the form similar to that of a writing instrument, such as a pen. In embodiments, the hand held user interface includes technologies relating to writing surface tip pressure monitoring, lens configurations setting a predetermined imaging distance, user interface software mode selection, quick software application launching, and other interface technologies.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

InactiveUS20160062118A1Input/output for user-computer interactionImage analysisNatural user interfaceHuman–computer interaction

Owner:OSTERHOUT GROUP INC

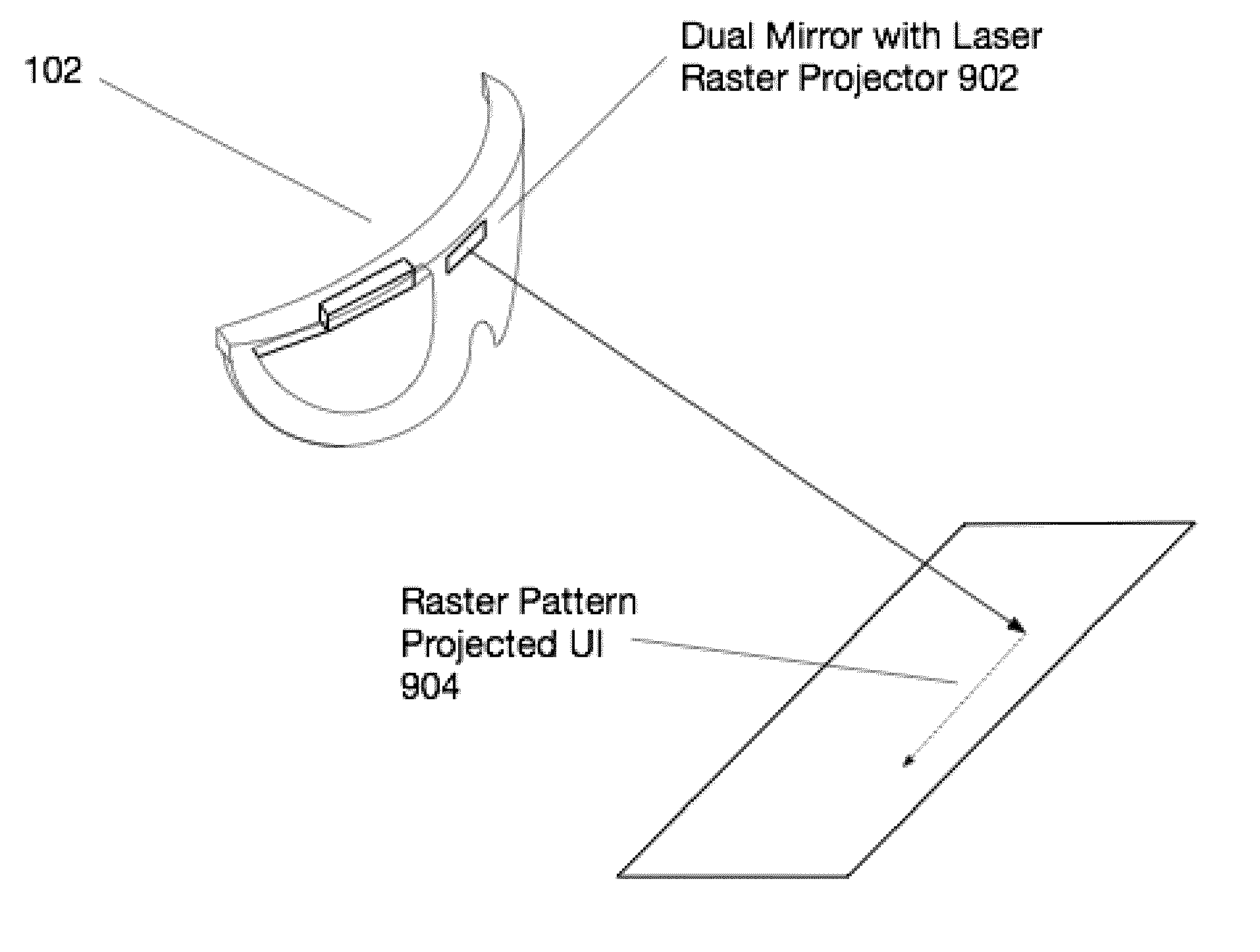

External user interface for head worn computing

InactiveUS20160027414A1Character and pattern recognitionCathode-ray tube indicatorsNatural user interfaceHuman–computer interaction

Aspects of the present invention relate to the projection of imagery from a head-worn computer, wherein a projector with x-y control and a laser are mounted in the head-worn computer and positioned to project a raster style interactive user interface image onto a nearby surface.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

InactiveUS20160025974A1Input/output for user-computer interactionCharacter and pattern recognitionNatural user interfaceHuman–computer interaction

Aspects of the present invention relate to the projection of imagery from a head-worn computer, wherein a projector with x-y control and a laser are mounted in the head-worn computer and positioned to project a raster style interactive user interface image onto a nearby surface.

Owner:OSTERHOUT GROUP INC

External user interface for head worn computing

ActiveUS20150363975A1Cathode-ray tube indicatorsImage data processingNatural user interfaceHuman–computer interaction

Owner:OSTERHOUT GROUP INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com