Patents

Literature

546 results about "Visual attention" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

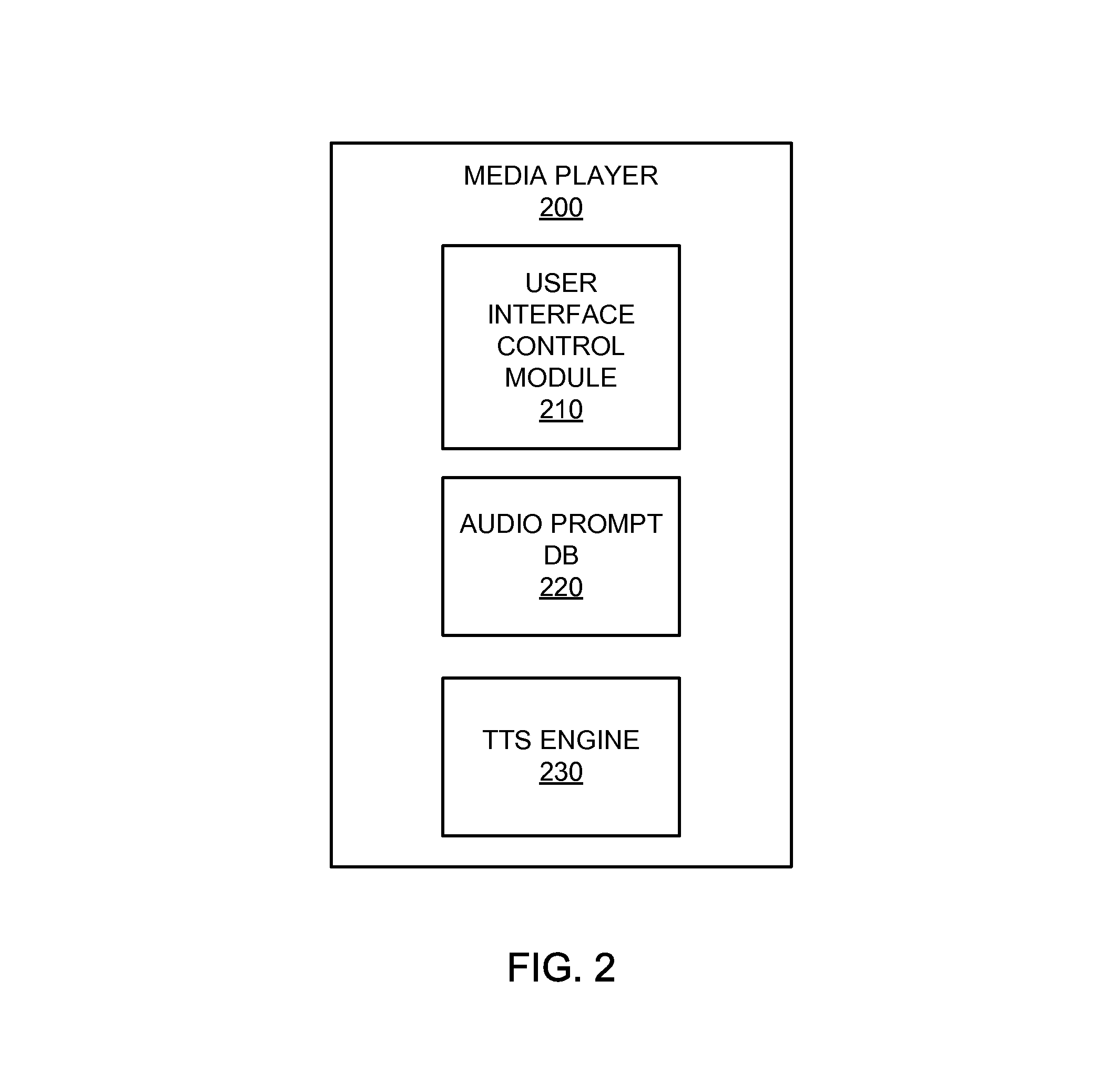

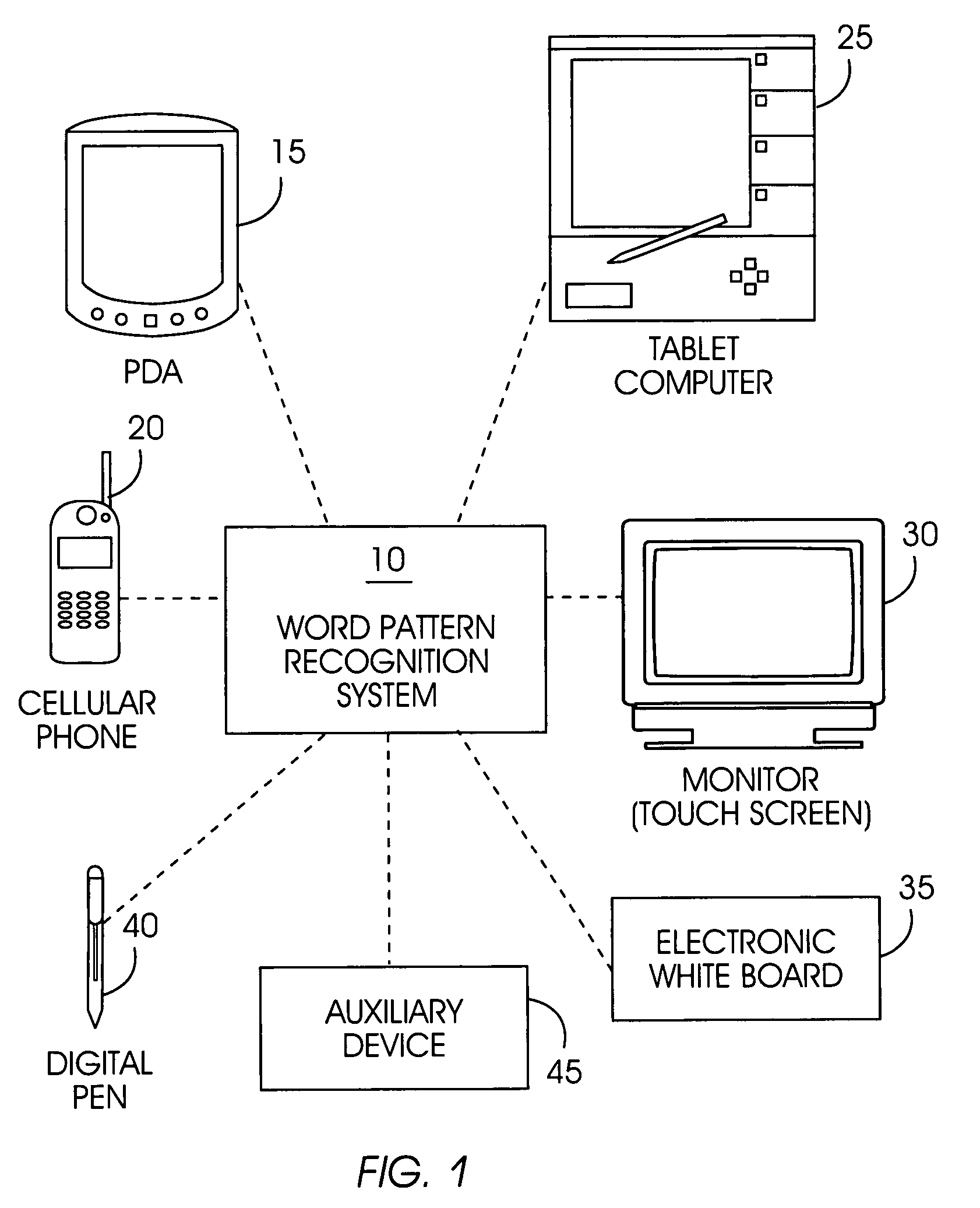

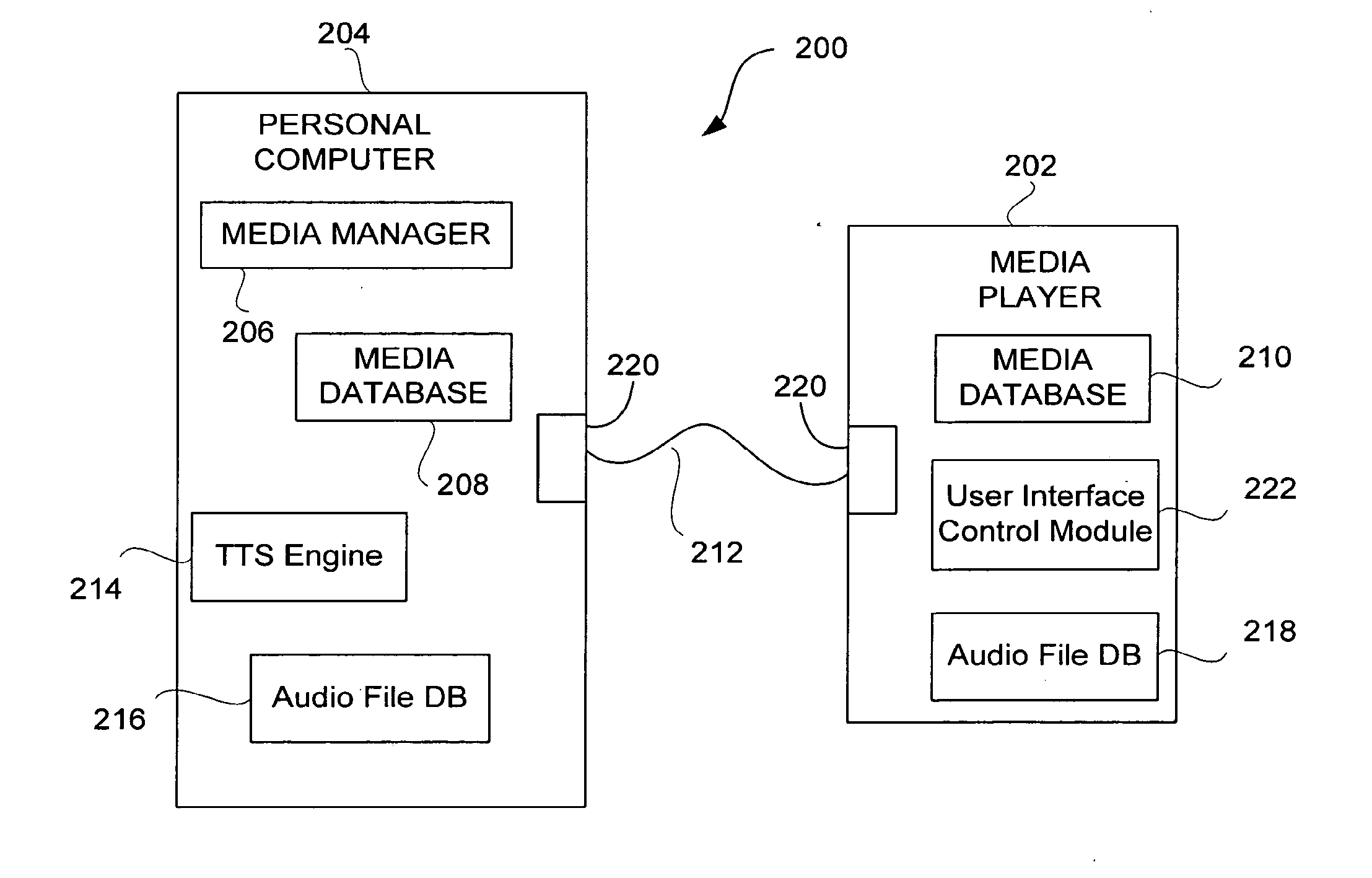

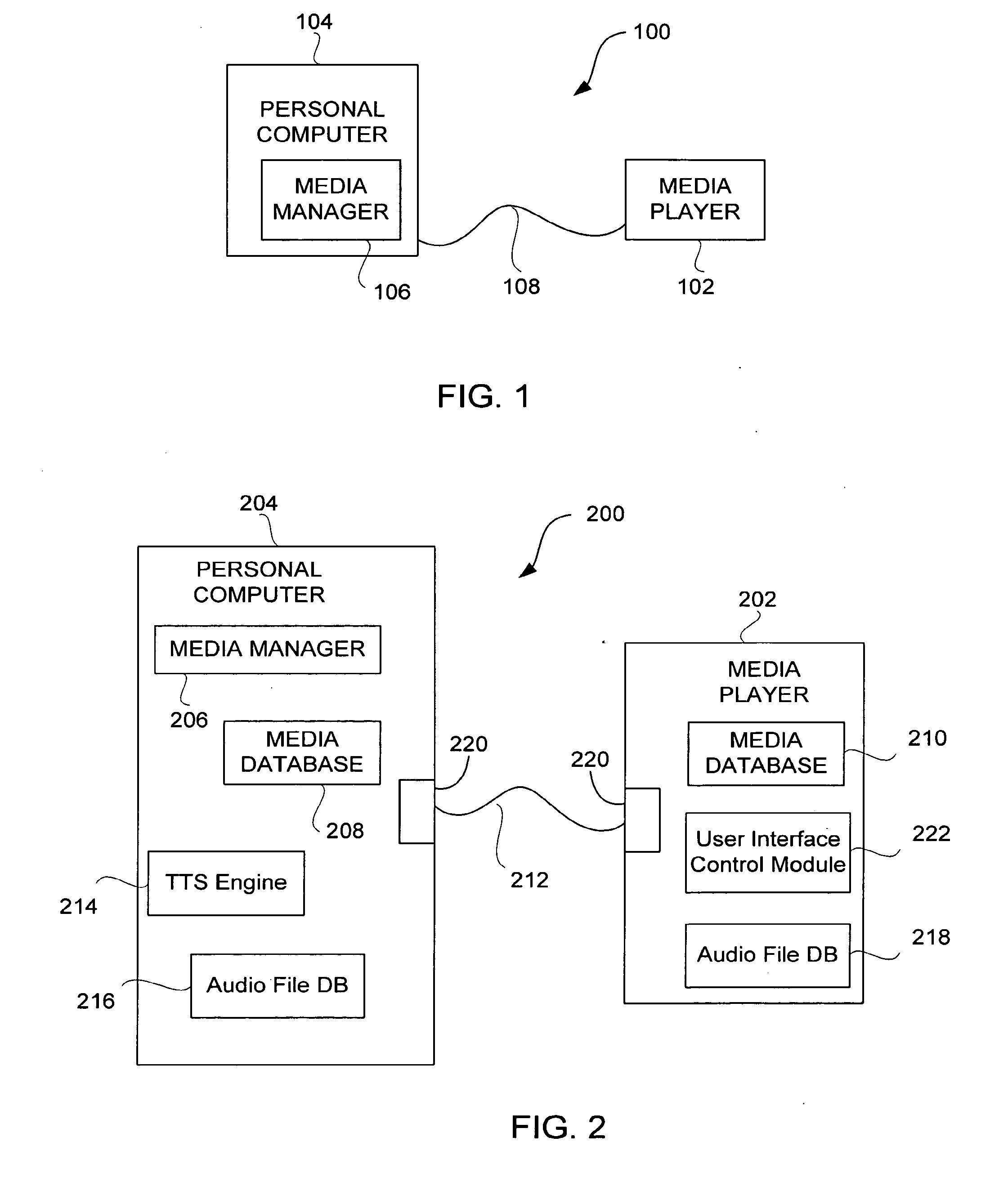

Audio user interface

InactiveUS20100064218A1User may experienceImprove experienceNavigation instrumentsSubstation equipmentNatural user interfaceHuman–computer interaction

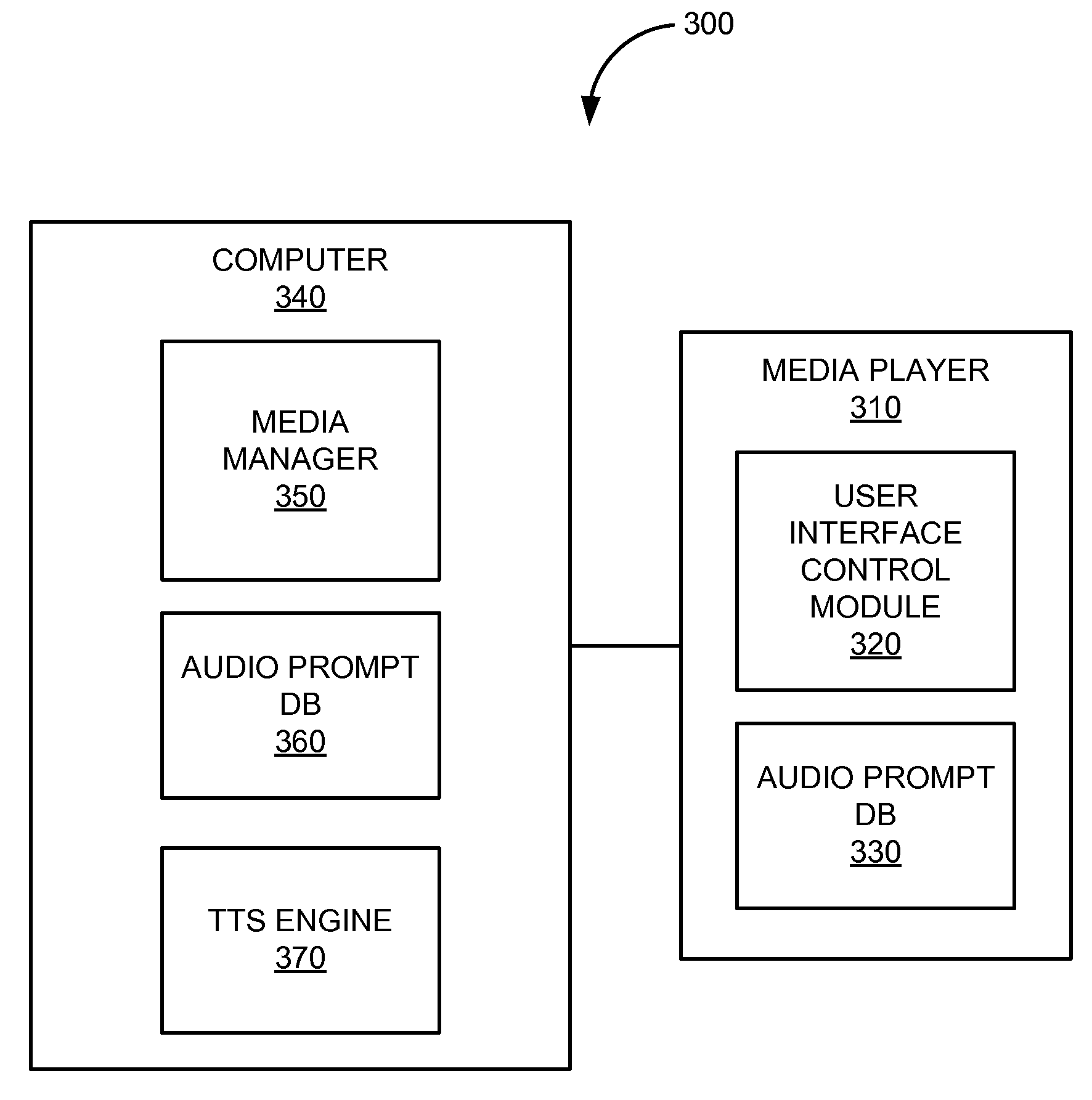

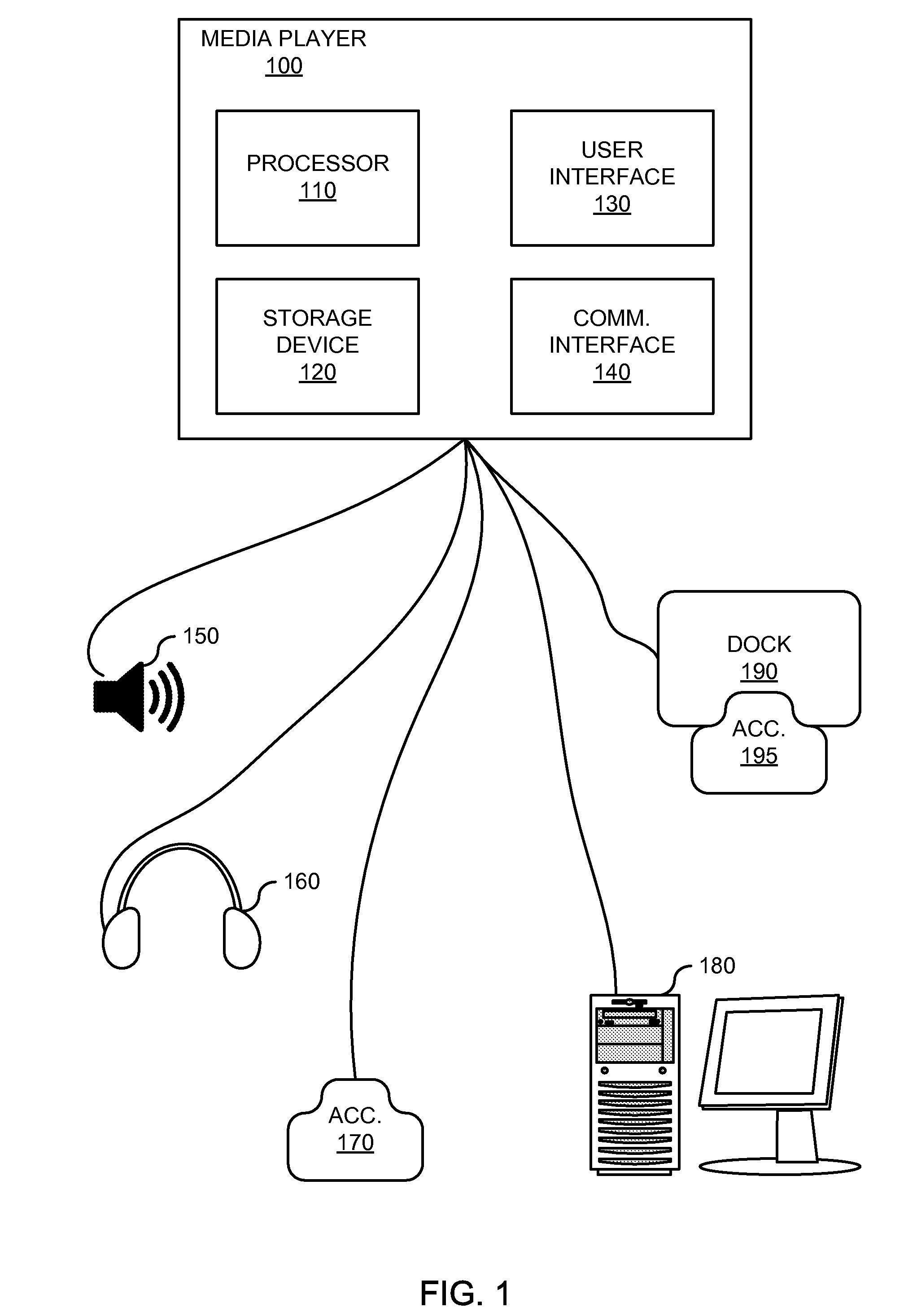

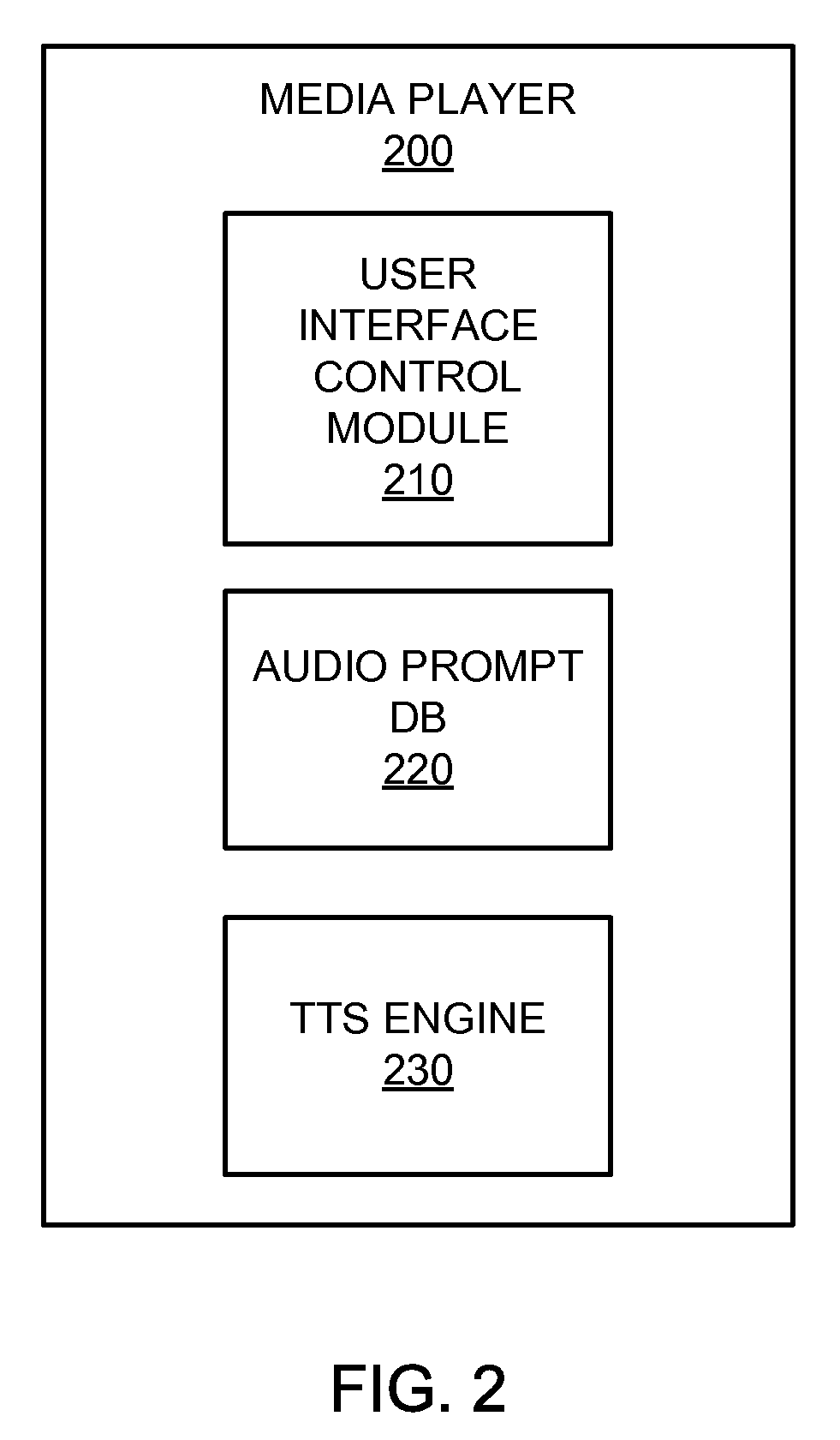

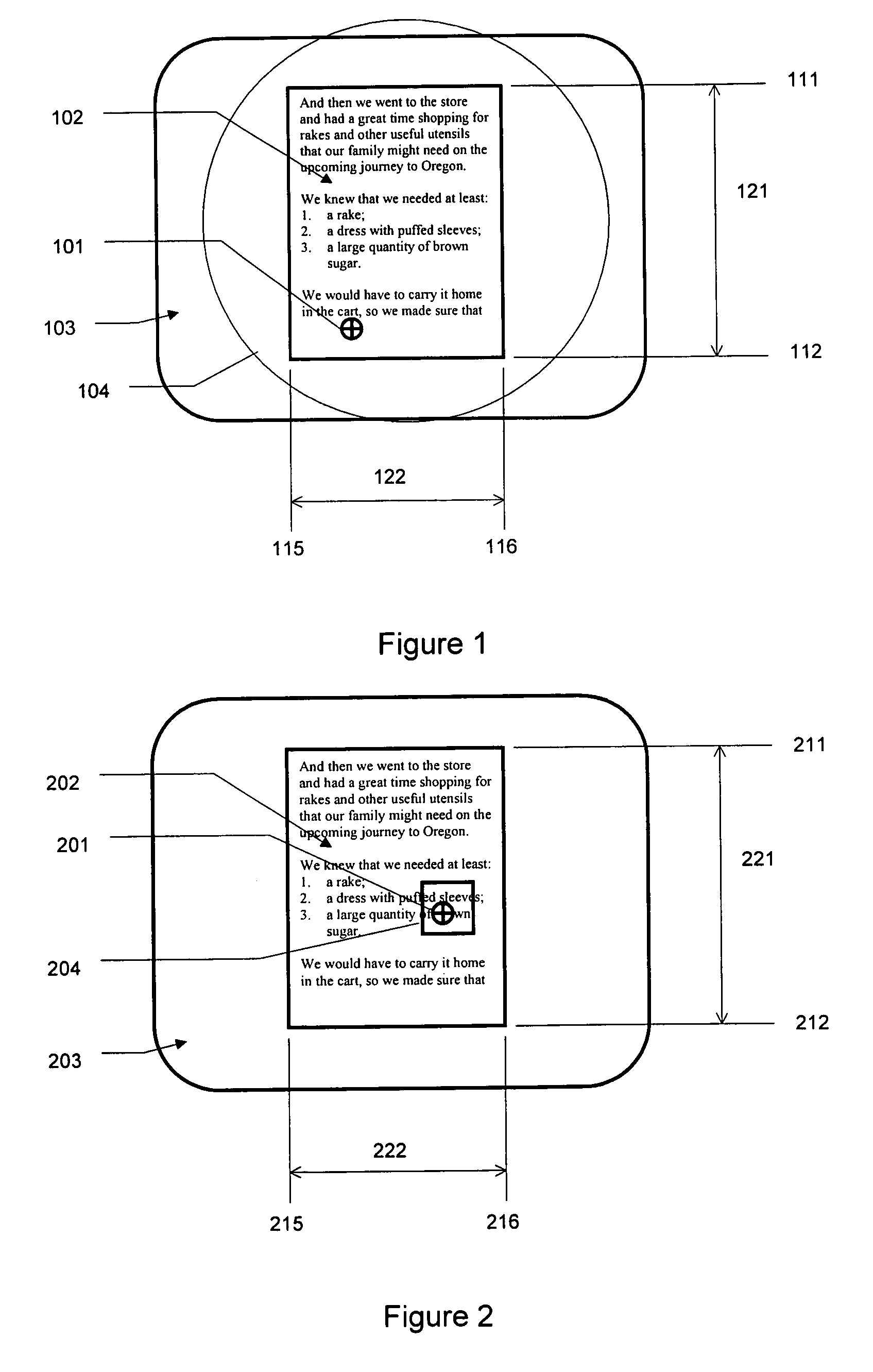

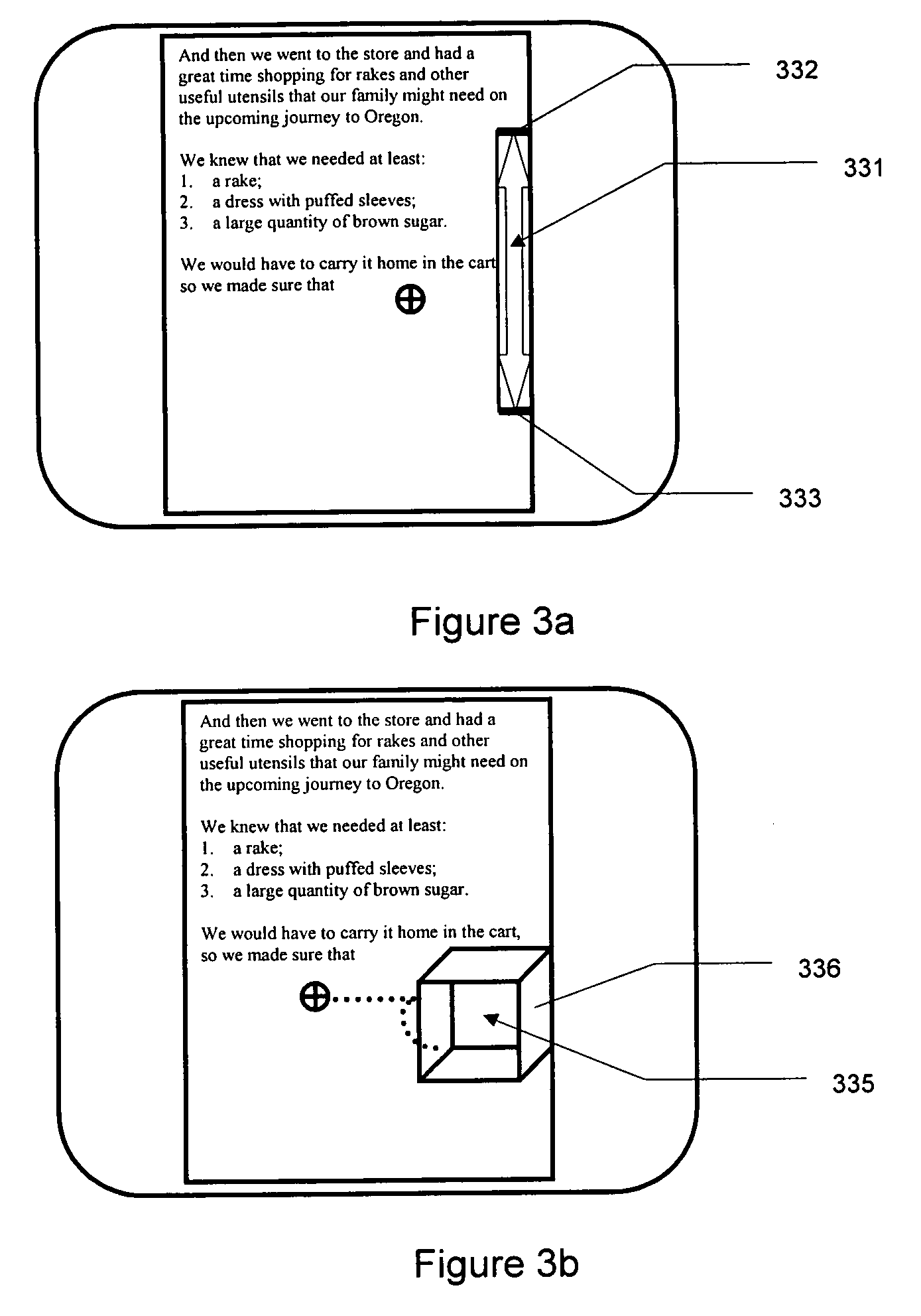

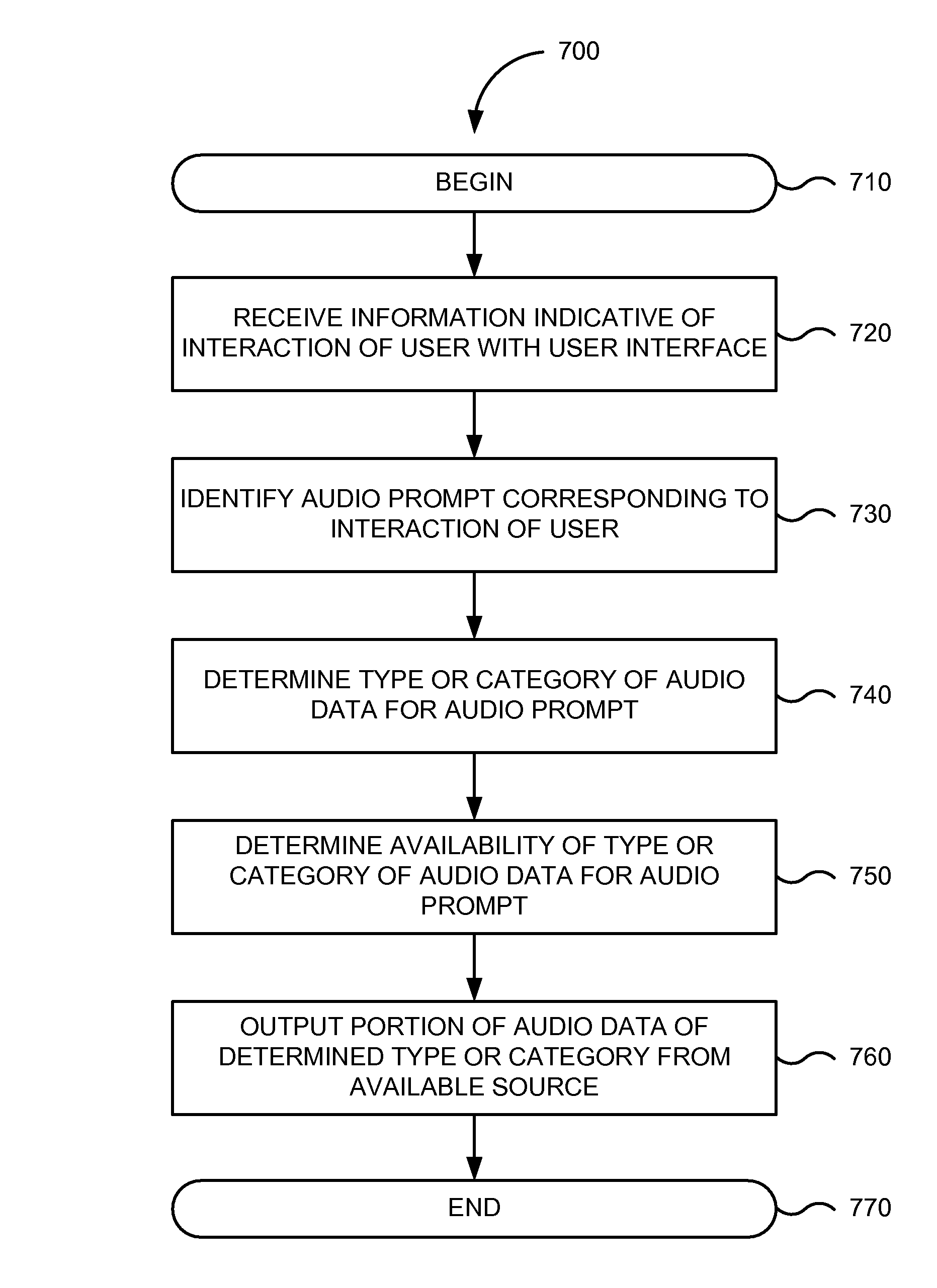

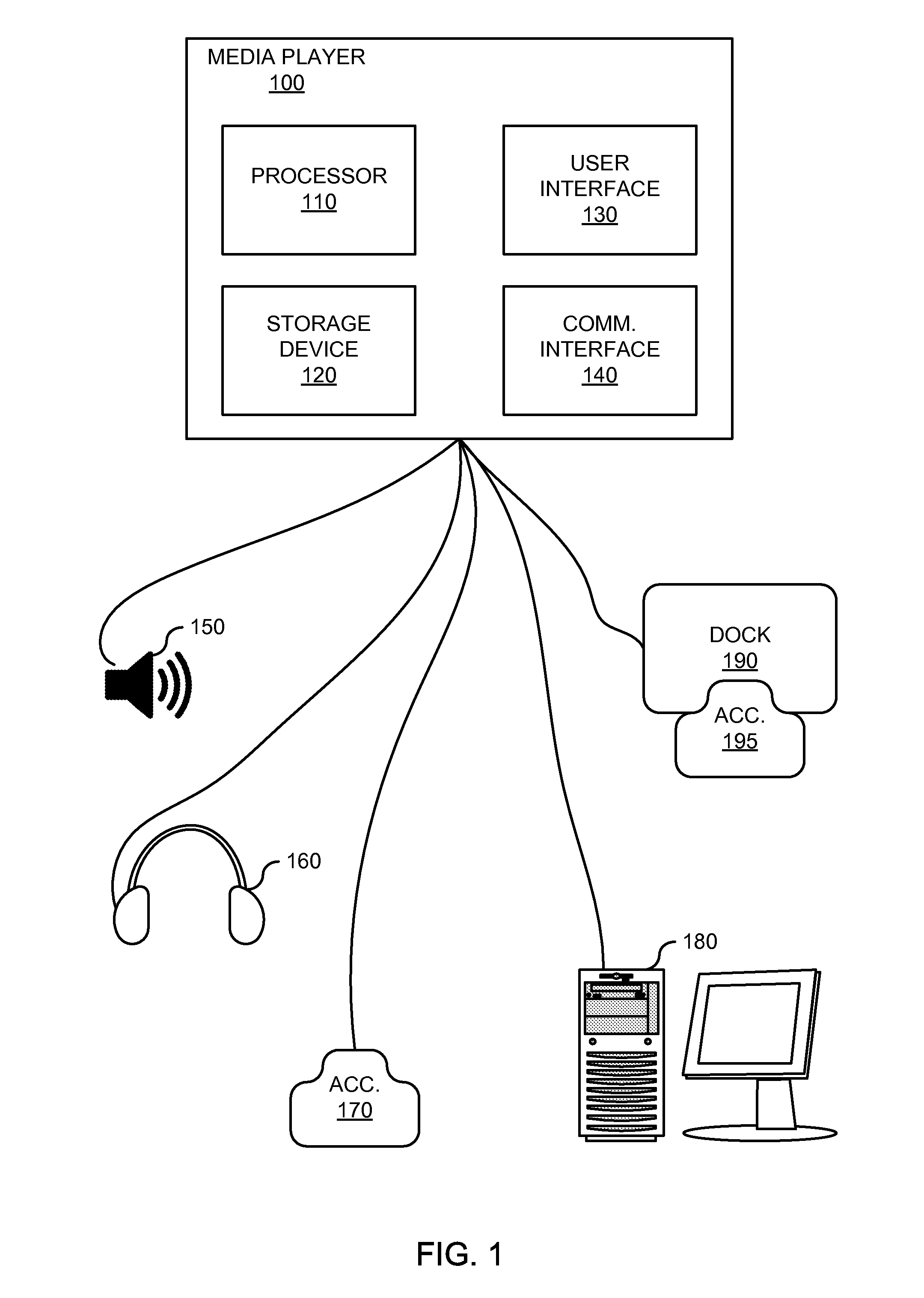

An audio user interface that provides audio prompts that help a user interact with a user interface of an electronic device is disclosed. The audio prompts can provide audio indicators that allow a user to focus his or her visual attention upon other tasks such as driving an automobile, exercising, or crossing a street, yet still enable the user to interact with the user interface. An intelligent path can provide access to different types of audio prompts from a variety of different sources. The different types of audio prompts may be presented based on availability of a particular type of audio prompt. As examples, the audio prompts may include pre-recorded voice audio, such as celebrity voices or cartoon characters, obtained from a dedicate voice server. Absent availability of pre-recorded or synthesized audio data, non-voice audio prompts may be provided.

Owner:APPLE INC

Human-computer interface including haptically controlled interactions

InactiveUS6954899B1Lower requirementEasy to controlInput/output for user-computer interactionCathode-ray tube indicatorsPresent methodHuman–machine interface

The present invention provides a method of human-computer interfacing that provides haptic feedback to control interface interactions such as scrolling or zooming within an application. Haptic feedback in the present method allows the user more intuitive control of the interface interactions, and allows the user's visual focus to remain on the application. The method comprises providing a control domain within which the user can control interactions. For example, a haptic boundary can be provided corresponding to scrollable or scalable portions of the application domain. The user can position a cursor near such a boundary, feeling its presence haptically (reducing the requirement for visual attention for control of scrolling of the display). The user can then apply force relative to the boundary, causing the interface to scroll the domain. The rate of scrolling can be related to the magnitude of applied force, providing the user with additional intuitive, non-visual control of scrolling.

Owner:META PLATFORMS INC

Audio user interface

InactiveUS8898568B2User may experienceImprove experienceInstruments for road network navigationGain controlNatural user interfaceHuman–computer interaction

An audio user interface that provides audio prompts that help a user interact with a user interface of an electronic device is disclosed. The audio prompts can provide audio indicators that allow a user to focus his or her visual attention upon other tasks such as driving an automobile, exercising, or crossing a street, yet still enable the user to interact with the user interface. An intelligent path can provide access to different types of audio prompts from a variety of different sources. The different types of audio prompts may be presented based on availability of a particular type of audio prompt. As examples, the audio prompts may include pre-recorded voice audio, such as celebrity voices or cartoon characters, obtained from a dedicate voice server. Absent availability of pre-recorded or synthesized audio data, non-voice audio prompts may be provided.

Owner:APPLE INC

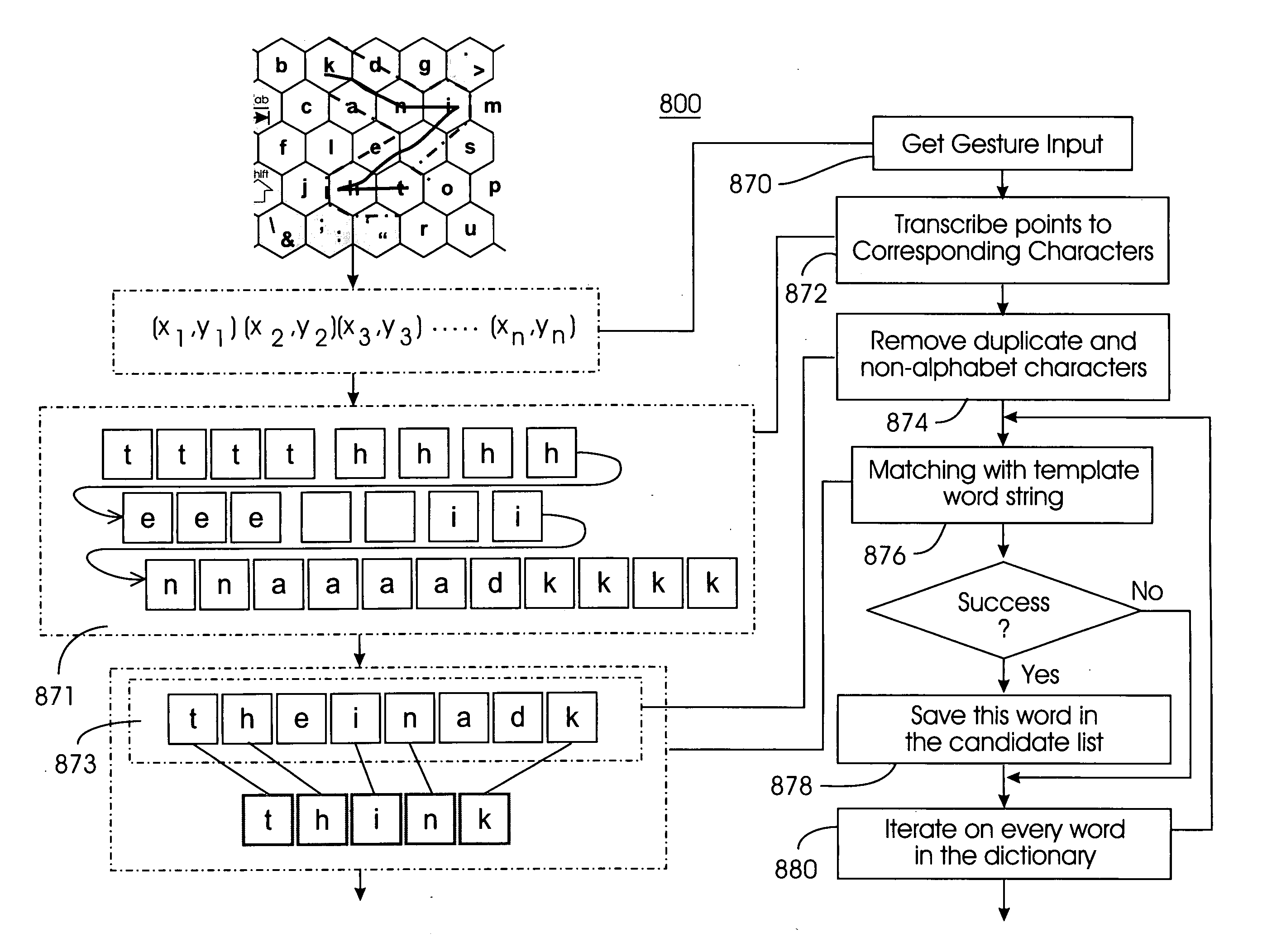

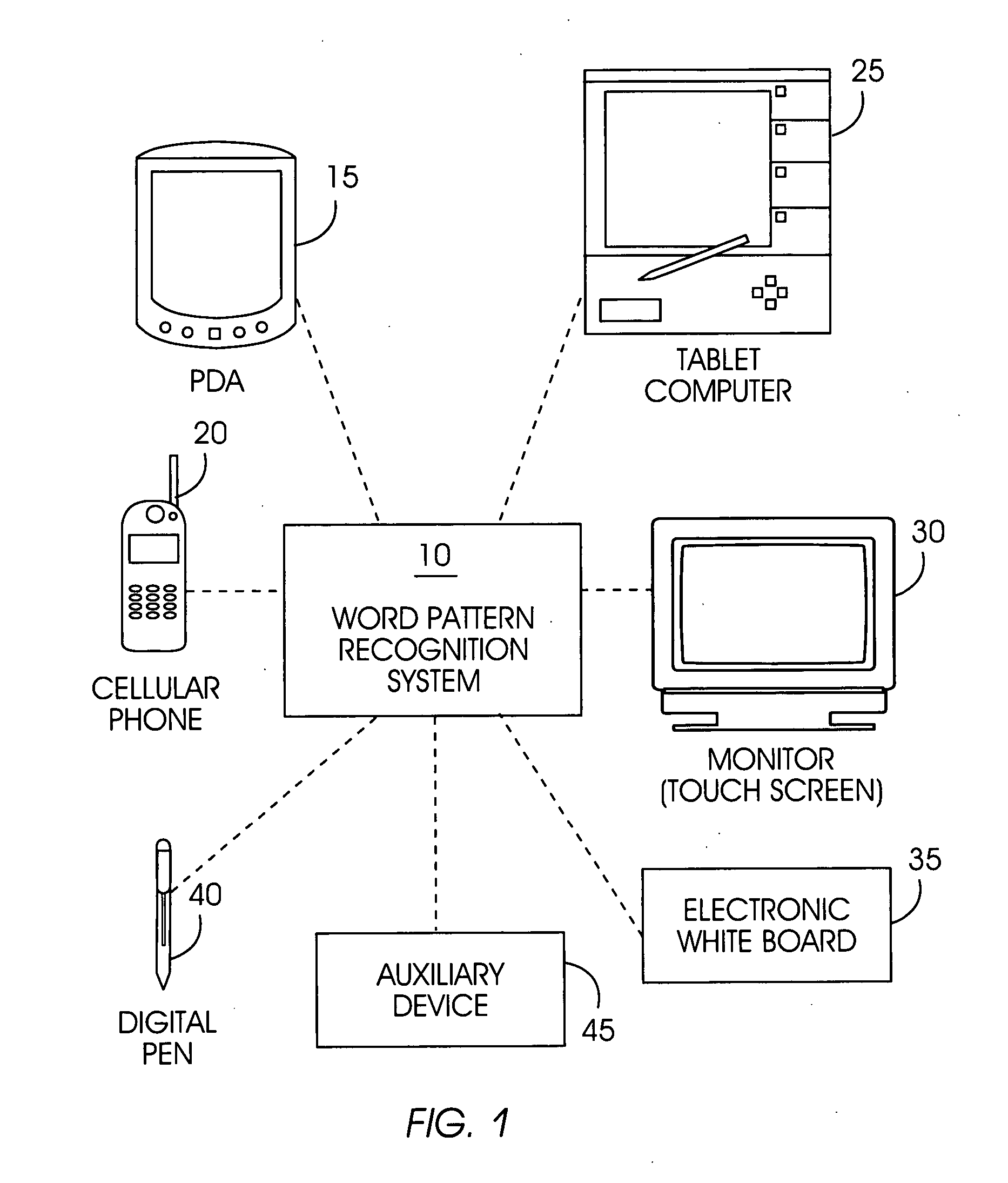

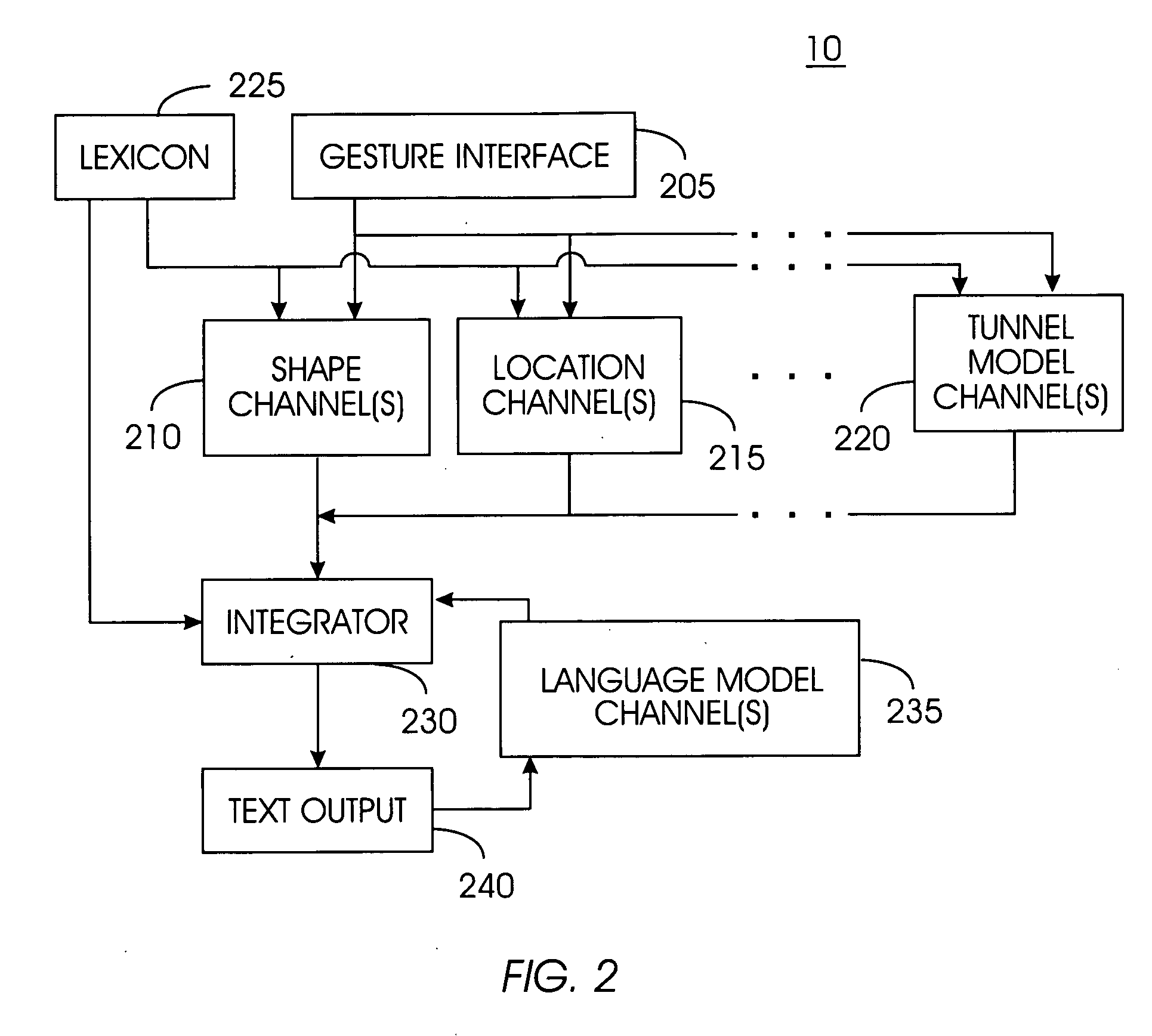

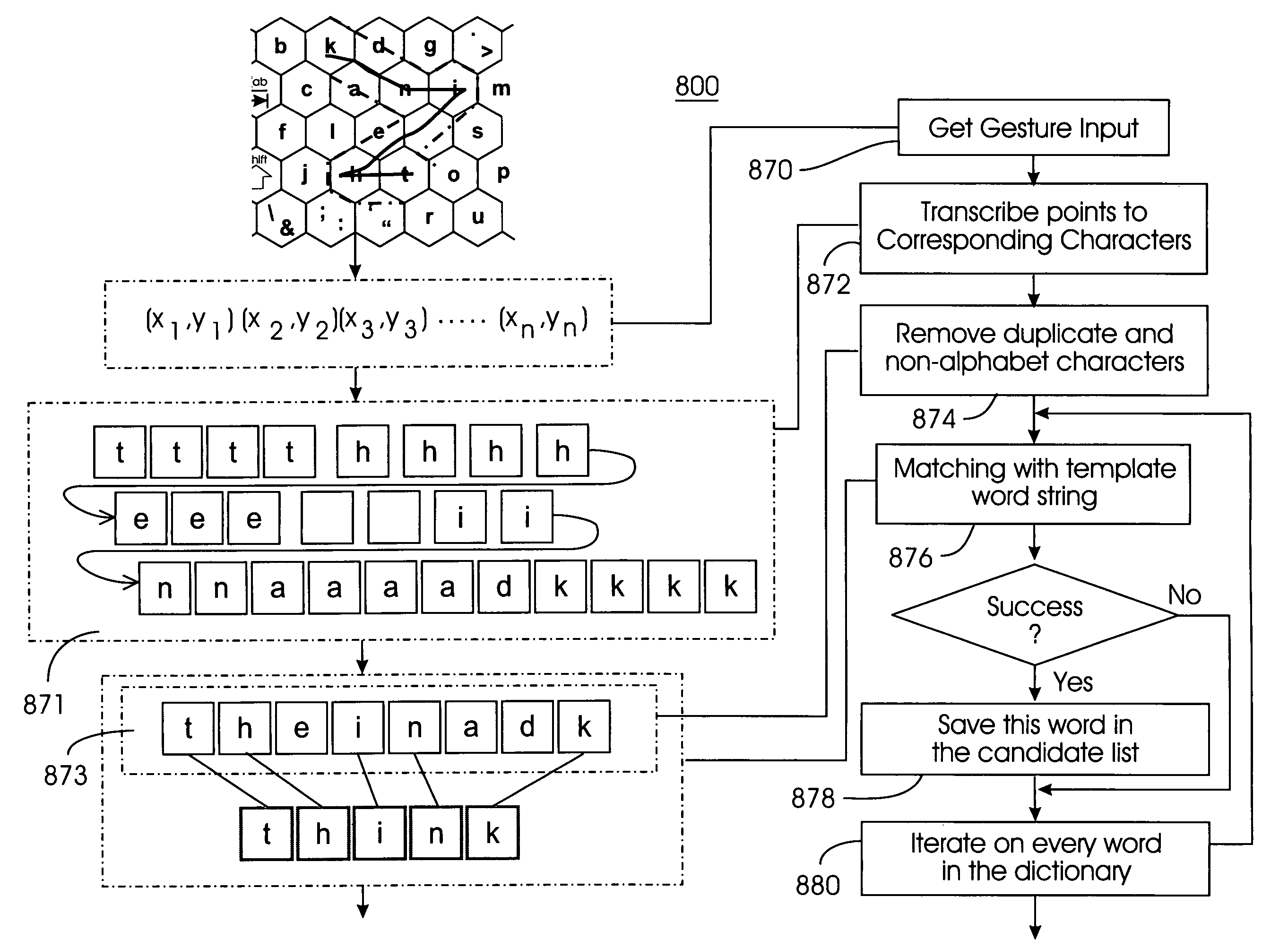

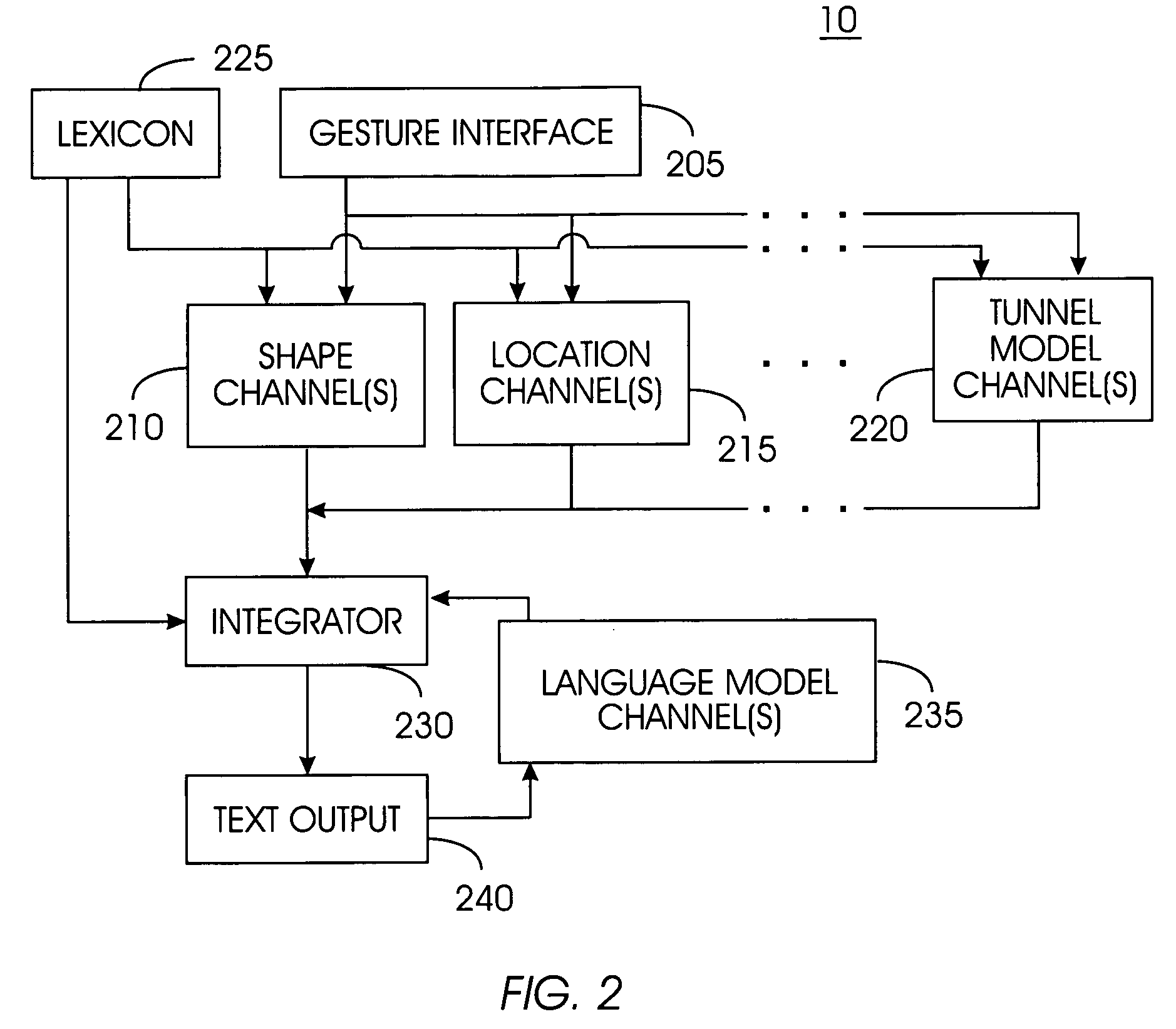

System and method for recognizing word patterns in a very large vocabulary based on a virtual keyboard layout

ActiveUS20050190973A1Reduce usageLess visual attentionCharacter and pattern recognitionSpecial data processing applicationsGraphicsText entry

A word pattern recognition system based on a virtual keyboard layout combines handwriting recognition with a virtual, graphical, or on-screen keyboard to provide a text input method with relative ease of use. The system allows the user to input text quickly with little or no visual attention from the user. The system supports a very large vocabulary of gesture templates in a lexicon, including practically all words needed for a particular user. In addition, the system utilizes various techniques and methods to achieve reliable recognition of a very large gesture vocabulary. Further, the system provides feedback and display methods to help the user effectively use and learn shorthand gestures for words. Word patterns are recognized independent of gesture scale and location. The present system uses language rules to recognize and connect suffixes with a preceding word, allowing users to break complex words into easily remembered segments.

Owner:CERENCE OPERATING CO

System and method for recognizing word patterns in a very large vocabulary based on a virtual keyboard layout

ActiveUS7706616B2Reduce usageLess visual attentionCharacter and pattern recognitionSpecial data processing applicationsGraphicsText entry

A word pattern recognition system based on a virtual keyboard layout combines handwriting recognition with a virtual, graphical, or on-screen keyboard to provide a text input method with relative ease of use. The system allows the user to input text quickly with little or no visual attention from the user. The system supports a very large vocabulary of gesture templates in a lexicon, including practically all words needed for a particular user. In addition, the system utilizes various techniques and methods to achieve reliable recognition of a very large gesture vocabulary. Further, the system provides feedback and display methods to help the user effectively use and learn shorthand gestures for words. Word patterns are recognized independent of gesture scale and location. The present system uses language rules to recognize and connect suffixes with a preceding word, allowing users to break complex words into easily remembered segments.

Owner:CERENCE OPERATING CO

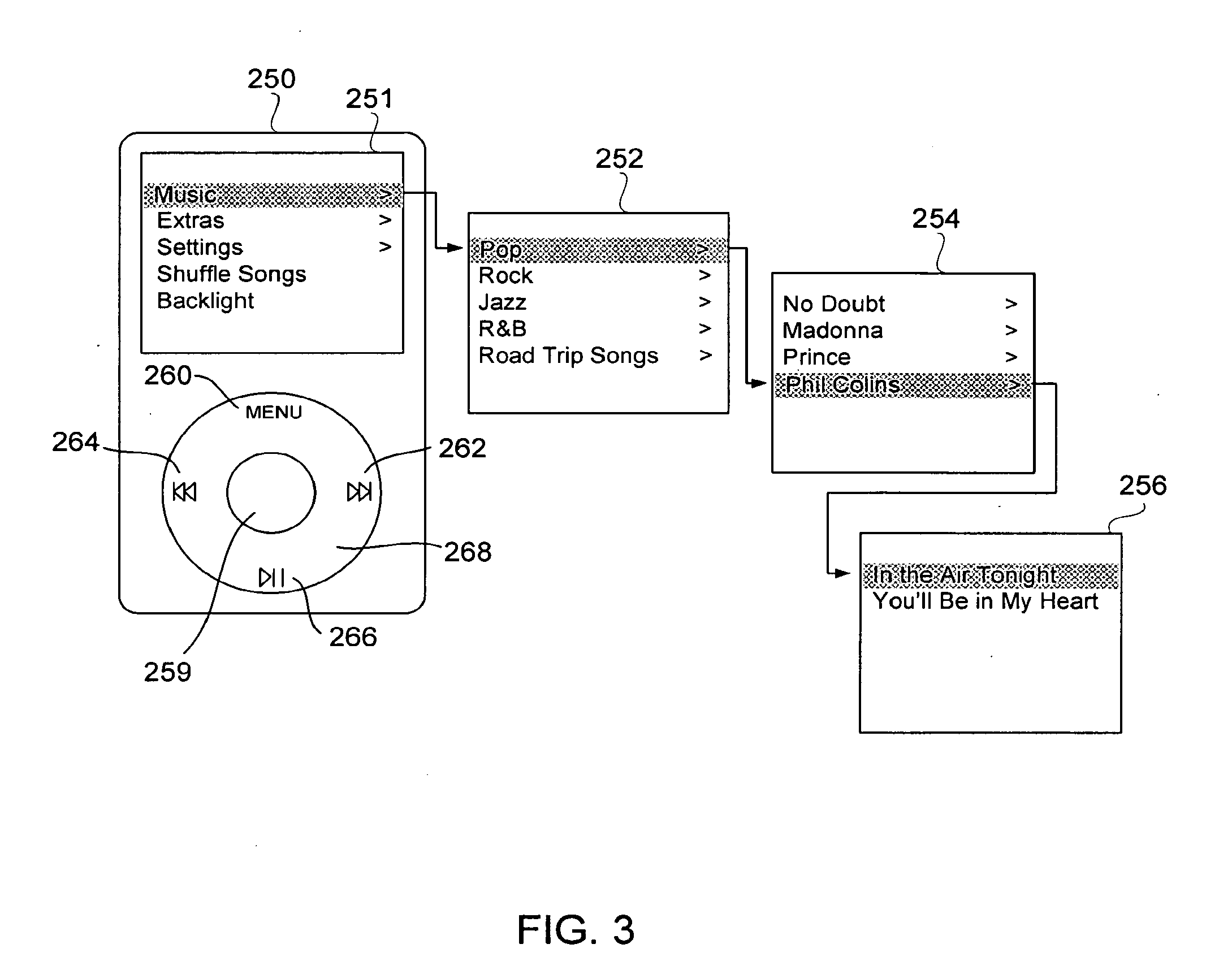

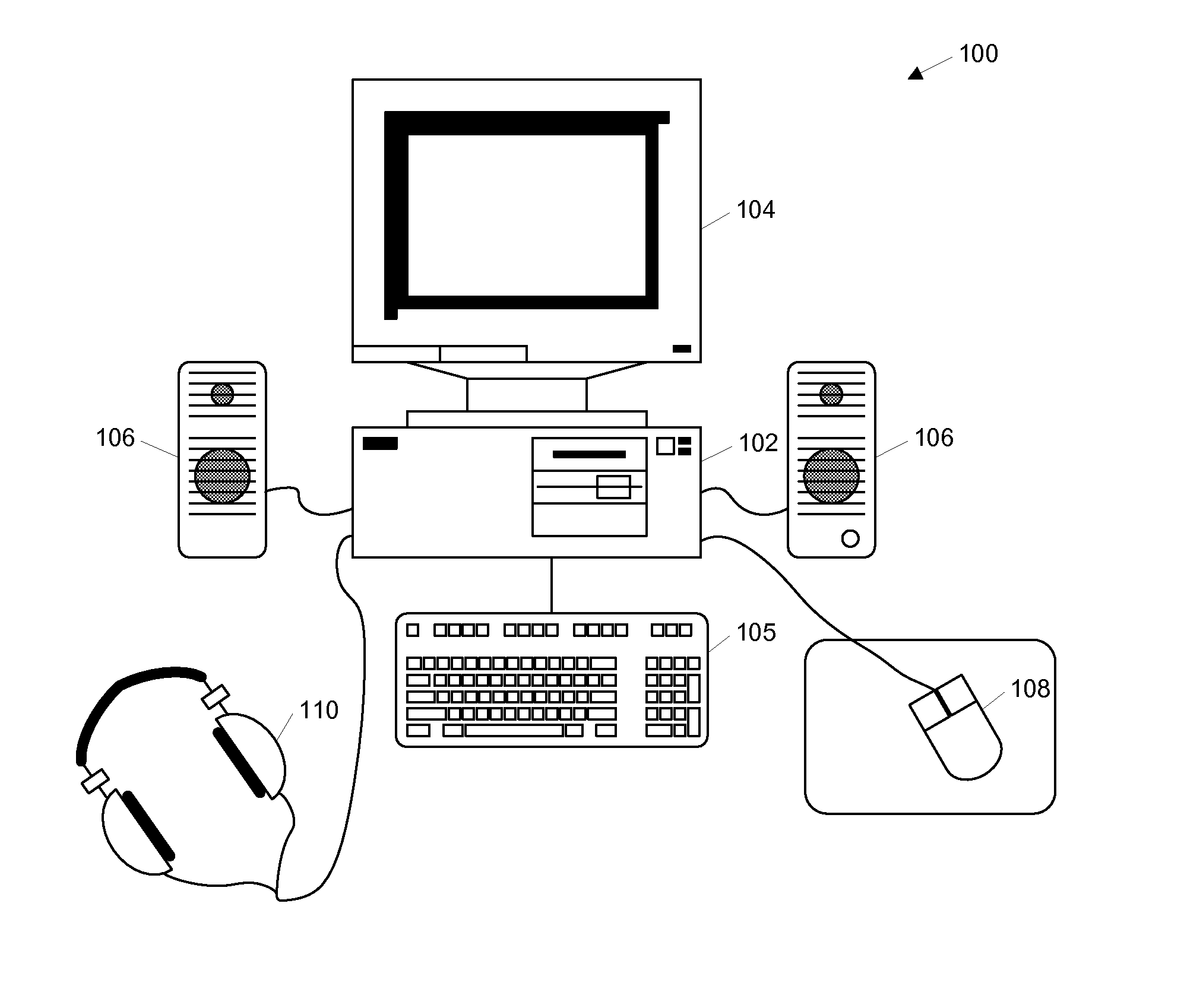

Audio user interface for computing devices

ActiveUS20070180383A1Easy to carryCarrier indicating arrangementsSound input/outputNatural user interfaceHuman–computer interaction

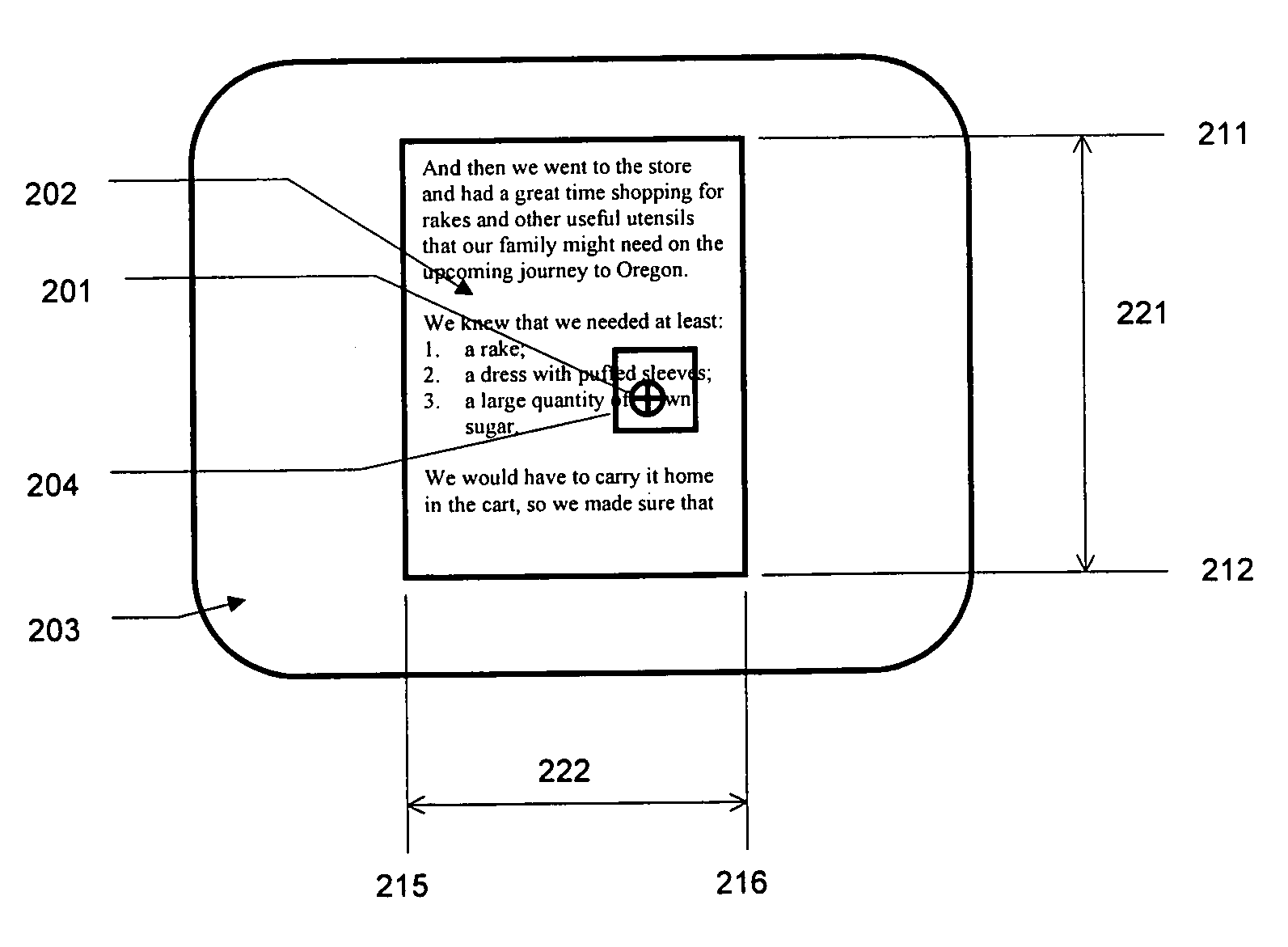

An audio user interface that generates audio prompts that help a user interact with a user interface of a computing device is disclosed. The audio prompts can provide audio indicators that allow a user to focus his or her visual attention upon other tasks such as driving an automobile, exercising, or crossing a street, yet still enable the user to interact with the user interface. As examples, the audio prompts provided can audiblize the spoken version of a user interface selection, such as a selected function or a selected (e.g., highlighted) menu item of a display menu. The computing device can be, for example, a media player such as an MP3 player, a mobile phone, or a personal digital assistant.

Owner:APPLE INC

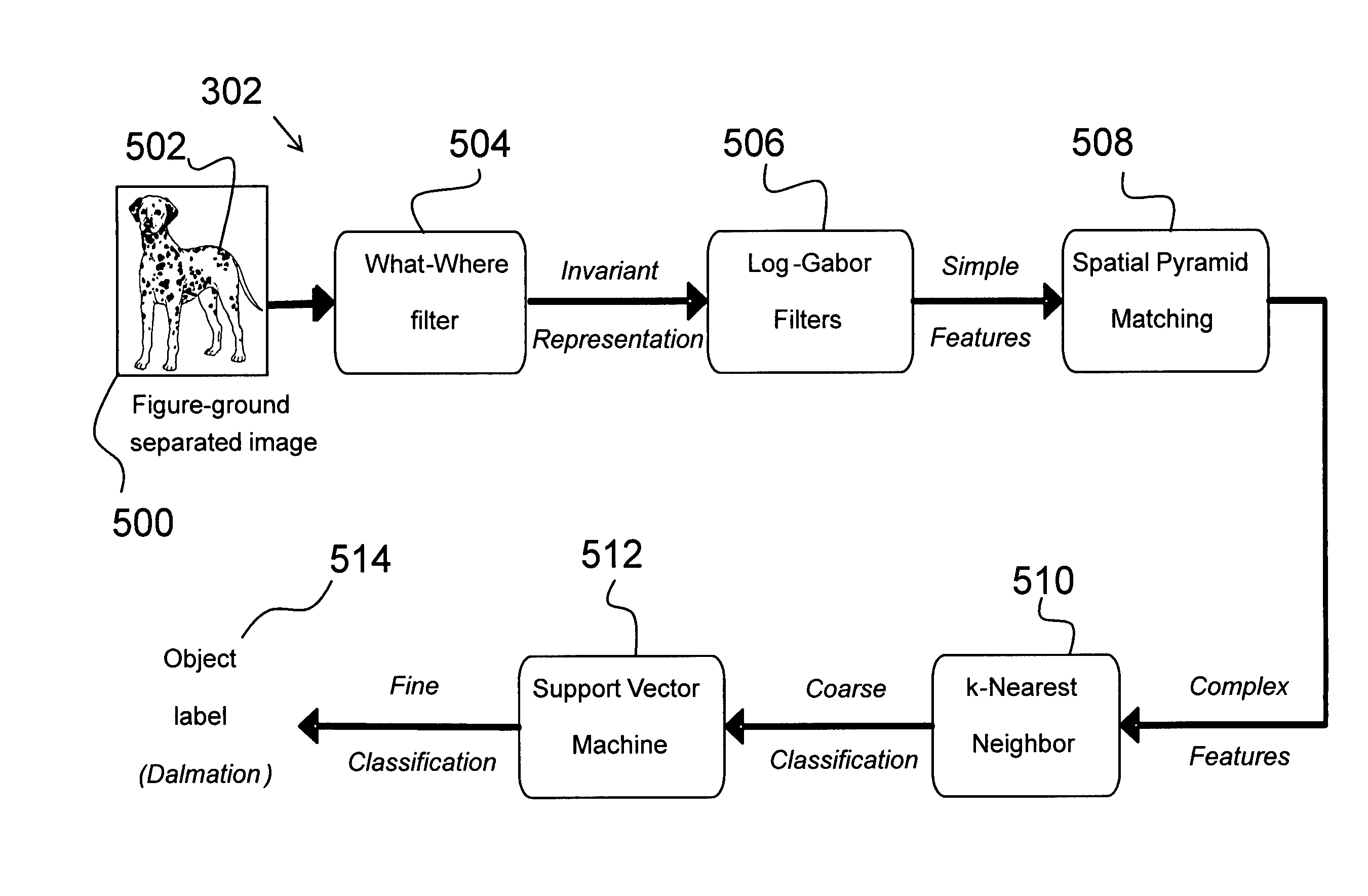

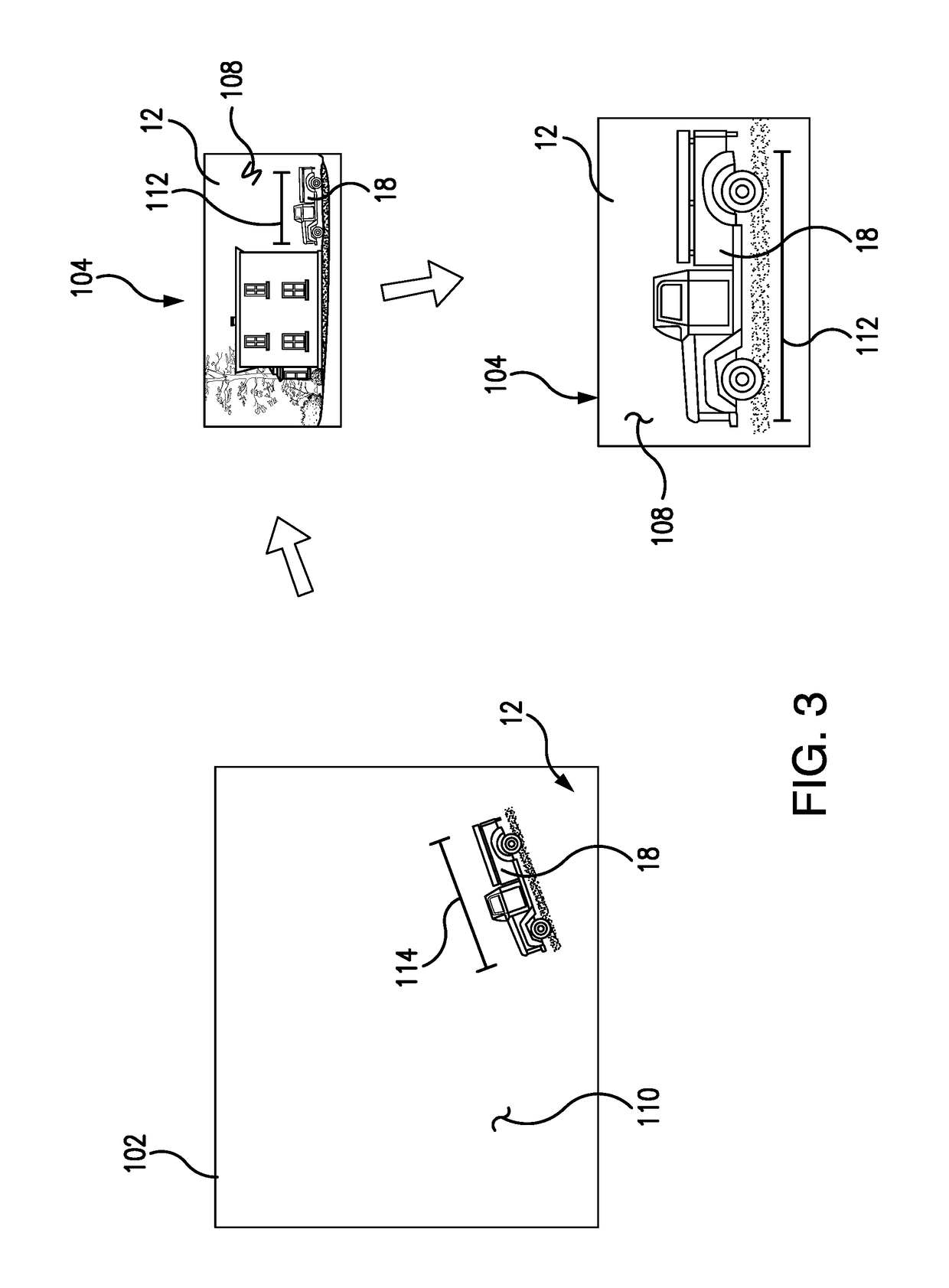

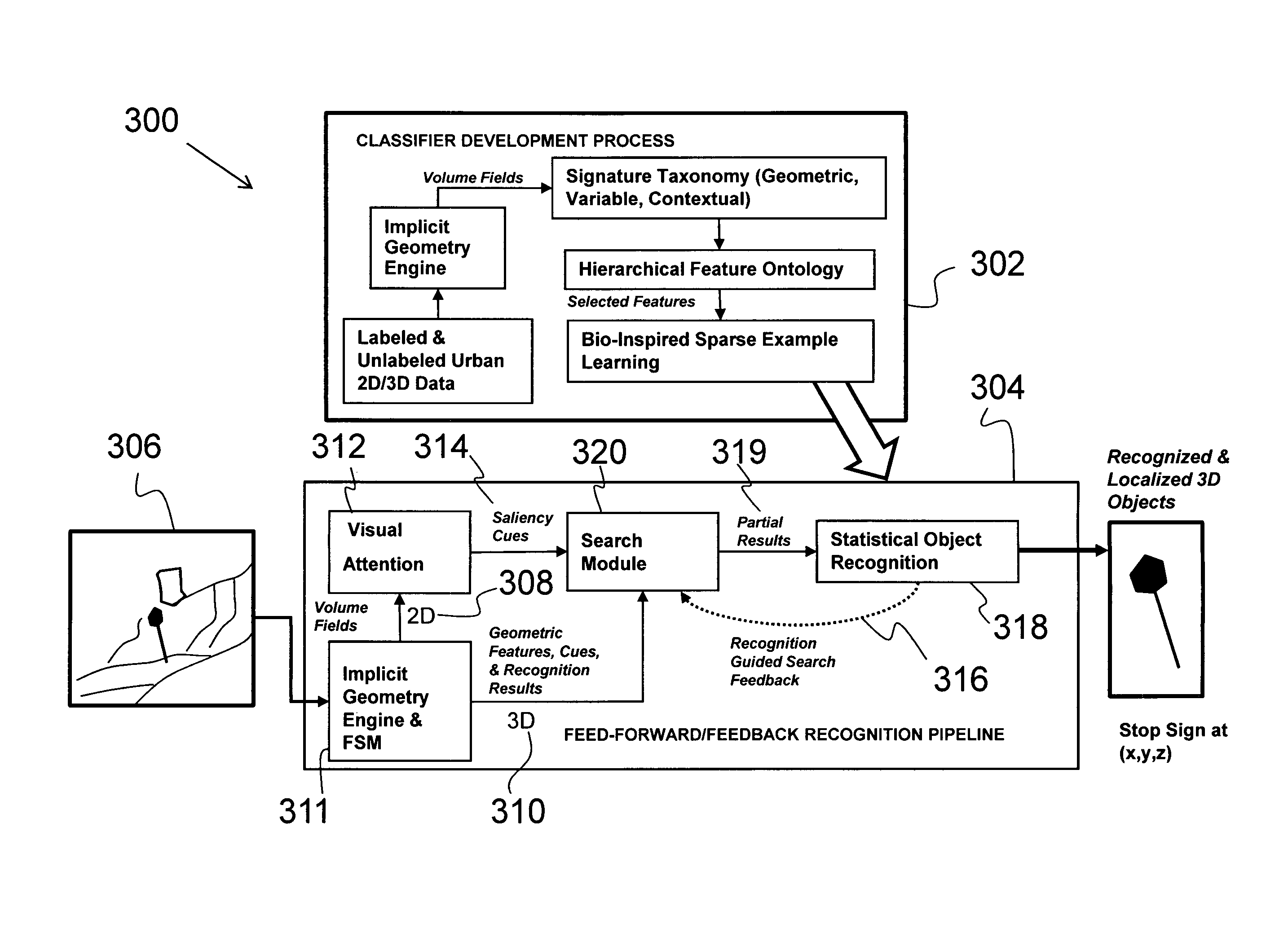

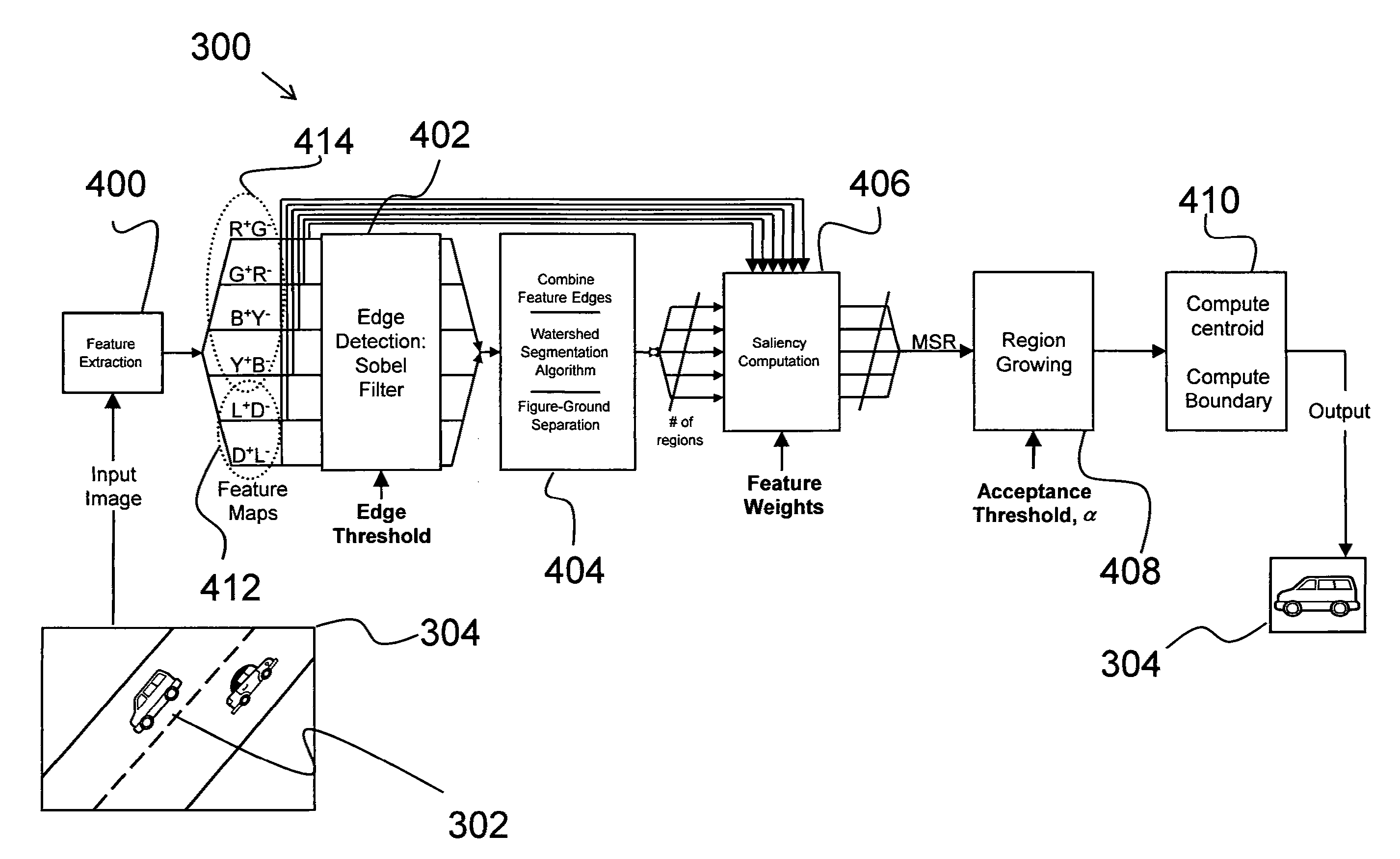

Visual attention and object recognition system

Described is a bio-inspired vision system for object recognition. The system comprises an attention module, an object recognition module, and an online labeling module. The attention module is configured to receive an image representing a scene and find and extract an object from the image. The attention module is also configured to generate feature vectors corresponding to color, intensity, and orientation information within the extracted object. The object recognition module is configured to receive the extracted object and the feature vectors and associate a label with the extracted object. Finally, the online labeling module is configured to alert a user if the extracted object is an unknown object so that it can be labeled.

Owner:HRL LAB

Image style migration method based on deep convolutional neural network

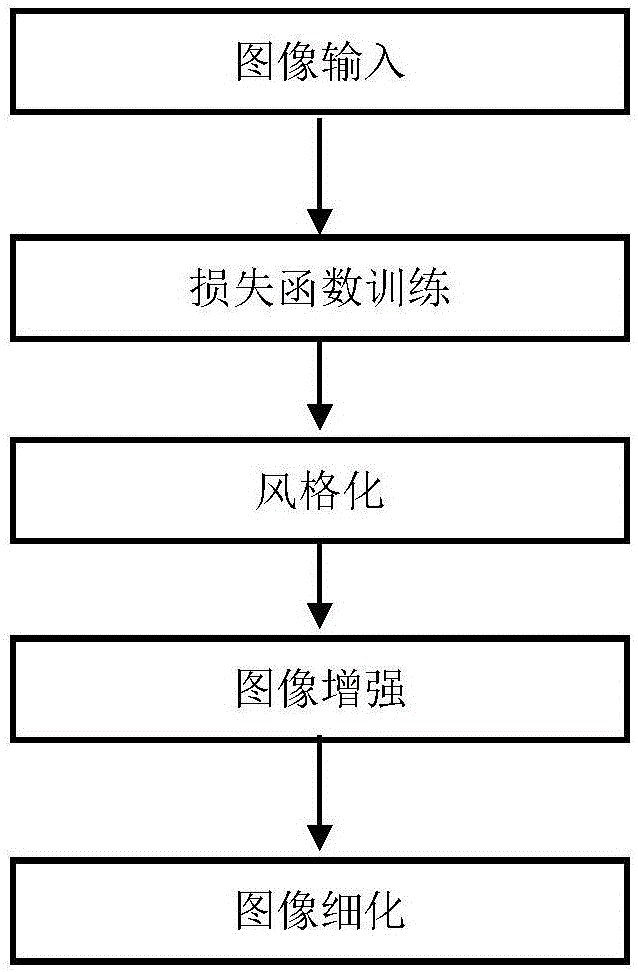

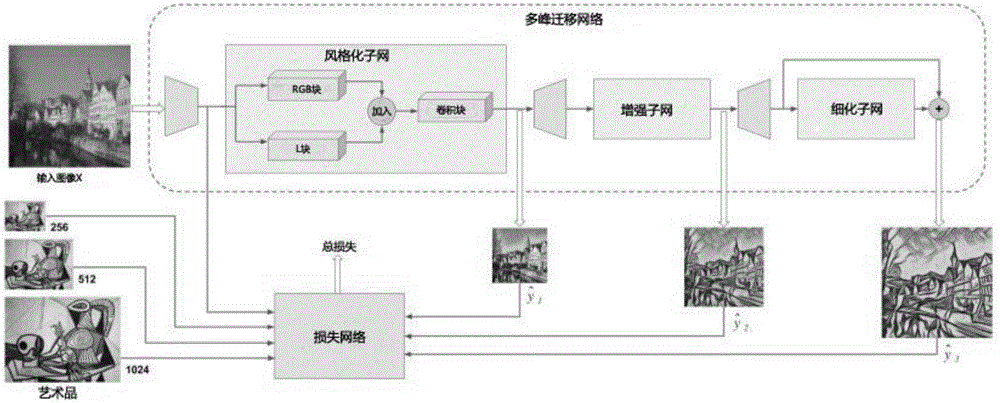

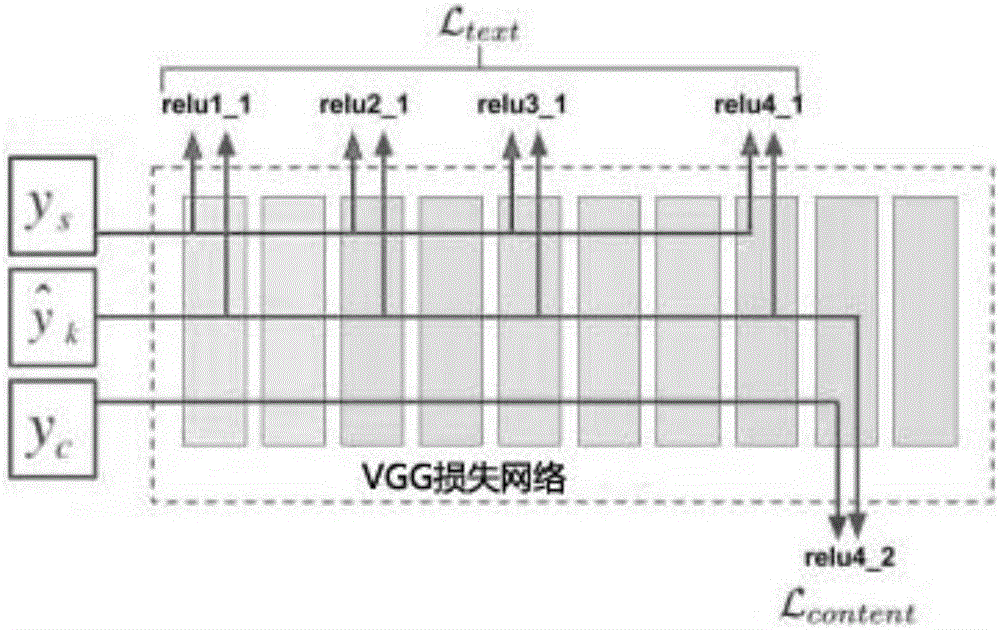

The invention discloses an image text description method based on a visual attention model. The main content comprises the followings: image inputting, loss function training, stylizing, image enhancing and image thinning; and the processes are as follows: an input image is firstly adjusted as a content image (256*256) with a dual-linear down-sampling layer, and then stylized through a style subnet; and then a stylized result as the first output image is up-sampled as an image in the size of 512*512, and then the up-sampled image is enhanced through an enhancement subnet to obtain the second output image; the second output image is adjusted as the image in the size of 1024*1024, and finally, a thinning subnet deletes locally pixelated artifact and further thins the result to obtain a high-resolution result. By use of the image style migration method disclosed by the invention, the brushwork of the artwork can be simulated more closely; multiple models are combined into a network so as to process the image with bigger and bigger size shot by a modern digital camera; and the method can be used for training the combined model to realize the migration of multiple artistic styles.

Owner:SHENZHEN WEITESHI TECH

Visual attention system

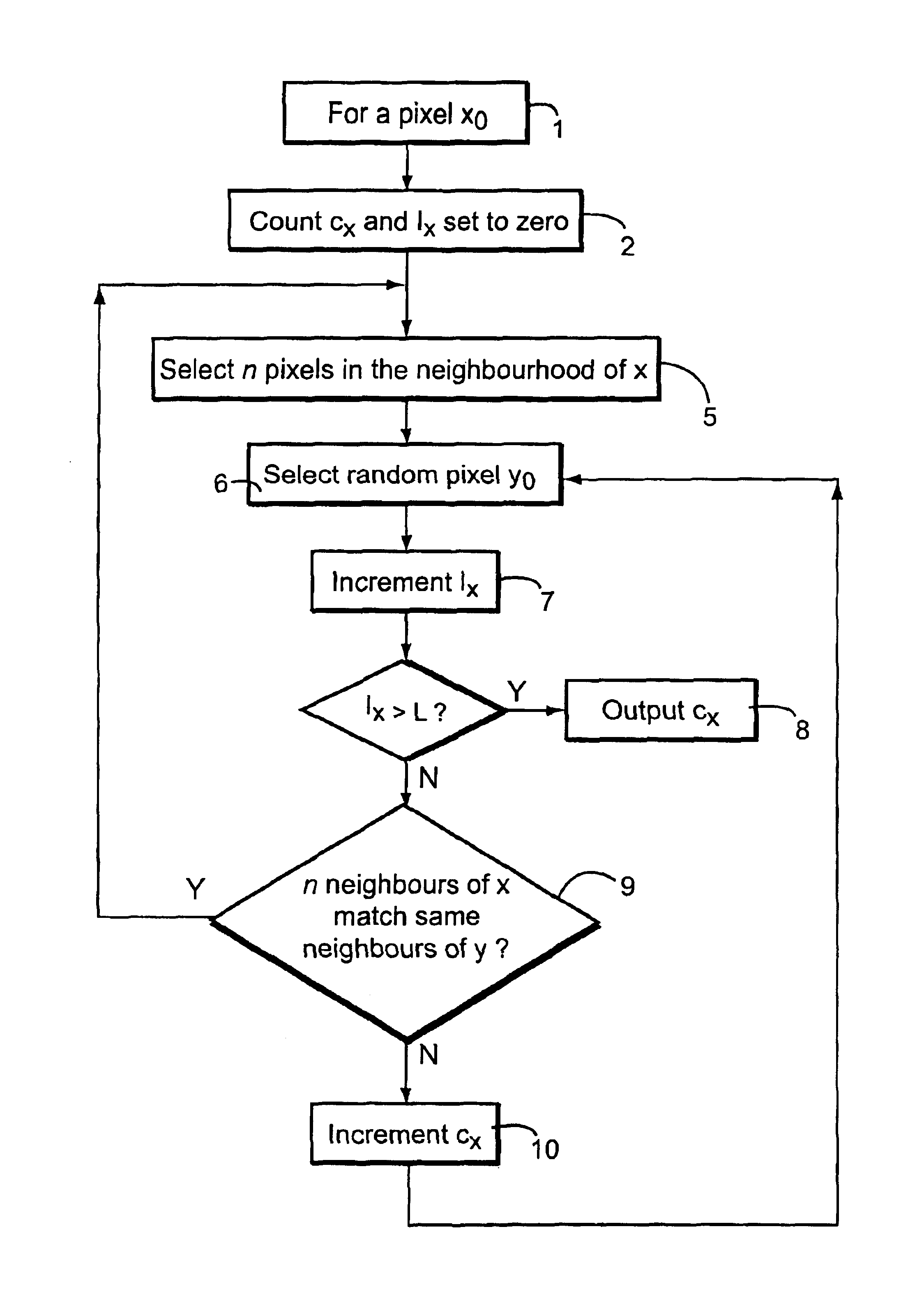

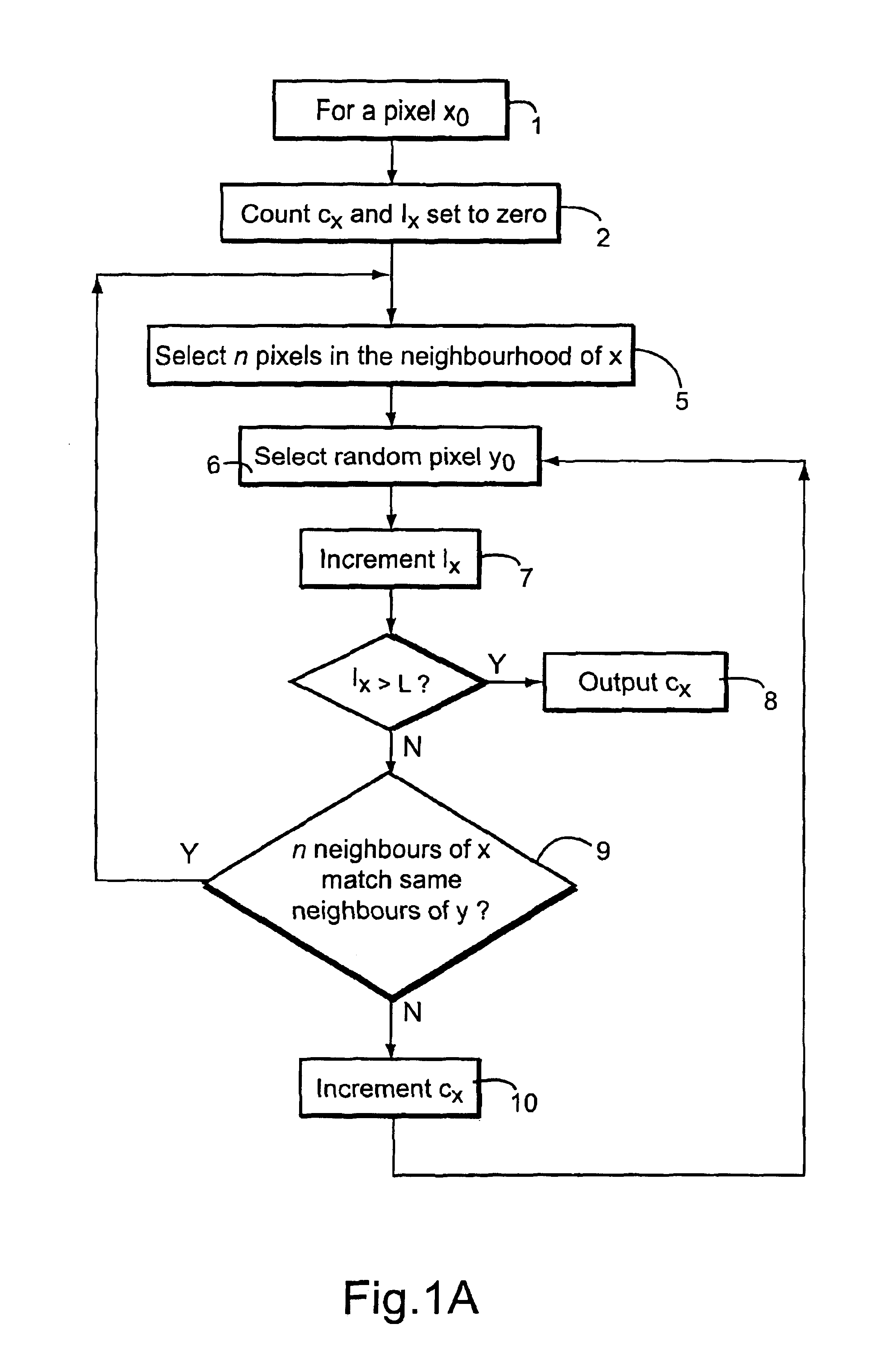

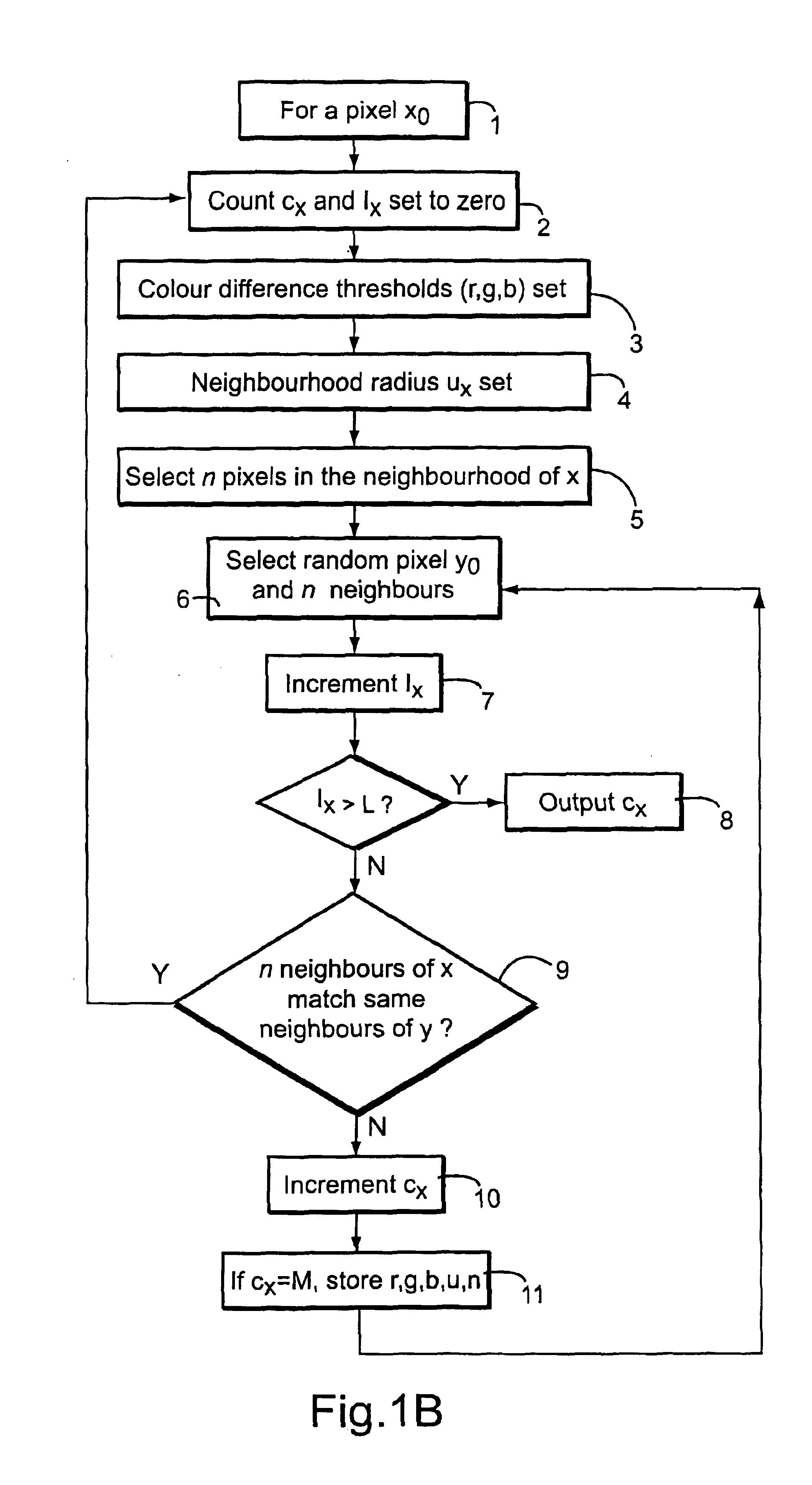

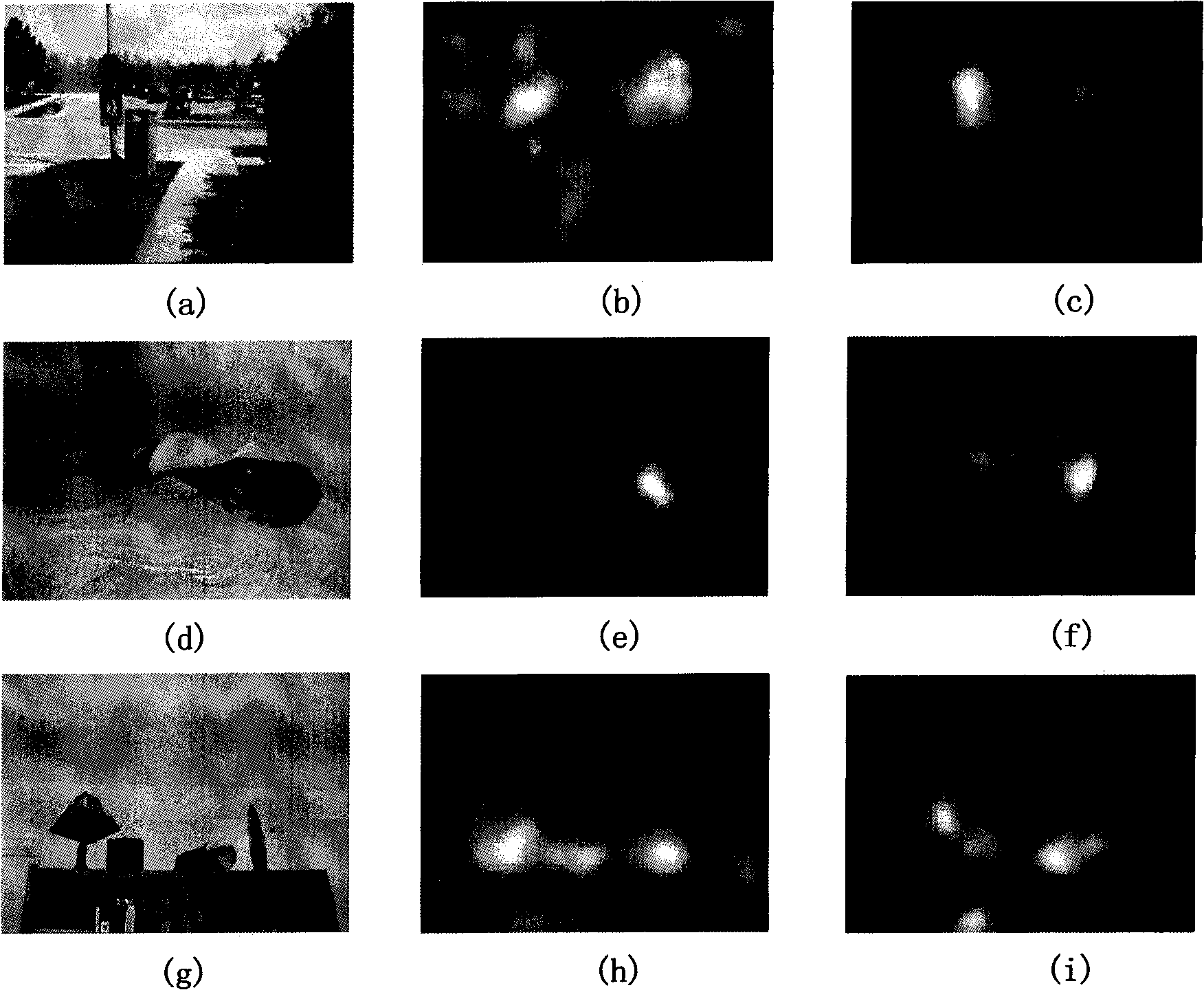

InactiveUS6934415B2Reduce usageEasy to operateColor television with pulse code modulationImage analysisVisibilityPattern recognition

The most significant features in visual scenes, is identified without prior training, by measuring the difficulty in finding similarities between neighbourhoods in the scene. Pixels in an area that is similar to much of the rest of the scene score low measures of visual attention. On the other hand a region that possesses many dissimilarities with other parts of the image will attract a high measure of visual attention. A trial and error process is used to find dissimilarities between parts of the image and does not require prior knowledge of the nature of the anomalies that may be present. The use of processing dependencies between pixels avoided while yet providing a straightforward parallel implementation for each pixel. Such techniques are of wide application in searching for anomalous patterns in health screening, quality control processes and in analysis of visual ergonomics for assessing the visibility of signs and advertisements. A measure of significant features can be provided to an image processor in order to provide variable rate image compression.

Owner:BRITISH TELECOMM PLC

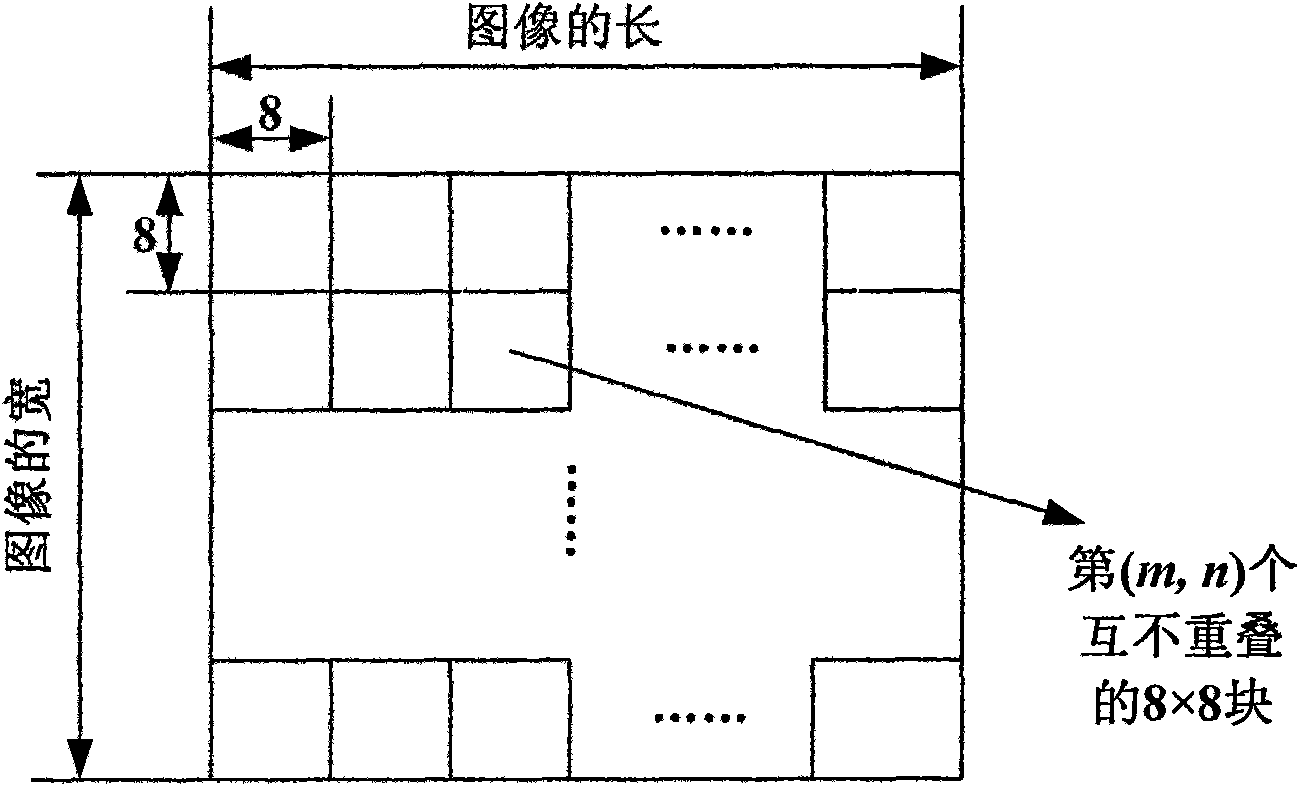

Dynamic vision caution region extracting method based on characteristic

InactiveCN101493890AEliminate structural constraintsSolve problems where salience needs to be handled independentlyImage analysisCharacter and pattern recognitionMachine visionIncremental encoding

The invention relates to a dynamic visual attention area extraction method based on features in the technical field of machine vision. The method comprises the following steps: first, an independent component analysis method is adopted for carrying out sparse decomposition to mass natural images so as to obtain a group of filtering base functions and a group of corresponding reconfigurable base functions and the input images are divided into small RGB blocks of m multiplied by m and projected on a group of the base to obtain the features of the images; second, effective encoding principle is utilized to measure an incremental encoding length index for each feature; third, according to the incremental encoding length index, the remarkable degree of each small block is processed through the energy reallocation of each feature and finally a remarkable map is obtained. The method can reduce a 'time slice', realize continuous sampling, therefore, data of different frames can direct the processing of remarkable degrees together, and the problem that the remarkable degrees of different frames require independent process is solved so as to realize the dynamic performance.

Owner:SHANGHAI JIAO TONG UNIV

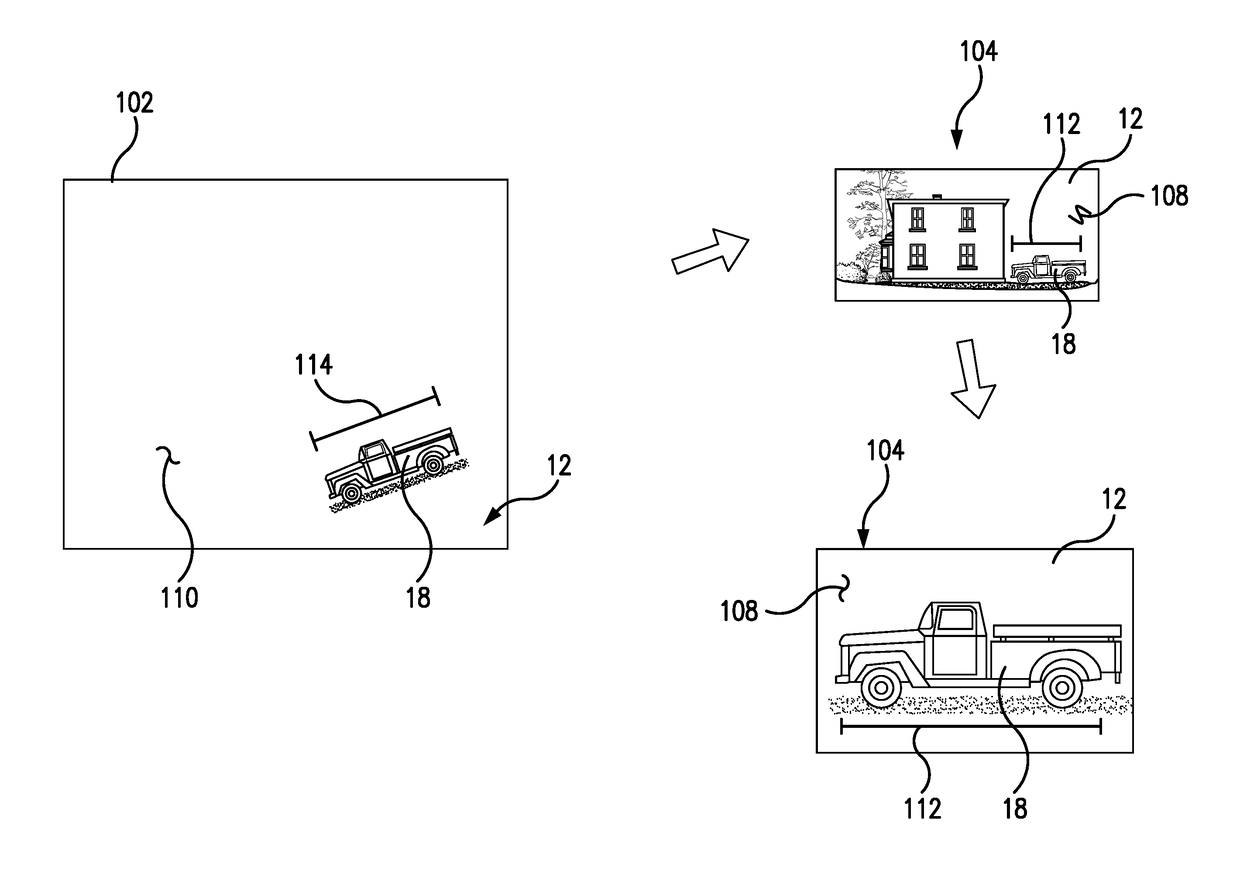

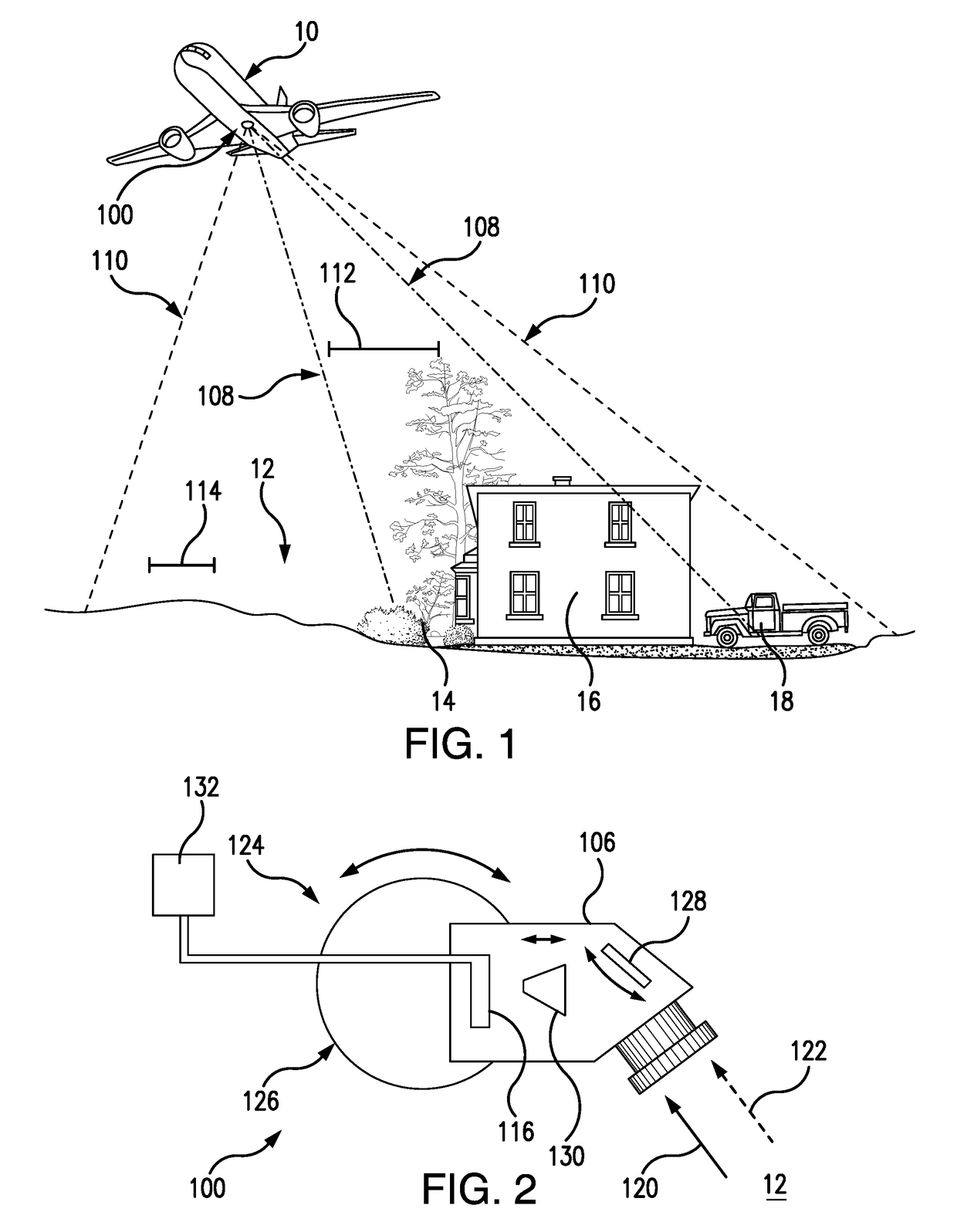

Active visual attention models for computer vision tasks

An imaging method includes obtaining an image with a first field of view and first effective resolution and the analyzing the image with a visual attention algorithm to one identify one or more areas of interest in the first field of view. A subsequent image is then obtained for each area of interest with a second field of view and a second effective resolution, the second field of view being smaller than the first field of view and the second effective resolution being greater than the first effective resolution.

Owner:THE BF GOODRICH CO

System for object recognition in colorized point clouds

Described is a system for object recognition in colorized point clouds. The system includes an implicit geometry engine that is configured to receive three-dimensional (3D) colorized cloud point data regarding a 3D object of interest and to convert the cloud point data into implicit representations. The engine also generates geometric features. A geometric grammar block is included to generate object cues and recognize geometric objects using geometric tokens and grammars based on object taxonomy. A visual attention cueing block is included to generate object cues based on 3D geometric properties. Finally, an object recognition block is included to perform a local search for objects using cues from the cueing block and the geometric grammar block and to classify the 3D object of interest as a particular object upon a classifier reaching a predetermined threshold.

Owner:HRL LAB

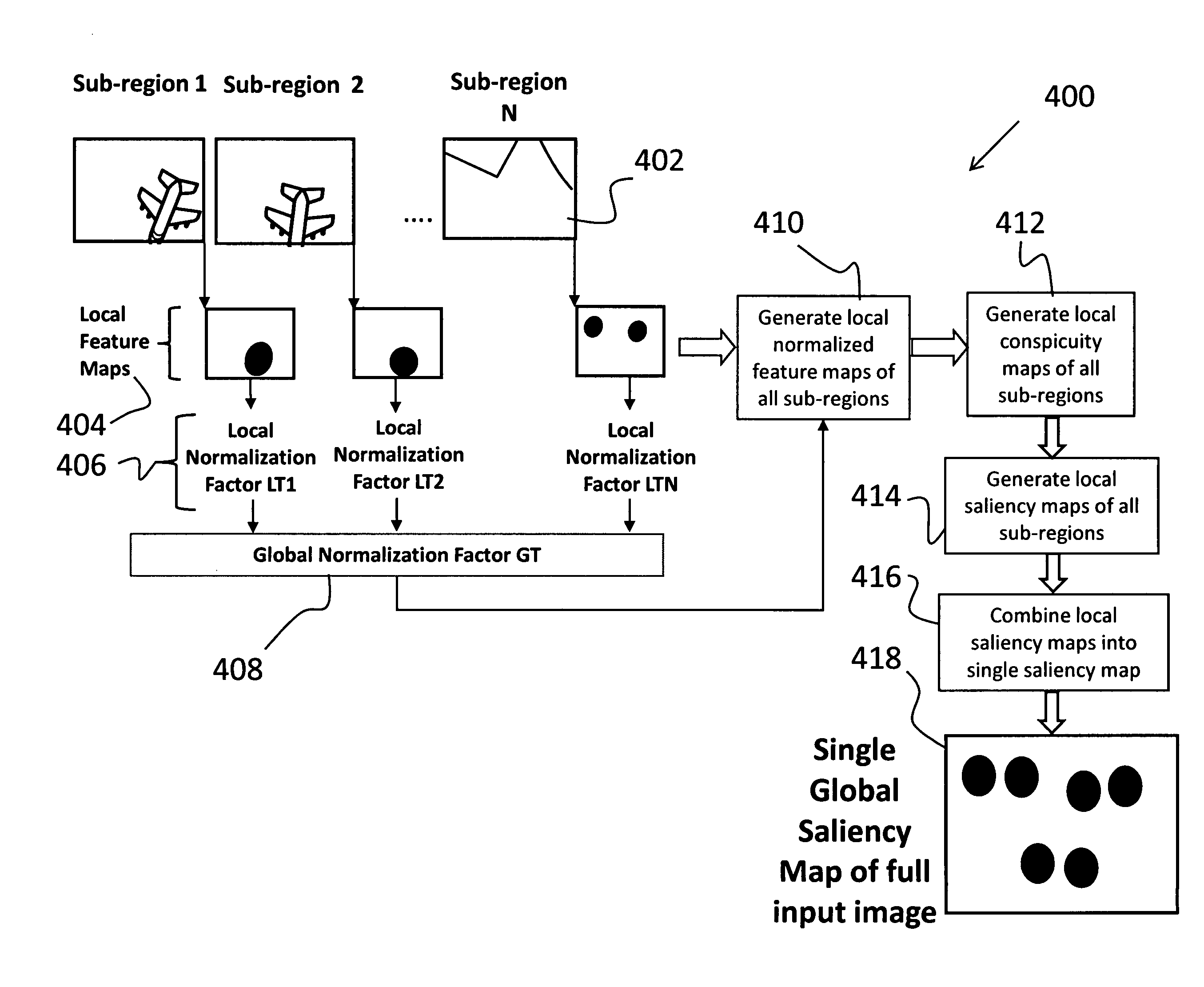

Visual attention system for salient regions in imagery

Described is a system for finding salient regions in imagery. The system improves upon the prior art by receiving an input image of a scene and dividing the image into a plurality of image sub-regions. Each sub-region is assigned a coordinate position within the image such that the sub-regions collectively form the input image. A plurality of local saliency maps are generated, where each local saliency map is based on a corresponding sub-region and a coordinate position representative of the corresponding sub-region. Finally, the plurality of local saliency maps is combined according to their coordinate positions to generate a single global saliency map of the input image of the scene.

Owner:HRL LAB

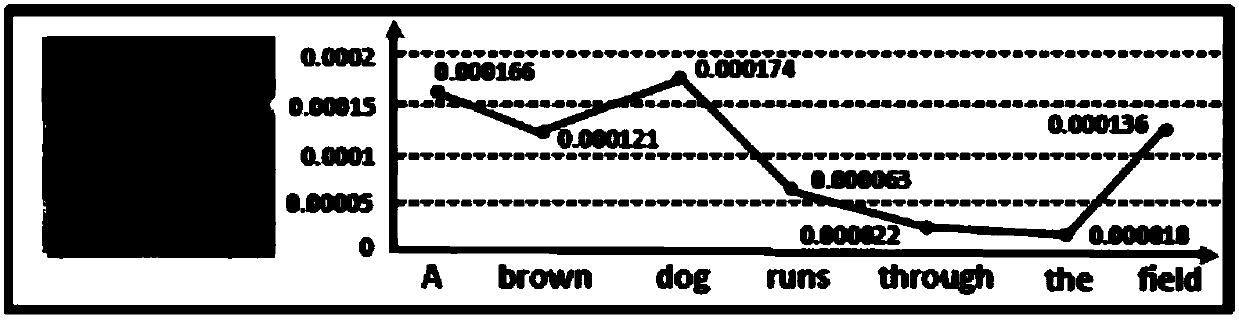

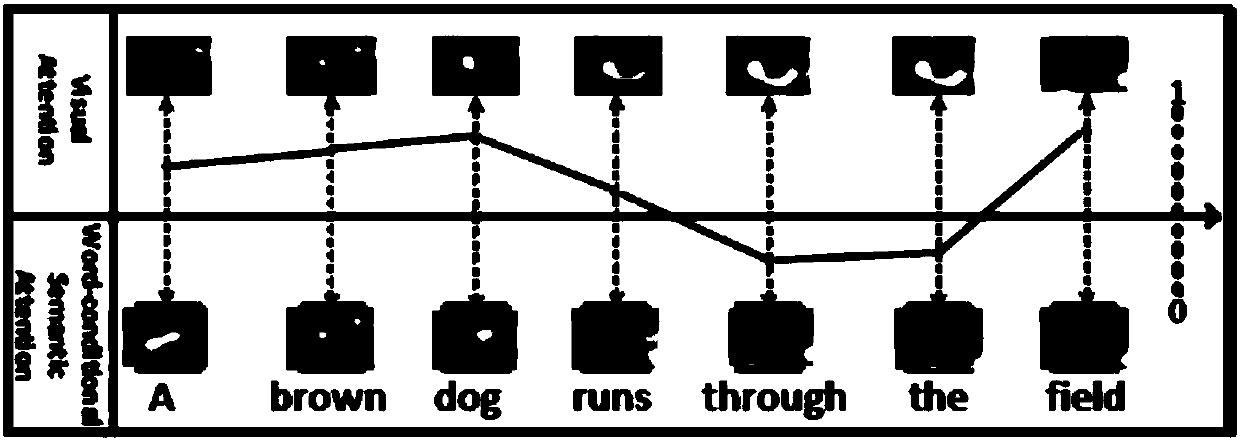

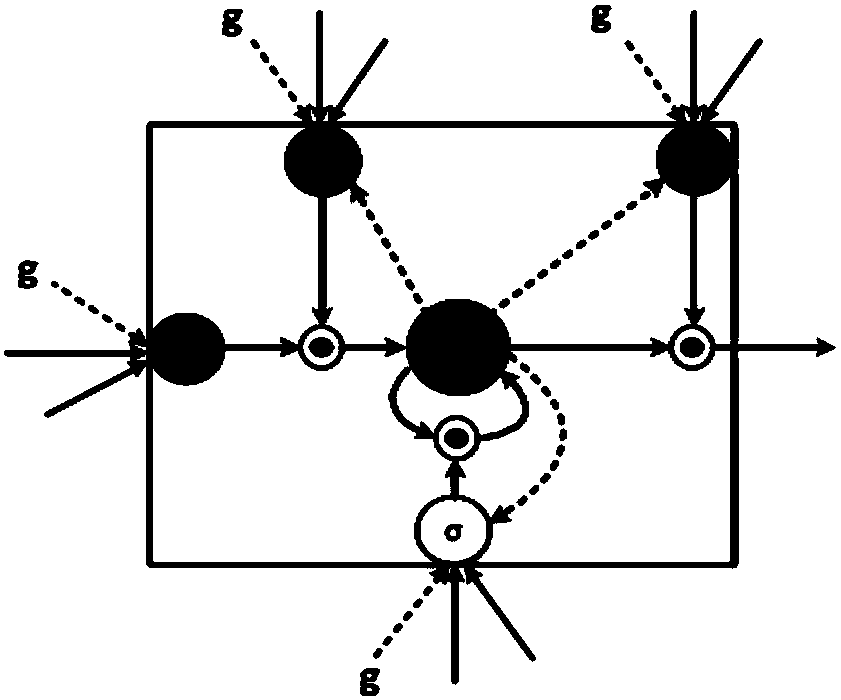

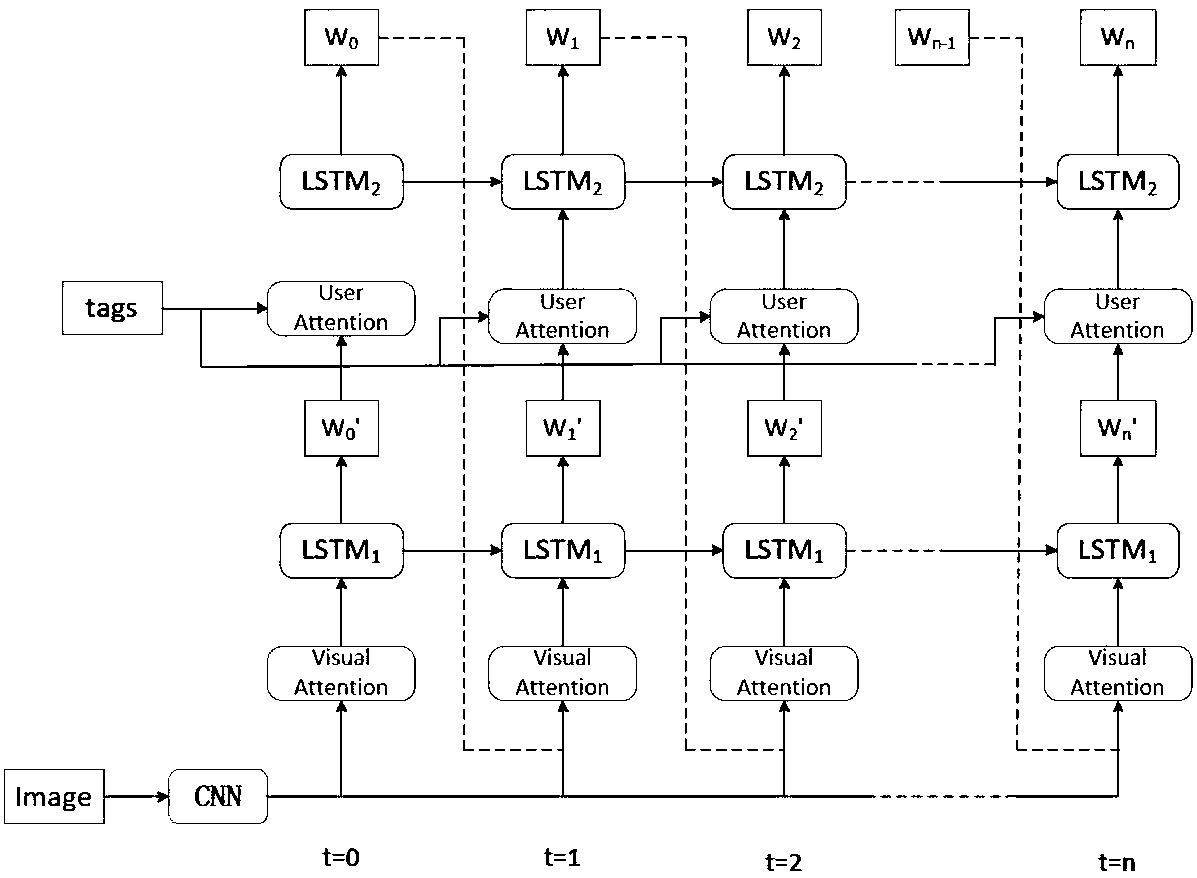

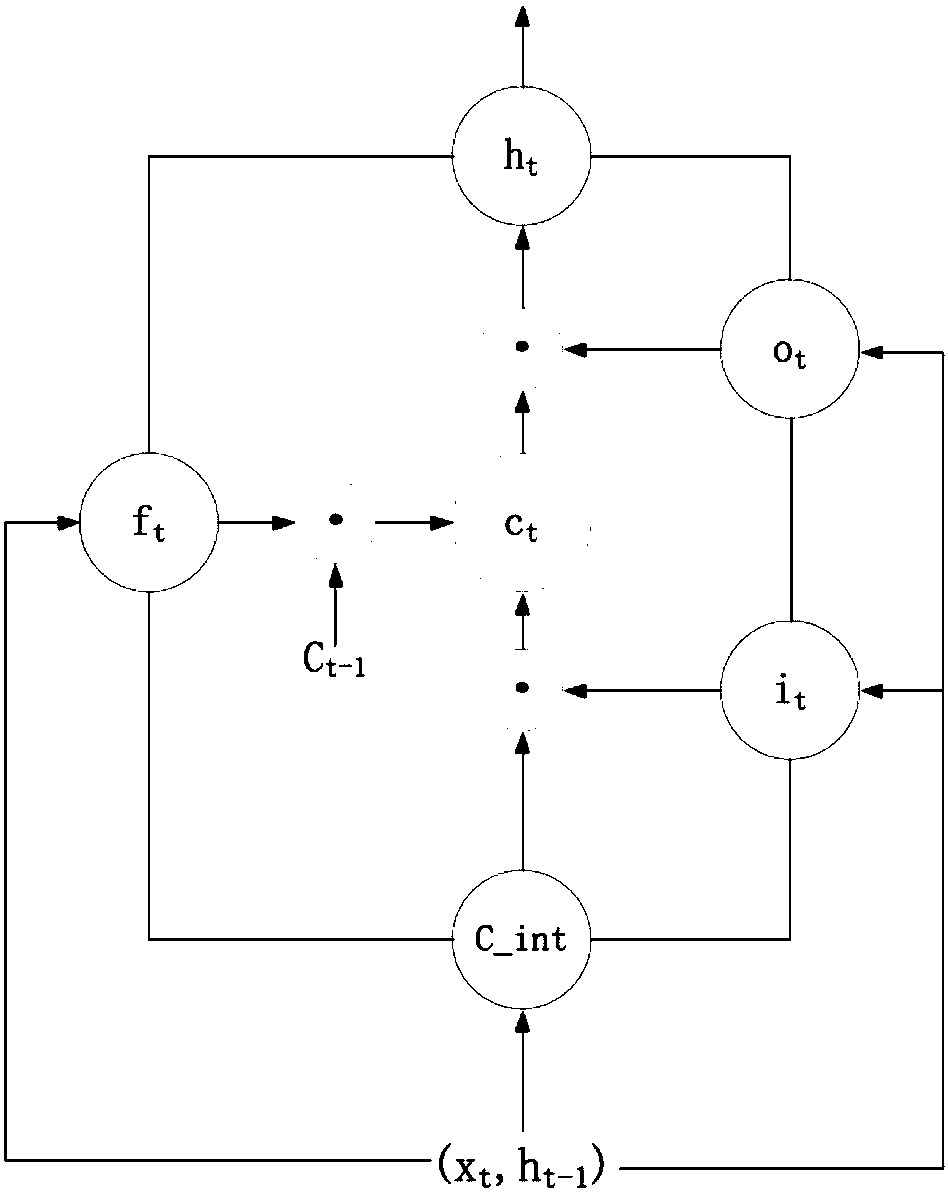

Image subtitle generation method and system fusing visual attention and semantic attention

ActiveCN107608943ASolve build problemsGood effectCharacter and pattern recognitionNeural architecturesAttention modelImaging Feature

The invention discloses an image subtitle generation method and system fusing visual attention and semantic attention. The method comprises the steps of extracting an image feature from each image tobe subjected to subtitle generation through a convolutional neural network to obtain an image feature set; building an LSTM model, and transmitting a previously labeled text description correspondingto each image to be subjected to subtitle generation into the LSTM model to obtain time sequence information; in combination with the image feature set and the time sequence information, generating avisual attention model; in combination with the image feature set, the time sequence information and words of a previous time sequence, generating a semantic attention model; according to the visual attention model and the semantic attention model, generating an automatic balance policy model; according to the image feature set and a text corresponding to the image to be subjected to subtitle generation, building a gLSTM model; according to the gLSTM model and the automatic balance policy model, generating words corresponding to the image to be subjected to subtitle generation by utilizing anMLP (multilayer perceptron) model; and performing serial combination on all the obtained words to generate a subtitle.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

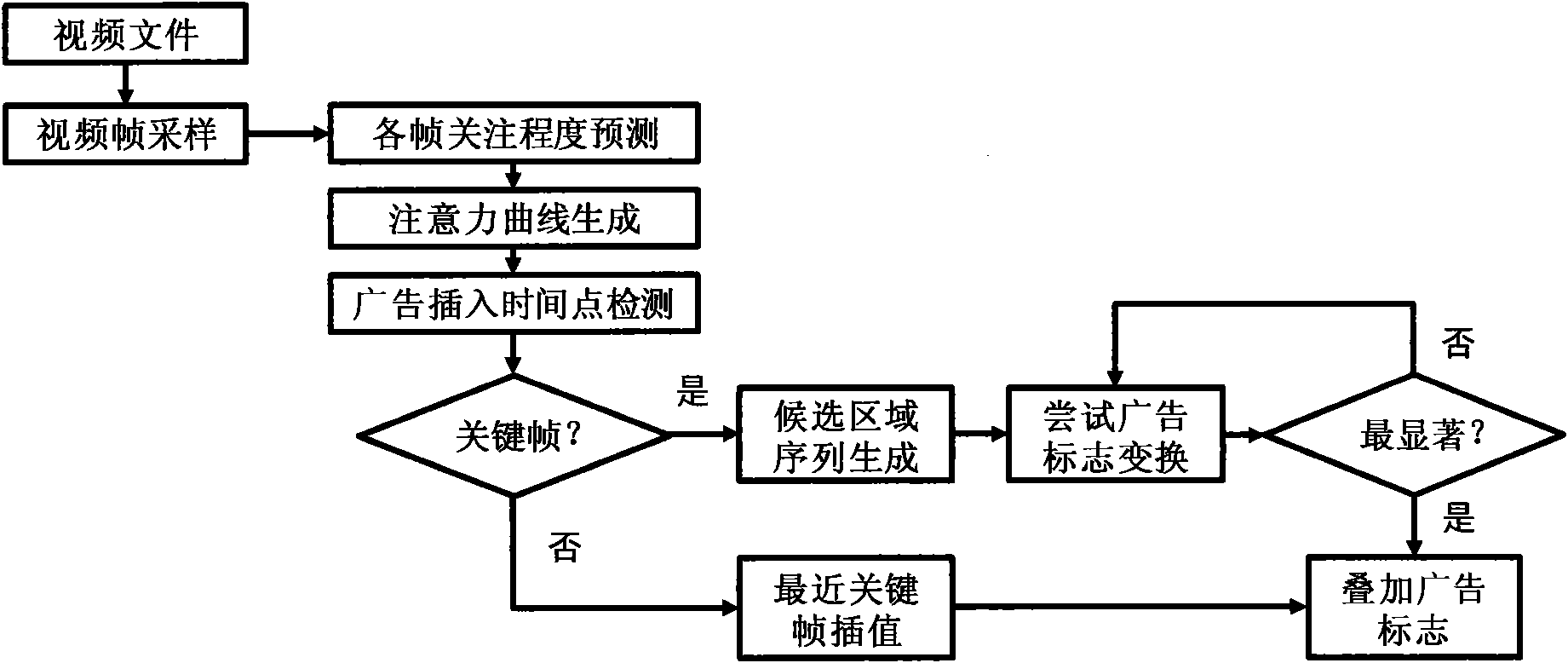

Method and system for inserting and transforming advertisement sign based on visual attention module

InactiveCN101621636AGood advertising effectAttract attentionTelevision system detailsColor television detailsVision basedProper time

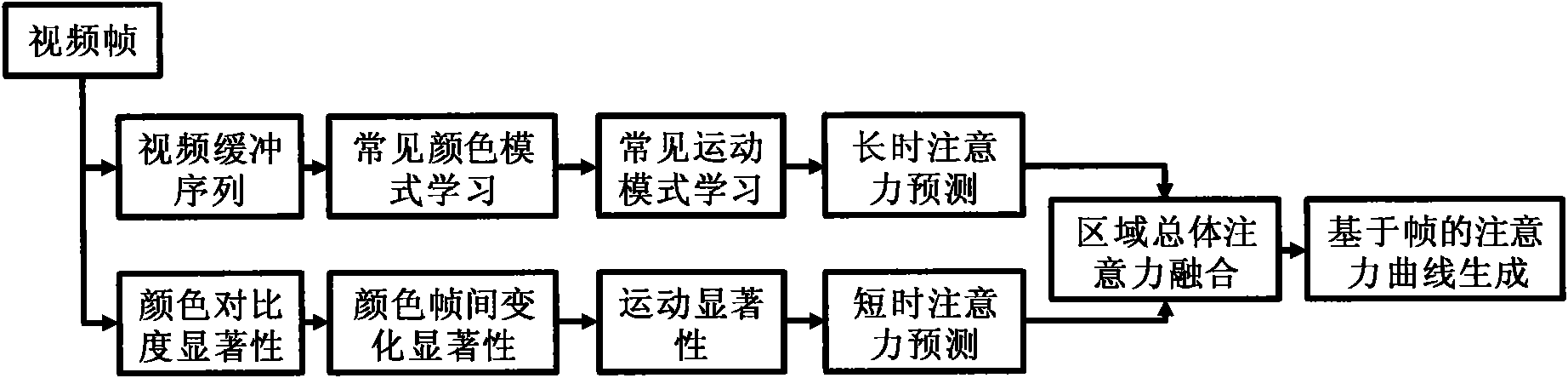

The invention discloses a method and a system for inserting and transforming an advertisement sign based on a visual attention module. The method comprises the following steps: firstly, predicting interest areas in various areas of each frame of video and the attention degree on each frame of a user on the basis of the constructed visual attention model; secondly, determining a time point for inserting an advertisement according to a curve of the attention degree on each frame of the user, evaluating the fitness degree of inserting the advertisement in the various areas on the basis of predicted attention distribution, further acquiring a sequence of candidate areas for inserting the advertisement, and inserting the advertisement in an area with little influence on video content; and finally, inserting the advertisement sign into proper time point and position according to the predicted attention distribution, and performing multiple feature transformation on the advertisement sign to make the advertisement sign attract the attention of users or audience repeatedly. The method and the system can effectively perform automatic insertion and transformation of the advertisement sign, and make the inserted advertisement sign attract the attention of people repeatedly in the condition of not influencing normal watching.

Owner:PEKING UNIV

Visual attention and segmentation system

Described is a bio-inspired vision system for attention and object segmentation capable of computing attention for a natural scene, attending to regions in a scene in their rank of saliency, and extracting the boundary of an attended proto-object based on feature contours to segment the attended object. The attention module can work in both a bottom-up and a top-down mode, the latter allowing for directed searches for specific targets. The region growing module allows for object segmentation that has been shown to work under a variety of natural scenes that would be problematic for traditional object segmentation algorithms. The system can perform at multiple scales of object extraction and possesses a high degree of automation. Lastly, the system can be used by itself for stand-alone searching for salient objects in a scene, or as the front-end of an object recognition and online labeling system.

Owner:HRL LAB

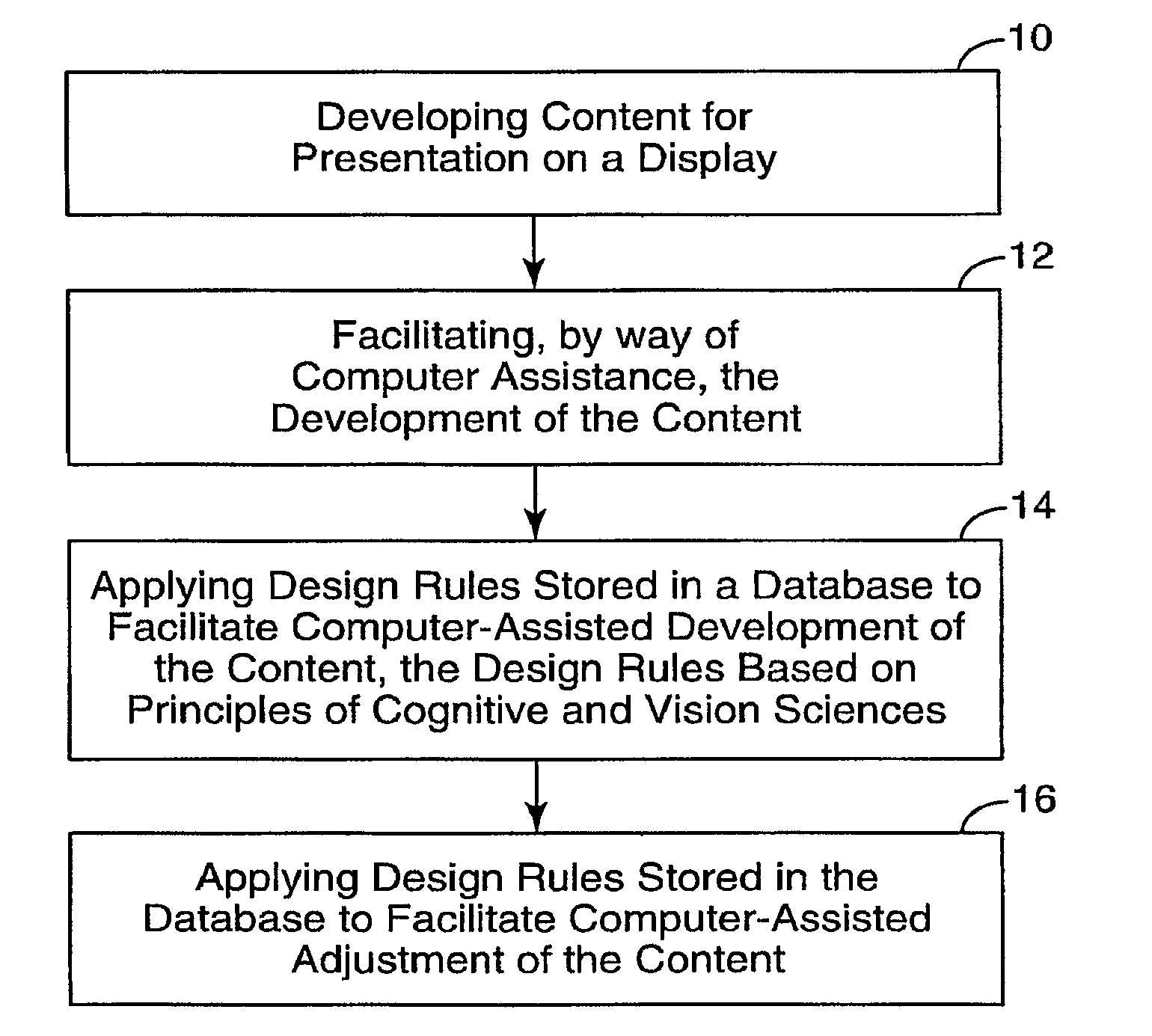

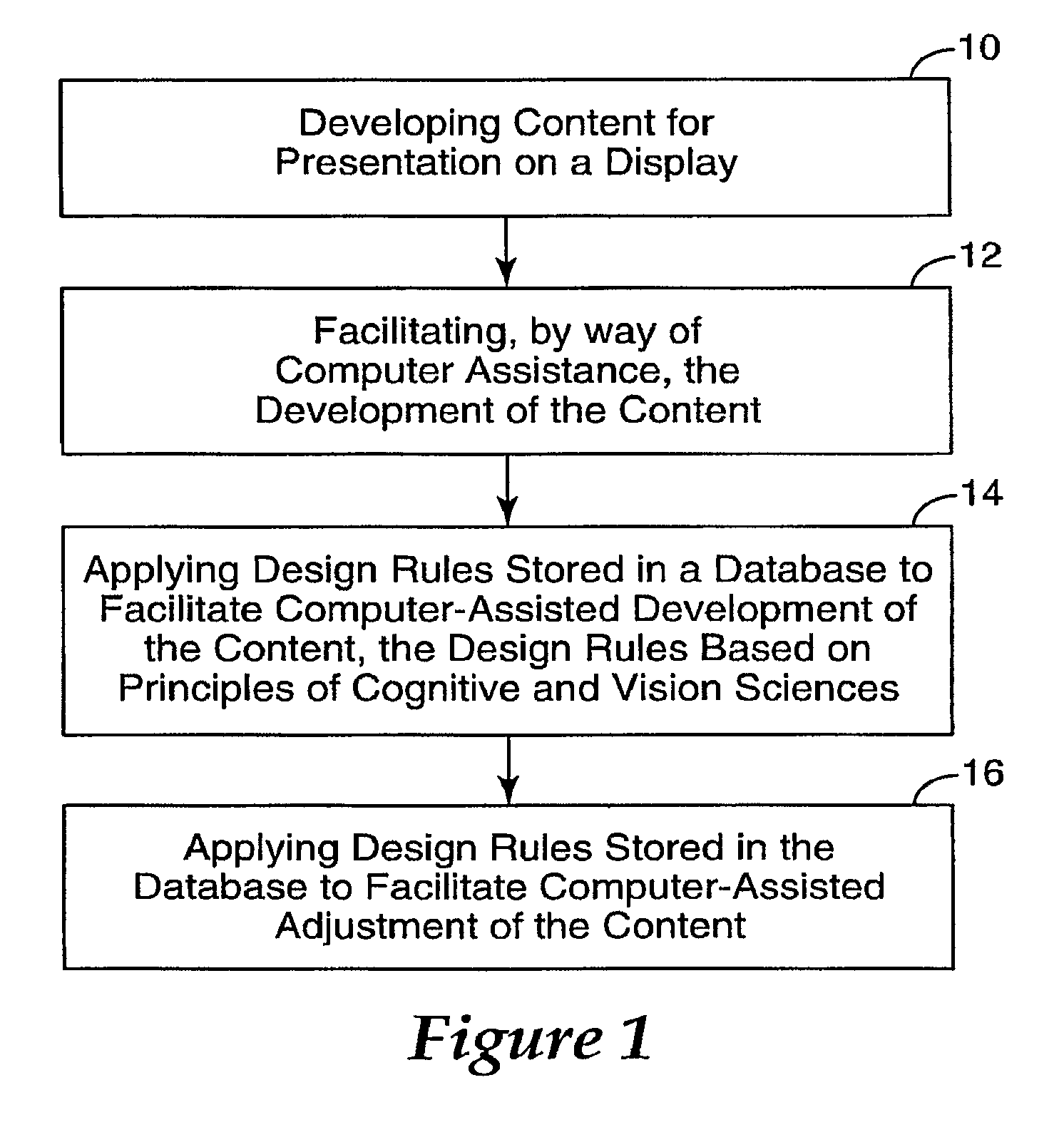

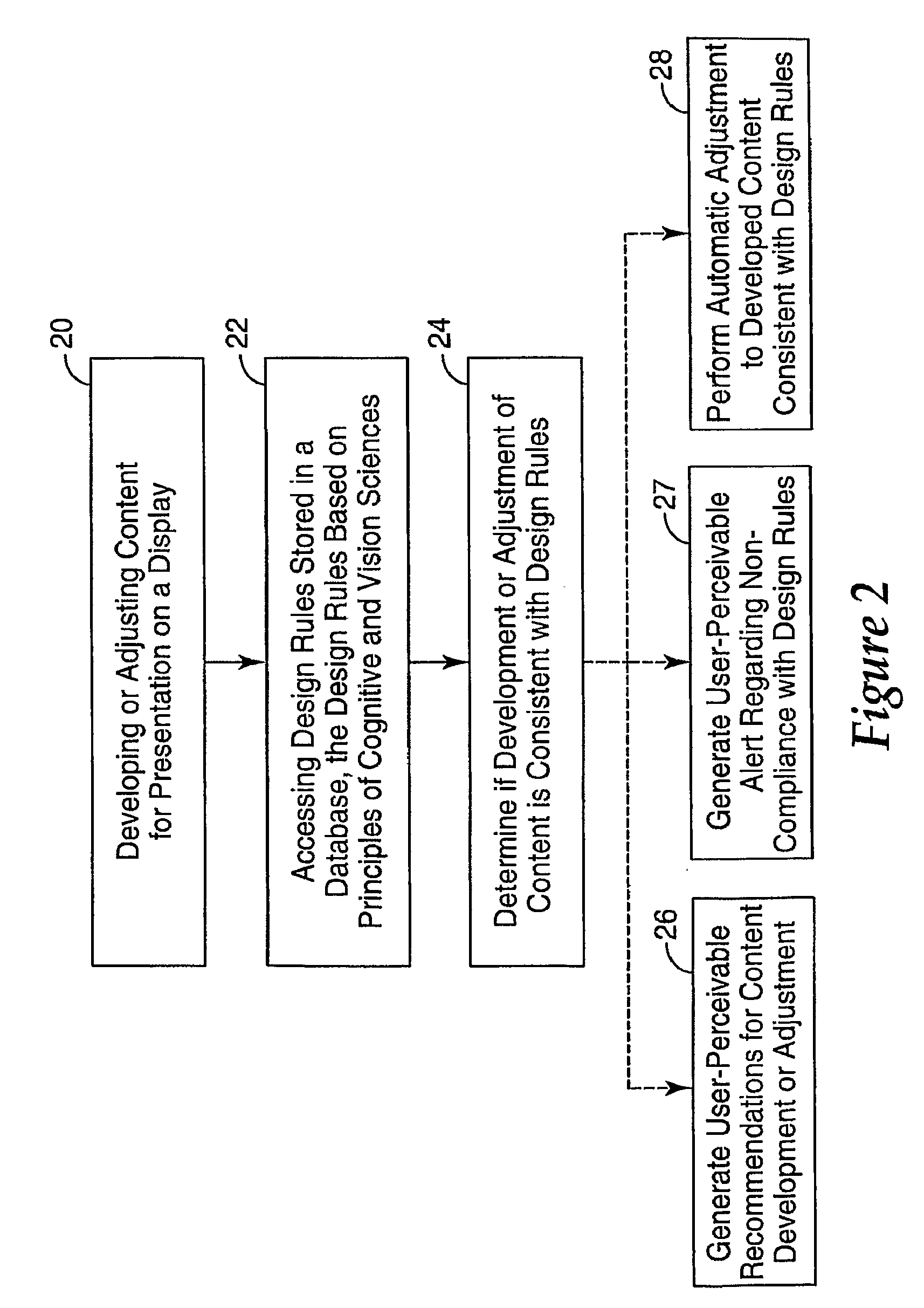

Content development and distribution using cognitive sciences database

InactiveUS20090158179A1Maximizing memory retention and recallImprove readabilityNatural language data processingInference methodsGraphicsText recognition

Computer implemented methods and systems facilitate development and distribution of content for presentation on a display or a multiplicity of networked displays, the content including content elements. The content elements may include graphics, text, video clips, still images, audio clips or web pages. The development of the content is facilitated using a database comprising design rules based on principles of cognitive and vision sciences. The database may include design rules based on visual attention, memory, and / or text recognition, for example.

Owner:3M INNOVATIVE PROPERTIES CO

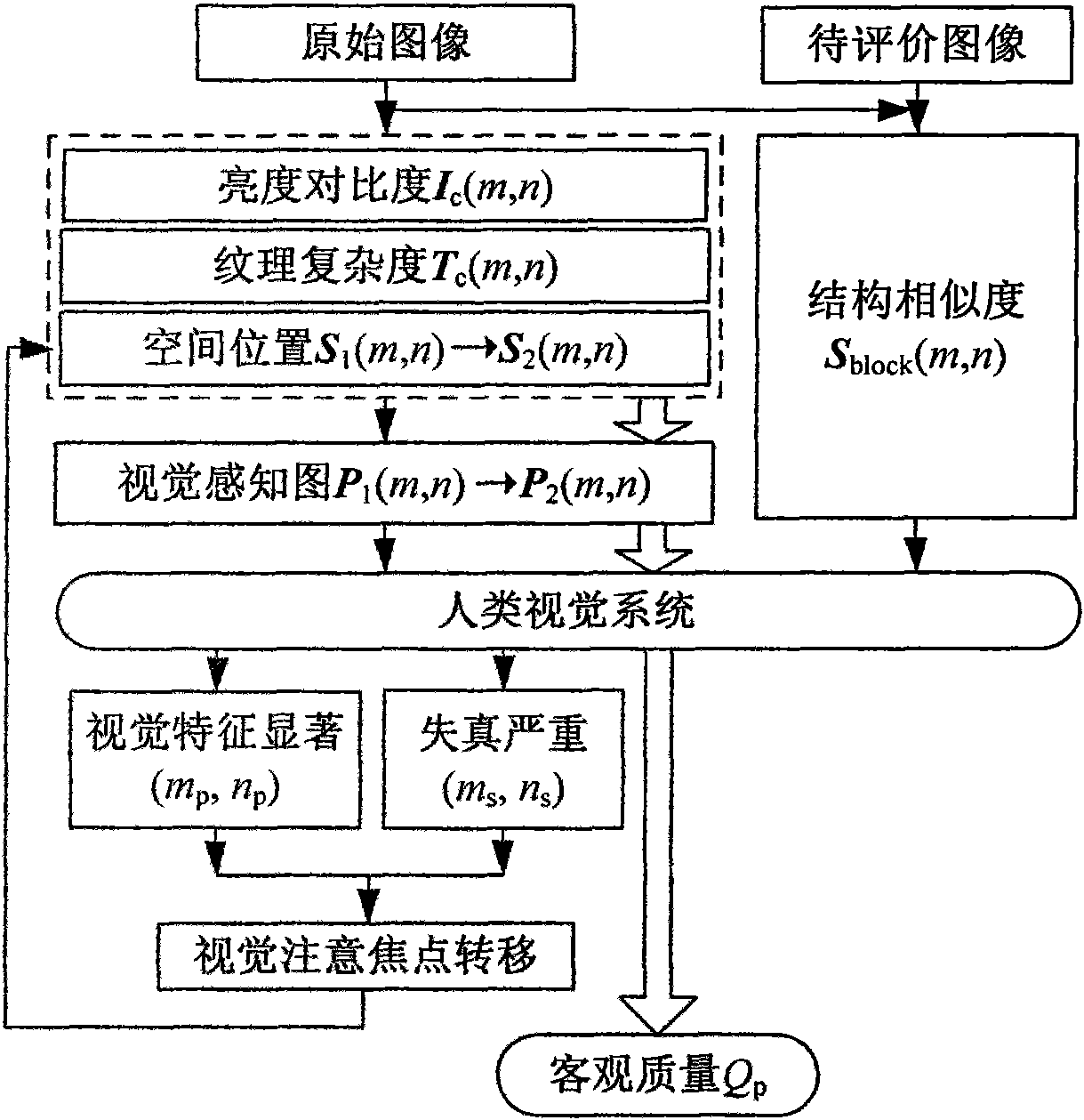

Method for evaluating objective quality of full-reference image

ActiveCN101621709AIn line with subjective evaluationTelevision systemsPattern recognitionObjective quality

The invention discloses a method for evaluating the objective quality of a full-reference image based on structure similarity, comprising the following steps: firstly, utilizing the space domain visual characteristic to obtain a visual perceptual map of an original image and solve for a position at which visual perception is remarkable; secondly, utilizing an evaluating method based on the structure similarity to solve for a structure similarity drawing SSIM (i, j) between the original image and a distorted image, calculating relative quality of the distorted image, and solving for a position of severe distortion; thirdly, defining the principle of visual attention focus transfer, ensuring a new visual attention focus, and producing a new visual perceptual map after the visual attention focus transfer; and fourthly, obtaining the objective evaluation of the image quality by using the weighted structure similarity of the produced visual perceptual map. The method is suitable for design of various image coding and treating algorithm as well as comparison of different algorithm effects, more accords with a human subjective evaluation on the aspect of an evaluating result of the image, and has a wide application prospect.

Owner:ZHEJIANG UNIV

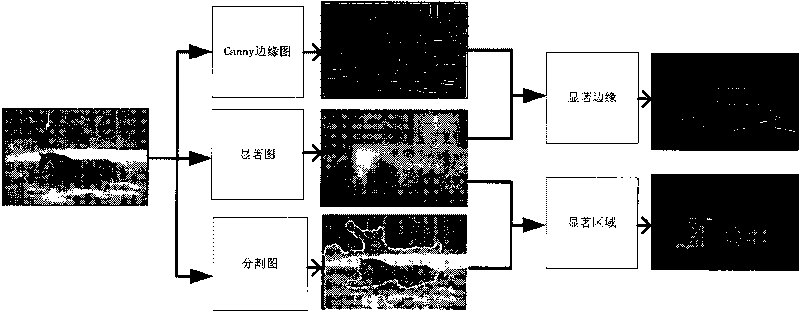

Image semantic retrieving method based on visual attention model

InactiveCN101706780AResolve ambiguityImprove image retrieval performanceImage enhancementSpecial data processing applicationsVision basedSemantic search

The invention provides an image semantic retrieving method based on a visual attention mechanism model, which is driven by data completely, thus understanding the semantics of images from an angle of a user as far as possible under the condition of no need to increase the interactive burden of the user, and being close to the perception of the user so as to improve the retrieving performance. The image semantic retrieving method has the advantages that: (1) a visual attention mechanism theory in a visual cognition theory is introduced into image retrieve; (2) the method is a completely bottom-up retrieve mode, thus having no need of user burden brought by user feedback; and (3) obvious edge information and obvious regional information in images are simultaneously considered, the mode of retrieval integration is realized, and the performance of image retrieval is improved.

Owner:BEIJING JIAOTONG UNIV

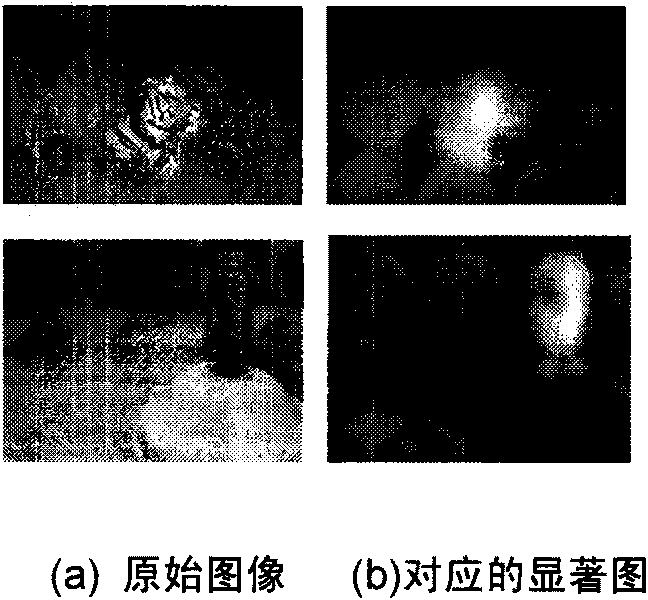

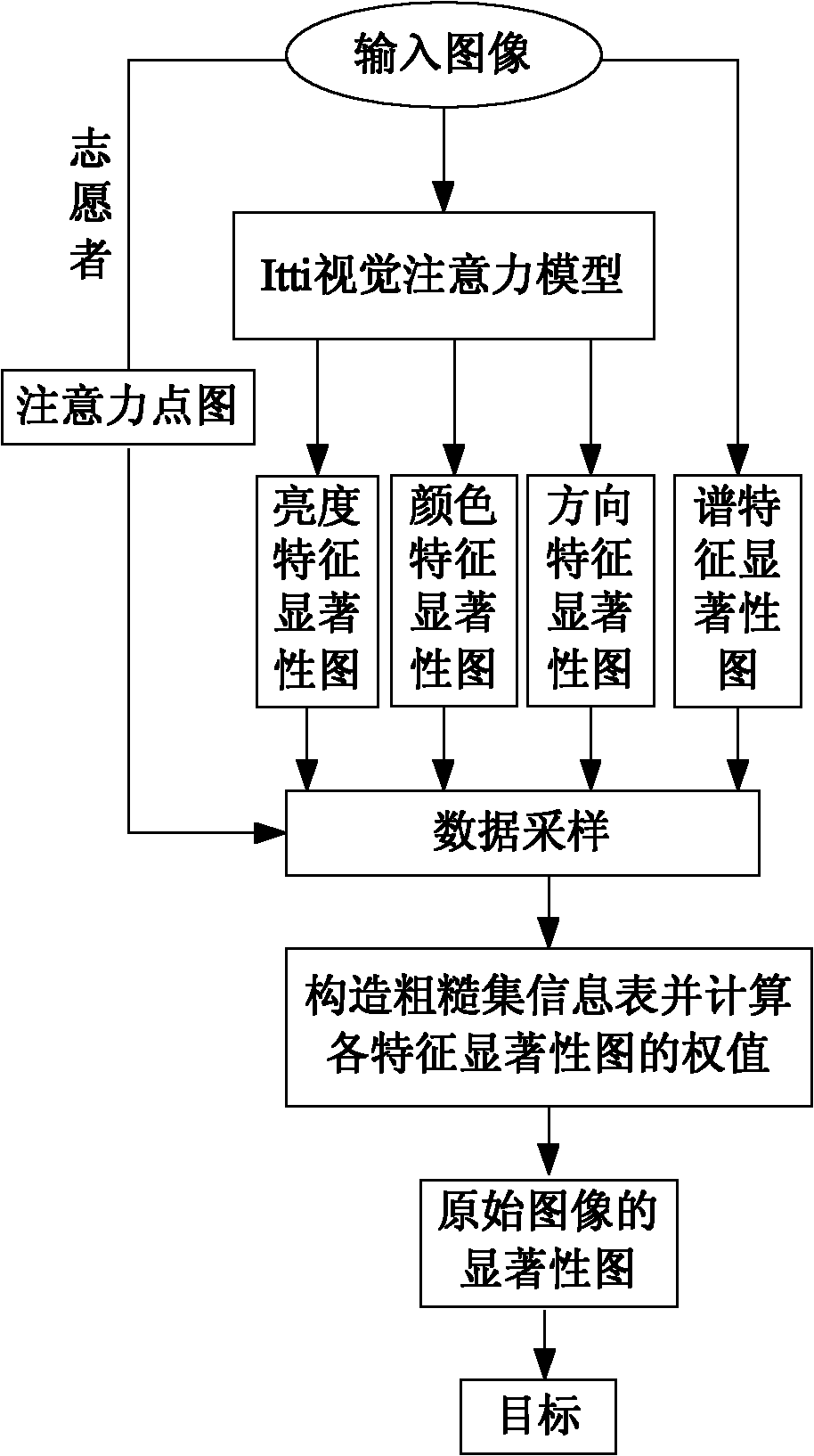

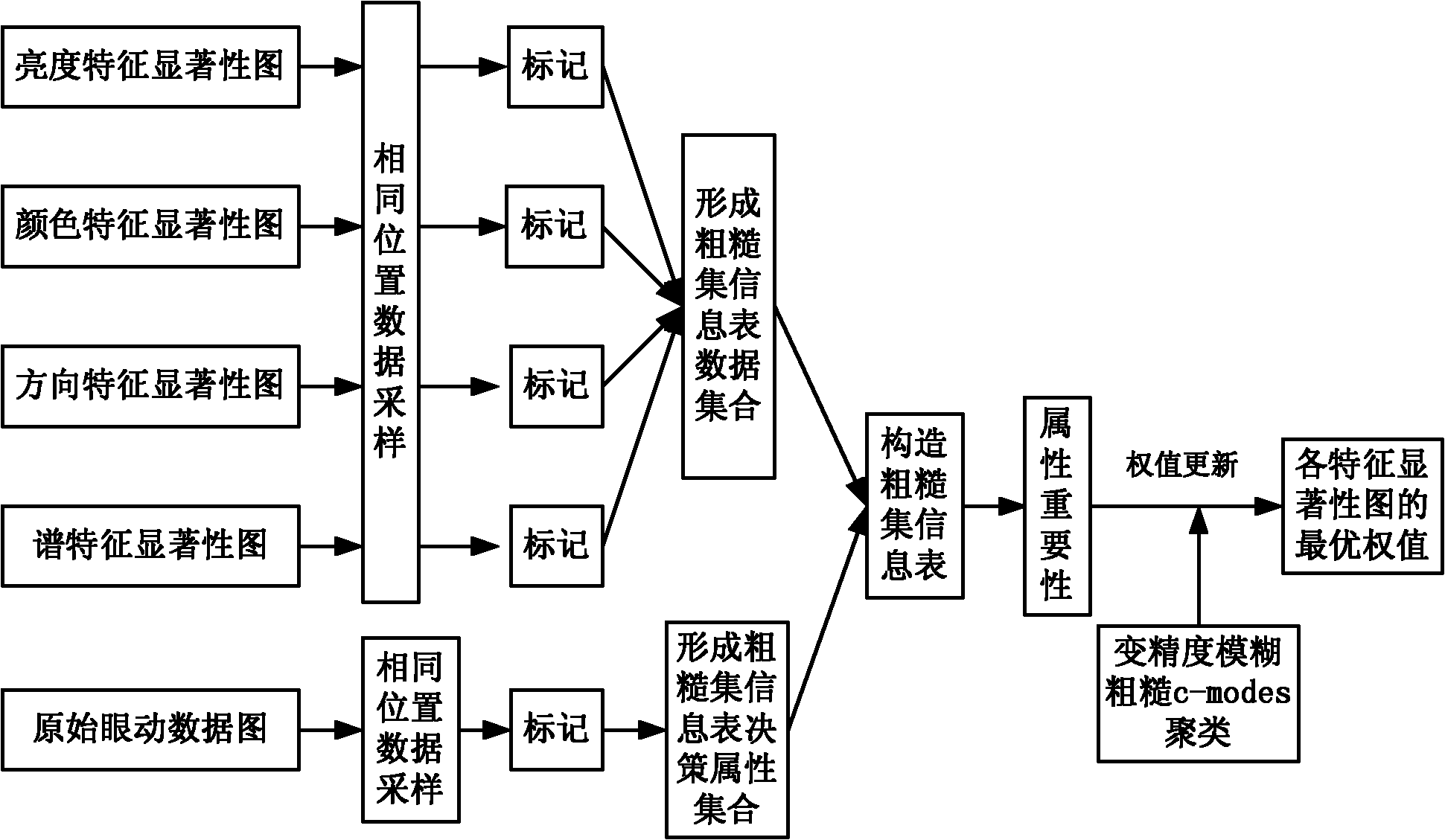

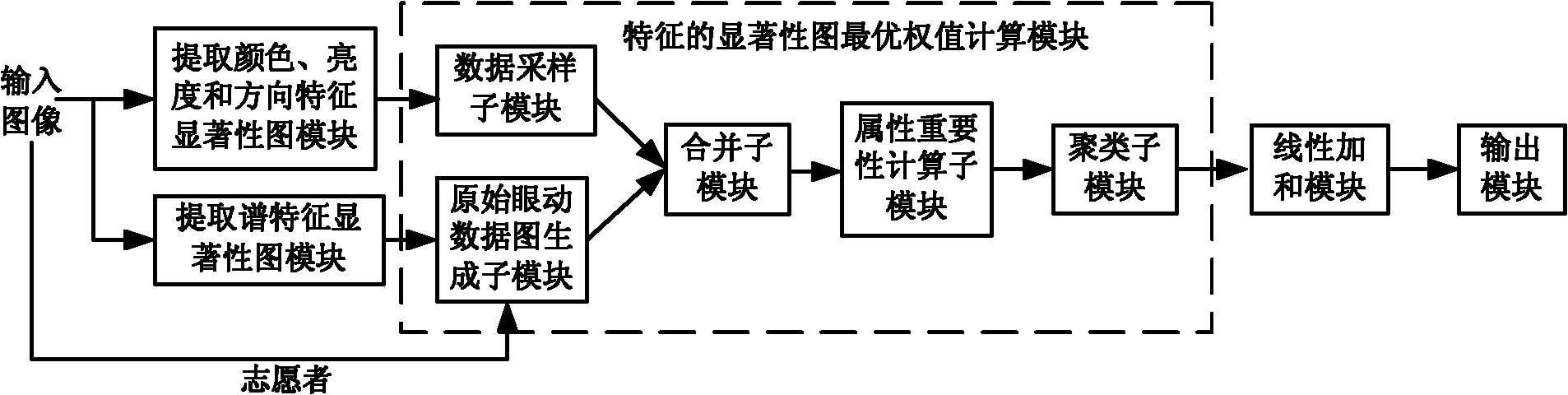

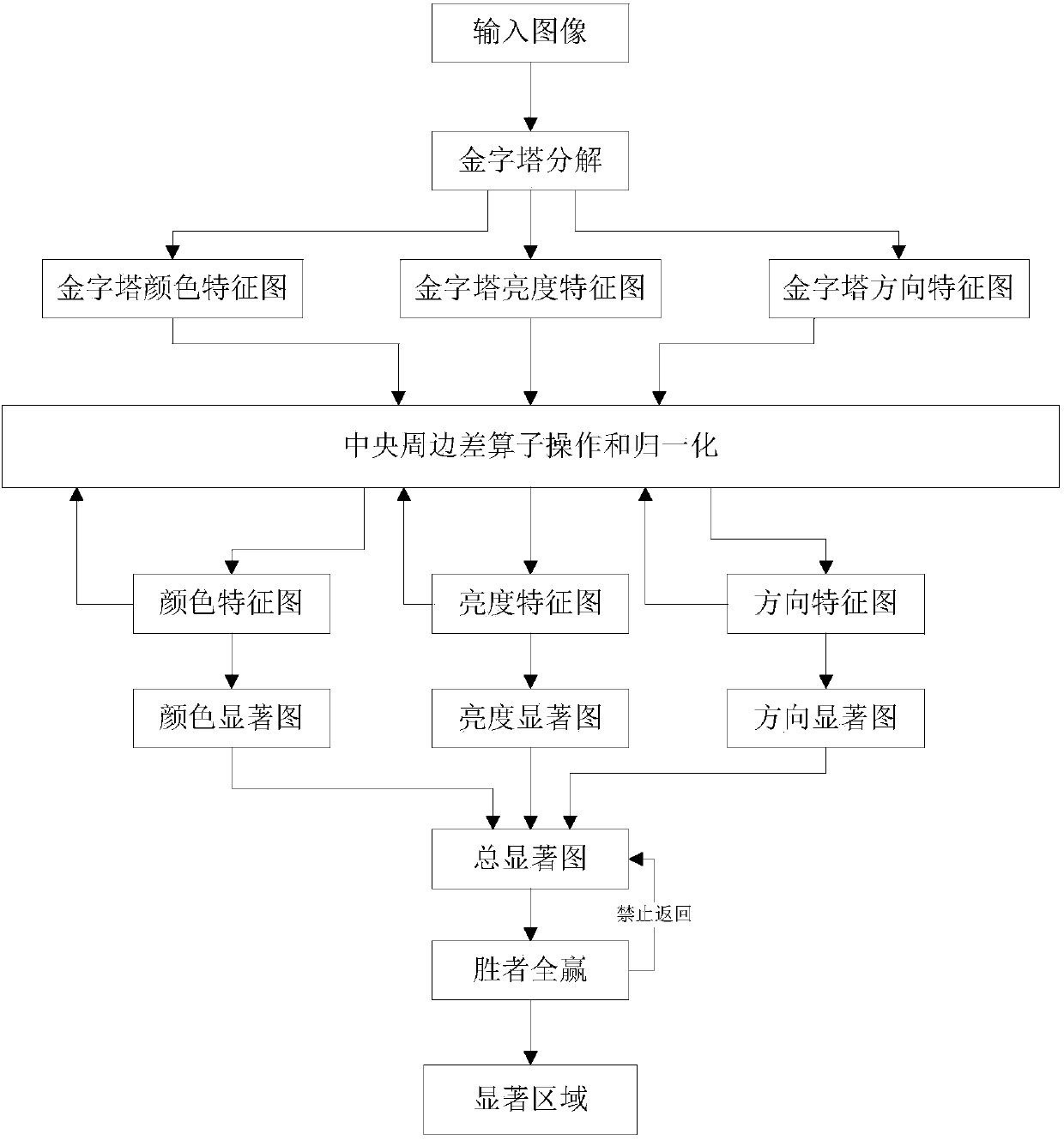

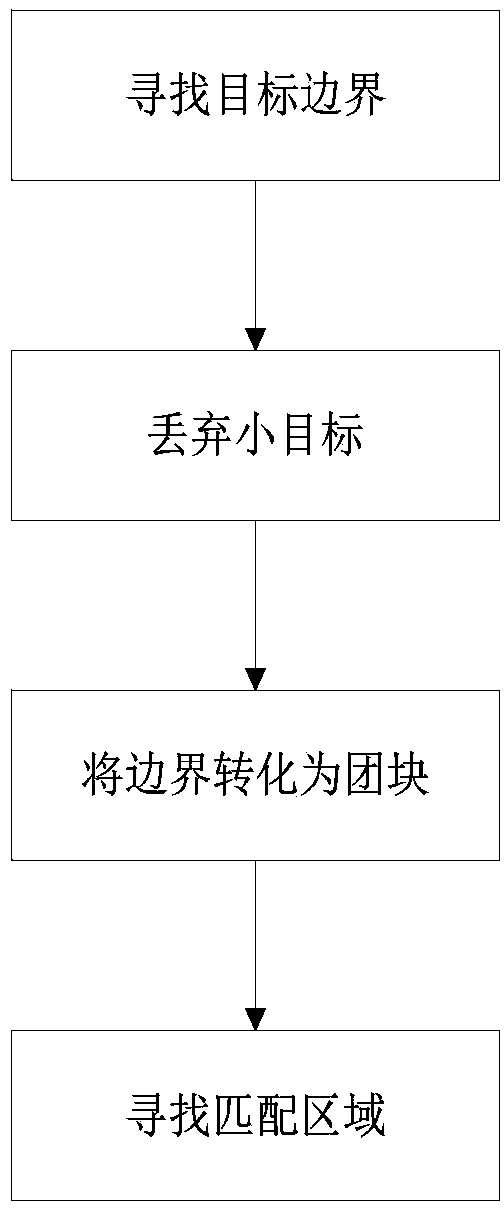

Improved visual attention model-based method of natural scene object detection

InactiveCN101980248AImprove accuracyIncrease contributionImage analysisCharacter and pattern recognitionImage extractionModel extraction

The invention discloses an improved visual attention model-based method of a natural scene object detection, which mainly solves the problems of low detection accuracy rate and high false detection rate in the conventional visual attention model-based object detection. The method comprises the following steps of: (1) inputting an image to be detected, and extracting feature saliency images of brightness, color and direction by using a visual attention model of Itti; (2) extracting a feature saliency image of a spectrum of an original image; (3) performing data sampling and marking on the feature saliency images of the brightness, the color, the direction and the spectrum and an attention image of an experimenter to form a final rough set information table; (4) constructing attribute significance according to the rough set information table, and obtaining the optimal weight value of the feature images by clustering ; and (5) weighing feature sub-images to obtain a saliency image of the original image, wherein a saliency area corresponding to the saliency image is a target position area. The method can more effectively detect a visual attention area in a natural scene and position objects in the visual attention area.

Owner:XIDIAN UNIV

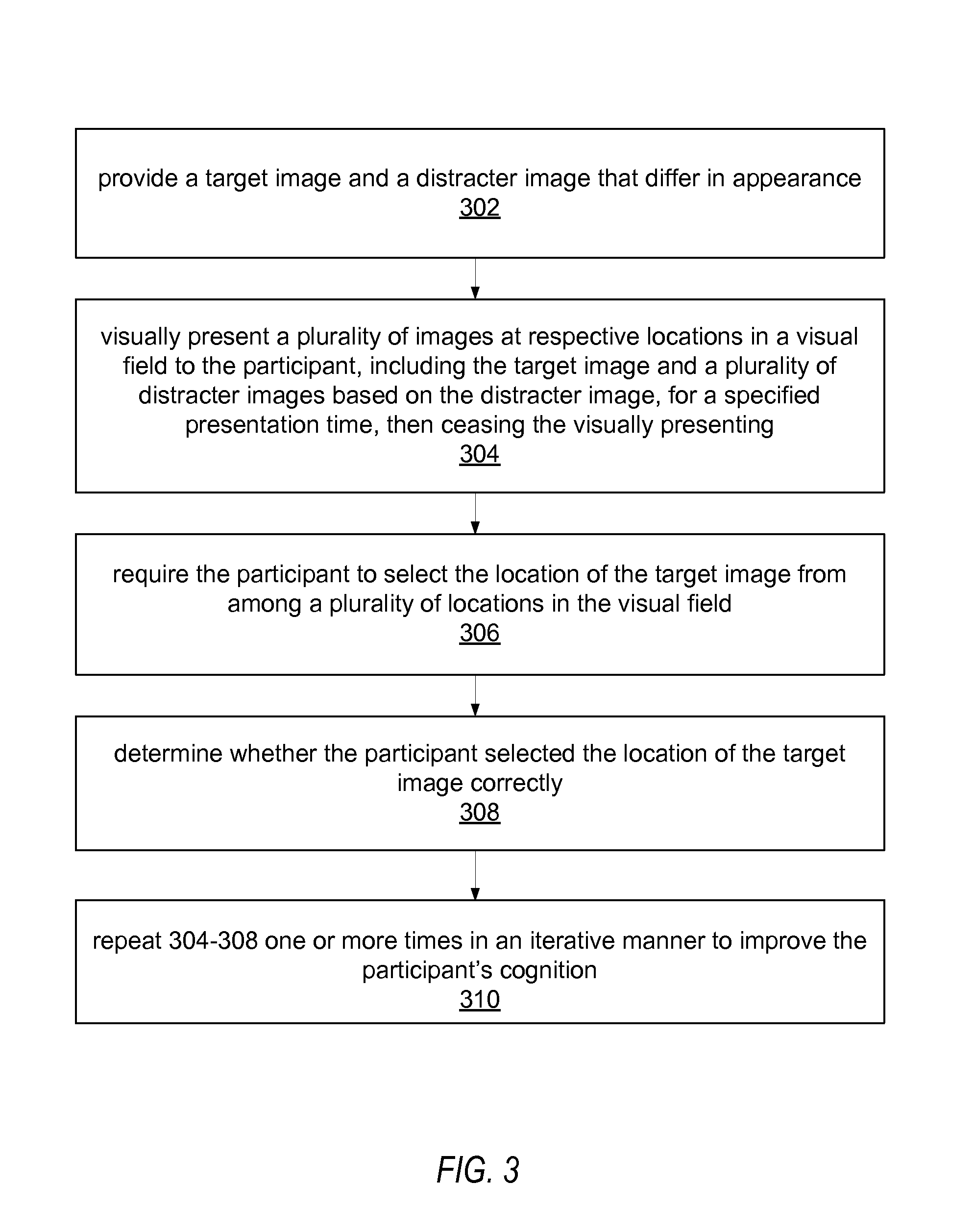

Cognitive training using visual searches

InactiveUS20070218439A1Increase awarenessEffective capacityEducational modelsElectrical appliancesPattern recognitionVisual presentation

Computer-implemented method for enhancing cognition of a participant using visual search. A target image and distracter image are provided for visual presentation. Multiple images, including the target image and multiple distracter images based on the distracter image, are temporarily visually presented at respective locations, then removed. The participant selects the target image location from multiple locations in the visual field, and the selection's correctness / incorrectness is determined. The visually presenting, requiring, and determining are repeated to improve the participant's cognition, e.g., efficiency, capacity and effective spatial extent of a participant's visual attention. In a dual attention version, potential target images differing by a specified attribute are provided, one of which is the target image. An indication of the specified attribute is also displayed. The participant selects the location of the target image from the multiple locations, including the locations of the potential target images, based on the indication.

Owner:POSIT SCI CORP

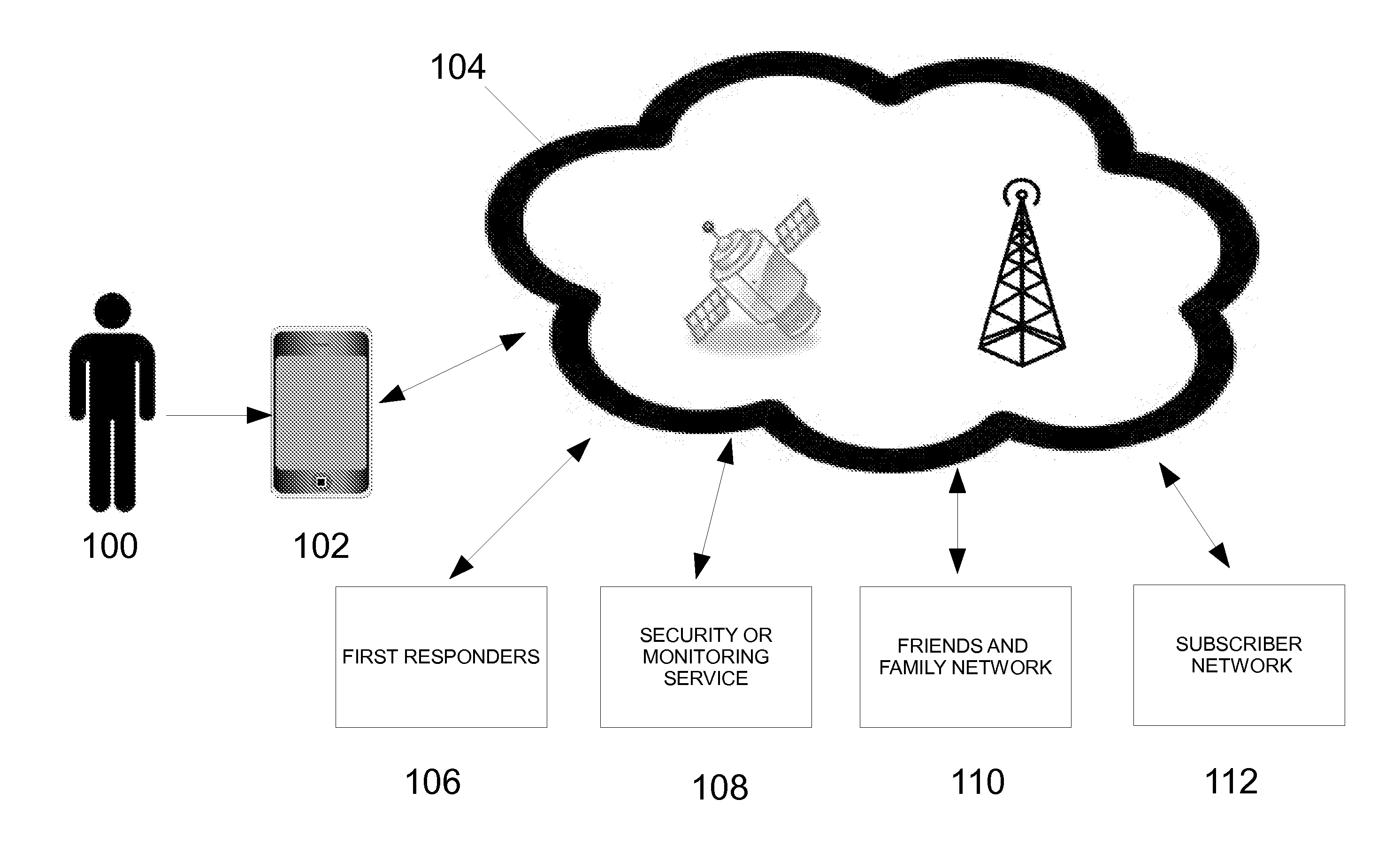

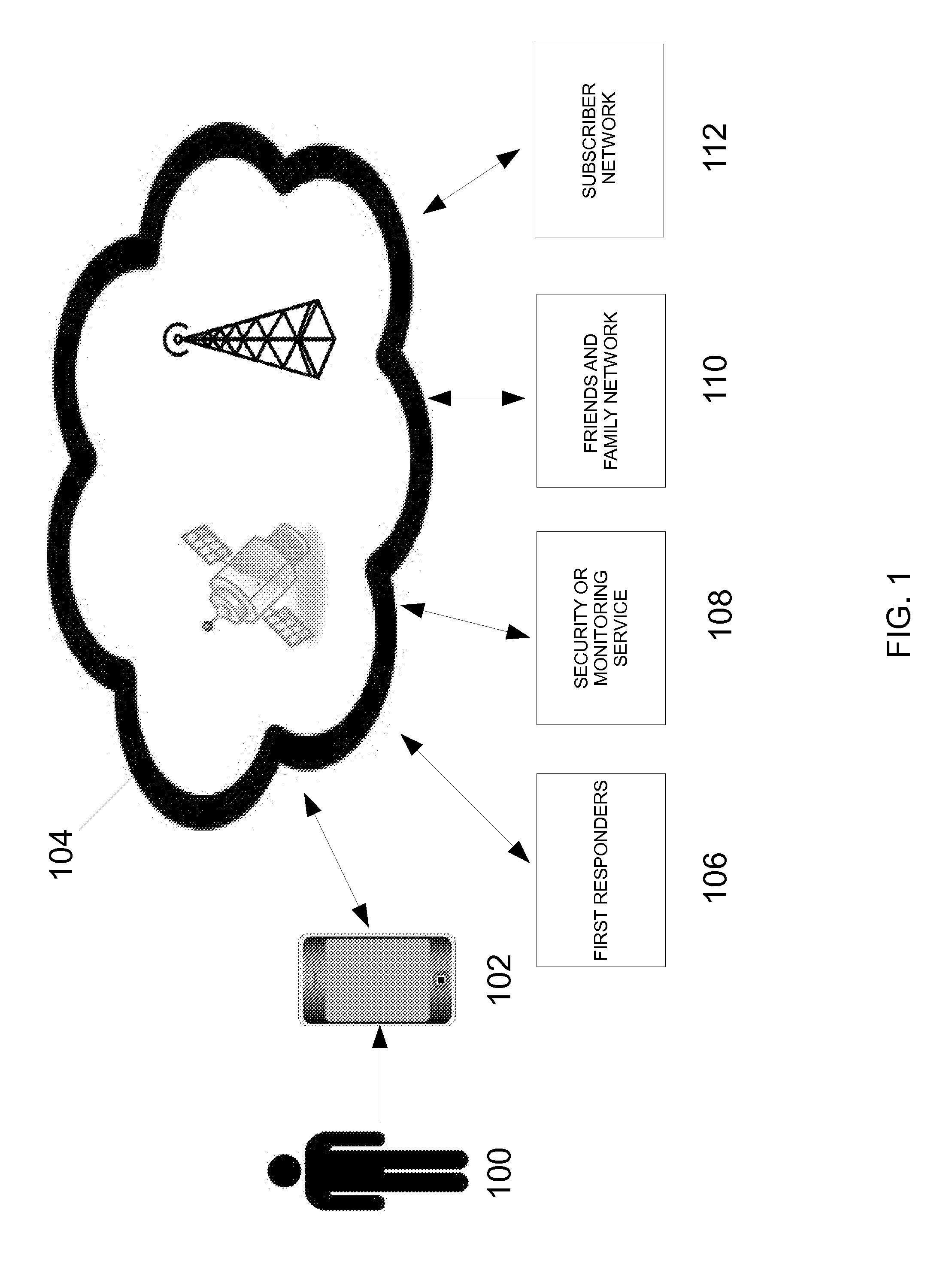

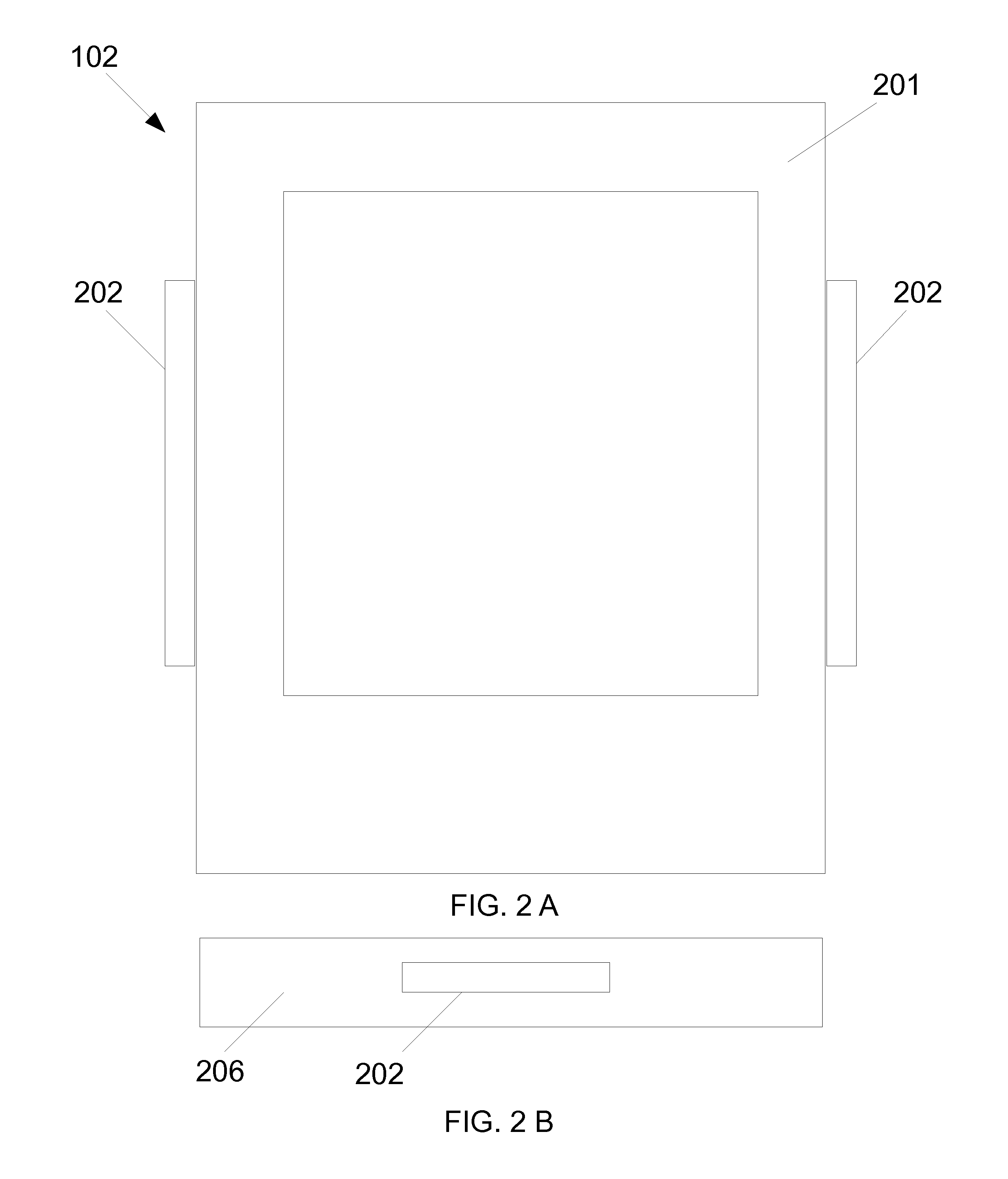

Systems and methods for initiating a distress signal from a mobile device without requiring focused visual attention from a user

InactiveUS20120282886A1Emergency connection handlingSubstation equipmentThird partyComputer hardware

The disclosure generally relates to systems and methods for allowing a person to activate a distress signal via a portable device, such as a mobile phone, without having to physically look at the portable device. For example, if a victim is being held hostage, attacked, threatened, etc., and cannot use their mobile phone in plain sight of the hostage-takers, the present invention allows the victim to silently activate a distress signal that can be sent to various third-party response providers, such as a 911 dispatch center, a private security / monitoring service and a friends and family network. The distress signal is activated through various software- and / or hardware-based tactile mechanisms and buttons provided on the portable device.

Owner:AMIS DAVID

Method for extracting video interested region based on visual attention

InactiveCN101651772AImprove stabilitySolve the noise problemTelevision system detailsPulse modulation television signal transmissionShortest distanceVision based

The invention discloses a method for extracting a video interested region based on a visual attention. The interested region extracted by the method combines a static image domain visual attention, amotion visual attention and a depth perception attention, and the method efficiently restrains the internal unicity and inaccuracy of each visual attention extraction and solves the problem of noisescaused by a complex background in the static image domain visual attention and the problem that the motion visual attention can not extract an interested region with small local motion and motion amplitude, thus the method improves the calculation accuracy, enhances the algorithm stability and extracts an interested region from a background with complex veins and the motion environment; furthermore, the interested region obtained by the method accords with the depth perception feature interesting on an object having a strong depth perception or a short distance in the stereovision and the semantic characteristic of human eye stereovision besides according with the vision feature of human eye interesting a static grain video frame and interesting a motion object.

Owner:上海斯派克斯科技有限公司

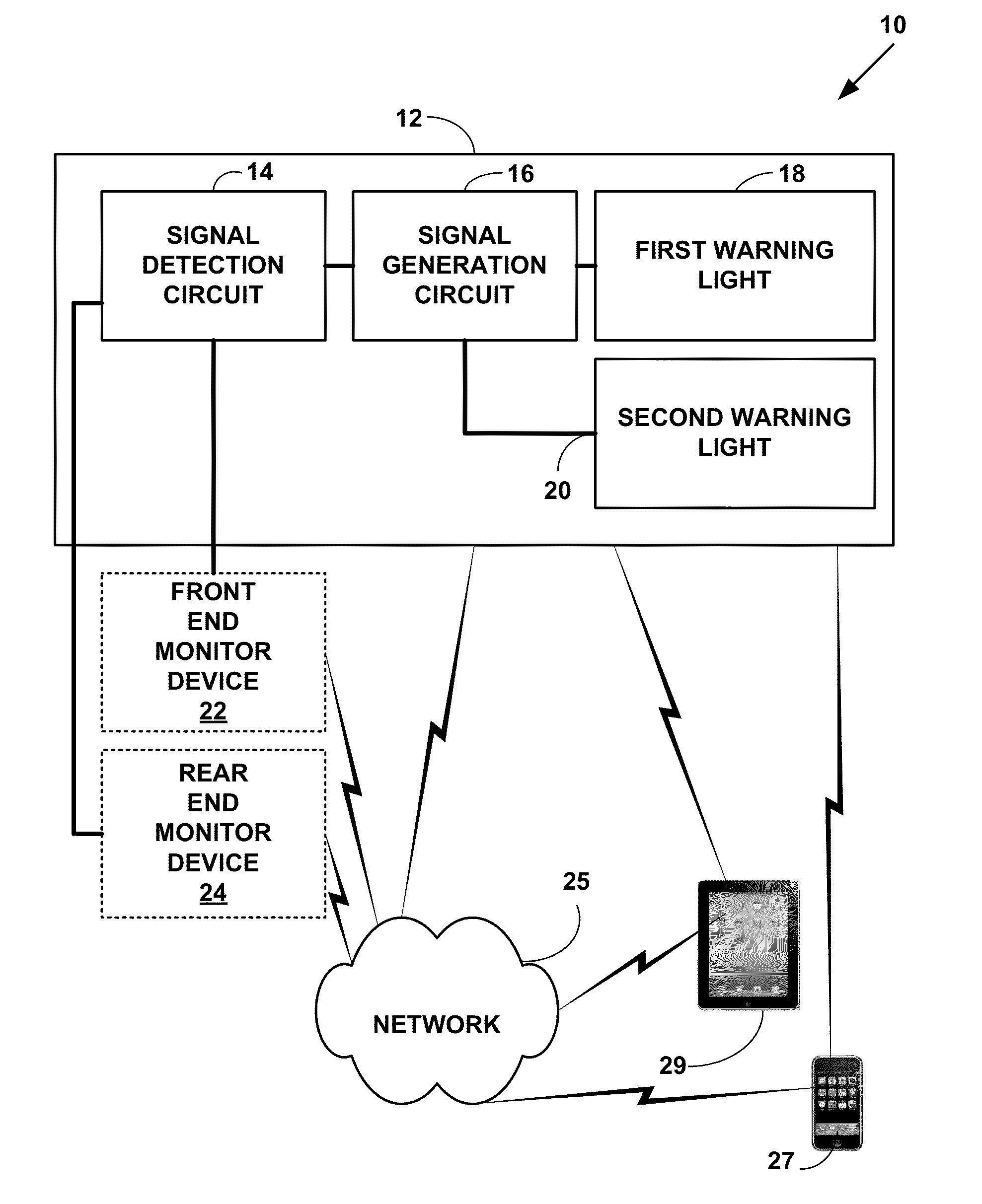

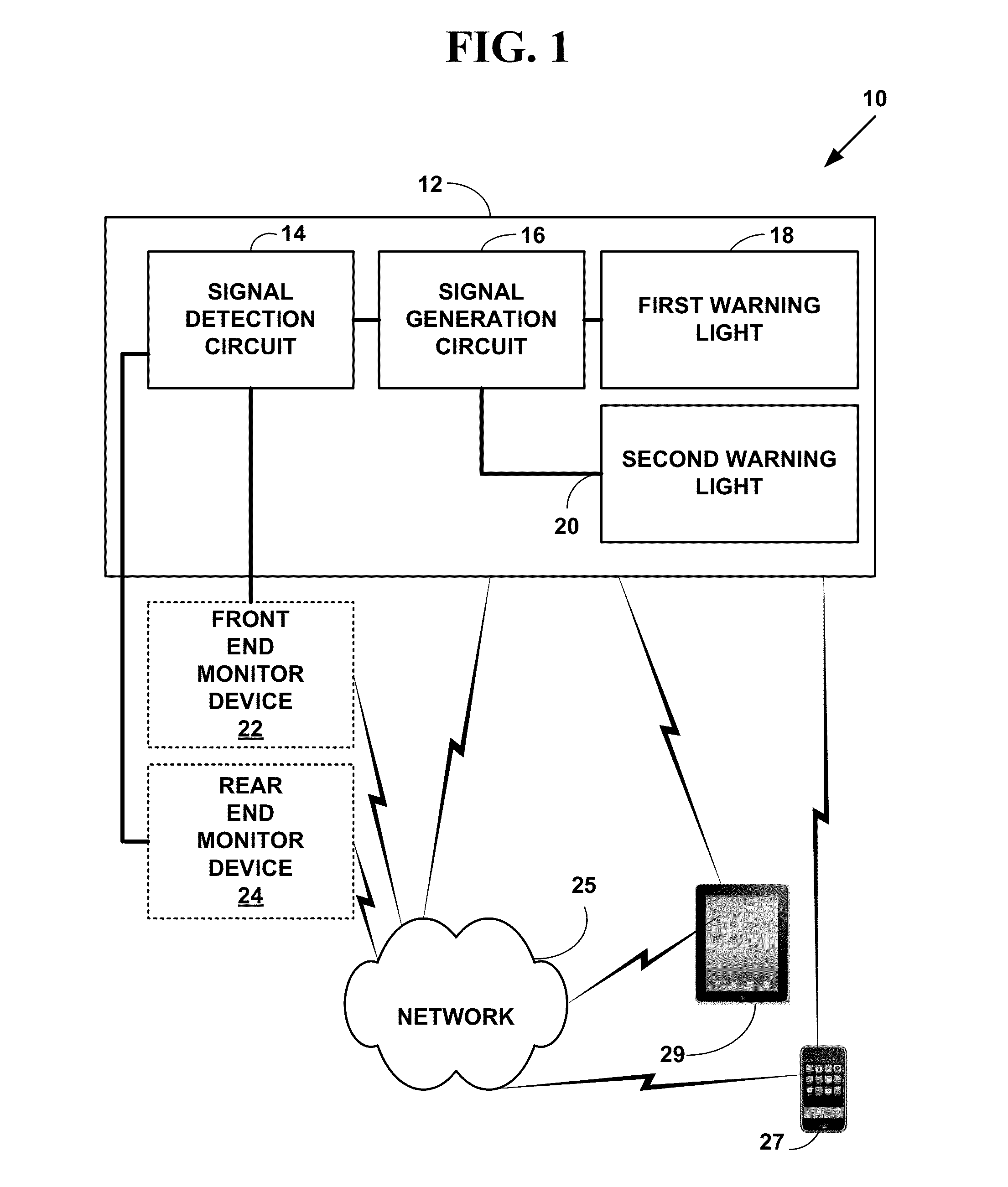

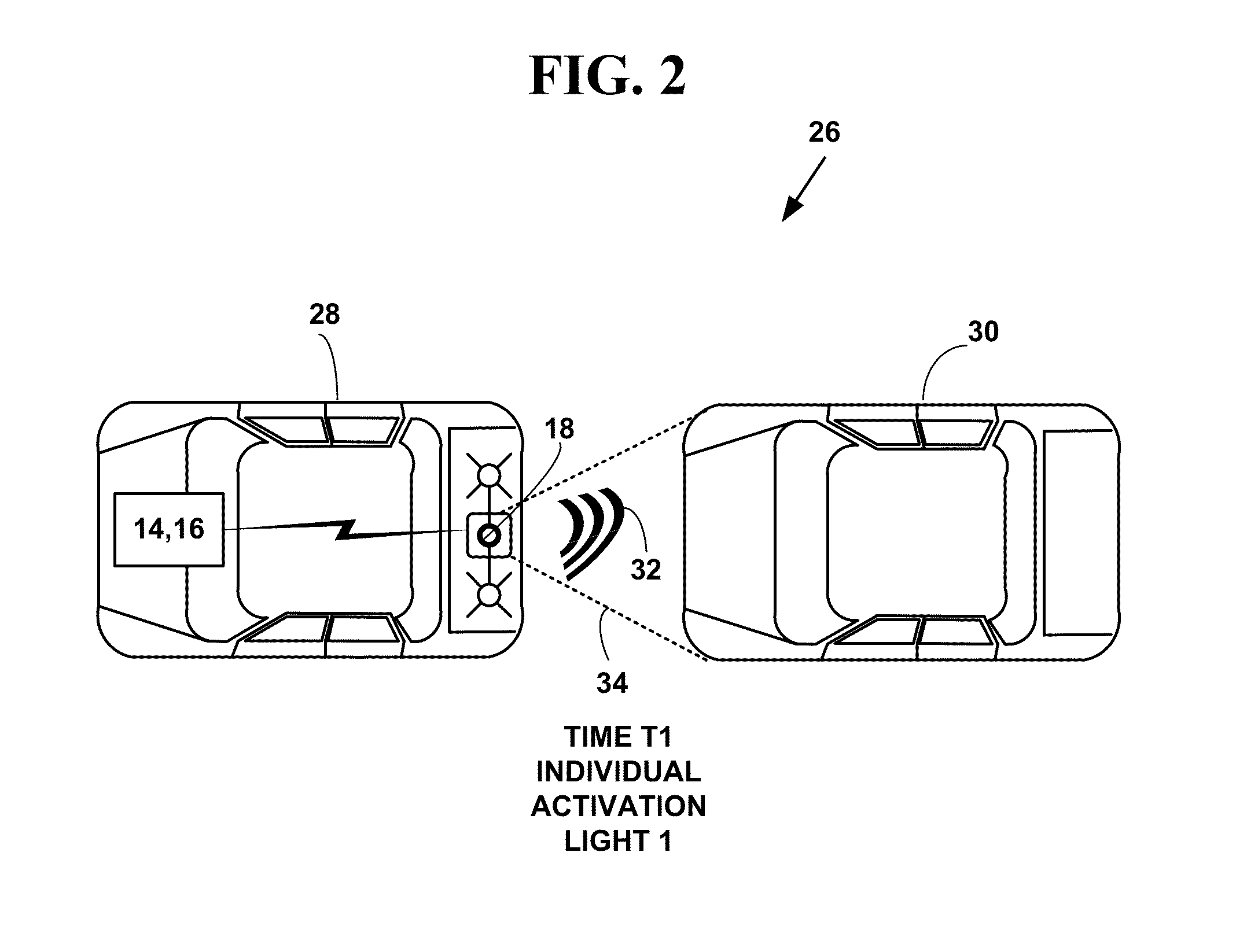

Rear end collision prevention apparatus

InactiveUS20140368324A1Reduce and prevent rear end collisionReducing or preventing driver acclimatizationAnti-collision systemsOptical signallingRear-end collisionEngineering

A rear end collision apparatus. The rear end collision apparatus includes a first warning light to capture a driver's attention indicating a slow down or stop event is about to occur or is occurring and re-focuses a drivers' visual attention point and a different type of second warning light indicating the vehicle has not yet started re-accelerating after a slowing or stop event. The rear end collision prevention apparatus may be integrated into a Center High Mount Stop Lamp (CHMSL) on a vehicle or used as a separate apparatus. The read end collision apparatus helps reduce or prevent rear end collisions of vehicles and reducing or preventing driver acclimatization to warning lights in a CHMSL or other rear end collision warning lights.

Owner:SEIFERT JERRY A

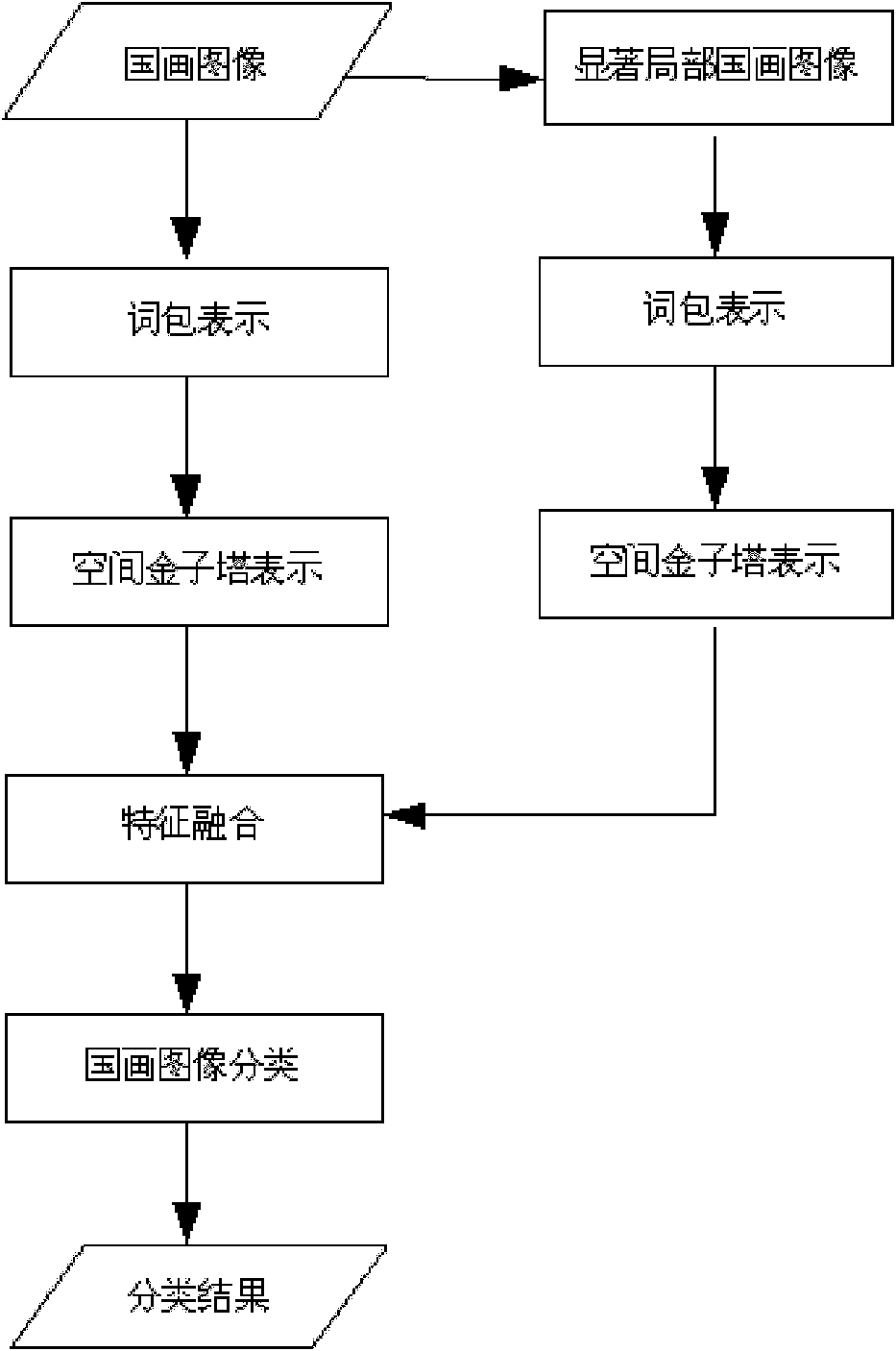

Chinese painting image identifying method based on local semantic concept

InactiveCN102054178AImprove accuracyAchieve integrationCharacter and pattern recognitionSupport vector machineClassification methods

The invention relates to a Chinese painting image identifying method based on a local semantic concept, which comprises the following steps: 1) collecting the image of a Chinese painting works to be identified by using a scanning device and storing the image into a computer; 2) dividing the collected image of the Chinese painting works into a training sample set and a testing sample set by using a random withdrawal device; 3) respectively extracting an obvious area image from the image of the Chinese painting works from the training sample set and the testing sample set by using a visual attention model; 4) establishing an image word-packaging model of the Chinese painting works for the image of the Chinese painting works and corresponding obvious area image in the training sample set; 5) generating two corresponding spatial pyramid feature column diagrams according to the image word-packaging model and a spatial pyramid model; 6) confusing the two spatial pyramid feature column diagrams generated in step 5) by using a serial confusing method; and 7) identifying the Chinese painting image to be identified in the testing sample set by using more than one classifying method of clustering method, K nearest neighbor method, neural network method and support vector machine method, and outputting an identifying result in the manner of identifying accuracy rate and confusion matrix.

Owner:BEIJING UNION UNIVERSITY

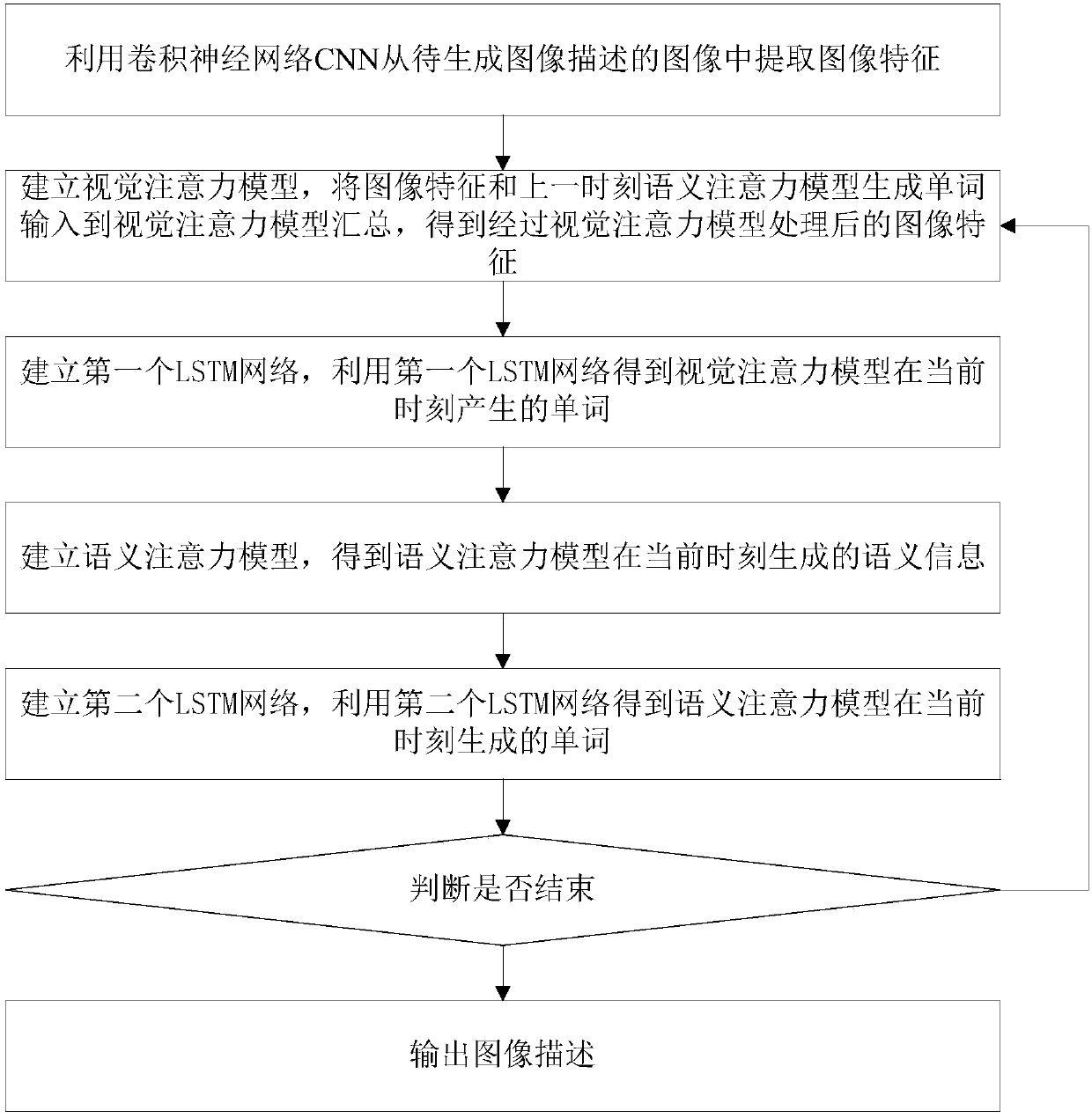

Image description method and system based on vision and semantic attention combined strategy

InactiveCN107563498AReduce dependenceAccurate descriptionCharacter and pattern recognitionNeural architecturesAttention modelSemantics

The invention discloses an image description method and system based on a vision and semantic attention combined strategy. The steps include utilizing a convolutional neural network (CNN) to extract image features from an image whose image description is to be generated; utilizing a visual attention model of the image to process the image features, feeding the image features processed by the visual attention model to a first LSTM network to generate words, then utilizing a semantic attention model to process the generated words and predefined labels to obtain semantic information, then utilizing a second LSTM network to process semantics to obtain words generated by the semantic attention model, repeating the abovementioned steps, and finally performing series combination on all the obtained words to generate image description. The method provided by the invention not only utilizes a summary of the input image, but also enriches information in the aspects of vision and semantics, and enables a generated sentence to reflect content of the image more truly.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

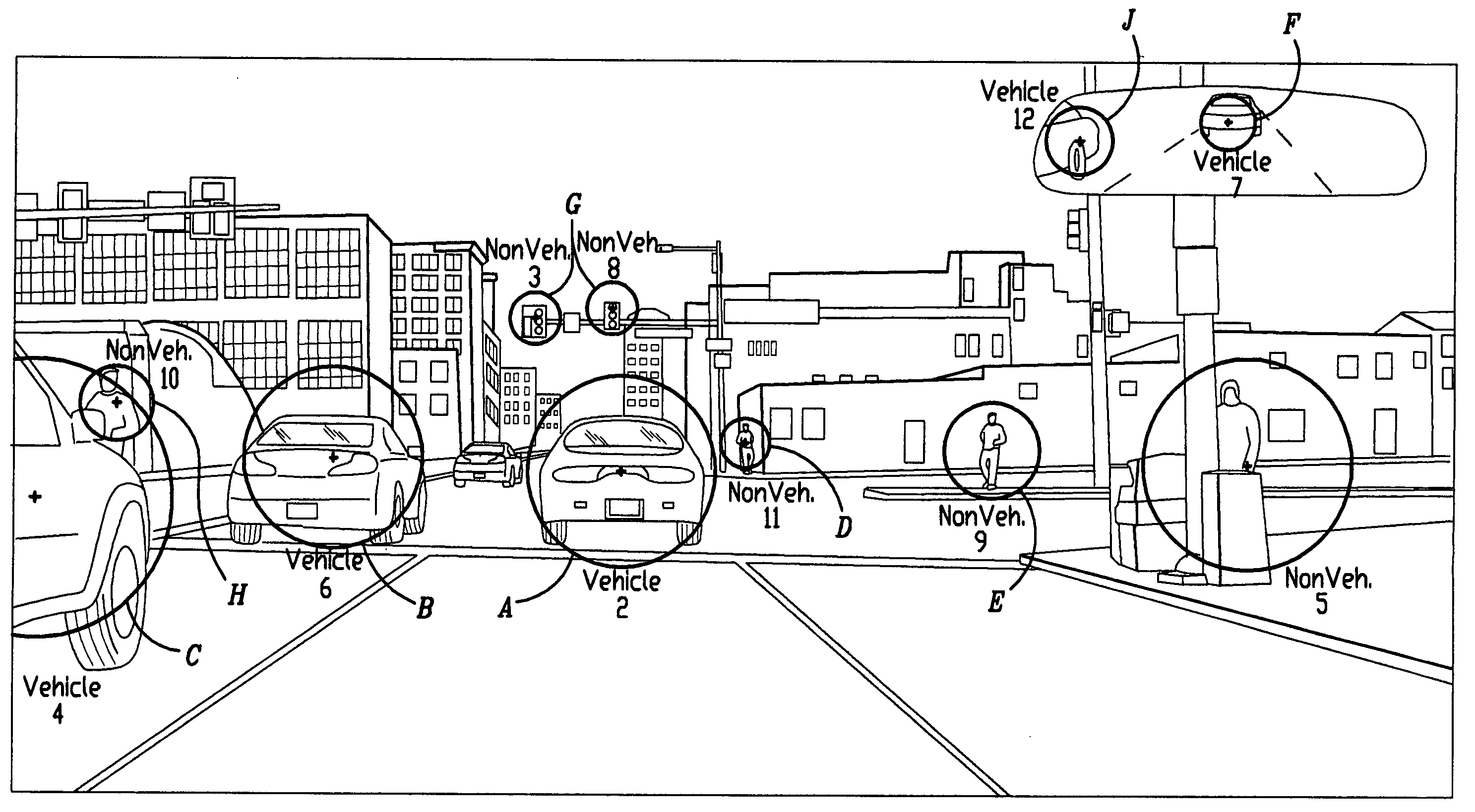

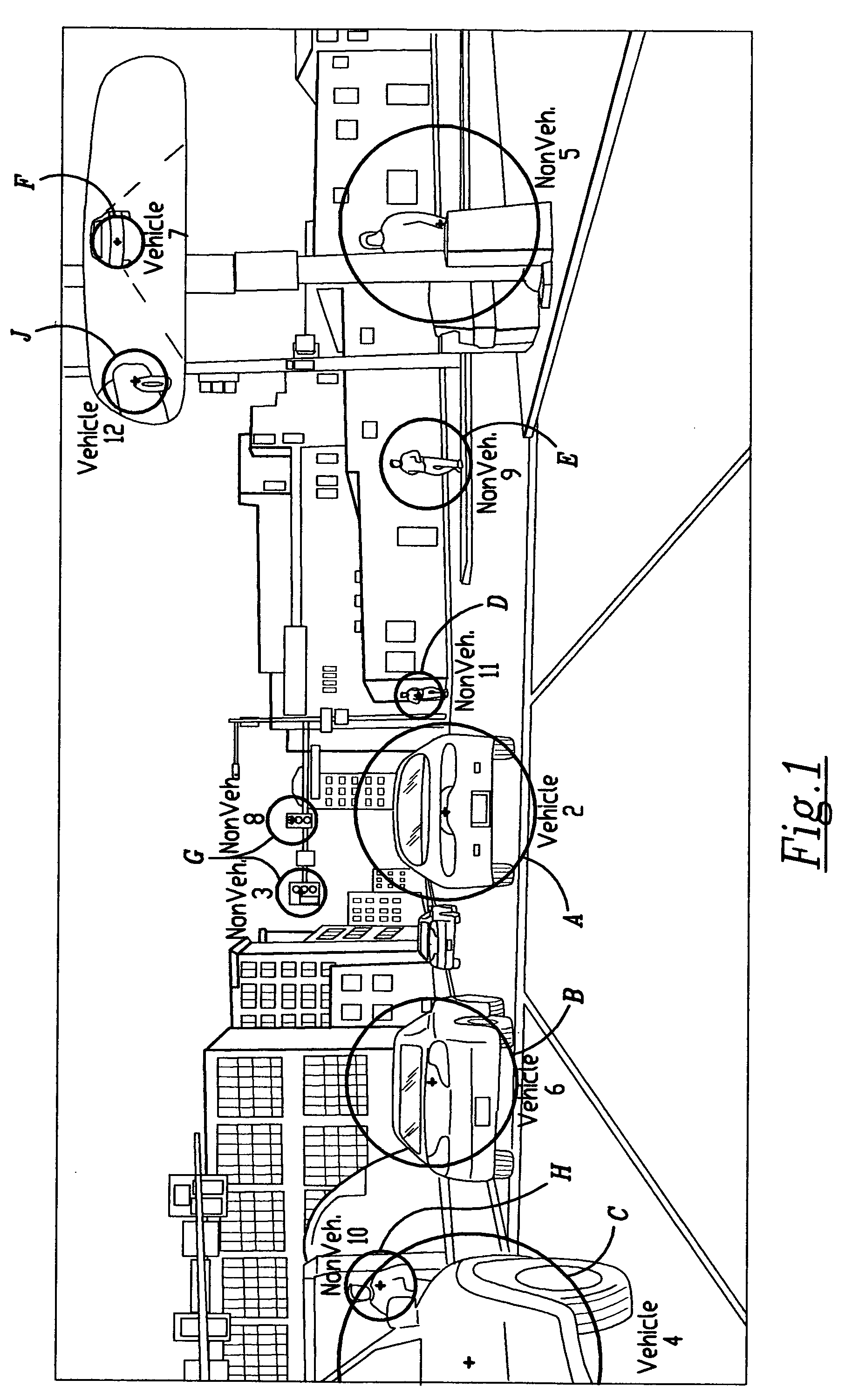

Dynamic object-based assessment and training of expert visual search and scanning skills for operating motor vehicles

InactiveUS20110123961A1Effective and feasible to implementCosmonautic condition simulationsSimulatorsObject basedSkill sets

The present invention is a method and system to permit assessment and measurement of a combination of visual and cognitive processes necessary for safe driving-object detection, by recognition of particular objects as actual or potential safety hazards or traffic hazards, through the continuous switching of visual attention between and among objects while virtually driving, according to an object's instantaneous priority as a safety threats or traffic hazard, that together are termed expert search and scanning skills. The present invention will reference the driver's performance against that of acknowledged experts in this skill; and then provide precise feedback about the type, location, and timing of search and scanning performance errors, plus remedial strategies to correct such errors.

Owner:TRANSANALYTICS HEALTH & SAFETY SERVICES LLC

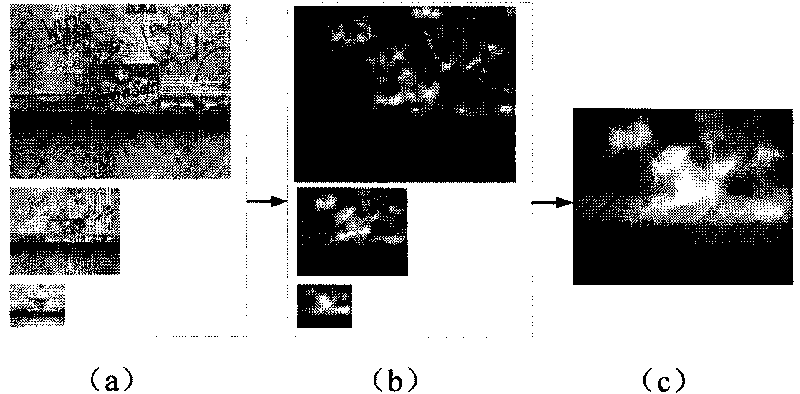

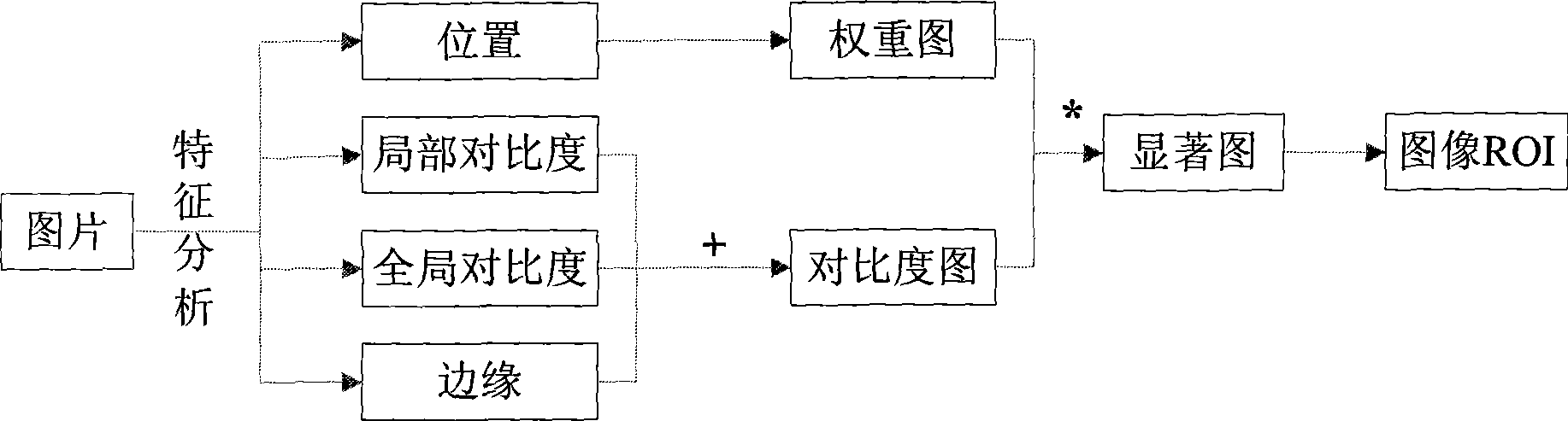

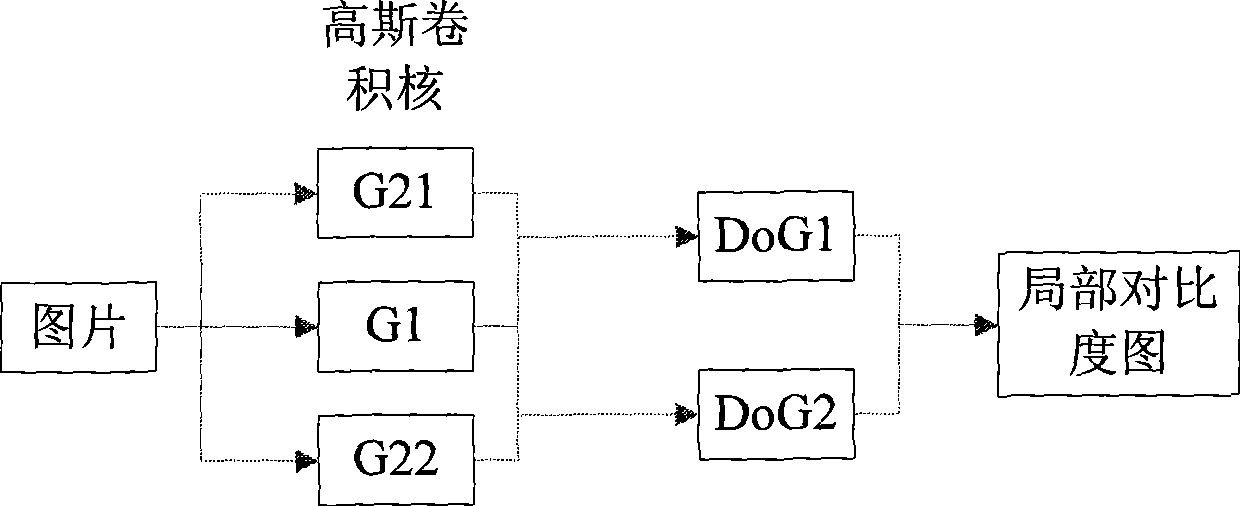

Method for automatically extracting interesting image regions based on human visual attention system

InactiveCN101533512AAccurate extractionSimplify the design processImage analysisPattern recognitionMerge algorithm

The invention discloses a method for automatically extracting interesting image regions based on a human visual attention system, which mainly solves the problem that the prior method for extracting interesting regions cannot extract a plurality of interesting regions and edge information. The method comprises the following steps: calculating the local brightness contrast, global brightness contrast and edge of an input image; fusing characteristic graphs corresponding to the three characteristics through global nonlinear normalization merging algorithm so as to generate a contrast graph; calculating the position characteristics of the input image so as to establish a weighting graph; establishing a saliency graph corresponding to the input image through the contrast graph and the weighting graph; and segmenting out the interesting regions of the input image according to the saliency graph. The method can effectively extract a plurality of interesting regions in the input image, and is used in the technical field of image analysis and image compression.

Owner:XIDIAN UNIV

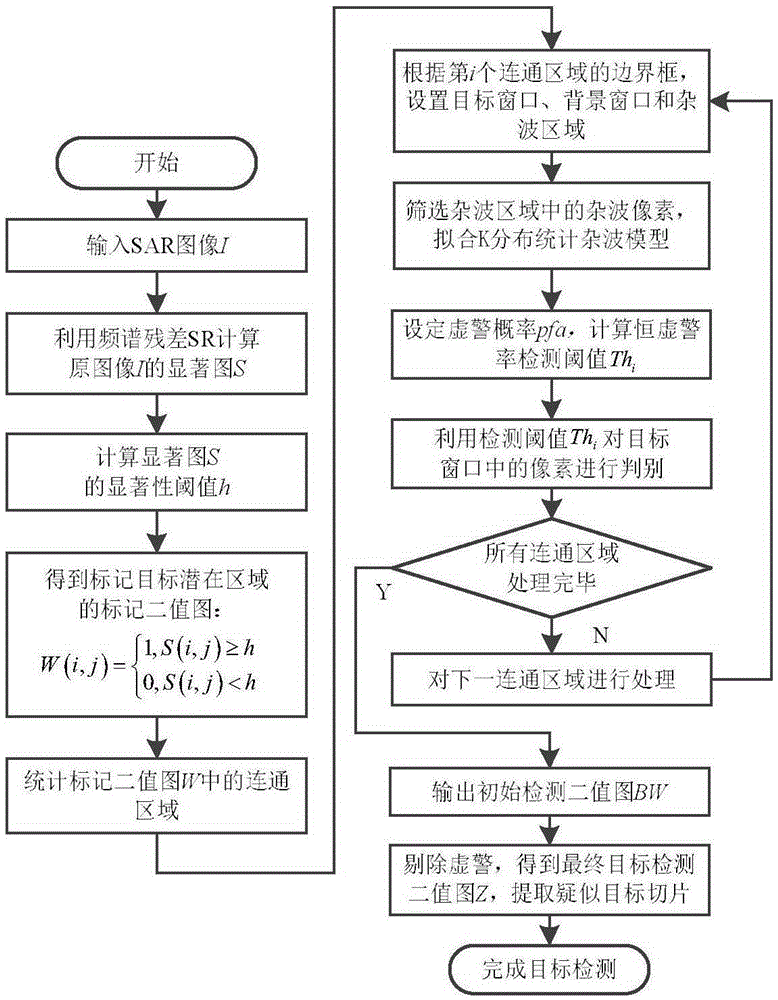

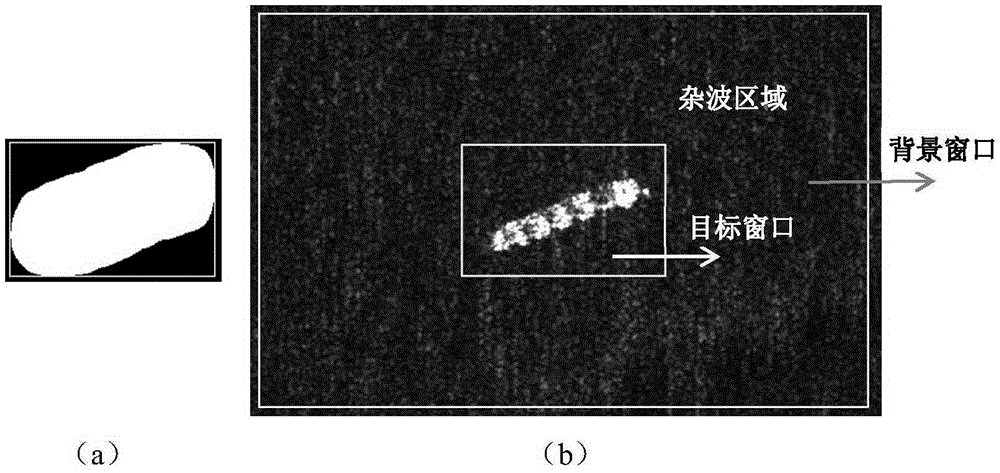

SAR (Synthetic Aperture Radar) image target detection method based on visual attention model and constant false alarm rate

ActiveCN105354541AAccurate acquisitionReduce false alarmsCharacter and pattern recognitionPattern recognitionFrequency spectrum

The present invention discloses an SAR (Synthetic Aperture Radar) image target detection method based on a visual attention model and a constant false alarm rate, which mainly solves the problems of a low detection speed and a high clutter false alarm rate in the existing SAR image marine ship target detection technology. The implementation steps of the method are as follows: extracting a saliency map corresponding to an SAR image according to Fourier spectrum residual error information; calculating a saliency threshold, so as to select a potential target area on the saliency map; detecting the potential target area by adopting an adaptive sliding window constant false alarm rate method, and obtaining an initial detection result; and obtaining a final detection result after removing a false alarm from the initial detection result, and extracting a suspected ship target slice, so as to complete a target detection process. The SAR image target detection method based on the visual attention model and the constant false alarm rate provided by the present invention has the advantages of a high calculation speed, a high target detection rate and a low false alarm rate, and meanwhile the method has the advantages of simpleness and easy implementation and can be used for marine ship target detection.

Owner:XIDIAN UNIV

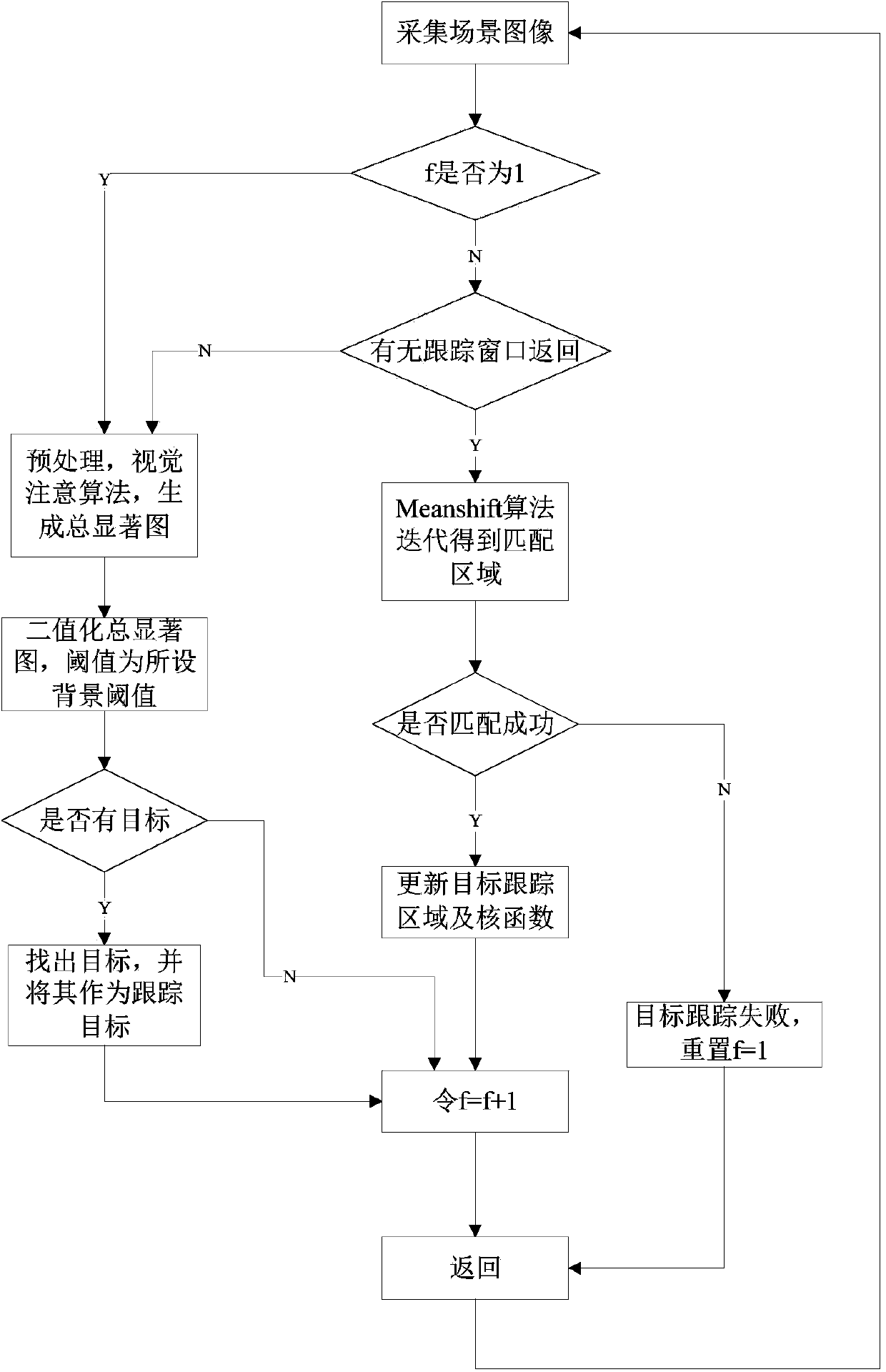

Visual attention and mean shift-based target detection and tracking method

InactiveCN103745203AHeavy calculationPrecise positioningCharacter and pattern recognitionNight visionMoving average

The invention discloses a visual attention and mean shift-based target detection and tracking method. The method comprises the following the steps: firstly, extracting the salient region of a first frame image in an image sequence by using a visual attention method, and removing interferences of background factors to obtain a moving target; then, changing fixed bandwidth of a kernel function in a traditional mean shift method into dynamically changed bandwidth, and tracking the detected moving target by using an improved mean shift method. Shown by an experimental result, the visual attention and mean shift-based target detection and tracking method disclosed by the invention is suitable for infrared and visible image sequences, and better in tracking effect. Moreover, the positional information of the moving target also can be provided by the visual attention and mean shift-based target detection and tracking method disclosed by the invention, and thus, possibility is provided for accurate positioning of the target. The visual attention and mean shift-based target detection and tracking method has a broad application prospect in the military and civil field of night vision investigation, security and protection monitoring, and the like.

Owner:NANJING UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com