Method for evaluating objective quality of full-reference image

An evaluation method, objective quality technology, applied in the field of digital video, to achieve the effect of wide application prospects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

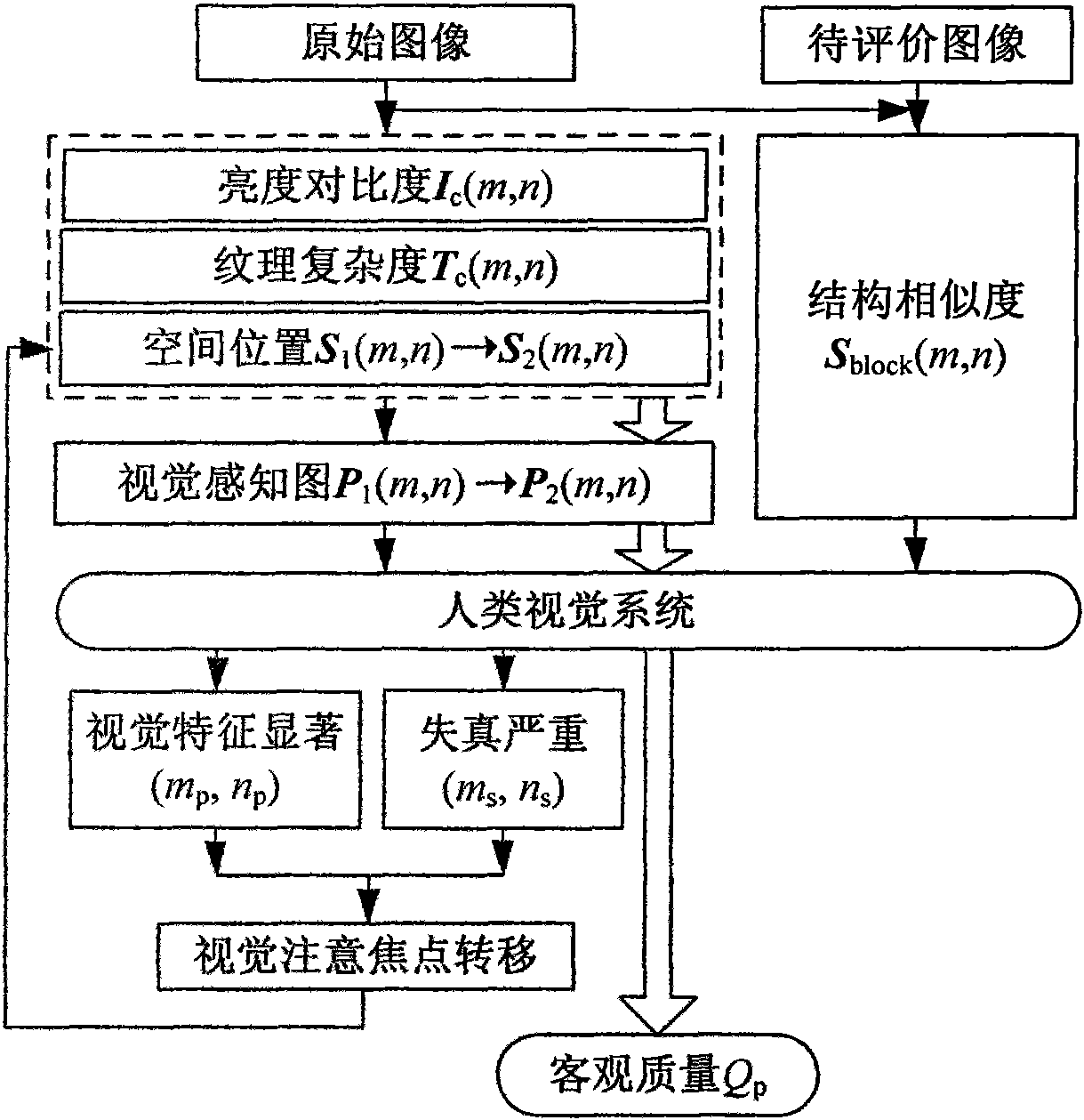

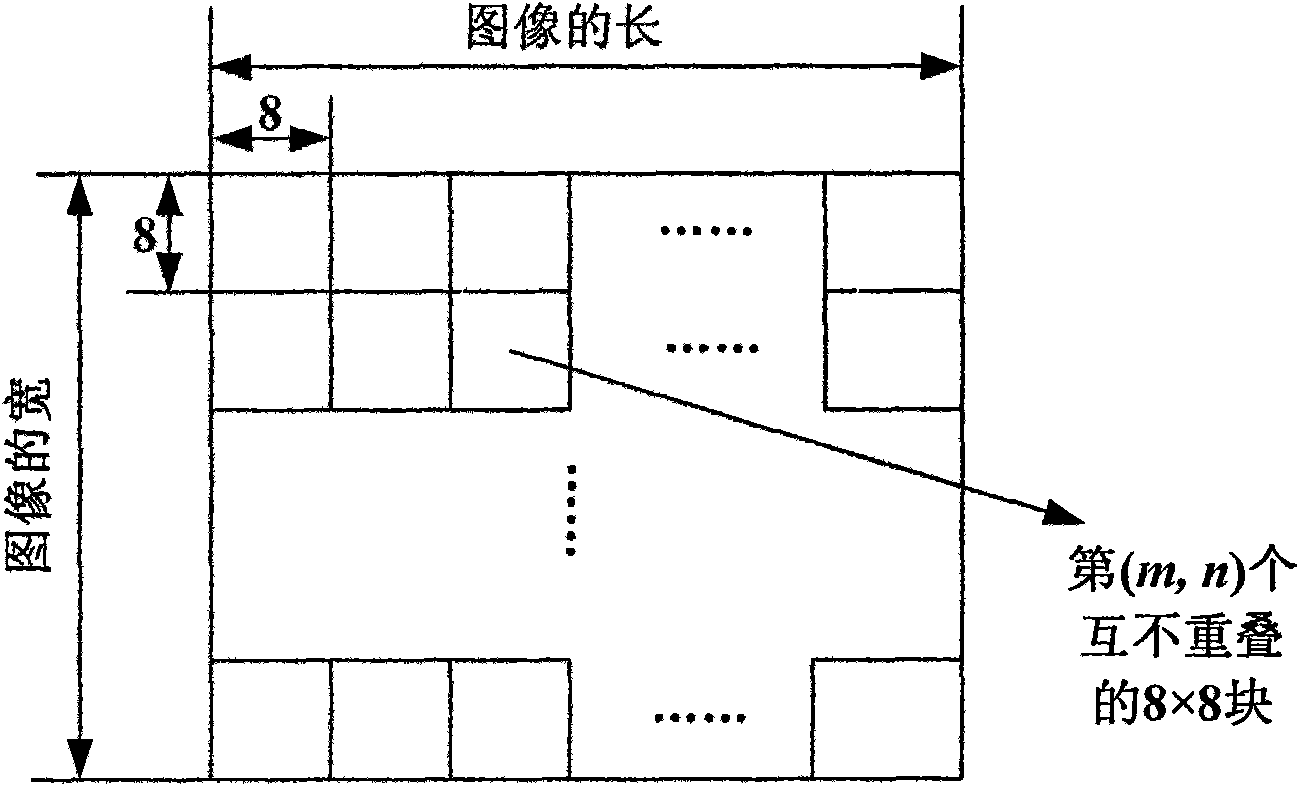

[0042] Such as figure 1 As shown, a method for evaluating the objective quality of a full-reference image based on structural similarity in the present invention includes:

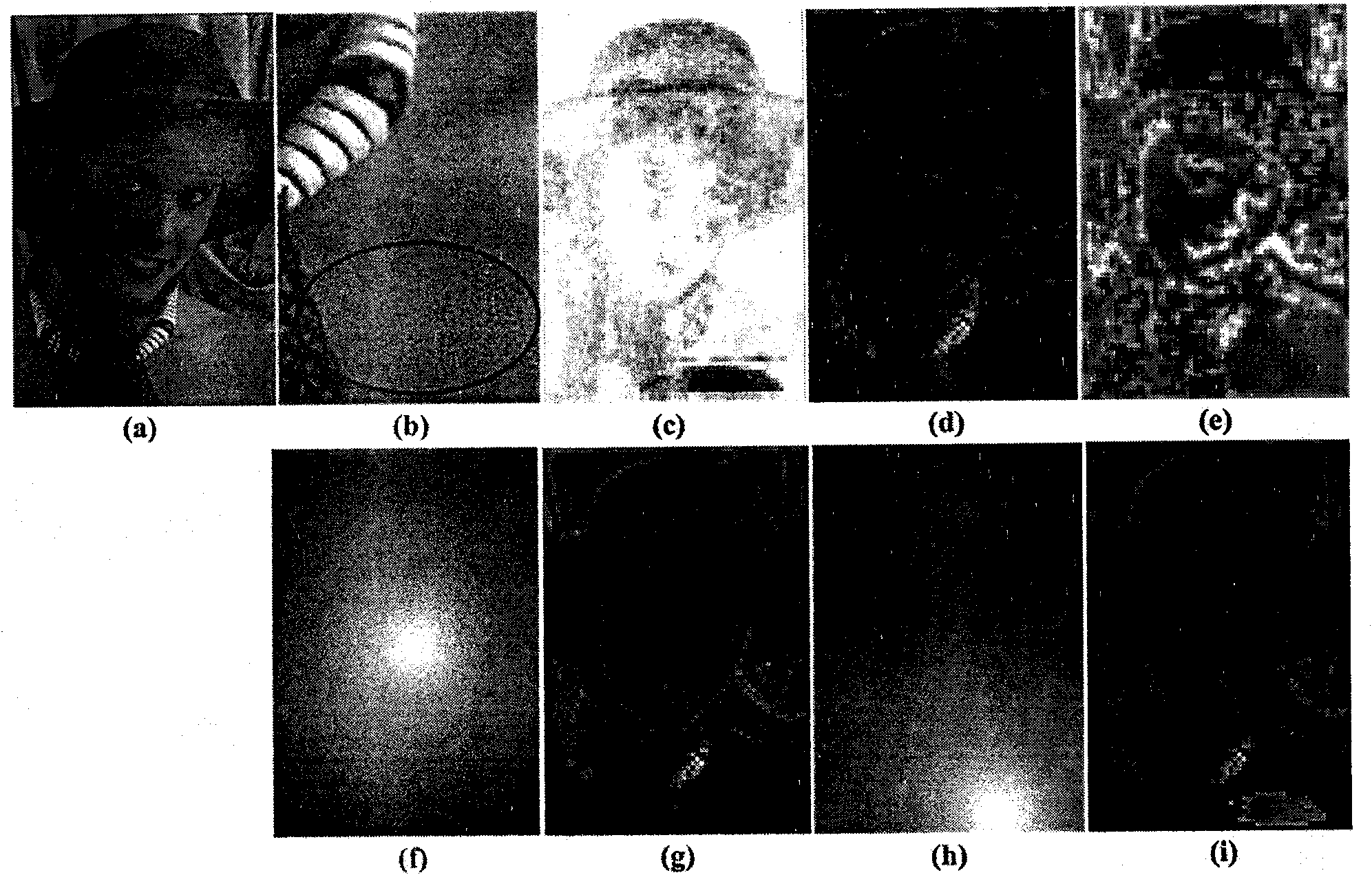

[0043] (1) Using spatial domain visual features such as brightness contrast, texture complexity, and spatial position, to obtain the visual perception map of the original image, and find the significant position of visual perception features;

[0044] (2) Find the structural similarity map SSIM(i, j) between the original image and the distorted image, where (i, j) is the pixel coordinates, calculate the relative quality of the distorted image, and find the location with serious distortion;

[0045] (3) Define the principle of shifting the focus of visual attention, determine a new focus of visual attention, and regenerate the visual perception map after the shift of focus of visual attention;

[0046] (4) Weight the structural similarity with the visual perceptual maps generated in (1) and (3) to obtain a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com