Dynamic vision caution region extracting method based on characteristic

A technology of visual attention and region extraction, applied in the field of image processing, which can solve the problems of discontinuous attention time, inability to consider multiple frames, and inability to be well implemented.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

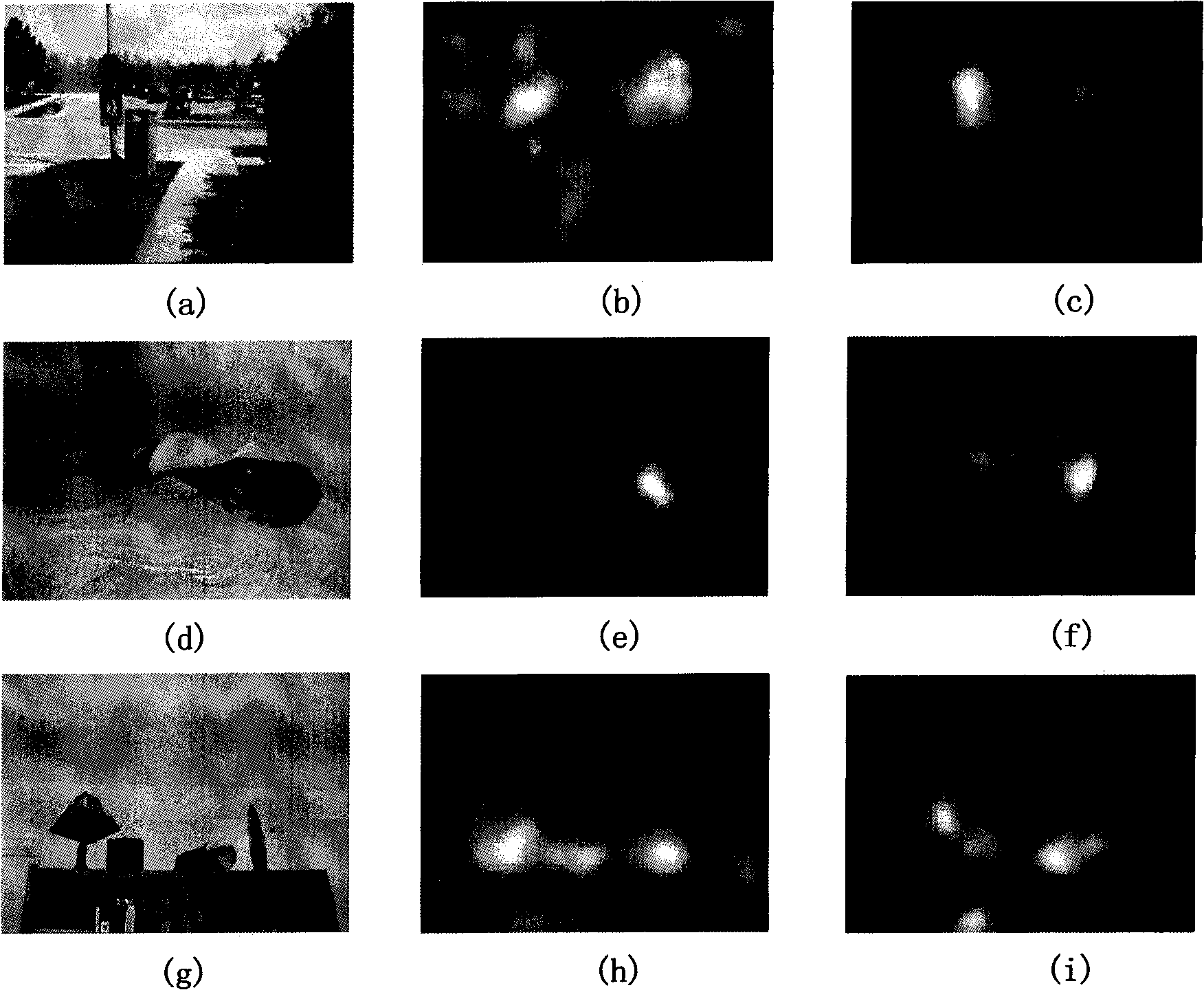

[0054] Example 1: Saliency Maps for Still Images

[0055] 8×8 RGB patches are used to train the basis functions (A, W), and their dimensionality is 192.

[0056] For an input picture with a size of 800×640, it is divided into 8000 8×8 RGB color blocks, that is, n=8000, forming a sampling matrix X=[x 1 , x 1 ,...,x 8000 ]. And the corresponding coefficients of the basis functions, that is, the characteristics of X, are calculated by the formula S=WX.

[0057] The activation rate p of each feature is obtained by formula (2.1), and the incremental coding length index of each feature is measured according to p and formula (2.3).

[0058] Divide the salient feature set SF according to the incremental coding length index of each feature and the formula (3.1), and use the formula (3.2) to redistribute the energy of each feature in the salient feature set. Then for image patch x k , according to the formula (3.3), deal with its significance m k , and finally use the formula (3....

example 2

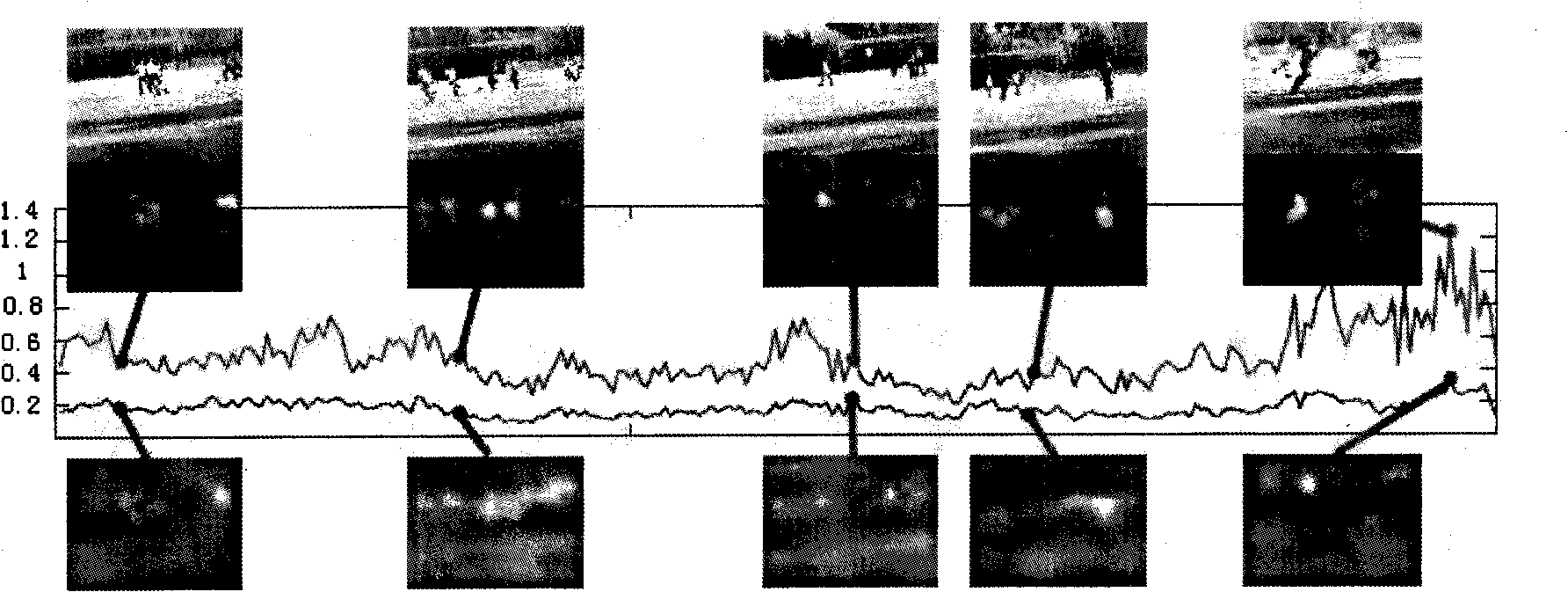

[0060] Example 2: Salient map in video

[0061] Compared with previous similar methods, a great advantage of the method of the present invention is that it is continuous. Incremental encoding length is a process of continuous updating. The change of the distribution of the feature activation rate can be based on the space domain or the time domain. If the time domain variation is considered to be a Laplace distribution, assuming p t is the tth frame, then it can be considered that p t is the cumulative sum of previous feature responses:

[0062] p t = 1 Z Σ τ = 0 t - 1 exp ( τ - t λ ) p ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com