Patents

Literature

638 results about "Model extraction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Multi-feature fusion vehicle re-identification method based on deep learning

ActiveCN107729818AImprove feature extractionGood presentation skillsCharacter and pattern recognitionFeature vectorData set

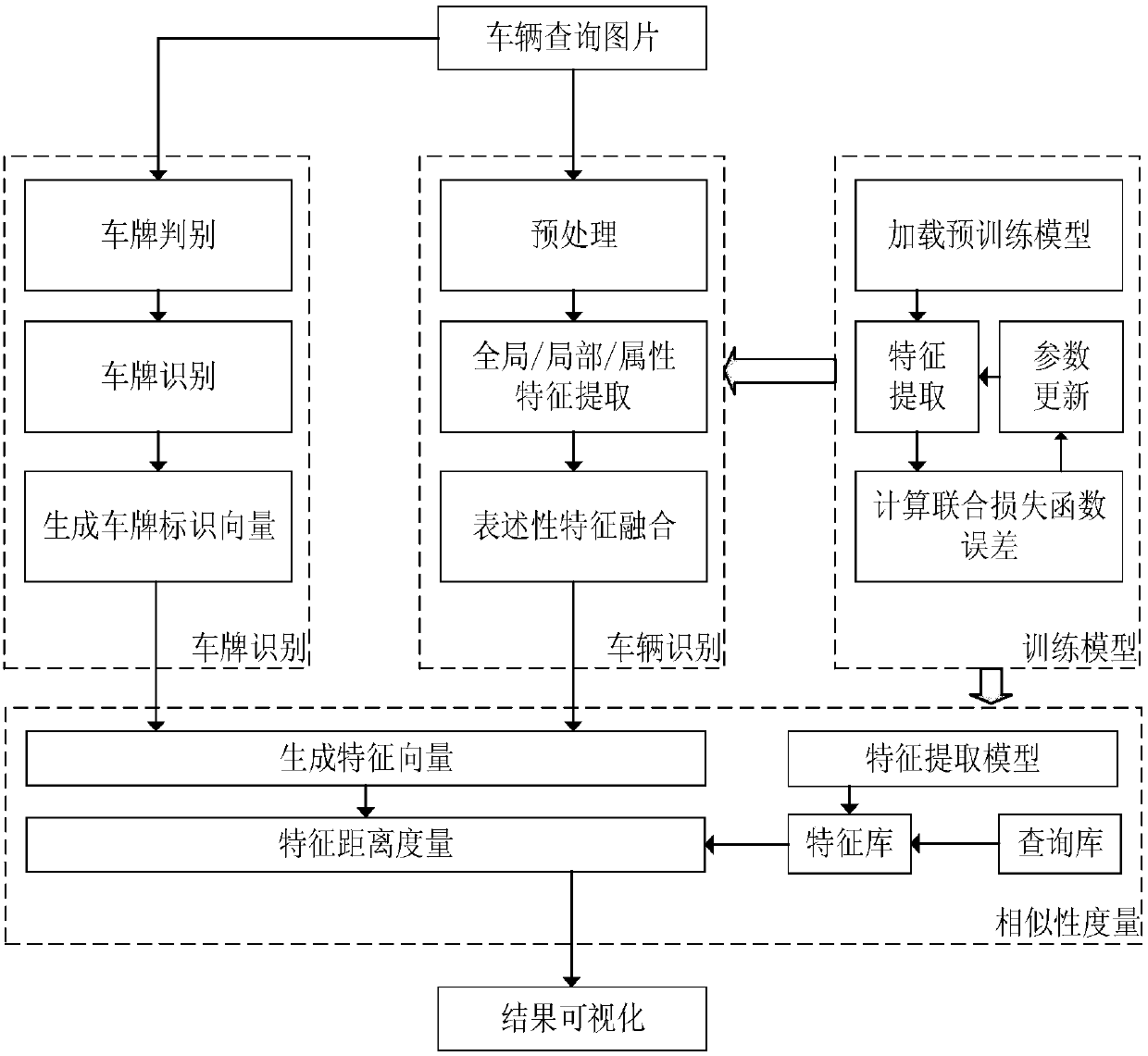

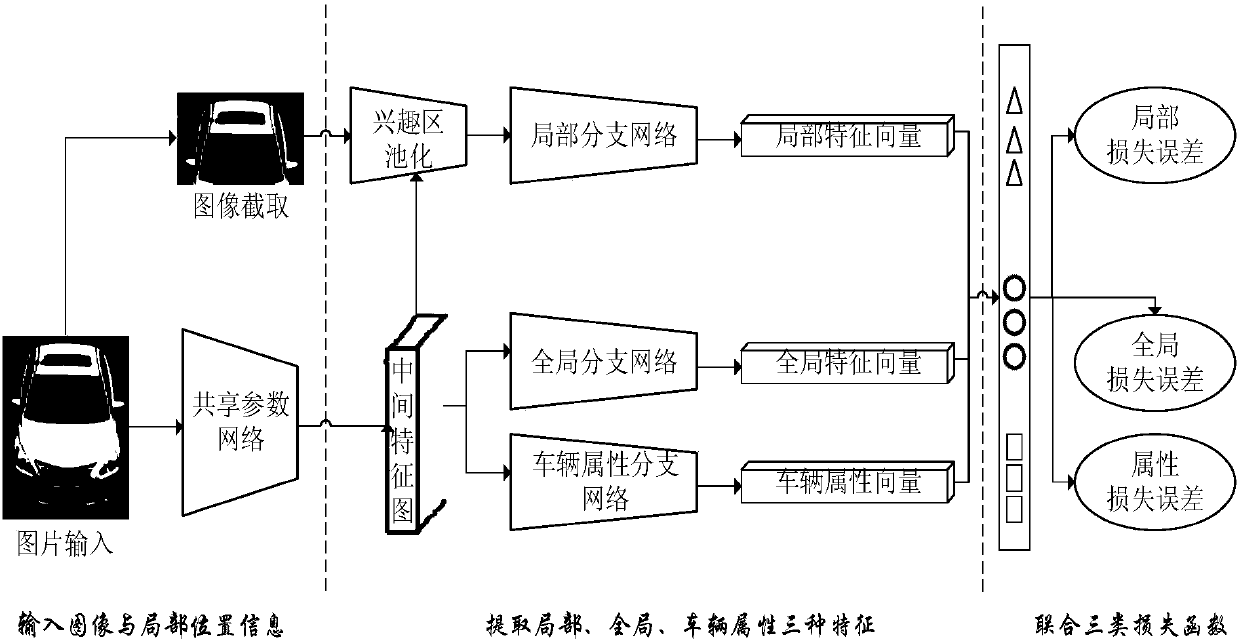

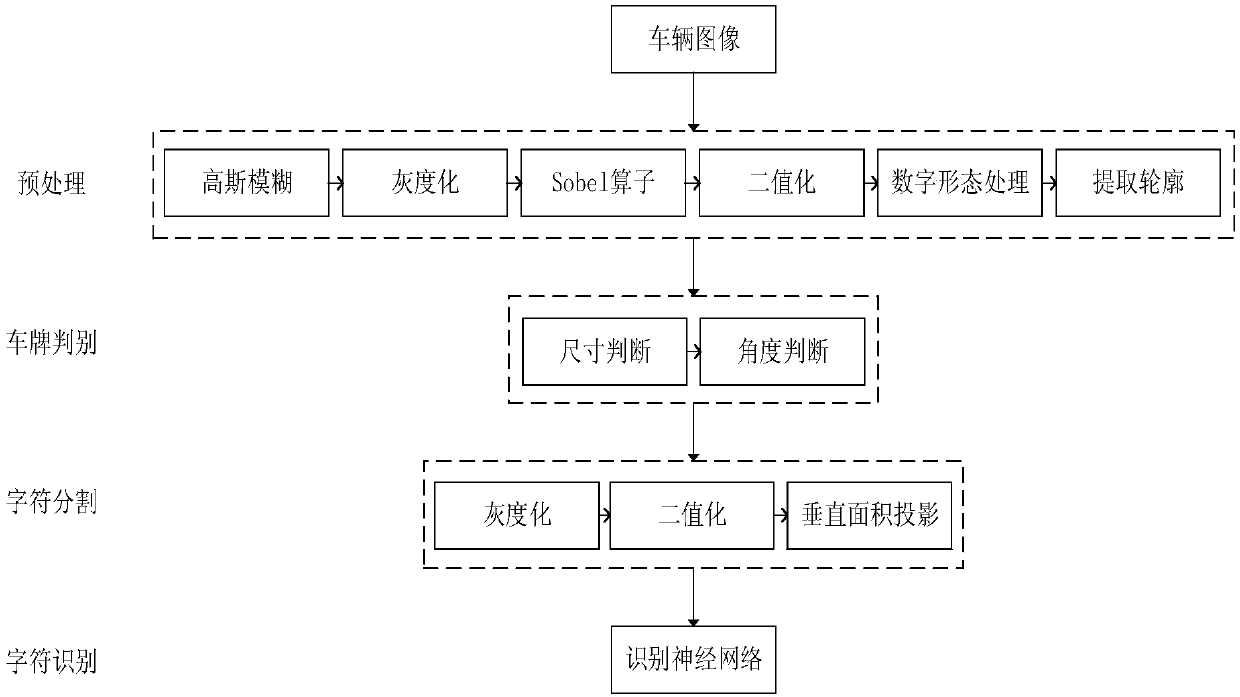

The invention discloses a multi-feature fusion vehicle re-identification method based on deep learning. The method comprises five parts of model training, license plate identification, vehicle identification, similarity measurement and visualization. The method comprises the steps that a large-scale vehicle data set is used for model training, and a multi-loss function-phased joint training policyis used for training; license plate identification is carried out on each vehicle image, and a license plate identification feature vector is generated according to the license plate recognition condition; a trained model is used to extract the vehicle descriptive features and vehicle attribute features of an image to be analyzed and an image in a query library, and the vehicle descriptive features and the license plate identification vector are combined with the unique re-identification feature vector of each vehicle image; in the stage of similarity measurement, similarity measurement is carried out on the image to be analyzed and the re-identification feature vector of the image in the query library; and a search result which meets requirements is locked and visualized.

Owner:BEIHANG UNIV

Improved visual attention model-based method of natural scene object detection

InactiveCN101980248AImprove accuracyIncrease contributionImage analysisCharacter and pattern recognitionImage extractionModel extraction

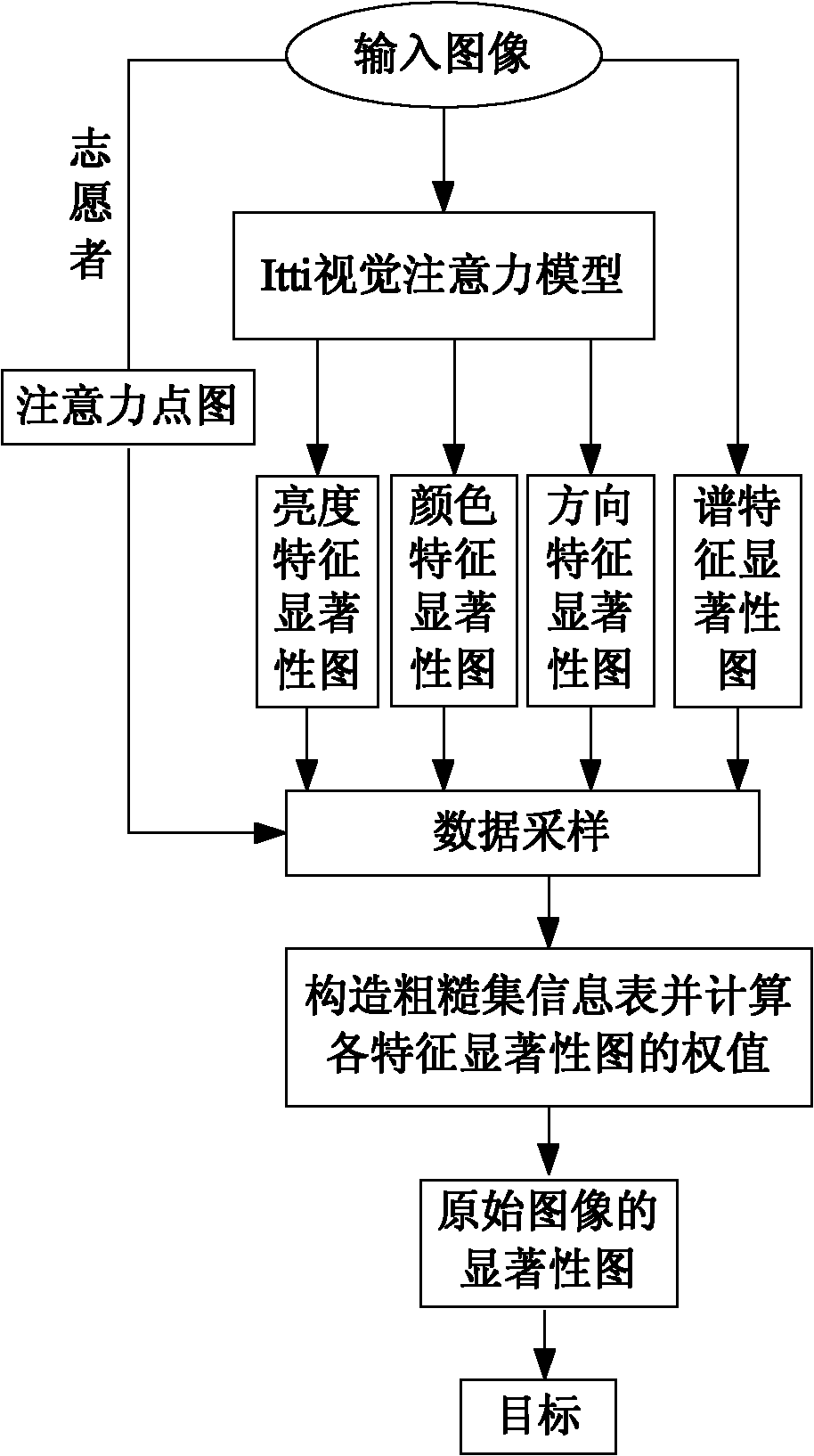

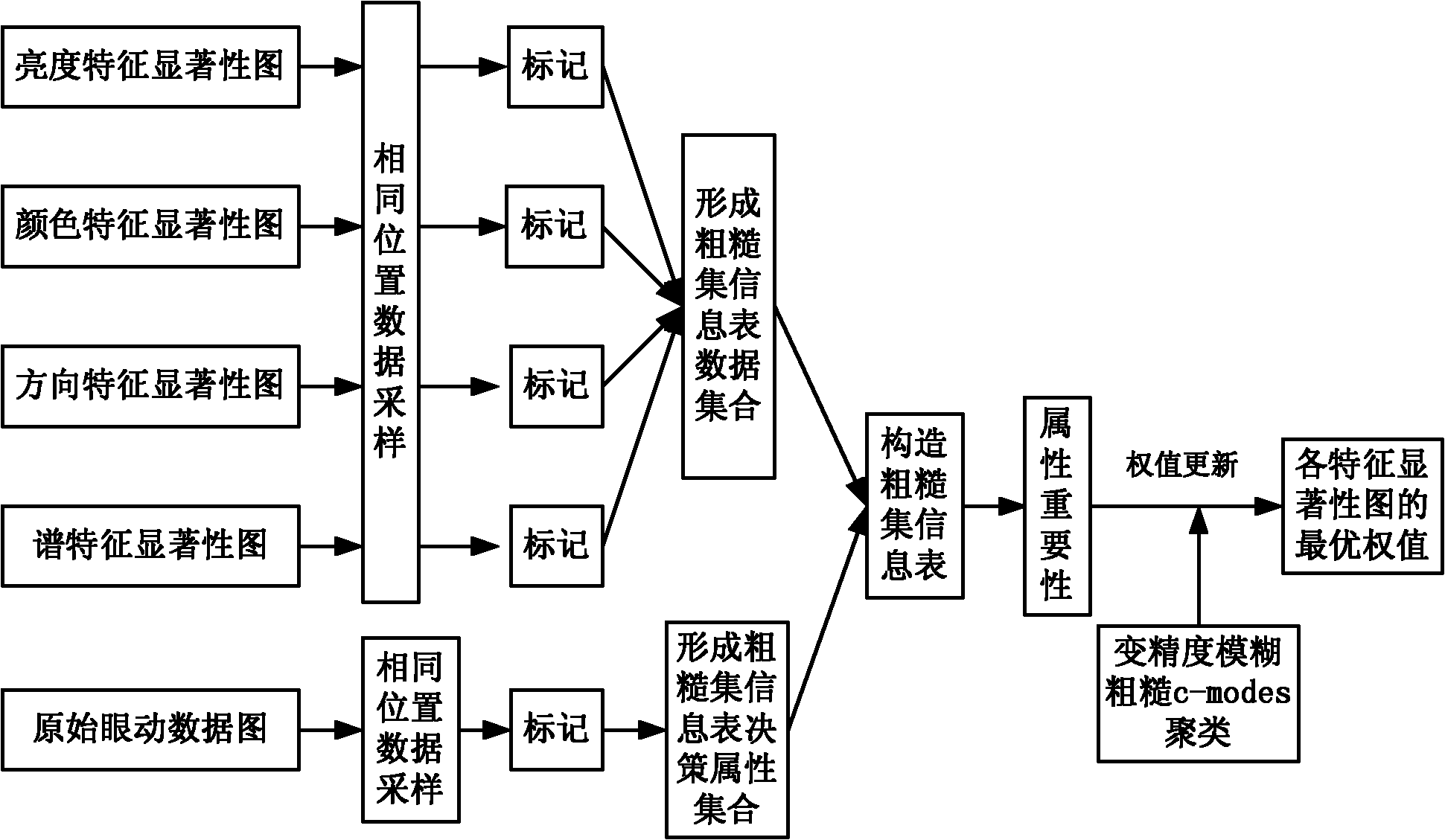

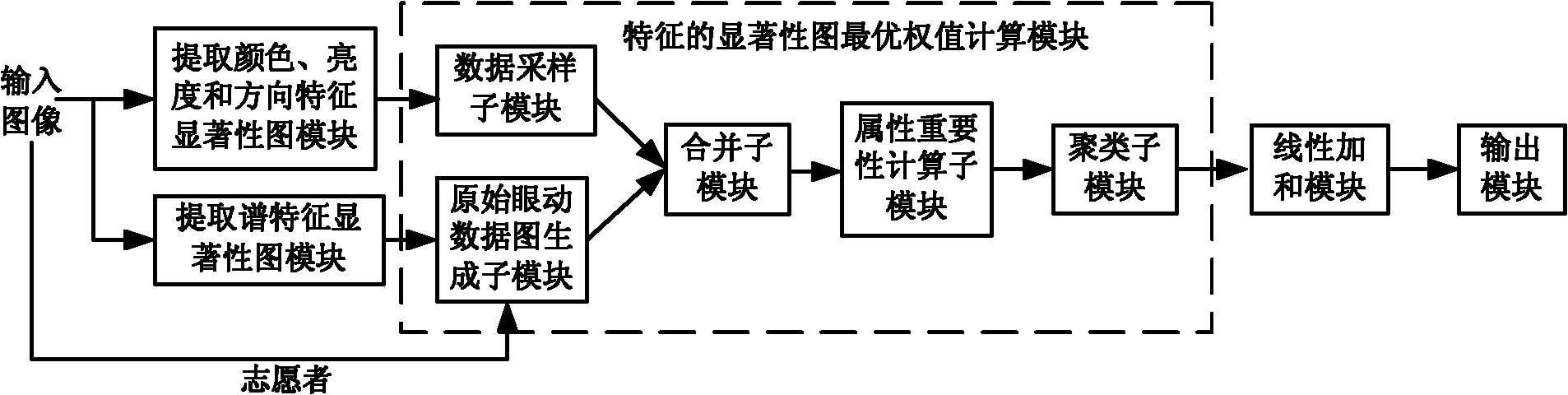

The invention discloses an improved visual attention model-based method of a natural scene object detection, which mainly solves the problems of low detection accuracy rate and high false detection rate in the conventional visual attention model-based object detection. The method comprises the following steps of: (1) inputting an image to be detected, and extracting feature saliency images of brightness, color and direction by using a visual attention model of Itti; (2) extracting a feature saliency image of a spectrum of an original image; (3) performing data sampling and marking on the feature saliency images of the brightness, the color, the direction and the spectrum and an attention image of an experimenter to form a final rough set information table; (4) constructing attribute significance according to the rough set information table, and obtaining the optimal weight value of the feature images by clustering ; and (5) weighing feature sub-images to obtain a saliency image of the original image, wherein a saliency area corresponding to the saliency image is a target position area. The method can more effectively detect a visual attention area in a natural scene and position objects in the visual attention area.

Owner:XIDIAN UNIV

Non-overlapping visual field multiple-camera human body target tracking method

InactiveCN101616309AExact matchHigh precisionImage analysisCharacter and pattern recognitionHuman bodyMulti camera

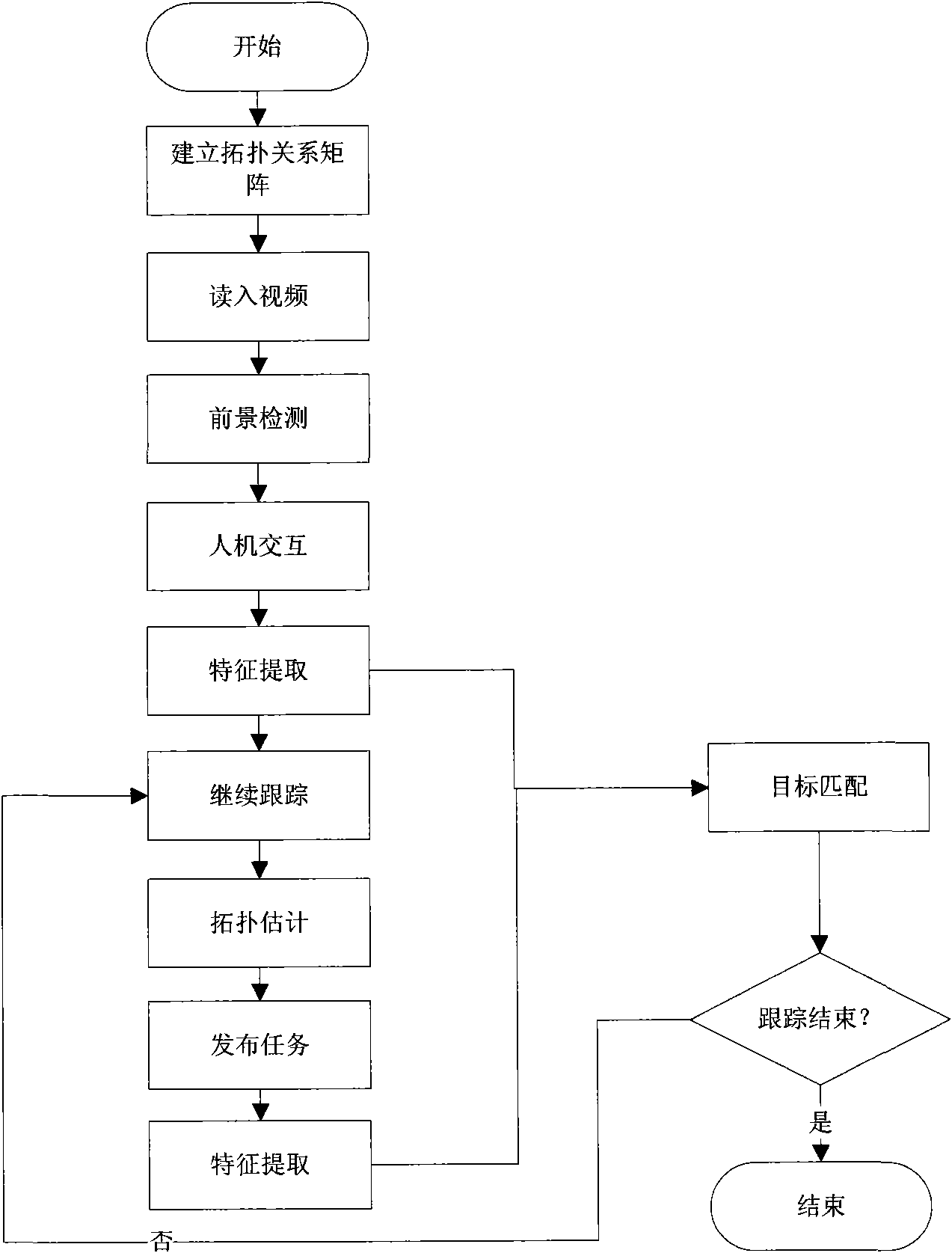

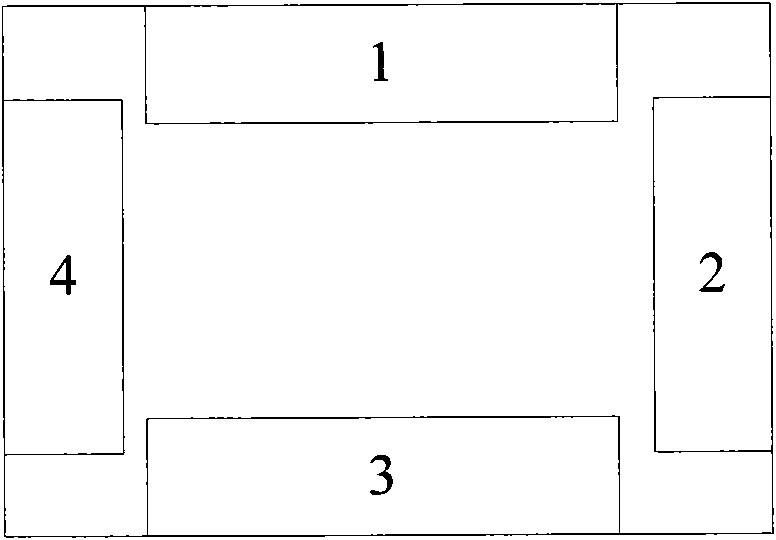

The invention relates to a non-overlapping visual field multiple-camera human body target tracking method, in particular to a method for carrying out target matching and tracking in multi-camera videos of a non-overlapping visual field under the condition of known topological relation among multiple cameras, comprising the following steps: firstly, establishing a topological relation matrix among multiple cameras, then extracting a foreground image of a pedestrian target followed by selecting a tracking target in the visual range of a certain camera by a human-machine interaction interface, using a mixed Gaussian model to extract the appearance characteristics of the target, comprising colour histogram characteristic and UV colour characteristic, in the whole tracking process, adopting a tree structure to manage tracking task, using Bayesian model to estimate overall similarity, and searching for the optimal matching target according to the overall similarity. The invention has the advantages of high precision, simple algorithm, easy realization, strong instantaneity and the like, and can provide a new real-time and reliable method for multi-camera target recognition and tracking.

Owner:SHANGHAI JIAO TONG UNIV

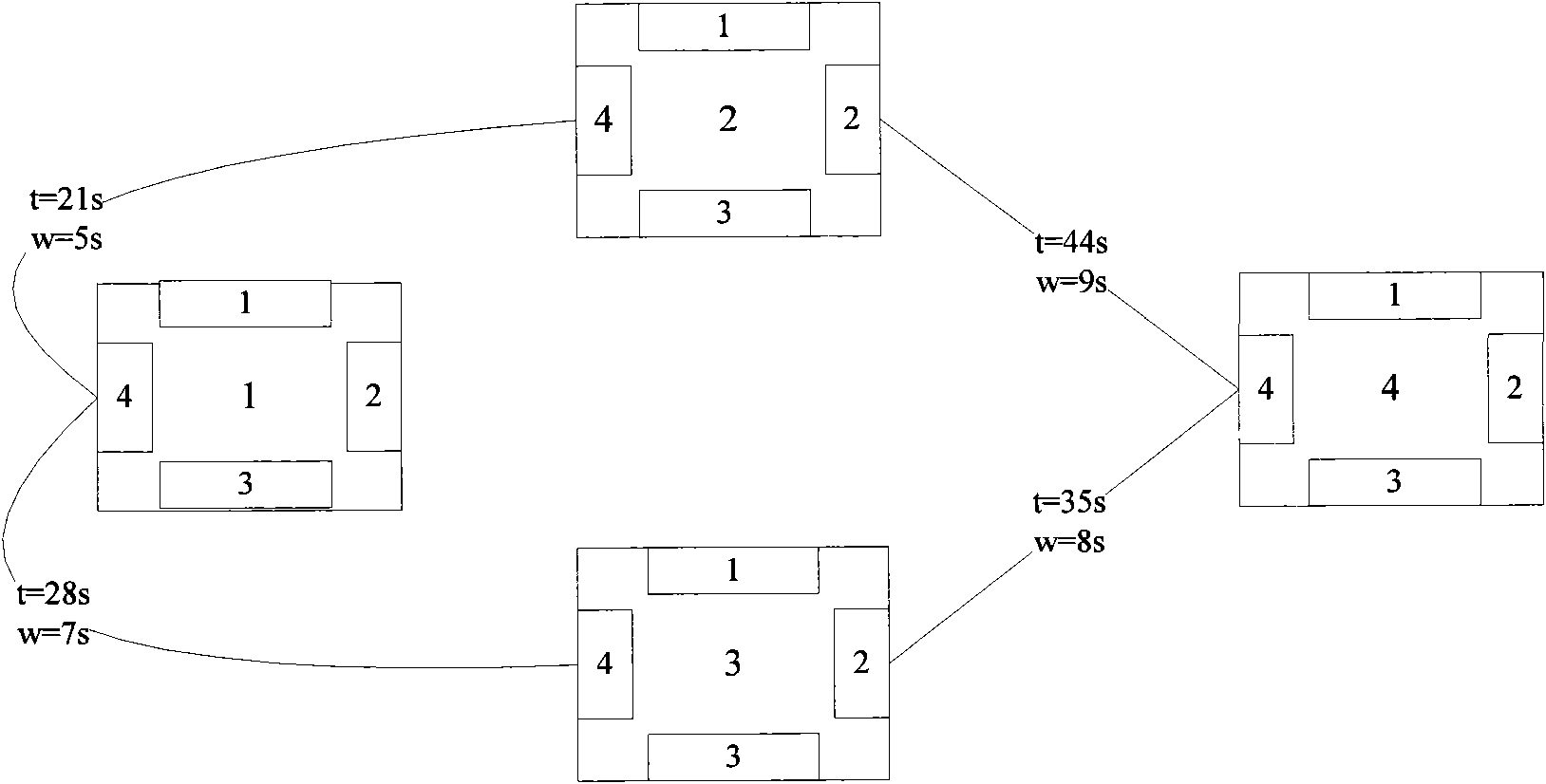

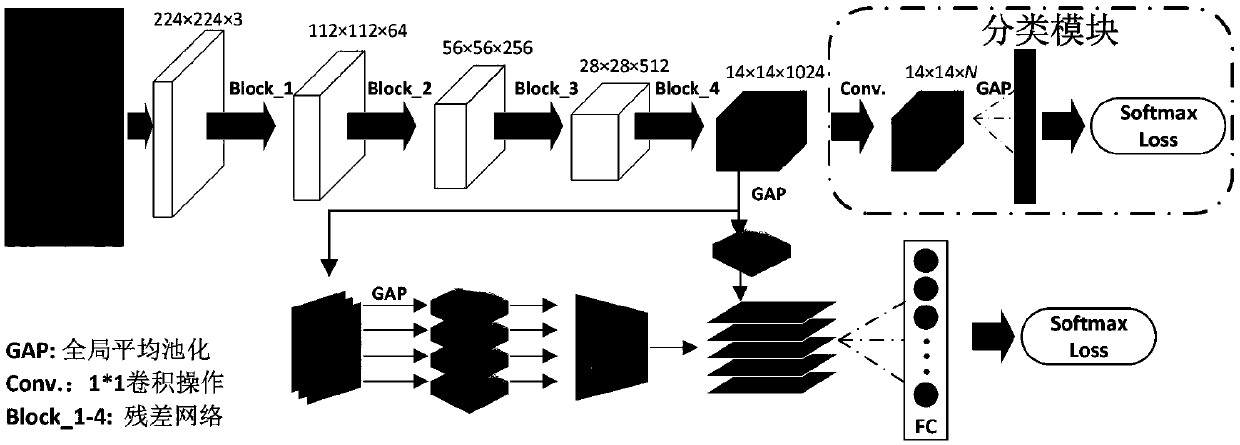

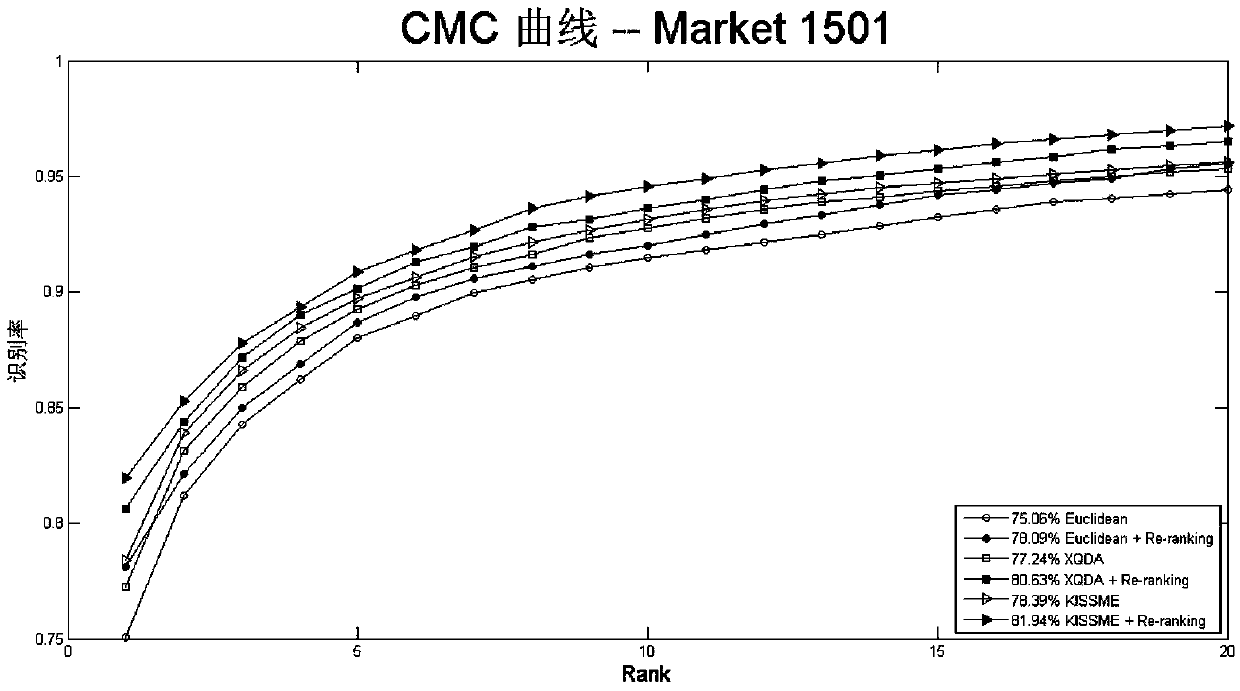

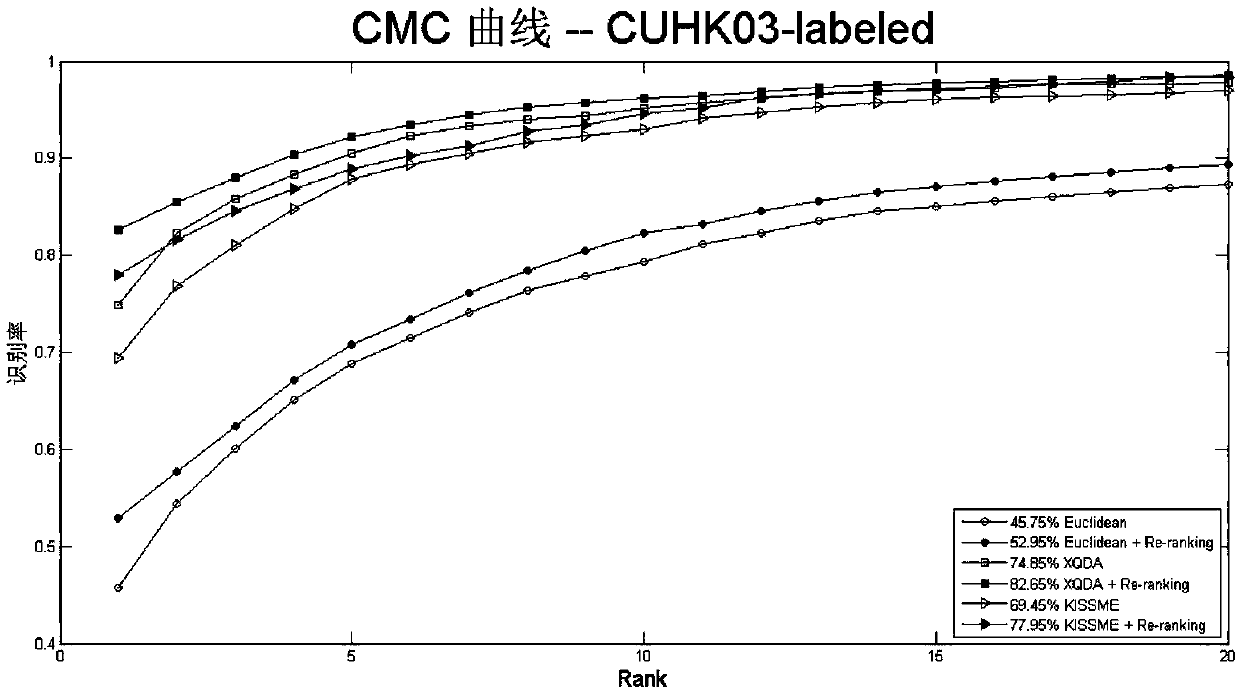

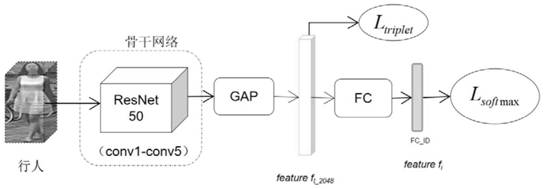

Pedestrian re-identification method based on multi-region feature extraction and fusion

ActiveCN108960140AReasonable designOptimizing the Distance MetricBiometric pattern recognitionNeural architecturesFeature vectorModel extraction

The invention relates to a pedestrian re-identification method based on multi-region feature extraction and fusion, including the following steps: using a residual network to extract global features,and adding a pedestrian identity classification module for global feature extraction and optimization in the training stage; constructing a multi-region feature extraction sub-network for local feature extraction, and carrying out weighted fusion of local features; setting a loss function including the loss of the classification module and the loss of a feature fusion module; training the networkto obtain a model, and extracting the feature vectors of a query set and a test set; and in the measuring stage, re-measuring the feature distance by using a cross-nearest neighbor method. The methodis reasonable in design. Global and local feature are effectively combined. The distance measurement method is optimized. A good pedestrian re-identification result is obtained. The overall matching accuracy of the system is greatly improved.

Owner:ACADEMY OF BROADCASTING SCI STATE ADMINISTATION OF PRESS PUBLICATION RADIO FILM & TELEVISION +1

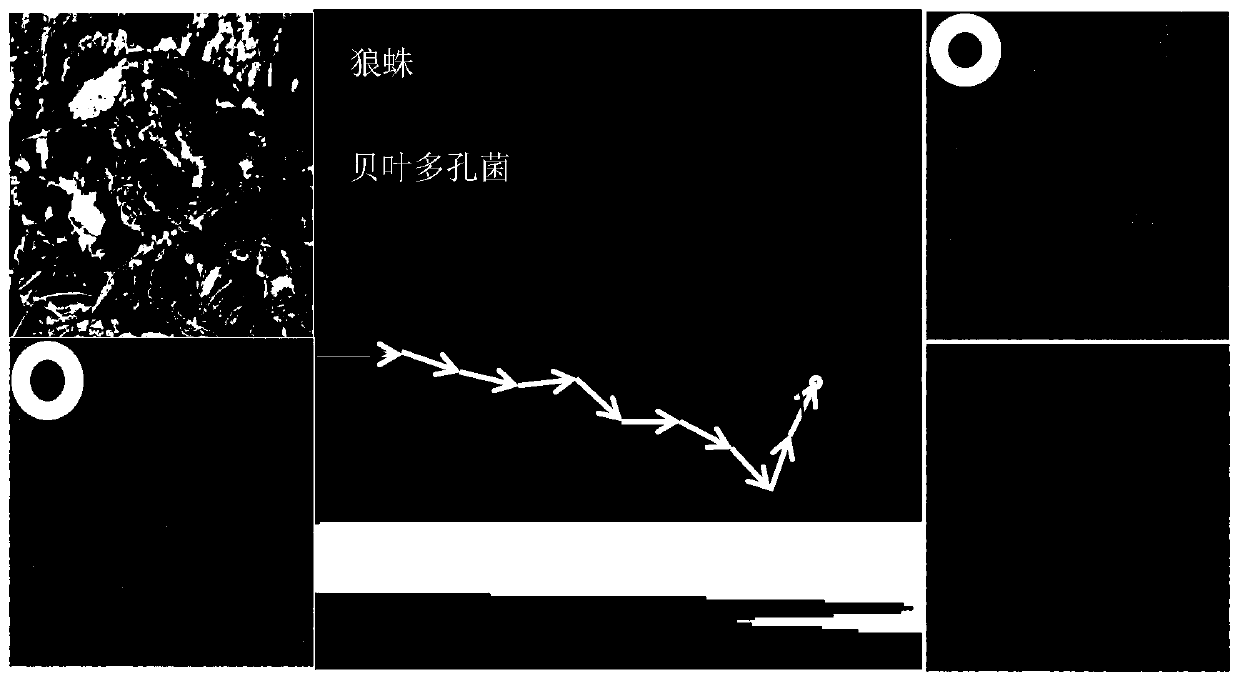

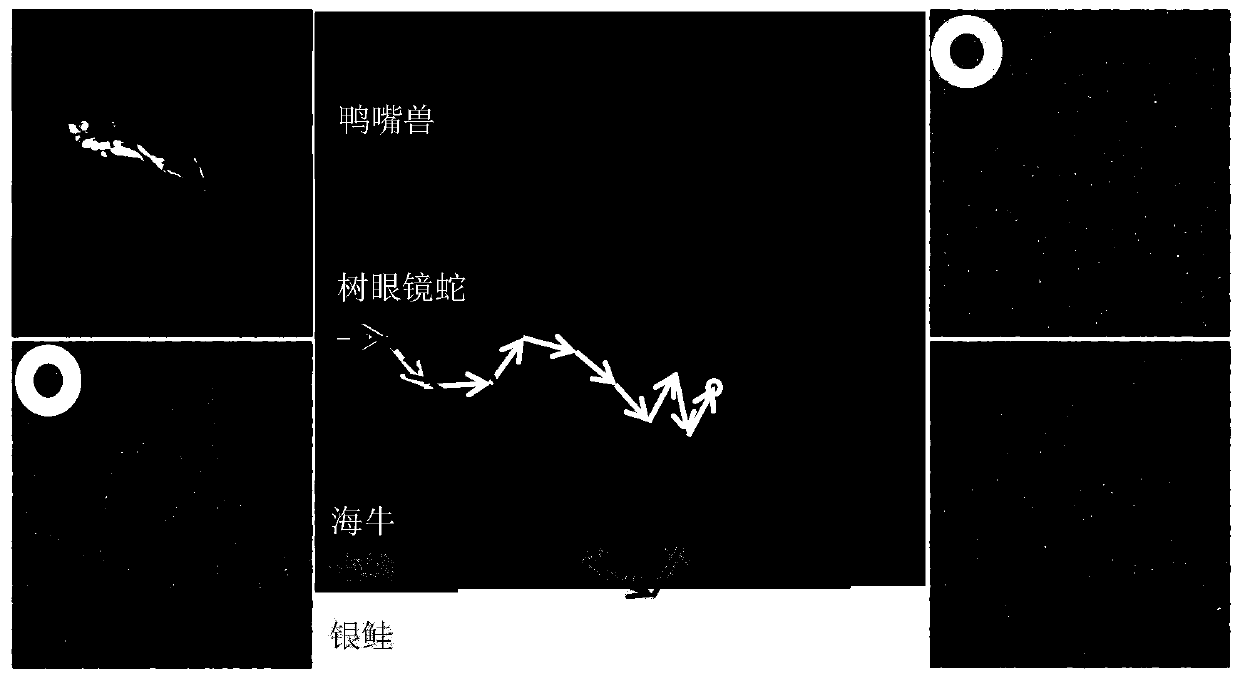

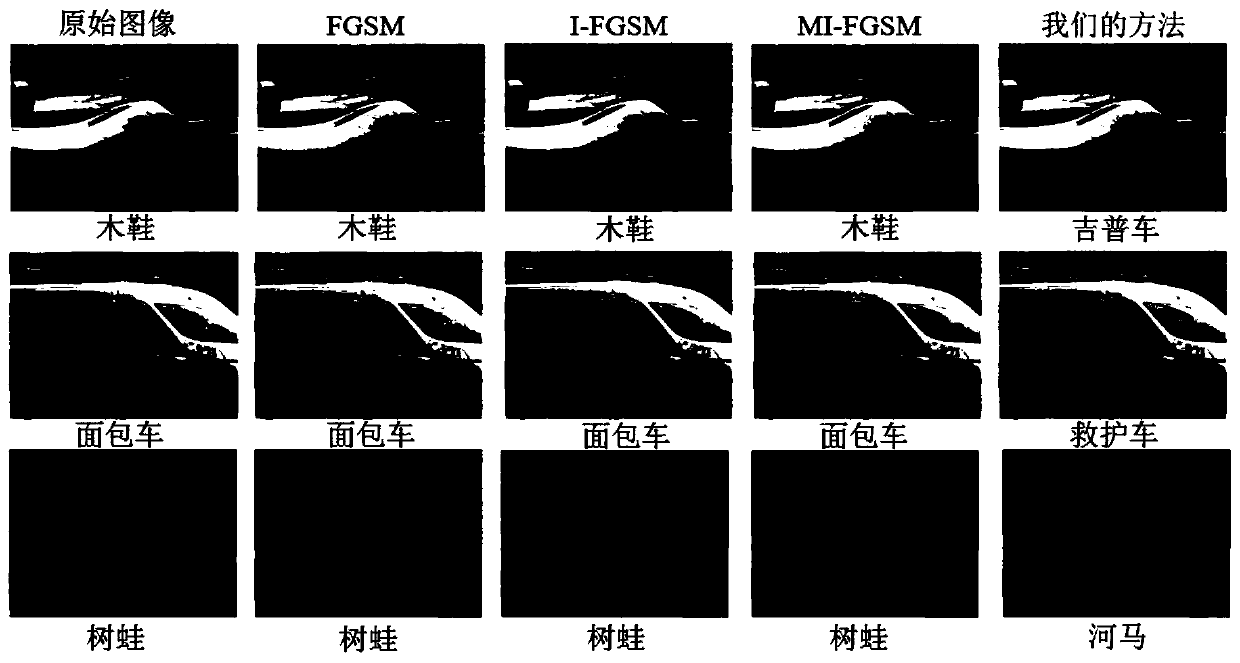

A step size self-adaptive attack resisting method based on model extraction

ActiveCN109948663AGood attack performanceStrong non-black box attack capabilityCharacter and pattern recognitionNeural architecturesModel extractionData set

The invention discloses a step size self-adaptive attack resisting method based on model extraction. The step size self-adaptive attack resisting method comprises the following steps: step 1, constructing an image data set; Step 2, training a convolutional neural network for the image set IMG to serve as a to-be-attacked target model, step 3, calculating a cross entropy loss function, realizing model extraction of the convolutional neural network, and initializing a gradient value and a step length g1 of an iterative attack; Step 4, forming a new adversarial sample x1; 5, recalculating the cross entropy loss function, and updating the step length of adding the confrontation noise in the next step by using the new gradient value; Step 6, repeatedly the process of inputting images, calculating cross entropy loss function, computing the step size, updating the adversarial sample; repeatedly operating the step 5 for T-1 timeS, obtaining a final iteration attack confrontation sample x'i, and inputting the confrontation sample into the target model for classification to obtain a classification result N (x'i). Compared with the prior art, the method has the advantages that a better attackeffect can be achieved, and compared with a current iteration method, the method has higher non-black box attack capability.

Owner:TIANJIN UNIV

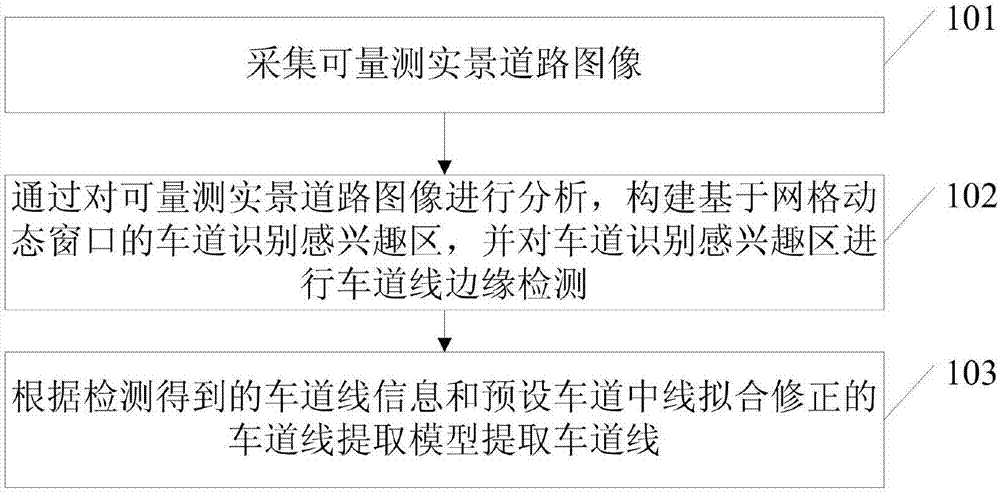

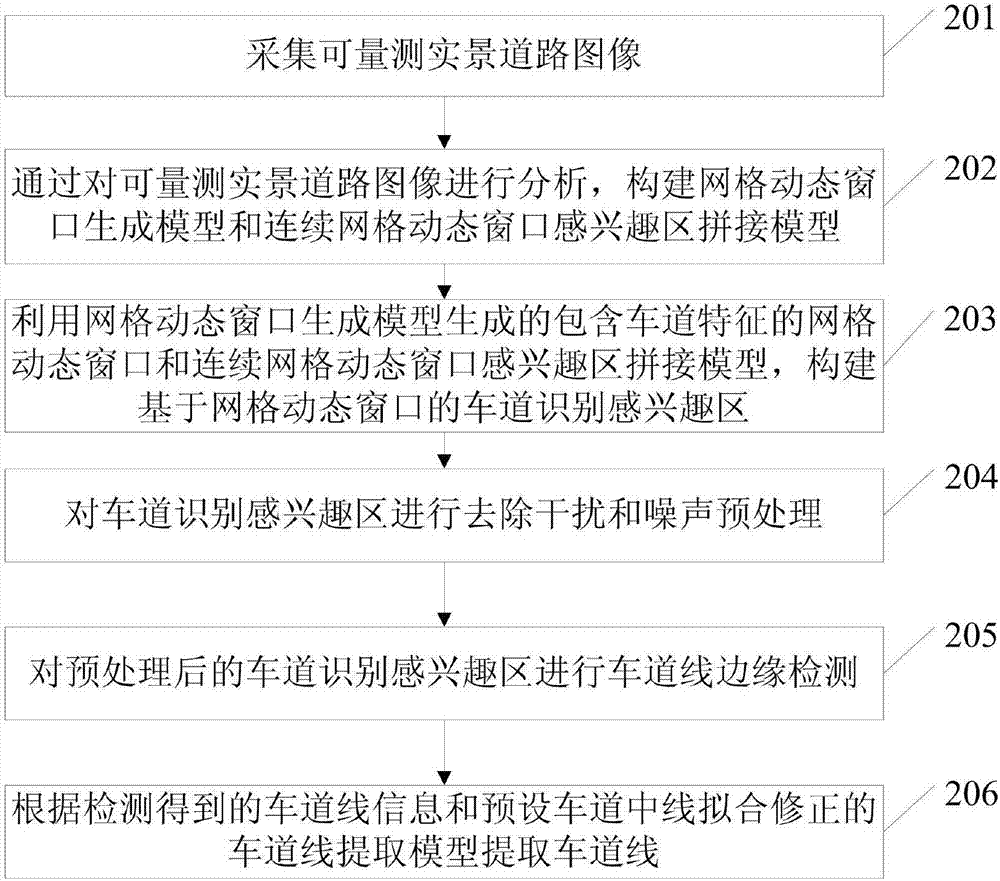

Lane line extraction method and apparatus

ActiveCN107341453AGuaranteed accuracyHigh precisionImage enhancementImage analysisModel extractionComputer graphics (images)

The invention discloses a lane line extraction method, apparatus and system, relates to the technical field of traffic, and mainly aims to improve lane line extraction precision and efficiency. The method comprises the steps of collecting a measureable real scene road image; by analyzing the measureable real scene road image, constructing a grid dynamic window-based lane identification region of interest, and performing lane line edge detection on the lane identification region of interest; and extracting lane lines according to lane line information obtained by detection and a lane line extraction model subjected to line fitting correction in a preset lane. The lane line extraction method, apparatus and system is suitable for measureable real scene road image-based lane line extraction.

Owner:BEIJING UNIV OF CIVIL ENG & ARCHITECTURE

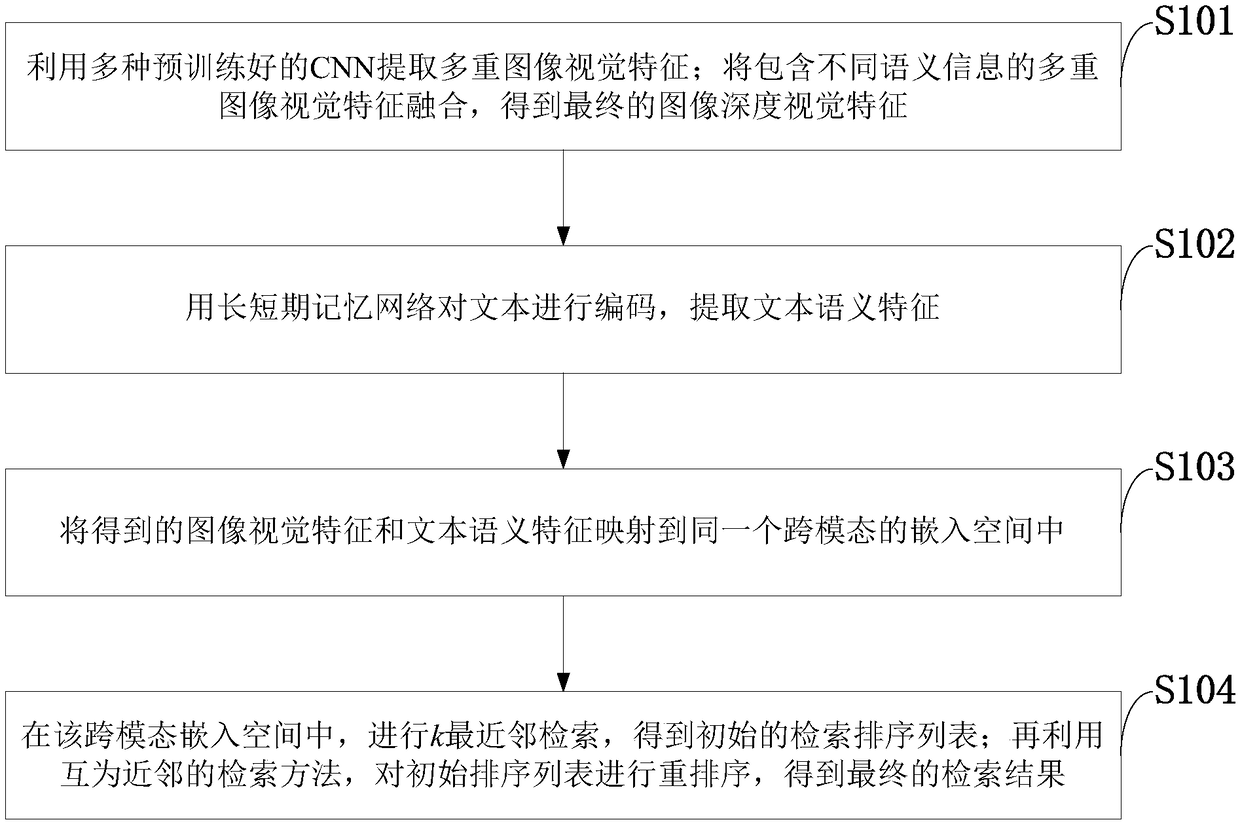

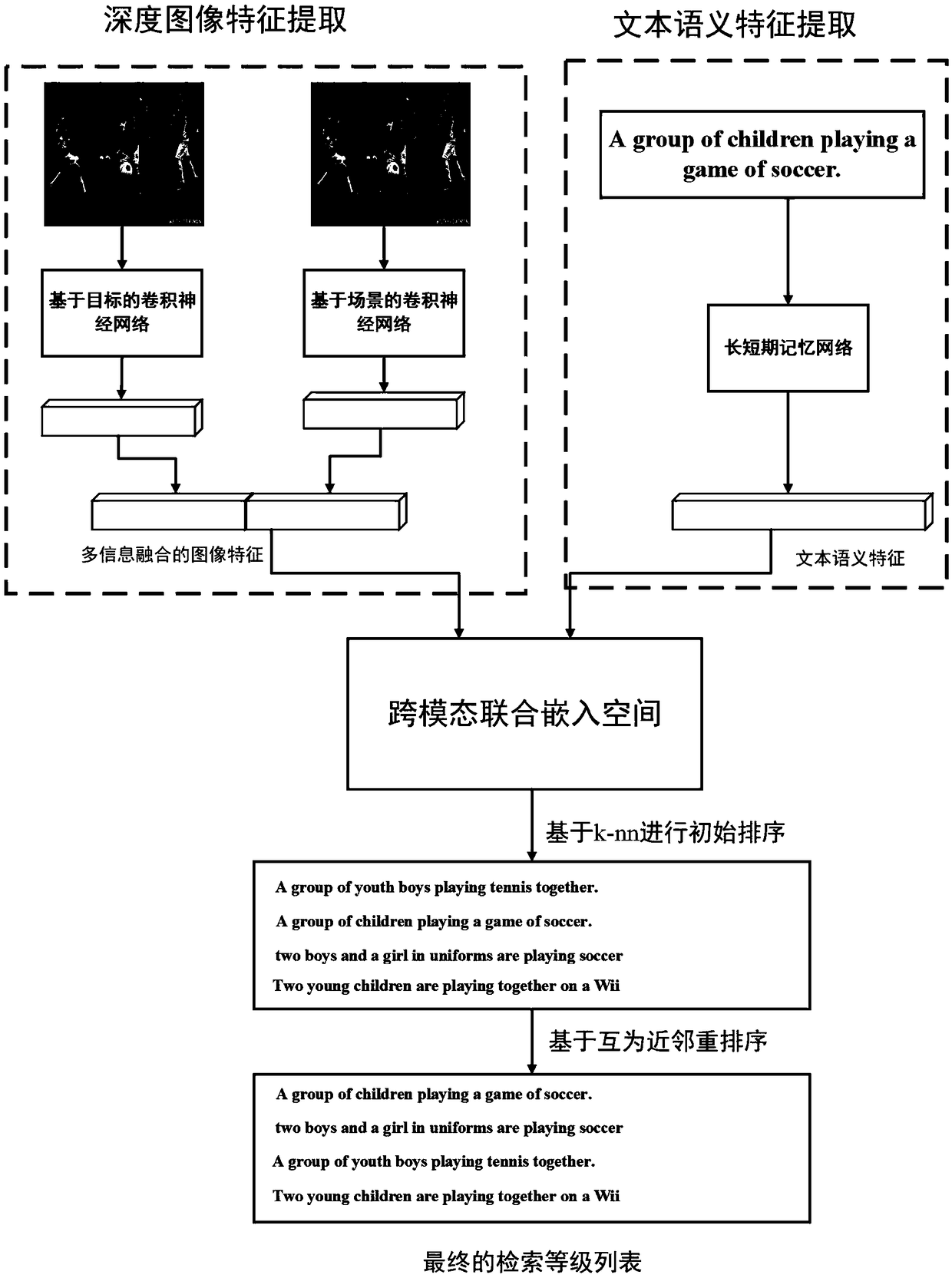

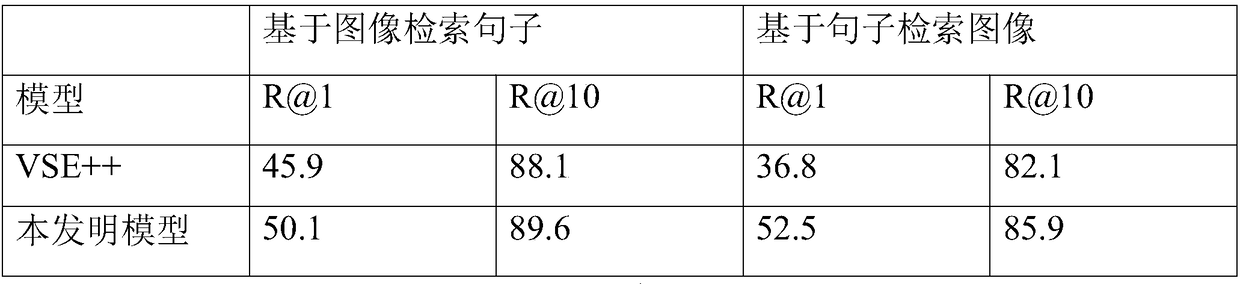

Image-text mutual retrieval method based on complementary semantic alignment and symmetric retrieval

InactiveCN109255047AEasy alignmentReduce mistakesCharacter and pattern recognitionMetadata still image retrievalSemantic alignmentObject based

The invention belongs to the technical field of computer vision and natural language processing, and discloses an image-text mutual retrieval method based on complementary semantic alignment and symmetric retrieval, comprising: using convolution neural network to extract the depth visual features of images; Using the model of object-based convolutional neural network and scene-based convolutionalneural network to extract depth visual features to ensure that the visual features contain multiple complementary semantic information of the object and the scene; encoding the text by using short-term and long-term memory network, and extracting the corresponding semantic features. mapping visual features and text features into the same cross-modal embedding space by using two mapping matrices; Using the k-nearest neighbor method, retrieving the initial list in the cross-modal embedding space. Using the neighborhood relation of symmetrical bi-directional retrieval based on mutual nearest neighbor method, the initial retrieval list is reordered and the final retrieval level list is obtained. The invention has the advantages of high accuracy.

Owner:XIDIAN UNIV

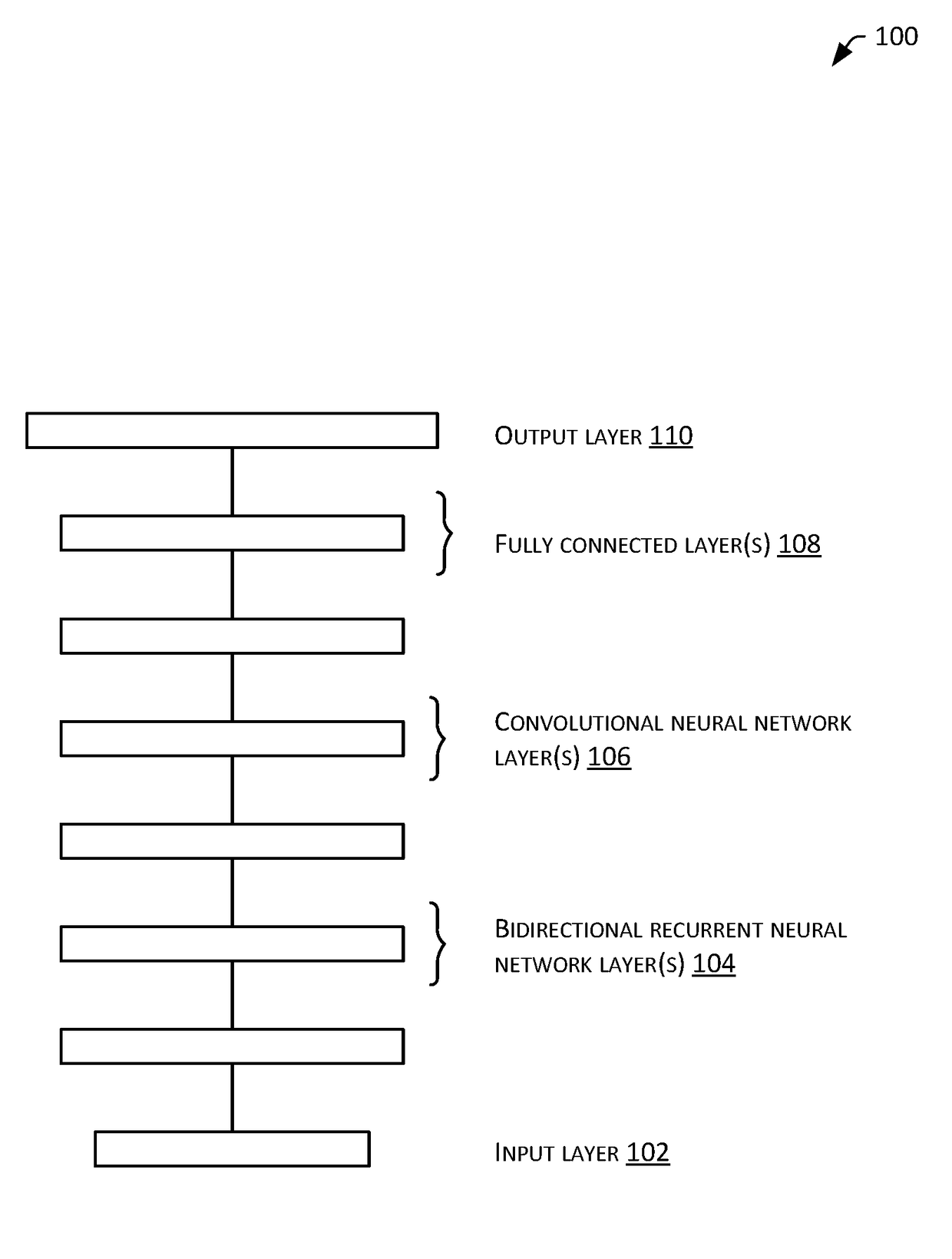

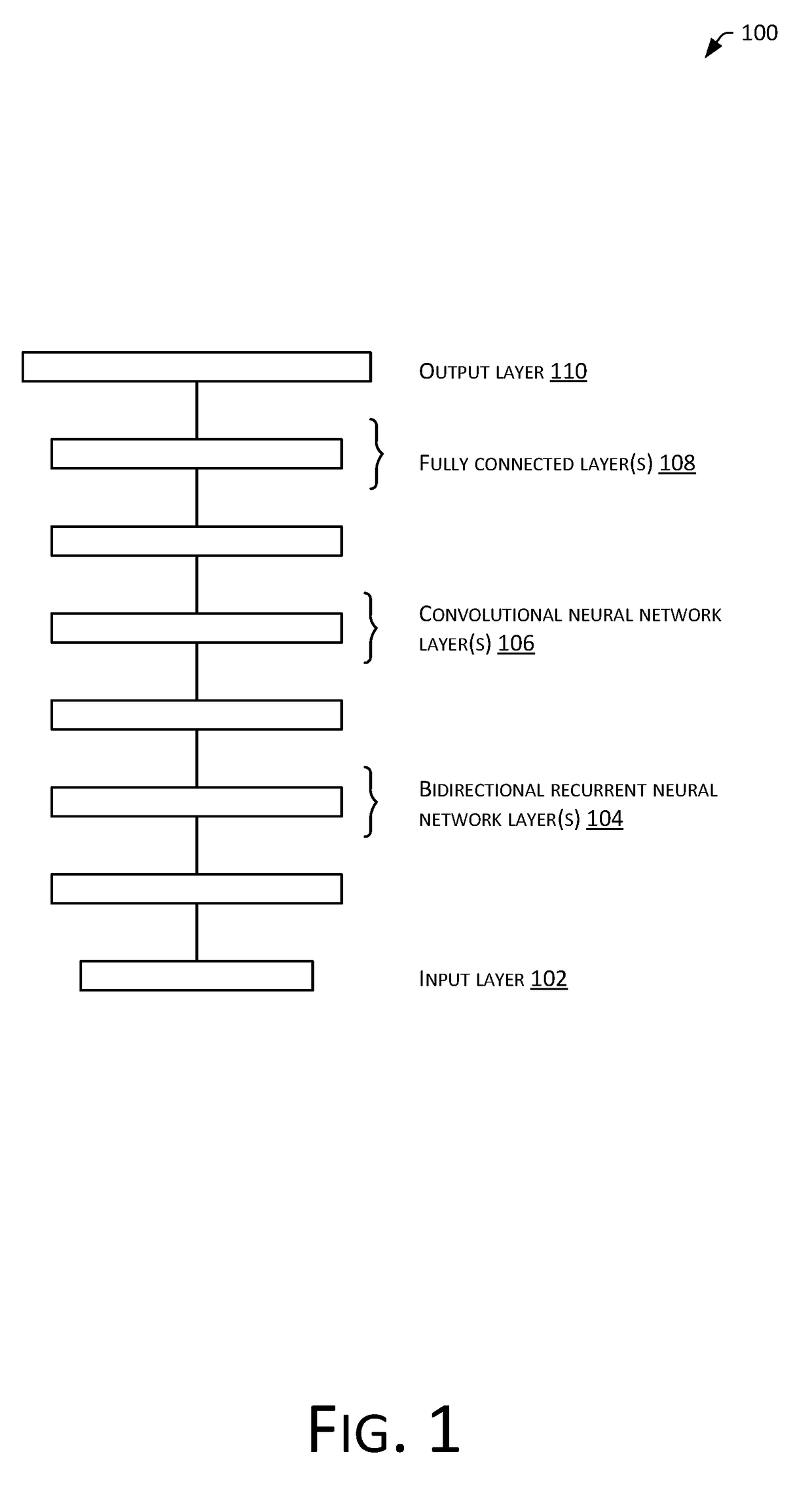

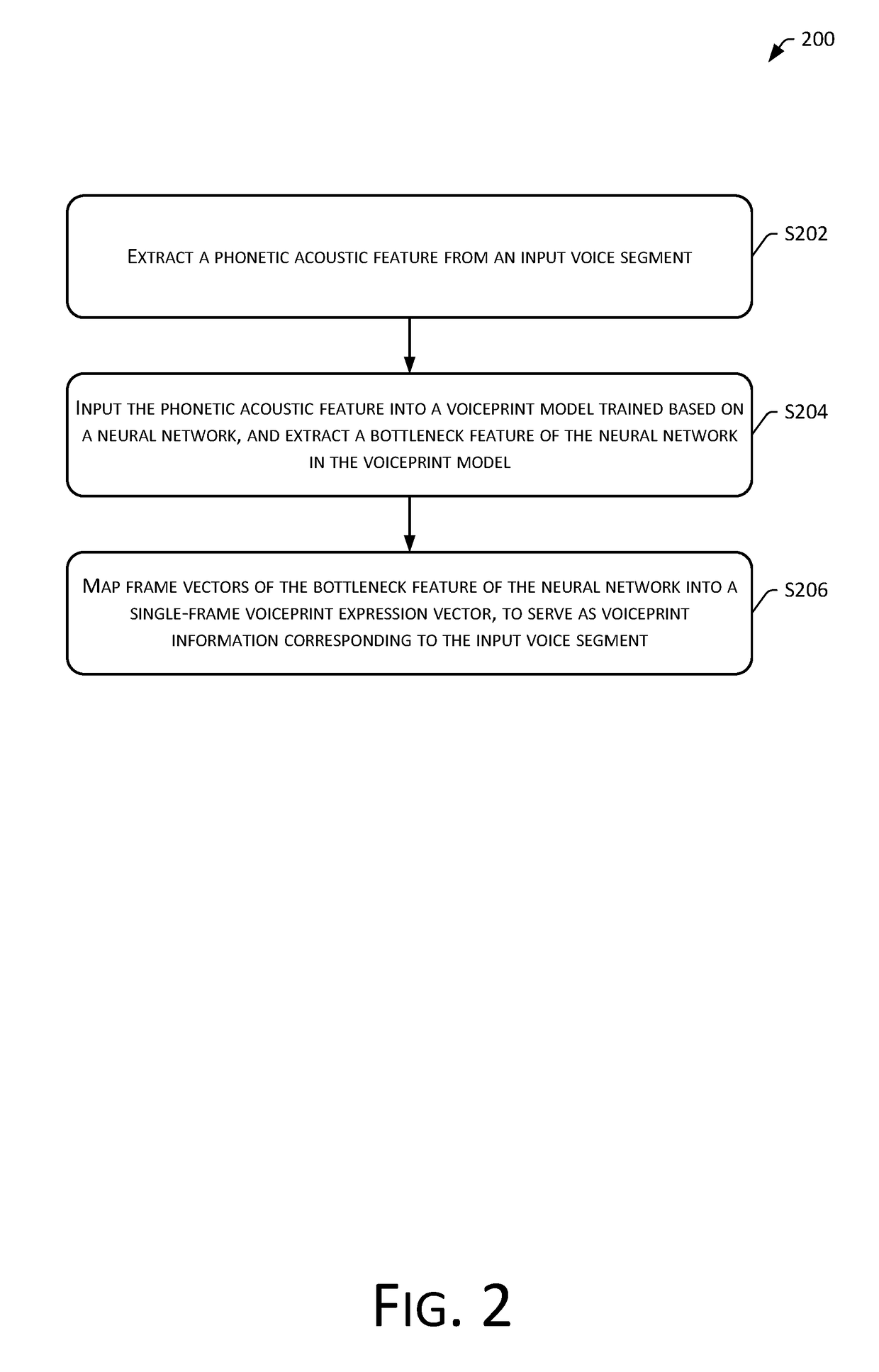

Neural network-based voiceprint information extraction method and apparatus

ActiveUS20170358306A1Simple processEasy extractionSpeech recognitionPattern recognitionModel extraction

A method and an apparatus of extracting voiceprint information based on neural network are disclosed. The method includes: extracting a phonetic acoustic feature from an input voice segment; inputting the phonetic acoustic feature into a voiceprint model trained based on a neural network, and extracting a bottleneck feature of the neural network in the voiceprint model; and mapping frame vectors of the bottleneck feature of the neural network into a single-frame voiceprint expression vector, which serves as voiceprint information corresponding to the input voice segment. The neural network-based voiceprint information extraction method and apparatus extract voiceprint information of a voice segment using a voiceprint model trained based on a neural network, and thus the extraction process is relatively simple, and a short-time voice segment can be processed in a better manner.

Owner:ALIBABA GRP HLDG LTD

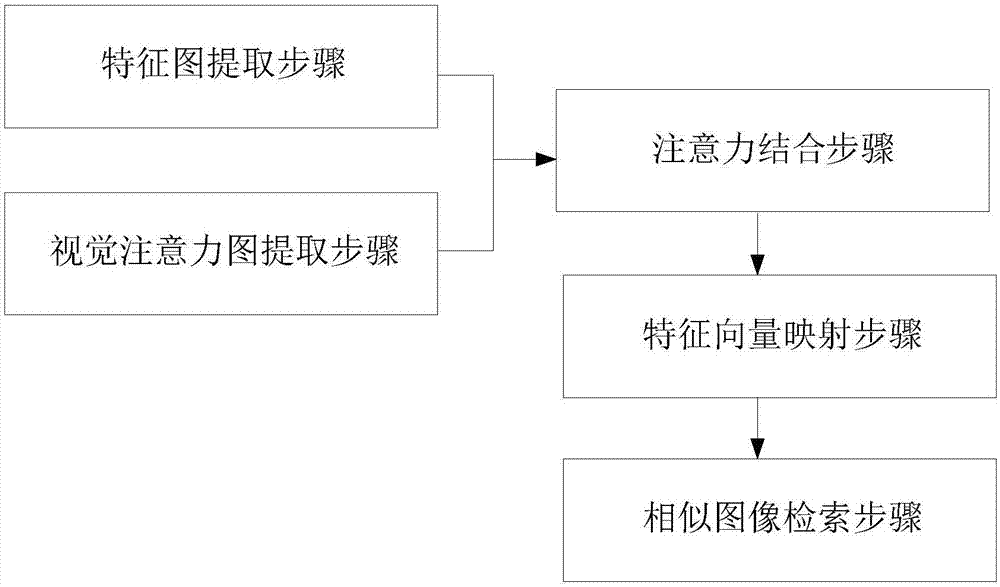

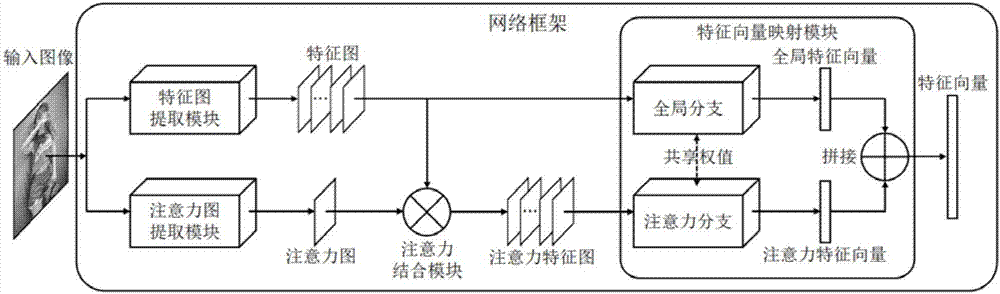

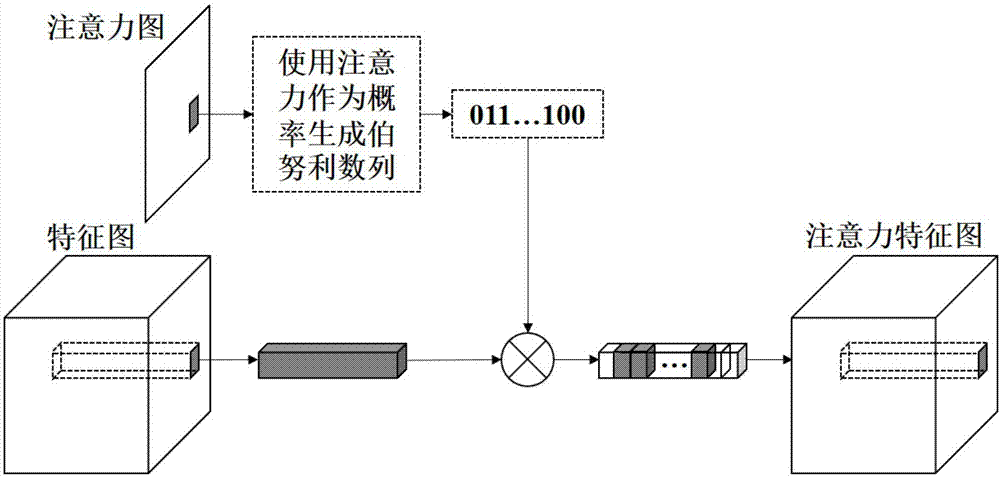

High-precision clothing image retrieval method and system based on visual attention model

ActiveCN107291945AHigh Precision Image RetrievalImprove accuracySpecial data processing applicationsNeural learning methodsFeature vectorModel extraction

The invention provides a high-precision clothing image retrieval method and system based on a visual attention model. The method includes the steps of feature map extraction: for an input image to be retrieved, extracting the feature map composed of floating-point number with the fixed size by using a deep neural network; attention map extraction: for the input image to be retrieved, extracting the attention map by using a neural full-convolution depth network; attention combination: combining the input feature map with the attention map to obtain a attention feature map; feature vector mapping: for the input feature map and the attention feature map, mapping to obtain an image feature vector with the fixed length by using a neural depth network; similar image retrieval. The system includes modules corresponding to each of the steps. The high-precision clothing image retrieval method and system can extract the attention features of the input image by using the visual attention model, and combine with the global features to form the features with multiple perspectives so as to carry out the high-precision image retrieval.

Owner:上海媒智科技有限公司

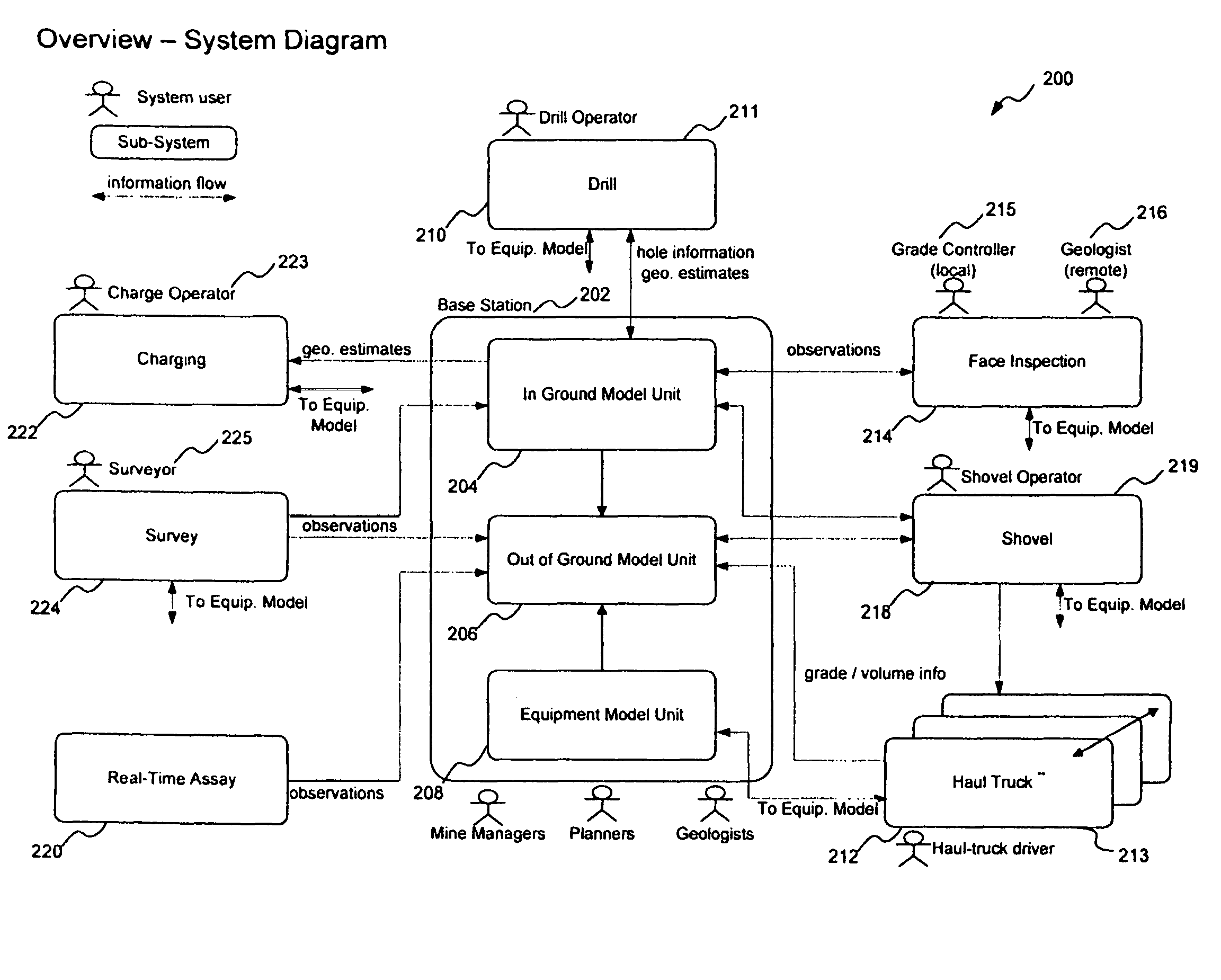

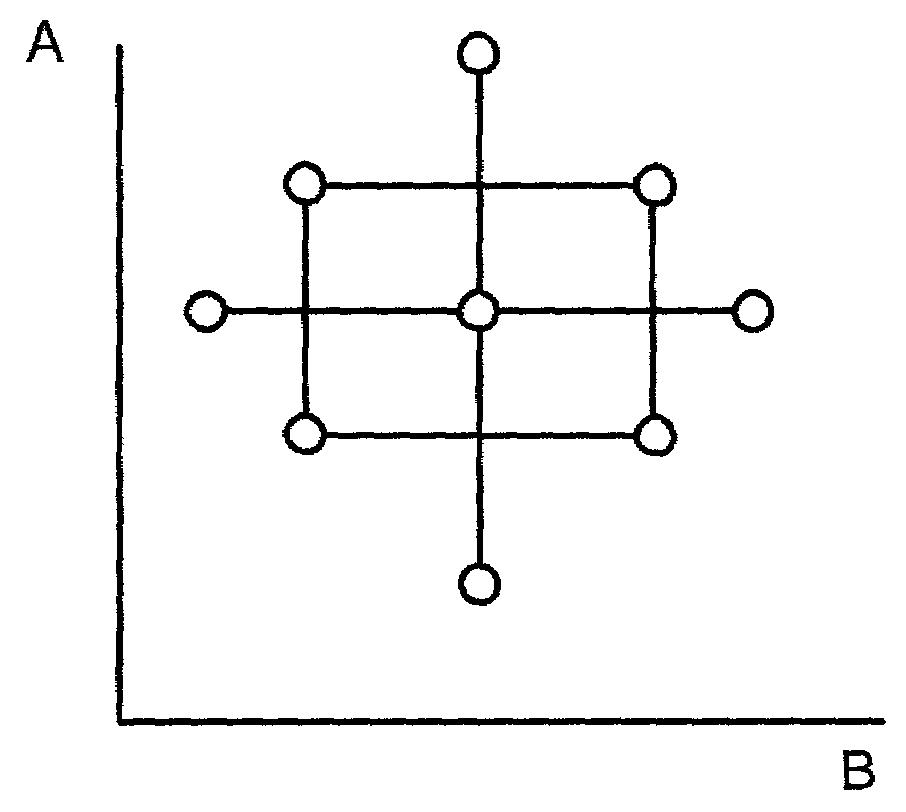

Method and system for exploiting information from heterogeneous sources

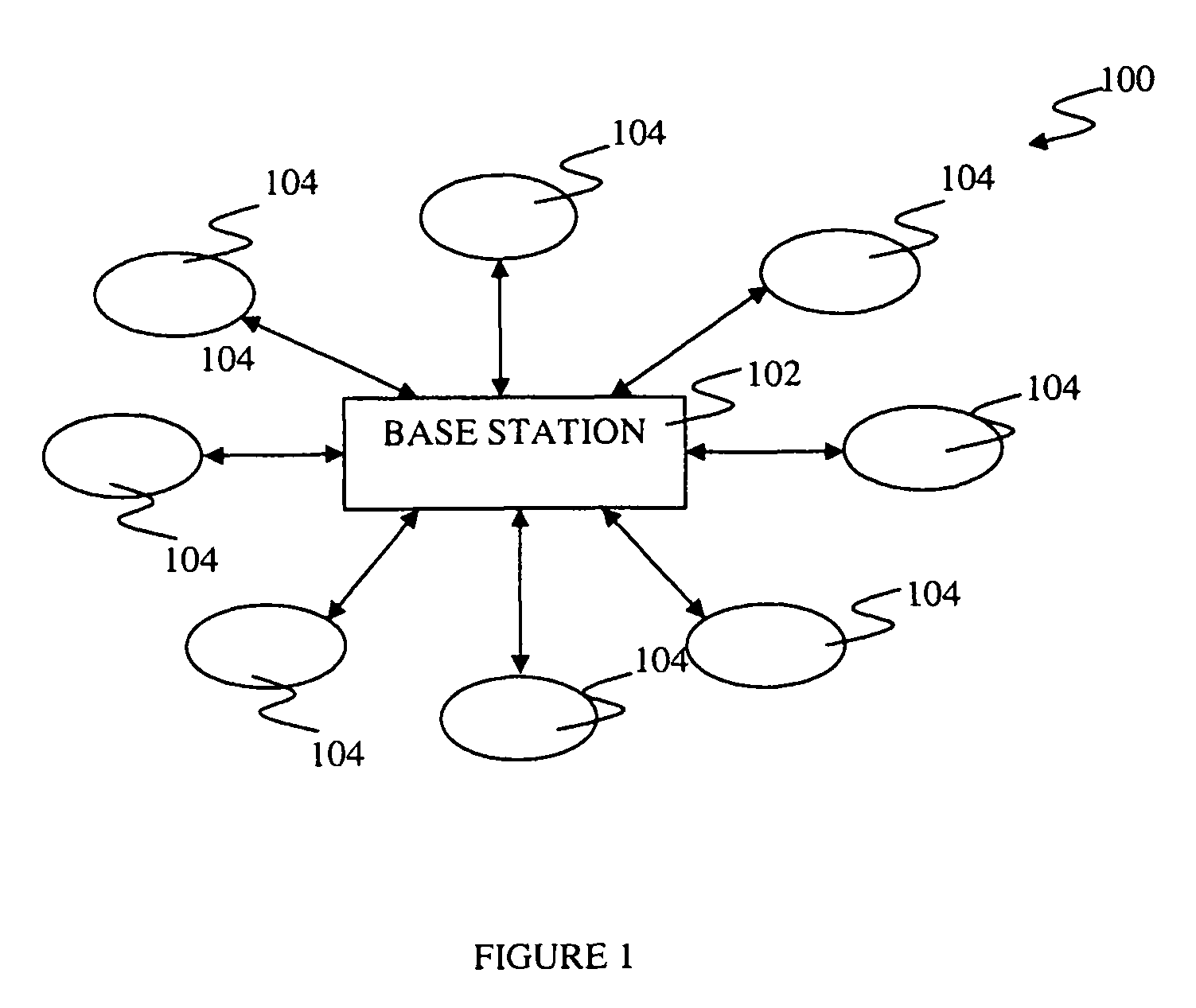

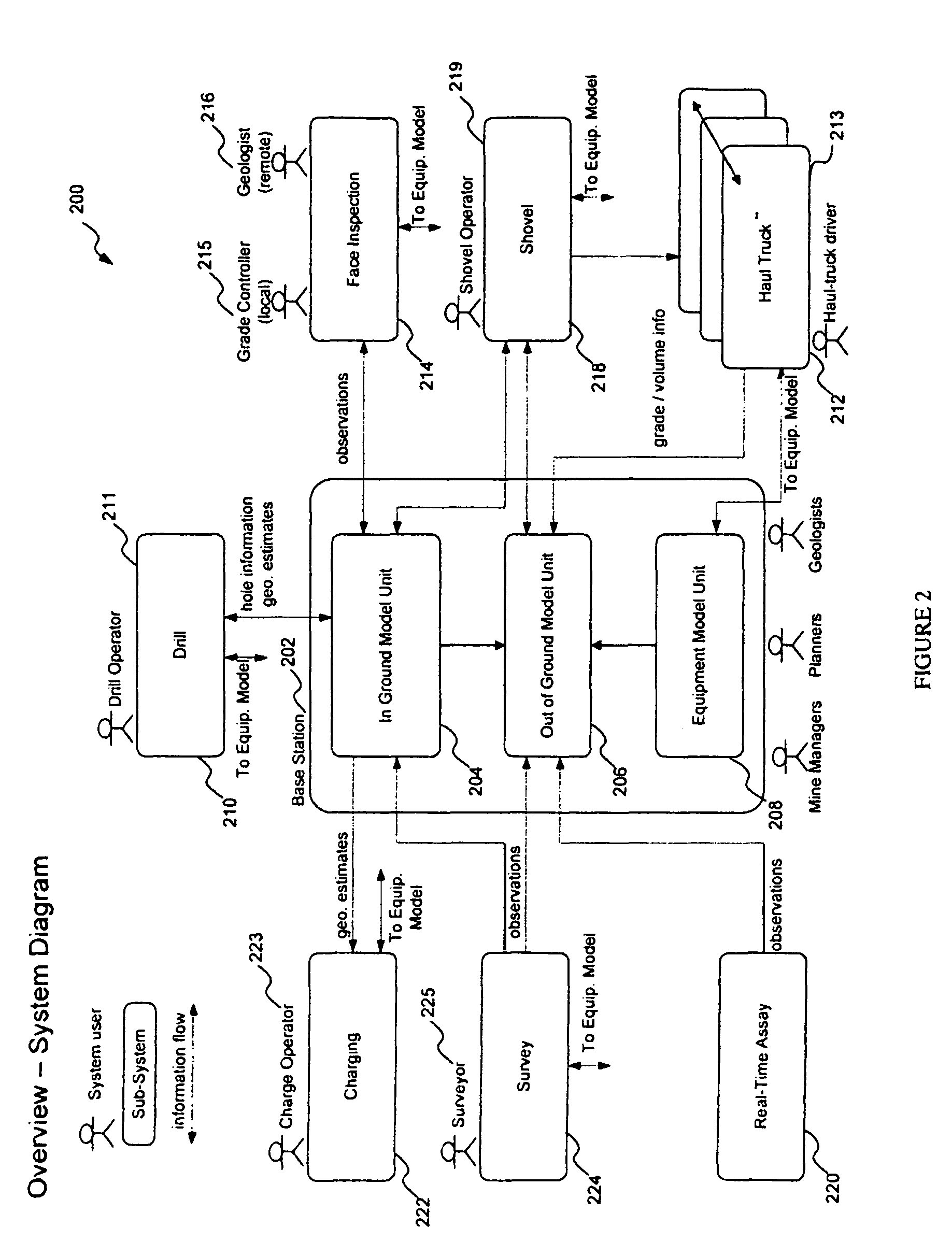

A system and method are described for generating a model of an environment in which a plurality of equipment units are deployed for the extraction of at least one resource from the environment. The system comprises a pre-extraction modeling unit configured to receive data from a first plurality of heterogeneous sensors in the environment and to fuse the data into a pre-extraction model. An equipment modeling unit is configured to receive equipment data relating to the plurality of equipment units and to combine the equipment data into an equipment model. A post-extraction modeling unit is configured to receive data from a second plurality of sensors and to fuse the data into a post-extraction model. Information from the pre-extraction model, the equipment model and / or the post-extraction model is communicable to the equipment units for use in controlling operation of the equipment units in the environment.

Owner:TECH RESOURCES PTY LTD +1

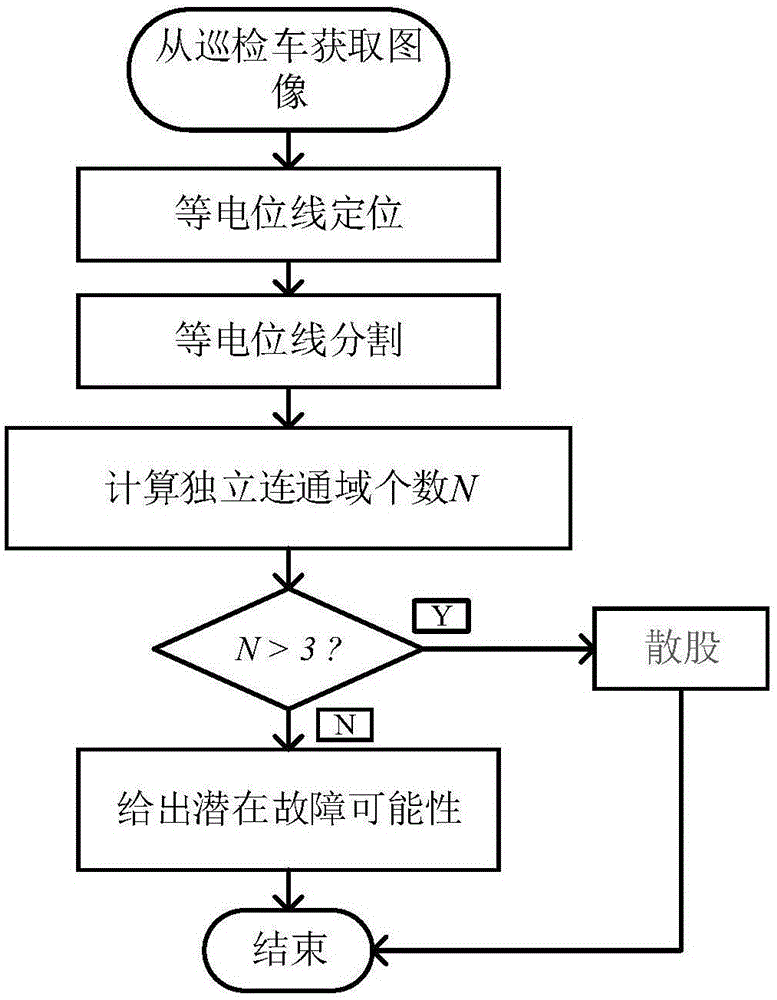

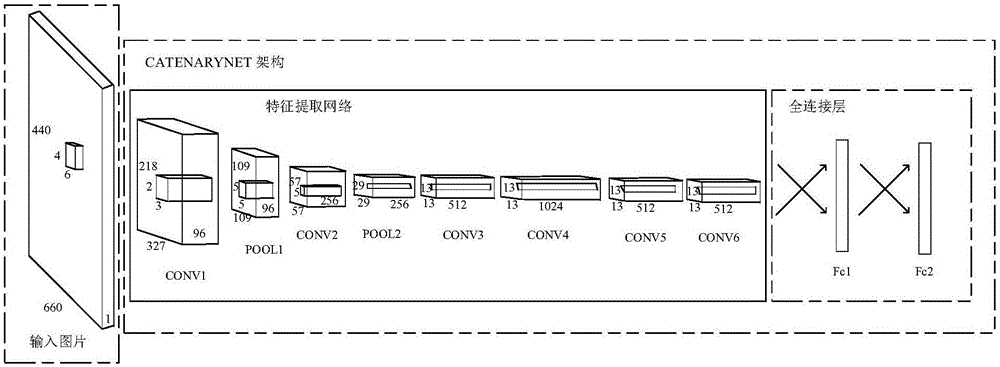

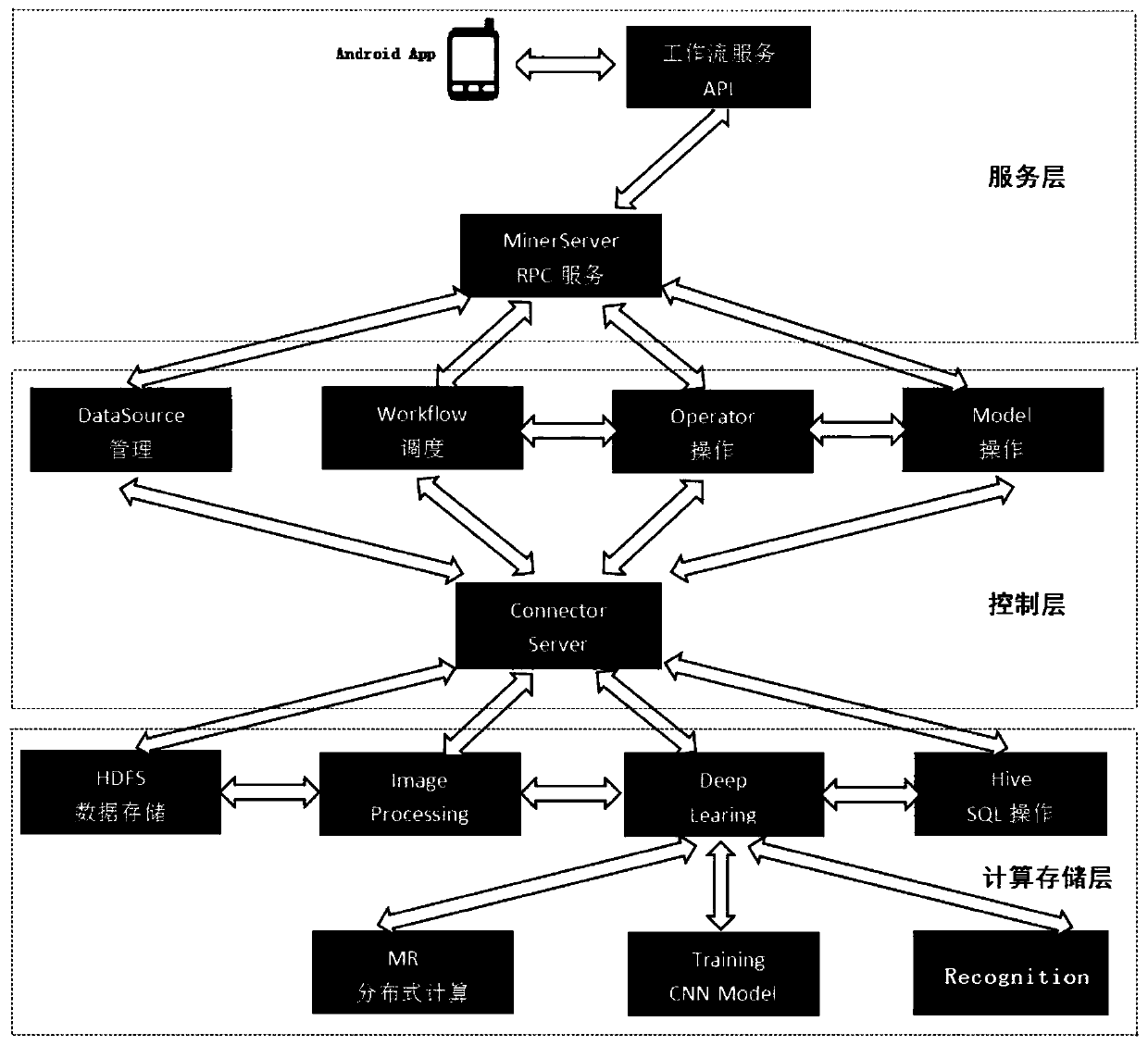

High speed railway catenary fault diagnosis method based on deep convolution neural network

InactiveCN107437245APrecise positioningThe segmentation result is accurateImage enhancementImage analysisNerve networkModel extraction

The invention discloses a high speed railway catenary fault diagnosis method based on a deep convolution neural network. The method comprises the following steps: the two-dimensional gray scale image of a high speed railway catenary supporting device is acquired; the deep convolution neural network is pre-trained through a catenary training set, the deep convolution neural network is put to a faster RCNN for training, an equipotential line in the two-dimensional gray scale image is extracted through a trained model and is segmented, and an equipotential line region picture is acquired; and the acquired equipotential line region picture is sequentially subjected to the following processing: the brightness and the contrast are adjusted; recursive Otsu presegmentation is carried out; and ICM / MPM (Iteration condition model / maximization of the posterior marginal) is used to segment and corrode and expand the picture, equipotential line pixel points are obtained, the maximum connected domain is extracted, and the number N of independent connected domains in the equipotential line pixel point region is counted; and if N is larger than m, separable strand fault is judged to happen to the part of the equipotential line. The equipotential line can be accurately positioned, the fault diagnosis accuracy is improved, and the actual production needs are met.

Owner:SOUTHWEST JIAOTONG UNIV

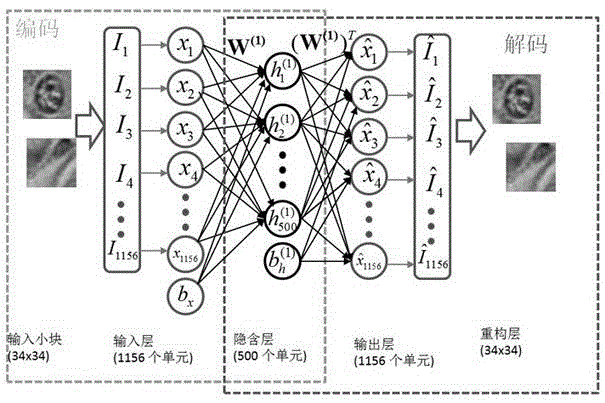

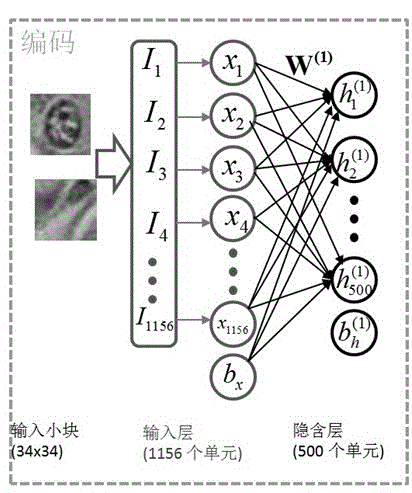

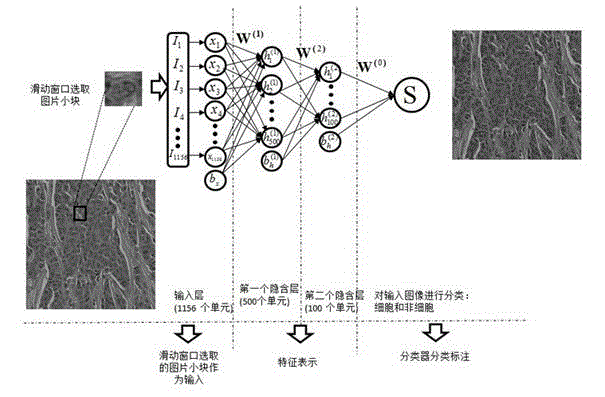

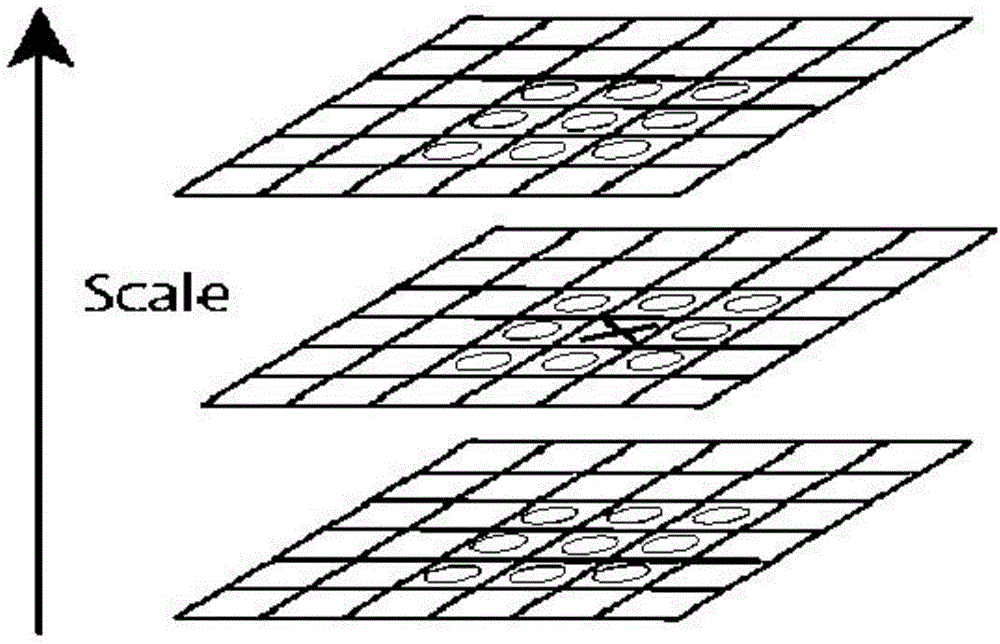

Cell detection method based on sliding window and depth structure extraction features

ActiveCN104346617AImprove detection accuracyImprove accuracyCharacter and pattern recognitionModel extractionFeature extraction

The invention discloses a cell detection method based on a sliding window and depth structure extraction features. The cell detection method is used for automatically detecting cells by utilizing depth model extraction features and then applying a sliding window technology to a pathological section image. The cell detection method comprises the following steps: section image blocking, training of stacked and sparse self-coding of a feature extraction model, detector training, scanning of a large image by the sliding window and cell position labeling. According to the cell detection method, the large section image is used as a search object, the positions of cells in the image can be found more accurately, faster and completely by adopting a new method of combining a detector and the sliding window, and a good detection effect can be achieved for some unobvious cells in the image. The automatic cell detection method disclosed by the invention can be used for assisting a clinical doctor in carrying out quantitative evaluation on digital pathological sections and accurately and rapidly carrying out clinical diagnosis, so that the diagnosis difference of different observers or one observer at different time periods is reduced.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

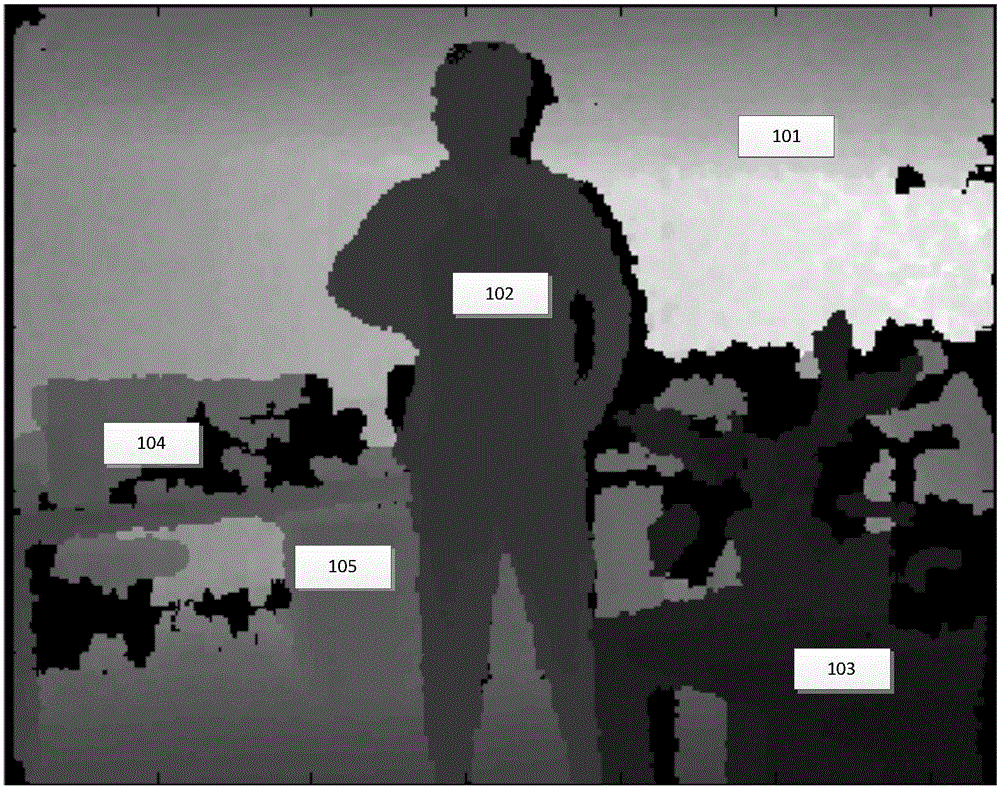

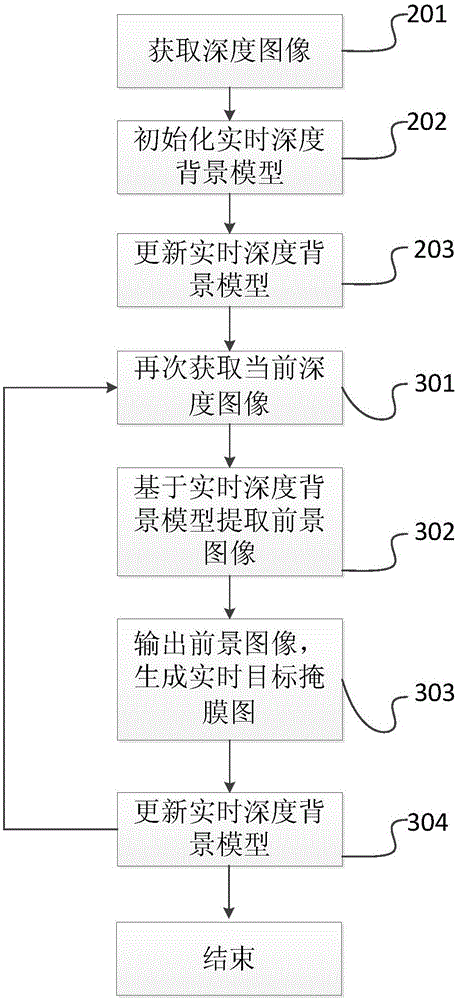

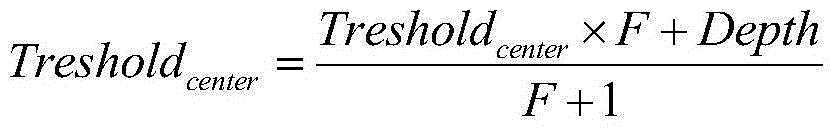

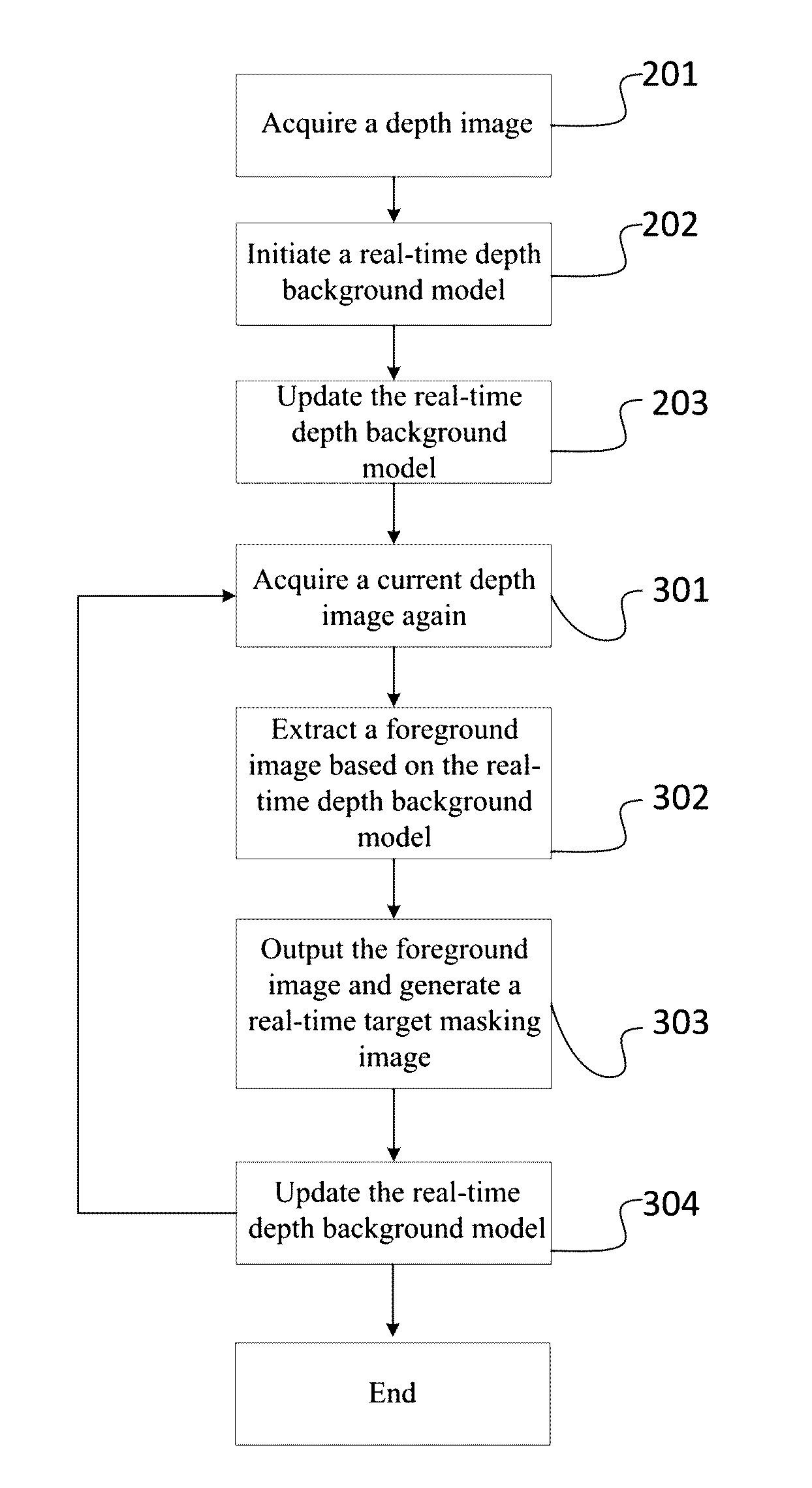

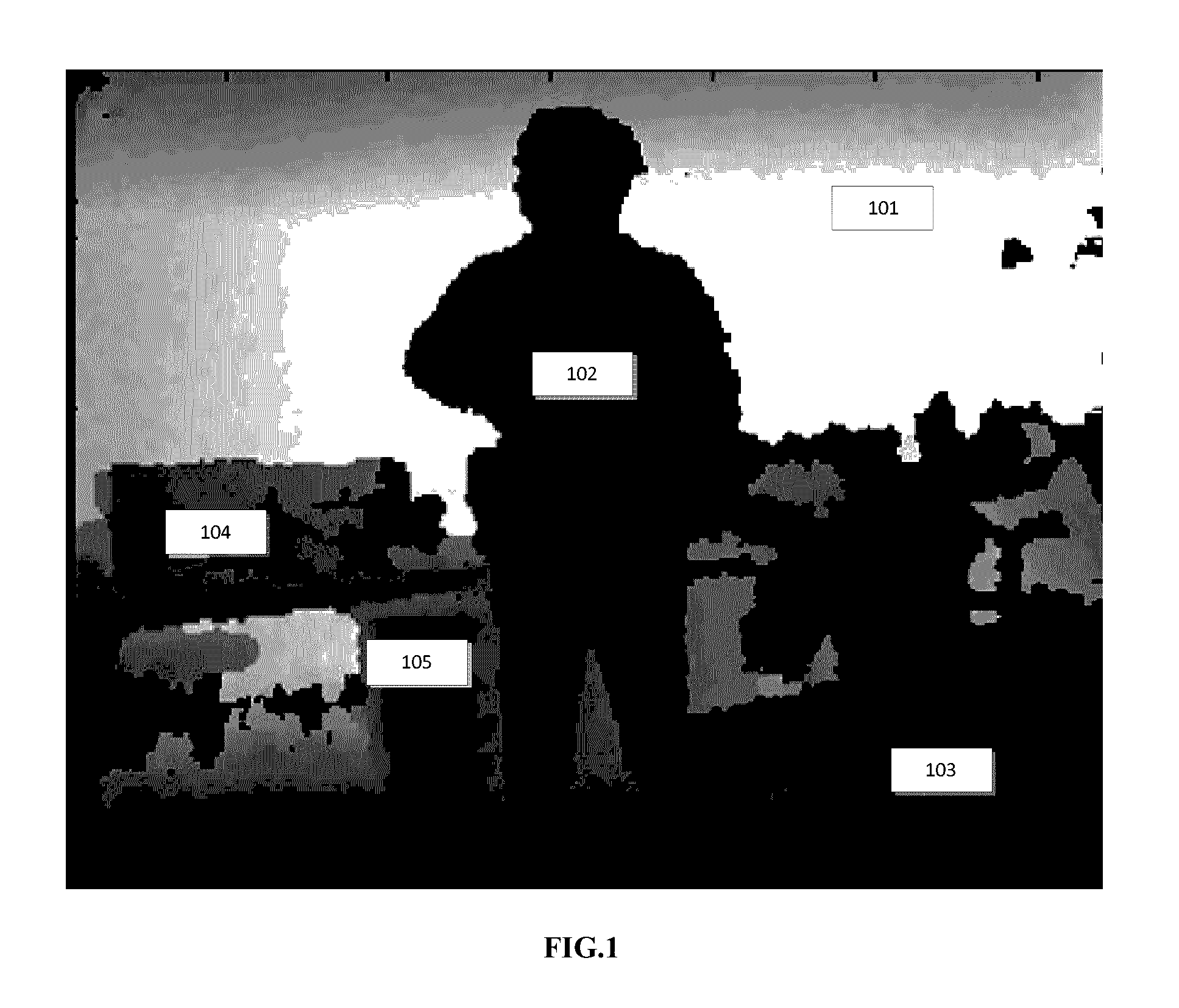

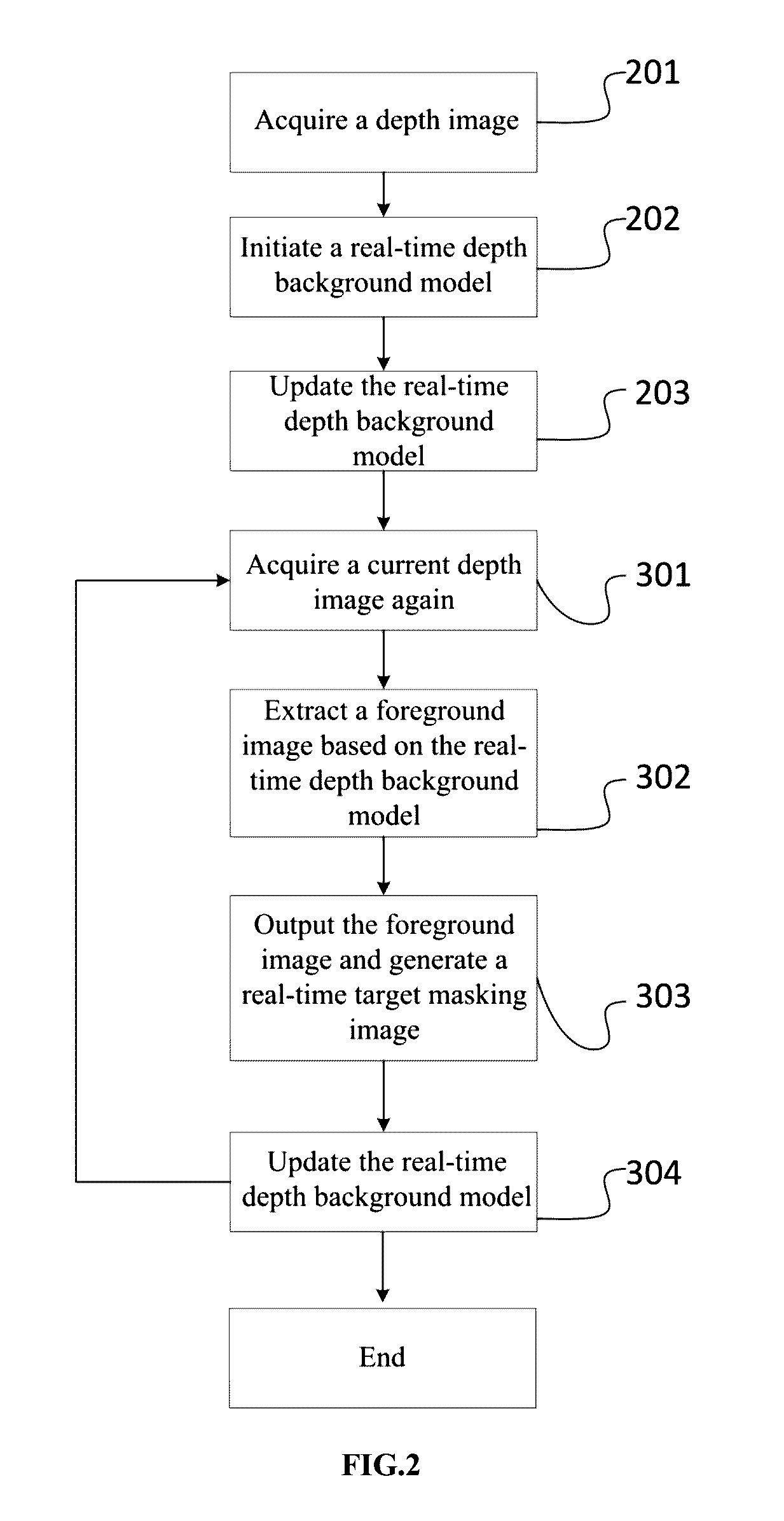

Background modeling and foreground extraction method based on depth map

ActiveCN105005992AIncreased operational complexityMaintain stabilityImage enhancementImage analysisModel extractionDepth map

The present invention relates to a image background modeling and foreground extraction method based on a depth map. The method is characterized by comprising the steps of: step 1, acquiring a depth image for characterizing the distance between an object and a camera; step 2, initializing a real-time depth background model; step 3, updating the real-time depth background model; step 4, acquiring a current depth image for characterizing the distance between the object and the camera; step 5, extracting a foreground image of the current depth image based on the real-time depth background model; step 6, outputting the foreground image and generating a real-time target mask image; and step 7, updating the real-time depth background model: according to the real-time target mask image, updating code group information of each pixel point in the real-time depth background model. The method provided by the present invention has stability incomparable to that of a known color diagram modeling method, high efficiency and superiority in location relationship handling, and initial modeling does not need to be performed on a scene, so that the implementation steps are simplified and the whole efficacy is dramatically improved.

Owner:BEIJING HUAJIE IMI TECH CO LTD

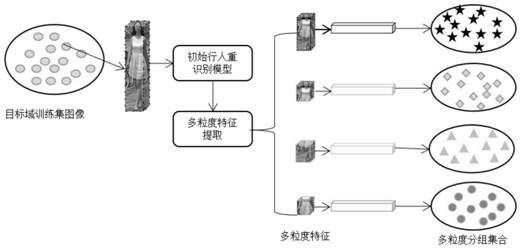

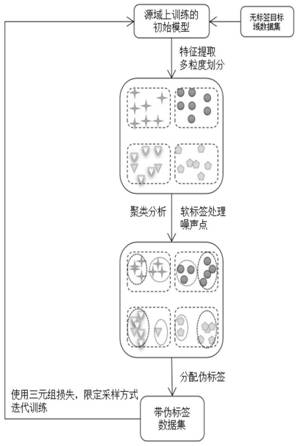

Unsupervised cross-domain self-adaptive pedestrian re-identification method

ActiveCN111967294AImprove learning effectImprove adaptabilityCharacter and pattern recognitionNeural learning methodsData setModel extraction

The invention discloses an unsupervised cross-domain self-adaptive pedestrian re-identification method. The method comprises the following steps of S1, pre-training an initial model in a source domain; s2, extracting multi-granularity characteristics of a target domain by utilizing the initial model, generating multi-granularity characteristic grouping sets, and calculating a distance matrix for each grouping set; s3, performing clustering analysis on the distance matrix to generate intra-cluster points and noise points, and estimating hard pseudo tags of samples in the cluster; s4, accordingto a clustering result, estimating a soft pseudo label of each sample for processing noise points, and updating a data set; s5, retraining the model on the updated data set until the model converges;s6, circulating the steps 2-5 according to a preset number of iterations; s7, inputting the test set data into the model to extract multi-granularity features, and obtaining a final re-identificationresult according to the feature similarity; according to the method, the natural similarity of the target domain data is mined by utilizing the source domain and the target domain, the model accuracyis improved on the label-free target domain, and the dependence of the model on the label is reduced.

Owner:NANCHANG UNIV

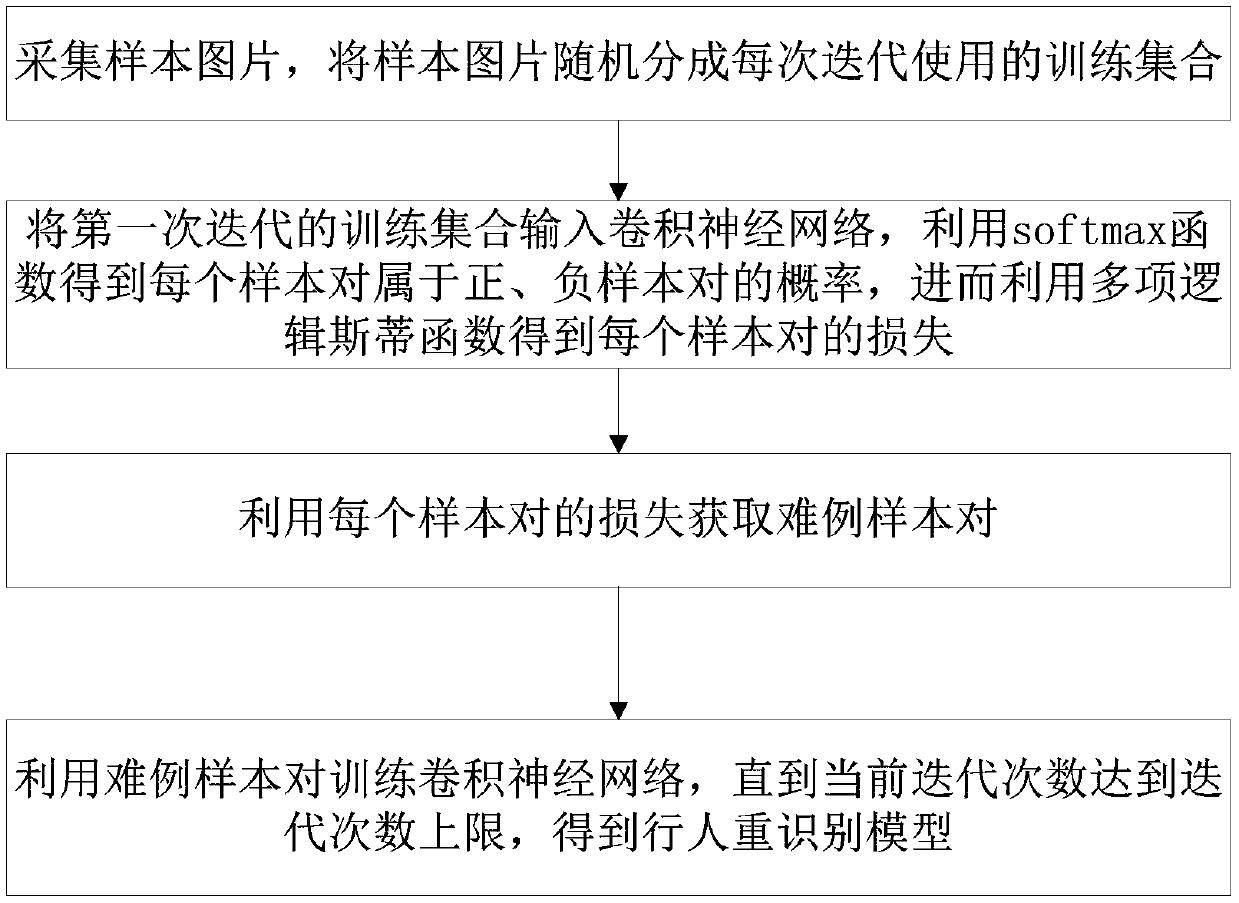

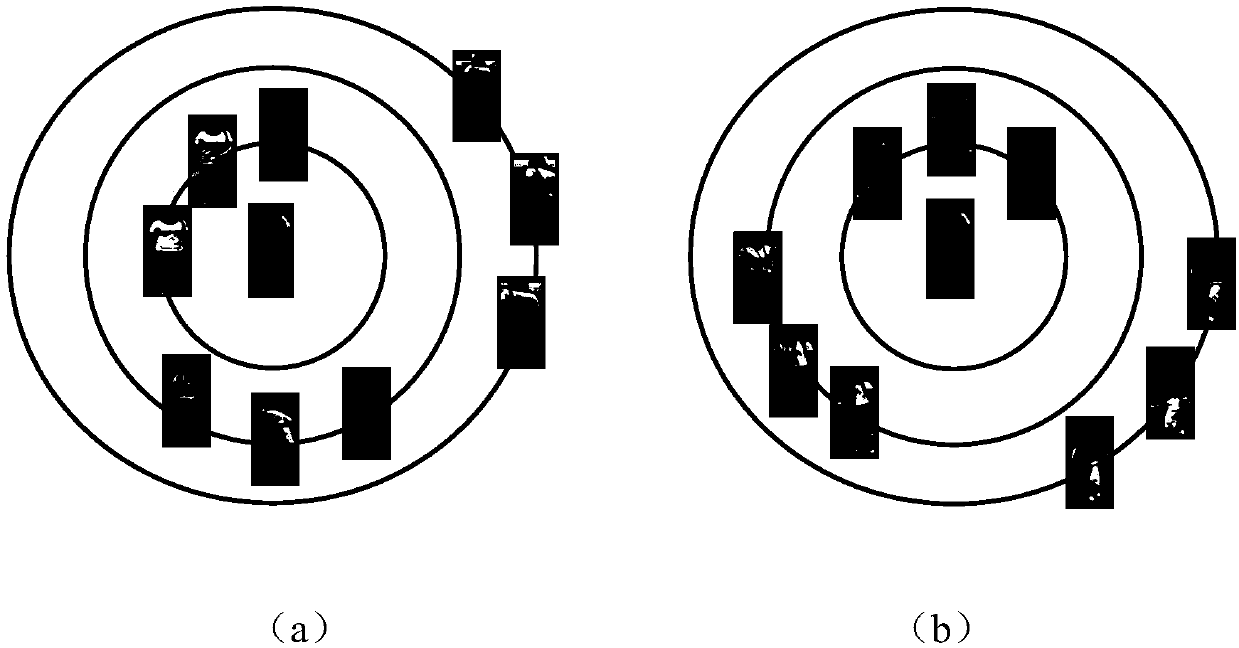

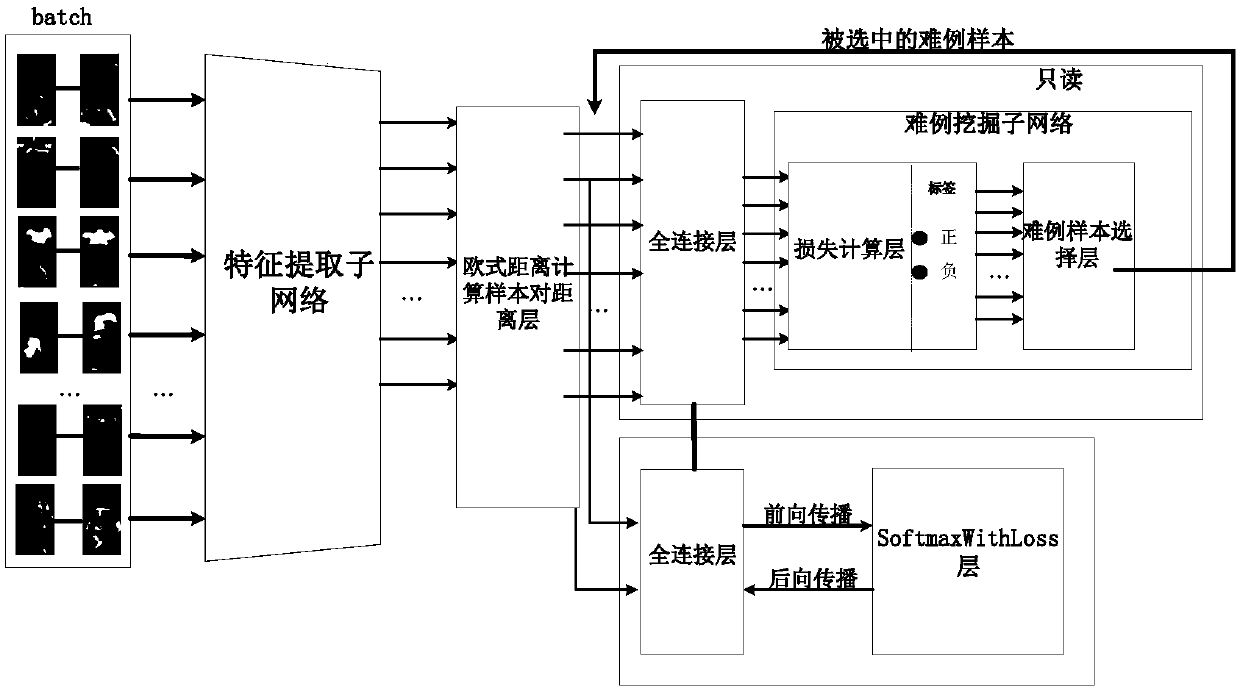

Pedestrian re-identification model, method and system for adaptive difficulty mining

InactiveCN108647577AChoose sciencePrevent overfittingCharacter and pattern recognitionModel extractionSample image

The invention discloses a pedestrian re-identification model, method and system for adaptive difficulty mining. The identification method comprises the steps of: randomly dividing sample pictures intoa training set used for each iteration, inputting the training set into a convolutional neural network, obtaining the probability that each sample pair belongs to a positive or negative sample pair by using a softmax function, and then obtaining the loss of each sample pair by using a multinomial logistic function; obtaining a difficult sample pair by using the loss of each sample pair; and training the convolutional neural network by using the difficult sample pair until the current number of iterations reaches the upper limit of the number of iterations, thus obtaining the pedestrian re-identification model. The pedestrian re-identification model is used to extract features of each picture in a picture set to be identified, and then a similarity order of the sample pairs in the pictureset to be identified is obtained. The pedestrian re-identification model, method and system avoid over-fitting and under-fitting, and have high recognition accuracy.

Owner:HUAZHONG UNIV OF SCI & TECH

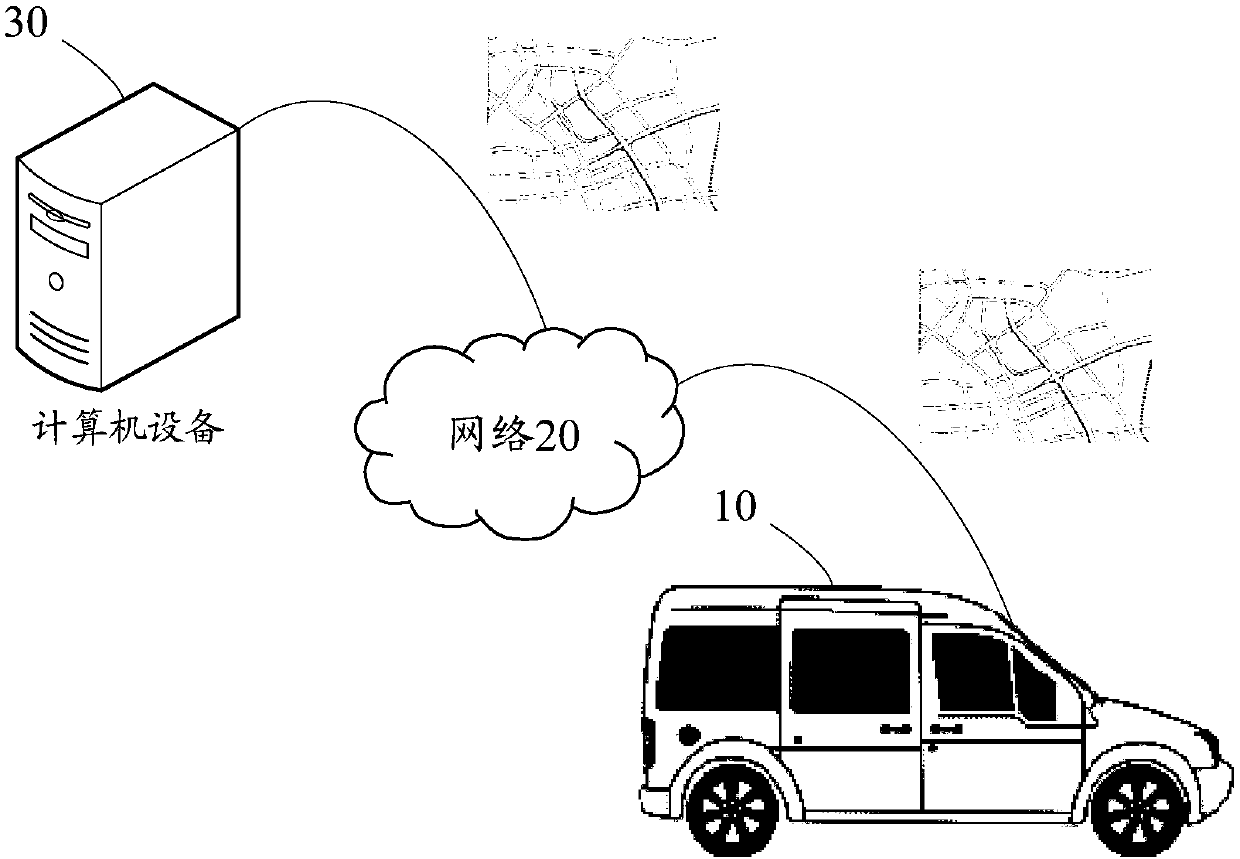

Ground mark extraction method, model training method, equipment and storage medium

ActiveCN108564874AImprove efficiencyImprove accuracyImage enhancementImage analysisPoint cloudModel extraction

The invention discloses a ground mark determination method which comprises the steps of acquiring a point cloud grey chart, running a mark extraction network model to acquire ground mark information from a road section map, and determining a target ground mark in ground marks according to the ground mark information, wherein the point cloud grey chart comprises a road section map; the mark extraction network model used for extracting the ground marks contained in the road section map; the ground mark information comprises information of each ground mark extracted by the mark extraction model;and the ground marks are driving indication information marked on ground of a road section. According to the method, the ground mark information in the road section map is acquired by the mark extraction network model; and the ground marks in the road section map are determined according to the ground mark information, so that the efficiency and accuracy of the ground marks in the road section mapare improved.

Owner:LINKTECH NAVI TECH +1

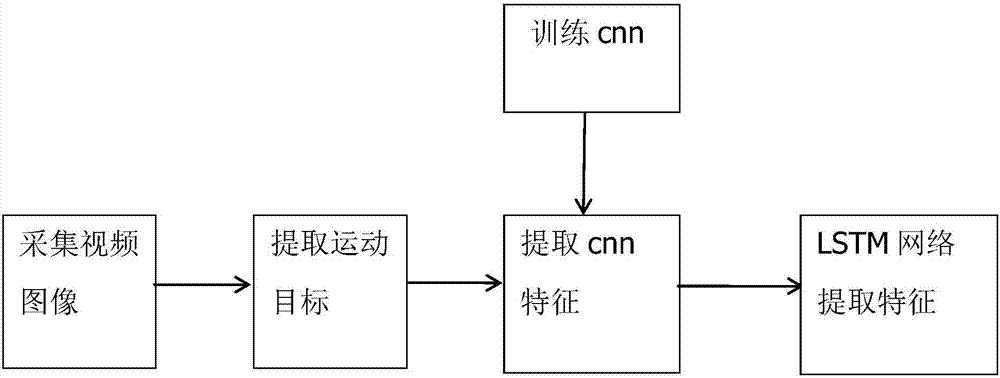

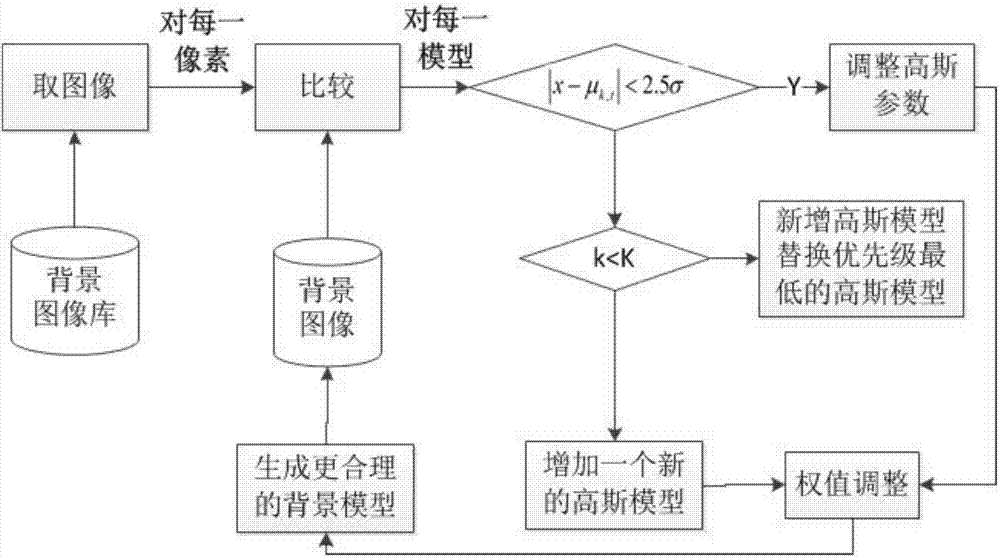

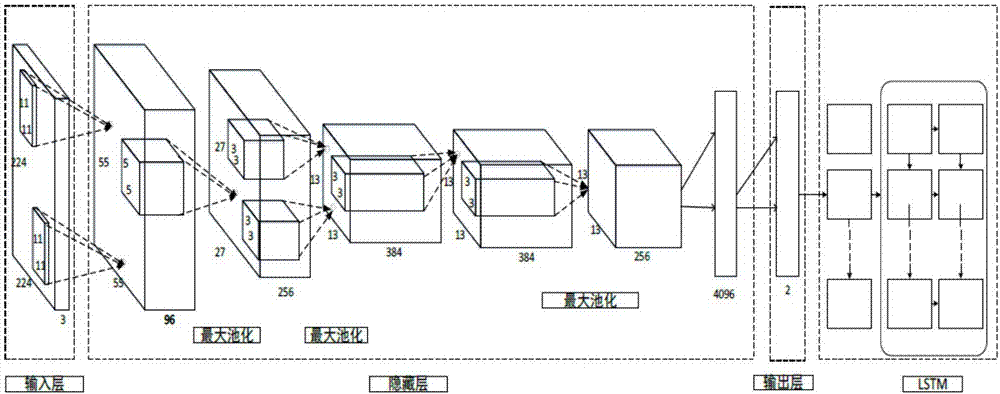

Gaussian background modeling and recurrent neural network combined vehicle type classification method

ActiveCN107133974AEasy to detectHigh precisionImage enhancementImage analysisModel extractionFeature extraction

Provided is a Gaussian background modeling and recurrent neural network combined vehicle type classification method. A Gaussian mixture model is used to extract a moving object, the moving object is sent to the recurrent neural network for feature extraction, and whether the moving object is a vehicle as well as the vehicle type are determined according to a vector output by the recurrent neural network (RNN). According to the invention, RNN is used for subsequent operation of the Gaussian mixture model to achieve the goal of vehicle type classification, the Gaussian mixture model is used to carry out background modeling on a video sequence, a moving object area is detected, CNN is used to classify the detected moving object area, and classification results are input the RNN to obtain final classification and further determine whether the object is a carriage or a lorry or is not a vehicle. Gaussian background modeling is combined with the recurrent neural network creatively, the method is highly robust, and the vehicle detecting and vehicle type identifying precision can be improved greatly.

Owner:NANJING UNIV

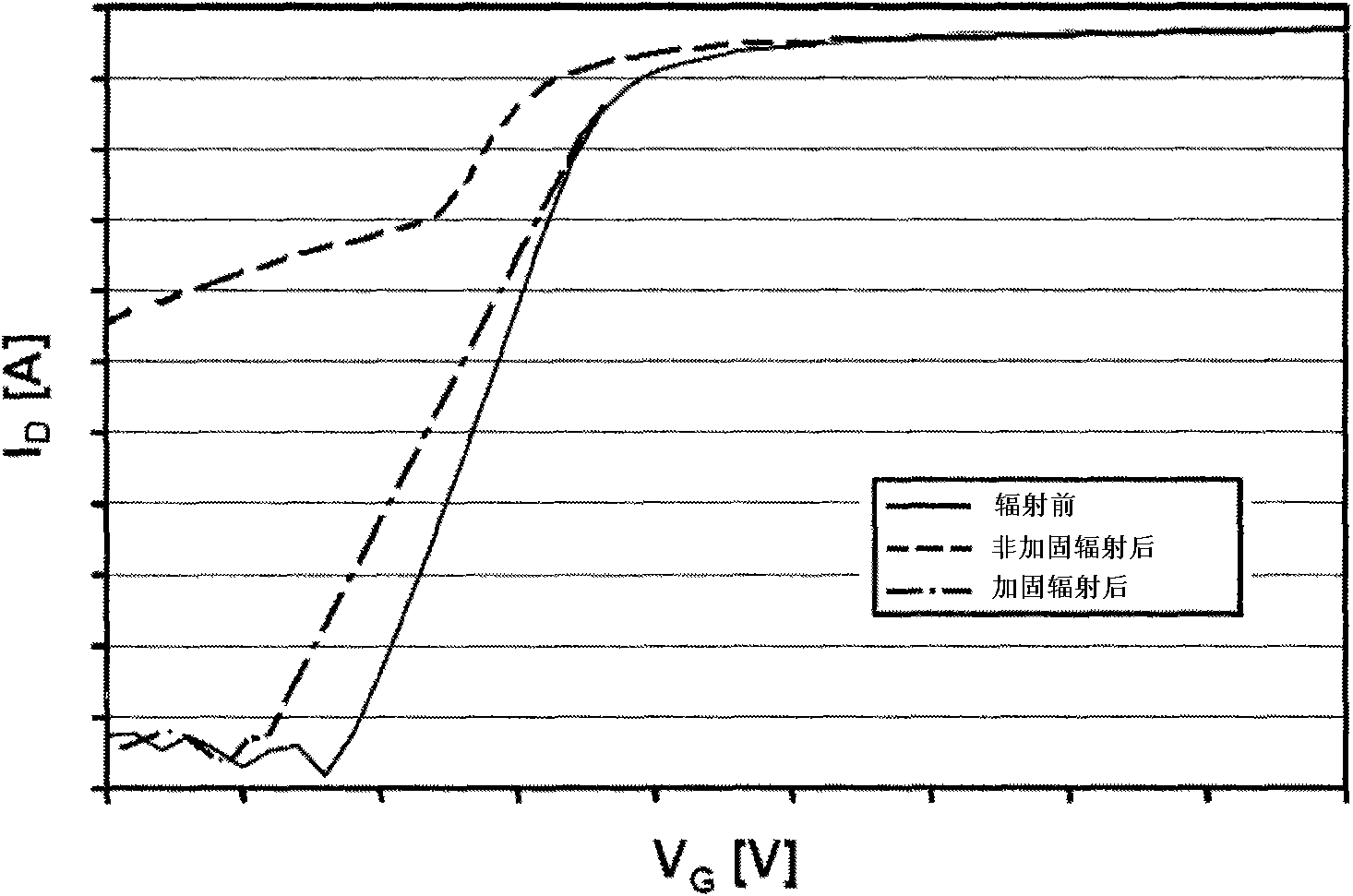

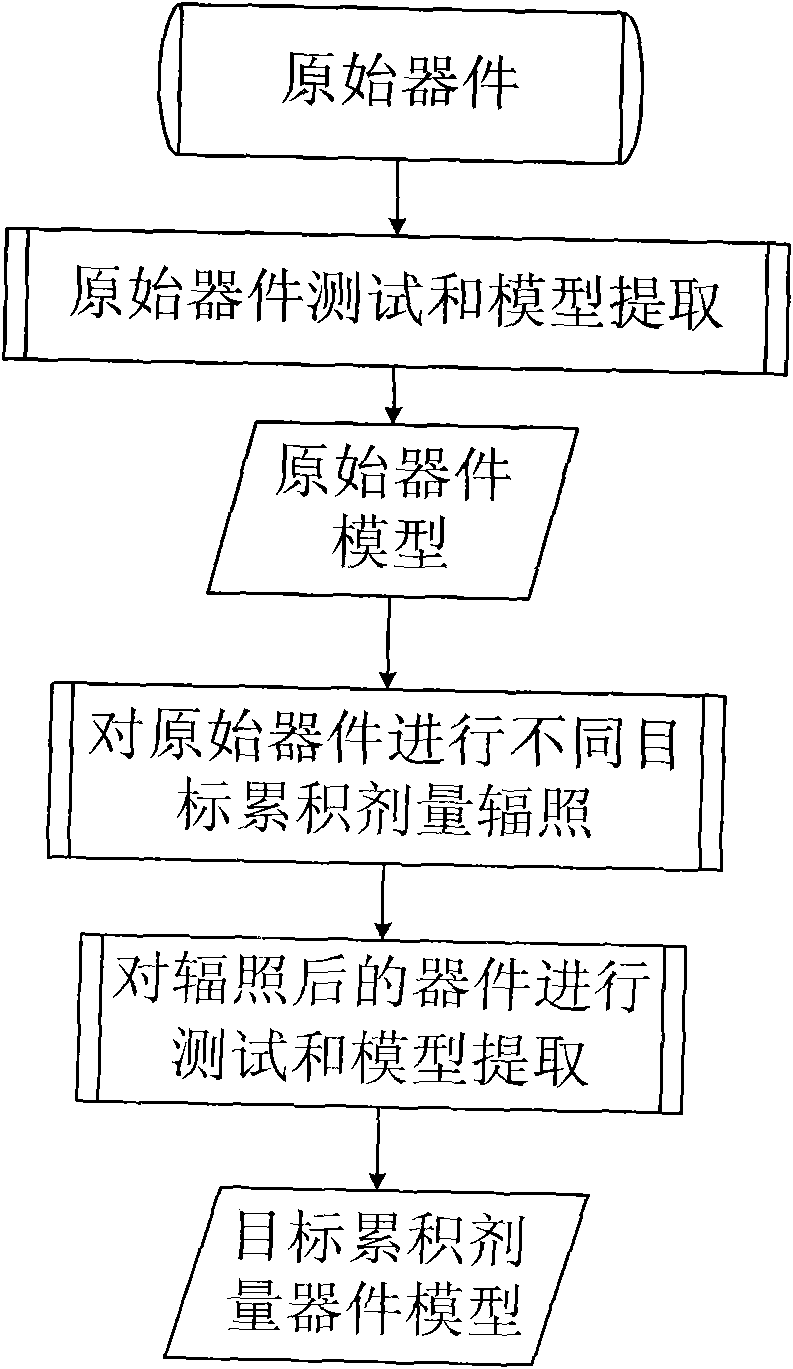

A device modeling method in relation to total dose radiation

InactiveCN101551831ASolve modeling problemsHigh precisionSpecial data processing applicationsModel extractionComputational physics

A device modeling method in relation to total dose radiation, wherein firstly, an original electronic device is designed and undergoes testing and model extraction to obtain the model of the original device, secondly, the obtained original device undergoes radiation at different target cumulative doses and model extraction to obtain the device model after radiation at all target cumulative doses, and lastly, the obtained original device model and the device model that has received radiation at all target cumulative doses jointly constitute the device model in relation to total dose radiation. Through testing and parameter extraction of the original device and the device that has received radiation at all target cumulative doses, the present invention provides a method for accurate modeling of hardened devices and unhardened devices. Meanwhile it adds radiation dose as a variable into the realization method of device model so that the performance of the circuit that has received radiation at different doses can be accurately predicted through simulation, thus raising the design efficiency and success rate of the circuits.

Owner:BEIJING MXTRONICS CORP +1

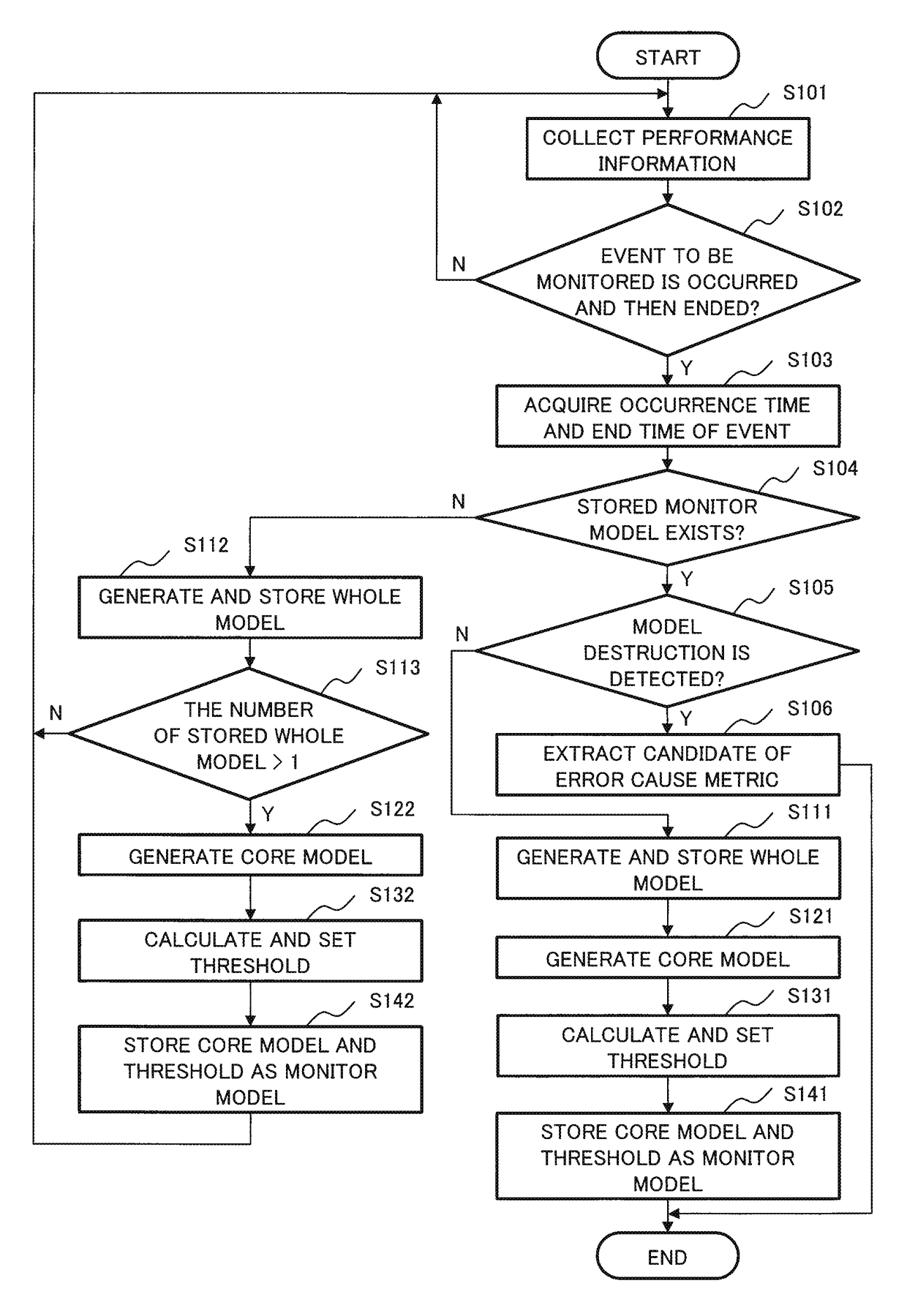

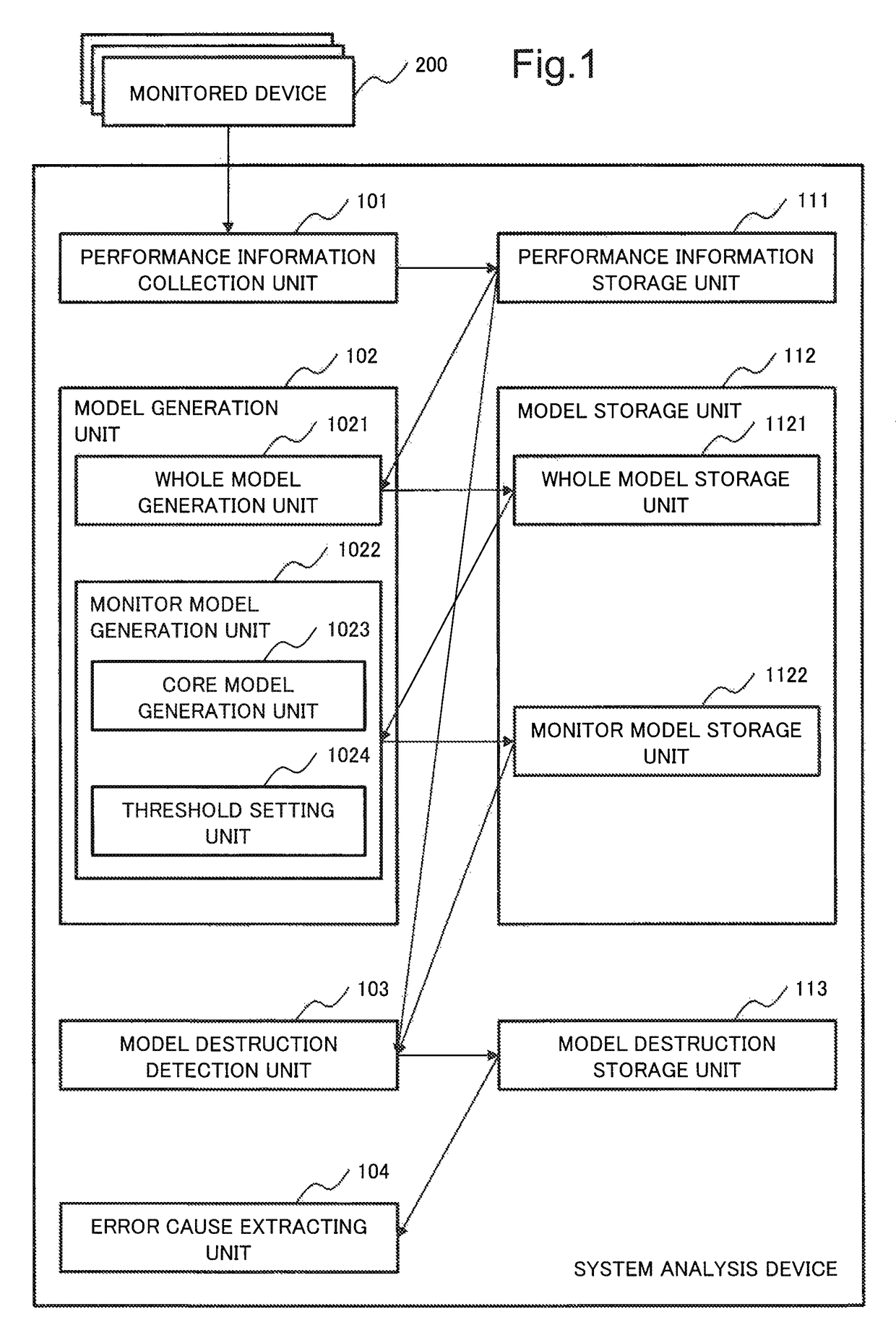

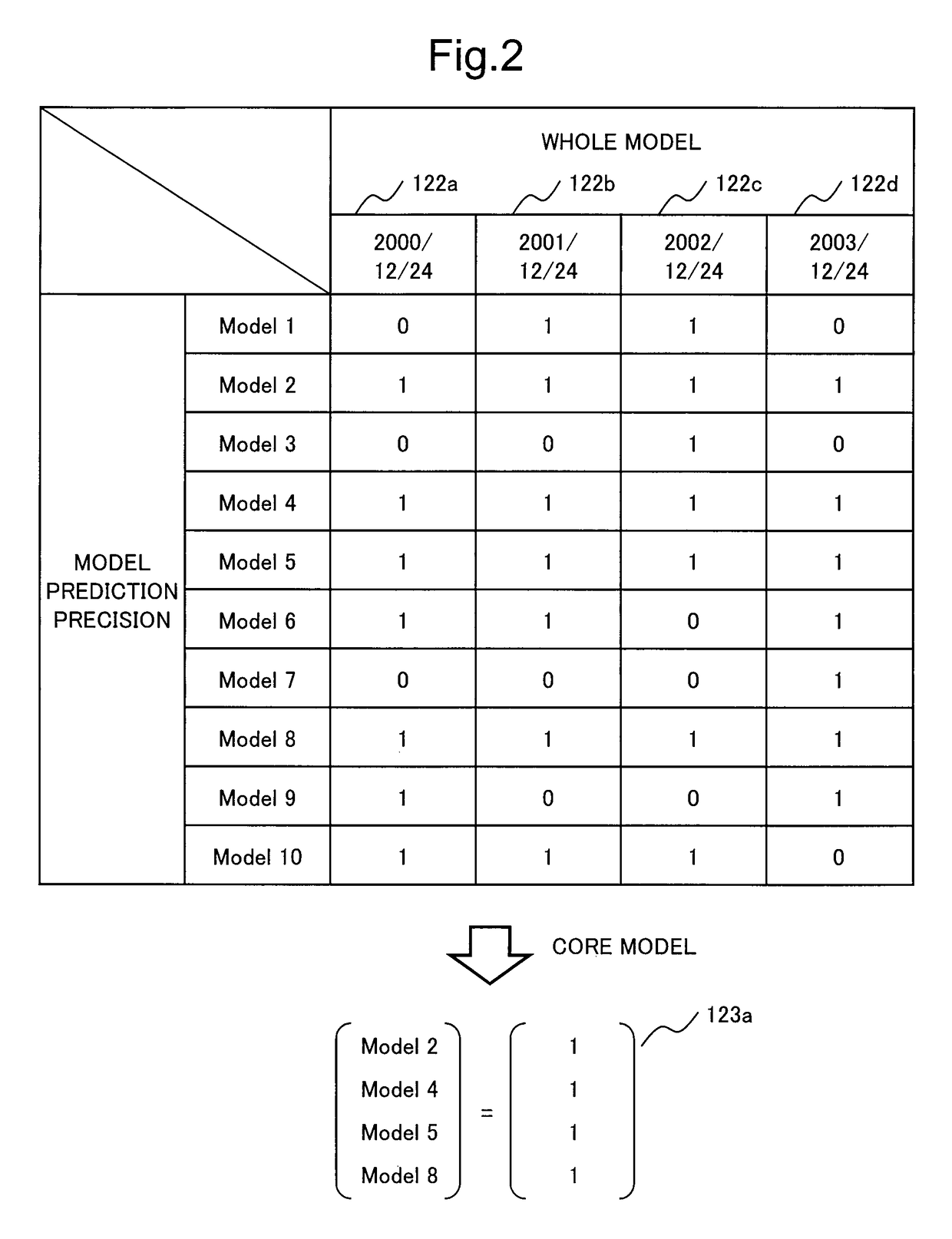

System analysis device, system analysis method and system analysis program

ActiveUS9658916B2Correction can not be performedImprove accuracyFault responseHardware monitoringSystems analysisModel extraction

Owner:NEC CORP

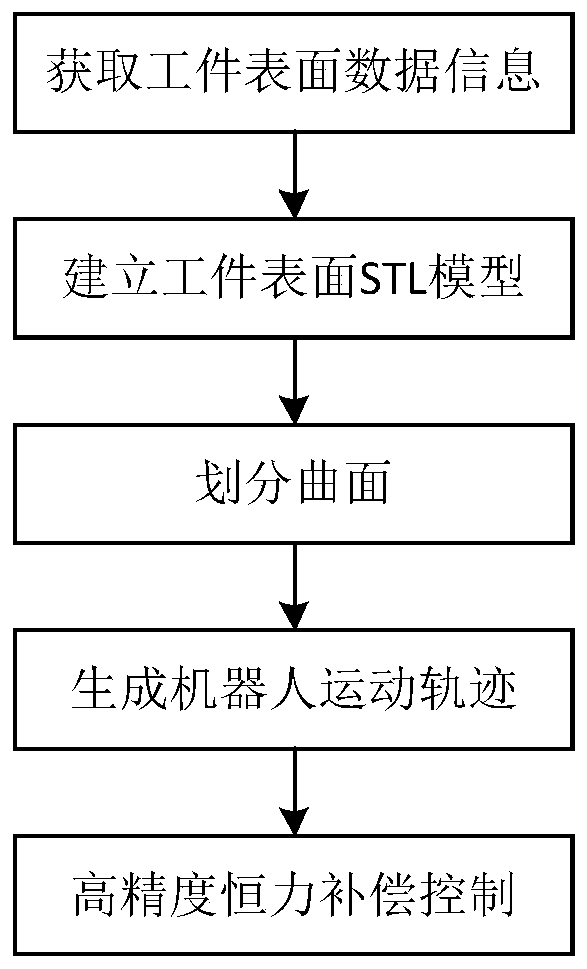

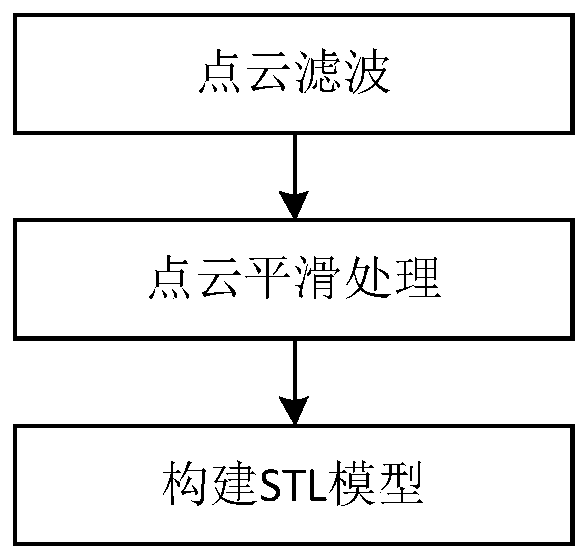

Industrial robot high-precision constant-force grinding method based on curved surface self-adaption

InactiveCN111055293AImprove effectivenessImprove versatilityProgramme-controlled manipulatorModel extractionData information

The invention discloses an industrial robot high-precision constant-force polishing method based on curved surface self-adaption. The method comprises the steps of acquiring scanning sampling point data information of the surface of a workpiece to be polished by adopting a linear structured light scanning mode, and acquiring an ordered point cloud model of the workpiece to be polished; through point cloud preprocessing, establishing an STL model of the surface of the to-be-polished workpiece; extracting and utilizing geometric features and topological features of the STL model on the surface of the to-be-polished workpiece, and dividing the curved surface of the to-be-polished workpiece into a plurality of planes without holes; constructing a feature frame according to the STL model of thesurface of the to-be-polished workpiece, and generating a robot polishing motion track by adopting a cutting plane projection method; and in the robot grinding process, constant-force grinding control is achieved according to real-time force feedback. The method has the beneficial effects that the constant-force grinding task for any curved surface can be achieved; the adaptability of the grinding method to the curved surface is improved; the grinding precision is improved; and therefore the intelligence and the automation level of a robot grinding system can be improved.

Owner:SOUTHEAST UNIV

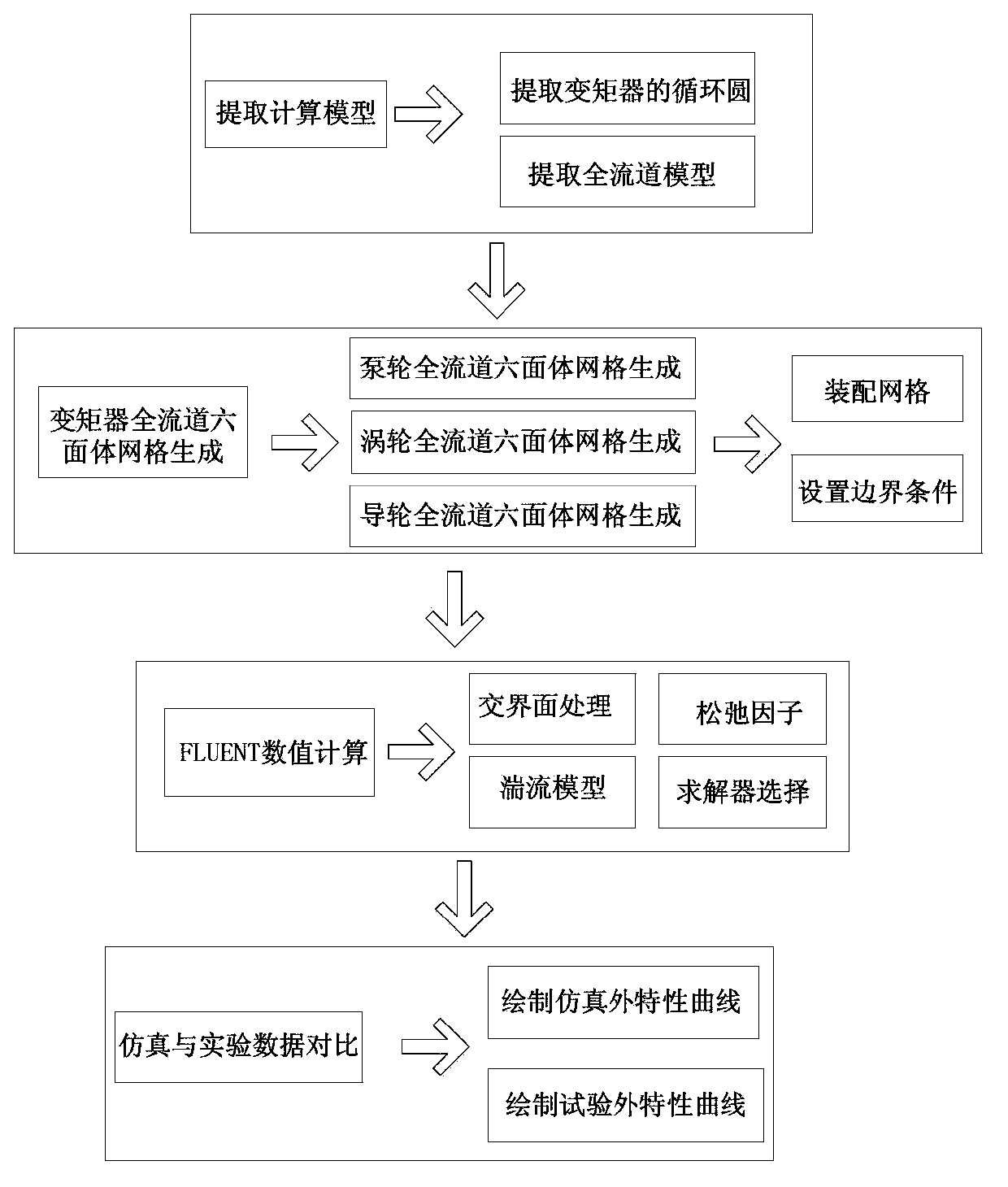

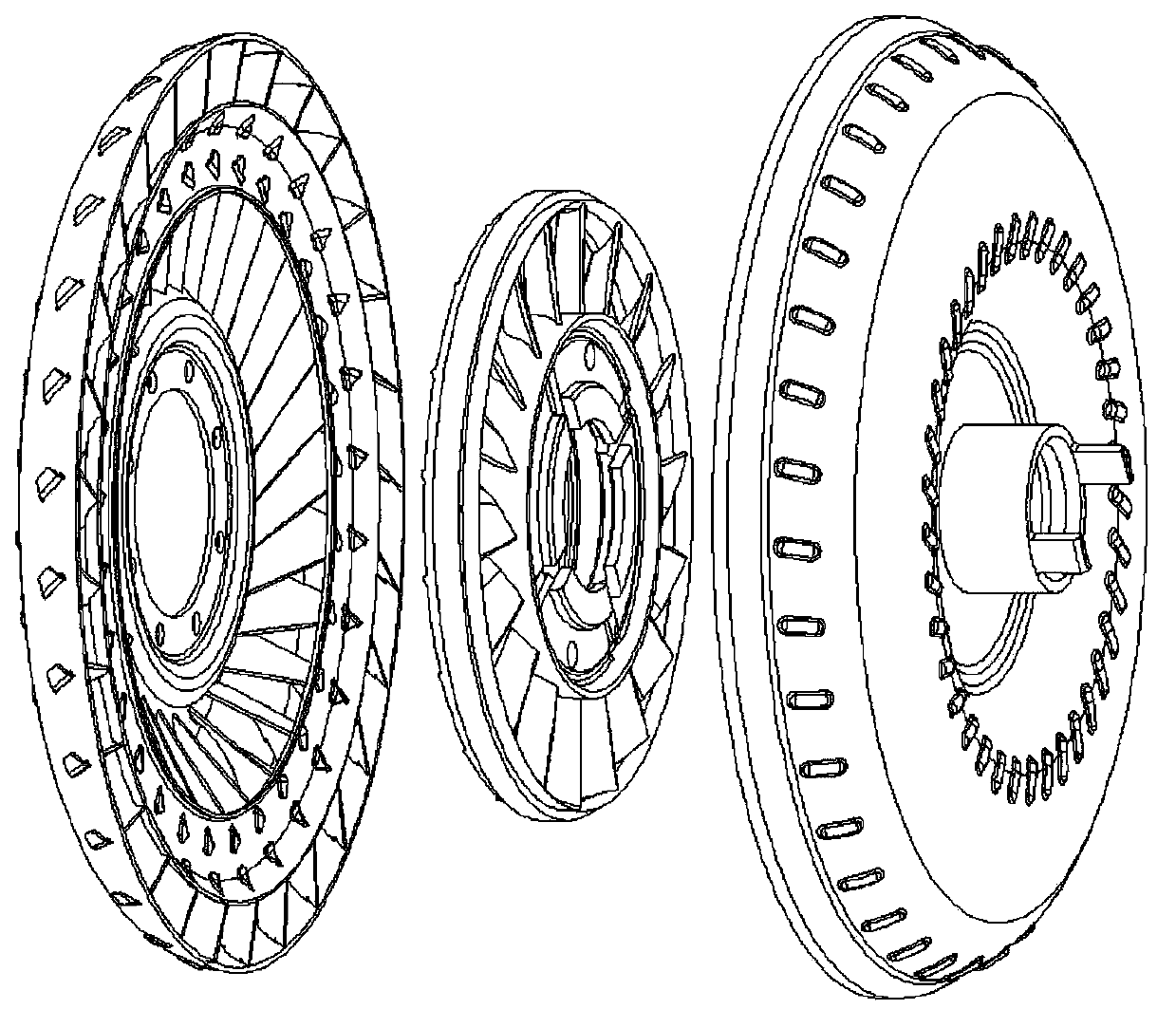

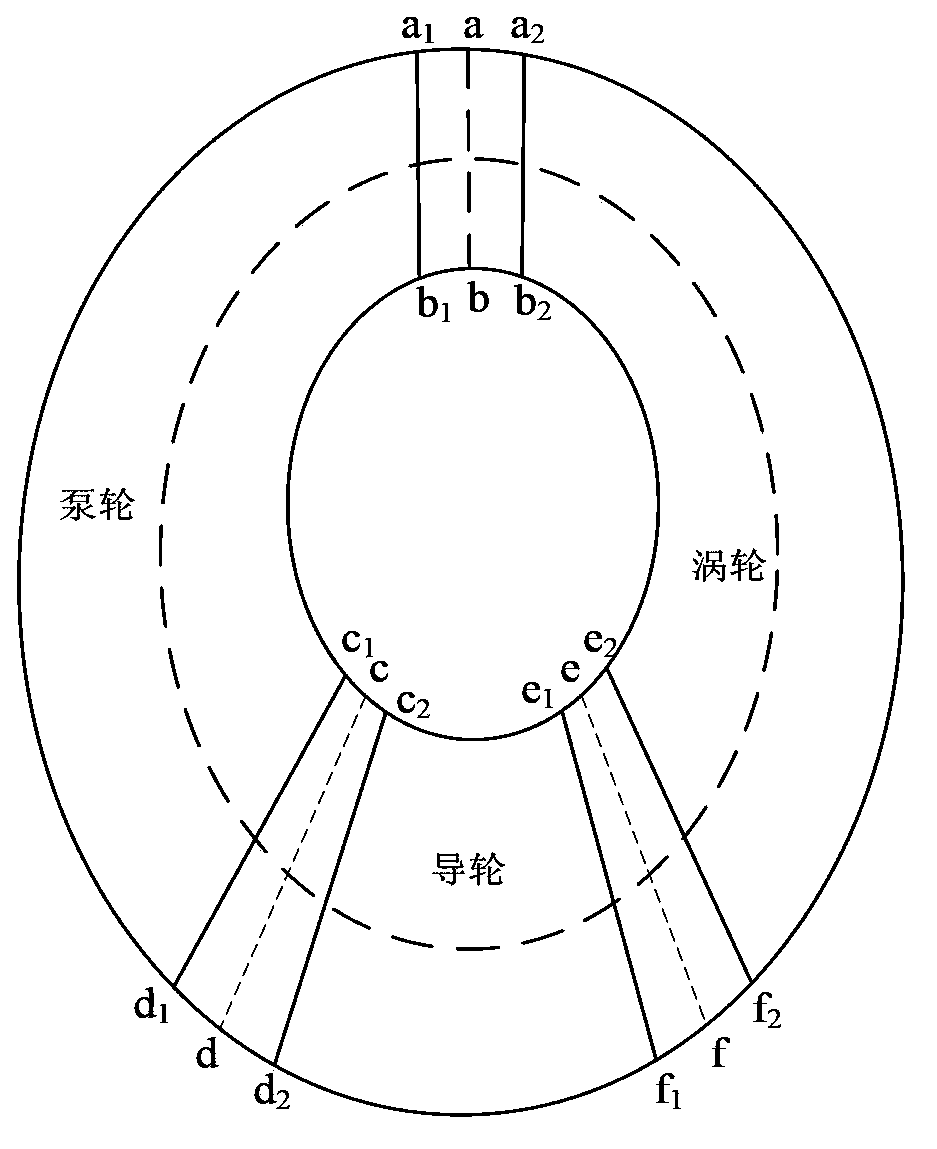

Method for predictingperformance of hydraulic torque converter through simulation

InactiveCN103366067ACreate quickly and accuratelyHigh precisionSpecial data processing applications3D modellingEngineeringTurbine

The invention discloses a method for predicting the performance of a hydraulic torque converter through simulation and aims to solve the problems of low accuracy, numerous grids and large calculated amount for predicating the performance of the hydraulic torque converter. The method for predicting the performance of the hydraulic torque converter through simulation comprises the following steps: 1, extracting calculation models, namely extracting a circulating circle of the hydraulic torque converter, extracting a full passage model of thehydraulic torque converter and extracting a single passage model of the hydraulic torque converter; 2, generating full passage hexahedral mesh models of the hydraulic torque converter, namely generating a guide wheel full passage hexahedral mesh model and a pump wheel and turbine full passage hexahedral mesh model, assembling a mesh, namely sequentially importing the generated pump wheel and turbine full passage hexahedral mesh model and guide wheel full passage hexahedral mesh model into a computational fluid dynamics pre-processing software for assembly, and setting boundary conditions; 3, performing numerical calculation by using Fluent, namely performing numerical solution by importing the assembled hydraulic torque converter full passage mesh file into commercialcomputational fluid dynamics software Fluent; and 4, comparing the simulation with experimental data.

Owner:JILIN UNIV

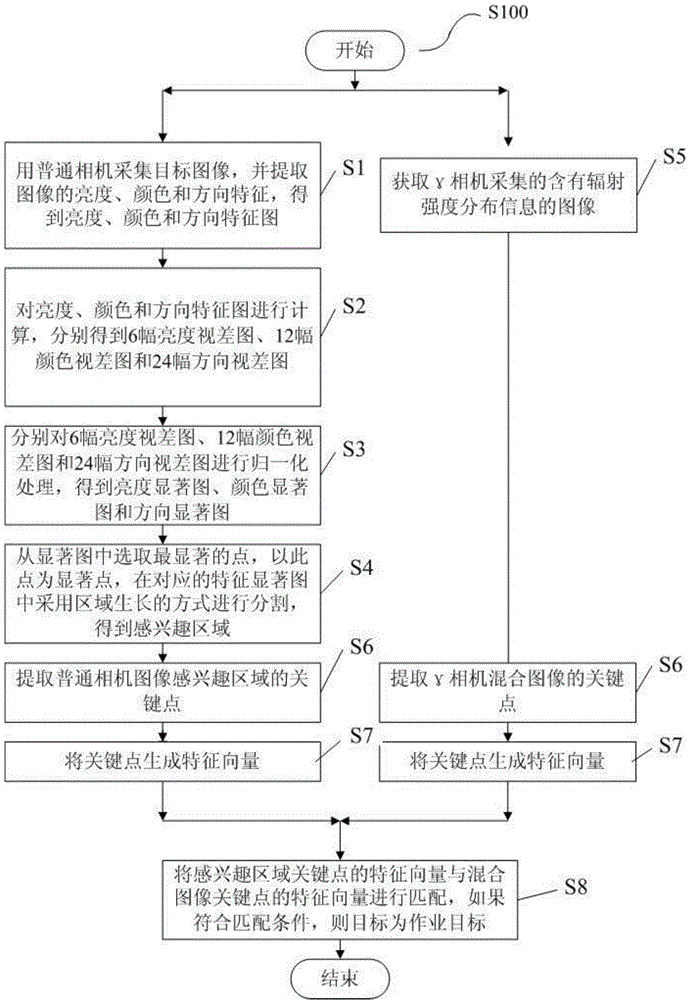

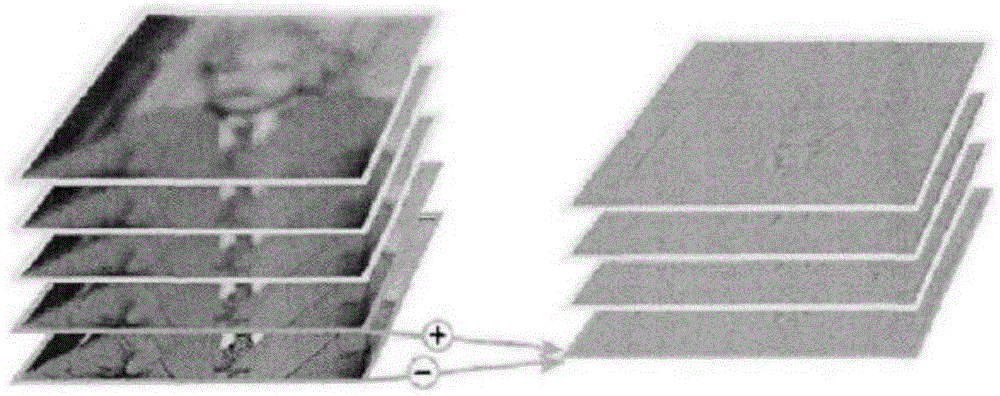

Visual attention mechanism-based method for detecting target in nuclear environment

The invention discloses a visual attention mechanism-based method for detecting a target in a nuclear environment. The method includes: extracting brightness, color, direction features collected by an ordinary camera, and so as to obtain three feature saliency images; performing weighing fusion on the abovementioned saliency images to obtain a weighting saliency image; obtaining regions of interest according to the weighing saliency image and performing feature extraction on the region of interests; extracting features of a gamma camera mixed graph; and using an SIFT method to fuse the regions of interest with the mixed graph, and detecting the position of the target. The visual attention mechanism-based method for detecting the target in the nuclear environment uses a bottom-up data drive attention model to extract the plurality of regions of interests, and greatly reduces the calculated amount of a later matching process; then combines the plurality of regions of interest with the up-down task drive attention model to establish a bidirectional visual attention model, detection precision and processing efficiency of target areas in the images can be greatly improved, and a matching process eliminates interference of unrelated regions in a scene, thereby enabling extracted operation target to have better robustness and accuracy.

Owner:四川核保锐翔科技有限责任公司

Background modeling and foreground extraction method based on depth image

ActiveUS9478039B1High operating requirementsReduced stabilityImage enhancementImage analysisCoding blockColor image

The present invention relates to an image background modeling and foreground extraction method based on a depth image, characterized by comprising: step 1: acquiring a depth image representing a distance from objects to a camera; step 2: initiating a real-time depth background model; step 3: updating the real-time depth background model; step 4: acquiring a current depth image representing the distance from the objects to the camera; step 5: extracting a foreground image of the current depth image based on the real-time depth background model; step 6: outputting the foreground image and generating a real-time target masking image; and step 7: updating the real-time depth background model, where code block information of each pixel point in the real-time depth background model is updated according to the real-time target masking image. The present invention has stability, high efficiency, and superiority in processing positional relationships that cannot be matched by a well-known modeling method using a color image, and does not require initially modeling a scene, thereby simplifying implementation steps and greatly improving the whole performance.

Owner:NANJING HUAJIE IMI TECH CO LTD

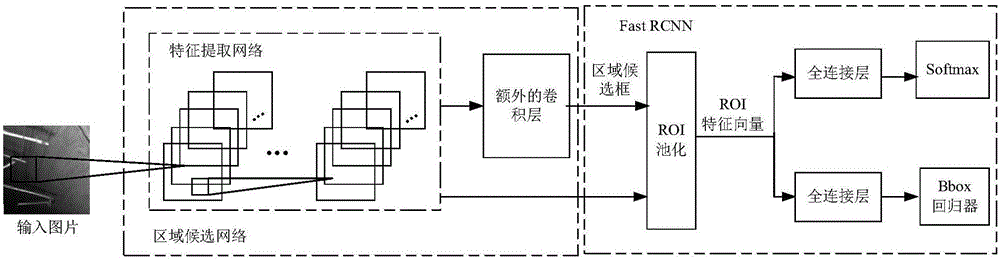

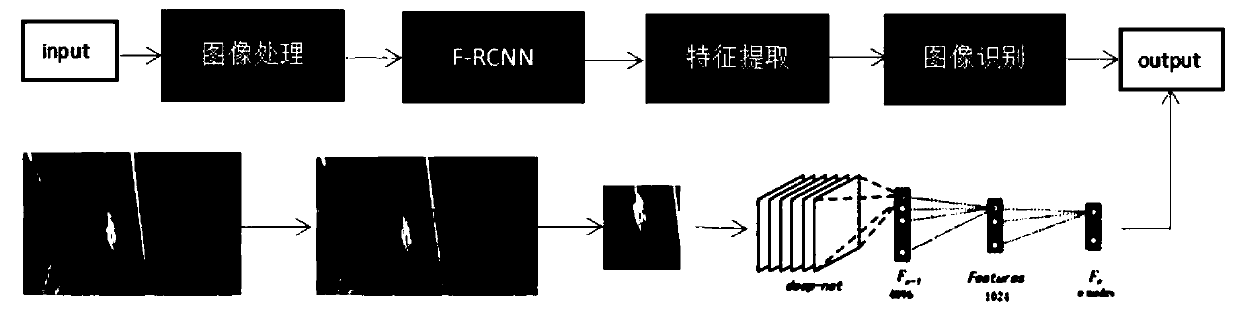

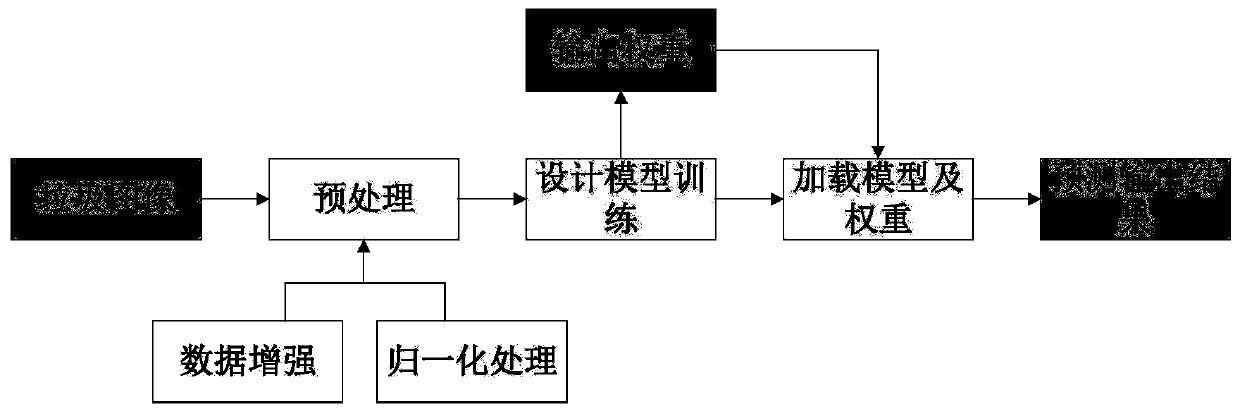

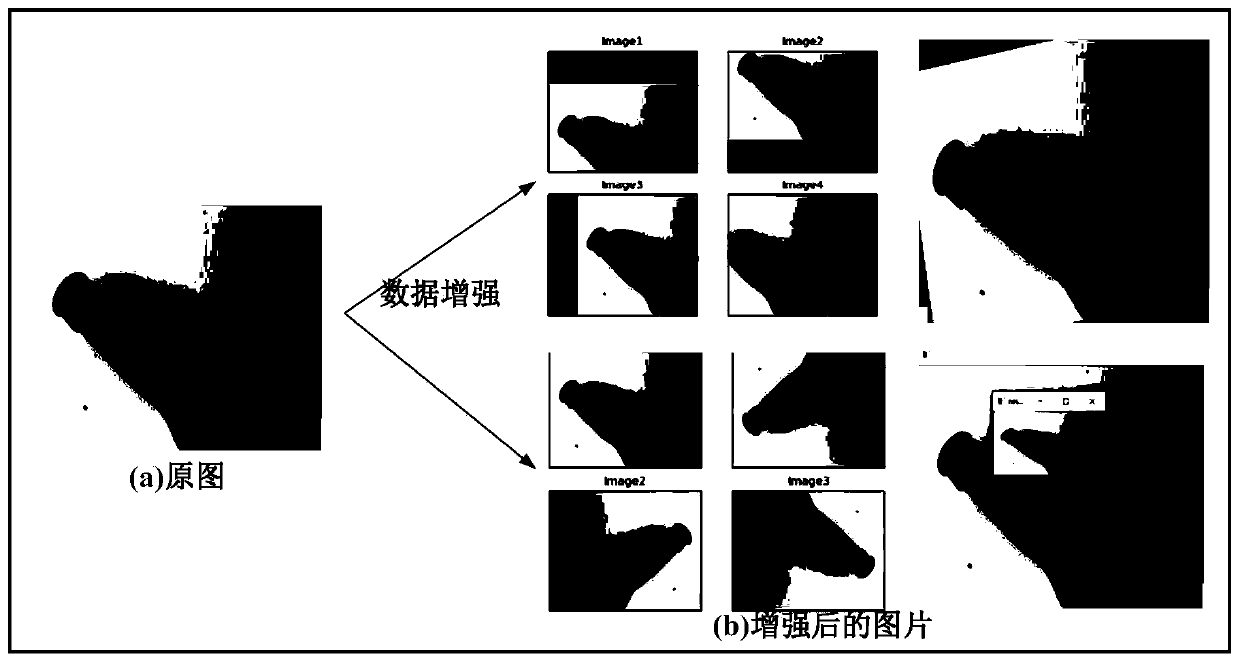

CNN and transfer learning-based disease intelligent identification method and system

ActiveCN110148120AReduce distractionsLow image quality requirementsImage enhancementImage analysisDiseased plantLearning based

The invention provides a CNN and transfer learning-based disease intelligent identification method and system, which can reduce interference of a picture background, can achieve high recognition accuracy under the condition of a limited sample number, and supports higher training sample multi-classification operation efficiency. The disease image identification method comprises the following steps: image preprocessing: normalizing the size of the image, quickly positioning a disease region by using Fast-RCNN, and eliminating background interference; image feature extraction: using a triplet similarity measurement model to extract image features, and then using SIFT features as compensation features to perform weighted fusion; and disease classification and recognition: learning a first image feature of a normal plant image by adopting a deep convolutional neural network, then learning a second image feature of the disease plant image by using transfer learning, and finally performing classification and recognition by combining the first image feature and the second image feature.

Owner:AGRI INFORMATION & RURAL ECONOMIC INST SICHUAN ACAD OF AGRI SCI

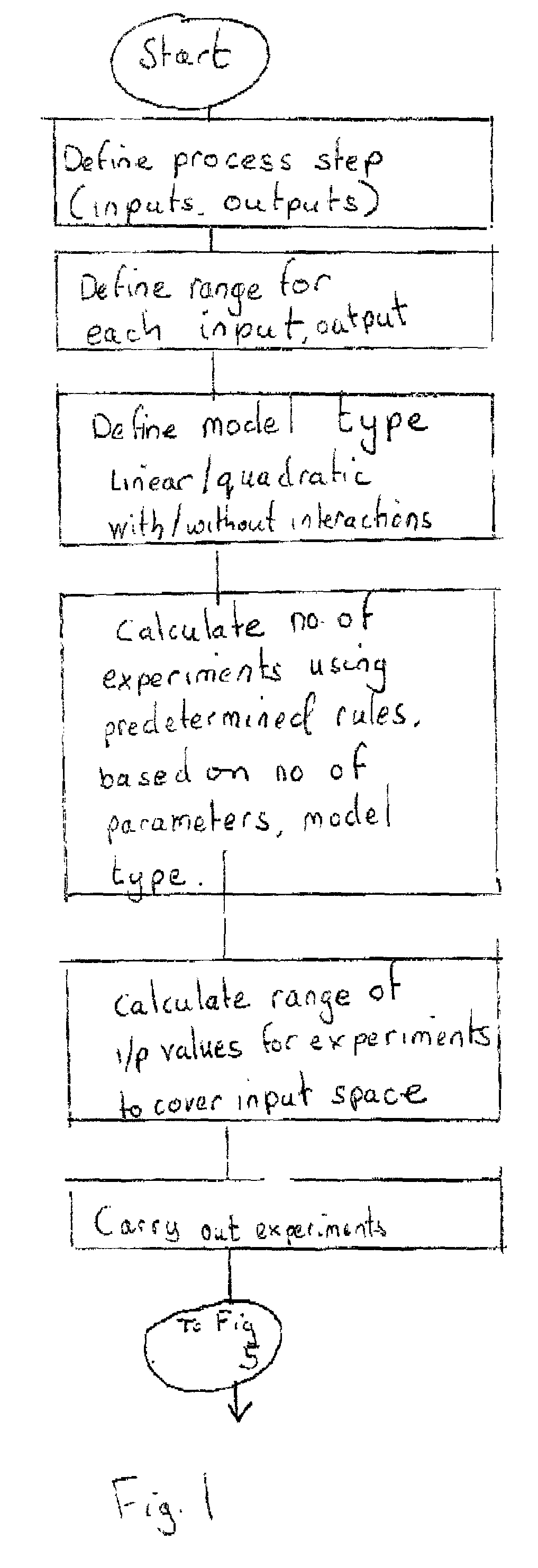

Model predictive control (MPC) system using DOE based model

InactiveUS7092863B2Simulator controlAnalogue computers for electric apparatusAutomatic controlControl system

Owner:ADA ANALYTICS ISRAEL

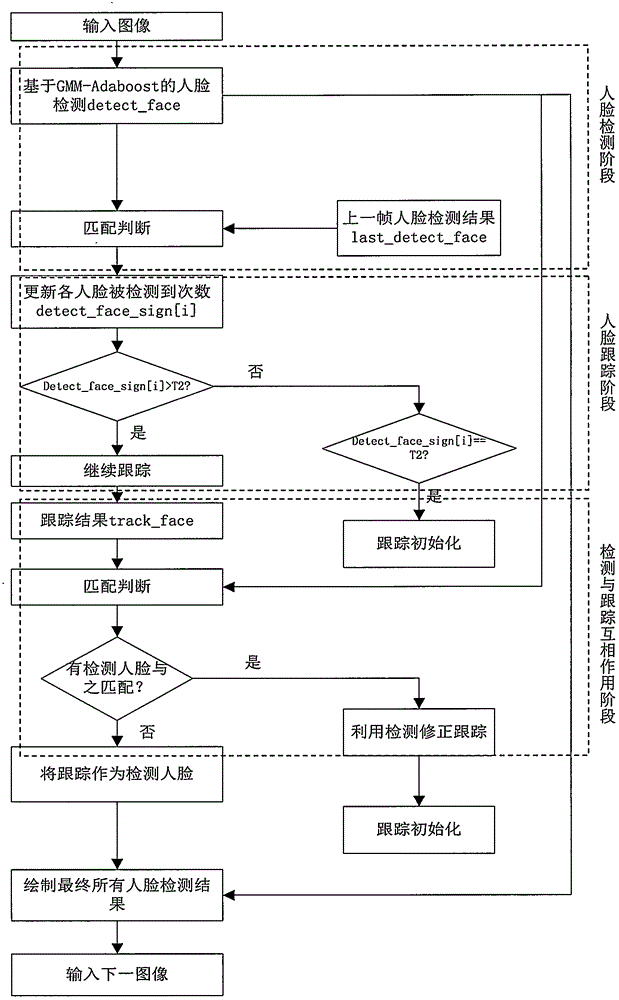

Multi-face detecting and tracking method

InactiveCN104834916ADetection time is shortMeet adaptabilityCharacter and pattern recognitionFace detectionModel extraction

The invention provides a multi-face detecting and tracking method, belonging to the technical field of artificial intelligence. Firstly, according to multiple face characteristics in a monitoring system video, the method with the combination of Haar characteristic and an Adaboost classifier is used to detect faces, the concrete procedure comprises the following steps: (1) for a video continuous sequence, a Gaussian mixture model is used to extract moving foreground as a first class interest region, a region from the previous frame detection out of a motion region to a face as a center is taken as a second class interest region, and the face detection of the two interest regions is carried out, (2) a mean shift method is used to achieve multi-target tracking, and the adaptive updating and tracking of multiple faces of the same video are satisfied at the same time, (3) the multi-target tracking algorithm in the step (2) and the detection algorithm in the step (1) are combined, and a mixed multi-target tracking face detection algorithm is developed. According to the multi-face detecting and tracking method, the missed detection caused by face direction change or facial expression change is solved, the detection rate is raised, and the requirement of real-time performance by a monitoring system is satisfied.

Owner:SHANGHAI SOLAR ENERGY S&T +1

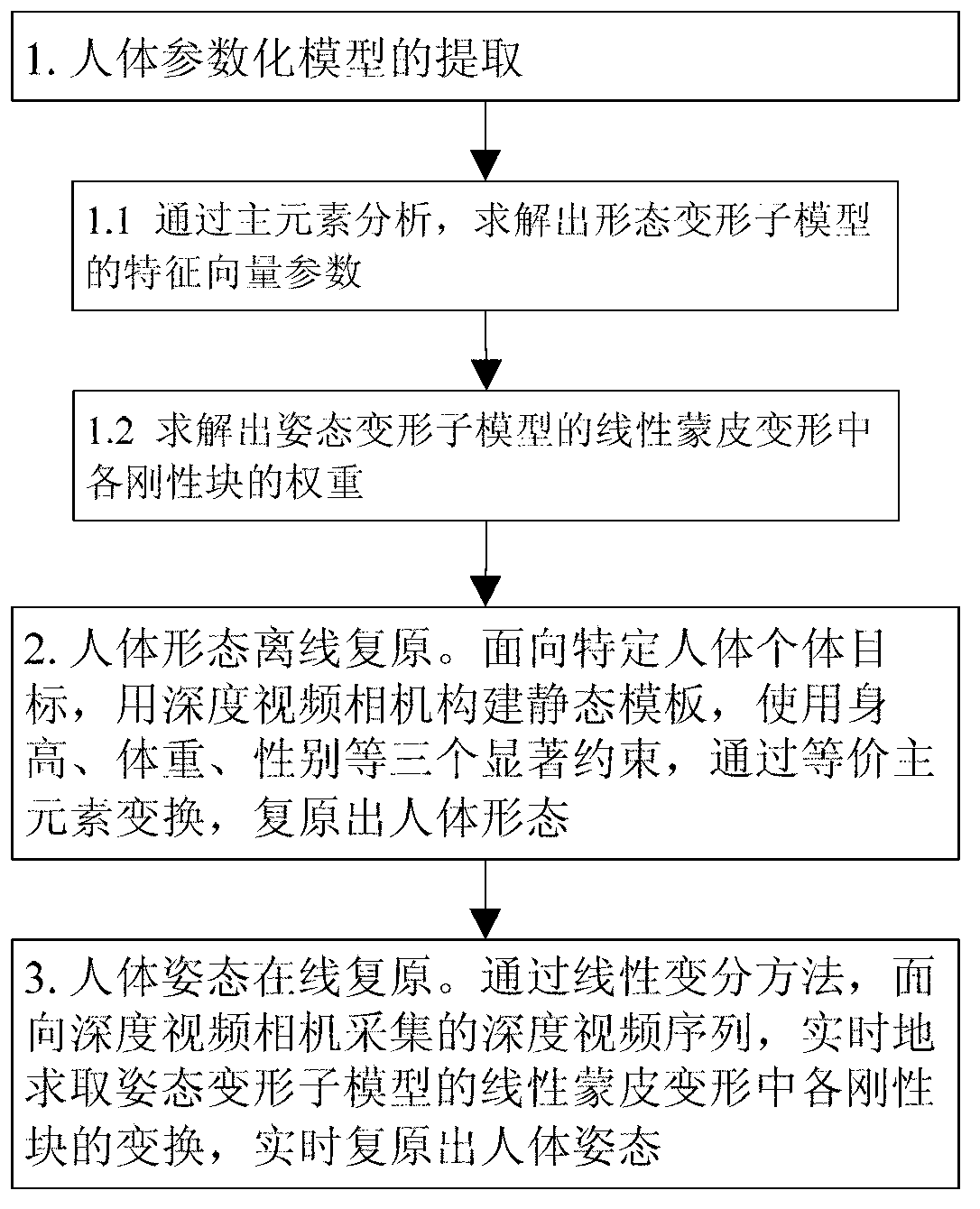

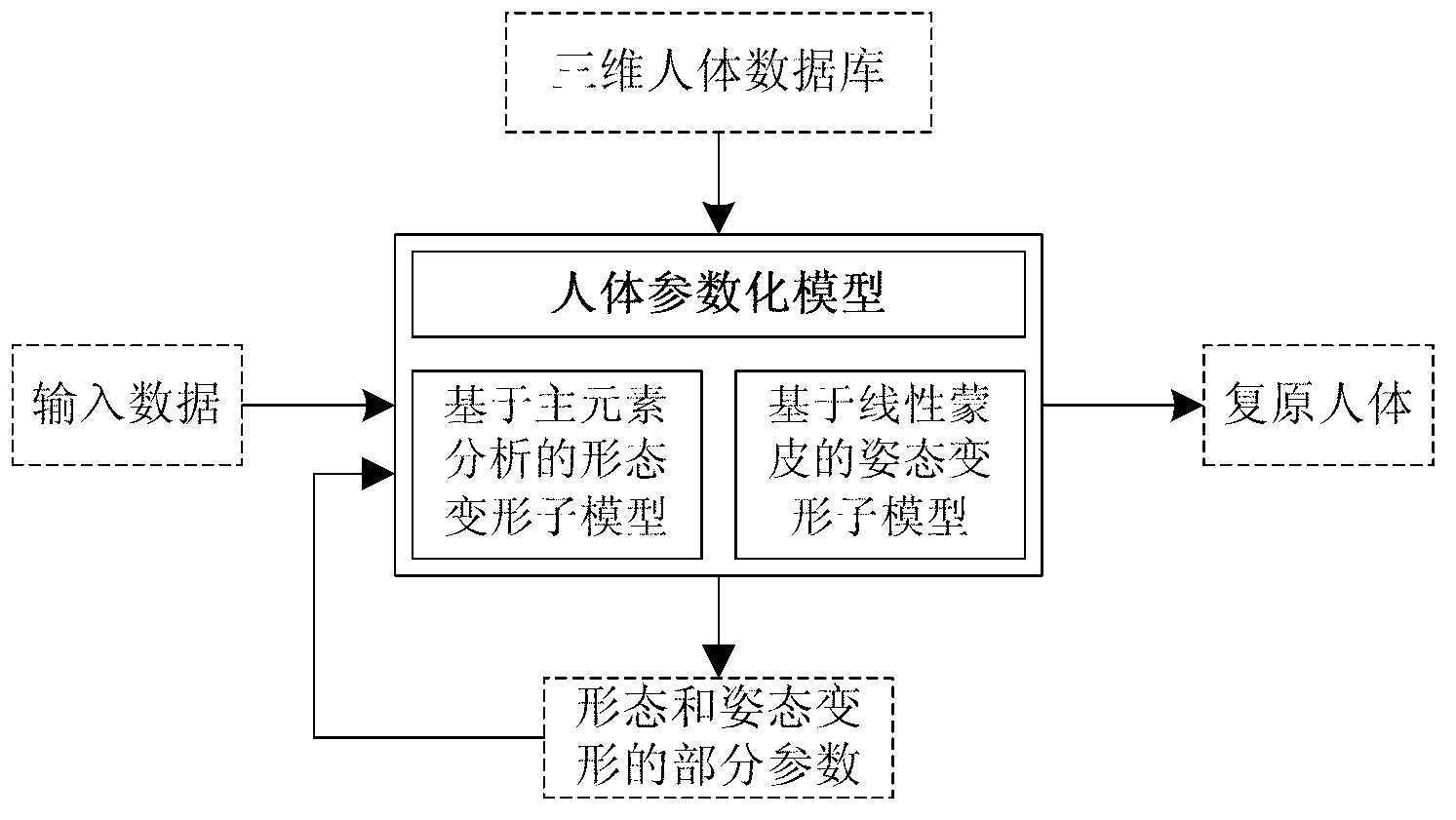

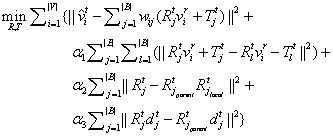

Mark-point-free real-time restoration method of three-dimensional human form and gesture

ActiveCN103268629AGuaranteed online recoveryReal-time recovery implementation3D-image renderingFeature vectorModel extraction

The invention relates to a mark-point-free real-time restoration method of a three-dimensional human form and a gesture. The mark-point-free real-time restoration method faces toward a monocular depth video, and real-time restoration of the human form and the gesture is achieved by aid of a human body parameterized model. The mark-point-free real-time restoration method comprises the steps of 1) human body parameterized model extraction, wherein the human body parameterized model is decomposed into a form deformation sub model and a gesture deformation sub model, a human library is used for training the human body parameterized model to solve part of parameters, the characteristic vector parameter of the form deformation sub model is solved, and the weight of each rigid block in the linear skin deformation of the gesture deformation sub model is solved; 2) human body form offline restoration; 3) human body gesture online restoration. Through a linear deformation and variation method, change of each rigid block in the linear skin deformation of the gesture deformation sub model is solved in real time. Therefore, the gesture of a human body is restored on line.

Owner:中电普信(北京)科技发展有限公司

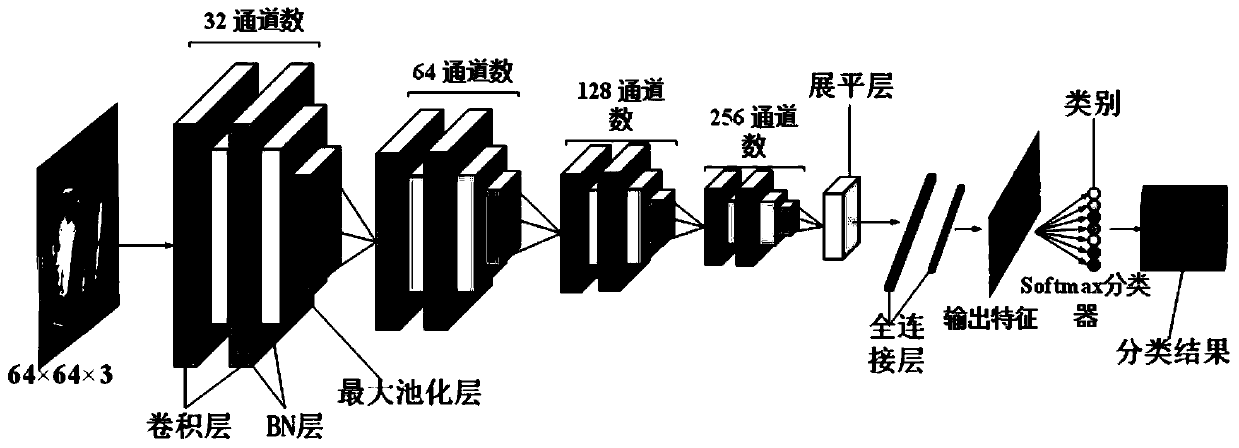

Garbage classification method based on hybrid convolutional neural network

ActiveCN111144496AEnhanced ability to extract featuresGarbage sorting results are goodWaste collection and transferCharacter and pattern recognitionComputation complexityFeature Dimension

The invention discloses a garbage classification method based on a hybrid convolutional neural network, and belongs to the technical field of garbage classification and recovery. The method solves theproblems that an existing method is low in garbage classification precision and long in required training time. According to a hybrid convolutional neural network model, a convolutional layer, batchstandardization, a maximum pooling layer and a full connection layer are flexibly applied, and BN batch standardization is applied to each convolutional layer and each full connection layer, so that the feature extraction capability of the model is further enhanced, the effect of each layer is brought into full play, and a relatively good classification result is obtained. By utilizing the regularization effect of the BN layer, the maximum pooling layer is properly added to perform statistics on the features, the feature dimension is reduced, the representation capability is improved, fittingcan be well performed, the convergence speed is high, the parameter quantity is small, the calculation complexity is low, and the method has obvious advantages compared with a traditional convolutional neural network. Meanwhile, an optimizer of SGDM + Nesterov is adopted in the model, and finally the classification accuracy of the model on the image reaches 92.6%. The method can be applied to household garbage classification.

Owner:QIQIHAR UNIVERSITY

Brain electrical signal independent component extraction method based on convolution blind source separation

ActiveCN104700119AImprove featuresImprove accuracyCharacter and pattern recognitionModel extractionComputation complexity

The invention discloses a brain electrical signal independent component extraction method based on convolution blind source separation. The brain electrical signal independent component extraction method based on the convolution blind source separation includes concrete steps: building a brain electrical signal independent component extraction system based on the convolution blind source separation, which comprises an AD (analog to digital) sampling module, a short time Fourier transformation module, a frequency domain instantaneous blind source separation module, a sequence adjustment module and a short time inverse Fourier transformation module; using the AD sampling module to sample brain electrical signals; using the short time Fourier transformation module to transform the brain electrical signals from a time domain to a frequency domain; using the frequency domain instant blind source separation module to separate instantaneous mixing signals in the frequency domain; using the sequence adjustment module to perform sequence adjustment on independent components in a vector on each frequency domain segment; using the short time inverse Fourier transformation module to transform a frequency domain separation result into an independent component on the time domain. The brain electrical signal independent component extraction method based on the convolution blind source separation extracts the independent components of brain electrical signals based on a true convolution mixing model, uses a convolution blind source separation frequency domain algorithm, and is simple to achieve, good in separation effect, and low in calculation complexity.

Owner:BEIJING MECHANICAL EQUIP INST

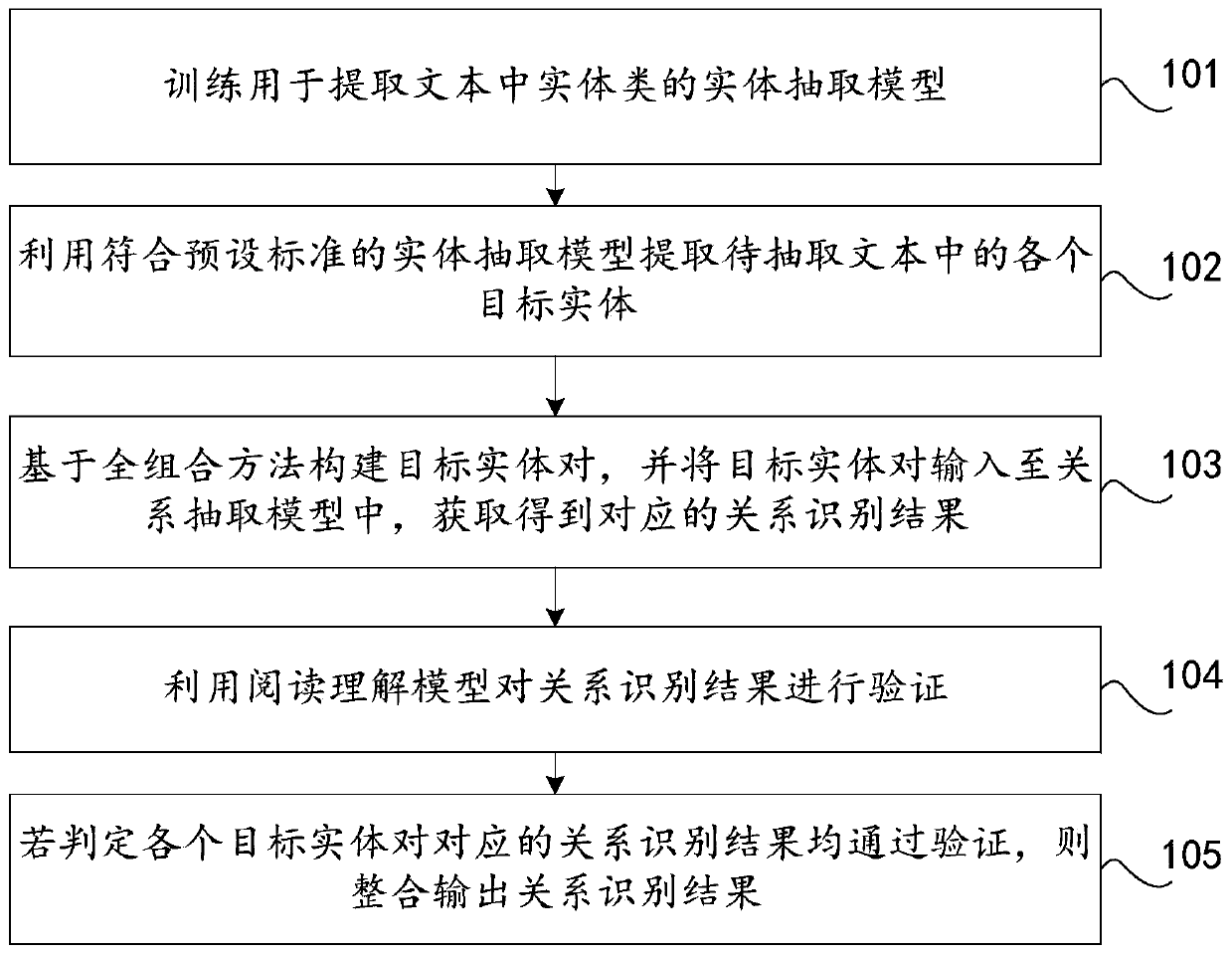

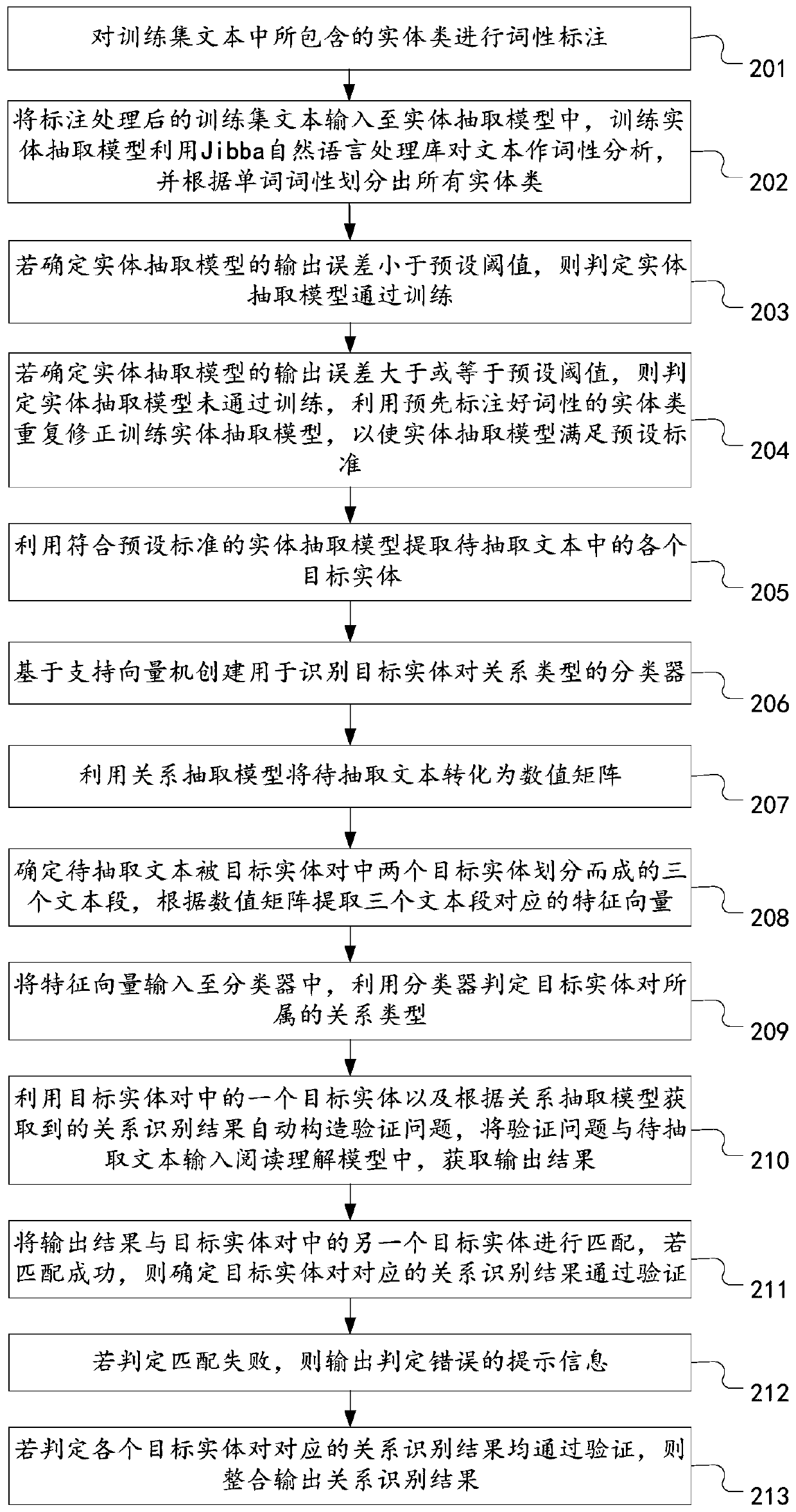

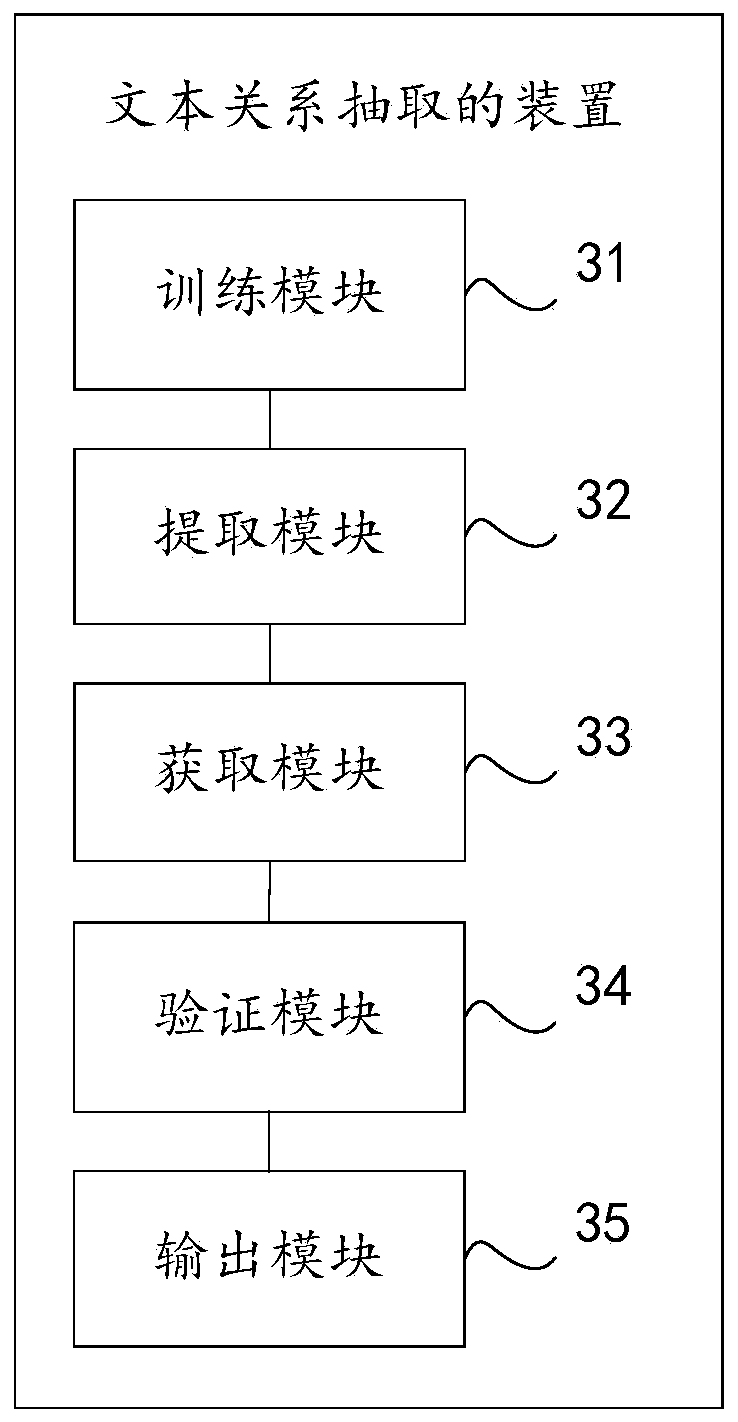

Text relationship extraction method and device, computer equipment and storage medium

PendingCN111324743AGuaranteed accuracyQuick searchSpecial data processing applicationsText database clustering/classificationModel extractionEngineering

The invention discloses a text relationship extraction method and device, computer equipment and a storage medium, relates to the technical field of computers, and can solve the problems of large workload and low text extraction efficiency during text relationship extraction. The method comprises the steps: training an entity extraction model used for extracting entity classes in a text; extracting each target entity in a to-be-extracted text by utilizing the entity extraction model meeting a preset standard; constructing a target entity pair based on a full combination method, and inputting the target entity pair into a relationship extraction model to obtain a corresponding relationship identification result; verifying the relationship identification result by using a reading understanding model; and if it is judged that the relationship identification results corresponding to the target entity pairs pass verification, integrating and outputting the relationship identification results. The method and the device are suitable for extracting the text relationship.

Owner:PING AN TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com