Patents

Literature

1263 results about "Feature Dimension" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Specialized DesignElementDimension to hold Features. (caMAGE)

Method for manufacturing high density non-volatile magnetic memory

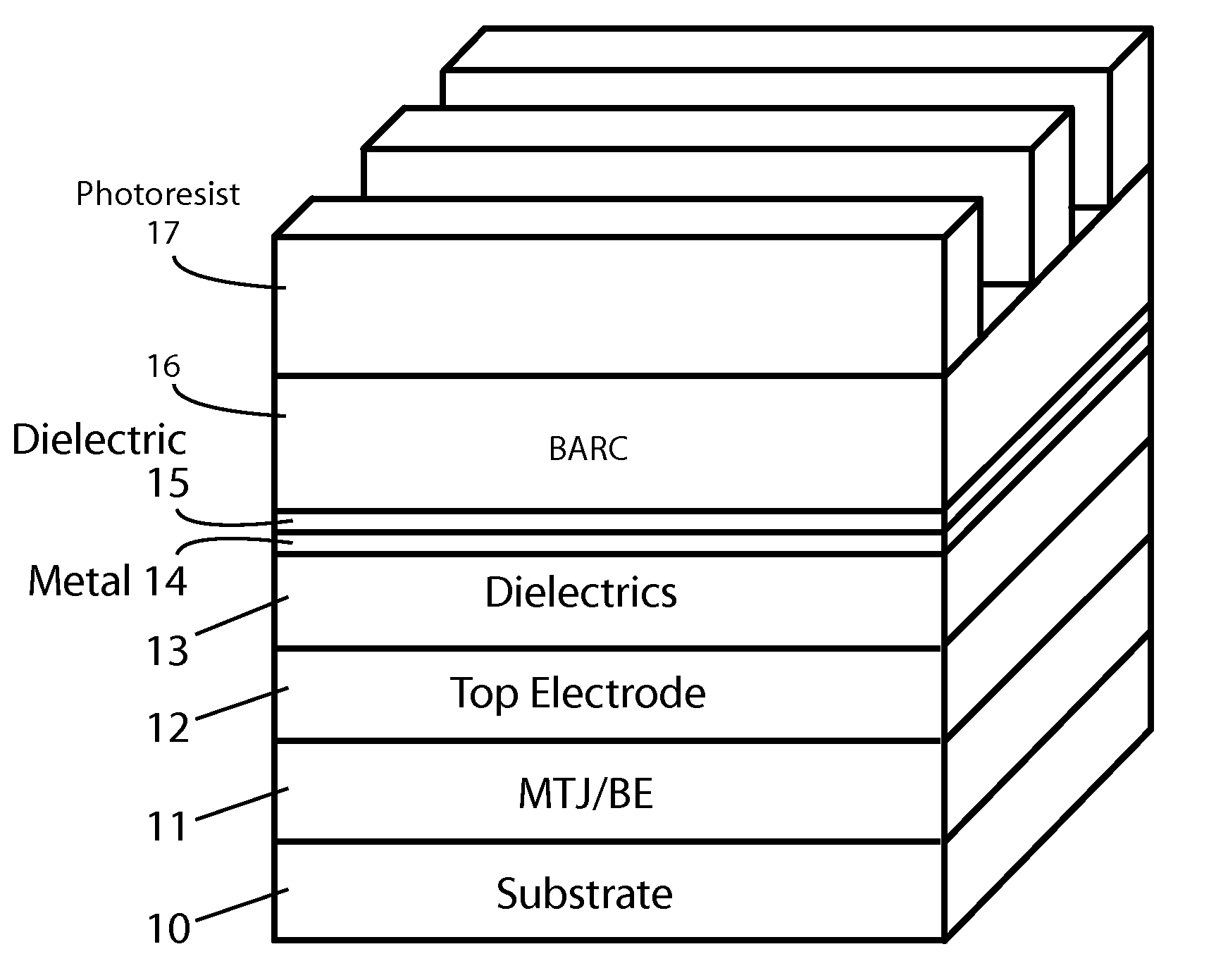

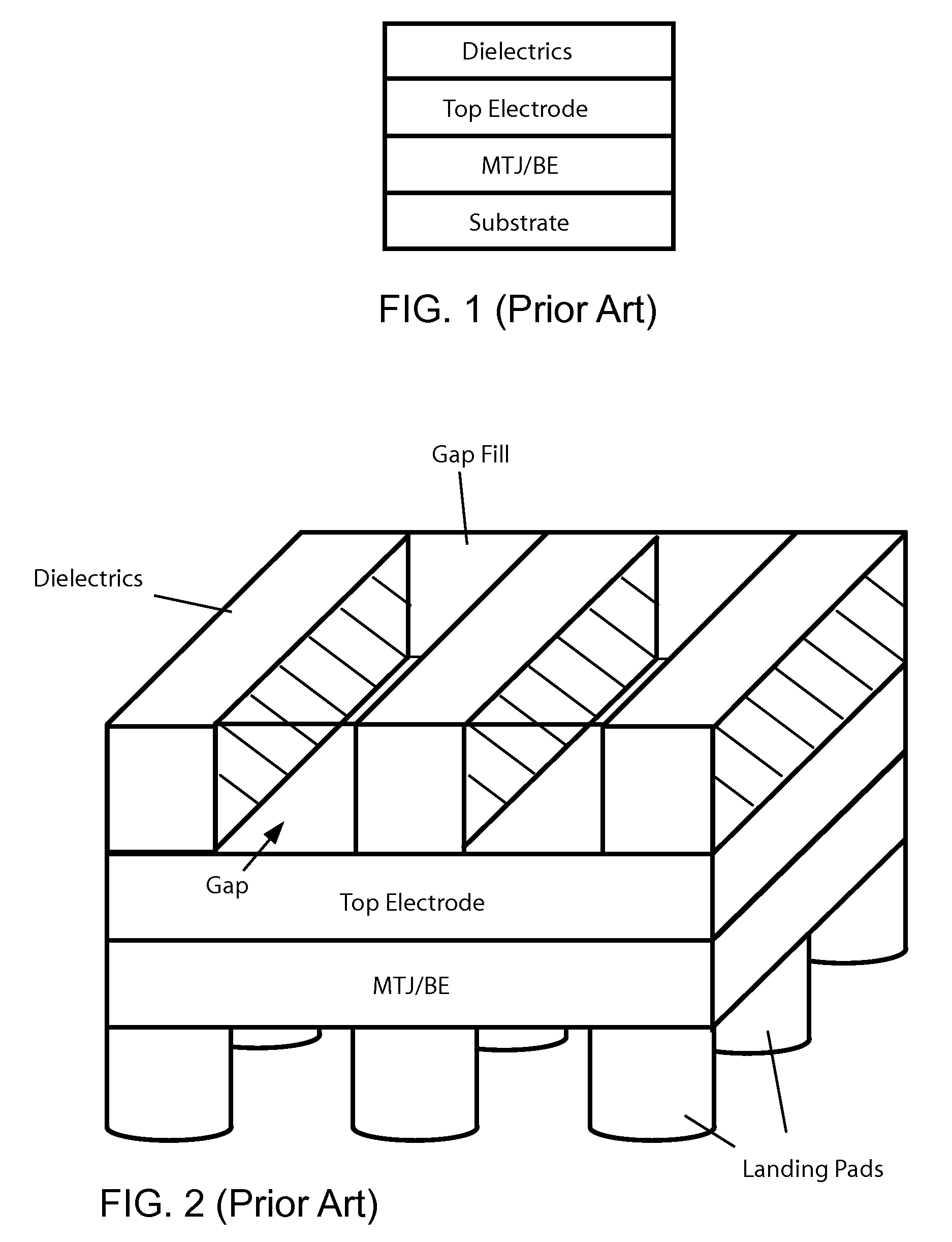

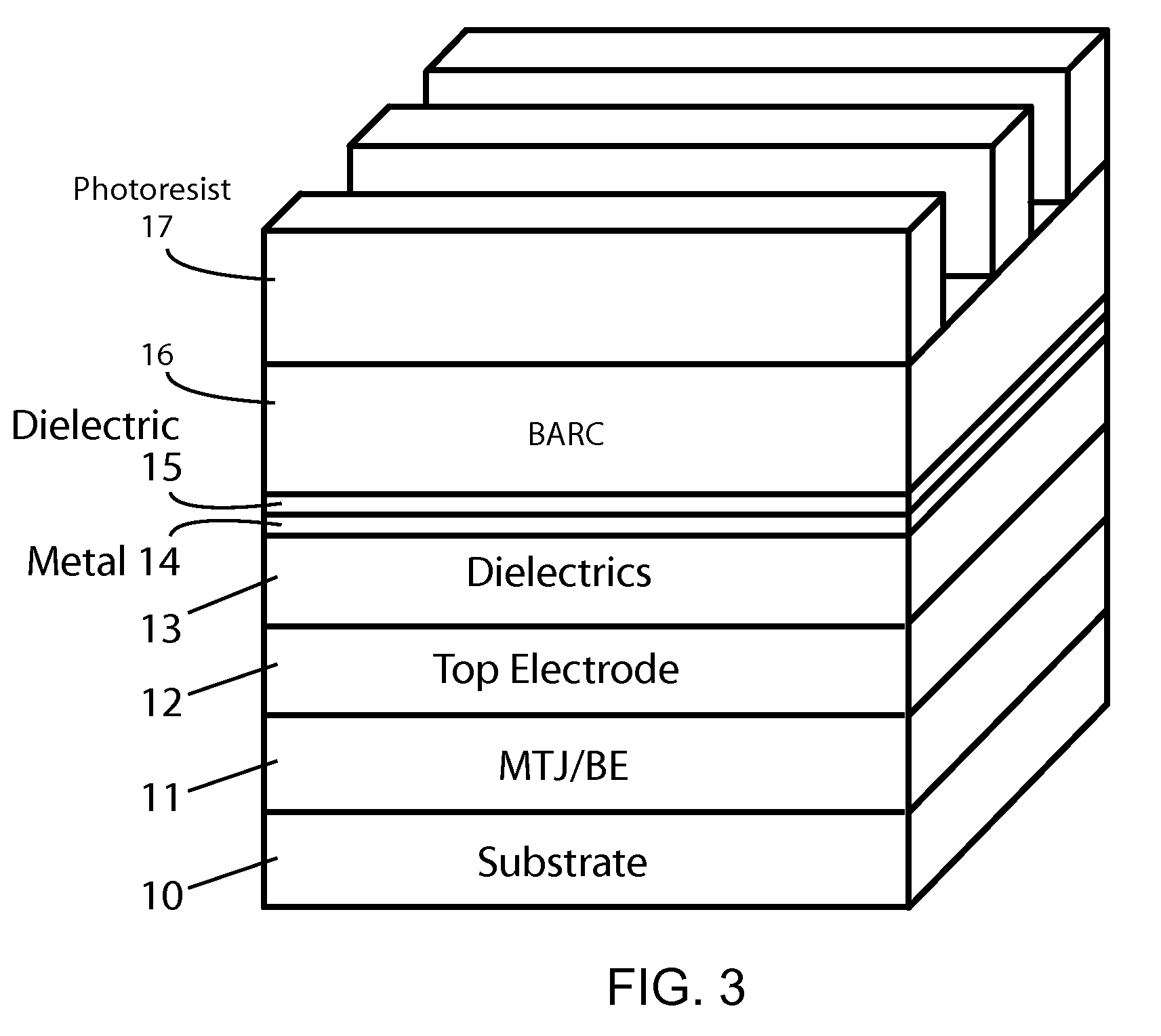

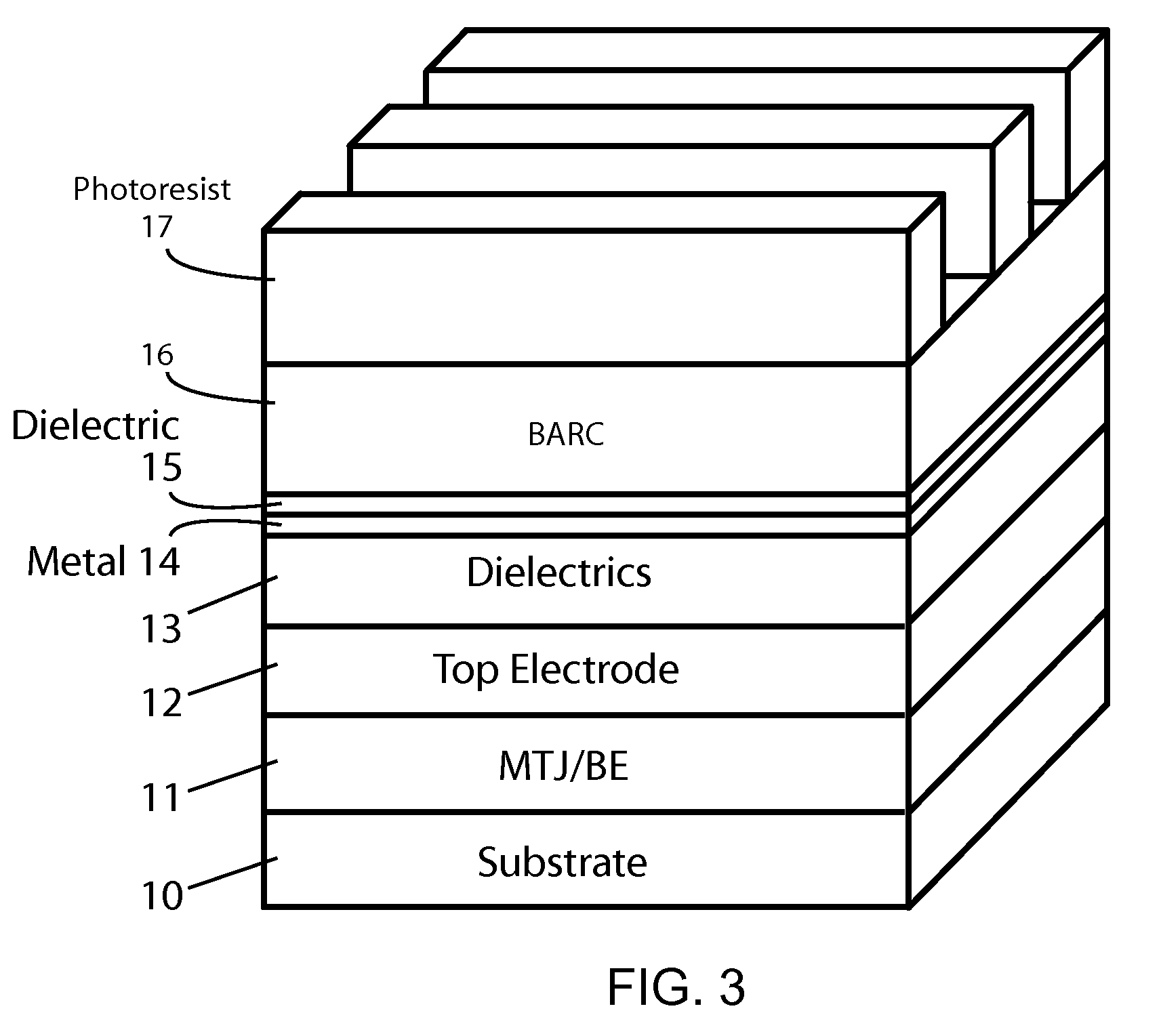

ActiveUS20130244344A1Reduce programming currentReduced dimensionNanomagnetismNanoinformaticsFeature DimensionLithographic artist

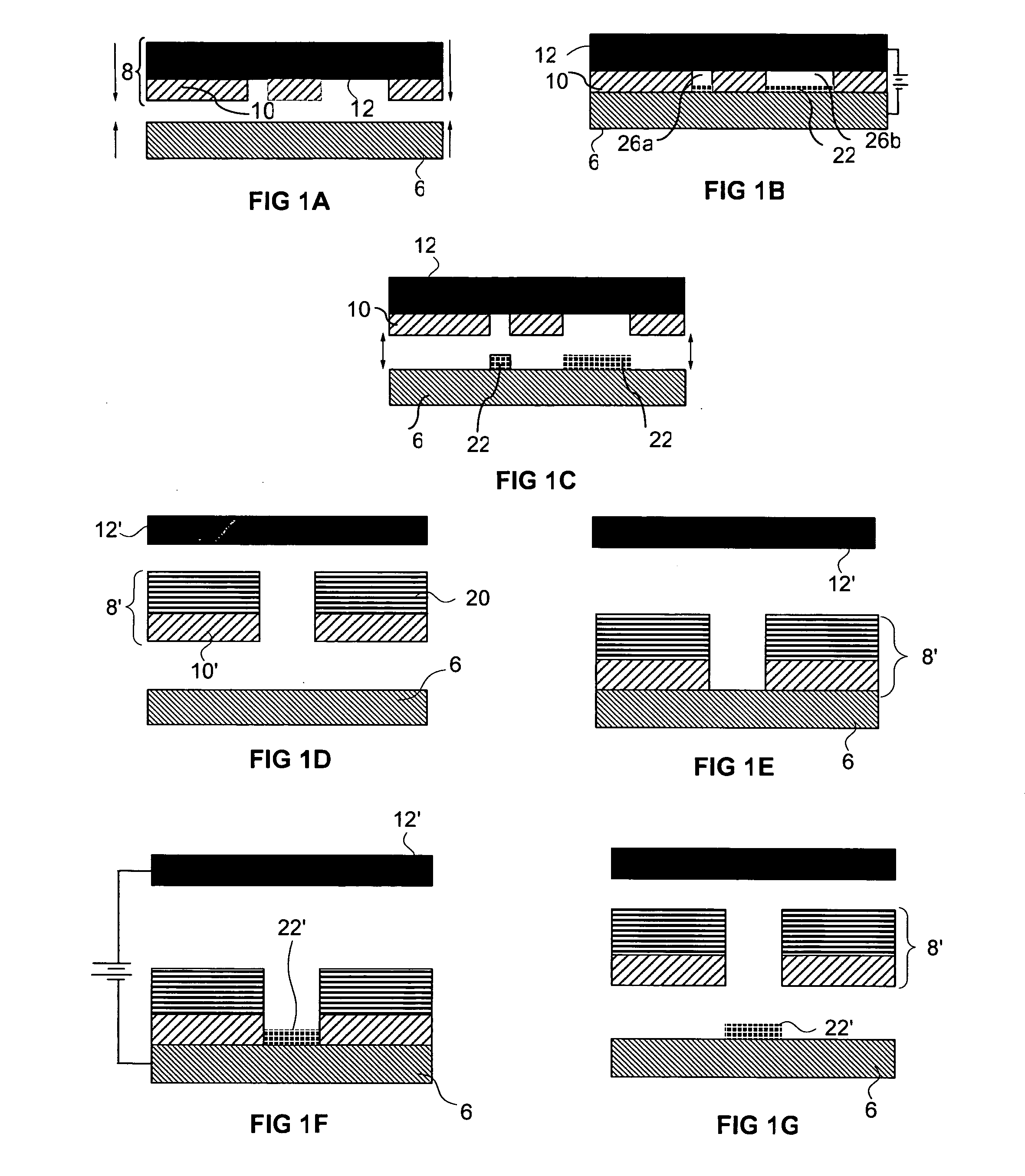

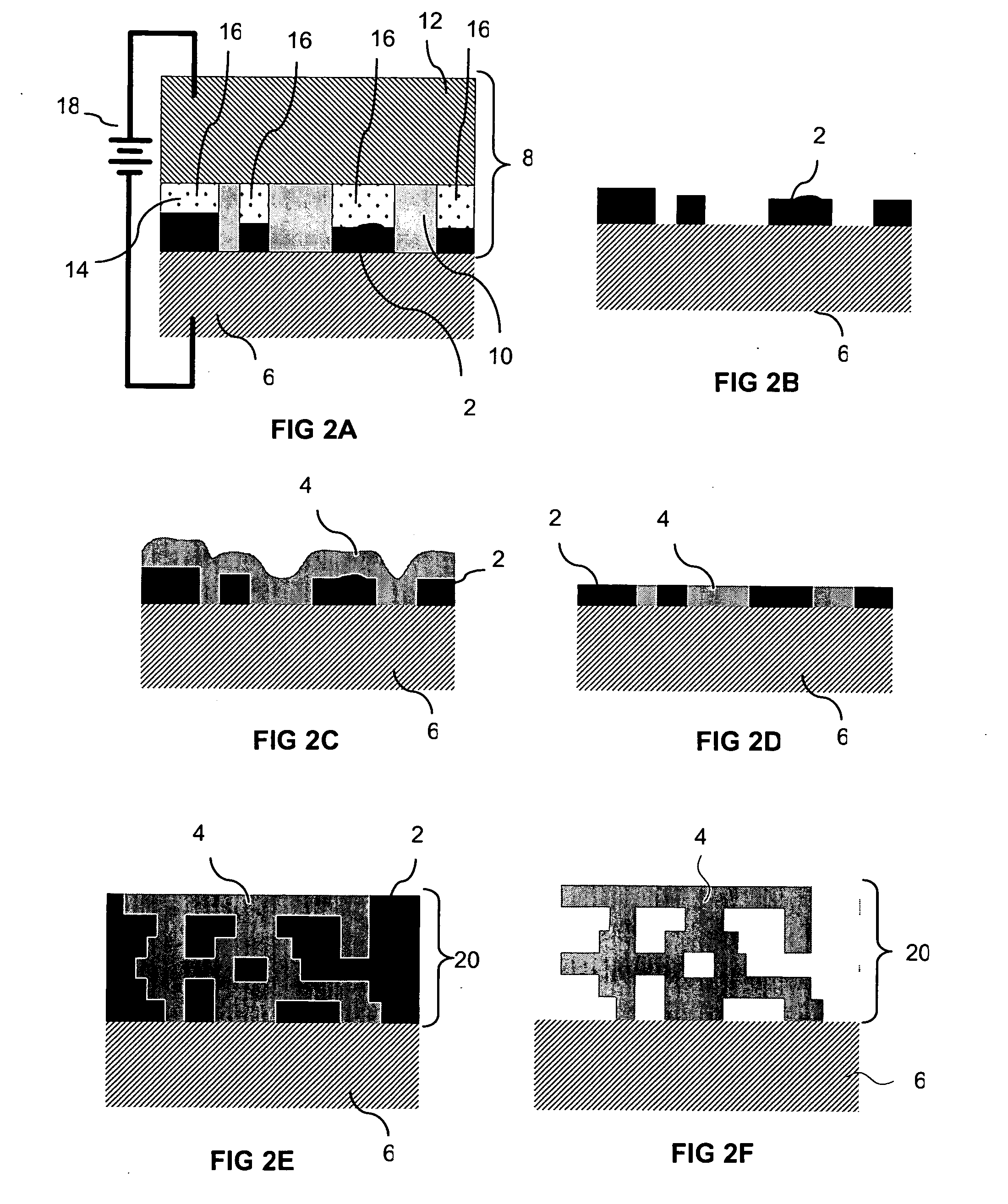

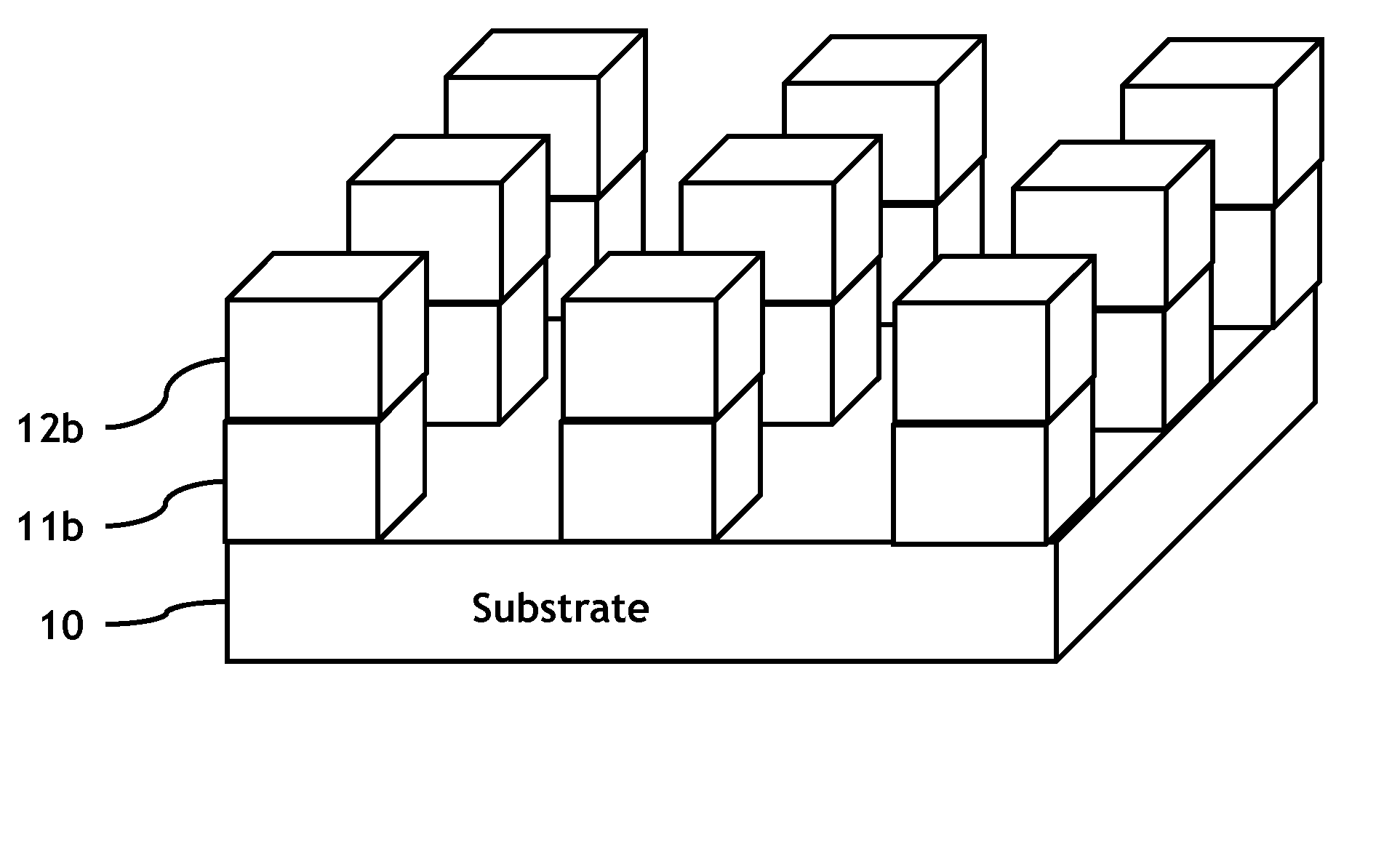

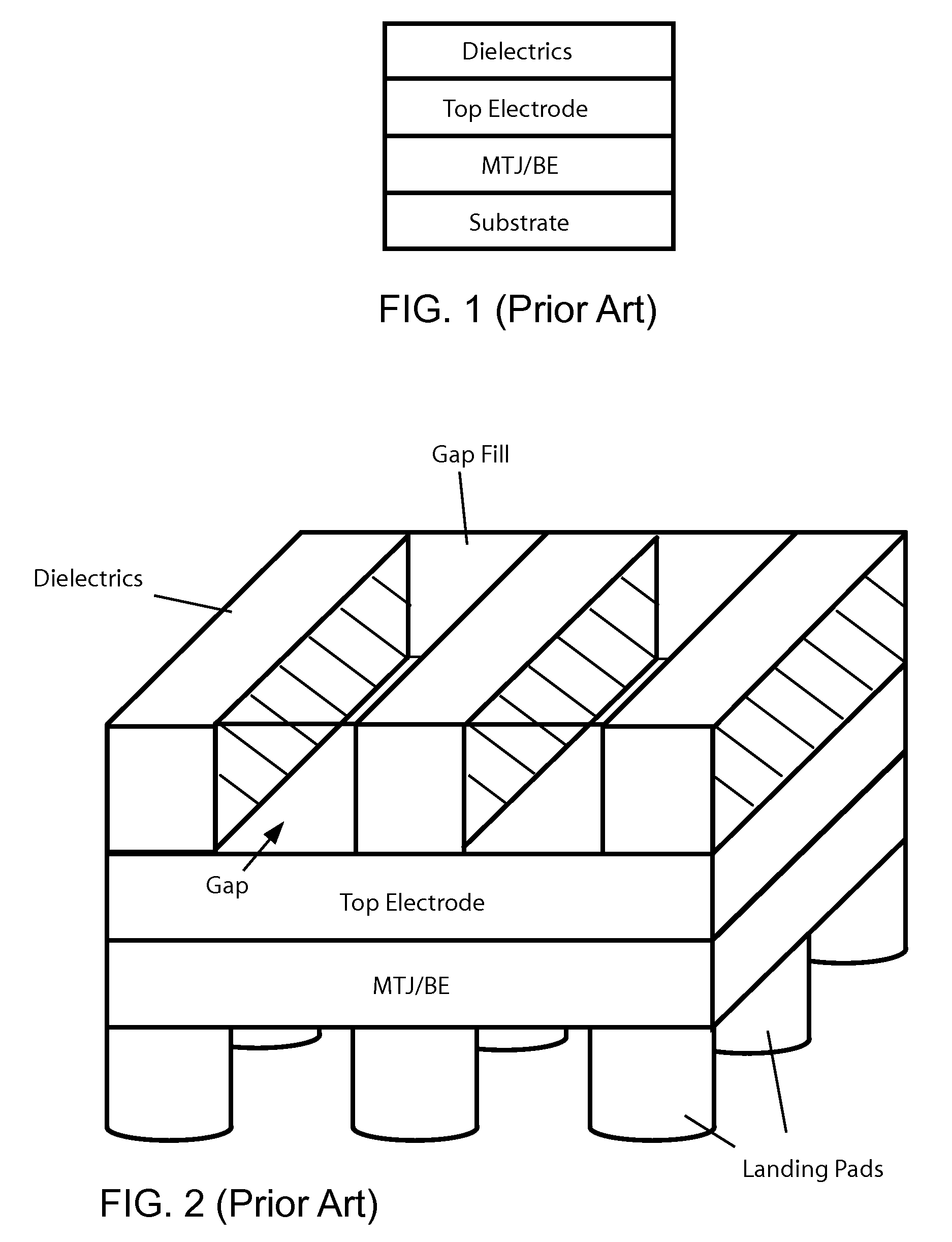

Methods of fabricating MTJ arrays using two orthogonal line patterning steps are described. Embodiments are described that use a self-aligned double patterning method for one or both orthogonal line patterning steps to achieve dense arrays of MTJs with feature dimensions one half of the minimum photo lithography feature size (F). In one set of embodiments, the materials and thicknesses of the stack of layers that provide the masking function are selected so that after the initial set of mask pads have been patterned, a sequence of etching steps progressively transfers the mask pad shape through the multiple mask layer and down through all of the MTJ cell layers to the form the complete MTJ pillars. In another set of embodiments, the MTJ / BE stack is patterned into parallel lines before the top electrode layer is deposited.

Owner:AVALANCHE TECH

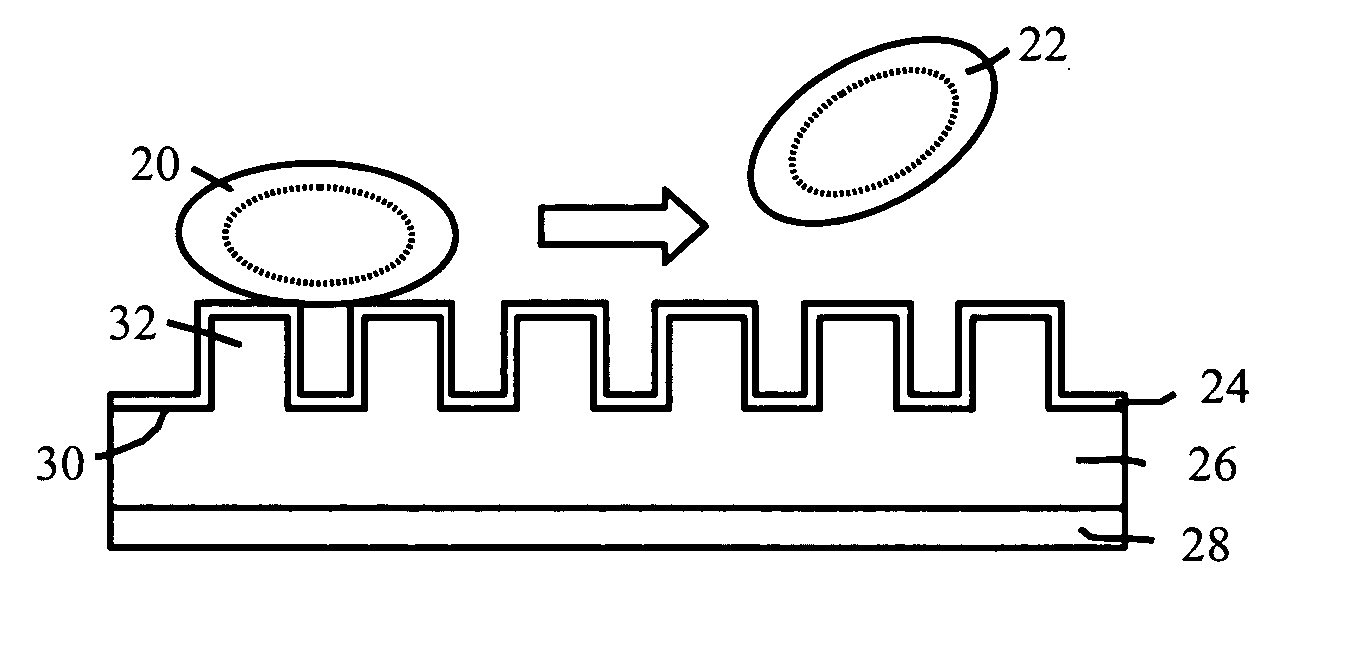

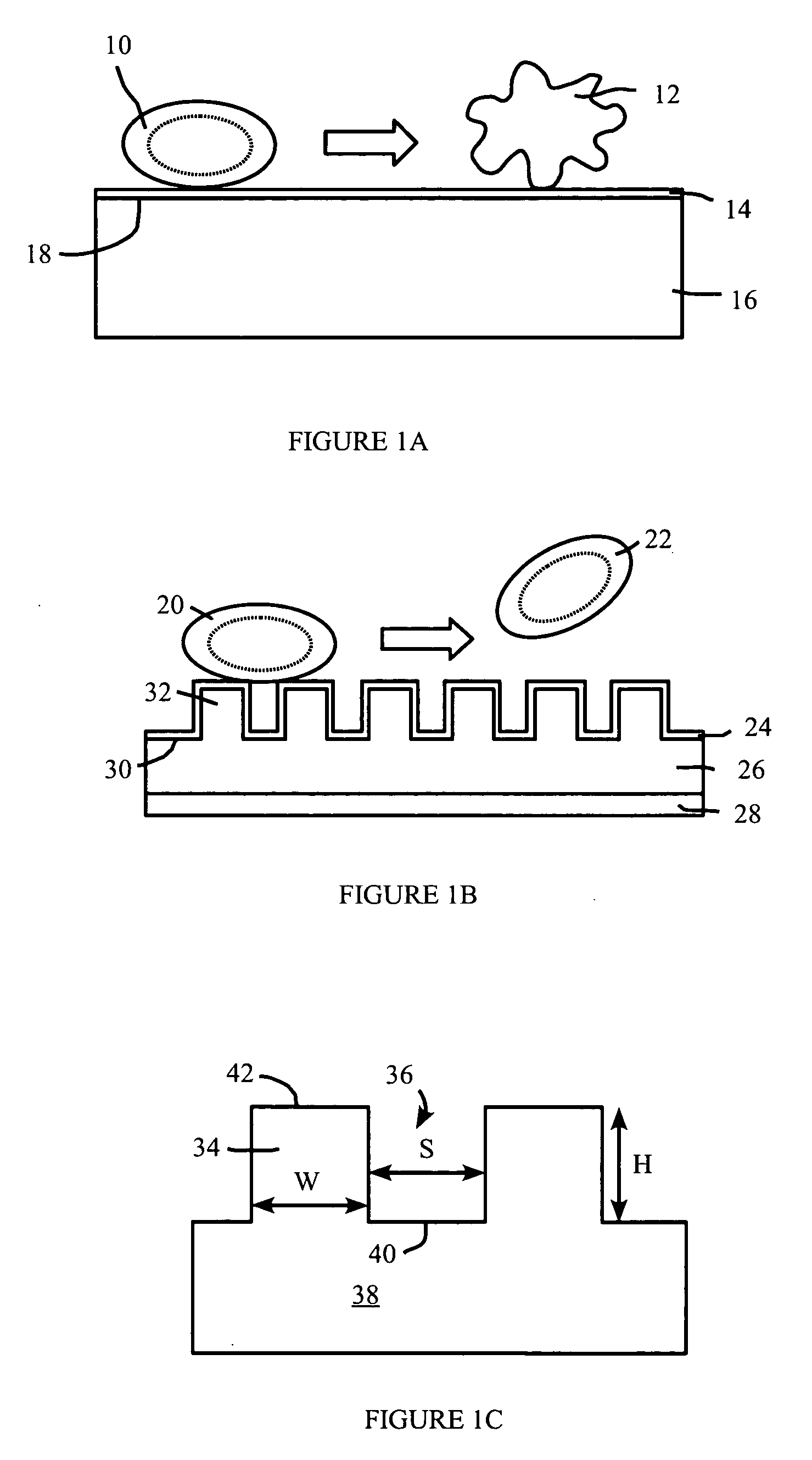

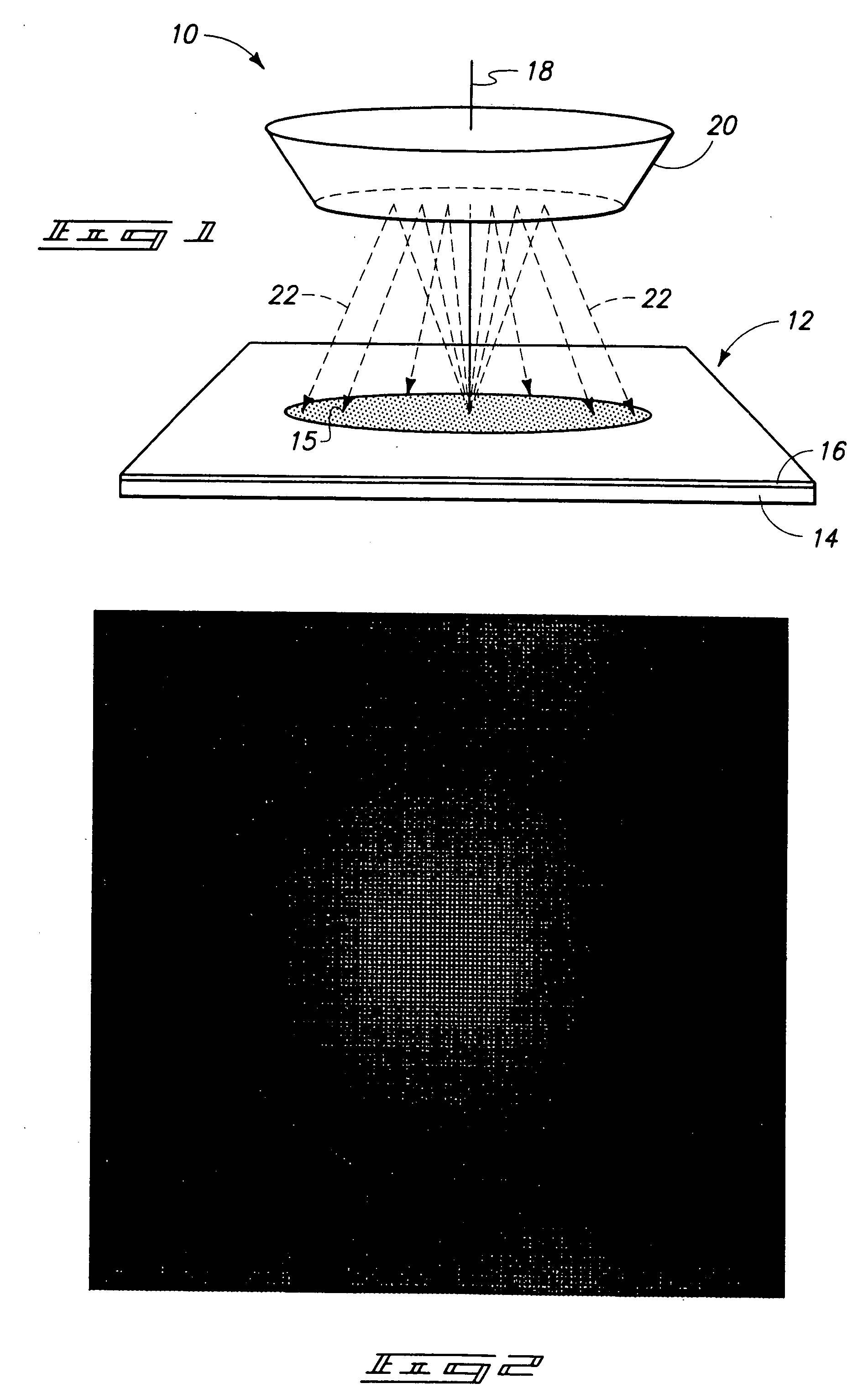

Anti-adhesive surface treatments

A surface providing reduced adhesion to formed elements, having an element dimension such as formed element diameter, has a plurality of topographic features. The topographic features have a feature dimension less than the dimension of the formed element so as to reduce the accessible area of the surface available to the formed element for adhesion to the surface. The topographic features may include protrusions, such as pillars.

Owner:PENN STATE RES FOUND

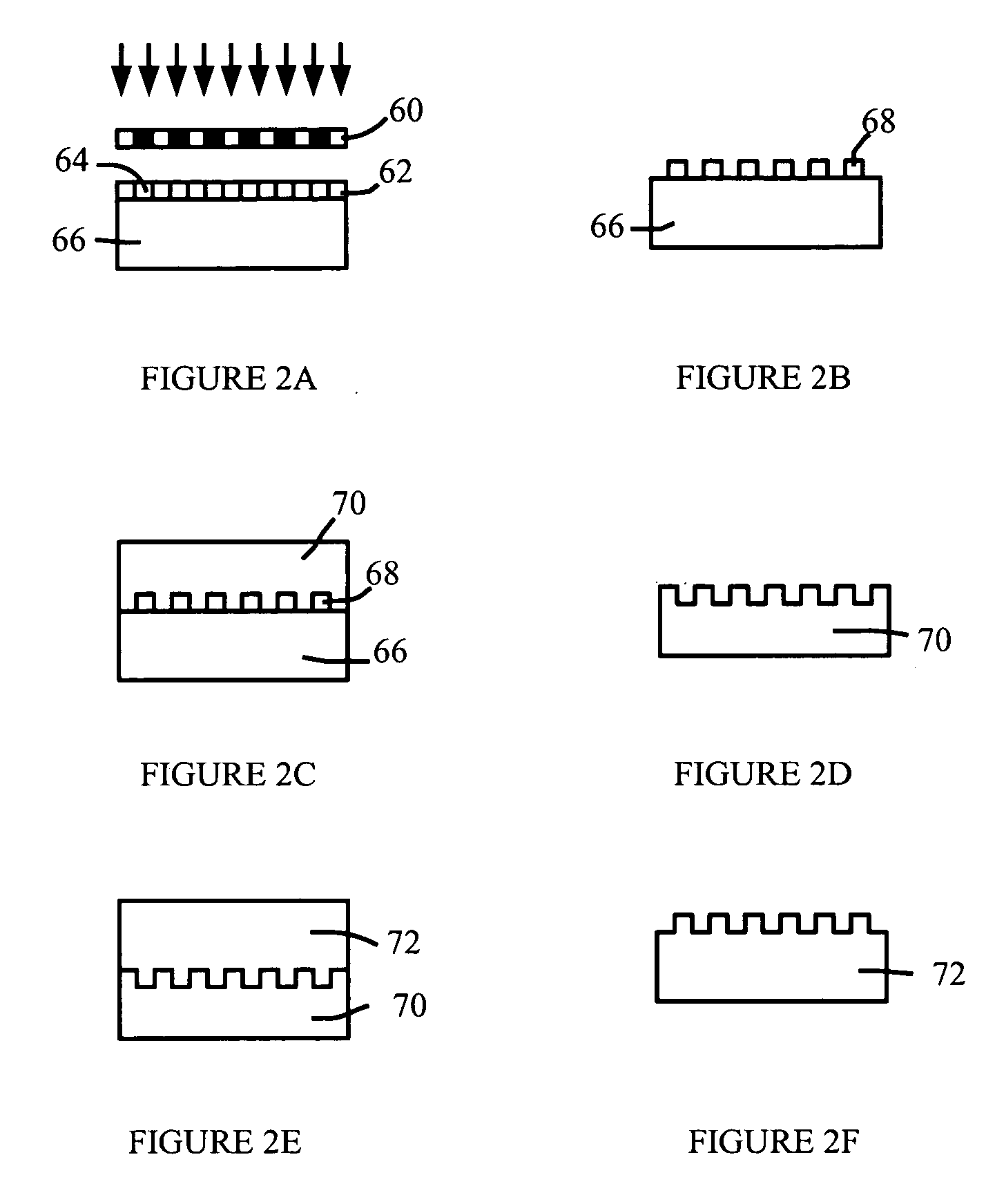

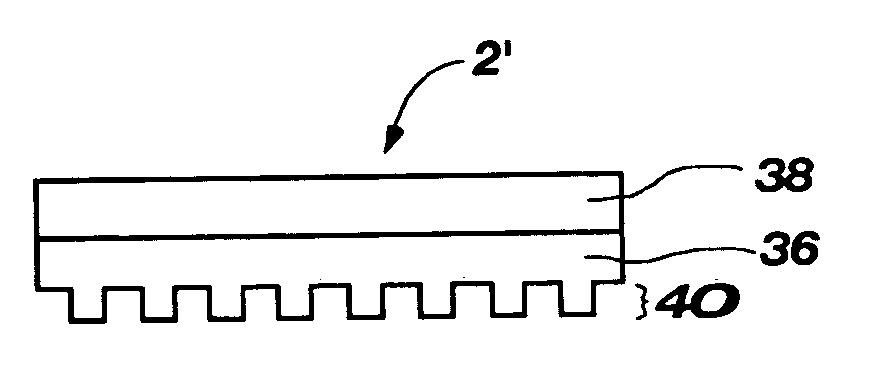

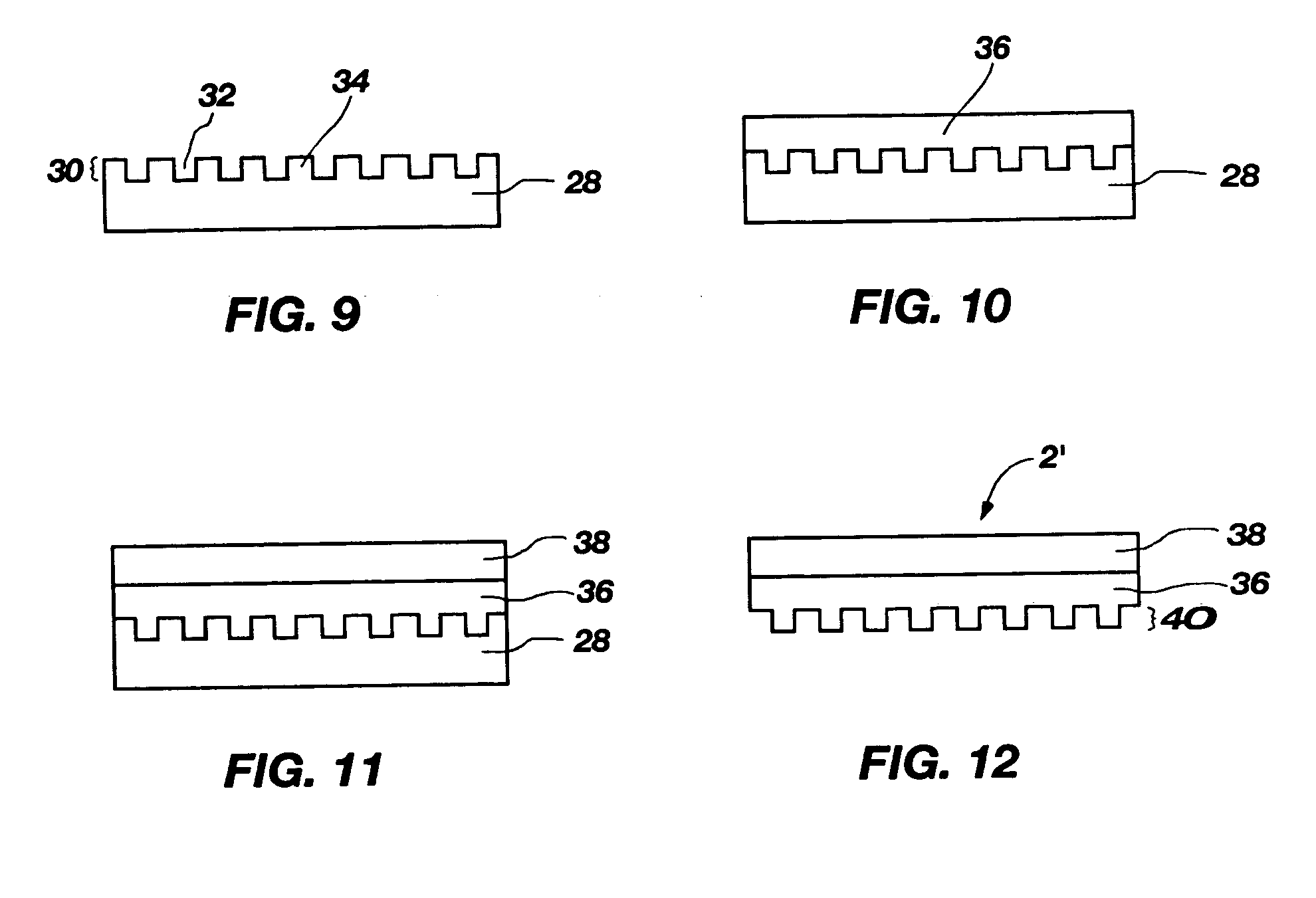

Methods of making templates for use in imprint lithography and related structures

A method of forming a template for use in imprint lithography. The method comprises providing an ultraviolet (“UV”) wavelength radiation transparent layer and forming a pattern in the UV transparent layer by photolithography. The pattern may be formed by anisotropically etching the UV transparent layer and may have feature dimensions of less than approximately 100 nm, such as dimensions of less than approximately 45 nm. An additional embodiment of the method comprises providing a UV opaque layer comprising a first pattern therein, forming a first UV transparent layer in contact with the first contact-pattern of the UV opaque layer, forming a second UV transparent layer in contact with the first UV transparent layer, and removing the UV opaque layer to form the template. An intermediate template structure for use in imprint lithography is also disclosed. In other embodiments, a template that is opaque to UV wavelength radiation and a method of forming the same are disclosed.

Owner:MICRON TECH INC

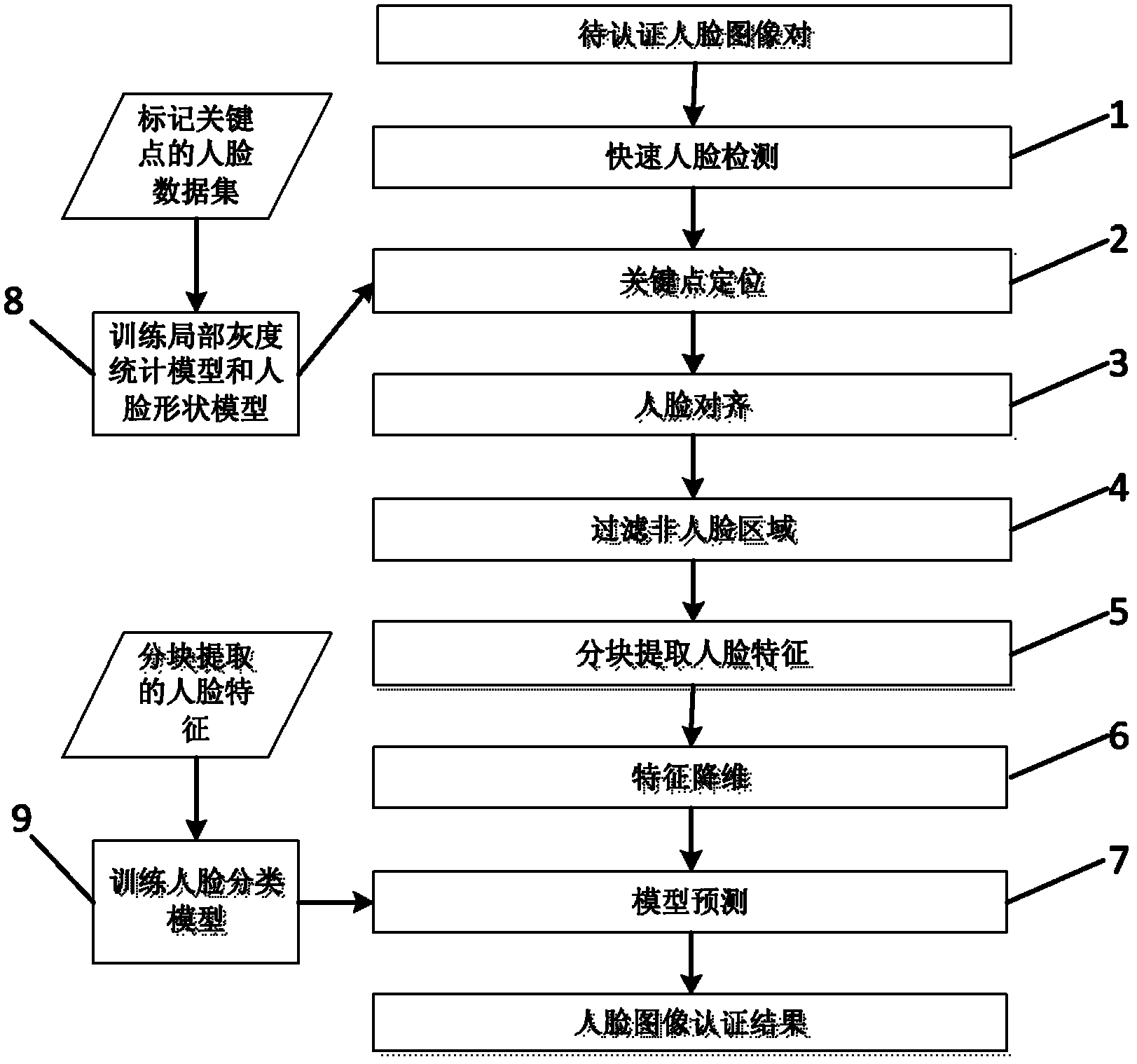

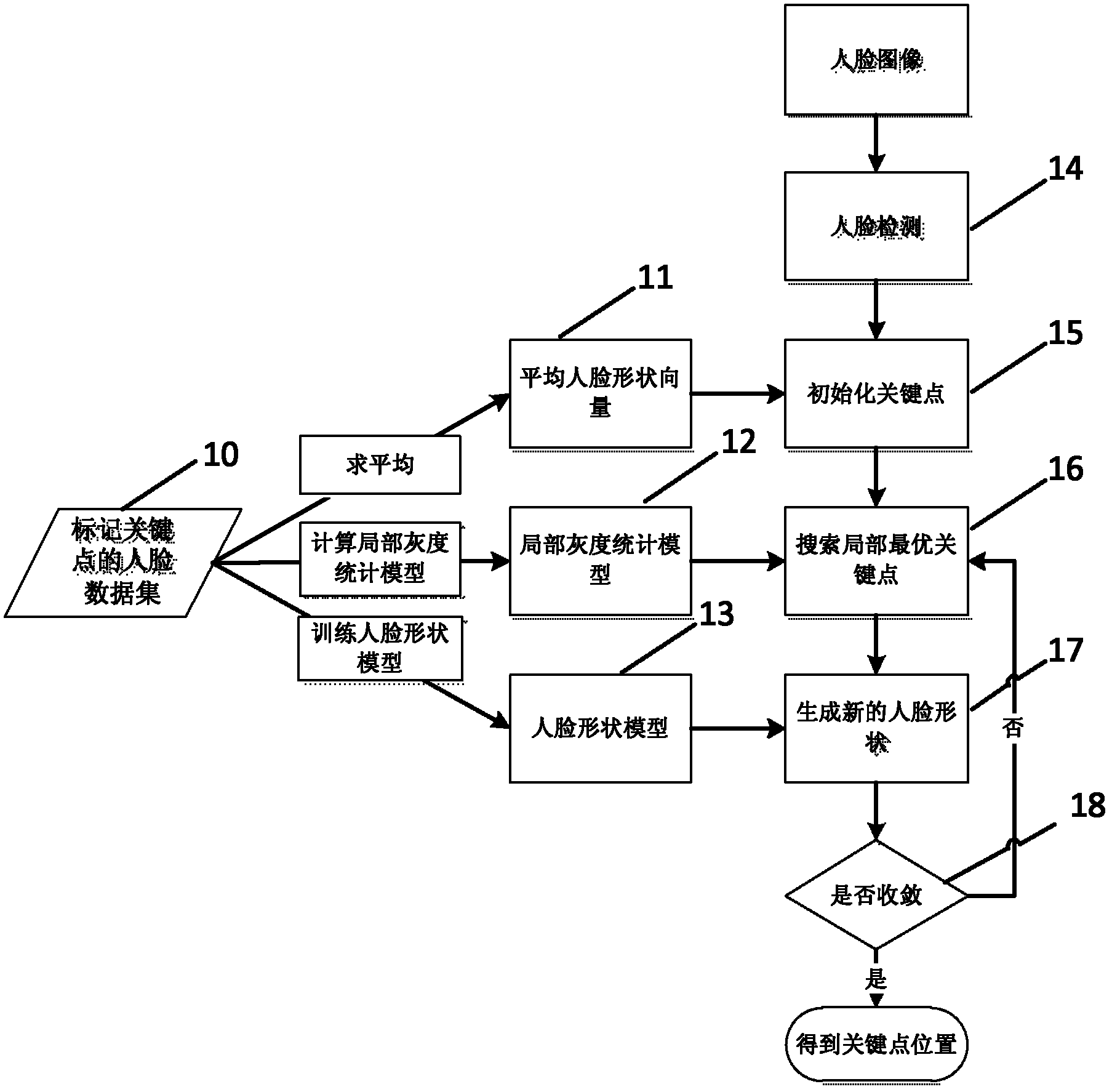

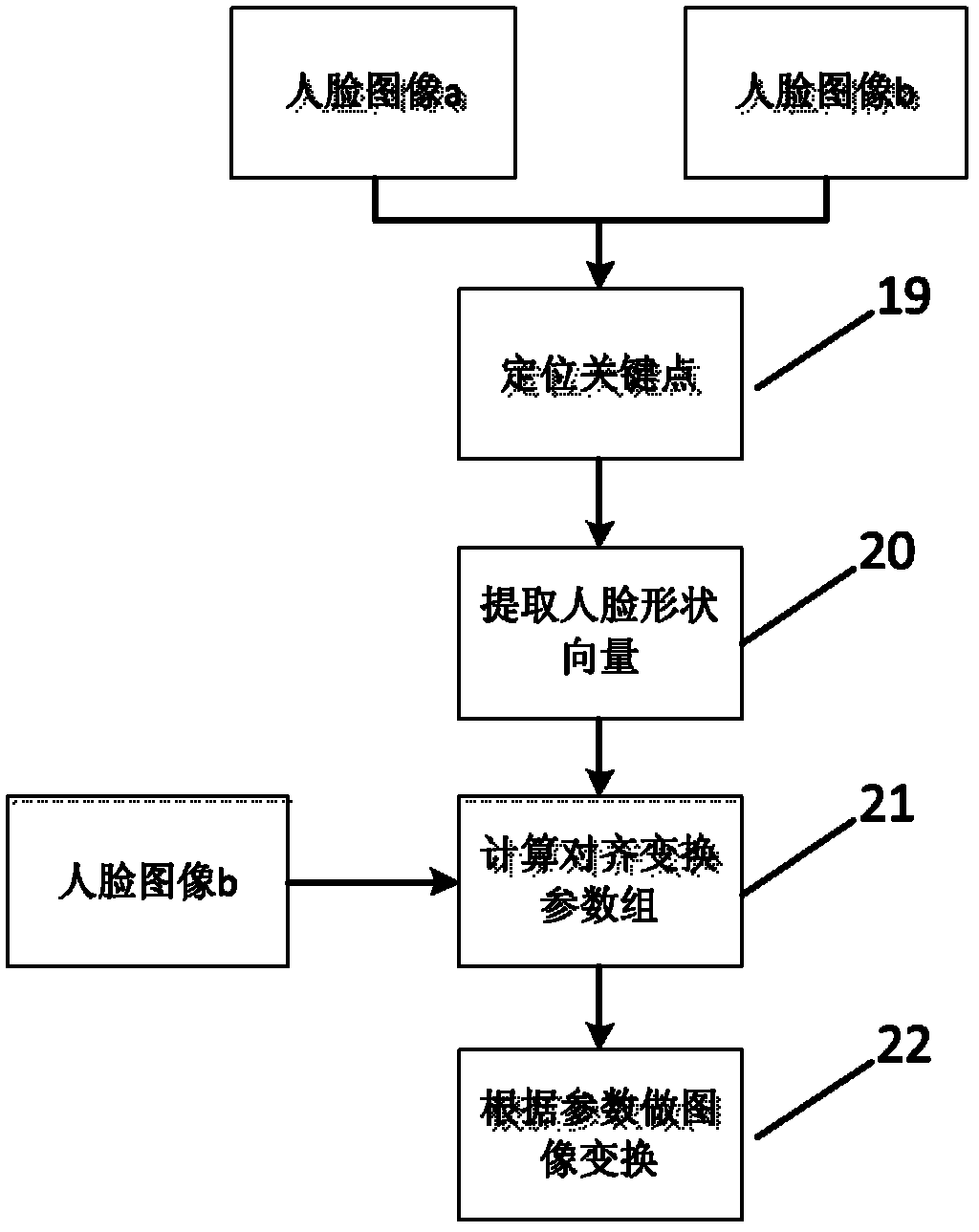

Multi-gesture and cross-age oriented face image authentication method

InactiveCN102663413AThe location correspondence is clearEliminate the effects ofCharacter and pattern recognitionFace detectionFeature Dimension

The invention discloses a multi-gesture and cross-age oriented face image authetication method. The method comprises the following steps of: rapidly detecting a face, performing key point positioning, performing face alignment, performing non-face area filtration, extracting the face features by blocks, performing feature dimension reduction and performing model prediction. The method provided by the invention can perform the face alignment, realize the automatic remediation for a multi-gesture face image, and improves the accuracy rate of the algorithm, furthermore, the feature extraction and dimension reduction modules provided by the invnetion have robustness for aging changes of the face, thus having high use value.

Owner:中盾信安科技(江苏)有限公司

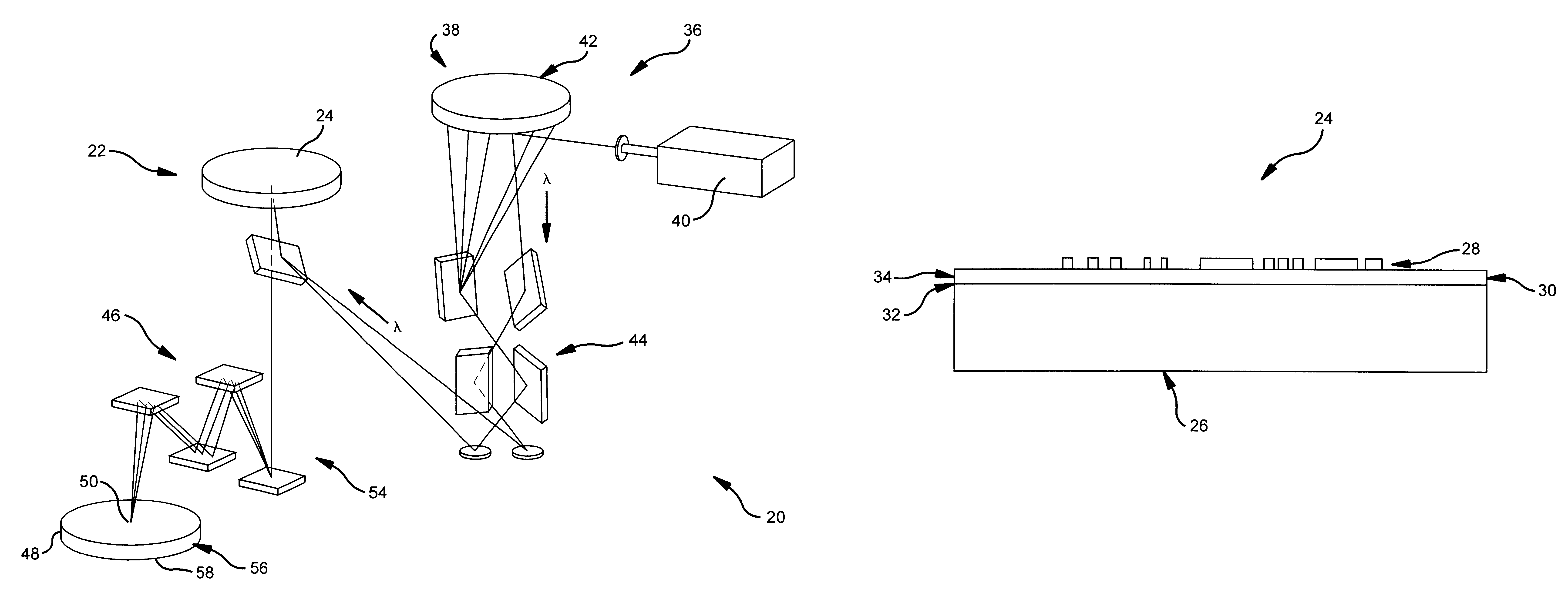

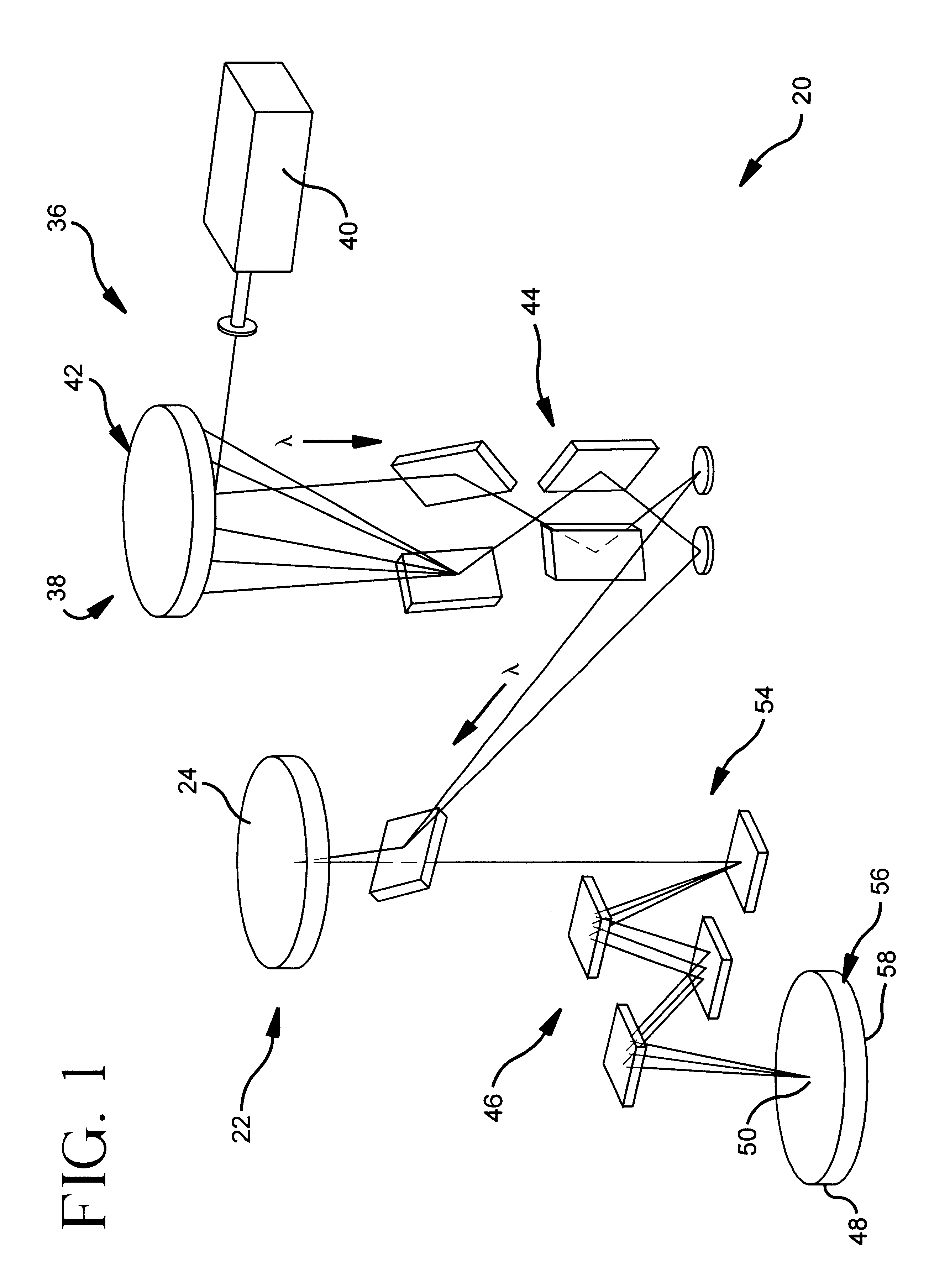

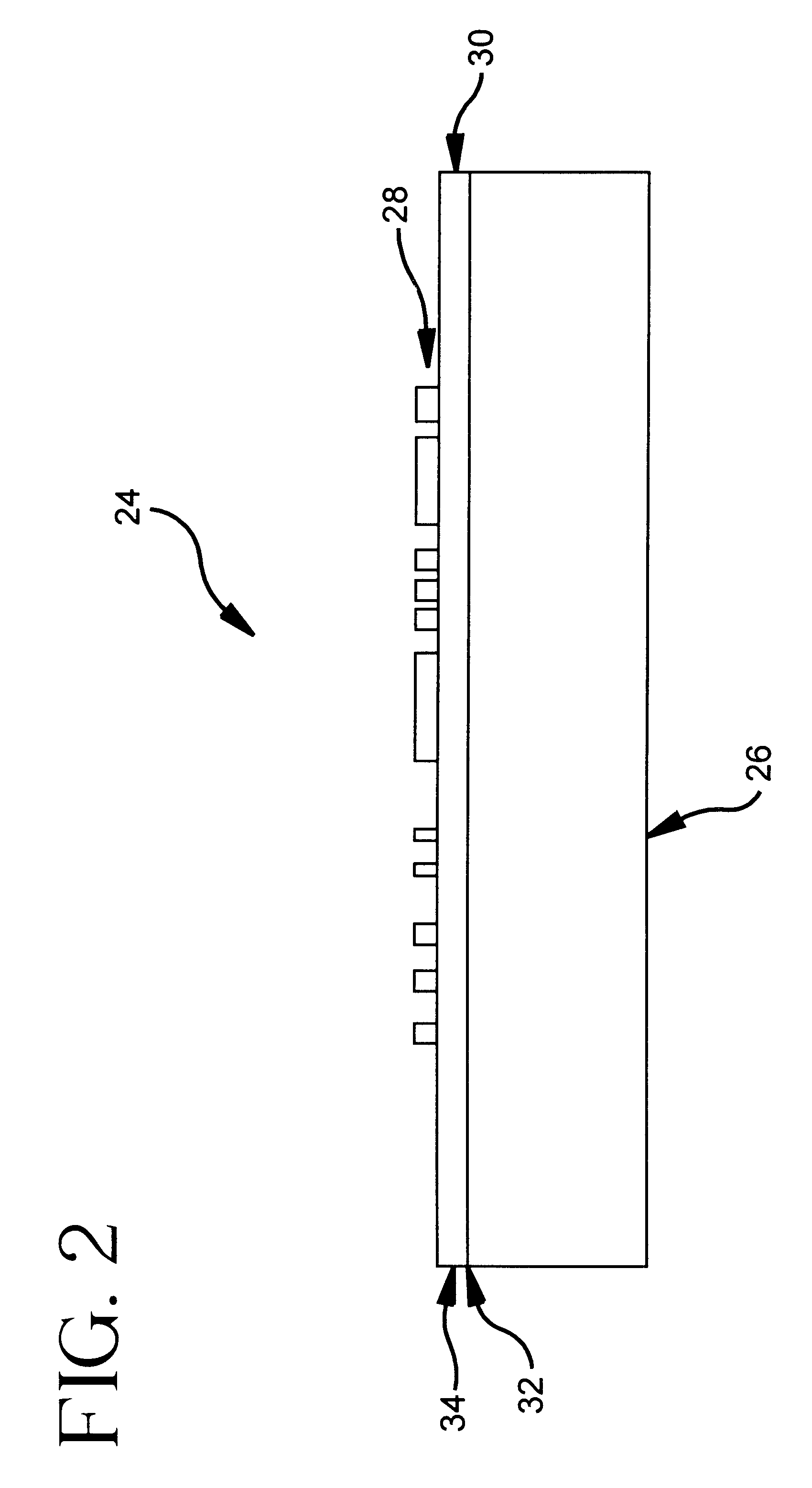

Extreme ultraviolet soft x-ray projection lithographic method and mask devices

InactiveUS6465272B1NanoinformaticsHandling using diffraction/refraction/reflectionTi dopingSoft x ray

The present invention relates to reflective masks and their use for reflecting extreme ultraviolet soft x-ray photons to enable the use of extreme ultraviolet soft x-ray radiation projection lithographic methods and systems for producing integrated circuits and forming patterns with extremely small feature dimensions. The projection lithographic method includes providing an illumination sub-system for producing and directing an extreme ultraviolet soft x-ray radiation lambd from an extreme ultraviolet soft x-ray source; providing a mask sub-system illuminated by the extreme ultraviolet soft x-ray radiation lambd produced by the illumination sub-system and providing the mask sub-system includes providing a patterned reflective mask for forming a projected mask pattern when illuminated by radiation lambd. Providing the patterned reflective mask includes providing a Ti doped high purity SiO2 glass wafer with a patterned absorbing overlay overlaying the reflective multilayer coated Ti doped high purity SiO2 glass defect free wafer surface that has an Ra roughness<=0.15 nm. The method includes providing a projection sub-system and a print media subject wafer which has a radiation sensitive wafer surface wherein the projection sub-system projects the projected mask pattern from the patterned reflective mask onto the radiation sensitive wafer surface.

Owner:CORNING INC

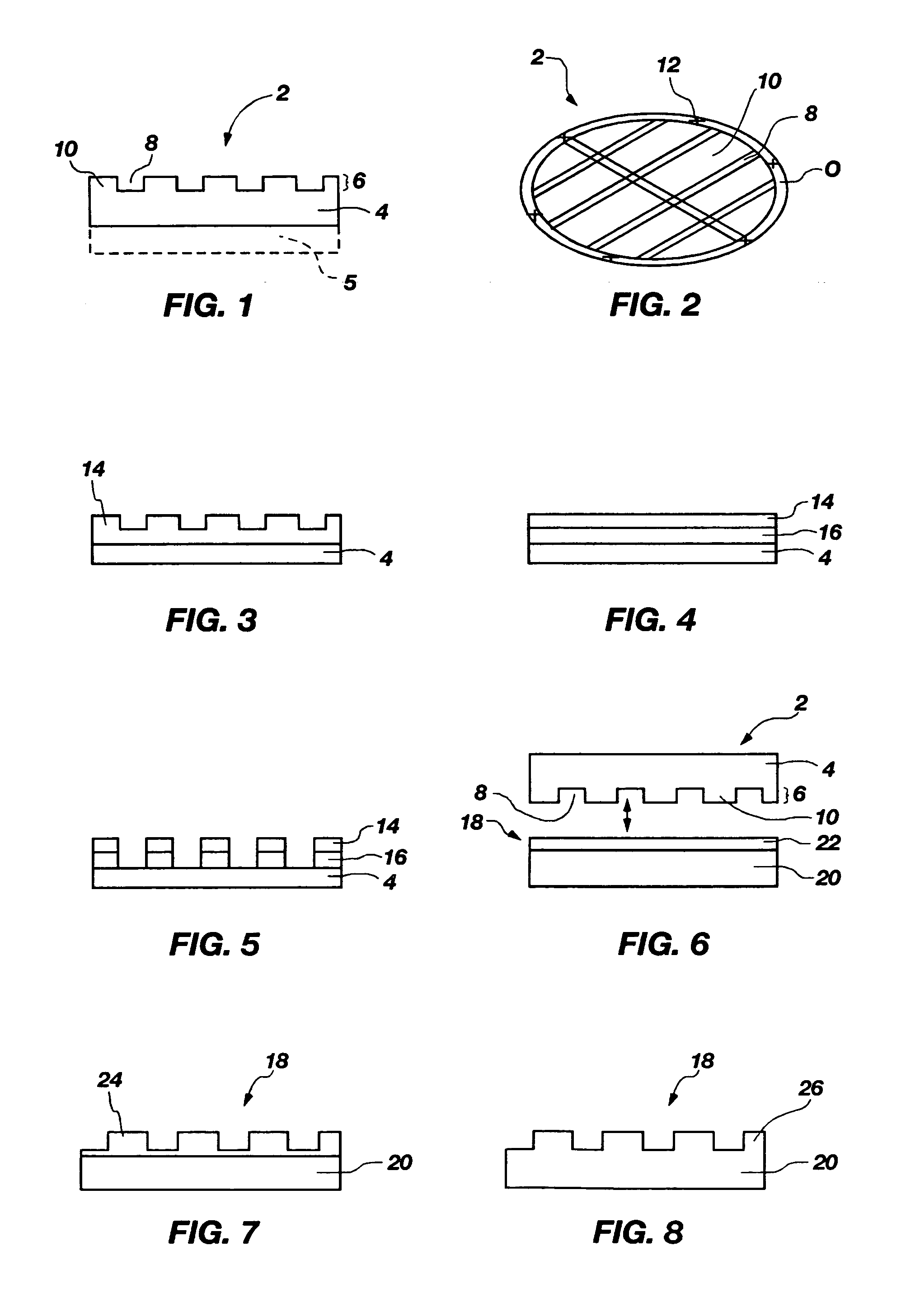

Three-dimensional structures having feature sizes smaller than a minimum feature size and methods for fabricating

ActiveUS20050126916A1Small feature sizeImprove efficiencyAdditive manufacturing apparatusDuplicating/marking methodsFeature DimensionComputer science

Owner:MICROFAB

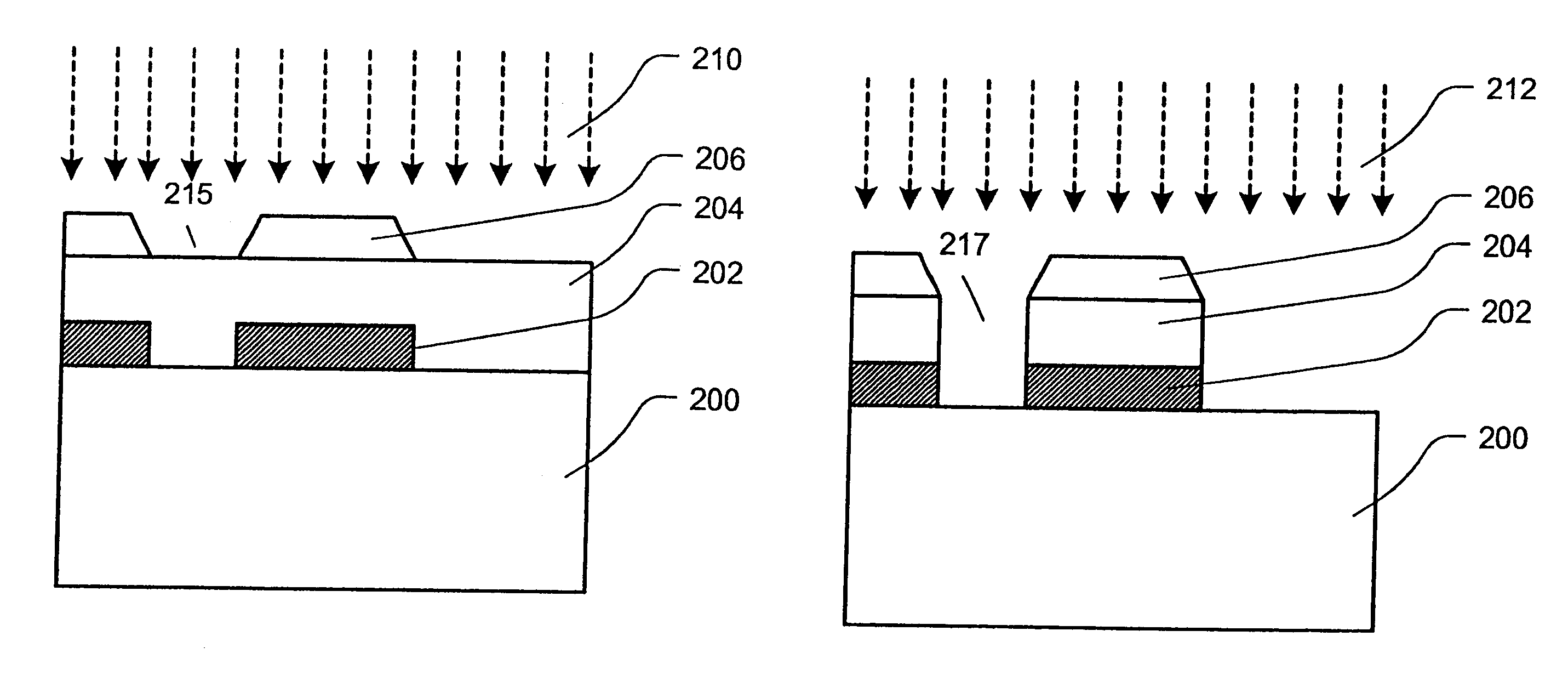

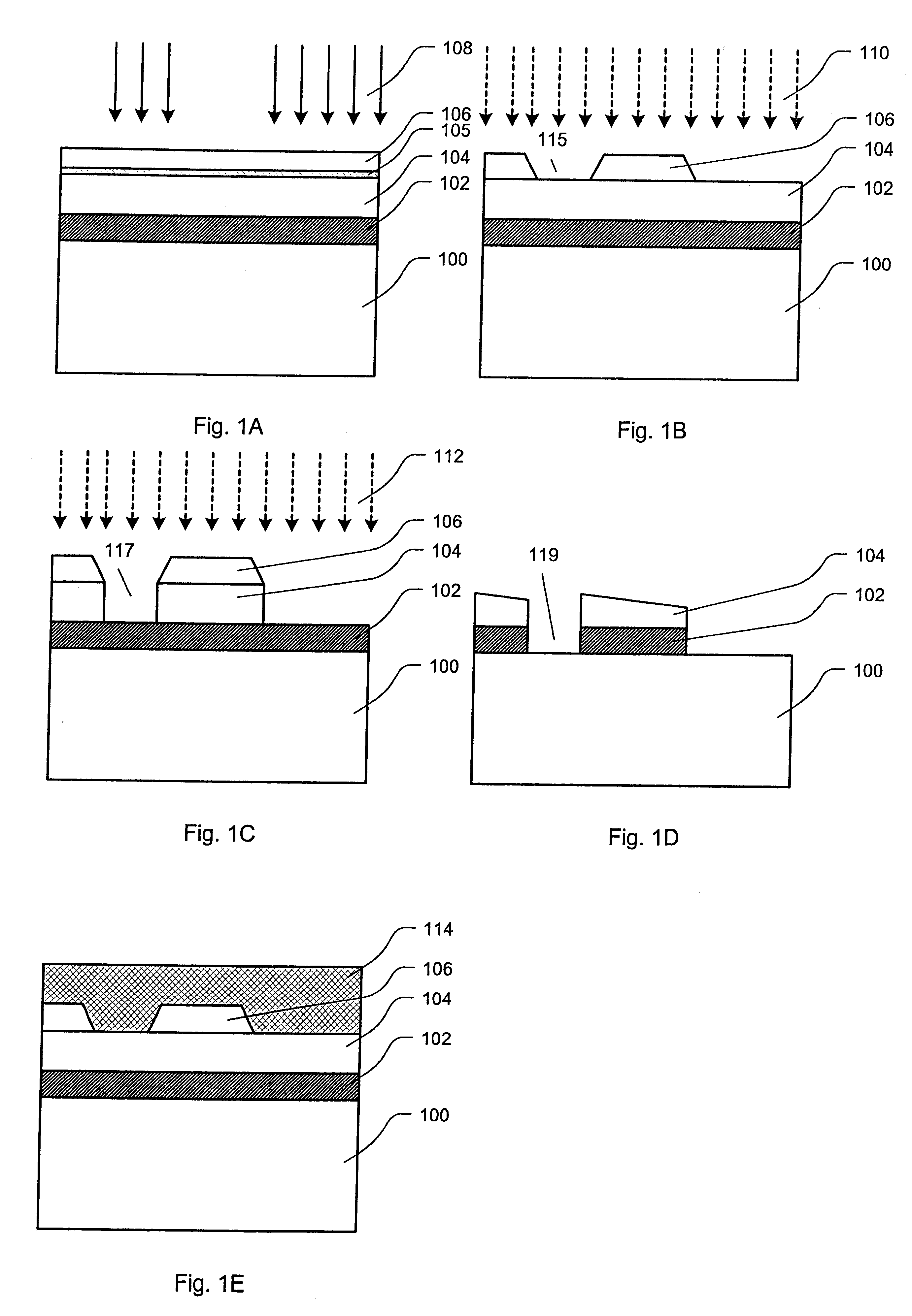

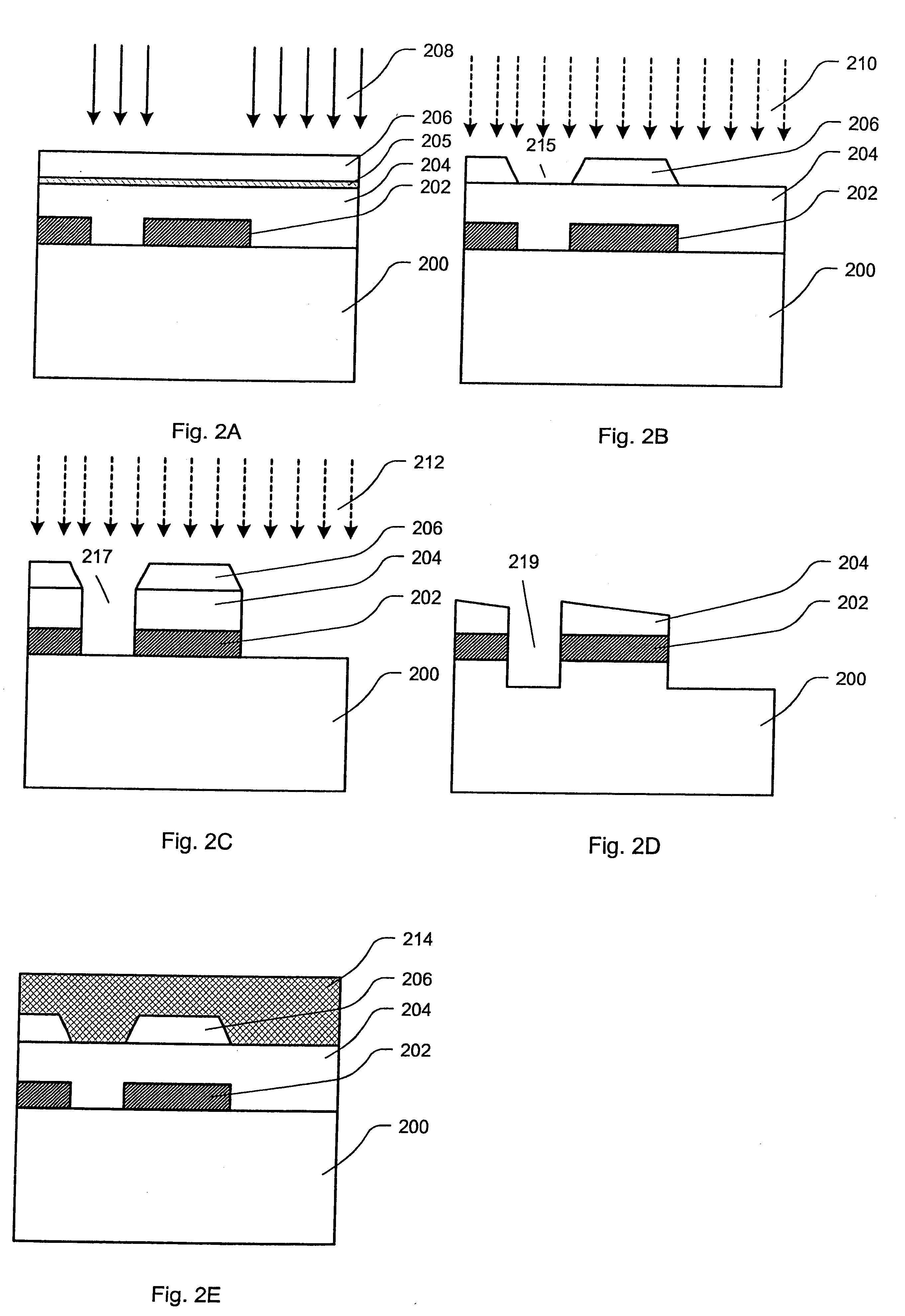

Method for manufacturing high density non-volatile magnetic memory

ActiveUS8802451B2Total current dropReduced dimensionNanomagnetismNanoinformaticsFeature DimensionHigh density

Methods of fabricating MTJ arrays using two orthogonal line patterning steps are described. Embodiments are described that use a self-aligned double patterning method for one or both orthogonal line patterning steps to achieve dense arrays of MTJs with feature dimensions one half of the minimum photo lithography feature size (F). In one set of embodiments, the materials and thicknesses of the stack of layers that provide the masking function are selected so that after the initial set of mask pads have been patterned, a sequence of etching steps progressively transfers the mask pad shape through the multiple mask layer and down through all of the MTJ cell layers to the form the complete MTJ pillars. In another set of embodiments, the MTJ / BE stack is patterned into parallel lines before the top electrode layer is deposited.

Owner:AVALANCHE TECH

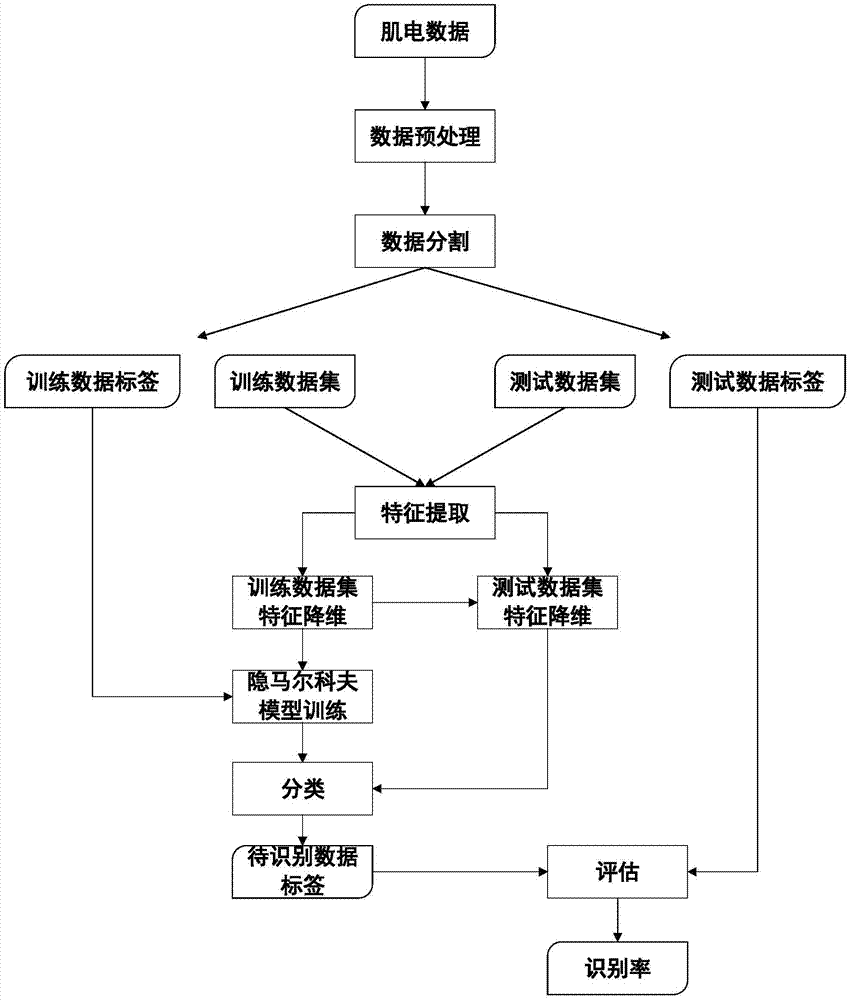

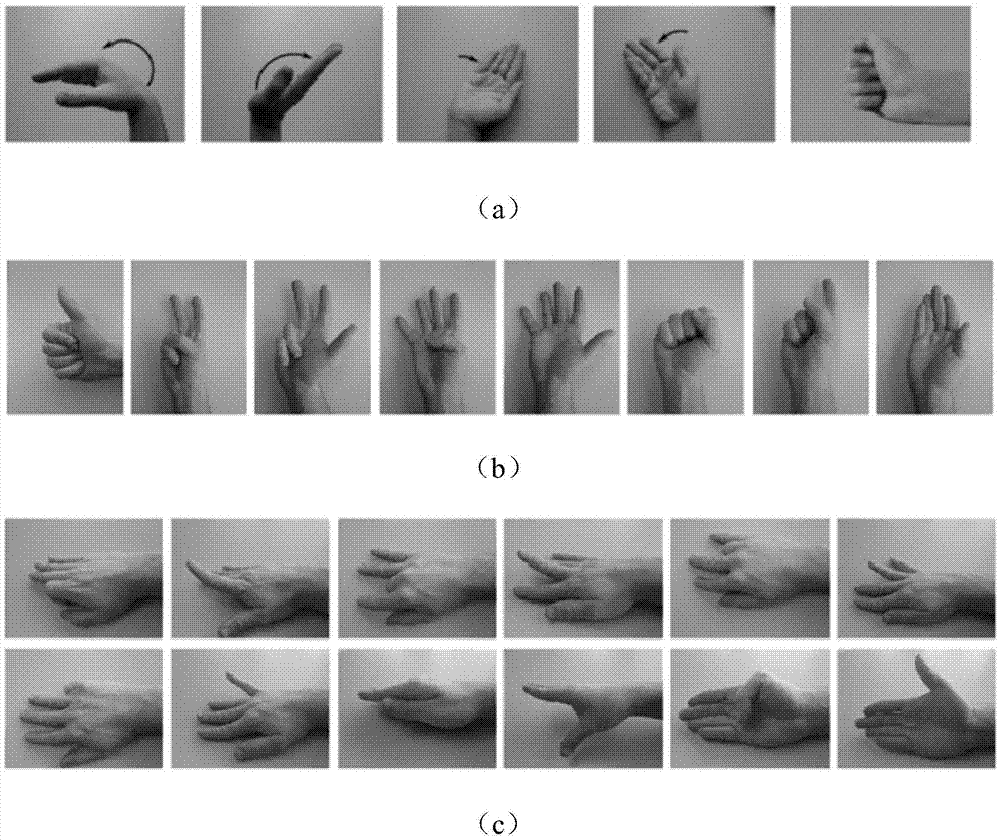

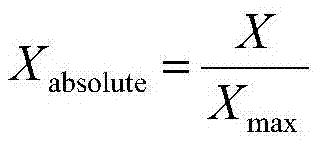

Electromyographic signal gesture recognition method based on hidden markov model

InactiveCN105446484AEasy to identifyAccurate identificationInput/output for user-computer interactionGraph readingData ingestionFeature vector

The invention discloses an electromyographic signal gesture recognition method based on a hidden markov model. The method comprises the following steps of: executing smoothing filtering for electromyographic signals; extracting a multi-feature feature set for each window data through a sliding window, and executing normalization and feature dimension reduction of minimum redundancy maximum correlation criterion for feature vectors; designing three classes of hidden markov model classifiers, and optimizing parameters of the hidden markov model classifiers; obtaining classifier models through training with hidden markov classifier model parameters and training data; inputting test data into the models trained well, and according to likelihood output by each class of hidden markov model, determining that the class corresponding to the maximum likelihood is the recognized class. According to the method provided by the invention, three classes of common hidden markov model classifiers are recognized based on a new feature set. By application of a classification method based on the hidden markov model, different gestures of the same testee can be recognized accurately, and gestures of different testees can be relatively recognized accurately.

Owner:ZHEJIANG UNIV

Automatic vehicle body color recognition method of intelligent vehicle monitoring system

ActiveCN102184413AOvercome the effects of color recognitionImprove recognition accuracyCharacter and pattern recognitionTemplate matchingFeature Dimension

The invention discloses an automatic vehicle body color recognition method of an intelligent vehicle monitoring system. The method comprises the following steps: firstly detecting a feature region on the behalf of a vehicle body color according to the position of a plate number and the textural features of the vehicle body; then, carrying out color space conversion and vector space quantization synthesis on pixels of the vehicle body feature region, and extracting normalization features of an obscure histogram Bin from the quantized vector space; carrying feature dimension reduction on the acquired high-dimension features by adopting an LDA (Linear Discriminant Analysis) method; carrying out various subspace analysis on the vehicle body color, then carrying out vehicle body color recognition of the subspaces by utilizing the recognition parameters of an offline training classifier, and adopting a multi-feature template matching or SVM (Space Vector Modulation) method; and finally, correcting color with easy intersection and low reliability according to the initial recognition reliability and color priori knowledge, so as to obtain the final vehicle body recognition result. The automatic vehicle body color recognition method is applicable to conditions of daylight, night and sunshine and is fast in recognition speed and high in recognition accuracy.

Owner:ZHEJIANG DAHUA TECH CO LTD

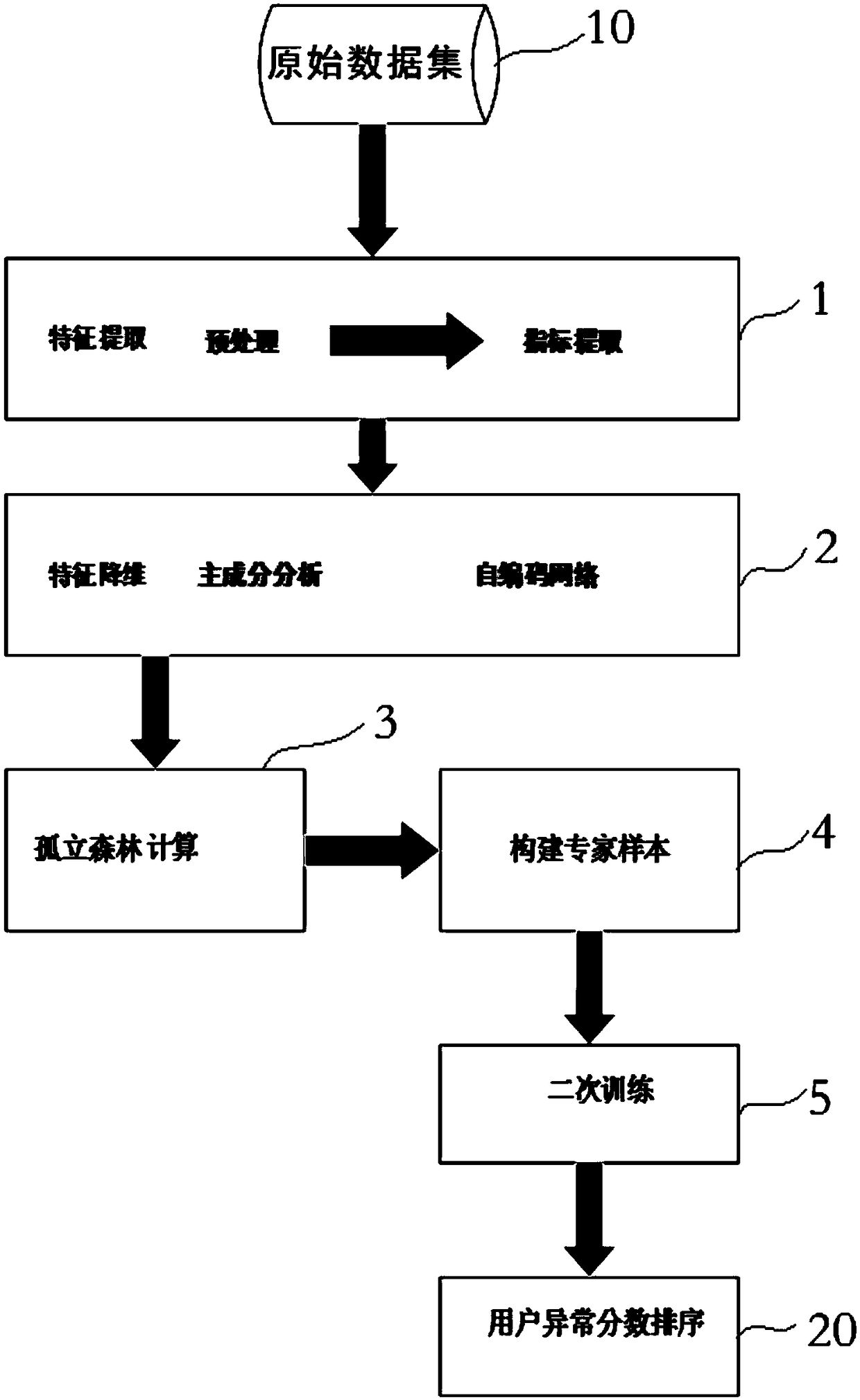

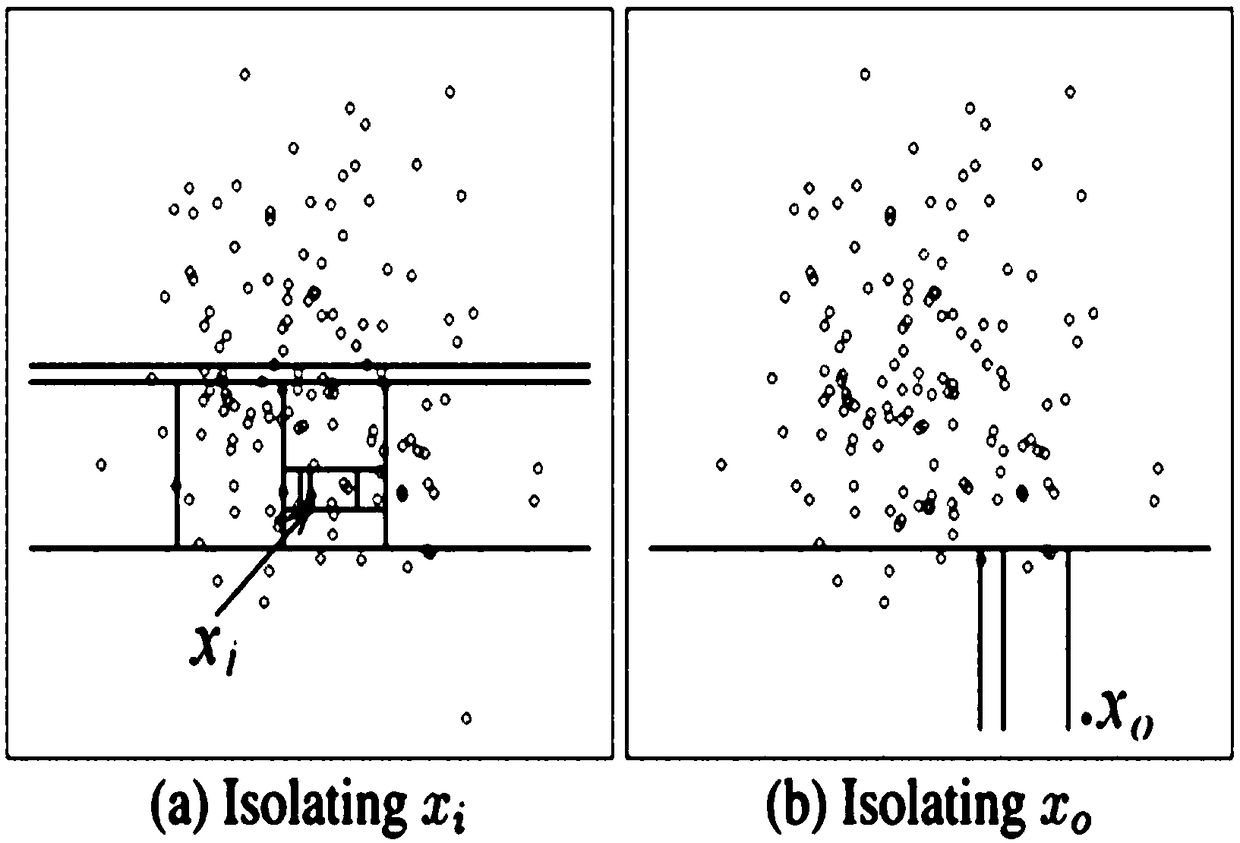

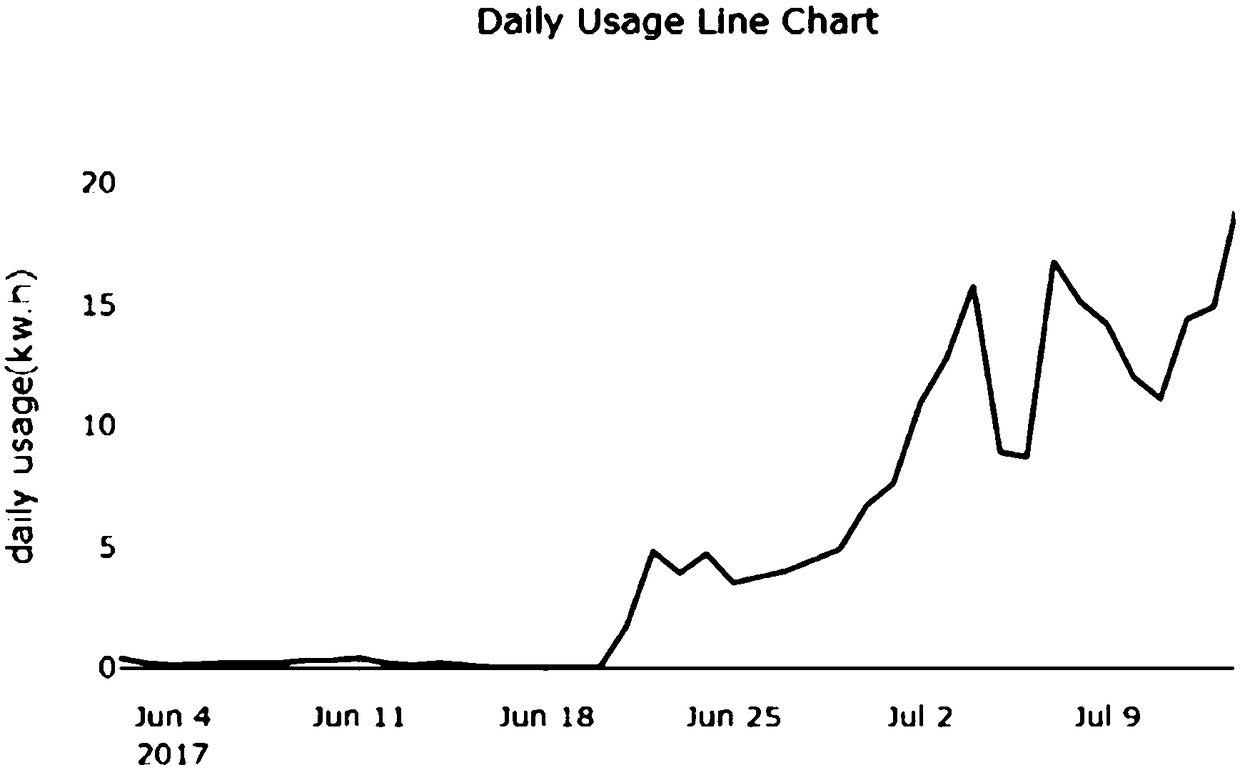

A power consumption data anomaly detection model based on isolated forest algorithm

InactiveCN108985632AEasy to handleImprove efficiencyResourcesAc network circuit arrangementsFeature DimensionFeature set

The invention discloses a power consumption data anomaly detection model based on an isolated forest algorithm. The model comprises a feature extraction module, a feature dimension reduction module, an isolated forest calculation module, an expert sample module and a secondary training module, wherein the feature extraction module extracts the time series of the user's power consumption data fromthe original data set as an initial feature set, and then carries out dimensionless and feature selection processing on the initial feature set; the feature dimension reduction module adopts principalcomponent analysis and self-coding network method to reduce the dimension of the initial feature set to get the effective feature set; the isolated forest computing module uses isolated forest algorithm to calculate the outlier score of each user to determine whether the user data is abnormal or not. The electric power data anomaly detection model based on the isolated forest algorithm of the invention is an unsupervised electric power data anomaly detection model, which not only can quickly process a large amount of data, but also can adapt to the situation of lack of training samples, and can better meet the practical requirements of the electric power department.

Owner:SHANGHAI MUNICIPAL ELECTRIC POWER CO

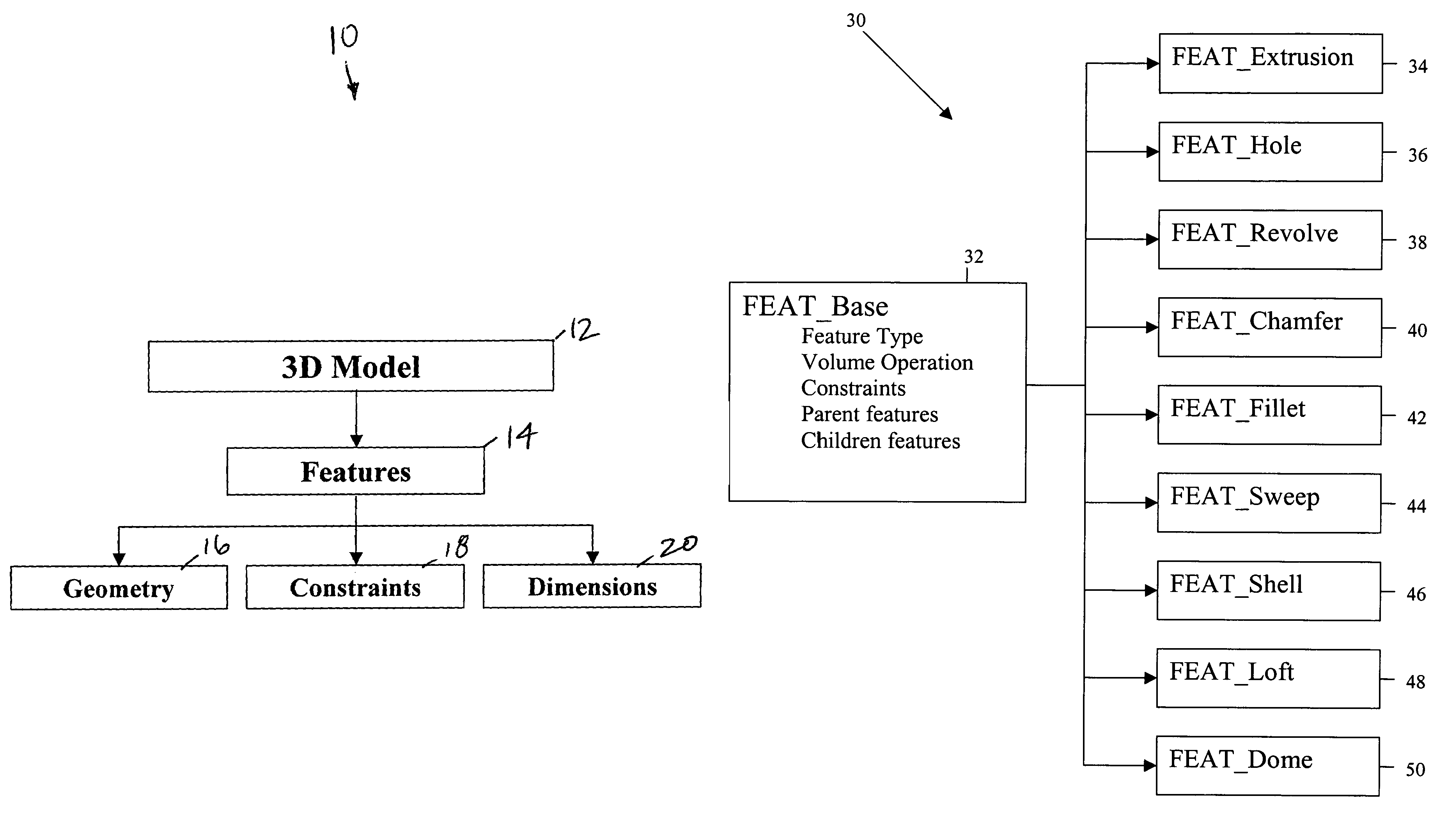

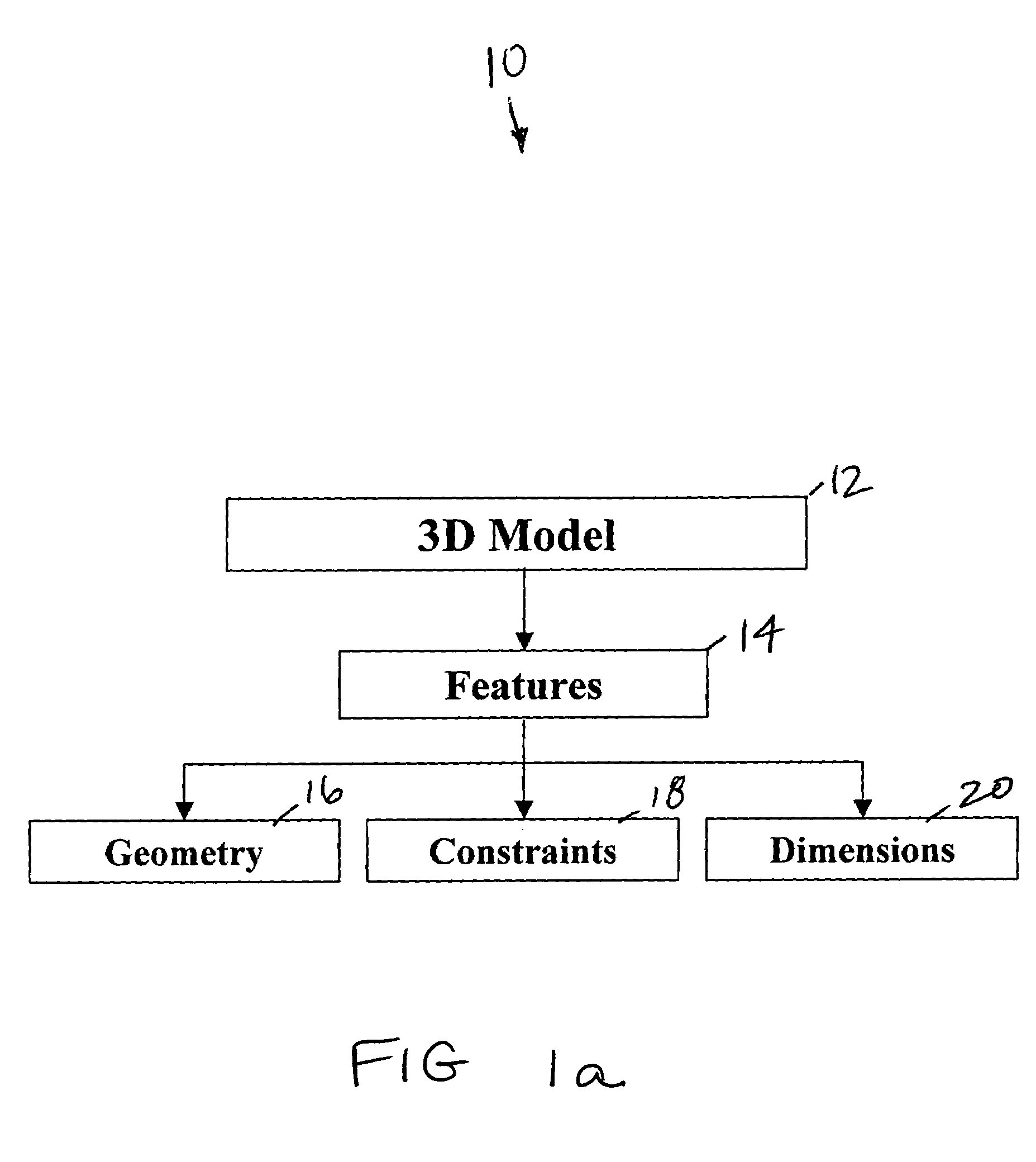

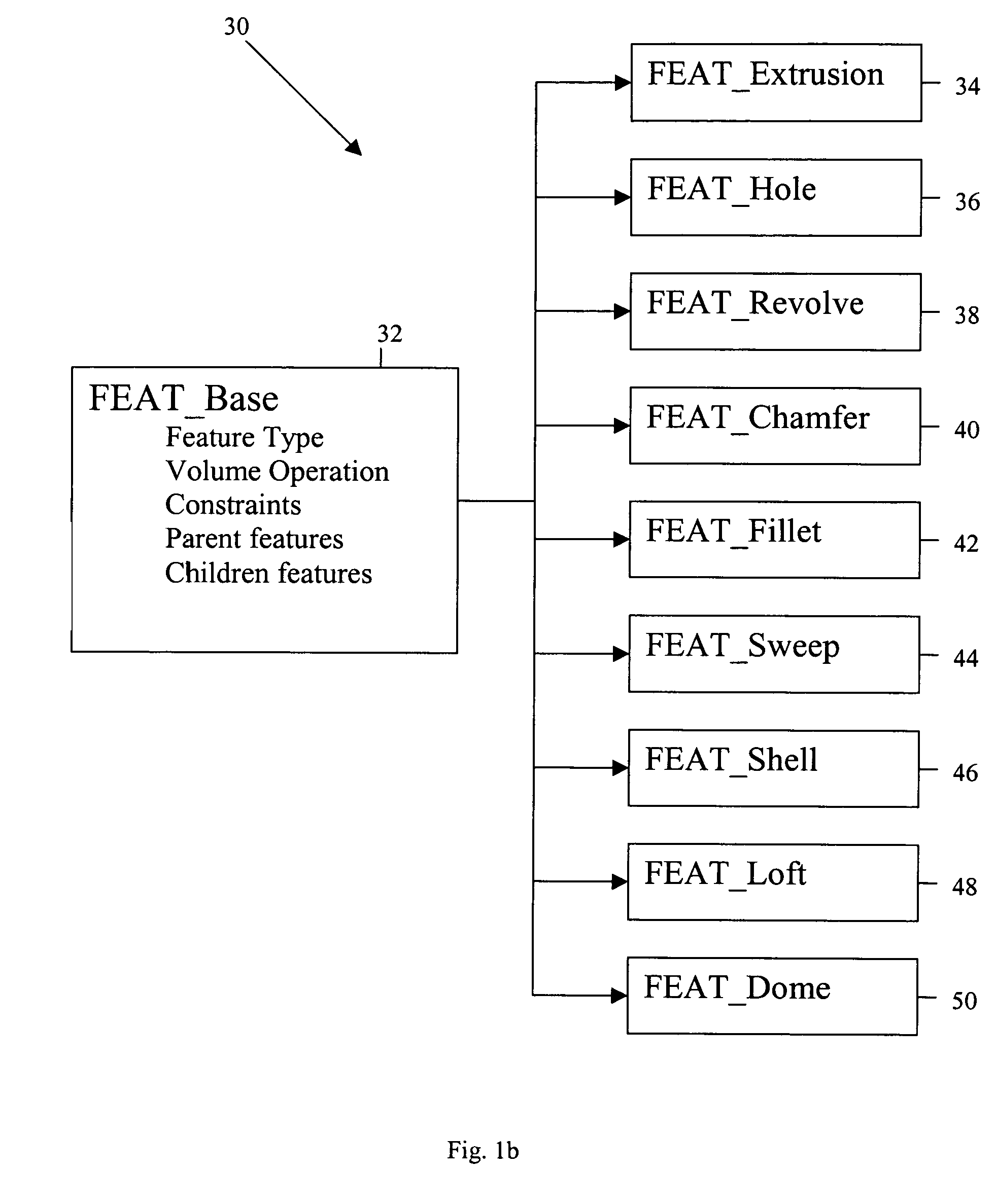

System and method for creating and updating a three-dimensional model and creating a related neutral file format

The present invention discloses a method for building, defining, and storing features in an application neutral format comprising building a feature based on a feature class, wherein the feature class comprises feature geometry, feature constraints, and feature dimensions, defining the built feature as a geometric representation of an individual feature type, and storing the representation in a binary file format.

Owner:IMAGECOM

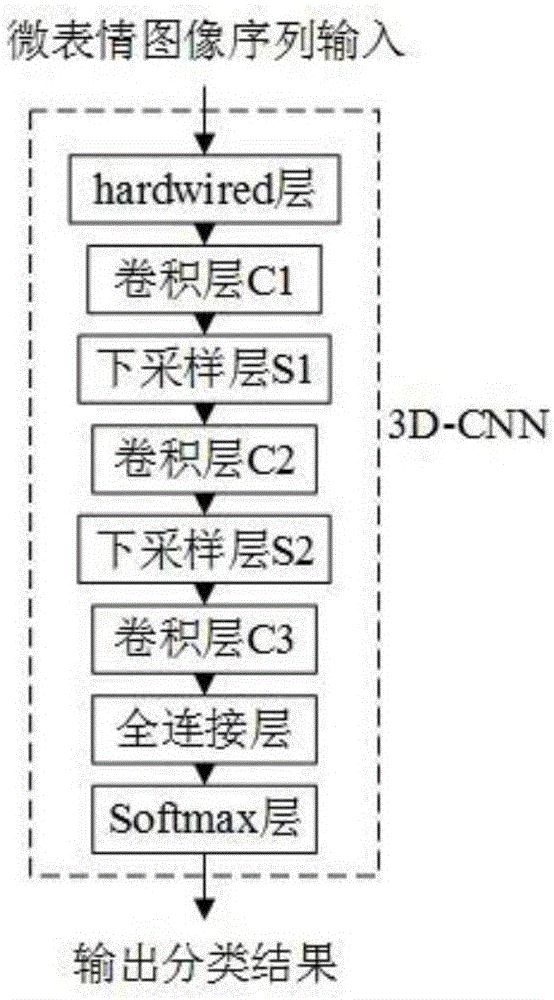

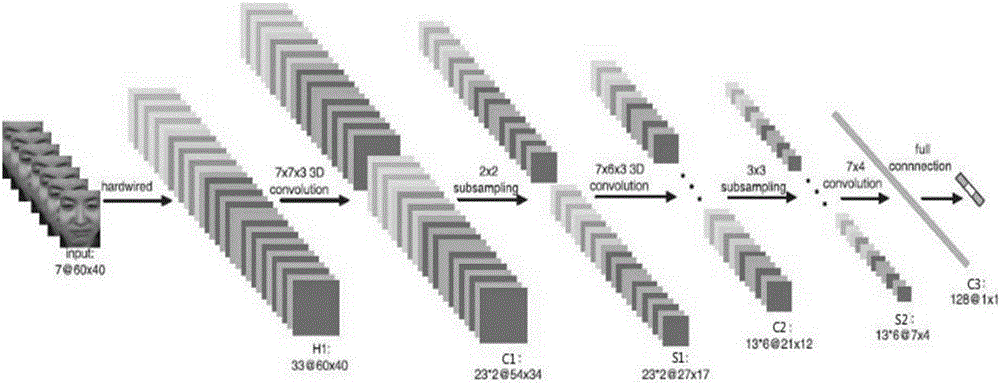

Micro expression recognition method based on 3D convolution neural network

ActiveCN106570474AImprove robustnessReduce complexityNeural architecturesAcquiring/recognising facial featuresFeature DimensionHybrid neural network

The invention relates to a micro expression recognition method based on a 3D convolution neural network. Based on a constructed 3D convolution neural network (3D-CNN) model, happiness, disgust, depression, surprise as well as five other micro expressions can be recognized effectively. The designed micro expression recognition method is simple and efficient. There is no need to carry out a series of processes such as feature extraction, feature dimension reduction and classification on sample data. The difficulty of preprocessing is reduced greatly. Through receptive field and weight sharing, the number of parameters needing to be trained by the neural network is reduced, and the complexity of the algorithm is reduced greatly. In addition, in the designed micro expression recognition method, through down-sampling operation of a down-sampling layer, the robustness of the network is enhanced, and image distortion to a certain degree can be tolerated.

Owner:NANJING UNIV OF POSTS & TELECOMM

Image search method and apparatus

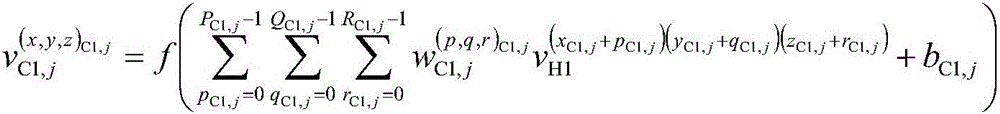

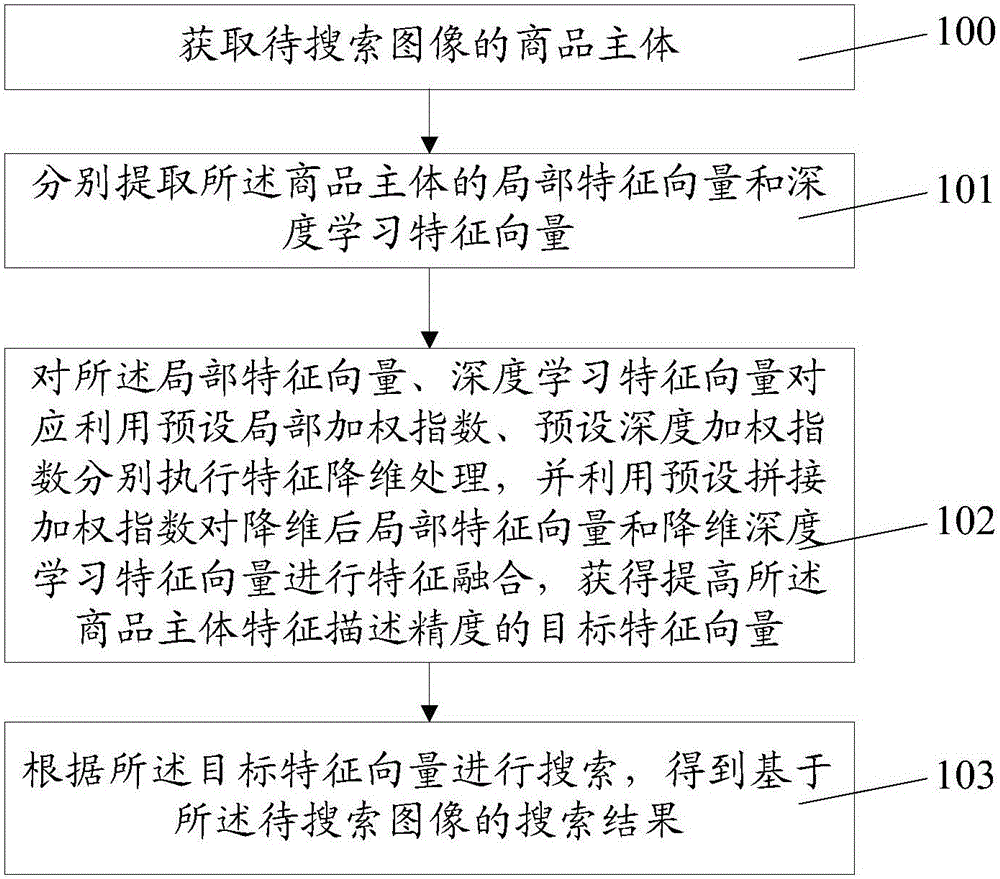

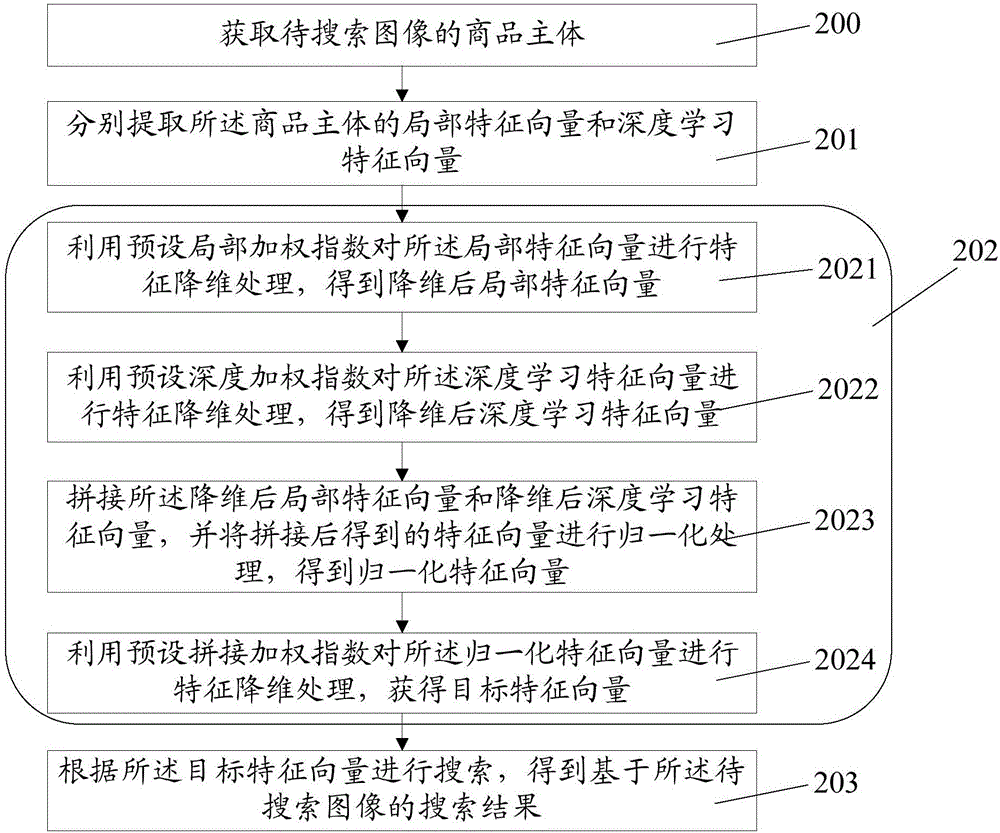

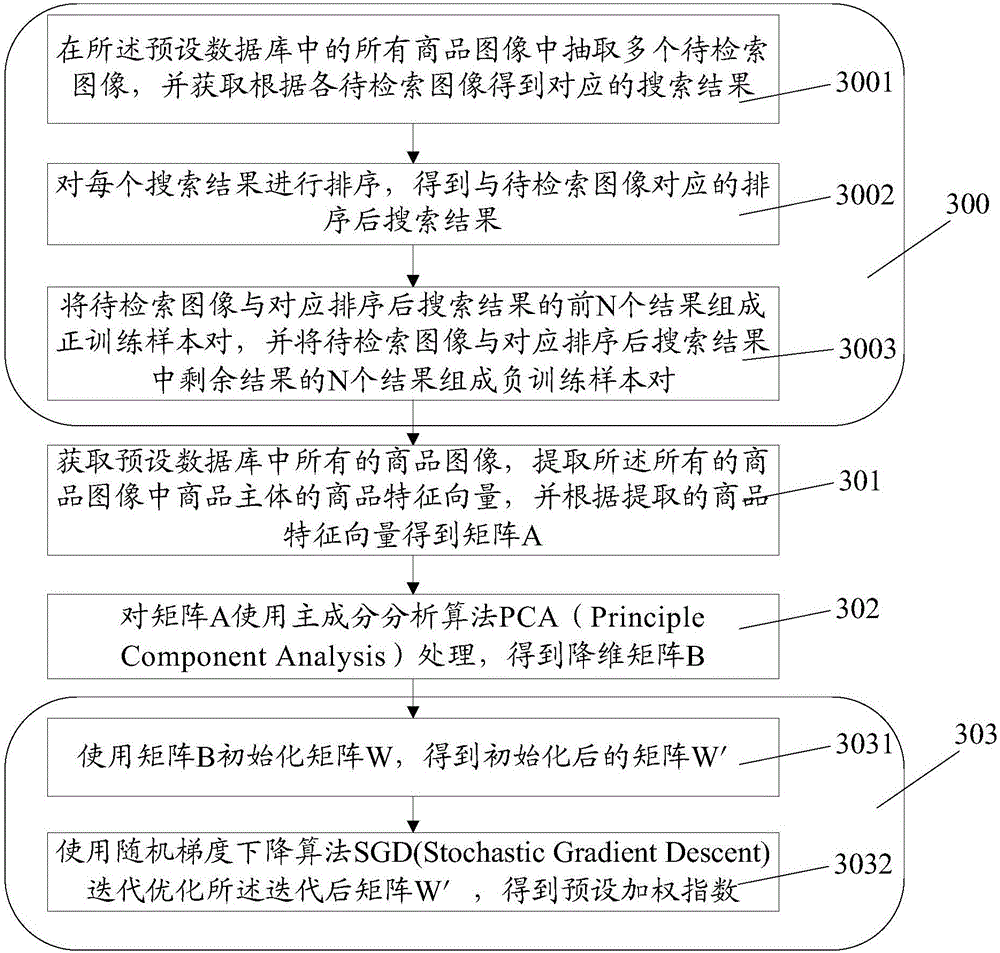

ActiveCN106326288AStrong combinationImprove characterization abilitySpecial data processing applicationsFeature DimensionDimensionality reduction

The invention discloses an image search method and apparatus. The image search method comprises the steps of obtaining a target region of interest of a to-be-searched image; extracting a local eigenvector and a deep learning eigenvector of the target region of interest; executing feature dimension reduction processing on the local eigenvector and the deep learning eigenvector by correspondingly utilizing a preset locally-weighted index and a preset depth-weighted index respectively, and performing feature fusion on the local eigenvector subjected to the dimension reduction and the deep learning eigenvector subjected to the dimension reduction by utilizing a preset splicing weighted index, thereby obtaining a target eigenvector which improves the feature description precision of the target region of interest; and performing a search according to the target eigenvector, thereby obtaining a search result based on the to-be-searched image.

Owner:ALIBABA GRP HLDG LTD

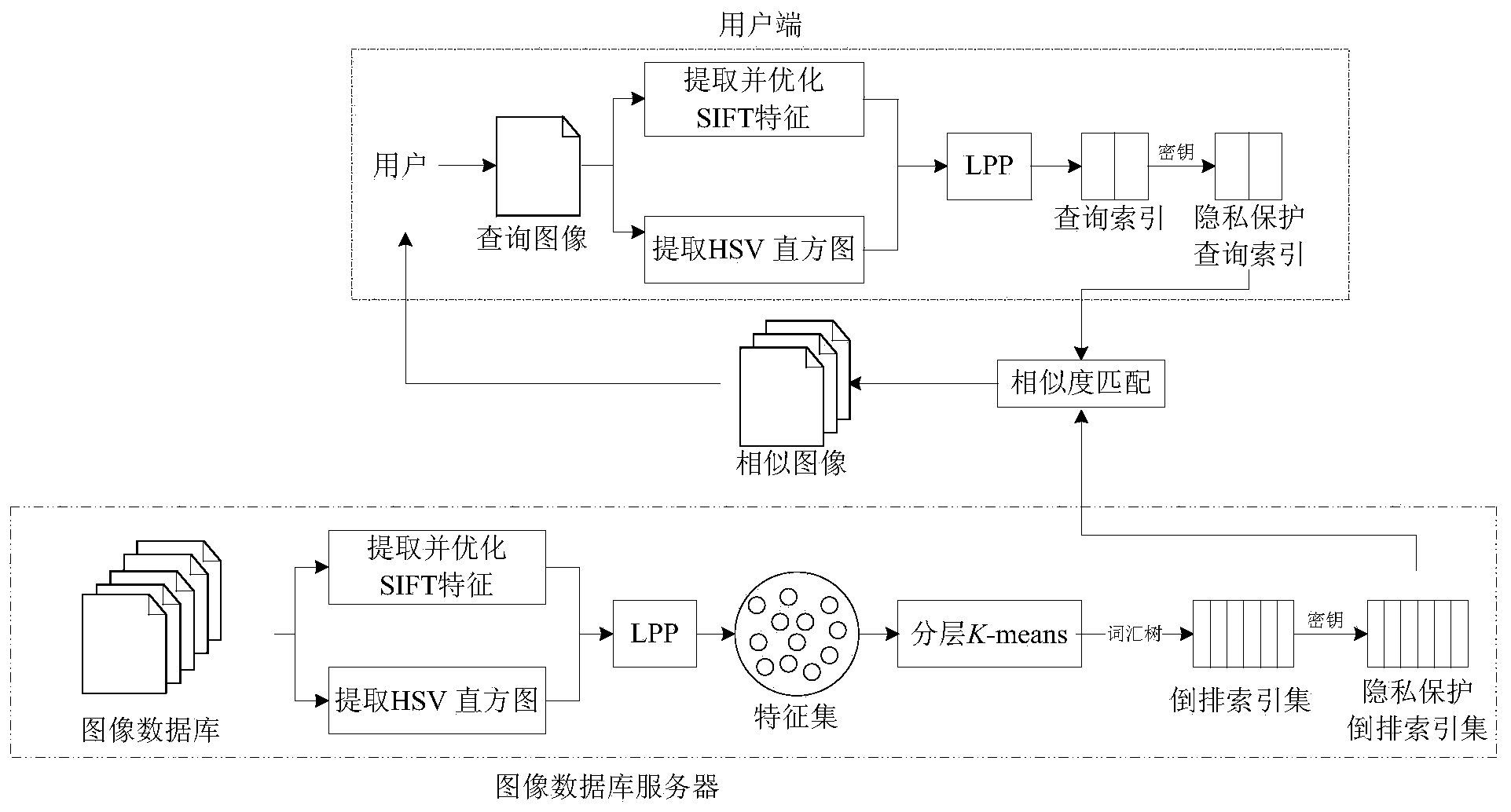

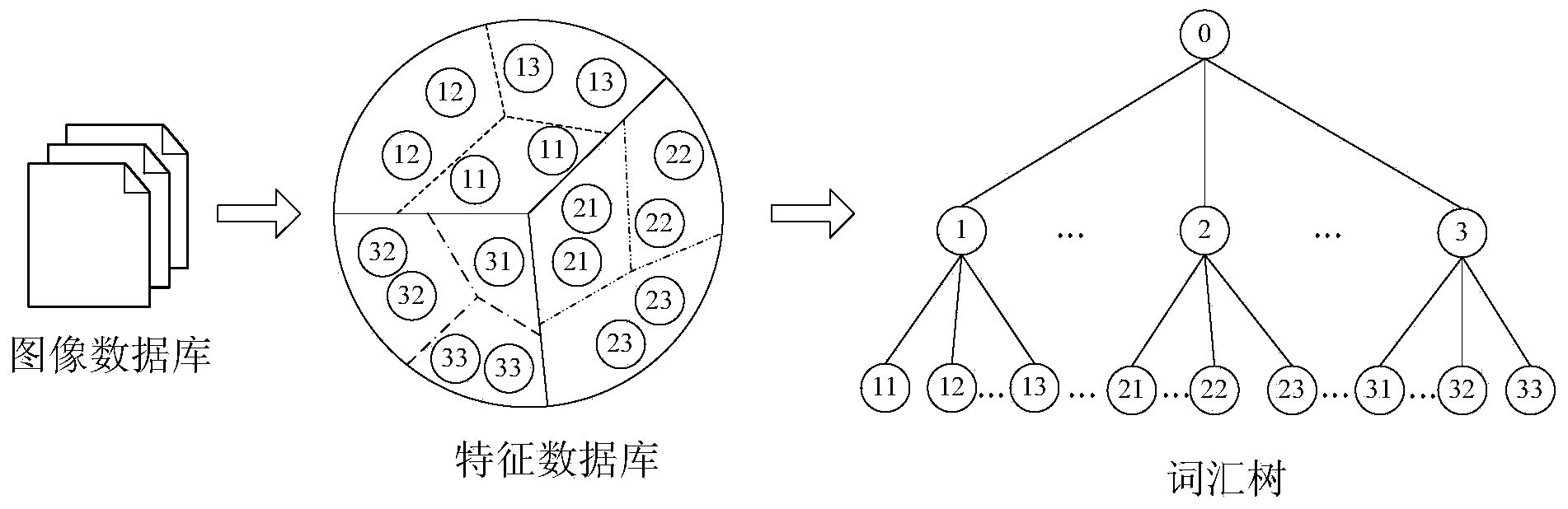

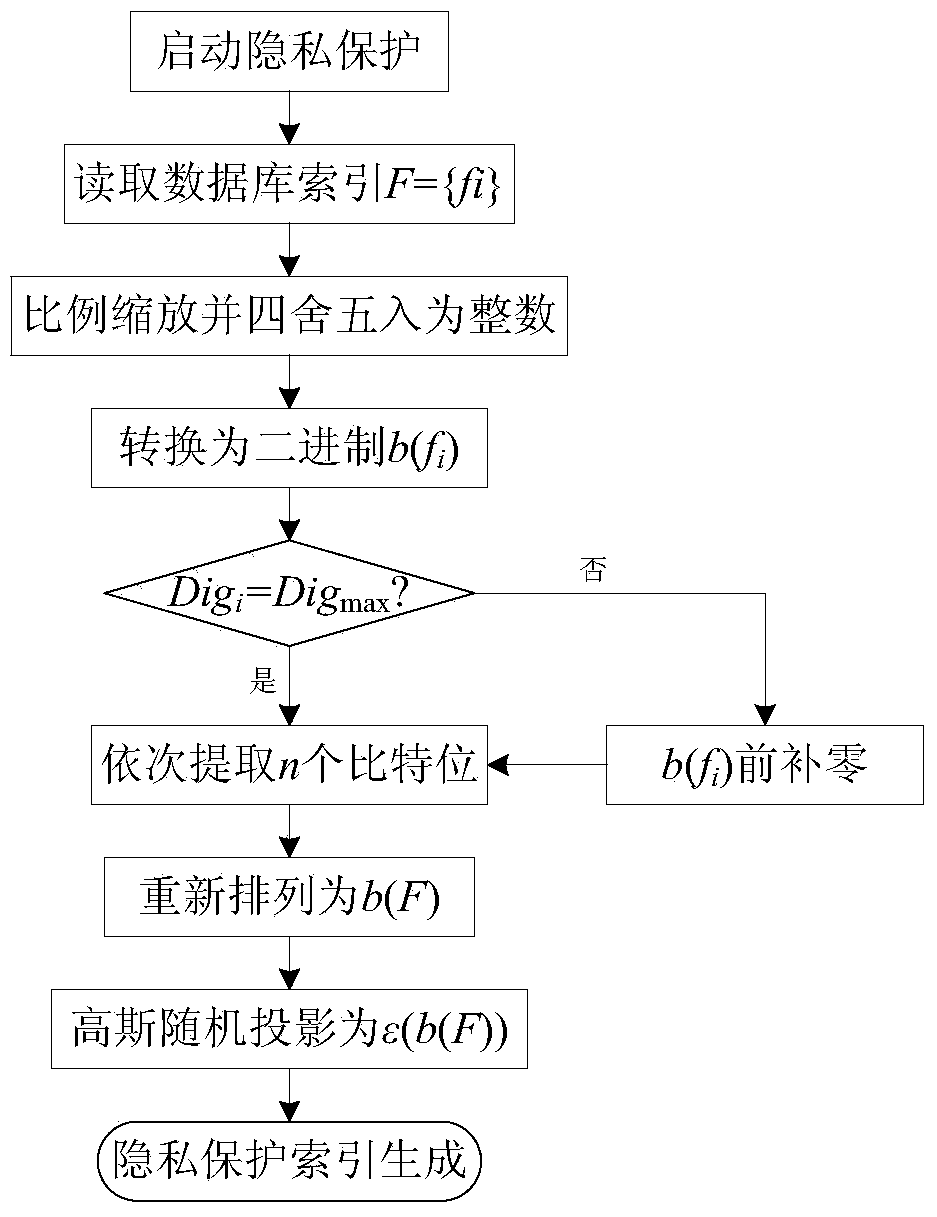

Privacy-protection index generation method for mass image retrieval

ActiveCN104008174AImprove performanceReduce the numberCharacter and pattern recognitionProgram/content distribution protectionFeature DimensionScale-invariant feature transform

The invention discloses a privacy-protection index generation method for mass image retrieval, relates to the privacy protection problem in mass image retrieval and involves with taking privacy protection into image retrieval. The method is used for establishing an image index with privacy protection, and therefore, the safety of the privacy information of a user can be protected while the retrieval performance is guaranteed. The method comprises the steps of firstly, extracting and optimizing SIFT (Scale Invariant Feature Transform) and HSV (Hue, Saturation and Value) color histogram, performing feature dimension reduction by use of a use of a manifold dimension reduction method of locality preserving projections, and constructing a vocabulary tree by using the dimension-reduced feature data. The vocabulary tree is used for constructing an inverted index structure; the method is capable of reducing the number of features, increasing the speed of plaintext domain image retrieval and also optimizing the performance of image retrieval. The method is characterized in that privacy protection is added on the basis of a plaintext domain retrieval framework and the inverted index is double encrypted by use of binary random codes and random projections, and therefore, the image index with privacy protection is realized.

Owner:数安信(北京)科技有限公司

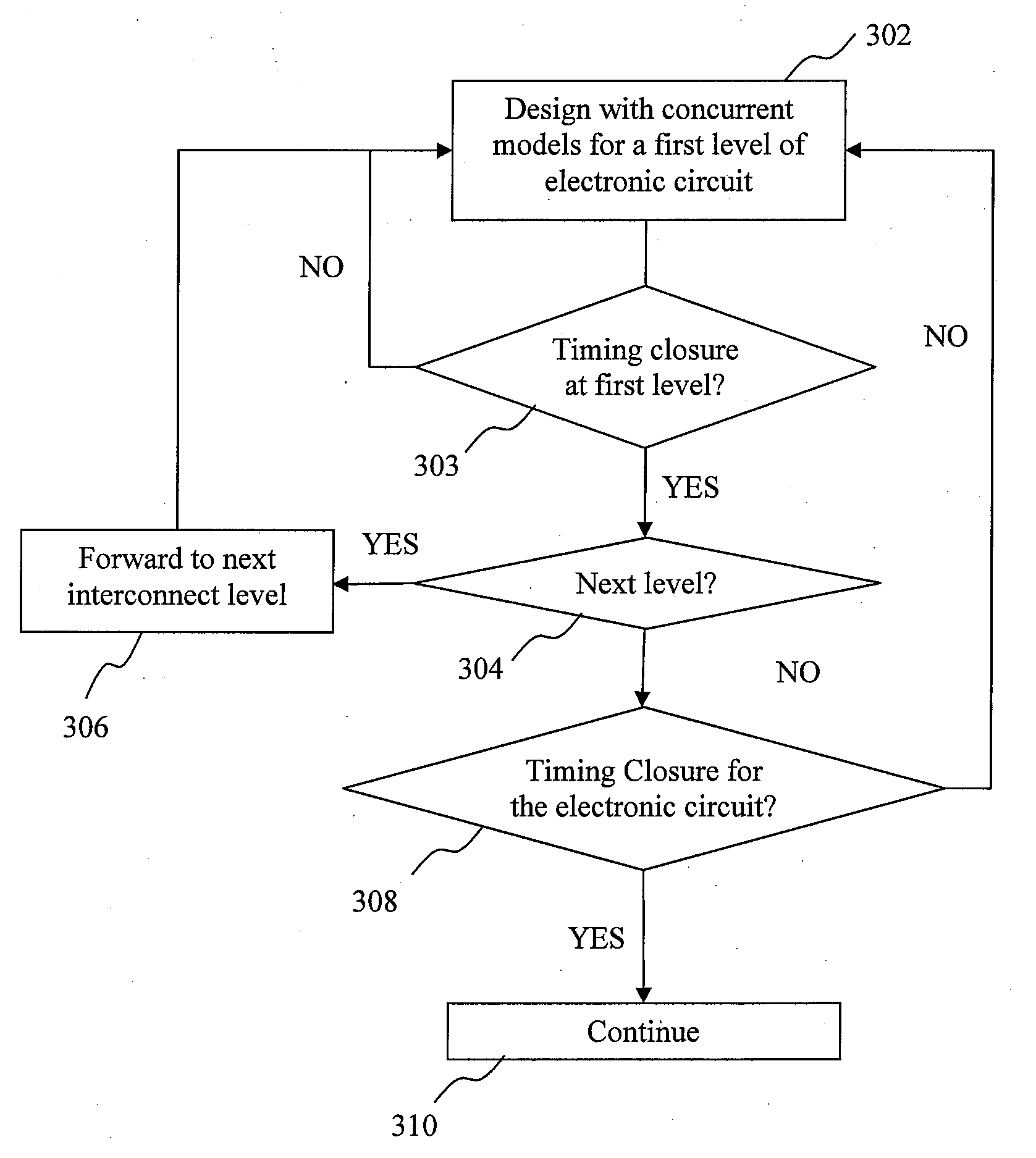

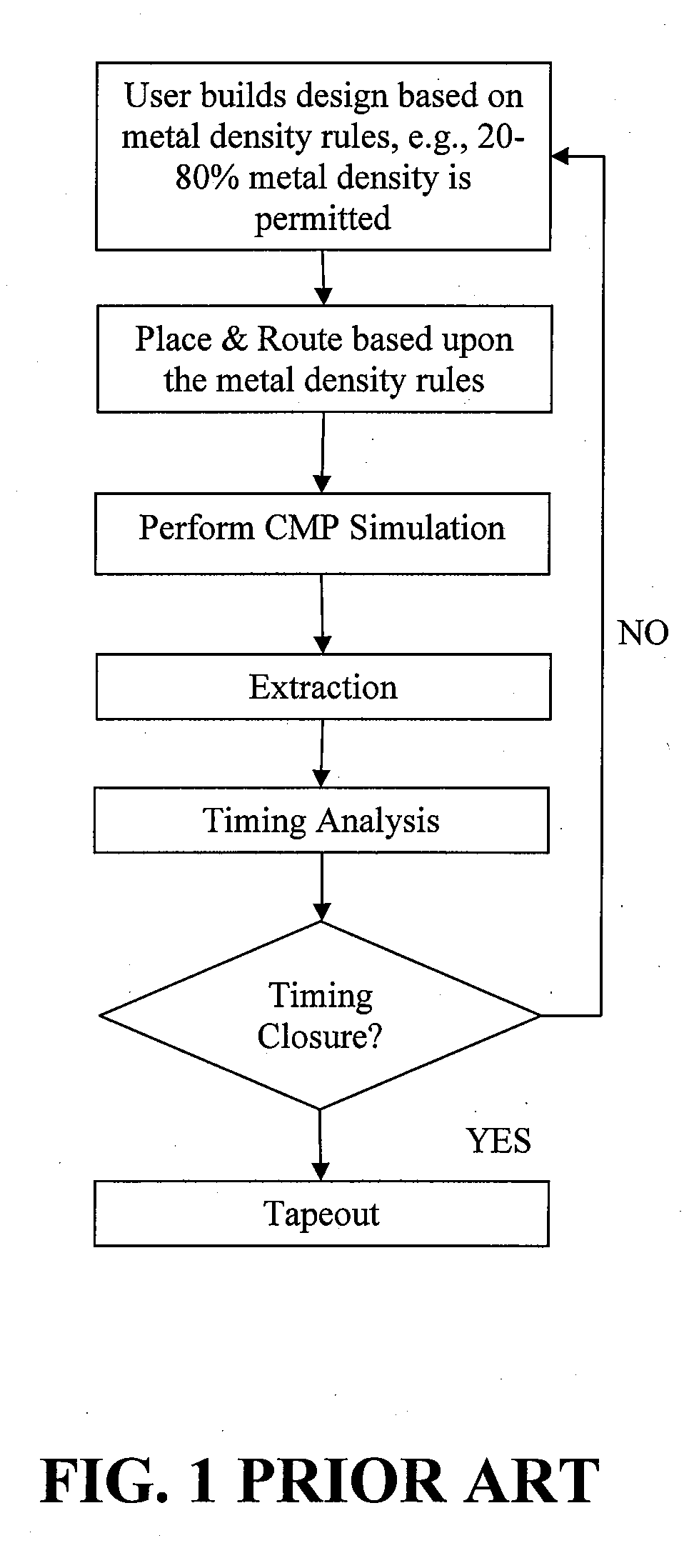

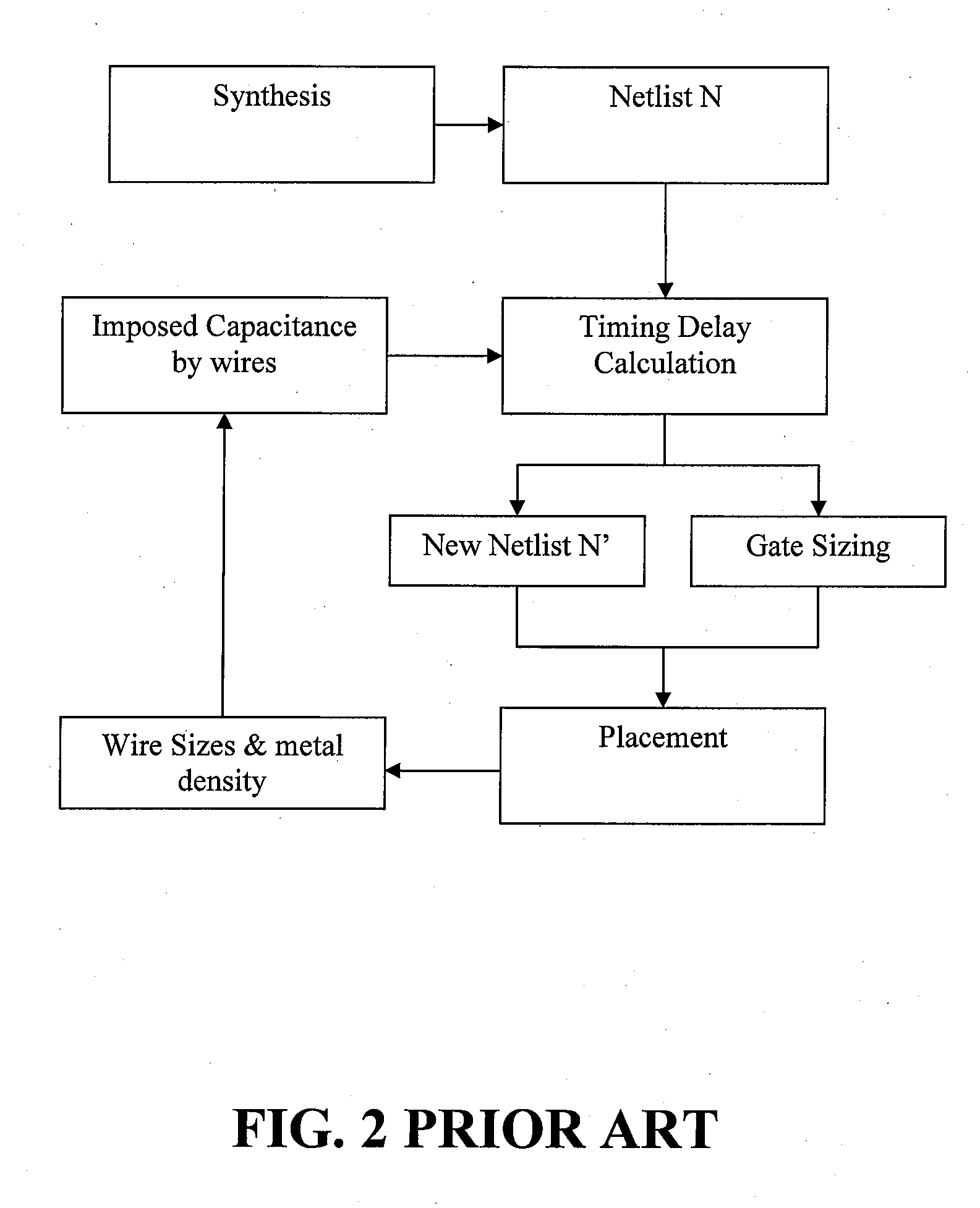

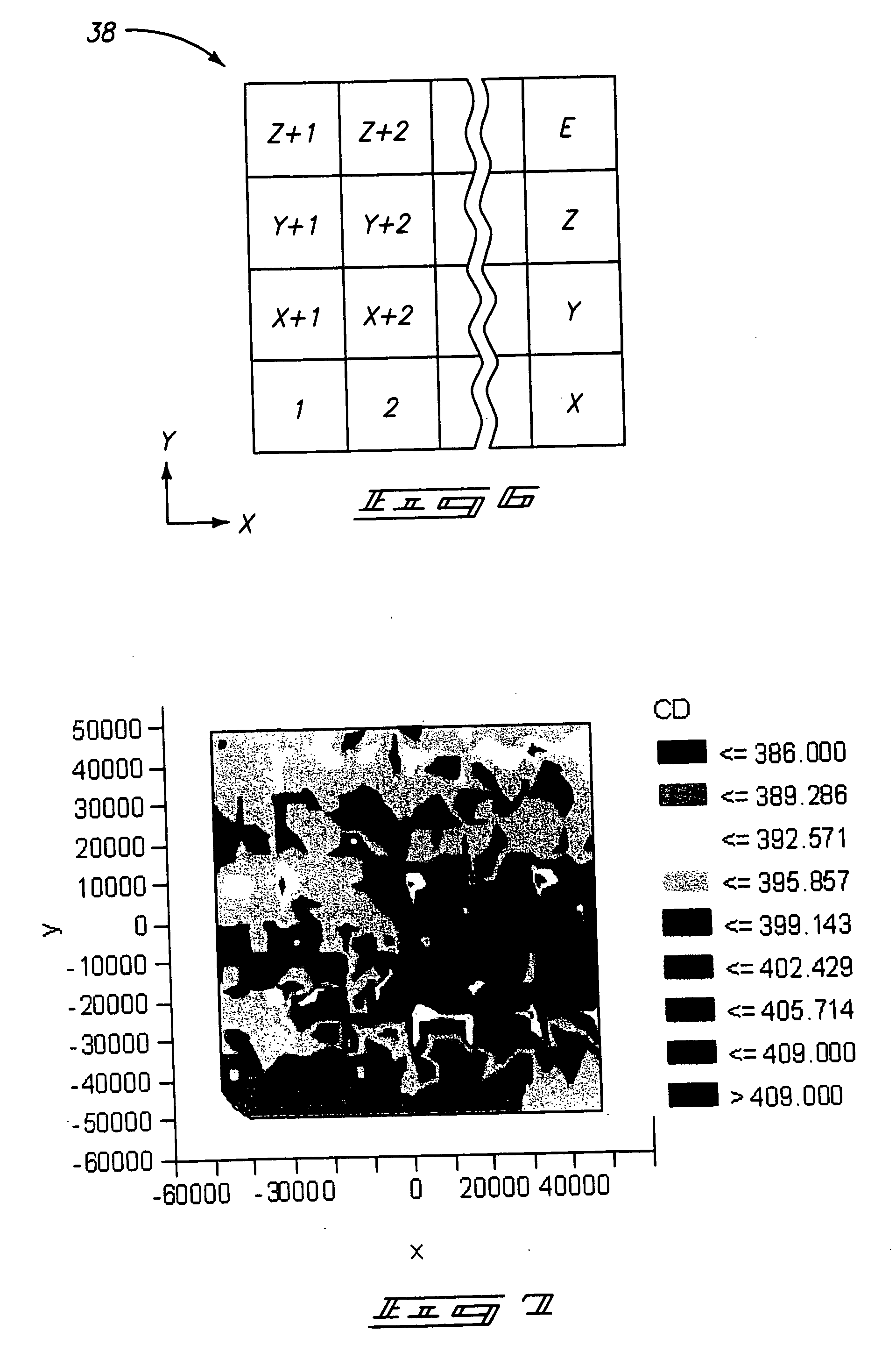

Method, system, and computer program product for timing closure in electronic designs

ActiveUS20080163148A1Effective and accurate methodologyAccurate predictionDesign optimisation/simulationCAD circuit designMetrologyComputer architecture

Disclosed is an improved method, system, and computer program product for timing closure with concurrent models for fabrication, metrology, lithography, and / or imaging processing analyses for electronic designs. Some embodiments of the present invention disclose a method for timing closure with concurrent process model analysis in which a design tool with such concurrent models generates a design for the one or more interconnect levels. The method or system then analyzes the effects of the concurrent models to predict feature dimension variations based upon the concurrent models. The method or system then modifies the design files to reflect the variations and determines one or more parameters based upon the concurrent models. One embodiment then determines the impact of concurrent models upon the electrical and timing performance.

Owner:CADENCE DESIGN SYST INC

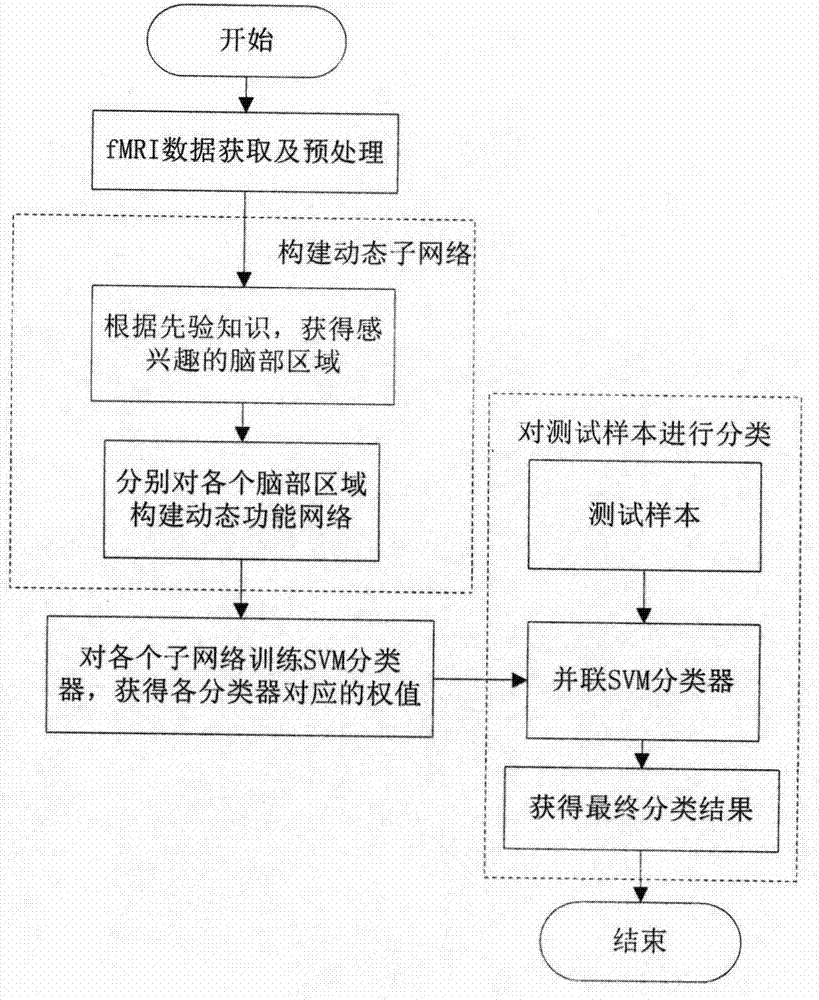

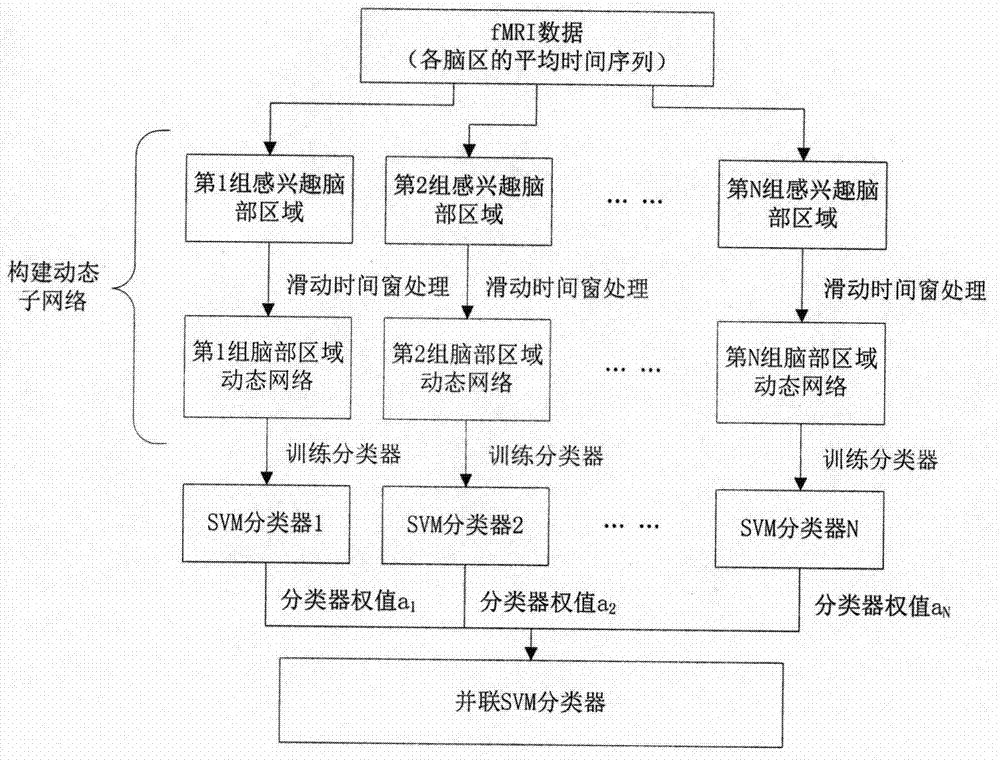

FMRI dynamic brain function sub-network construction and parallel connection SVM weighted recognition method

InactiveCN104715261AReduce feature dimensionAccurate classificationCharacter and pattern recognitionFeature DimensionAlgorithm

The invention discloses an fMRI dynamic brain function sub-network construction and parallel connection SVM weighted recognition method which comprises the steps that (1) data are preprocessed; (2) the time series of each brain area is extracted; (3)interested brain areas are selected; (4) dynamic brain function sub-networks of all the brain areas are constructed; (5) all the sub-network classifiers are trained; (6) values are assigned to all sub-classifiers to form parallel connection SVM classifiers; (7) unknown samples are classified. Compared with a traditional static function network, information on the time dimension is added on the constructed dynamic brain function networks; prior knowledge is combined for constructing dynamic sub-networks on different interested brain areas, and the feature dimensions are reduced while useful information is reserved; SVM classifiers of all the sub-networks are trained, the parallel connection SVM classifiers is formed by determining the weight of the sub-classifiers through the recognition rate, the brain areas are integrally weighed and classified, and the classifiers have better robustness.

Owner:NANJING UNIV OF TECH

Open domain video natural language description generation method based on multi-modal feature fusion

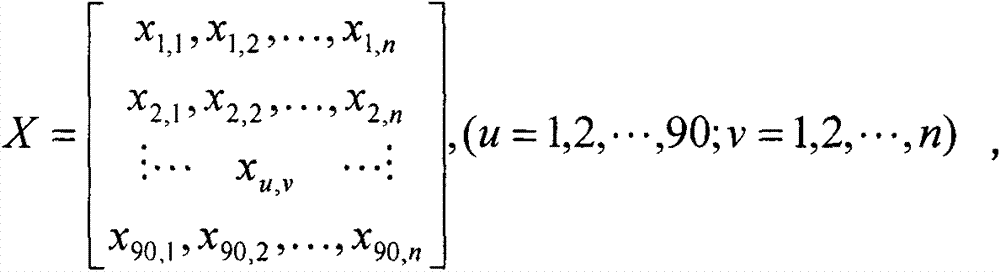

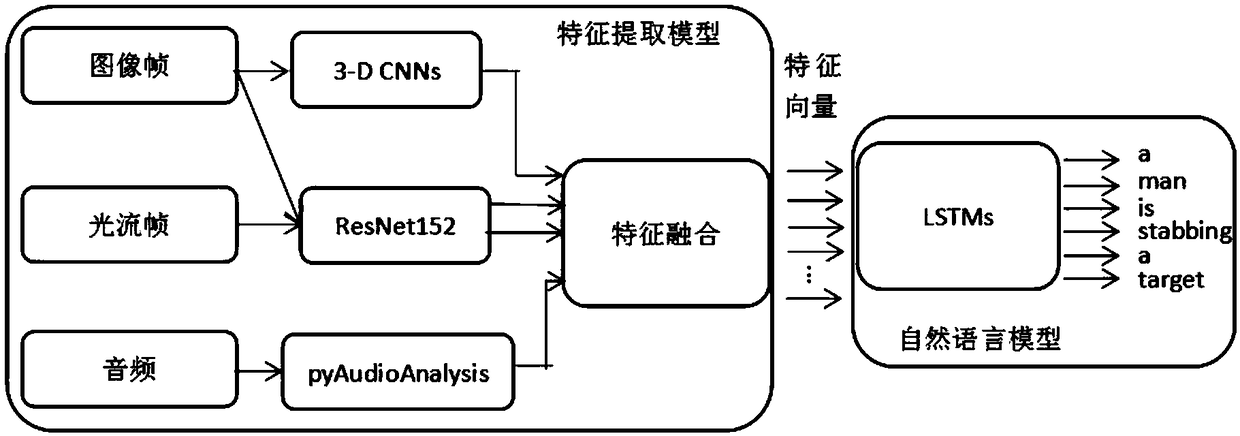

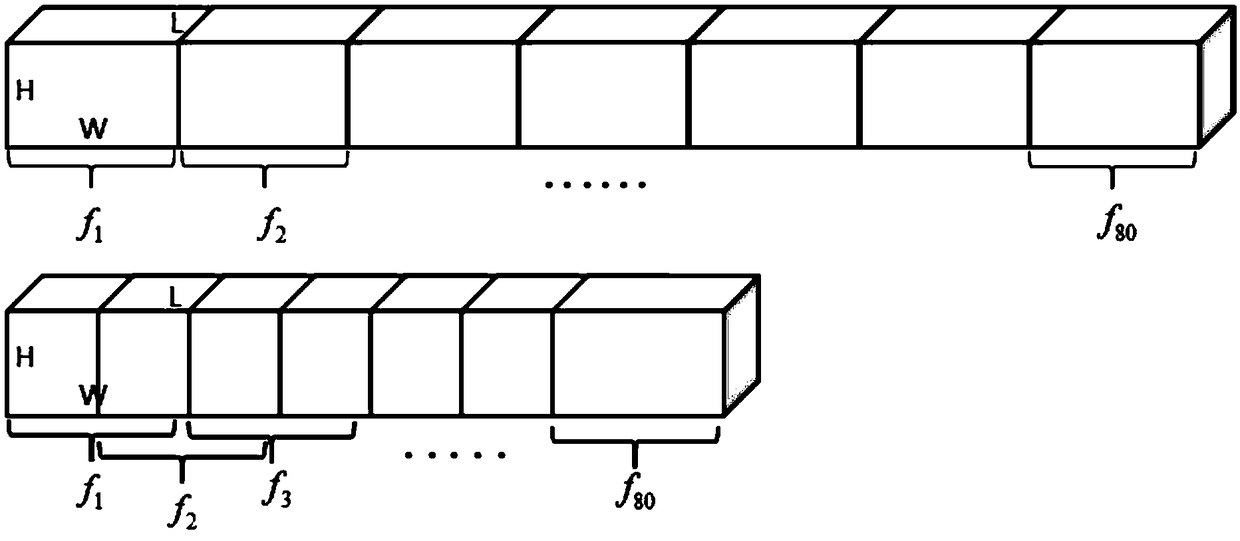

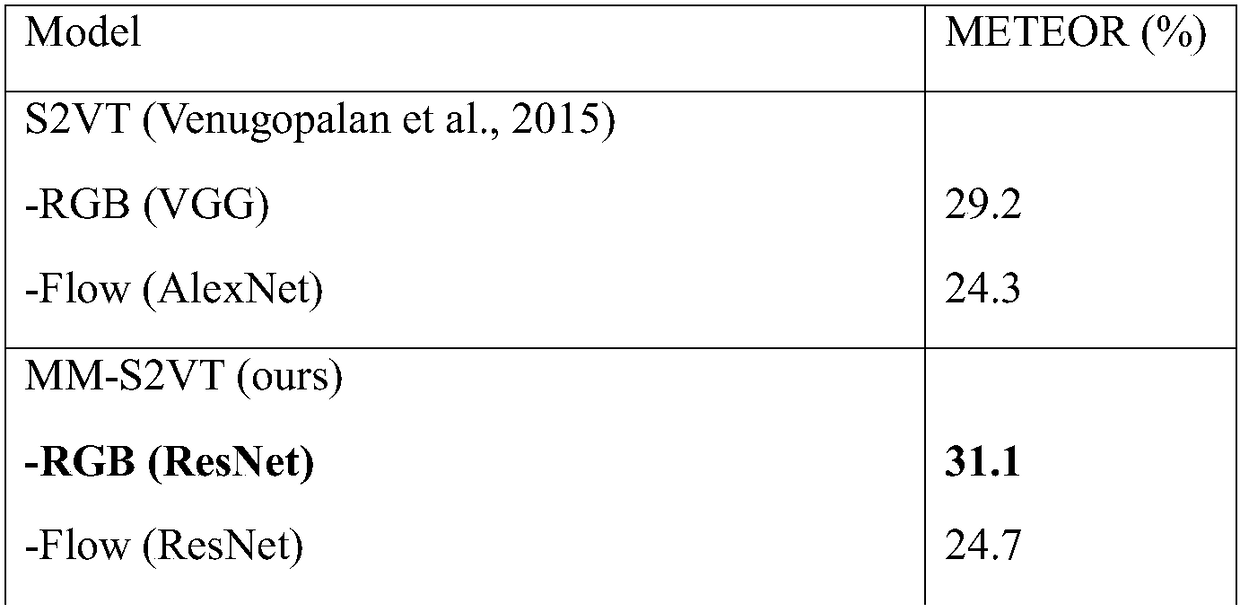

ActiveCN108648746AImprove robustnessHigh speedCharacter and pattern recognitionSpeech recognitionFeature DimensionRgb image

The invention discloses an open domain video natural language description method based on multi-modal feature fusion. According to the method, a deep convolutional neural network model is adopted forextracting the RGB image features and the grayscale light stream picture features, video spatio-temporal information and audio information are added, then a multi-modal feature system is formed, whenthe C3D feature is extracted, the coverage rate among the continuous frame blocks input into the three-dimensional convolutional neural network model is dynamically regulated, the limitation problem of the size of the training data is solved, meanwhile, robustness is available for the video length capable of being processed, the audio information makes up the deficiencies in the visual sense, andfinally, fusion is carried out aiming at the multi-modal features. For the method provided by the invention, a data standardization method is adopted for standardizing the modal feature values withina certain range, and thus the problem of differences of the feature values is solved; the individual modal feature dimension is reduced by adopting the PCA method, 99% of the important information iseffectively reserved, the problem of training failure caused by the excessively large dimension is solved, the accuracy of the generated open domain video description sentences is effectively improved, and the method has high robustness for the scenes, figures and events.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

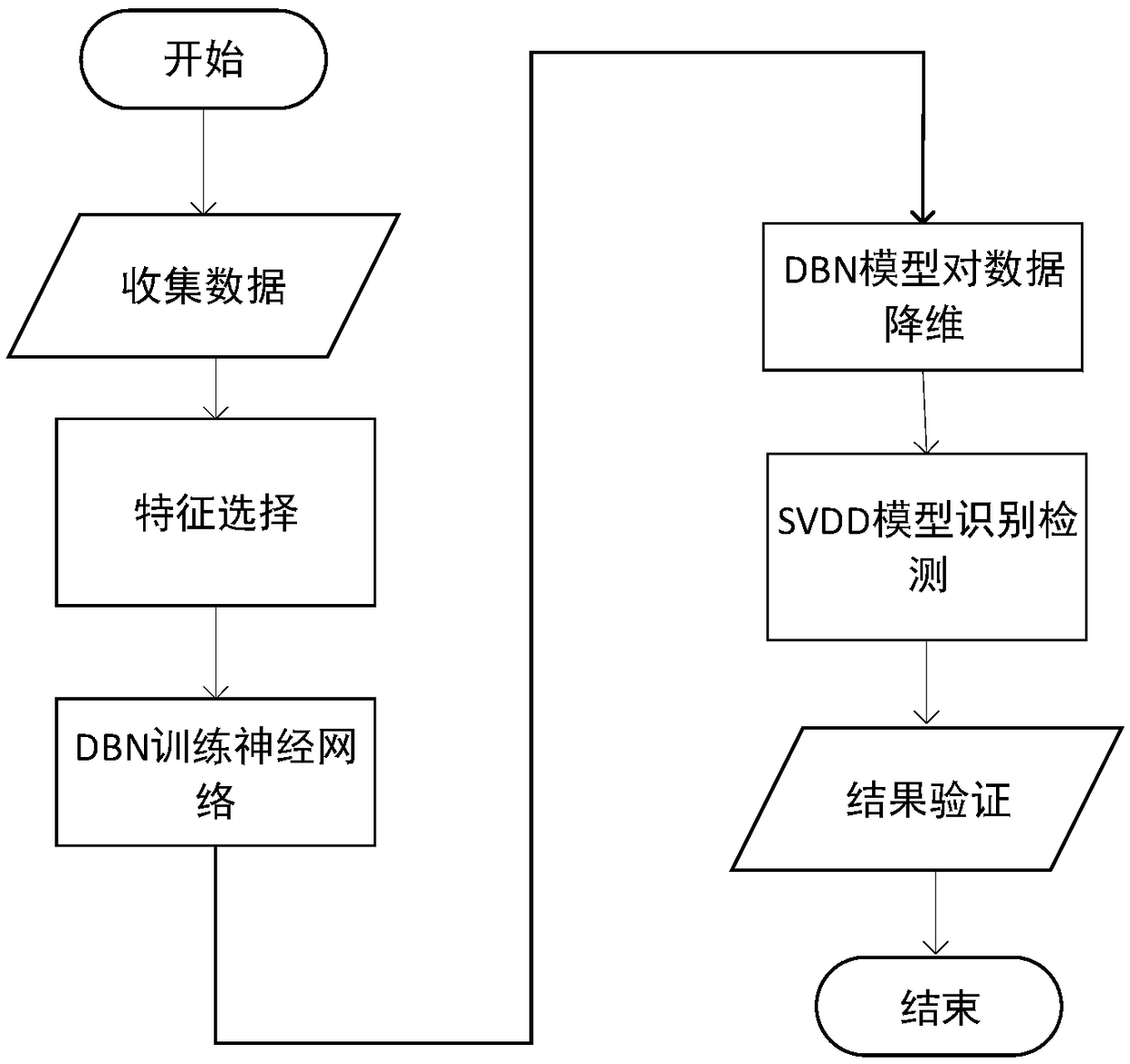

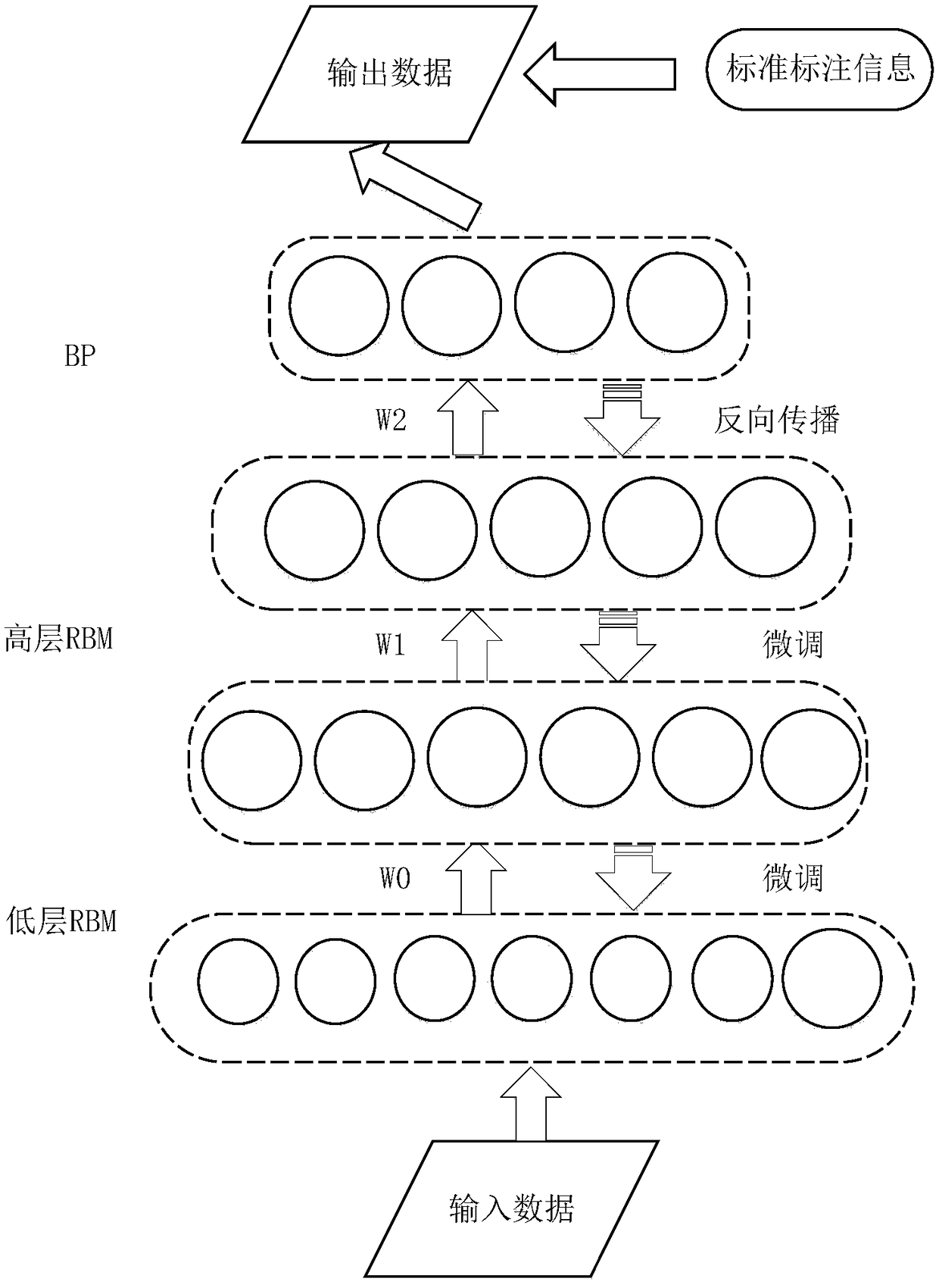

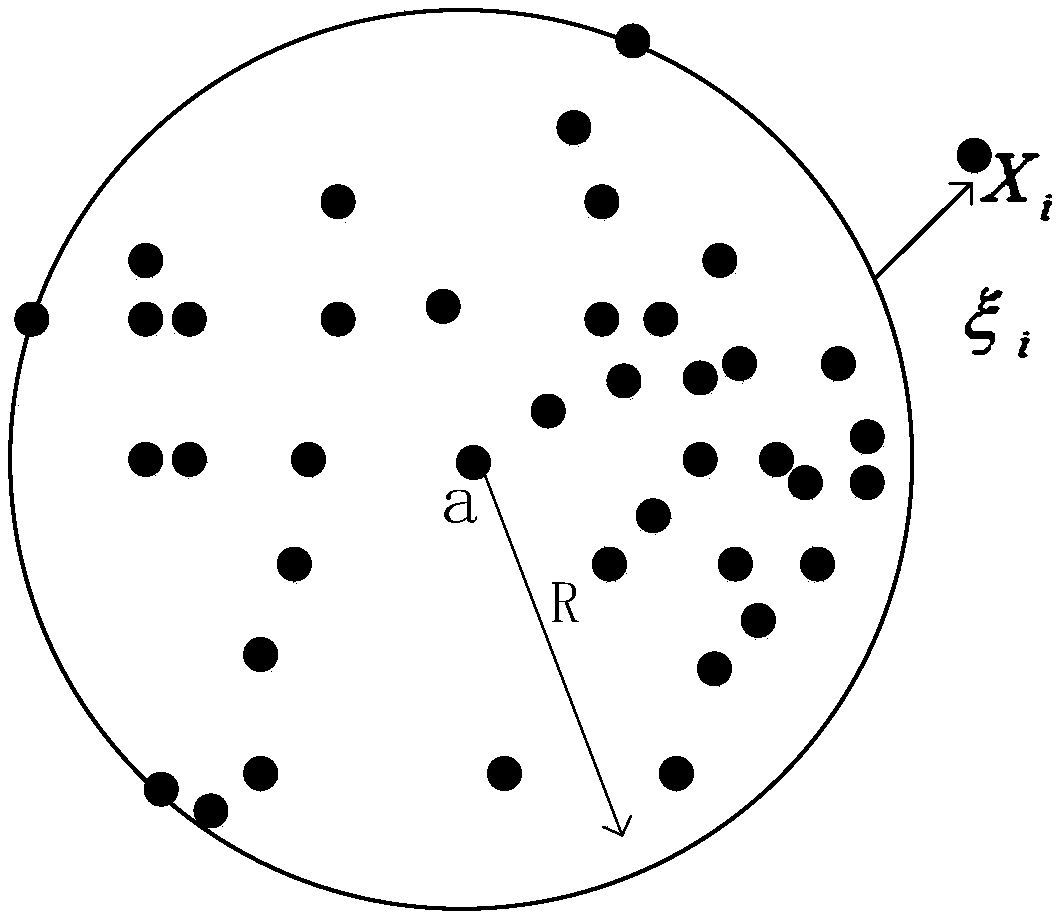

APT attack detection method based on deep belief network-support vector data description

InactiveCN108848068AImplement attack detectionGood dimensionality reduction effectData switching networksRestricted Boltzmann machineFeature Dimension

The invention discloses an advanced persistent threat (APT) attack detection method based on deep belief network-support vector data description. A deep belief network (DBN) is used for feature dimension-reduction and excellent feature vector extraction; and support vector data description (SVDD) is used for the data classification and detection. At a DBN training state, the feature dimension-reduction is performed by using the DBN model after obtaining a standard data set; a low-level restricted Boltzmann machine (RBM) receives simple representation transmitted from the low-level RBM by usingthe high-level RBM so as to learn more abstract and complex representation after performing the initial dimension-reduction, and back propagation of a back propagation (BP) neural network is used forrepeatedly adjusting a weight value until the data with excellent feature is extracted. The data processed by the DBN is divided into a training set and a testing set, and the data set is provided for the SVDD to perform training and identification detection, thereby obtaining the detection result. The attack detection method disclosed by the invention is suitable for the unsupervised attack datadetection with large data size and high-dimension feature, is fit for the APT attack detection and can obtain an excellent detection result.

Owner:SHANGHAI MARITIME UNIVERSITY

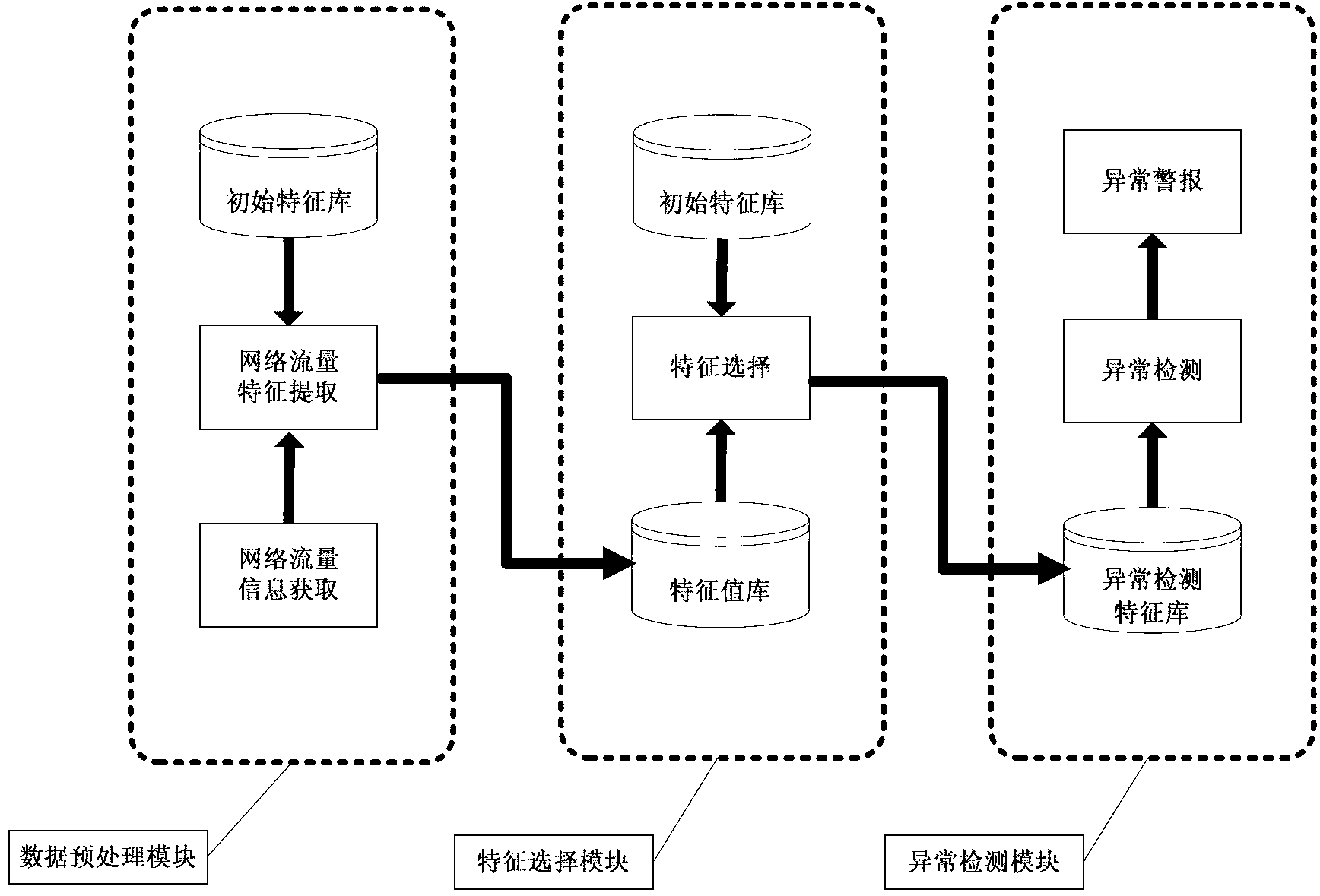

Anomaly detection method based on network flow analysis

ActiveCN103023725AImprove performanceReduce the dimensionality of traffic featuresData switching networksFeature DimensionFeature set

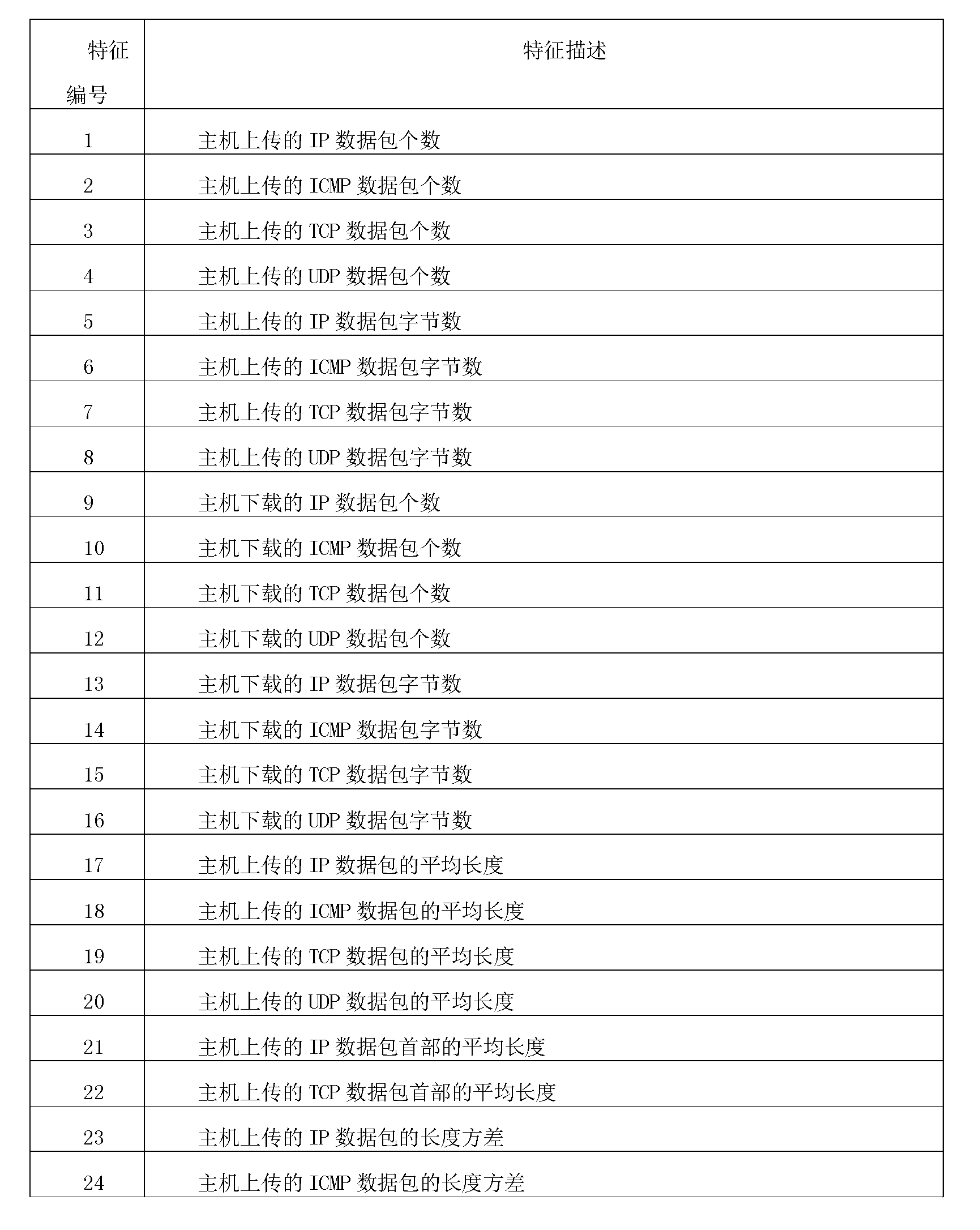

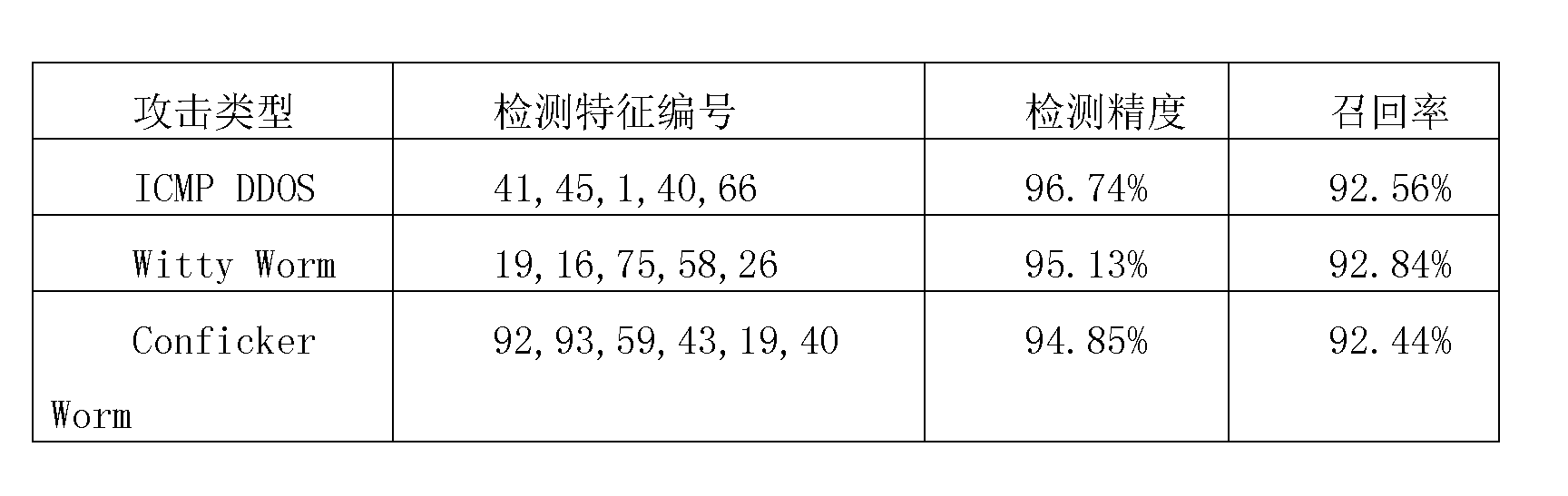

The invention discloses an anomaly detection method based on network flow analysis. A complete network flow initial feature set is provided by deeply analyzing an IP (internet protocol) data packet, and the performance of an anomaly detection system can be fundamentally improved. A feature subset for anomaly detection is dynamically selected according to different types of network anomalies, finally, the class of an unknown sample is predicted according to the feature subset by a Bayes classifier, and the anomalies are prompted if the unknown sample is abnormal according to a prediction result. A data preprocessing module is used for processing preliminary data; a feature selecting module is used for selecting the proper feature subset for anomaly detection according to the types of the anomalies; and an anomaly detection module is used for prompting the anomalies after the anomalies are found. By the aid of dynamic feature selection algorithm, the optimal feature subset for detecting the anomalies can be dynamically selected according to the different types of anomalies, flow feature dimensions for detecting the anomalies can be reduced, and anomaly detection accuracy is improved.

Owner:BEIJING UNIV OF TECH

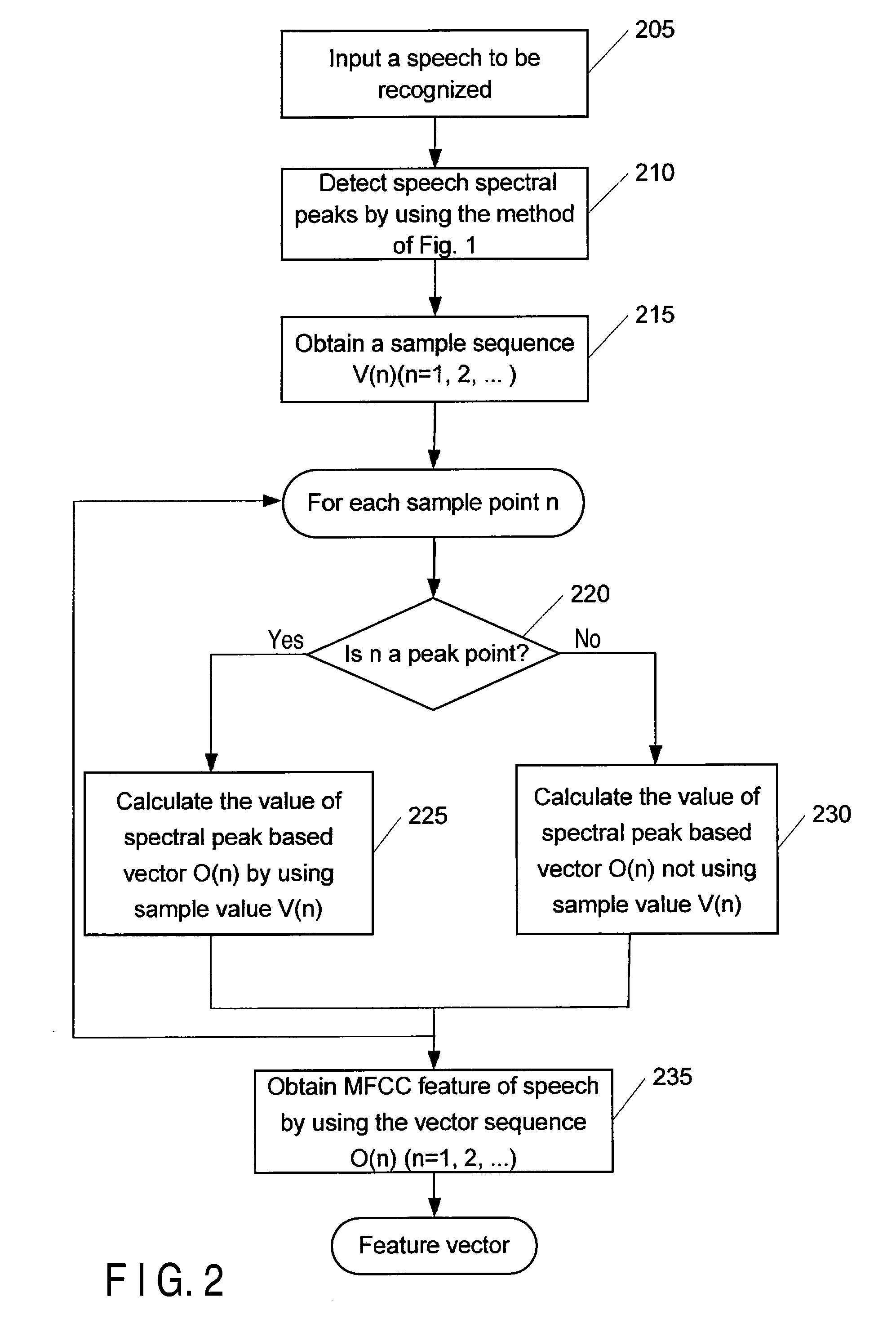

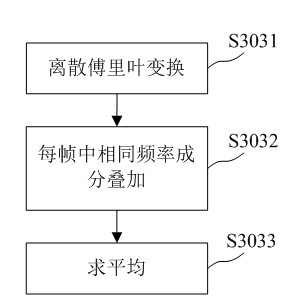

Detection of speech spectral peaks and speech recognition method and system

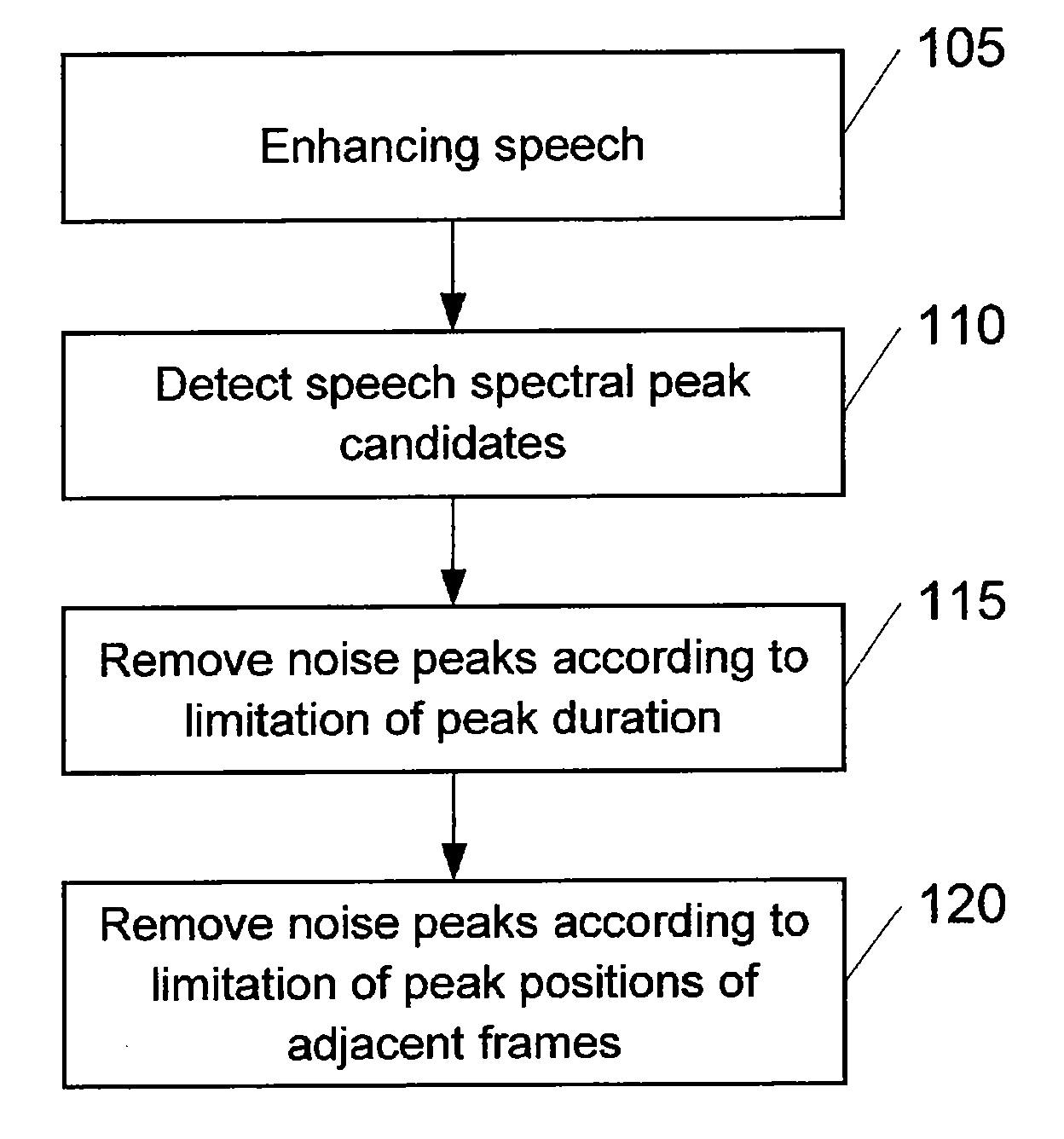

InactiveUS20090177466A1Improve Noise RobustnessRemove noise peaksSpeech analysisFrequency spectrumFeature Dimension

The present invention provides a method and apparatus for detecting speech spectral peaks and a speech recognition method and system. The method for detecting speech spectral peaks comprises detecting speech spectral peak candidates from power spectrum of the speech, and removing noise peaks from the speech spectral peak candidates according to peak duration and / or peak positions of adjacent frames, to detect speech spectral peaks. In the present invention, reliable speech spectral peaks can be obtained by removing noise peaks using the limitations of peak duration and adjacent frames in the detection of the speech spectral peaks. Further the energy values of the speech spectral peaks are used to extract the MFCC feature of speech instead of a sample sequence of the whole power spectrum in the conventional technique, the noise robustness of speech recognition can be enhanced while not increasing the speech feature dimensions.

Owner:KK TOSHIBA

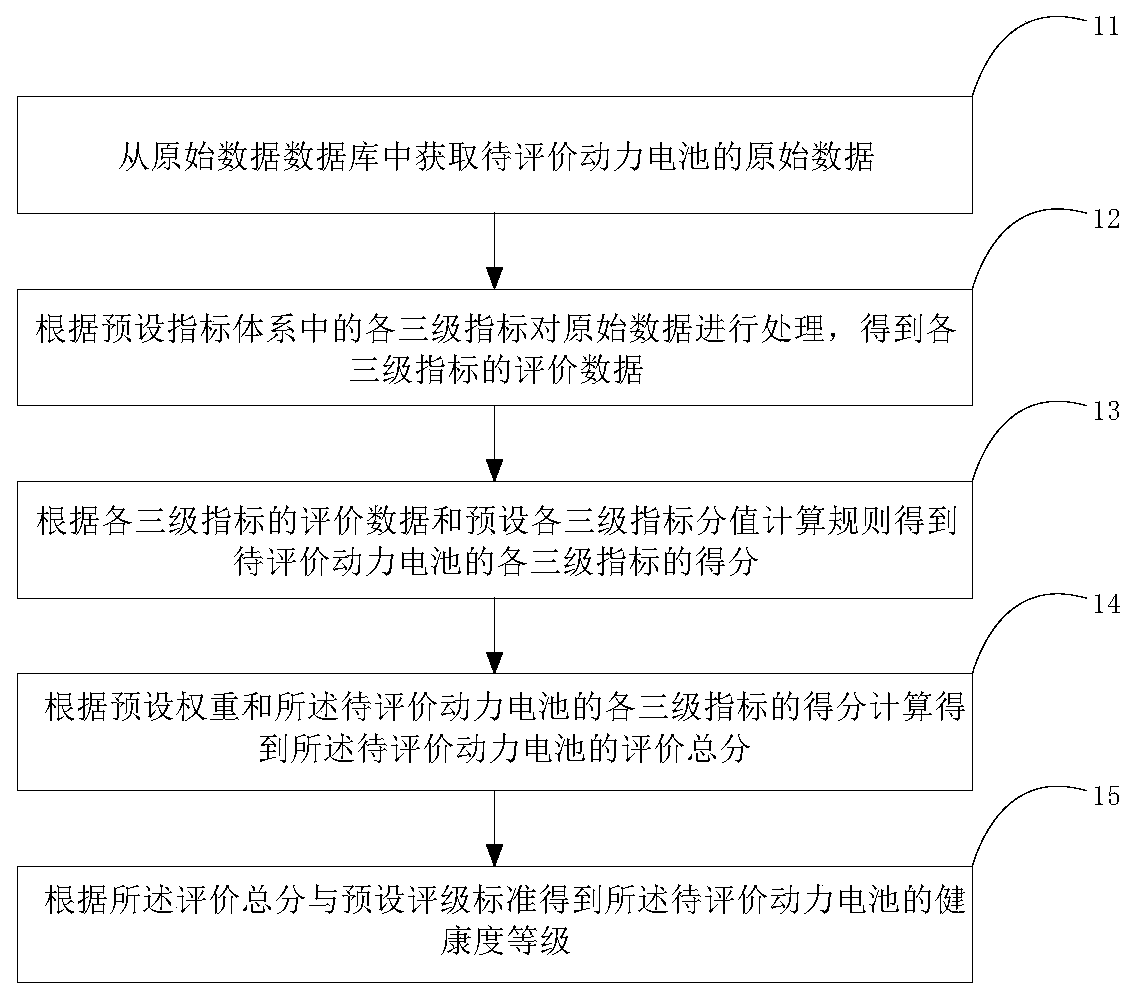

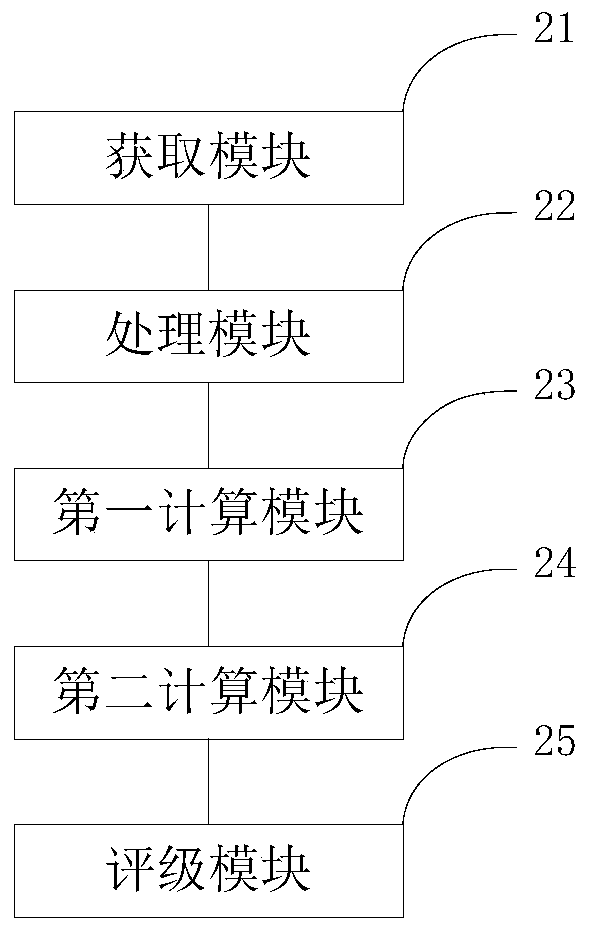

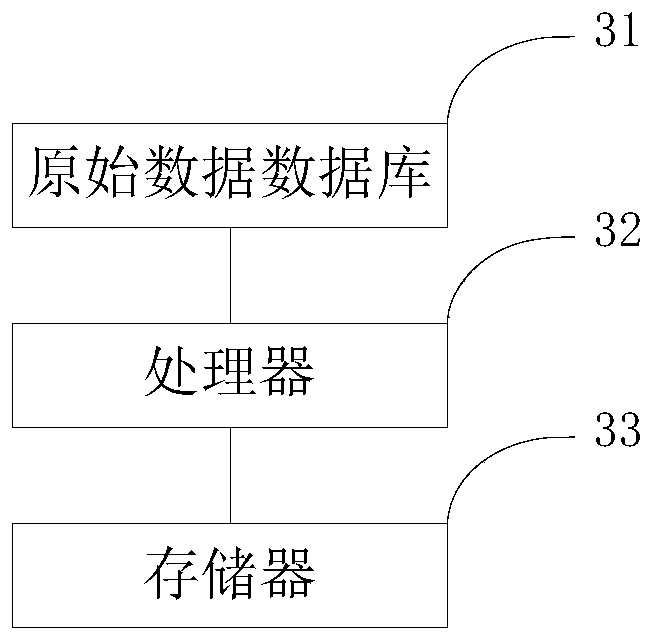

Power battery health degree evaluation method, device and system

The invention relates to the field of electric vehicle performance evaluation, in particular to a power battery health degree evaluation method, device and system, and the method comprises the steps:obtaining original data of a to-be-evaluated power battery from an original data database; processing the original data to obtain evaluation data of each level of index; obtaining the score of each three-level index of the power battery to be evaluated according to the evaluation data of each three-level index and a preset three-level index score calculation rule; calculating and obtaining an evaluation total score of the power battery to be evaluated according to the preset weight and the score of each level of index of the power battery to be evaluated; and obtaining the health degree gradeof the to-be-evaluated power battery according to the total evaluation score and a preset rating standard. Due to the fact that the first-level indexes comprise the battery use environment feature dimension and the driving behavior feature dimension, and the scene when the power battery is used and the influence of the driving behavior on the health degree of the power battery are comprehensivelyincluded, the health degree of the power battery can be more accurately evaluated by using the technical scheme of the invention.

Owner:优必爱信息技术(北京)有限公司

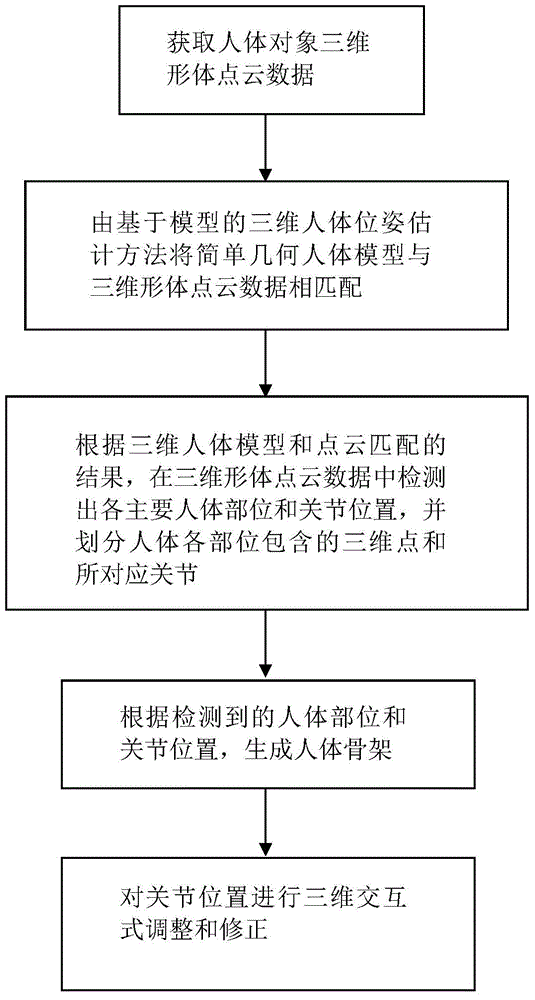

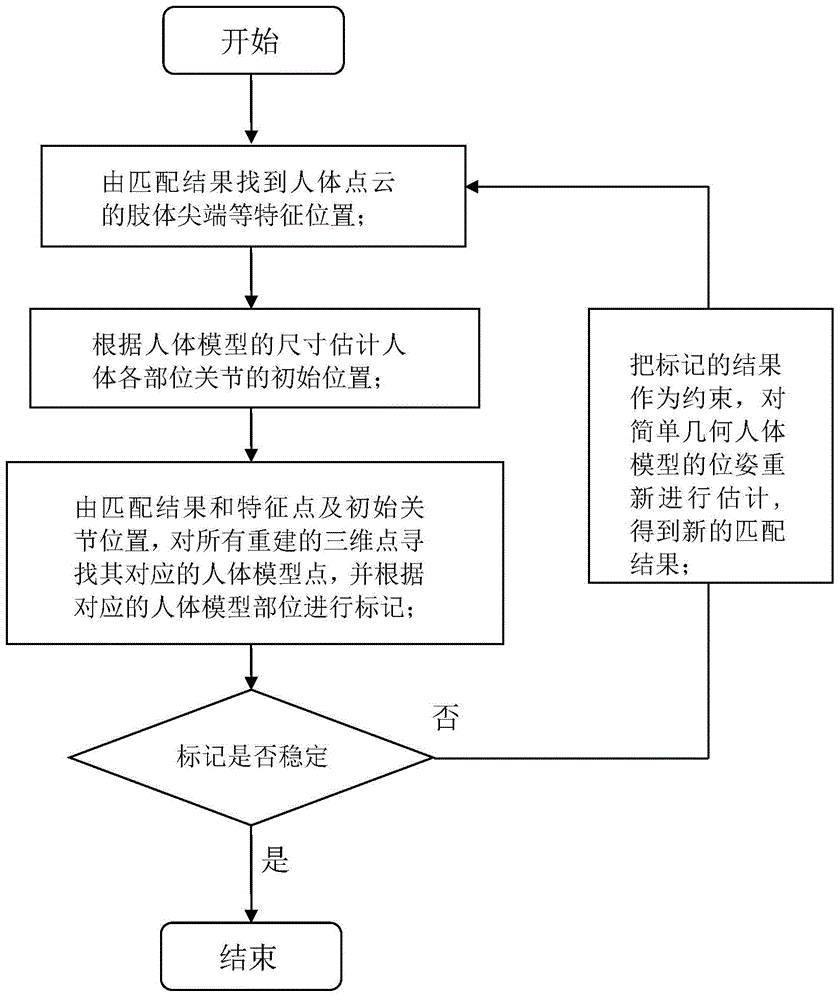

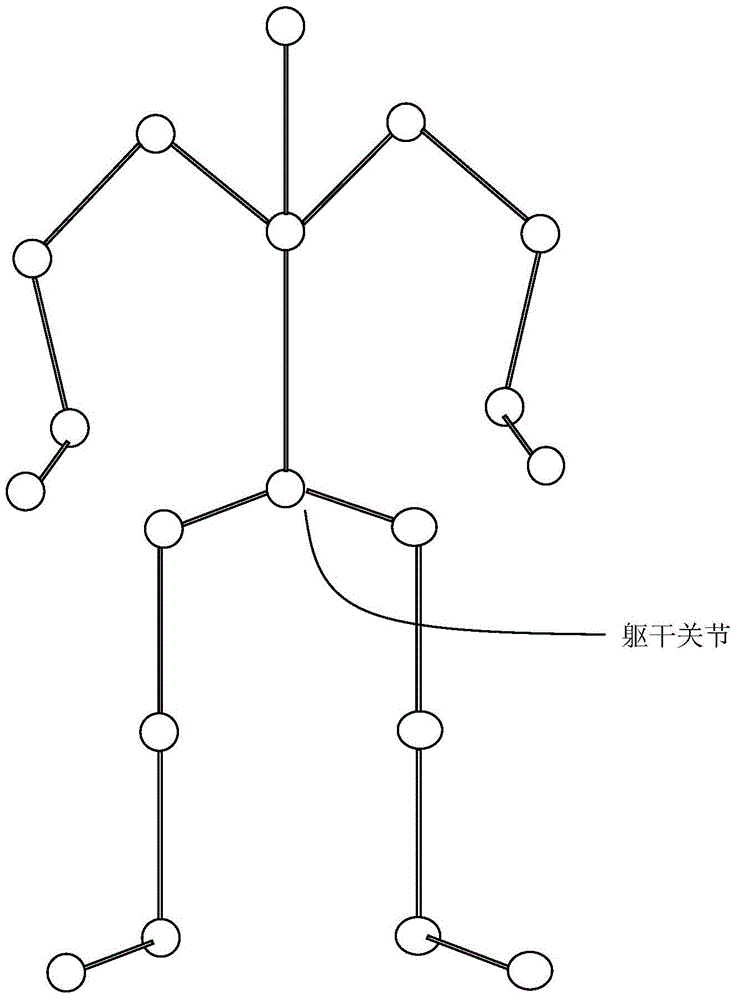

Method for creating object-oriented customized three-dimensional human body model

The invention discloses a method for creating an object-oriented customized three-dimensional human body model. The method comprises the following steps: acquiring images of a human body object at the same moment at different angles by a plurality of synchronization cameras and rebuilding a three-dimensional feature point cloud of a human body from the images by adopting a three-dimensional rebuilding method; matching a simple three-dimensional human body model onto the three-dimensional feature point cloud by adopting a pose estimation method on the basis of a model; according to a matching result of the three-dimensional human body model and the point cloud, detecting all main human body positions and joint positions and dividing the human body to form all of the part; according to the detected human body positions and joint positions, generating a human body skeleton for driving the human body model; regulating and amending the joint positions. The method for creating the object-oriented customized three-dimensional human body model, disclosed by the invention, solves the problems of shortage of matching degree and adaptability of an existing three-dimensional human body model on the specific human body object and can create the three-dimensional human body model completely coincided with the feature dimension of the human body object.

Owner:NAT UNIV OF DEFENSE TECH

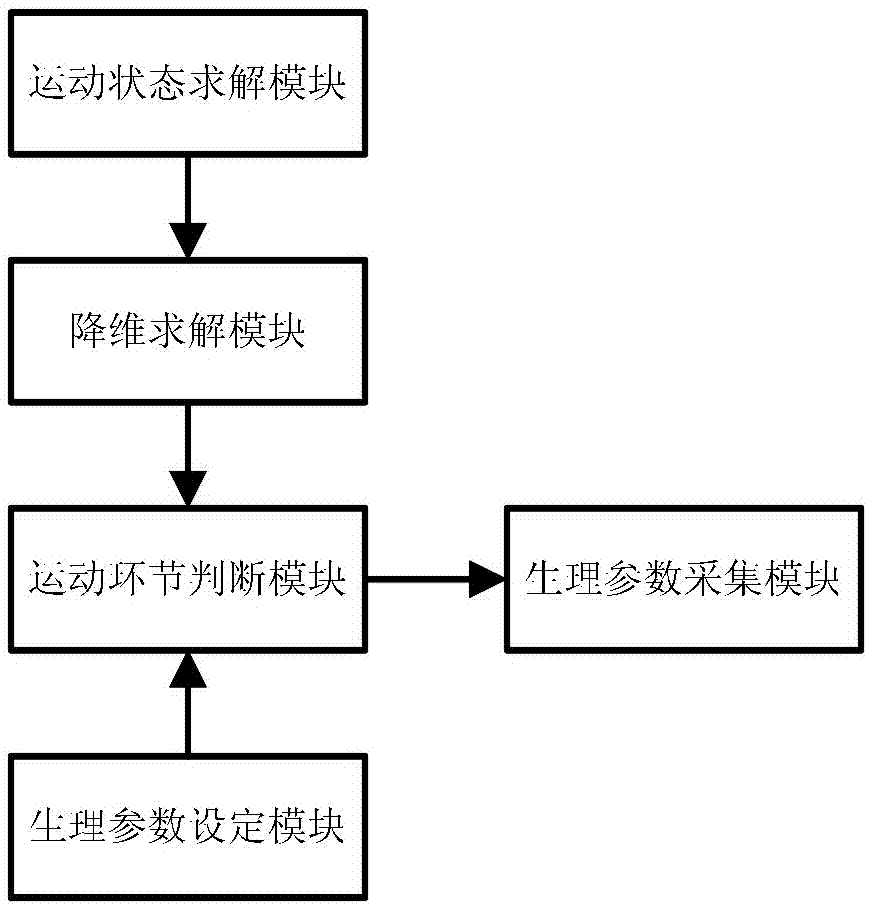

Wearable device based on neural network adaptive health monitoring

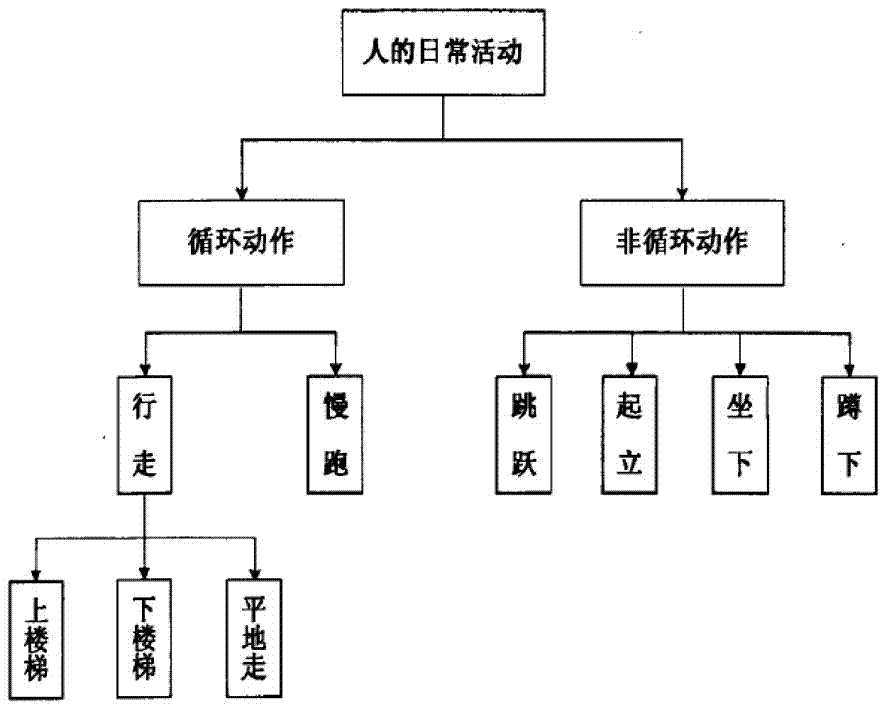

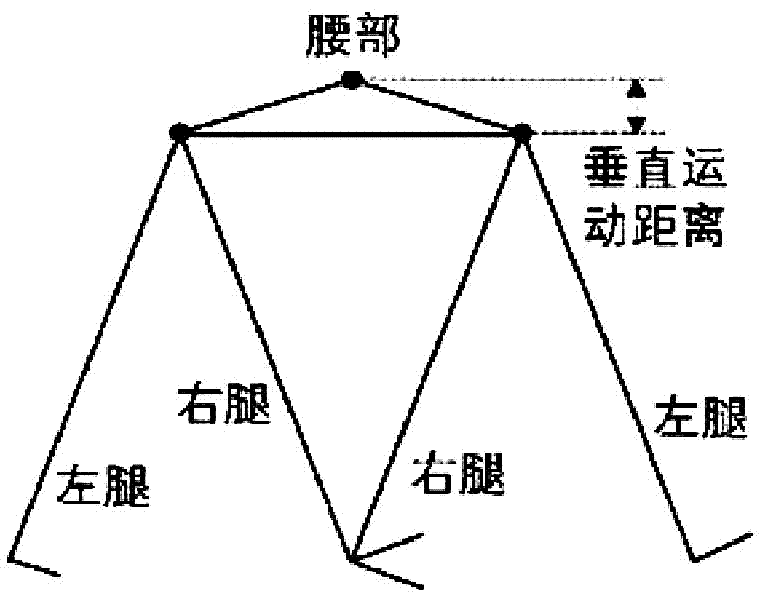

ActiveCN106971059AHigh reference valueReduce exerciseMedical data miningHealth-index calculationFeature DimensionAngular velocity

The invention discloses a wearable device based on neural network adaptive health monitoring. The wearable device comprises an exercise state solving module, an exercise link judgment module, a dimension reduction solving module, a physiological parameter setting module and a physiological parameter collection module; the exercise state solving module collects acceleration data output by an acceleration sensor, high-frequency noise is filtered through wavelet transformation, and the exercise state of a user is divided; the exercise link judgment module subdivides the exercise state in combination with an angular velocity to obtain an exercise subdivision link; and according to the dimension reduction solving module, an optimized SVM is used to perform further fine division on partial pathological features hierarchically in combination with data at different levels. According to the wearable device, different feature dimension reduction samples are integrated in an analysis link, the amount of exercise is greatly reduced, correctness is guaranteed by emphatically calculating a verification link, the medical reference value of obtained user physiological parameters is increased, and power consumption standby capability is improved under the same condition.

Owner:陈晨

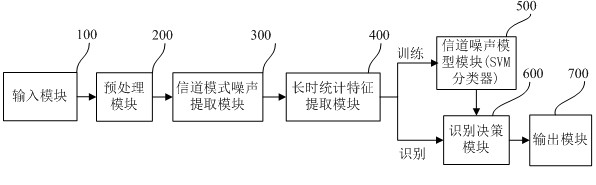

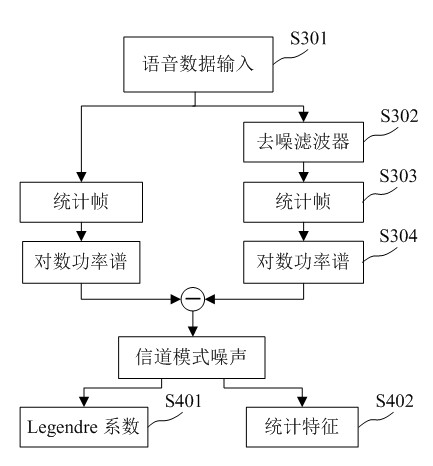

Record replay attack detection method and system based on channel mode noise

InactiveCN102436810AReduce feature dimensionImprove efficiencyDigital data information retrievalSpeech recognitionComputation complexityFeature Dimension

The invention relates to the technical field of intelligent voice signal processing, mode recognition and artificial intelligence and in particular relates to a record replay attack detection method and system in a speaker recognition system based on a channel mode noise. The invention discloses a simpler and more efficient record replay attack detection method in a speaker recognition system. The method comprises the following steps: (1) inputting a to-be-recognized voice signal; (2) pre-processing the voice signal; (3) extracting the channel mode noise in the pre-processed voice signal; (4) extracting a long time statistic feature based on the channel mode noise; and (5) classifying the long time statistic feature according to a channel noise classifying judging model. By using the channel mode noise to perform the record replay attack detection, the extracted feature dimension is low, the computation complexity is low, and the recognition error rate is low, therefore, the safety performance of the speaker recognition system is greatly improved, and the method and system provided by the invention can be used in the reality more easily.

Owner:SOUTH CHINA UNIV OF TECH

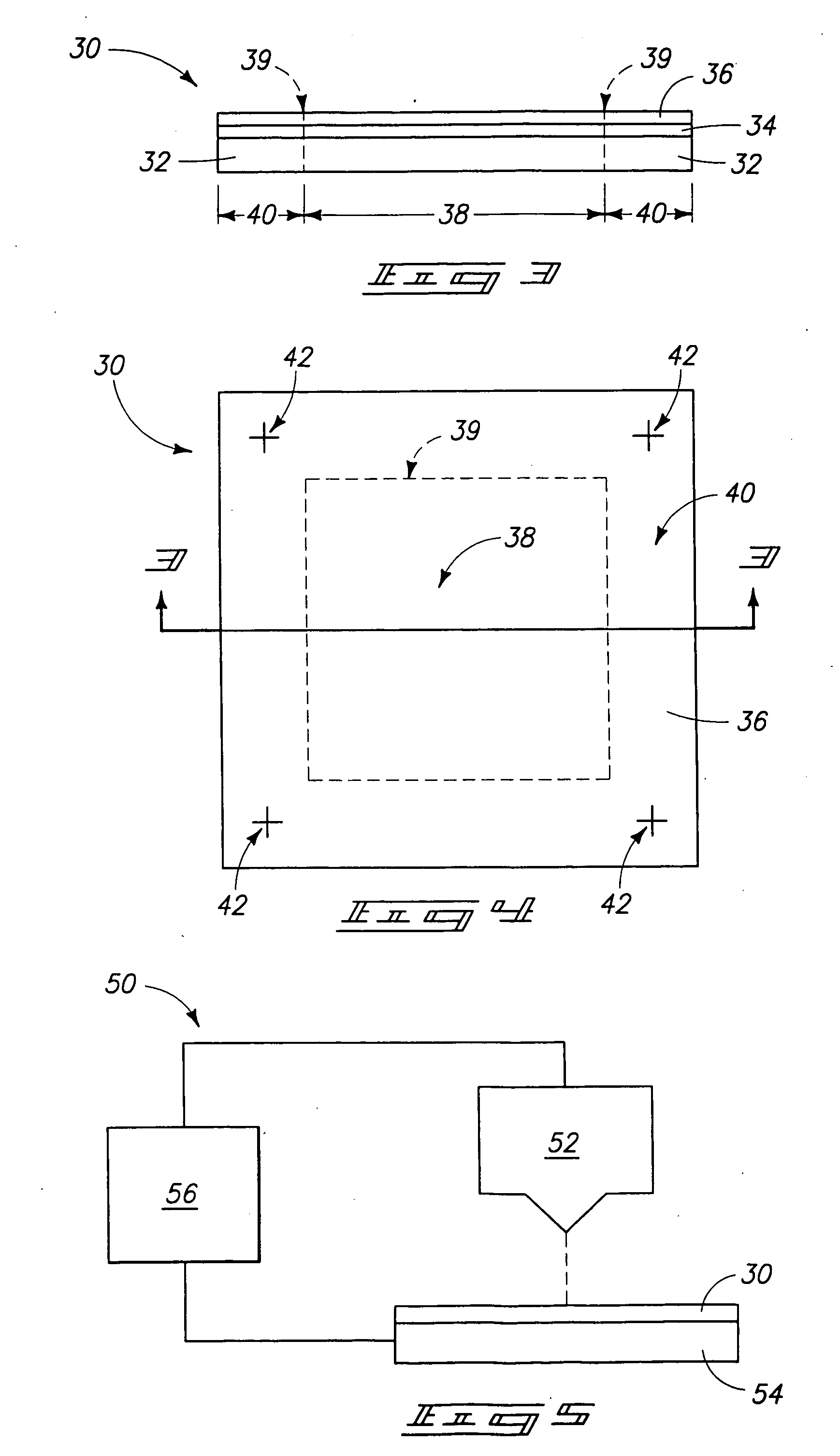

Reticle and direct lithography writing strategy

The present invention relates to preparation of patterned reticles to be used as masks in the production of semiconductor and other devices. Methods and devices are described utilizing resist and transfer layers over a masking layer on a reticle. The methods and devices produce small feature dimensions in masks and phase shift masks. The methods described for masks are in many cases applicable to the direct writing on other workpieces having similarly small features, such as semiconductor, cryogenic, magnetic and optical microdevices.

Owner:ASML NETHERLANDS BV

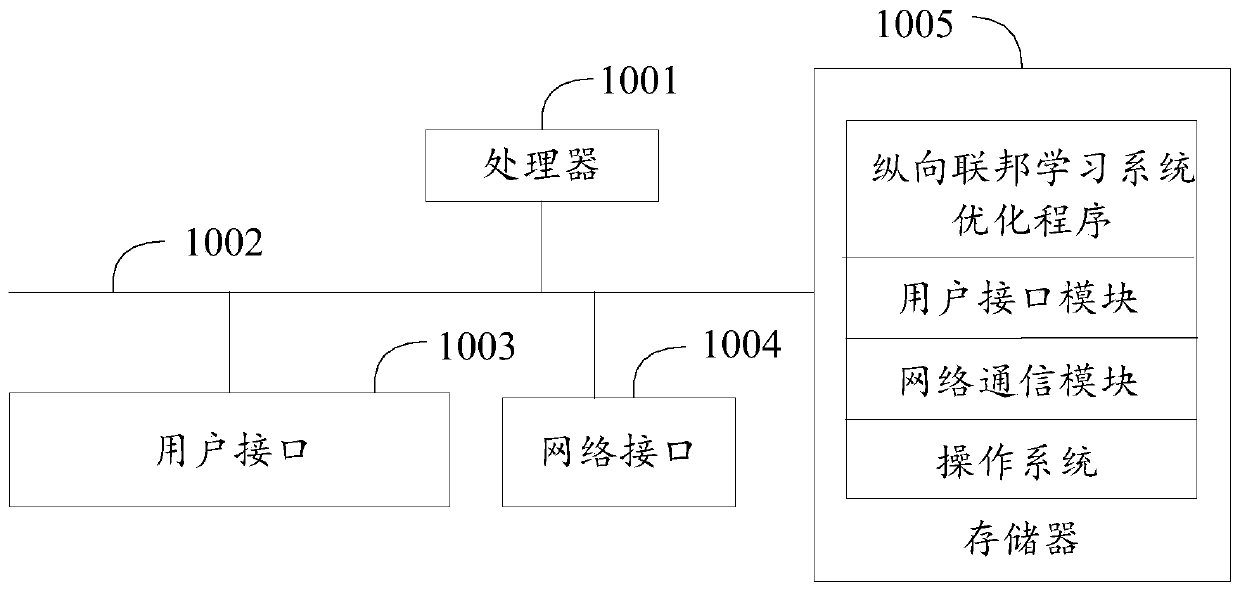

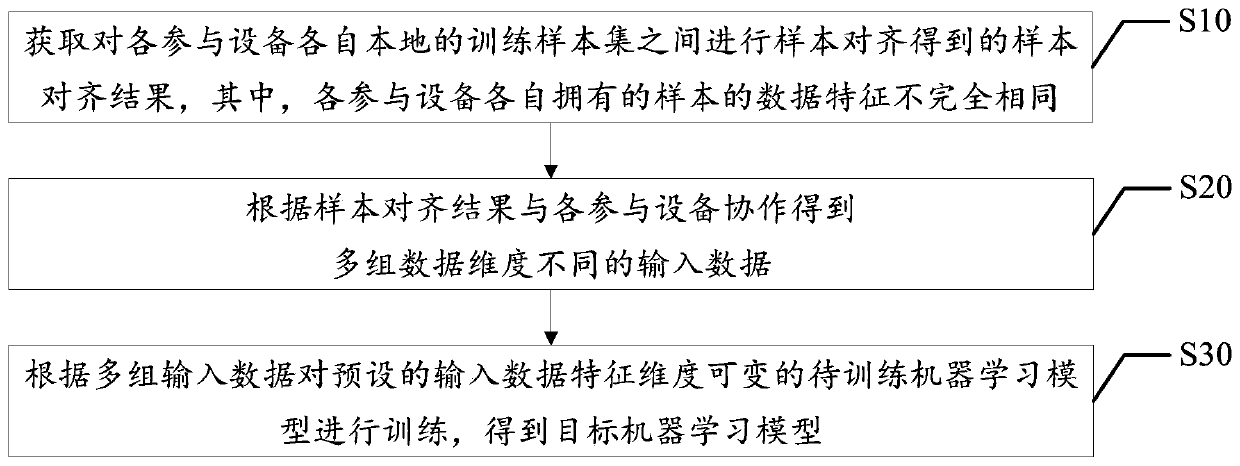

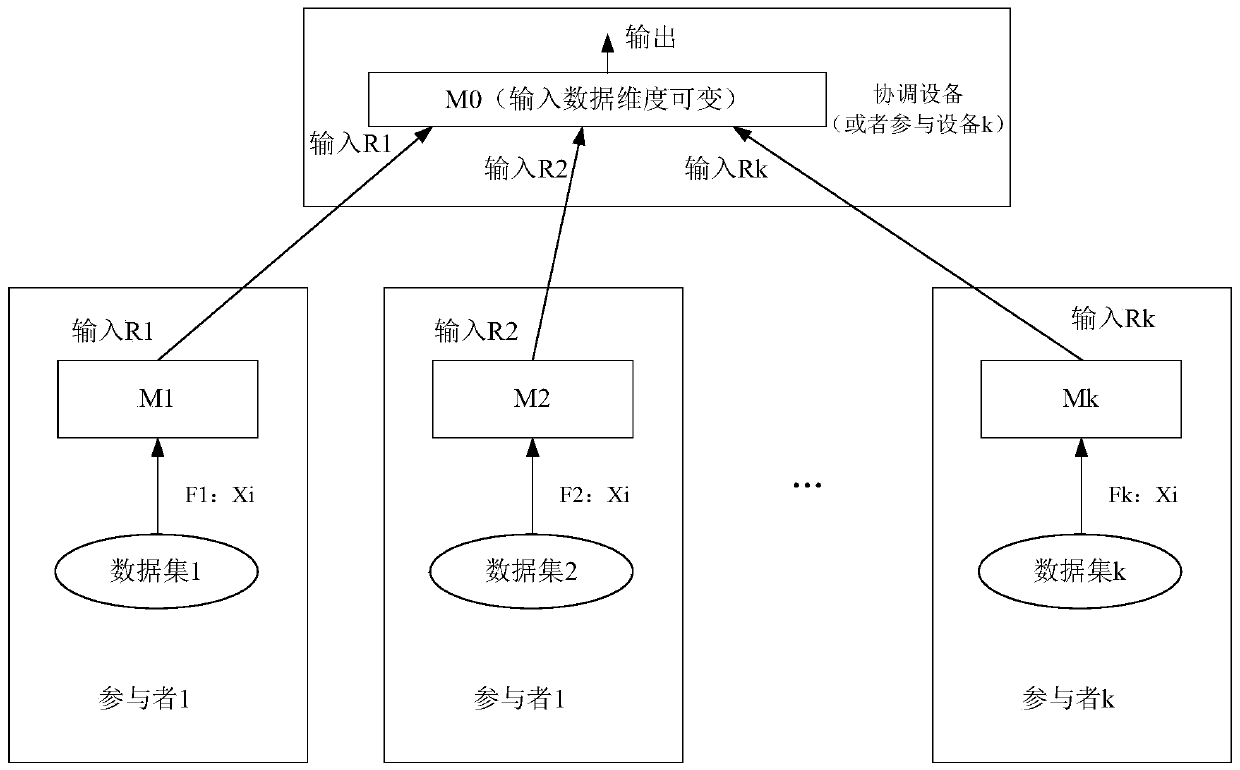

Longitudinal federated learning system optimization method, apparatus and device and readable storage medium

The invention discloses a longitudinal federated learning system optimization method, apparatus and device and a readable storage medium. The longitudinal federated learning system optimization methodcomprises the steps: obtaining a sample alignment result obtained by conducting sample alignment on local training sample sets of all participation devices, wherein data characteristics of samples owned by all the participation devices are not completely the same; according to the sample alignment result, cooperating with each participation device to obtain multiple groups of input data with different data dimensions; and training a preset to-be-trained machine learning model with variable input data feature dimensions according to the multiple groups of input data to obtain a target machinelearning model. According to the longitudinal federated learning system optimization method, when a participant of longitudinal federated learning uses the model trained through longitudinal federatedlearning, the participant can use the model independently without cooperation of other participants, so that the application range of longitudinal federated learning is expanded.

Owner:WEBANK (CHINA)

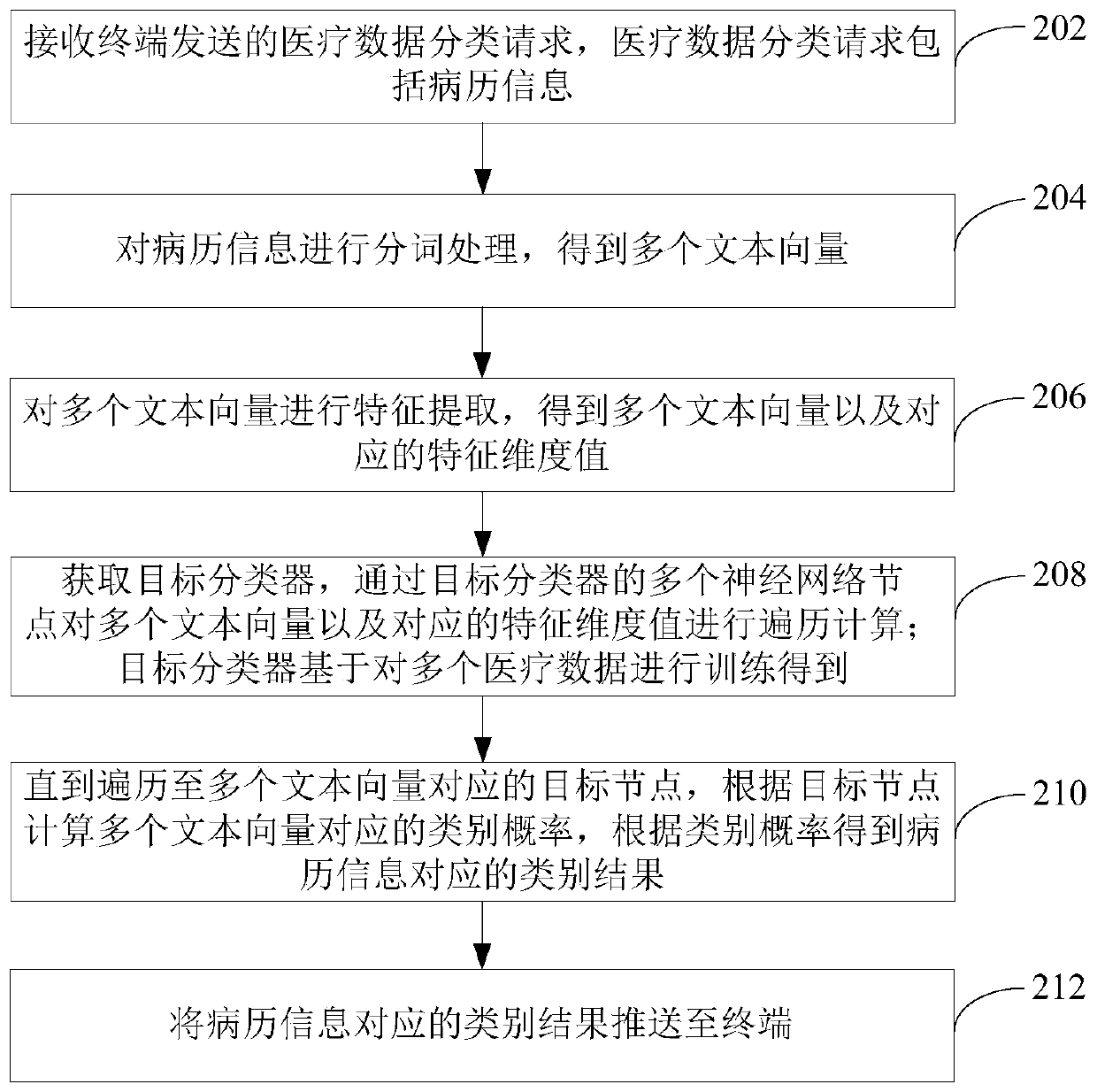

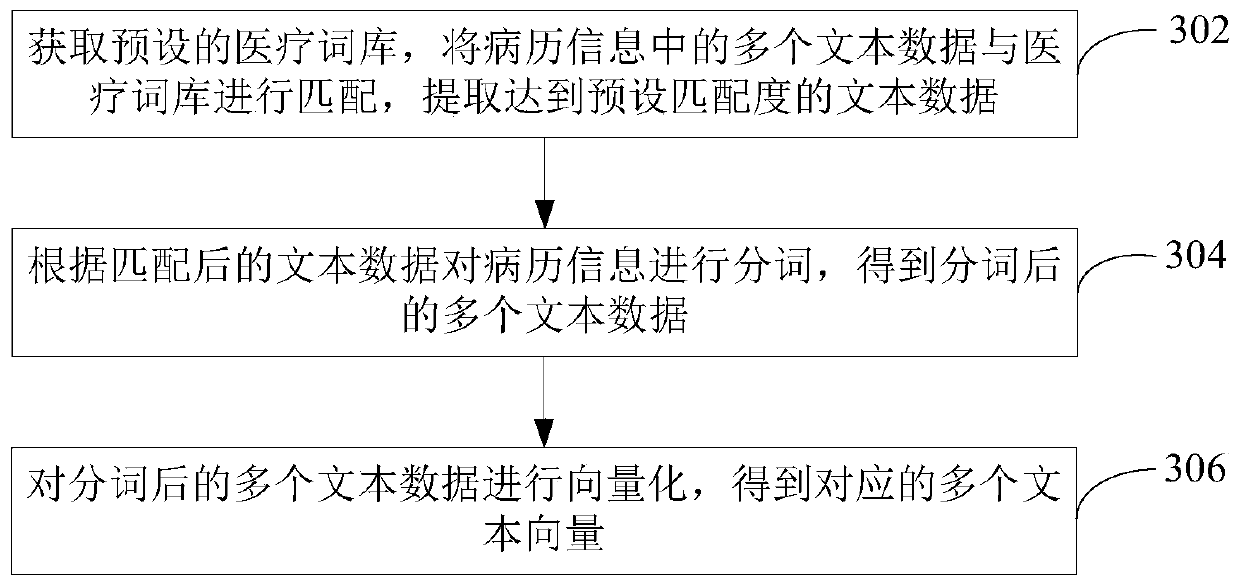

Medical data classification method and device based on machine learning and computer equipment

ActiveCN110021439AImprove processing efficiencyImprove classification accuracyMedical data miningEnsemble learningMedical recordFeature Dimension

The invention relates to a medical data classification method and device based on machine learning and computer equipment. The method comprises the steps that a medical data classification request sent by an end is received, wherein the medical data classification request comprises case history information; the case history information is subjected to word separating processing to obtain a plurality of text vectors; the multiple text vectors are subjected to feature extraction to obtain a plurality of text vectors and corresponding feature dimension values; a target classifier is obtained based on training of multiple medical data, the multiple text vectors and the corresponding feature dimension values are subjected to traversal computation through a plurality of neural network nodes of the target classifier until the target nodes corresponding to the multiple text vectors are traversed, the type possibility corresponding to the multiple text vectors is calculated according to the target nodes, and the type result corresponding to the case history information is acquired according to the type possibility; and the type result corresponding to the case history information is pushedto the end. The medical data classification precision can be effectively improved by adopting the method.

Owner:PING AN TECH (SHENZHEN) CO LTD

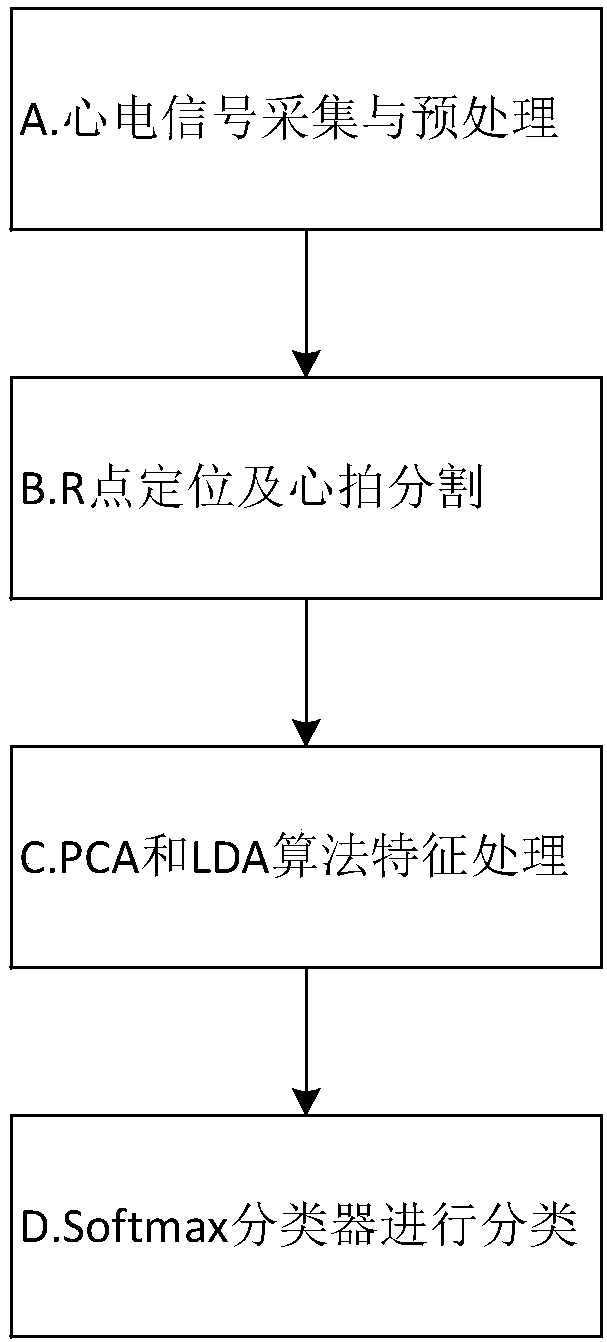

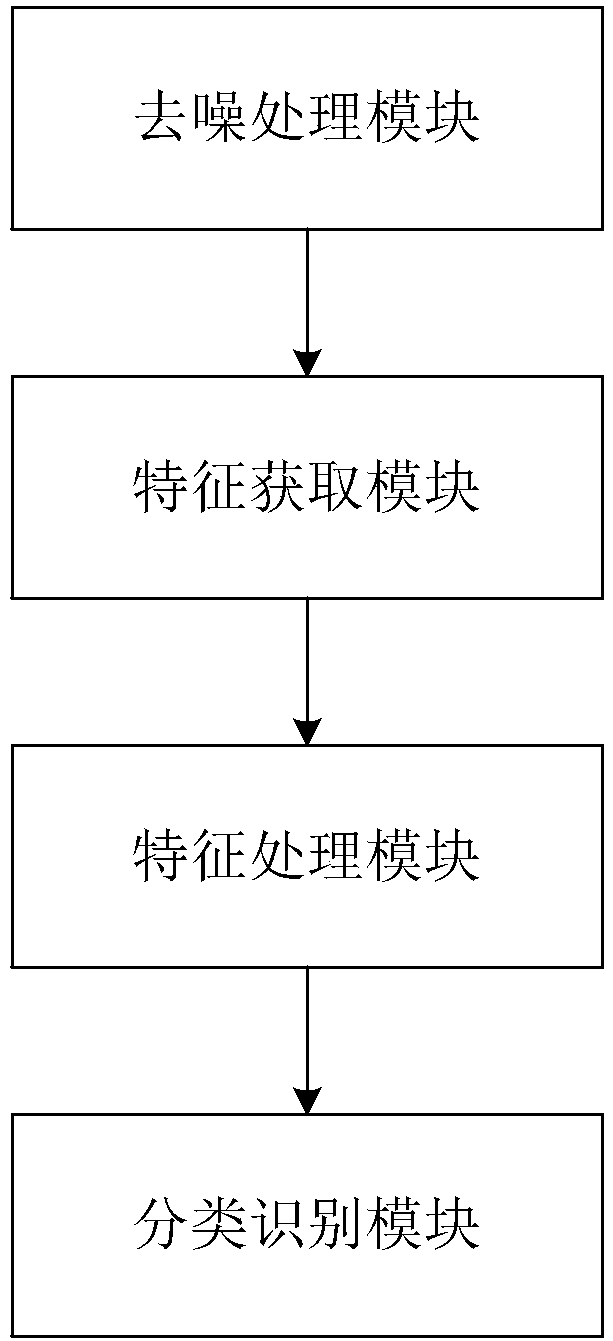

Electrocardiosignal identity recognition method based on PCA and LDA analysis and electrocardiosignal identity recognition system based on PCA and LDA analysis

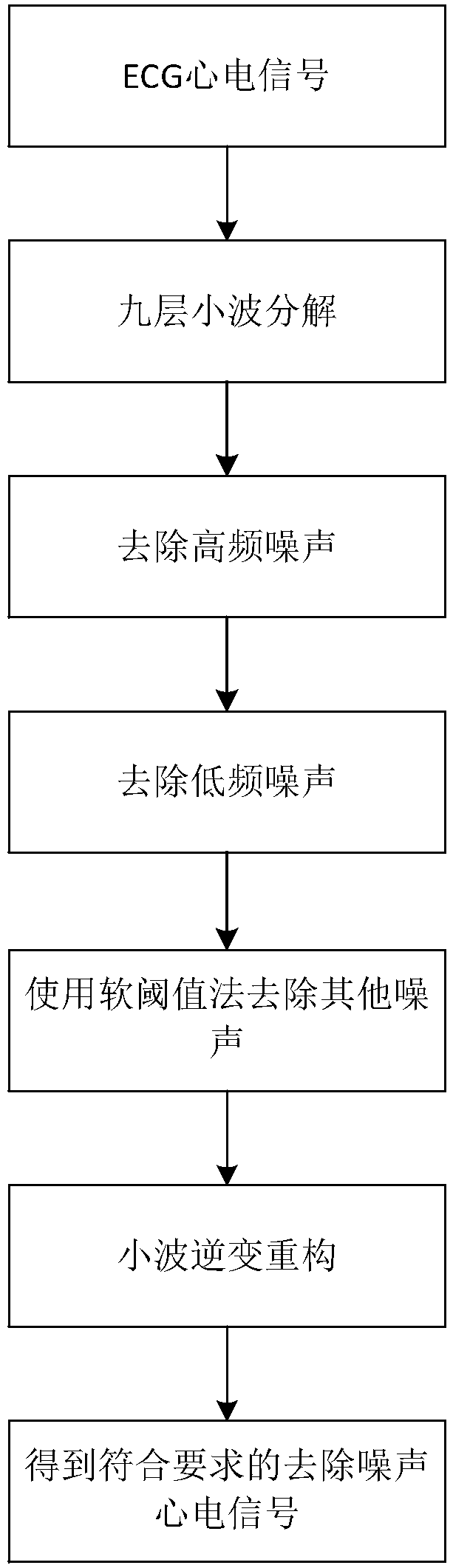

InactiveCN108537100AReduce the dimensionality of signal featuresSmall amount of calculationPhysiological signal biometric patternsFeature vectorEcg signal

The invention provides an electrocardiosignal identity recognition method based on PCA and LDA analysis and an electrocardiosignal identity recognition system based on PCA and LDA analysis. The methodis characterized by comprising the steps that electrocardiosignals are acquired and preprocessed, wherein the ECG signals are acquired and denoised; the denoised ECG signals are acquired so as to perform R crest value point locating on the ECG signals, segment the cardiac beat to construct morphological feature vectors and acquire the corresponding feature vectors; PCA and LDA analysis is performed on the acquired feature vectors so as to obtain the final feature vectors to be recognized; and a training set cardiac beat feature database constructed by the final feature vectors to be recognized and a test set cardiac beat feature database are used, and the classifier is applied to perform matching verification so as to complete identity recognition. The beneficial effects of the method andthe system are that the signal feature dimension can be reduced, the calculation burden can be reduced for subsequent classification of the Softmax classifier and the classification accuracy can be enhanced, and the method and the system can be effectively applied to identity recognition based on the electrocardiosignals.

Owner:JILIN UNIV +1

Face authentication method and device

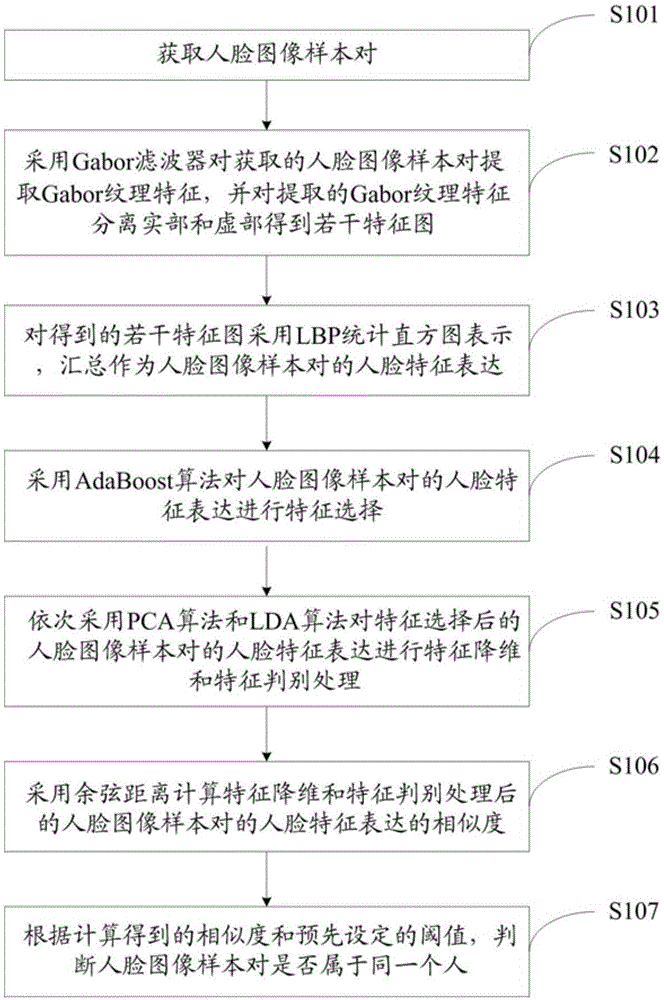

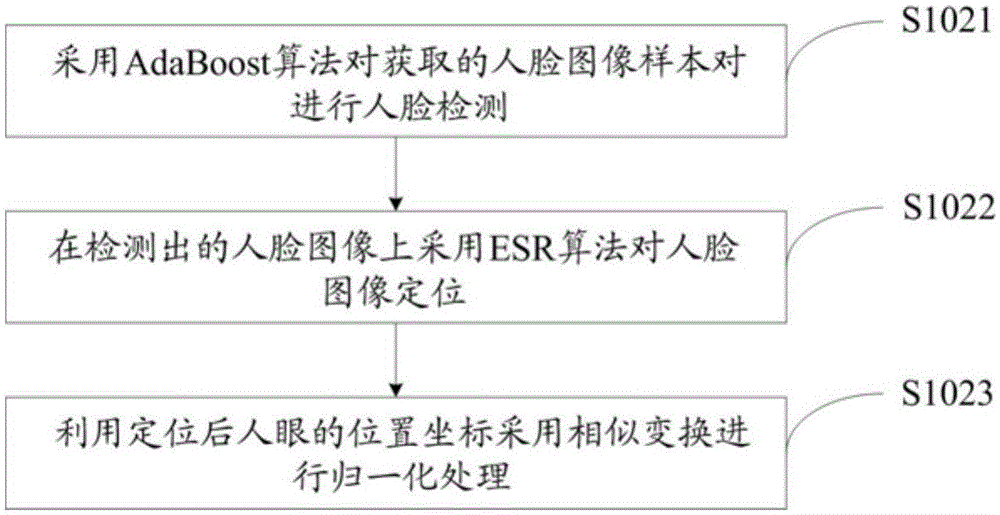

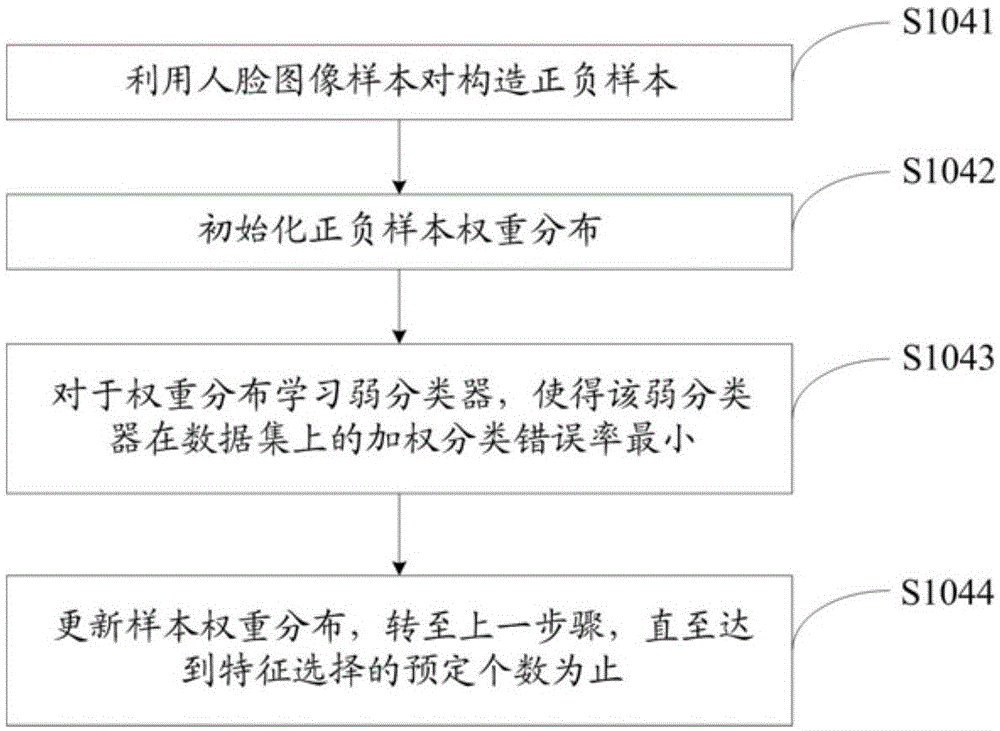

ActiveCN105138972ASolve few problemsReduce time complexityCharacter and pattern recognitionFeature DimensionImaging processing

The invention provides a face authentication method and device, and belongs to the field of image processing and pattern recognition. The method comprises the following steps: extracting Gabor texture features of an acquired face image sample pair through a Gabor filter, and separating real parts from virtual parts of the extracted Gabor texture features to obtain a plurality of feature graphs; summarizing the plurality of obtained feature graphs through an LBP (Local Binary Pattern) statistical histogram to serve as face feature expression of the face image pair; performing feature selection on the face feature expression of the face image sample pair through an AdaBoost (Adaptive Boosting) algorithm; and performing feature dimension reduction, feature judgment processing and the like on the face feature expression of the face image sample pair subjected to the feature selection through a PCA (Principal Components Analysis) algorithm and an LDA (Linear Discriminant Analysis) algorithm in sequence. Compared with the prior art, the face authentication method provided by the invention has the advantages of full extraction of sample texture information, low sample quantity demand, short algorithm time and low space complexity.

Owner:BEIJING EYECOOL TECH CO LTD +1

Methods of forming mask patterns, methods of correcting feature dimension variation, microlithography methods, recording medium and electron beam exposure system

InactiveUS20060183025A1Reduce the impactAlleviate deflection effectElectric discharge tubesPhotomechanical exposure apparatusFeature DimensionReticle

The invention includes methods of forming reticles. A mask blank is provided having a plurality of regions defined within a main-field area. Exposure to an electron beam is initiated at an initial locus within an interior region of the main-field. The invention includes a method of correcting feature dimension variation. A mask blank is patterned utilizing a first dose correction component and feature dimension variance is determined. The variance is utilized to determine a second correction component which is added to the first dose correction component to create an enhanced dose correction. The invention includes a recording medium and a system comprising the recording medium. The medium contains programming configured to cause processing circuitry to: access data defining a design pattern; obtain error data pertaining to feature dimension variation; generate correction data; produce data defining a corrective pattern; and apply the corrective pattern during an exposure event.

Owner:MICRON TECH INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com