Pedestrian re-identification method based on multi-region feature extraction and fusion

A pedestrian re-identification and feature extraction technology, applied in the field of computer vision pedestrian re-identification, can solve the problem of low overall matching accuracy of the pedestrian re-identification method, and achieve the effect of reasonable design and improved matching accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] Embodiments of the present invention will be described in further detail below in conjunction with the accompanying drawings.

[0040] A pedestrian re-identification method based on multi-region feature extraction and fusion, comprising the following steps:

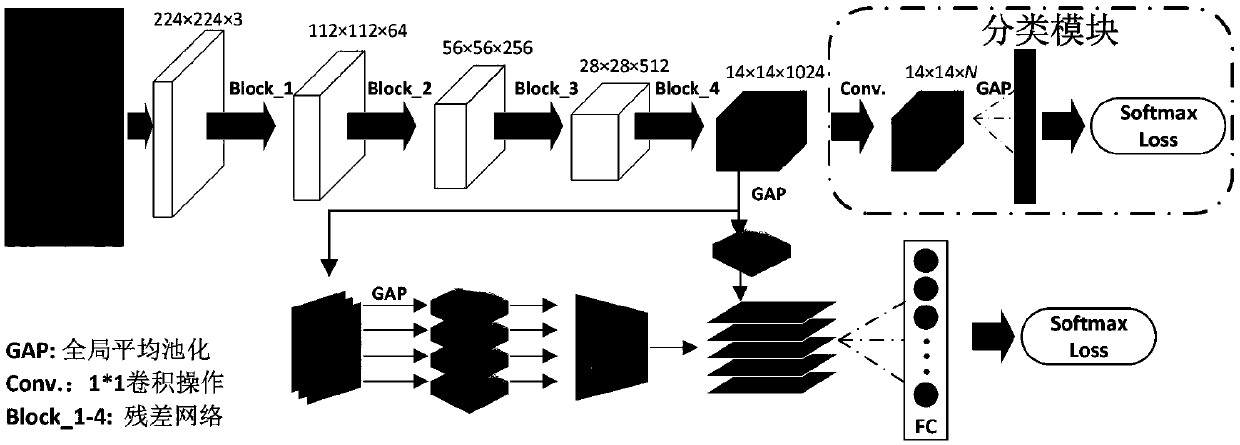

[0041] Step 1. Use the residual network to extract global features, and add a pedestrian identity classification module in the training phase for the extraction and optimization of global features.

[0042] The traditional convolutional neural network uses the fully connected layer to map the convolutional features to a feature vector whose dimension is equal to the number of pedestrian categories. Since all nodes in the fully connected layer are connected to all nodes in the previous layer, the number of parameters is large. like figure 1 As shown, the classification module (Classification Structure) in the present invention uses 1×1 convolution to implement feature mapping, and all neurons share weight parameter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com