Patents

Literature

4501 results about "Structured light" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Structured light is the process of projecting a known pattern (often grids or horizontal bars) on to a scene. The way that these deform when striking surfaces allows vision systems to calculate the depth and surface information of the objects in the scene, as used in structured light 3D scanners.

An articulated structured light based-laparoscope

The present invention provides a structured-light based system for providing a 3D image of at least one object within a field of view within a body cavity, comprising: a. An endoscope; b. at least one camera located in the endoscope's proximal end, configured to real-time provide at least one 2D image of at least a portion of said field of view by means of said at least one lens; c. a light source, configured to real-time illuminate at least a portion of said at least one object within at least a portion of said field of view with at least one time and space varying predetermined light pattern; and, d. a sensor configured to detect light reflected from said field of view; e. a computer program which, when executed by data processing apparatus, is configured to generate a 3D image of said field of view.

Owner:TRANSENTERIX EURO SARL

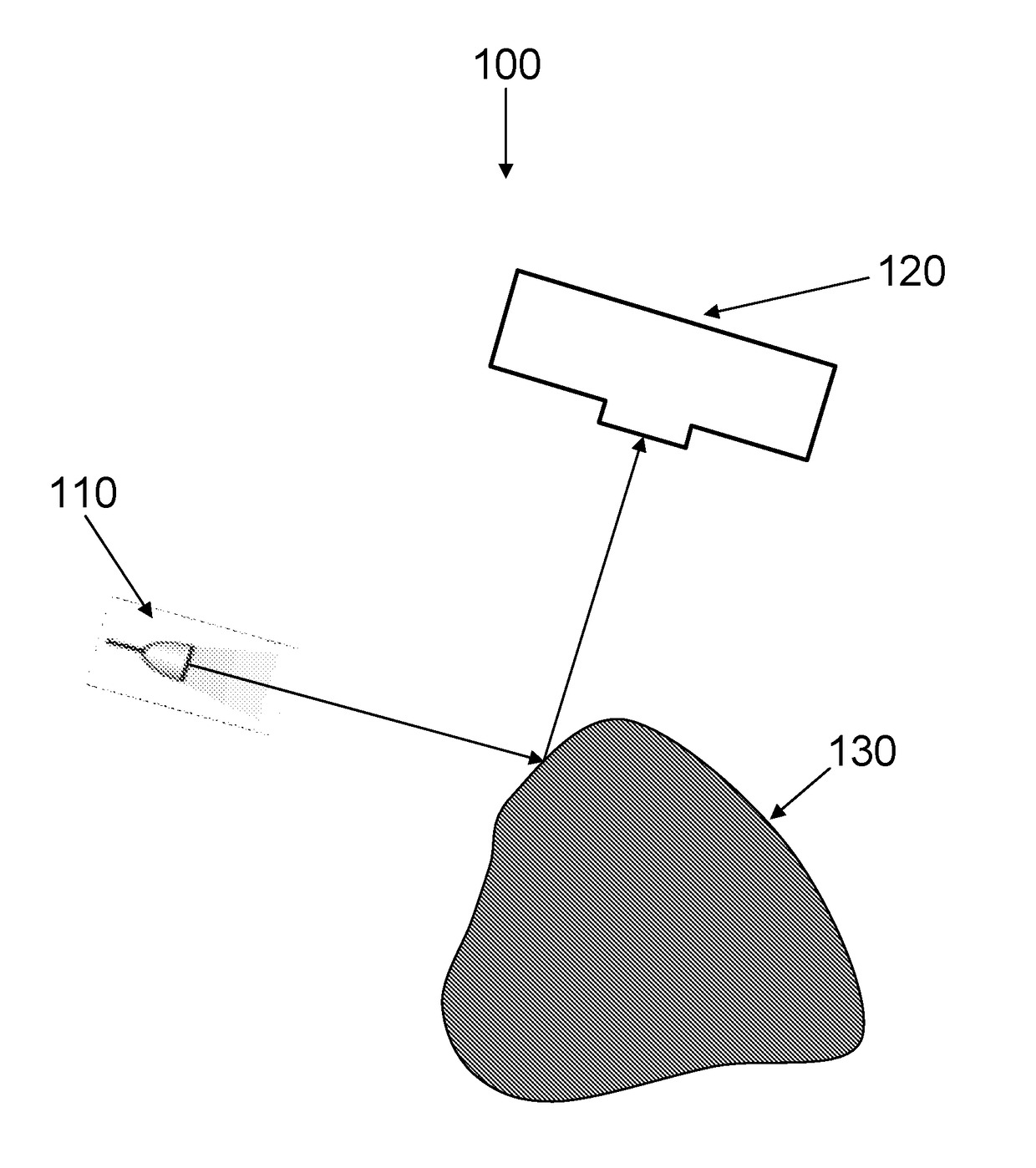

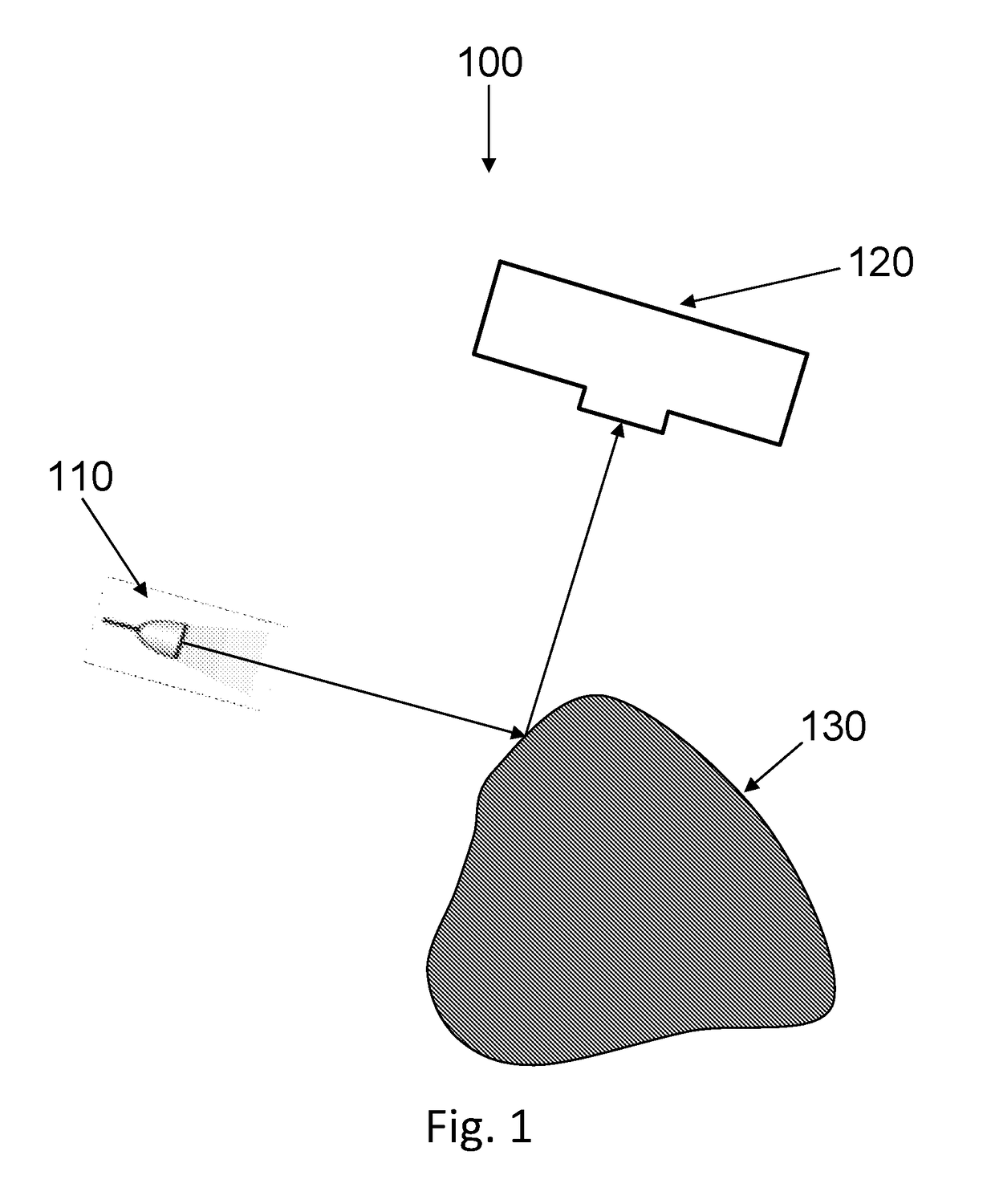

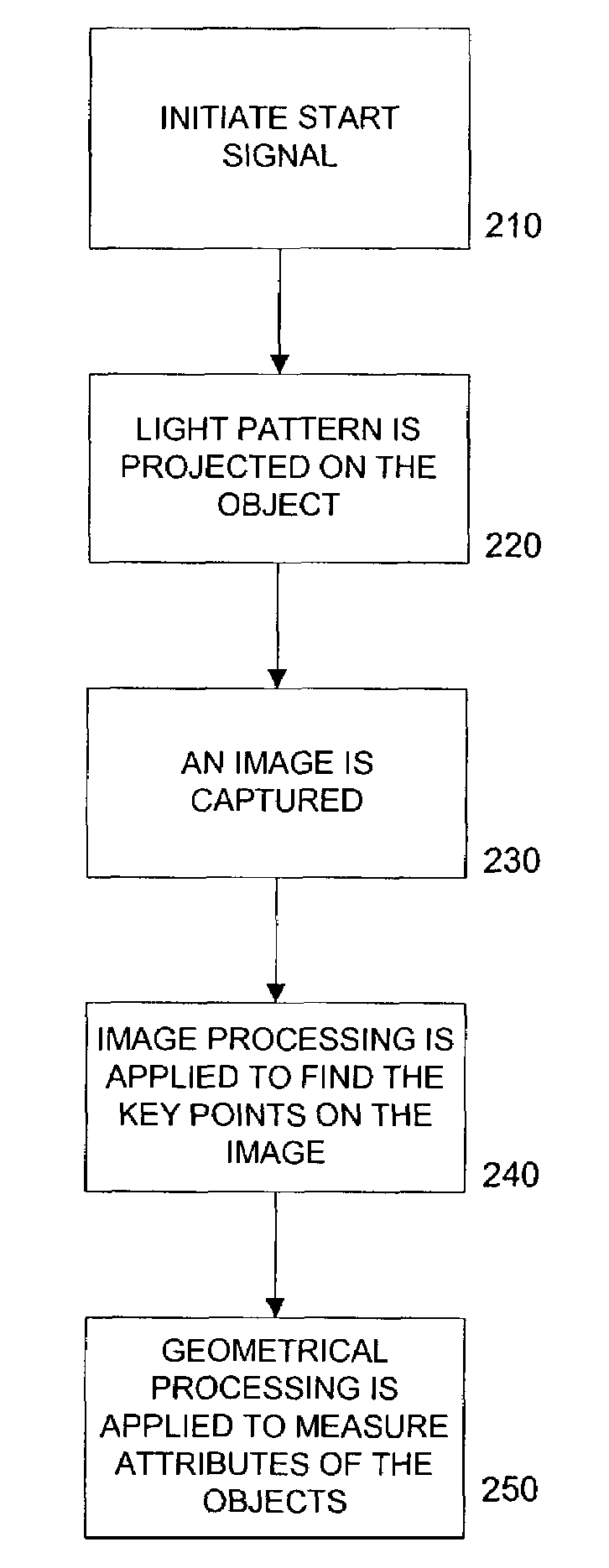

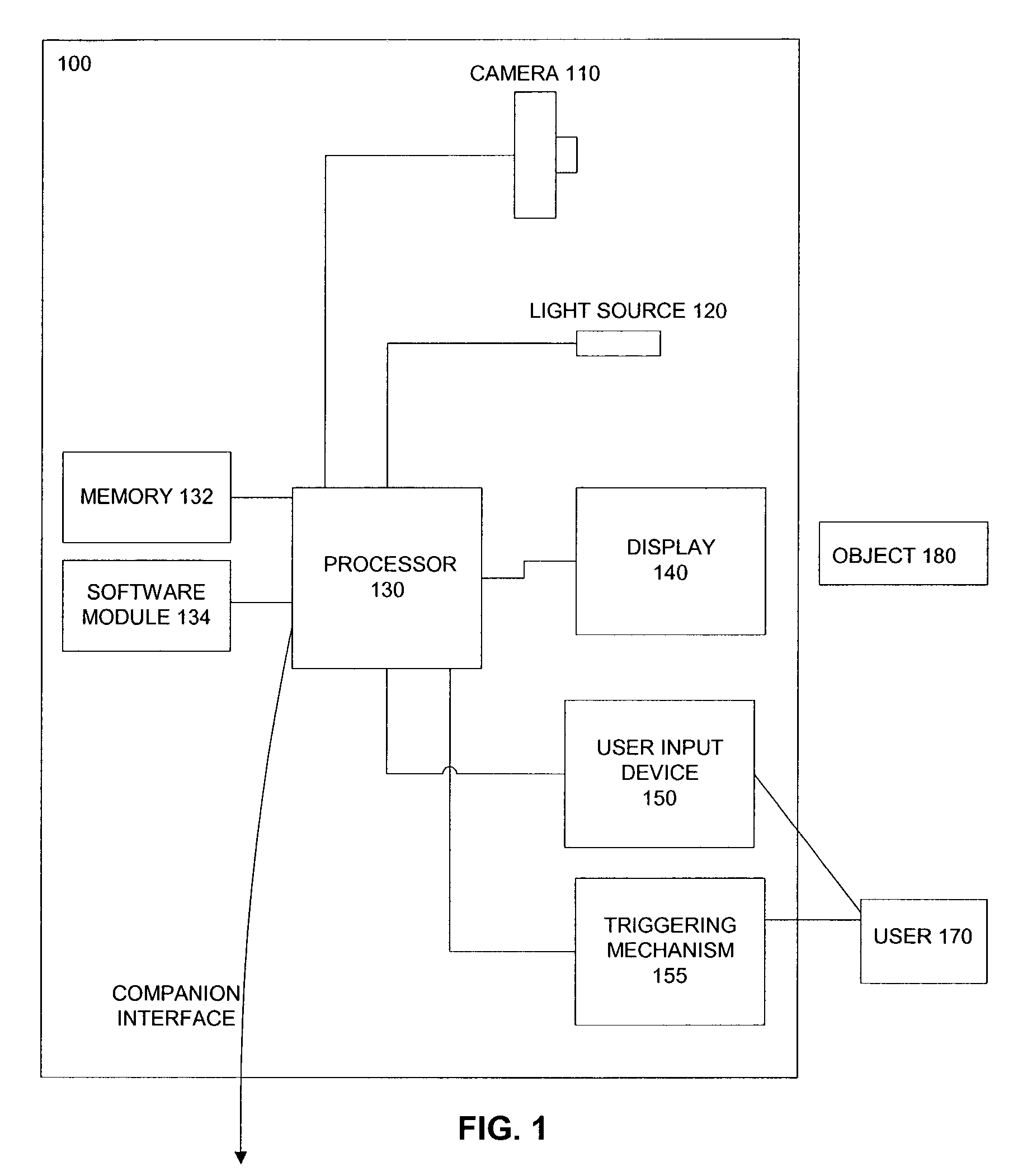

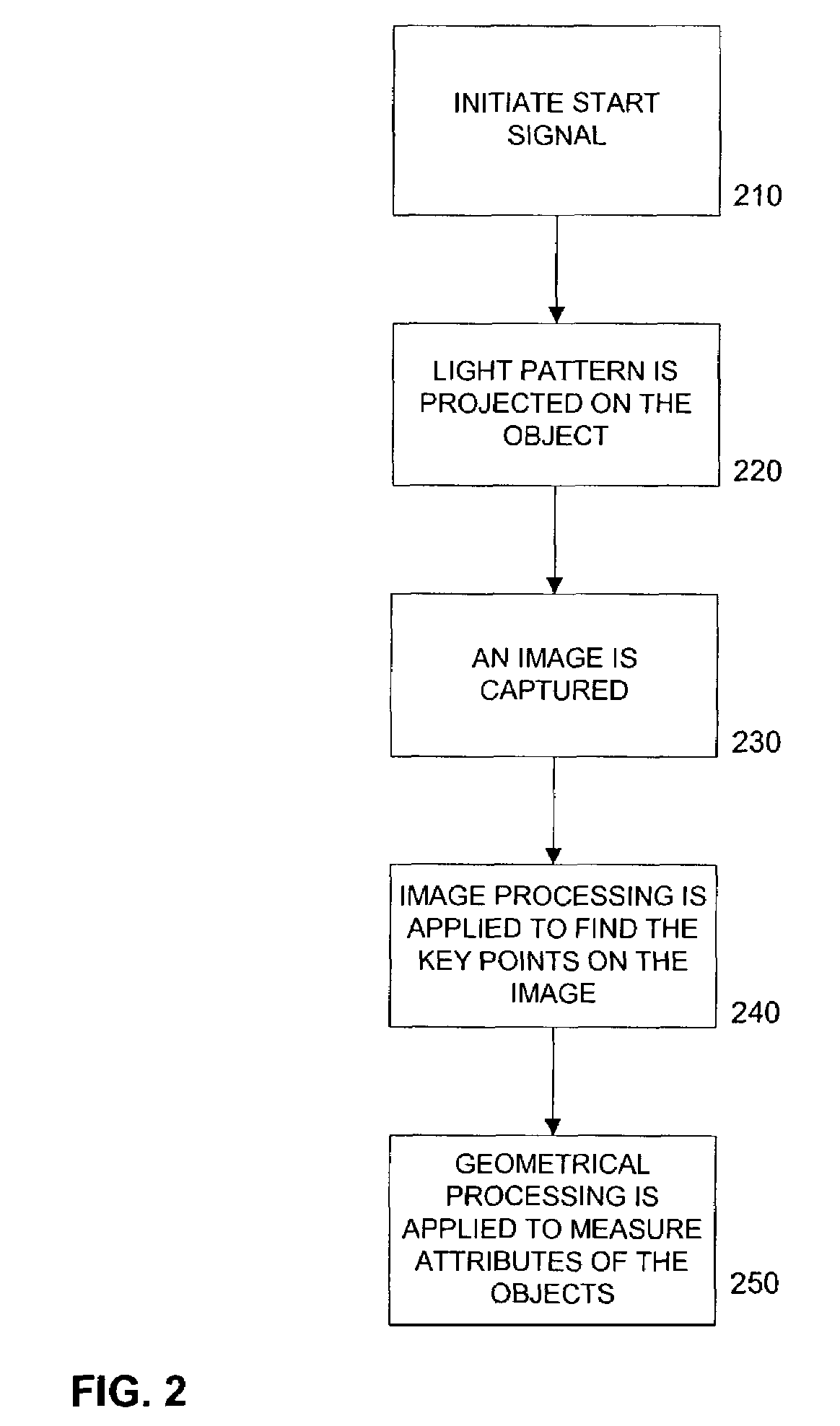

Optical methods for remotely measuring objects

A class of measurement devices can be made available using a family of projection patterns and image processing and computer vision algorithms. The proposed system involves a camera system, one or more structured light source, or a special pattern that is already drawn on the object under measurement. The camera system uses computer vision and image processing techniques to measure the real length of the projected pattern. The method can be extended to measure the volumes of boxes, or angles on planar surfaces.

Owner:MICROSOFT TECH LICENSING LLC

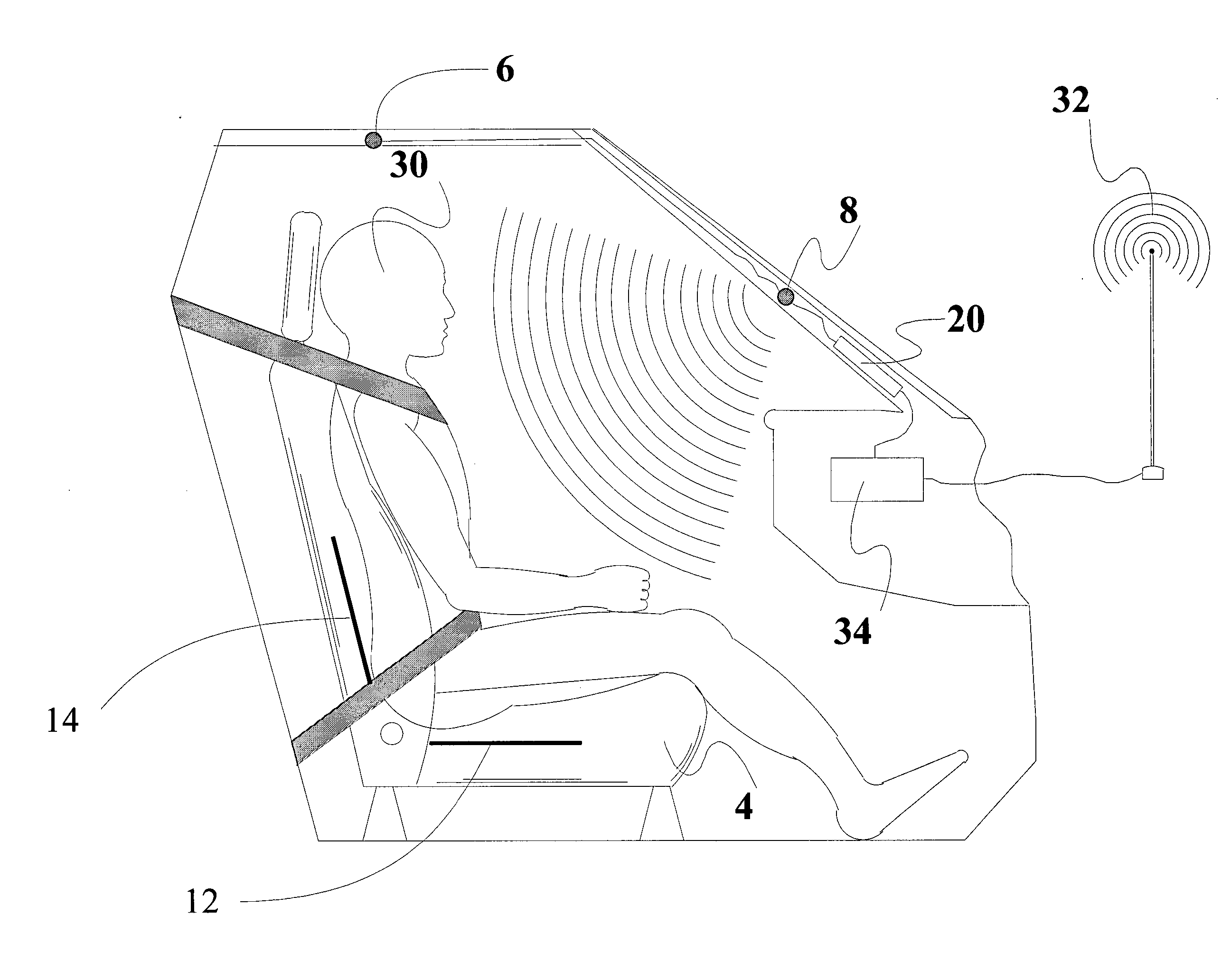

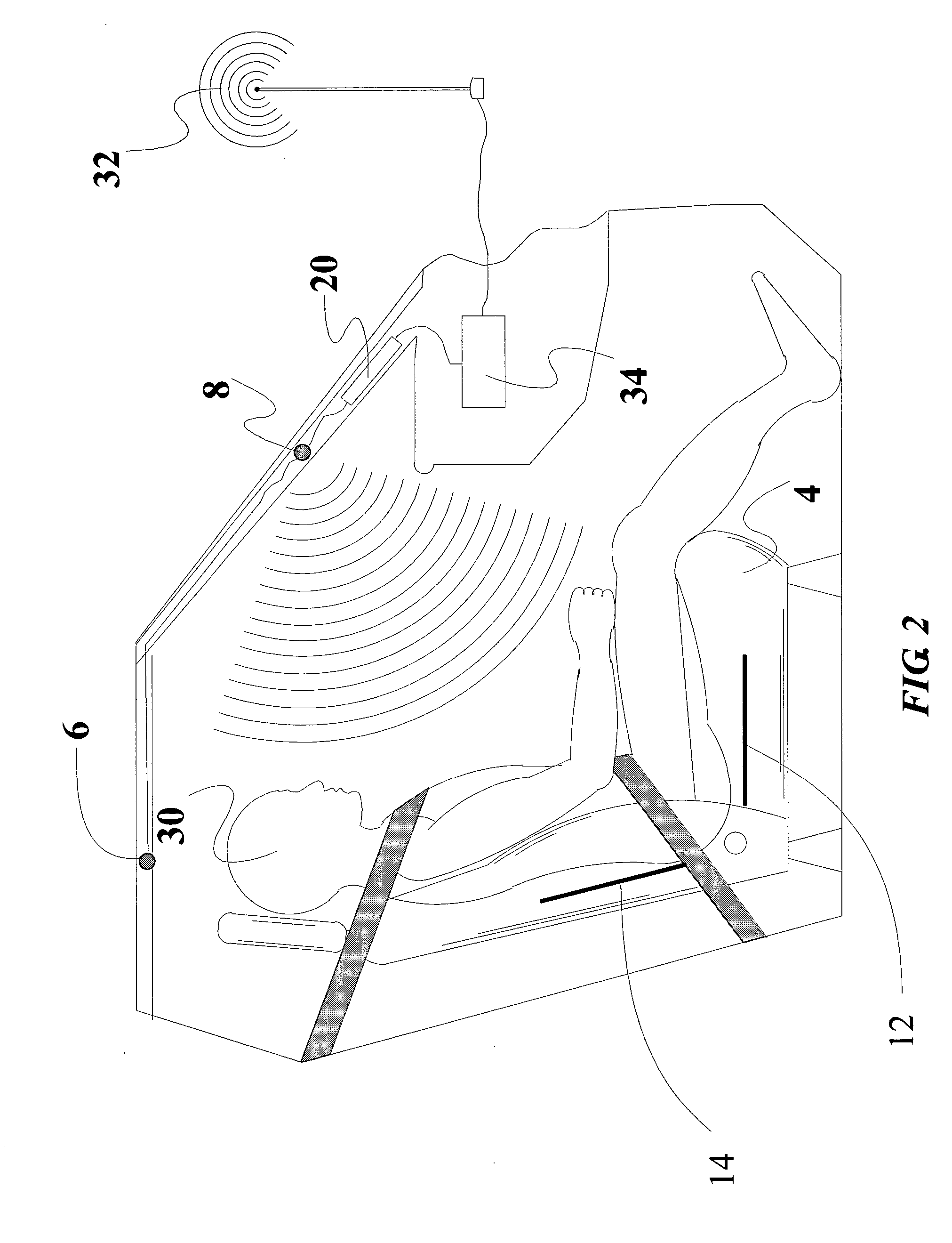

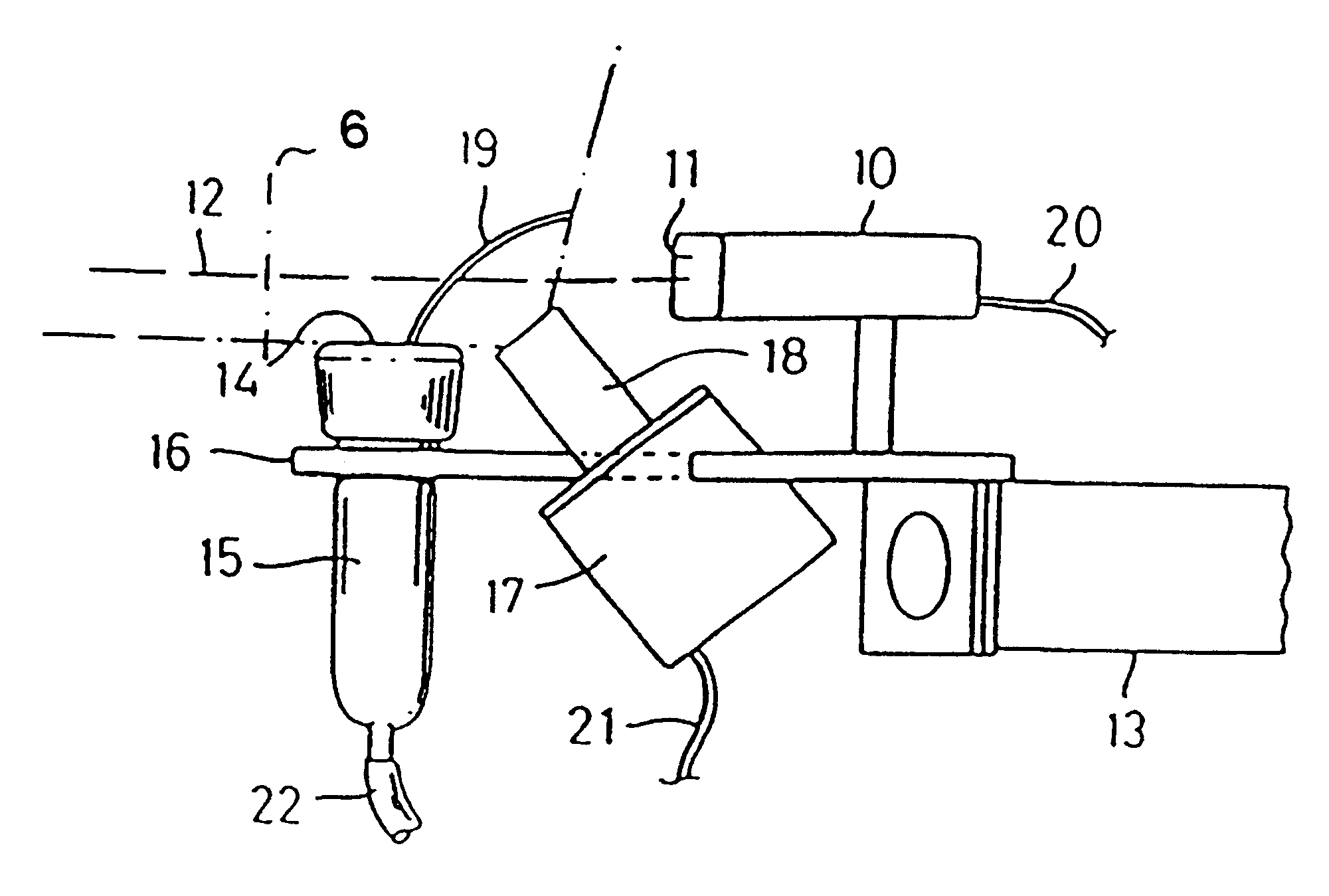

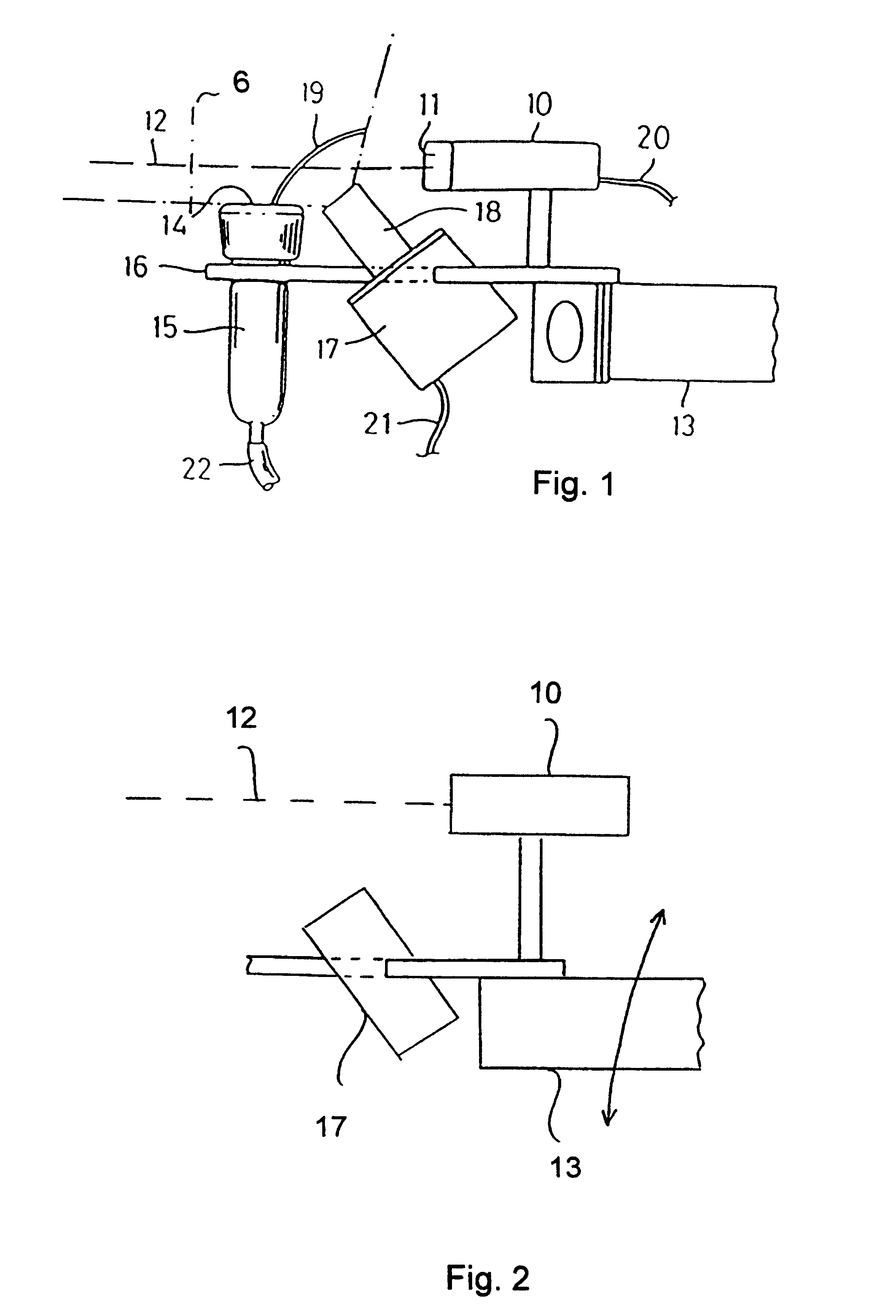

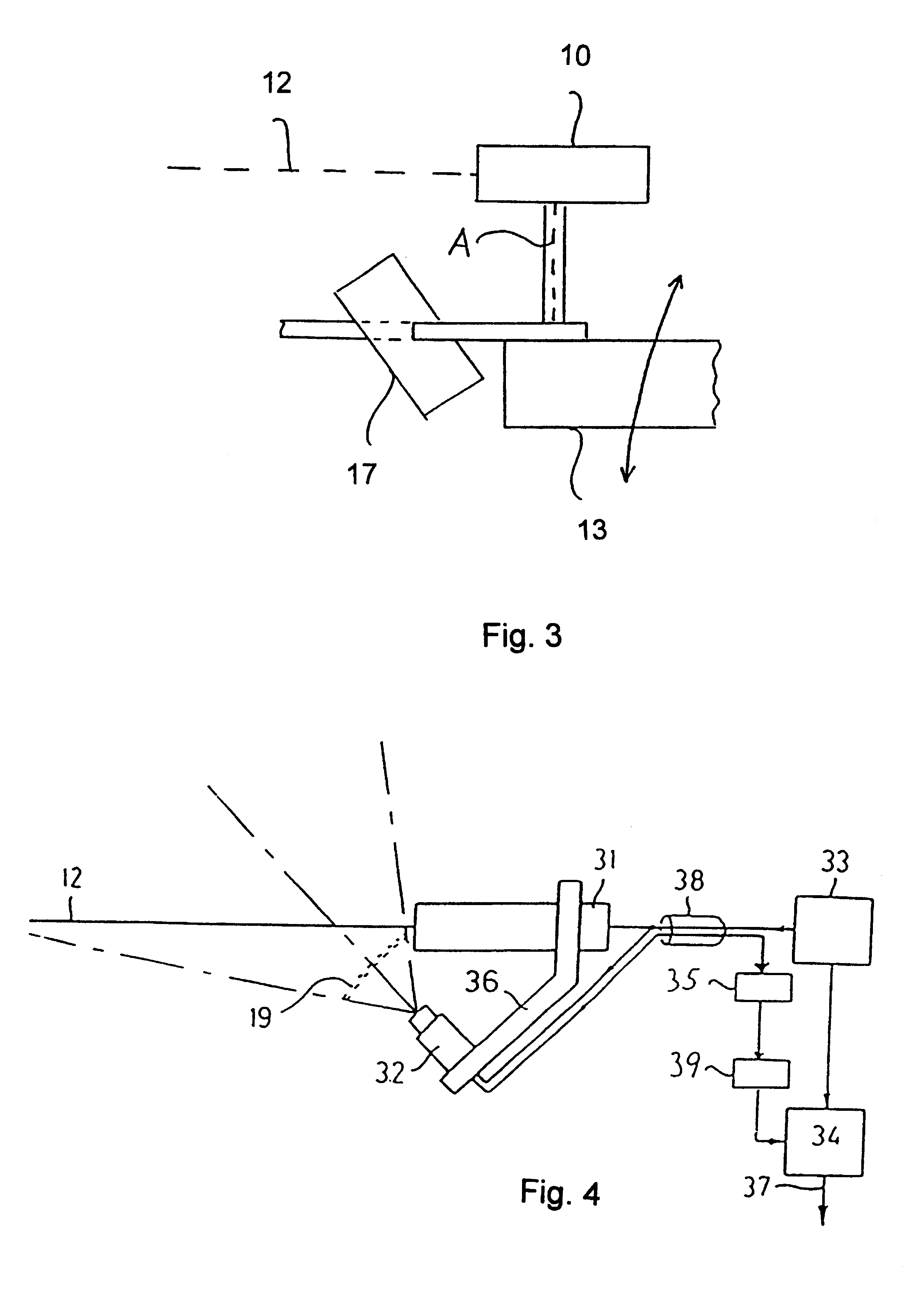

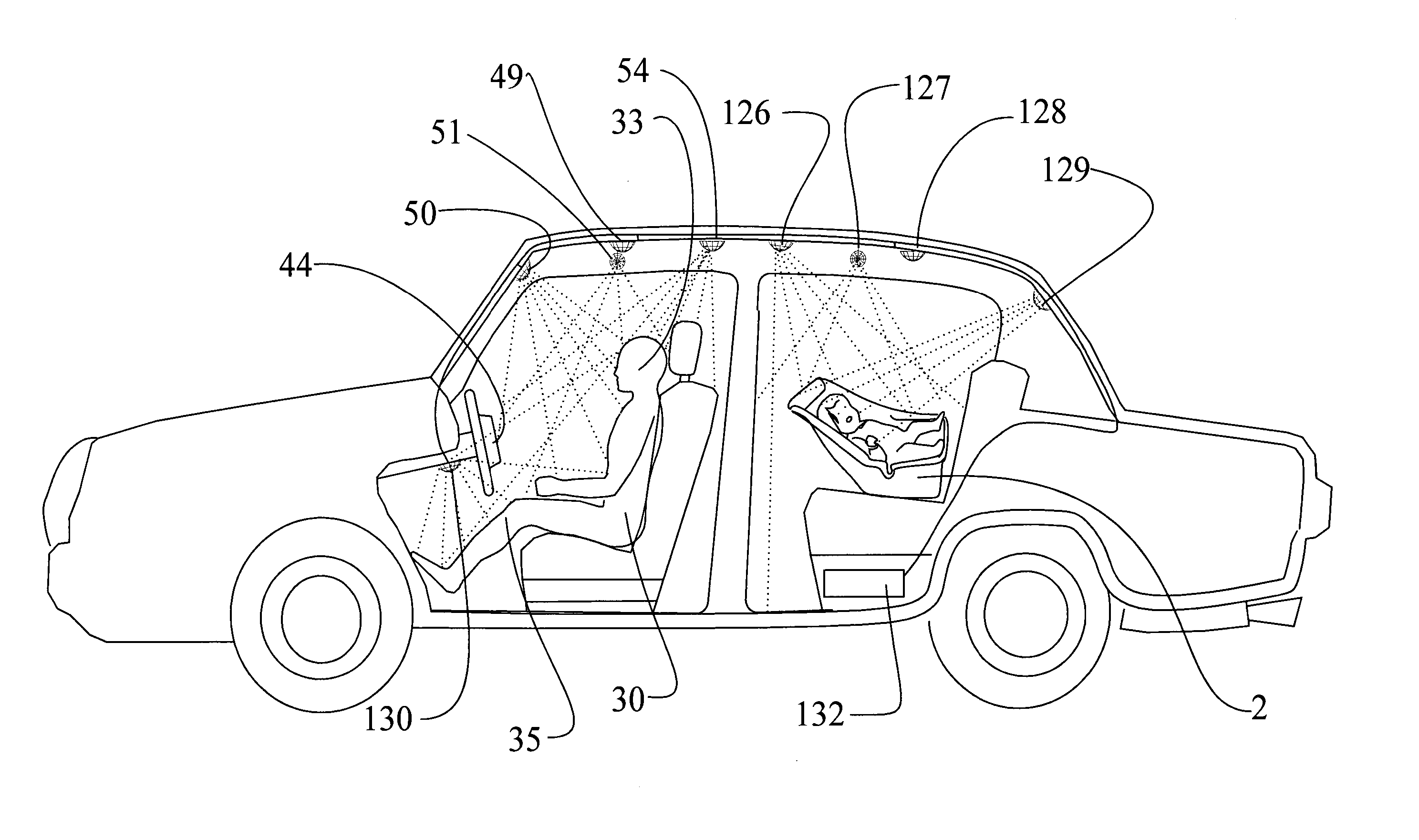

Method and arrangement for obtaining information about vehicle occupants

InactiveUS20050131607A1Digital data processing detailsAnti-theft devicesStructured lightRegion of interest

Arrangement and method for obtaining information about a vehicle occupant in a compartment of the vehicle in which a light source is mounted in the vehicle, structured light is projected into an area of interest in the compartment, rays of light forming the structured light originating from the light source, reflected light is detected at an image sensor at a position different than the position from which the structured light is projected, and the reflected light is analyzed relative to the projected structured light to obtain information about the area of interest. The structured light is designed to appear as if it comes from a source of light (virtual or actual) which is at a position different than the position of the image sensor.

Owner:AMERICAN VEHICULAR SCI

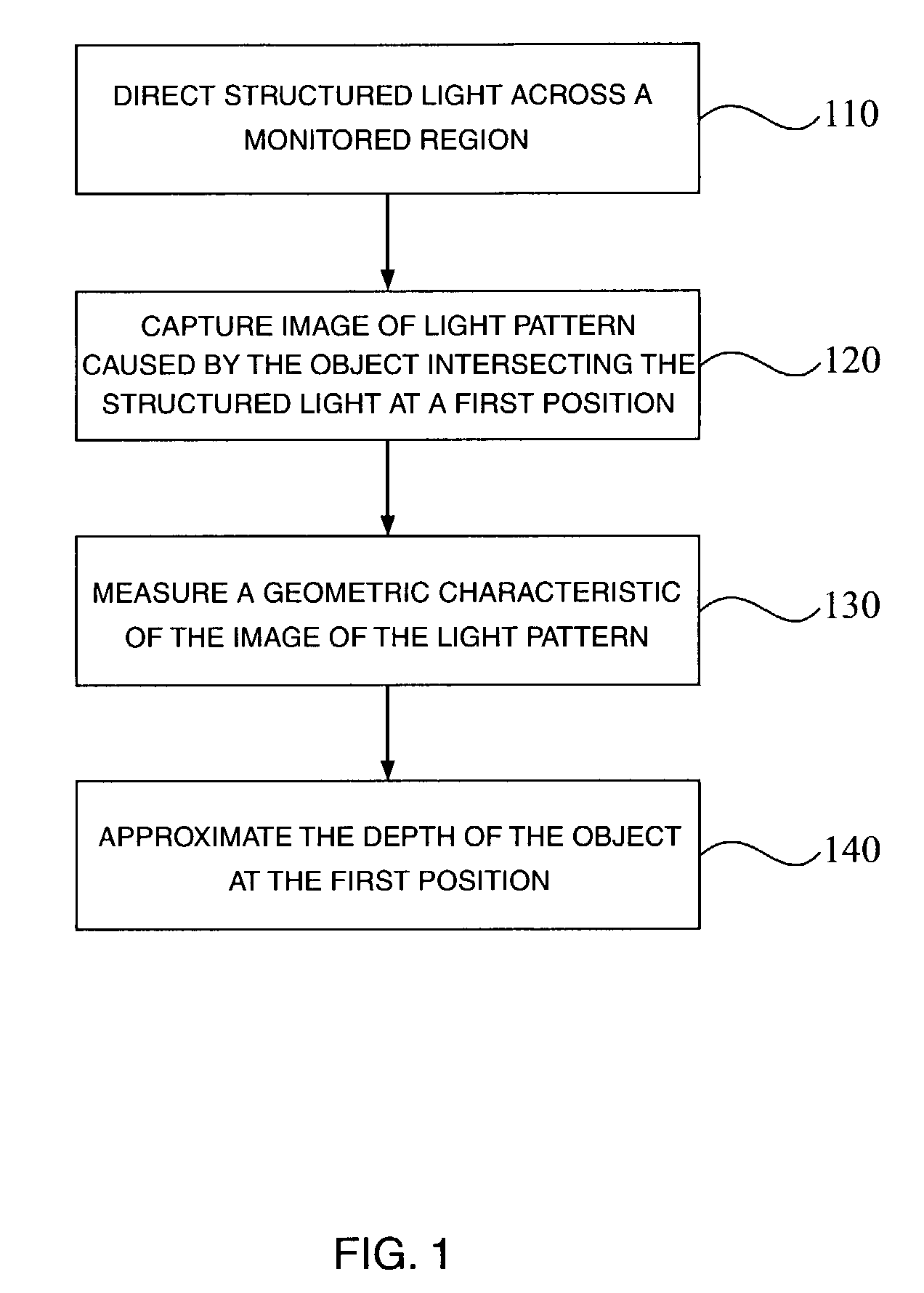

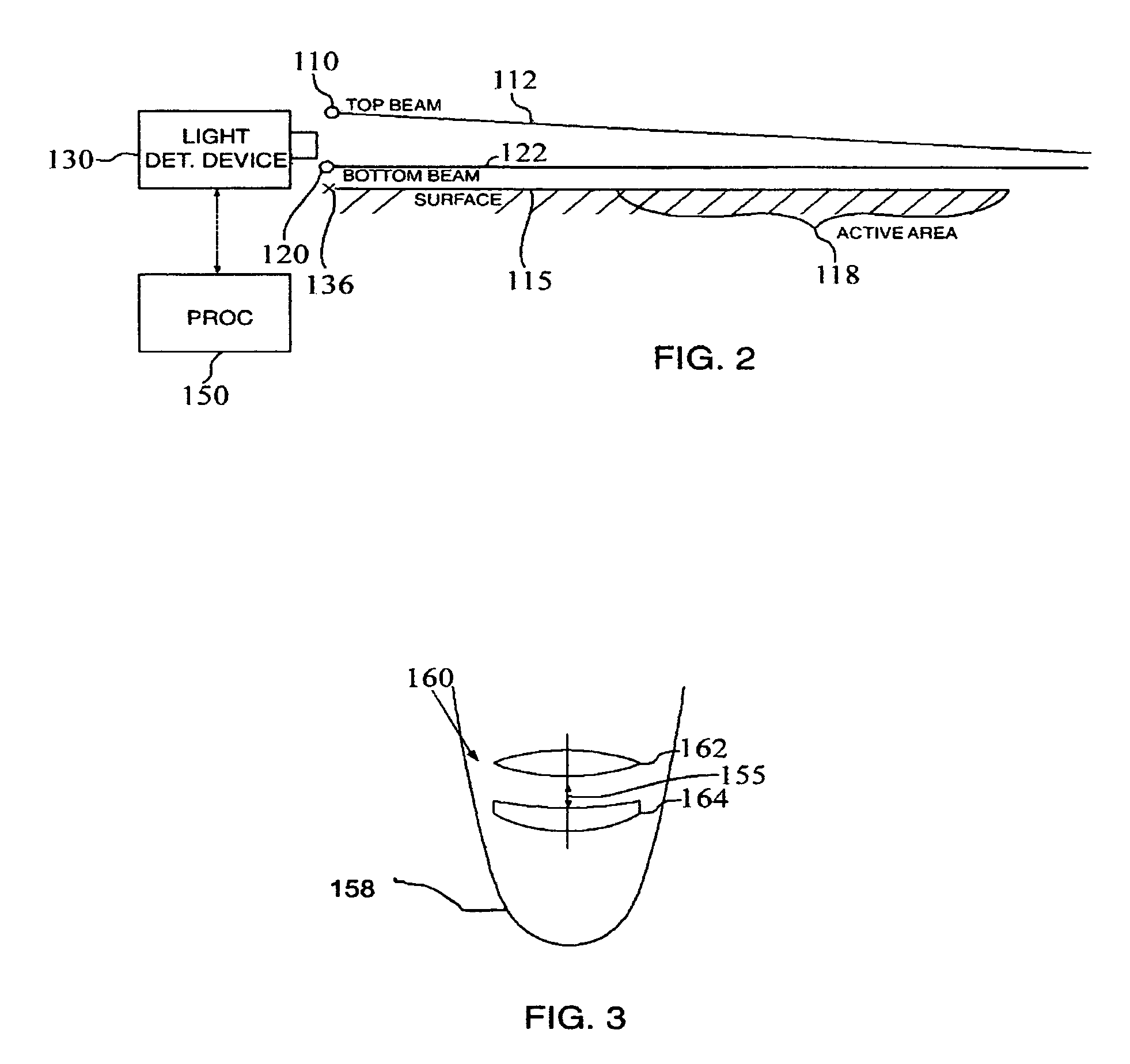

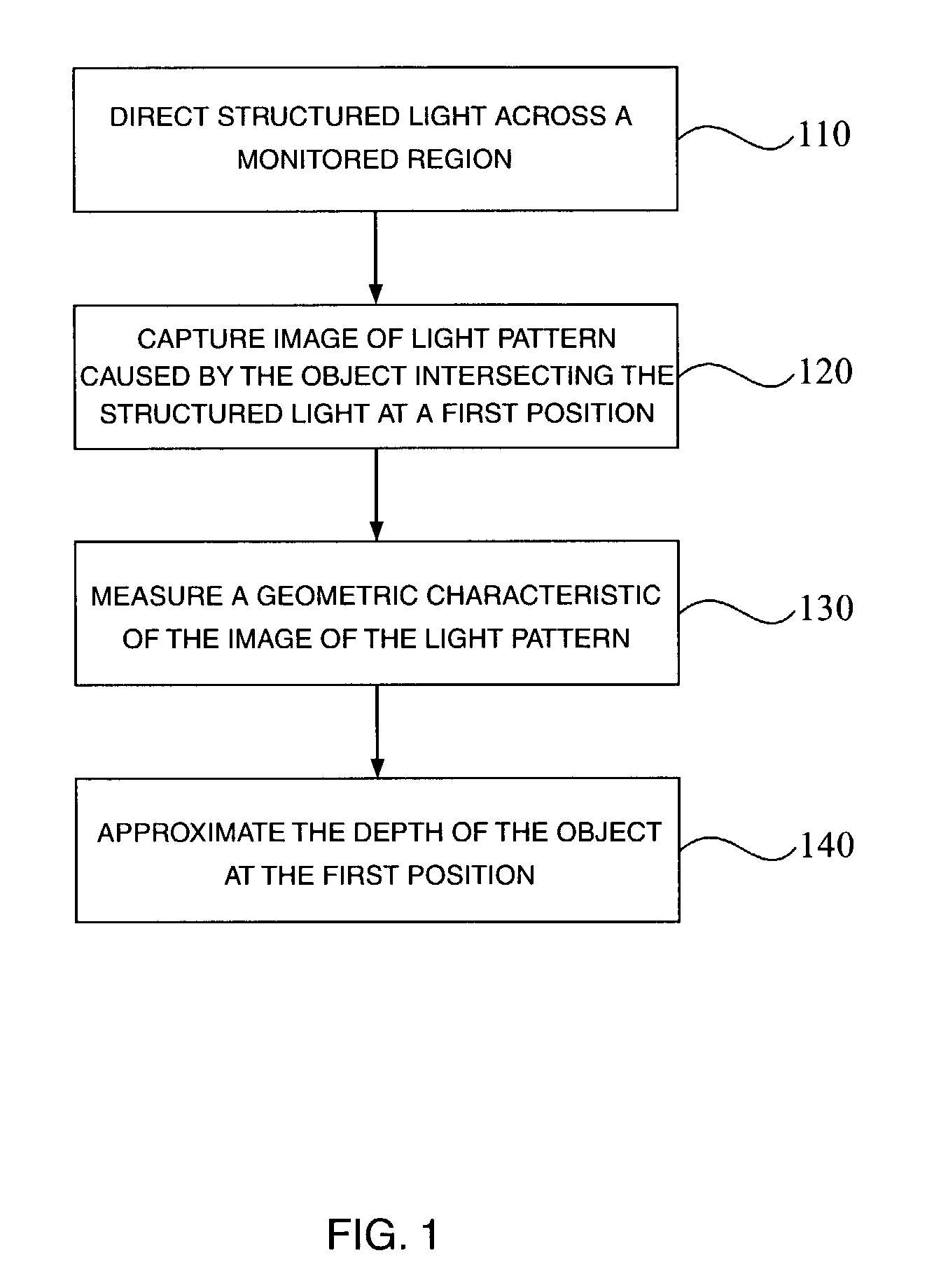

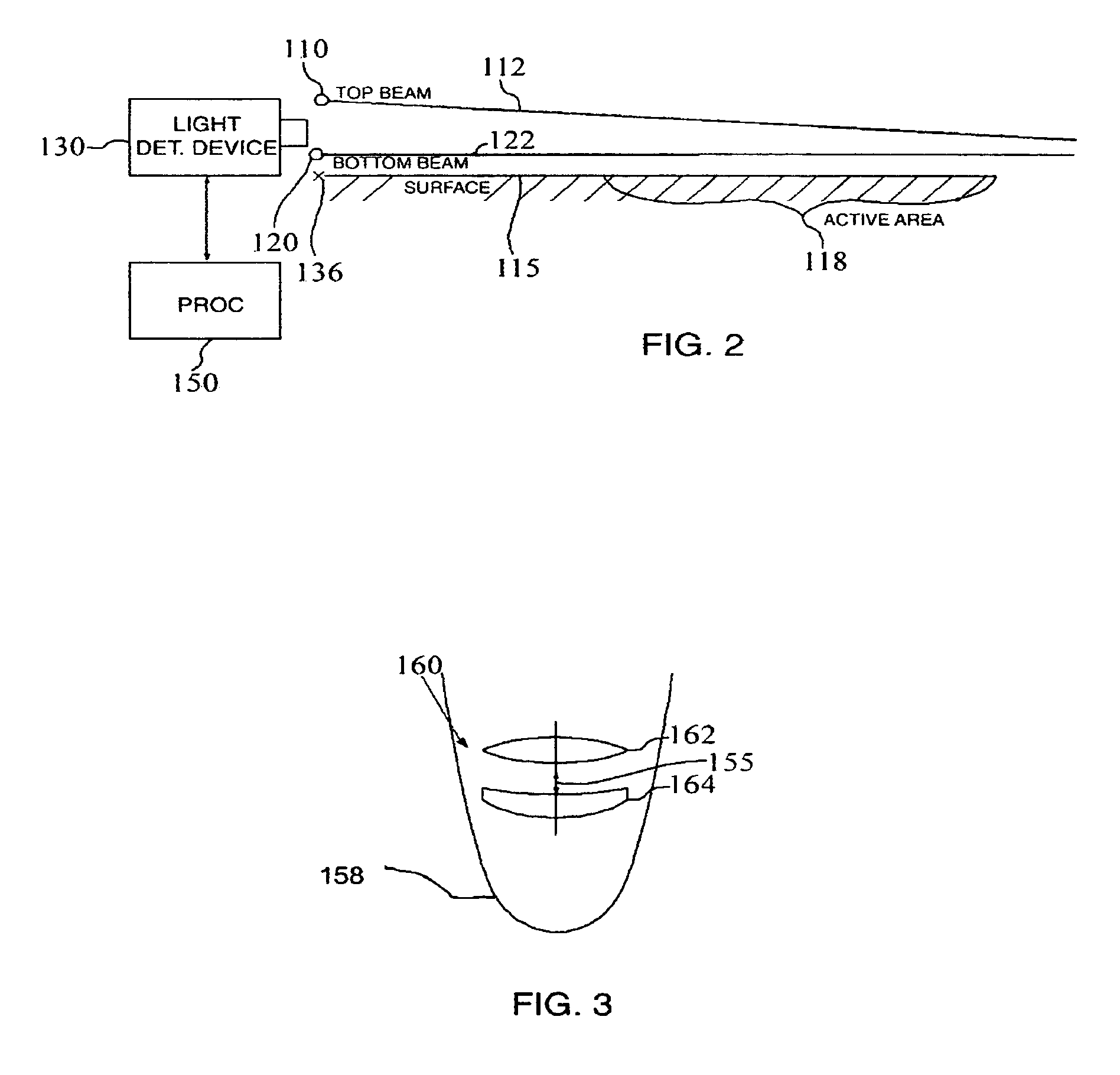

Method and apparatus for approximating depth of an object's placement onto a monitored region with applications to virtual interface devices

InactiveUS7006236B2Small sizeEasy to separateDigital data processing detailsCathode-ray tube indicatorsStructured light

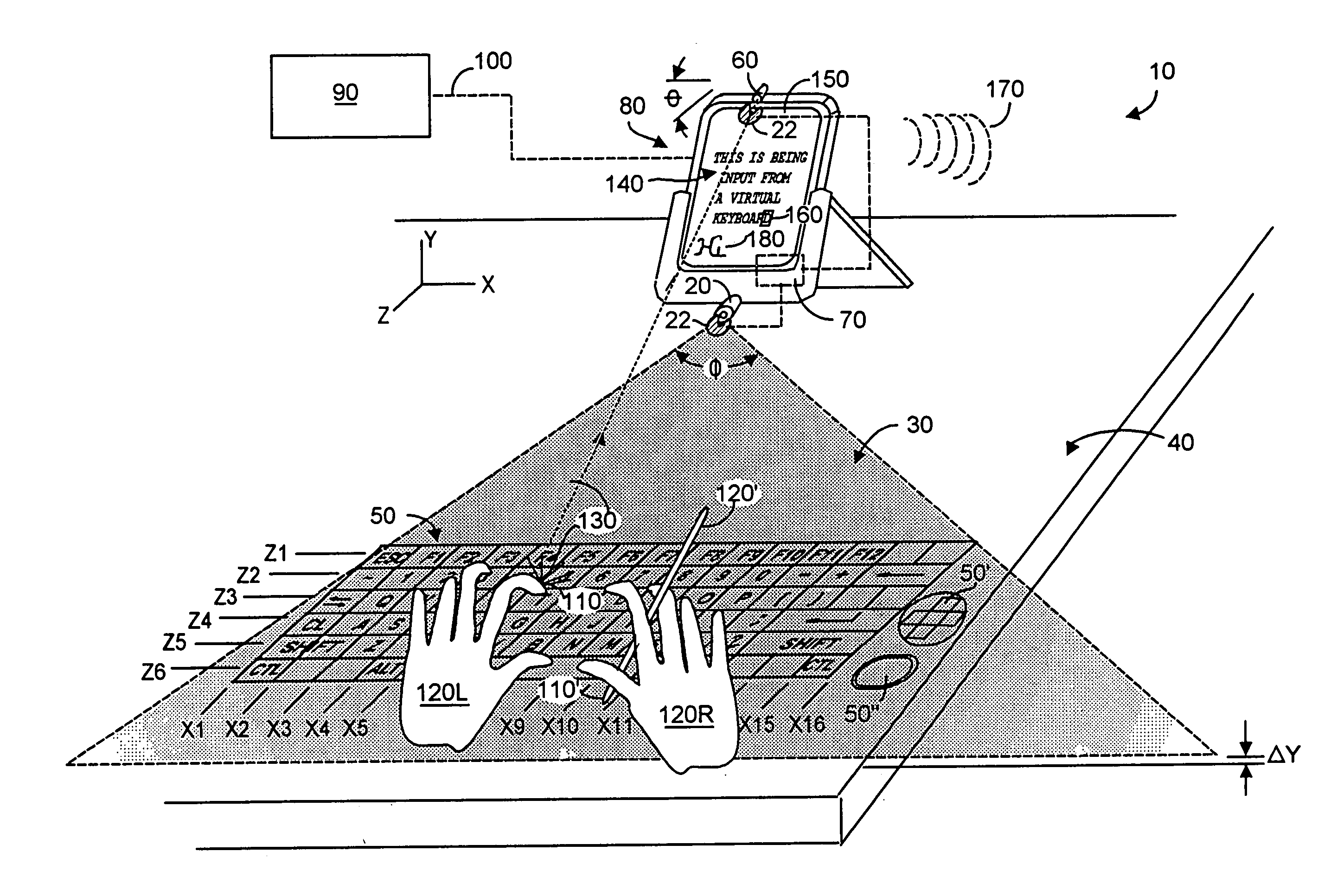

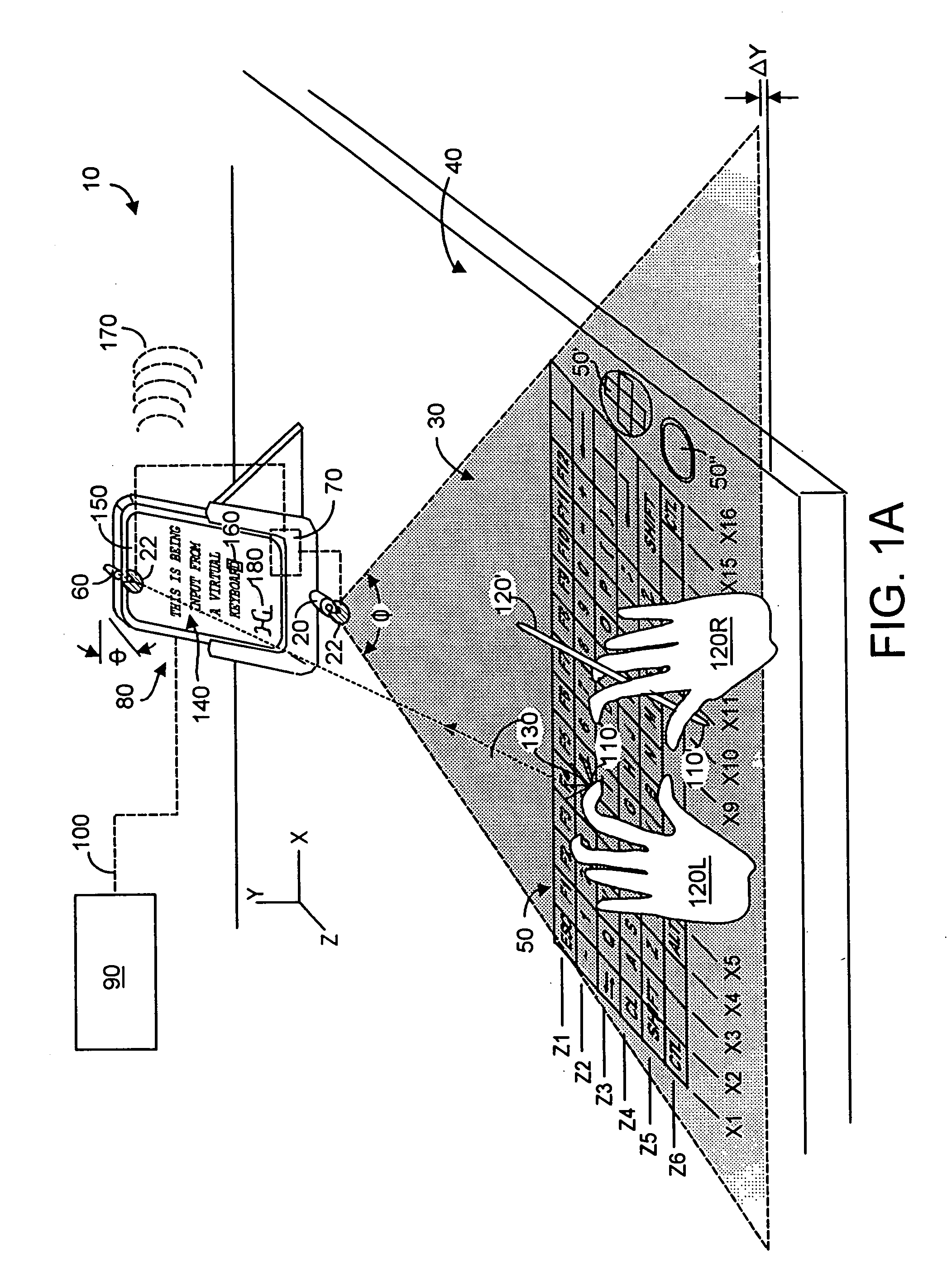

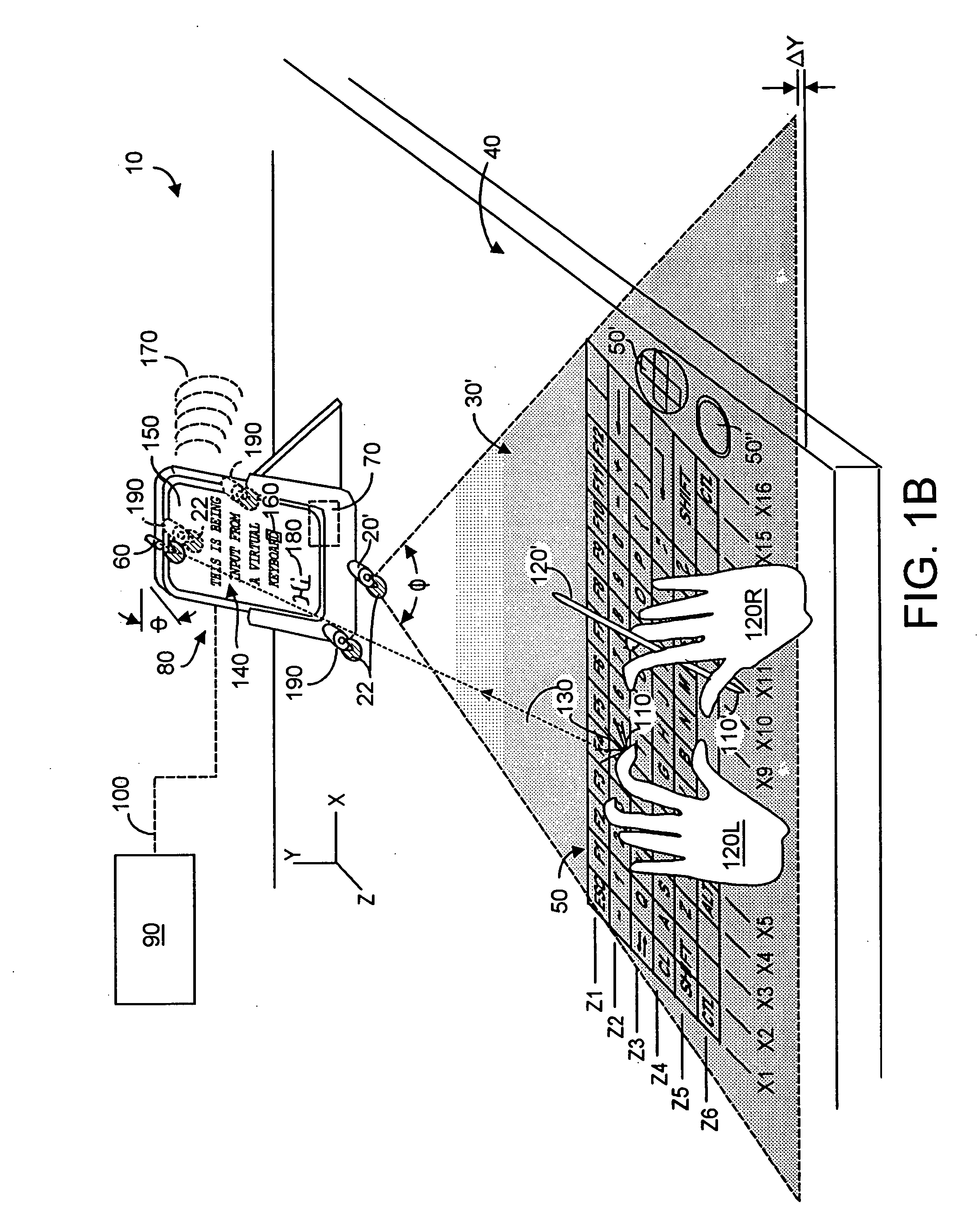

Structured light is directed across a monitored region. An image is captured of a light pattern that forms on the object as a result of the object intersecting the structured light when the object is placed at a first position in the monitored region. A geometric characteristic is identified of the image of the light pattern. The geometric characteristic is variable with a depth of the first position relative to where the image is captured. The depth of the first position is approximated based on the measured geometric characteristic.

Owner:HEILONGJIANG GOLDEN JUMPING GRP

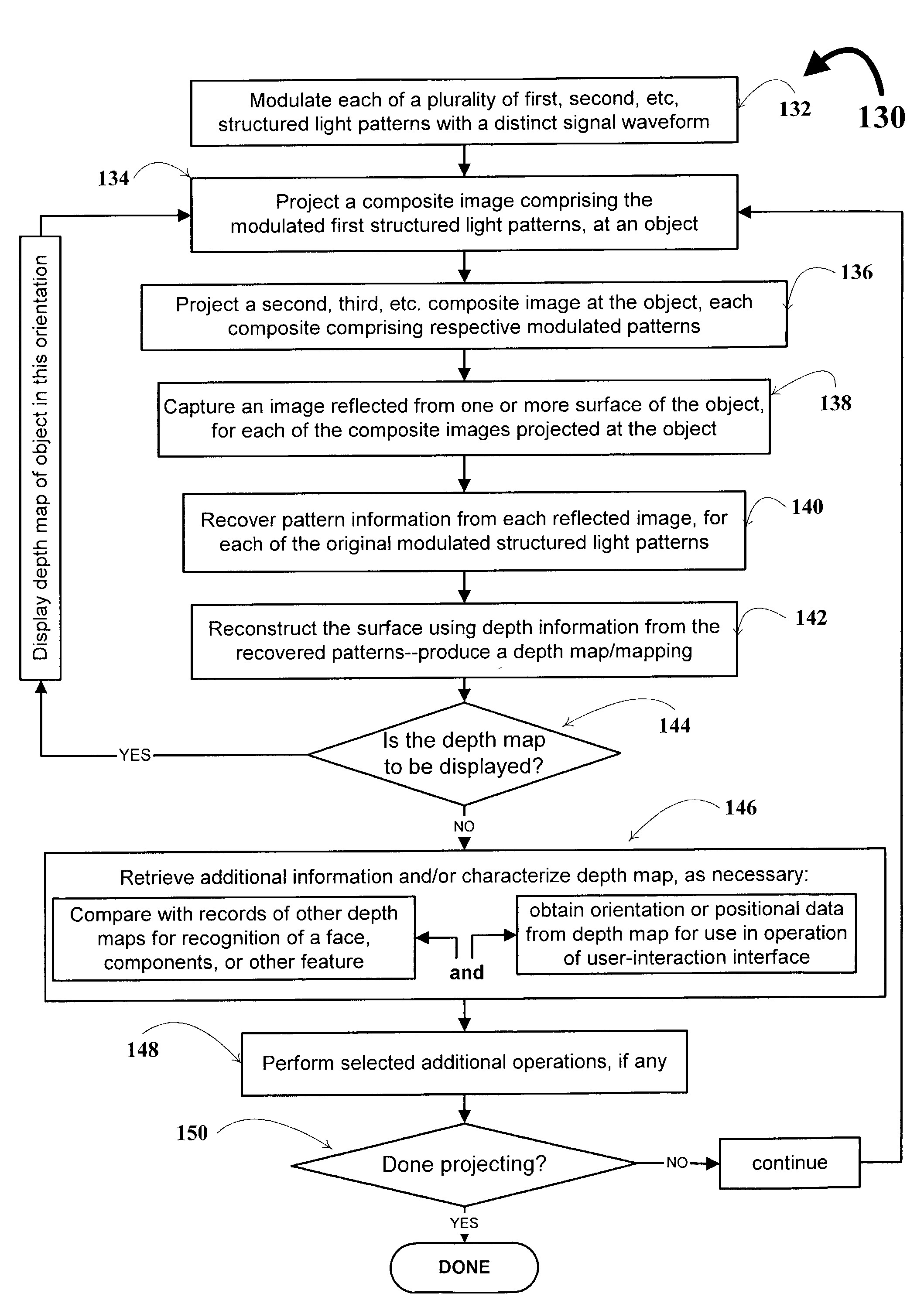

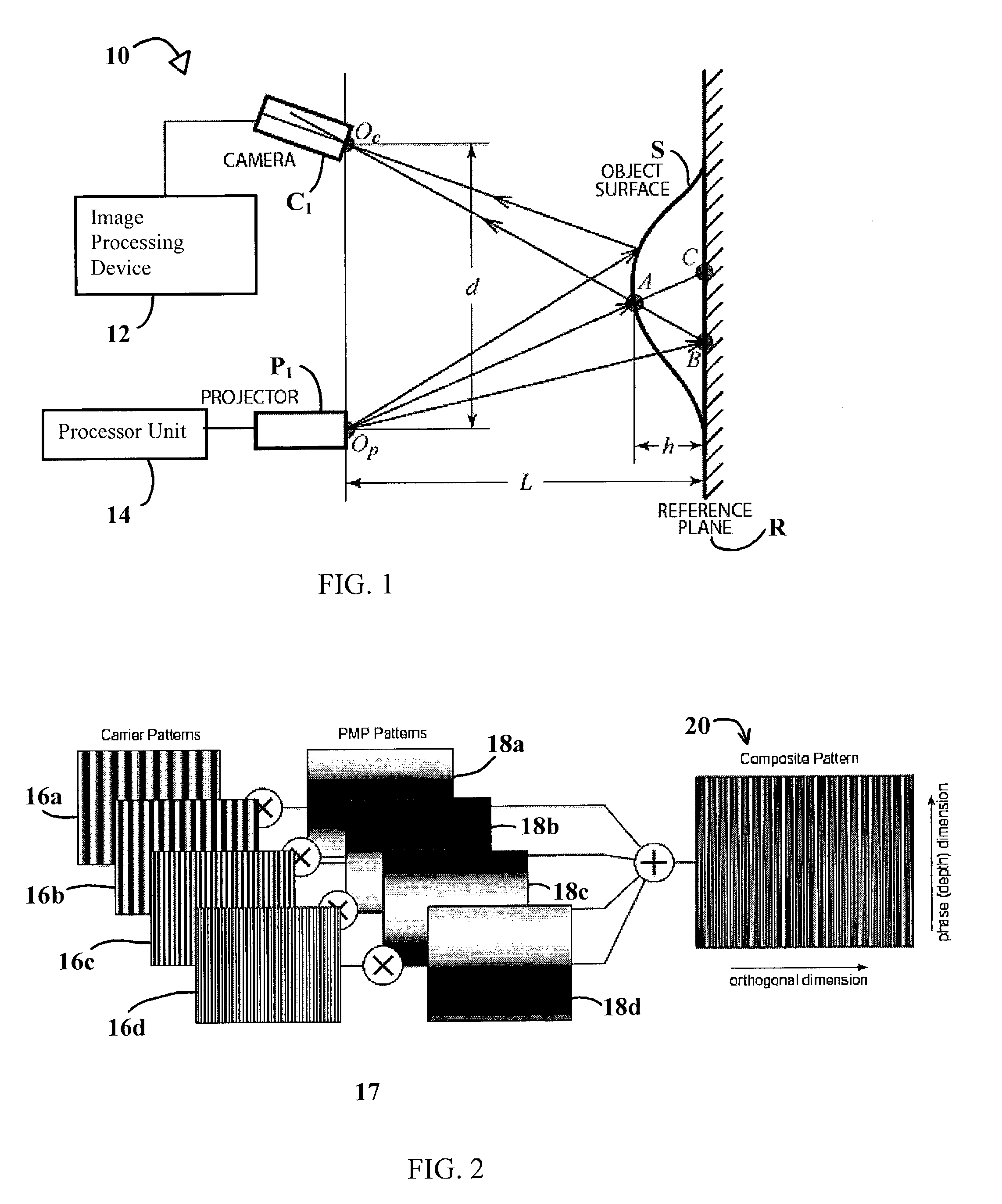

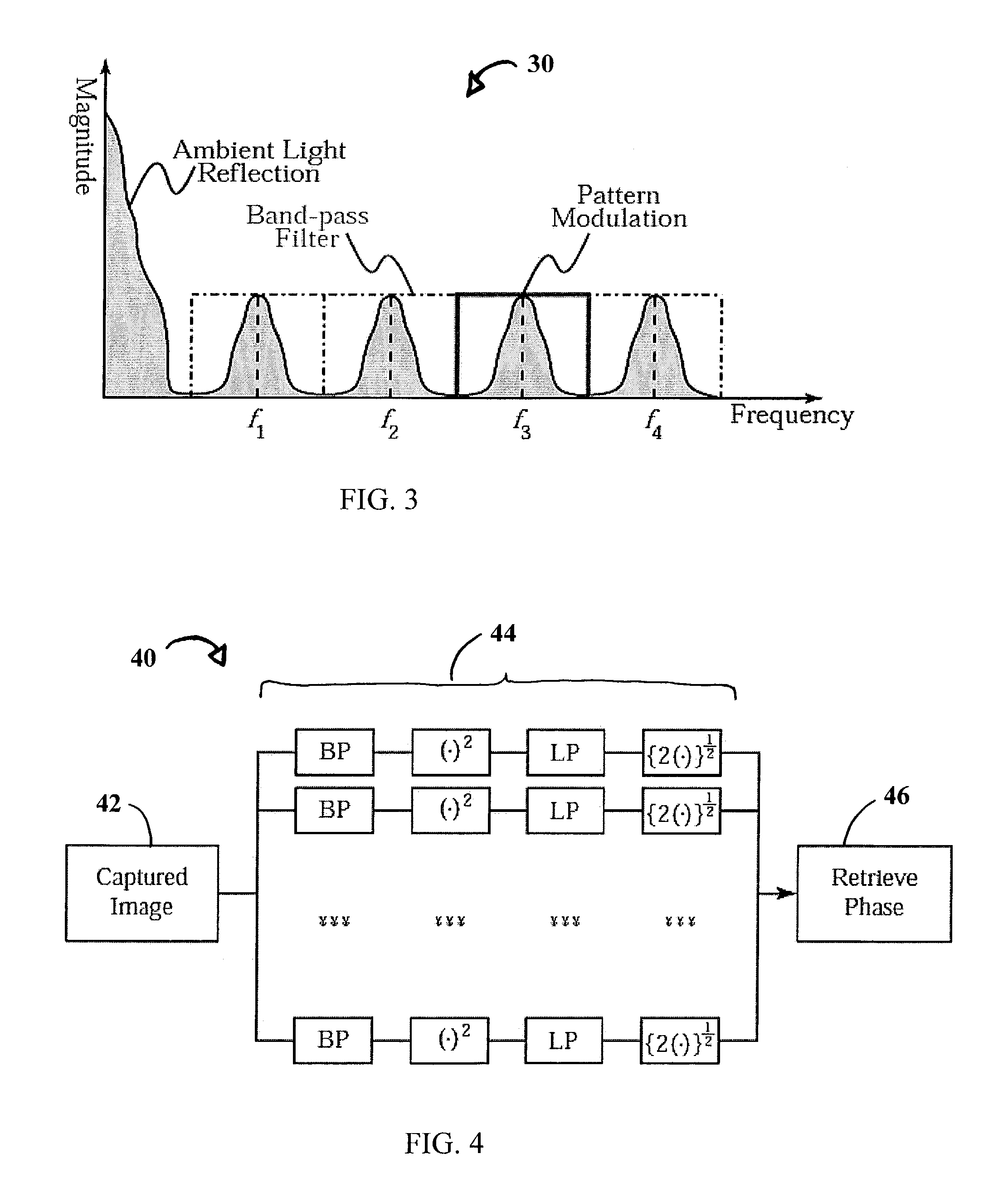

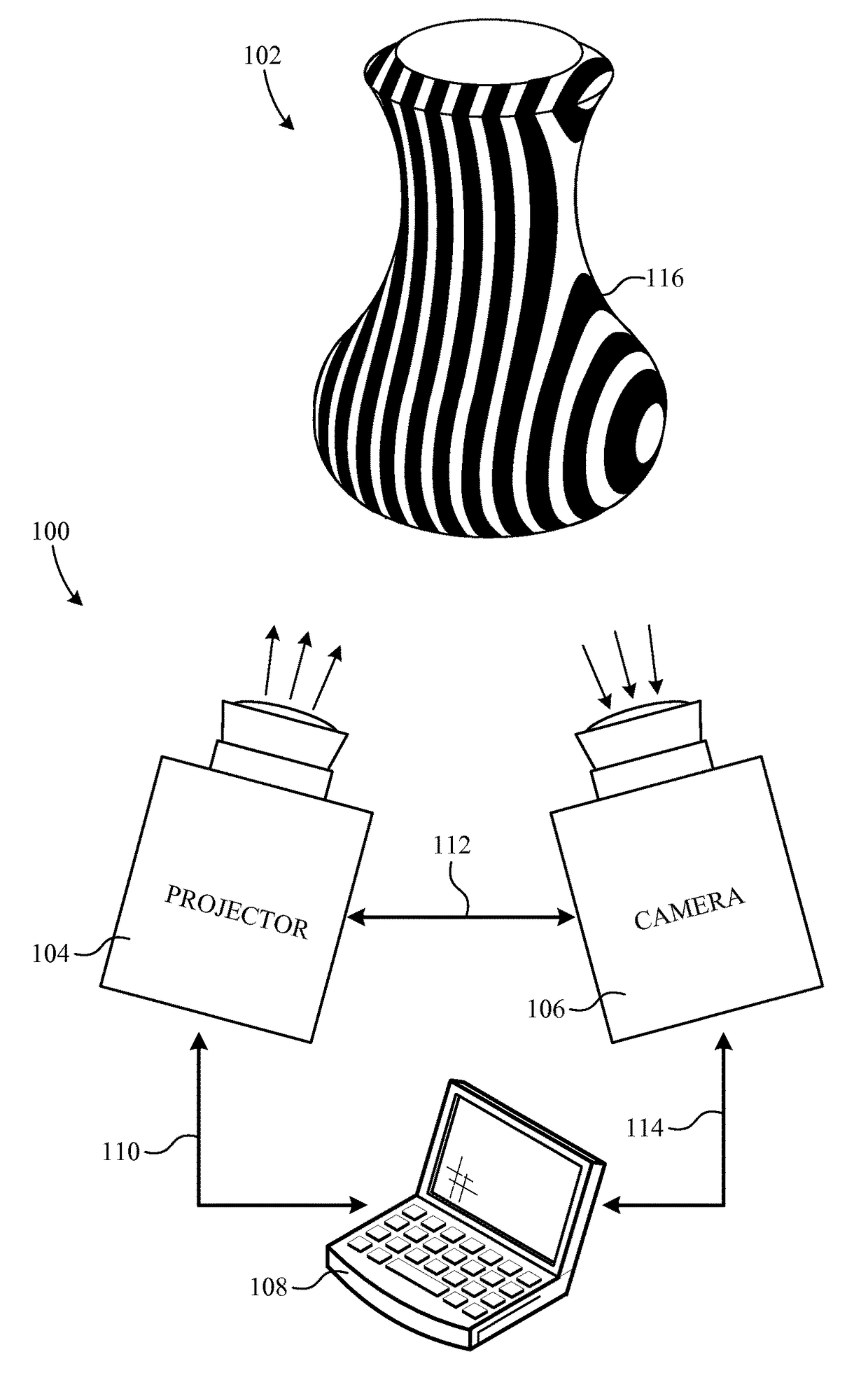

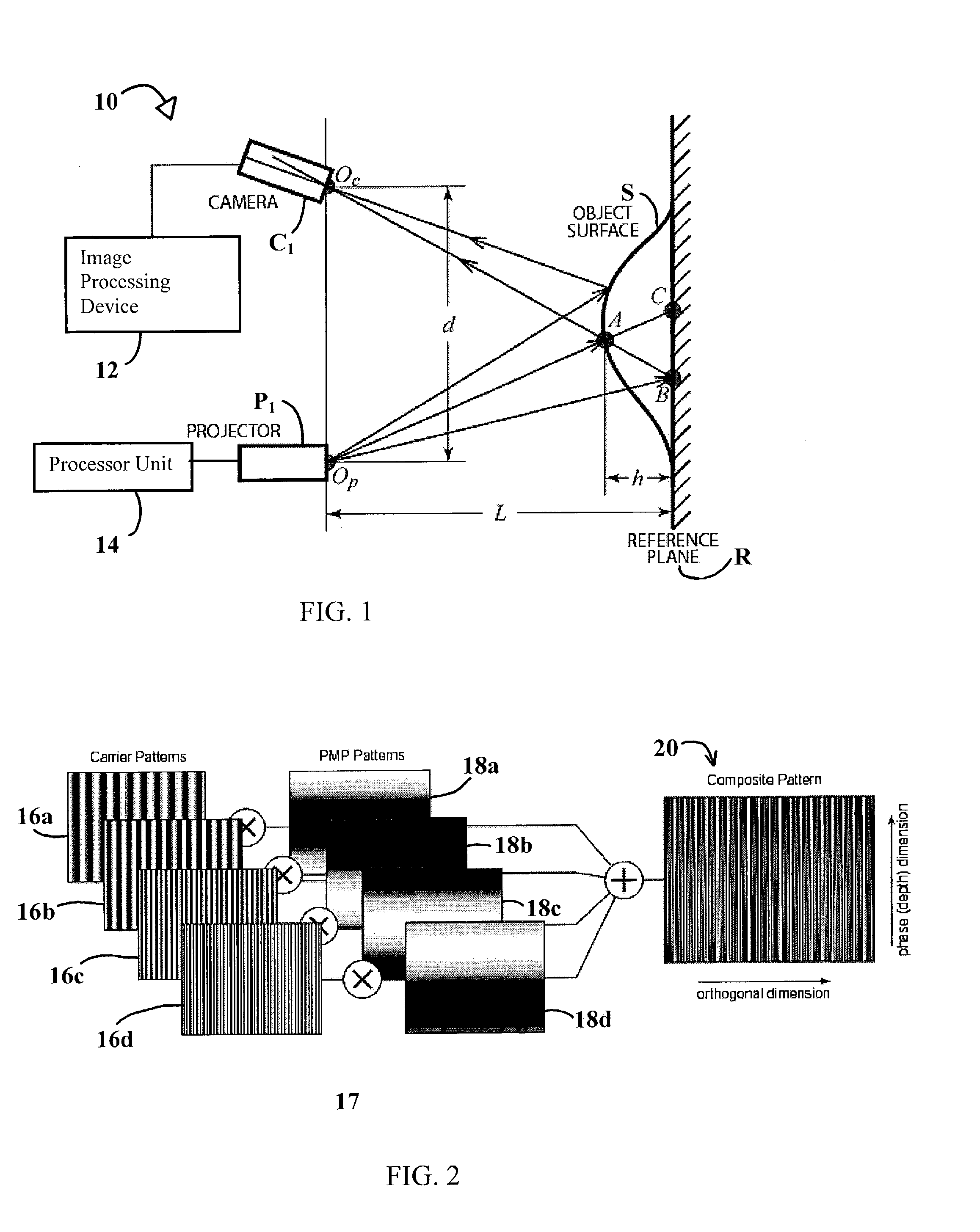

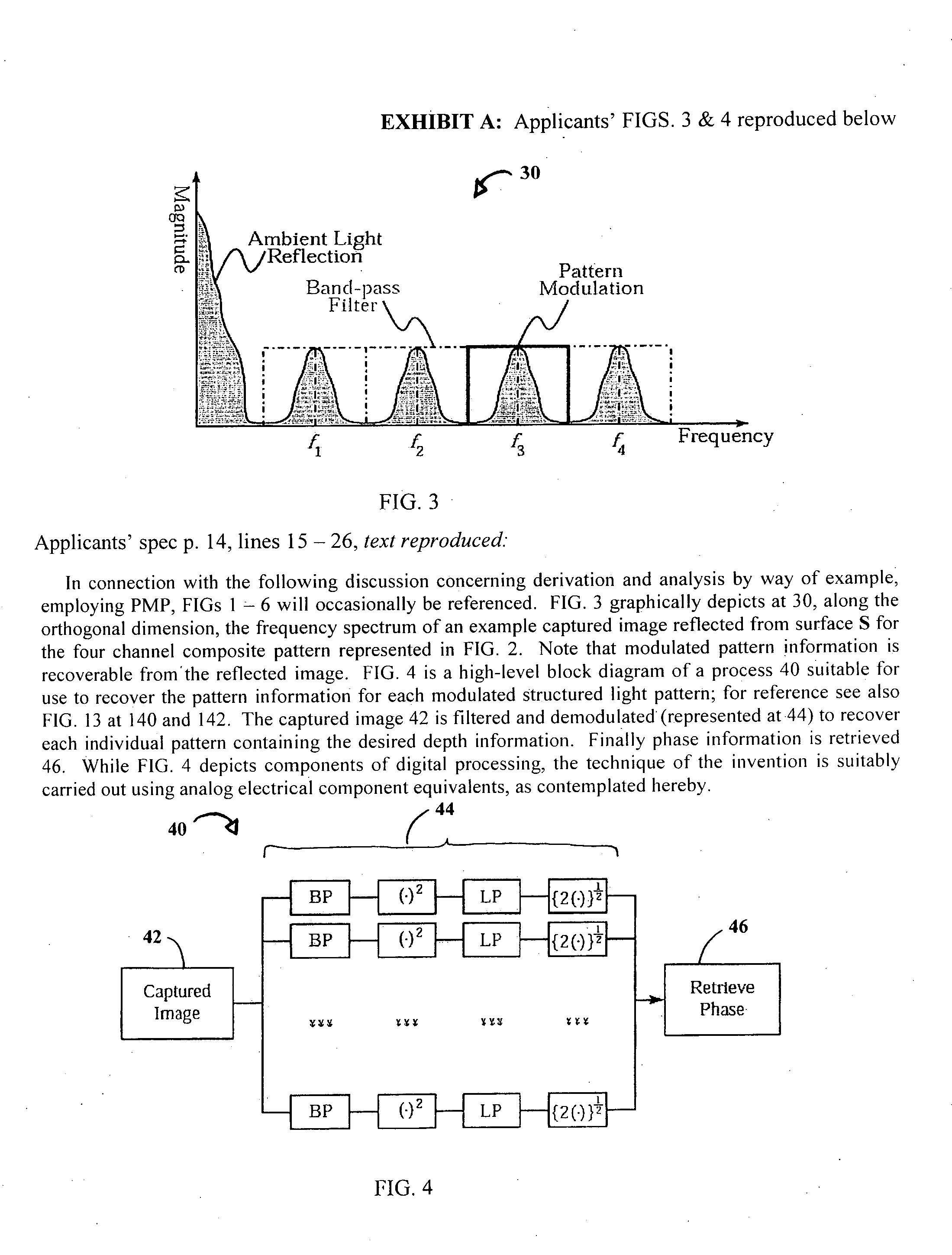

System and technique for retrieving depth information about a surface by projecting a composite image of modulated light patterns

InactiveUS7440590B1More detailed and large depth mappingLimited bandwidthProjectorsCathode-ray tube indicatorsInteraction interfaceTelecollaboration

A technique, associated system and program code, for retrieving depth information about at least one surface of an object. Core features include: projecting a composite image comprising a plurality of modulated structured light patterns, at the object; capturing an image reflected from the surface; and recovering pattern information from the reflected image, for each of the modulated structured light patterns. Pattern information is preferably recovered for each modulated structured light pattern used to create the composite, by performing a demodulation of the reflected image. Reconstruction of the surface can be accomplished by using depth information from the recovered patterns to produce a depth map / mapping thereof. Each signal waveform used for the modulation of a respective structured light pattern, is distinct from each of the other signal waveforms used for the modulation of other structured light patterns of a composite image; these signal waveforms may be selected from suitable types in any combination of distinct signal waveforms, provided the waveforms used are uncorrelated with respect to each other. The depth map / mapping to be utilized in a host of applications, for example: displaying a 3-D view of the object; virtual reality user-interaction interface with a computerized device; face—or other animal feature or inanimate object—recognition and comparison techniques for security or identification purposes; and 3-D video teleconferencing / telecollaboration.

Owner:UNIV OF KENTUCKY RES FOUND

Method and apparatus for approximating depth of an object's placement onto a monitored region with applications to virtual interface devices

InactiveUS7050177B2Small sizeEasy to separateDigital data processing detailsCathode-ray tube indicatorsComputer scienceStructured light

Structured light is directed across a monitored region. An image is captured of a light pattern that forms on the object as a result of the object intersecting the structured light when the object is placed at a first position in the monitored region. A geometric characteristic is identified of the image of the light pattern. The geometric characteristic is variable with a depth of the first position relative to where the image is captured. The depth of the first position is approximated based on the measured geometric characteristic.

Owner:HEILONGJIANG GOLDEN JUMPING GRP

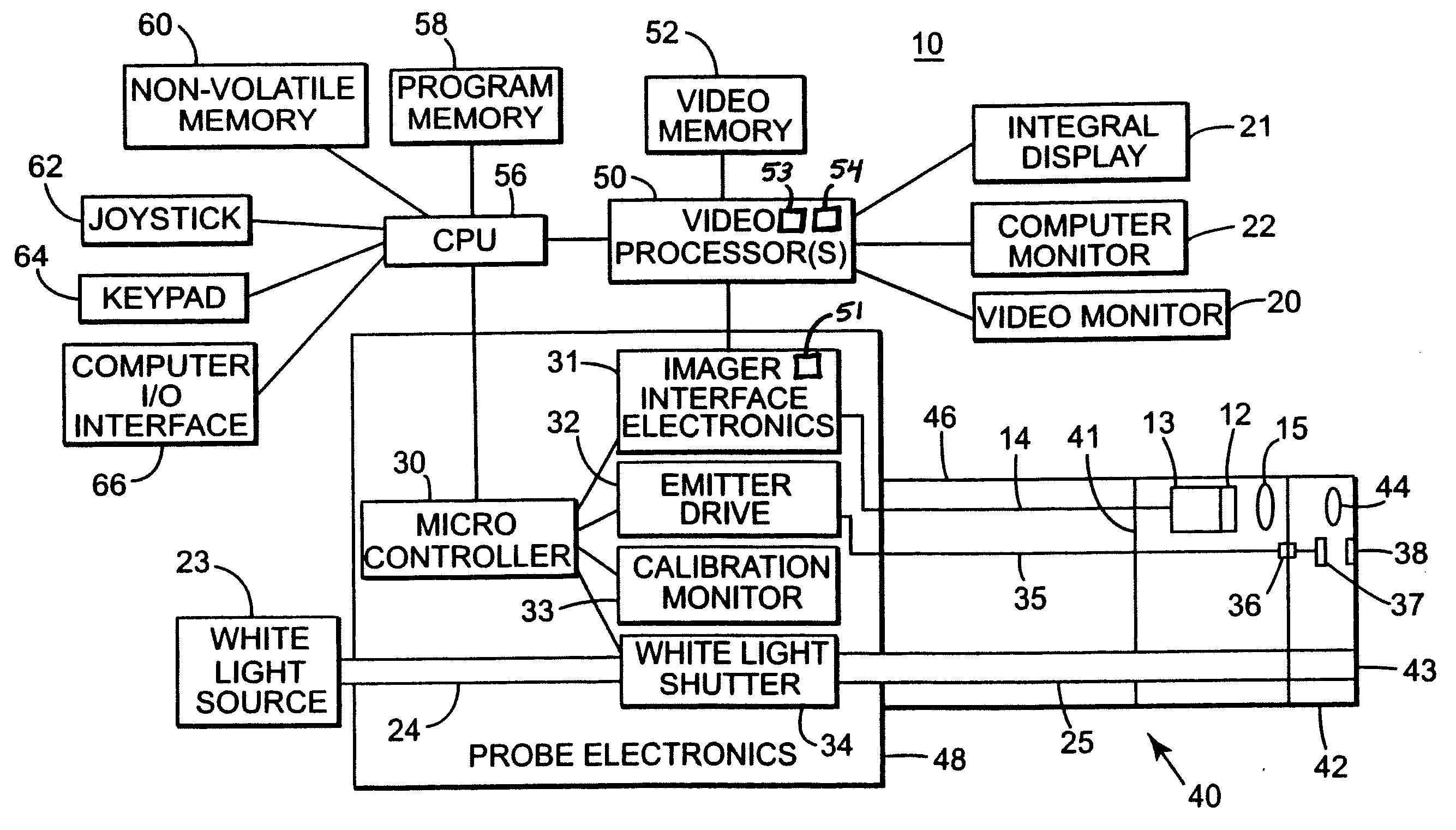

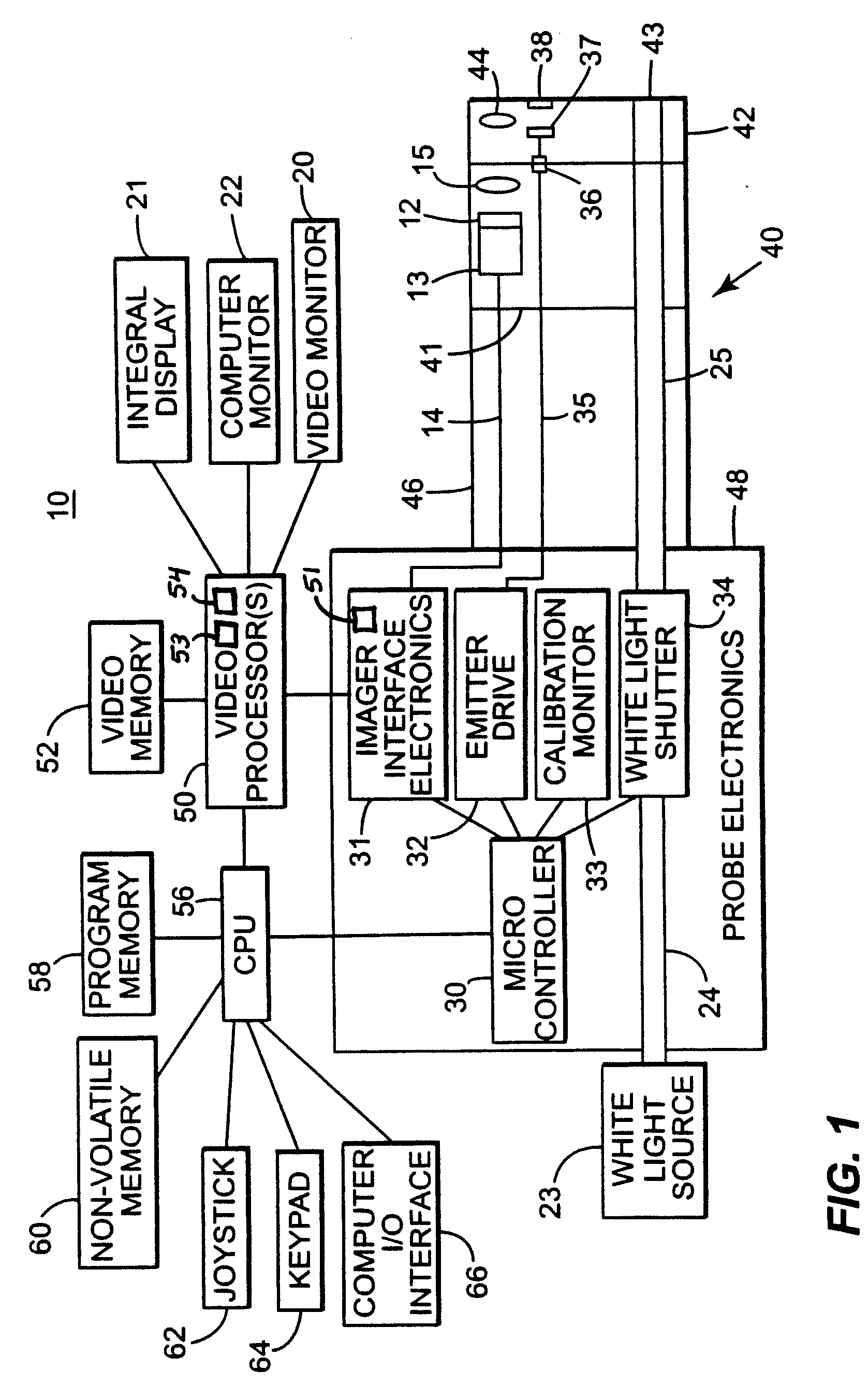

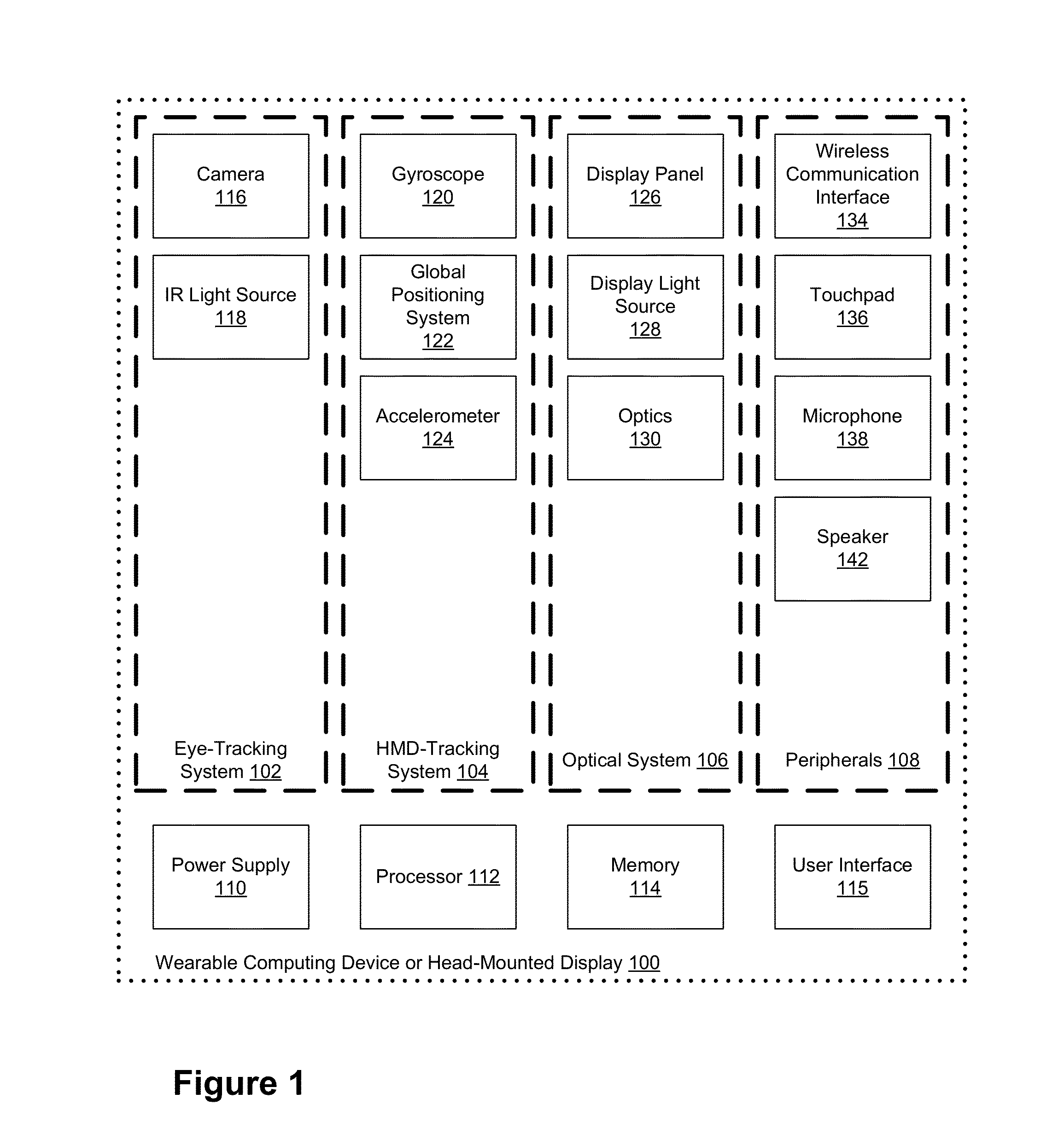

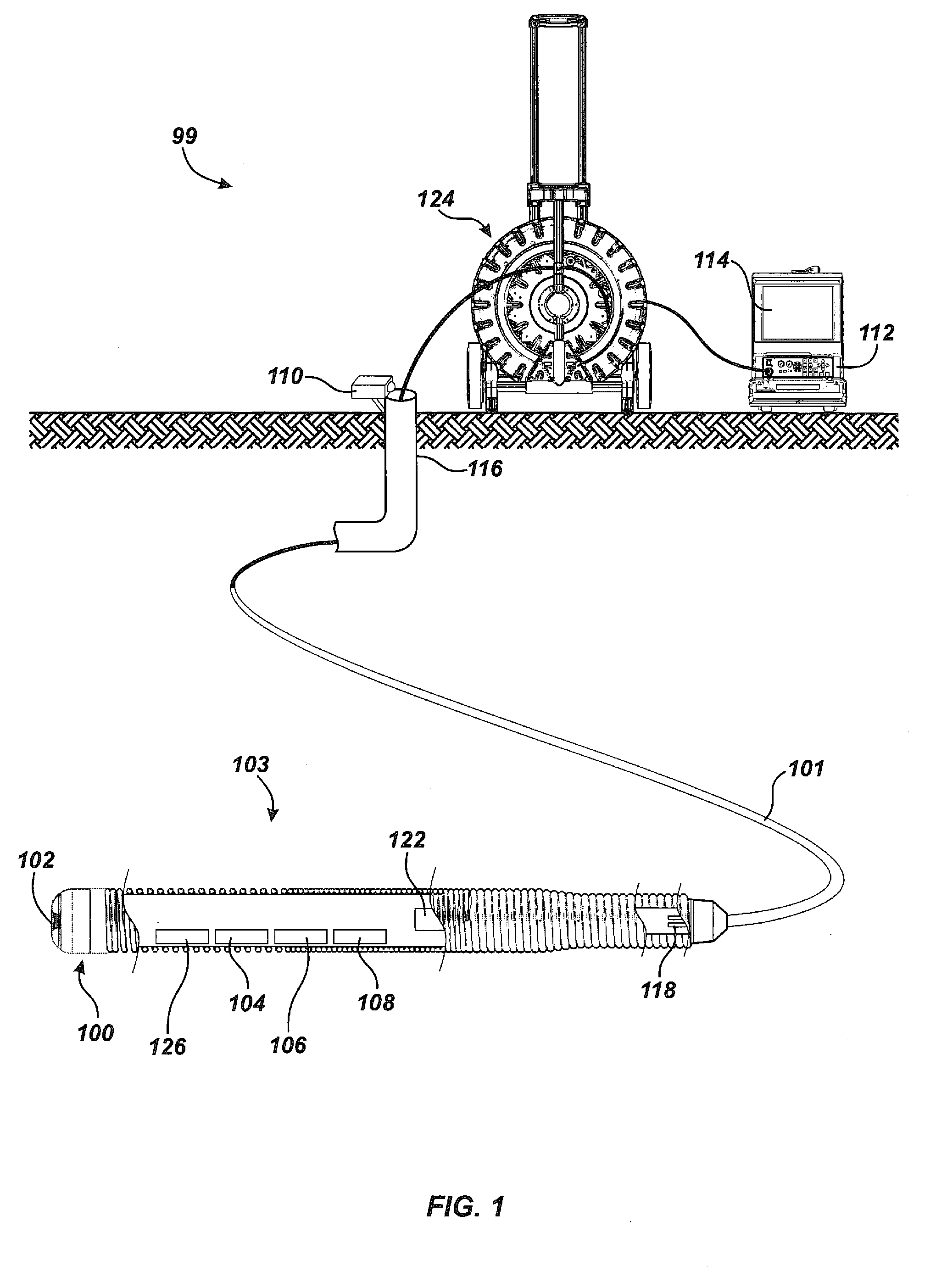

System aspects for a probe system that utilizes structured-light

ActiveUS20090225333A1Easy to useImage analysisMaterial analysis by optical meansSystem configurationStructured light

A probe system includes an imager and an inspection light source. The probe system is configured to operate in an inspection mode and a measurement mode. During inspection mode, the inspection light source is enabled. During measurement mode, the inspection light source is disabled, and a structured-light pattern is projected. The probe system is further configured to capture at least one measurement mode image. In the at least one measurement mode image, the structured-light pattern is projected onto an object. The probe system is configured to utilize pixel values from the at least one measurement mode image to determine at least one geometric dimension of the object. A probe system configured to detect relative movement between a probe and the object between captures of two or more of a plurality of images is also provided.

Owner:BAKER HUGHES OILFIELD OPERATIONS LLC

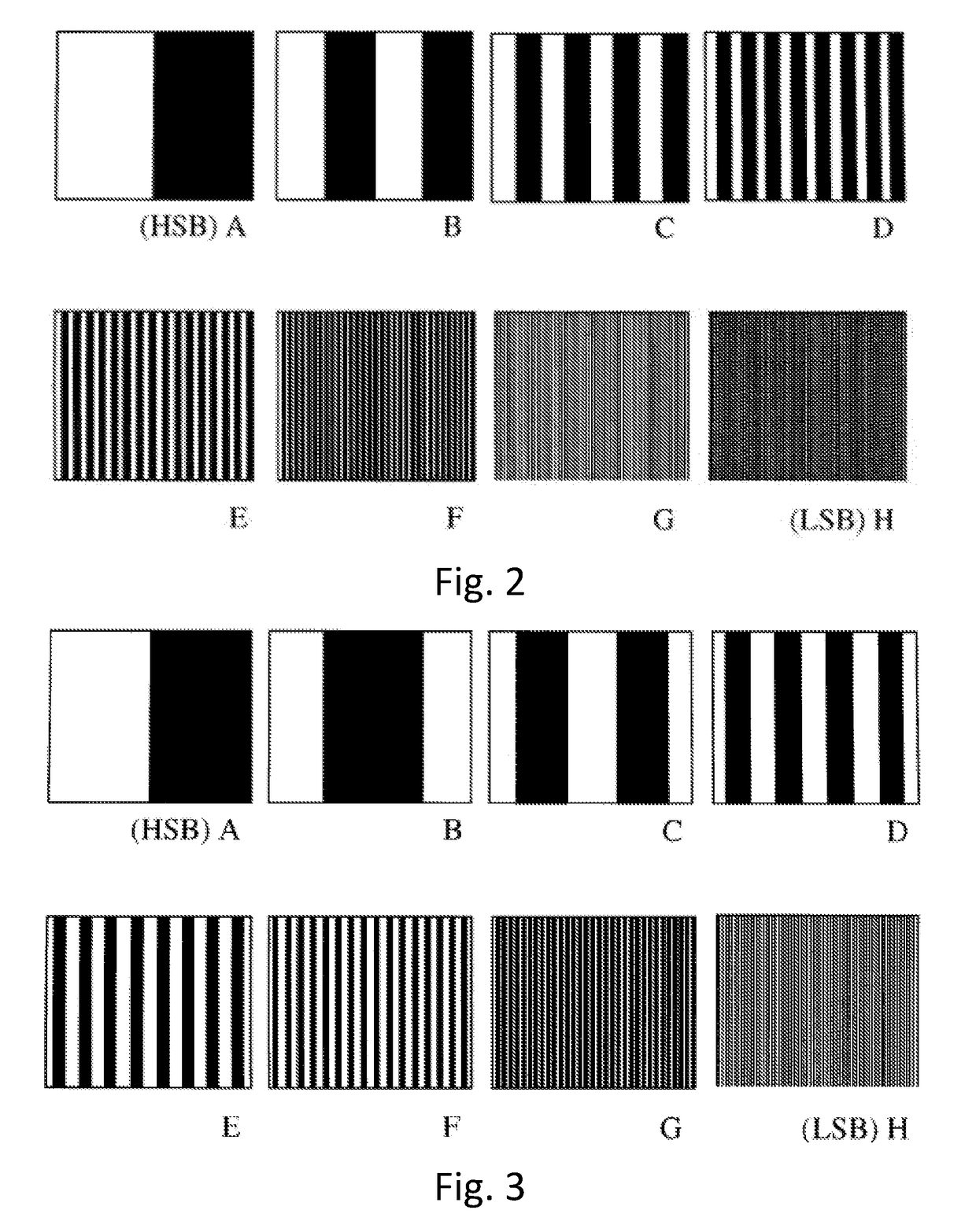

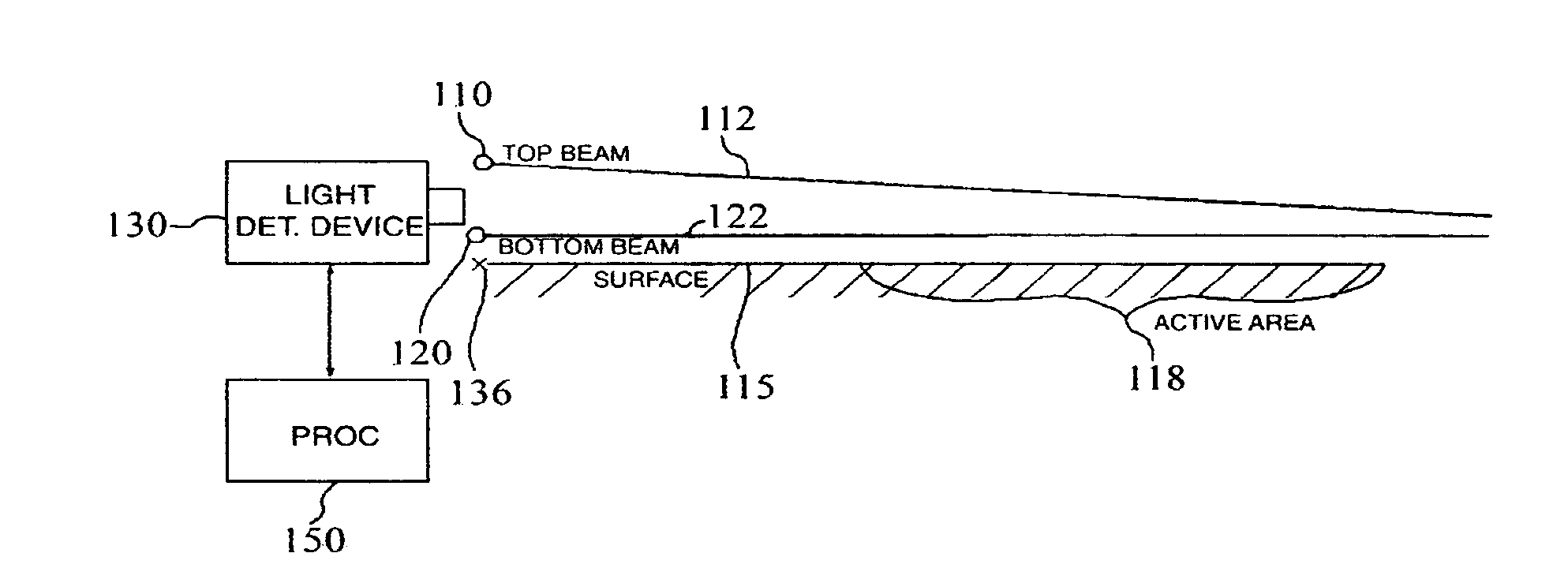

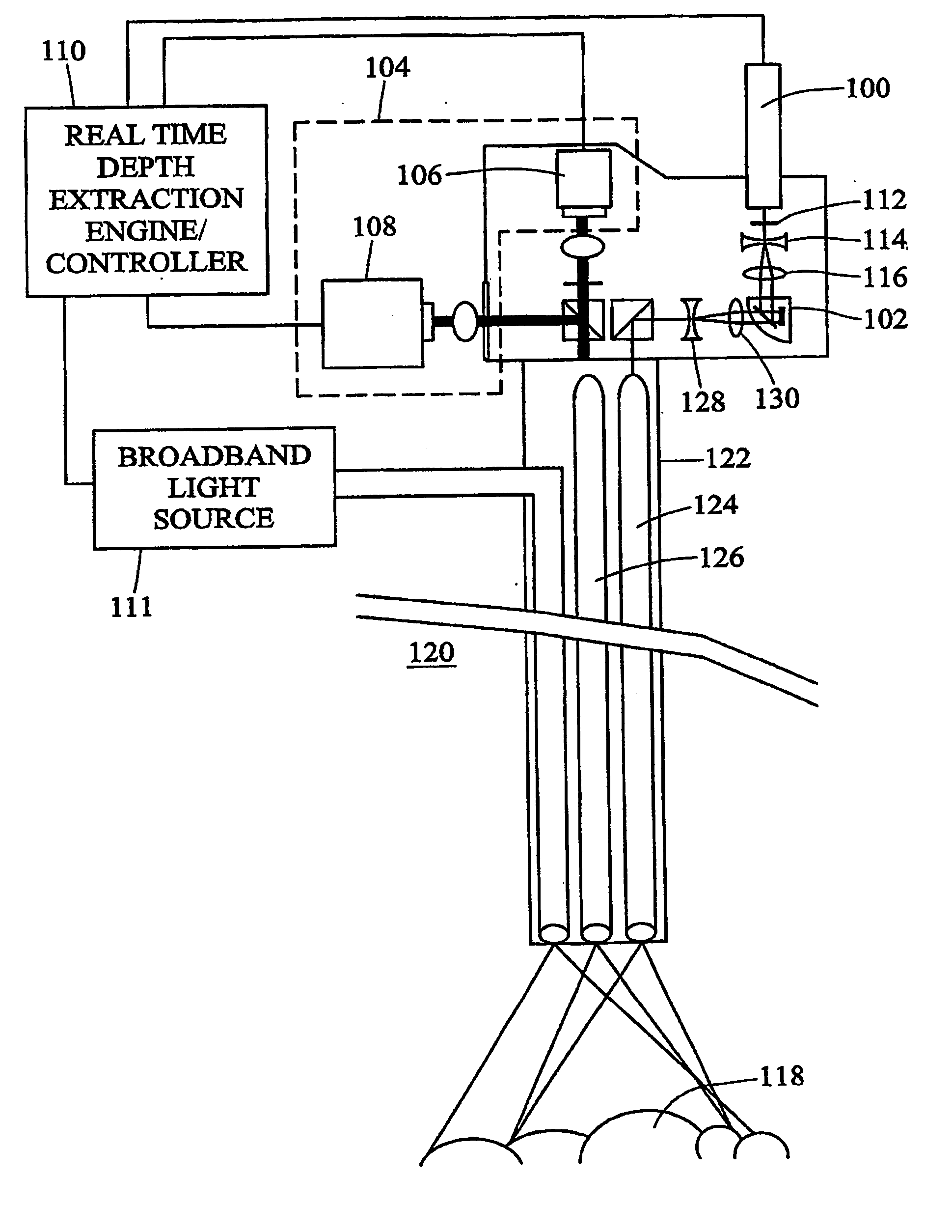

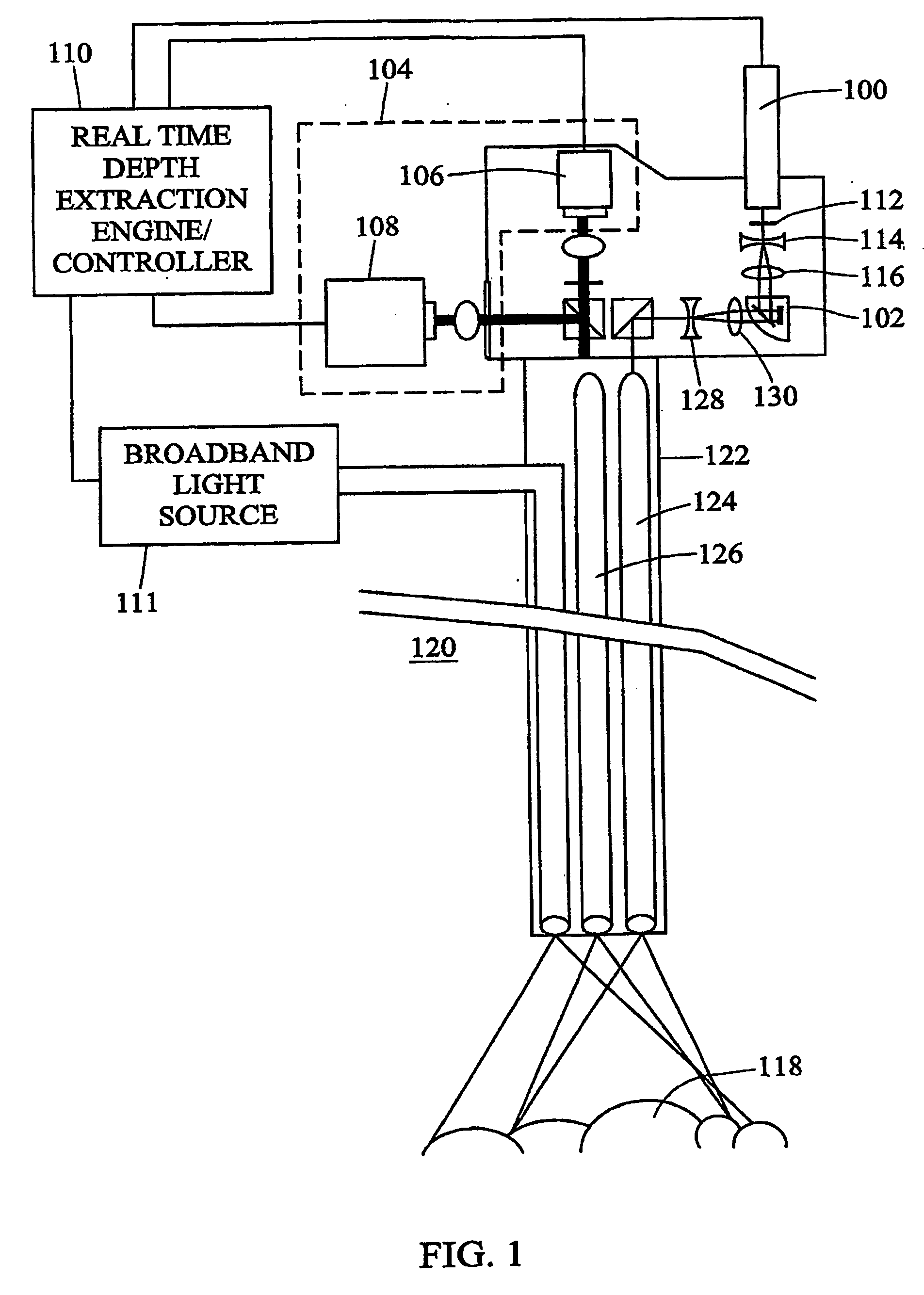

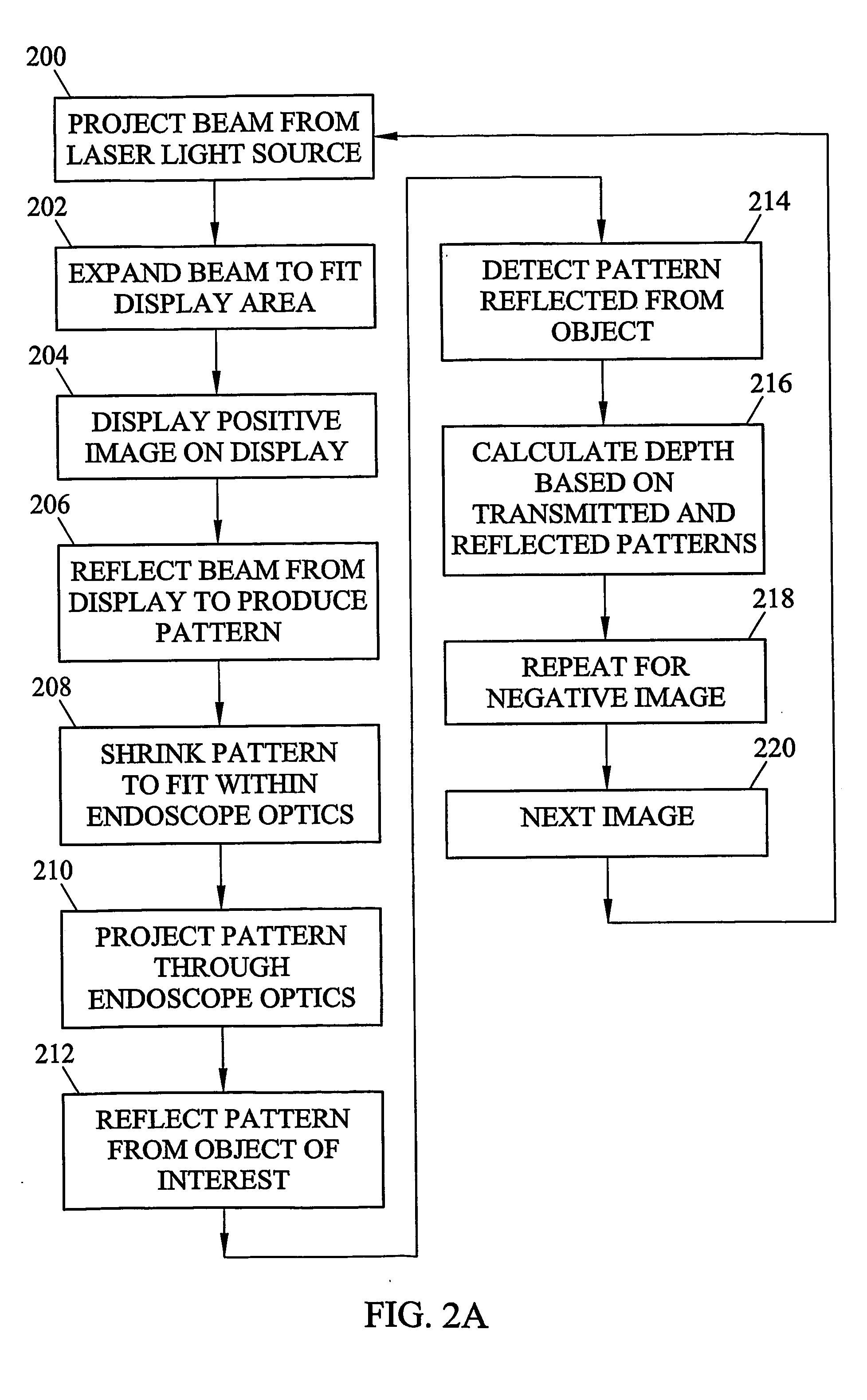

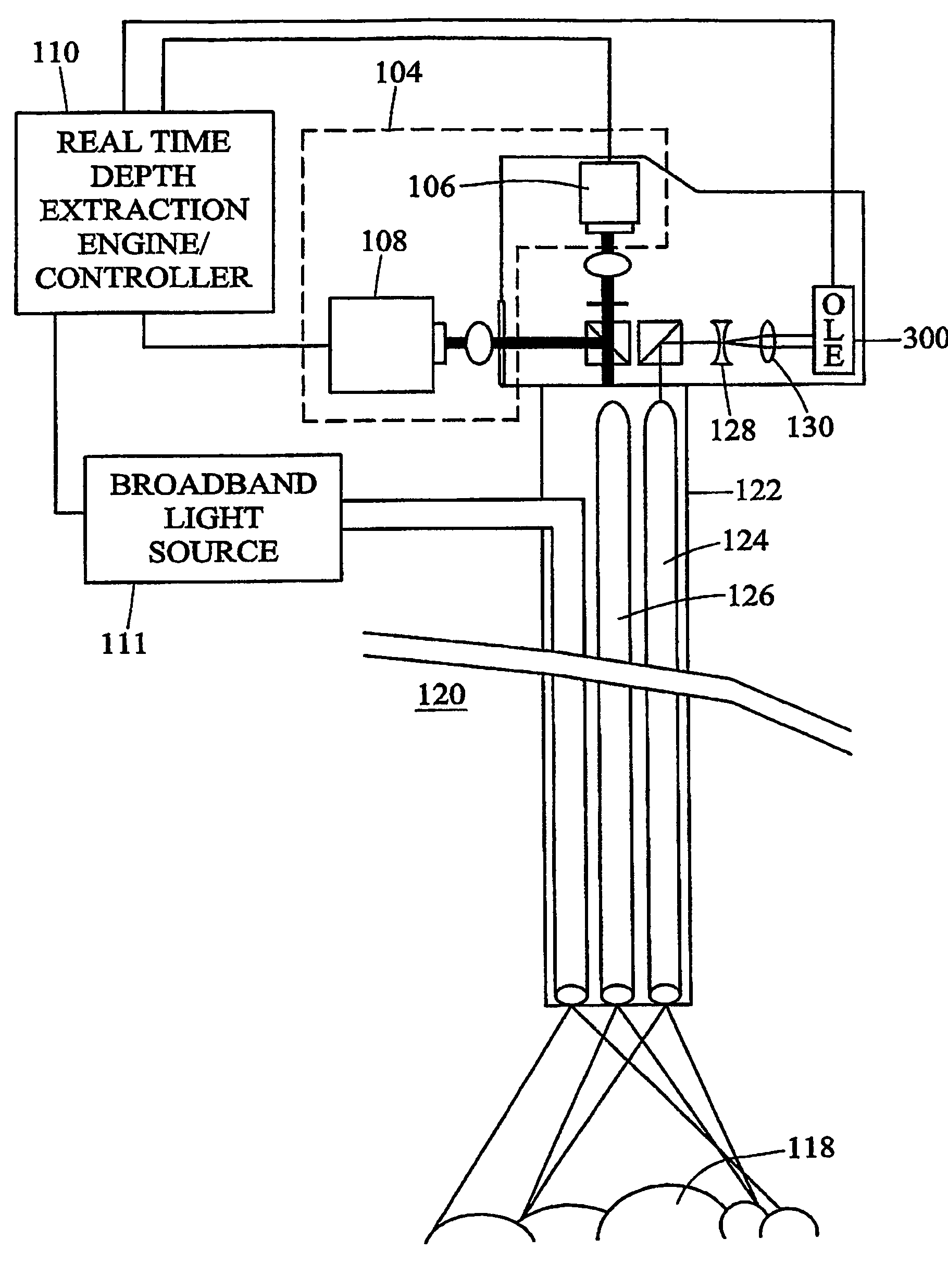

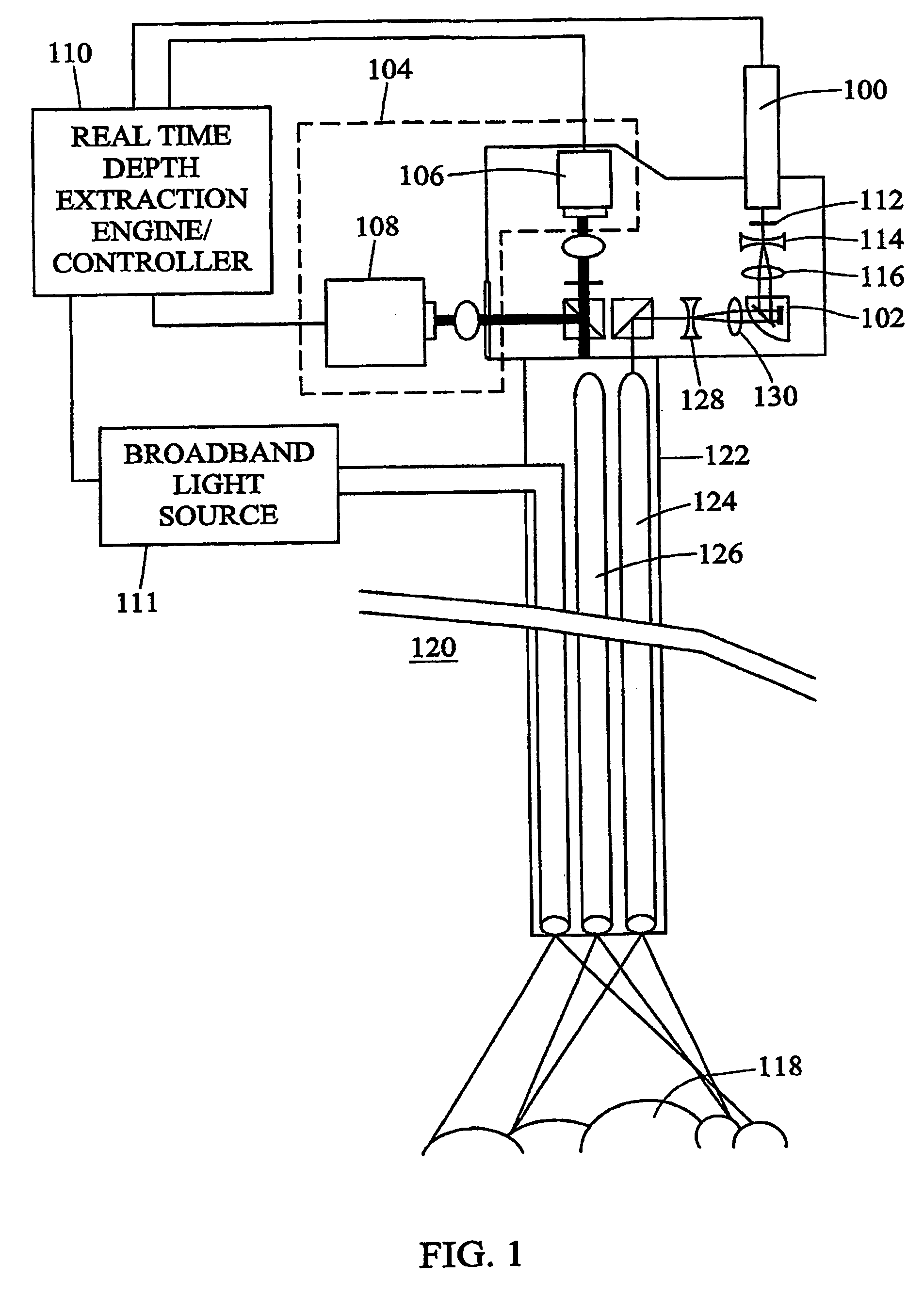

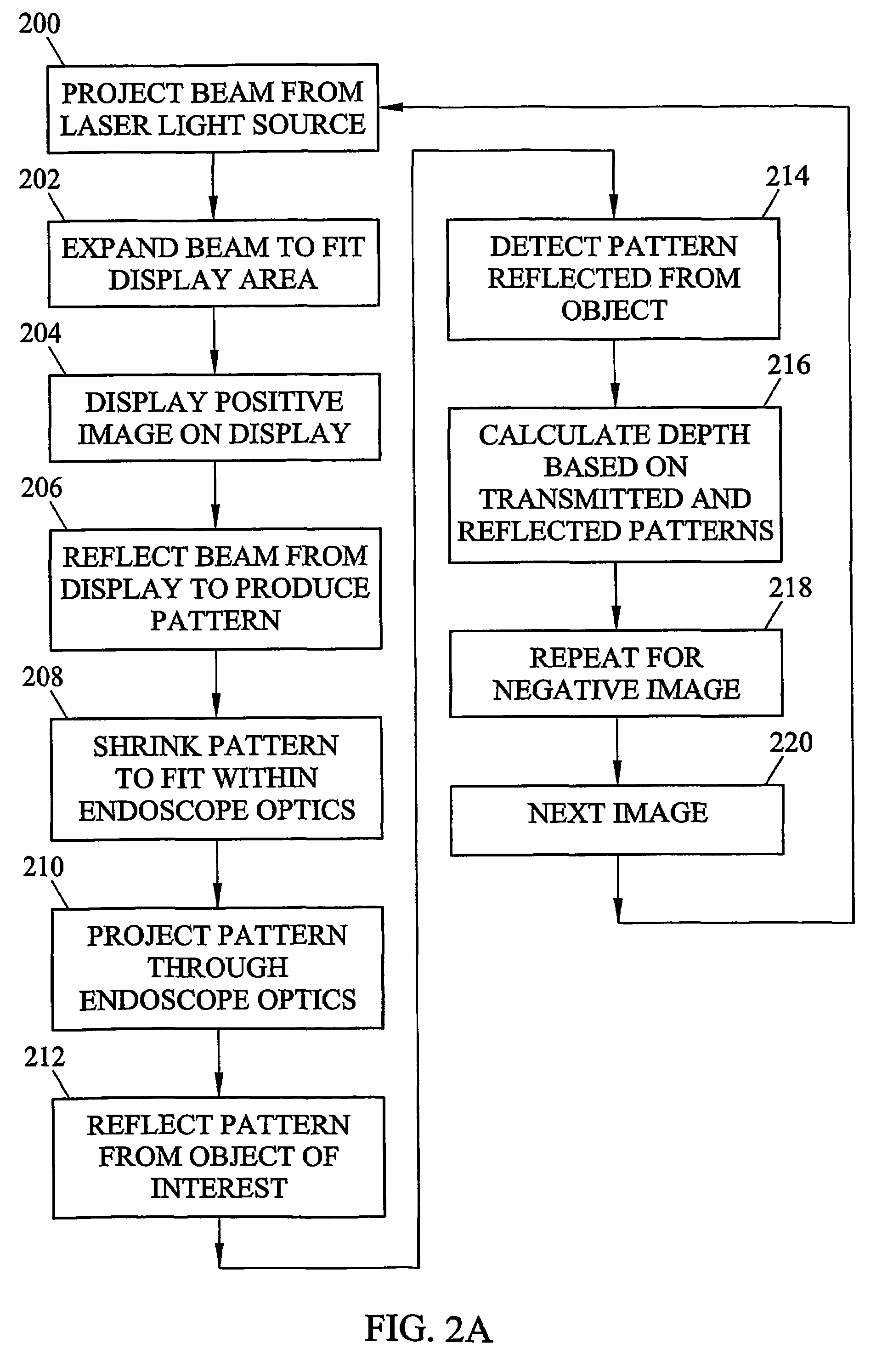

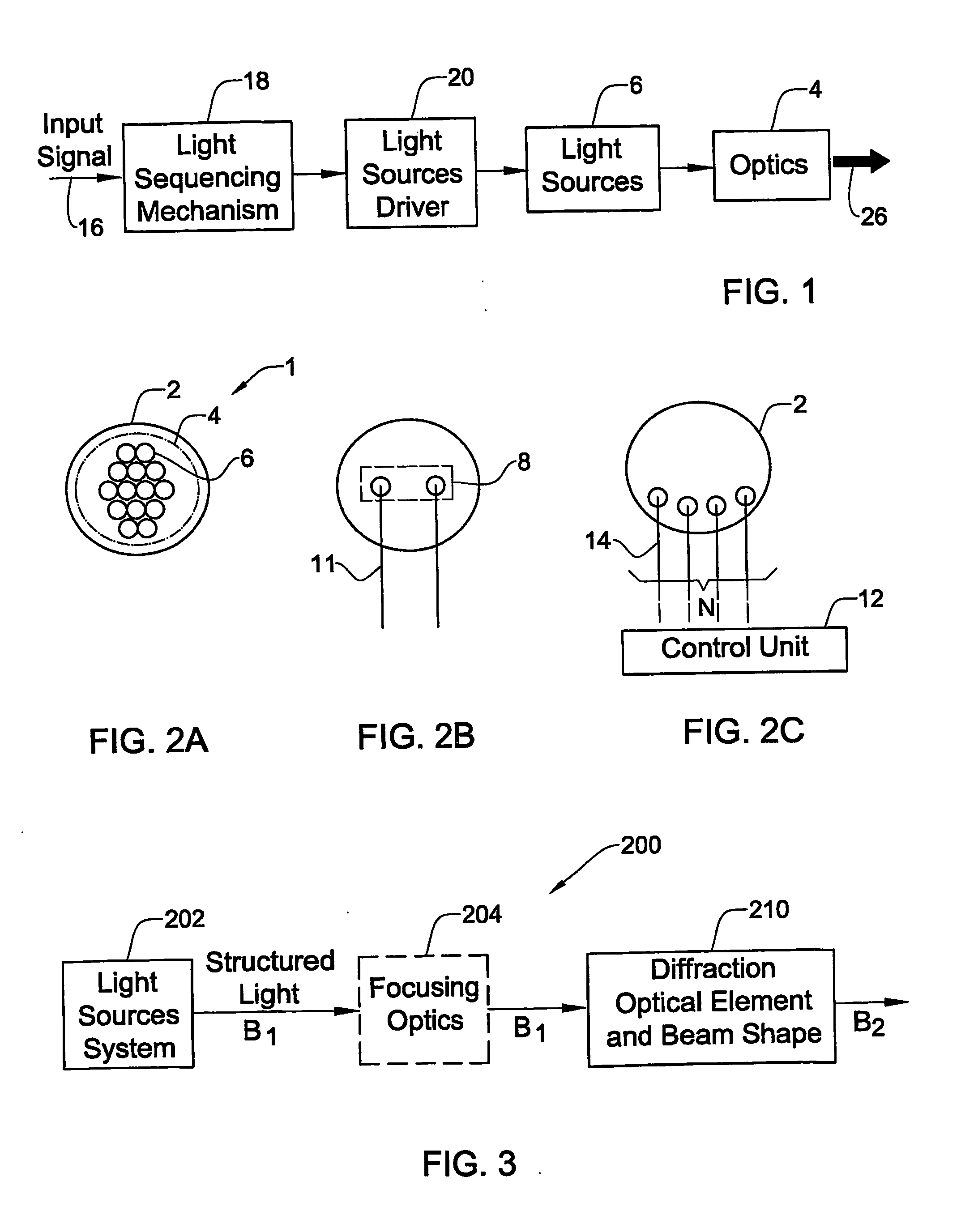

Methods and systems for laser based real-time structured light depth extraction

InactiveUS20050219552A1Photonic efficiencyImprove efficiencyImage analysisSurgeryLow speedImage resolution

Laser-based methods and systems for real-time structured light depth extraction are disclosed. A laser light source (100) produces a collimated beam of laser light. A pattern generator (102) generates structured light patterns including a plurality of pixels. The beam of laser light emanating from the laser light source (100) interacts with the patterns to project the patterns onto the object of (118). The patterns are reflected from the object of interest (118) and detected using a high-speed, low-resolution detector (106). A broadband light source (111) illuminates the object with broadband / light, and a separate high-resolution, low-speed detector (108) detects broadband light reflected from the object (118). A real-time structured light depth extraction engine / controller (110) based on the transmitted and reflected patterns and the reflected broadband light.

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

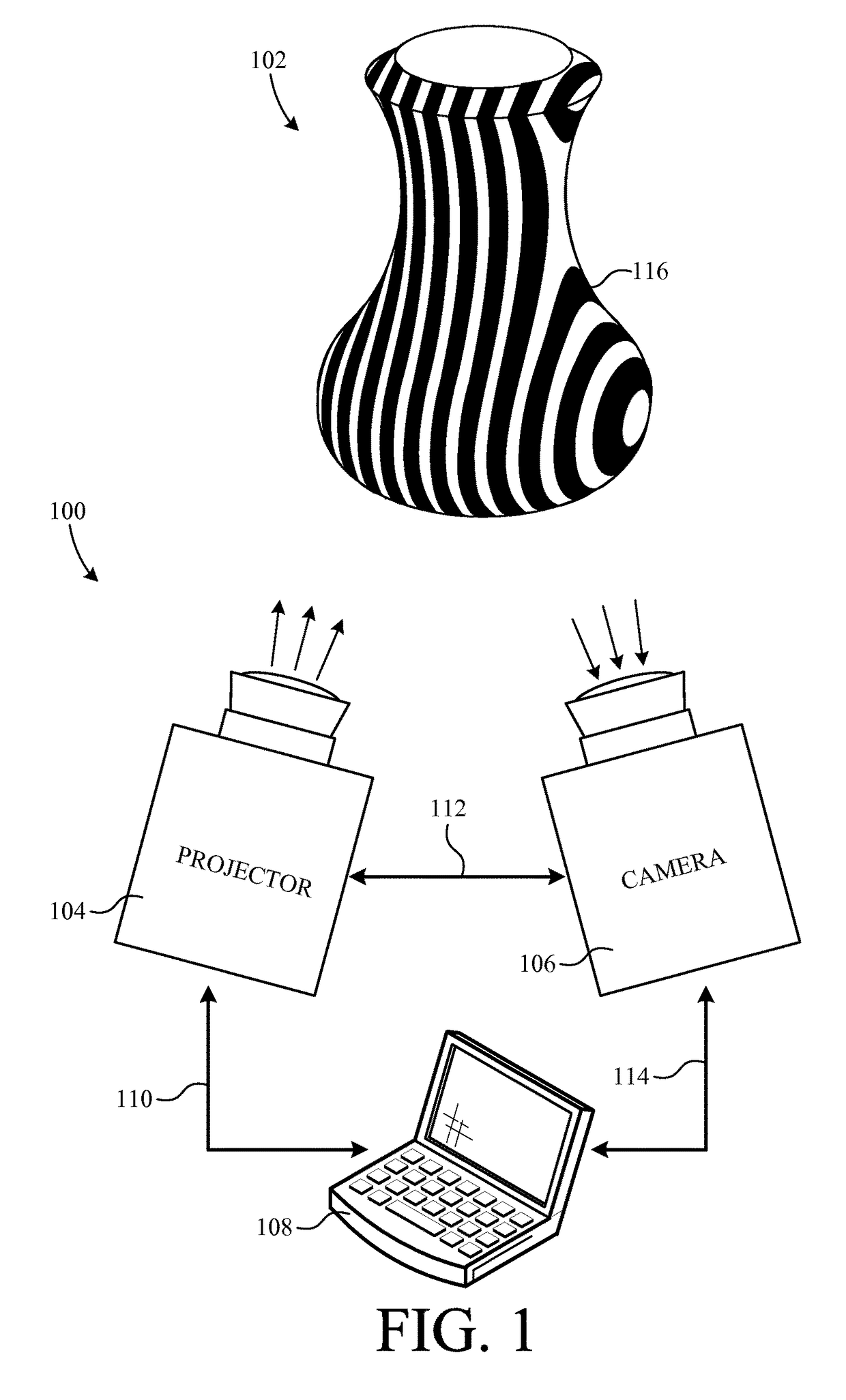

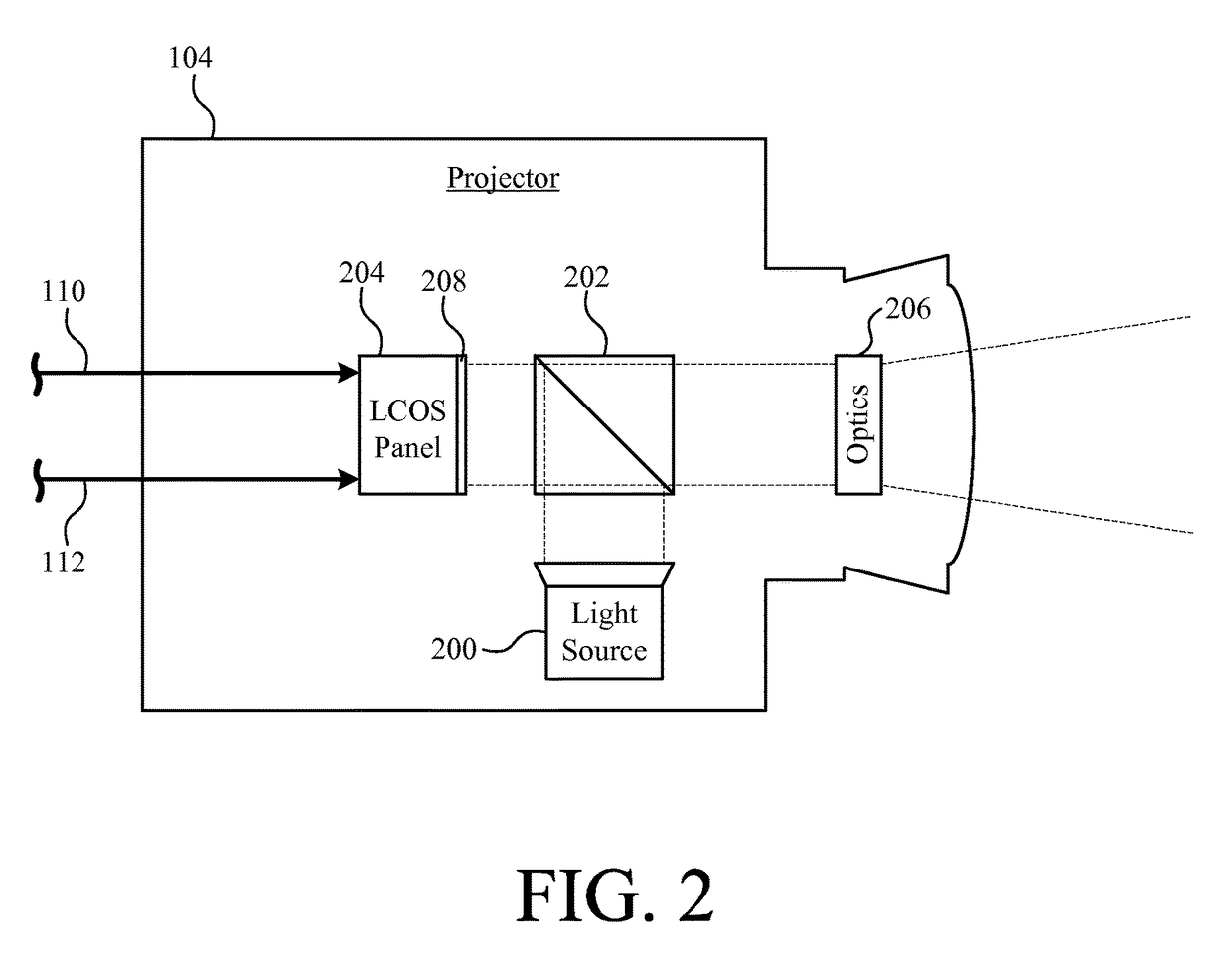

Application specific, dual mode projection system and method

ActiveUS9759554B2Facilitates configuring the on-chip pattern generatorSaving complexityTelevision system detailsStatic indicating devicesDual modeDisplay device

A projector panel includes pixel display, a display controller, and a pattern generator. The pattern generator is operative to output pixel data indicative of at least one application specific predetermined pattern. In a particular embodiment, the projector panel is a liquid-crystal-on-silicon panel. In another particular embodiment, the projector panel is adapted for selective use in either structured light projection systems or conventional video projection systems.

Owner:OMNIVISION TECH INC

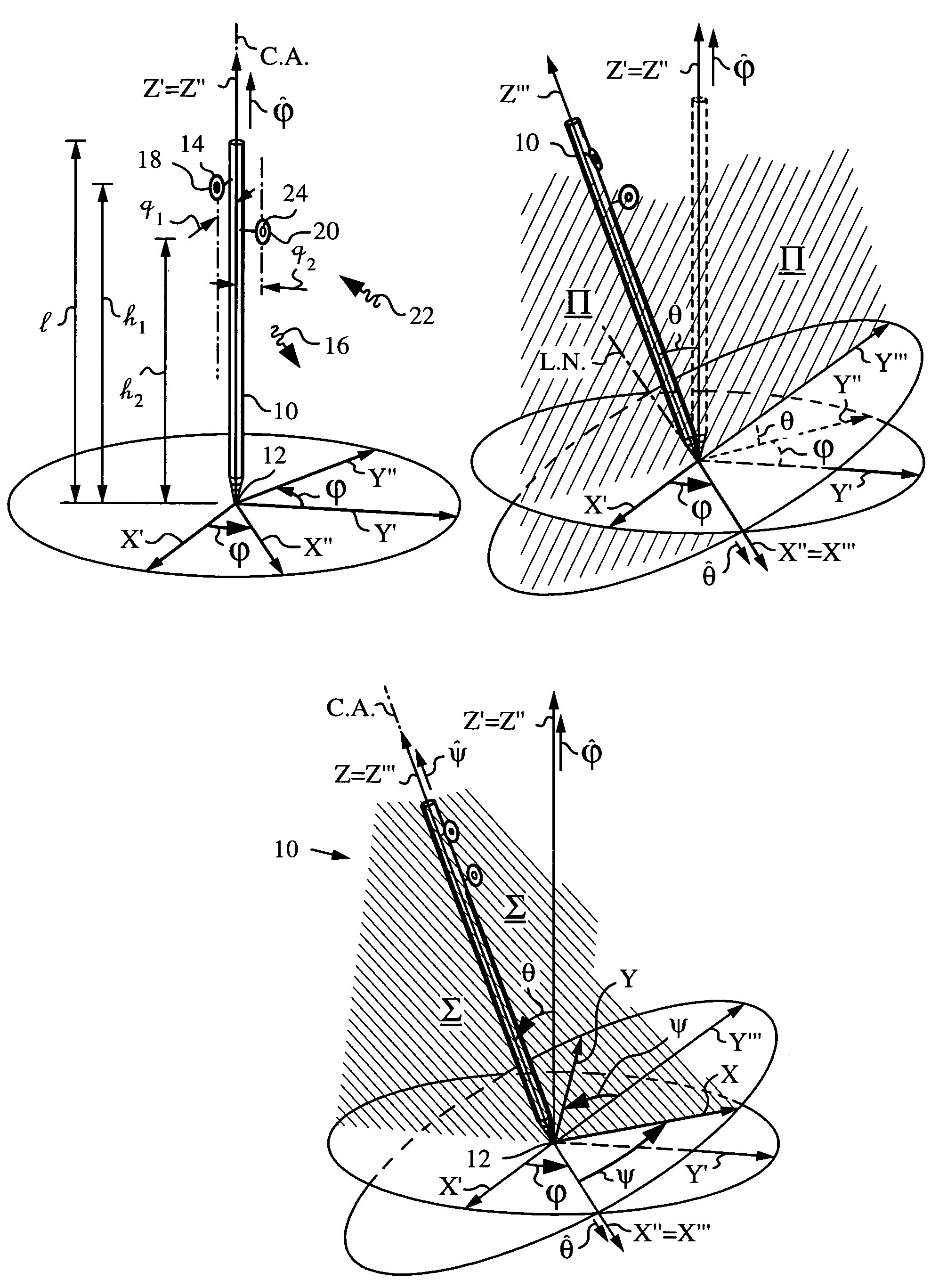

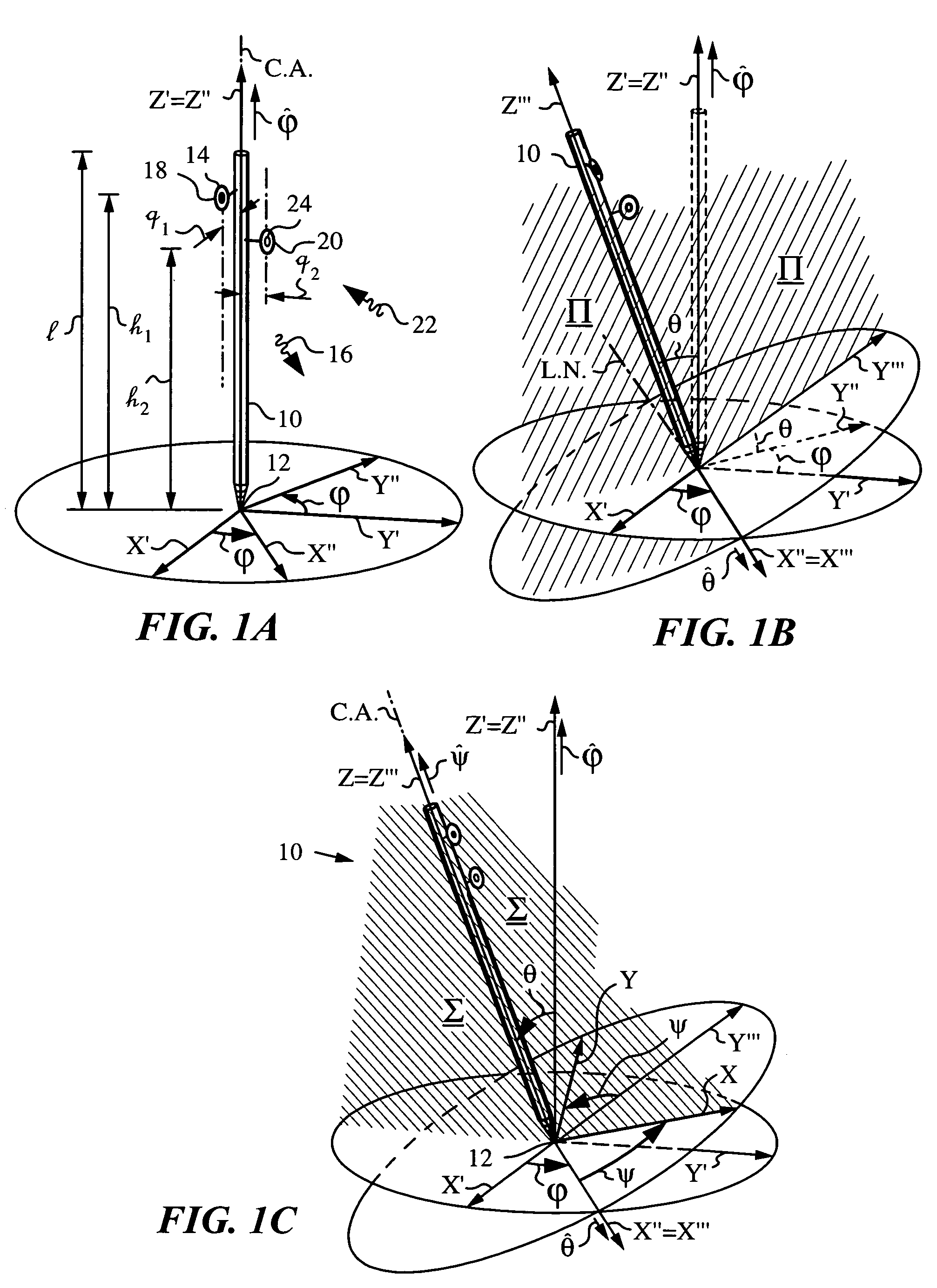

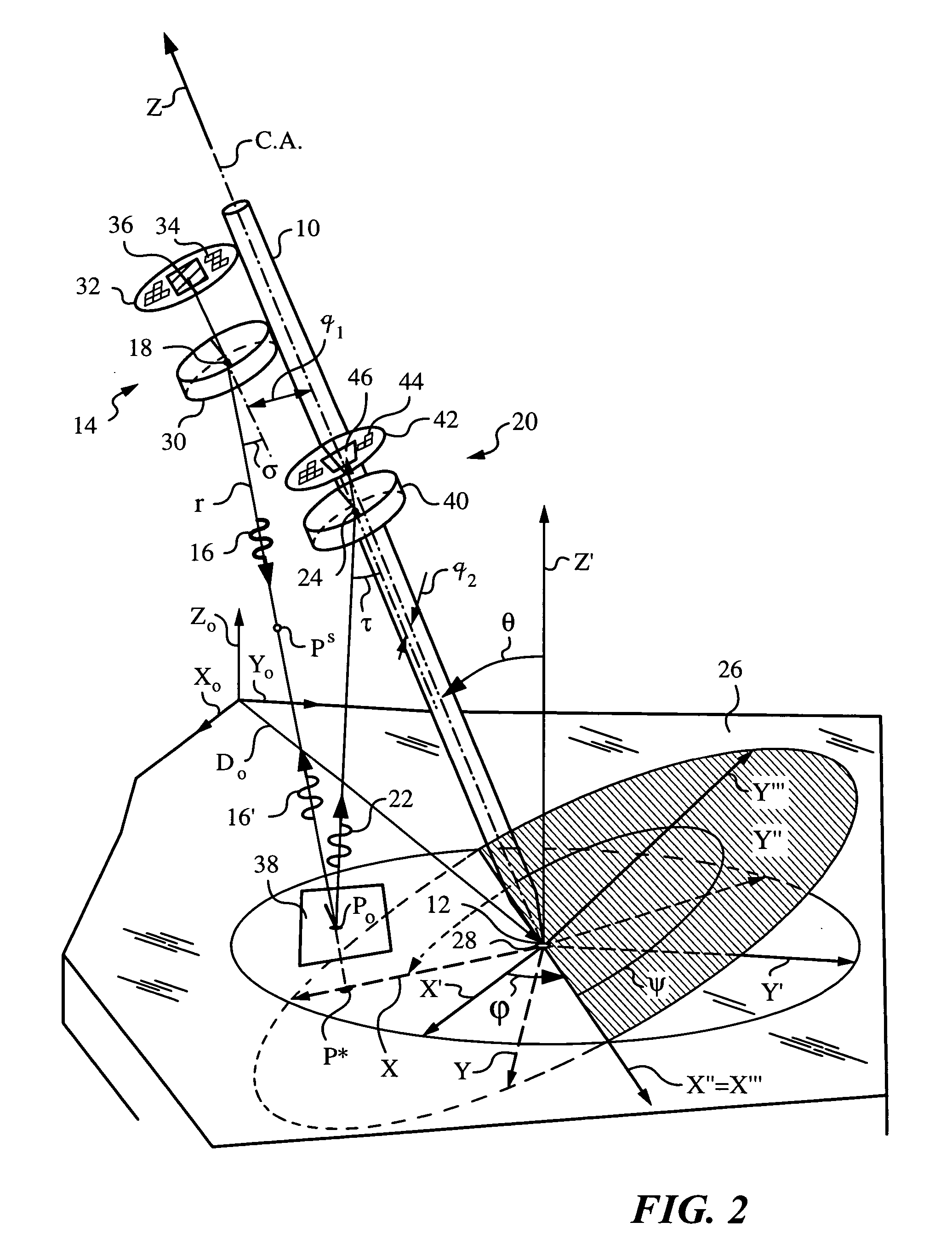

Apparatus and method for determining orientation parameters of an elongate object

An apparatus and method employing principles of stereo vision for determining one or more orientation parameters and especially the second and third Euler angles θ, ψ of an elongate object whose tip is contacting a surface at a contact point. The apparatus has a projector mounted on the elongate object for illuminating the surface with a probe radiation in a known pattern from a first point of view and a detector mounted on the elongate object for detecting a scattered portion of the probe radiation returning from the surface to the elongate object from a second point of view. The orientation parameters are determined from a difference between the projected and detected probe radiation such as the difference between the shape of the feature produced by the projected probe radiation and the shape of the feature detected by the detector. The pattern of probe radiation is chosen to provide information for determination of the one or more orientation parameters and can include asymmetric patterns such as lines, ellipses, rectangles, polygons or the symmetric cases including circles, squares and regular polygons. To produce the patterns the projector can use a scanning arrangement or a structured light optic such as a holographic, diffractive, refractive or reflective element and any combinations thereof. The apparatus is suitable for determining the orientation of a jotting implement such as a pen, pencil or stylus.

Owner:ELECTRONICS SCRIPTING PRODS

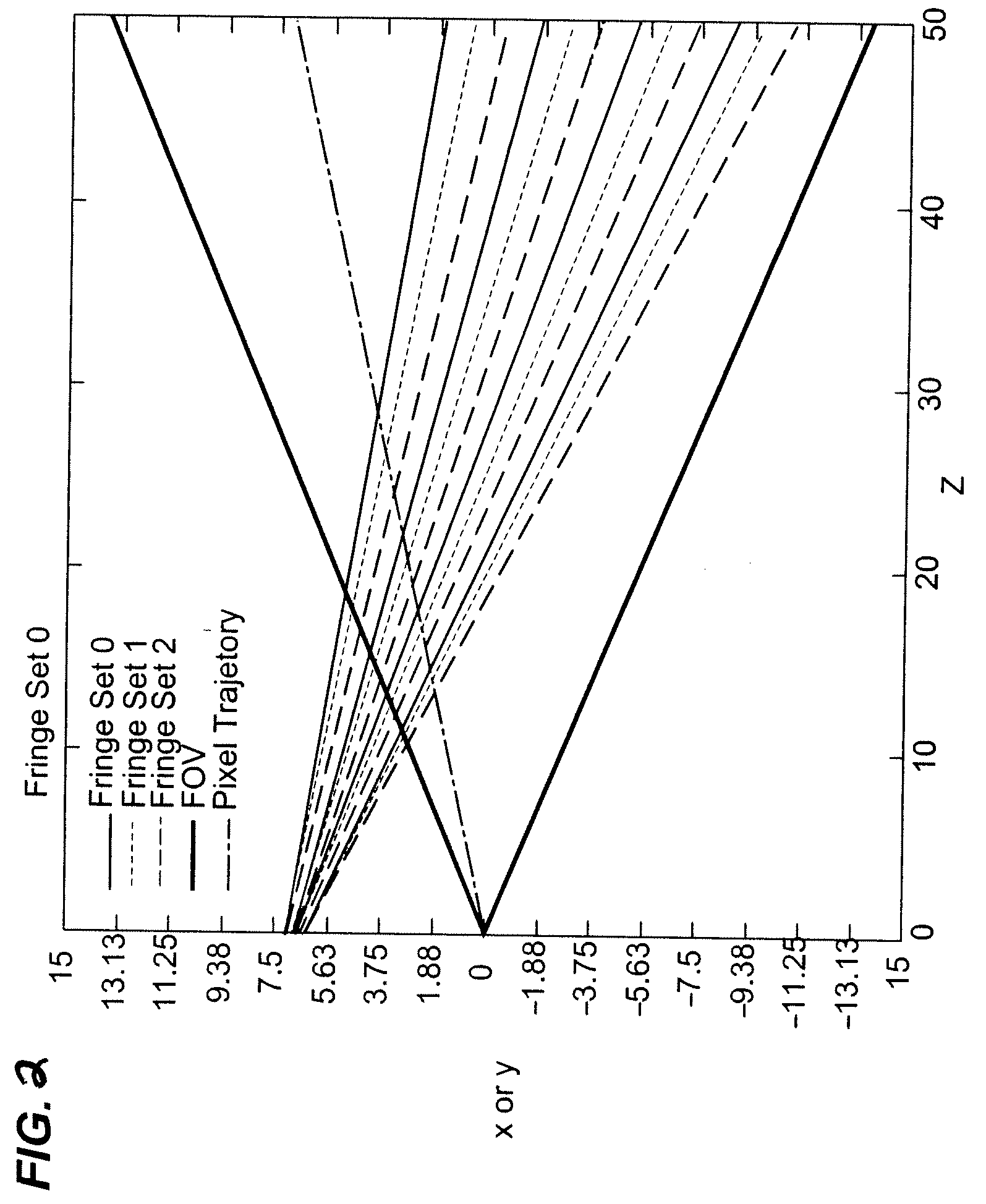

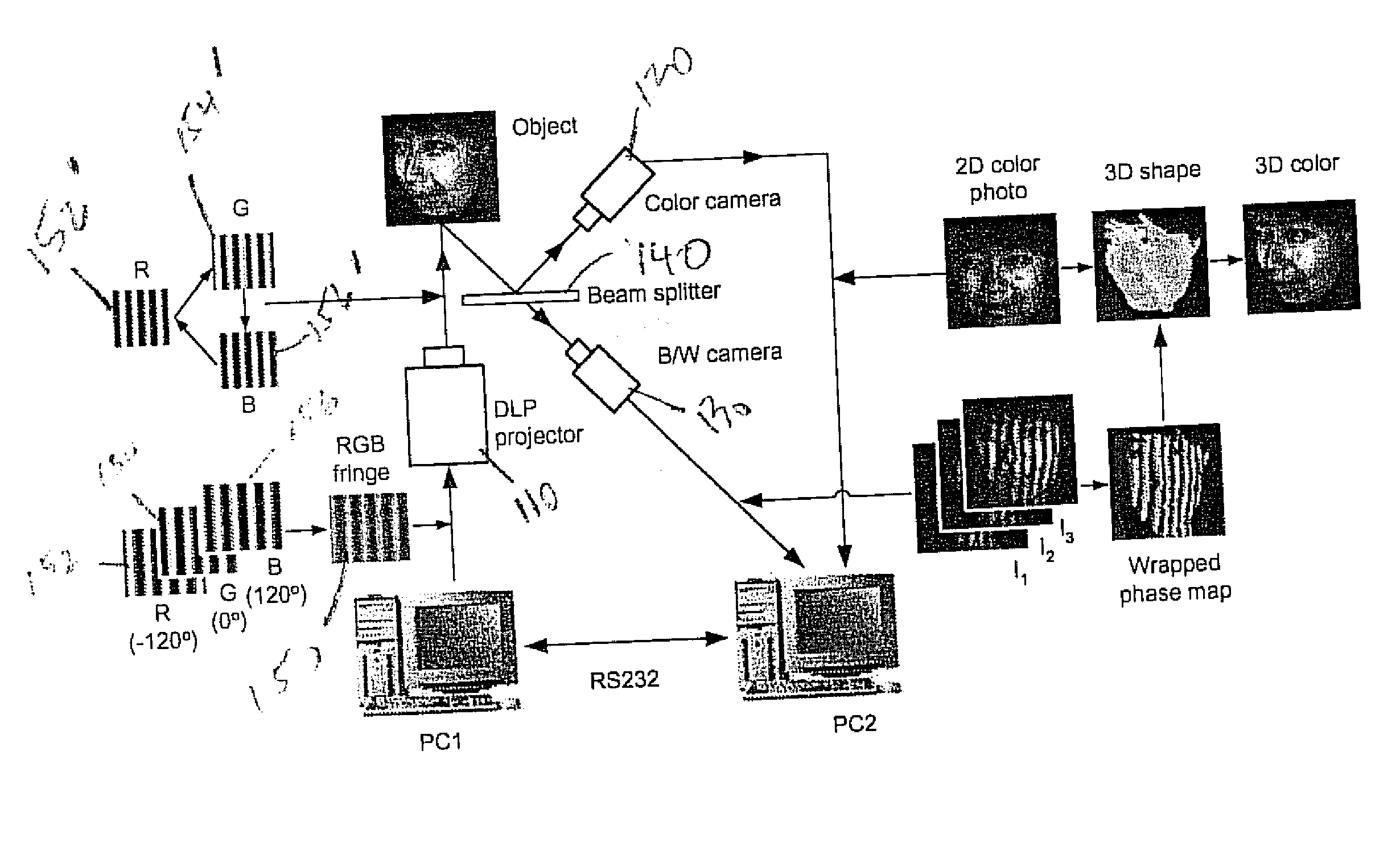

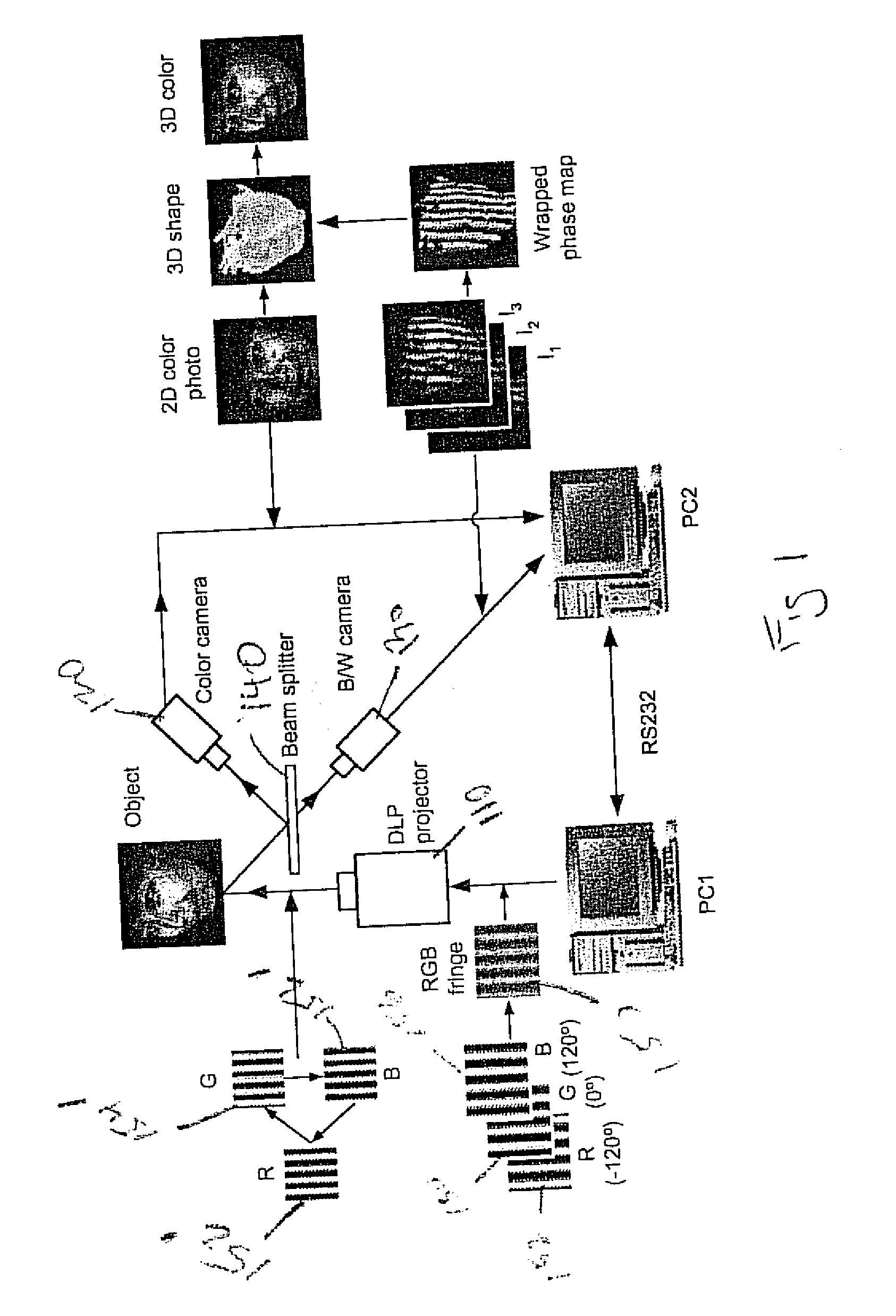

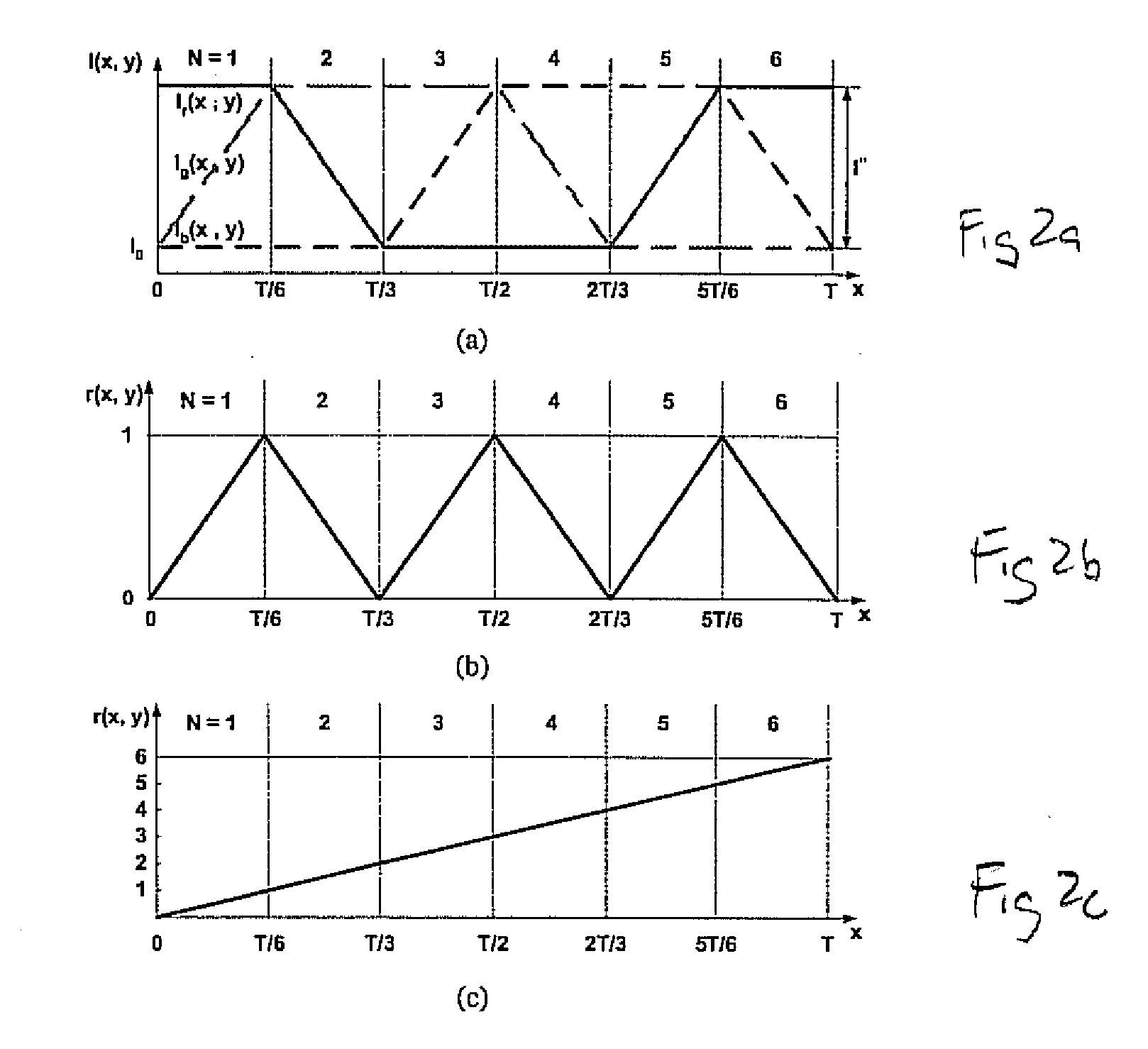

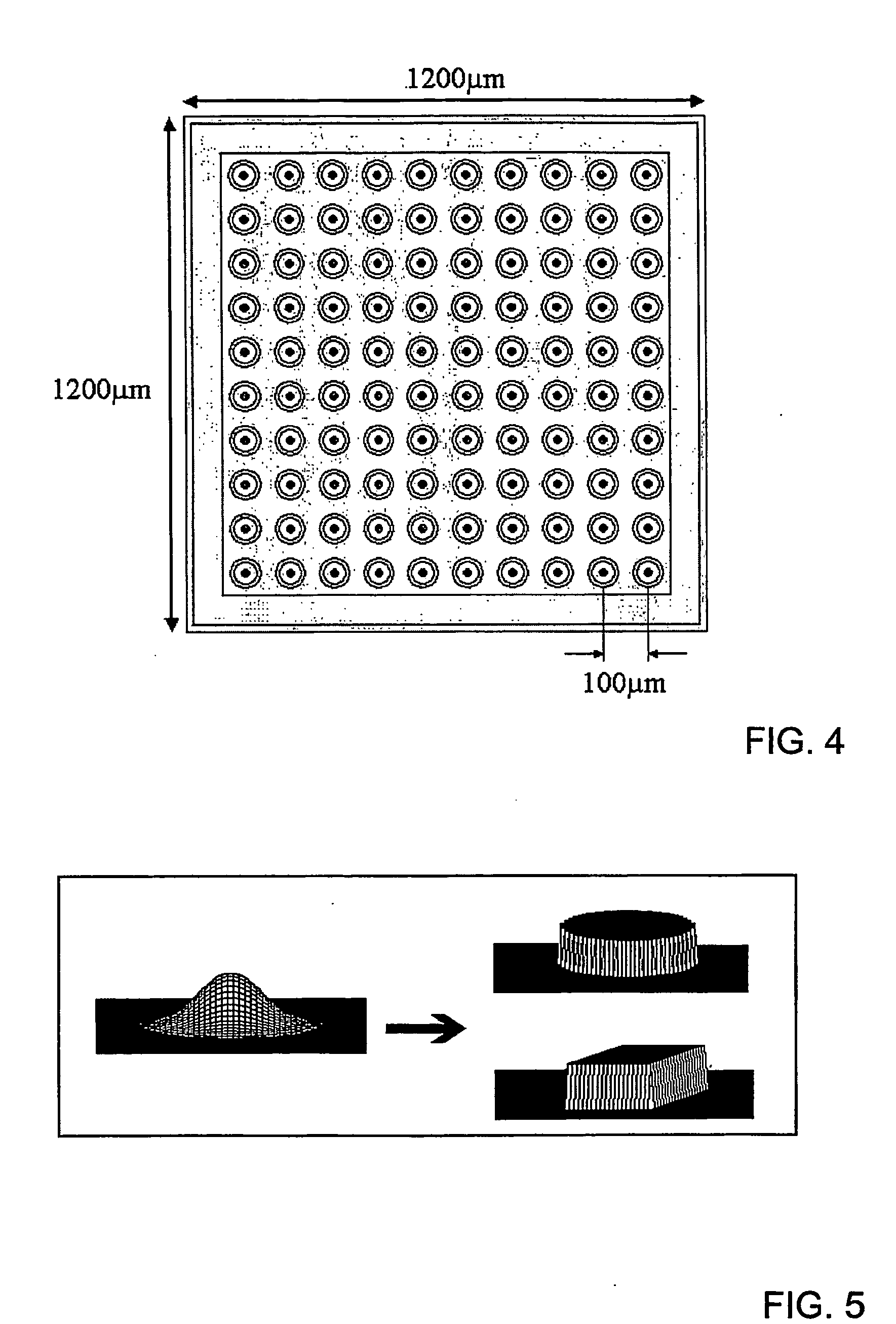

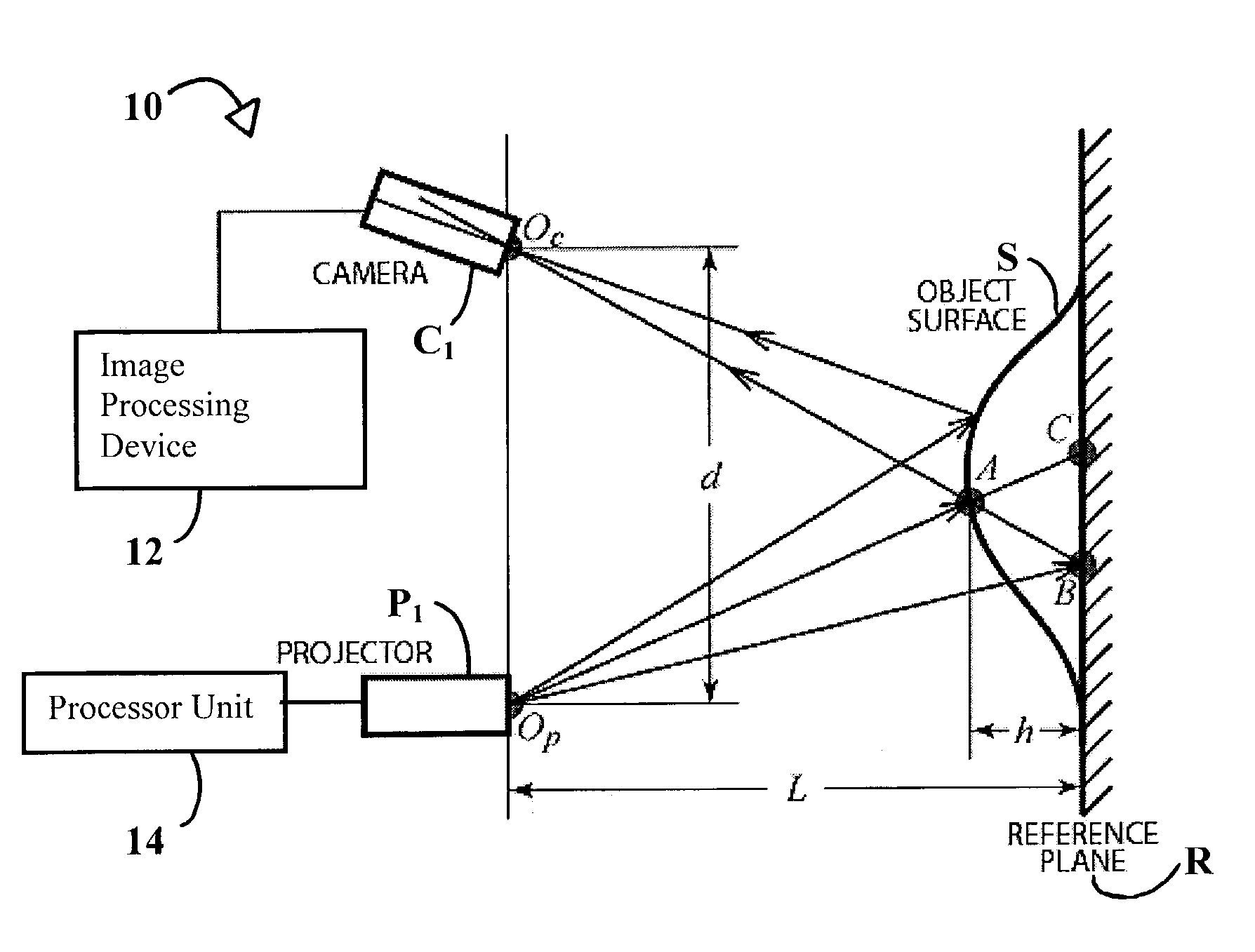

3D shape measurement system and method including fast three-step phase shifting, error compensation and calibration

InactiveUS20070115484A1Improve system speedFacilitates establishment of coordinate relationshipUsing optical means3d shapesPhase shifted

A structured light system for object ranging / measurement is disclosed that implements a trapezoidal-based phase-shifting function with intensity ratio modeling using sinusoidal intensity-varied fringe patterns to accommodate for defocus error. The structured light system includes a light projector constructed to project at least three sinusoidal intensity-varied fringe patterns onto an object that are each phase shifted with respect to the others, a camera for capturing the at least three intensity-varied phase-shifted fringe patterns as they are reflected from the object and a system processor in electrical communication with the light projector and camera for generating the at least three fringe patterns, shifting the patterns in phase and providing the patterns to the projector, wherein the projector projects the at least three phase-shifted fringe patterns sequentially, wherein the camera captures the patterns as reflected from the object and wherein the system processor processes the captured patterns to generate object coordinates.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

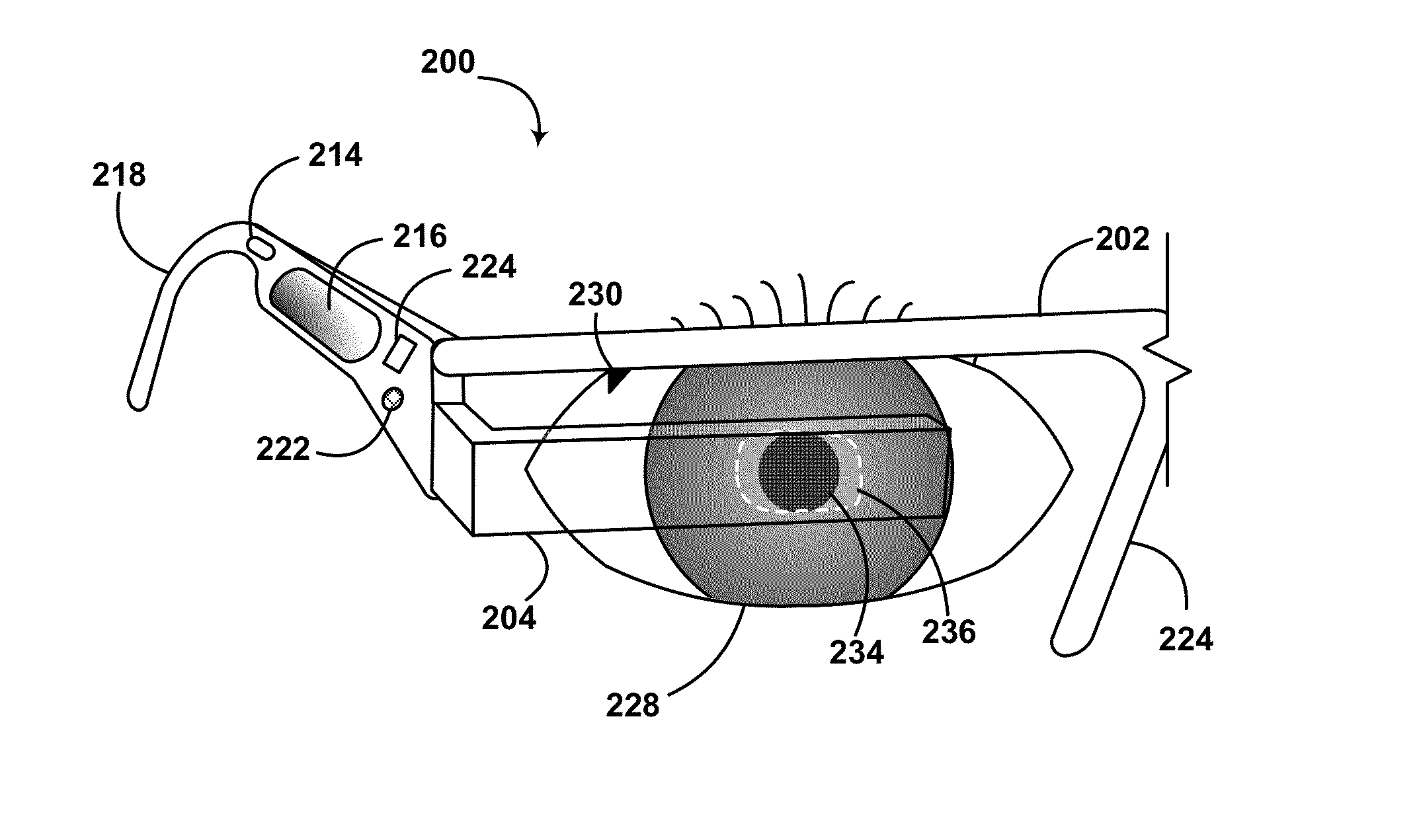

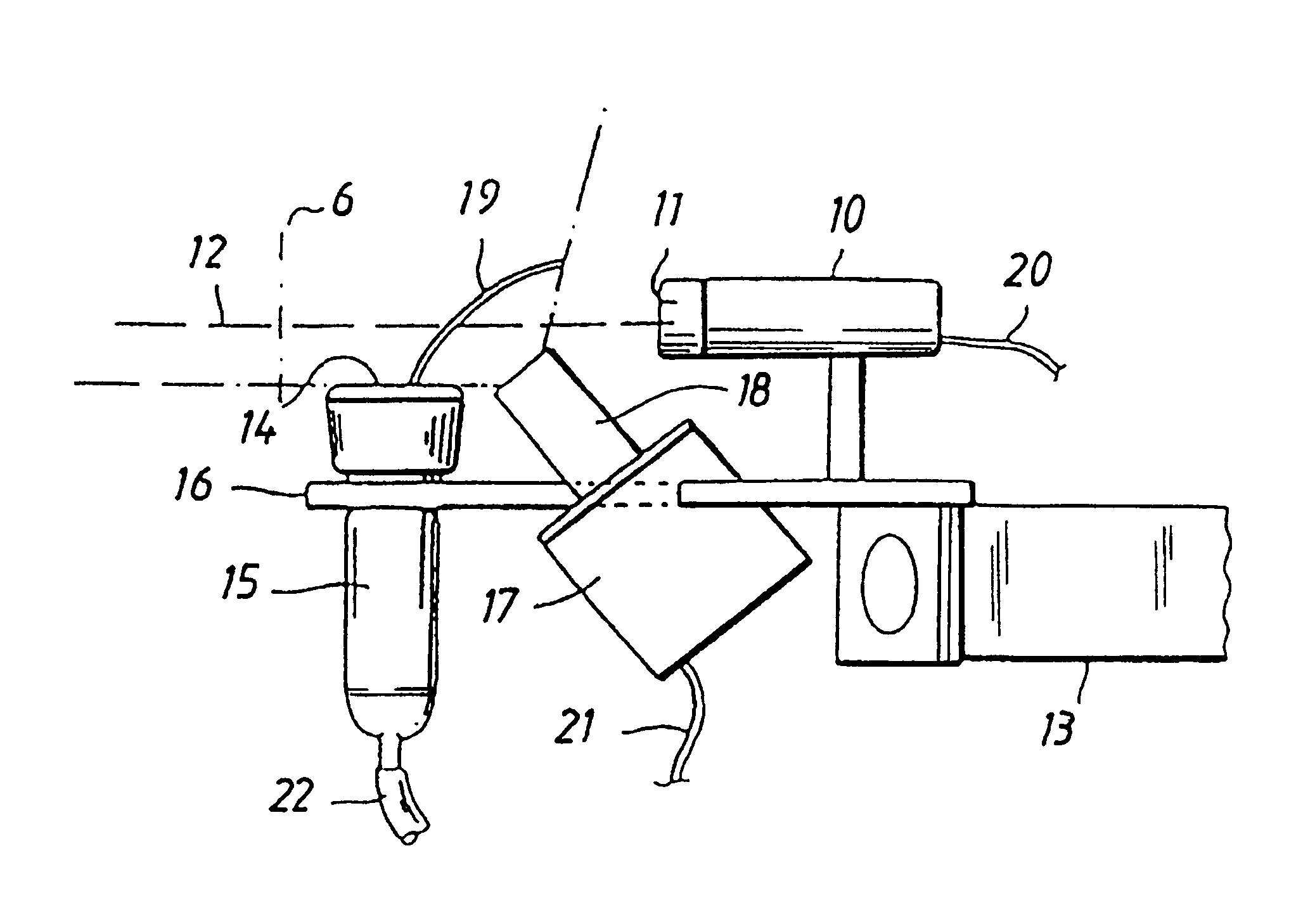

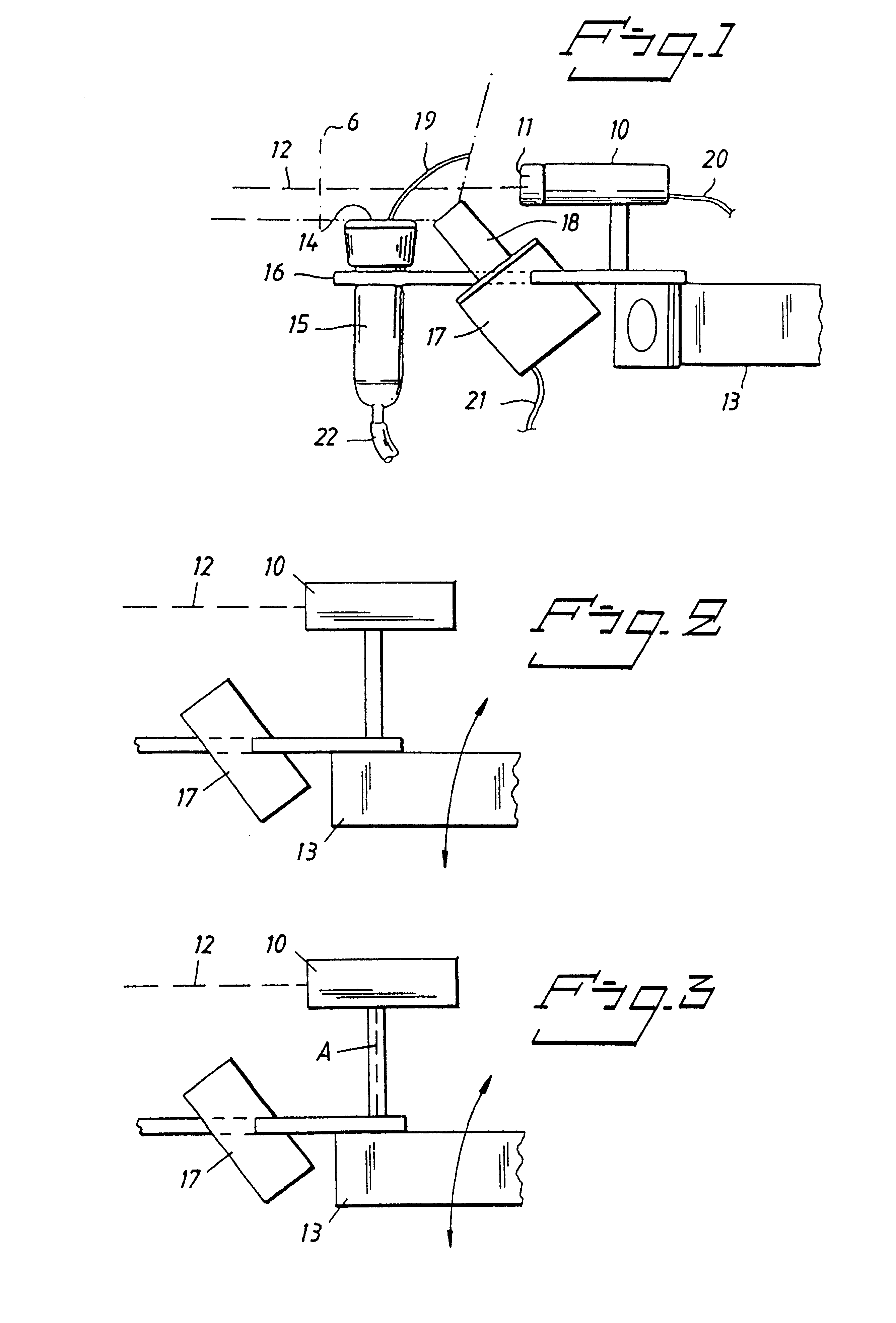

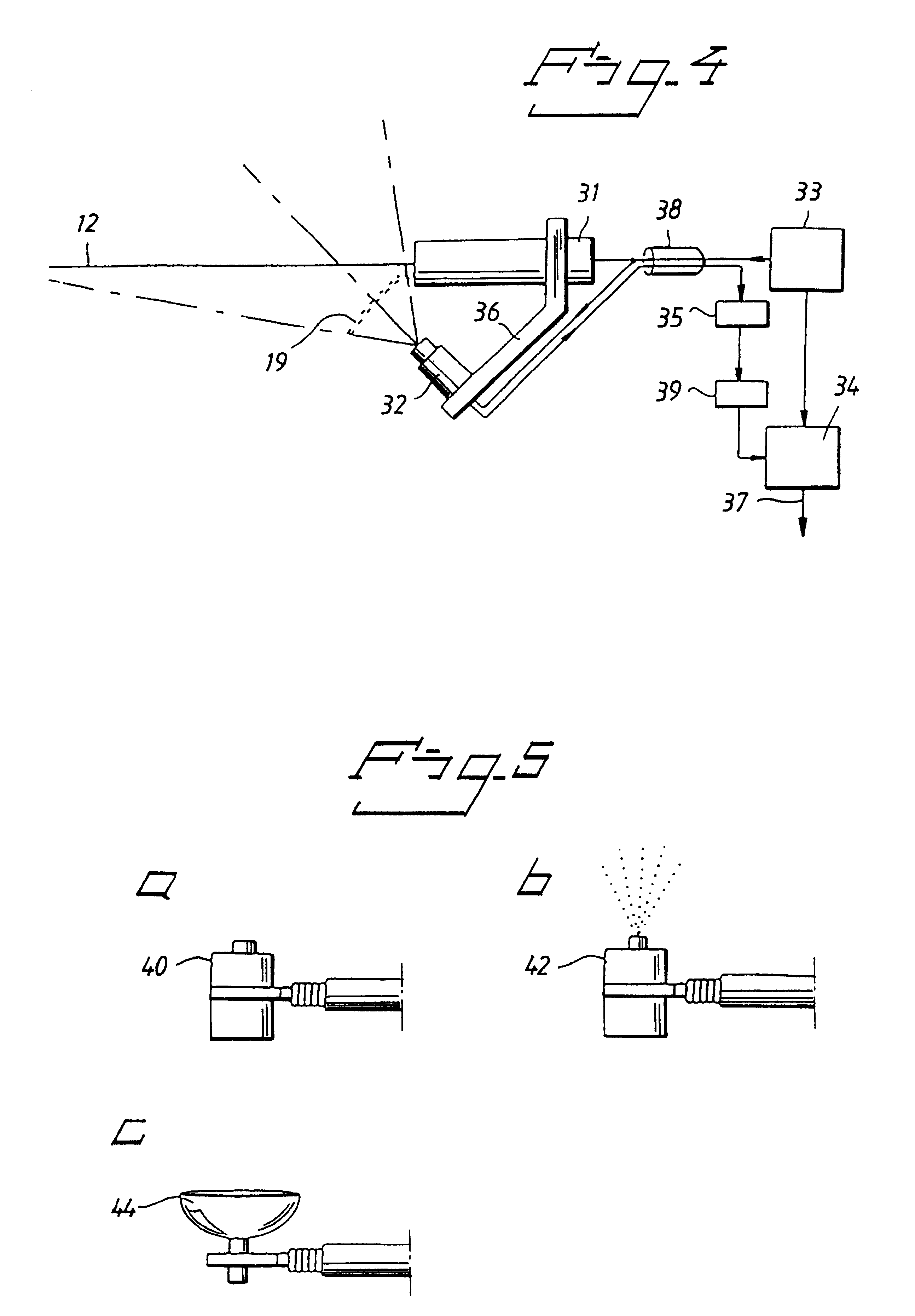

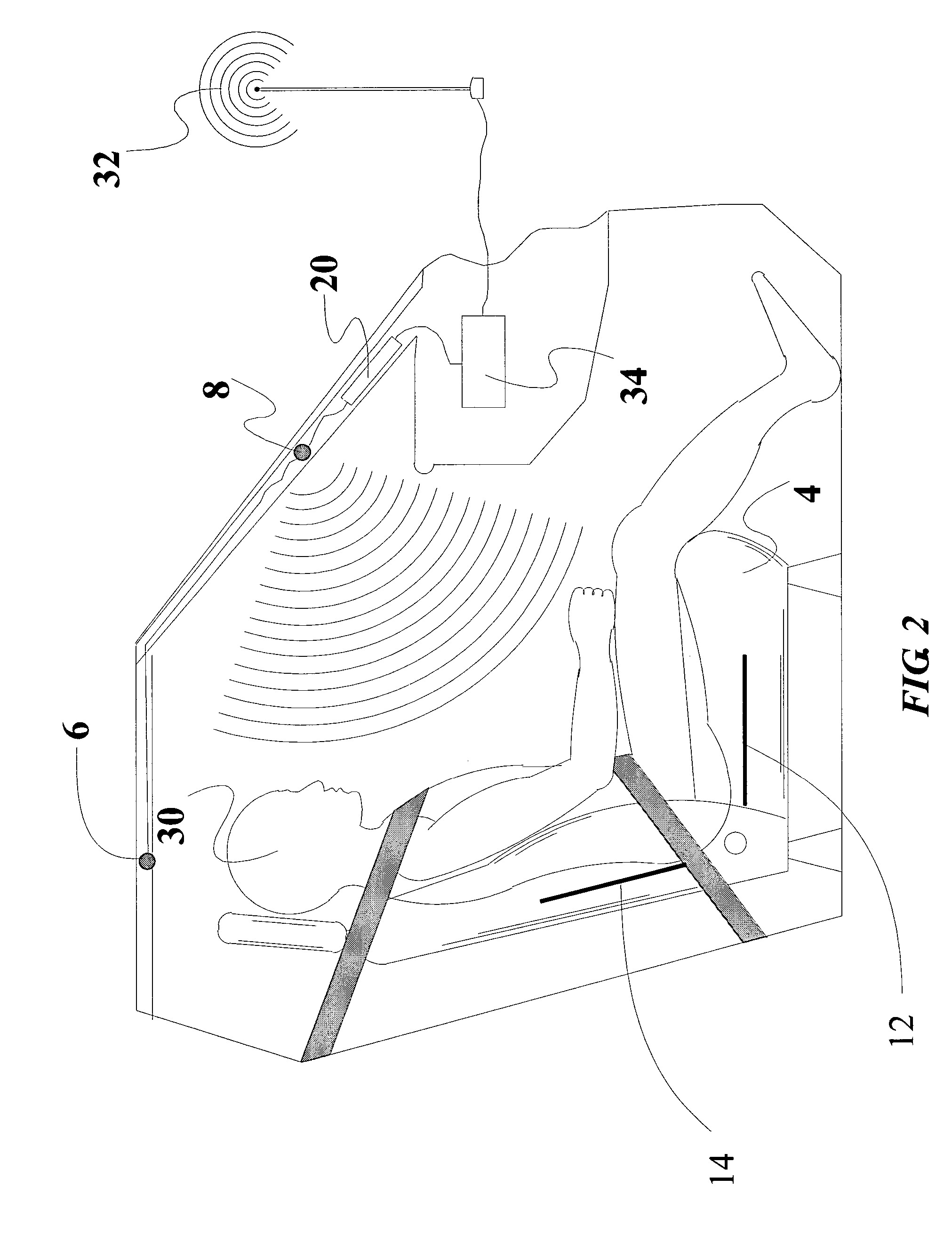

Apparatus and method for recognizing and determining the position of a part of an animal

InactiveUS6234109B1Easy to separateEasy to distinguishImage analysisCathetersComputer scienceImage capture

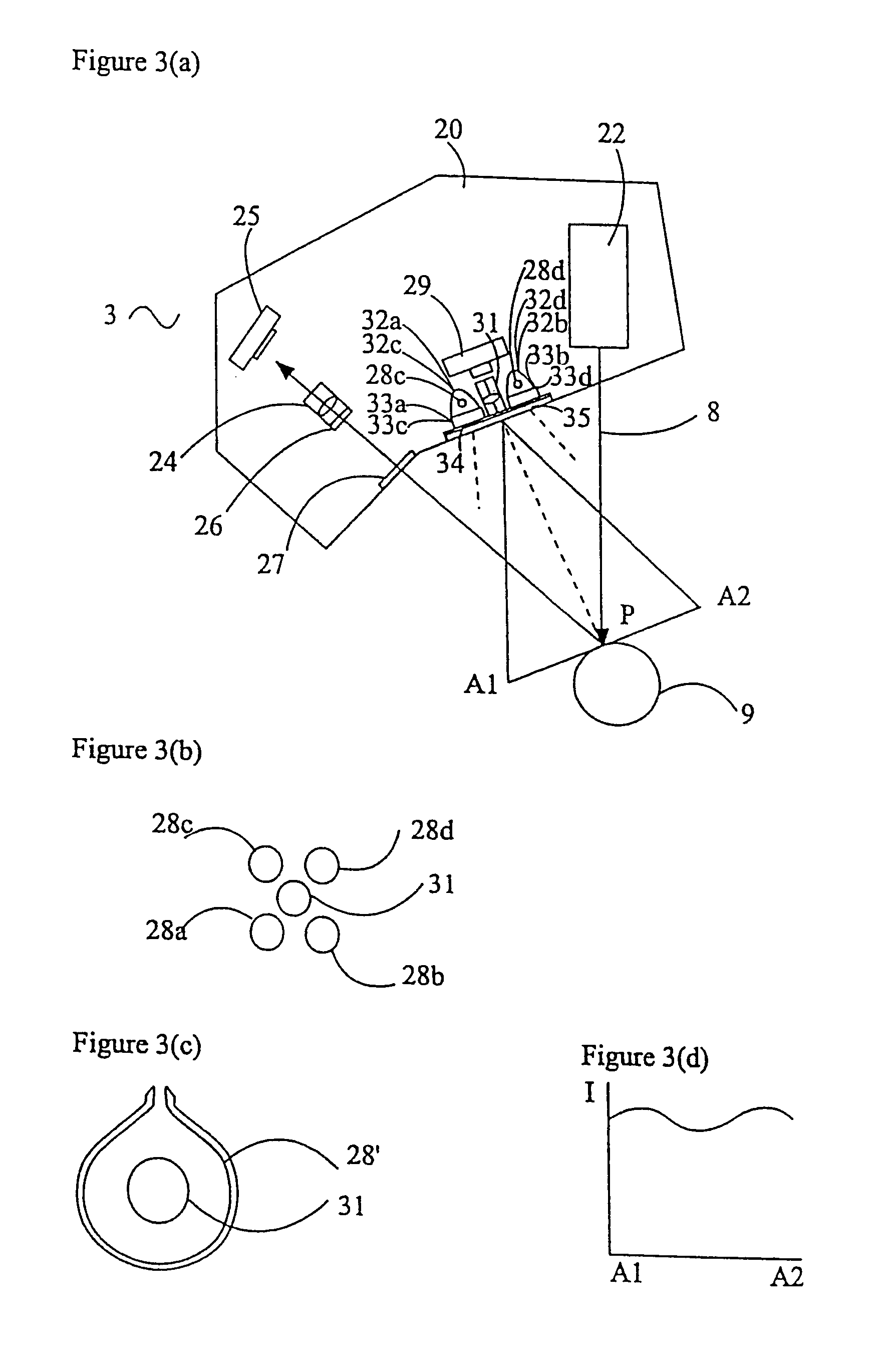

The present invention relates to an apparatus and a method for recognizing and determining the position of a part of an animal. The apparatus comprises a source of structured light for illuminating a region expected to contain at least one of the part in such a way that an object illuminated by the light will have at least one illuminated area with a very distinct outline in at least one direction, an image capture and processor device arranged to capture and process the at least one image formed by the light and a control device to determine if the illuminated object is the part by comparing the image of the illuminated object to reference criteria defining different objects, and if the illuminated object is established to be the part of the animal, the position thereof is established, and animal related device and a device to guide the animal related device towards position of the part are provided.

Owner:DELAVAL HLDG AB

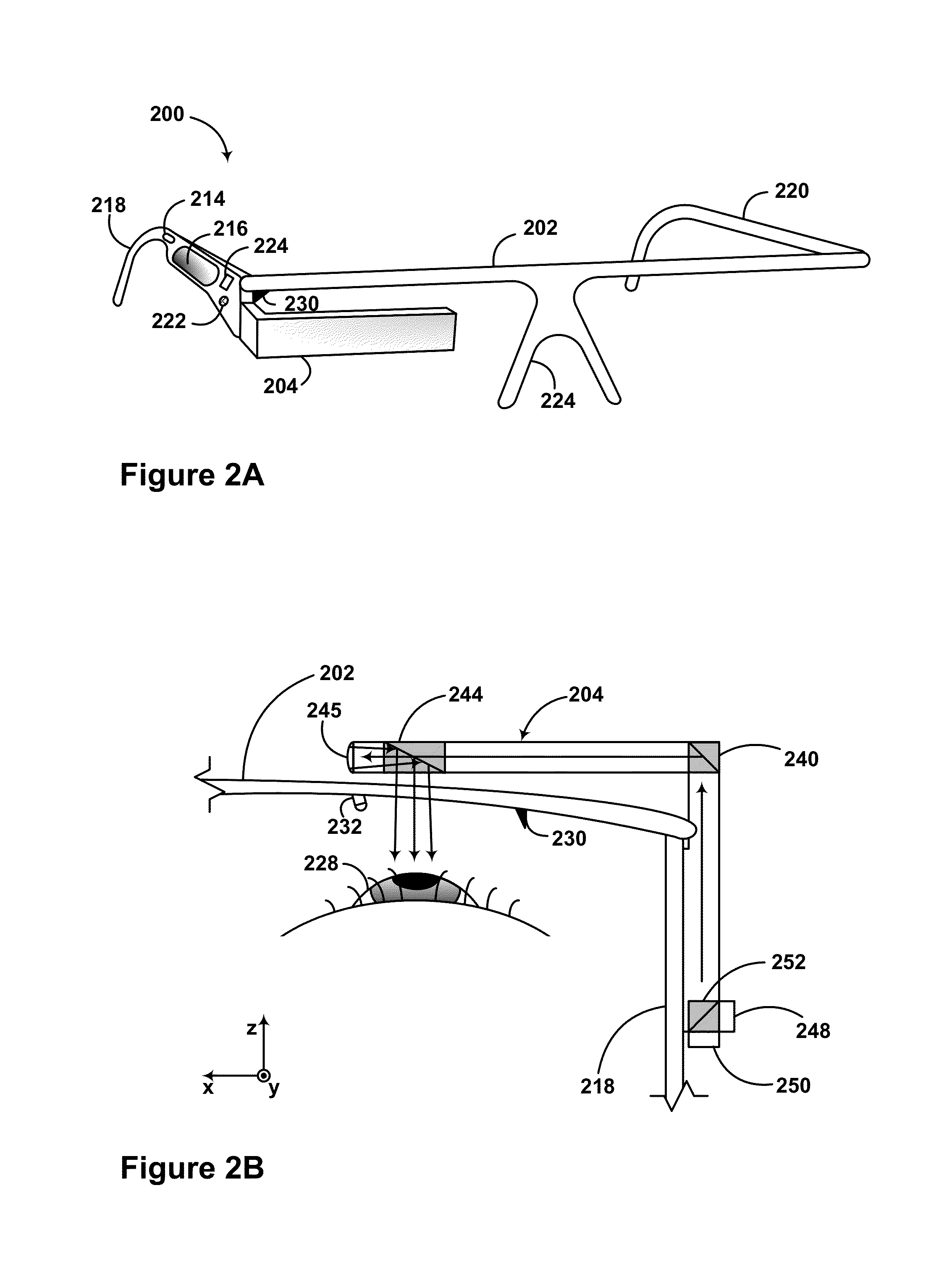

Method and system for input detection using structured light projection

Exemplary methods and systems help provide for tracking an eye. An exemplary method may involve: causing the projection of a pattern onto an eye, wherein the pattern comprises at least one line, and receiving data regarding deformation of the at least one line of the pattern. The method further includes correlating the data to iris, sclera, and pupil orientation to determine a position of the eye, and causing an item on a display to move in correlation with the eye position.

Owner:GOOGLE LLC

Methods and systems for laser based real-time structured light depth extraction

InactiveUS7385708B2Photonic efficiencyFocusImage analysisSurgeryImage resolutionBroadband light source

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

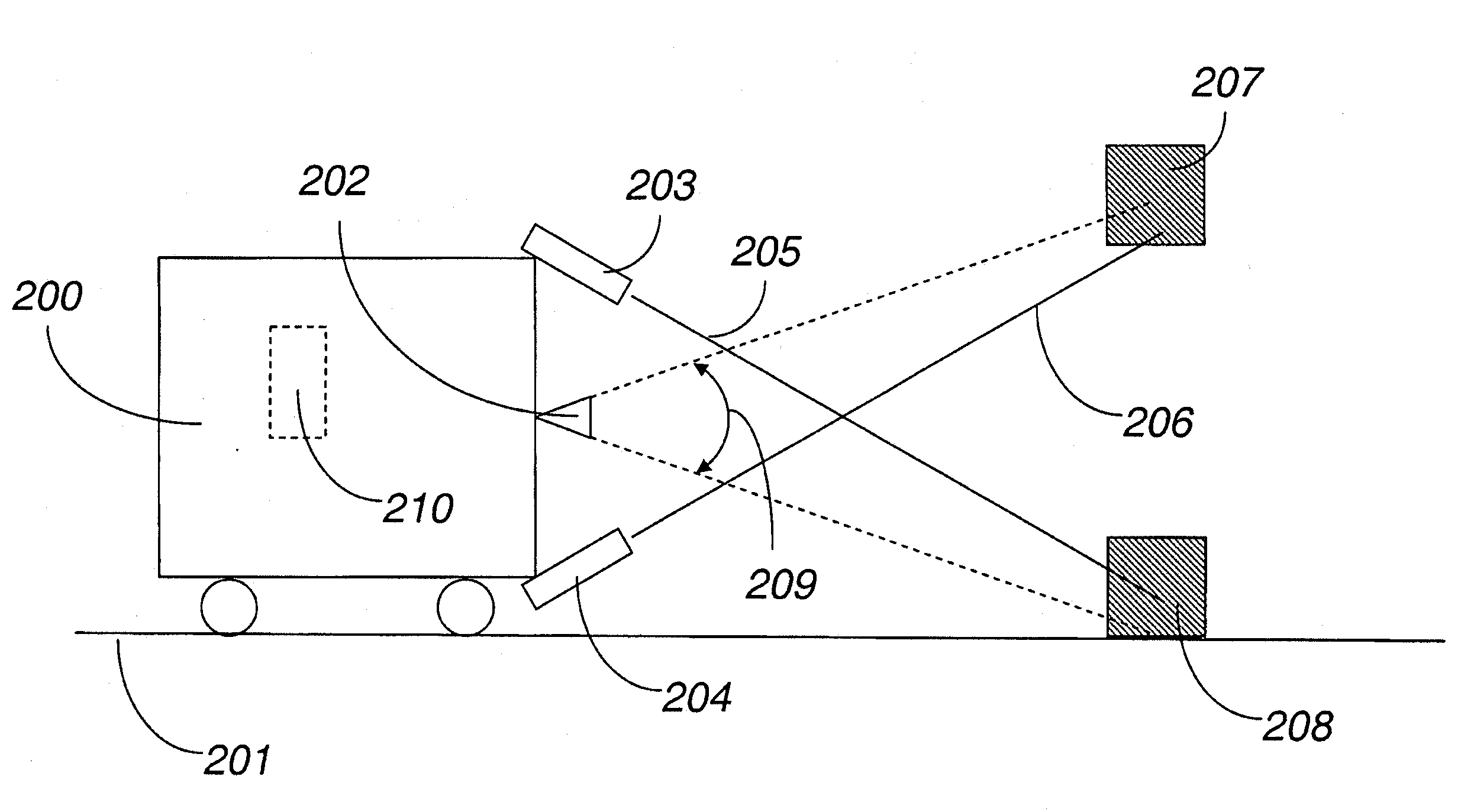

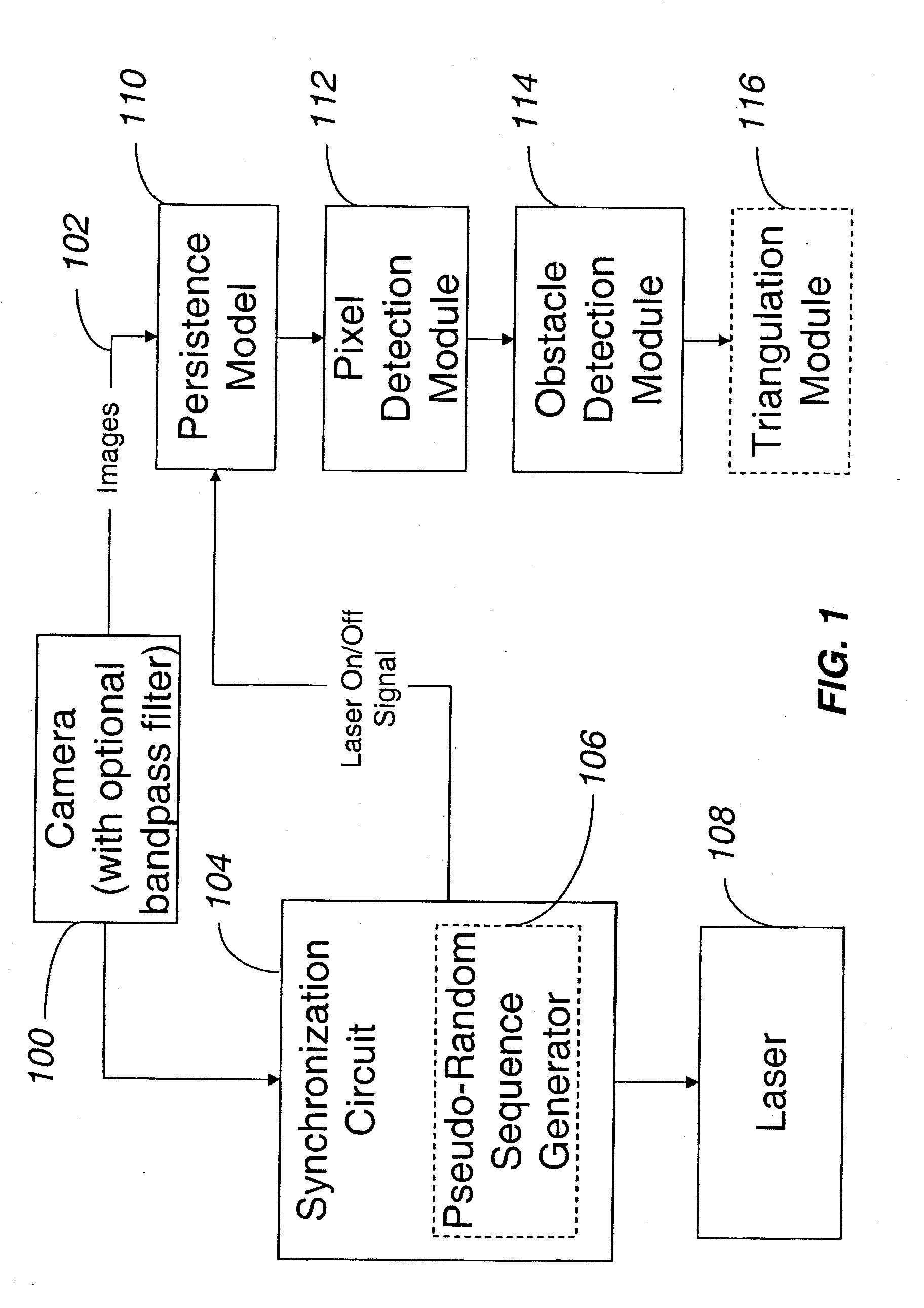

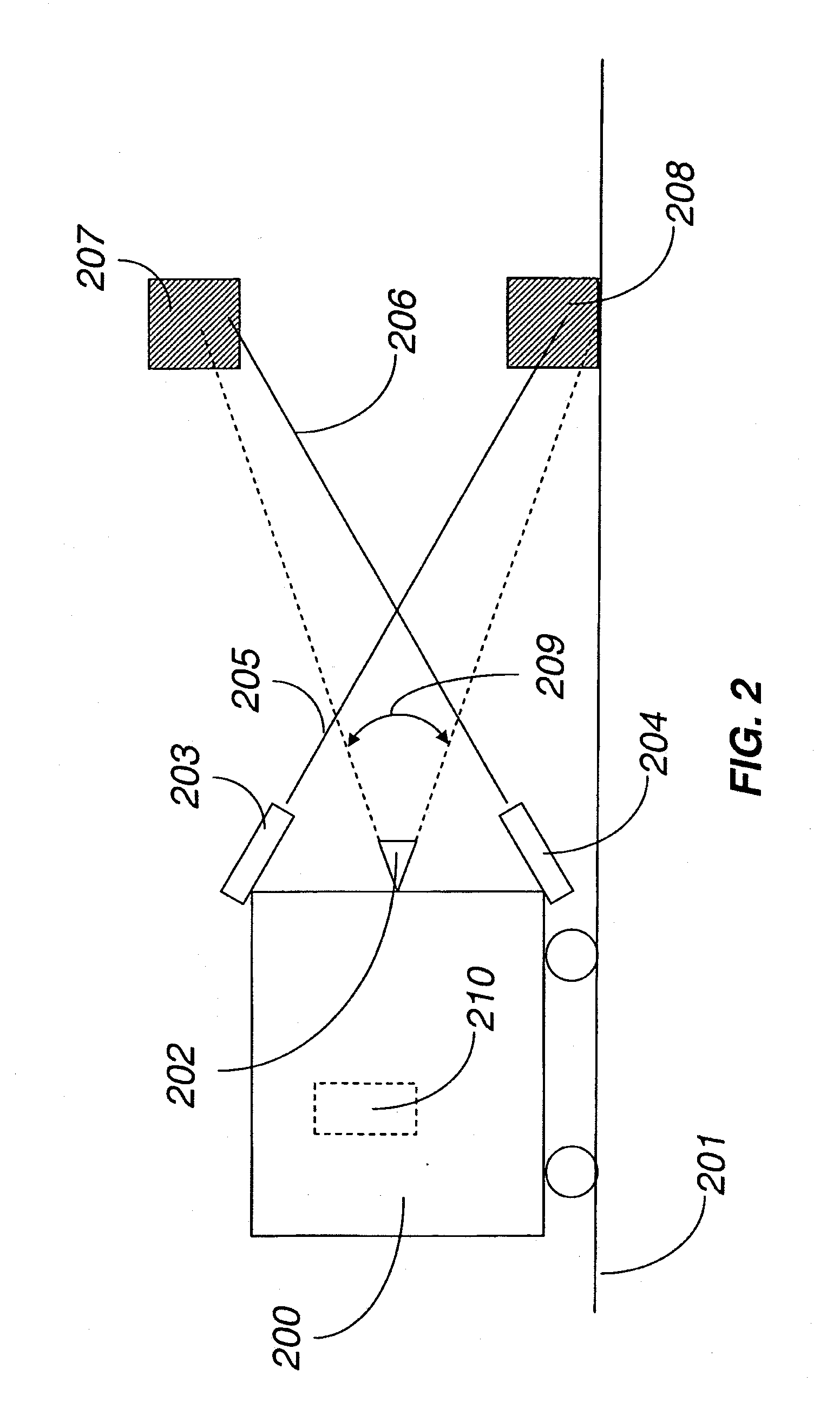

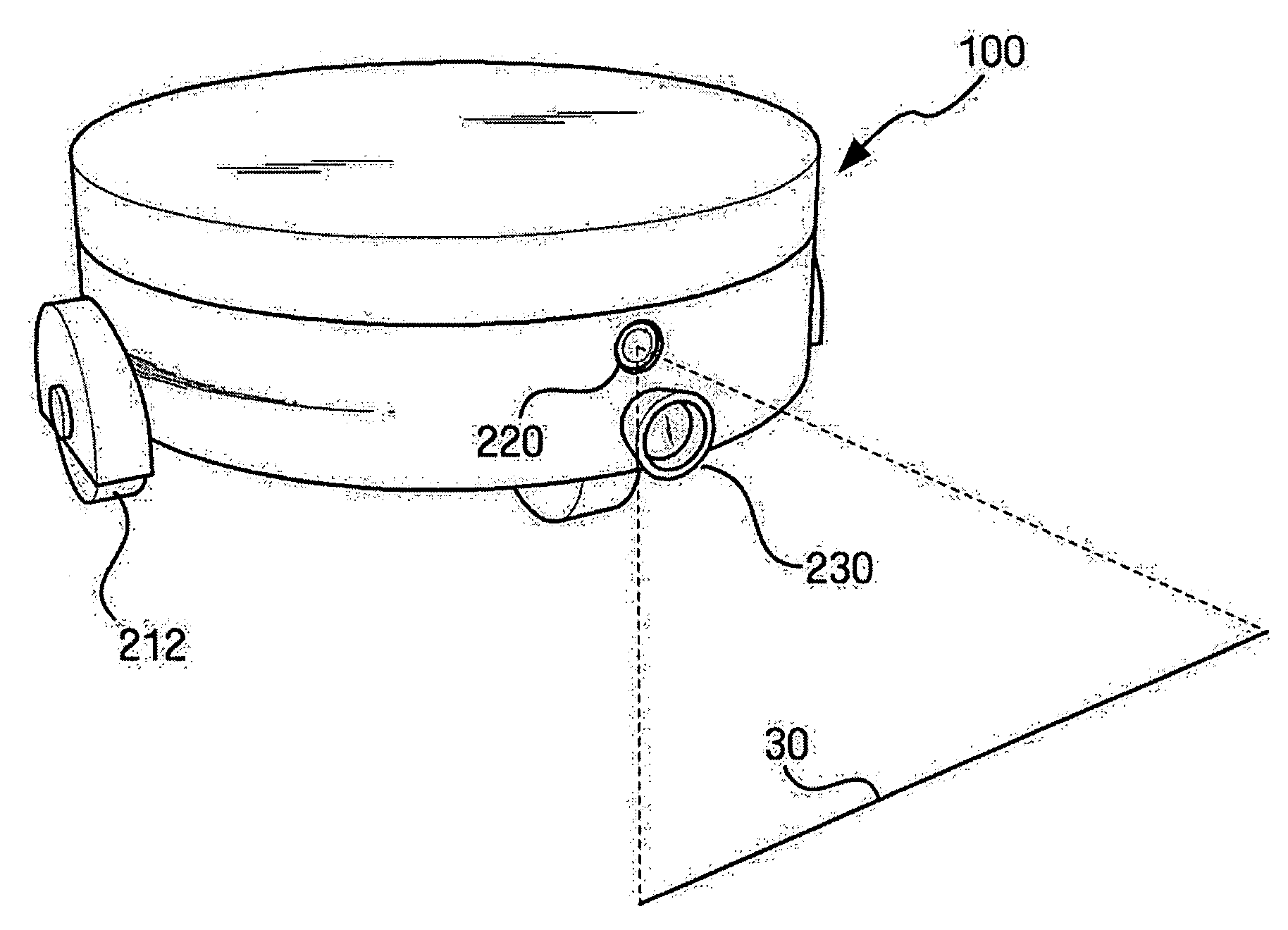

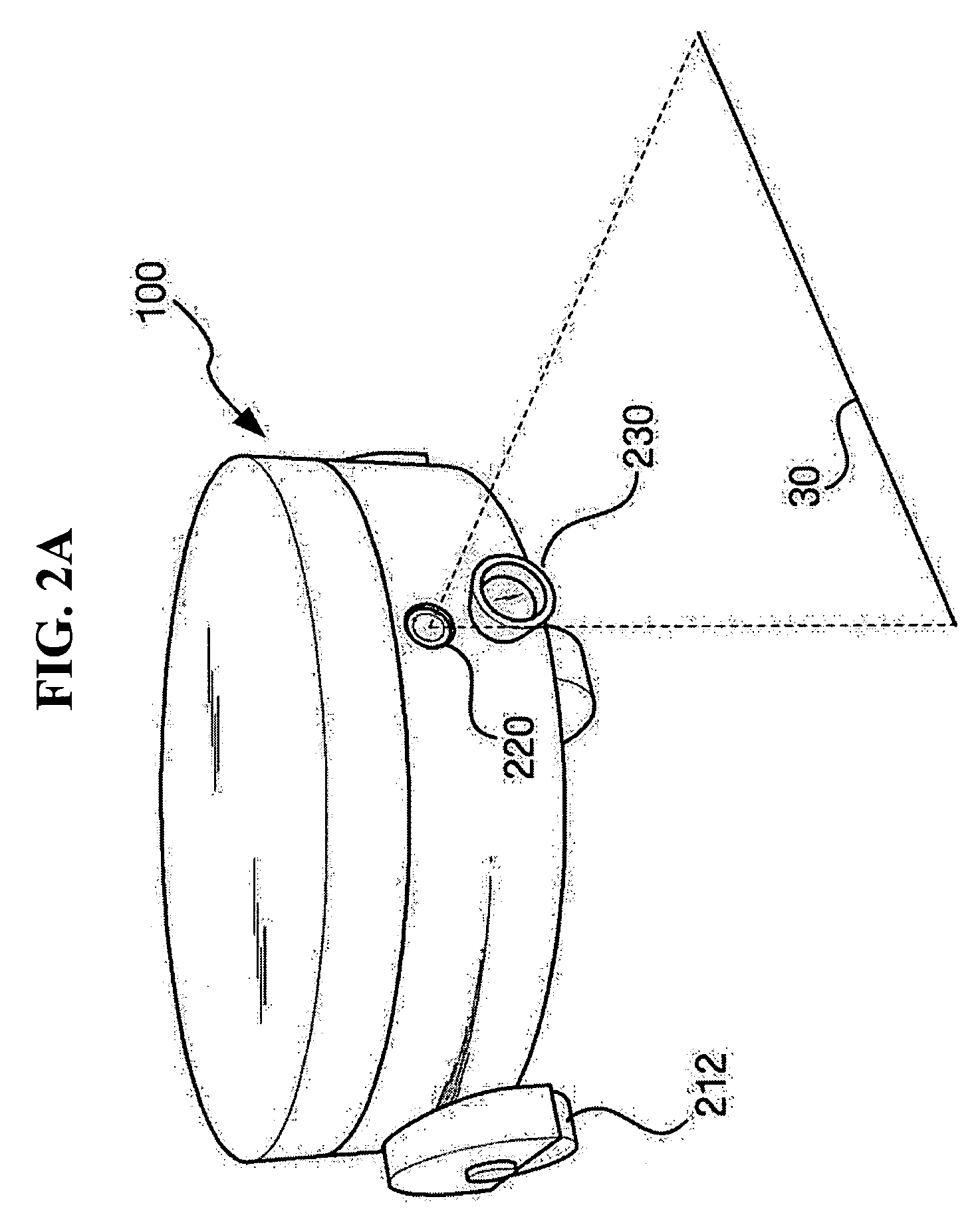

Methods and systems for obstacle detection using structured light

ActiveUS20150168954A1Robust detectionImprove accuracyProgramme controlComputer controlProcessing elementVision sensor

An obstacle detector for a mobile robot while the robot is in motion is disclosed. The detector preferably includes at least one light source configured to project pulsed light in the path of the robot; a visual sensor for capturing a plurality of images of light reflected from the path of the robot; a processing unit configured to extract the reflections from the images; and an obstacle detection unit configured to detect an obstacle in the path of the robot based on the extracted reflections. In the preferred embodiment, the reflections of the projected light are extracted by subtracting pairs of images in which each pair includes a first image captured with the at least one light source on and a second image captured with the at least one light source off, and then combining images of two or more extracted reflections to suppress the background.

Owner:IROBOT CORP

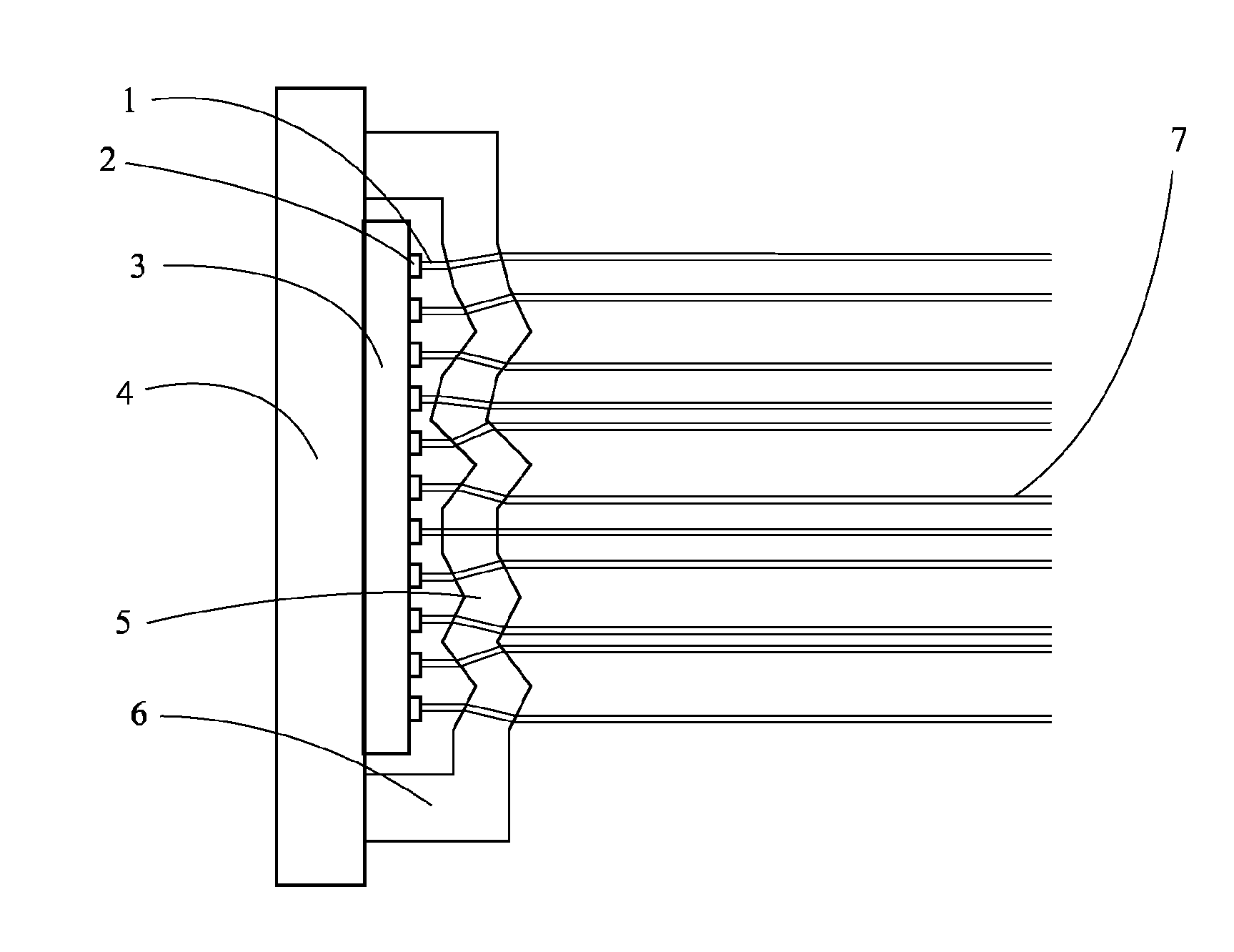

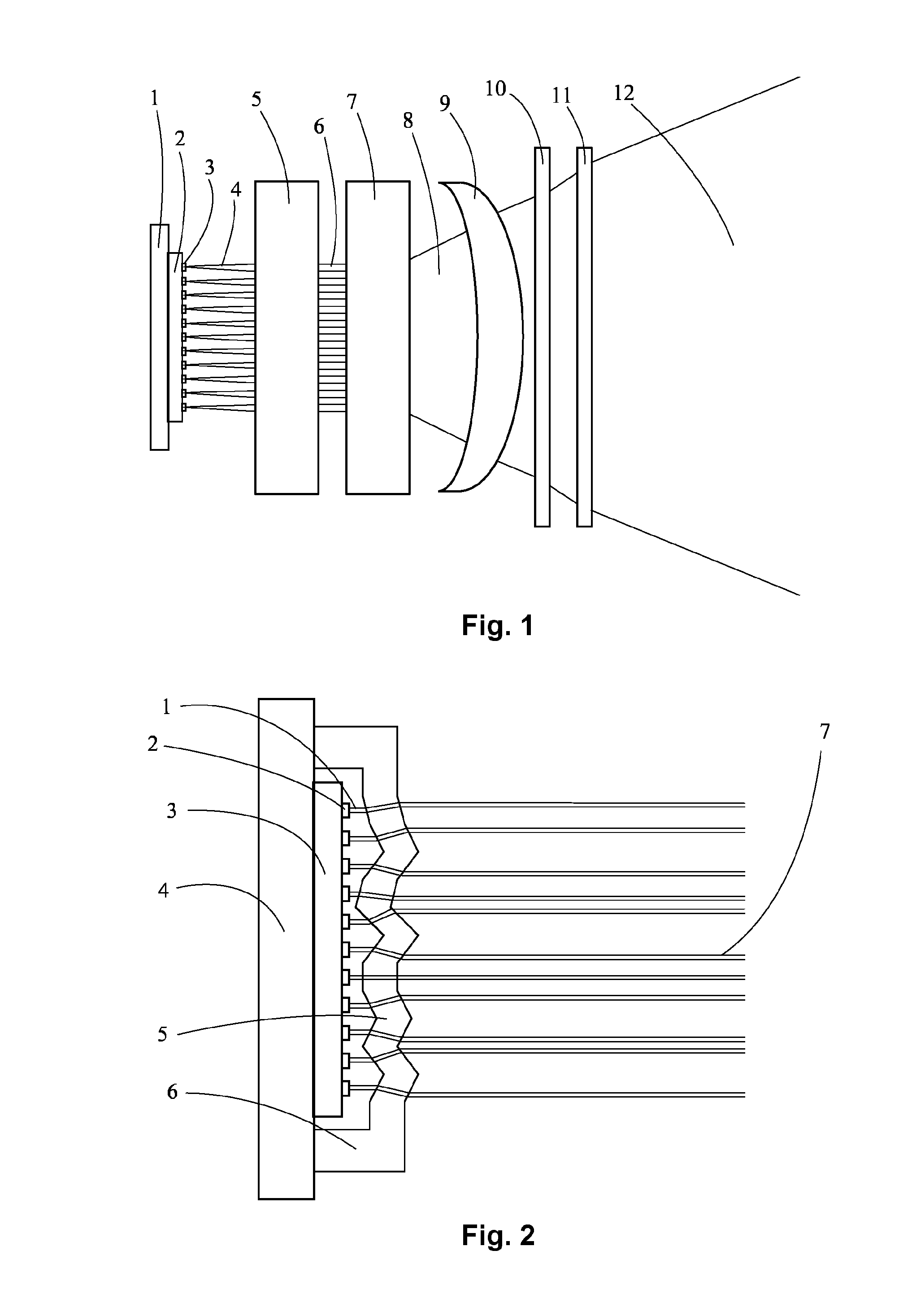

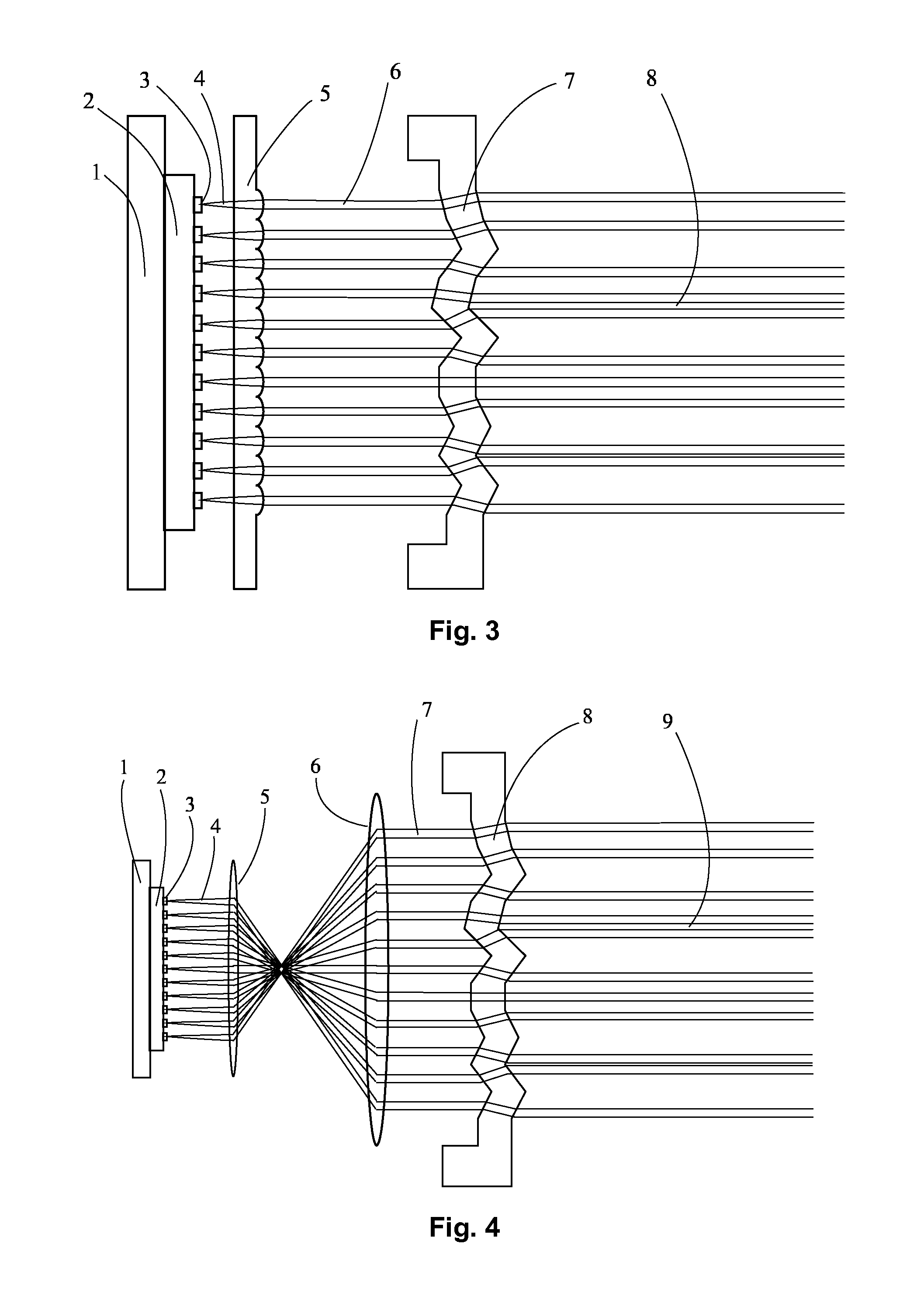

Optical system generating a structured light field from an array of light sources by means of a refracting or reflecting light structuring element

ActiveUS20150355470A1Maximum flexibilityIncrease flexibilityLaser detailsUsing optical meansLight beamOptoelectronics

An optical system for the generation of a structured light field comprises an array of light sources and a structuring unit separate from said array of light sources, said structuring unit being refractive or reflective and transforming the output of that array of light sources into a structured light illumination by collimating the light beam of each individual light source and directing each beam into the scene under vertical and horizontal angles that can be arbitrarily chosen by refraction or reflection.

Owner:IEE INT ELECTRONICS & ENG SA

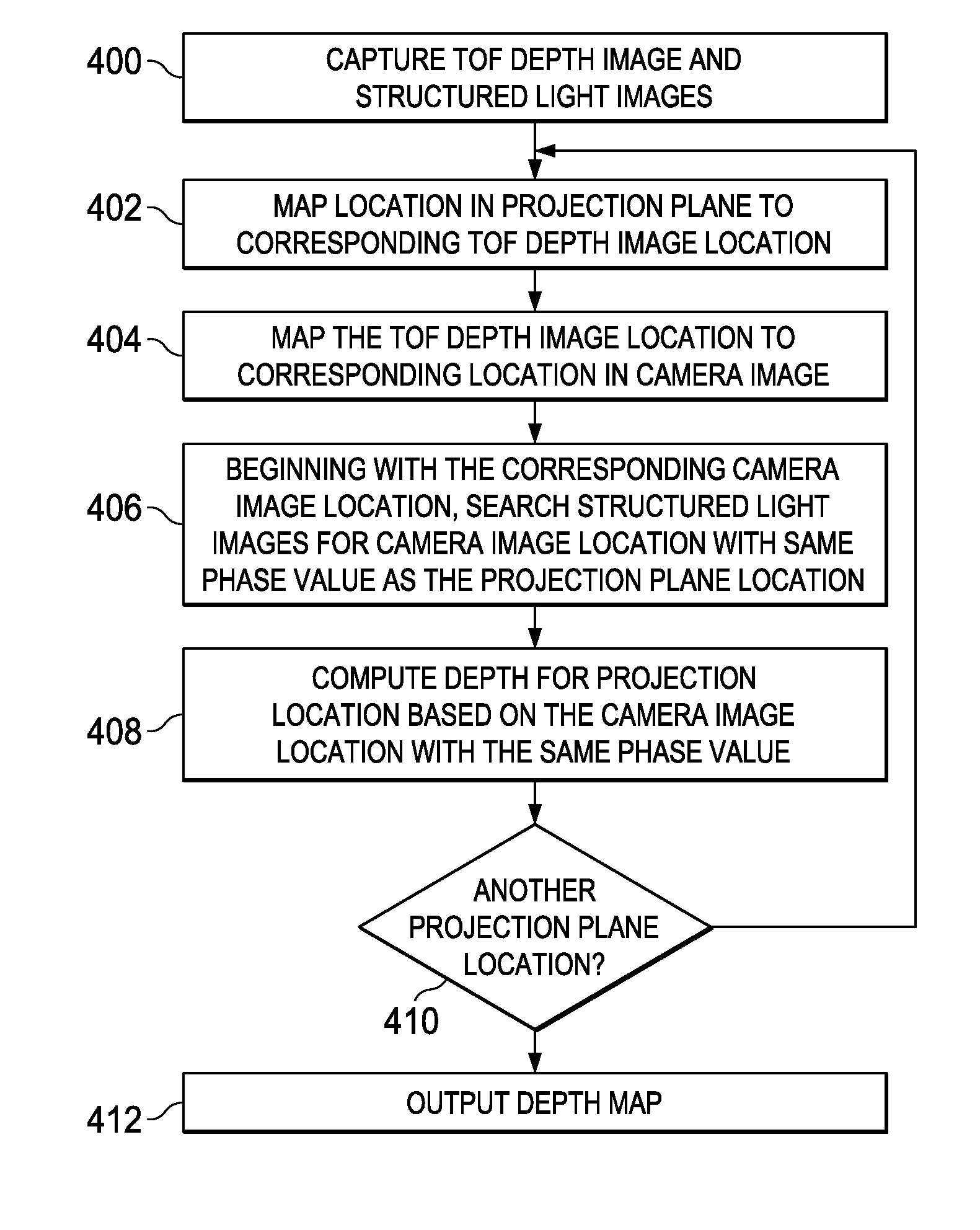

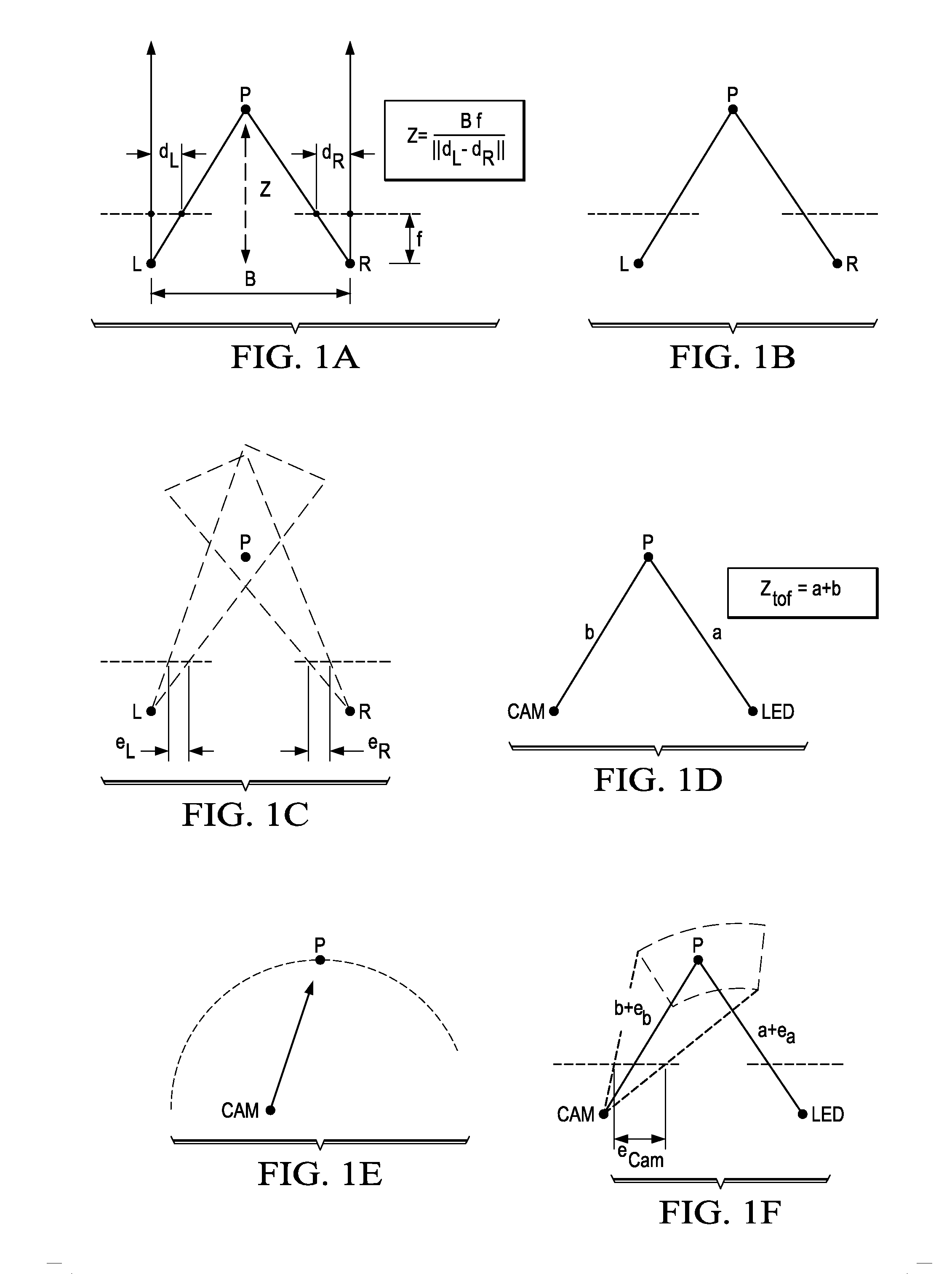

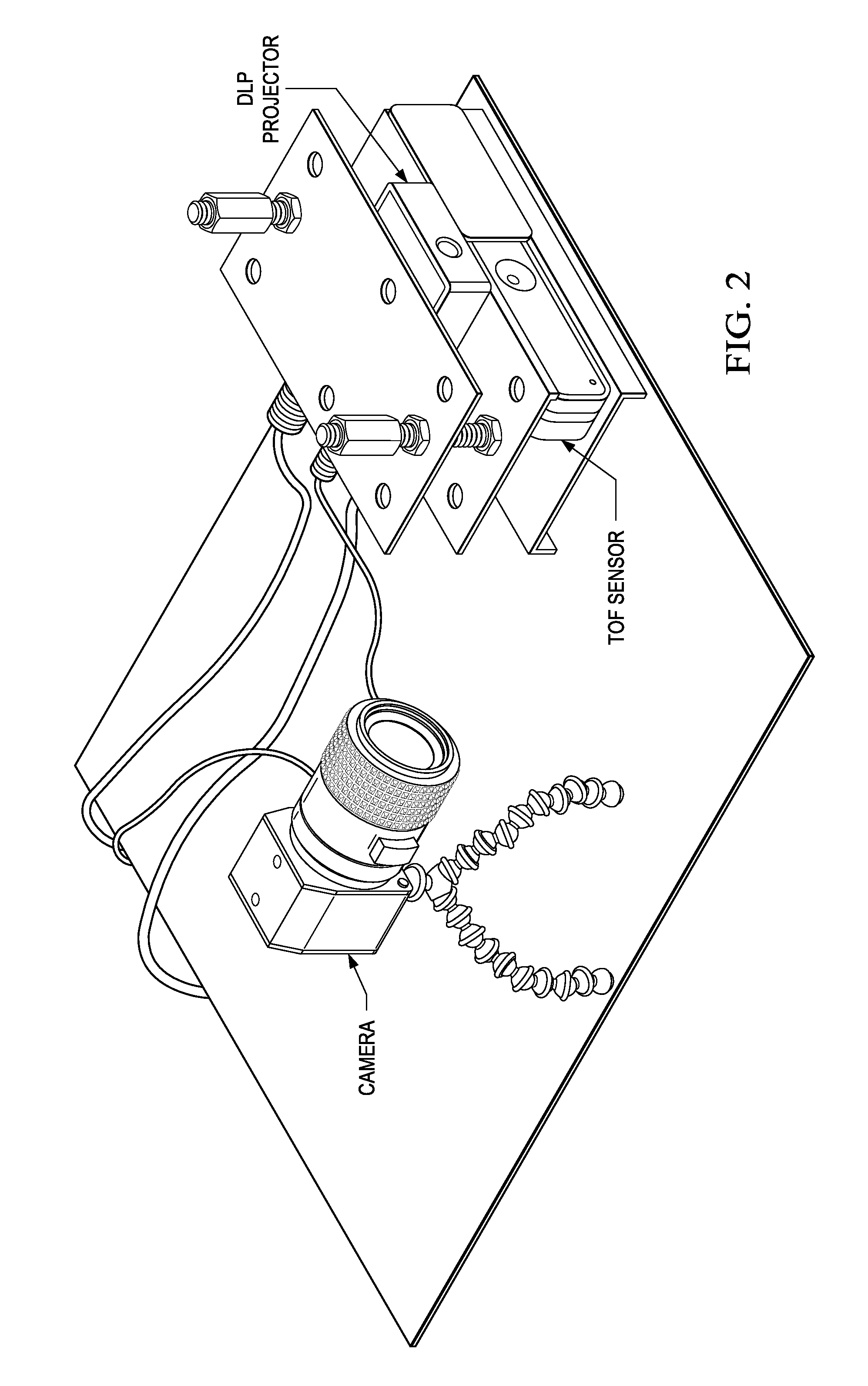

Time-of-Flight (TOF) Assisted Structured Light Imaging

A method for computing a depth map of a scene in a structured light imaging system including a time-of-flight (TOF) sensor and a projector is provided that includes capturing a plurality of high frequency phase-shifted structured light images of the scene using a camera in the structured light imaging system, generating, concurrently with the capturing of the plurality of high frequency phase-shifted structured light images, a time-of-flight (TOF) depth image of the scene using the TOF sensor, and computing the depth map from the plurality of high frequency phase-shifted structured light images wherein the TOF depth image is used for phase unwrapping.

Owner:TEXAS INSTR INC

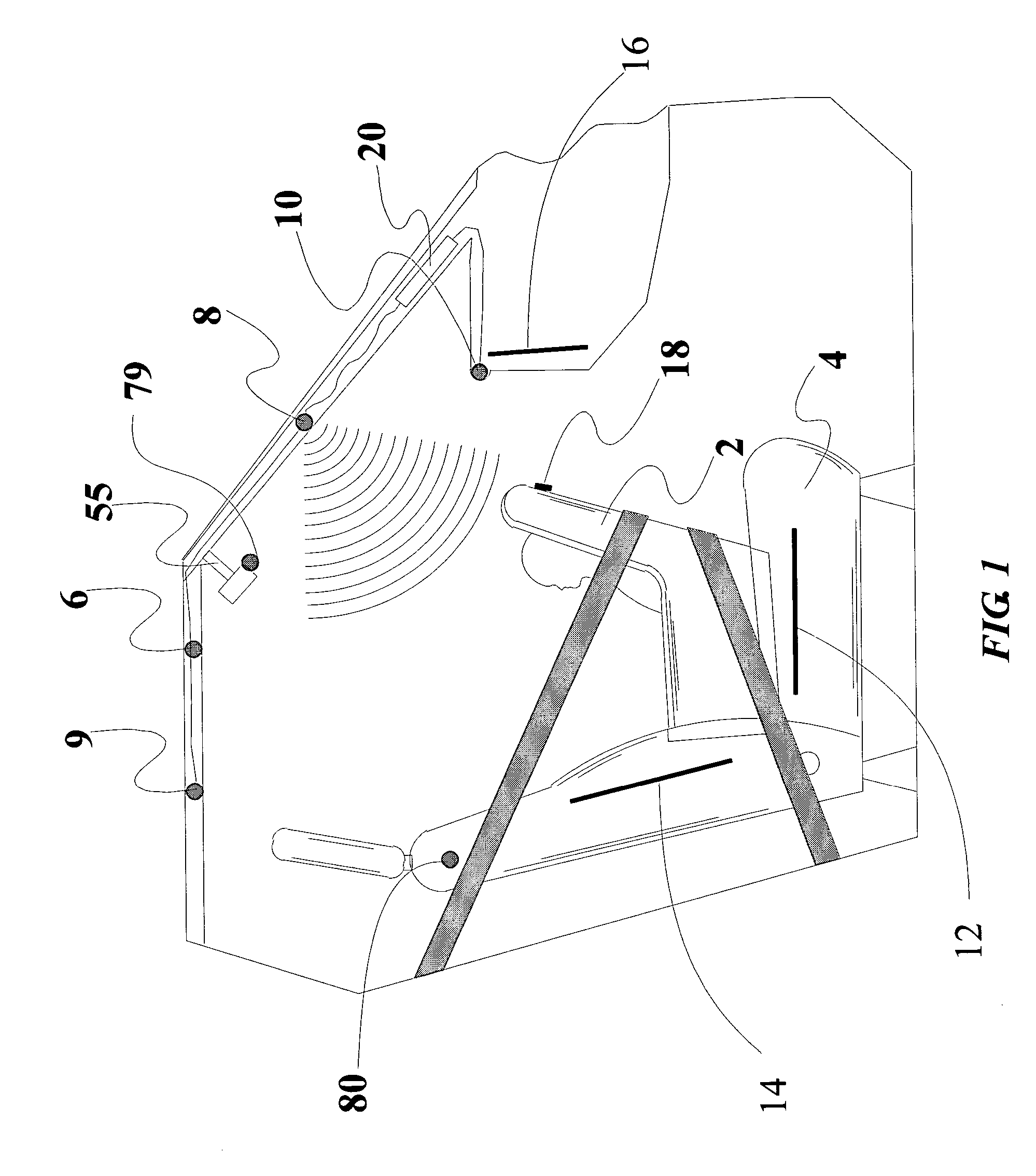

Apparatus and method for recognizing and determining the position of a part of an animal

InactiveUS6227142B1Easy to identifyQuality improvementCathetersUsing optical meansImage signalSignal processing

The present invention relates to an apparatus and a method for recognizing and determining the position of a part of an animal. The apparatus comprises a source of structured light for illuminating a region expected to contain at least one part in such a way that an object illuminated by the light simultaneously or discrete in time is partitioned into at least two illuminated areas, where each two illuminated areas are separated by a not illuminated area, an image capture device arranged to capture at least one image formed by the light and provide an image signal, the apparatus further comprising image signal processing device to respond to the captured image signal and a control device to determine if the illuminated object is the part by comparing the image of the illuminated object to reference criteria defining different objects, and if the illuminated object is established to be the part of the animal, the position thereof is established, an animal related device and the device to guide the animal related device towards to the position of the part.

Owner:DELAVAL HLDG AB

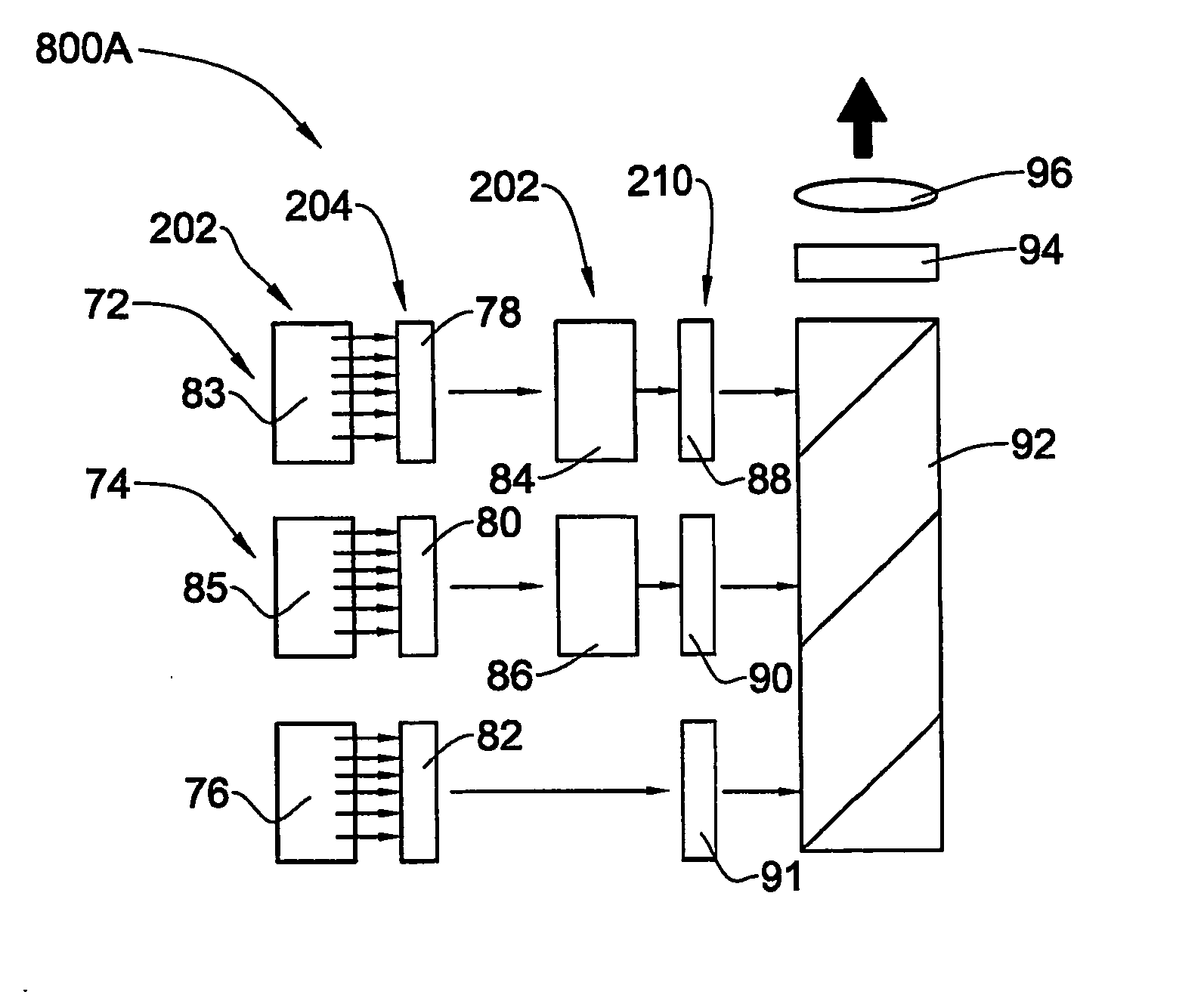

Optical System and Method for Use in Projection Systems

InactiveUS20070273957A1Reduce physical sizeImprove and optimize projection systemSemiconductor laser optical deviceDiffraction gratingsLight beamProjection system

An optical system and method are presented to produce a desired illuminating light pattern. The system comprises a light source system configured and operable to produce structured light in the form of a plurality of spatially separated light beams; and a beam shaping arrangement. The beam shaping arrangement is configured as a diffractive optical unit configured and operable to carry out at least one of the following: (i) combining an array of the spatially separated light beams into a single light beam thereby significantly increasing intensity of the illuminating light; (ii) affecting intensity profile of the light beam to provide the illuminating light of a substantially rectangular uniform intensity profile.

Owner:EXPLAY

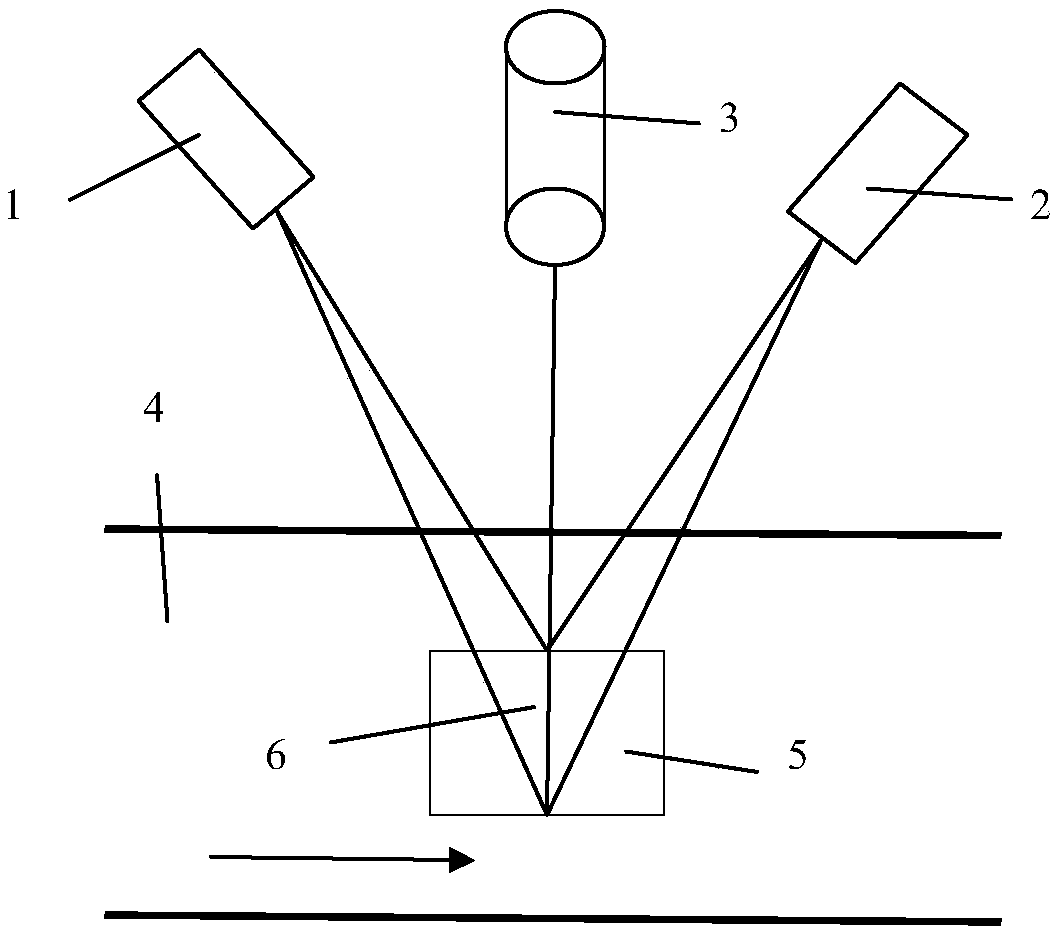

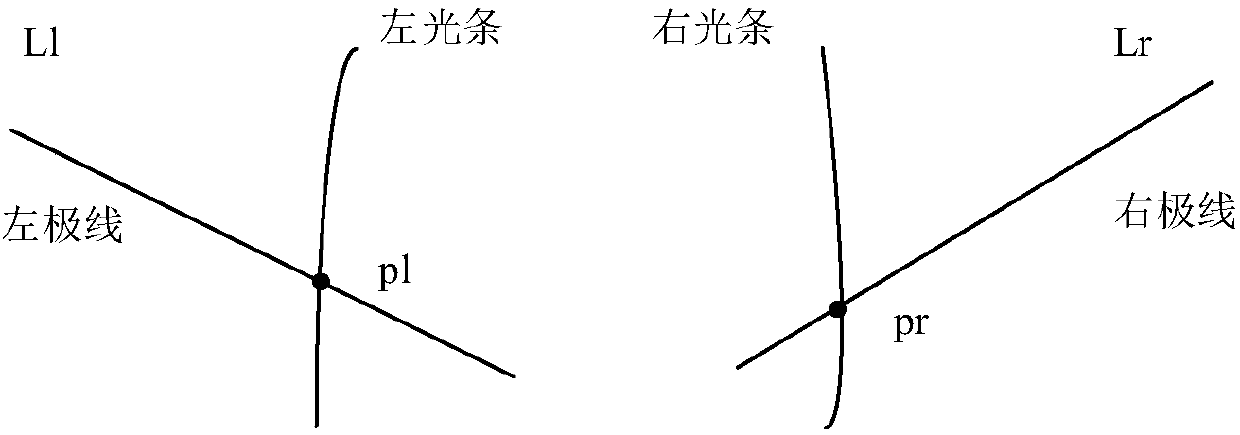

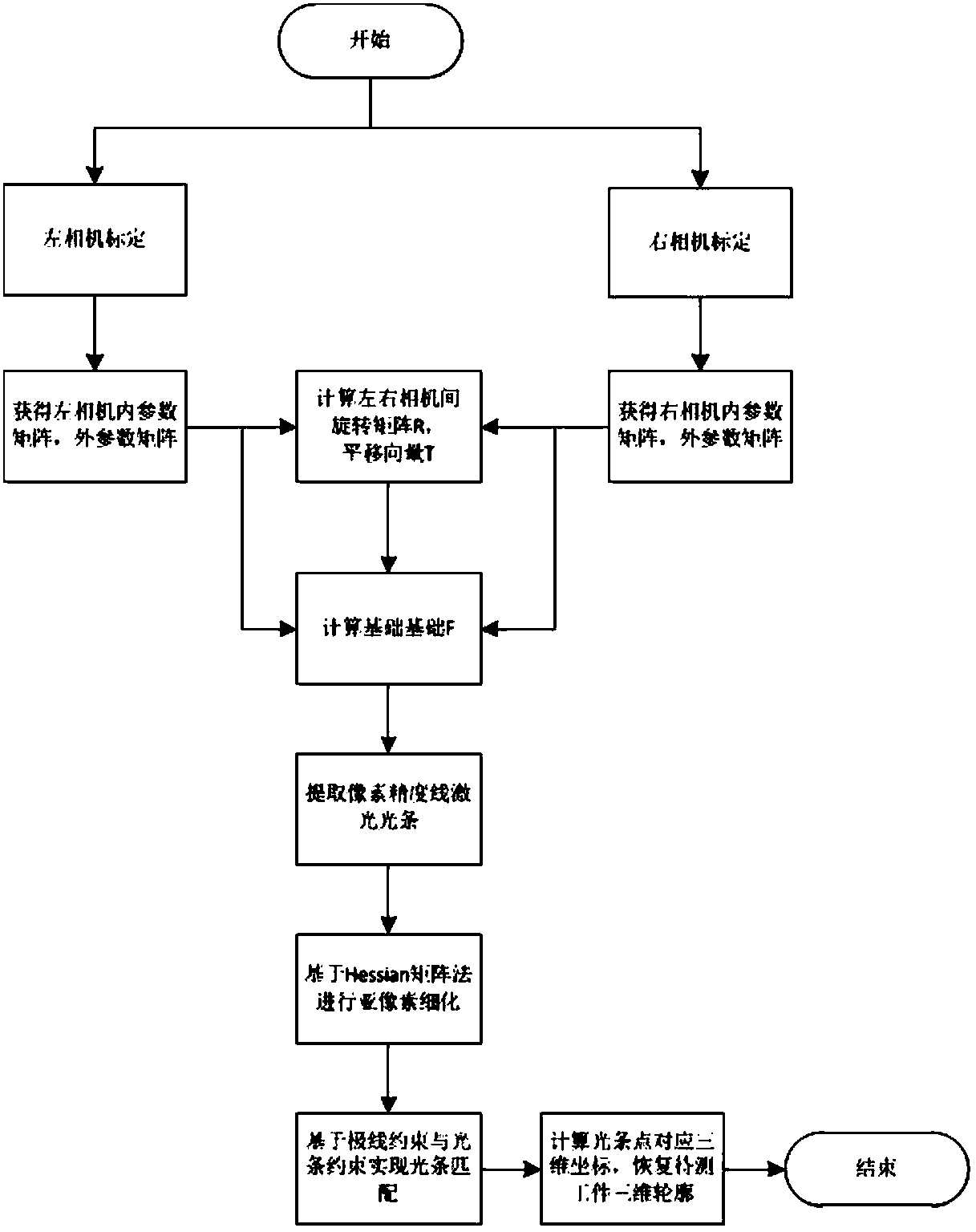

Binocular stereo vision three-dimensional measurement method based on line structured light scanning

InactiveCN107907048AReduce the difficulty of matchingImprove robustnessUsing optical meansThree dimensional measurementLaser scanning

The invention discloses a binocular stereo vision three-dimensional measurement method based on line structured light scanning, which comprises the steps of performing stereo calibration on binocularindustrial cameras, projecting laser light bars by using a line laser, respectively acquiring left and right laser light bar images, extracting light bar center coordinates with sub-pixel accuracy based on a Hessian matrix method, performing light bar matching according to an epipolar constraint principle, and calculating a laser plane equation; secondly, acquiring a line laser scanning image of aworkpiece to be measured, extracting coordinates of the image of the workpiece to be measured, calculating world coordinates of the workpiece to be measured by combining binocular camera calibrationparameters and the laser plane equation, and recovering the three-dimensional surface topography of the workpiece to be measured. Compared with a common three-dimensional measurement system combininga monocular camera and line structured light, the binocular stereo vision three-dimensional measurement method avoids complicated laser plane calibration. Compared with the traditional stereo vision method, the binocular stereo vision three-dimensional measurement method reduces the difficulty of stereo matching in binocular stereo vision while ensuring the measurement accuracy, and improves the robustness and the usability of a visual three-dimensional measurement system.

Owner:CHANGSHA XIANGJI HAIDUN TECH CO LTD

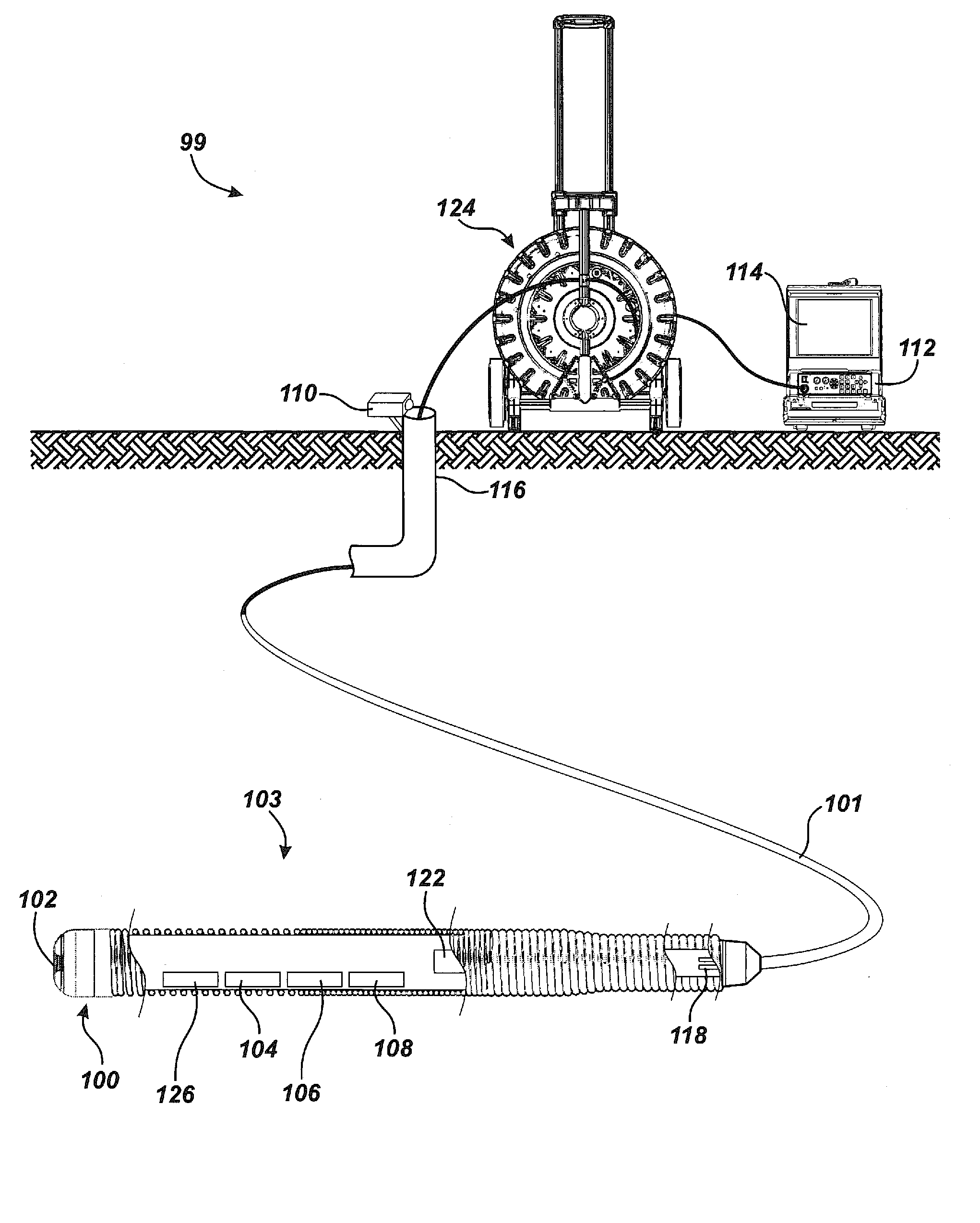

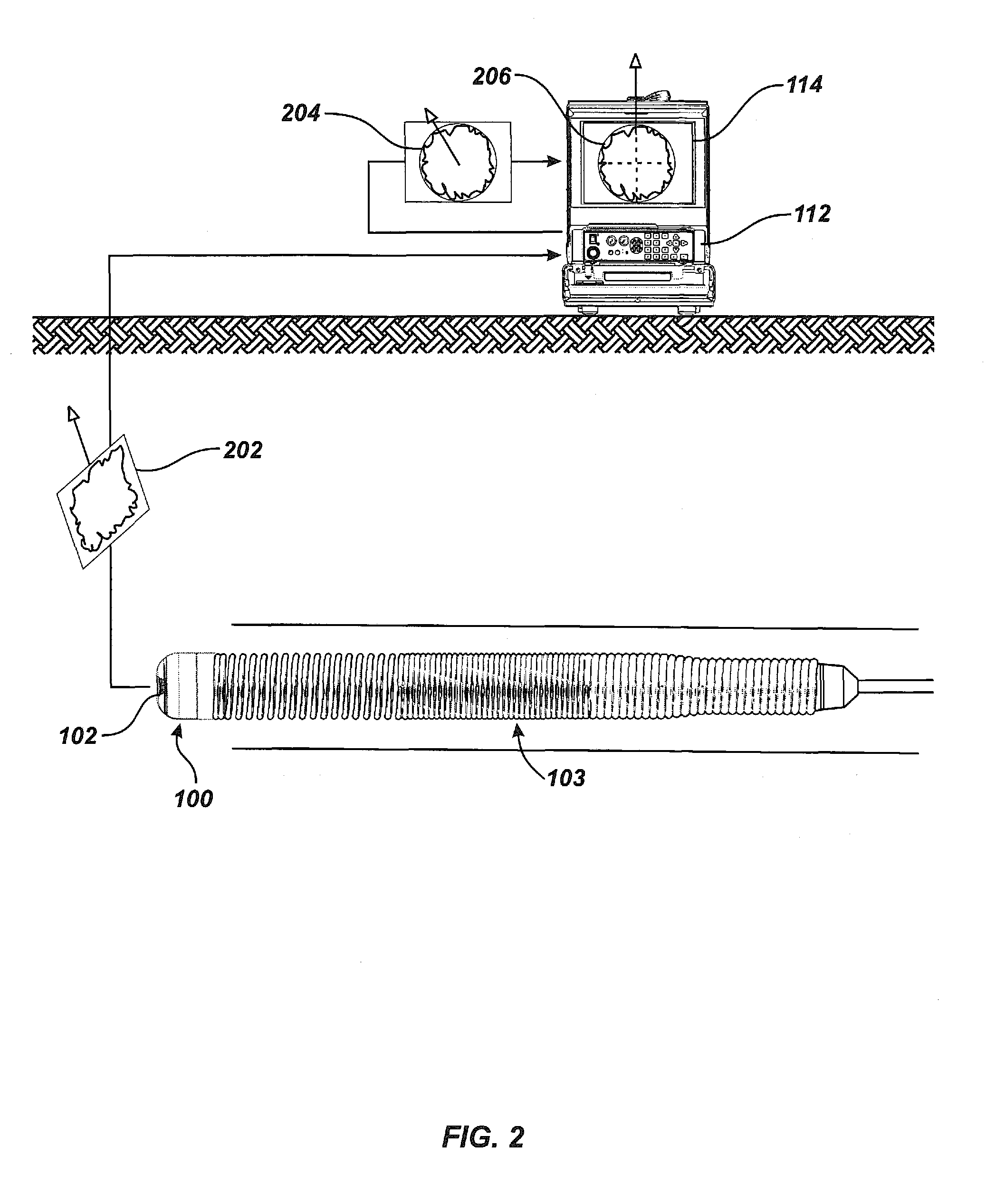

Pipe mapping system

ActiveUS8547428B1Simple methodTelevision system detailsImage enhancementData connectionThree axis accelerometer

A pipe inspection system employing a camera head assembly incorporating multiple local condition sensors, an integral dipole Sonde, a three-axis compass, and a three-axis accelerometer. The camera head assembly terminates a multi-channel push-cable that relays local condition sensor and video information to a processor and display subsystem. A cable storage structure includes data connection and wireless capability with tool storage and one or more battery mounts for powering remote operation. During operation, the inspection system may produce a two- or three-dimensional (3D) map of the pipe or conduit from local condition sensor data and video image data acquired from structured light techniques or LED illumination.

Owner:SEESCAN

Scanning apparatus and method

InactiveUS7313264B2Shorten the timeLow costCharacter and pattern recognitionUsing optical meansComputer graphics (images)Structured light

A scanning apparatus and method for generating computer models of three-dimensional objects comprising means for scanning the object to capture data from a plurality of points on the surface of the object so that the scanning means may capture data from two or more points simultaneously, sensing the position of the scanning means, generating intermediate data structures from the data, combining intermediate data structures to provide the model; display, and manually operating the scanning apparatus. The signal generated is structured light in the form of a stripe or an area from illumination sources such as a laser diode or bulbs which enable data for the position and color of the surface to be determined. The object may be on a turntable and may be viewed in real time as rendered polygons on a monitor as the object is scanned.

Owner:3D SCANNERS LTD

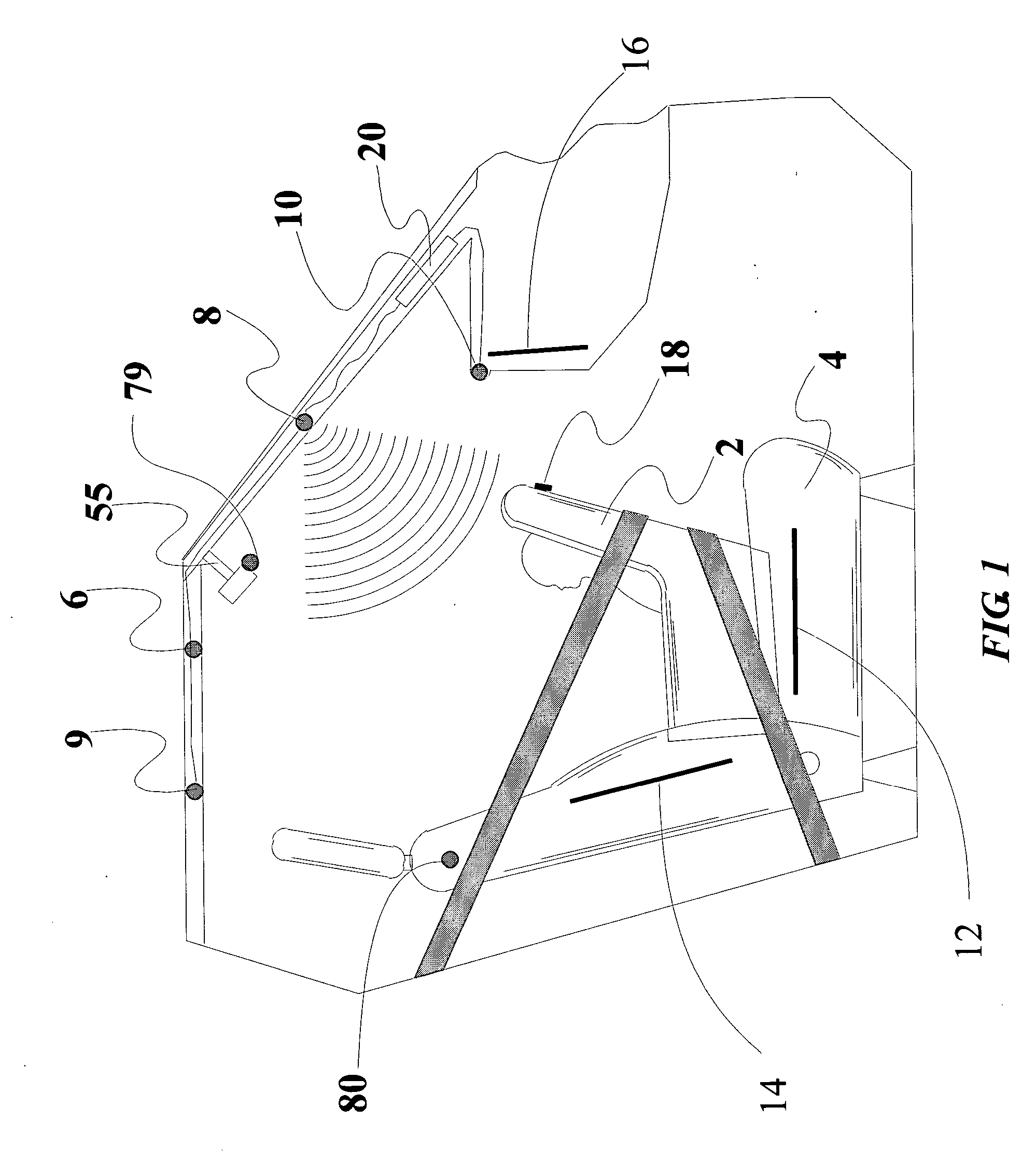

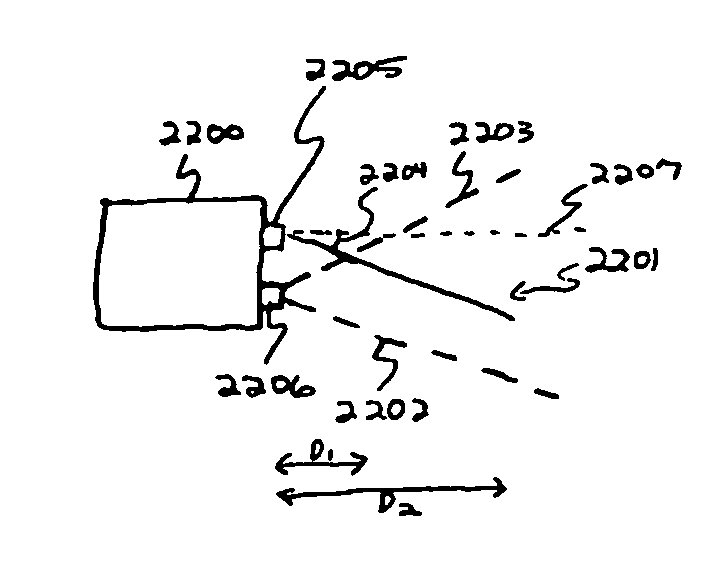

Quasi-three-dimensional method and apparatus to detect and localize interaction of user-object and virtual transfer device

InactiveUS20050024324A1Fine surfaceInput/output for user-computer interactionCharacter and pattern recognitionTriangulationThree dimensional method

A system used with a virtual device inputs or transfers information to a companion device, and includes two optical systems OS1, OS2. In a structured-light embodiment, OS1 emits a fan beam plane of optical energy parallel to and above the virtual device. When a user-object penetrates the beam plane of interest, OS2 registers the event. Triangulation methods can locate the virtual contact, and transfer user-intended information to the companion system. In a non-structured active light embodiment, OS1 is preferably a digital camera whose field of view defines the plane of interest, which is illuminated by an active source of optical energy. Preferably the active source, OS1, and OS2 operate synchronously to reduce effects of ambient light. A non-structured passive light embodiment is similar except the source of optical energy is ambient light. A subtraction technique preferably enhances the signal / noise ratio. The companion device may in fact house the present invention.

Owner:TOMASI CARLO +1

Method and arrangement for obtaining information about vehicle occupants

Arrangement and method for obtaining information about a vehicle occupant in a compartment of the vehicle in which a light source is mounted in the vehicle, structured light is projected into an area of interest in the compartment, rays of light forming the structured light originating from the light source, reflected light is detected at an image sensor at a position different than the position from which the structured light is projected, and the reflected light is analyzed relative to the projected structured light to obtain information about the area of interest. The structured light is designed to appear as if it comes from a source of light (virtual or actual) which is at a position different than the position of the image sensor.

Owner:AMERICAN VEHICULAR SCI

System and technique for retrieving depth information about a surface by projecting a composite image of modulated light patterns

InactiveUS20080279446A1Reduce system costInformation can be reducedUsing optical meansAquisition of 3D object measurementsInteraction interfaceTelecollaboration

Owner:UNIV OF KENTUCKY RES FOUND

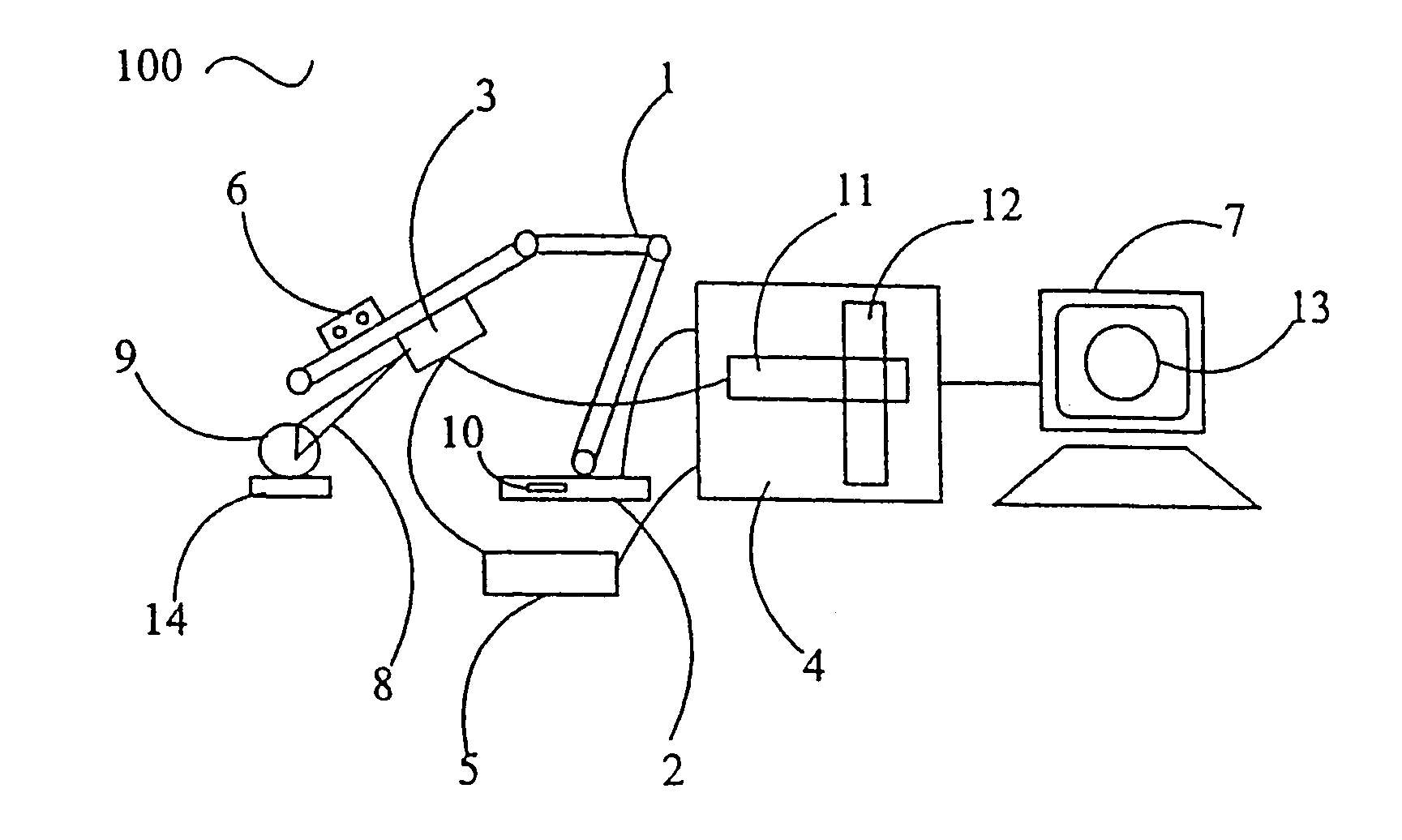

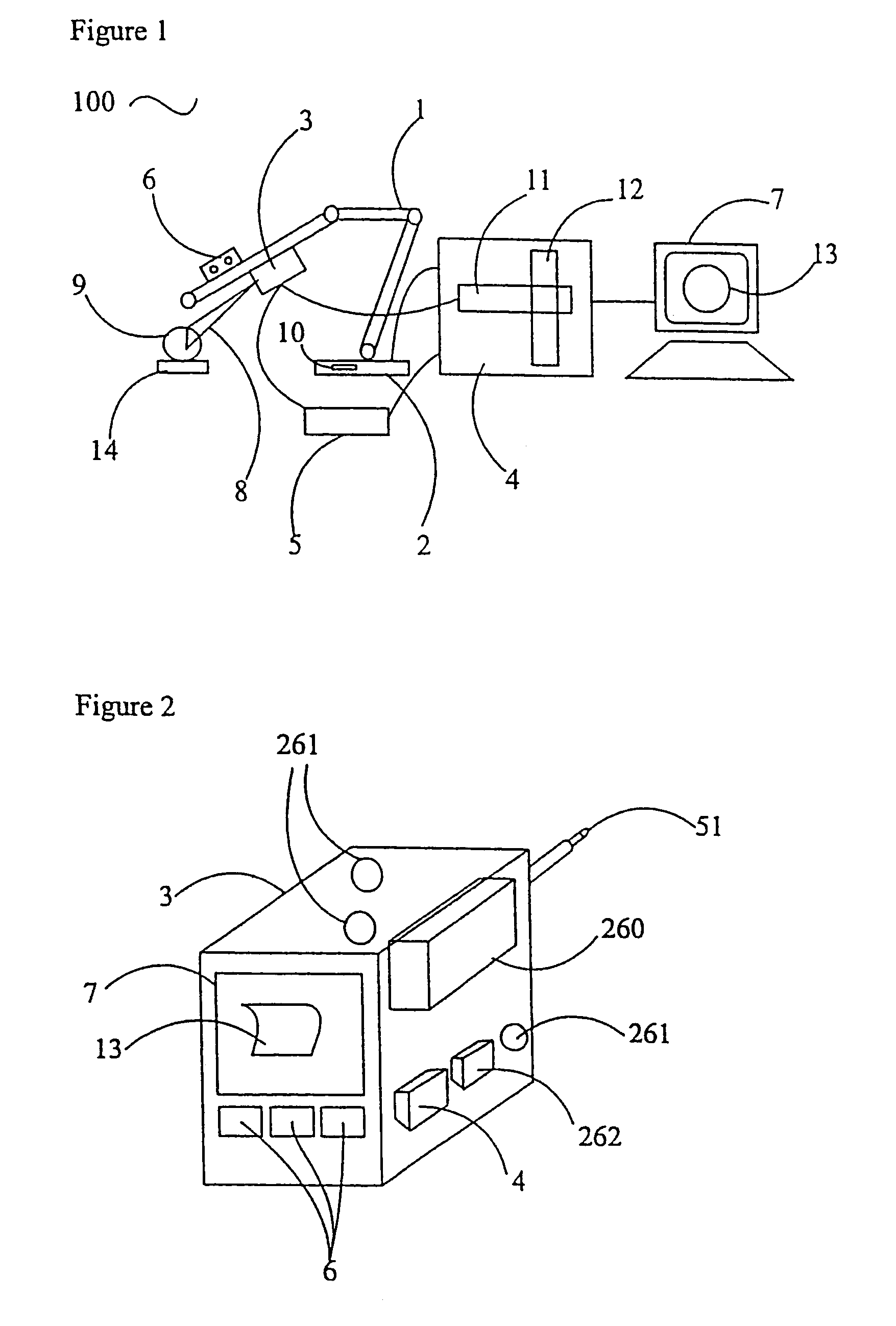

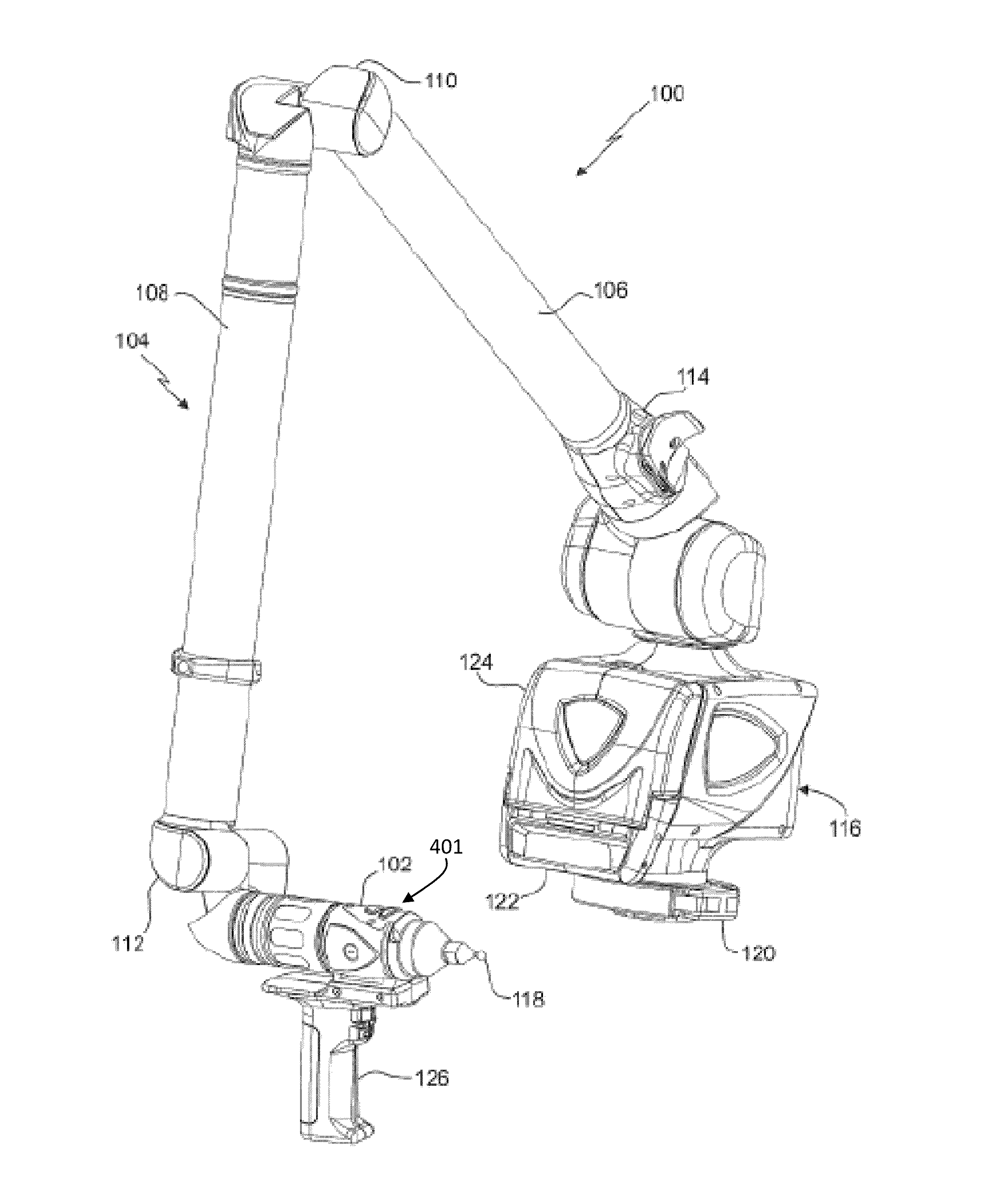

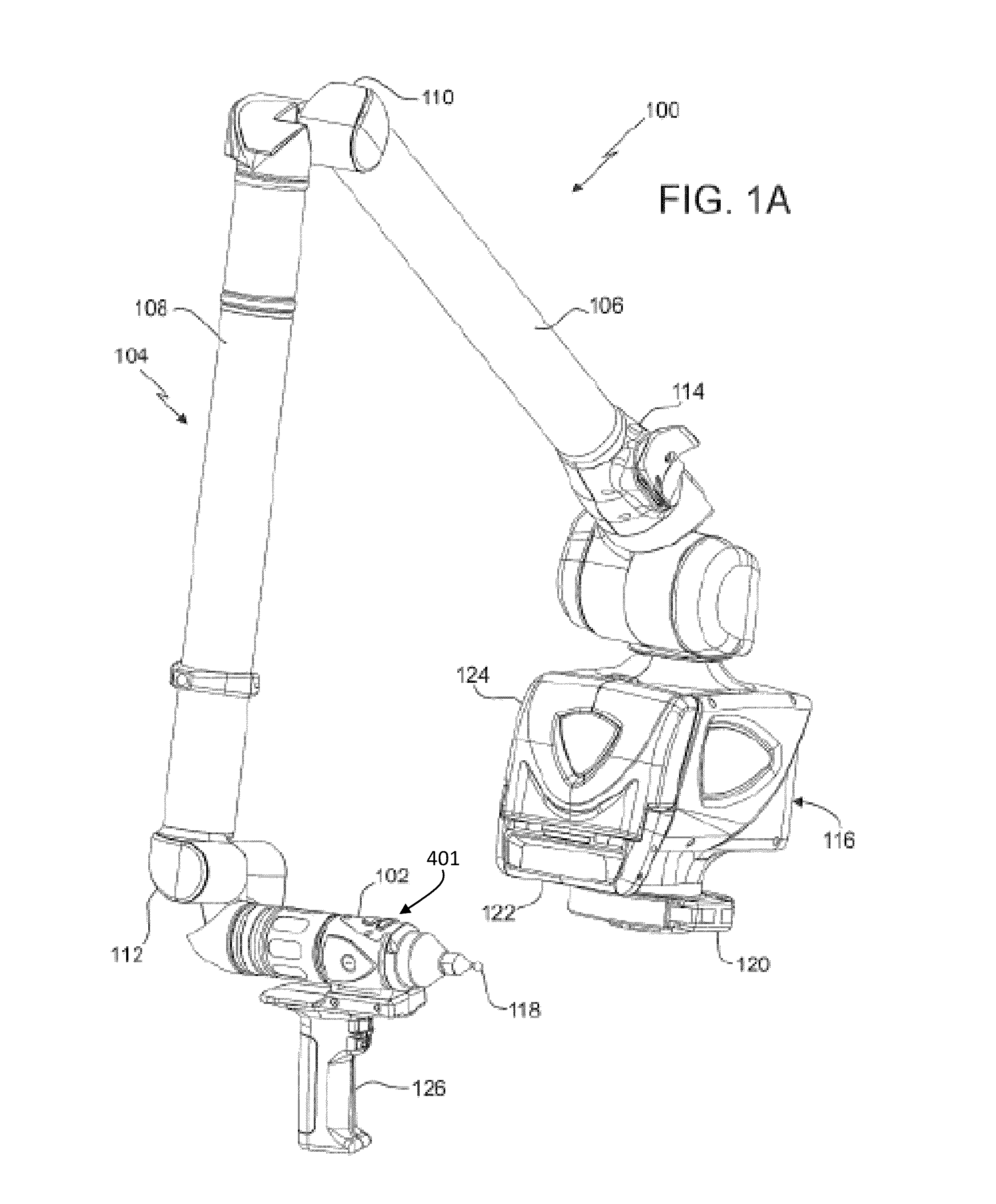

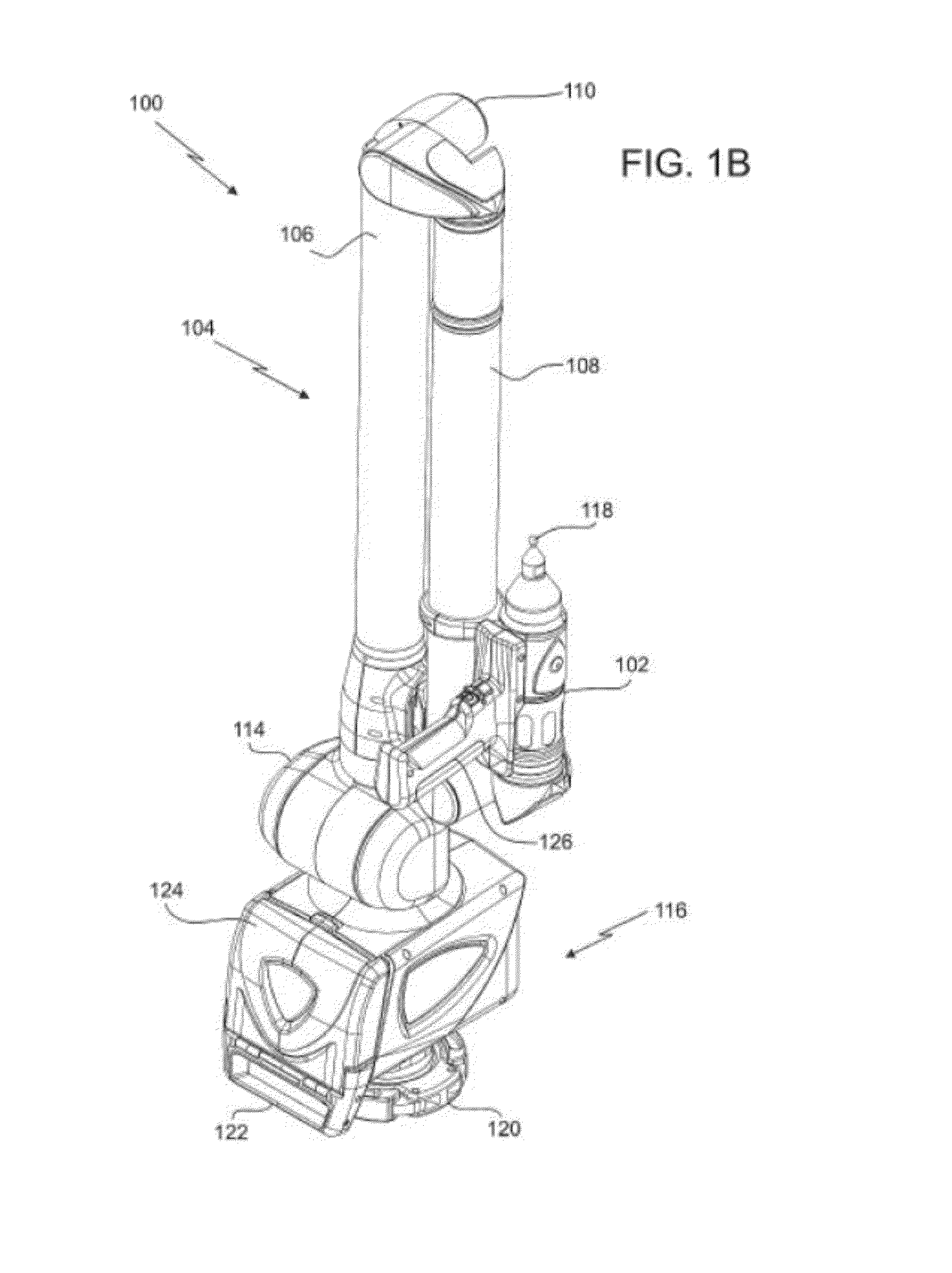

Coordinate measurement machines with removable accessories

ActiveUS20130125408A1Programme controlUsing optical meansCoordinate-measuring machineComputer science

A portable articulated arm coordinate measuring machine is provided. The coordinate measuring machine includes a base with an arm portion. A probe end is coupled to an end of the arm portion distal from the base. A device configured to emit a coded structured light onto an object to determine the three dimensional coordinates of a point on the object.

Owner:FARO TECH INC

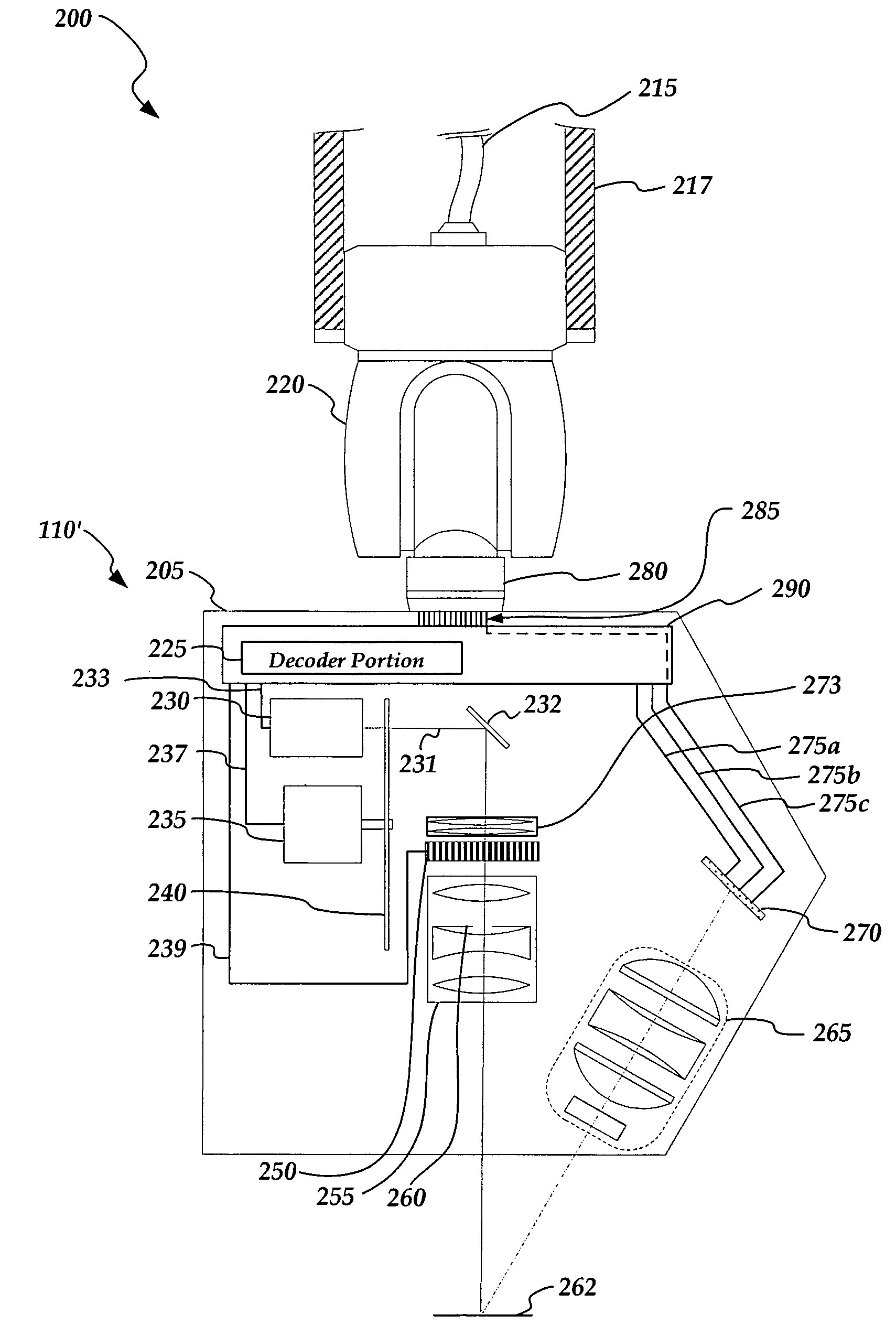

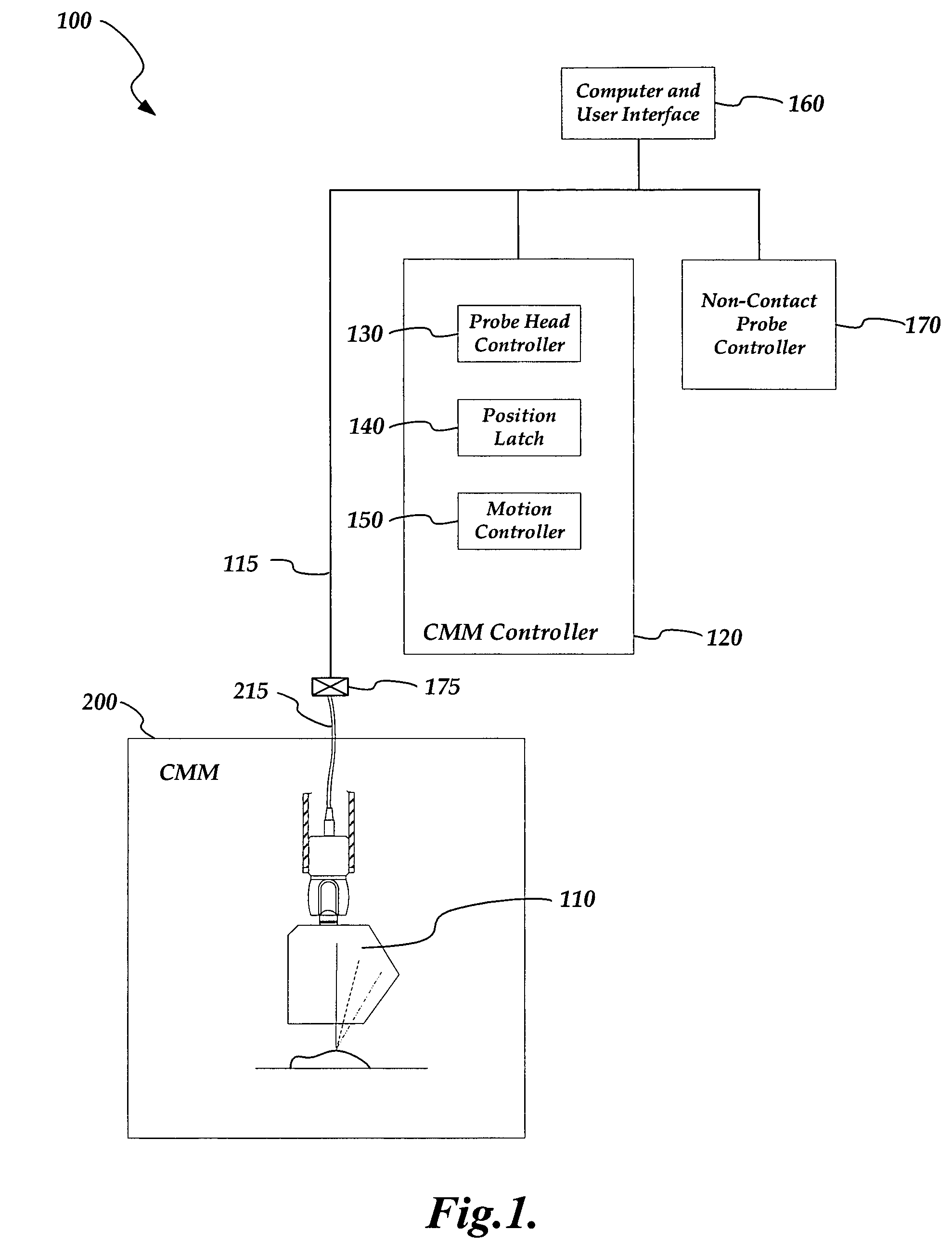

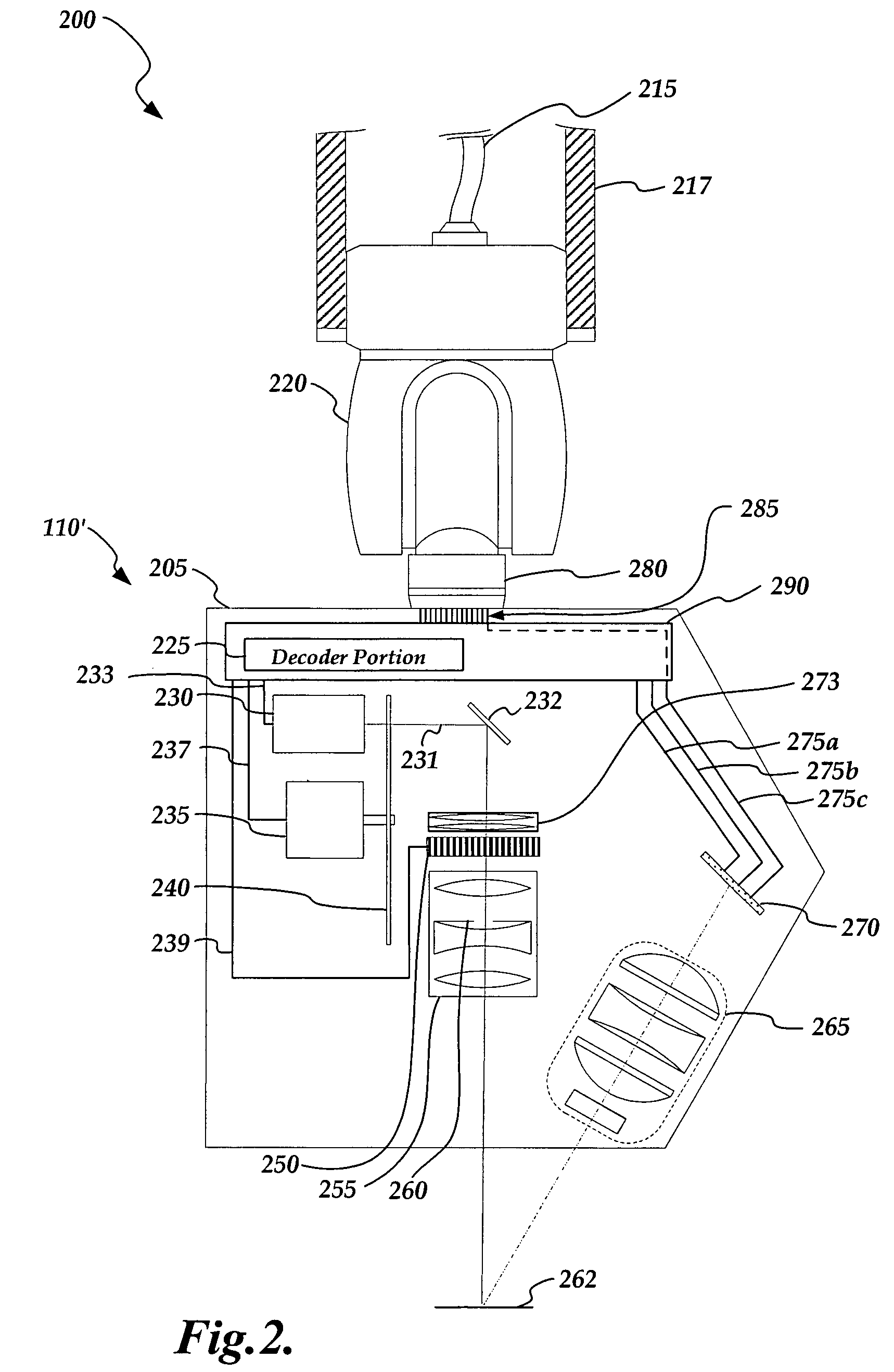

Non-contact probe control interface

ActiveUS7652275B2Existing systems can be upgraded more easilyEasy to upgradeImage analysisOptical rangefindersSpatial light modulatorControl signal

A probe control interface is provided for a structured light non-contact coordinate measuring machine probe. Portions of a video control signal for controlling the grey level of selected rows of pixels of a spatial light modulator of the probe can be decoded into control signals for additional probe components or functions that have been added to increase the measuring capabilities or versatility of the non-contact probe. By providing the additional probe component control signals in this manner, a versatile structured light non-contact probe system can be made compatible with a standard probe head autojoint system (e.g. a Renishaw™ type system), thus allowing the probe to be automatically exchanged with other standard probes and allowing existing systems to use the non-contact probe more easily. Various aspects of the probe control interface allow for relatively simple, compact, lightweight and robust implementation.

Owner:MITUTOYO CORP

Method of detecting object using structured light and robot using the same

ActiveUS20070267570A1Automatic obstacle detectionTravelling automatic controlHeight differenceStructured light

A method of detecting an object using a structured light and a robot using the same are disclosed. The method of detecting a floor object using a structured light includes measuring a height difference of a position onto which a specified structured light is projected with a reference position, and detecting the floor object using the measured height difference.

Owner:SAMSUNG ELECTRONICS CO LTD

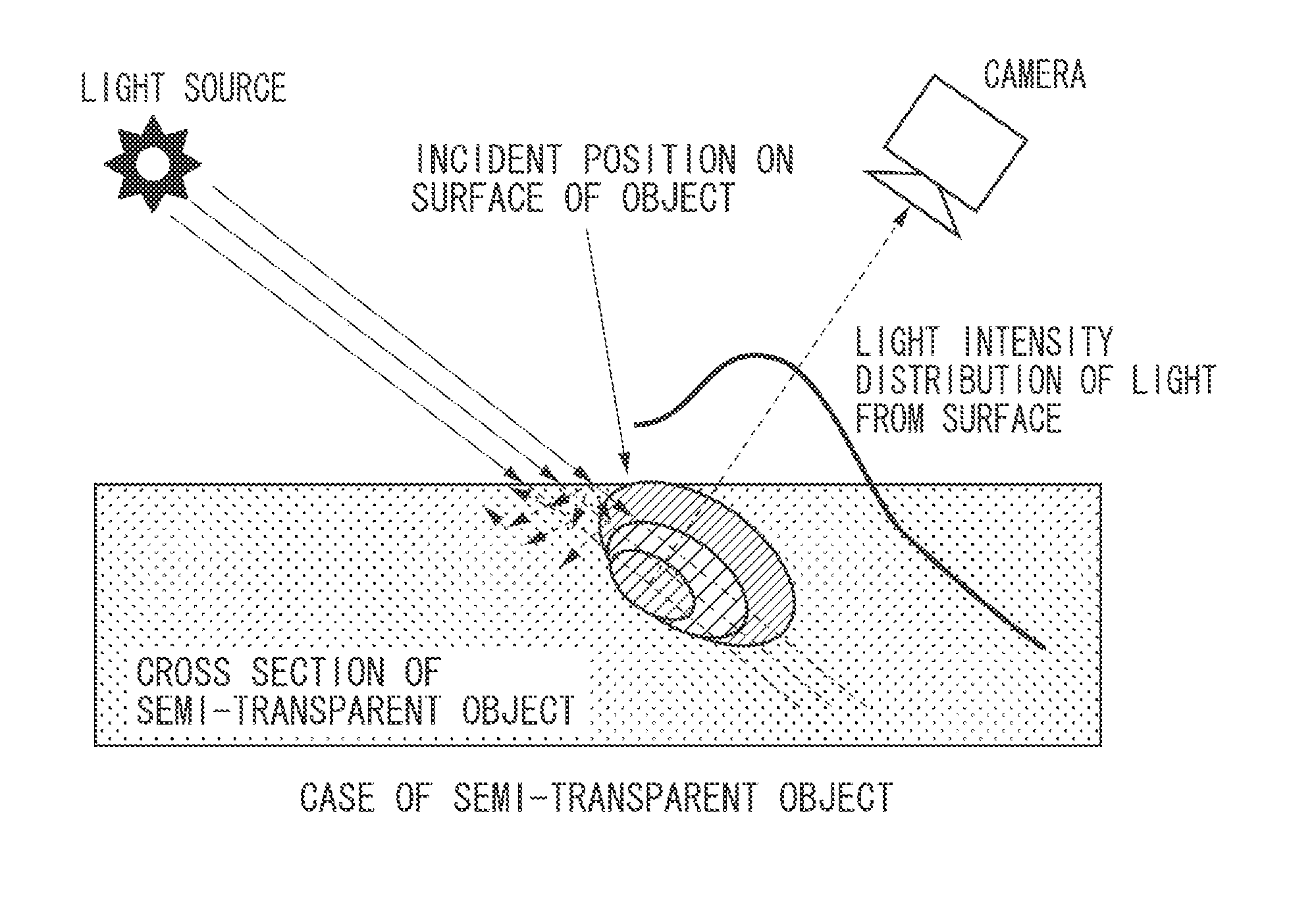

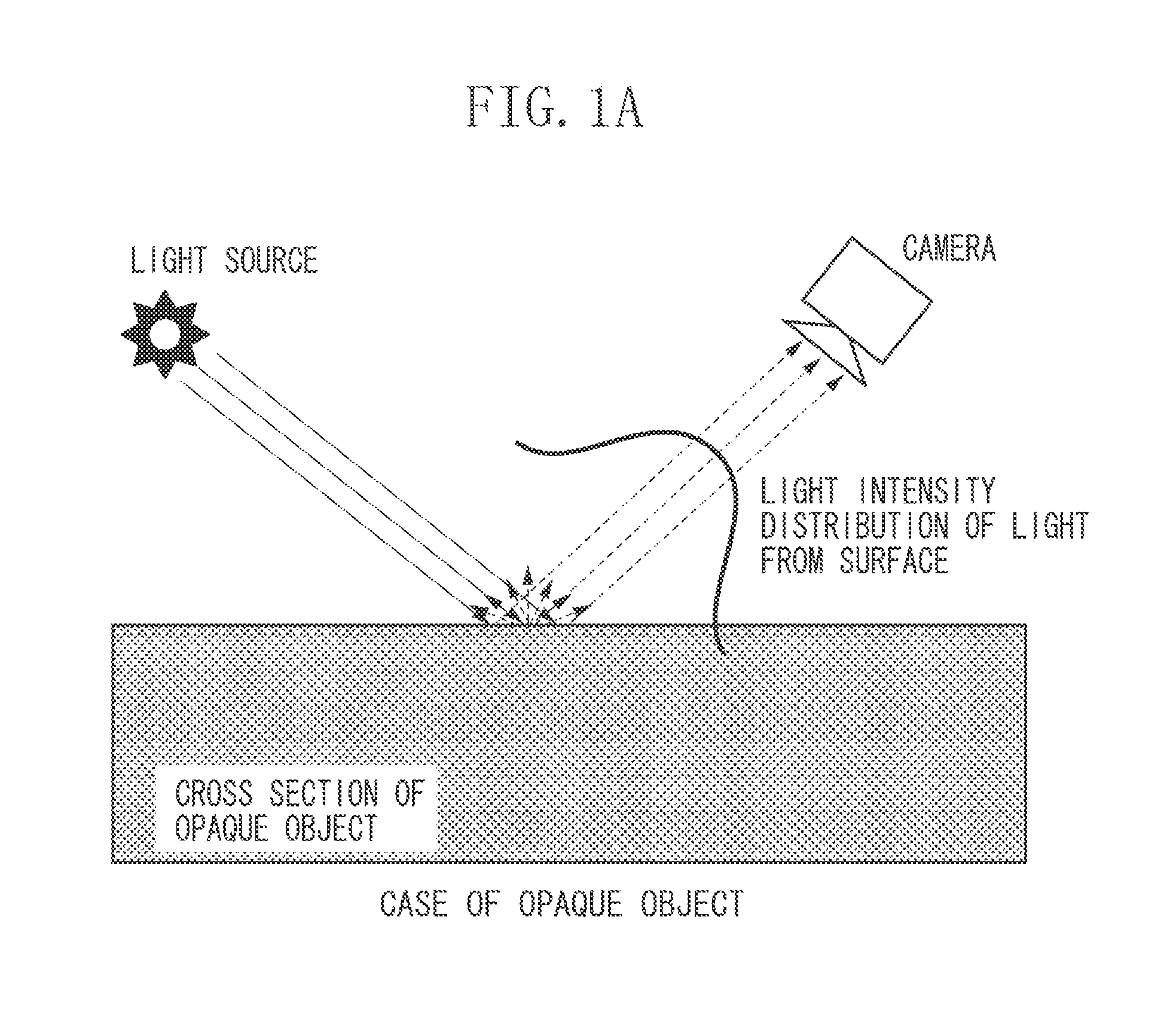

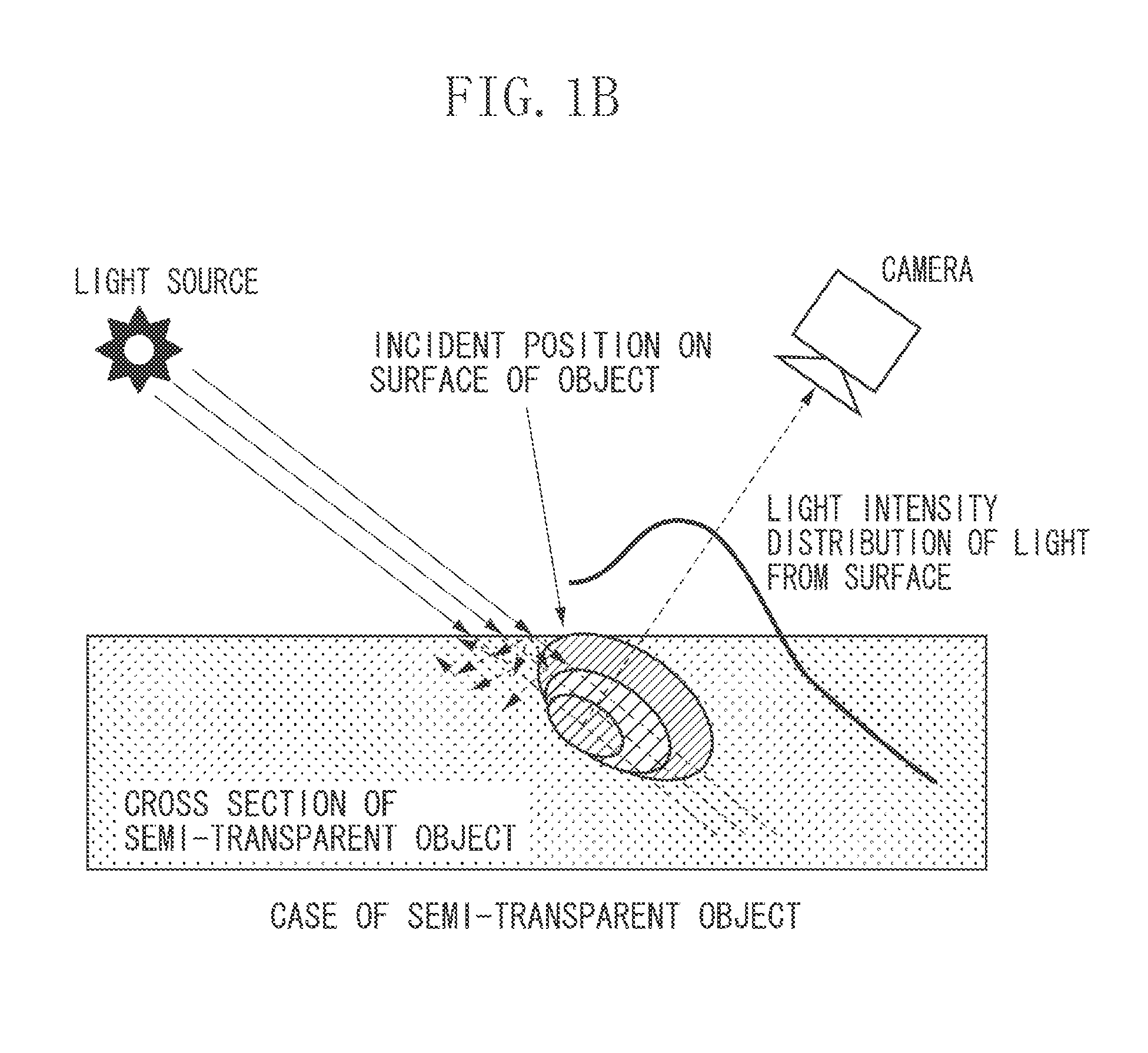

Information processing apparatus and information processing method

ActiveUS20120316820A1High precisionUsing optical meansTesting/calibration of speed/acceleration/shock measurement devicesInformation processingObject based

An information processing apparatus includes an acquisition unit configured to acquire a plurality of positions on a surface of a measurement target object using information of light reflected from the measurement target object on which structured light is projected, a position of a light source of the structured light, and a position of a light reception unit configured to receive the reflected light and acquire the information of the reflected light, a calculation unit configured to acquire at least one of a position and a direction on the surface of the measurement target object based on the plurality of the positions, and a correction unit configured to correct at least one of the plurality of the positions based on information about an error in measurement for acquiring the plurality of the positions and at least one of the position and the direction on the surface of the measurement target object.

Owner:CANON KK

Object detection system

InactiveUS20070019181A1Wide field of viewHigh resolutionOptical rangefindersRoad vehicles traffic controlTriangulationHome robot

An object detection system utilizing one or more thin, planar structured light patterns projected into a volume of interest, along with digital processing hardware and one or more electronic imagers looking into the volume of interest. Triangulation is used to determine the intersection of the structured light pattern with objects in the volume of interest. Applications include navigation and obstacle avoidance systems for autonomous vehicles (including agricultural vehicles and domestic robots), security systems, and pet training systems.

Owner:SINCLAIR KENNETHH +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com