Patents

Literature

46results about How to "Low image quality requirements" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

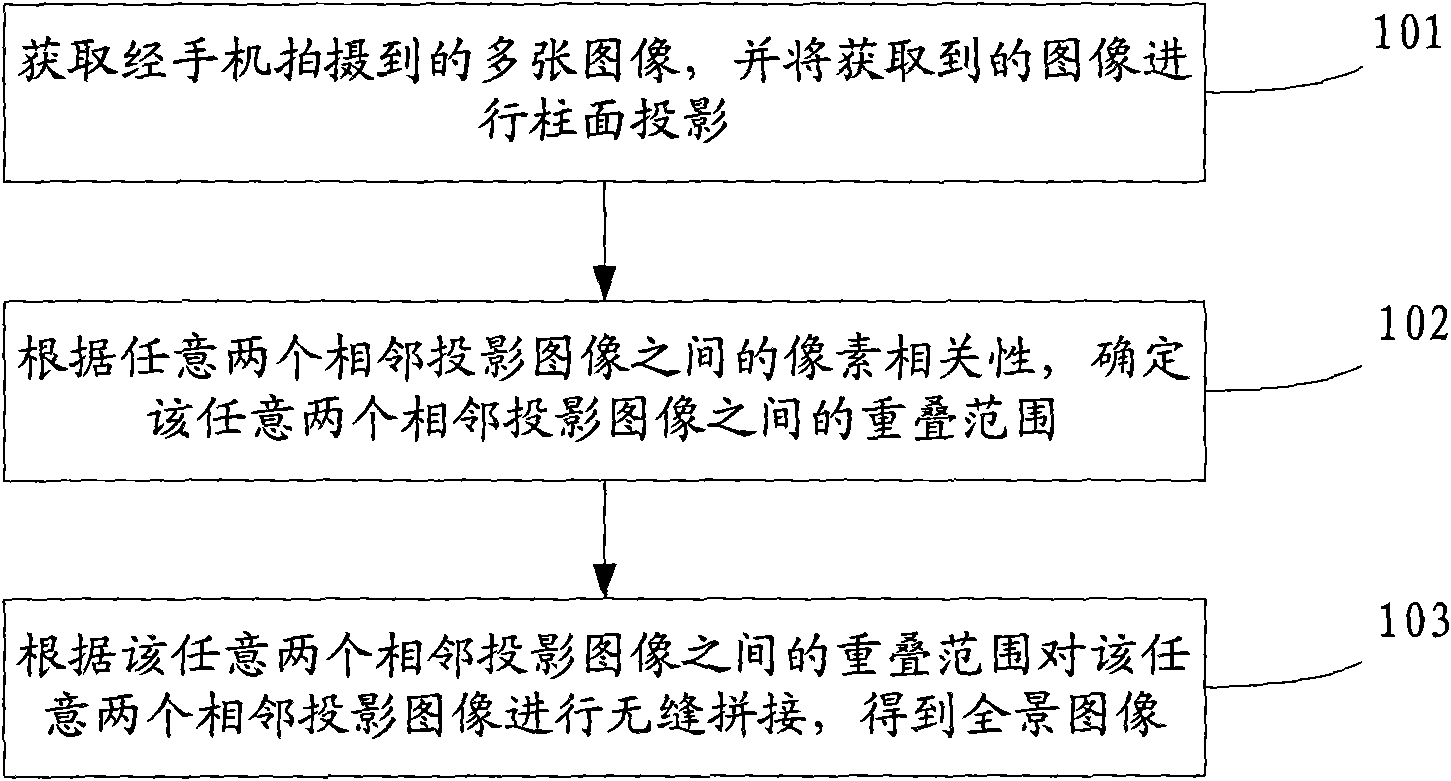

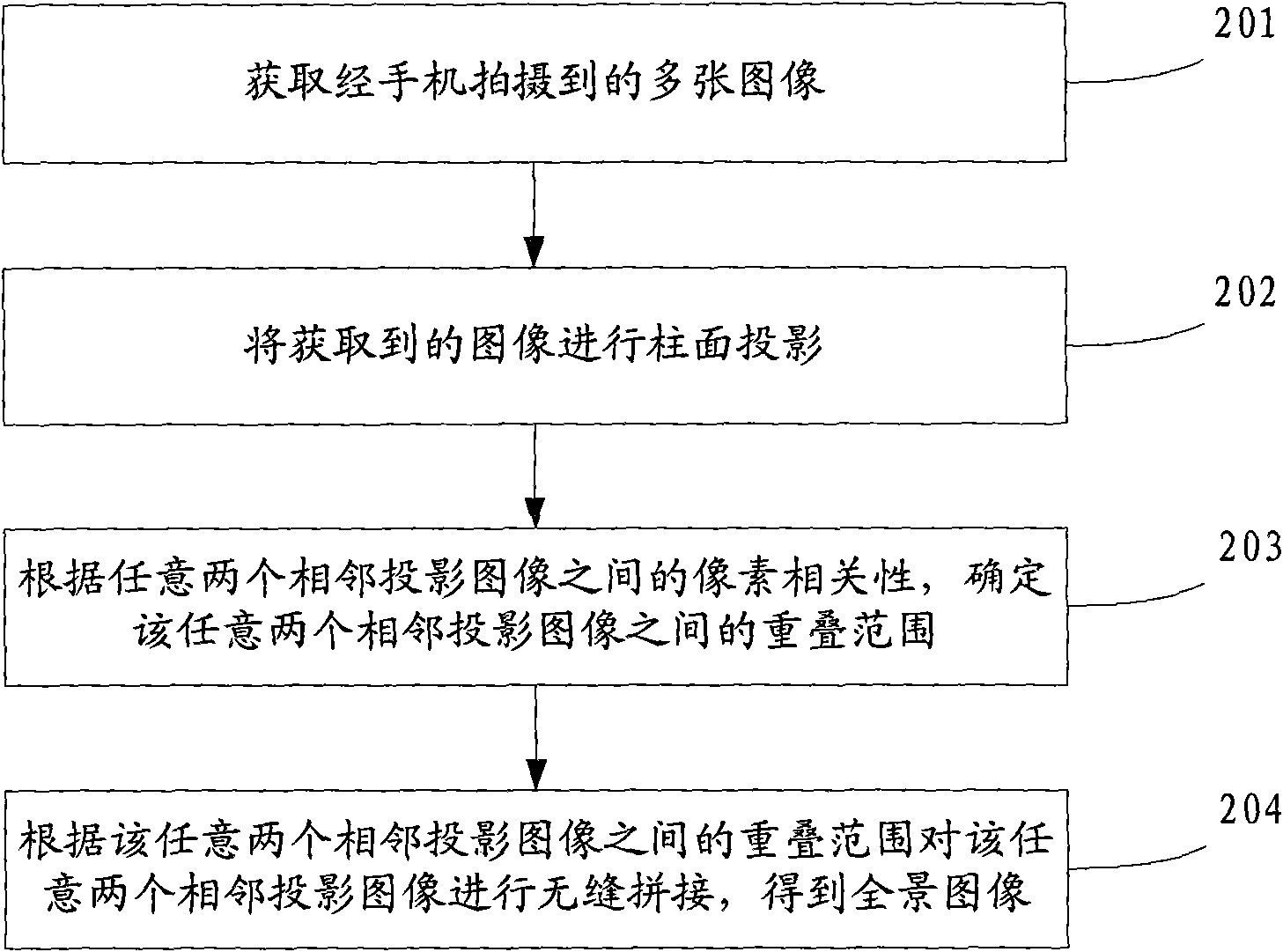

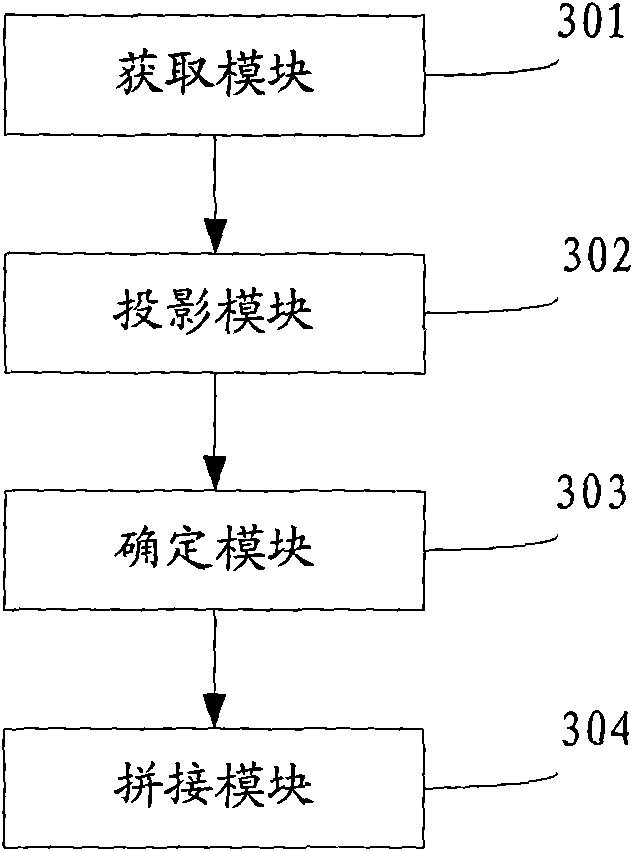

Method and device for generating panoramic image

InactiveCN101895693AEasy to generateLow image quality requirementsTelevision system detailsColor television detailsImaging processingImaging quality

The invention discloses a method and device for generating a panoramic image, belonging to the technical field of image processing. The method comprises the following steps: obtaining a plurality of images shot with a mobile phone and carrying out cylindrical projection on the obtained images; determining the overlapping range between any two adjacent projected images according to the pixel coherence between any two adjacent projected images; and carrying out seamless joining on any two adjacent projected images according to the overlapping range between any two adjacent projected images to obtain the panoramic image. The device comprises an obtaining module, a projecting module, a determining module and a joining module. The panoramic image is generated in the invention by carrying out cylindrical projection on the images shot with the mobile phone and carrying out seamless joining after determining the overlapping range between any two adjacent projected images; therefore, the invention not only can simplify the ways of generating the panoramic image but also reduces the requirement for image quality, thus ensuring easy popularization of application of the panoramic image.

Owner:北京高森明晨信息科技有限公司

Blood vessel diameter measuring method based on digital image processing technology

InactiveCN101984916AImprove computing efficiencyLow image quality requirementsDiagnostic recording/measuringSensorsImaging qualityLine fitting

The invention provides a blood vessel diameter measuring method based on digital image processing technology. The method includes that: (1) Gauss matched filtering method is utilized to enhance a blood vessel image; (2) the image is normalized and binarized; (3) according to related theory of mathematical morphology, the image is refined, and skeleton is extracted; (4) straight line fitting method is used for solving slope of a section of blood vessel, pixel number of blood vessel is detected along the direction vertical to the blood vessel, and then the blood vessel diameter is solved according to dot pitch. The invention is used for measuring medical image blood vessel diameter, computing efficiency is high, requirement on image quality is low, and computation is accurate.

Owner:HARBIN ENG UNIV

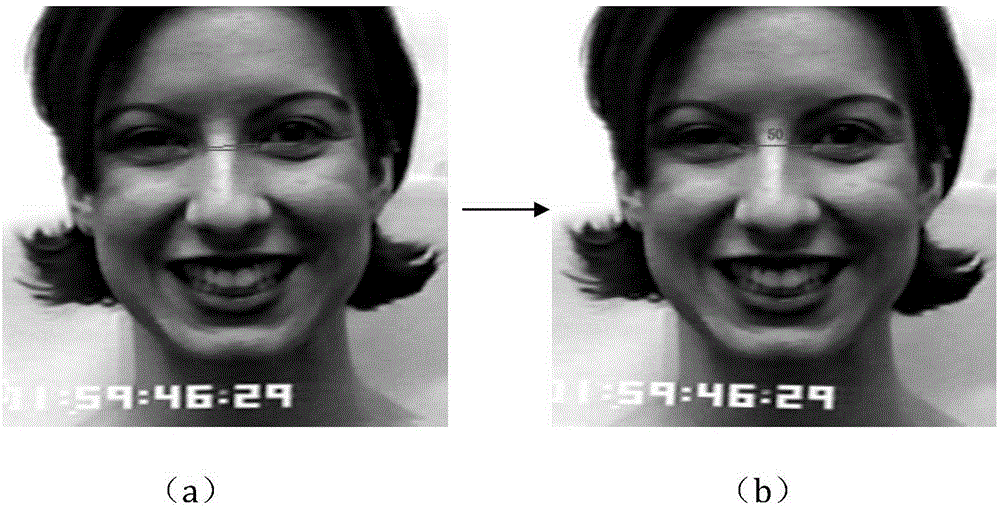

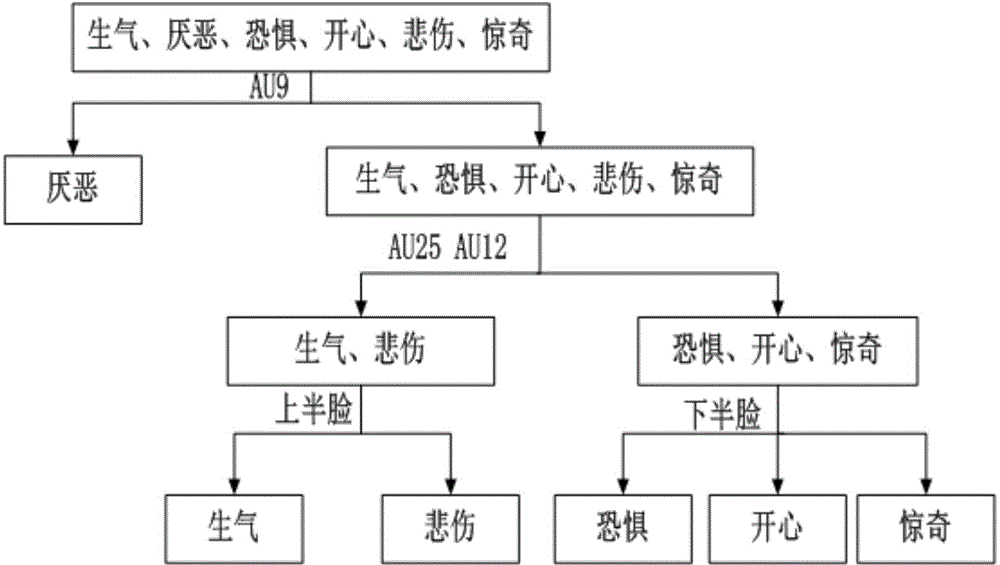

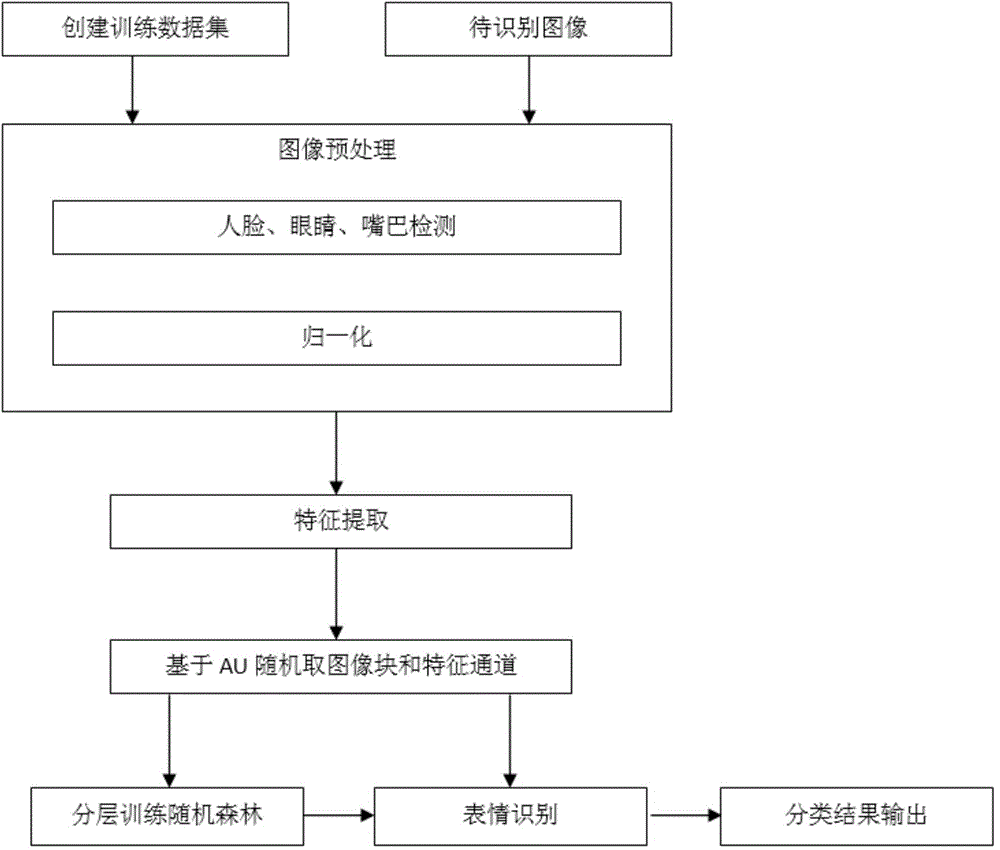

Motion unit layering-based facial expression recognition method and system

ActiveCN104680141AReduce difficultyHigh precisionCharacter and pattern recognitionImage resolutionRandom forest

The invention discloses a motion unit layering-based facial expression recognition method and system. The facial expression recognition method comprises steps of classifying three layers, and specifically comprises the following steps: first extracting an area adjacent to the upper part of the nose as a first-layer classification area, and roughly classifying an expression by taking whether an AU9 motion unit is detected or not as a judgment standard of a first-layer classifier; then extracting a lip area as a second-layer classification area, and performing fine adjustment on the basis of a first-layer classification result by taking whether AU25 and AU12 motion units are detected or not as a judgment standard of a second-layer classifier; finally extracting an upper half face area and a lower half face area as third-layer classification areas respectively, and performing precision classification on the basis of a second-layer classification result. The invention further provides the system for implementing the method. According to the method and the system, characteristics of representative areas of the expression are extracted on the basis of an AU layered structure, and layer-by-layer random forest classification is combined, so that expression recognition accuracy is effectively improved, expression recognition speed is increased, and the method and the system are particularly applied to a low-resolution image.

Owner:HUAZHONG NORMAL UNIV

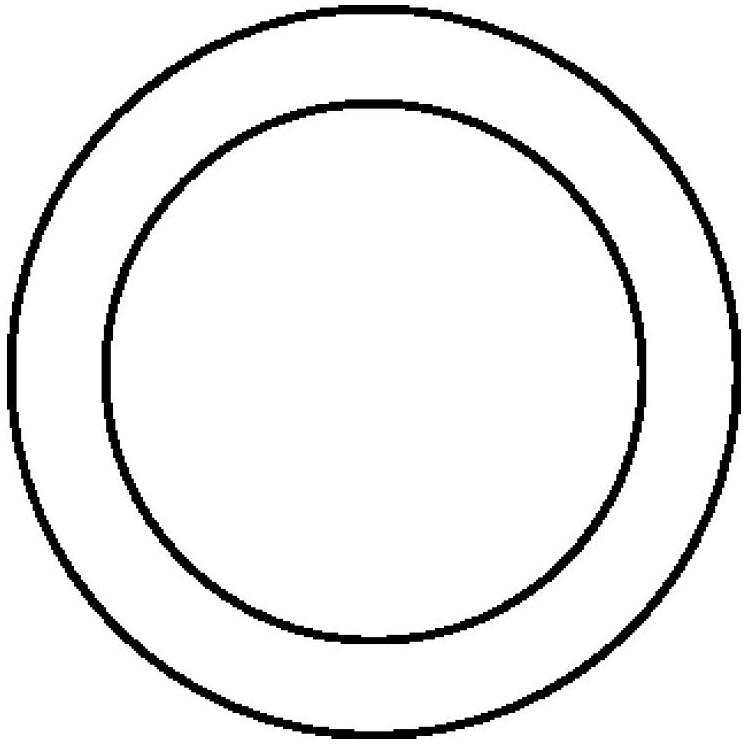

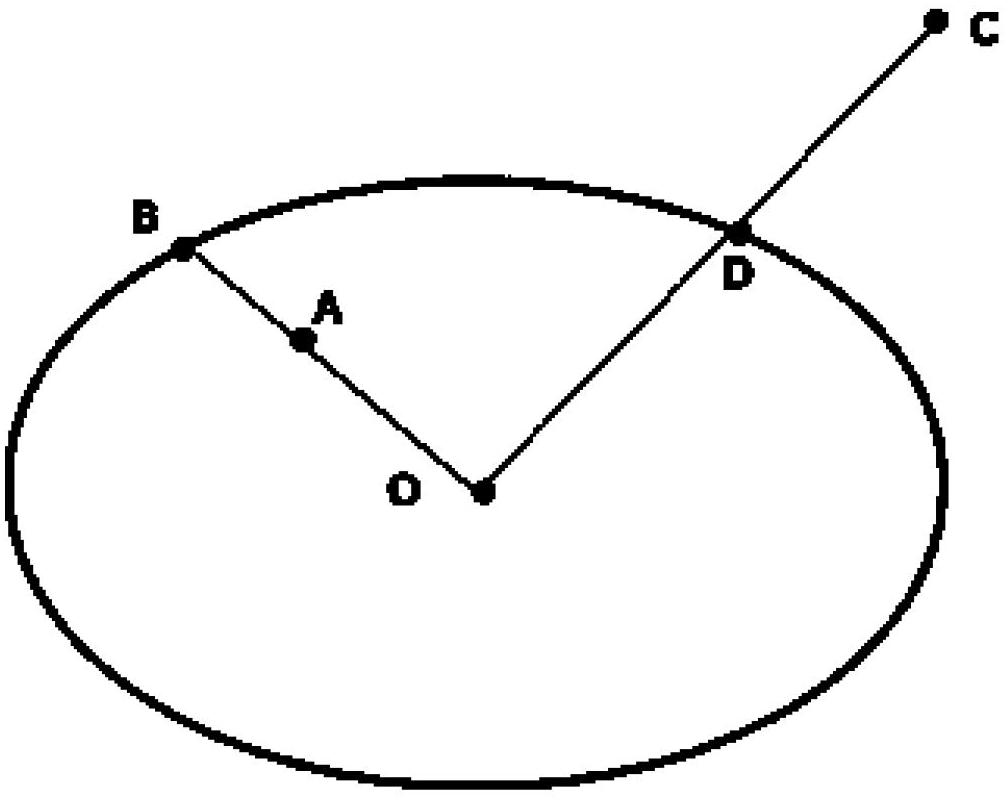

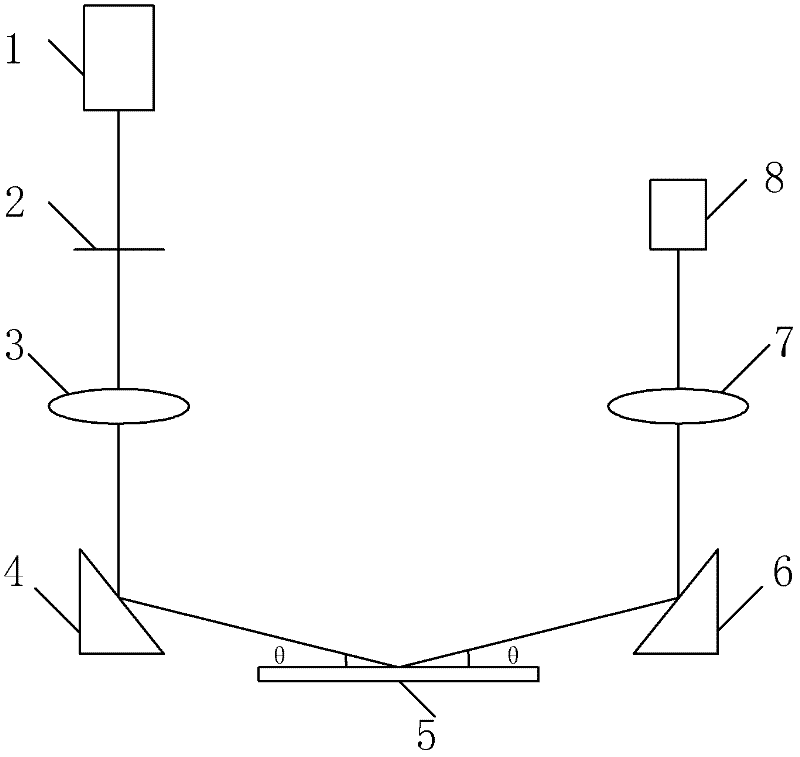

Robot vision locating method based on round road sign imaging analysis

The invention discloses a robot vision locating method based on round road sign imaging analysis, belonging to the technical field of robot vision location. The robot vision locating method is characterized by comprising the following steps of: designing and placing a circular road sign; identifying the circular road sign, wherein the step of identifying the circular road sign comprises the three procedures of fitting edges with specific colors, fitting RANSAC (Random Sampling Consensus) ellipse and checking the sign; and finally, locating a mobile robot. The robot vision locating method has the advantages of location completion by using only one road sign, simple road sign mode, short detection process, capability of being used in outdoor light conditions and simpleness and rapidness in location.

Owner:TSINGHUA UNIV

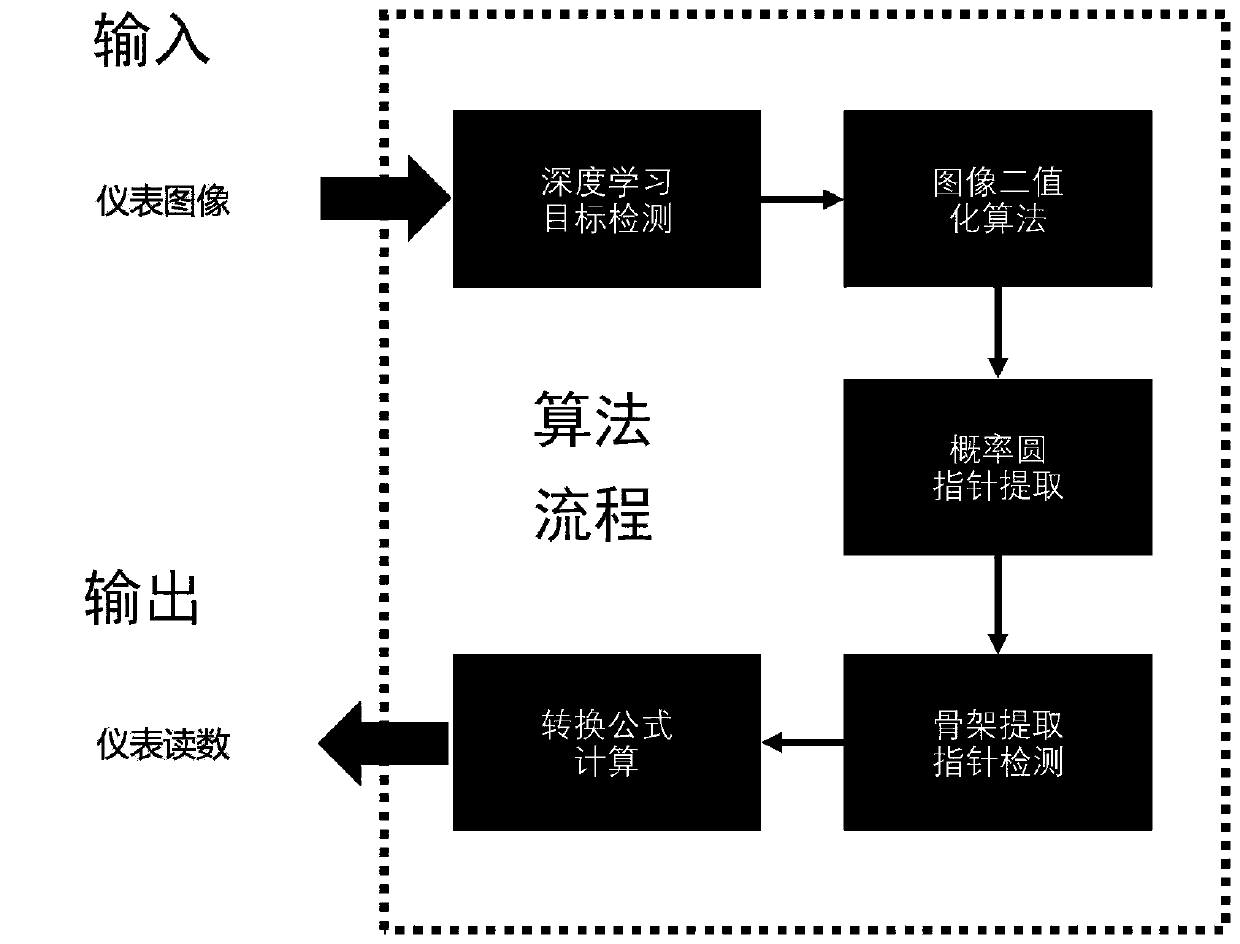

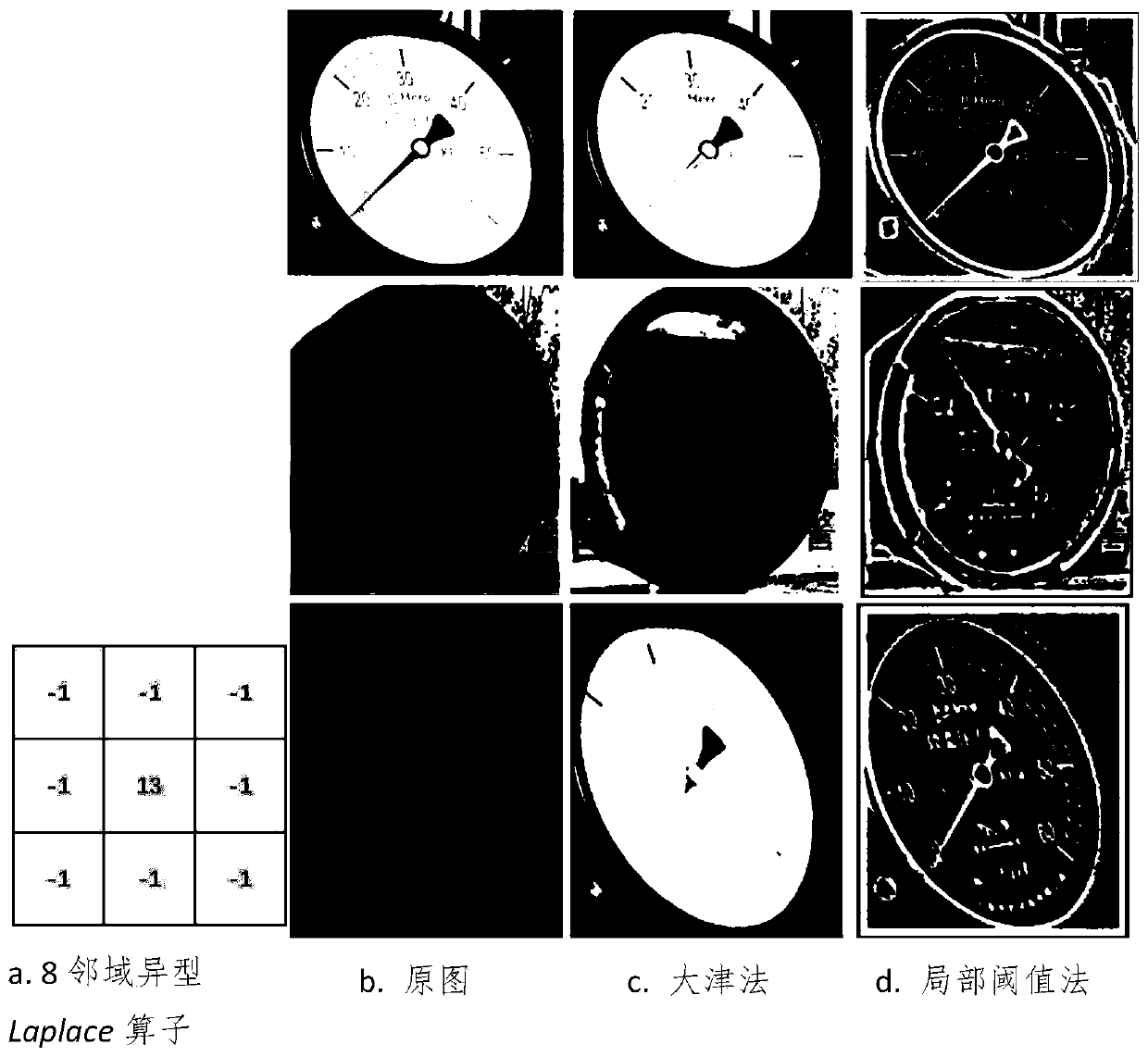

Pointer instrument detection and reading identification method based on mobile robot

ActiveCN110807355AImprove detection accuracyImprove the detection accuracy of small-scale targetsImage enhancementImage analysisEngineeringContrast enhancement

The invention relates to a pointer instrument detection and reading identification method based on a mobile robot. The method comprises the following steps: obtaining a deep neural network detection model M for a pointer instrument; the mobile robot moves to a designated place to obtain an original environment image containing the instrument equipment at present in a mode that the mobile robot carries a camera; S serves as system input and is transmitted to a deep neural network model M, whether an instrument exists in S or not is detected, the position of the instrument is framed out, an image in a frame is intercepted and subjected to height setting processing, the length-width ratio is not changed, and the processing result is represented by J; contrast enhancement is performed on the image, and a processing result is represented by E; local adaptive threshold segmentation is performed on the E to obtain a reverse binary image B; pointer extraction processing is performed based on aprobability circle; a central straight line L of the pointer part is extracted as pointing information of the instrument pointer; and a coordinate system is established based on a probability circlecenter projection algorithm and reading by an angle method.

Owner:TIANJIN UNIV

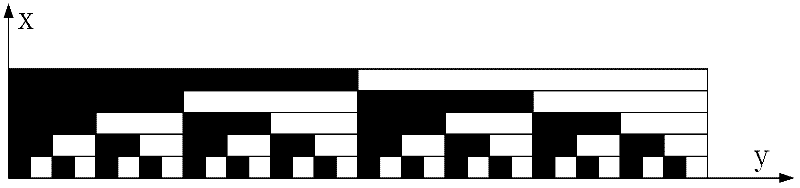

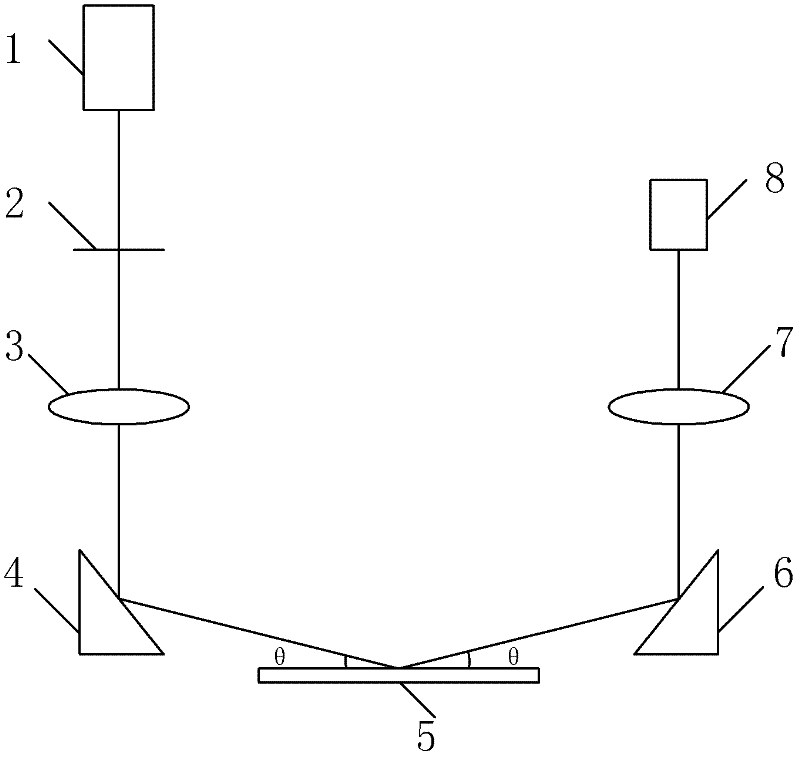

Focal plane detection device for projection lithography

InactiveCN102243138ALow image quality requirementsLower requirementPhotomechanical exposure apparatusMicrolithography exposure apparatusLithographic artistGrating

The invention provides a focal plane detection device for projection lithography. The device is characterized in that: light emitted by a lighting source is irradiated to an absolute encoded grating; the light which is modulated by the encoded grating passes through a projection imaging system, and is projected and imaged to a surface to be detected through a first reflector; after being reflected by the surface to be detected, the light enters a focus detecting mark amplification system through a second reflector and is received by a detector; a height change of the surface to be detected changes an absolute encoded grating image received by the detector, the absolute encoded grating image is received by utilizing the detector, and absolute codes of a grating image corresponding to the height of the surface to be detected is extracted to complete detection on the position height of the surface to be detected. The focal plane detection device adopts the absolute encoded grating instead of the traditional grating slit, and reduces the coding cycle by increasing the number of code bits of the absolute encoded grating, so that the focal detection range can be enlarged, and the focus detection accuracy can be improved.

Owner:INST OF OPTICS & ELECTRONICS - CHINESE ACAD OF SCI

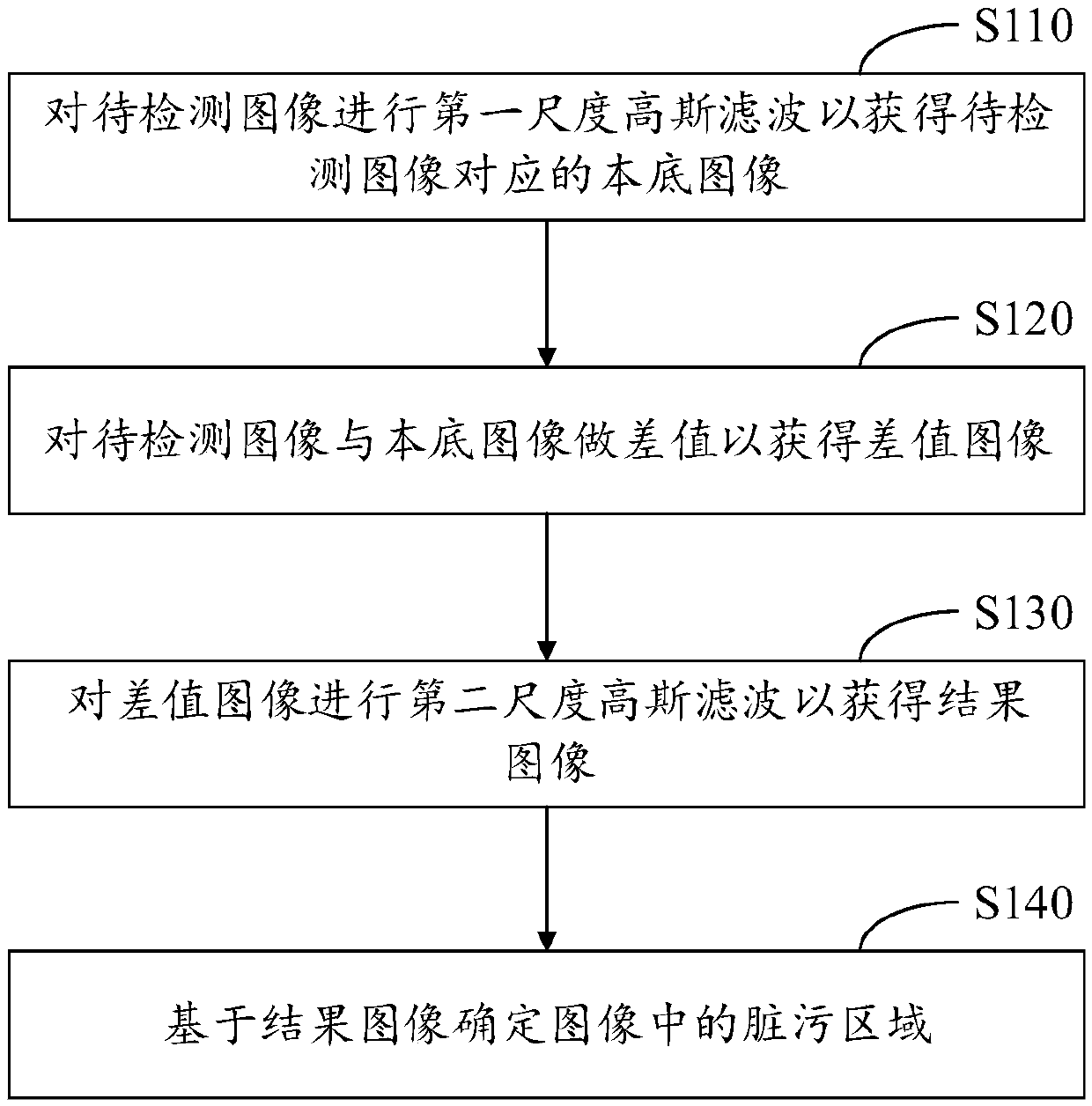

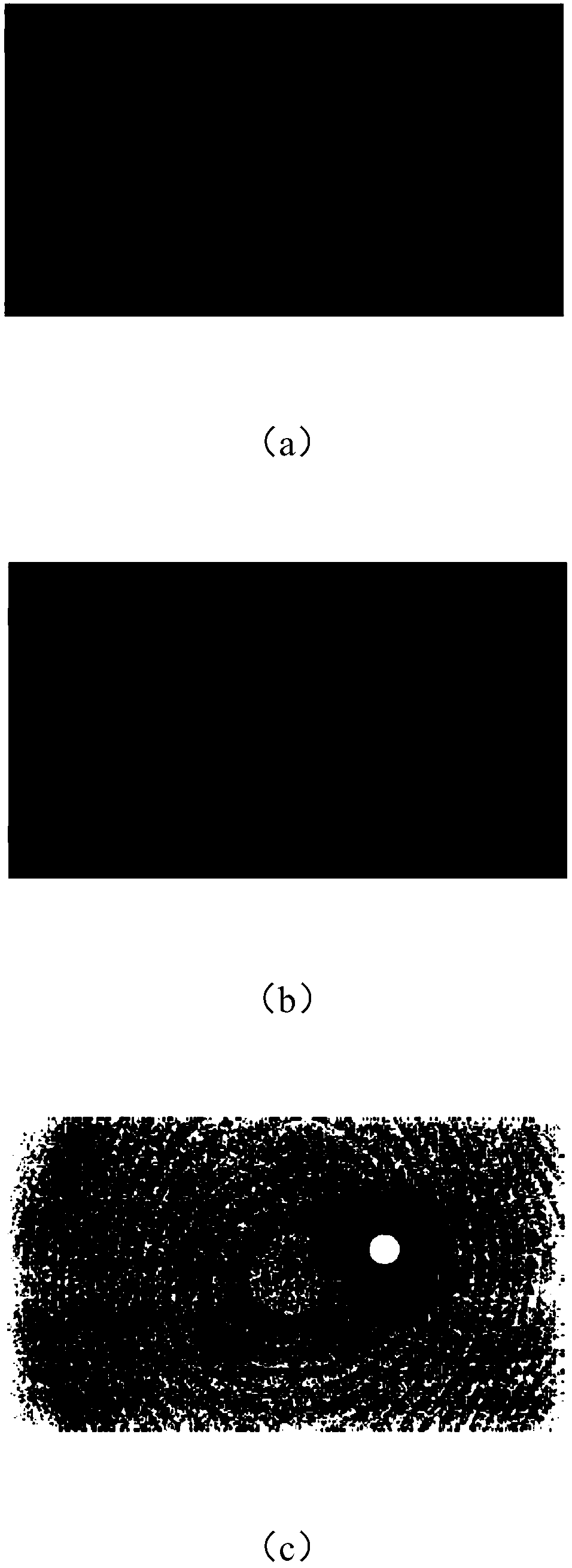

Method, apparatus and electronic device for detecting dirty areas in an image

ActiveCN109523527AImprove usabilityImprove processing speedImage enhancementImage analysisElectric devicesBackground image

Disclosed are a method, apparatus and electronic device for detecting dirty areas in an image. The method includes: performing a first scale Gaussian filter on an image to be detected to obtain a background image corresponding to the image to be detected; Making a difference between the image to be detected and the background image to obtain a difference image; Performing a second scale Gaussian filter on the difference image to obtain a resultant image, the scale of the second scale Gaussian filter being smaller than the scale of the first scale Gaussian filter; And determining a dirty area in the image based on the resultant image. In this way, the sensitivity, ease of use, processing speed and environmental adaptability of dirt detection can be improved.

Owner:BEIJING HORIZON ROBOTICS TECH RES & DEV CO LTD

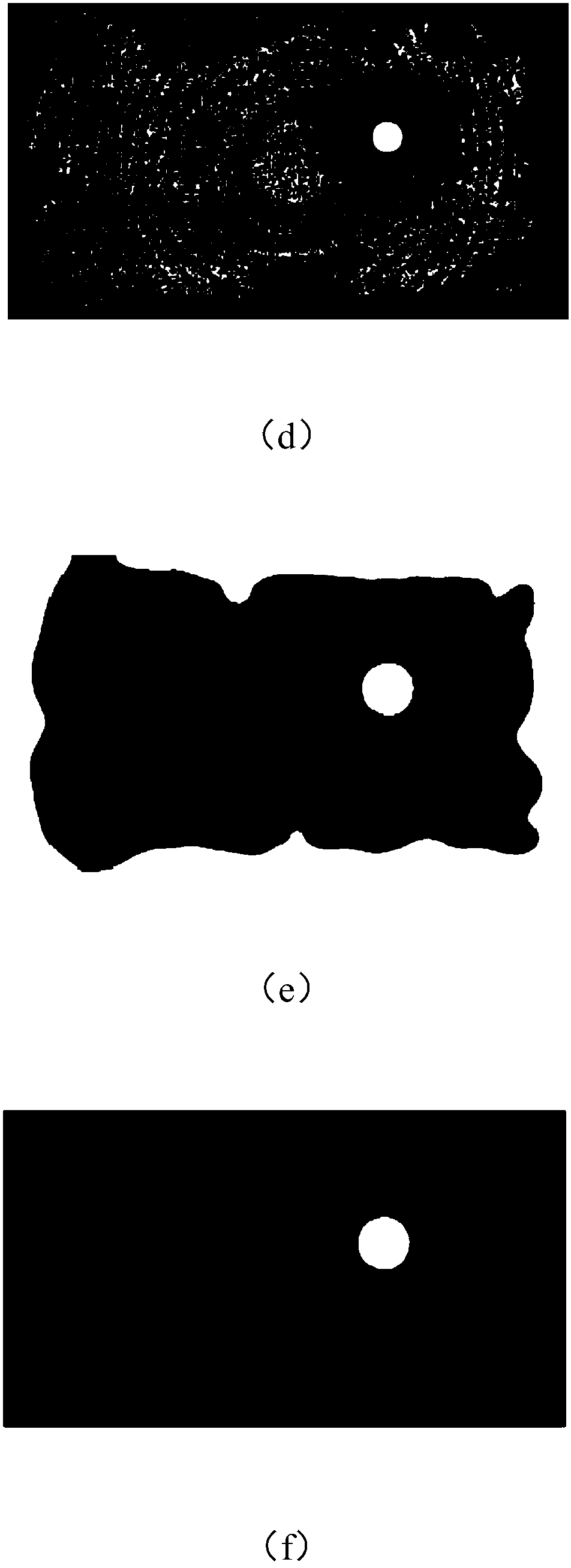

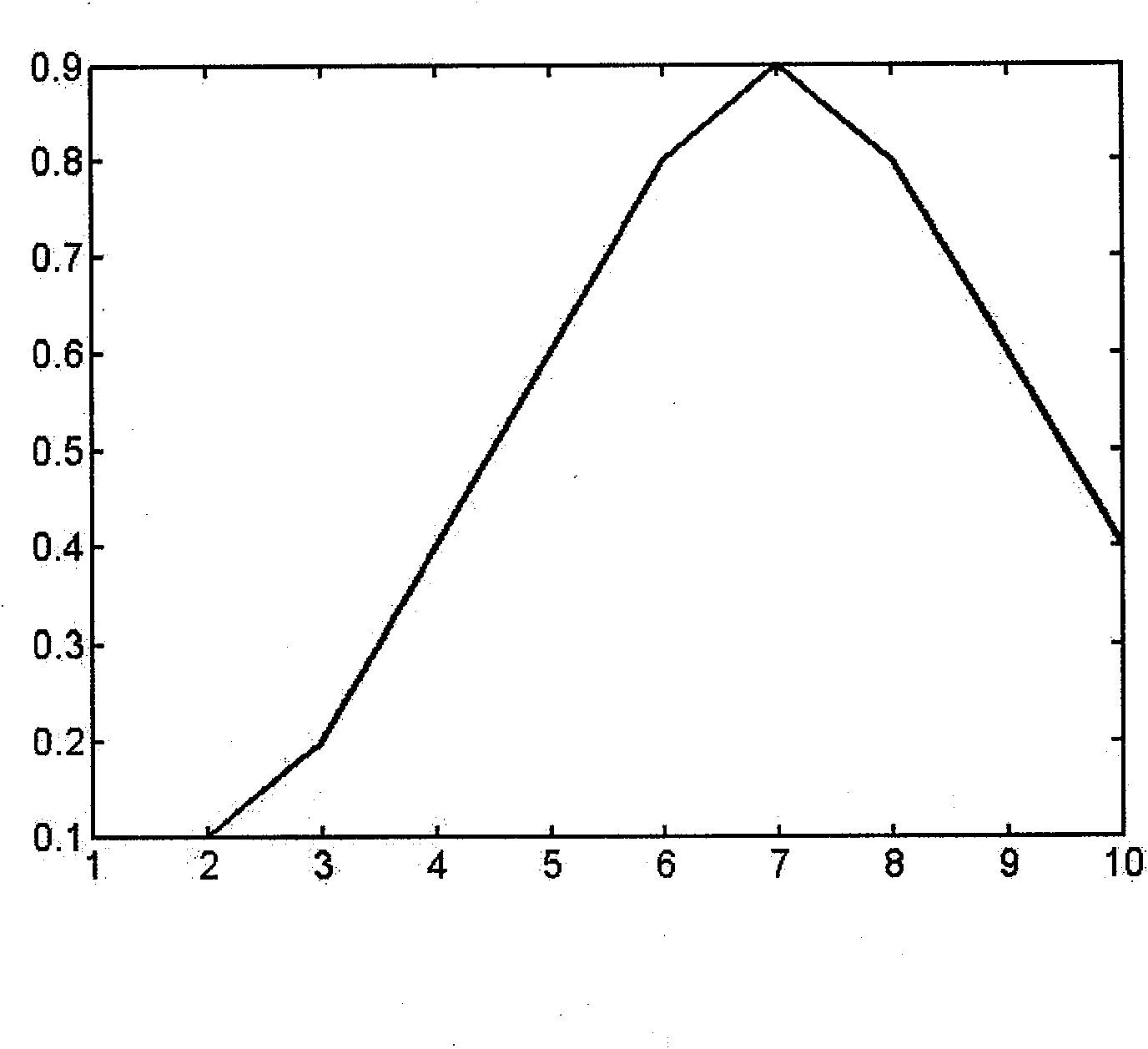

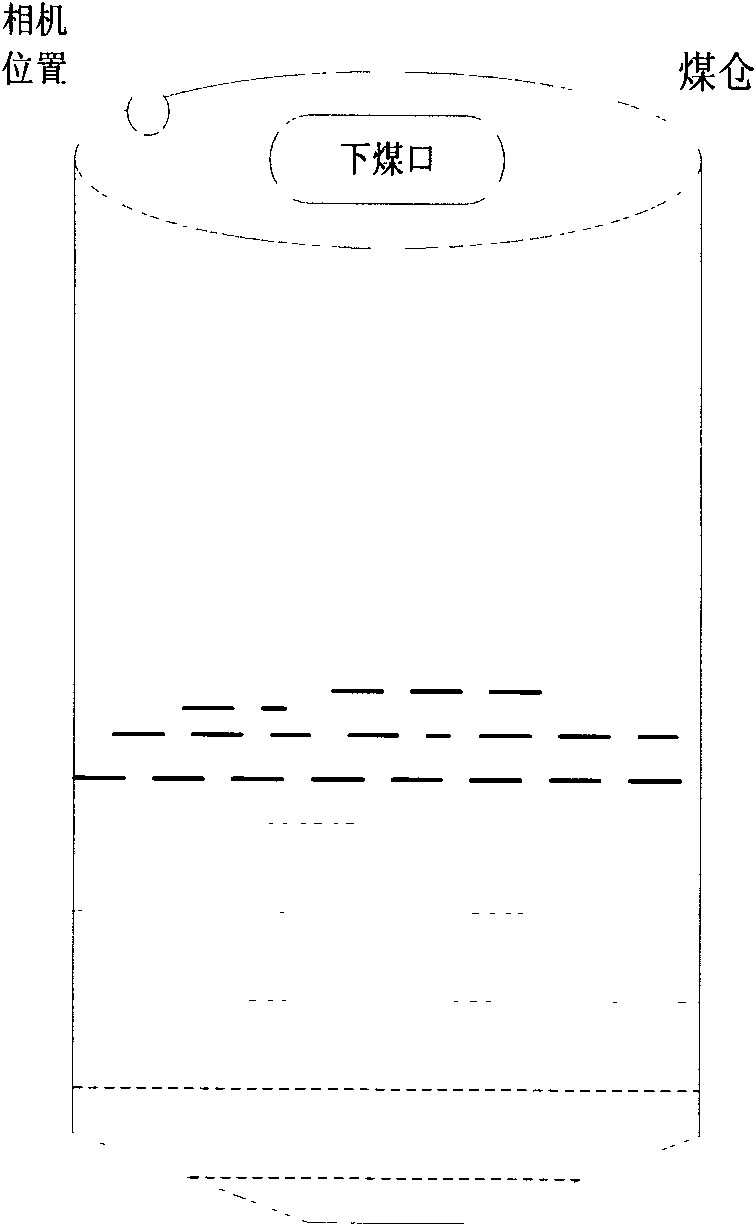

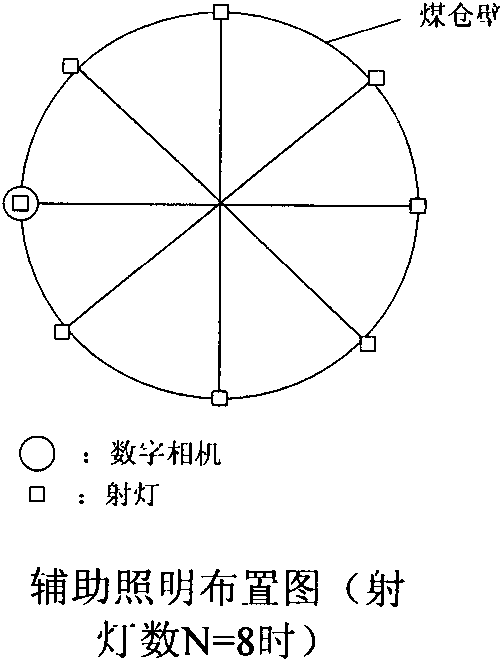

Method for detecting bin level based on image entropy

InactiveCN102116658AImprove adaptabilityLow image quality requirementsMachines/enginesLevel indicatorsImage basedDigital camera

The invention provides a method for detecting a bin level in an image processing mode based on a digital camera and auxiliary lighting equipment. The method provided by the invention comprises the following steps of: grading the range of a bin by summarizing the characteristics of an image environment of the bin and analyzing the distribution law of the image entropy of defocused images within the full range of the bin in the environment of the bin; controlling the lens focus of the digital camera by a program so that the camera captures images based on grades; preprocessing the images firstly, and extracting feature textures of materials; and calculating the image entropy of the processed images, and finally obtaining a bin level value through analysis and calculation.

Owner:CHINA UNIV OF MINING & TECH (BEIJING)

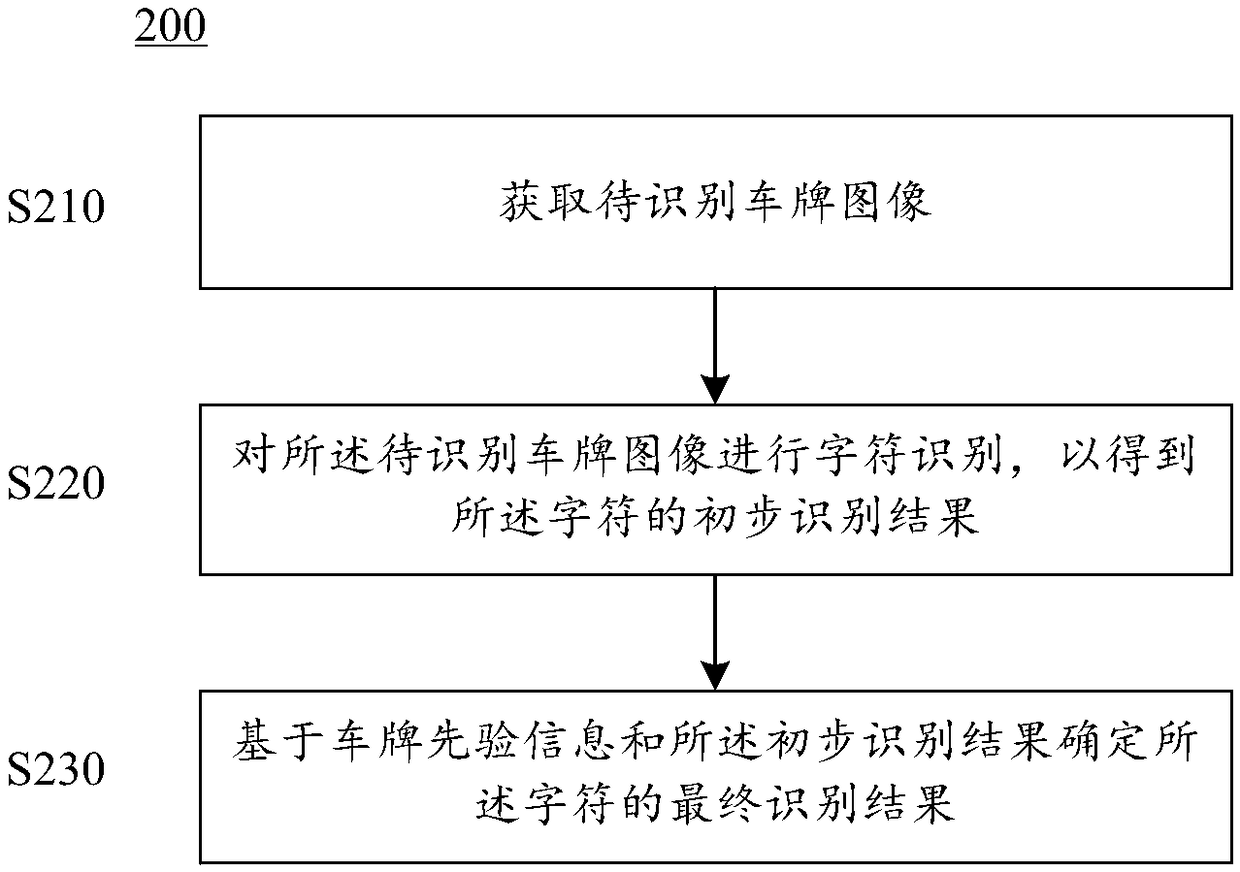

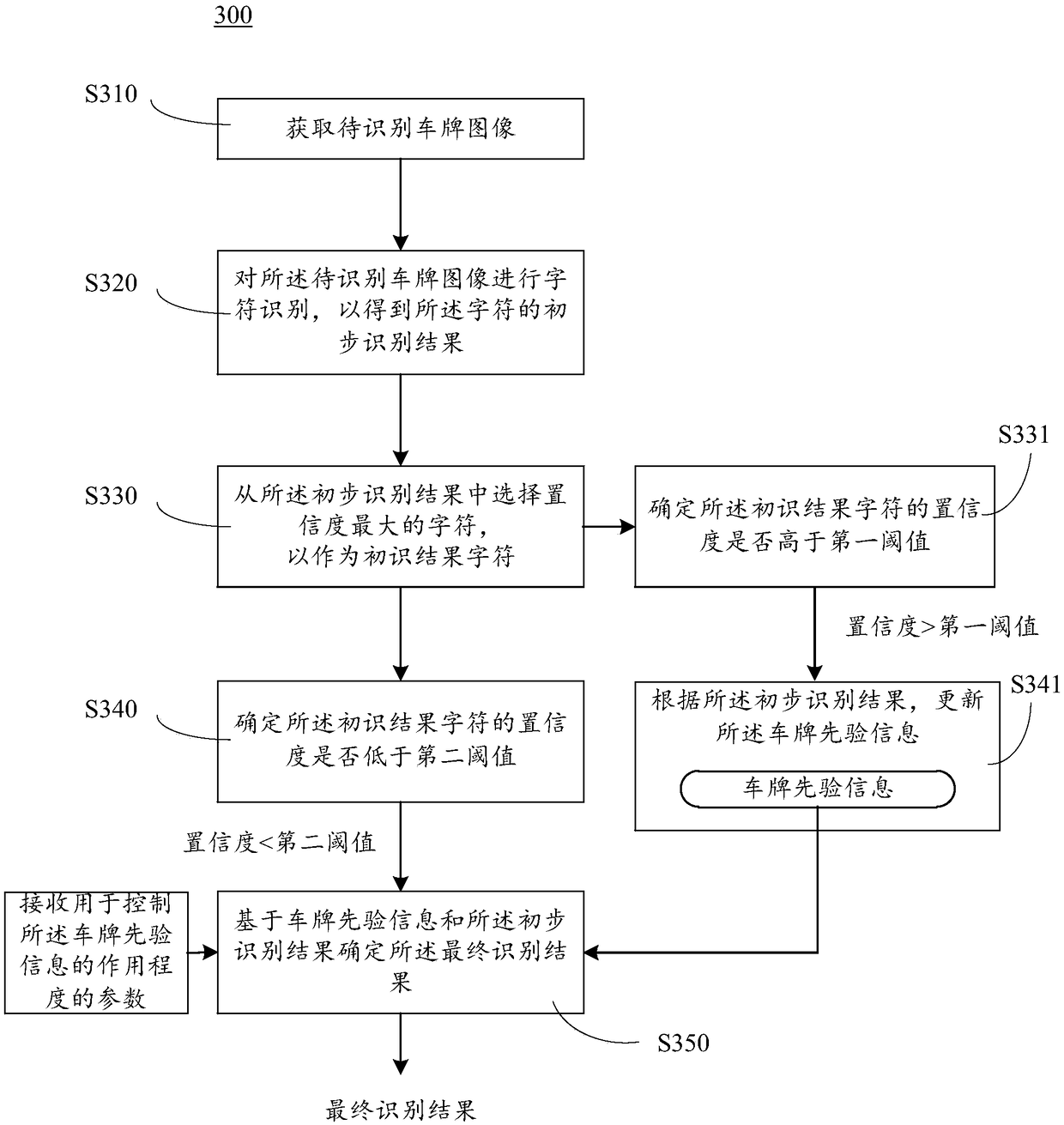

License plate recognition method, device and system, and storage medium

ActiveCN108875746AImprove recognition accuracyReduce heavy dependenceCharacter and pattern recognitionPrior informationImaging quality

Embodiments of the present invention provide a license plate recognition method, device and system, and a storage medium. The method comprises the steps: obtaining an image to be subjected to licenseplate recognition; performing character recognition on the image to be subjected to license plate recognition, so as to obtain a preliminary recognition result of the character; and determining a final recognition result based on license plate prior information and the preliminary recognition result. The license plate prior information is utilized for determining the final recognition result, andthe serious dependence of the license plate recognition technology on the image quality can be reduced according to the technical scheme. Even if the quality of the image to be subjected to license plate recognition is poor, an ideal recognition result can still be obtained based on the license plate prior information, thereby the license plate identification precision is remarkably improved.

Owner:BEIJING KUANGSHI TECH

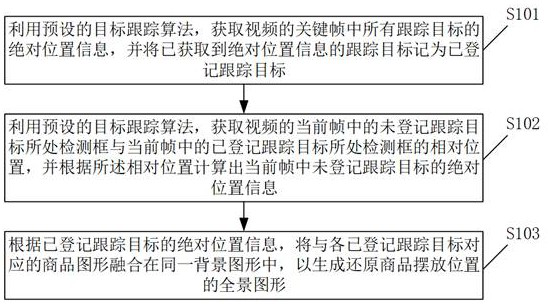

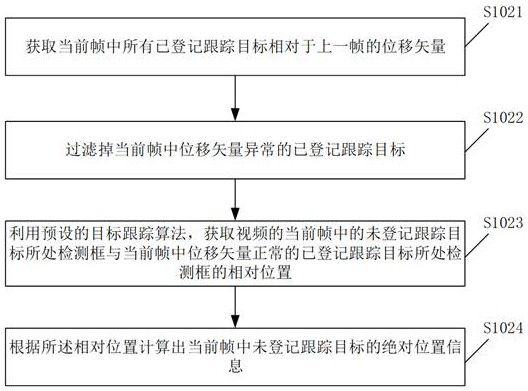

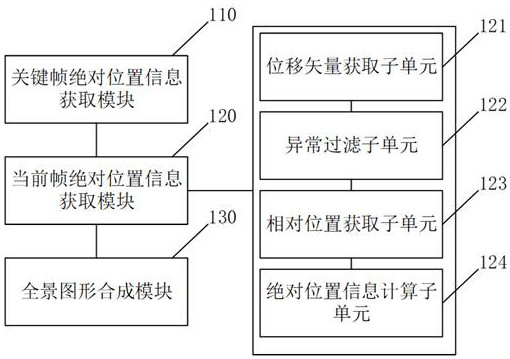

Commodity placement position panorama generation method based on video target tracking

ActiveCN112037267ALow image quality requirementsImprove efficiencyImage enhancementDetails involving processing stepsCategory recognitionImaging quality

The invention discloses a commodity placement position panorama generation method and device based on video target tracking, and the method and device can achieve the target detection, tracking and recognition of a commodity position in a video based on deep learning. By utilizing the characteristics that the relative position of the static target is invariant and the tracking target correspondingto the same commodity can appear in multiple video frames of the video, the accuracy of commodity tracking target position and category identification is improved, so that the image quality requirement of the commodity in each video frame is reduced, and the efficiency and quality of panoramic image synthesis are improved. When a video is shot, video shooting conditions do not need to be strictlyrequired, for example, the video can be shot from up, down, left and right directions, so that the requirement on video shooting is reduced, and the video data acquisition efficiency before panoramicpicture synthesis is improved. The generated graph can select to use a two-dimensional graph or a three-dimensional graph as required, so that a panoramic graph with more diversified display forms can be generated.

Owner:GUANGZHOU XUANWU WIRELESS TECH CO LTD

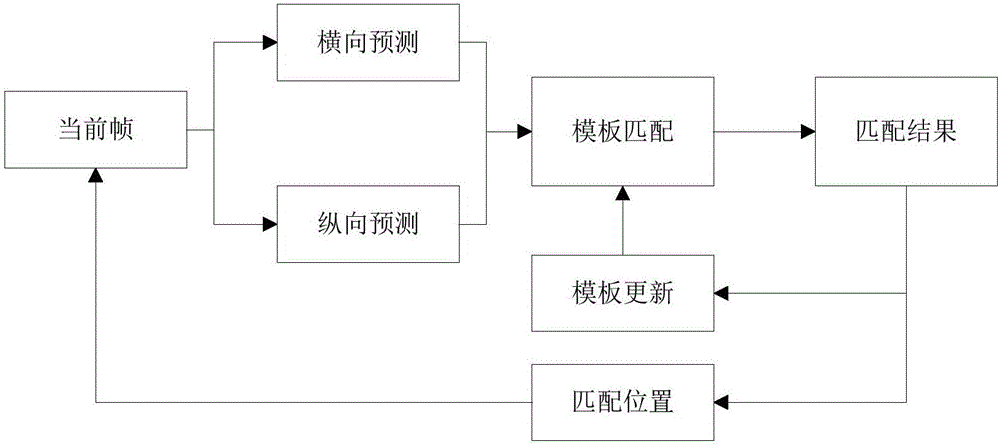

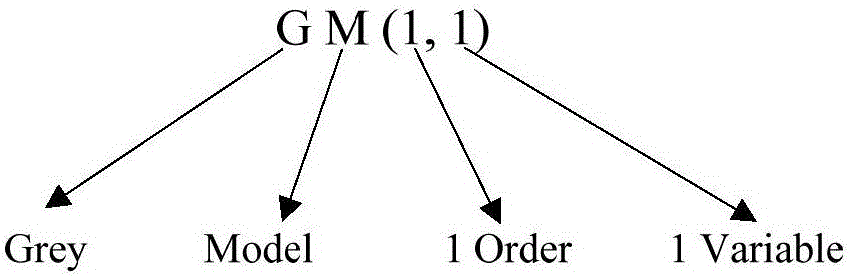

Target tracking method fusing template matching and grey prediction

InactiveCN106780554AImprove accuracyImprove real-time performanceImage enhancementImage analysisTemplate matchingSelf adaptive

The invention discloses a target tracking method fusing template matching and grey prediction. The method comprises the steps of firstly predicting the position of a target in an image by using a grey prediction GM(1,1) model; and secondly calculating the definite position of the target by using a template matching method. The position prediction greatly reduces a search range, so that the target tracking method meets a real-timeliness requirement. In a matching process, a sequential similarity detection method with an adaptive threshold is used, and the threshold is compared with an absolute error sum, so that the calculation amount is reduced and the threshold well adapts to the matching process. Finally, a weighted template updating method is adopted for changes of target scale and environment, so that the robustness of the tracking method is improved.

Owner:NANJING UNIV OF SCI & TECH

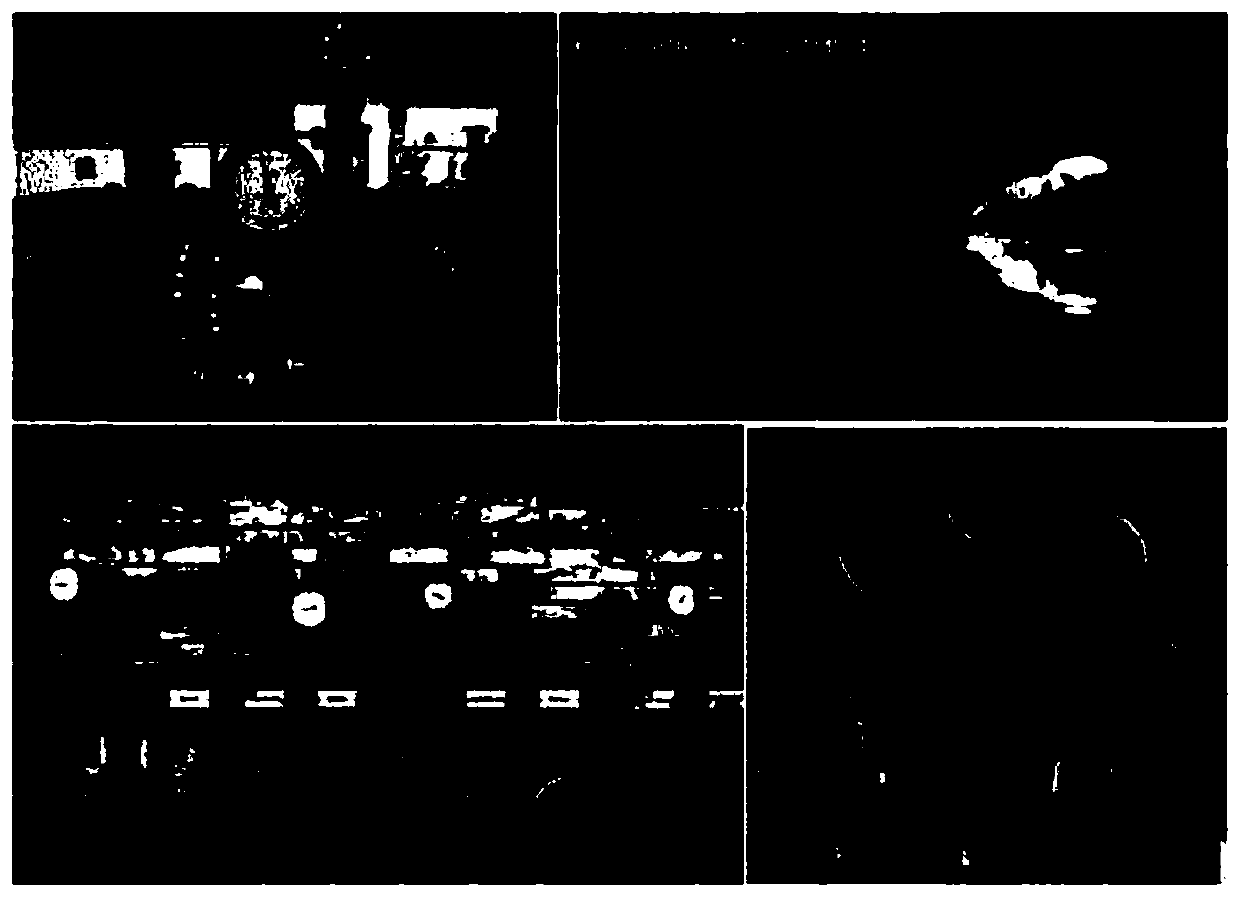

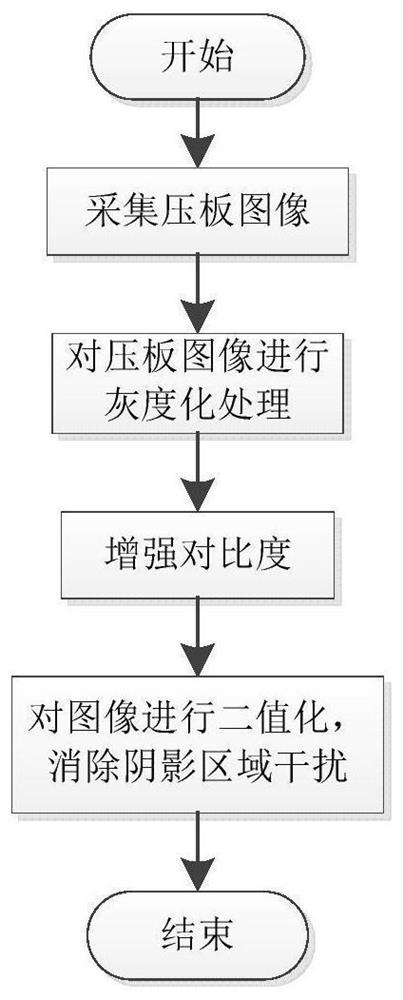

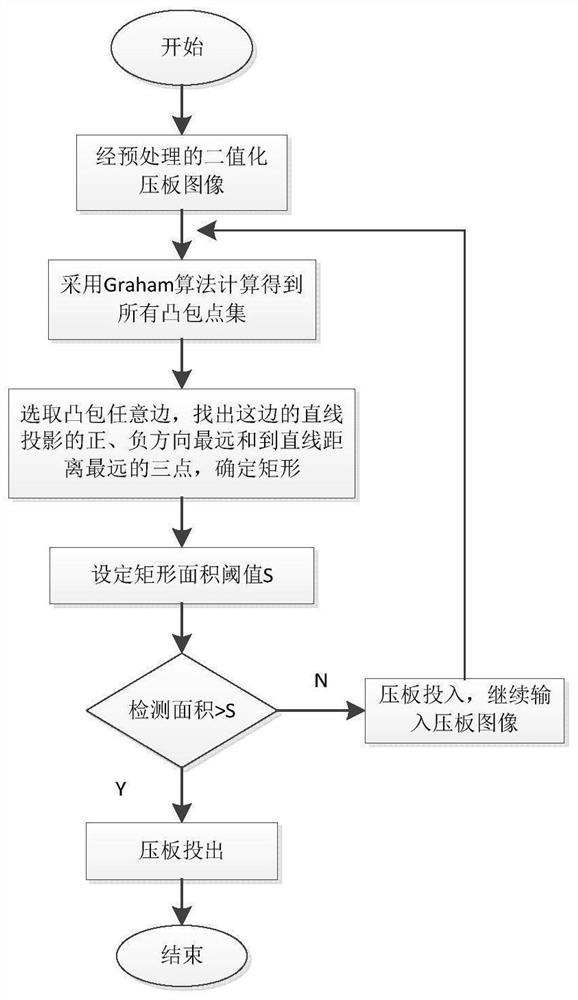

Protection pressing plate state identification method based on image processing shadow removal optimization

PendingCN111915509AAccurately identify the running statusReduce the impactImage enhancementImage analysisColor imageImaging processing

The invention discloses a protection pressing plate state identification method based on image processing shadow removal optimization, and the method comprises the steps: carrying out the graying of aprotection pressing plate color image, converting the protection pressing plate color image into a gray-scale image, carrying out the contrast enhancement and binarization of the gray-scale image, and eliminating a shadow region; obtaining a convex hull of each protection pressing plate switch through a Graham algorithm principle, then connecting the convex hulls into a rectangle through a minimum enclosing rectangle principle, and obtaining the rectangular area. setting a threshold value for the rectangular area; if the rectangular area is greater than the threshold value, determining that throwing-out is performed, otherwise, determining that throwing-in is performed. According to the method, the influence of shadow interference can be effectively reduced, the requirement on the qualityof the acquired image is not high, and the robustness is high; the influence of shadow interference can be effectively reduced, and the operation state of the pressing plate in the image can be accurately identified.

Owner:CHINA THREE GORGES UNIV

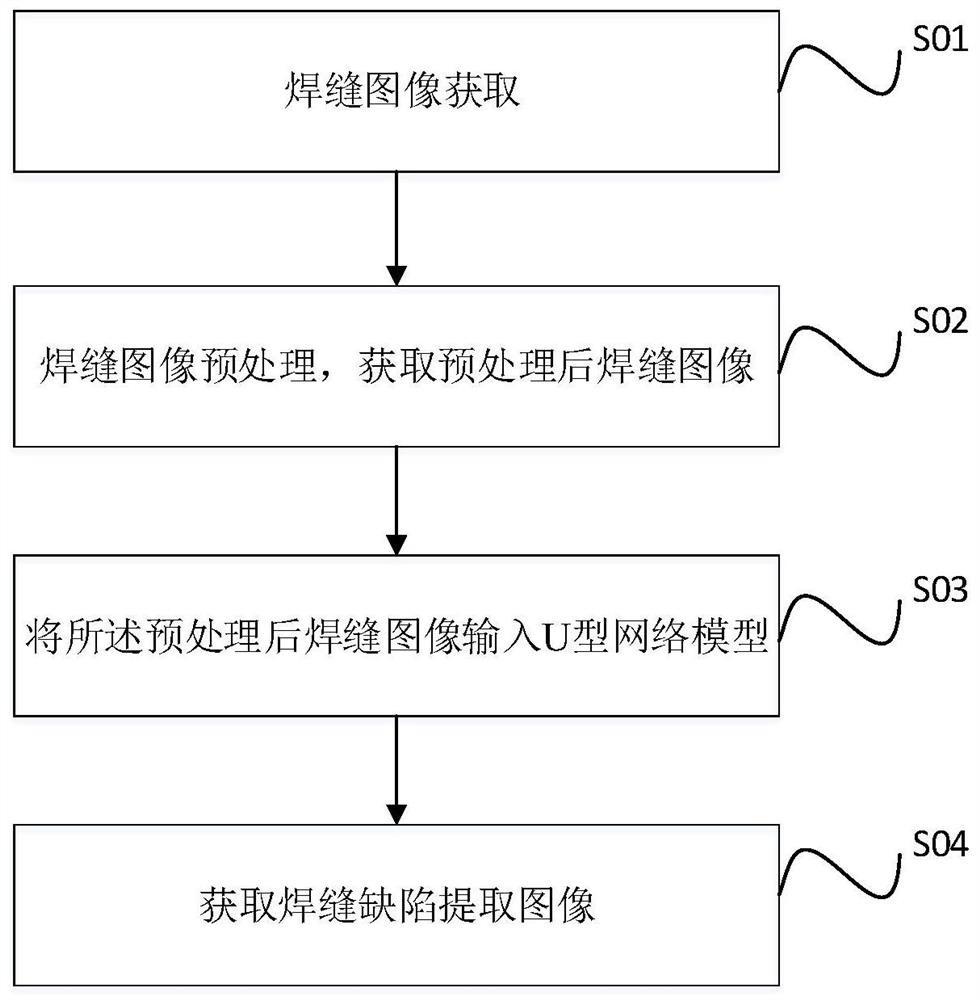

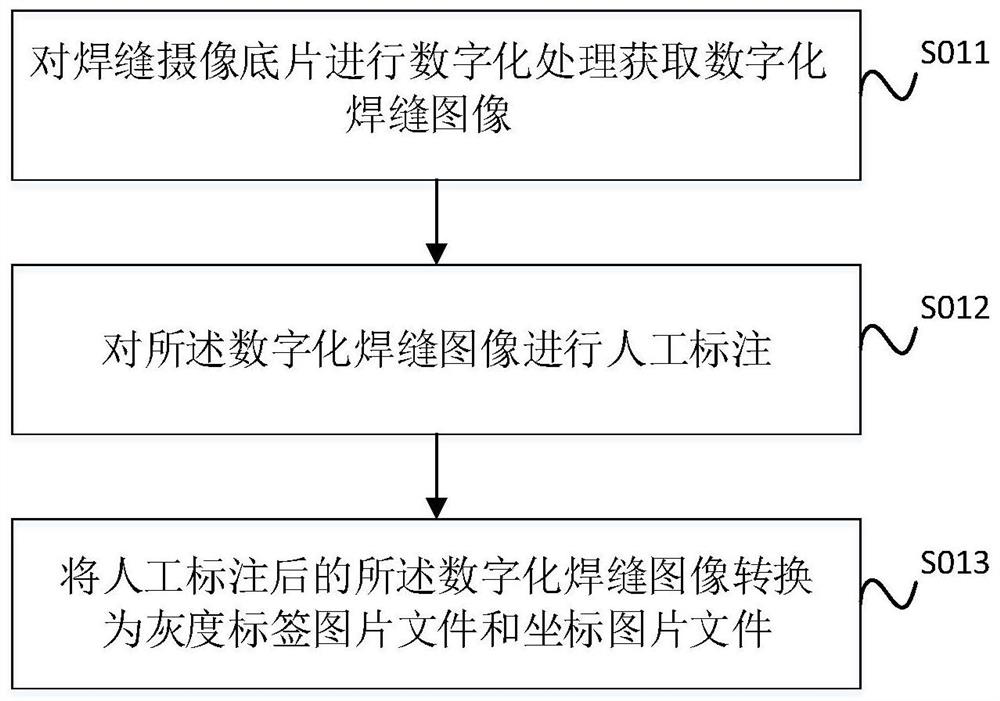

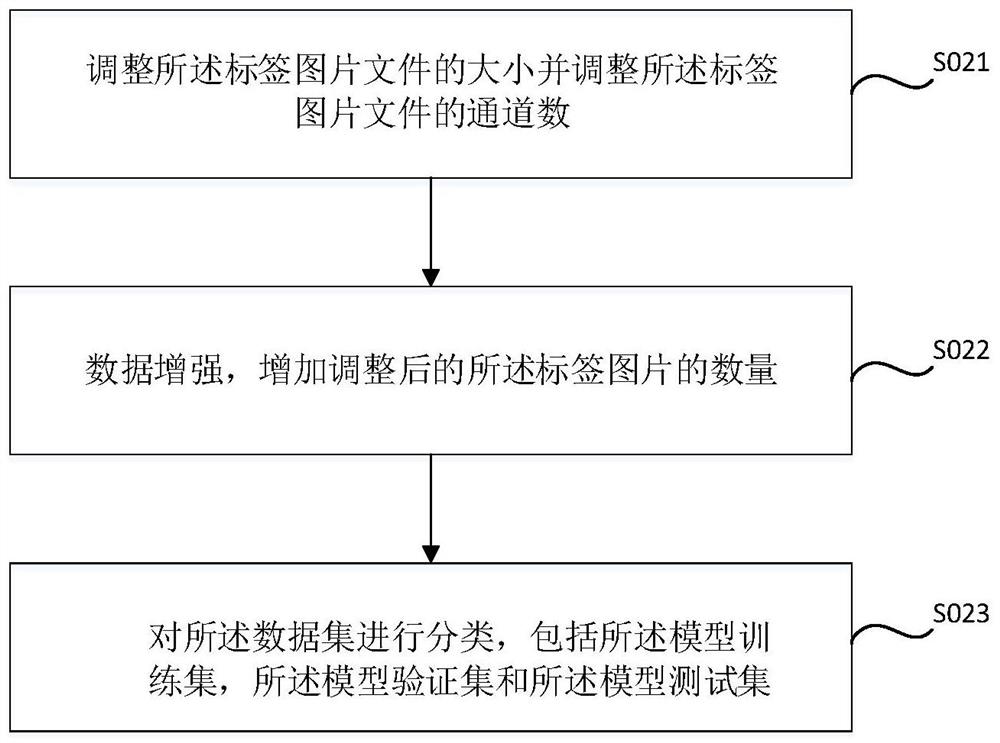

Automatic weld defect extraction method

PendingCN112215907AEasy to identifyIncrease usageImage enhancementImage analysisPattern recognitionNetwork output

The invention discloses an automatic weld defect extraction method. The method comprises the following steps: acquiring a weld image; pre-processing the weld joint image to obtain a pre-processed weldjoint image; inputting the preprocessed weld joint image into a U-shaped network model, the U-shaped network model comprising a network input end, a network output end, a down-sampling part and an up-sampling part, the down-sampling part being composed of an encoder and ResNet34, the up-sampling part being composed of a decoder and Unet, and the up-sampling part being composed of a decoder and Unet, the upper sampling part and the lower sampling part being superposed by adopting jump connection, the network input end adopting a channel image adaptive to ResNet34 as an input image, the outputend being connected to the input end of the lower sampling part, and the output end of the upper sampling part being used for converting the image into a single-channel image and outputing the single-channel image; obtaining weld seam extraction images.

Owner:SHANGHAI DIANJI UNIV

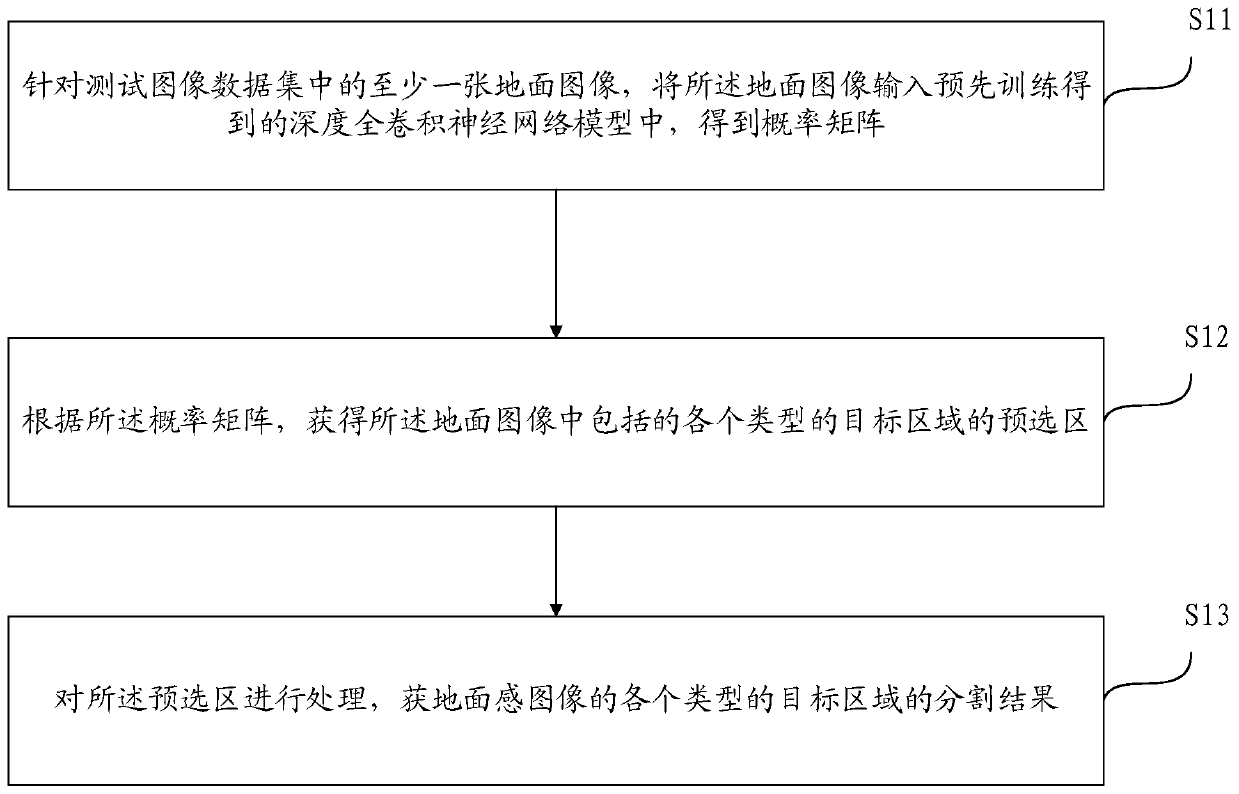

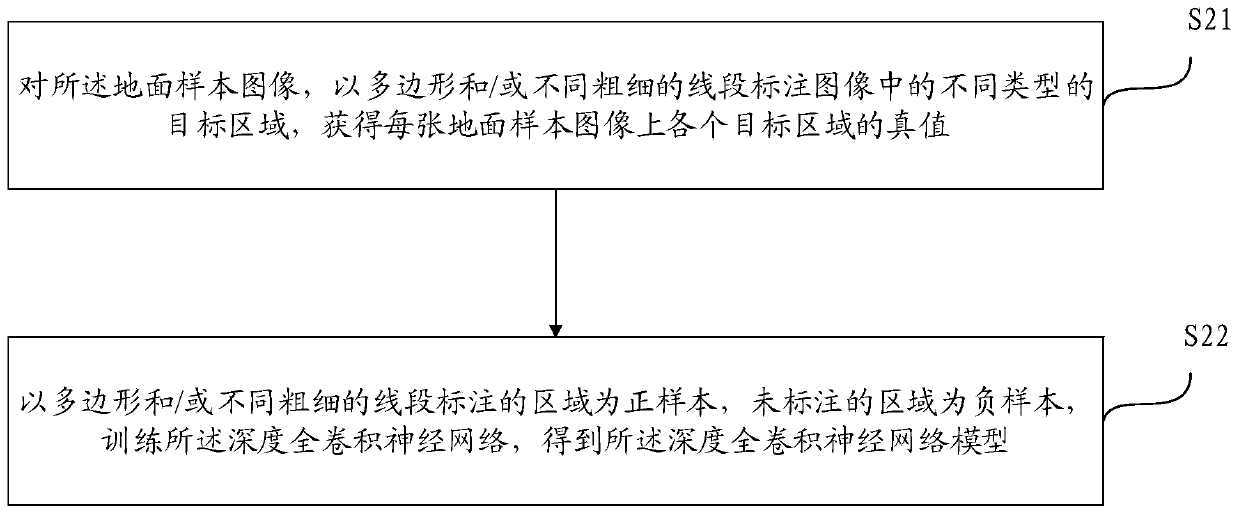

Target area segmentation method and device in ground image

PendingCN111582004ANot susceptible to image quality effectsLow image quality requirementsCharacter and pattern recognitionData setImaging quality

The invention discloses a target area segmentation method and a device in a ground image. The method comprises the following steps: for at least one ground image in a test image data set, inputting the ground image into a pre-trained deep full convolutional neural network model to obtain a probability matrix; wherein each element of the probability matrix represents the probability that a pixel point corresponding to the element in the ground image belongs to at least one type of target area; wherein the deep full convolutional neural network model is obtained by training different types of target regions of a plurality of ground sample images in an image sample set; according to the probability matrix, obtaining a pre-selected region of each type of target region included in the ground image; and processing the pre-selected area to obtain a segmentation result of each type of target area of the ground image. According to the method, each pixel of the ground image is classified throughthe deep full convolutional neural network, accurate segmentation of different target areas is realized, the realization process is simple, and the method is not easily influenced by the imaging quality.

Owner:ALIBABA GRP HLDG LTD

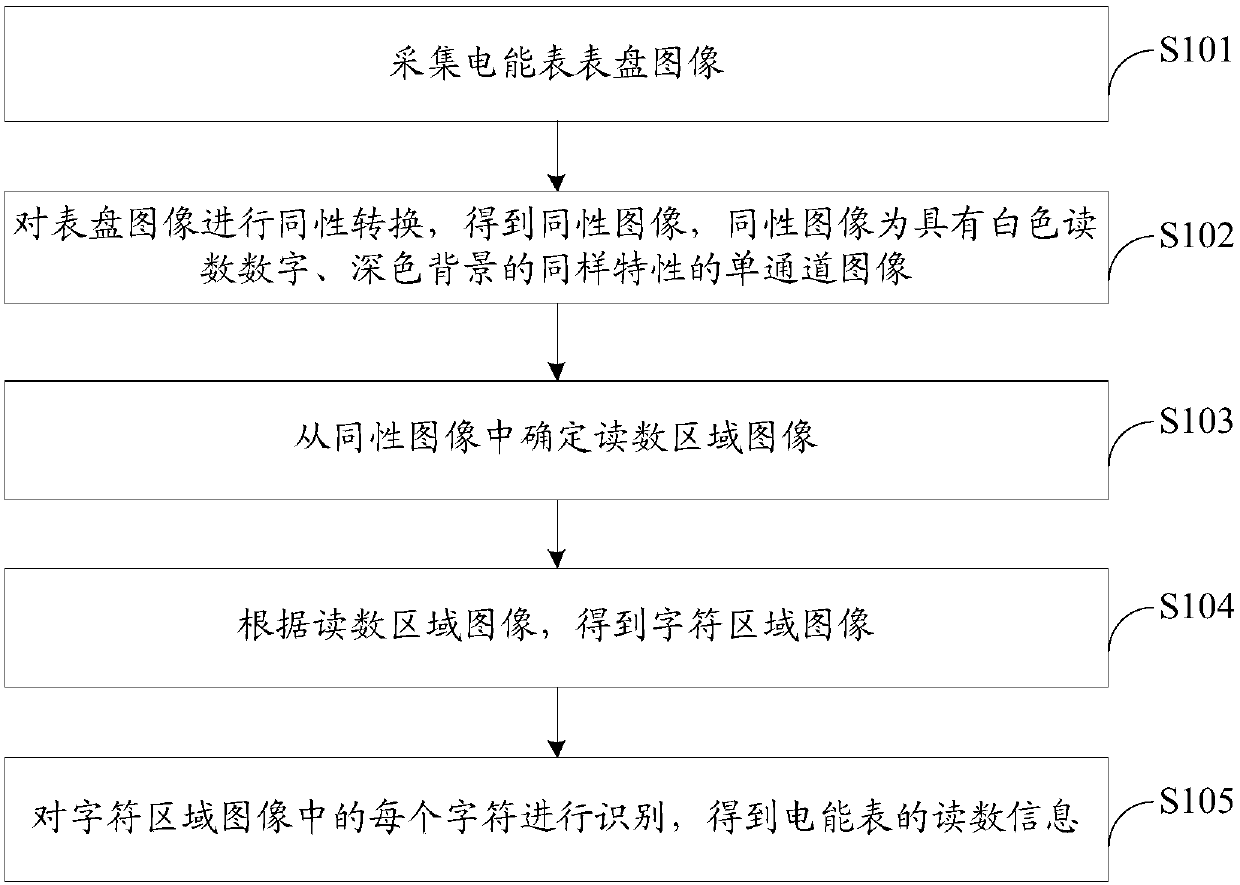

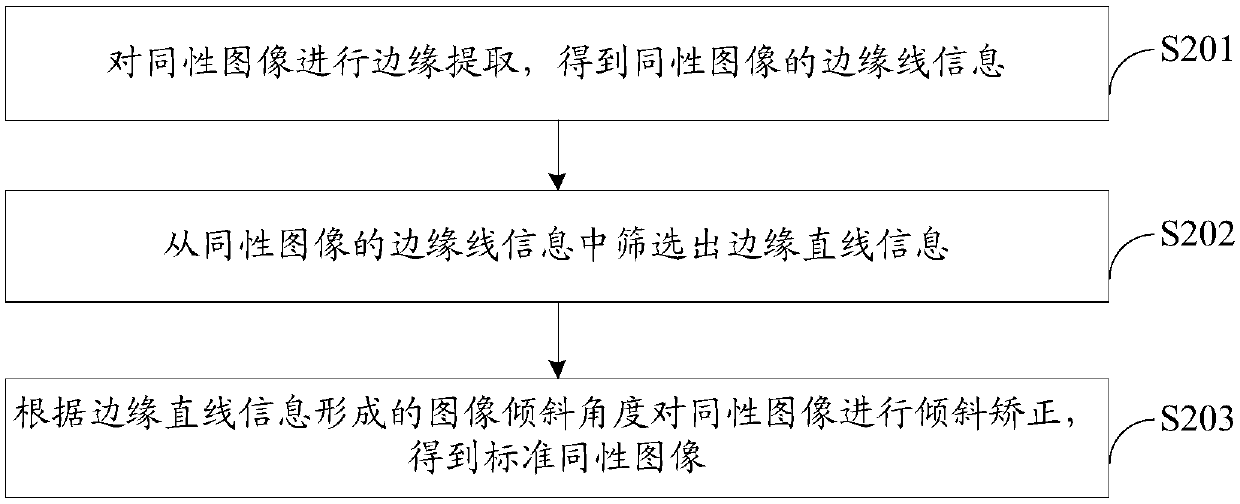

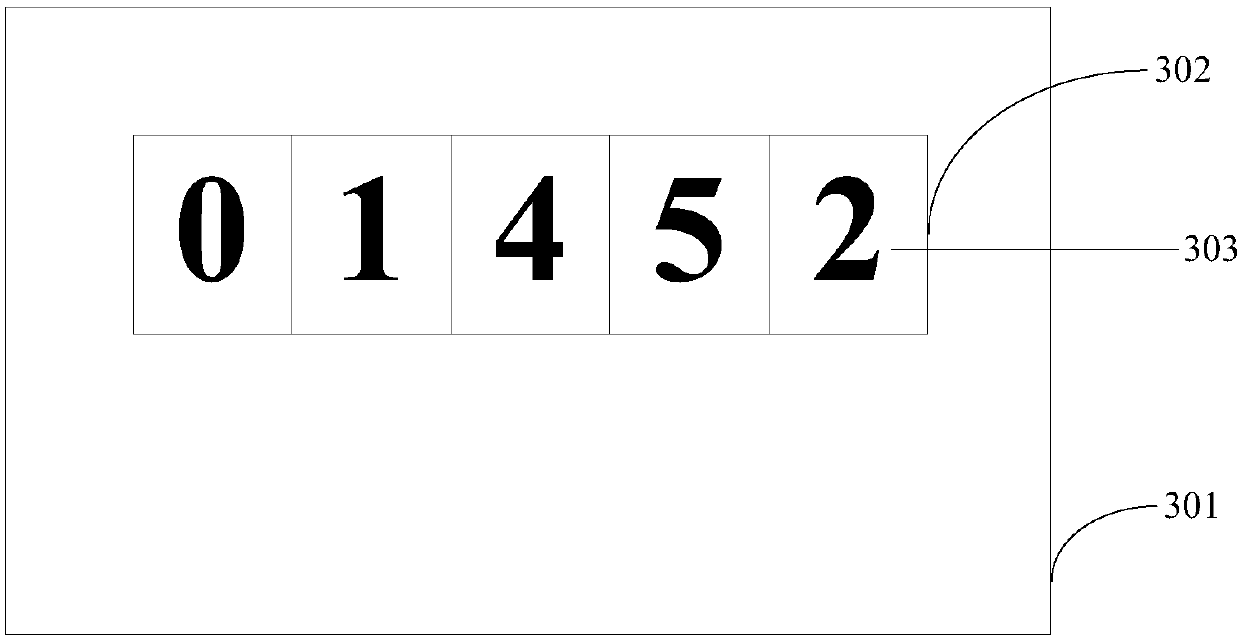

Meter reading method and device based on image recognition

InactiveCN110263778ALow image quality requirementsAvoid color inconsistenciesImage enhancementImage analysisDark colorElectric energy

The embodiment of the invention discloses a meter reading method based on image recognition. The method comprises the following steps: acquiring a meter image of an electric energy meter; carrying out homogeneity conversion on the meter image to obtain a homogeneity image which is a single-channel image with the same characteristics of white reading numbers and dark color backgrounds; determining a reading area image from the homogeneity image; obtaining a character area image according to the reading area image; and identifying each character in the character area image to obtain reading information of the electric energy meter.

Owner:CHINA MOBILE M2M +1

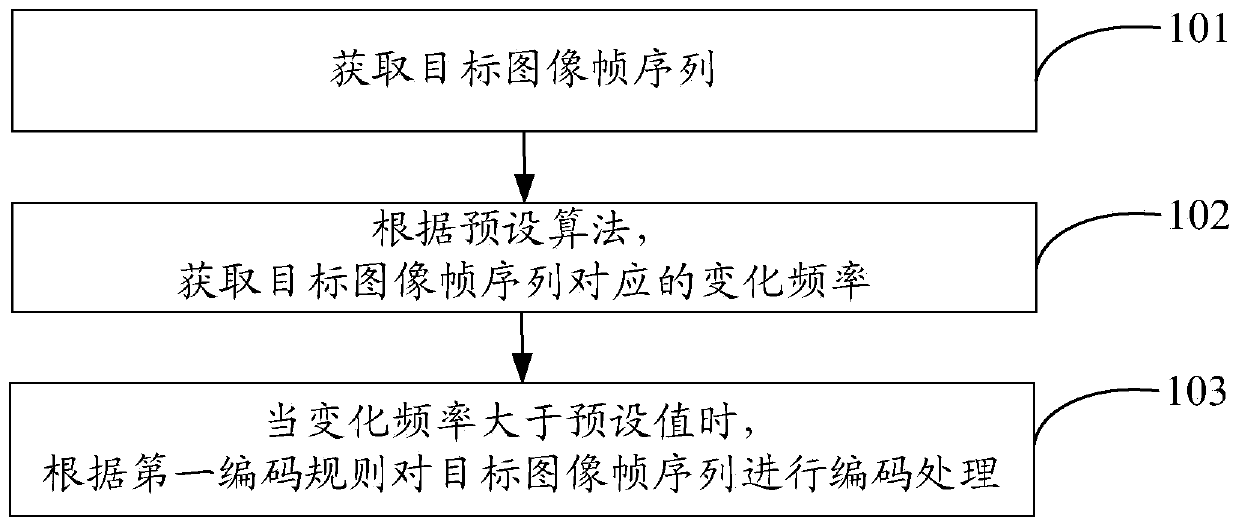

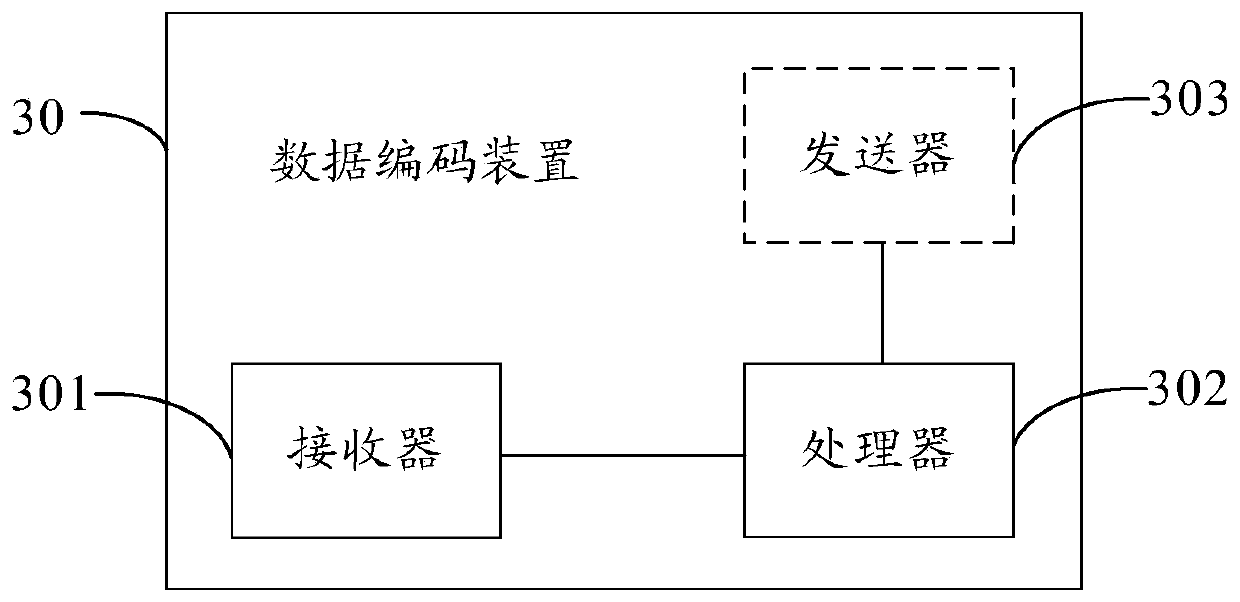

Data encoding method and device

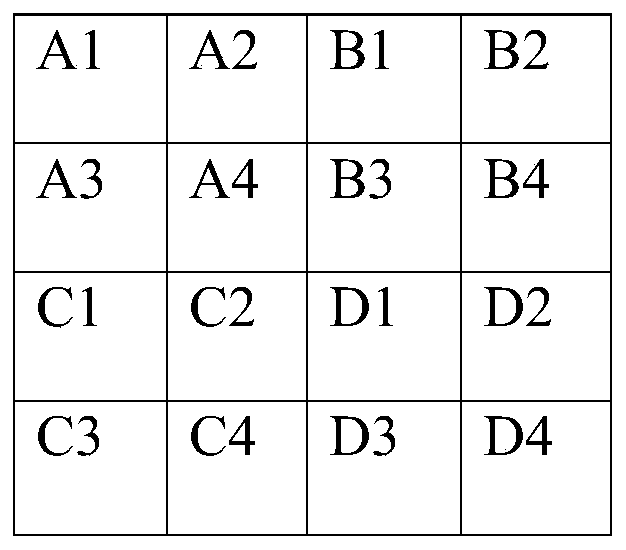

PendingCN110740316ALow image quality requirementsReduce sharpnessDigital video signal modificationFrame sequenceAlgorithm

The invention provides a data encoding method and device, and relates to the technical field of electronic information, capable of solving the problem of resource waste during encoding processing of image frames. The specific technical scheme includes the steps: generating a target image frame sequence after a display image of terminal equipment is acquired; calculating the change frequency corresponding to the target image frame sequence according to a preset algorithm; and according to the change frequency, when the change frequency is greater than a preset value, performing encoding processing on the target image frame sequence according to a first encoding rule, and when the change frequency is less than or equal to the preset value, performing encoding processing on the target image frame sequence according to a second encoding rule. The present disclosure is used for image encoding.

Owner:XIAN WANXIANG ELECTRONICS TECH CO LTD

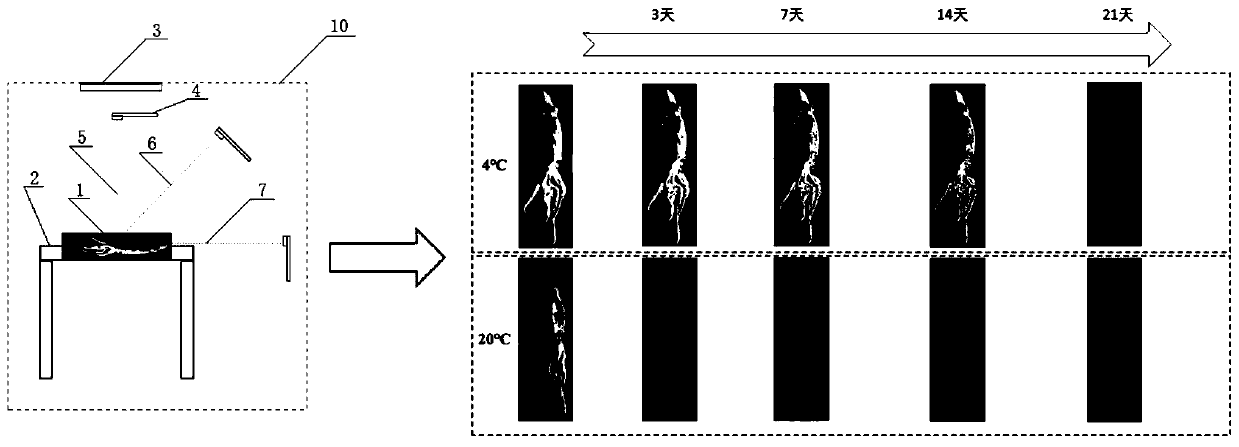

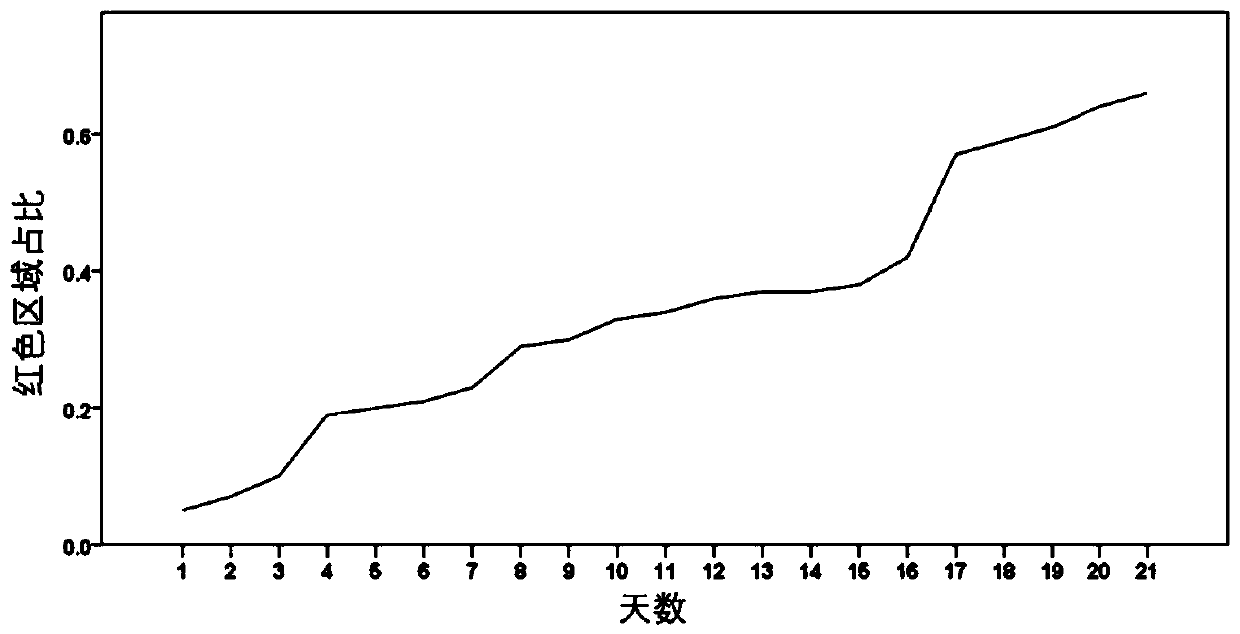

Squid freshness identification method based on color space transformation and pixel clustering

PendingCN110322434AOvercoming pollutionOvercome destructionImage analysisCharacter and pattern recognitionImaging processingDynamic monitoring

The invention discloses a squid freshness identification method based on color space transformation and pixel clustering, and the method comprises the steps: unfreezing squids, cleaning the unfrozen squids, and preparing squid samples; unfolding the squid sample on a workbench, placing the squid sample in an auxiliary light source irradiation area, and performing image acquisition on the squid sample at different angles by using shooting equipment to obtain an original squid image; carrying out image preprocessing to obtain a test image; carrying out color space transformation and pixel clustering on the test image, extracting a red decay area of the test image, carrying out ratio calculation on the red decay area and the total surface area of the squid, dynamically analyzing the meat quality change condition of the squid, and monitoring the decay rate of the squid. According to the method, the image processing technology is utilized, color space transformation and pixel clustering analysis are carried out on the shot squid images to obtain the metamorphic region area of the squid images, ratio calculation is carried out on the metamorphic region area and the total surface area, and therefore non-contact and lossless squid freshness dynamic monitoring and identification under different storage durations and temperatures are achieved.

Owner:ZHEJIANG ACADEMY OF AGRICULTURE SCIENCES

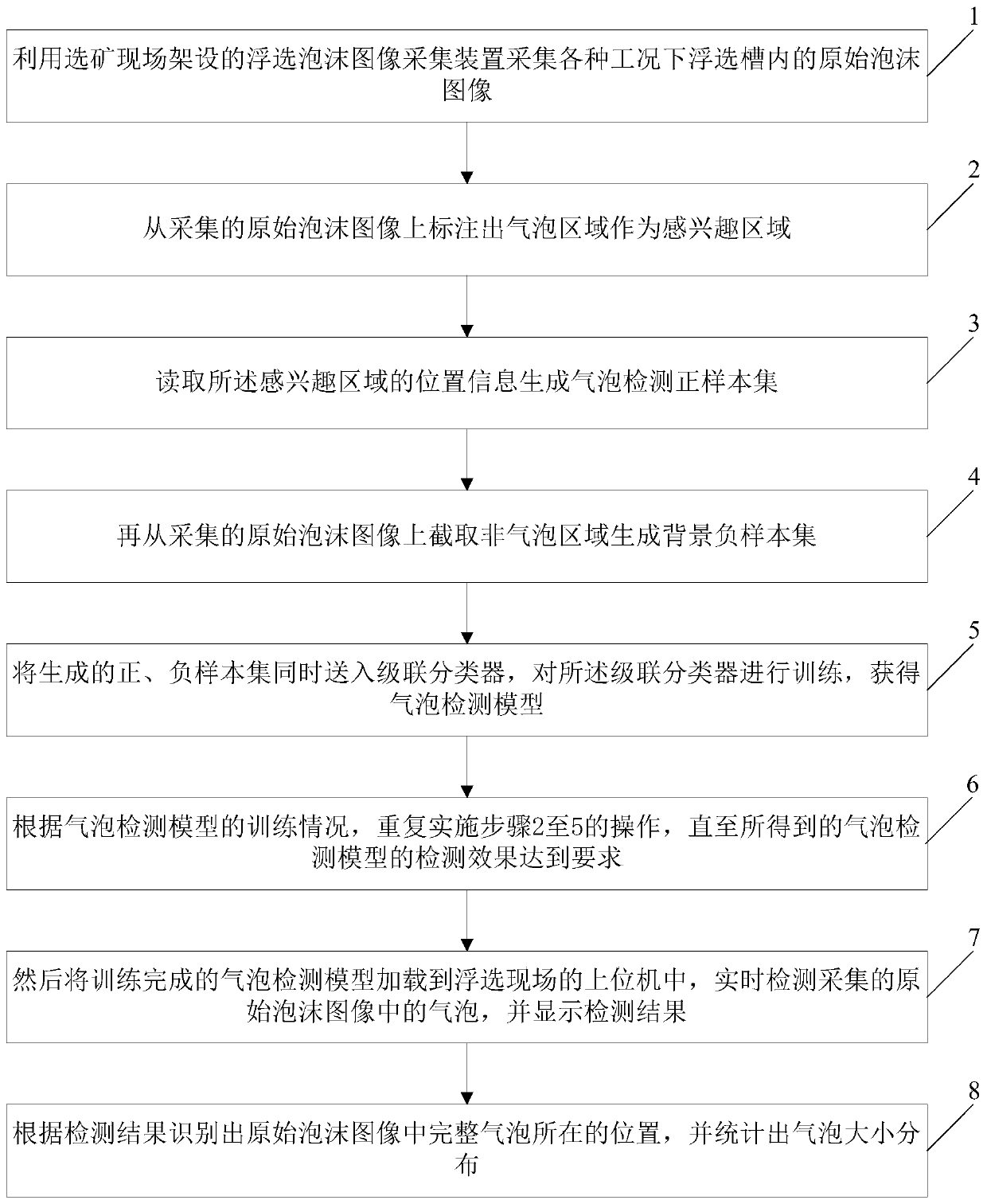

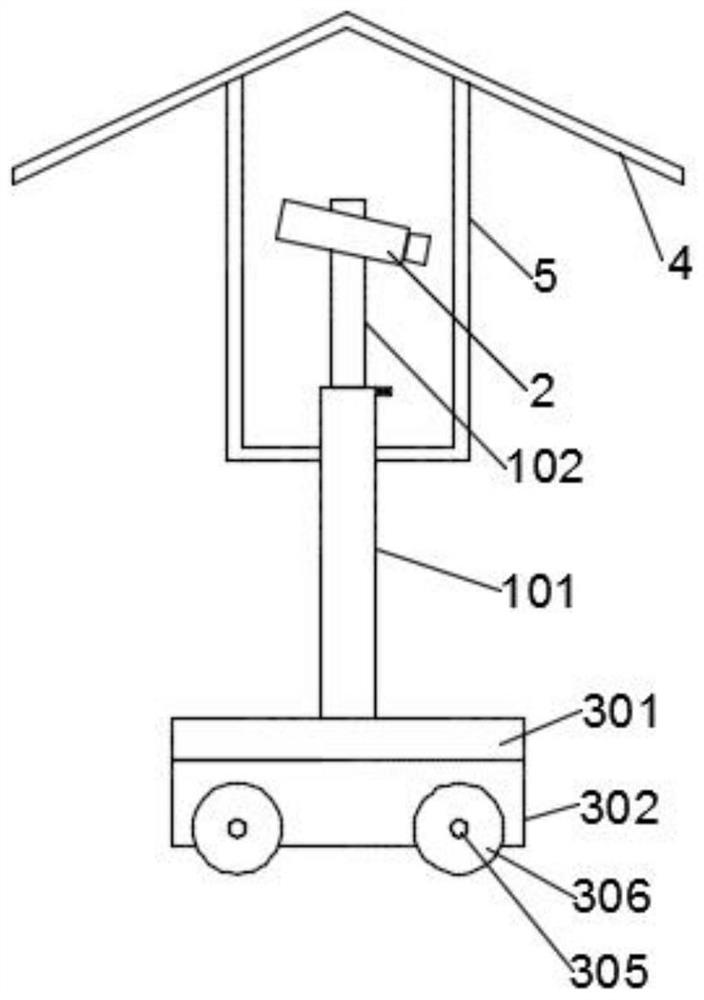

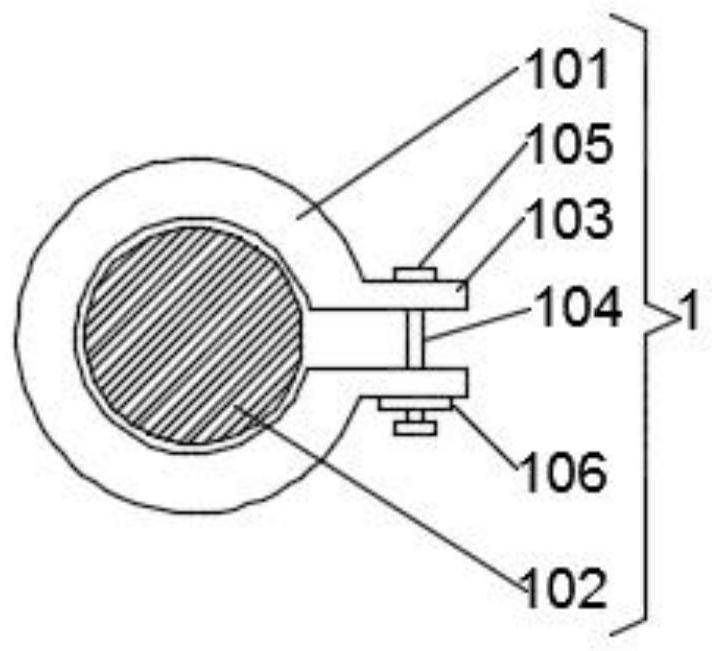

Flotation bubble identification method based on cascade classifier

ActiveCN111259972AAccurate identificationLow image quality requirementsCharacter and pattern recognitionManufacturing computing systemsPositive sampleAlgorithm

The invention discloses a flotation bubble recognition method based on a cascade classifier. The method comprises the steps that firstly, collecting original bubble images in a flotation tank under various working conditions; marking a bubble region from the collected original foam image as a region of interest, and generating a bubble detection positive sample set; intercepting a non-bubble areafrom the collected original foam image to generate a background negative sample set; sending the generated positive and negative sample sets into a cascade classifier at the same time to obtain a bubble detection model; loading the trained bubble detection model into an upper computer on a flotation site, detecting bubbles in the collected original bubble image in real time, and displaying a detection result; and identifying the position of the complete bubbles in the original foam image according to the detection result, and counting the size distribution of the bubbles. The invention has lowrequirements for image quality, is not affected by a plurality of bright spots, color blocks, collapse and the like on the surface of the bubble, and can quickly and accurately identify the bubble.

Owner:BGRIMM MACHINERY & AUTOMATION TECH CO LTD +1

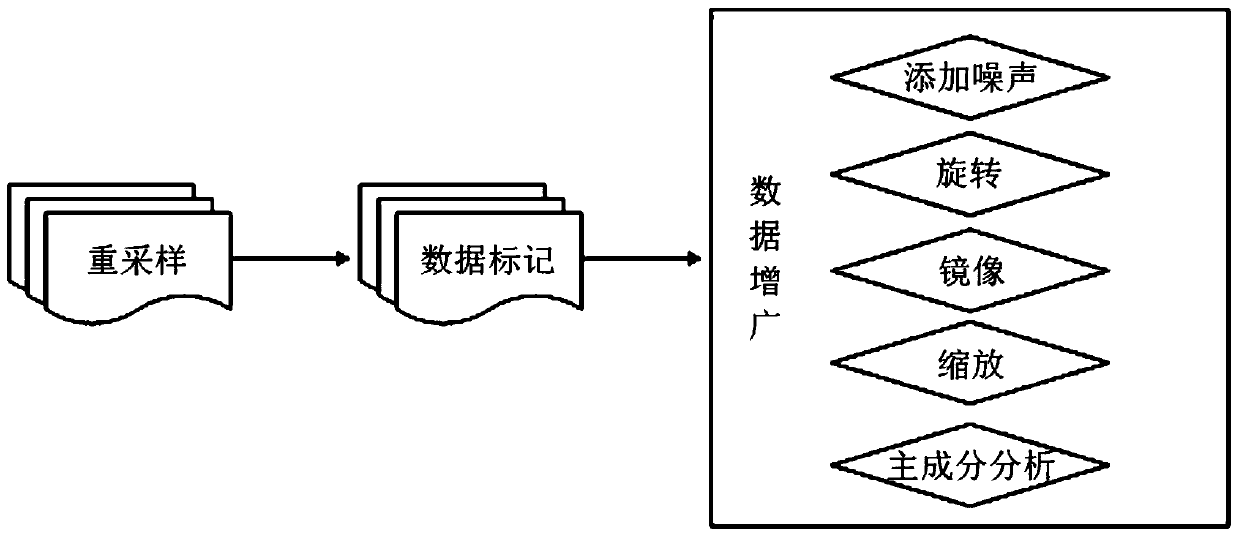

Crop pest intelligent identification method

PendingCN112580513ALow image quality requirementsReduce difficulty and costTelevision system detailsCharacter and pattern recognitionAgricultural cropsBiology

The invention belongs to the technical field of disease and insect pest identification, and discloses a crop disease and insect pest intelligent identification method. The method comprises the steps:S1, setting a region range, and obtaining basic information of crops in multiple time periods in the region range; S2, acquiring image data and meteorological data in a regional range; S3, acquiring first feature information reflecting the physiological features of the crops from the image data; S4, judging whether the physiological characteristics of the crops in the area range are normal or notin the current time period, and if yes, ending the process; if not, entering the step S5; S5, identifying a suspected area with abnormal physiological features, and generating a crop resource image; S6, acquiring second feature information reflecting the physiological features of the crops; S7, comparing and judging whether the physiological characteristics of the crops in the suspected area are normal or not, and if yes, ending the process; if not, entering the step S8; and S8, making the final judgment manually. The method is low in cost and accurate in judgment.

Owner:福州引凤惠农科技服务有限公司

Smoke and fire detection method based on video

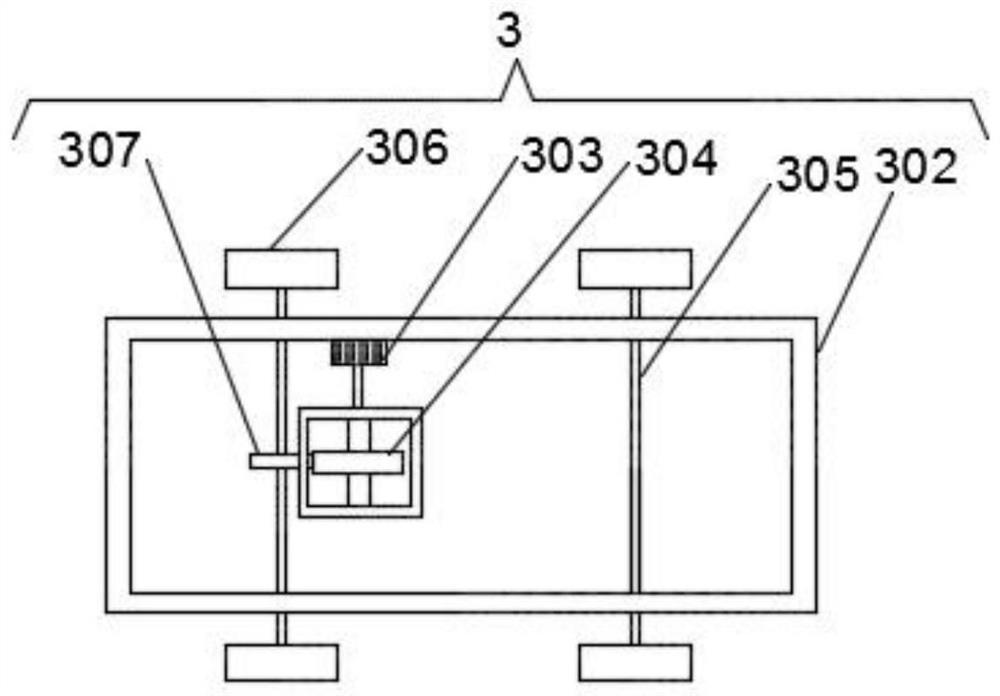

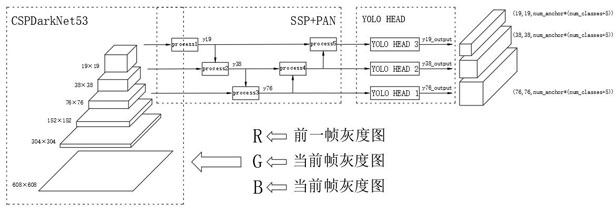

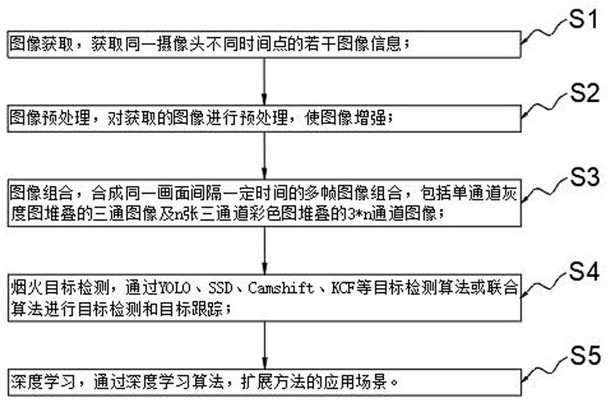

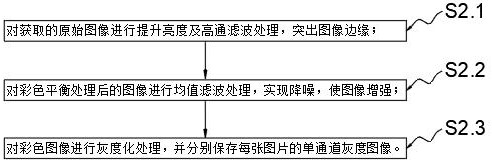

PendingCN113239860AReduce false alarm rateLow image quality requirementsImage enhancementImage analysisColor imageImaging quality

The invention relates to the technical field of image processing, in particular to a smoke and fire detection method based on videos. The method includes the steps of image acquisition, image preprocessing, image combination, smoke and fire target detection, deep learning and the like. According to the design of the invention, through combination of smoke and fire motion information and the advantages of deep learning, three-channel color image input of deep learning target detection is modified into a multi-channel image formed by combining images of the same camera at different time points; then, the moving smoke and fire targets possibly existing in the image are detected and tracked through multiple target detection algorithms or two or more combined algorithms, so that the probability of misinformation can be effectively reduced, the requirement of the method for image quality is reduced, the detection accuracy is improved, and the application scene of the smoke and fire detection method is expanded; in conclusion, the method can be effectively applied to safety monitoring of the environment.

Owner:中建材信息技术股份有限公司 +2

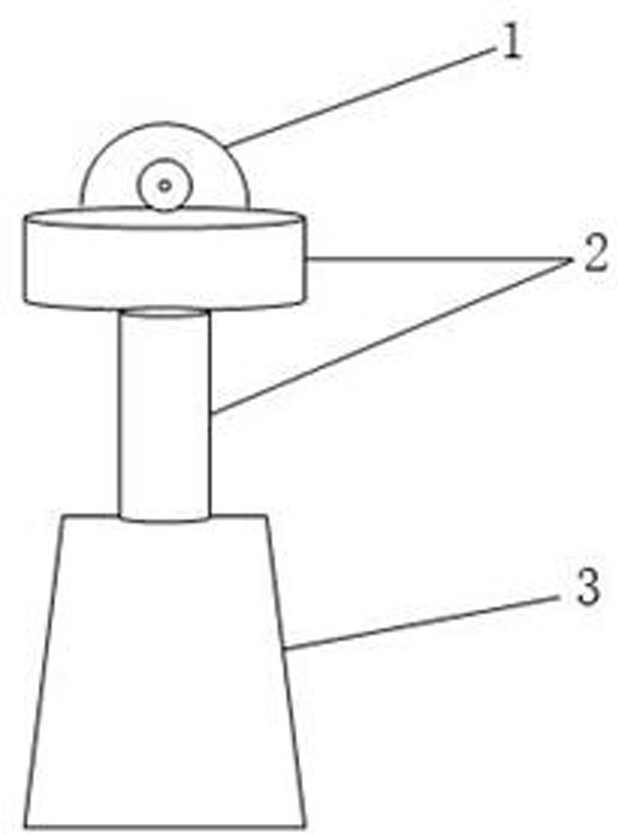

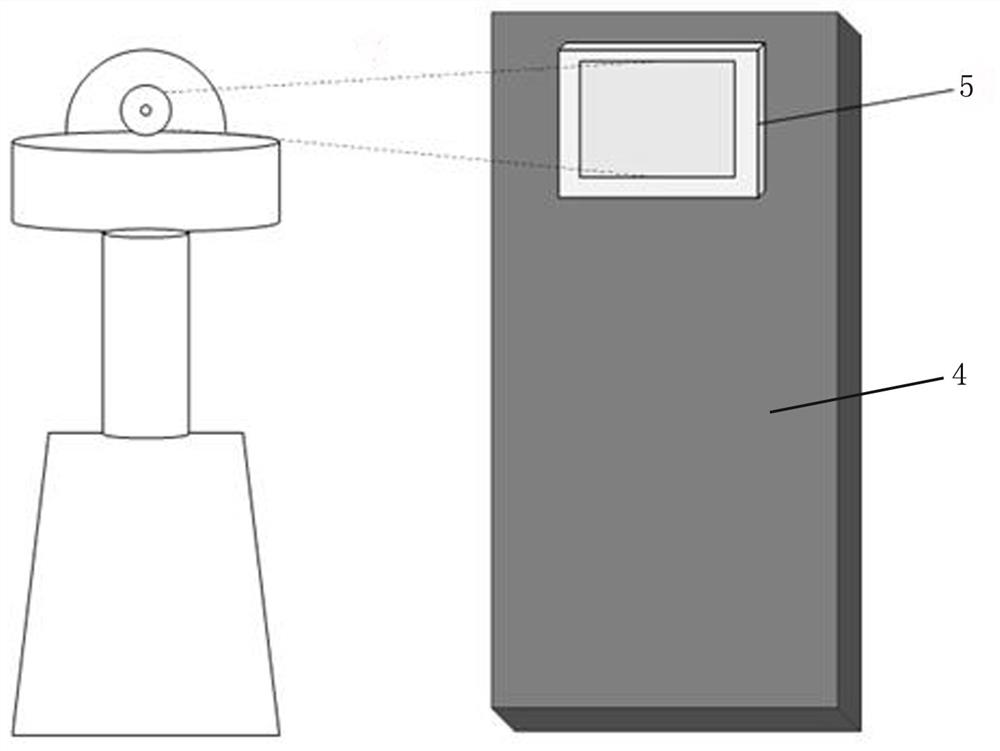

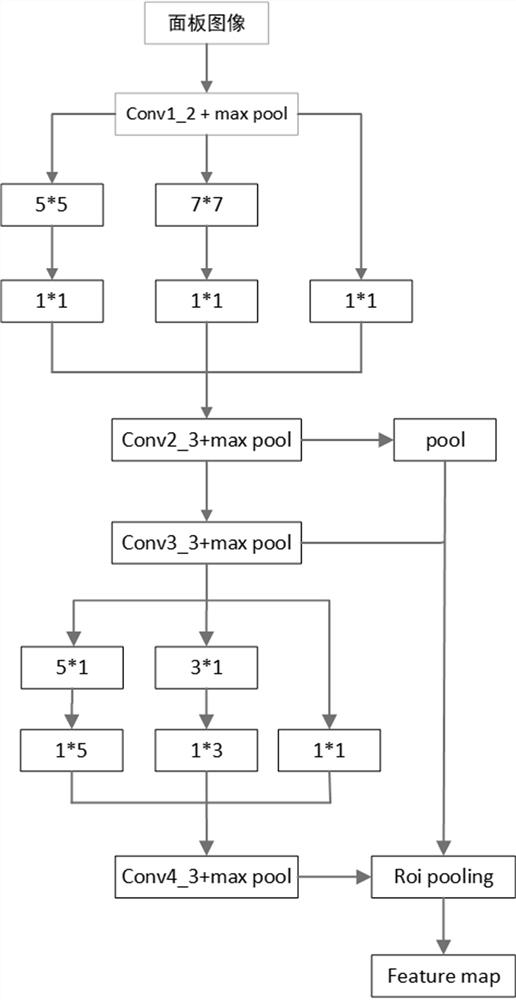

Intelligent collection method for machine room equipment display panel data

PendingCN111738264APrecise positioningEnhance light adaptabilityKernel methodsCharacter and pattern recognitionData setText detection

The invention discloses an intelligent collection method of machine room equipment display panel data, which comprises the following steps: S1, a robot acquires images of a plurality of display panelsas a training data set; s2, inputting the training data set into an improved master-rcnn algorithm, and training to obtain a text detection model; s3, the robot collects an image of the display panelin real time and inputs the image into the text detection model obtained through training in the step S2, all texts are automatically marked, so that detection boxes are obtained, and position coordinates and size information of all the detection boxes in the image in the display panel are output; s4, extracting a Roi image in the detection frame, performing image preprocessing on the Roi image,and reserving the extracted digital skeleton image as a training sample set; s5, training an svm classifier according to the training sample set, and performing classification recognition on a singlenumber through the svm classifier; and S6, splicing the numbers into a character string, and outputting the character string to a client for display. Data is automatically collected, the labor cost isreduced, and the operation and maintenance efficiency of the data center is improved.

Owner:杭州优云科技有限公司

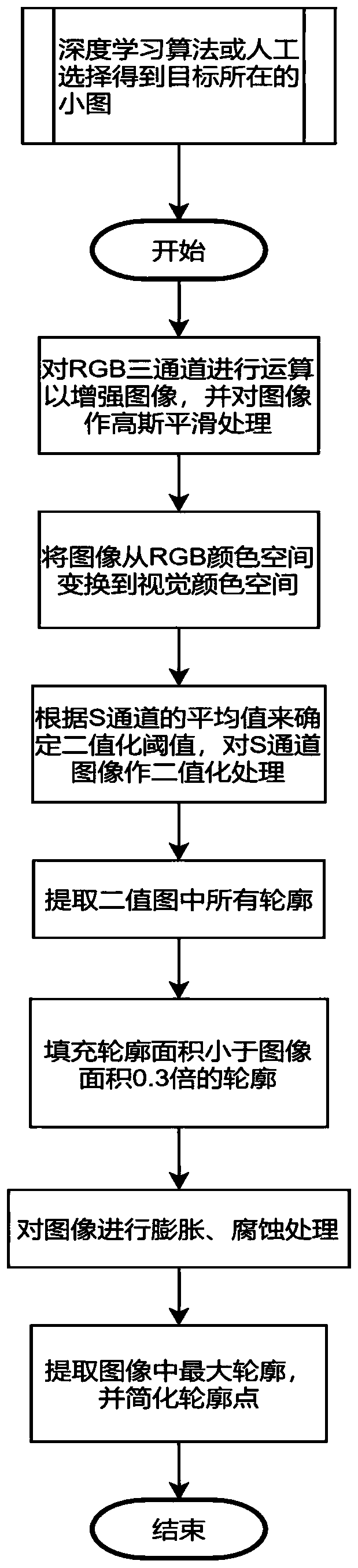

Method for batch extraction of similar target contours with single color in aerial images

PendingCN111368854AImprove accuracyLow image quality requirementsScene recognitionPattern recognitionComputer graphics (images)

The invention provides a method for batch extraction of similar target contours with single color in aerial images, and solves the problem of low batch extraction efficiency of similar target contoursrepeatedly appearing in aerial photos under the condition of similar target colors. The method comprises the following steps: (1) reading a small image where a target is located from an original image, and enhancing the image saturation difference; (2) converting a color space, generating a binary image, and carrying out contour extraction preprocessing on the binary image; and (3) extracting theobject with the maximum outer contour in the binary image as the contour of the target. The method has the advantages that the method is high in universality; the algorithm is simple; the calculationefficiency is high; result conciseness.

Owner:东南数字经济发展研究院

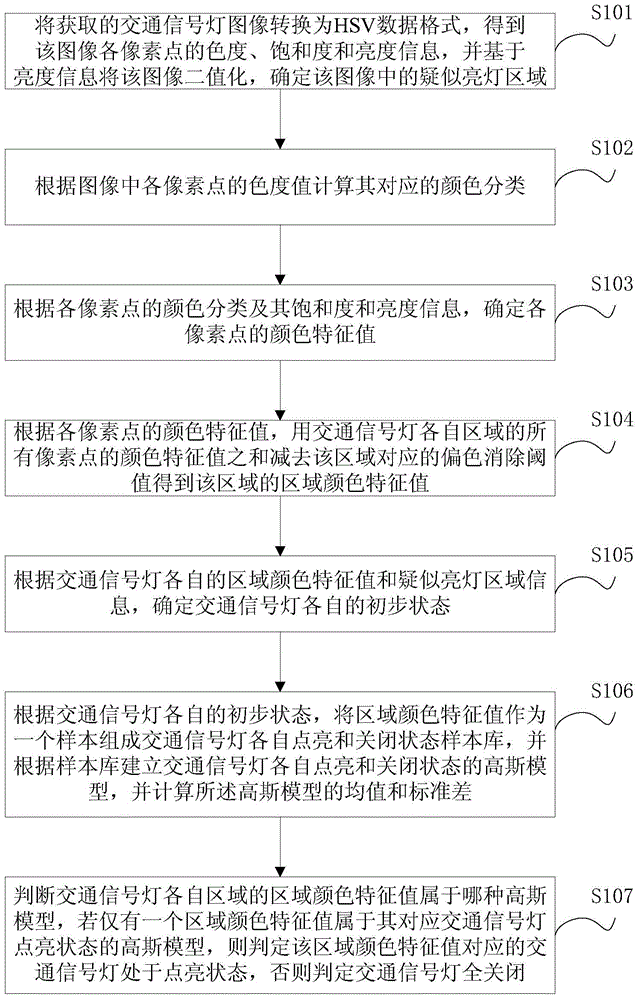

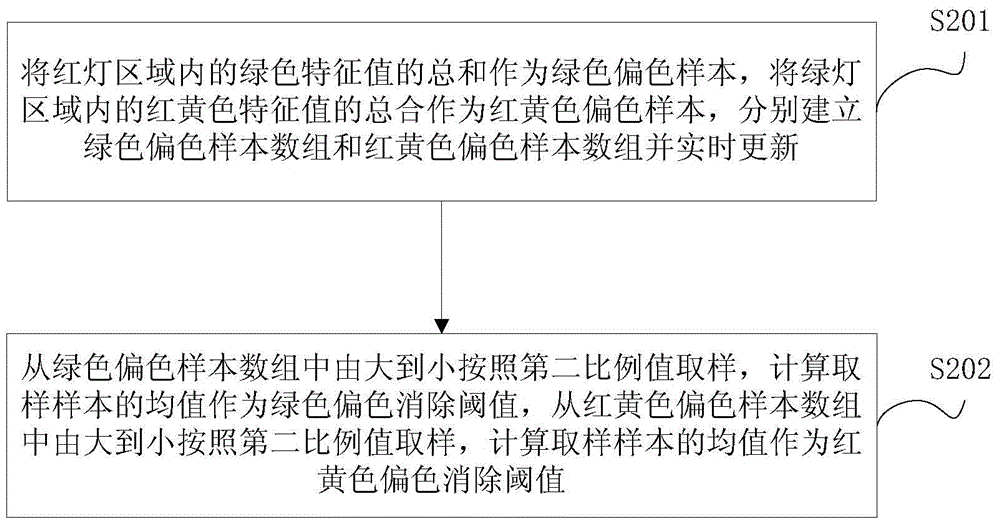

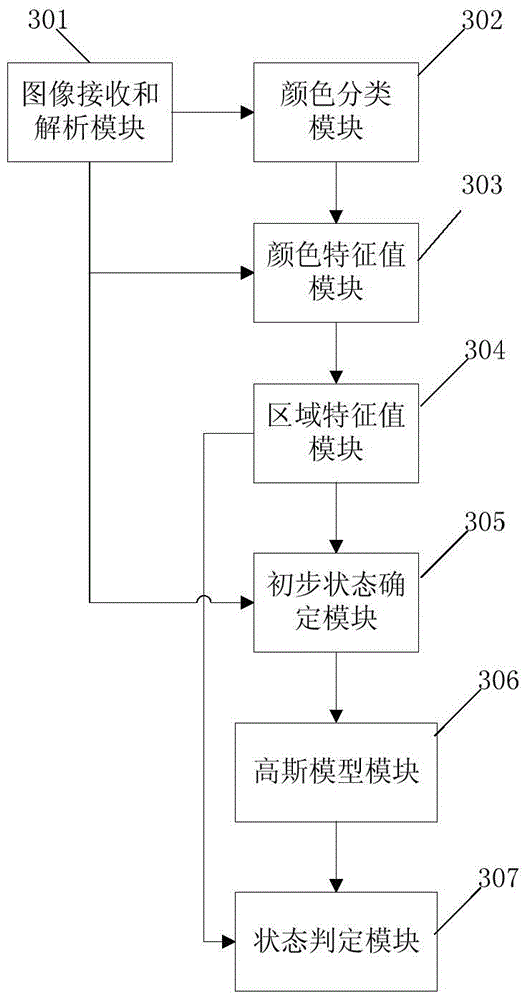

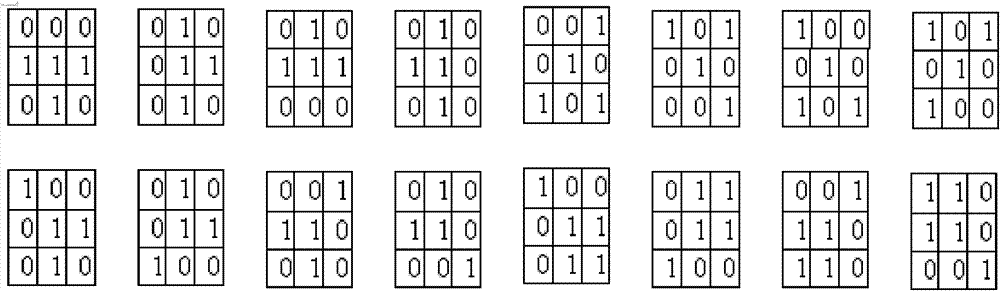

A method and device for detecting traffic lights based on video

ActiveCN103488987BLow image quality requirementsImage enhancementCharacter and pattern recognitionTraffic signalImage conversion

The invention discloses a video-based method and device for detecting traffic lights. The method comprises the steps of converting an acquired image into an HSV (Hue, Saturation, Value) data format to obtain the chromaticity, saturation and luminance information of pixels of the image, binarizing the image on the basis of the luminance information, and determining the suspected light area in the image; according to the chromaticity value of pixels in the image, determining the corresponding color classification; then determining the color character values of the pixels; according to the color character values of the pixels, determining the area color character values of the traffic lights respectively; then, judging the primary states of the traffic lights, and establishing the respective on-and-off state sample library of the traffic lights and corresponding Gaussian models; finally, judging the states of the traffic lights. The invention further discloses a device for realizing the method. The method and device disclosed by the invention have low requirements for the quality of the image, and can well adapt to complicated scenes.

Owner:ZHEJIANG UNIVIEW TECH CO LTD

Blood vessel diameter measuring method based on digital image processing technology

InactiveCN101984916BImprove computing efficiencyLow image quality requirementsDiagnostic recording/measuringSensorsDot pitchImaging quality

The invention provides a blood vessel diameter measuring method based on digital image processing technology. The method includes that: (1) Gauss matched filtering method is utilized to enhance a blood vessel image; (2) the image is normalized and binarized; (3) according to related theory of mathematical morphology, the image is refined, and skeleton is extracted; (4) straight line fitting method is used for solving slope of a section of blood vessel, pixel number of blood vessel is detected along the direction vertical to the blood vessel, and then the blood vessel diameter is solved according to dot pitch. The invention is used for measuring medical image blood vessel diameter, computing efficiency is high, requirement on image quality is low, and computation is accurate.

Owner:HARBIN ENG UNIV

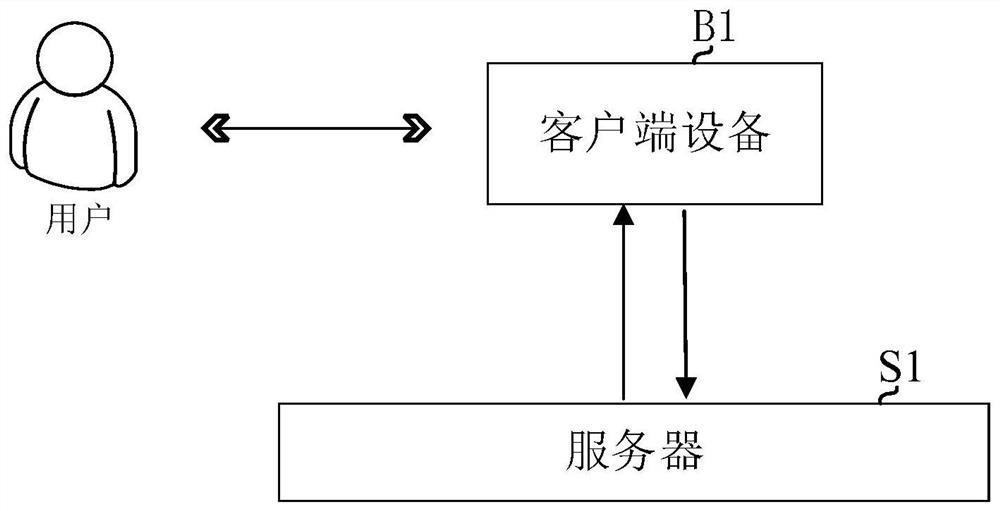

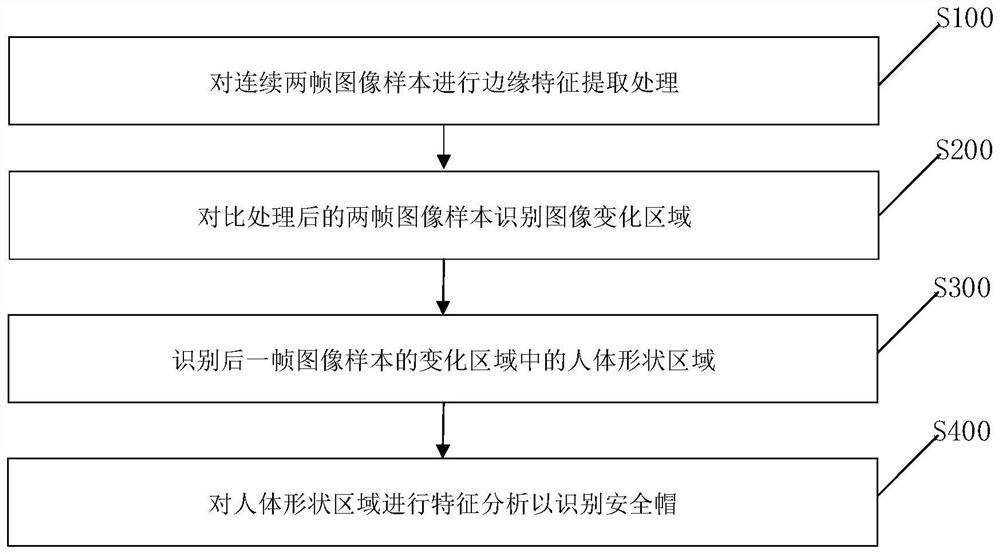

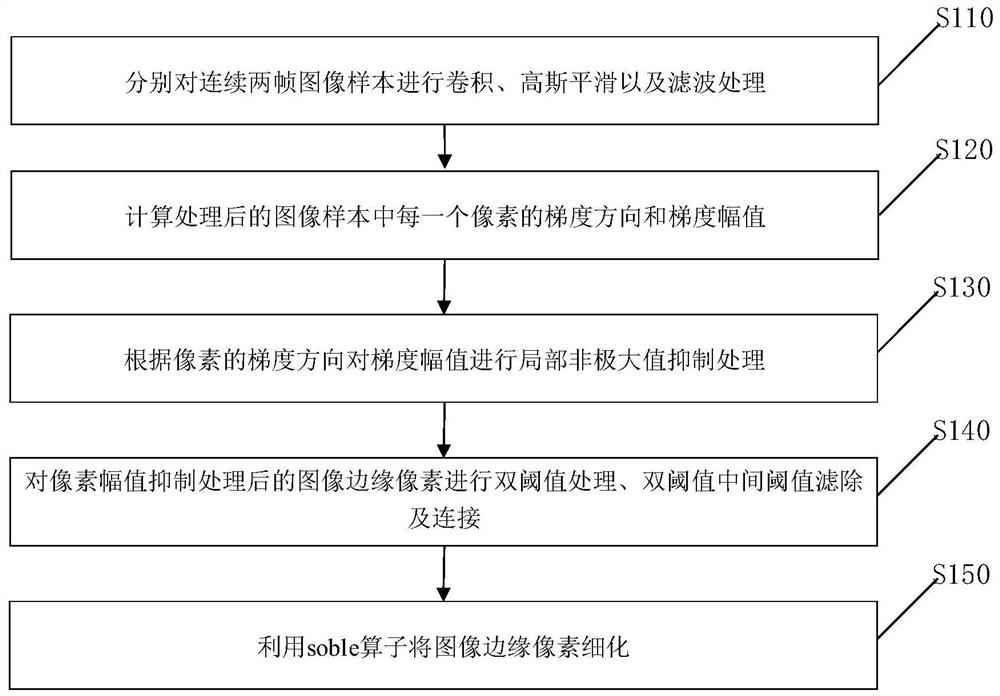

Low-resolution image safety helmet recognition method and device

PendingCN112329554ALow image quality requirementsUniversal adaptabilityBiometric pattern recognitionFeature extractionImaging quality

The invention provides a low-resolution image safety helmet recognition method and device. The method comprises the steps: carrying out edge feature extraction processing on two continuous image samples; comparing the processed two frames of image samples to identify an image change area; identifying a human body shape area in the change area of the next frame of image sample; carrying out characteristic analysis on a human body shape area to identify the safety helmet, utilizing an edge characteristic extraction technology and color and shape analysis to compare change areas of two continuousframes of images. The requirement for image quality is low, safety helmet identification can be carried out under the condition that the resolution ratio is very low, the requirement for system analysis hardware is lowered, and universality and adaptability are achieved.

Owner:通辽发电总厂有限责任公司 +1

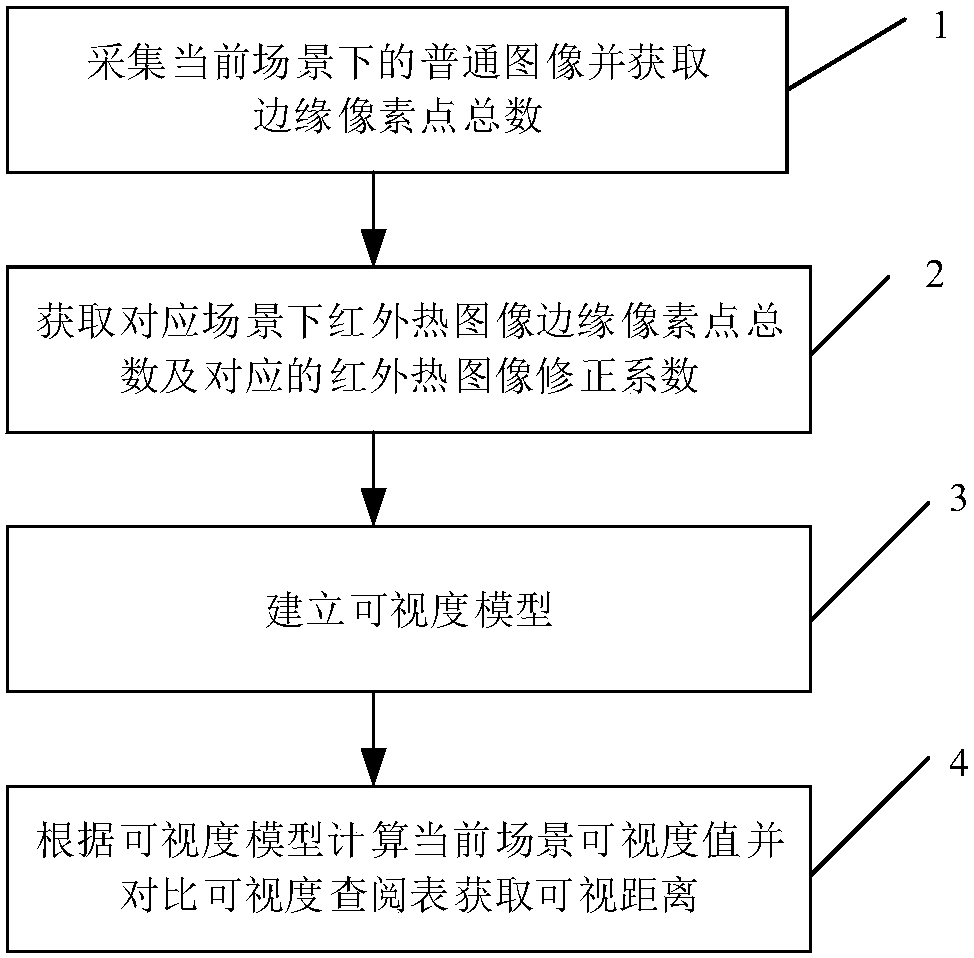

A visual distance detection method based on image processing

ActiveCN105894500BSimplified Image Processing AlgorithmsLow image quality requirementsImage enhancementImage analysisVisibilityImaging processing

The invention relates to a visualized distance detection method based on image processing. The method includes the steps of acquiring a common image under a current scene and obtaining the total number of edge pixel points, obtaining the total number of infrared thermal image edge pixel points under corresponding scene and corresponding infrared thermal image correction coefficient, establishing a visibility model, and calculating the visibility under current scene according to the visibility model, obtaining corresponding visualized distance by comparing with a stored visibility lookup table, wherein the visibility lookup table contains mapping relations between standard visibility value and visualized distance under different scenes. Compared with the prior art, the method is simple, has lower requirements on image quality and ensures higher detection precision.

Owner:TONGJI UNIV

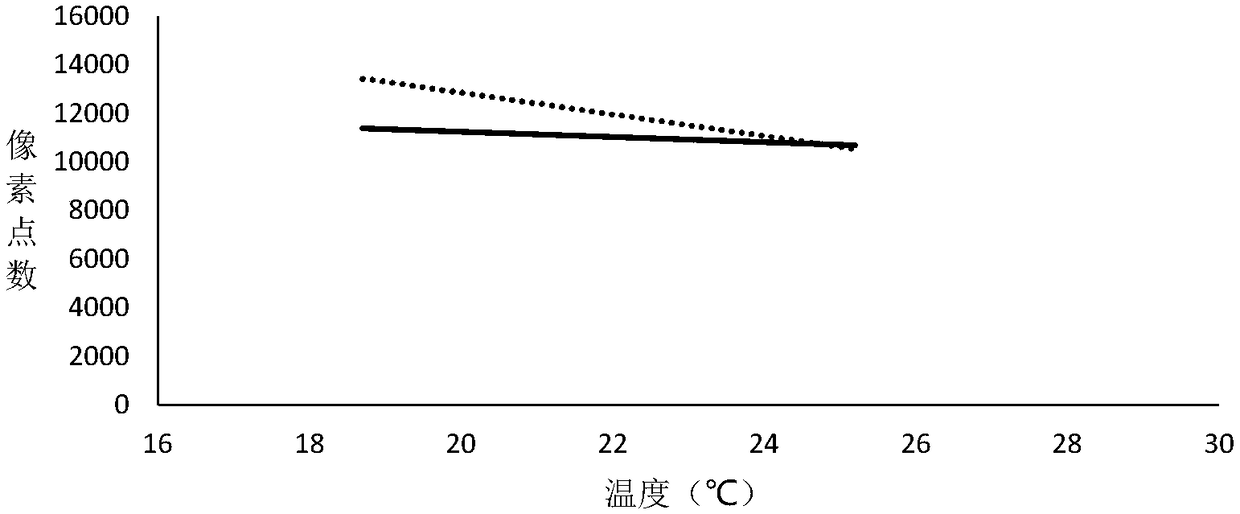

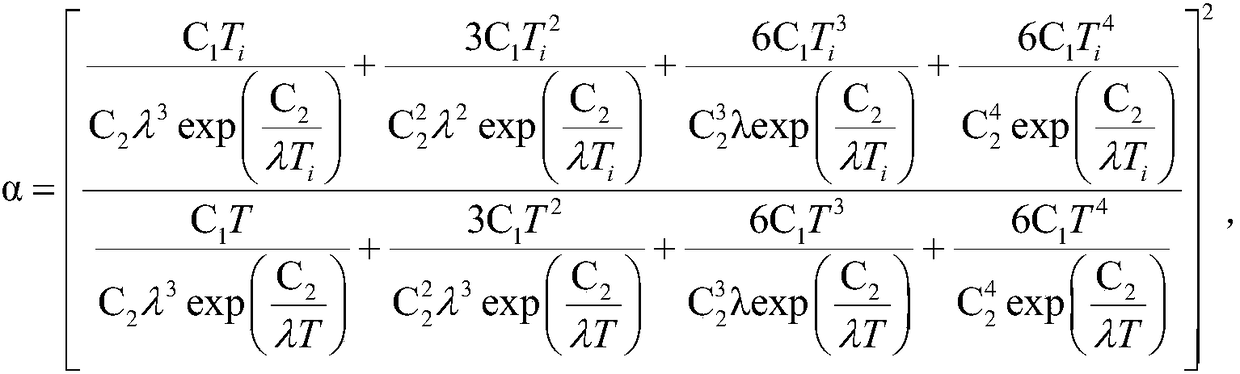

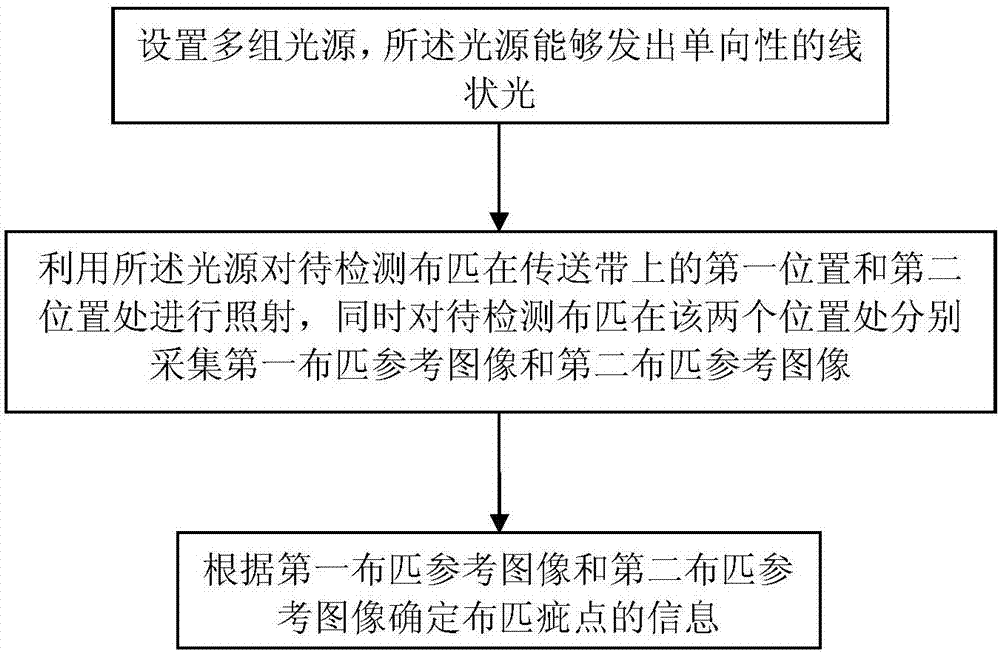

Real-time detection method for defect points of cloth

ActiveCN107014824ALow image quality requirementsReduce computationMaterial analysis by optical meansEngineering

The invention provides a real-time detection method for defect points of cloth. According to the real-time detection method, the real-time detection efficiency of defect points of cloth is improved when the accuracy is guaranteed, and particularly, the problems that a cloth pattern is difficult to be accurately positioned and then the pattern and the defect points cannot be accurately recognized by reasons of malposition movement due to mechanical sliding and the like after the cloth which is smaller than a standard size and is expected to be printed or jetted with a complex pattern is subjected to multiple printing or jetting processes are avoided. When the detection accuracy of the defect points which are 1mm or over higher than a peripheral region is guaranteed, the real-time detection of the cloth on a conveying belt in a real-time conveying period can be carried out, the detection speed is high, and the detection accuracy is high.

Owner:江苏荣旭纺织有限公司

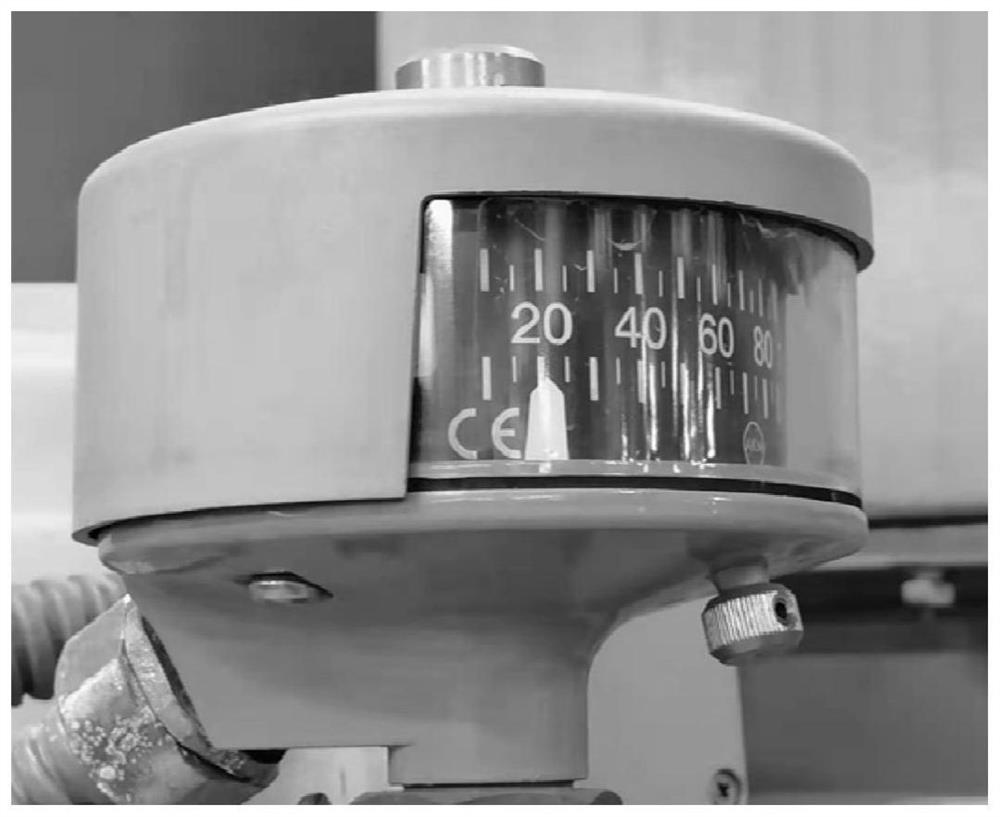

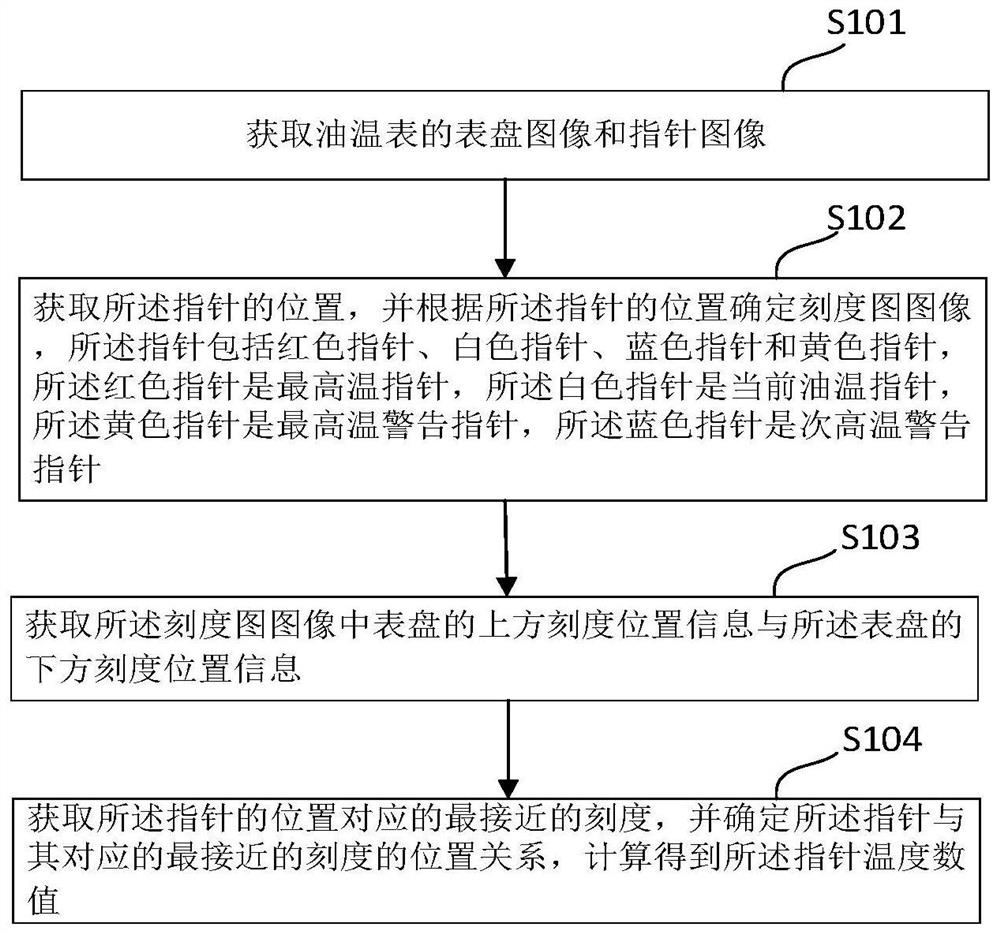

Transformer arc-shaped oil temperature meter reading method and device based on machine vision

PendingCN113780142ALow image quality requirementsEasy to read workImage enhancementImage analysisEngineeringMechanical engineering

The invention relates to a transformer arc-shaped oil temperature meter reading method based on machine vision. The method comprises the following steps of S1, obtaining a dial image of a transformer arc-shaped oil temperature meter, and recording scales and pointers in the dial image, S2, determining the position of each pointer through color recognition, and determining a scale graph image according to the positions of the pointers, S3, determining the position of each scale through gray recognition, S4, according to the position of each pointer and the position of each scale, acquiring the scale closest to each pointer through a distance method, and S5, obtaining the temperature indicated by each pointer through the closest scale. According to the method, pointers and scales on a dial plate can be recognized through color recognition, the pointers correspond to the scales in position through a distance method, the relation between the scales in the arc-shaped oil temperature meter and the positions of the pointers are accurately read, and therefore the scale value of each pointer is accurately calculated and converted into corresponding temperature data.

Owner:SOUTH CHINA NORMAL UNIVERSITY

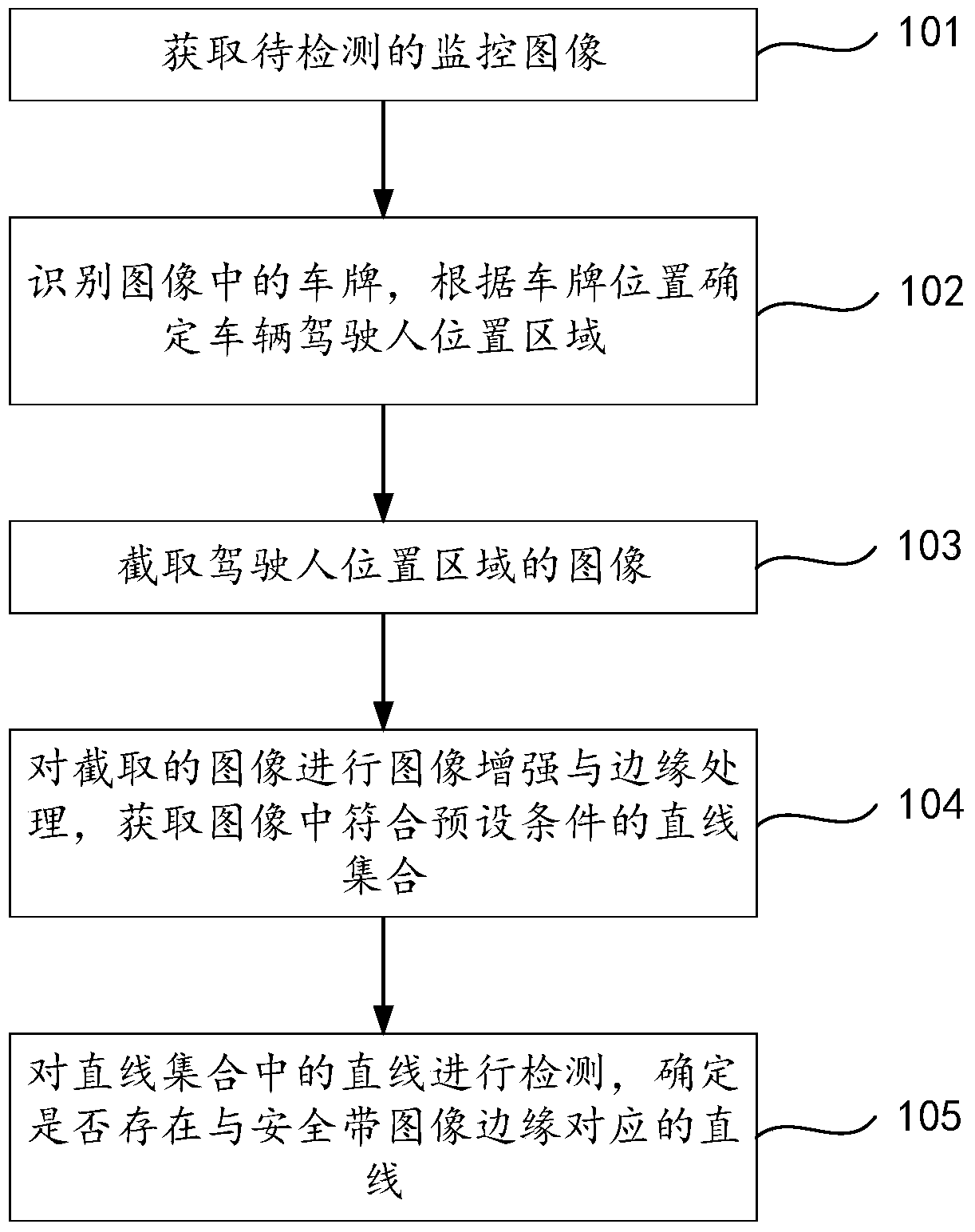

Seat belt detection method based on surveillance image

ActiveCN105809171BLow image quality requirementsImprove detection efficiencyCharacter and pattern recognitionVideo monitoringImaging quality

Owner:CHENGDU IDEALSEE TECH

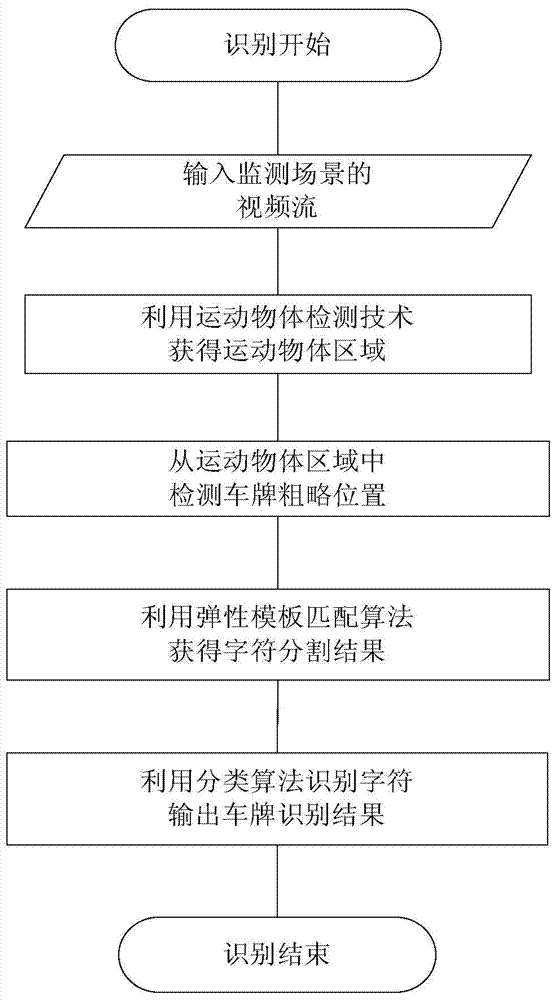

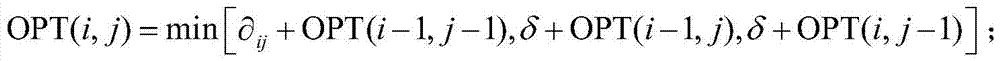

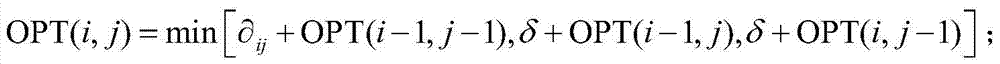

License Plate Character Segmentation Method Based on Elastic Template Matching Algorithm

ActiveCN104408454BShorten the timeWell described scale changesCharacter recognitionTemplate matchingImaging quality

The invention describes a license plate character segmentation method based on an elastic template matching algorithm, describes an ideal elastic template, can well describe the scale change of the license plate in a video, and expresses the character segmentation problem as an optimization problem. Matching the license plate picture with the ideal template, and obtaining the global optimal match from the license plate picture can effectively deal with various problems in traditional license plate character segmentation. The corresponding position of each character in the license plate image is determined through the prior positions of each character in the template, and is not affected by the projection of special characters, uneven illumination or weather noise. Moreover, the segmentation algorithm can be applied to the binarized projection sequence, and the matching speed is extremely fast without increasing the time for character recognition. The invention has lower requirements on imaging quality and is suitable for real-time recognition of license plate characters.

Owner:HOPE CLEAN ENERGY (GRP) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com