Patents

Literature

743 results about "Robot vision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

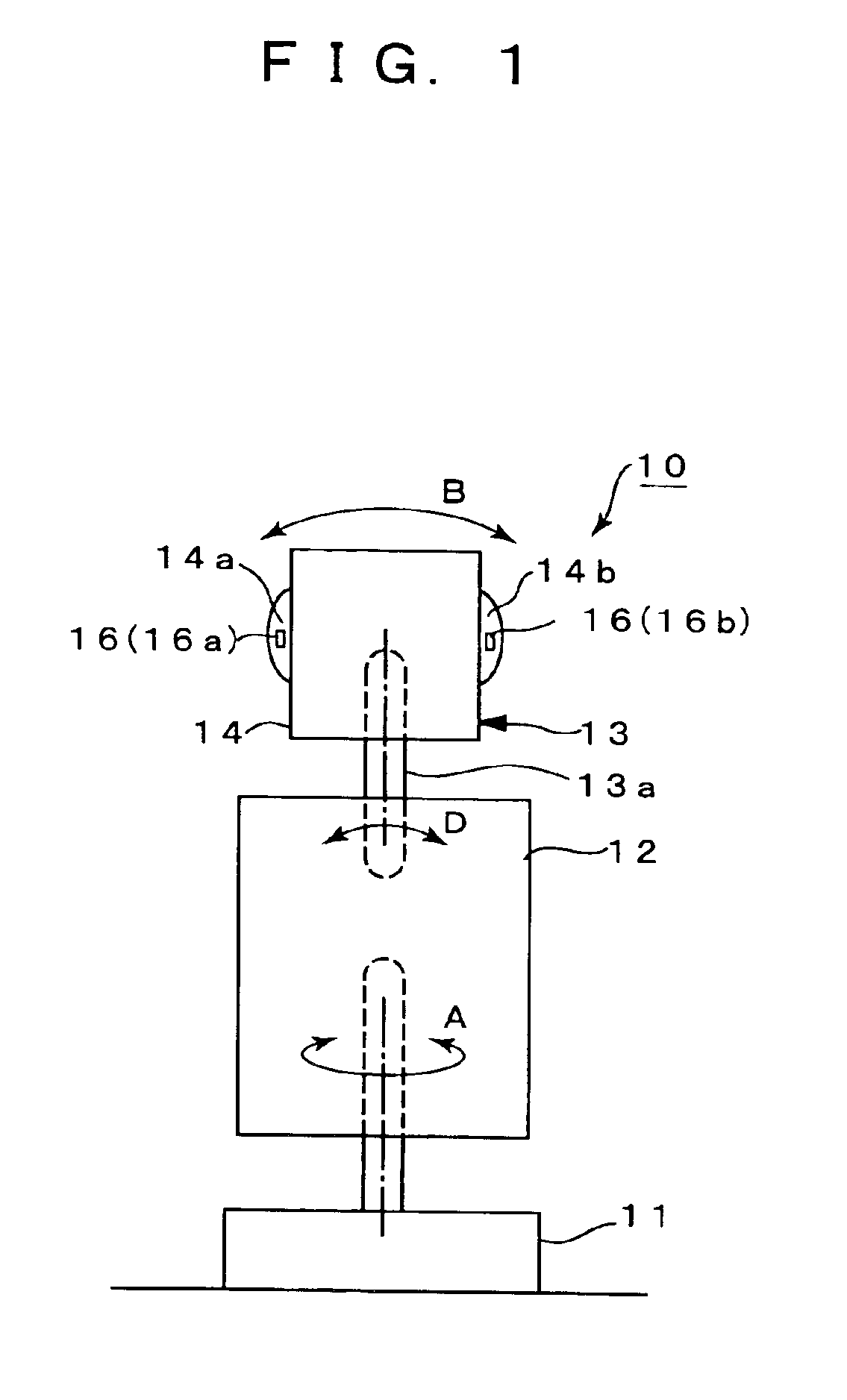

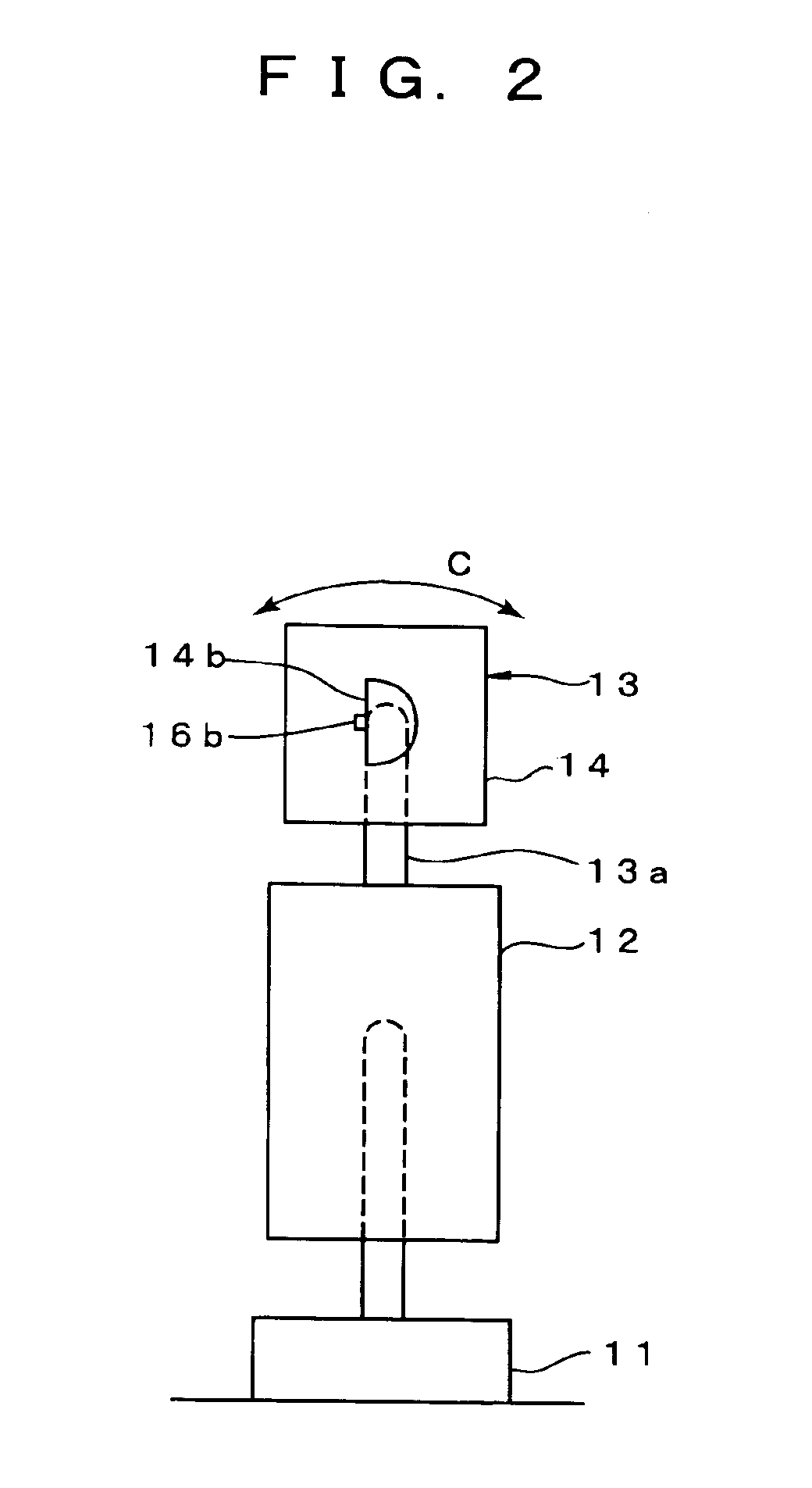

Robotics visual and auditory system

InactiveUS20090030552A1Accurate collectionAccurately localizeProgramme controlComputer controlSound source separationPhase difference

It is a robotics visual and auditory system provided with an auditory module (20), a face module (30), a stereo module (37), a motor control module (40), and an association module (50) to control these respective modules. The auditory module (20) collects sub-bands having interaural phase difference (IPD) or interaural intensity difference (IID) within a predetermined range by an active direction pass filter (23a) having a pass range which, according to auditory characteristics, becomes minimum in the frontal direction, and larger as the angle becomes wider to the left and right, based on an accurate sound source directional information from the association module (50), and conducts sound source separation by restructuring a wave shape of a sound source, conducts speech recognition of separated sound signals from respective sound sources using a plurality of acoustic models (27d), integrates speech recognition results from each acoustic model by a selector, and judges the most reliable speech recognition result among the speech recognition results.

Owner:JAPAN SCI & TECH CORP

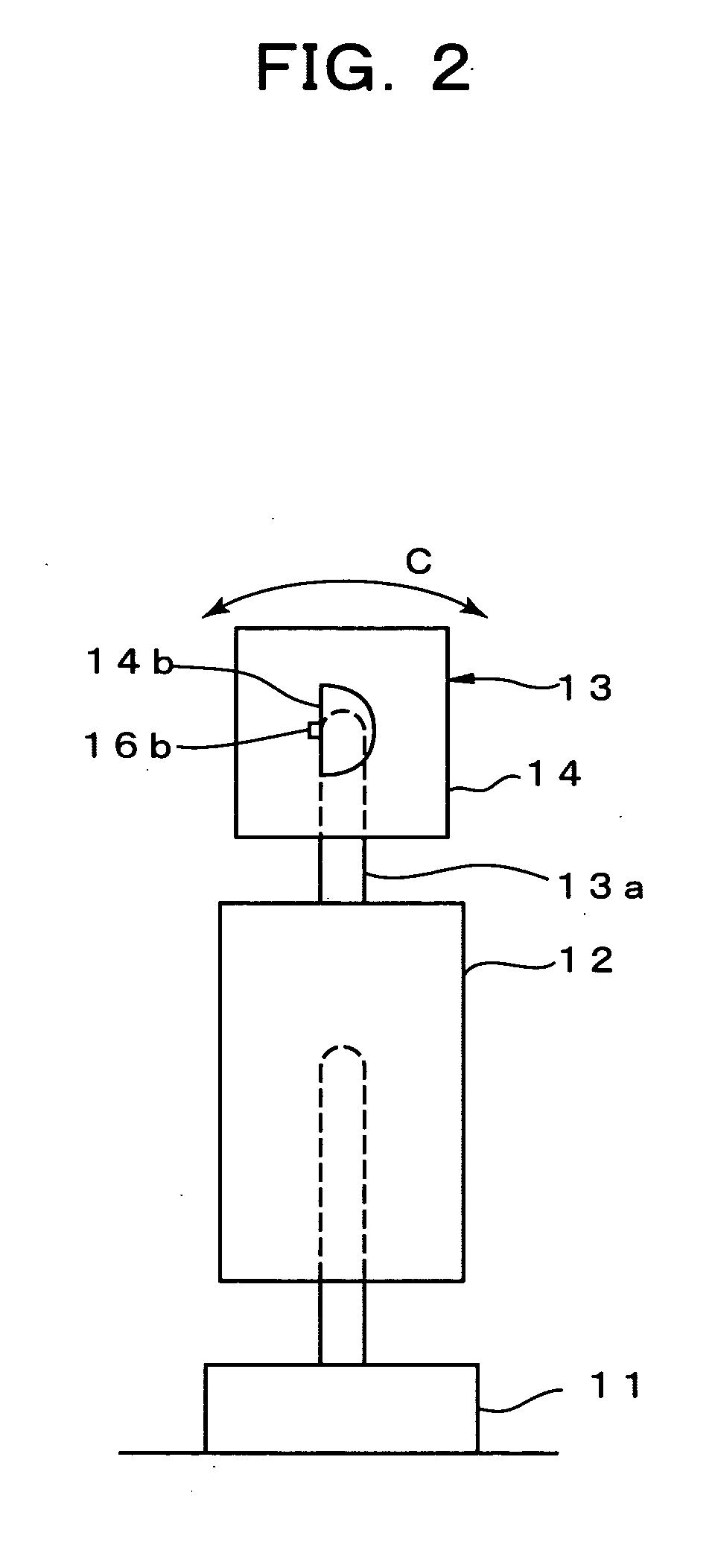

High-resolution polarization-sensitive imaging sensors

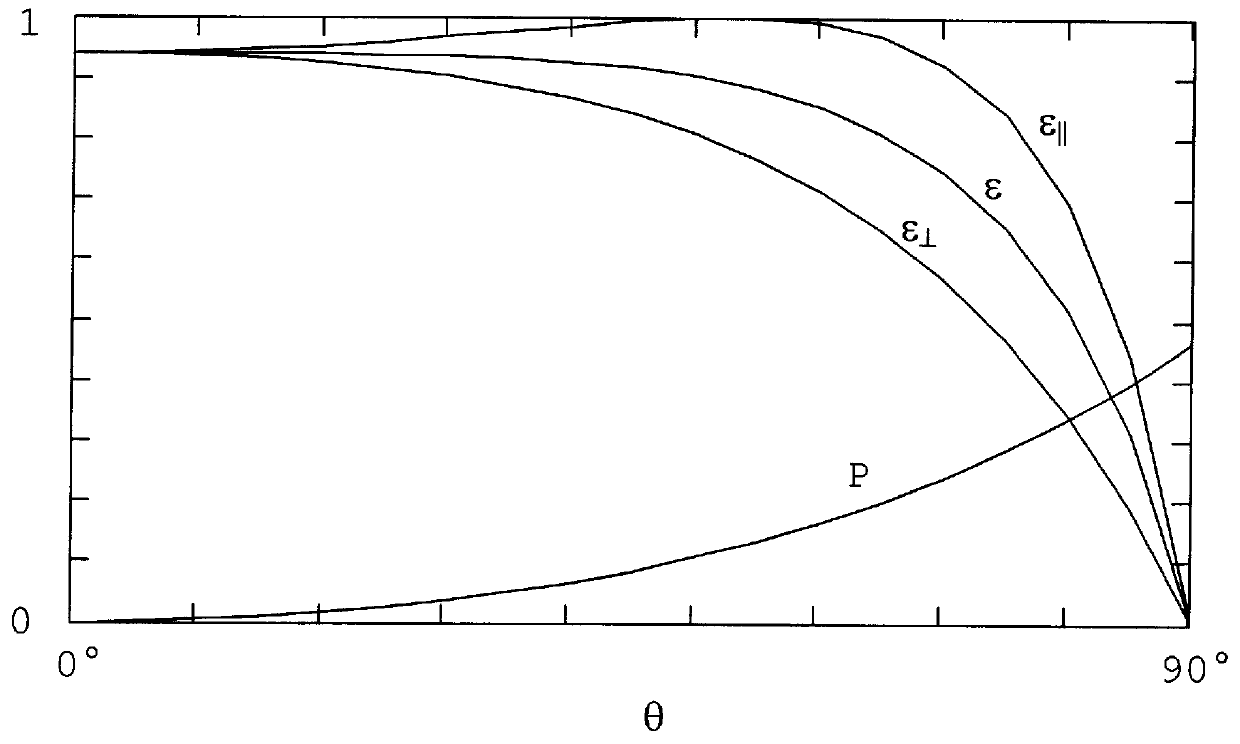

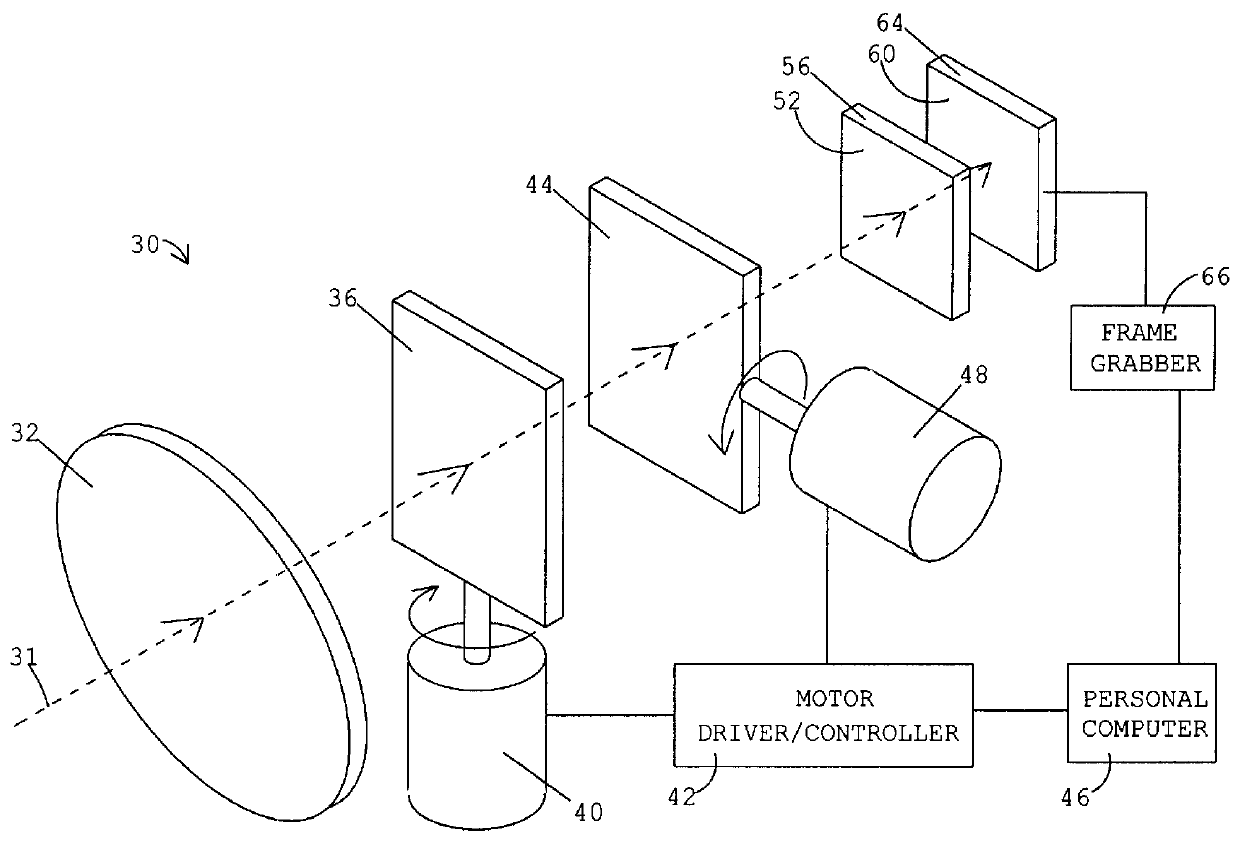

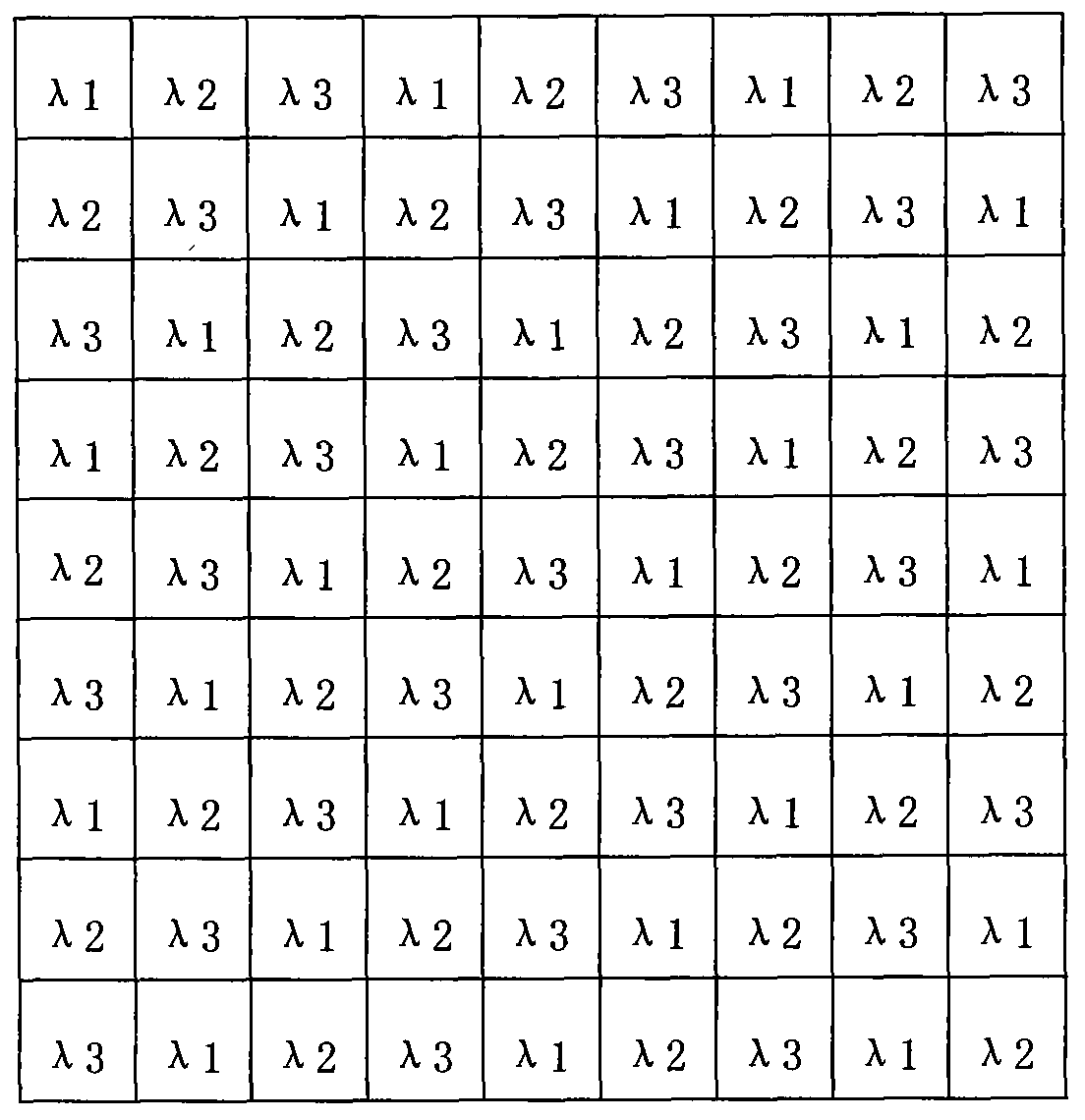

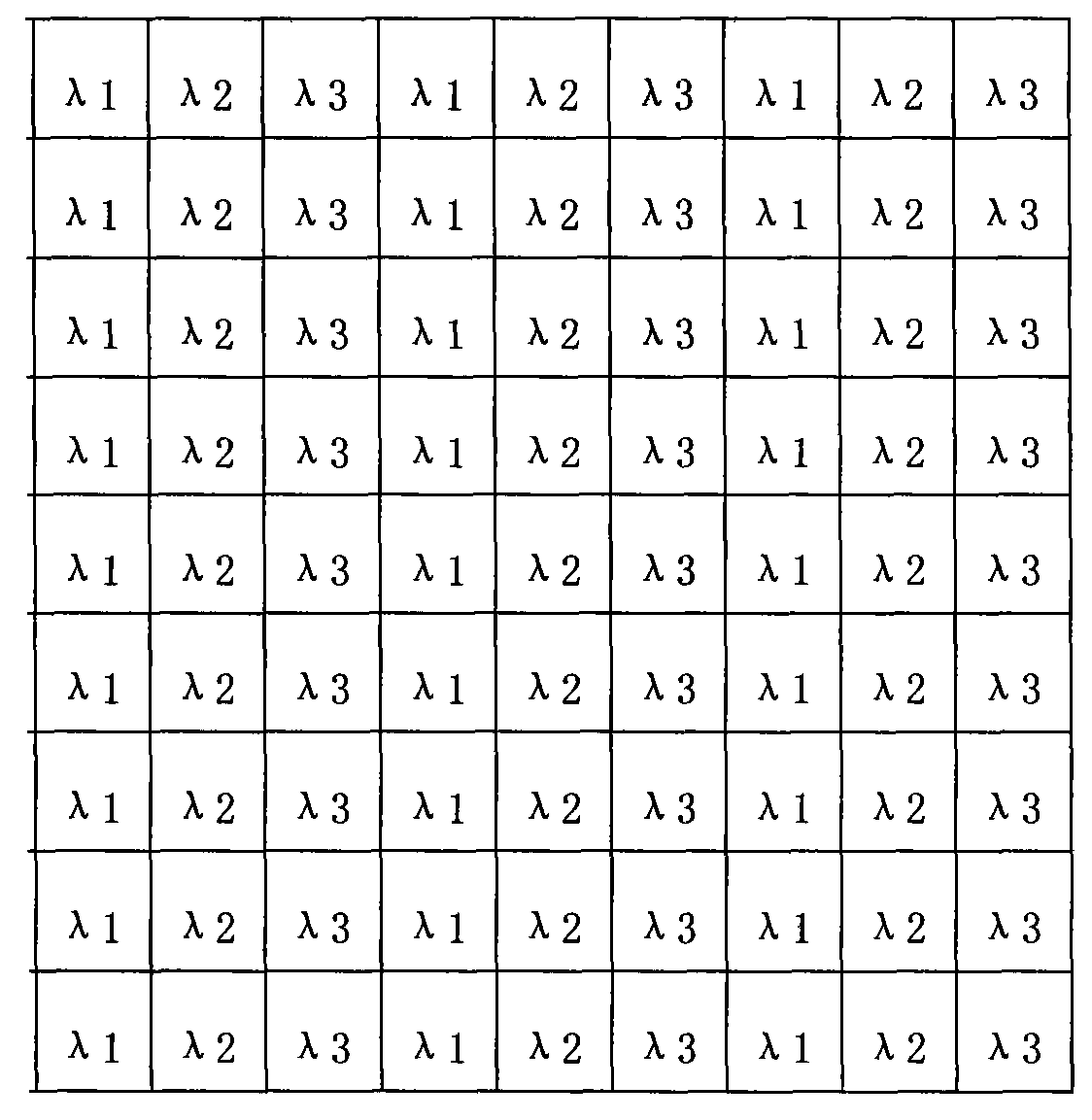

An apparatus and method to determine the surface orientation of objects in a field of view is provided by utilizing an array of polarizers and a means for microscanning an image of the objects over the polarizer array. In the preferred embodiment, a sequence of three image frames is captured using a focal plane array of photodetectors. Between frames the image is displaced by a distance equal to a polarizer array element. By combining the signals recorded in the three image frames, the intensity, percent of linear polarization, and angle of the polarization plane can be determined for radiation from each point on the object. The intensity can be used to determine the temperature at a corresponding point on the object. The percent of linear polarization and angle of the polarization plane can be used to determine the surface orientation at a corresponding point on the object. Surface orientation data from different points on the object can be combined to determine the object's shape and pose. Images of the Stokes parameters can be captured and viewed at video frequency. In an alternative embodiment, multi-spectral images can be captured for objects with point source resolution. Potential applications are in robotic vision, machine vision, computer vision, remote sensing, and infrared missile seekers. Other applications are detection and recognition of objects, automatic object recognition, and surveillance. This method of sensing is potentially useful in autonomous navigation and obstacle avoidance systems in automobiles and automated manufacturing and quality control systems.

Owner:THE UNITED STATES OF AMERICA AS REPRESENTED BY THE SECRETARY OF THE NAVY

Apparatus and methods for training of robots

ActiveUS20160096272A1Programme-controlled manipulatorAutonomous decision making processVision basedCharacteristic space

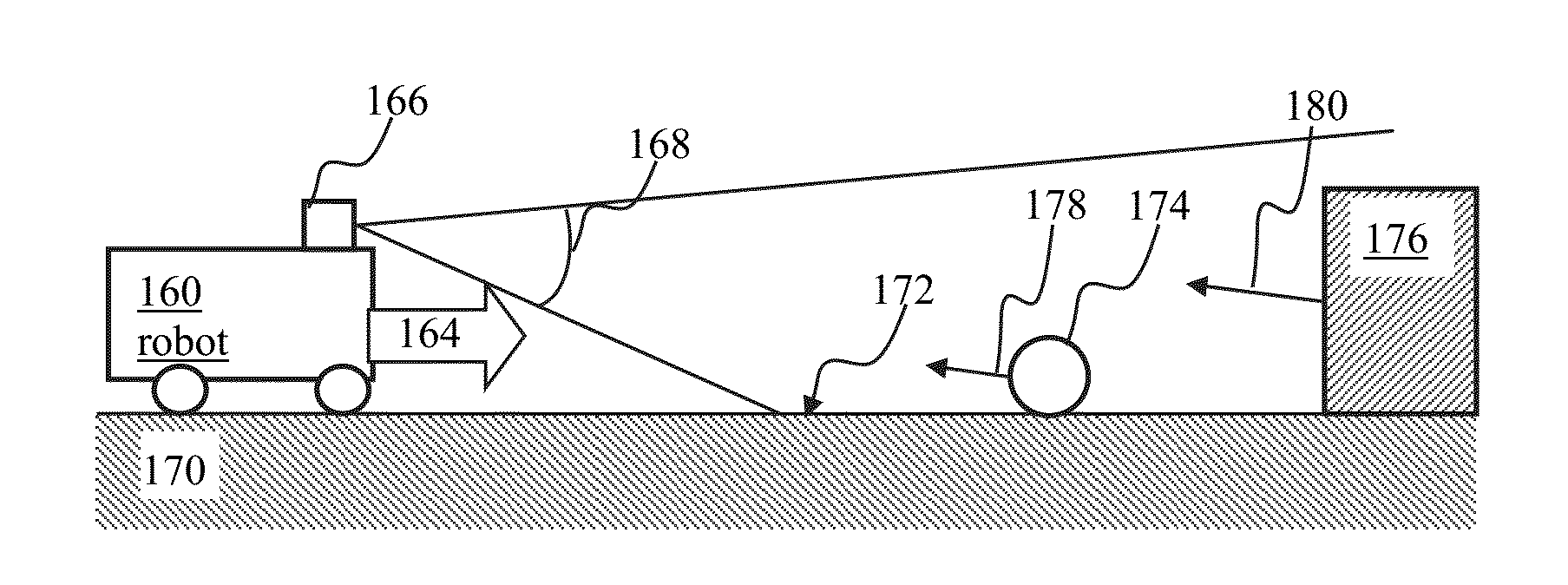

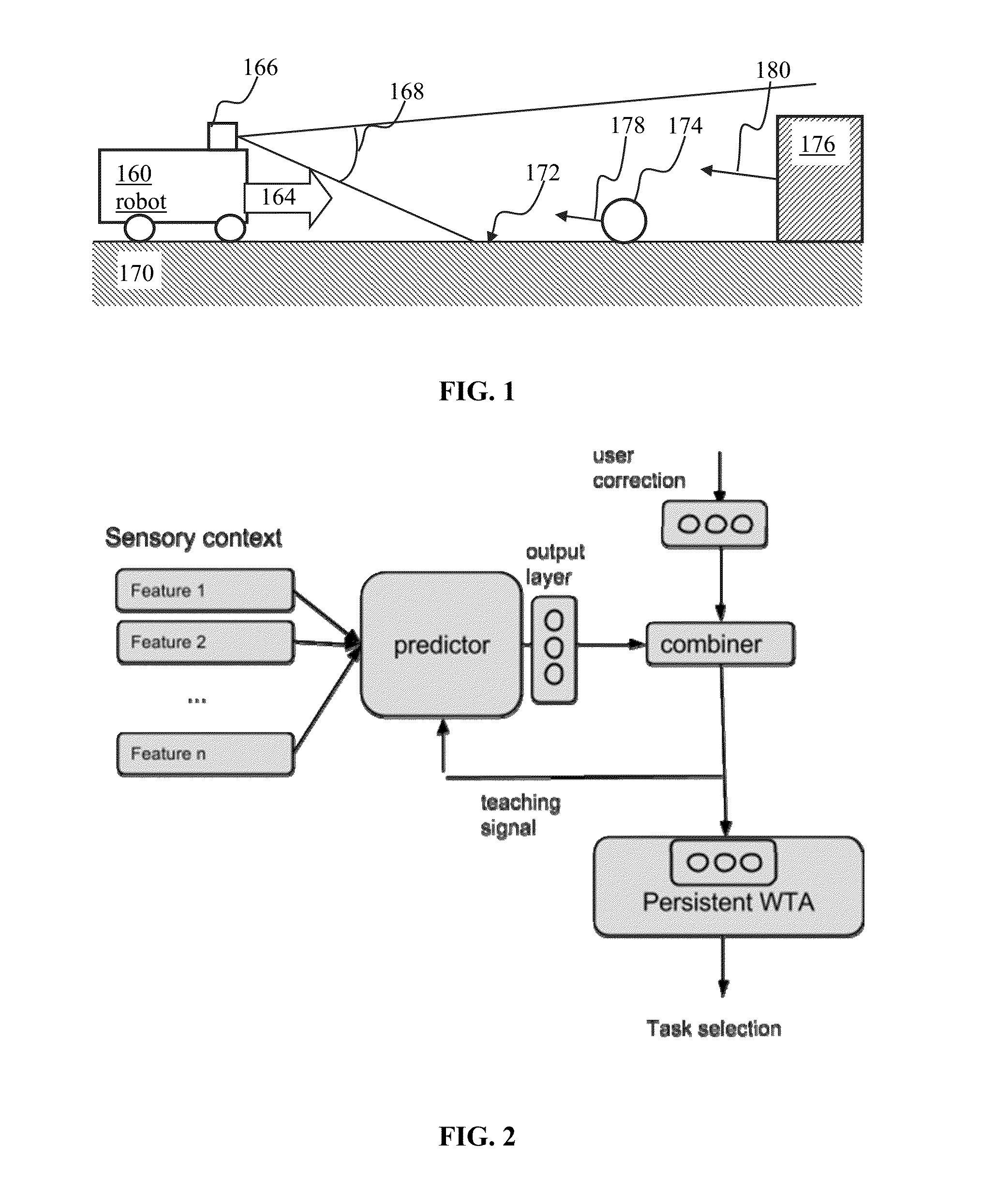

A random k-nearest neighbors (RKNN) approach may be used for regression / classification model wherein the input includes the k closest training examples in the feature space. The RKNN process may utilize video images as input in order to predict motor command for controlling navigation of a robot. In some implementations of robotic vision based navigation, the input space may be highly dimensional and highly redundant. When visual inputs are augmented with data of another modality that is characterized by fewer dimensions (e.g., audio), the visual data may overwhelm lower-dimension data. The RKNN process may partition available data into subsets comprising a given number of samples from the lower-dimension data. Outputs associated with individual subsets may be combined (e.g., averaged). Selection of number of neighbors, subset size and / or number of subsets may be used to trade-off between speed and accuracy of the prediction.

Owner:BRAIN CORP

Method and computer-based sytem for non-probabilistic hypothesis generation and verification

InactiveUS20040103108A1Data processing applicationsDigital data processing detailsAlgorithmTheoretical computer science

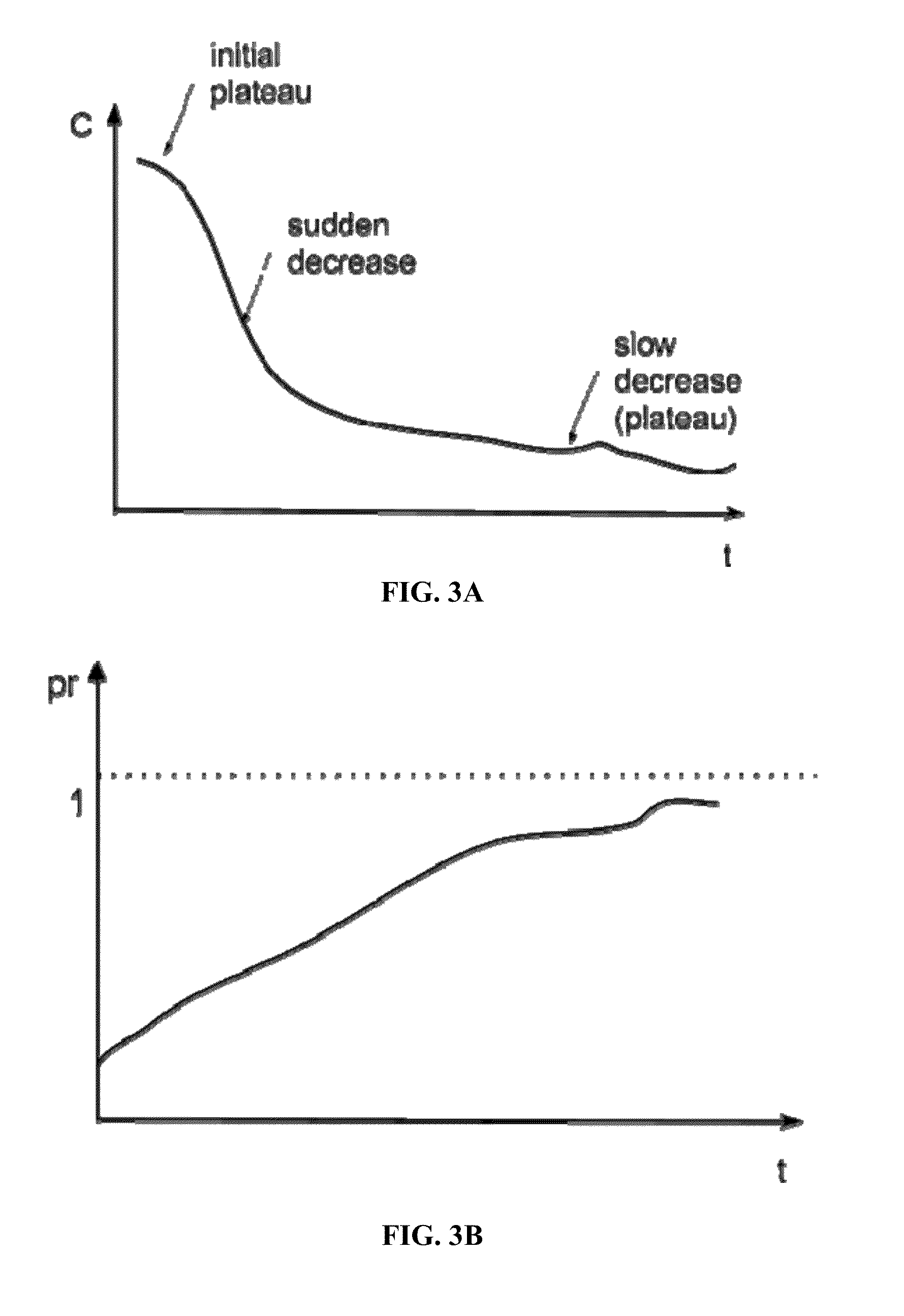

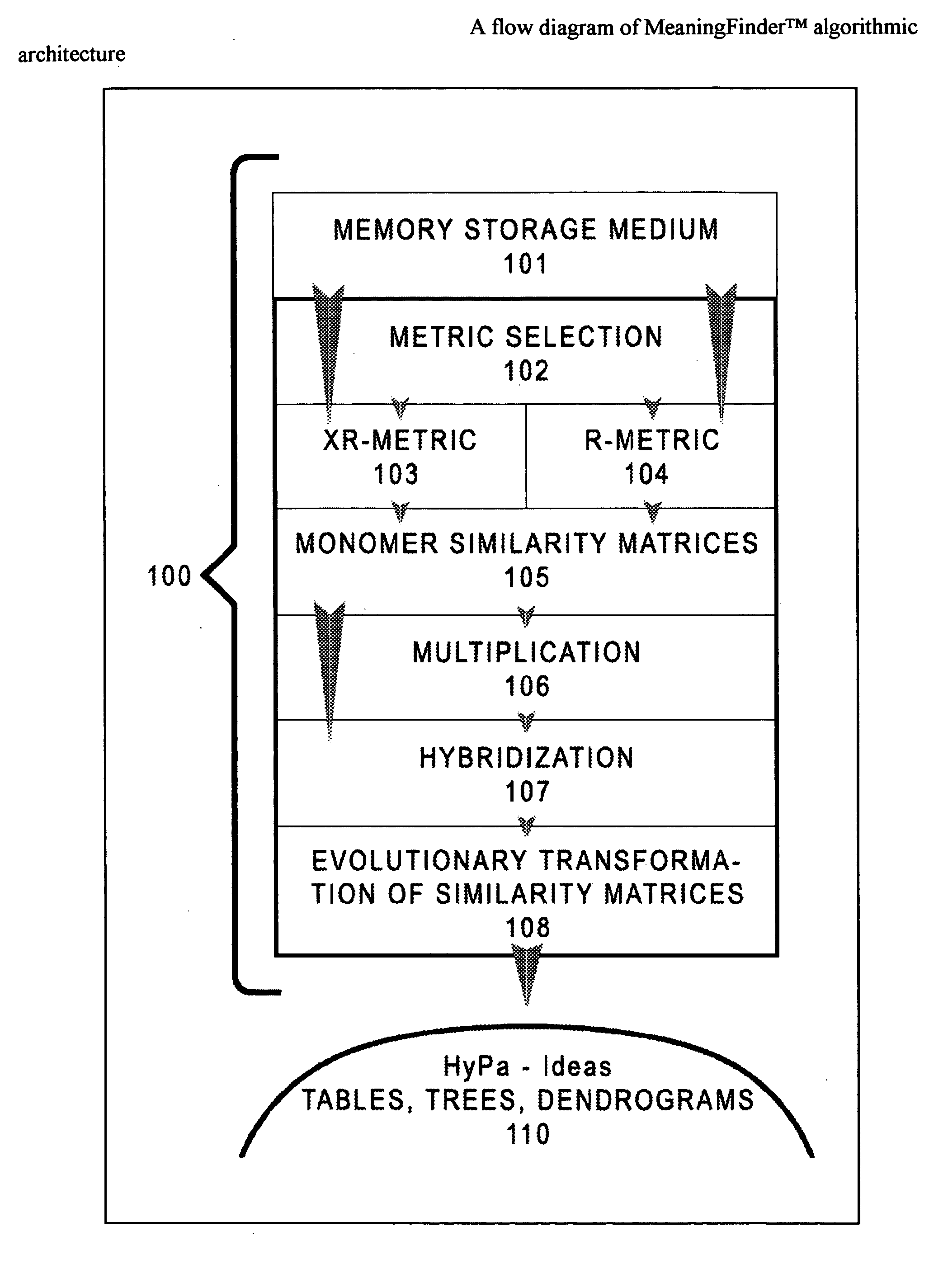

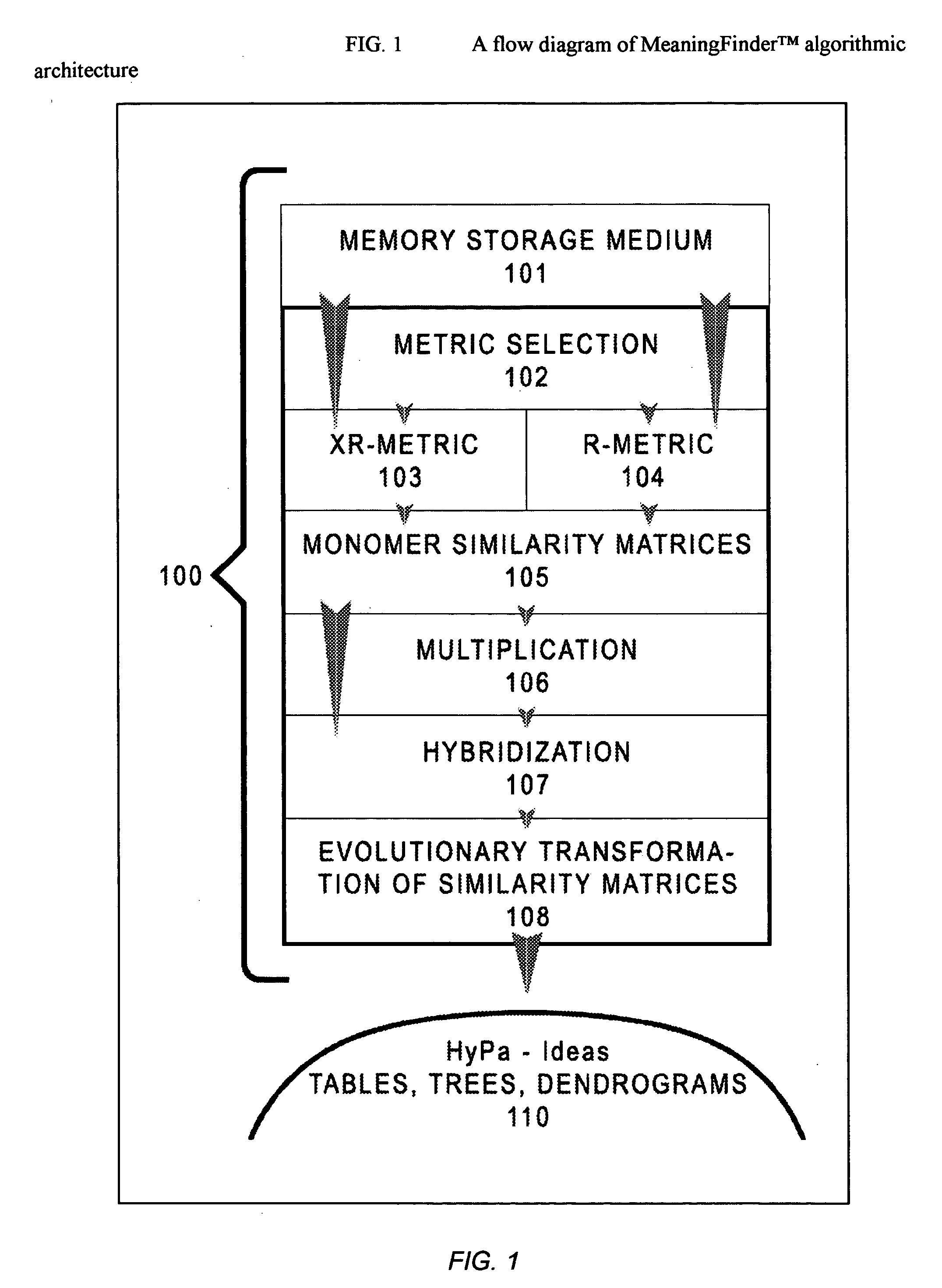

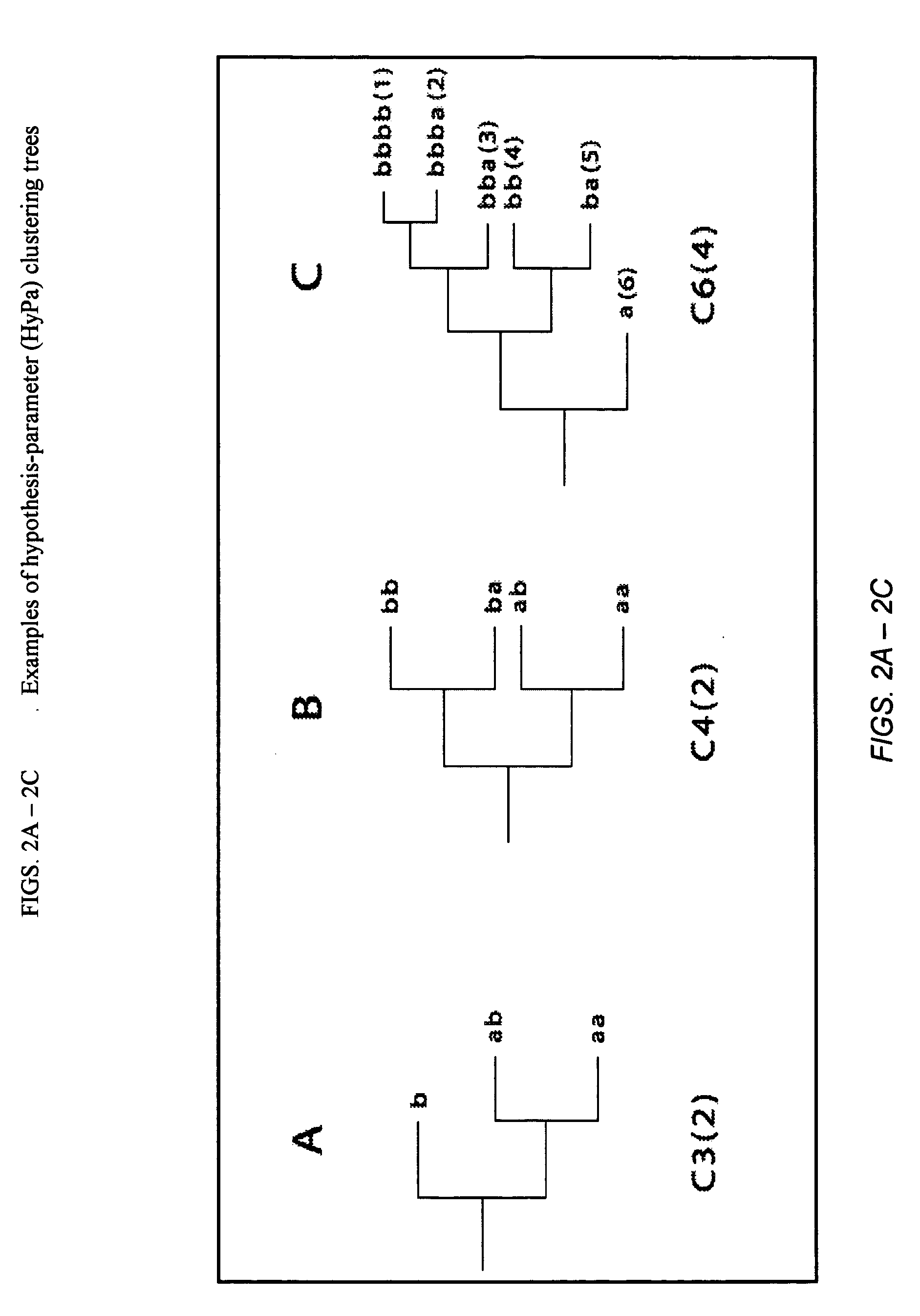

The invention provides a method, apparatus and algorithm for data processing that allows for hypothesis generation and the quantitative evaluation of its validity. The core procedure of the method is the construction of a hypothesis-parameter, acting as an "ego" of the non-biological reasoning system. A hypothesis-parameter may be generated either based on totality of general knowledge facts as a global description of data, or by a specially designed "encapsulation" technique providing for generation of hypothesis-parameters in unsupervised automated mode, after which a hypothesis-parameter is examined for the concordance with a totality of parameters describing objects under analysis. The hypothesis examination (verification) is done by establishing a number of copies of a hypothesis-parameter that may adequately compensate for the rest of existing parameters so that the clustering could rely on a suggested hypothesis-parameter. The method of this invention is based on the principle of the information thyristor and represents its practical implementation. This invention can be used as a universal computer-based recognition system in robotic vision, intelligent decision-making and machine-learning.

Owner:AIDO LLC

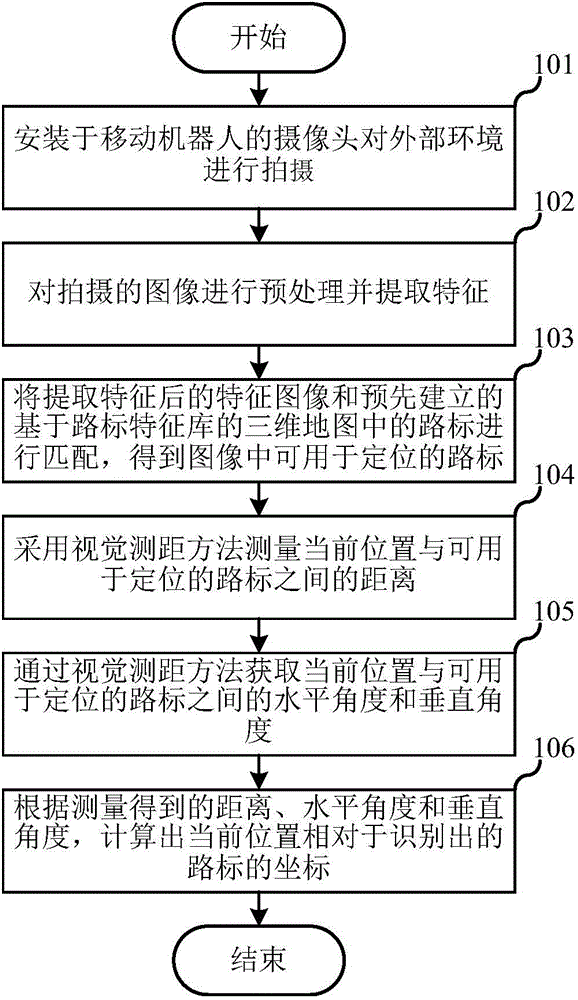

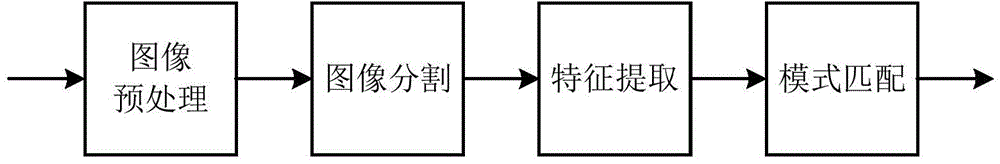

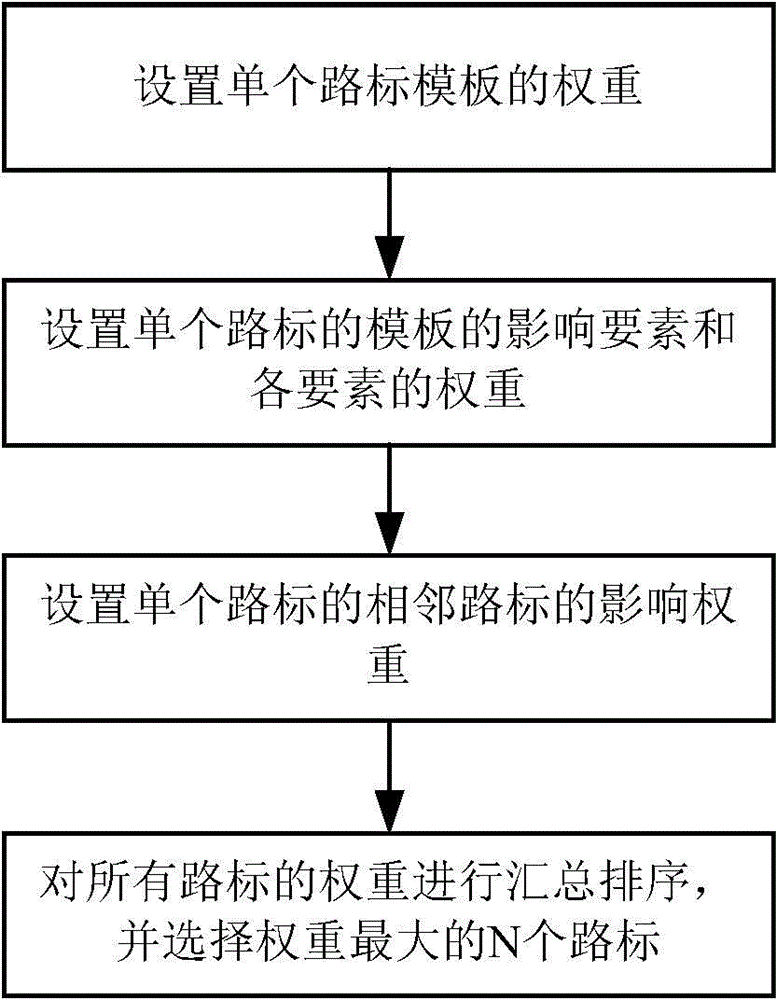

Indoor location method and indoor location system

ActiveCN105841687ALow costReduce in quantityNavigation instrumentsPicture interpretationFeature extractionComputer science

The invention relates to the field of indoor navigation and discloses an indoor location method and an indoor location system. In the invention, vision road signs (artificial or natural road signs) are deployed in fixed indoor positions. During the location process, a movable robot photographs images from surrounding environment, wherein the images are subjected to pretreatment and character extraction. A characteristic image after the character extraction is matched with the road signs in a road sign character library to obtain a road sign which can be used for location in the image. Through a vision distance measurement scheme on the basis of the characteristic road sign, real-time location is carried to the movable robot. In the embodiment, with the vision road signs (passive road sign), the road sign itself does not emit a wireless signal and has low cost. Through the vision distance measurement scheme, only one road sign is required to deploy within in vision range of the robot, so that the method and the system need fewer road signs, thereby reducing deployment cost of indoor navigation.

Owner:SHANGHAI ZHISHENG NETWORK TECH CO LTD

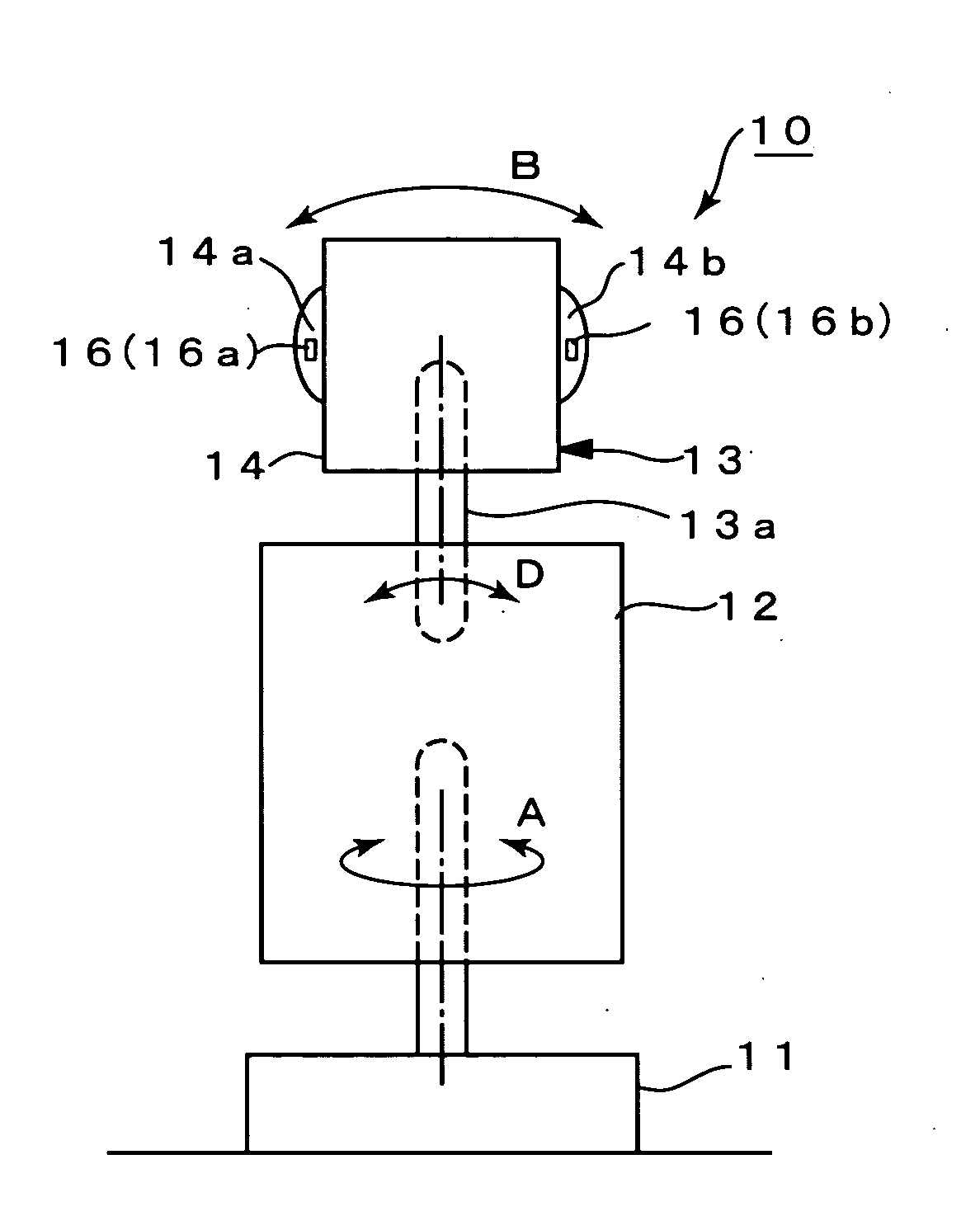

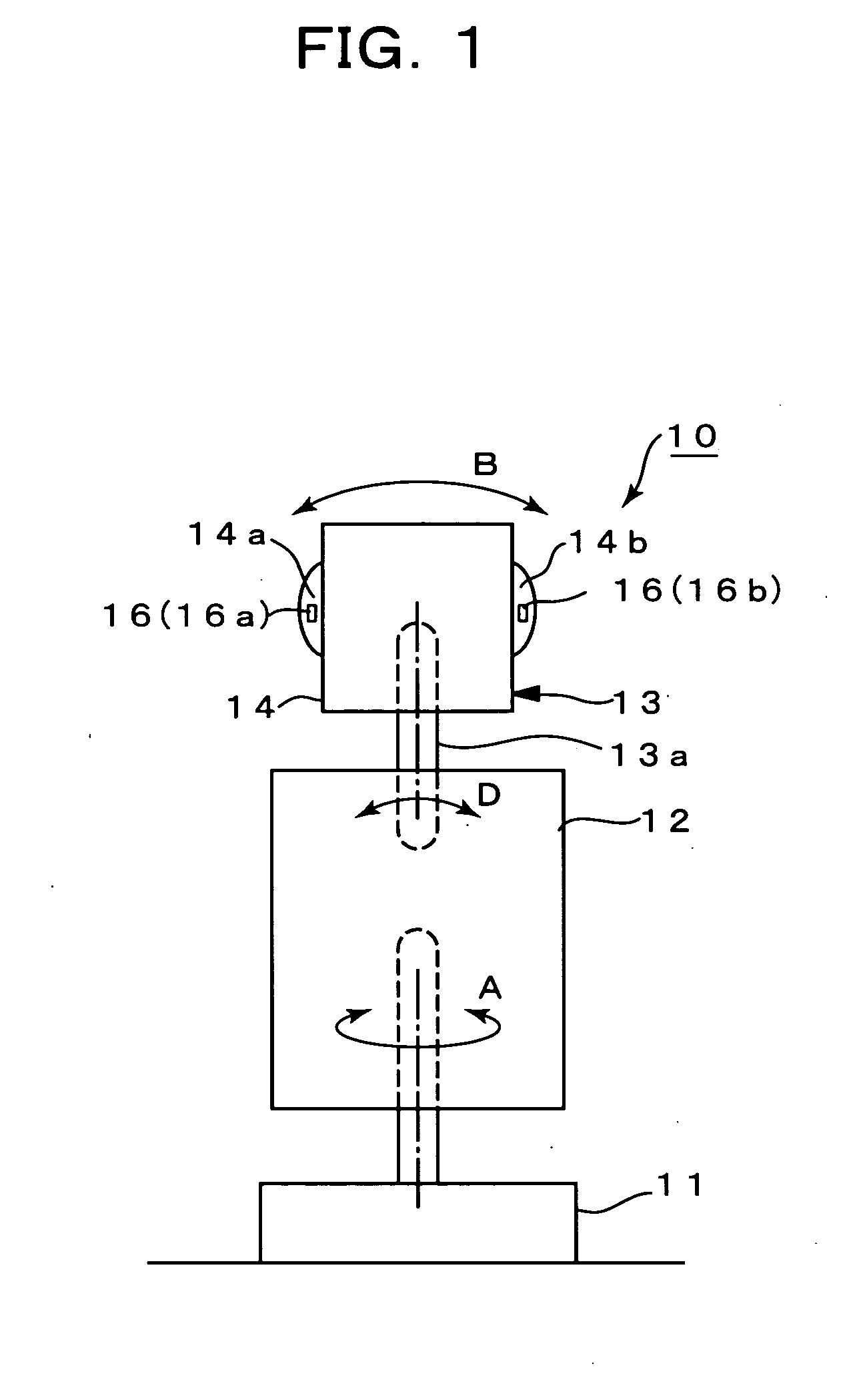

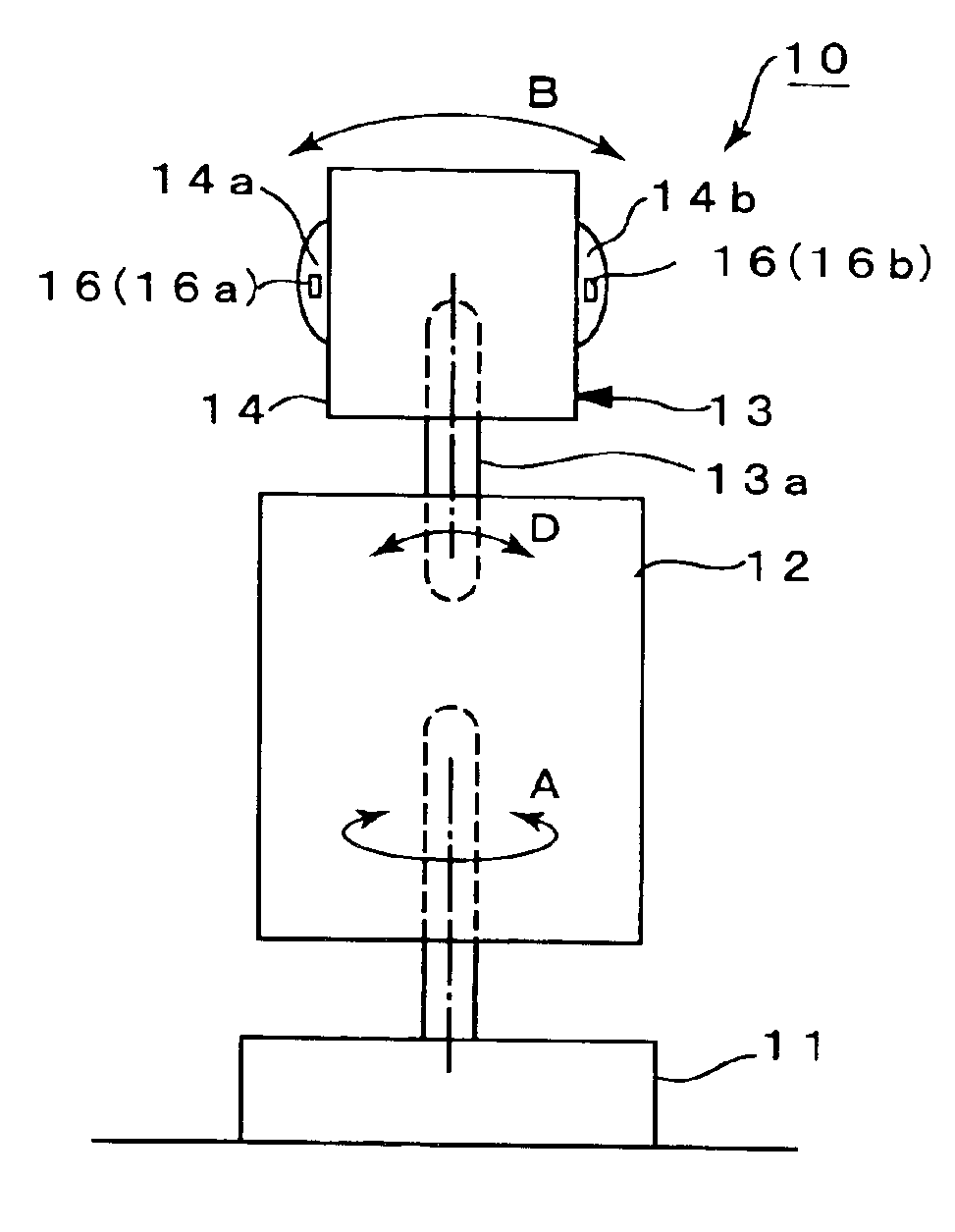

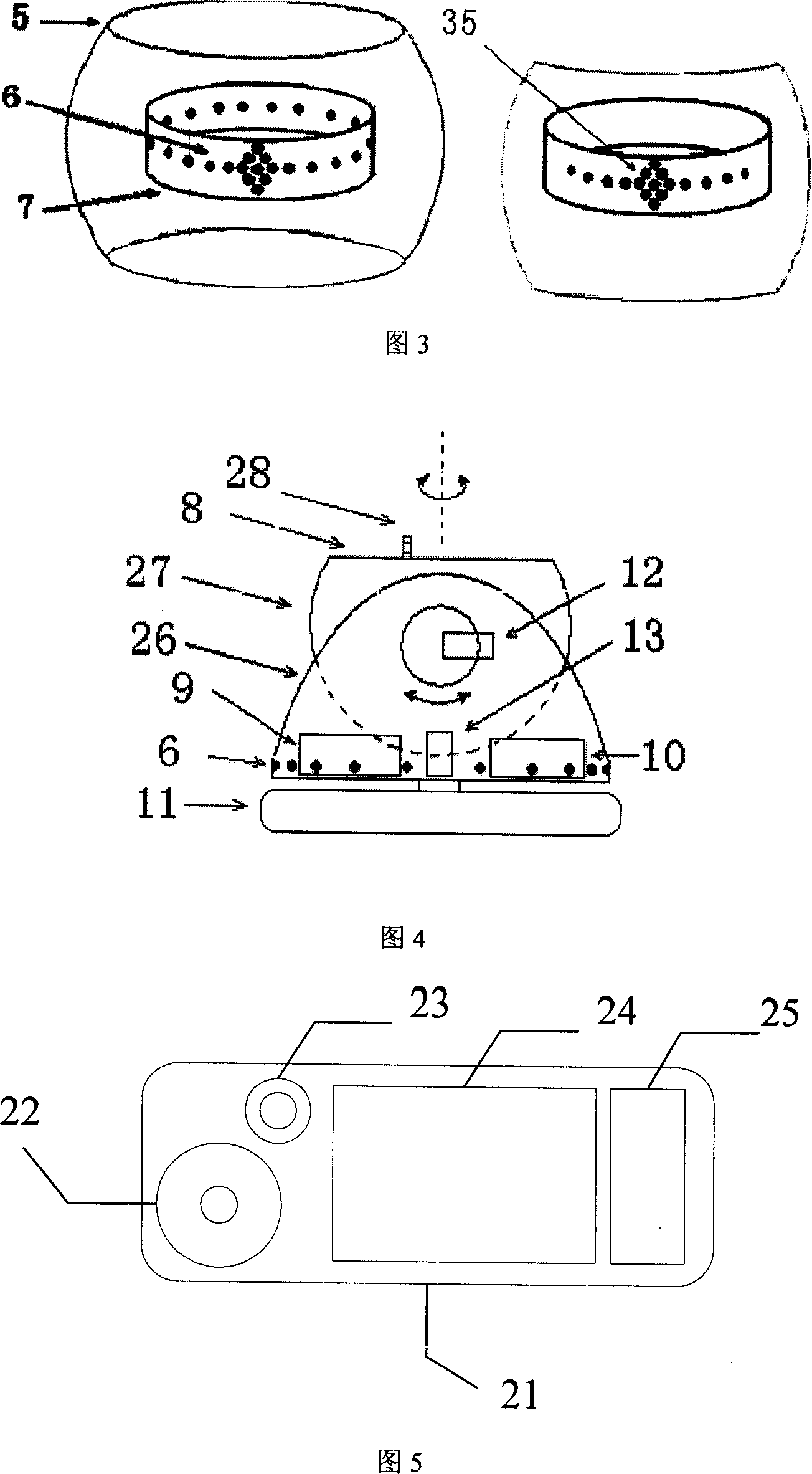

Robot audiovisual system

A robot visuoauditory system that makes it possible to process data in real time to track vision and audition for an object, that can integrate visual and auditory information on an object to permit the object to be kept tracked without fail and that makes it possible to process the information in real time to keep tracking the object both visually and auditorily and visualize the real-time processing is disclosed. In the system, the audition module (20) in response to sound signals from microphones extracts pitches therefrom, separate their sound sources from each other and locate sound sources such as to identify a sound source as at least one speaker, thereby extracting an auditory event (28) for each object speaker. The vision module (30) on the basis of an image taken by a camera identifies by face, and locate, each such speaker, thereby extracting a visual event (39) therefor. The motor control module (40) for turning the robot horizontally. extracts a motor event (49) from a rotary position of the motor. The association module (60) for controlling these modules forms from the auditory, visual and motor control events an auditory stream (65) and a visual stream (66) and then associates these streams with each other to form an association stream (67). The attention control module (6) effects attention control designed to make a plan of the course in which to control the drive motor, e.g., upon locating the sound source for the auditory event and locating the face for the visual event, thereby determining the direction in which each speaker lies. The system also includes a display (27, 37, 48, 68) for displaying at least a portion of auditory, visual and motor information. The attention control module (64) servo-controls the robot on the basis of the association stream or streams.

Owner:JAPAN SCI & TECH CORP

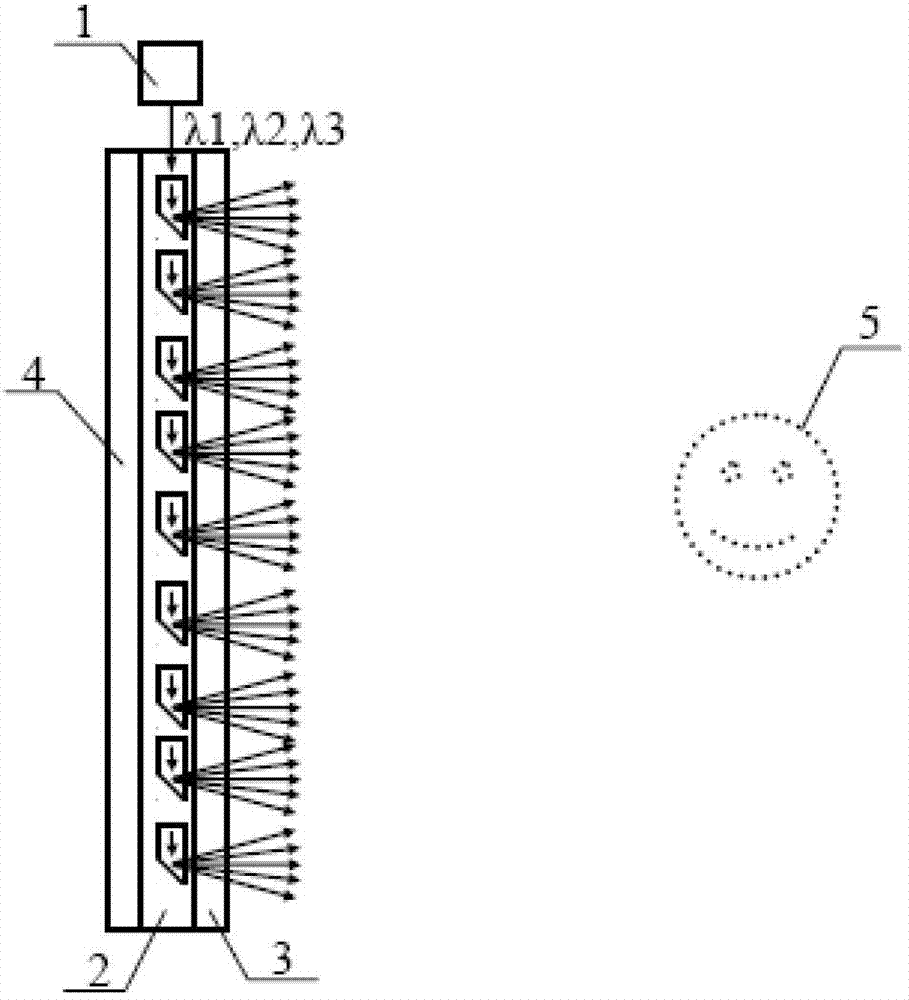

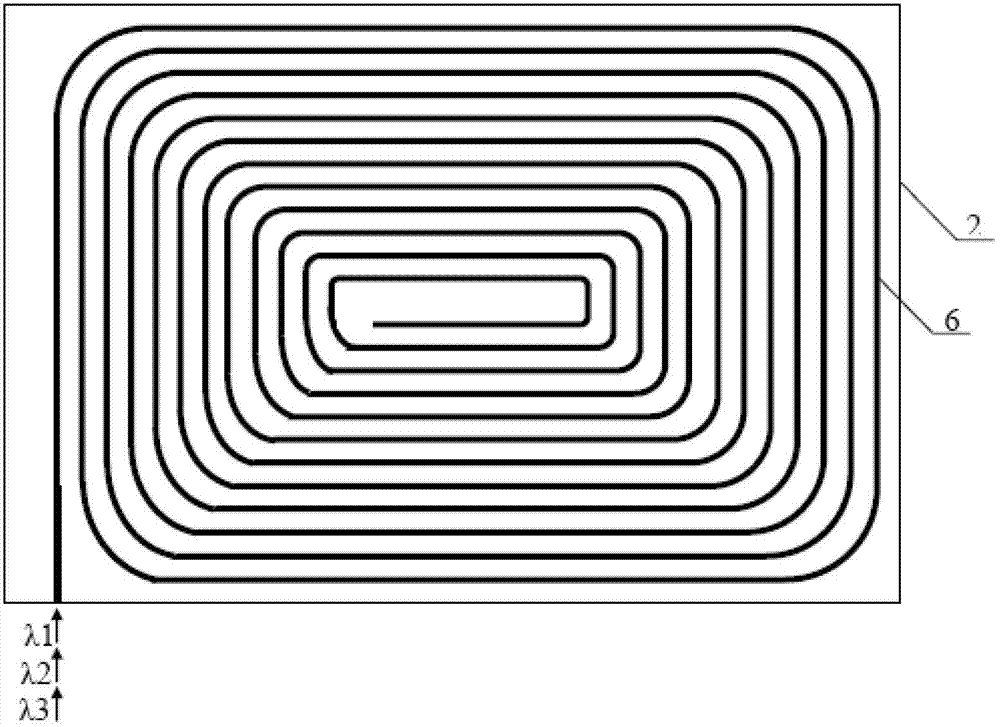

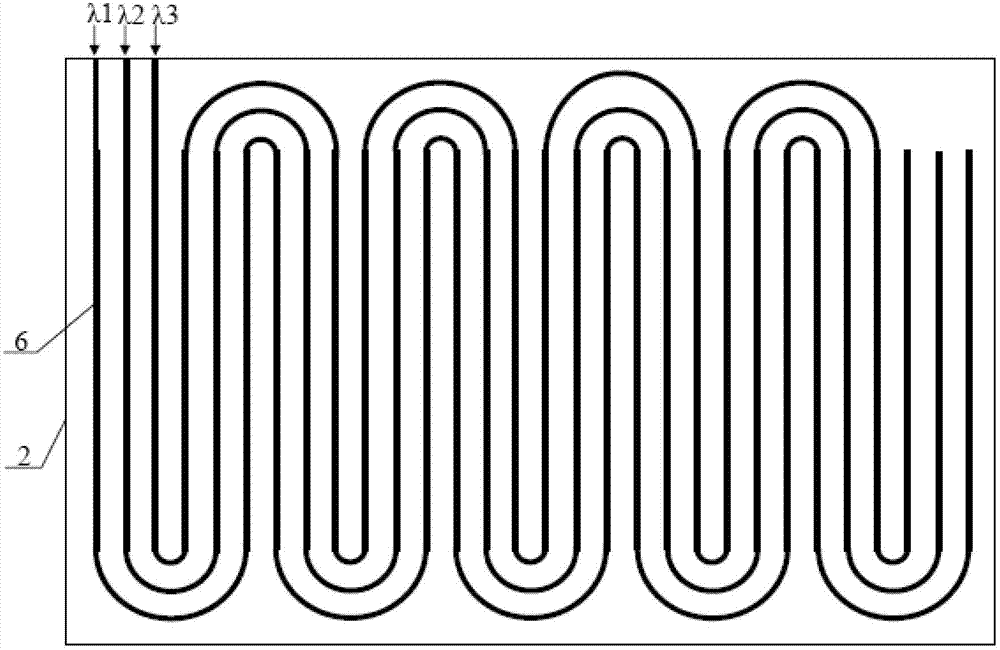

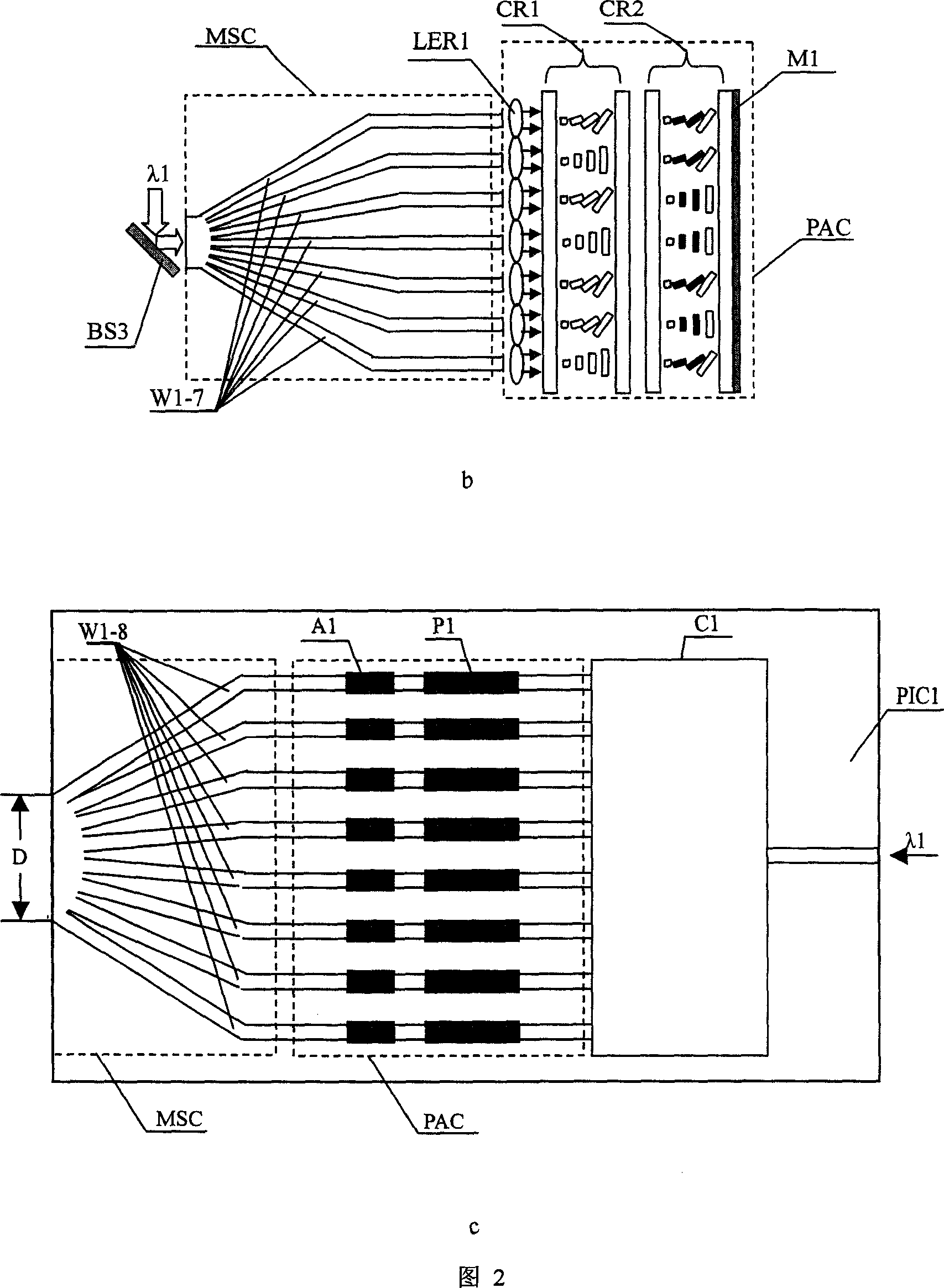

Three-dimensional imaging method and device utilizing planar lightwave circuit

ActiveCN103207458ASmear suppressionRealize regulationHolographic optical componentsActive addressable light modulatorVisual perceptionLightness

The invention discloses a three-dimensional imaging method and a device utilizing a planar lightwave circuit. The three-dimensional imaging method includes that coherent light emitted from coherent light source is converted into a two dimensional point light source array; the position of every point light source in the two dimensional point light source array is randomly distributed; three-dimensional images are discretized into a large amount of vexel; the vexel is divided into a plurality of groups from high to low according to the brightness; a phase regulating amplitude of the point light source is calculated according to the distance between every point light source and every vexel of every group to enable the lightwave from every point light source to be in the same phase when reaches the vexel; every point light source is accumulated as a complex amplitude regulation amplitude for generating every vexel; and an amplitude regulator and a phase regulator of every point light source are driven to generate every group of vexel based on constructive interference. The imaging device is formed by coherent light source, the planar lightwave circuit, a conductive glass front panel and a back driving circuit. The three-dimensional imaging method and the device utilizing the planar lightwave circuit are capable of being widely applied to the fields of three-dimensional display of a computer and a television, three-dimensional human-machine exchange, robot vision and the like.

Owner:李志扬

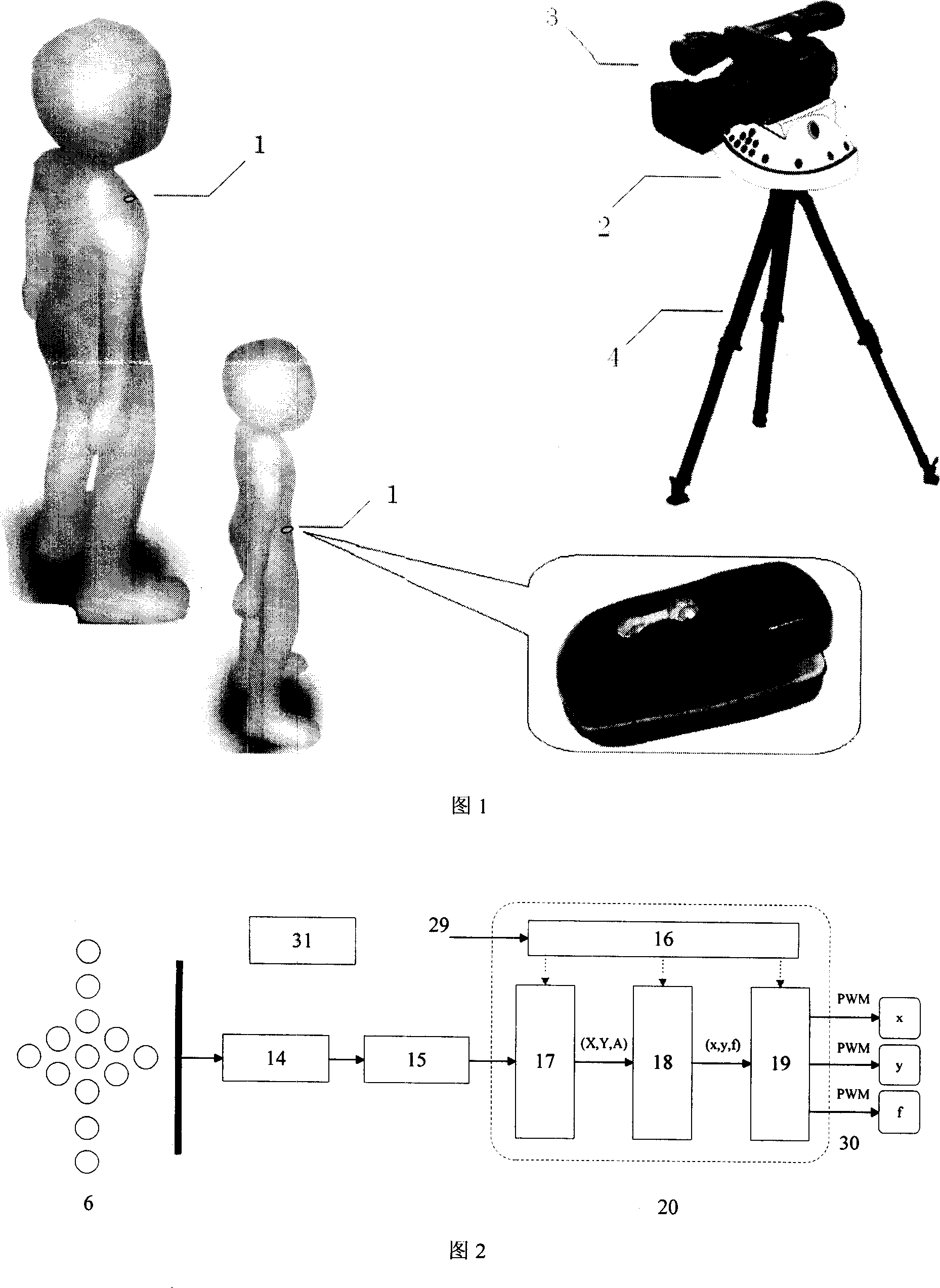

Active infrared tracking system

InactiveCN101014097ACutting costsSmall amount of calculationTelevision system detailsPosition fixationCamera lensInfrared

The invention relates to one intelligent trace system and discloses one system to focus its upper load camera machine onto one or more aim objects through main infrared positioning in level and vertical dimensional angel to judge aim object and system distance. The invention identifies the different aim objects through infrared emission machines codes to compute cameral lens level and forward and focus parameters to drive relative servo work.

Owner:马涛

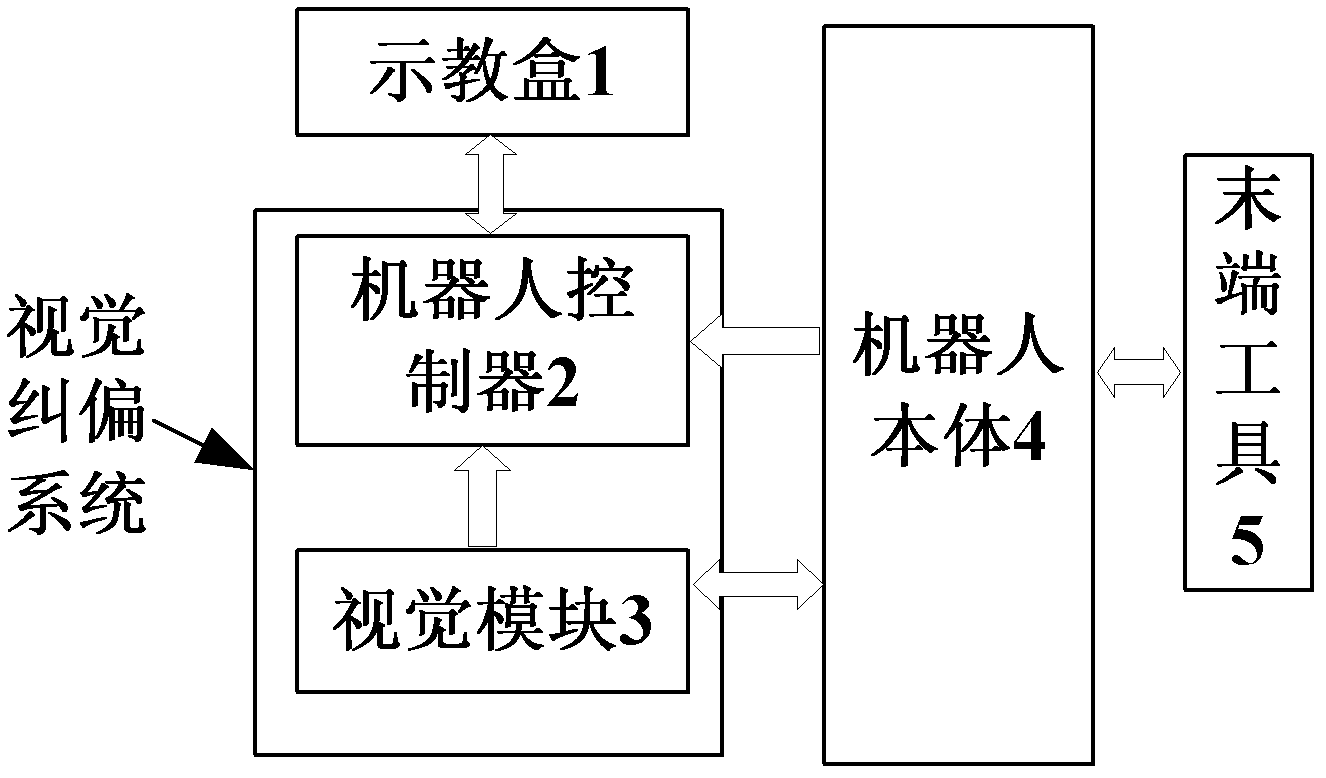

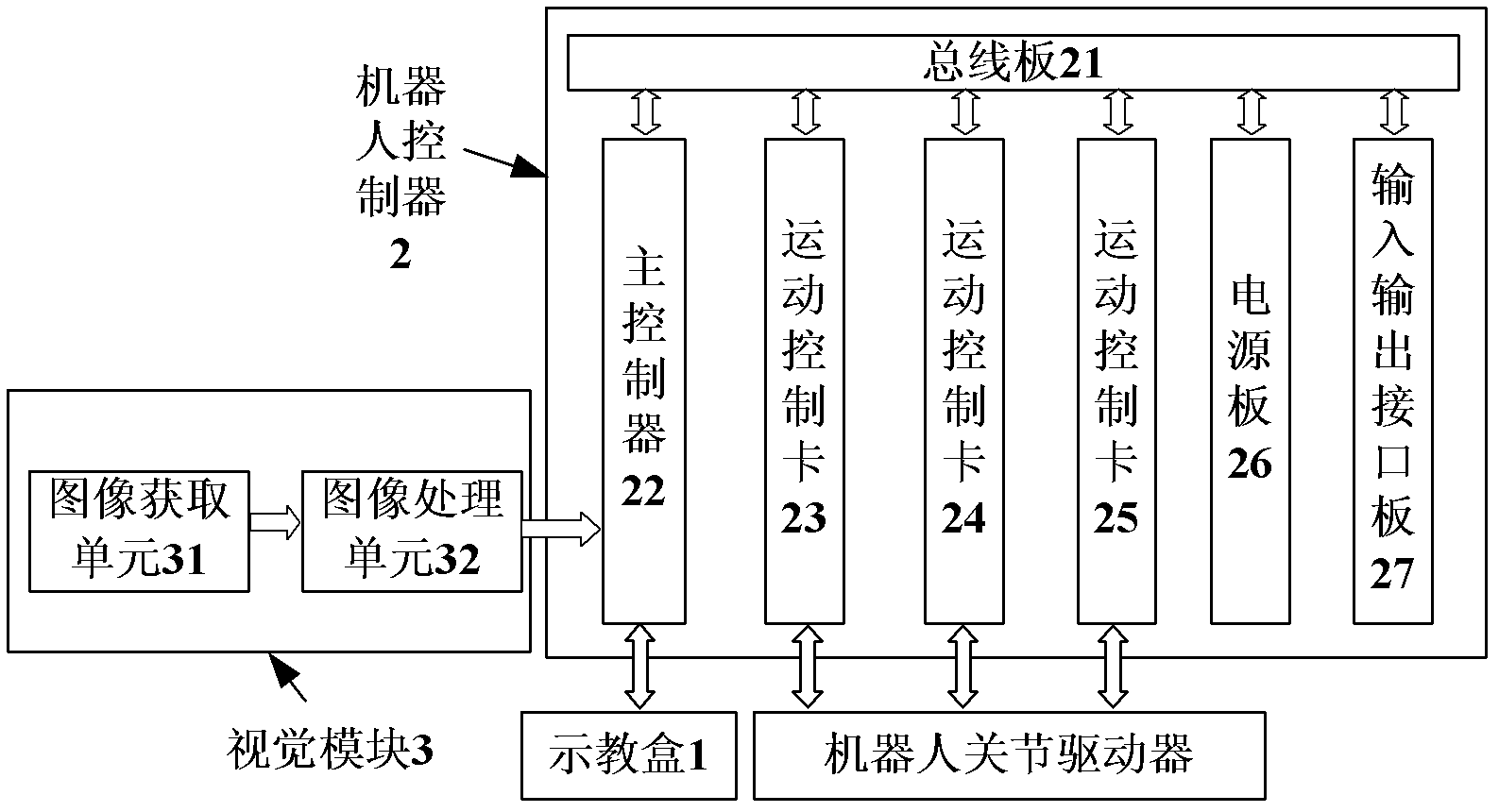

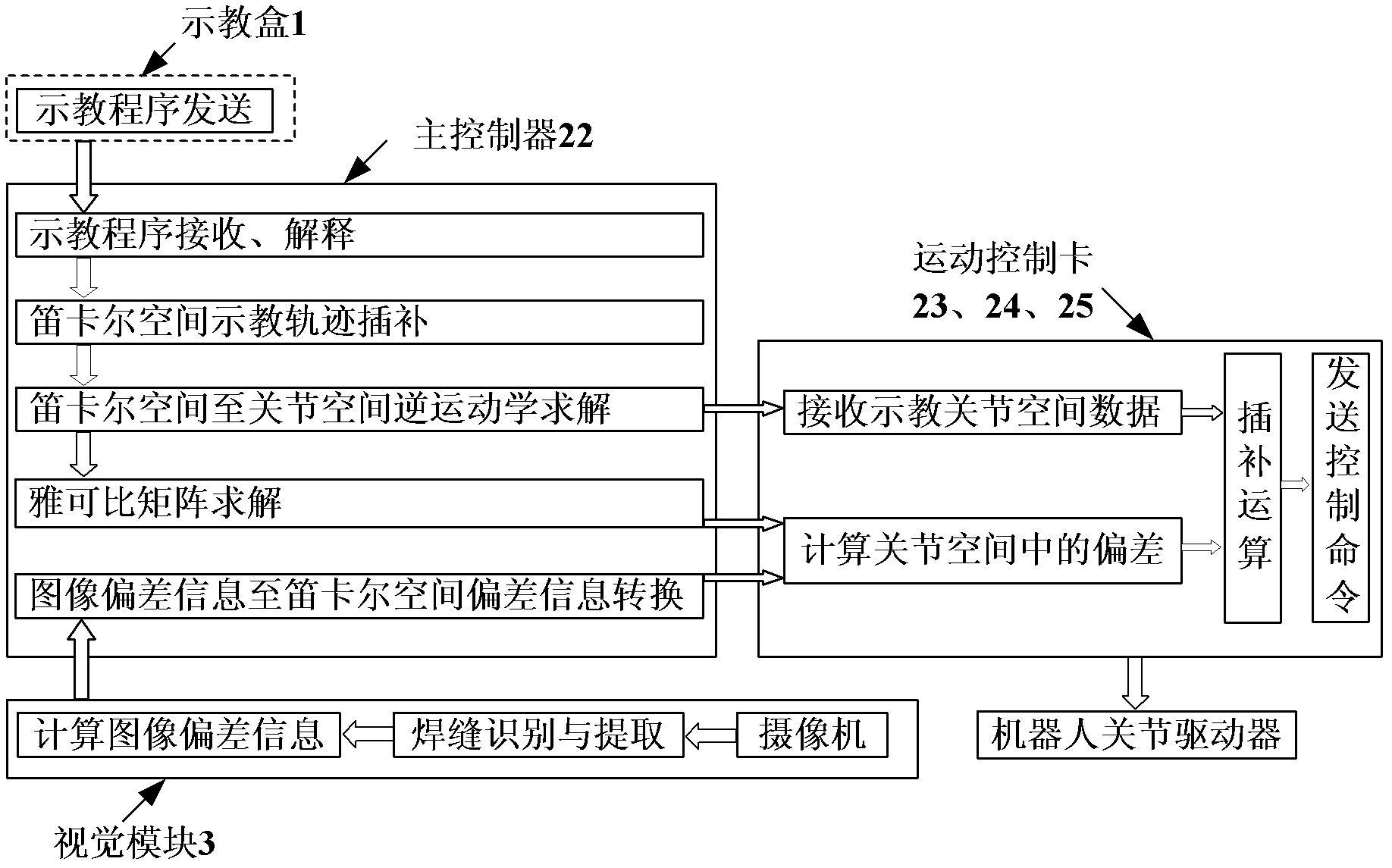

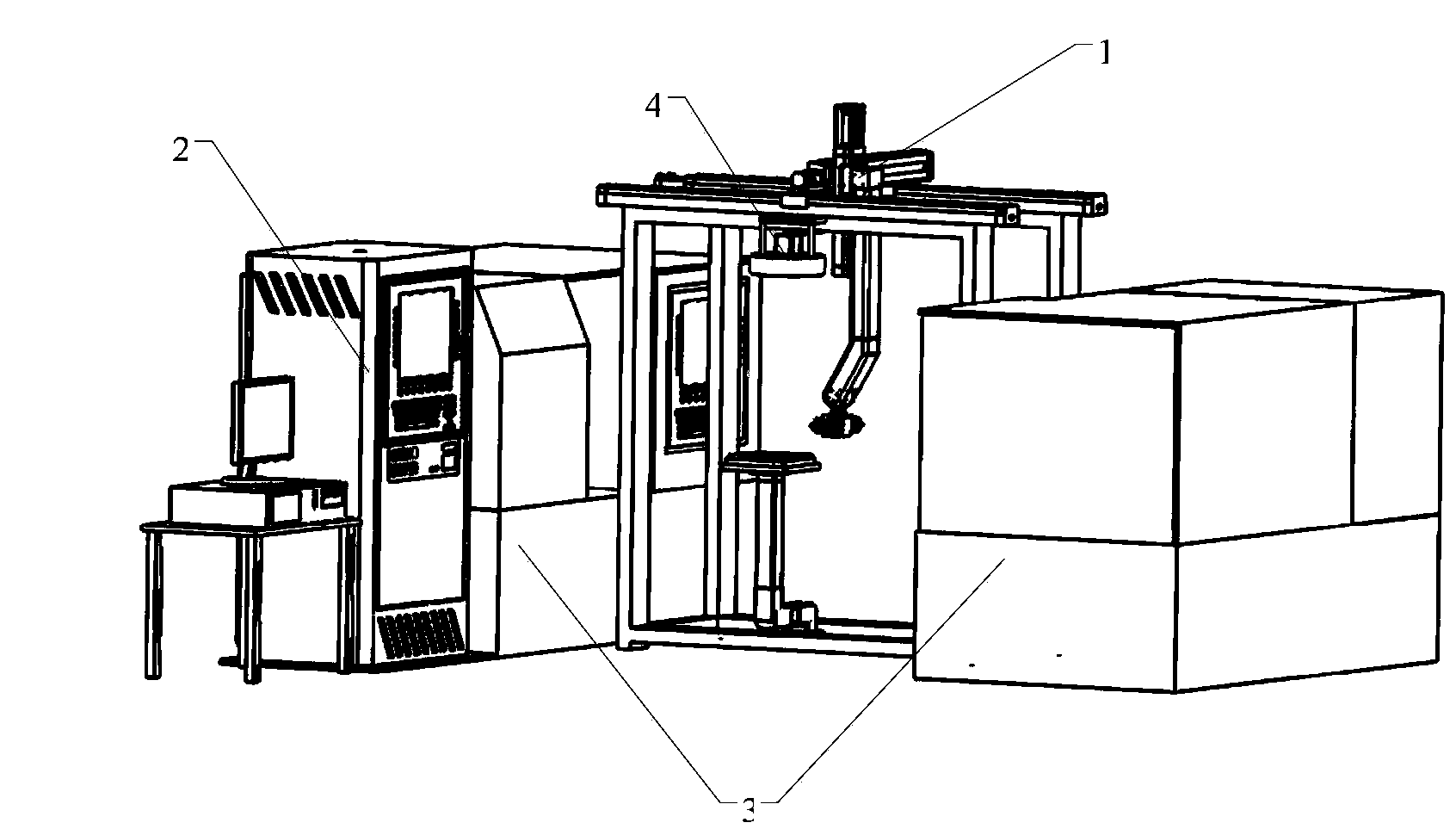

Visual real-time deviation rectifying system and visual real-time deviation rectifying method for robot

ActiveCN102581445AReal-time adjustmentSmall distortionWelding/cutting auxillary devicesArc welding apparatusTime deviationVisual perception

The invention discloses a visual real-time deviation rectifying system for a robot. The robot comprises a robot body (4) and an end tool (5) connected to the tail end of the robot body. The system comprises a visual module (3) and a robot controller (2), wherein the visual module (3) is electrically connected with the robot controller (2), and is used for acquiring target image information in real time, processing the acquired target image information so as to obtain position deviation information between the end tool (5) and a target, and sending the position deviation information to the robot controller (2); and the robot controller (2) is electrically connected with the robot body (4), and is used for generating a real-time servo command for controlling the end tool (5) according to the position deviation information from the visual module (3), and sending the servo command to the robot body (4) in real time so as to perform real-time deviation rectifying for the end tool (5).

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

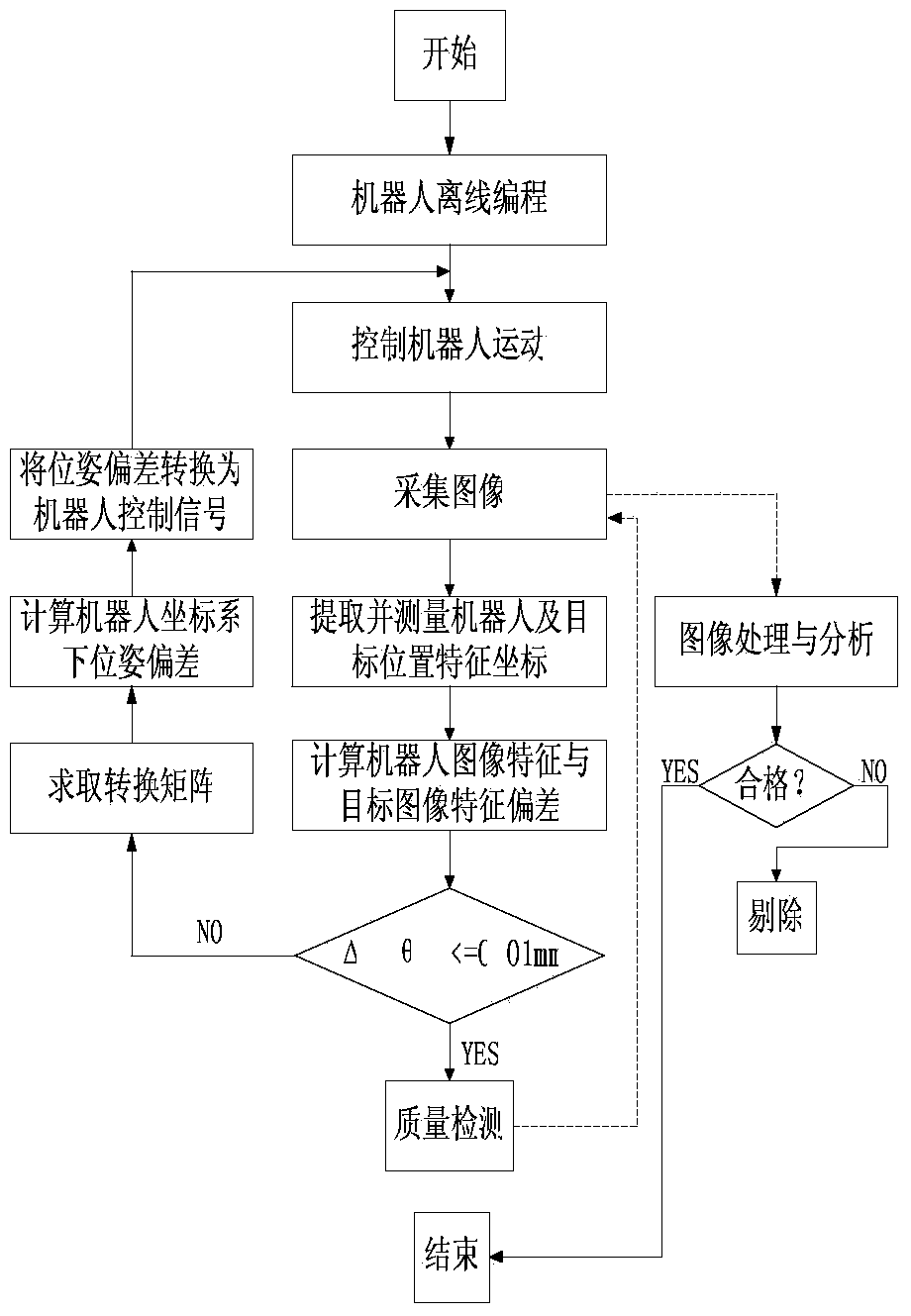

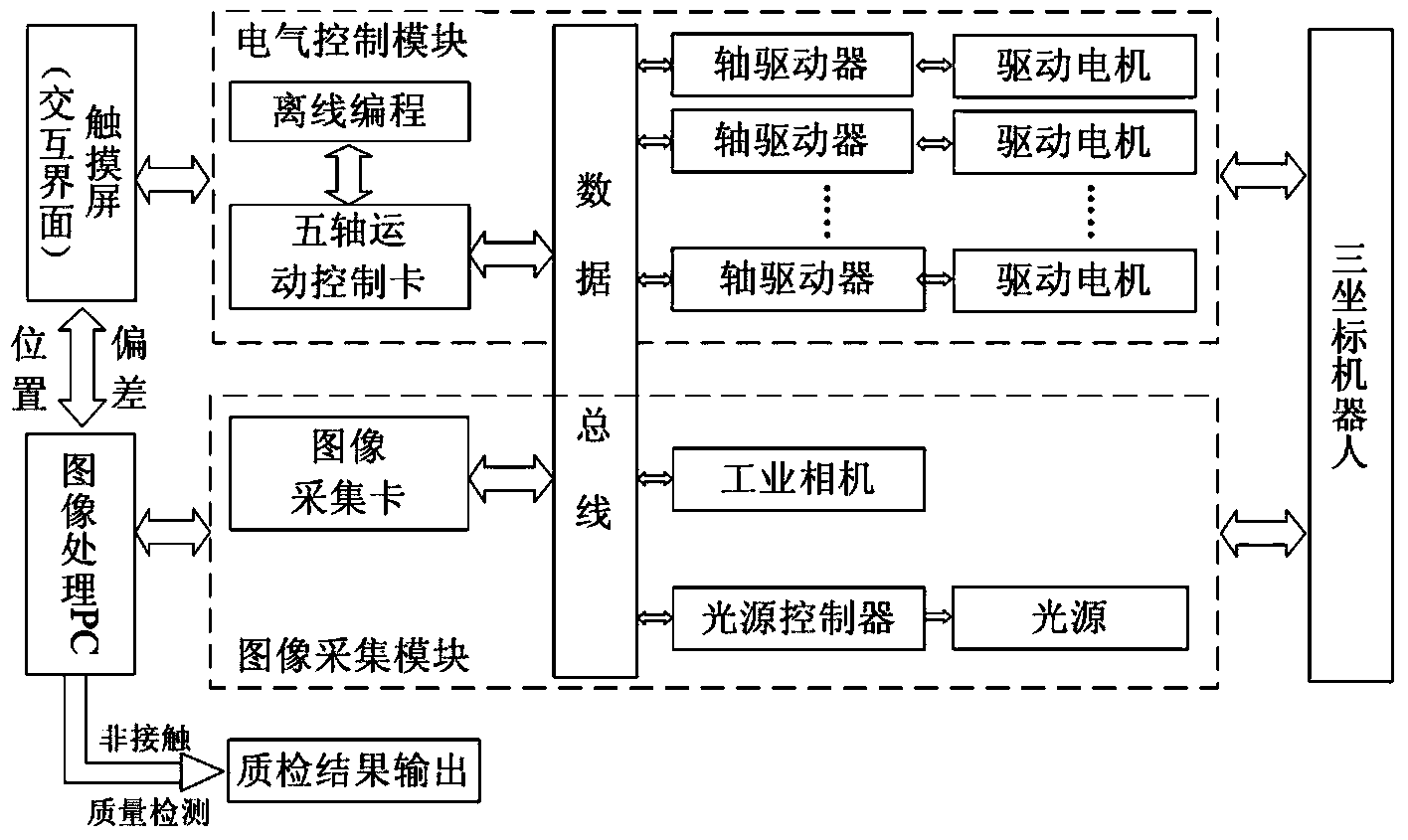

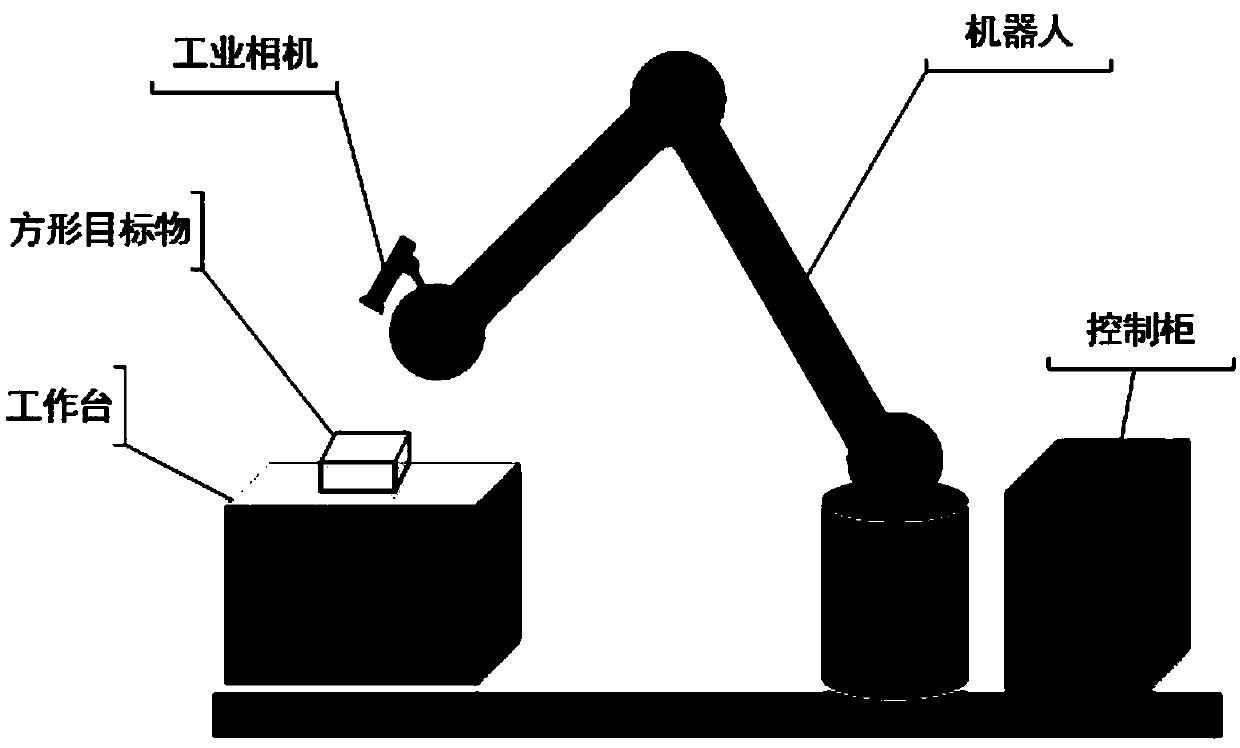

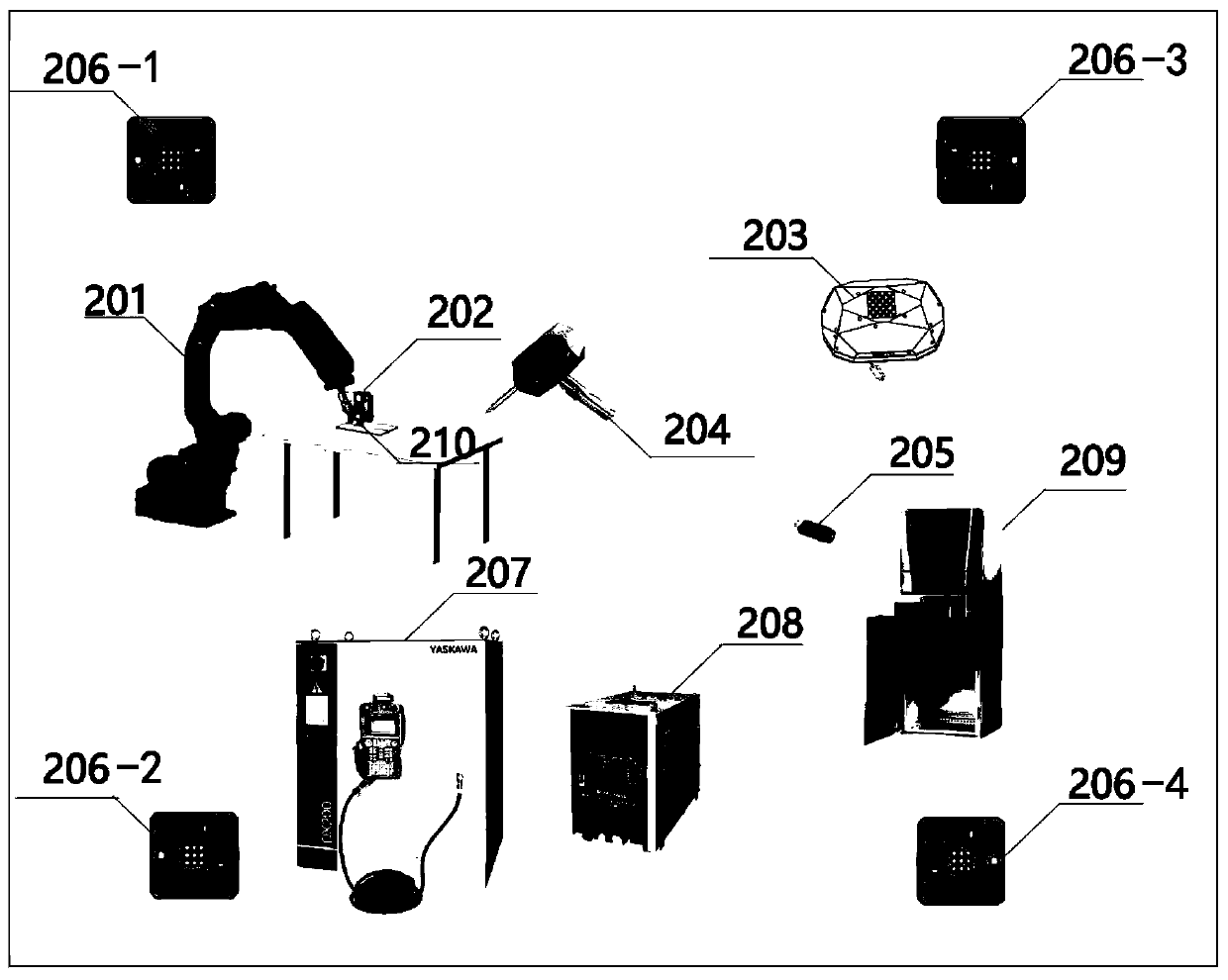

Robot system with visual servo and detection functions

ActiveCN103406905ARealize functionImprove motion control efficiencyProgramme-controlled manipulatorAutomatic controlControl signal

The invention discloses a robot system with visual servo and detection functions. The robot system comprises a robot, an image acquisition and image processing unit, a robot vision servo control unit and a communication network unit for connecting all the modules, wherein data and signal transmission among all the units is realized by the communication network unit; the robot vision servo control unit sends or receives a control signal of the robot by the communication network unit, rapidly understands the surrounding and constructs a vision feedback control model at the same time, so as to realize vision identification and movement control functions of the robot. The movement control of the robot adopts a method of combining offline programming with robot vision servo control to carry out automatic control on the robot and a tail end executer, so that the movement control efficiency of the robot and the repeated positioning precision and flexibility of the robot are improved; the robot system has higher intelligence. Robot control has a non-contact quality detection function based on robot vision; the robot system is simple in structure and convenient to operate.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

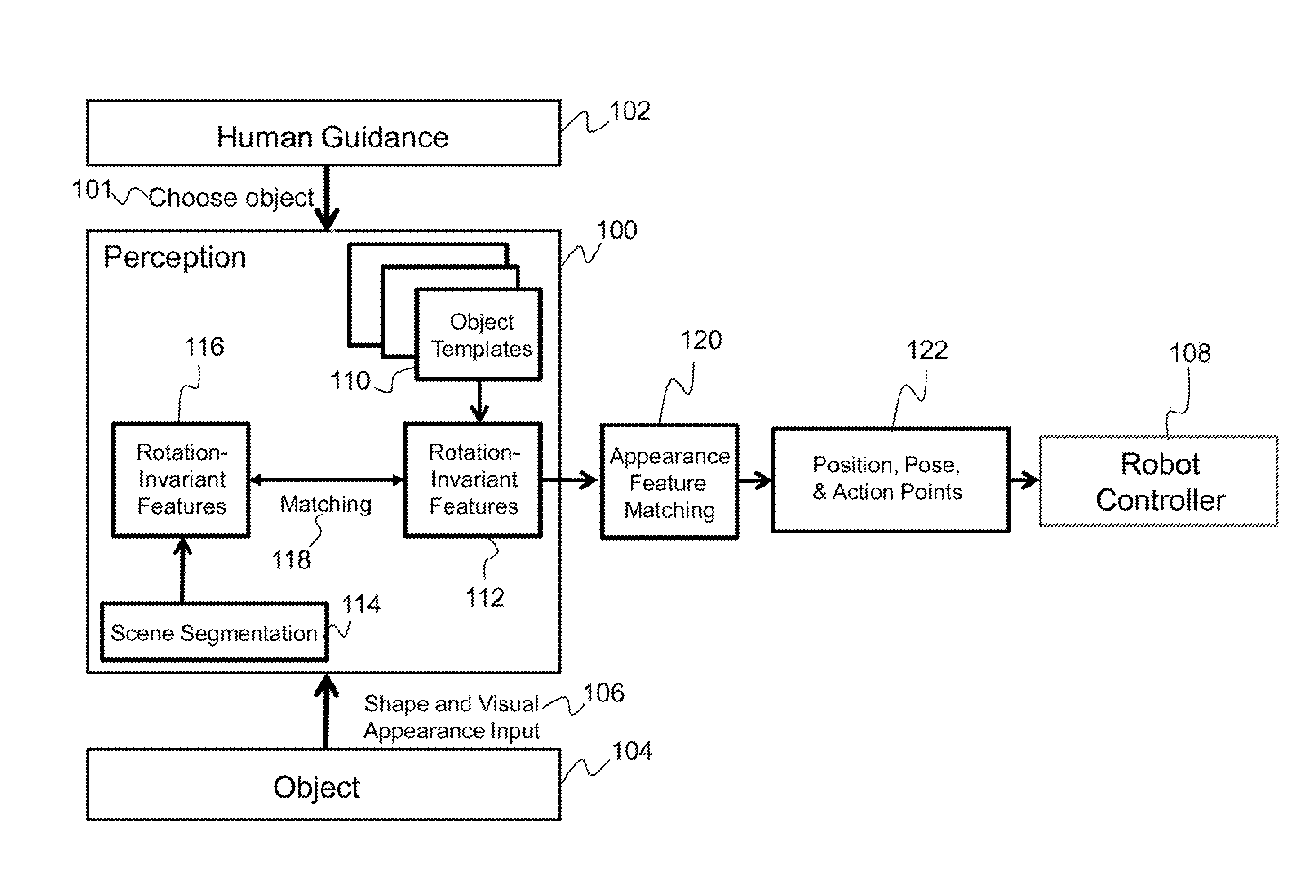

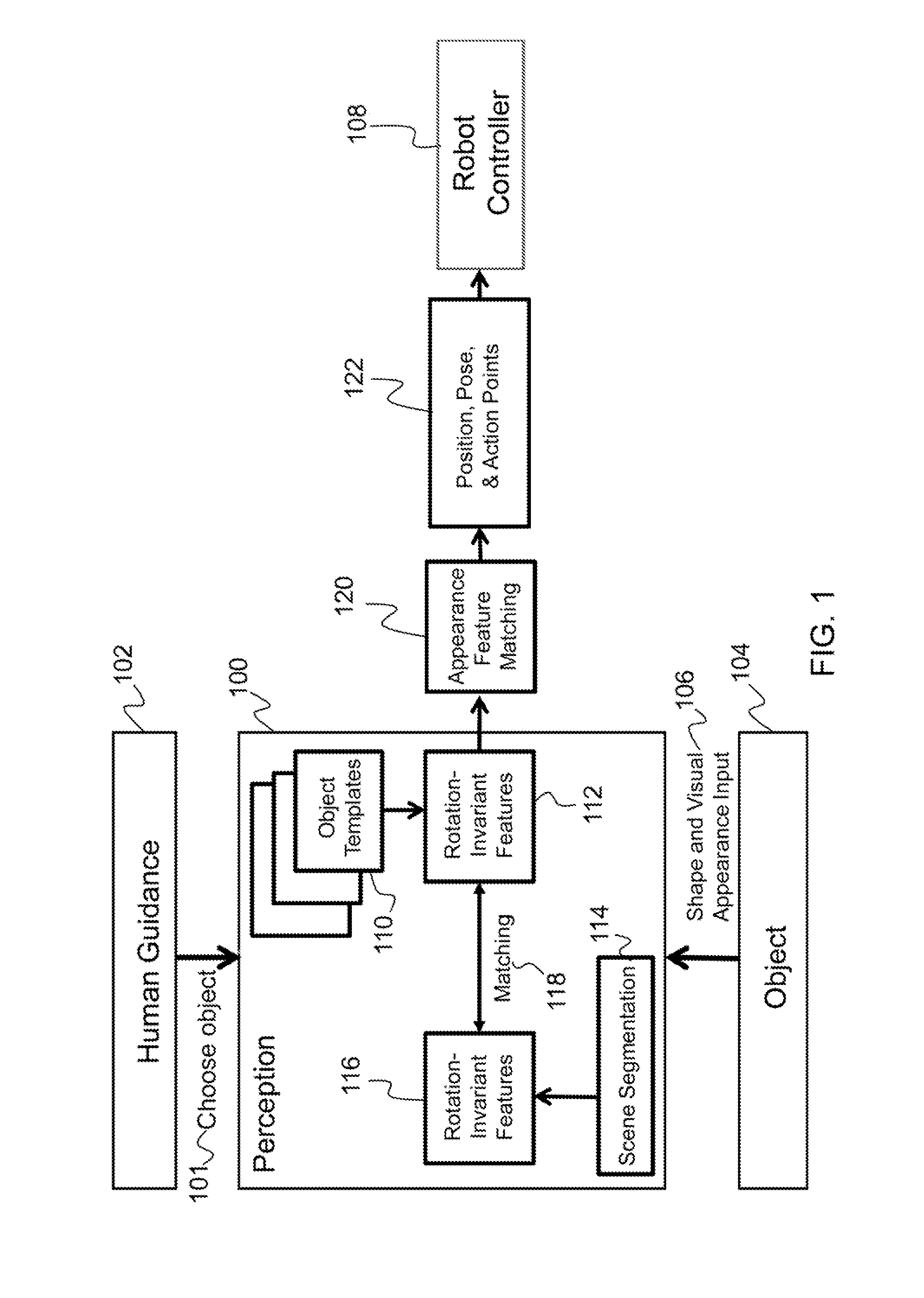

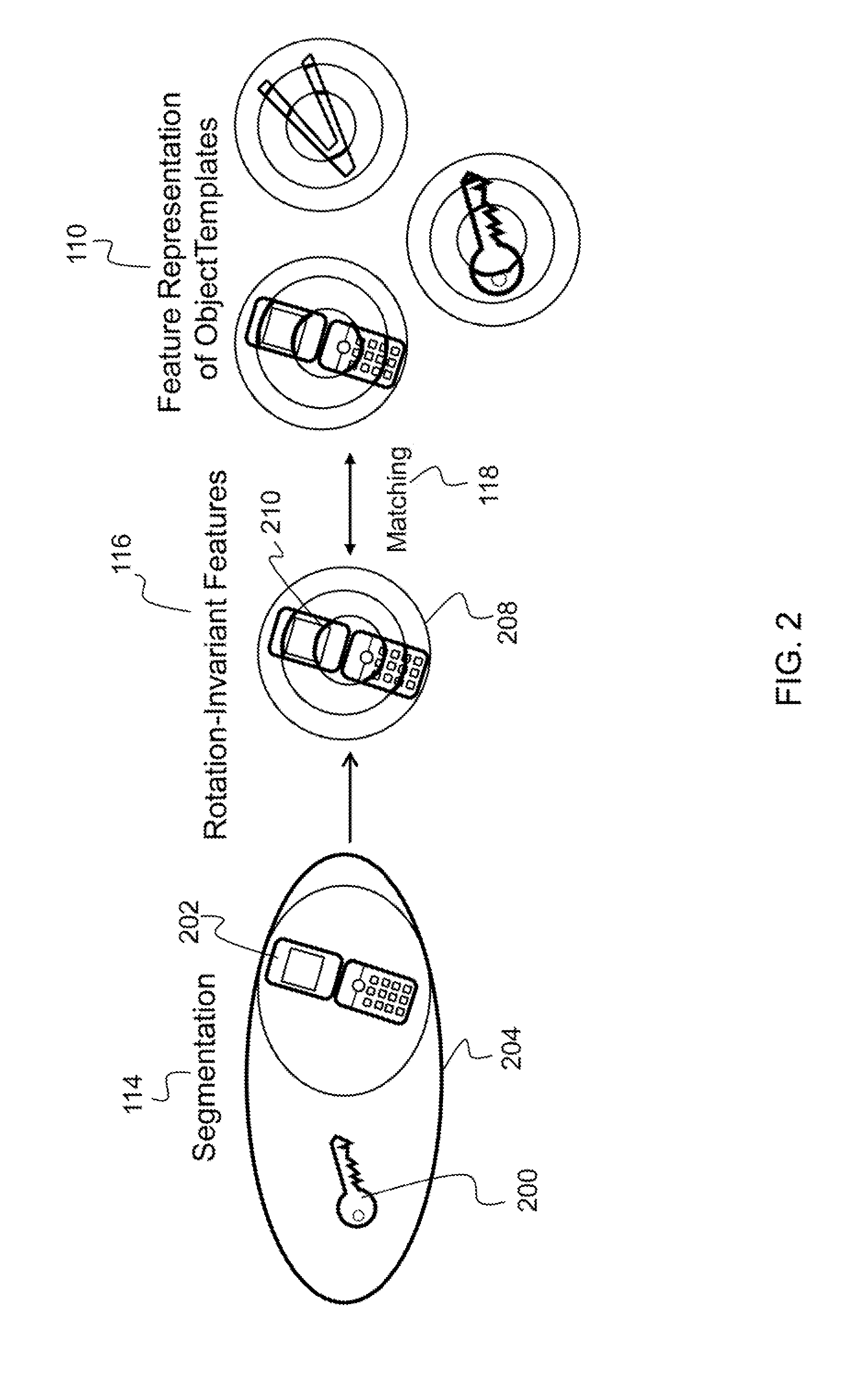

Robotic visual perception system

Described is a robotic visual perception system for determining a position and pose of a three-dimensional object. The system receives an external input to select an object of interest. The system also receives visual input from a sensor of a robotic controller that senses the object of interest. Rotation-invariant shape features and appearance are extracted from the sensed object of interest and a set of object templates. A match is identified between the sensed object of interest and an object template using shape features. The match between the sensed object of interest and the object template is confirmed using appearance features. The sensed object is then identified, and a three-dimensional pose of the sensed object of interest is determined. Based on the determined three-dimensional pose of the sensed object, the robotic controller is used to grasp and manipulate the sensed object of interest.

Owner:HRL LAB

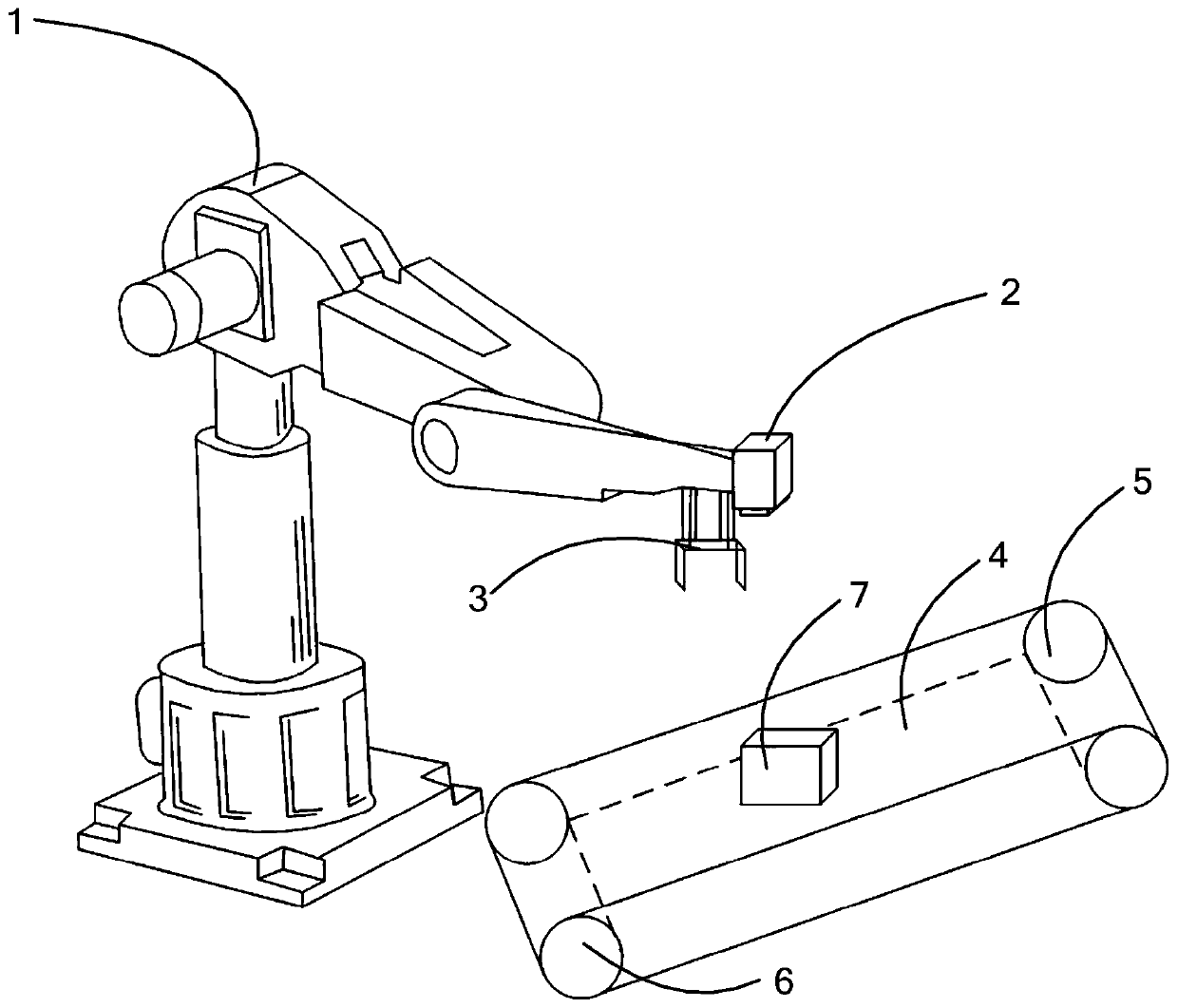

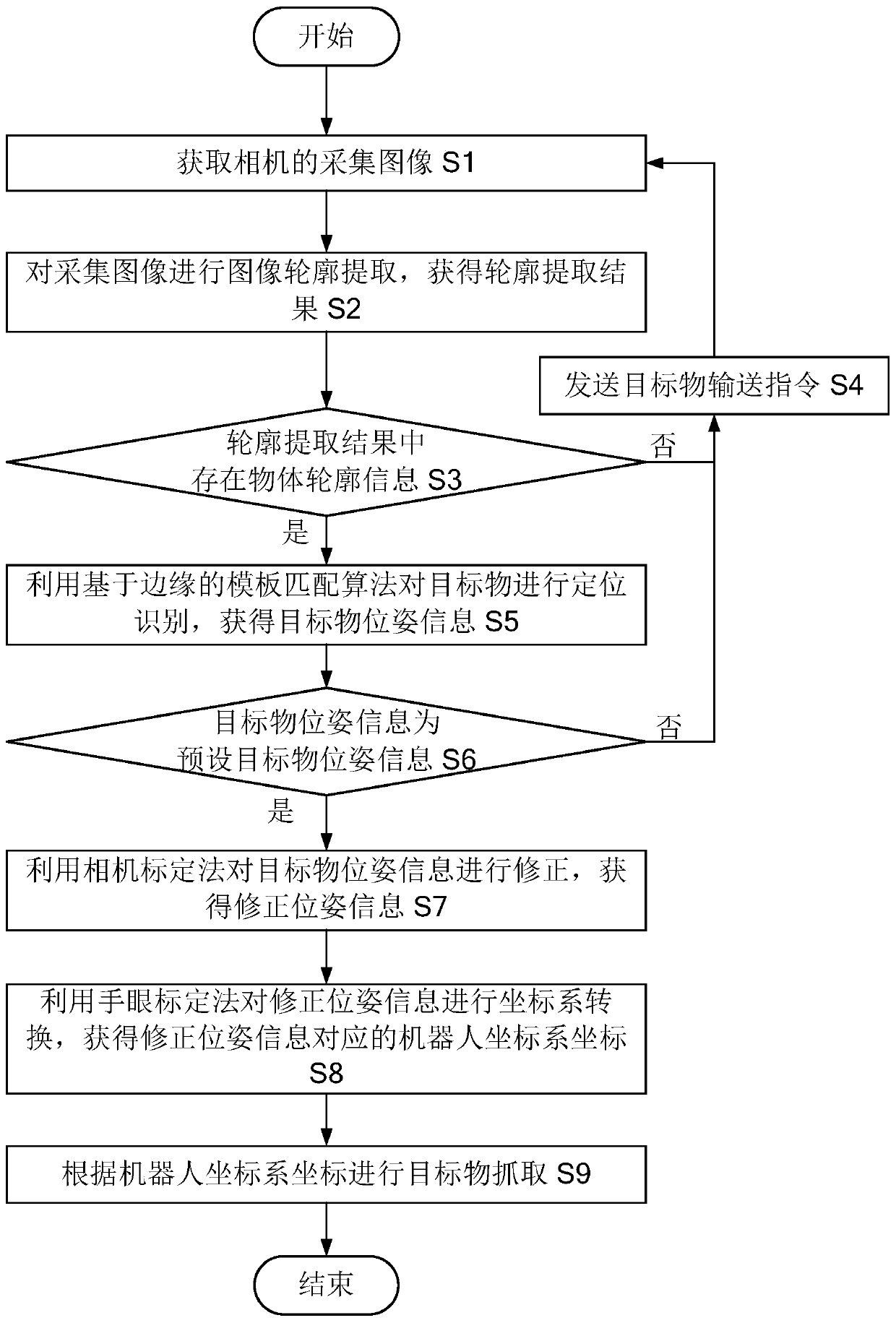

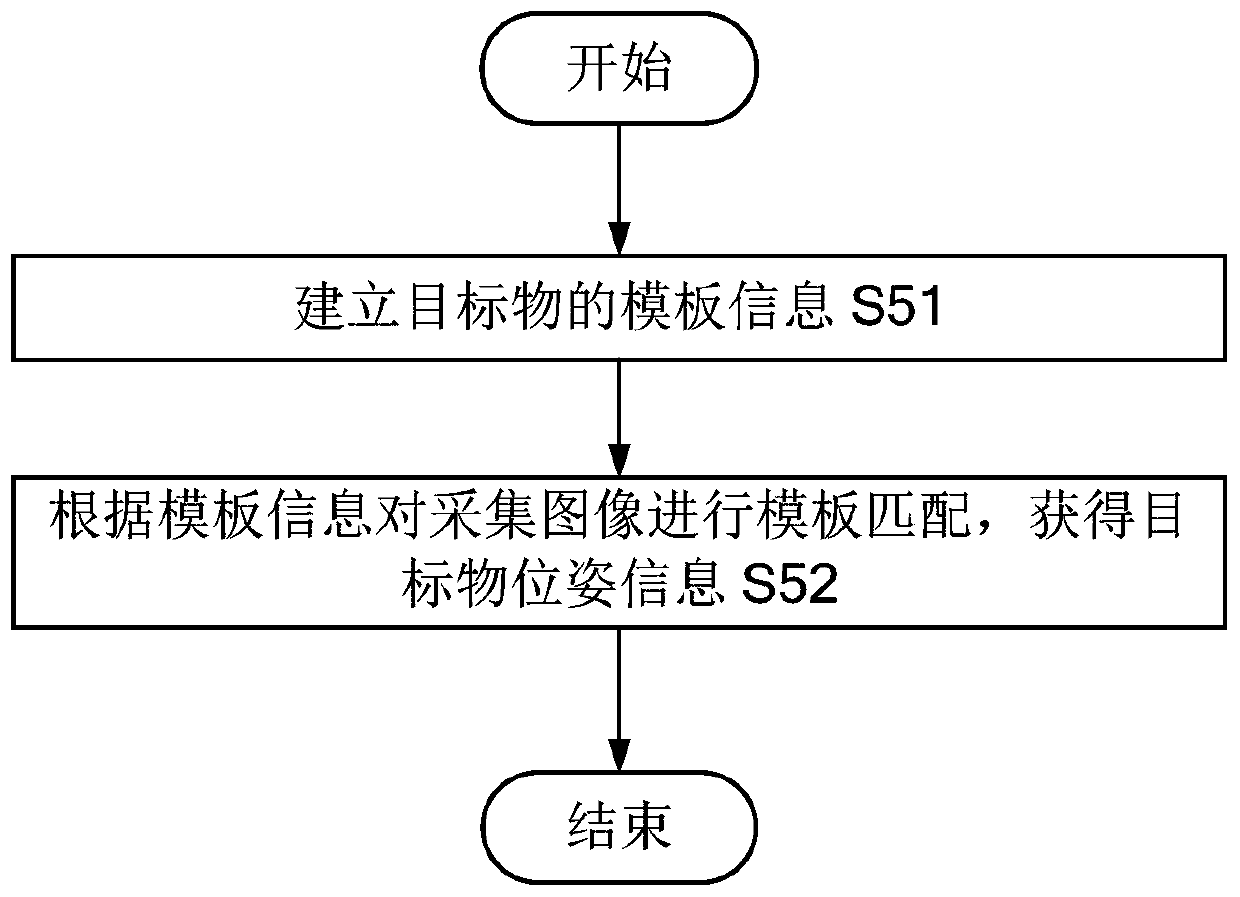

Industrial robot visual recognition positioning grabbing method, computer device and computer readable storage medium

InactiveCN110660104AImprove stabilityHigh precisionImage enhancementProgramme-controlled manipulatorComputer graphics (images)Engineering

The invention provides an industrial robot visual recognition positioning grabbing method, a computer device and a computer readable storage medium. The method comprises the steps that image contour extraction is performed on an acquired image; when the object contour information exists in the contour extraction result, positioning and identifying the target object by using an edge-based templatematching algorithm; when the target object pose information is preset target object pose information, correcting the target object pose information by using a camera calibration method; and performingcoordinate system conversion on the corrected pose information by using a hand-eye calibration method. The computer device comprises a controller, and the controller is used for implementing the industrial robot visual recognition positioning grabbing method when executing the computer program stored in the memory. A computer program is stored in the computer readable storage medium, and when thecomputer program is executed by the controller, the industrial robot visual recognition positioning grabbing method is achieved. The method provided by the invention is higher in recognition and positioning stability and precision.

Owner:GREE ELECTRIC APPLIANCES INC

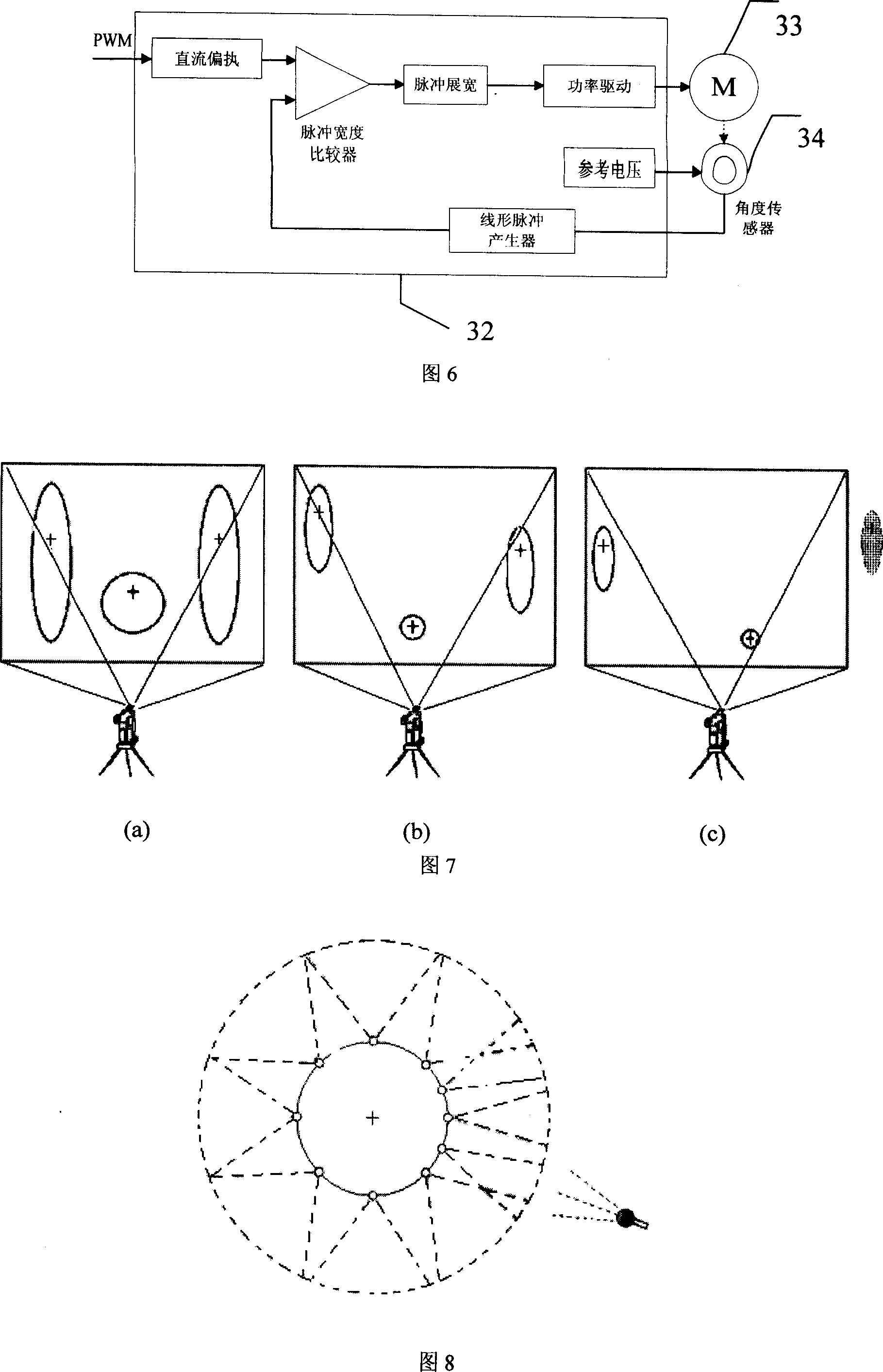

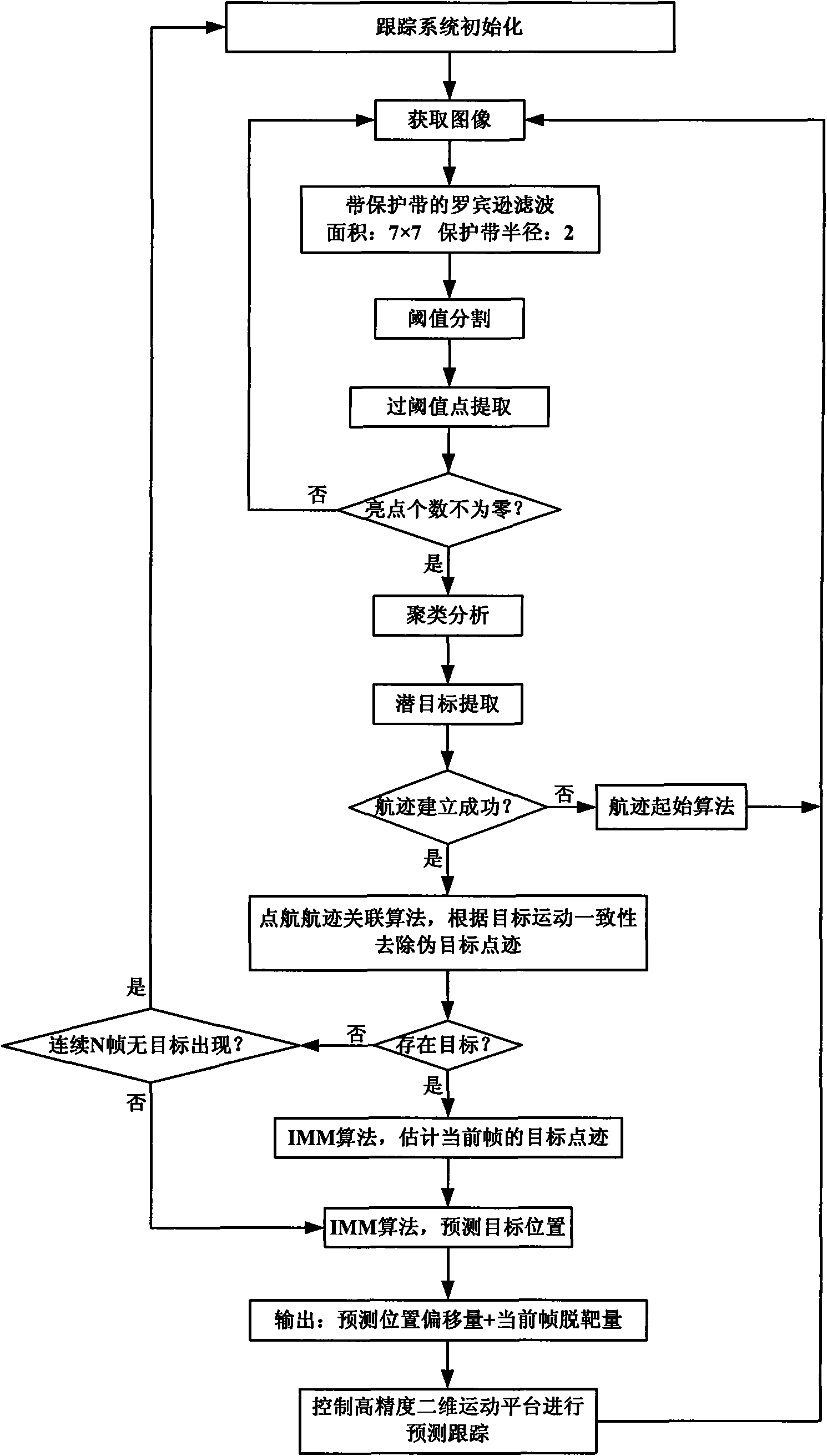

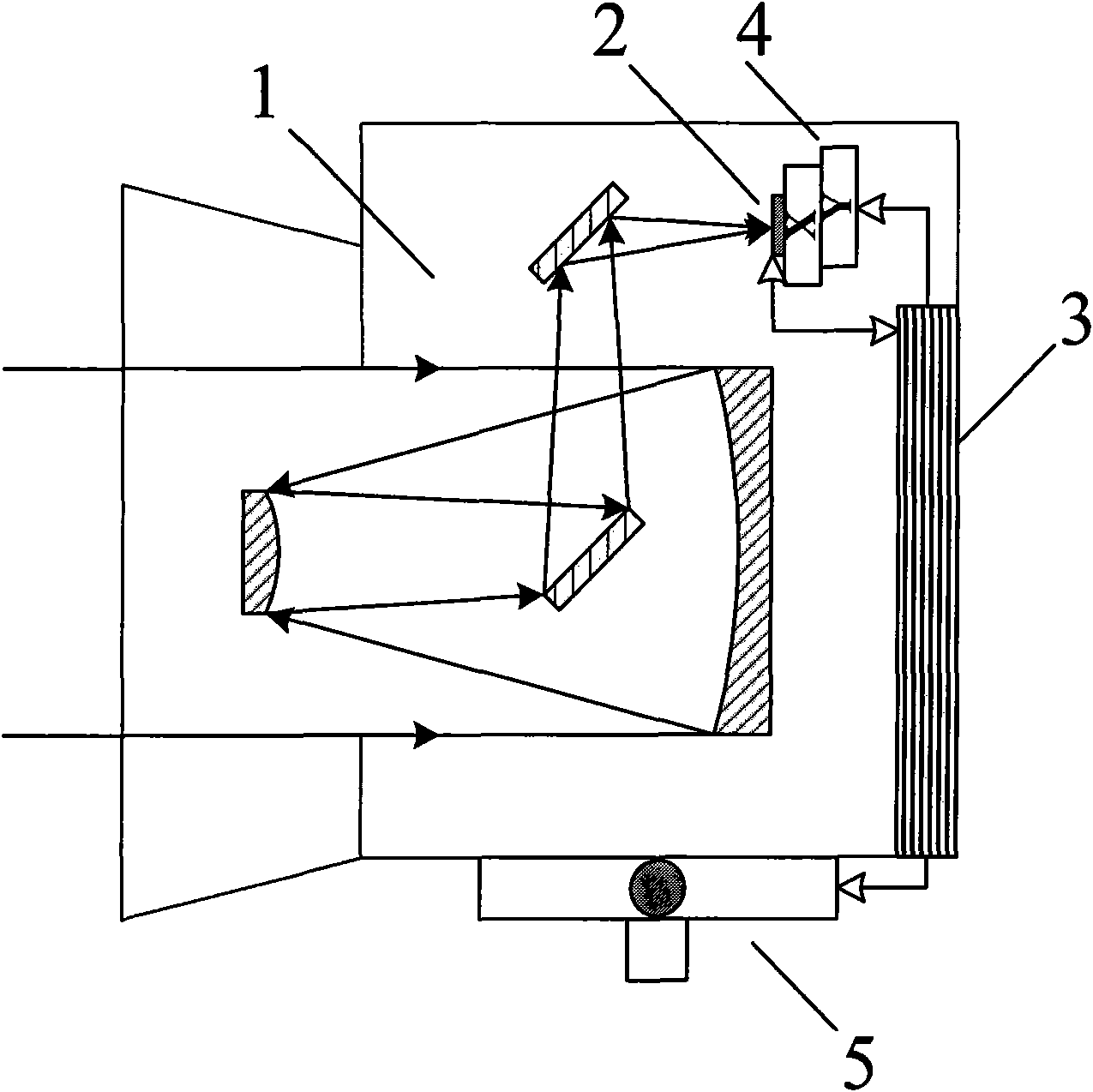

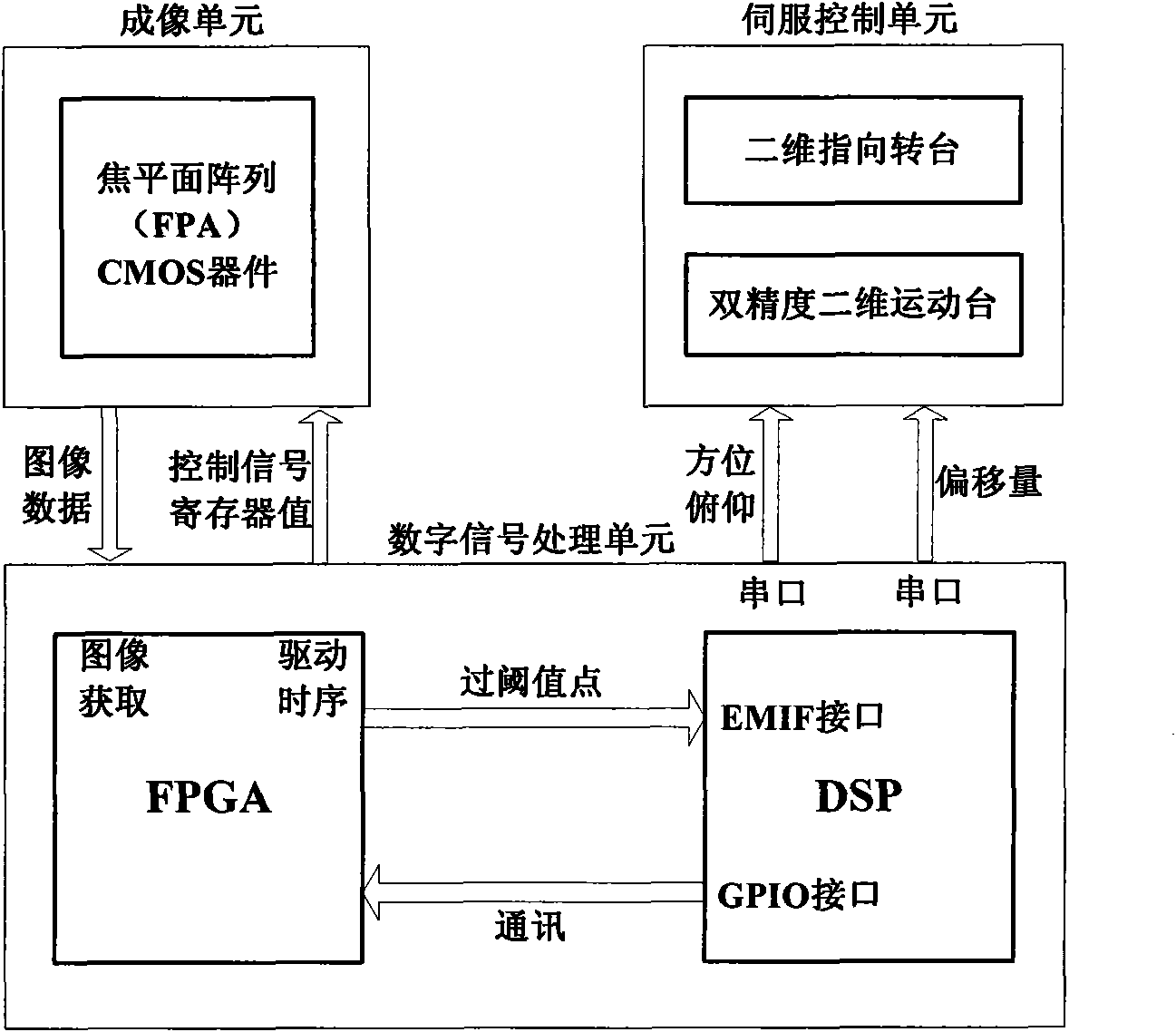

Real-time closed loop predictive tracking method of maneuvering target

InactiveCN102096925AReliable trackingContinuous and stable trackingImage analysisPrediction algorithmsClosed loop

The invention discloses a real-time closed loop predictive tracking method of a maneuvering target, which is a closed loop real-time self-adaptive processing method of on-line predictive immediate tracking in a maneuvering small target imaging tracking system and is mainly used for fields of photoelectric imaging tracking, robot vision, intelligent traffic control and the like. Due to the adoption of the method, a captured target can be extracted to to establish a flight track, the target flight track is filtered, the position of a target at a next collection time is predicted, a platform is processed in real time on line with high performance of a DSP main processor and a FPGA coprocessor, a prediction algorithm which can cope with target maneuver with higher accuracy is adopted to predict the motion state of the target in real time and a prediction result is utilized to drive a piezoelectric ceramic motor two-dimensional motion station to carry out overcompensation, thereby the self-adaptive predictive tracking is realized. The invention has the advantages that the method can overcome the defect of a largened tracking error caused by system delay and can still carry out continuous and stable tracking when the target maneuvers or is temporarily sheltered.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

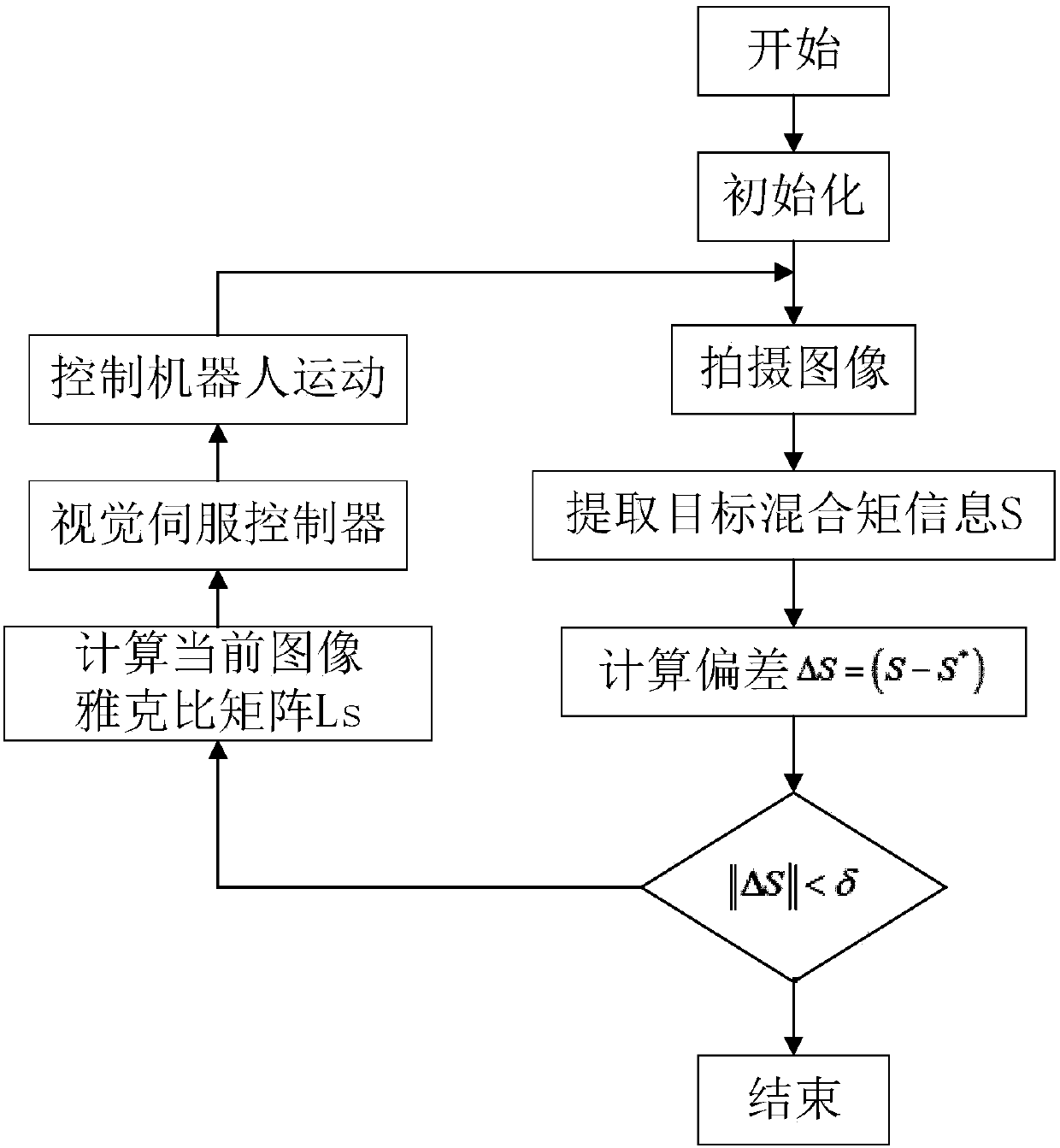

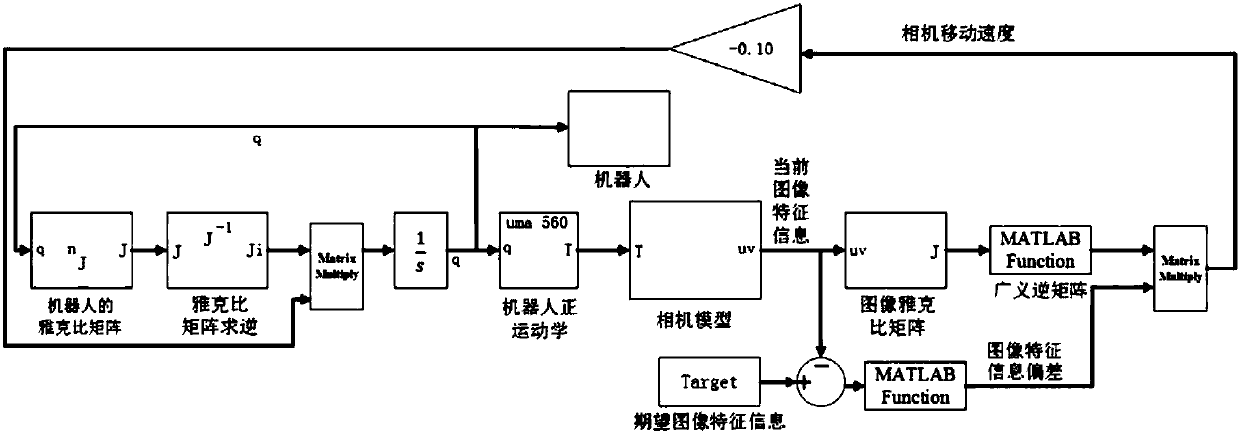

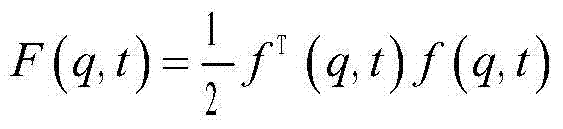

Robot vision servo control method based on image mixing moment

ActiveCN107901041AImprove work efficiencyAdaptableProgramme-controlled manipulatorVisual perceptionWork space

The invention discloses a robot vision servo control method based on the image mixing moment. Firstly, construction of mixing moment features in one-to-one correspondence with space attitudes after target object imaging under the robot expected pose is given; and then a target object image is obtained under any attitude, the current mixing moment feature information value is calculated, the deviation of the mixing moment feature value is calculated according to information of an expected image and information of the current image, if the deviation is smaller than the preset threshold value, itis shown that the robot achieves the expected pose, if the deviation is not smaller than the threshold value, an image jacobian matrix relevant to the mixing moment features is deducted, a vision servo robot is used so that the robot can move towards the expected pose till the feather deviation is smaller than the preset threshold value, and the control process is over. By means of the robot vision servo control method, the image field mixing moment feature corresponding to the space movement track of the robot is introduced in to serve as the control input, vision servo control of a eye-in-hand robot system under the working space model unknown circumstance is completed, and the method can be widely applied to robot intelligent control based on machine vision.

Owner:CENT SOUTH UNIV

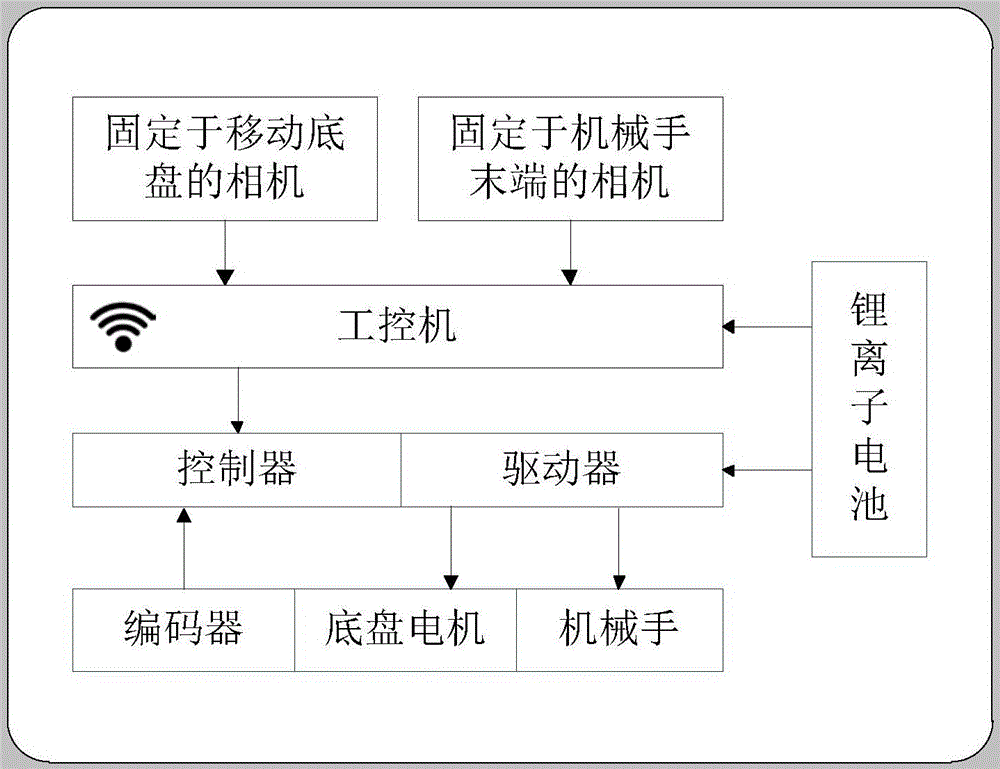

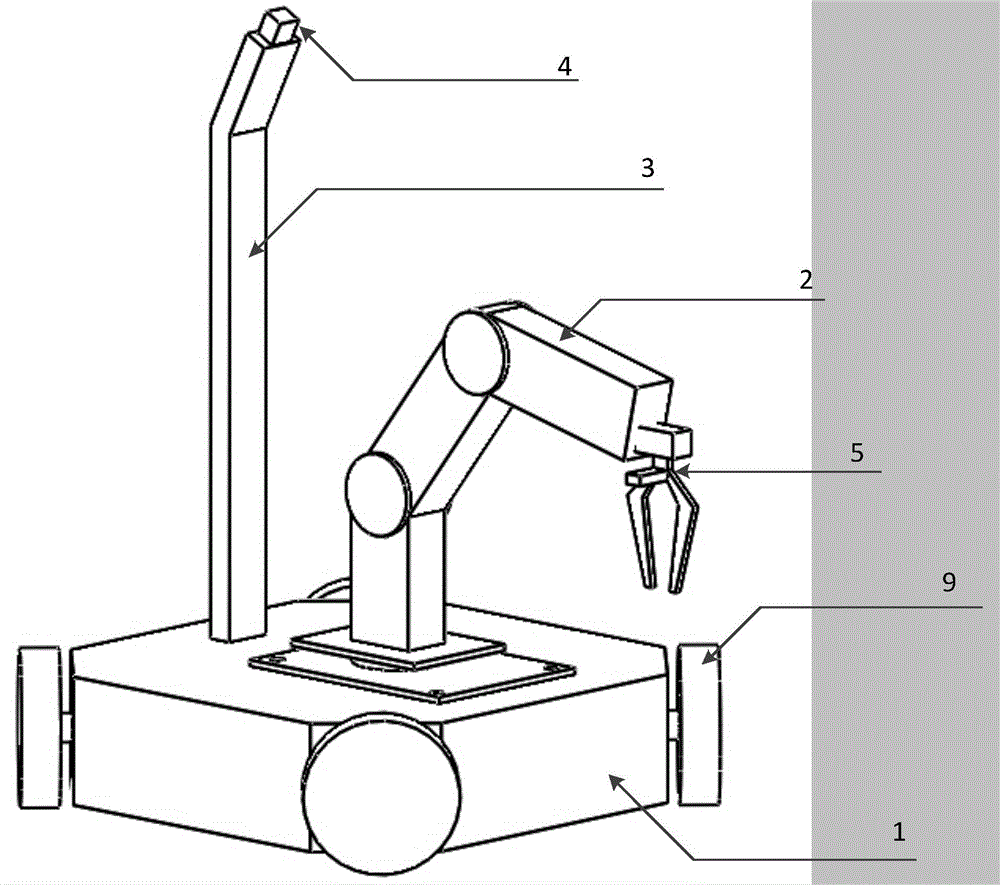

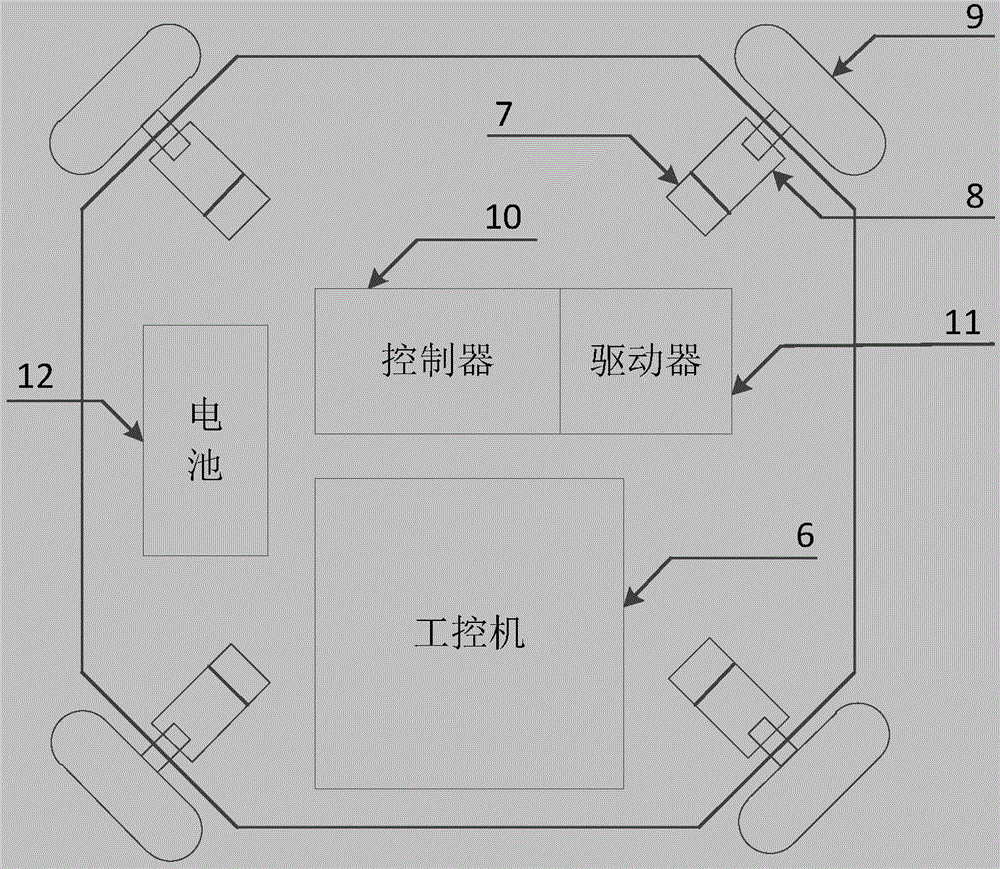

Mobile vision robot and measurement and control method thereof

InactiveCN106607907ARealize automatic controlHigh movement accuracyProgramme-controlled manipulatorMachine visionManipulator

The invention provides a mobile vision robot and a measurement and control method thereof. Positioning and state information of the mobile robot and a robot operating target is obtained through a machine vision detection method, and meanwhile, movement and other operation of the robot are controlled according to information provided by vision images; and the robot vision images are provided by two cameras, one camera is fixed to a mobile platform and used for acquiring images on the robot movement path, and the other camera is fixed to the tail end of a manipulator and used for acquiring detailed images of a manipulator operating target. According to the mobile vision robot and the measurement and control method thereof, the positioning and state information of the mobile robot and the specific target are calculated through an image distortion correcting and mode recognizing method according to the obtained image information, and then the robot is controlled to complete instructed action on a target object. Meanwhile, the invention provides a method for eliminating accumulative errors generated in the operating process of the robot through vision images. The mobile vision robot has the characteristics of high precision and high anti-interference capacity, complex environment support is not needed, and the mobile vision robot is suitable for various laboratories and factory environments.

Owner:XI AN JIAOTONG UNIV

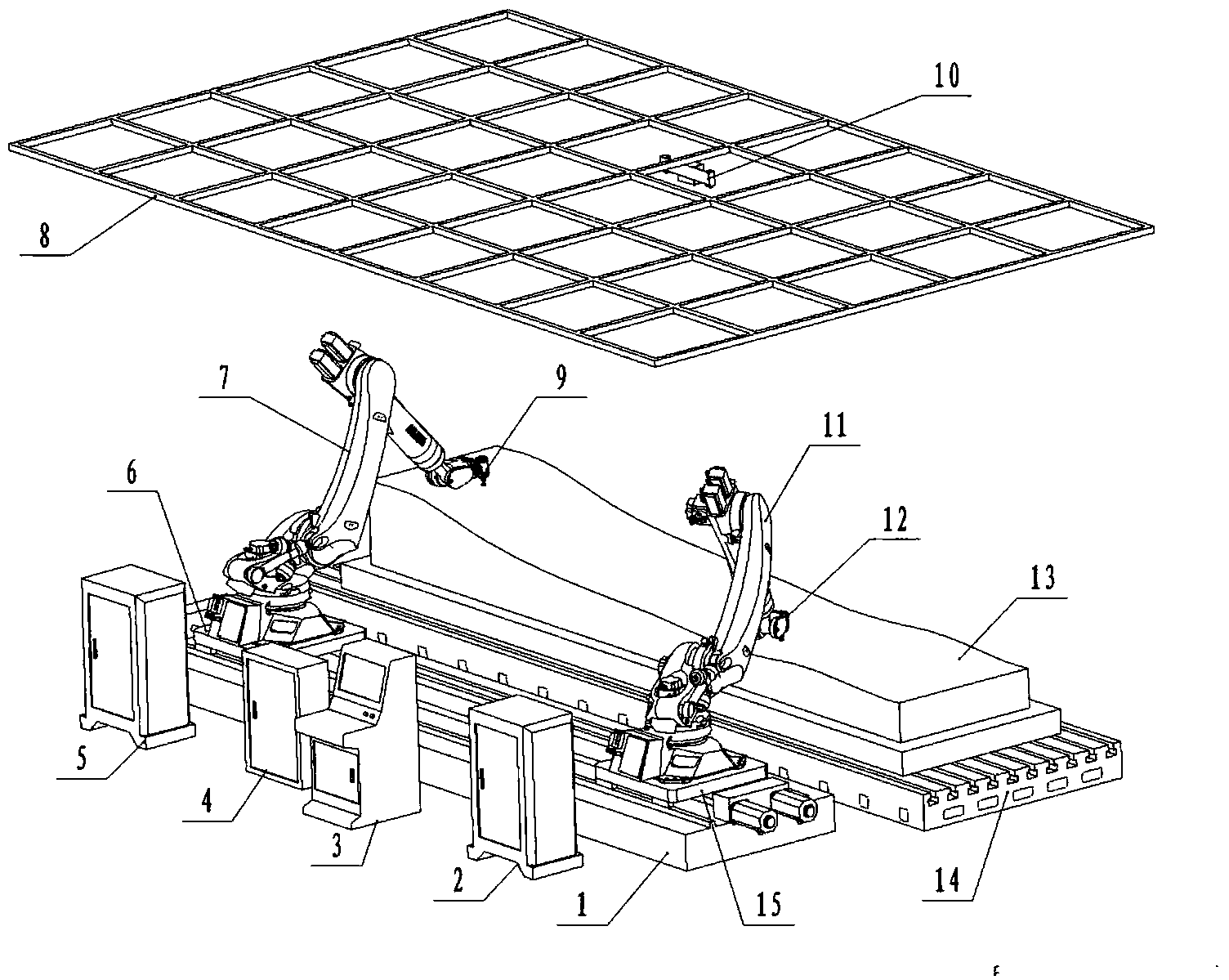

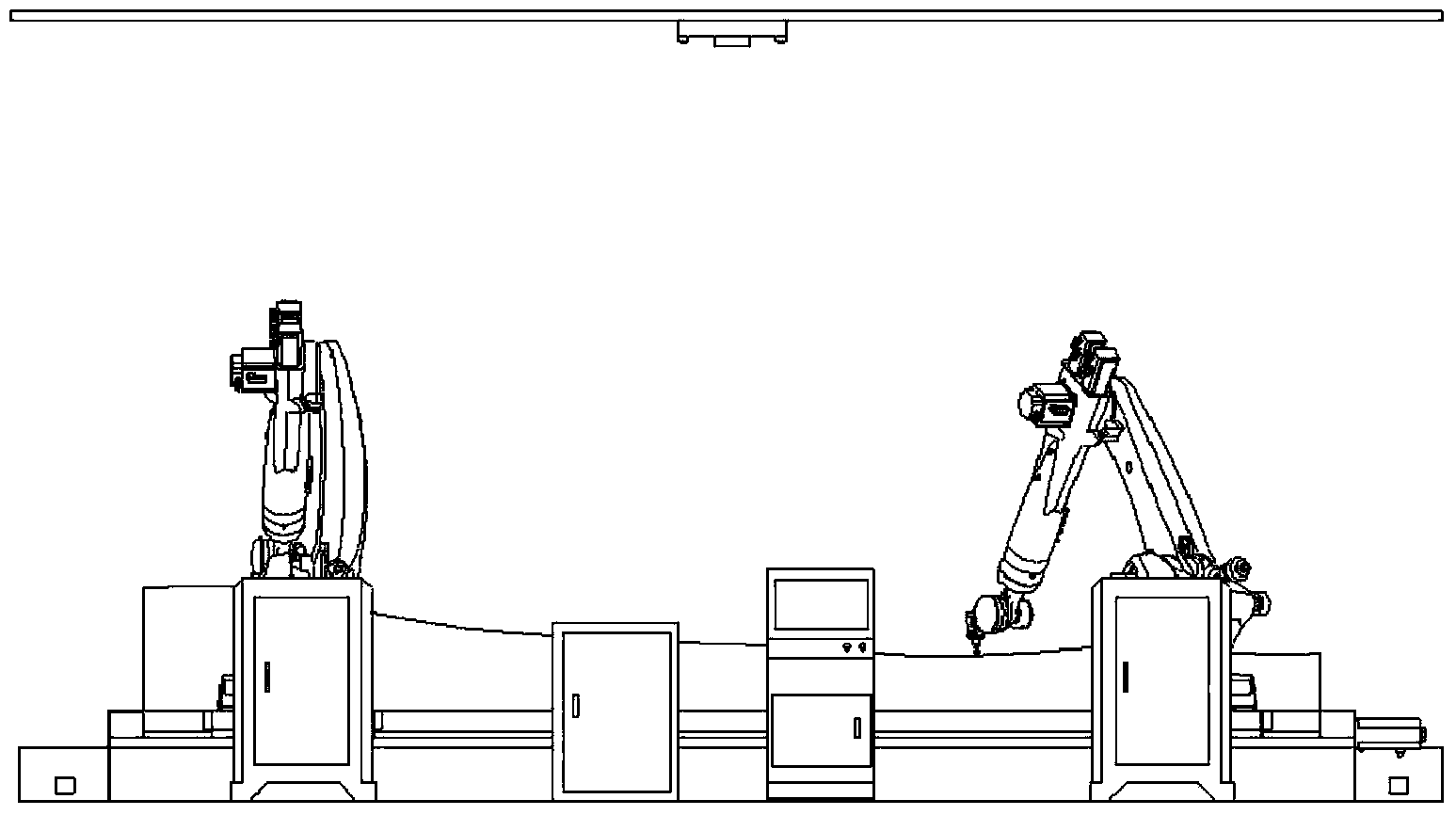

Robot parallel polishing system

ActiveCN103862340APressure controllableGuaranteed grinding removalEdge grinding machinesGrinding drivesSimulationSelf positioning

The invention discloses a robot parallel polishing system which comprises a coarse polishing system, a refined polishing system, a base component, a system control cabinet, a pneumatic control cabinet, a robot vision self-positioning system, workpieces and a working table. The workpieces with large free curved faces can be coarsely and finely polished through the polishing systems at the same time, simultaneous polishing on the two same workpieces can be achieved, the polishing accuracy can be effectively guaranteed, and the polishing efficiency is improved. Before the robot parallel polishing system works, the polishing areas are divided by path generative software according to three-dimensional models of the workpieces, the polishing paths are generated, standards of the workpieces are rapidly and accurately demarcated through the robot vision self-positioning system, two industrial robots drive a pneumatic polishing head to conduct coarse polishing and refined polishing on the polishing areas according to the planed paths, the pneumatic mild force control technology, the normal polishing force control technology and the path real-time calibration compensation technology are adopted in the polishing process, and the polishing accuracy and the coincidence of the polishing quality are effectively guaranteed.

Owner:中科君胜(深圳)智能数据科技发展有限公司

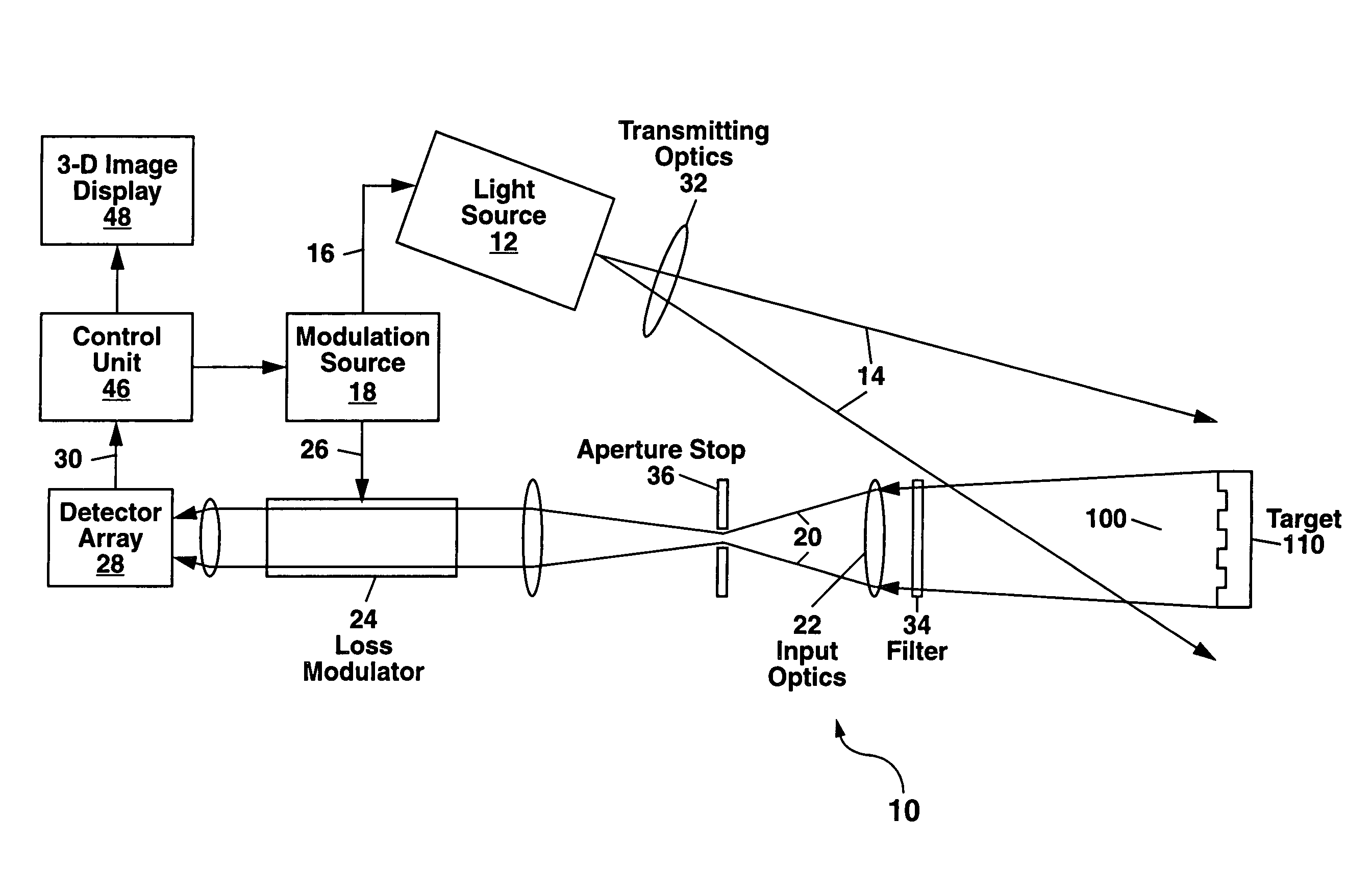

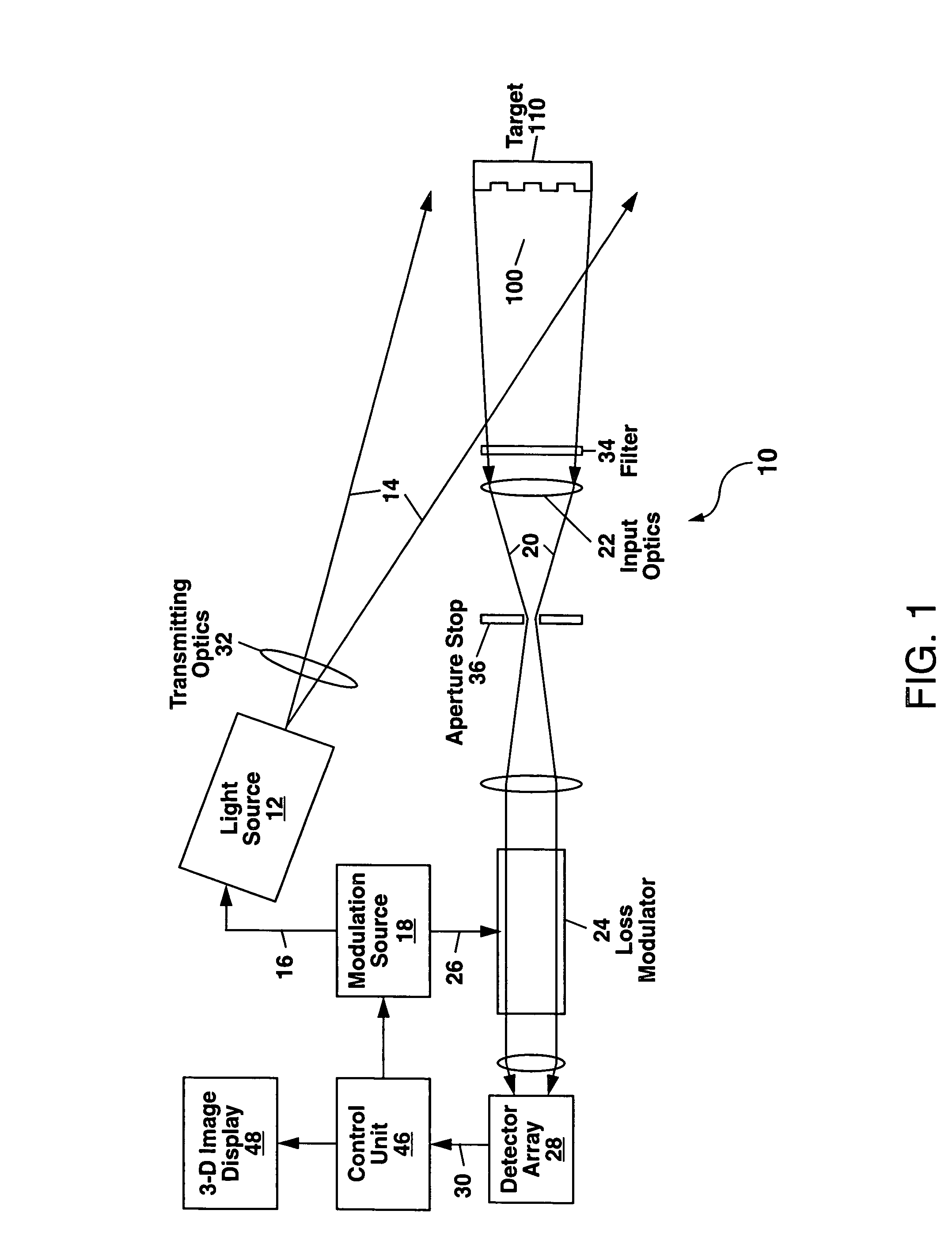

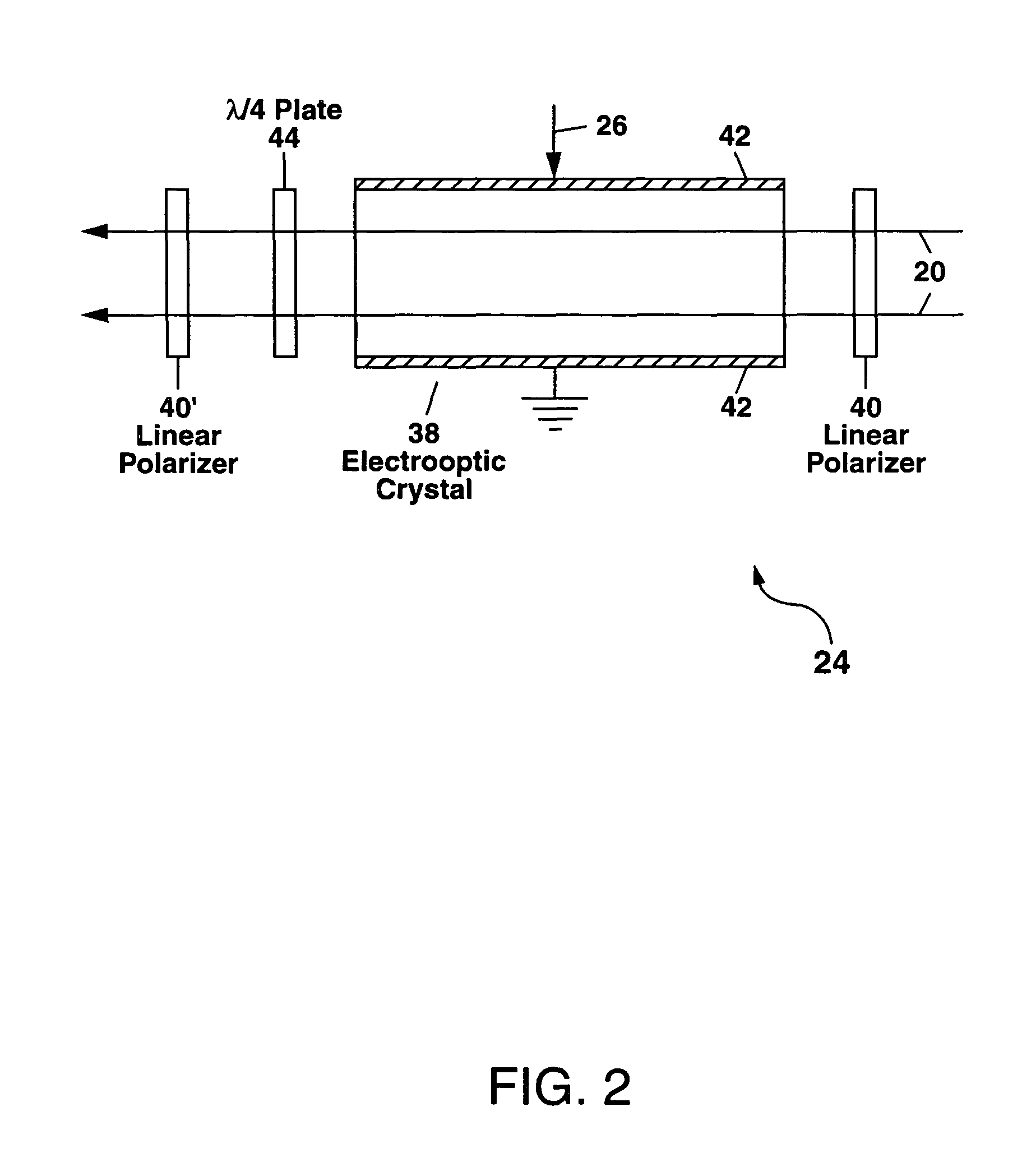

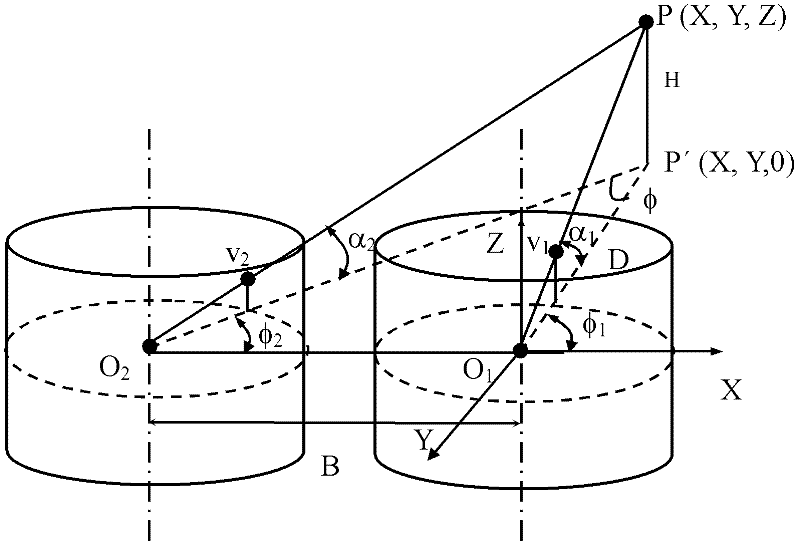

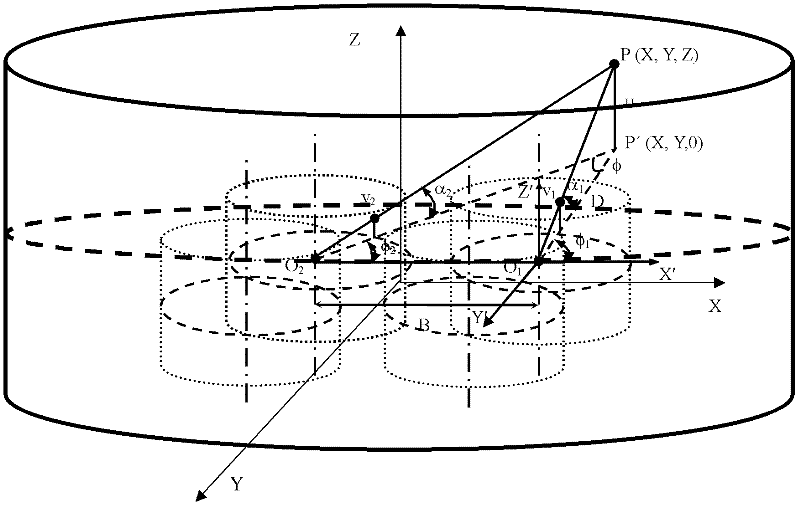

Scannerless laser range imaging using loss modulation

A scannerless 3-D imaging apparatus is disclosed which utilizes an amplitude modulated cw light source to illuminate a field of view containing a target of interest. Backscattered light from the target is passed through one or more loss modulators which are modulated at the same frequency as the light source, but with a phase delay δ which can be fixed or variable. The backscattered light is demodulated by the loss modulator and detected with a CCD, CMOS or focal plane array (FPA) detector to construct a 3-D image of the target. The scannerless 3-D imaging apparatus, which can operate in the eye-safe wavelength region 1.4-1.7 μm and which can be constructed as a flash LADAR, has applications for vehicle collision avoidance, autonomous rendezvous and docking, robotic vision, industrial inspection and measurement, 3-D cameras, and facial recognition.

Owner:NAT TECH & ENG SOLUTIONS OF SANDIA LLC

Panoramic three-dimensional photographing device

The invention discloses a panoramic three-dimensional photographing device which is established by integrating four omnibearing photographing devices with identical imaging parameters, wherein a plane is used for connecting the four omnibearing photographing devices with identical imaging parameters so as to ensure that fixed single viewpoints of four ODVSs (Omnidirectional Vision Sensors) with identical imaging parameters are positioned on the same plane; the connection manner is as follows: four hyperbolical-surface mirror planes with identical parameters are fixed on a transparent glass face, and four cameras with identical inner and outer parameters are fixed on the same plane; and a microprocessor is used for performing three-dimensional imaging processing on images of the four ODVSs and comprises a panoramic image reading and preprocessing unit, a perspective unfolding unit and a panoramic three-dimensional image output unit; and the panoramic three-dimensional photographing device can be widely applied to a plurality of application fields such as robot vision, animated films, games and the like. The invention provides the panoramic three-dimensional photographing device with the advantages of high cost performance, simplicity for operation and capability of photographing a panoramic three-dimensional video image in real time.

Owner:ZHEJIANG UNIV OF TECH

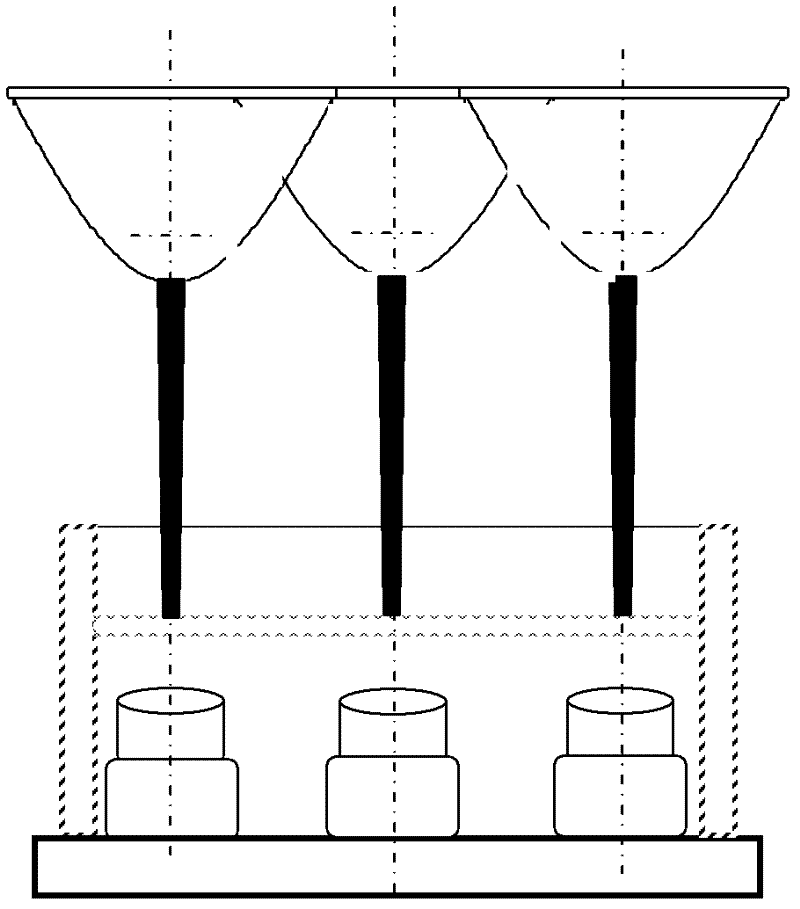

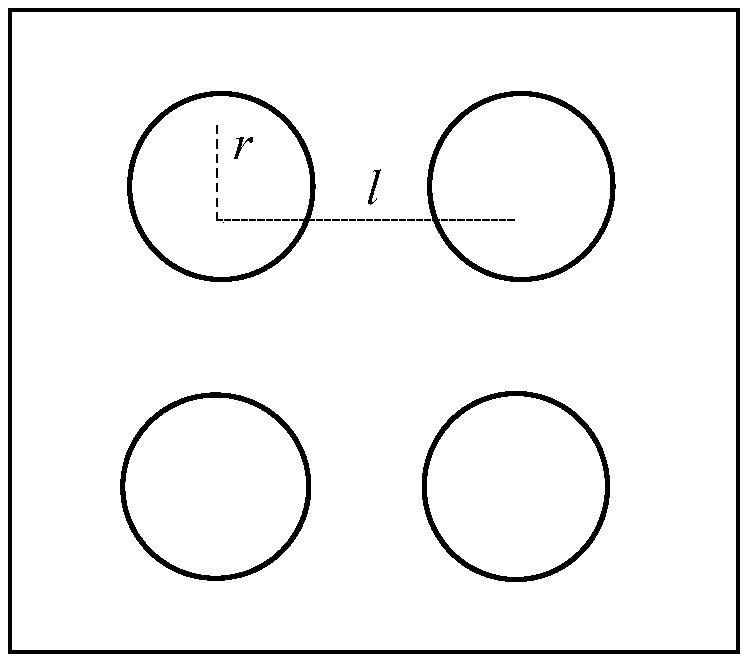

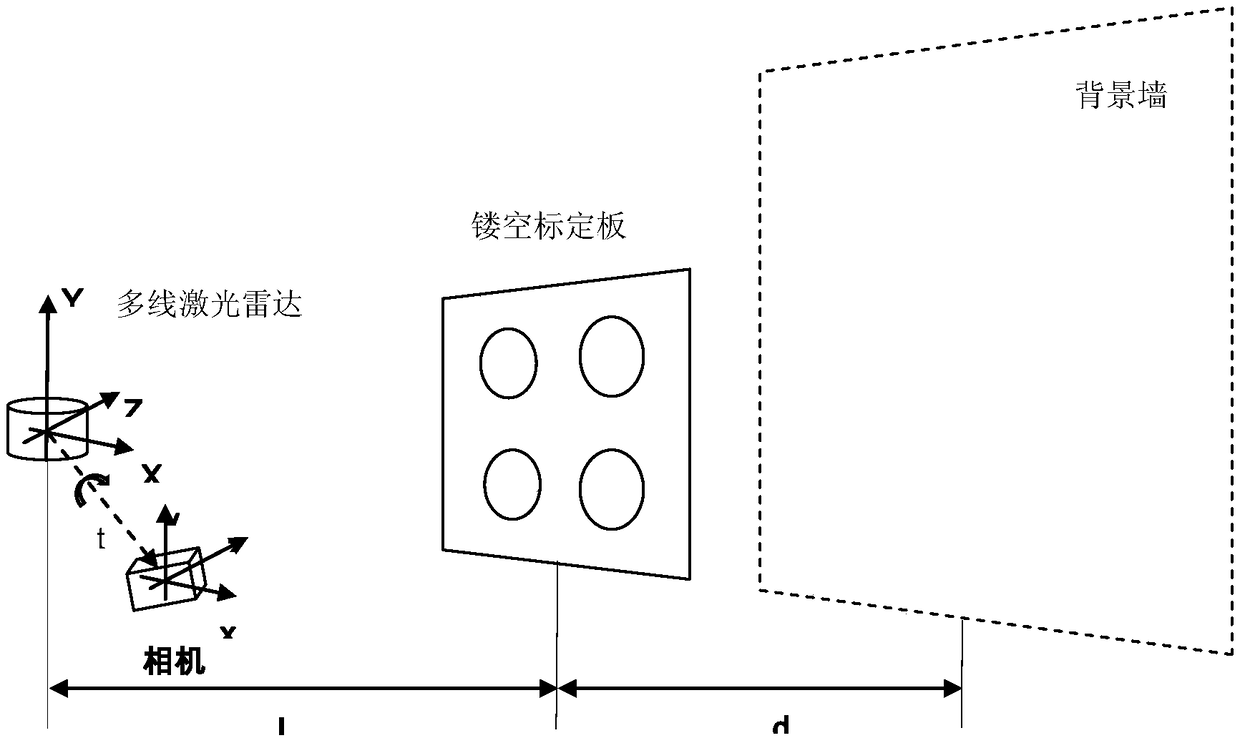

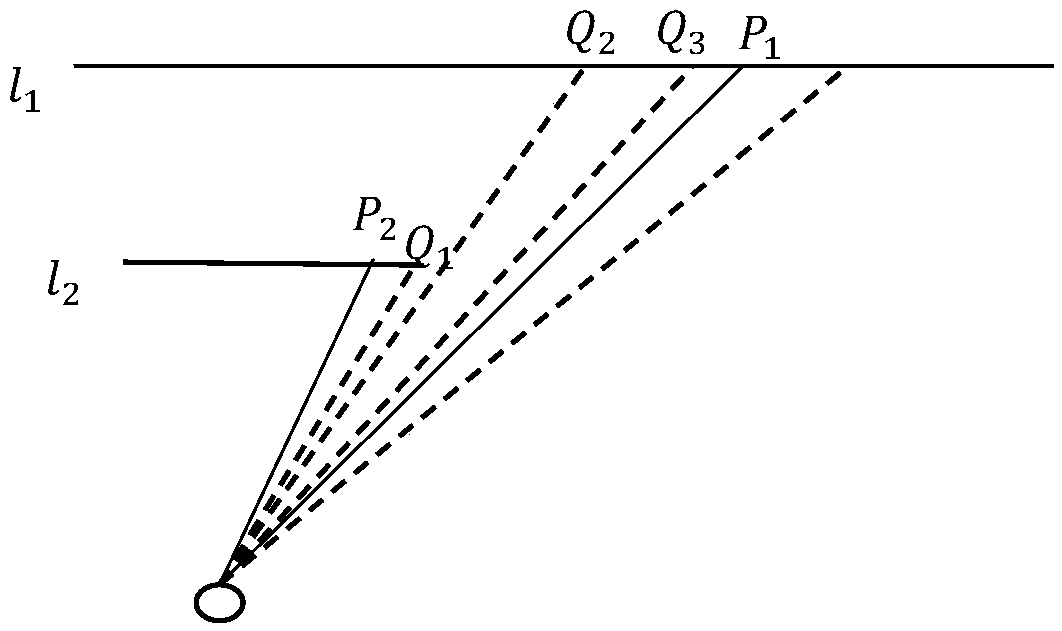

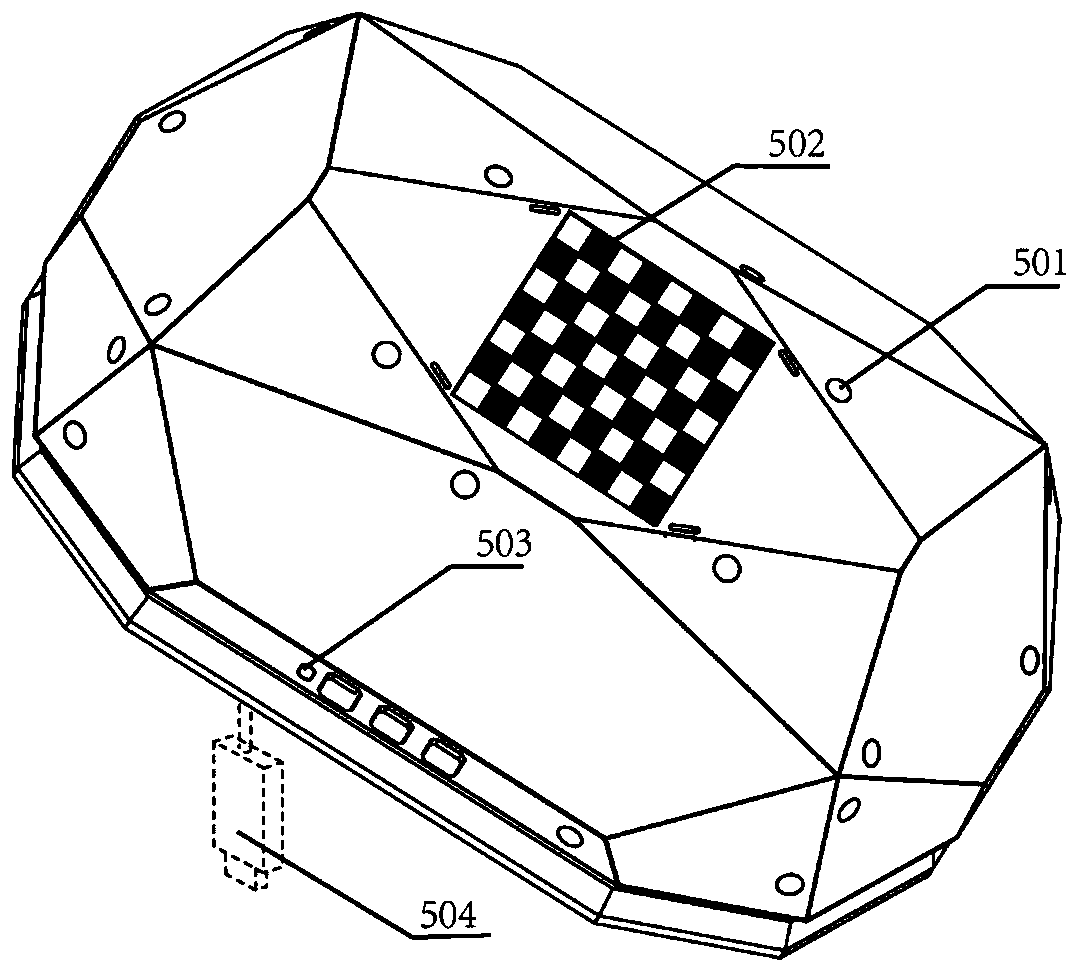

A multi-line lidar and camera joint calibration method based on fine radar scanning edge points

ActiveCN109300162AHigh precision extractionSolve the lack of precisionImage enhancementImage analysisCalibration resultVisual perception

The invention relates to a multi-line lidar and camera joint calibration method based on fine radar scanning edge points, which mainly relates to the technical fields of robot vision, multi-sensor fusion and the like. Because of the influence of the resolution of lidar, the scanned edge points are often not accurate enough, so the calibration results are not accurate enough. According to the characteristic that the range of the lidar point is abrupt at the edge, the invention searches and compares for many times, and takes the point closer to the edge as the standard point. By detecting the circle in the camera image and radar edge points, the translation between the camera and the lidar is calculated according to the pinhole camera model. The calibration parameter C is searched in the neighborhood space of the obtained translation vector to find the calibration result that minimizes the projection error. The invention can extract the points swept by the lidar on the edge of the objectwith high precision, avoids the problem of insufficient precision caused by low resolution of the lidar, and improves the calibration precision.

Owner:ZHEJIANG UNIV OF TECH

Industrial robot visual servo system and servo method and device

InactiveCN110039523ARealize real-time trackingOptimize workflowProgramme-controlled manipulatorWelding/cutting auxillary devicesRobot controlReal time tracking

The embodiment of the invention discloses an industrial robot visual servo system and a servo method and device. The industrial robot visual servo system comprises a six-axis robot, a weld joint tacker, a calibration object, a wireless handheld demonstrator, a USB wireless receiver, an infrared laser positioning base station, a robot control cabinet, a welding machine power supply control cabinet,an upper computer control cabinet and an automatic wire feeding system. Rapid demonstration without programming is achieved, non-uniform workpiece incoming is avoided, and real-time tracking of welding thermal deformation is achieved; the working process of a traditional welding automatic system is improved, and an operator does not need to use the demonstrator to carry out complex demonstrationprogramming; as the recognition technology based on deep learning is adopted for weld joint tracking, the universality of welding automation is greatly improved; and in addition, welding path planning, demonstration, system calibration and welding real-time tracking are completely integrated in the same upper computer interface, and therefore the process operation and management can be conveniently carried out by the operator.

Owner:北京无远弗届科技有限公司

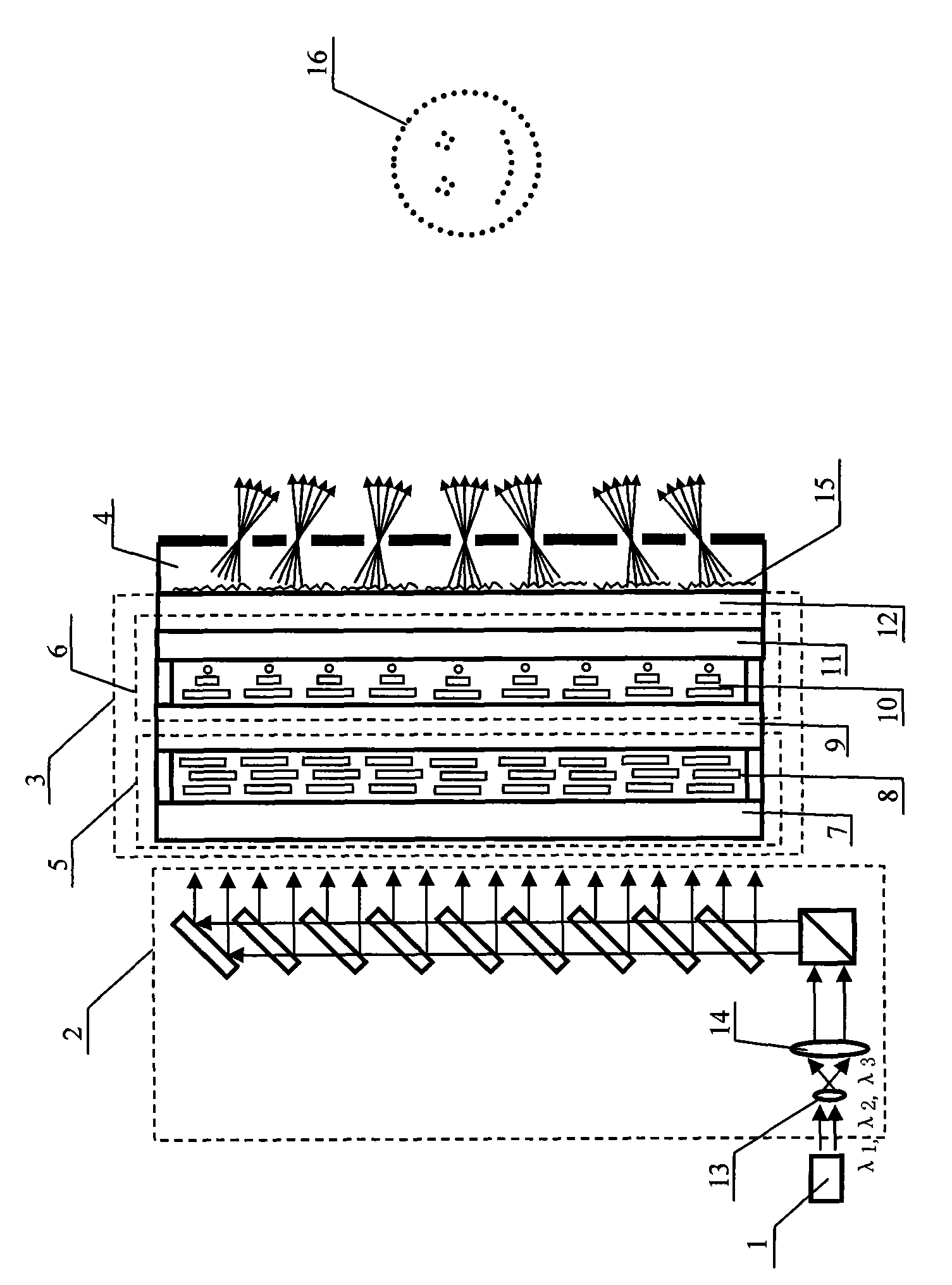

Three-dimensional display device based on random constructive interference principle

ActiveCN102033413AImprove energy utilizationSimple structureStereoscopic photographySteroscopic systemsVisual perceptionLiquid crystal

The invention discloses a three-dimensional display device based on a random constructive interference principle, which adopts an amplitude and phase regulator array to regulate the amplitude and phase of incident laser and the converging function of a reflective or transmissive holographic optical element or binary optical element or tilted microlens to generate a coherent sub light source array of which the position is random, so that coherent sub light sources generate constructive interference to form a three-dimensional image. The device adopts a gray scale liquid crystal panel as well as the partitioned lighting by red, green and blue lasers to realize real-time color three-dimensional display, can be widely used in fields of three-dimensional display in computers and televisions, three-dimensional man-machine exchange, robot vision and the like.

Owner:李志扬

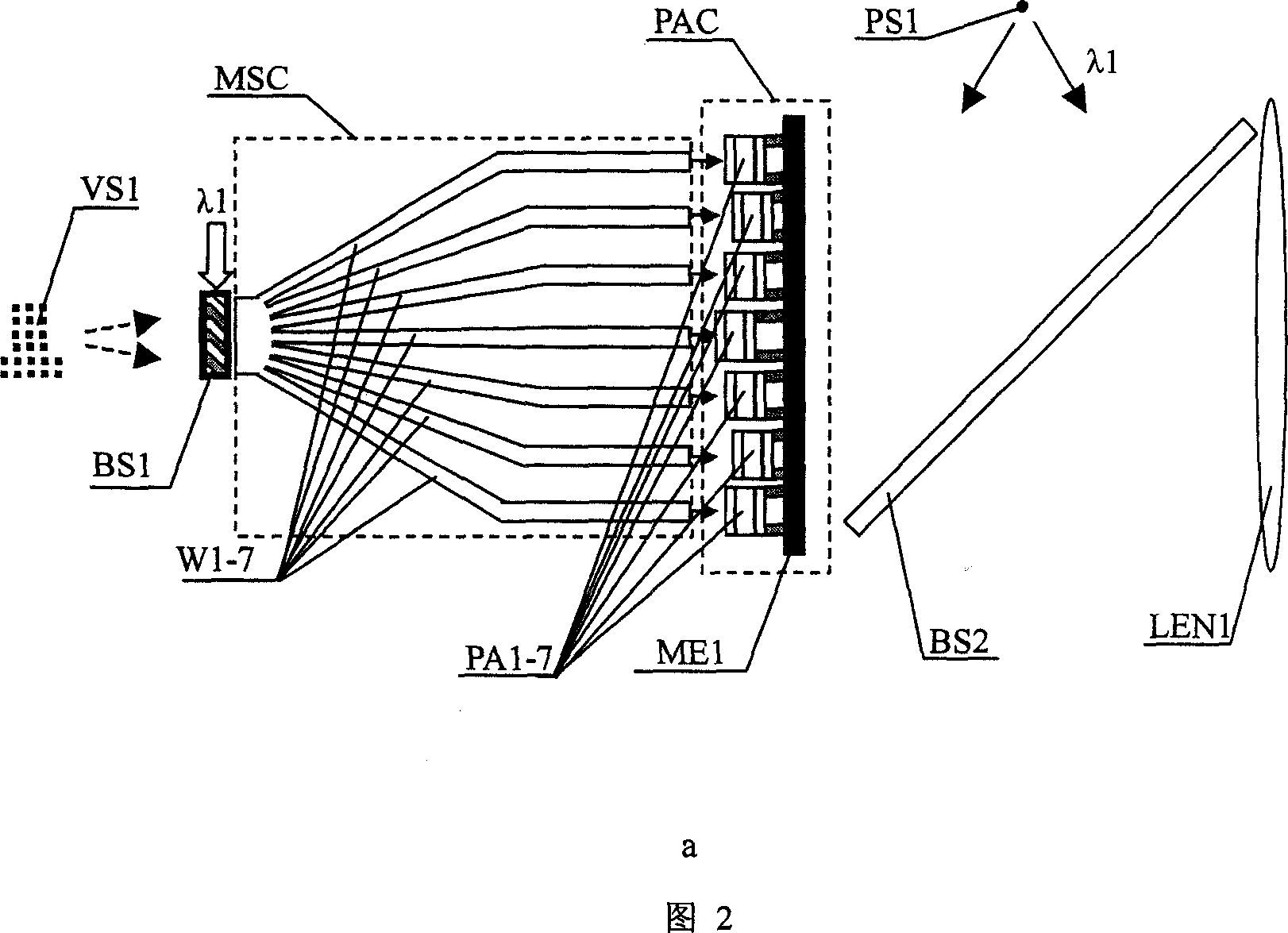

Active optical phase conjugating method and apparatus

InactiveCN1932565ARealize automatic and precise adjustmentSimple structureCoupling light guidesWaveguideWaveguide array

The invention relates to the active optics phase conjugate method and the imaging device, the optical switch. It constructs the mode separation / integration convertor by the optical waveguide array which is set together at one end, the optics field couples with each other; it is set separately in the other end. It leads the optical wave into the separating optical waveguide by the separation / integration convertor, then to achieve the active optics phase conjugate by adjusting the phase and the swing. It can solves the imaging problem in many limit condition such as big size, high quality, super quick focal variation, long distance and so on. So it can be used in the field of the computer-human conversation, the robot optics, the integrate circuit photoetching, information storage, the military affairs, the energy source, the biology and the light communication network.

Owner:李志扬

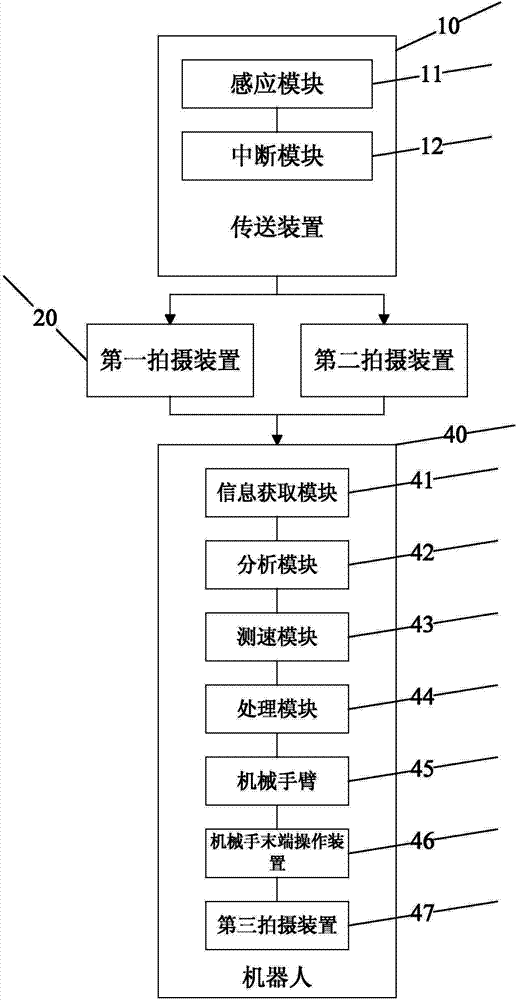

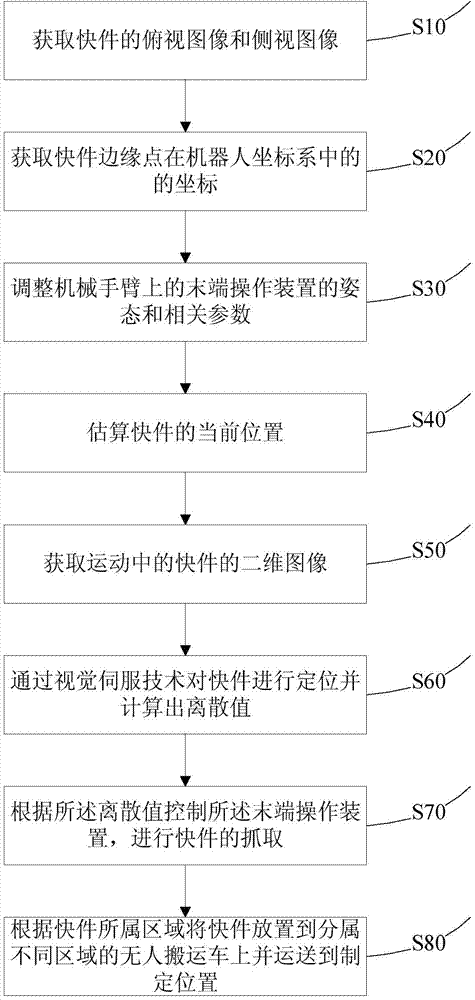

Express sorting method and system based on robot visual servo technology

The invention relates to an express sorting method and system based on a robot visual servo technology. The method includes: acquiring a top view image and a side view image of an express; according to the top view image and side view image of the express, acquiring the coordinates of express peripheral points, the express affiliated area and the placement posture of the express on a conveyor, and letting the robot estimate the current position of the express; when the express enters a sorting zone, utilizing the estimated current position of the express and relevant parameters of the robot to adjust the position of a mechanical arm, shooting the two-dimensional image of the express in motion, positioning the express through the visual servo technology and calculating a discrete value; and controlling an end operation apparatus according to the discrete value to grab the express.

Owner:SHENYANG SIASUN ROBOT & AUTOMATION

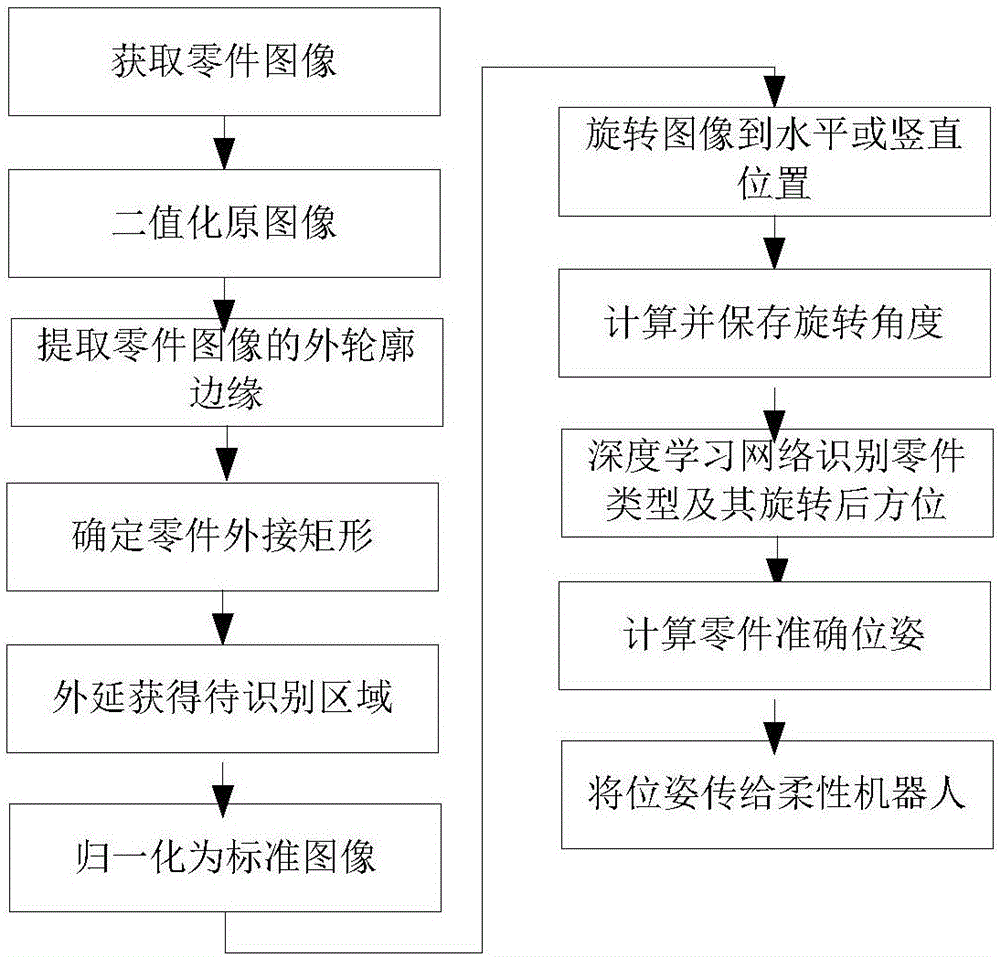

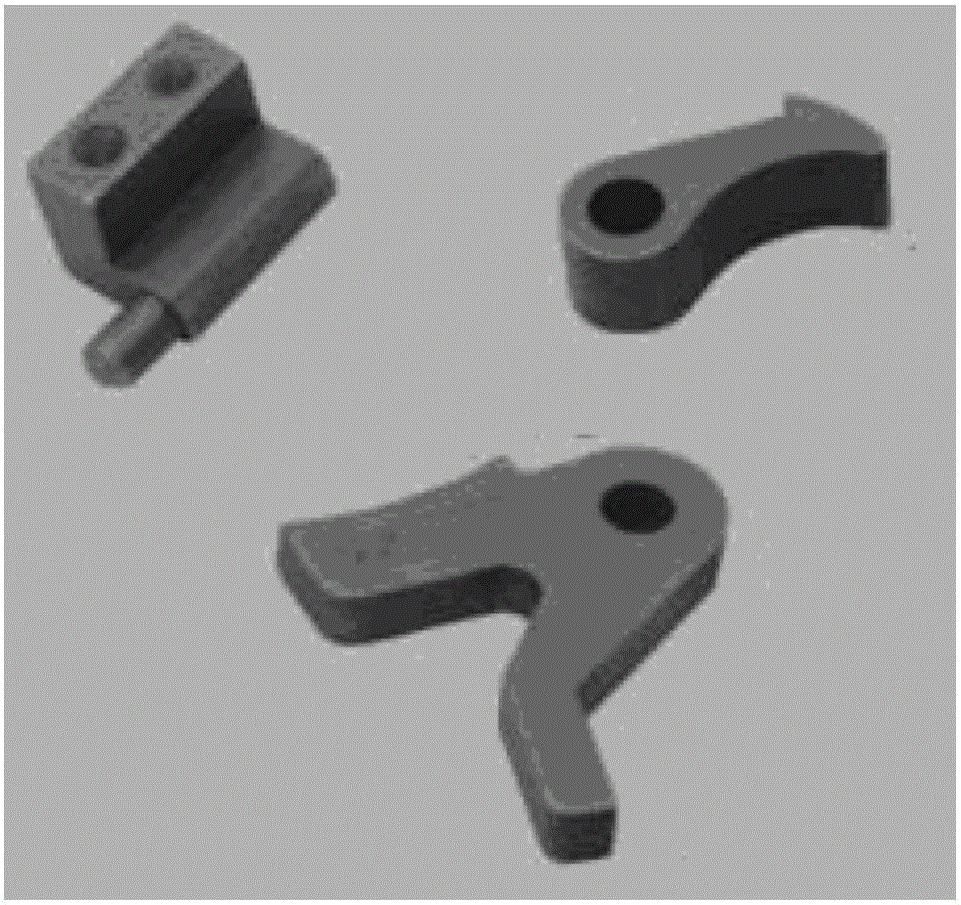

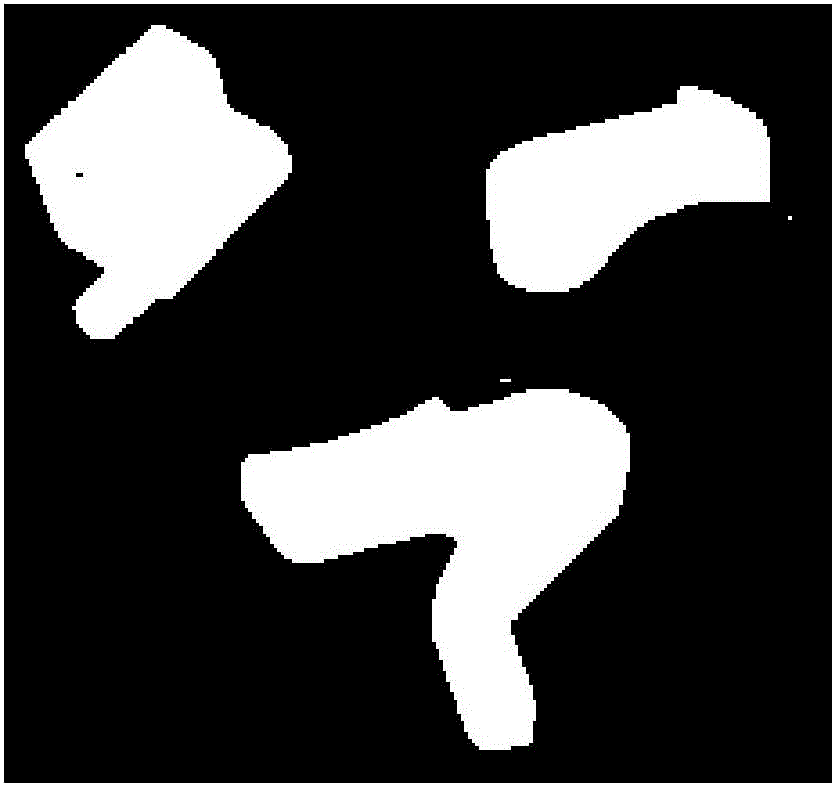

Flexible robot vision recognition and positioning system based on depth learning

ActiveCN106709909AEasy to identifyPrecise positioningImage enhancementImage analysisPattern recognitionLearning network

The present invention discloses a flexible robot vision recognition and positioning system based on the depth learning. The system is implemented in the following steps: obtaining an image of a part, carrying out binarization processing on the image of the part to extract an outer contour of the image of the part; finding out a circumscribed rectangle of the outer contour edge in the lateral direction, determining to-be-recognized areas, and normalizing the areas to a standard image; gradually rotating the standard image at an equal angle, finding out a rotation angle alpha when the standard image is rotated to a minimum area of the circumscribed rectangle of the outer contour edge in the lateral direction; using a depth learning network to extract the outer contour edge when the rotation angle is alpha, and recognizing the part and the pose of the part; and according to the rotation angle alpha and the pose, calculating the actual pose of the to-be-recognized part before rotating, and transmitting the pose data to a flexible robot, so that the flexible robot can pick up the to-be-recognized part. According to the system disclosed by the present invention, contour shape features contained in the part image data are automatically extracted layer by layer by using the depth learning network, so that accuracy and adaptability of part recognition and positioning can be greatly improved under the complicated conditions.

Owner:CHONGQING UNIV OF TECH

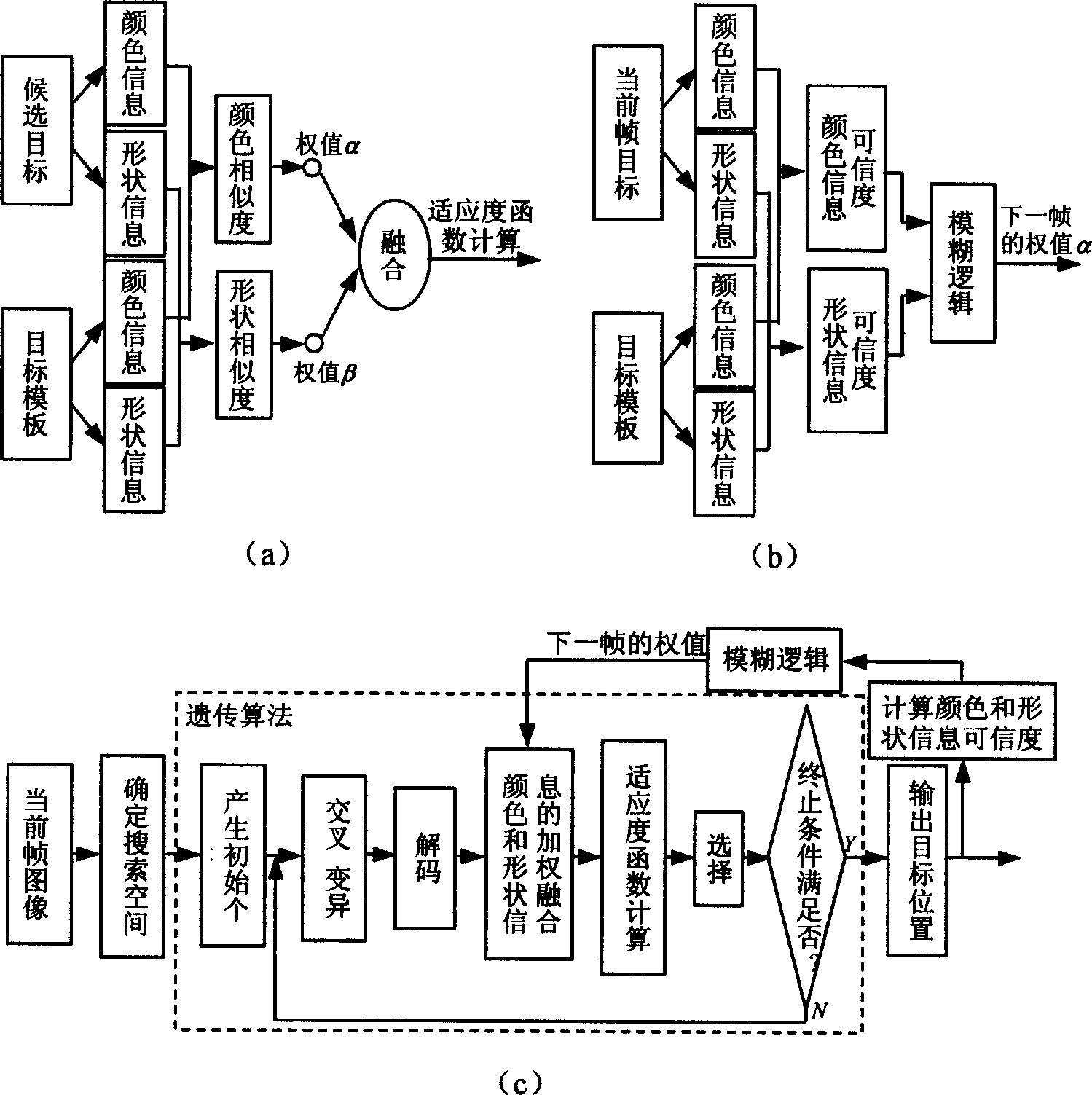

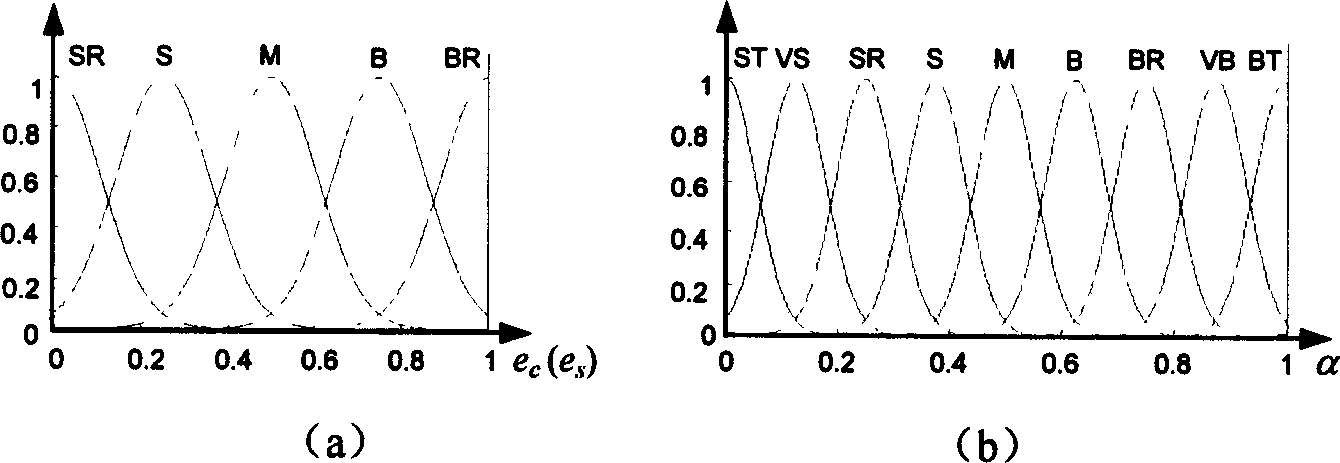

Video frequency motion target adaptive tracking method based on multicharacteristic information fusion

InactiveCN1619593AImprove reliabilityImplementing Adaptive Weighted FusionImage analysisPattern recognitionVideo monitoring

The video moving target self-adaptive tracking method based on multiple characteristic information fusion can be extensively used in computer vision, image processing and mode identification field. Said method includes the following steps: firstly, extracting characteristic information of target, then utilizing fuzzy logic method to make self-adaptive fusion of various characteristic informations, using fused information to describe observation information of target, finally adopting genetic algorithm with heuristic search function to find out candidate target most similar to target template observation information in current image target candidate zone.

Owner:SHANGHAI JIAO TONG UNIV

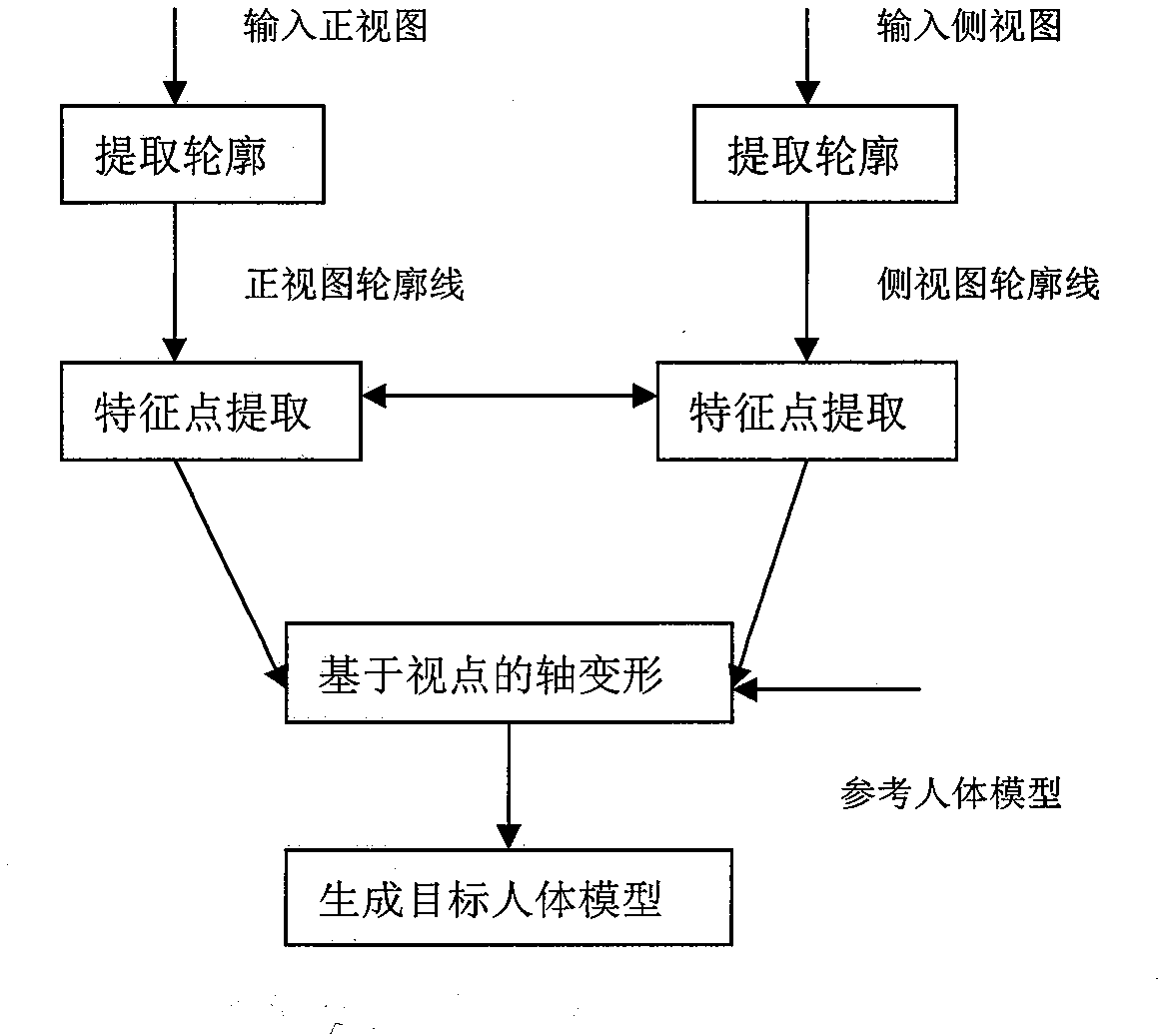

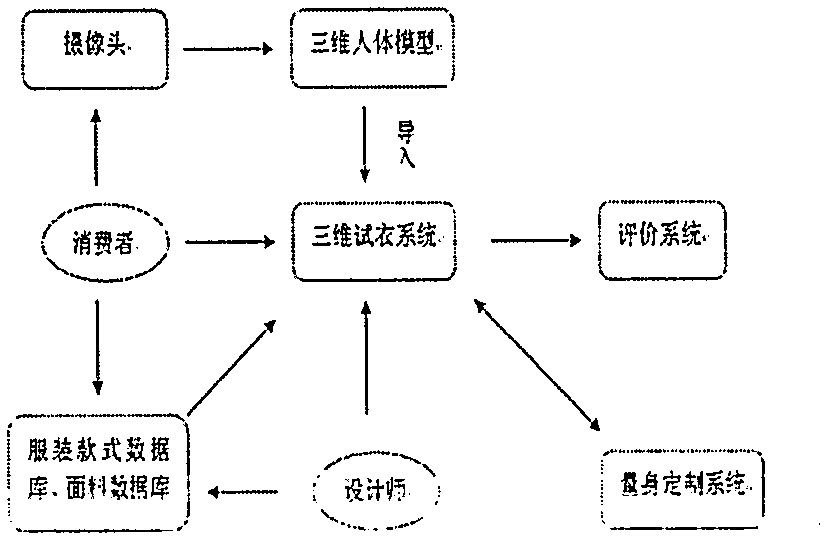

Three-dimensional virtual fitting system

InactiveCN103106586AImprove the design levelImprove efficiencyCommerceImage data processingHuman bodyPersonalization

The invention discloses a three-dimensional virtual fitting system and relates to the technical field of robot vision and digitized costume design. The three-dimensional virtual fitting system is characterized in that the object of costume three-dimensional virtual fitting is a three-dimensional human body model which is built through shooting and processing of a real human body. A customer is shot by a camera to obtain multiple groups of images; contour extraction and feature extraction are carried out on the images in a computer, based on axial deformation of viewpoints, with the human body model as reference, further factors including human body types and the like are considered, and the real-person human body model of the customer is finally obtained; the obtained model is input into the three-dimensional fitting system; through two-way selection, a style, cloth, design and color and the like of clothes are determined by the customer and a designer; the data are input into a 'custom-made system' to complete a costume design, an individual fashion sample is generated automatically and input back into the three-dimensional fitting system, and then a custom ready-to-wear is sewed in a virtual mode and worn on the real-person human body model to display an individual fitting effect intuitively; relative marking is carried out by an evaluation system, and scores can be a reference index for the customer and the designer; and the design level and design efficiency of the costume are greatly improved.

Owner:JIANGNAN UNIV

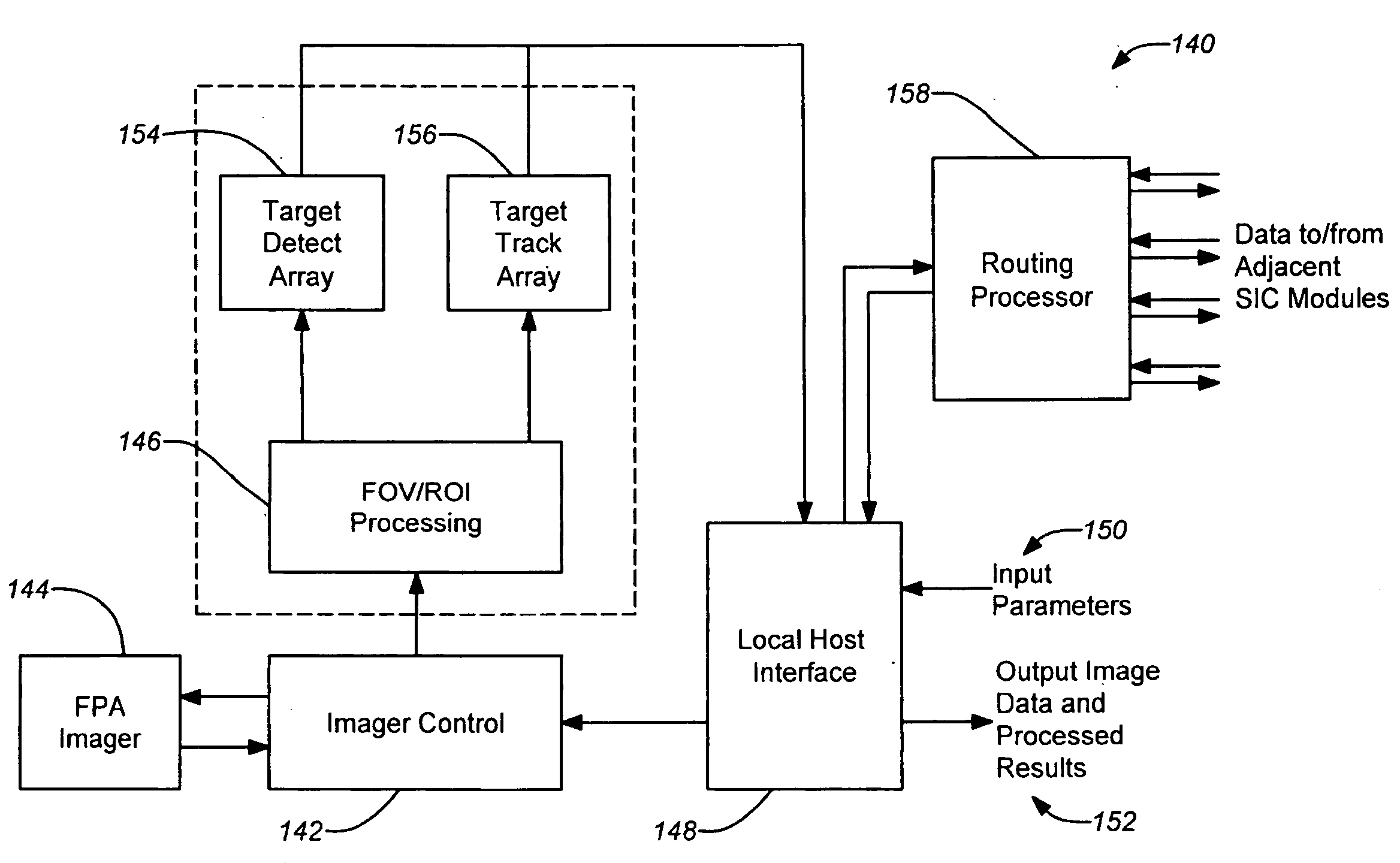

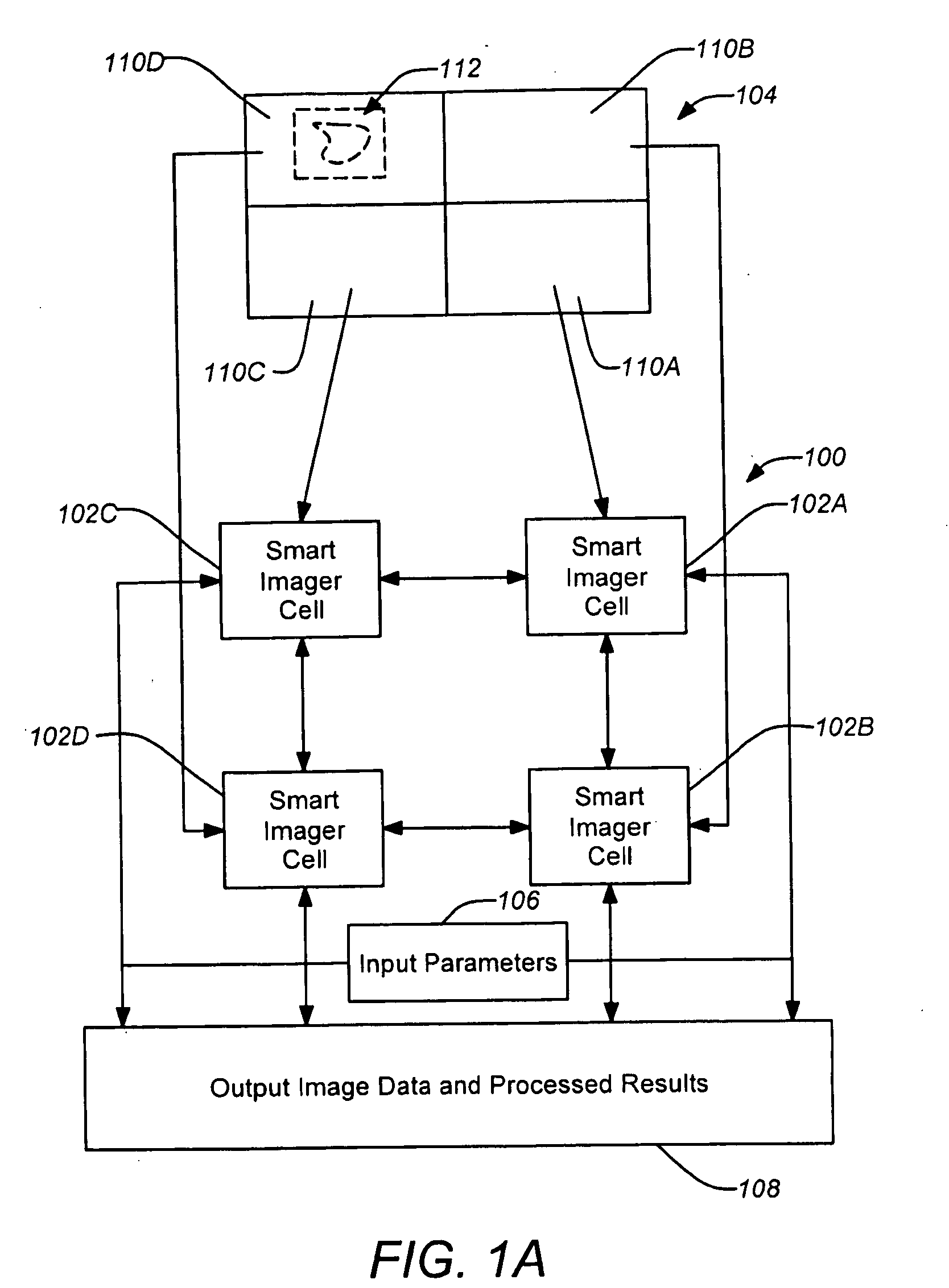

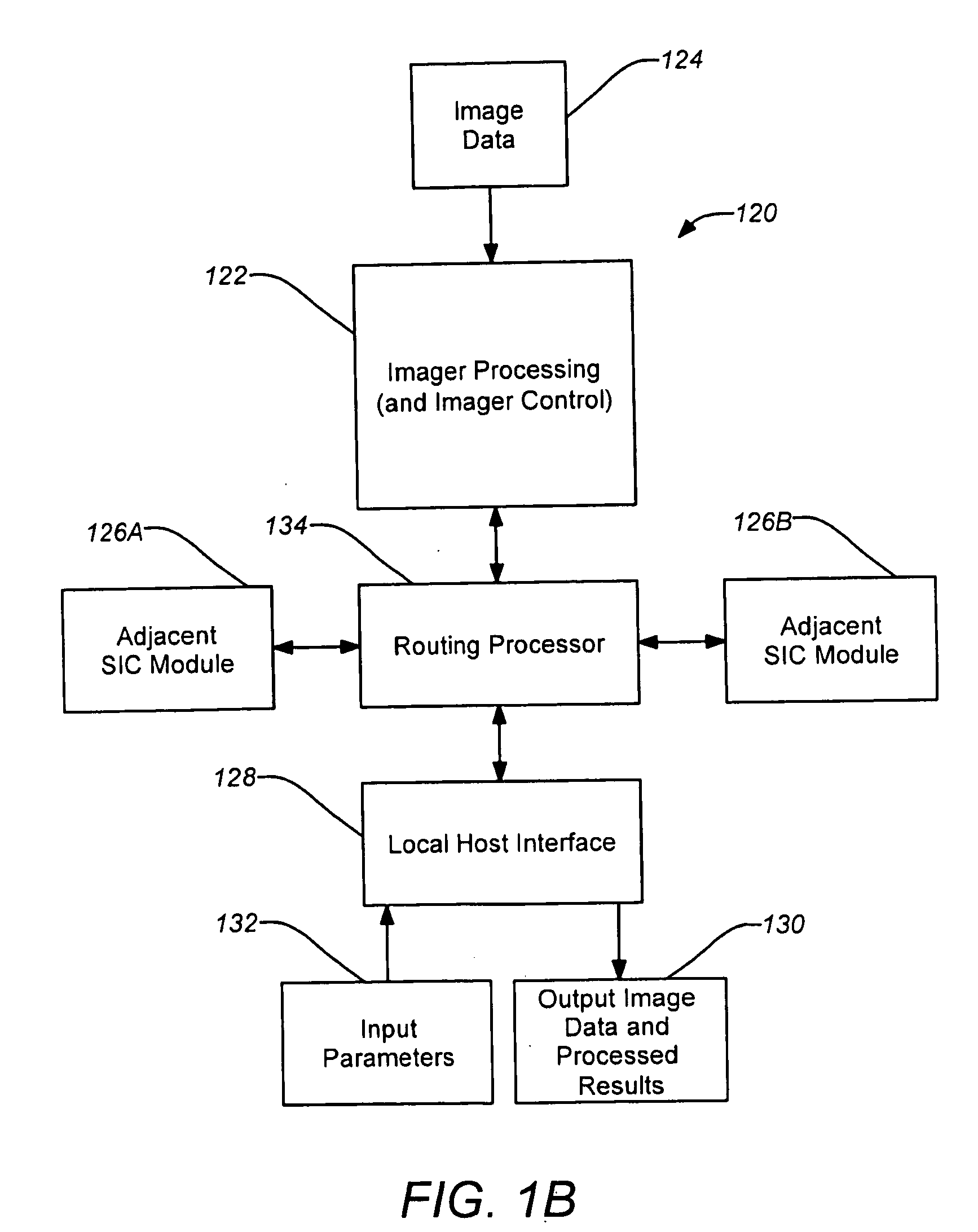

Synthetic foveal imaging technology

InactiveUS20090116688A1Rapid responseImage enhancementImage analysisImaging processingApplication software

Apparatuses and methods are disclosed that create a synthetic fovea in order to identify and highlight interesting portions of an image for further processing and rapid response. Synthetic foveal imaging implements a parallel processing architecture that uses reprogrammable logic to implement embedded, distributed, real-time foveal image processing from different sensor types while simultaneously allowing for lossless storage and retrieval of raw image data. Real-time, distributed, adaptive processing of multi-tap image sensors with coordinated processing hardware used for each output tap is enabled. In mosaic focal planes, a parallel-processing network can be implemented that treats the mosaic focal plane as a single ensemble rather than a set of isolated sensors. Various applications are enabled for imaging and robotic vision where processing and responding to enormous amounts of data quickly and efficiently is important.

Owner:CALIFORNIA INST OF TECH

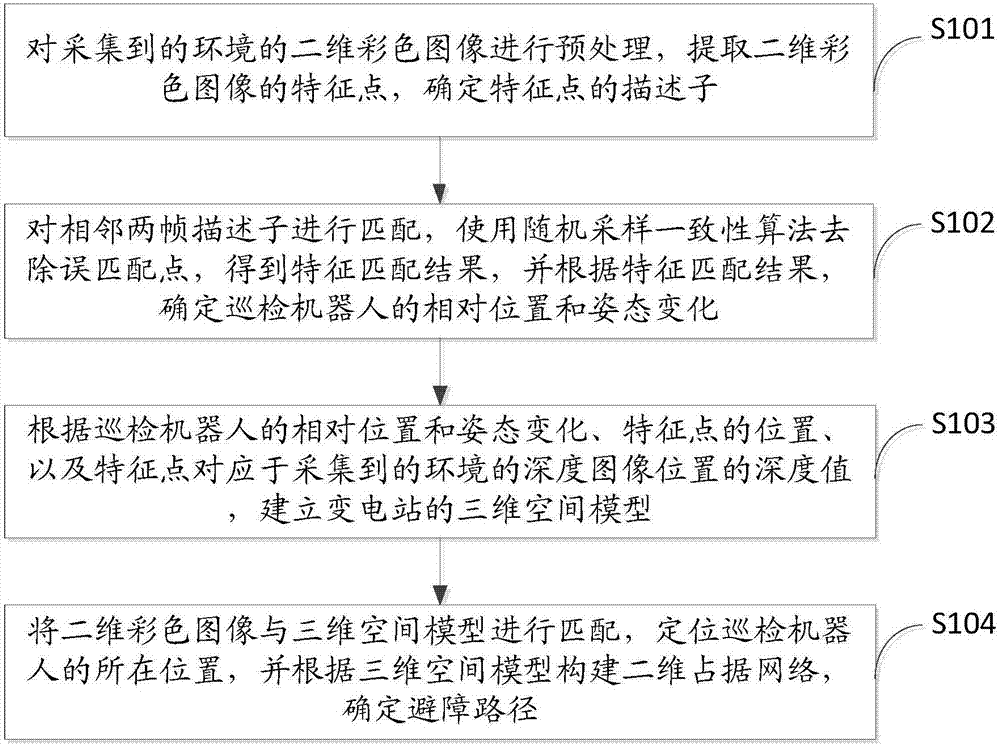

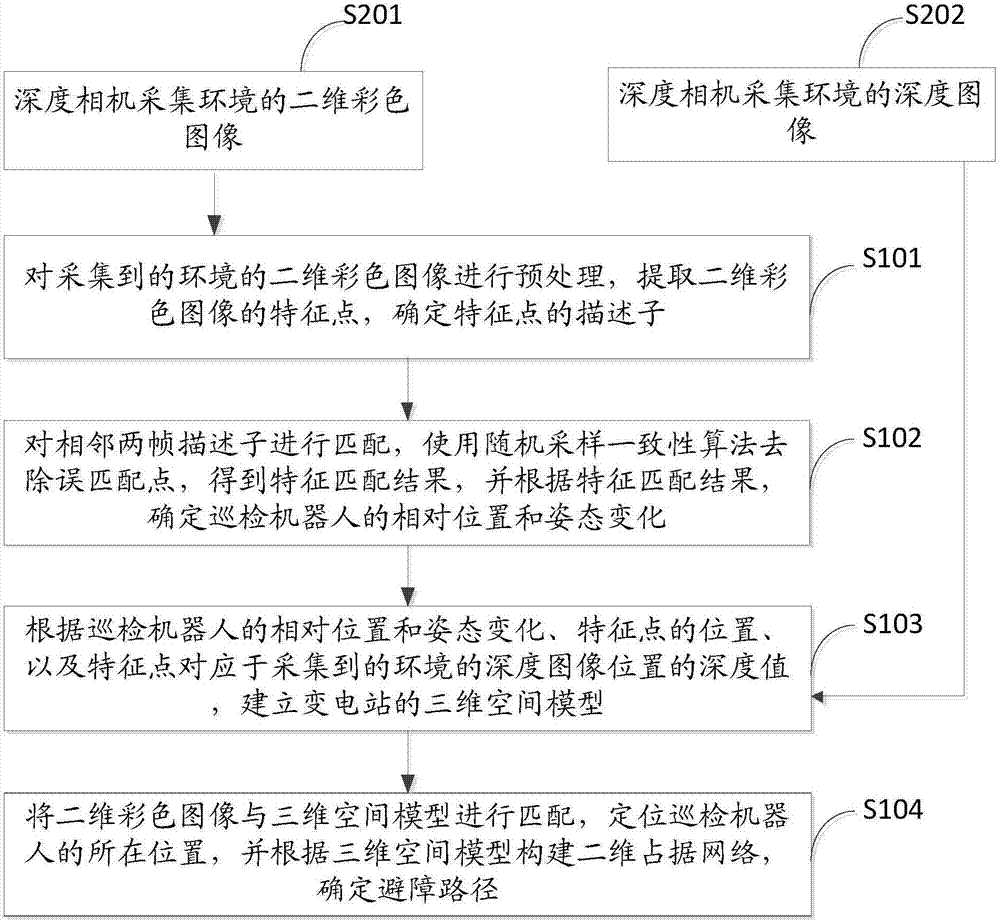

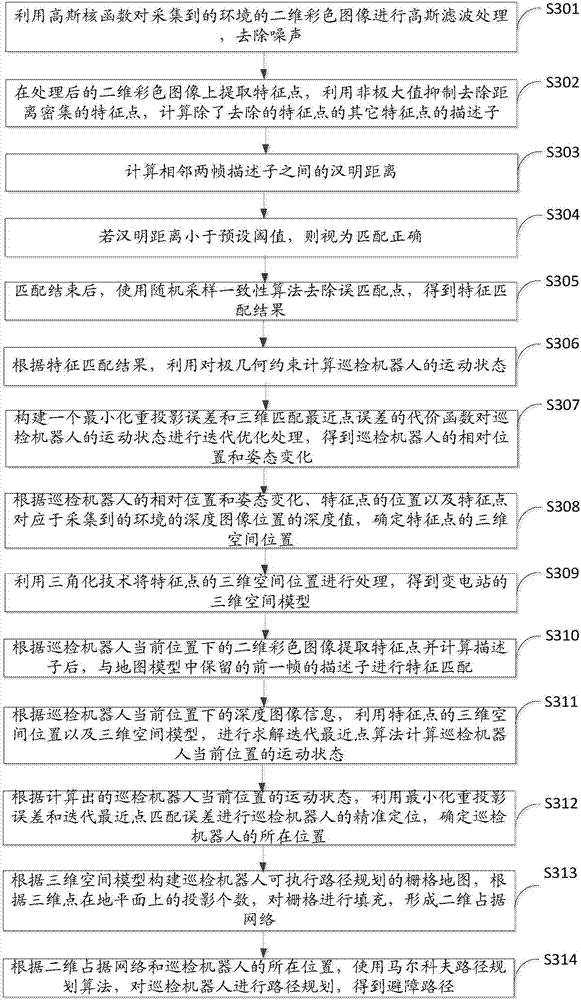

Vision localization and navigation method and system for polling robot of transformer substation

InactiveCN107167139ARealize the navigation functionEasy to implementNavigational calculation instrumentsInformation analysisThree-dimensional space

The invention discloses a vision localization and navigation method and system for a polling robot of a transformer substation. The vision localization and navigation method comprises the following steps: preprocessing a collected environmental two-dimensional colored image, extracting feature points, and determining descriptors of the feature points; matching the descriptors of two adjacent frames so as to obtain a feature matching result, and determining a relative position and posture change of the polling robot; establishing a three-dimensional space model of the transformer substation according to the relative position and posture change of the polling robot, the positions of the feature points and the depth values of the feature points corresponding to depth image positions; matching the two-dimensional colored image with the three-dimensional space model, locating the position of the polling robot, constructing a two-dimensional occupancy network, and determining an obstacle avoidance route. Based on image information analysis and relevant algorithms, the accurate position of the polling robot can be positioned, obstacles in a whole situation can be automatically detected, and the route can be planned according to the obstacles and the positions of the obstacles. The method is easy to implement, low in cost, good in stability and high in precision.

Owner:GUANGDONG UNIV OF TECH

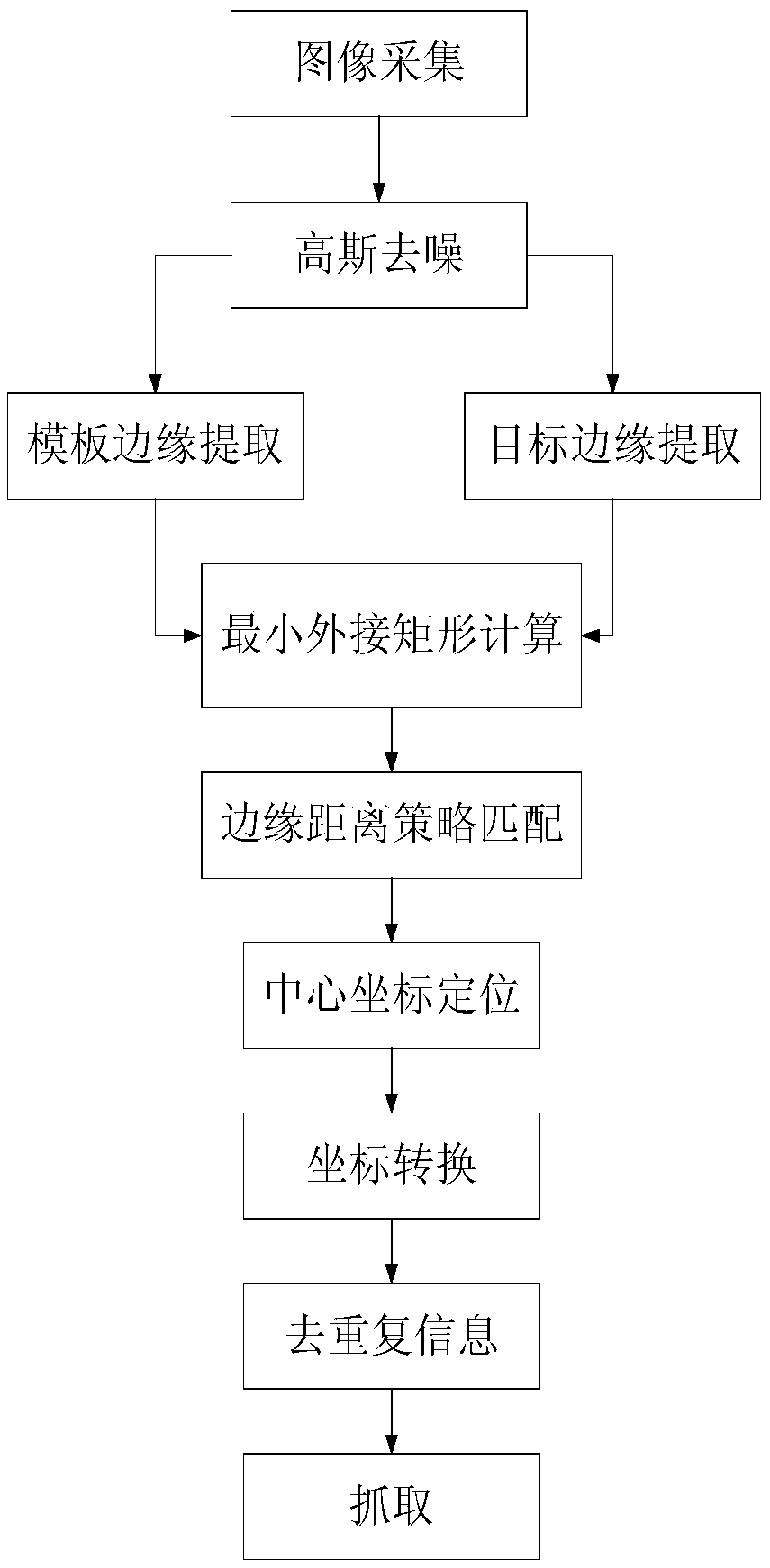

Visual guiding-based robot workpiece grabbing method

InactiveCN110315525AReduce data volumeStrong targetingProgramme-controlled manipulatorMinimum bounding rectangleVision based

The invention provides a visual guiding-based robot workpiece grabbing method, and belongs to the field of robot visual recognition and positioning. The method comprises the following steps of (1) establishing templates of target workpieces in advance, collecting images of the target workpieces, and acquiring edge feature images of the templates and edge feature images of the target workpieces; (2) acquiring the deflection angle of each target workpiece relative to the corresponding template by utilizing the minimum enclosing rectangle of the edge feature image of the target workpiece, and establishing a compensation template set by utilizing the deflection angles and the edge feature images of the templates; (3) matching the edge feature images of the target workpieces and the compensation template set, and recognizing the target workpieces and acquiring the center coordinates of the target workpieces under a visual coordinate system; (4) converting the visual coordinate system to a robot user coordinate system; and (5) selecting a reference point, and uniquely identifying the same target workpiece by utilizing the time and the position to complete positioning grabbing. The targetworkpieces can be accurately recognized by utilizing the method.

Owner:TIANJIN POLYTECHNIC UNIV

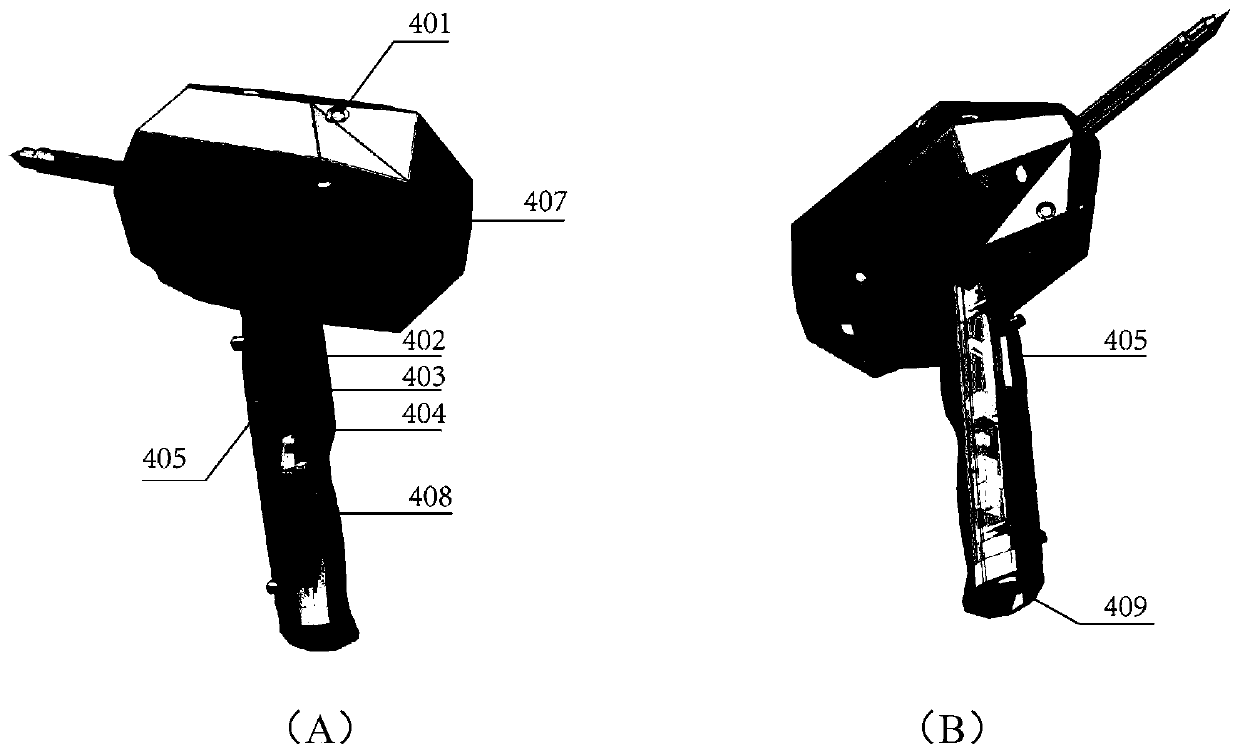

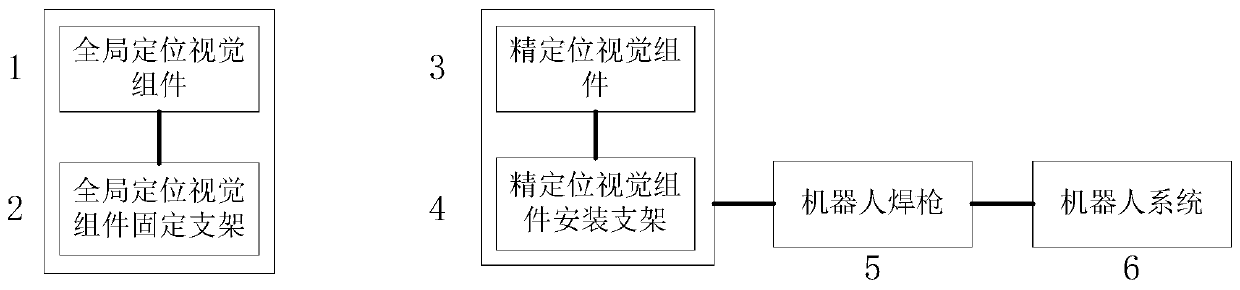

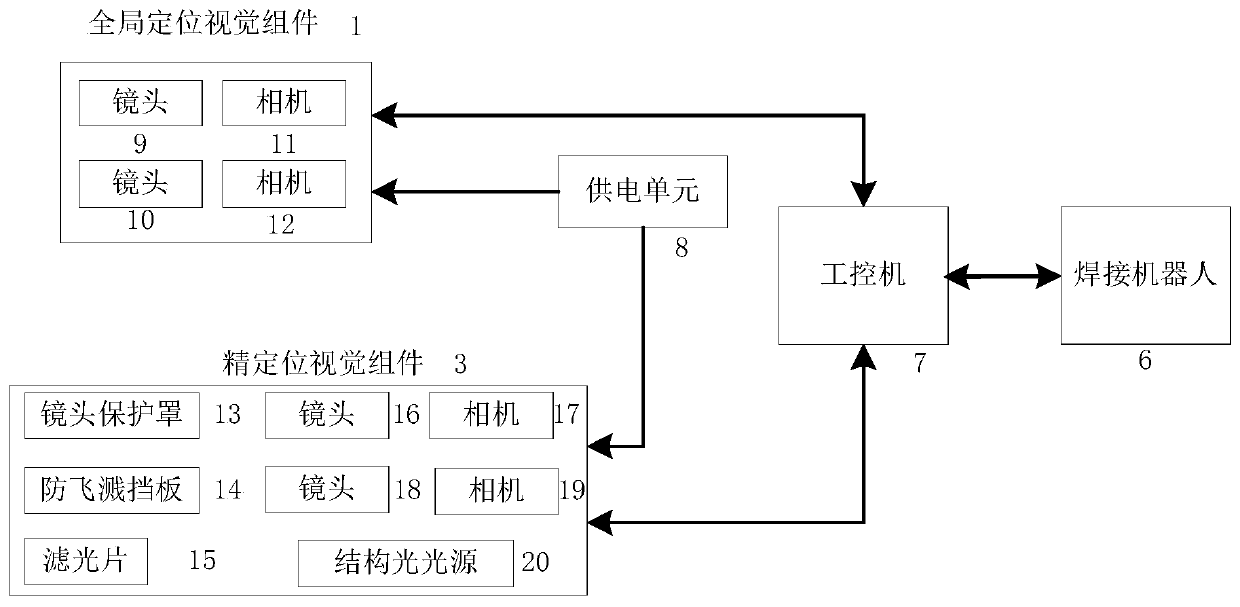

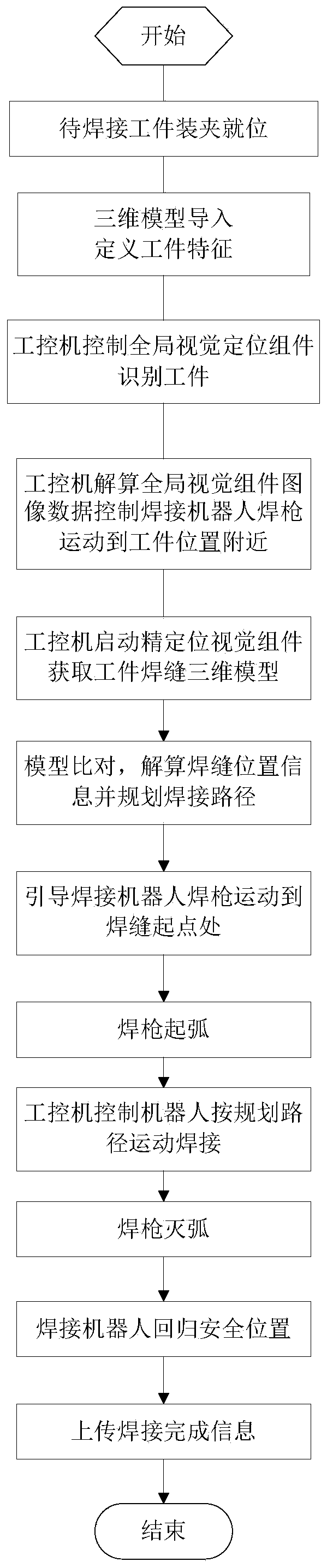

Welding robot vision assembly and measuring method thereof

PendingCN110524580AAddressing Adaptive IssuesEliminate multiple manual calibration problemsProgramme-controlled manipulatorWelding/cutting auxillary devicesRobot visionRobot control system

The invention discloses a welding robot vision assembly and a measuring method thereof. The measuring method comprises the following steps: an industrial personal computer controls a global positioning vision assembly to collect workpiece image information and sends workpiece fine positioning measurement position information to a welding robot control system; the welding robot control system controls a welding gun of the welding robot to move a fine positioning visual assembly to a fine positioning measurement point; the industrial personal computer controls the fine positioning visual assembly to collect a workpiece weld joint image, calculates coordinates of a starting point and an ending point of a workpiece weld joint, and automatically generates a welding path of the workpiece weld joint; and the industrial personal computer guides the welding gun to move to a weld joint starting point for arc starting welding and to the ending point for arc extinguishing, and welding of the welding joint is completed. The welding robot vision assembly has the advantages of being free of teaching, high in welding efficiency, high in system tolerance and high in flexibility.

Owner:XIAN ZHONGKE PHOTOELECTRIC PRECISION ENG CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com