Flexible robot vision recognition and positioning system based on depth learning

A robot vision and deep learning technology, applied in the field of flexible robot visual recognition and positioning system, can solve the problems of poor validity and adaptability of artificial features, poor adaptability, and low accuracy of flexible robot identification and positioning.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0058] The present invention will be further described in detail below in conjunction with the accompanying drawings.

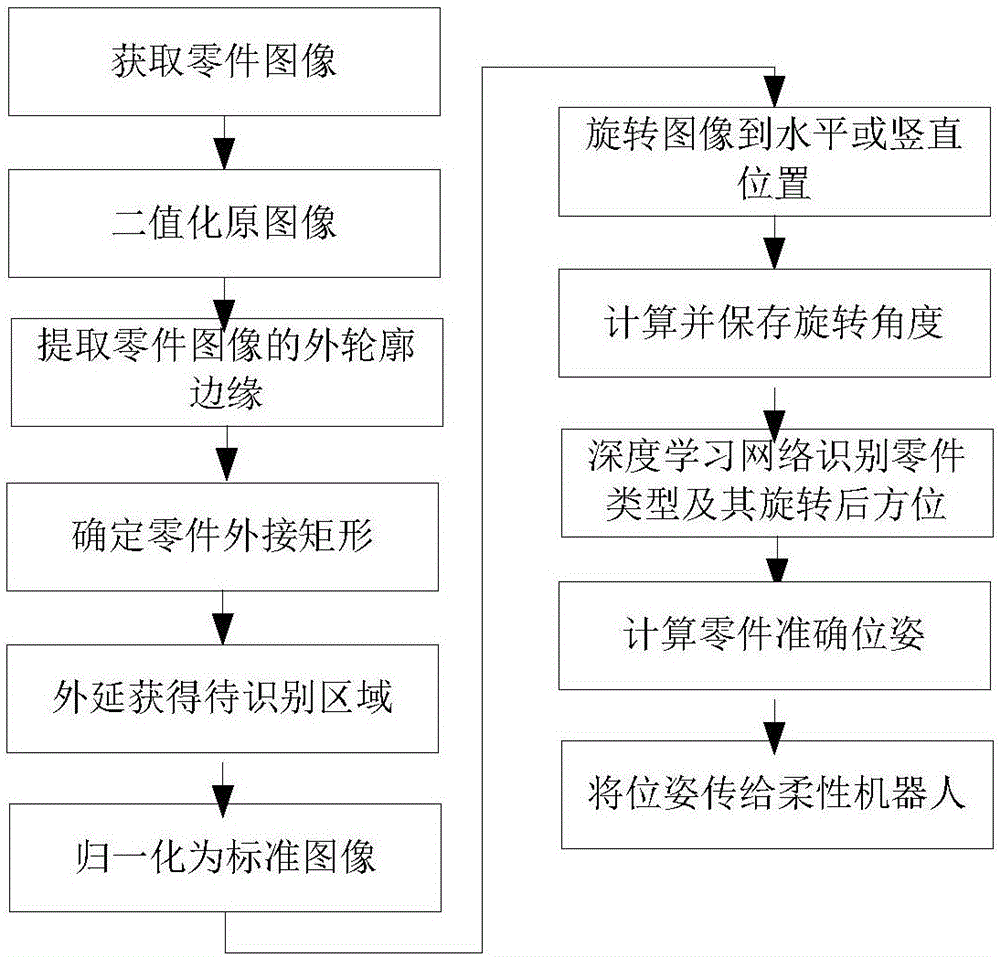

[0059] During specific implementation: if figure 1 As shown, it is the process of the flexible robot visual recognition and positioning system based on deep learning in this embodiment. Taking the flexible robot visual recognition and positioning system to identify and locate a certain type of part on the transmission device as an example, the specific implementation process is as follows:

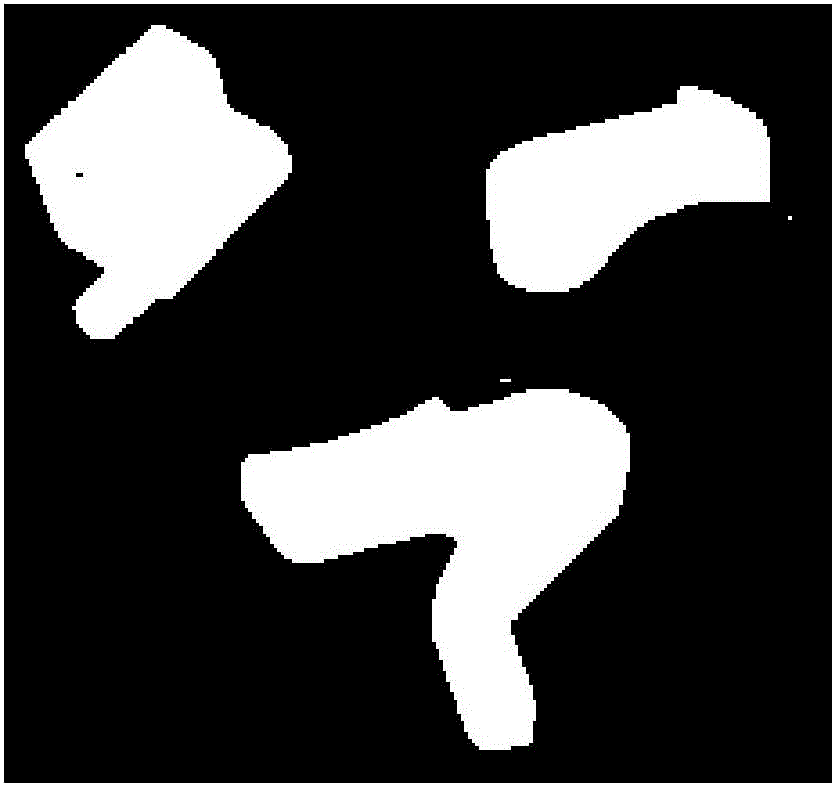

[0060] 1) Obtain the part image with a CCD camera, use a single threshold method to binarize the original image, and use the Roberts operator to extract the outer contour edge of the part image;

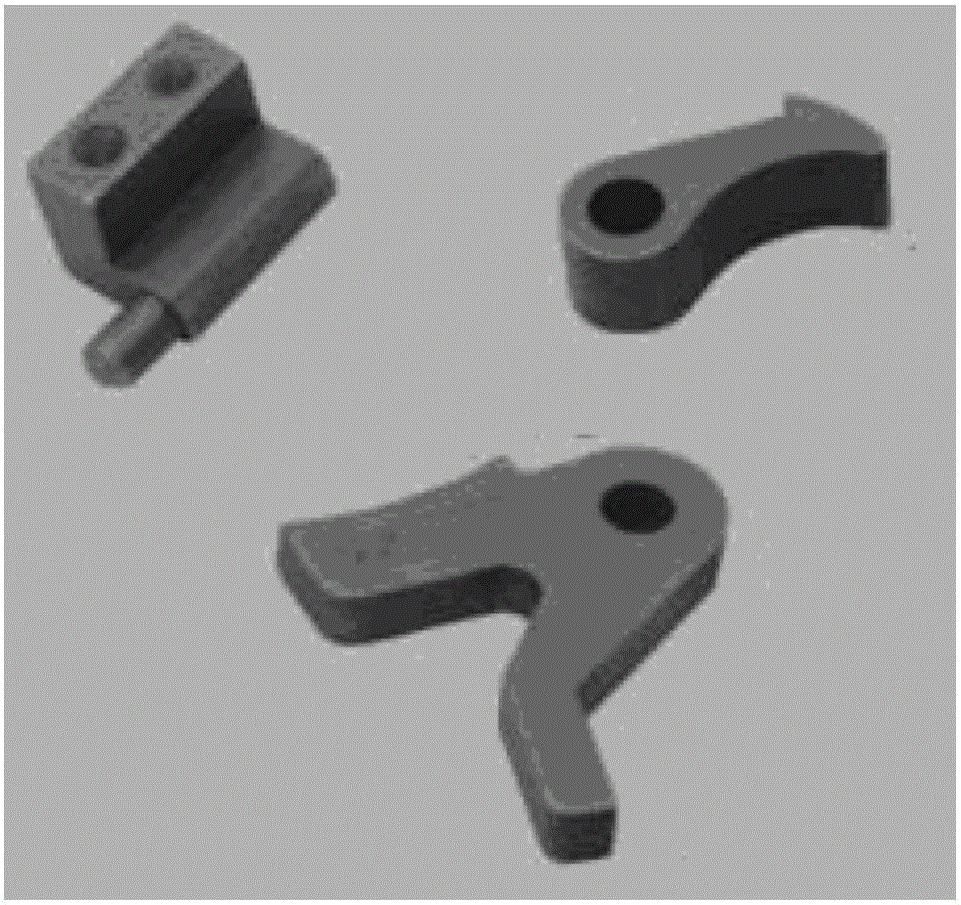

[0061] The system adopts Daheng DH-SV2001FM CCD camera, Computar M1614-MP2 industrial lens, EpVision EP-1394A dual-channel 1394A image acquisition card, and backlighting. Such as figure 2 Shown is the image of parts on the transmission device captured by a CCD camera. The par...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com